Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

48results about How to "Improve Quantization Efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

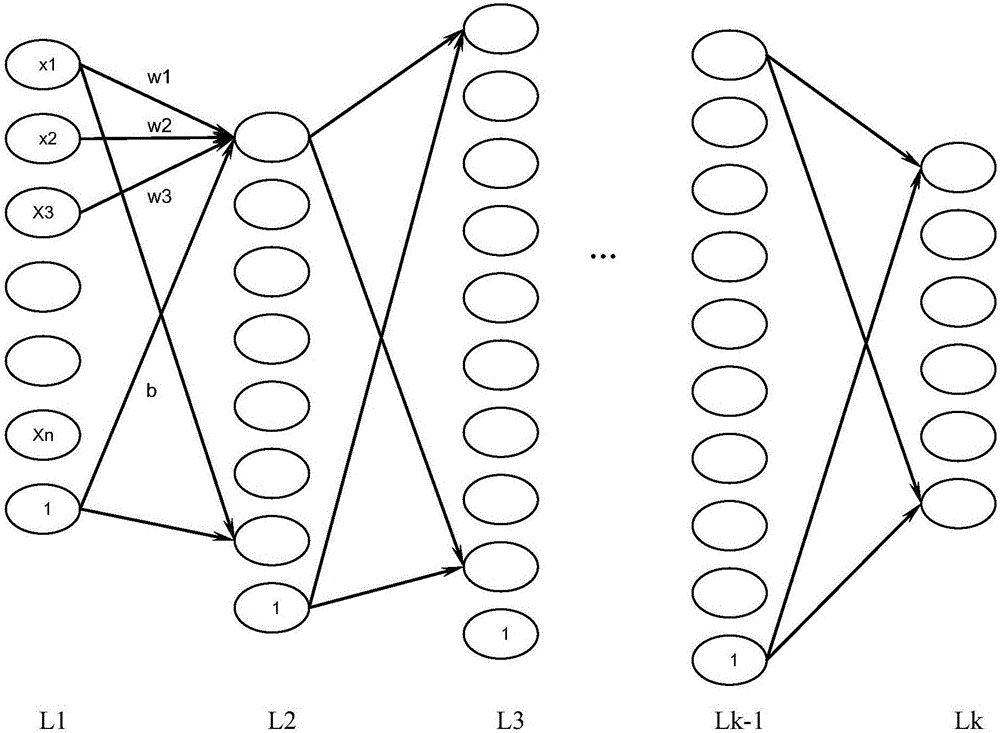

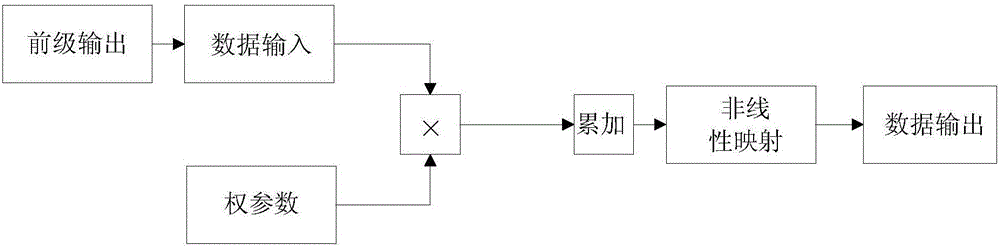

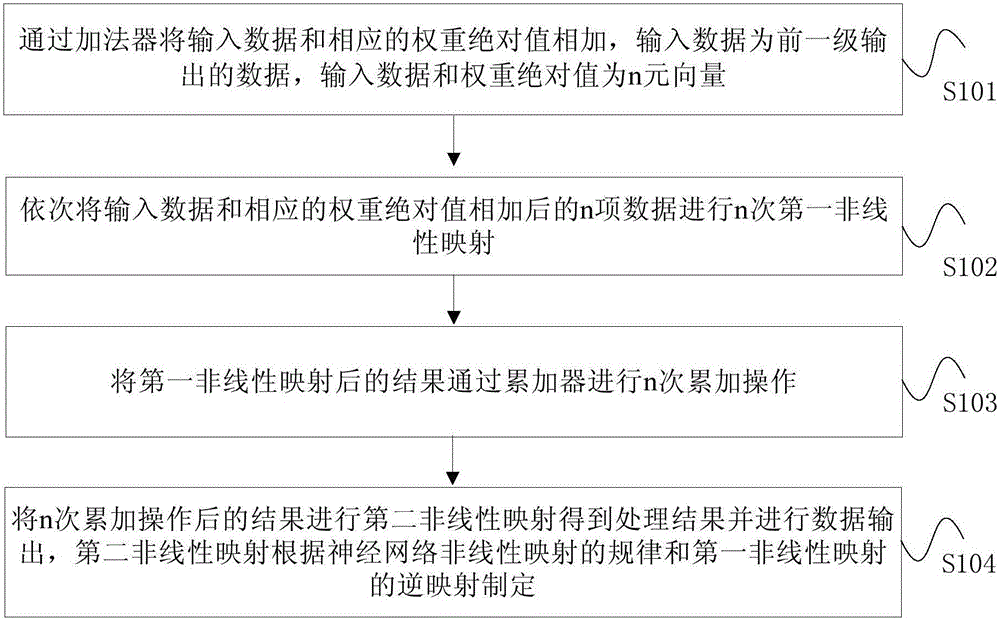

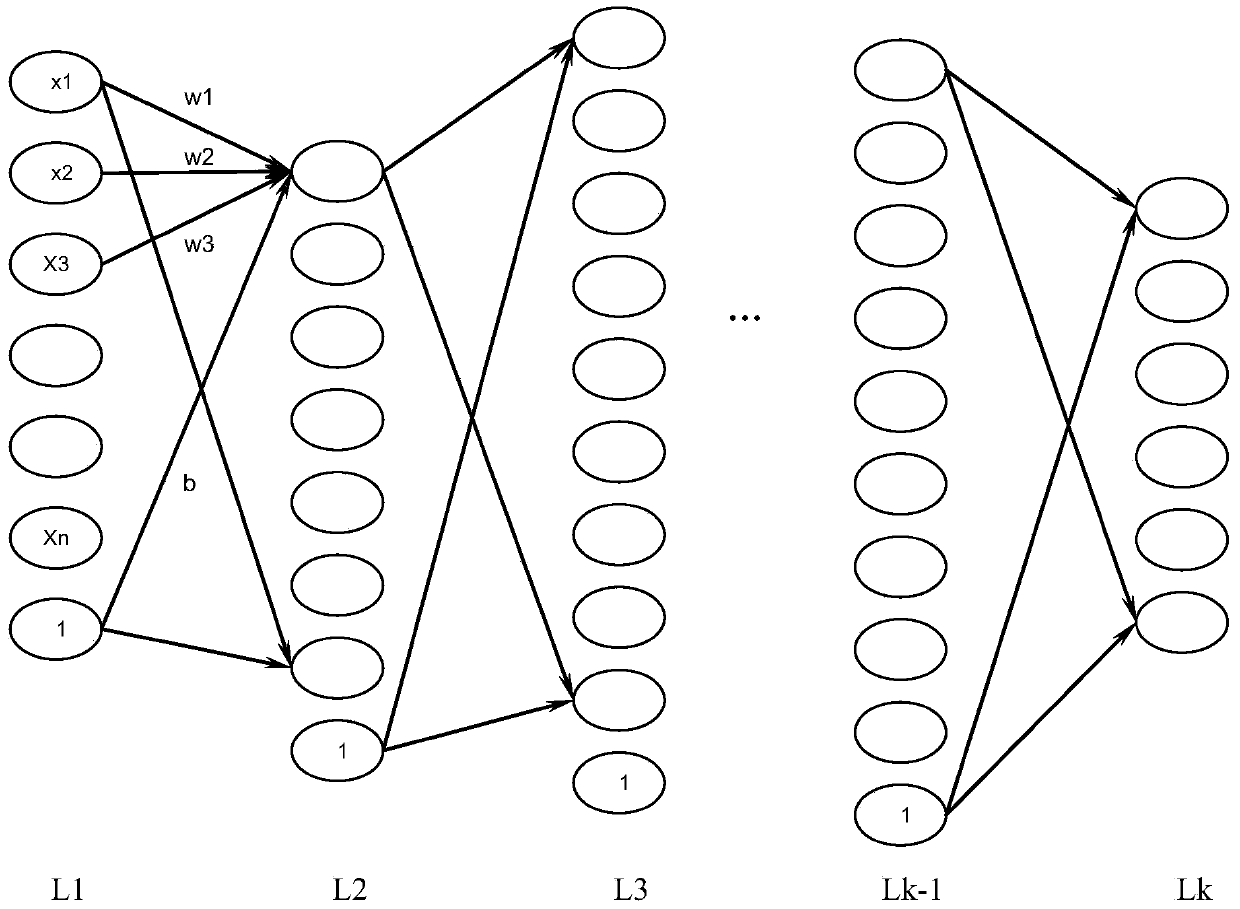

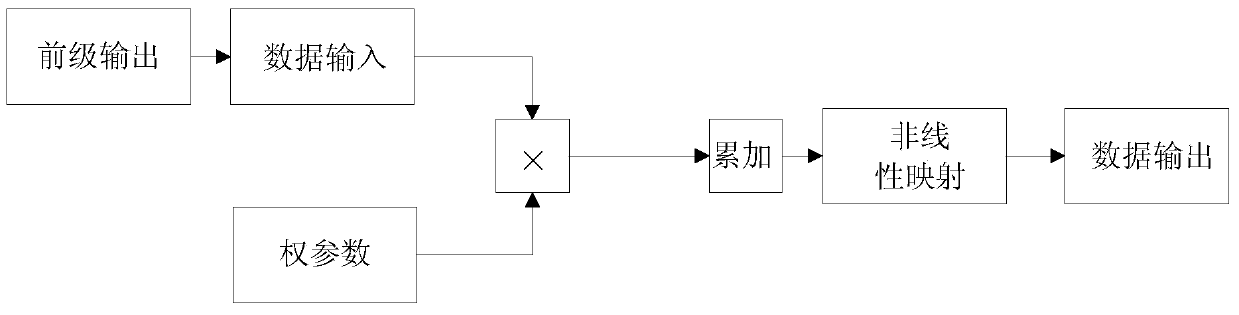

Data processing method of neural network processor and neural network processor

ActiveCN105844330AReduce storage requirementsReduce bandwidth requirementsPhysical realisationNetwork processorBandwidth requirement

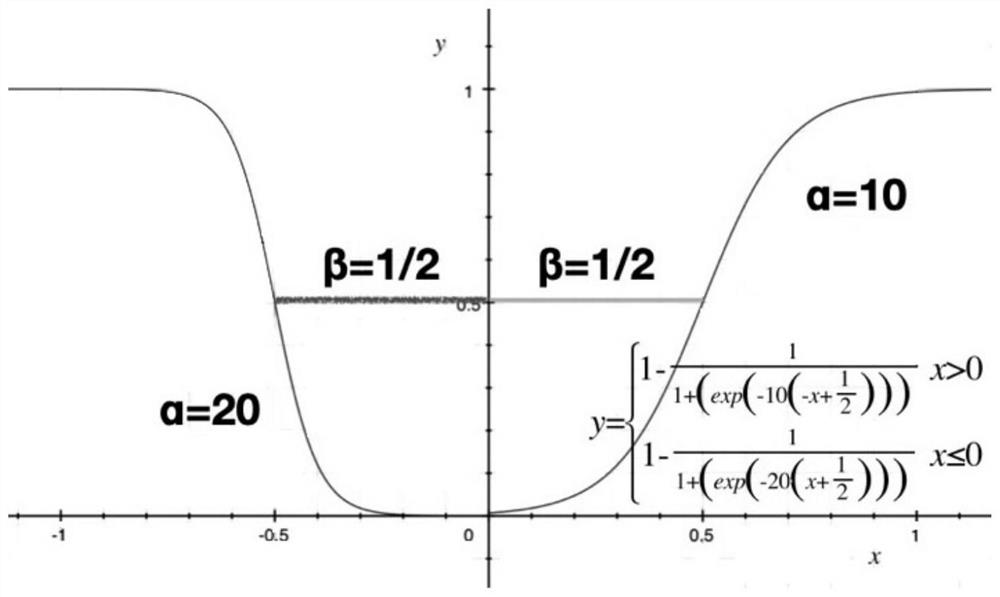

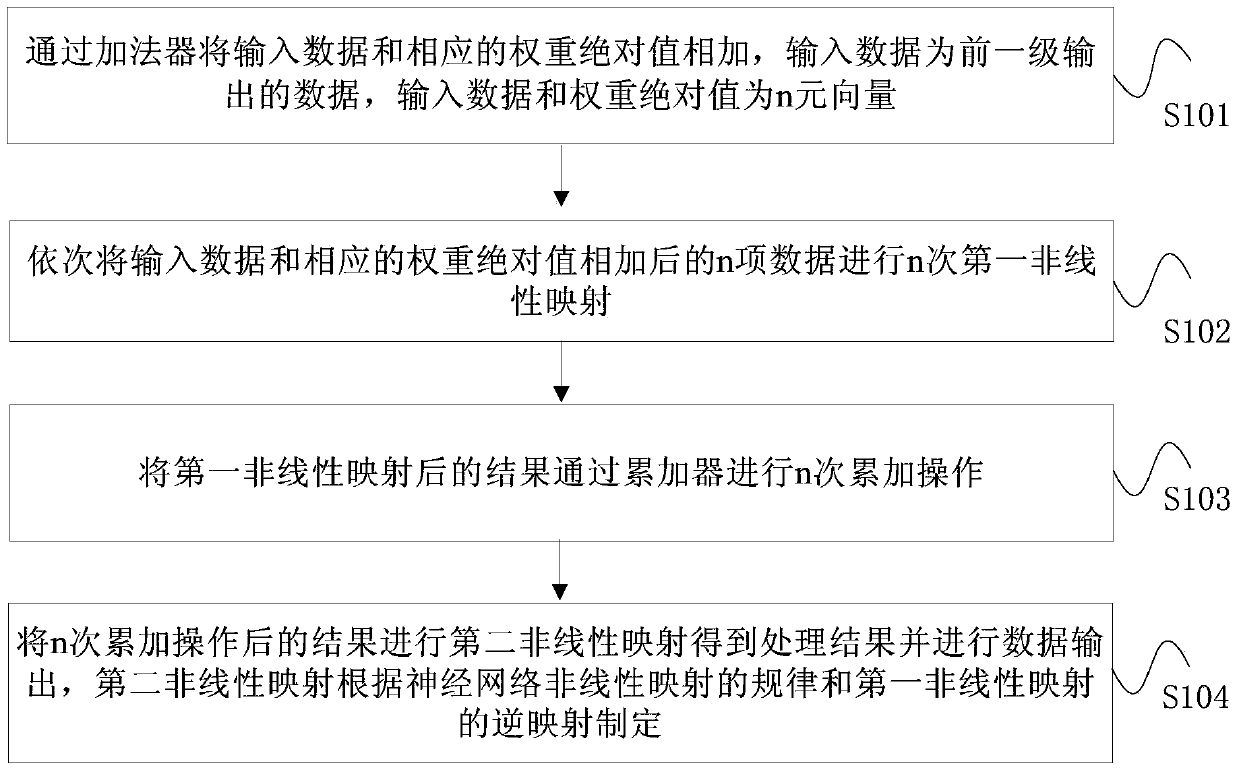

The invention provides a data processing method of a neural network processor and a neural network processor. The method includes the following steps that: input data and corresponding weight absolute values are added together through an adder, wherein the input data are data of the output of a previous stage, and the input data and the weight absolute values are n-element vectors; and n-term data obtained after adding the input data and the corresponding weight absolute values together are subjected to n times of first nonlinear mapping; results obtained after the first nonlinear mapping are subjected to n times of accumulation operation through an accumulator, wherein the accumulation operation includes weight sign bit-controlled adding operation and subtraction operation; and a result obtained after the n times of accumulation operation is subjected to second nonlinear mapping, so that a processing result can be obtained, and data output is carried out, wherein the second nonlinear mapping is formulated according to the rules of neural network nonlinear mapping and the inverse mapping of the first nonlinear mapping. With the method adopted, quantization efficiency can be improved, and storage requirements and bandwidth requirements of data can be decreased.

Owner:HUAWEI TECH CO LTD

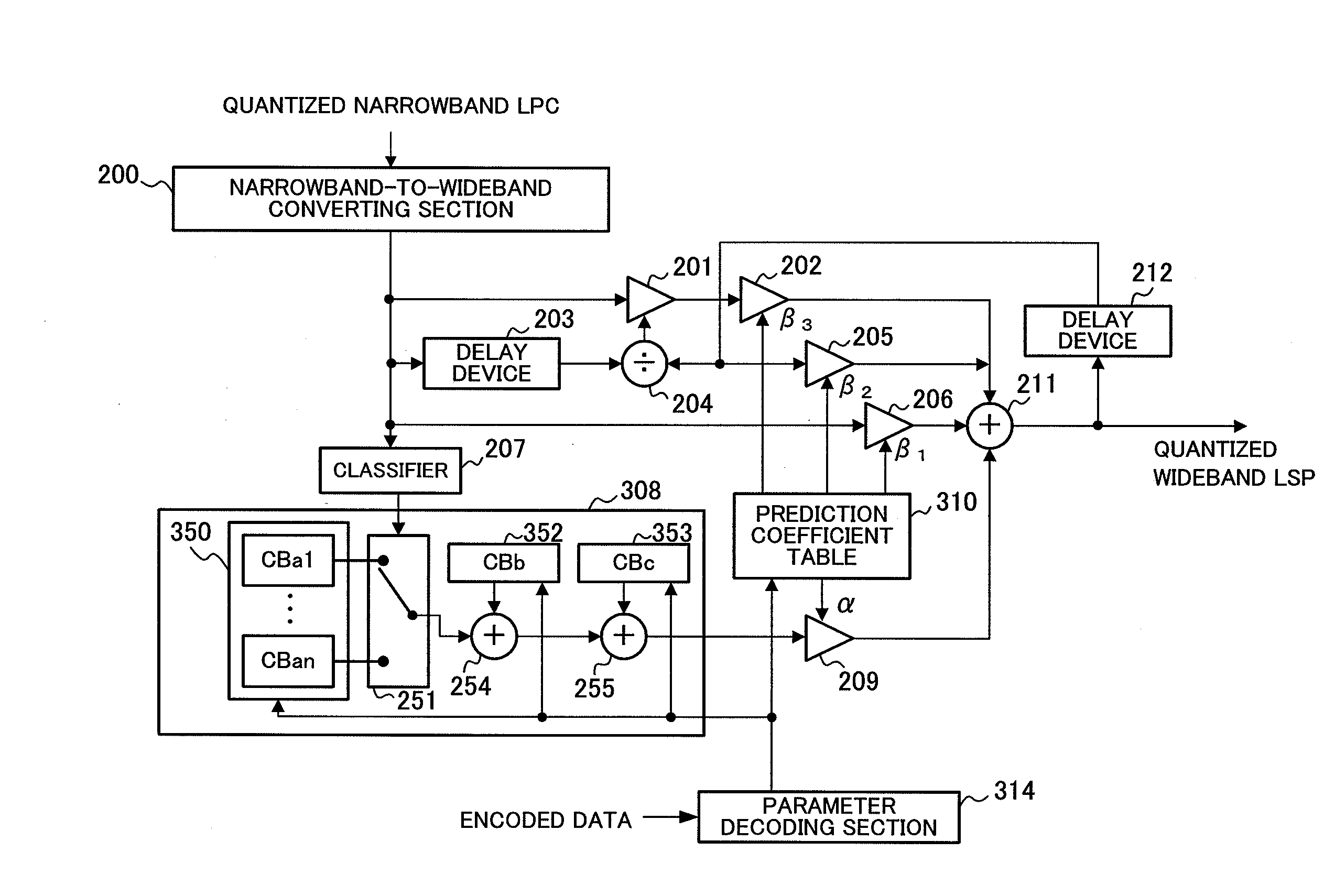

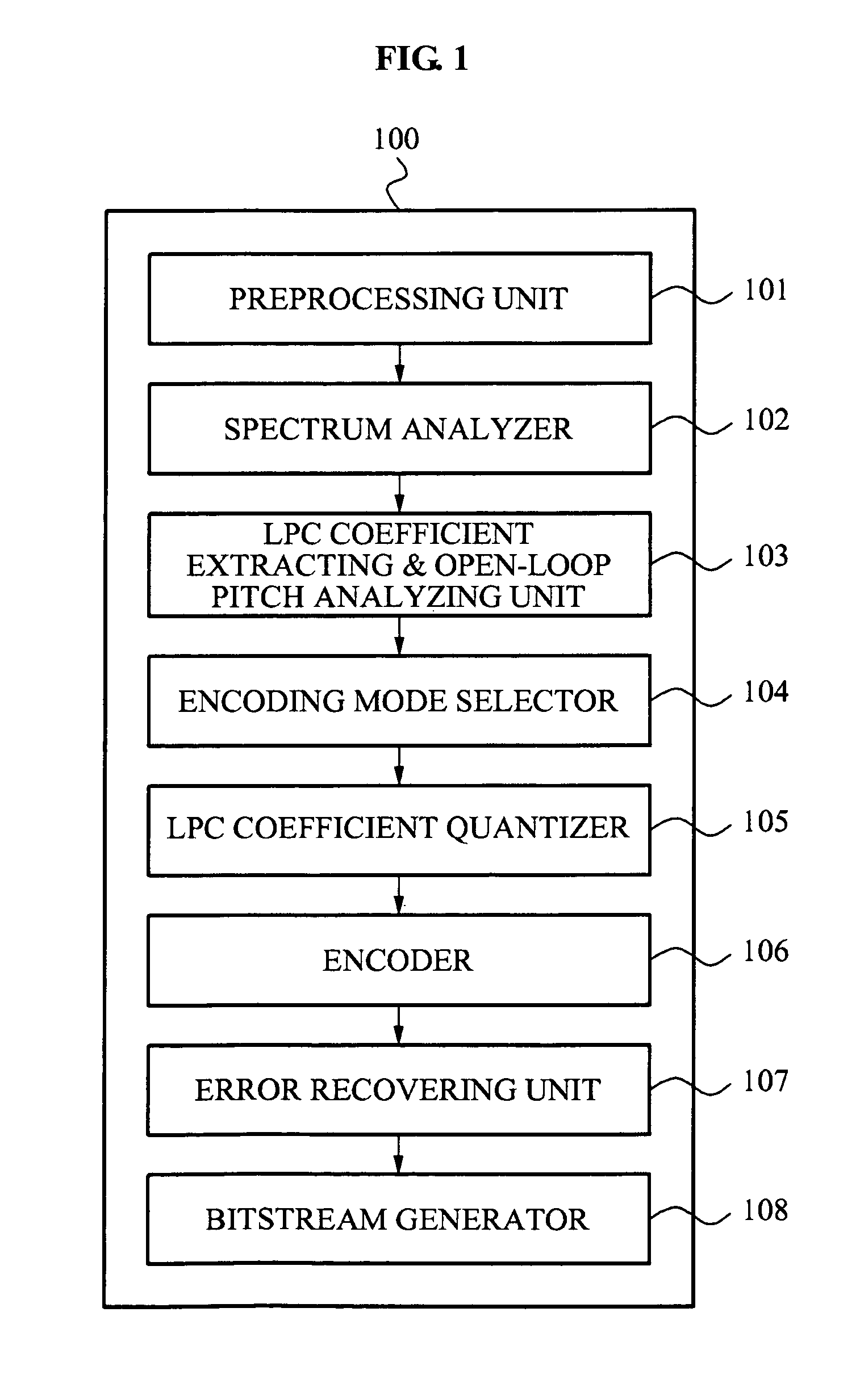

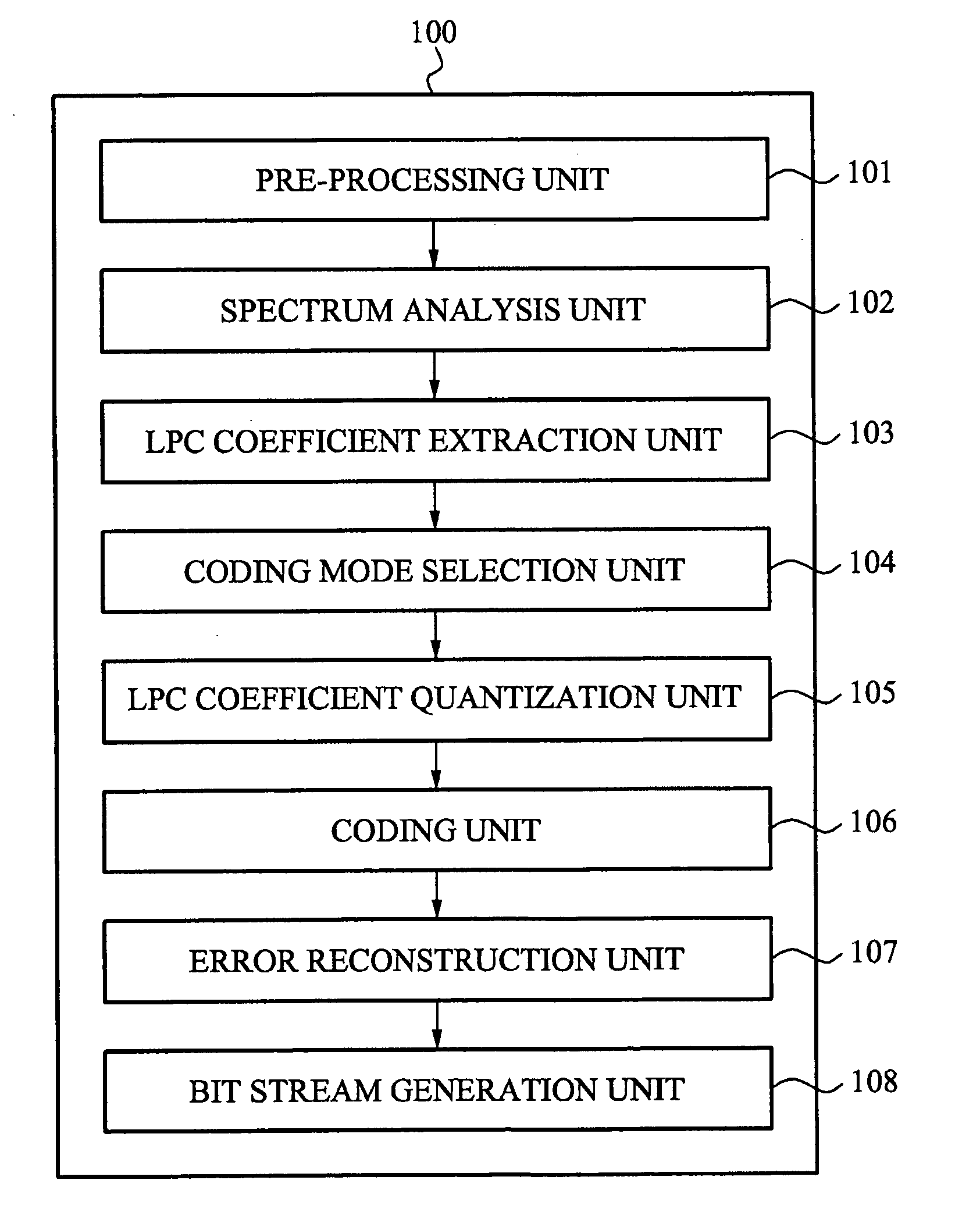

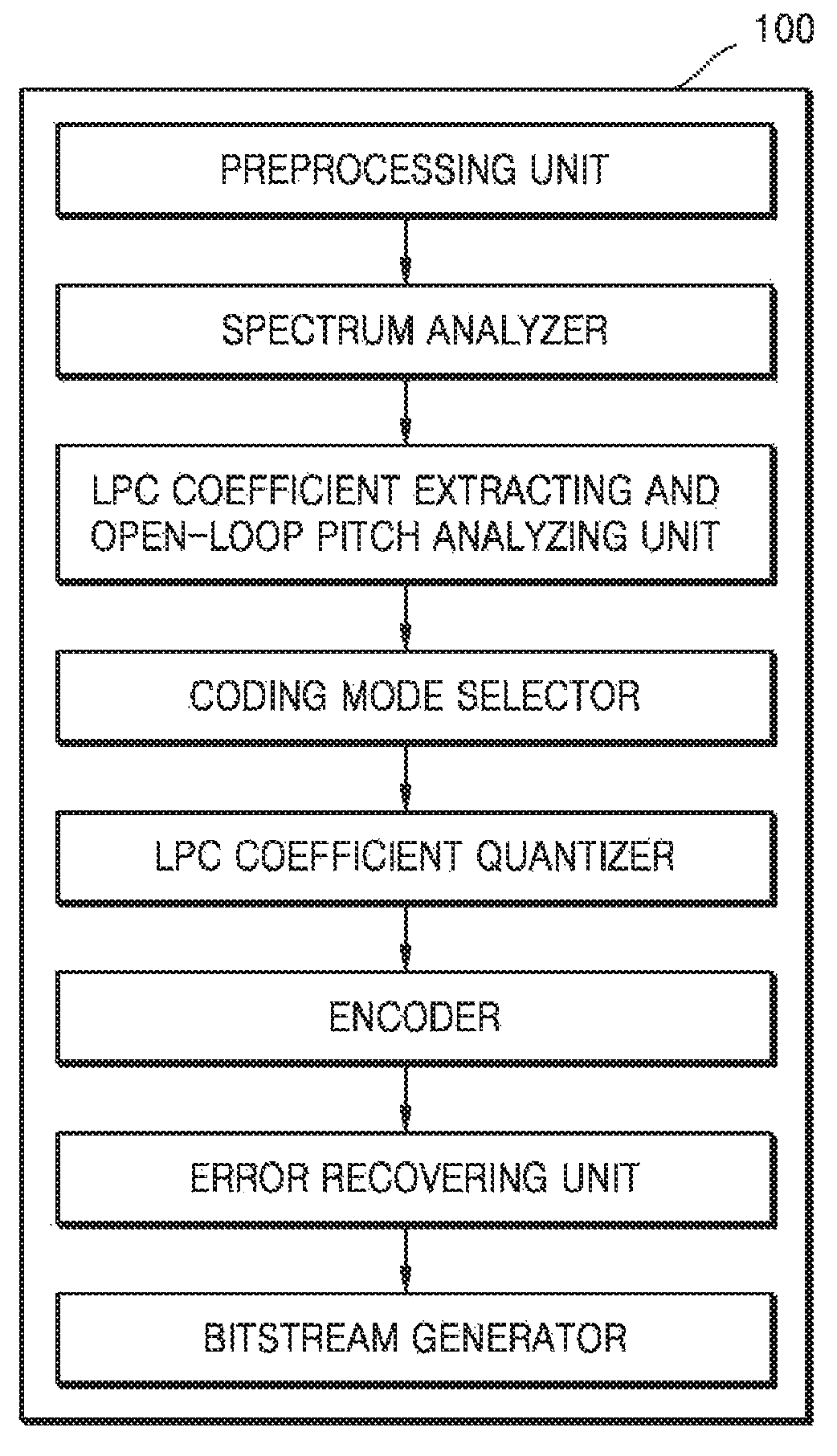

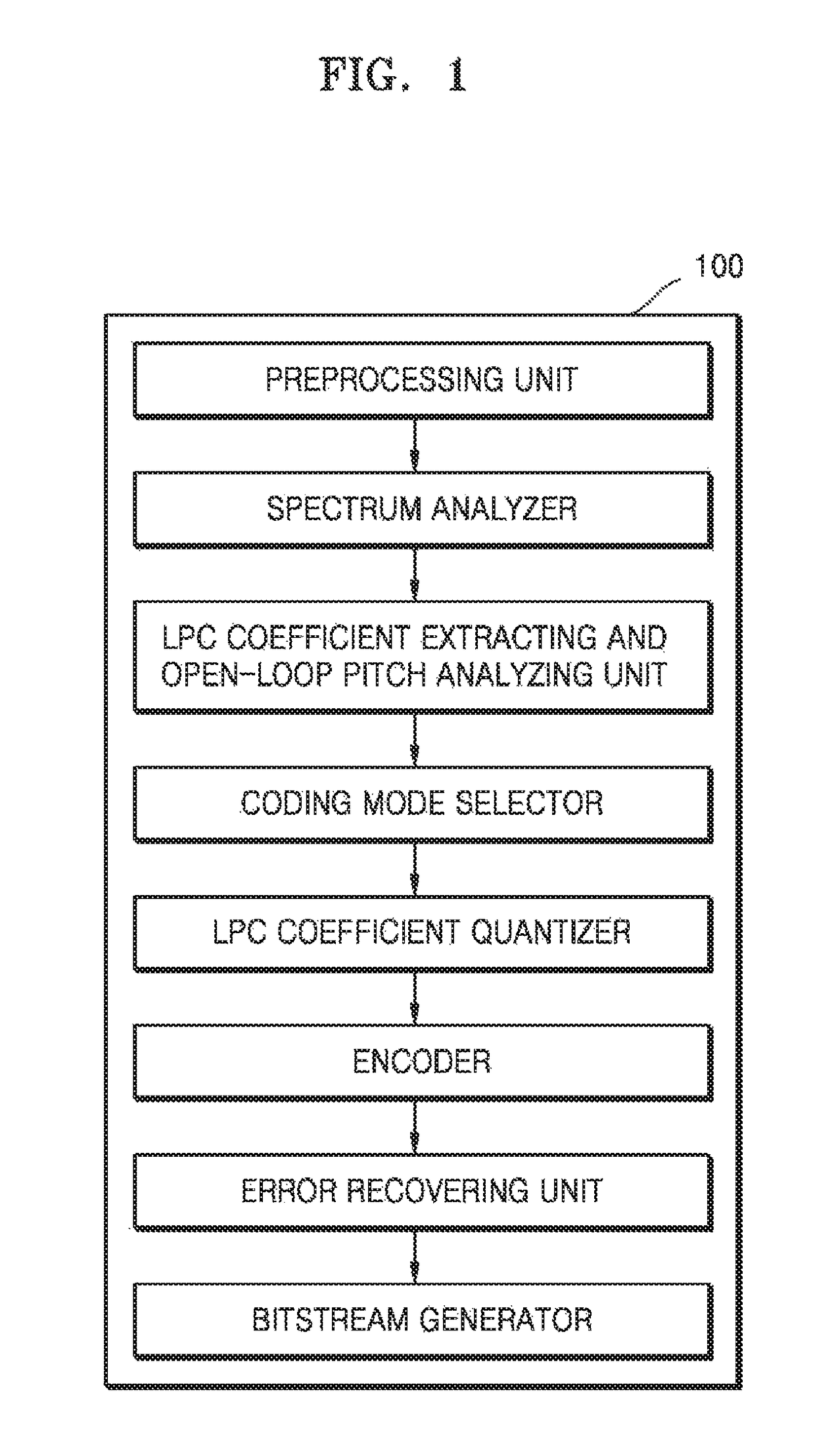

Scalable Encoding Apparatus, Scalable Decoding Apparatus, Scalable Encoding Method, Scalable Decoding Method, Communication Terminal Apparatus, and Base Station Apparatus

ActiveUS20080059166A1Improve Quantization EfficiencyImprove efficiencySpeech analysisComputer architectureTerminal equipment

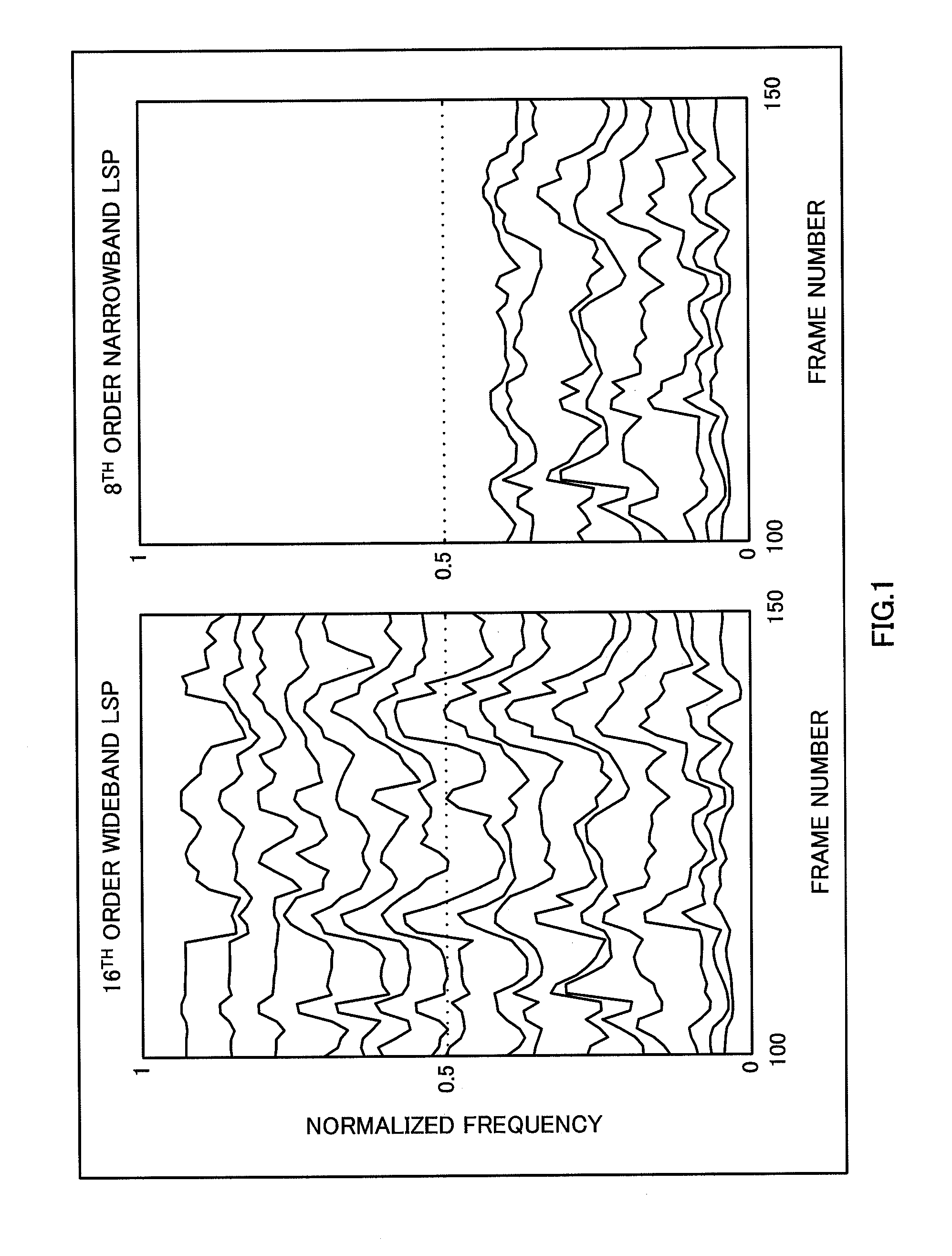

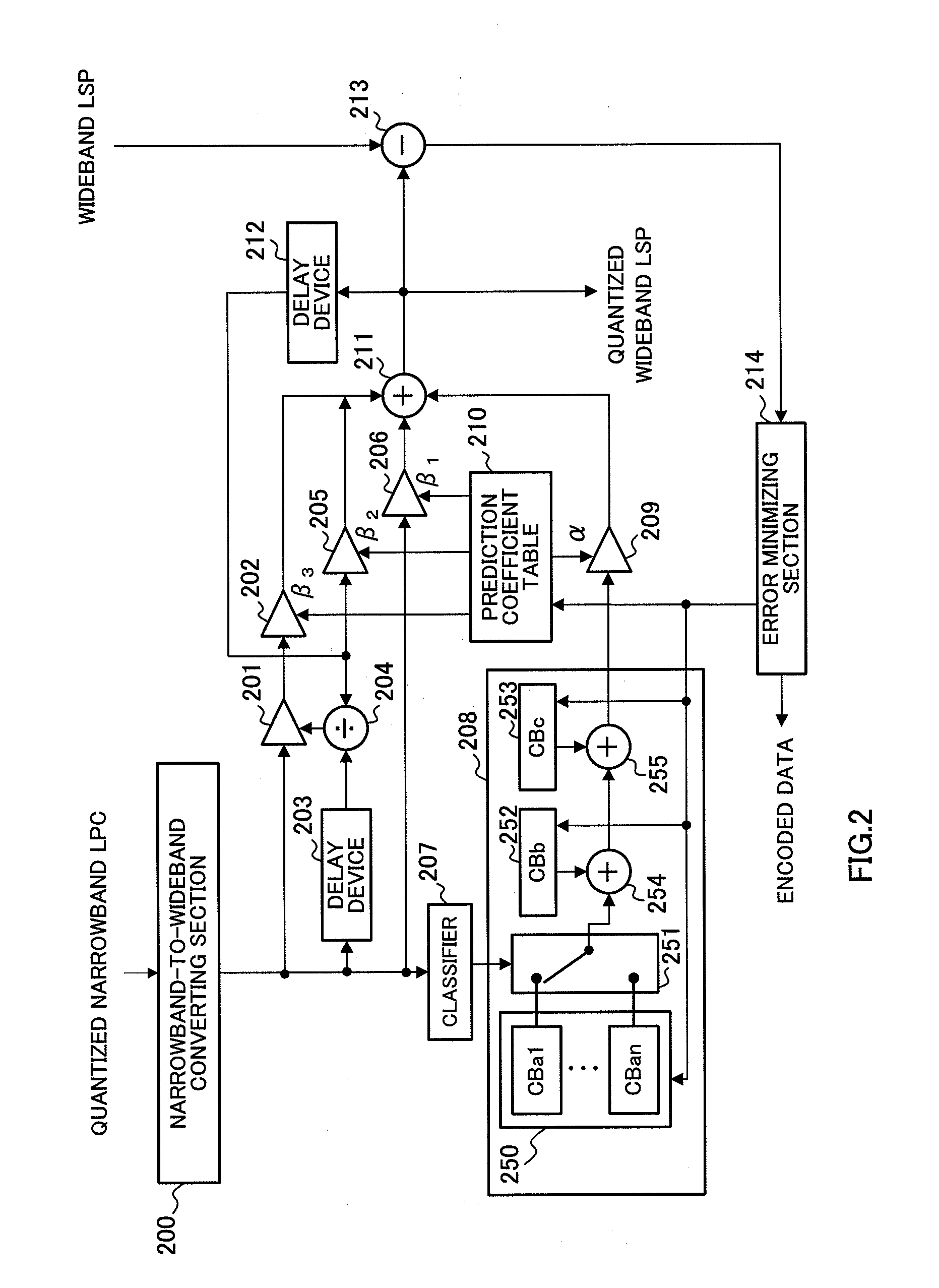

A scalable encoding apparatus, a scalable decoding apparatus and the like are disclosed which can achieve a band scalable LSP encoding that exhibits both a high quantization efficiency and a high performance. In these apparatuses, a narrow band-to-wide band converting part (200) receives and converts a quantized narrow band LSP to a wide band, and then outputs the quantized narrow band LSP as converted (i.e., a converted wide band LSP parameter) to an LSP-to-LPC converting part (800). The LSP-to-LPC converting part (800) converts the quantized narrow band LSP as converted to a linear prediction coefficient and then outputs it to a pre-emphasizing part (801). The pre-emphasizing part (801) calculates and outputs the pre-emphasized linear prediction coefficient to an LPC-to-LSP converting part (802). The LPC-to-LSP converting part (802) converts the pre-emphasized linear prediction coefficient to a pre-emphasized quantized narrow band LSP as wide band converted, and then outputs it to a prediction quantizing part (803).

Owner:III HLDG 12 LLC

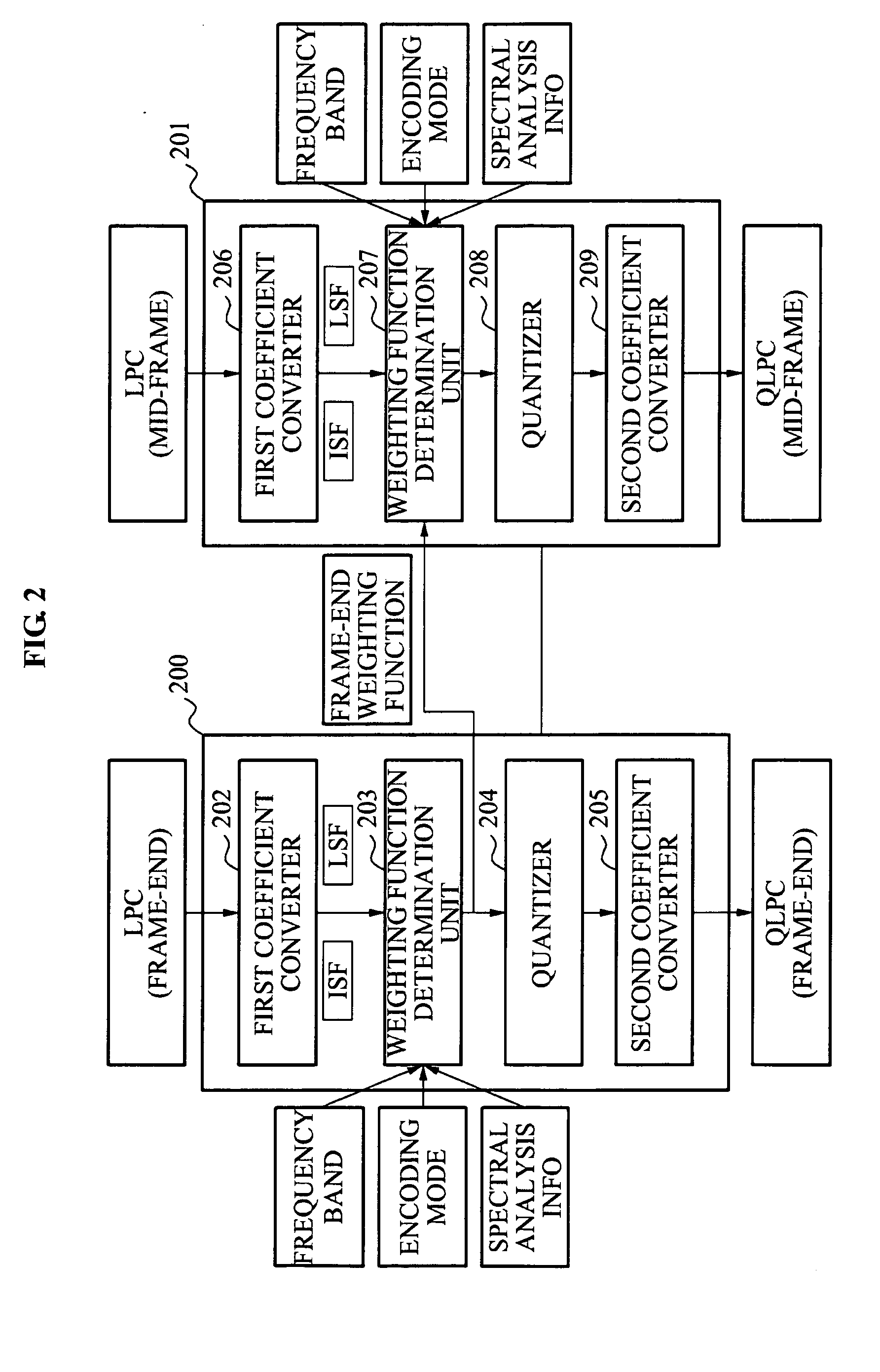

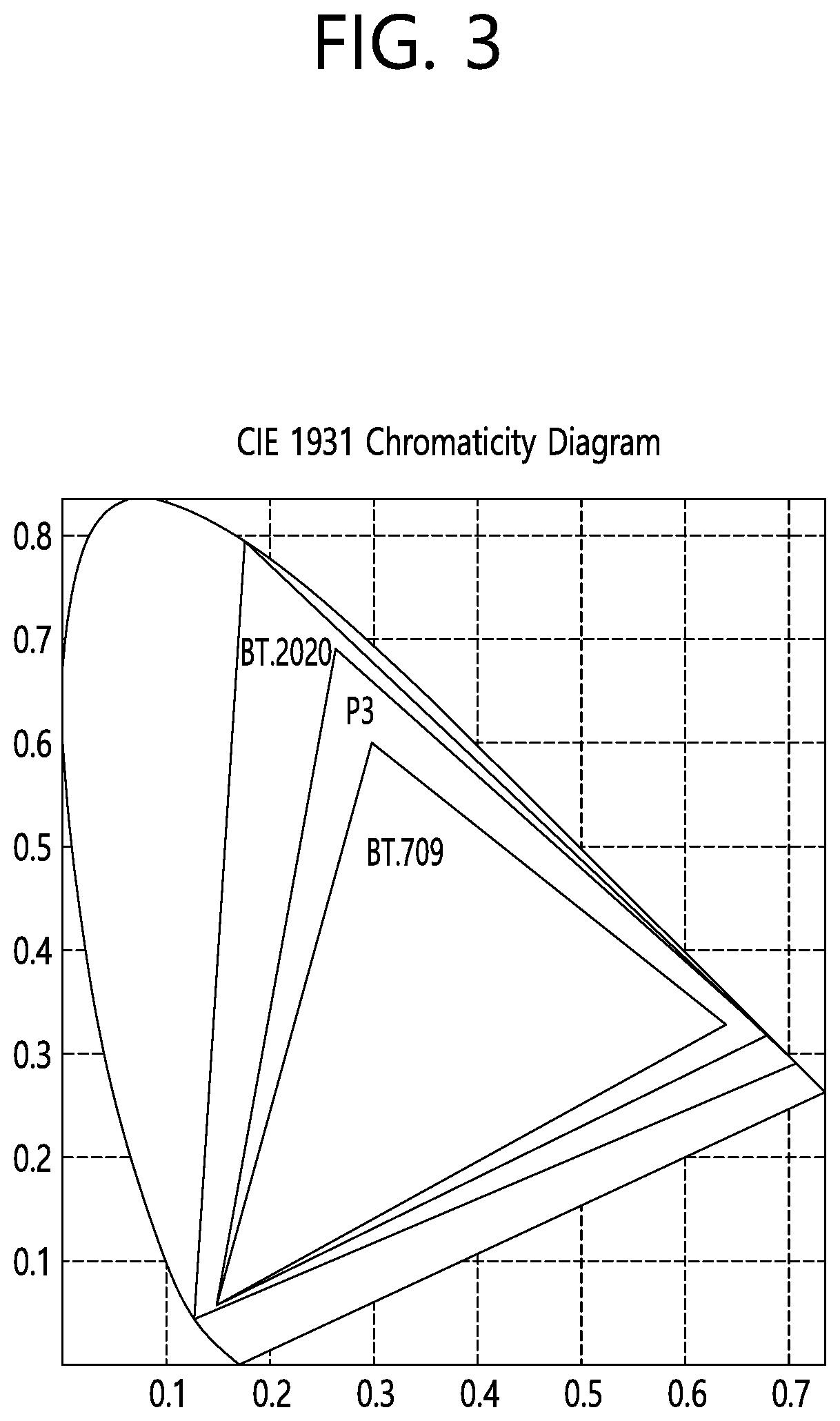

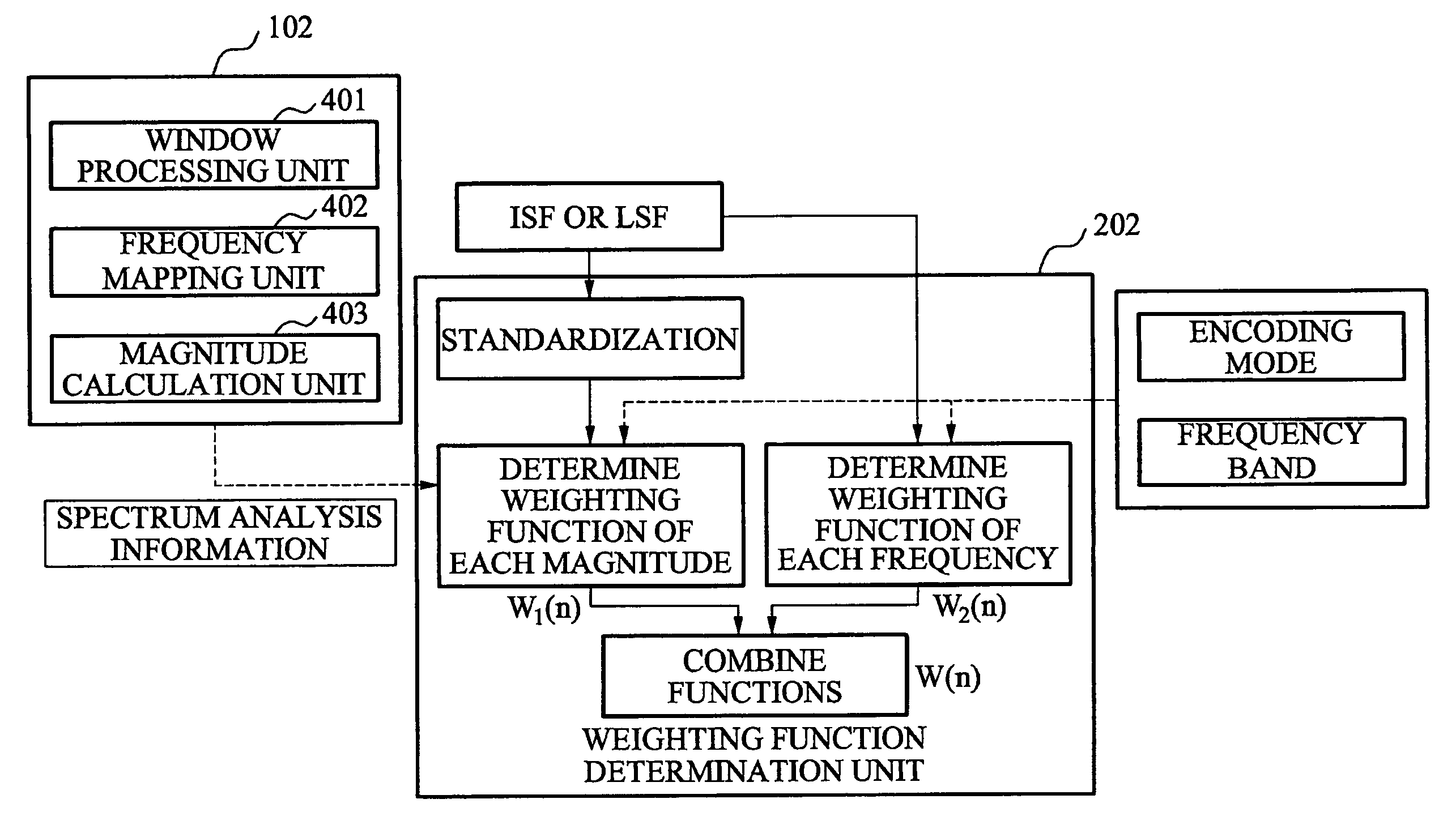

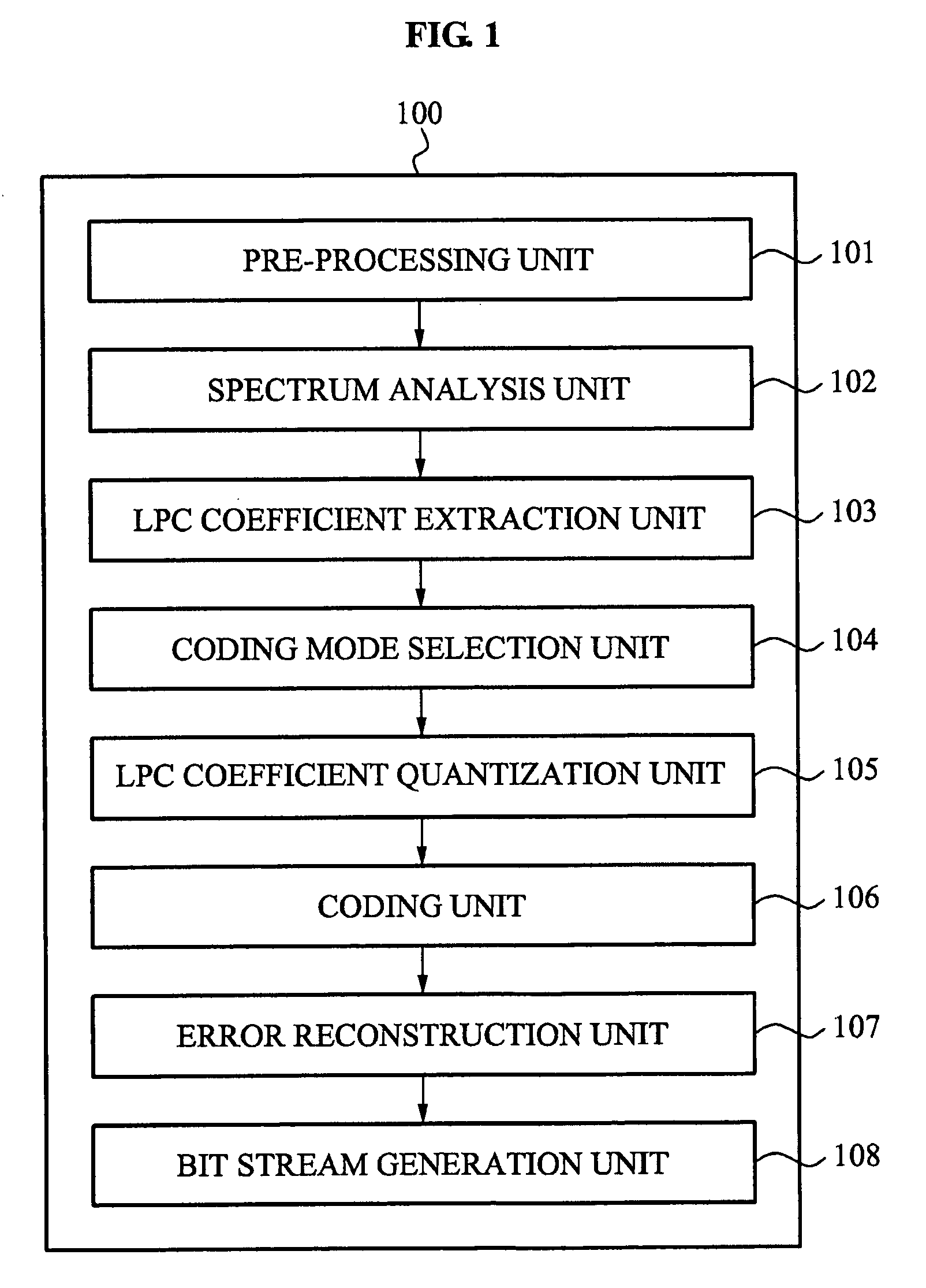

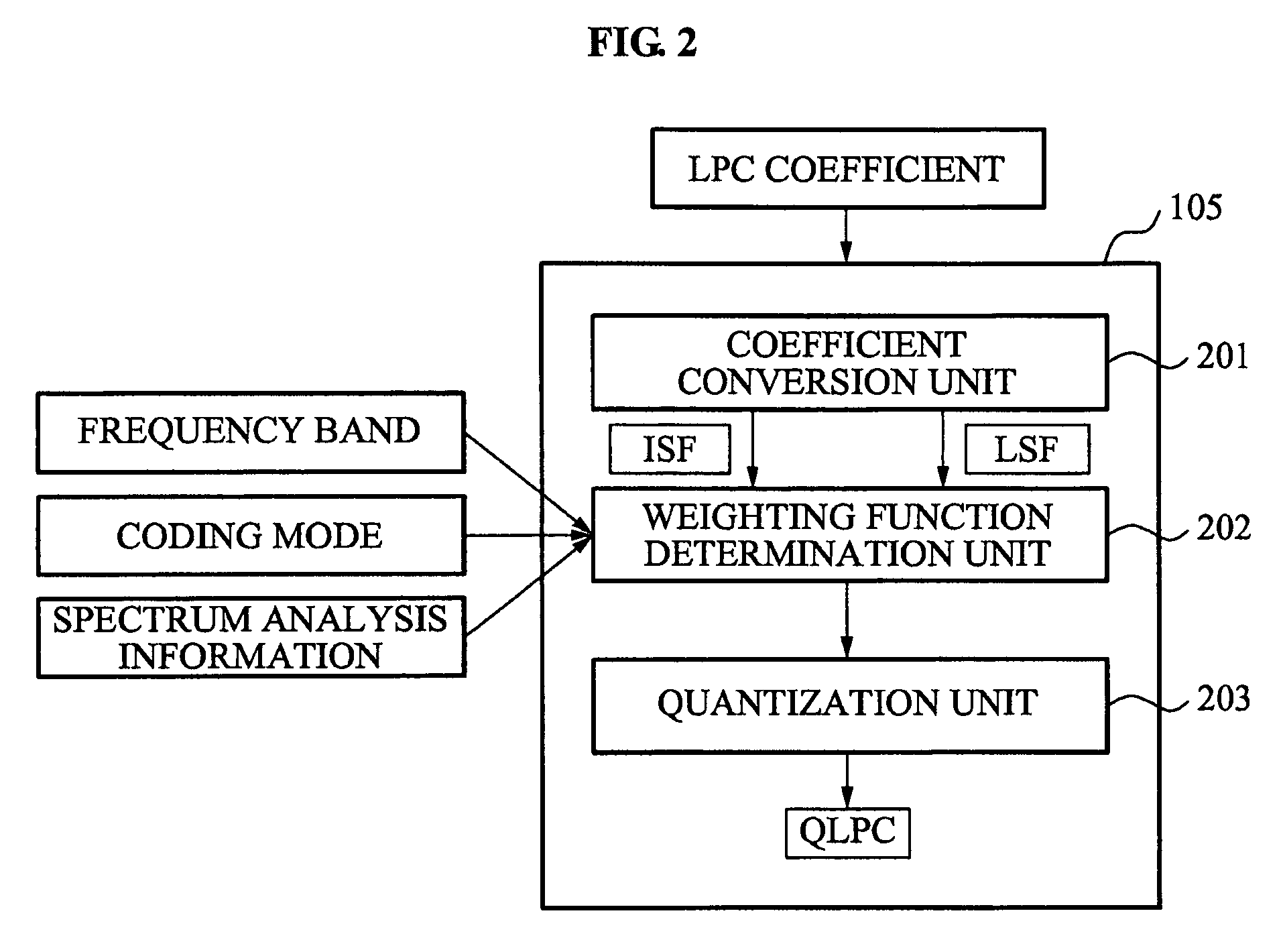

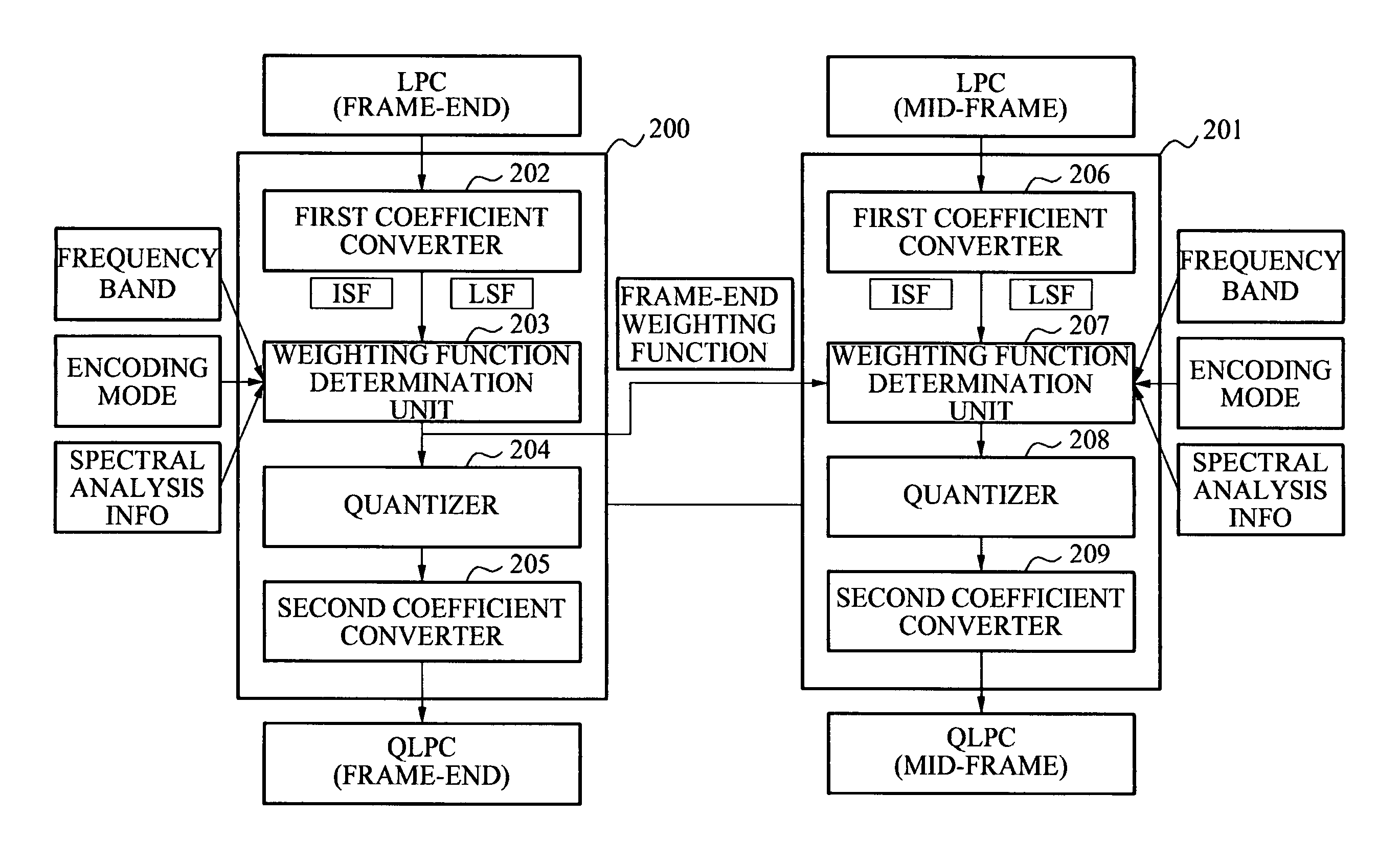

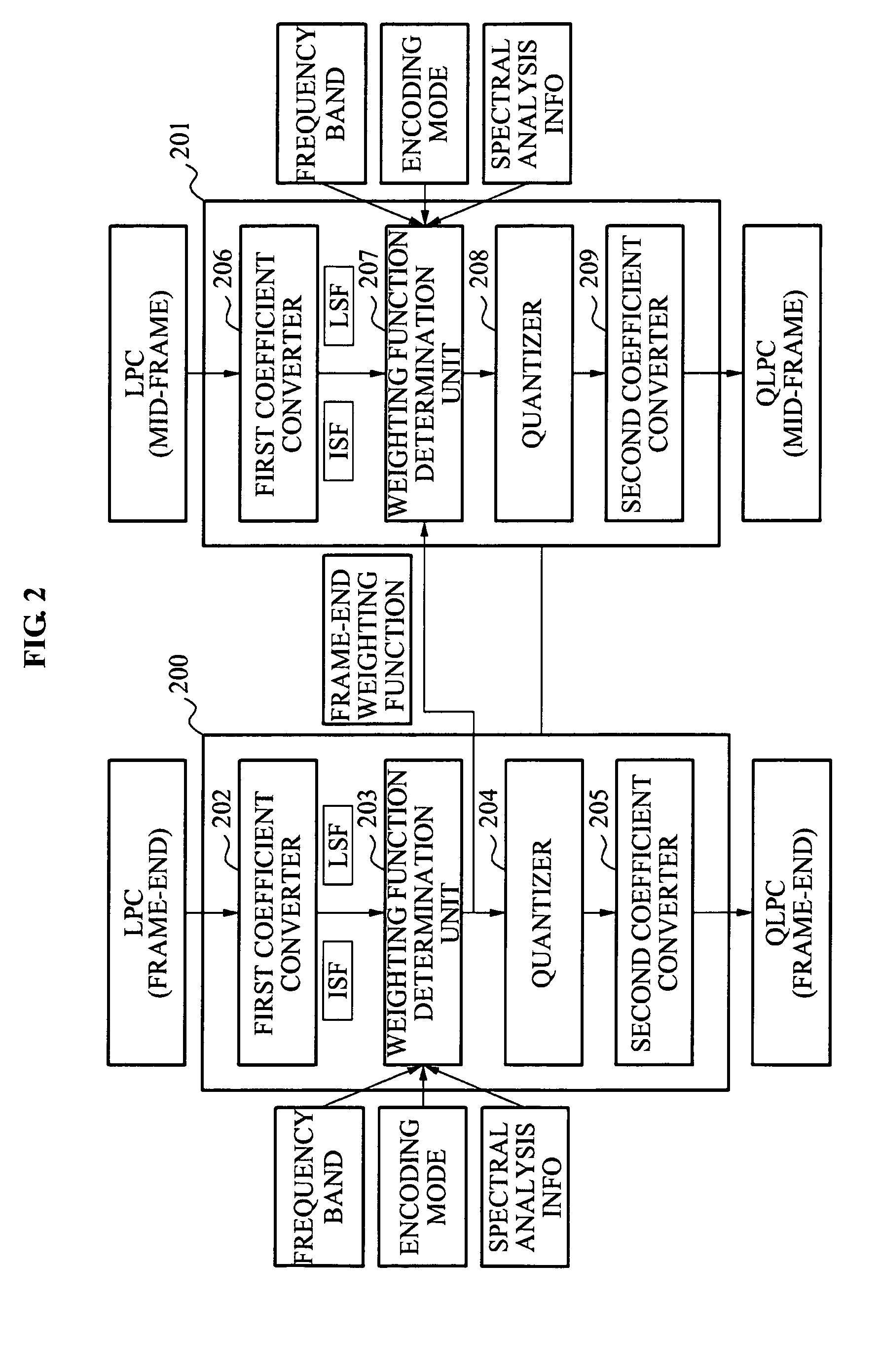

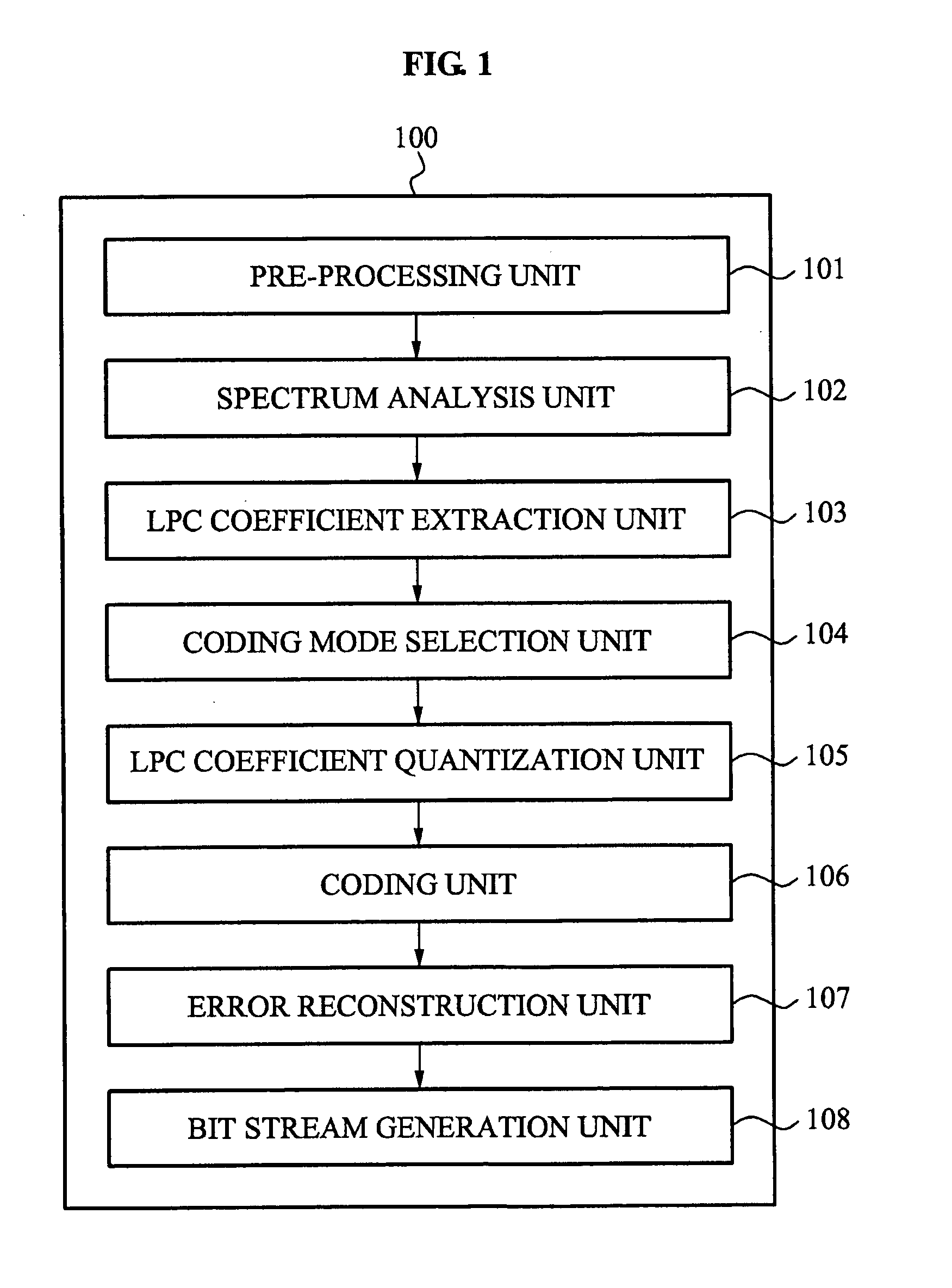

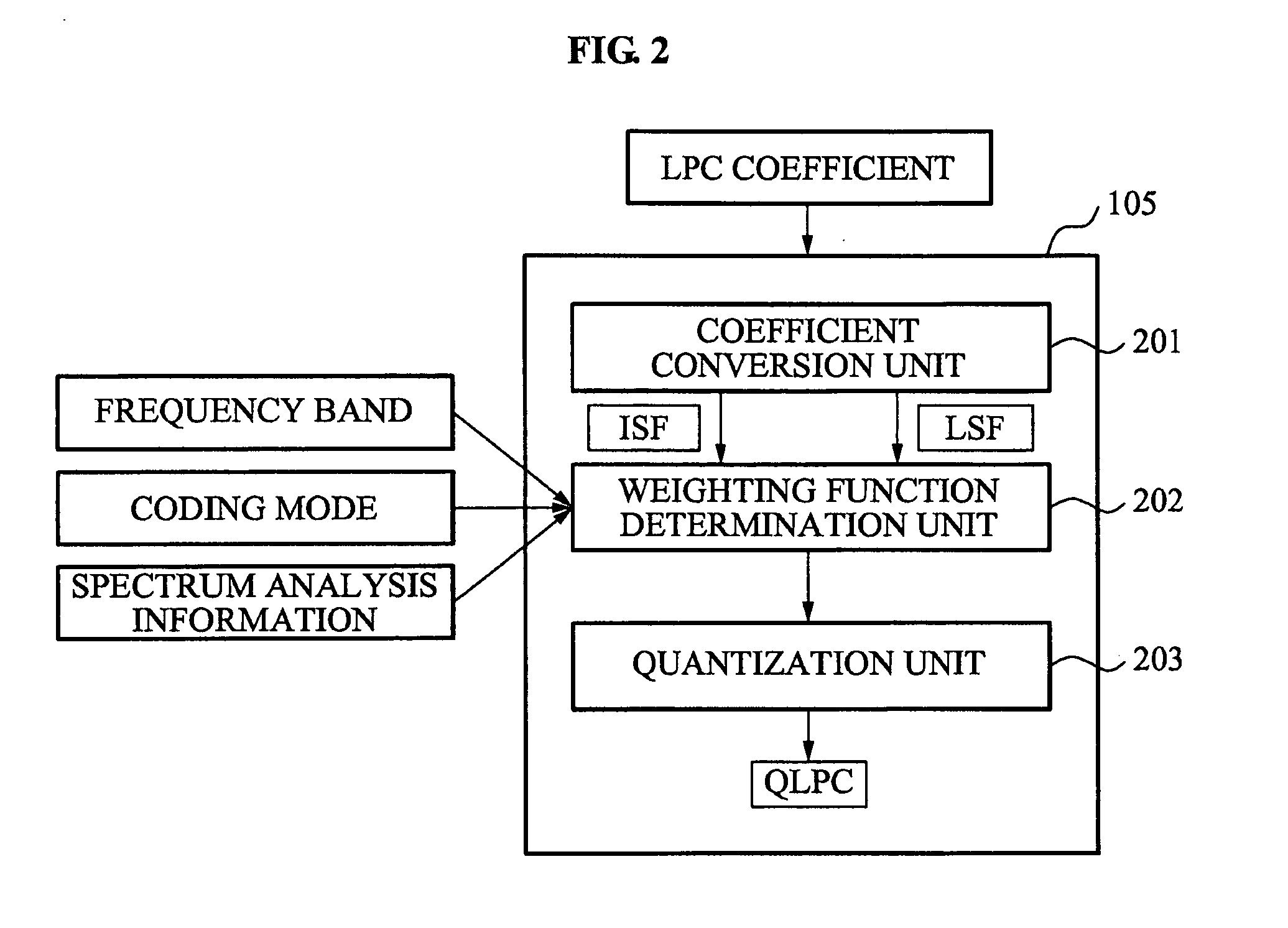

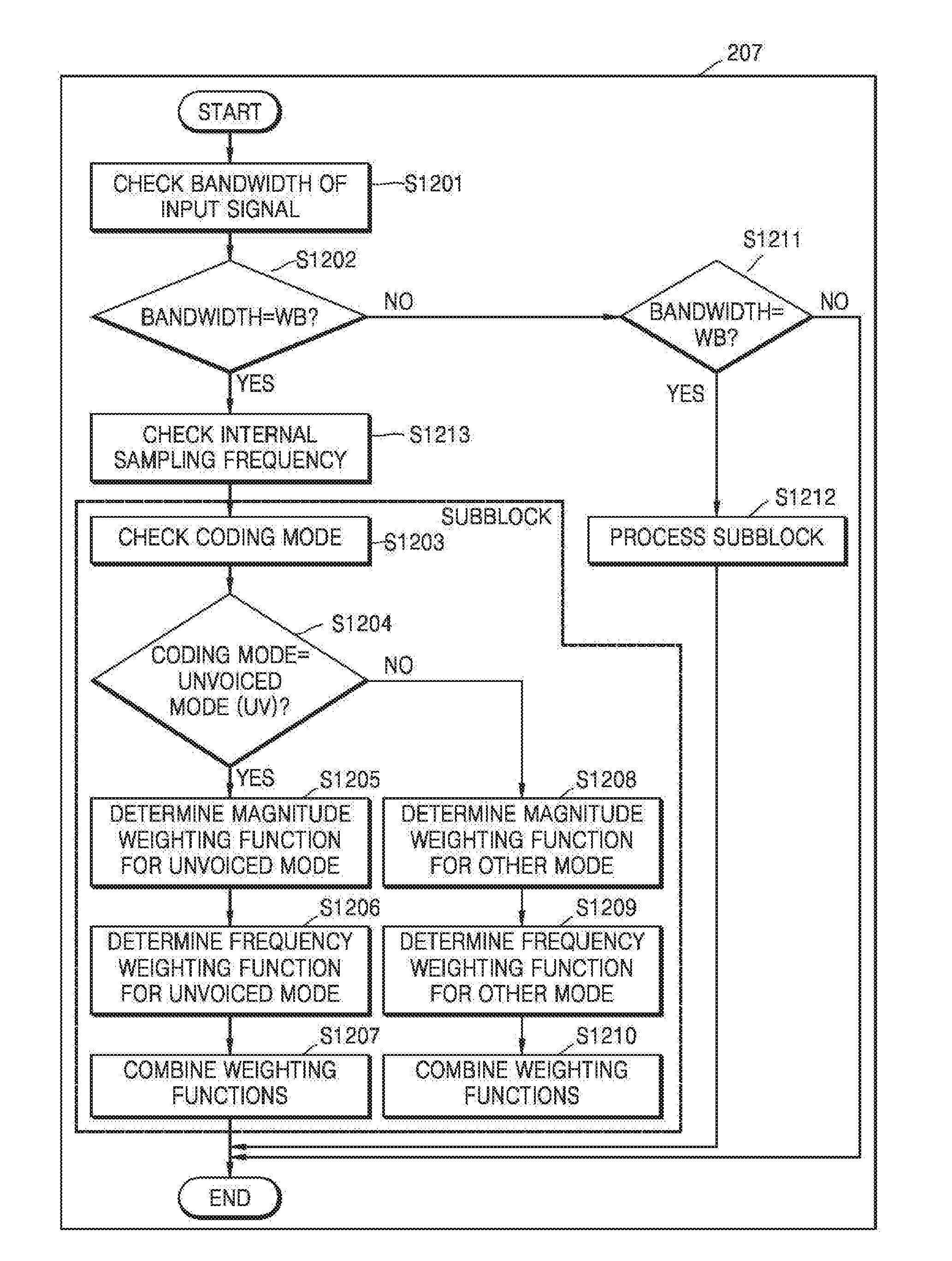

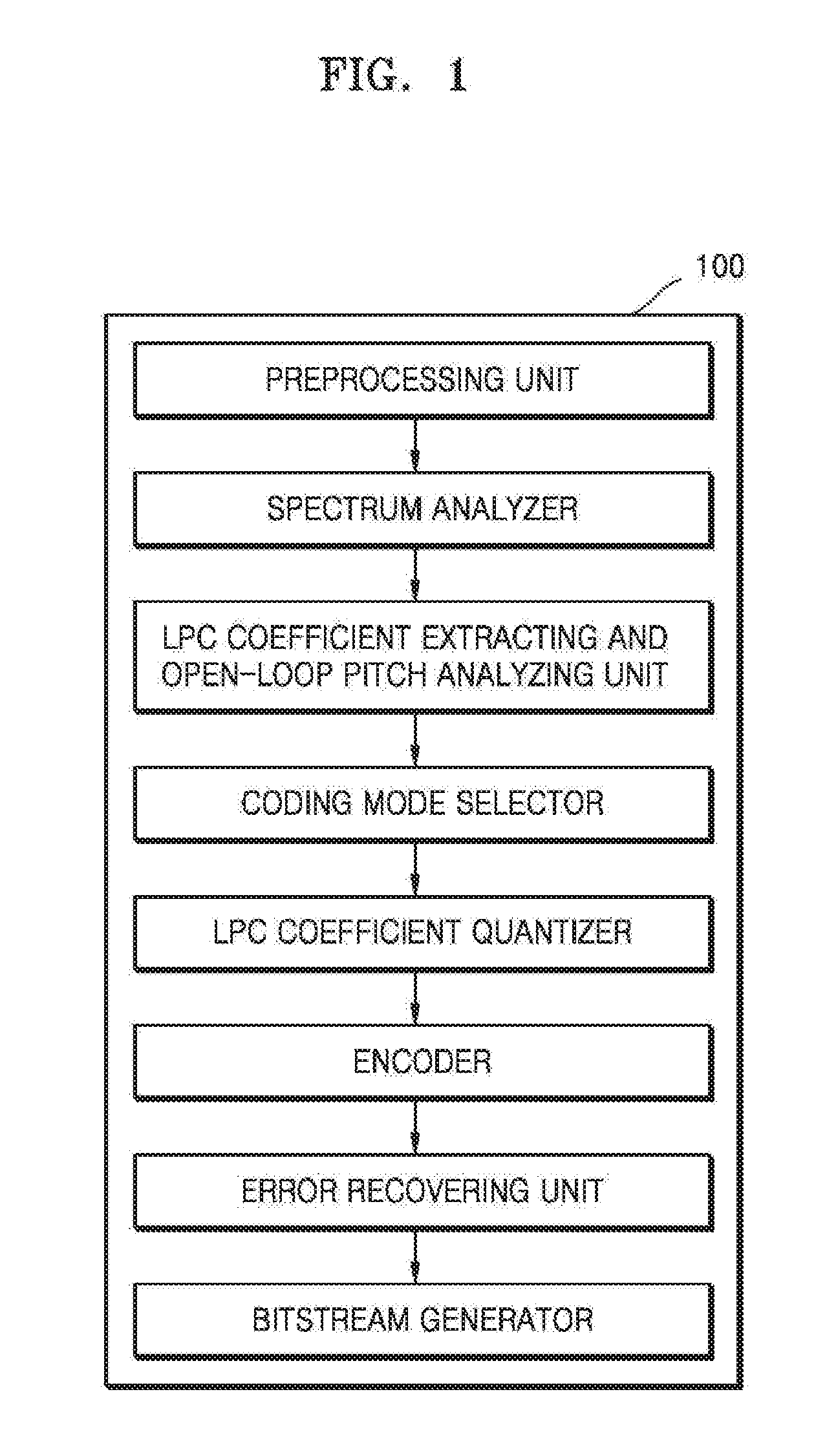

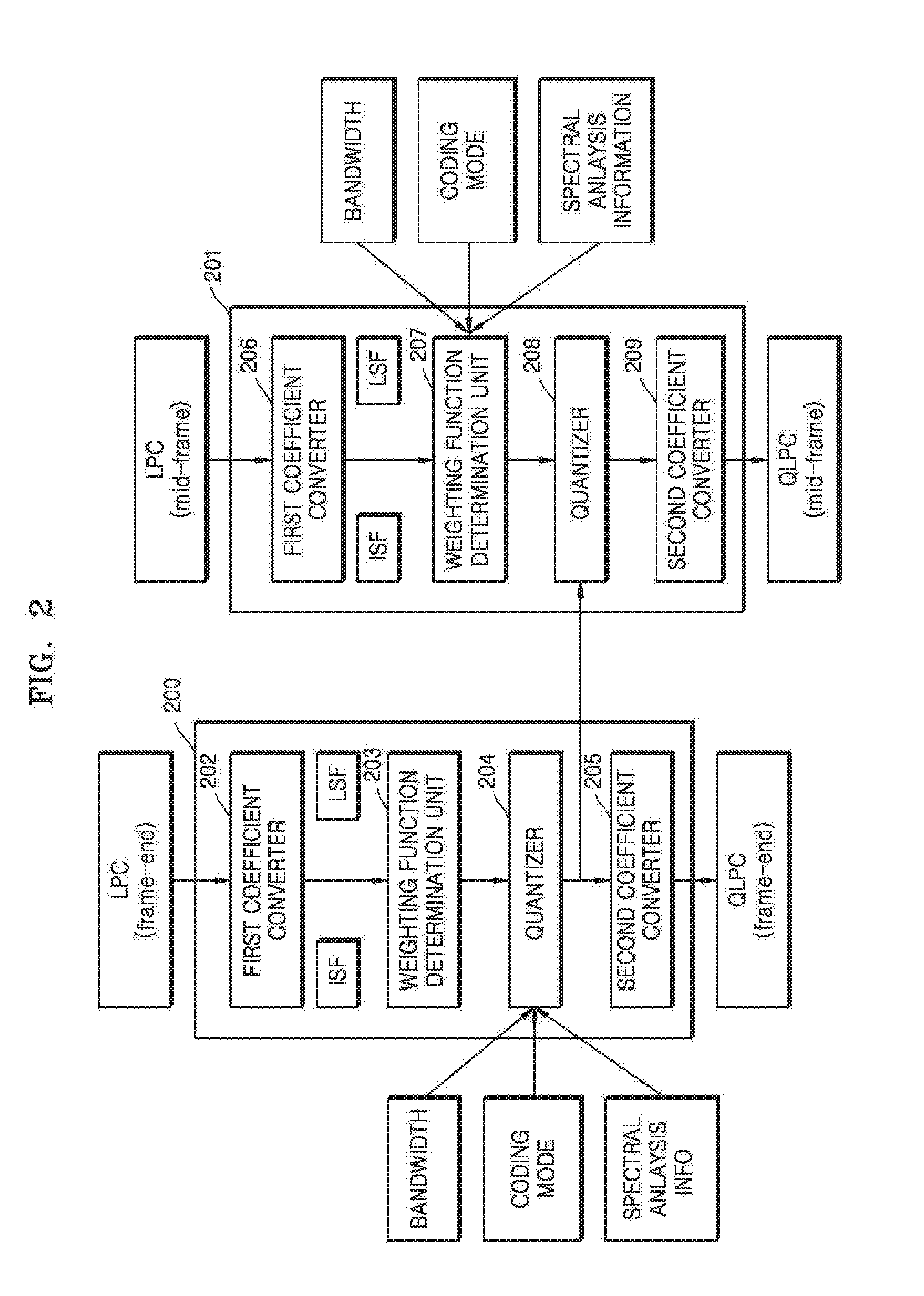

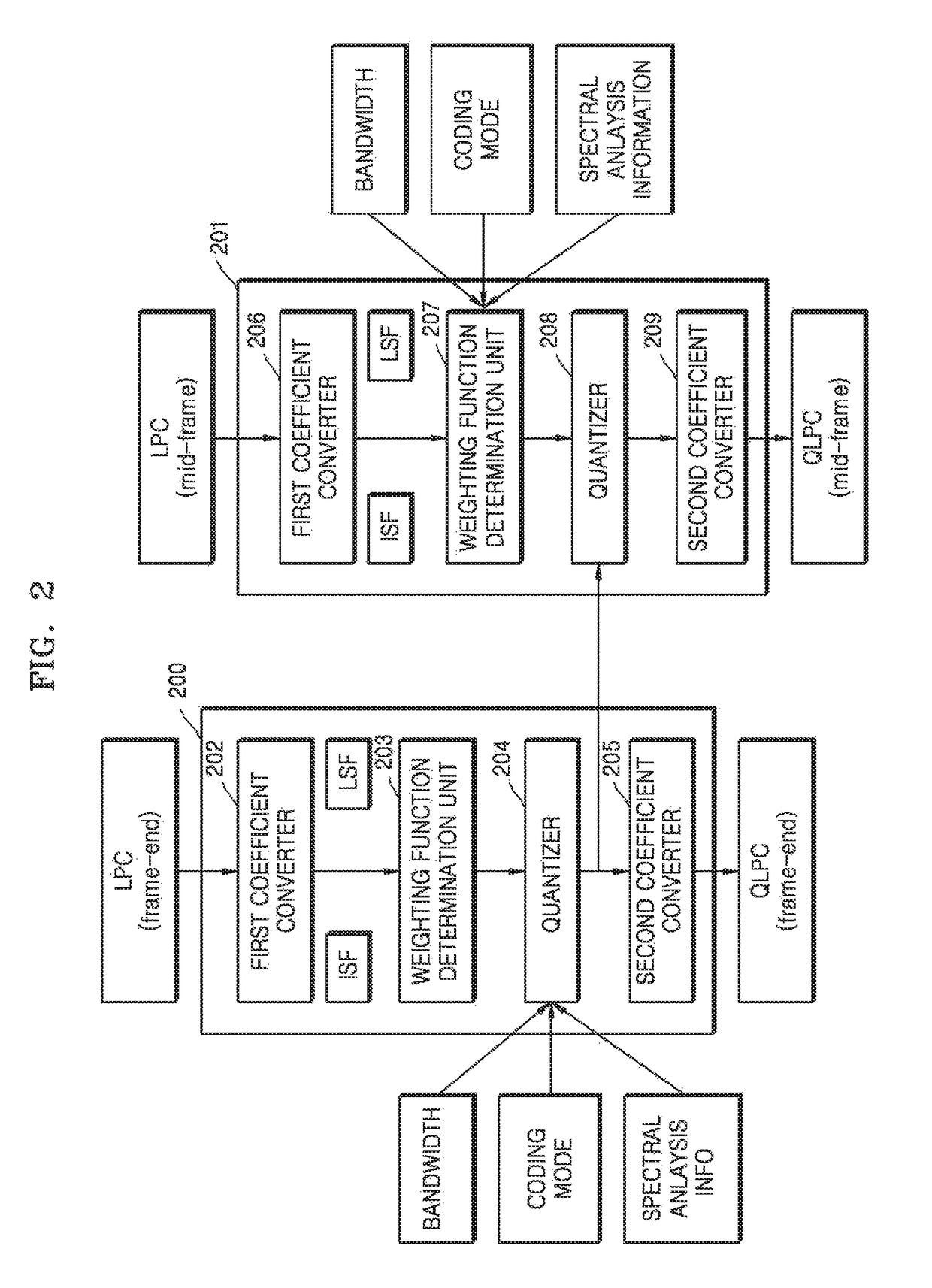

Apparatus and method for determining weighting function having low complexity for linear predictive coding (LPC) coefficients quantization

ActiveUS20120095756A1Improve Quantization EfficiencySpeech analysisLinear predictive codingLow complexity

Proposed is a method and apparatus for determining a weighting function for quantizing a linear predictive coding (LPC) coefficient and having a low complexity. The weighting function determination apparatus may convert an LPC coefficient of a mid-subframe of an input signal to one of a immitance spectral frequency (ISF) coefficient and a line spectral frequency (LSF) coefficient, and may determine a weighting function associated with an importance of the ISF coefficient or the LSF coefficient based on the converted ISF coefficient or LSF coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

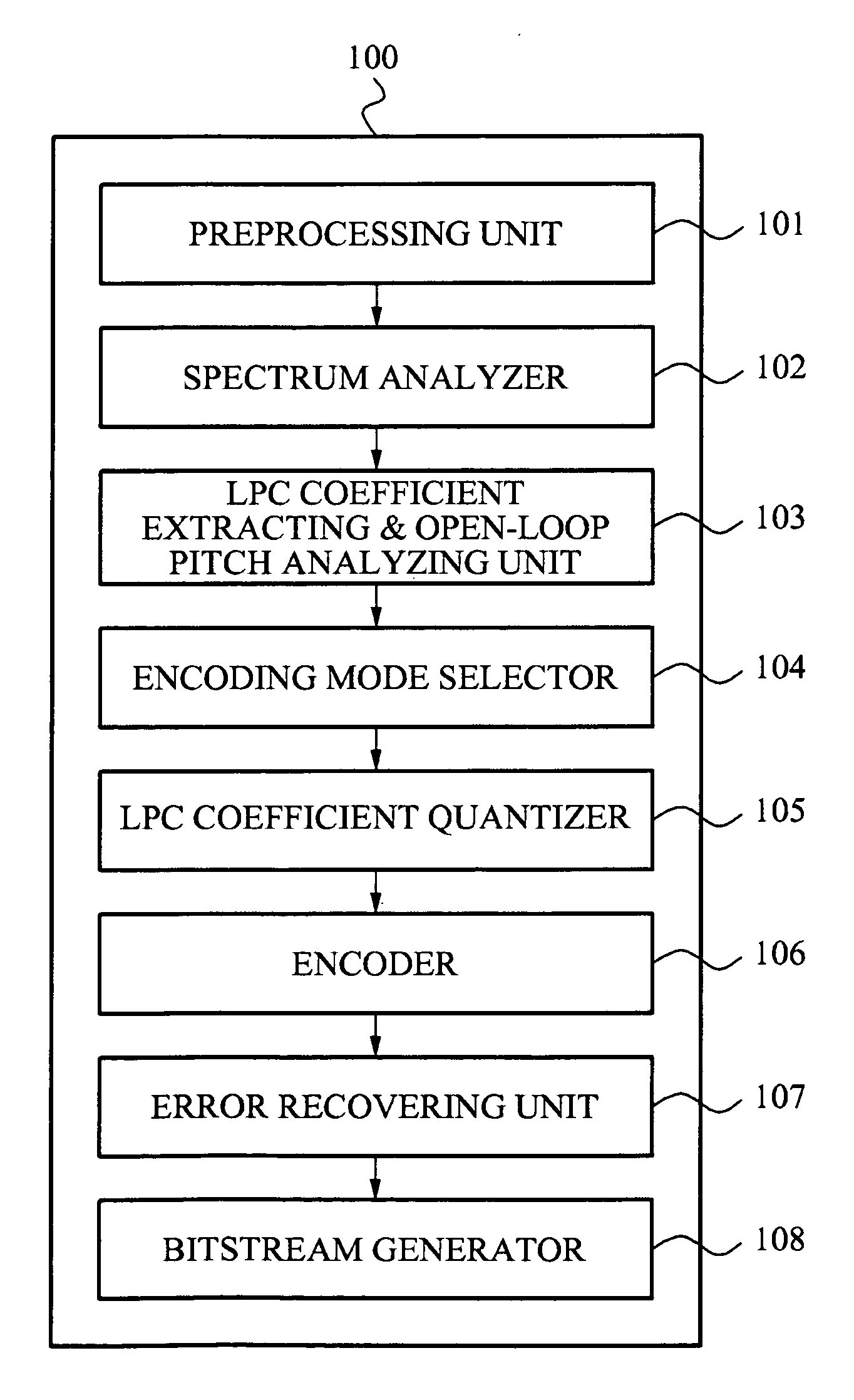

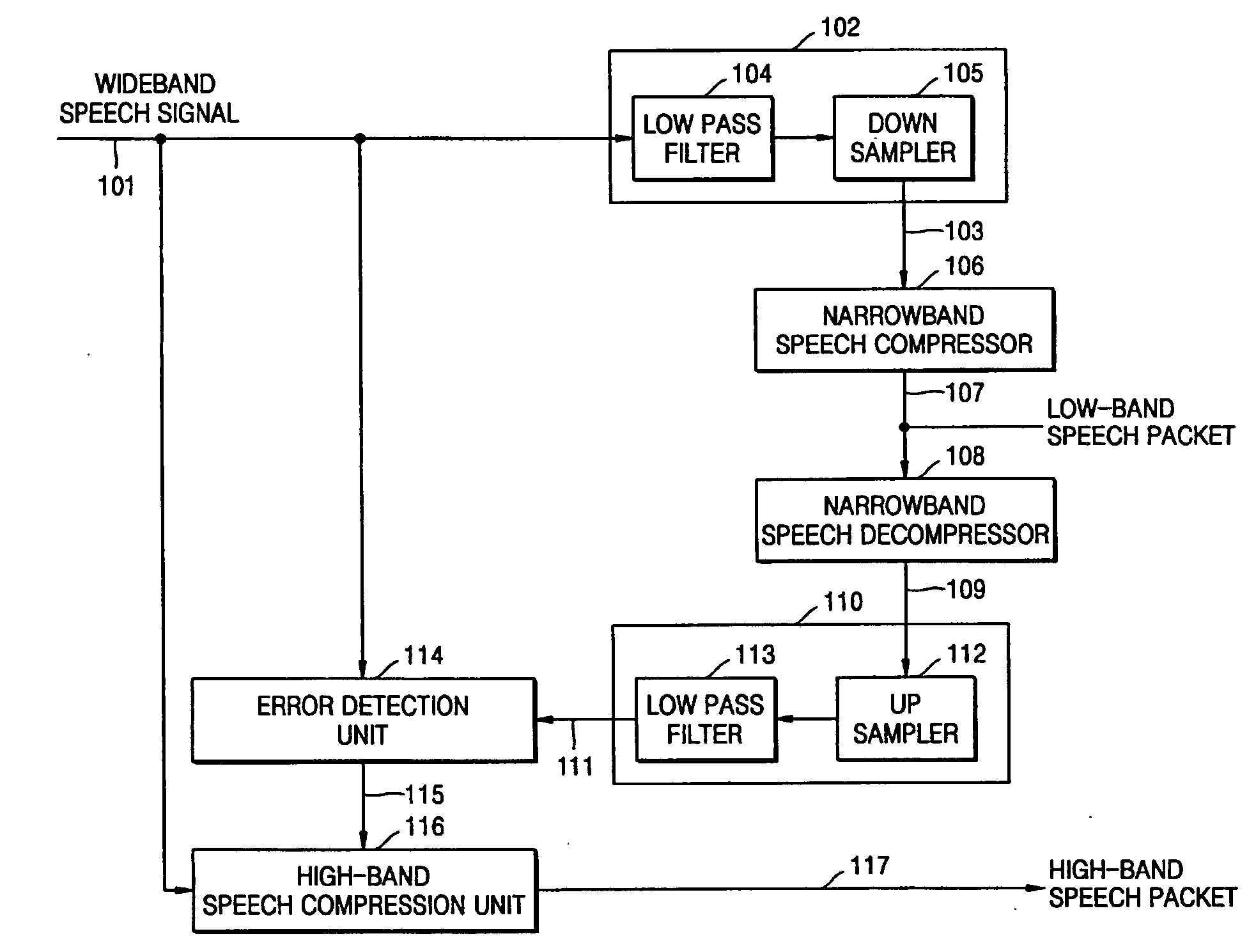

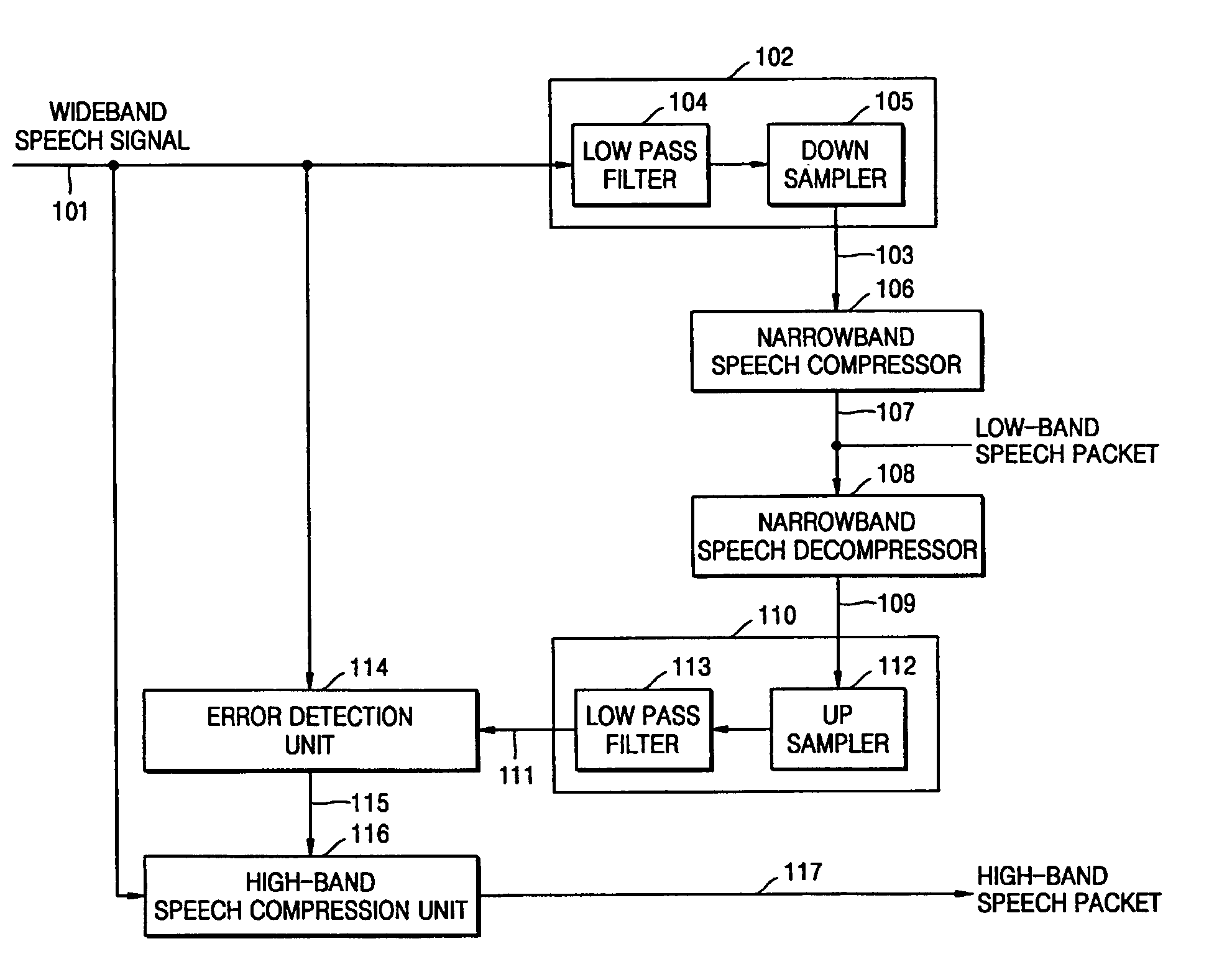

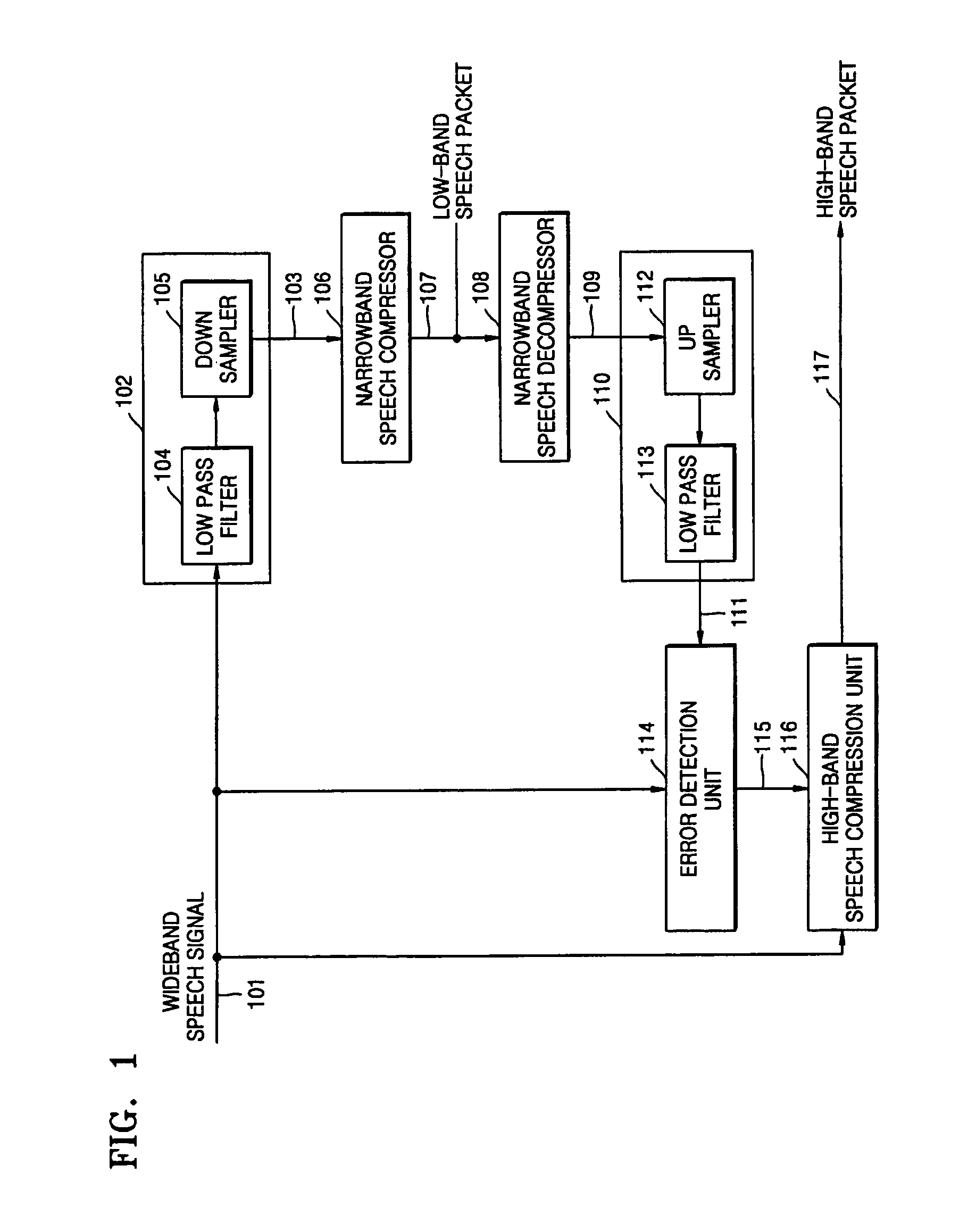

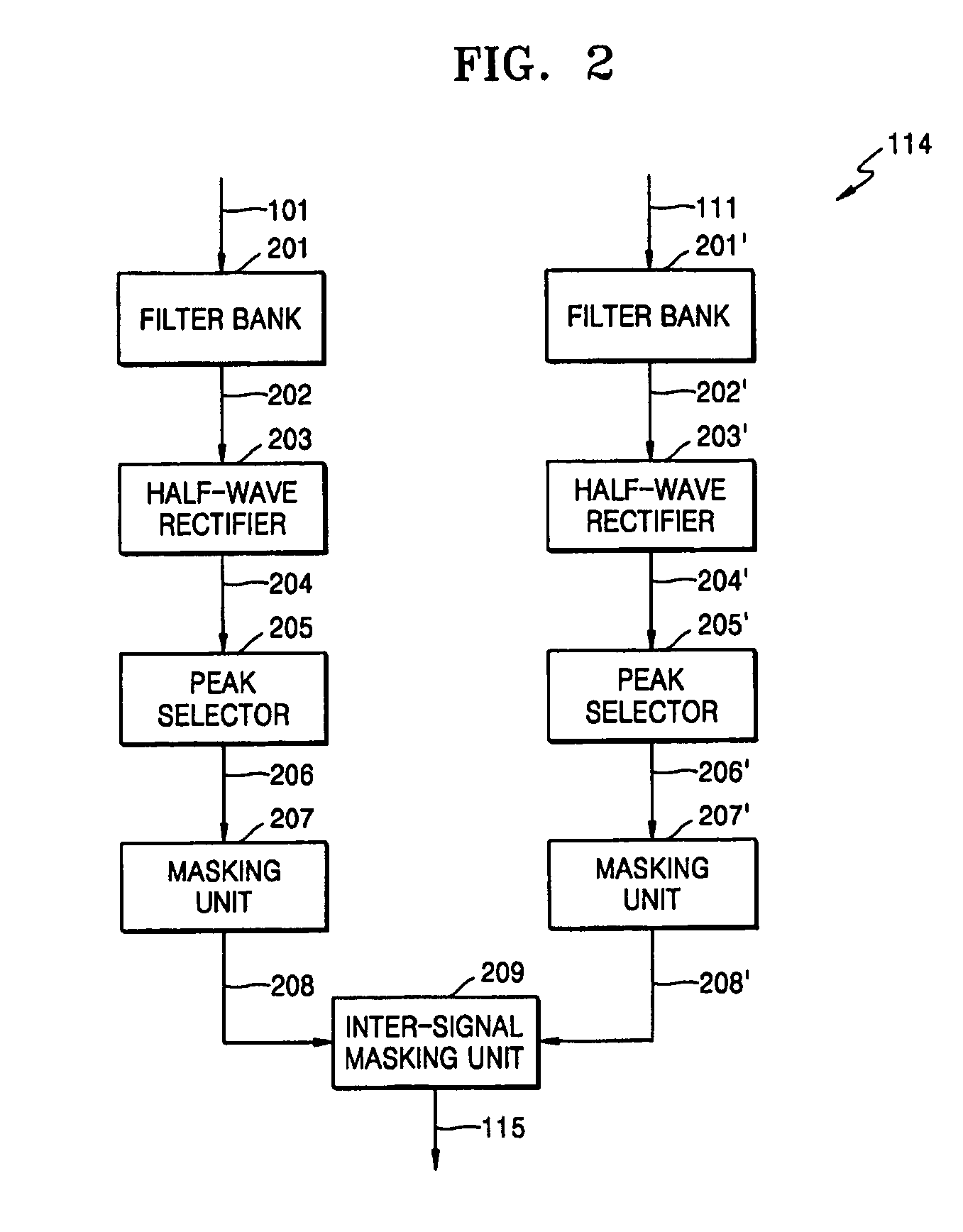

Speech compression and decompression apparatuses and methods providing scalable bandwidth structure

InactiveUS20050004794A1Improve Quantization EfficiencyMinimize distortionSpeech analysisCode conversionSpeech soundNarrowband speech

A speech compression apparatus including: a first band-transform unit transforming a wideband speech signal to a narrowband low-band speech signal; a narrowband speech compressor compressing the narrowband low-band speech signal and outputting a result of the compressing as a low-band speech packet; a decompression unit decompressing the low-band speech packet and obtaining a decompressed wideband low-band speech signal; an error detection unit detecting an error signal that corresponds to a difference between the wideband speech signal and the decompressed wideband low-band speech signal; and a high-band speech compression unit compressing the error signal and a high-band speech signal of the wideband speech signal and outputting the result of the compressing as a high-band speech packet.

Owner:SAMSUNG ELECTRONICS CO LTD

Speech signal compression and/or decompression method, medium, and apparatus

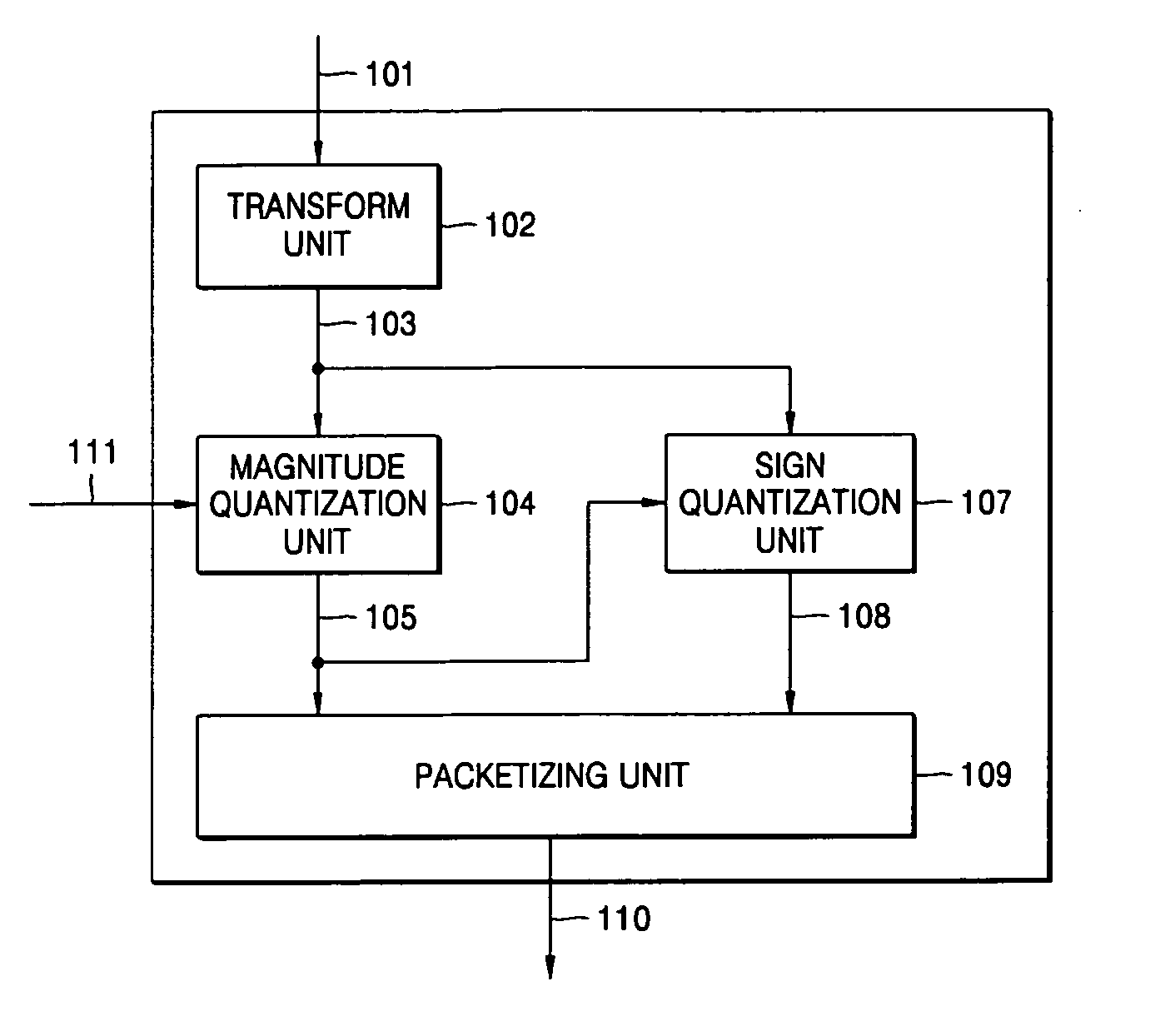

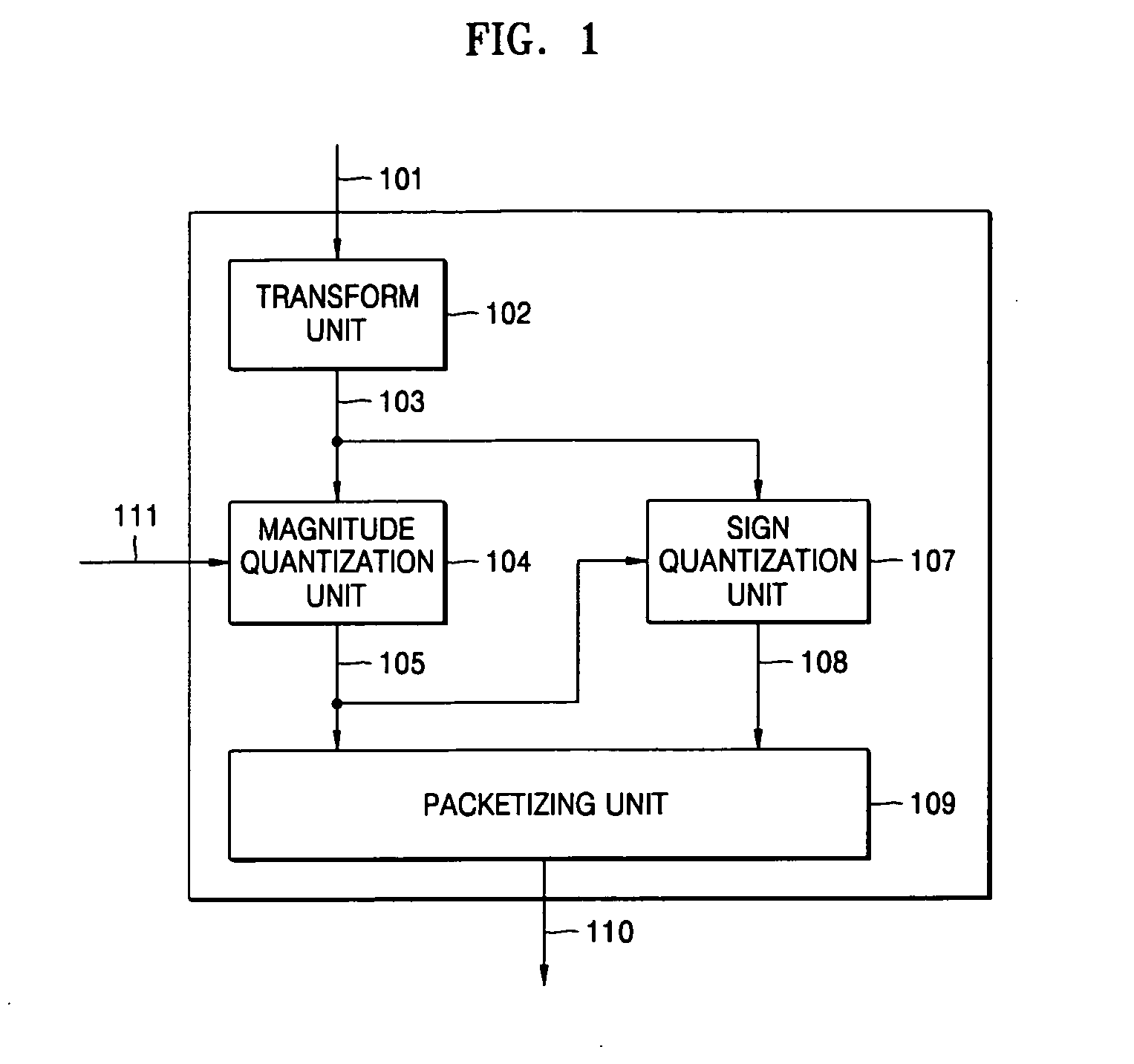

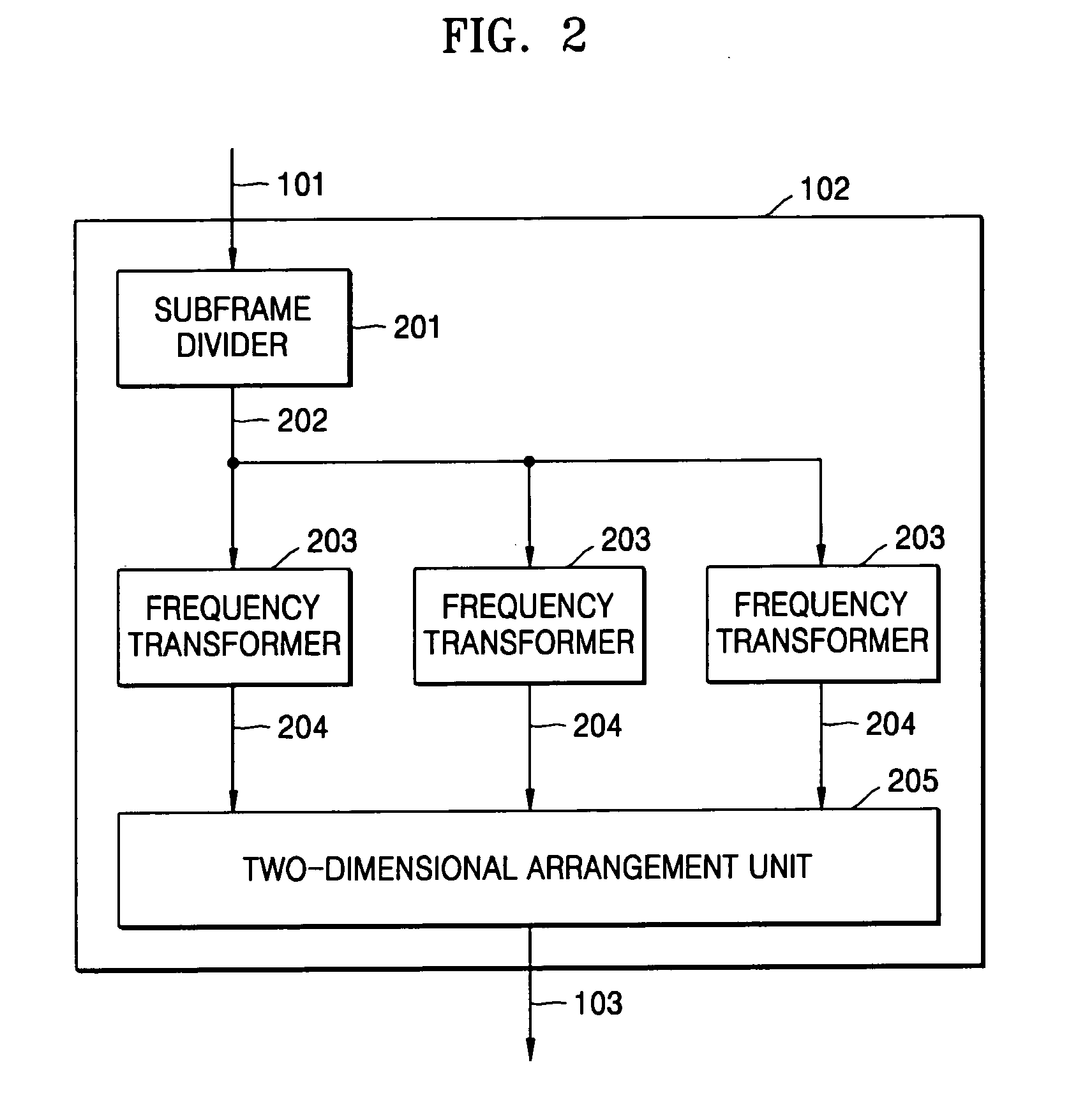

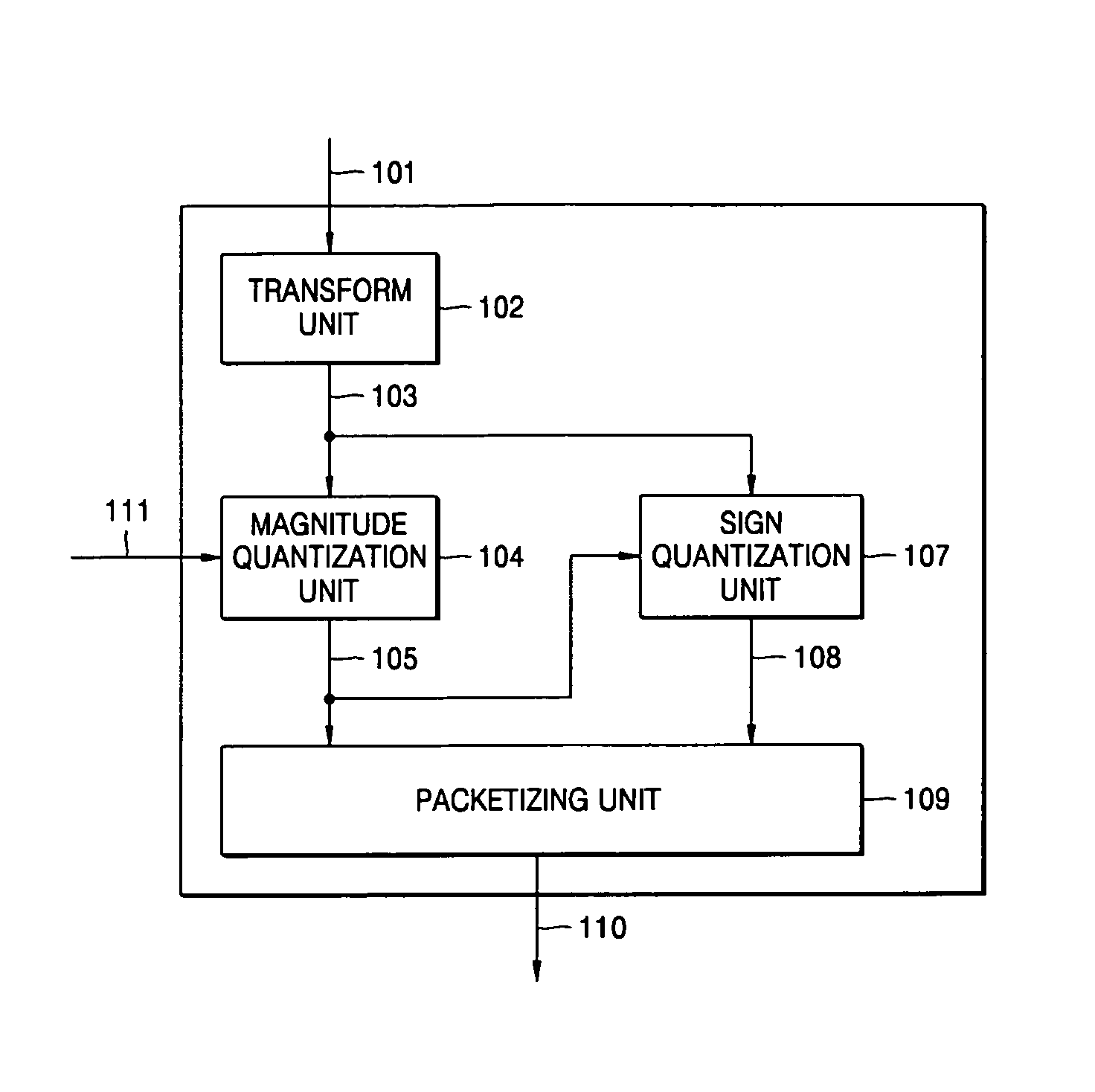

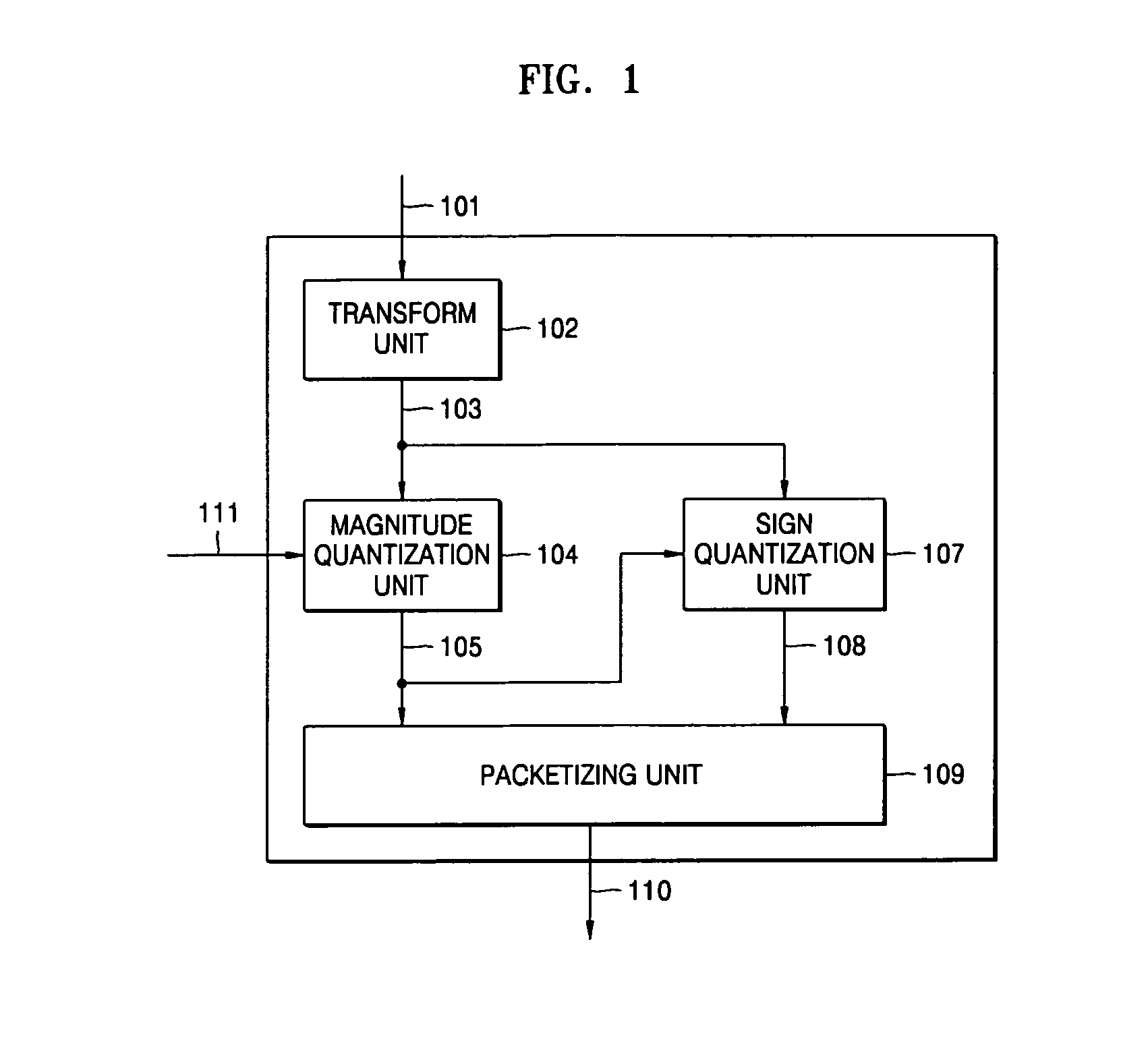

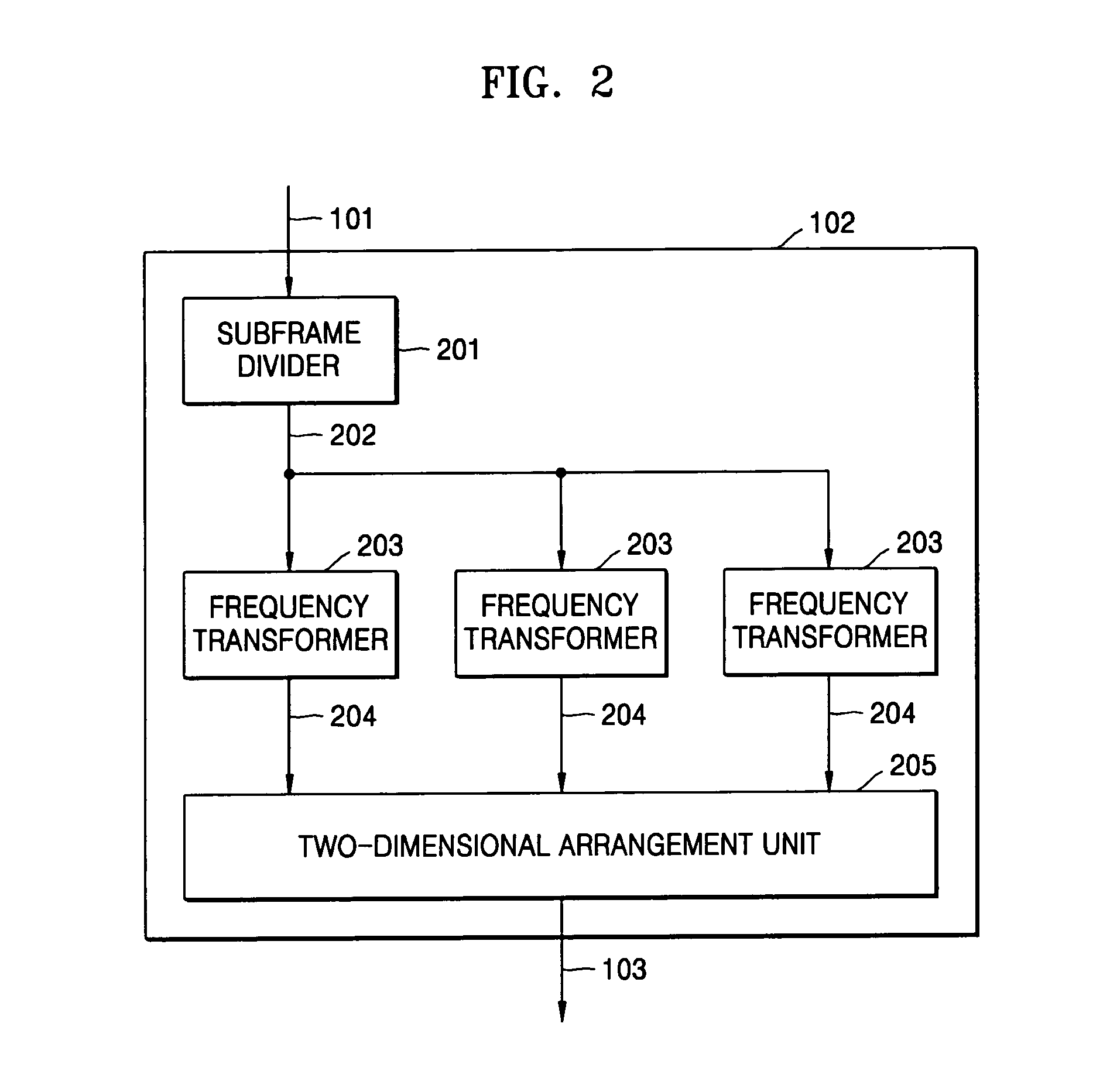

A speech signal compression and / or decompression method, medium, and apparatus in which the speech signal is transformed into the frequency domain for quantizing and dequantizing information of frequency coefficients. The speech signal compression apparatus includes a transform unit to transform a speech signal into the frequency domain and obtain frequency coefficients, a magnitude quantization unit to transform magnitudes of the frequency coefficients, quantize the transformed magnitudes and obtain magnitude quantization indices, a sign quantization unit to quantize signs of the frequency coefficients and obtain sign quantization indices, and a packetizing unit to generate the magnitude and sign quantization indices as a speech packet.

Owner:SAMSUNG ELECTRONICS CO LTD

Neural network low-bit quantization method

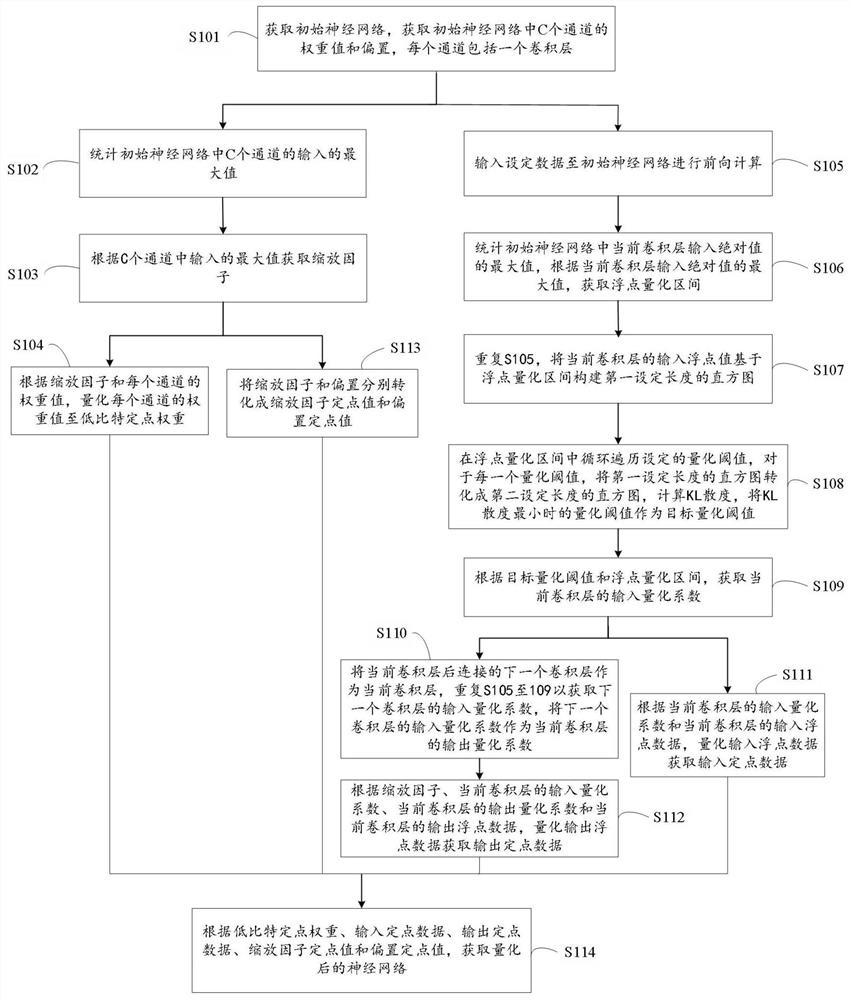

PendingCN112381205APracticalImprove Quantization EfficiencyNeural architecturesPhysical realisationAlgorithmQuantized neural networks

The invention relates to a neural network low-bit quantification method. The weight value of each channel of a neural network is quantified to a low-bit fixed-point weight. And the method also includes obtaining an input quantization coefficient of the current convolution layer according to the target quantization threshold and the floating point quantization interval; taking the input quantization coefficient of the next convolution layer as the output quantization coefficient of the current convolution layer; quantizing the input floating point data to obtain input fixed point data; and quantizing the output floating point data to obtain output fixed point data; and converting the scaling factor and the bias into a scaling factor fixed-point value and a bias fixed-point value respectively; according to the low-bit fixed-point weight, the input fixed-point data, the output fixed-point data, the scaling factor fixed-point value and the offset fixed-point value, obtaining a neural network, and applying the quantized neural network model to embedded equipment.

Owner:BEIJING TSINGMICRO INTELLIGENT TECH CO LTD

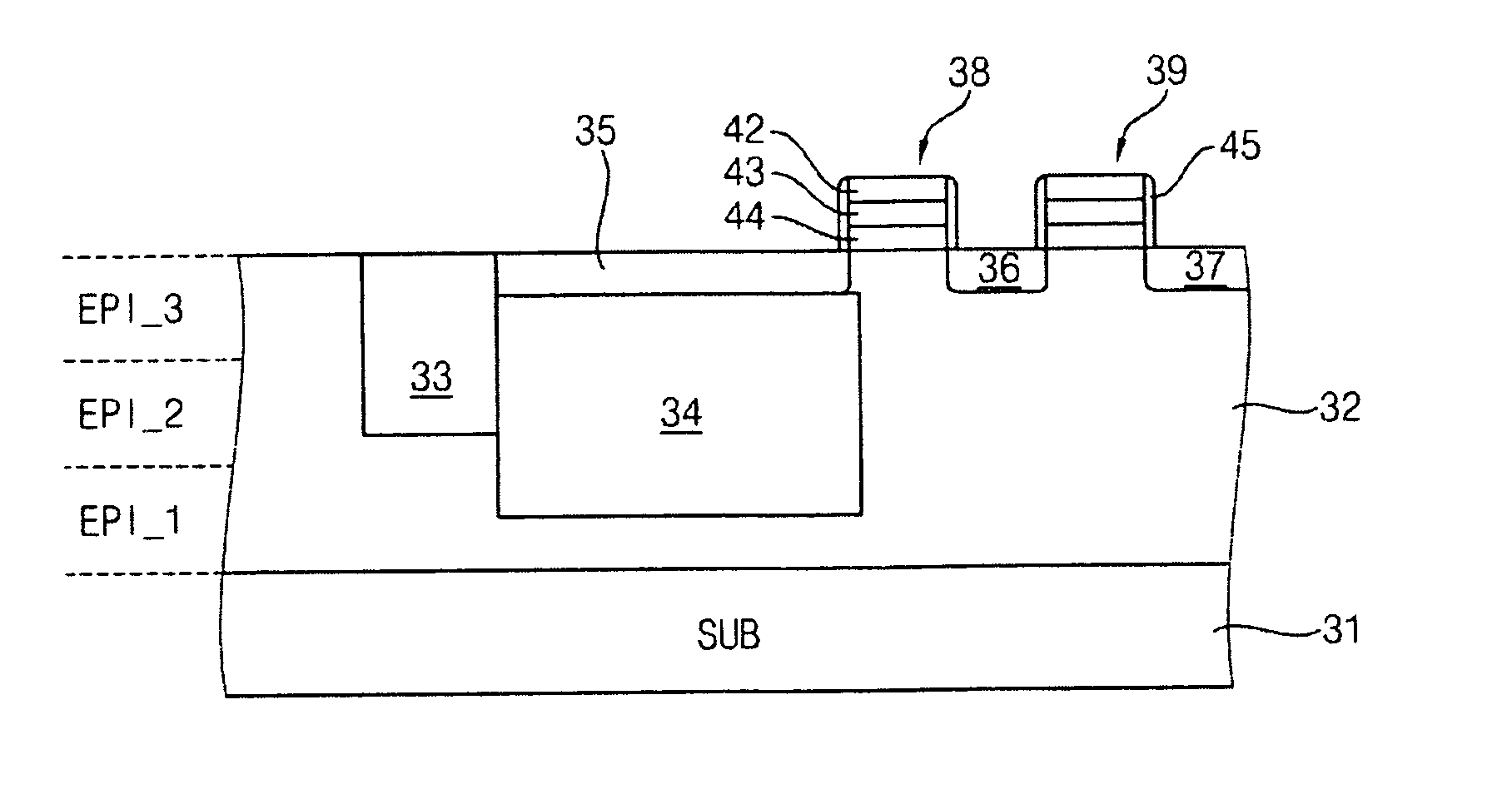

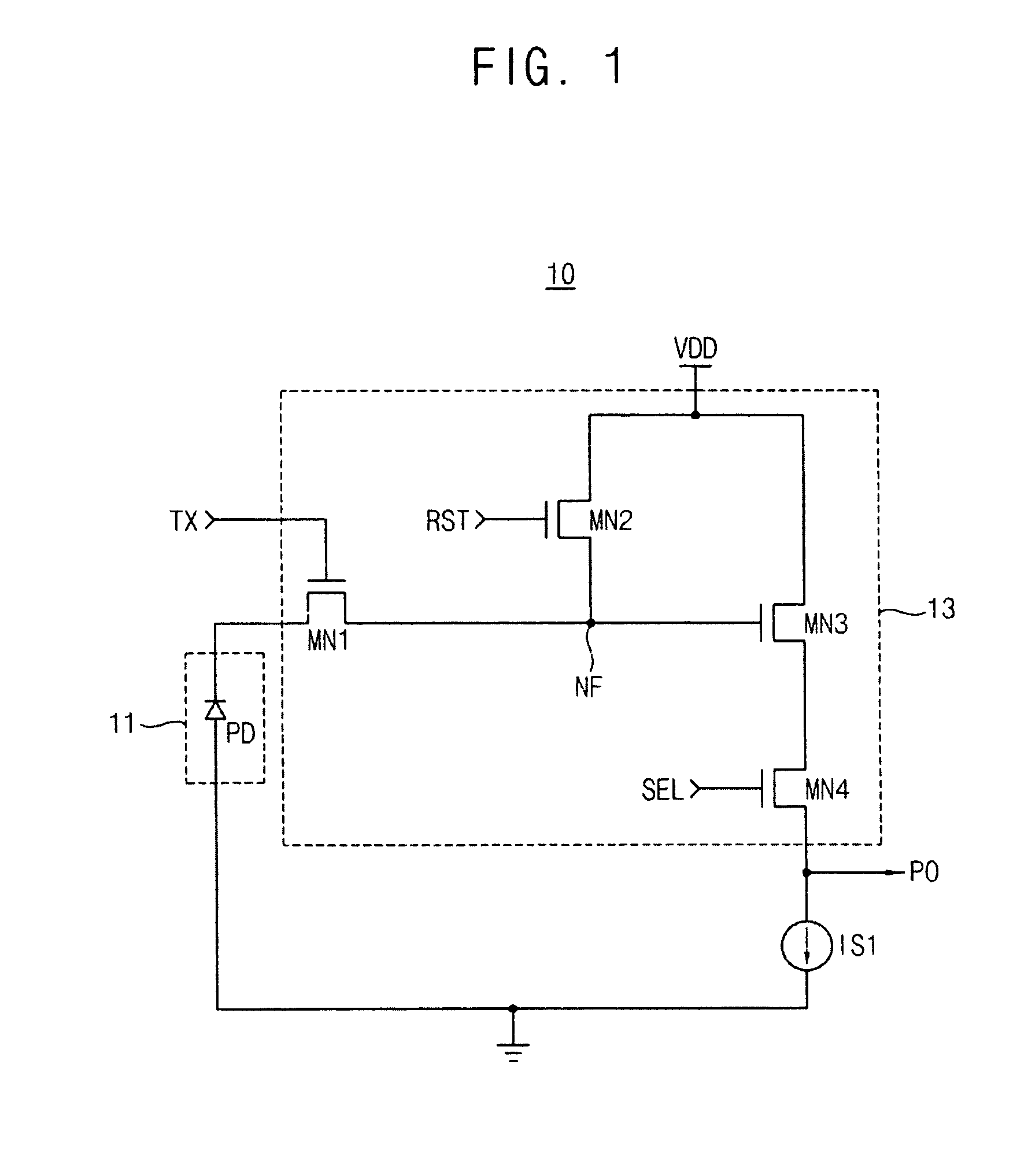

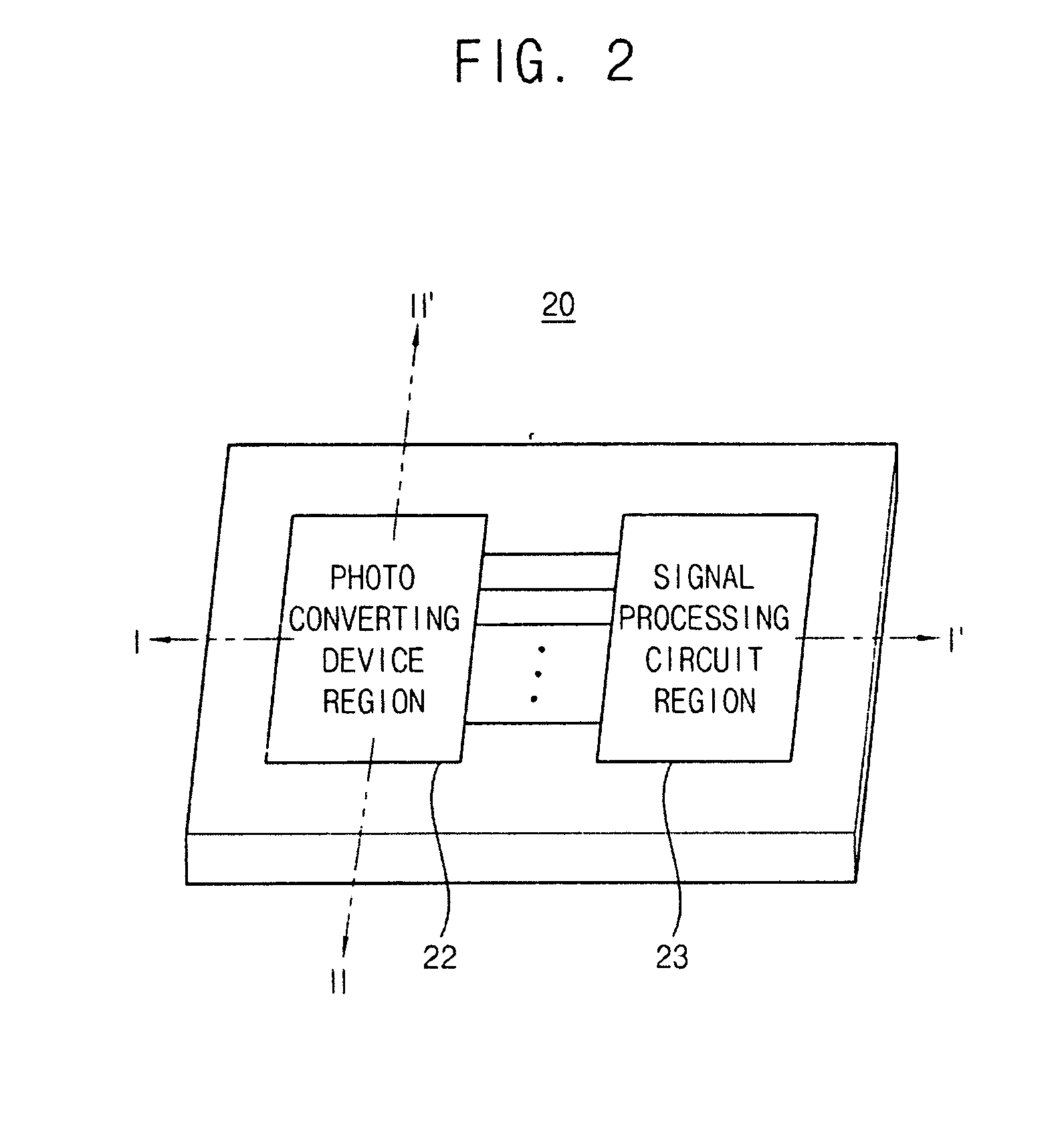

Image sensor including a pixel cell having an epitaxial layer, system having the same, and method of forming a pixel cell

InactiveUS20100065896A1Improve Quantization EfficiencyReduce crosstalkSolid-state devicesSemiconductor/solid-state device manufacturingComputer scienceCrosstalk

A pixel cell includes a substrate, an epitaxial layer, and a photo converting device in the epitaxial layer. The epitaxial layer has a doping concentration profile of embossing shape, and includes a plurality of layers that are stacked on the substrate. The photo converting device does not include a neutral region that has a constant potential in the vertical direction. Therefore, the image sensor including the pixel cell has high quantization efficiency, and a crosstalk between photo-converting devices is decreased.

Owner:SAMSUNG ELECTRONICS CO LTD

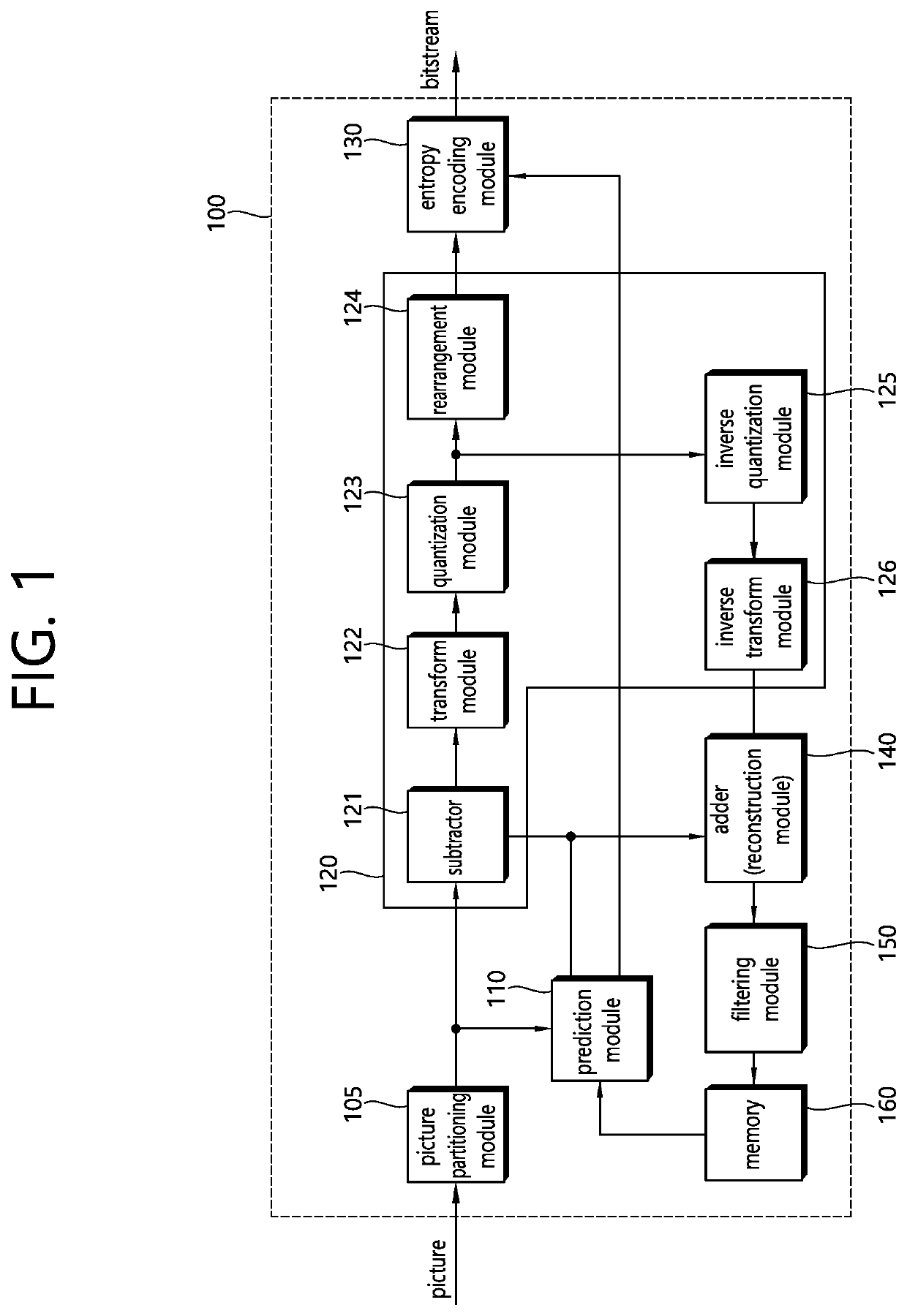

Image coding apparatus and method thereof based on a quantization parameter derivation

ActiveUS20200260084A1Improve compression efficiencyImprove Quantization EfficiencyDigital video signal modificationAlgorithmImage code

According to an embodiment of the present invention, a picture decoding method performed by a decoding apparatus is provided. The method comprises: decoding image information comprising information on a quantization parameter (QP), deriving an expected average luma value of a current block from neighboring available samples, deriving a quantization parameter offset (QP offset) for deriving a luma quantization parameter (luma QP) based on the expected average luma value and the information on the QP, deriving the luma QP based on the QP offset, performing an inverse quantization for a quantization group comprising the current block based on the derived luma QP, generating residual samples for the current block based on the inverse quantization, generating prediction samples for the current block based on the image information and generating reconstructed samples for the current block based on the residual samples for the current block and the prediction samples for the current block.

Owner:LG ELECTRONICS INC

Apparatus and method determining weighting function for linear prediction coding coefficients quantization

ActiveUS9236059B2Improve Quantization EfficiencyQuality improvementSpeech analysisLinear prediction codingLinear prediction

An apparatus determining a weighting function for line prediction coding coefficients quantization converts a linear prediction coding (LPC) coefficient of an input signal into one of a line spectral frequency (LSF) coefficient and an immitance spectral frequency (ISF) coefficient and determines a weighting function associated with one of an importance of the ISF coefficient and importance of the LSF coefficient using one of the converted ISF coefficient and the converted LSF coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

Speech compression and decompression apparatuses and methods providing scalable bandwidth structure

InactiveUS7624022B2Improve Quantization EfficiencyMinimize distortionSpeech analysisCode conversionNarrowband speechSpeech sound

A speech compression apparatus including: a first band-transform unit transforming a wideband speech signal to a narrowband low-band speech signal; a narrowband speech compressor compressing the narrowband low-band speech signal and outputting a result of the compressing as a low-band speech packet; a decompression unit decompressing the low-band speech packet and obtaining a decompressed wideband low-band speech signal; an error detection unit detecting an error signal that corresponds to a difference between the wideband speech signal and the decompressed wideband low-band speech signal; and a high-band speech compression unit compressing the error signal and a high-band speech signal of the wideband speech signal and outputting the result of the compressing as a high-band speech packet.

Owner:SAMSUNG ELECTRONICS CO LTD

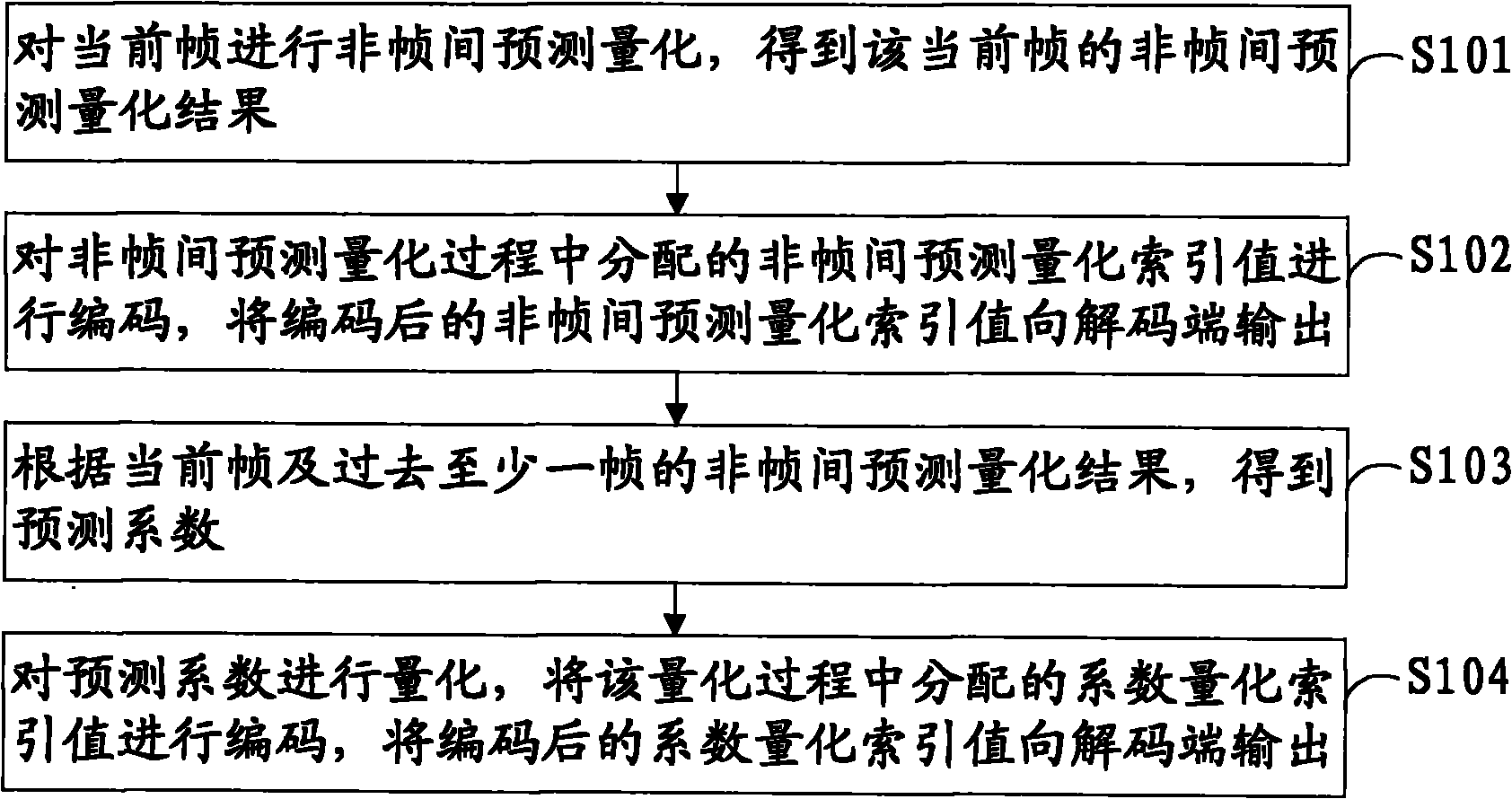

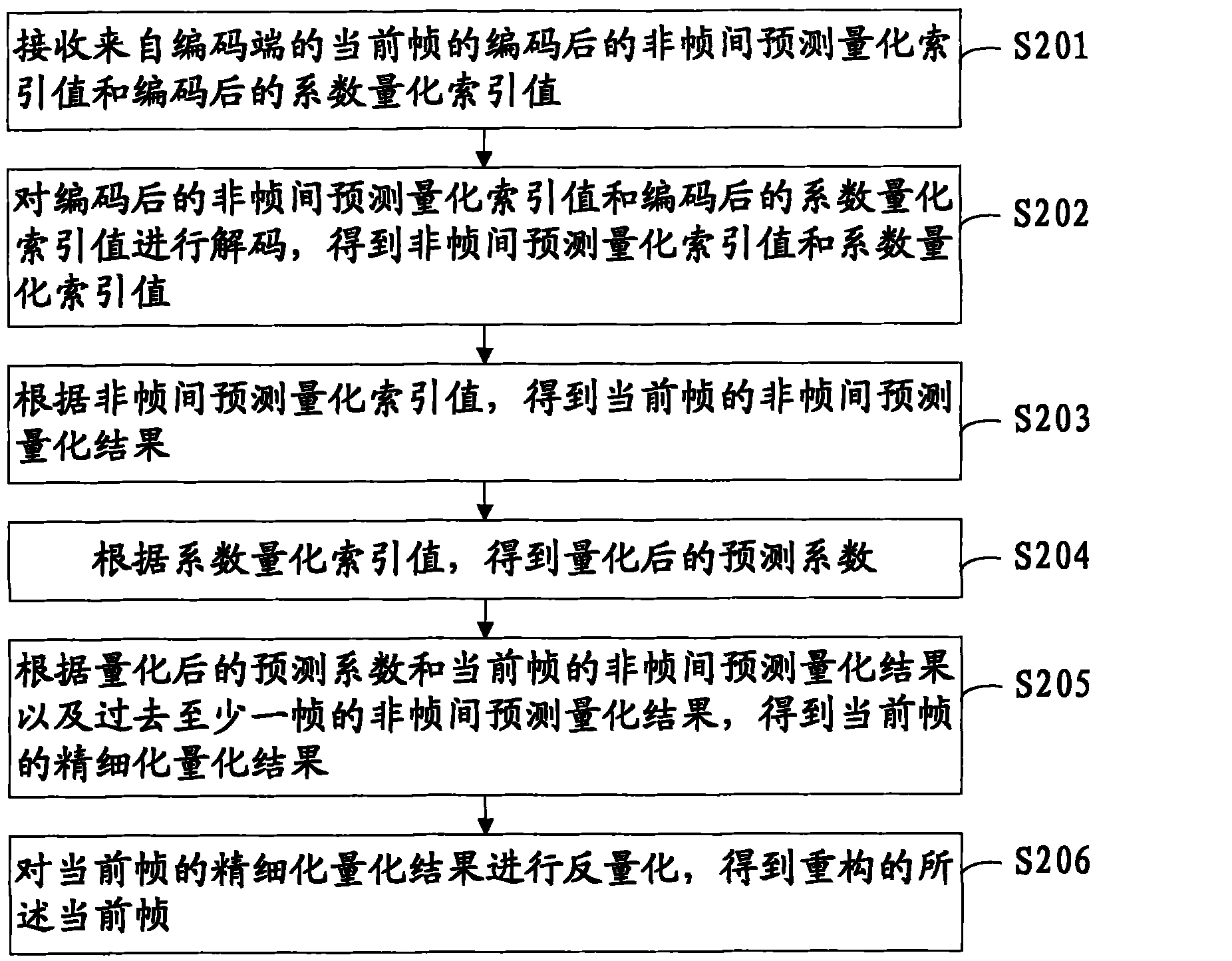

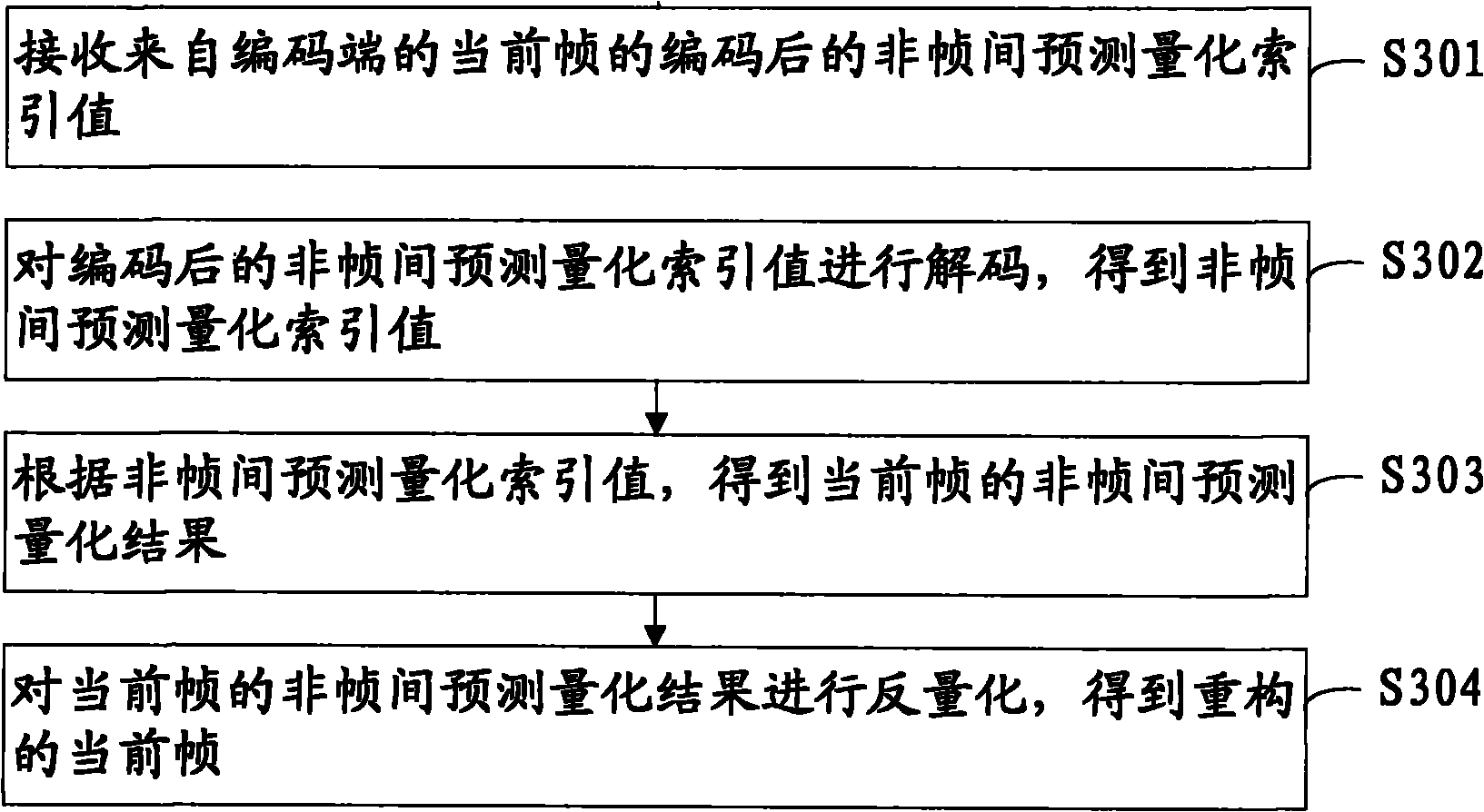

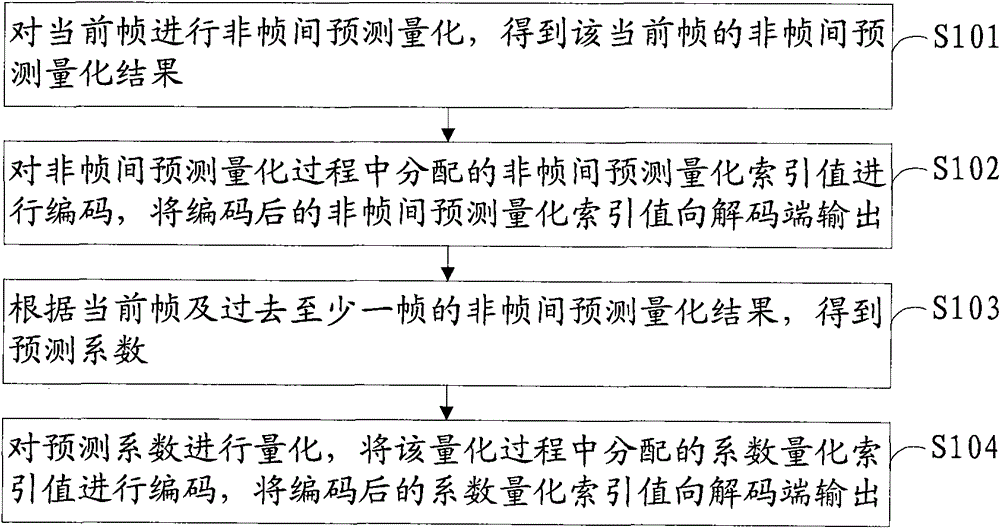

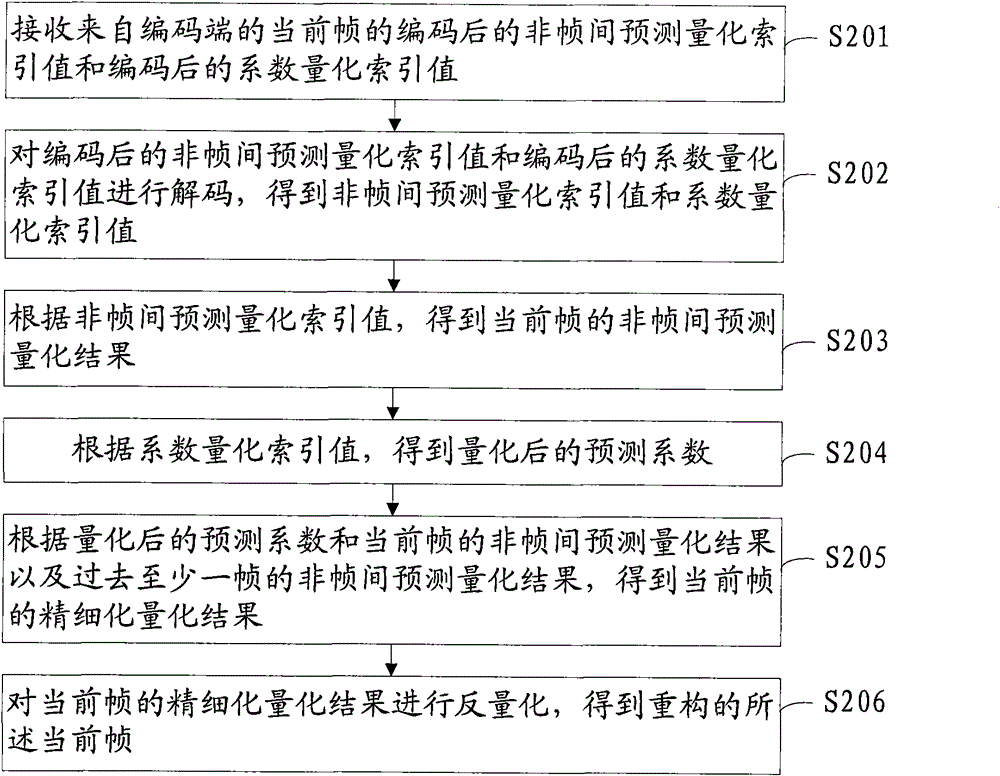

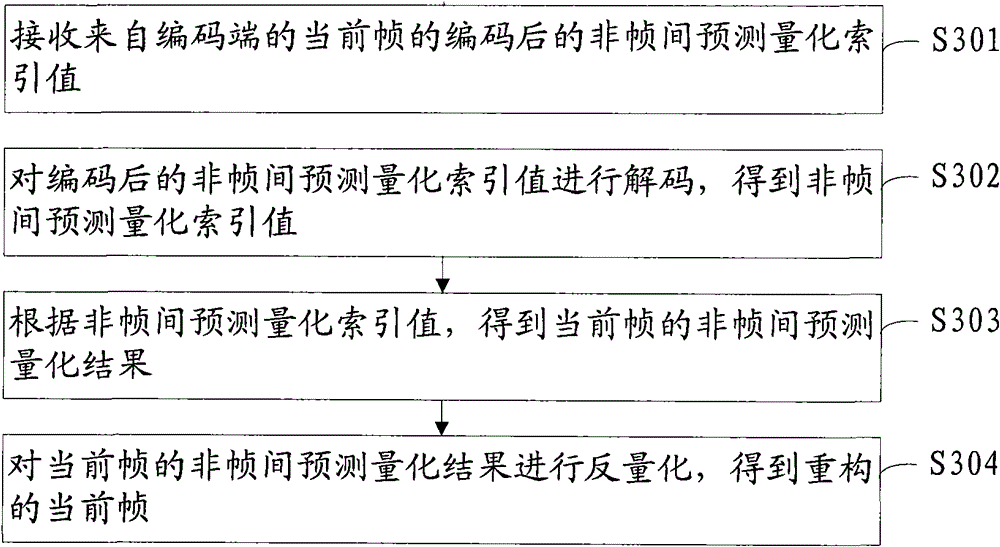

Quantitative coding/decoding method and device

ActiveCN102098057ARemove inter-frame correlationImprove Quantization EfficiencyCode conversionCoefficient quantizationPacket loss

The embodiment of the invention provides a quantitative coding / decoding method and device, relating to the field of communication. The method and the device can effectively avoid error transmission caused by frame loss at the same time of reasonably removing inter-frame correlation and increasing the quantization efficiency so as to improve the robustness of errors and achieve better packet loss resistance. The method provided by the embodiment of the invention comprises the following steps of: performing non-inter-frame prediction quantization on a current frame to obtain a non-inter-frame prediction quantization result of the current frame; coding non-inter-frame prediction quantization index values distributed in the non-inter-frame prediction quantization process, and outputting the coded non-inter-frame prediction quantization index values to a decoding end; obtaining a prediction coefficient according to the non-inter-frame prediction quantization result of the current frame and at least one past frame; and quantizing the prediction coefficient, coding the coefficient quantization index values distributed in the quantization process, and outputting the coded coefficient quantization index values to the decoding end.

Owner:HONOR DEVICE CO LTD

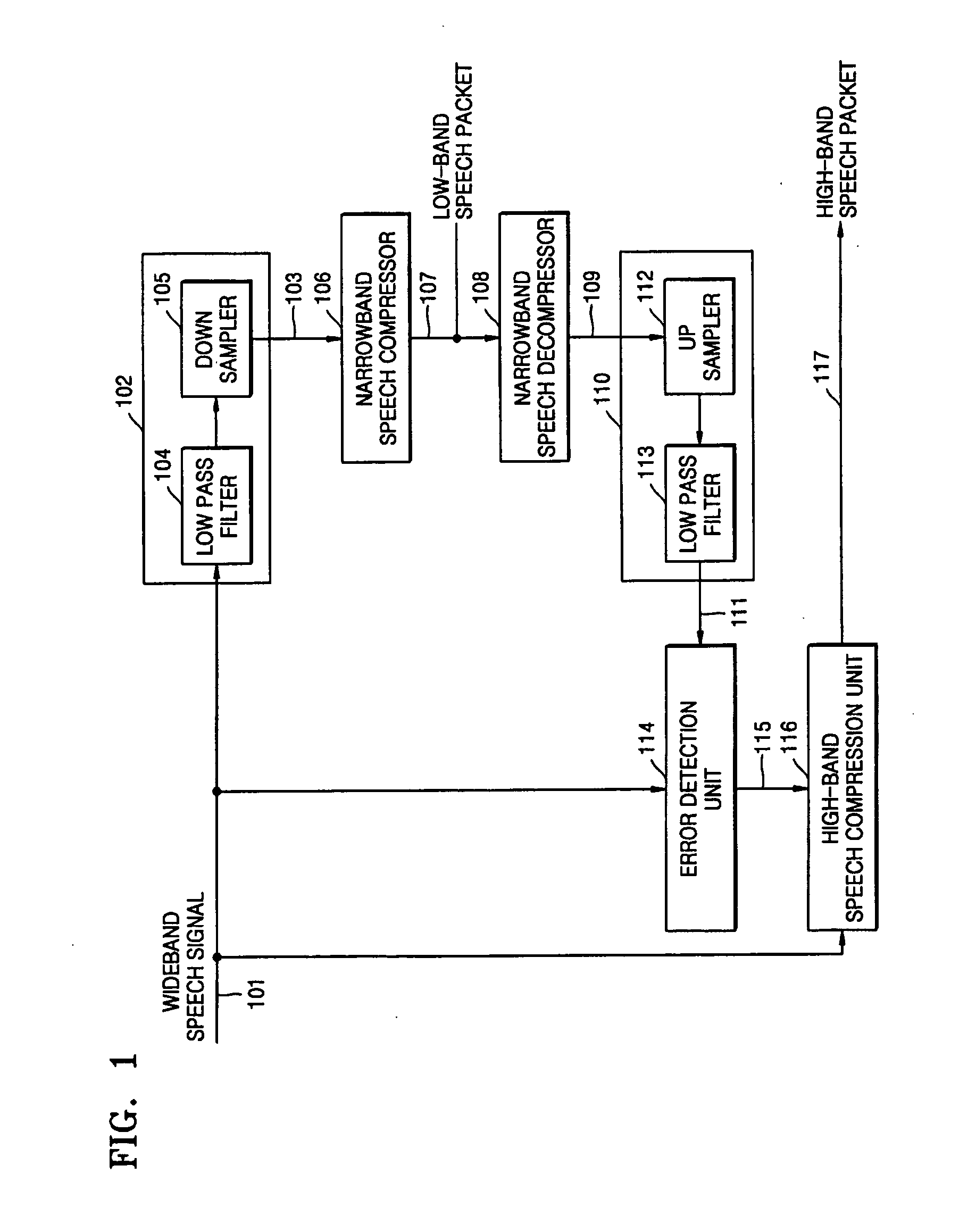

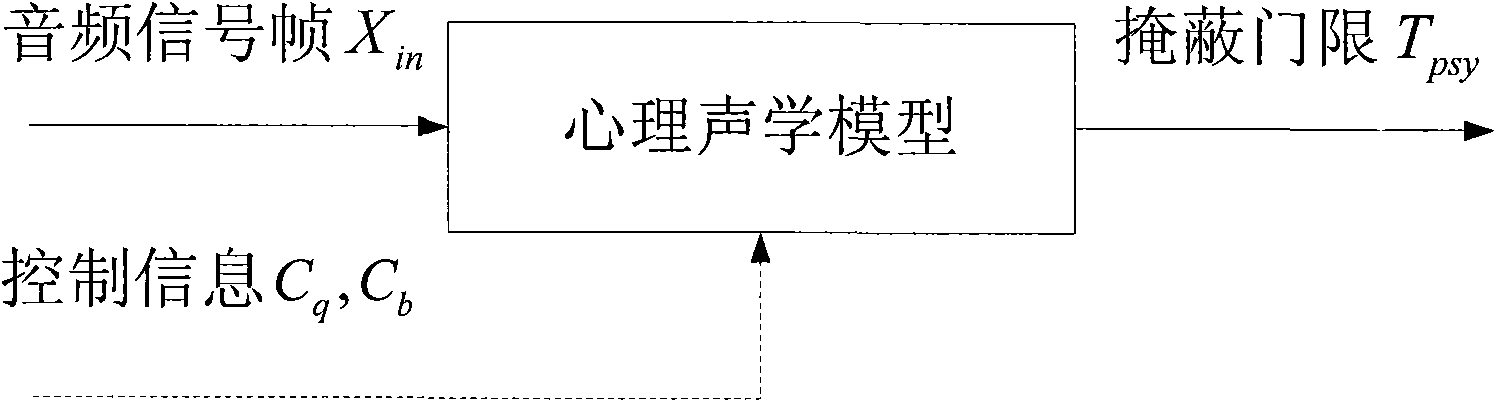

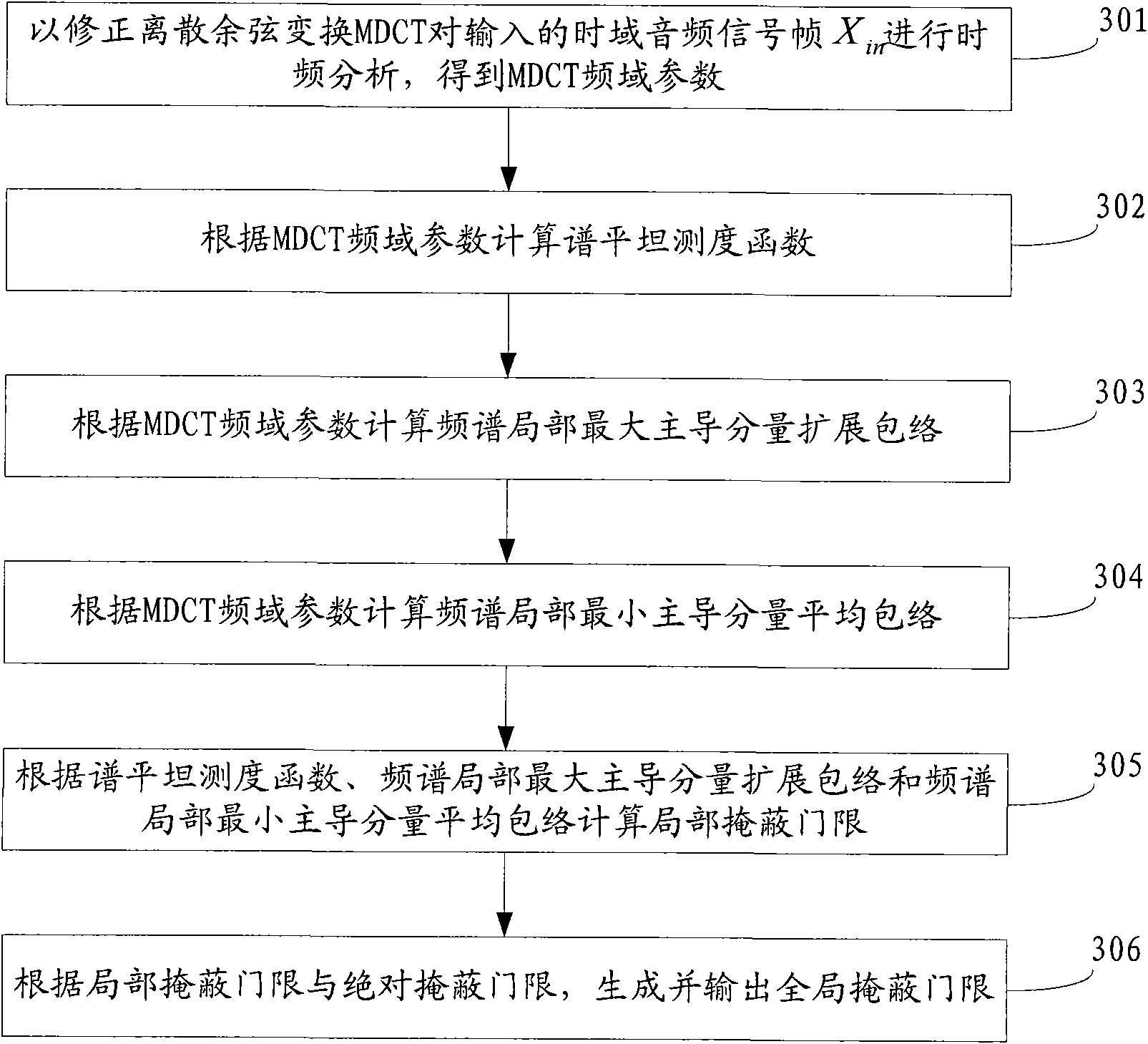

Method and device for generating psychoacoustic model

InactiveCN102169694AReasonable distributionReduce complexitySpeech analysisTime domainFrequency spectrum

The invention discloses a method and device for generating a psychoacoustic model, and the method and device provided by the invention belong to the technical field of audio processing. The method comprises the following steps: using a modified discrete cosine transform (MDCT) to perform the time sequence analysis on an input time domain audio signal frame to obtain a MDCT frequency domain parameter; computing a spectrum flat measure function, a local maximum dominant component extension envelope of the frequency spectrum and a local minimum dominant component average envelope of the frequency spectrum according to the MDCT frequency domain parameter, and computing a local masking threshold according to the spectrum flat measure function, the local maximum dominant component extension envelope of the frequency spectrum and the local minimum dominant component average envelope of the frequency spectrum; and generating and outputting a global masking threshold according to the local masking threshold. By computing the local masking threshold through the spectrum flat measure function, the tone masking characteristic and non-tone masking characteristic of the audio signal are distinguished from each other, thereby achieving the purposes of distributing quantification bit numbers more reasonably and effectively improving the effect of the quantification efficiency.

Owner:HUAWEI TECH CO LTD +1

Apparatus and method for determining weighting function having for associating linear predictive coding (LPC) coefficients with line spectral frequency coefficients and immittance spectral frequency coefficients

Proposed is a method and apparatus for determining a weighting function for quantizing a linear predictive coding (LPC) coefficient and having a low complexity. The weighting function determination apparatus may convert an LPC coefficient of a mid-subframe of an input signal to one of a immitance spectral frequency (ISF) coefficient and a line spectral frequency (LSF) coefficient, and may determine a weighting function associated with an importance of the ISF coefficient or the LSF coefficient based on the converted ISF coefficient or LSF coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

Apparatus and method determining weighting function for linear prediction coding coefficients quantization

ActiveUS20110295600A1Improve quantization efficiencyQuality improvementSpeech analysisA-weightingLinear prediction

An apparatus determining a weighting function for line prediction coding coefficients quantization converts a linear prediction coding (LPC) coefficient of an input signal into one of a line spectral frequency (LSF) coefficient and an immitance spectral frequency (ISF) coefficient and determines a weighting function associated with one of an importance of the ISF coefficient and importance of the LSF coefficient using one of the converted ISF coefficient and the converted LSF coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

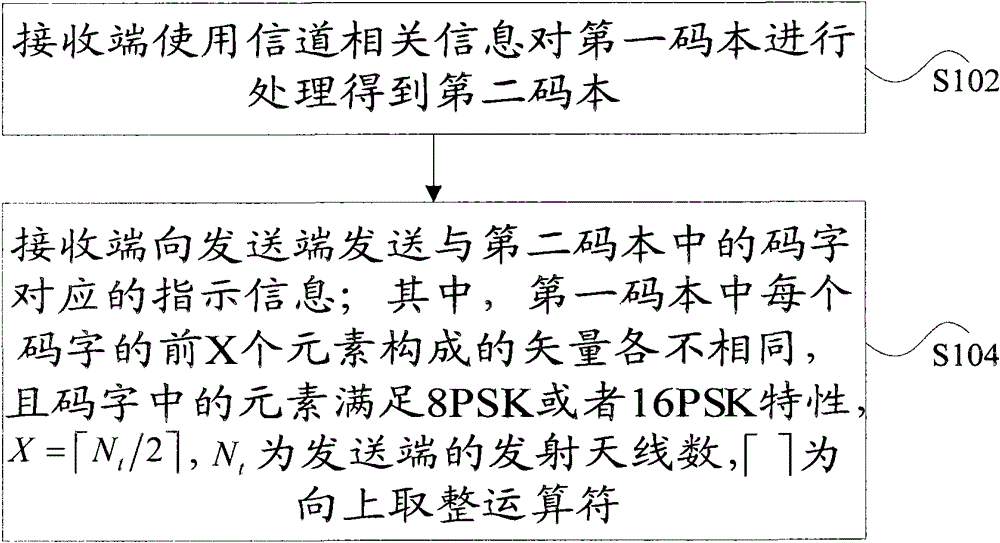

Method and device for feeding back channel quantitative information

ActiveCN101826941AReduce similarityIncrease distanceSpatial transmit diversityError prevention/detection by diversity receptionEngineeringChannel correlation

The invention discloses a method and a device for feeding back channel quantitative information. The method comprises the following steps that: a receiving end processes a first codebook by using channel-related information to obtain a second codebook and transmits the indication information corresponding to a code word in the second codebook to a transmitting end, wherein the vectors consisting of the former X elements of each code word in the first codebook are different from one another; the element in the code word meets the characteristic of 8PSK or 16PSK; and Nt is the number of transmitting antennas at the transmitting end and an operator which is rounded up. The method and the device reduce the level of similarity of the code word in the codebook (CB), increase the distance between every two code words and improve the quantization efficiency.

Owner:江苏慧煦电子科技有限公司

4-bit quantization method and system of neural network

PendingCN111882058AImprove 4-bit quantization efficiencyImprove accuracyNeural architecturesNeural learning methodsAlgorithmEngineering

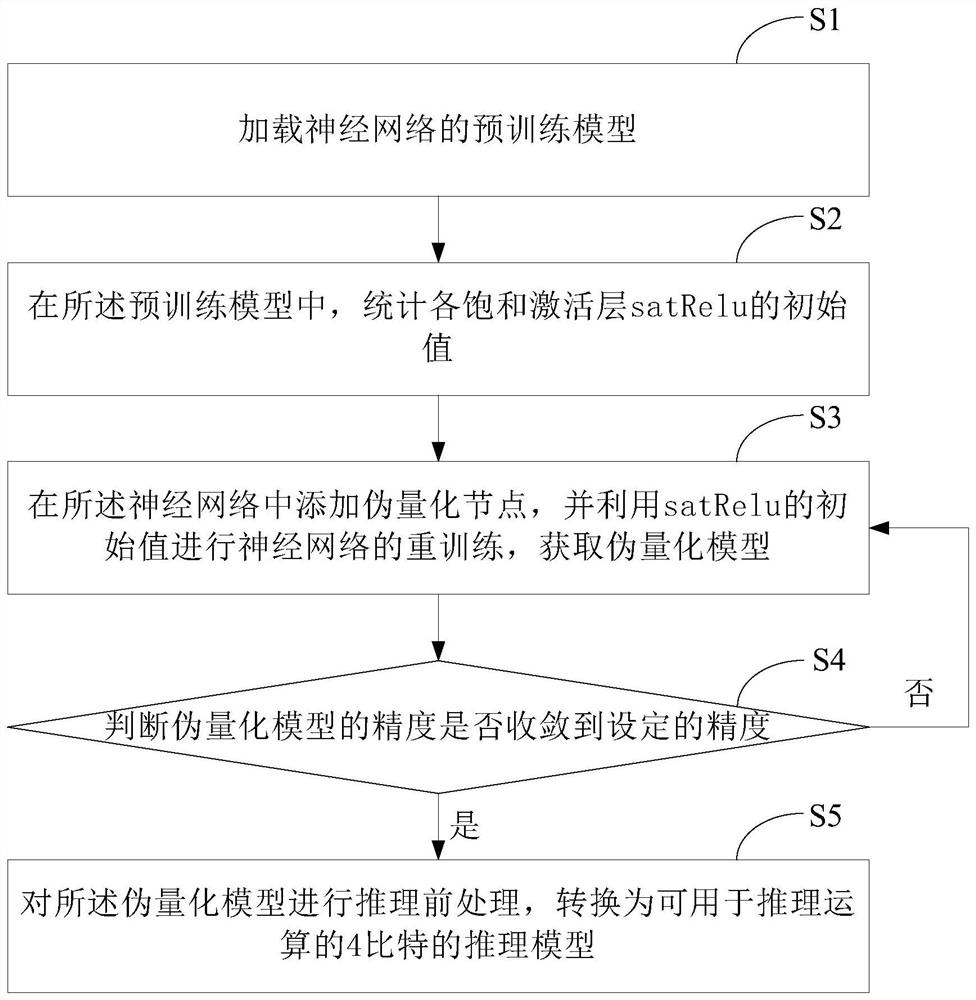

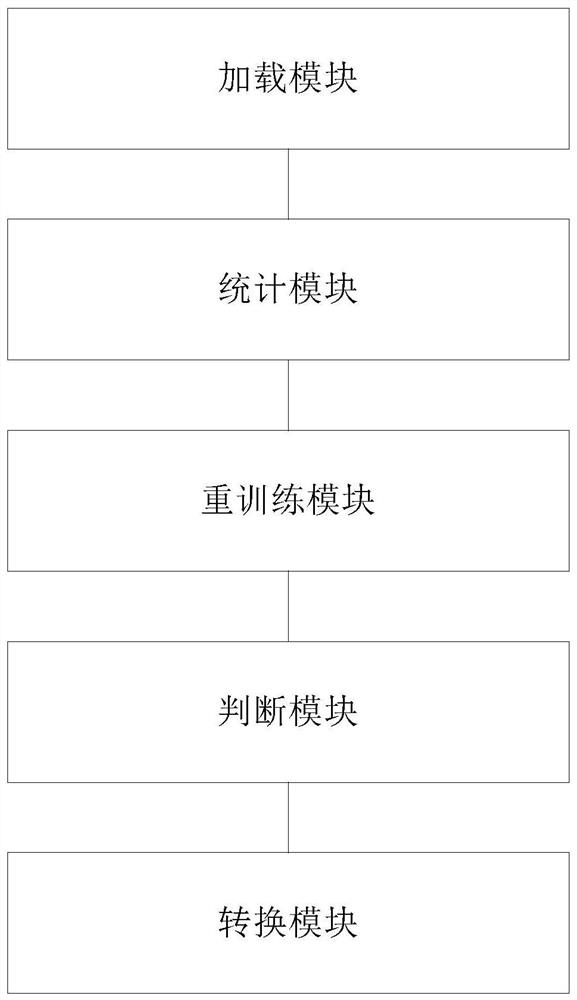

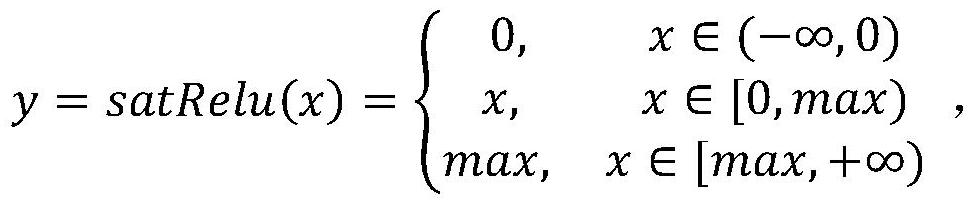

The invention discloses a 4-bit quantification method and system of a neural network. The method comprises the steps of loading a pre-training model of the neural network; in the pre-training model, counting an initial value of each saturation activation layer satRelu; adding pseudo quantization nodes into the neural network, and using the initial value of satRelu for retraining the neural networkto obtain a pseudo quantization model; judging whether the precision of the pseudo-quantization model converges to the set precision; if yes, carrying out reasoning pretreatment on the pseudo-quantization model, and converting the pseudo-quantization model into a 4-bit reasoning model which can be used for reasoning operation; otherwise, returning to carry out re-training of the neural network. The system mainly comprises a loading module, a statistics module, a retraining module, a judgment module and a conversion module. Through the method and the system, the training efficiency can be effectively improved on the basis of ensuring the accuracy of the training result.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Weight function determination device and method for quantizing linear prediction coding coefficient

ActiveUS20160336018A1Improve Quantization EfficiencyQuality improvementSpeech analysisLinear prediction codingAlgorithm

A weighting function determination method includes obtaining a line spectral frequency (LSF) coefficient or an immitance spectral frequency (ISF) coefficient from a linear predictive coding (LPC) coefficient of an input signal and determining a weighting function by combining a first weighting function based on spectral analysis information and a second weighting function based on position information of the LSF coefficient or the ISF coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

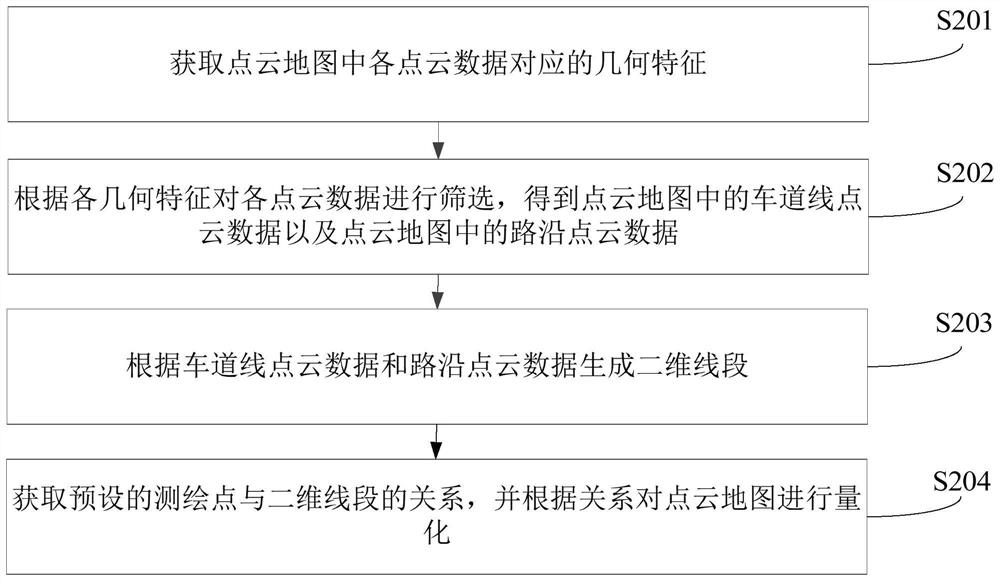

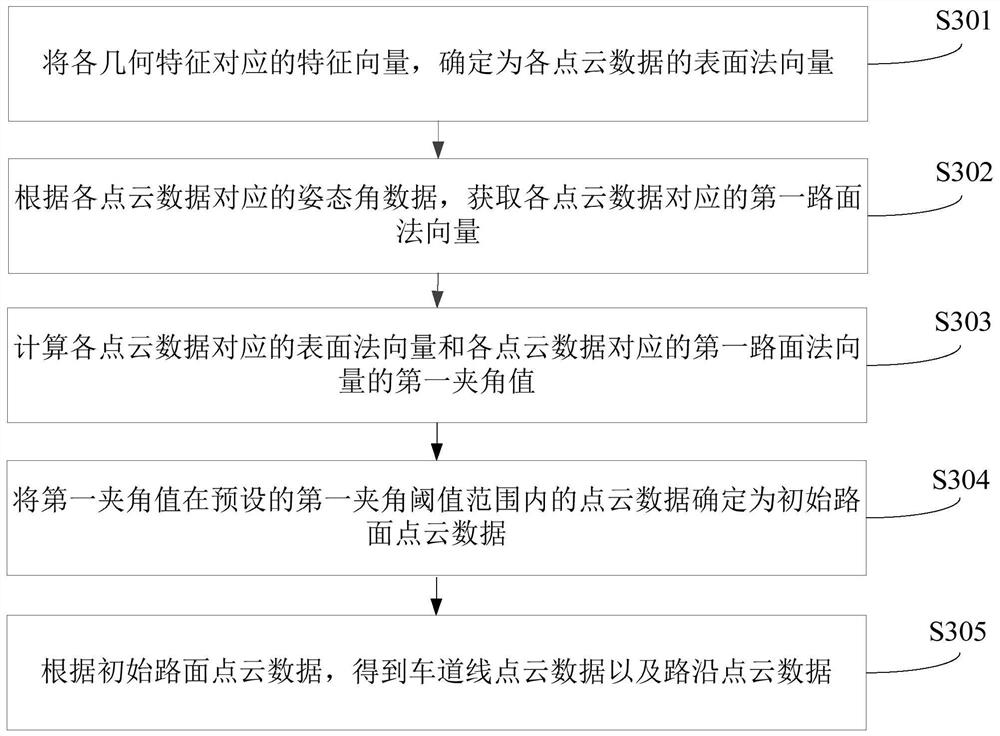

Point cloud map quantification method and device, computer equipment and storage medium

ActiveCN111982152AImprove efficiencyImprove accuracyMeasurement devicesGeographical information databasesLine segmentComputer device

The invention relates to a point cloud map quantification method and device, computer equipment and a storage medium. The method comprises the steps: acquiring geometrical characteristics corresponding to point cloud data in a point cloud map; screening the point cloud data according to the geometrical characteristics to obtain lane line point cloud data in the point cloud map and road edge pointcloud data in the point cloud map; generating a two-dimensional line segment according to the lane line point cloud data and the road edge point cloud data; and obtaining a relationship from a presetsurveying and mapping point to the two-dimensional line segment, and quantifying the point cloud map according to the relationship. By adopting the method, the efficiency of quantizing the point cloudmap according to the distance from the surveying and mapping point corresponding to the point cloud map to the obtained two-dimensional line segment can be improved.

Owner:GUANGZHOU WERIDE TECH LTD CO

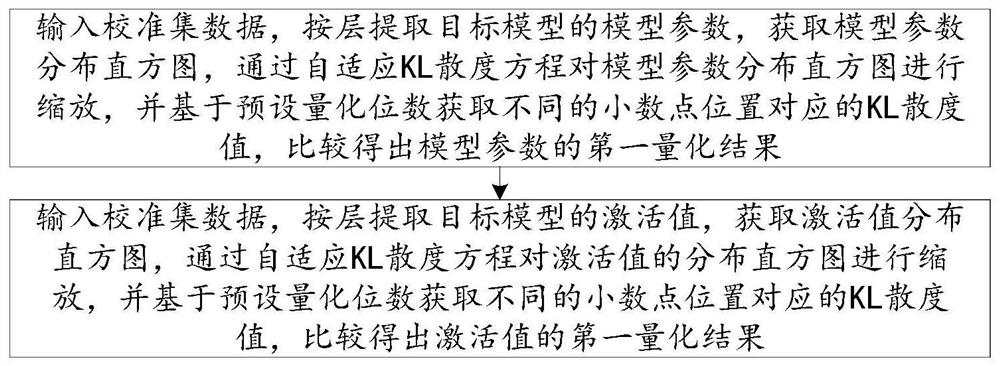

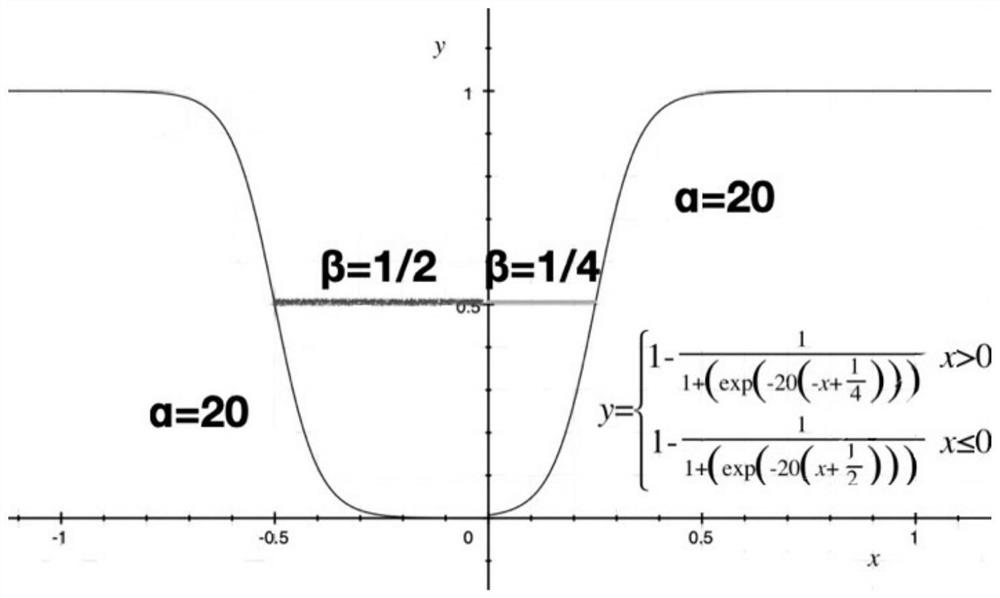

Fixed-point quantification method and device for deep learning model

PendingCN113408696AGuaranteed accuracyMake up for the defect of only focusing on probabilityCharacter and pattern recognitionNeural architecturesAlgorithmSelf adaptive

The invention discloses a fixed-point quantification method and device for a deep learning model, and the method comprises the following steps: inputting calibration data to a target model, taking a model parameter and an activation value of the target model as quantification objects in sequence, and executing the following steps: inputting calibration set data, extracting the quantification objects of the target model according to layers, acquiring a distribution histogram of a quantized object, scaling the distribution histogram of the quantized object through an adaptive KL divergence equation, acquiring KL divergence values corresponding to different decimal point positions based on a preset quantization digit, and comparing to obtain a first quantization result of the quantized object. The defect that the KL divergence algorithm only pays attention to the probability is overcome, the quantization result is optimized, and the quantization speed can be greatly increased, the quantization efficiency is improved and the time is saved under the condition that the certain precision of the quantized model is ensured.

Owner:珠海亿智电子科技有限公司

Segmentation and tracking system and method based on self-learning using video patterns in video

PendingUS20220121853A1Exact matchImprove accuracyImage analysisCharacter and pattern recognitionPattern recognitionNetwork processing unit

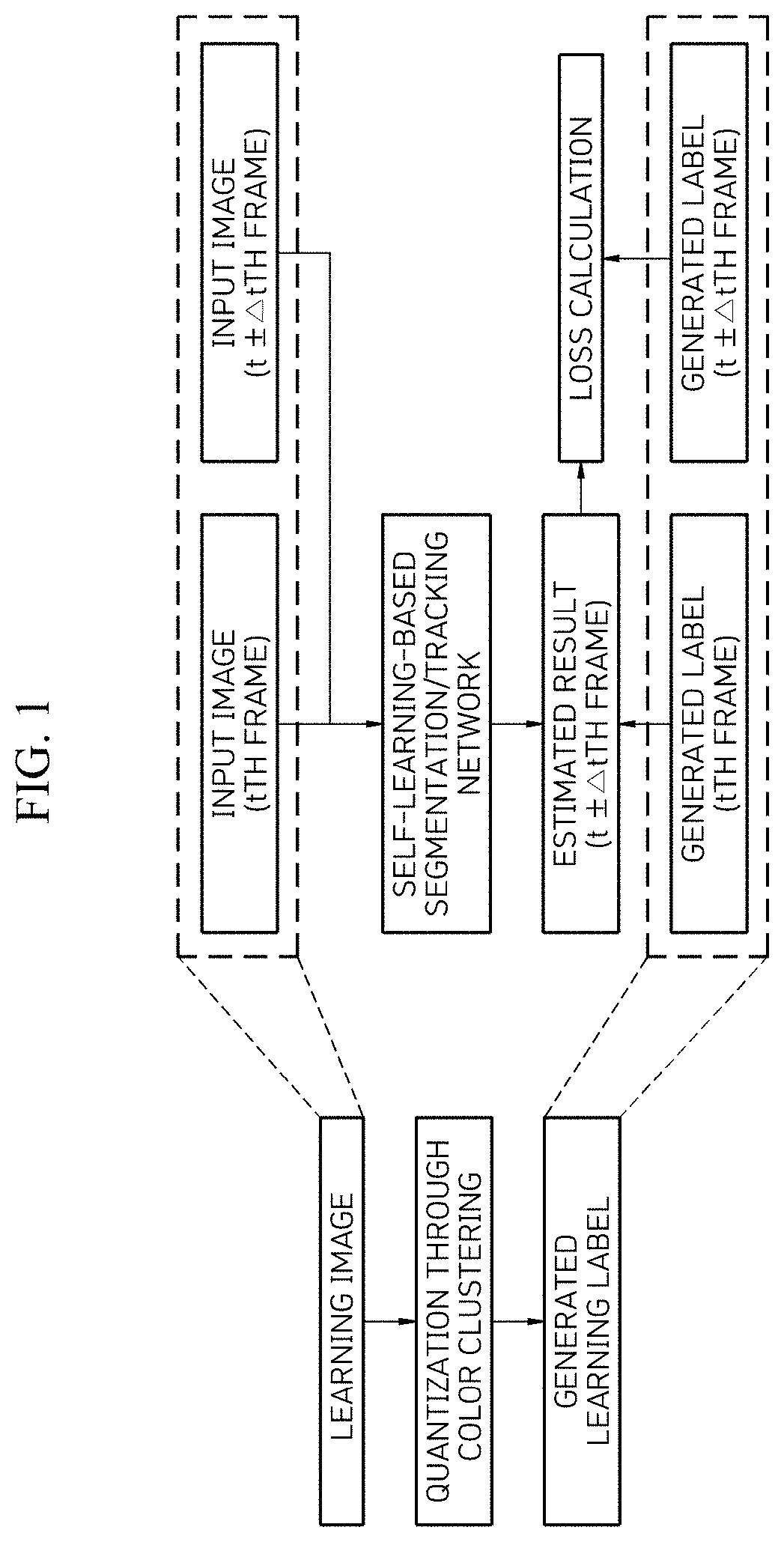

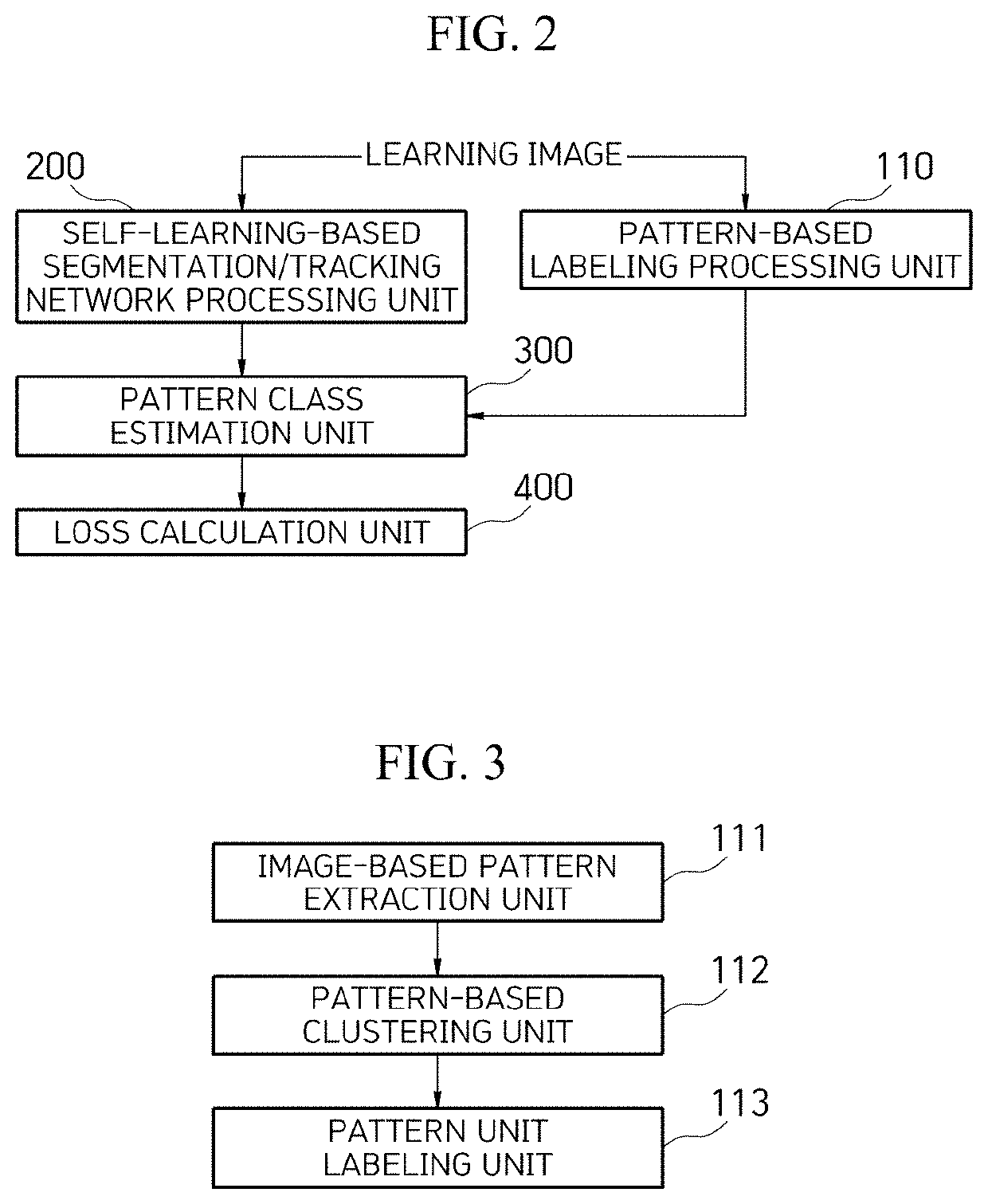

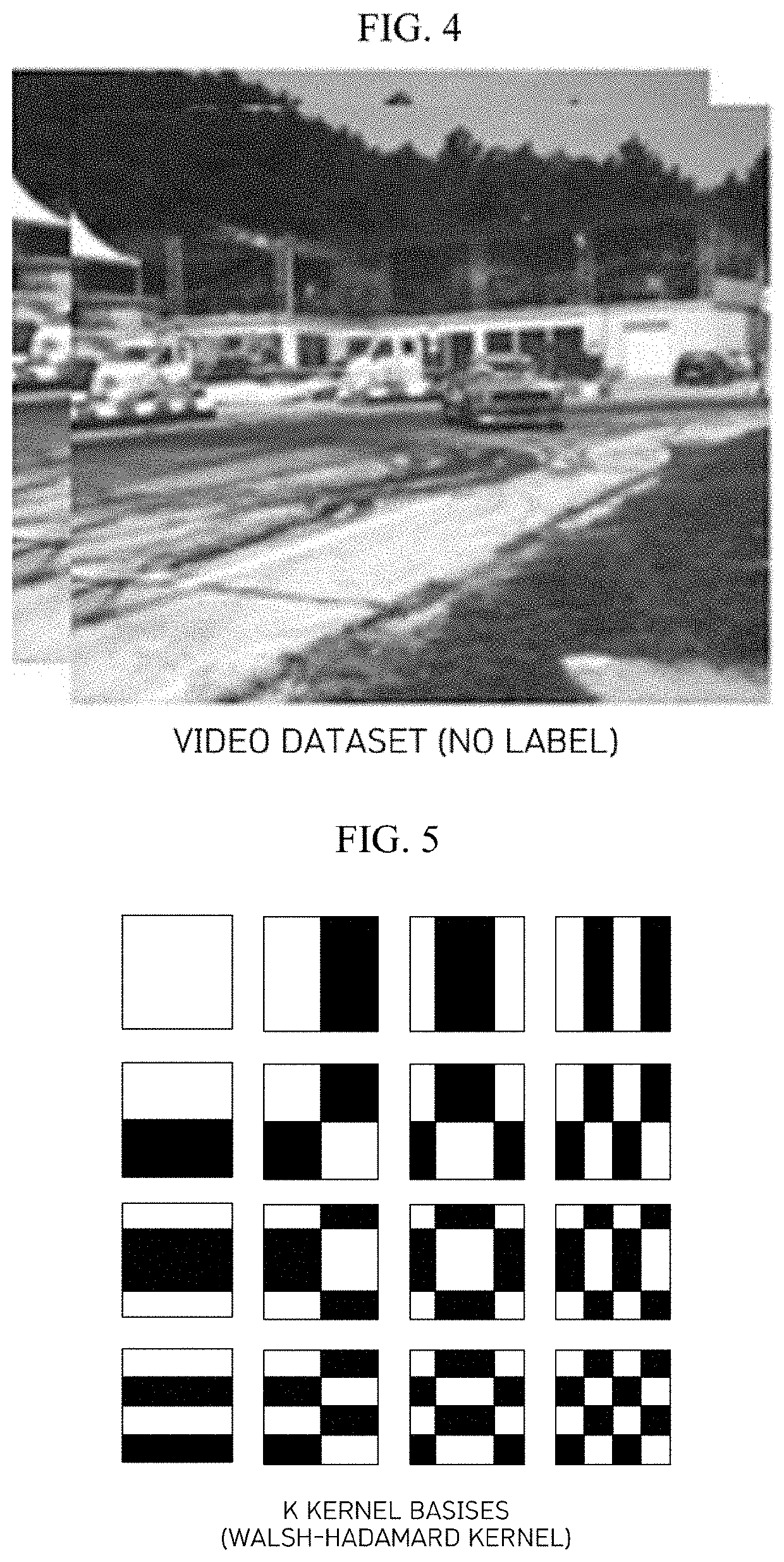

Provided is a segmentation and tracking system based on self-learning using video patterns in video. The present invention includes a pattern-based labeling processing unit configured to extract a pattern from a learning image and then perform labeling in each pattern unit to generate a self-learning label in the pattern unit, a self-learning-based segmentation / tracking network processing unit configured to receive two adjacent frames extracted from the learning image and estimate pattern classes in the two frames selected from the learning image, a pattern class estimation unit configured to estimate a current labeling frame through a previous labeling frame extracted from the image labeled by the pattern-based labeling processing unit and a weighted sum of the estimated pattern classes of a previous frame of the learning image, and a loss calculation unit configured to calculate a loss between a current frame and the current labeling frame by comparing the current labeling frame with the current labeling frame estimated by the pattern class estimation unit.

Owner:ELECTRONICS & TELECOMM RES INST

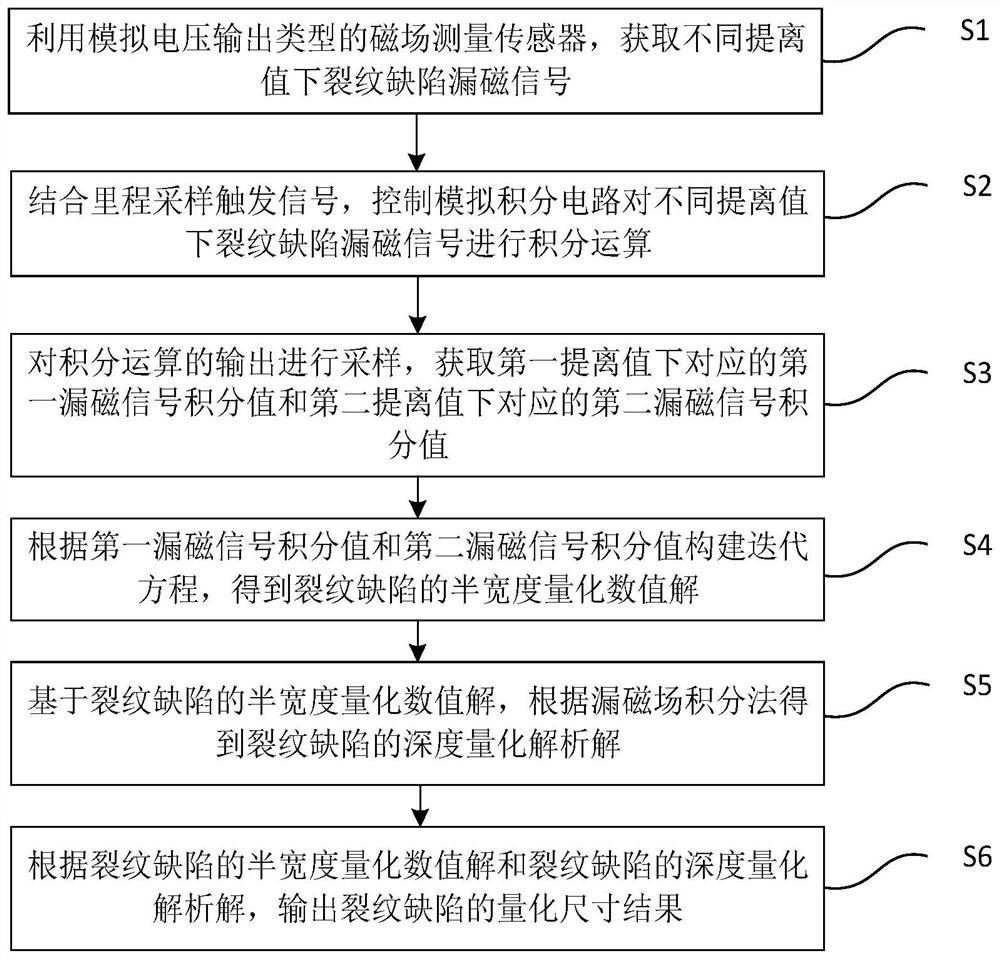

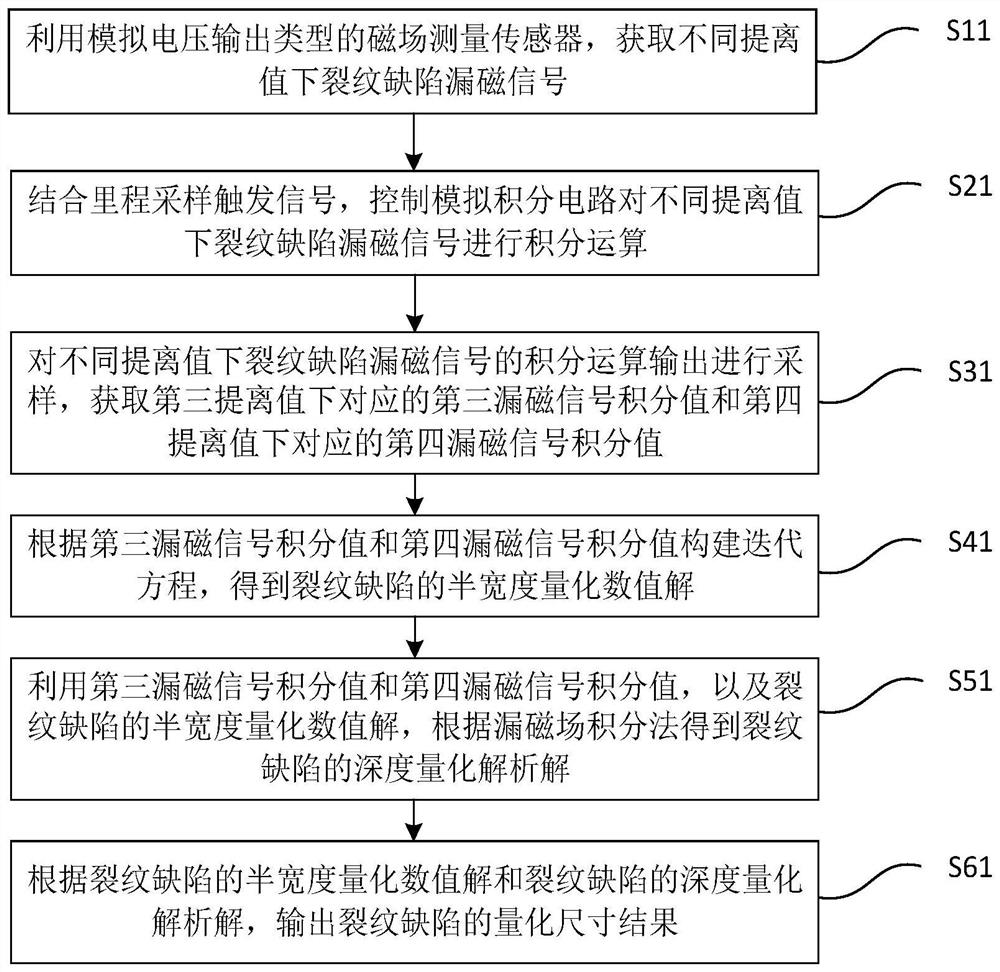

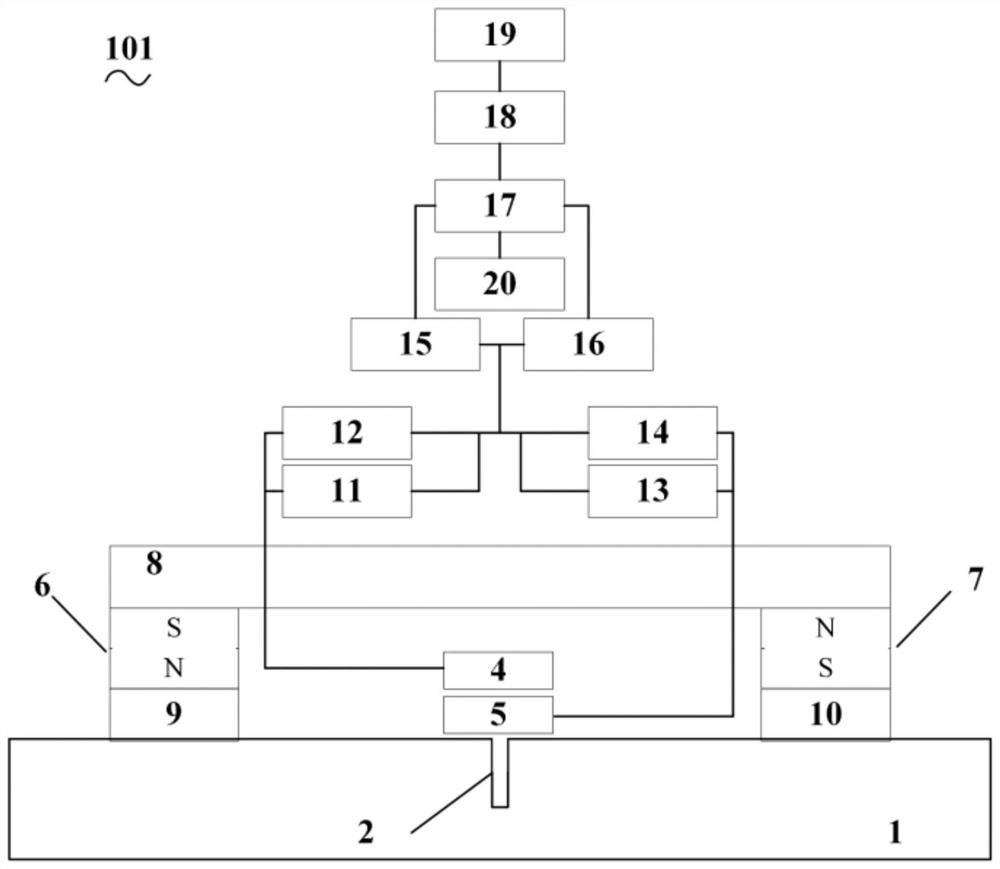

Crack defect quantification method and device based on magnetic flux leakage space integration

PendingCN114636754AHigh sizing efficiencyImprove Quantization EfficiencyMaterial magnetic variablesComputational physicsAnalog signal

The invention discloses a crack defect quantification method and device based on magnetic flux leakage space integration. The method comprises the following steps: converting crack defect magnetic flux leakage signals under different lift-off values into analog voltage signals; carrying out integral operation on the crack defect magnetic flux leakage analog signals under different lift-off values by combining a mileage sampling trigger signal; sampling the output of the integral operation to obtain a first magnetic flux leakage signal integral value corresponding to the first lift-off value and a second magnetic flux leakage signal integral value corresponding to the second lift-off value; constructing an iterative equation to obtain a half-width quantitative numerical solution of the crack defect; obtaining a depth quantitative analytic solution of the crack defect according to a leakage magnetic field integration method; and outputting a quantitative size result of the crack defect. According to the invention, problems which may occur when a conventional magnetic flux leakage detection system directly samples a leakage magnetic field, such as leakage of crack magnetic flux leakage signals or loss of effective magnetic flux leakage signals, can be avoided; the theoretical model is clear, the calculation speed is high, the calculation result is accurate, and the crack defect size quantification efficiency is high.

Owner:TSINGHUA UNIV

Weight function determination device and method for quantizing linear prediction coding coefficient

ActiveUS10074375B2Improve Quantization EfficiencyQuality improvementSpeech analysisLinear prediction codingAlgorithm

Owner:SAMSUNG ELECTRONICS CO LTD

Data processing method of neural network processor and neural network processor

ActiveCN105844330BReduce storage requirementsReduce bandwidth requirementsPhysical realisationNerve networkBandwidth requirement

The invention provides a data processing method of a neural network processor and a neural network processor. The method includes the following steps that: input data and corresponding weight absolute values are added together through an adder, wherein the input data are data of the output of a previous stage, and the input data and the weight absolute values are n-element vectors; and n-term data obtained after adding the input data and the corresponding weight absolute values together are subjected to n times of first nonlinear mapping; results obtained after the first nonlinear mapping are subjected to n times of accumulation operation through an accumulator, wherein the accumulation operation includes weight sign bit-controlled adding operation and subtraction operation; and a result obtained after the n times of accumulation operation is subjected to second nonlinear mapping, so that a processing result can be obtained, and data output is carried out, wherein the second nonlinear mapping is formulated according to the rules of neural network nonlinear mapping and the inverse mapping of the first nonlinear mapping. With the method adopted, quantization efficiency can be improved, and storage requirements and bandwidth requirements of data can be decreased.

Owner:HUAWEI TECH CO LTD

Speech signal compression and/or decompression method, medium, and apparatus

A speech signal compression and / or decompression method, medium, and apparatus in which the speech signal is transformed into the frequency domain for quantizing and dequantizing information of frequency coefficients. The speech signal compression apparatus includes a transform unit to transform a speech signal into the frequency domain and obtain frequency coefficients, a magnitude quantization unit to transform magnitudes of the frequency coefficients, quantize the transformed magnitudes and obtain magnitude quantization indices, a sign quantization unit to quantize signs of the frequency coefficients and obtain sign quantization indices, and a packetizing unit to generate the magnitude and sign quantization indices as a speech packet.

Owner:SAMSUNG ELECTRONICS CO LTD

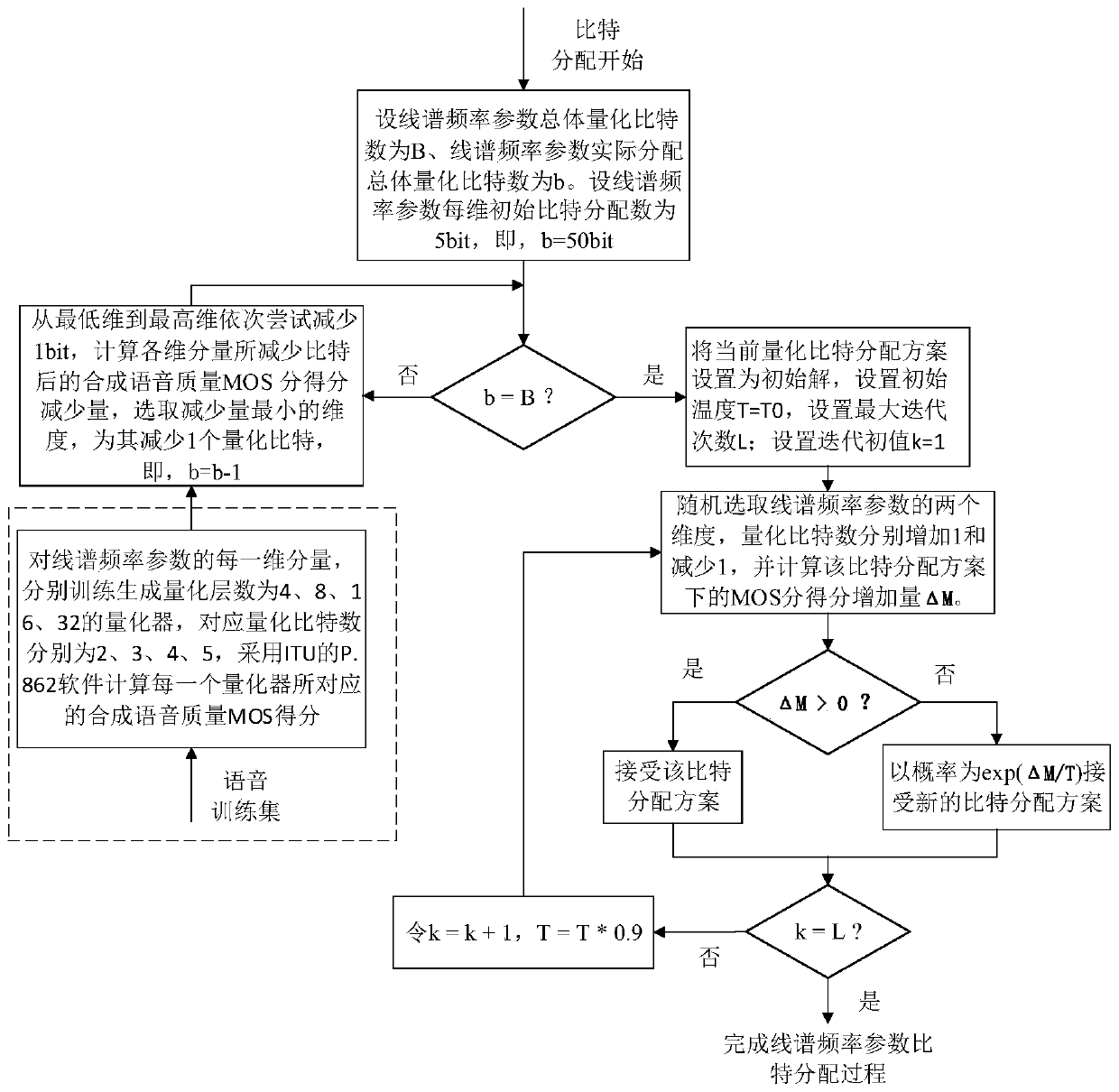

Linear spectral frequency parameter quantization bit allocation method and system

ActiveCN110428847AImprove Quantization EfficiencyQuality improvementSpeech analysisMean opinion scoreBit allocation

The invention discloses a linear spectral frequency parameter quantization bit allocation method and system. The method includes the following steps: an objective voice mean opinion score (MOS) is used as the basis of a linear spectral frequency parameter quantization bit allocation solution, initial bit allocation is obtained by applying quantized bit subtraction and an MOS comparing method, andthen an optimal bit allocation solution is searched by a simulated annealing algorithm. The method has the following advantages: the method takes into full account the influence of the difference between all dimensions of linear spectral frequency parameters on the quality of a synthetic voice, and applies the simulated annealing algorithm to search a globally optimal solution, which can further improve the quantization efficiency of the linear spectral frequency parameters and the quality of the synthetic voice.

Owner:南京梧桐微电子科技有限公司

Feedback method and device for channel quantization information

ActiveCN101826941BReduce similarityIncrease distanceSpatial transmit diversityError prevention/detection by diversity receptionEngineeringChannel correlation

The invention discloses a method and a device for feeding back channel quantitative information. The method comprises the following steps that: a receiving end processes a first codebook by using channel-related information to obtain a second codebook and transmits the indication information corresponding to a code word in the second codebook to a transmitting end, wherein the vectors consisting of the former X elements of each code word in the first codebook are different from one another; the element in the code word meets the characteristic of 8PSK or 16PSK; and Nt is the number of transmitting antennas at the transmitting end and an operator which is rounded up. The method and the device reduce the level of similarity of the code word in the codebook (CB), increase the distance between every two code words and improve the quantization efficiency.

Owner:江苏慧煦电子科技有限公司

Quantitative coding/decoding method and device

The embodiment of the invention provides a quantitative coding / decoding method and device, relating to the field of communication. The method and the device can effectively avoid error transmission caused by frame loss at the same time of reasonably removing inter-frame correlation and increasing the quantization efficiency so as to improve the robustness of errors and achieve better packet loss resistance. The method provided by the embodiment of the invention comprises the following steps of: performing non-inter-frame prediction quantization on a current frame to obtain a non-inter-frame prediction quantization result of the current frame; coding non-inter-frame prediction quantization index values distributed in the non-inter-frame prediction quantization process, and outputting the coded non-inter-frame prediction quantization index values to a decoding end; obtaining a prediction coefficient according to the non-inter-frame prediction quantization result of the current frame and at least one past frame; and quantizing the prediction coefficient, coding the coefficient quantization index values distributed in the quantization process, and outputting the coded coefficient quantization index values to the decoding end.

Owner:HONOR DEVICE CO LTD

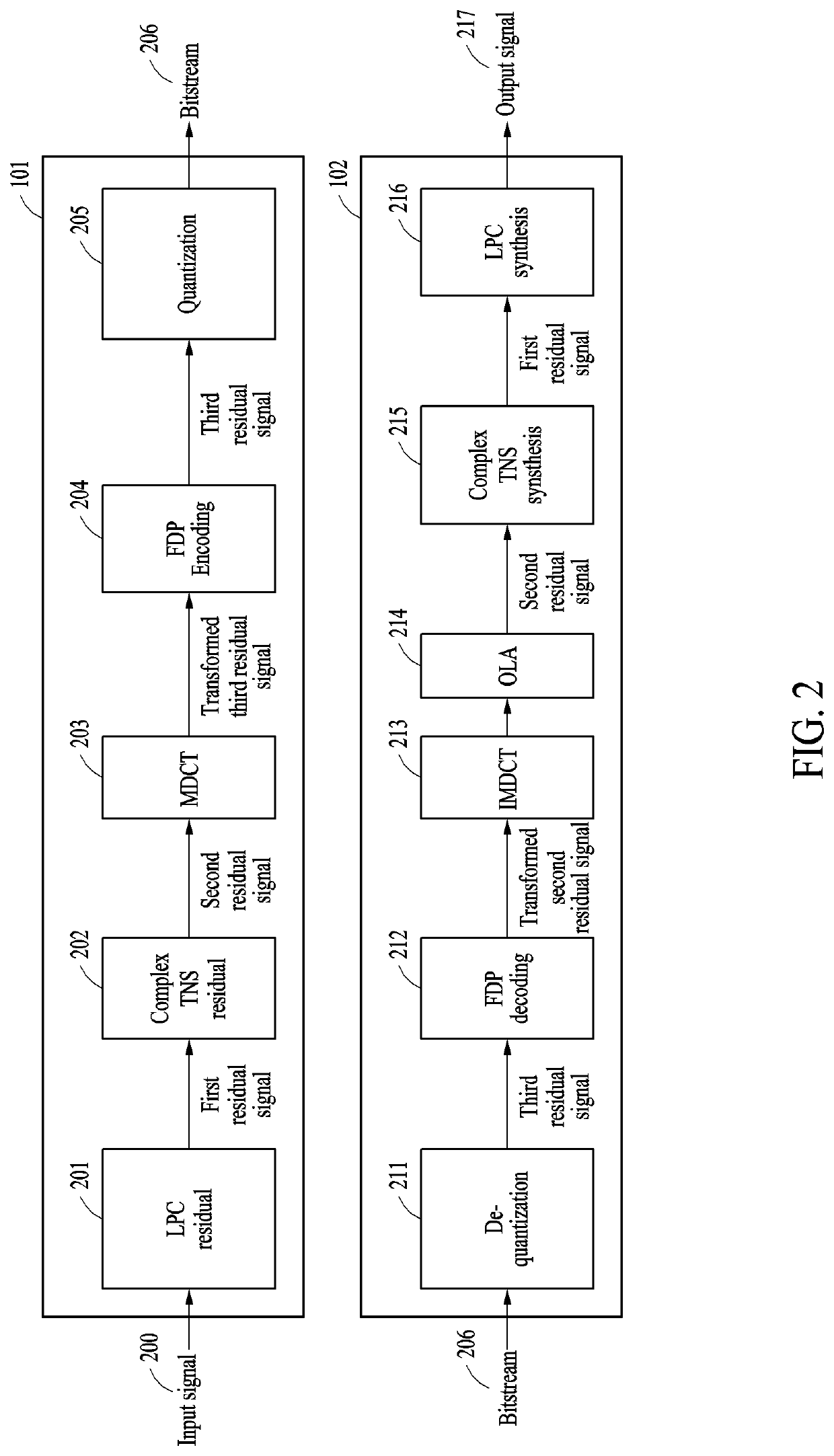

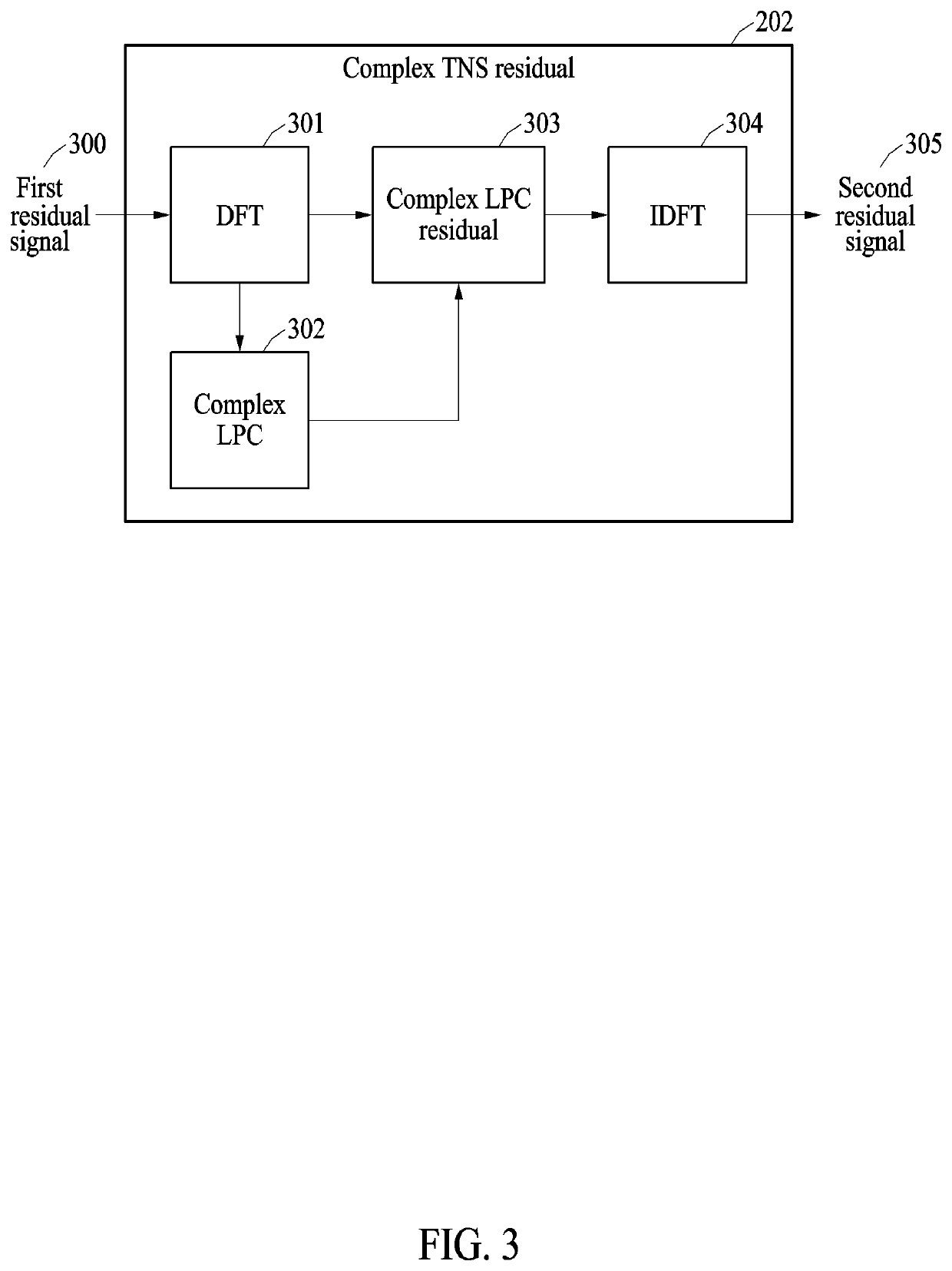

Method of generating residual signal, and encoder and decoder performing the method

PendingUS20220157326A1Improve Quantization EfficiencyEffective recoverySpeech analysisSpeech recognitionFrequency domain

A method of generating a residual signal performed by an encoder includes identifying an input signal including an audio sample, generating a first residual signal from the input signal using linear predictive coding (LPC), generating a second residual signal having a less information amount than the first residual signal by transforming the first residual signal, transforming the second residual signal into a frequency domain, and generating a third residual signal having a less information amount than the second residual signal from the transformed second residual signal using frequency-domain prediction (FDP) coding.

Owner:ELECTRONICS & TELECOMM RES INST

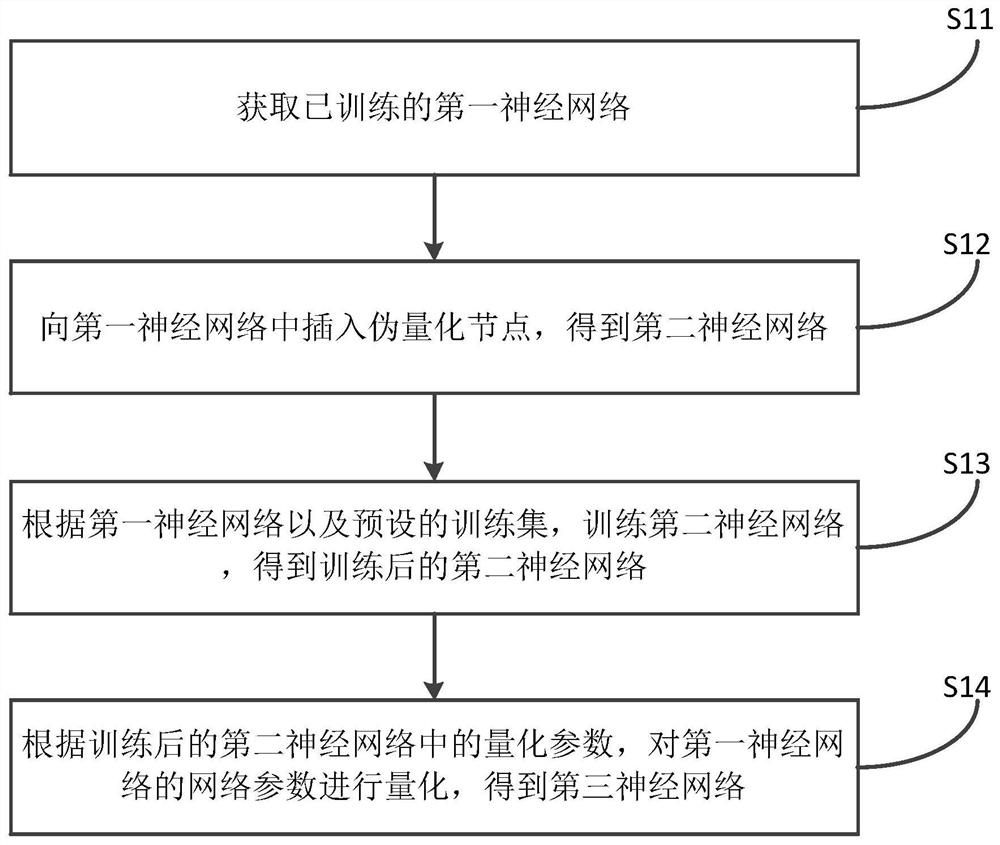

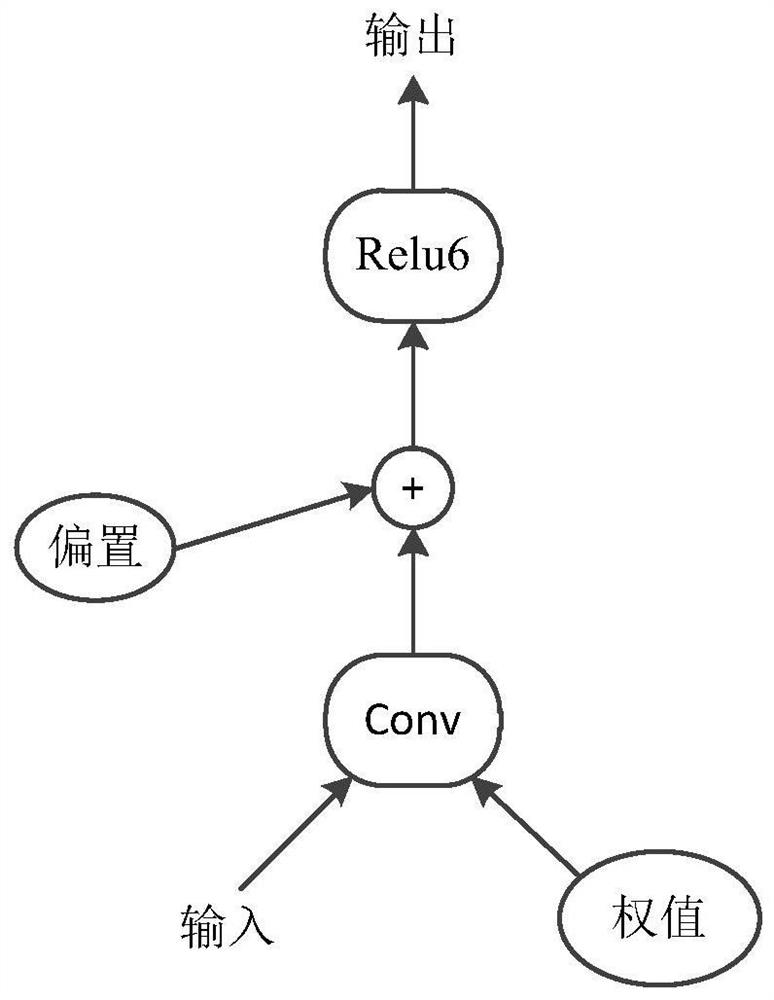

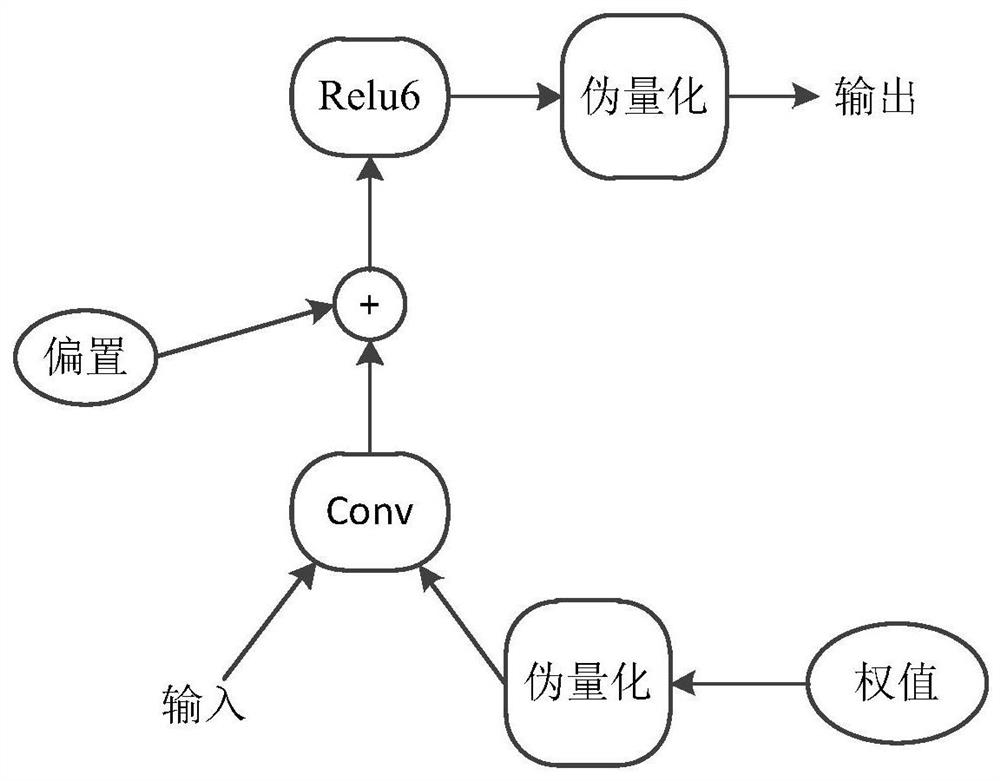

Network quantization method and device, electronic equipment and storage medium

PendingCN112884144AImprove Quantization EfficiencyImprove convenienceNeural architecturesNeural learning methodsNeural network nnElectrical and Electronics engineering

The invention relates to a network quantification method and device, electronic equipment and a storage medium. The method comprises the following steps: acquiring a trained first neural network; a pseudo quantization node is inserted into the first neural network to obtain a second neural network, the pseudo quantization node comprises a quantization parameter to be trained, and the quantization parameter is used for quantizing a network parameter of the first neural network; according to the first neural network and a preset training set, training a second neural network to obtain a trained second neural network; According to the quantization parameter in the trained second neural network, the network parameter of the first neural network is quantized to obtain a third neural network, and the data precision of the network parameter of the third neural network is smaller than the data precision of the network parameter of the first neural network. According to the embodiment of the invention, the quantification efficiency, convenience and universality of the neural network can be improved, and the overhead of network quantification is reduced.

Owner:SHANGHAI SENSETIME INTELLIGENT TECH CO LTD

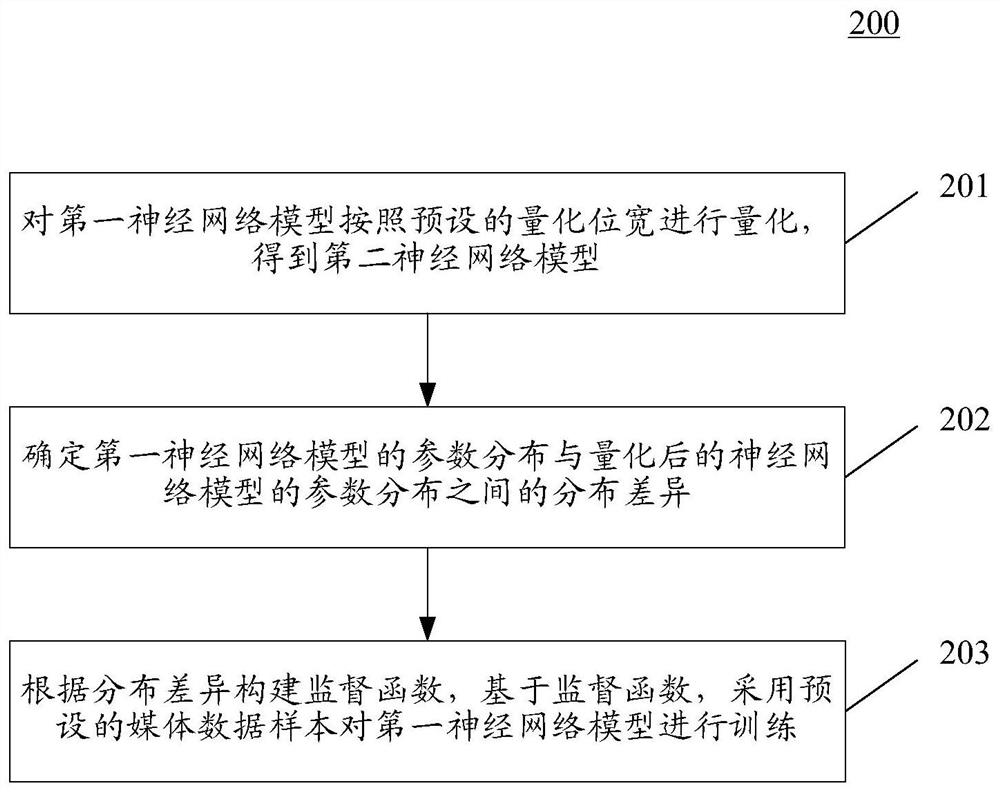

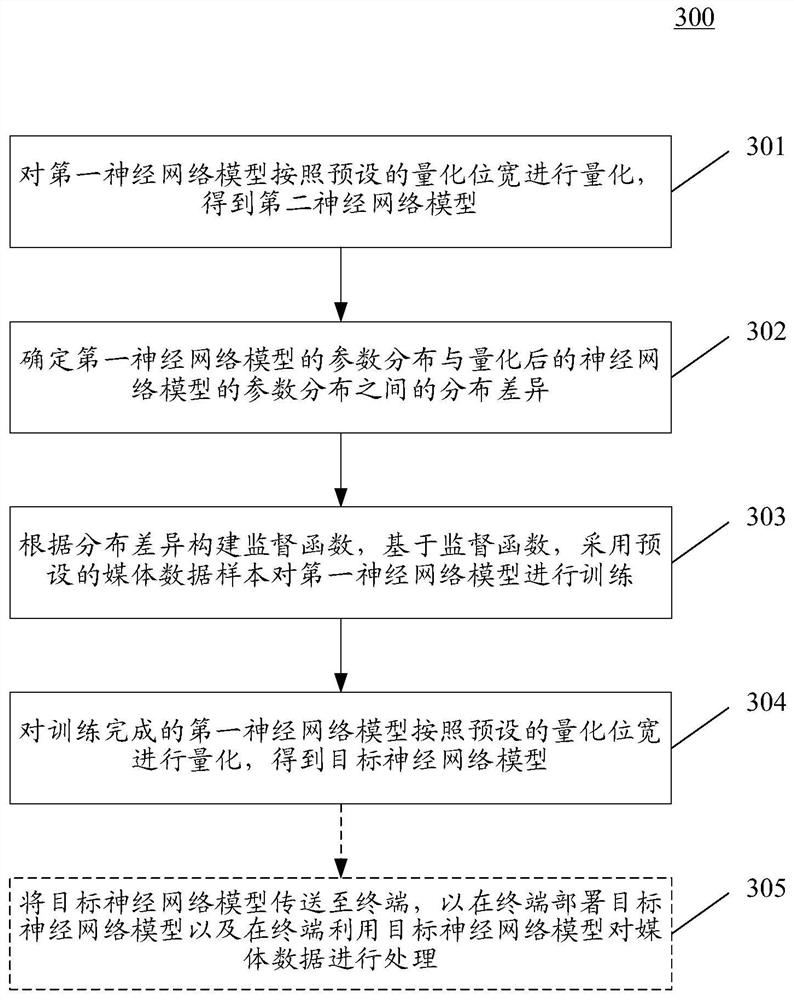

Neural network model training method and device

PendingCN113361678AReduce precision lossSimplified quantification methodNeural architecturesEngineeringQuantized neural networks

The invention relates to the field of artificial intelligence. The embodiment of the invention discloses a neural network model training method and device. The method comprises the steps that a first neural network model is quantized according to a preset quantization bit width, a second neural network model is obtained, and the parameter bit width of the first neural network model is larger than the parameter quantization bit width of the second neural network model; the method also includes determining a distribution difference between the parameter distribution of the first neural network model and the parameter distribution of the quantized neural network model; and constructing a supervision function according to the distribution difference, and training the first neural network model by adopting a preset media data sample based on the supervision function. The method can be used for training to obtain a neural network model suitable for quantification.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com