Fixed-point quantification method and device for deep learning model

A technology of deep learning and quantitative methods, applied in the field of deep learning, can solve the problems of slow reasoning deep learning network, increase in model storage size, and difficulty in implementing landing applications, so as to save time, improve the efficiency of quantization, and increase the speed of quantization Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

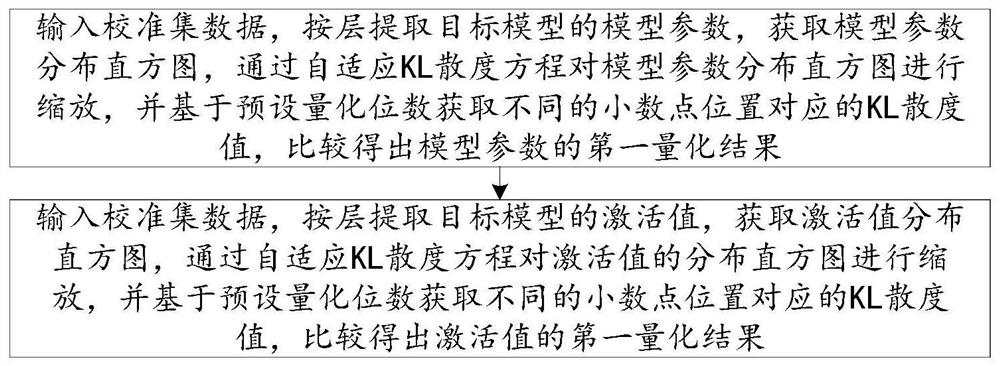

Method used

Image

Examples

Embodiment Construction

[0030] Embodiments of the present invention are described in detail below, examples of which are shown in the drawings, wherein the same or similar reference numerals designate the same or similar elements or elements having the same or similar functions throughout. The embodiments described below by referring to the figures are exemplary only for explaining the present invention and should not be construed as limiting the present invention.

[0031] In the description of the present invention, the meaning of several means one or more, and the meaning of multiple means two or more than two. Greater than, less than, exceeding, etc. are understood as not including the original number, and above, below, within, etc. are understood as including the original number . If the description of the first and second is only for the purpose of distinguishing the technical features, it cannot be understood as indicating or implying the relative importance or implicitly indicating the number...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com