Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

180 results about "Network processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

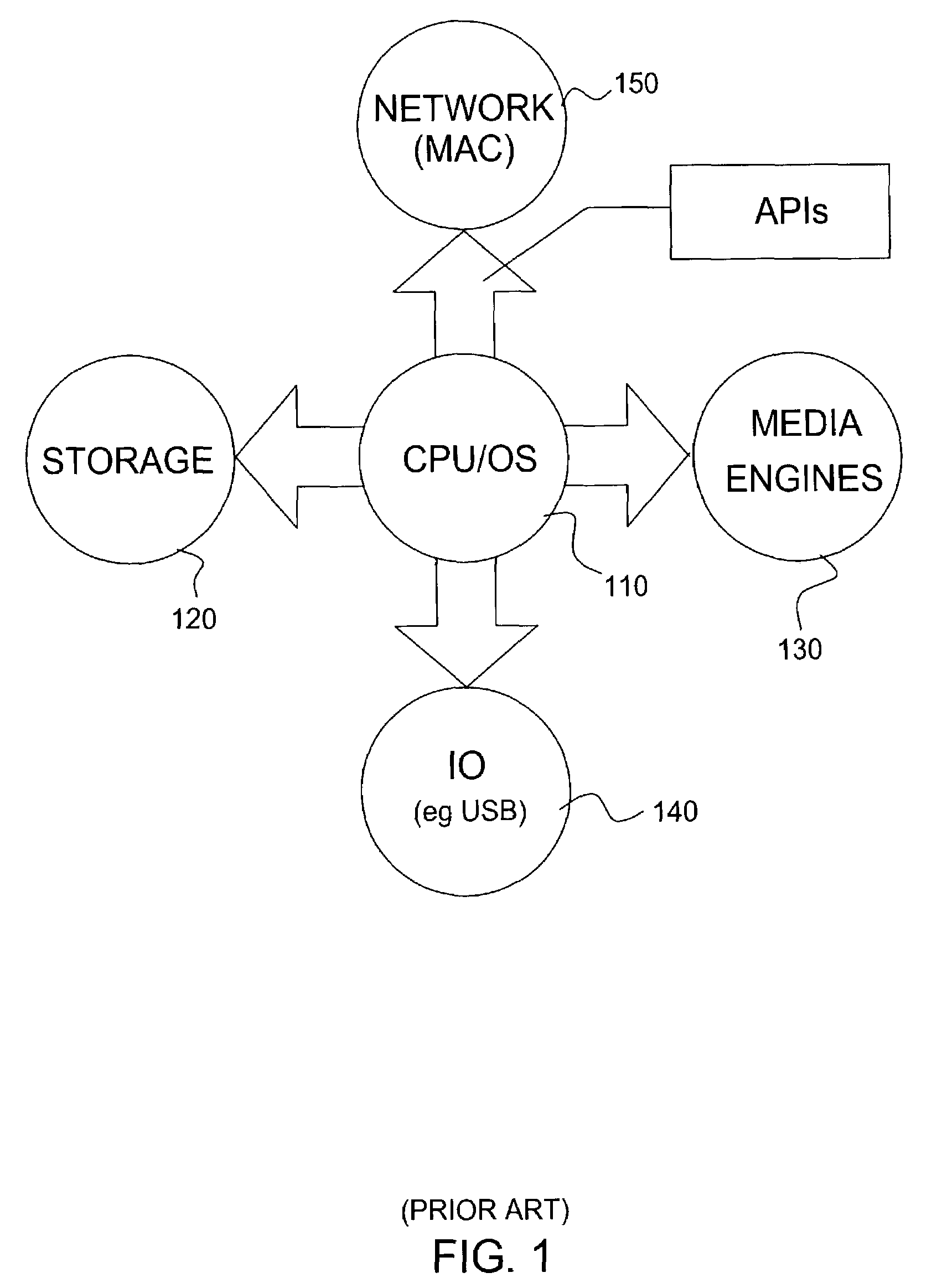

Network processors are typically software programmable devices and would have generic characteristics similar to general purpose central processing units that are commonly used in many different types of equipment and products.

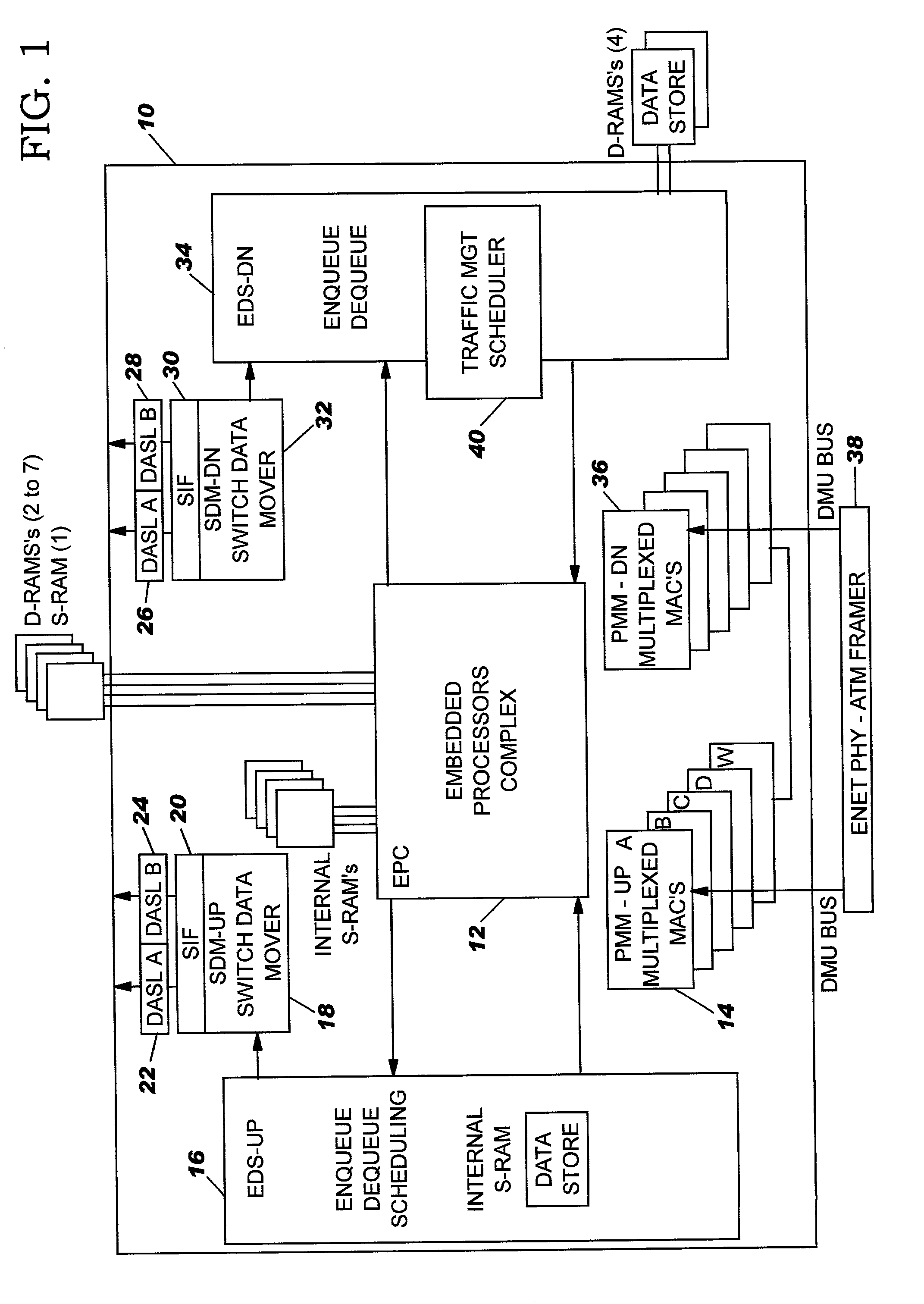

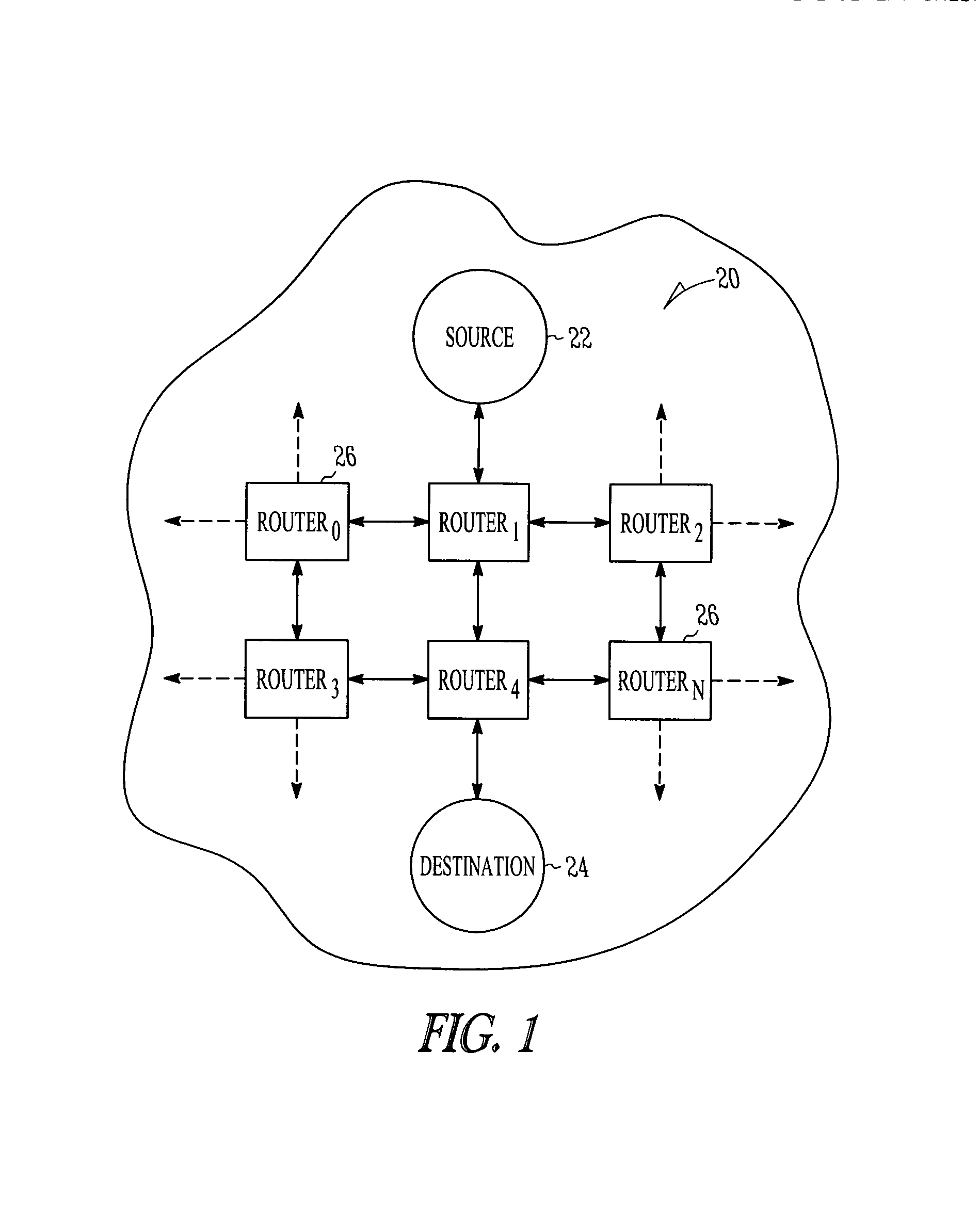

Packet routing and switching device

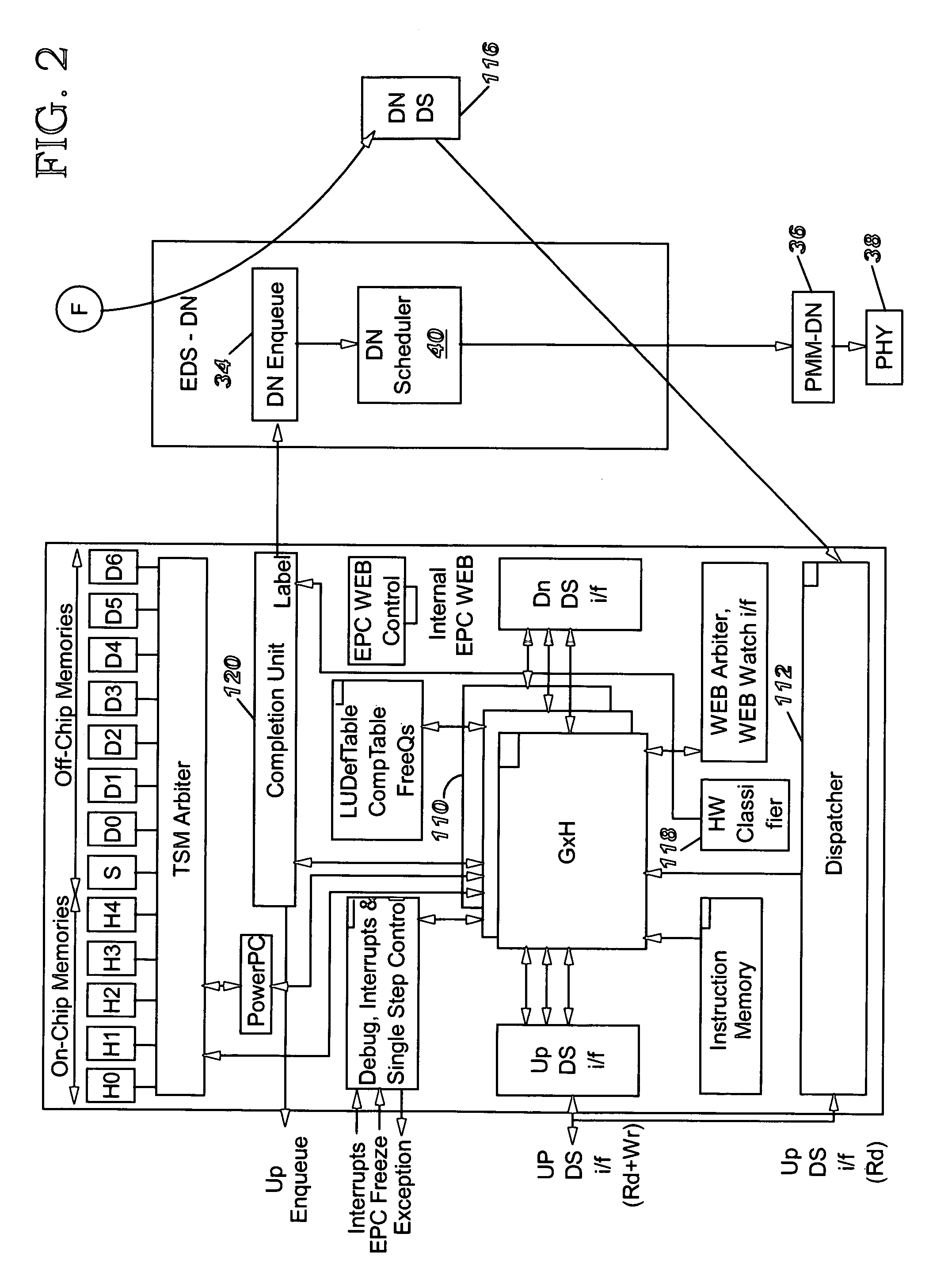

InactiveUS7382787B1Quick extractionTime-division multiplexData switching by path configurationNetwork processing unitSystolic array

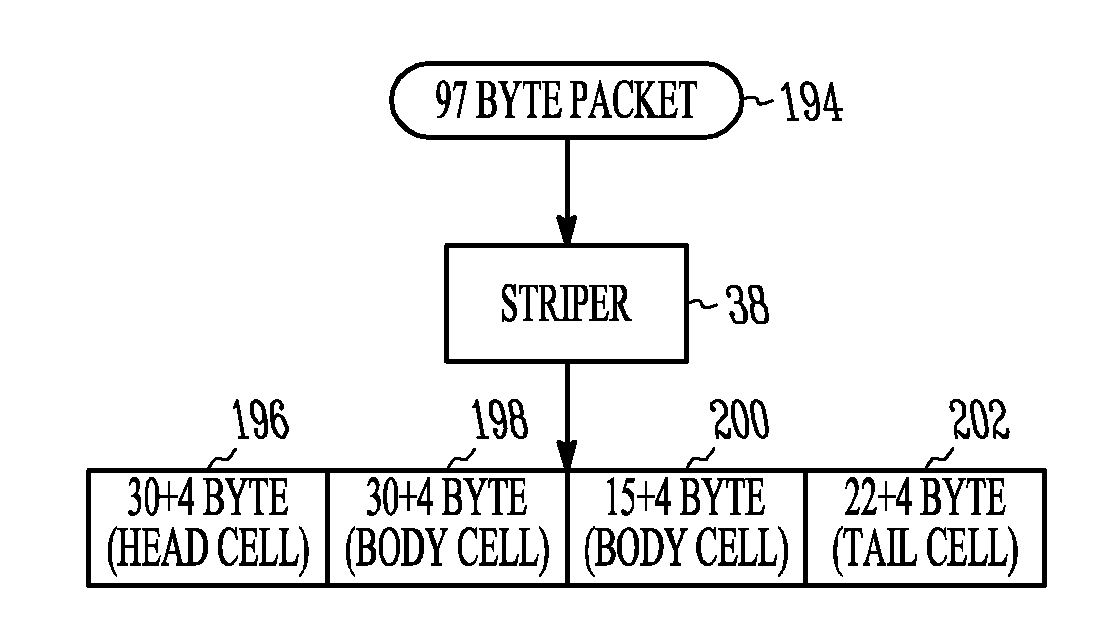

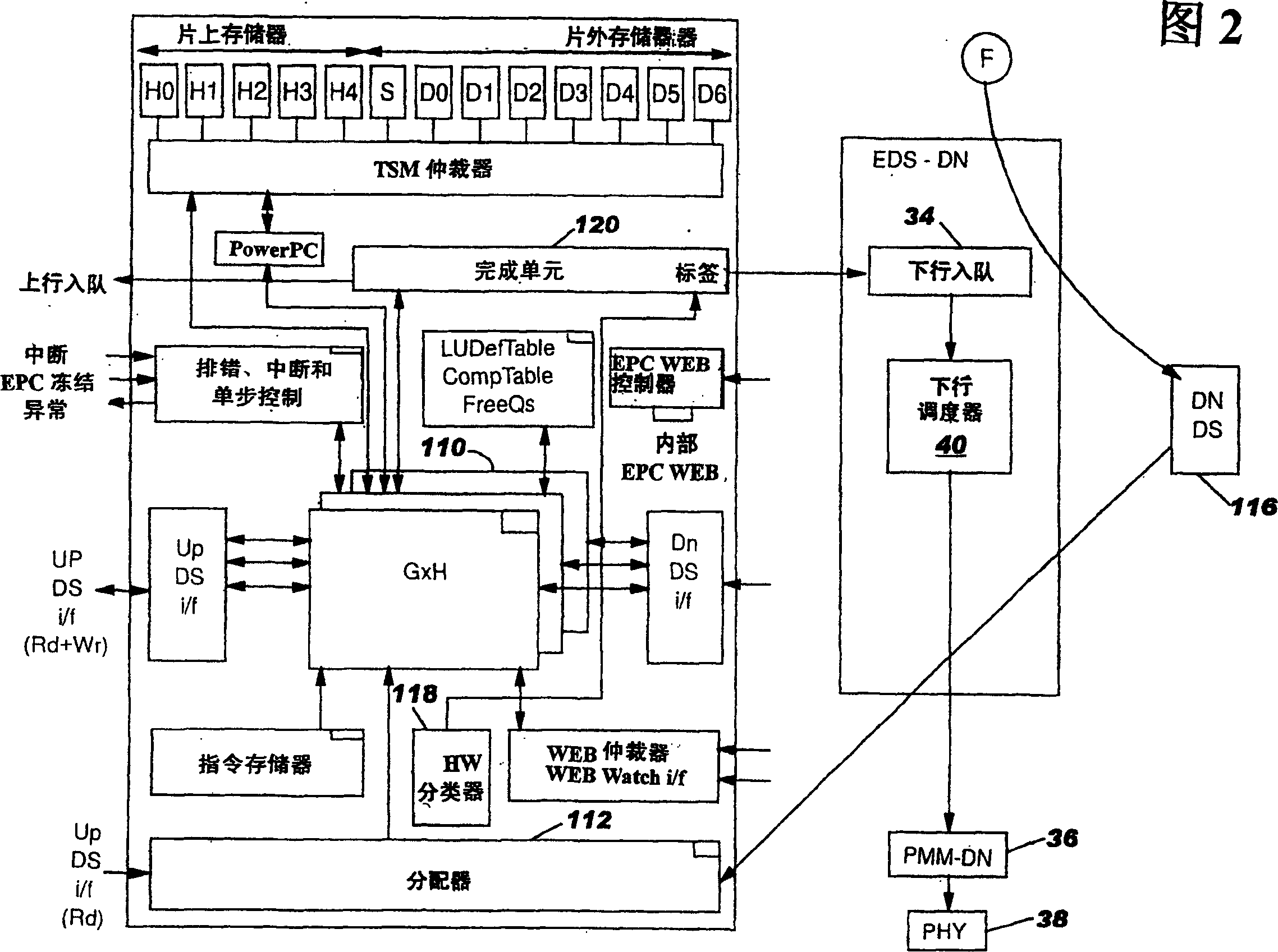

A method for routing and switching data packets from one or more incoming links to one or more outgoing links of a router. The method comprises receiving a data packet from the incoming link, assigning at least one outgoing link to the data packet based on the destination address of the data packet, and after the assigning operation, storing the data packet in a switching memory based on the assigned outgoing link. The data packet extracted from the switching memory, and transmitted along the assigned outgoing link. The router may include a network processing unit having one or more systolic array pipelines for performing the assigning operation.

Owner:CISCO TECH INC

Packet routing and switching device

ActiveUS8270401B1Quick extractionData switching by path configurationNetwork connectionsNetwork processing unitPacket routing

Owner:CISCO TECH INC

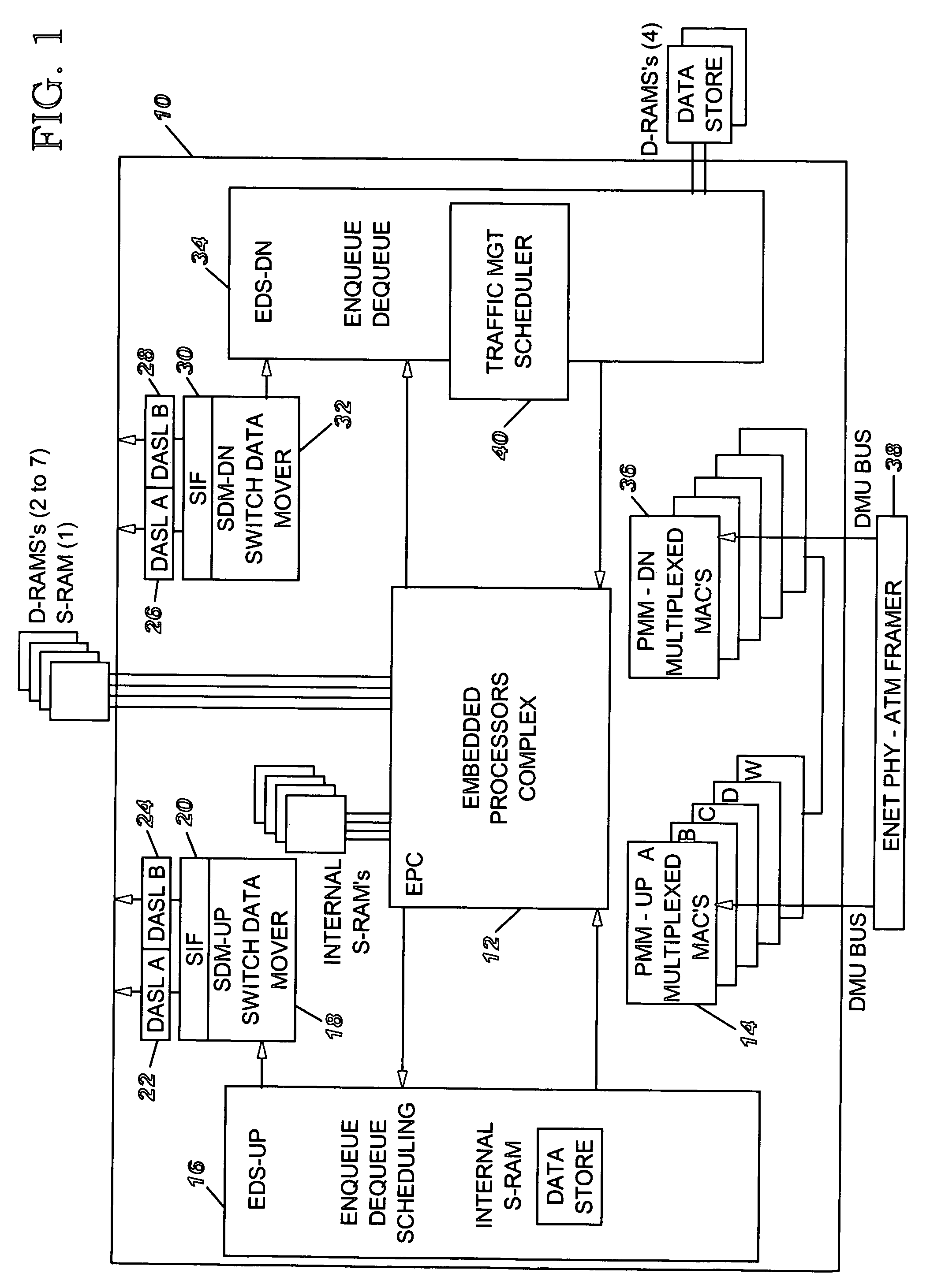

Method and system for network processor scheduling based on service levels

A system and method of moving information units from an output flow control toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to service based on a weighted fair queue where position in the queue is adjusted after each service based on a weight factor and the length of frame, a process which provides a method for and system of interaction between different calendar types is used to provide minimum bandwidth, best effort bandwidth, weighted fair queuing service, best effort peak bandwidth, and maximum burst size specifications. The present invention permits different combinations of service that can be used to create different QoS specifications. The "base" services which are offered to a customer in the example described in this patent application are minimum bandwidth, best effort, peak and maximum burst size (or MBS), which may be combined as desired. For example, a user could specify minimum bandwidth plus best effort additional bandwidth and the system would provide this capability by putting the flow queue in both the NLS and WFQ calendar. The system includes tests when a flow queue is in multiple calendars to determine when it must come out.

Owner:IBM CORP

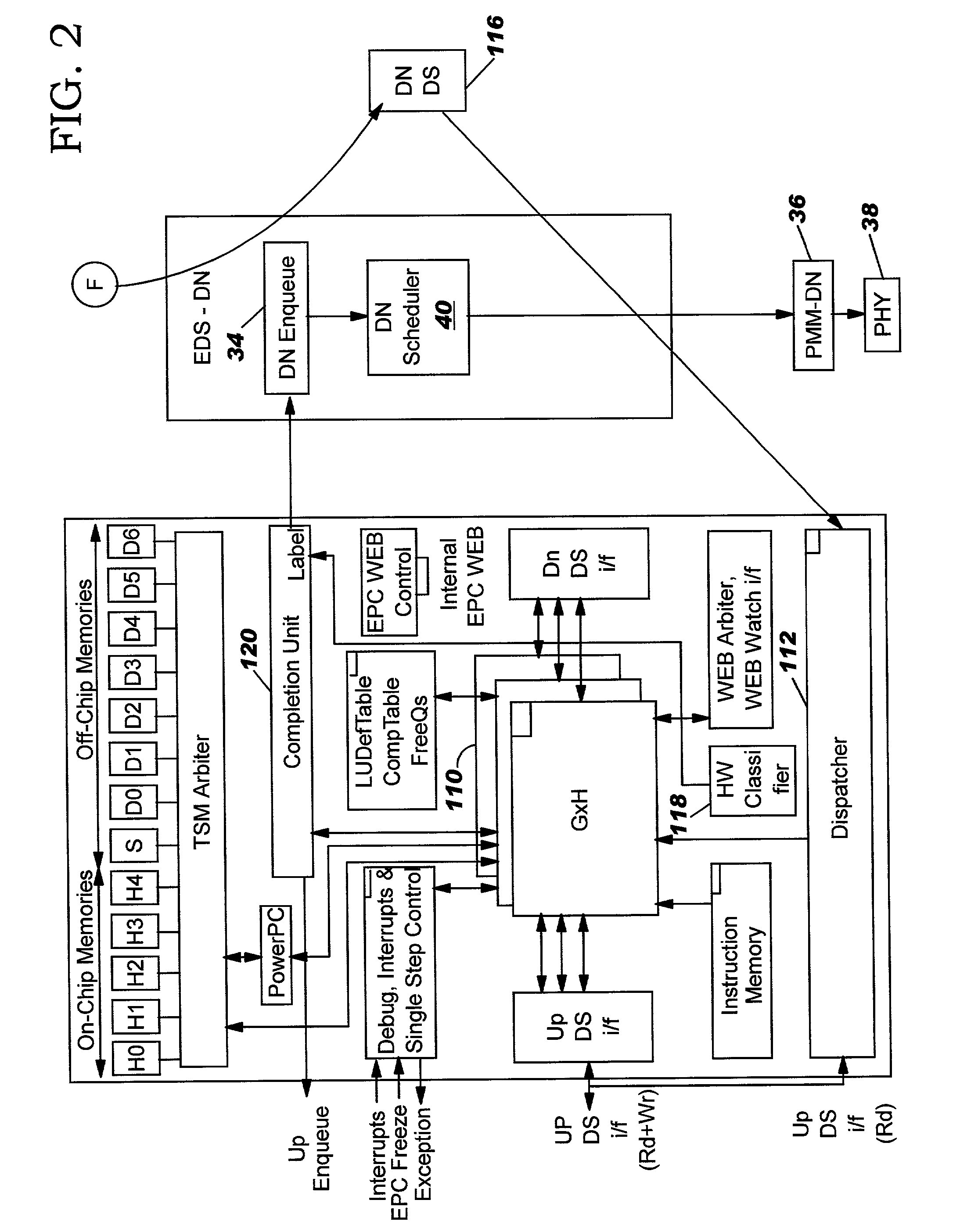

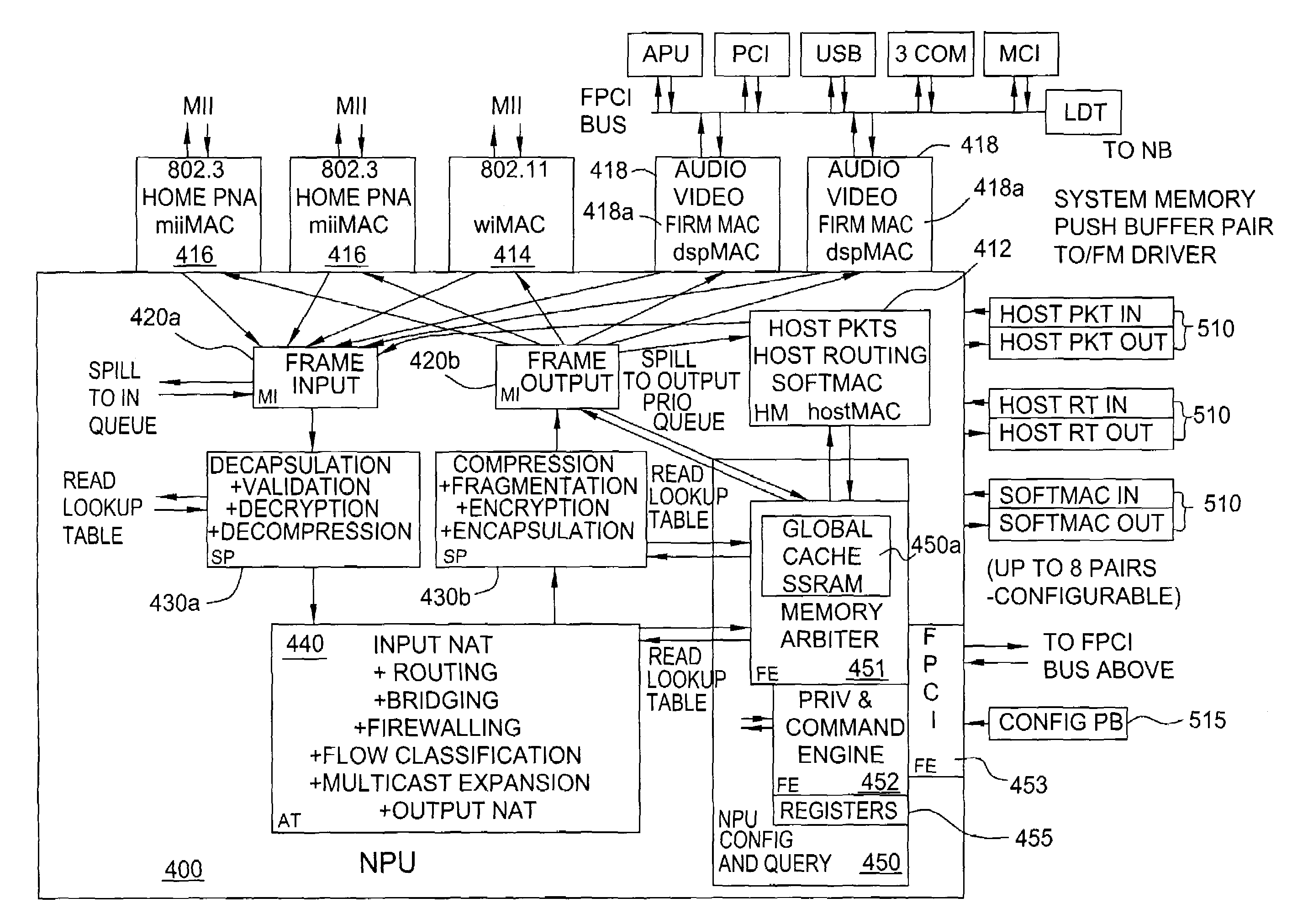

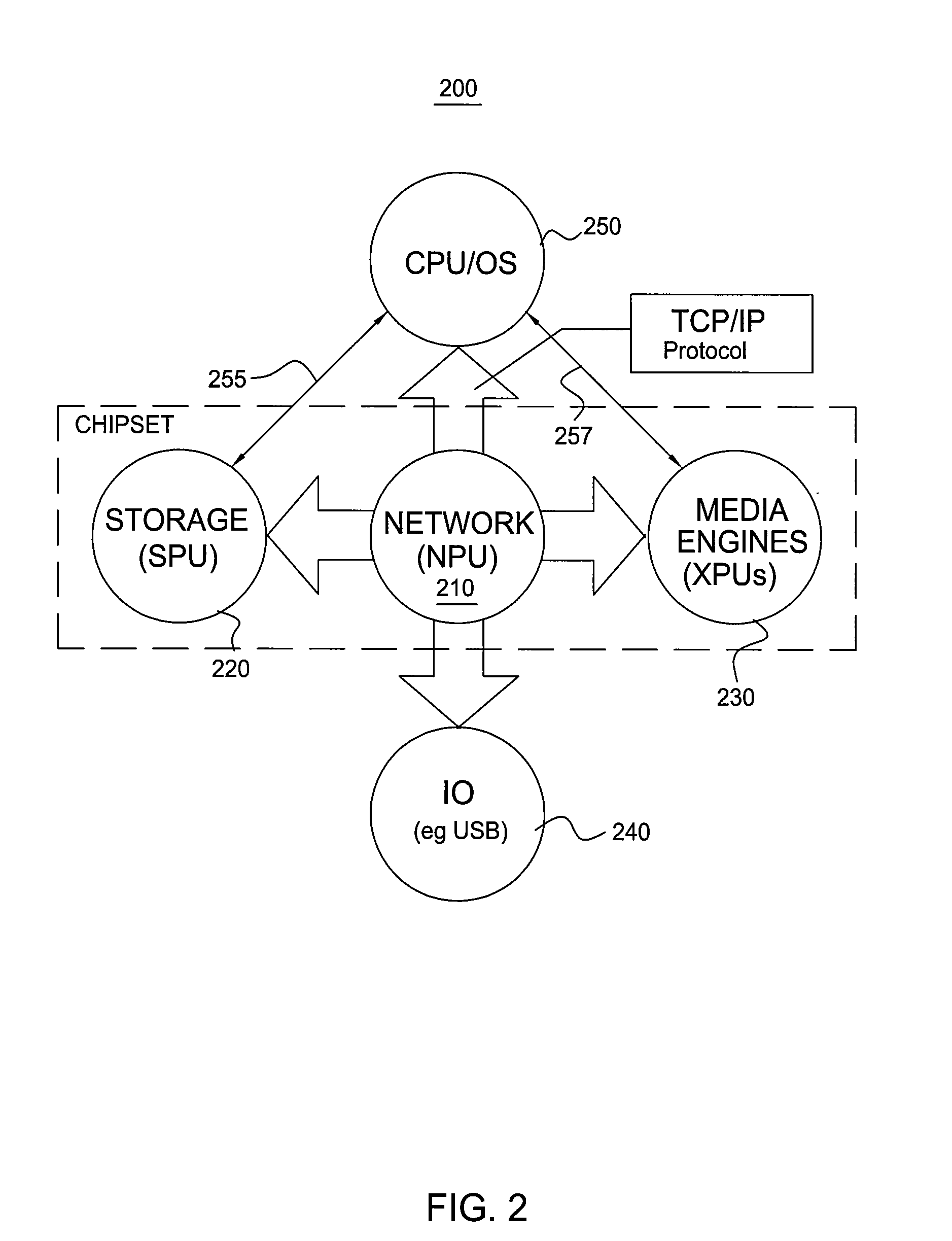

Internet protocol (IP) router residing in a processor chipset

ActiveUS7324547B1Effective serviceEasily configure networkLoop networksNetwork connectionsTTEthernetNetwork processing unit

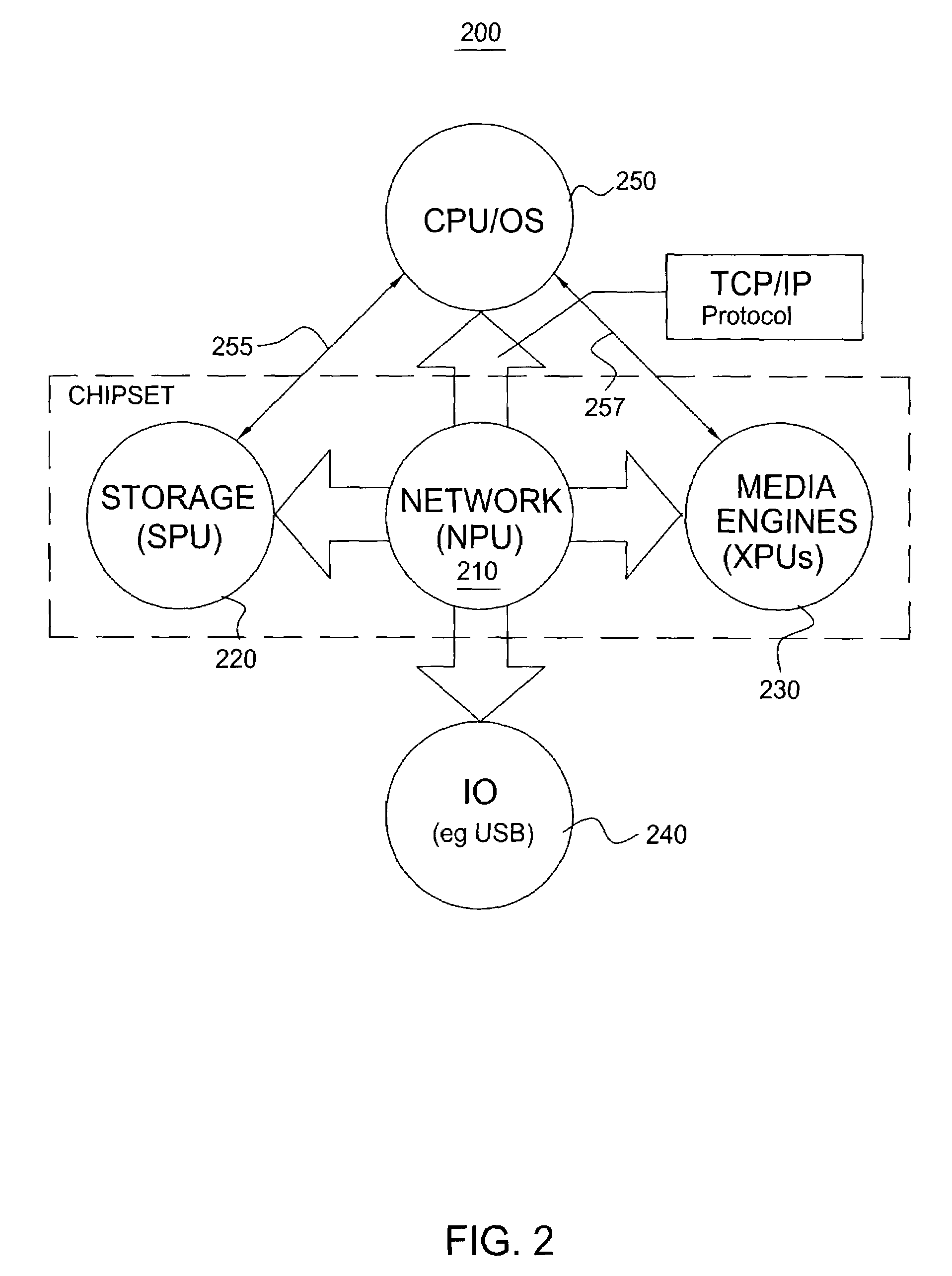

A novel network architecture that integrates the functions of an internet protocol (IP) router into a network processing unit (NPU) that resides in a host computer's chipset such that the host computer's resources are perceived as separate network appliances. The NPU appears logically separate from the host computer even though, in one embodiment, it is sharing the same chip.

Owner:NVIDIA CORP

Communication apparatus and data communication method

ActiveUS20060039287A1Efficient implementationError preventionTransmission systemsNetwork processing unitArrival time

A communication apparatus includes an arrival time estimation unit, connection selection unit, and network processing unit. The arrival time estimation unit estimates, for each block and each of a plurality of connections, an arrival time until a block generated by segmenting transmission data arrives from the apparatus at a final reception terminal or a merging apparatus through a network. The connection selection unit selects, for each block, a connection with the shortest arrival time from the plurality of connections on the basis of the estimation result. The network processing unit outputs each block to the network by using the selected connection. A data communication method and a data communication program are also disclosed.

Owner:NEC CORP

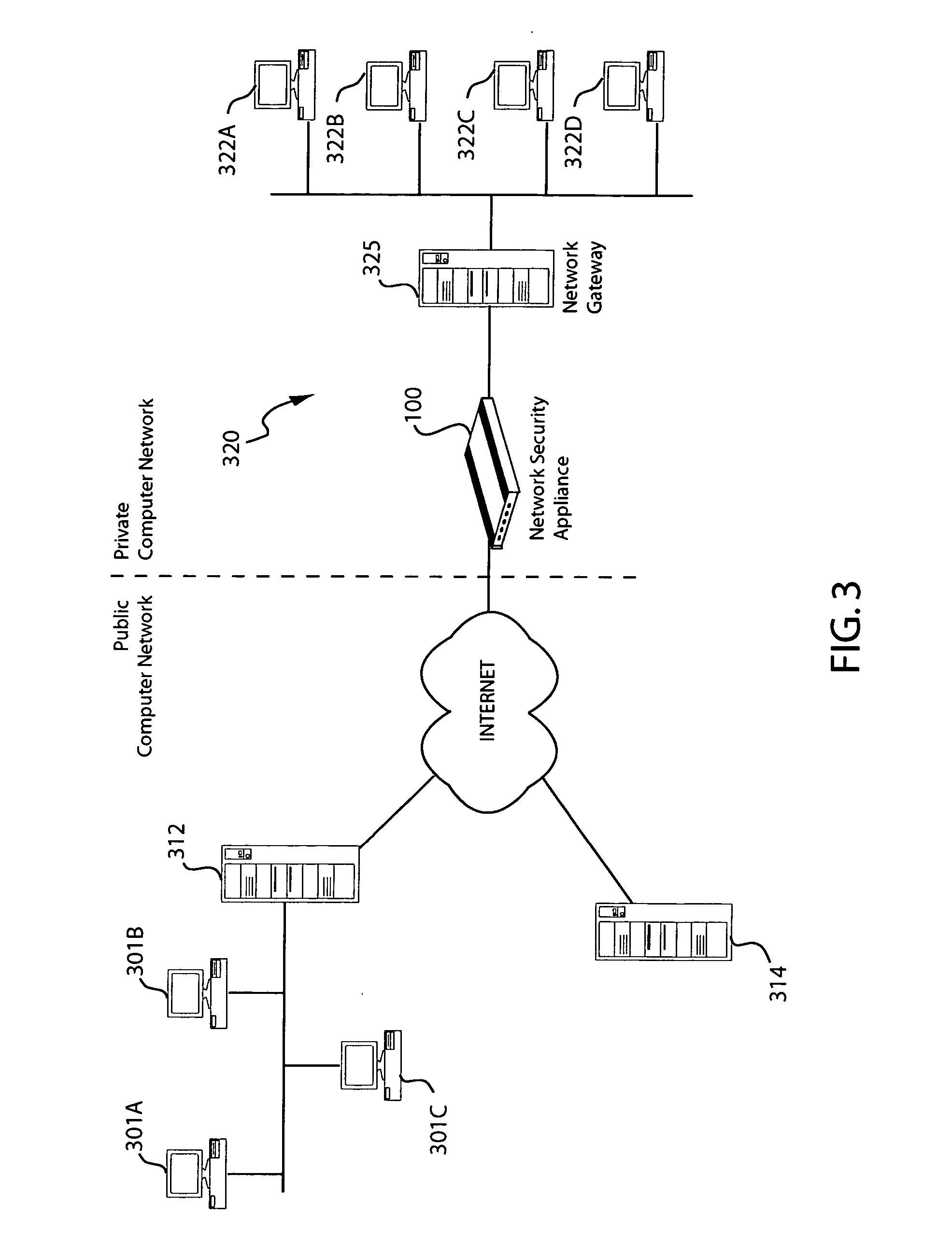

Method and apparatus for securing a computer network

ActiveUS20060206936A1Minimize impactMemory loss protectionError detection/correctionData packGeneral purpose

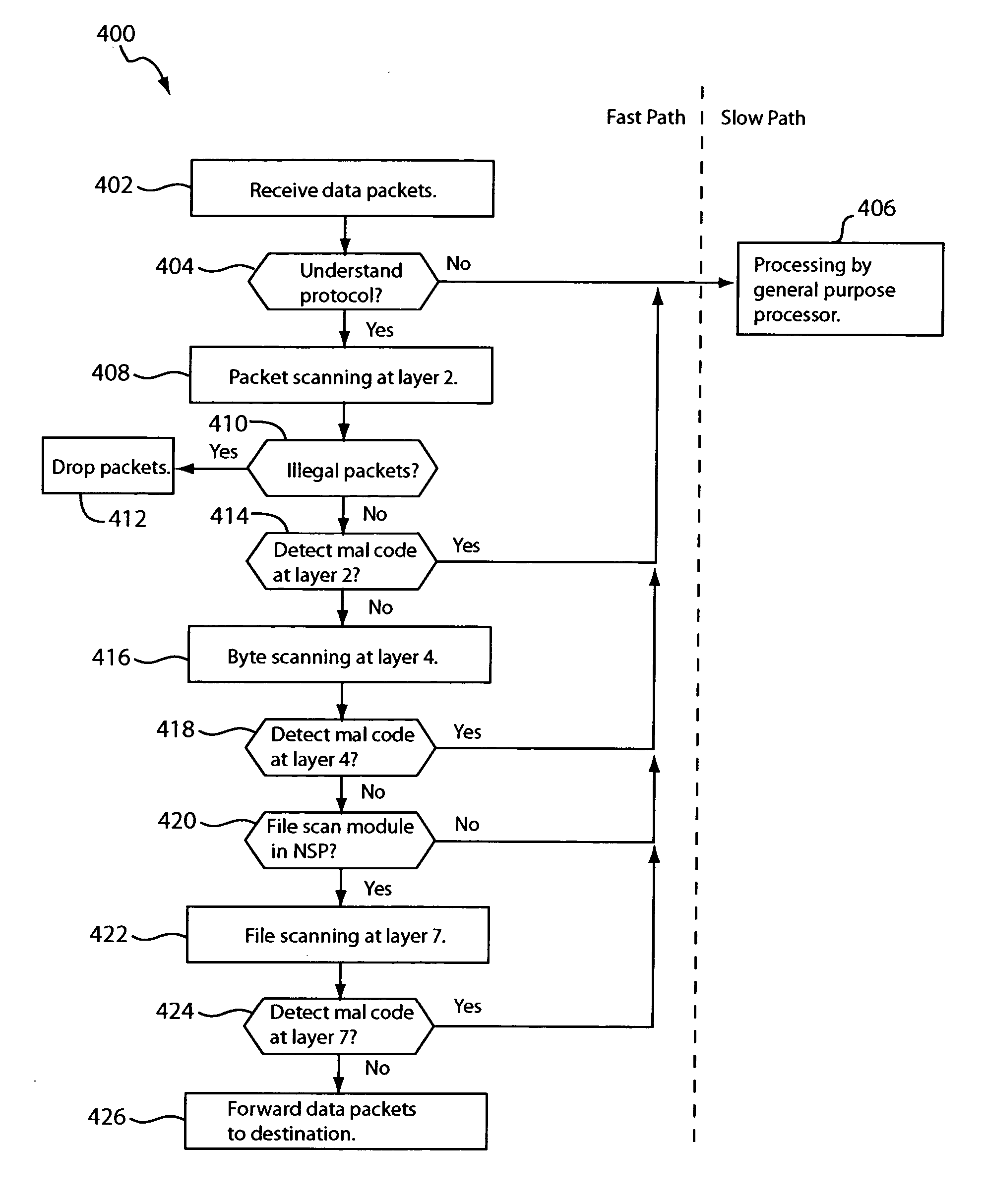

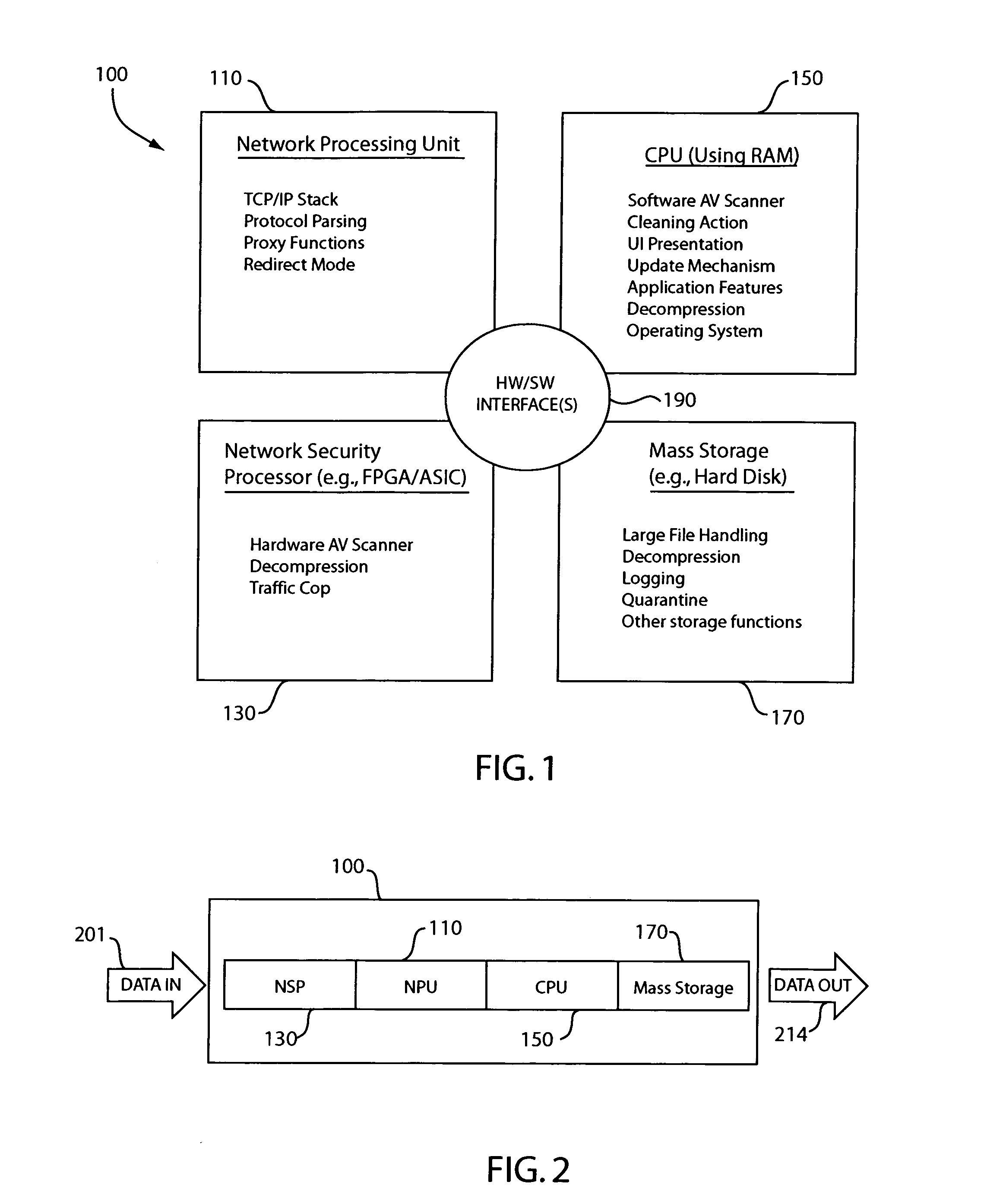

In one embodiment, a network security appliance includes a logic circuit, a network processing unit, and a general purpose processor to protect a computer network from malicious codes, unauthorized data packets, and other network security threats. The logic circuit may include one or more programmable logic devices configured to scan incoming data packets at different layers of a multi-layer protocol, such as the OSI-seven layer model. The network processing unit may work in conjunction with the logic circuit to perform protocol parsing, to form higher layer data units from the data packets, and other network communications-related tasks. The general purpose processor may execute software for performing functions not available from the logic circuit or the network processing unit. For example, the general purpose processor may remove malicious code from infected data or perform malicious code scanning on data when the logic circuit is not configured to do so.

Owner:TREND MICRO INC

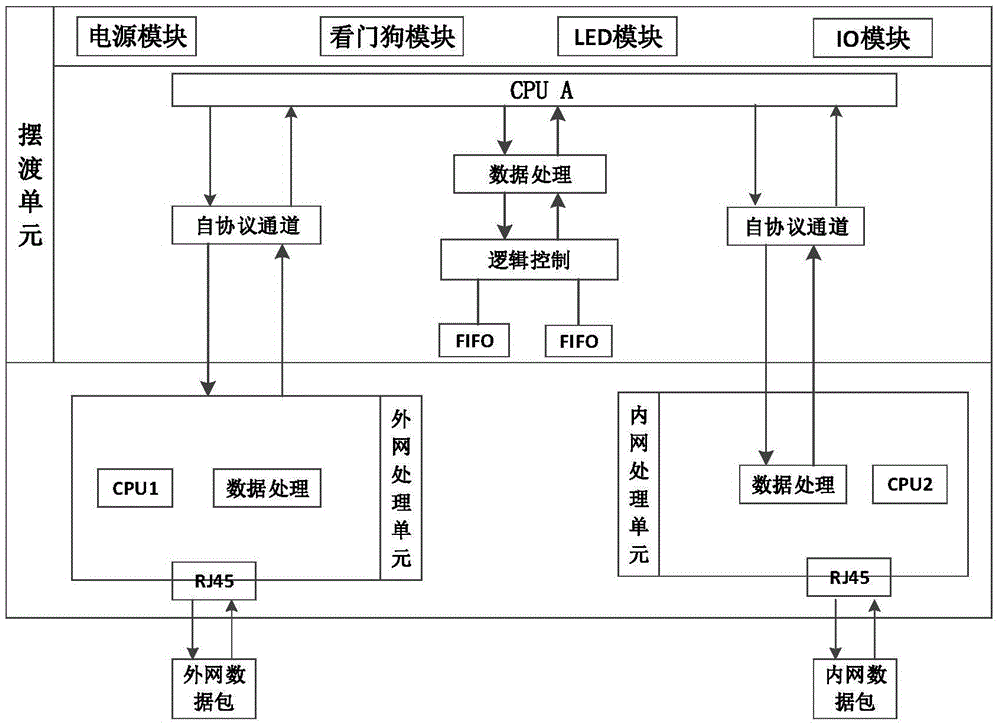

Unidirectional transmission internal and external network secure isolating gateway applicable to industrial control network

InactiveCN105656883AEnsure safetyEliminate vulnerabilityTransmissionNetwork processing unitPublic network

The invention discloses a unidirectional transmission internal and external network secure isolating gateway applicable to an industrial control network, belongs to the technical field of the industrial control network. The isolating gateway comprises an external network processing unit, a data ferry unit and an internal network processing unit; the external network processing unit is connected with the internal network processing unit through the data ferry unit; and the software and the hardware of the internal and external networks are isolated at the same time; the external network processing unit, the data ferry unit and the internal network processing unit are completely independent and communicate through a self-defined protocol. The isolating gateway is advantaged by that the isolating gateway is used for realizing unidirectional data transmission by the industrial control network and an upper computer public network under a physical isolating condition; identity authentication and content filter are controlled through a plug-in mode; and the reliability and the security of the industrial control network are ensured to the maximum extent.

Owner:AUTOMATION RES & DESIGN INST OF METALLURGICAL IND

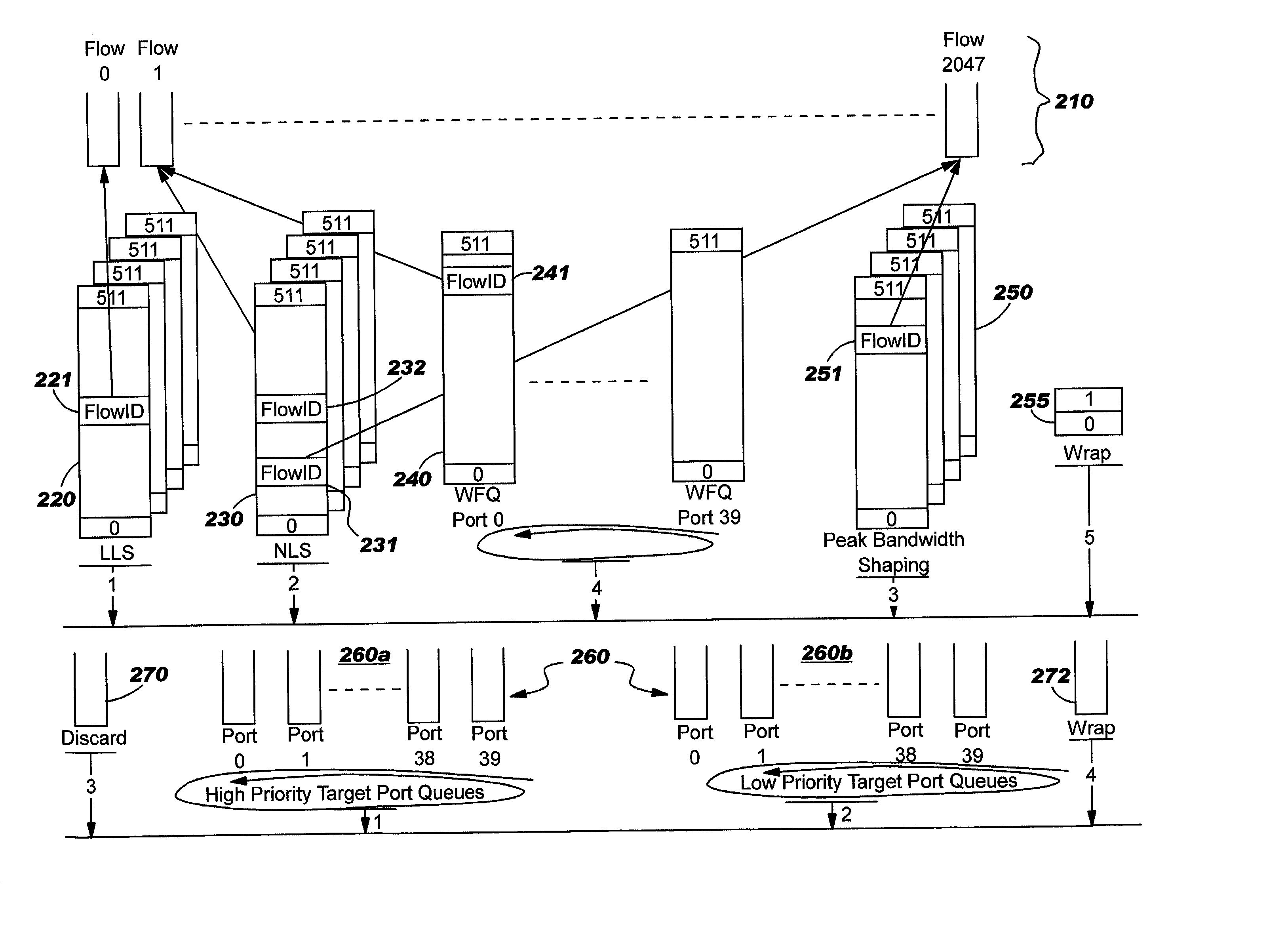

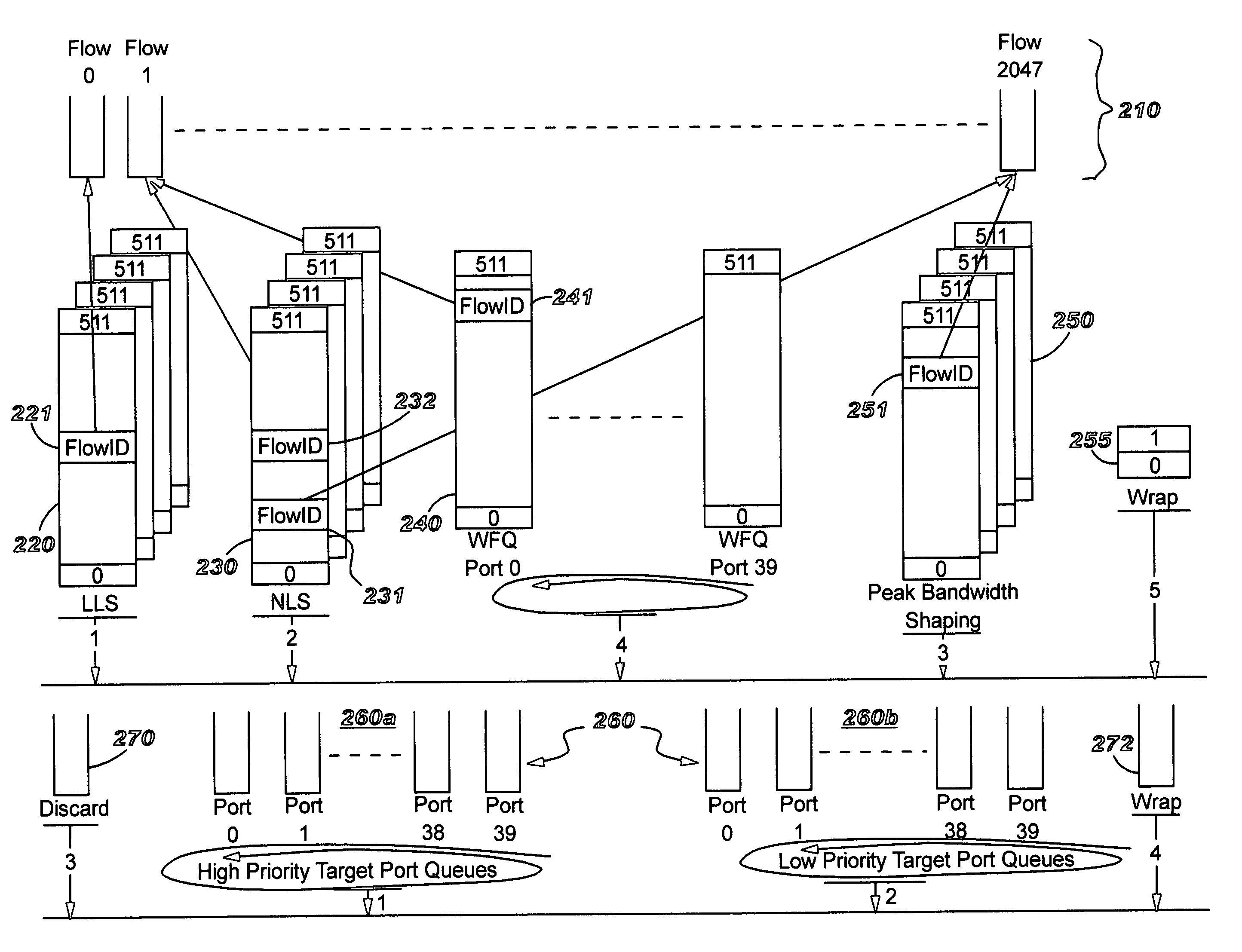

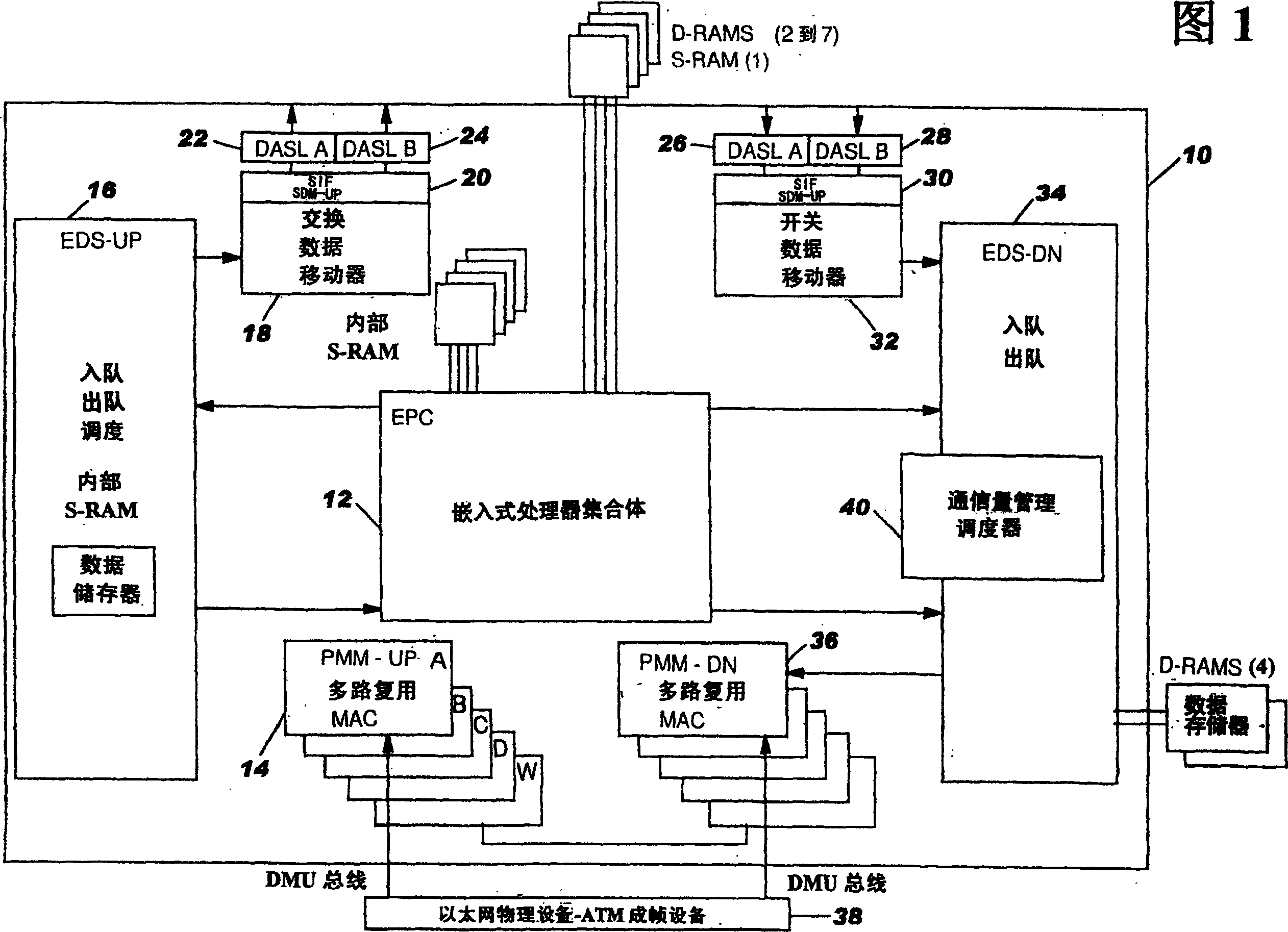

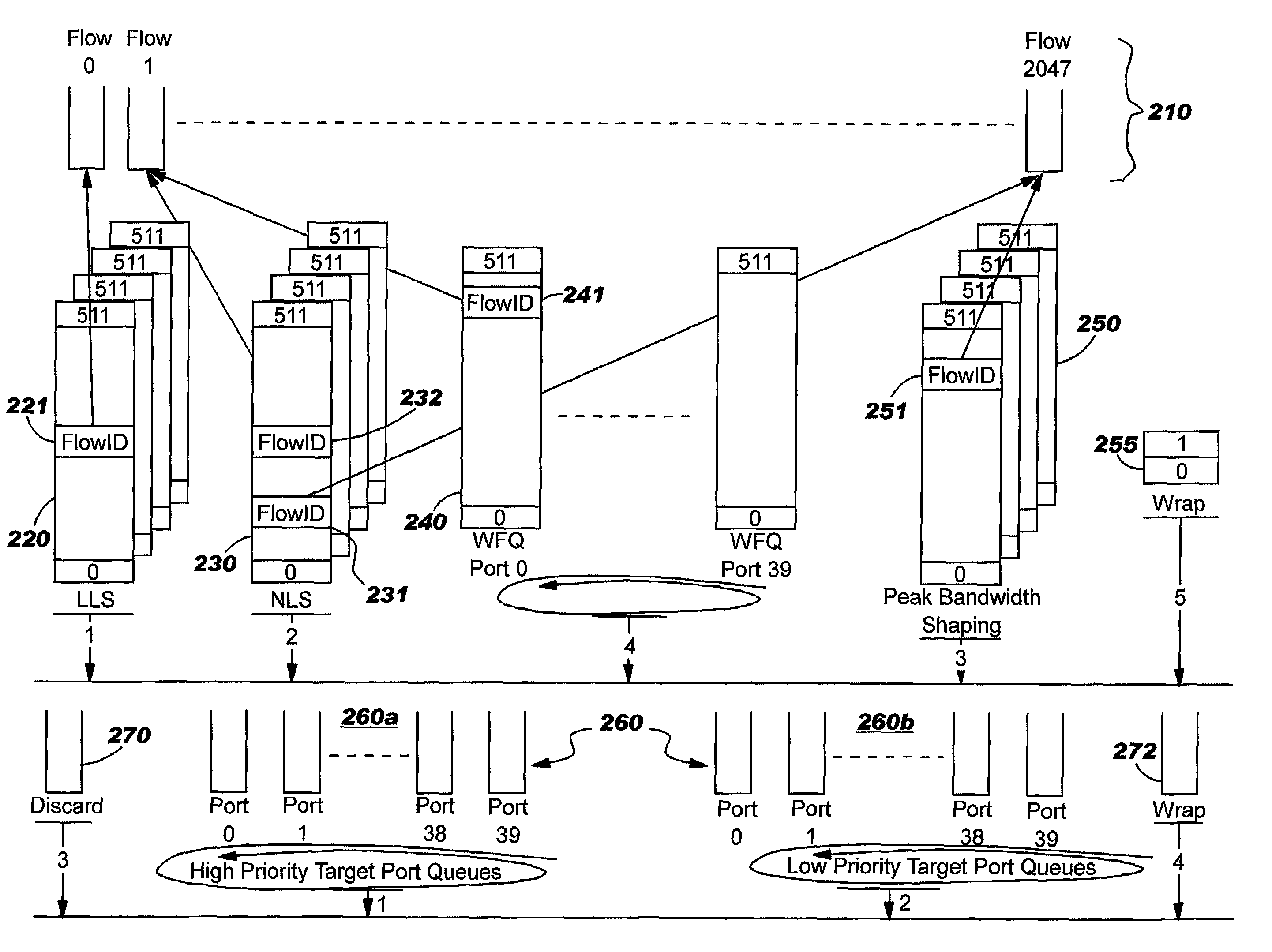

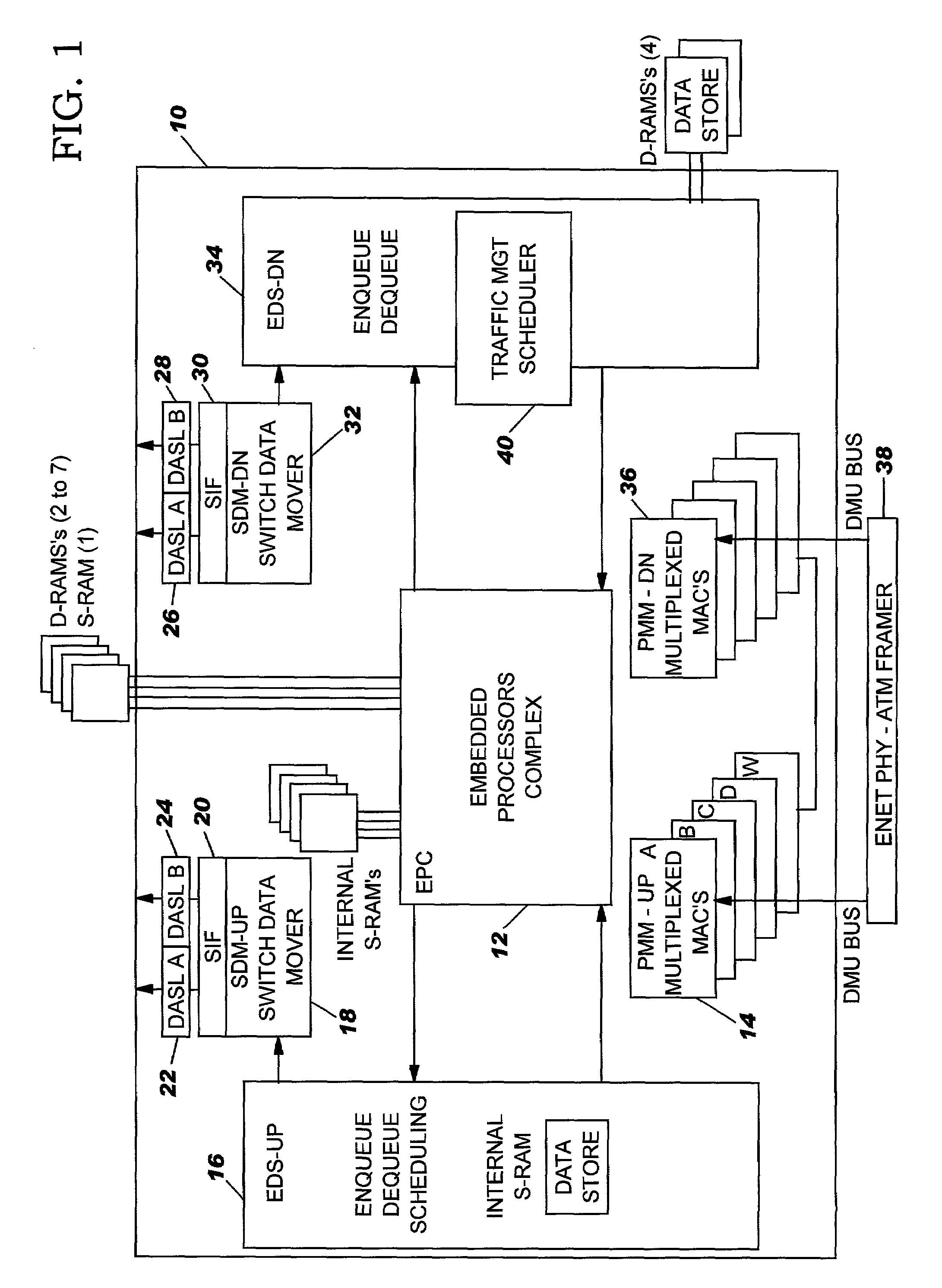

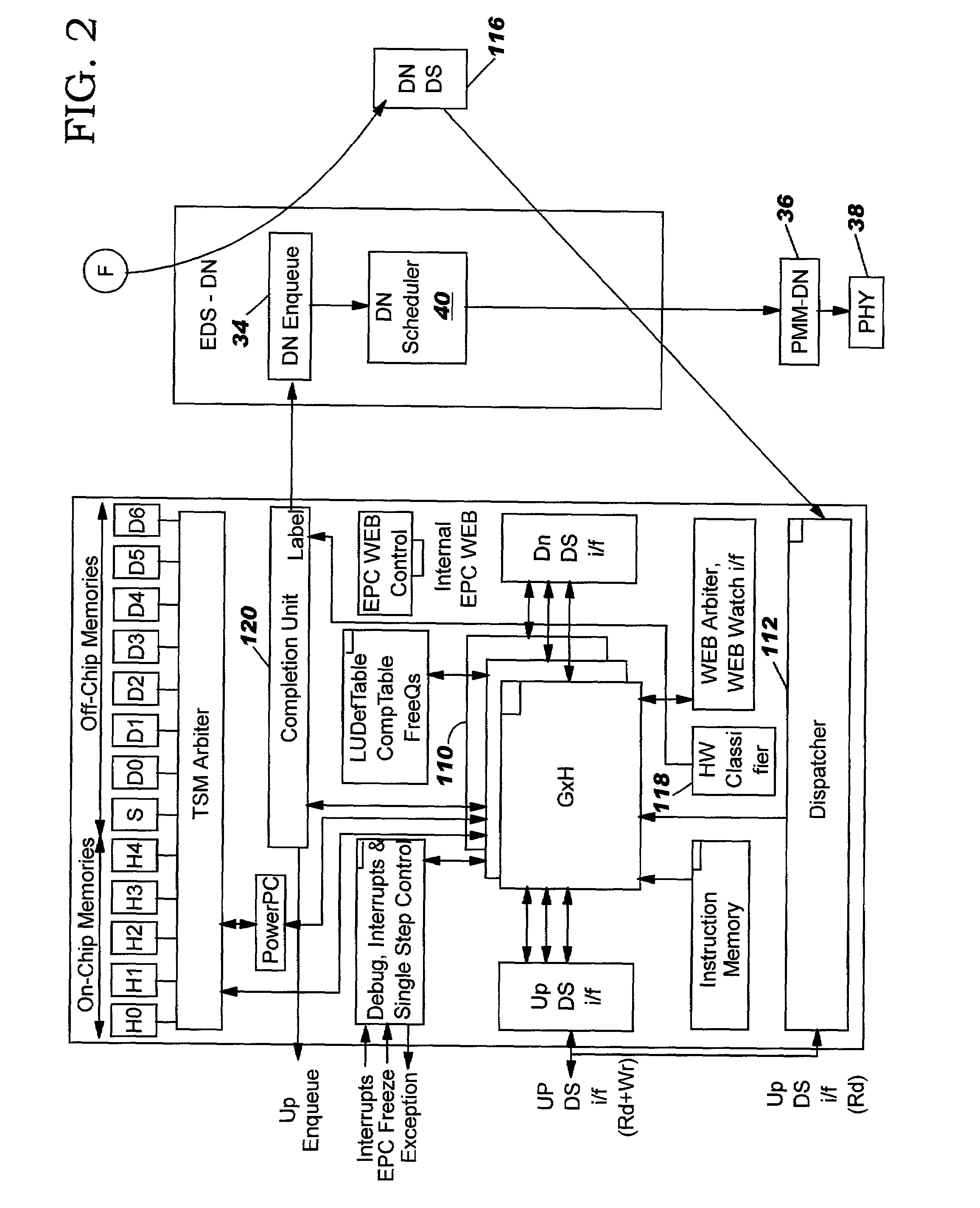

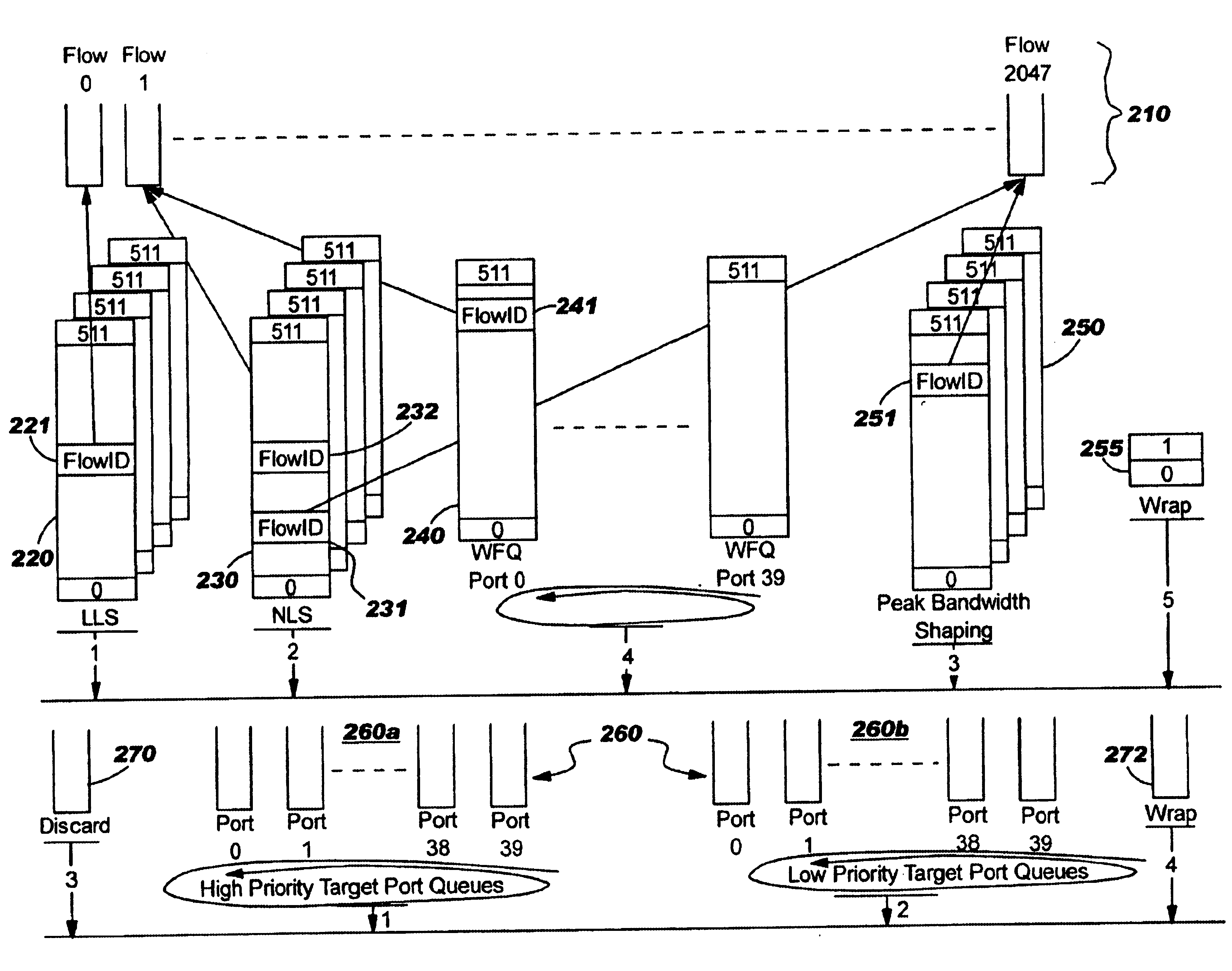

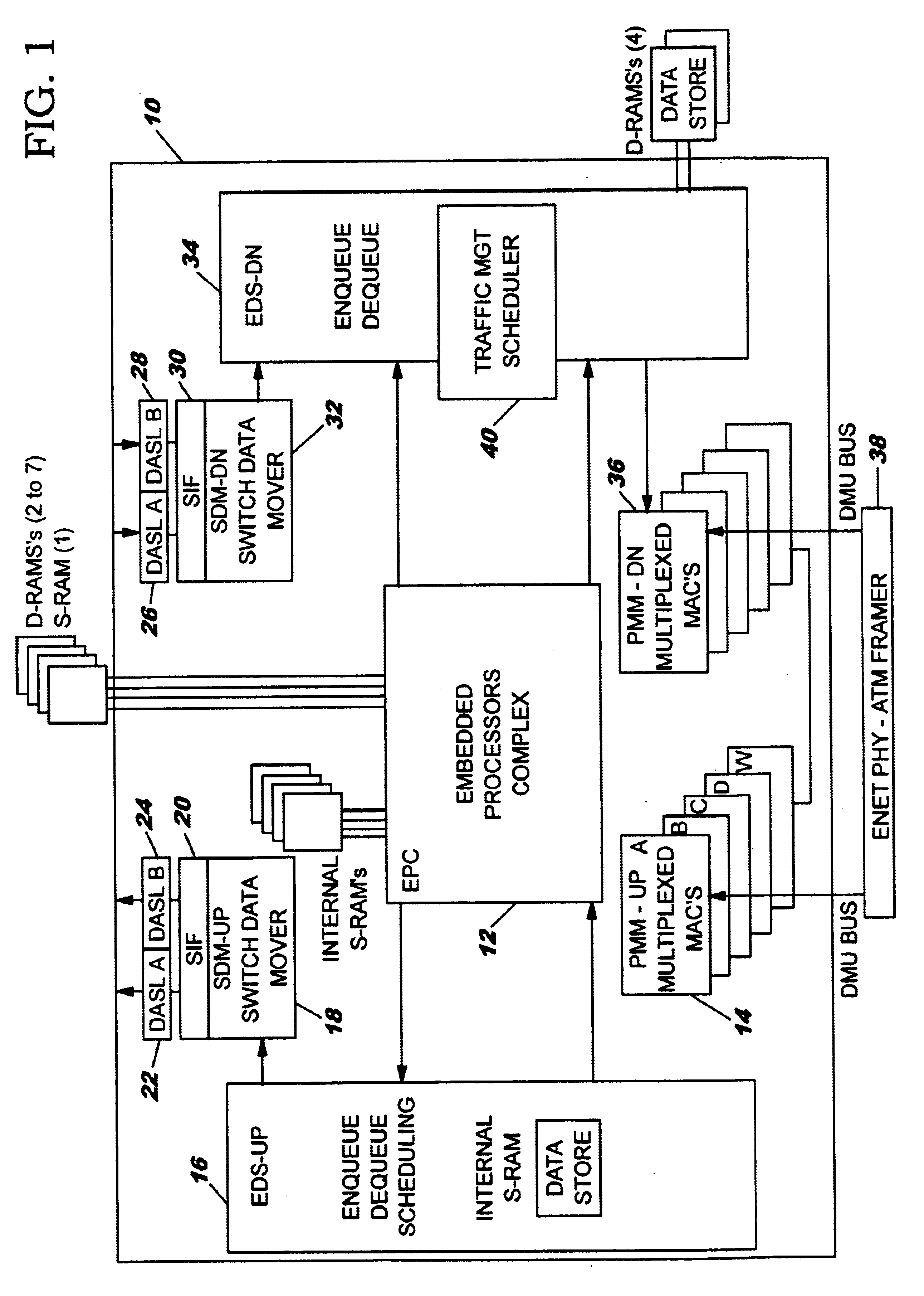

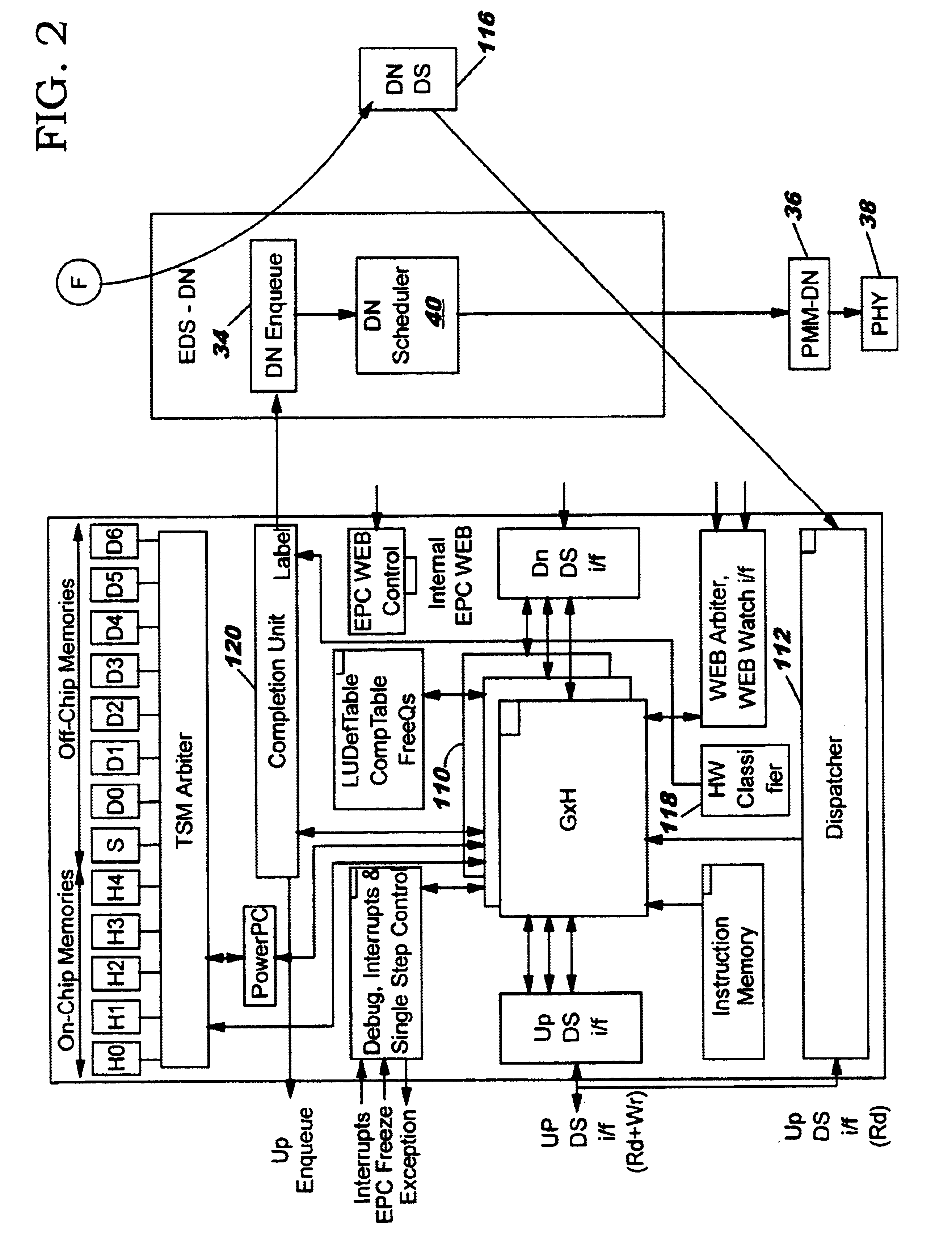

Method and system for network processor scheduling outputs using queueing

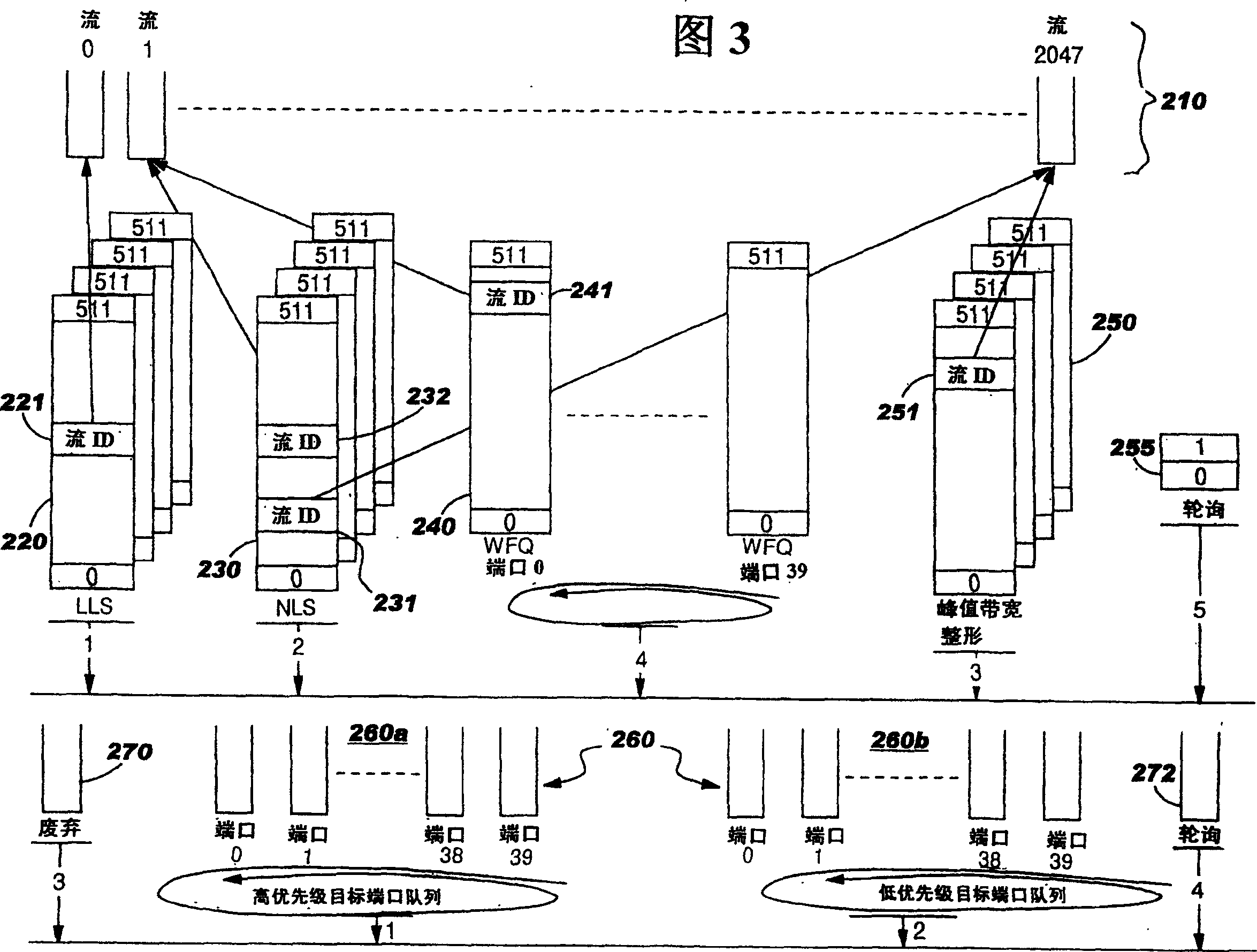

InactiveUS6952424B1Level of serviceGuaranteed bandwidthData switching by path configurationStore-and-forward switching systemsNetwork processing unitTime segment

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a weighted fair queueing system where the position of the next service in a best efforts system for using bandwidth which is not used by committed bandwidth is determined based on the length of the frame and the weight of the particular flow. A “back pressure” system keeps a flow from being selected if its output cannot accept an additional frame because the current level of that port queue exceeds a threshold.

Owner:IBM CORP

Method and apparatus for performing network processing functions

ActiveUS7188250B1Effective serviceEasily configure networkEncryption apparatus with shift registers/memoriesUser identity/authority verificationNetwork processing unitNetwork architecture

A novel network architecture that integrates the functions of an internet protocol (IP) router into a network processing unit (NPU) that resides in a host computer's chipset such that the host computer's resources are perceived as separate network appliances. The NPU appears logically separate from the host computer even though, in one embodiment, it is sharing the same chip.

Owner:NVIDIA CORP

Method And Apparatus For Performing Network Processing Functions

InactiveUS20080279188A1Lower latencyEasily configure networkTime-division multiplexData switching by path configurationNetwork processing unitNetwork architecture

Owner:NVIDIA CORP

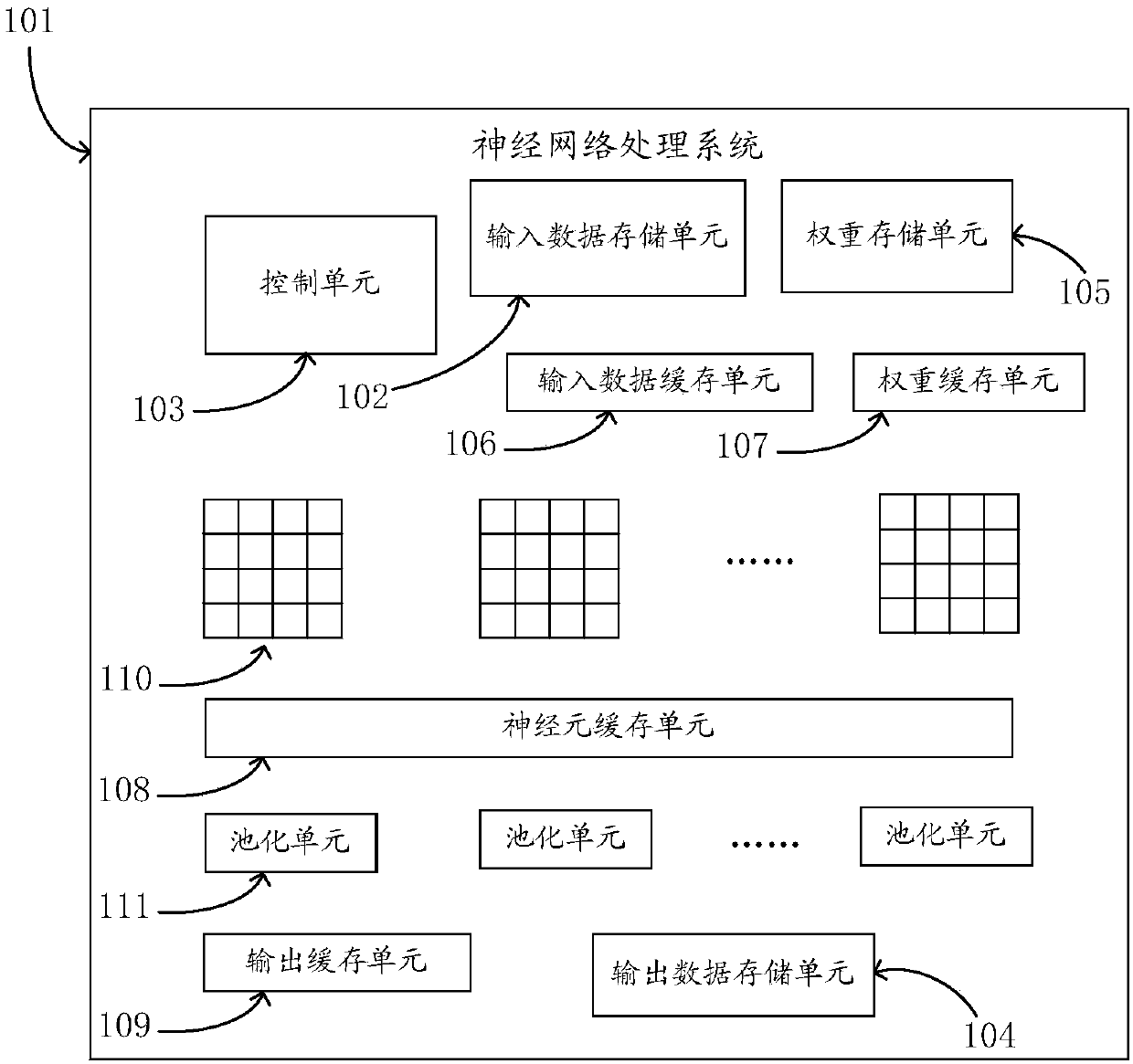

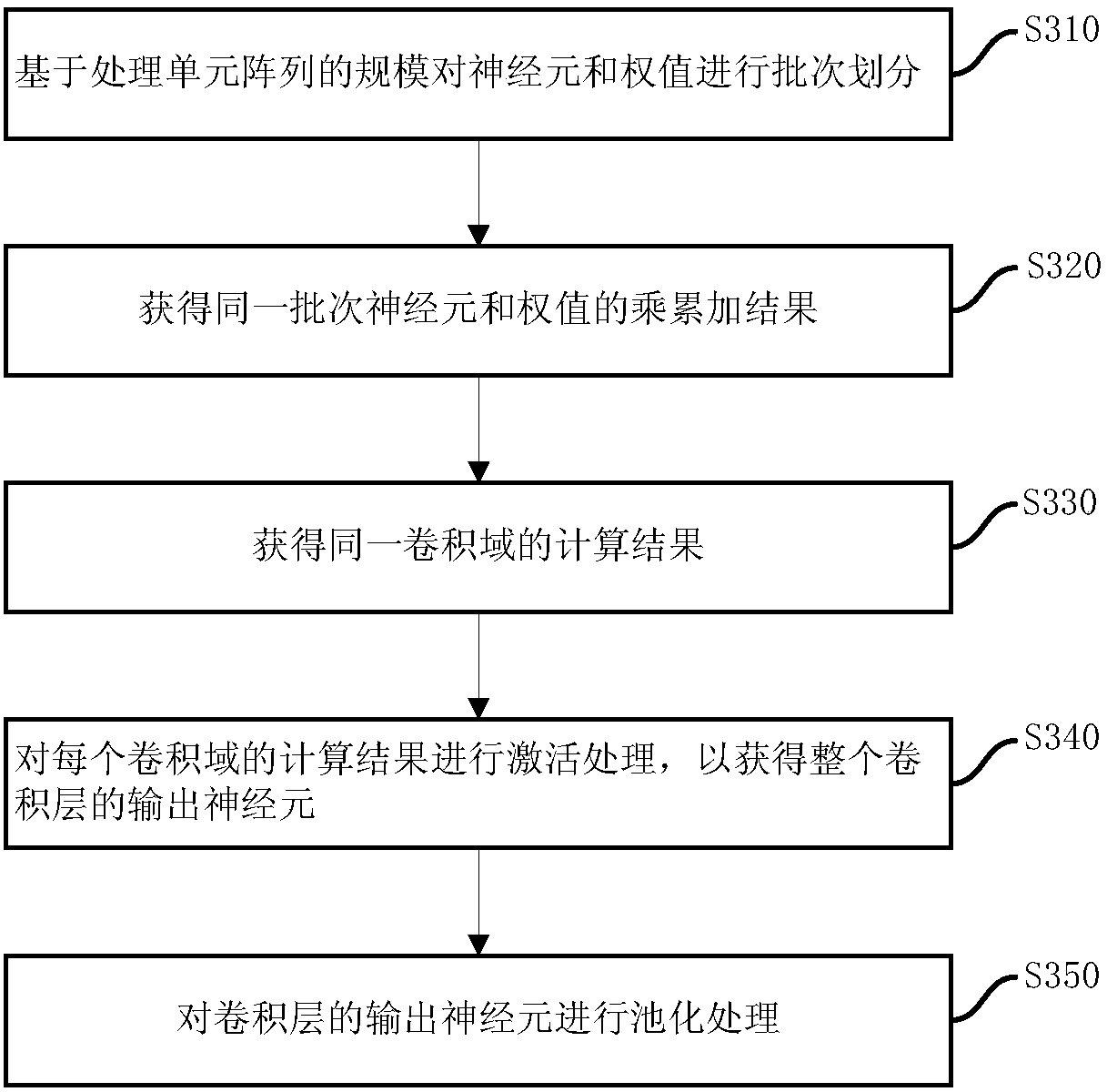

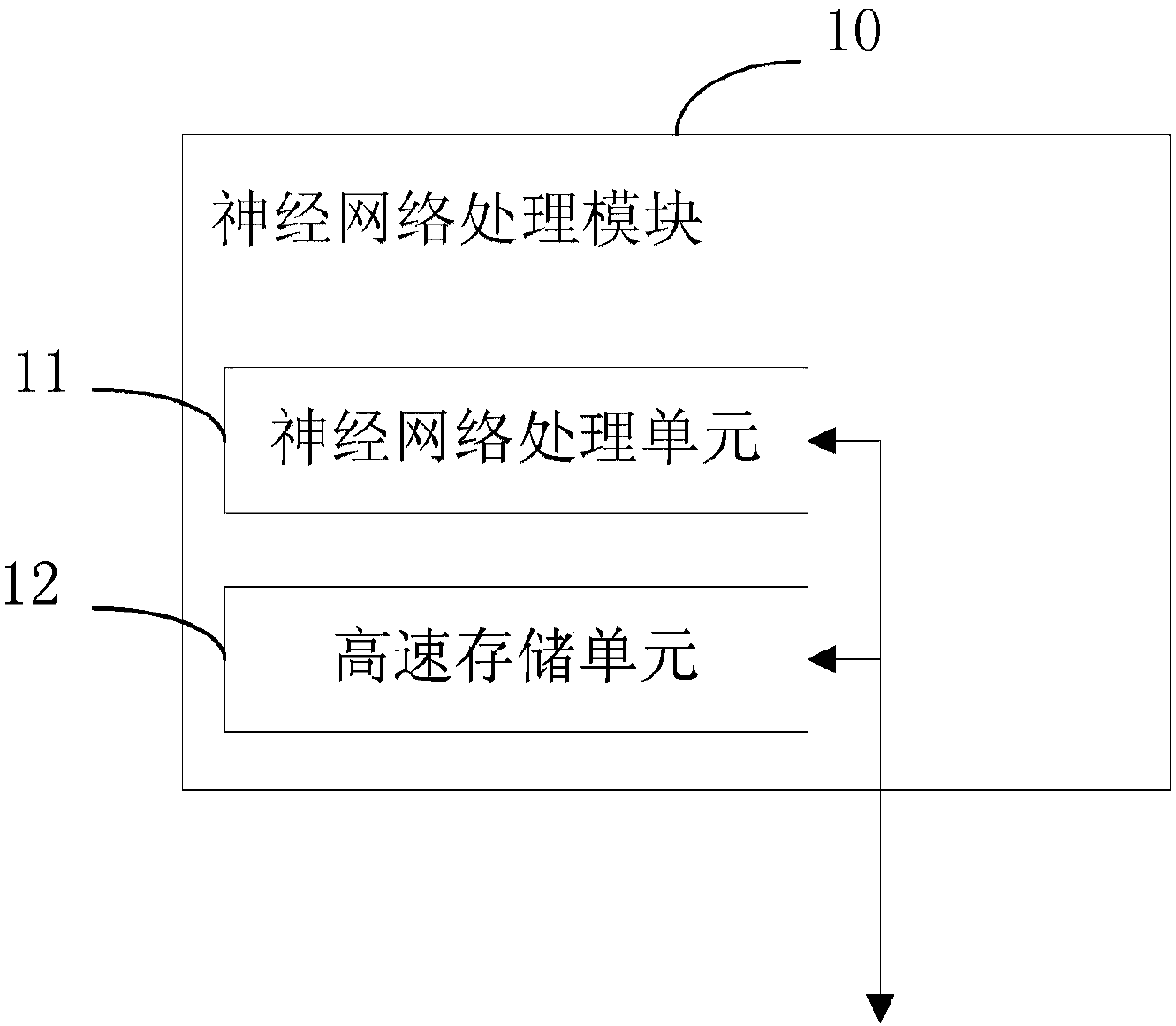

Neural network processing unit and processing system comprising same

ActiveCN107844826AImprove processing speedImprove throughputNeural architecturesResource utilizationNetwork processing unit

The invention provides a neural network processing unit and a processing system comprising the same. The processing unit comprises a multiplying unit module which comprises a multilevel structure forming an assembly line, and is used for executing the multiplying operation of to-be-calculated nerve cells and weight values in a neural network, wherein each level of the structure of the multiplyingunit module complete the suboperation of the multiplying operation of to-be-calculated nerve cells and weight values; and a self-accumulator module which carries out the accumulation of the multiplying operation result of the multiplying unit module based on a control signal, or outputs the accumulation result. The processing unit and the processing system can improve the calculation efficiency ofthe neural network and the resource utilization rate.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Image forming system, image forming apparatus, and image forming method

ActiveUS20090251724A1Improve performanceUser identity/authority verificationComputer security arrangementsService provisionNetwork processing unit

An image forming system includes a terminal apparatus and an image forming apparatus executing a process in response to a request from the terminal apparatus. The terminal apparatus sends user identification information to the image forming apparatus, receives from the image forming apparatus a token issued to a user logging into the image forming apparatus, and sends a service request associated with the token to the image forming apparatus. The image forming apparatus includes a network processing unit that communicates data using a predetermined protocol with the terminal apparatus; a login processing unit that permits the user to log in when the user identification information is valid and sends the token to the terminal apparatus; a determination unit that determines whether the token is valid upon receipt of the service request; and a service providing unit that executes a process designated by the service request when the token is valid.

Owner:KYOCERA DOCUMENT SOLUTIONS INC

Data processing apparatus, image registration method, and program product

InactiveUS20080158612A1Image enhancementDigitally marking record carriersNetwork processing unitImage correction

A data processing apparatus for registering image data includes a network processing unit configured for acquiring image data through a network, an image correction unit for analyzing the image data, extracting a background image and a subject image from the image data, and correcting the extracted background image according to a correction condition, and a database unit for storing the corrected image data such that the image data is associated with the corrected image data.

Owner:RICOH KK

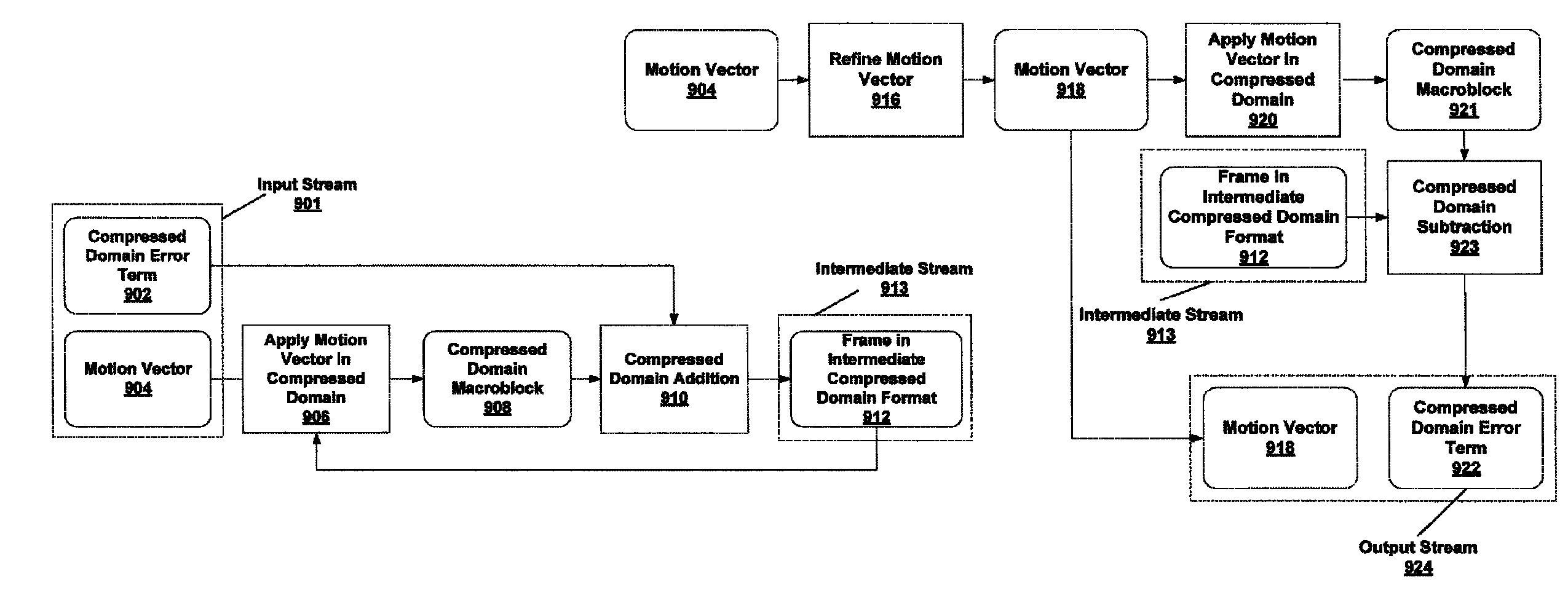

Architecture for combining media processing with networking

Systems and methods for processing media streams for transport over a network based on network conditions. An integrated circuit comprises a media processing unit coupled to receive feedback from a network processing unit. The media processing unit converts a media stream from a compressed input stream to a compressed output stream such that the compressed output stream has characteristics that are best suited for the network conditions. Network conditions can include, for example, characteristics of the network (e.g., latency or bandwidth) or characteristics of the remote playback devices (e.g., playback resolution). Changes in the network conditions can result in a change in the conversion process.

Owner:NXP USA INC

Power consumption information acquisition system safety isolation gateway and application method thereof

InactiveCN106941494AGuaranteed uptimePerfect and effective safety protection measuresTransmissionNetwork processing unitComputer terminal

The invention relates to a power consumption information acquisition system safety isolation gateway and an application method thereof; the safety isolation gateway comprises the following units: an internal network processing unit used for receiving a message sent by an acquisition server, sending packaged pure application data to an isolation exchange unit, and receiving the data transmitted by an external network processing unit from the isolation exchange unit; the external network processing unit used for receiving the message sent by the acquisition terminal, sending the packaged pour application data to the isolation exchange unit, and receiving the data transmitted by the internal network processing unit from the isolation exchange unit; the isolation exchange unit arranged between the internal and external network processing units and used for storing the pure application data transmitted by the internal and external network processing units, thus realizing controllable exchange of the pure application data between the internal and external network processing units; a code processing unit used for carrying out code protocol inspection for the data processed by the isolation exchange unit in a flow pass mode, and providing code examination and decryption services.

Owner:CHINA ELECTRIC POWER RES INST +1

Device and method for executing neural network calculation

ActiveCN107688853AGuaranteed normal operationSolve the lack of processing powerDigital computer detailsNeural architecturesComputer hardwareNetwork processing unit

The invention provides a device and method for executing neural network calculation. The device comprises an on-chip interconnection module and a plurality of neural network processing modules which are in communication with an on-chip interconnection unit, and the neural network processing modules can read and write data from other neural network processing modules through the on-chip interconnection module. In multi-core multi-layer artificial neural network calculation, neural network calculation of each layer is required to be divided, then the neural network processing modules calculate,calculation result data of each layer is obtained, and multiple neural network processing units further conduct data exchange on the calculation result data of each layer.

Owner:CAMBRICON TECH CO LTD

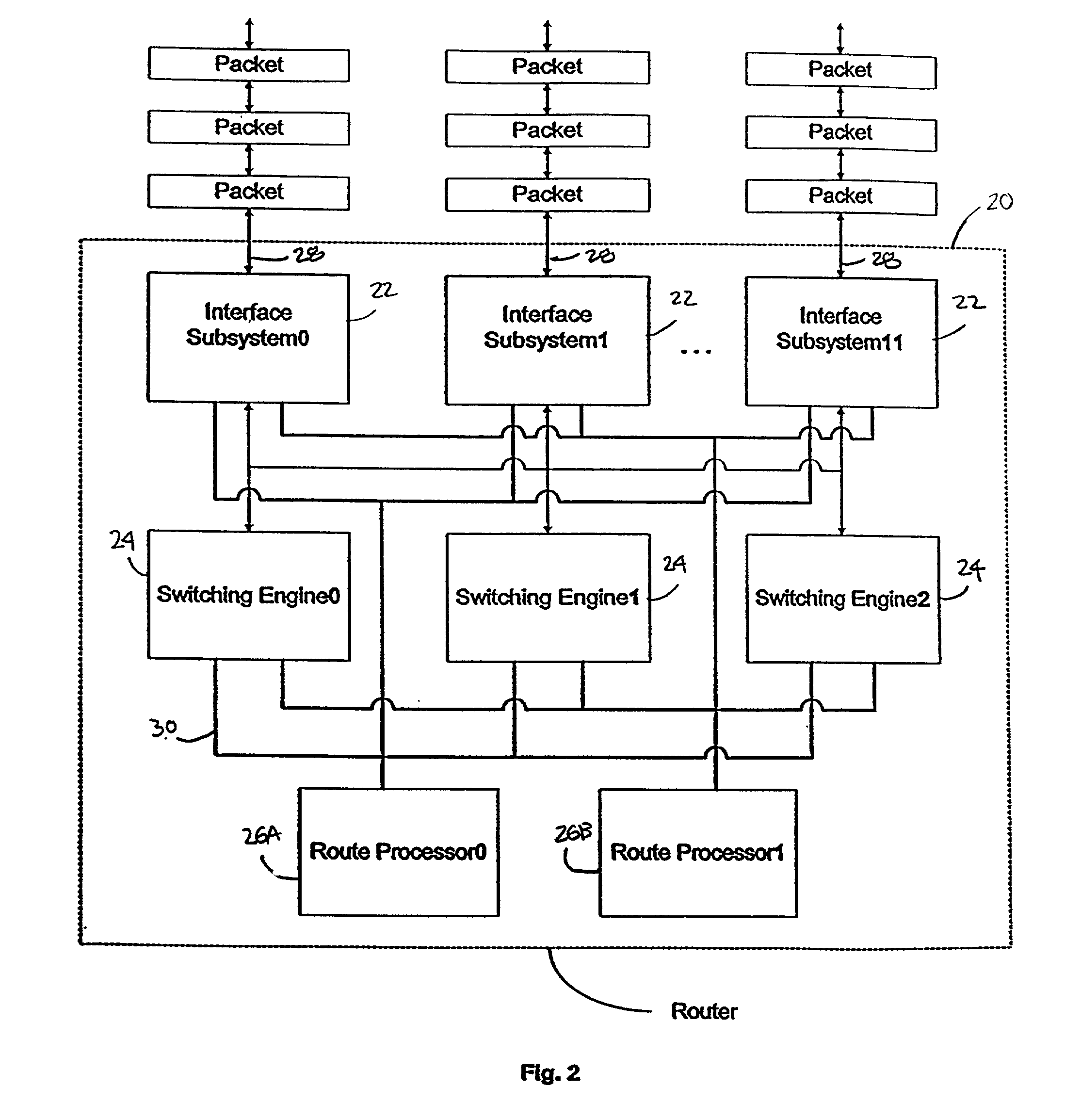

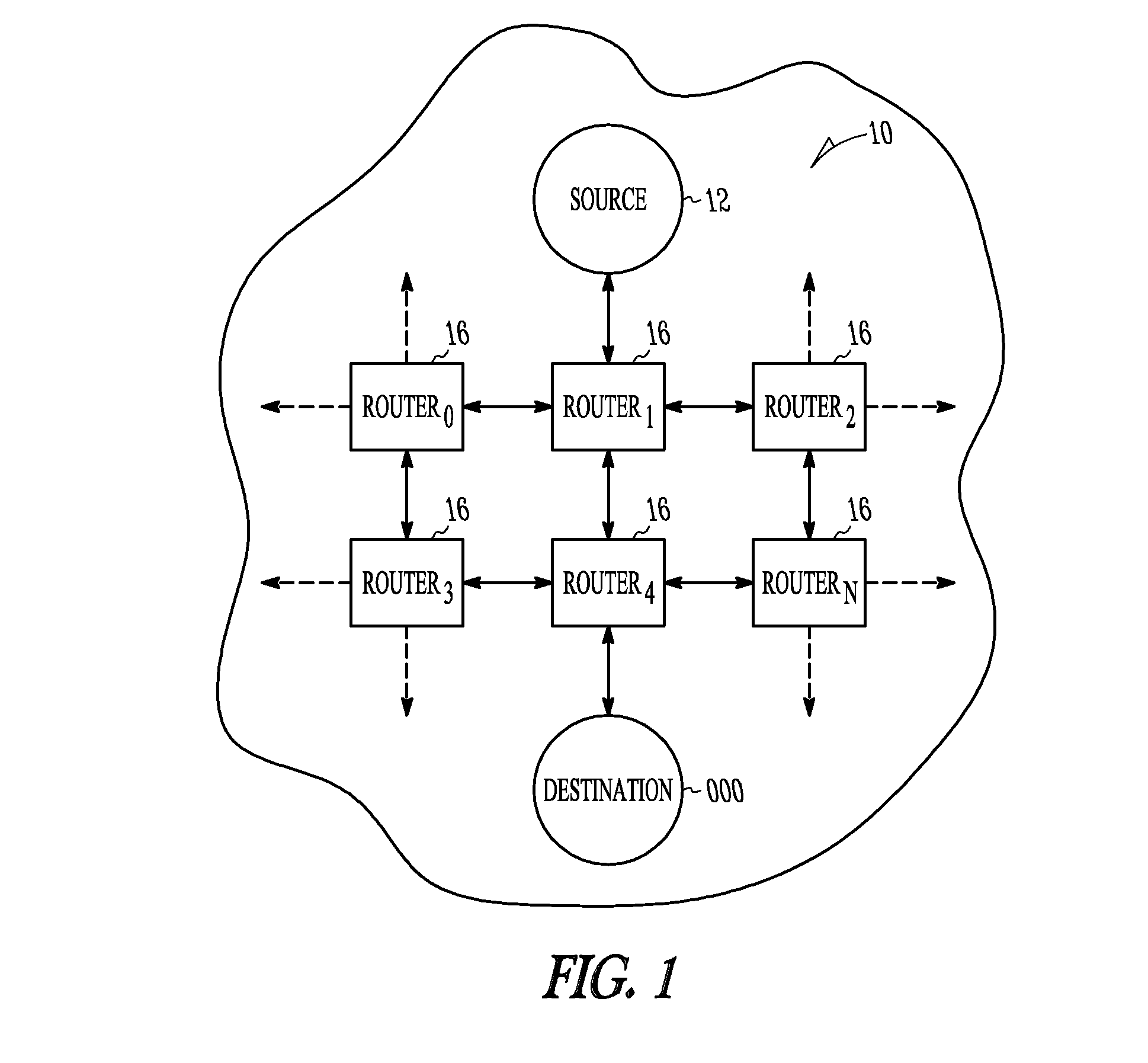

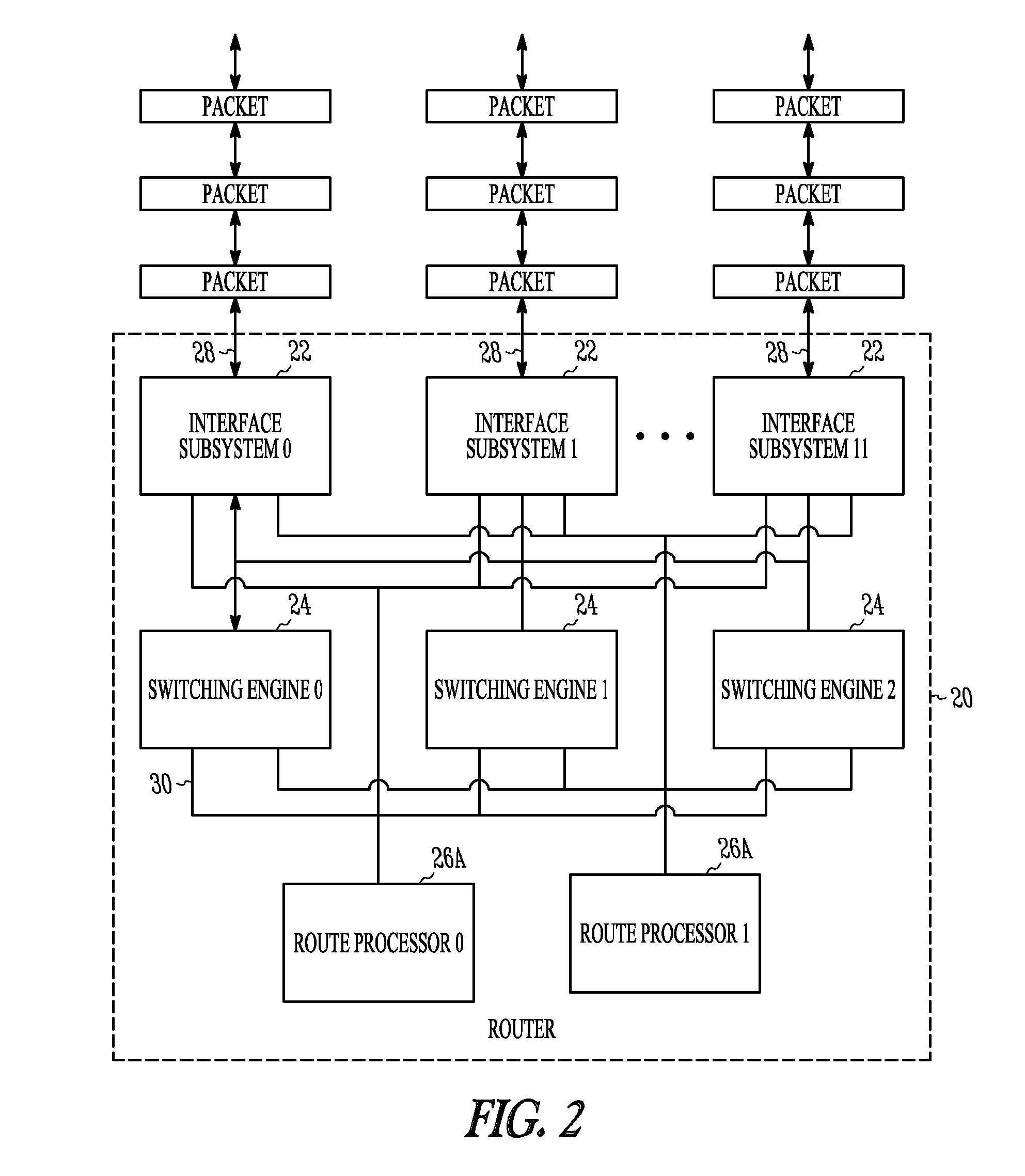

Redundant packet routing and switching device and method

ActiveUS7525904B1Error preventionFrequency-division multiplex detailsNetwork processing unitLine card

A router and method therefore for routing and switching a packet from an incoming link to an outgoing link. The router may include a plurality of network processing units, a plurality of switching engines, and a plurality of connections between the plurality of network processing units and the plurality of switching engines defining a rotational symmetric topology. The router may also include a means for connecting the plurality of network processing units to the plurality of switching engines, as well as means for connecting the plurality of switching engines to the plurality of line card units. In one example, the plurality of line card units is connected with the plurality of switching engines in a full mesh topology.

Owner:CISCO TECH INC

Method and system for network processor scheduling outputs using disconnect/reconnect flow queues

InactiveCN1642143AEfficient use ofFair useStore-and-forward switching systemsTime-division multiplexing selectionHigh bandwidthNetwork processing unit

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a plurality of calendars with different service rates to allow a user to select the service rate which he desires. If a customer has chosen a high bandwidth for service, the customer will be included in a calendar which is serviced more often than if the customer has chosen a lower bandwidth.

Owner:INT BUSINESS MASCH CORP

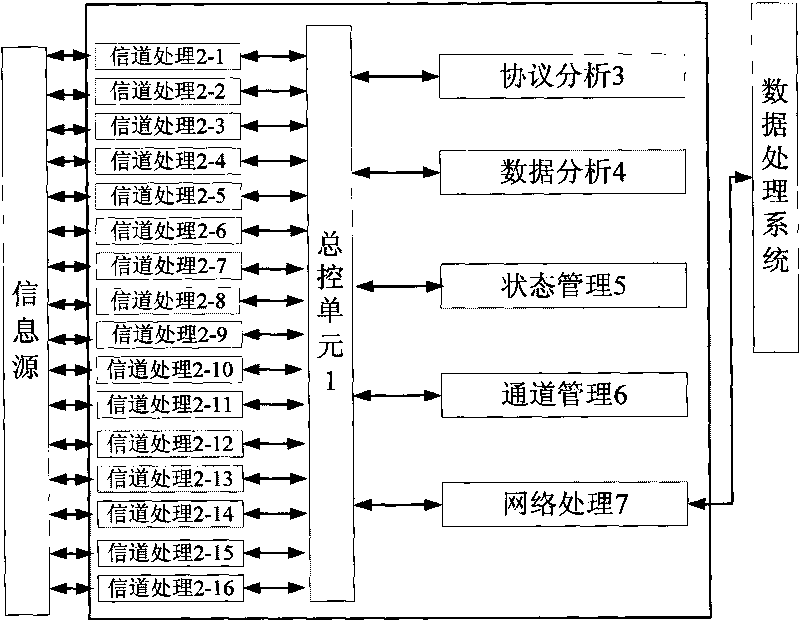

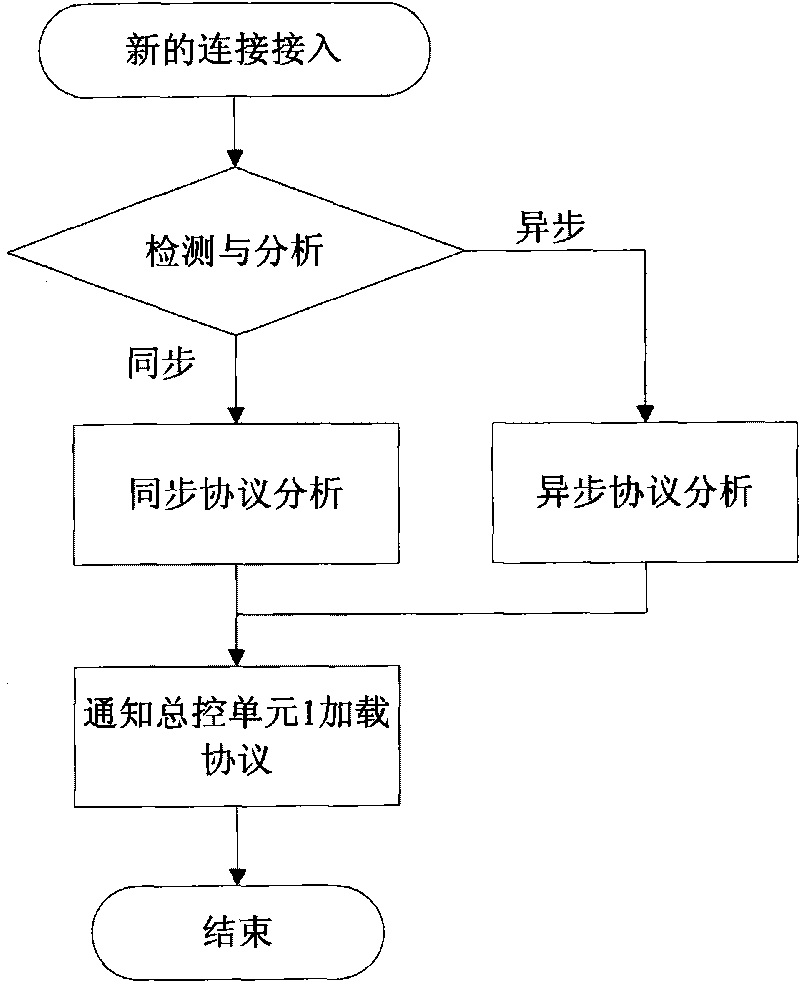

Multichannel intelligent data communication processing system

InactiveCN101692675AImprove real-time performanceImprove usabilityData switching networksNetwork processing unitData access

The invention discloses a multichannel intelligent data communication processing system arranged between an information source and a data processing system, which comprises a total control unit, a channel processing unit, a protocol analysis unit, a data analysis unit, a state management unit, a channel management unit and a network processing unit. Aiming at interconnection of various data processing systems and other data processing systems or remote data terminals, the invention provides the key technology for system data access; and because the data access requires high real-time, usability and high reliability, the invention adopts channel warm backup, protocol analysis, data format analysis and other design methods to realize functions such as multichannel synchronous and asynchronous transmission, plug and play, protocol automatic detection and analysis, data format automatic identification and conversion, channel warm backup and the like, effectively provides the intelligent data communication processing technology between the data processing systems and other data processing systems or remote data terminals.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

Multi-domain net packet classifying method based on network flow

ActiveCN1822567ALimit adaptabilityImprove performanceData switching networksTraffic capacityNetwork processing unit

Present invention relates to network filtering and monitoring technology field. It includes following steps: receiving reached network package, collecting network package head information, statistics and normalization network flow property, computing element obtaining network package classifying structure according to configured rule congregation and network flow statistical property, network package classifying unit obtaining network package head information and classifying network package through sorter data structure obtained by calculating unit, transmitting unit transmitting network package in output queue according to classifying result. Present invention is realized based on microprocessor universal platform or network processing unit special platform, combined network flow dynamic statistics property and rule aggregative static structure property, optimizing network package classification method, raising 80-400 per cent average classifying rate than current both abroad and home same class of method, and reducing memory requirements by 30-600 per cent.

Owner:CERTUS NETWORK TECHNANJING

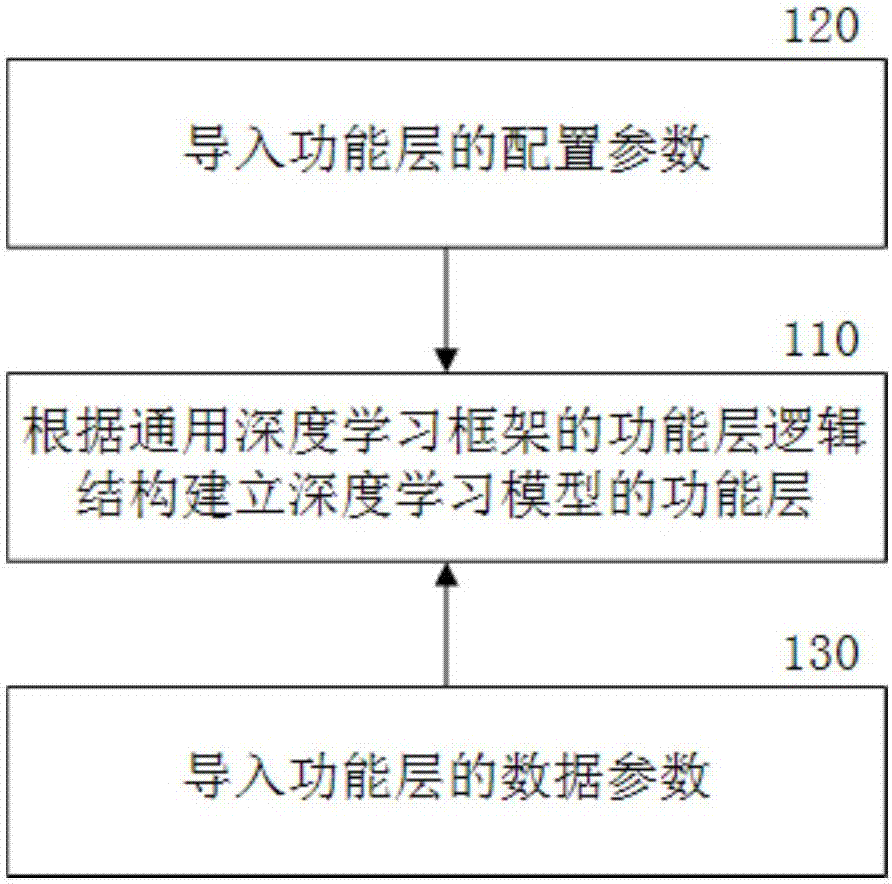

Efficient conversion method and device for deep learning model

ActiveCN107480789ADecreased structural correlationAchieve early optimizationFuzzy logic based systemsAlgorithmNetwork processing unit

An efficient conversion method for a deep learning model provided by the embodiment of the invention is used to solve the technical problem that the development efficiency and operation efficiency of a deep learning model are low. The method includes the following steps: building a data standardization framework corresponding to an NPU (Neural-Network Processing Unit) model according to a general deep learning framework; using the data standardization framework to convert the parameters of a deep learning model into the standard parameters of the data standardization framework; and converting the standard parameters into the parameters of the NPU model. According to the invention, a unified data standardization framework is built for a specific processor according to the parameter structures of general deep learning frameworks. Standard data can be formed using the unified data structure of the data standardization framework according to the parameters of a deep learning model formed by a general deep learning framework. Thus, the process of data analysis by the processor depends much less on the structure of the deep learning model, and the development of the processing process of the processor and the development of the deep learning model can be separated. A corresponding efficient conversion device is also provided.

Owner:VIMICRO CORP

Intelligent tracking flight system and method of unmanned aerial vehicle (UAV)

ActiveCN110456805AImprove detection accuracyEasy to detectAttitude controlPosition/course control in three dimensionsNetwork processing unitObstacle avoidance

The invention relates to an intelligent tracking flight system and method of a UAV, and belongs to the field of intelligent autonomous navigation of the UAV. The flight system comprises an image acquisition unit for image acquisition, a flight attitude measurement unit for detecting a flight inertia, a neural network processing unit for neural network processing and a control unit for control flight motion of the UAV; an image collected by a camera in the front of the UAV serves as input, the input is identified by the convolutional neural network, and an obstacle avoidance strategy is given.In the flight process, the alight attitude measurement unit based on a tunnel magnetic resistance effect characterized by high response is used to obtain key change position of the flight attitude ofthe UAV, the image acquisition unit is started to capture key images, a stereoscopic model of the flight environment is formed, key position images are provided for the UAV tracking flight path, and the integrated safety of the UAV tracking flight is improved.

Owner:深圳慈航无人智能系统技术有限公司 +1

Packet processing apparatus and method codex

InactiveUS7990971B2Deterioration in line speedShorten speedTime-division multiplexData switching by path configurationNetwork processing unitPacket processing

A packet processing apparatus and method are provided. The packet processing apparatus changes a size of an input packet, analyzes the input packet to perform a second layer associated process, generates basic delivery headers of the input packet, processes the input packet to which the basic delivery headers are inserted according to a type of the input packet, transforms the header of the input packet to which the basic delivery headers are inserted, and transitions the header-transformed input packet to delivers the packet. Accordingly, it is possible to process various packets without addition of separate process to the packet processing apparatus. In addition, a use efficiency of a network processing unit can be optimized, so that it is possible to increase a packet processing rate and performance.

Owner:ELECTRONICS & TELECOMM RES INST

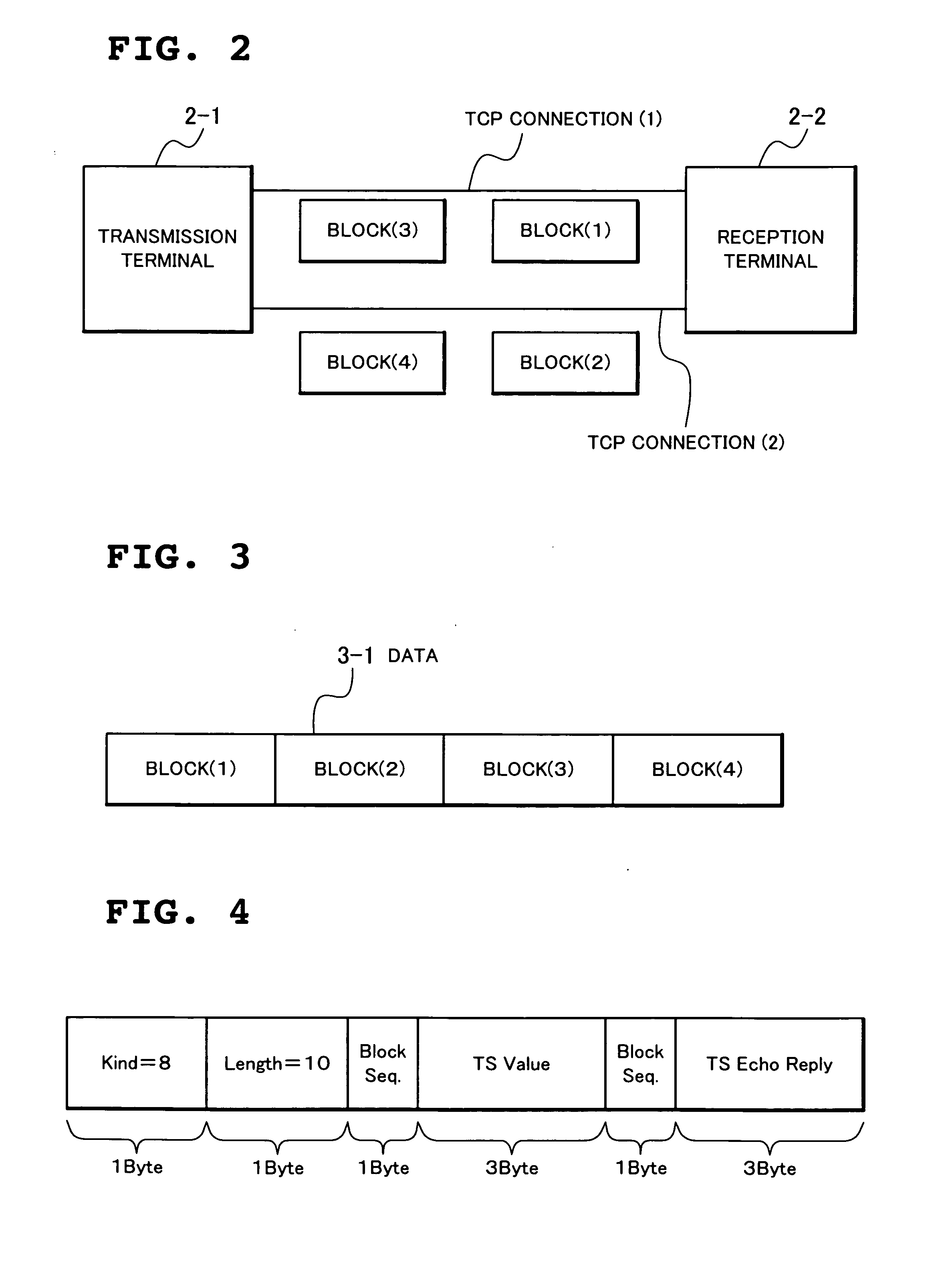

Communication device, and communication method and program thereof

ActiveUS20070071004A1Efficient implementationTime-division multiplexData switching by path configurationOriginal dataNetwork processing unit

In a communication device (1) which realizes communication with data distributed to a plurality of connections, a data division restoration processing unit (1-2), when transmitting data, receives data from an application processing unit (1-1), divides the data into an arbitrary number of blocks, stores information for restoring the block to original data within a TCP header and sends the data to a network processing unit (1-3) by using an arbitrary number of TCP connections, and when receiving data, refers to restoration information stored within the TCP header with respect to data of the plurality of TCP connections received from the network processing unit (1-3), identifies a divisional block, combines the blocks to restore data as of before division and sends the data to the application processing unit (1-1).

Owner:NEC CORP

Method and system for network processor scheduling based on service levels

InactiveUS7123622B2Easy to useMinimal overheadError preventionTransmission systemsMaximum burst sizeComing out

A system and method of moving information units from an output flow control toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to service based on a weighted fair queue where position in the queue is adjusted after each service based on a weight factor and the length of frame, a process which provides a method for and system of interaction between different calendar types is used to provide minimum bandwidth, best effort bandwidth, weighted fair queuing service, best effort peak bandwidth, and maximum burst size specifications. The present invention permits different combinations of service that can be used to create different QoS specifications. The “base” services which are offered to a customer in the example described in this patent application are minimum bandwidth, best effort, peak and maximum burst size (or MBS), which may be combined as desired. For example, a user could specify minimum bandwidth plus best effort additional bandwidth and the system would provide this capability by putting the flow queue in both the NLS and WFQ calendar. The system includes tests when a flow queue is in multiple calendars to determine when it must come out.

Owner:IBM CORP

Anomaly detection method and system for network processing unit

ActiveCN101976217ADoes not affect forwarding performanceDoes not affect handlingError detection/correctionNetwork processing unitAnomaly detection

The invention discloses an anomaly detection method and a system for a network processing unit. The method comprises the following steps: anomaly detection operating codes are added into execution processes of each thread of the network processing unit, and the network processing unit makes first marks on mark positions corresponding to the current thread in an anomaly protection mark data area in a shared memory when the network processing unit runs the anomaly detection operating codes of the current thread; when the cycle of a timer is up, a coprocessing unit detects all the mark positions in the anomaly protection mark data area, and the thread not corresponding to the mark positions of the first marks is abnormal if the fact that not all the mark positions are the first marks is detected; and the coprocessing unit can make second marks on all the mark positions if the fact that all the mark positions are the first marks is detected. Based on the characteristics of the process of the network processing unit software, the invention can effectively detect the anomaly of the thread in time, thereby greatly improving the failure detection capability of network equipment using the network processing unit as the core unit in the operating period.

Owner:ZTE CORP

Method and system for network processor scheduling outputs based on multiple calendars

InactiveUS6862292B1Level of serviceEasy to useError preventionTransmission systemsHigh bandwidthNetwork processing unit

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a plurality of calendars with different service rates to allow a user to select the service rate which he desires. If a customer has chosen a high bandwidth for service, the customer will be included in a calendar which is serviced more often than if the customer has chosen a lower bandwidth.

Owner:IBM CORP

Communication Device

InactiveUS20090177896A1Reduce power consumptionVolume/mass flow measurementData switching by path configurationMain processing unitNetwork processing unit

There is provided a communication device which can search for a desired communication device and request a service, without being conscious of the status of the power supply of other communication devices on a network, and achieve a reduction in power consumption. A communication device 100 includes a main processing unit 110 to process main service provided for other communication devices, a network processing unit 120 to transmit and receive a request packet and a response packet among other communication devices, and an integrated power supply unit 150 to stop supplying power to the main processing unit 110 in a state of being able to supply it again and to supply the power to the network processing unit 120, wherein the network processing unit 120 is provided with an automatic responding unit 703 to determine whether or not it can respond to the received request packet only by itself and, when the response is possible, to transmit the response packet to the communication device, and a power supply controlling unit 704, when the response is not possible, to control a main-power supply unit 151 to supply the power to the main processing unit 110.

Owner:PANASONIC CORP

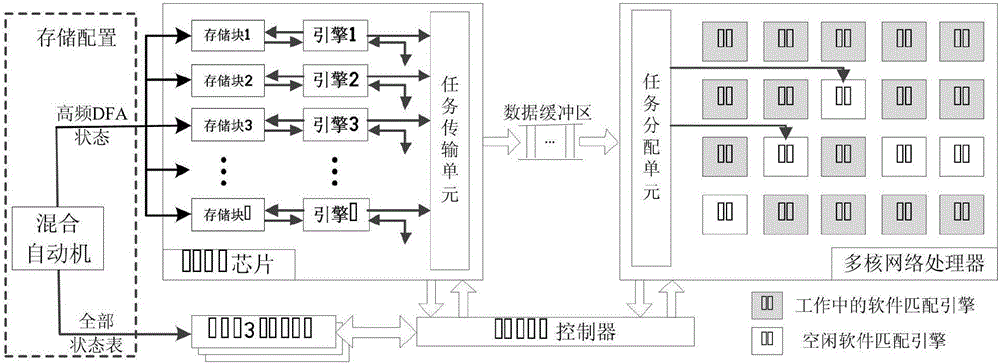

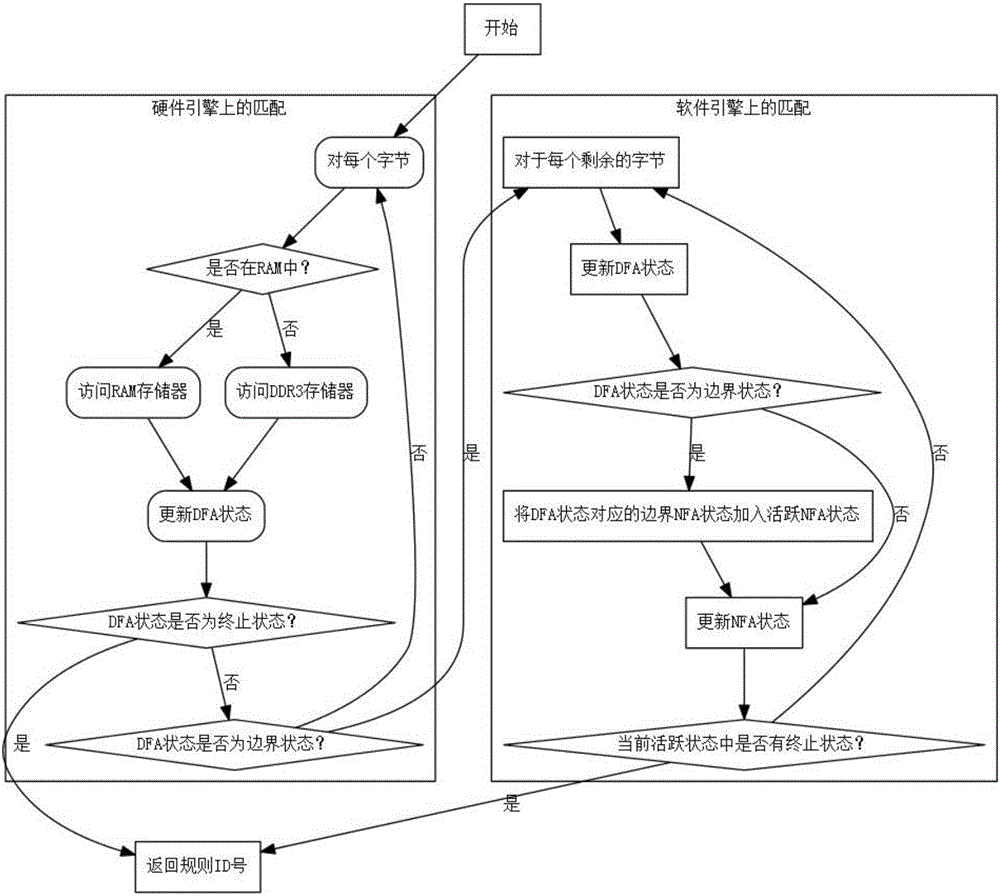

High speed regular expression matching hybrid system and method based on FPGA and NPU (field programmable gate array and network processing unit)

ActiveCN106776456AHeavy workloadReduce workloadDigital computer detailsData switching networksDouble date rateSoftware engine

The invention provides a high speed regular expression matching hybrid system and method based on FPGA and NPU (field programmable gate array and network processing unit); the system is mainly composed of an FPGA chip and a multicore NPU; a plurality of parallel hardware matching engines are implemented on the FPGA chip, a plurality of software matching engines are instantiated on the NPU, and the hardware engines and the software engines operate in running water manner. In addition, a high speed RAM (random-access memory) on the FGPA chip and an off-chip DDR3 SDARM (double-date-rate three synchronous dynamic random-access memory) are used to construct two-level storage architecture; secondly, a regular expression rule set is compiled to generate a hybrid automaton; thirdly, state table items of the hybrid automaton are configured; fourthly, network messages are processed. The high speed regular expression matching hybrid system and method based on FPGA and NPU have the advantages that matching performance under complex rule sets is improved greatly, and the problem that the complex rule sets have poor performance is solved.

Owner:NAT UNIV OF DEFENSE TECH

Power transformer fault diagnosis device and method based on BP nerve network algorithm

InactiveCN107145675AGuaranteed uptimeExtended service lifeDesign optimisation/simulationNeural learning methodsNerve networkTransformer

The invention relates to a power transformer fault diagnosis device and method based on a BP nerve network algorithm; the device comprises a transformer fault diagnosis data import module, a fault type output module and a data analysis module; a rough set processing unit, a normalization processing unit, a BP nerve network processing unit, a device fault data interface module and a data output interface module are arranged in the data analysis module; the method uses the rough set to preprocess a BP nerve network training set, extracts features of collected information and forms a decision sample table, uses the rough set to parse extraction rules, removes redundancy attributes, carries out data normalization treatment, finally uses the BP nerve network to train the sample data after normalization treatment, thus diagnosing the transformer fault main factors, effectively ensuring transformer normal operations, improving service life, reducing working personnel work pressure, and reducing enterprise economic losses.

Owner:STATE GRID TIANJIN ELECTRIC POWER +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com