Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

144 results about "Video reconstruction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

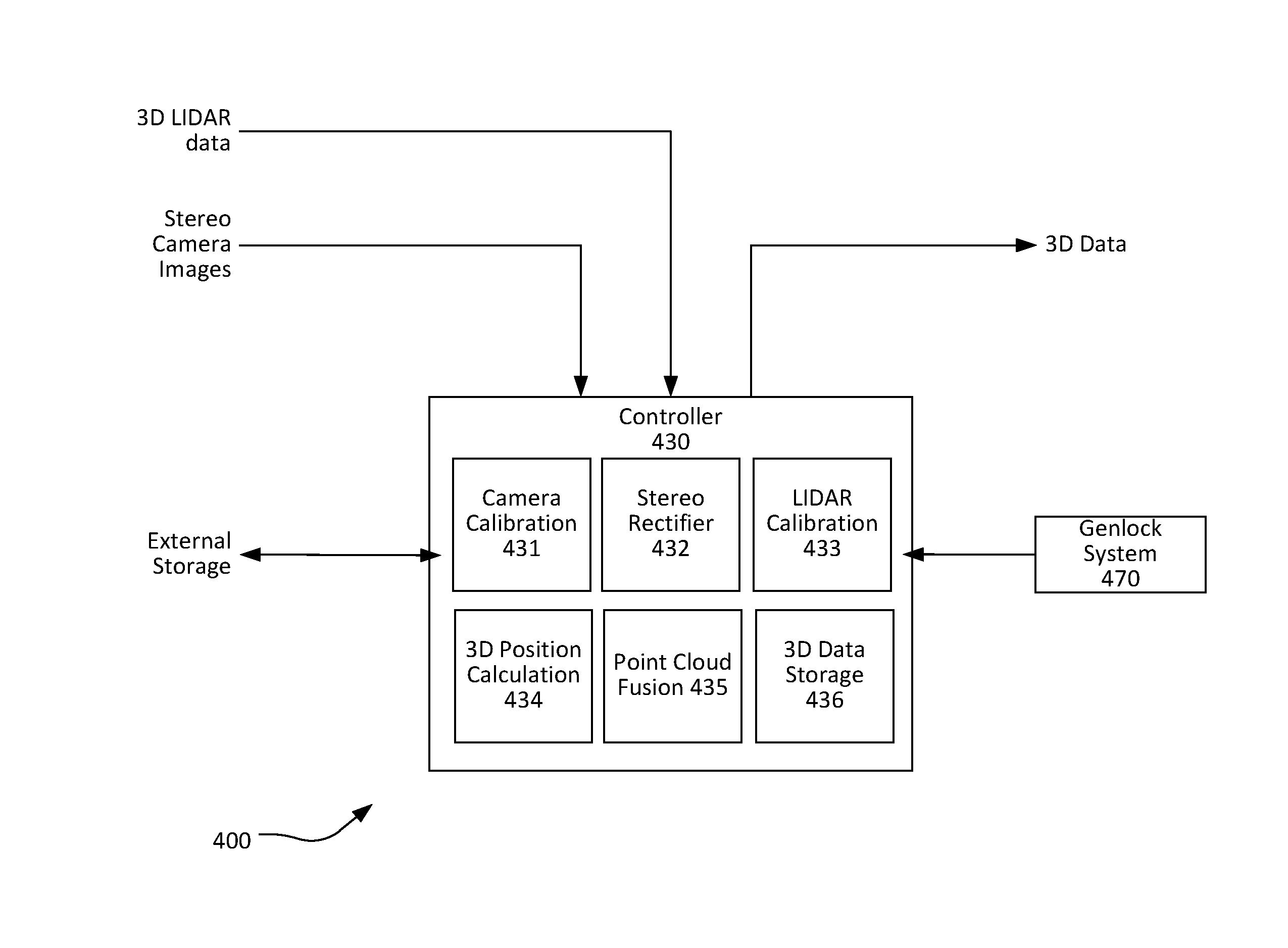

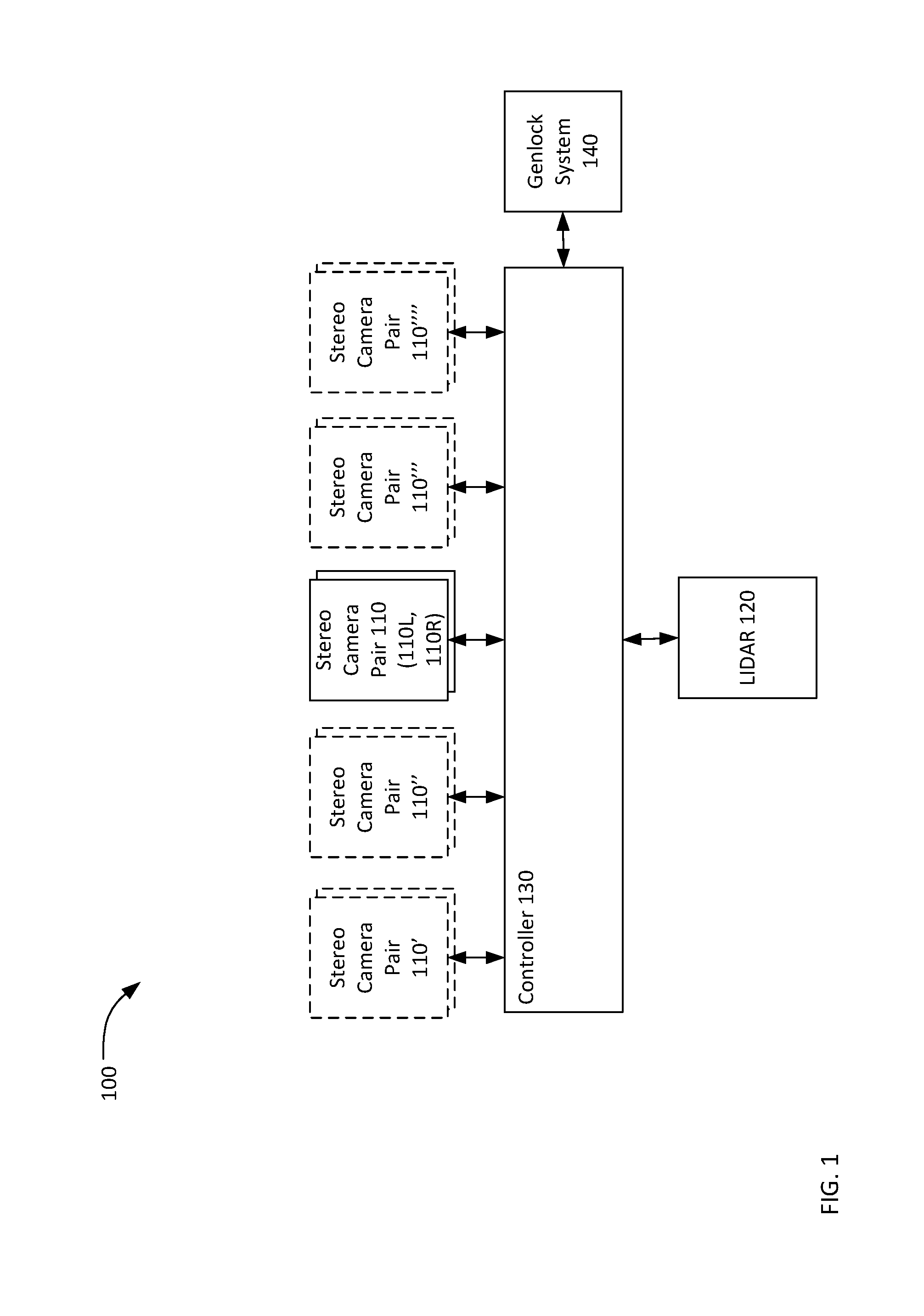

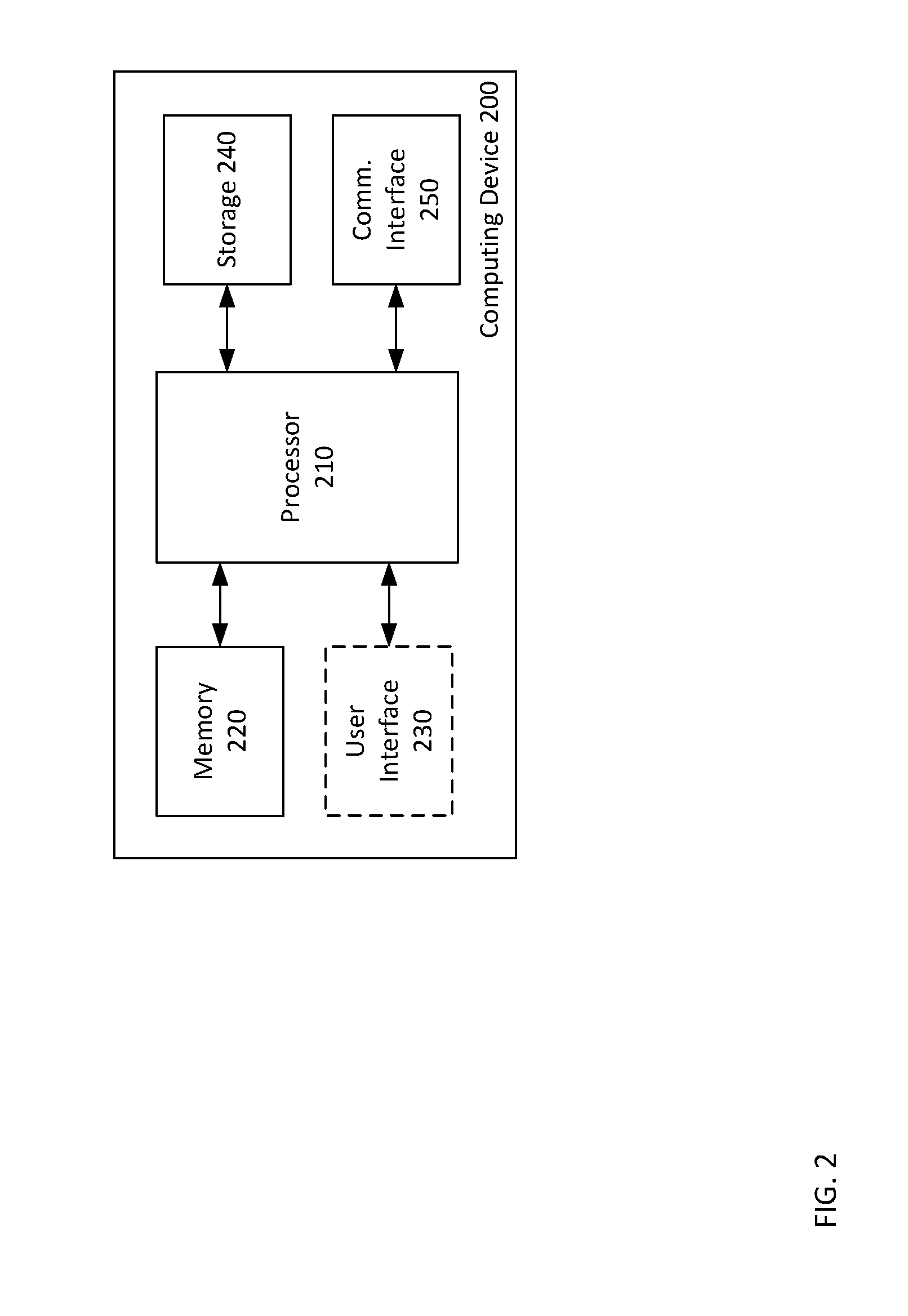

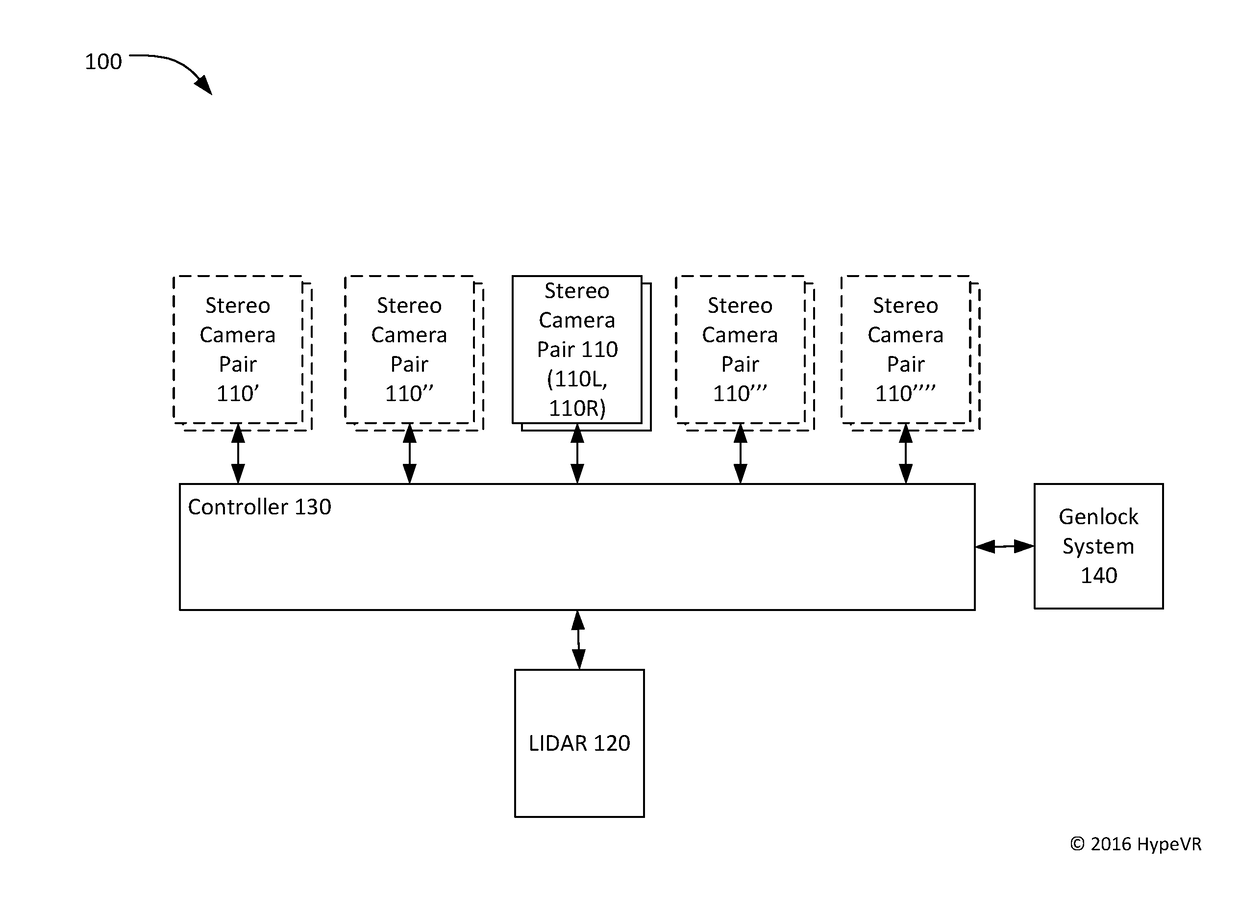

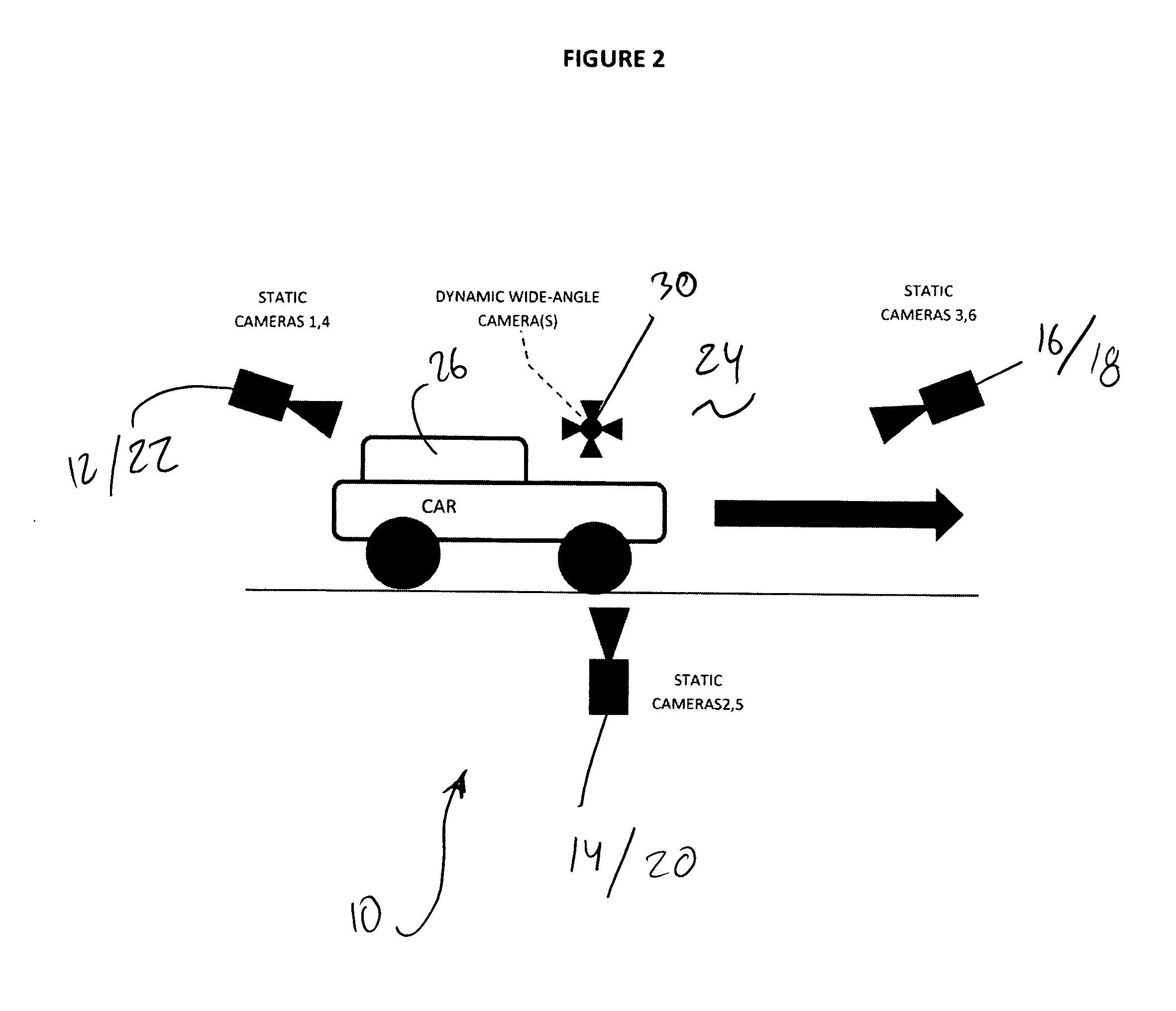

Lidar stereo fusion live action 3D model video reconstruction for six degrees of freedom 360° volumetric virtual reality video

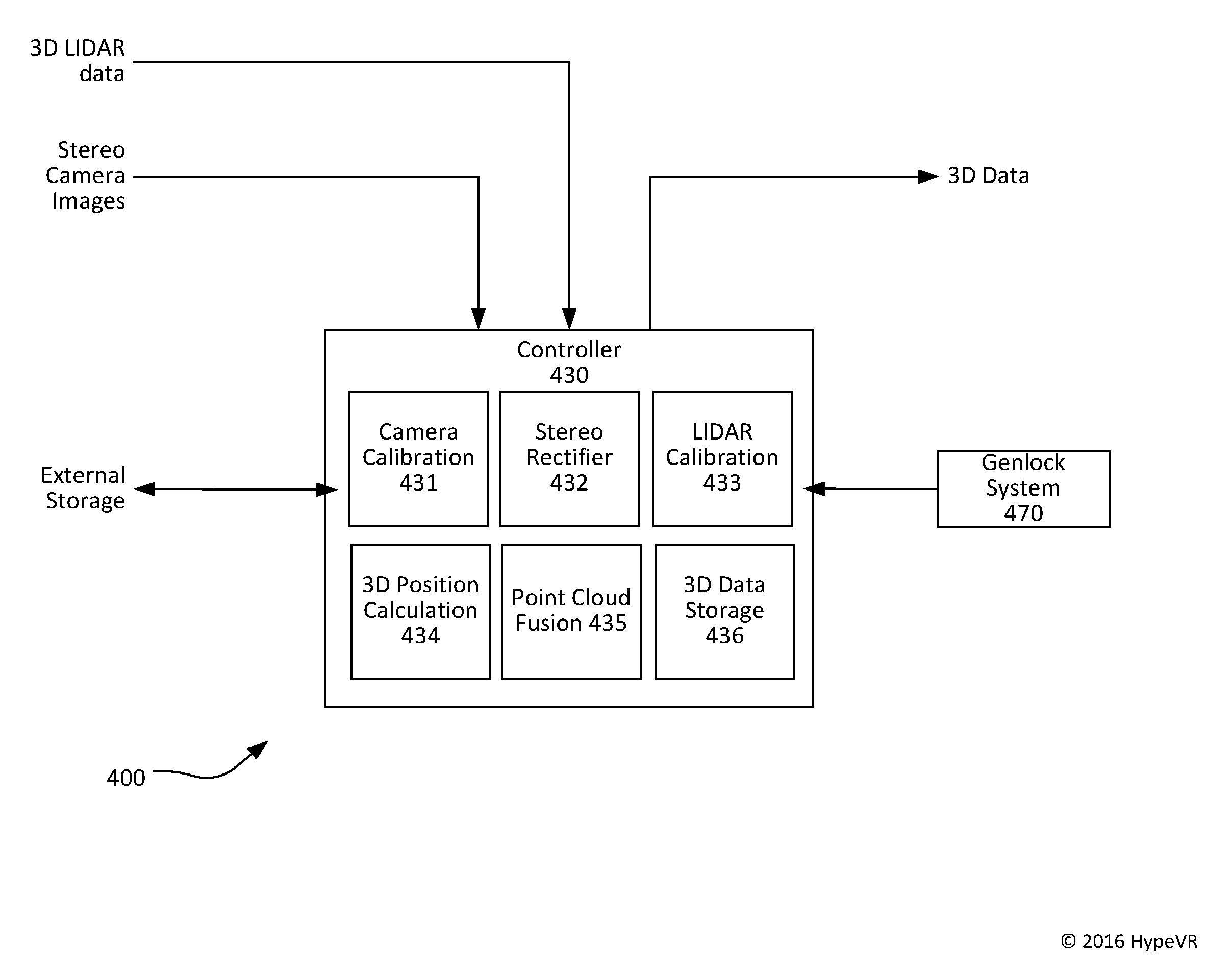

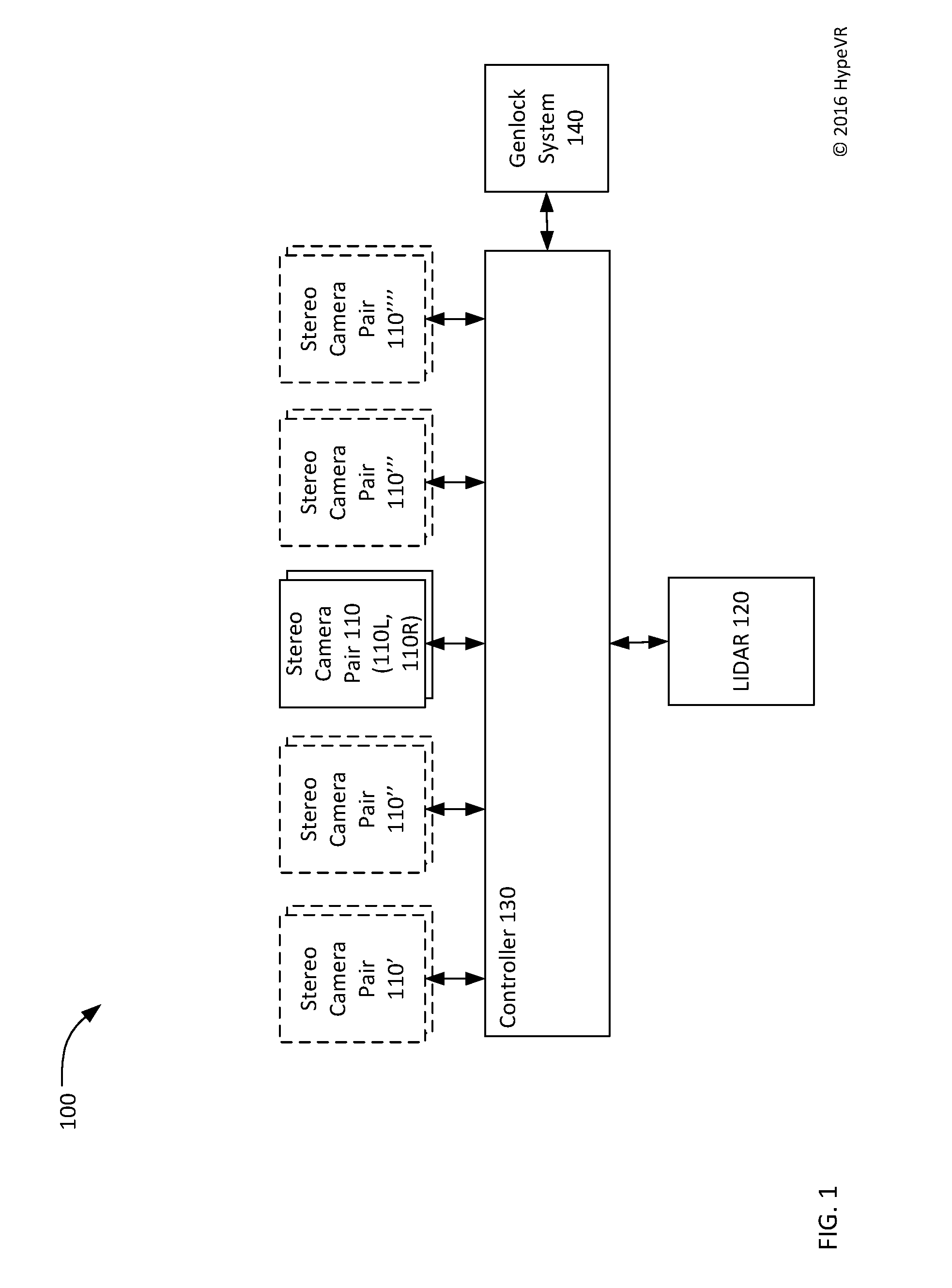

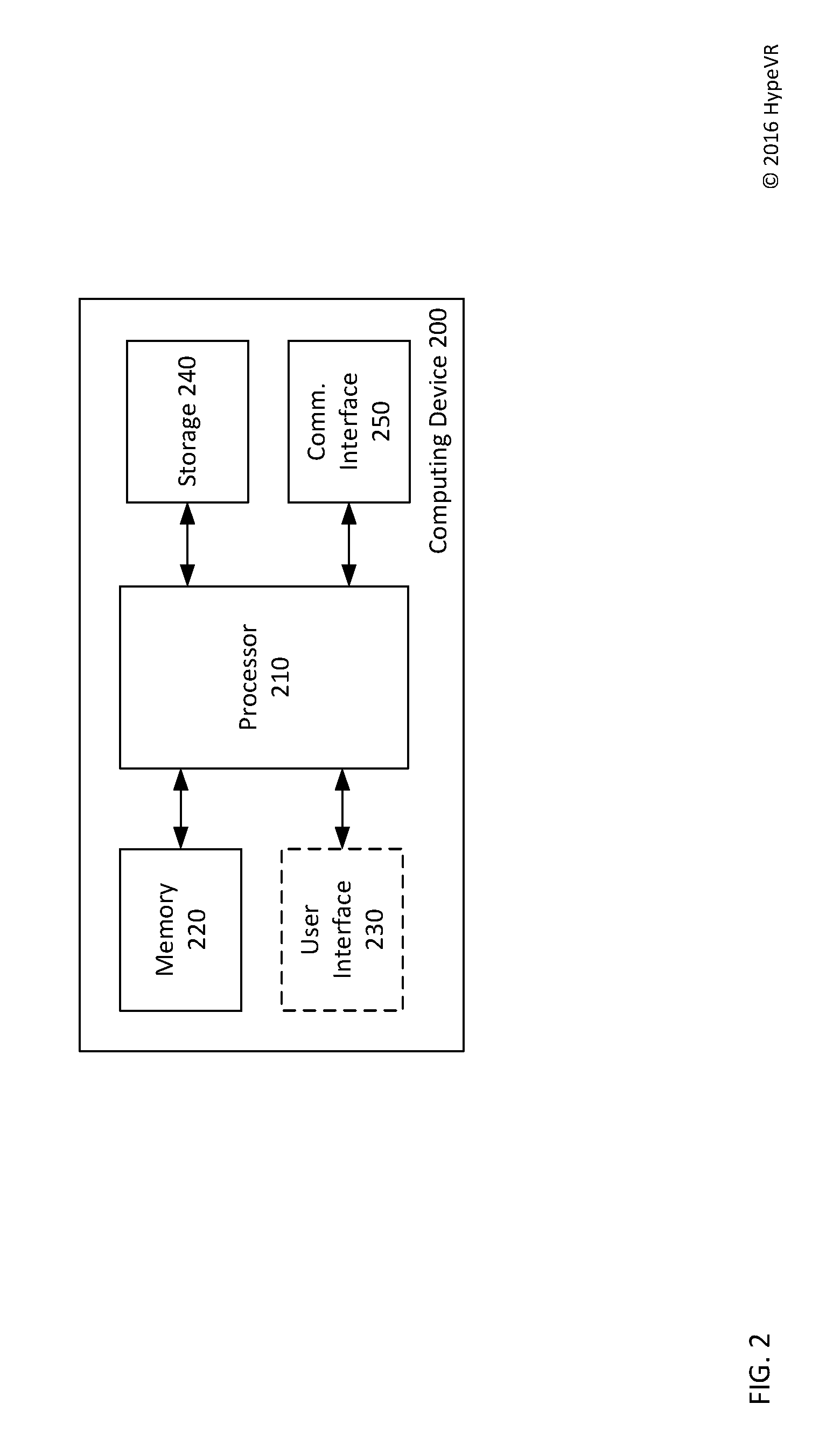

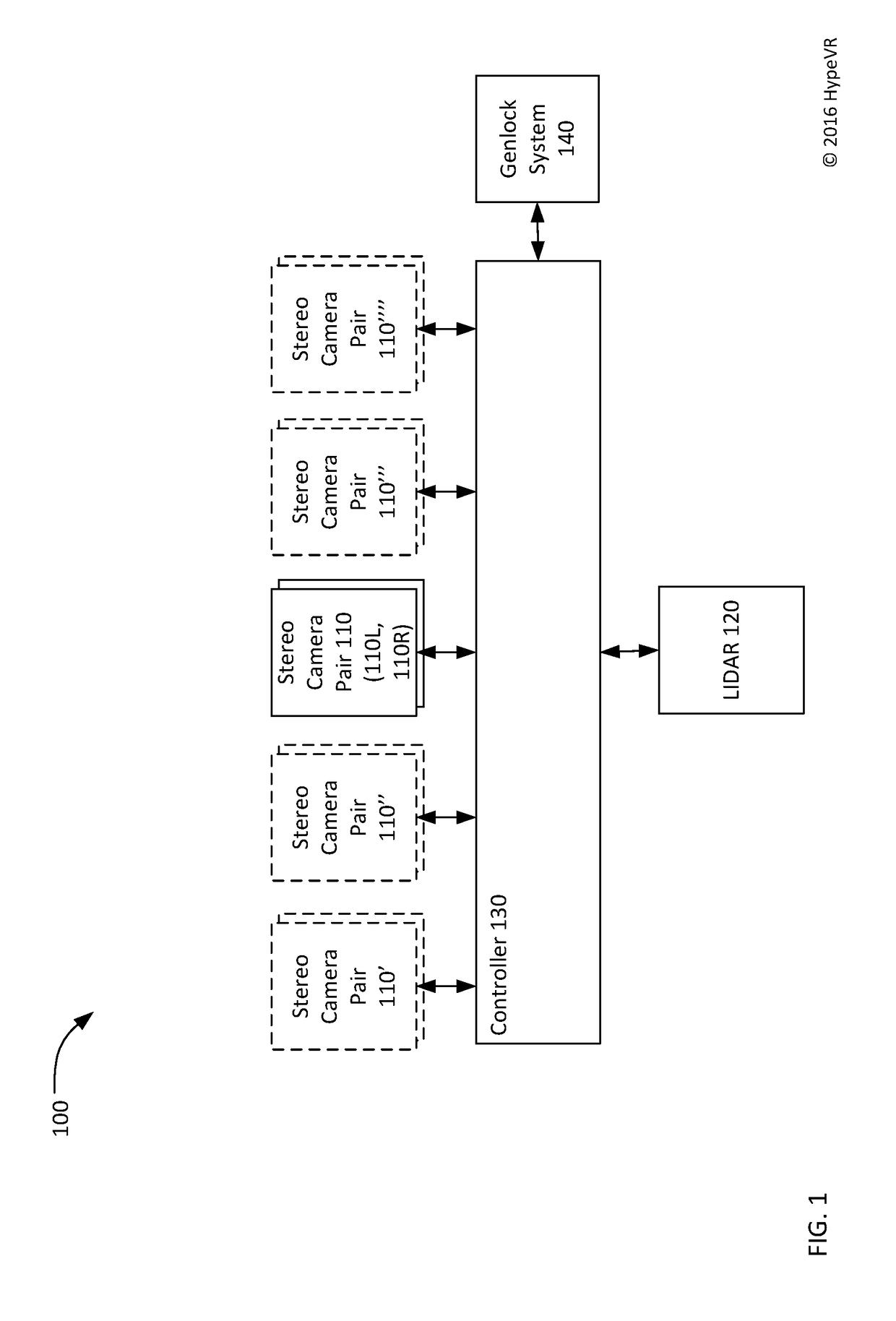

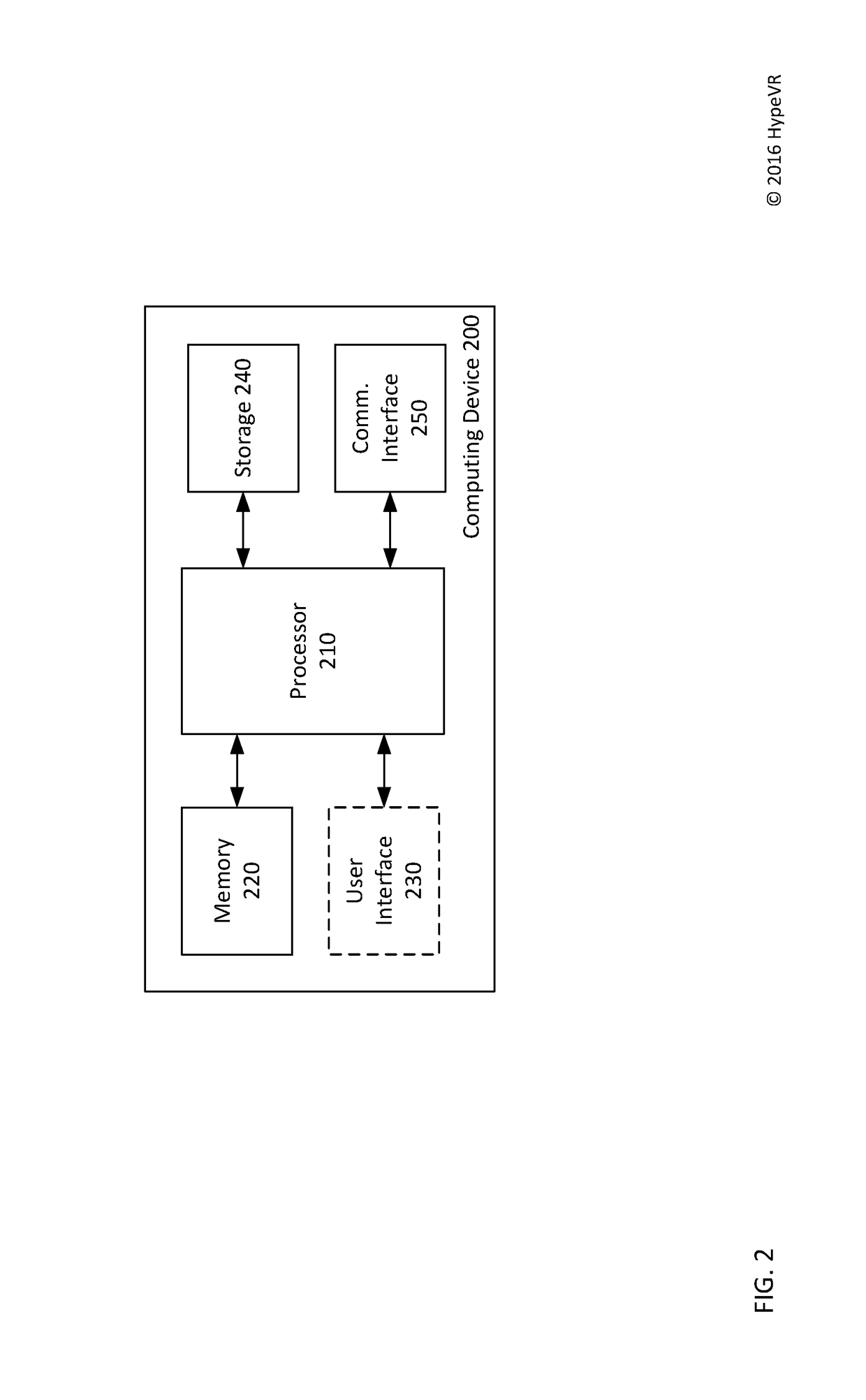

A system for capturing live-action three-dimensional video is disclosed. The system includes pairs of stereo cameras and a LIDAR for generating stereo images and three-dimensional LIDAR data from which three-dimensional data may be derived. A depth-from-stereo algorithm may be used to generate the three-dimensional camera data for the three-dimensional space from the stereo images and may be combined with the three-dimensional LIDAR data taking precedence over the three-dimensional camera data to thereby generate three-dimensional data corresponding to the three-dimensional space.

Owner:HYPEVR

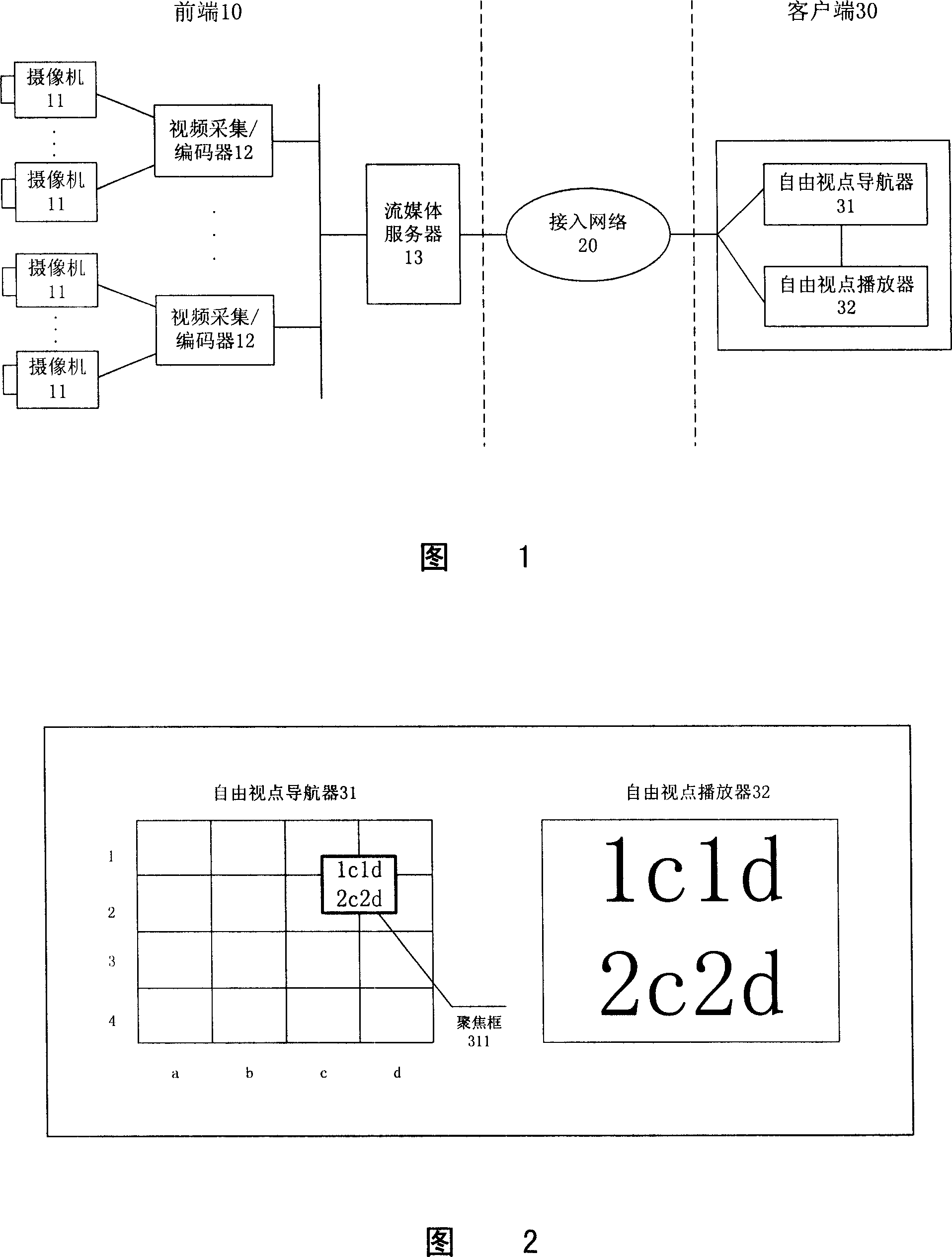

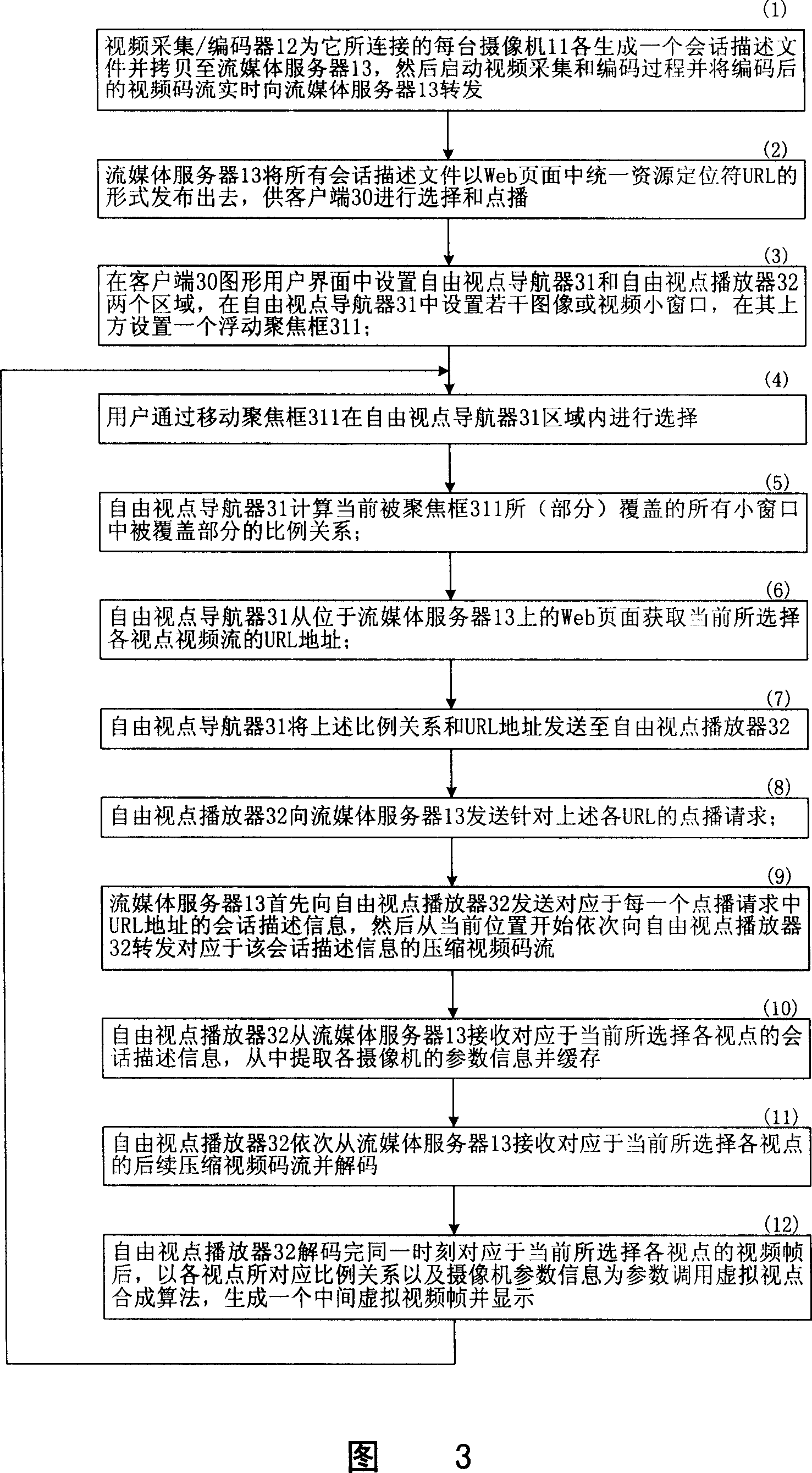

Method and system for rebuilding free viewpoint of multi-view video streaming

InactiveCN101014123AEasy to handleLower requirementPulse modulation television signal transmissionData switching by path configurationGraphical user interfaceComputer graphics (images)

The invention relates to one free visual point re-form method and system of multi-point visual flow, which is characterized by the following: setting free point and free point player in customer end pattern user interface; setting several small windows and one float focus frame in guide device; user selects in free visual point guide area through mobile float focus frame; free visual point player receives relative visual point code flow with current float focus frame covered each windows from flow media servo; then transferring virtual visual point to integrate one middle virtual point for display.

Owner:PEKING UNIV

Lidar stereo fusion live action 3D model video reconstruction for six degrees of freedom 360° volumetric virtual reality video

A system for capturing live-action three-dimensional video is disclosed. The system includes pairs of stereo cameras and a LIDAR for generating stereo images and three-dimensional LIDAR data from which three-dimensional data may be derived. A depth-from-stereo algorithm may be used to generate the three-dimensional camera data for the three-dimensional space from the stereo images and may be combined with the three-dimensional LIDAR data taking precedence over the three-dimensional camera data to thereby generate three-dimensional data corresponding to the three-dimensional space.

Owner:HYPEVR

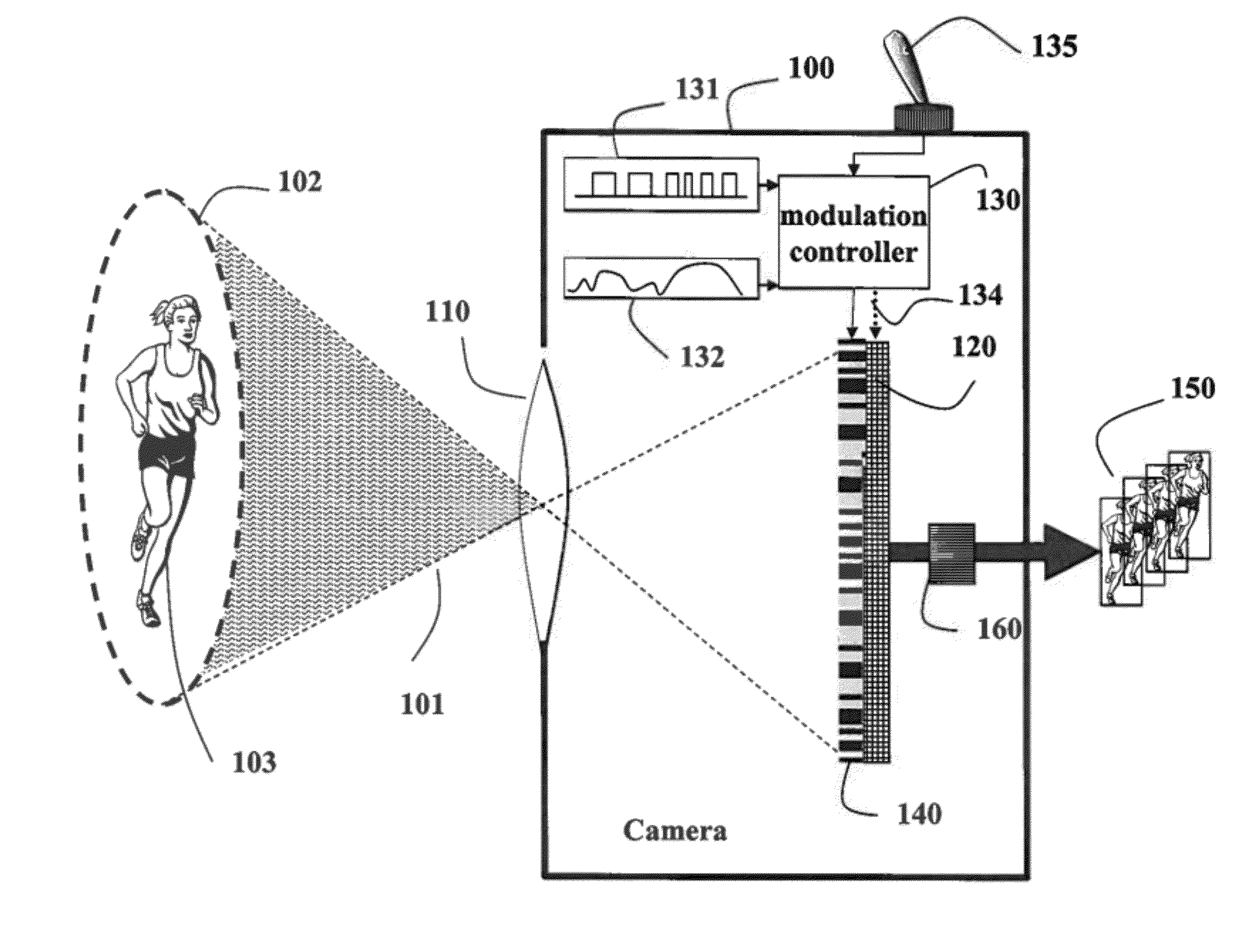

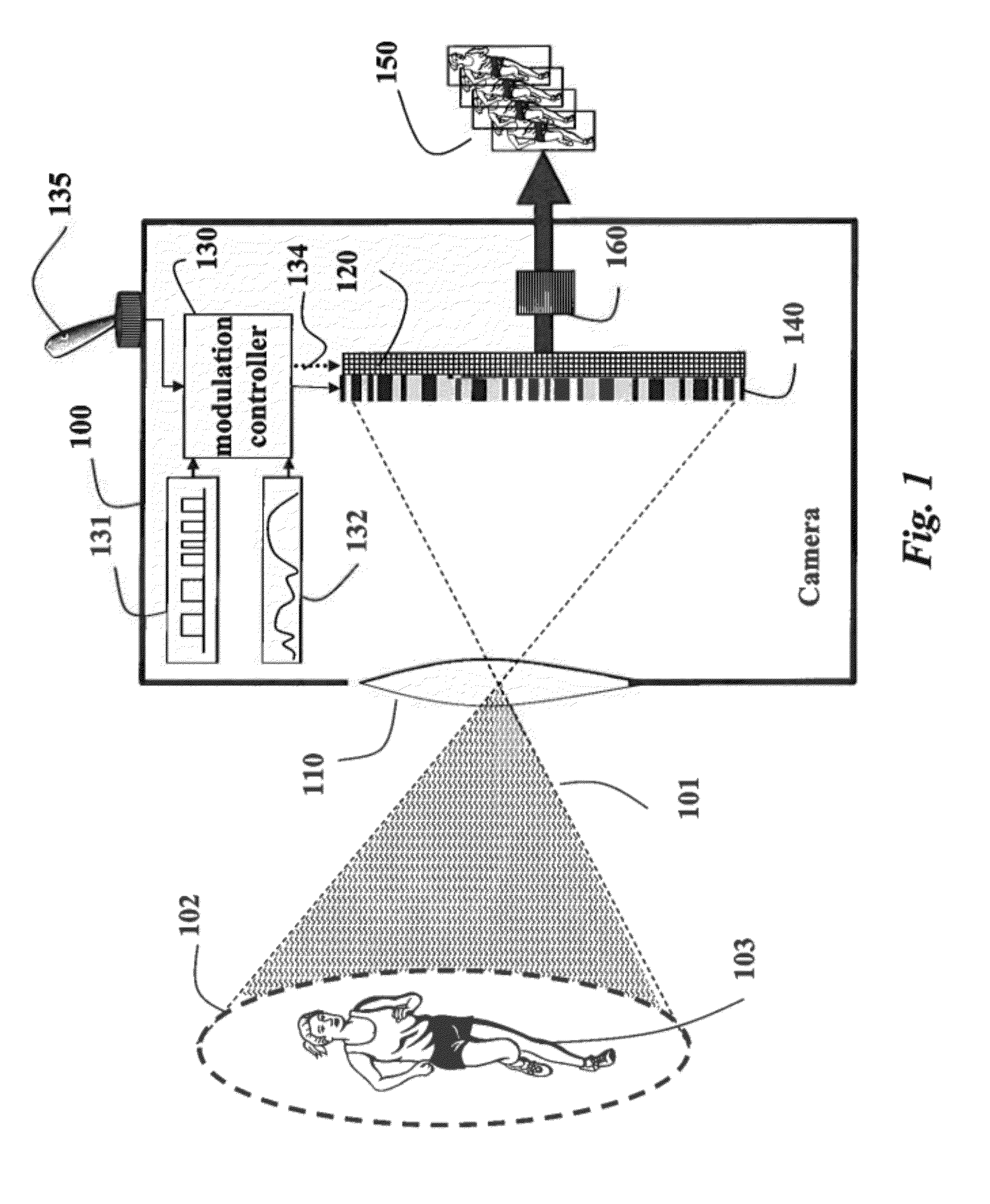

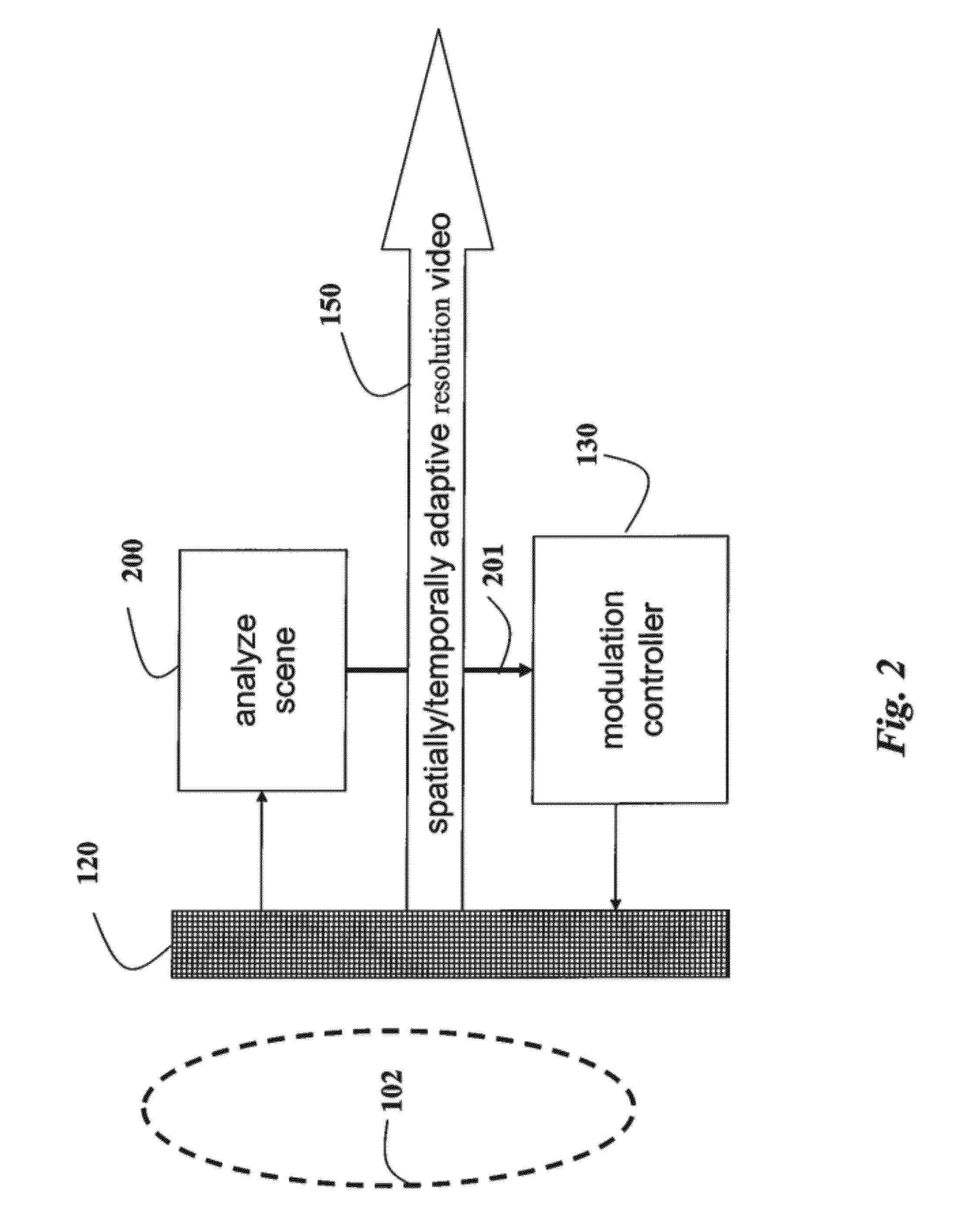

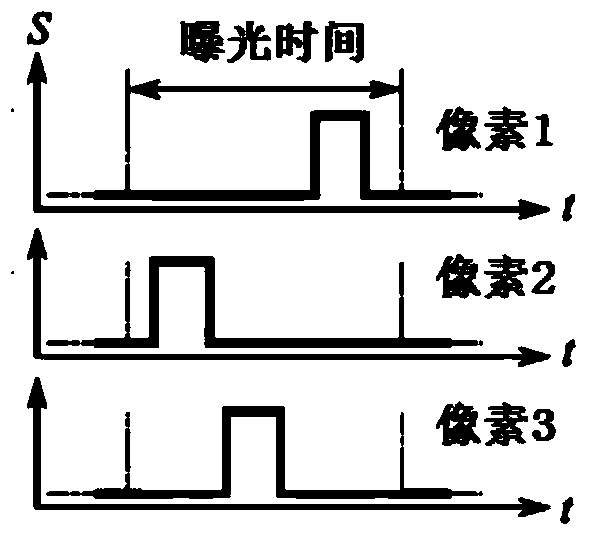

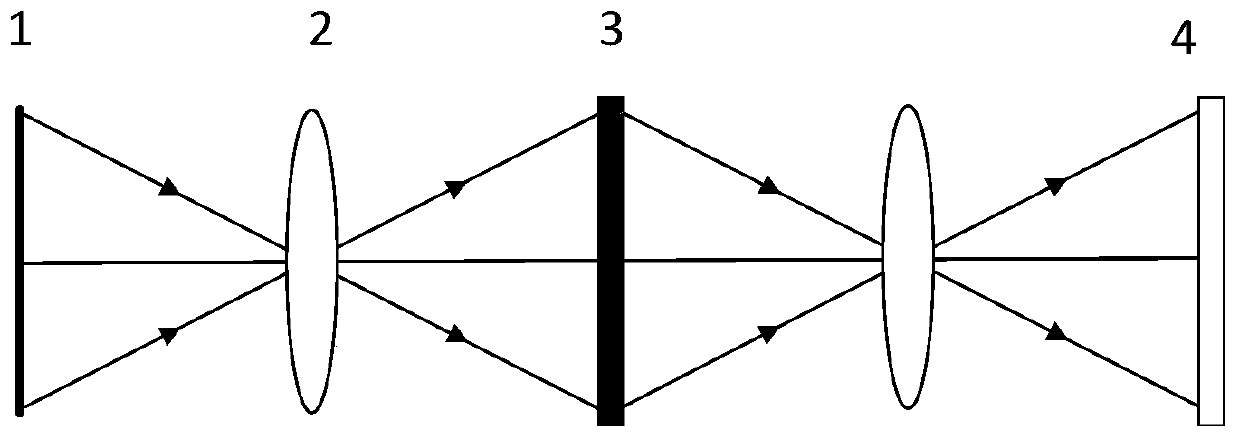

Programmable Camera and Video Reconstruction Method

InactiveUS20120162457A1Mask pixel resolutionTelevision system detailsCharacter and pattern recognitionModulation functionImage resolution

A camera for acquiring a sequence of frames of a scene as a video includes a sensor with an array of sensor pixels. Individual sensor pixels are modulated by corresponding modulation functions while acquiring each frame of the video. The modulation can be performed by a transmissive or reflective masked arranged in an optical path between the scene and the senor. The frames can be reconstructed to have a frame rate and spatial resolution substantially higher than a natural frame rate and a spatial resolution of the camera.

Owner:MITSUBISHI ELECTRIC RES LAB INC

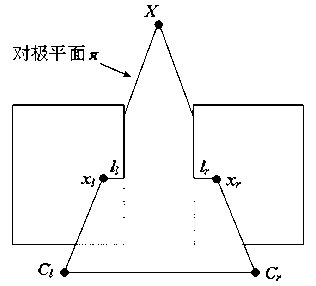

Quasi-three dimensional reconstruction method for acquiring two-dimensional videos of static scenes

InactiveCN103236082ASimplify the solution processLess artifactsSteroscopic systems3D modellingParallaxViewpoints

The invention discloses a quasi-three dimensional reconstruction method for acquiring two-dimensional videos of static scenes, which belongs to the field of computer vision three-dimensional video reconstruction. The method comprises the following steps: step A, extracting double-viewpoint image pairs from each frame of a two-dimensional video; step B, respectively and polarly correcting each double-viewpoint image; step C, adopting a binocular stereo matching method based on overall optimization to respectively solve overall optimum disparity maps of all the polarly corrected double-viewpoint images; step D, reversely correcting the overall optimum disparity maps so as to obtain the corresponding disparity maps of all the frames in the three-dimensional video; step E, splicing the disparity maps obtained in the step D according to a corresponding video frame sequence to form a disparity map sequence, and optimizing the disparity map sequence; and step F, combining all the extracted video frames and the corresponding disparity maps, adopting a depth image based rendering (DIBR) method to recover virtual viewpoint images, and splicing the virtual viewpoint images into a virtual viewpoint video. The method is low in computational complexity, simple and practicable.

Owner:NANJING UNIV OF POSTS & TELECOMM

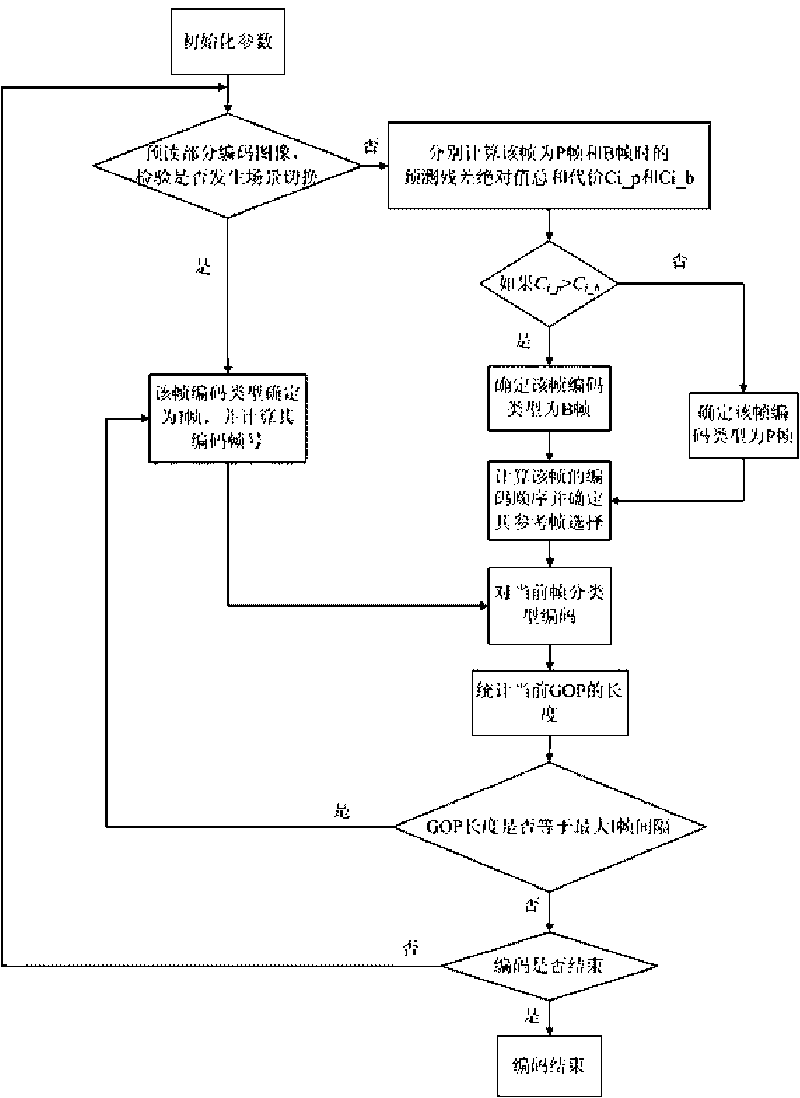

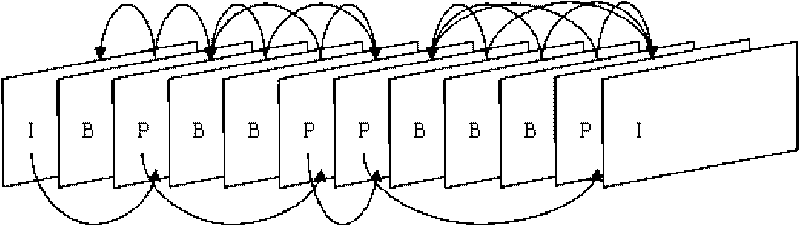

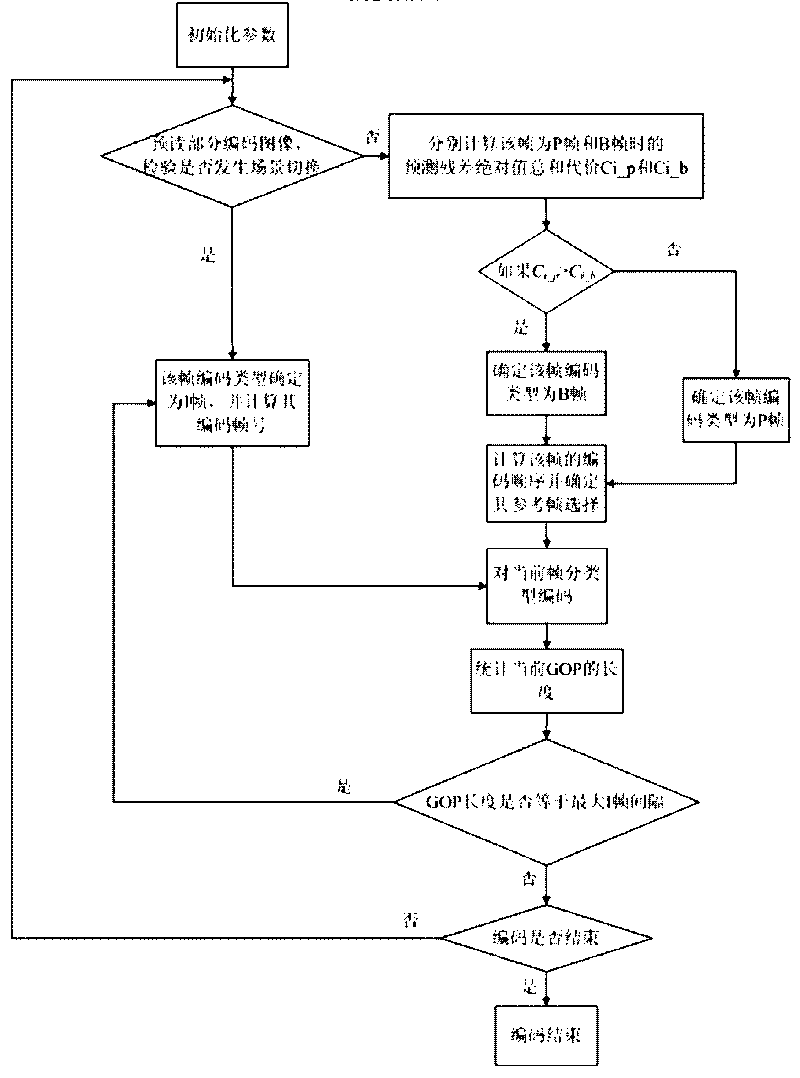

Adaptive frame structure-based AVS coding method

ActiveCN101720044AEliminate mosaic phenomenonImprove stabilityTelevision systemsDigital video signal modificationPattern recognitionVideo encoding

The invention relates to an Adaptive frame structure-based AVS coding method and relates to a method for processing video signals. According to the method, the scene switch is detected by reading a video coded image frame by frame, and the relevance and the complexity of the adjacent frames are analyzed at the same time; coding types of the images to be coded is judged, and a coding order of the images is adjusted to determine the selection of the reference frames. By considering the characteristics of video sequences, the method ensures the compression efficiency of the video images, can greatly improve the subjective quality and the stability of the AVS video reconstruction images, and eliminate a mosaic appearance caused by the AVS coding in the process of scene switch or violent motion. The method can be used in the video sequences in one scene or a plurality of scenes.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

Lidar stereo fusion live action 3D model video reconstruction for six degrees of freedom 360° volumetric virtual reality video

A system for capturing live-action three-dimensional video is disclosed. The system includes pairs of stereo cameras and a LIDAR for generating stereo images and three-dimensional LIDAR data from which three-dimensional data may be derived. A depth-from-stereo algorithm may be used to generate the three-dimensional camera data for the three-dimensional space from the stereo images and may be combined with the three-dimensional LIDAR data taking precedence over the three-dimensional camera data to thereby generate three-dimensional data corresponding to the three-dimensional space.

Owner:HYPEVR

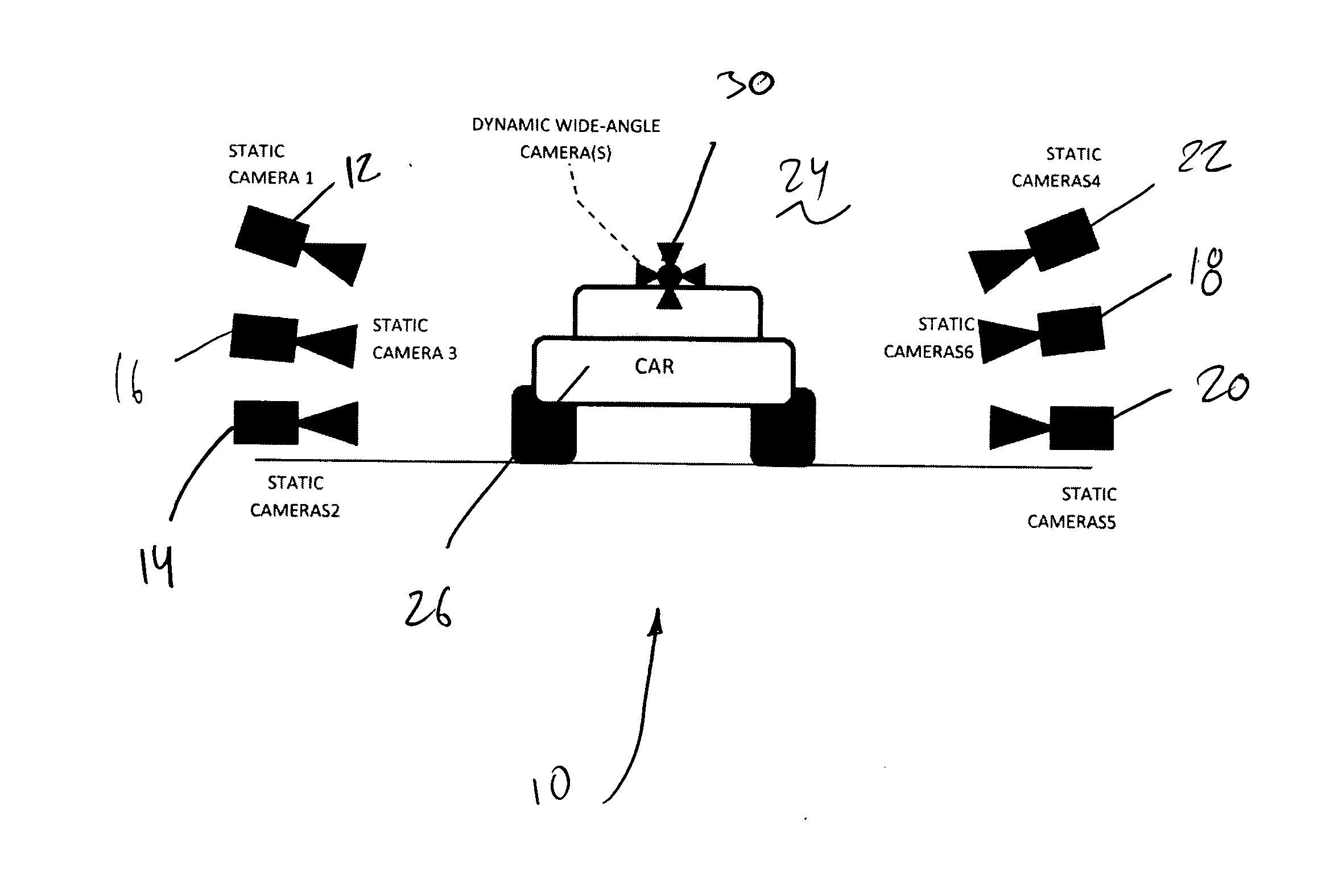

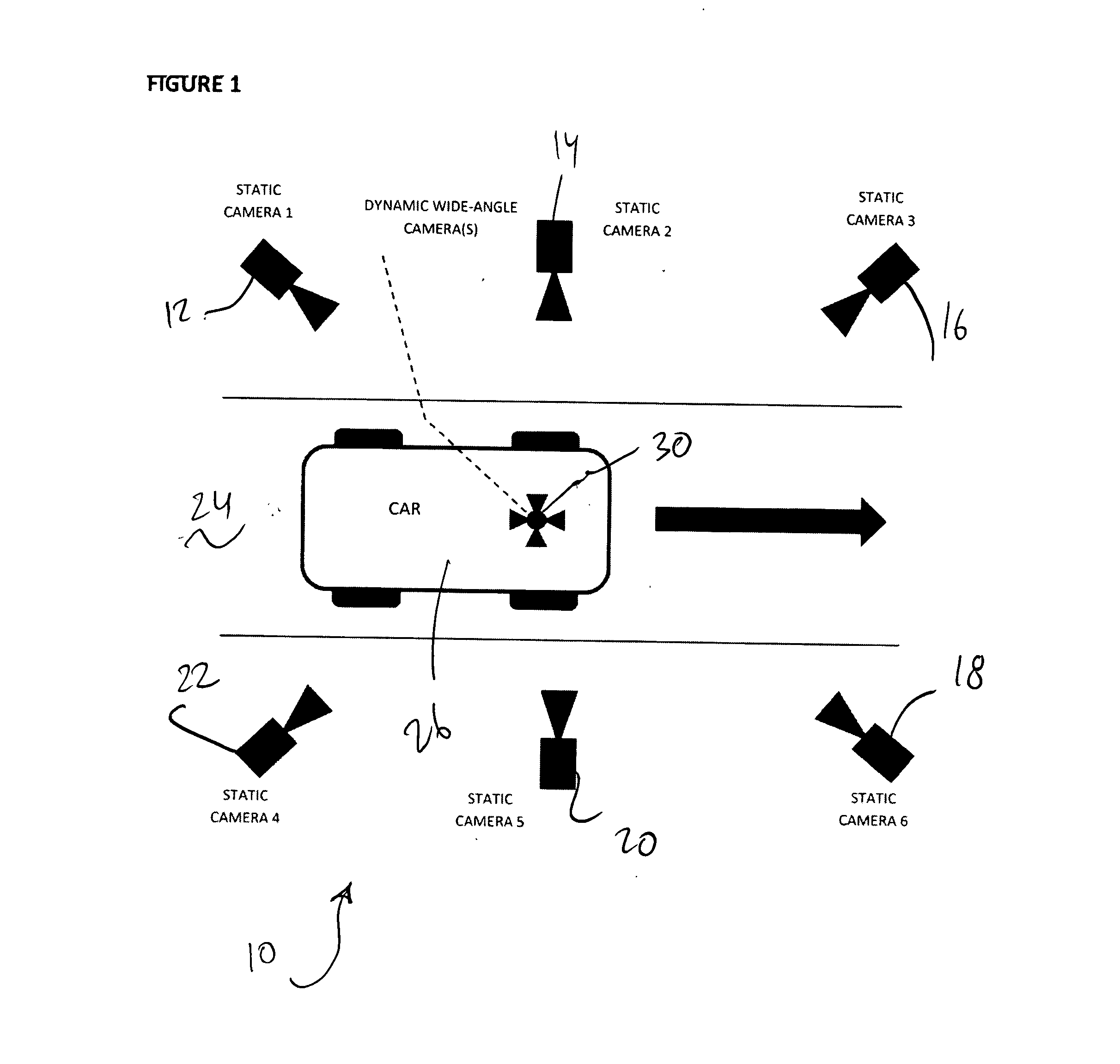

System and method for implementation of three dimensional (3D) technologies

InactiveUS20140015832A1Eliminate errorsTime differenceImage analysisAnimationAnimationLight reflection

A system and method of the present invention is a video reconstruction provided to reconstruct animated three dimensional scenes from number of videos received from cameras which observe the scene from different positions and different angles. Multiple video footages of objects are taken from different positions. Then the video footages are filtered to avoid noise (results of light reflection and shadows. The system then restores 3D model of the object and the texture of the object. The system also restores positions of dynamic cameras. Finally, the system maps texture to 3D model.

Owner:KOZKO DMITRY +2

Fluid-solid interaction simulation method based on video reconstruction and SPH model

InactiveCN106446425AOvercome limitationsReal fluid-solid coupling simulation effectDesign optimisation/simulationSpecial data processing applicationsSimulationSurface level

The invention discloses a fluid-solid interaction simulation method based on video reconstruction and an SPH model. The fluid-solid interaction simulation method includes steps: 1), adopting a bright-dark recovery shape method to quickly reconstruct fluid surface geometric information in video, and acquiring a surface height field of each frame of input image; 2), combining the height fields with a shallow water equation, and calculating to acquire a speed field on the surface of fluid in a form of a minimizing energy equation; 3), using the surface geometric information as a boundary constraint condition to be discretized into a whole three-dimension body to acquire volume data; 4), guiding the volume data reconstructed in the video into an SPH simulation scene to serve as an initial condition of the simulation scene, and interacting with other virtual environment objects in the scene. By the fluid-solid interaction simulation method, high-accuracy data reconstruction can be realized, fluid surface details can be retained, bidirectional interaction simulation is performed on the basis of reconstructed data and a physical simulation model, fluid animation effect closer to real condition is acquired, algorithm complexity is low, and the method has high creativity compared with related algorithms.

Owner:EAST CHINA NORMAL UNIV

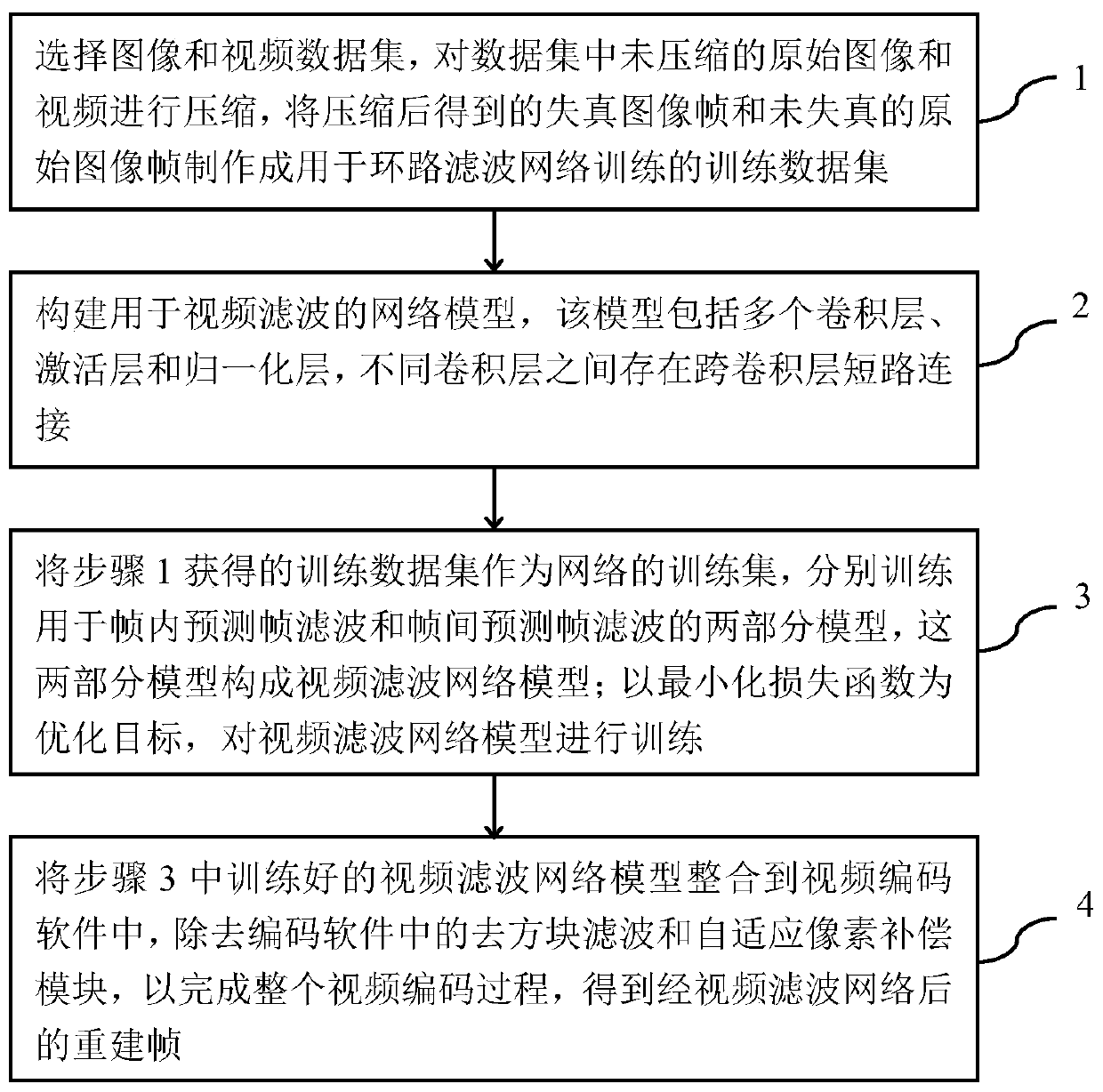

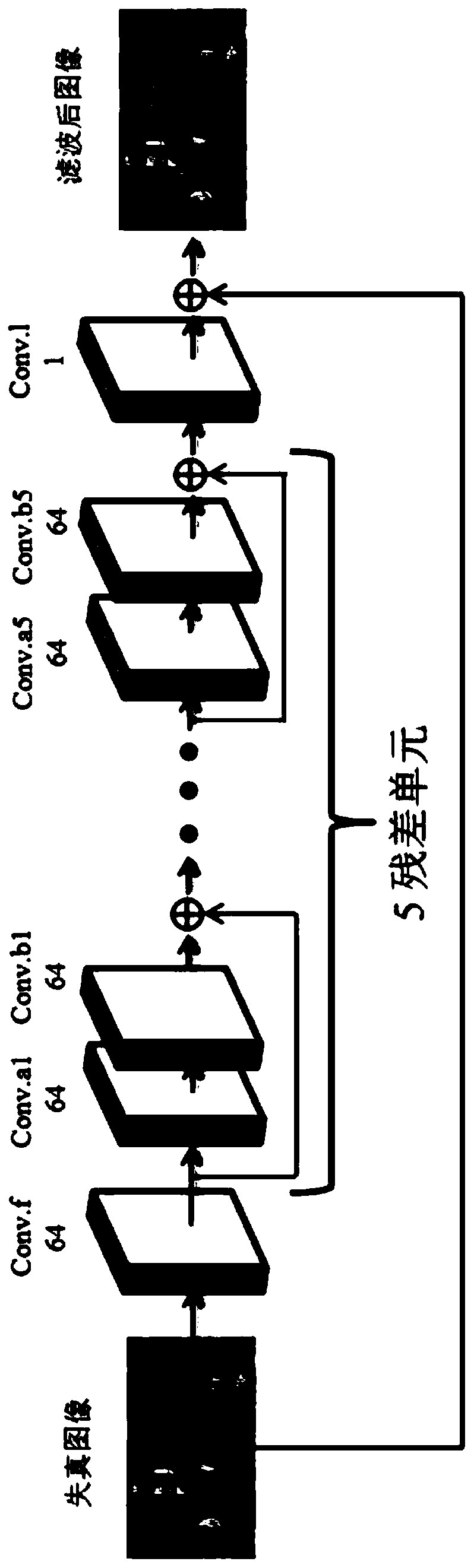

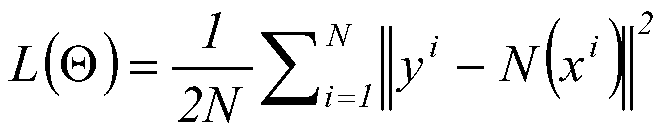

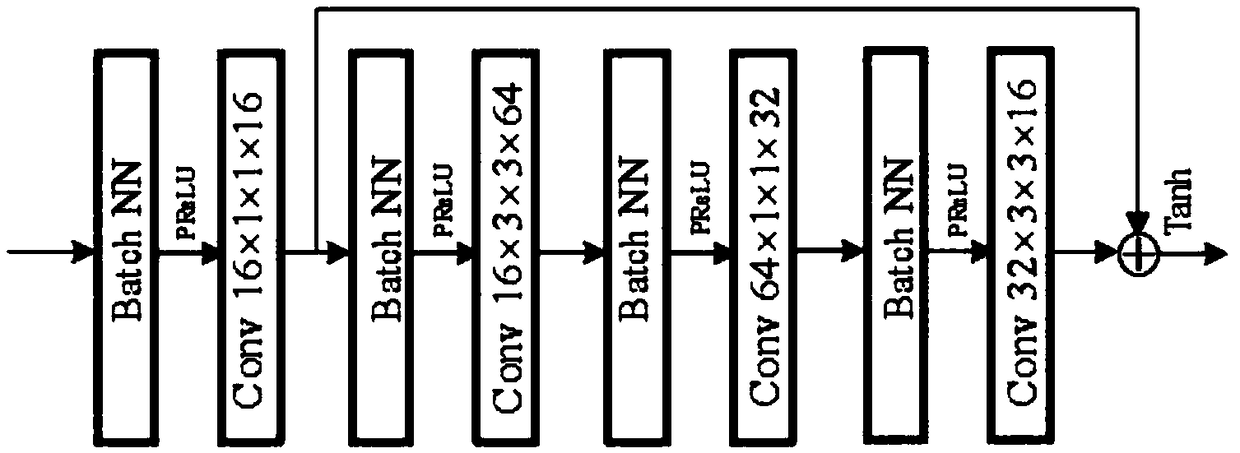

Video loop filter based on deep convolutional network

InactiveCN110351568AImprove image qualityImprove accuracyDigital video signal modificationData setImaging quality

The invention discloses a video loop filtering method based on a deep convolutional network. The method comprises the following steps: step 1, making a training data set for loop filtering network training; step 2, constructing a network model for video filtering; step 3, taking the training data set obtained in the step 1 as a training set of the network, respectively training two models for intra-frame prediction frame filtering and inter-frame prediction frame filtering, and forming a video filtering network model by the two models; training the video filtering network model by taking a minimized loss function as an optimization target; and step 4, integrating the video filtering network model trained in the step 3 into video encoding software to complete the whole video encoding process and obtain a reconstructed frame after passing through the video filtering network. Compared with a traditional filtering method, the method has the advantages that the image quality of the video reconstruction frame is improved, the accuracy of inter-frame prediction is improved, the coding efficiency is improved, the filtered image frame has higher reconstruction quality, and the video codingefficiency is greatly improved.

Owner:TIANJIN UNIV

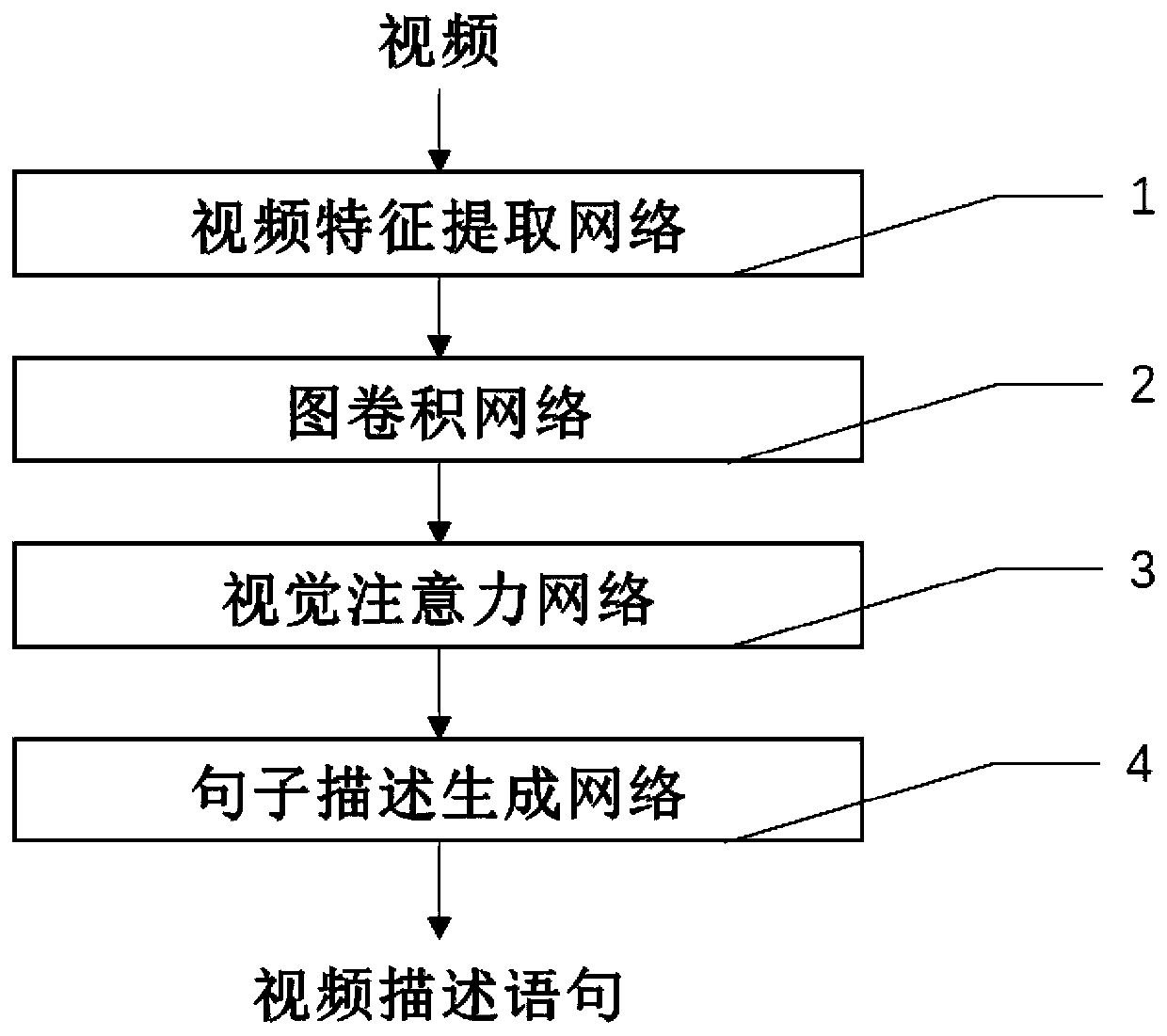

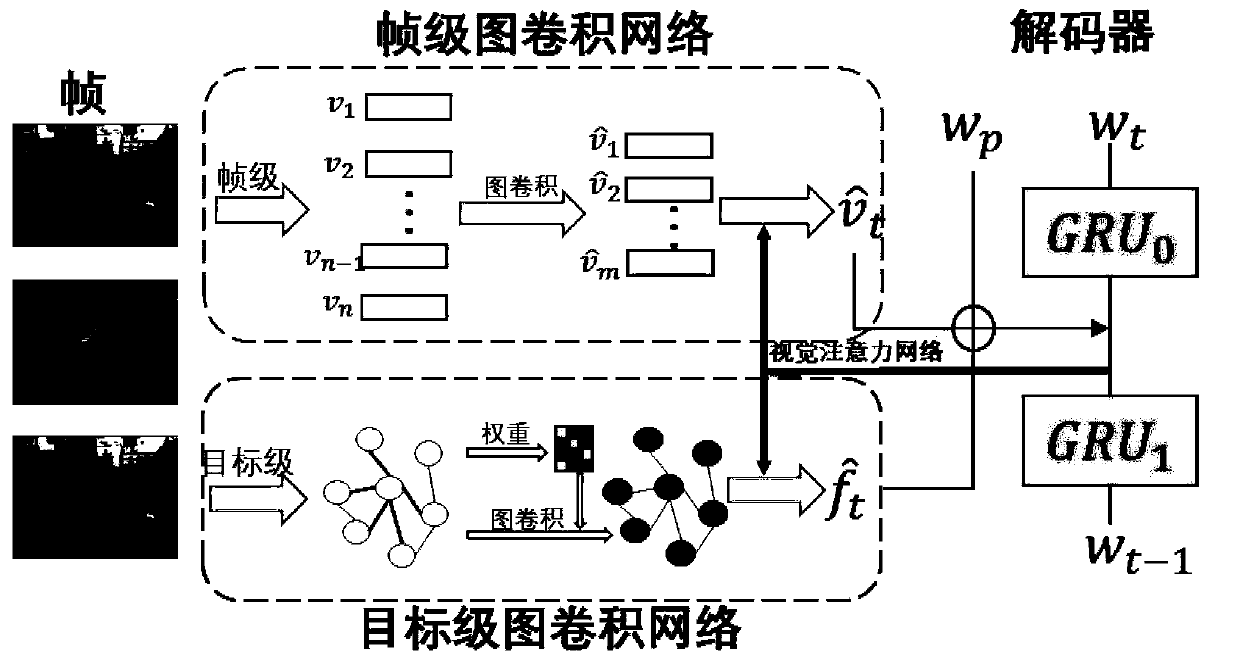

Video description generation system based on graph convolution network

PendingCN111488807AGenerate accuratelyImprove experienceCharacter and pattern recognitionNeural architecturesFeature extractionVideo reconstruction

The invention belongs to the technical field of cross-media generation, and particularly relates to a video description generation system based on a graph convolution network. The video description generation system comprises a video feature extraction network, a graph convolution network, a visual attention network and a sentence description generation network. The video feature extraction network performs sampling processing on videos to obtain video features and outputs the video features to the graph convolution network. The graph convolution network recreates the video features accordingto semantic relations and inputs the video features into sentence descriptions to generate a recurrent neural network; and the sentence description generation network generates sentences according tofeatures of video reconstruction. The features of a frame-level sequence and a target-level sequence in the videos are reconstructed by adopting graph convolution, and the time sequence information and the semantic information in the videos are fully utilized when description statements are generated, so that the generation is more accurate. The invention is of great significance to video analysisand multi-modal information research, can improve the understanding ability of a model to video visual information, and has a wide application value.

Owner:FUDAN UNIV

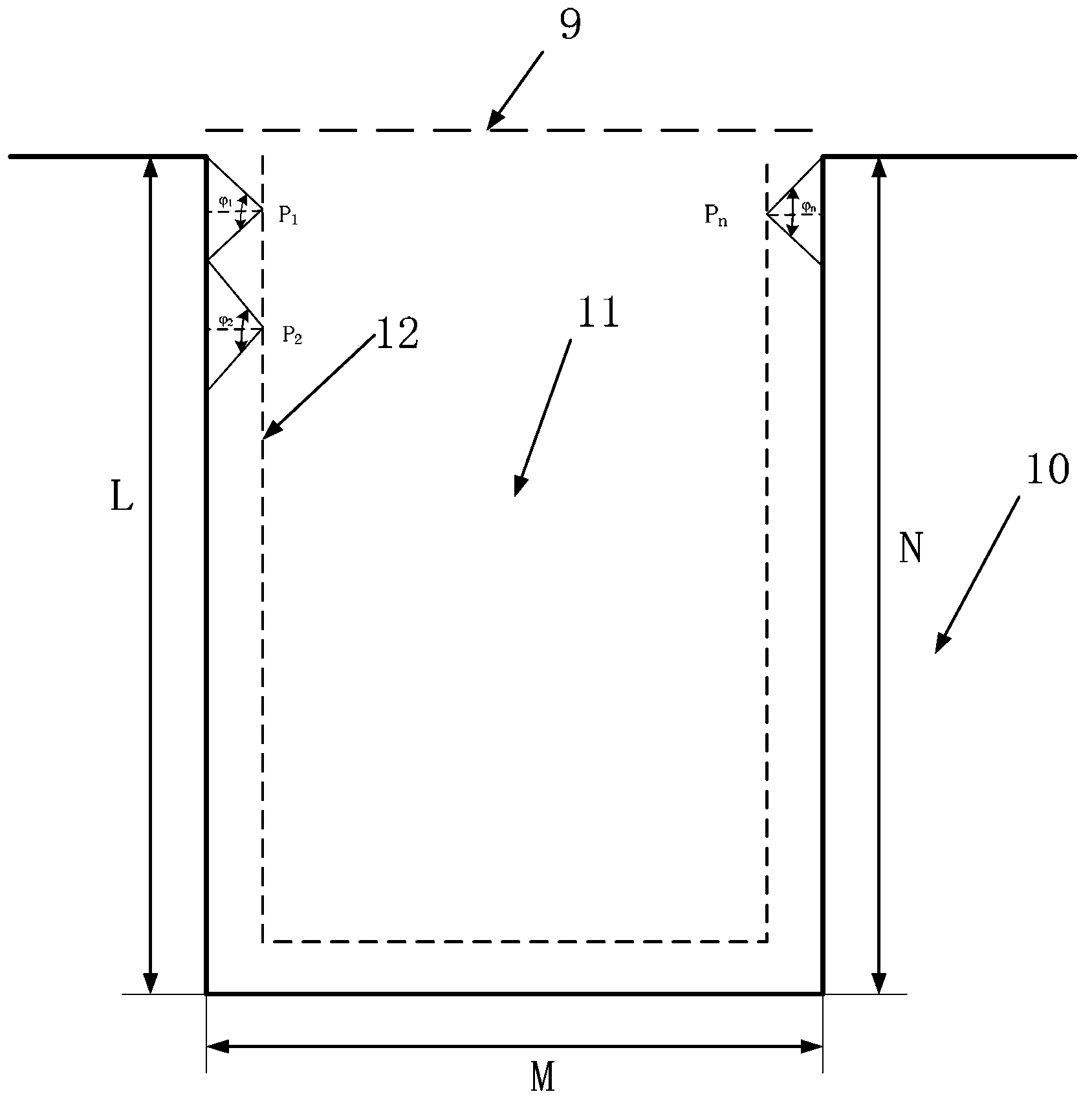

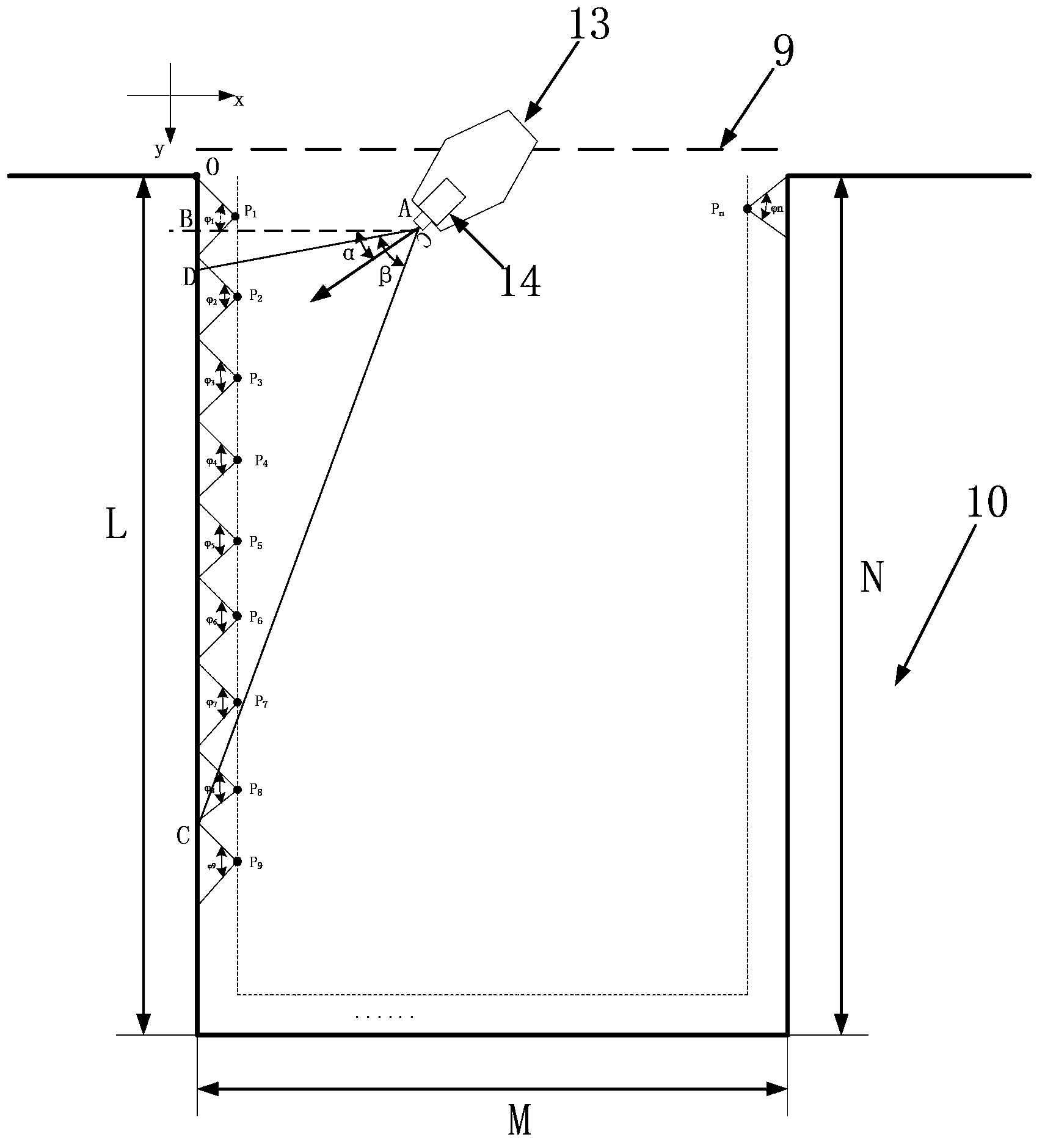

Navigation system for entering and leaving port of ships and warships under night-fog weather situation and construction method thereof

InactiveCN103398710ASave resourcesImprove computing efficiencyNavigation instrumentsWireless videoVideo reconstruction

The invention discloses a navigation system for entering and leaving port of ships and warships under a night-fog weather situation and a construction method thereof, the navigation system includes a port-circumference seacoast panorama system, a color video reconstruction system for the night-fog weather situation, a wireless request signal sending module, a wireless video signal sending module, a wireless video signal receiving module, an infrared camera imaging system, a video display module and a differential global position system (GPS)system; and the construction method comprises the construction of the port-circumference seacoast panorama system and the construction of the color video reconstruction system for the night-fog weather situation. According to the navigation system and the construction method thereof, port-circumference seacoast panoramas are stored in advance in the ships and warships frequently haunting about a port, so that the ships and warships can still clearly know the details circumstances of a port seacoast without need of commands from an on-shore control center, and the ships and warships can enter and leave the port at a day with night fog. Through use of the navigation system and the construction method thereof, differential GPS information of the ships and warships can be effectively utilized to precisely locate direction, speed, location and other information of the ships and warships, and maps with best view-field effects can be sent to the ships and warships.

Owner:DALIAN MARITIME UNIVERSITY

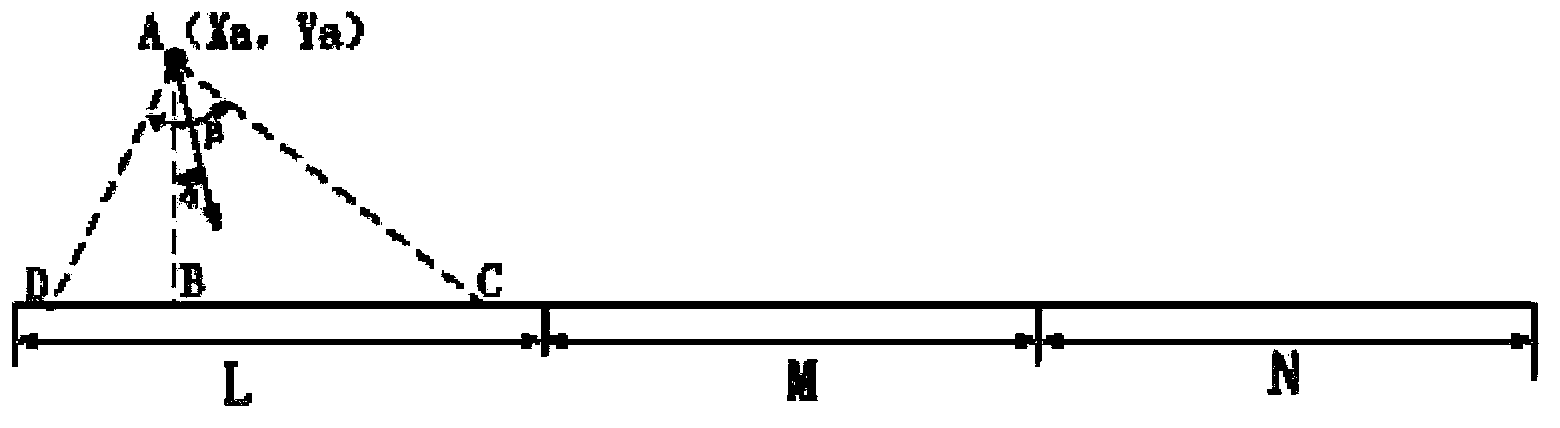

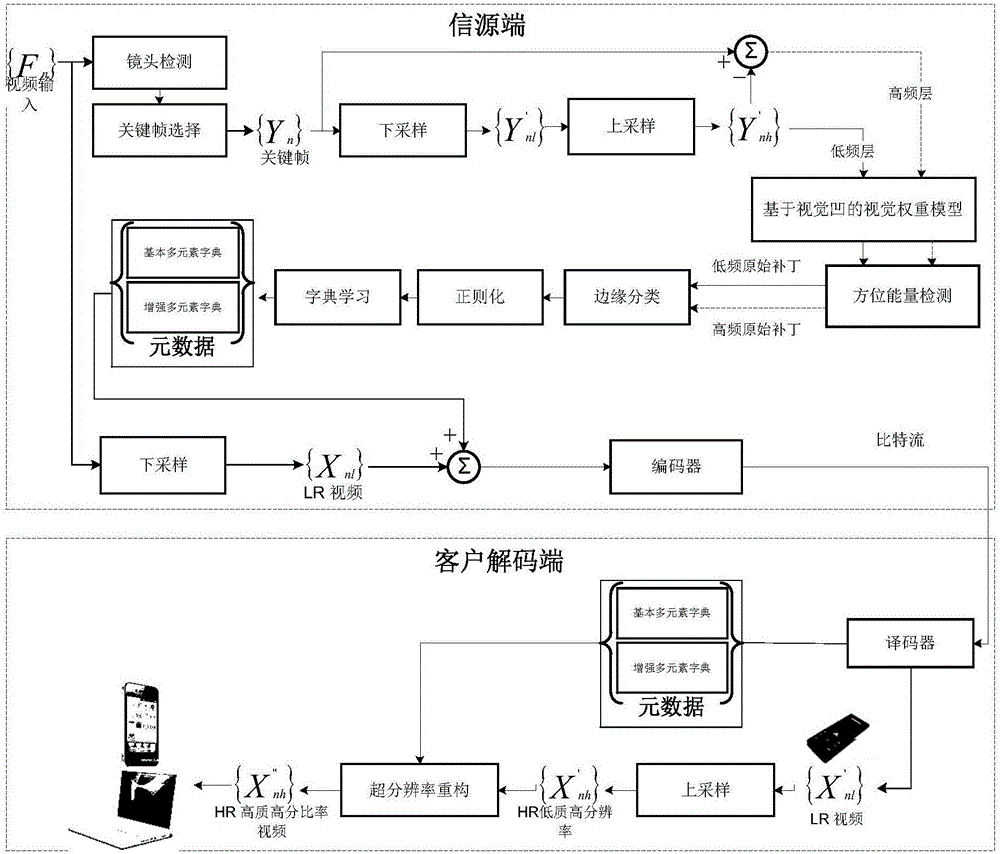

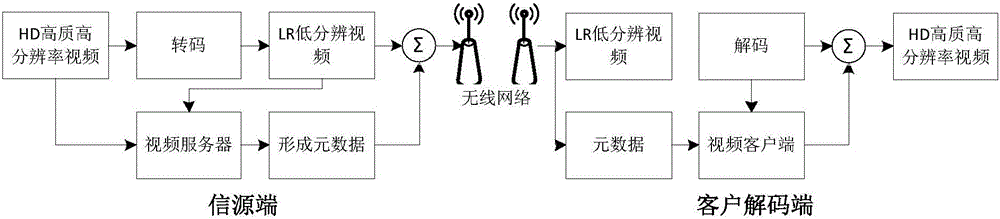

Nonuniform sparse sampling video super resolution method

InactiveCN106097251AImplementing Differential Sparse SamplingReduce the number of atomsImage enhancementGeometric image transformationImage resolutionTerminal equipment

The invention discloses a nonuniform sparse sampling video super resolution method, which belongs to the technical field of video super resolution. The method comprises key technologies such as shot detection, key frame extraction, original image fuzzy processing and down sampling, nonuniform sampling vision model building, sparse dictionary construction, and video reconstruction. A nonuniform sparse sampling method based on foveated vision is adopted, differential sparse sampling is carried out on a video sequence, the number of atoms is greatly reduced, a dual dictionary of high and low resolutions (high and low resolution reference copy) is generated in real time or calculated in a prior mode according to the resolution of a mobile terminal device screen as carried meta data, a hardware-supported nonlinear Mipmap interpolation method is used for simulating and generating a Foveation image in real time, a result similar to that in a Gauss pyramid method can be acquired, and the computation overhead is lower.

Owner:SHENZHEN INSTITUTE OF INFORMATION TECHNOLOGY

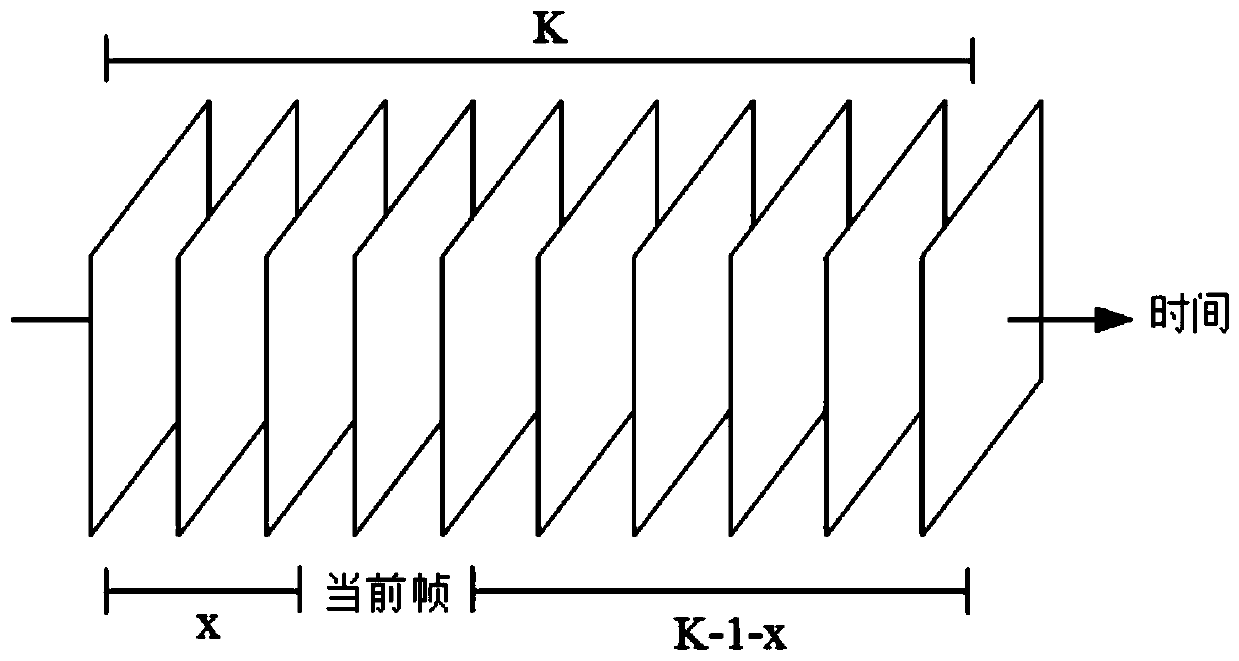

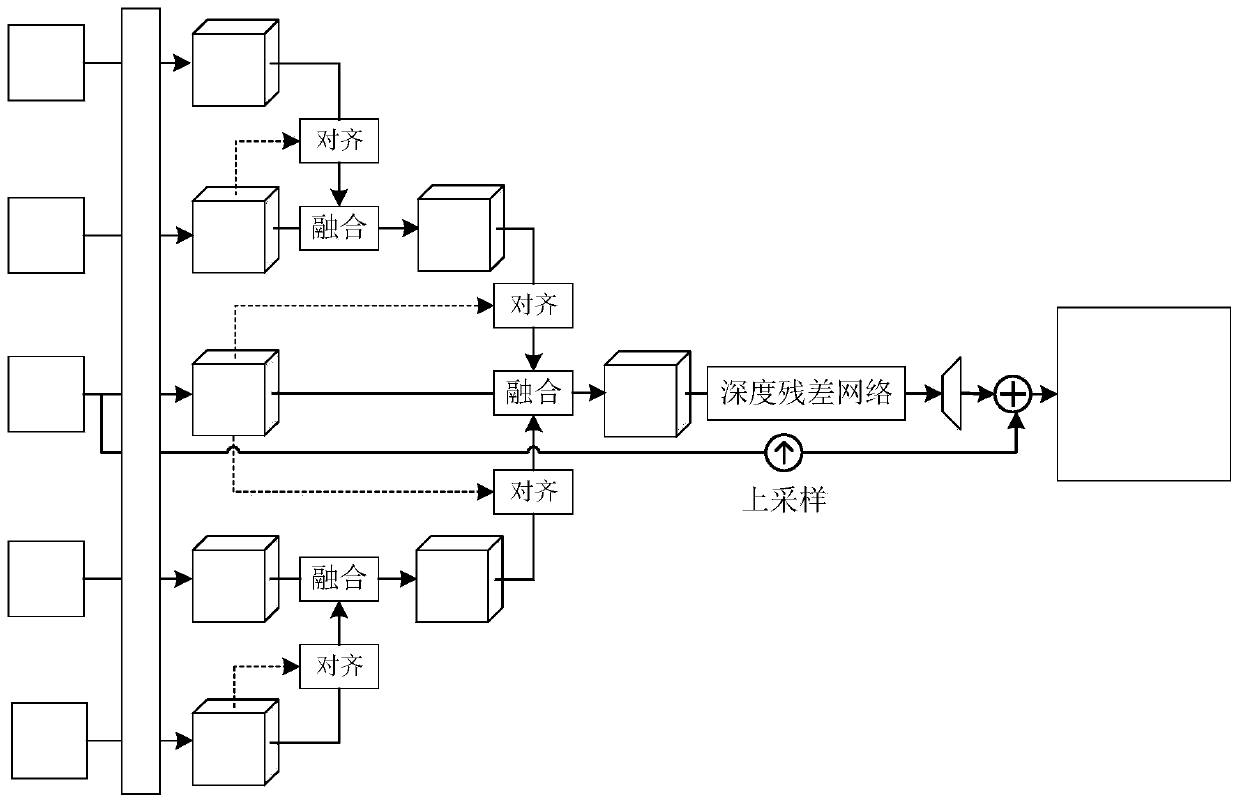

Variable-length input super-resolution video reconstruction method based on deep learning

ActiveCN111524068AImprove reconstruction effectPracticalImage enhancementImage analysisFeature extractionImage resolution

The invention discloses a variable-length input super-resolution video reconstruction method based on deep learning. The method comprises the following steps: constructing a training sample with a random length, and obtaining a training set; establishing a super-resolution video reconstruction network model, wherein the super-resolution video reconstruction network model comprises a feature extractor, a gradual alignment fusion module, a depth residual error module and a superposition module which are connected in sequence; training the super-resolution video reconstruction network model by adopting the training set to obtain a trained super-resolution video reconstruction network; and sequentially inputting to-be-processed videos into the trained super-resolution video reconstruction network for video reconstruction to obtain corresponding super-resolution reconstructed videos. According to the method, a gradual alignment fusion mechanism is adopted, alignment and fusion can be carried out frame by frame, and alignment operation only acts on two adjacent frames of images, so that the model can process a longer time sequence relationship, more adjacent video frames are used, that is to say, more scene information is contained in input, and the reconstruction effect can be effectively improved.

Owner:CHANGAN UNIV

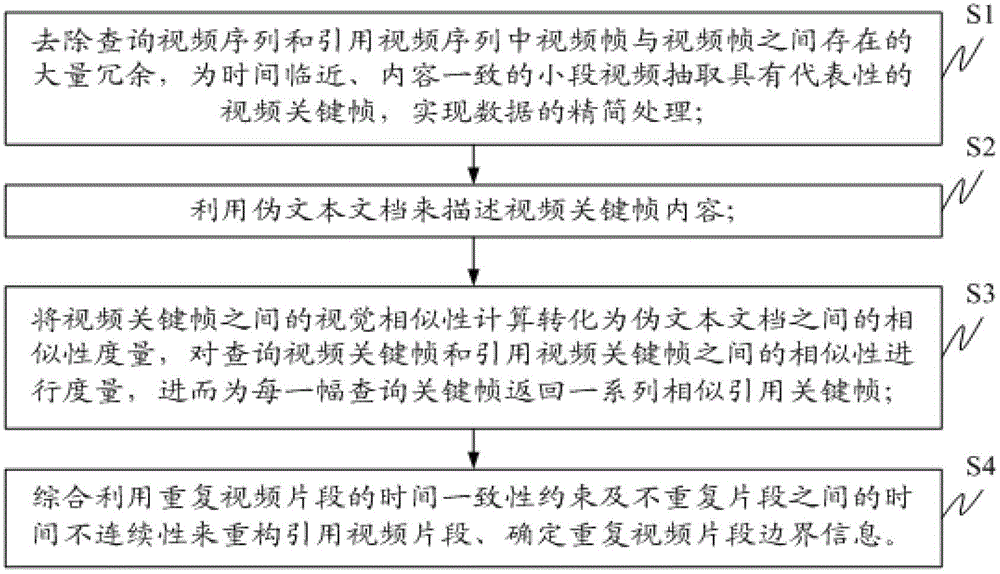

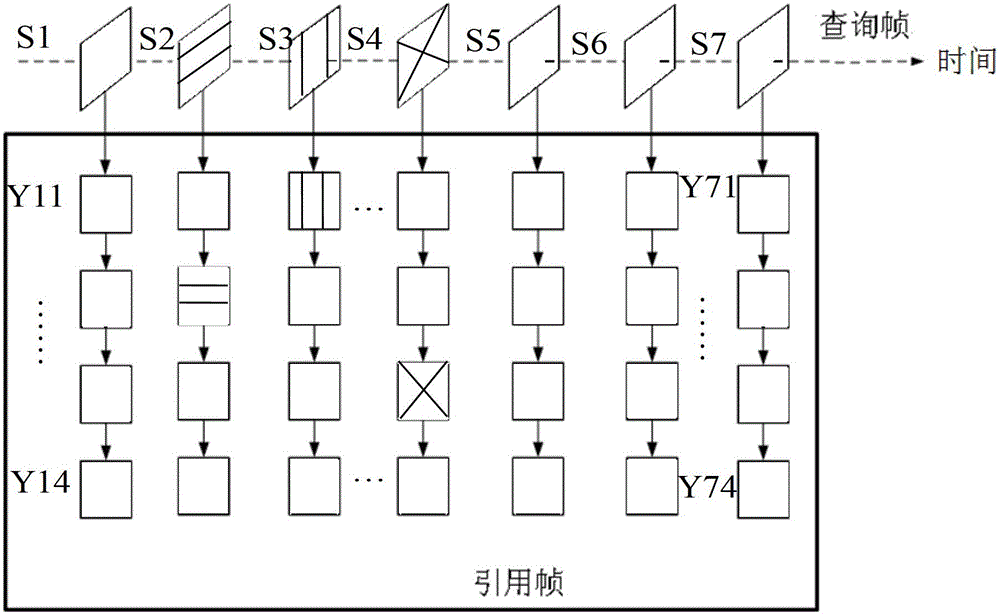

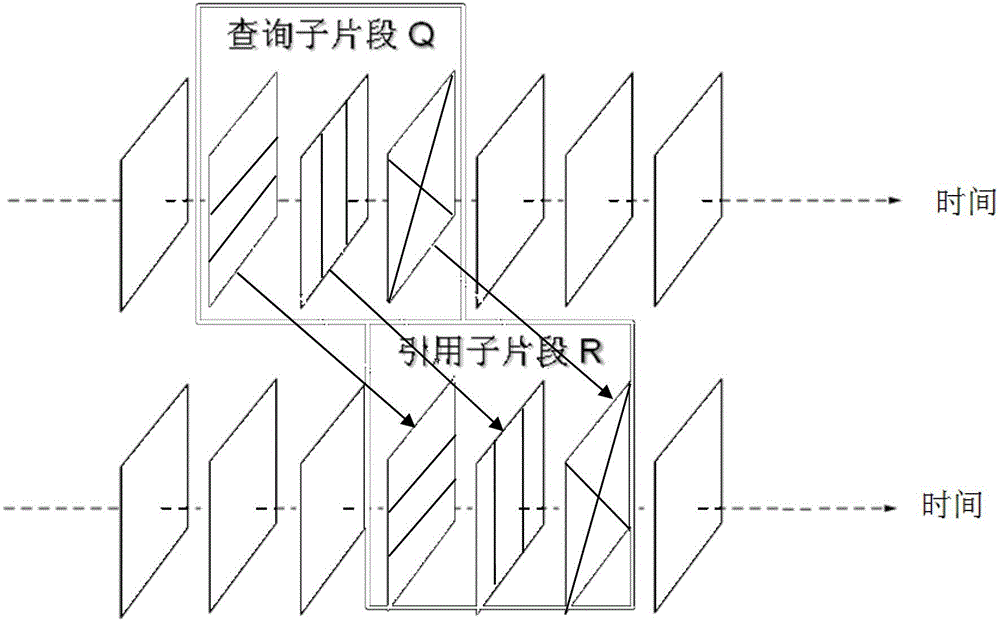

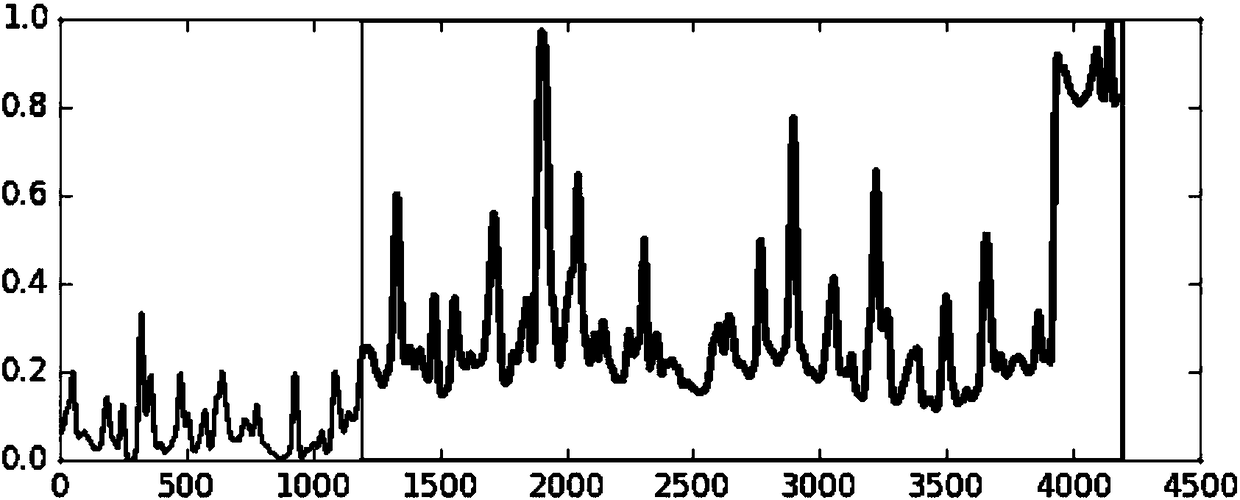

Positioning method of repeated fragments based on video reconstruction

ActiveCN102750339AThin processing is achievedSelective content distributionSpecial data processing applicationsVideo reconstructionKey frame

The invention discloses a positioning method of repeated fragments based on video reconstruction. The positioning method includes the steps of removing mass redundancy among video frames in a query video sequence and a quote video sequence, and extracting representative video key frames for small video fragments which have close time and consistent contents to achieve a downsizing process of data; describing contents of the video key frames by utilizing pseudo text documents; converting visual similarity calculation among video key frames into similarity measurement among pseudo text documents, measuring the similarity between query video key frames and quote video key frames, and returning a series of similar quote key frames for each query key frame; comprehensively using time consistency restraints of repeated video fragments and time inconsistency among non-repeated fragments to reconstruct quote video fragments and determine boundary information of the repeated video fragments. The positioning method is applicable to mining of data media and copyright protection.

Owner:BEIJING JIAOTONG UNIV

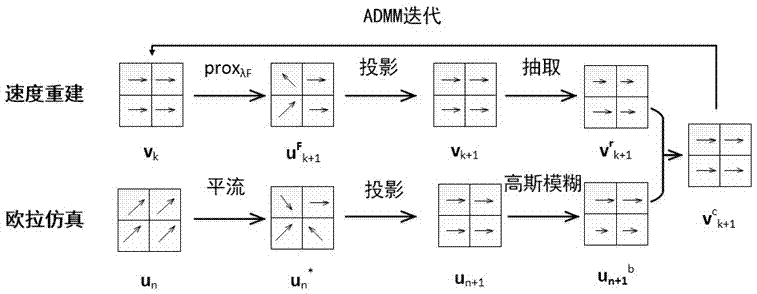

Fluid simulation method based on video reconstruction and eulerian model coupling

ActiveCN107085629AControllable detailsIn line with the real sceneDesign optimisation/simulationAnimationPrior informationCoupling

The invention discloses a fluid simulation method based on video reconstruction and eulerian model coupling. The fluid simulation method comprises the steps of 1) reconstructing a three-dimensional density field of each frame of a fluid according to an input video; 2) solving an N-S equation by adopting an eulerian method, and updating the speed field and density field of the fluid; 3) taking the reconstructed density field of adjacent two frames as prior information and taking the result of the eulerian method as correction to reconstruct a three-dimensional speed field of the fluid; and 4) guiding the eulerian fluid simulation by using the reconstructed density field and speed field to generate a new animation effect. By adoption of the fluid simulation method, the density field and the speed field of the fluid can be reconstructed with relatively high precision; and by tightly coupling the reconstructed data and a fluid geometrical model, a fluid animation effect which is more closer to an actual condition can be obtained, and controllable fluid details can be added.

Owner:EAST CHINA NORMAL UNIV

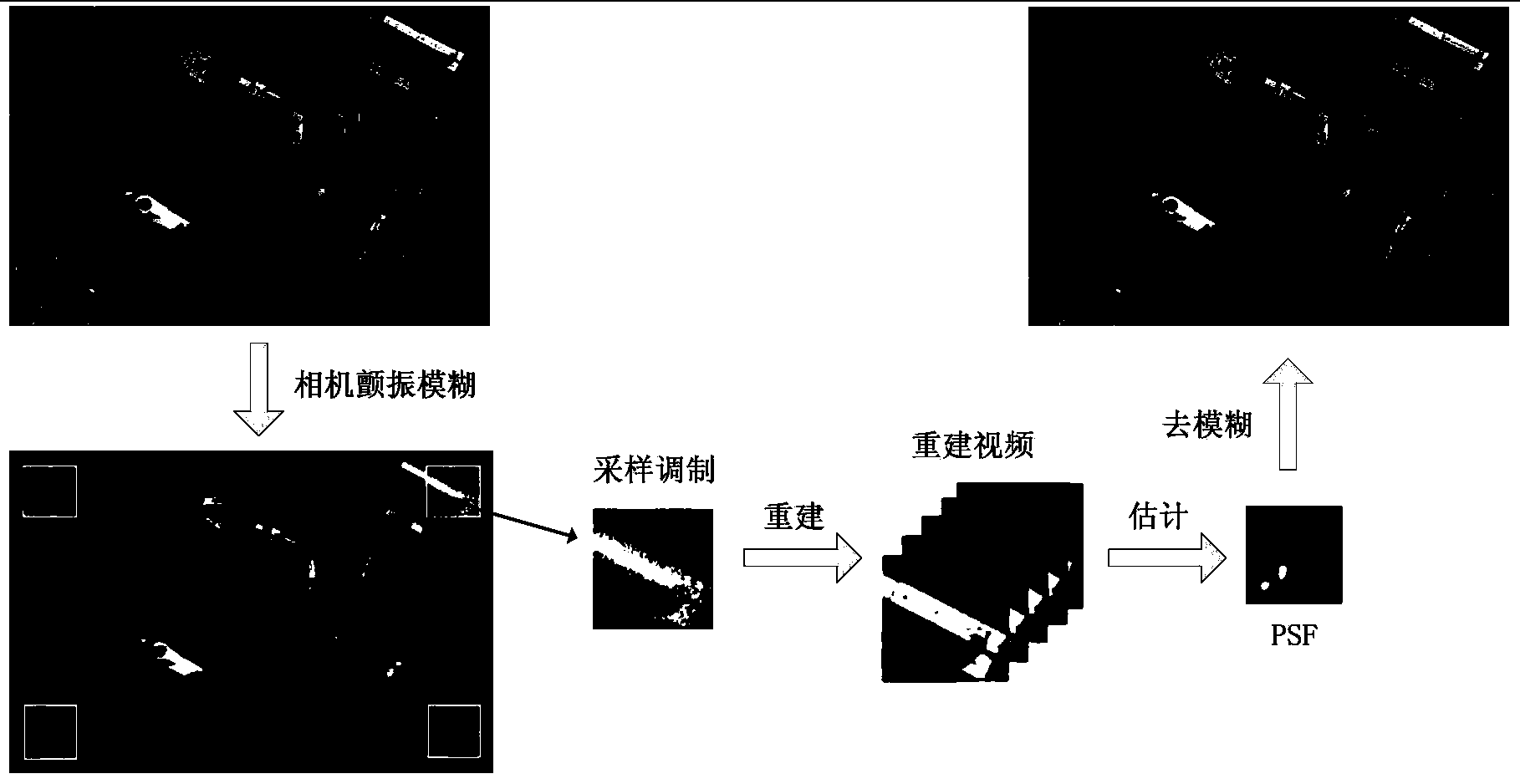

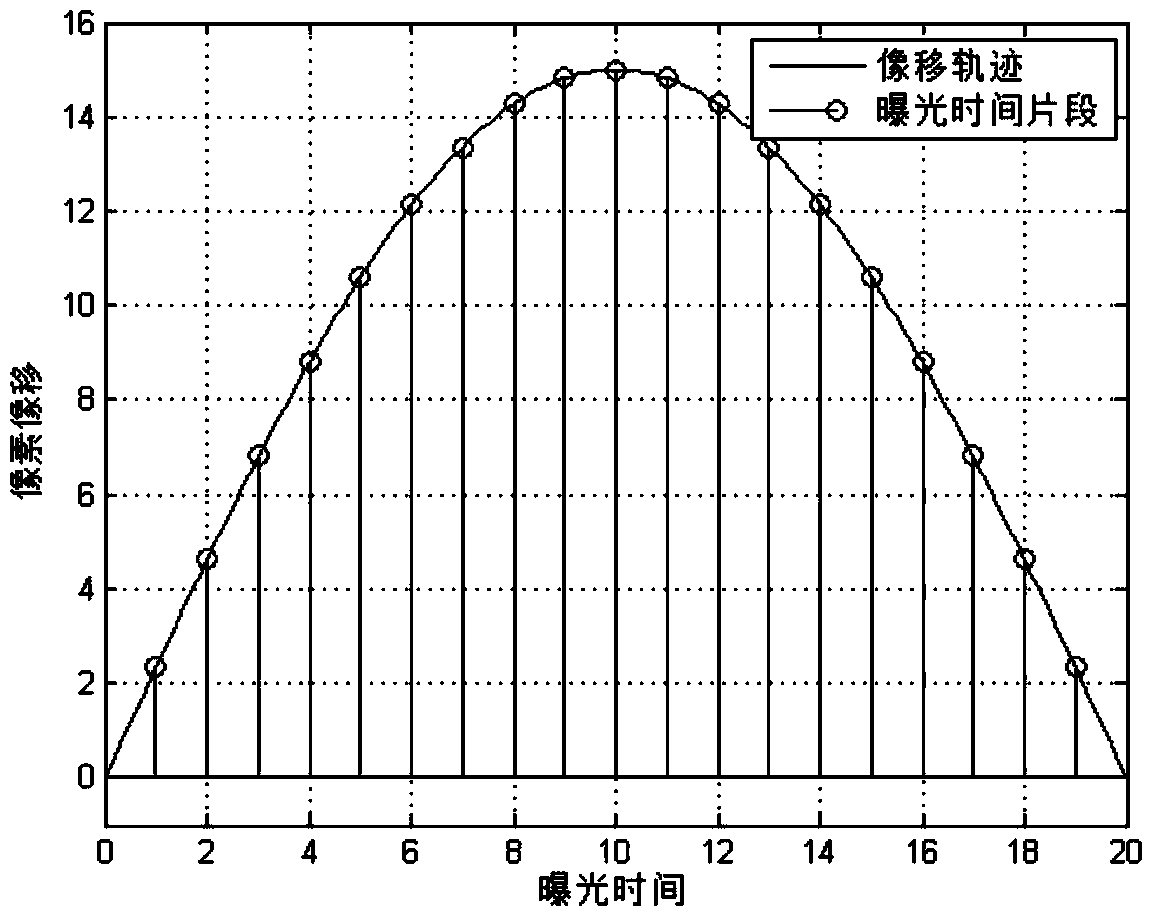

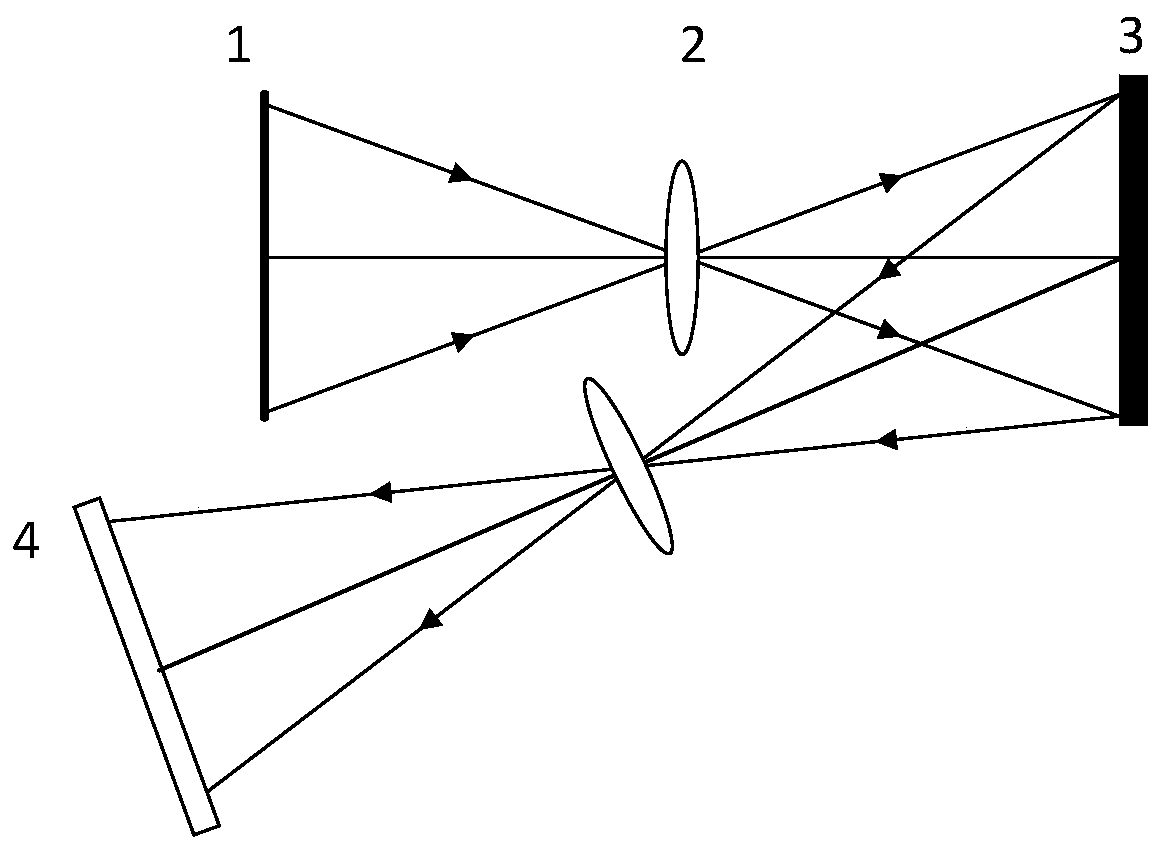

Vibration detection and remote sensing image recovery method based on single-exposure video reconstruction

InactiveCN104243837AImprove reconstruction accuracyReduce time complexityImage enhancementTelevision system detailsRestoration methodImage motion

The invention provides a vibration detection and remote sensing image recovery method based on single-exposure video reconstruction. The method includes the steps of dividing the exposure time into a plurality of equal time durations, conducting encoding exposure on each pixel of a plurality of small blocks at the edge of a detector according to the single-exposure video reconstruction method based on the compression sensing principle to obtain modulated blurred image blocks, reconstructing a clear video image through a reconstruction algorithm, estimating inter-frame image motion according to the reconstructed video image, estimating a point spread function, finally conducting deconvolution on unmodulated blurred images through the point spread function, eliminating the vibration influences of a platform, and obtaining a clear remote sensing image. By means of the method, the problems that a traditional image deblurring method based on compression sensing is long in time and high in complexity are solved, and instantaneity is better achieved; meanwhile, compared with an ordinary blind restoration method, the method has higher restoration accuracy.

Owner:ZHEJIANG UNIV

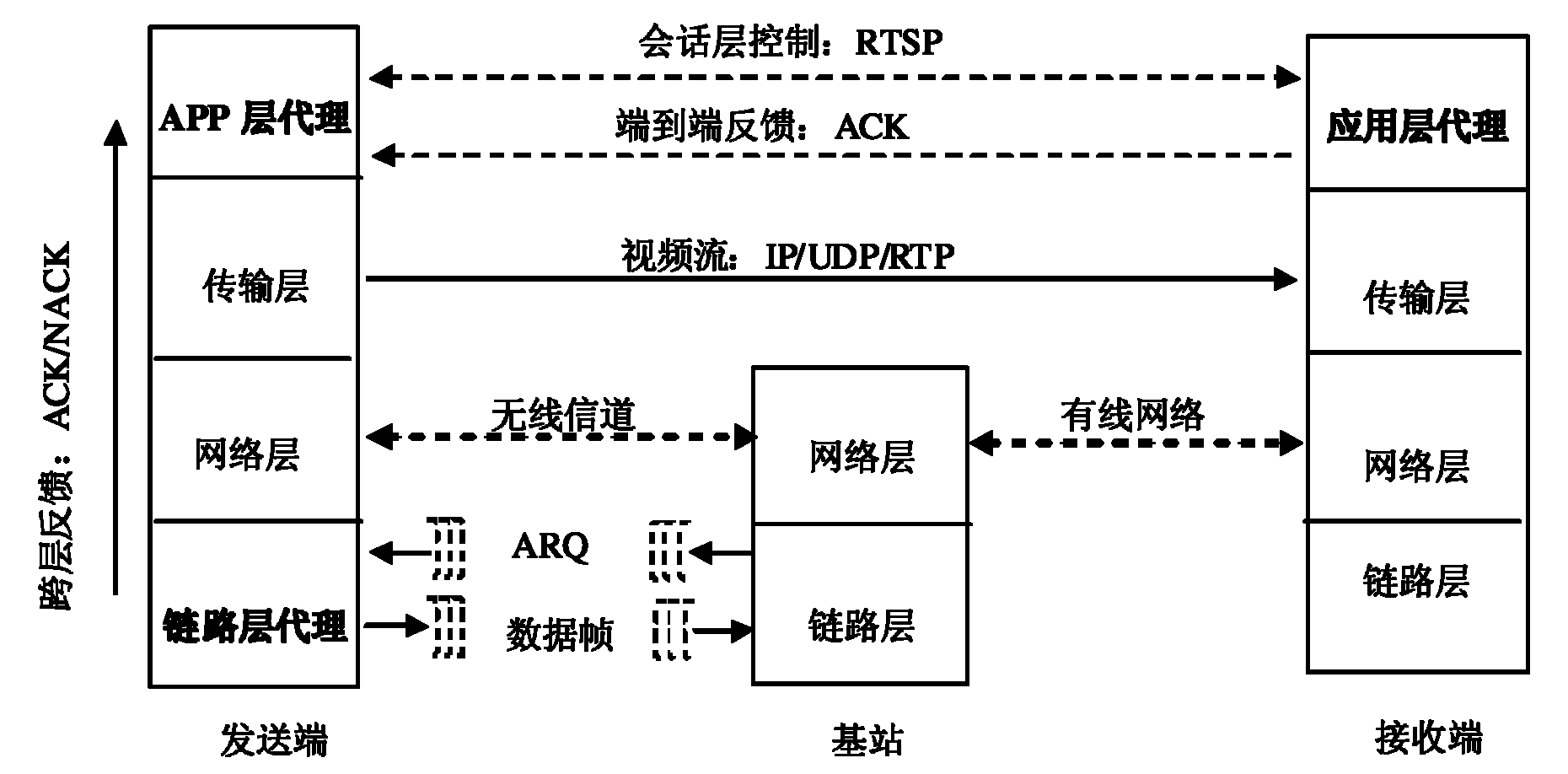

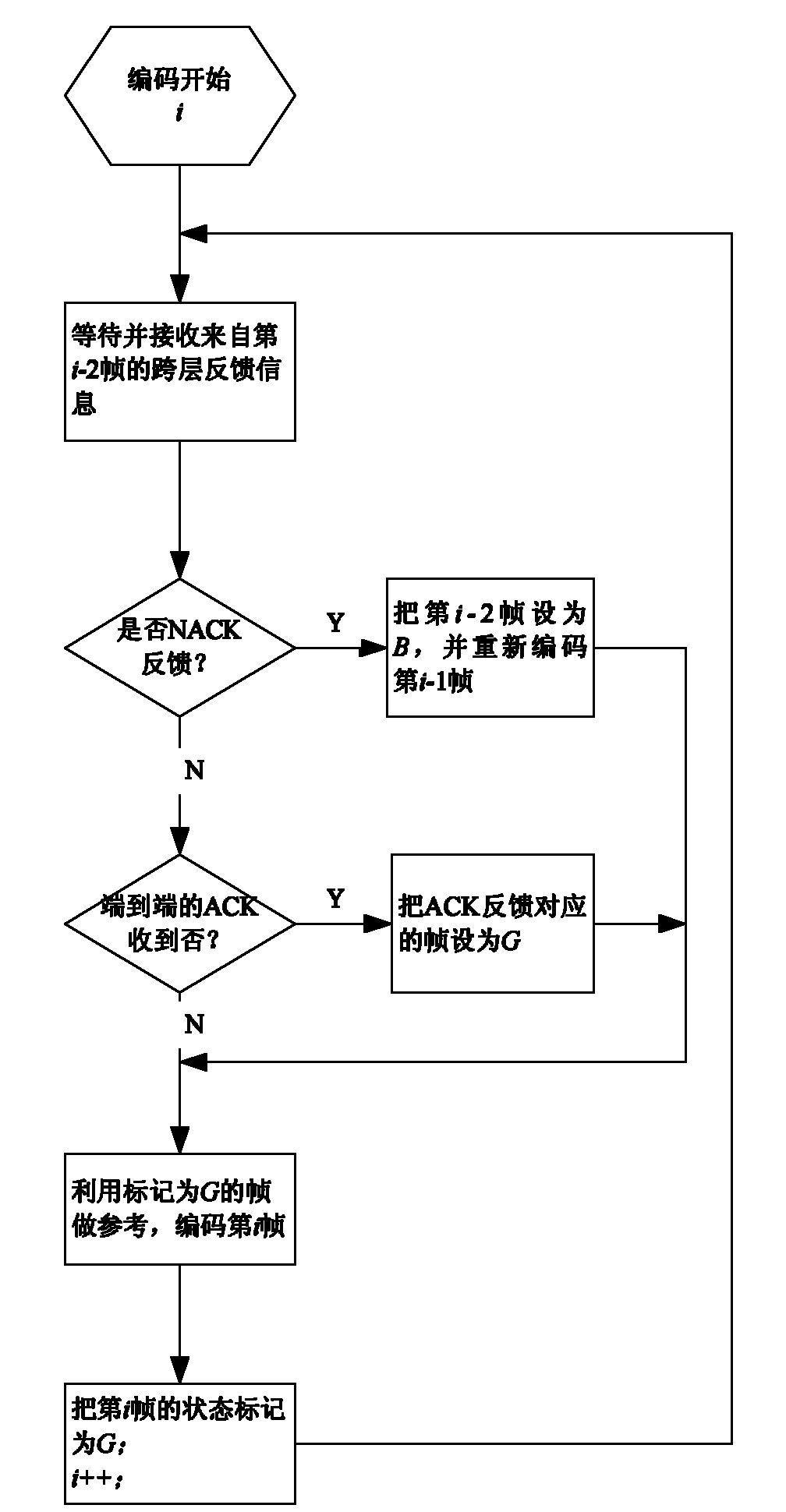

Frame loss prediction based cellular network uplink video communication QoS (Quality of Service) optimization method

InactiveCN101969372AReduced coding efficiencyReduced fault toleranceError prevention/detection by using return channelNetwork traffic/resource managementQuality of serviceInformation feedback

The invention relates to a frame loss prediction based cellular network uplink video communication QoS (Quality of Service) optimization method in the technical field of communication. In the invention, frame loss prediction and timeout frame loss based on ARQ (Automatic Repeat Request) retransmission number counting are realized through designing a link layer proxy at a sending terminal link layer on the basis of the own ARQ function of the link layer of a base station, and the frame loss information of the link layer is fed back to an application layer encoder; meanwhile, the receiving conditions of all frames are counted through designing an application layer proxy on the application layer of a receiving end, and correctly received information is fed back to a sending end encoder end to end; and the sending end encoder marks the transmitting state of each encoded image frame according to received cross layer feedback and end-to-end feedback, wherein a correctly transmitted mark is G, and a reference frame while encoding is limited in a frame of which the mark is G so as to promote the robustness to error codes while video reconstruction.

Owner:SHANGHAI JIAO TONG UNIV

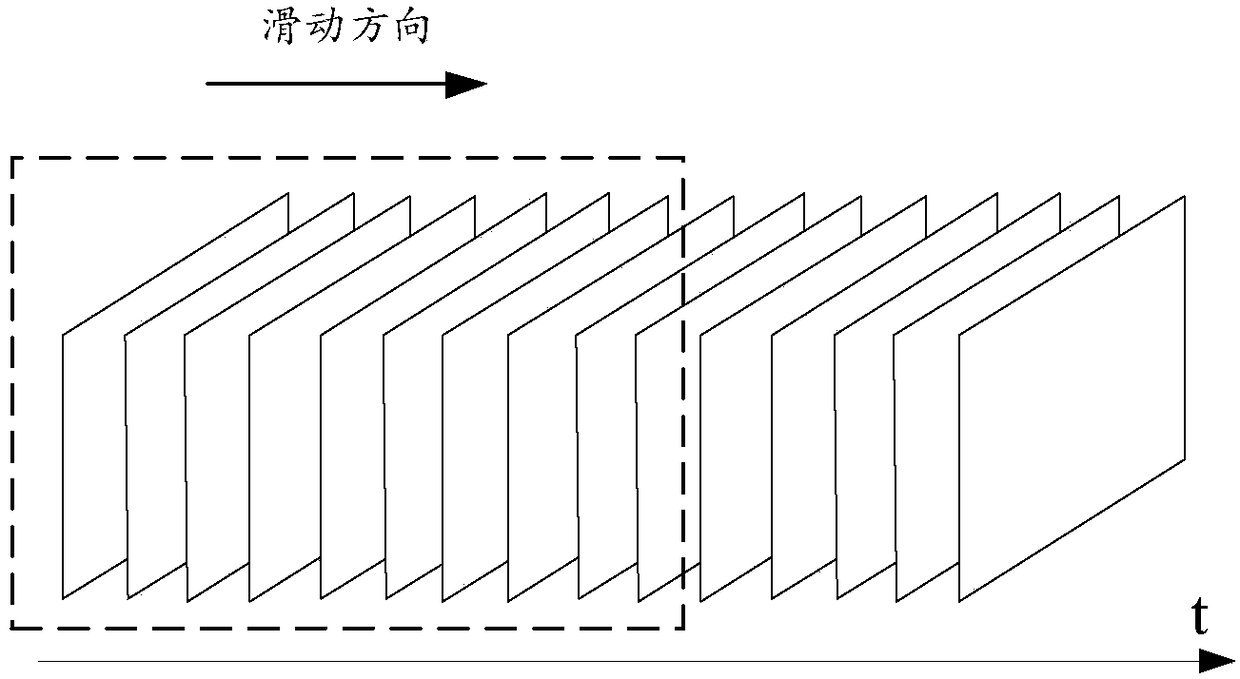

Time-space video compressed sensing method based on convolutional network

ActiveCN108923984AReduce in quantityImprove equalization performanceDigital video signal modificationData switching networksData setVideo reconstruction

The invention discloses a time-space video compressed sensing method based on a convolutional network, and mainly aims at solving the problems that in the prior art, the video compression time-space balance and the video reconstruction real-time performance are poor. The scheme of the method comprises the steps that a training data set is prepared; a network structure of a time-space video compressed sensing method is designed; training and testing files are written according to the designed network structure; a network of the time-space video compressed sensing method is trained; and the network of the time-space video compressed sensing method is tested. The network of the time-space video compressed sensing method adopts an observation technology of simultaneous time-space compression are conducted simultaneously and a reconstruction technology of using 'time-space blocks' to enhance the time-space correlation, not only can real-time video reconstruction be achieved, but also the reconstruction result has the high time-space balance, the reconstruction quality is high and stable, and the network can be used for compressed transmission of a video and follow-up video reconstruction.

Owner:XIDIAN UNIV

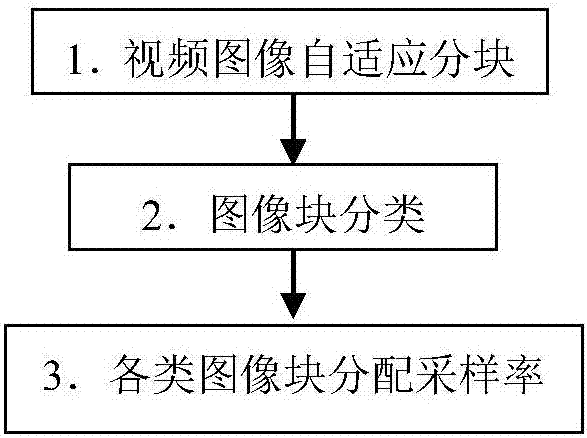

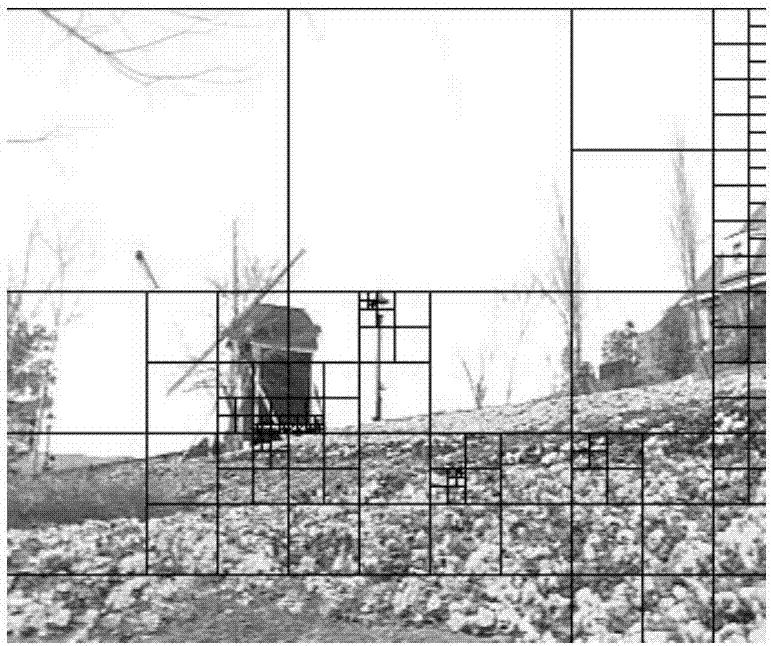

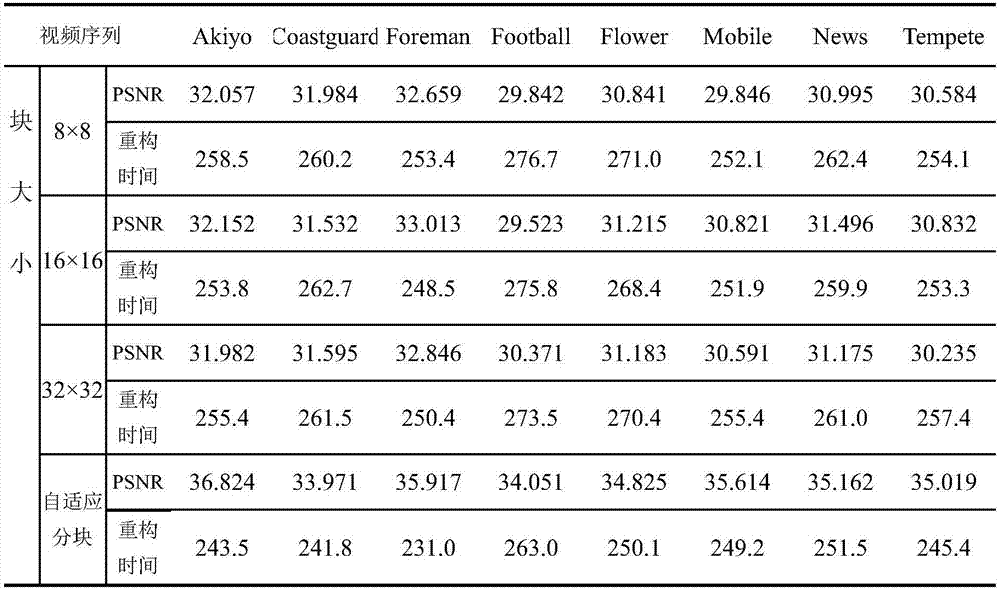

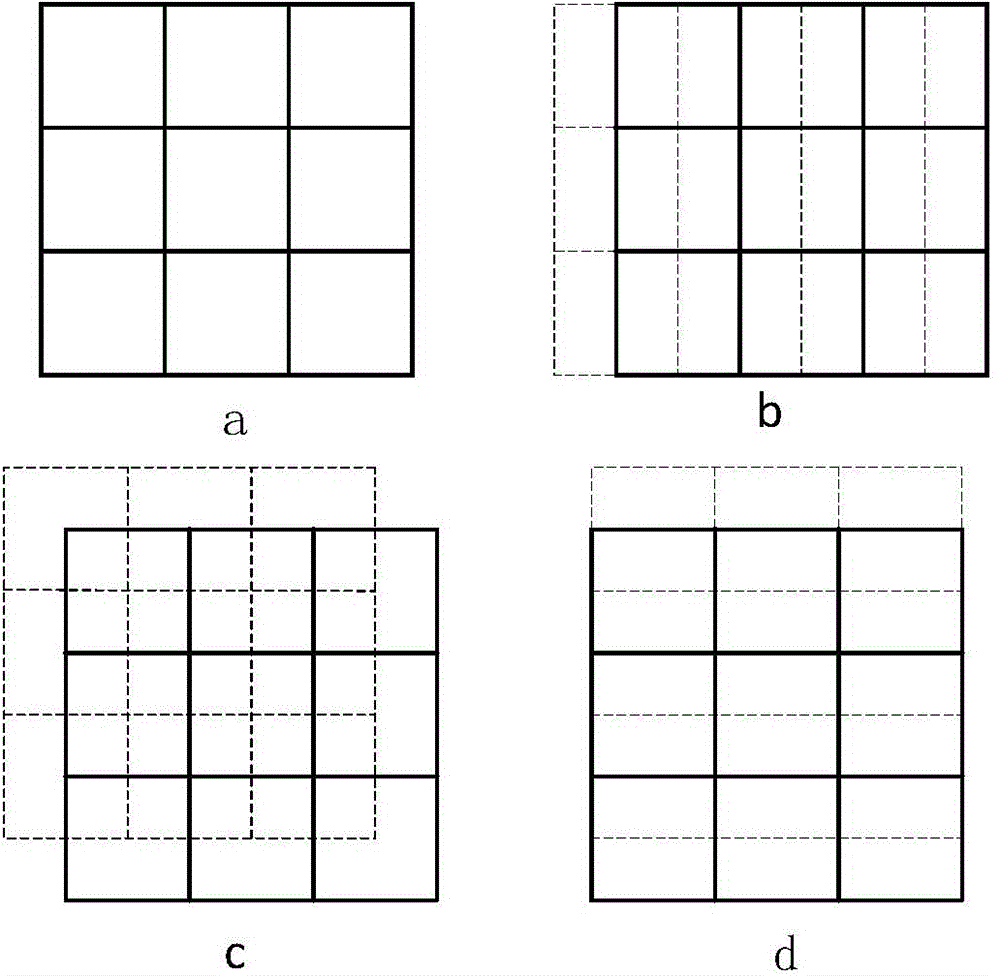

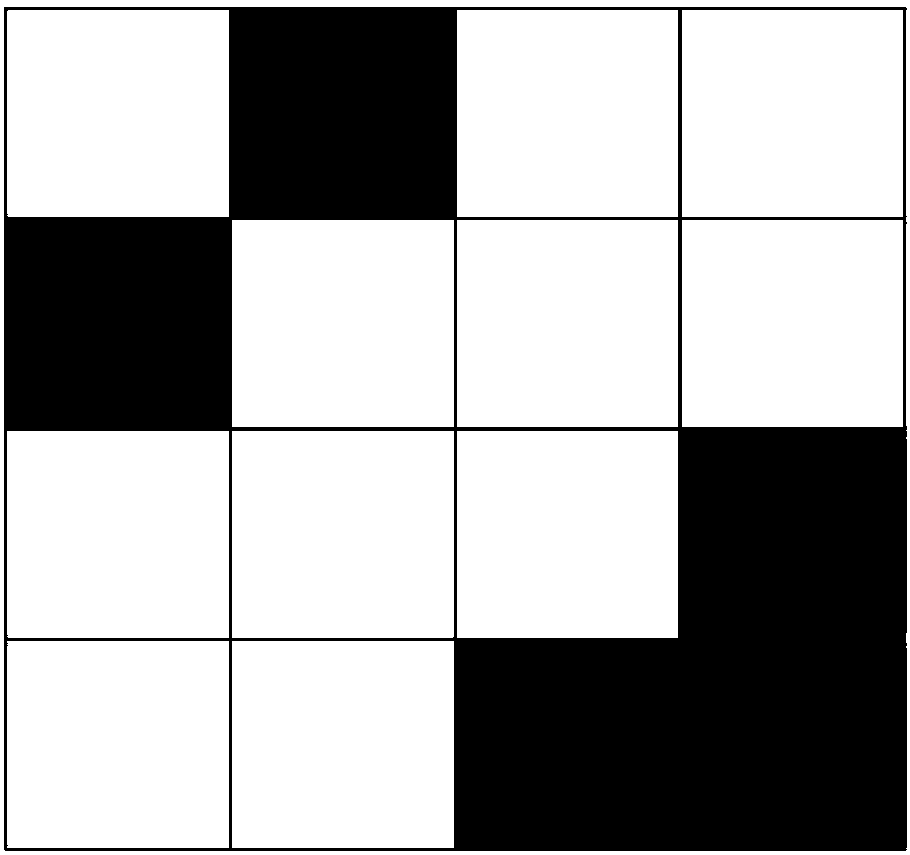

Adaptive partition compression and perception-based video compression method

ActiveCN106941609AImprove refactoring qualityShort refactoring timeDigital video signal modificationPattern recognitionVideo reconstruction

The invention provides an adaptive partition compression and perception-based video compression method. The method comprises two steps, namely the step of adaptively partitioning video images, and classifying and assigning sampling rates for various image blocks. During the step of adaptively partitioning video images, a gray difference value between the adjacent pixels of a reference frame image is adopted as a basis for block size segmentation, and a partitioning threshold value T is set. The gray average difference value between the adjacent pixels of a current region block is compared with the threshold value, and the adaptive blocks of the video image are partitioned based on the quad-tree algorithm. In this way, flat regions are effectively separated from detail regions and edge regions. On the basis of the adaptive partitioning operation, an inter-frame difference value for the DCT coefficients of video pixels is adopted as a basis for partitioning, and various image blocks diversified in size are divided into three types, namely quickly changing blocks, transition blocks and slowly varying blocks. Meanwhile, appropriate sampling rates are assigned to different types of image blocks. The method is good in video reconstruction quality and short in reconstruction time. Under the same condition, the video reconstruction quality and the reconstruction time of the above method are better than those of the video uniform partitioning, compression and perception processing method.

Owner:ZHEJIANG UNIV OF TECH

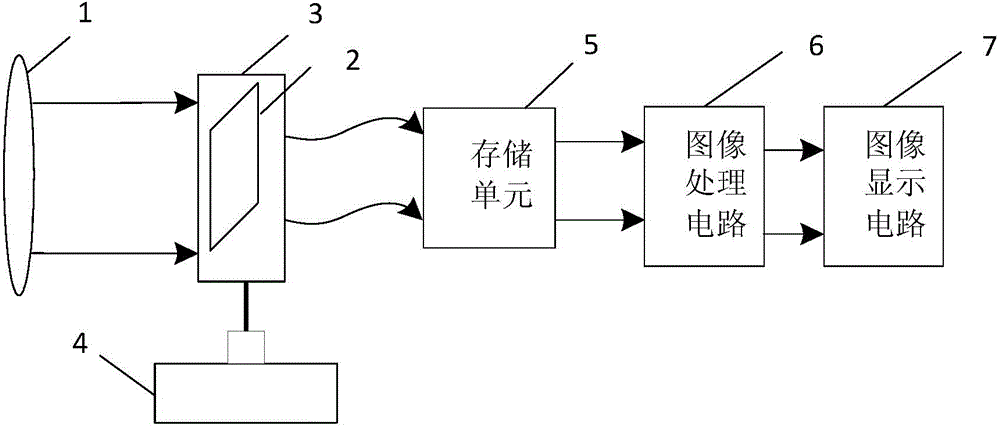

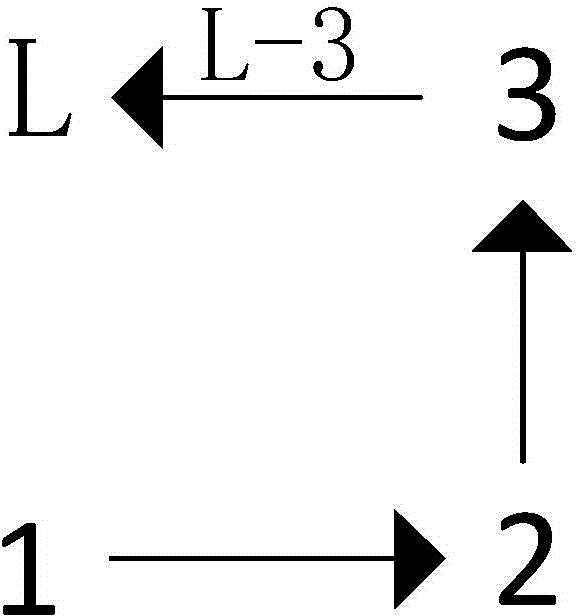

Staring super-resolution imaging device and method

InactiveCN104410789AHigh resolutionSimple processTelevision system detailsImage enhancementImaging processingImage resolution

The invention provides a staring super-resolution imaging device and method, and aims at solving the problem of low resolution of an existing imaging device. The device comprises an imaging device lens group (1), a detector (2), a detector driving platform (3), a driver (4), a storage device (5), an image processing circuit (6) and an image display circuit (7), wherein the detector (2) is arranged in a focal plane on a light path of the imaging device lens group (1); a drive part of the driver (4) drives the detector driving platform (3) to generate movement with magnitude and direction being random values. The imaging method comprises the following realization steps: a change number instruction is set; the driver (4) drives the detector (2) to do corresponding number of random jitter; a light signal is imaged on the detector (2) through the imaging device lens group (1) to obtain a low-resolution image with a frame number corresponding to a change number; the low-resolution image is rebuilt by the utilization of a variational Bayesian algorithm. The staring super-resolution imaging device and method are suitable for video reconstruction and satellite photography.

Owner:XIDIAN UNIV

A method and apparatus for detecting video frames

ActiveCN109214253AEasy accessImprove regularityCharacter and pattern recognitionNeural architecturesFrame sequenceVideo reconstruction

Embodiments of the present application disclose a video frame detection method and apparatus. The method comprises the following steps of: obtaining a target video frame sequence; extracting video feature data of the target video frame sequence using a convolution neural network model, the convolution neural network model being set to be learned according to a plurality of reference history videoframe sequences; carrying out video reconstruction according to the video characteristic data to generate a reconstructed video frame sequence; based on a difference value between the target video frame sequence and the reconstructed video frame sequence, it is determined that there is an abnormal event in the target video frame sequence. With the embodiment of the present application, the detection accuracy and the detection efficiency of the video frame detection can be improved.

Owner:ALIBABA GRP HLDG LTD

Shunting coding method for digital video monitoring system and video monitoring system

InactiveCN101841709AMeet monitoring needsReduce resolutionClosed circuit television systemsDigital video signal modificationVideo monitoringDigital video

The embodiment of the invention discloses a shunting coding method for a digital video monitoring system. The method comprises the following steps: decomposing a video into primary video frames and secondary video frames by using two-dimensional wavelet transform; coding the primary video frames to generate a primary code stream and primary video reconstruction frames; and based on the primary video reconstruction frames, coding the secondary video frames to generate a secondary code stream. Corresponding, the embodiment of the invention also discloses a video monitoring system. The video monitoring system comprises a shunting module, a primary code stream coding module and a secondary code stream coding module, wherein the shunting module is used for decomposing the video into the primary video frames and the secondary video frames by using two-dimensional wavelet transform; the primary code stream coding module is used for coding the primary video frames to generate the primary code stream and the primary video reconstruction frames; and the secondary code stream coding module is used for coding the secondary video frames to generate the secondary code stream based on the primary video reconstruction frames. By implementing the invention, the digital video monitoring system can satisfy monitoring requirements for videos with different resolutions and enhance the video compression coding efficiency.

Owner:广东中大讯通信息有限公司 +2

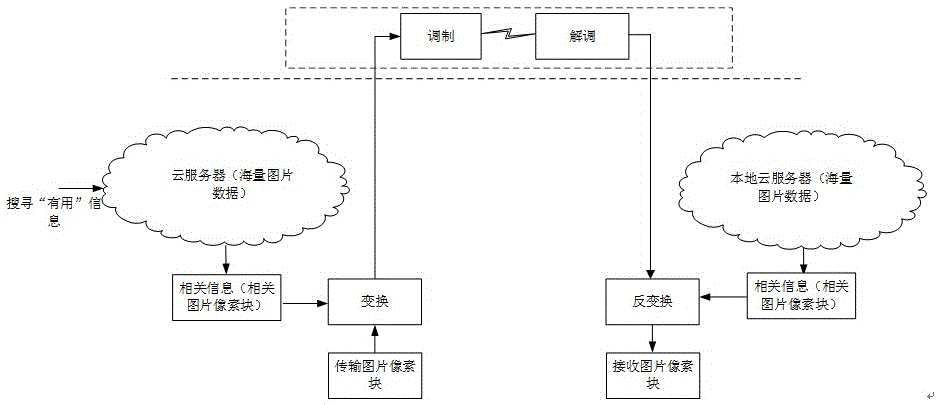

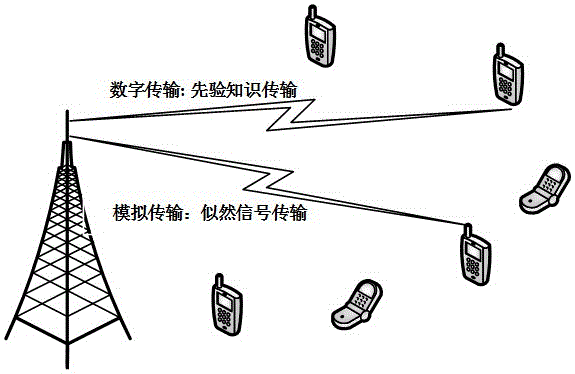

Big data aided video transmission method based on digital-analog hybrid

ActiveCN105657434AImprove visual qualityImprove effectivenessDigital video signal modificationSignal-to-noise ratio (imaging)Data aided

The invention relates to a big data aided video transmission method based on digital-analog hybrid. The method comprises the following steps: step 1), based on a wireless video transmission system with a maximum signal to noise ratio, specifically as follows: establishing a pseudo analog video transmission framework, based on Bayesian reasoning, obtaining a minimum mean-square error estimation of a receiving end reconstruction video signal, and acquiring best prior knowledge; step 2), transmitting a signal by a signal transmitting end of the big data aided video transmission method based on digital-analog hybrid; and step 3), reconstructing a video by a signal receiving end of the big data aided video transmission method based on digital-analog hybrid. Assuming that the transmitting end and the receiving end have the same cloud data, information which is most relevant to a transmission signal in the big data is extracted to be used for reconstructing an aided video, the best prior knowledge is extracted by maximizing the signal to noise ratio of the receiving end, and a corresponding standard is set at the transmitting end to determine whether to transmit an original video signal, so as to save a transmission bandwidth. Compared with the prior art, the invention fully uses information relevant to the transmission signal in cloud mass data, so that the visual quality of the transmitted video can be enhanced.

Owner:TONGJI UNIV

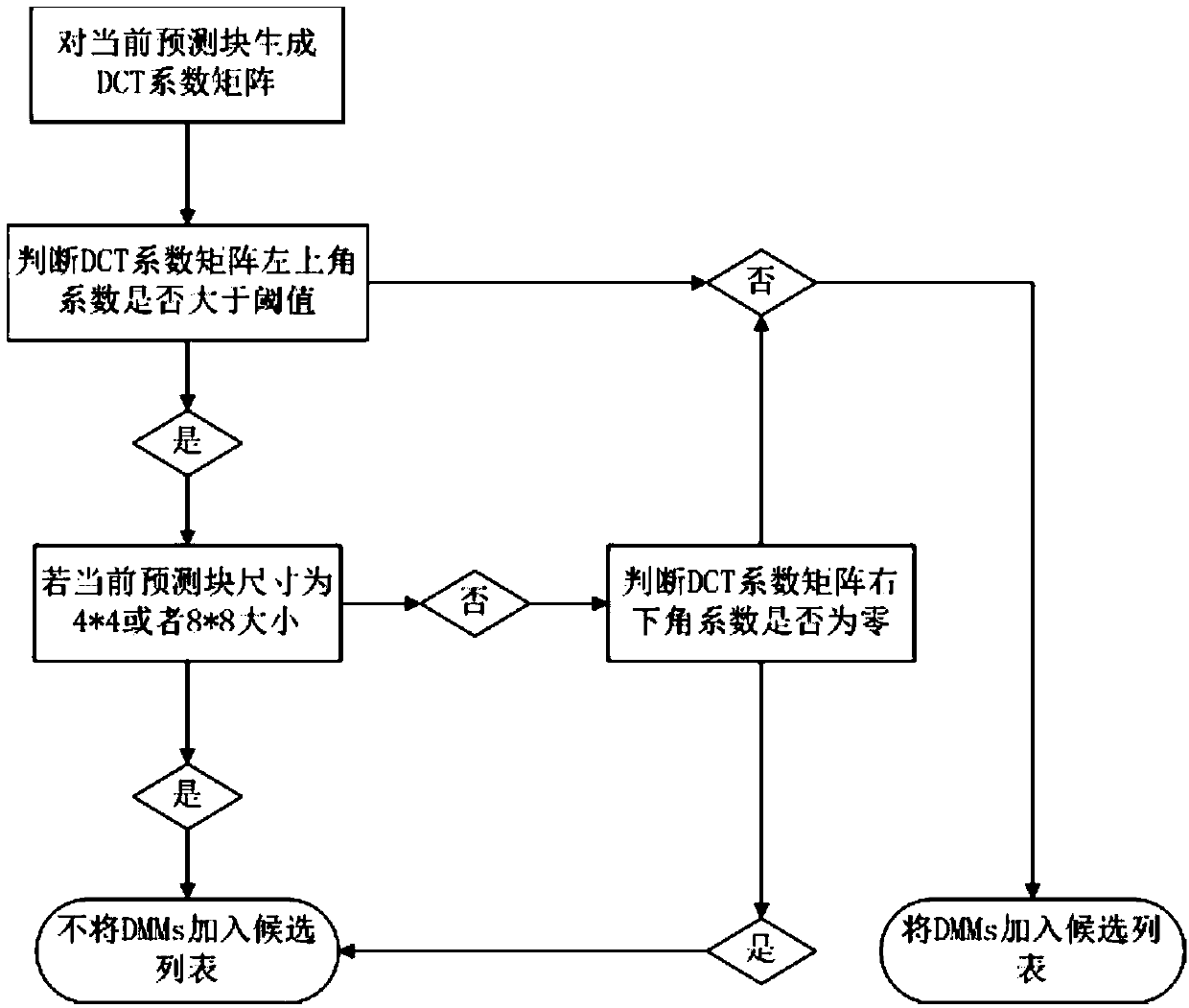

DCT-based 3D-HEVC fast intra-frame prediction decision-making method

ActiveCN107864380AEasy to distinguishHigh speedDigital video signal modificationCoding blockComputation complexity

The invention discloses a DCT-based 3D-HEVC fast intra-frame prediction decision-making method. The method comprises the following steps: firstly, calculating a DCT matrix of a current prediction block by utilizing a DCT formula; then, judging whether the left upper corner coefficient of a current coefficient block is provided with an edge and further judging whether the right lower corner coefficient thereof is provided with an edge; and finally, judging whether DMMs need to be added into an intra-frame prediction mode candidate list through judging whether the edges are provided. According to the DCT-based 3D-HEVC fast intra-frame prediction decision-making method, a depth map is introduced into the 3D-HEVC to achieve better view synthesis; aiming at the depth map intra-frame predicationcoding, a 3D video coding extension development joint cooperative team proposes four new kinds of intra-frame predication modes DMMs for the depth map. The DCT has the characteristic of energy aggregation, so that whether a coding block is provided with an edge can be obviously distinguished in the 3D-HEVC depth map coding process. The DCT-based 3D-HEVC fast intra-frame prediction decision-makingmethod has the advantages of low calculation complexity, short coding time and good video reconstruction effect.

Owner:HANGZHOU DIANZI UNIV

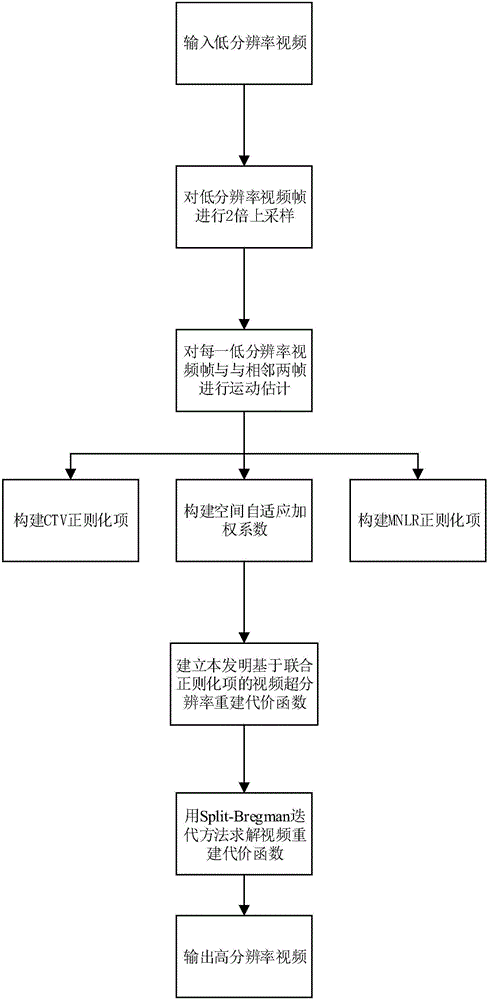

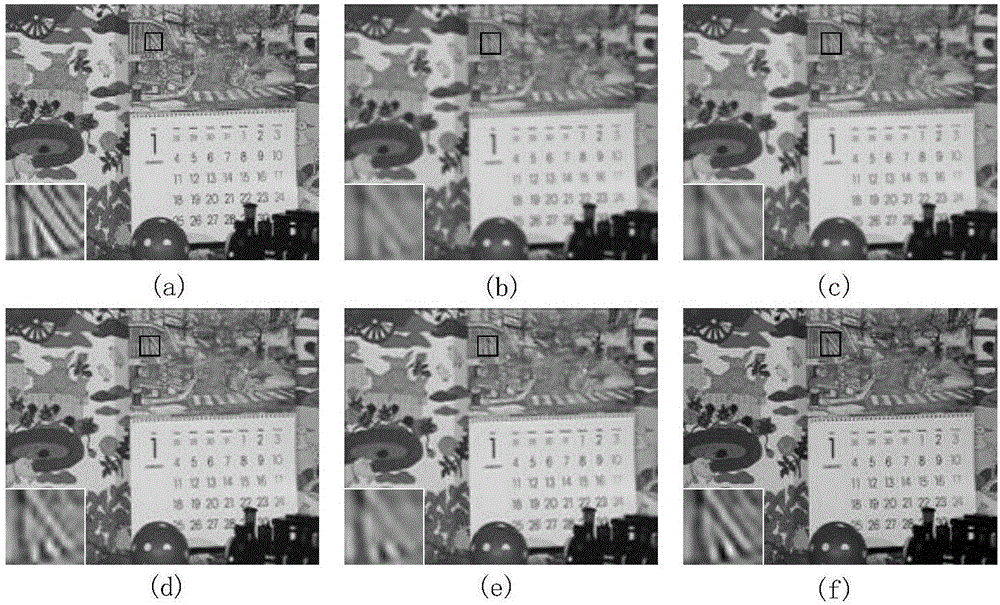

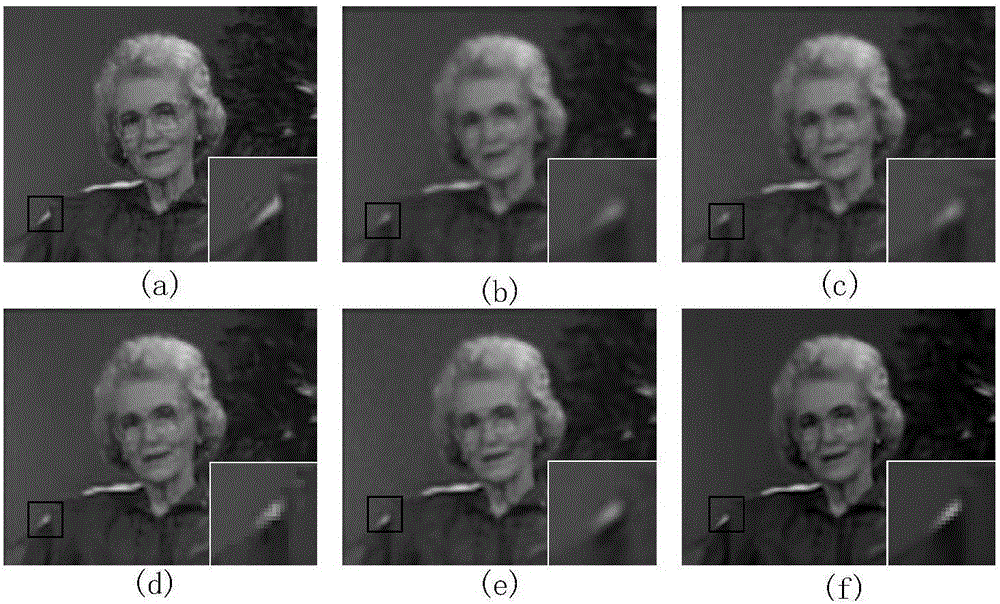

Joint regularization based video super-resolution reconstruction method

ActiveCN106254720ATelevision system detailsColor television detailsPattern recognitionReconstruction method

The invention discloses a joint regularization based video super-resolution reconstruction method, which comprises the steps of building a low-resolution observation model; building a universal video reconstruction cost function through a regularization based least mean square method; constructing a compensation based TV (CTV) regularization item, and assigning an adaptive weighting coefficient to a regional space so as to reduce adverse effects brought about by a registration error; constructing a multi-frame nonlocal low-rank (MNLR) regularization item; building a joint regularization based video super-resolution reconstruction cost function; solving the cost function by using a Split-Bregman iteration method so as to reconstruct high-resolution video. Video frames reconstructed according to the video super-resolution reconstruction method have abundant edge information, and hardly have any sawtooth effects. It is observed from the video reconstruction frames that the method disclosed by the invention is also excellent in ability of noise suppression, and the method has a very high reference value in an objective evaluation parameter. Therefore, disclosed by the invention is an effective video super-resolution reconstruction method.

Owner:SICHUAN UNIV

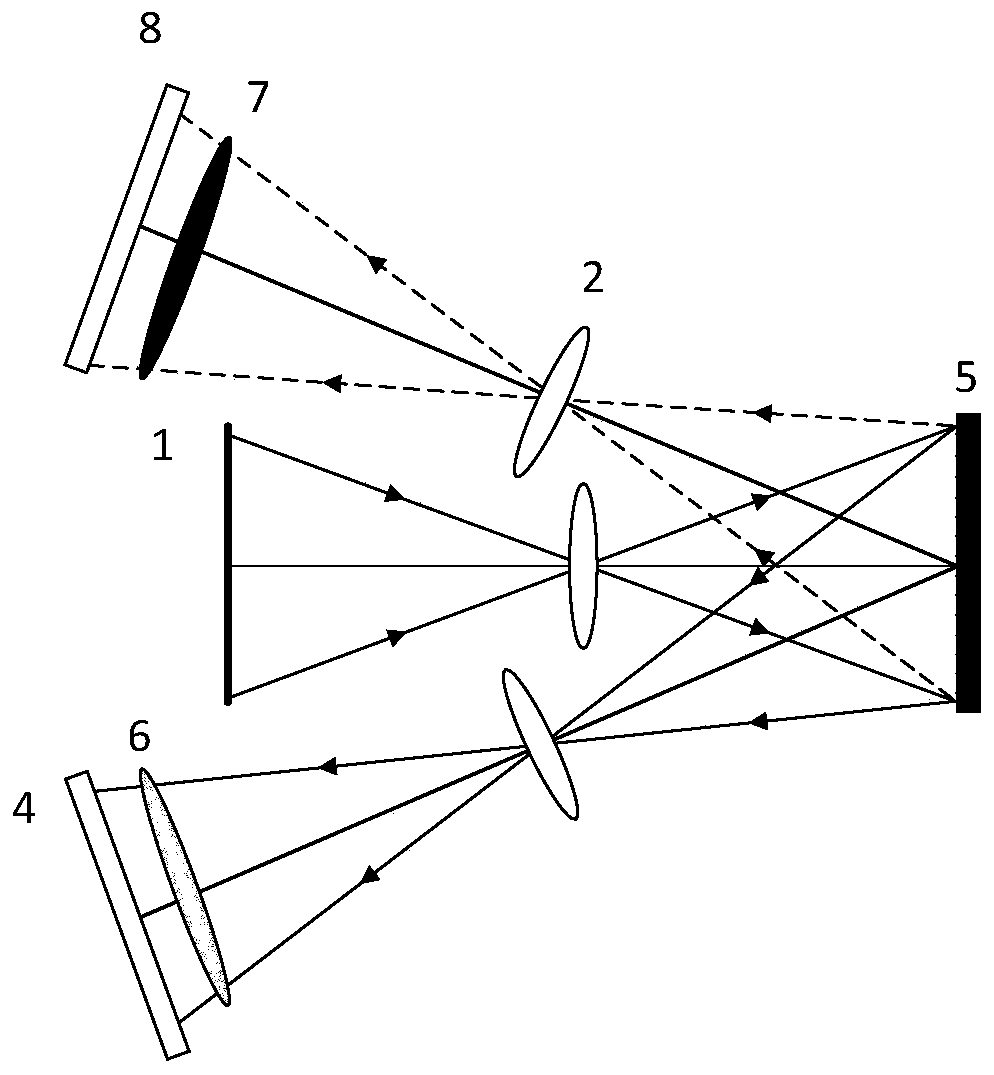

Dual-band time domain compressed sensing high-speed imaging method and device

ActiveCN109828285AIncrease profitMake full use of light energyElectromagnetic wave reradiationOptical elementsLow speedLight energy

The invention provides a dual-band time domain compressed sensing high-speed imaging method and device. Aiming at a high-speed moving object, the characteristic of overturning of a digital micromirrordevice is utilized, and the light energy in two directions is respectively used for imaging at visible light and infrared bands; in combination with a time domain compressed sensing imaging method, the detail information at double wavebands of the high-speed moving target is obtained at the same time, and a video reconstruction algorithm based on compressed sensing is used to reconstruct the shotdouble-waveband low-speed video into a double-waveband target high-speed video. According to the invention, the method can obtain the high-speed moving target video with more detail information whilethe data conversion bandwidth of the imaging camera is not increased, thereby solving the problem of the strict requirements of high-speed imaging for high sensitivity and ultrahigh data bandwidth ofthe camera. Meanwhile, through the characteristics of the optical modulator of the digital micromirror device, the visible light and infrared high-speed imaging light paths can be obtained at the same time under the condition that no additional device is added, the light energy is fully utilized, and the utilization rate of the energy is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

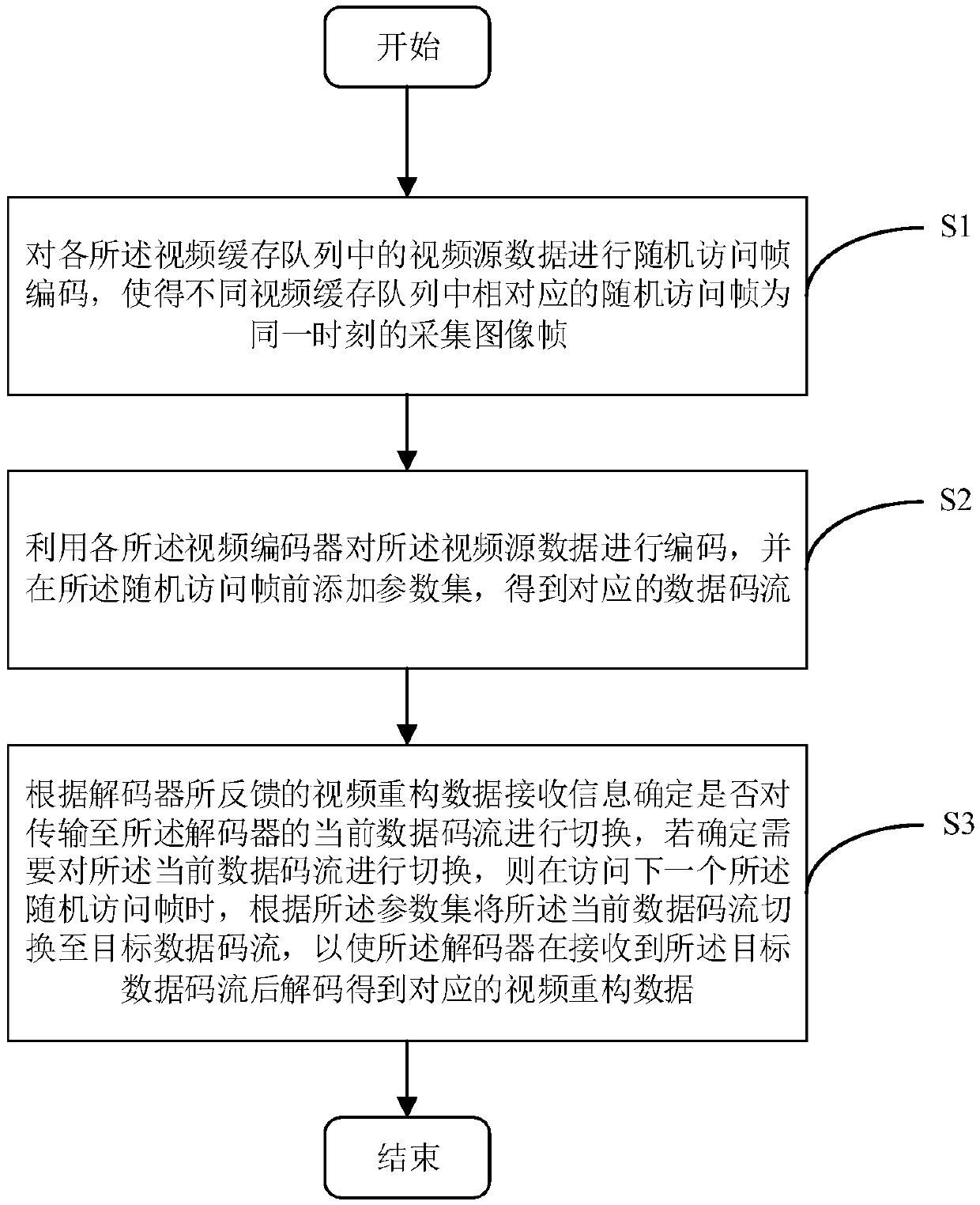

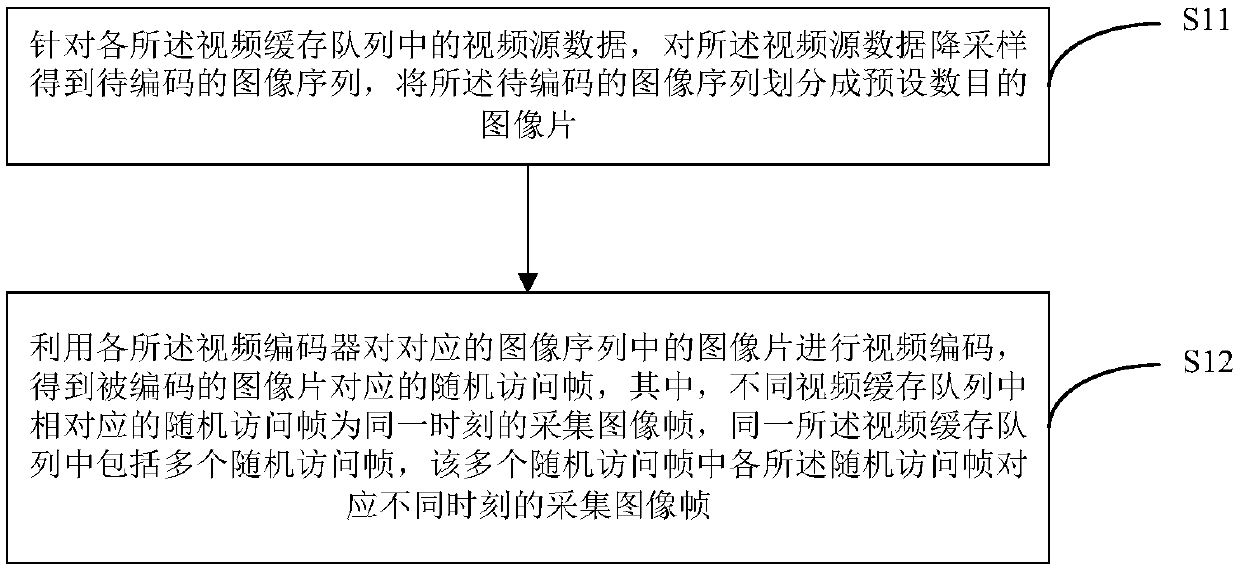

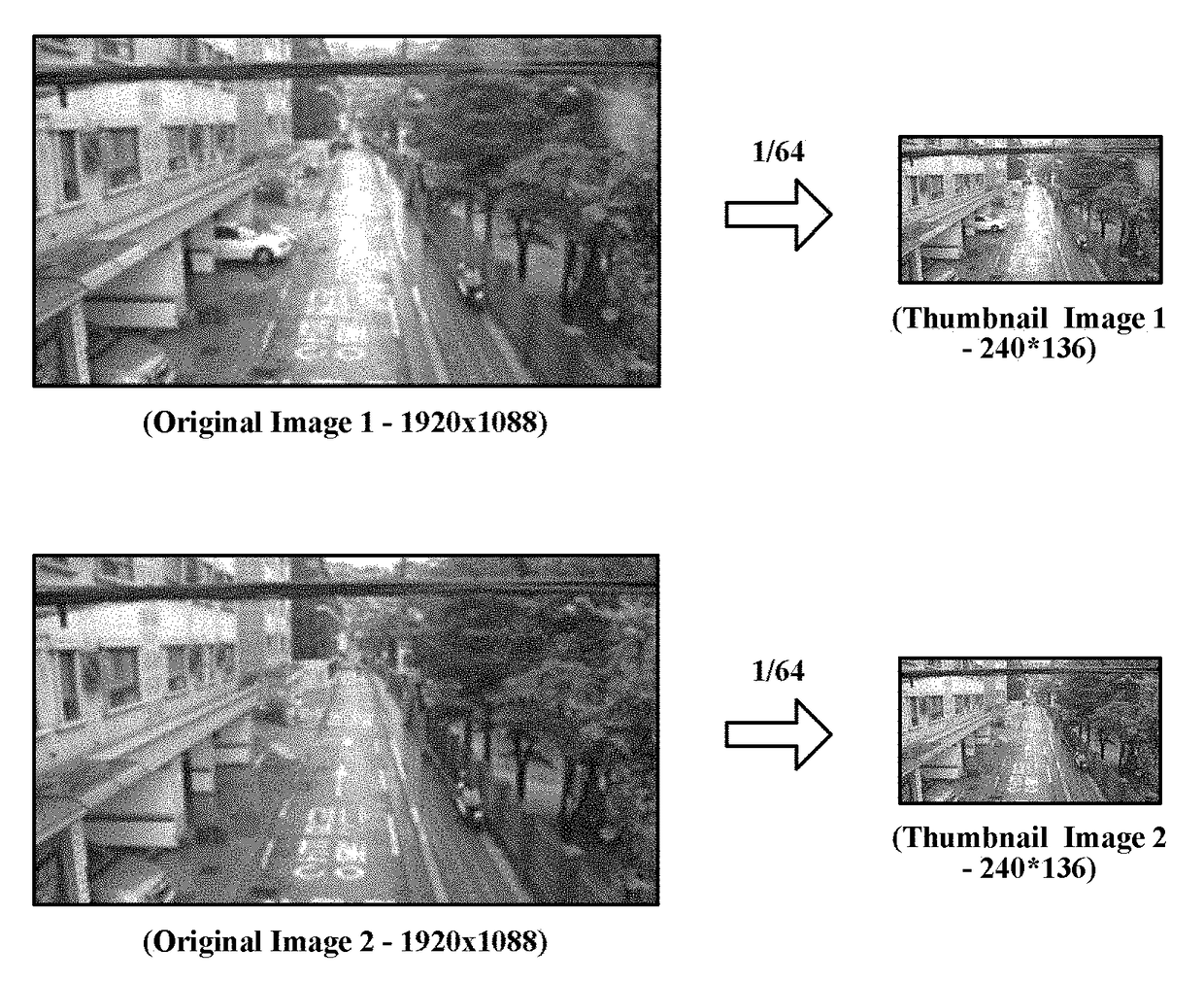

Video transmission method and device, electronic equipment and readable storage medium

The embodiment of the invention provides a video transmission method and device, electronic equipment and a readable storage medium. Video source data in a video cache queue is encoded; correspondingrandom access frames in different video cache queues are enabled to be acquired image frames at the same moment; adding a parameter set containing the code rate, the spatial resolution, the time resolution and the random access frame time interval of a data code stream obtained by encoding of each video encoder in a plurality of video encoders at the same time; therefore, the sending end can sendthe data in the case of bandwidth fluctuation; the method comprises the following steps: receiving packet loss information of current video reconstruction data obtained by decoding sent by a decoder and network time delay data of the current video reconstruction data reaching the decoder; and switching data code streams in real time in combination with the parameter set, so that a decoder decodesthe data code streams to obtain video reconstruction data, and the video reconstruction data displays clearer and smoother pictures.

Owner:ZHEJIANG UNIVIEW TECH CO LTD

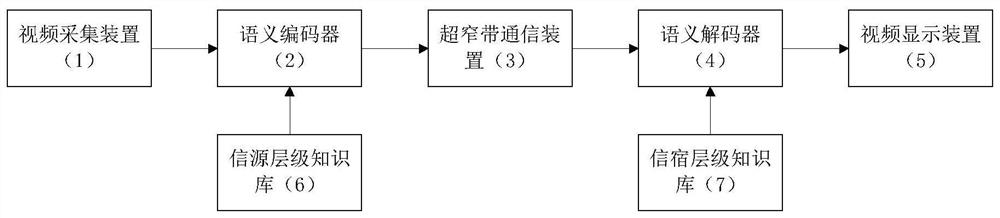

Video semantic communication method and system based on hierarchical knowledge expression

ActiveCN113315972AAvoid single-type semantic featuresImprove integritySemantic analysisDigital video signal modificationSemantic featureCommunication bandwidth

The invention provides a video semantic communication method based on hierarchical knowledge expression, and mainly aims to solve the problems of incomplete semantic extraction, insufficient semantic representation capability and redundant semantic description in the prior art. According to the implementation scheme, the method comprises the following steps: constructing a hierarchical knowledge base consisting of a multi-level signal sensing network, a semantic abstract network, a semantic reconstruction network and a video reconstruction network; collecting a to-be-transmitted video signal; extracting structured semantic features of the video signals based on a signal sensing network and a semantic abstract network in the hierarchical knowledge base, and transmitting the structured semantic features through an ultra-narrowband channel; and reconstructing a video signal by using a semantic reconstruction network and a signal reconstruction network in the hierarchical knowledge base according to the structured semantic features. According to the method, semantic features of different scales are mined, and the semantics are structurally represented by using the structured data, so that the integrity of semantic extraction is improved, the semantic representation capability and the communication bandwidth utilization rate are improved, and the method can be applied to online conferences, human-computer interaction and intelligent Internet of Things.

Owner:XIDIAN UNIV

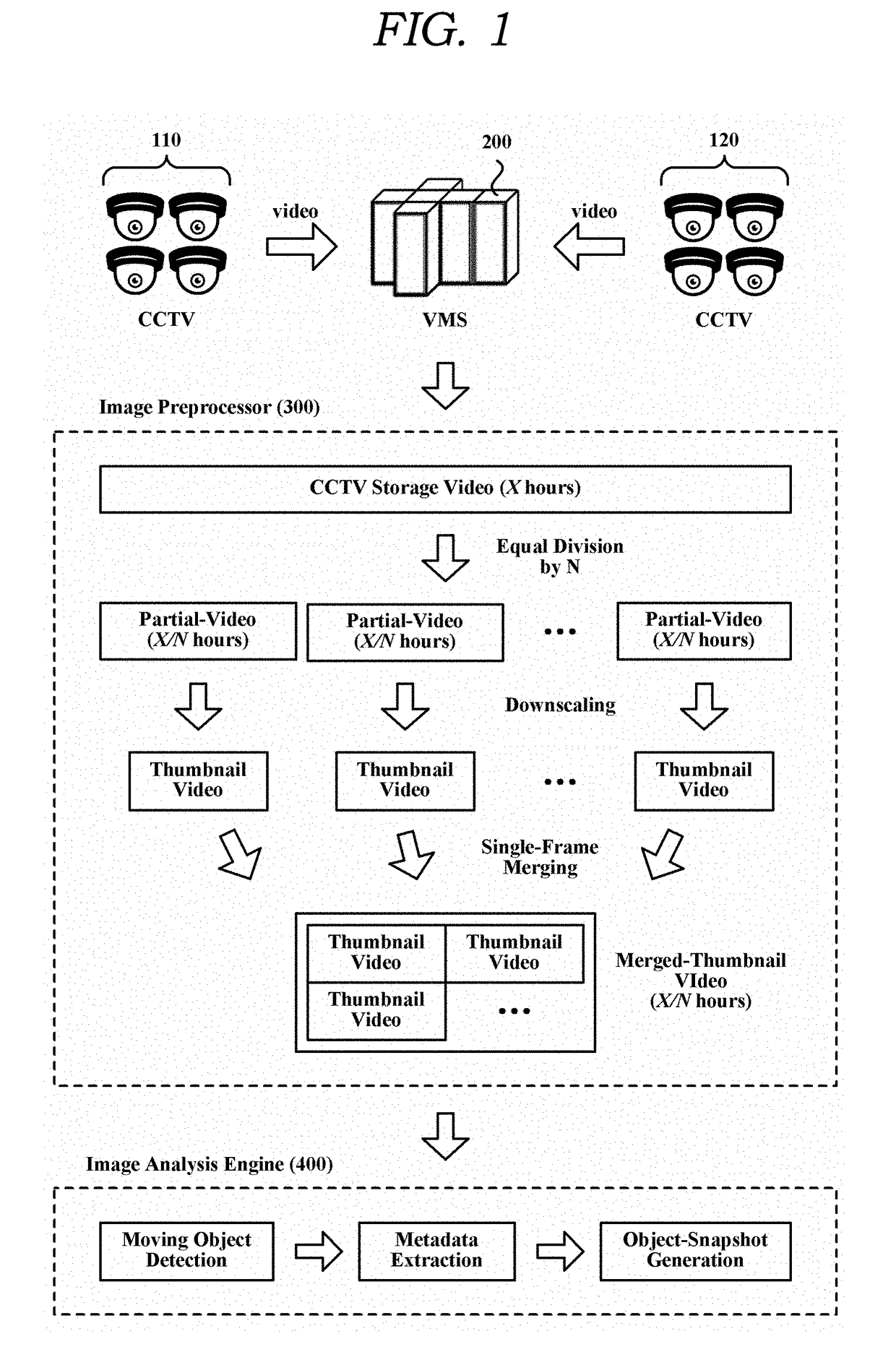

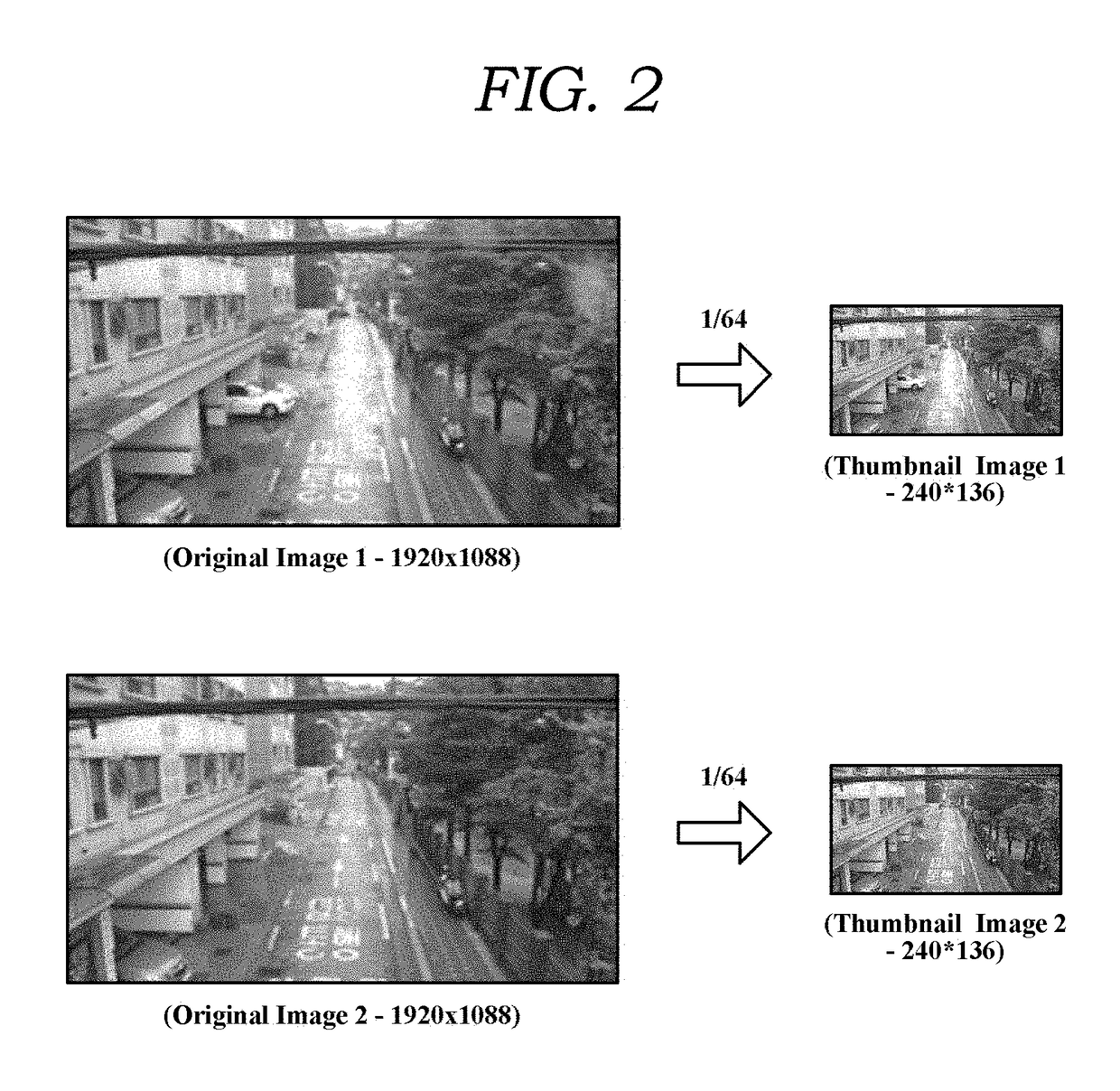

Method of Detecting a Moving Object by Reconstructive Image Processing

ActiveUS20180046866A1Reduce the amount requiredEfficient searchImage analysisCharacter and pattern recognitionImaging processingVideo reconstruction

Disclosed is a method of detecting a moving object in video produced in a plurality of CCTV cameras. In the present invention, a video reconstruction is applied to the CCTV storage video in order to significantly reduce the amount of video data, and then an image processing is performed on the reconstructed storage video in order to detect a moving object and further to extract metadata thereof. According to the present invention, staff members of the integrated control center may efficiently search any possible critical objects in the CCTV storage video.

Owner:INNODEP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com