Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

208 results about "Dispatch table" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

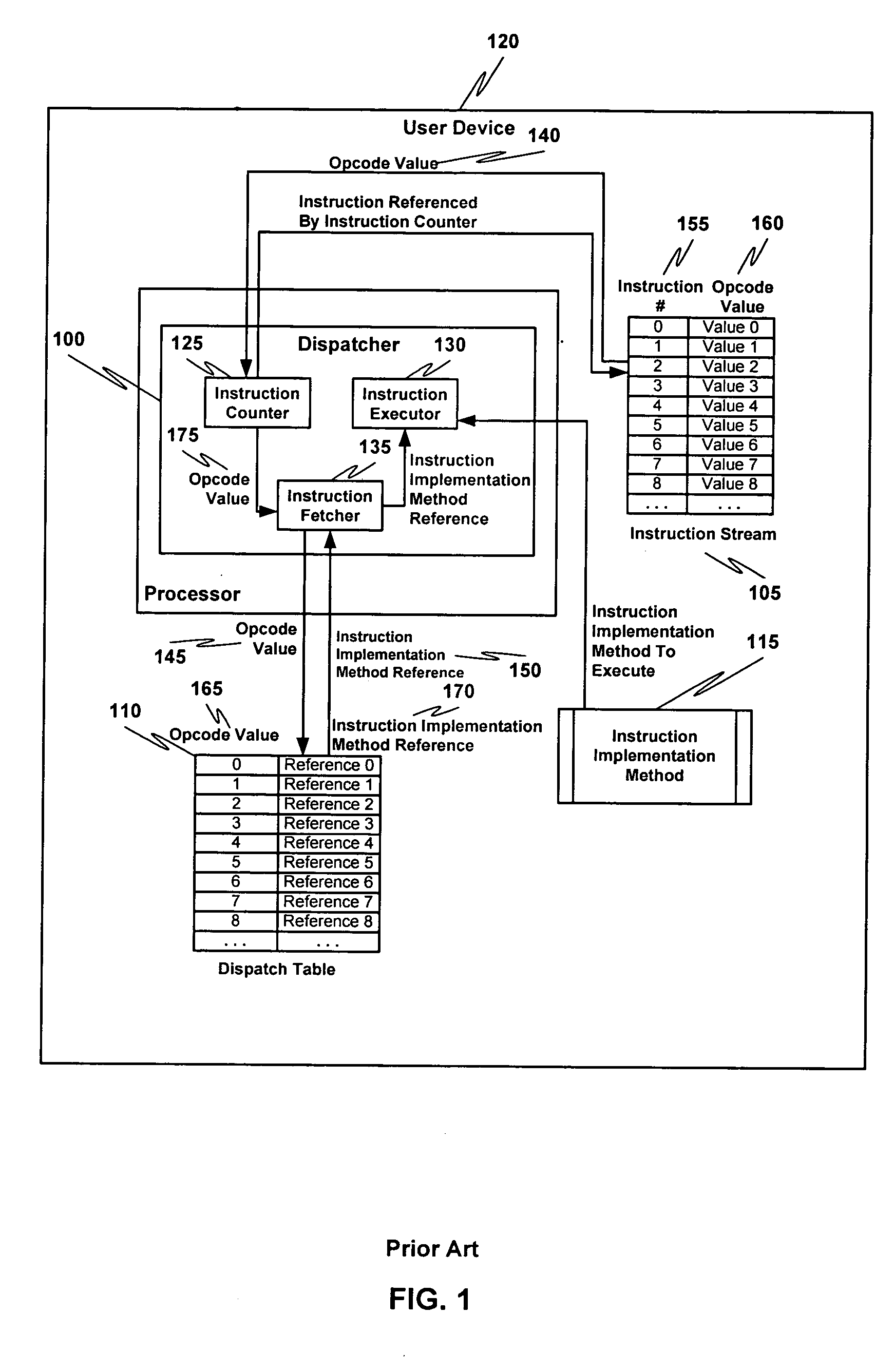

In computer science, a dispatch table is a table of pointers to functions or methods. Use of such a table is a common technique when implementing late binding in object-oriented programming.

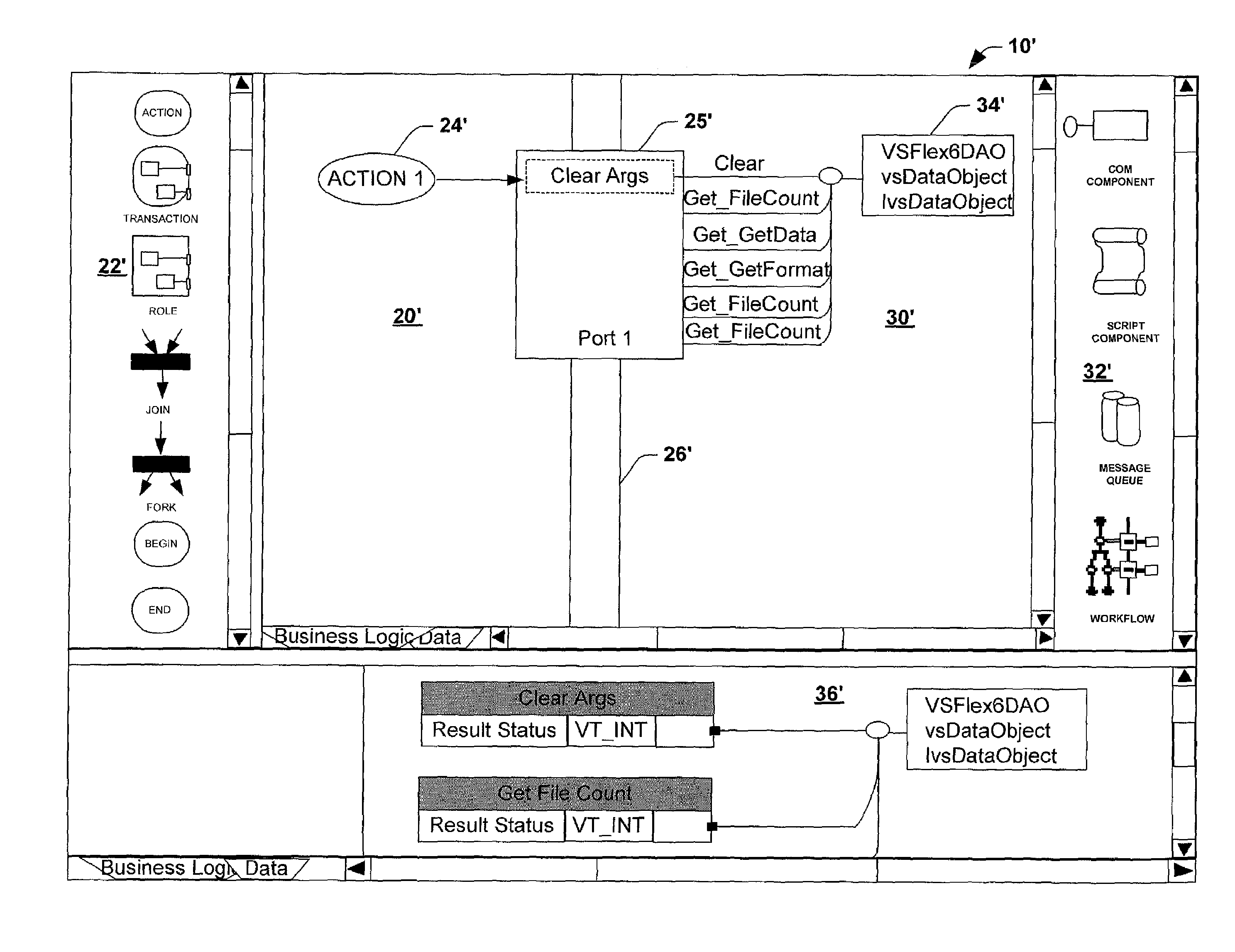

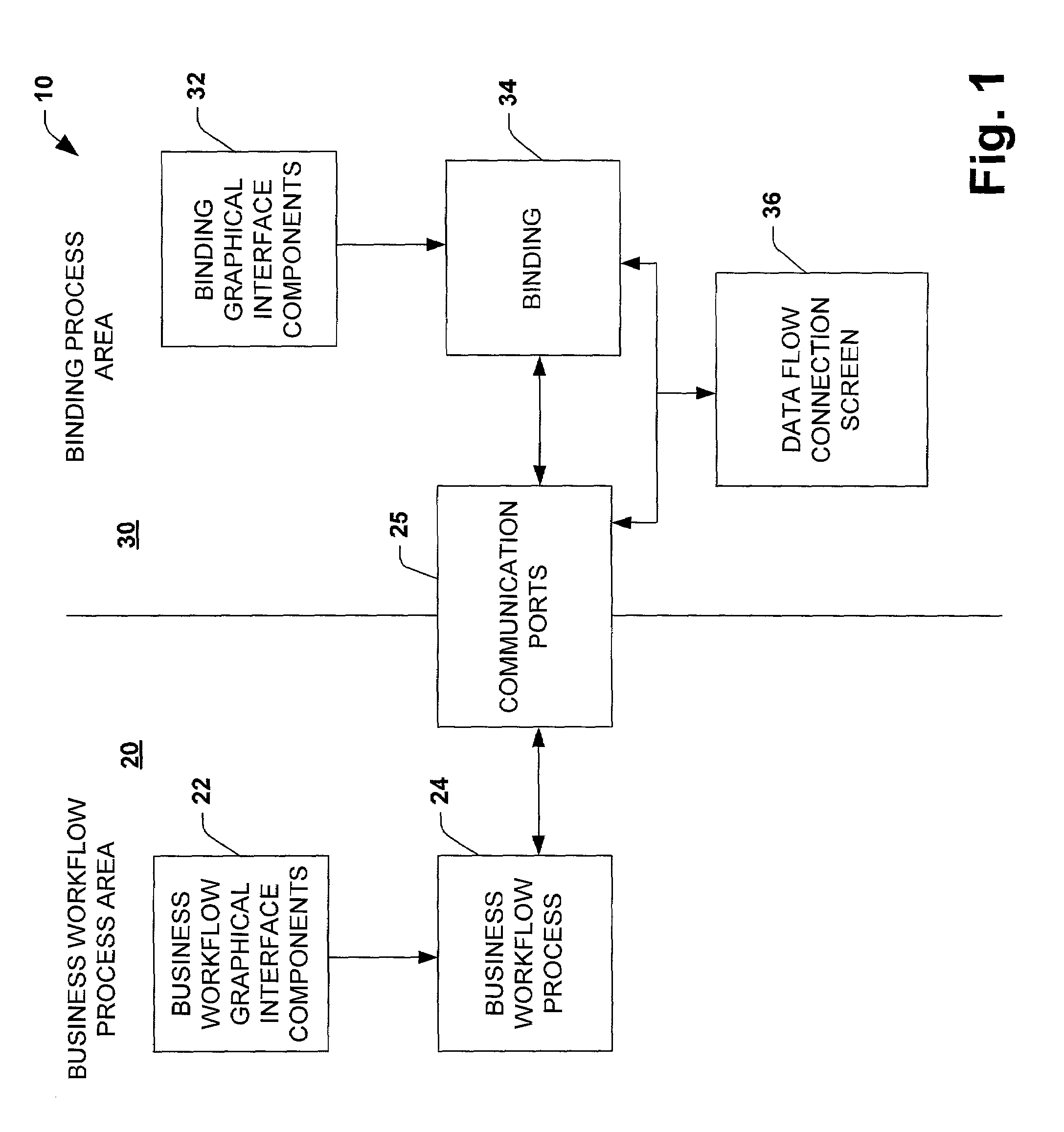

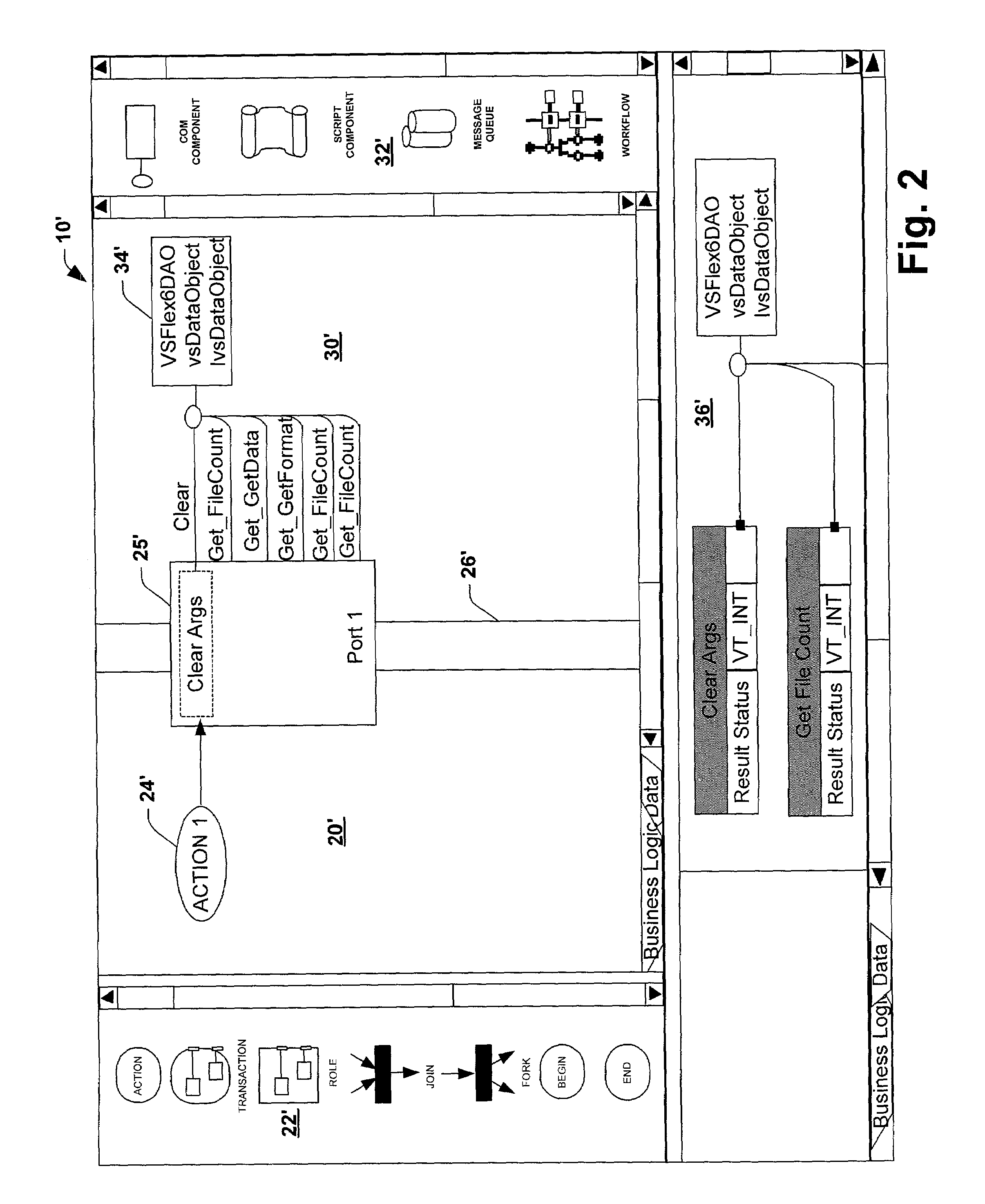

System and method utilizing a graphical user interface of a business process workflow scheduling program

InactiveUS7184967B1Promote modelOffice automationProgramme total factory controlSoftware engineeringBusiness process

A graphical user interface (GUI) scheduler program is provided for modeling business workflow processes. The GUI scheduler program includes tools to allow a user to create a schedule for business workflow processes based on a set of rules defined by the GUI scheduler program. The rules facilitate deadlock not occurring within the schedule. The program provides tools for creating and defining message flows between entities. Additionally, the program provides tools that allow a user to define a binding between the schedule and components, such as COM components, script components, message queues and other workflow schedules. The scheduler program allows a user to define actions and group actions into transactions using simple GUI scheduling tools. The schedule can then be converted to executable code in a variety of forms such as XML, C, C+ and C++. The executable code can then be converted or interpreted for running the schedule.

Owner:MICROSOFT TECH LICENSING LLC

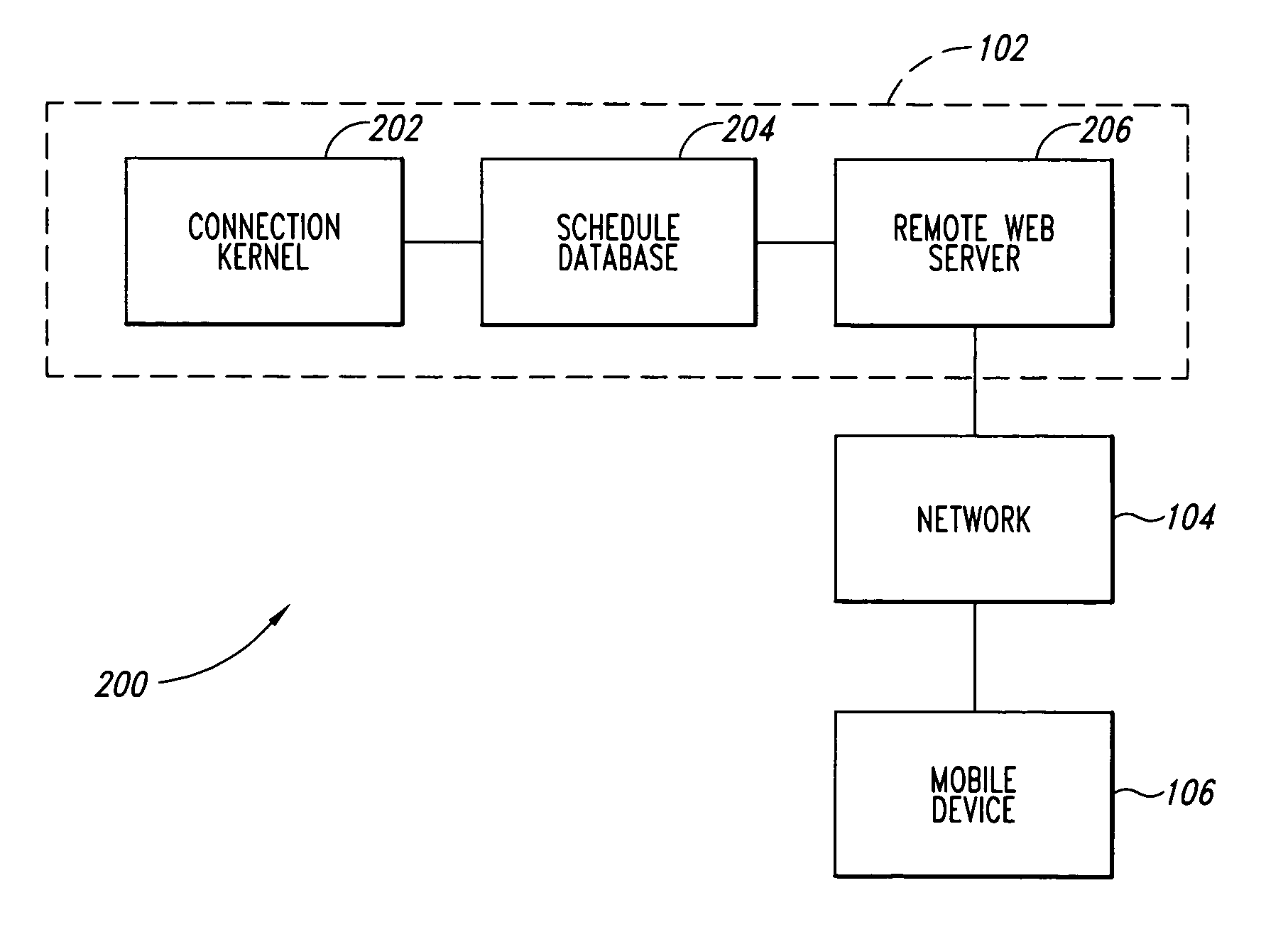

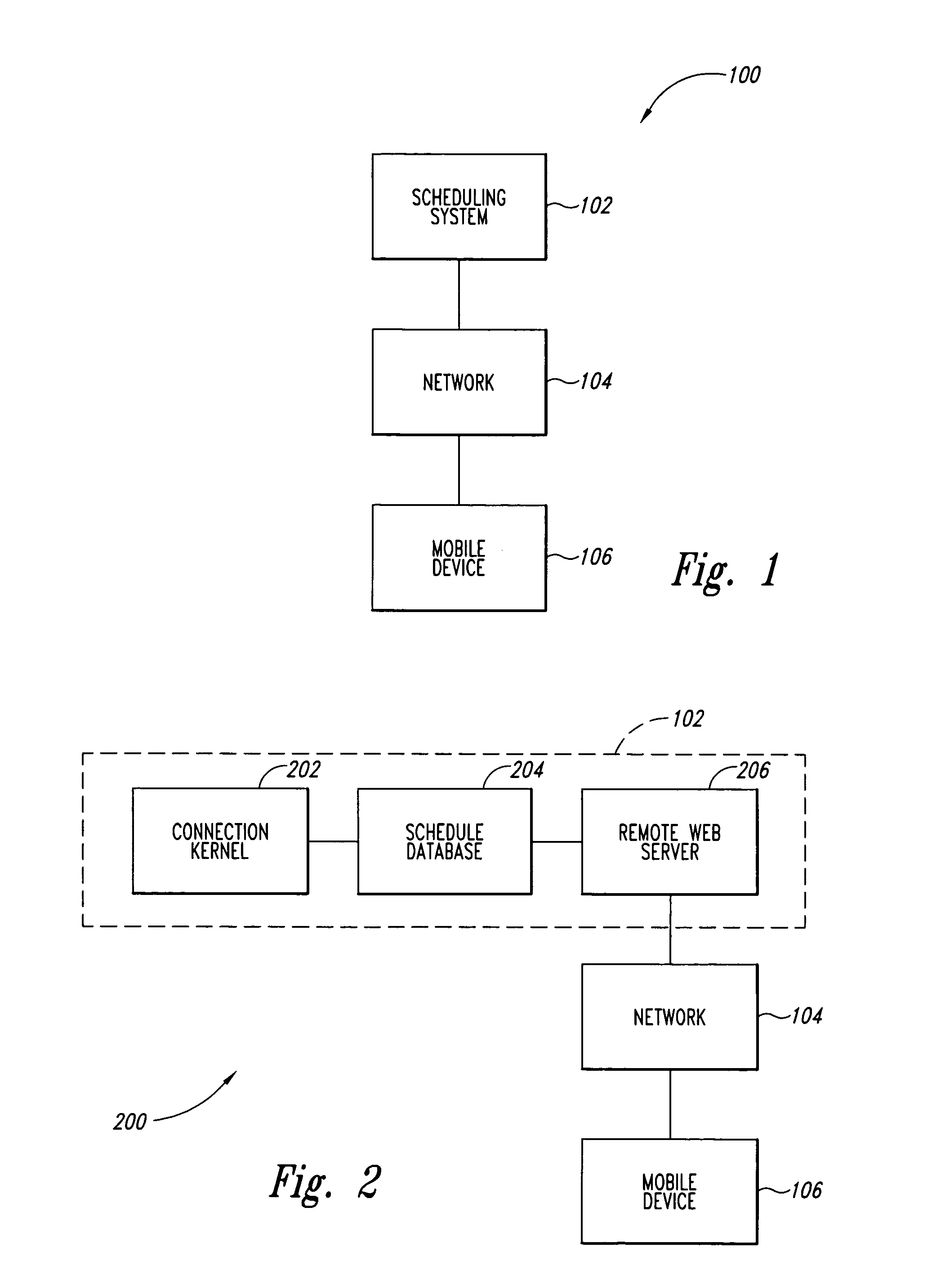

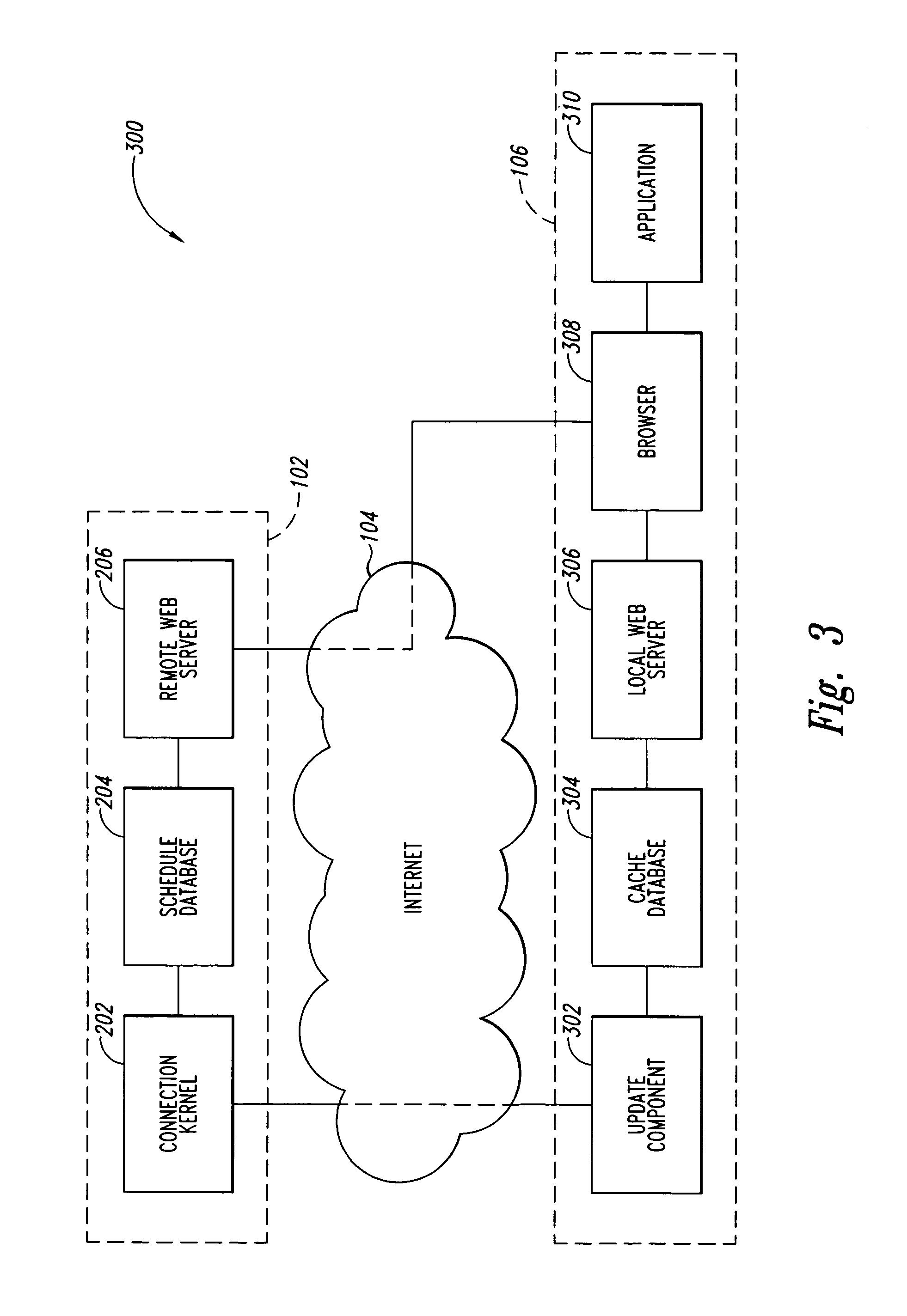

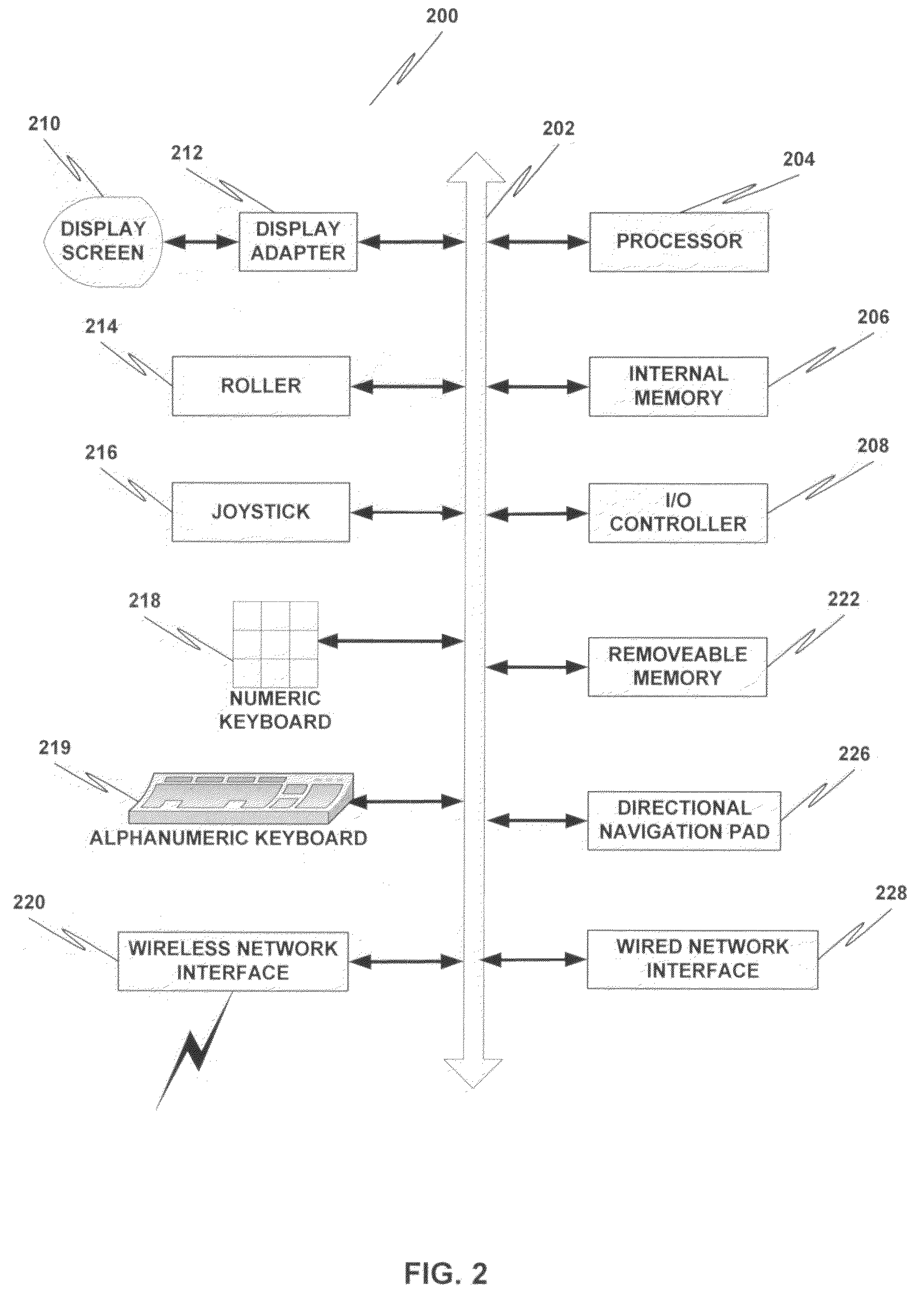

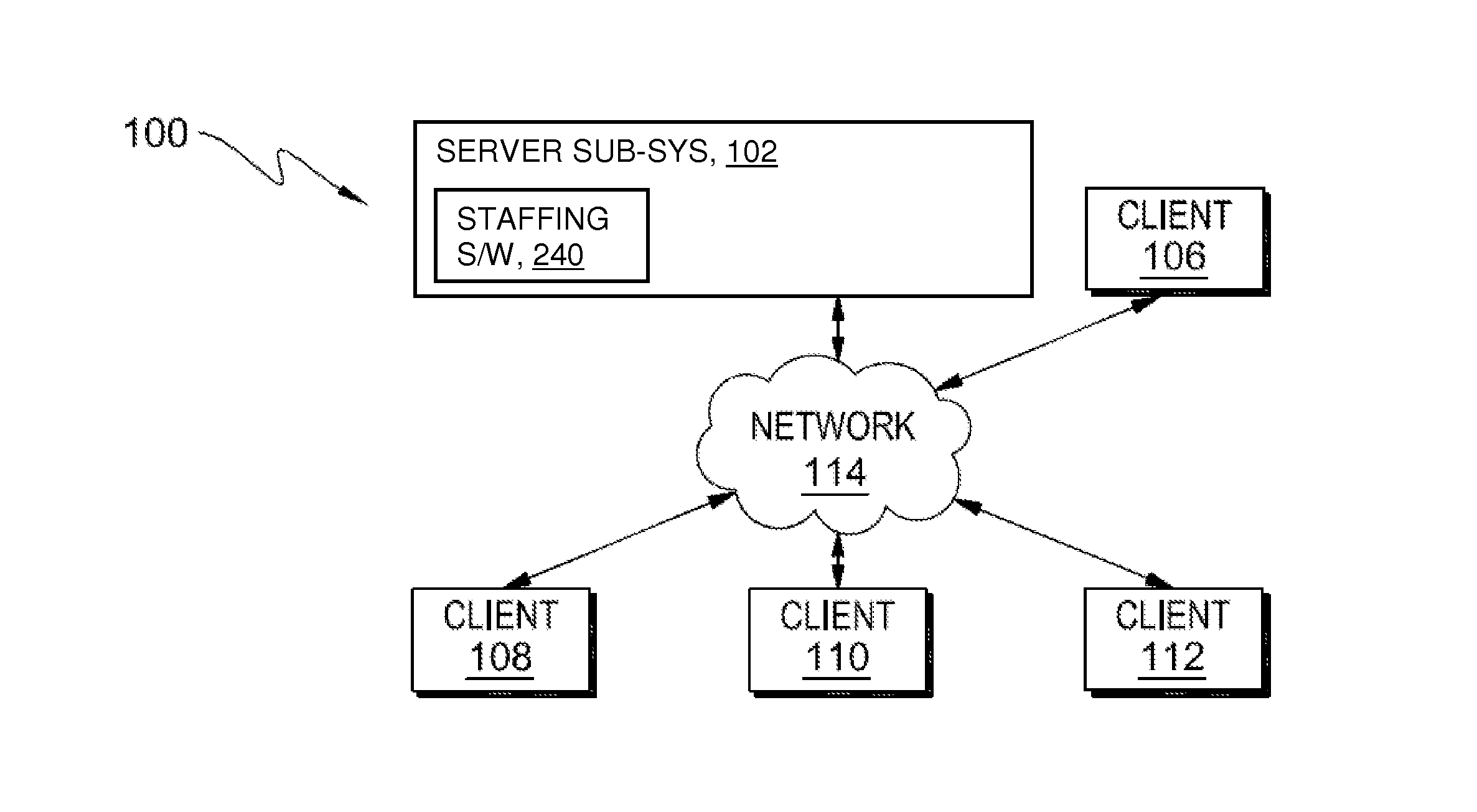

Systems and methods for enhancing connectivity between a mobile workforce and a remote scheduling application

Systems and methods for enhancing connectivity are discussed. An illustrative aspect of the invention includes a method for enhancing connectivity. The method includes scheduling an order to be performed by a worker into a schedule, accessing the schedule by a mobile device via a server on the Internet, and substituting the schedule by a proxy to allow an application on the mobile device to interact with the proxy when the mobile device is temporarily disconnected from the schedule.

Owner:HITACHI ENERGY SWITZERLAND AG

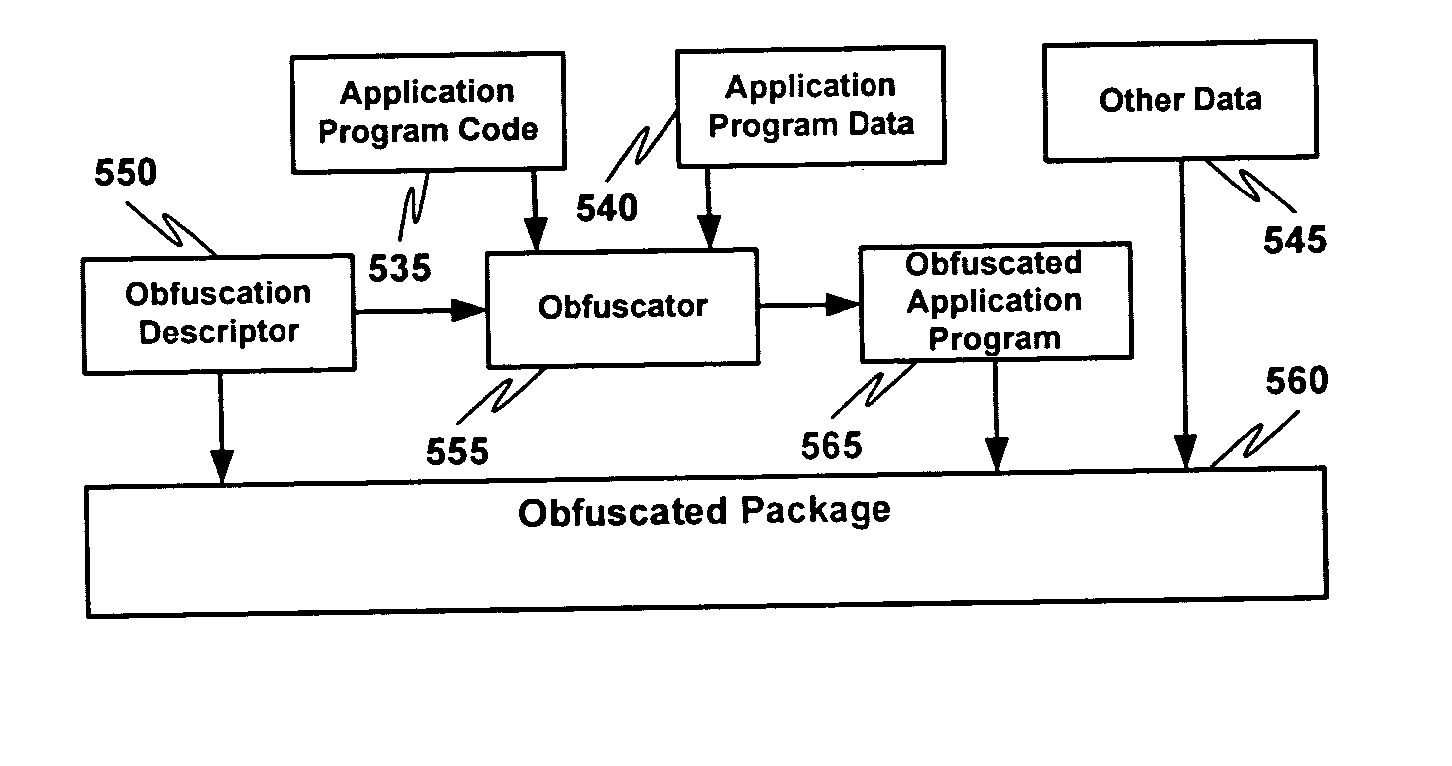

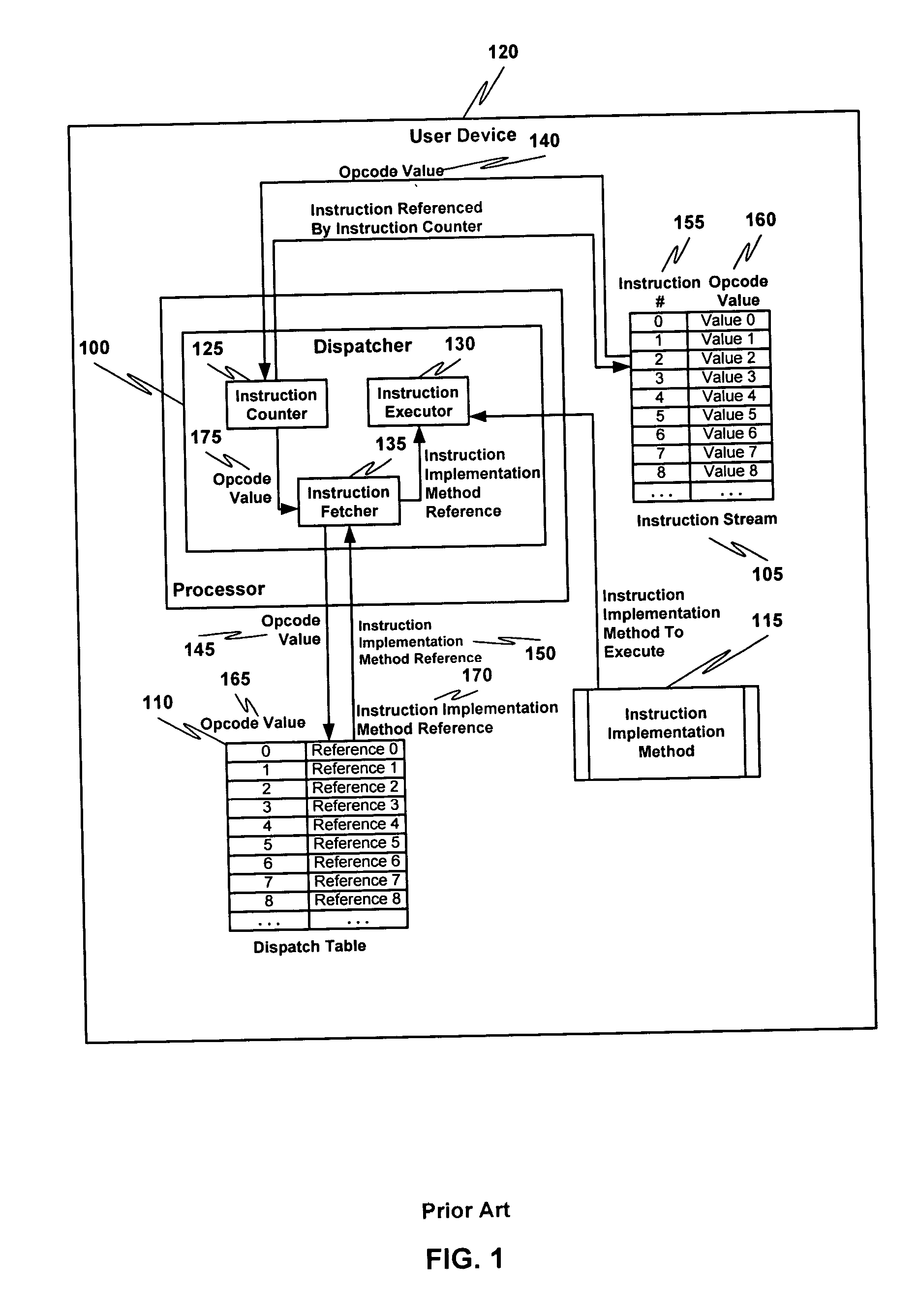

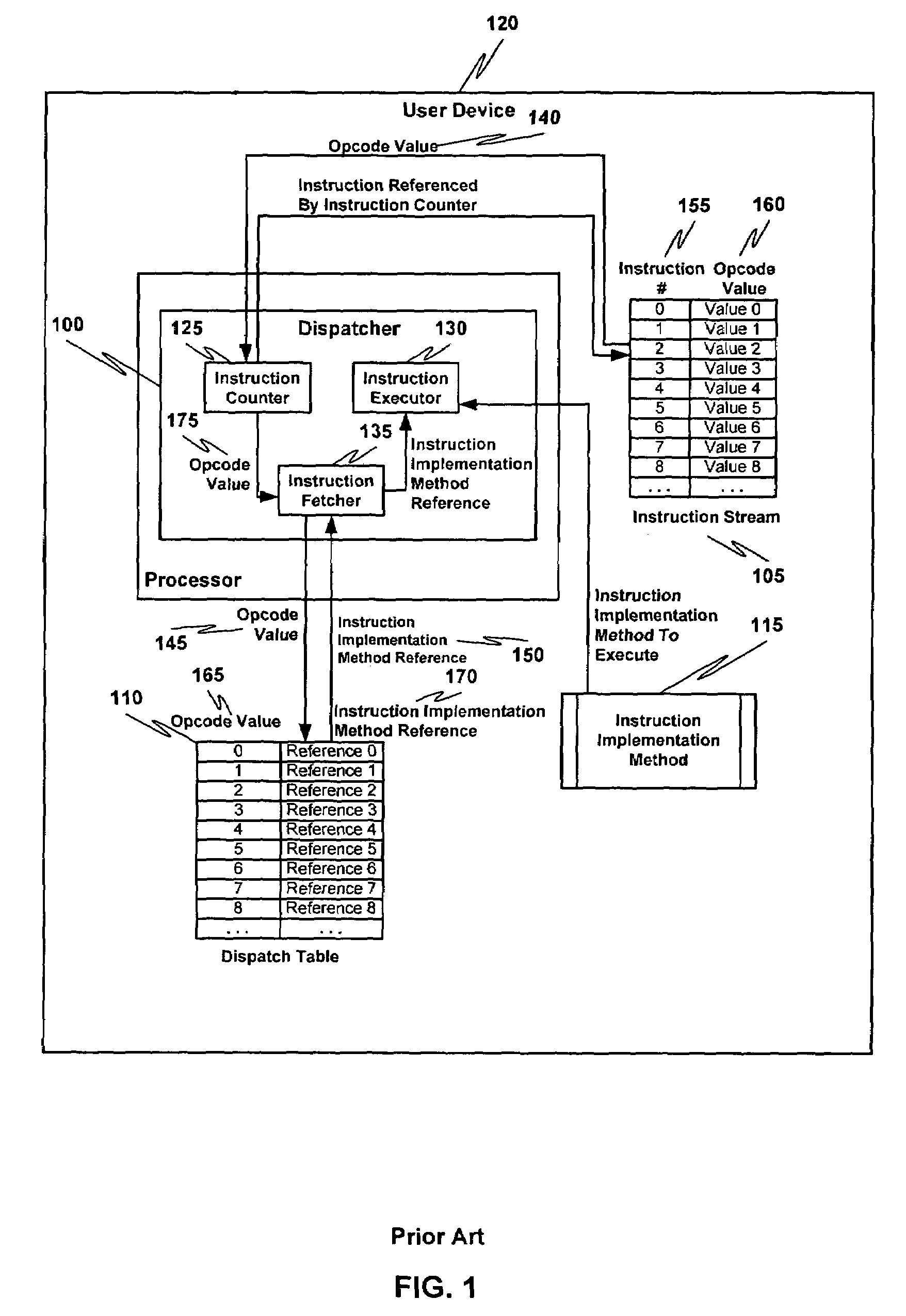

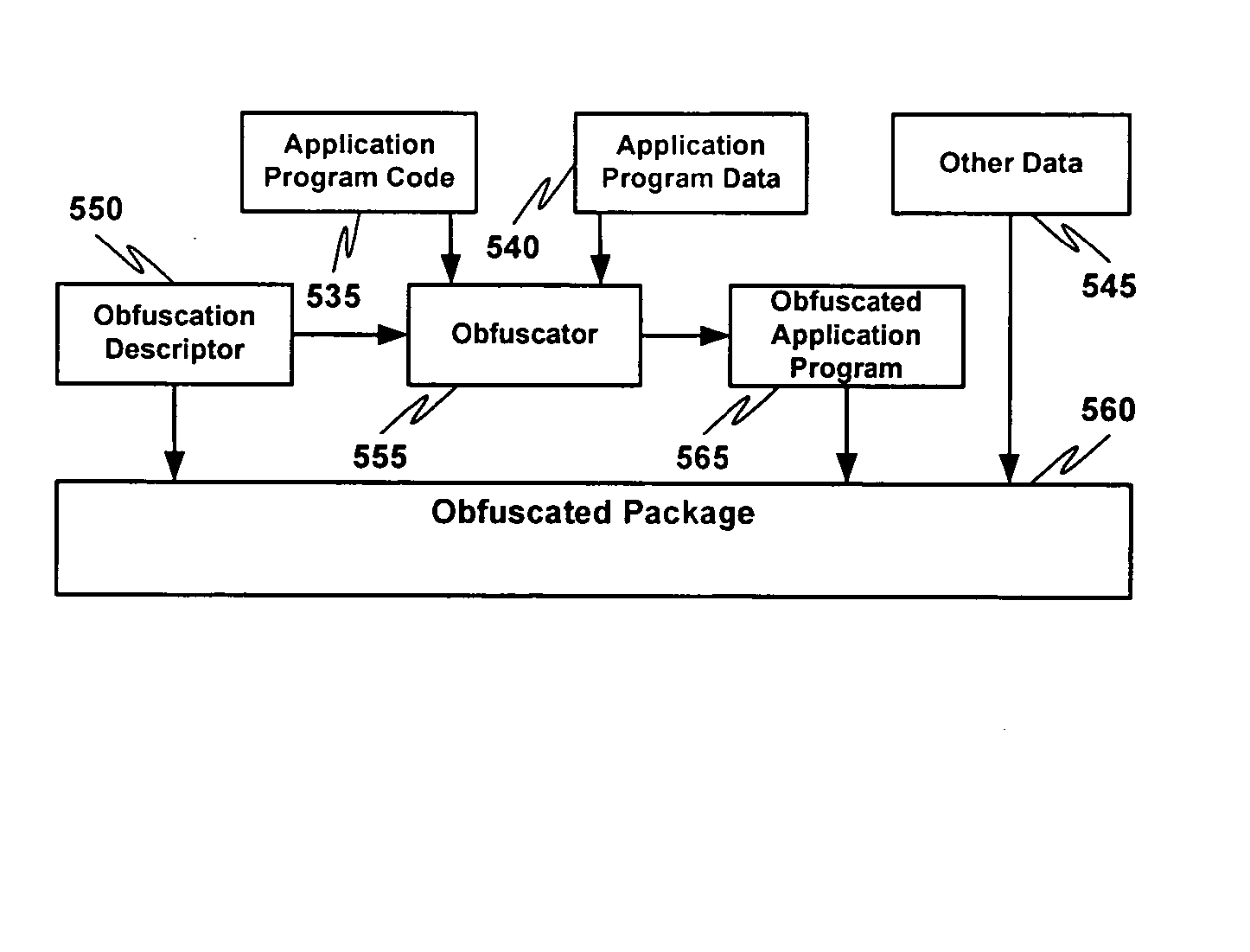

Multiple instruction dispatch tables for application program obfuscation

ActiveUS20050071652A1Key distribution for secure communicationDigital data processing detailsProgram instructionInstruction set

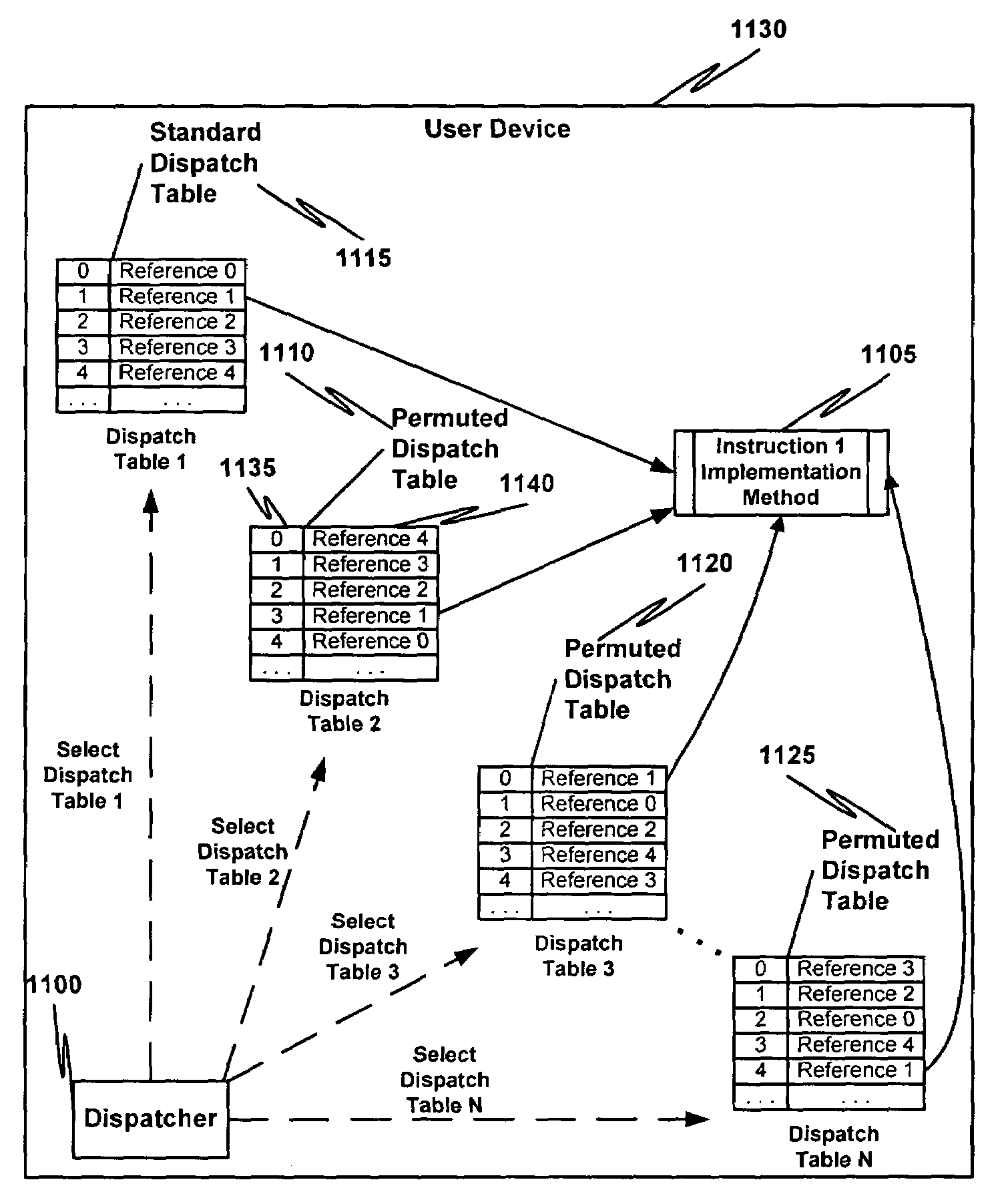

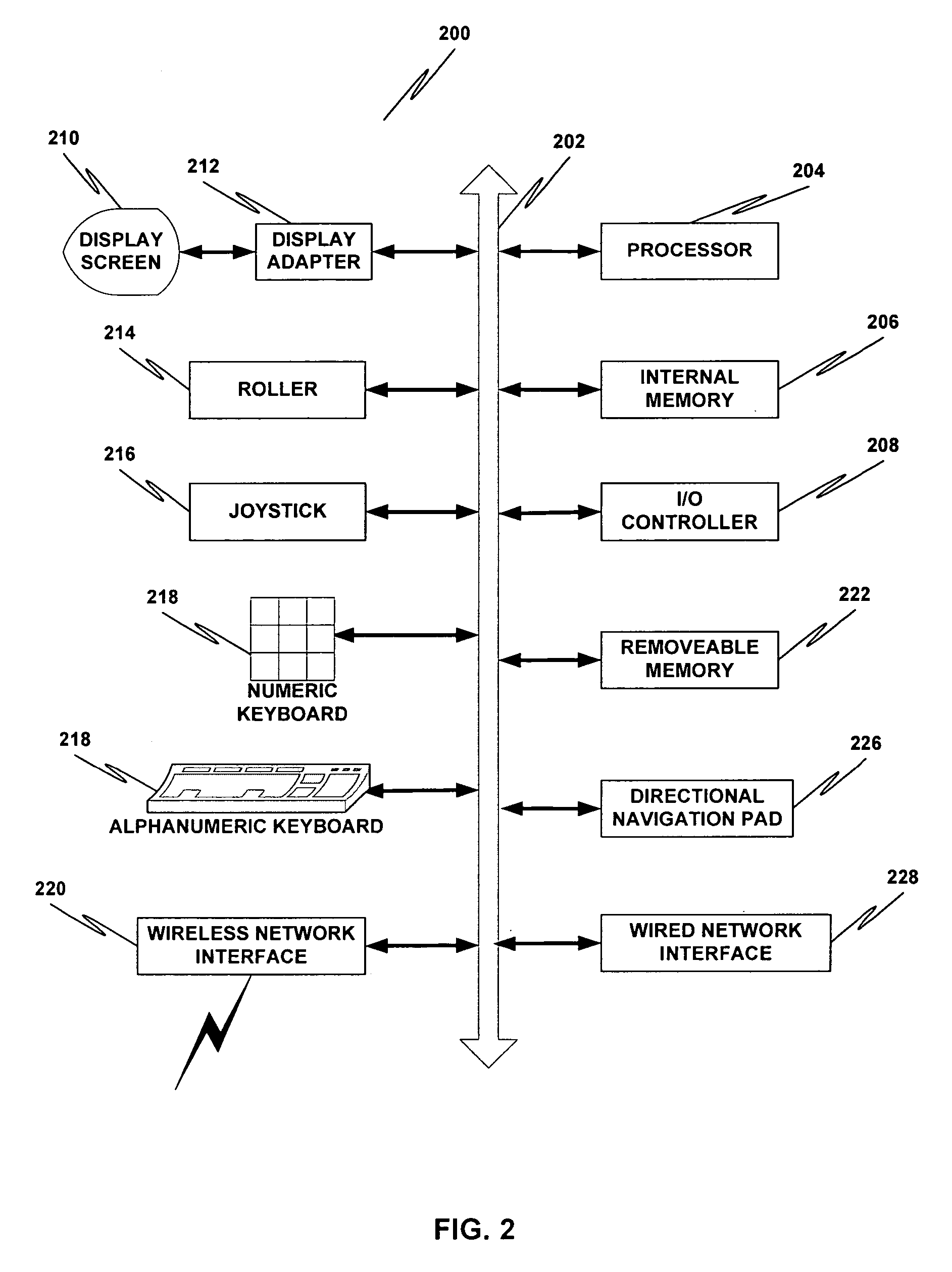

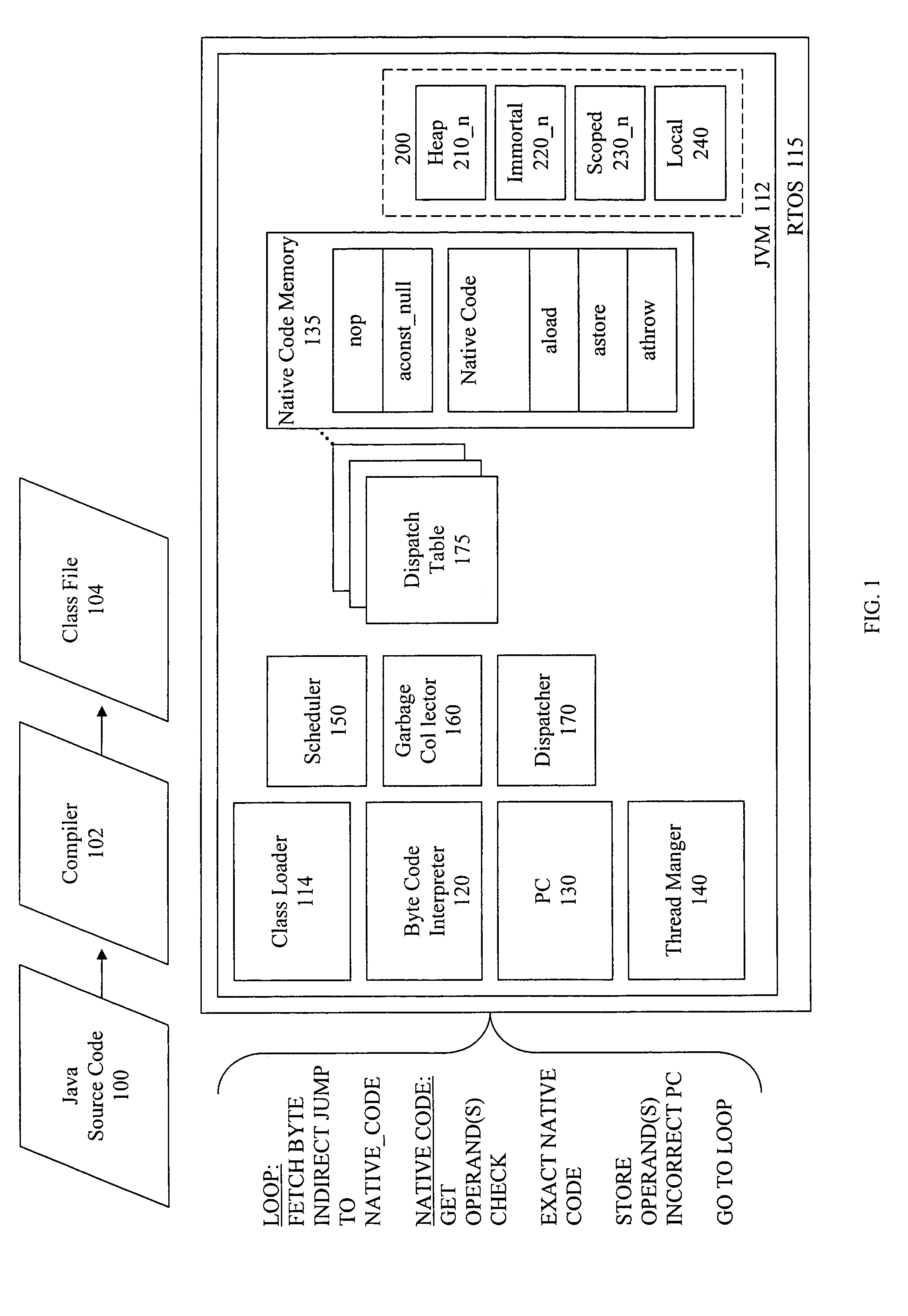

Obfuscating an application program comprises reading an application program comprising code, determining multiple dispatch tables associated with the application program, transforming the application program into application program code configured to utilize the dispatch tables during application program execution to determine the location of instruction implementation methods to be executed based at least in part on a current instruction counter value, and sending the application program code. Executing an obfuscated application program comprises receiving an obfuscated application program comprising at least one instruction opcode value encoded using one of multiple instruction set opcode value encoding schemes, receiving an application program instruction corresponding to a current instruction counter value, selecting an instruction dispatch table based at least in part on the current instruction counter value, and executing the application program instruction using the selected instruction dispatch table.

Owner:ORACLE INT CORP

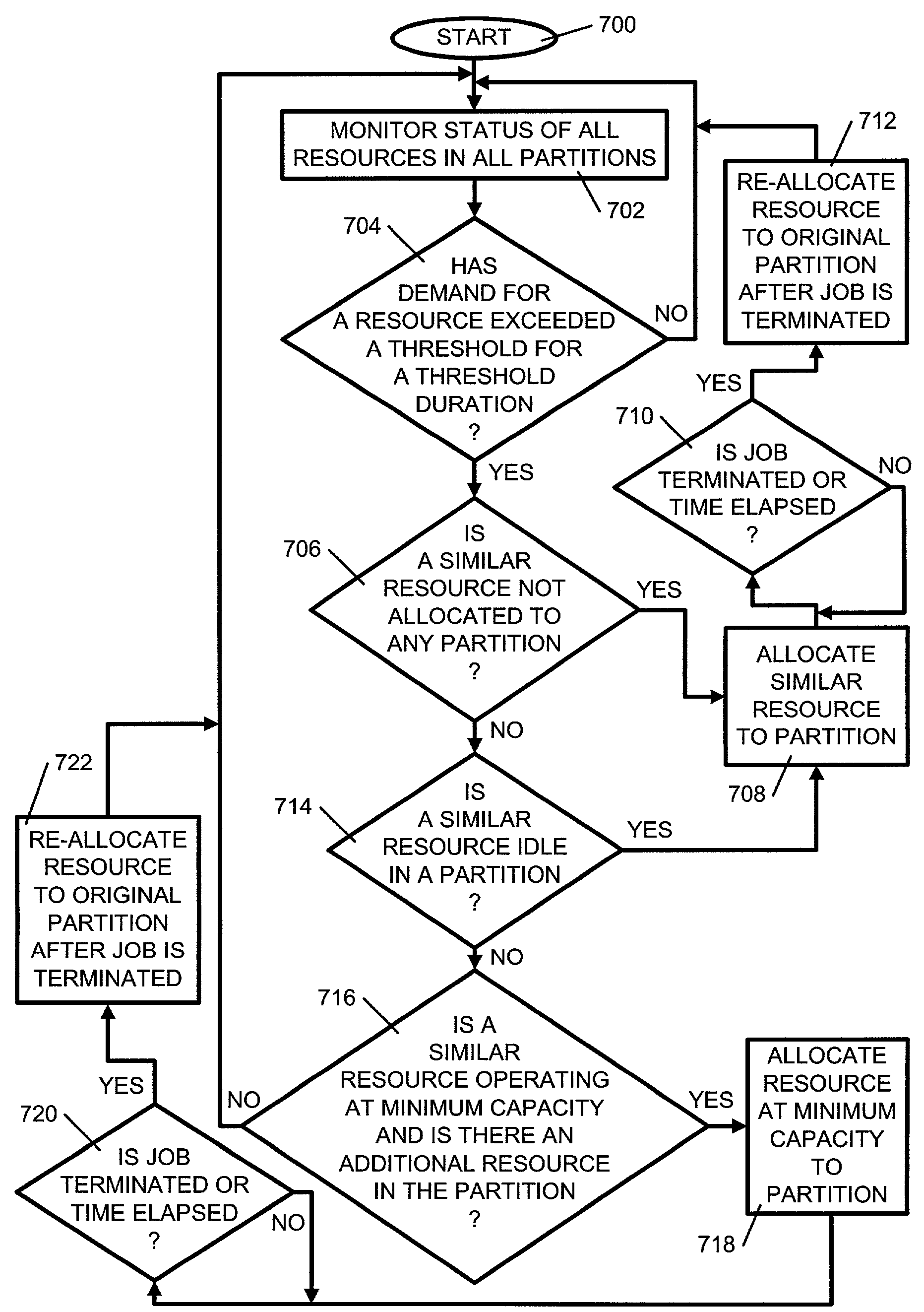

Apparatus and method of dynamically repartitioning a computer system in response to partition workloads

A method, system and apparatus for dynamically repartitioning a partitioned computer system in response to workload demands are provided. In one embodiment, a monitoring software is used to monitor workloads on all resources in all the partitions. If a workload on a resource in a partition is determined to exceed a maximum threshold, a similar resource is allocated to the partition. The similar resource is preferentially an unassigned or unallocated resource. However, resources from other partitions may also be used. In another embodiment, a workload schedule is stored in a workload profile. If a scheduled workload in any of the resources of a partition is to exceed a maximum threshold, additional similar resources will be allocated to the partition before the scheduled workload.

Owner:IBM CORP

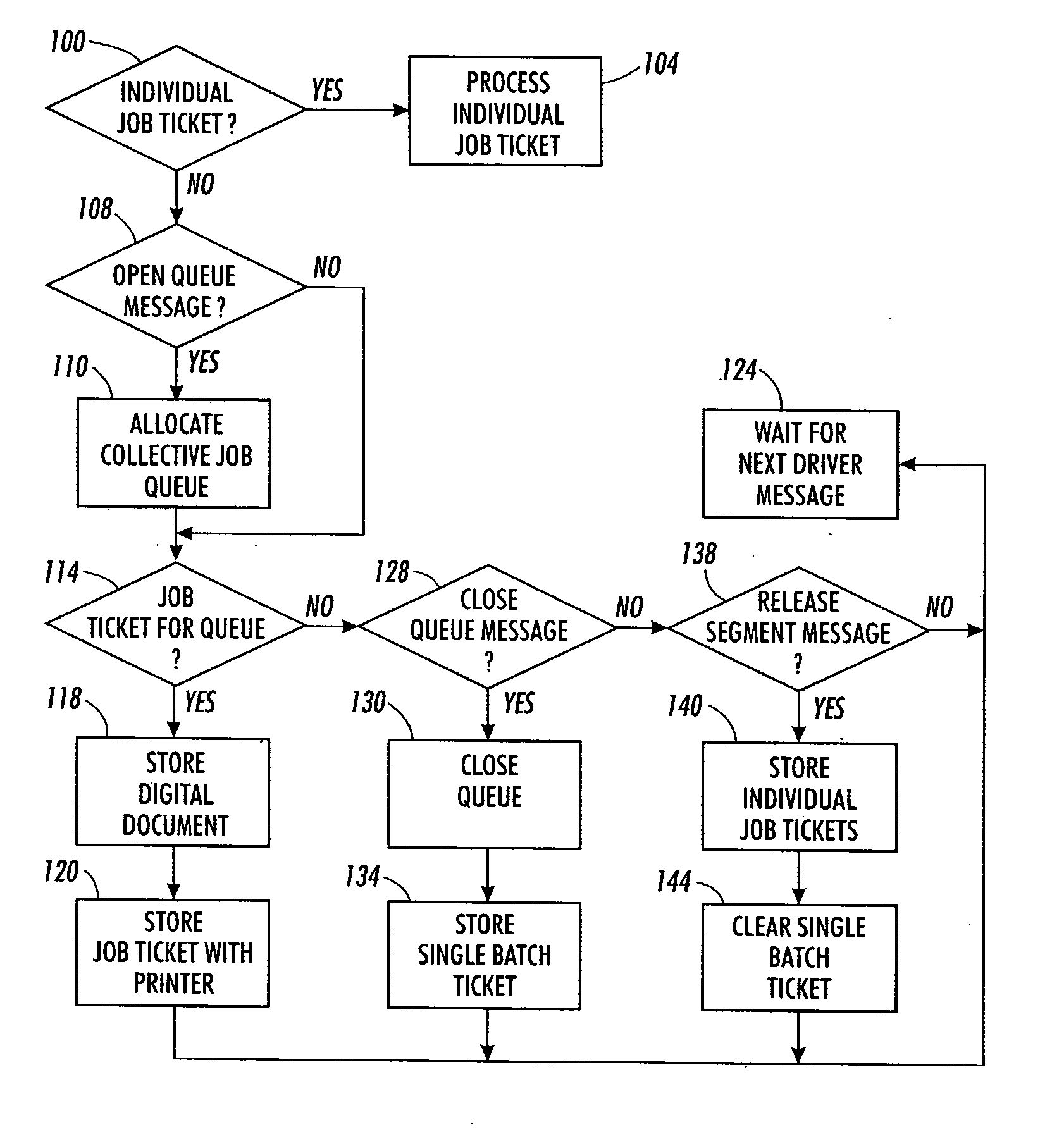

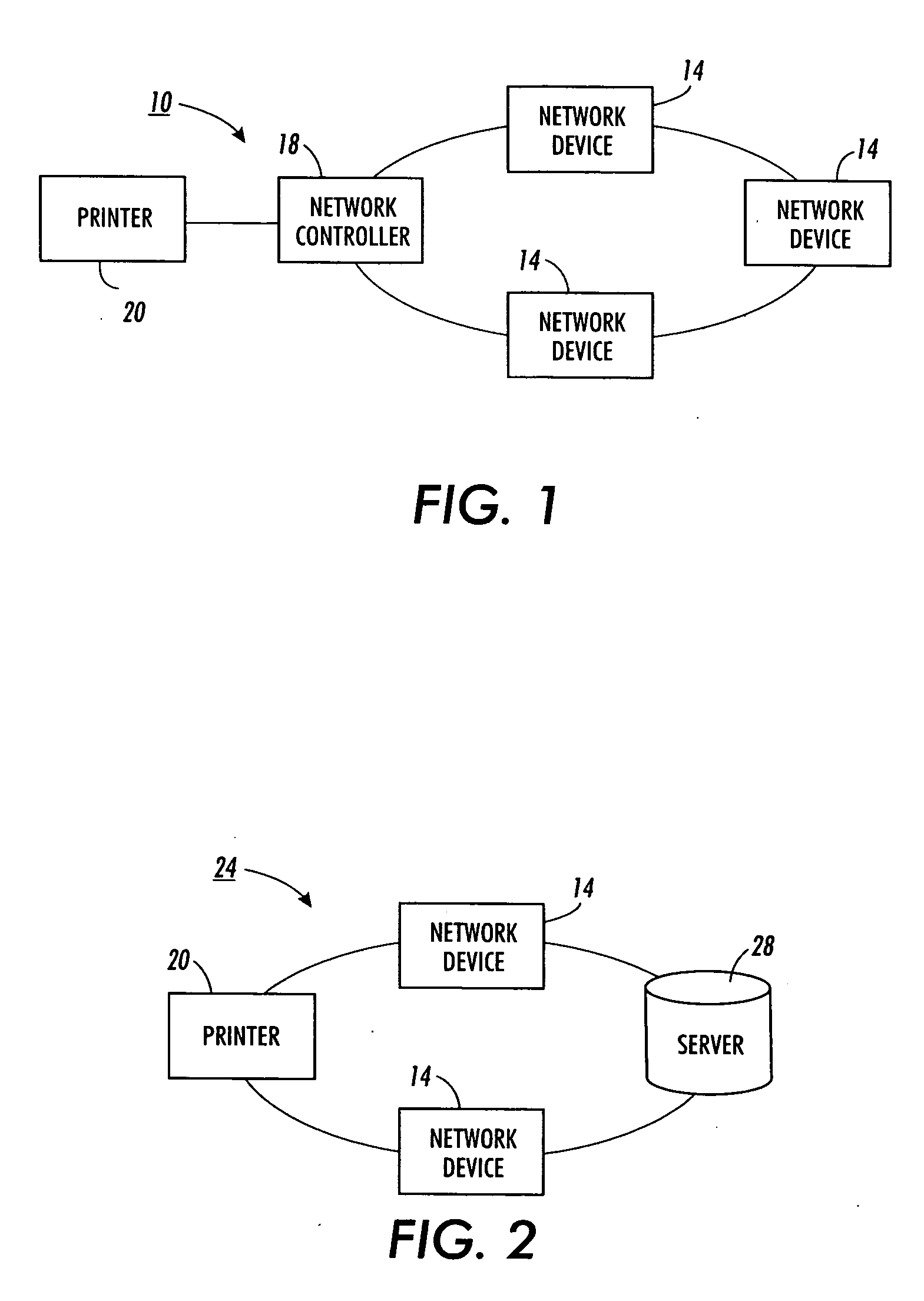

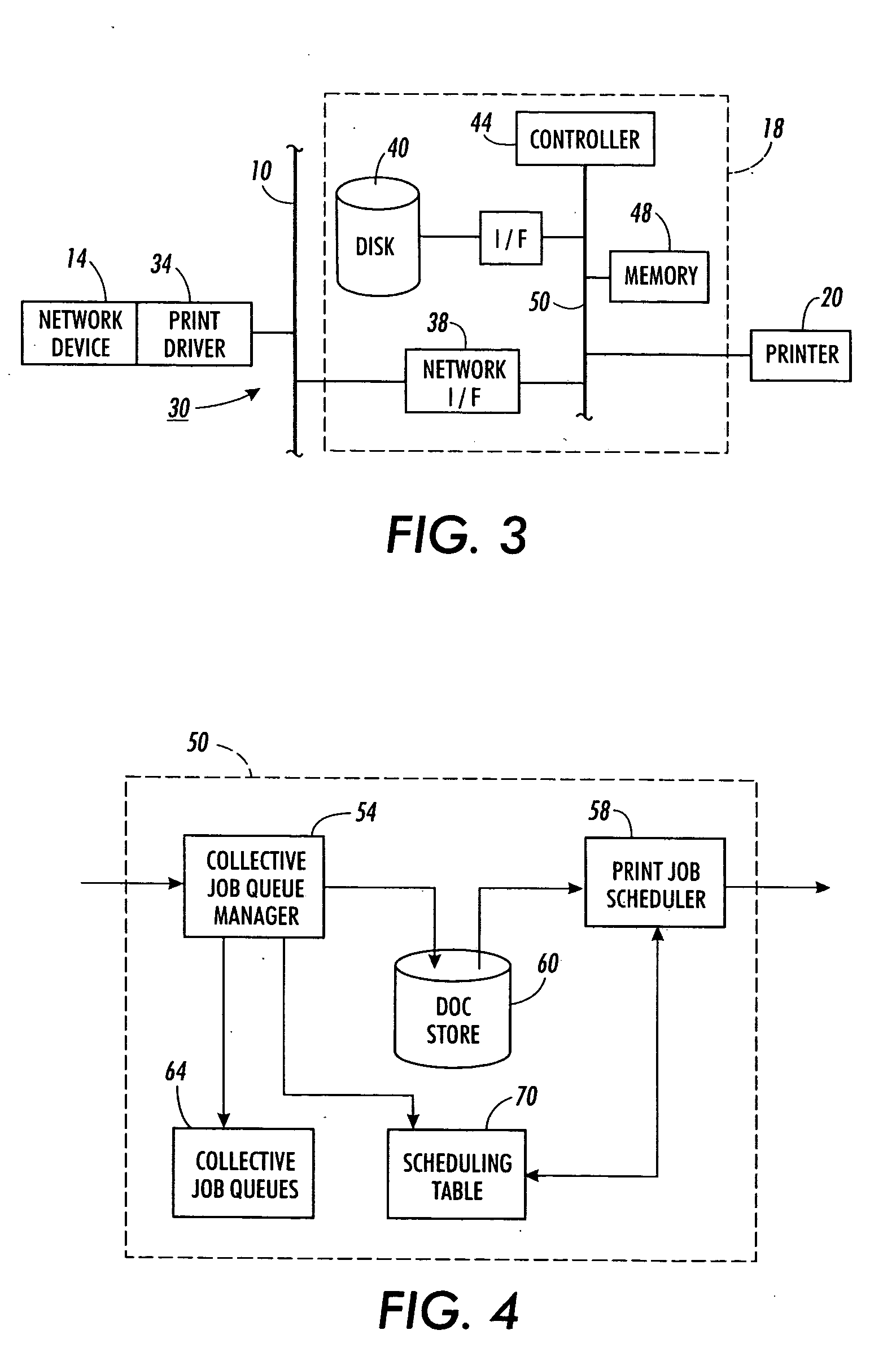

Method and system for managing print job files for a shared printer

InactiveUS20060033958A1Promote recoveryEfficient printingDigitally marking record carriersVisual presentation using printersComputer scienceQueue manager

A system and method enable a user to generate a single batch job ticket for a plurality of print job tickets. The system includes a print driver, a print job manager, and a print engine. The print driver enables a user to request generation of a collective job queue and to provide a plurality of job tickets for the job queue. The print job manager includes a collective job queue manager and a print job scheduler. The collective job queue manager collects job tickets for a job queue and generates a single batch job ticket for the print job scheduling table when the job queue is closed. The print job scheduler selects single batch job tickets in accordance with various criteria and releases the job segments to a print engine for contiguous printing of the job segments.

Owner:XEROX CORP

Multiple instruction dispatch tables for application program obfuscation

ActiveUS7353499B2Key distribution for secure communicationDigital data processing detailsProgram instructionScheduling instructions

Obfuscating an application program comprises reading an application program comprising code, determining multiple dispatch tables associated with the application program, transforming the application program into application program code configured to utilize the dispatch tables during application program execution to determine the location of instruction implementation methods to be executed based at least in part on a current instruction counter value, and sending the application program code. Executing an obfuscated application program comprises receiving an obfuscated application program comprising at least one instruction opcode value encoded using one of multiple instruction set opcode value encoding schemes, receiving an application program instruction corresponding to a current instruction counter value, selecting an instruction dispatch table based at least in part on the current instruction counter value, and executing the application program instruction using the selected instruction dispatch table.

Owner:ORACLE INT CORP

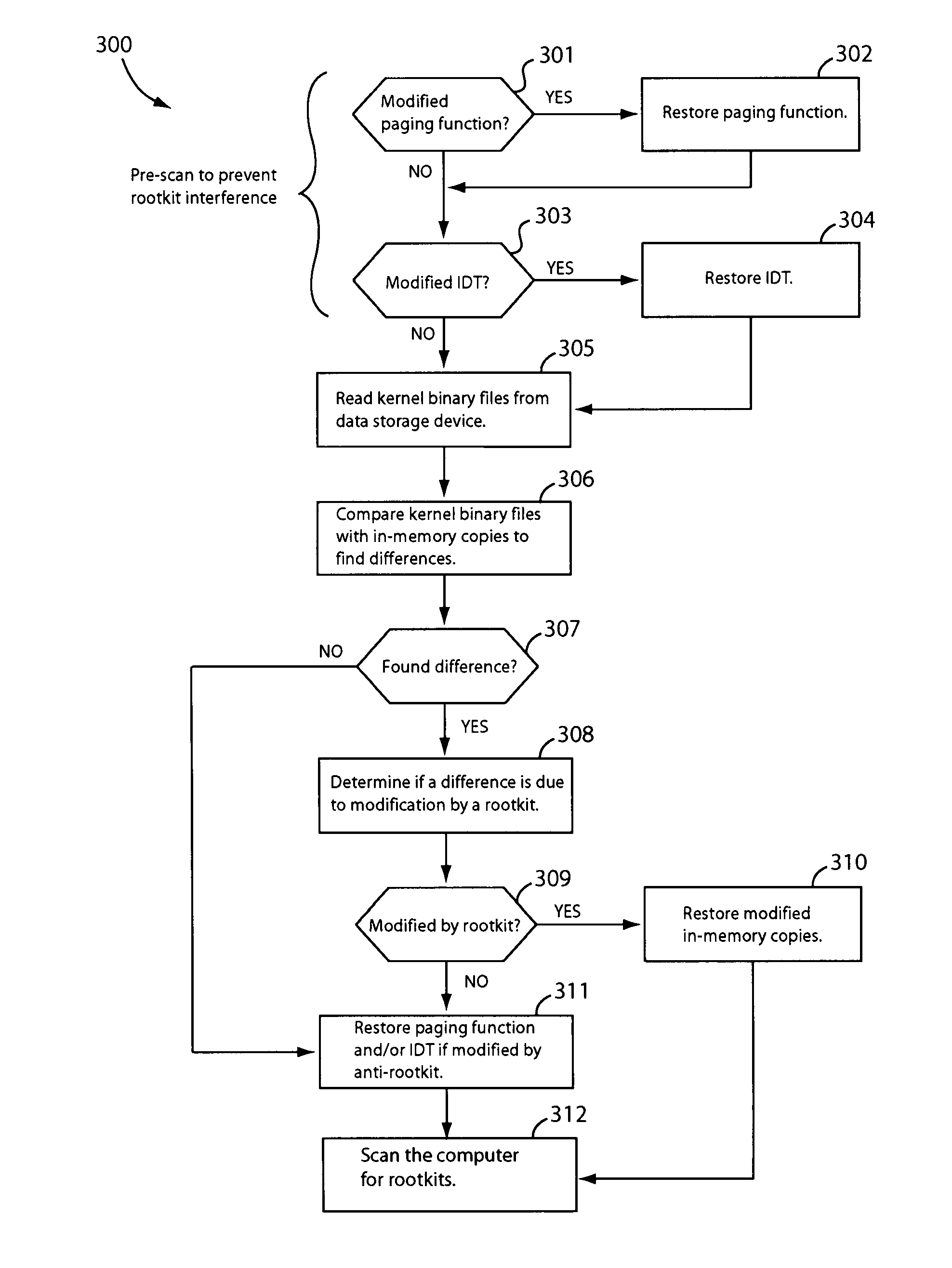

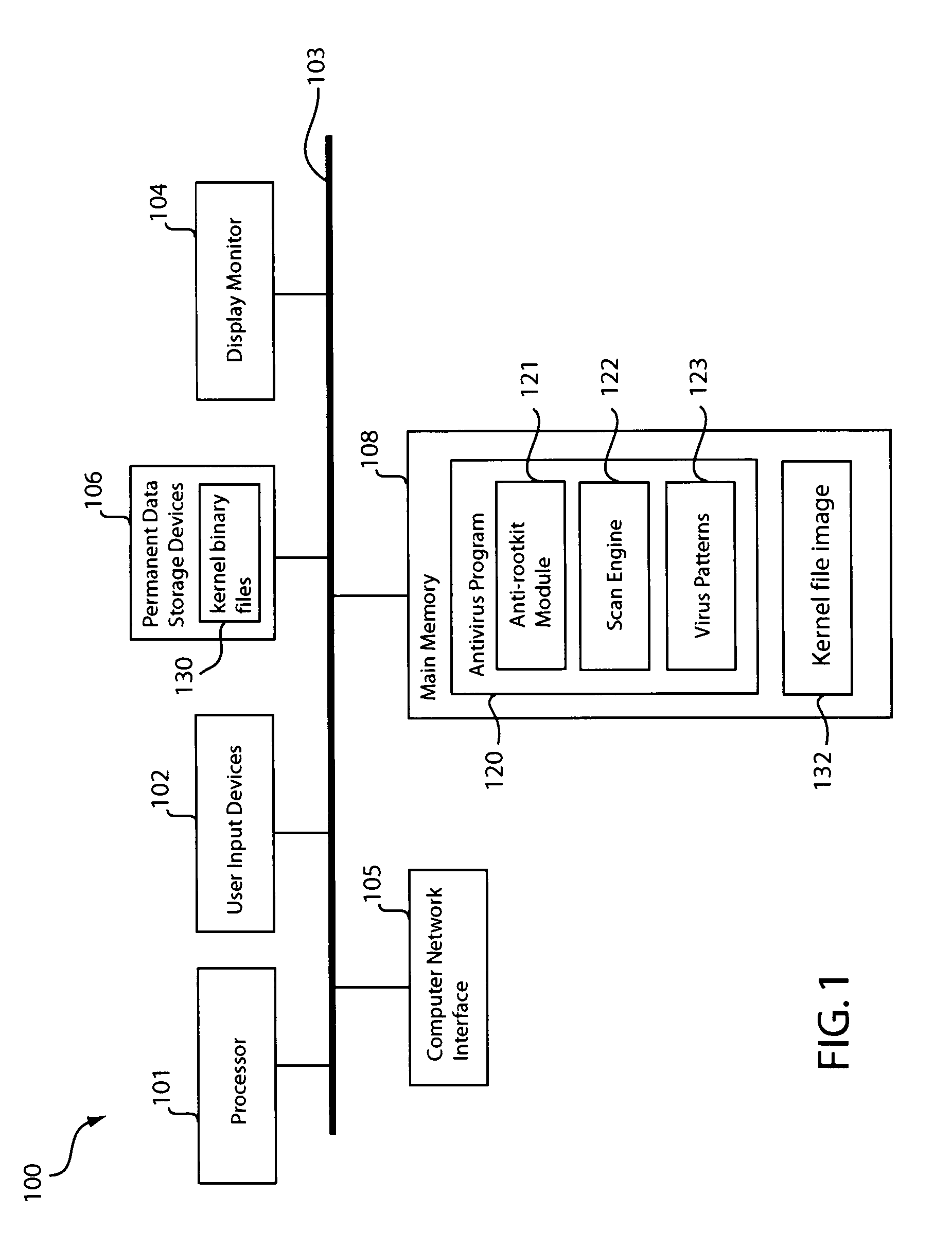

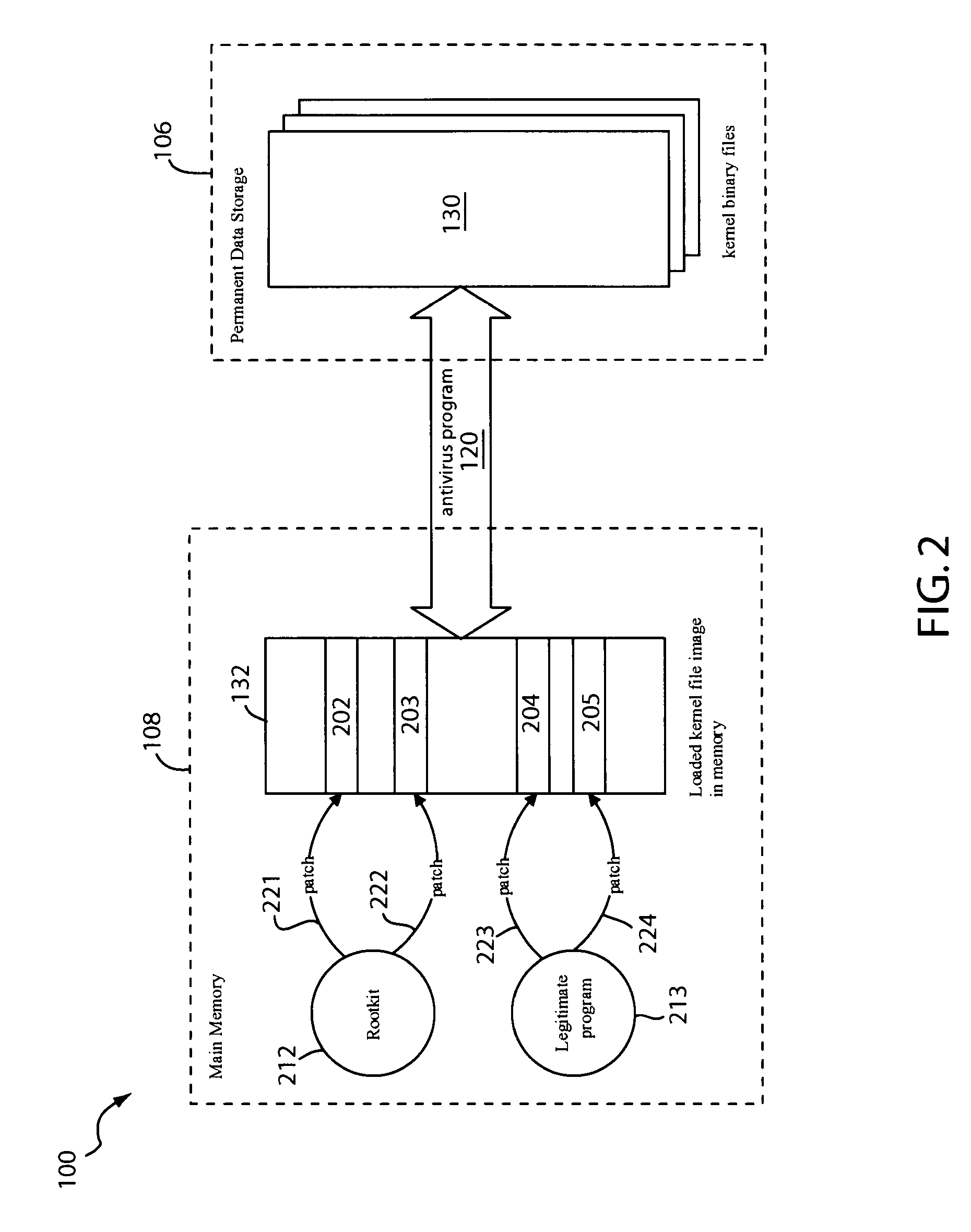

Method and apparatus for detecting and removing kernel rootkits

ActiveUS7802300B1Image interferenceMemory loss protectionDigital data processing detailsOperational systemComputer module

In one embodiment, an anti-rootkit module compares operating system kernel binary files to their loaded kernel file image in memory to find a difference between them. The difference may be scanned for telltale signs of rootkit modification. To prevent rootkits from interfering with memory access of the kernel file image, a pre-scan may be performed to ensure that paging functions and the interrupt dispatch table are in known good condition. If the difference is due to a rootkit modification, the kernel file image may be restored to a known good condition to disable the rootkit. A subsequent virus scan may be performed to remove remaining traces of the rootkit and other malicious codes from the computer.

Owner:TREND MICRO INC

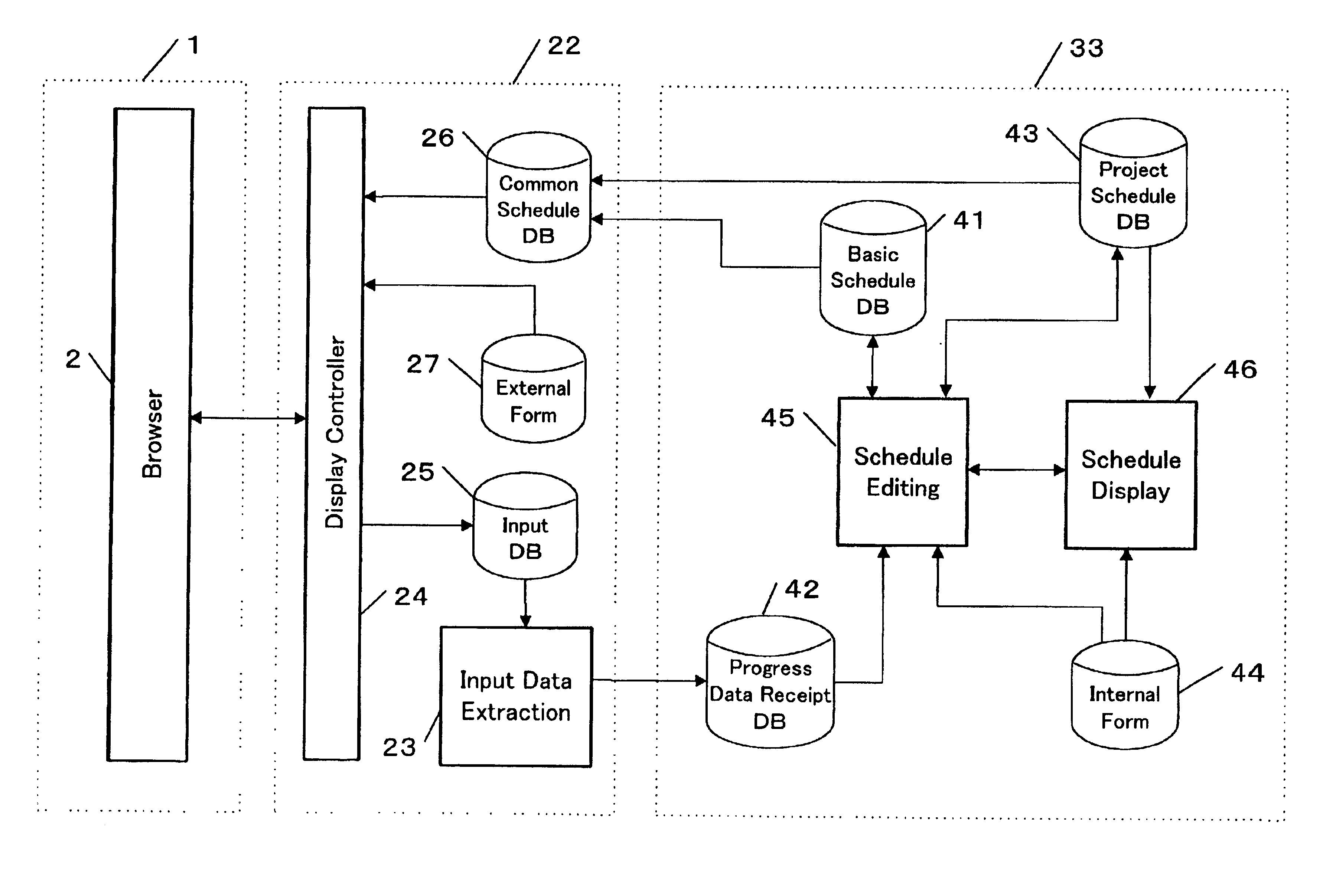

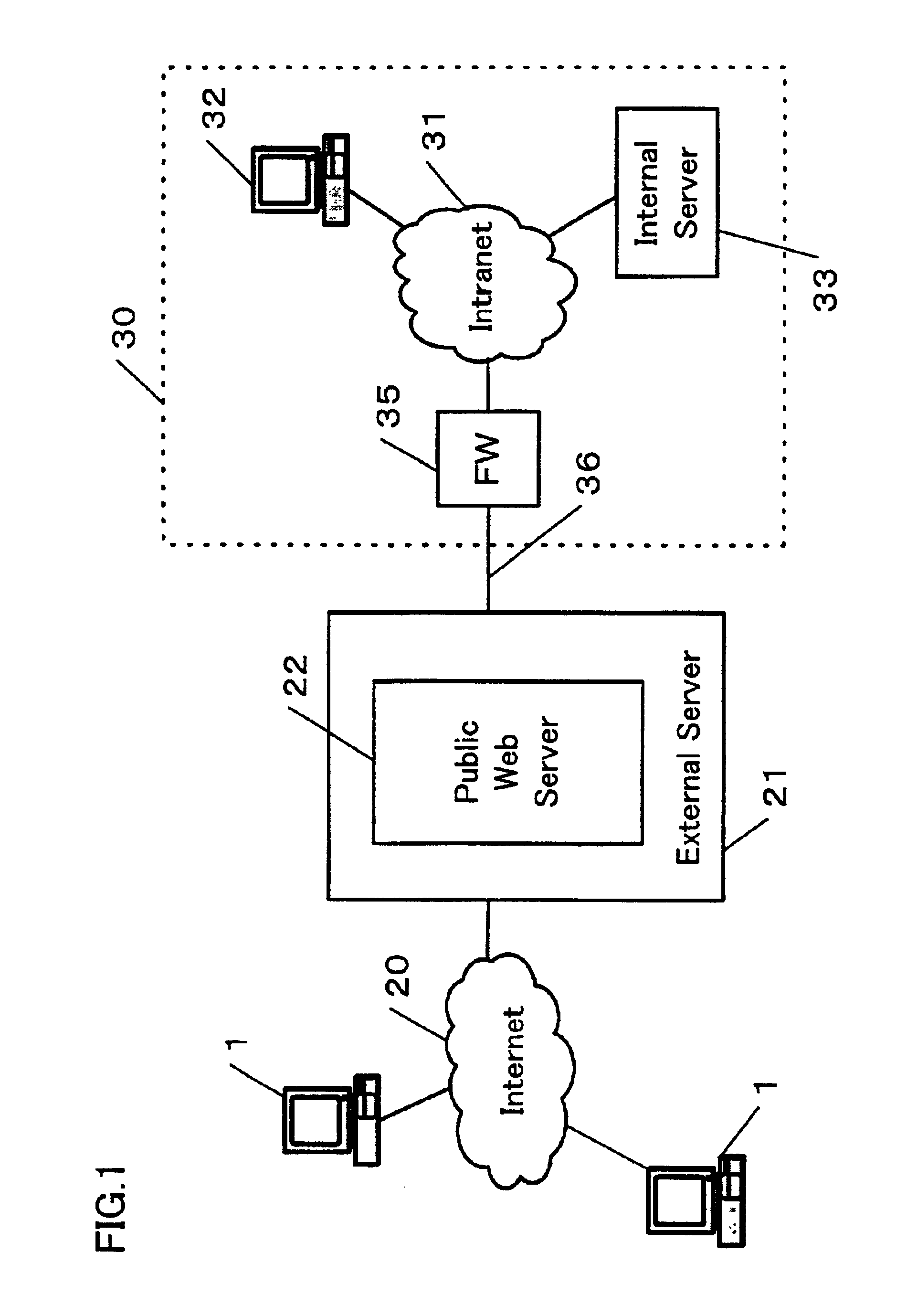

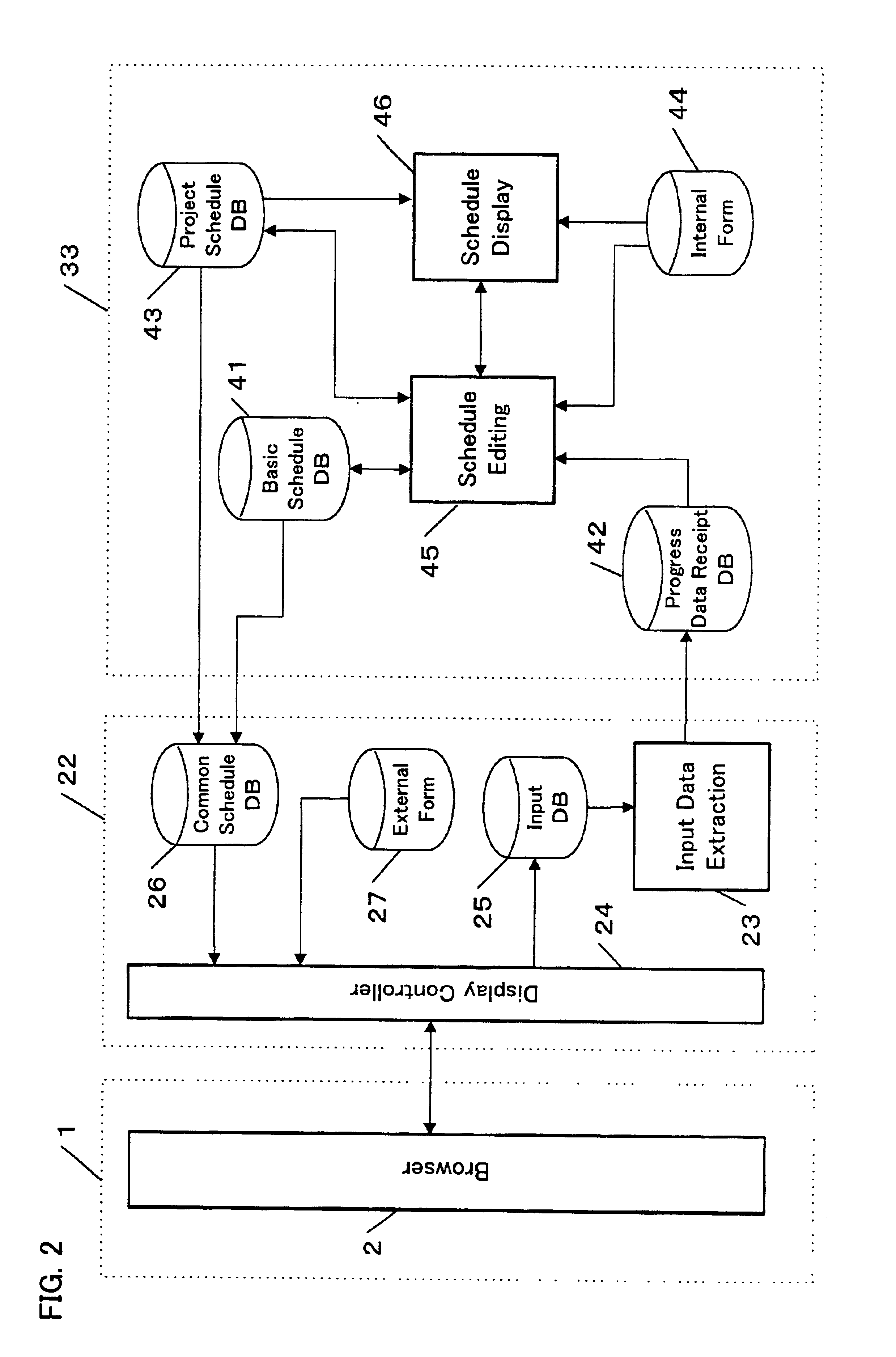

Schedule management system

InactiveUS6918089B2Easy to masterEasy to adjustCathode-ray tube indicatorsOffice automationTime scheduleNetwork connection

A schedule management system in a managing party connects via a network to one or more managed parties. The system comprises a schedule table for storing a schedule created by the managing party. The created schedule is transferred to a common schedule table provided on a server. The server is provided outside the managing party. The system provides each of the managed parties with an inquiry means for inquiring the schedule stored in the common schedule table. When the schedule stored in the schedule table is modified for some reasons, the modified schedule is transferred to the common schedule table. Thus, the managed party can view the latest schedule. Furthermore, each of the managed parties transfers modification data via the modification means. The system modifies the schedule stored in the schedule table with the received modification data. The system further displays the progress in a hierarchical format. The system compares the progress data with the schedule and displays the progress by a mark assigned in accordance with the comparison result.

Owner:HONDA MOTOR CO LTD

Permutation of opcode values for application program obfuscation

ActiveUS7415618B2Key distribution for secure communicationData processing applicationsApplication softwareProgram obfuscation

Obfuscating an application program comprises reading an application program comprising code, transforming the application program code into transformed application program code that uses one of multiple opcode value encoding schemes of a dispatch table associated with the application program, and sending the transformed application program code. Executing an obfuscated application program comprises receiving an obfuscated application program comprising at least one instruction opcode value encoded using one of multiple instruction set opcode value encoding schemes, determining a dispatch table associated with the application program, and executing the application program using the associated dispatch table. The dispatch table corresponds to the one of multiple instruction set opcode value encoding schemes.

Owner:ORACLE INT CORP

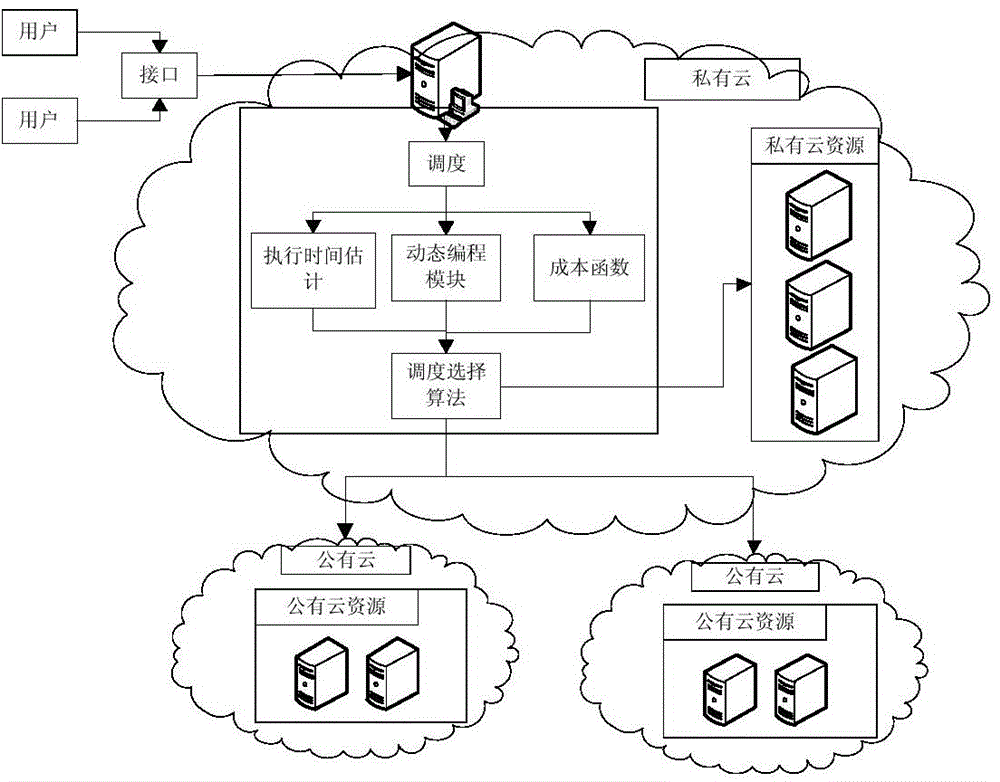

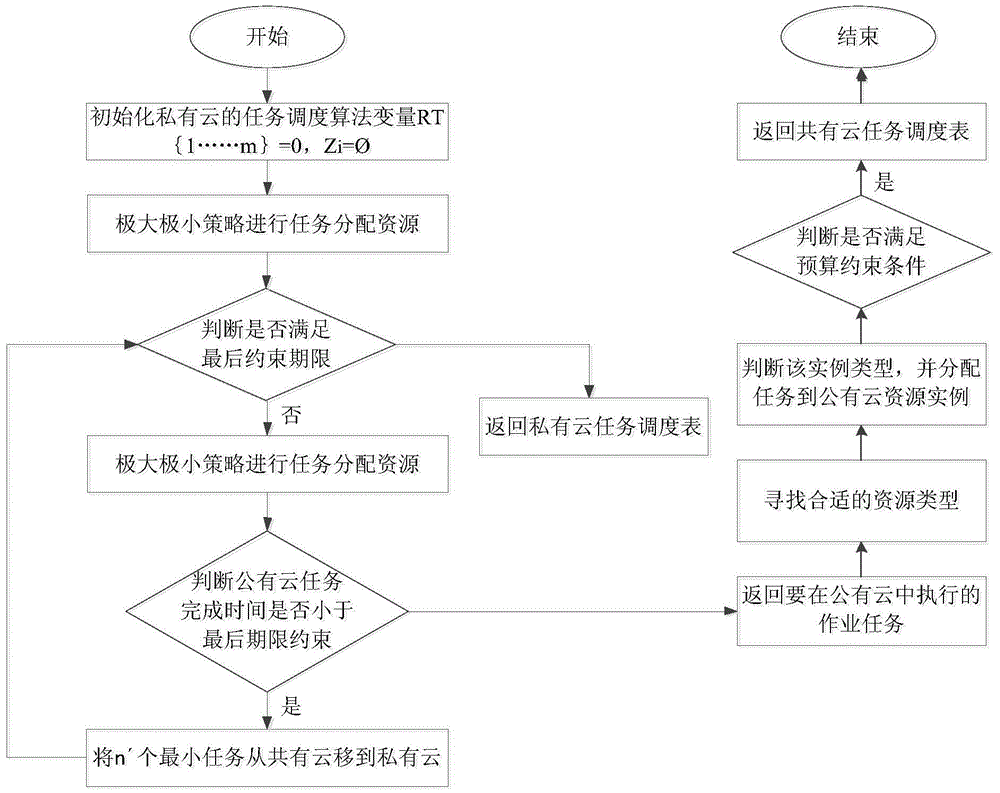

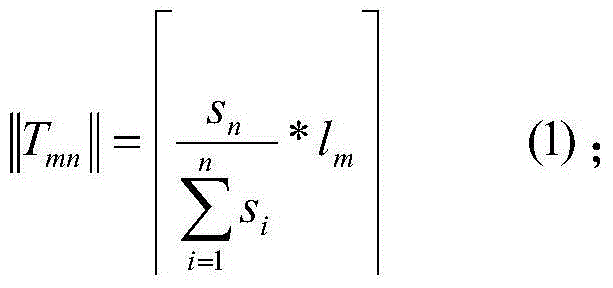

Multi QoS (quality of service)-constrained parallel task scheduling cost optimizing method under mixed cloud environment

InactiveCN104102544AResource allocationConcurrent instruction executionQuality of servicePublic resource

The invention relates to a multi QoS (quality of service)-constrained parallel task scheduling cost optimizing method under a mixed cloud environment. The method comprises the steps of task scheduling of a private cloud, task rescheduling and the minimizing of the leasing cost of public resources. In the task scheduling of the private cloud, resources are distributed for the tasks according to the improved maximal and minimum strategies, and a fast heuristic algorithm-TSOPR is provided. The task rescheduling step is finished by an RSD algorithm and comprises steps of deciding the task type distributed to a public cloud, judging whether the public cloud can meet the final time constraint and the budget constraint or not; generating a scheduling table for the task in the submitted operation by a system if all constraint conditions are met. The leasing cost of the public cloud resources can be minimized under the premise that the budget control constraint is met.

Owner:WUHAN UNIV OF TECH

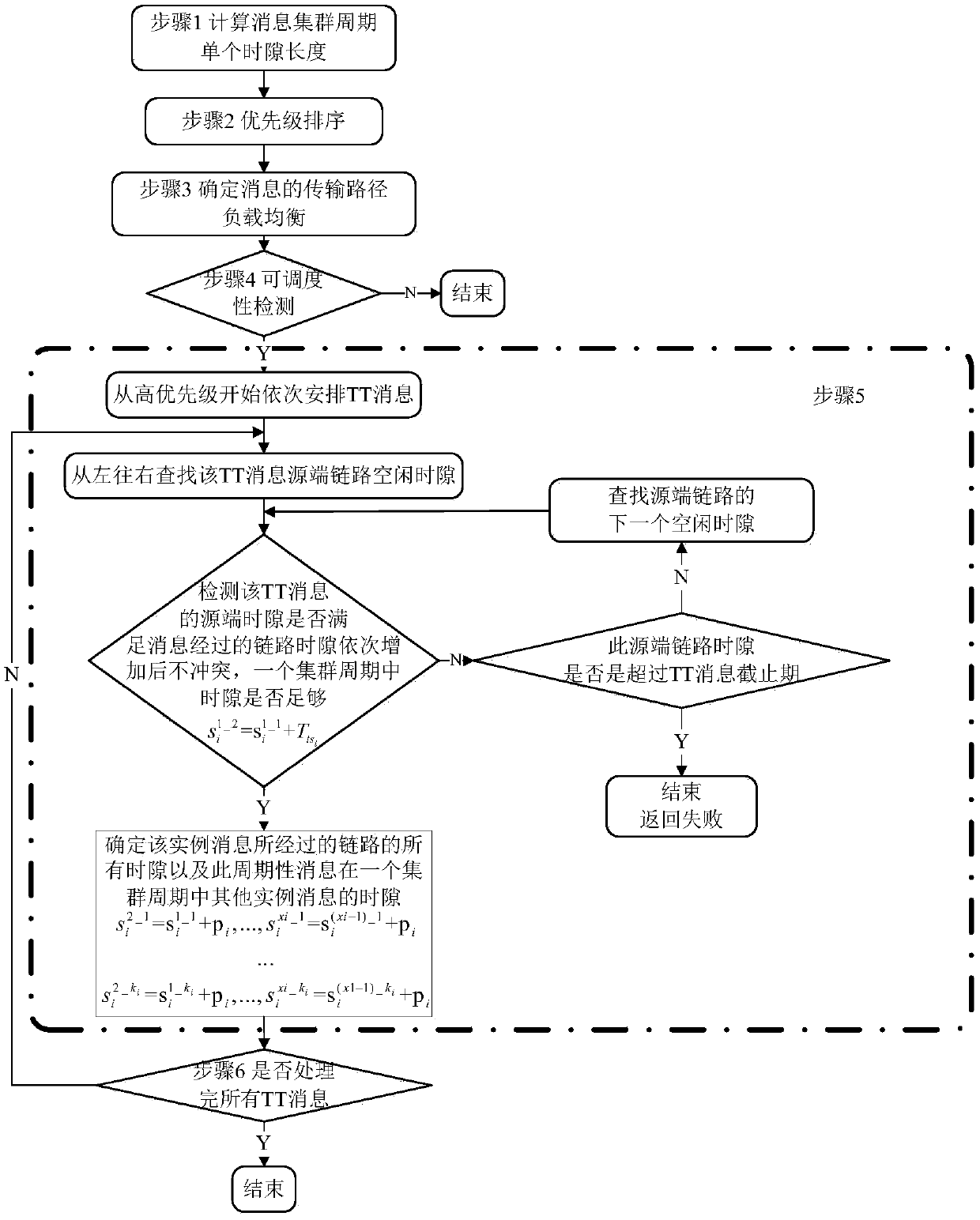

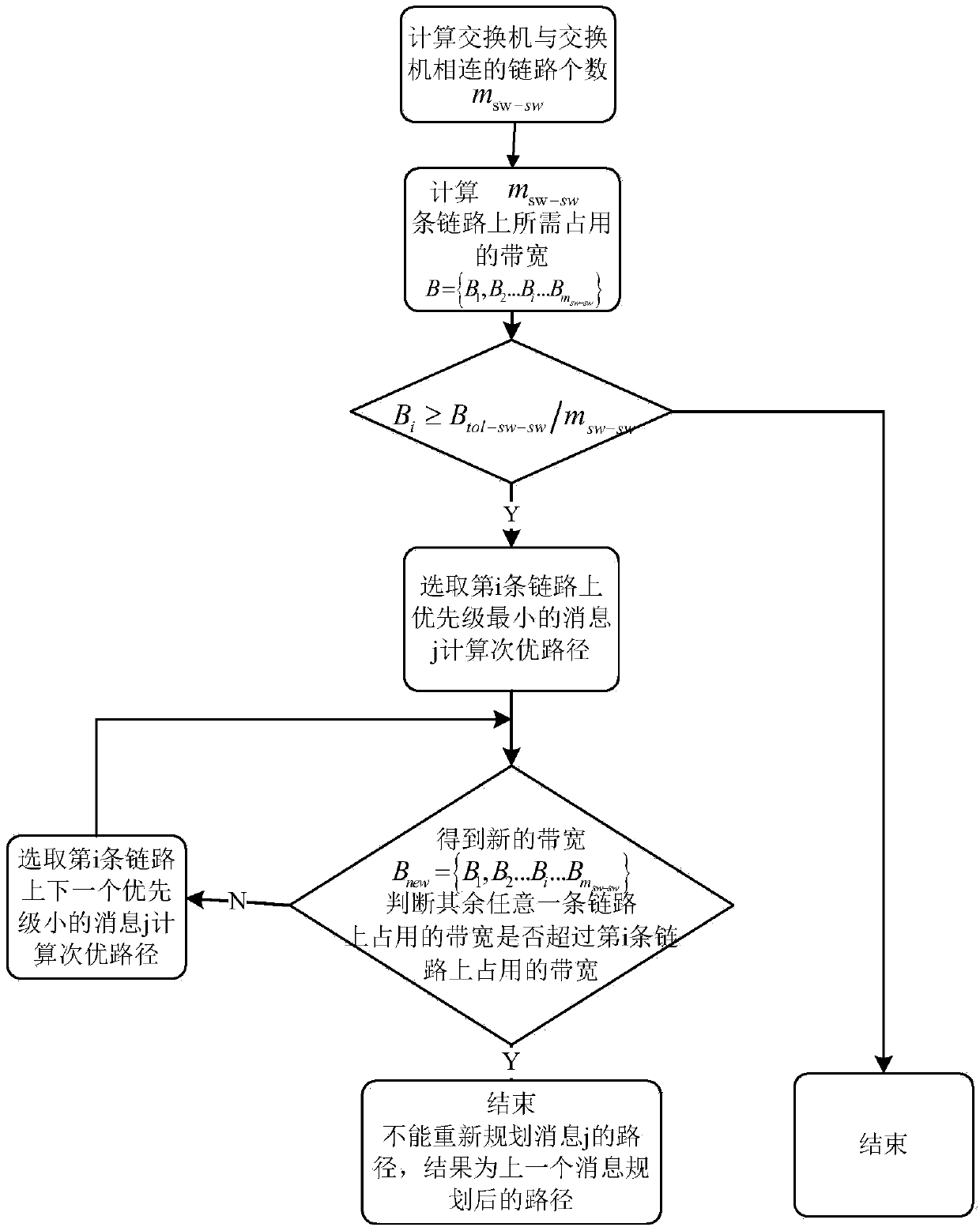

Method for business dispatching in time triggering FC network

ActiveCN108777660AReduce space complexityMeet the needs of real-time data configurationFibre transmissionData switching networksChannel networkFibre Channel

The present invention discloses a method for business dispatching in a time triggering FC network, and relates to the field of an FC network. The method comprises the following steps of: establishinga network model, calculating a cluster period, and determining the length of a single time slot of each time triggering message; determining the priority of a TT message according to a certain rule; planning a link transmitting the TT message; detecting the schedulability of the TT message; selecting the TT message with the highest priority to arrange the time slot of the TT message; according tothe periodicity and the transmission link of the TT message, arranging all other time slots; arranging the TT message of the next priority, and solving a time slot map meeting conflict-free and periodsending of all the TT messages in all the links; and according to a whole network and total business time slot map, solving the sending and receiving time dispatching tables in each terminal and eachexchange. The method ensures the transmitting and receiving of the message determinacy in the optical fiber channel network so as to meet the demand of real-time message dispatching in the complex application system and allow the upper-layer application system performance to be more determined and reliable.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA +1

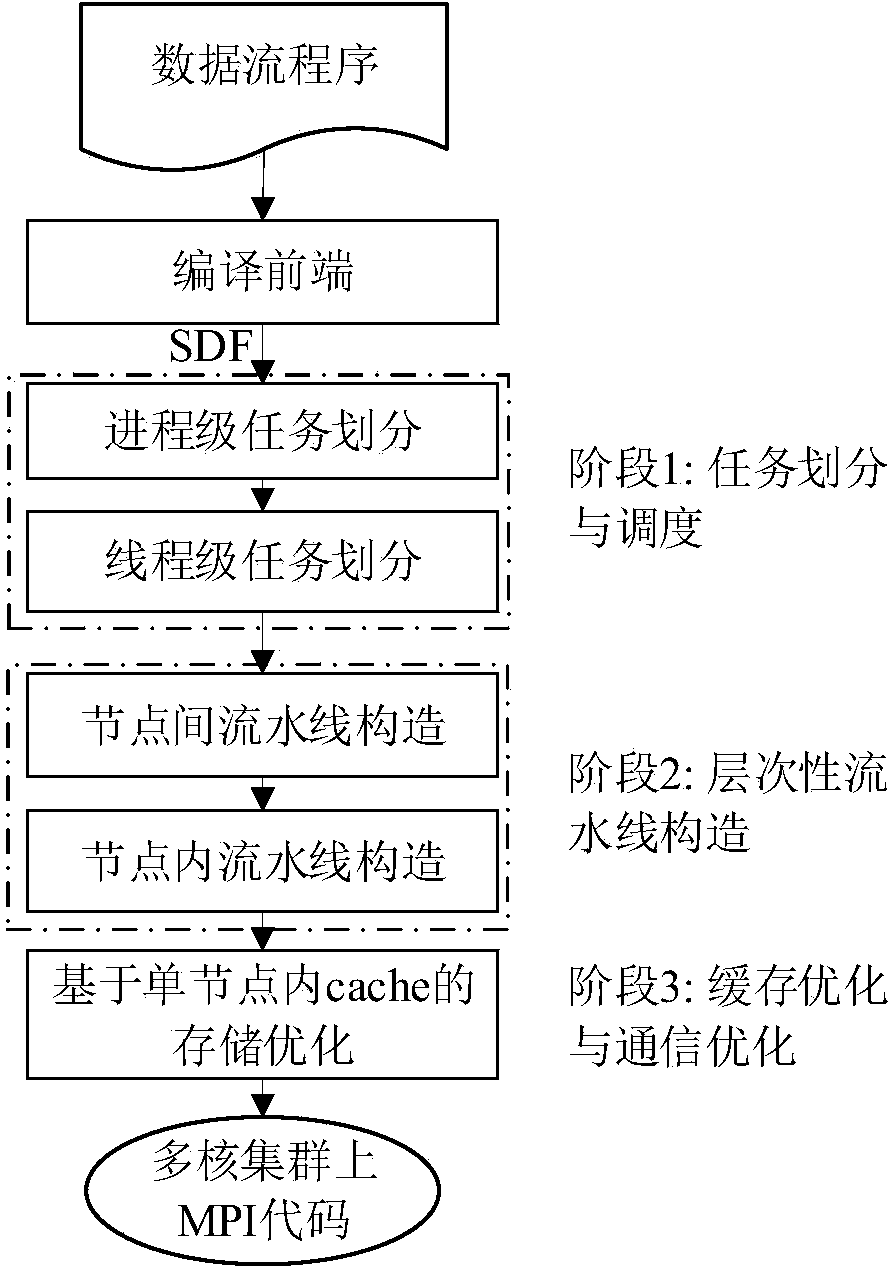

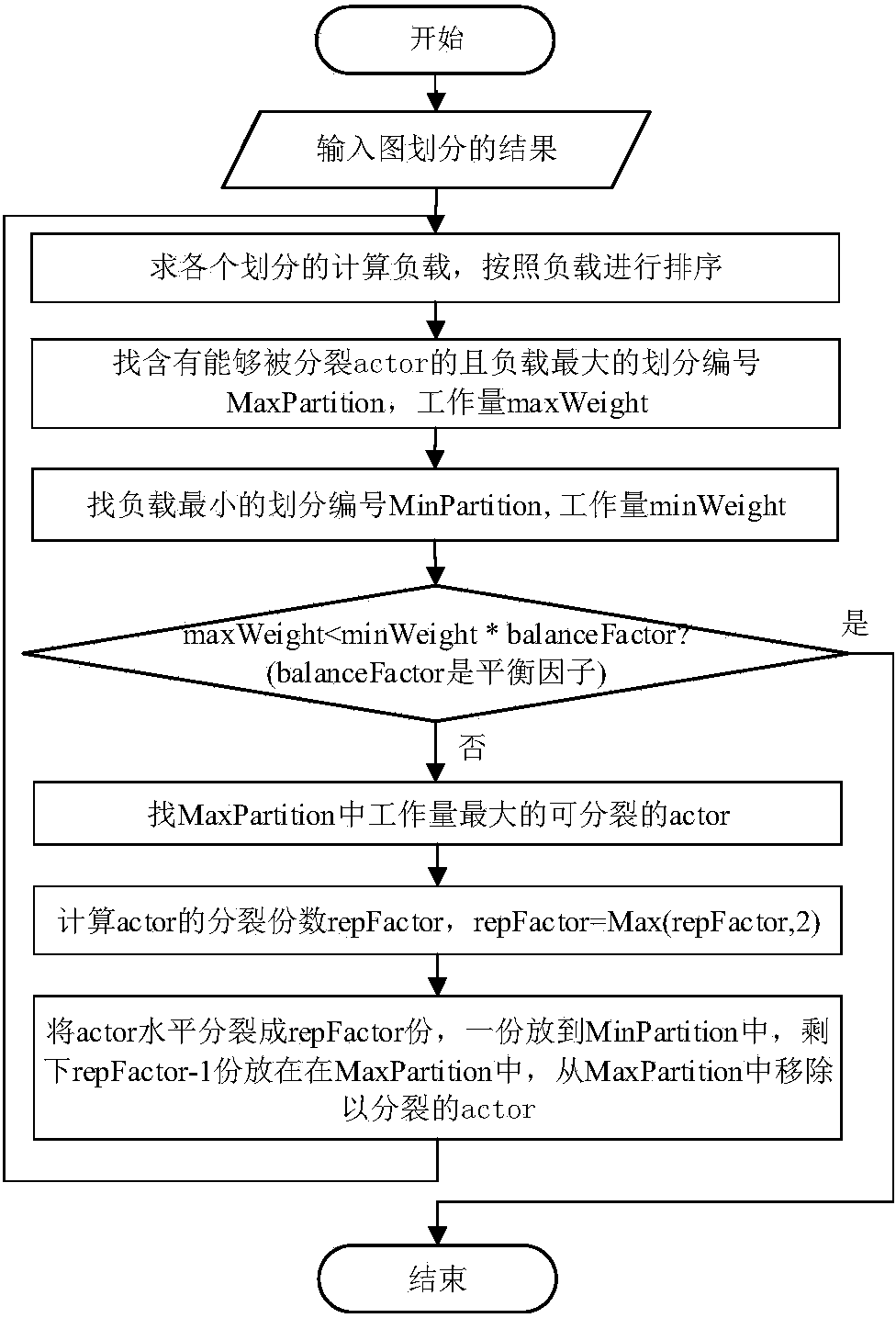

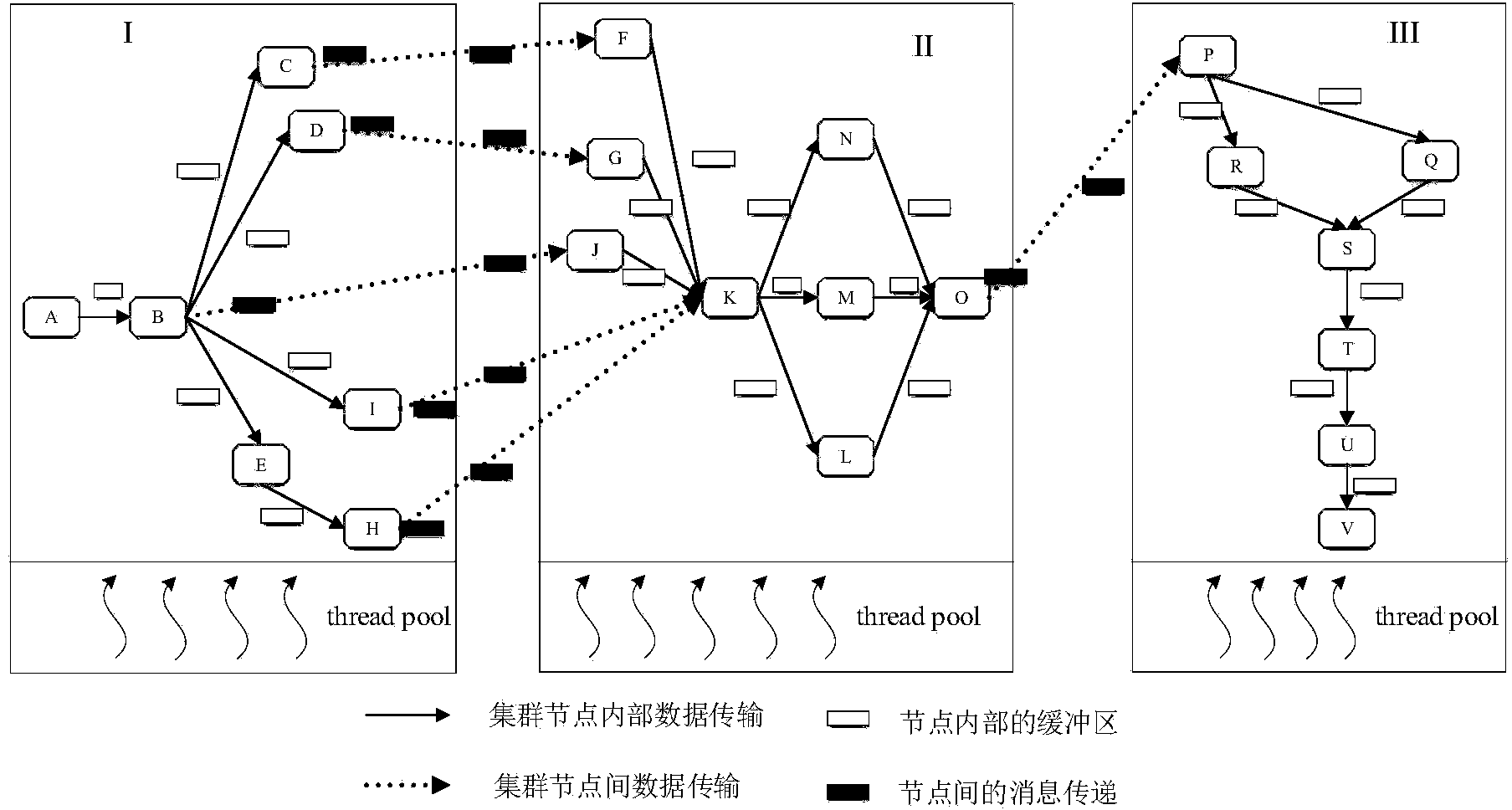

Data flow compilation optimization method oriented to multi-core cluster

ActiveCN103970580AImplementing a three-level optimization processImprove execution performanceResource allocationMemory systemsCache optimizationData stream

The invention discloses a data flow compilation optimization method oriented to a multi-core cluster system. The data flow compilation optimization method comprises the following steps that task partitioning and scheduling of mapping from calculation tasks to processing cores are determined; according to task partitioning and scheduling results, hierarchical pipeline scheduling of pipeline scheduling tables among cluster nodes and among cluster node inner cores is constructed; according to structural characteristics of a multi-core processor, communication situations among the cluster nodes, and execution situations of a data flow program on the multi-core processor, cache optimization based on cache is conducted. According to the method, the data flow program and optimization techniques related to the structure of the system are combined, high-load equilibrium and high parallelism of synchronous and asynchronous mixed pipelining codes on a multi-core cluster are brought into full play, and according to cache and communication modes of the multi-core cluster, cache access and communication transmission of the program are optimized; furthermore, the execution performance of the program is improved, and execution time is shorter.

Owner:HUAZHONG UNIV OF SCI & TECH

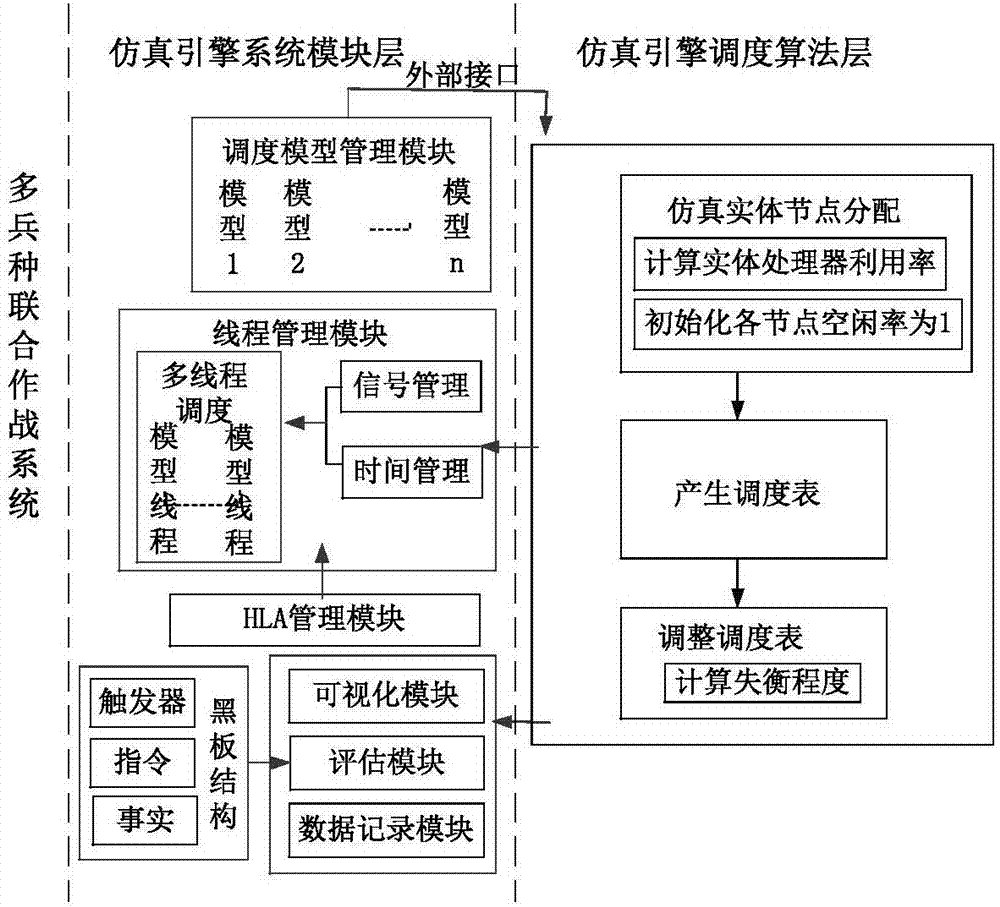

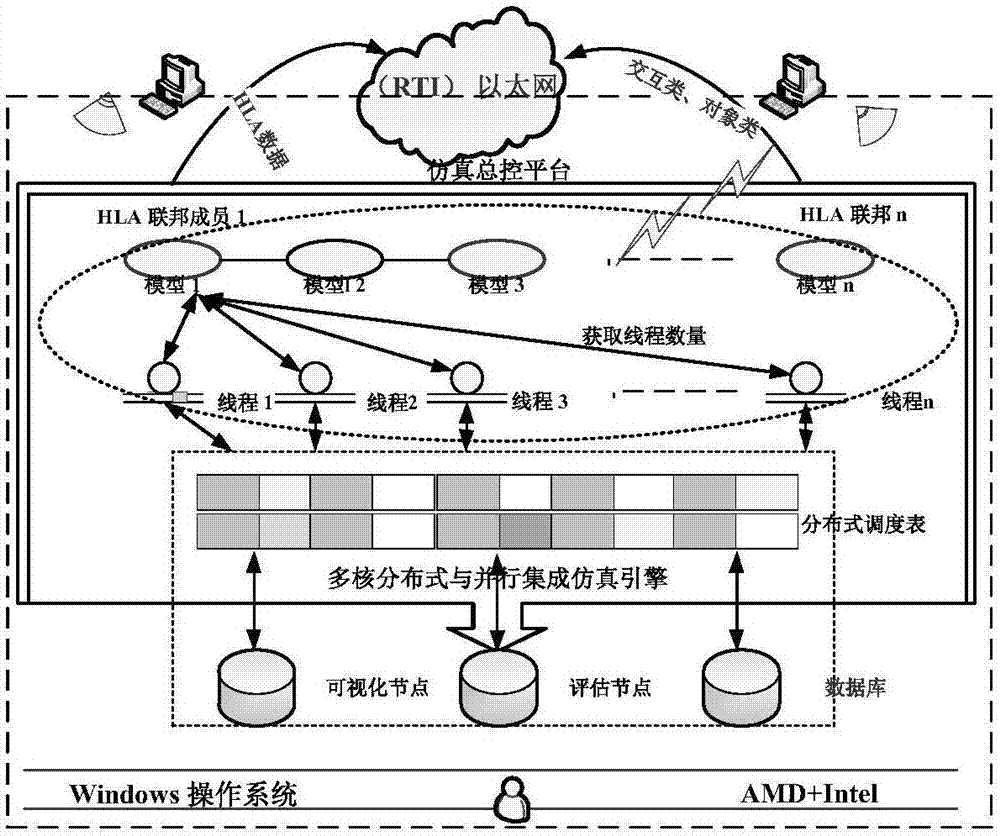

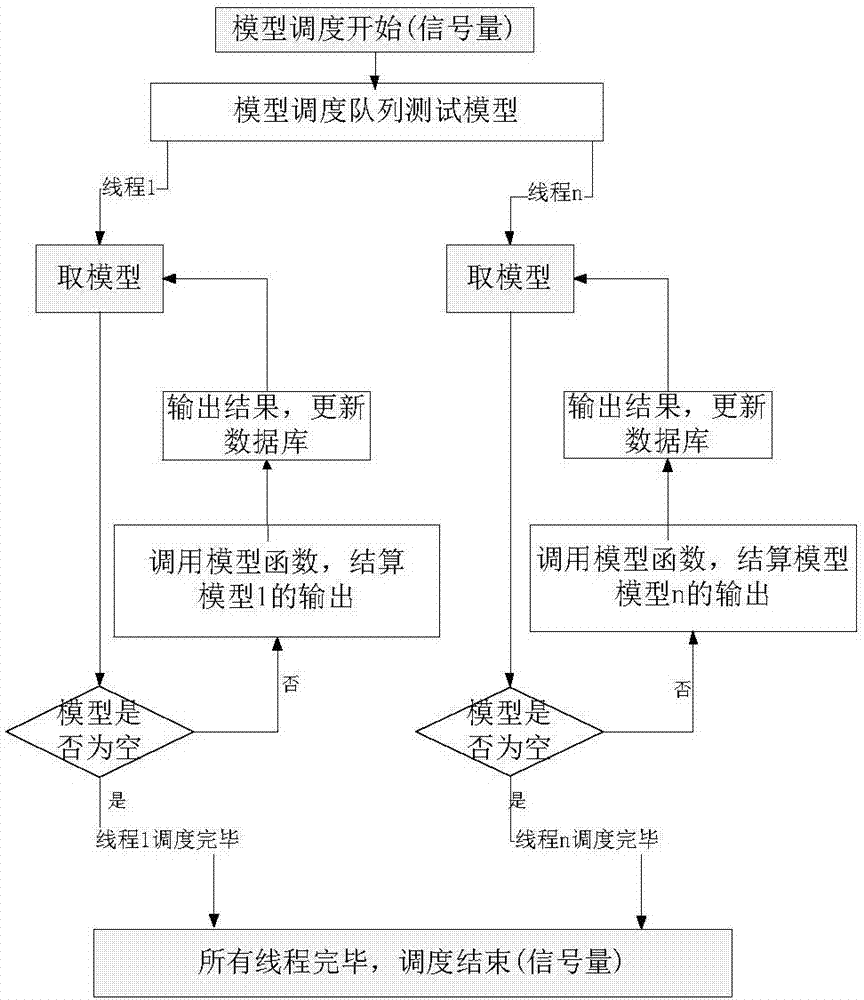

Multi-core parallel simulation engine system supporting joint operations

ActiveCN107193639ASolve the problem that real-time is vulnerableStrong independenceData processing applicationsSoftware simulation/interpretation/emulationNODALPhysical model

The invention discloses a multi-core parallel simulation engine system supporting joint operations. The system solves the problem that the real-time performance of a traditional joint operation system is easily influenced when step length is used to forward logic time. The system includes a model scheduling management module, a thread management module, an external interface management module and a high-level architecture (HLA) management module. According to the system, target nodes are assigned for simulation entities to enable total computation amounts of models on each node to be equivalent; then through the model scheduling management module, a scheduling schedule of each node is generated based on a principle of load balancing, the simulation step length is assigned for the models, and during a simulation process, the scheduling schedule is adjusted and the simulation step length of the destroyed entities and generated new entities is adjusted. The system can autonomously divide the scheduling schedule according to operating cycles of the models and the system step length, allow the entities to use the different physical models or the behavior models according to needs for simulation, and support real-time scheduling of large-scale simulation and the high-fidelity operation models.

Owner:BEIHANG UNIV

Permutation of opcode values for application program obfuscation

ActiveUS20050071655A1Key distribution for secure communicationData processing applicationsApplication schedulingInstruction set

Obfuscating an application program comprises reading an application program comprising code, transforming the application program code into transformed application program code that uses one of multiple opcode value encoding schemes of a dispatch table associated with the application program, and sending the transformed application program code. Executing an obfuscated application program comprises receiving an obfuscated application program comprising at least one instruction opcode value encoded using one of multiple instruction set opcode value encoding schemes, determining a dispatch table associated with the application program, and executing the application program using the associated dispatch table. The dispatch table corresponds to the one of multiple instruction set opcode value encoding schemes.

Owner:ORACLE INT CORP

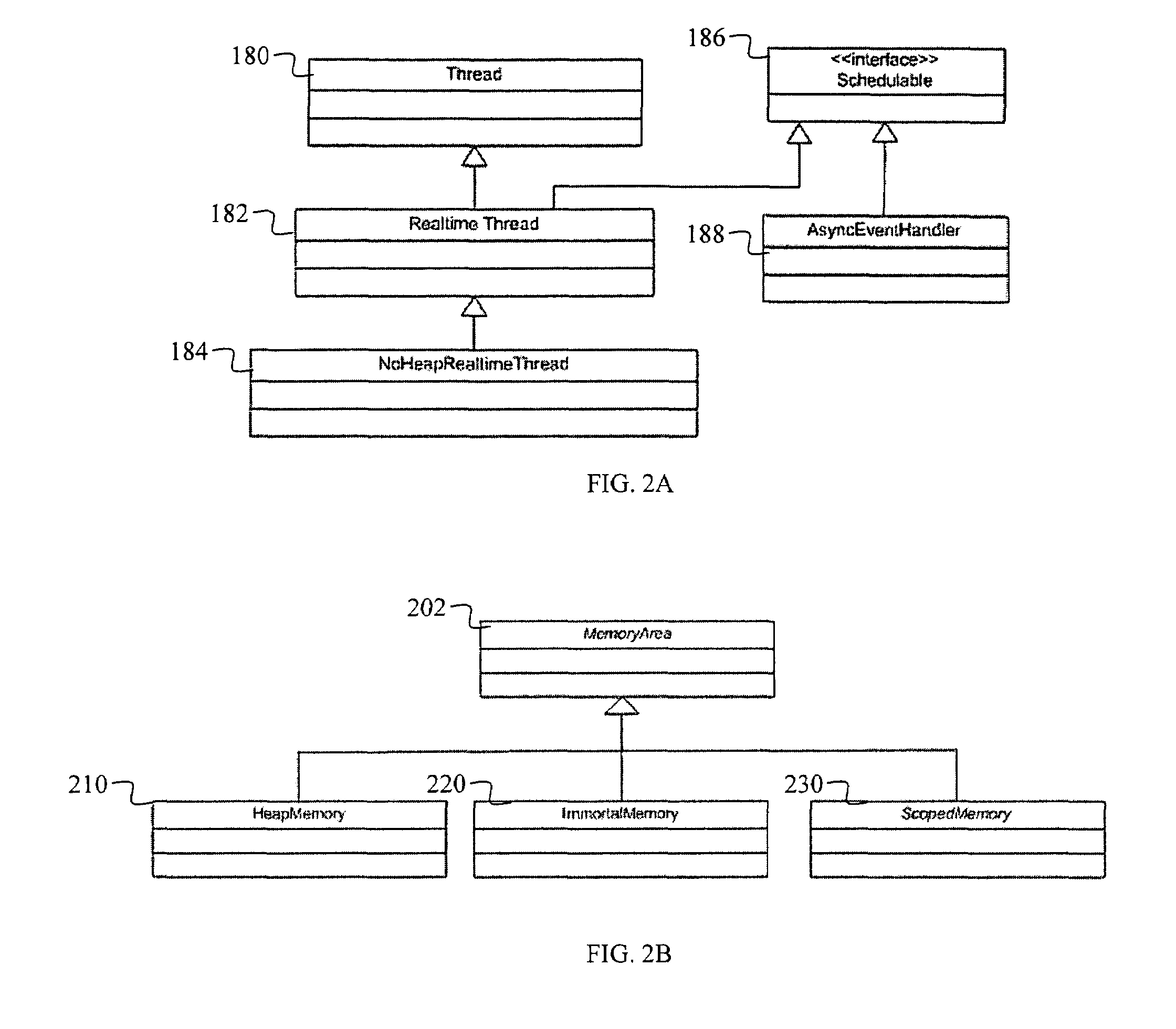

Multiple code sets for multiple execution contexts

InactiveUS7496897B1Specific program execution arrangementsMemory systemsData processing systemState switching

The invention relates to optimizing software code execution during state transitions. The system handles changes in execution context using differential rule checking techniques. For instance, when a thread executing in a data processing system changes state, its new state may be subject to different rules than its previous state. To enforce these rules, the thread may be associated with software code that causes certain restrictions, such as memory restrictions, to be applied to the thread. In an interpretive environment, this can be implemented by detecting a state transition in an active thread, and responding to the state transition by associating the thread with a dispatch table that reflects its state change. The dispatch table may cause the thread to be associated with code that enforces those restrictions. In one example, different dispatch tables can be provided, each table reflecting a different state of a thread, and each causing a thread to be subject to different restrictions. In another example, the same dispatch table can be rewritten to accommodate the changed state of the thread.

Owner:TIMESYS

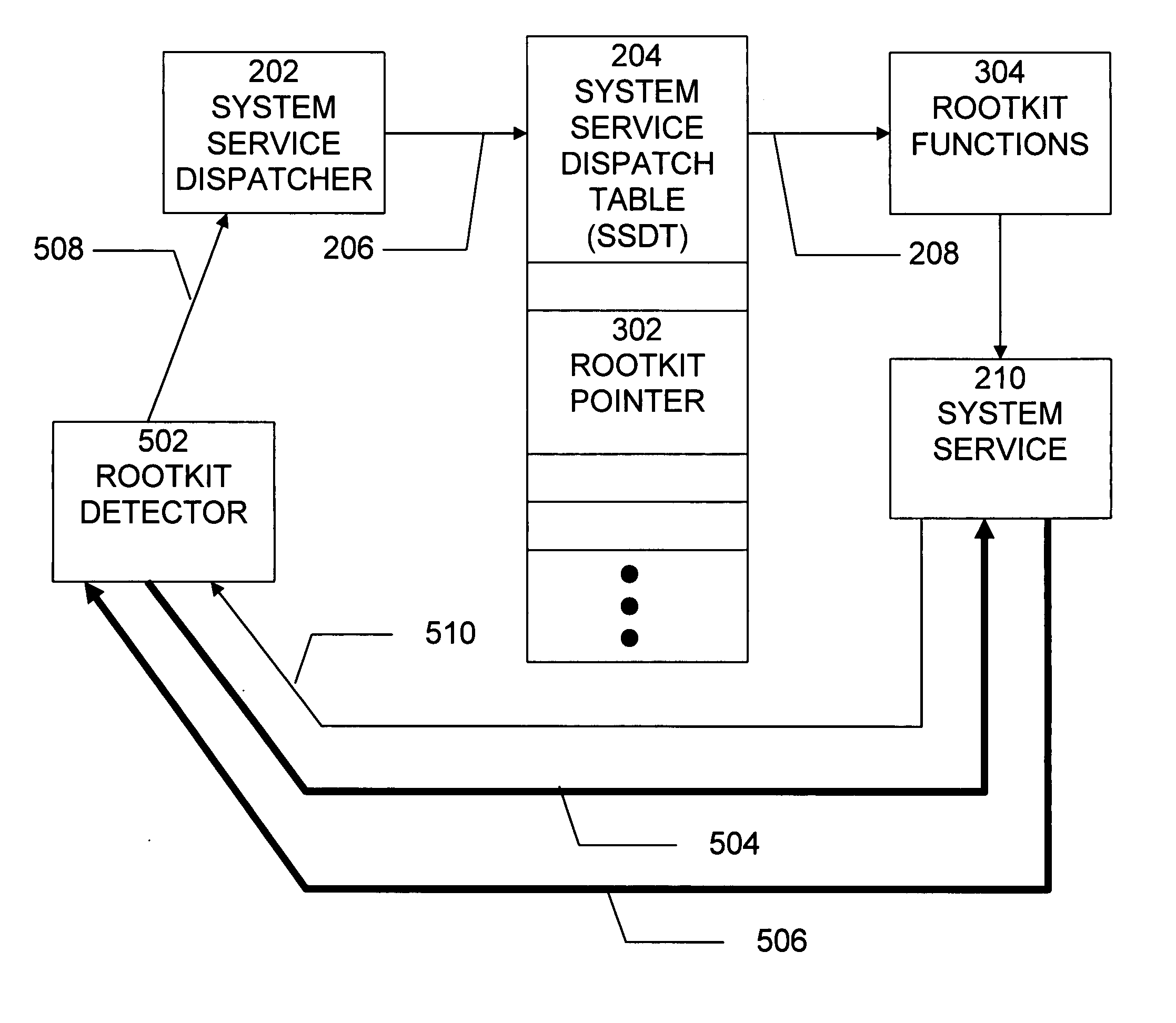

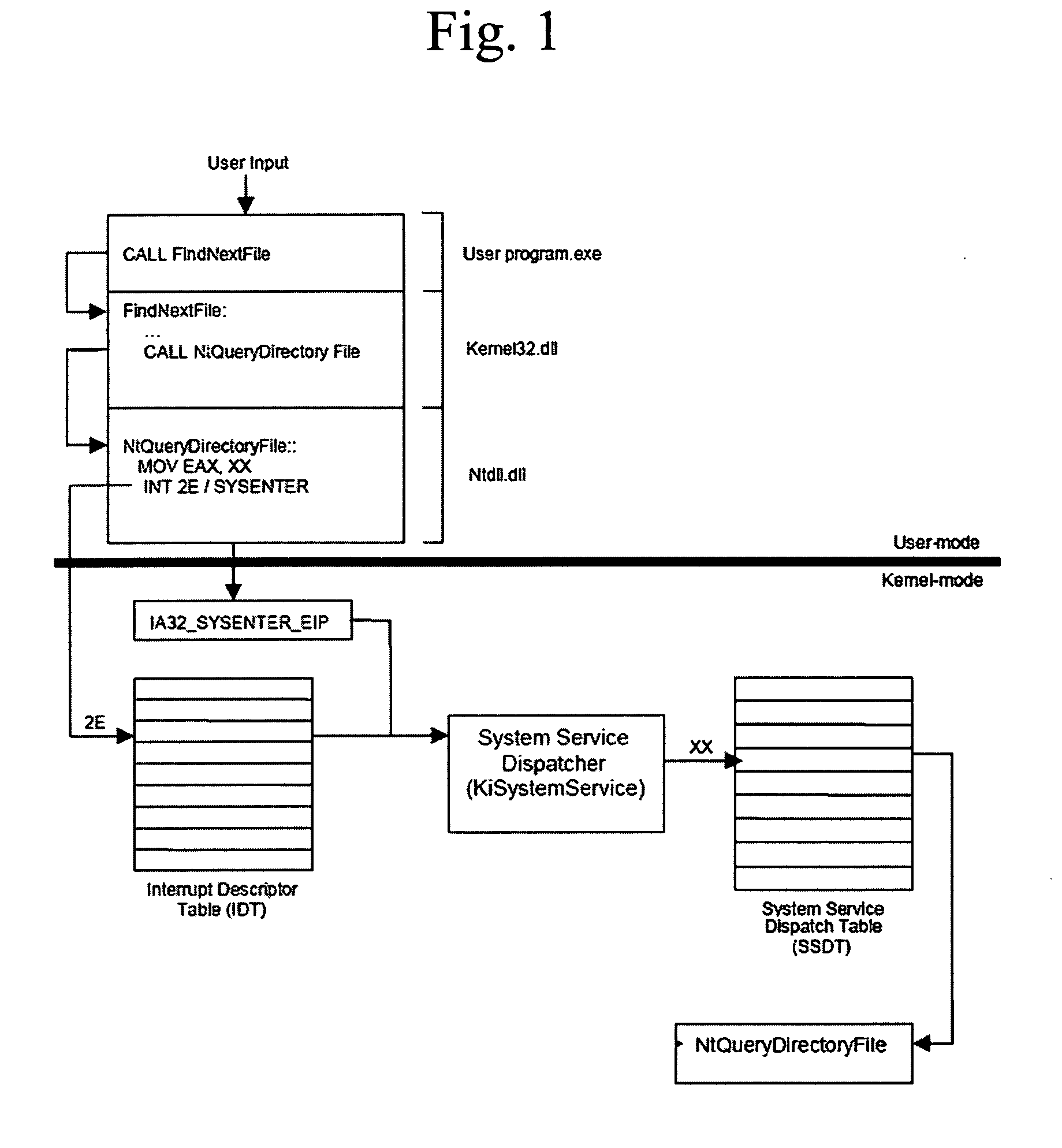

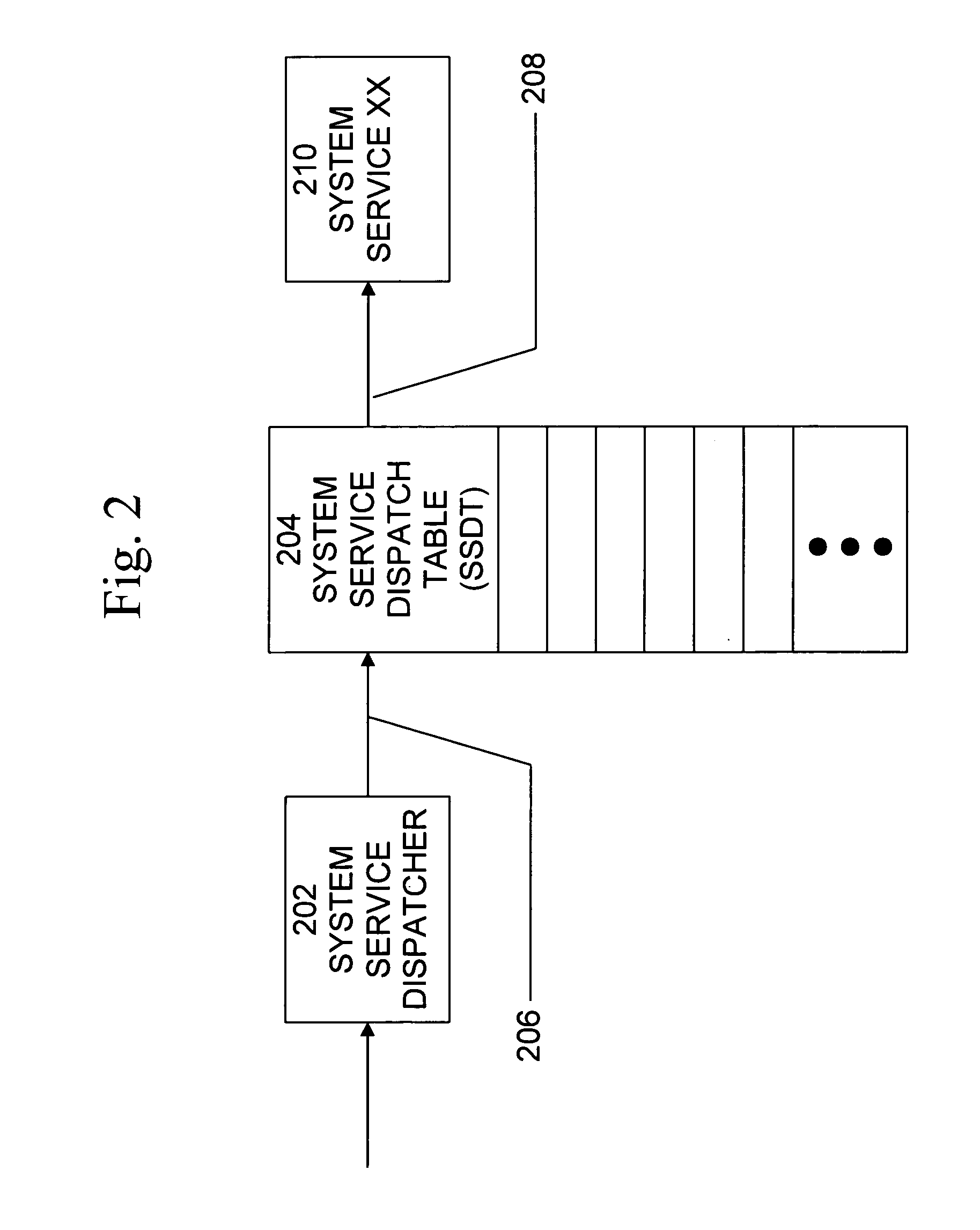

Method and system for detecting windows rootkit that modifies the kernel mode system service dispatch table

ActiveUS20080127344A1Memory loss protectionError detection/correctionSoftware engineeringApplication software

A method, system, and computer program product for detecting a kernel-mode rootkit that hooks the System Service Dispatch Table (SSDT) is secure, avoids false positives, and does not disable security applications. A method for detecting a rootkit comprises the steps of calling a function that accesses a system service directly, receiving results from calling the function that accesses the system service directly, calling a function that accesses the system service indirectly, receiving results from calling the function that accesses the system service indirectly, and comparing the received results from calling the function that accesses the system service directly and the received results from calling the function that accesses the system service indirectly to determine presence of a rootkit.

Owner:MCAFEE LLC

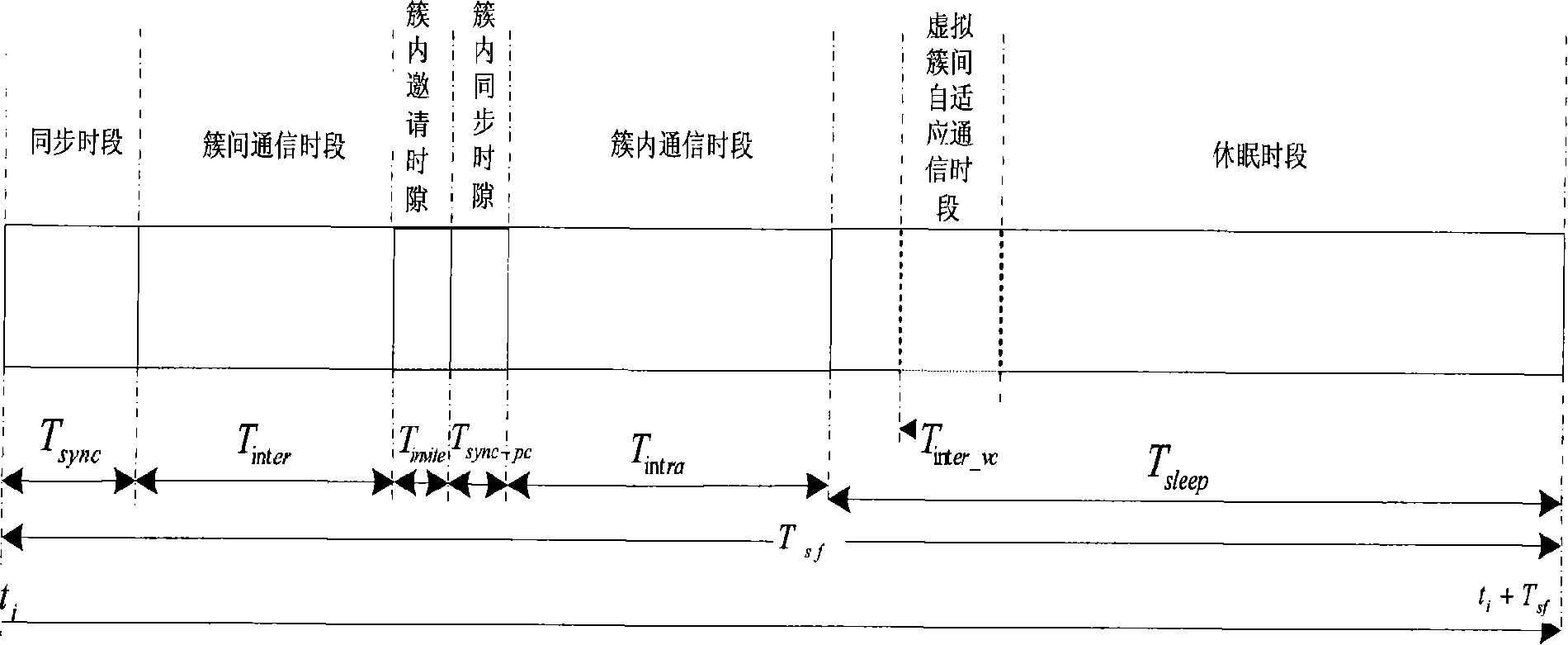

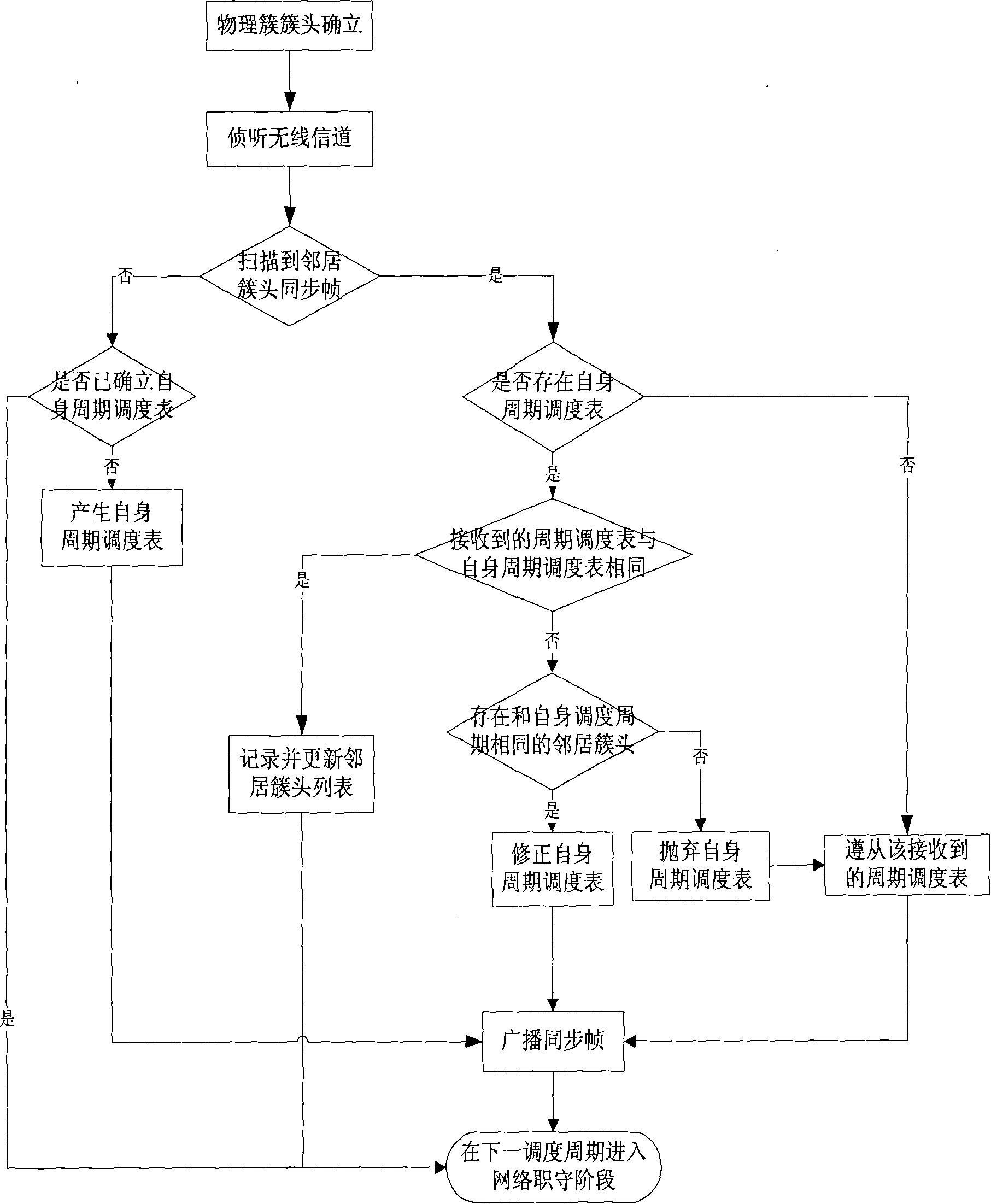

Double cluster based wireless sensor network distributed topology control method

InactiveCN101184004ALoad balancingImprove energy efficiencyEnergy efficient ICTData switching by path configurationWireless sensor networkingLife time

The invention relates to a distributed topology control realization method for wireless sensor network based on double clustering, which comprises a physical clustering step and a virtual clustering step, wherein, the physical clustering step is determining the cluster head or cluster member status of each node, so as to form a physical cluster; the virtual clustering step adopts distributed competitive mode; the cluster head of the physical cluster can generate own cycle dispatch table or follow the cycle dispatch table which is generated by the adjacent cluster head, so that the cluster heads of the adjacent physical clusters can form a virtual clustering which is run periodically and slept synchronously, and the communication between the cluster heads can be realized through an adaptivecommunication mechanism. The invention aims at the characteristics of the wireless sensor network on energy limitation, application specificity, and random dense distribution of nodes; on the basis of meeting the specific QoS requirement, the network load balancing can be realized effectively; the network energy consumption efficiency can be enhanced and the life time of network system can be extended.

Owner:JIAXING WIRELESS SENSOR NETWORKS CENT CAS

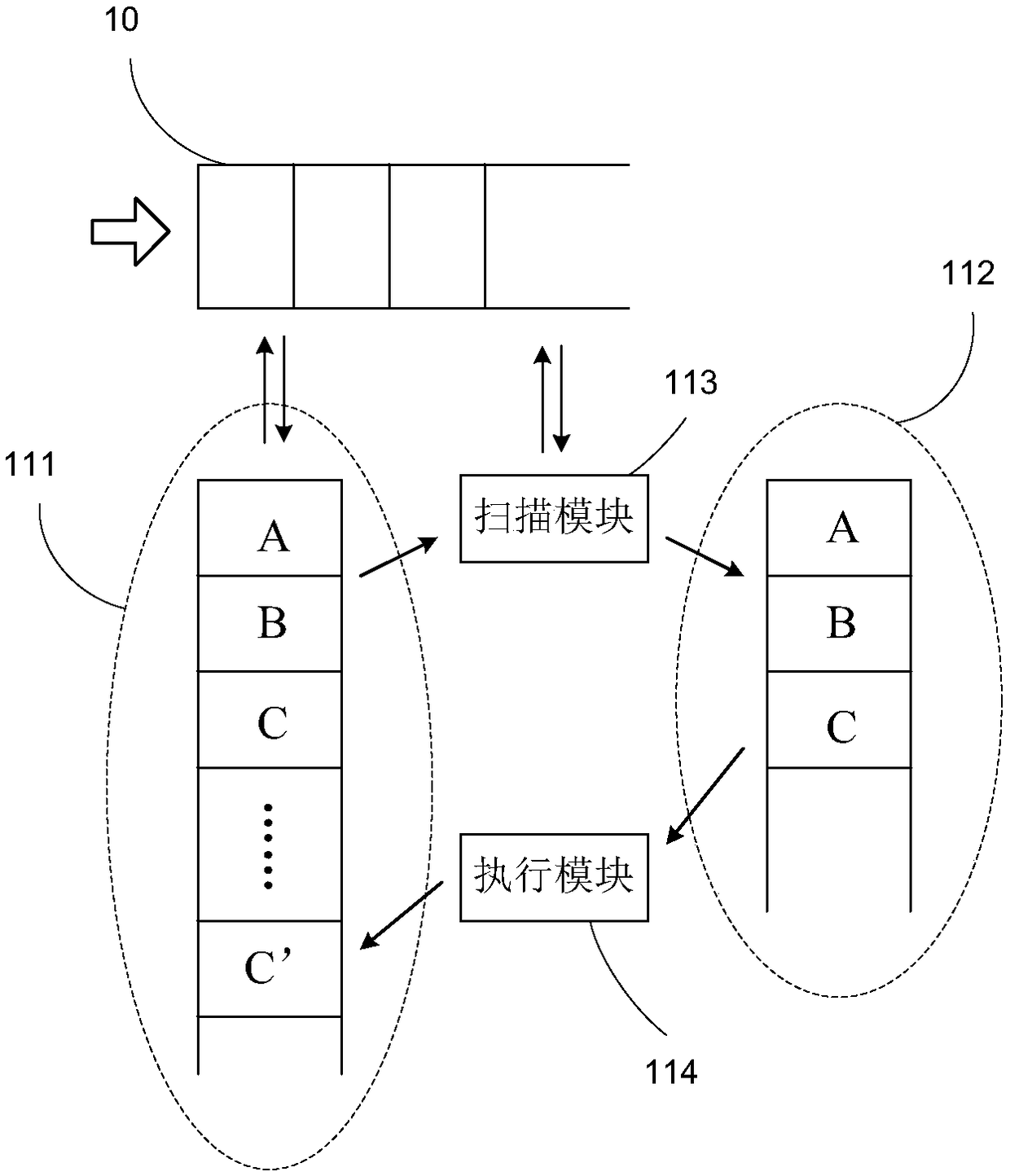

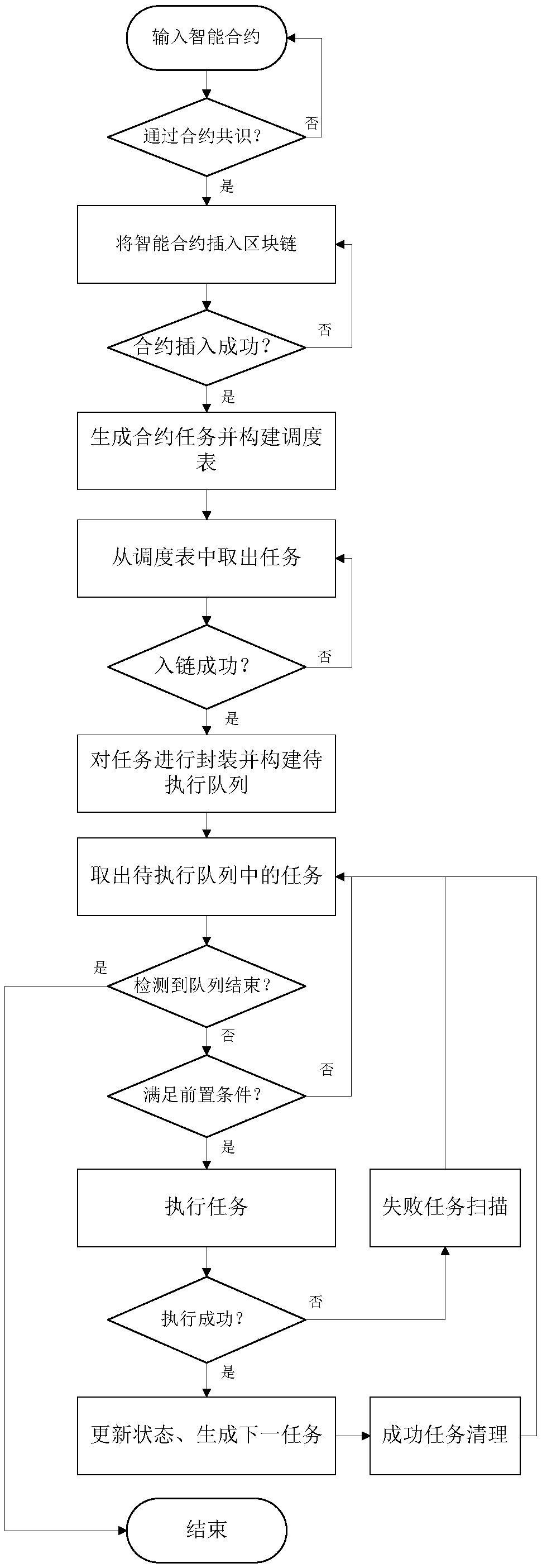

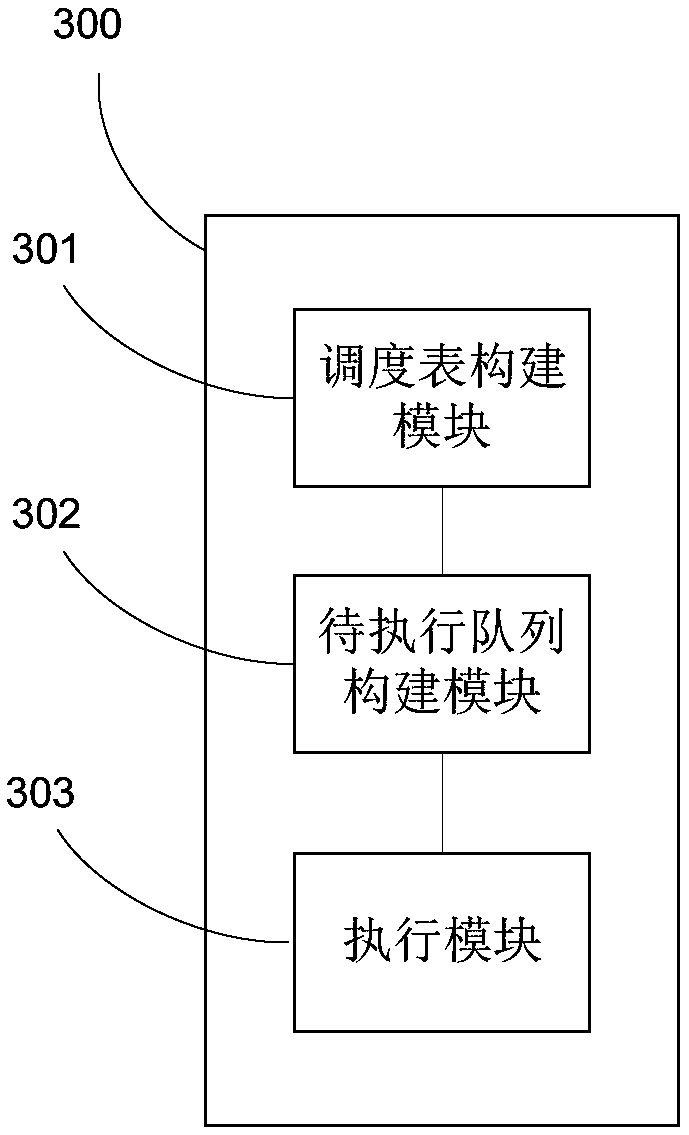

Task scheduling execution method and device based on smart contracts

PendingCN108804096AImprove execution efficiencyEnhance risk resistanceInterprogram communicationVisual/graphical programmingSmart contractDispatch table

The invention relates to a task scheduling execution method and device based on smart contracts. The task scheduling execution method comprises a scheduling table construction step, wherein multiple smart contracts are inserted into a block chain, contract tasks are generated based on the smart contracts inserted into the block chain, and the contract tasks are used to construct a scheduling table; a to-be-executed queue construction step, wherein the contract tasks in the scheduling table are encapsulated, and the encapsulated contract tasks are used to construct a to-be-executed queue; and an execution step, wherein the encapsulated contract tasks in the to-be-executed queue are sequentially executed according to the order in the to-be-executed queue. According to the task scheduling execution method and device based on the smart contracts, the execution efficiency of the smart contracts can be improved, and the risk resistance ability can be enhanced.

Owner:中思博安科技(北京)有限公司

Hardware assisted real-time scheduler using memory monitoring

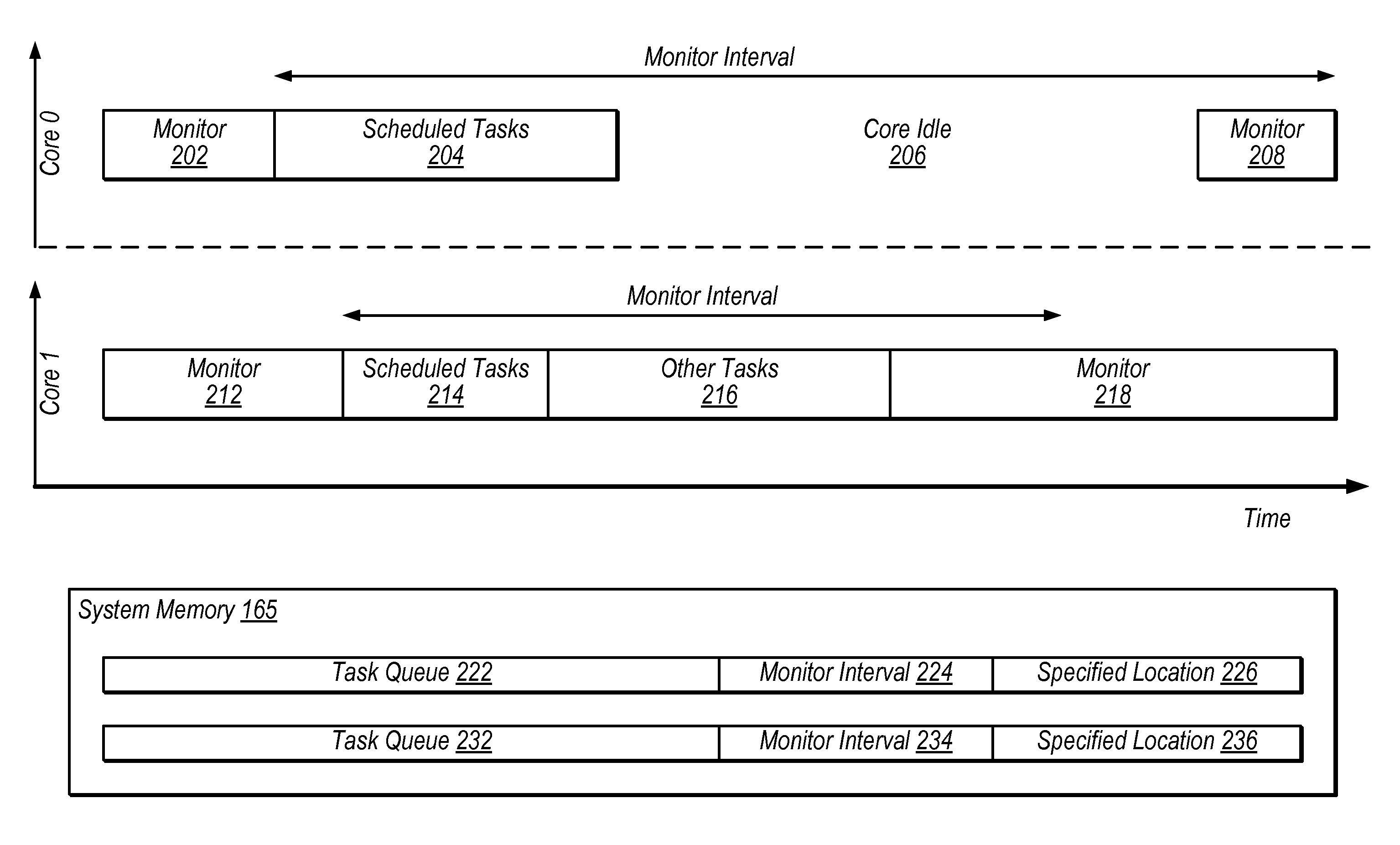

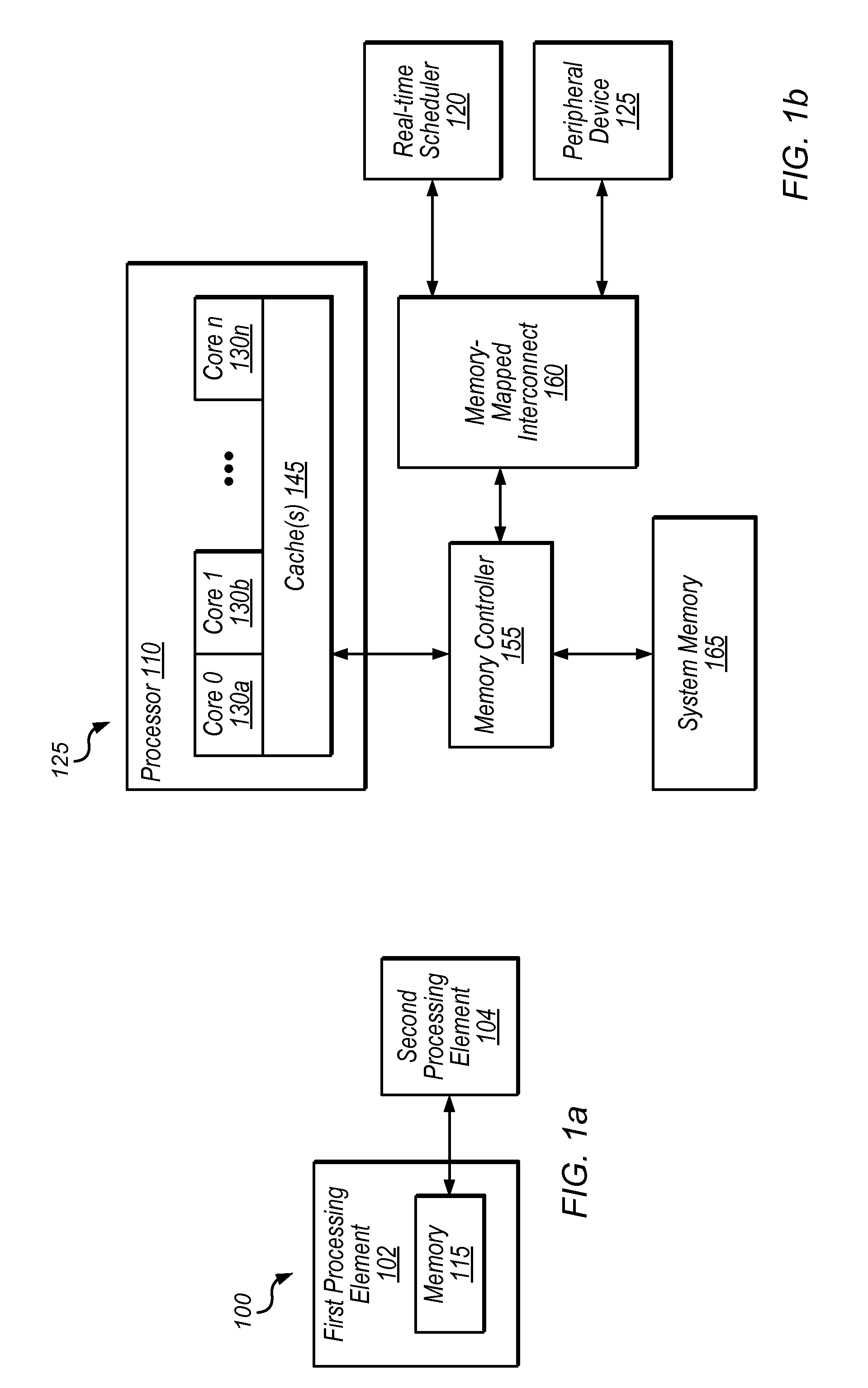

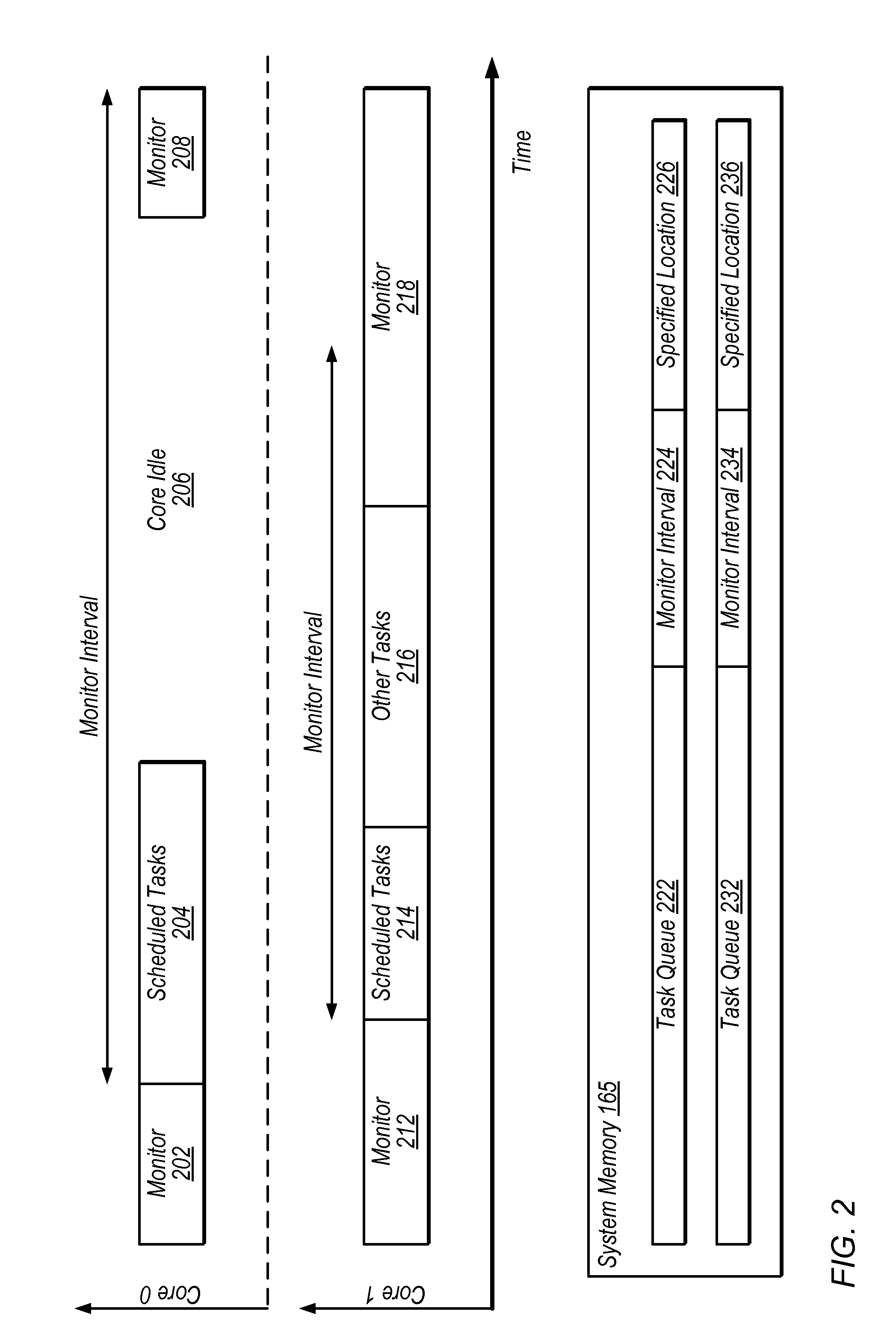

ActiveUS20140059553A1Reduce overheadReduce power consumptionProgram synchronisationEnergy efficient computingProcessing elementDispatch table

Apparatus and method for real-time scheduling. An apparatus includes first and second processing elements and a memory. The second processing element is configured to generate or modify a schedule of one or more tasks, thereby creating a new task schedule, and to write to a specified location in the memory to indicate that the new schedule has been created. The first processing element is configured to monitor for a write to the specified location in the memory and execute one or more tasks in accordance with the new schedule in response to detecting the write to the specified location. The first processing element may be configured to begin executing tasks based on detecting the write without invoking an interrupt service routine. The second processing element may store the new schedule in the memory.

Owner:NATIONAL INSTRUMENTS

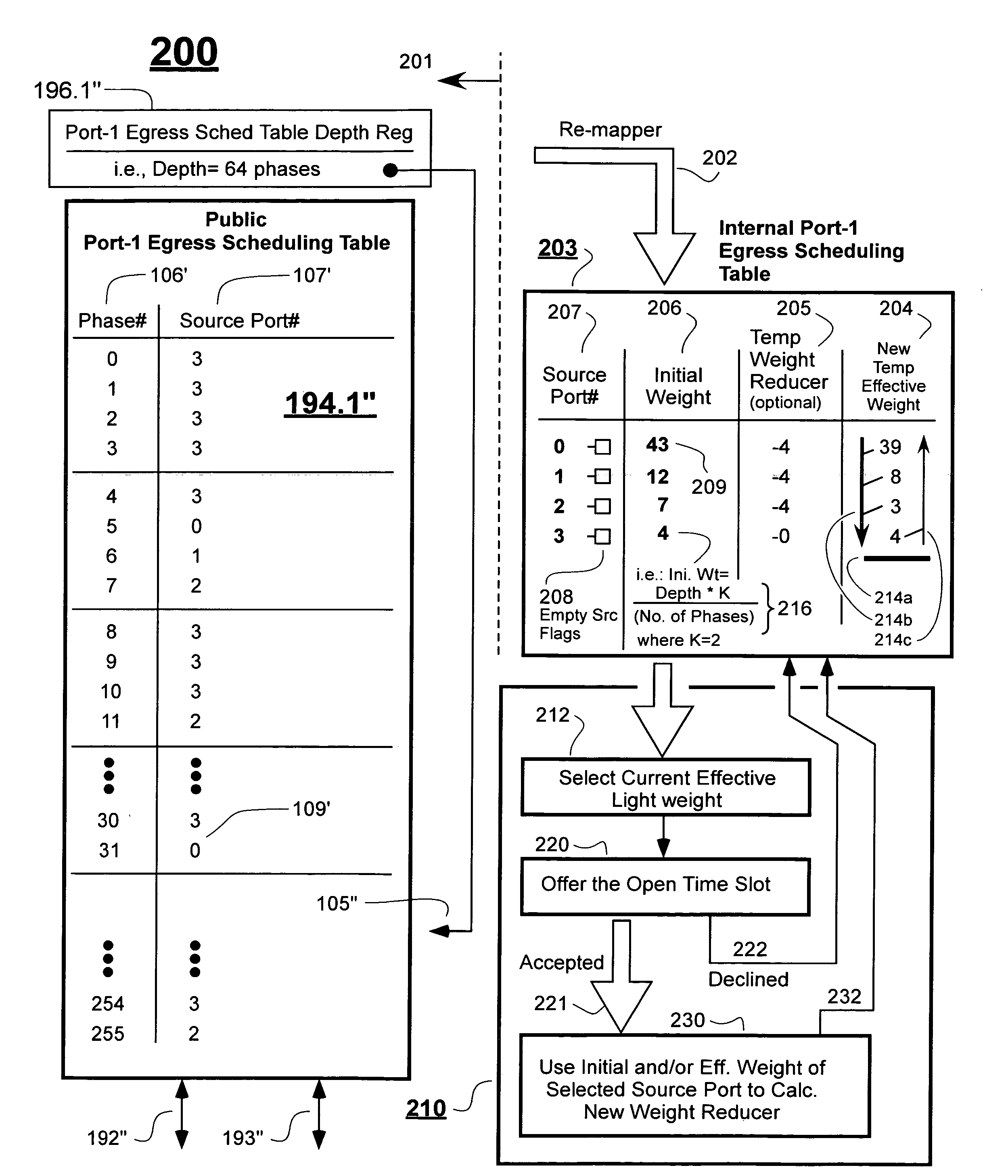

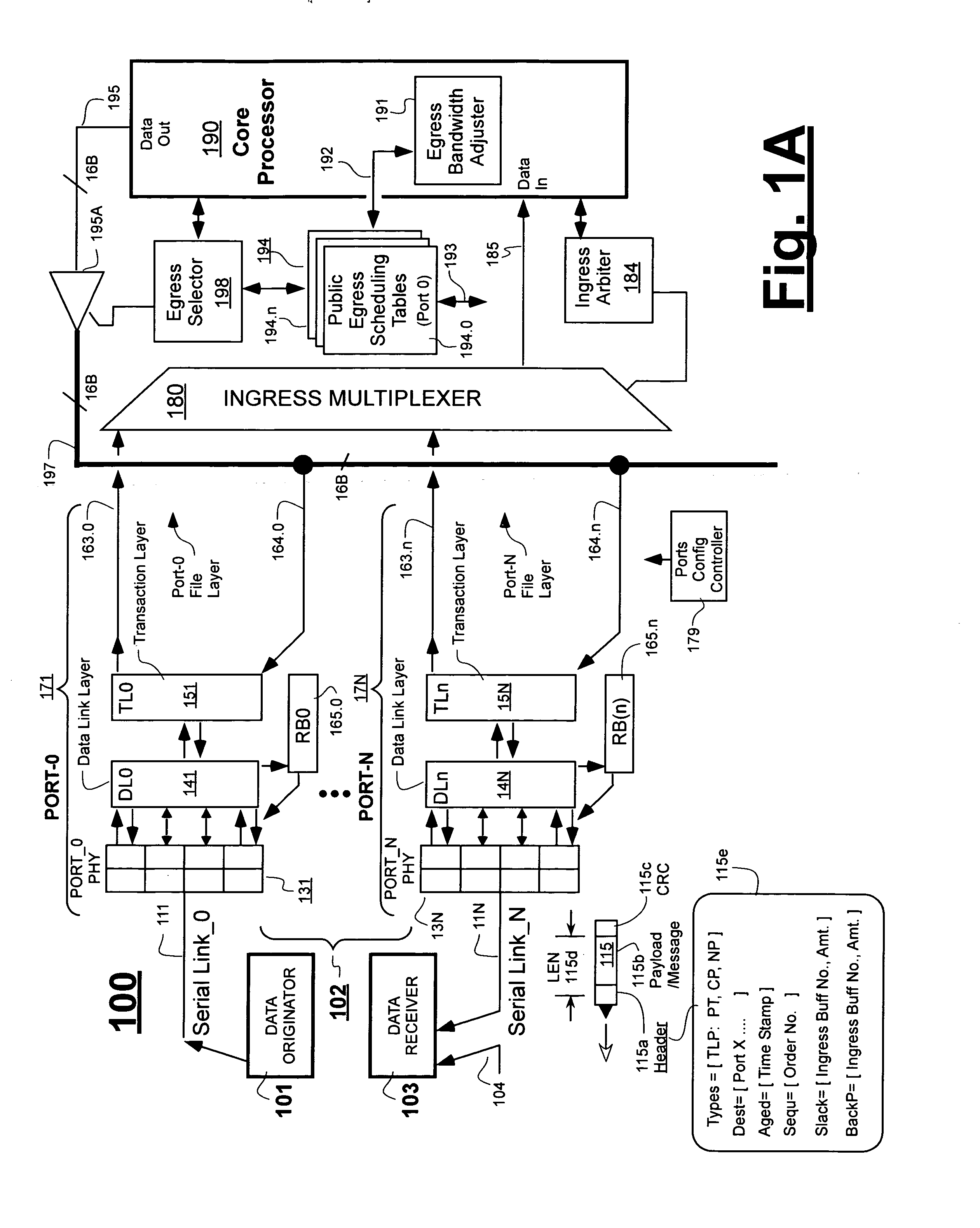

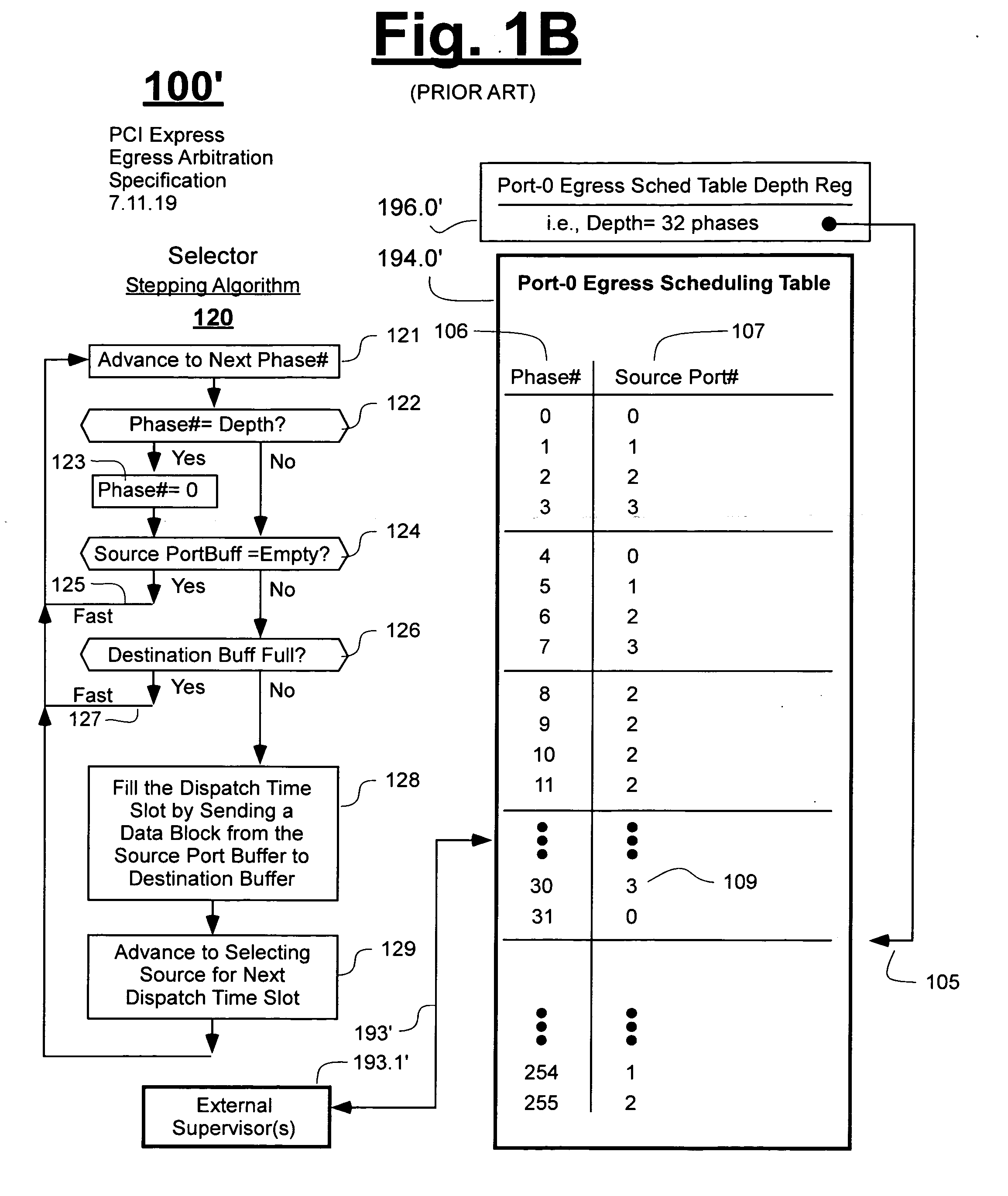

Method of improving over protocol-required scheduling tables while maintaining same

ActiveUS20080151753A1Less memory spaceDifferent data structureError preventionFrequency-division multiplex detailsData sourcePCI Express

Public scheduling tables of PCI-Express network devices are remapped into private scheduling tables having different data structures. The private scheduling tables enable the construction of parallel-processing selection engines (ones with look-ahead selection capabilities) that are more compact in size than would have been possible with use of the data structures of the public scheduling tables. In one embodiment, residual weight values are re-shuffled so as to move each winner of an arbitration round away from a winner's proximity bar by a distance corresponding to an initial weight assigned to the winner. The initial weight can be proportional to the reciprocal of a bandwidth allocation assigned to each data source.

Owner:MICROSEMI STORAGE SOLUTIONS US INC

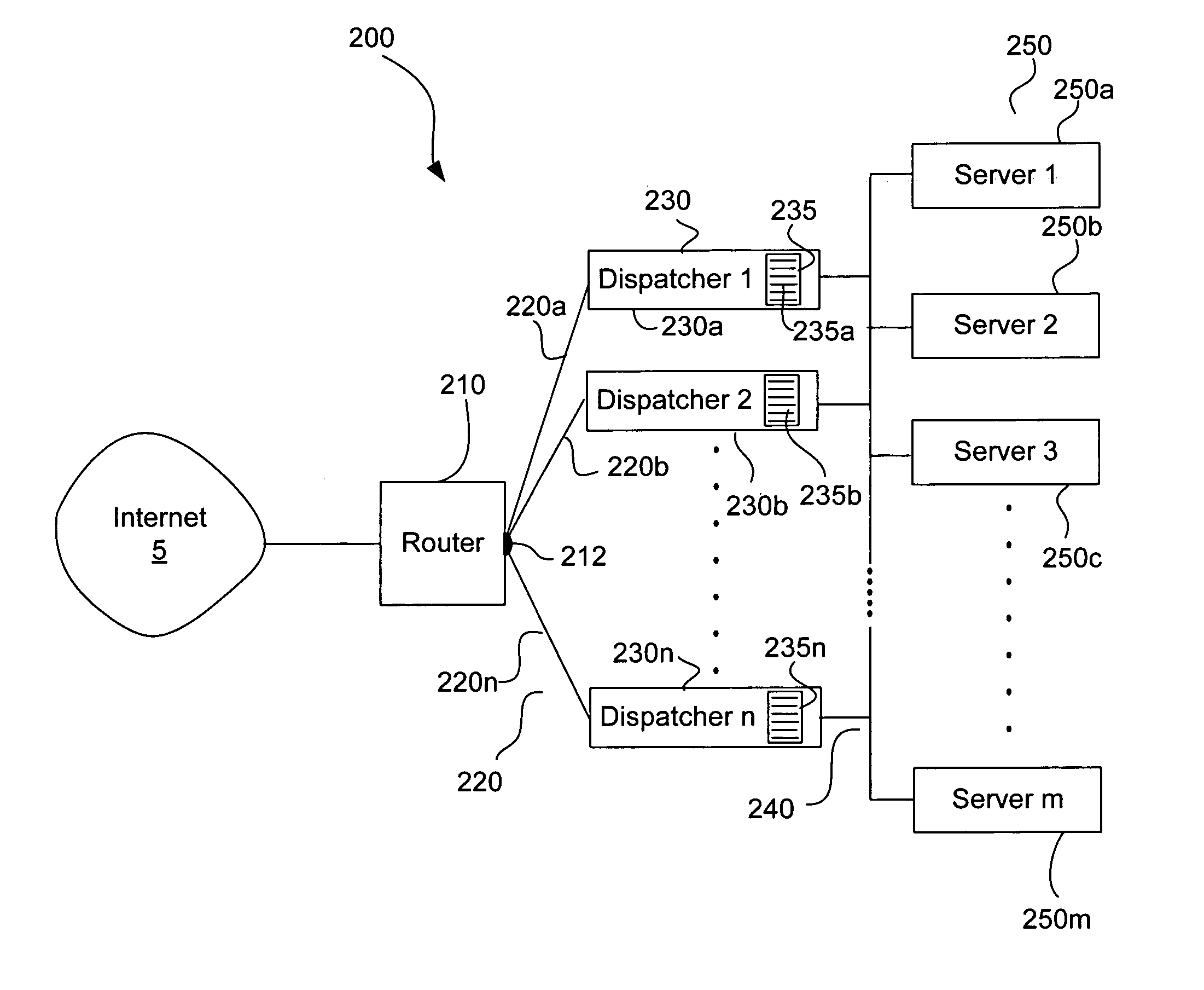

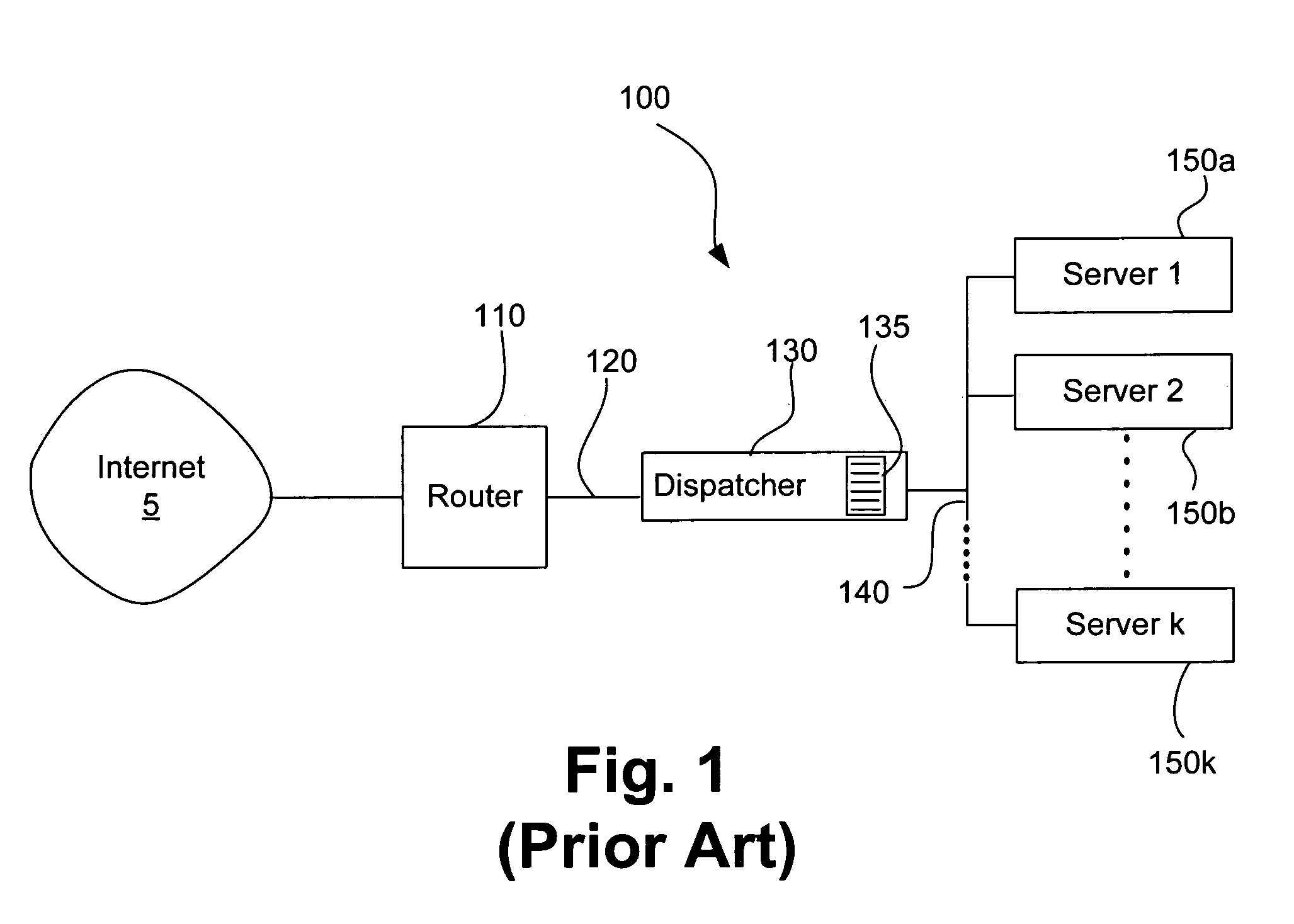

Apparatus and method for scalable server load balancing

ActiveUS7290059B2Resource allocationMultiple digital computer combinationsMultiple pointSystem area network

A server hosting system including a server cluster and a plurality of dispatchers, the plurality of dispatchers providing multiple points of entry into the server cluster. The server cluster and plurality of dispatchers are interconnected by a network, such as a system area network. Each of the dispatchers maintains a local dispatch table, and the local dispatch tables of the plurality of dispatchers, respectively, share data over the network.

Owner:TAHOE RES LTD

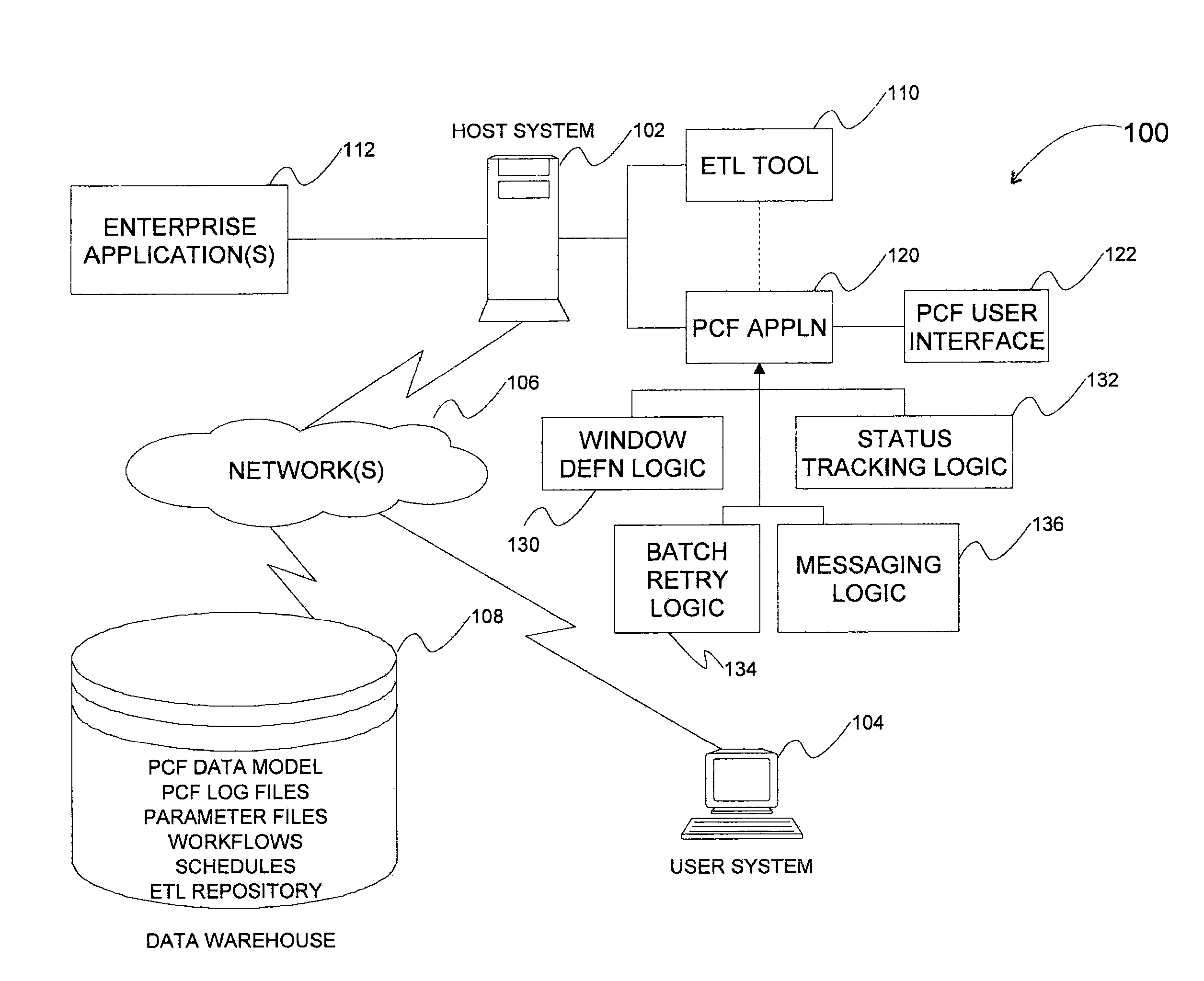

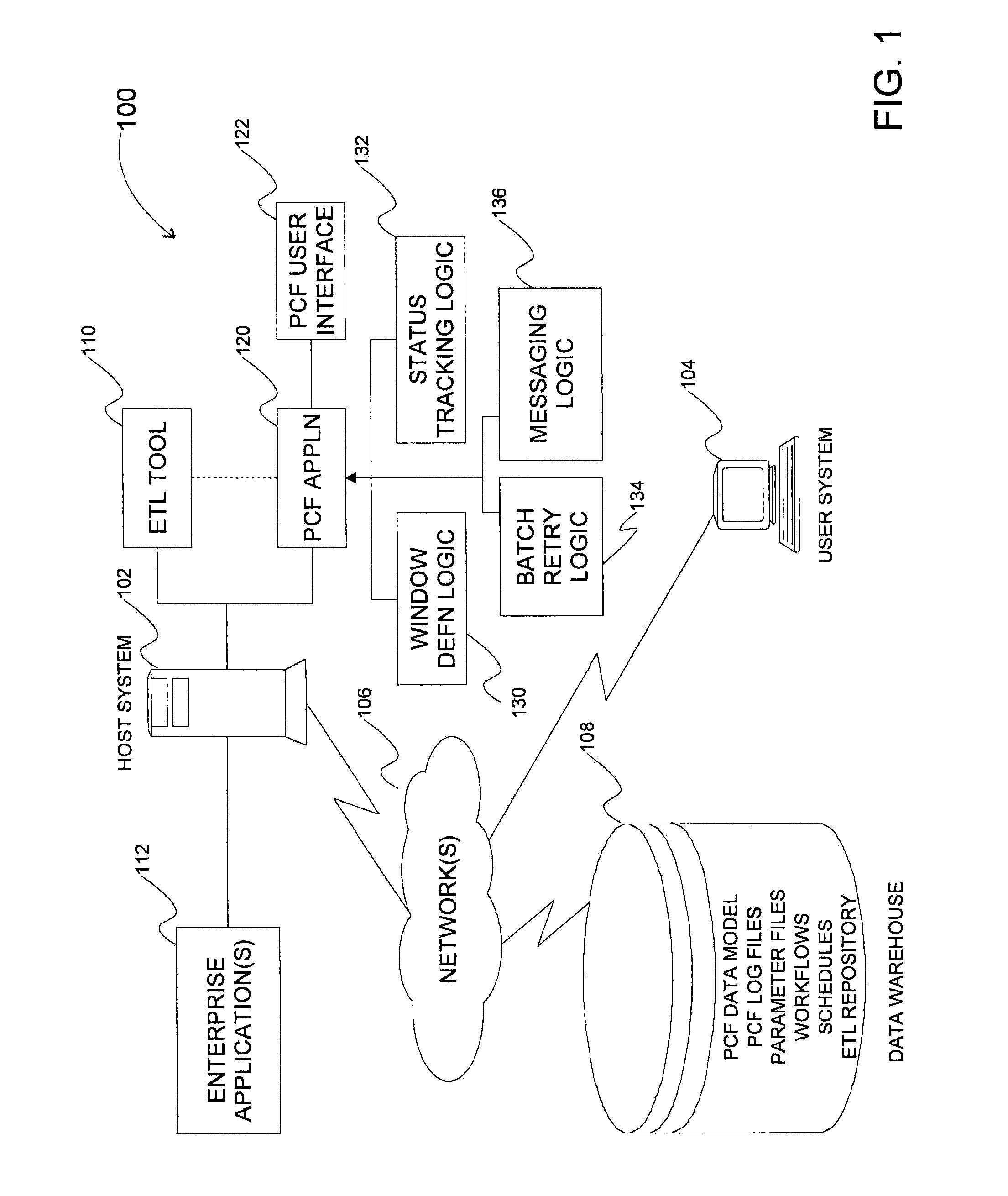

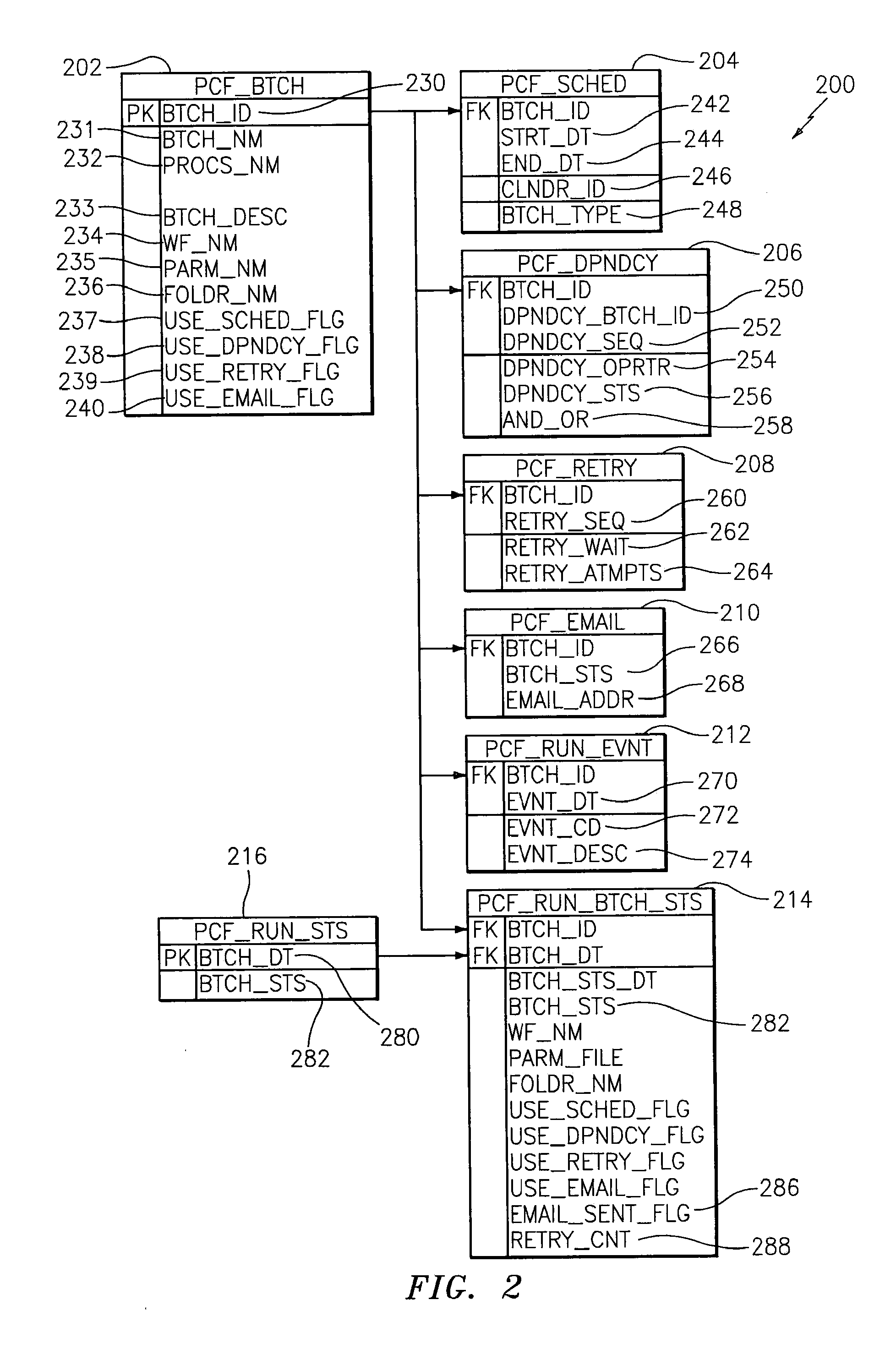

Methods, systems, and computer program products for managing batch operations in an enterprise data integration platform environment

InactiveUS20100153952A1Multiprogramming arrangementsOffice automationBatch processingBatch operation

Methods, system, and computer program products for managing batch operations are provided. A method includes defining a window of time in which a batch will run by entering a batch identifier into a batch table, the batch identifier specifying a primary key of the batch table and is configured as a foreign key to a batch schedule table. The time is entered into the batch schedule table. The method further includes entering extract-transform-load (ETL) information into the batch table. The ETL information includes a workflow identifier, a parameter file identifier, and a location in which the workflow resides. The method includes retrieving the workflow from memory via the workflow identifier and location, retrieving the parameter file, and processing the batch, according to the process, workflow, and parameter file.

Owner:AT&T INTPROP I L P

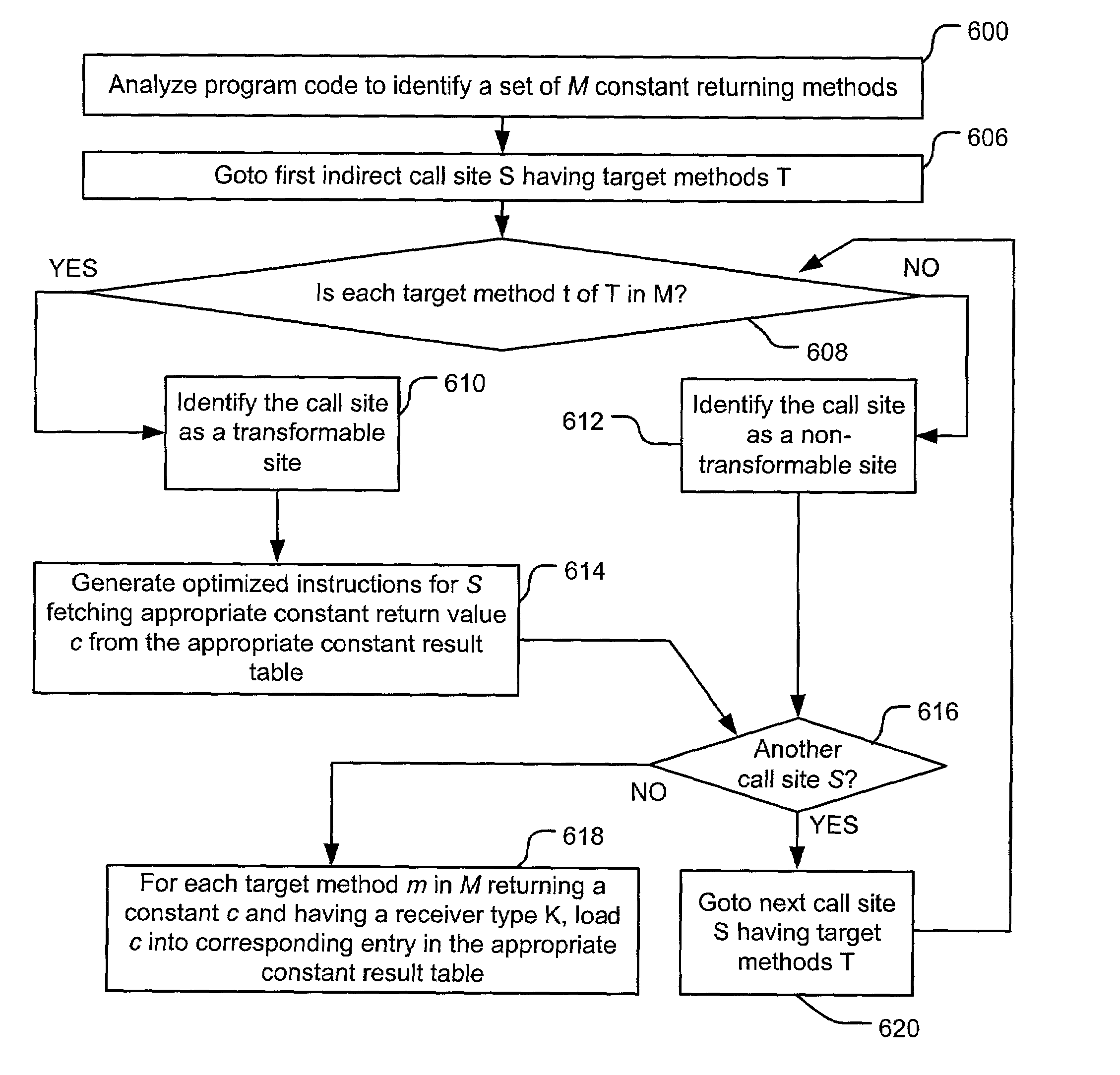

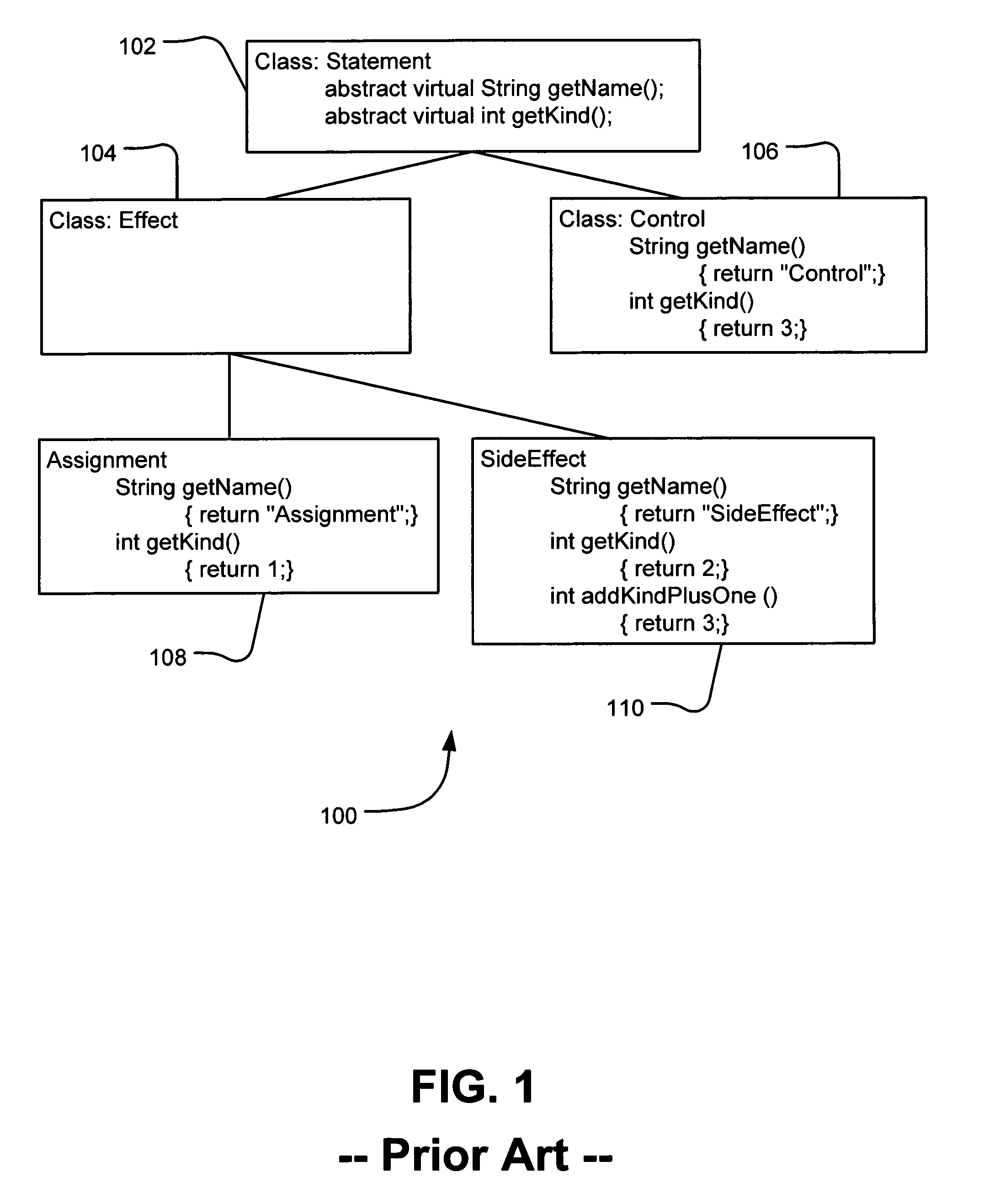

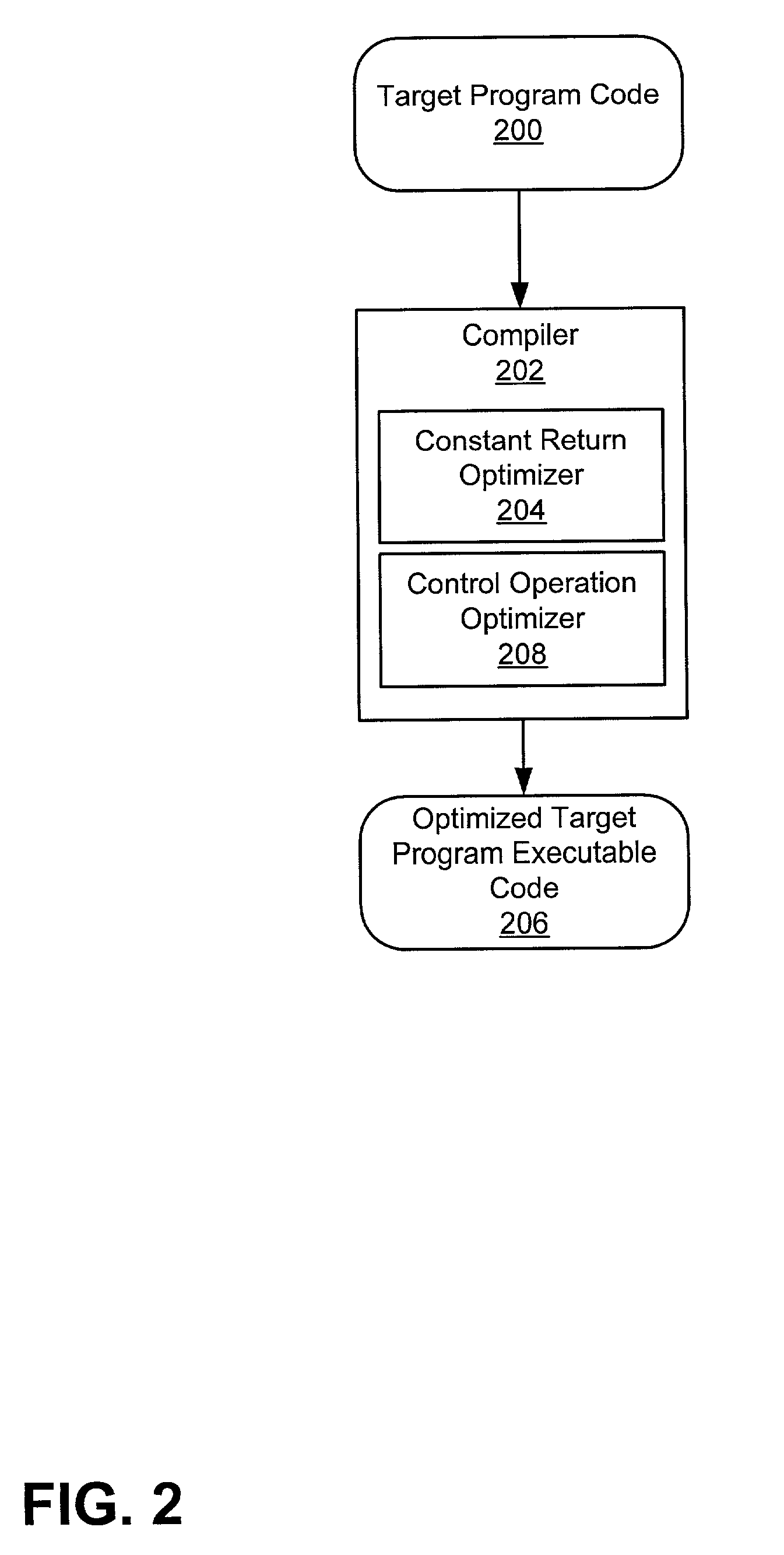

Constant return optimization transforming indirect calls to data fetches

InactiveUS7028293B2No side effectSimple methodSoftware engineeringSpecific program execution arrangementsData ingestionCall site

Indirect method invocation of methods that only return constant values is optimized using fetching operations and return constant tables. Such method calls can be optimized if all possible method calls via the call site instruction return a constant value and have no side effects. Each constant return value is loaded from a return constant table, and method invocation is eliminated. If all possible target methods in a program result in a constant return value and have no side effects, an associated virtual function dispatch table (vtable) may be used as the return constant table. Furthermore, control operation optimization may be applied to identify type constraints, based on one or more possible type-dependent constant return values from an indirect method invocation. An optimizer identifies and maps between a restricted set of values and an associated restricted set of types on which execution code may operate relative to a given control operation. The type constraints are used to optimize the associated execution code by filtering propagation of runtime type approximations for optimization.

Owner:MICROSOFT TECH LICENSING LLC

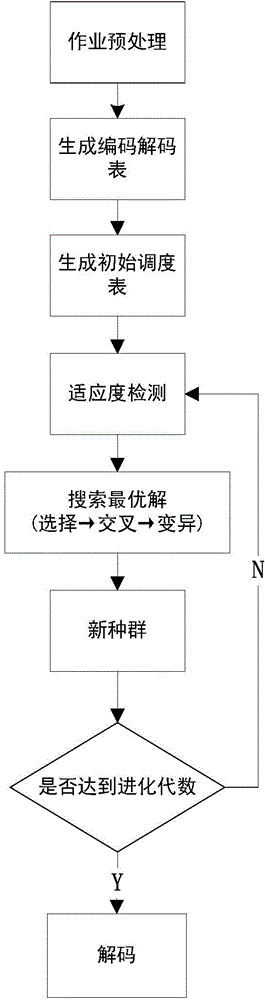

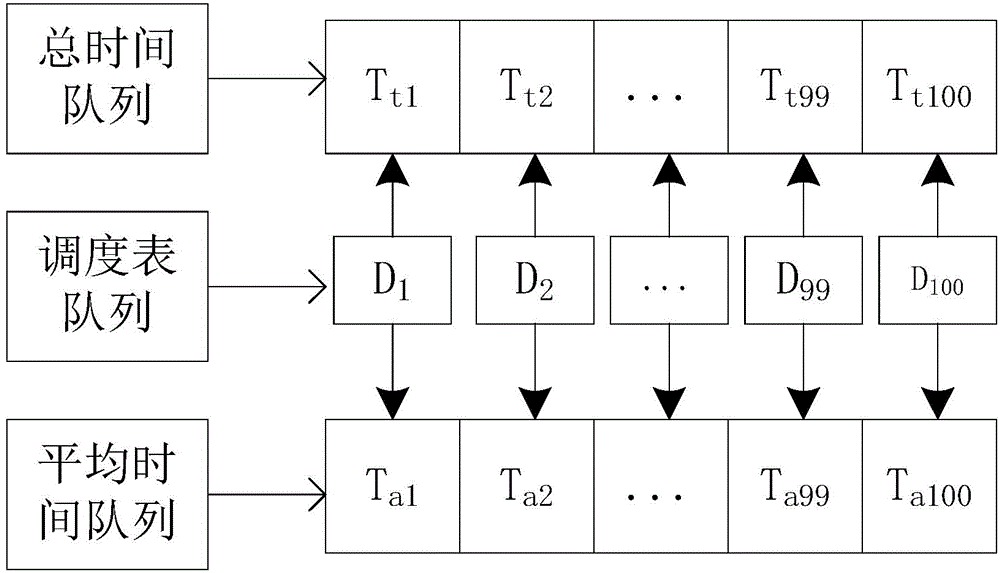

Hadoop job scheduling method based on genetic algorithm

InactiveCN104572297AReduce the burden onEnsure fairnessResource allocationOptimal schedulingGenetic algorithm

The invention discloses a Hadoop job scheduling method based on a genetic algorithm. The Hadoop job scheduling method comprises the following steps: firstly, pre-processing work to generate an encoding and decoding table; secondly, generating initial scheduling tables of a plurality of executing work, and carrying out fitness detection sorting on the initial scheduling tables to obtain a scheduling table list; finally, carrying out genetic operation on the initial scheduling tables in the scheduling table list to form a final scheduling table list; taking the scheduling table ranked in the most front of the final scheduling table list as an optimal scheduling table; distributing tasks of different work to corresponding TaskTracker for execution according to the optimal scheduling table, so as to finish a Hadoop job scheduling task. According to the scheduling method, resources in a platform do not need to be pre-set before jobs are scheduled; dynamic acquisition, counting and distribution are carried out in a scheduling process and the burden of an administrator is alleviated; furthermore, the total finishing time of the work and the average finishing time of the work can be controlled by the scheduling method, so that the fairness of executing the work is guaranteed and the executing efficiency can also be ensured.

Owner:XI'AN POLYTECHNIC UNIVERSITY

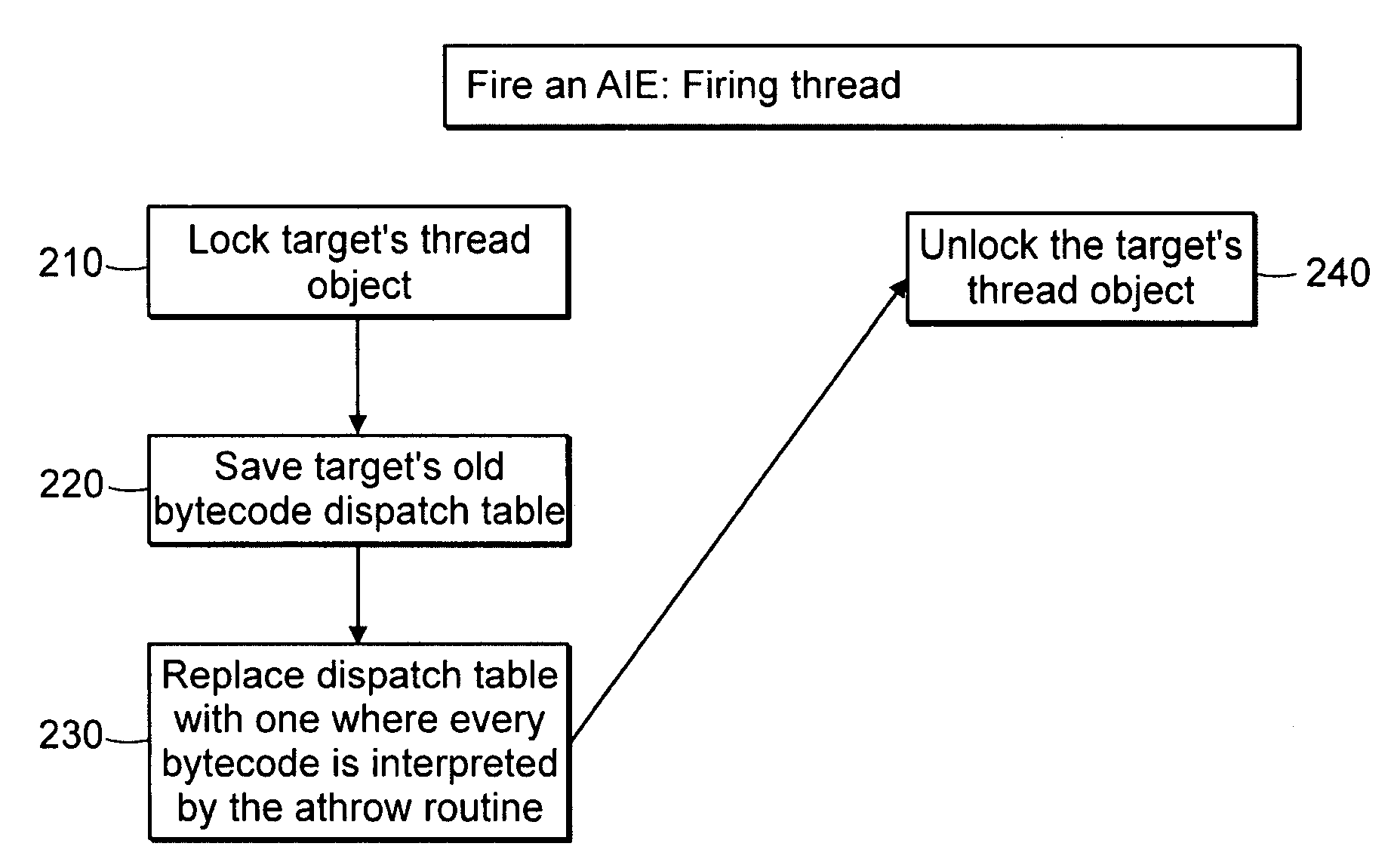

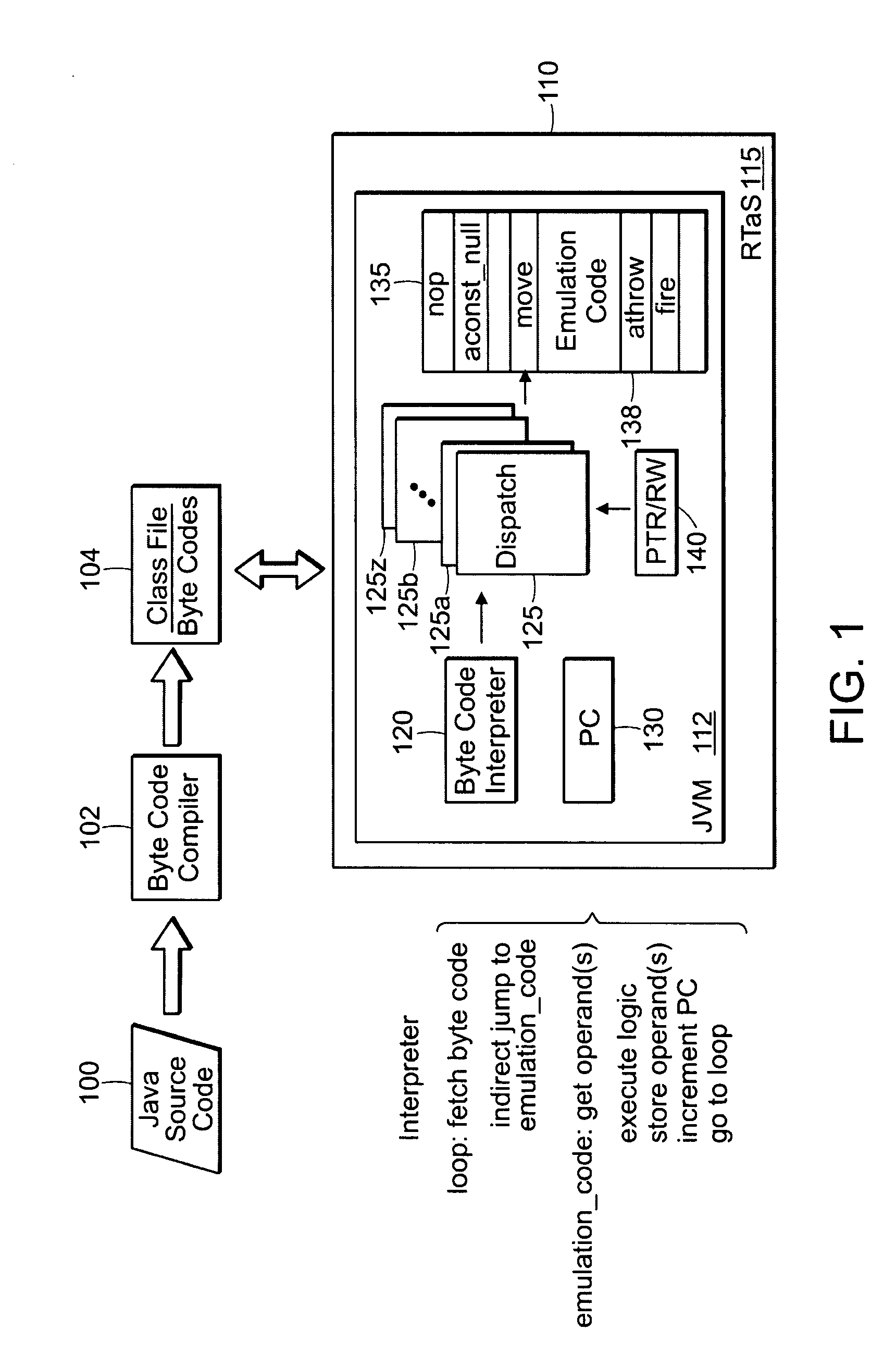

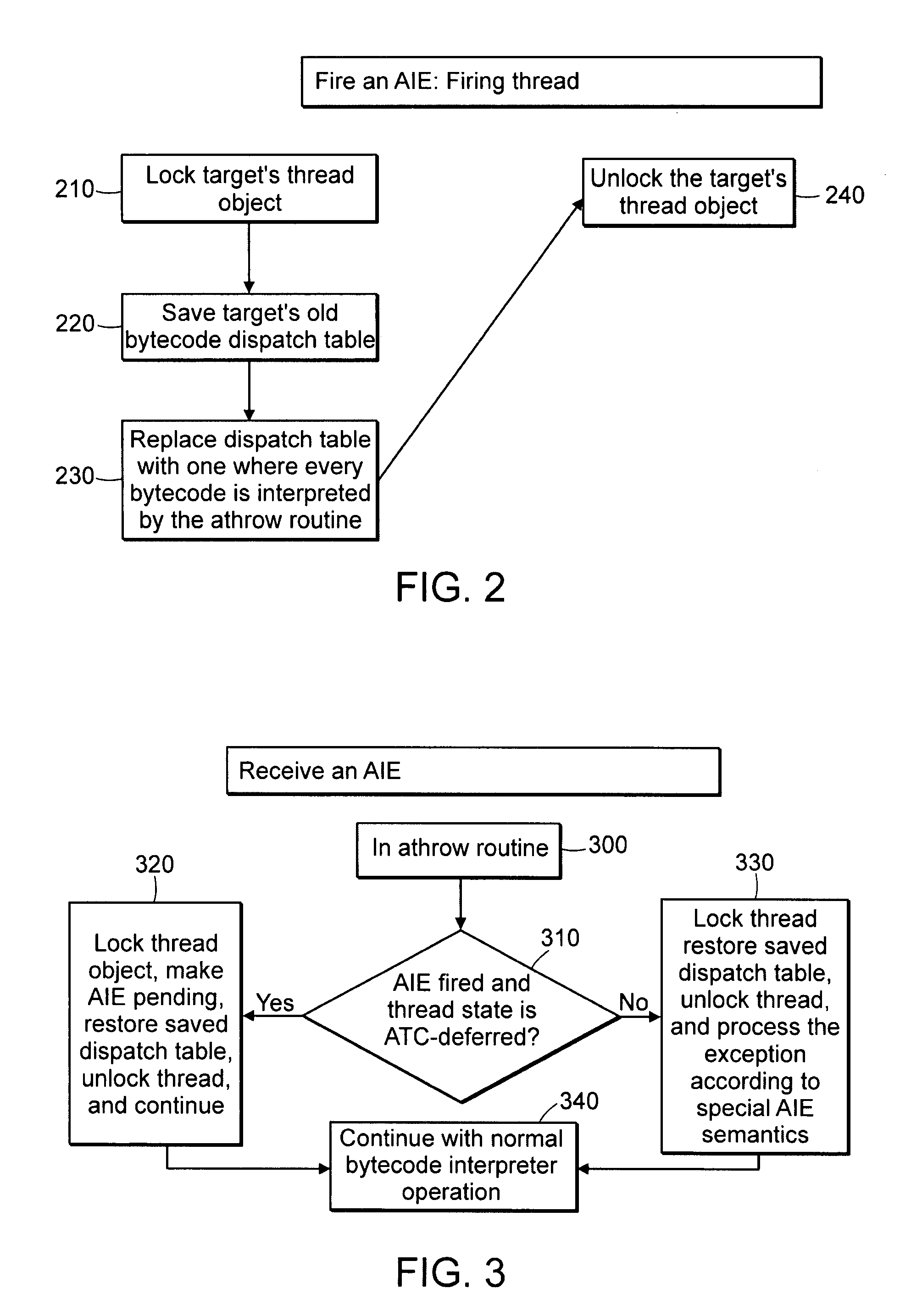

Techniques for exception handling by rewriting dispatch table elements

InactiveUS7296257B1Low costConveniently and efficiently substituteError detection/correctionDigital computer detailsByteRewriting

A technique for implementing a data processor to determine if an exception has been thrown. Specifically, the technique may be used in an interpretive environment where a table known as a bytecode (upcode) dispatch table is used. The dispatch table contains addresses for code that implements each bytecode. When the interpreter executes normally, these addresses point to the basic target machine code for executing each bytecode. However, when an Asynchrounously Interrupted Exception (AIE) is thrown, then the dispatch table is repopulated so that all byte codes point to routines that can handle the exception. These may point to a routine, such as an athrow, that has been extended to handle the special case of the AIE. Alternatively, the rewritten table can point to a routine that is specifically written to handle the firing of an AIE. The table contents are restored to their prior state once the exception handling is complete. The scheme may also be implemented non-rewriting of the table if a thread is in a non-Asynchronous Transfer of Control (to defer ATC) deferred state.

Owner:TIMESYS

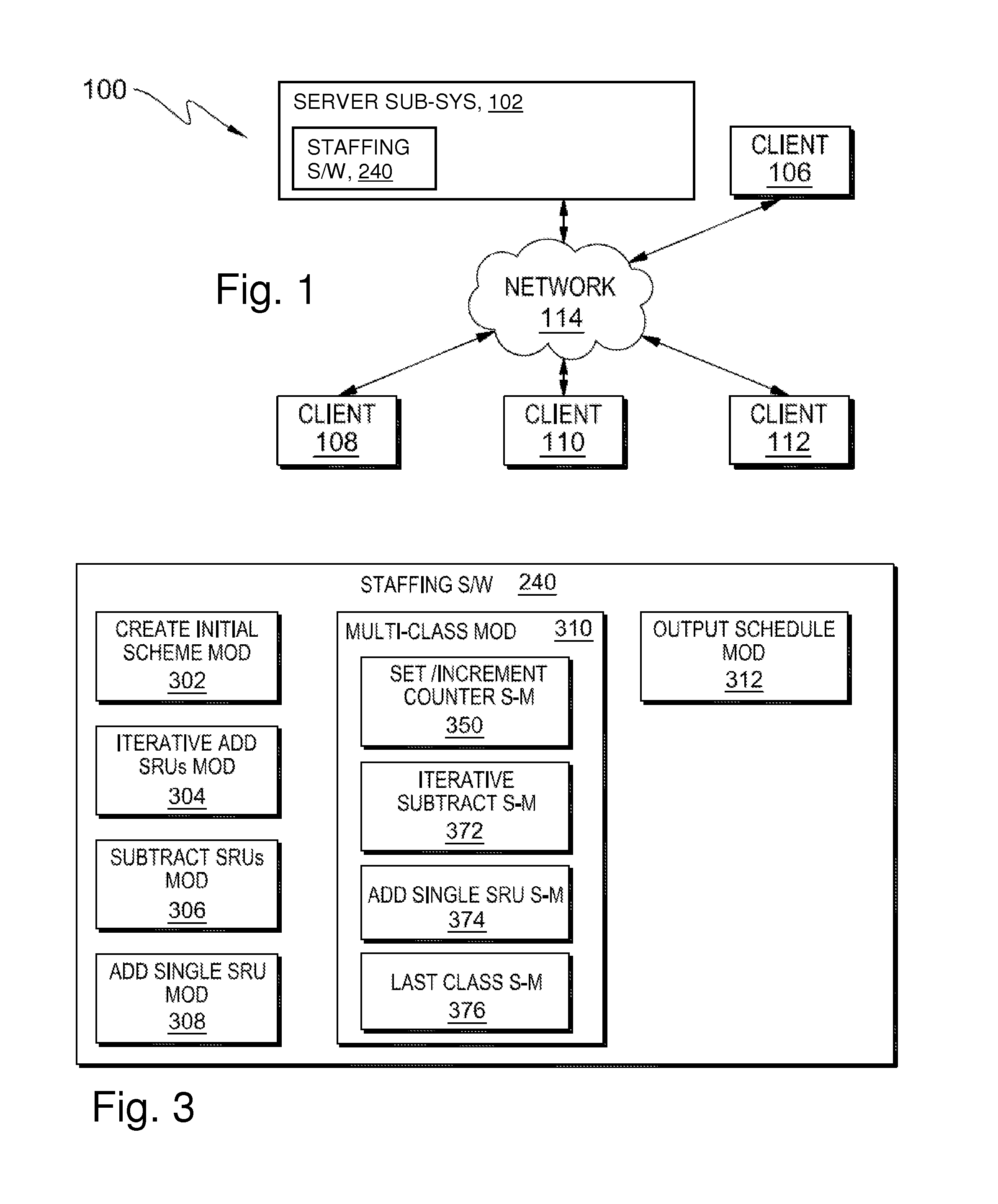

Optimizing staffing levels with reduced simulation

A method, system and software for determining a final staffing schedule in a ticketing system by scheduling a plurality of staffing resource units (SRUs) of multiple classes into one or more shift(s). The method includes finding a simulated staffing schedule for some sub-set of class(es), where the sub-set of class(es) is: (i) less than the total number of classes; and (ii) can accomplish all tasks that may come in during the shift(s) being scheduled. The schedule for the sub-set of classes is then converted into a schedule including SRUs of every class based on historical data relating to historical proportions between SRUs of various classes and / or incoming tasks of various types.

Owner:IBM CORP

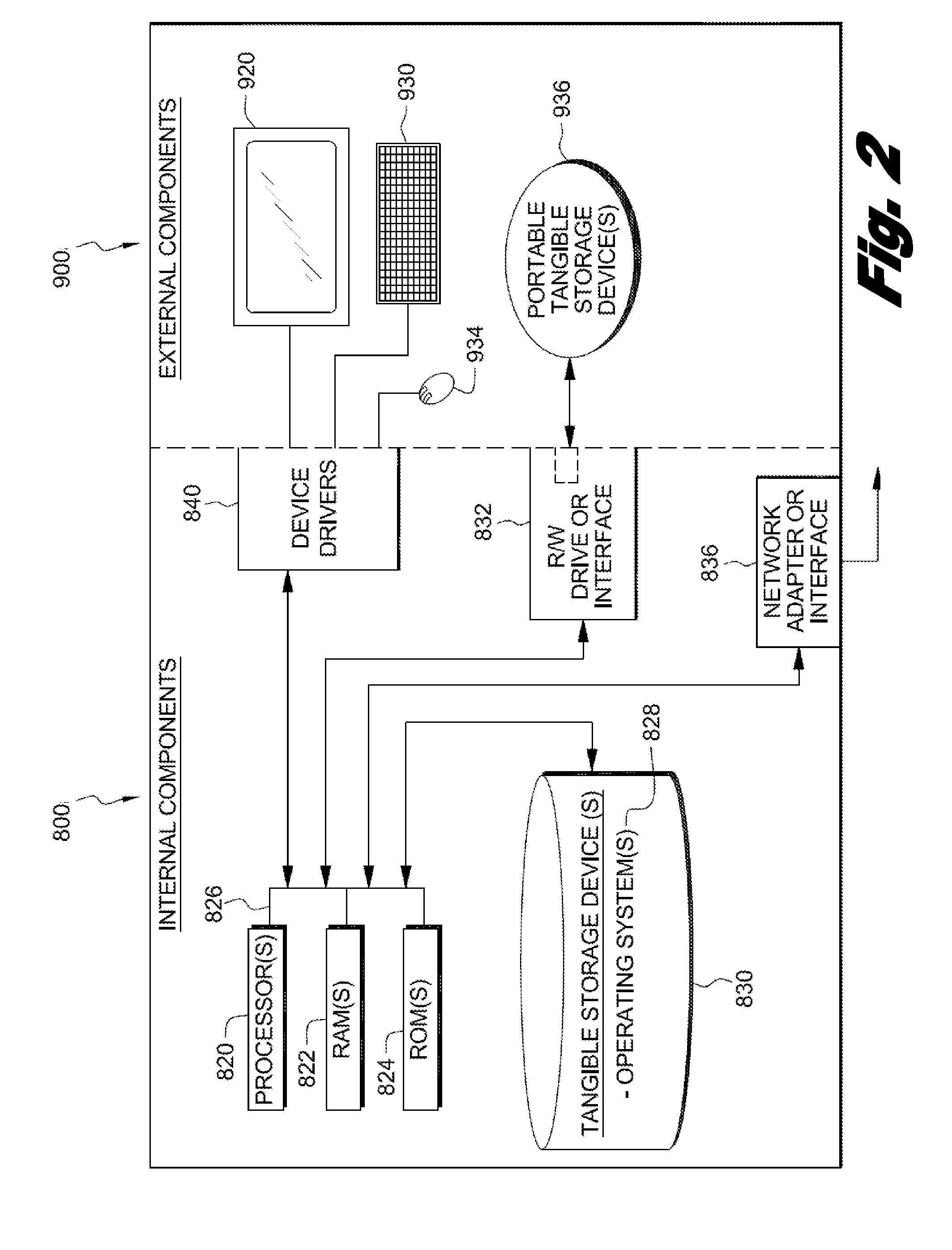

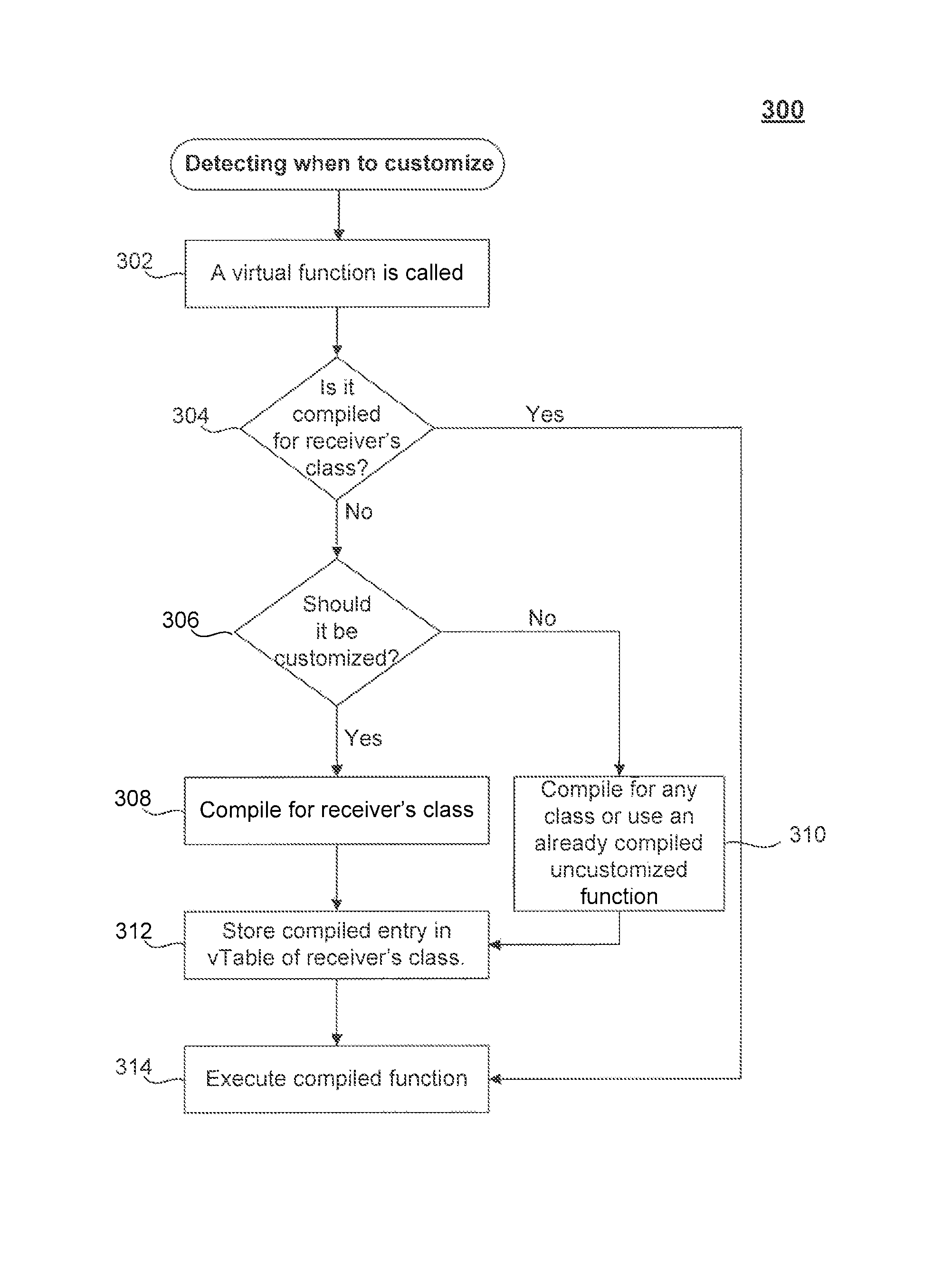

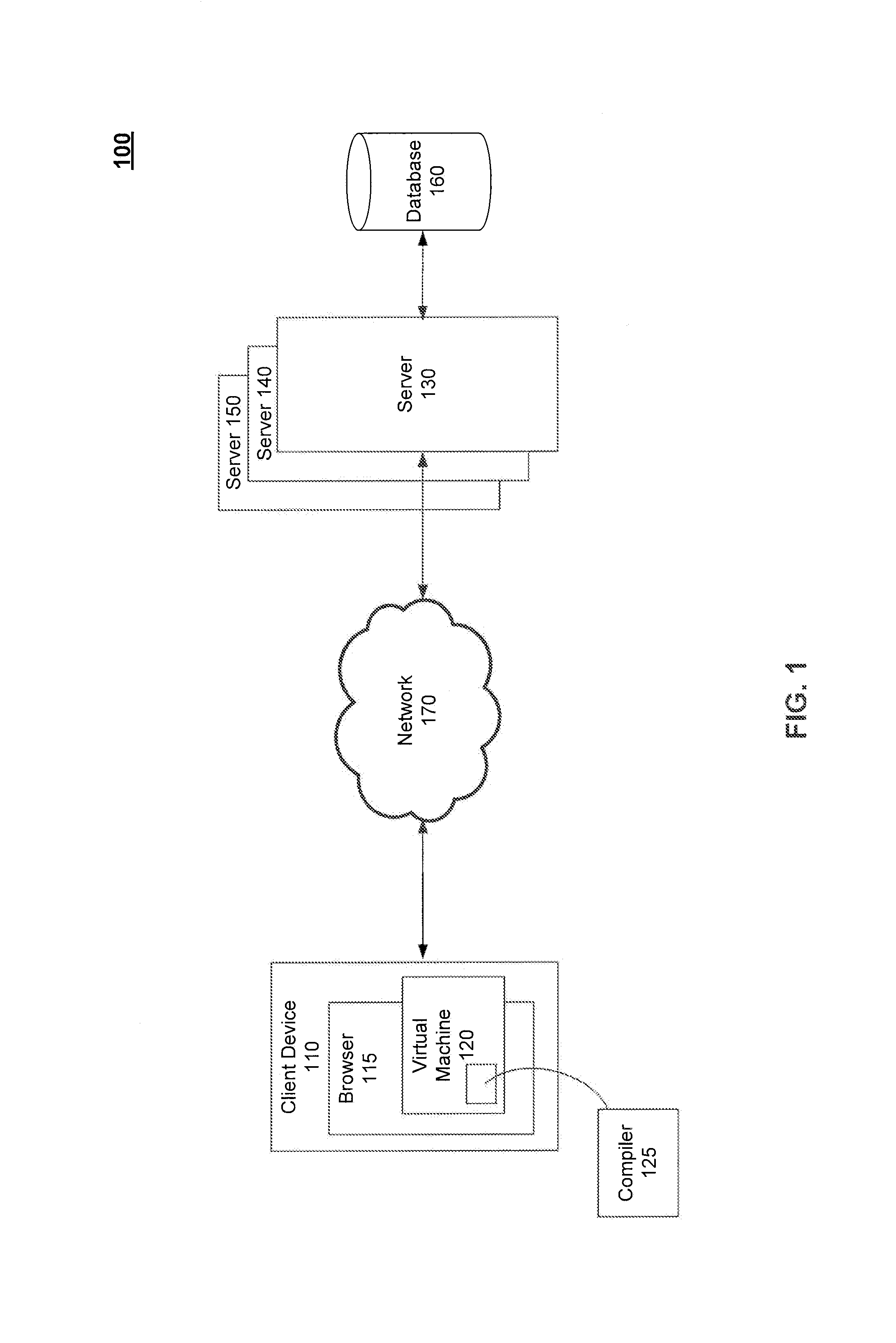

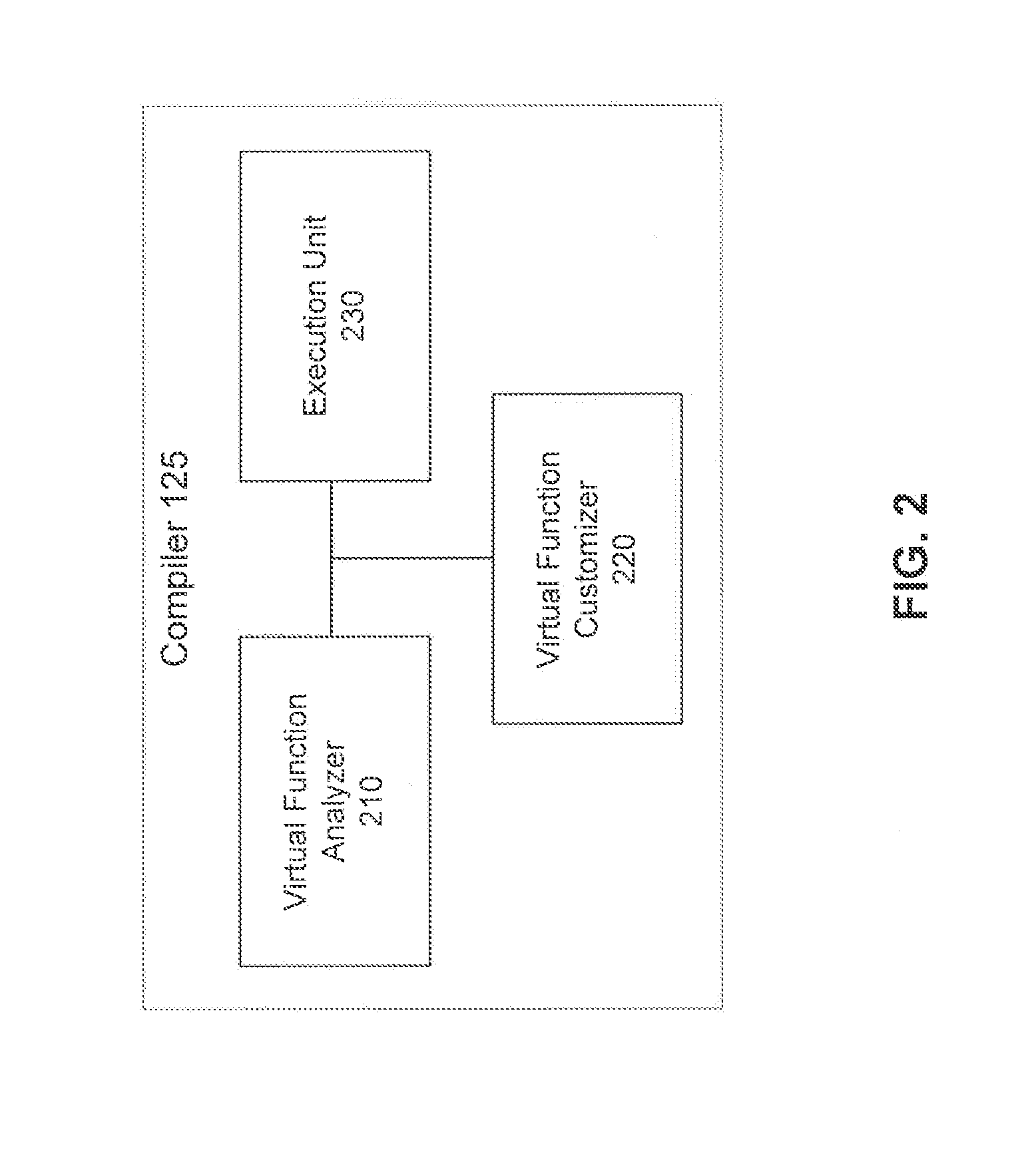

Optimizing object oriented programs using limited customization

A capability for limited customization that utilizes existing virtual dispatch table technology and allows selective customization is provided. Such a capability combines the usage of virtual dispatch tables with both customized and non-customized code to reduce, or even eliminate over-customization. Further, such a capability may employ a runtime system that decides what methods to customize based on several factors including, but not limited to the size of a class hierarchy, the amount of available space for compiled code, and the amount of available time for compilation.

Owner:GOOGLE LLC

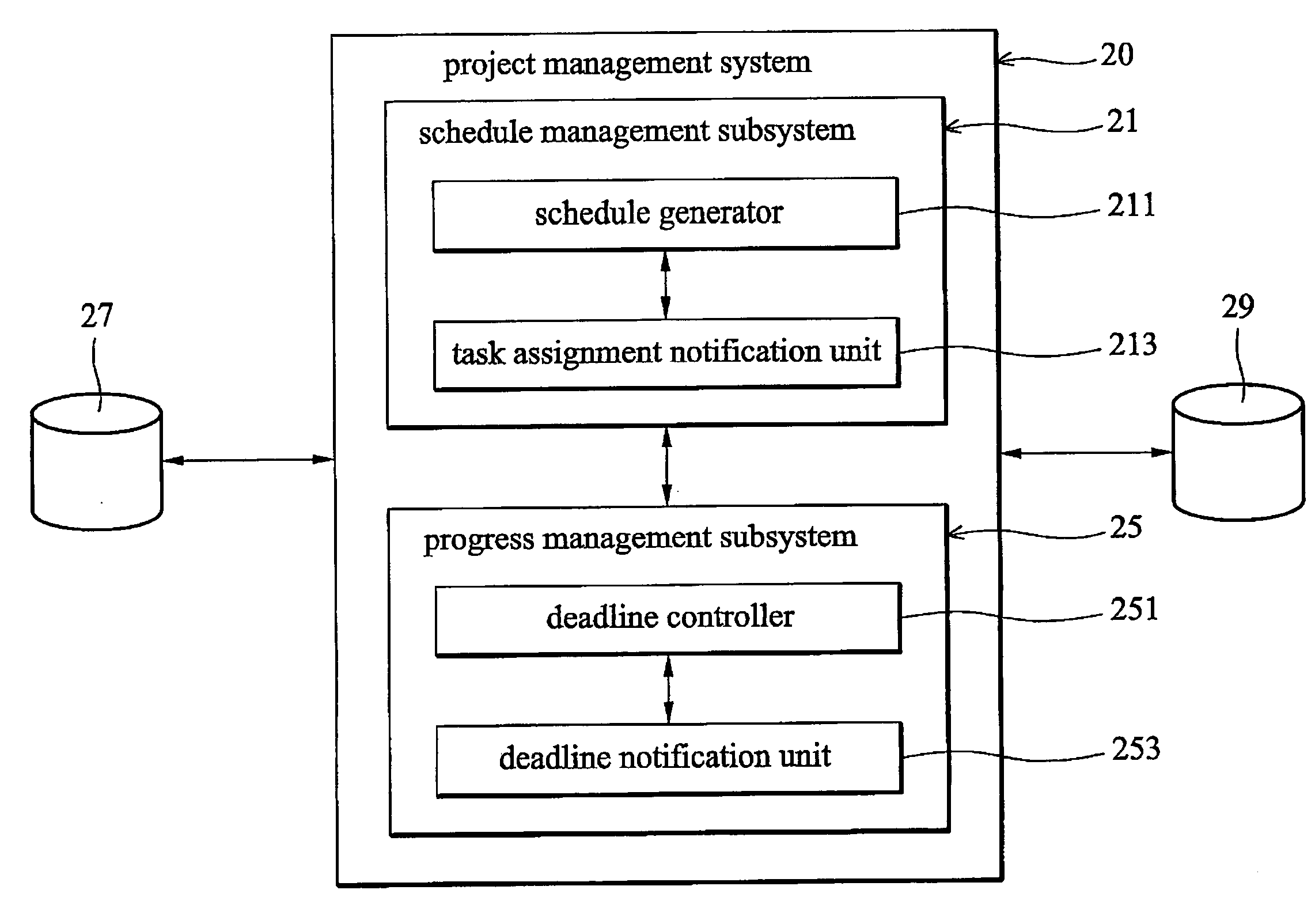

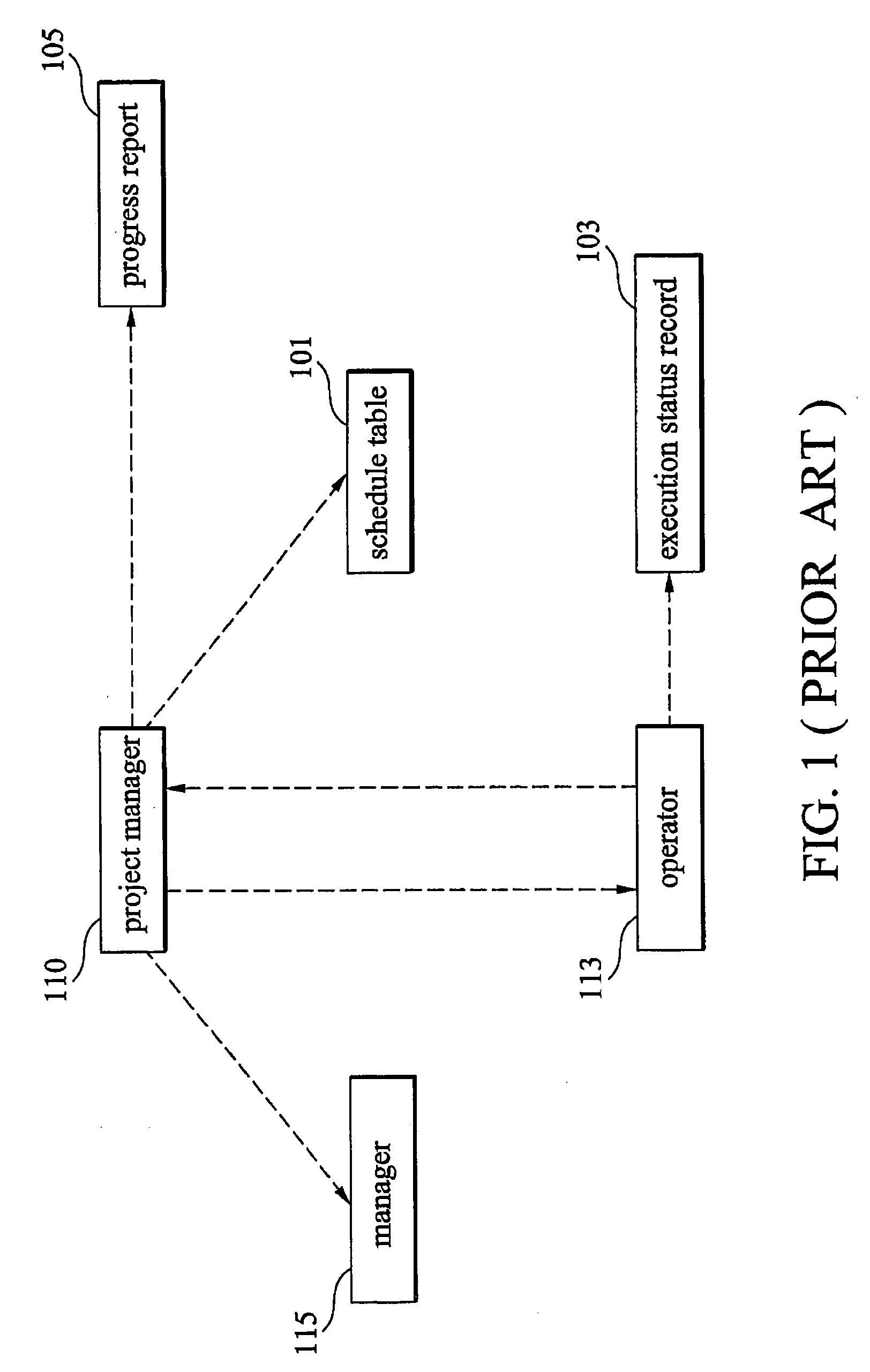

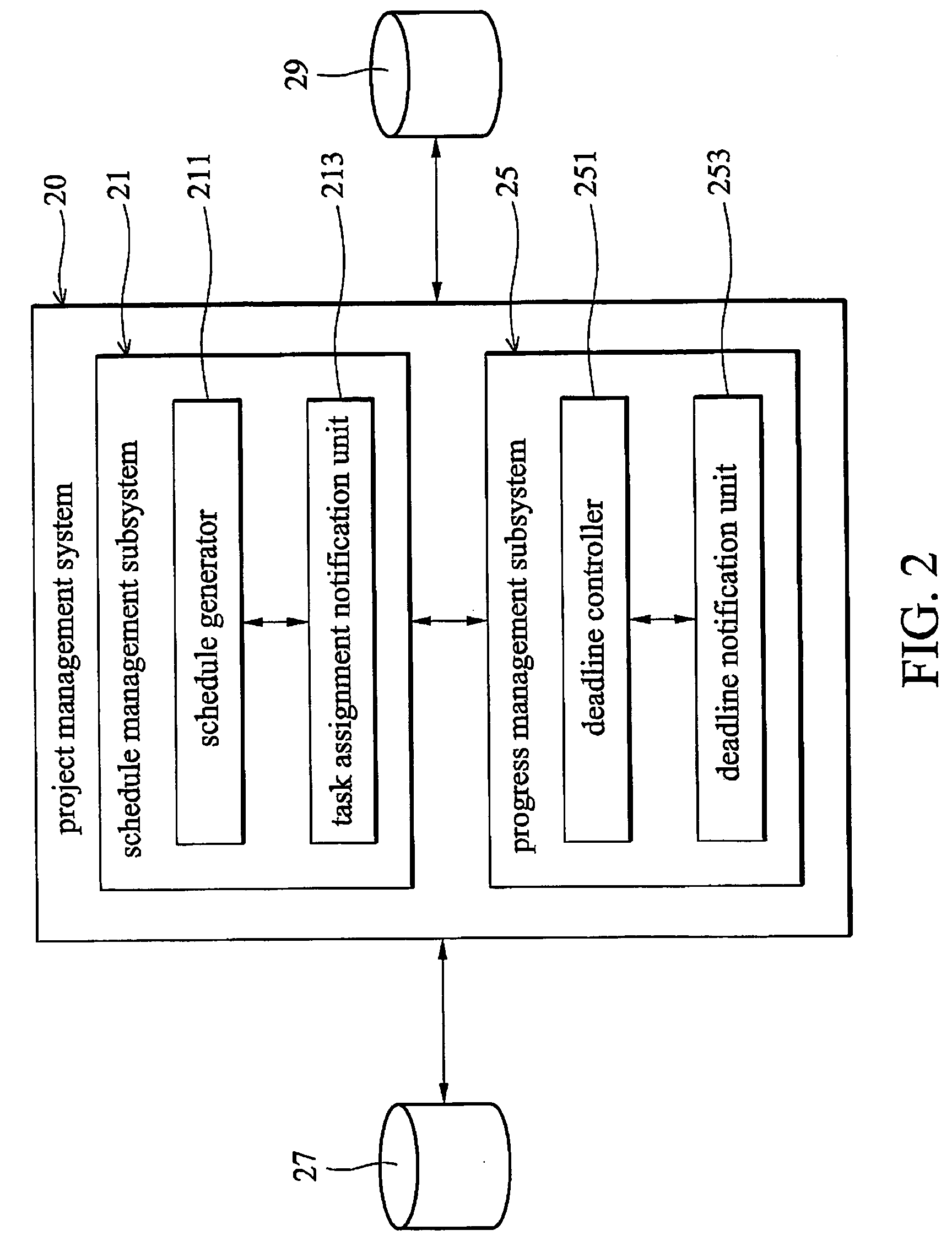

Project management system and method therefor

InactiveUS20060178921A1Low costReduce transferMultiprogramming arrangementsResourcesControl tableComputer science

A project management system is disclosed. The project management system contains a schedule management subsystem and a progress management subsystem. The schedule management subsystem contains a schedule generator and a task assignment notification unit. The schedule generator generates a schedule table from project management information and task status information. The task assignment notification unit sends task assignment notices in accordance with the schedule table. The progress management subsystem, in communication with the schedule management subsystem, contains a task deadline controller and a deadline notification unit. The task deadline controller establishes a deadline control table in accordance with the task duration data. The deadline notification unit sends a deadline notice in accordance with the task deadline control table.

Owner:TAIWAN SEMICON MFG CO LTD

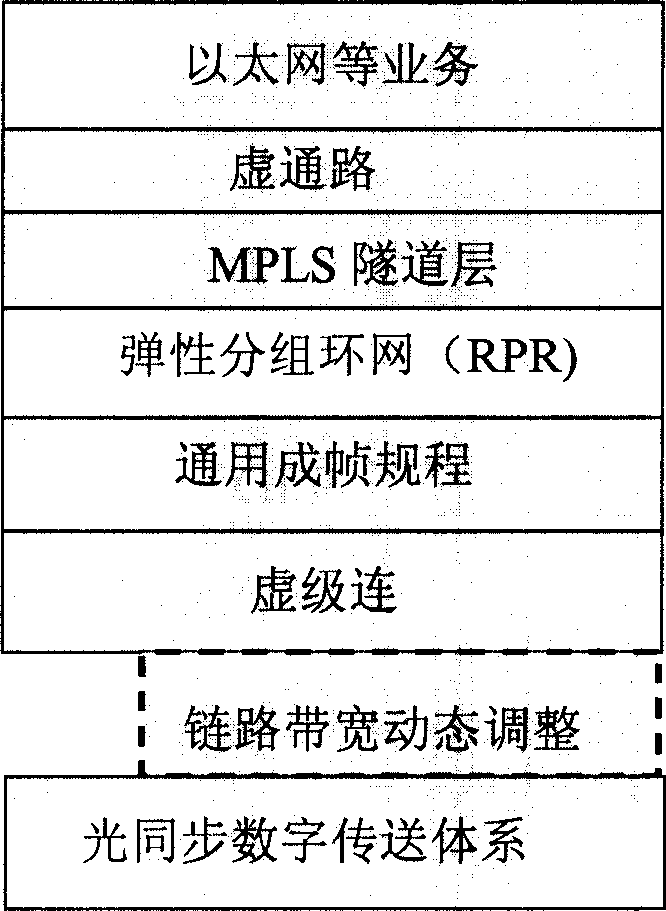

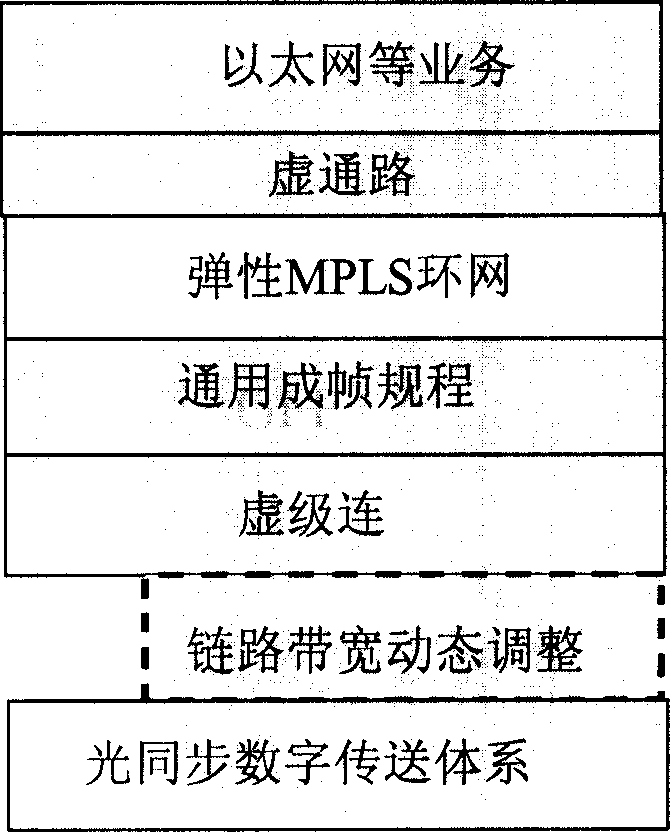

Ring net and method for realizing service

InactiveCN1697416AImprove efficiencyEasy to handleLoop networksNetworks interconnectionLabel switchingMultiple node

The ring net includes multiple nodes and physical links. Being coupled to each node, physical link accepts load of data at each node in mode of label switching route. Node receives and sends data packed in format of standard frame in label switching multiple protocols. Based on the ring net, the method of implementing operation includes following steps: building dispatch list of label switching route at each node; based on the said dispatch list, service source node obtains service host node; based on prearranged algorithm, service is dispatched to the said ring net; services from different nodes are multiplexing transmitted through same physical channel in mode of label switching route; service is dispatched out of the ring net at service host node.

Owner:HUAWEI TECH CO LTD

Asynchronous concurrent processing method

InactiveCN101170507ADoes not affect other operationsQuick responseData switching networksDispatch tableData bank

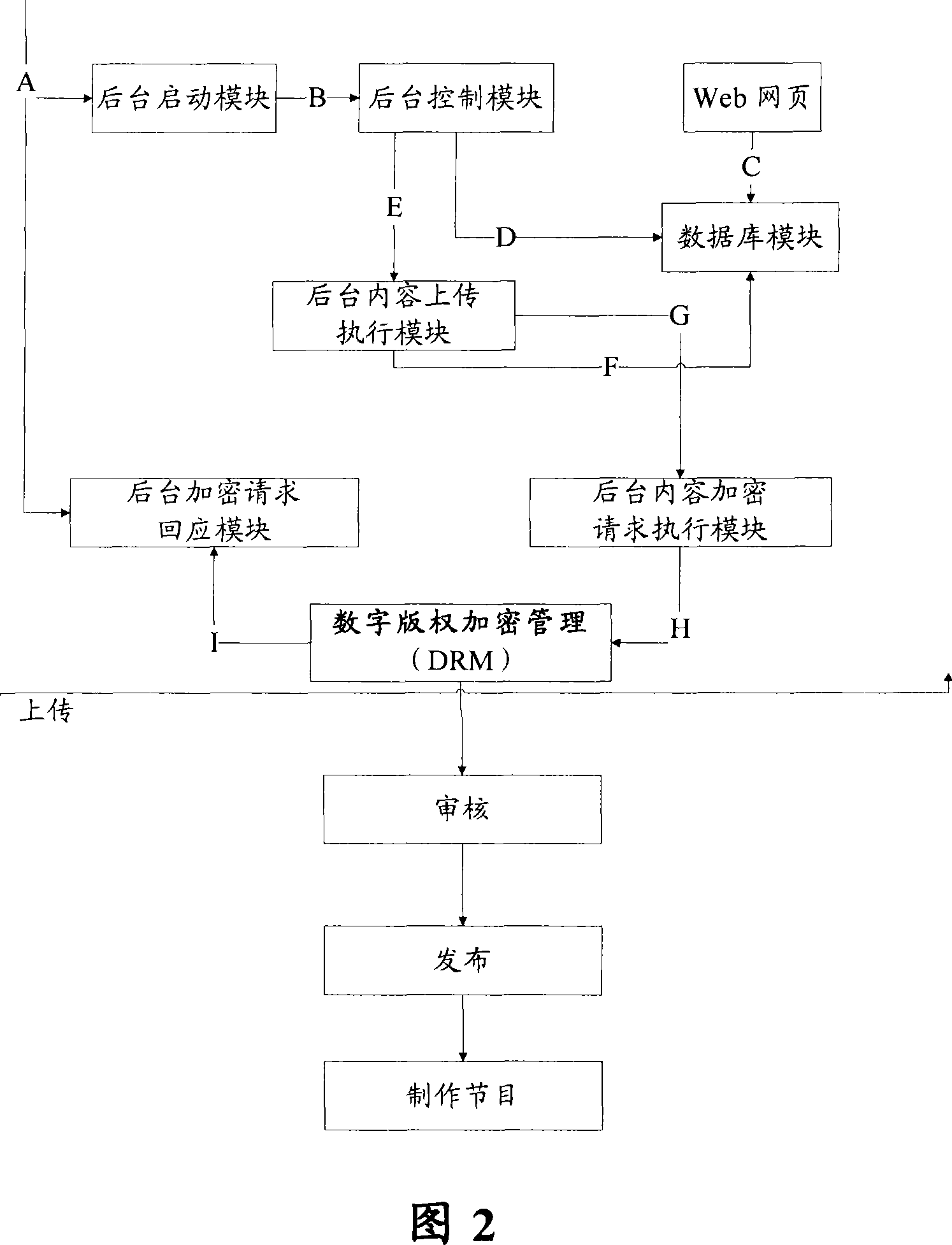

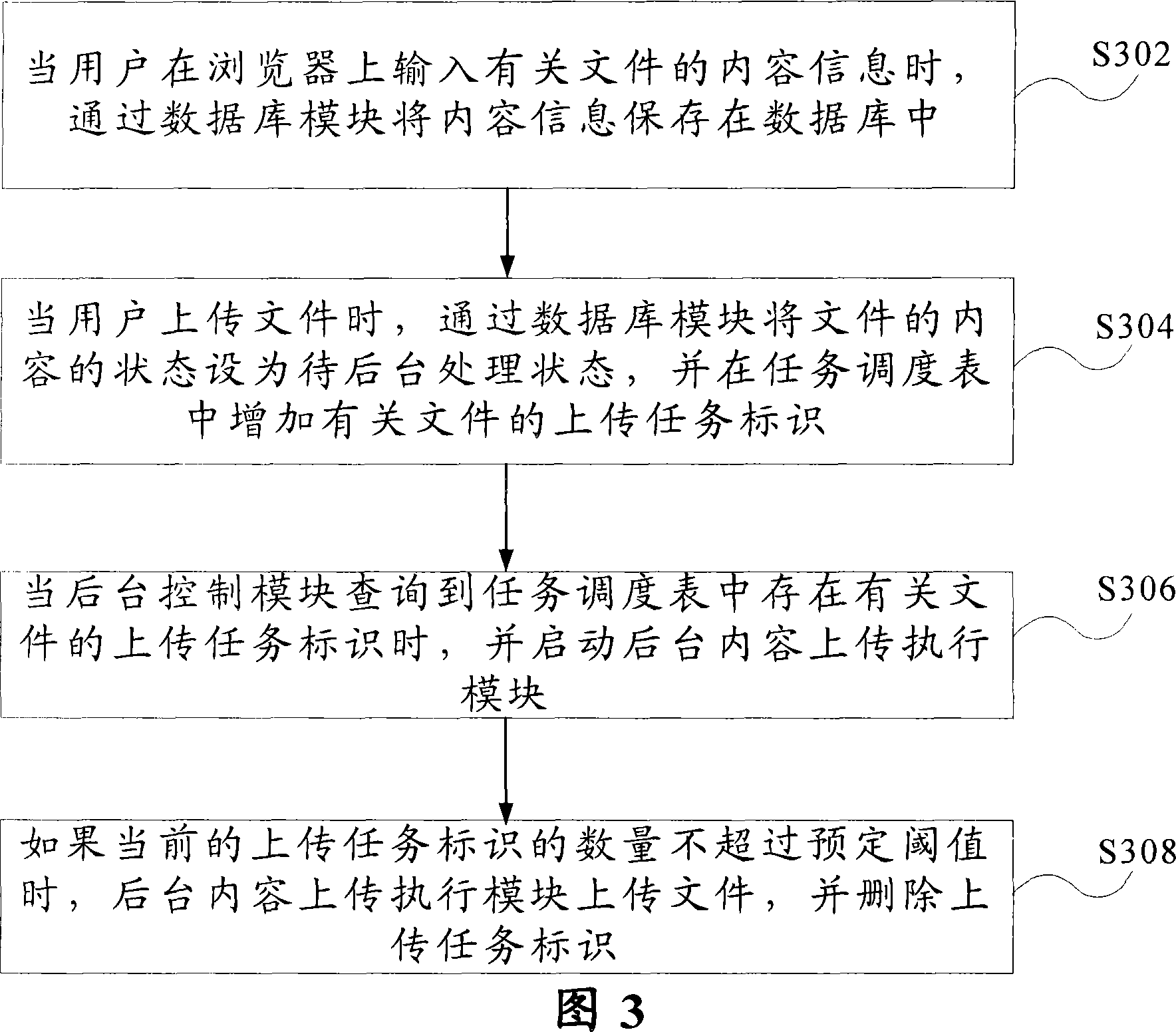

The invention provides an asynchronous and concurrent processing method for implementing uploading files in batch via a content management system, which includes the steps: Step 1, when a user inputs content information of a relative file on an explorer, the content information is saved in a data base via a data base module; Step 2, when the user uploads the file, the status of the file content is set as the status of waiting for the backstage processing, and the upload task indicator of a relative file is added in a task scheduling table; Step 3, when searching out that the task scheduling table has the upload task indicator of the relative file, a backstage control module starts a backstage content upload executing module; and Step 4, if the number of the current upload task indicators are not beyond the preset threshold, the backstage content upload executing module uploads the files, and deletes the upload task indicator.

Owner:STATE GRID CORP OF CHINA +4

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com