Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

30 results about "Throughput (business)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Throughput is rate at which a product is moved through a production process and is consumed by the end-user, usually measured in the form of sales or use statistics. The goal of most organizations is to minimize the investment in inputs as well as operating expenses while increasing throughput of its production systems. Successful organizations which seek to gain market share strive to match throughput to the rate of market demand of its products. .

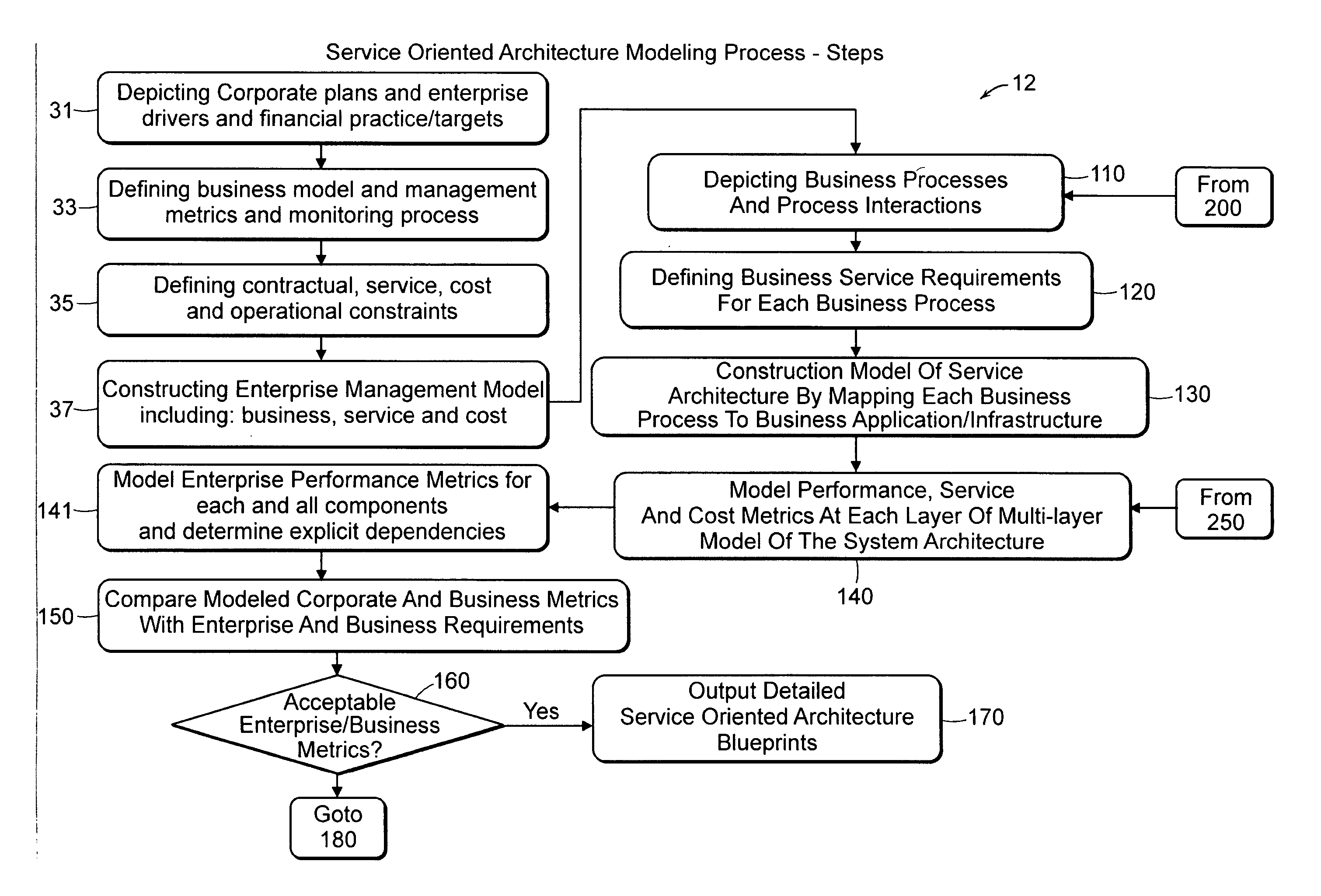

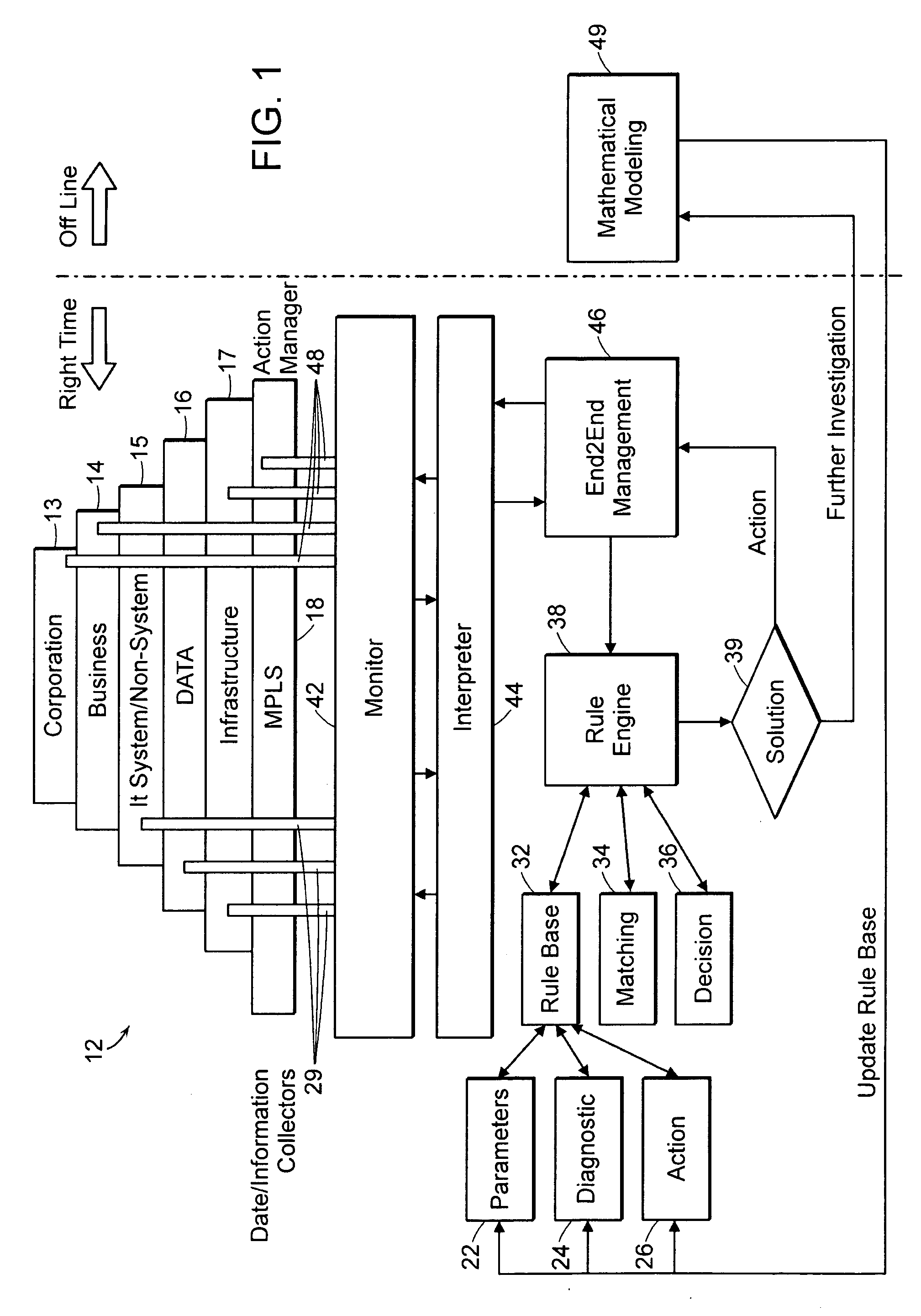

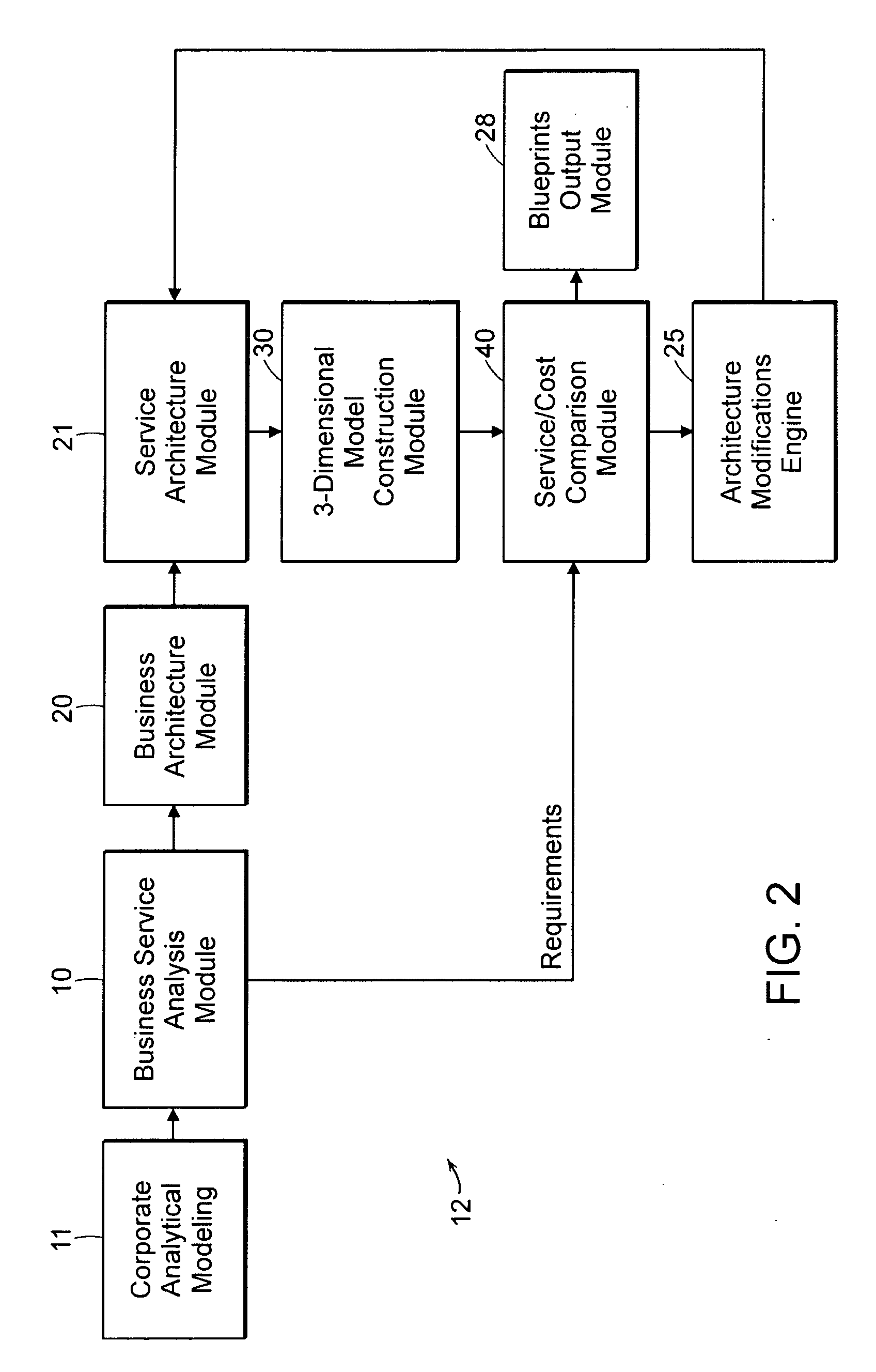

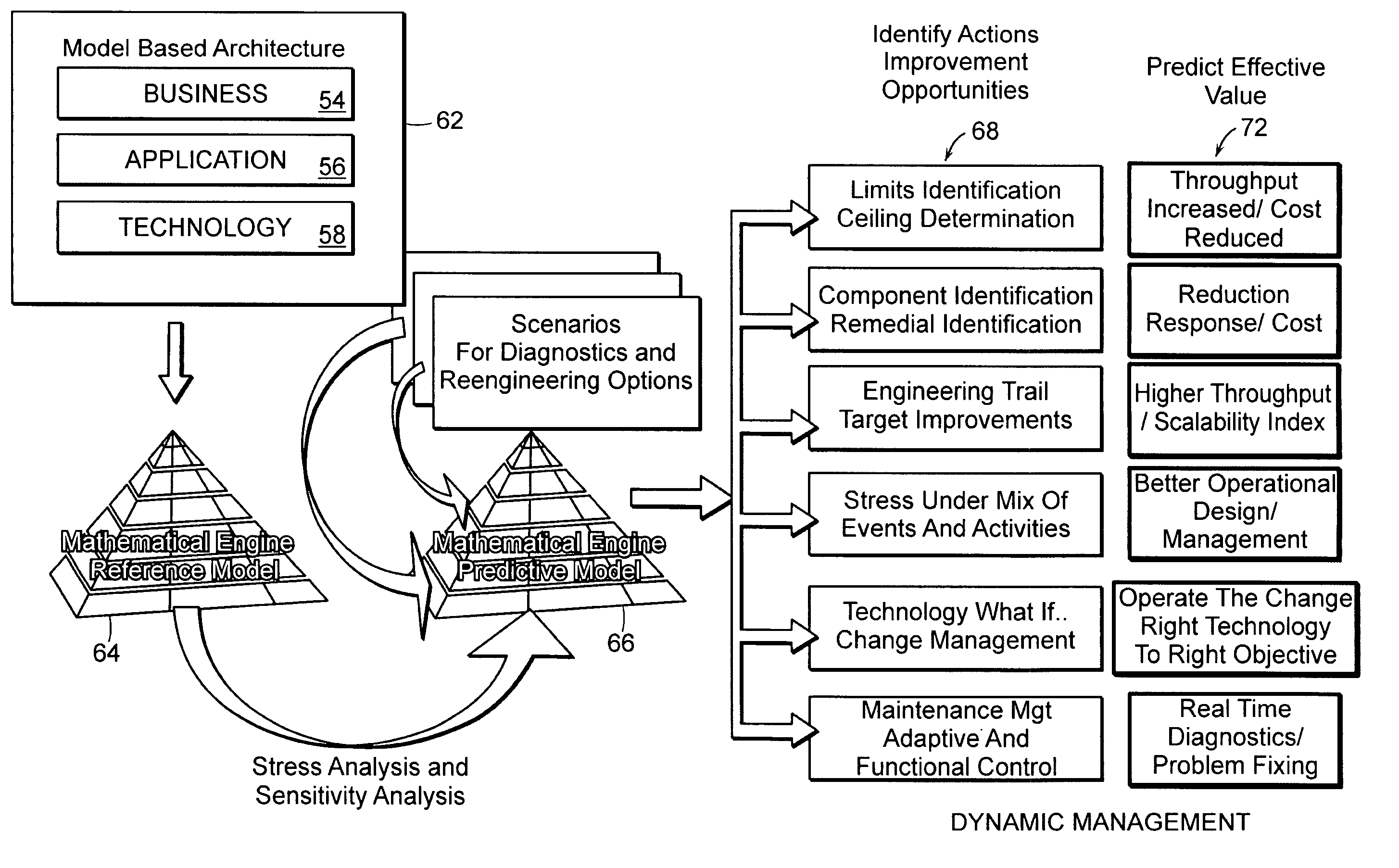

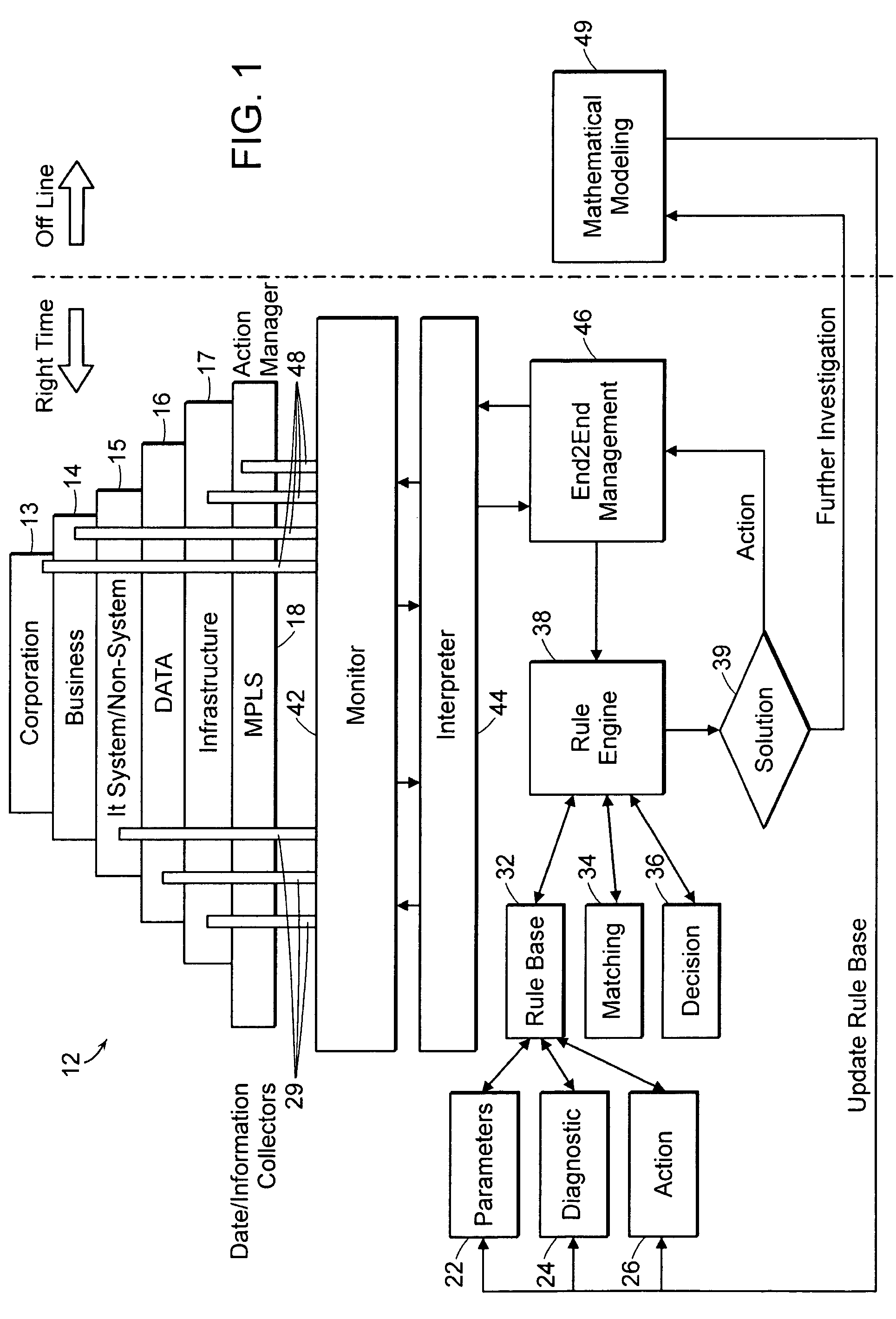

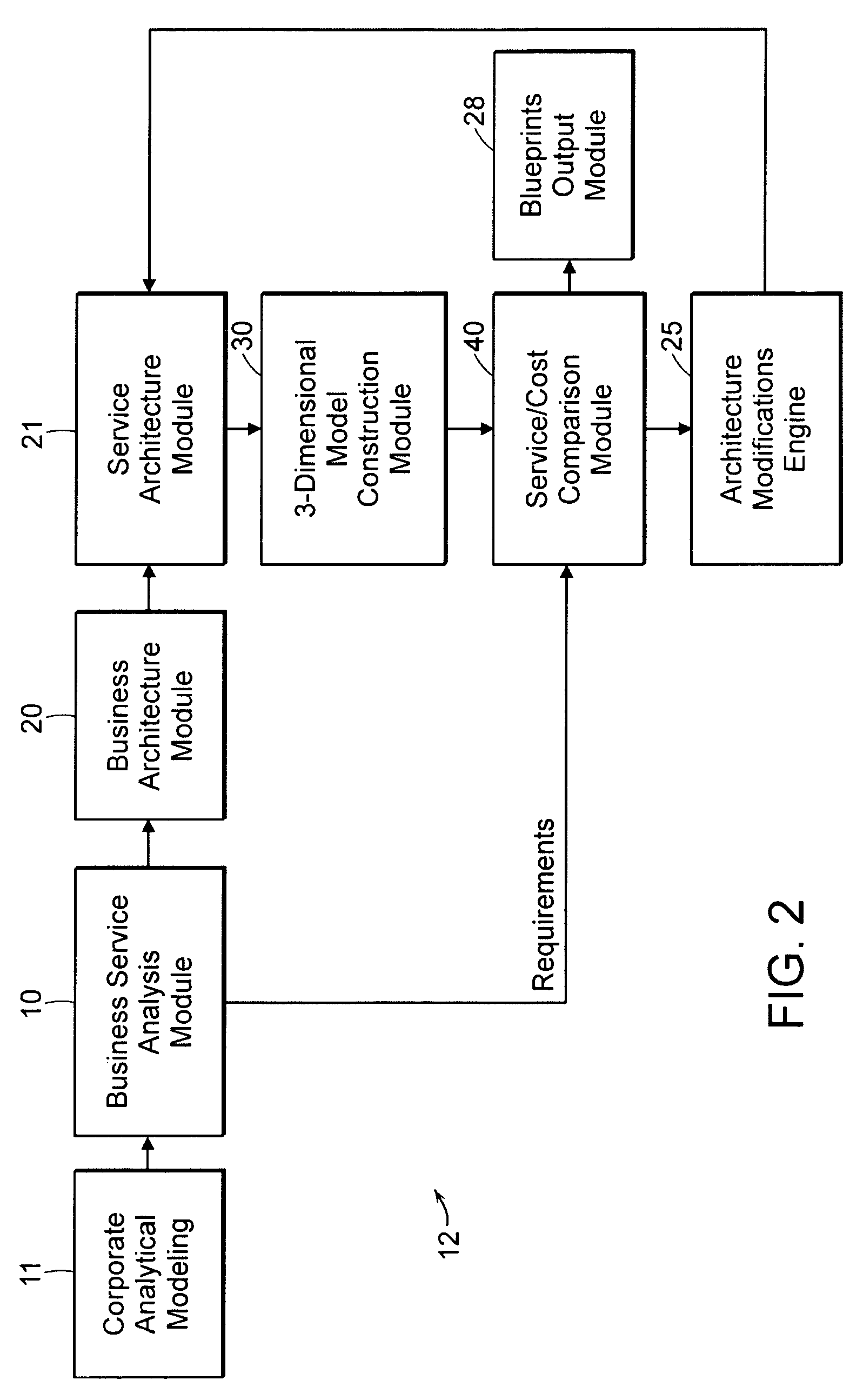

Automated system and method for service and cost architecture modeling of enterprise systems

An automated system and method is provided for system architects to model enterprise-wide architectures of information systems. From an initial model of a proposed system architecture, performance metrics are modeled and compared against a set of user-defined corporate and business requirements. The performance metrics include cost, quality of service and throughput. For unacceptable metrics, modifications to the system architecture are determined and proposed to the system architect. If accepted, the model of the system architecture is automatically modified and modeled again. Once the modeled performance metrics satisfy the corporate and business requirements, a detailed description of the system architecture derived from the model is output. The model of the system architecture also enables a business ephermeris or precalculated table cross referencing enterprise situation and remedy to be formed. A rules engine employs the business ephemeris and provides indications to the enterprise user for optimizing or modifying components of the enterprise system architecture. Off line a mathematical modeling member provides feedback to further update the business ephemeris and rules base for the rules engine.

Owner:X ACT SCI INC

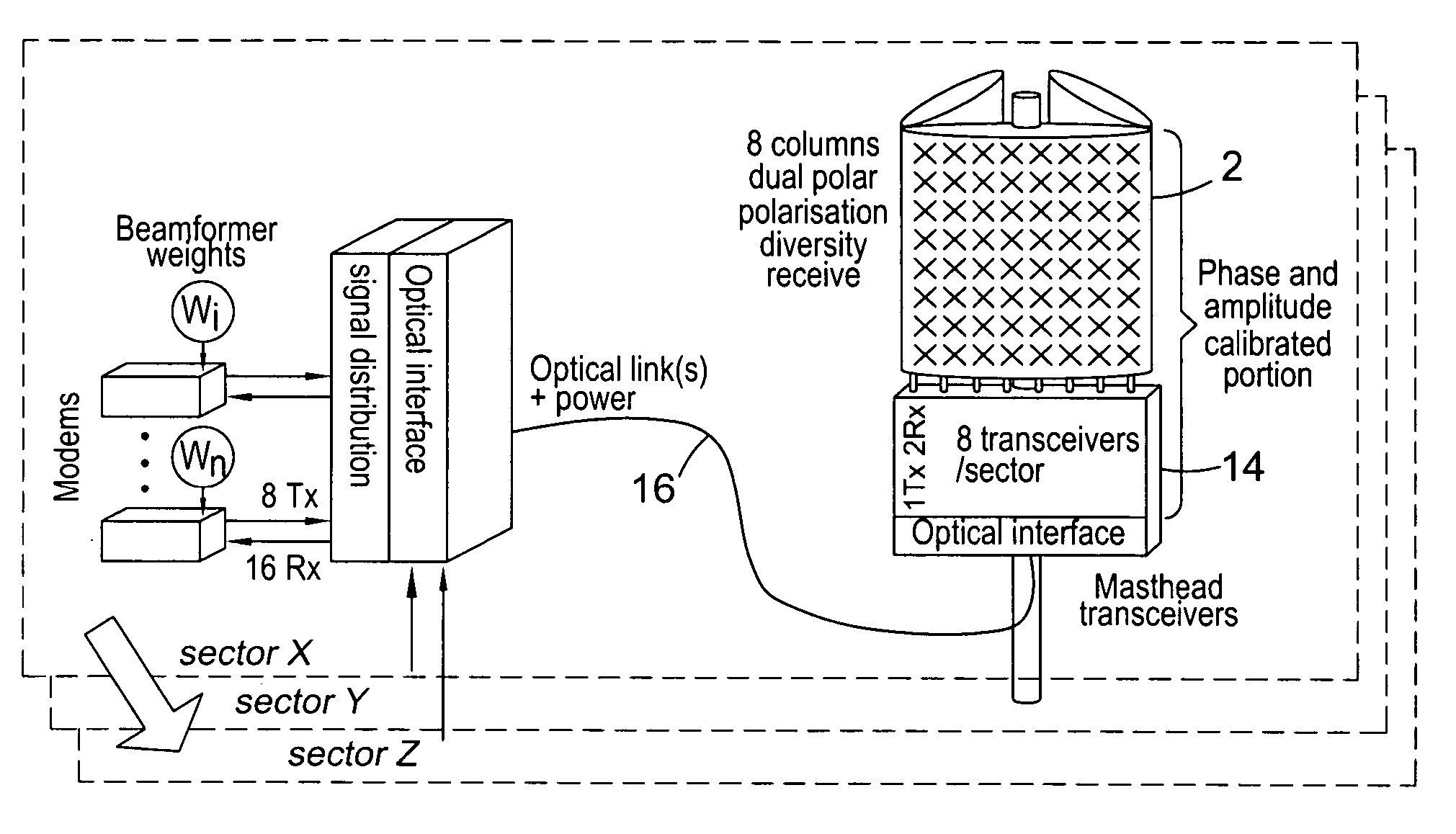

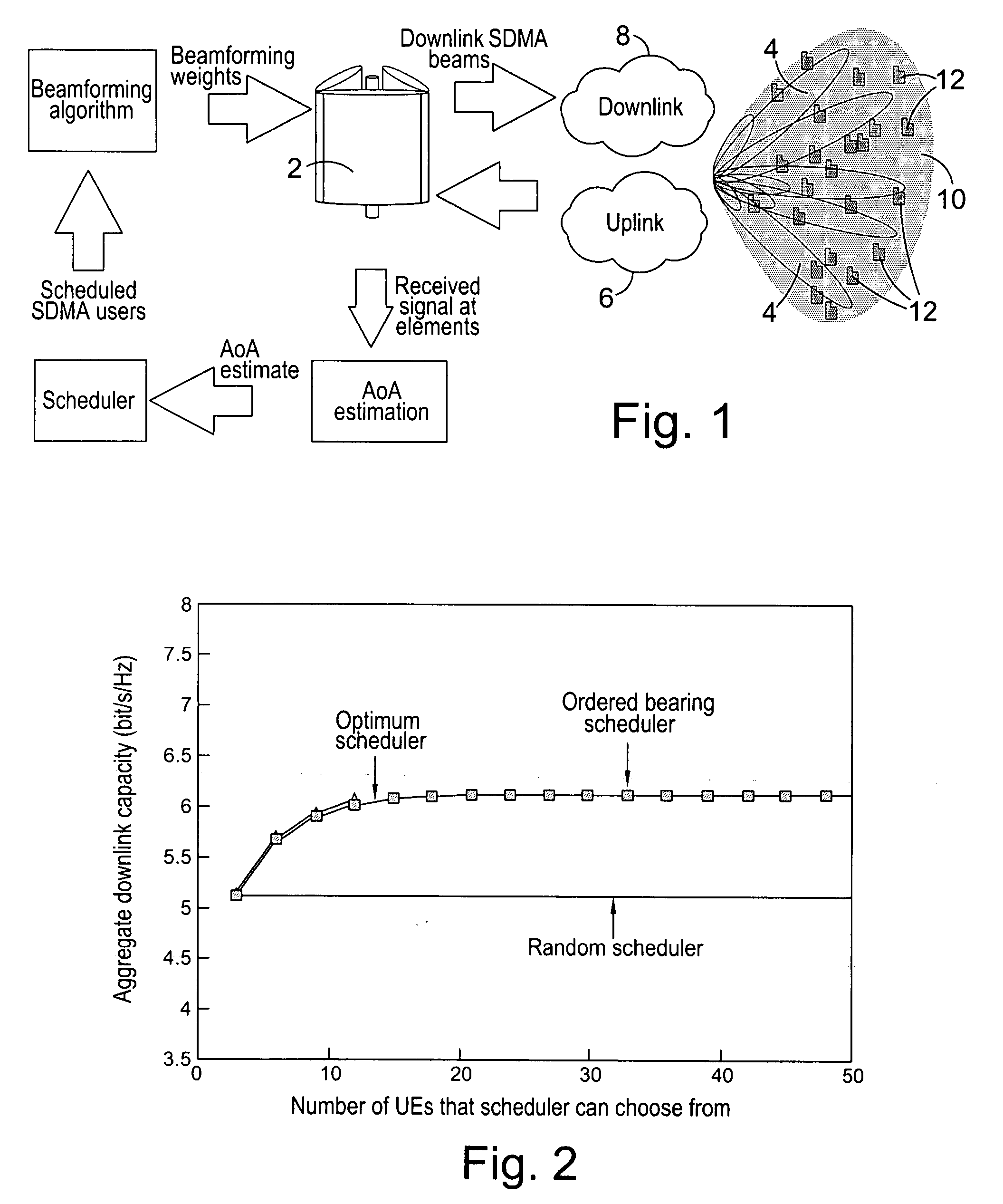

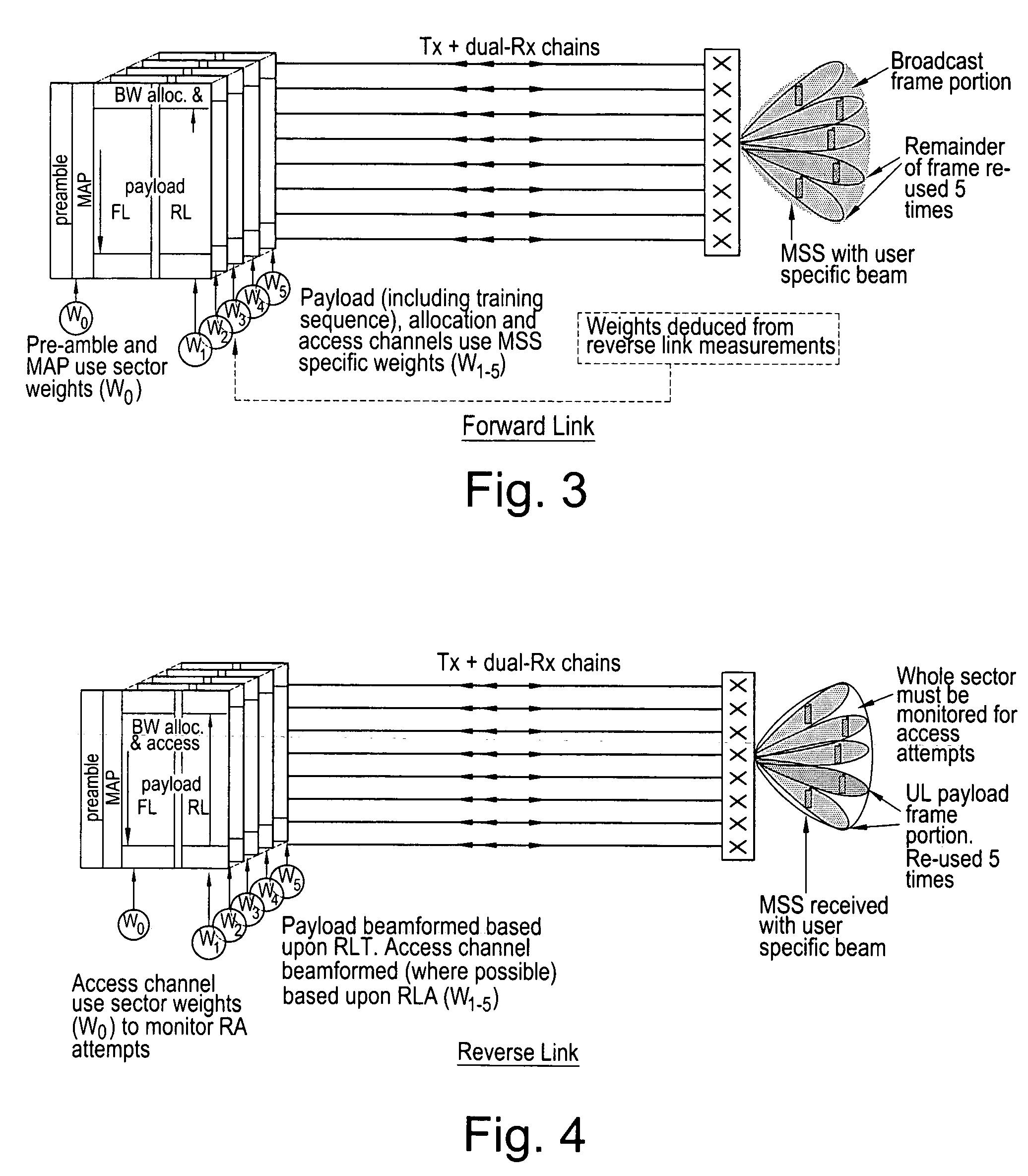

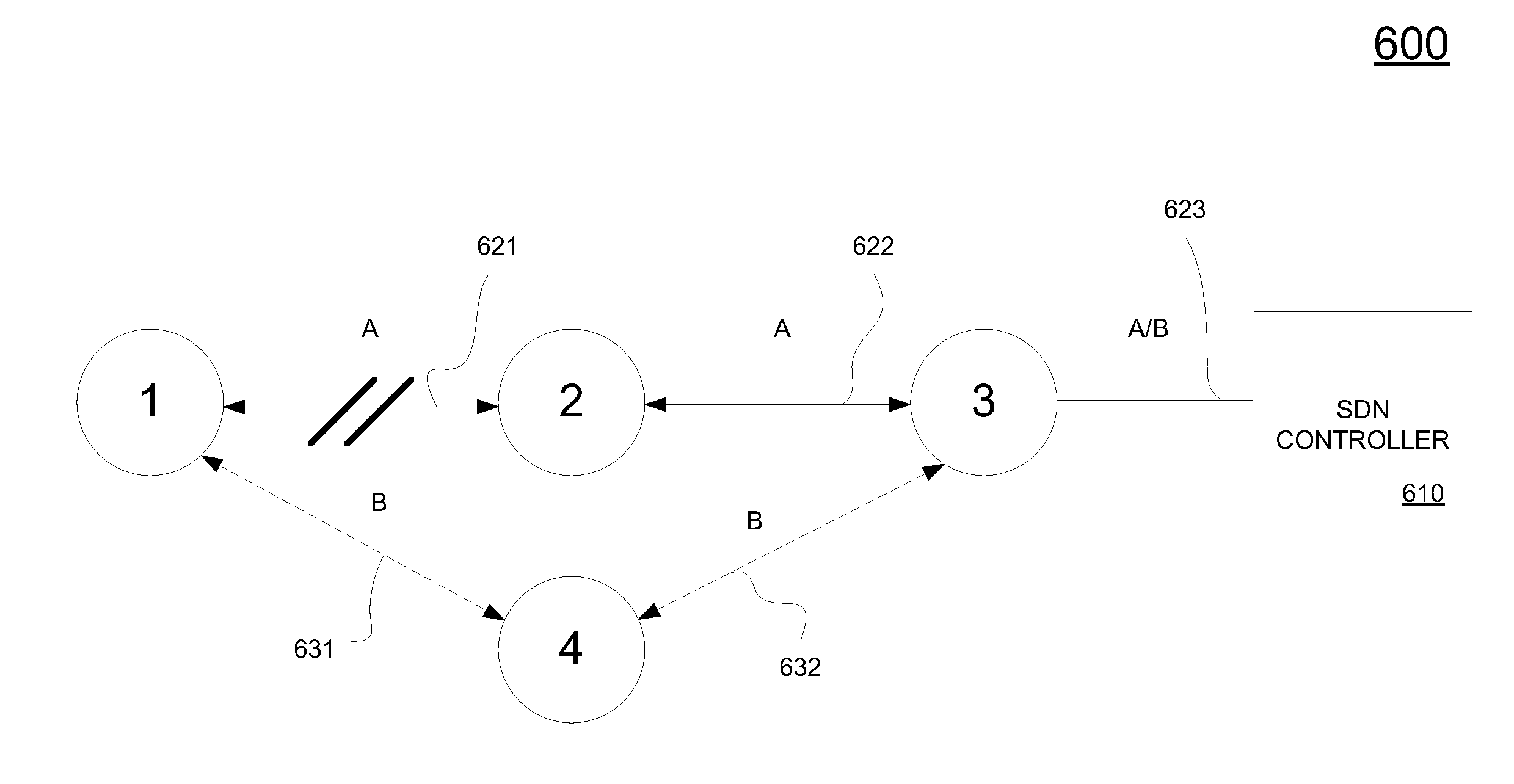

Downlink beamforming for broadband wireless networks

ActiveUS20060281494A1Multiplicative spectral efficiency gainHigh trafficSubstation equipmentRadio transmissionDownlink beamformingFrequency spectrum

Spatial Division Multiple Access (SDMA) offers multiplicative spectral efficiency gains in wireless networks. An adaptive SDMA beamforming technique is capable of increasing the traffic throughput of a sector, as compared to a conventional tri-cellular arrangement, by between 4 and 7 times, depending on the environment. This system uses an averaged covariance matrix of the uplink signals received at the antenna array to deduce the downlink beamforming solution, and is equally applicable to Frequency Division Duplex (FDD) and Time Division Duplex (TDD) systems. A scheduling algorithm enhances the SDMA system performance by advantageously selecting the users to be co-scheduled.

Owner:APPLE INC

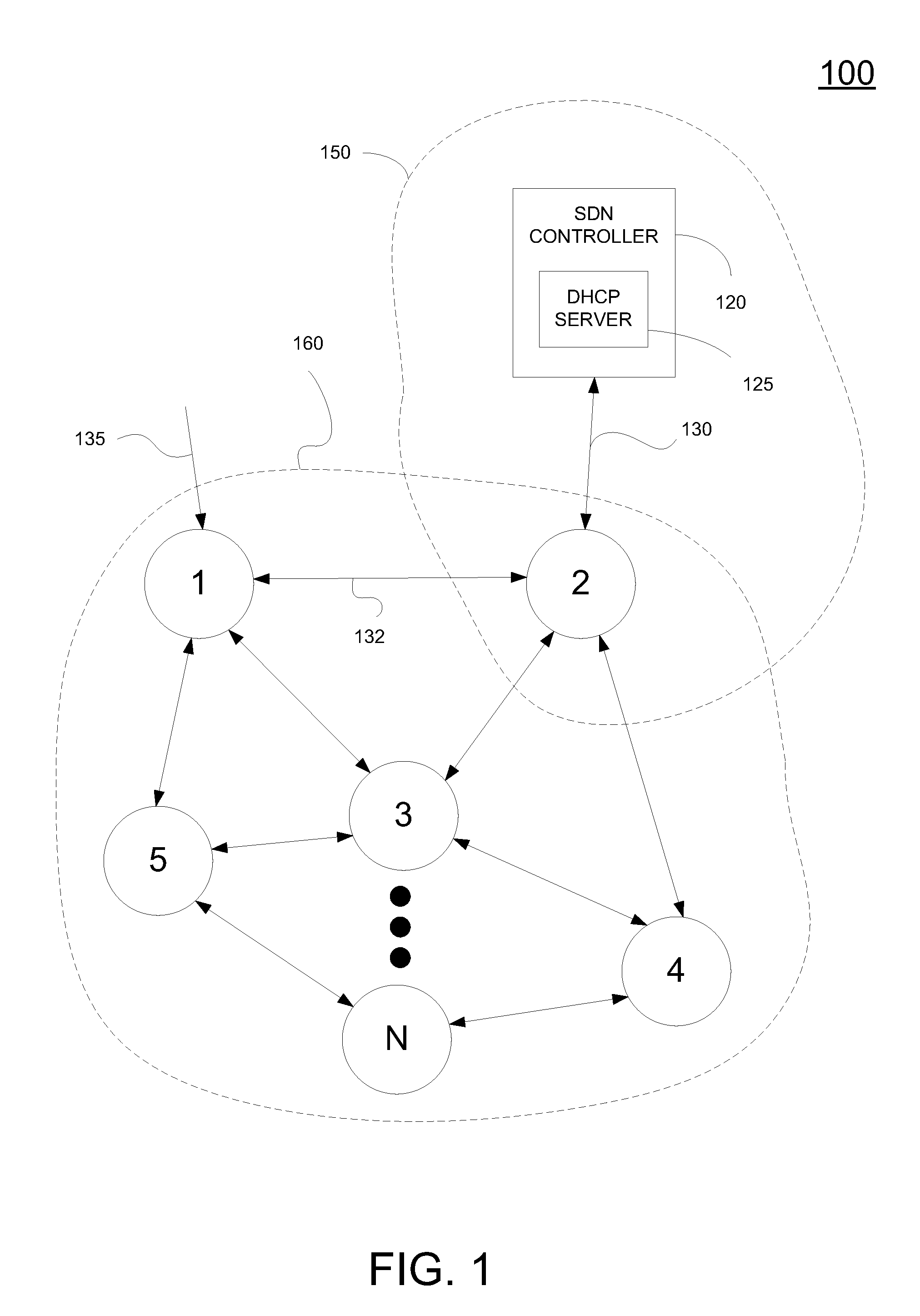

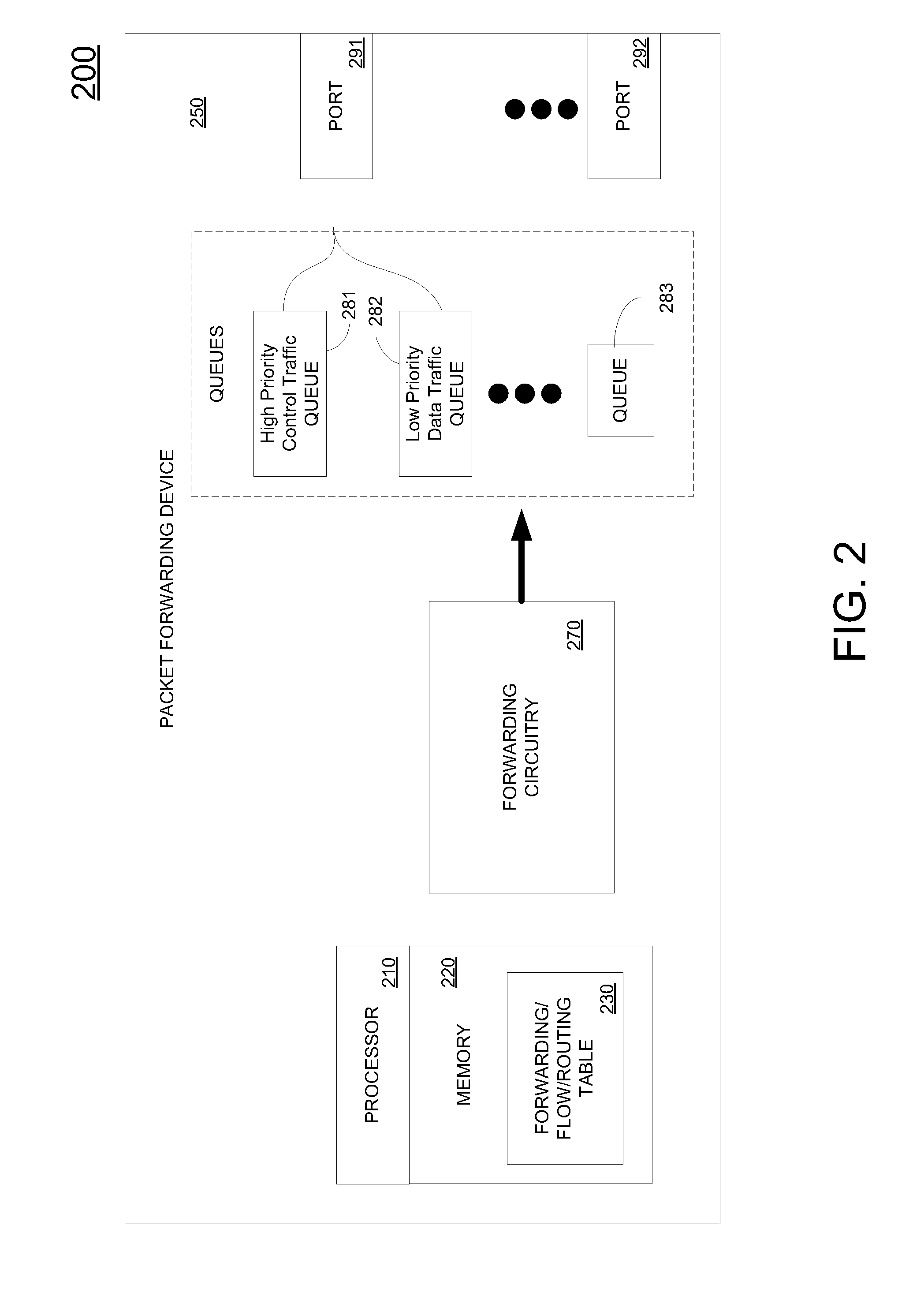

Method and system for providing QOS for in-band control traffic in an openflow network

ActiveUS20160197839A1Guaranteed throughputData switching by path configurationOpenFlowNetwork control

A method for guaranteeing control traffic throughput in an in-band network configured for delivering control and data traffic, and considering both local and global views of the communication network. The method includes determining an initial configuration for forwarding first control traffic from the packet forwarding device, wherein the initial configuration comprises a first in-band queue for receiving the first control traffic that is delivered over the control path to the controller via a port in the packet forwarding device, and a first bandwidth reserved for the first queue. The method includes performing handshaking with the controller by sending a request to the controller confirming the initial configuration using a network control protocol, and receiving a response from the controller in association with the request. The method includes confirming or modifying the initial configuration based on the response.

Owner:FUTUREWEI TECH INC

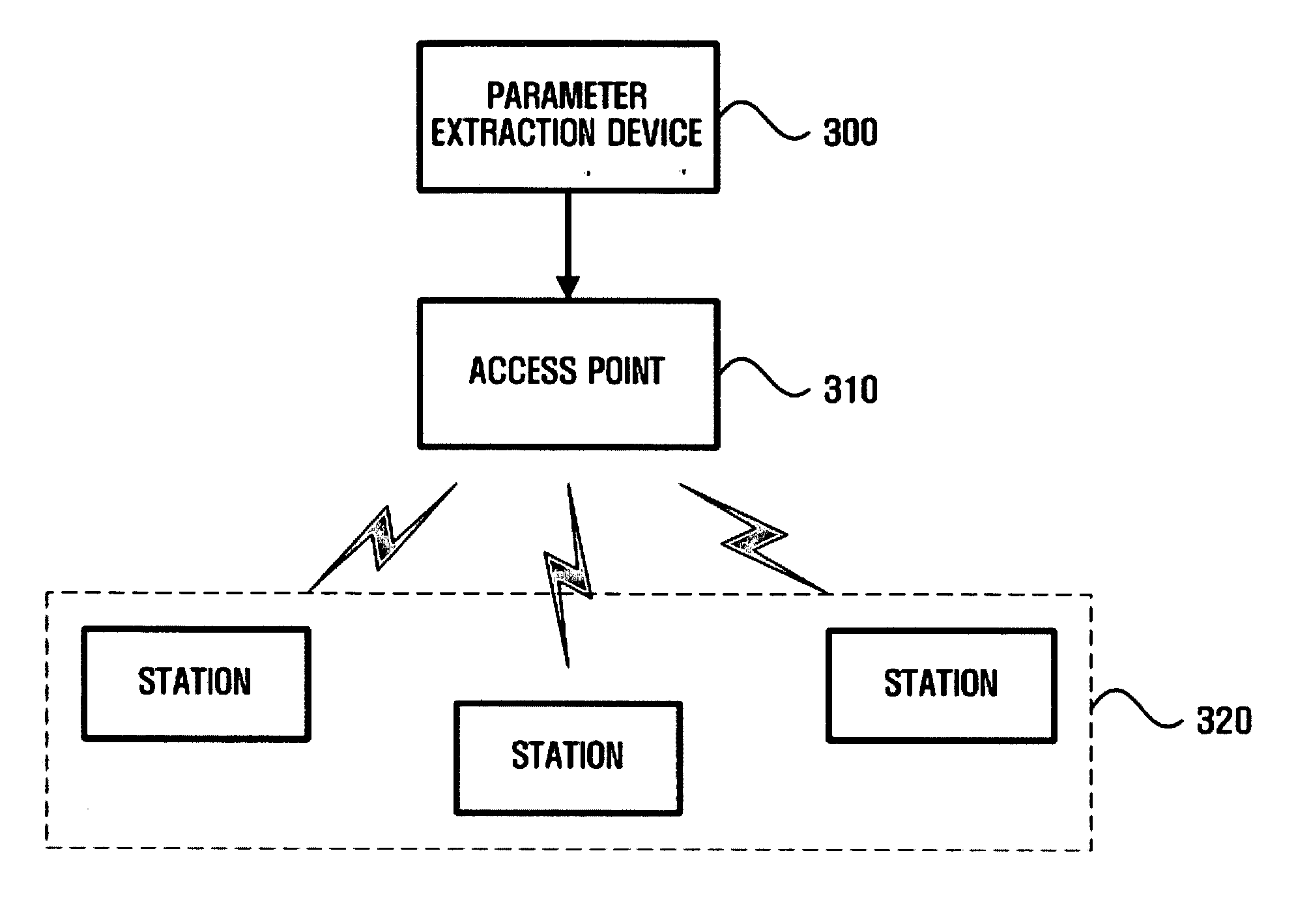

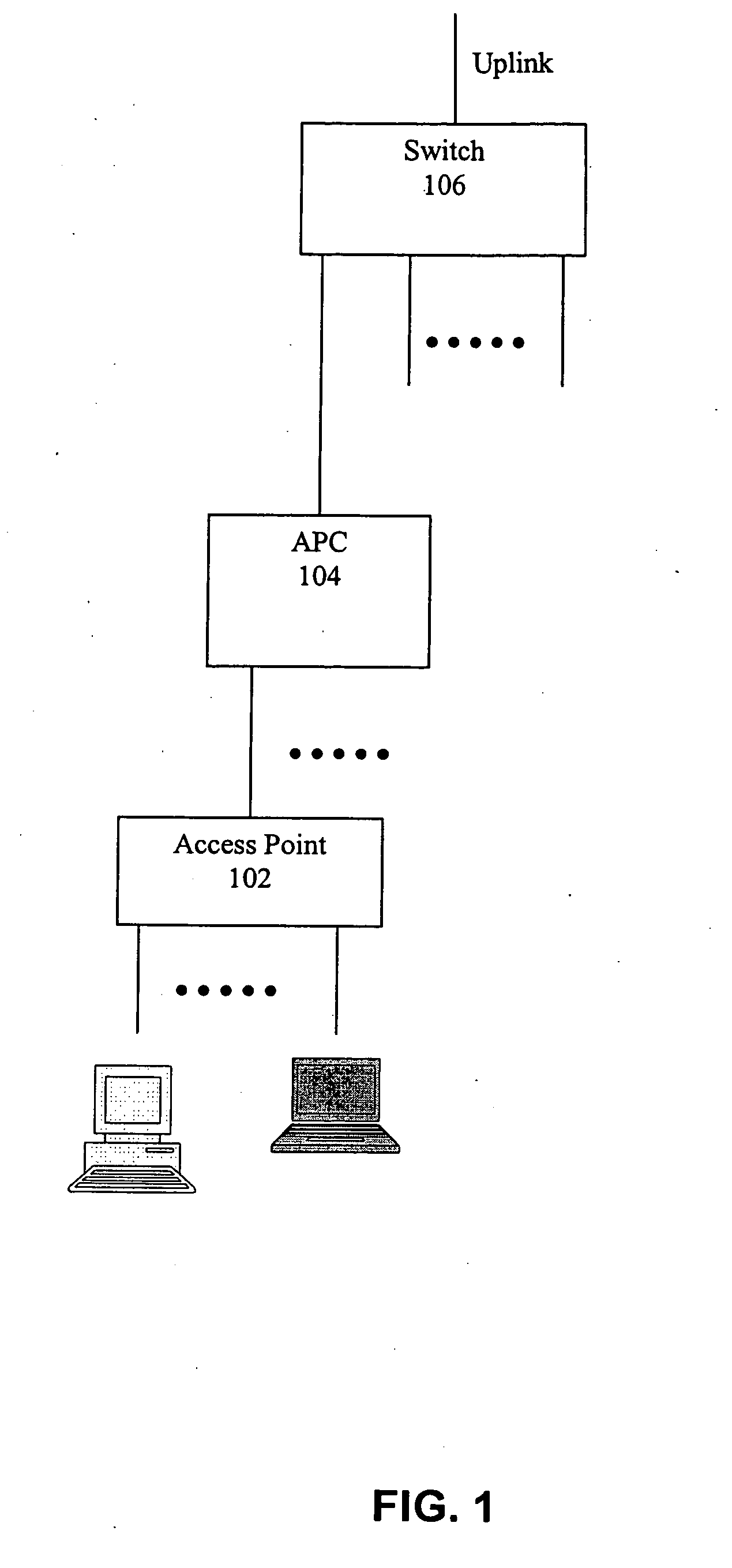

Apparatus and method for providing enhanced wireless communications

InactiveUS20060291402A1Convenient wireless communicationCommunication throughputError preventionFrequency-division multiplex detailsNon real timeTraffic load

An apparatus and method for providing enhanced wireless communications, in which predetermined parameters are obtained by using an experiment in a wireless network environment to decrease traffic backoffs in real-time or increase traffic throughputs in non real-time, and the obtained parameters are used for access points and each station. The apparatus for providing enhanced wireless communications includes a storage unit for storing EDCA parameters and predetermined retrieval information corresponding to the EDCA parameters, the EDCA parameters decreasing traffic backoffs in real-time or increasing traffic throughputs in non real-time, a receiving unit for receiving information including at least one of access categories of data to be transmitted from each station connected to a network and a traffic load in real-time, a parameter retrieval unit for retrieving the EDCA parameters corresponding to the retrieval information selected by referring to the received information, and a transmitting unit for transmitting the retrieved EDCA parameters.

Owner:SAMSUNG ELECTRONICS CO LTD

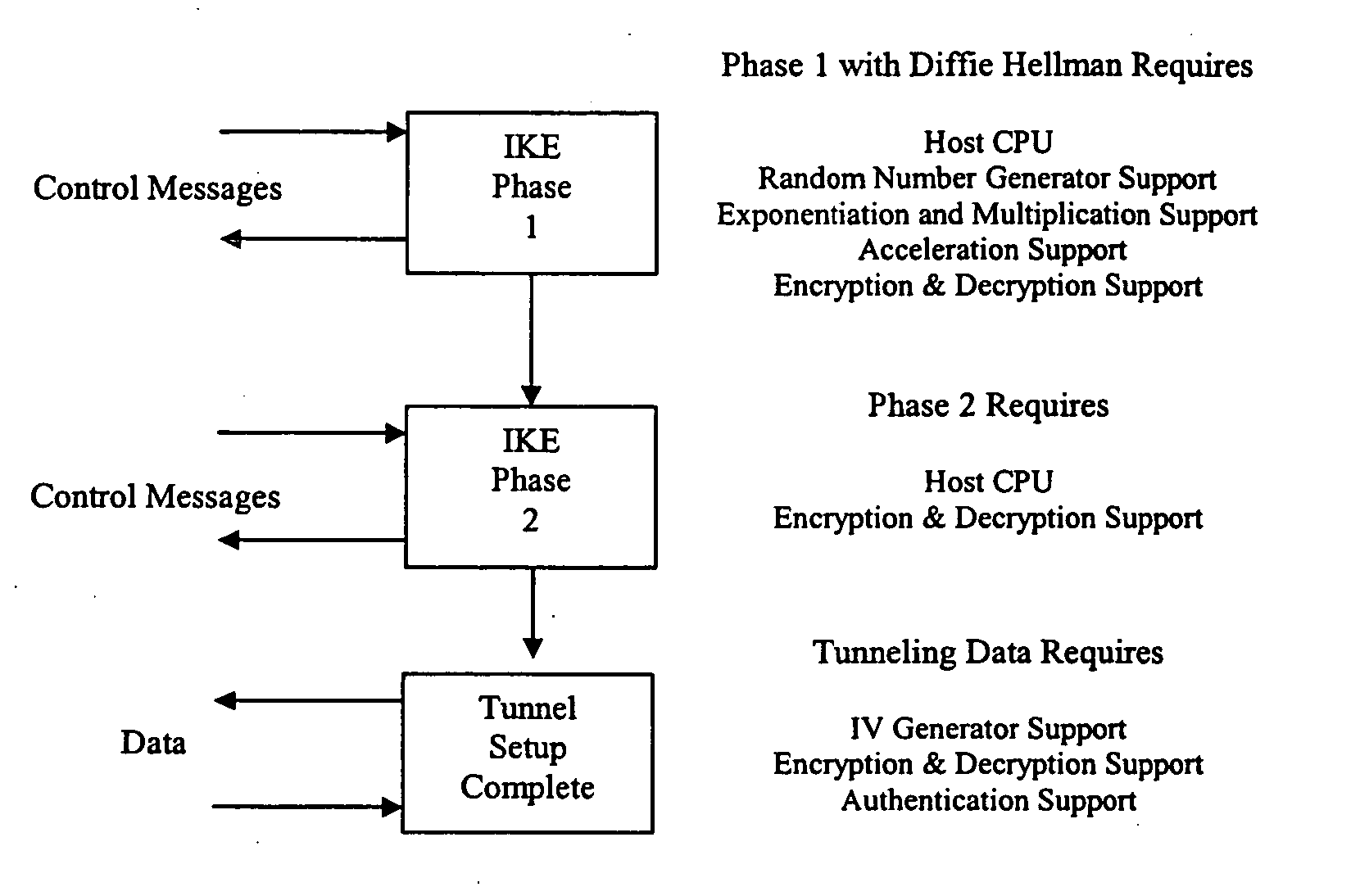

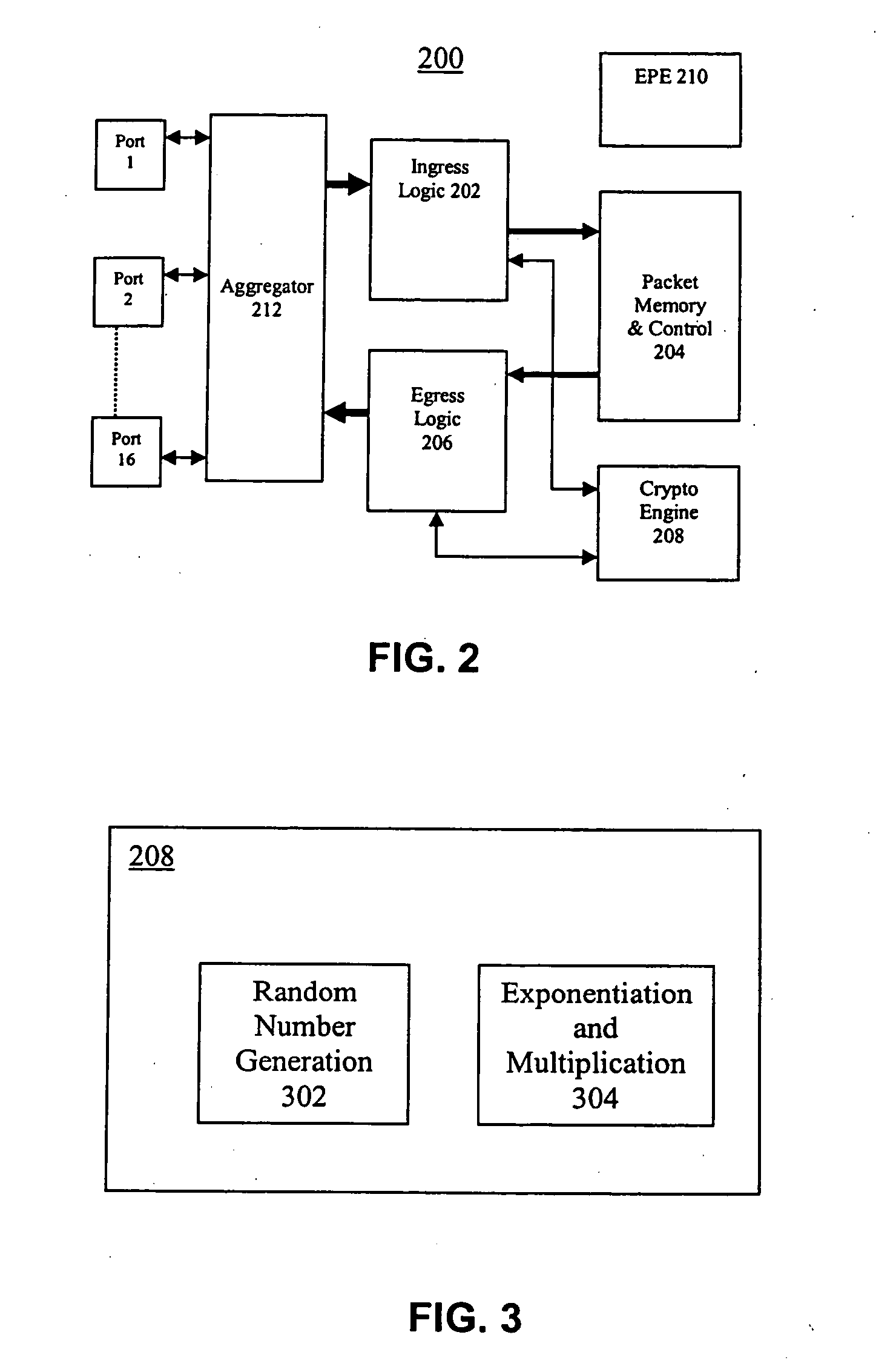

Hardware acceleration for Diffie Hellman in a device that integrates wired and wireless L2 and L3 switching functionality

InactiveUS20050063543A1Reduce throughputKey distribution for secure communicationNetwork topologiesExtensibilityIPsec

An apparatus provides an integrated single chip solution to solve a multitude of WLAN problems, and especially Switching / Bridging, and Security. In accordance with an aspect of the invention, the apparatus is able to terminate secured tunneled IPSec, L2TP with IPSec, PPTP, SSL traffic. In accordance with a further aspect of the invention, the apparatus is also able to handle computation-intensive security-based algorithms such as Diffie Hellman without significant reduction in traffic throughput. The architecture is such that it not only resolves the problems pertinent to WLAN it is also scalable and useful for building a number of useful networking products that fulfill enterprise security and all possible combinations of wired and wireless networking needs.

Owner:SINETT CORP

Automated system and method for service and cost architecture modeling of enterprise systems

An automated system and method is provided for system architects to model enterprise-wide architectures of information systems. From an initial model of a proposed system architecture, performance metrics are modeled and compared against a set of user-defined corporate and business requirements, including cost, quality of service and throughput. For unacceptable metrics, modifications to the system architecture are determined and proposed to the system architect. If accepted, the model of the system architecture is automatically modified and modeled again. Once the modeled performance metrics satisfy the corporate and business requirements, a detailed description of the system architecture derived from the model is output. The model of the system architecture also enables a business ephermeris or precalculated table cross referencing enterprise situation and remedy to be formed. A rules engine employs the business ephemeris and provides indications to the enterprise user for optimizing or modifying components of the enterprise system architecture.

Owner:X ACT SCI INC

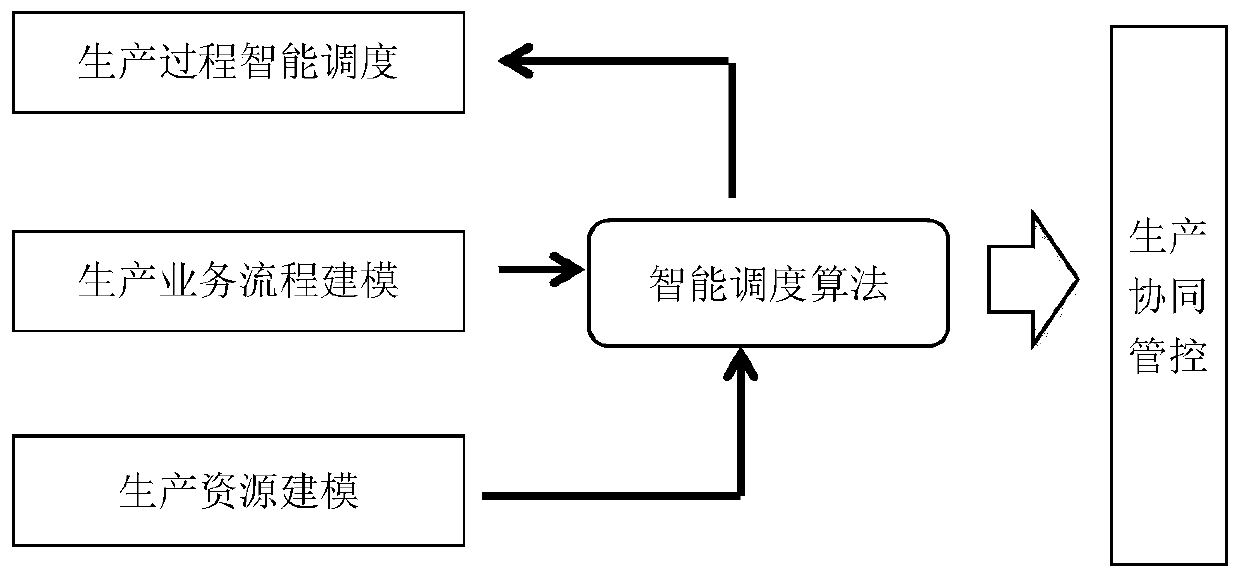

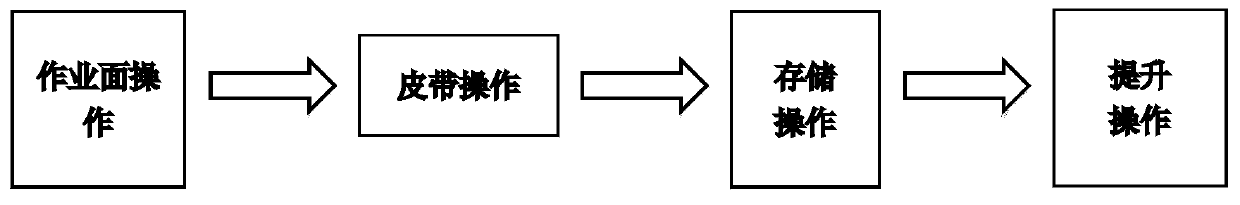

Resource scheduling method and system based on multiple process models

ActiveCN111223001ARealize the optimization of downhole schedulingDesign optimisation/simulationResourcesOptimal schedulingOperations research

The invention discloses a resource scheduling method and a system based on multiple process models. The method comprises the following steps: 1) establishing a mining production resource model for describing resource basic characteristics, functional characteristics and constraint characteristics of each production resource; wherein the production resources comprise process resources, discrete resources and batch resources; 2) establishing production business models including a process type business model, a discrete type business model and a batch type business model; 3) calculating constraint conditions on different position distribution by the intelligent scheduling module on the basis of the discrete service model; 4) planning a path and calculating the energy demand and the maximum throughput on the path by the intelligent scheduling module according to the constraint condition of each position and the upstream and downstream node information of the position node; and 5) calculating batch-type service output in the time sequence segmentation period by the intelligent scheduling module according to the batch-type service model, and selecting the minimum energy demand as the optimal scheduling planning route by taking the maximum throughput as the constraint condition, so that underground scheduling optimization can be realized.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

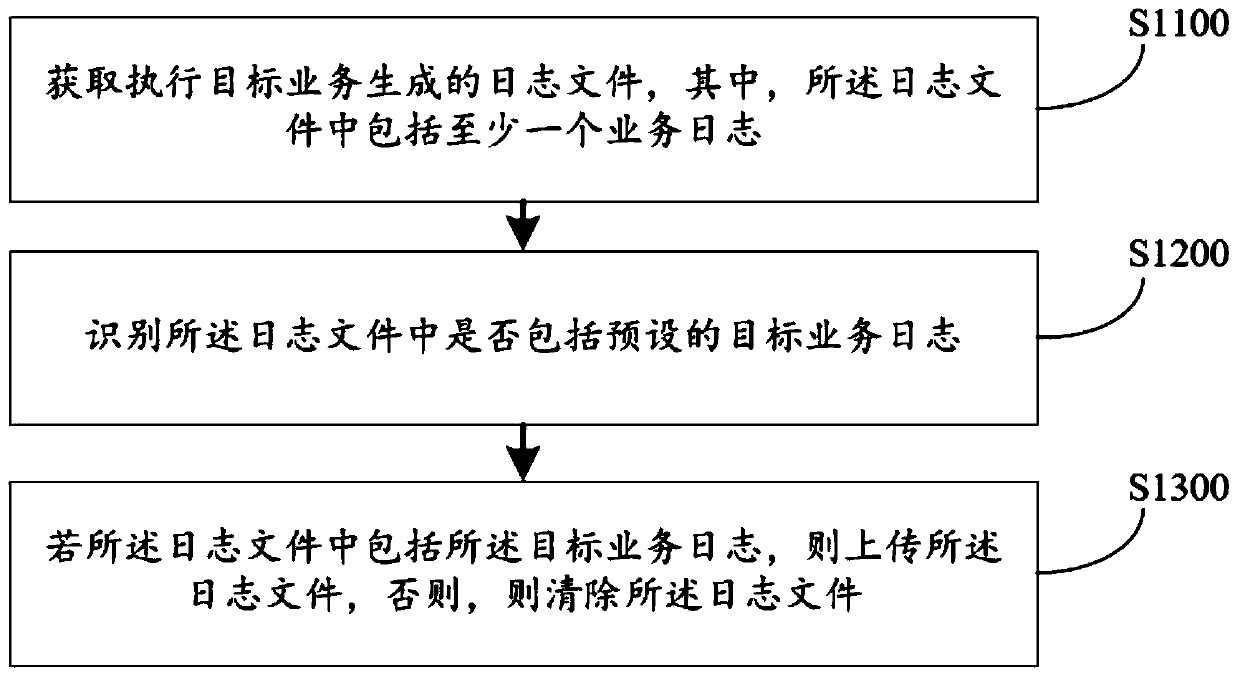

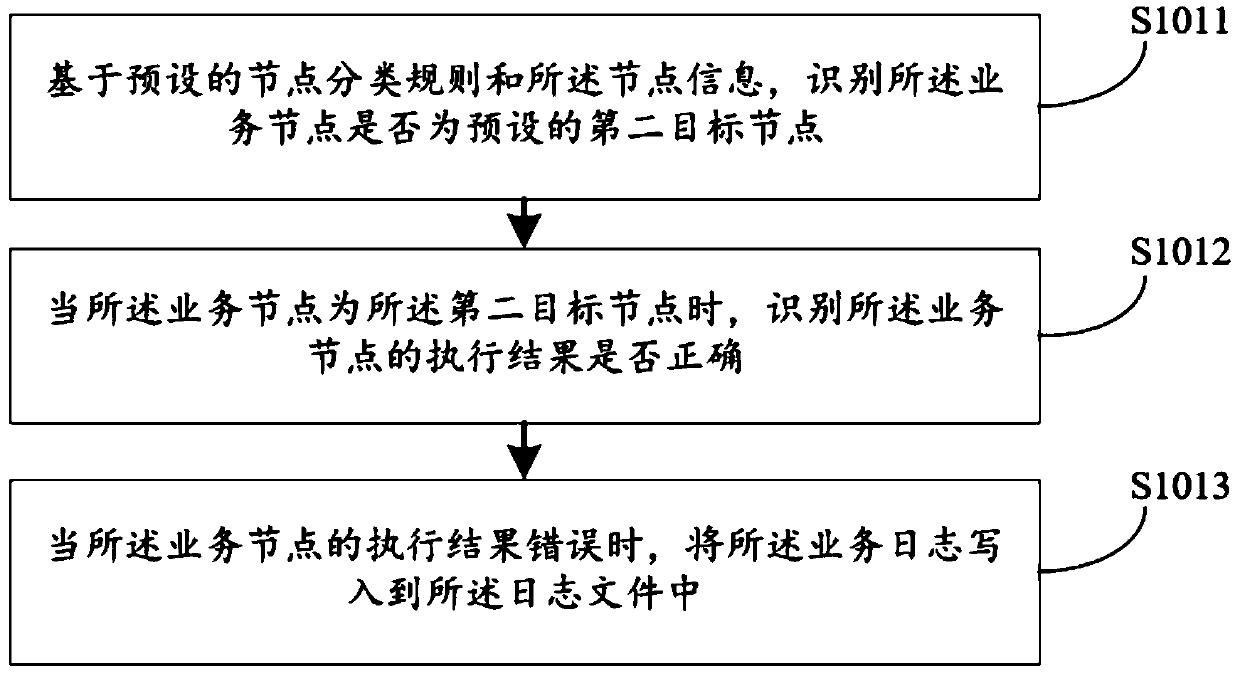

Log generation method and device, computer equipment and storage medium

PendingCN111190871AImprove usabilityReduce the impactData switching networksSpecial data processing applicationsBatch processingEngineering

The embodiment of the invention discloses a log generation method and device, computer equipment and a storage medium, and the method comprises the steps: obtaining a log file generated by executing atarget business, and enabling the log file to comprise at least one business log; identifying whether the log file comprises a preset target service log or not; and if the log file comprises the target service log, uploading the log file, otherwise, clearing the log file. Whether the log file is uploaded or not is determined by taking whether the log file contains the target service log or not asan uploading condition; only the log file containing the specified log can be uploaded and stored; other logs are cleared in time and are not stored any more, so that the throughput is improved, batch submission and batch processing are realized, the influence on user service processing is very small, and meanwhile, the logs are selectively controlled by utilizing uploading conditions, so that the log quantity can be greatly reduced, and the log availability is improved.

Owner:CHINA MOBILEHANGZHOUINFORMATION TECH CO LTD +1

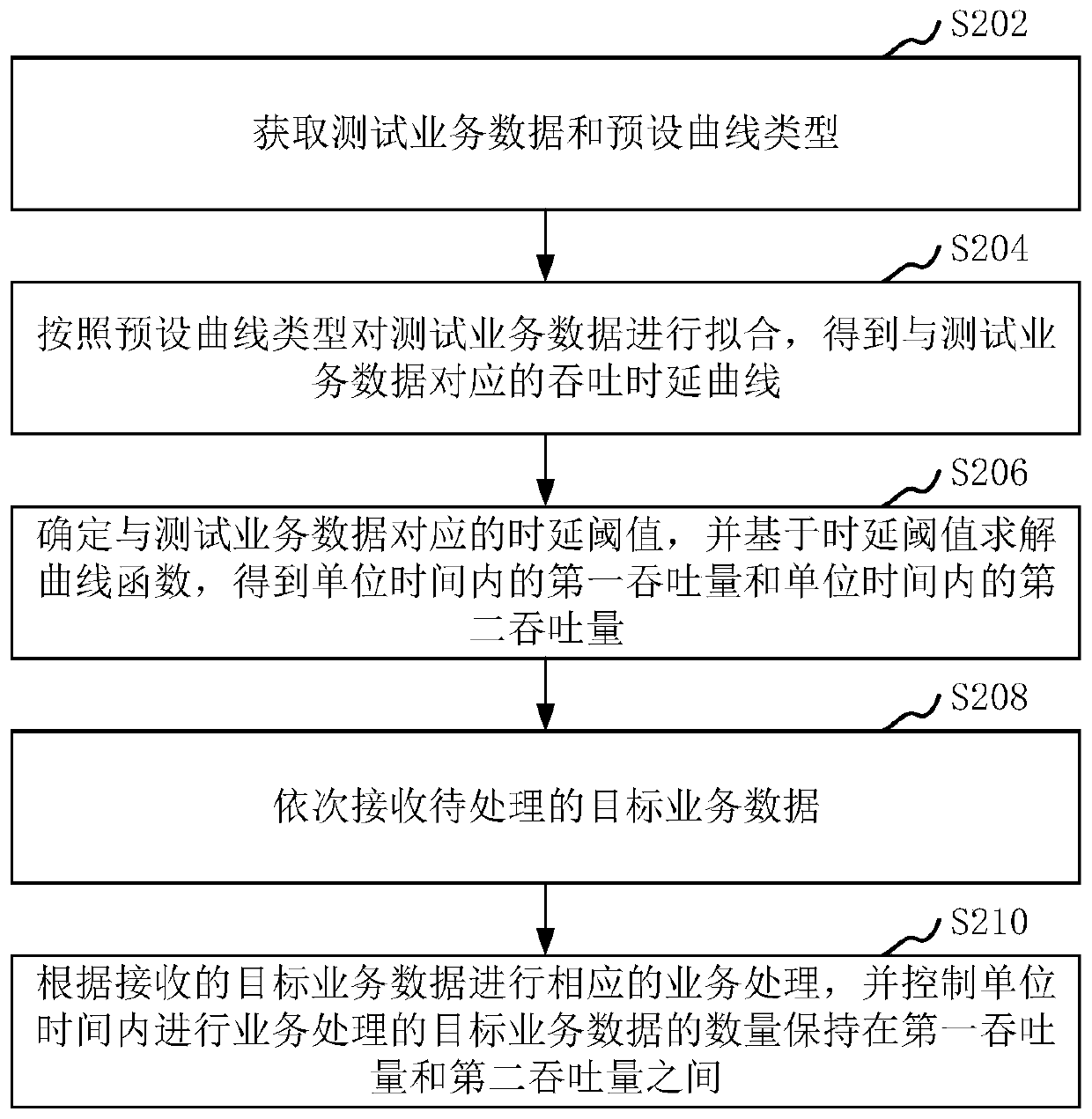

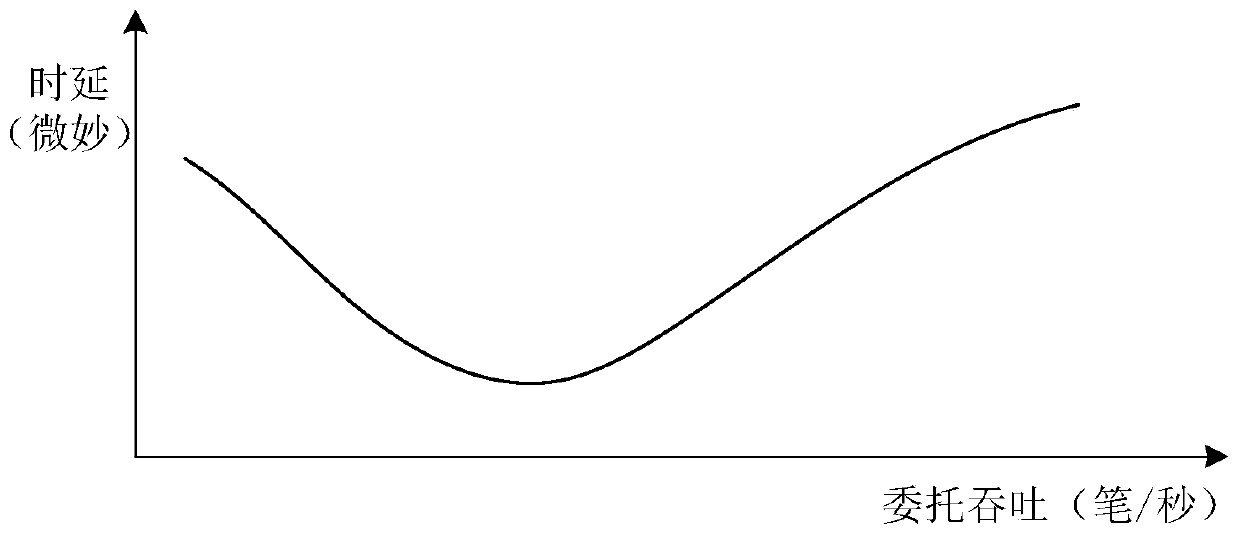

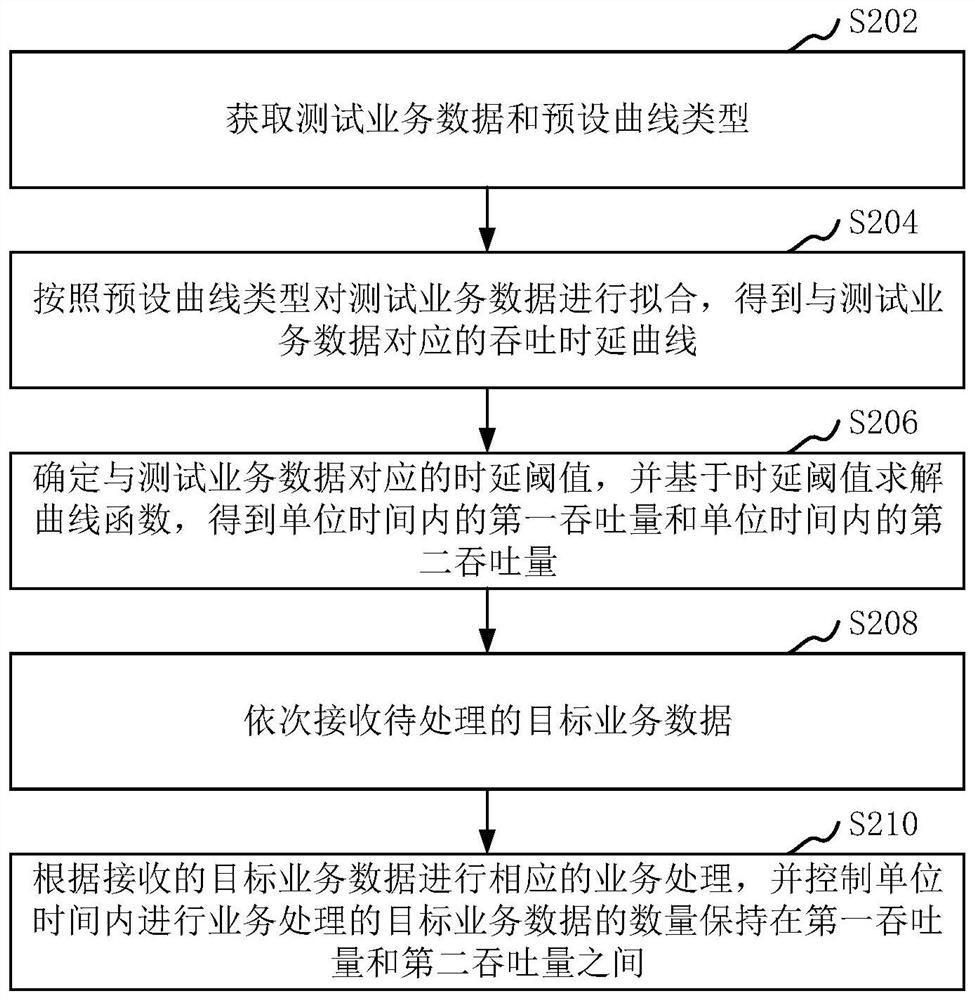

Business data control method and device, computer equipment and storage medium

The invention relates to a business data control method and device, computer equipment and a storage medium. The method comprises the following steps: acquiring test service data and a preset curve type; and fitting the test service data according to a preset curve type to obtain a throughput time delay curve corresponding to the test service data; determining a time delay threshold correspondingto the test service data, and solving the curve function based on the time delay threshold to obtain a first throughput in unit time and a second throughput in unit time; sequentially receiving to-be-processed target service data; performing corresponding service processing according to the received target service data, and controlling the quantity of the target service data subjected to service processing in unit time to be kept between the first throughput and the second throughput. By adopting the method, the system performance of the service data processing system can be stabilized.

Owner:深圳华锐分布式技术股份有限公司

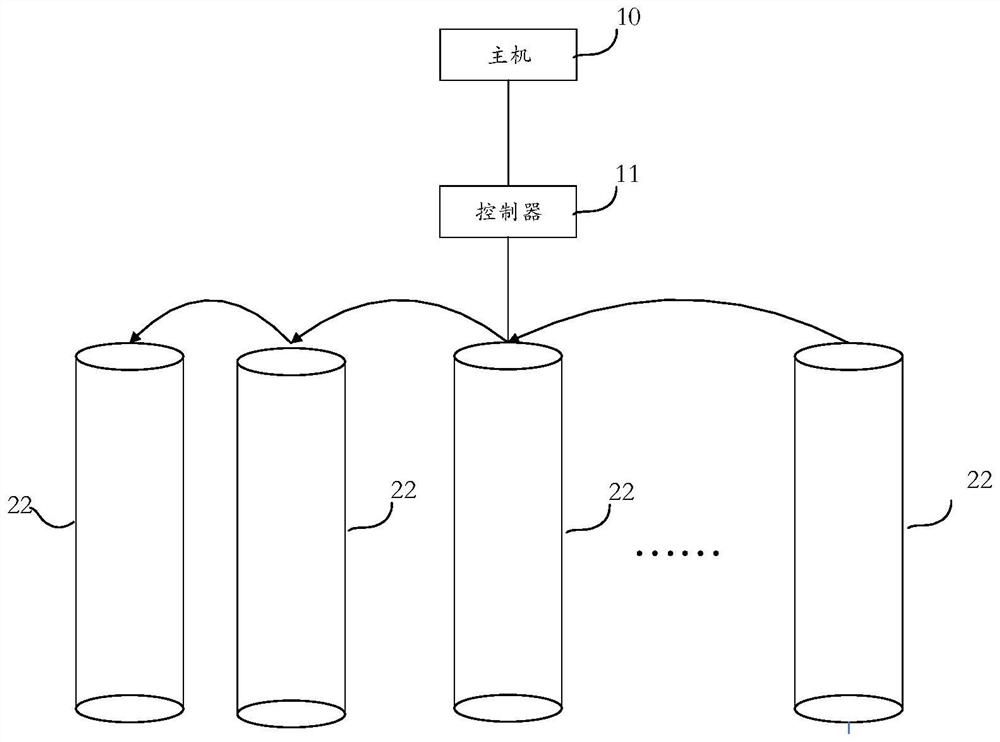

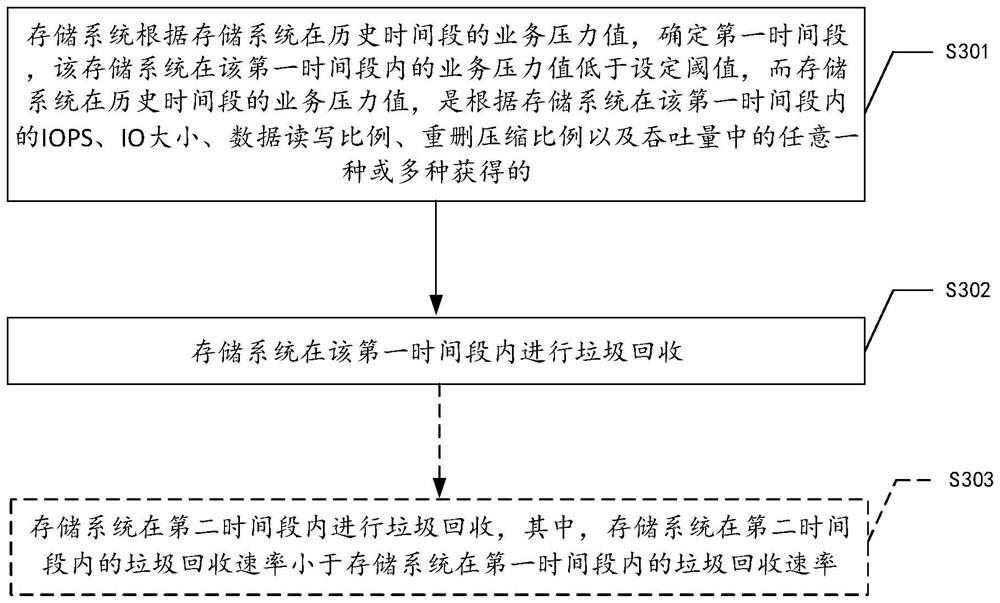

Garbage recycling method and device

PendingCN113971137AInput/output to record carriersMemory adressing/allocation/relocationGarbage collectionReliability engineering

The invention provides a garbage recycling method. The storage system determines a first time period according to the business pressure value of the storage system in the historical time period, where the business pressure value of the storage system in the first time period is lower than a set threshold value, and the business pressure value of the storage system in the historical time period is obtained according to any one or more of IOPS, IO size, data read-write proportion, deduplication compression proportion and throughput; therefore, the storage system can carry out garbage collection in the first time period with the small business pressure value. Therefore, the storage system can have more blank logic block groups to support the storage system to quickly write a large amount of new data in other time periods, so that the influence of garbage collection in the other time periods on the IOPS and other performances of the host can be reduced, and the performance of the host when the host has a large amount of data write-in tasks is improved. In addition, the embodiment of the invention further provides a garbage recycling device.

Owner:HUAWEI TECH CO LTD

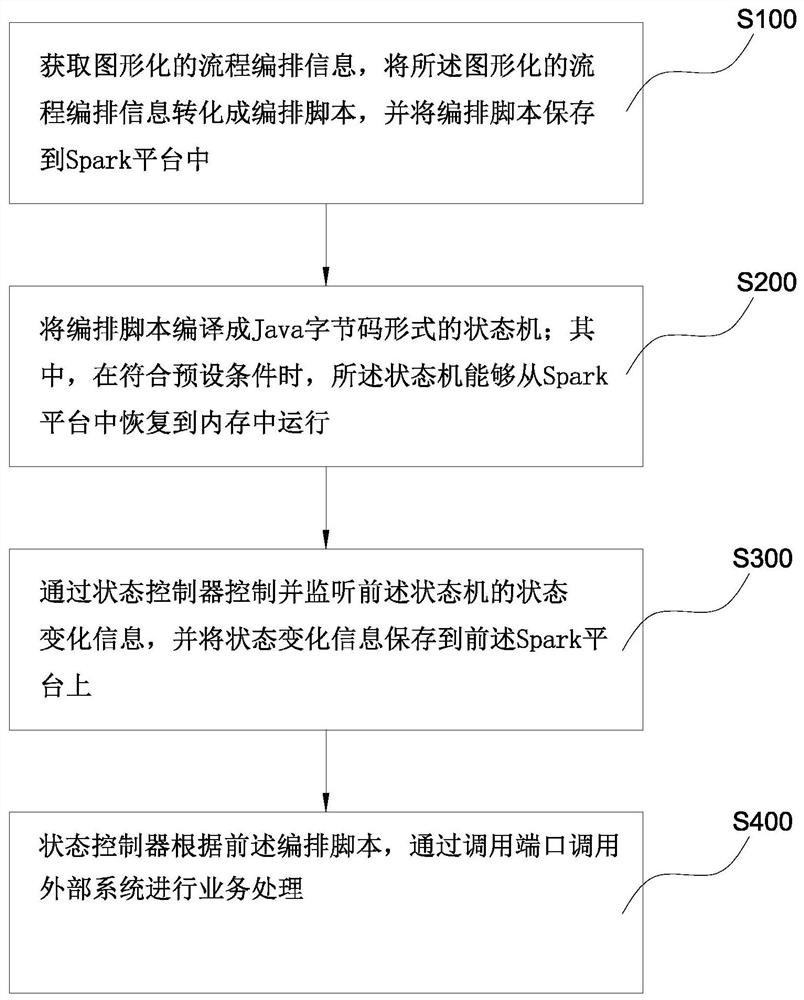

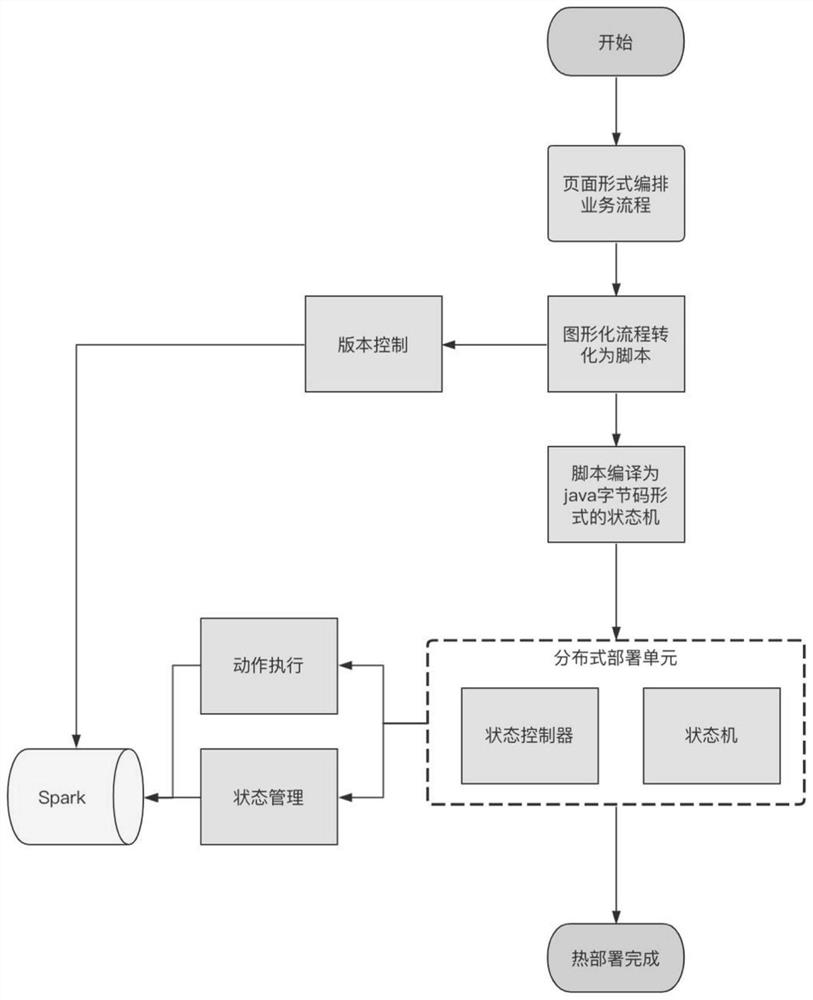

Process engine implementation method and system based on Spark and parallel memory computing

PendingCN112379884AIncrease elasticityProgram initiation/switchingDatabase management systemsData streamProcess (computing)

The invention discloses a process engine implementation method and system based on Spark and parallel memory computing, and relates to the technical field of process automation. The method comprises the following steps: acquiring graphical process arrangement information, converting the graphical process arrangement information into an arrangement script, and storing the arrangement script in a Spark platform; compiling the arrangement script into a state machine in a Java byte code form, wherein the state machine can be recovered from a Spark platform to a memory for operation; controlling and monitoring state change information of the state machine through a state controller, and storing the state change information to the Spark platform; and enabling the state controller to call an external system to perform service processing through the calling port according to the arrangement script. According to the invention, distributed data flow automation and business flow automation can beachieved at the same time, large throughput and instant responsiveness are both considered, and the method and system are especially suitable for scene requirements of large throughput.

Owner:李斌

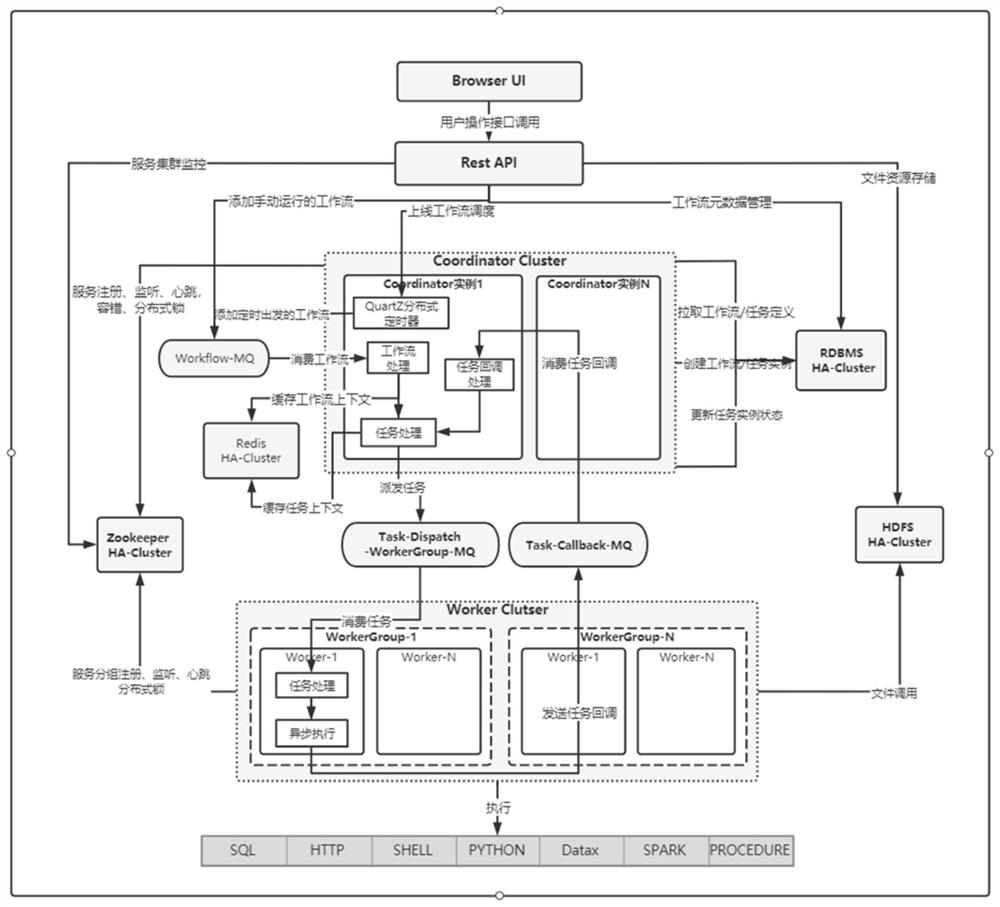

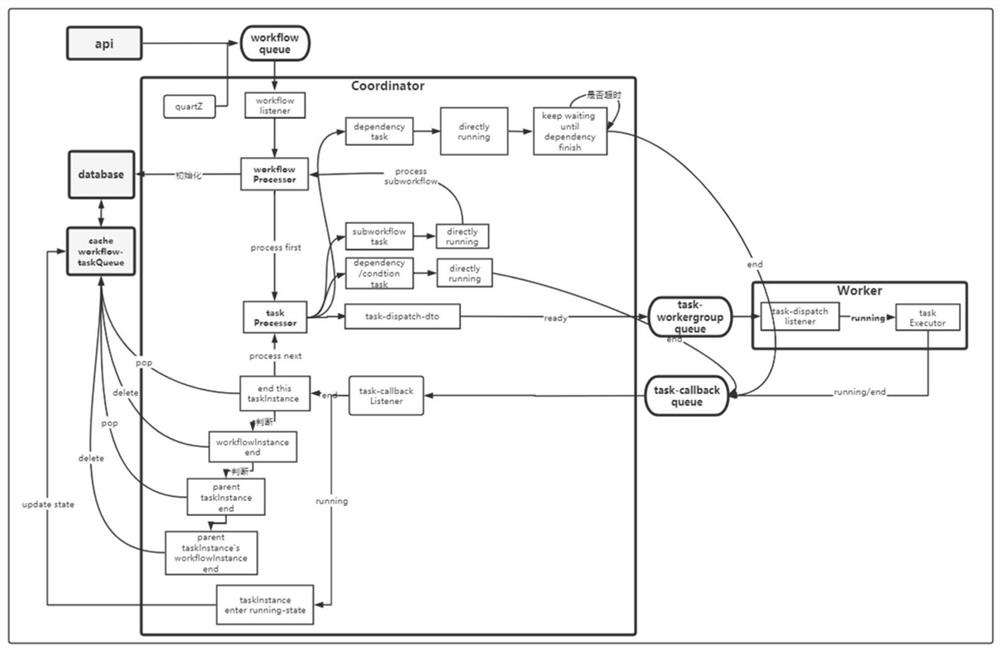

Loosely coupled distributed workflow coordination system and method

PendingCN113821322AGuaranteed order of priorityHigh precisionProgram initiation/switchingResource allocationMessage queueParallel computing

The invention discloses a loosely coupled distributed workflow coordination system and method, and the method comprises the steps: a user carries out definition, online, operation and maintenance of a workflow through calling an interface service API (Application Program Interface); a distributed workflow coordinator schedules the workflow in a timed manner by integrating a distributed timing engine Quartz, adds the workflow to a workflow distribution distributed message queue MQ, receives the workflow, processes the task dependency relationship of the workflow, and adds a business type task to be executed after coordination to the task distribution distributed message queue MQ; a distributed task actuator Worker receives each business type task from the task distribution distributed message queue MQ and executes the business type tasks, and the task execution result is called back to the distributed workflow coordinator through the task callback distributed message queue MQ; and finally, the Coordinator persistently stores a task execution result in a database for feeding back the result to a user. According to the method, Coordinator is focused on logic coordination processing, so that full decoupling of workflow coordination processing and task execution is ensured, and the throughput, expansibility and scalability of the system are improved.

Owner:浙江数新网络有限公司

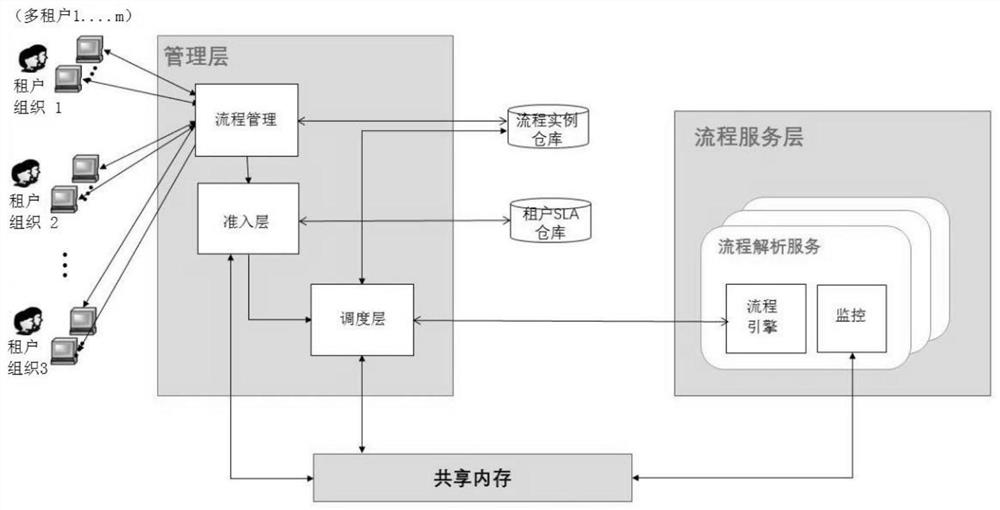

A method for load balancing scheduling of stateless cloud workflow based on SLA

ActiveCN109861850BImprove load balancingReduce request peakData switching networksEngineeringCloud workflow

The invention discloses an SLA-based stateless cloud workflow load balancing scheduling method. According to the requirements of different tenants on the business scene and the flow model, different SLA levels are selected, different SLA levels are adopted; the cloud workflow system provides different request throughput services for the tenants; grading service is carried out on different processrequests; engine load real-time monitoring and process model distribution condition on an engine are realized by combining a shared memory; the overall memory overhead of the engine cluster is reducedwhile the request wave crest of the engine service is reduced; Therefore, the load balancing capability of the cloud workflow under the multi-tenant architecture is improved, so that the flow serviceprovider can provide services for more tenants on the basis of meeting the analysis execution performance requirements of different tenants on request throughput and different flow definitions.

Owner:SUN YAT SEN UNIV

Service data control method, device, computer equipment and storage medium

The present application relates to a service data control method, device, computer equipment and storage medium. The method includes: acquiring test service data and preset curve types. Fit the test service data according to the preset curve type to obtain the throughput delay curve corresponding to the test service data. Determining the delay threshold corresponding to the test service data, and solving the curve function based on the delay threshold, to obtain the first throughput per unit time and the second throughput per unit time. Receive the target business data to be processed in sequence. Perform corresponding service processing according to the received target service data, and control the amount of target service data for service processing per unit time to be kept between the first throughput and the second throughput. By adopting the method, the system performance of the service data processing system can be stabilized.

Owner:深圳华锐分布式技术股份有限公司

A Consortium Chain Master-Slave Multi-Chain Consensus Method Based on Tree Structure

ActiveCN110245951BImplement classificationMeet diverse business needsDigital data protectionPayment protocolsByzantine fault toleranceFinancial transaction

The invention discloses a tree-structured alliance chain master-slave multi-chain consensus method. By dividing the alliance chain consensus group, the upper channel and the lower channel are obtained, and the channels are isolated from each other to realize the classification and classification of different digital assets. Data isolation meets the privacy requirements of data isolation, and multiple channels are processed concurrently, which improves transaction performance and solves the problems of low throughput and high transaction delay in the existing blockchain. Chain architecture, and the Byzantine fault-tolerant consensus algorithm based on threshold signature under the master-slave multi-chain architecture solves the consistency problem caused by concurrent processing of diversified digital asset classification, and has the advantages of low communication complexity and signature verification complexity. Master-slave The multi-chain structure breaks through the functional and performance constraints of a single chain, has good high concurrent transaction performance, and takes into account the isolation and protection of private data, meeting the diverse business needs of enterprises.

Owner:芽米科技(广州)有限公司

A stable and high-throughput asynchronous task processing method based on kafka

ActiveCN110377486BIntegrity guaranteedTake advantage ofProgram initiation/switchingHardware monitoringTask completionOperating system

The invention relates to a kafka-based asynchronous task processing method for realizing stable high throughput. Using the asynchronous task mode based on kafka, for the processing mechanism of asynchronous tasks, the management thread is used to monitor the various activities of the worker threads and combined with the timing task to output the execution of the asynchronous task according to the frequency of the monitoring interval, providing processing for overtime tasks. Mechanism and abnormal task processing mechanism, increase monitoring task consumption, statistics task execution time, task completion details, and optimize and adjust parameters through statistics. This statistical information will be written into the log, and users can analyze performance through the log. The invention can greatly reduce the coupling between the system and the business system.

Owner:福建南威软件有限公司

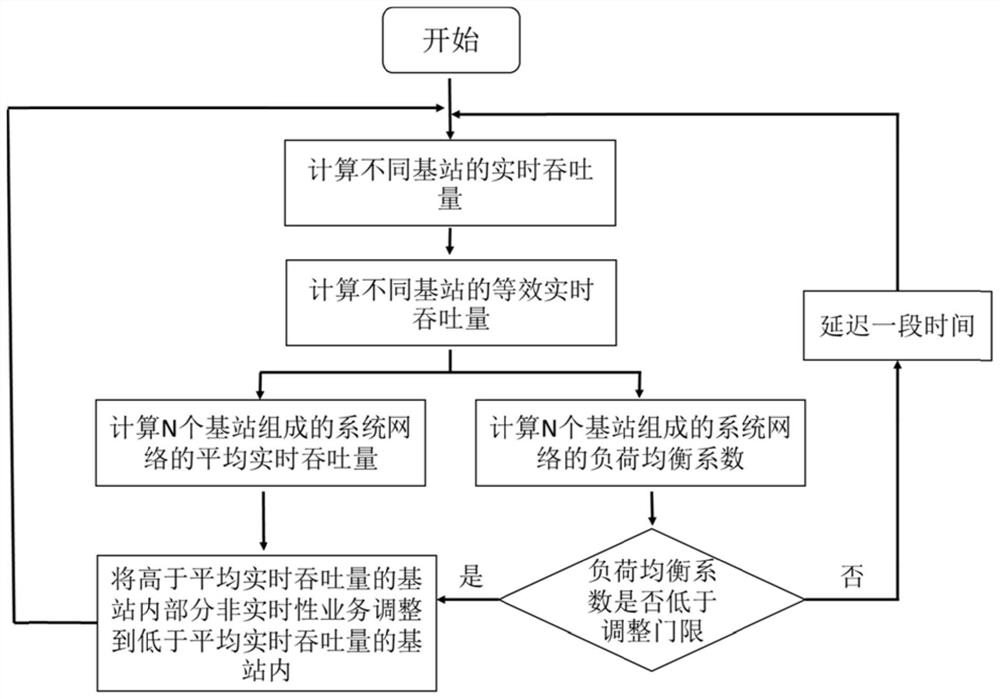

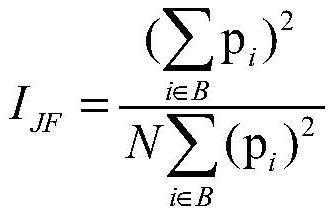

A service balancing method based on base station throughput capability in 5G network

ActiveCN108632872BReduce throughputGuaranteed experienceNetwork traffic/resource managementG-networkEqualization

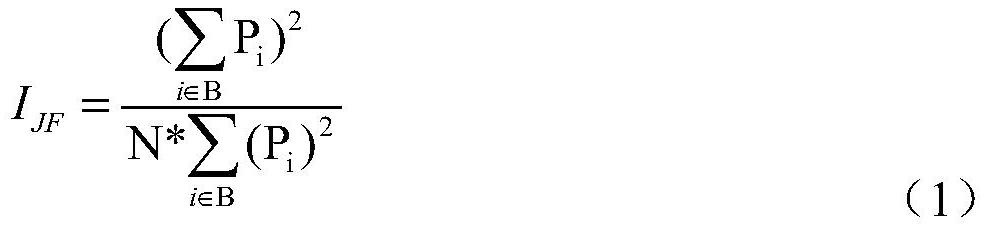

The invention discloses a service balancing method based on base station throughput capability in a 5G network, comprising the following steps: step 1, calculating the real-time throughput of the base station; step 2, calculating the average real-time throughput of the base station according to the real-time throughput of the base station; Step 3, calculate the load balancing coefficient I of the network JF ; Step 4, every time T, recalculate the load balancing coefficient I of the network JF , according to the coefficient and the average real-time throughput of the network, the non-real-time services in the base station are preferentially adjusted.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD

Three-dimensional log full-link monitoring system and method, medium and equipment

PendingCN114189430AFacilitate aggregated searchRealize monitoringTransmissionMonitoring systemData profiling

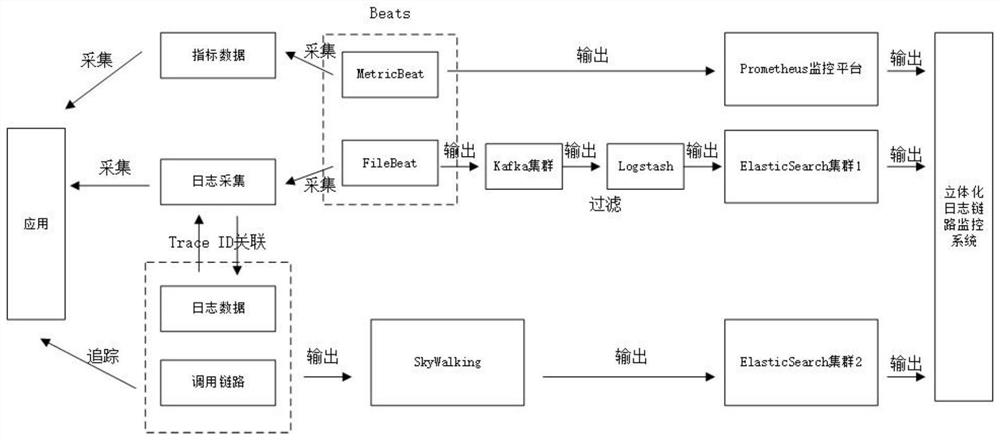

The invention provides a three-dimensional log full-link monitoring system and method, a medium and equipment, and the system comprises a log analysis platform which collects application logs, and carries out the filtering, desensitization, storage, query and alarm of the application logs; the full-link tracking platform is used for collecting related data based on an application performance monitoring system SkyWalking, and analyzing and diagnosing the performance bottleneck of the application under the distributed architecture; and the monitoring platform is used for monitoring infrastructures, application performance, middleware and business operation indexes and controlling performance conditions of each access system, including application response time, throughput, slow response and error details, JVM and middleware states and business indexes. According to the method, logs and full-link tracking are combined, end-to-end transactions are associated in a micro-service scene, problems can be quickly positioned and analyzed by directly searching the logs and tracking the call chain, and the problem positioning complexity is reduced.

Owner:IND BANK CO +1

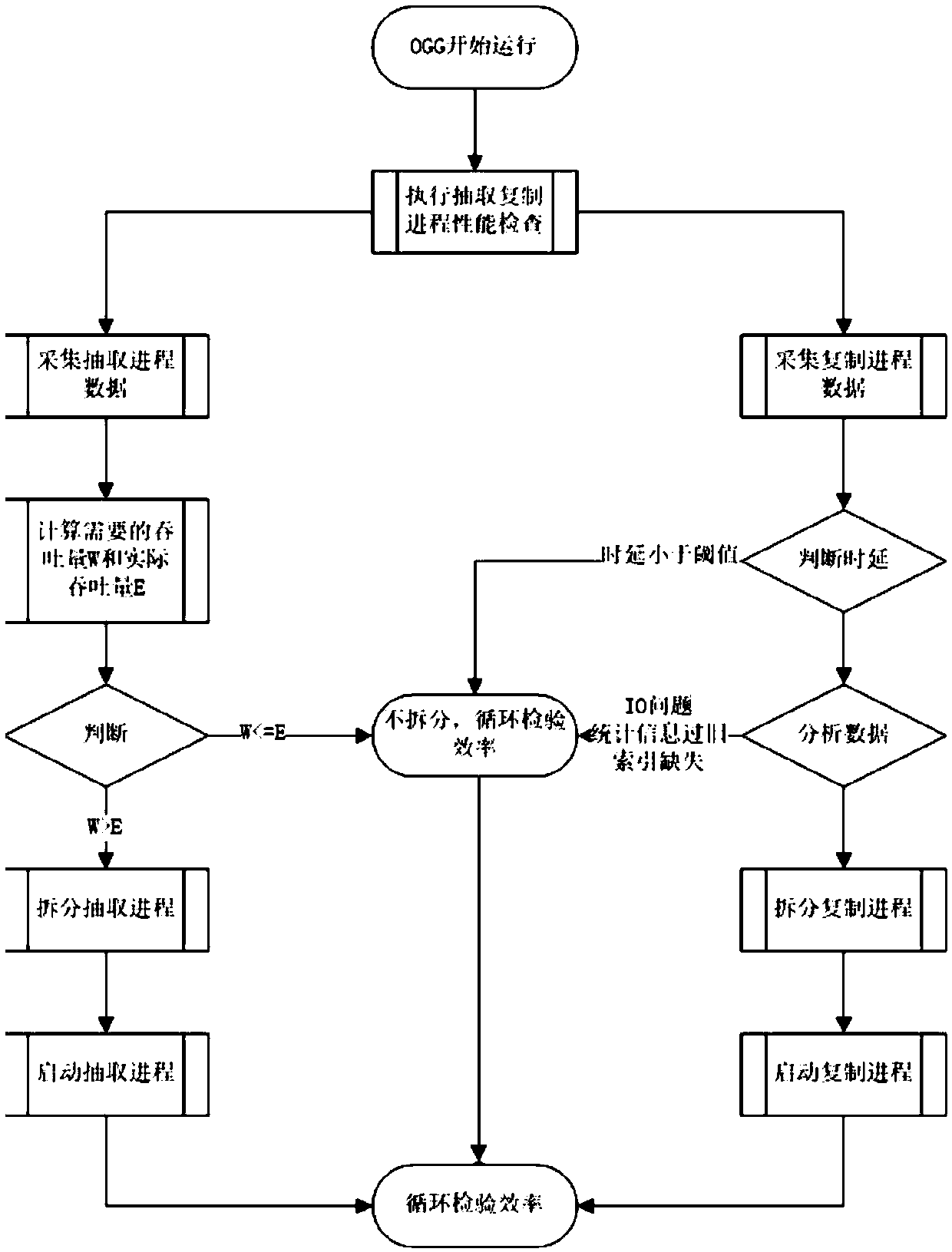

Method, device and equipment for distinguishing splitting process based on OGG technology

PendingCN111352707AAffect timelinessAffect consistencyProgram initiation/switchingHardware monitoringData synchronizationConversion coefficients

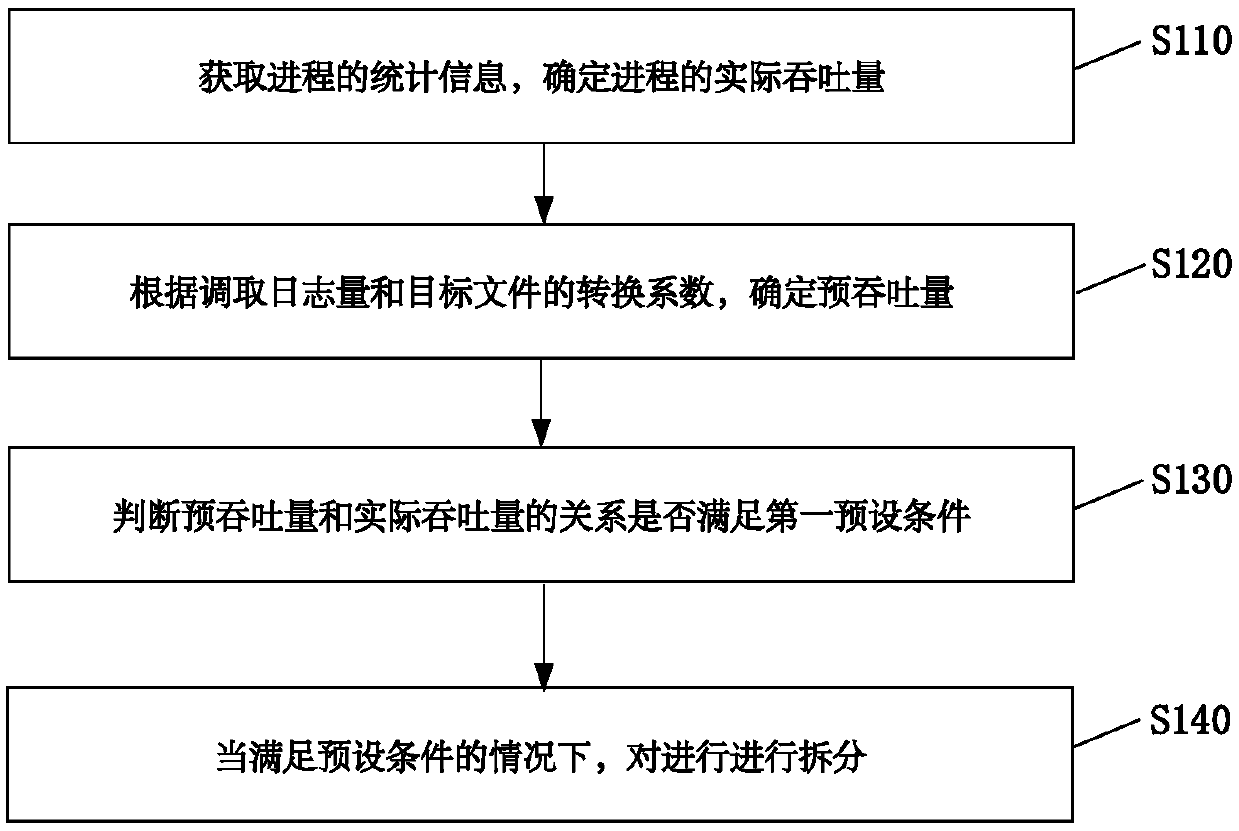

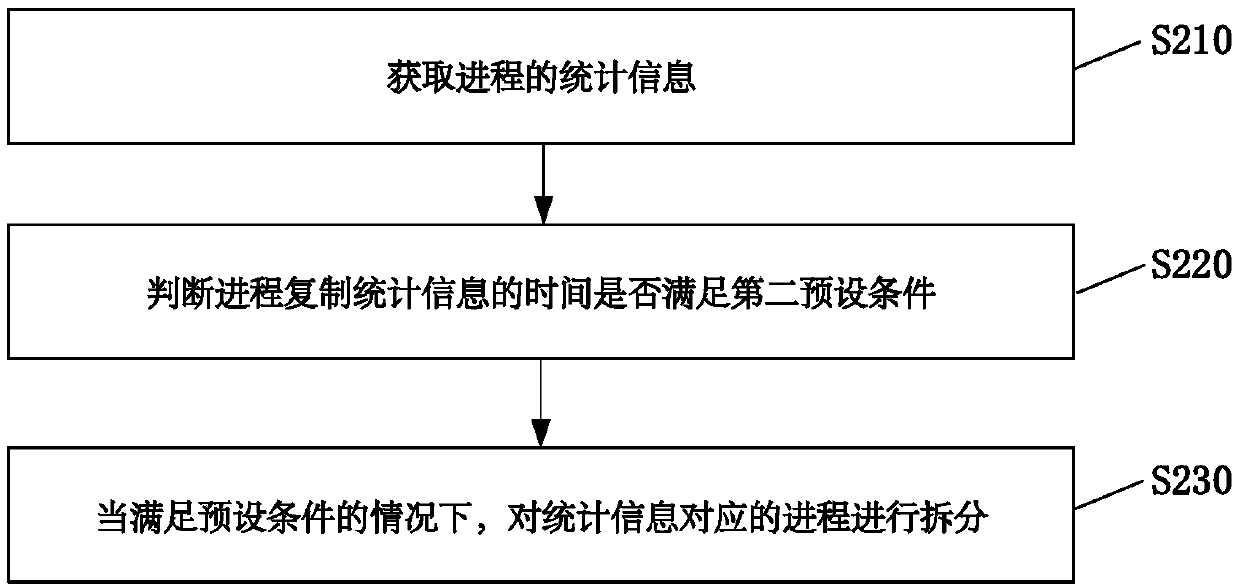

The embodiment of the invention provides a method, device and equipment for distinguishing a splitting process based on an OGG technology, and the method comprises the steps: obtaining the statisticalinformation of a process, and determining the actual throughput of the process, wherein the statistical information comprises a target file; determining a pre-throughput according to the called log quantity and the conversion coefficient of the target file; judging whether the relationship between the pre-throughput and the actual throughput meets a first preset condition or not; and under the condition that a preset condition is met, splitting the process. According to the invention, the statistical information is obtained; executing and splitting processes are optimized; the process based on the OGG technology can timely respond to the data change caused by the change of the business system, the influence on the timeliness and consistency of the data due to the data synchronization delay caused by the sudden business change can be prevented, and the labor cost is reduced while the data extraction and replication efficiency of the OGG process is improved.

Owner:CHINA MOBILE GROUP SICHUAN +1

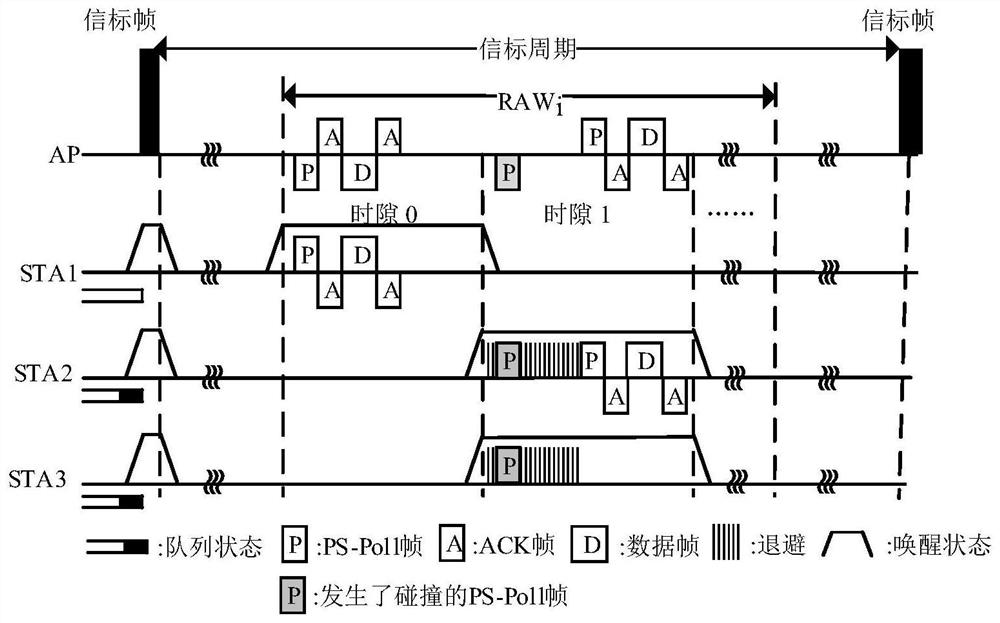

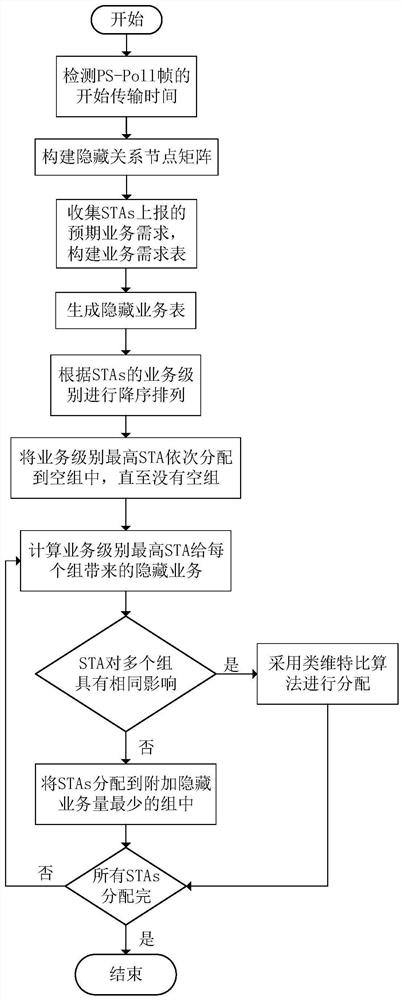

A workstation regrouping method in 5g network

ActiveCN110337125BImprove throughputGuaranteed service qualityNetwork traffic/resource managementHigh level techniquesWorkstationG-network

The invention belongs to the technical field of mobile communication, and specifically relates to a method for regrouping workstations in a 5G network, including using the PS-Poll frame start transmission time of all workstations as an inspection indicator for hidden nodes to detect hidden node relationships in the network; After the access point obtains the PS-Poll frame transmission time of the workstation, it determines the hidden node relationship between the workstations and builds a hidden relationship matrix; the access point quantifies the expected service requirements reported by the workstations into different service levels, and builds the expected Business demand table; integrate the expected business demand table into the hidden node relationship matrix to form a hidden business table with a comprehensive understanding of the potential impact of hidden nodes; regroup workstations according to the detected hidden business table during each TBTT; The throughput of the STA regrouping method of the present invention is 40% higher than that of the existing random grouping method.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

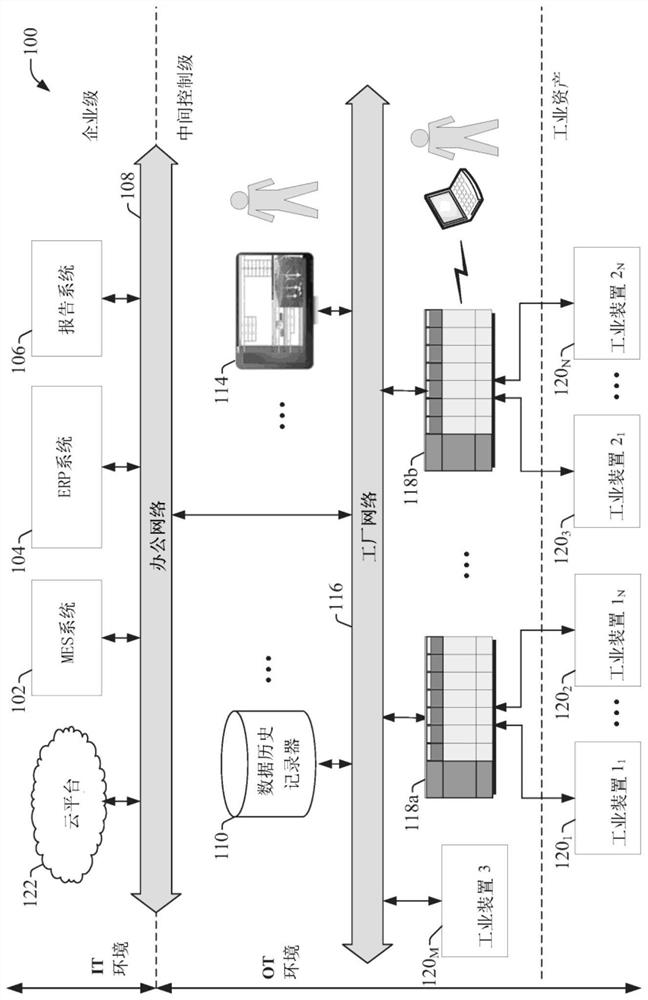

Contextualization of industrial data at the device level

The disclosure relates to contextualization of industrial data at the device level. An industrial device supports device-level data modeling that pre-models data stored in the device with known relationships, correlations, key variable identifiers, and other such metadata to assist higher-level analytic systems to more quickly and accurately converge to actionable insights relative to a defined business or analytic objective. Data at the device level can be modeled according to modeling templates stored on the device that define relationships between items of device data for respective analytic goals (e.g., improvement of product quality, maximizing product throughput, optimizing energy consumption, etc.). This device-level modeling data can be provided to higher level systems together with their corresponding data tag values to high level analytic systems, which discovers insights into an industrial process or machine based on analysis of the data and its modeling data.

Owner:ROCKWELL AUTOMATION TECH

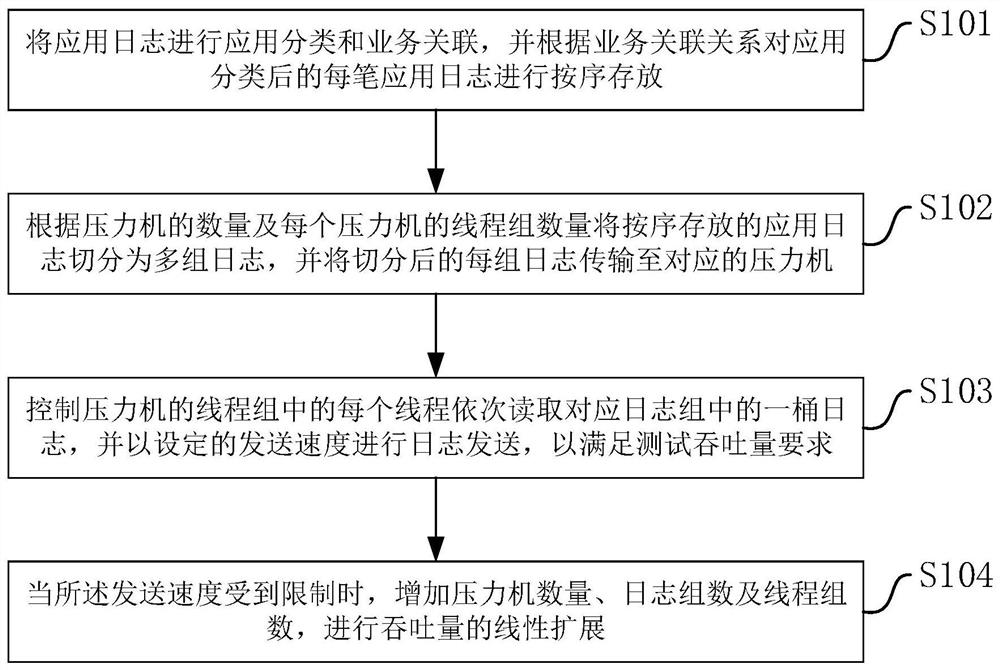

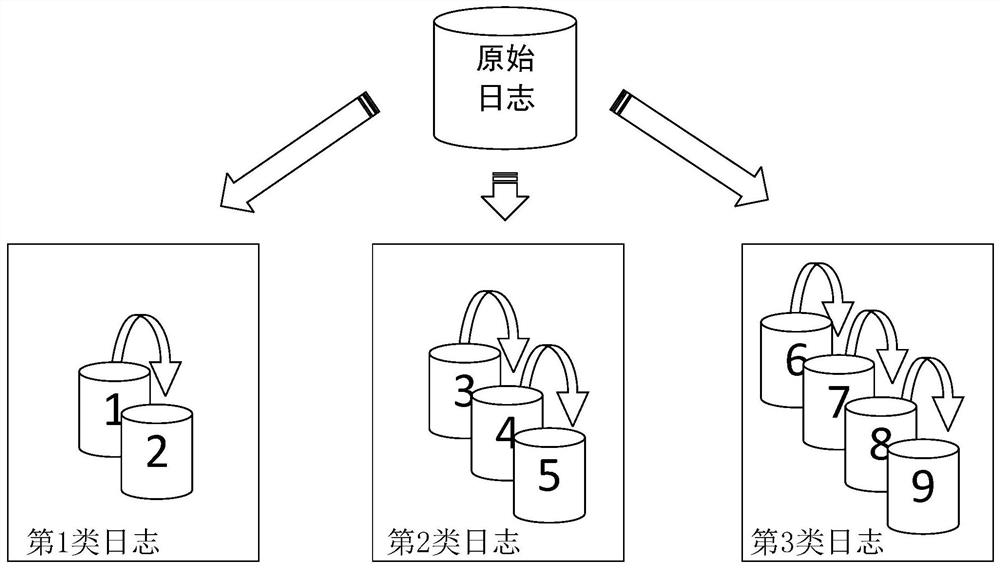

Association log playback method and device

ActiveCN108829802BFast playbackPlayback any specifiedHardware monitoringData switching networksDatabaseComputer engineering

Owner:THE PEOPLES BANK OF CHINA NAT CLEARING CENT

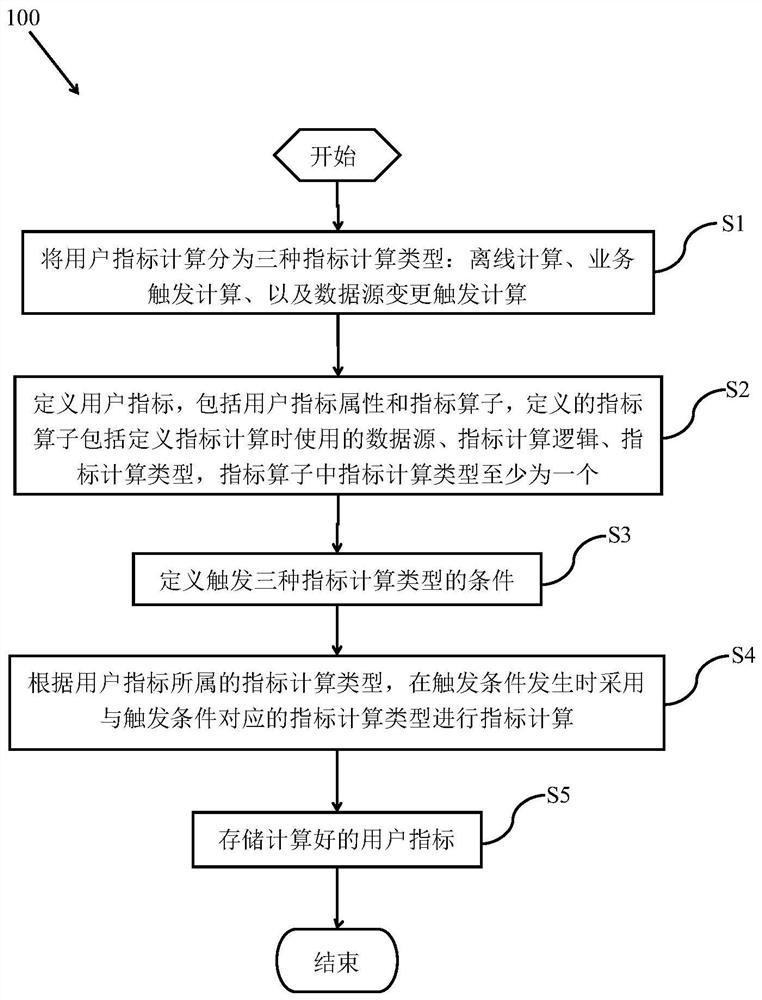

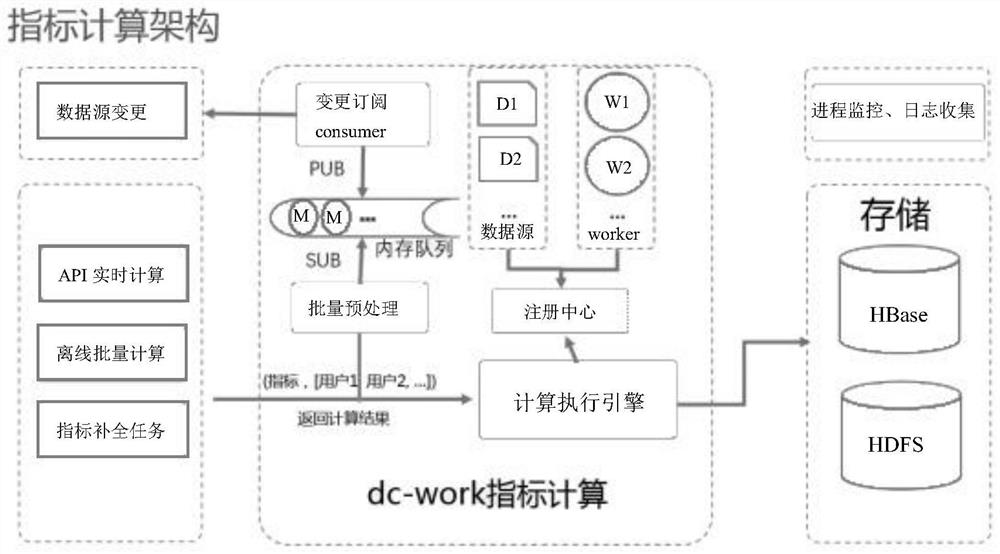

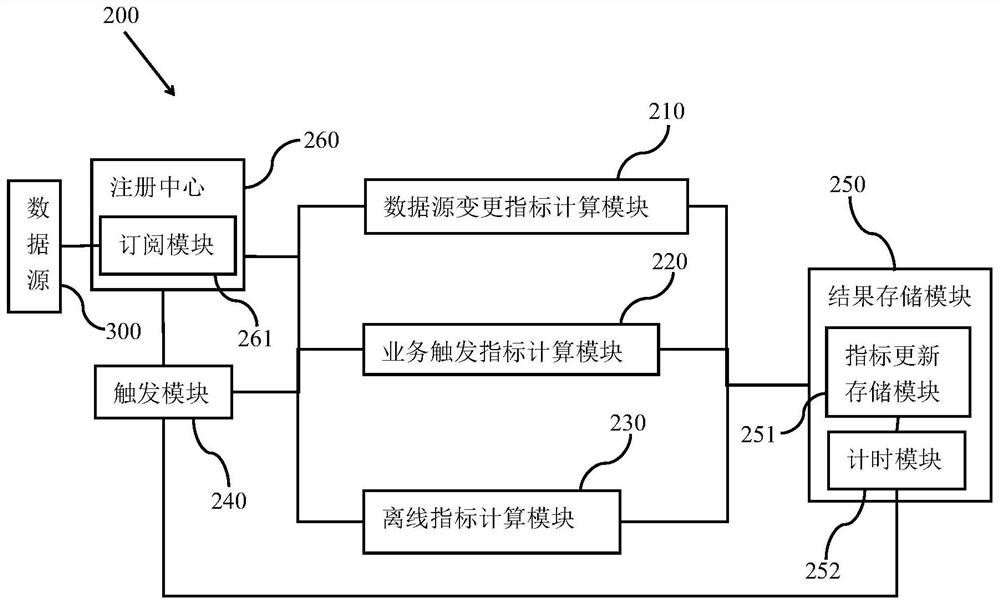

Distributed computing method and system for financial indicators

ActiveCN108573348BImprove computing efficiencyGuaranteed normal executionFinanceResourcesComputational logicData source

The present invention provides a method and system for distributed calculation of user indicators in finance. The method includes: dividing the calculation of user indicators into three types of indicator calculations: offline calculation, business-triggered calculation, and data source change-triggered calculation; defining user indicators , including user indicator attributes and indicator operators. Indicator operators include defining the data source used in indicator calculation, indicator calculation logic, and indicator calculation type. There must be at least one indicator calculation type in the indicator operator; define triggers for three indicator calculation types conditions; according to the index calculation type to which the user index belongs, when the trigger condition occurs, the index calculation type corresponding to the trigger condition is used for index calculation; the calculated user index is stored, and the index calculation efficiency of the present invention is high, and can solve the problem of repeated index calculation and new Add / modify index calculation logic difficulties, serious index missing and other problems, and can effectively ensure the execution of high-quality computing and fast operators, and improve system stability and real-time throughput.

Owner:鑫涌算力信息科技(上海)有限公司

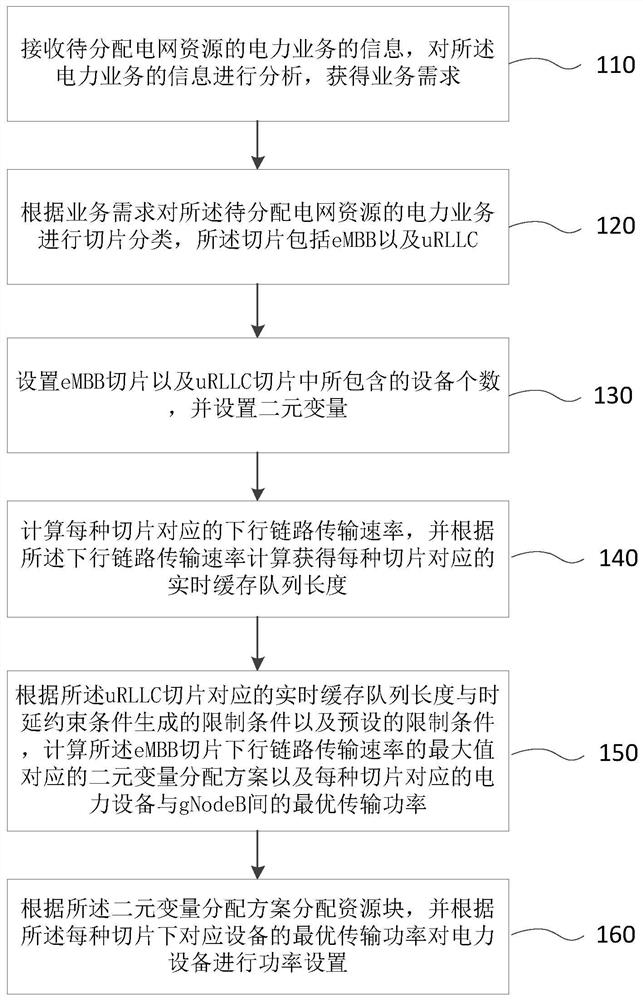

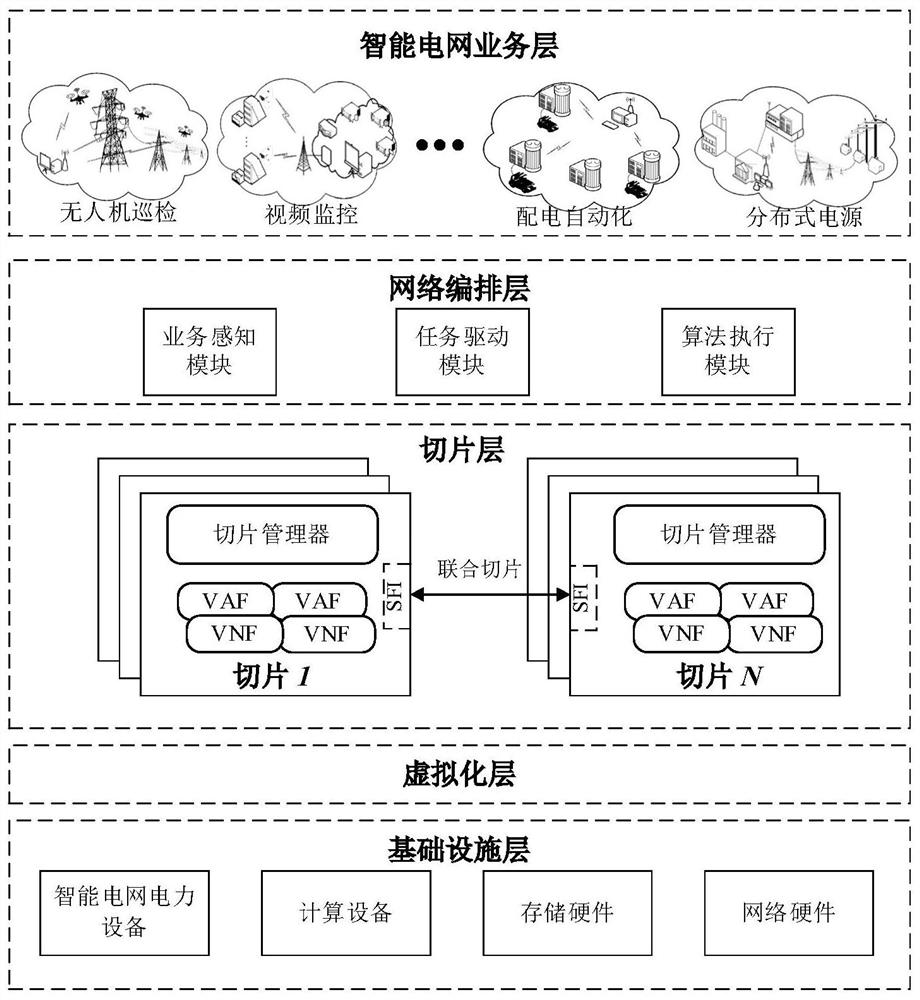

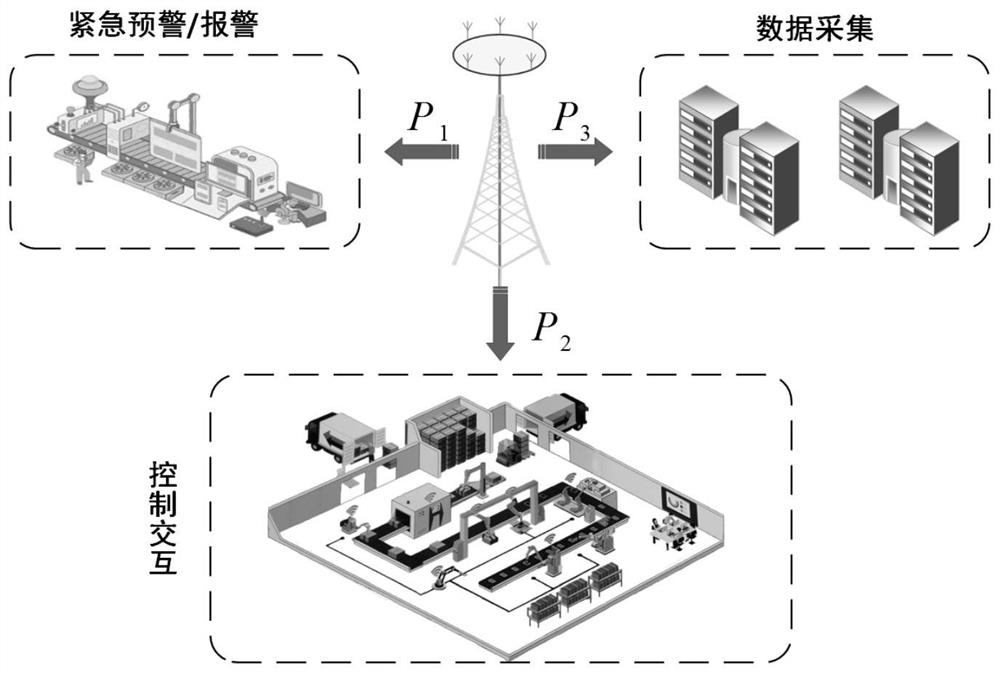

A smart grid resource management method and system based on delay and throughput

ActiveCN110149646BEasy resource managementImprove throughputHigh level techniquesWireless communicationResource blockElectric power equipment

The invention discloses a smart grid resource management method and system based on time delay and throughput. The method includes: receiving and analyzing information on power services to obtain service requirements; Slice classification of services; calculate the downlink transmission rate corresponding to each slice, and calculate and obtain the real-time buffer queue length corresponding to each slice according to the downlink transmission rate; calculate the maximum downlink transmission rate of the eMBB slice The binary variable allocation scheme corresponding to the value and the optimal transmission power between the electric equipment and the gNodeB corresponding to each slice; according to the above results, the power is set for the electric equipment; the method and the system confirm the delay and throughput through analysis Restricted conditions, rationally allocate resource blocks of the smart grid, and maximize the throughput on the basis of ensuring the delay requirements, so as to realize the optimization of smart grid resource management.

Owner:CHINA ELECTRIC POWER RES INST +1

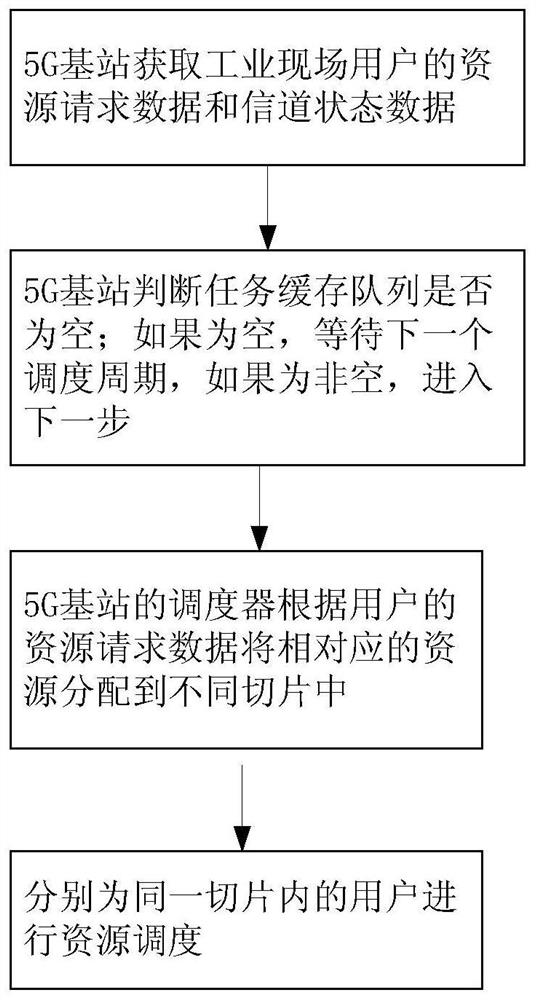

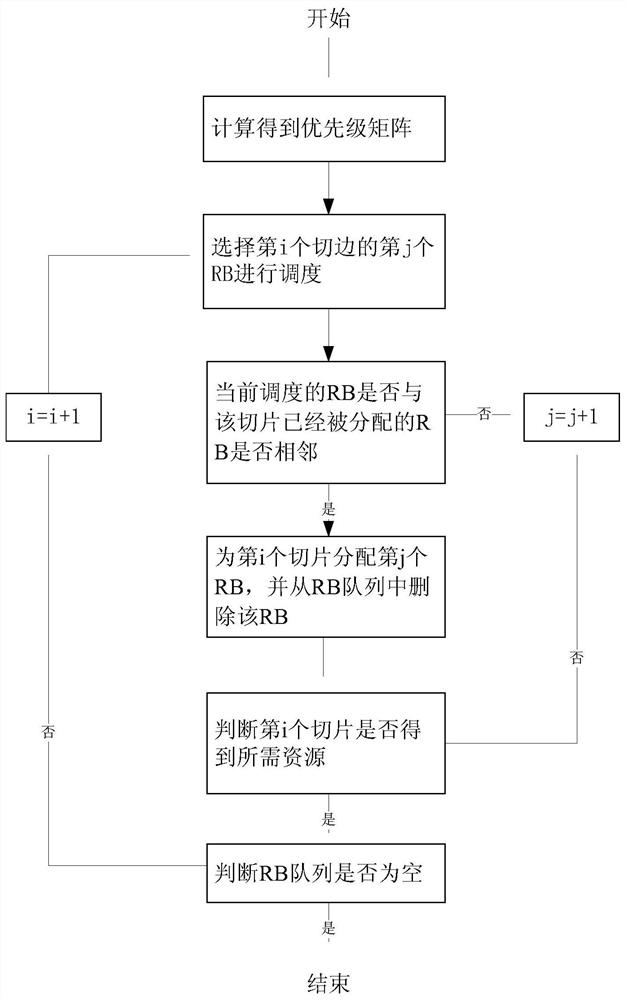

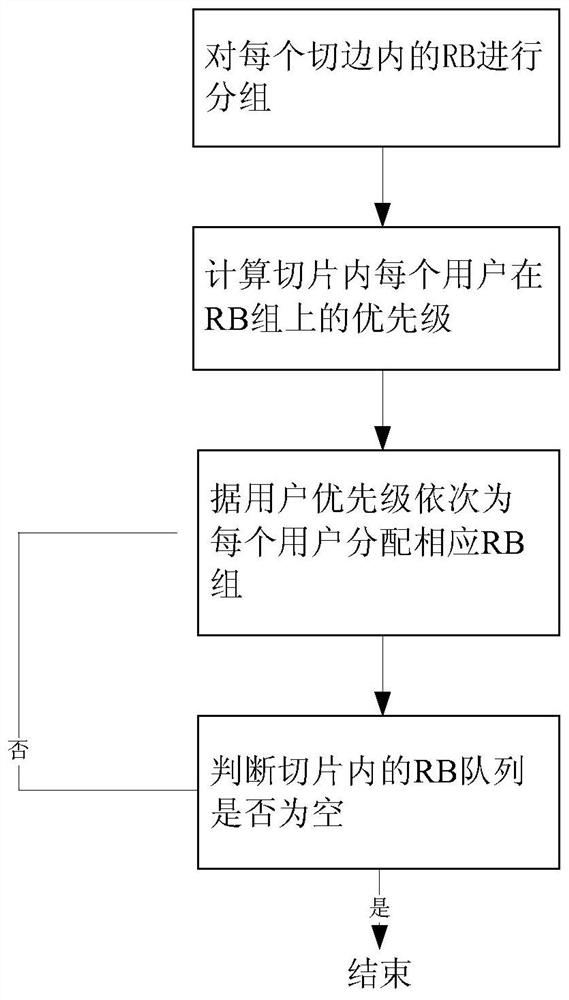

A multi-priority scheduling method for industrial site data based on 5G slicing

ActiveCN111278052BGuaranteed isolation effectImprove throughputNetwork traffic/resource managementQuality of serviceFlexible scheduling

The invention discloses a multi-priority scheduling method for industrial field data based on 5G slices, including S1: 5G base stations obtain resource request data and channel state data of industrial field users; S2: the scheduler of the 5G base station in the t-th scheduling period, Determine whether the task cache queue is empty; if it is empty, wait for the next scheduling cycle; if it is not empty, enter S3; S3: The scheduler of the 5G base station allocates the corresponding resources to different slices according to the resource request data of the user; S4: Perform resource scheduling for users in the same slice. The present invention completes resource allocation for the three major slices of 5G, ensures flexible scheduling and isolation of resources between slices, improves system throughput, and at the same time improves the fairness of scheduling methods for different business service quality requirements.

Owner:CHONGQING UNIV

Industrial device, method, and non-transitory computer readable medium

The invention provides an industrial device, a method, and a non-transitory computer readable medium. The industrial device supports device-level data modeling that pre-models data stored in the device with known relationships, correlations, key variable identifiers, and other such metadata to assist higher-level analytic systems to more quickly and accurately converge to actionable insights relative to a defined business or analytic objective. Data at the device level can be modeled according to modeling templates stored on the device that define relationships between items of device data for respective analytic goals (e.g., improvement of product quality, maximizing product throughput, optimizing energy consumption, etc.). This device-level modeling data can be exposed to higher level systems for creation of analytic models that can be used to analyze data from the industrial device relative to desired business objectives.

Owner:ROCKWELL AUTOMATION TECH

Smart factory-oriented random access resource optimization method and device

ActiveCN113490184AData SecurityEasy accessNeural architecturesMachine learningQuality of serviceSmart factory

The invention discloses a smart factory-oriented random access resource optimization method and device. The method comprises the following steps: dividing the access priority of each service according to the delay sensitivity of different services; training a local model at a local end by adopting a reinforcement learning algorithm; carrying out global model aggregation on the local model parameters of each local end by adopting a federal learning algorithm at the cloud end, and establishing a shared machine learning model, wherein the reinforcement learning target is to maximize the number of successfully accessed users on the premise of ensuring the service quality requirements of various businesses; and utilizing the optimized shared machine learning model to realize access resource allocation, so that the system throughput is maximized and the overall production efficiency of a factory is improved on the premise of meeting the service quality requirements of various businesses. According to the invention, the resource utilization rate can be optimized and the network performance can be improved on the premise of meeting the delay requirements of various services in industrial production.

Owner:UNIV OF SCI & TECH BEIJING

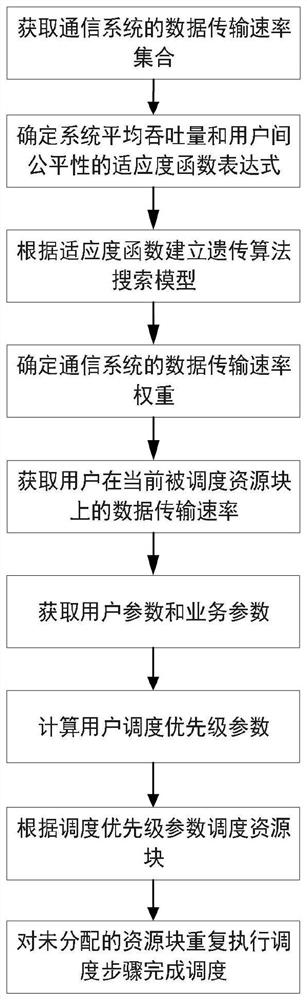

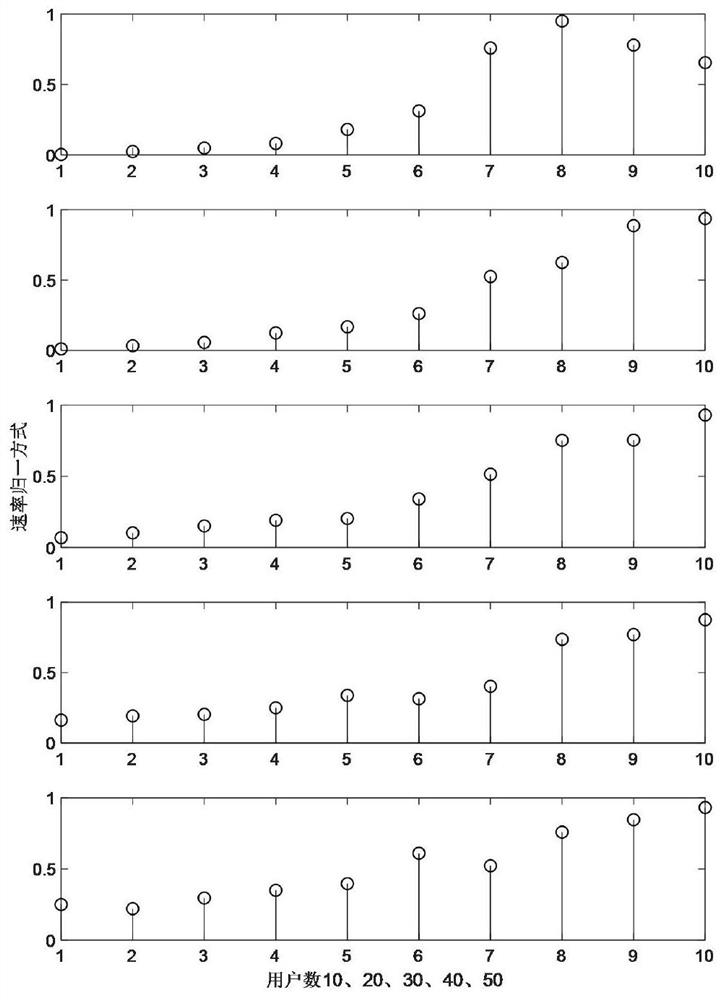

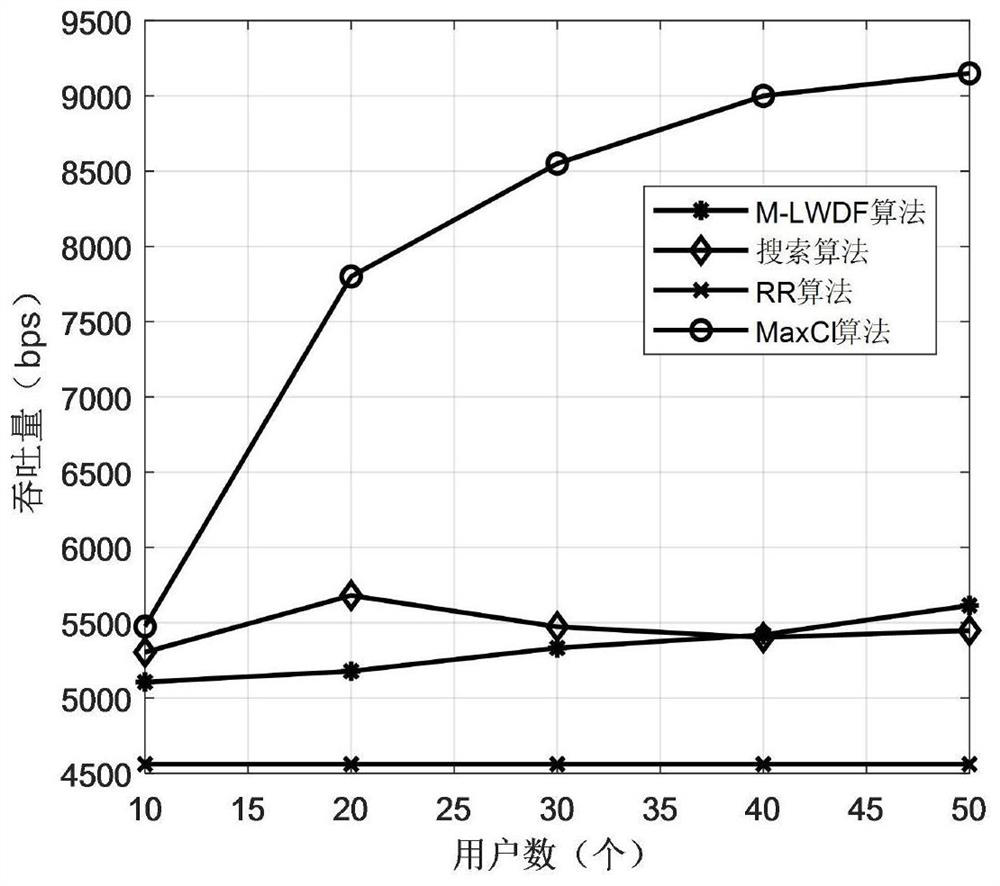

A Service Scheduling Method Based on Genetic Algorithm to Optimize Transmission Rate Weight

The invention discloses a business scheduling method based on a genetic algorithm to optimize the transmission rate weight, which mainly solves the problem that the existing method is difficult to comprehensively measure throughput and user fairness performance, and has poor adaptability to different systems. Including: 1) Obtain the data transmission rate set of the system; 2) Determine the fitness function of the average throughput of the system and the fairness among users; 3) Establish a genetic algorithm search model according to the fitness function; 4) Combining with the search model, simulate and determine each The optimal weight of the data transmission rate; 5) Obtain the user's data transmission rate, user parameters and business parameters; 6) Calculate the user scheduling priority; 7) Schedule resource blocks according to the scheduling priority, and repeat the execution for unallocated resource blocks The scheduling step completes the scheduling. The invention adopts the genetic algorithm to search for the optimal weight of each data transmission rate of the system, can flexibly change the fitness function, and effectively improves the adaptability of the business scheduling method to different systems.

Owner:XIDIAN UNIV

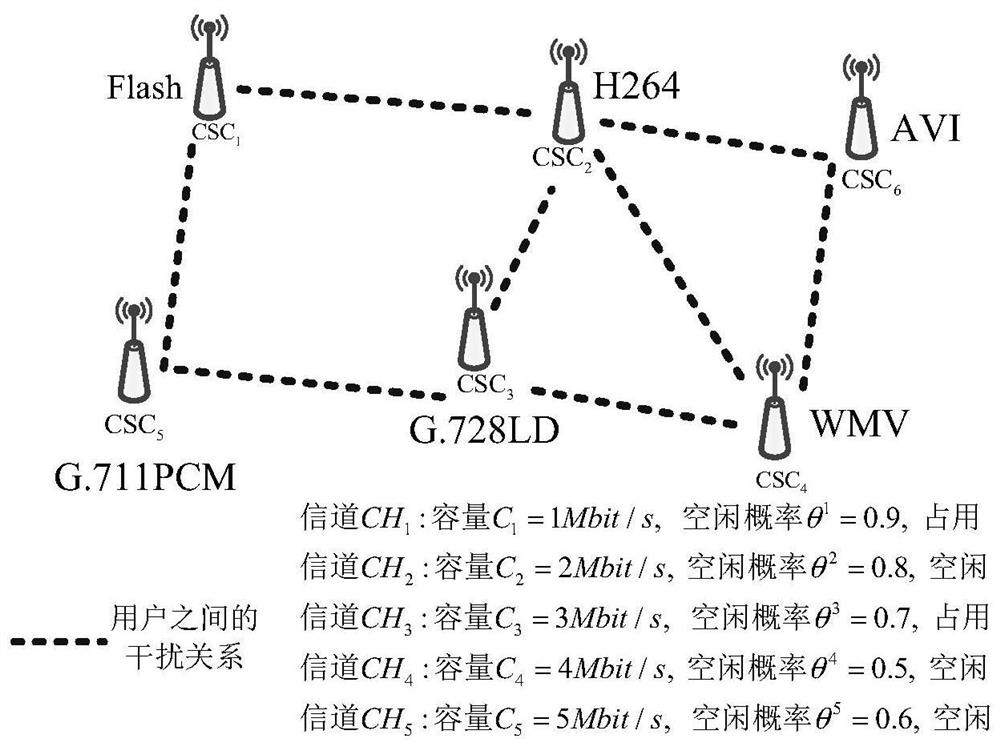

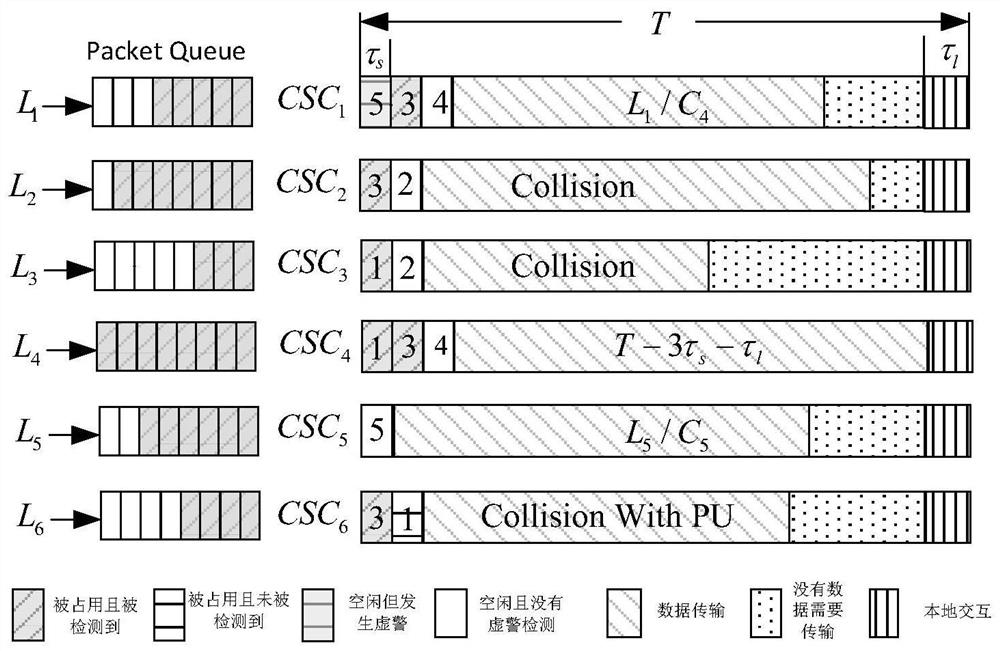

A Decision-Making Method for Distributed Service Matching Sequential Spectrum Access

ActiveCN110856181BIncrease profitAssess restrictionNetwork planningNetworked systemDistributed services

The invention discloses a distributed service matching sequential spectrum access decision-making method, comprising the following steps: acquiring a target system and initializing and defining the target system; calculating the expected throughput of the user's sequential detection order; based on the user's The expected throughput of the sequential detection order updates the user's own sequential detection order; based on the updated decision, the user selects the corresponding sequential detection order for channel sensing, accesses an idle channel, and transmits data. The present invention adopts a system optimization method for distributed learning based on the heterogeneous characteristics of distributed user business data, introduces an appropriate local cooperation mechanism in the channel access method, and proposes a service-driven local cooperative distributed learning algorithm, using local The small cost of information interaction gradually converges to the global optimal state, which realizes the optimization of the throughput of the network system.

Owner:YANGTZE NORMAL UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com