Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

595 results about "Global model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

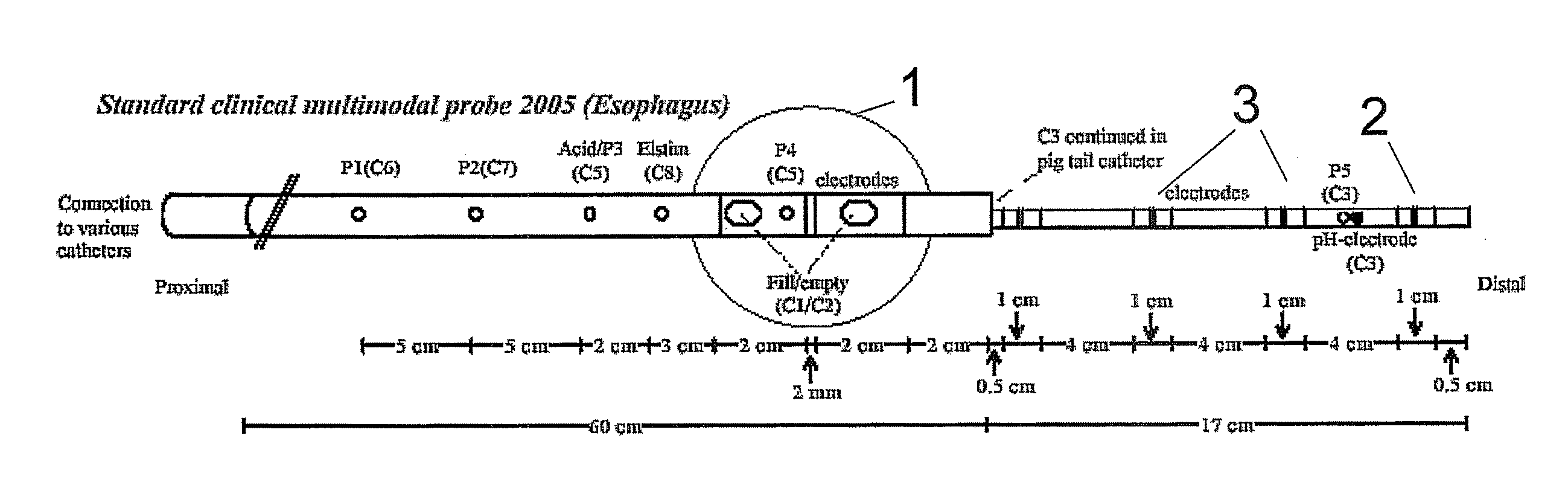

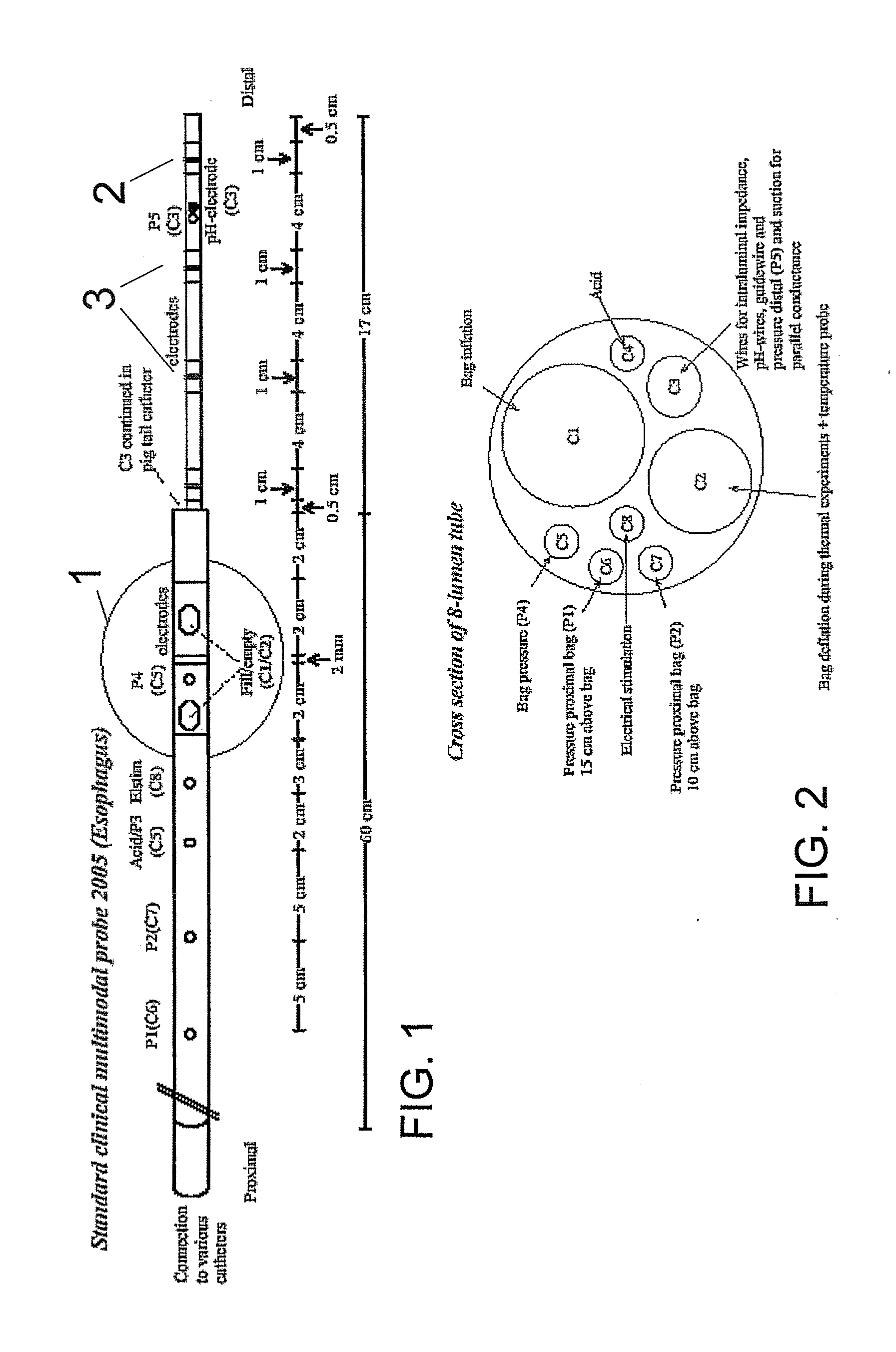

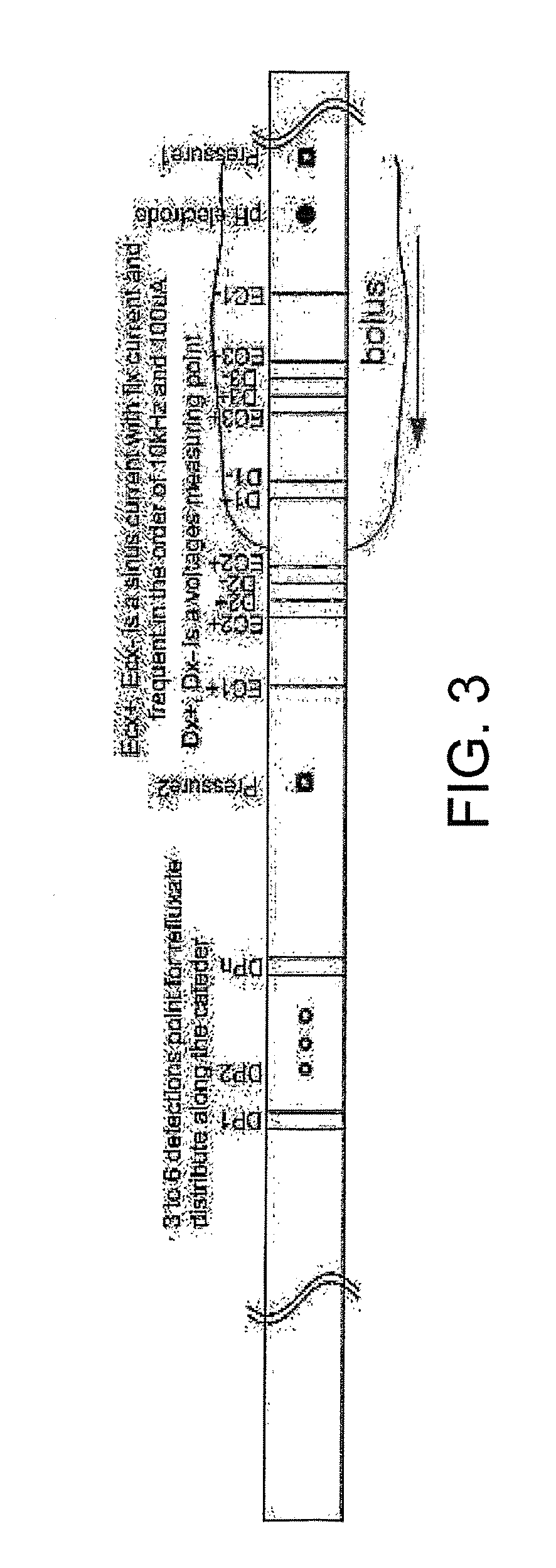

Apparatus and method for a global model of hollow internal organs including the determination of cross-sectional areas and volume in internal hollow organs and wall properties

InactiveUS20090062684A1Ease of evaluationCorrection errorCatheterDiagnostic recording/measuringThree vesselsVisceral organ

The present invention relates generally to medical measurement systems for evaluation of organ function and understanding symptom and pain mechanisms. This model takes into account a number of factors such as volume and properties of the fluid and the surrounding tissue. Particular emphasis is on a multifunctional probe that can provide a number of measurements including volume of refluxate in the esophagus and to what level it extents. The preferred embodiments of the invention relate to methods and apparatus for measuring luminal cross-sectional areas of internal organs such as blood vessels, the gastrointestinal tract, the urogenital tract and other hollow visceral organs and the volume of the flow through the organ. It can also be used to determine conductivity of the fluid in the lumen and thereby it can determine the parallel conductance of the wall and geometric and mechanical properties of the organ wall.

Owner:GREGERSEN ENTERPRISES 2005

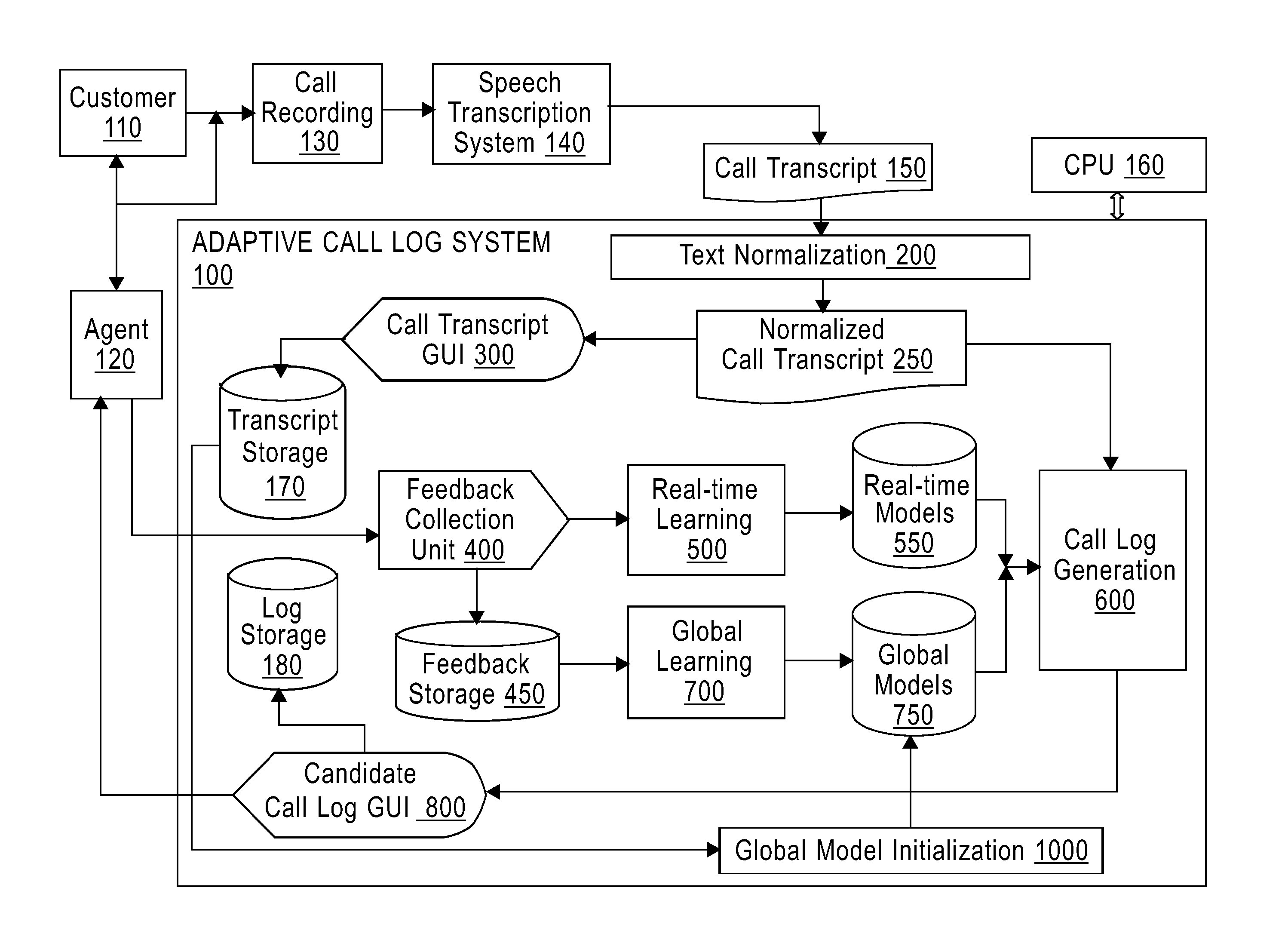

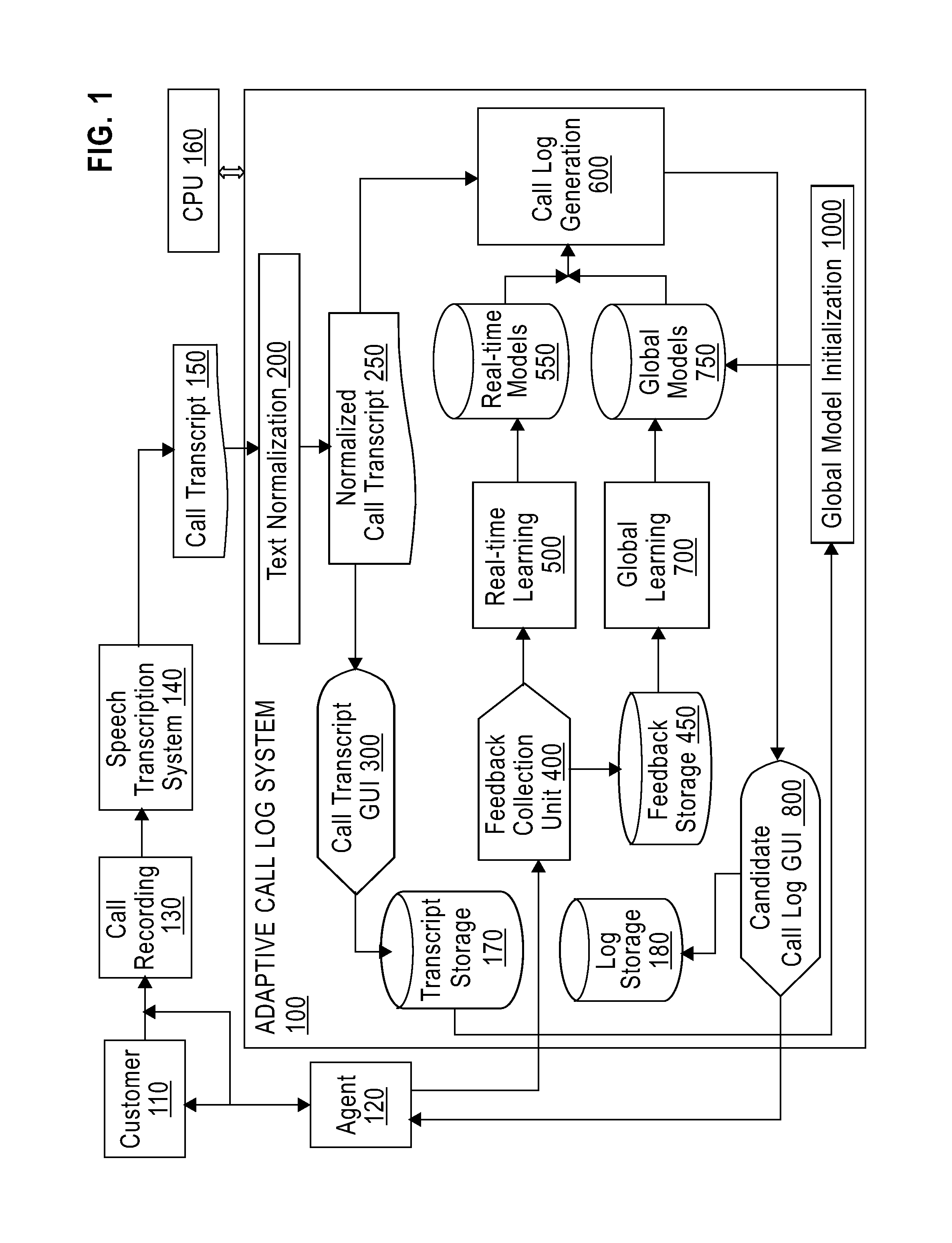

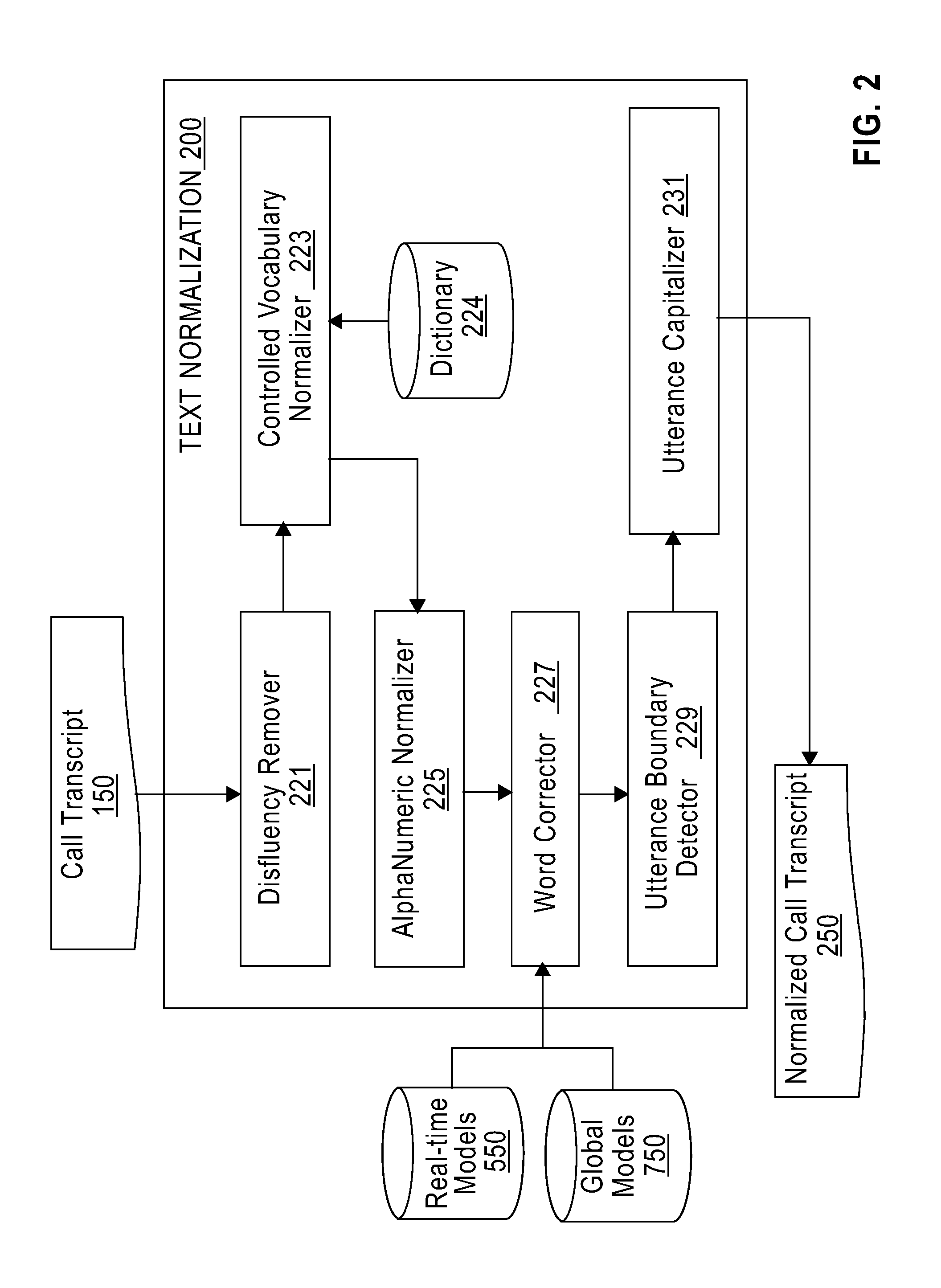

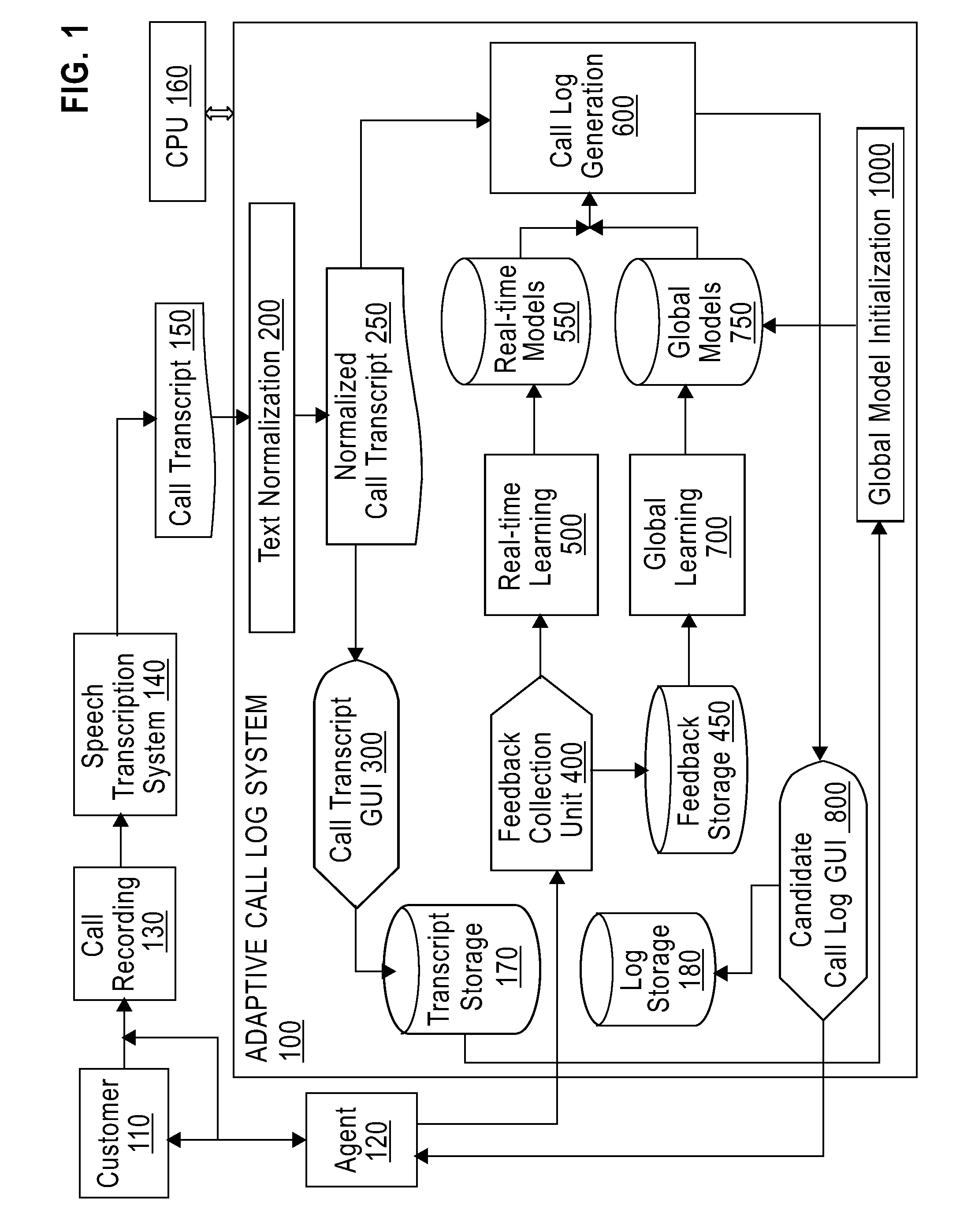

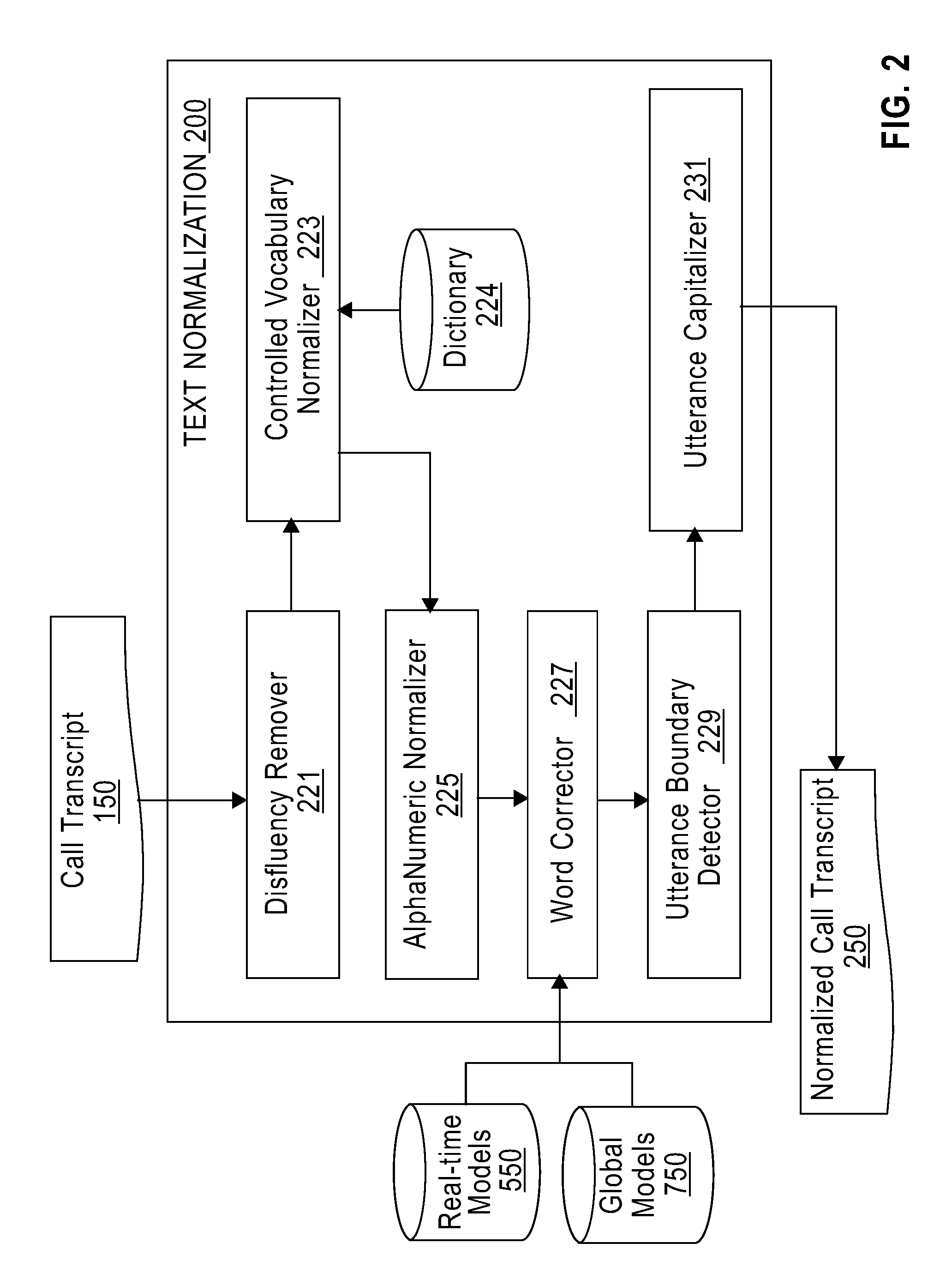

System And Method For Automatically Generating Adaptive Interaction Logs From Customer Interaction Text

ActiveUS20140153709A1Shorten the timeFacilitates correct generationSpecial service for subscribersManual exchangesAdaptive interactionContact center

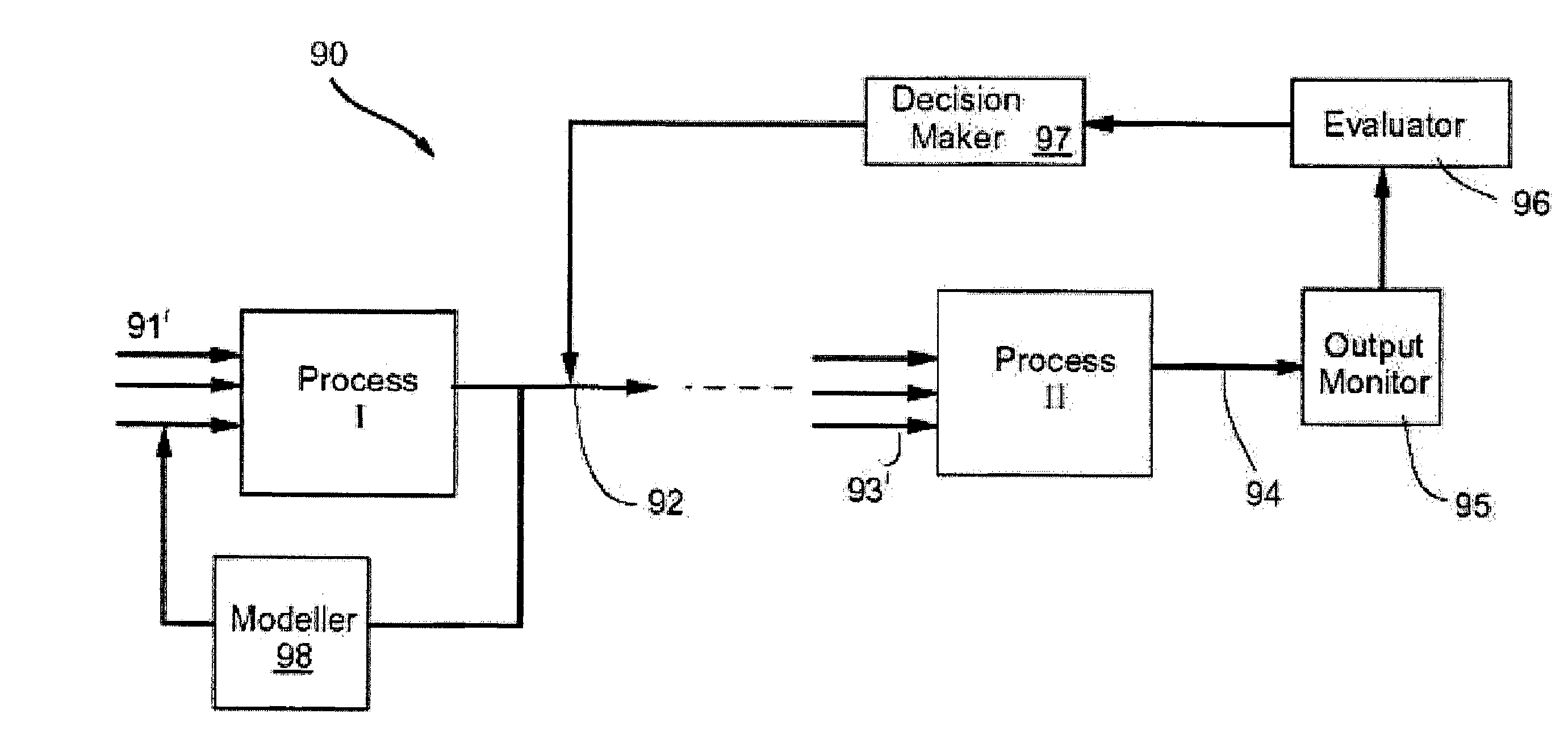

A system and method for providing an adaptive Interaction Logging functionality to help agents reduce the time spent documenting contact center interactions. In a preferred embodiment the system uses a pipeline comprising audio capture of a telephone conversation, automatic speech transcription, text normalization, transcript generation and candidate call log generation based on Real-time and Global Models. The contact center agent edits the candidate call log to create the final call log. The models are updated based on analysis of user feedback in the form of the editing of the candidate call log done by the contact center agents or supervisors. The pipeline yields a candidate call log which the agents can edit in less time than it would take them to generate a call log manually.

Owner:MICROSOFT TECH LICENSING LLC

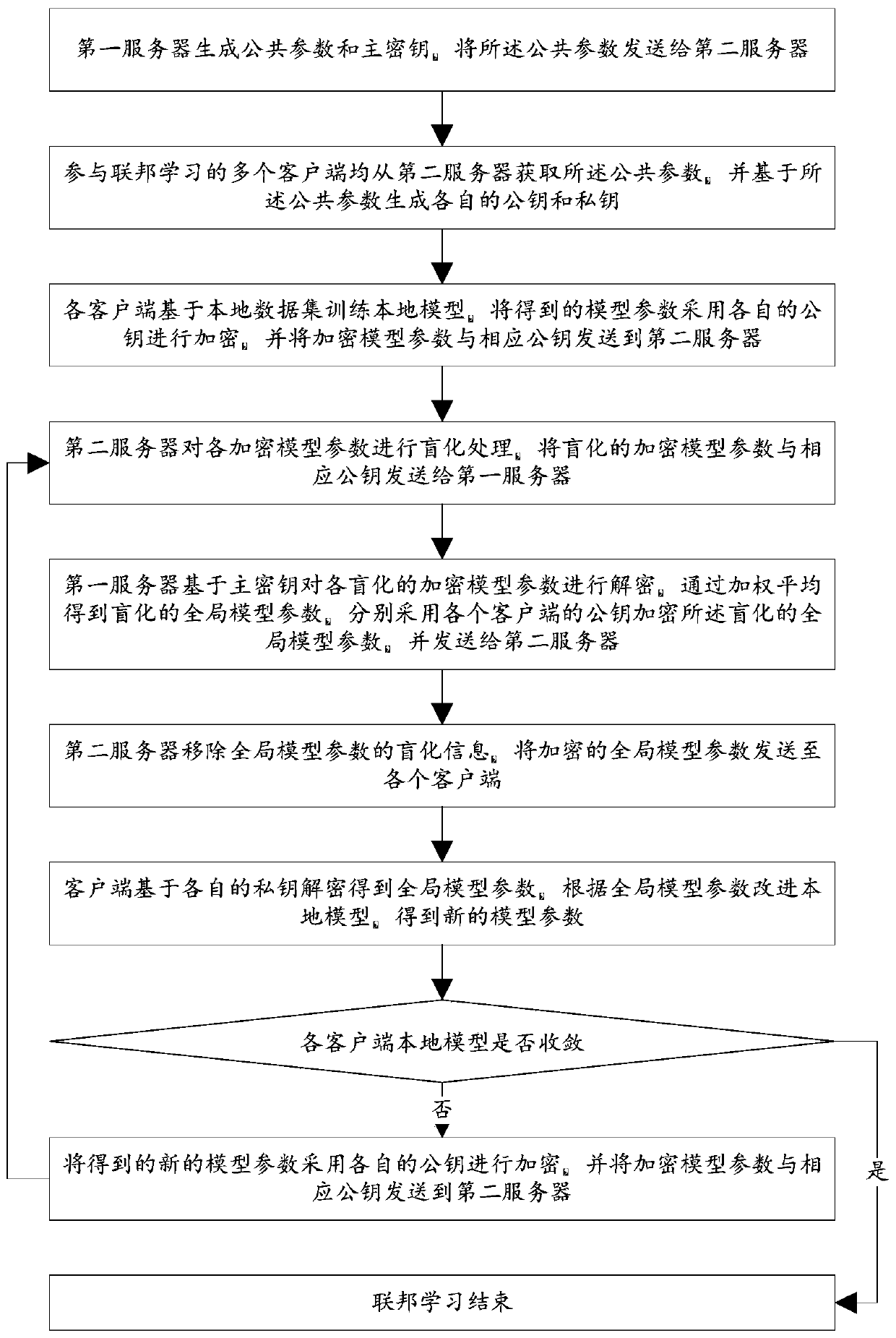

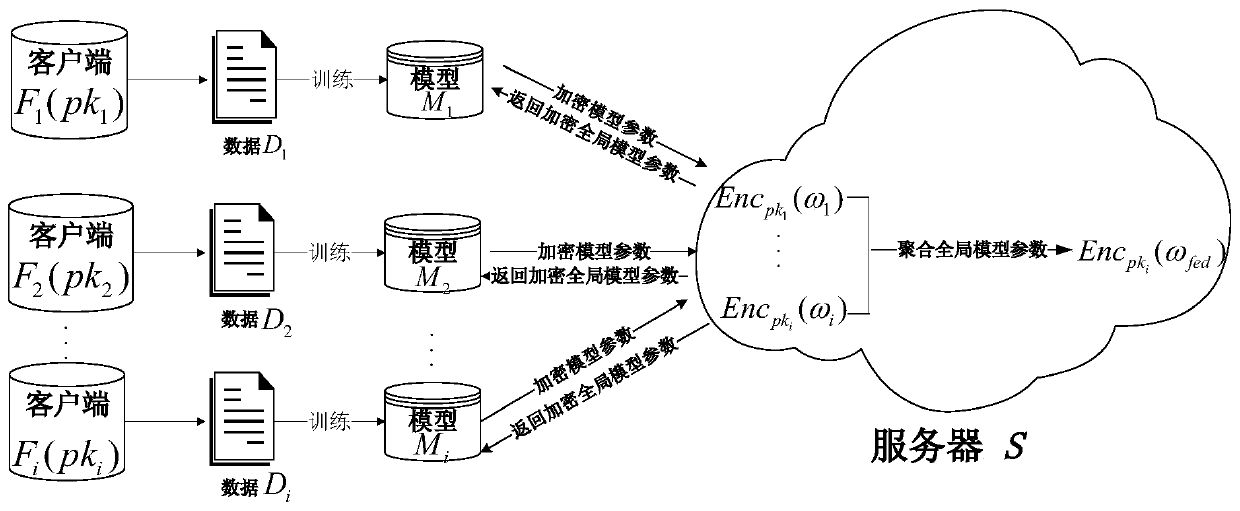

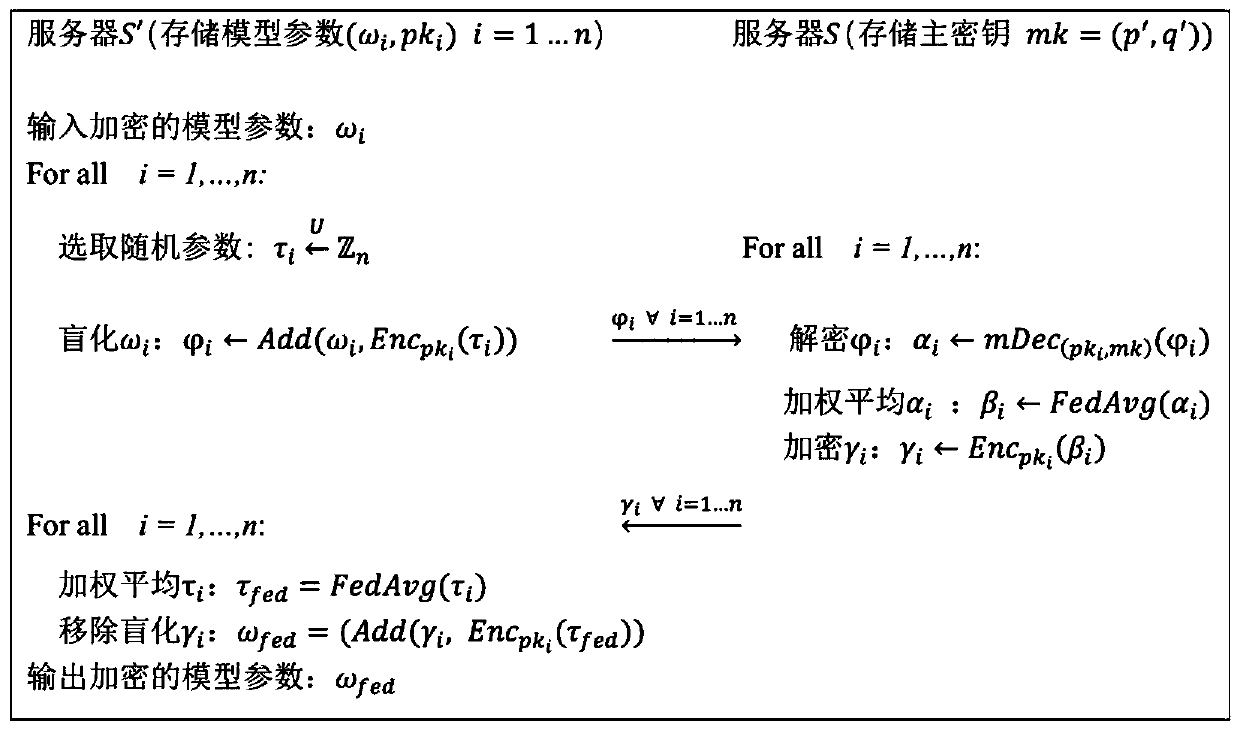

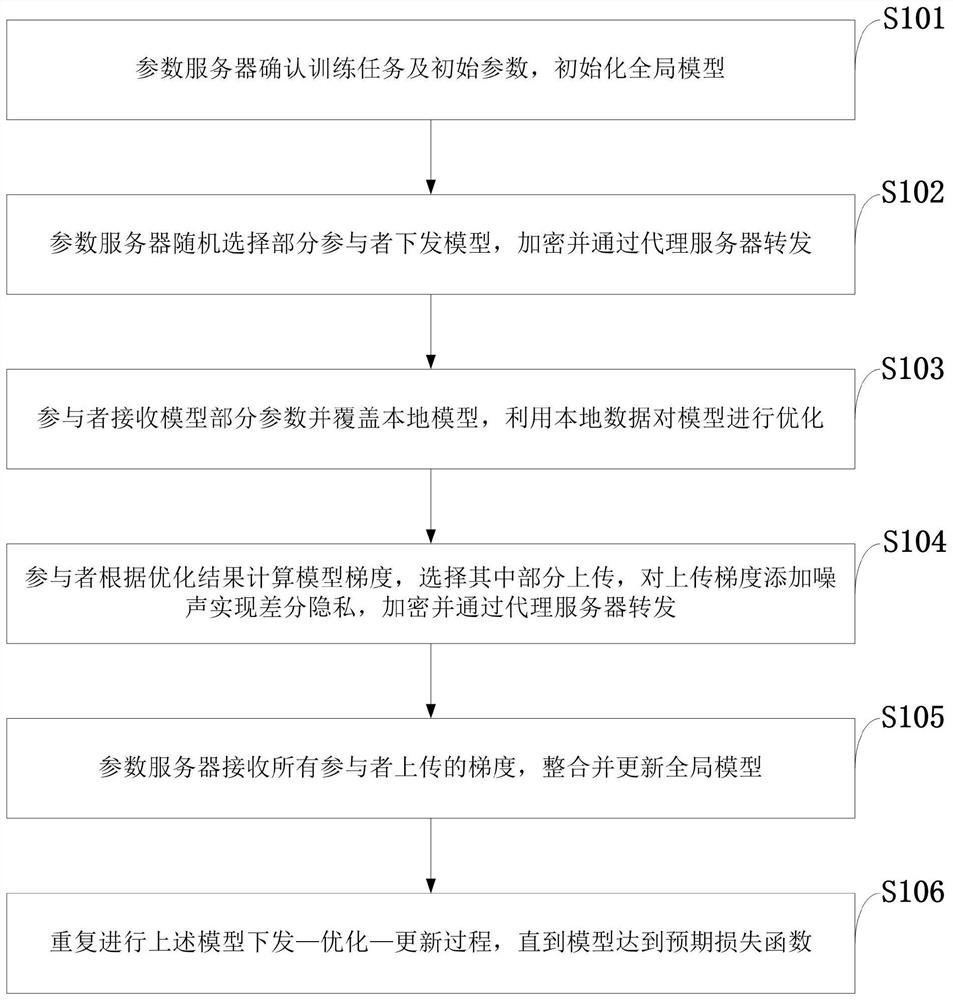

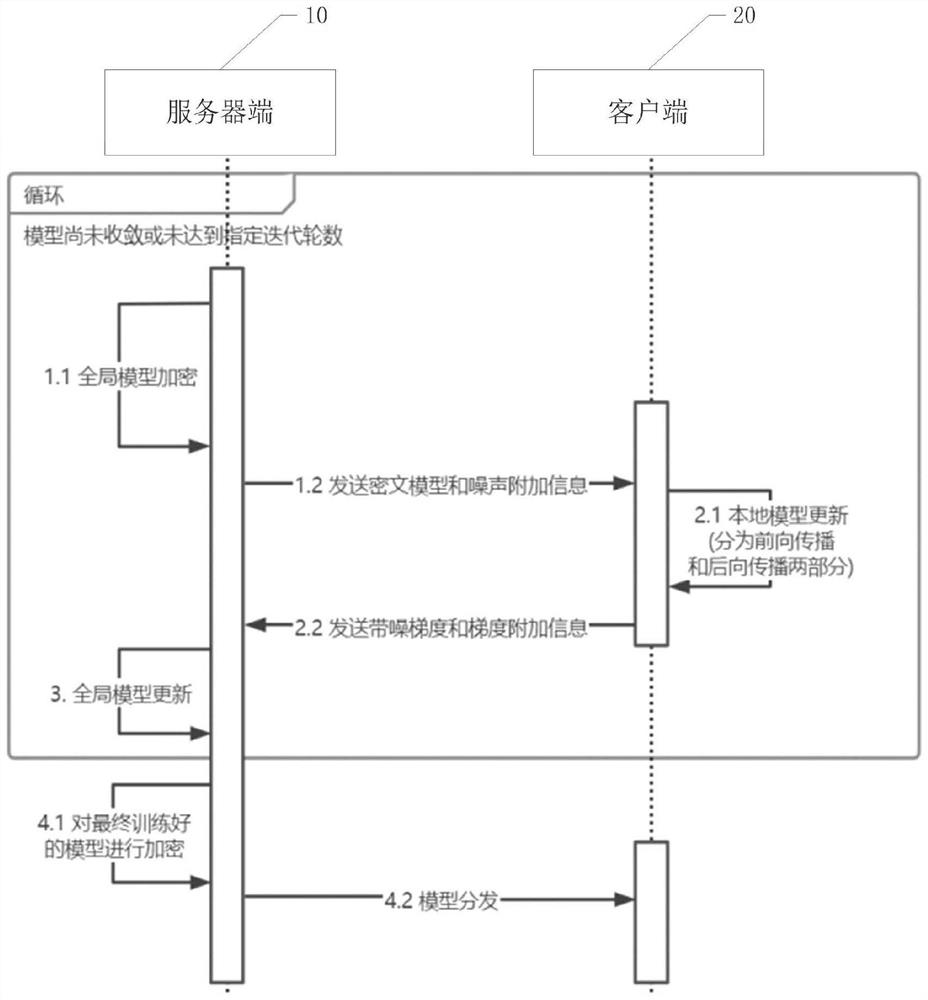

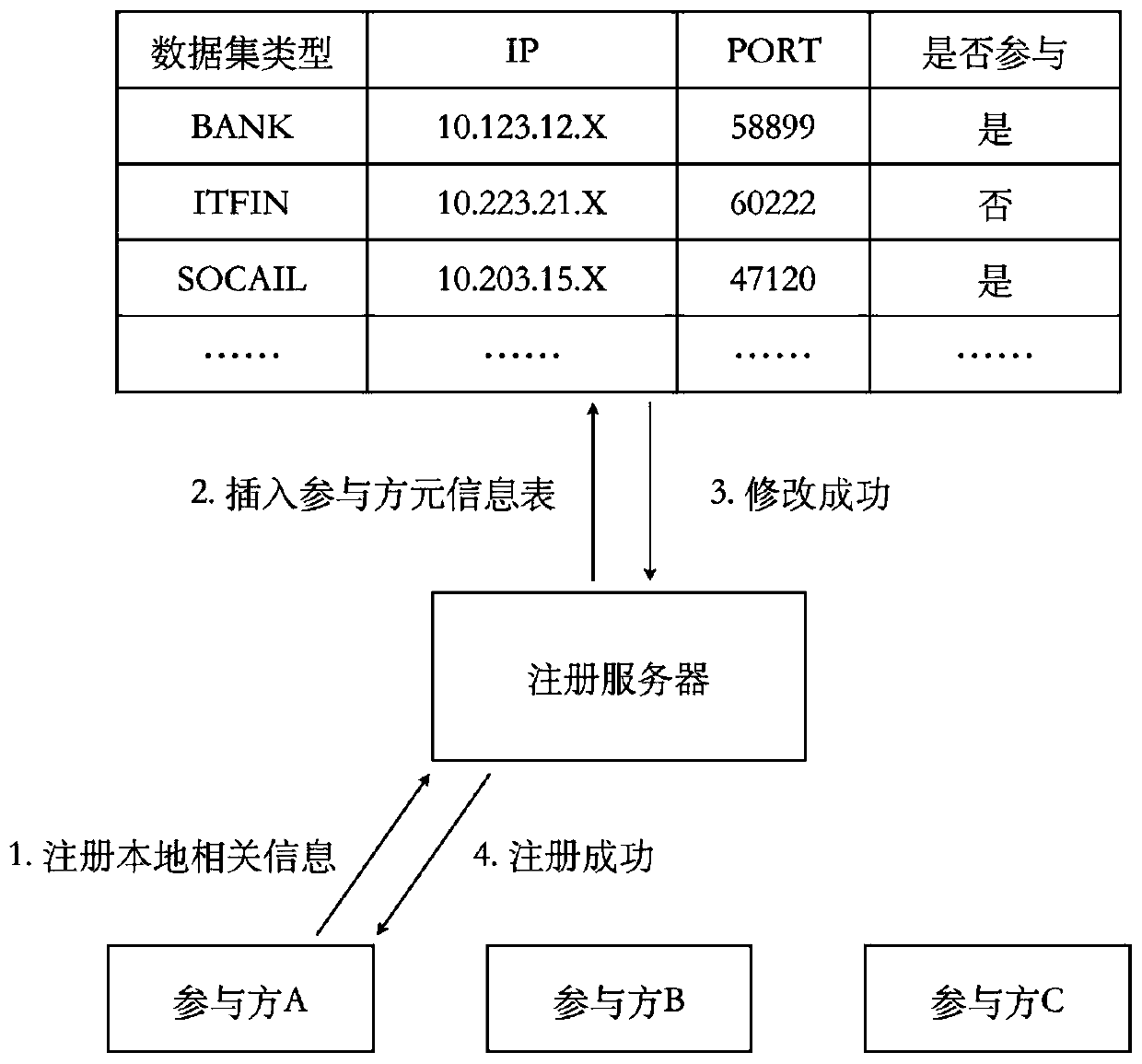

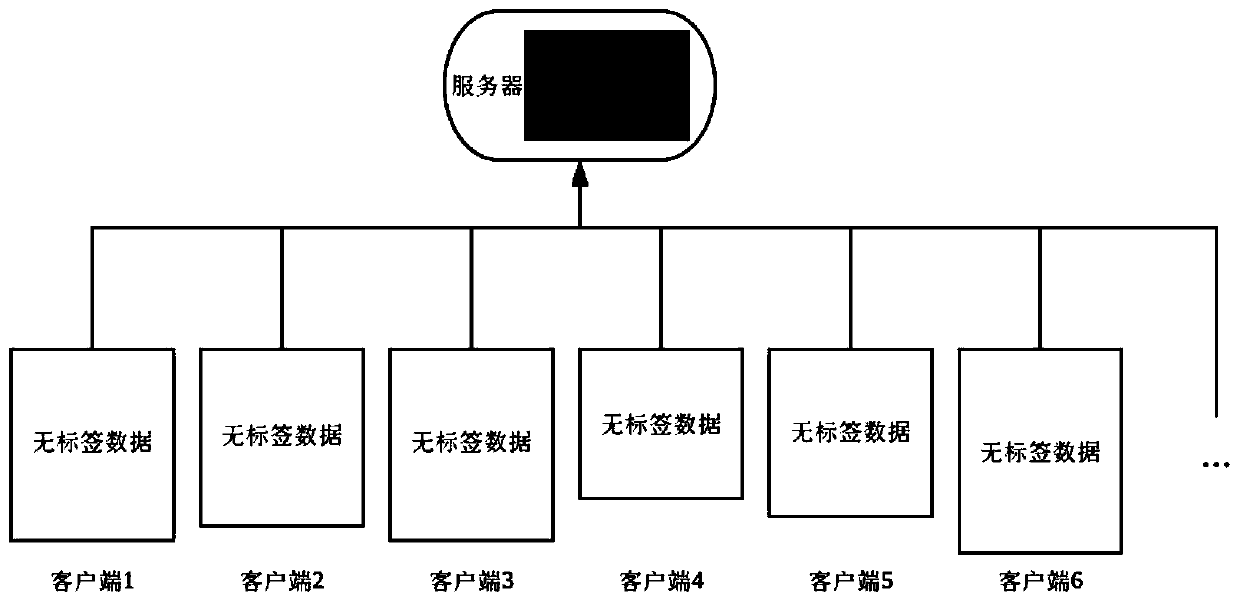

Federated learning training data privacy enhancement method and system

ActiveCN110572253AEnsure safetyEasy to joinCommunication with homomorphic encryptionModel parametersMaster key

The invention discloses a federated learning training data privacy enhancement method and system, and the method comprises the steps that a first server generates a public parameter and a main secretkey, and transmits the public parameter to a second server; a plurality of clients participating in federated learning generate respective public key and private key pairs based on the public parameters; the federated learning process is as follows: each client encrypts a model parameter obtained by local training by using a respective public key, and sends the encrypted model parameter and the corresponding public key to a first server through a second server; the first server carries out decryption based on the master key, obtains global model parameters through weighted average, carries outencryption by using a public key of each client, and sends the global model parameters to each client through the second server; and the clients carry out decrypting based on the respective private keys to obtain global model parameters, and the local models are improved, and the process is repeated until the local models of the clients converge. According to the method, a dual-server mode is combined with multi-key homomorphic encryption, so that the security of data and model parameters is ensured.

Owner:UNIV OF JINAN

System and Method for Automatically Generating Adaptive Interaction Logs from Customer Interaction Text

ActiveUS20100104087A1Reduce time they spend documentingFacilitates correct generationSpecial service for subscribersManual exchangesAdaptive interactionContact center

A system and method for providing an adaptive Interaction Logging functionality to help agents reduce the time spent documenting contact center interactions. In a preferred embodiment the system uses a pipeline comprising audio capture of a telephone conversation, automatic speech transcription, text normalization, transcript generation and candidate call log generation based on Real-time and Global Models. The contact center agent edits the candidate call log to create the final call log. The models are updated based on analysis of user feedback in the form of the editing of the candidate call log done by the contact center agents or supervisors. The pipeline yields a candidate call log which the agents can edit in less time than it would take them to generate a call log manually.

Owner:NUANCE COMM INC

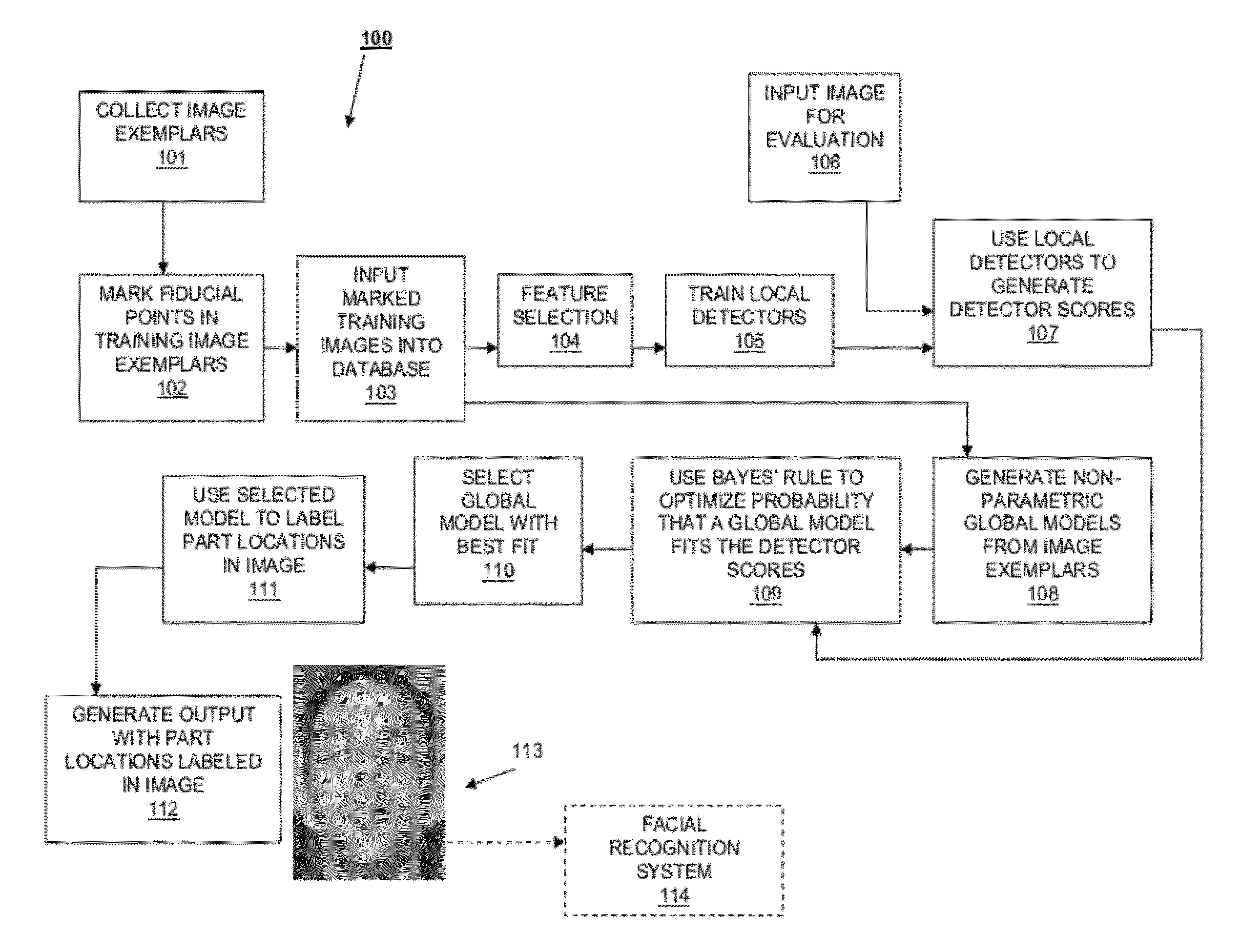

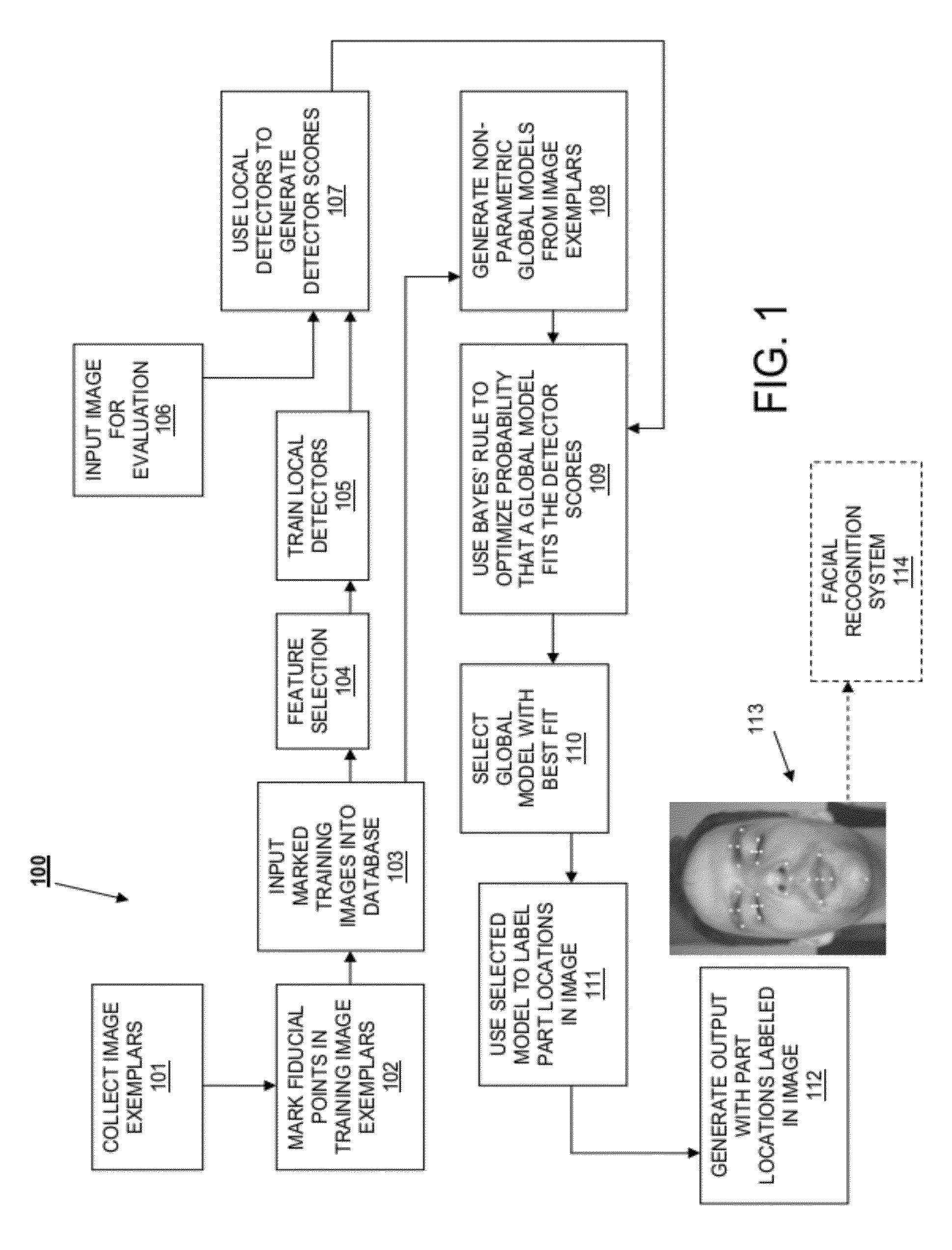

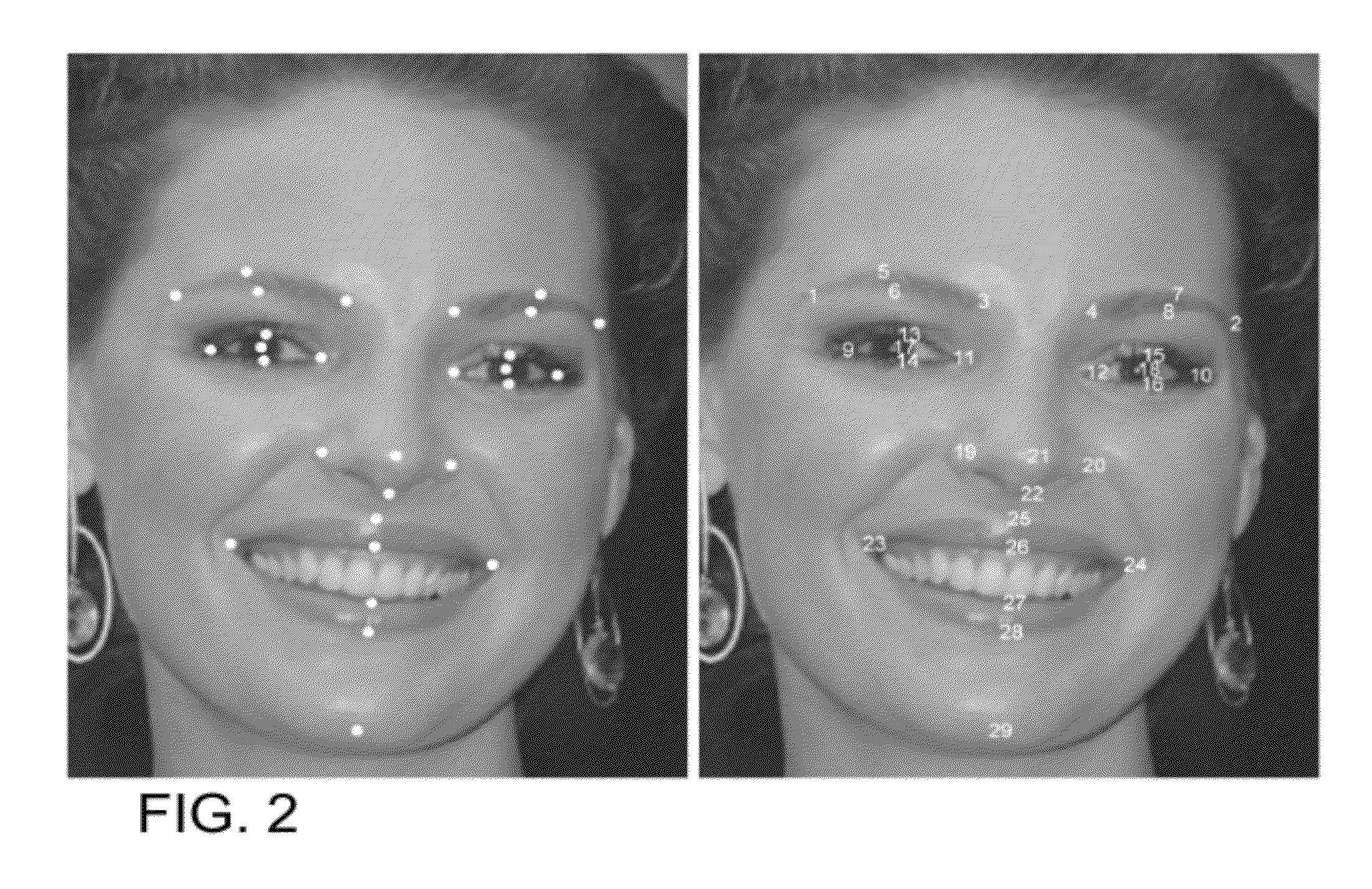

Method and System For Localizing Parts of an Object in an Image For Computer Vision Applications

A method is provided for localizing parts of an object in an image by training local detectors using labeled image exemplars with fiducial points corresponding to parts within the image. Each local detector generates a detector score corresponding to the likelihood that a desired part is located at a given location within the image exemplar. A non-parametric global model of the locations of the fiducial points is generated for each of at least a portion of the image exemplars. An input image is analyzed using the trained local detectors, and a Bayesian objective function is derived for the input image from the non-parametric model and detector scores. The Bayesian objective function is optimized using a consensus of global models, and an output is generated with locations of the fiducial points labeled within the object in the image.

Owner:KRIEGMAN BELHUMEUR VISION TECH

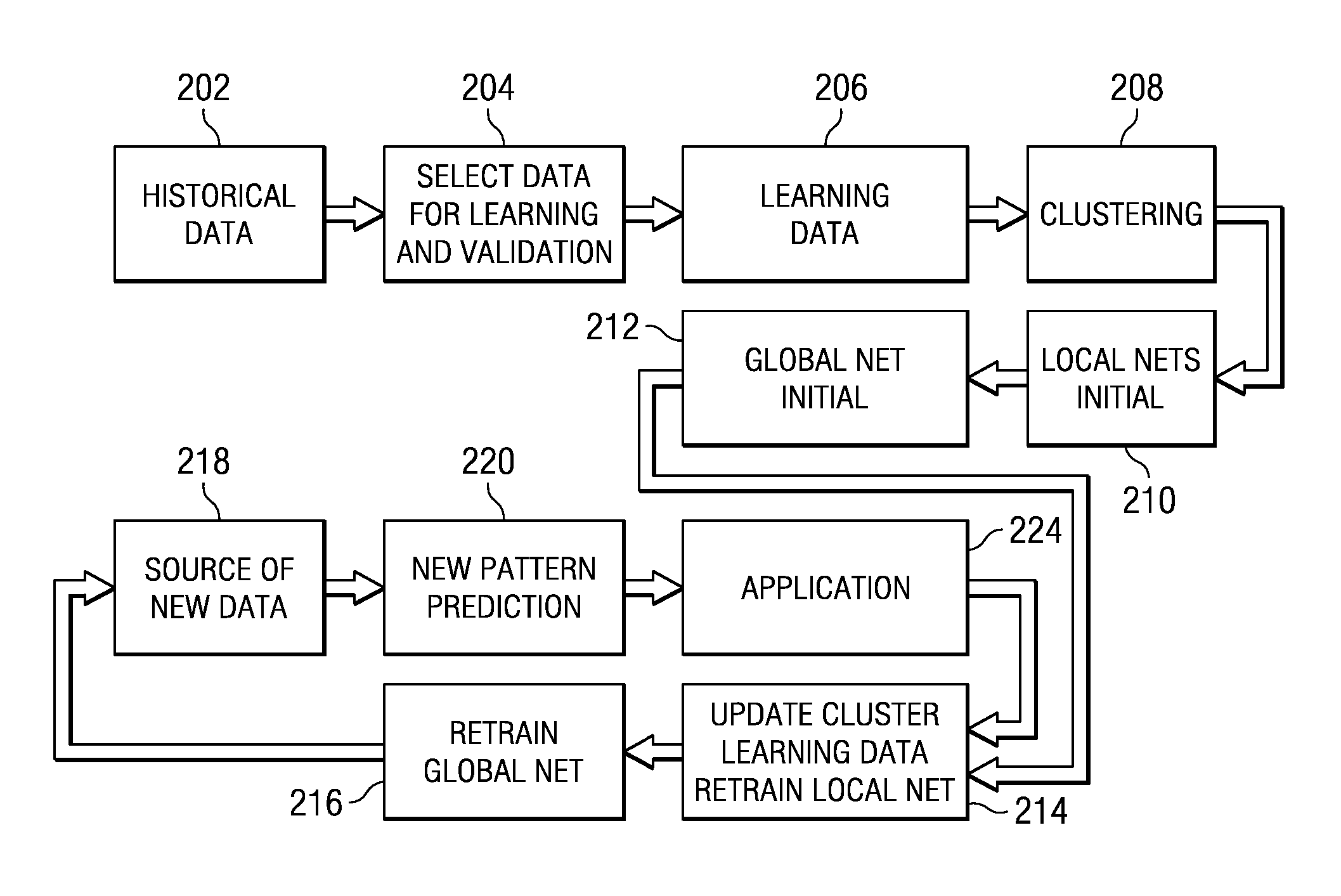

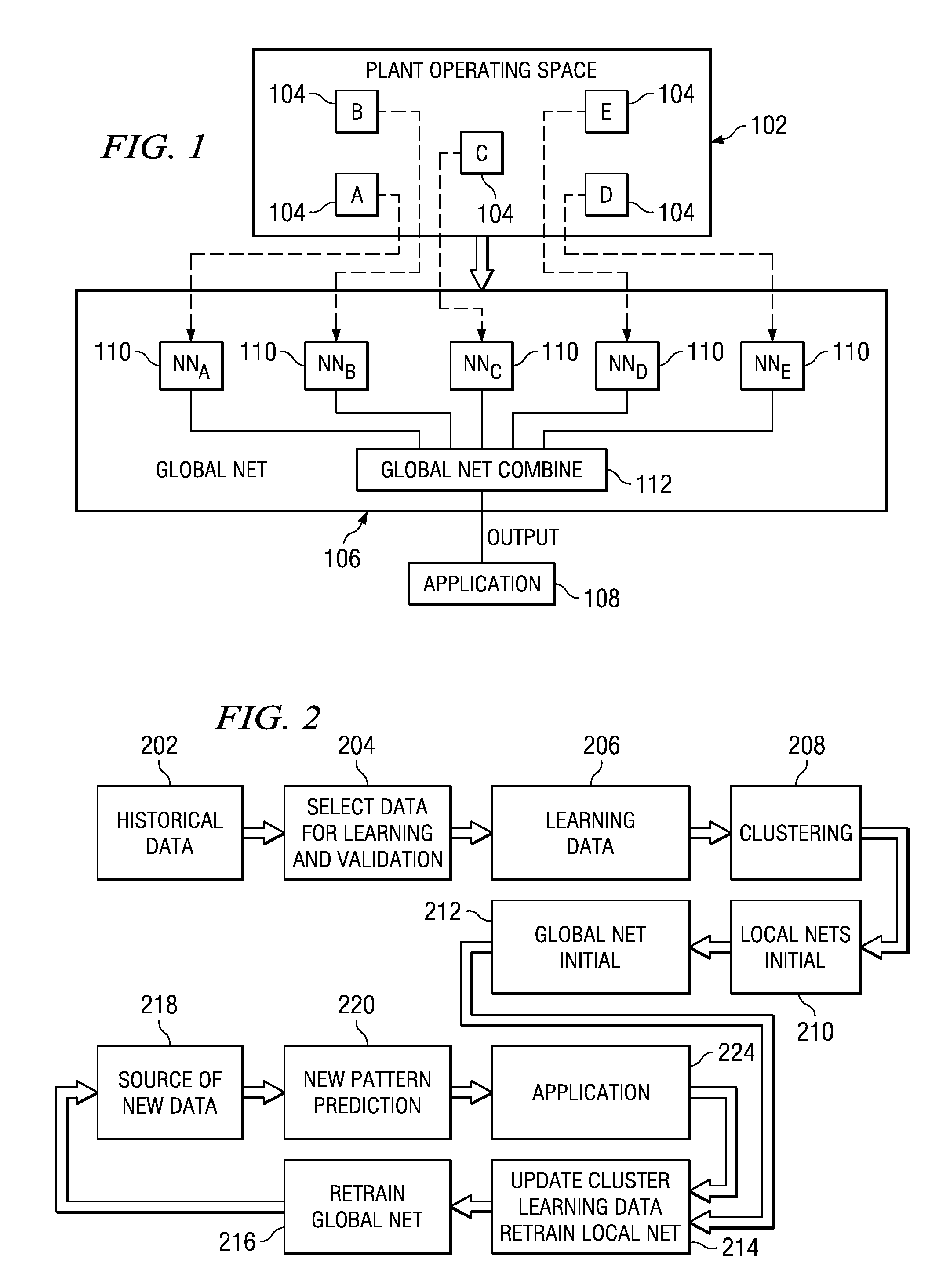

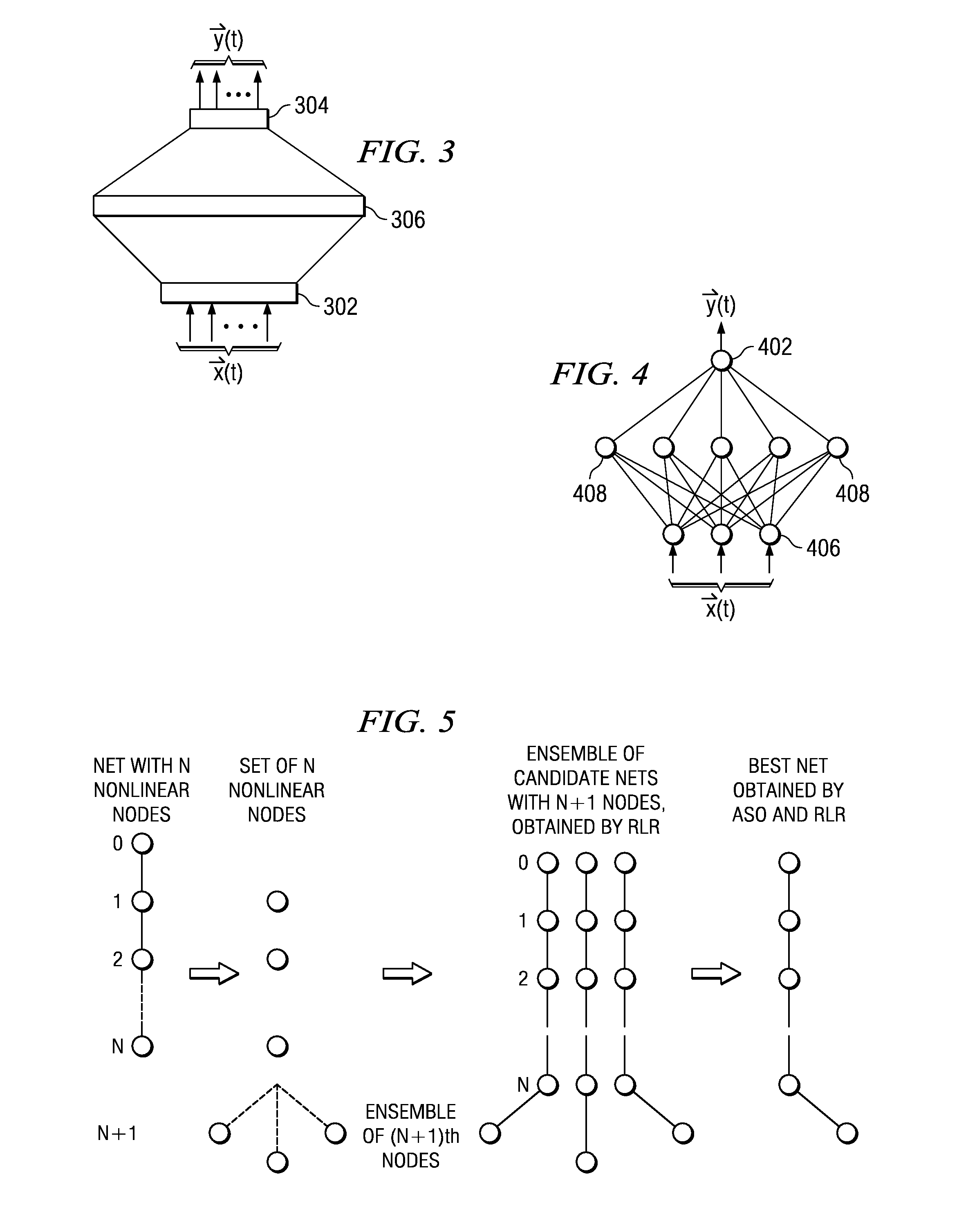

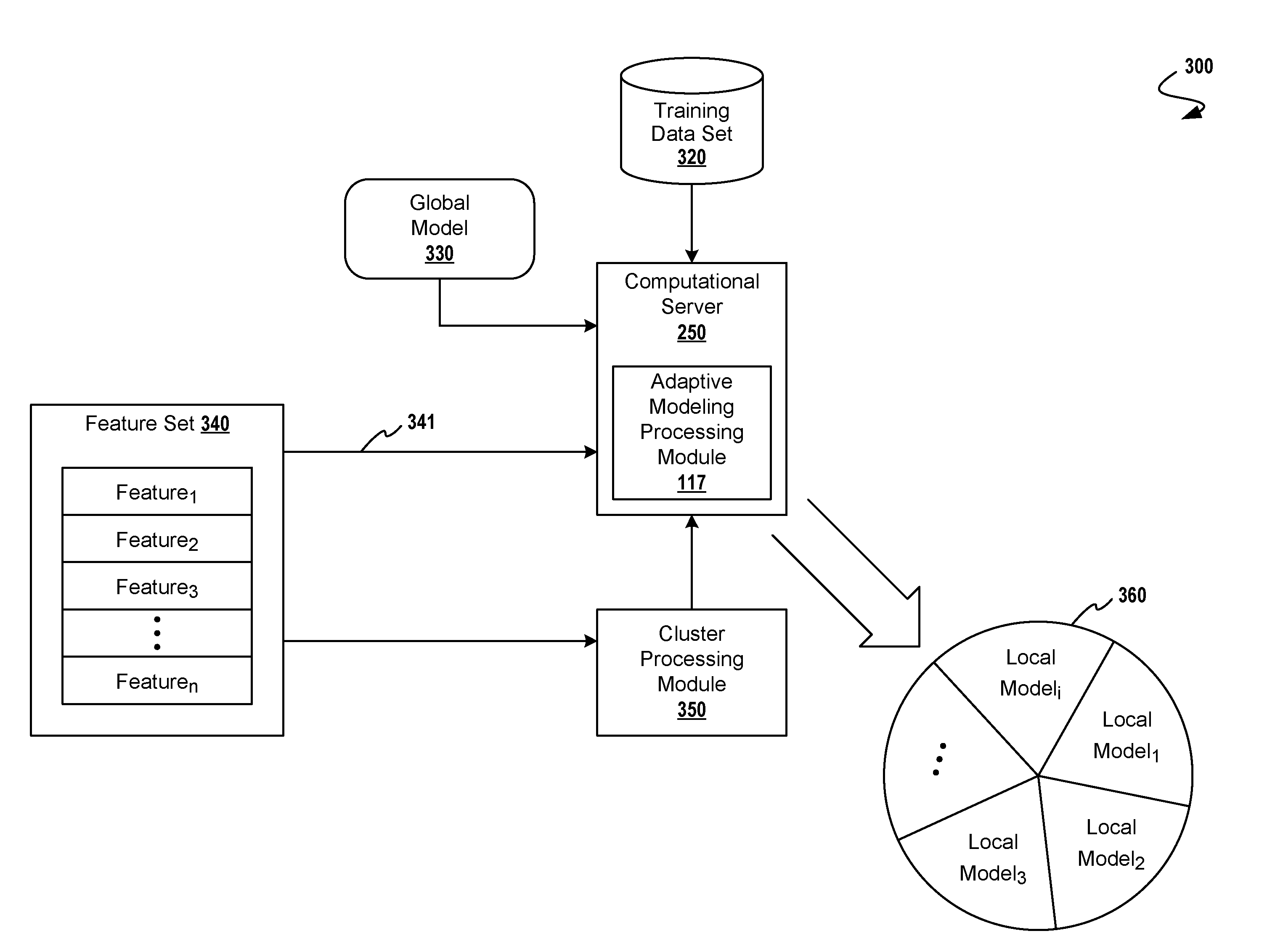

Neural network model with clustering ensemble approach

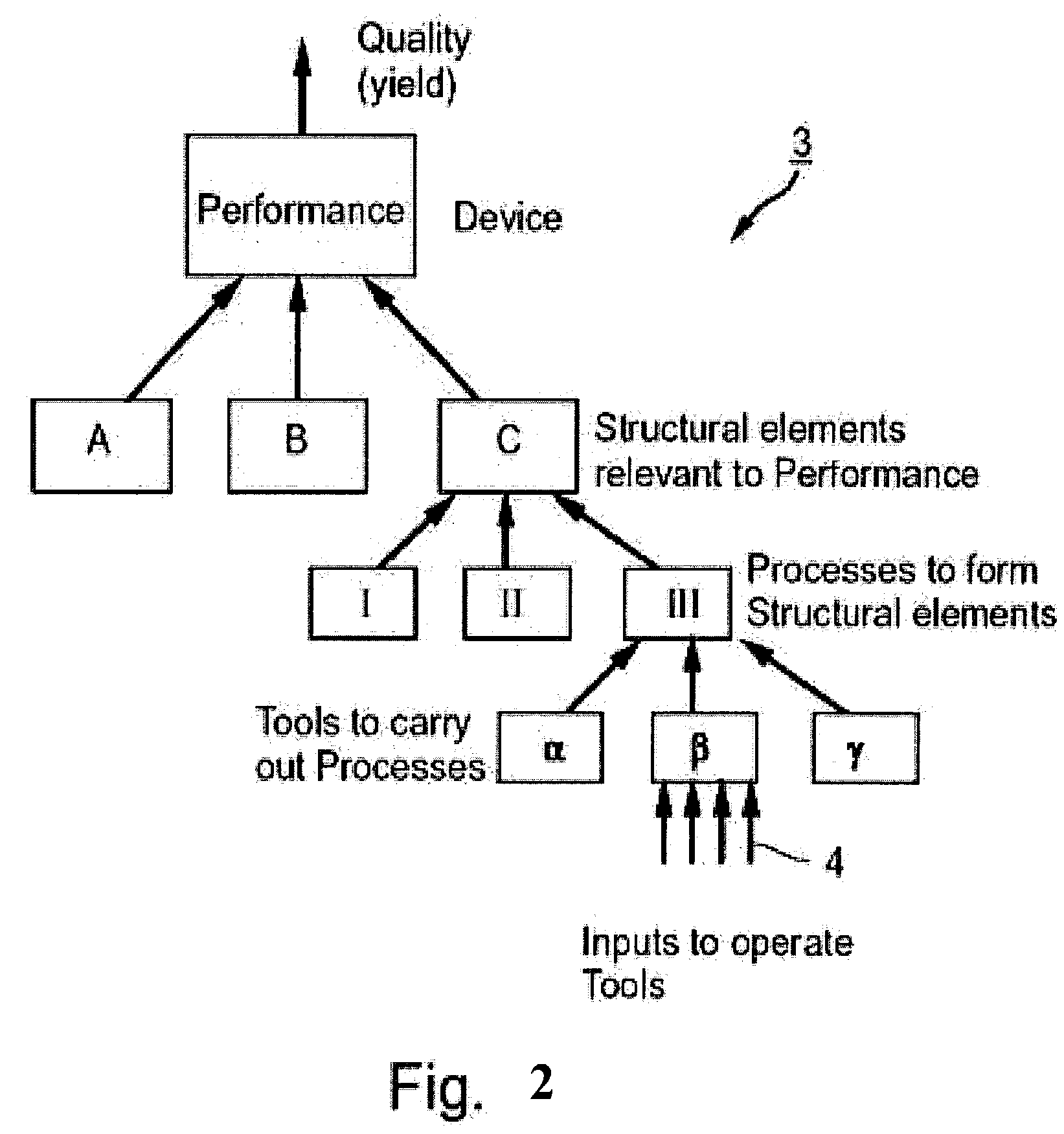

A predictive global model for modeling a system includes a plurality of local models, each having: an input layer for mapping into an input space, a hidden layer and an output layer. The hidden layer stores a representation of the system that is trained on a set of historical data, wherein each of the local models is trained on only a select and different portion of the set of historical data. The output layer is operable for mapping the hidden layer to an associated local output layer of outputs, wherein the hidden layer is operable to map the input layer through the stored representation to the local output layer. A global output layer is provided for mapping the outputs of all of the local output layers to at least one global output, the global output layer generalizing the outputs of the local models across the stored representations therein.

Owner:PEGASUS TECH INC

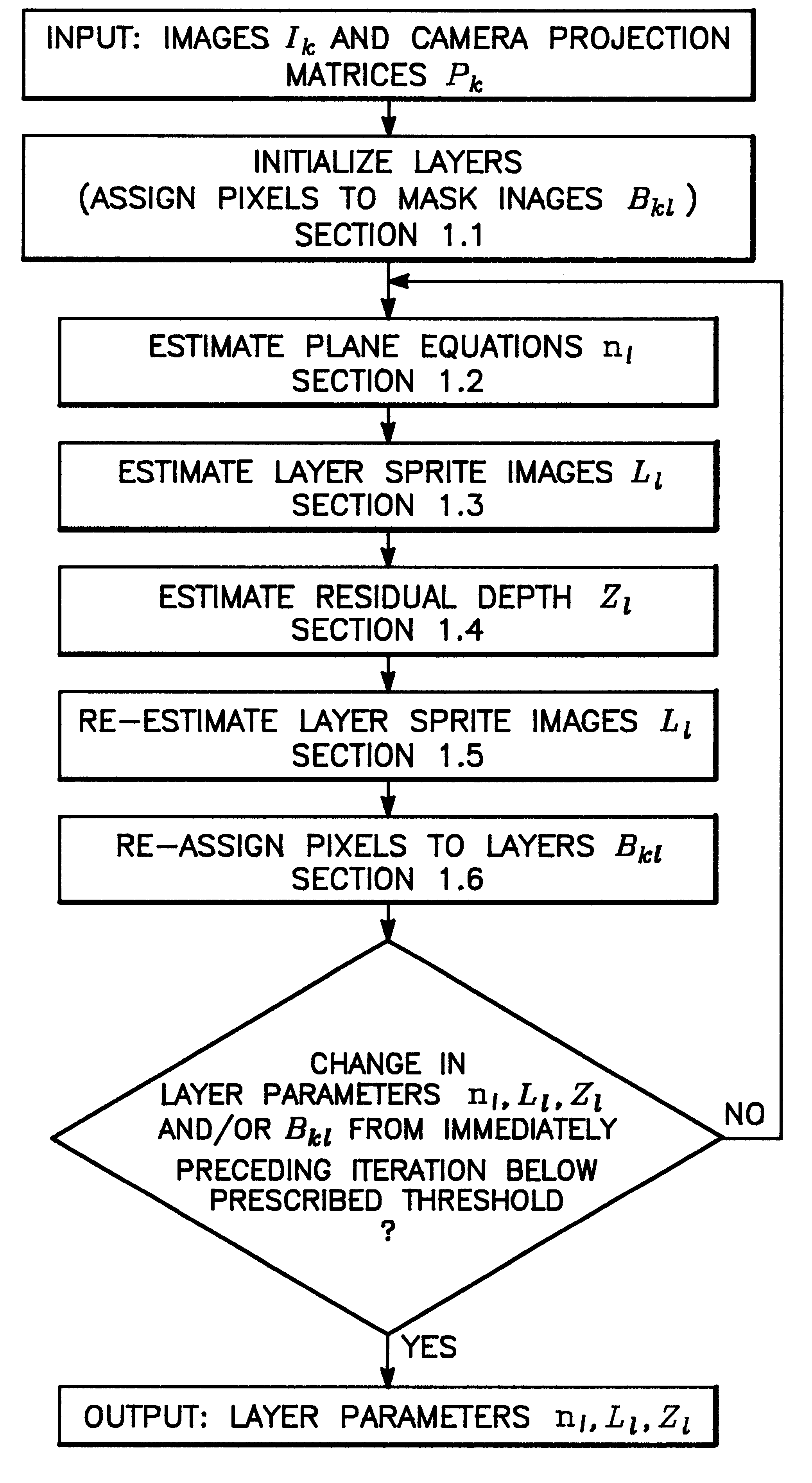

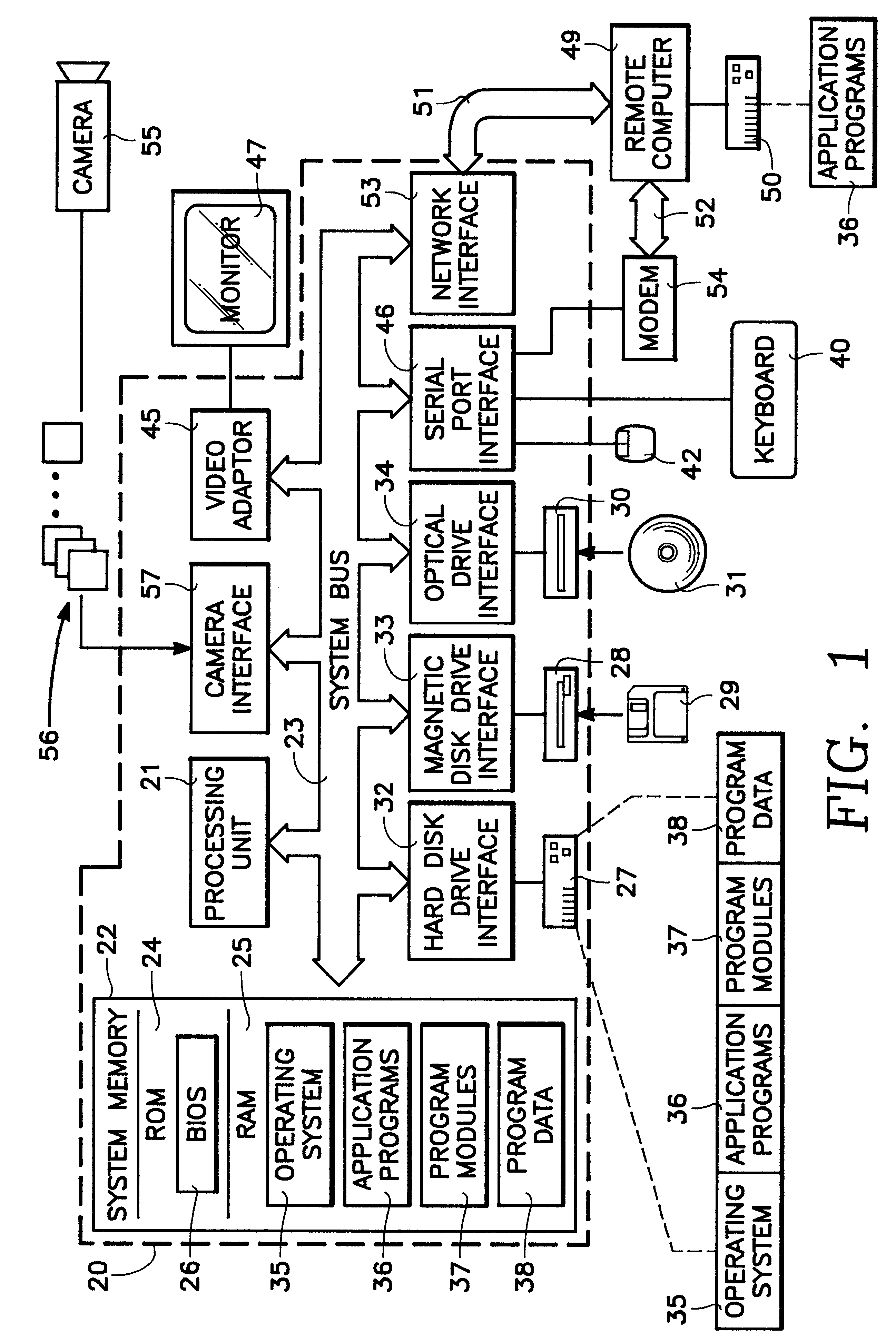

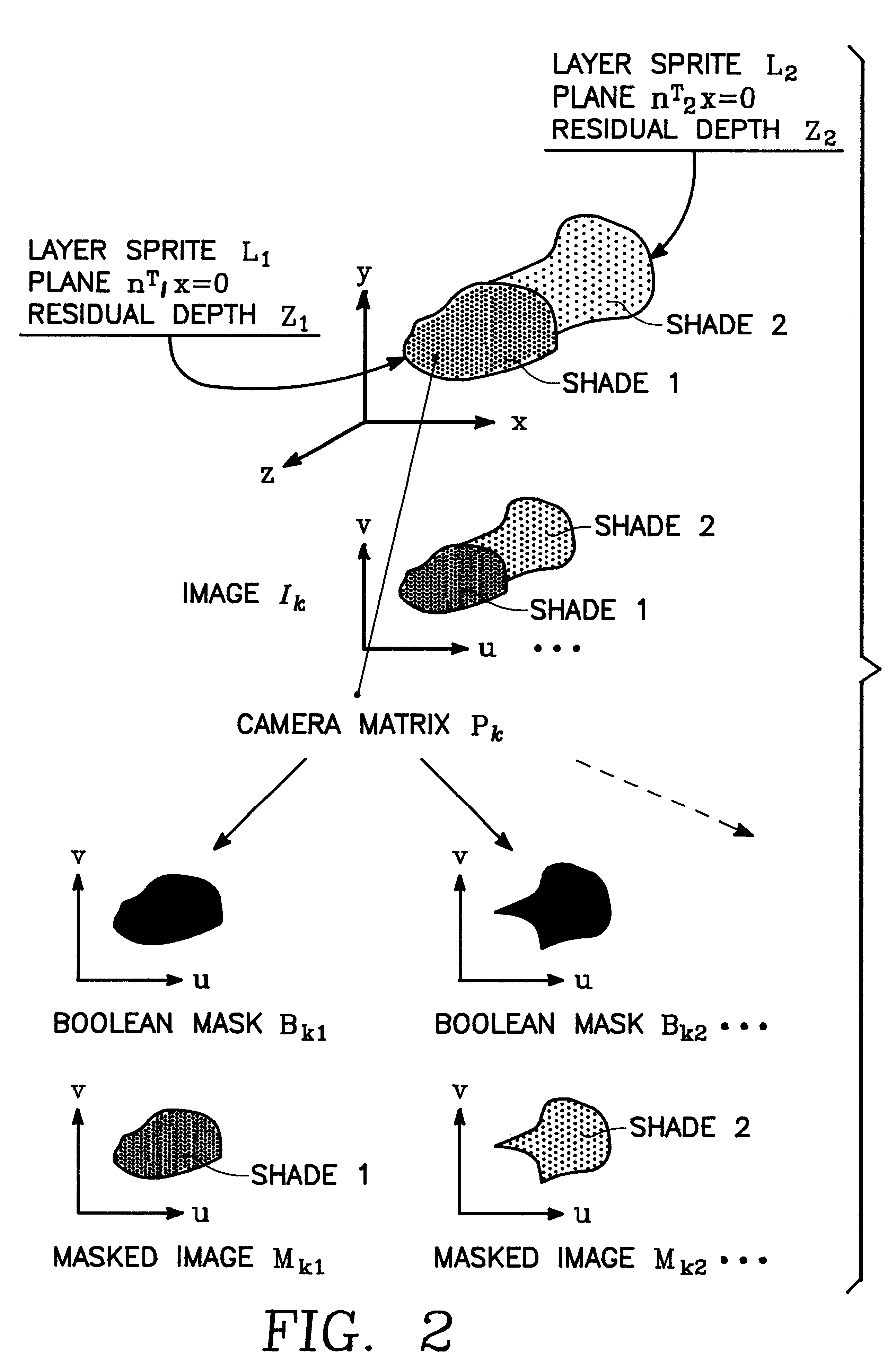

Stereo reconstruction employing a layered approach

InactiveUS6348918B1Improve performanceDepth accurateImage enhancementImage analysisPattern recognitionComputer graphics (images)

A system and method for extracting structure from stereo that represents the scene as a collection of planar layers. Each layer optimally has an explicit 3D plane equation, a colored image with per-pixel opacity, and a per-pixel depth value relative to the plane. Initial estimates of the layers are recovered using techniques from parametric motion estimation. The combination of a global model (the plane) with a local correction to it (the per-pixel relative depth value) imposes enough local consistency to allow the recovery of shape in both textured and untextured regions.

Owner:MICROSOFT TECH LICENSING LLC

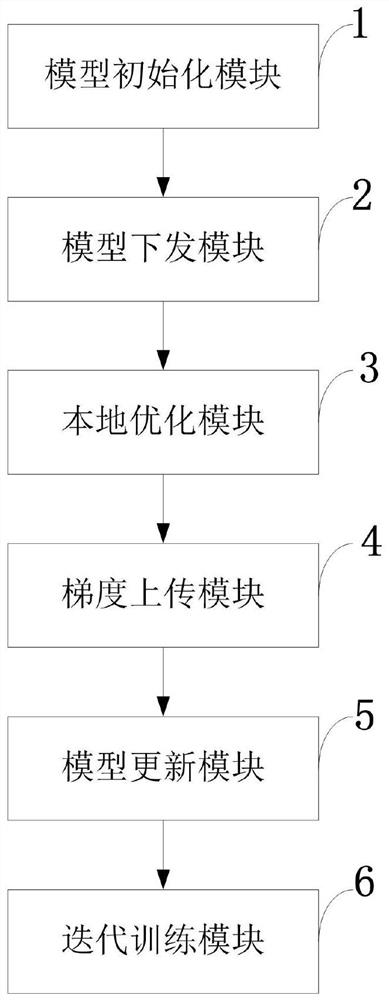

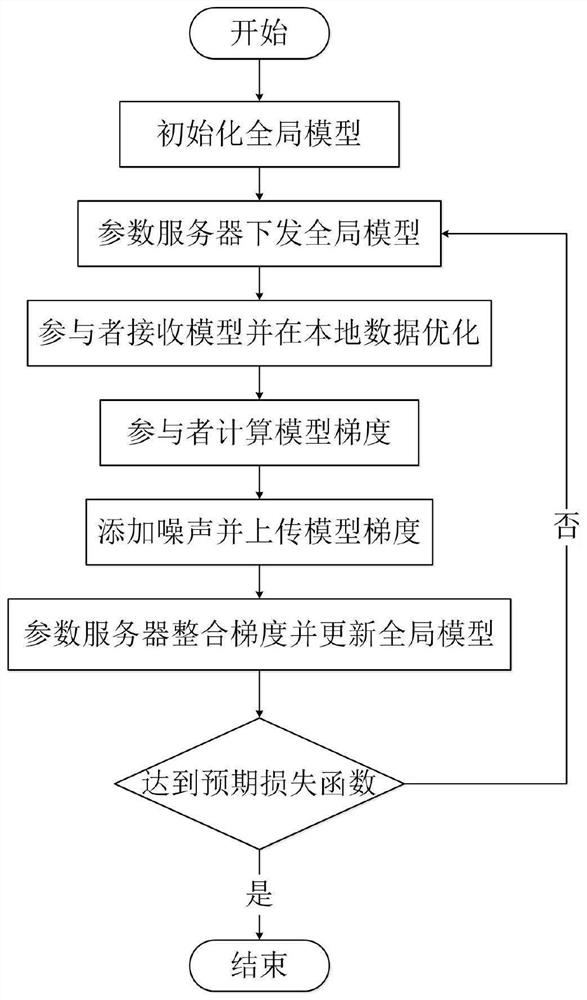

Federated learning information processing method and system, storage medium, program and terminal

ActiveCN111611610ARealize identity anonymityGuaranteed separation effectDigital data protectionNeural architecturesInformation processingData privacy protection

The invention belongs to the technical field of wireless communication networks, and discloses a federated learning information processing method and system, a storage medium, a program, and a terminal. A parameter serve confirms a training task and an initial parameter and initialize a global model. The parameter server randomly selects part of participants to issue model parameters, encrypts themodel parameters and forwards the model parameters through the proxy server; the participants receive part of parameters of the model and cover the local model, and the model is optimized by using local data; the participant calculates a model gradient according to an optimization result, selects a part of the model gradient for uploading, adds noise to the uploading gradient to realize differential privacy, encrypts the uploading gradient and forwards the uploading gradient through the proxy server; the parameter server receives the gradients of all participants, and integrates and updates the global model; and the issuing-training-updating process of the model is repeated until an expected loss function is achieved. According to the invention, data privacy protection is realized; the communication overhead of a parameter server is reduced, and anonymity of participants is realized.

Owner:XIDIAN UNIV

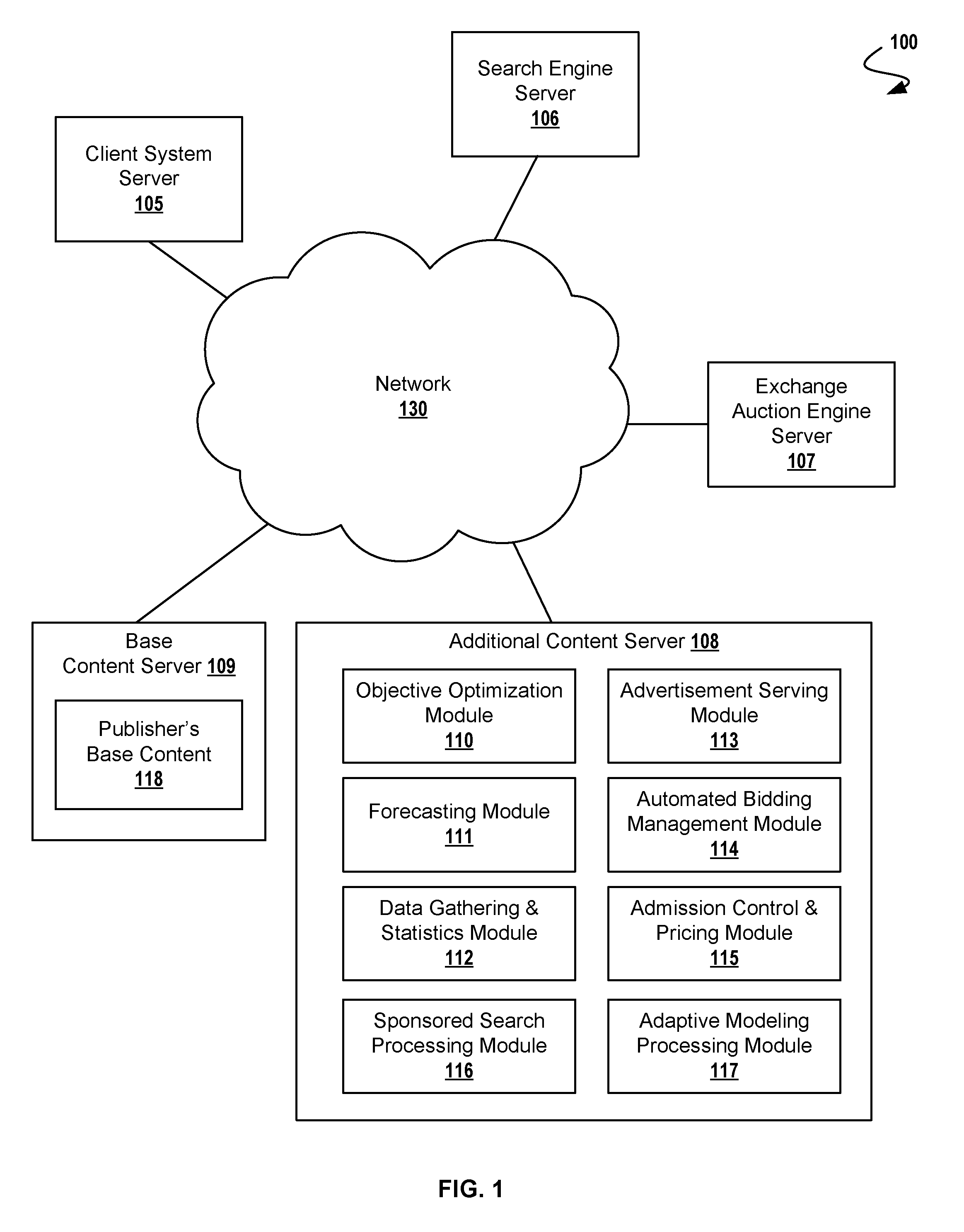

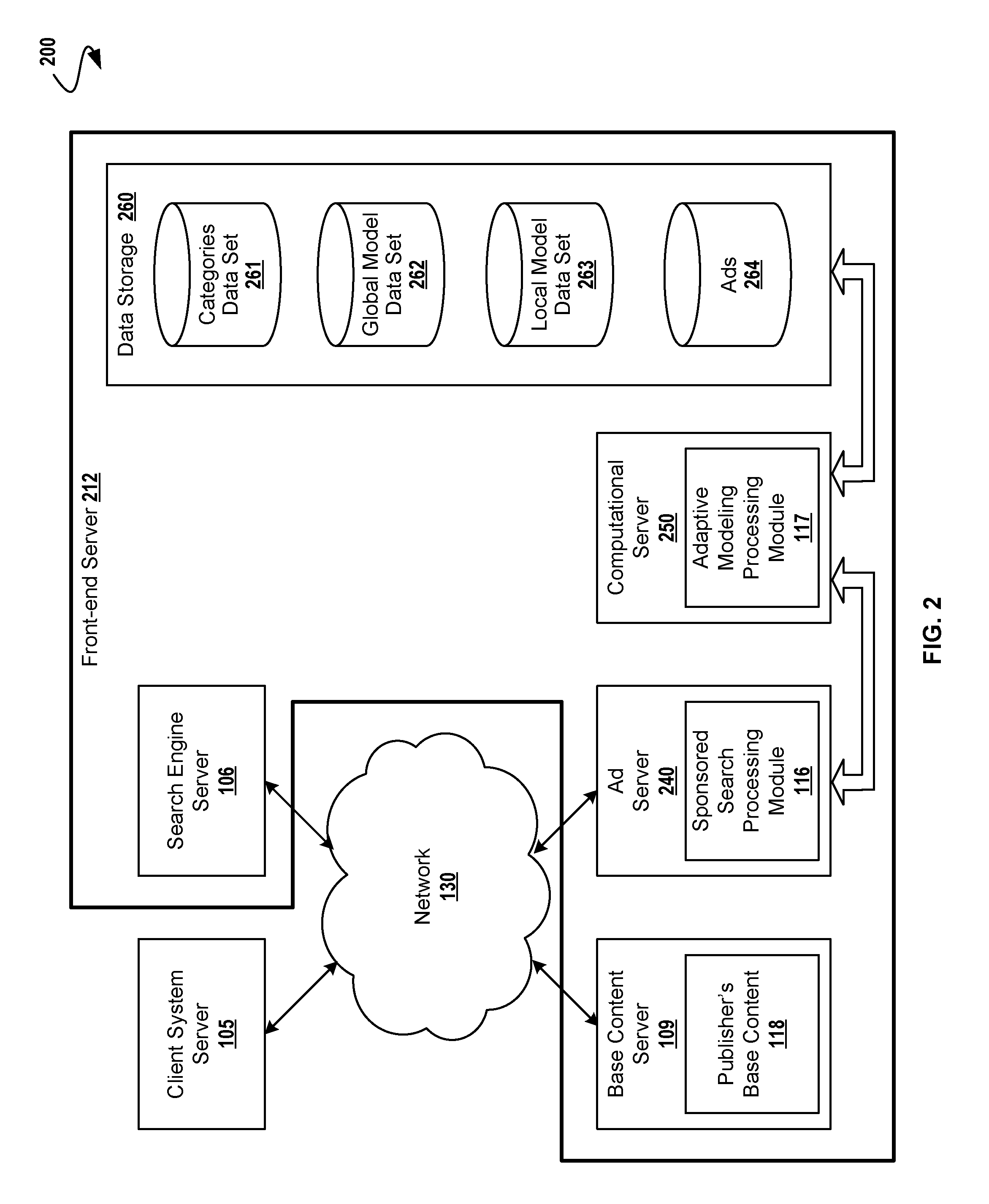

Estimating Probabilities of Events in Sponsored Search Using Adaptive Models

A machine-learning method for estimating probability of a click event in online advertising systems by computing and comparing an aggregated predictive model (a global model) and one or more data-wise sliced predictive models (local models). The method comprises receiving training data having a plurality of features stored in a feature set and constructing a global predictive model that estimates the probability of a click event for the processed feature set. Then, partitioning the global predictive model into one or more data-wise sliced training sets for training a local model from each of the data-wise slices, and then determining whether a particular local model estimates probability of click event for the feature set better than the global model. A given feature set may be collected from historical data, and may comprise a feature vector for a plurality of query-advertisement pairs and a corresponding indicator that represents a click on the advertisement.

Owner:TWITTER INC

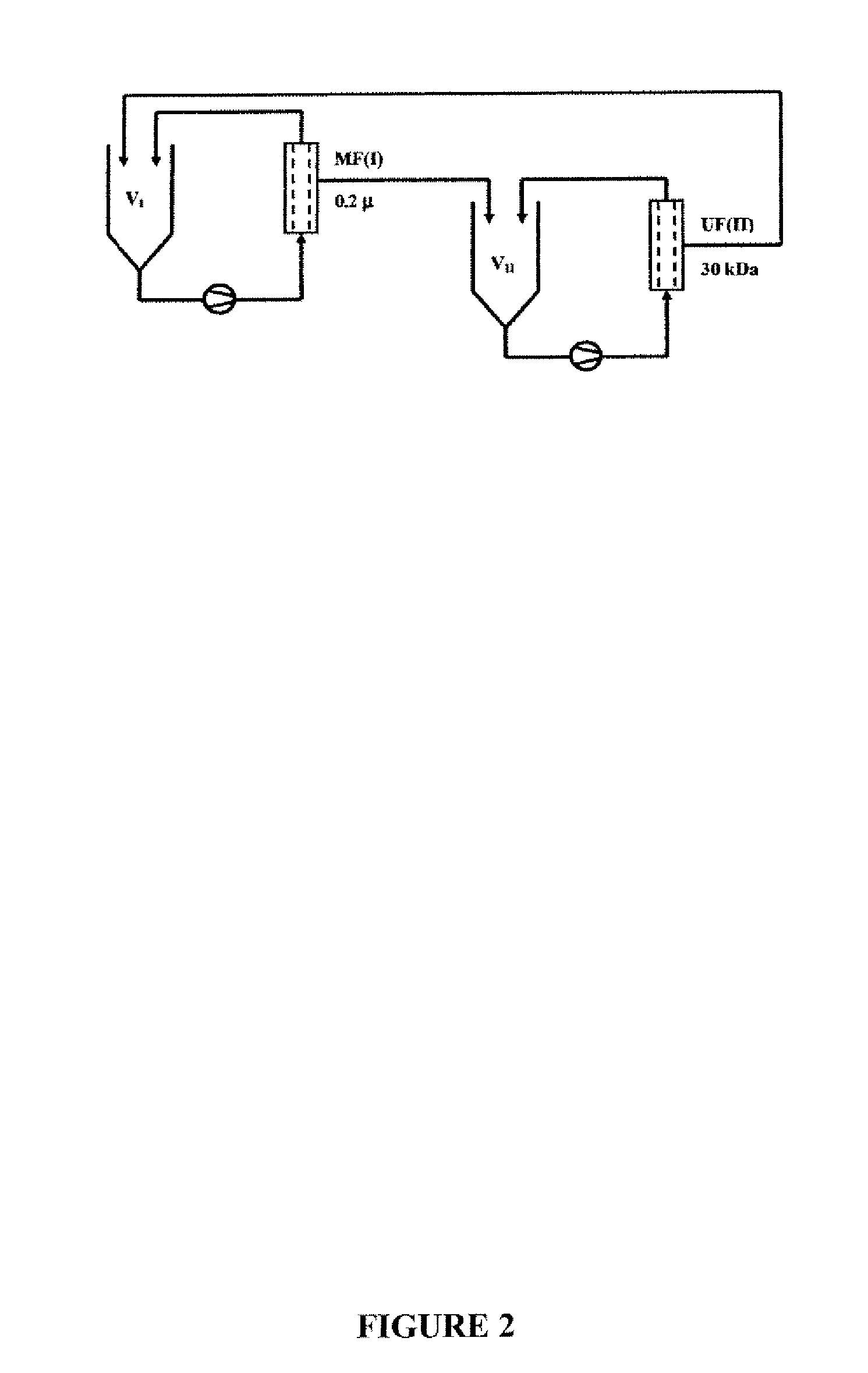

Global model for optimizing crossflow microfiltration and ultrafiltration processes

InactiveUS20080017576A1Realistic and rapid in silico MF/UF optimizationFill in the blanksMembranesUltrafiltrationChemical physicsSieving coefficient

The present invention is a method for optimizing operating conditions for yield, purity, or selectivity of target species, and / or processing time for crossflow membrane filtration of target species in feed suspensions. This involves providing as input parameters: size distribution and concentration of particles and solutes in the suspension; suspension pH and temperature; physical and operating properties of membranes, and number and volume of reservoirs. The method also involves determining effective membrane pore size distribution; suspension viscosity, hydrodynamics, and electrostatics; pressure-independent permeation flux of the suspension and cake composition; pressure-independent permeation flux for each particle and overall observed sieving coefficient of each target species through cake deposit and pores; solving mass balance equations for all solutes; and iterating the mass balance equation for each solute at all possible permeation fluxes, thereby optimizing operating conditions. The invention also provides a computer readable medium for carrying out the method of the present invention.

Owner:RENESSELAER POLYTECHNIC INST

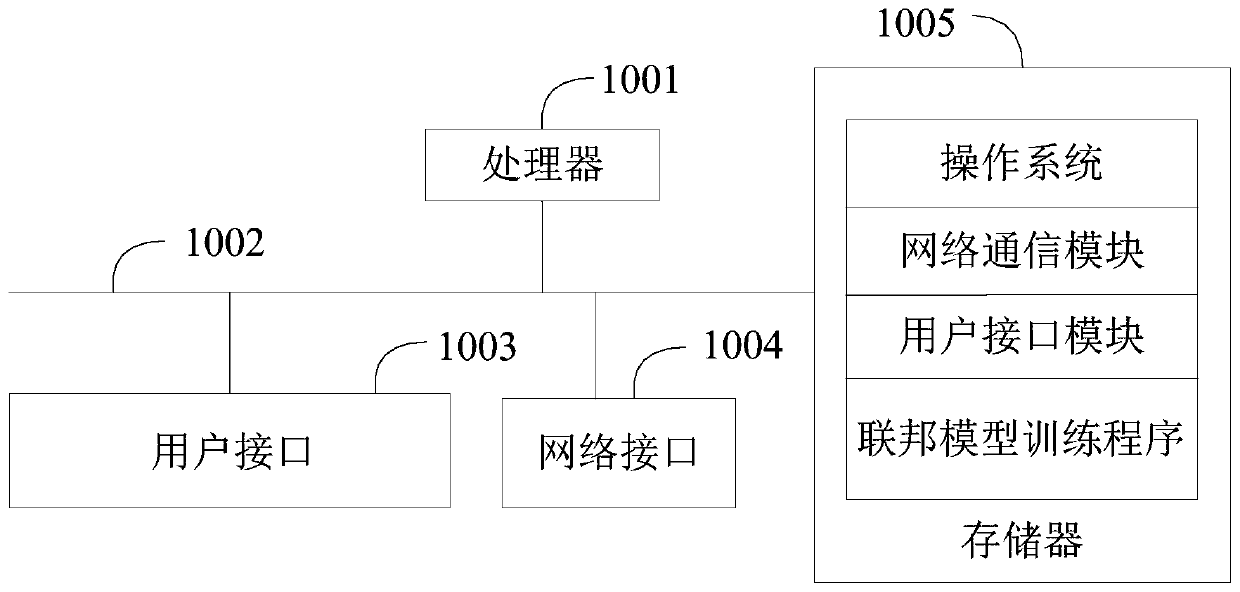

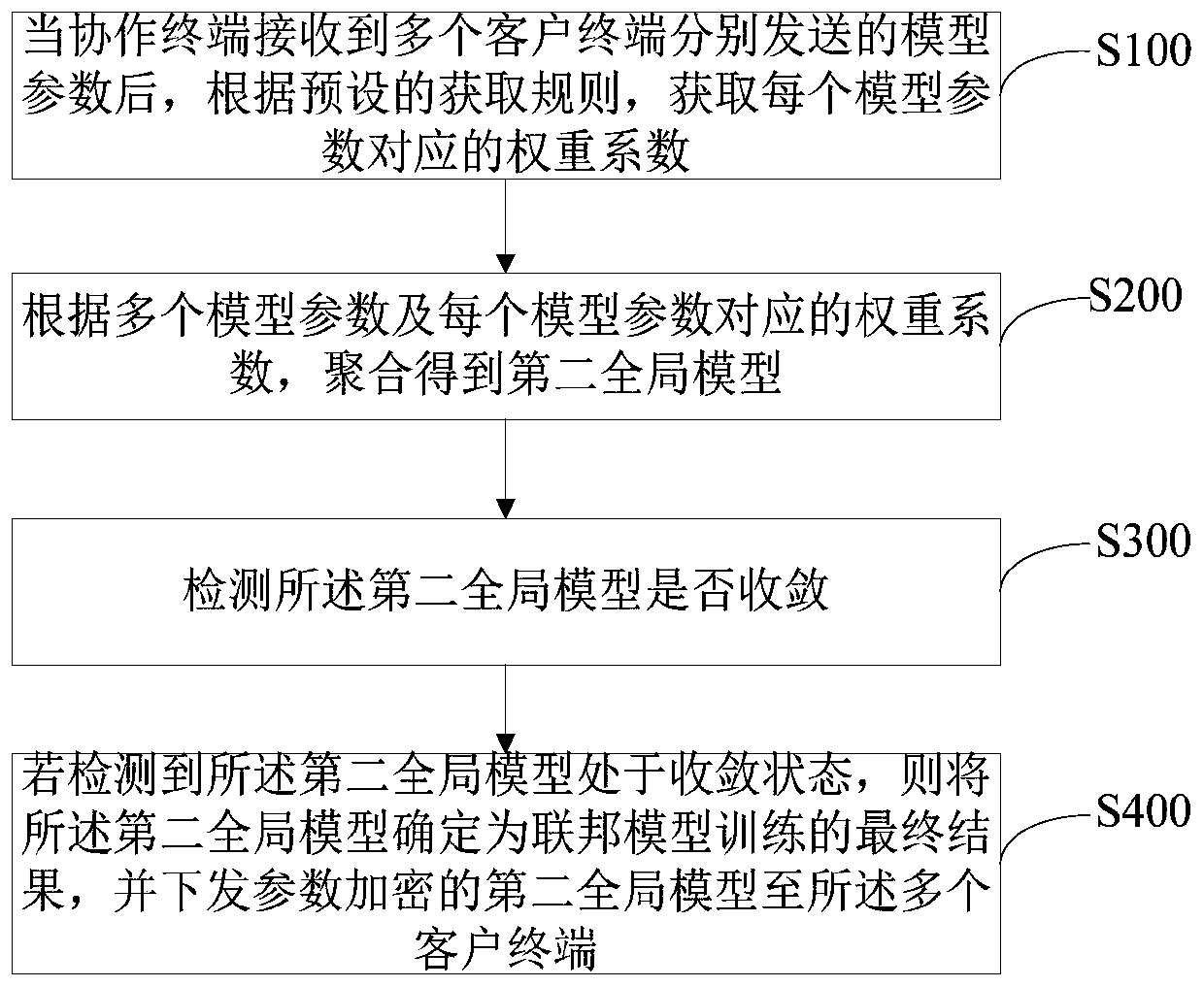

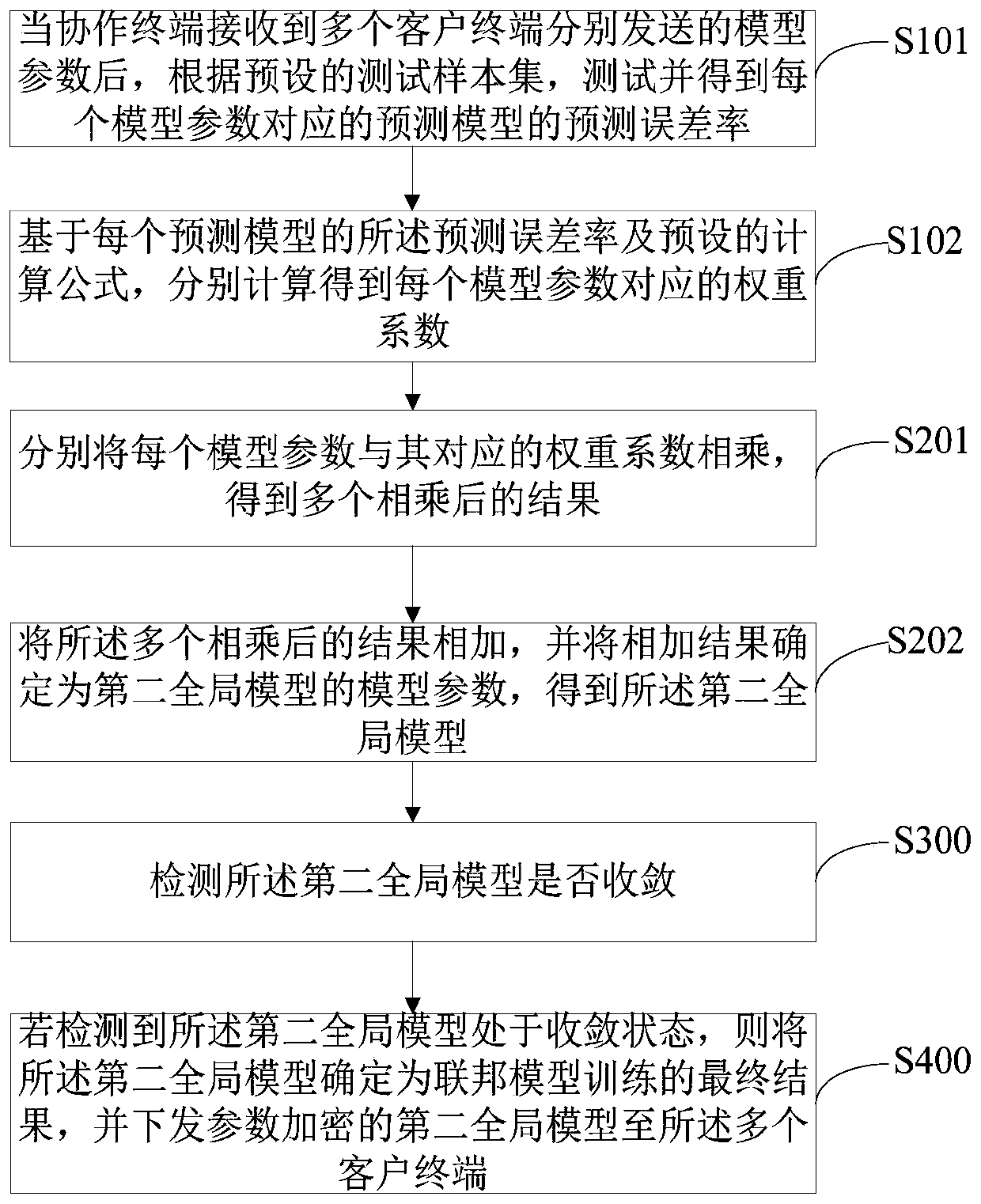

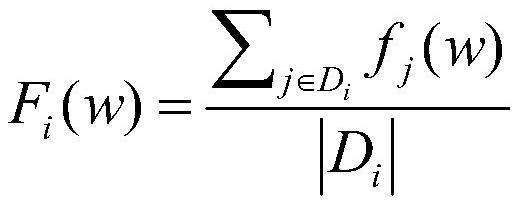

Joint model training method, system and device and computer readable storage medium

PendingCN109871702AImprove predictive performanceAvoid unsatisfactory resultsCharacter and pattern recognitionDigital data protectionWeight coefficientAlgorithm

The invention discloses a federal model training method, a federal model training system, federal model training equipment and a computer readable storage medium, and the method comprises the steps: obtaining a weight coefficient corresponding to each model parameter according to a preset obtaining rule after a cooperative terminal receives the model parameters respectively sent by a plurality ofclient terminals; according to the plurality of model parameters and the weight coefficient corresponding to each model parameter, aggregating to obtain a second global model; detecting whether the second global model converges or not; and if it is detected that the second global model is in the convergence state, determining the second global model as a final result of federated model training, and issuing the second global model with encrypted parameters to a plurality of client terminals. According to the invention, the cooperative terminal updates and obtains the new global model accordingto the model parameter and the weight coefficient of each client terminal, and the prediction effect of the federal model is improved.

Owner:WEBANK (CHINA)

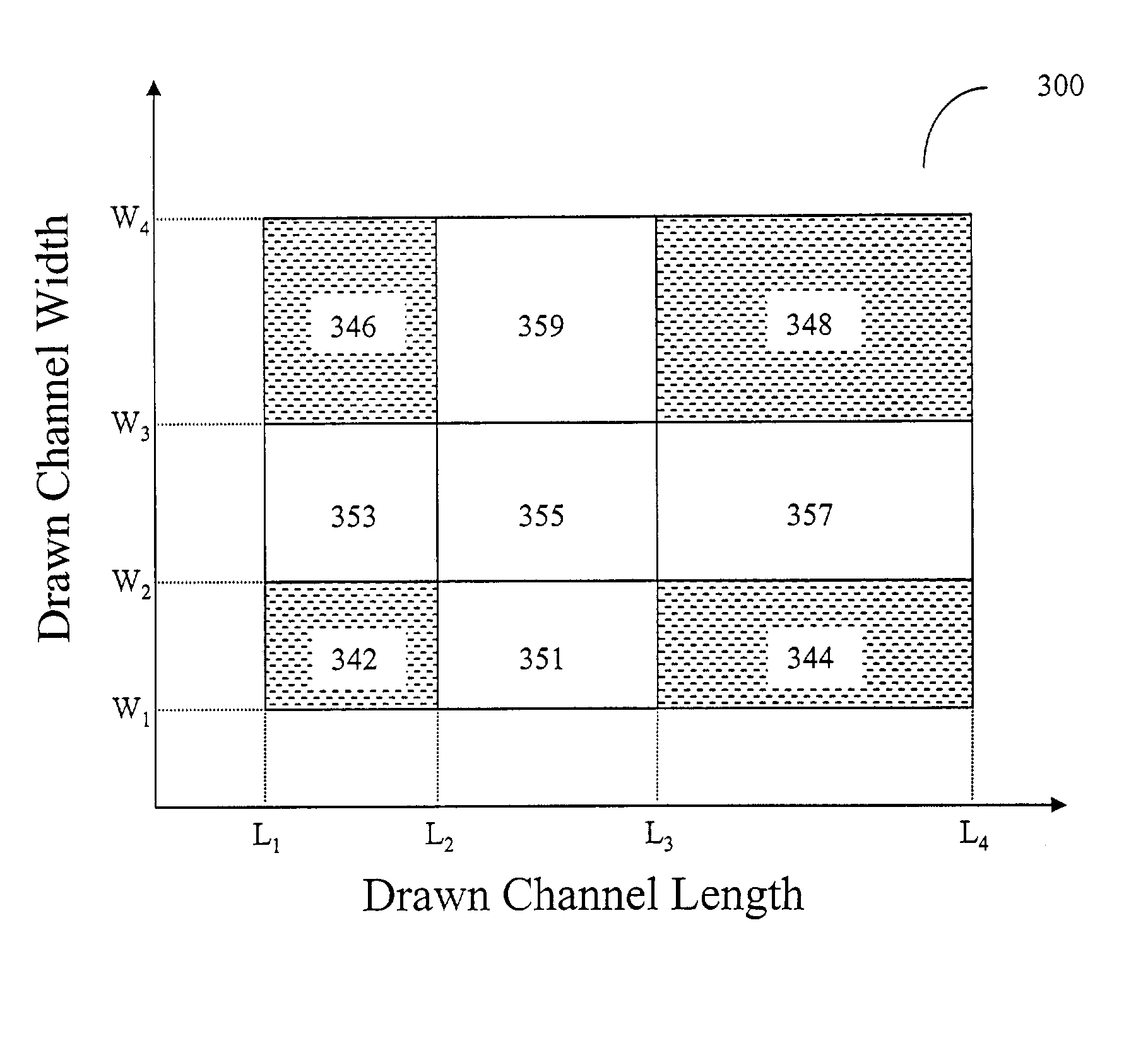

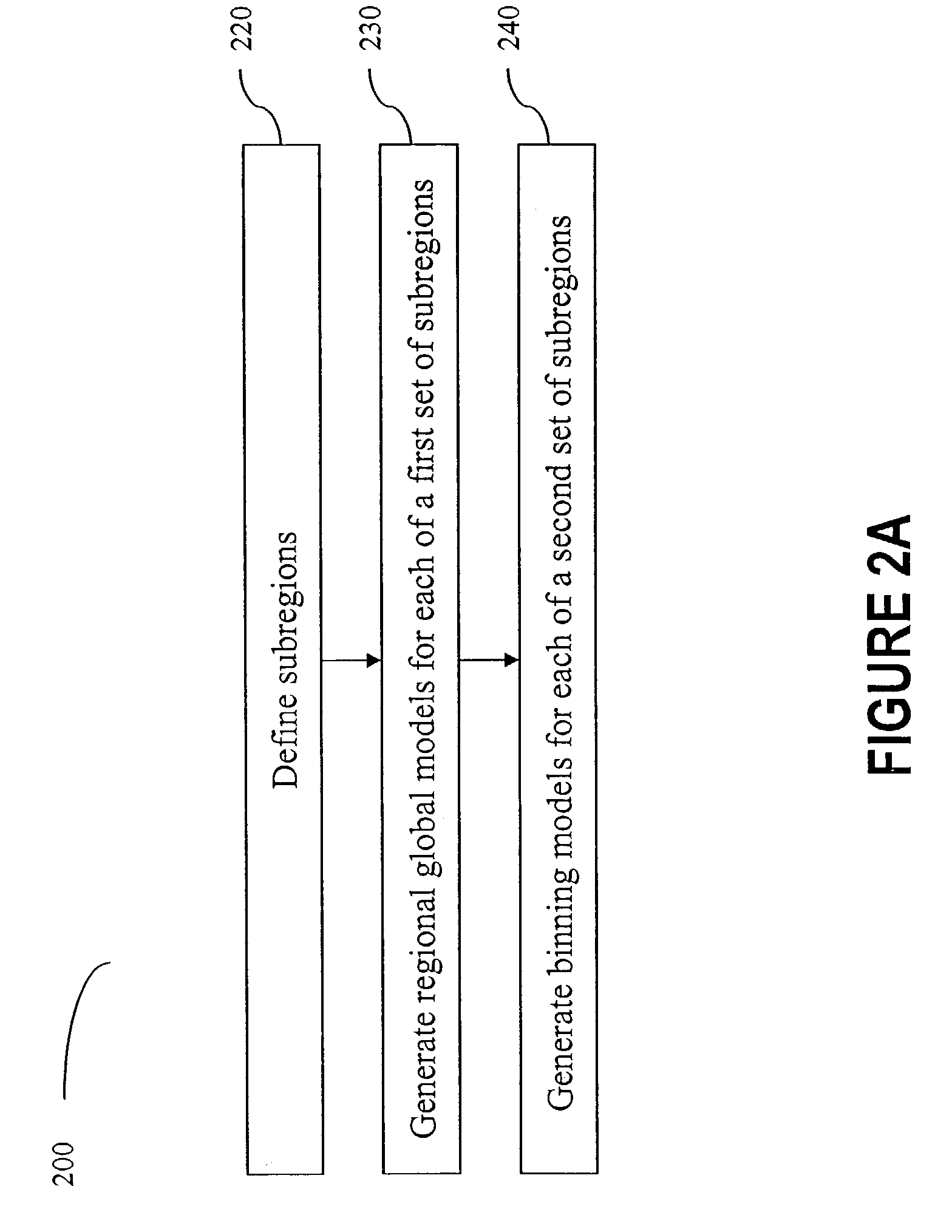

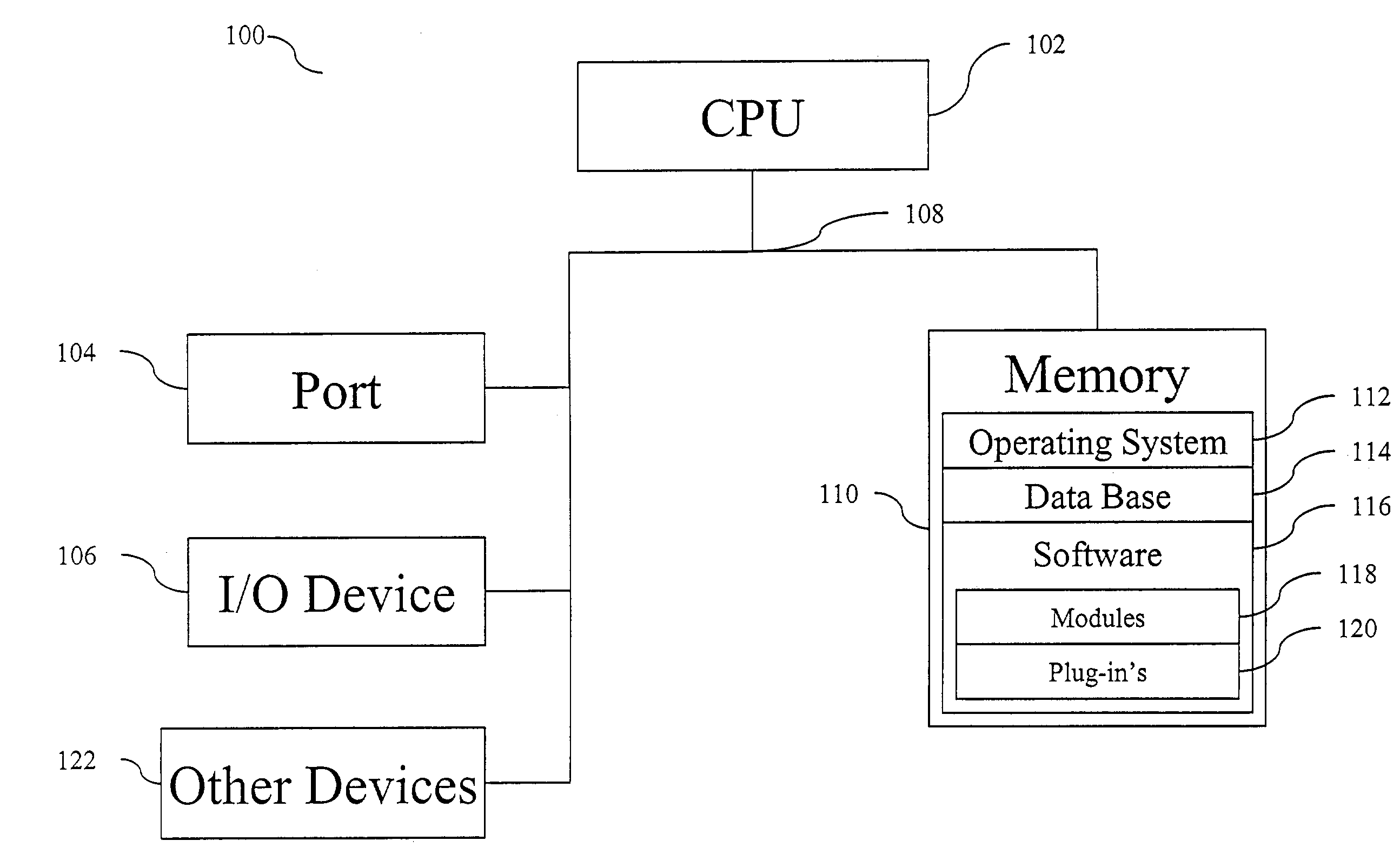

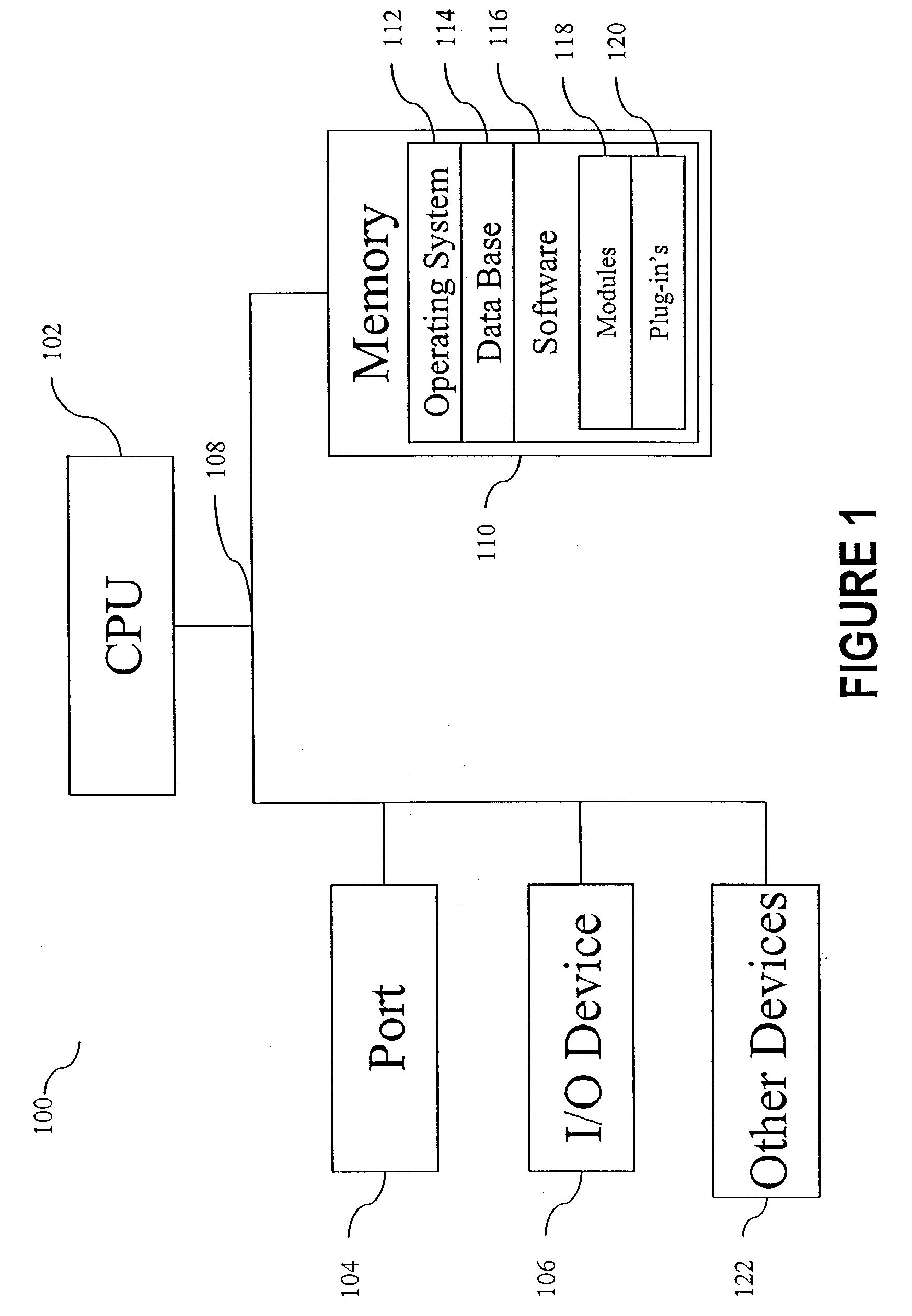

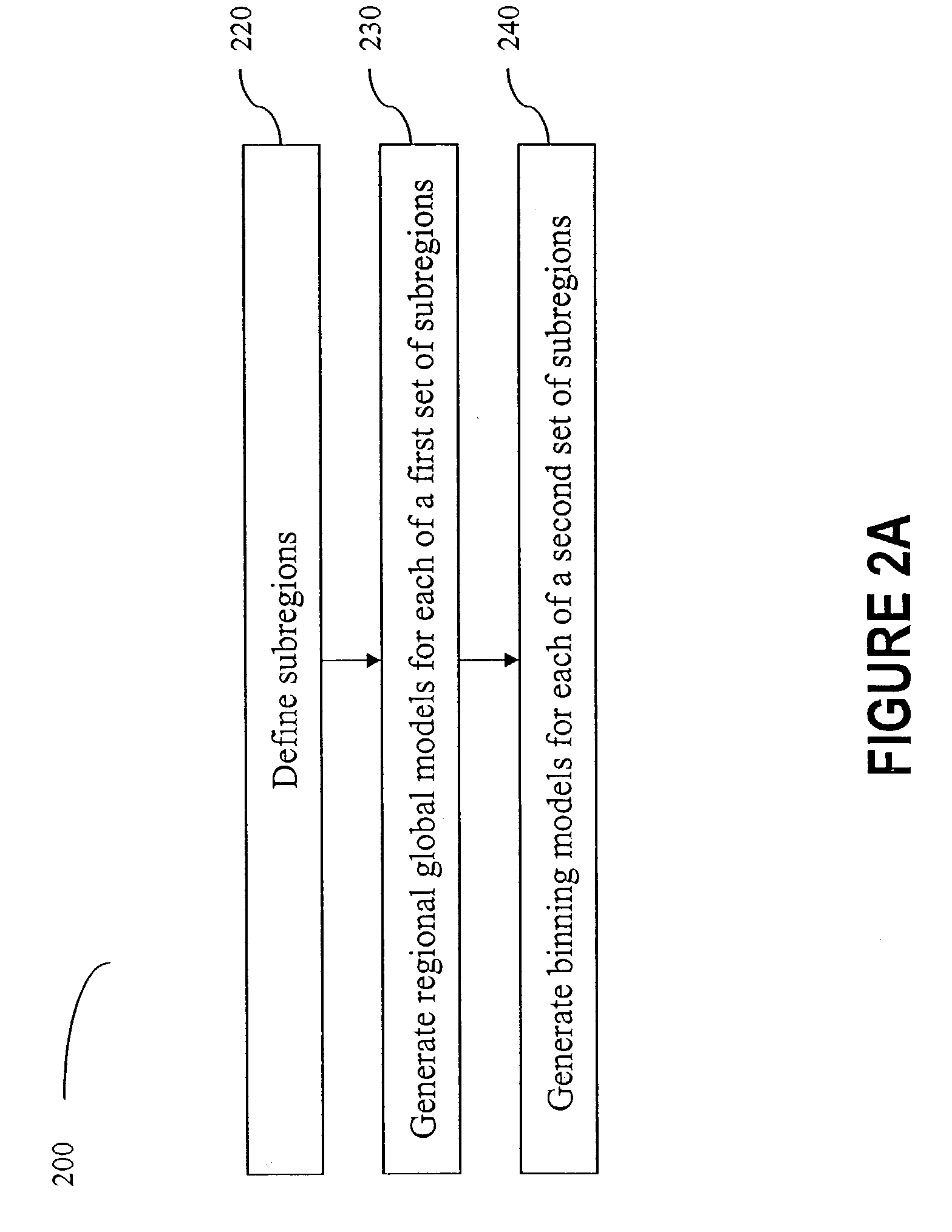

Method and apparatus for modeling devices having different geometries

InactiveUS7263477B2Detecting faulty computer hardwareAnalogue computers for electric apparatusModel parametersComputer science

The present invention includes a method for modeling devices having different geometries, in which a range of interest for device geometrical variations is divided into a plurality of subregions each corresponding to a subrange of device geometrical variations. The plurality of subregions include a first type of subregions and a second type of subregions. The first or second type of subregions include one or more subregions. A regional global model is generated for each of the first type of subregions and a binning model is generated for each of the second type of subregions. The regional global model for a subregion uses one set of model parameters to comprehend the subrange of device geometrical variations corresponding to the G-type subregion. The binning model for a subregion includes binning parameters to provide continuity of the model parameters when device geometry varies across two different subregions.

Owner:CADENCE DESIGN SYST INC

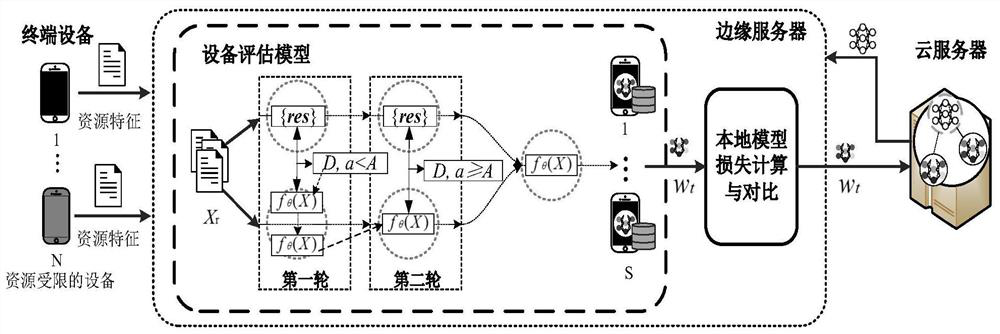

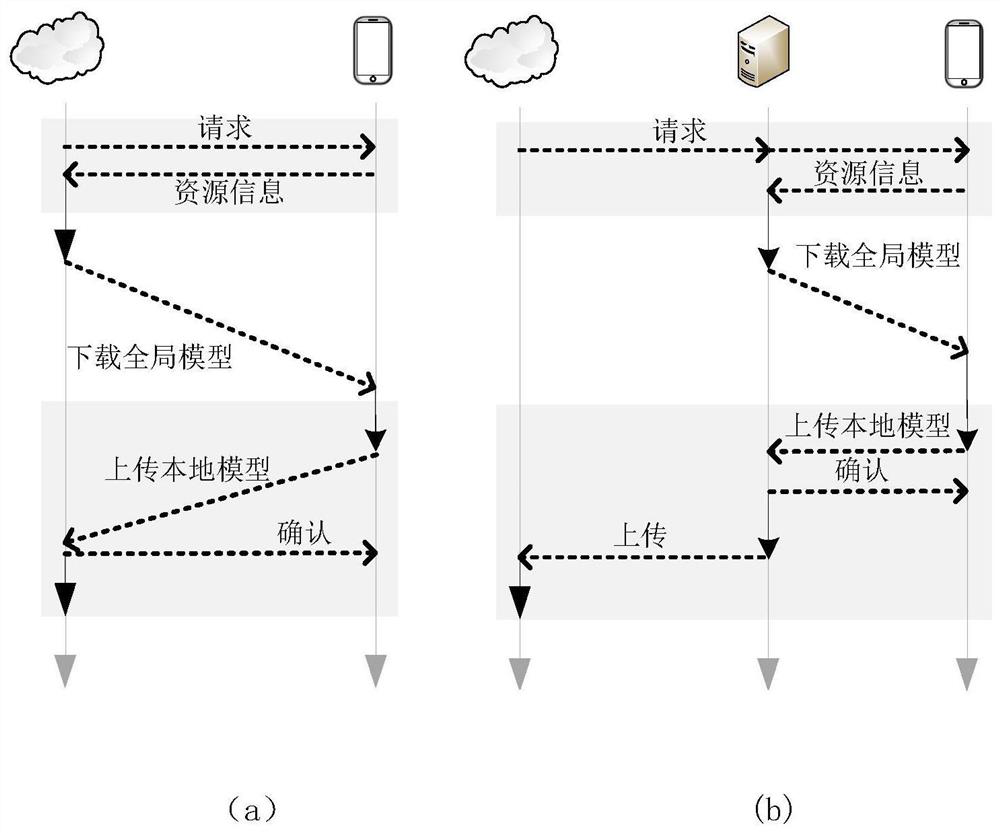

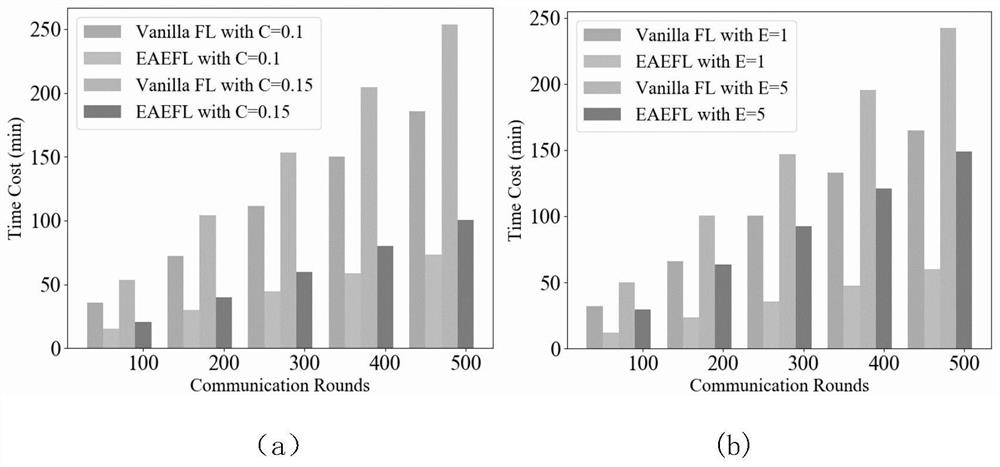

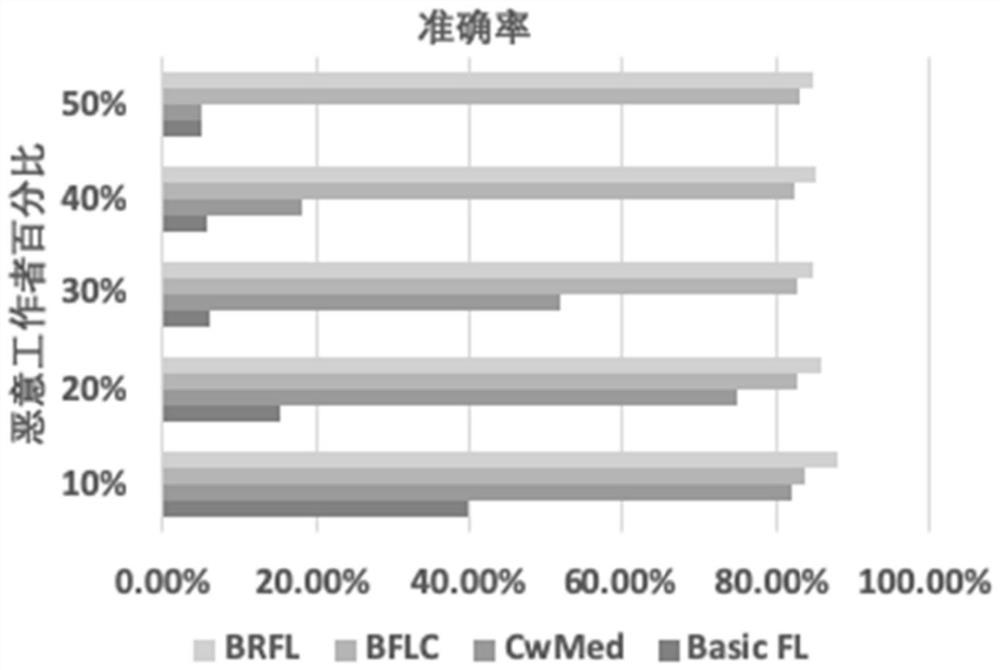

Equipment evaluation and federated learning importance aggregation method, system and equipment based on edge intelligence and readable storage medium

ActiveCN112181666AReduce consumptionReduce latencyDigital data information retrievalResource allocationEdge serverResource information

The invention provides an equipment evaluation and federated learning importance aggregation method based on edge intelligence, which comprises the following steps of cloud server initialization: generating an initial model by a cloud server, equipment evaluation and selection: receiving resource information of terminal equipment by an edge server, generating a resource feature vector, and inputting the resource feature vector to the evaluation model, local training: after the edge server selects the intelligent equipment, sending the transferred initial model to the intelligent equipment, andenabling the intelligent equipment to carry out local training on the initial model in federated learning to obtain a local model, local model screening: sending the local model to an edge server, and judging whether the local model is an abnormal model or not by comparing the loss values of the local model and a previous round of global model, and global aggregation: performing global aggregation by using a classical federated average algorithm. According to the method provided by the invention, on one hand, the training bottleneck problem with resource constraint equipment is solved, and onthe other hand, the model aggregation effect is improved so as to reduce redundant training and communication consumption.

Owner:HUAQIAO UNIVERSITY

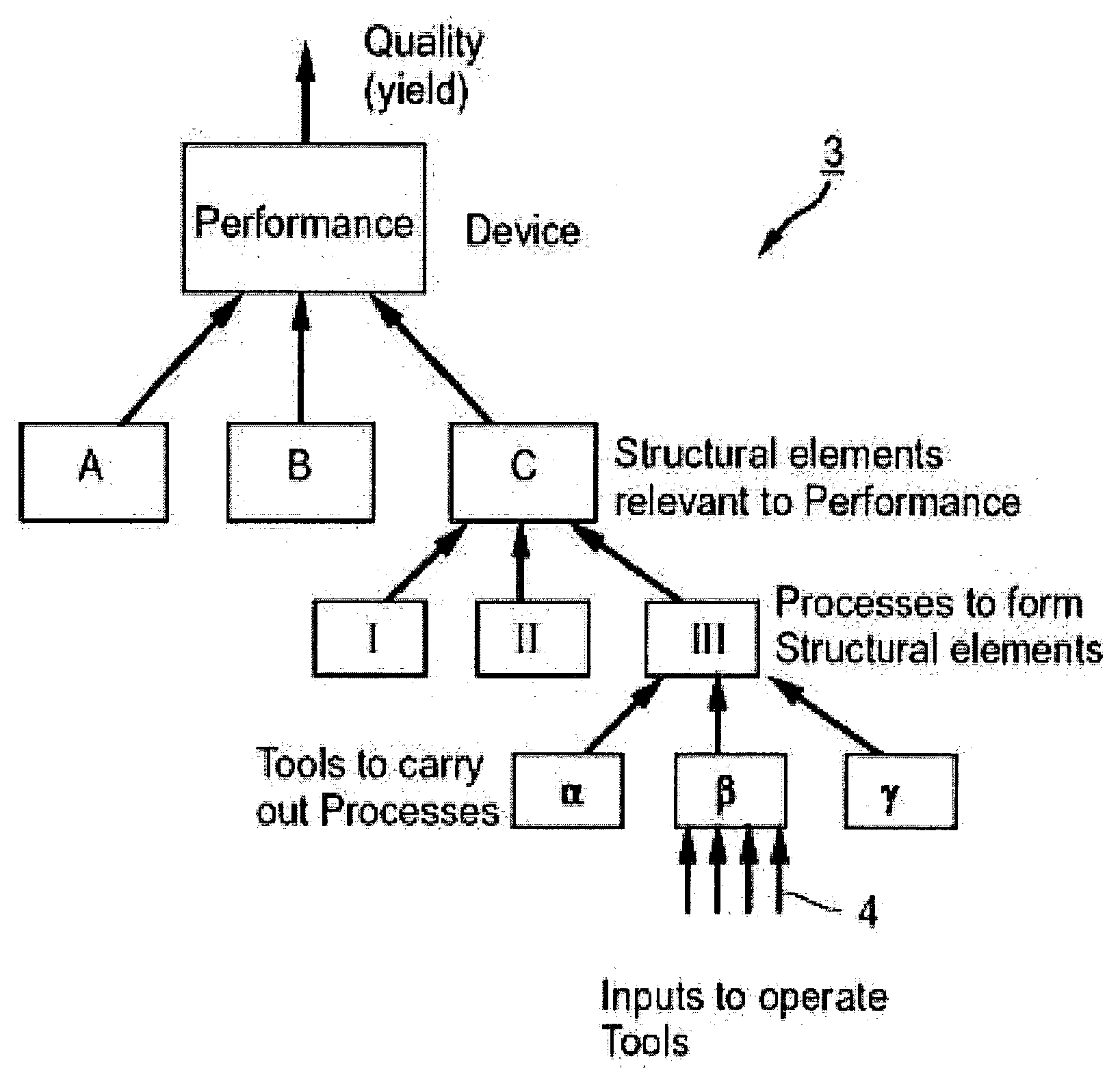

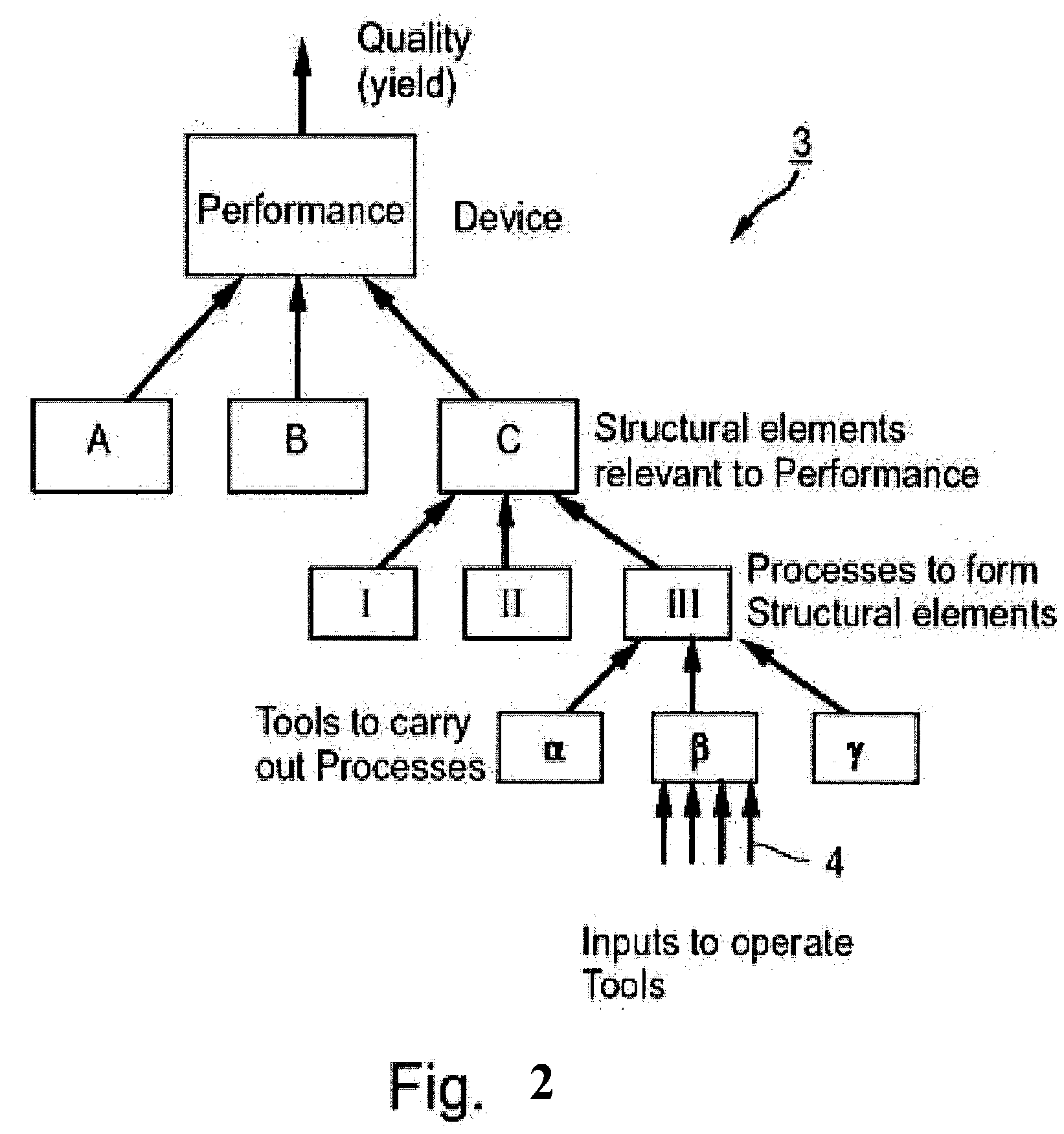

Method for dynamically targeting a batch process

InactiveUS7123978B2Improve product qualityProgramme controlElectric controllersBatch processingData mining

A method for controlling at least one characteristic of a product of an industrial batch process. The method comprising the steps of creating a hierarchical knowledge tree describing the process. Following the creation of a knowledge tree a learning process occurs. This leads to the creation of a global model. During the execution of a batch process, the global model is applied to dynamically target subsequent phase parameters based on already executed phases.

Owner:ADA ANALYTICS ISRAEL

Method and device for neural network machine learning model training

PendingCN109754060AShort cycleShorten the training periodNeural architecturesSpecial data processing applicationsModel parametersMachine learning

The invention discloses a method and a device for neural network machine learning model training, and the method comprises the steps: applying the method and device to a distributed computing frame which comprises a plurality of computing nodes, segmenting training data into training data slices in advance, and enabling the number of the segmented slices to be the same as the number of the computing nodes participating in the calculation; a computing node obtaining training data slices and trains local model parameters; the computing node transmits the trained local model parameters to a parameter server; and the computing node updating local model parameters according to the global model parameters returned by the parameter server and continues to train the local model parameters. According to the method, the calculation acceleration ratio at multiple nodes can almost reach a linear ideal value, and the period of model training is greatly shortened.

Owner:ALIBABA GRP HLDG LTD

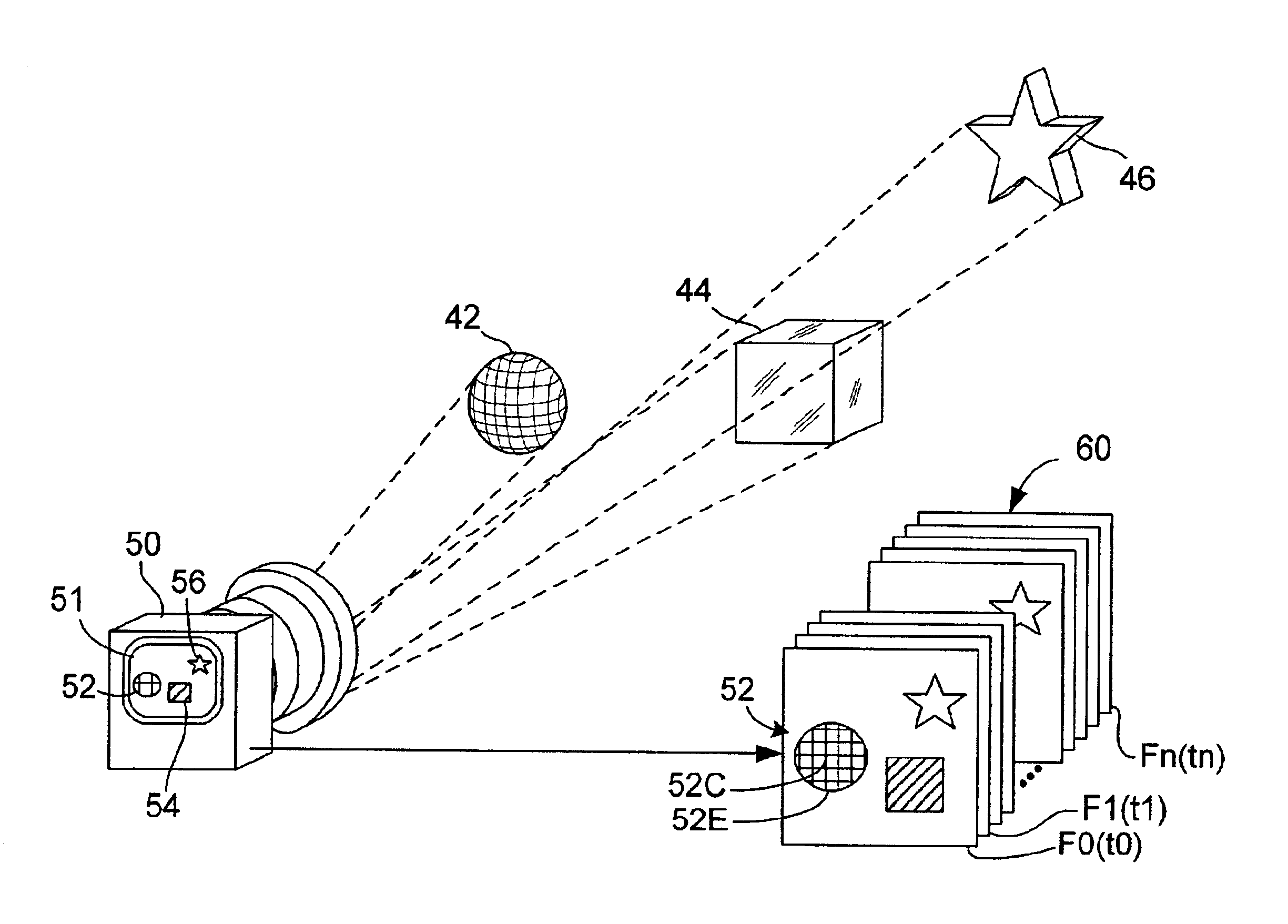

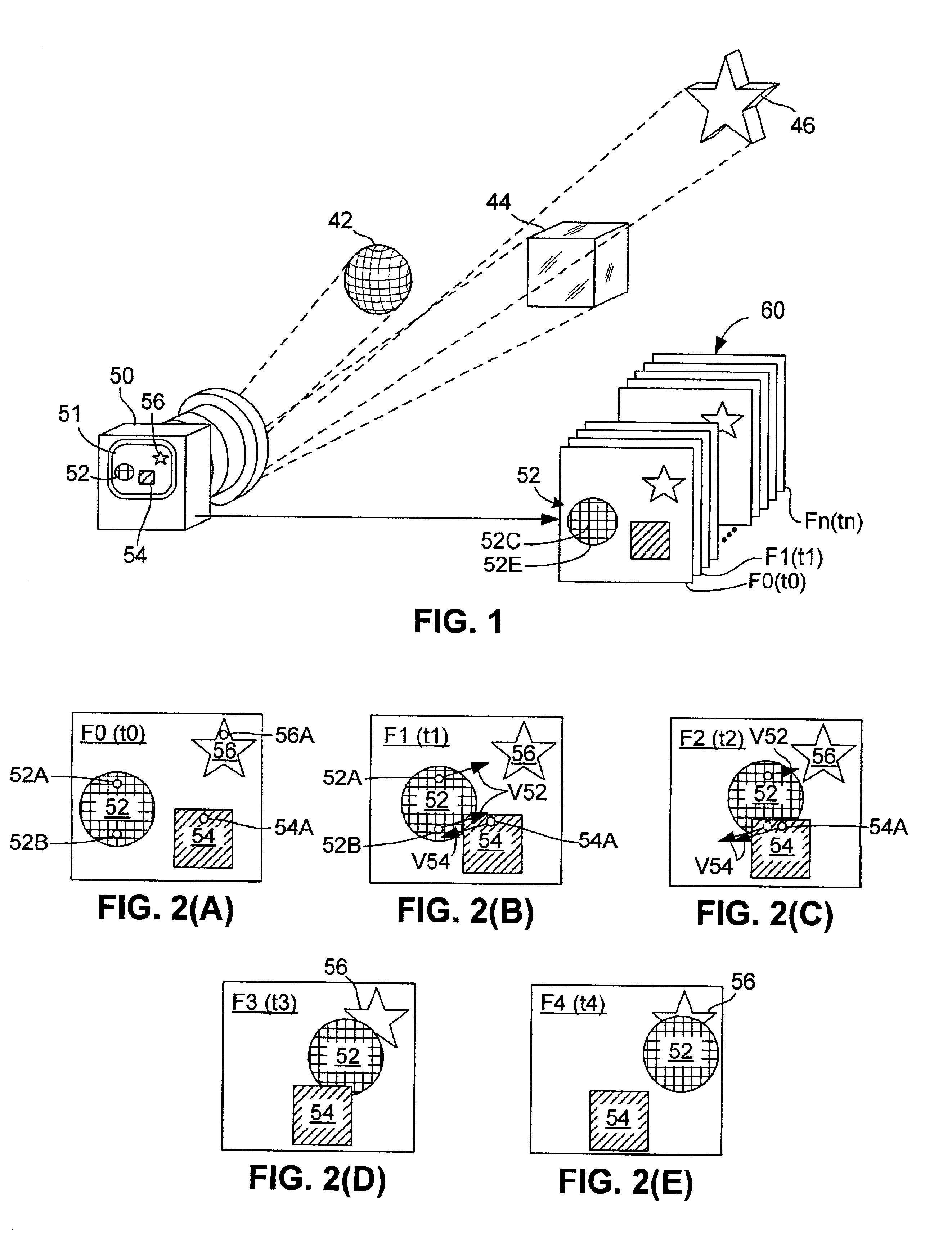

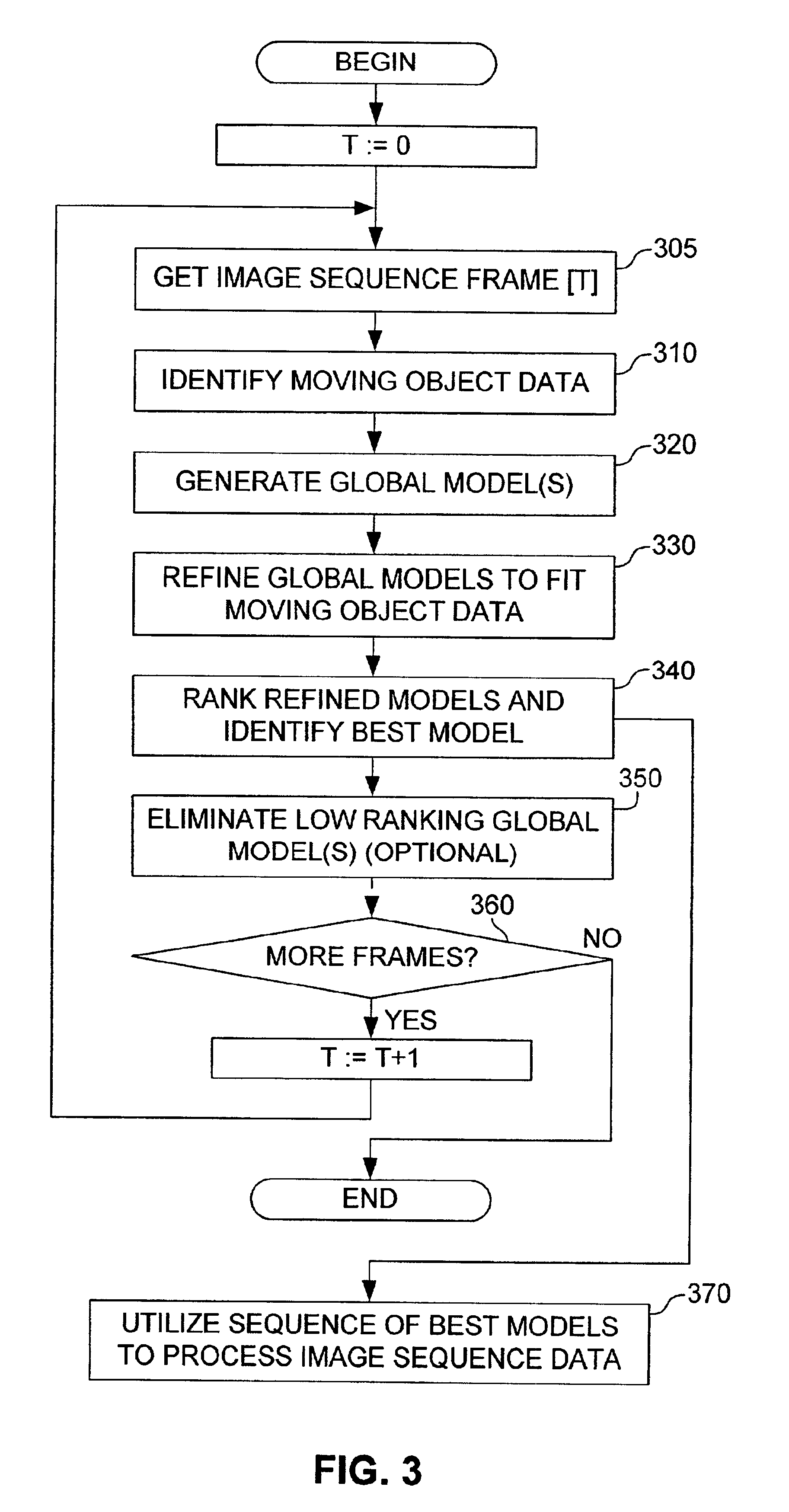

Visual motion analysis method for detecting arbitrary numbers of moving objects in image sequences

InactiveUS6954544B2Easy to analyzeSimplified representationImage enhancementImage analysisModel selectionModel parameters

A visual motion analysis method that uses multiple layered global motion models to both detect and reliably track an arbitrary number of moving objects appearing in image sequences. Each global model includes a background layer and one or more foreground “polybones”, each foreground polybone including a parametric shape model, an appearance model, and a motion model describing an associated moving object. Each polybone includes an exclusive spatial support region and a probabilistic boundary region, and is assigned an explicit depth ordering. Multiple global models having different numbers of layers, depth orderings, motions, etc., corresponding to detected objects are generated, refined using, for example, an EM algorithm, and then ranked / compared. Initial guesses for the model parameters are drawn from a proposal distribution over the set of potential (likely) models. Bayesian model selection is used to compare / rank the different models, and models having relatively high posterior probability are retained for subsequent analysis.

Owner:XEROX CORP

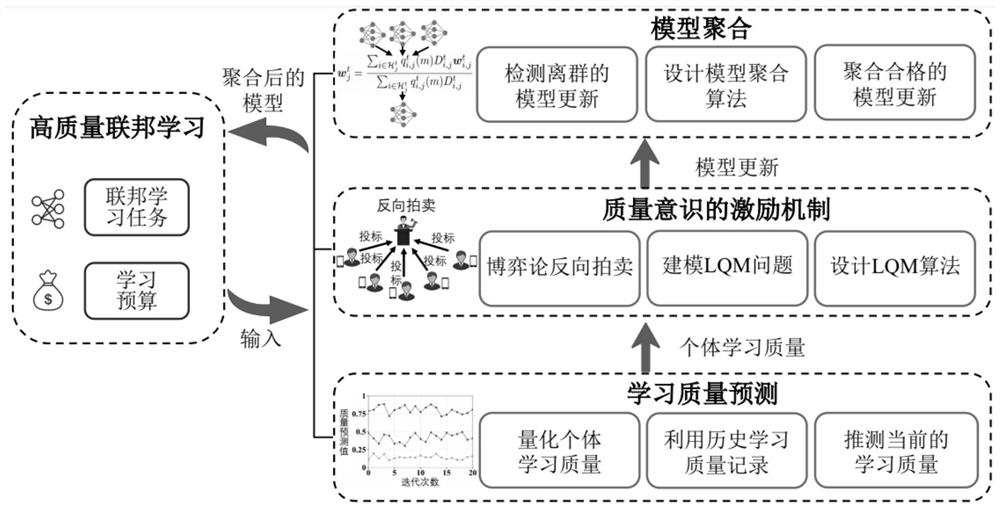

Quality-aware edge intelligent federated learning method and system

ActiveCN111754000AQuality improvementPrivacy protectionEnsemble learningCommerceEngineeringData mining

The invention discloses a quality-aware edge intelligent federated learning method and a quality-aware edge intelligent federated learning system. The method comprises the following steps that: a cloud platform constructs a federated learning quality optimization problem by taking the maximum sum of aggregation model qualities of a plurality of learning tasks in each iteration as an optimization target and solves the problem: in each iteration, the learning quality of participating nodes is predicted by utilizing historical learning quality records of the participating nodes, and the learningquality of the node training data is quantified by using the reduction amount of a loss function value in each iteration; in each iteration, the cloud platform stimulates nodes with high learning quality to participate in federated learning through a reverse auction mechanism; therefore, the distribution of learning tasks and learning rewards is carried out; in each iteration, for each learning task, each participation node uploads its local model parameters to the cloud platform to aggregate to obtain a global model. According to the method and the system, richer data and more computing powercan be provided for model training under the condition of protecting data privacy so that the quality of the model is improved.

Owner:TSINGHUA UNIV +1

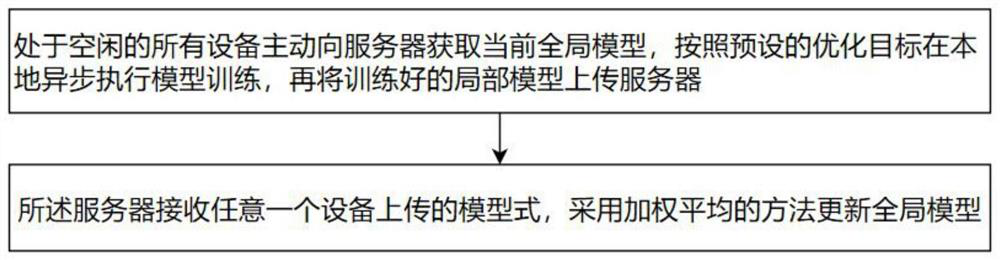

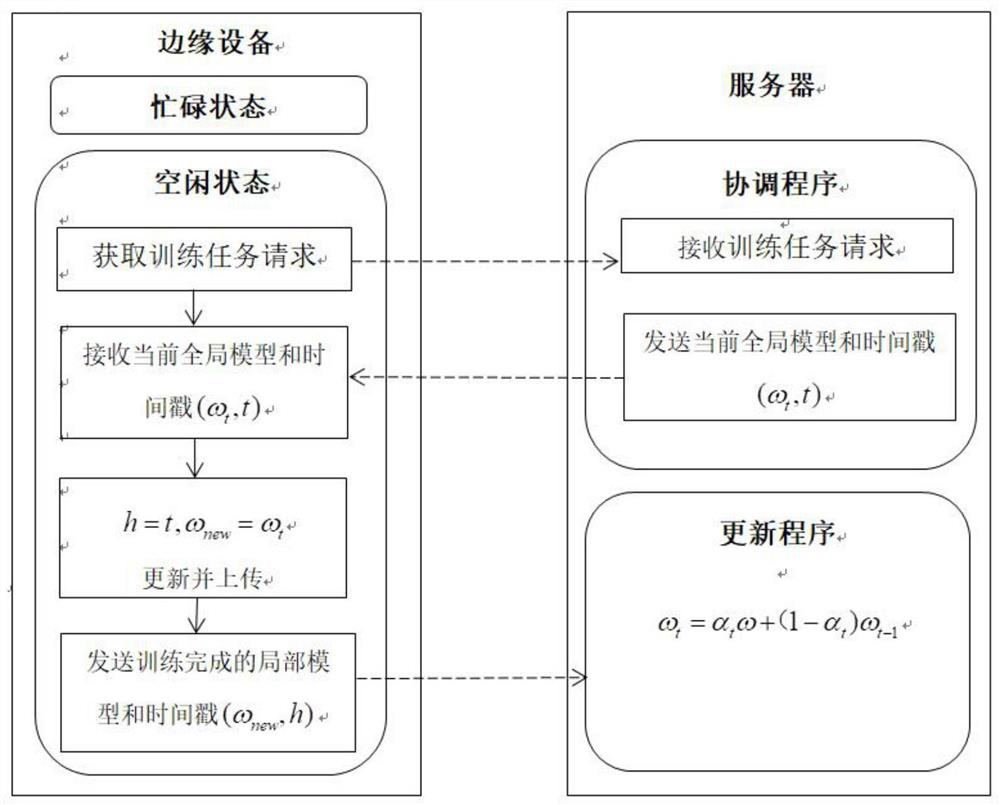

Edge computing-oriented federated learning method

InactiveCN111708640AEfficient training processMake the most of free timeResource allocationMachine learningEdge computingWeighted average method

The invention provides an edge computing-oriented federated learning method, which comprises the following steps that: all idle devices actively acquire a current global model from a server, asynchronously execute model training locally according to a preset optimization target, and upload the trained local model to the server; and the server receives the model formula uploaded by any one device and updates the global model by adopting a weighted average method. According to the method, asynchronous training and federation learning can be combined, in asynchronous federation optimization, allidle devices are used for asynchronous model training, a server updates a global model through weighted average, idle time of all edge devices is fully utilized, and model training is more efficient.

Owner:苏州联电能源发展有限公司

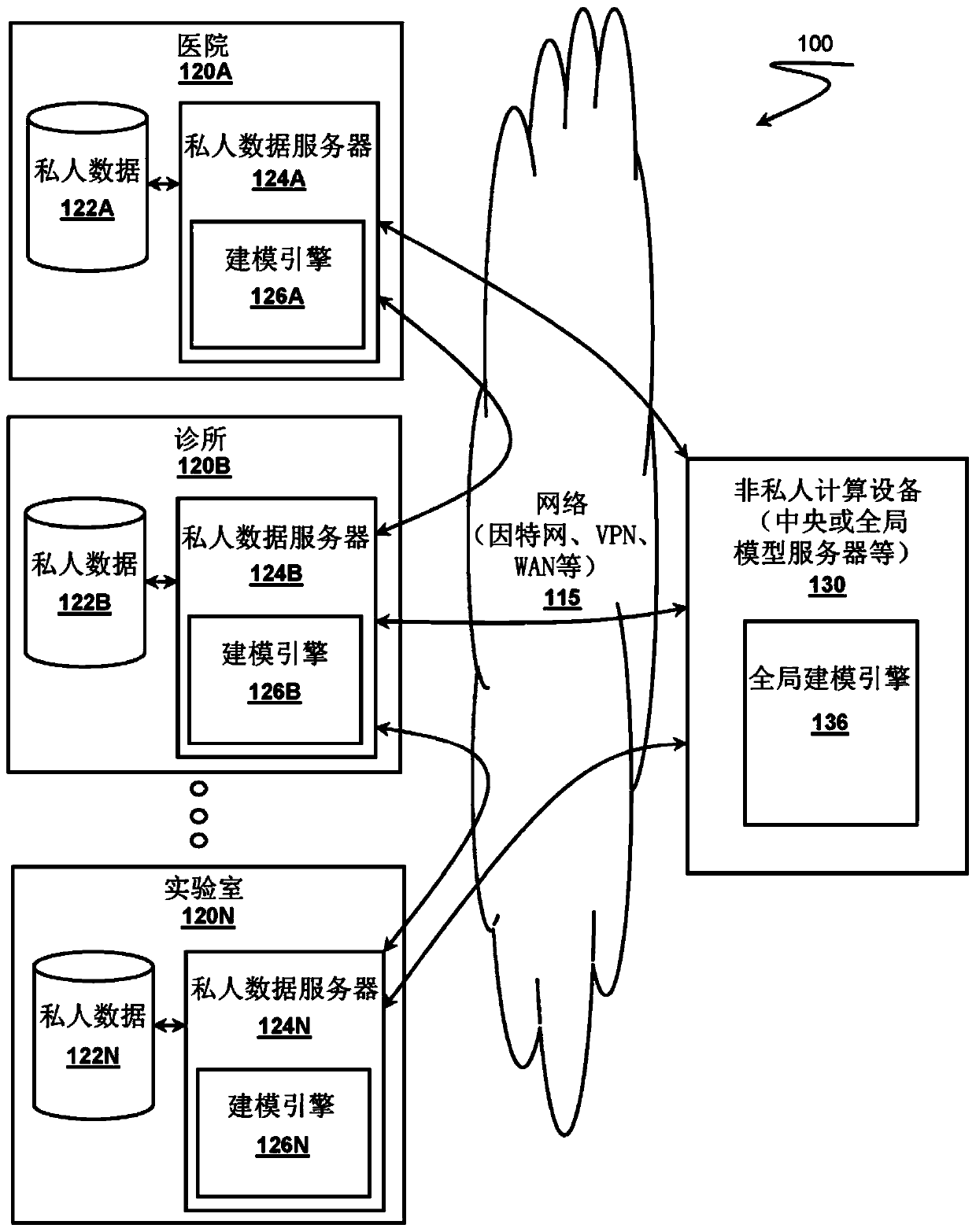

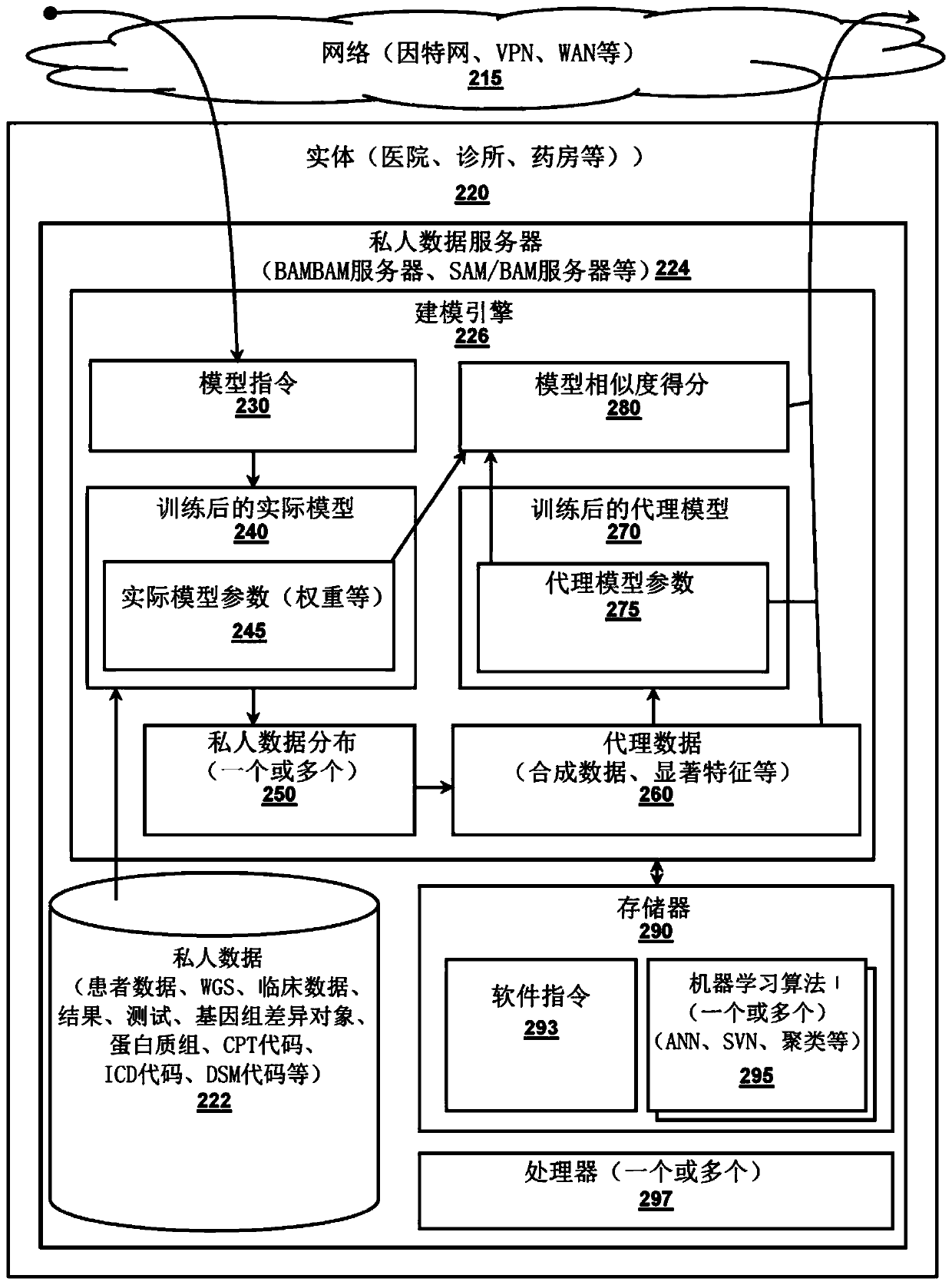

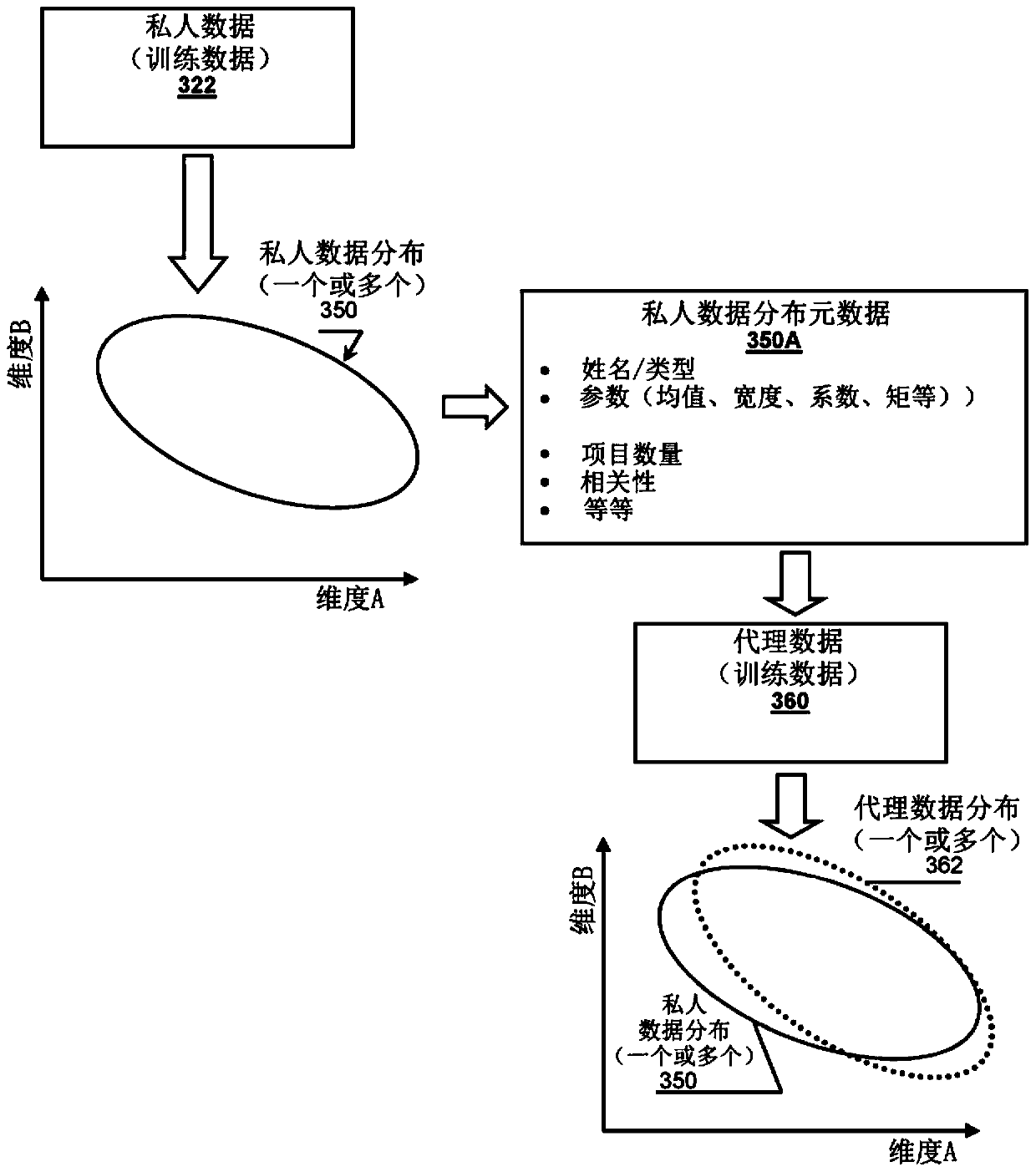

Distributed machine learning system, apparatus, and method

A distributed online machine learning system is presented. Contemplated systems include many private data servers, each having local private data. Researchers can request that relevant private data servers train implementations of machine learning algorithms on their local private data without requiring de-identification of the private data or without exposing the private data to unauthorized computing systems. The private data servers also generate synthetic or proxy data according to the data distributions of the actual data. The servers then use the proxy data to train proxy models. When the proxy models are sufficiently similar to the trained actual models, the proxy data, proxy model parameters, or other learned knowledge can be transmitted to one or more non-private computing devices. The learned knowledge from many private data servers can then be aggregated into one or more trained global models without exposing private data.

Owner:河谷生物组学有限责任公司 +1

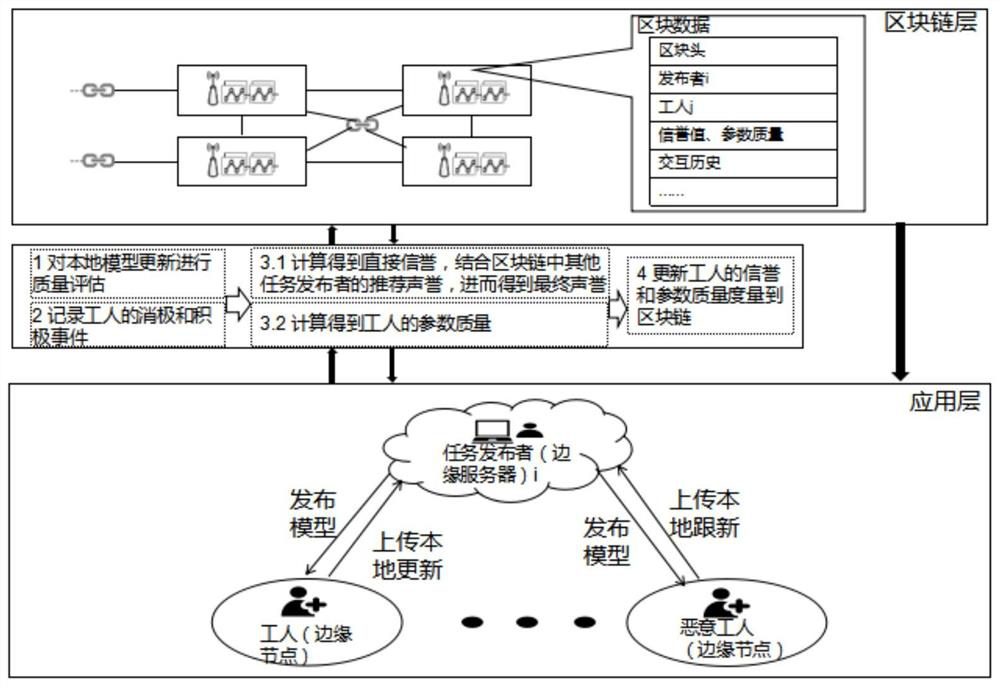

Marine Internet of Things data security sharing method under edge computing framework based on federated learning and block chain technology

The invention discloses a marine Internet of Things data security sharing method under an edge computing framework based on federated learning and a block chain technology, and the method comprises the steps: firstly the parameter quality and reputation of edge nodes are calculated, and the selection of the edge nodes are carried out; secondly, the edge server issues an initial model to the selected edge node, and the edge node performs local training by using a local data set; then, the edge server updates the global model by using the local training data parameters collected from the edge nodes, trains the global model in each iteration, and updates the reputation and quality metrics; and finally, the alliance block chain is used as a decentralized method, and effective reputation and quality management of workers are achieved under the condition of no reputation and tampering. Besides, a reputation consensus mechanism is introduced into the block chain, so that edge nodes recorded in the block chain are higher in quality, and the overall model effect is improved. According to the invention, the marine Internet of Things edge computing framework has more efficient data processingand safer data protection capabilities.

Owner:DALIAN UNIV OF TECH

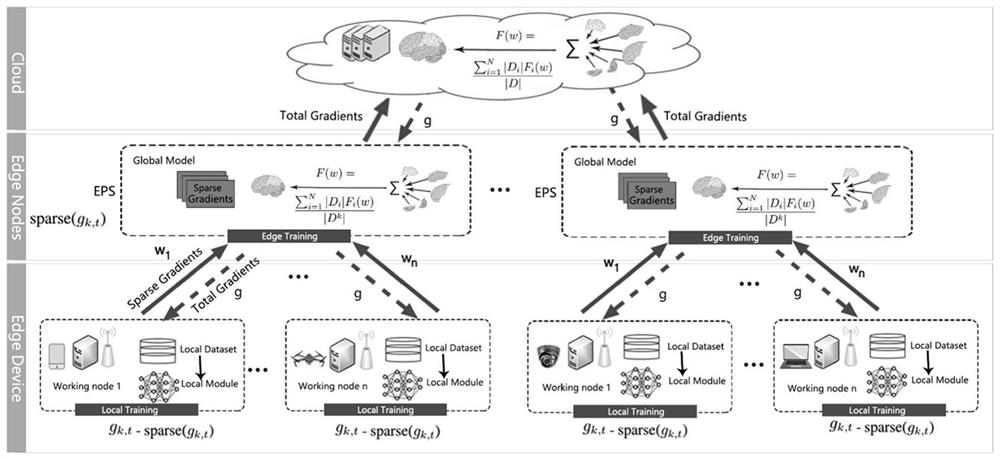

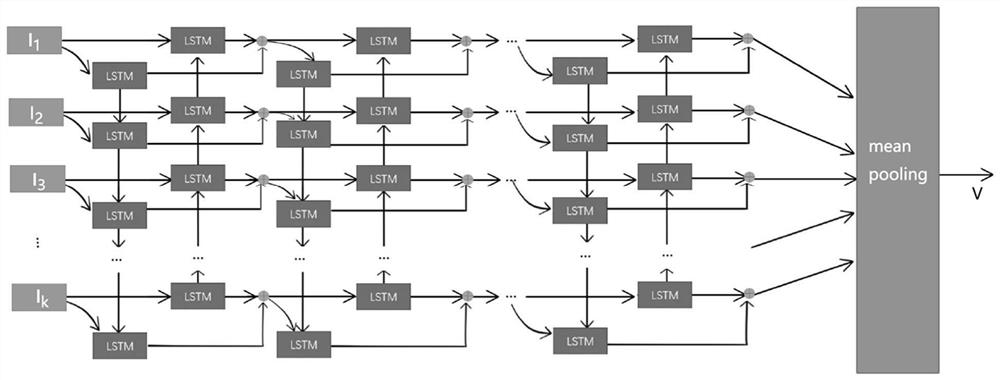

Federal learning computing unloading computing system and method based on cloud side end

InactiveCN112817653AMake up for deficienciesAccurate decisionProgram initiation/switchingResource allocationComputation complexityEdge node

The invention discloses a federated learning computing unloading resource allocation system and a method based on a cloud side end, and aims to make an accurate decision for computing task unloading and resource allocation, eliminate the need for solving a combinatorial optimization problem and greatly reduce the computing complexity. Based on cloud side three-layer federated learning, the adjacent advantage of edge nodes to a terminal is comprehensively utilized, core powerful computing resources in cloud computing are also utilized, the problem that the computing resources of the edge nodes are insufficient is solved, a local model is trained at each of multiple clients to predict an unloading task. A global model is formed by periodically executing one-time parameter aggregation at an edge end, the cloud end executes one-time aggregation after the edge executes the periodic aggregation until a global BiLSTM model is formed through convergence, and the global model can intelligently predict the information amount of each unloading task. Therefore, guidance is better provided for calculation unloading and resource allocation.

Owner:XI AN JIAOTONG UNIV

Method and apparatus for modeling devices having different geometries

InactiveUS20050027501A1Analogue computers for electric apparatusDetecting faulty computer hardwareModel parametersComputer science

The present invention includes a method for modeling devices having different geometries, in which a range of interest for device geometrical variations is divided into a plurality of subregions each corresponding to a subrange of device geometrical variations. The plurality of subregions include a first type of subregions and a second type of subregions. The first or second type of subregions include one or more subregions. A regional global model is generated for each of the first type of subregions and a binning model is generated for each of the second type of subregions. The regional global model for a subregion uses one set of model parameters to comprehend the subrange of device geometrical variations corresponding to the G-type subregion. The binning model for a subregion includes binning parameters to provide continuity of the model parameters when device geometry varies across two different subregions.

Owner:CADENCE DESIGN SYST INC

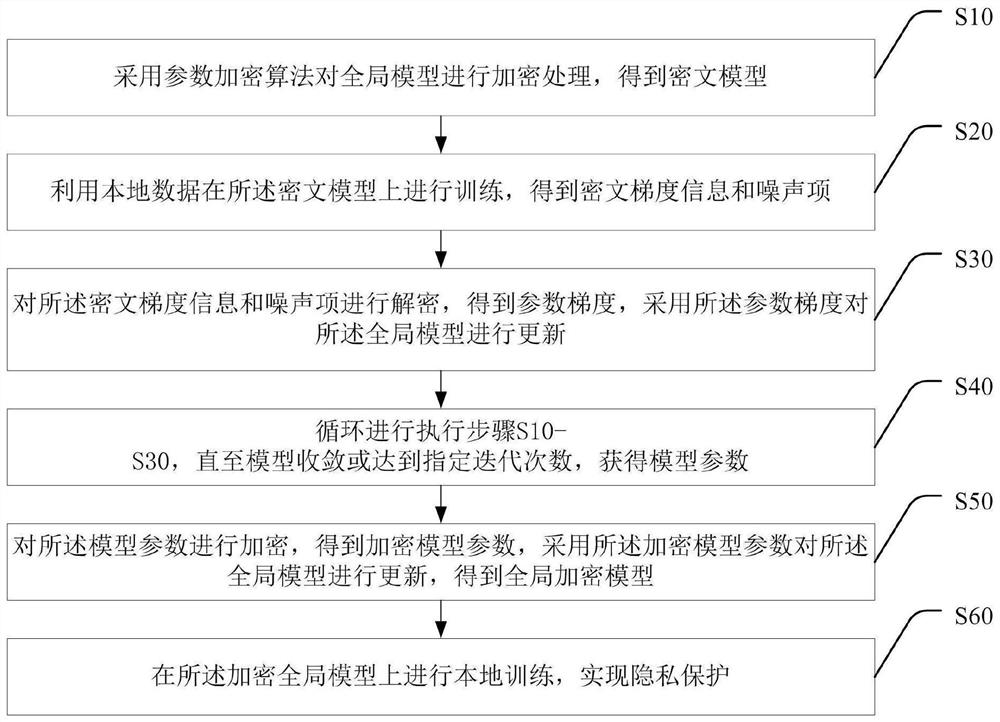

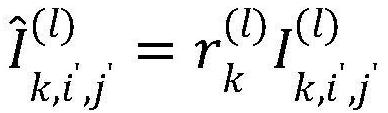

Privacy protection method and system based on federated learning, and storage medium

PendingCN112199702AAvoid gettingTrue Prediction ResultsDigital data protectionNeural architecturesAlgorithmCiphertext

The invention discloses a privacy protection method and system based on federated learning, and a storage medium, and the method comprises the steps: carrying out the encryption of a global model through employing a parameter encryption algorithm, and obtaining a ciphertext model; training on the ciphertext model by using local data, decrypting the obtained ciphertext gradient information and noise item to obtain a parameter gradient, updating the global model by using the parameter gradient, and repeating the steps until the model converges or reaches a specified iteration number to obtain amodel parameter; encrypting the model parameters to obtain encrypted model parameters, and updating the global model by adopting the encrypted model parameters to obtain a global encrypted model; andperforming local training on the encrypted global model to realize privacy protection. According to the method, semi-trusted federated learning participants can be effectively prevented from acquiringreal parameters of the global model and an output result of the intermediate model, and meanwhile, the participants can acquire a real prediction result by utilizing the finally trained encryption model.

Owner:PENG CHENG LAB +1

Credit risk control system and method based on federation mode

The invention relates to a big data technology, and aims to provide a credit risk control system and method based on a federation mode. The system comprises a heterogeneous data access layer used foraccessing and converting data, a data preprocessing layer used for preprocessing original data, and a sample alignment layer used for keeping training samples of different data providers aligned, anda federated learning layer used for training a local model by utilizing the participant local data and forming a global model after gradient aggregation. The invention provides a unified data access format, data preprocessing and a risk prediction model based on federated learning, and solves the challenge problem brought by data heterogeneity and privacy leakage to risk control. A central serverdoes not need to participate in the model training and learning process, and it can be guaranteed that user privacy is not eavesdropped. Risk control modeling can be carried out by combining a plurality of different participants, the modeling process is standardized, the risk control capability is finally improved, and the cost is reduced for enterprises.

Owner:ZHEJIANG UNIV

Method for dynamically targeting a batch process

A method for controlling at least one characteristic of a product of an industrial batch process. The method comprising the steps of creating a hierarchical knowledge tree describing the process. Following the creation of a knowledge tree a learning process occurs. This leads to the creation of a global model. During the execution of a batch process, the global model is applied to dynamically target subsequent phase parameters based on already executed phases.

Owner:ADA ANALYTICS ISRAEL

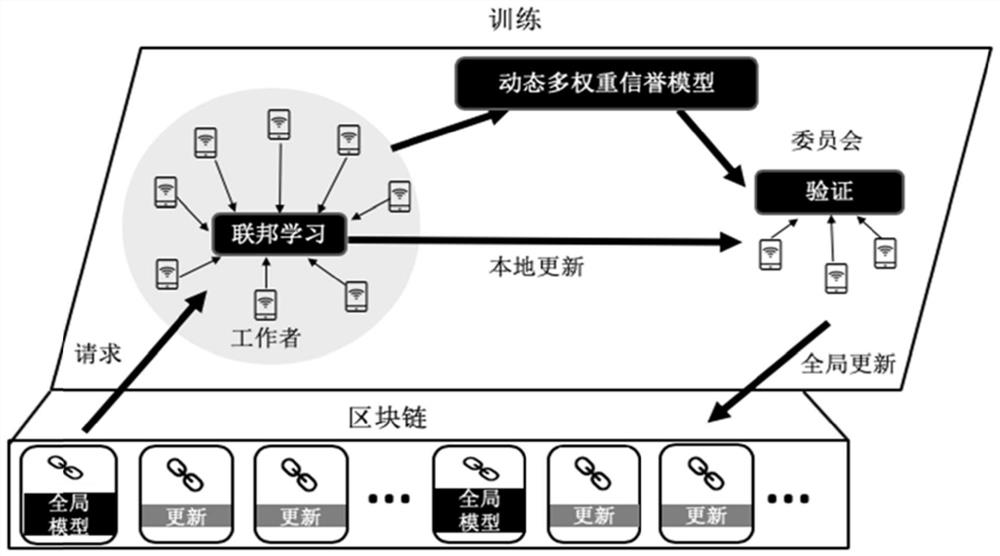

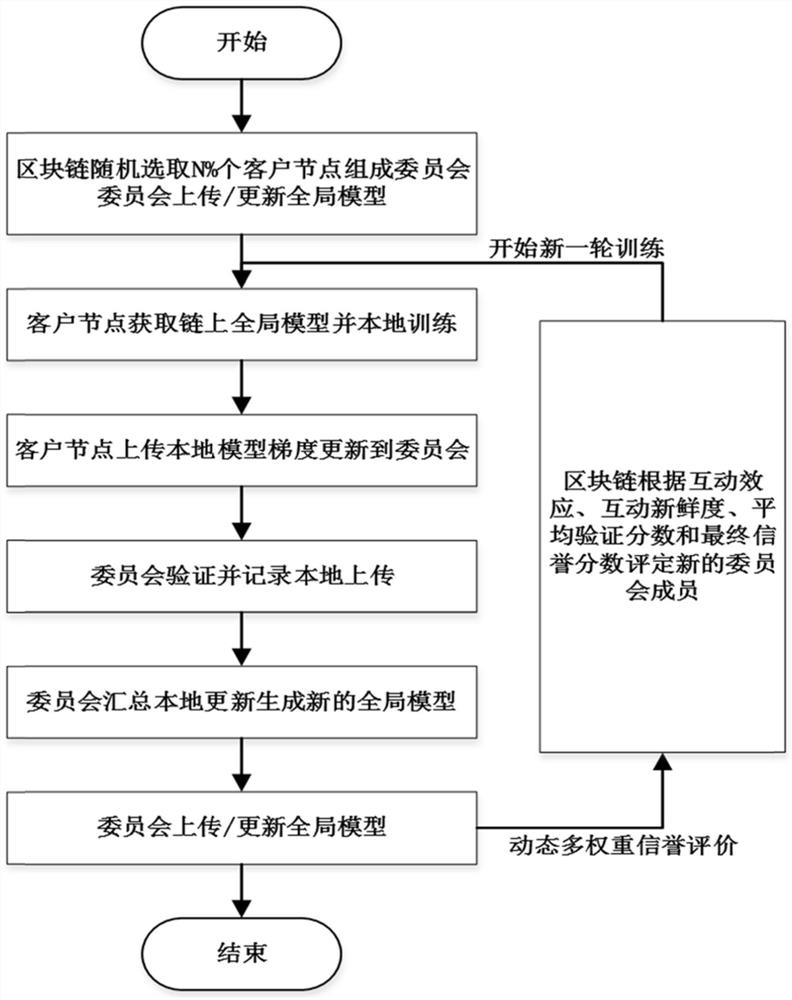

Trusted federated learning method, system and device based on block chain and medium

The invention discloses a trusted federation learning method, system and device based on a block chain and a medium, and the method comprises the steps: selecting a client node from the block chain toform an initial committee, and determining an initial shared global model; training the initial shared global model through each client node in a block chain to obtain local model updating information of each client node; generating a target global model by the initial committee according to the local model updating information of each client node; and determining a target committee through the dynamic multi-weight reputation model, and starting a new round of training until a target global model meeting convergence requirements is obtained. According to the invention, the central server is removed by using the blockchain technology, so that the distributed client nodes are stored dispersedly, the security of private data is improved, and the method, system and device can be widely applied to the technical field of blockchains.

Owner:SOUTH CHINA NORMAL UNIVERSITY

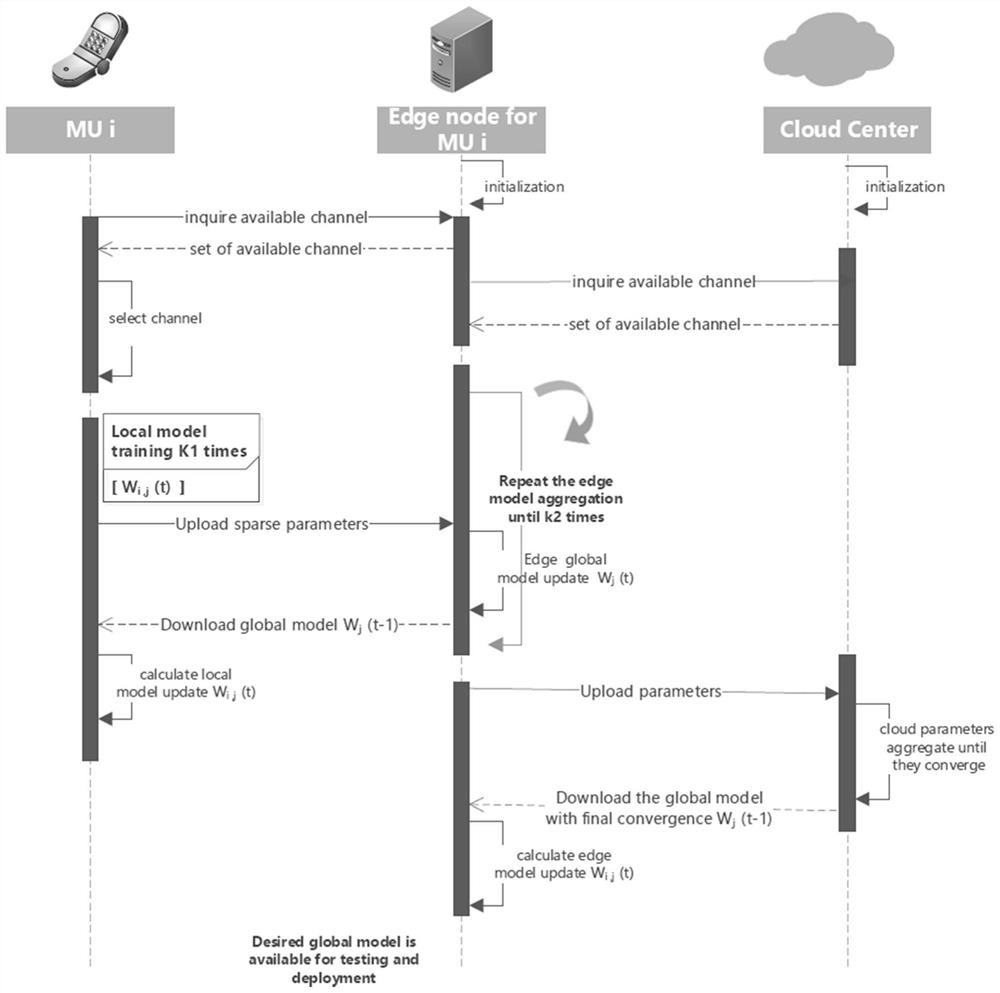

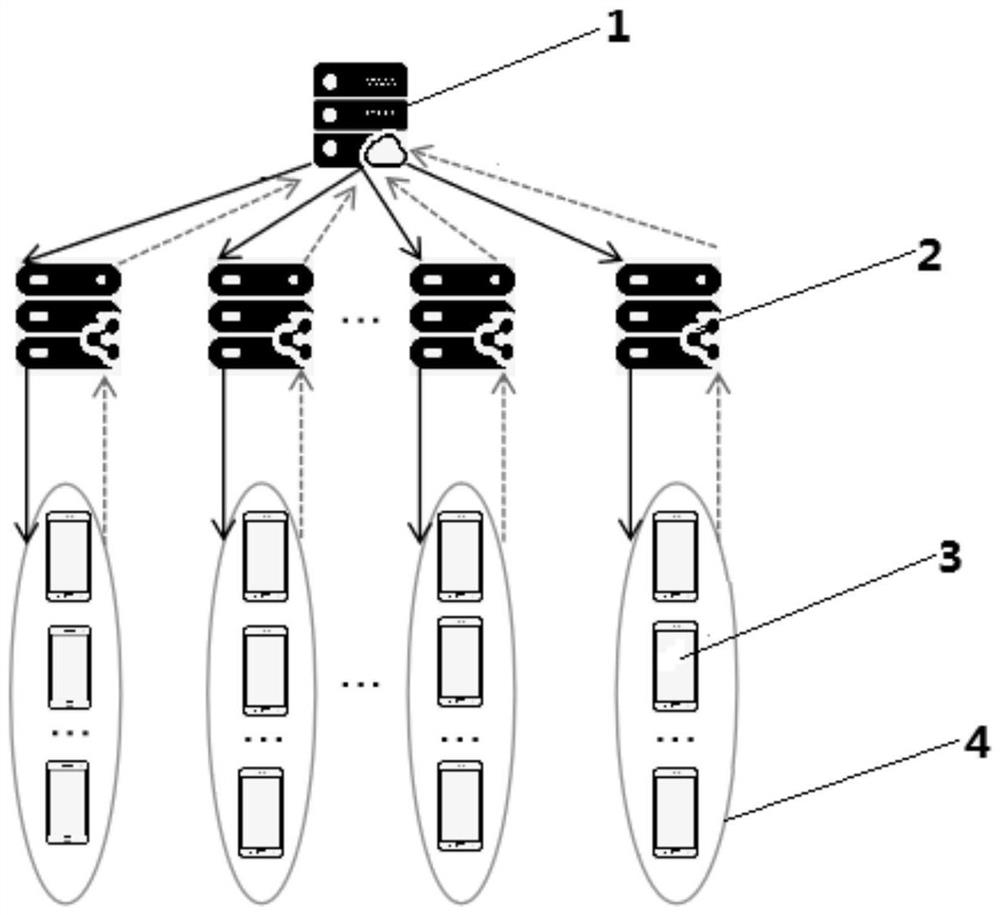

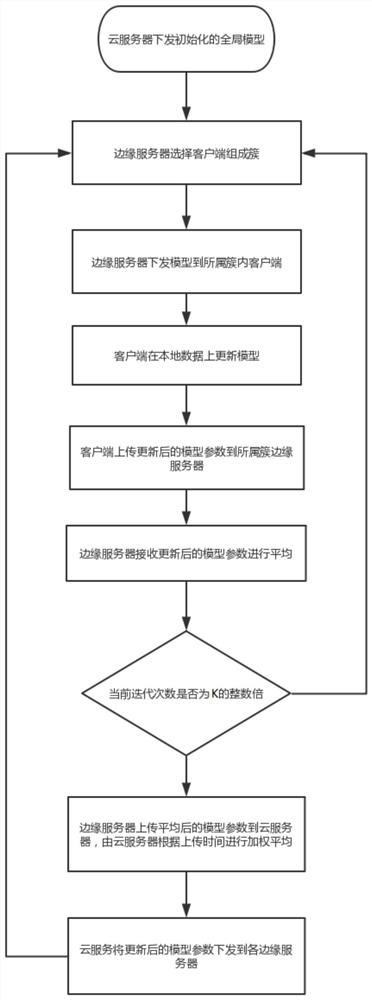

Hierarchical federated learning method and device based on asynchronous communication, terminal equipment and storage medium

ActiveCN112532451AResolving heterogeneityImprove training efficiencyEnsemble learningData switching networksAsynchronous communicationEdge server

The invention provides a hierarchical federated learning method and device based on asynchronous communication, terminal equipment and a storage medium, and relates to the technical field of wirelesscommunication networks. The method comprises the following steps: an edge server issues a global model to an intra-cluster client to which the global model belongs; the client updates the model by using the local data and uploads the model to each belonging cluster edge server; the edge server determines to update the clients in the cluster according to the client update uploading time; the received model parameters are averaged by the edge server, and it is selected to asynchronously upload the model parameters to the central server or directly issue the model parameters to the client according to the updating times of the current client; and the central server performs weighted averaging on the parameters uploaded by the edge server, and issues the parameters to the client for training until the local model converges or reaches an expected standard. According to the method, the federated learning task can be efficiently executed, the communication cost required by the parameters of the federated learning model is reduced, the edge server butted with the client is dynamically selected, and the overall training efficiency of the federated learning is improved.

Owner:ANHUI UNIVERSITY OF TECHNOLOGY

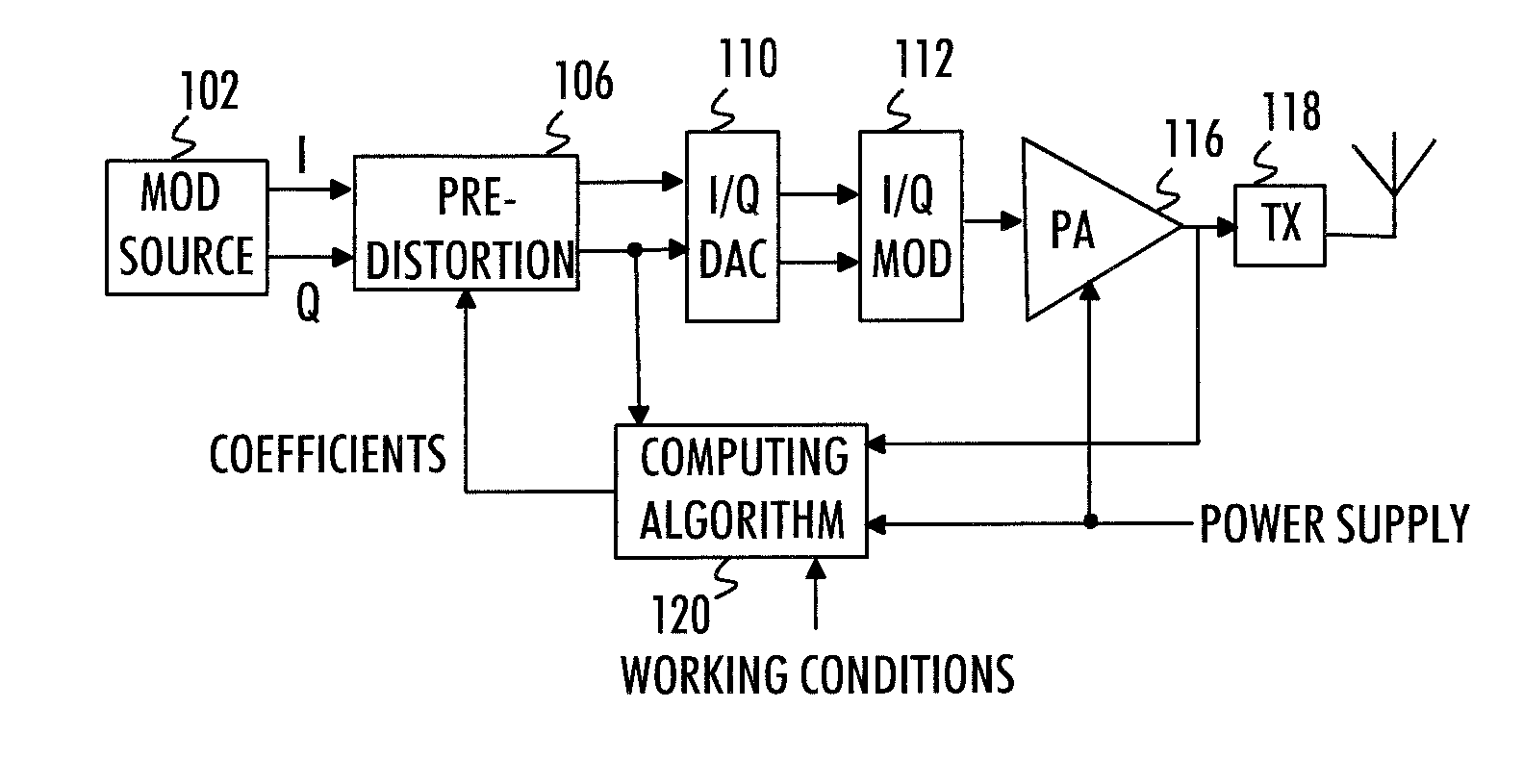

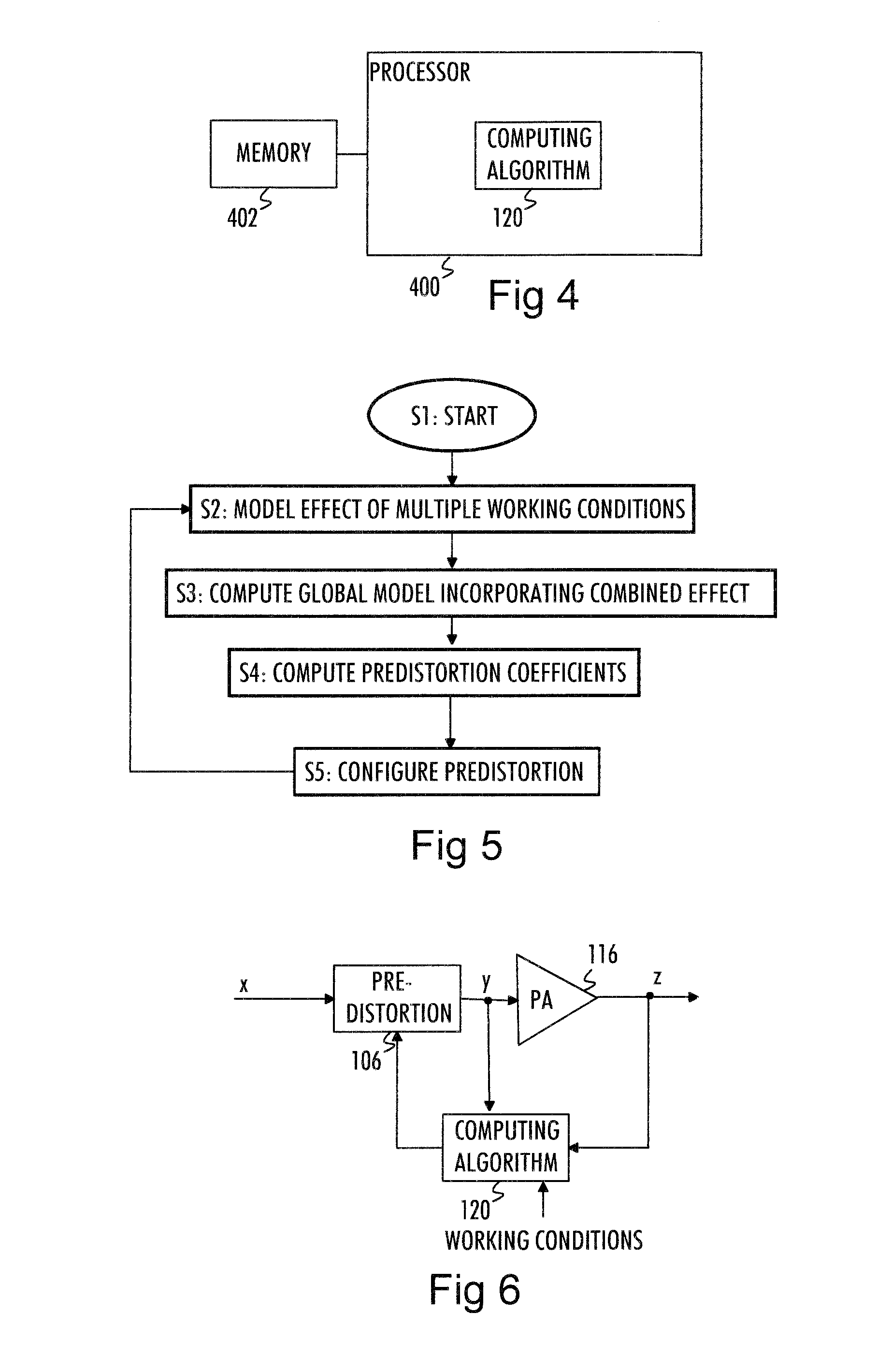

Signal Predistortion for Non-Linear Amplifier

ActiveUS20140292406A1Amplifier modifications to reduce non-linear distortionAmplifiers with memory effect compensationAudio power amplifierNonlinear amplifier

A method, apparatus, and computer program for modeling mathematically an effect of a plurality of factors on signal distortion caused by a non-linear amplifier are provided. First, there is computed a global model which incorporates a combined effect of the plurality of factors on signal distortion caused by the non-linear amplifier. Before applying the pre-distorted transmission signal to the non-linear amplifier, a transmission signal is pre-distorted with coefficients derived from the global model thus compensating for the signal distortion caused by the non-linear amplifier.

Owner:RPX CORP

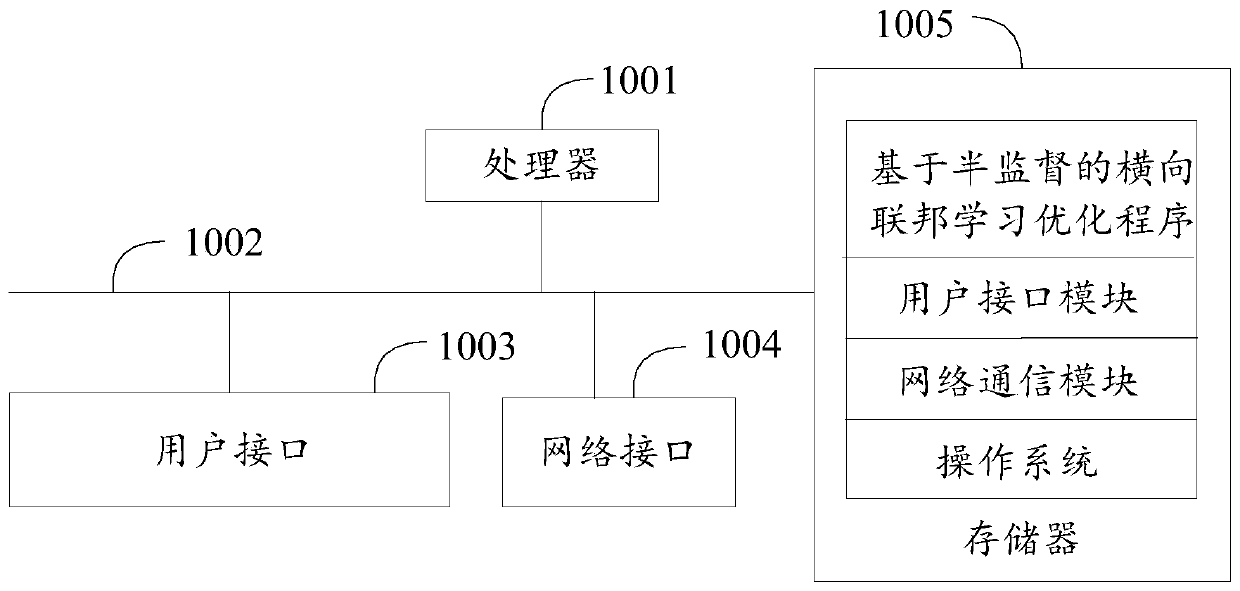

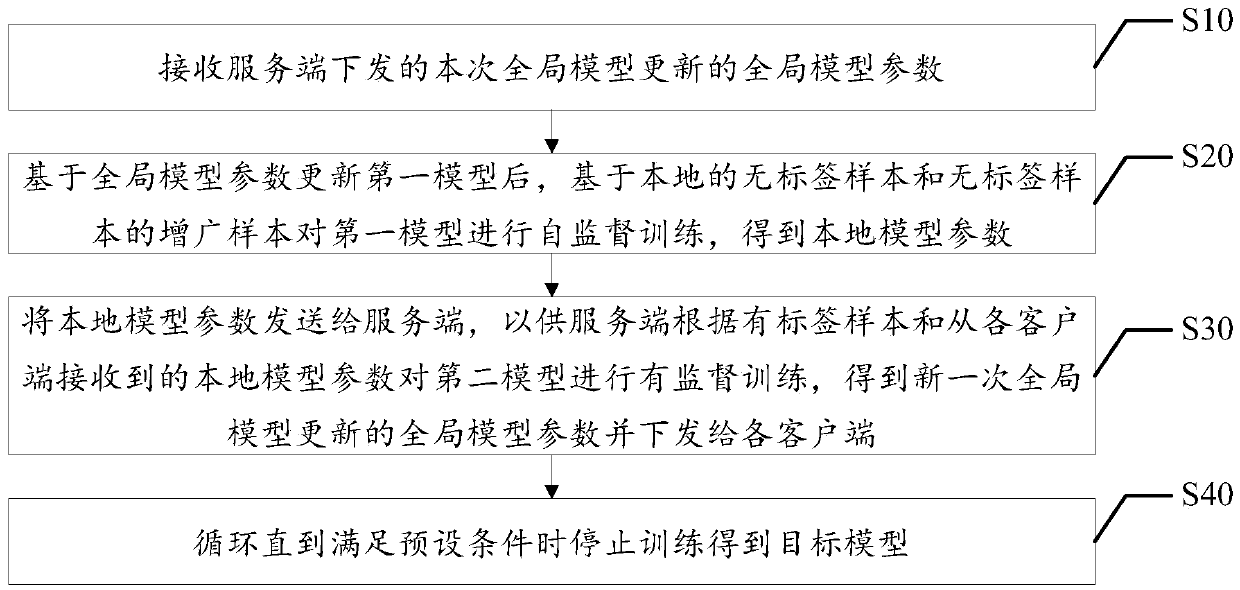

Transverse federated learning optimization method and device based on semi-supervision and storage medium

PendingCN111275207AAvoid wastingPrevent deviationEnsemble learningCharacter and pattern recognitionEngineeringLabeled data

The invention discloses a transverse federated learning optimization method and device based on semi-supervision and a storage medium. The method comprises the steps that global model parameters updated by a global model this time and issued by a server side are received; after the first model is updated based on the global model parameters, self-supervised training on the first model is performedbased on a local label-free sample and an augmented sample of the label-free sample to obtain local model parameters; the local model parameters are sent to a server, so that the server performs supervised training on the second model according to the labeled samples and the local model parameters received from each client to obtain new global model parameters updated by the global model and issues the global model parameters to each client; and the process is repeated until a preset condition is met to stop training to obtain a target model. According to the invention, transverse federatedlearning can be carried out when only a small number of labeled samples exist at the server side and no label data exists at the client side, so that the method is suitable for a real scene lacking label data and the labor cost is saved.

Owner:WEBANK (CHINA)

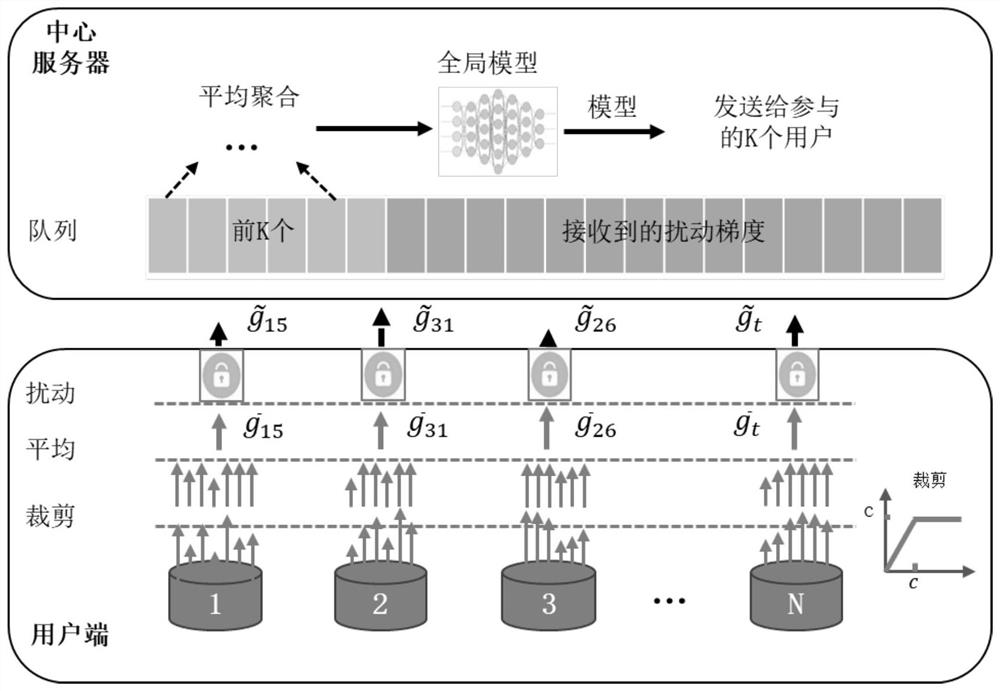

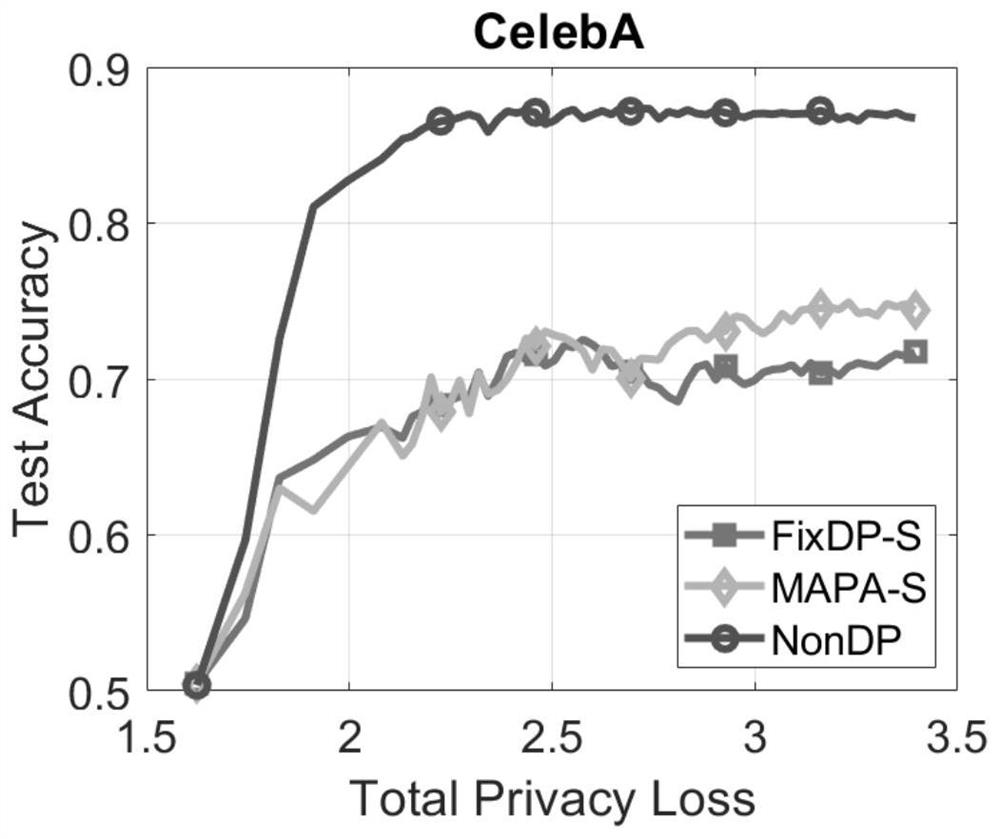

Self-adaptive asynchronous federated learning method with local privacy protection

PendingCN112818394AImprove learning efficiencyEnhance the ultimate utilityDigital data information retrievalDigital data protectionStochastic gradient descentPrivacy protection

The invention discloses a self-adaptive asynchronous federal learning method with local privacy protection, which comprises the following steps that: a central server initializes a global model and broadcasts global model parameters, a gradient cutting standard, a noise mechanism and a noise variance to all participating users; each user firstly uses samples extracted from local data to train a global model and cuts and disturbs the gradients one by one, then the disturbed gradients are sent to a central server, the central server selects the first K disturbed gradients from a buffer queue to perform average aggregation, the averaged gradient is substituted into a stochastic gradient descent formula to update global model parameters, and a gradient cutting standard, a noise variance and a learning rate are adaptively adjusted according to the number of iterations in a preset stage; and then the central server broadcasts the updated global model parameters, the gradient cutting standard and the noise variance to K users participating in updating in the last round, and the local users and the central server repeat the operation until the number of global iterations reaches a given standard.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com