Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1753 results about "Federated learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Federated Learning is a very exciting and upsurging Machine Learning technique for learning on decentralized data. The core idea is that a training dataset can remain in the hands of its producers (also known as workers) which helps improve privacy and ownership, while the model is shared between workers.

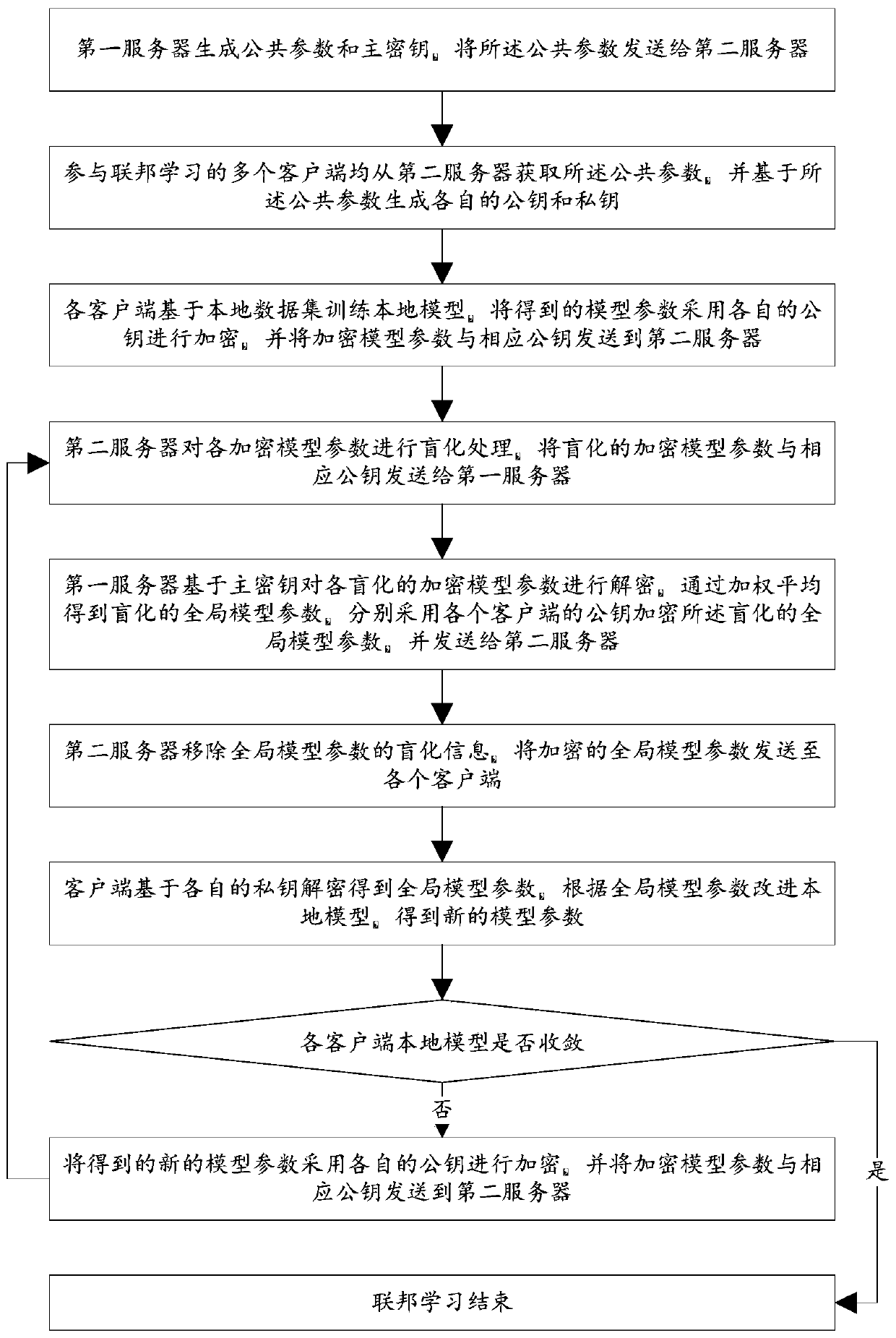

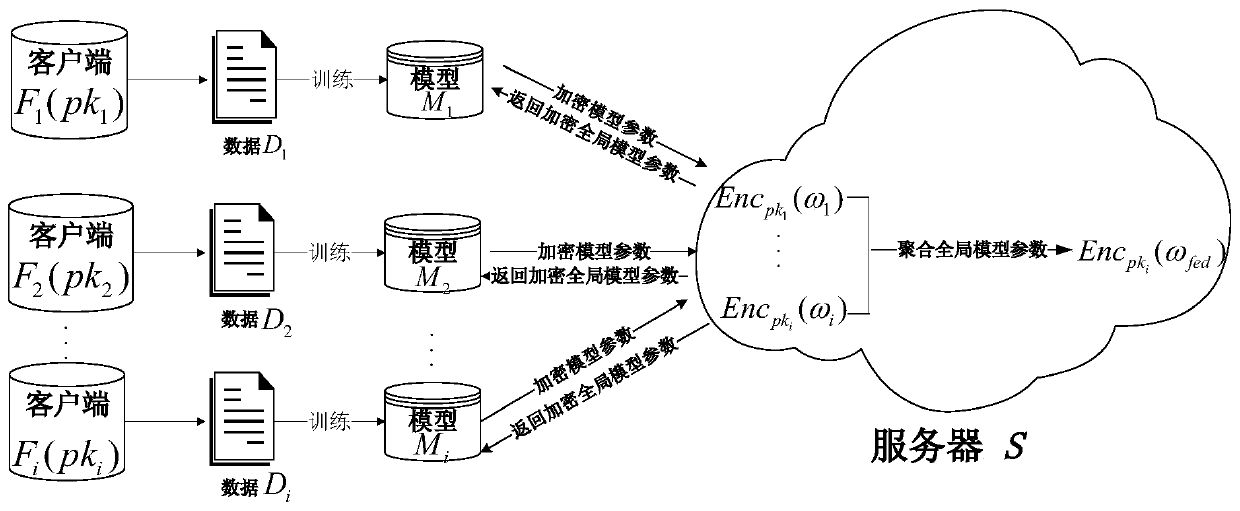

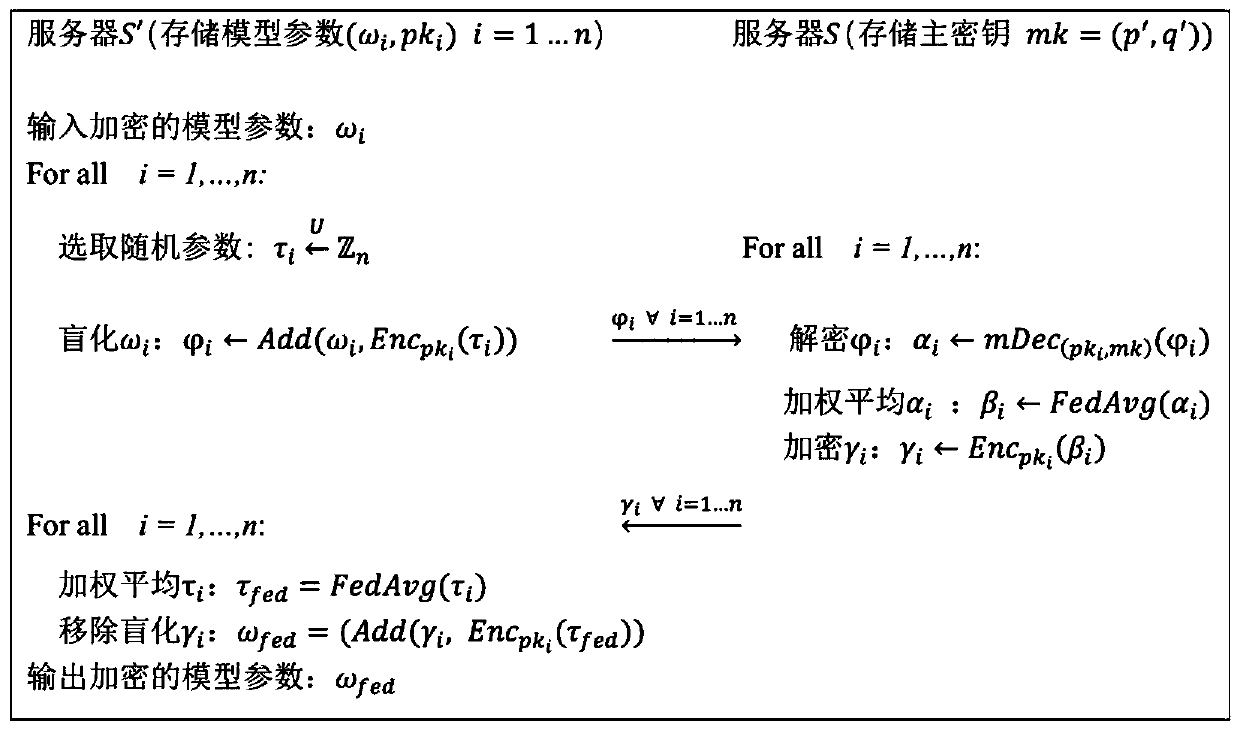

Federated learning training data privacy enhancement method and system

ActiveCN110572253AEnsure safetyEasy to joinCommunication with homomorphic encryptionModel parametersMaster key

The invention discloses a federated learning training data privacy enhancement method and system, and the method comprises the steps that a first server generates a public parameter and a main secretkey, and transmits the public parameter to a second server; a plurality of clients participating in federated learning generate respective public key and private key pairs based on the public parameters; the federated learning process is as follows: each client encrypts a model parameter obtained by local training by using a respective public key, and sends the encrypted model parameter and the corresponding public key to a first server through a second server; the first server carries out decryption based on the master key, obtains global model parameters through weighted average, carries outencryption by using a public key of each client, and sends the global model parameters to each client through the second server; and the clients carry out decrypting based on the respective private keys to obtain global model parameters, and the local models are improved, and the process is repeated until the local models of the clients converge. According to the method, a dual-server mode is combined with multi-key homomorphic encryption, so that the security of data and model parameters is ensured.

Owner:UNIV OF JINAN

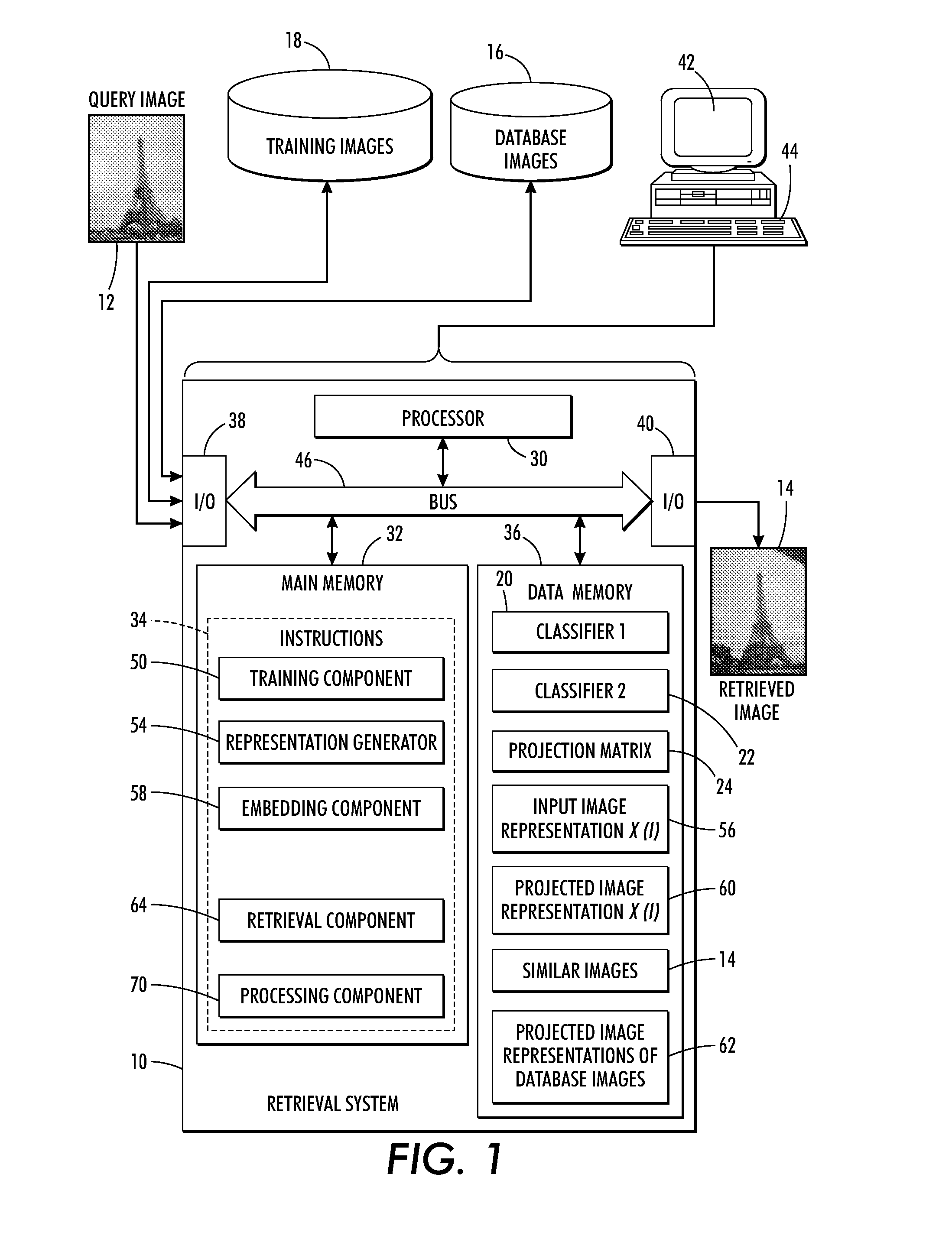

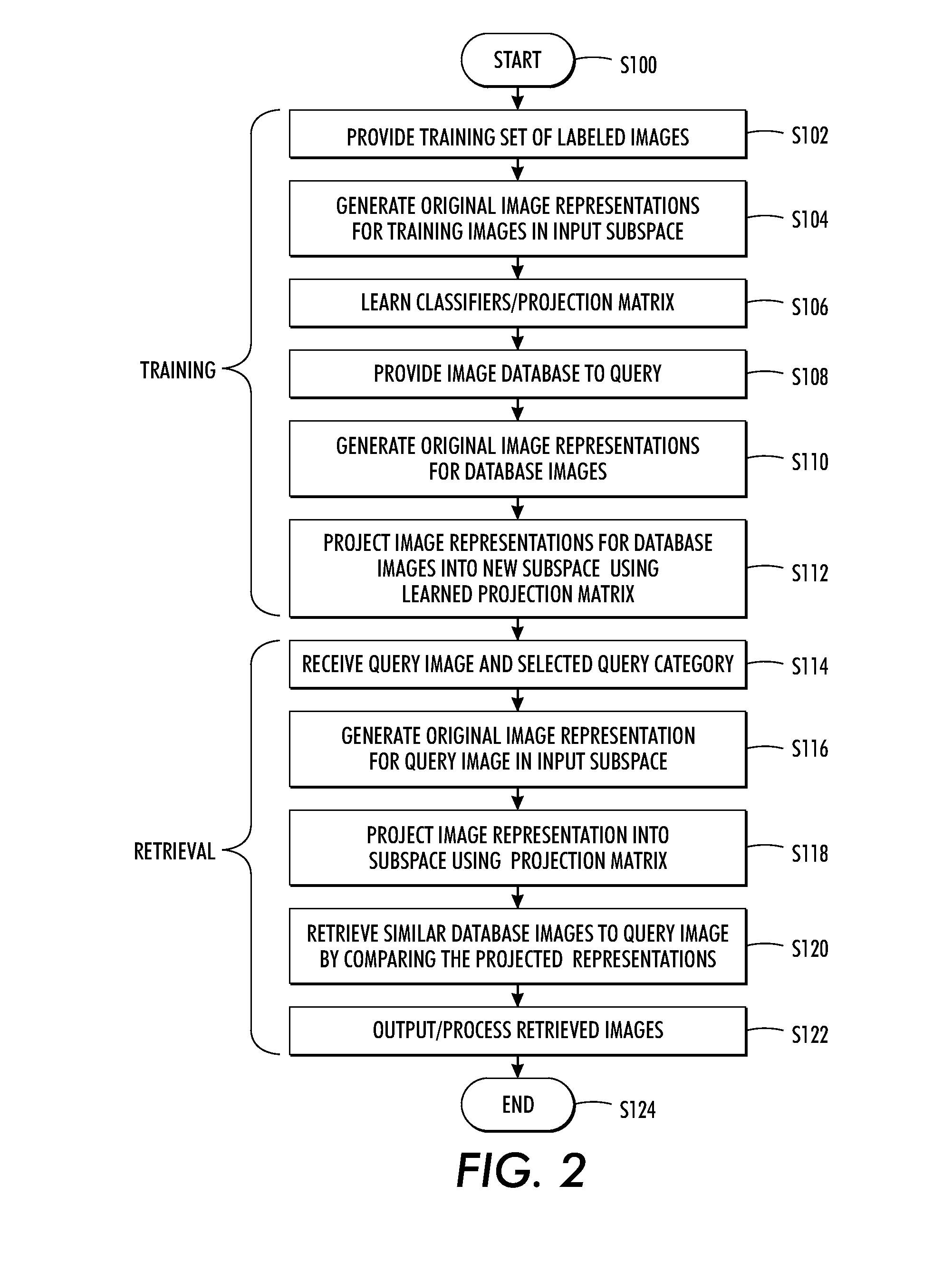

Retrieval system and method leveraging category-level labels

InactiveUS20130290222A1Digital data processing detailsDigital computer detailsComputer visionMachine learning

An instance-level retrieval method and system are provided. A representation of a query image is embedded in a multi-dimensional space using a learned projection. The projection is learned using category-labeled training data to optimize a classification rate on the training data. The joint learning of the projection and the classifiers improves the computation of similarity / distance between images by embedding them in a subspace where the similarity computation outputs more accurate results. An input query image can thus be used to retrieve similar instances in a database by computing the comparison measure in the embedding space.

Owner:XEROX CORP

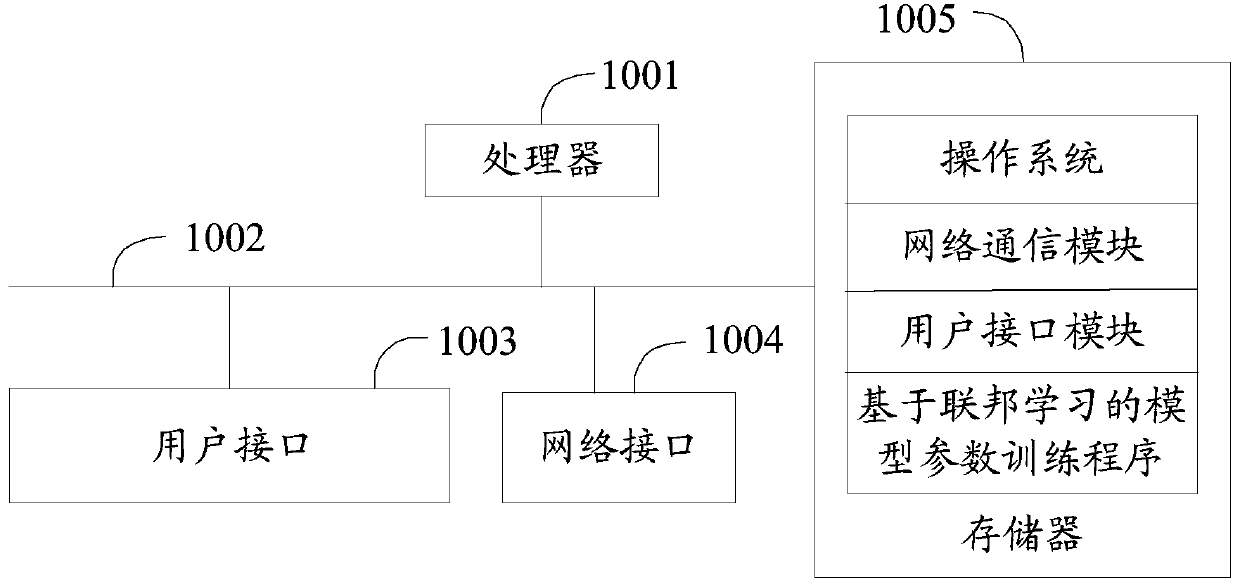

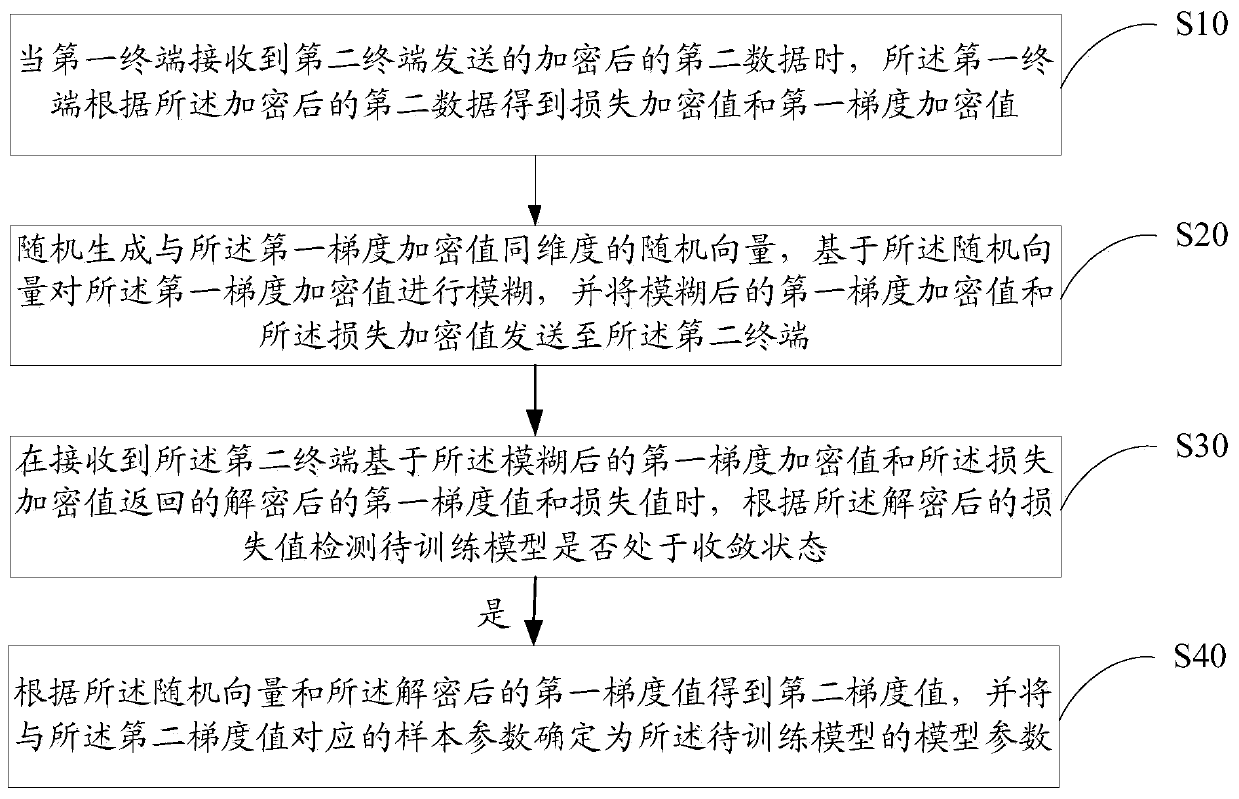

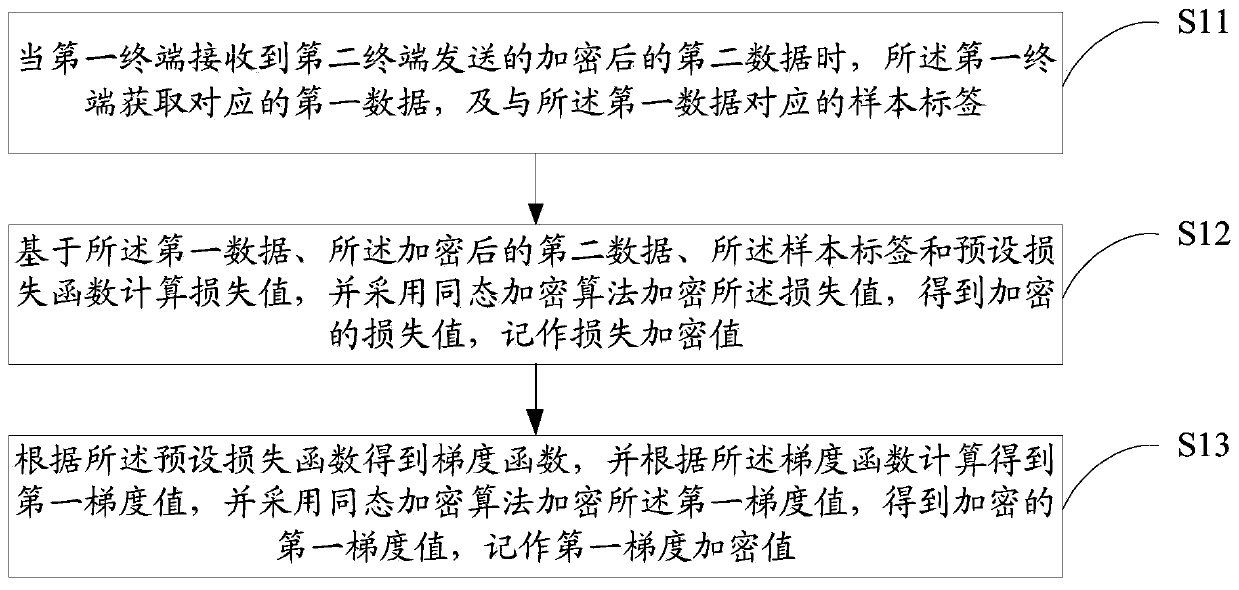

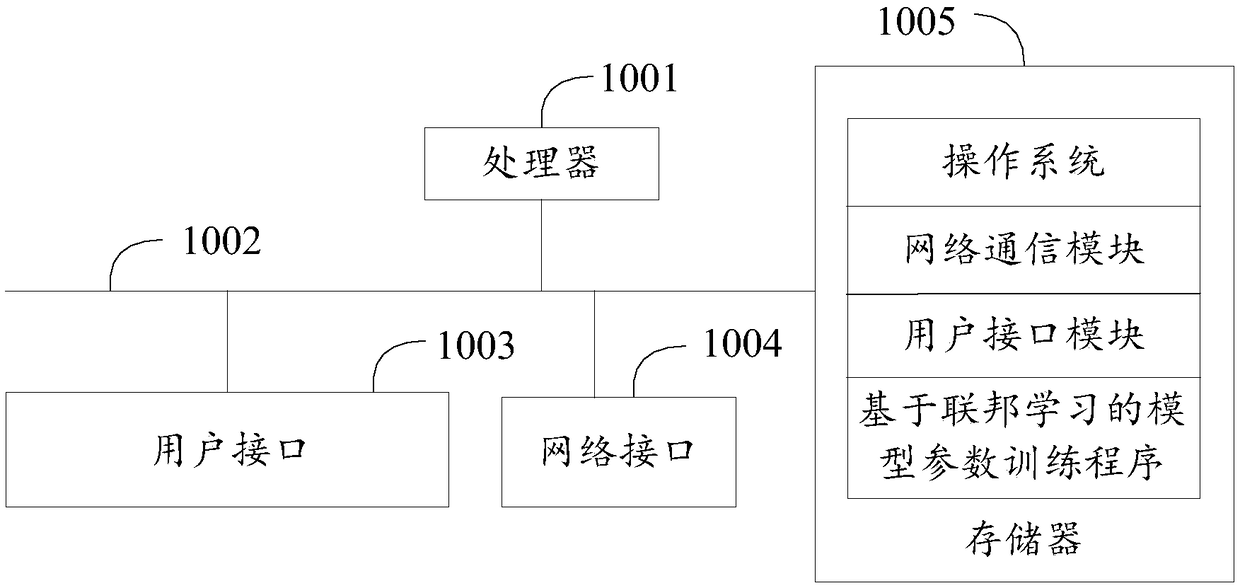

Model parameter training method and device based on federated learning, equipment and medium

PendingCN109886417ASolve application limitationsWon't leakPublic key for secure communicationDigital data protectionTrusted third partyModel parameters

The invention discloses a model parameter training method and device based on federal learning, equipment and a medium. The method comprises the following steps: when a first terminal receives encrypted second data sent by a second terminal, obtaining a corresponding loss encryption value and a first gradient encryption value; randomly generating a random vector with the same dimension as the first gradient encryption value, performing fuzzy on the first gradient encryption value based on the random vector, and sending the fuzzy first gradient encryption value and the loss encryption value toa second terminal; when the decrypted first gradient value and the loss value returned by the second terminal are received, detecting whether the model to be trained is in a convergence state or not according to the decrypted loss value; and if yes, obtaining a second gradient value according to the random vector and the decrypted first gradient value, and determining the sample parameter corresponding to the second gradient value as the model parameter. According to the method, model training can be carried out only by using data of two federated parties without a trusted third party, so thatapplication limitation is avoided.

Owner:WEBANK (CHINA)

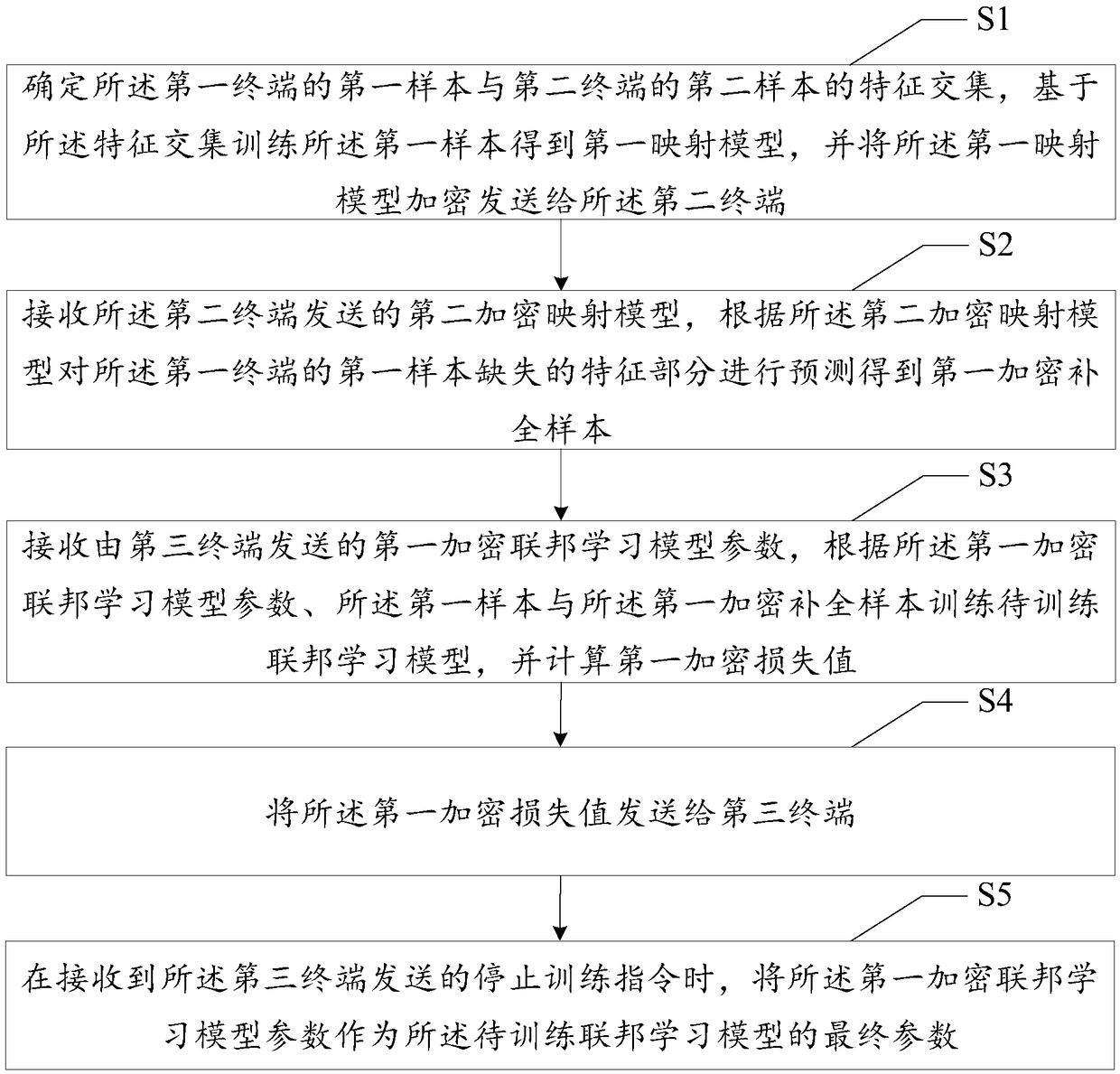

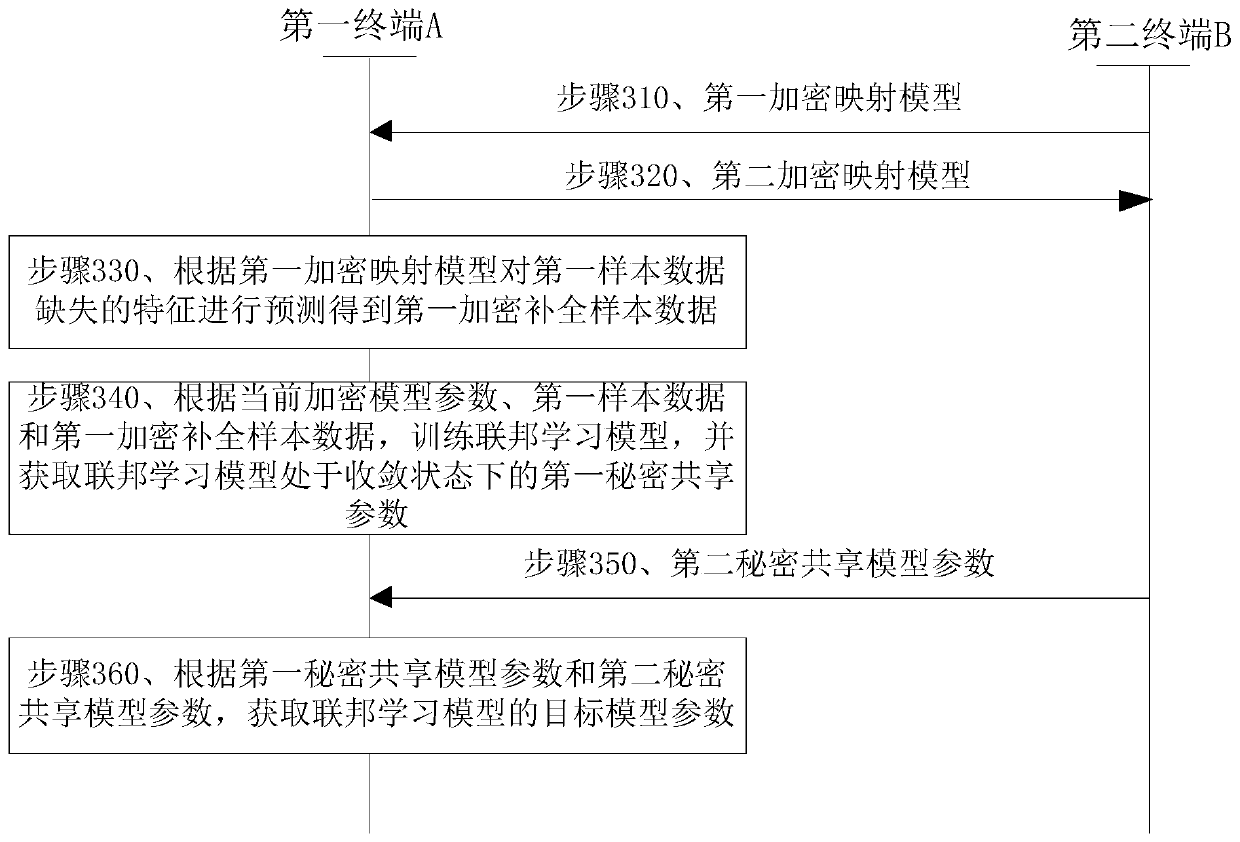

Model parameter training method, terminal, system and medium based on federated learning

ActiveCN109492420AImprove predictive abilityFeature Space ExpansionEnsemble learningCharacter and pattern recognitionCharacteristic spaceModel parameters

The invention discloses a model parameter training method based on federal learning, a terminal, a system and a medium, and the method comprises the steps: determining a feature intersection of a first sample of a first terminal and a second sample of a second terminal, training the first sample based on the feature intersection to obtain a first mapping model, and sending the first mapping modelto the second terminal; receiving a second encryption mapping model sent by a second terminal, and predicting the missing feature part of the first sample to obtain a first encryption completion sample; receiving a first encrypted federal learning model parameter sent by a third terminal, training a to-be-trained federal learning model according to the first encrypted federal learning model parameter, and calculating a first encryption loss value; sending the first encryption loss value to a third terminal; and when a training stopping instruction sent by the third terminal is received, takingthe first encrypted federal learning model parameter as a final parameter of the federal learning model to be trained. According to the invention, the characteristic space of two federated parties isexpanded by using transfer learning, and the prediction capability of the federated model is improved.

Owner:WEBANK (CHINA)

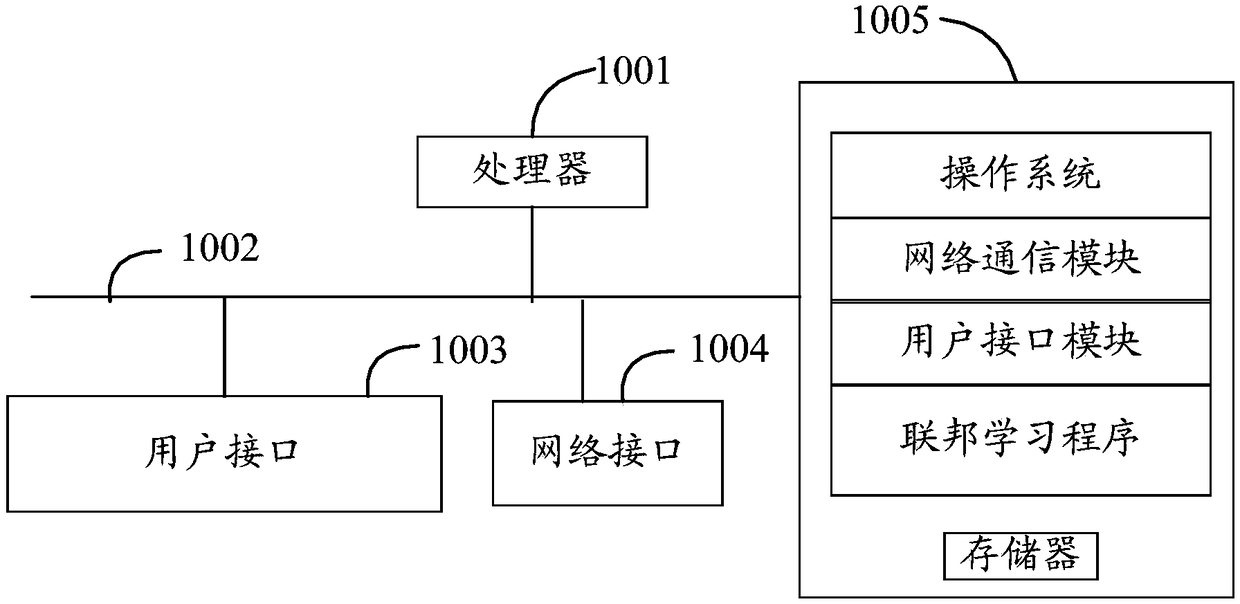

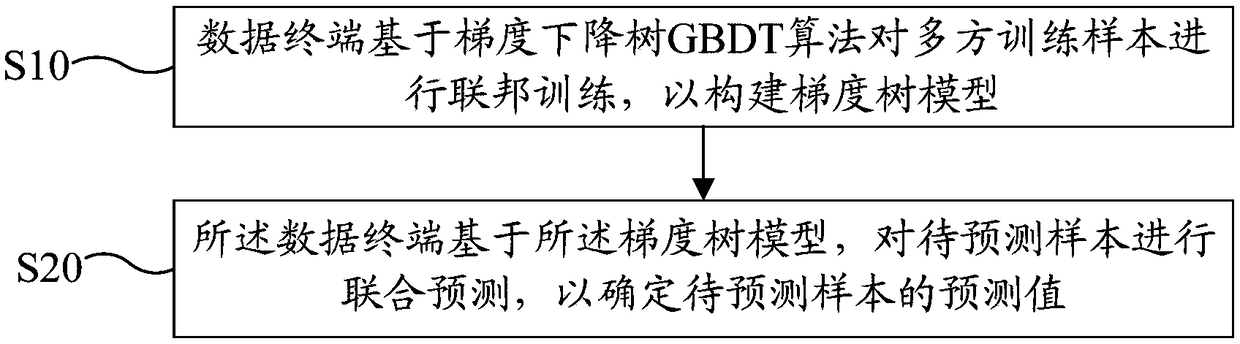

Federated learning method, system, and readable storage medium

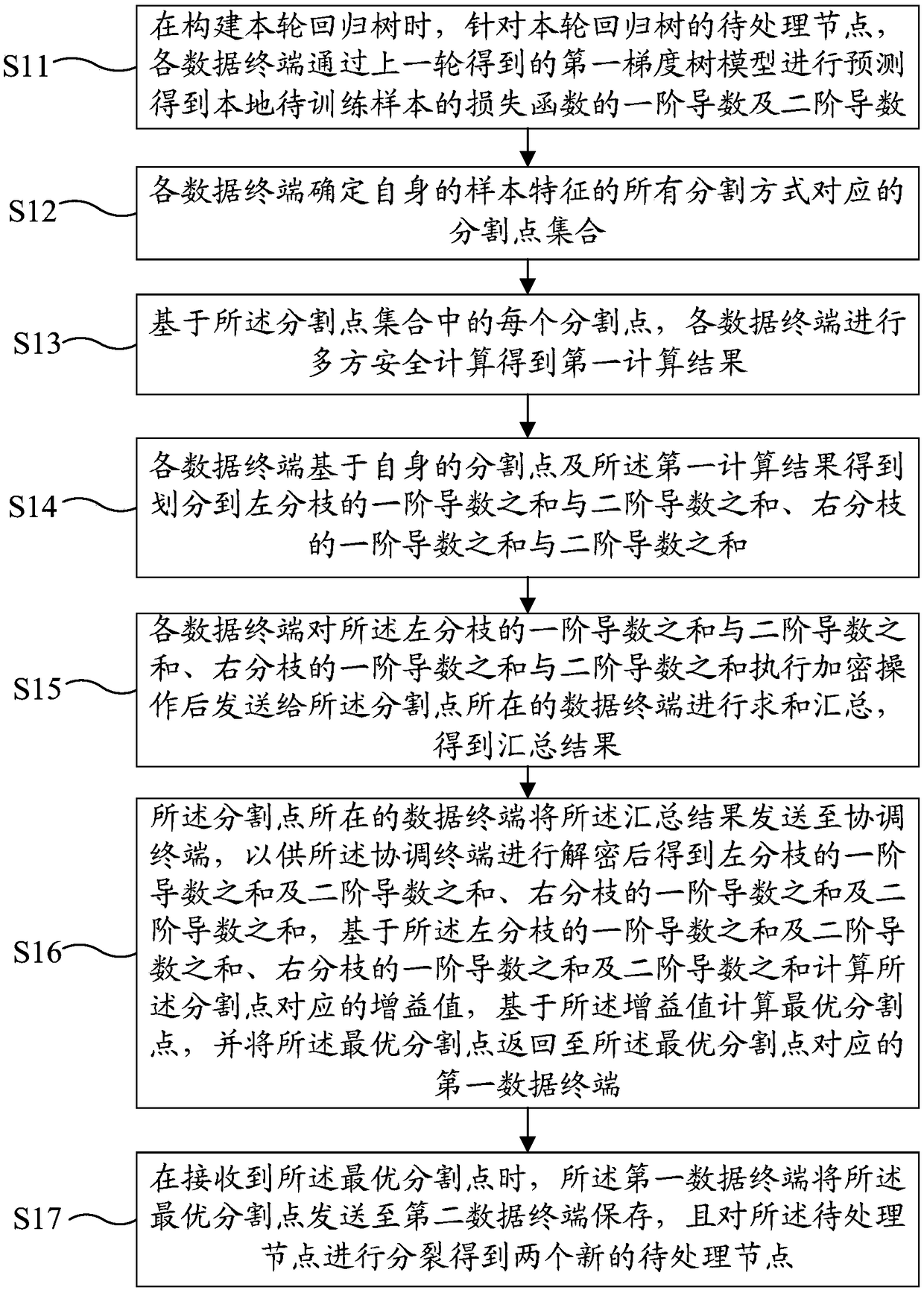

ActiveCN109299728ASolve the inefficiency of trainingAchieve forecastCharacter and pattern recognitionInformation technology support systemData terminalAlgorithm

The invention discloses a federation learning method, a system and a readable storage medium. The federated learning method includes the following steps: the data terminal performs federation trainingon the multi-party training samples based on the gradient descent tree GBDT algorithm, to construct a gradient tree model, wherein the data terminal is a plurality of, the gradient tree model comprises a plurality of regression trees, the regression trees comprise a plurality of partition points, and the training sample comprises a plurality of features, the features correspond to the partition points one by one; the data terminal performs joint prediction on a sample to be predicted based on the gradient tree model to determine a prediction value of the sample to be predicted. The inventioncarries out federation training on multi-party training samples through GBDT algorithm, realizes the establishment of gradient tree model, and is suitable for scenes with large data volume and can well meet the needs of realistic production environment through the gradient tree model. Forecast the sample to be forecasted jointly, and realize the forecast of the sample to be forecasted.

Owner:WEBANK (CHINA)

Federated learning information processing method and system, storage medium, program and terminal

ActiveCN111611610ARealize identity anonymityGuaranteed separation effectDigital data protectionNeural architecturesInformation processingData privacy protection

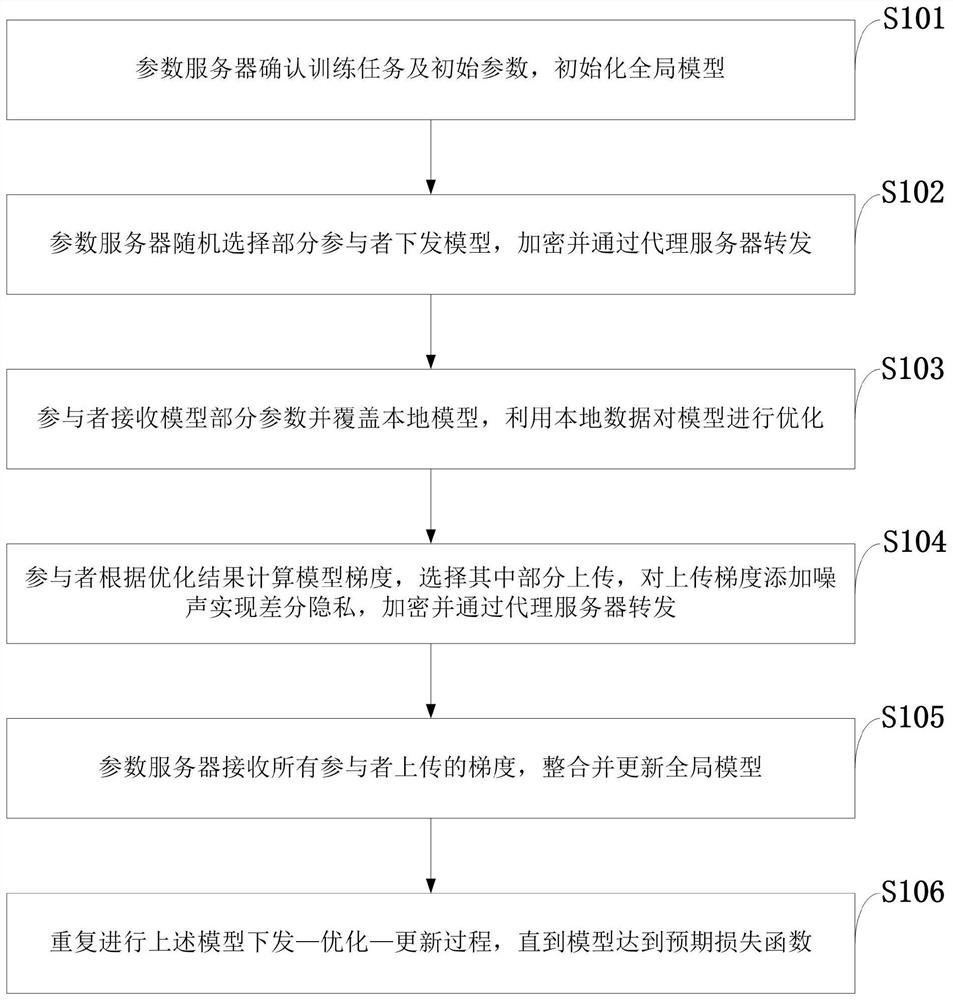

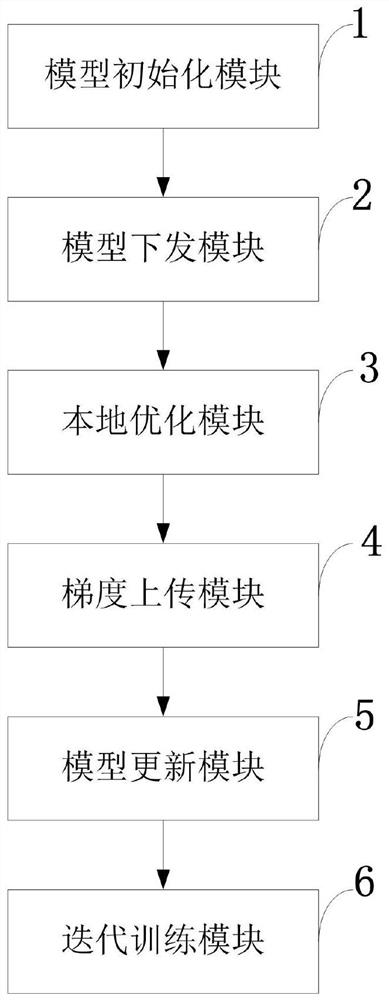

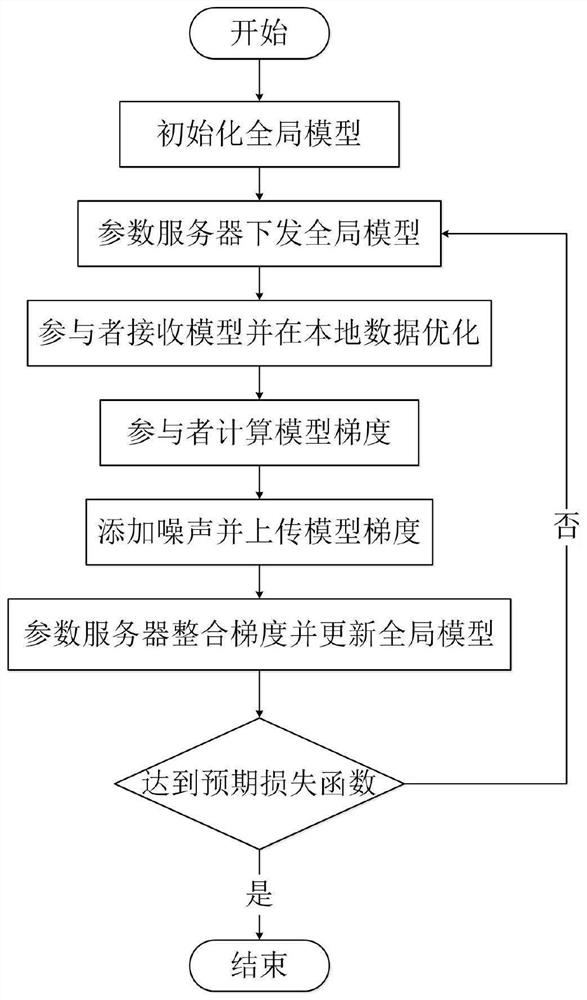

The invention belongs to the technical field of wireless communication networks, and discloses a federated learning information processing method and system, a storage medium, a program, and a terminal. A parameter serve confirms a training task and an initial parameter and initialize a global model. The parameter server randomly selects part of participants to issue model parameters, encrypts themodel parameters and forwards the model parameters through the proxy server; the participants receive part of parameters of the model and cover the local model, and the model is optimized by using local data; the participant calculates a model gradient according to an optimization result, selects a part of the model gradient for uploading, adds noise to the uploading gradient to realize differential privacy, encrypts the uploading gradient and forwards the uploading gradient through the proxy server; the parameter server receives the gradients of all participants, and integrates and updates the global model; and the issuing-training-updating process of the model is repeated until an expected loss function is achieved. According to the invention, data privacy protection is realized; the communication overhead of a parameter server is reduced, and anonymity of participants is realized.

Owner:XIDIAN UNIV

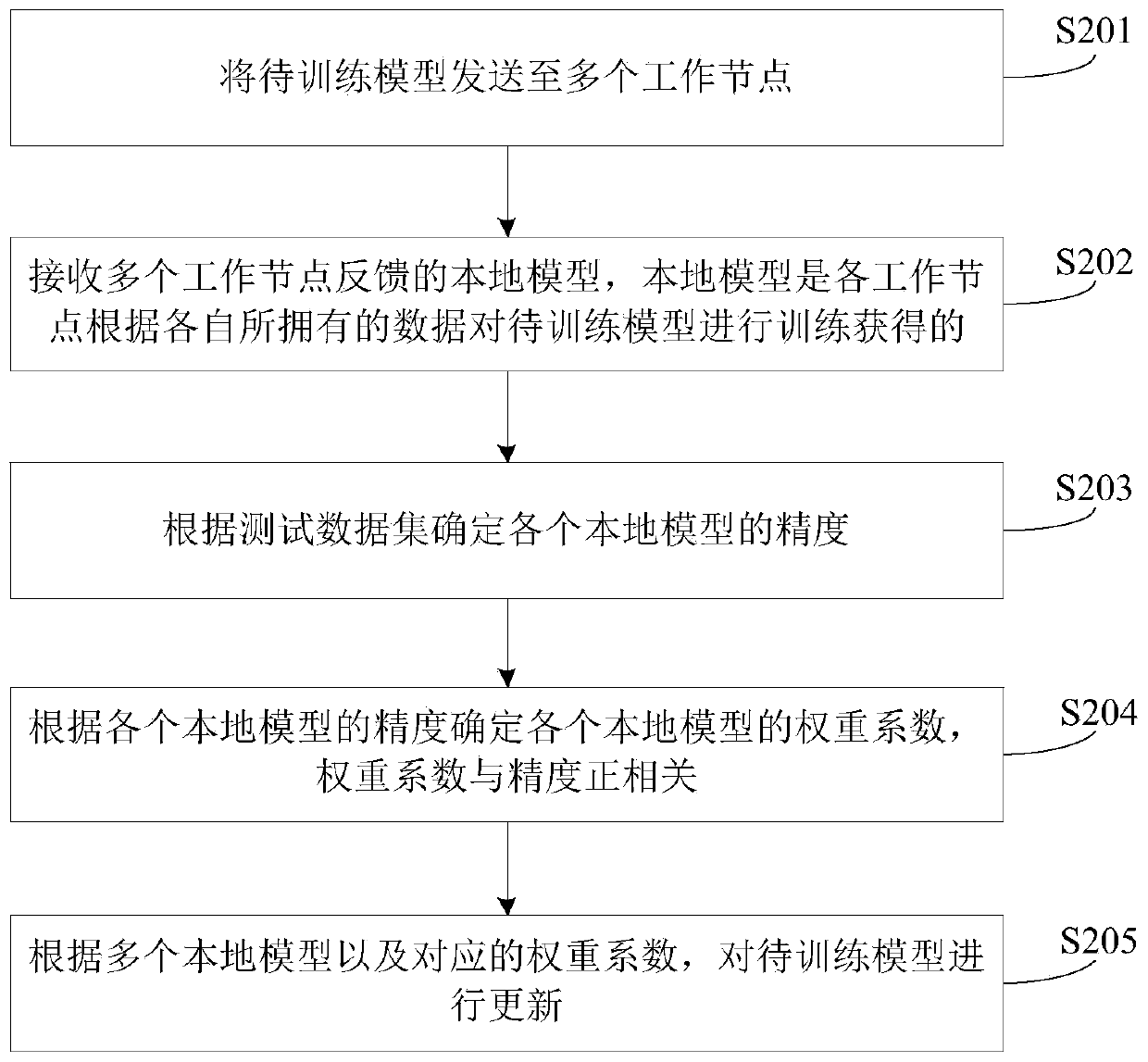

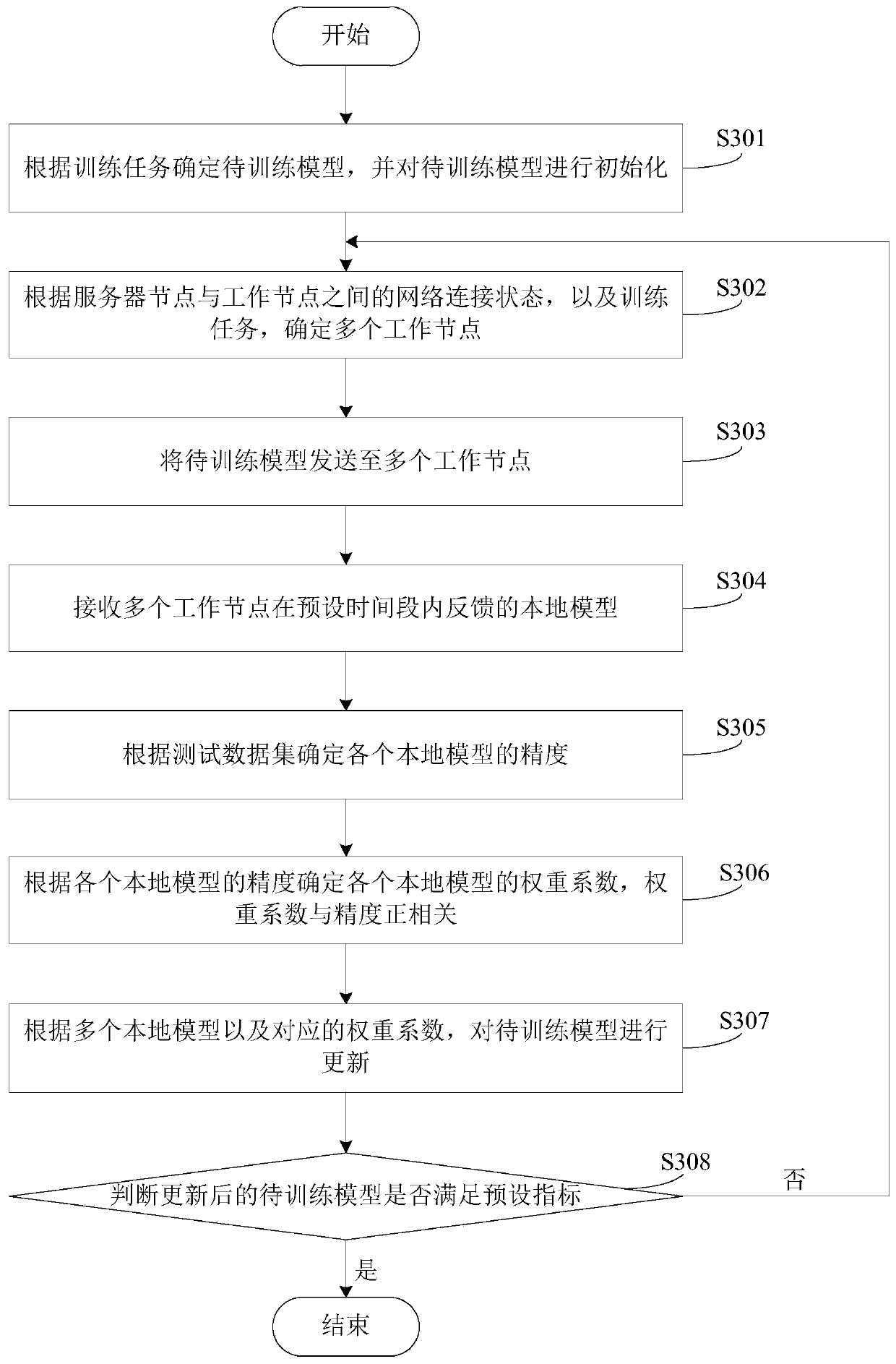

Model training method and device based on federated learning and server

PendingCN110442457AHigh precisionReduce workloadResource allocationCharacter and pattern recognitionPattern recognitionData set

The embodiment of the invention provides a model training method and device based on federated learning and a server. The method comprises the steps of sending a to-be-trained model to a plurality ofworking nodes; receiving a local model fed back by the plurality of working nodes, wherein the local model is obtained by training a to-be-trained model by each working node according to own data; determining the precision of each local model according to the test data set; determining a weight coefficient of each local model according to the precision of each local model, wherein the weight coefficient is positively correlated with the precision; and updating the to-be-trained model according to the plurality of local models and the corresponding weight coefficients. According to the method provided by the embodiment of the invention, by increasing the weight coefficient of the high-precision local model and reducing the weight coefficient of the low-precision local model, the convergencerate of the to-be-trained model is increased, and the precision of the to-be-trained model is improved.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

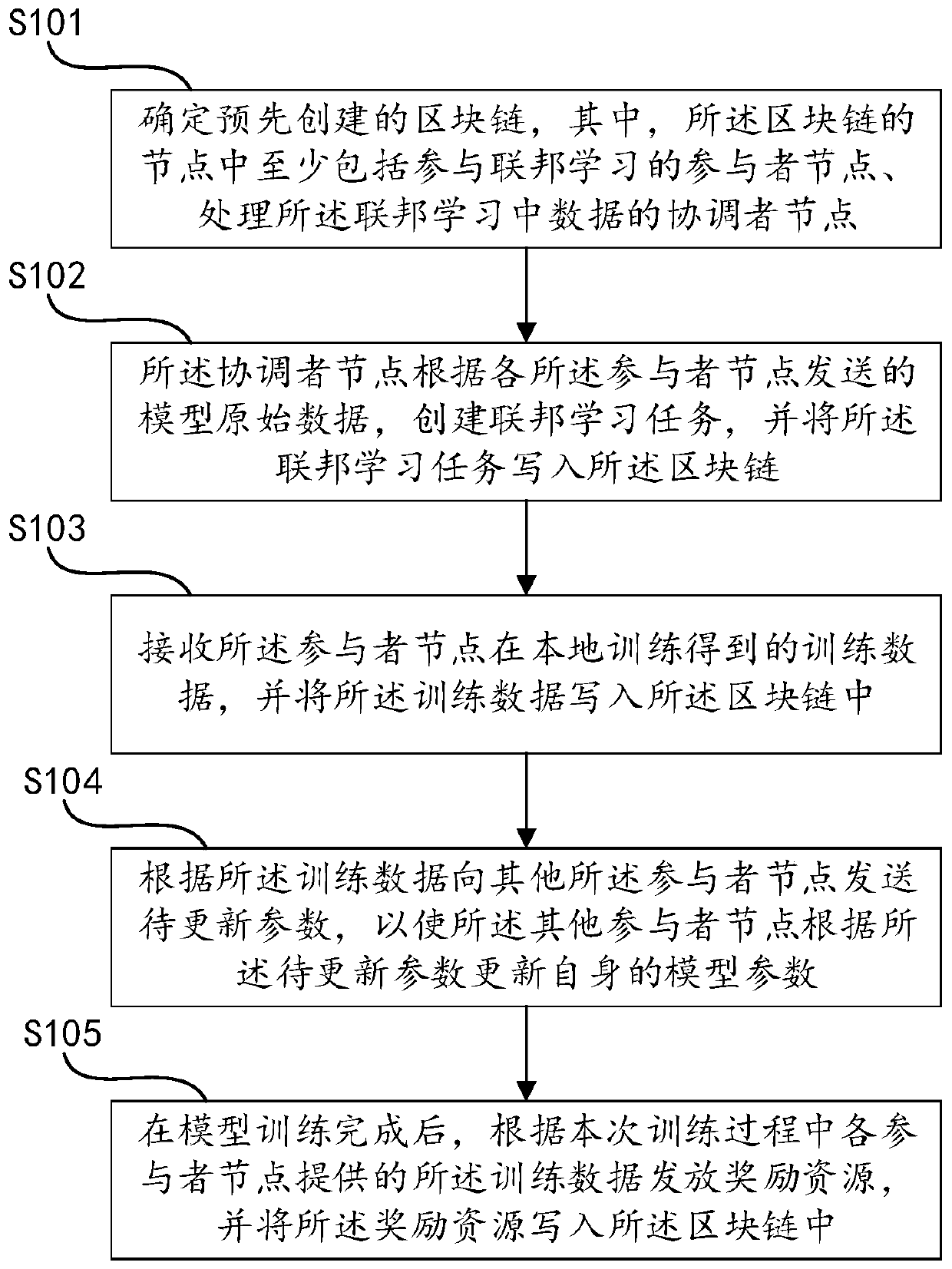

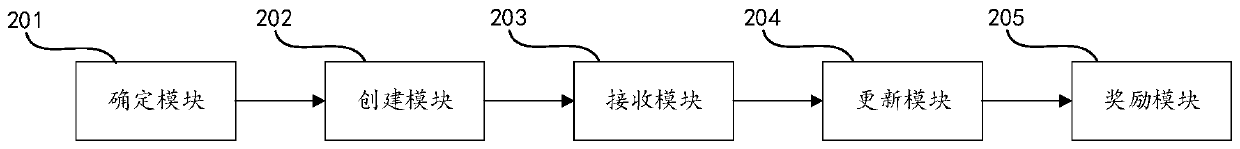

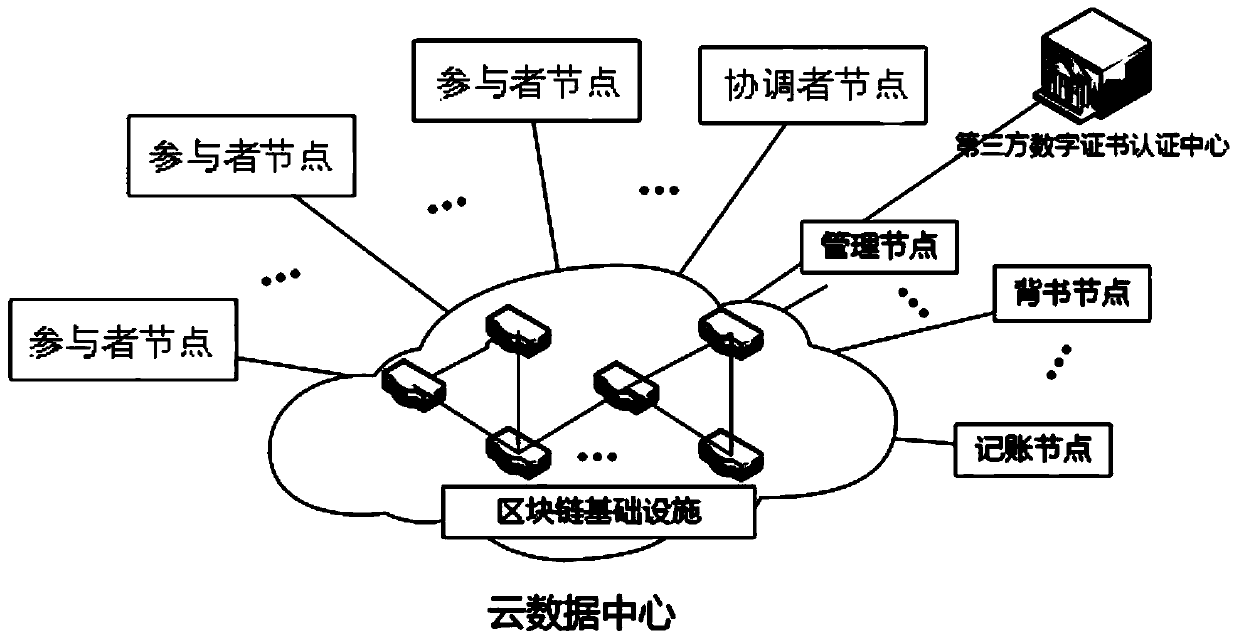

Federated learning method and device based on block chain

InactiveCN111125779ALow costReduce operating costsDigital data protectionMachine learningOriginal dataData operations

The invention discloses a federated learning method and device based on a block chain. The method comprises the steps: determining the block chain; enabling the coordinator node to create a federatedlearning task according to the model original data sent by each participant node; receiving training data obtained by local training of the participant nodes; sending the to-be-updated parameters to other participant nodes according to the training data, so as to enable the other participant nodes to update own model parameters according to the to-be-updated parameters; and after model training iscompleted, issuing reward resources according to training data provided by each participant node in the training process, and writing rewards into the block chain. Compared with a traditional mode, the mutual trust problem of all parties is effectively solved; all parties participating in federated learning negotiate together to generate a coordinator node, so that the transparency of the processis improved; federated learning whole-process data is recorded in a block chain, so that the traceability of data operation is ensured; all parties are encouraged to actively participate through rewarding resources, and the enthusiasm of participants is improved.

Owner:INSPUR ARTIFICIAL INTELLIGENCE RES INST CO LTD SHANDONG CHINA

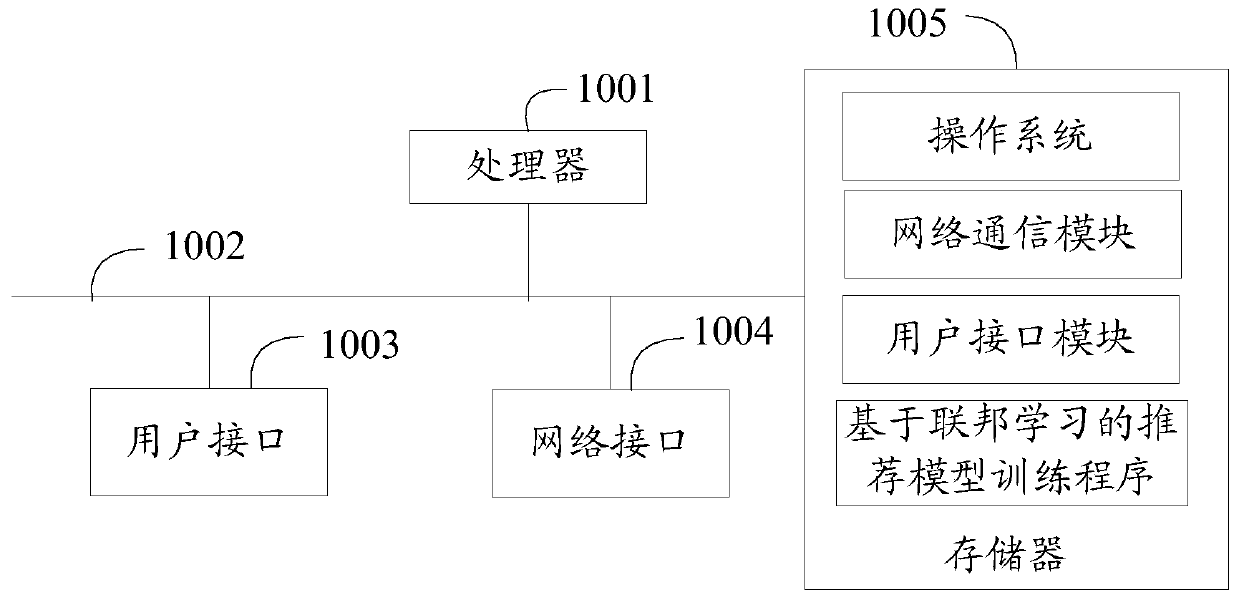

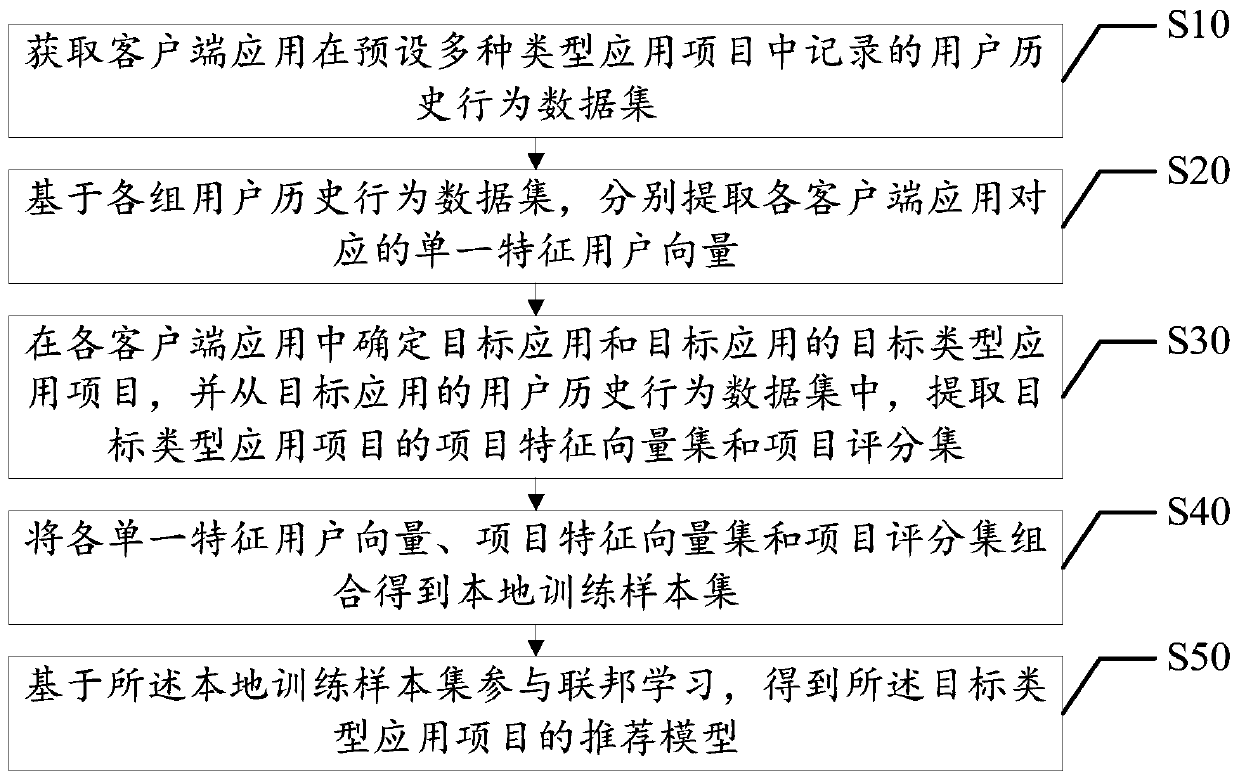

Federated learning-based recommendation model training method, terminal and storage medium

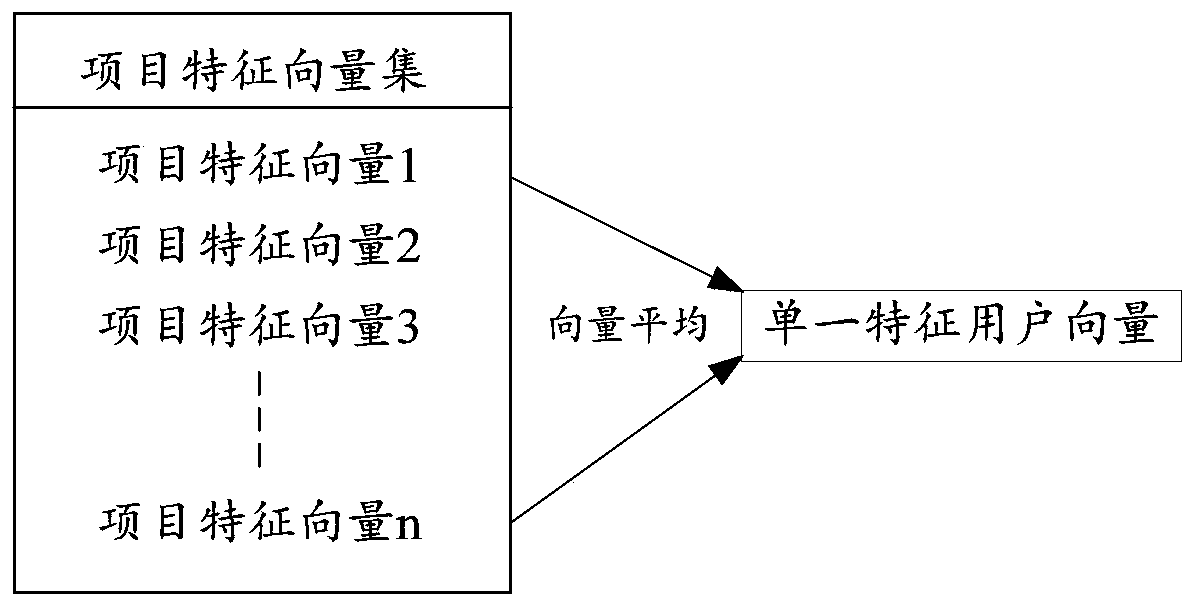

PendingCN110297848AProtect private dataImprove recommendation effectDigital data information retrievalSpecial data processing applicationsLearning basedFeature vector

The invention discloses a federated learning-based recommendation model training method, a terminal and a storage medium. The method comprises the steps of obtaining a user historical behavior data set recorded by a client application in multiple preset types of application projects; extracting a single characteristic user vector of each client application based on each group of user historical behavior data set; extracting a project feature vector set and a project score set from a user historical behavior data set of the target application; combining each single feature user vector, the project feature vector set and the project score set to obtain a local training sample set; and participating in federated learning based on the local training sample set to obtain a recommendation modelof the target type application project. According to the method, model training is carried out under a federal framework to protect user privacy data, meanwhile, recommendation model training is carried out on the basis of multi-scene data, the recommendation model obtained through training can more accurately locate the preference characteristics of the user, and therefore the recommendation effect of the recommendation model is improved.

Owner:WEBANK (CHINA)

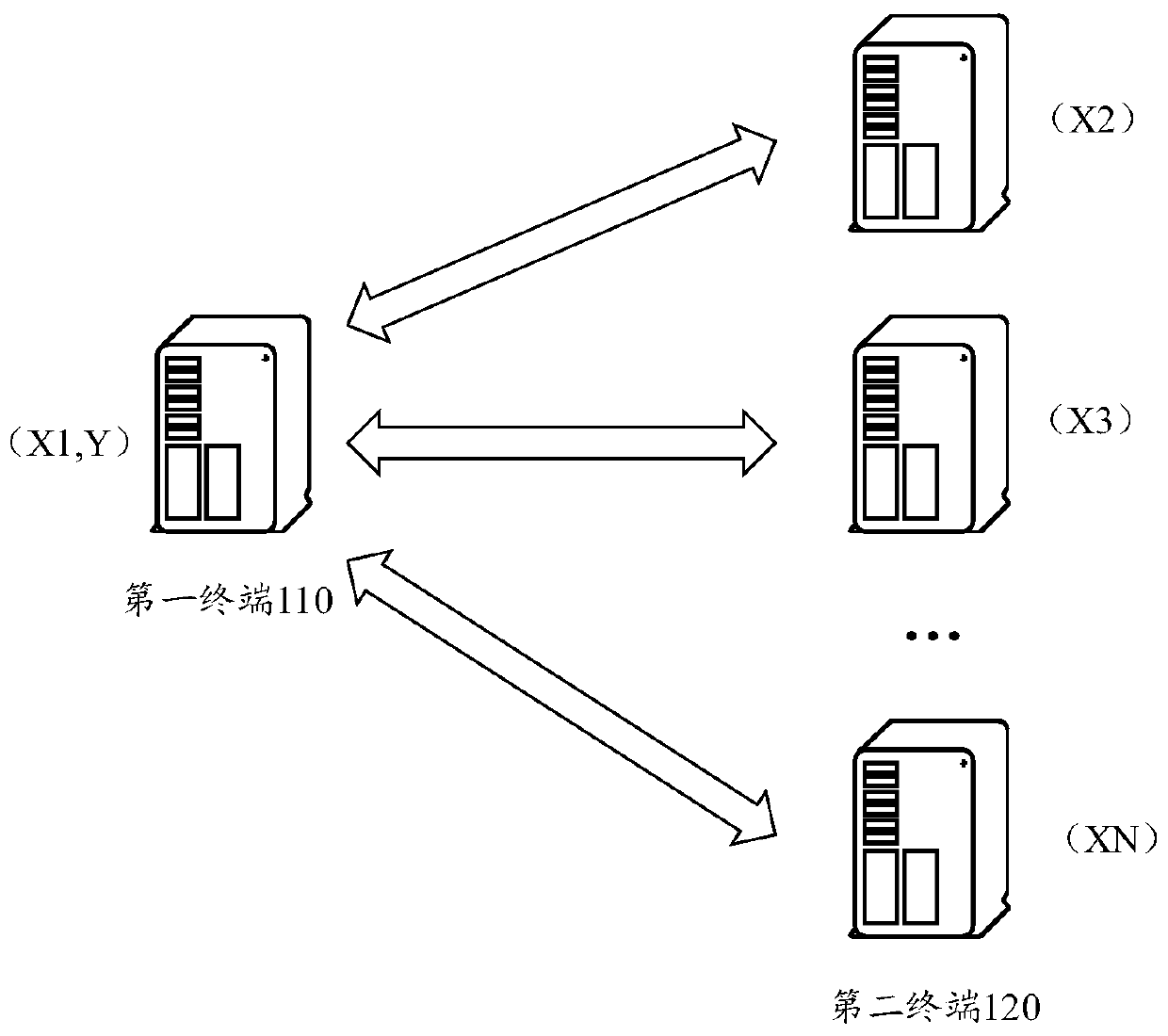

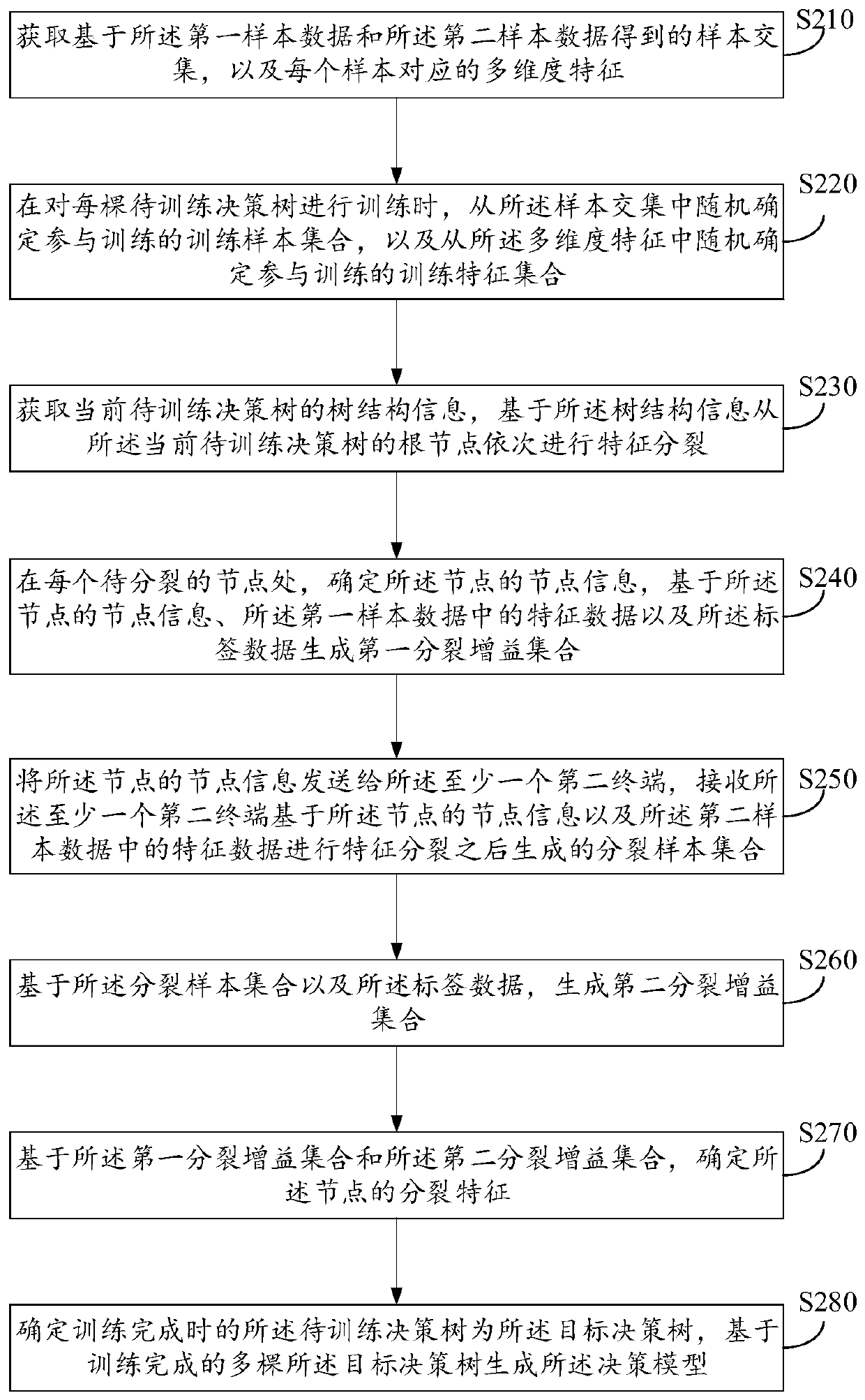

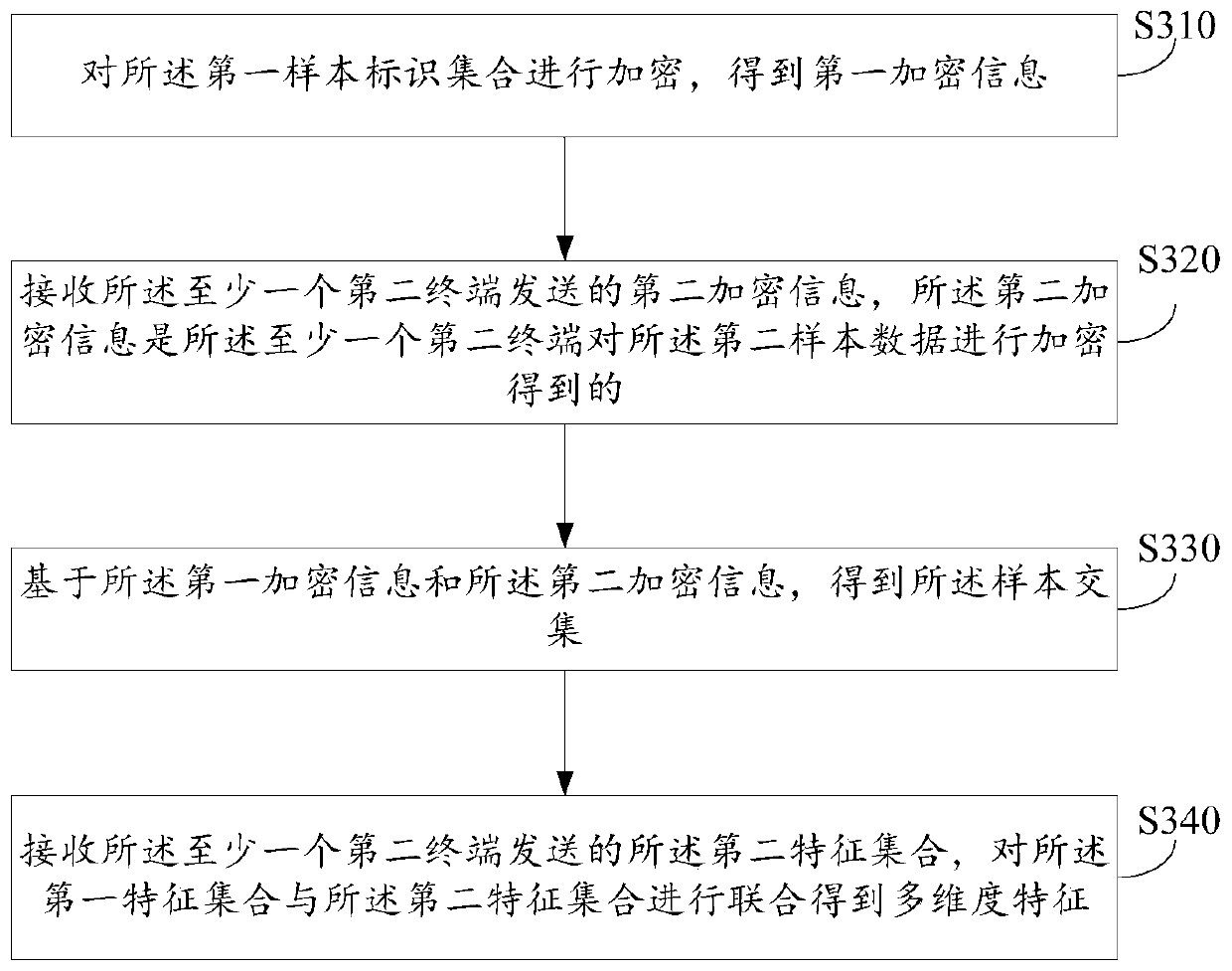

Decision model training method, prediction method and device based on longitudinal federation learning

ActiveCN111598186AAvoid safety hazardsAvoid leaked issuesCharacter and pattern recognitionDecision modelFeature set

The invention relates to a decision model training method, prediction method and device based on longitudinal federated learning. The training method comprises the steps that a training sample set participating in training and a training feature set are randomly determined; at each node of the current decision tree to be trained, determining node information of the node, and generating a first split gain set; receiving a split sample set generated by at least one second terminal; generating a second split gain set based on the split sample set and the label data; determining a splitting feature of the node based on the first splitting gain set and the second splitting gain set; and generating the decision model based on the plurality of trained target decision trees. According to the method and the device, the problem that the label data is leaked in the transmission process can be avoided, the safety of the node data of each participant is improved, and the data privacy of each participant node is protected.

Owner:TENCENT TECH (SHENZHEN) CO LTD

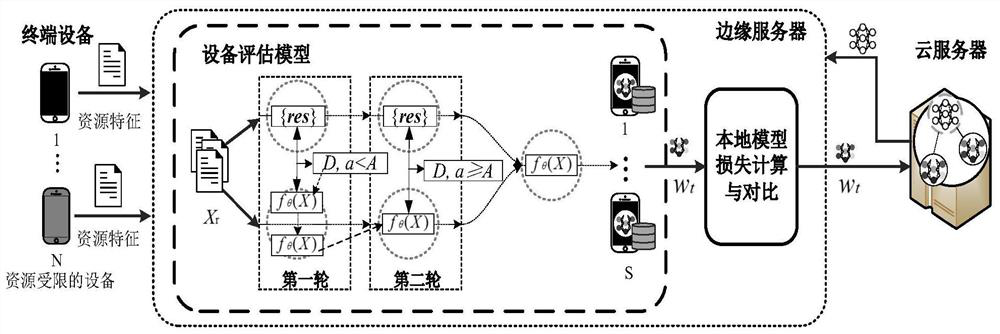

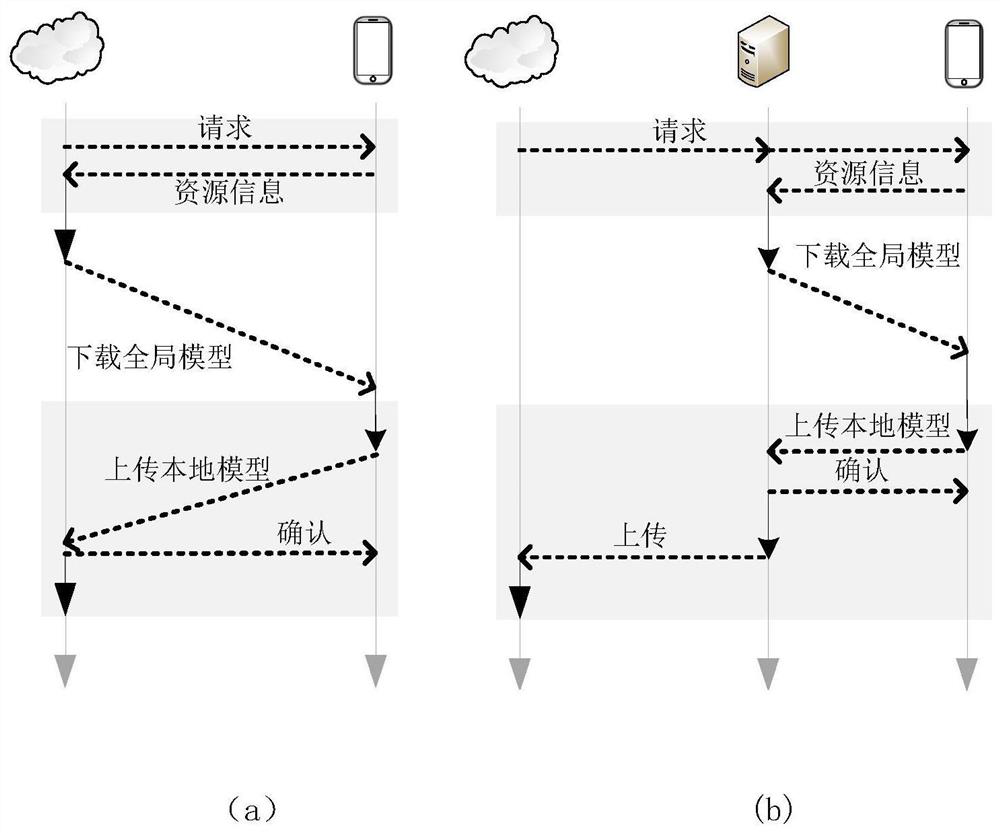

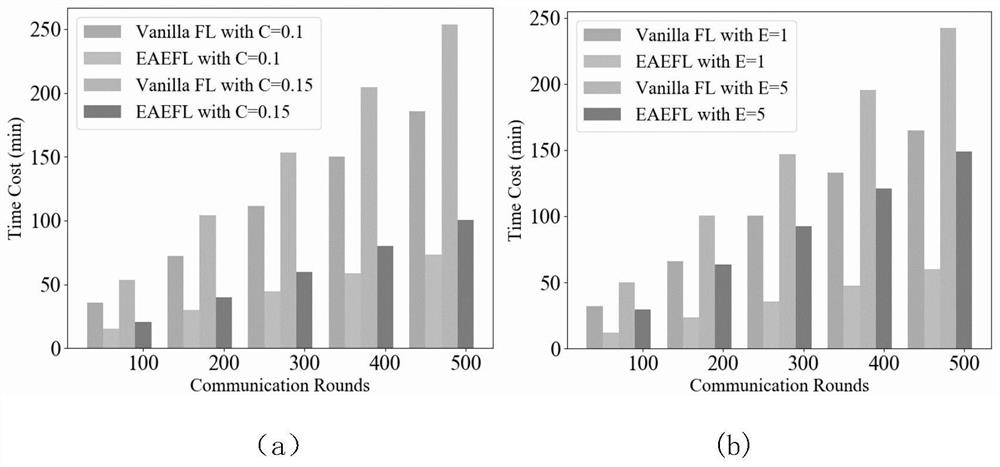

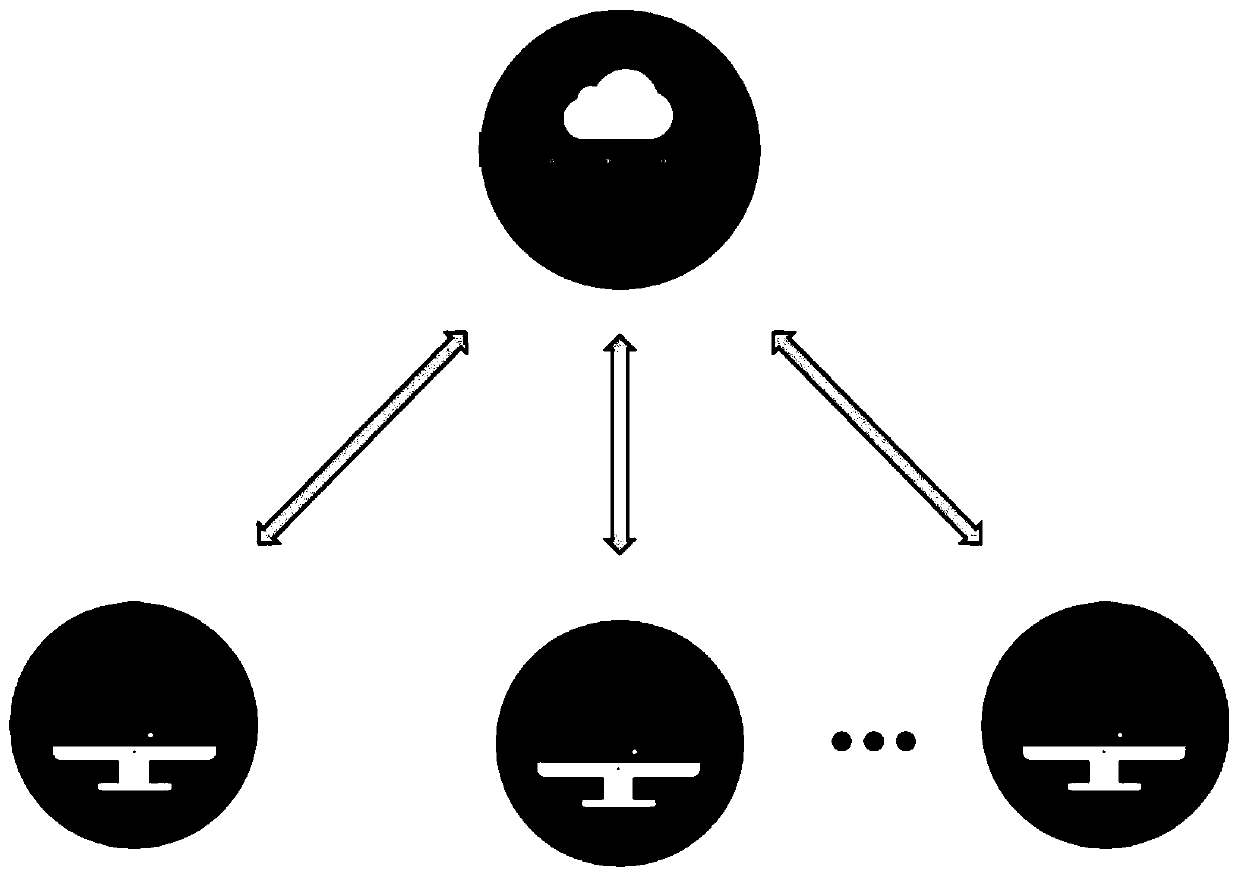

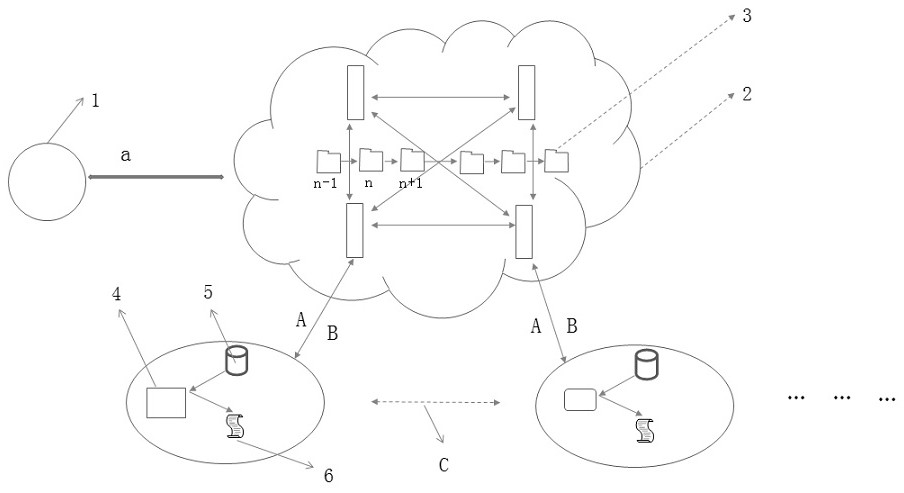

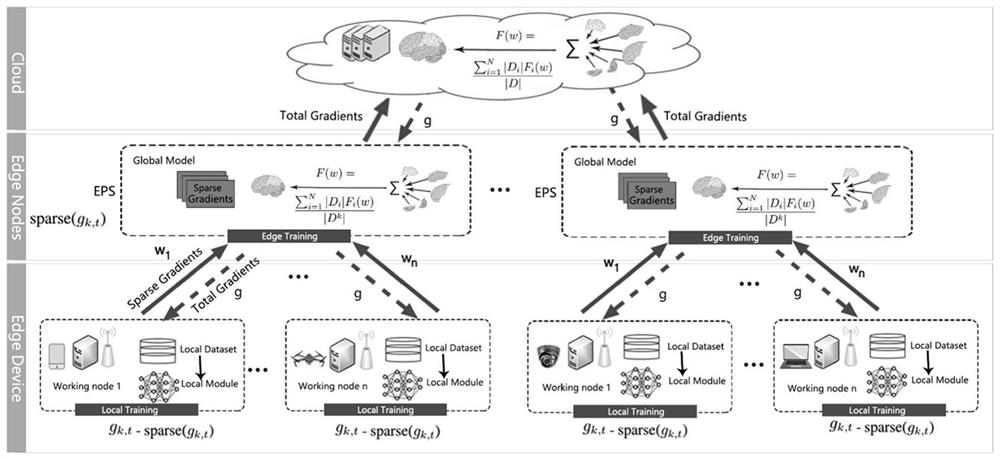

Equipment evaluation and federated learning importance aggregation method, system and equipment based on edge intelligence and readable storage medium

ActiveCN112181666AReduce consumptionReduce latencyDigital data information retrievalResource allocationEdge serverResource information

The invention provides an equipment evaluation and federated learning importance aggregation method based on edge intelligence, which comprises the following steps of cloud server initialization: generating an initial model by a cloud server, equipment evaluation and selection: receiving resource information of terminal equipment by an edge server, generating a resource feature vector, and inputting the resource feature vector to the evaluation model, local training: after the edge server selects the intelligent equipment, sending the transferred initial model to the intelligent equipment, andenabling the intelligent equipment to carry out local training on the initial model in federated learning to obtain a local model, local model screening: sending the local model to an edge server, and judging whether the local model is an abnormal model or not by comparing the loss values of the local model and a previous round of global model, and global aggregation: performing global aggregation by using a classical federated average algorithm. According to the method provided by the invention, on one hand, the training bottleneck problem with resource constraint equipment is solved, and onthe other hand, the model aggregation effect is improved so as to reduce redundant training and communication consumption.

Owner:HUAQIAO UNIVERSITY

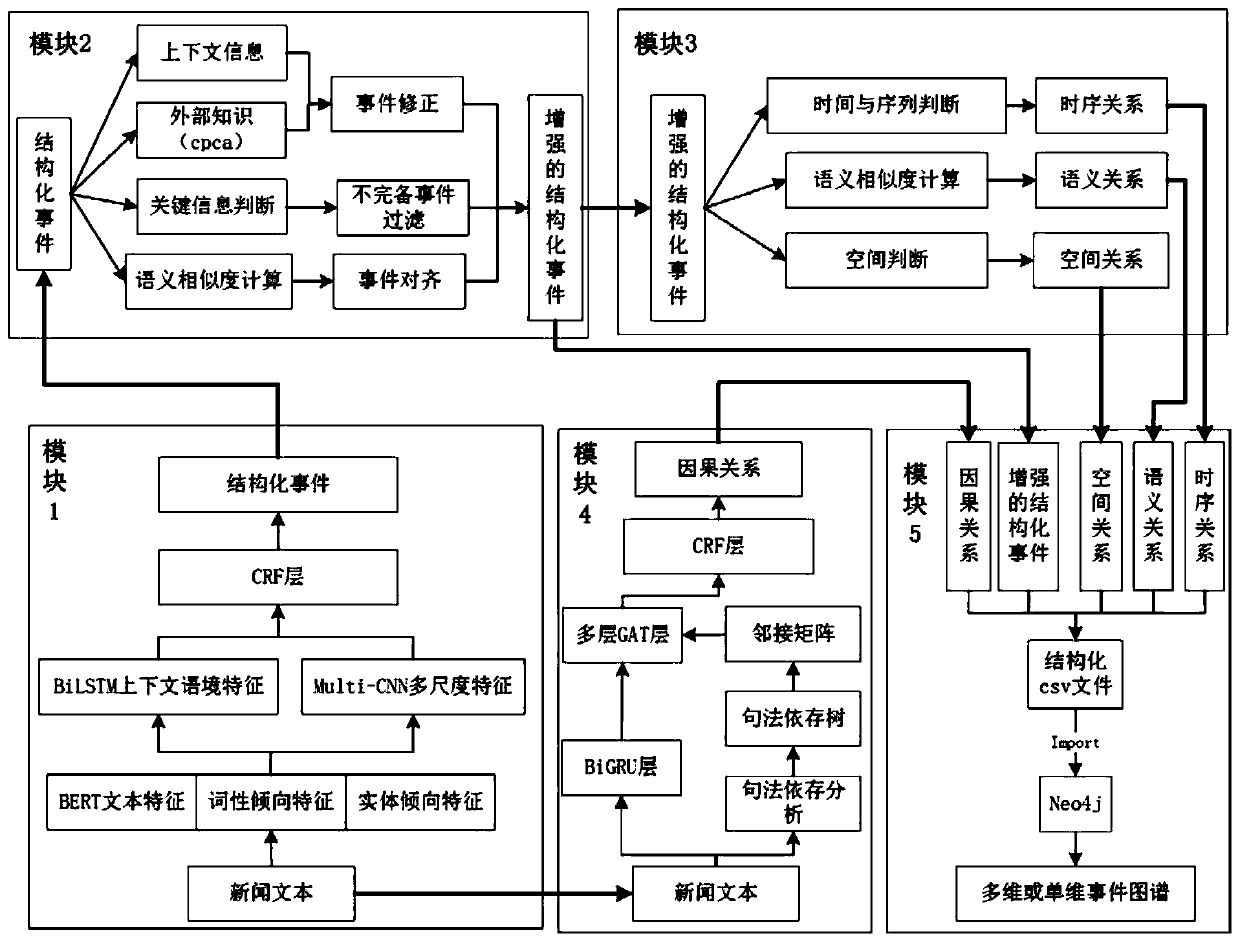

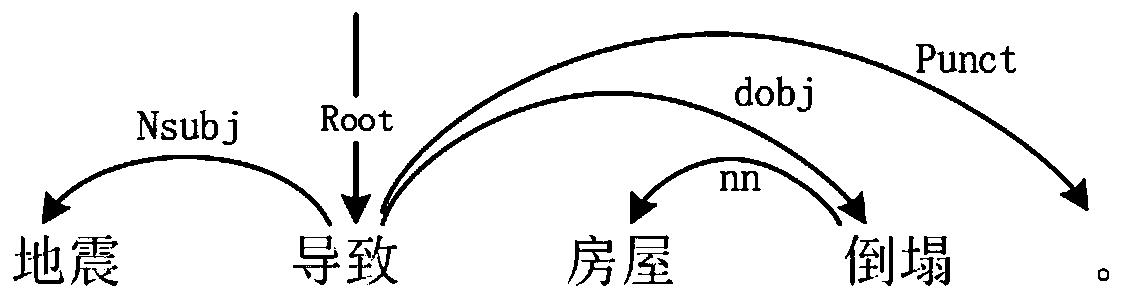

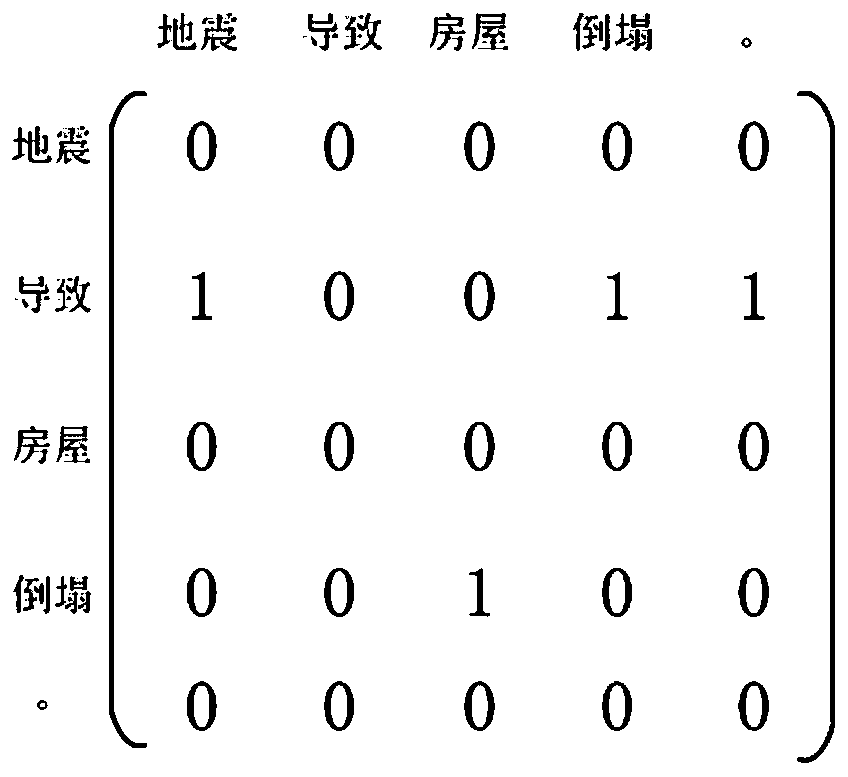

Event atlas construction system and method based on multi-dimensional feature fusion and dependency syntax

ActiveCN111581396AOvercoming the defects of the impact of the buildImprove the extraction effectSemantic analysisNeural architecturesEvent graphEngineering

The invention discloses an event atlas construction system and method based on multi-dimensional feature fusion and dependency syntax. The event graph construction method based on multi-dimensional feature fusion and dependency syntax is realized through joint learning of event extraction, event correction and alignment based on multi-dimensional feature fusion, relationship extraction based on enhanced structured events, causal relationship extraction based on dependency syntax and graph attention network and an event graph generation module. According to the event graph construction method and device, the event graph is constructed through the quintuple information of the enhanced structured events and the relations between the events in four dimensions, and the defects that in the priorart, event representation is simple and depends on an NLP tool, the event relation is single, and the influence of the relations between the events on event graph construction is not considered at the same time are overcome. According to the event atlas construction method provided by the invention, the relationships among the events in four dimensions can be randomly combined according to different downstream tasks, and the structural characteristics of the event atlas are learned to be associated with potential knowledge, so that downstream application is assisted.

Owner:XI AN JIAOTONG UNIV

Model parameter training method and device based on federal learning

ActiveCN110288094AImprove experienceProtect private dataMachine learningPattern recognitionModel parameters

The invention discloses a model parameter training method and device based on federal learning. The method comprises the steps of using a first terminal to receive a first encryption mapping model sent by a second terminal; predicting the missing feature of the first sample data according to the first encryption mapping model to obtain the first encryption complement sample data; training a federal learning model according to the current encryption model parameters, the first sample data and the first encryption completion sample data, and obtaining a first secret sharing loss value and a first secret sharing gradient value; and if it is detected that the federal learning model is in a convergence state, obtaining a target model parameter according to the updated first secret sharing model parameter corresponding to the first secret sharing gradient value and a second secret sharing model parameter sent by the second terminal. According to the method, by adopting a secret sharing mode, the training process of the federated learning model does not need the assistance of a second collaborator, and the user experience is improved.

Owner:WEBANK (CHINA)

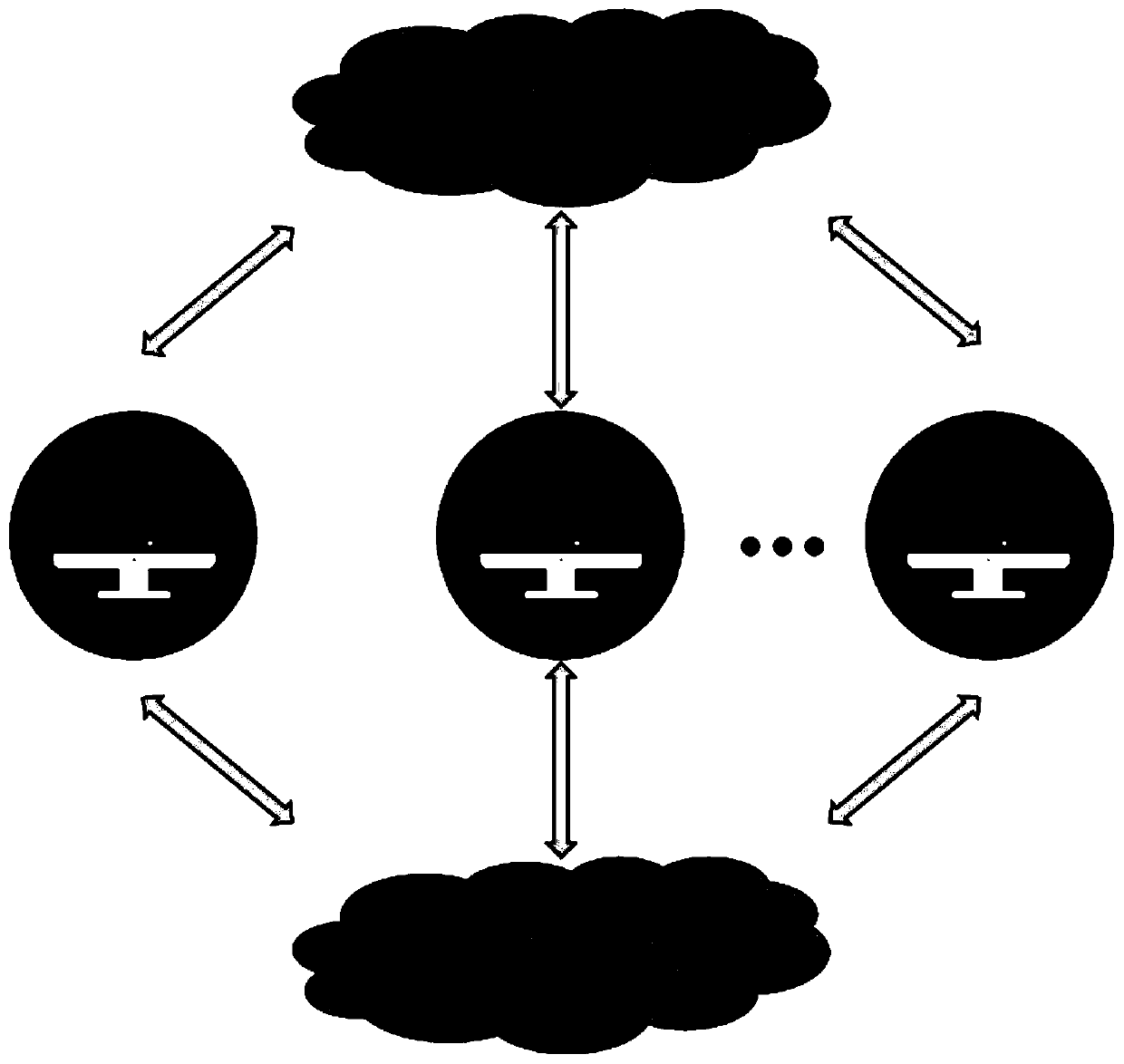

Federated learning system and method based on block chain

ActiveCN111212110AAchieve decentralizationImprove robustnessMachine learningData switching networksEngineeringSmart contract

The invention provides a federated learning system and method based on a block chain, and the system comprises a model training module which is used for the updating of a machine learning model in a federated learning process and the aggregation of the change values of the machine learning model, an intelligent contract module which is based on the blockchain technology and is used for providing adecentralized control function and a key management function in the federated learning process, and a storage module which is based on an IPFS protocol and is used for providing a decentralized information storage mechanism for the intermediate information in the federated learning process, wherein the model training module, the intelligent contract module based on the blockchain technology and the storage module based on the IPFS protocol run on each node participating in federated learning at the same time. Complete decentralization of the whole system is realized, the failure and quit of any node do not influence the continuous federated learning of other nodes, and the robustness is stronger.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

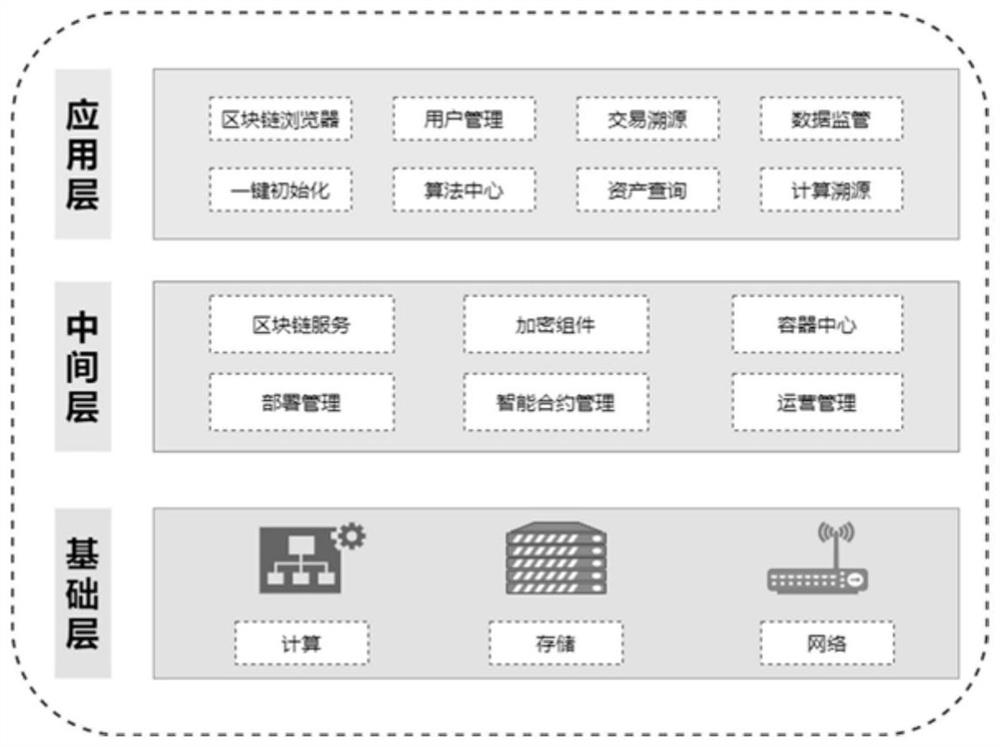

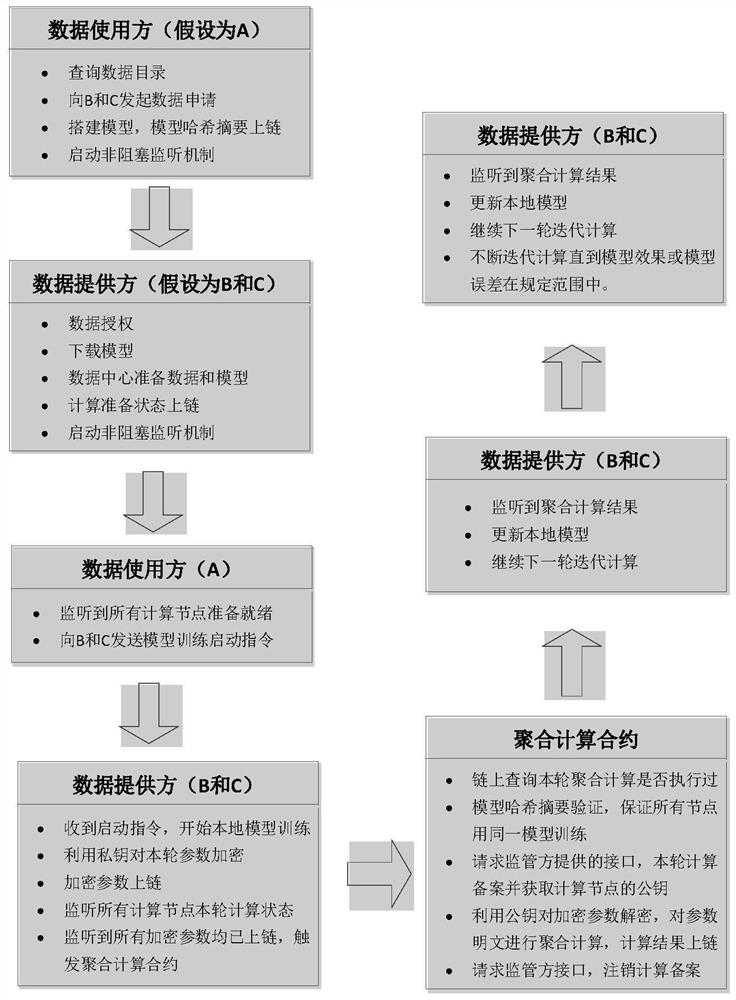

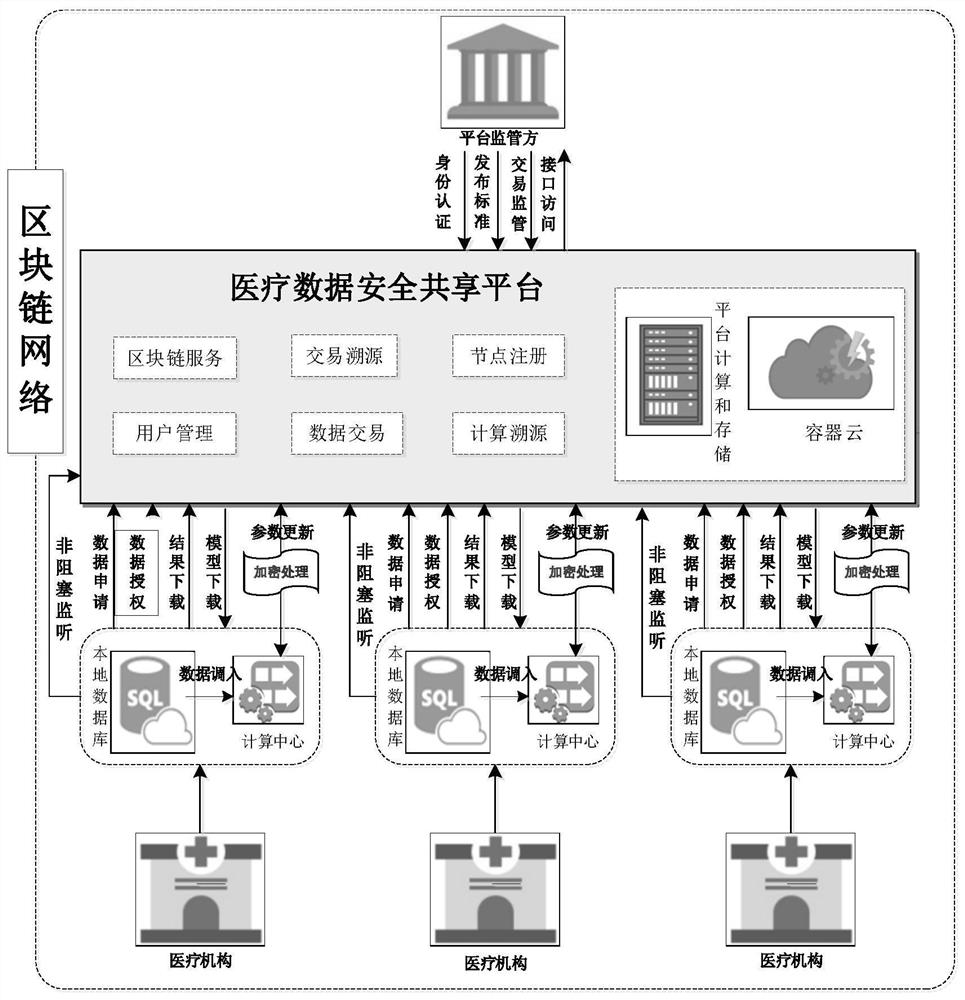

Medical data security sharing method based on block chain and federated learning

ActiveCN111698322APrevent malicious tamperingAvoid inconsistenciesMedical equipmentSecuring communicationData providerOriginal data

The invention discloses a medical data security sharing method based on a block chain and federated learning. The data applicant can use the data after being authorized on the chain of the data provider; the data fingerprint carries out hash abstract chaining on authorized data, the problem that the authorized data is maliciously tampered to cause data inconsistency is prevented, the use right ofthe original data is shared in the whole process, a data user cannot directly obtain the data, and the value of the data can be mined only through federated learning. In each round of iterative computation of federated learning, asset chaining is carried out on model parameters and aggregation results, and credible traceability of federated learning computation can be achieved. Each step of operation in the data sharing process is subjected to related auditing by a supervisor, such as identity auditing, data checking, transaction detail auditing and the like. According to the invention, aggregation calculation is carried out without the help of a central server, decentralized federated learning is realized, aggregation calculation is realized through an intelligent contract, and maliciousaggregation calculation results received by each node due to malicious control of the central server are avoided.

Owner:福州数据技术研究院有限公司

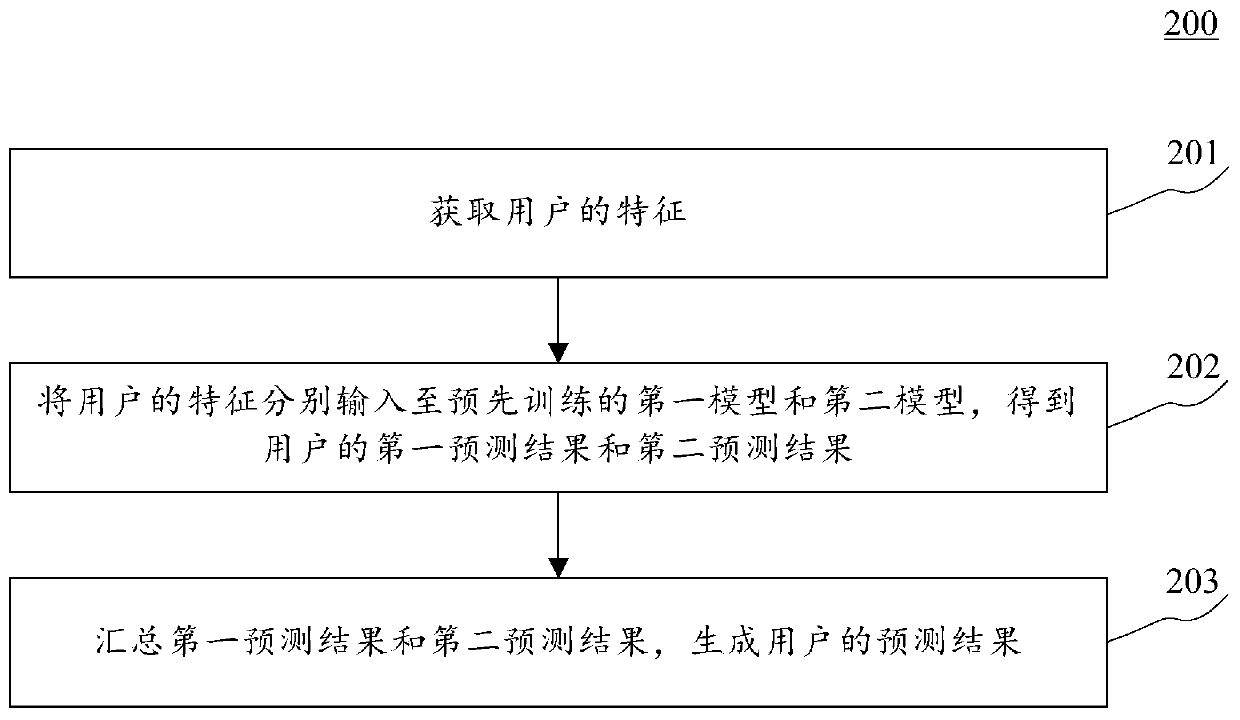

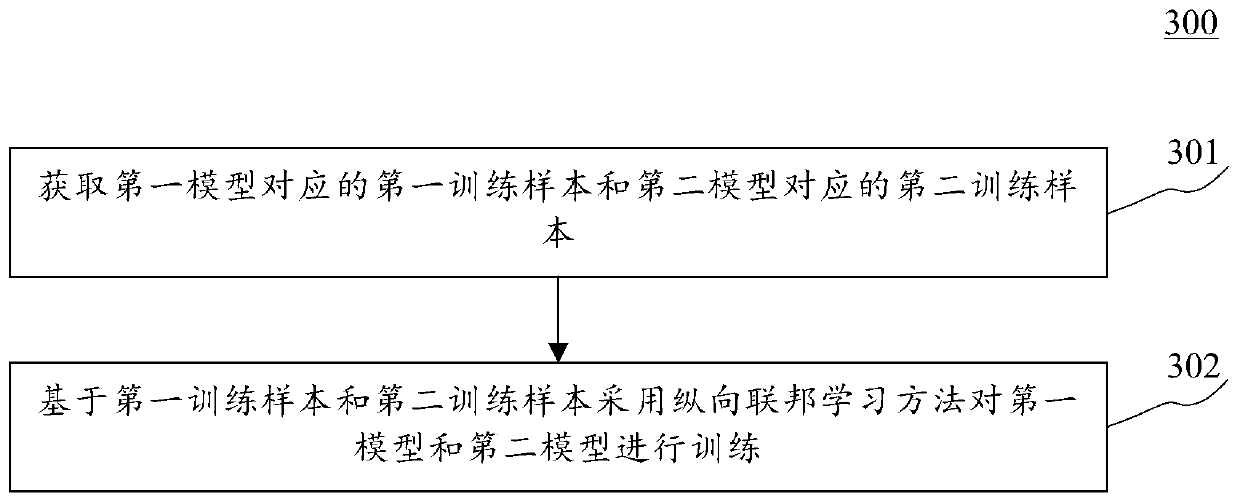

Method and device for predicting information

The embodiment of the invention discloses a method and a device for predicting information. A specific embodiment of the method comprises the steps of obtaining features of a user; inputting the features of the user into a pre-trained first model and a pre-trained second model respectively to obtain a first prediction result and a second prediction result of the user, wherein the first model and the second model correspond to different types of mechanisms respectively, and are obtained by performing training by adopting a longitudinal federated learning method based on respective corresponding training samples; and summarizing the first prediction result and the second prediction result to generate a prediction result of the user. The embodiment of the invention relates to the field of cloud computing, and improves the accuracy of information prediction based on the prediction information of the first model and the second model trained by adopting the longitudinal federal learning method.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

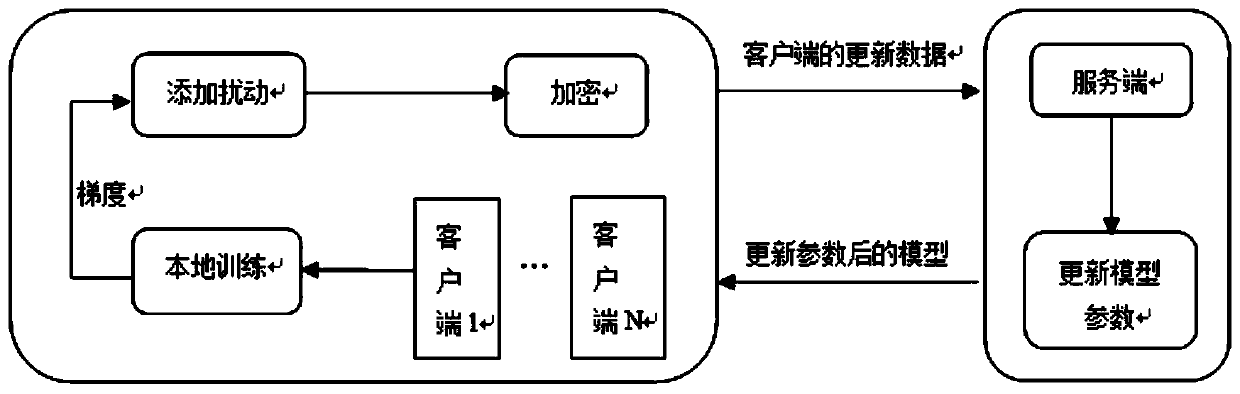

Edge computing privacy protection system and method based on joint learning

ActiveCN110719158AGuarantee authenticitySolve protection problemsDigital data protectionNeural learning methodsEdge computingPrivacy protection

The invention discloses an edge computing privacy protection system and method based on joint learning. The system comprises a client and a server, the client is used for local training, adding disturbance to updated parameters, encrypting the updated parameters and sending the encrypted parameters to the server, and the server receives encrypted data sent by the client, decrypts the encrypted data and updates local parameters to update a deep learning model; the protection method comprises the following steps: step 1, adding disturbance to parameters at a client; step 2, encrypting the data at a client; and step 3, decrypting the data at the server. According to the method, each participant can safely submit data on the premise of not needing any trusted aggregator; noise disturbance is added to local updating in a distributed mode, updating of the disturbance is encrypted through a Paillier homomorphic cryptosystem, safety and performance analysis shows that the PPFL protocol can guarantee privacy of client data and learning accuracy at the same time, and the conflict problem of privacy protection and learning accuracy is solved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

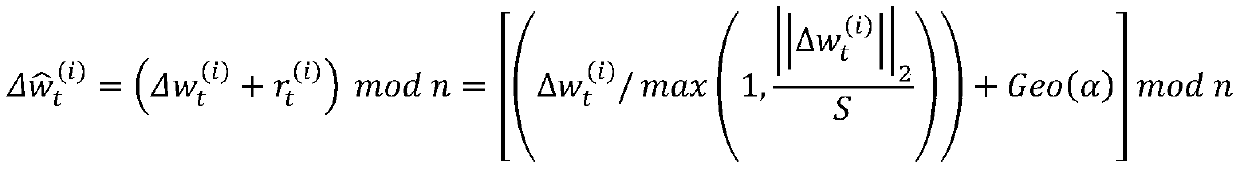

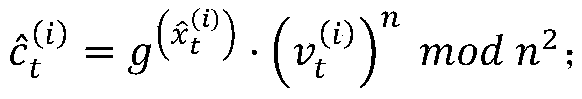

Decentralized federated machine learning method under privacy protection

ActiveCN111600707AAvoid paralysisImprove performanceKey distribution for secure communicationUser identity/authority verificationAttackPrivacy protection

The invention discloses a decentralized federated learning method under privacy protection. The decentralized federated learning method comprises a system initialization step, a request model and local parallel training step, a model parameter encryption and model sending step, a model receiving and recovering step and a system updating step. Decentralization is achieved by using a strategy of randomly selecting participants as parameter aggregators, and the defects that existing federated learning is easily attacked by DoS, a parameter server has a single point of failure and the like are overcome; a PVSS verifiable secret distribution protocol is combined to protect participant model parameters from model inversion attacks and data member reasoning attacks. Meanwhile, it is guaranteed that parameter aggregation is carried out by different participants in each training task, when an untrusted aggregator occurs or the aggregator is attacked, the aggregator can recover to be normal by itself, and the robustness of federated learning is improved; while the functions are achieved, the federated learning performance is guaranteed, the safety training environment of federated learning is effectively improved, and wide application prospects are achieved.

Owner:SOUTH CHINA NORMAL UNIVERSITY

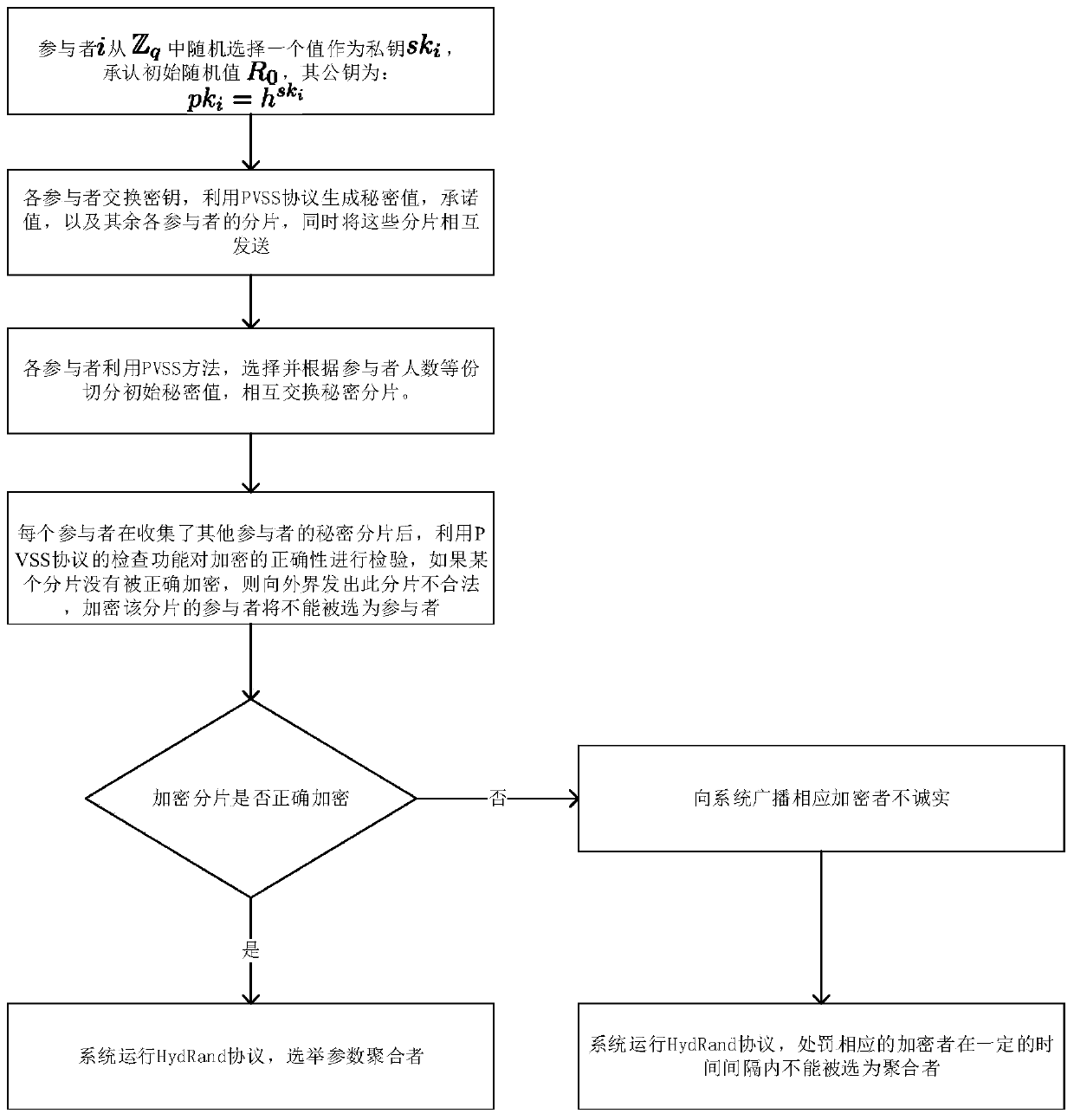

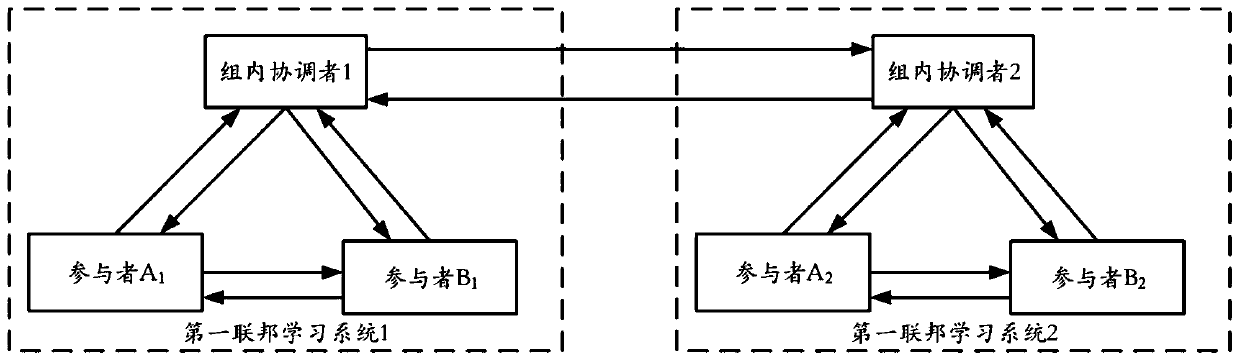

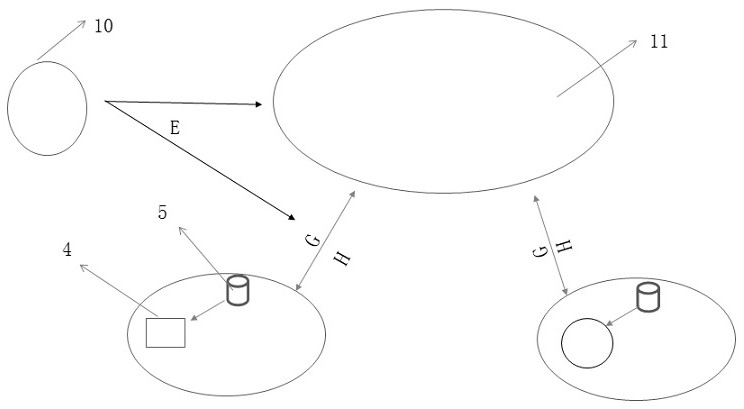

Hybrid federated learning method and architecture

The invention discloses a hybrid federated learning method and architecture. The method is suitable for training a federated learning model with multiple groups of participants. The method comprises:for each group, jointly training a first federated learning model of each group according to data sets of participants in the group; fusing the first federated learning models of each group to obtaina second federated learning model, and sending the second federated learning model to participants in each group; and for each group, training according to the second federated learning model and thedata set of the participants in the group to obtain an updated first federated learning model, and returning to the step of fusing the first federated learning models of each group to obtain a secondfederated learning model until the model training is finished. When the method is applied to fintech, the accuracy of a federated learning model can be improved.

Owner:WEBANK (CHINA)

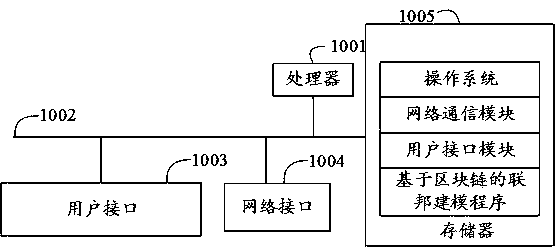

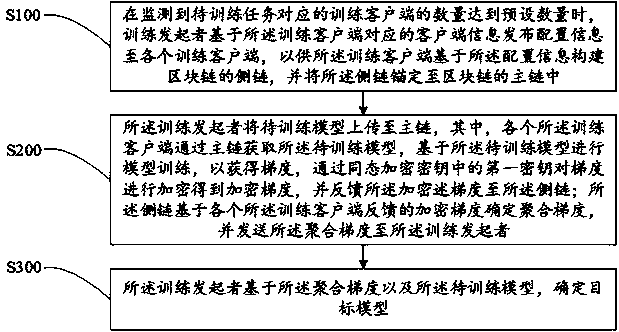

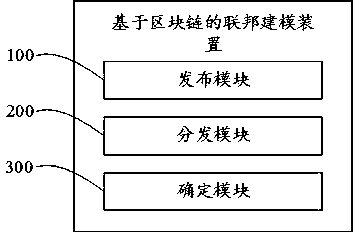

Federated modeling method, device and equipment based on block chain, and storage medium

ActiveCN111552986AAccuracy has no effectAccuracy impactDigital data protectionNeural architecturesModelSimPrivacy protection

The invention discloses a federated modeling method, device and equipment based on a block chain, and a storage medium. The method comprises the following steps: a training initiator issues configuration information to all training clients based on client information corresponding to the training clients when the number of the training clients is monitored to reach a preset number; the training initiator uploads a to-be-trained model to a main chain; and the training initiator determines a target model based on aggregation gradient and the to-be-trained model. Modeling of federated learning isrealized through the block chain; on the premise of protecting the privacy of federated learning data, the accuracy of federated learning is not affected, the training effect and model precision of federated learning are improved, model parameters such as gradients in transmission do not need to be modified, and the balance between privacy protection of the model parameters such as gradients andmodel convergence or model precision is achieved; and information leakage can be completely prevented, so the safety of data samples in federated learning is improved.

Owner:PENG CHENG LAB +1

Data processing method and system based on neural network and federated learning

PendingCN110874484AEasy to handleReduce computing loadDigital data protectionNeural architecturesNetwork outputEngineering

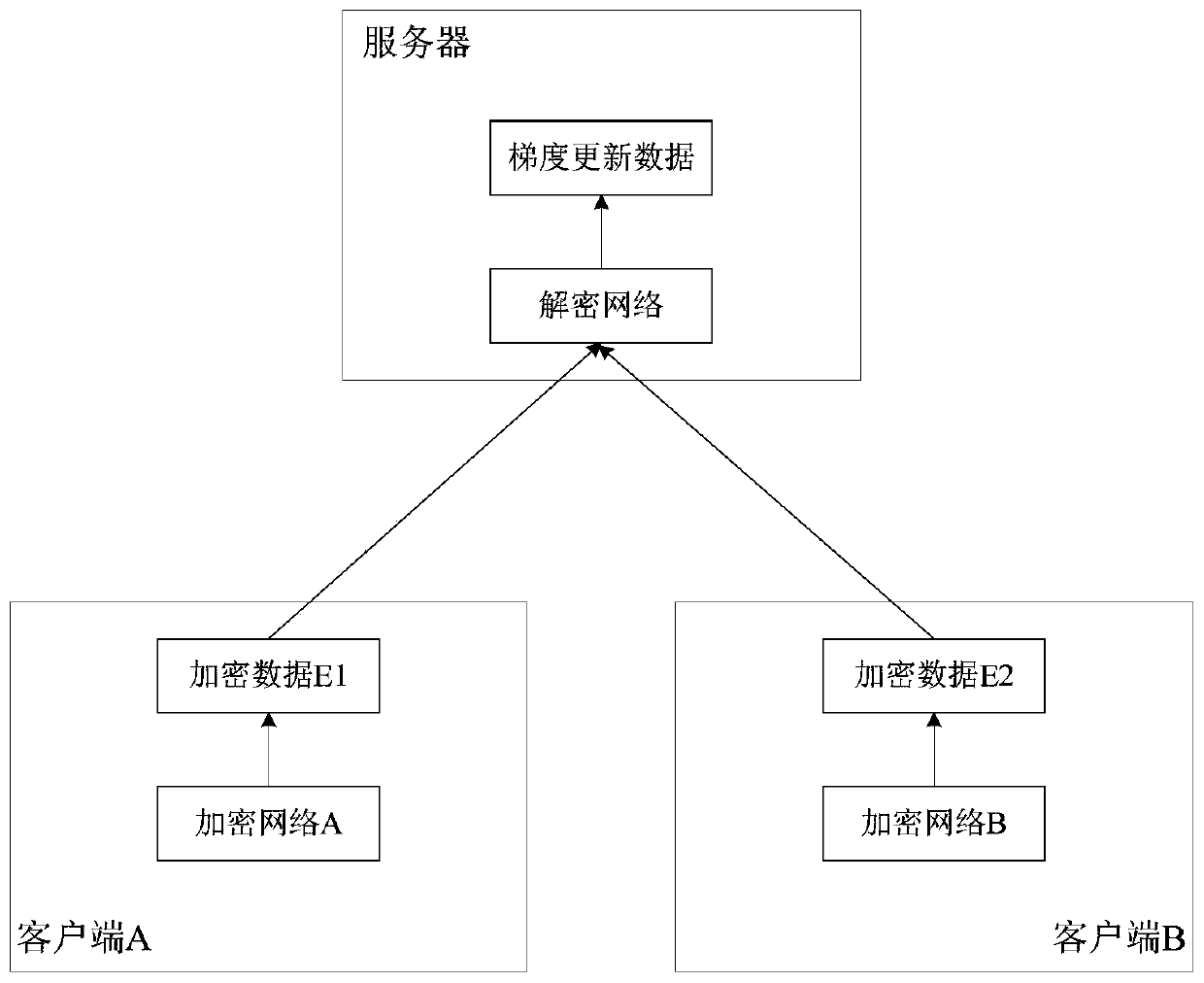

The embodiment of the invention provides a data processing method and system based on a neural network and federated learning. The method comprises the following steps: determining an encryption and decryption neural network according to a joint modeling task, wherein the encryption and decryption neural network comprises M encryption neural networks and a decryption neural network; training the encryption and decryption neural network according to randomly generated training data until the encryption and decryption neural network converges; sending the M trained encrypted neural networks to Mclients, so that each client determines encrypted data by taking owned local data and model parameters of the joint modeling task as input data of each encrypted neural network; and receiving encrypted data output by the encrypted neural network of each client, inputting the encrypted data into the decrypted neural network, and determining gradient update data of the model parameters. According to the method provided by the embodiment of the invention, the joint modeling is realized while the data privacy is ensured, the operation is simple and convenient, and the data processing efficiency is improved.

Owner:ZHONGAN INFORMATION TECH SERVICES CO LTD

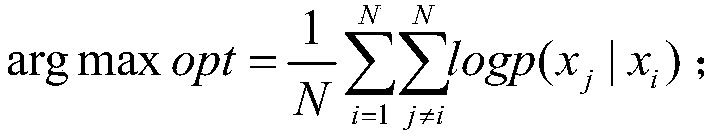

Sequence recommendation method based on user behavioral difference modeling

ActiveCN108648049AOperation forecastBuying/selling/leasing transactionsFeature vectorRecommendation service

The present invention discloses a sequence recommendation method based on user behavioral difference modeling. The method comprises: acquiring historical behavior information of a user; calculating acommodity feature vector according to the acquired historical behavior information; by combining a commodity feature vector, using a behavioral difference modeling method for sequence modeling, and obtaining the current demand and historical preferences of the user by using two different neural network architectures; and according to the current purchase demand and the historical preferences of the user, predicting the next commodity of interest to the user through joint learning, performing matching in a commodity vector space, finding a plurality of commodities that are closest to the predicted result in the commodity vector space, and generating a commodity recommendation sequence. According to the method provided by the present invention, through difference modeling on user timing sequence behaviors, the current demand and long-term preferences in the purchase decision of the user can be intelligently understood, and accurate sequence recommendation services can be provided for users.

Owner:UNIV OF SCI & TECH OF CHINA

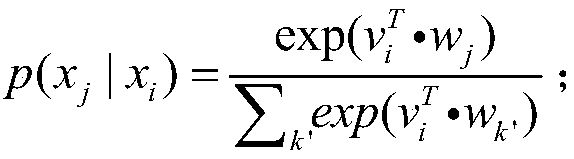

Quality-aware edge intelligent federated learning method and system

ActiveCN111754000AQuality improvementPrivacy protectionEnsemble learningCommerceEngineeringData mining

The invention discloses a quality-aware edge intelligent federated learning method and a quality-aware edge intelligent federated learning system. The method comprises the following steps that: a cloud platform constructs a federated learning quality optimization problem by taking the maximum sum of aggregation model qualities of a plurality of learning tasks in each iteration as an optimization target and solves the problem: in each iteration, the learning quality of participating nodes is predicted by utilizing historical learning quality records of the participating nodes, and the learningquality of the node training data is quantified by using the reduction amount of a loss function value in each iteration; in each iteration, the cloud platform stimulates nodes with high learning quality to participate in federated learning through a reverse auction mechanism; therefore, the distribution of learning tasks and learning rewards is carried out; in each iteration, for each learning task, each participation node uploads its local model parameters to the cloud platform to aggregate to obtain a global model. According to the method and the system, richer data and more computing powercan be provided for model training under the condition of protecting data privacy so that the quality of the model is improved.

Owner:TSINGHUA UNIV +1

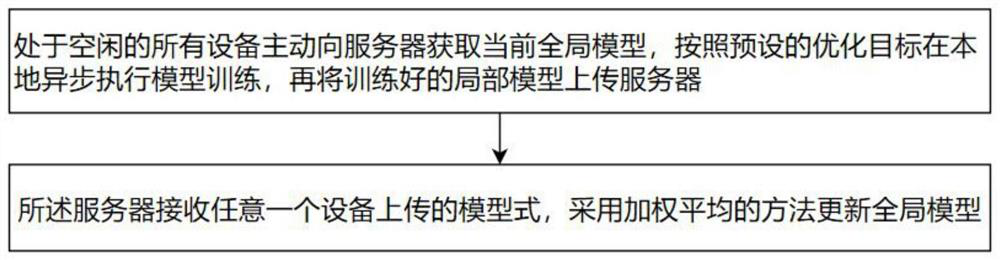

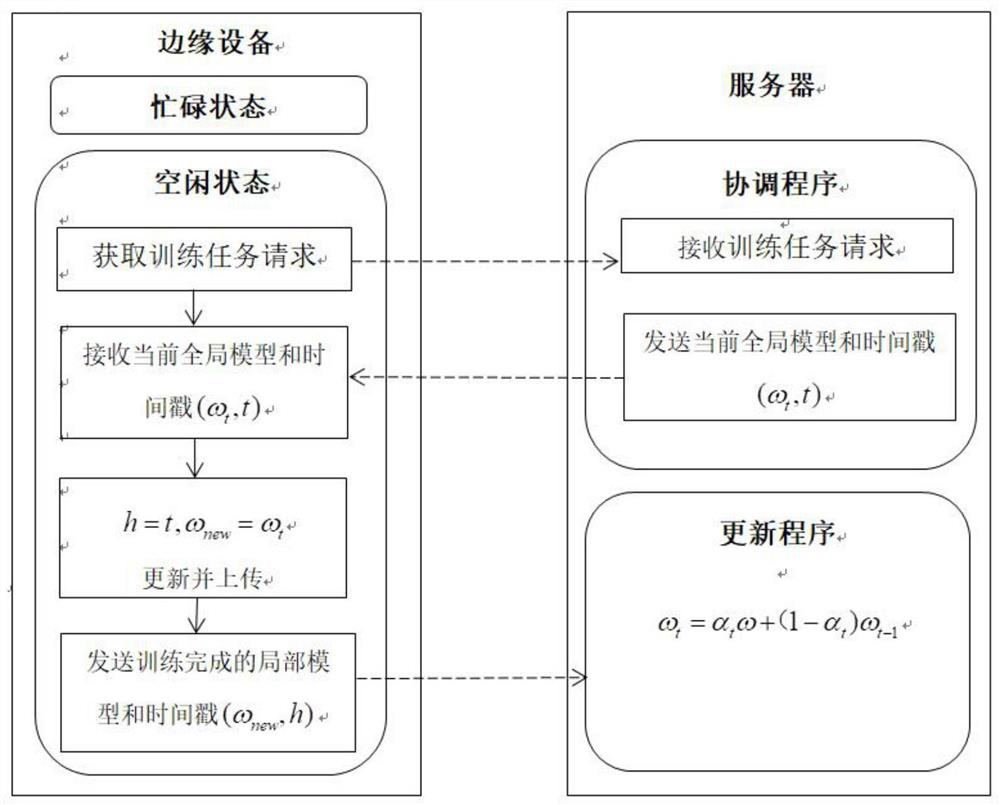

Edge computing-oriented federated learning method

InactiveCN111708640AEfficient training processMake the most of free timeResource allocationMachine learningEdge computingWeighted average method

The invention provides an edge computing-oriented federated learning method, which comprises the following steps that: all idle devices actively acquire a current global model from a server, asynchronously execute model training locally according to a preset optimization target, and upload the trained local model to the server; and the server receives the model formula uploaded by any one device and updates the global model by adopting a weighted average method. According to the method, asynchronous training and federation learning can be combined, in asynchronous federation optimization, allidle devices are used for asynchronous model training, a server updates a global model through weighted average, idle time of all edge devices is fully utilized, and model training is more efficient.

Owner:苏州联电能源发展有限公司

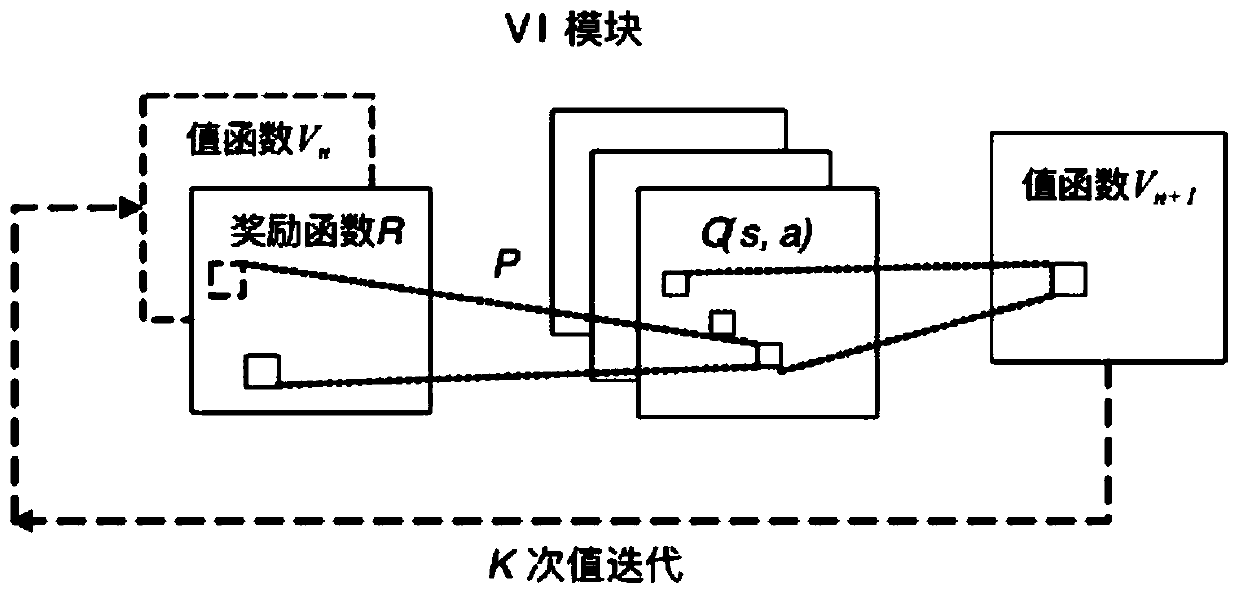

A cross-domain federated learning model and method based on a value iteration network

ActiveCN109711529AImprove forecast accuracyPrivacy protectionNeural architecturesNeural learning methodsNetwork structureData preparation

The invention discloses a cross-domain federal learning model and method based on a value iteration network, and the model comprises a data preparation unit which is used for taking a path planning field of a grid map as a training environment, and taking observation states of two different parts in the same map as inputs of the two fields of federal learning; an Federated-VIN network establishingunit is used for establishing a Federhead-VIN network structure based on a value iteration network; the VIN network structure constructs the full connection of the value iteration modules of the source domain and the target domain, and defines a new joint loss function about the two domains according to the newly constructed network; the iteration unit is used for carrying out forward calculationon the VI modules in the two fields in the training process, and value iteration is achieved for multiple times through the VI modules; and the backward updating unit is used for carrying out backward calculation to update the network parameters, and carrying out backward updating on the VIN parameters and the full connection parameters in the two fields according to the joint loss function.

Owner:SUN YAT SEN UNIV

Data sharing method, computer equipment applying same and readable storage medium

ActiveCN111931242AAddress privacy breachesSafe and reliableEnsemble learningDigital data protectionData informationSoftware engineering

The invention discloses a data sharing method, computer equipment applying the same and a readable storage medium, and belongs to the technical field of data information security. According to the method, a blockchain technology and a federated learning technology are combined, a data security sharing model based on the blockchain and federated learning is constructed, and a data sharing basic process is designed; a working node selection algorithm based on a block chain and node working quality is designed by taking reliable federated learning as a target; a consensus method of a block chainis modified, an excitation mechanism consensus algorithm based on model training quality is designed, and the purposes of encouraging excellent work nodes to work, simplifying the consensus process and reducing the consensus cost are achieved. The differential privacy algorithm suitable for federated learning is selected by taking balance data security and model practicability as targets. According to the invention, the problem of privacy leakage in a data sharing process can be solved; the blockchain technology is combined into data sharing, so that the security and credibility of data are guaranteed; meanwhile, the efficiency of federated learning tasks is improved.

Owner:ELECTRIC POWER RES INST OF STATE GRID ZHEJIANG ELECTRIC POWER COMAPNY +1

Federated learning method and system based on batch size and gradient compression ratio adjustment

ActiveCN111401552APrivacy protectionProtection securityNeural architecturesNeural learning methodsEdge serverUplink transmission

The invention discloses a federated learning method and system based on batch size and gradient compression ratio adjustment, which are used for improving model training performance. The method comprise the following steps: in a federated learning scene, enabling a plurality of terminals to share uplink wireless channel resources; completing the training of a neural network model together with anedge server based on training data of a local terminal; in the model training process, enabling the terminal to calculate the gradient by adopting a batch method in local calculation, and in the uplink transmission process, compressing the gradient before transmission; adjusting the batch size and the gradient compression rate according to the computing power of each terminal and the channel stateof each terminal, so as to improve the convergence rate of model training while ensuring the training time and not reducing the accuracy of the model.

Owner:ZHEJIANG UNIV

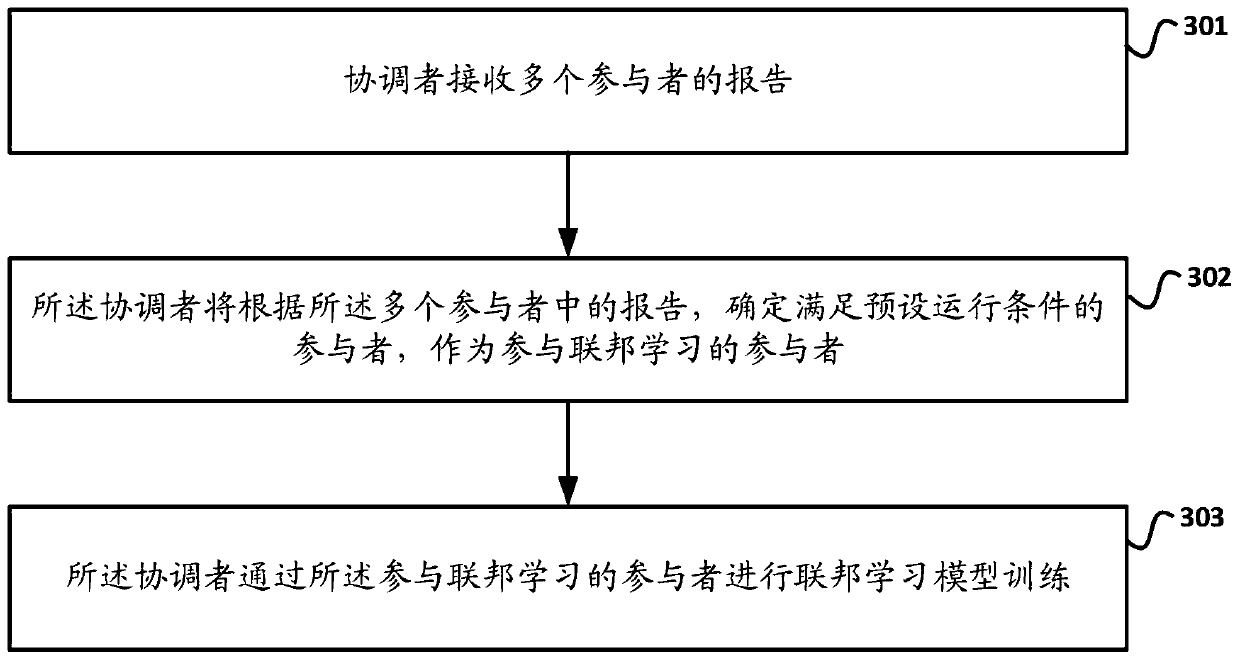

Federated learning method and device

PendingCN110598870ASolve the problem that the performance cannot meet the predetermined requirementsMachine learningStudy methodsKnowledge management

The invention discloses a federated learning method and device. The method comprises the steps that a coordinator receives reports of multiple participants; the coordinator determines participants meeting a preset condition as participants participating in federated learning according to the reports of the plurality of participants; wherein the report represents the expected available resource condition of the participant; and the coordinator performs federated learning model training through the participants participating in federated learning. When the method is applied to financial scienceand technology (Fintech), participants who do not meet expected available resource conditions are removed as much as possible, so that in the process that a coordinator performs federated learning through the participants participating in federated learning, the influence of participant transmission efficiency on federated learning model performance in the federated learning process is reduced.

Owner:WEBANK (CHINA)

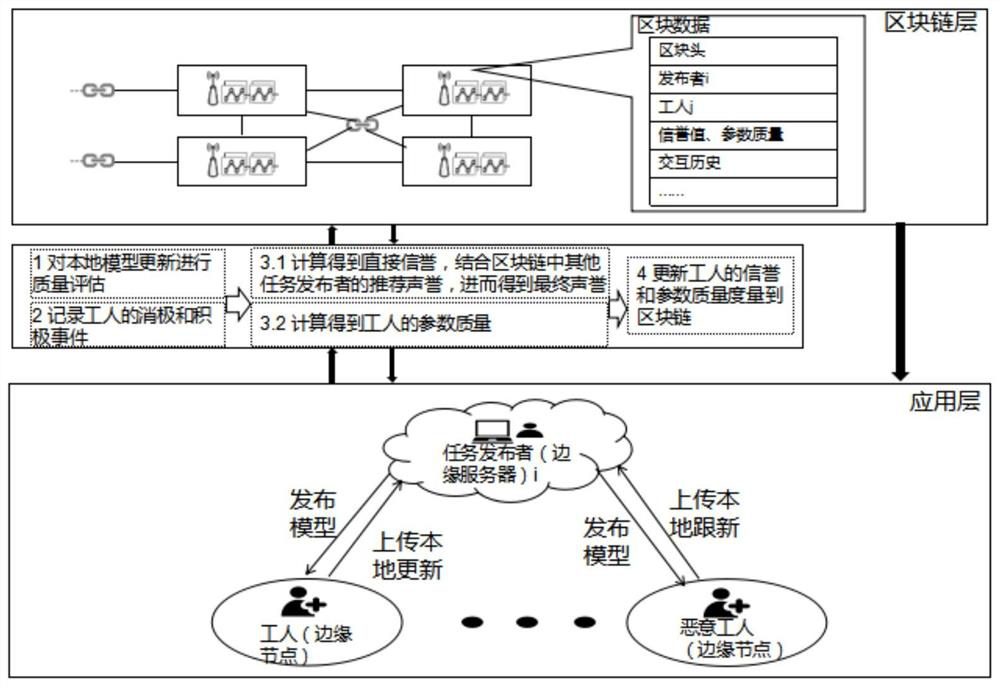

Marine Internet of Things data security sharing method under edge computing framework based on federated learning and block chain technology

The invention discloses a marine Internet of Things data security sharing method under an edge computing framework based on federated learning and a block chain technology, and the method comprises the steps: firstly the parameter quality and reputation of edge nodes are calculated, and the selection of the edge nodes are carried out; secondly, the edge server issues an initial model to the selected edge node, and the edge node performs local training by using a local data set; then, the edge server updates the global model by using the local training data parameters collected from the edge nodes, trains the global model in each iteration, and updates the reputation and quality metrics; and finally, the alliance block chain is used as a decentralized method, and effective reputation and quality management of workers are achieved under the condition of no reputation and tampering. Besides, a reputation consensus mechanism is introduced into the block chain, so that edge nodes recorded in the block chain are higher in quality, and the overall model effect is improved. According to the invention, the marine Internet of Things edge computing framework has more efficient data processingand safer data protection capabilities.

Owner:DALIAN UNIV OF TECH

Federal learning computing unloading computing system and method based on cloud side end

InactiveCN112817653AMake up for deficienciesAccurate decisionProgram initiation/switchingResource allocationComputation complexityEdge node

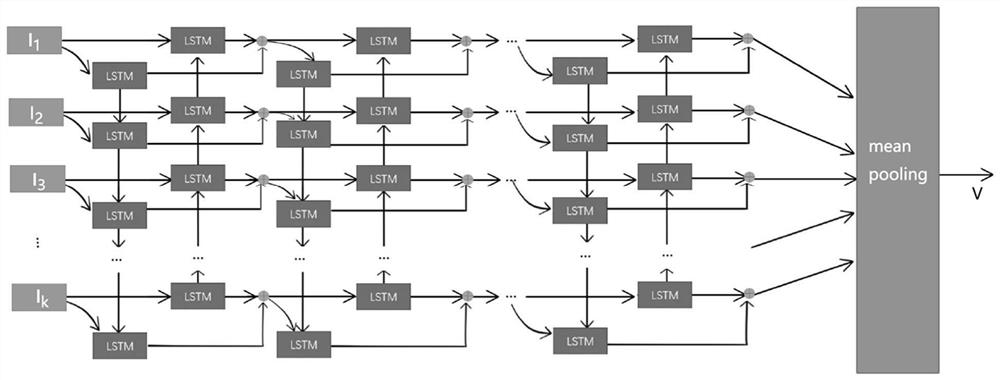

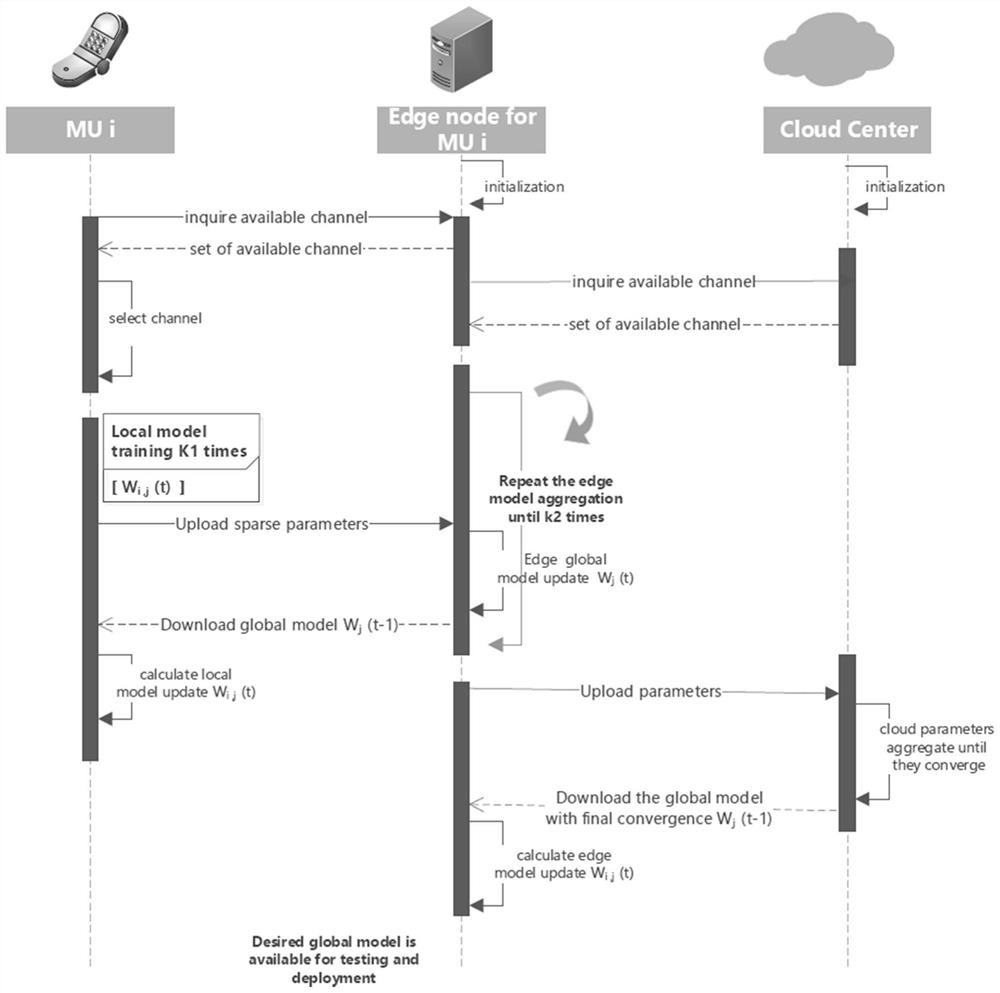

The invention discloses a federated learning computing unloading resource allocation system and a method based on a cloud side end, and aims to make an accurate decision for computing task unloading and resource allocation, eliminate the need for solving a combinatorial optimization problem and greatly reduce the computing complexity. Based on cloud side three-layer federated learning, the adjacent advantage of edge nodes to a terminal is comprehensively utilized, core powerful computing resources in cloud computing are also utilized, the problem that the computing resources of the edge nodes are insufficient is solved, a local model is trained at each of multiple clients to predict an unloading task. A global model is formed by periodically executing one-time parameter aggregation at an edge end, the cloud end executes one-time aggregation after the edge executes the periodic aggregation until a global BiLSTM model is formed through convergence, and the global model can intelligently predict the information amount of each unloading task. Therefore, guidance is better provided for calculation unloading and resource allocation.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com