Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

32 results about "Sparse matrix multiplication" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

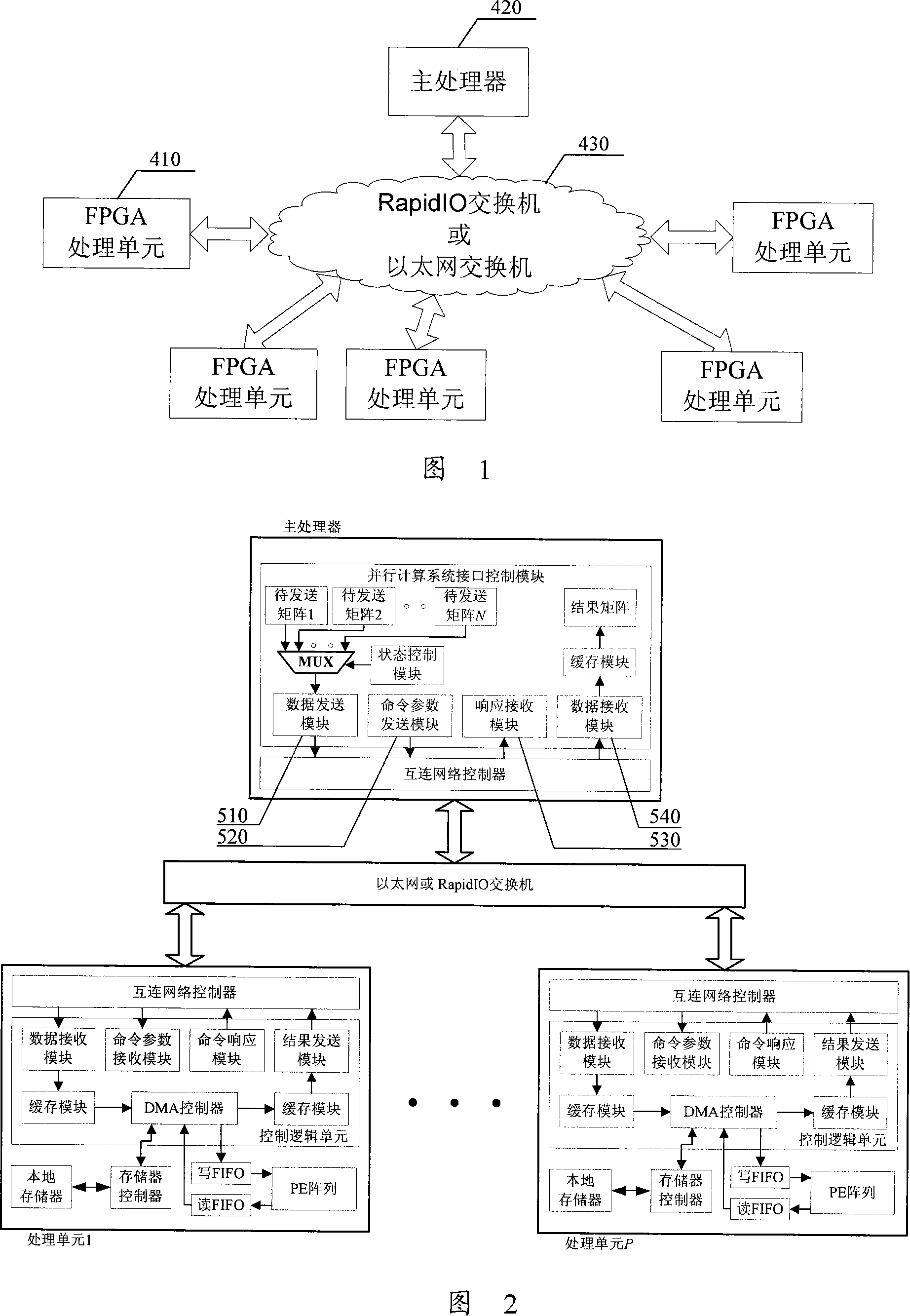

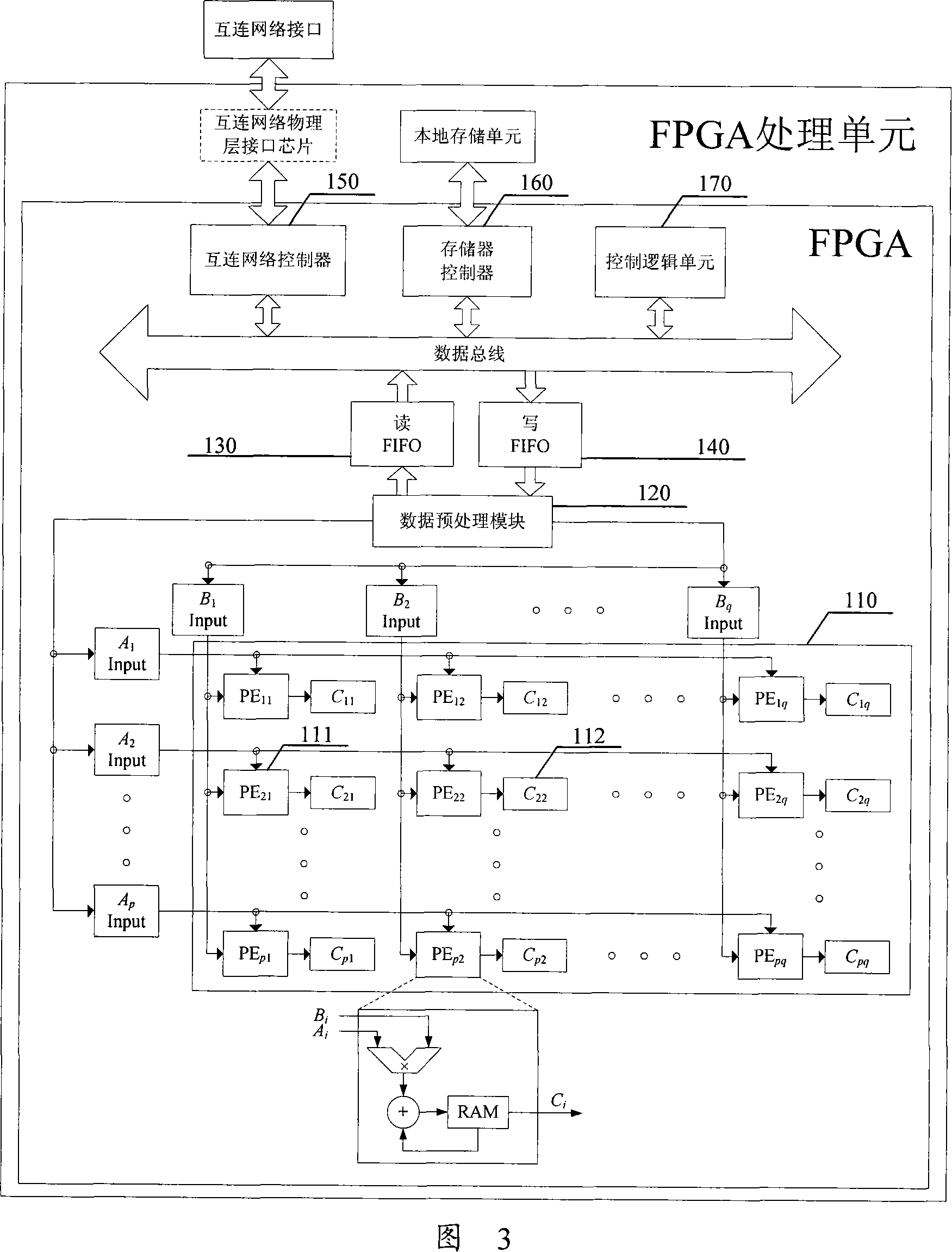

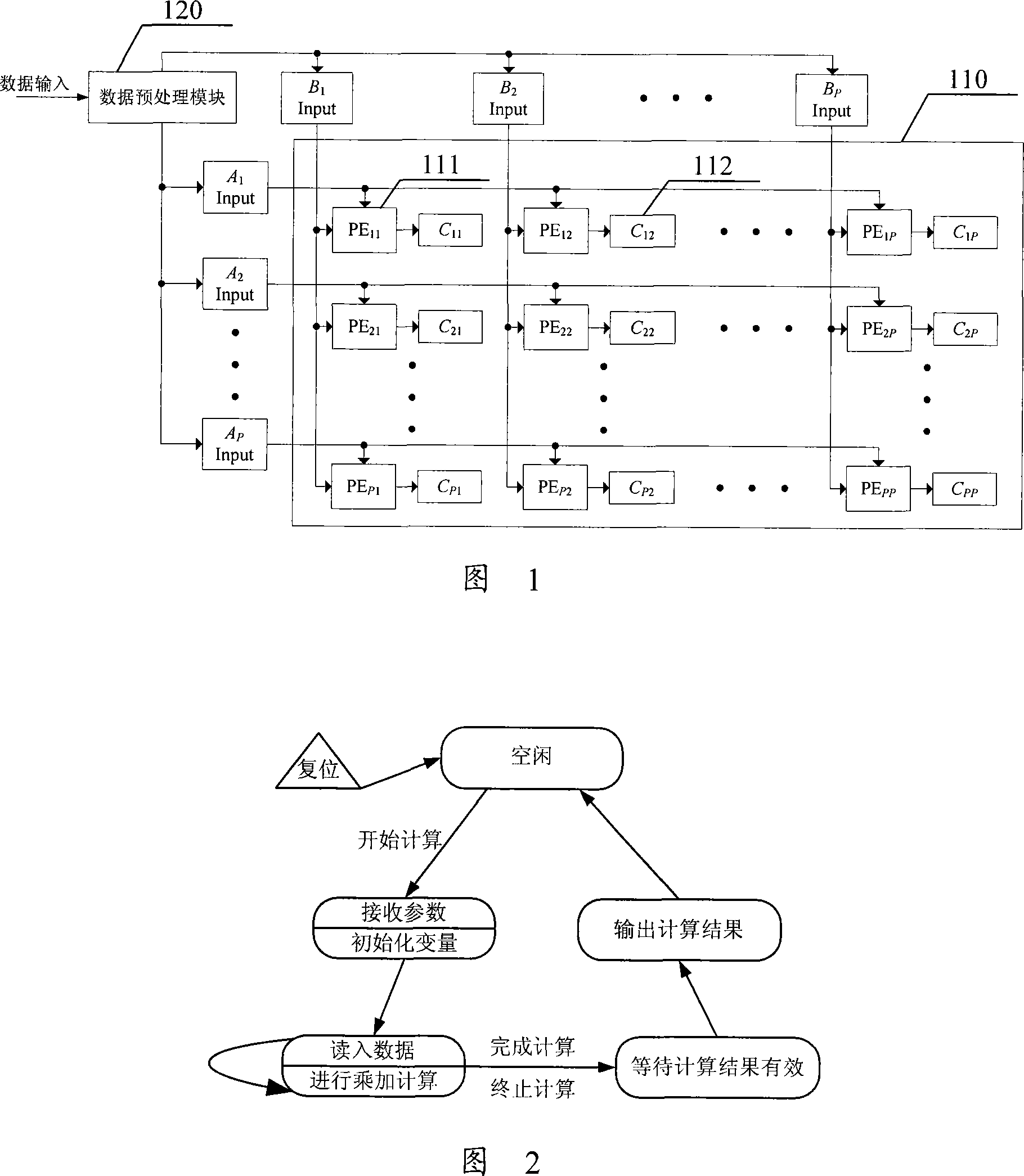

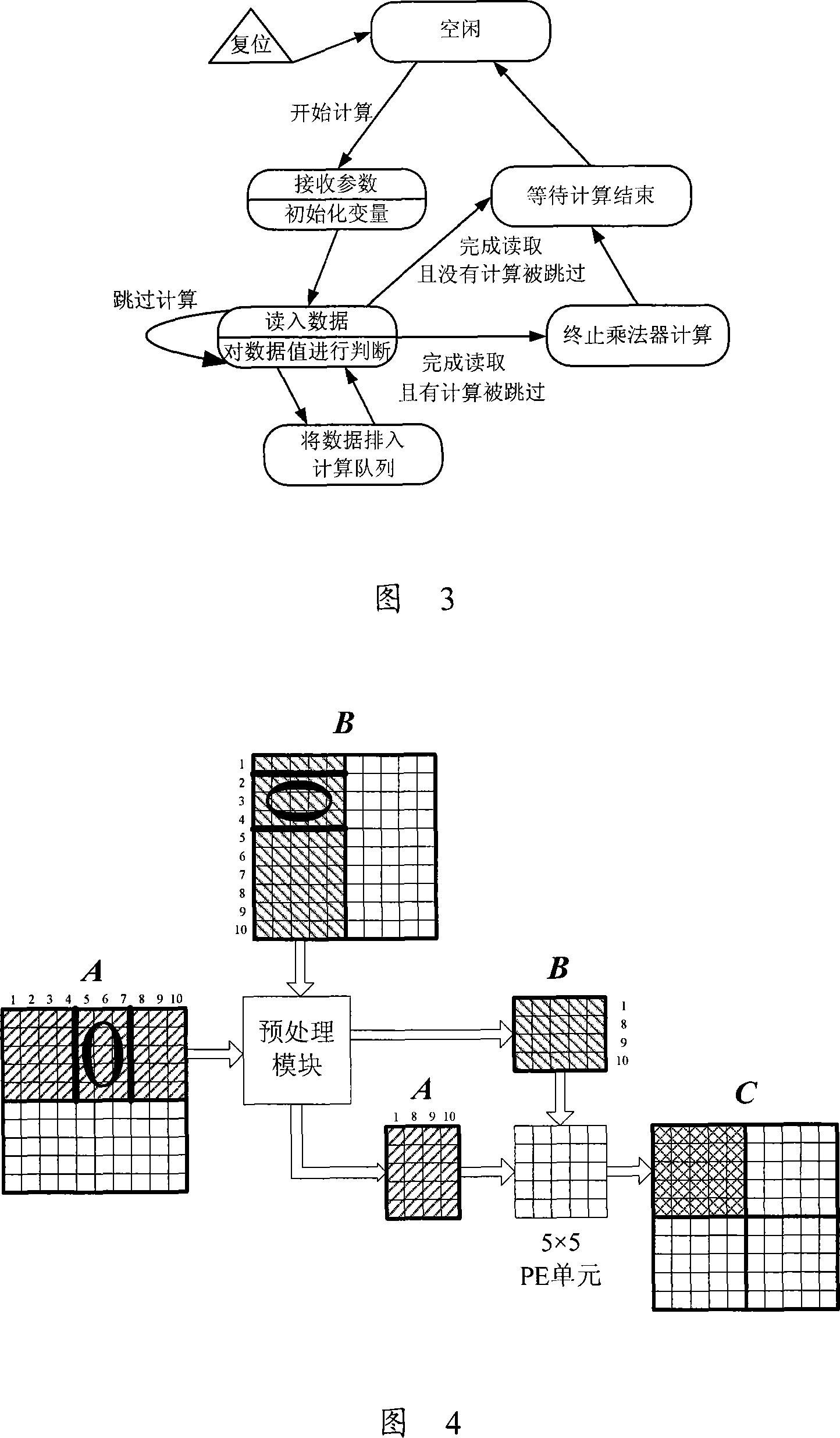

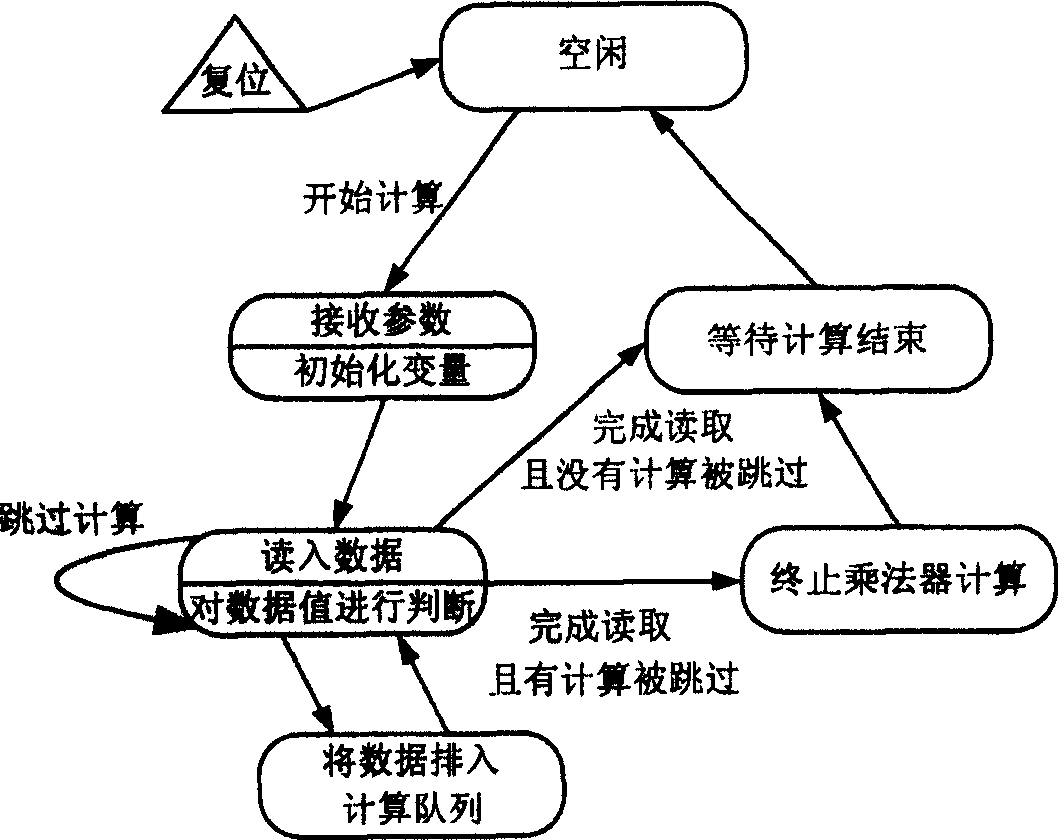

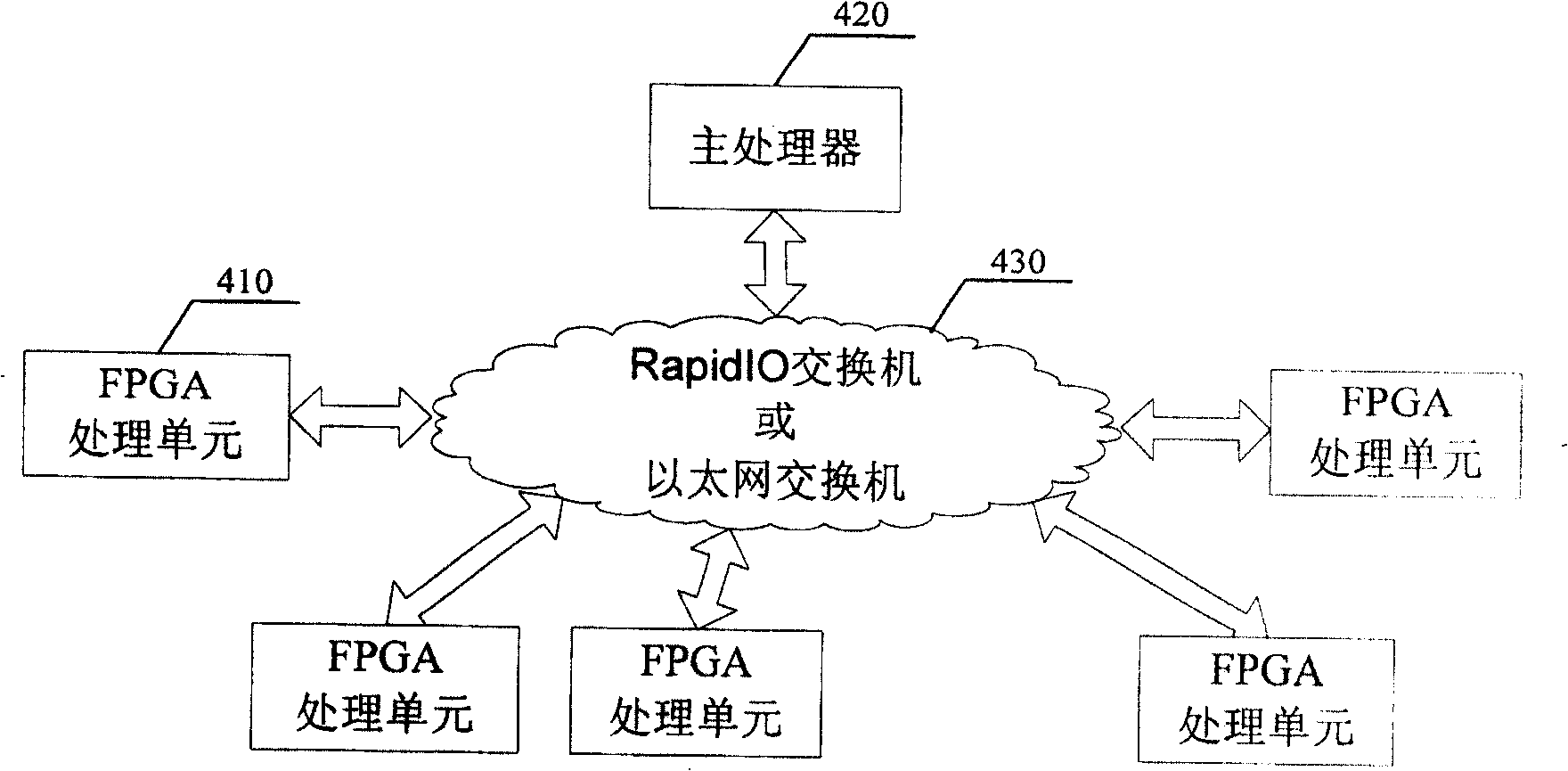

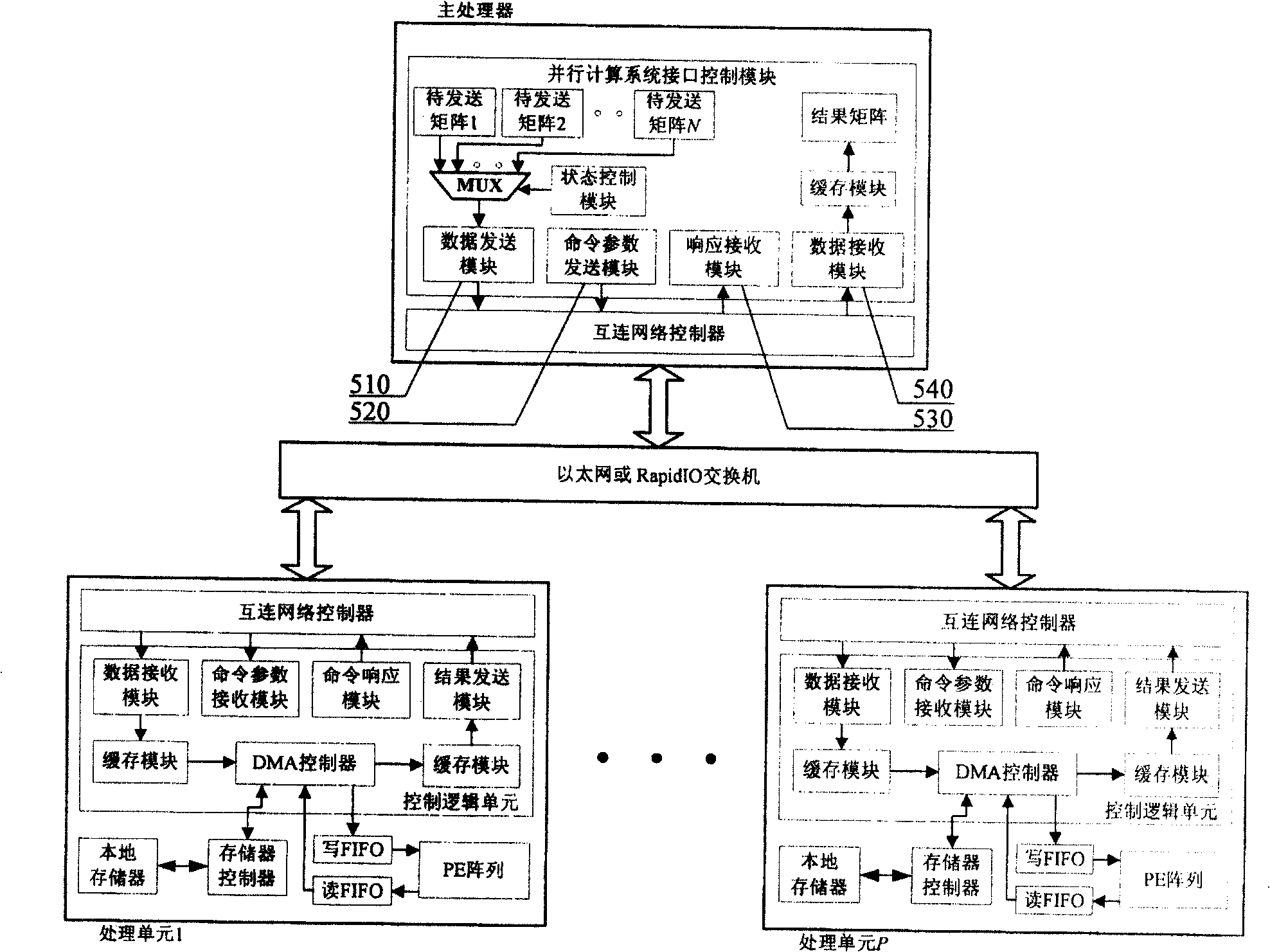

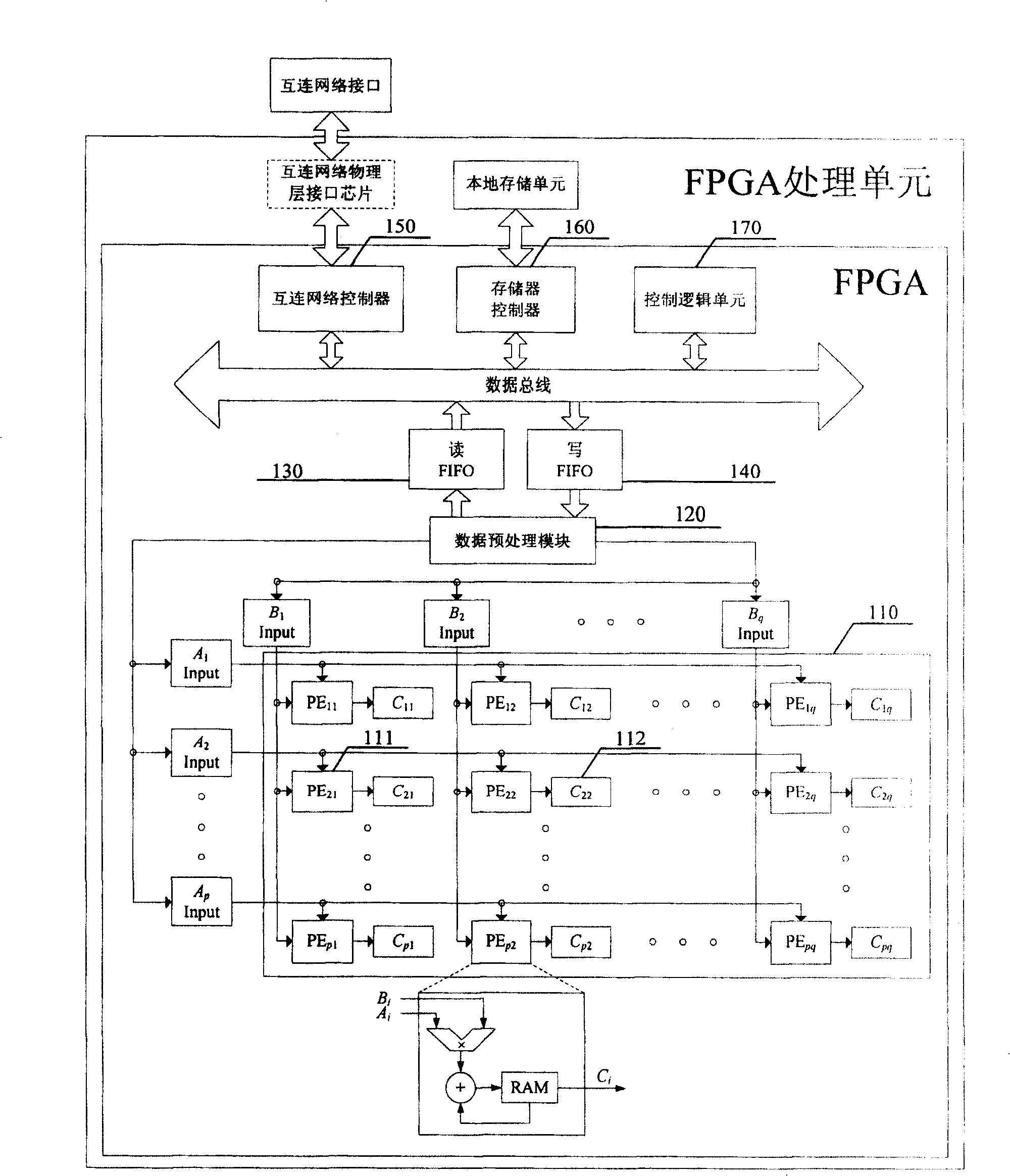

Matrix multiplication parallel computing system based on multi-FPGA

InactiveCN101089840AReduce resource requirementsImprove computing powerDigital computer detailsData switching networksBinary multiplierParallel algorithm

A matrix multiplication parallel calculation system based on multi-FPGA is prepared as utilizing FPGA as processing unit to finalize dense matrix multiplication calculation and to raise sparse matrix multiplication calculation function, utilizing Ethernet and star shaped topology structure to form master-slave distributed FPGA calculation system, utilizing Ethernet multicast-sending mode to carry out data multicast-sending to processing unit requiring the same data and utilizing parallel algorithm based on line one-dimensional division output matrix to carry out matrix multiplication parallel calculation for decreasing communication overhead of system.

Owner:ZHEJIANG UNIV

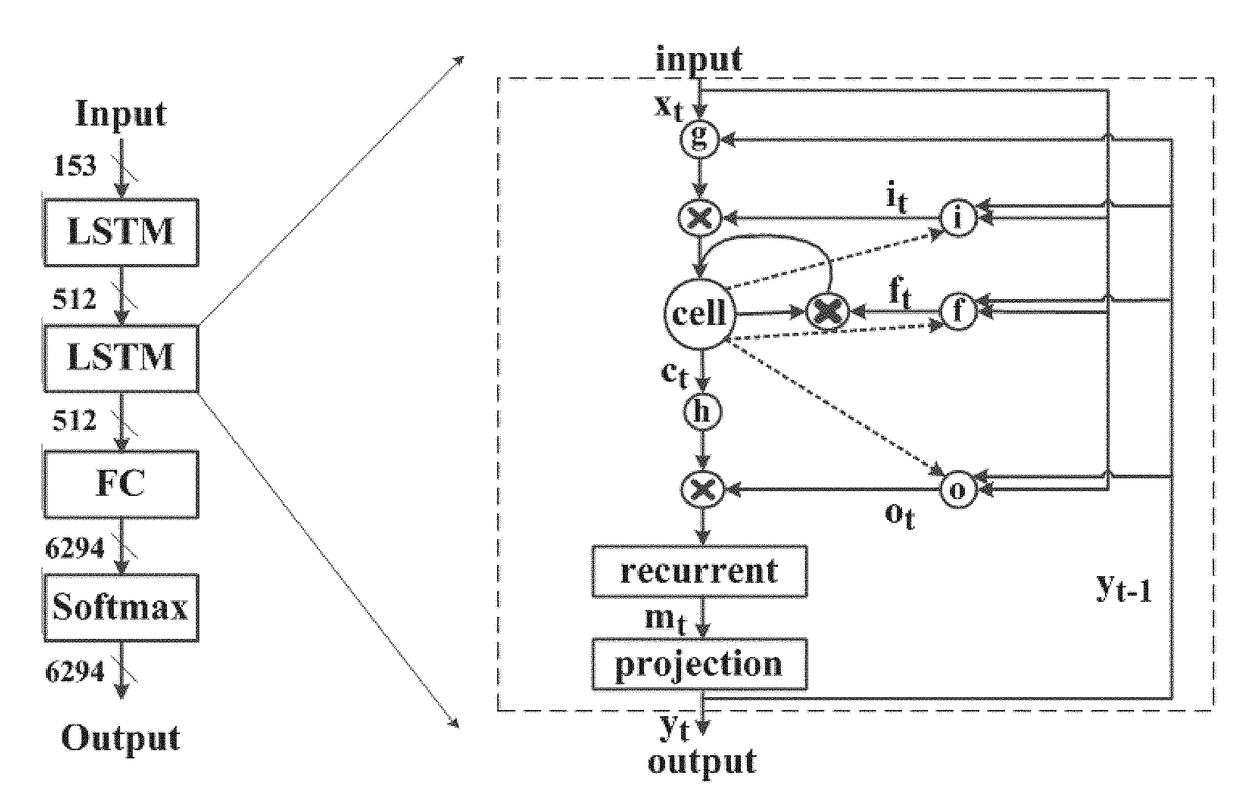

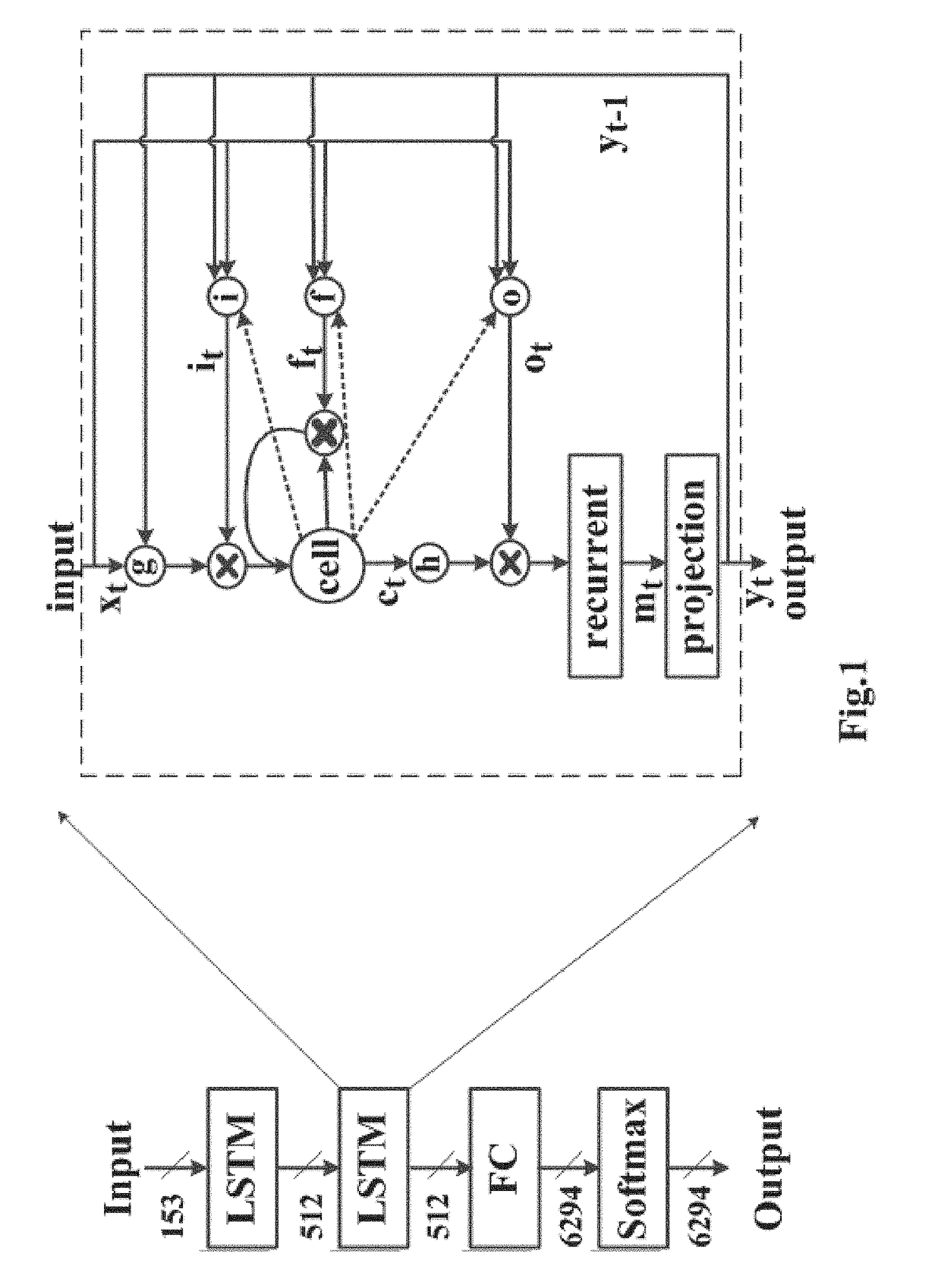

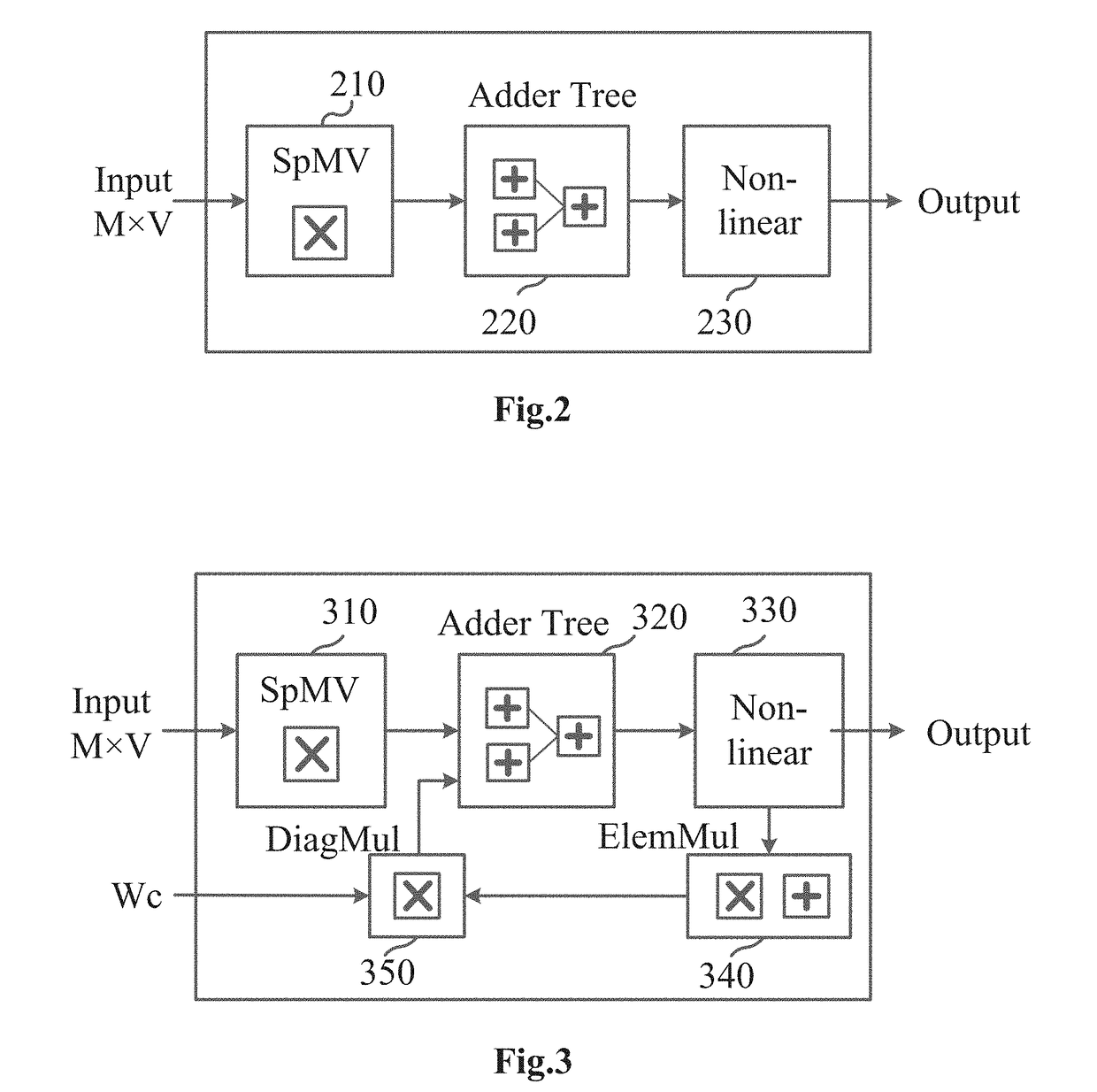

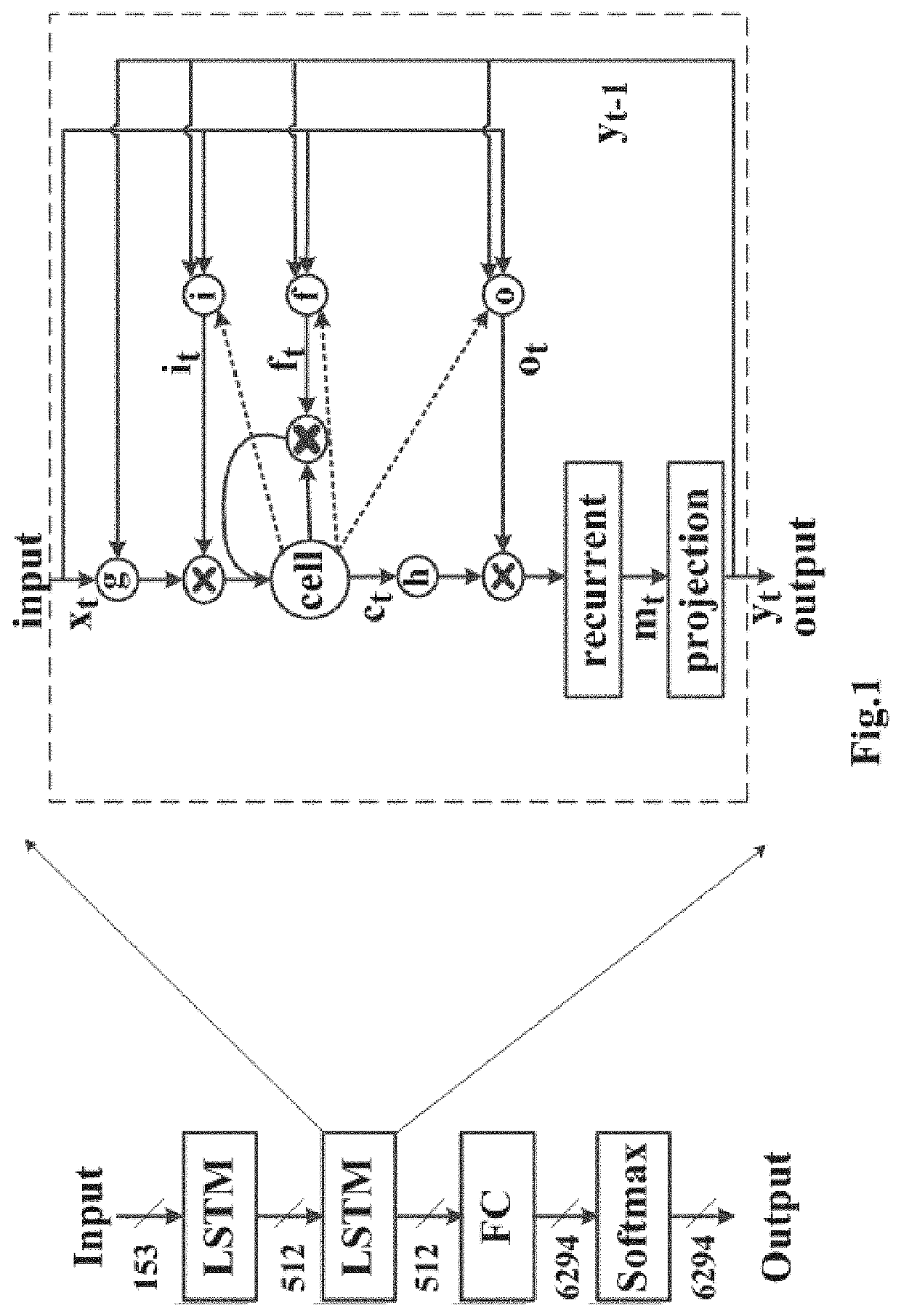

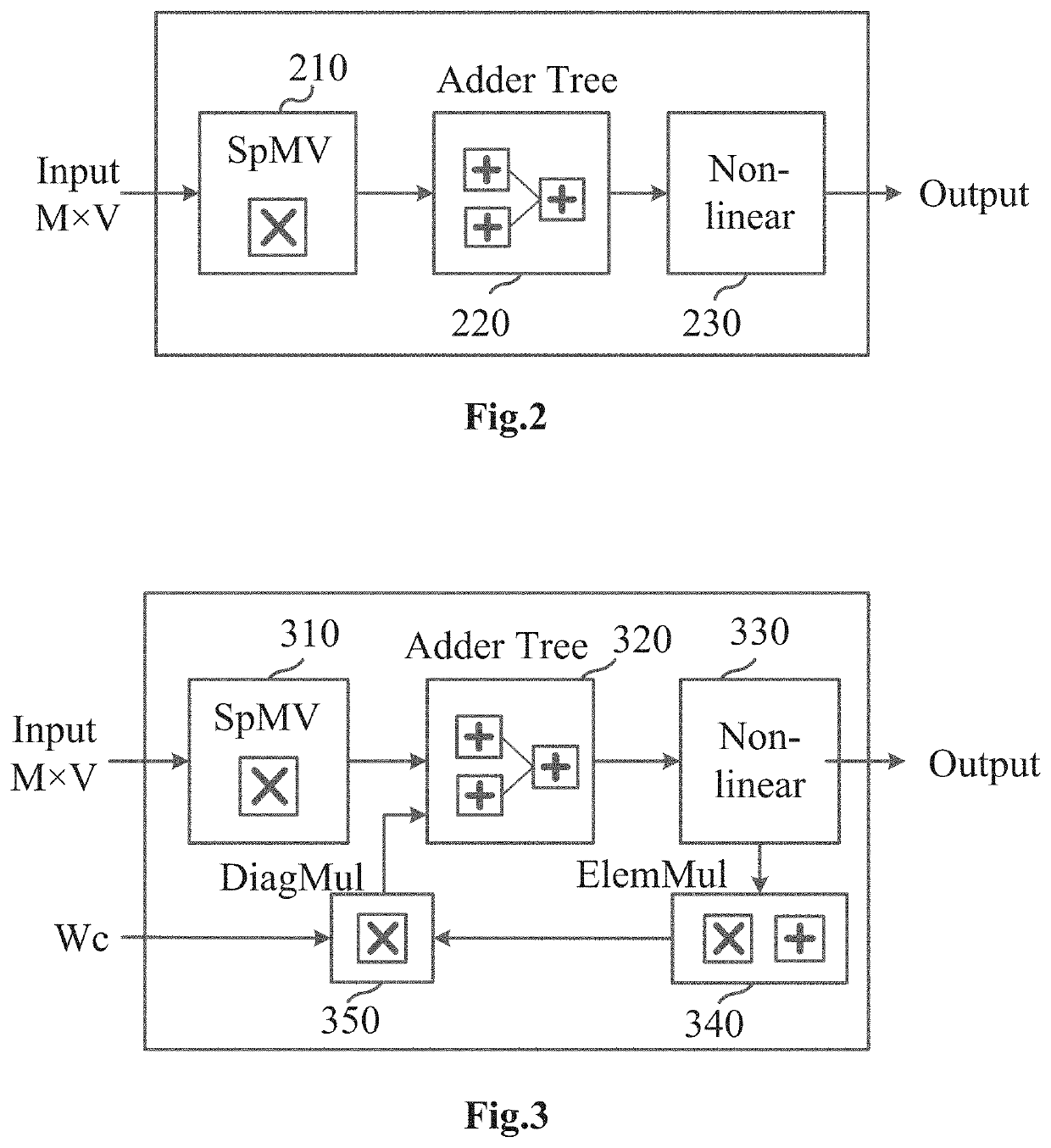

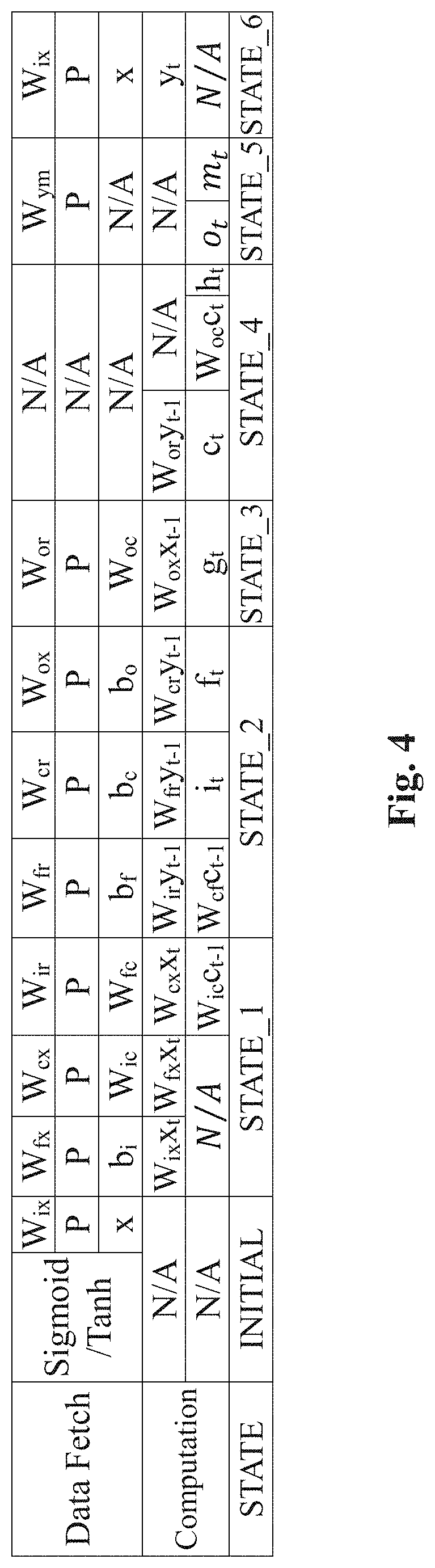

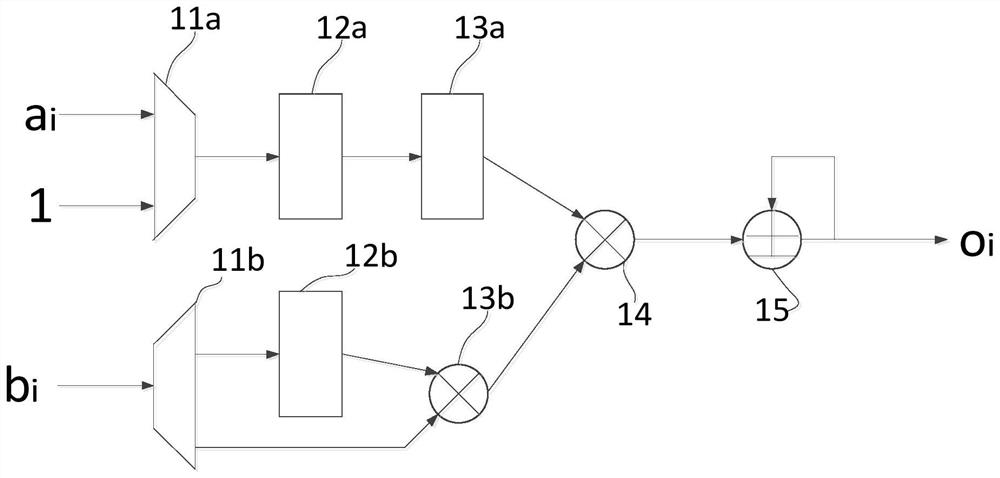

Hardware Accelerator for Compressed LSTM

ActiveUS20180174036A1Easy to operateImprove forecastNeural architecturesPhysical realisationActivation functionComputer module

Hardware accelerator for compressed Long Short Term Memory (LSTM) is disclosed. The accelerator comprise a sparse matrix-vector multiplication module for performing multiplication operation between all sparse matrices in the LSTM and vectors to sequentially obtain a plurality of sparse matrix-vector multiplication results. A addition tree module are also included for accumulating a plurality of said sparse matrix multiplication results to obtain an accumulated result. And a non-linear operation module passes the accumulated results through an activation function to generate non-linear operation result. That is, the present accelerator adopts pipeline design to overlap the time of data transfer and computation for compressed LSTM.

Owner:XILINX TECH BEIJING LTD

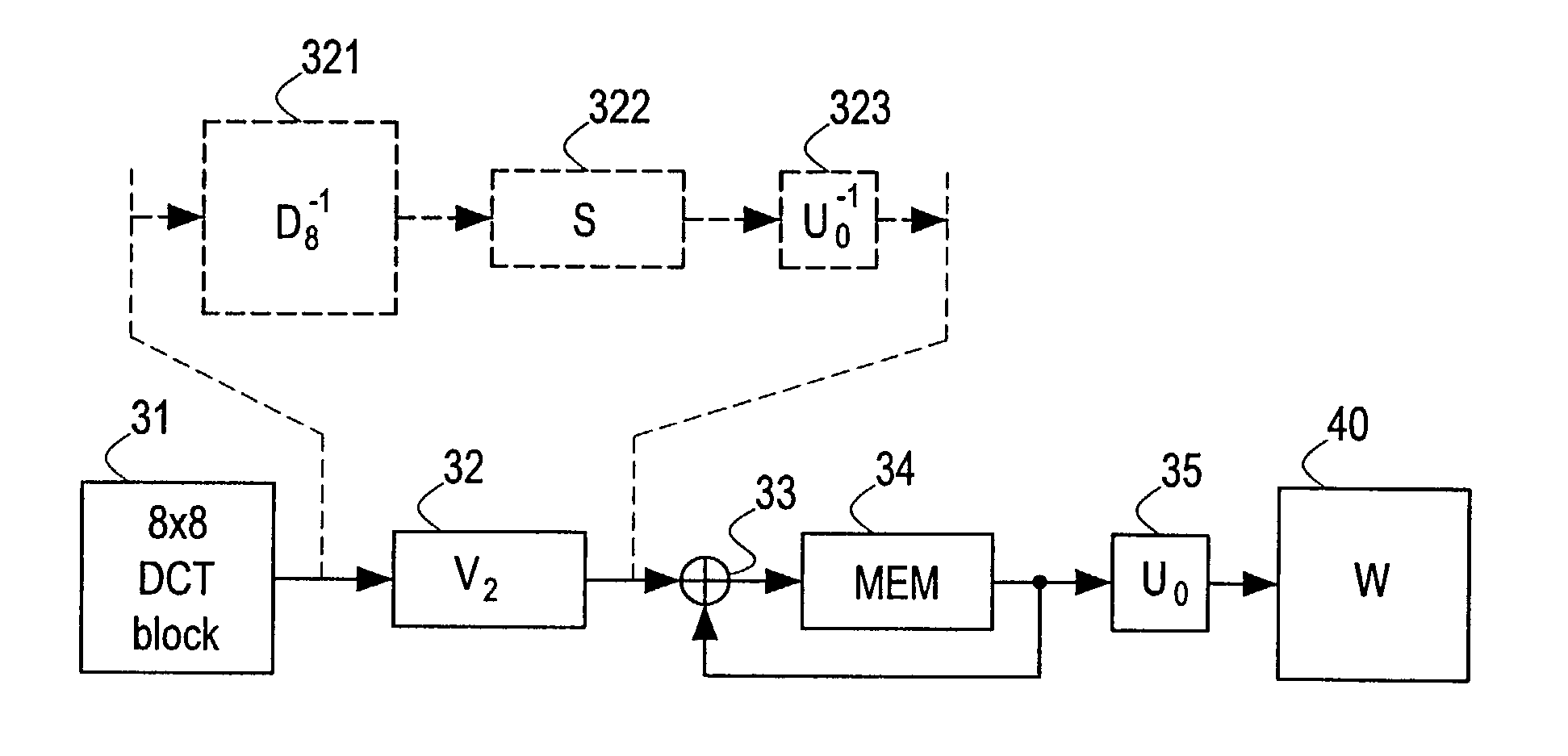

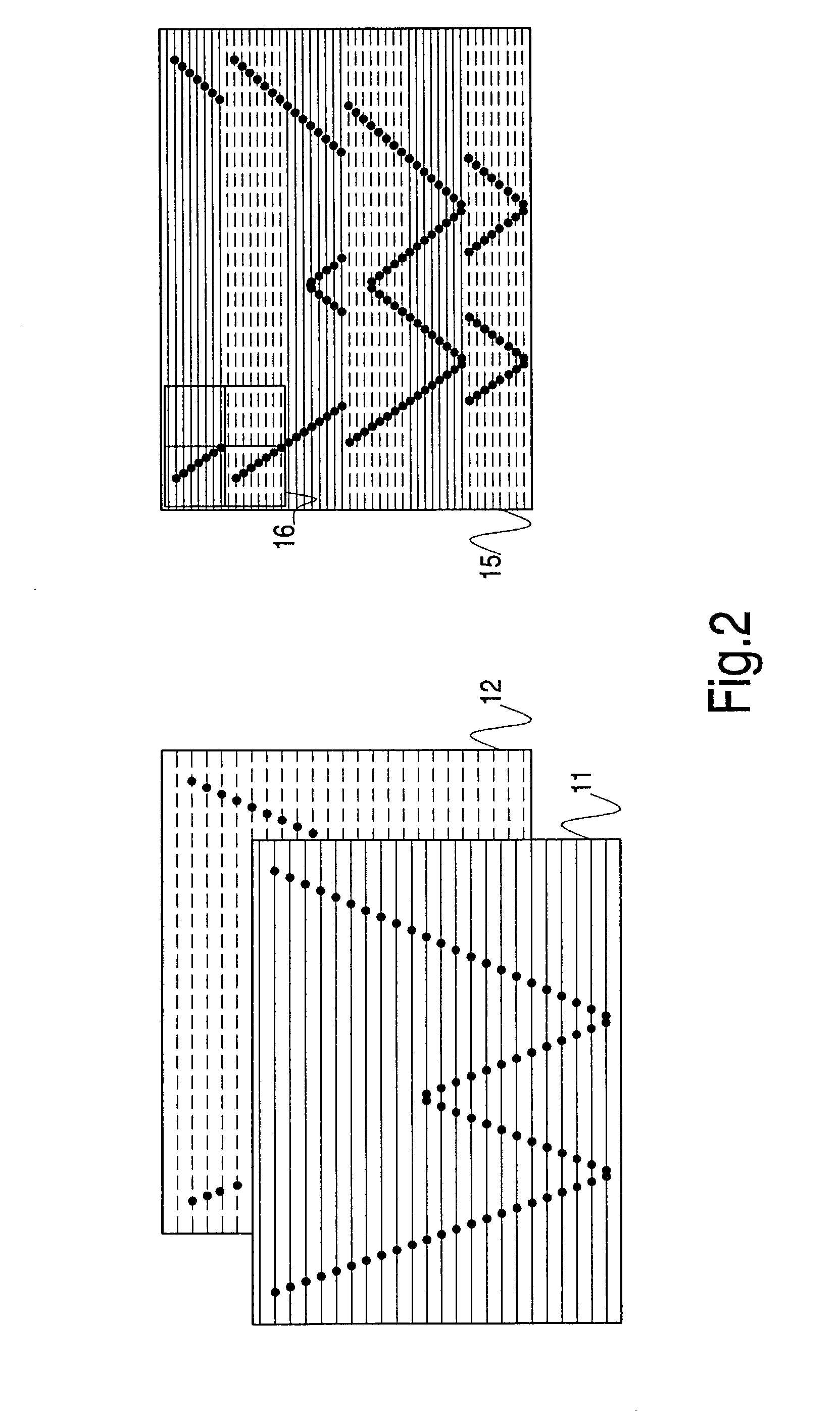

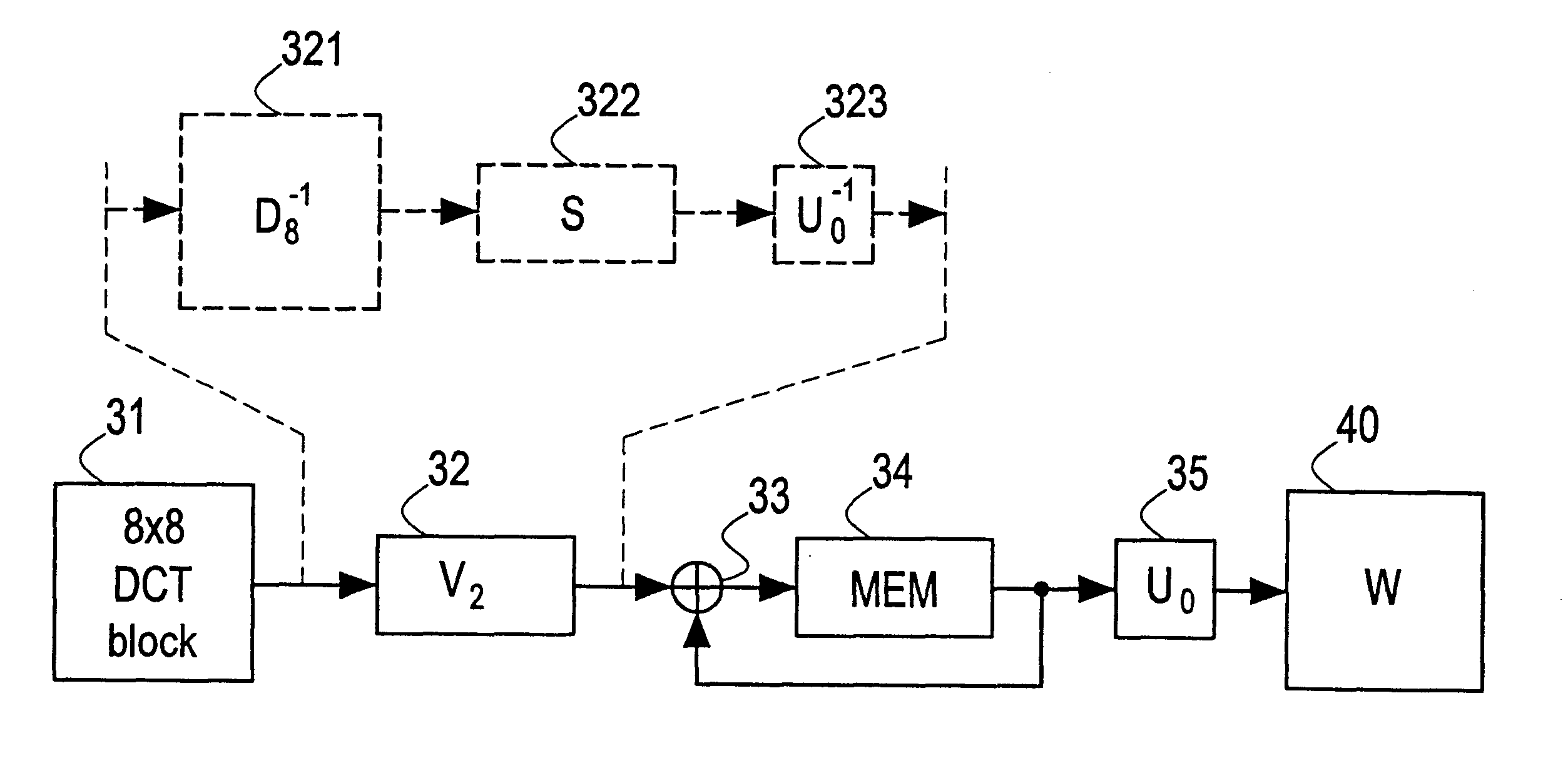

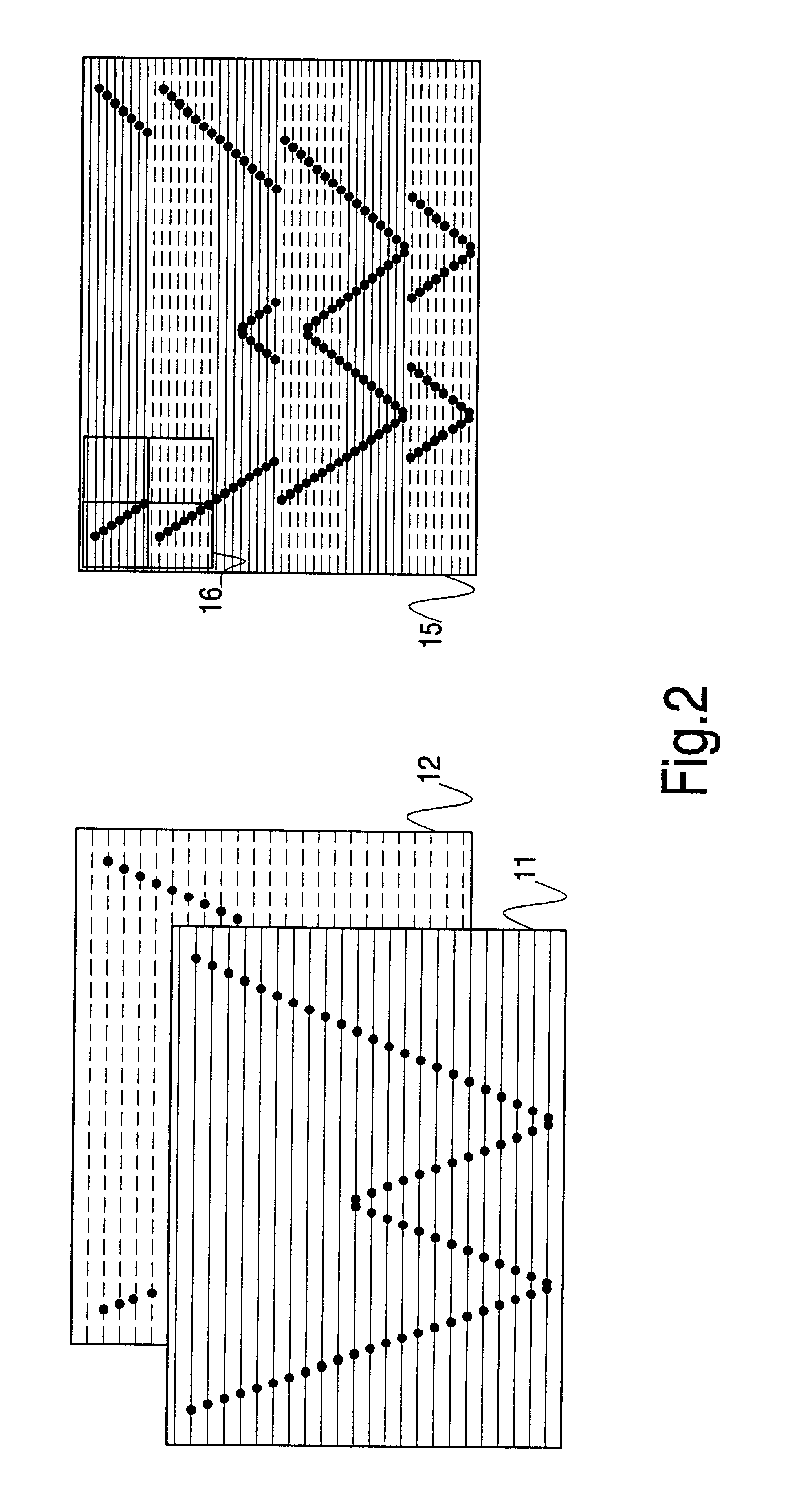

Adding fields of a video frame

InactiveUS20030043922A1Easy to implementMatrix is sparseTelevision system scanning detailsPicture reproducers using cathode ray tubesVideo processingComputer science

For some video processing applications, most notably watermark detection (40), it is necessary to add or average (parts of) the two interlaced fields which make up a frame. This operation is not trivial in the MPEG domain due to the existence of frame-encoded DCT blocks. The invention provides a method and arrangement for adding the fields without requiring a frame memory or an on-the-fly inverse DCT. To this end, the mathematically required operations of inverse vertical DCT (321) and addition (322) are combined with a basis transform (323). The basis transform is chosen to be such that the combined operation is physically replaced by multiplication with a sparse matrix (32). Said sparse matrix multiplication can easily be executed on-the-fly. The inverse basis transform (35) is postponed until after the desired addition (33, 34) has been completed.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

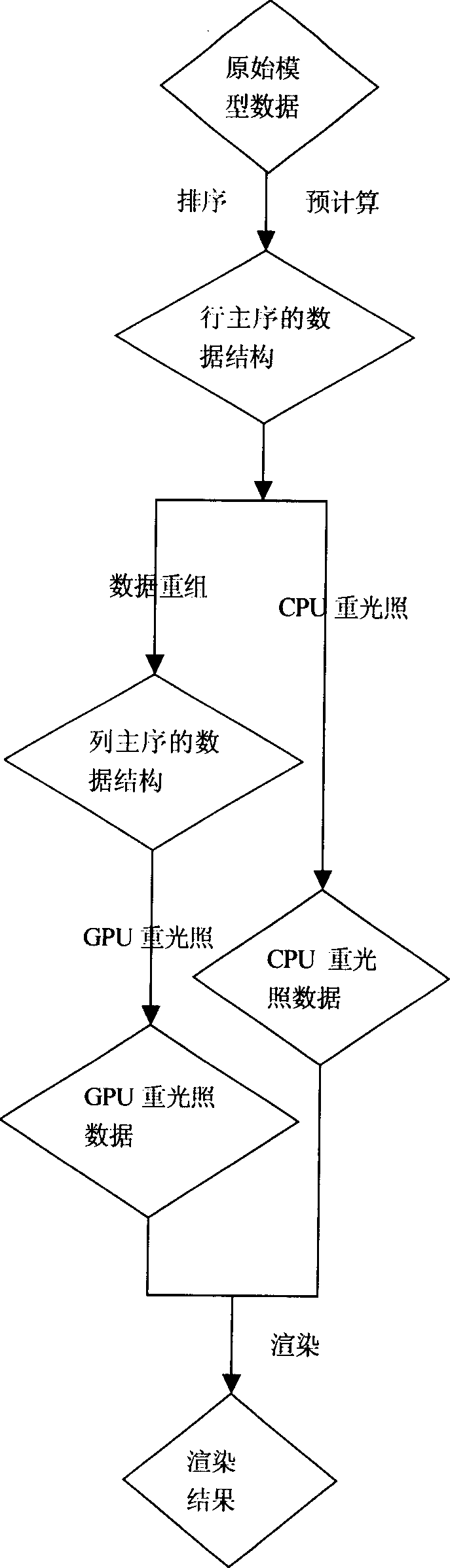

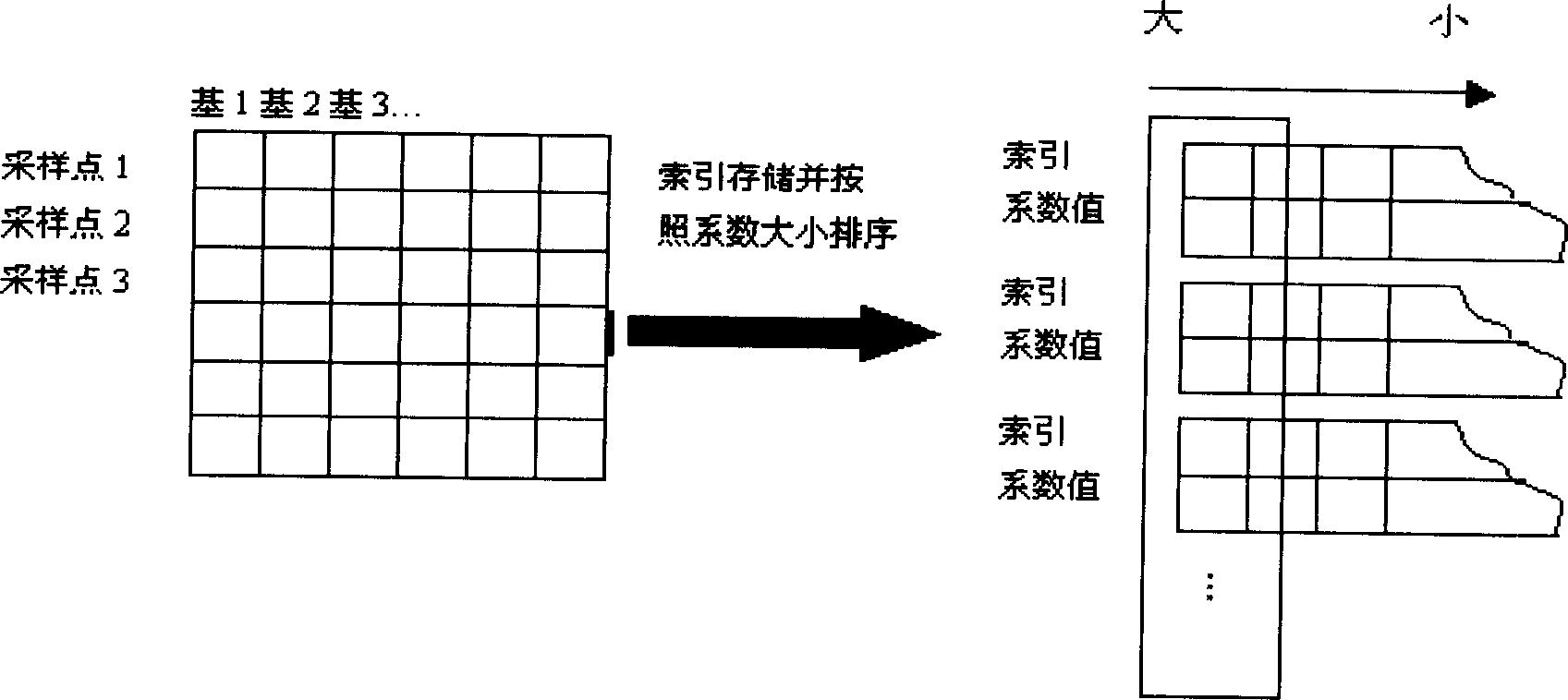

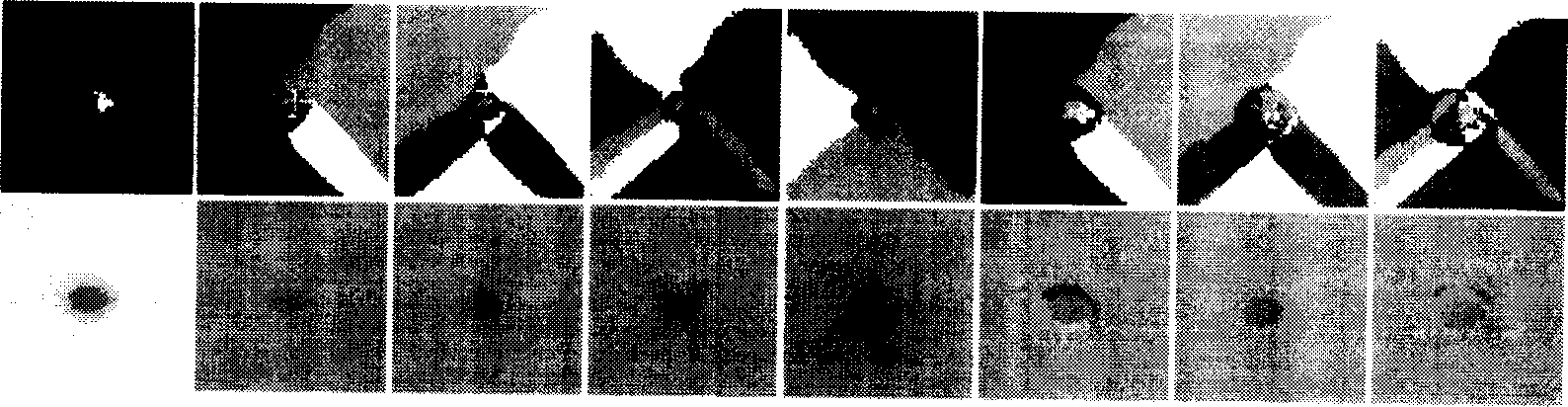

Method for precalculating radiancy transfer full-frequency shadow based on GPU

InactiveCN1889128AReduce usageRendering speed maintained3D-image renderingIlluminanceTransfer matrix

A method of transferring entire frequency shadow owing to the pre-computed radiancey of GPU, (1) making use of the circumstance illuminance image to pursues the illumination to circumstance , getting the radiation delivery function B = TL, matrix T is radiation delivery matrix, L is an illuminant matrix; (2) pre-computing the radiation delivery matrix T; (3)getting sparse radiation delivery matrix when the pre-computed radiation delivery matrix T compressed in quantization owe to small echo alternation; (4) rearranging the sparse radiation delivery matrix in (3) to put the important matrix in the front part; (5) doing small echo alternation rapidly for L, getting sparse illuminant matrix which has been quantization compressed; (6) carrying out rapidly sparse matrix multiplication on T and L in GPU to accomplish the re illumination exaggeration. The invention make use of the data structure and algorithm according to the ability of GPU that computed in parallel, it can reach fairly good balance between CPU loads and GPU loads, exaggerating speed and exaggerating quality . It can reduce the use of memory and keep the quality of exaggerating at the same time, and the exaggerating speed has increased in wide-range. It has reached the purpose of exaggerating entire frequency shadow in real time.

Owner:BEIHANG UNIV

Large-scale sparse matrix multiplication method based on mapreduce

InactiveCN103106183AReduce restrictionsCalculation speedDigital data processing detailsComplex mathematical operationsAlgorithmSparse matrix multiplication

The invention provides a large-scale sparse matrix multiplication method based on mapreduce. Suppose that the large-scale sparse matrixes are A and B, and a multiplication matrix of A and B is C. The method includes the following steps: step 10, a mapreduce Job finishes transposing of the matrix A and outputting of a matrix A'; step 20, a transformational matrix B converts a storage mode using the matrix B as a coordinate point into a storage mode of sparse vector, and outputs a matrix B'; step 30, connecting the matrix A' and the matrix B', calculating a product component, obtaining the product component of a column number K on the matrix A and column number K on the matrix B of Cij; step 40, merging the product components, calculating Cij through accumulation of the product components Cij_k. The large-scale sparse matrix multiplication is converted into basic operations of transposition and transformation, connection and merging and the like which are suitable for mapreduce calculation, and the problem of resource limit of a single machine large-scale sparse matrix multiplication is solved.

Owner:FUJIAN TQ DIGITAL

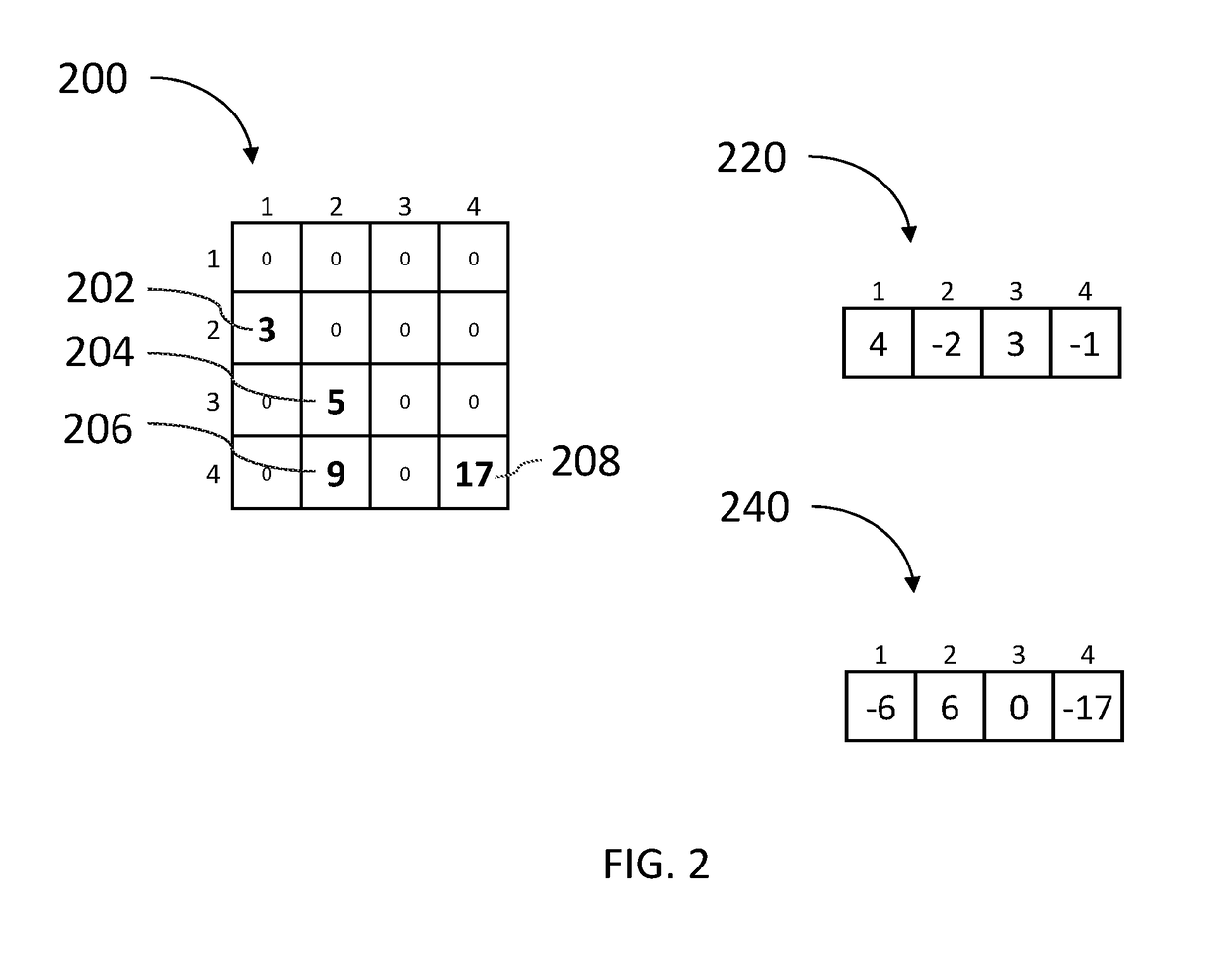

Sparse matrix multiplication in associative memory device

ActiveUS20180210862A1Digital data processing detailsComplex mathematical operationsAlgorithmSparse matrix multiplication

A method for multiplying a first sparse matrix by a second sparse matrix in an associative memory device includes storing multiplicand information related to each non-zero element of the second sparse matrix in a computation column of the associative memory device; the multiplicand information includes at least a multiplicand value. According to a first linear algebra rule, the method associates multiplier information related to a non-zero element of the first sparse matrix with each of its associated multiplicands, the multiplier information includes at least a multiplier value. The method concurrently stores the multiplier information in the computation columns of each associated multiplicand. The method, concurrently on all computation columns, multiplies a multiplier value by its associated multiplicand value to provide a product in the computation column, and adds together products from computation columns, associated according to a second linear algebra rule, to provide a resultant matrix.

Owner:GSI TECH

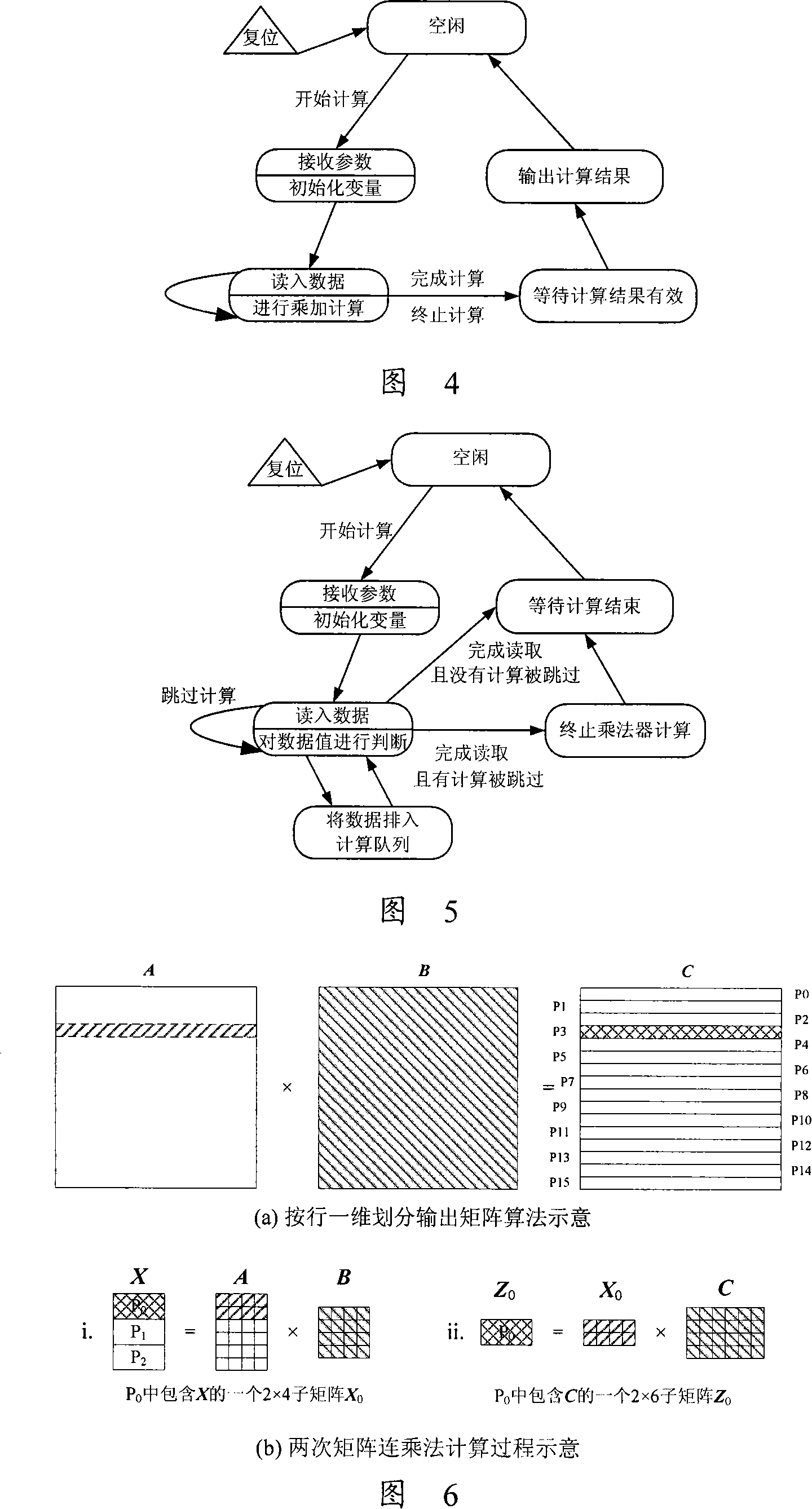

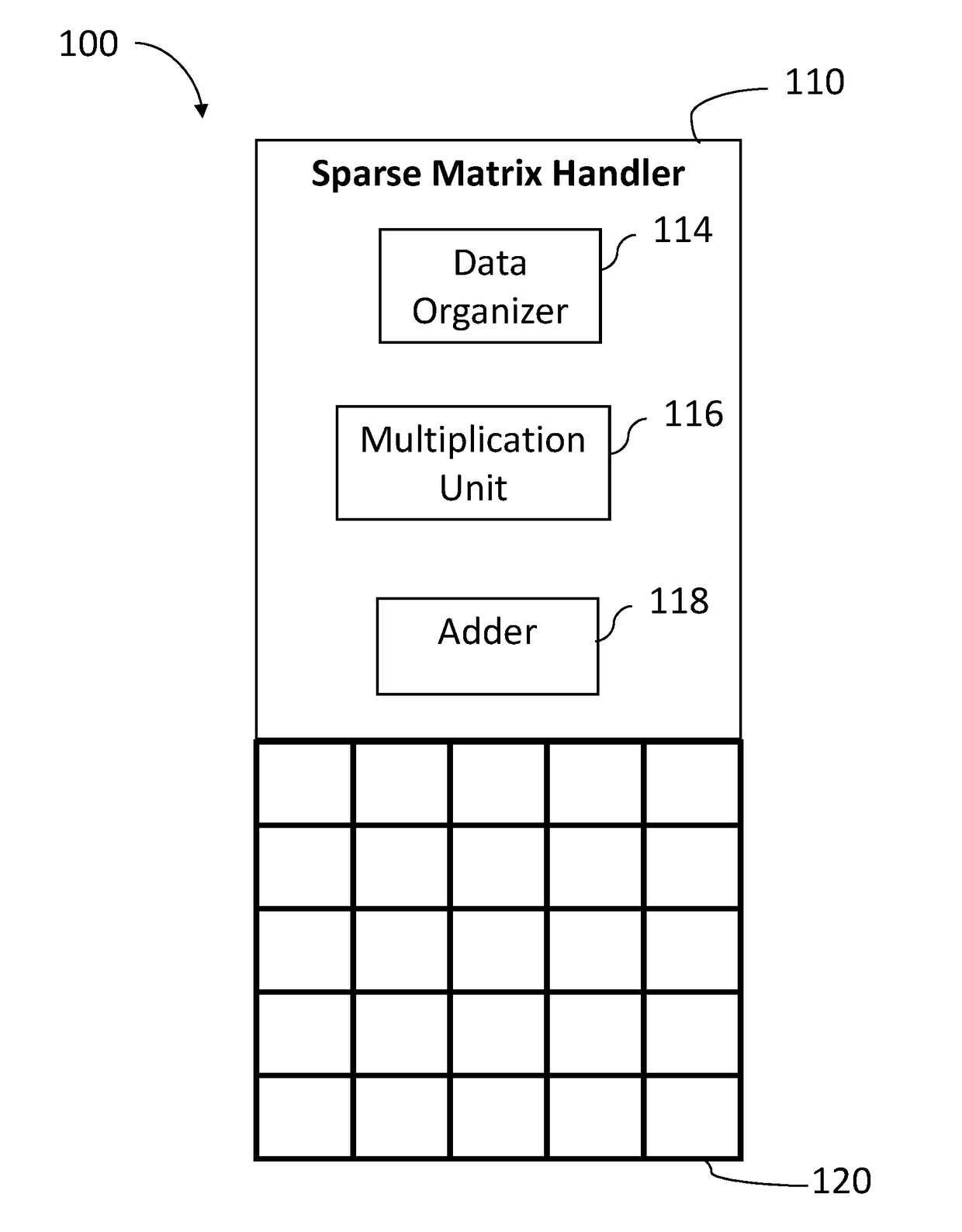

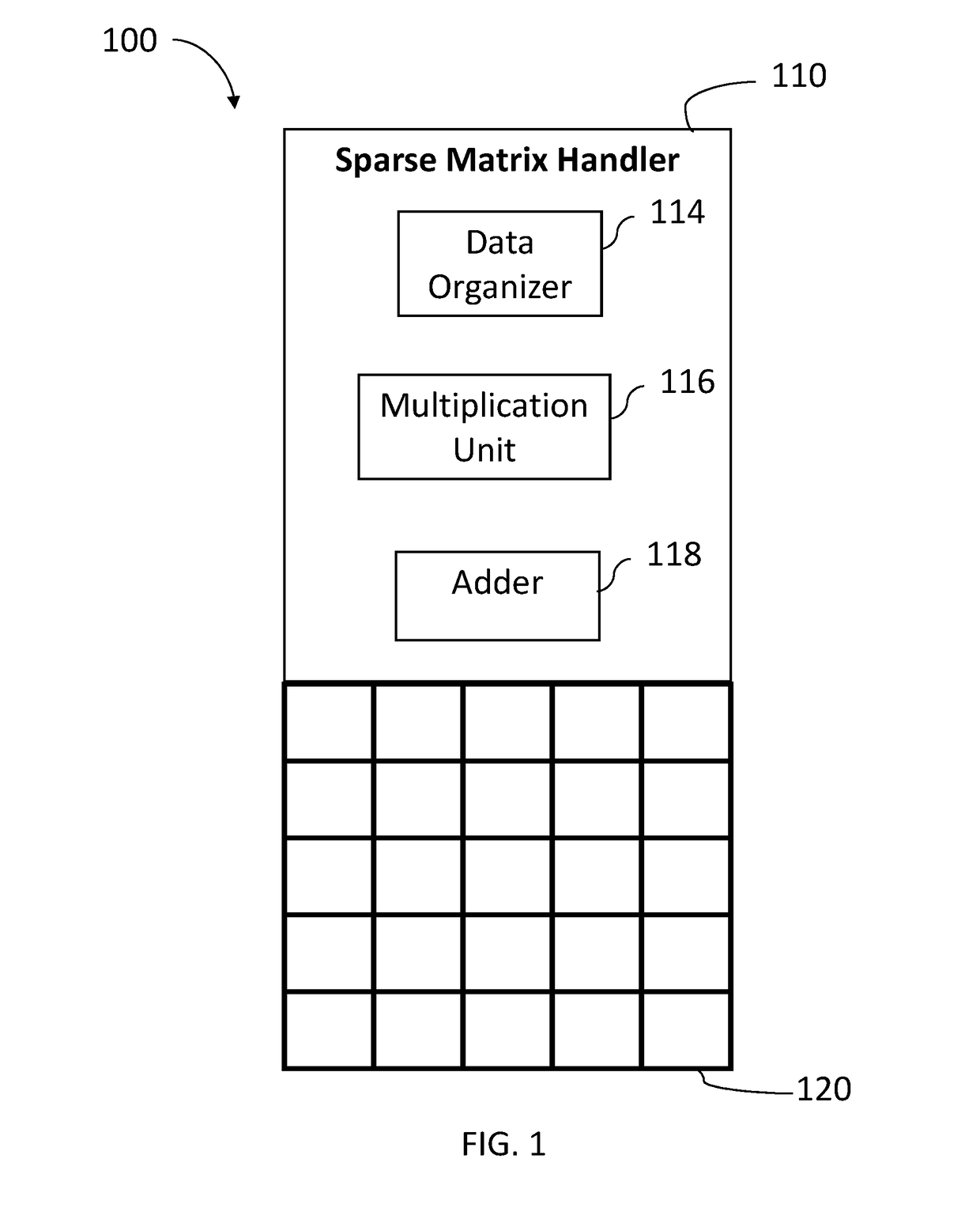

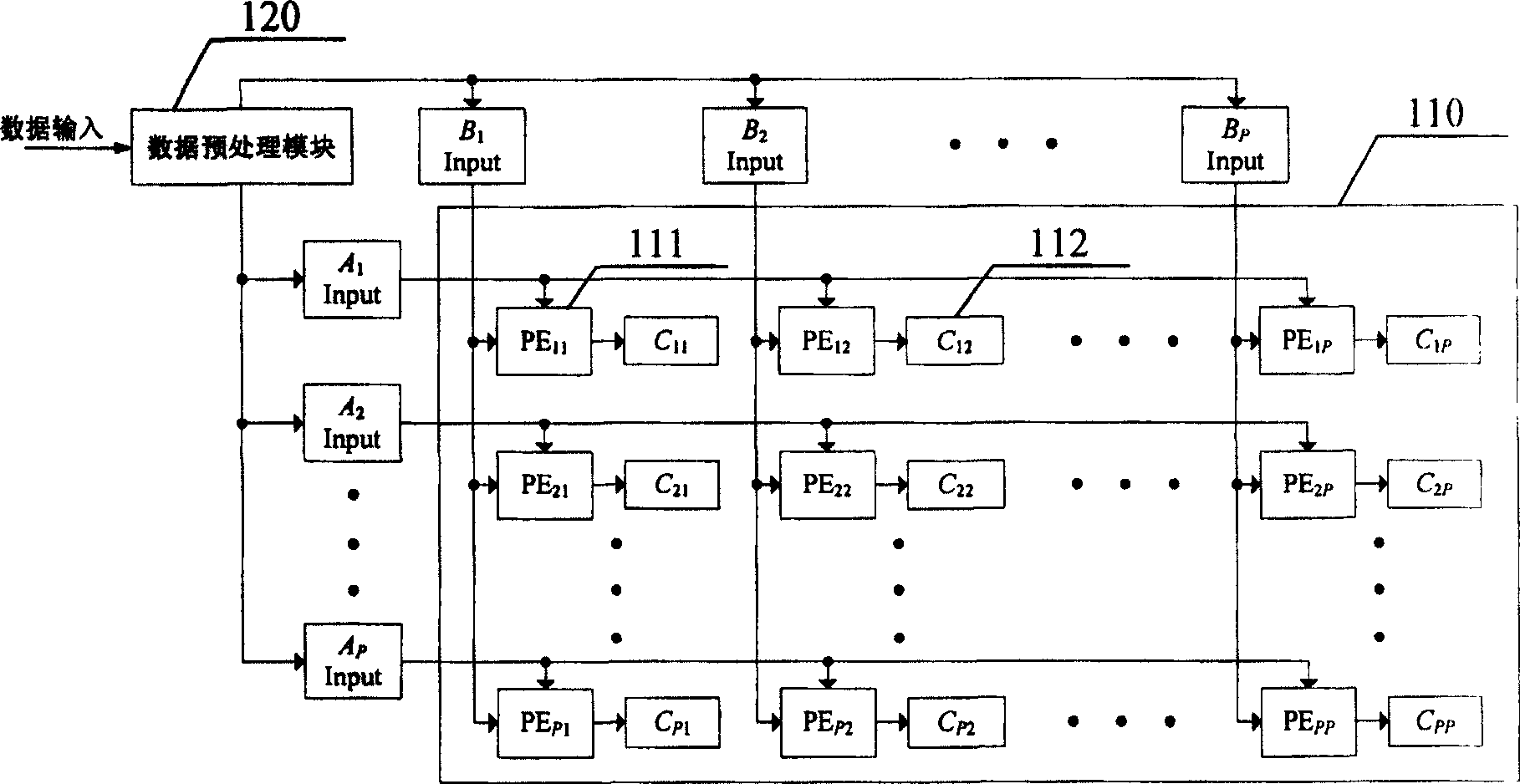

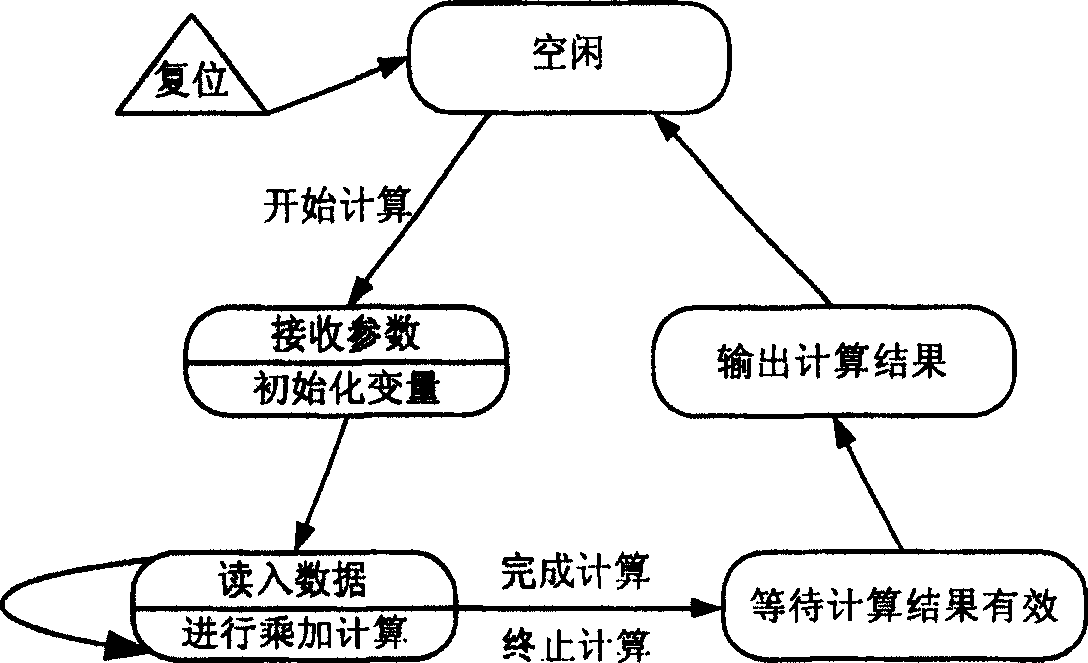

Matrix multiplier device based on single FPGA

The invention relates to a single FPGA matrix multiplication device that comprises P2 PEs formed in P row and P column matrix, data input and output interface and data pre processing unit. It can manage dense matrix and loose matrix multiplication with improvement in computing performance. It also relates to a matrix multiplication device based on FPGA.

Owner:ZHEJIANG UNIV

Matrix multiplier device based on single FPGA

Owner:ZHEJIANG UNIV

Method and device for realizing sparse matrix multiplication on reconfigurable processor array

ActiveCN112507284AReduce configuration timesReduce the number of memory accessesDigital data processing detailsEnergy efficient computingComputational scienceEngineering

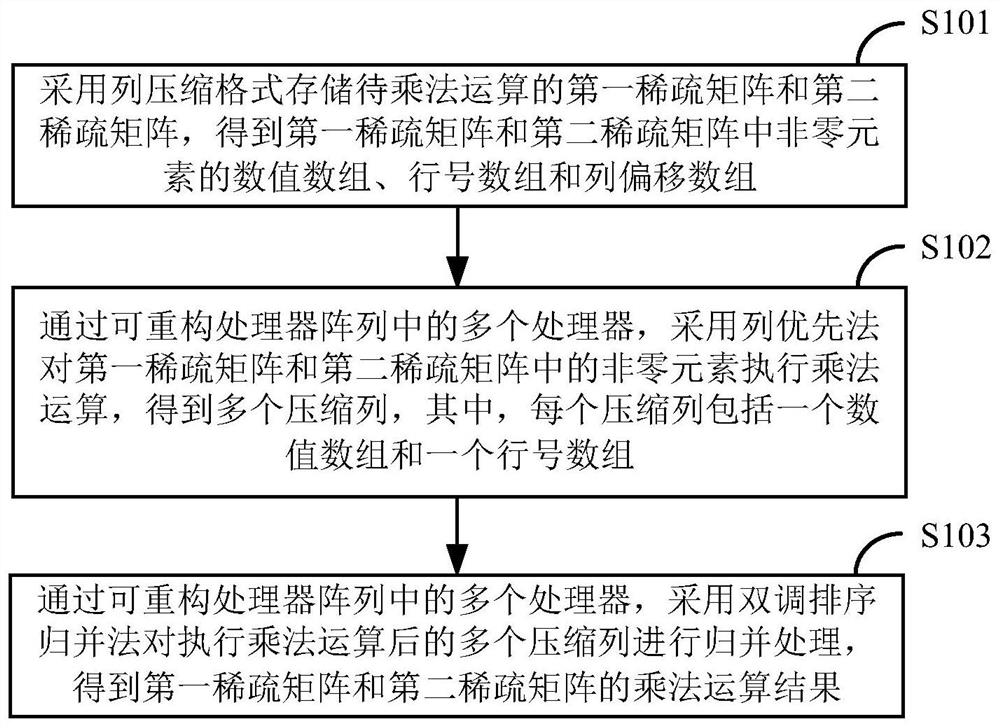

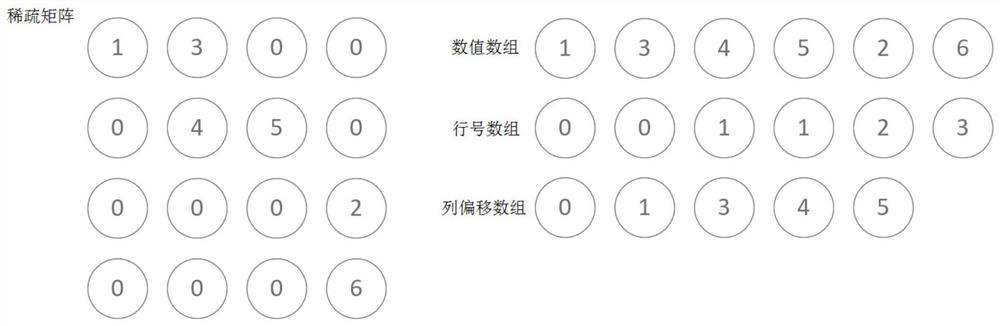

The invention discloses a method and device for realizing sparse matrix multiplication on a reconfigurable processor array, and the method comprises the steps: storing a first sparse matrix and a second sparse matrix to be multiplied in a column compression format, obtaining a numerical array, a row number array and a column offset array of non-zero elements in the first sparse matrix and the second sparse matrix; multiplying non-zero elements in the first sparse matrix and the second sparse matrix by using a column priority method through a plurality of processors in the reconfigurable processor array to obtain a plurality of compressed columns, each compressed column comprising a numerical array and a row number array; and merging the plurality of compressed columns subjected to multiplication by adopting a double-tone sorting and merging method through a plurality of processors in the reconfigurable processor array to obtain multiplication results of the first sparse matrix and thesecond sparse matrix. According to the invention, sparse matrix multiplication can be efficiently realized on the reconfigurable processing array.

Owner:TSINGHUA UNIV

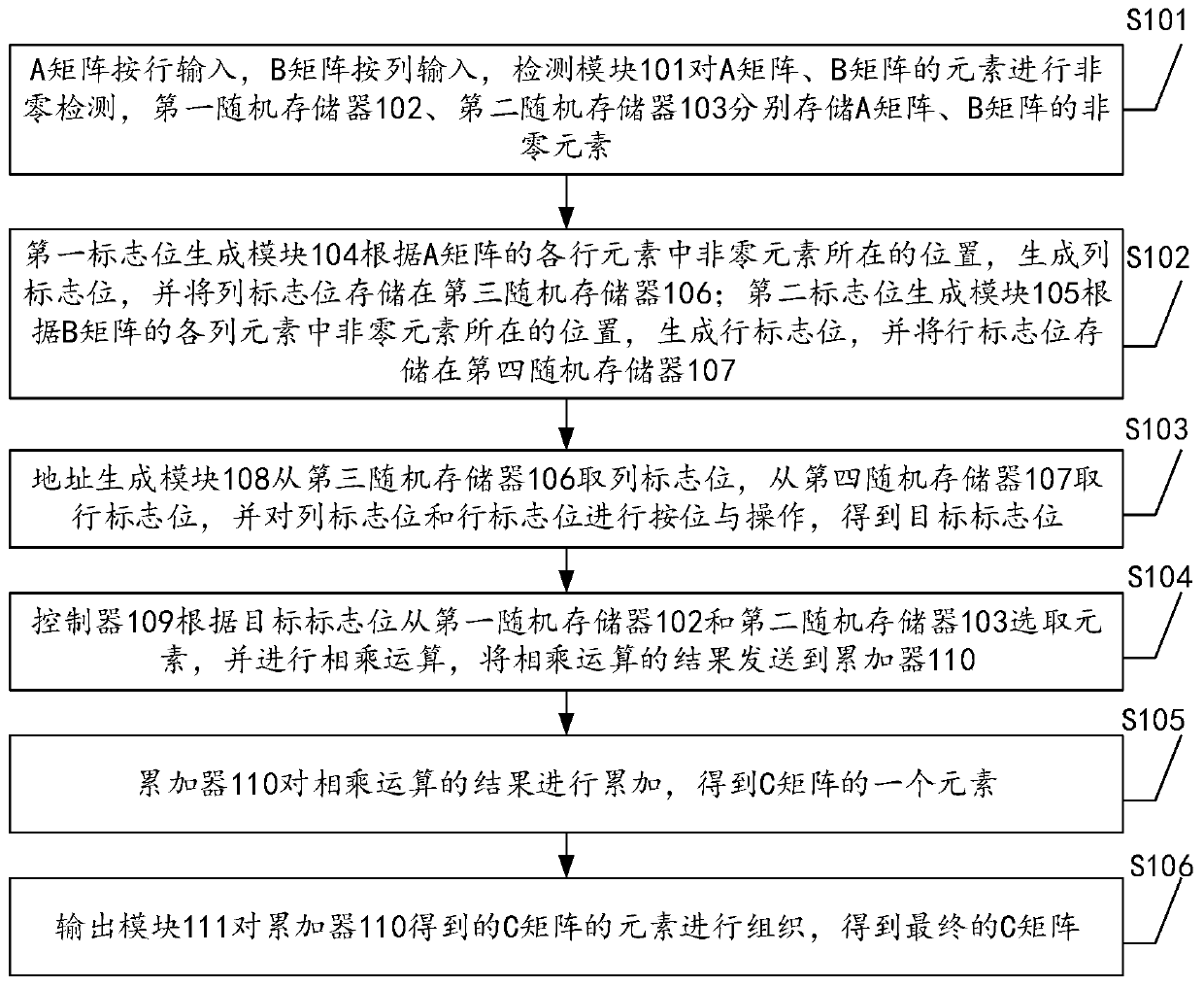

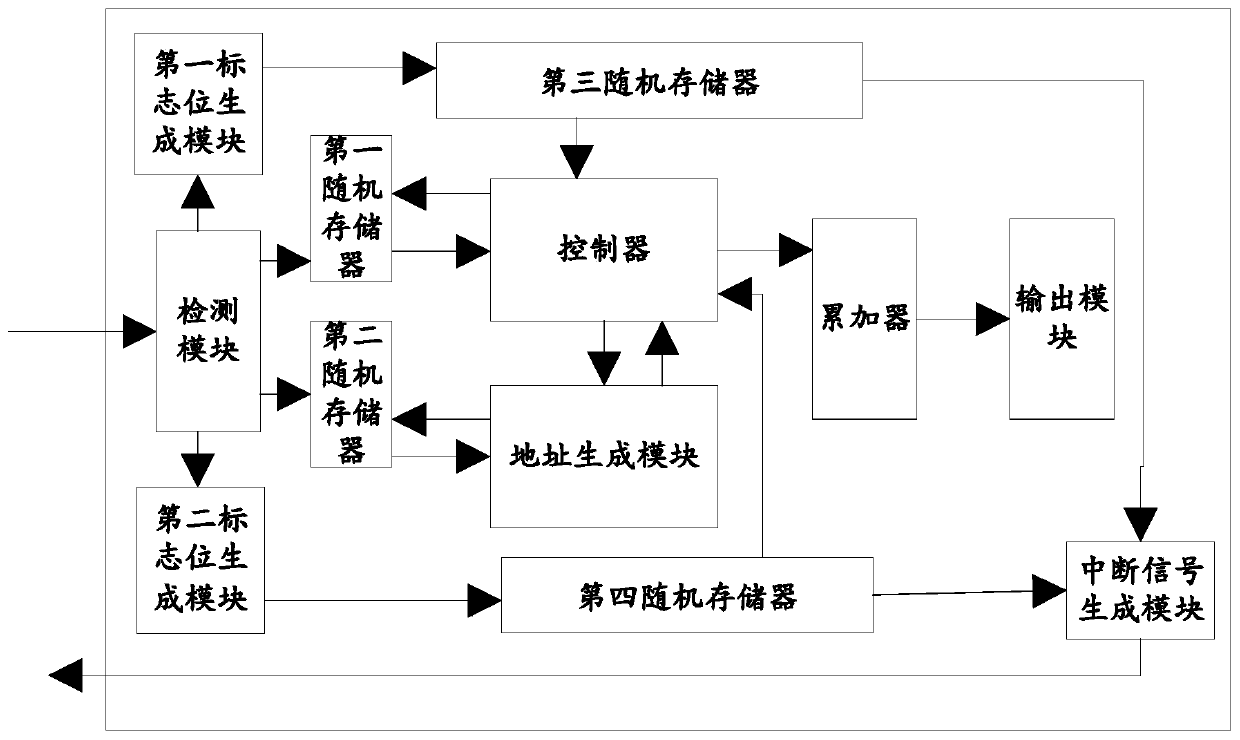

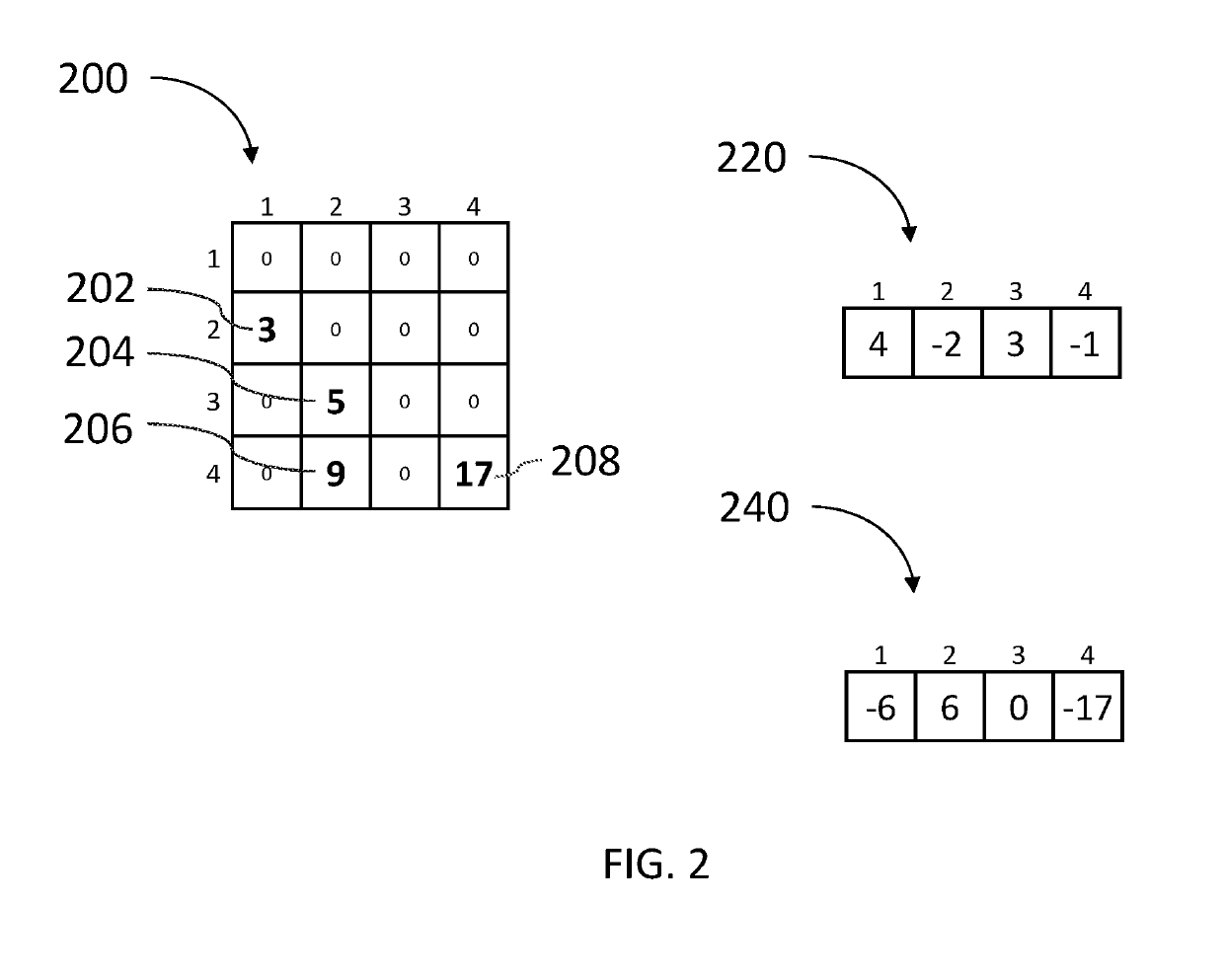

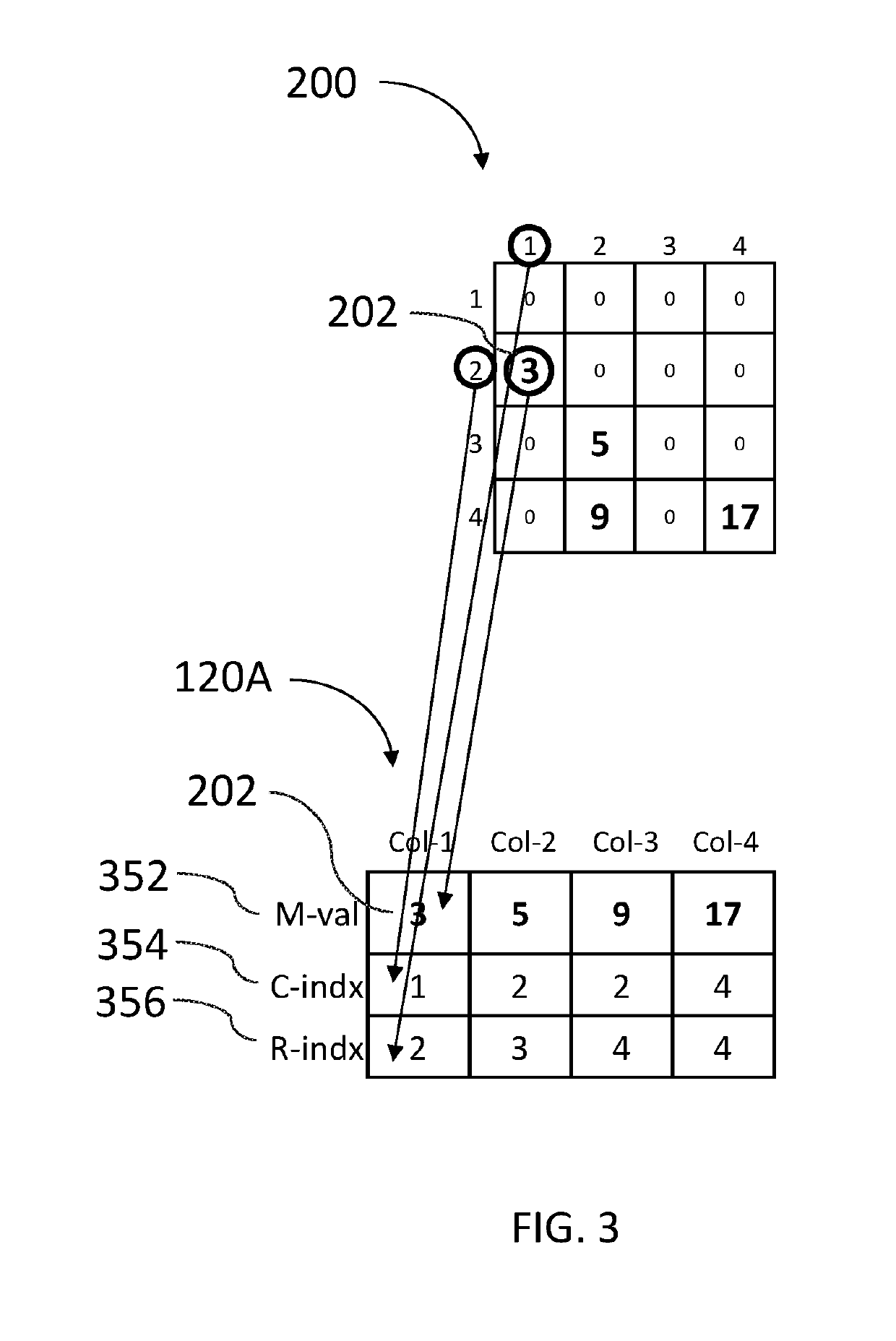

A circuit for realizing sparse matrix multiplication and an FPGA board

InactiveCN109740116AAvoid calculationMultiplication boostArchitecture with single central processing unitComplex mathematical operationsBitwise operationParallel computing

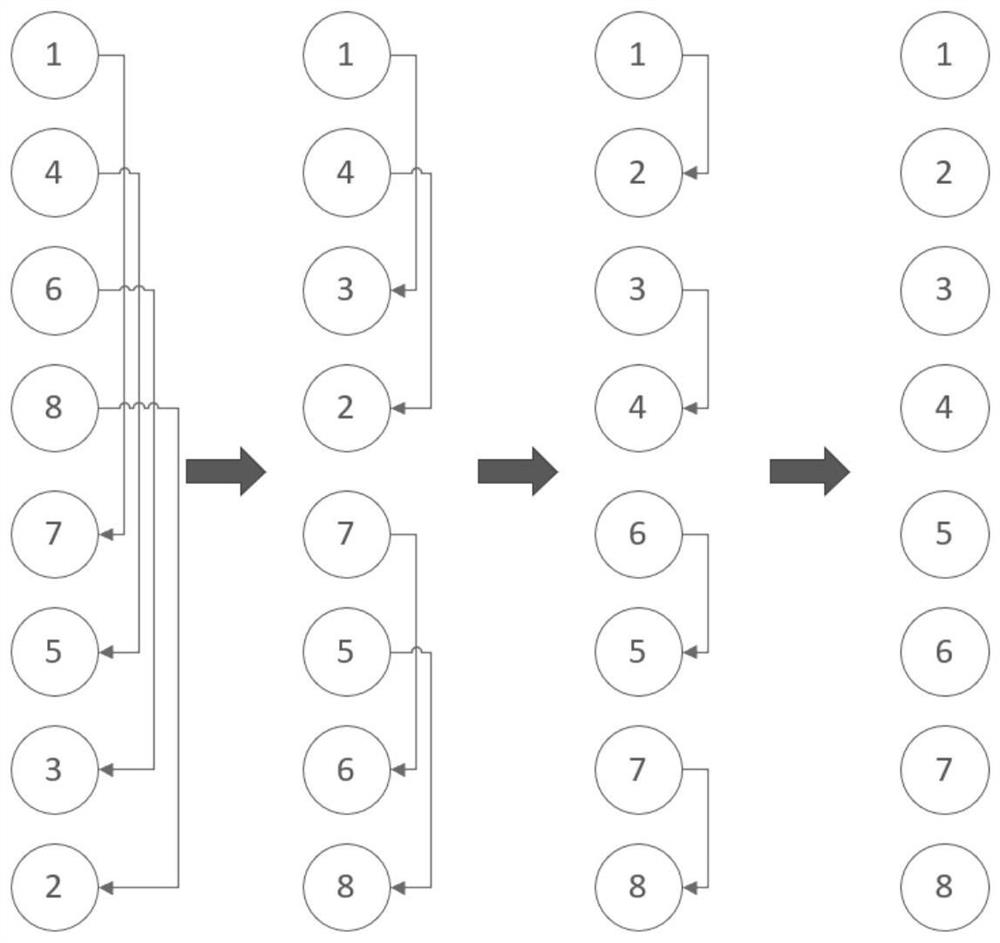

The invention discloses a circuit for realizing sparse matrix multiplication. The circuit comprises multiple modules, all the modules are coordinated and matched; in the multiplication process of thesparse matrix, the sparse matrix can be multiplied; non-zero elements in the sparse matrix are screened out; in addition, the calculation process is carried out, column flag bits of all rows of a first matrix and row flag bits of all columns of a second matrix are subjected to bitwise operation; and finally, non-zero elements which really need to participate in operation are selected from the non-zero elements according to the target flag bit, so that the calculation process of a large number of zero elements and the non-zero elements which do not need to participate in operation is avoided,and the efficiency of sparse matrix multiplication operation is remarkably improved. In addition, the invention further provides an FPGA board, and the effect of the FPGA board corresponds to the effect of the circuit.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

Sparse matrix multiplication in associative memory device

ActiveUS10489480B2Digital data processing detailsComplex mathematical operationsBinary multiplierAlgorithm

A method for multiplying a first sparse matrix by a second sparse matrix in an associative memory device includes storing multiplicand information related to each non-zero element of the second sparse matrix in a computation column of the associative memory device; the multiplicand information includes at least a multiplicand value. According to a first linear algebra rule, the method associates multiplier information related to a non-zero element of the first sparse matrix with each of its associated multiplicands, the multiplier information includes at least a multiplier value. The method concurrently stores the multiplier information in the computation columns of each associated multiplicand. The method, concurrently on all computation columns, multiplies a multiplier value by its associated multiplicand value to provide a product in the computation column, and adds together products from computation columns, associated according to a second linear algebra rule, to provide a resultant matrix.

Owner:GSI TECH

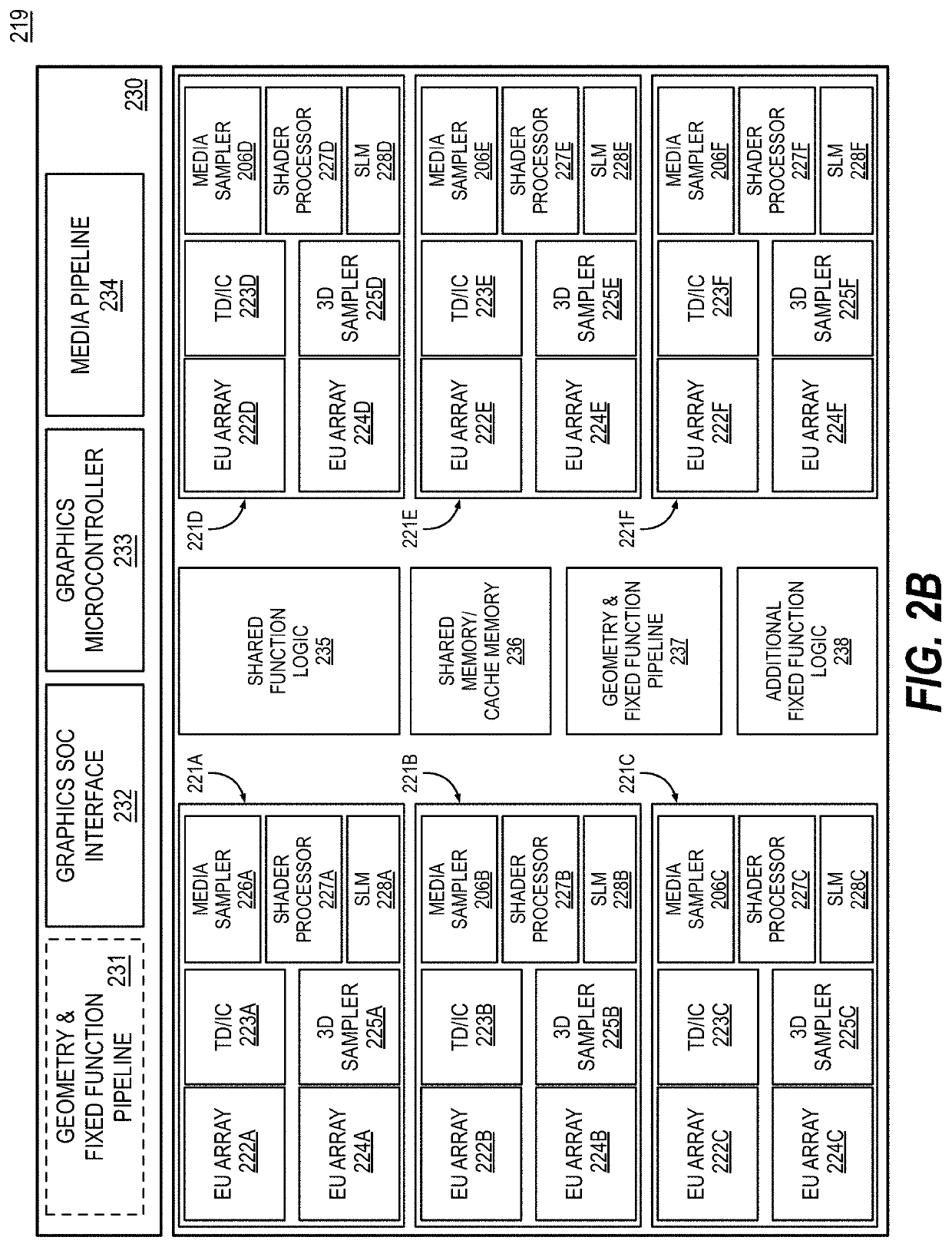

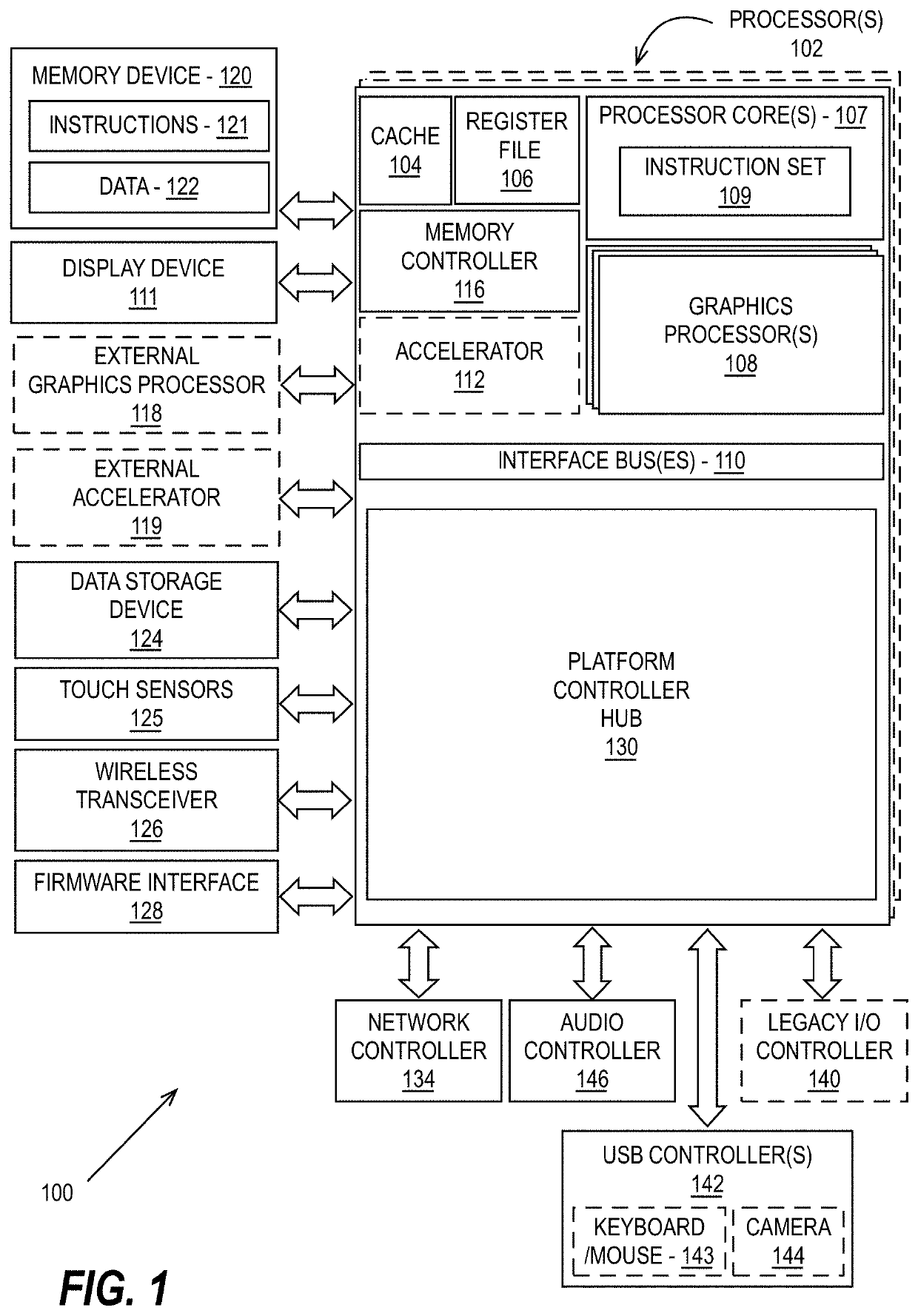

Sparse matrix multiplication acceleration mechanism

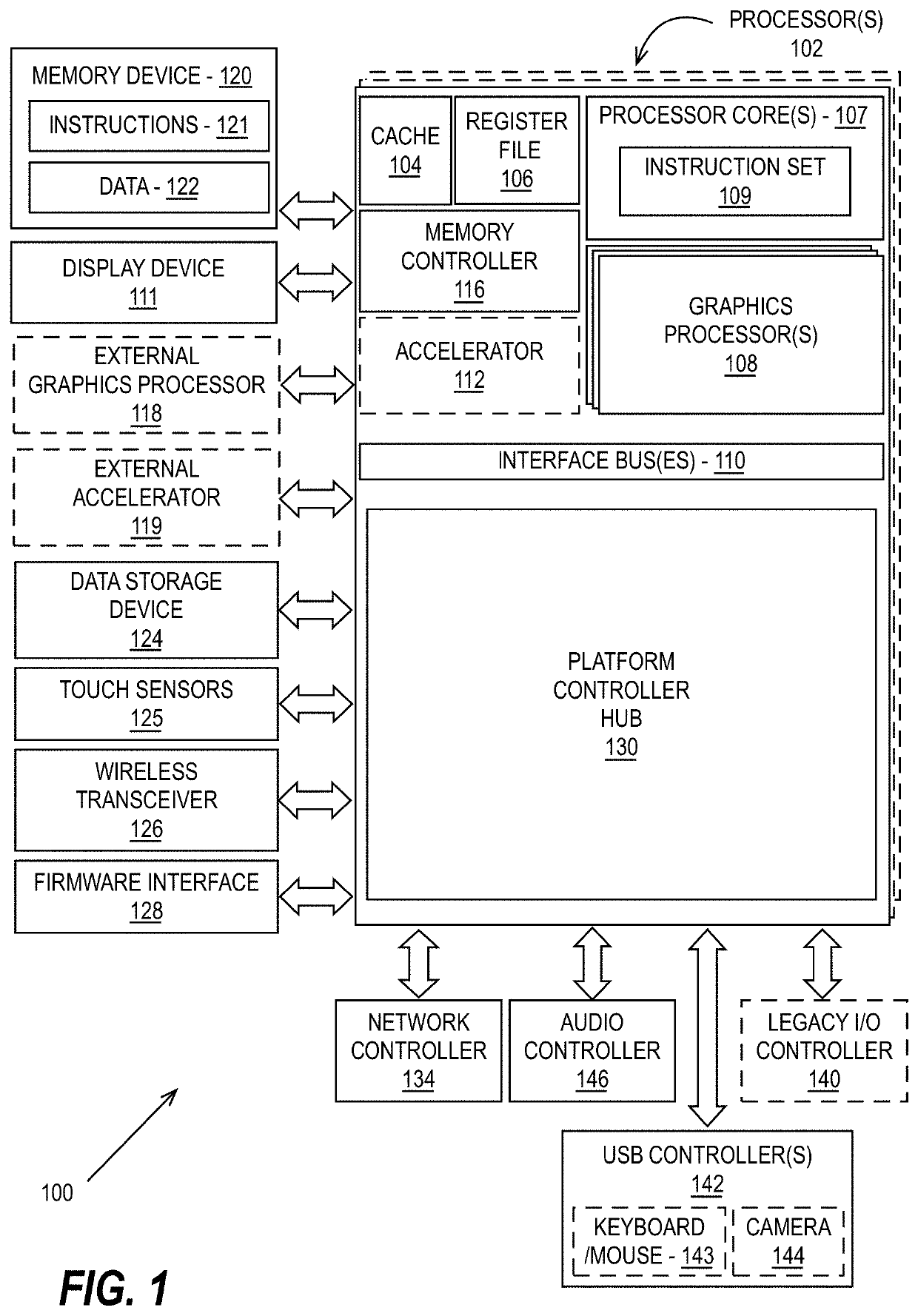

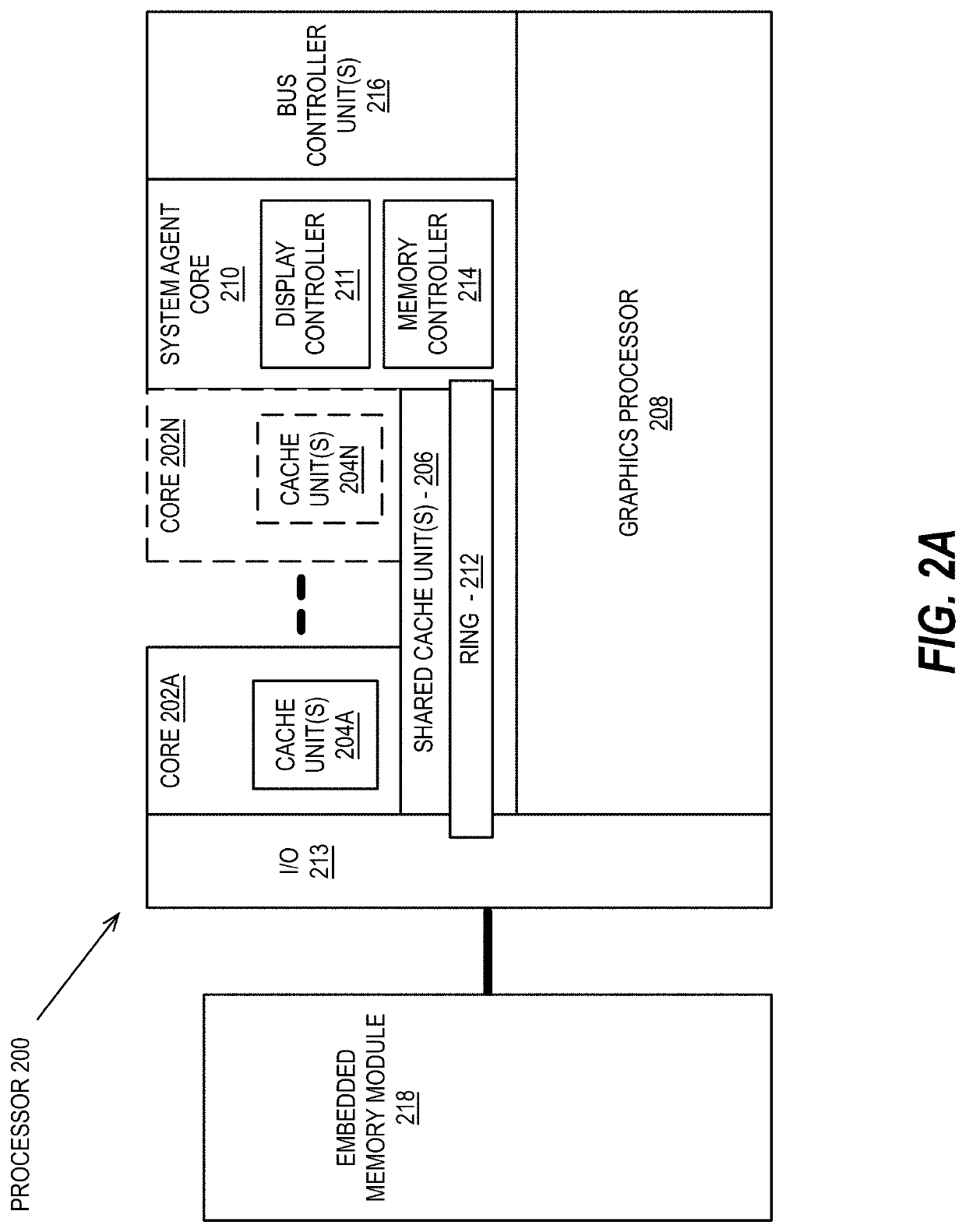

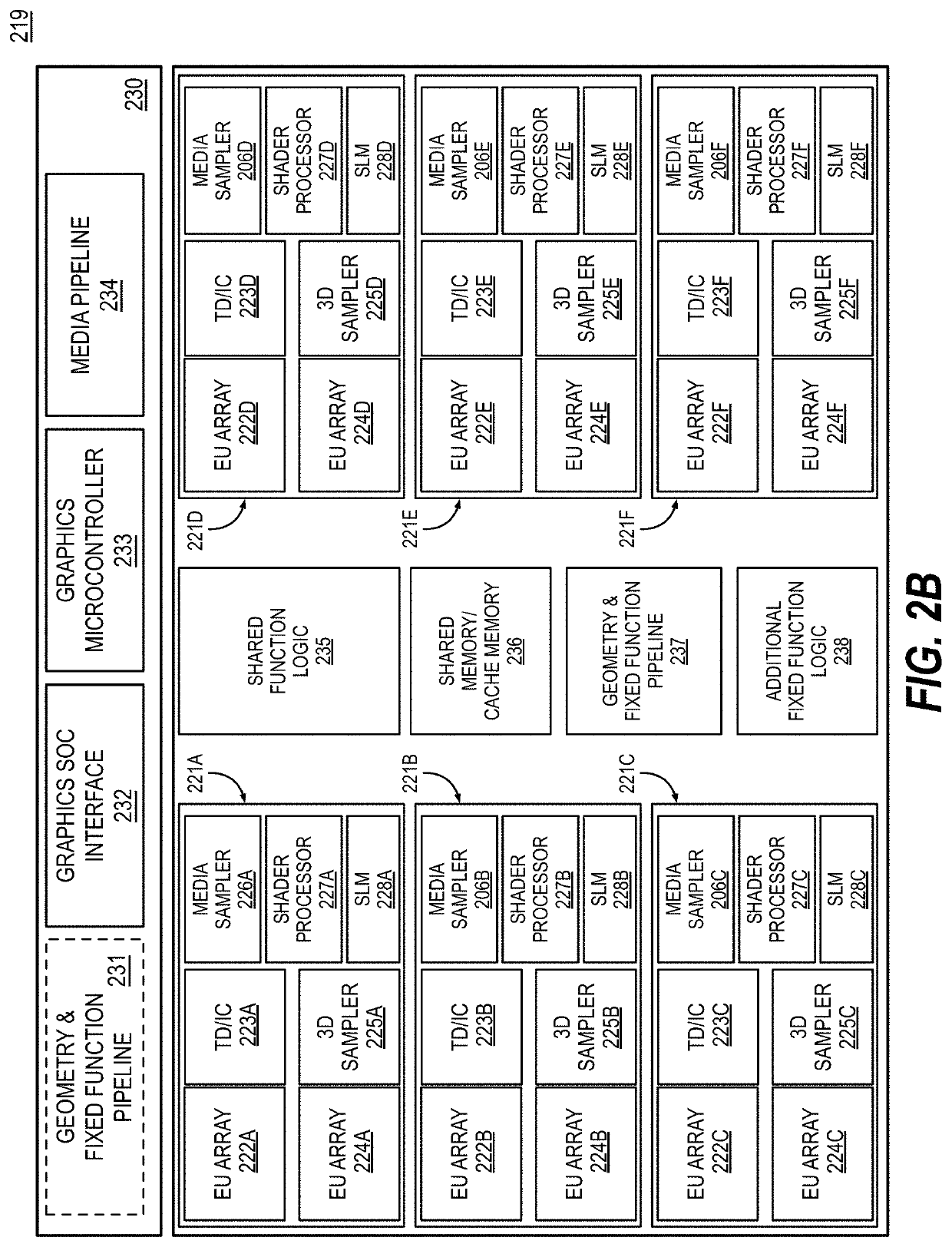

ActiveUS20210073318A1Digital data processing detailsProcessor architectures/configurationComputational scienceData pack

An apparatus to facilitate acceleration of matrix multiplication operations. The apparatus comprises a systolic array including matrix multiplication hardware to perform multiply-add operations on received matrix data comprising data from a plurality of input matrices and sparse matrix acceleration hardware to detect zero values in the matrix data and perform one or more optimizations on the matrix data to reduce multiply-add operations to be performed by the matrix multiplication hardware.

Owner:INTEL CORP

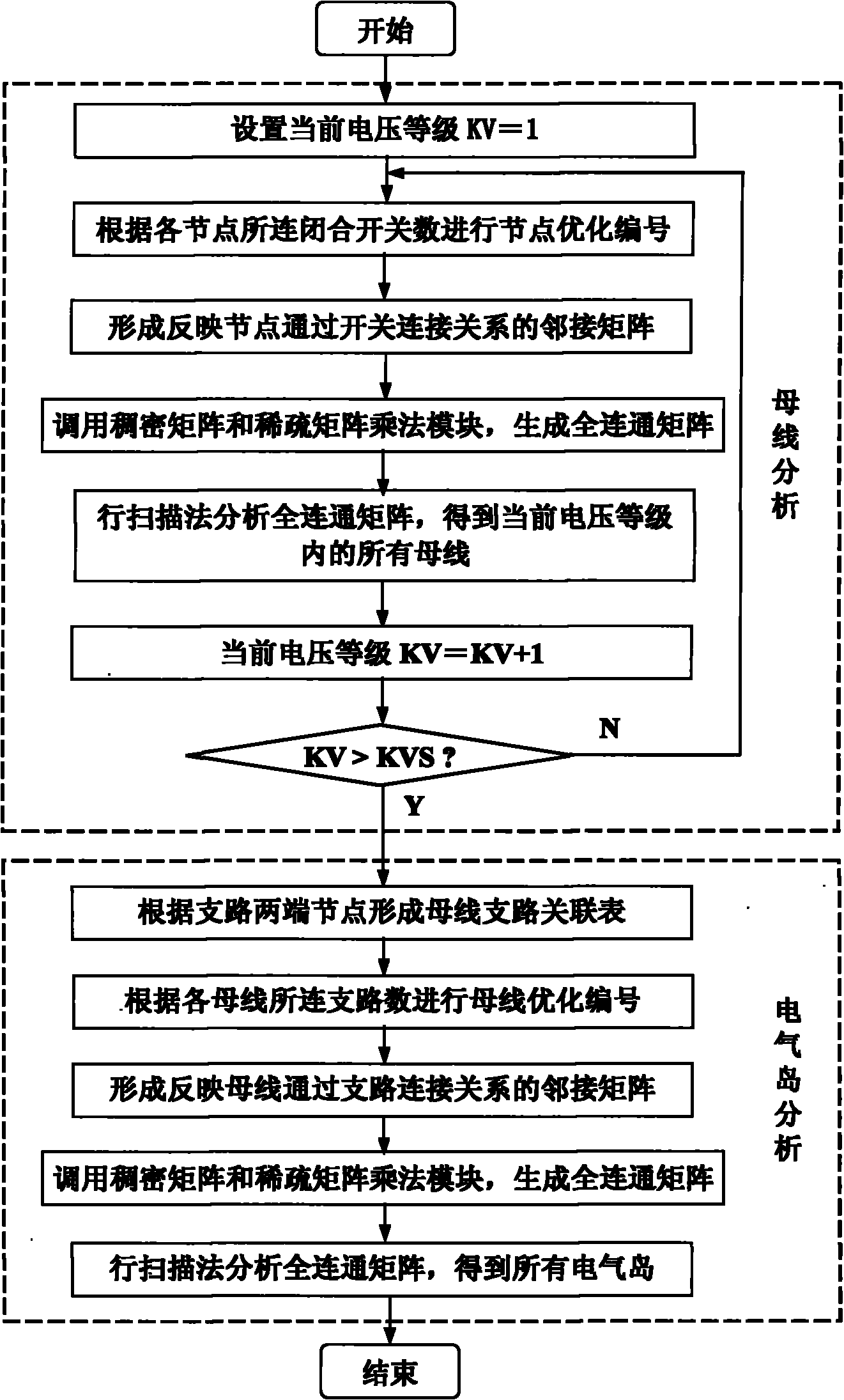

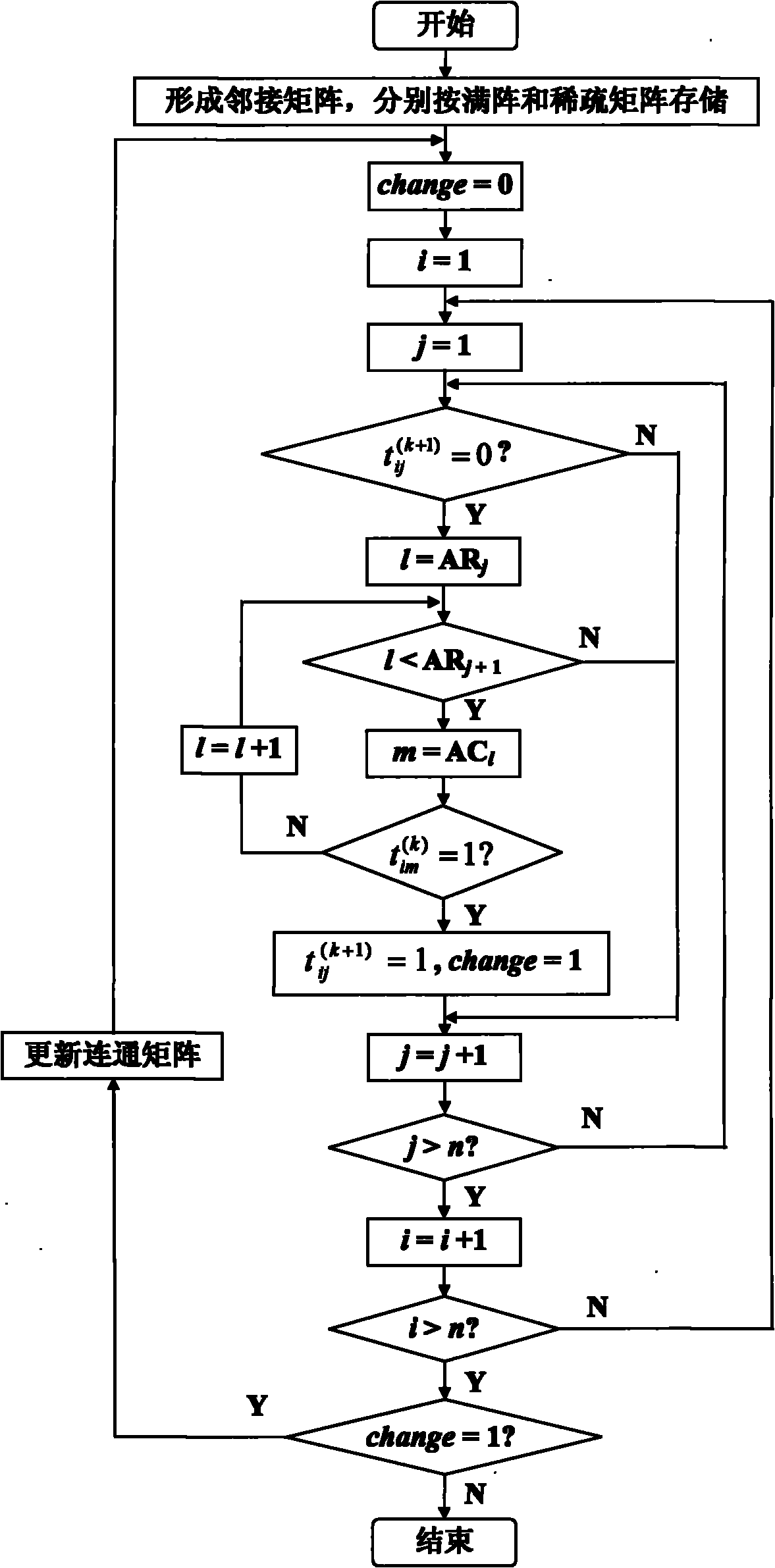

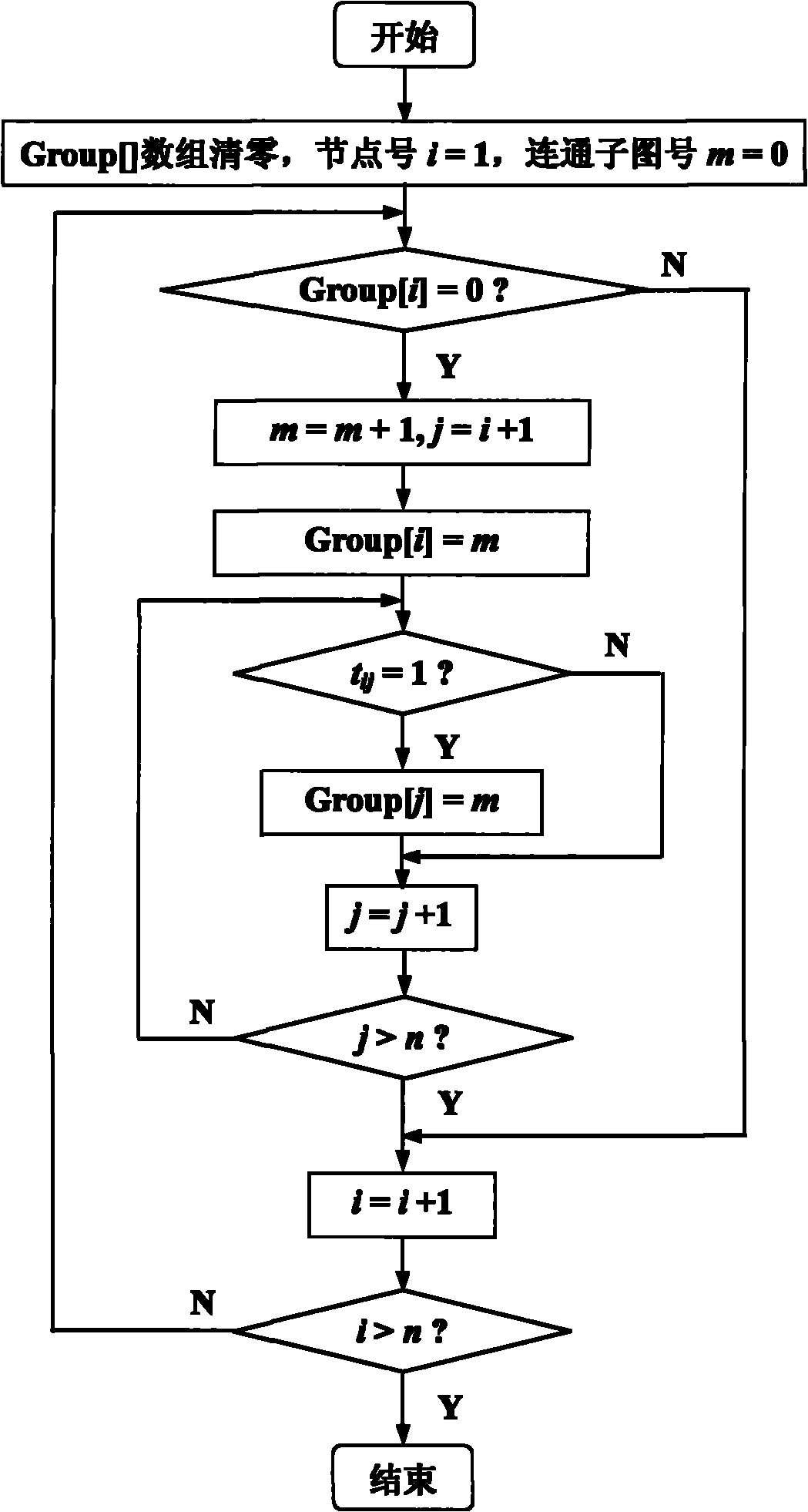

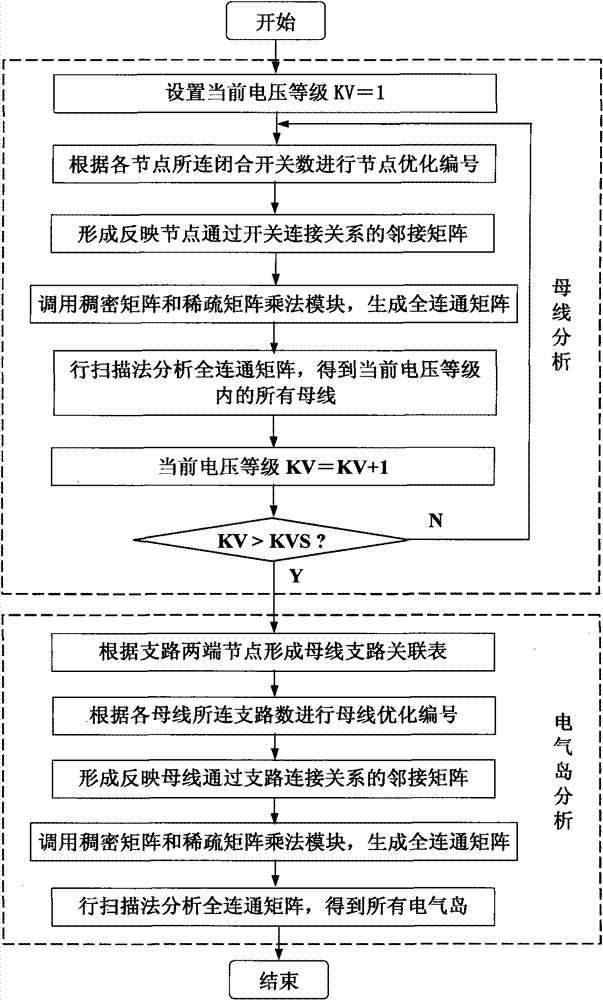

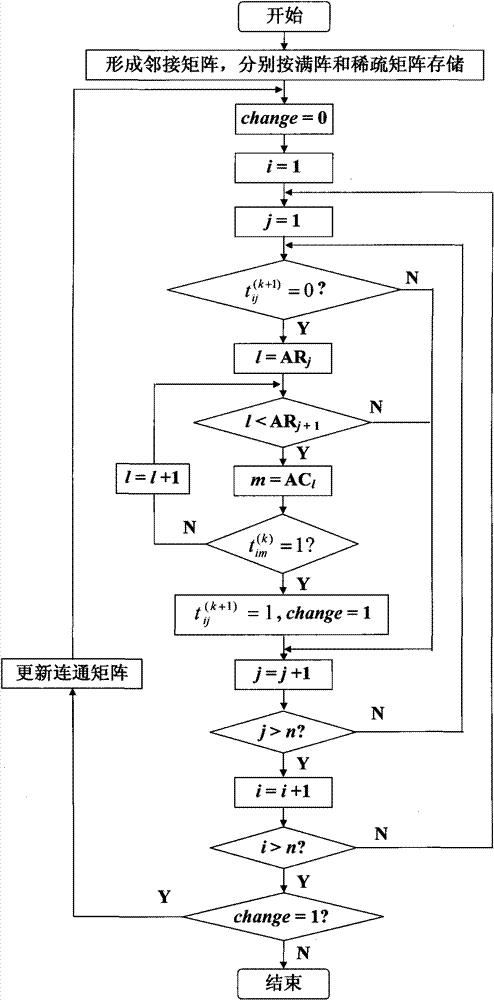

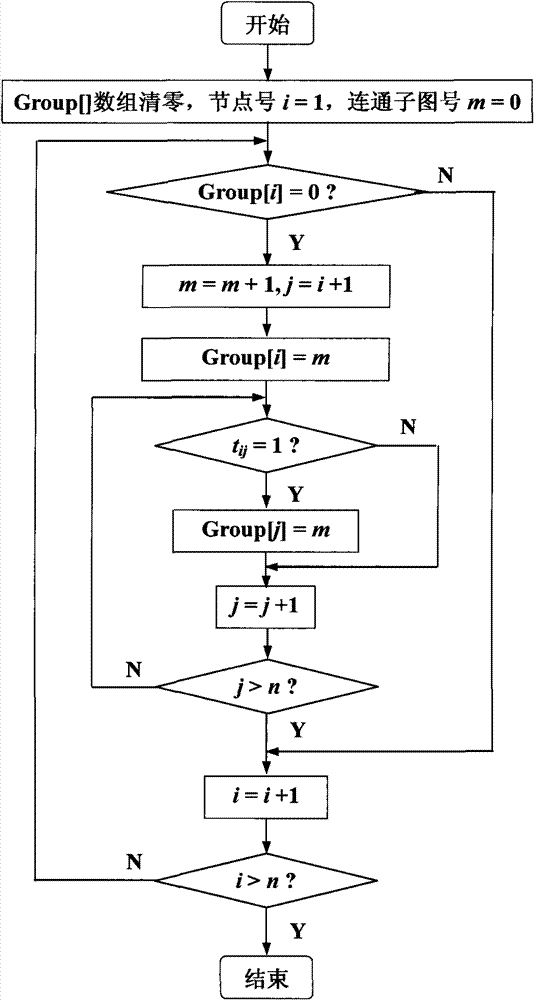

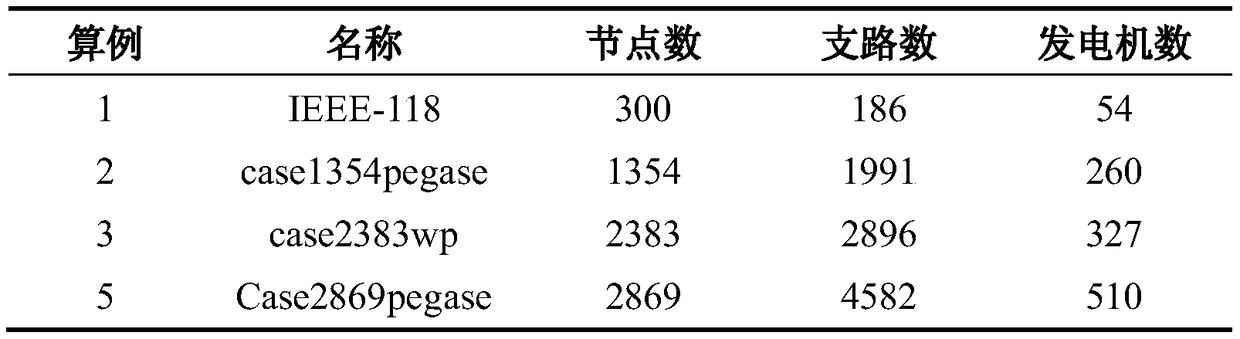

Sparse matrix method-based network topology analysis method for power system

InactiveCN101976834ASave memoryClear conceptSpecial data processing applicationsInformation technology support systemElectric power systemMatrix method

The invention discloses a sparse matrix method-based network topology analysis method for a power system. The method is implemented by adopting sparse matrix technology based on solving a full connection matrix by using adjacency matrix self multiplication. The method still has the advantage of a matrix method, has the characteristics of clear concept and simple programming of the matrix method, and has higher computing speed compared with the conventional matrix method. The sparse matrix computing technology adopted in multiplication of a full matrix and a sparse matrix greatly improves the network topology analysis speed. Nodes are optimized and numbered in order from large to small number of closed switches connected with the nodes, and buses are optimized and numbered in order from large to small number of branches connected with the buses, so the matrix multiplication computing quantity is reduced. The adjacency matrixes are sparsely stored so as to effectively save the storage space of a computer.

Owner:DALIAN MARITIME UNIVERSITY

Matrix multiplication parallel computing system based on multi-FPGA

InactiveCN100449522CReduce resource requirementsImprove computing powerDigital computer detailsData switching networksBinary multiplierParallel algorithm

A matrix multiplication parallel calculation system based on multi-FPGA is prepared as utilizing FPGA as processing unit to finalize dense matrix multiplication calculation and to raise sparse matrix multiplication calculation function, utilizing Ethernet and star shaped topology structure to form master-slave distributed FPGA calculation system, utilizing Ethernet multicast-sending mode to carry out data multicast-sending to processing unit requiring the same data and utilizing parallel algorithm based on line one-dimensional division output matrix to carry out matrix multiplication parallel calculation for decreasing communication overhead of system.

Owner:ZHEJIANG UNIV

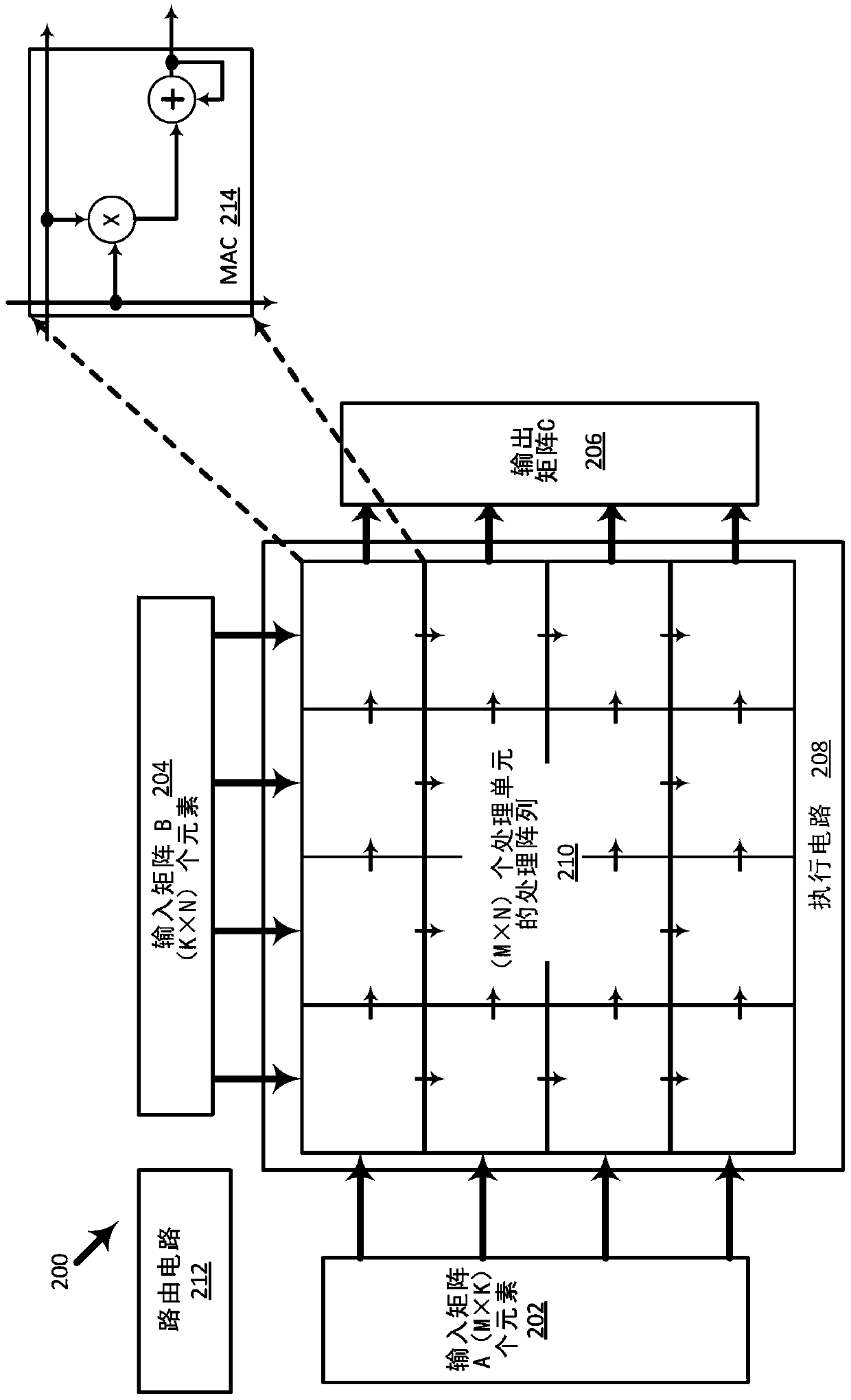

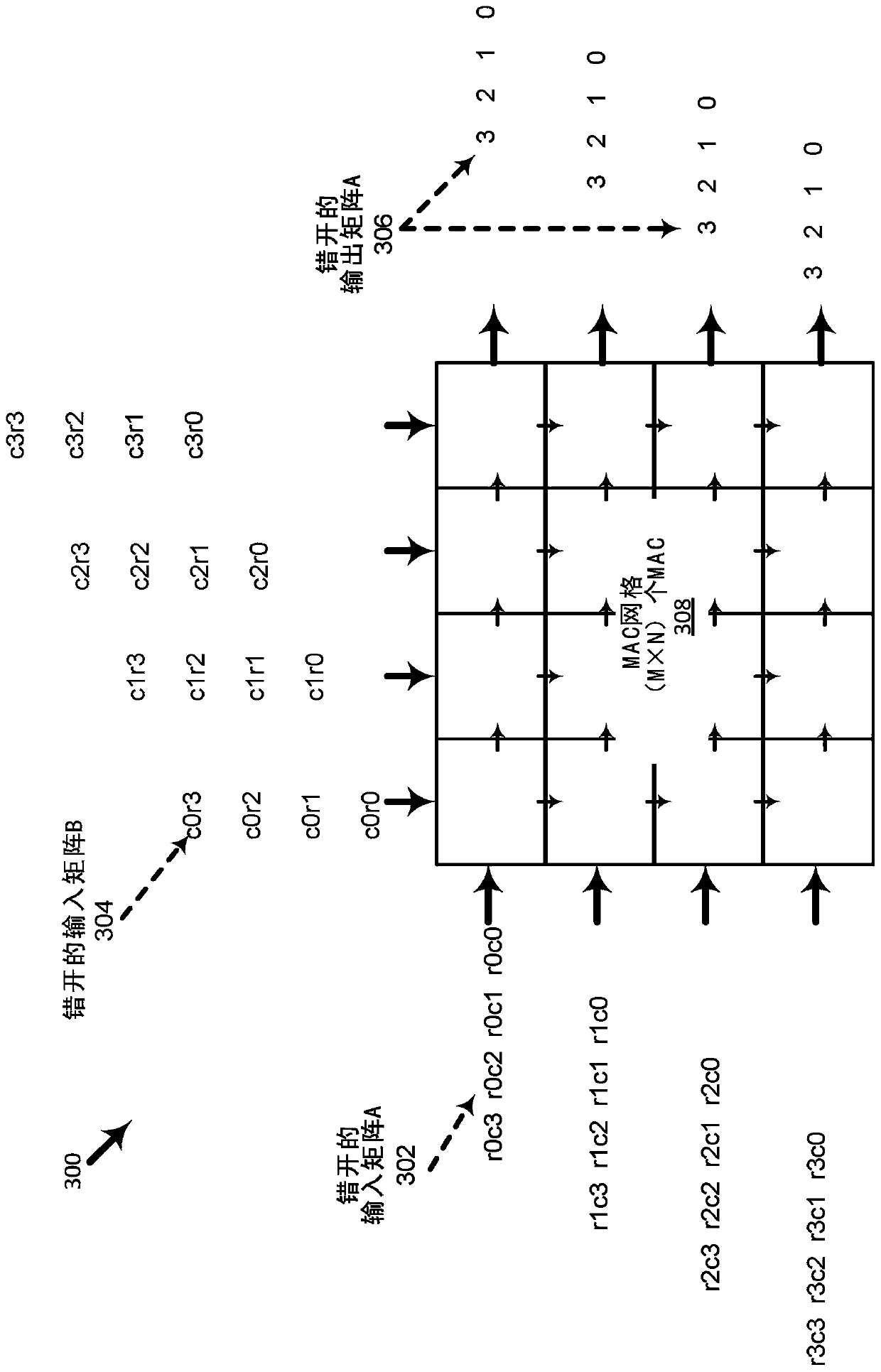

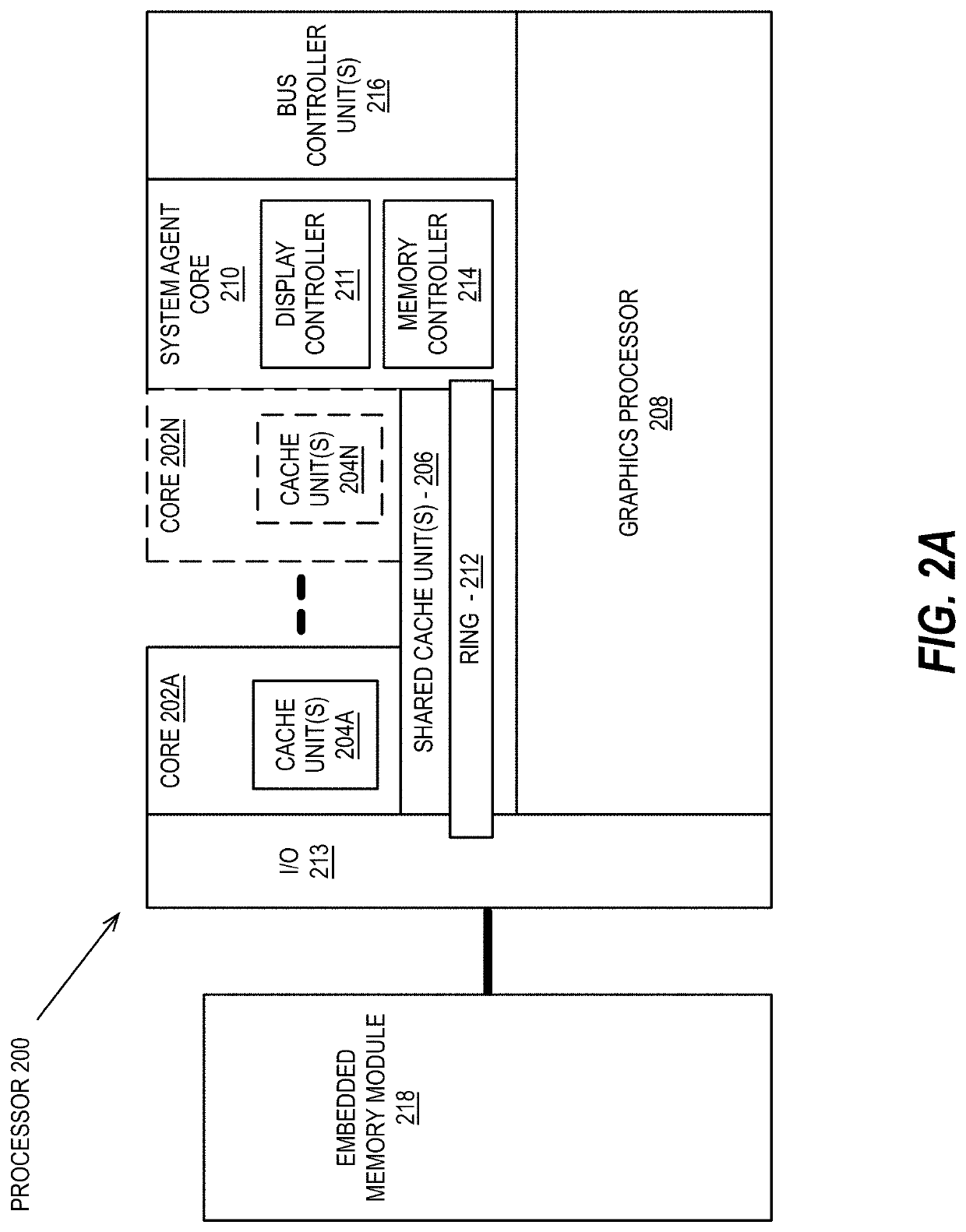

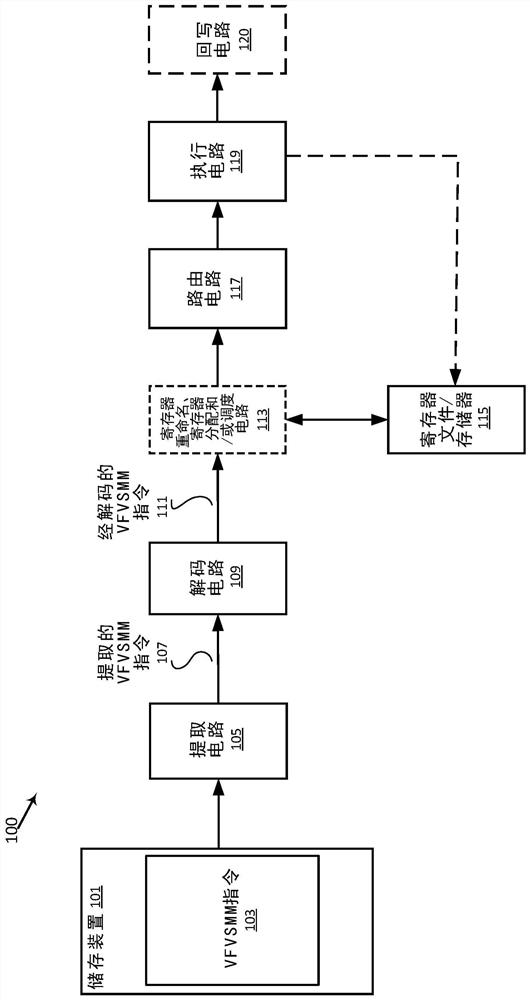

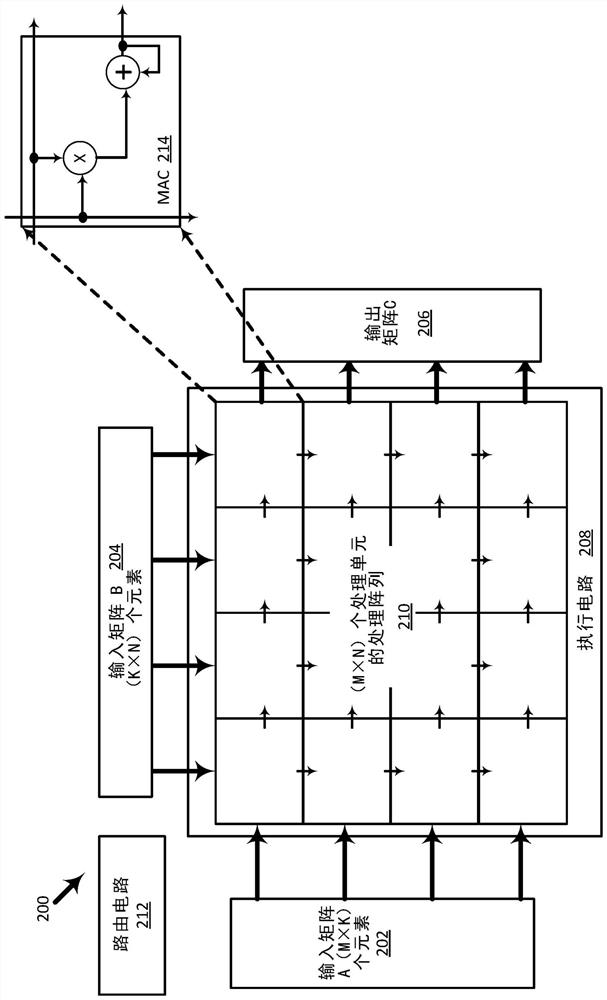

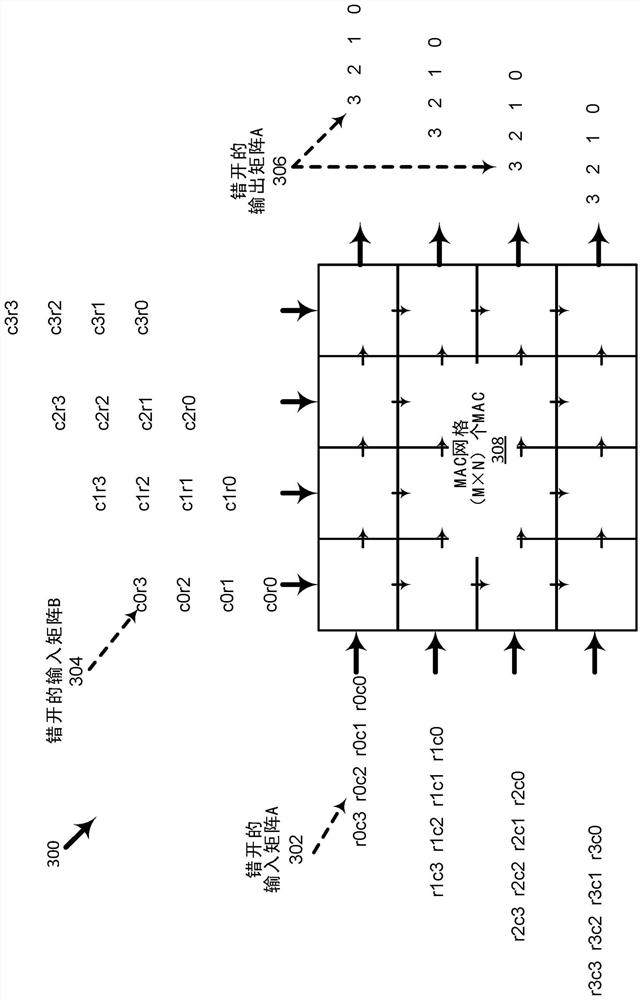

Variable format, variable sparsity matrix multiplication instruction

PendingCN110580175ADigital data processing detailsInstruction analysisParallel computingMatrix multiplication

Disclosed embodiments relate to a variable format, variable sparsity matrix multiplication (VFVSMM) instruction. In one example, a processor includes fetch and decode circuitry to fetch and decode a VFVSMM instruction specifying locations of A, B, and C matrices having (M x K), (K x N), and (M x N) elements, respectively, execution circuitry, responsive to the decoded VFVSMM instruction, to: routeeach row of the specified A matrix, staggering subsequent rows, into corresponding rows of a (M x N) processing array, and route each column of the specified B matrix, staggering subsequent columns,into corresponding columns of the processing array, wherein each of the processing units is to generate K products of A-matrix elements and matching B-matrix elements having a same row address as a column address of the A-matrix element, and to accumulate each generated product with a corresponding C-matrix element.

Owner:INTEL CORP

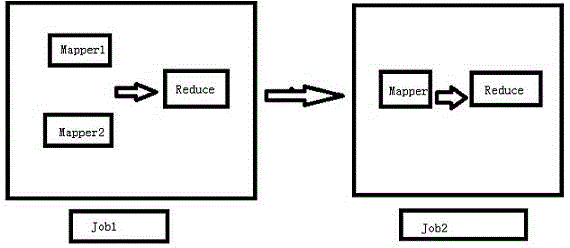

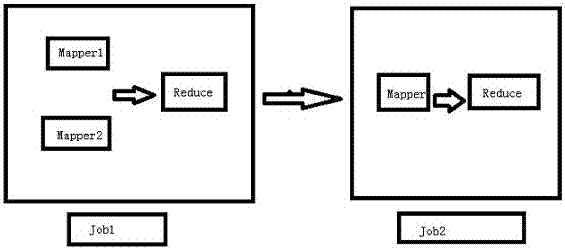

Super-large scale sparse matrix multiplication method based on mapreduce frame

ActiveCN104462023AReduce performanceShorten the timeComplex mathematical operationsAlgorithmCoefficient matrix

Disclosed is a super-large scale sparse matrix multiplication method based on a mapreduce frame. The algorithm is realized through two mapreduce jobs, elements in a matrix A and elements in a matrix B are grouped correctly to enable the elements in the i row of the matrix A and the elements in the k line of the matrix B to belong to a group of the same reduce, and multiplication is conducted on each element from the matrix A and each element from the matrix B in the group. Due to the fact that super-large scale sparse matrix multiplication can be achieved through two mapreduce jobs, the number of steps of the algorithm is reduced, time is shortened, and the requirement for the memory of a machine is reduced as long as each row of the matrix A can be stored by the machine with a hashmap.

Owner:阿里巴巴(北京)软件服务有限公司

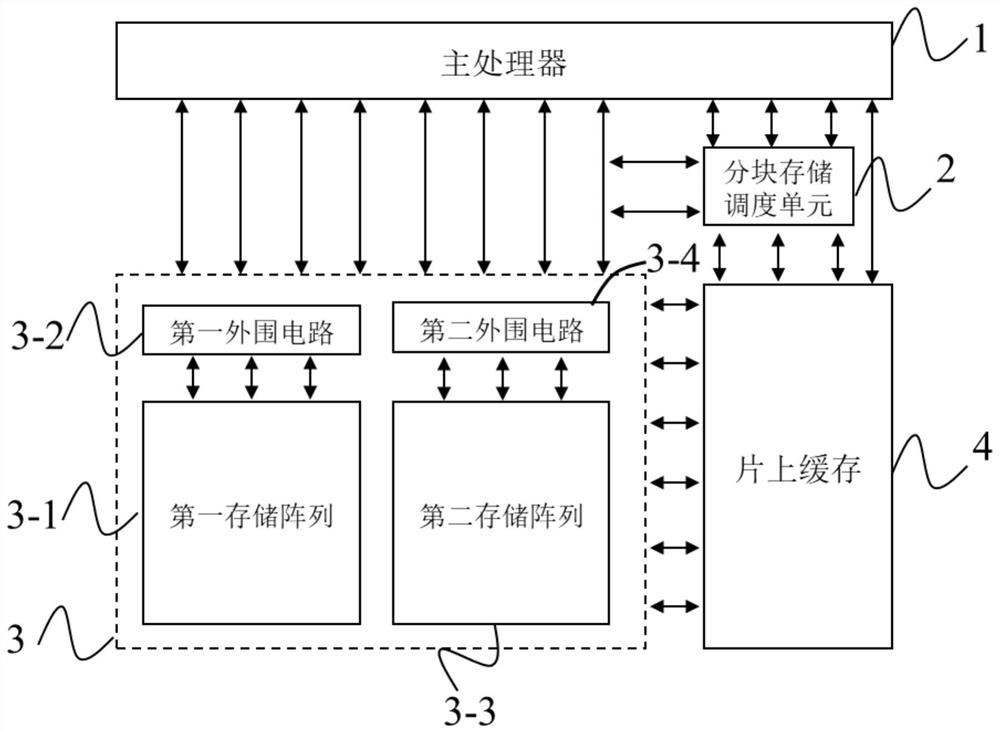

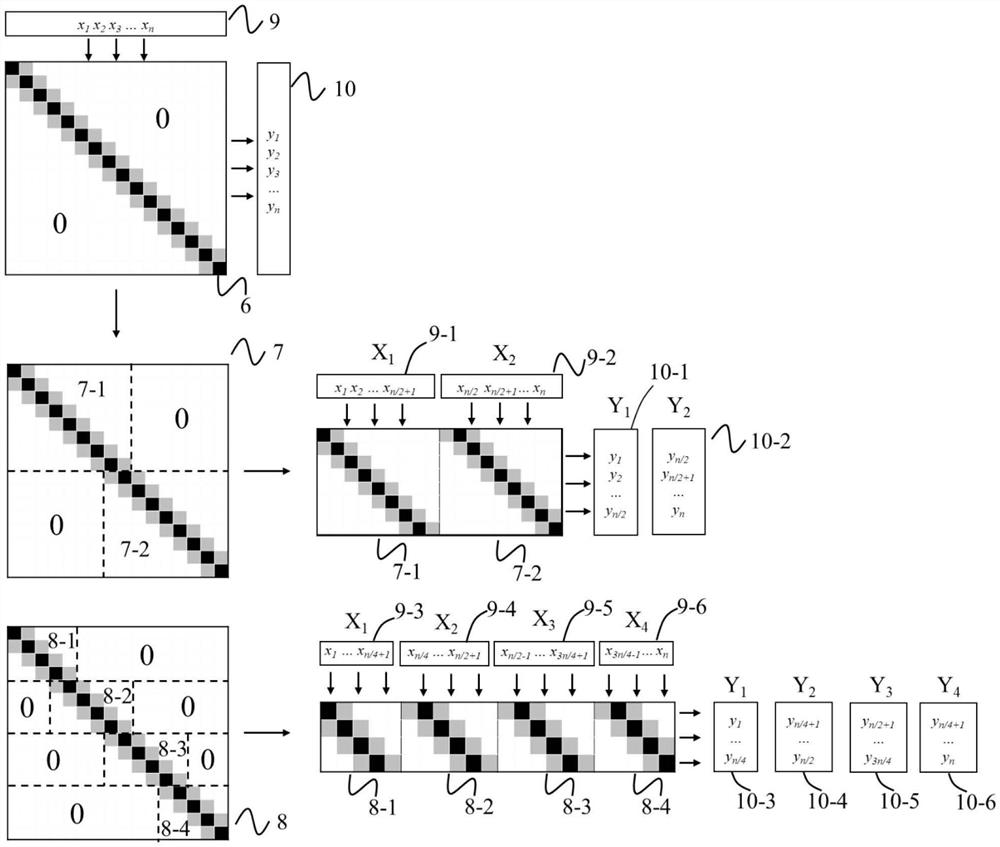

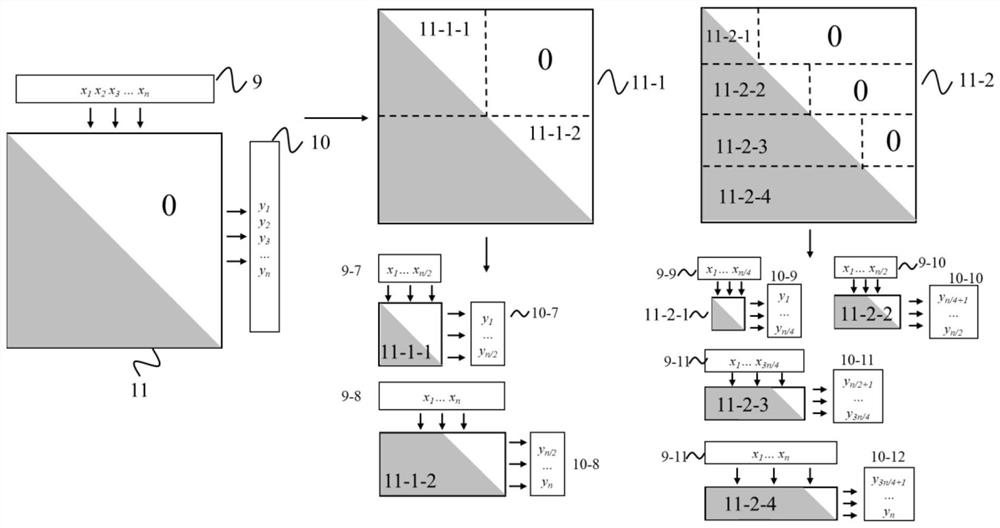

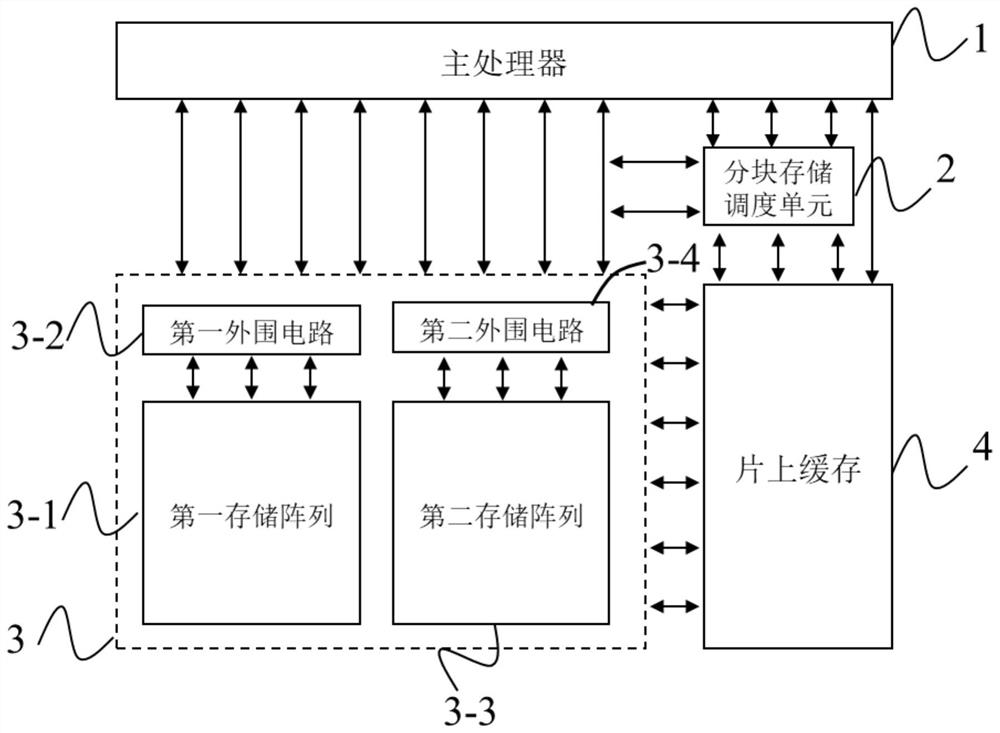

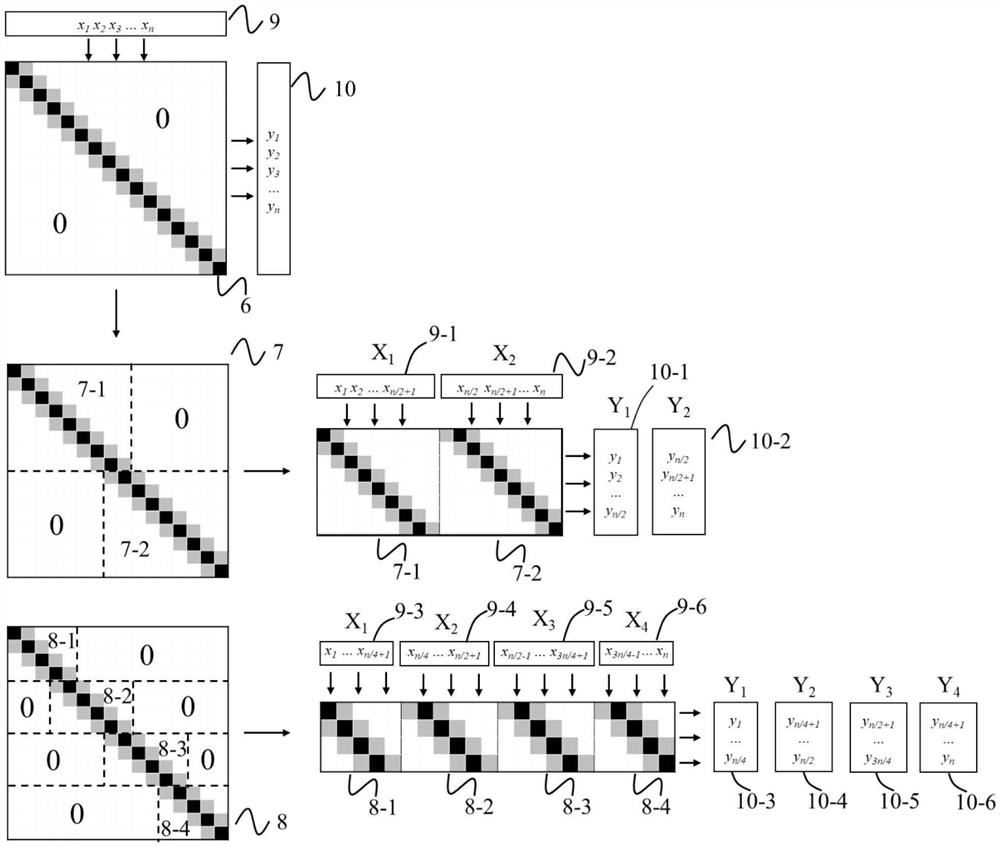

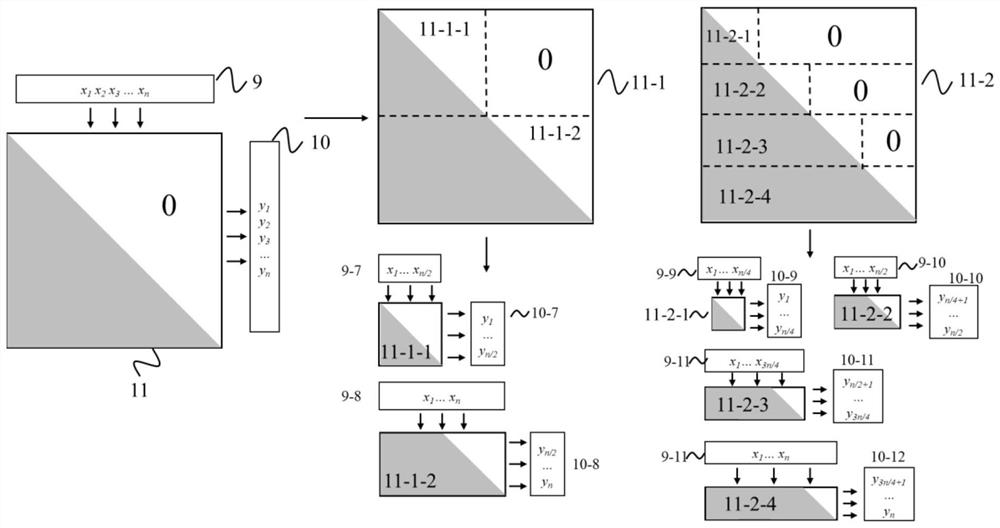

Sparse matrix storage and calculation system and method

The invention provides a sparse matrix storage and calculation system and method, and belongs to the field of microelectronic devices. The system comprises a first storage array which is used for storing a coordinate index table of non-zero elements of a sparse matrix; the second storage array is used for storing elements of the sparse matrix and also serves as an in-situ calculation kernel of sparse matrix multiplication; the block storage scheduling unit is used for partitioning the sparse matrix into a plurality of sub-matrixes and storing the sub-matrixes into a second storage array according to different compression formats, and establishing an index table corresponding to the sparse matrix; and the second peripheral circuit is used for converting the vector into a voltage signal and applying the voltage signal to a bit line or a word line corresponding to a sub-matrix of the sparse matrix to complete multiplication of the sparse matrix and the vector.

Owner:HUAZHONG UNIV OF SCI & TECH

Hardware accelerator for compressed LSTM

ActiveUS10691996B2Easy to operateImprove forecastNeural architecturesPhysical realisationComputer hardwareComputational science

Hardware accelerator for compressed Long Short Term Memory (LSTM) is disclosed. The accelerator comprise a sparse matrix-vector multiplication module for performing multiplication operation between all sparse matrices in the LSTM and vectors to sequentially obtain a plurality of sparse matrix-vector multiplication results. A addition tree module are also included for accumulating a plurality of said sparse matrix multiplication results to obtain an accumulated result. And a non-linear operation module passes the accumulated results through an activation function to generate non-linear operation result. That is, the present accelerator adopts pipeline design to overlap the time of data transfer and computation for compressed LSTM.

Owner:XILINX TECH BEIJING LTD

Adding fields of a video frame

InactiveUS6823006B2Easy to implementTelevision system scanning detailsPicture reproducers using cathode ray tubesVideo processingComputer science

For some video processing applications, most notably watermark detection (40), it is necessary to add or average (parts of) the two interlaced fields which make up a frame. This operation is not trivial in the MPEG domain due to the existence of frame-encoded DCT blocks. The invention provides a method and arrangement for adding the fields without requiring a frame memory or an on-the-fly inverse DCT. To this end, the mathematically required operations of inverse vertical DCT (321) and addition (322) are combined with a basis transform (323). The basis transform is chosen to be such that the combined operation is physically replaced by multiplication with a sparse matrix (32). Said sparse matrix multiplication can easily be executed on-the-fly. The inverse basis transform (35) is postponed until after the desired addition (33, 34) has been completed.

Owner:KONINK PHILIPS ELECTRONICS NV

Sparse matrix multiplication acceleration mechanism

ActiveUS11188618B2Digital data processing detailsProcessor architectures/configurationComputational scienceParallel computing

An apparatus to facilitate acceleration of matrix multiplication operations. The apparatus comprises a systolic array including matrix multiplication hardware to perform multiply-add operations on received matrix data comprising data from a plurality of input matrices and sparse matrix acceleration hardware to detect zero values in the matrix data and perform one or more optimizations on the matrix data to reduce multiply-add operations to be performed by the matrix multiplication hardware.

Owner:INTEL CORP

Sparse matrix method-based network topology analysis method for power system

InactiveCN101976834BClear conceptEasy programmingSpecial data processing applicationsInformation technology support systemAlgorithmMatrix method

The invention discloses a sparse matrix method-based network topology analysis method for a power system. The method is implemented by adopting sparse matrix technology based on solving a full connection matrix by using adjacency matrix self multiplication. The method still has the advantage of a matrix method, has the characteristics of clear concept and simple programming of the matrix method, and has higher computing speed compared with the conventional matrix method. The sparse matrix computing technology adopted in multiplication of a full matrix and a sparse matrix greatly improves the network topology analysis speed. Nodes are optimized and numbered in order from large to small number of closed switches connected with the nodes, and buses are optimized and numbered in order from large to small number of branches connected with the buses, so the matrix multiplication computing quantity is reduced. The adjacency matrixes are sparsely stored so as to effectively save the storage space of a computer.

Owner:DALIAN MARITIME UNIVERSITY

Sparse matrix multiplication acceleration mechanism

ActiveUS20220171827A1Digital data processing detailsProcessor architectures/configurationComputational scienceData pack

An apparatus to facilitate acceleration of matrix multiplication operations. The apparatus comprises a systolic array including matrix multiplication hardware to perform multiply-add operations on received matrix data comprising data from a plurality of input matrices and sparse matrix acceleration hardware to detect zero values in the matrix data and perform one or more optimizations on the matrix data to reduce multiply-add operations to be performed by the matrix multiplication hardware.

Owner:INTEL CORP

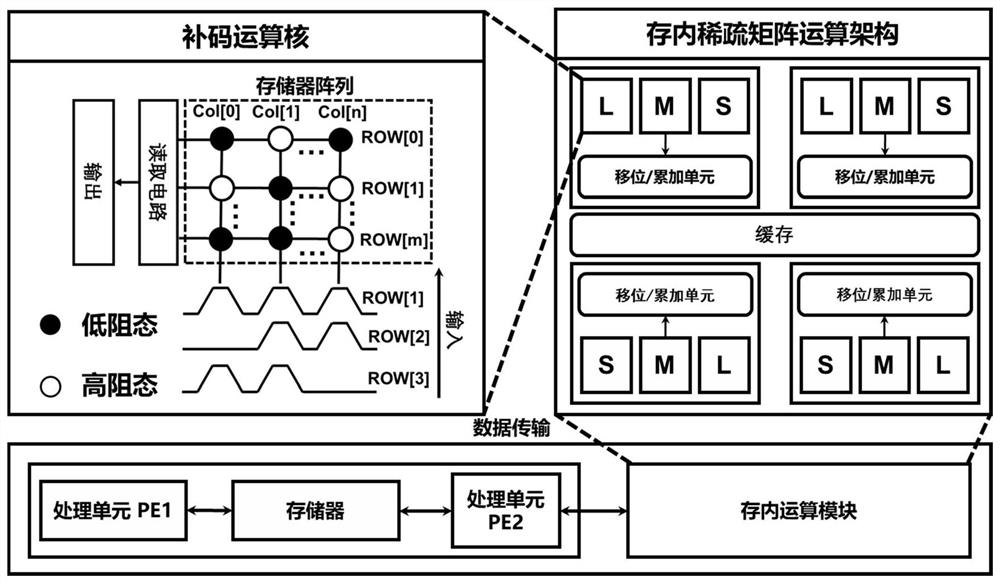

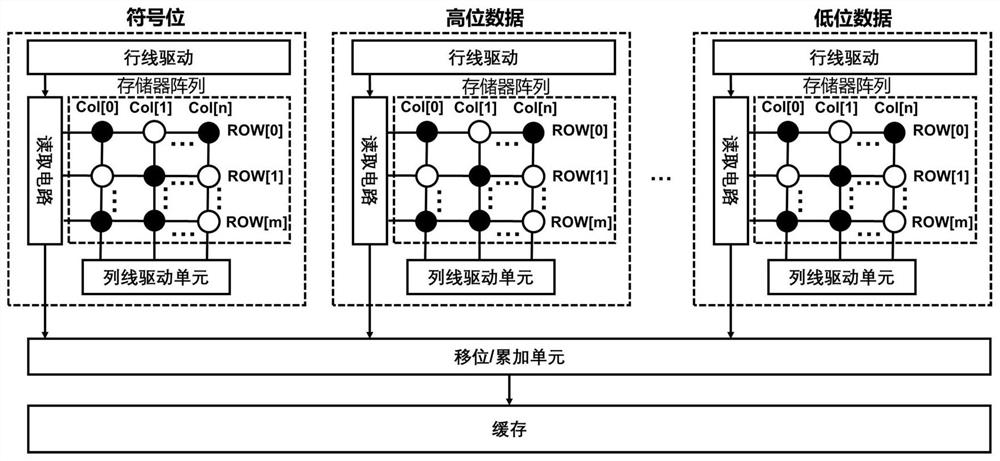

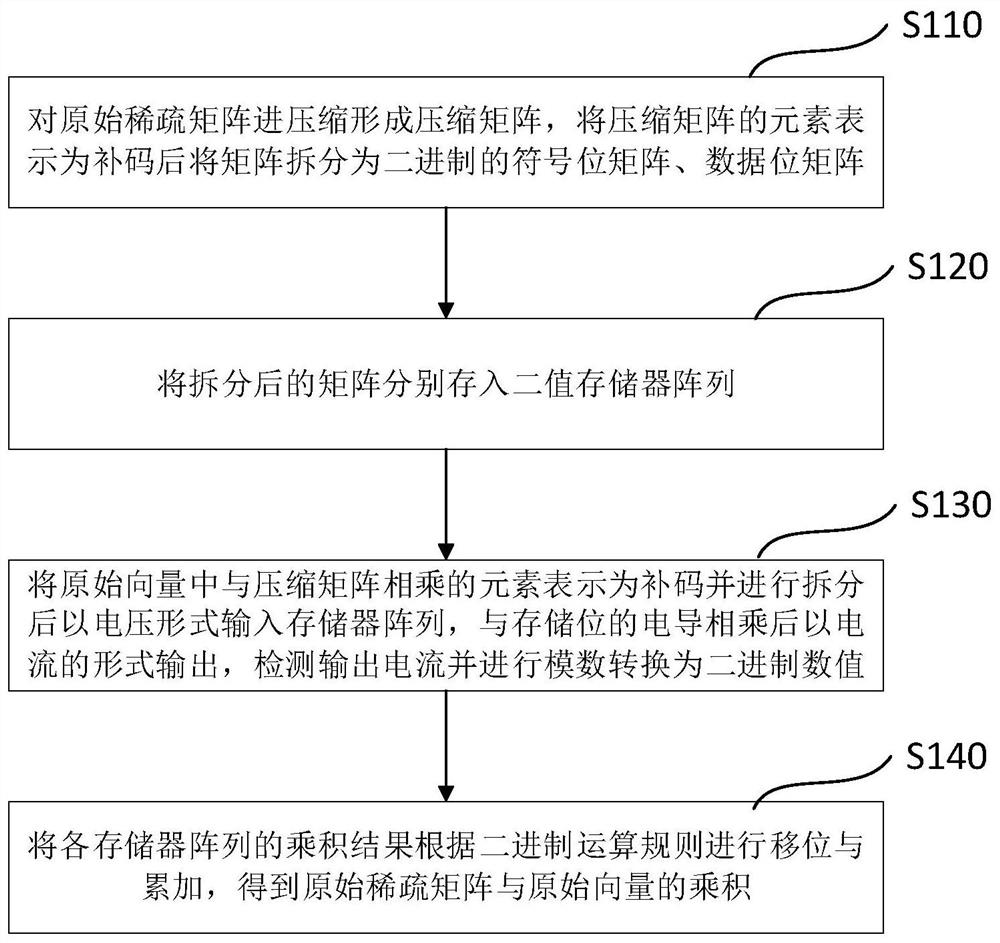

In-memory sparse matrix multiplication operation method, equation solving method and solver

ActiveCN113870918AReduce power consumptionSave storage spaceDigital data processing detailsDigital storageComputational scienceSign bit

The invention discloses an in-memory sparse matrix multiplication operation method, an equation solving method and a solver, and the multiplication operation method comprises the steps of compressing an original sparse matrix to form a compressed matrix, representing the elements of the compressed matrix as complements, and splitting the matrix into a binary sign bit matrix and a binary data bit matrix; respectively storing the split matrixes into a binary memory array; representing elements multiplied by the compression matrix in the original vector as complements, splitting the complements, inputting the complements into a memory array in a voltage form, multiplying the complements by conductance of a storage bit, outputting the complements in a current form, detecting the output current, and performing analog-to-digital conversion to obtain a binary numerical value; and shifting and accumulating the product result of each memory array according to a binary operation rule to obtain the product of the original sparse matrix and the original vector. Through the operation method, the storage space can be reduced, so that the power consumption of the circuit is reduced, a low conductivity value is avoided, and the calculation error is reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

Variable format, variable sparsity matrix multiplication instruction

PendingCN112099852ADigital data processing detailsInstruction analysisProgramming languageParallel computing

Owner:INTEL CORP

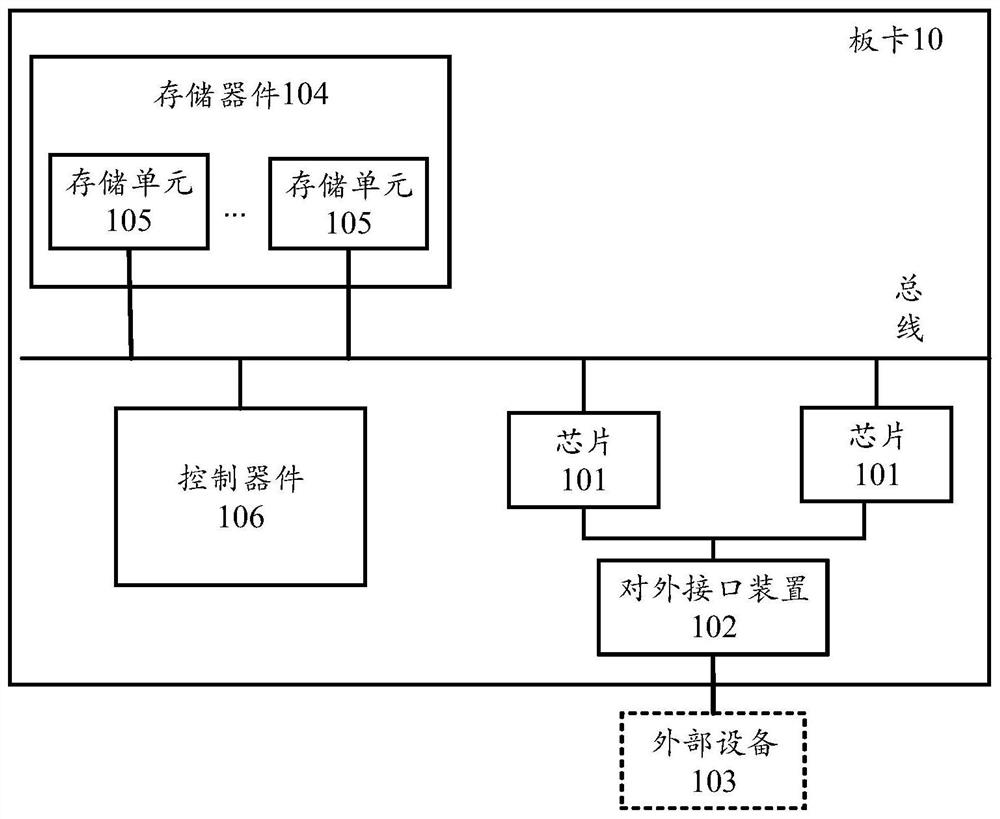

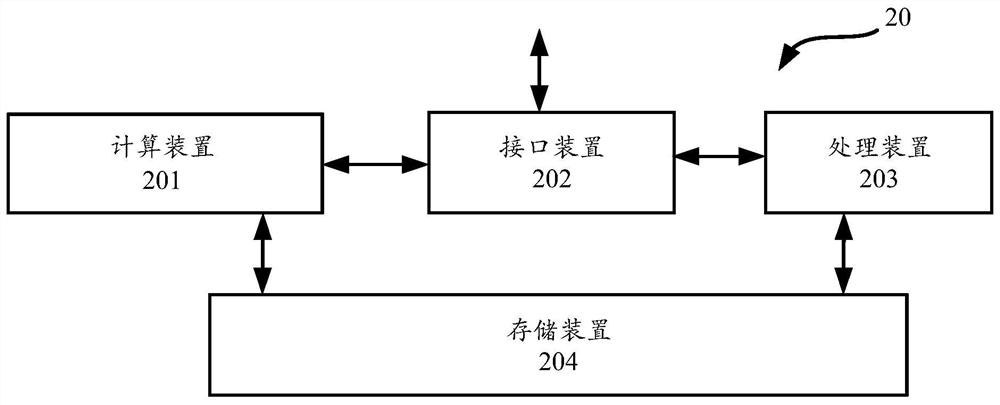

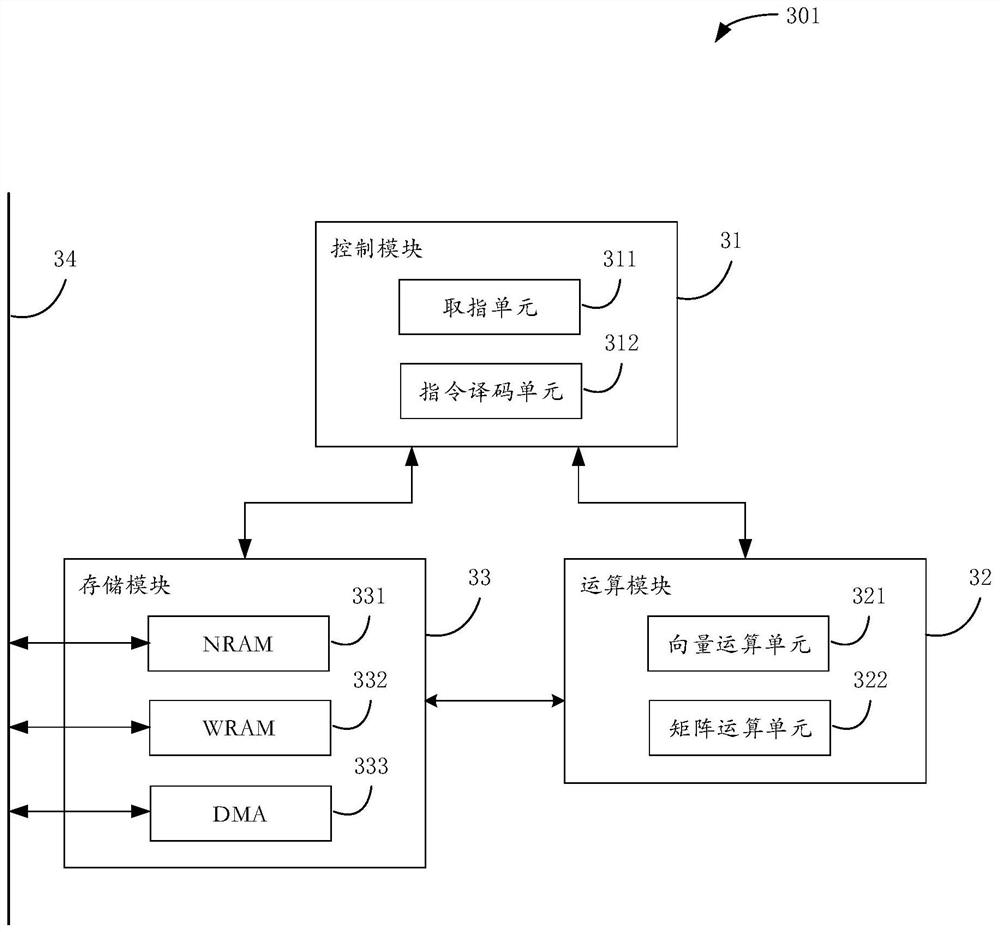

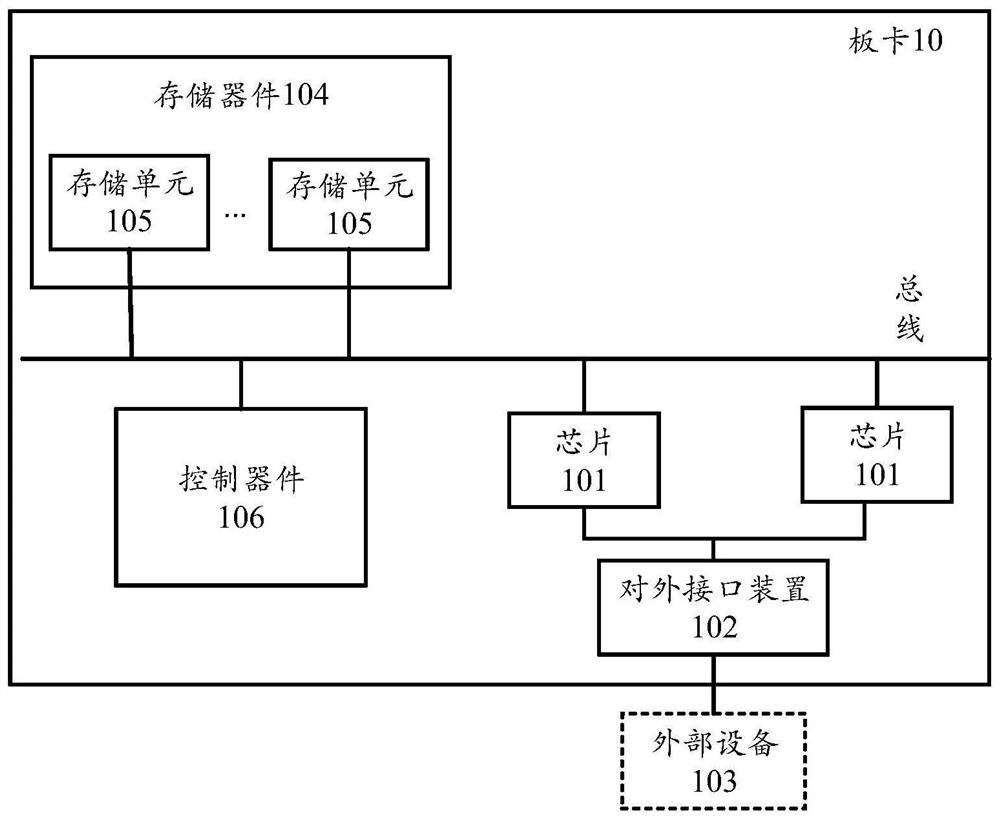

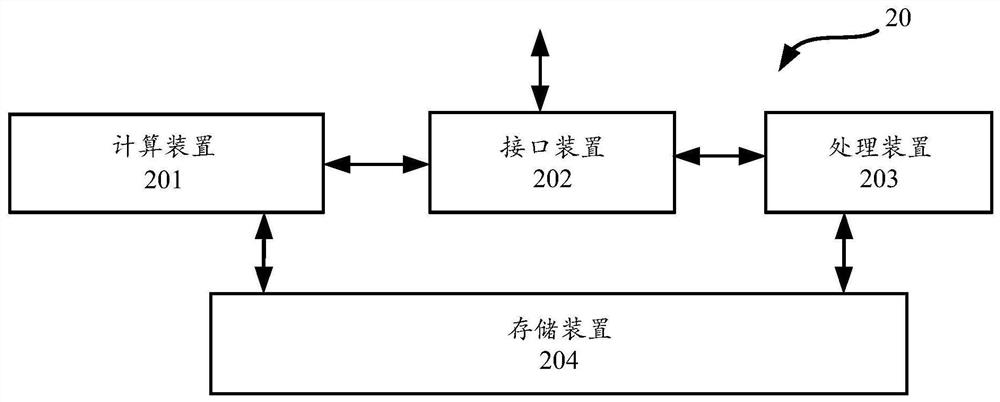

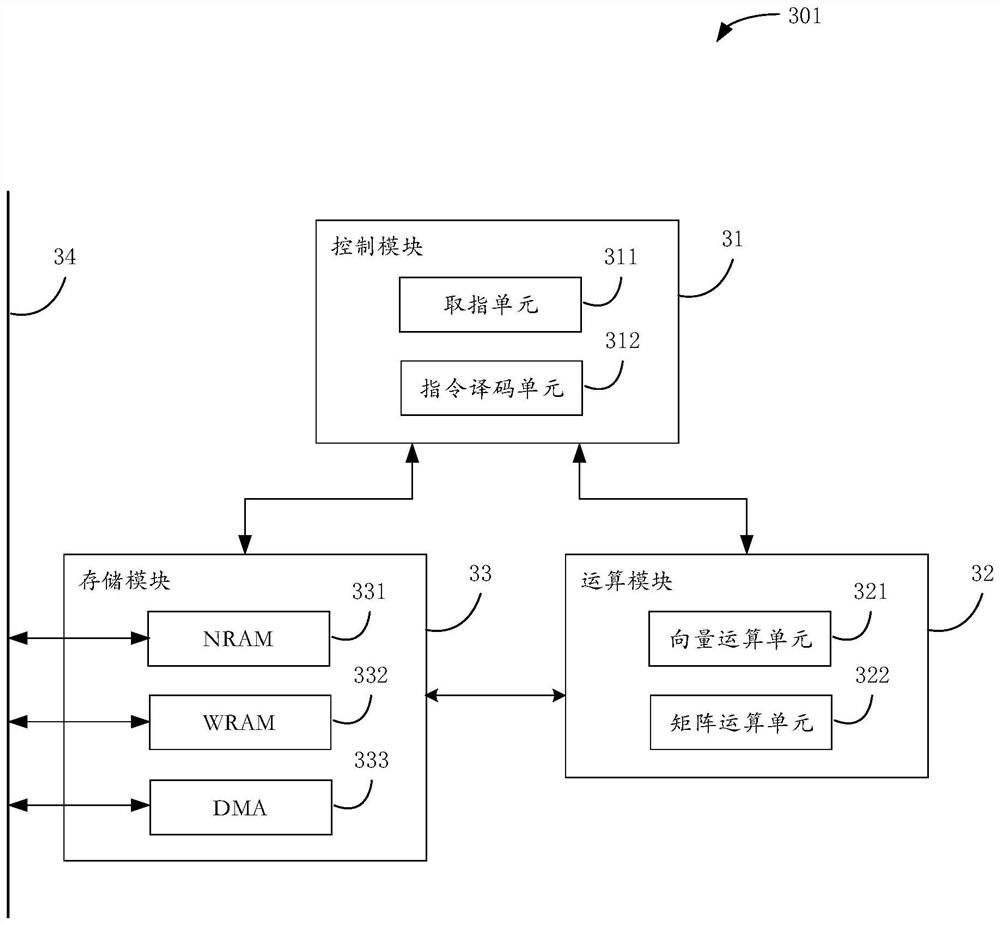

Matrix multiplication circuit and method and related product

PendingCN114691083AImprove processing efficiencyReduce operational complexityDigital data processing detailsPhysical realisationComputer hardwareComputer architecture

The invention discloses a matrix multiplication circuit, a method for executing sparse matrix multiplication by using the matrix multiplication circuit and a related product. The matrix multiplication circuit may be implemented as a computing device included in a combinatorial processing device, which may also include an interface device and other processing devices. The computing device interacts with other processing devices to jointly complete computing operation specified by a user. The combined processing device can further comprise a storage device, and the storage device is connected with the computing device and the other processing devices and used for storing data of the computing device and the other processing devices. The scheme of the disclosure provides a circuit supporting sparse matrix multiplication, which can simplify processing and improve processing efficiency of a machine.

Owner:ANHUI CAMBRICON INFORMATION TECH CO LTD

A method for multiplication of super-large-scale sparse matrix based on mapreduce framework

ActiveCN104462023BReduce performanceShorten the timeComplex mathematical operationsFrame basedAlgorithm

A large-scale sparse matrix multiplication method based on the mapreduce framework. The algorithm is completed by two mapreduce jobs, and the elements of matrix A and matrix B are correctly grouped, so that the elements of the ith column of matrix A are the same as The elements of the kth row of the matrix B enter the same reduce group, and do a product of each element from A and element from B in the group. The present invention only needs 2 mapreduce operations to complete the multiplication of the super-large-scale coefficient matrix, reducing the operation steps and time of the algorithm, and the present invention reduces the requirement for the machine memory, and only needs the machine to store each row of the matrix A with a hashmap conduct.

Owner:阿里巴巴(北京)软件服务有限公司

A sparse matrix storage and calculation system and method

The invention provides a sparse matrix storage and calculation system and method, which belong to the field of microelectronic devices. The system includes: a first storage array used to store the coordinate index table of the non-zero elements of the sparse matrix; a second storage array used to store the coordinate index table of the sparse matrix Elements, at the same time as the in-situ calculation core of the sparse matrix multiplication operation; the block storage scheduling unit is used to block the sparse matrix into several sub-matrices, and store each sub-matrix to the second storage array according to different compression formats; and establish a sparse An index table corresponding to the matrix; the second peripheral circuit is used to convert the vector into a voltage signal, and apply the voltage signal to the bit line or word line corresponding to the sub-matrix of the sparse matrix to complete the multiplication operation of the sparse matrix and the vector.

Owner:HUAZHONG UNIV OF SCI & TECH

Matrix multiplication circuit and method and related product

PendingCN114692074AImprove processing efficiencyReduce operational complexityComplex mathematical operationsComputer hardwareComputer architecture

The invention discloses a matrix multiplication circuit, a method for executing sparse matrix multiplication by using the matrix multiplication circuit and a related product. The matrix multiplication circuit may be implemented as a computing device included in a combinatorial processing device, which may also include an interface device and other processing devices. The computing device interacts with other processing devices to jointly complete computing operation specified by a user. The combined processing device can further comprise a storage device, and the storage device is connected with the computing device and the other processing devices and used for storing data of the computing device and the other processing devices. The scheme of the disclosure provides a circuit supporting sparse matrix multiplication, which can simplify processing and improve processing efficiency of a machine.

Owner:ANHUI CAMBRICON INFORMATION TECH CO LTD

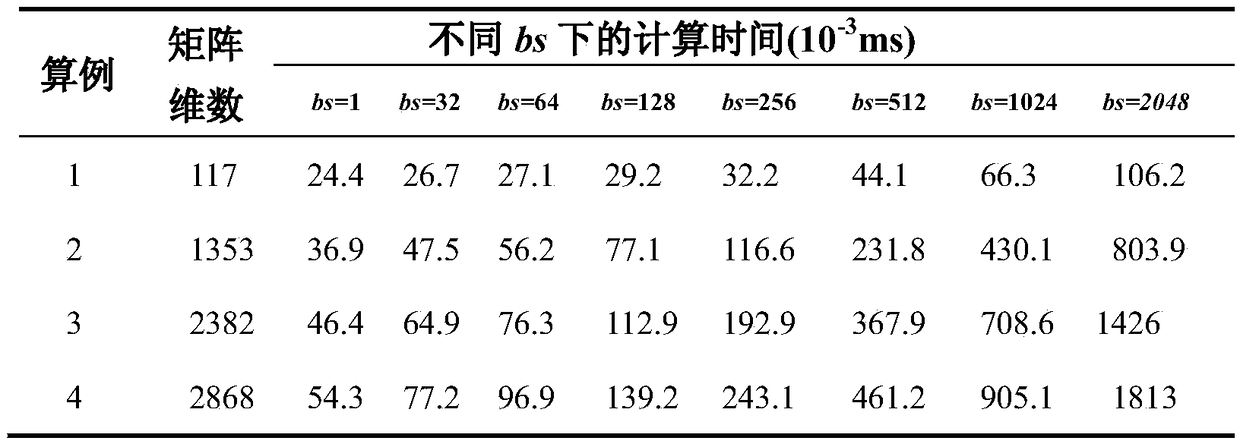

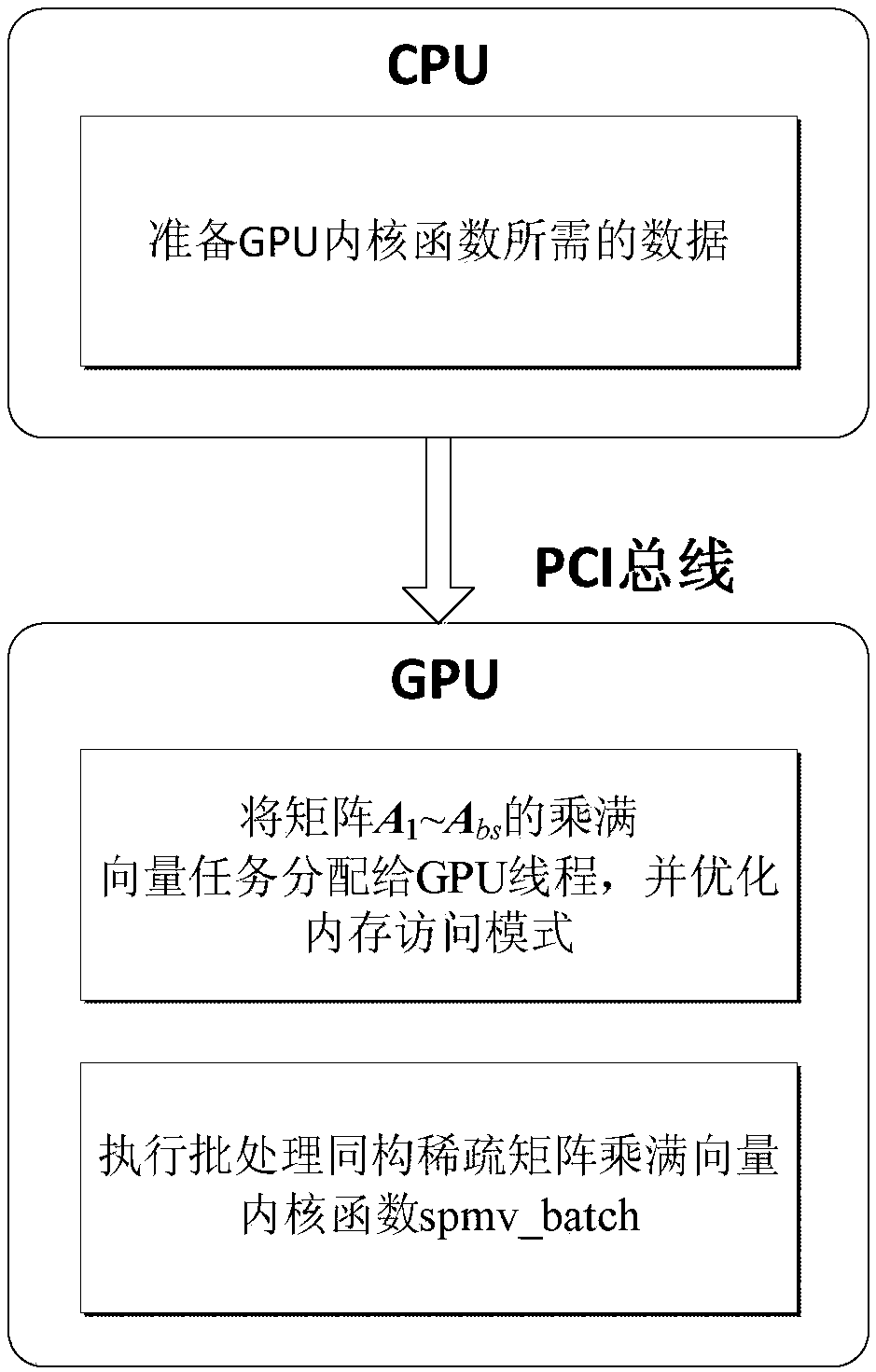

A GPU-accelerated batch processing method for multiplying full vectors by homogeneous sparse matrices

ActiveCN106407158BImprove parallelismImprove efficiencyProcessor architectures/configurationComplex mathematical operationsBatch processingData transmission

The invention discloses a GPU accelerated method for performing batch processing of isomorphic sparse matrixes multiplied by full vectors. The method comprises the following steps of: (1), storing all matrixes A<1>-A<bs> in a row compression storage format in a CPU; (2), transmitting data required by a GPU kernel function to a GPU by the CPU; (3), distributing full vector multiplication tasks of the matrixes A<1>-A<bs> to GPU threads, and optimizing a memory access mode; and (4), executing batch processing of the isomorphic sparse matrixes multiplied by a full vector kernel function spmv_batch in the GPU, and calling the kernel function to perform batch processing of parallel computing of the isomorphic sparse matrixes multiplied by the full vectors. In the method disclosed by the invention, the CPU is responsible for controlling the whole process of a program and preparing data; the GPU is responsible for computing intensive vector multiplication; the algorithm parallelism and the access efficiency are increased by utilization of a batching processing mode; and thus, the computing time of batch sparse matrixes multiplied by full vectors is greatly reduced.

Owner:SOUTHEAST UNIV

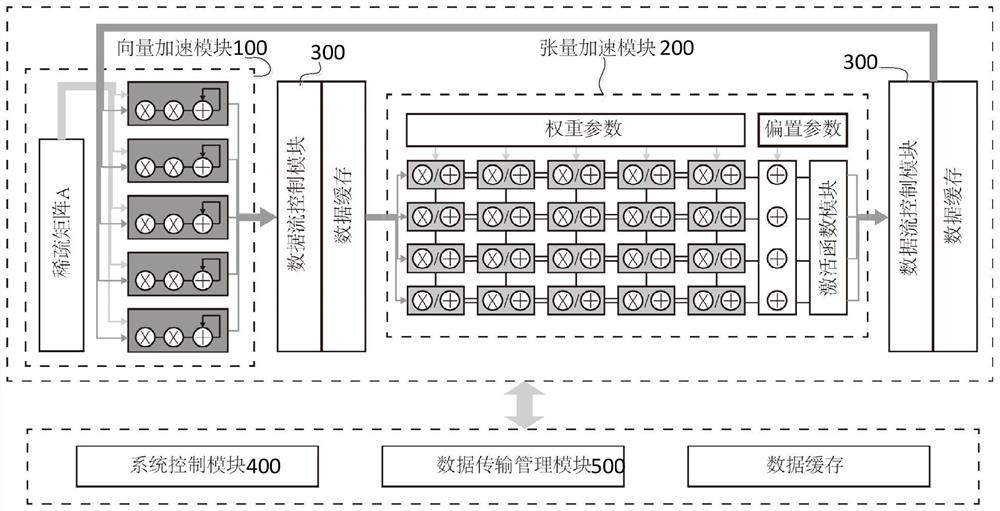

FPGA-based space-time diagram neural network accelerator structure

PendingCN114548391AAcceleration and efficiencyEasy to handleNeural architecturesPhysical realisationActivation functionData stream

The invention discloses a space-time diagram neural network accelerator structure based on an FPGA, a tensor and vector double acceleration module parallel processing mode is designed, a vector acceleration module executes sparse matrix multiplication or element-by-element multiplication and addition operation, and a tensor acceleration module executes dense matrix multiplication, bias term addition and operation of different activation functions. The system control module controls the tensor acceleration module and the vector acceleration module to complete calculation corresponding to a calculation time sequence according to the set calculation time sequence, and the data flow control module controls the data flow direction between the tensor acceleration module and the vector acceleration module so as to complete calculation in a circulating mode. According to the design of the whole architecture, efficient processing of a three-dimensional space-time diagram neural network is achieved, and calculation of a multifunctional function is achieved in a simple mode; the acceleration calculation of multiple neural network modes can be realized, so that the method can be downwards compatible with the acceleration of multiple network models such as a graph convolutional neural network GCN and a gated recursive unit GRU, and has higher universality.

Owner:国家超级计算深圳中心(深圳云计算中心)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com