Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

43 results about "Memory replacement" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory replacement is a psycho-engineering process used by the Combine as part of the transhuman operation, transforming humans into Overwatch Soldiers and Overwatch Elites.

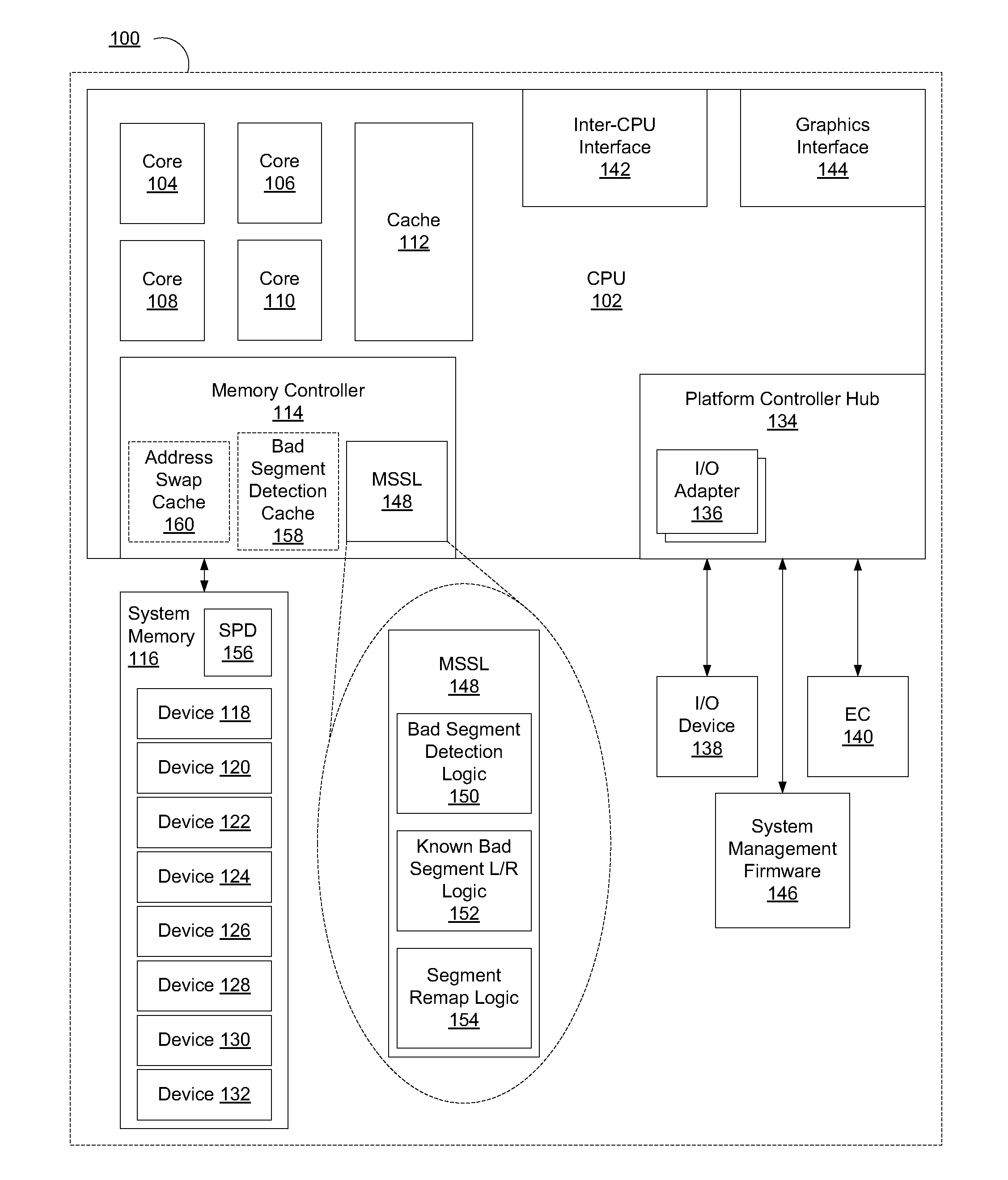

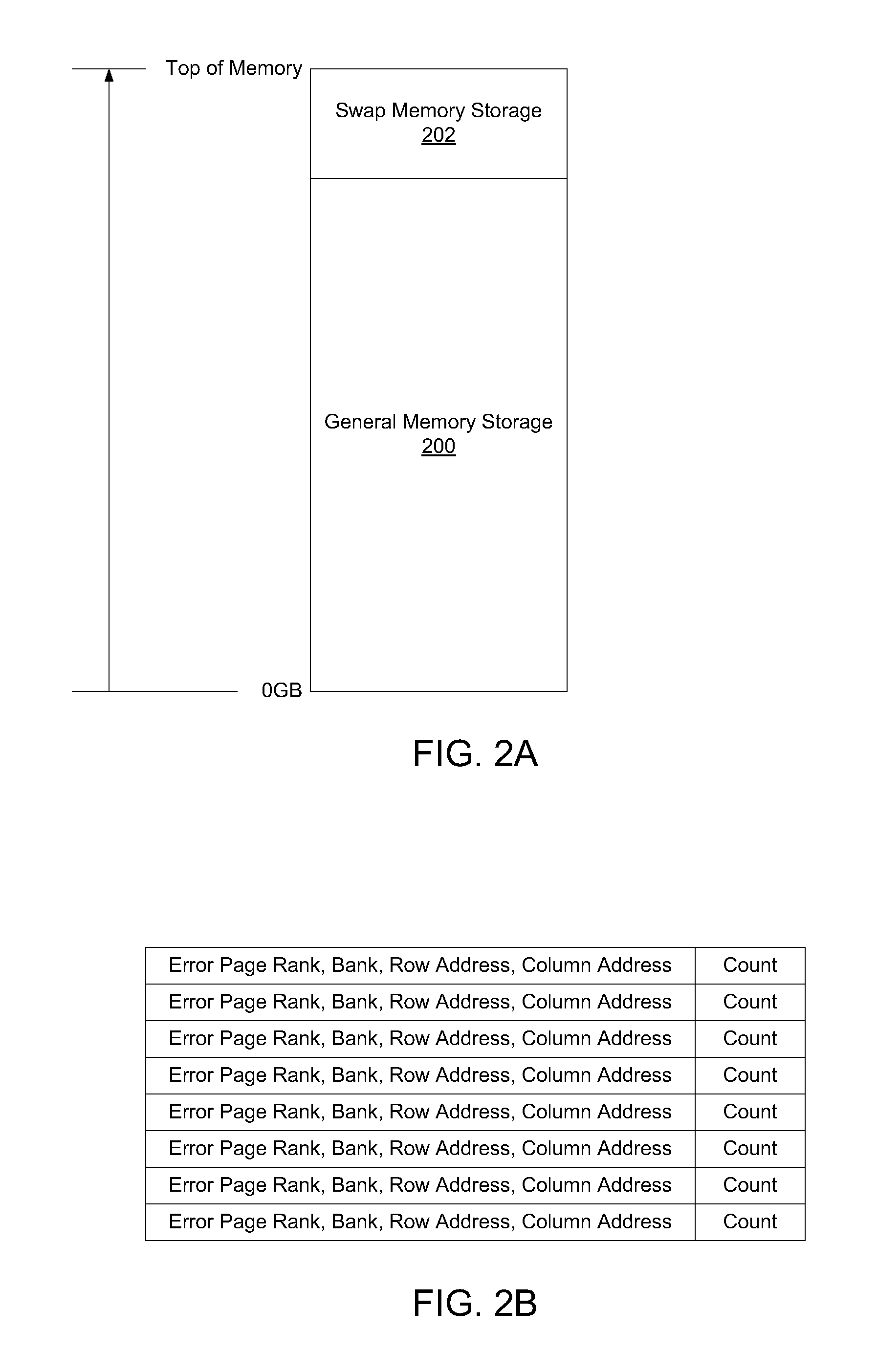

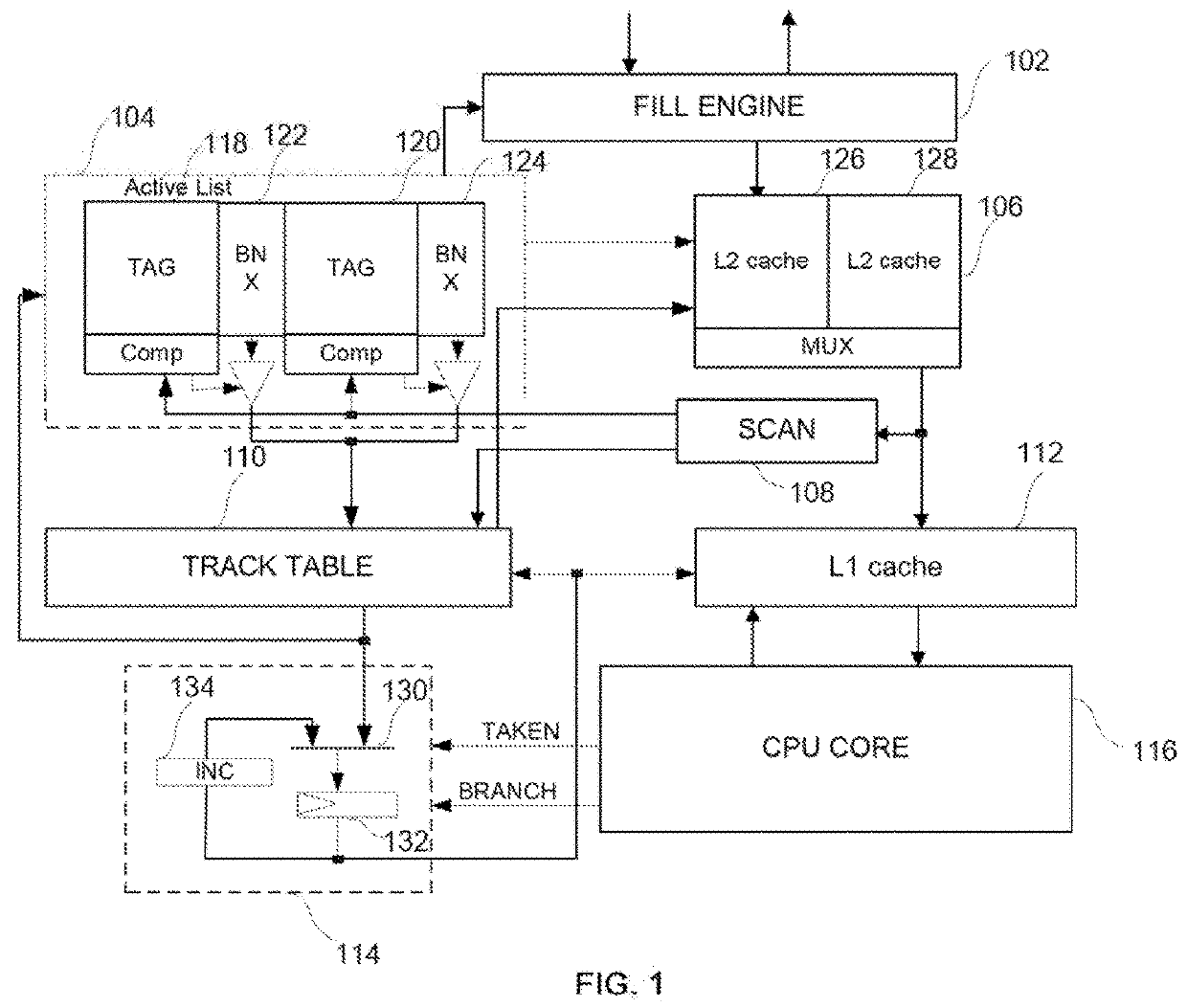

Dynamic physical memory replacement through address swapping

InactiveUS20120072768A1Memory adressing/allocation/relocationDigital storagePhysical addressMemory replacement

An apparatus, system, method, and machine-readable medium are disclosed. In one embodiment the apparatus includes an address swap cache. The apparatus also includes memory segment swap logic that is capable of detecting a reproducible fault at a first address targeting a memory segment. Once detected, the logic remaps the first address targeting the faulty memory segment with a second address targeting another memory segment. The logic stores the two addresses in an entry in the address swap cache. Then the memory segment swap logic receives a memory transaction that is targeting the first physical address and use the address to perform a lookup process in the address swap cache to determine if an entry exists that has the faulty address. If an entry does exist for that address, the logic then swaps the second address into the memory transaction for the first address.

Owner:INTEL CORP

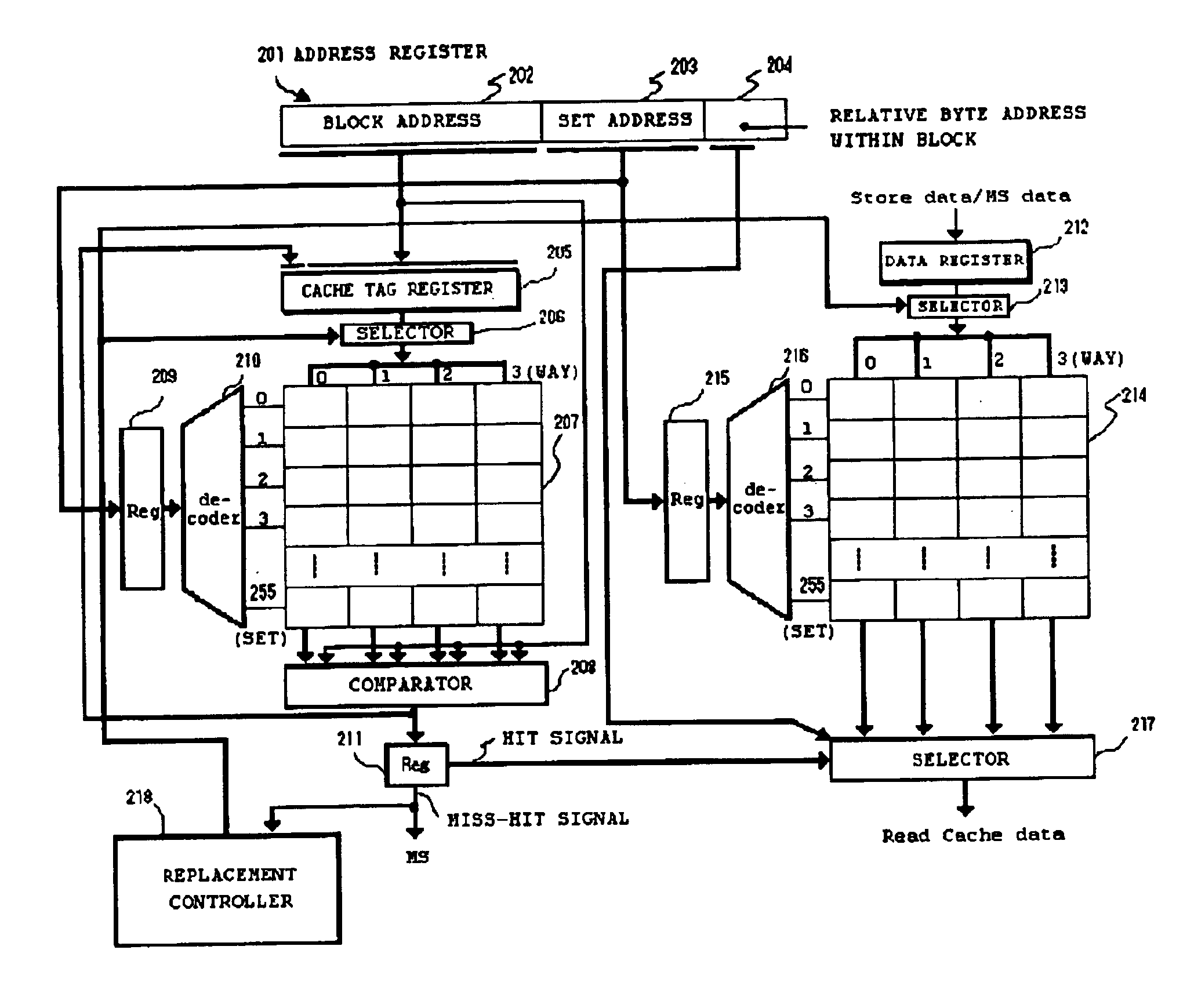

Shared cache memory replacement control method and apparatus

InactiveUS6877067B2Avoid it happening againReduce utilizationMemory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorParallel computing

In a multiprocessor system in which a plurality of processors share an n-way set-associative cache memory, a plurality of ways of the cache memory are divided into groups, one group for each processor. When a miss-hit occurs in the cache memory, one way is selected for replacement from the ways belonging to the group corresponding to the processor that made a memory access but caused the miss-hit. When there is an off-line processor, the ways belonging to that processor are re-distributed to the group corresponding to an on-line processor to allow the on-line processor to use those ways.

Owner:NEC CORP

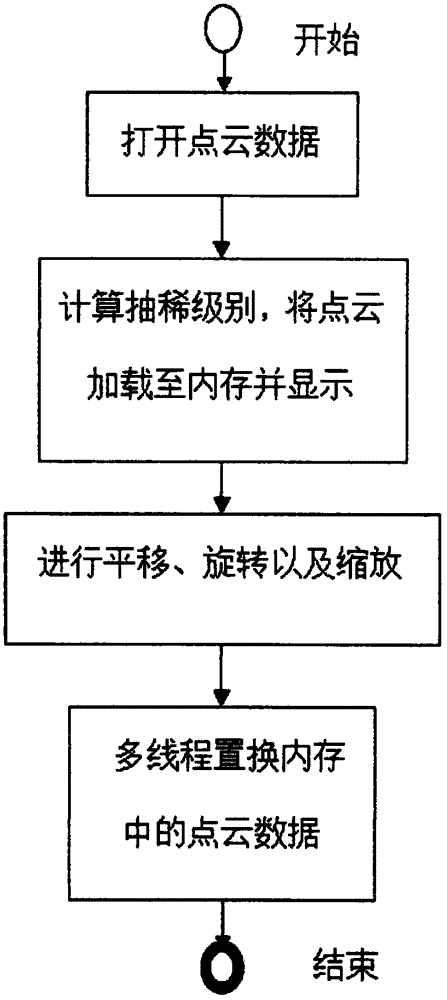

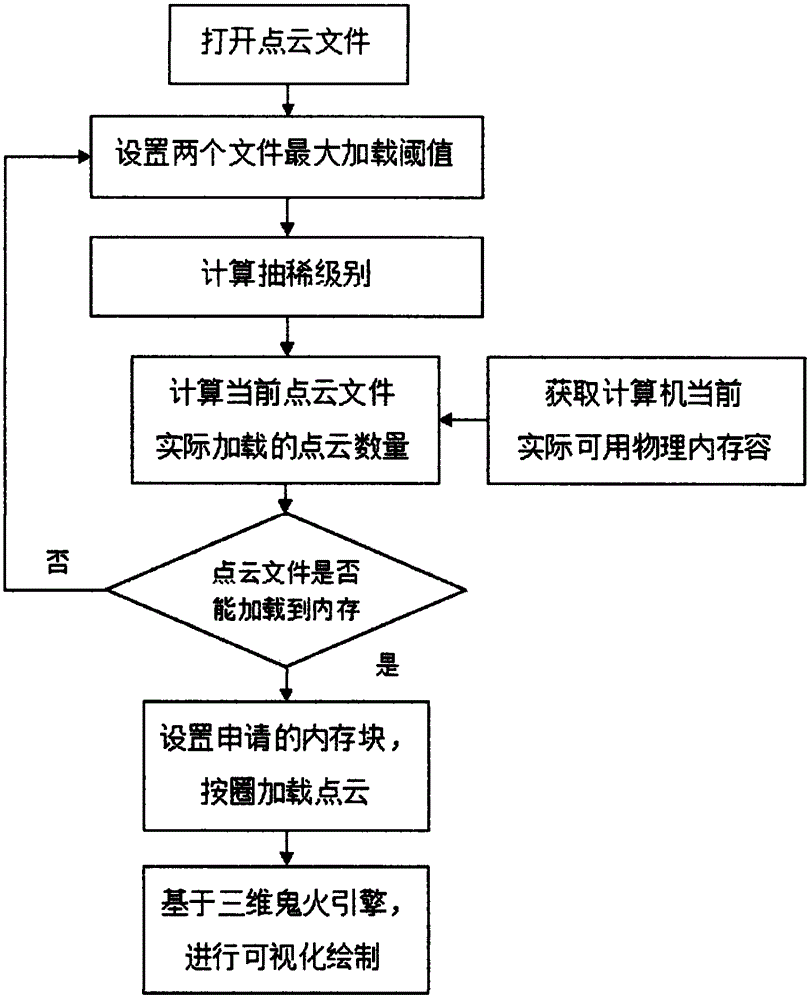

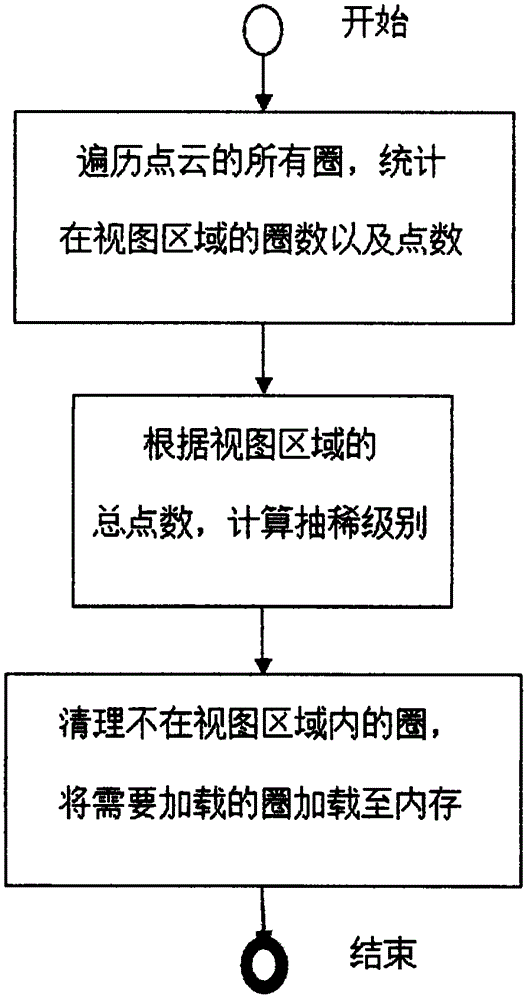

Method for dynamic browsing of vehicle-mounted mass point cloud data

ActiveCN104391906ASimple structureEasy accessPhotogrammetry/videogrammetryGeographical information databasesPoint cloudCurrent point

The invention relates to a method for dynamic browsing of vehicle-mounted mass point cloud data. According to a principle of a vehicle-mounted laser measuring system, a quick access point cloud format and a corresponding memory structure are self-defined. For a point cloud file with the format, the method comprises the following steps of setting the threshold value of the maximum point cloud loading number of a single file, and calculating the thinning level of the current point cloud file; according to the thinning level and the total point number of the point cloud file, calculating the number of actually loaded point clouds in the current point cloud file; according to the current available physical memory capacity of a computer, judging whether the point cloud file can be loaded into a memory or not; when the point cloud file can be loaded into the memory, resetting the threshold value of the maximum point cloud loading number of the single file; using a sectional memory mapping method to load the point cloud file into the memory, and adopting a multi-thread technique to perform real-time memory replacement and dot cloud rendering according to circle indexes and point cloud data in the memory, so as to realize the dynamic browsing of the vehicle-mounted mass point cloud data. The method has the advantages that the higher view displaying property can be obtained by shorter response time, and the problem of dynamic browsing of the vehicle-mounted mass point cloud data in computers with common configuration is solved.

Owner:WUHAN HI TARGET DIGITAL CLOUD TECH CO LTD

Method and device for monitoring server memory and readable medium

InactiveCN110674005ARealize secondary filtrationTo achieve the purpose of storm monitoringHardware monitoringRedundant data error correctionError checkTerm memory

The invention discloses a method and device for monitoring a server memory and a medium. The method comprises: receiving and counting error checking and correcting information; judging whether the number of times of receiving the error checking and correcting information within a continuous first preset duration reaches a first threshold value or not; in response to the fact that the number of times of receiving the error checking and correcting information within the continuous first preset duration reaches a first threshold value, recording the log information into a black box log; judging whether the log information in the black box log reaches a preset standard or not within a continuous second preset duration; and in response to the fact that the log information in the black box log in the continuous second preset duration reaches a preset standard, recording the log information into the BMC system log to remind the user to replace the memory. According to the scheme provided by the invention, secondary filtering of ECC monitoring is realized, waste and influence on customer services caused by memory replacement due to generation of a small amount of ECC errors are avoided, timely processing can be ensured when a fault really occurs, and the stability of system operation is ensured.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

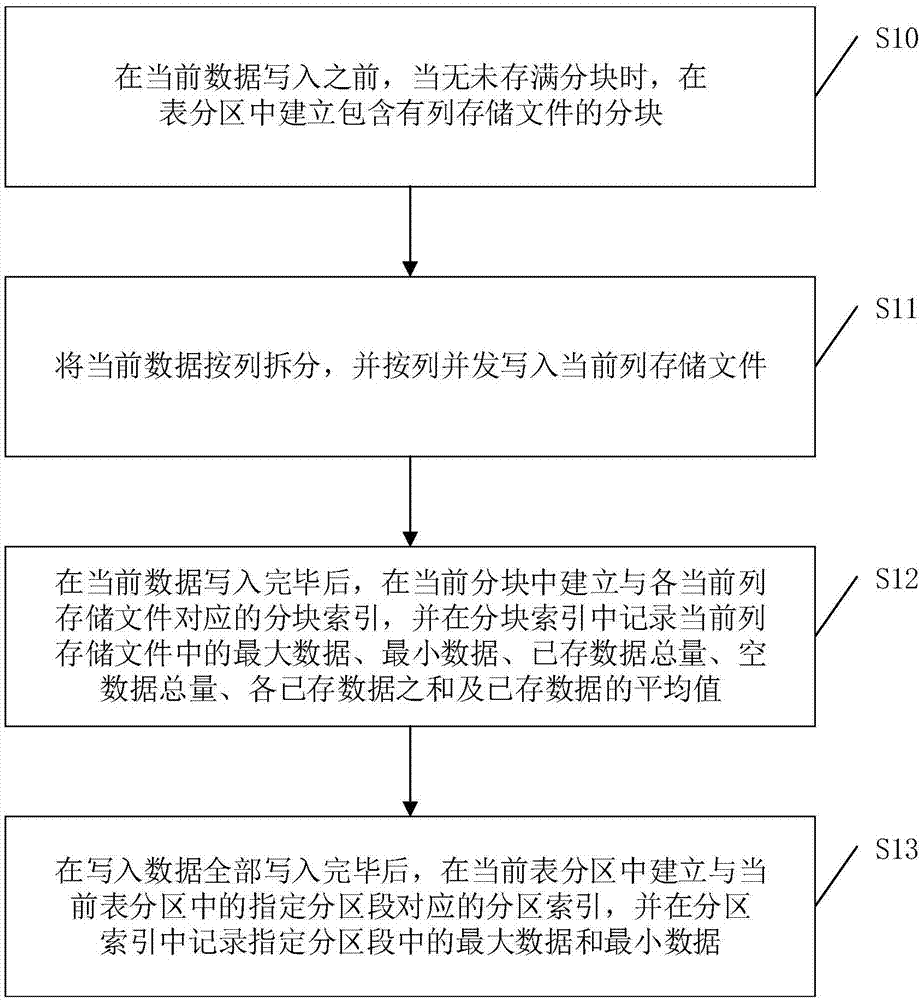

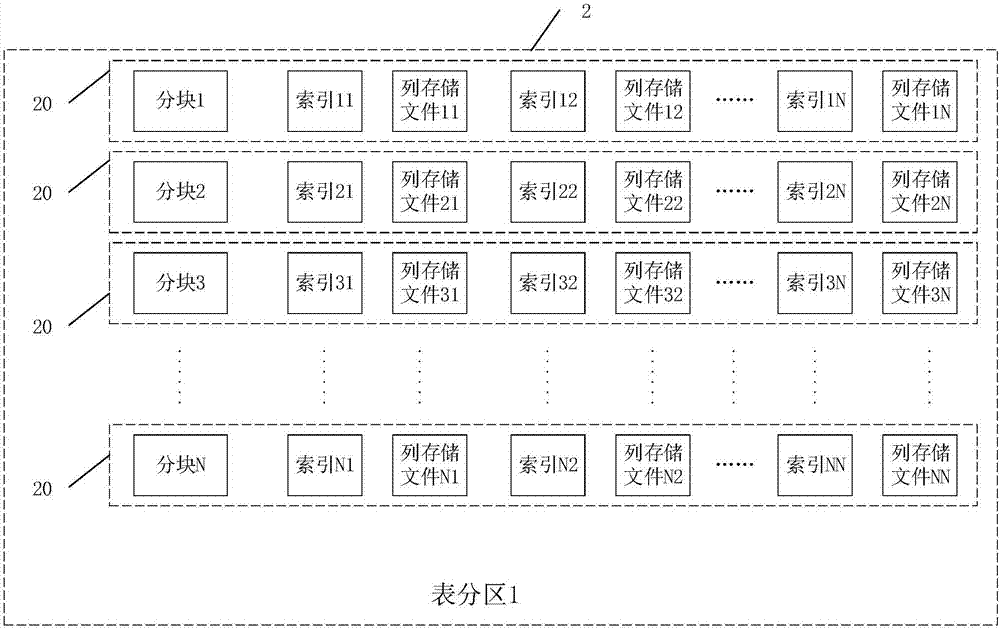

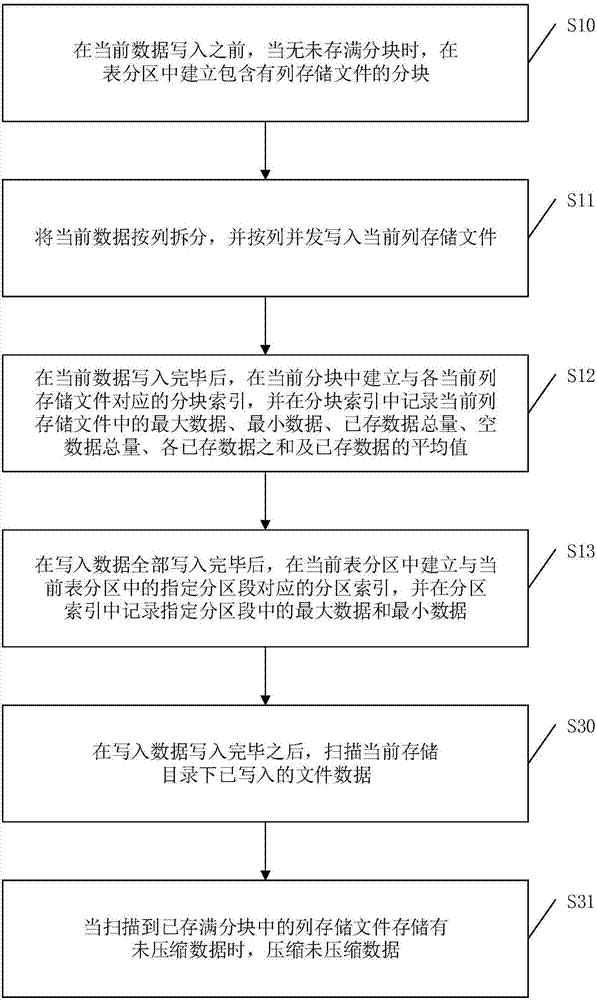

Data storage method and device

ActiveCN107577436AImprove query speedNarrow your searchInput/output to record carriersSpecial data processing applicationsData queryData store

The invention discloses a data storage method. Due to the fact that only maximum data and minimum data in a designated partition section of a table partition are stored in a partition index and the maximum data, minimum data, the total quantity of stored data, only the total quantity of empty data, the sum of stored data and the mean value of the stored data in column-stored files are stored in ablocking index during data storage, both the storage space occupied by the partition index and the storage space occupied by the blocking index are very small, the partition index and the blocking index can be also basically stored in a memory even during massive data storage, and frequent memory replacement caused by huge indexing files is avoided. Therefore, by adopting the data storage method,the partition index and the blocking index can be completely stored in the memory during massive data storage, and the data query speed can be improved. In addition, the invention also discloses a data storage device having the above advantages.

Owner:HANGZHOU SHIQU INFORMATION TECH CO LTD

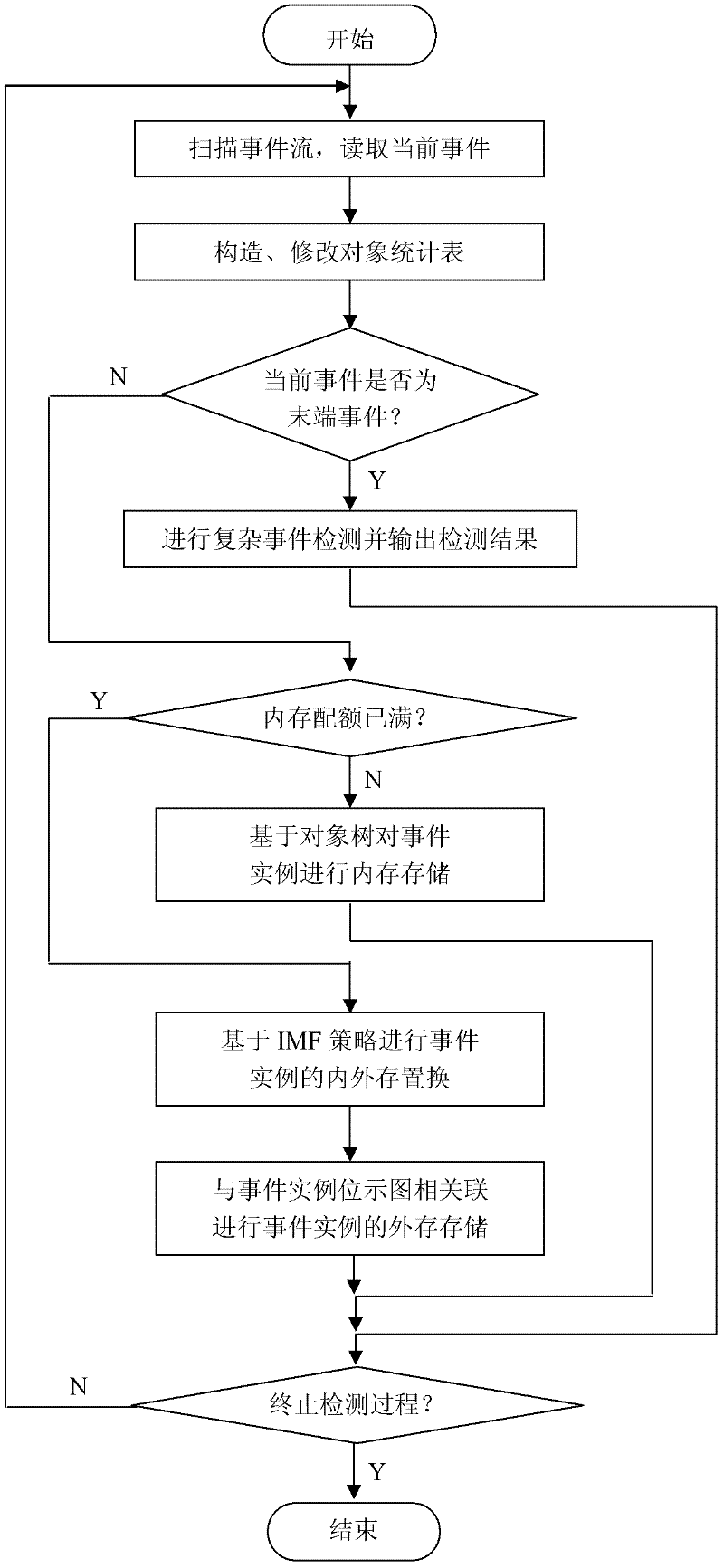

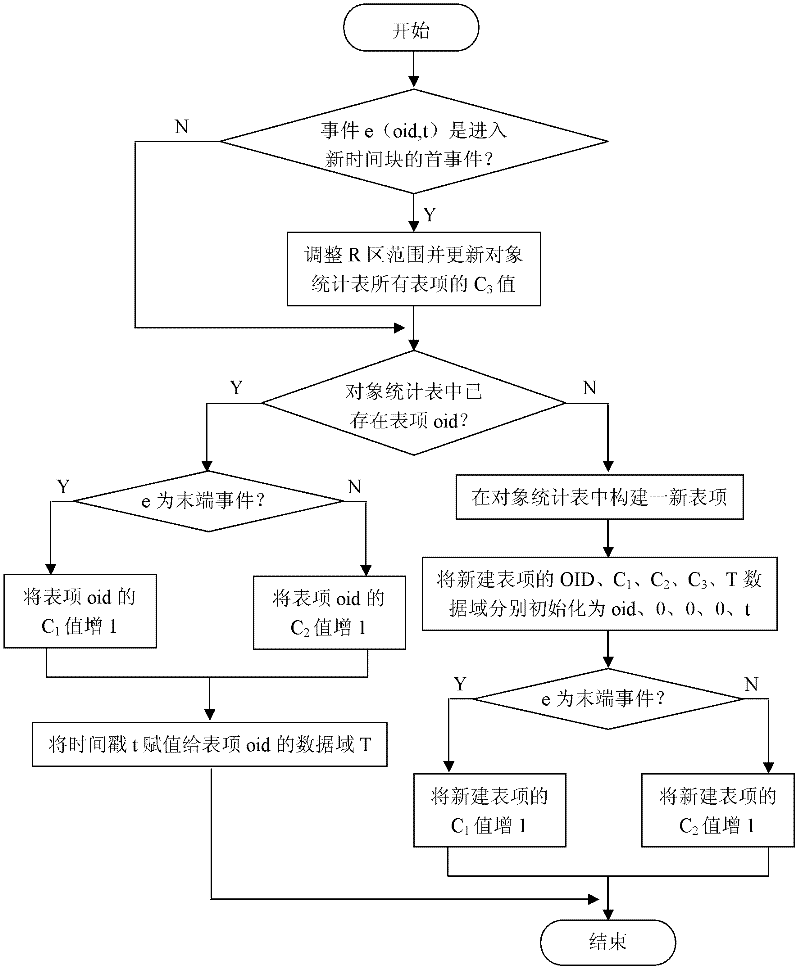

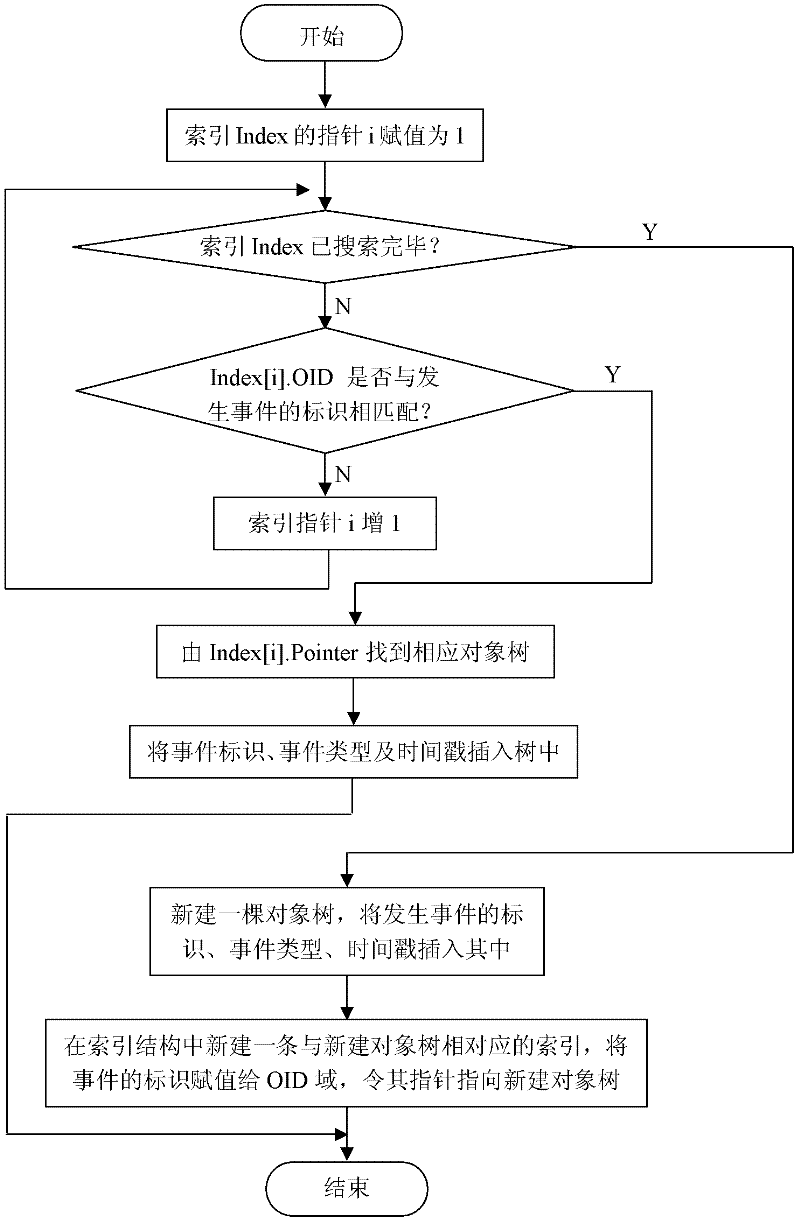

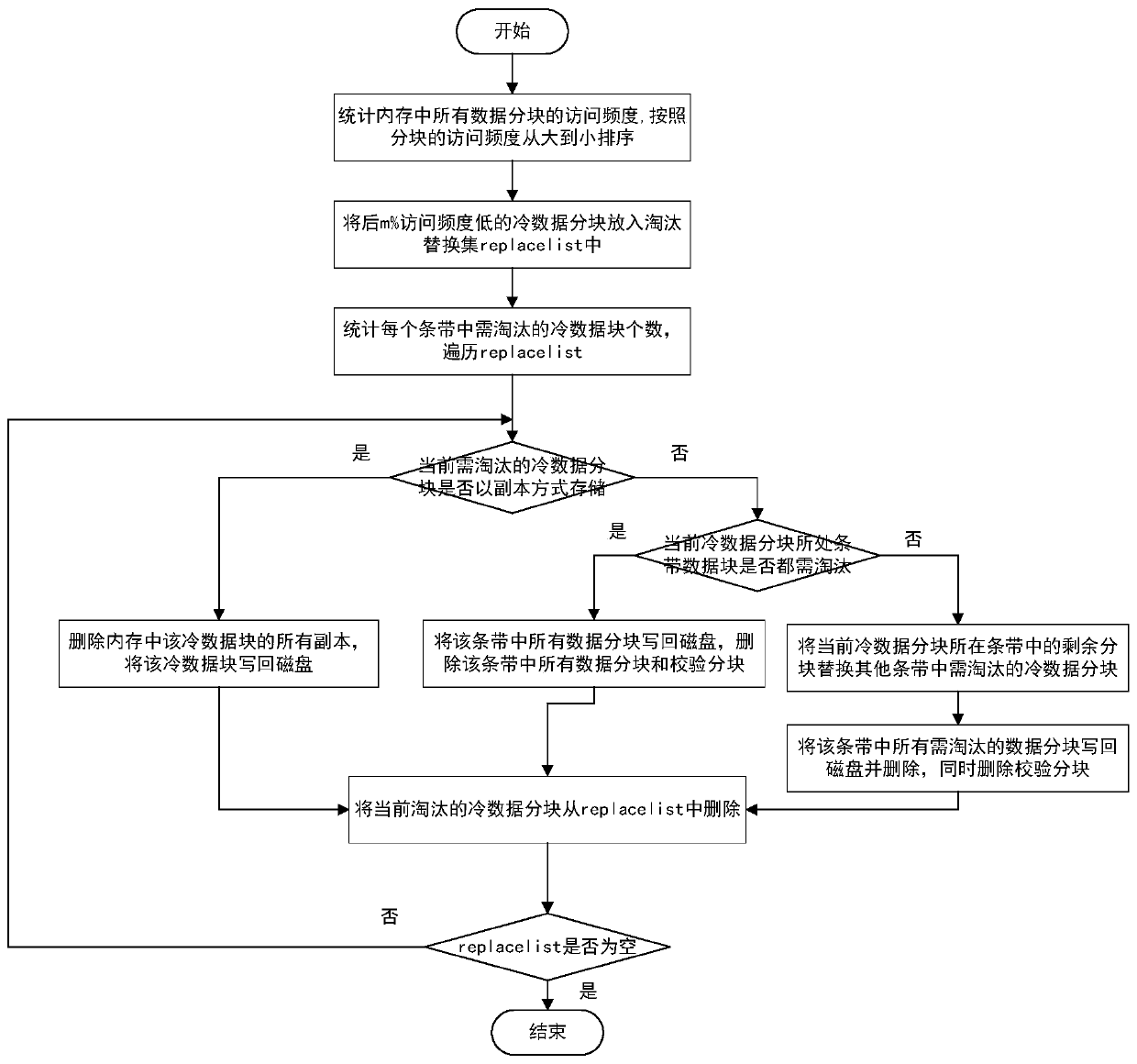

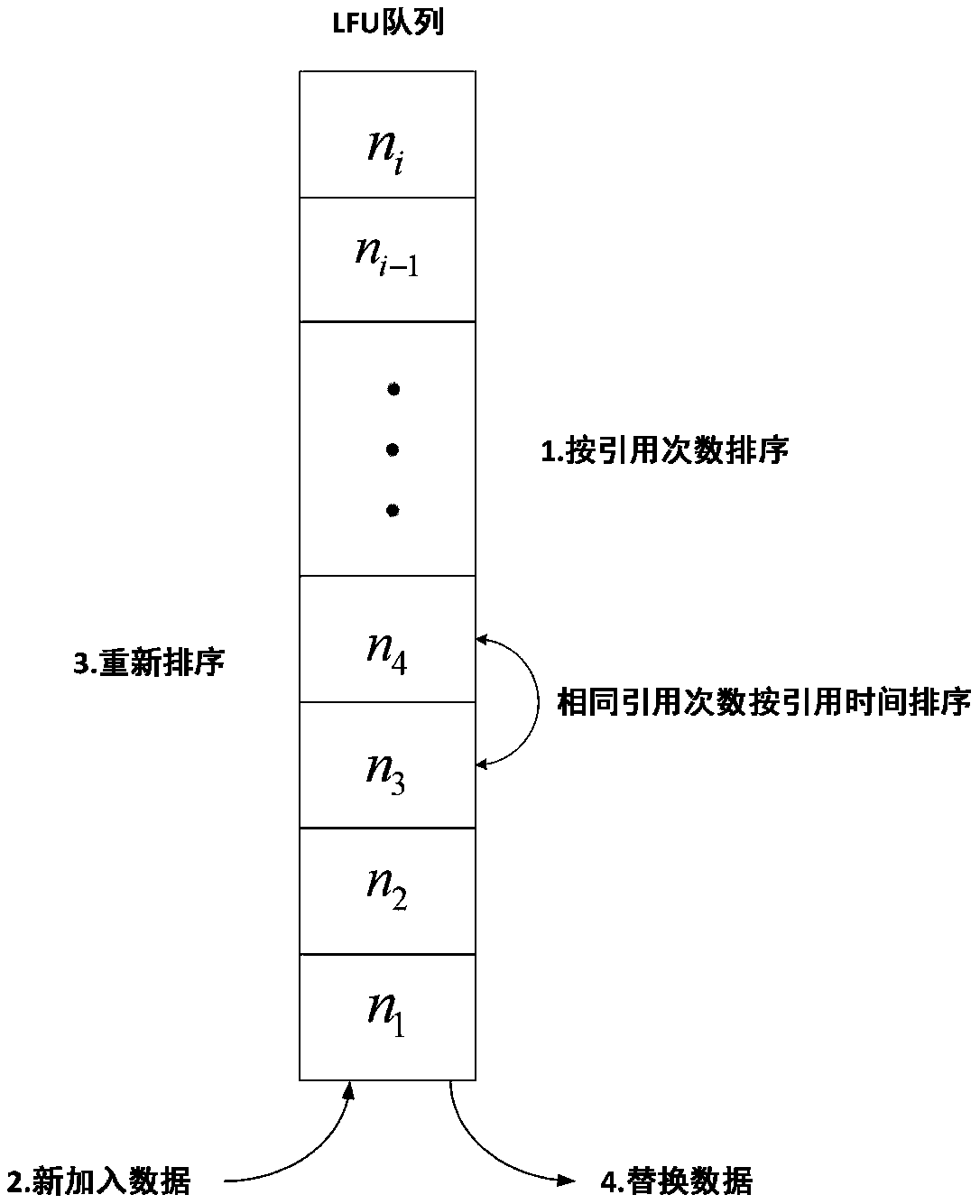

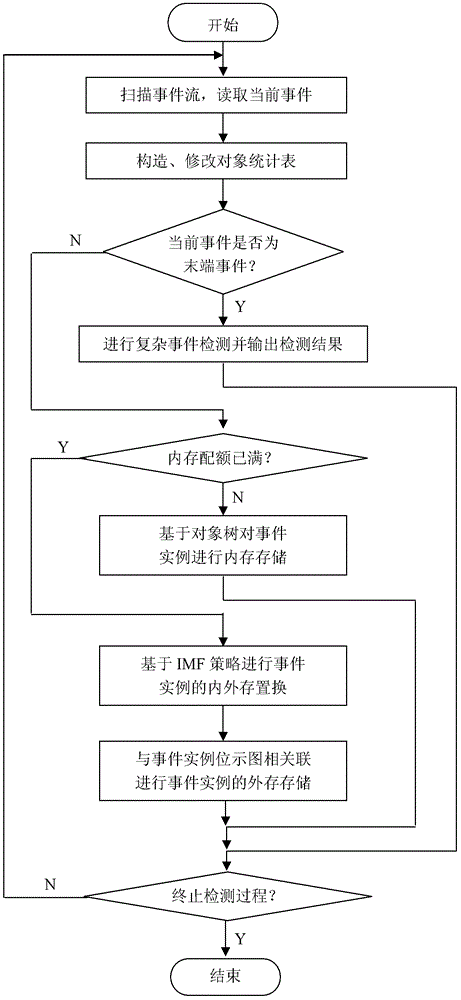

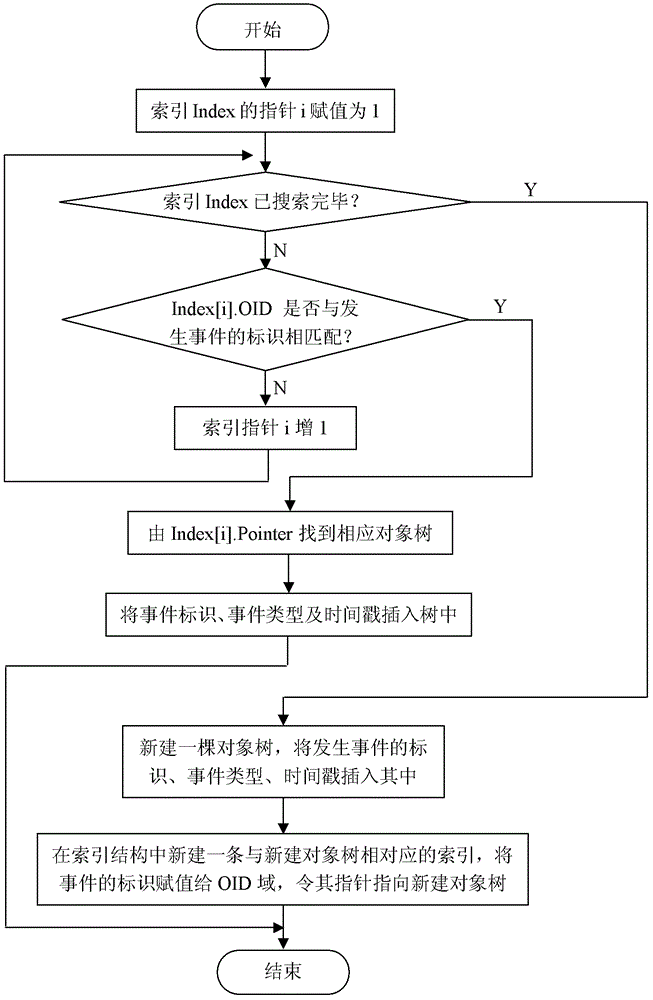

Complex event detection method on basis of IMF (instance matching frequency) internal and external memory replacement policy

InactiveCN102339256AEfficiencyImplement compressed storageMemory adressing/allocation/relocationInternal memoryData mining

The invention relates to a complex event detection method on the basis of an IMF (Instance Matching Frequency) internal and external memory replacement policy. In the method, when a user does not terminate a detection process, an event flow is continuously scanned; a current event is read; an object statistical table is constructed and modified; and different treatments are carried out according to the type of the current event; if the current event is a terminal event, a complex event detection process is triggered and a detected sequence which meets a user defined mode is output; if the current event is a nonterminal event and an internal memory quota of the current event is not full, the internal memory storage of an event instance is carried out on the basis of an object tree and an index thereof; and if the current event is the nonterminal event and the internal memory quota of the current event is full, the internal and external memory replacement of the event instance is carried out on the basis of the IMF policy and the current event is associated with an event instance bit map to carry out external memory storage of each event instance of an replaced object. The complex event detection method can effectively support the large time scale complex event detection and has high efficiency of utilizing the space and processing time.

Owner:NORTHEASTERN UNIV

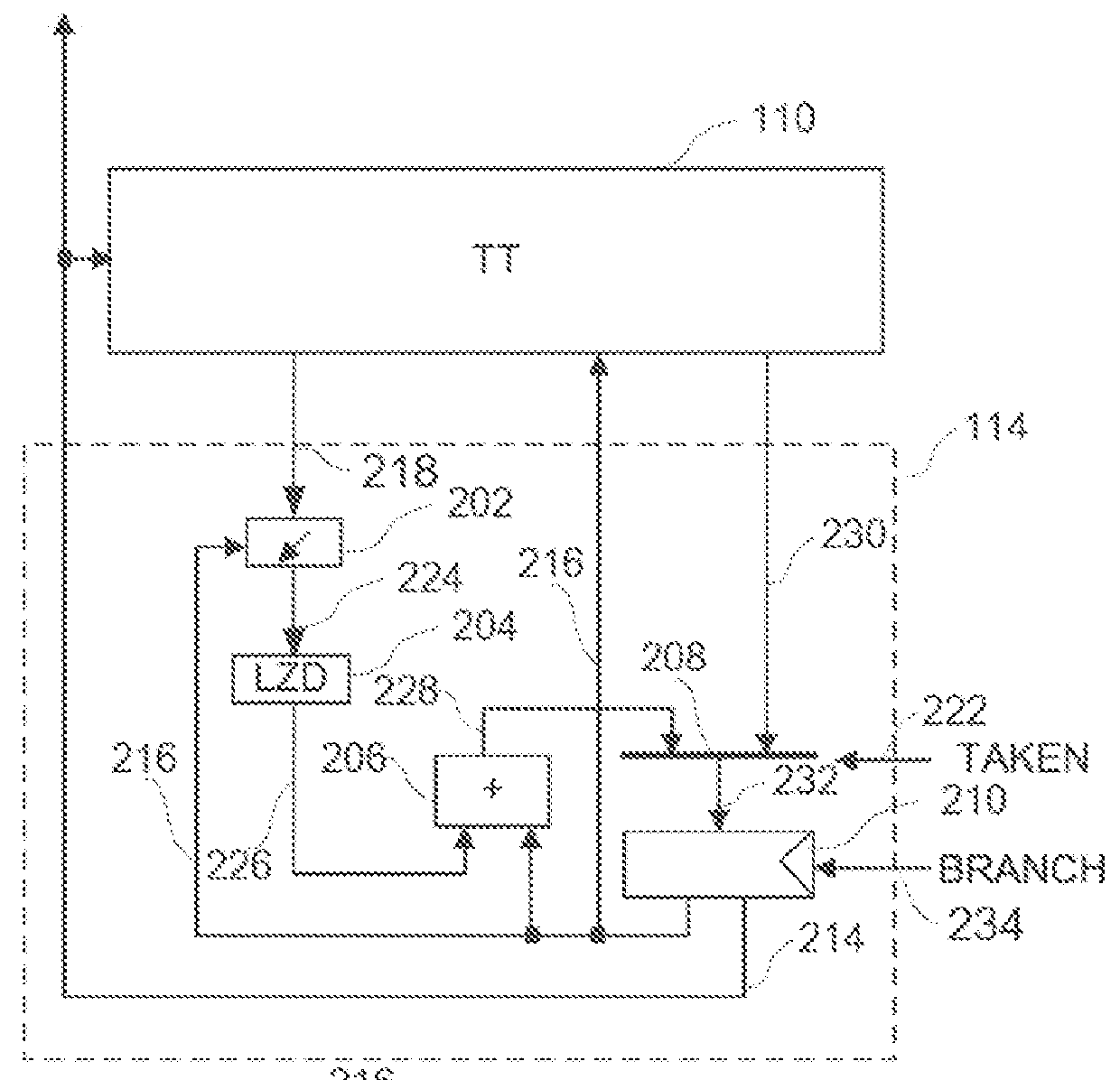

Dynamic physical memory replacement through address swapping

An apparatus, system, method, and machine-readable medium are disclosed. In one embodiment the apparatus includes an address swap cache. The apparatus also includes memory segment swap logic that is capable of detecting a reproducible fault at a first address targeting a memory segment. Once detected, the logic remaps the first address targeting the faulty memory segment with a second address targeting another memory segment. The logic stores the two addresses in an entry in the address swap cache. Then the memory segment swap logic receives a memory transaction that is targeting the first physical address and use the address to perform a lookup process in the address swap cache to determine if an entry exists that has the faulty address. If an entry does exist for that address, the logic then swaps the second address into the memory transaction for the first address.

Owner:INTEL CORP

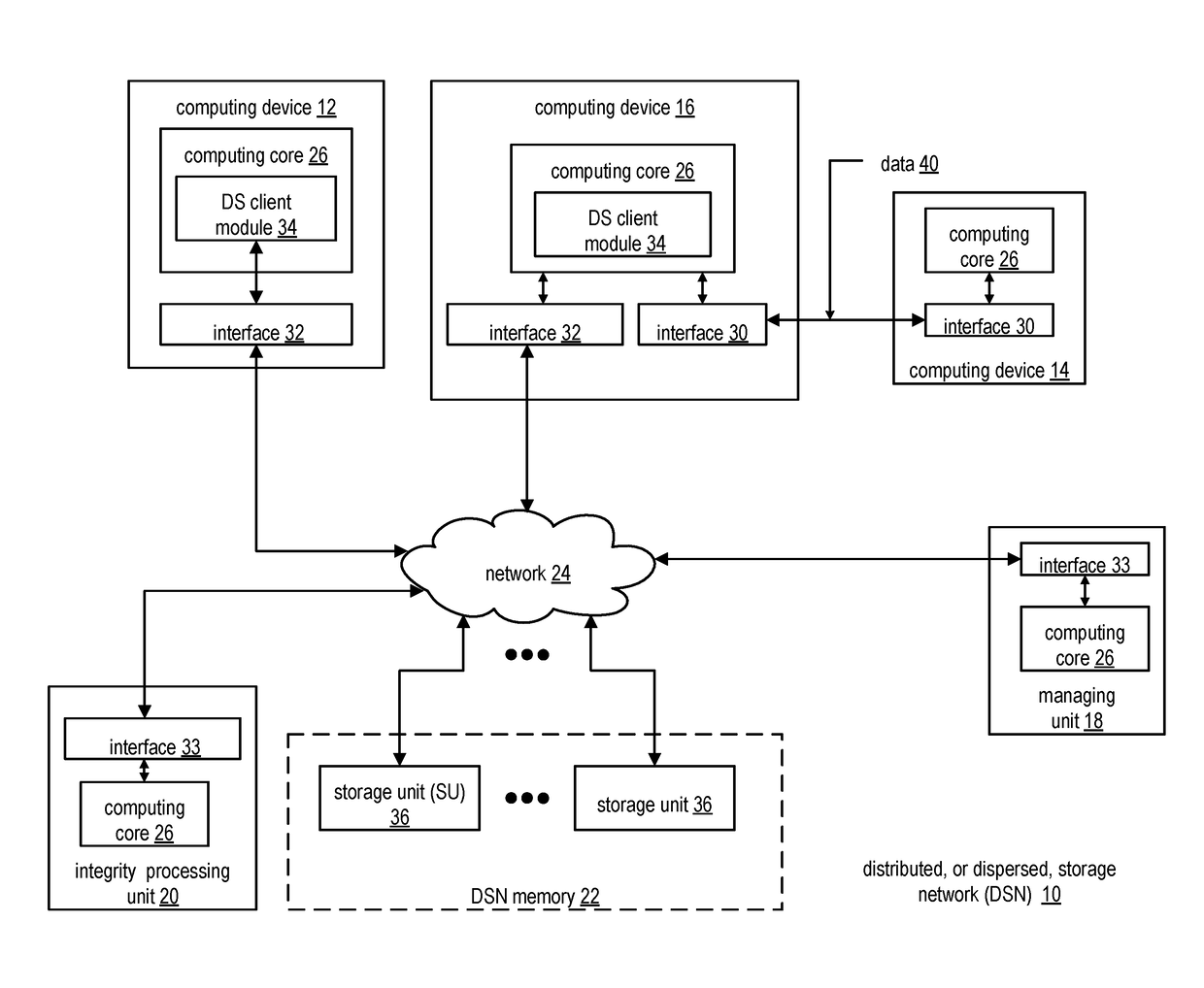

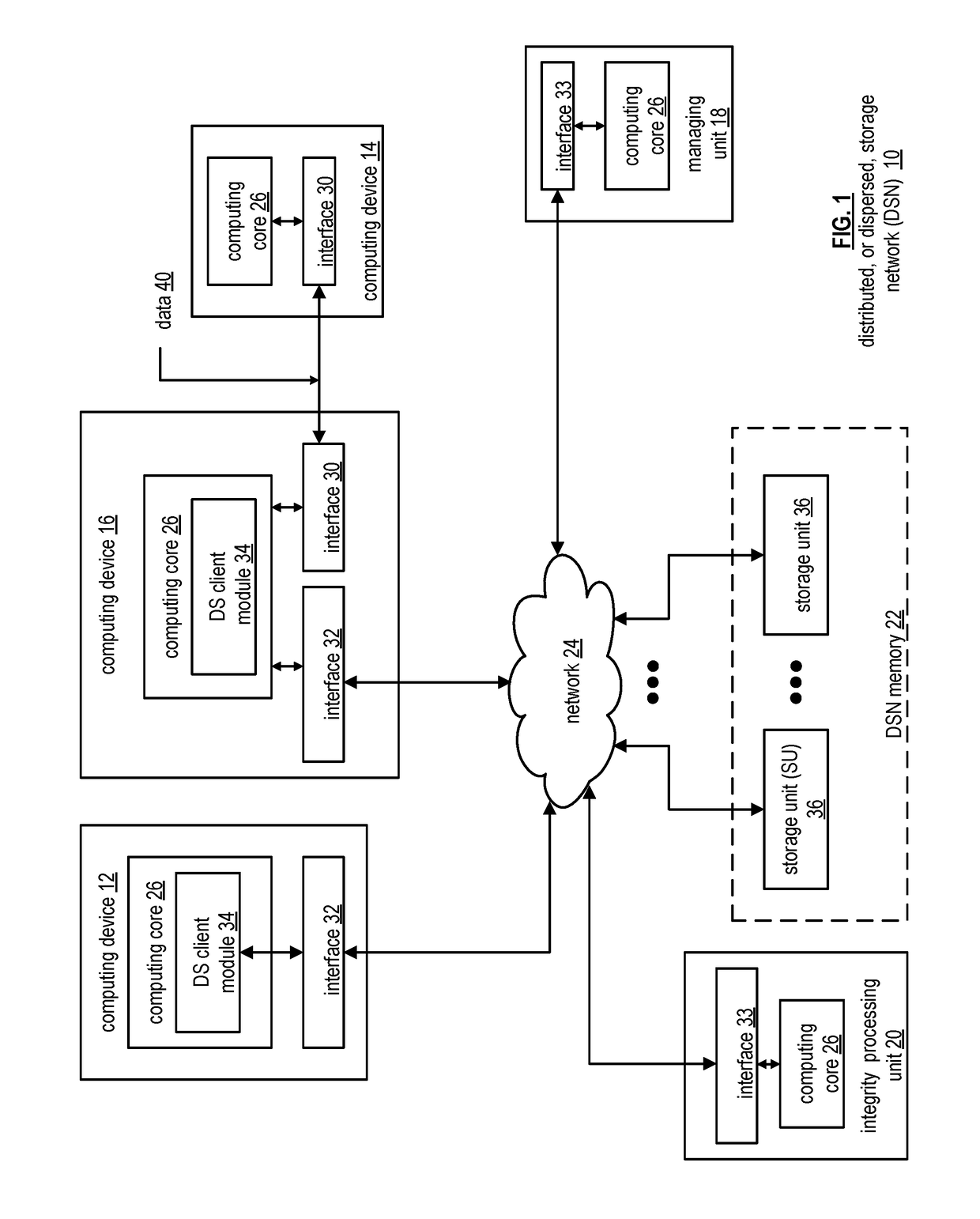

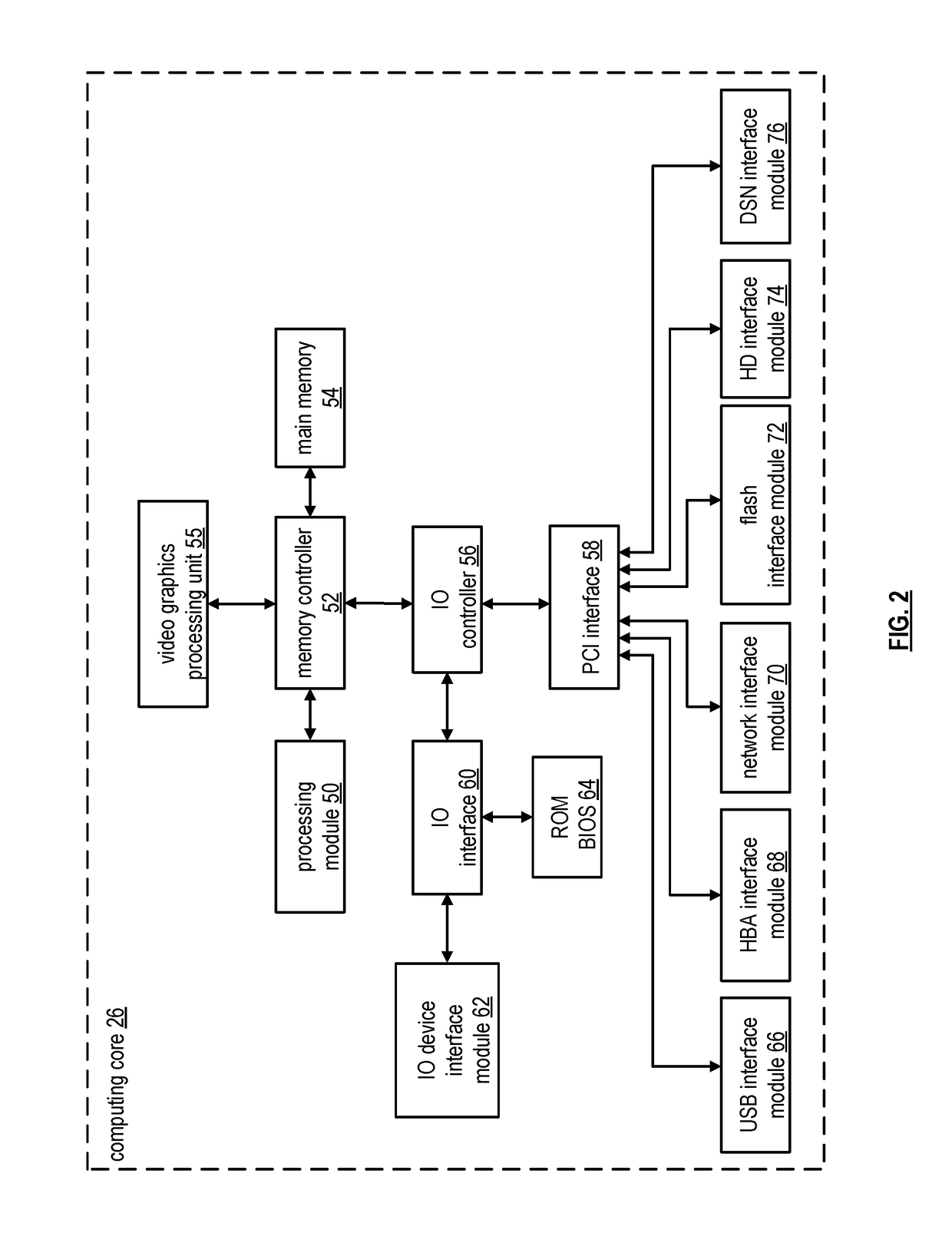

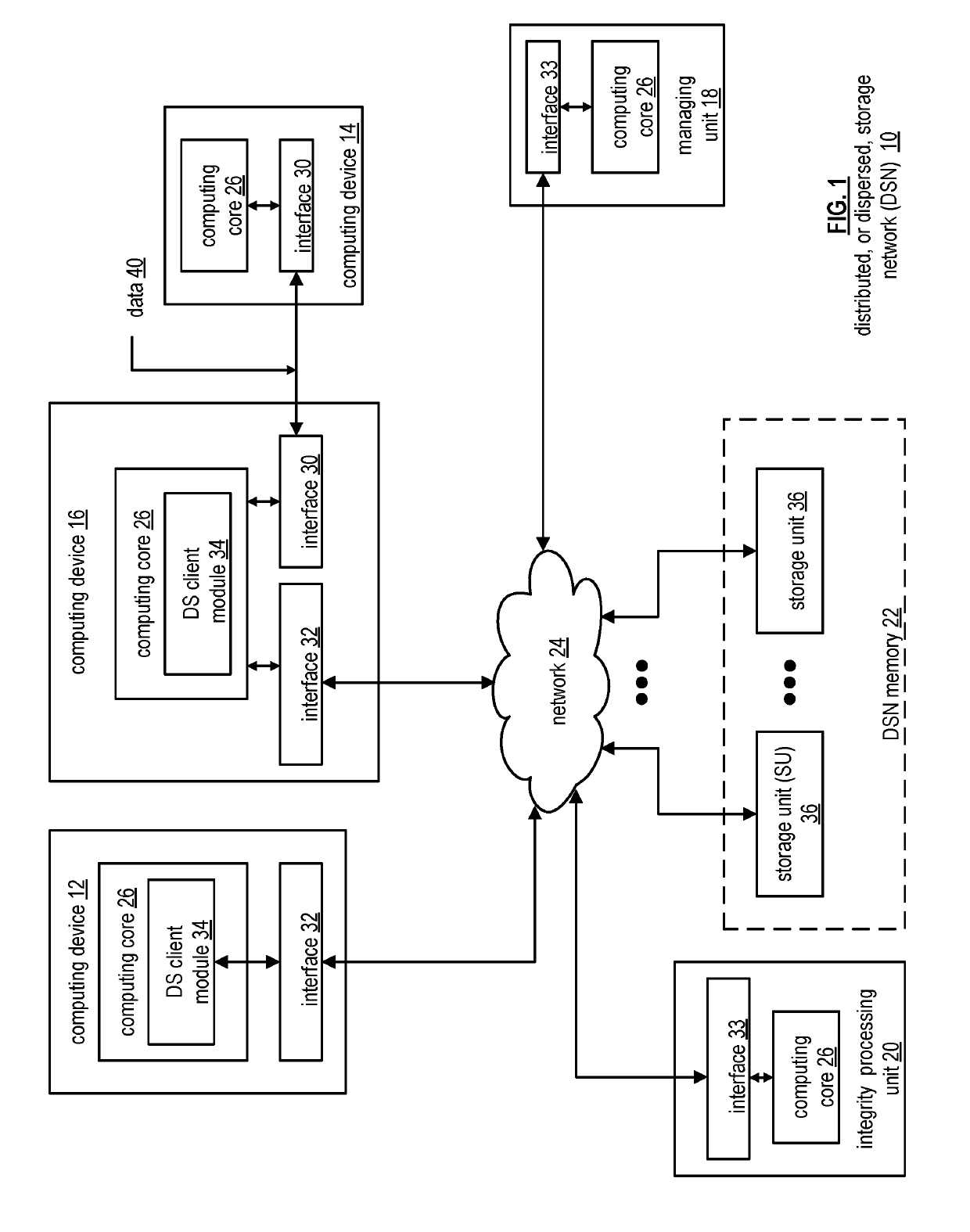

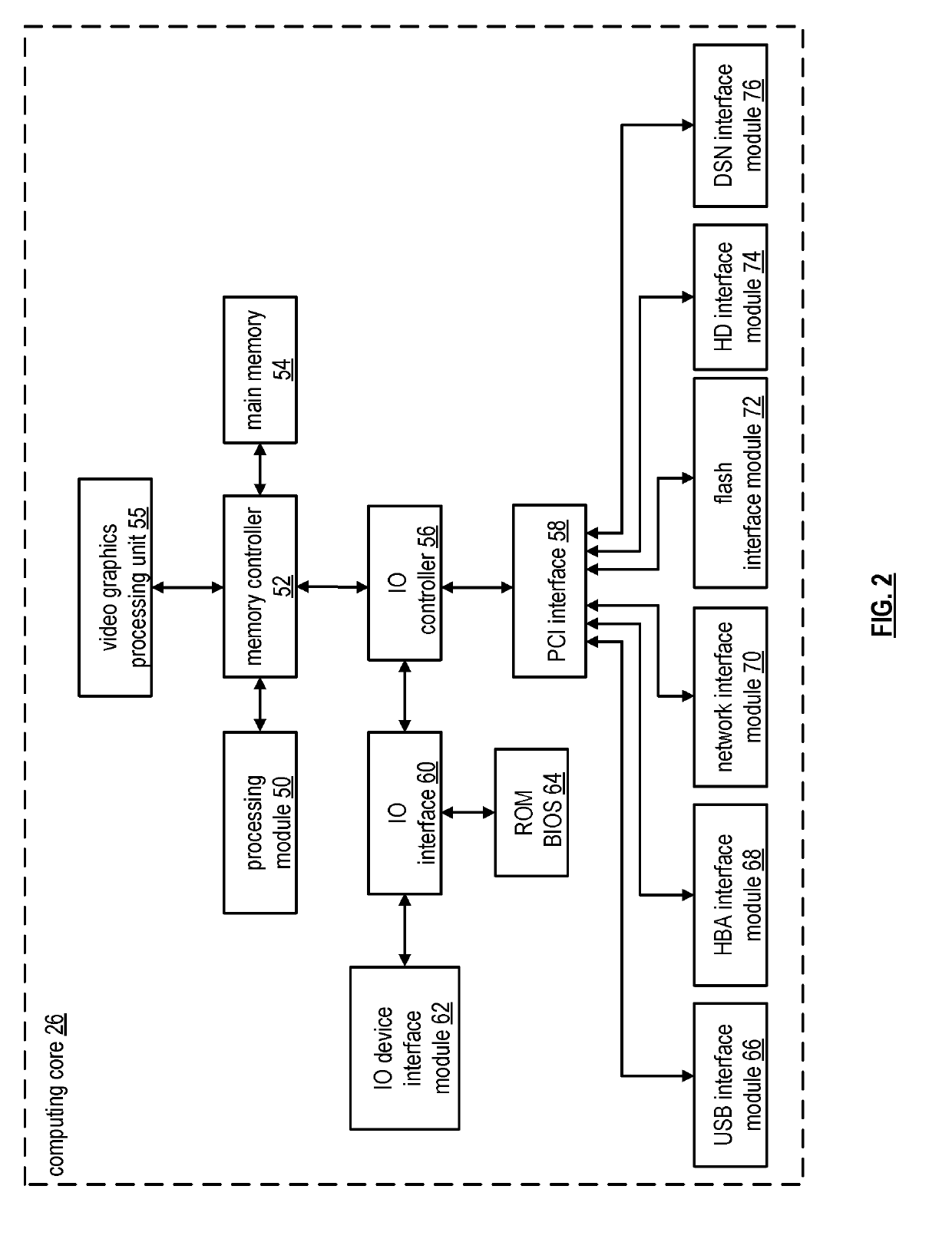

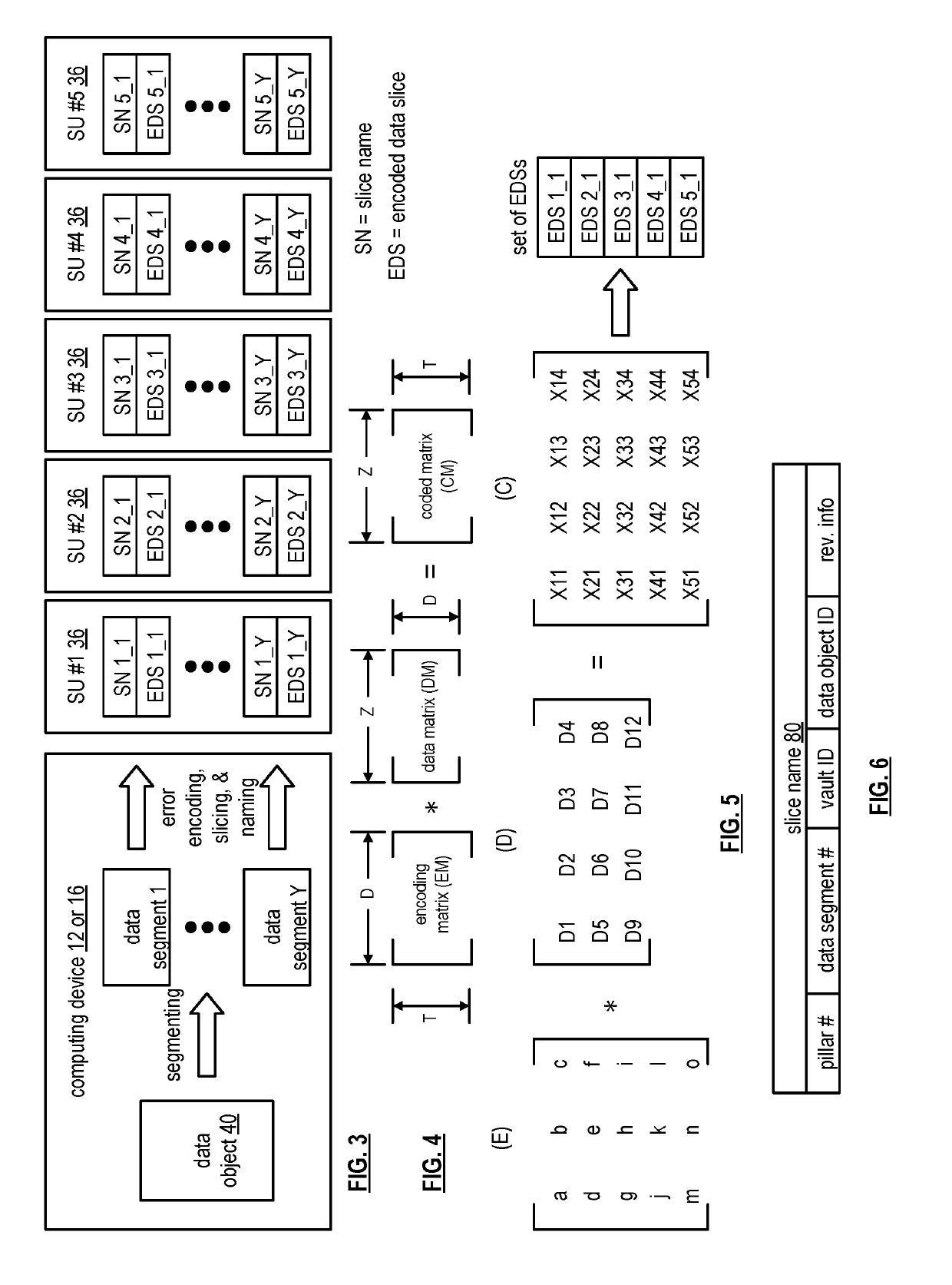

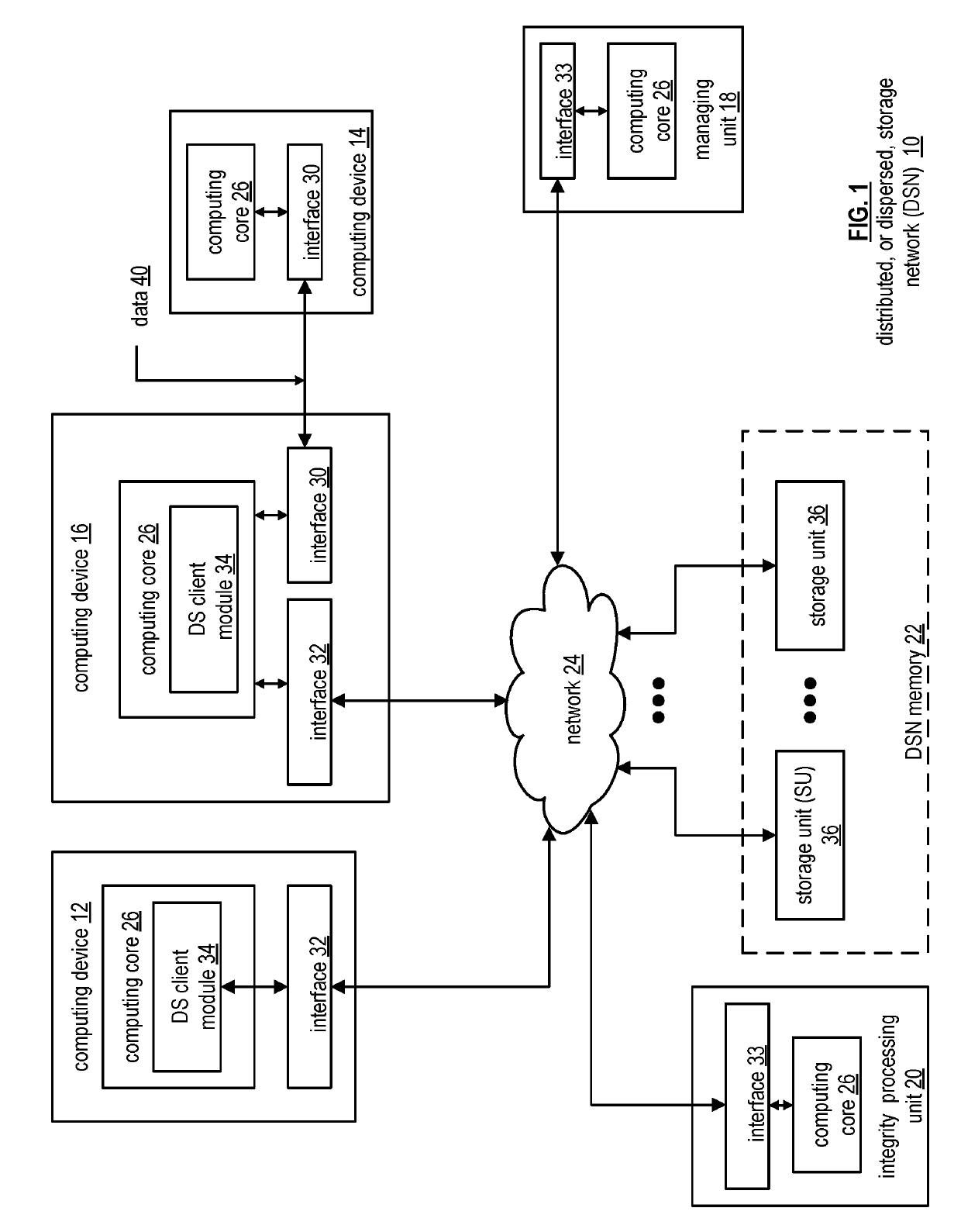

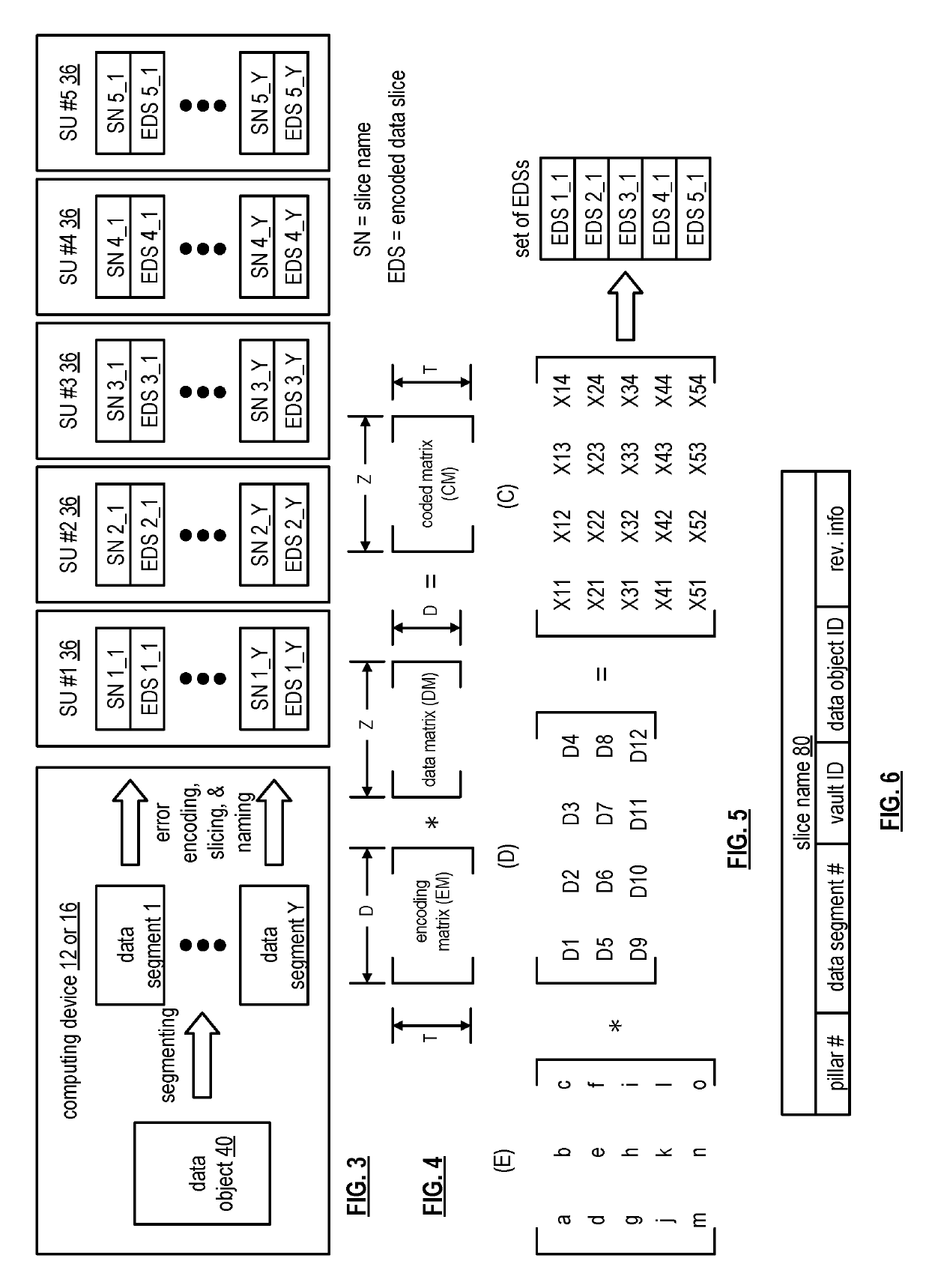

Prioritizing memory devices to replace based on namespace health

A computing device includes an interface configured to interface and communicate with a dispersed storage network (DSN), a memory that stores operational instructions, and processing circuitry operably coupled to the interface and to the memory. The processing circuitry is configured to execute the operational instructions to perform various operations and functions. The computing device detects memory error(s) associated with a plurality of sets of memory devices of sets of storage unit(s) (SU(s)) within the DSN that distributedly store a set of encoded data slices (EDSs). The computing device facilitates detection of EDS error(s) associated with the memory error(s). For a set of memory devices, the computing device establishes a corresponding memory replacement priority level and facilitates replacement of corresponding memory device(s) associated with the EDS error(s) based on the corresponding memory replacement priority level.

Owner:PURE STORAGE

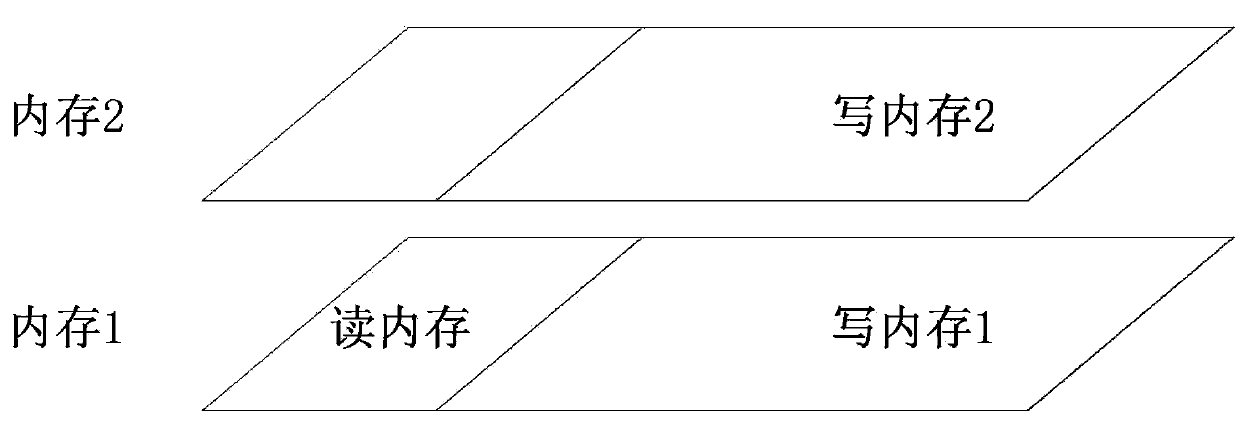

Data writing method based on memory replacement

ActiveCN103744626AKeep intactNot easy to loseInput/output to record carriersTerm memoryMemory replacement

The invention discloses a data writing method based on memory replacement. The data writing method includes the following steps: firstly, generating a memory structure, designing a part which is used for reading and writing data in the memory independently and integrating two integrated circuits with the same capacity with each other; secondly, processing the memory replacement, starting a system with a general model at the beginning and testing available capacity of stacks according to a replacement model set by the system by a memory managing unit when related applications of the system are completely loaded after the system runs for a period of time; thirdly, adding a certain amount of redundant stack areas according to actual capacity, testing memory for reading and adding a certain amount of redundant reading memory; fourthly, completely mapping the rest of the memory to writing memory so as to complete the process of memory replacement. Compared with the prior art, the memory replacement in writing files enables the overall writing process to be smoother, and the writing performance is improved. The data writing method based on the memory replacement has the advantages of being high in practicality and easy to promote.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

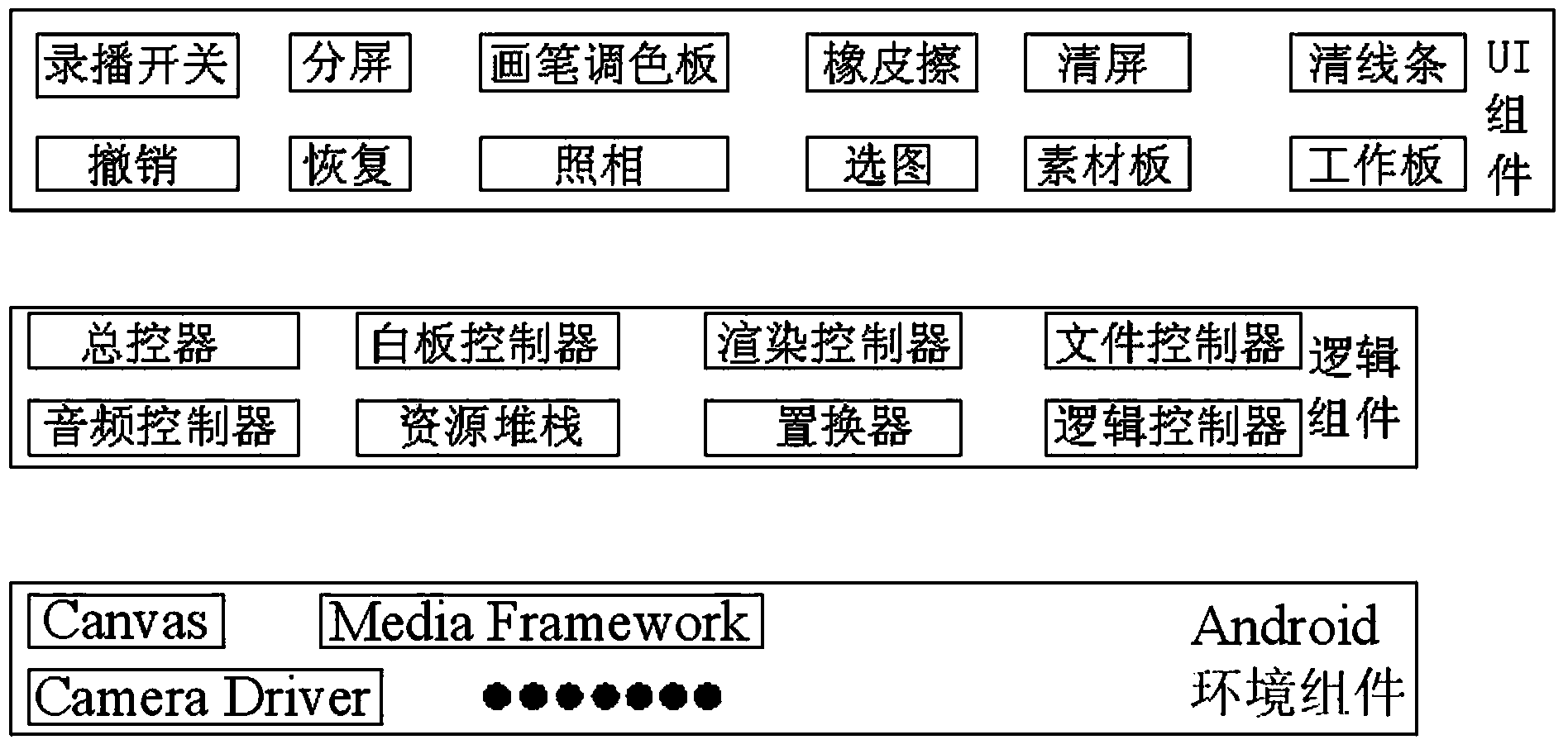

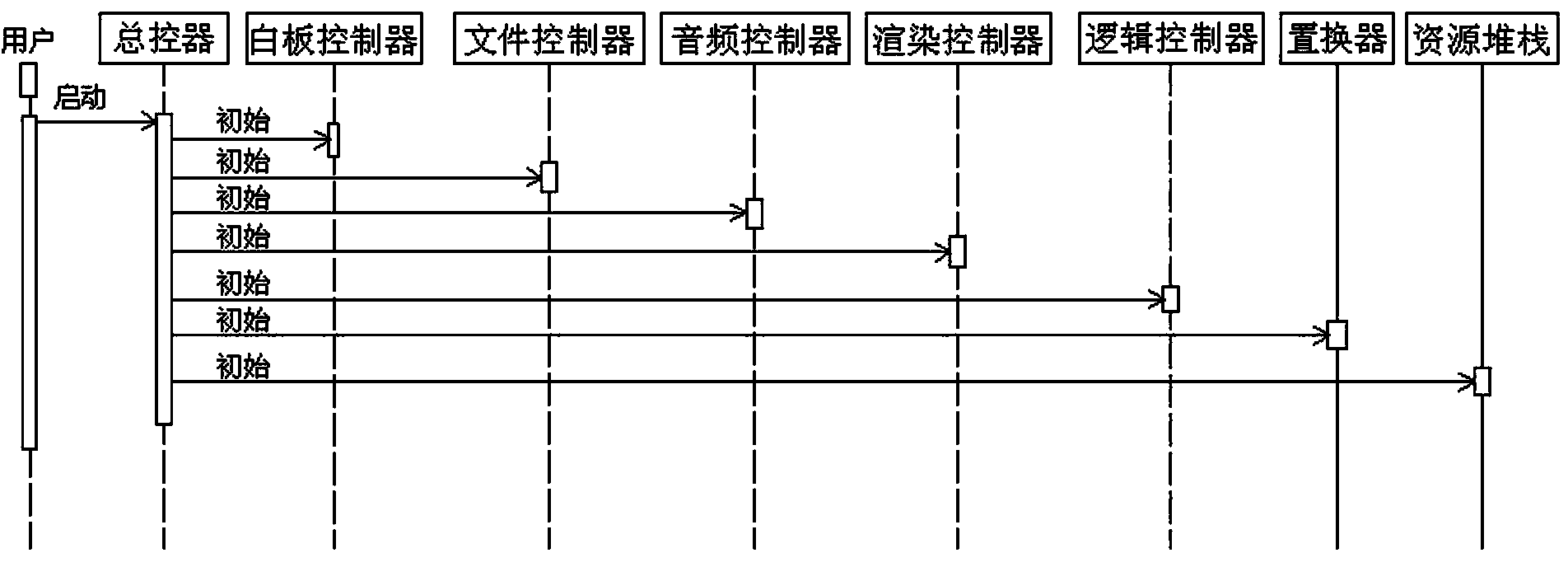

Micro-course recording method and engine adopting android system

InactiveCN103838579ASupport importSupport undoSpecific program execution arrangementsWhiteboardMaster controller

The invention discloses a micro-course recording method and engine adopting an android system. The engine mainly comprises a master controller, a whiteboard controller, a logic controller, a file controller, an audio controller, a rendering controller, a displacer and a resource stack, wherein the whiteboard controller, the logic controller, the file controller, the audio controller, the rendering controller, the displacer and the resource stack are respectively connected with the master controller. The whiteboard controller is respectively connected with the file controller, the rendering controller and the logic controller, the resource stack is respectively connected with the displacer and the logic controller, and the audio controller is respectively connected with the master controller and the file controller. The recording method includes: A, starting; B, recording; C, storing, wherein the step B includes: B1, user clicking for recording; B2, monitoring movements and starting voice acquisition; B3, processing voice events; B4, processing touch events; B5, monitoring memory stack arrays; B6, subjecting threshold values exceeding rulemaking to hard disk cache and memory replacement; B7, reading or writing in disk cache from disk cache.

Owner:上海景界信息科技有限公司

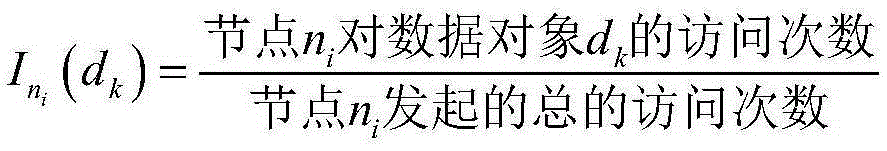

Mobile P2P network buffer memory replacement method

The invention discloses a mobile P2P network buffer memory replacement method based on data correlation. The method is characterized in that through analyzing correlation between data objects in a buffer memory, data importance and data information concentration and the like, value of buffer memory data is comprehensively calculated, and data with lowest value is selected for leaving the buffer memory and is thus replaced. Compared to the prior art, correlation between data is taken into full consideration, the buffer memory hit rate can be effectively improved, and buffer memory jittering is reduced.

Owner:STATE GRID CORP OF CHINA +1

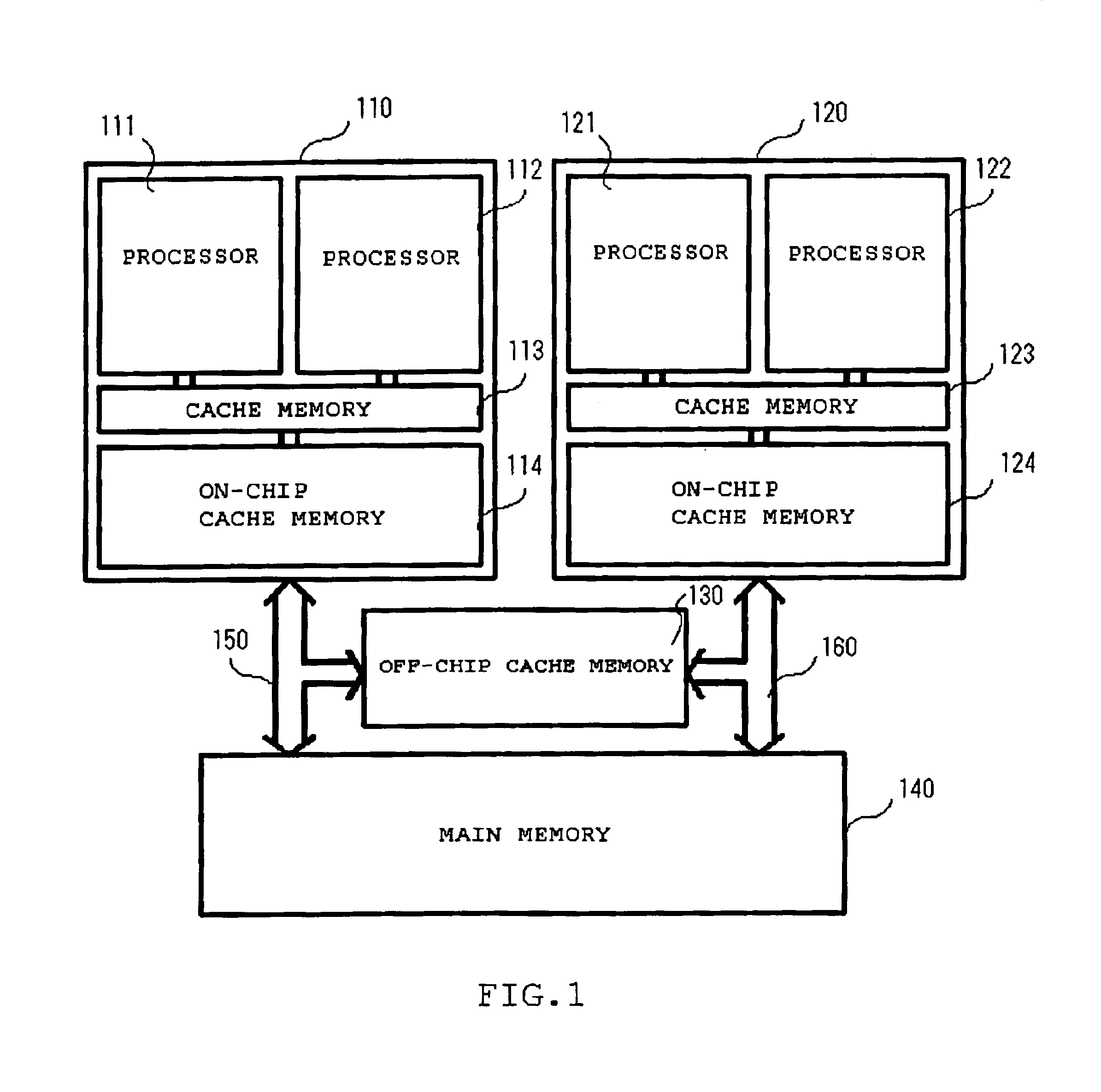

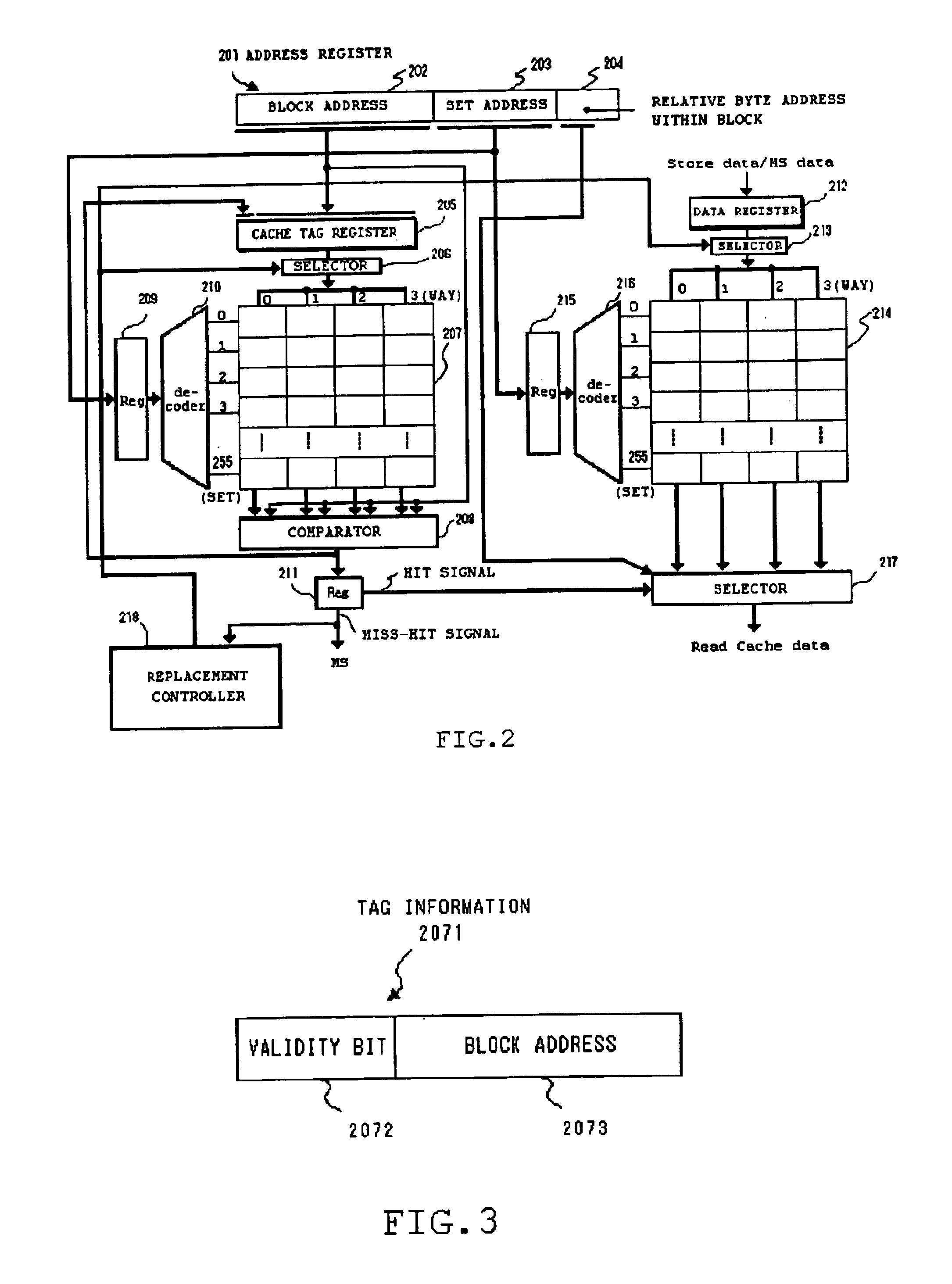

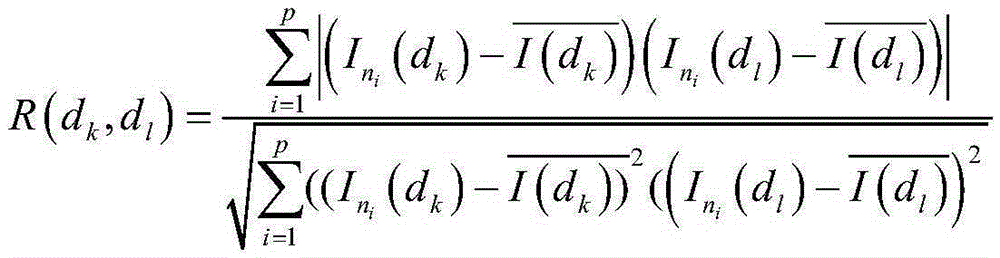

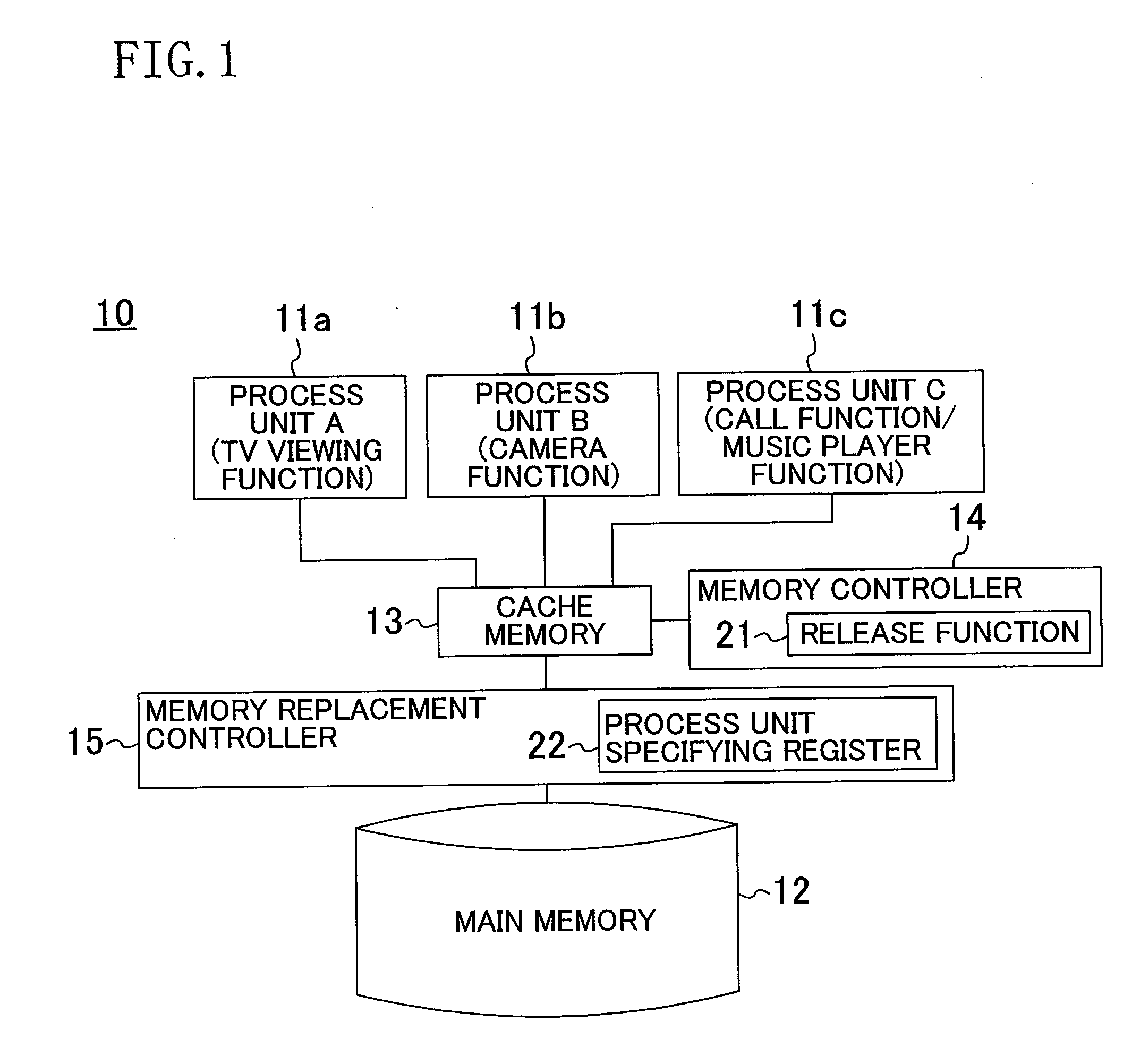

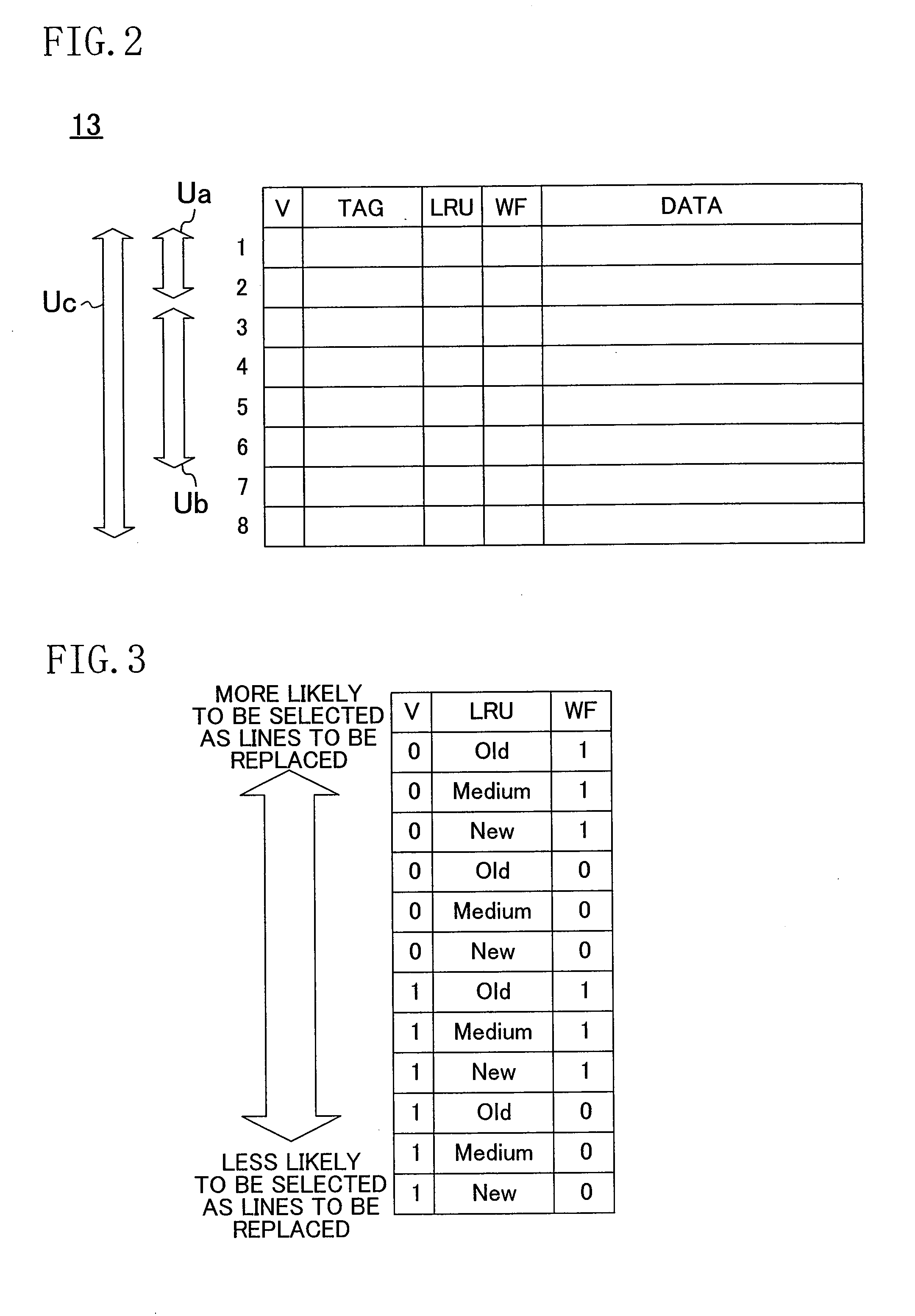

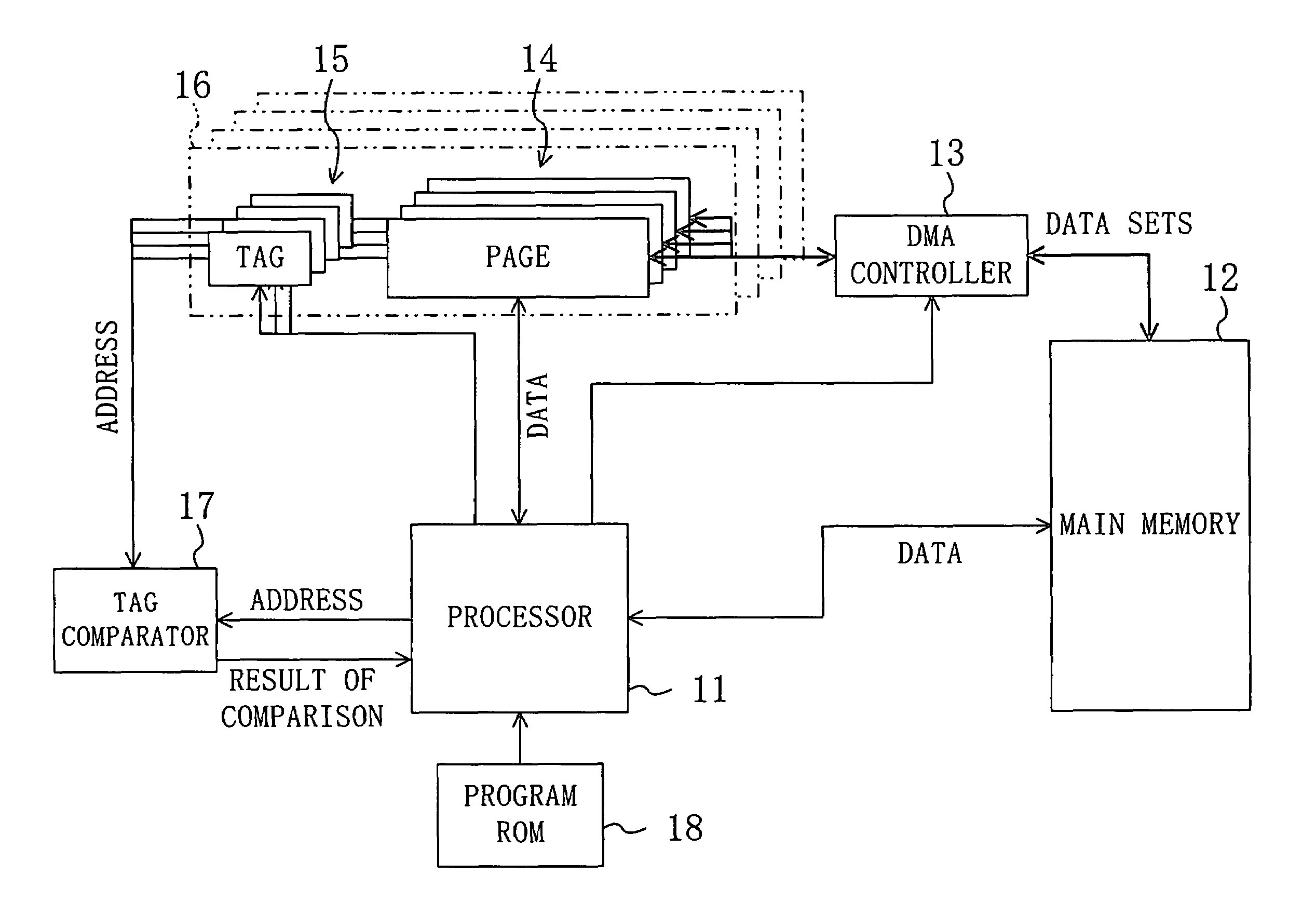

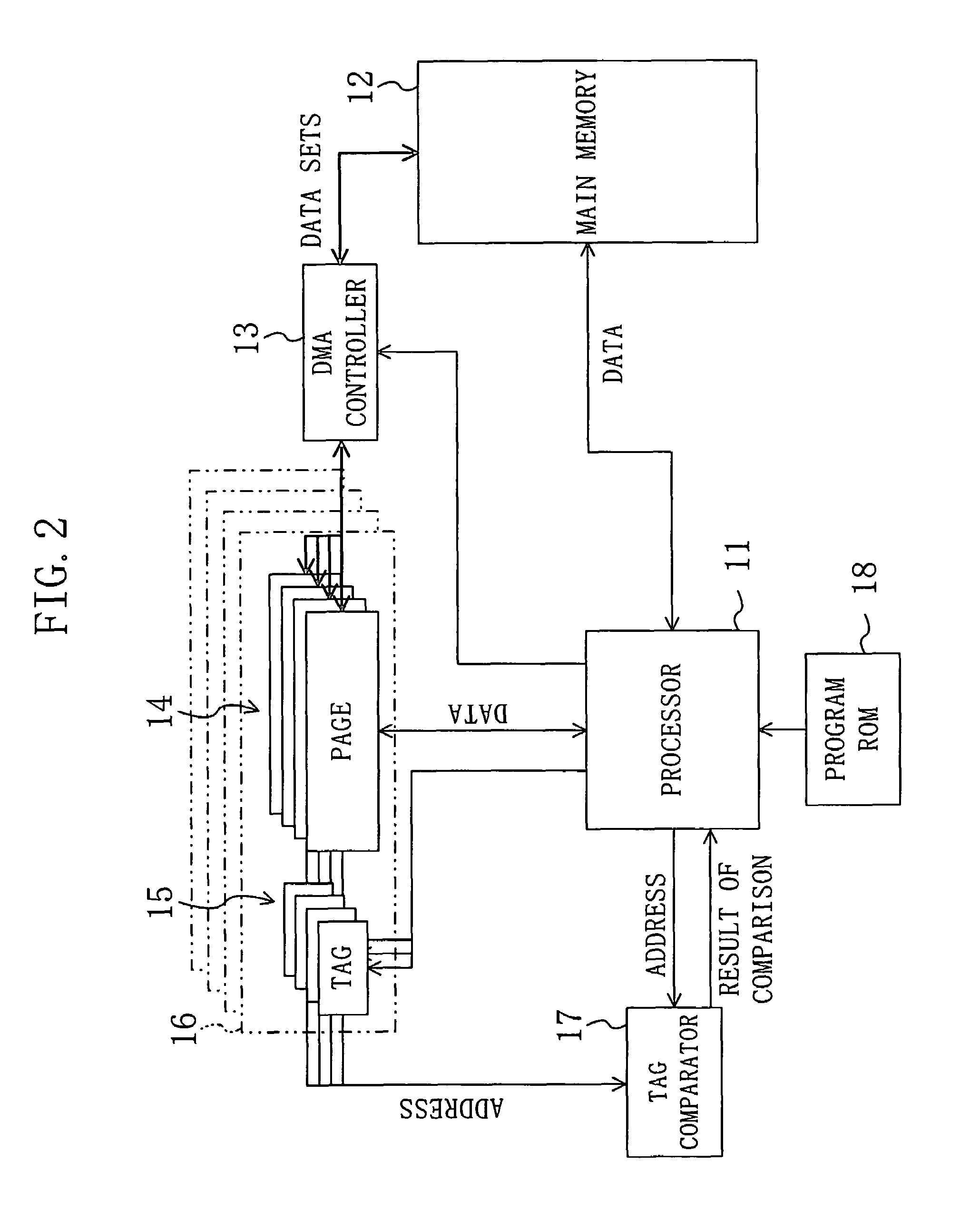

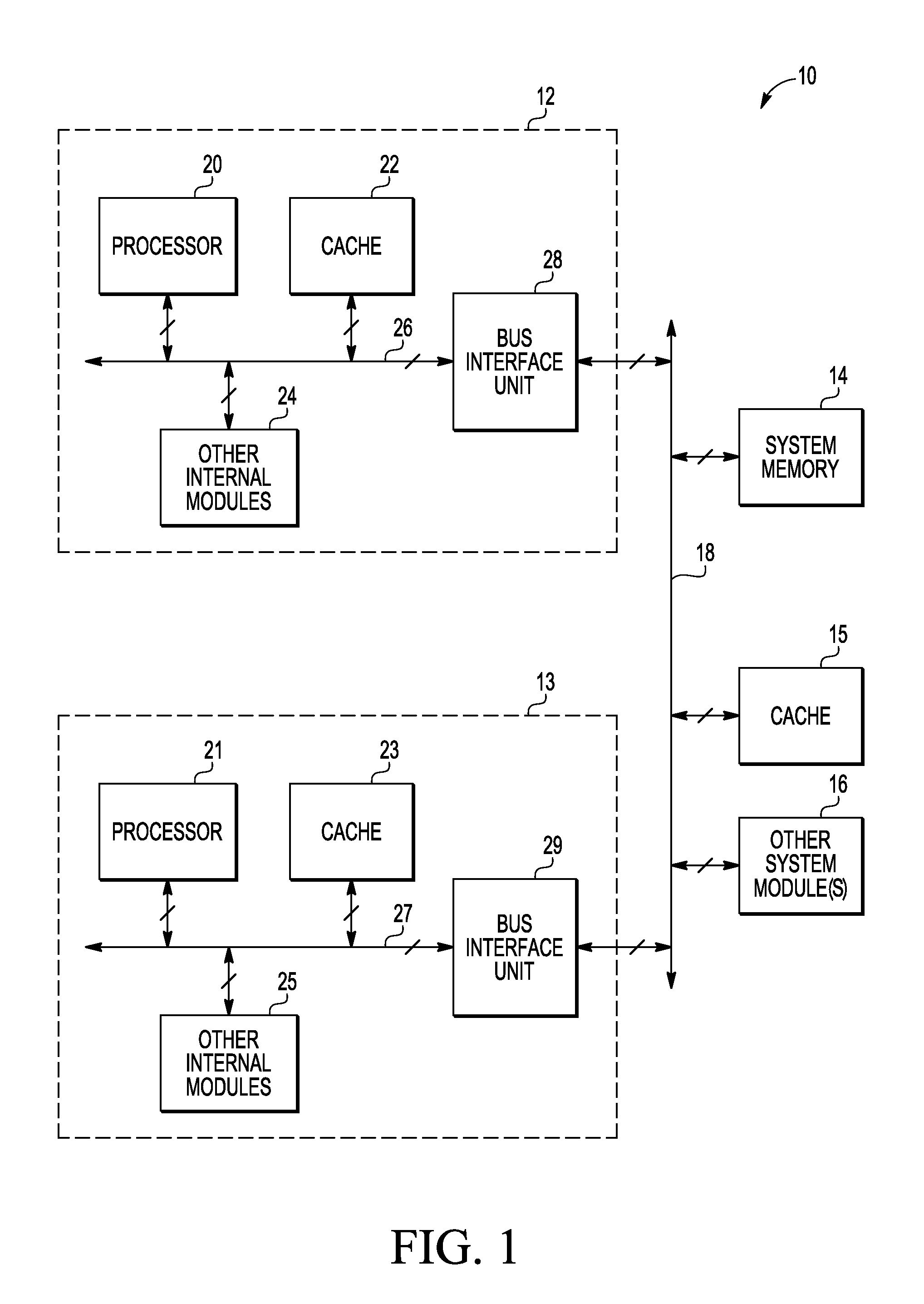

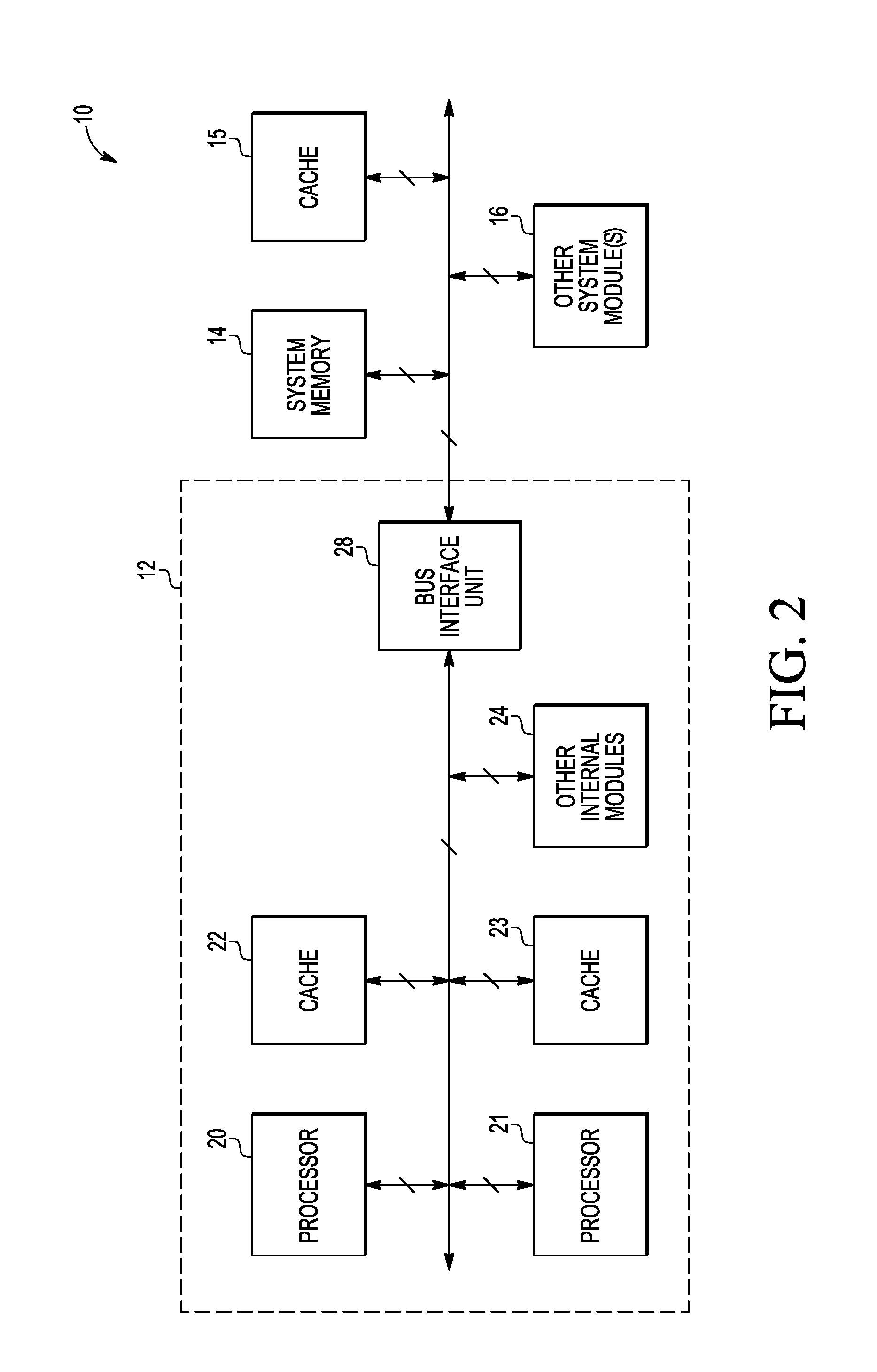

Computer system and method for controlling the same

InactiveUS20090198901A1Simple processCreate interferenceEnergy efficient ICTMemory adressing/allocation/relocationParallel computingComputerized system

A computer system includes a main memory for storing a large amount of data, a cache memory that can be accessed at a higher speed than the main memory, a memory replacement controller for controlling the replacement of data between the main memory and the cache memory, and a memory controller capable of allocating one or more divided portions of the cache memory to each process unit. The memory replacement controller stores priority information for each process unit, and replaces lines of the cache memory based on a replacement algorithm taking the priority information into consideration, wherein the divided portions of the cache memory are allocated so that the storage area is partially shared between process units, after which the allocated amounts of cache memory are changed automatically.

Owner:PANASONIC CORP

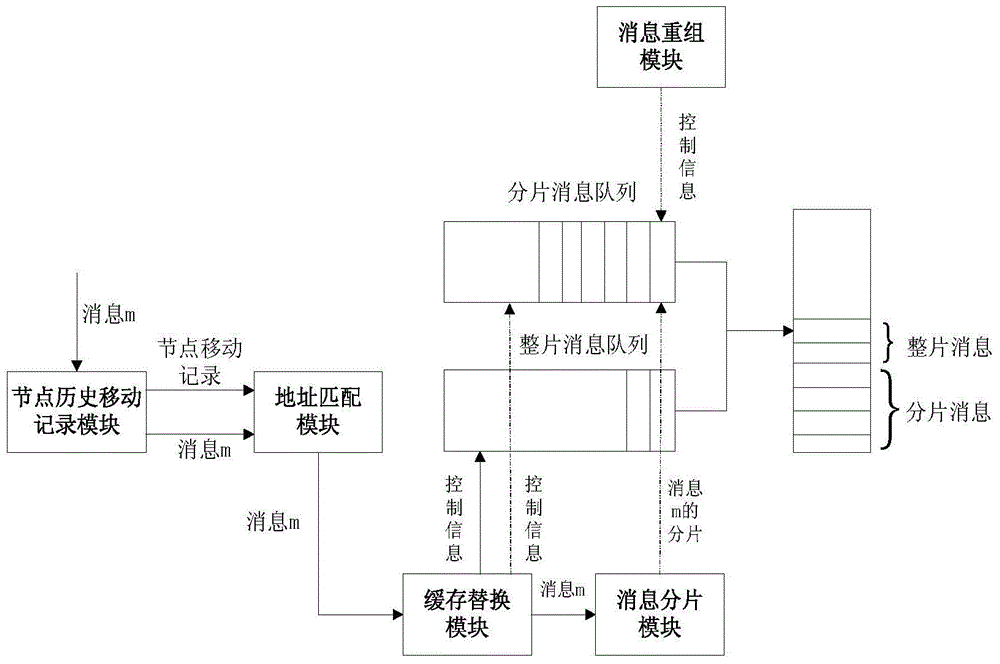

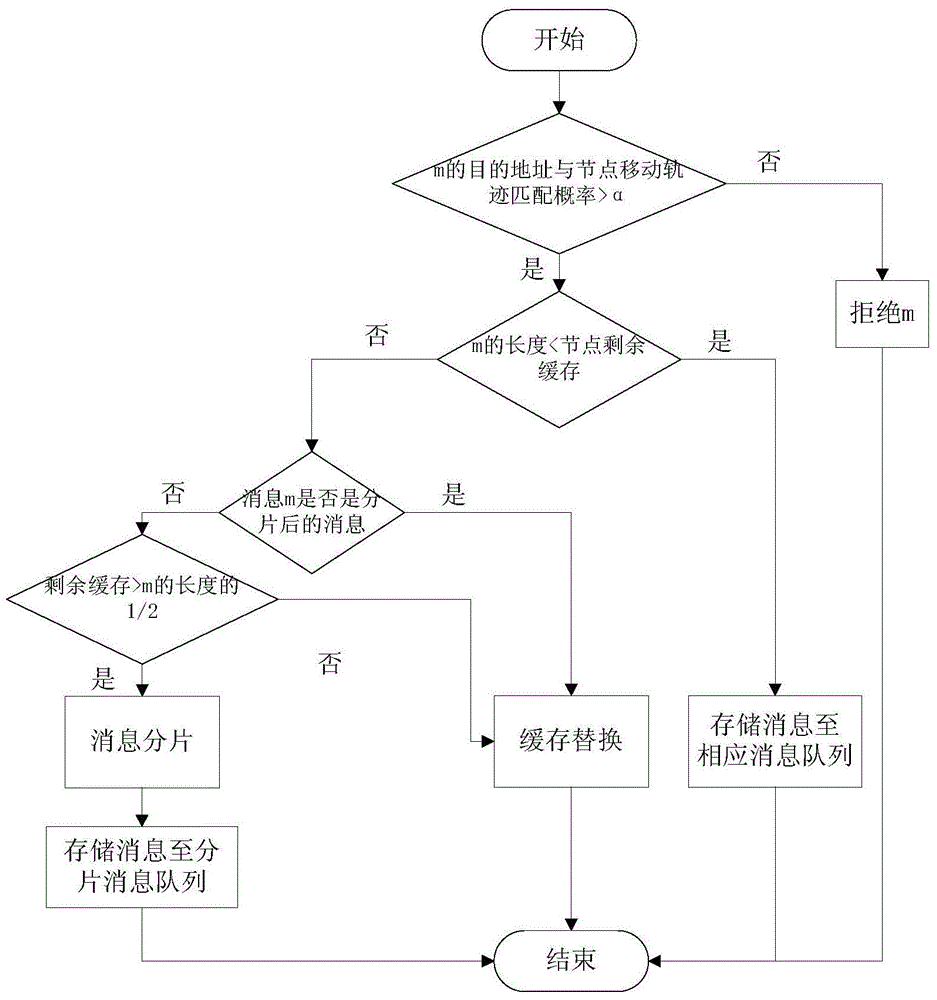

DTN (Delay/Distribution Tolerant Network) buffer memory management system and method based on message sharding and node cooperation

ActiveCN104955075AImprove transmission efficiencyFacilitate transmissionWireless communicationComputer scienceMemory replacement

The invention discloses a DTN buffer memory management system and method based on message sharding and node cooperation. A node historical movement record module transmits a node movement record and a message m to an address coupling module; the address coupling module calculates the coupling probability between the node movement record and a target address of the received message m, and if the coupling probability is greater than a threshold, the message m is transmitted to a buffer memory replacement module; when the buffer memory replacement module determines whether the length of the message m is lower the residual buffer memory of a node, if yes, the message m is stored in a corresponding queue, and if no, when the message m is an entire message and the buffer memory of the node is greater than 1 / 2 the length of the message m, the message m is transmitted to a message sharding module, and the otherwise, buffer memory of the message m is replaced; the message sharding module divides the message m; and a message recombination module recombines the divided messages. The method and system can effectively utilize buffer memory of the node, and improve the message transmission efficiency.

Owner:HARBIN ENG UNIV

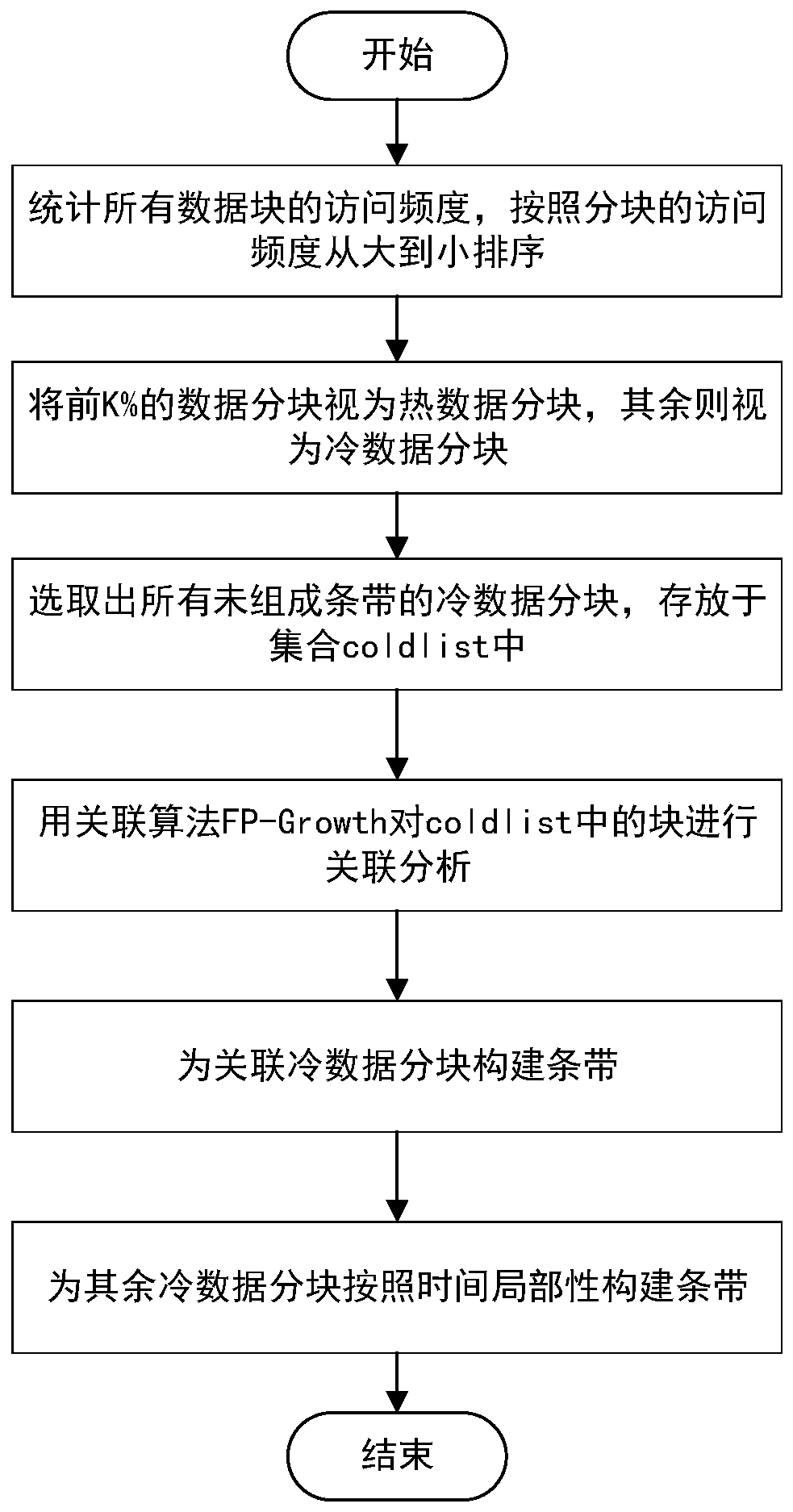

Data correlation-aware erasure code memory replacement method and equipment and memory system

ActiveCN111444036AIncrease the probability of simultaneous eliminationReduce access latencyRedundant data error correctionParallel computingTerm memory

Owner:HUAZHONG UNIV OF SCI & TECH

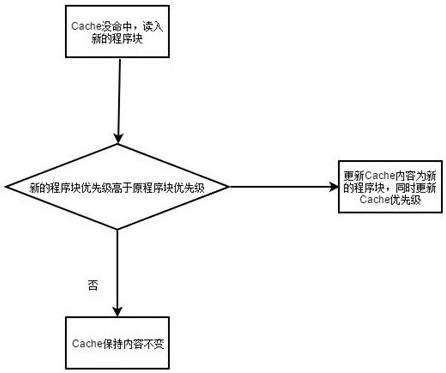

Cache memory replacement method, cache memory and computer system

PendingCN112148640AImprove hit rateAvoid frequent replacementMemory systemsComputer architectureParallel computing

The invention provides a cache memory replacement method, a cache memory and a computer system; in the invention, a sliding window consistent with the capacity k of the cache memory is adopted, the sliding window corresponds to a data block in a main memory, a data block in a main memory corresponding to the sliding window and a priority mark of the data block are stored in a cache block of the cache memory; the priority of a newly accessed program block is judged when the current Cache block of the cache memory has access miss; the Cache content and the priority are replaced only when the priority of the new program block is higher than that of the current program block; the bottom end of the sliding window is moved to a miss address when the Cache block is replaced, and the data block inthe main memory corresponding to the sliding window and the priority of the data block are stored in the cache memory.

Owner:SHENZHEN HANGSHUN CHIP TECH DEV CO LTD

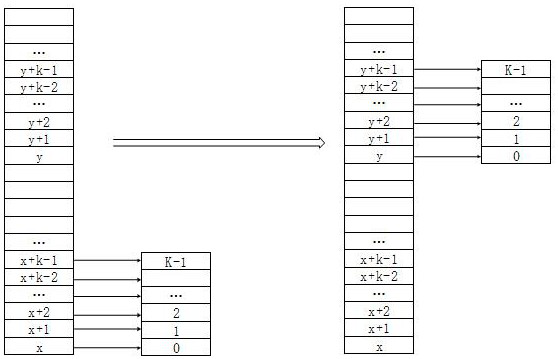

Memory replacement mechanism in semiconductor device

InactiveUS7502901B2Reduce transferReduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationMemory replacementSemiconductor

A semiconductor device has a processor, a first memory unit accessed by the processor, a plurality of page memory units obtained by partitioning a second memory unit which is accessible by the processor at a speed higher than the speed at which the first memory unit is accessible such that each of the page memory units has a storage capacity larger than the memory capacity of a line composing a cache memory, a tag adding, to each of the page memory units, tag information indicative of an address value in the first memory unit and priority information indicative of a replacement priority, a tag comparator for comparing, upon receipt of an access request from the processor, the address value in the first memory unit with the tag information held by the tag, and a replacement control unit for replacing the respective contents of the page memory units.

Owner:SOCIONEXT INC

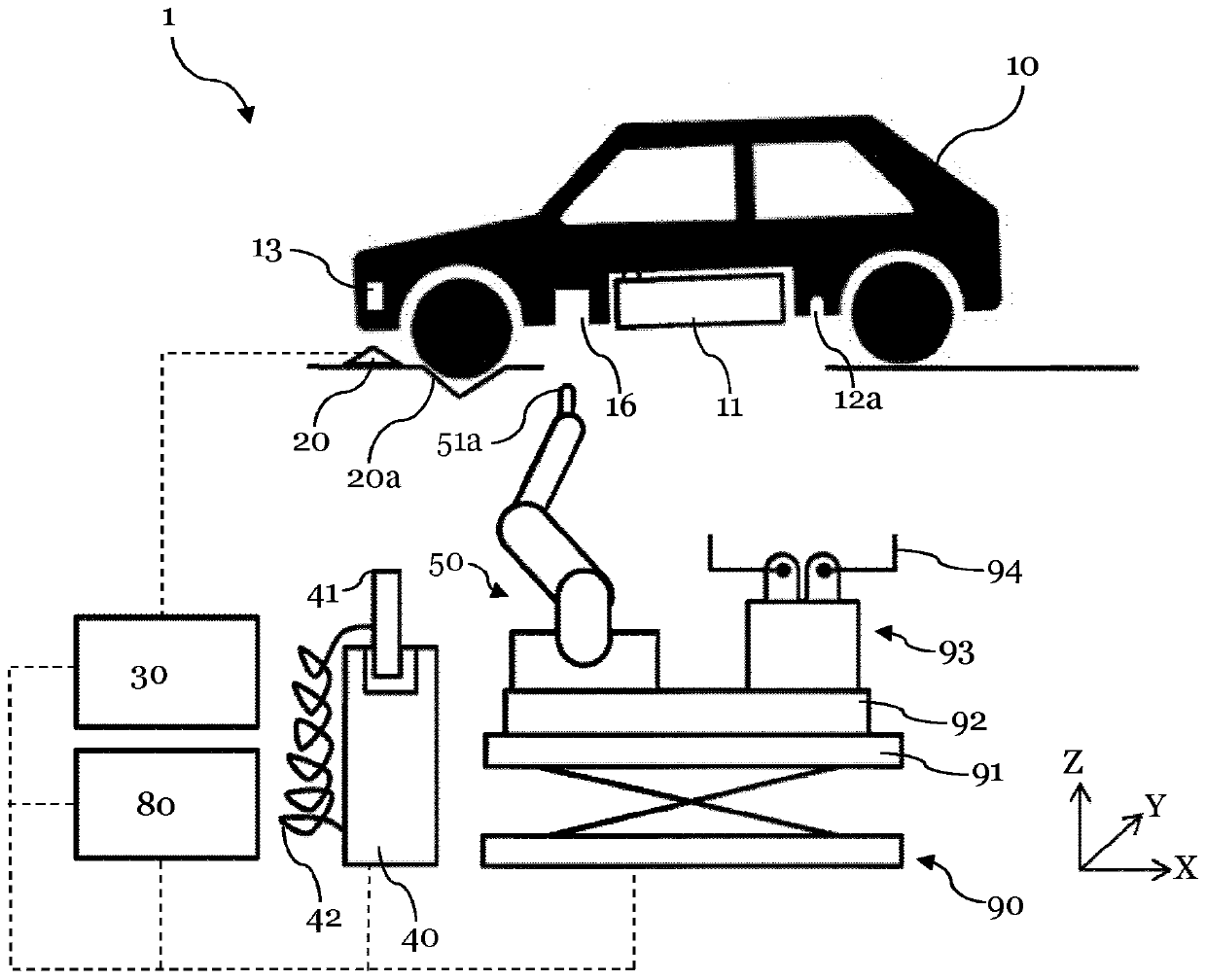

Method for exchanging a vehicle energy store and energy store exchange device

ActiveCN107107880BAccurate and fast positioningShorten the timeCharging stationsGripping headsMemory replacementEnergy storage

The invention relates to a method for exchanging at least one energy store of a vehicle and to an energy store exchanging device. The method includes at least method steps for ascertaining the vehicle type and providing vehicle data of the ascertained vehicle type. The vehicle data include data about the arrangement of the energy store in the vehicle. Furthermore, the position of the vehicle is ascertained based on at least one spatial reference point and at least one energy store of the vehicle is replaced.

Owner:KUKA SYSTEMS

Prioritizing memory devices to replace based on namespace health

A computing device includes an interface configured to interface and communicate with a dispersed storage network (DSN), a memory that stores operational instructions, and processing circuitry operably coupled to the interface and to the memory. The processing circuitry is configured to execute the operational instructions to perform various operations and functions. The computing device detects memory error(s) associated with a plurality of sets of memory devices of sets of storage unit(s) (SU(s)) within the DSN that distributedly store a set of encoded data slices (EDSs). The computing device facilitates detection of EDS error(s) associated with the memory error(s). For a set of memory devices, the computing device establishes a corresponding memory replacement priority level and facilitates replacement of corresponding memory device(s) associated with the EDS error(s) based on the corresponding memory replacement priority level.

Owner:PURE STORAGE

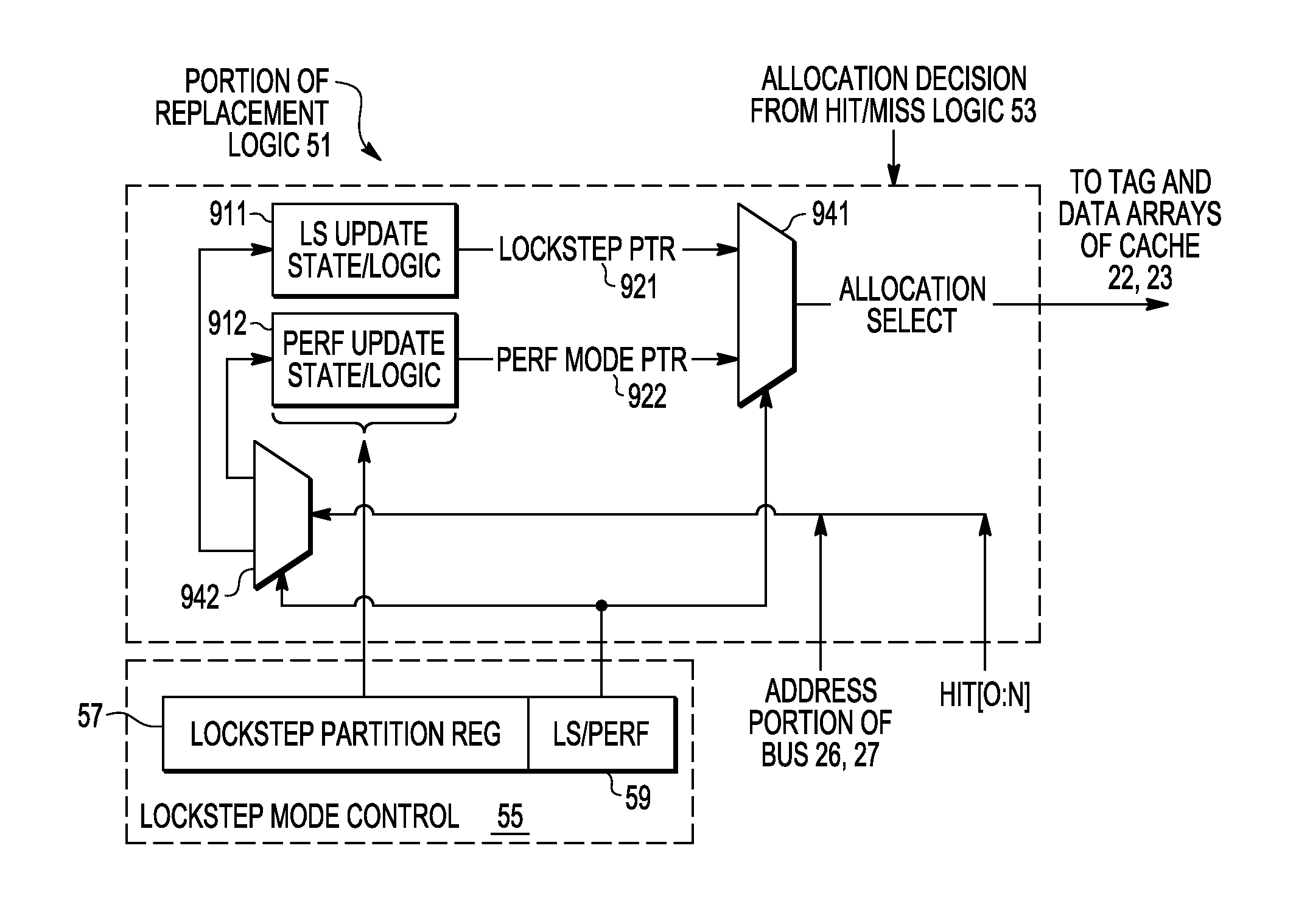

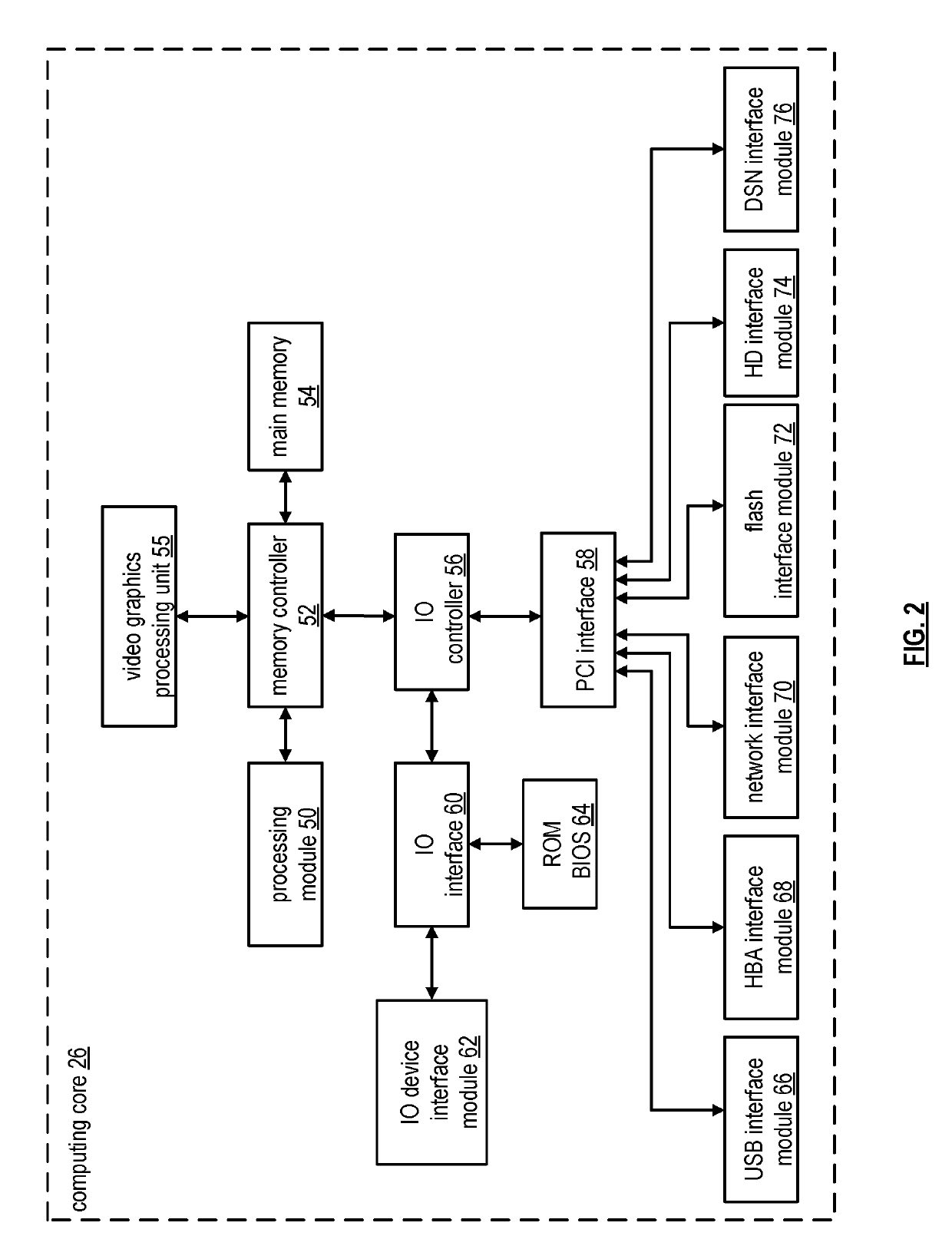

Dynamic lockstep cache memory replacement logic

ActiveUS9208036B2Memory architecture accessing/allocationMemory adressing/allocation/relocationLockstepTheoretical computer science

Owner:NXP USA INC

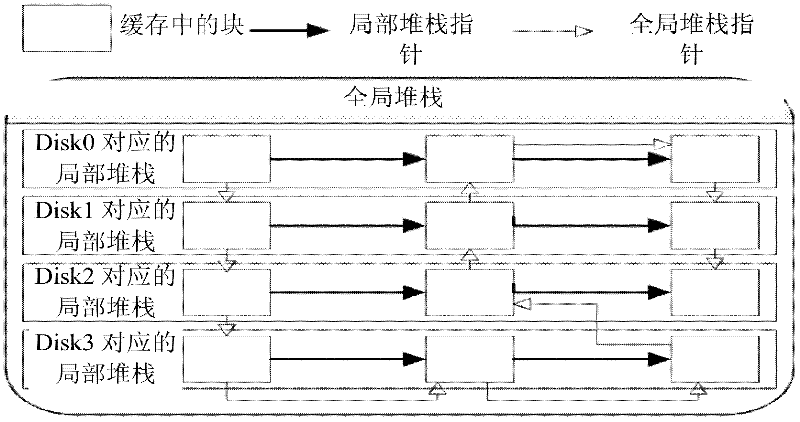

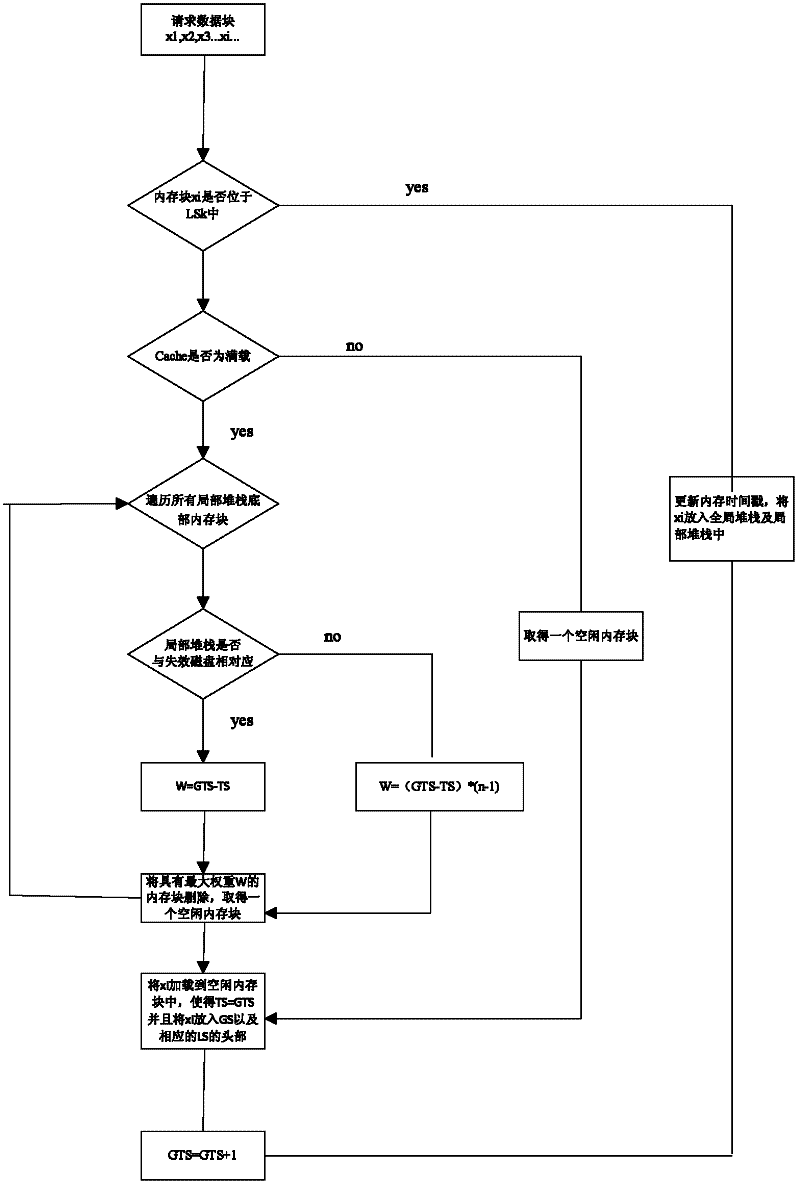

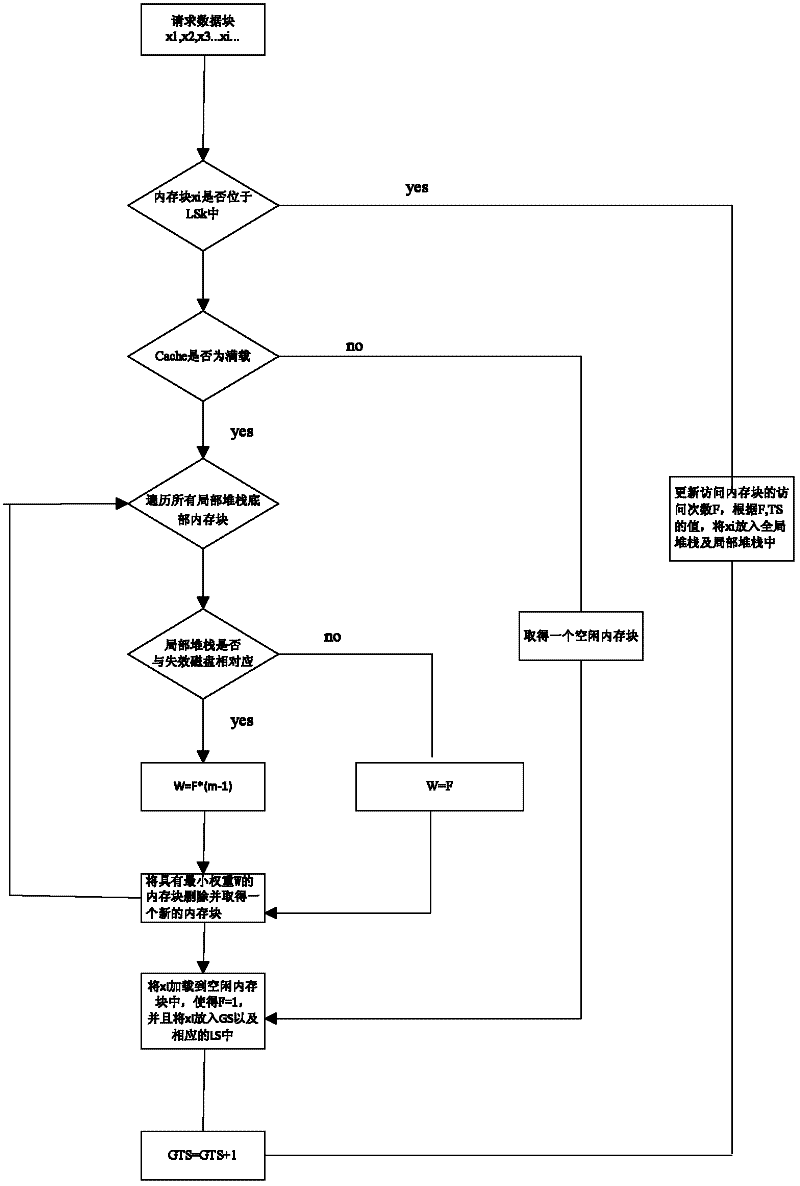

A Cache Memory Replacement Method with Failed Disk Priority

ActiveCN102289354AEasy to handleImprove performanceInput/output to record carriersParallel computingMinimum weight

The invention provides a VDF (Victim Disk First) based cache memory replacement method. The method comprises the following steps: calculating the weight of a bottom memory block in all local stacks, deleting memory blocks with the maximum / or minimum weight in all the local stacks, wherein the weight calculation methods are different for a victim disk and a non-victim disk; putting a disk block in an available memory block obtained after deletion. Since the probability that the weight of the memory block of the victim disk is maximum or minimum is high, the purpose of preferentially retainingthe memory disk of the victim disk is reached. In the method, I / O (Input / Output) request frequency for the whole live disk is reduced by reducing the directing frequency of the cache to the victim disk, and less I / O requests can improve the performance of a disk array.

Owner:HUAZHONG UNIV OF SCI & TECH

Prioritizing memory devices to replace based on namespace health

A computing device includes an interface configured to interface and communicate with a dispersed storage network (DSN), a memory that stores operational instructions, and processing circuitry operably coupled to the interface and to the memory. The processing circuitry is configured to execute the operational instructions to perform various operations and functions. The computing device detects memory error(s) associated with a plurality of sets of memory devices of sets of storage unit(s) (SU(s)) within the DSN that distributedly store a set of encoded data slices (EDSs). The computing device facilitates detection of EDS error(s) associated with the memory error(s). For a set of memory devices, the computing device establishes a corresponding memory replacement priority level and facilitates replacement of corresponding memory device(s) associated with the EDS error(s) based on the corresponding memory replacement priority level.

Owner:PURE STORAGE

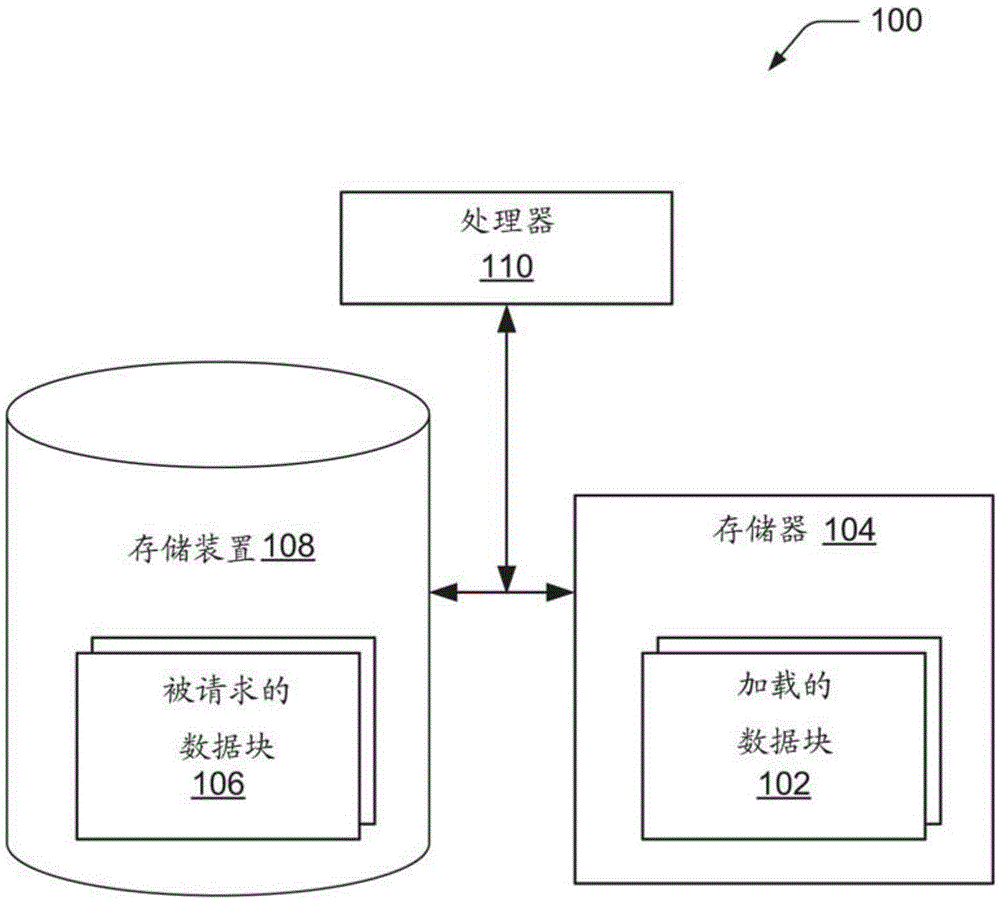

Intelligent memory block replacement

A framework for intelligent memory replacement of loaded data blocks by requested data blocks is provided. For example, various factors are taken into account to optimize the selection of loaded data blocks to be discarded from the memory, in favor of the requested data blocks to be loaded into the memory. In some implementations, correlations between the requested data blocks and the loaded data blocks are used to determine which of the loaded data blocks may become candidates to be discarded from memory.

Owner:SAP AG

High-performance instruction cache system and method

ActiveUS20180165212A1Improve performanceHigh speedMemory architecture accessing/allocationMemory systemsInstruction memoryMemory address

A high-performance instruction cache method based on extracting instruction information and store in a track table. The method enables reading of all levels of cache, including the last level cache, without performing tag matching. The method enables the content of the track table addressing directly instruction memories in both track cache or in set associative organization. Further, the method includes a memory replacement method using a track table, a first memory containing multiple rows instruction blocks, and a correlation table. The correlation table records source addresses of rows indexing a target row and the lower level memory address of the target row. During replacement of a first memory row, the lower level memory address of the target row replaces the address of the target row in the source row of the track table, and therefore preserve the indexing relationship recorded in the track table despite the replacement.

Owner:SHANGHAI XINHAO MICROELECTRONICS

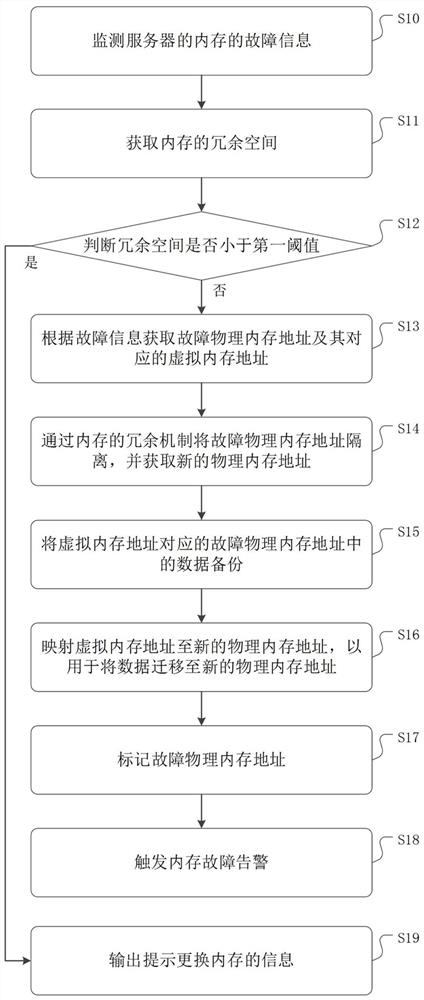

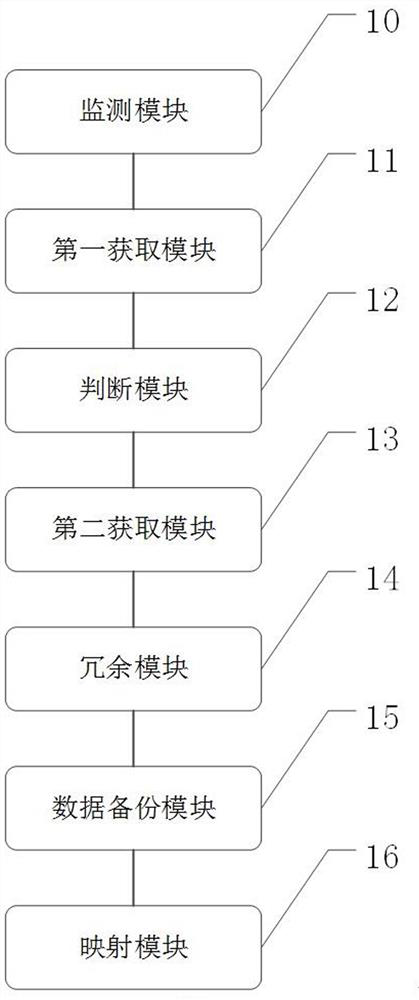

Memory fault processing method and device and computer readable storage medium

InactiveCN114461436AReduce the probability of downtimeLow costNon-redundant fault processingMemory addressVirtual memory

The invention discloses a memory fault processing method and device and a computer readable storage medium, and relates to the technical field of computers. The method comprises the steps of obtaining redundant space of a memory by monitoring fault information of the memory of a server, and judging whether the redundant space is smaller than a first threshold value or not; if not, obtaining a fault physical memory address and a corresponding virtual memory address according to the fault information; isolating the faulted physical memory address through a redundancy mechanism of the memory, and obtaining a new physical memory address; and backing up the data in the fault physical memory address, and mapping the virtual memory address to a new physical memory address so as to migrate the data to the new physical memory address. Therefore, according to the scheme, the fault memory is permanently isolated through a redundancy mechanism, meanwhile, the mapping position of the virtual memory is changed to isolate a software level in the fault memory, and data in the fault memory is not lost; the downtime rate caused by memory faults is effectively reduced, unnecessary memory replacement is reduced, and the operation and maintenance cost is reduced.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

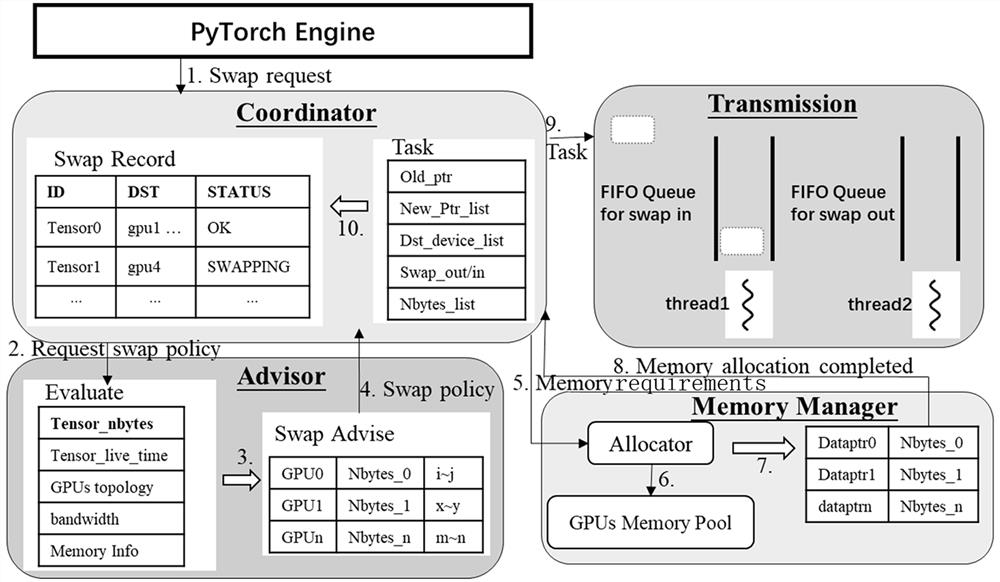

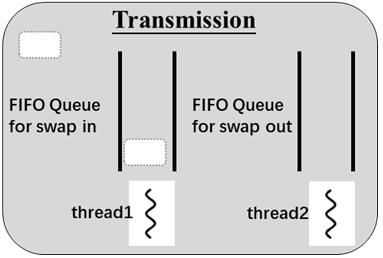

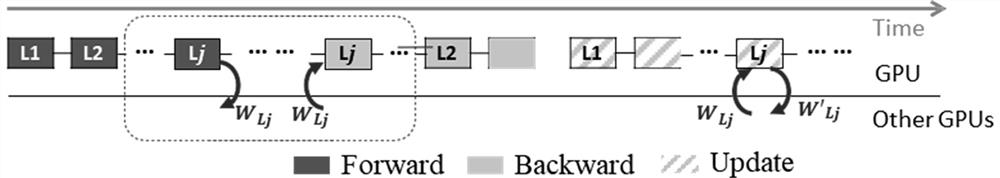

Method, system and device for efficient memory replacement between GPU devices and storage medium

ActiveCN114860461AAlleviate memory constraintsHide transfer timeResource allocationInterprogram communicationParallel computingHigh memory

The invention discloses a method, system and device for efficient memory replacement between GPU devices and a storage medium. In a related scheme, firstly, on the premise of effectively reducing memory limitation, data exchange operation (unloading and retrieving) is parallel to model training, calculation overhead is not introduced, and transmission time can be hidden; and secondly, the inactive data on the GPU equipment with high memory load are unloaded to other GPU equipment and are retrieved when needed, so that the free memory space of the equipment in the system is fully utilized, a plurality of direct connection high-speed links among the GPUs are aggregated, and the high-speed communication bandwidth is obtained, so that the memory is reduced more quickly, and the data are retrieved more timely. By combining the two points, the performance overhead introduced by memory compression can be greatly reduced, and the limitation of the memory on model training can be effectively reduced, so that the model training efficiency is improved.

Owner:UNIV OF SCI & TECH OF CHINA

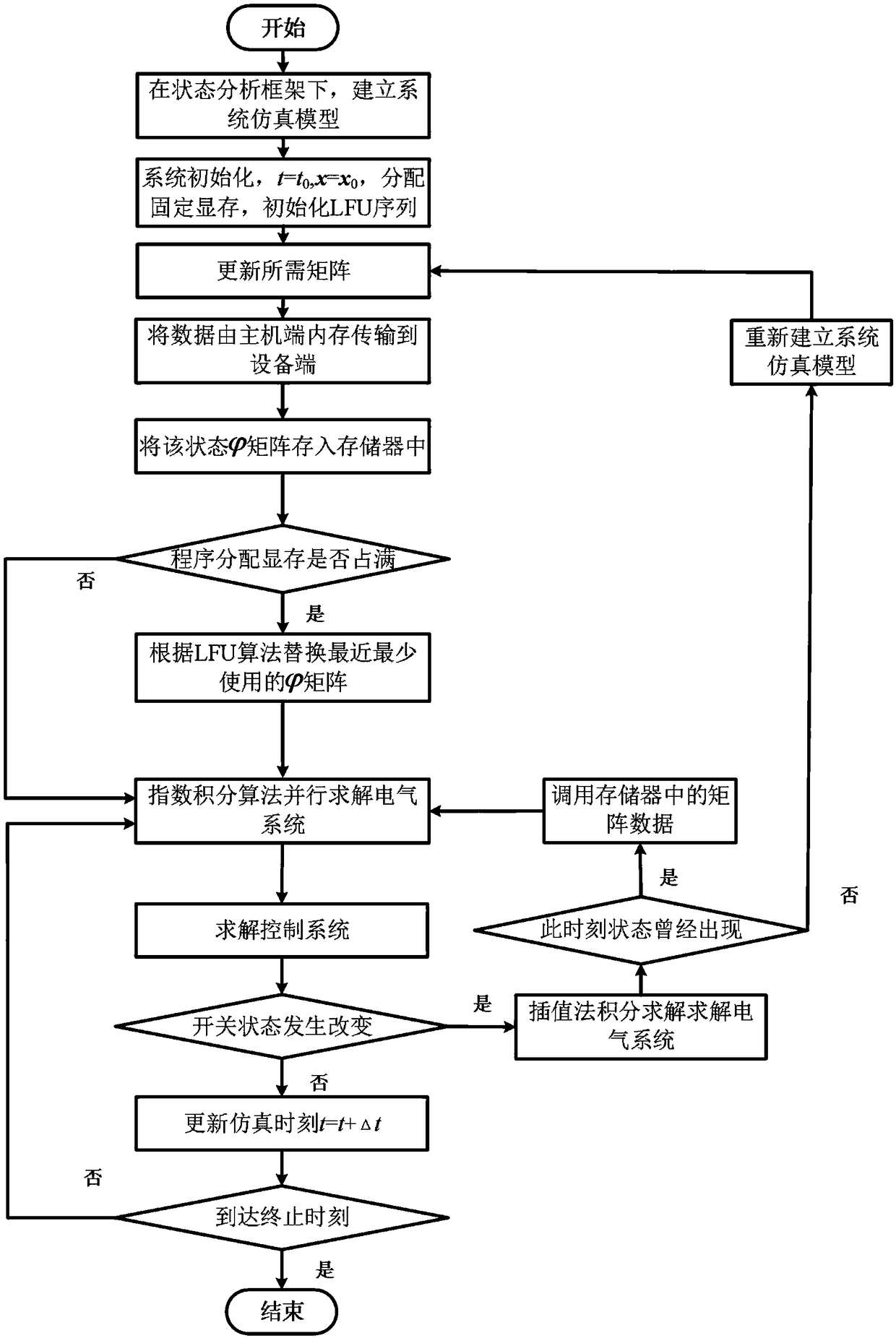

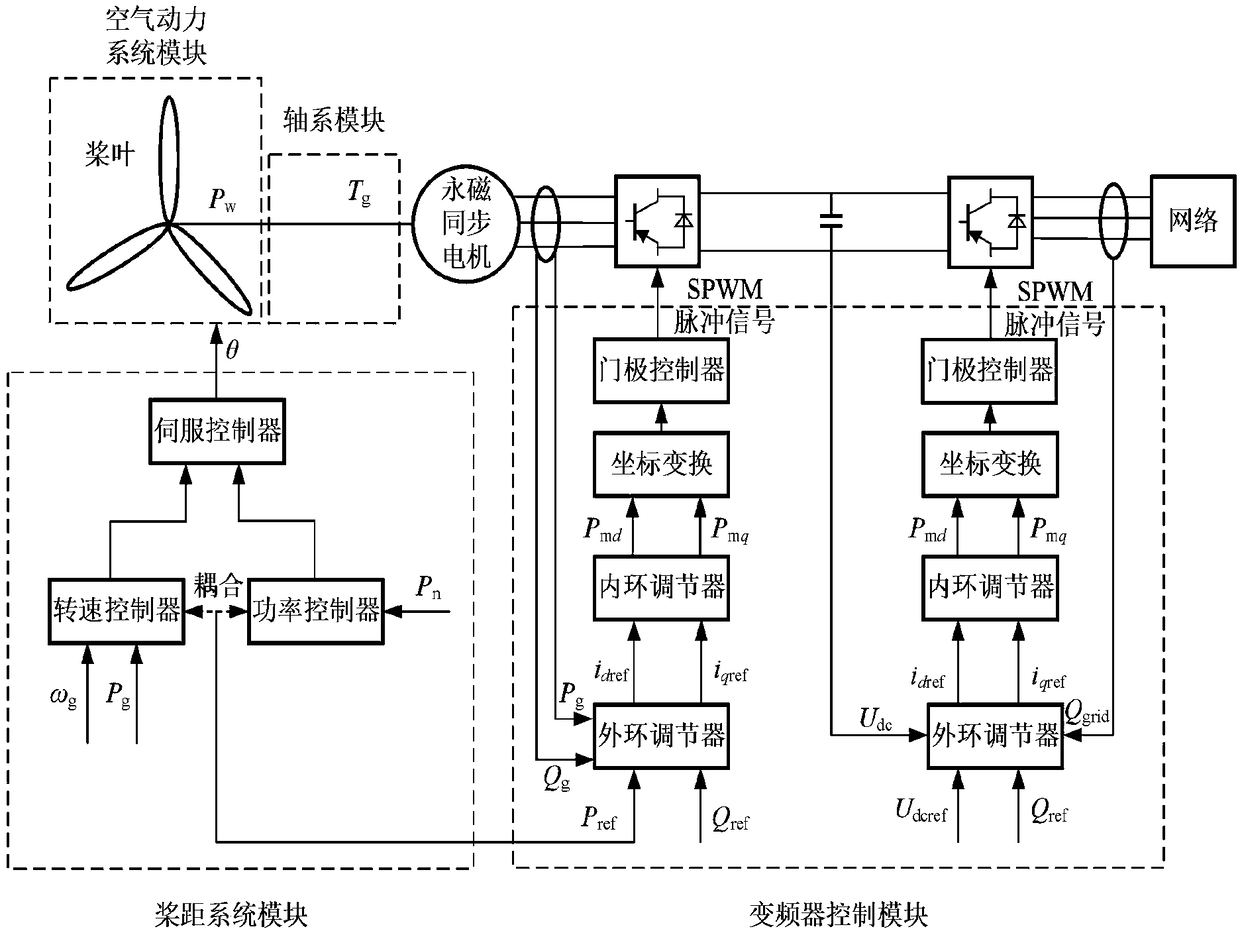

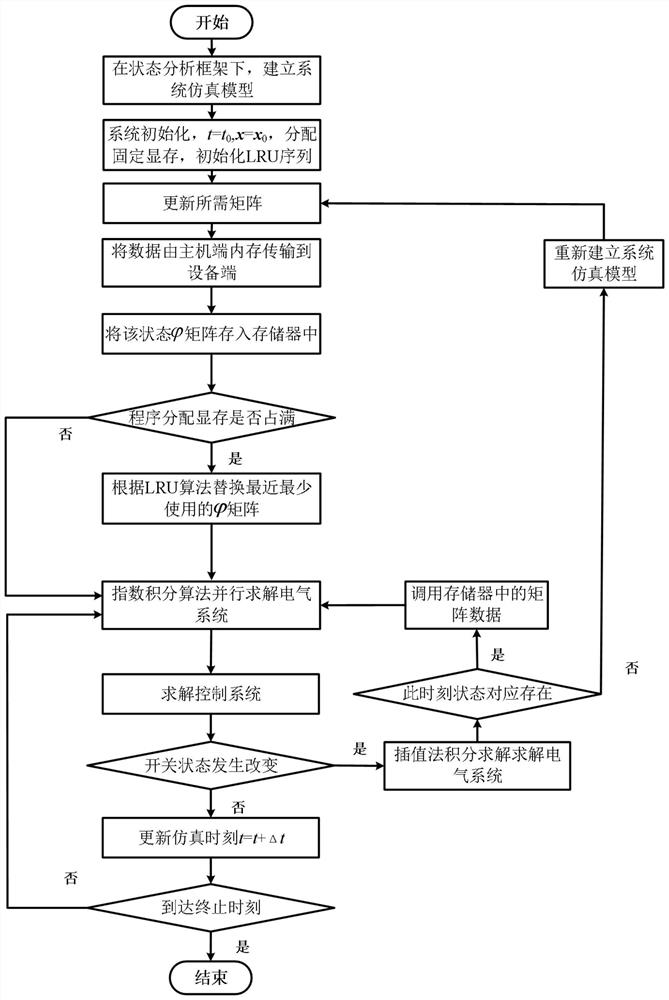

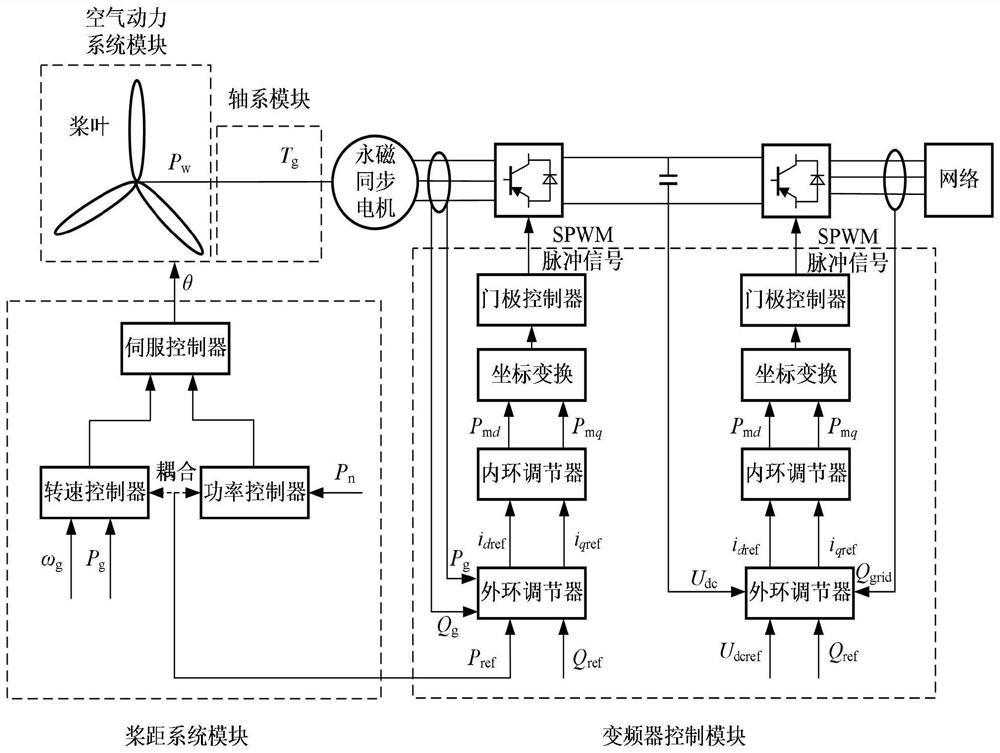

GPU memory management method for efficient transient simulation of power electronics

ActiveCN109214059AReduce sizeSimulation went wellData processing applicationsDesign optimisation/simulationTransient stateGraphics

The memory management method of the graphics processor used for high-efficiency transient simulation of power electronics of the present invention focuses on the contradiction between the limited computational resource situation and the electromagnetic transient simulation computational demand of a large-scale power system. During the simulation process, the rapid change of multiple power electronic switches makes the system state change frequently, furthermore, the state update matrix (img file = 'DDA000175845996000011. TIF' wi= '166' he= '64' / ) needs to be solved and stored many times, whichleads to the problems of low computational efficiency and excessive computational resources. Based on the least frequently used algorithm for memory substitution, on the other hand, with the increasing scale of actual power system, the requirement of simulation scale is also increasing. After using the memory replacement method, the simulation scale can be greatly improved at the expense of little simulation speed.

Owner:TIANJIN UNIV

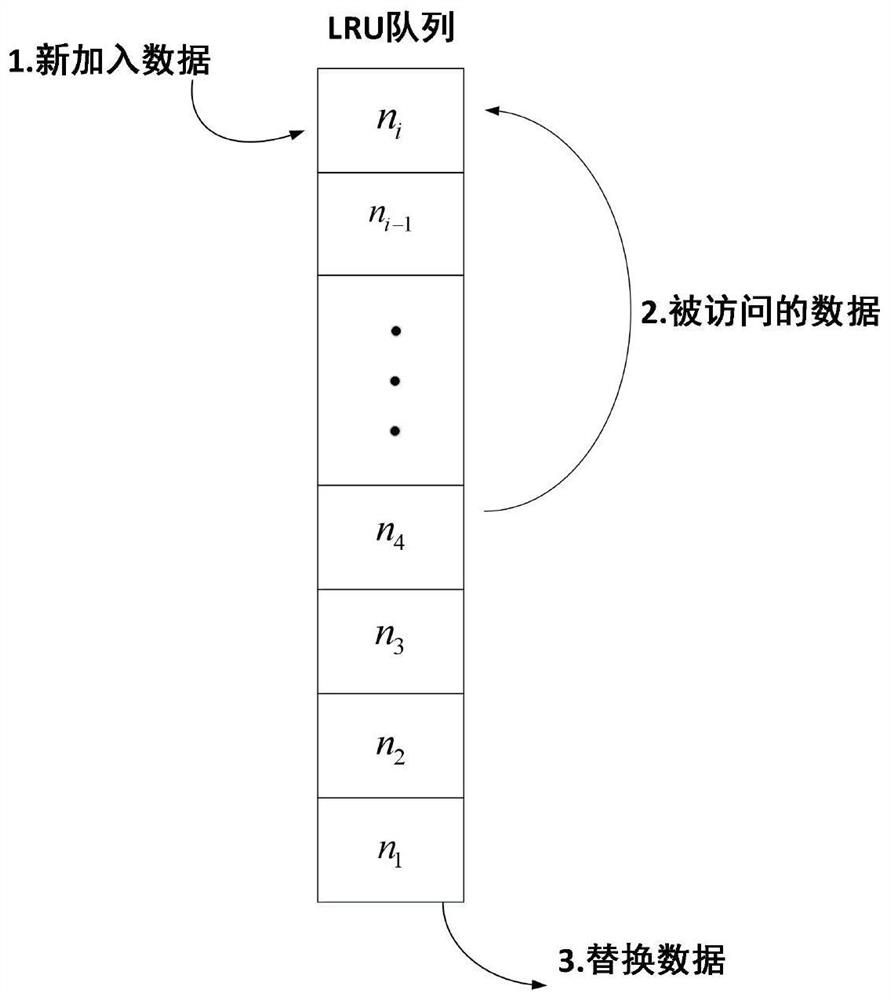

Graphics Processor Memory Management Method for Power Electronics Transient Simulation Acceleration

ActiveCN109271250BReduce sizeResource allocationDesign optimisation/simulationGraphicsElectric power system

A graphics processor memory management method for power electronic transient simulation acceleration, focusing on the contradiction between limited computing resources and large-scale power system electromagnetic transient simulation computing requirements. During the simulation process, the rapid changes of multiple power electronic switches make the system state change frequently, and the state update matrix needs to be solved and stored many times, which leads to the problems of low computing efficiency and excessive computing resources. The memory replacement method based on the least recently used algorithm can ensure the smooth progress of electromagnetic transient simulation under limited computing resources. The replacement method can greatly increase the size of the simulation at the expense of very little simulation speed.

Owner:TIANJIN UNIV

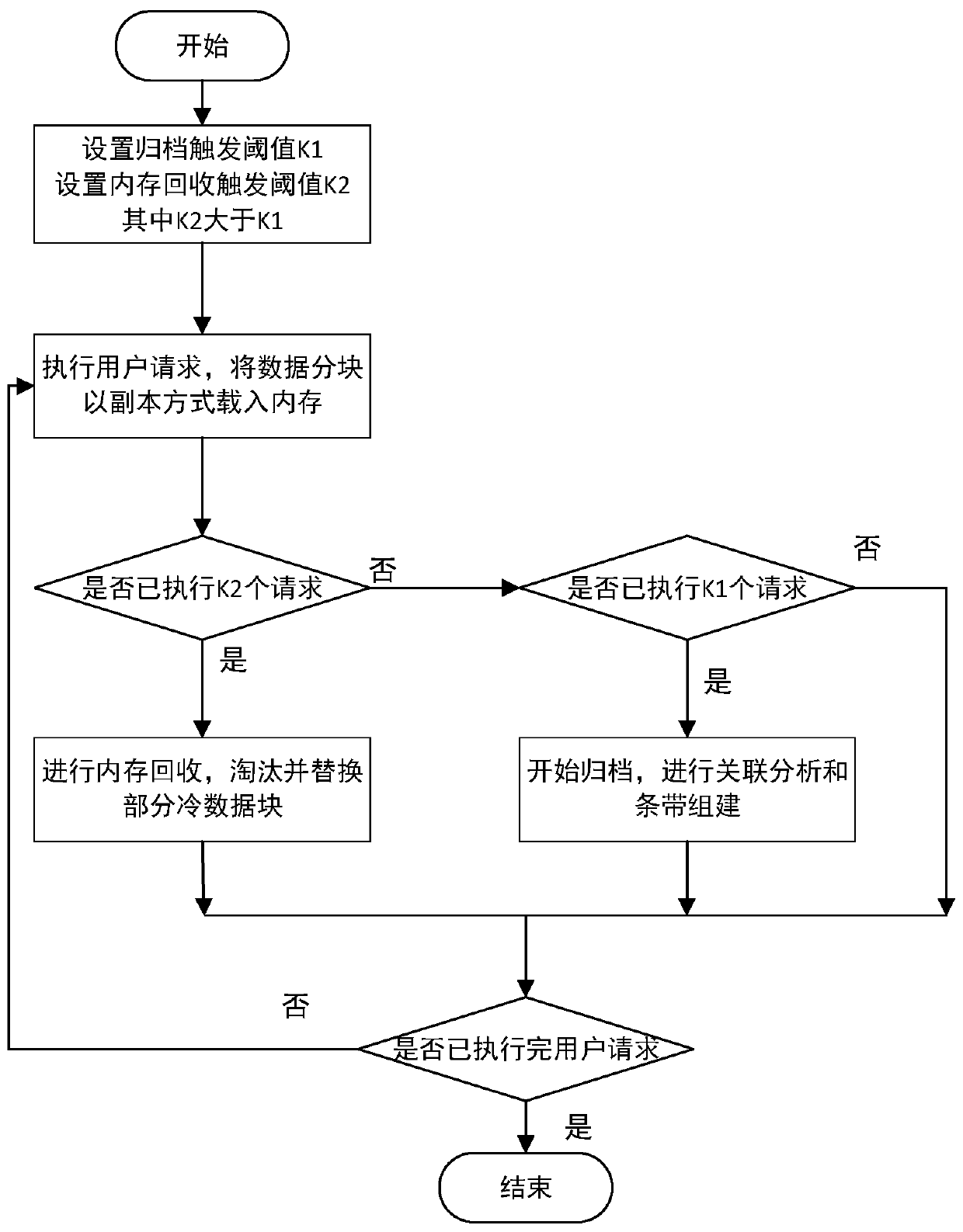

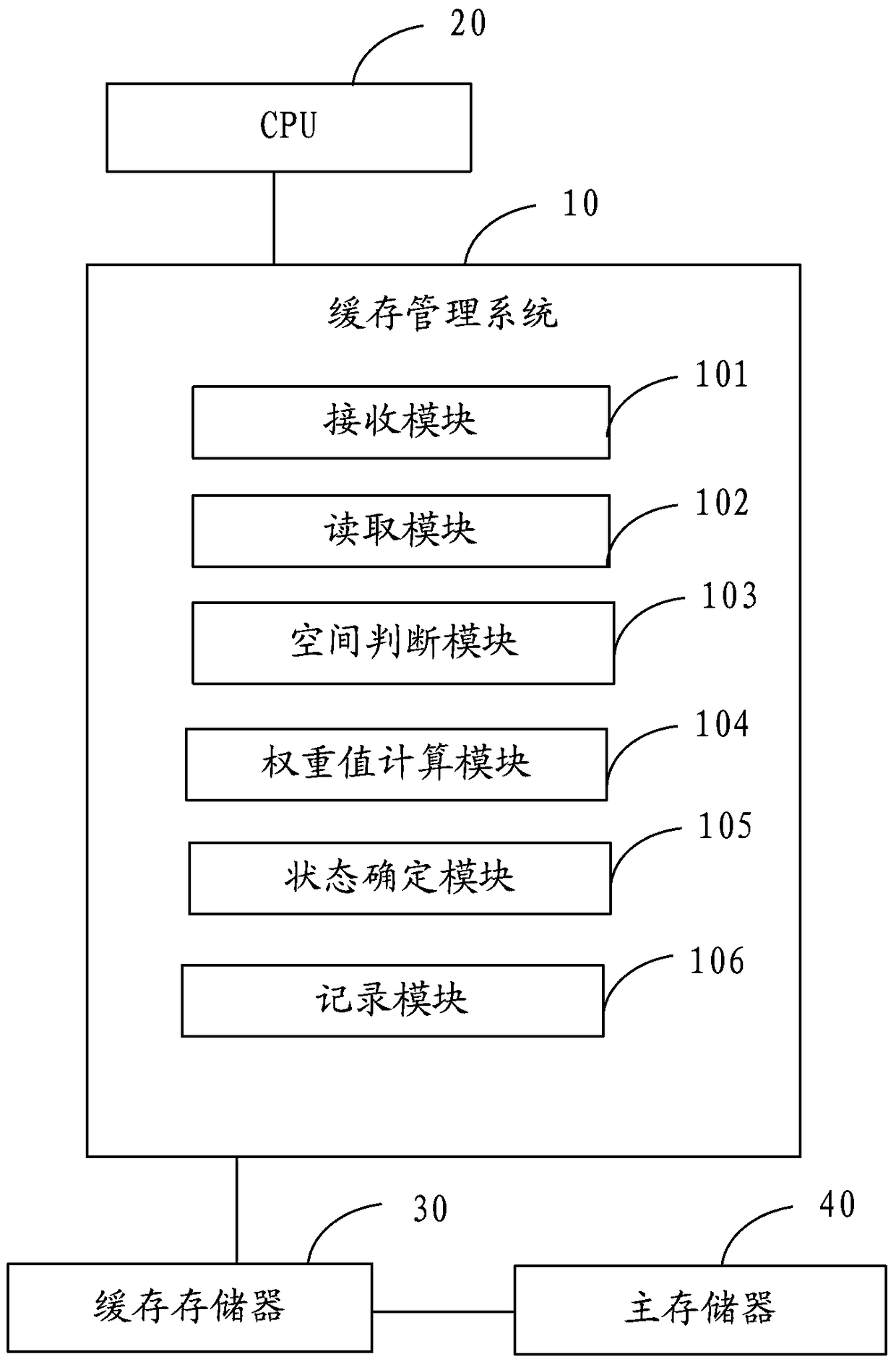

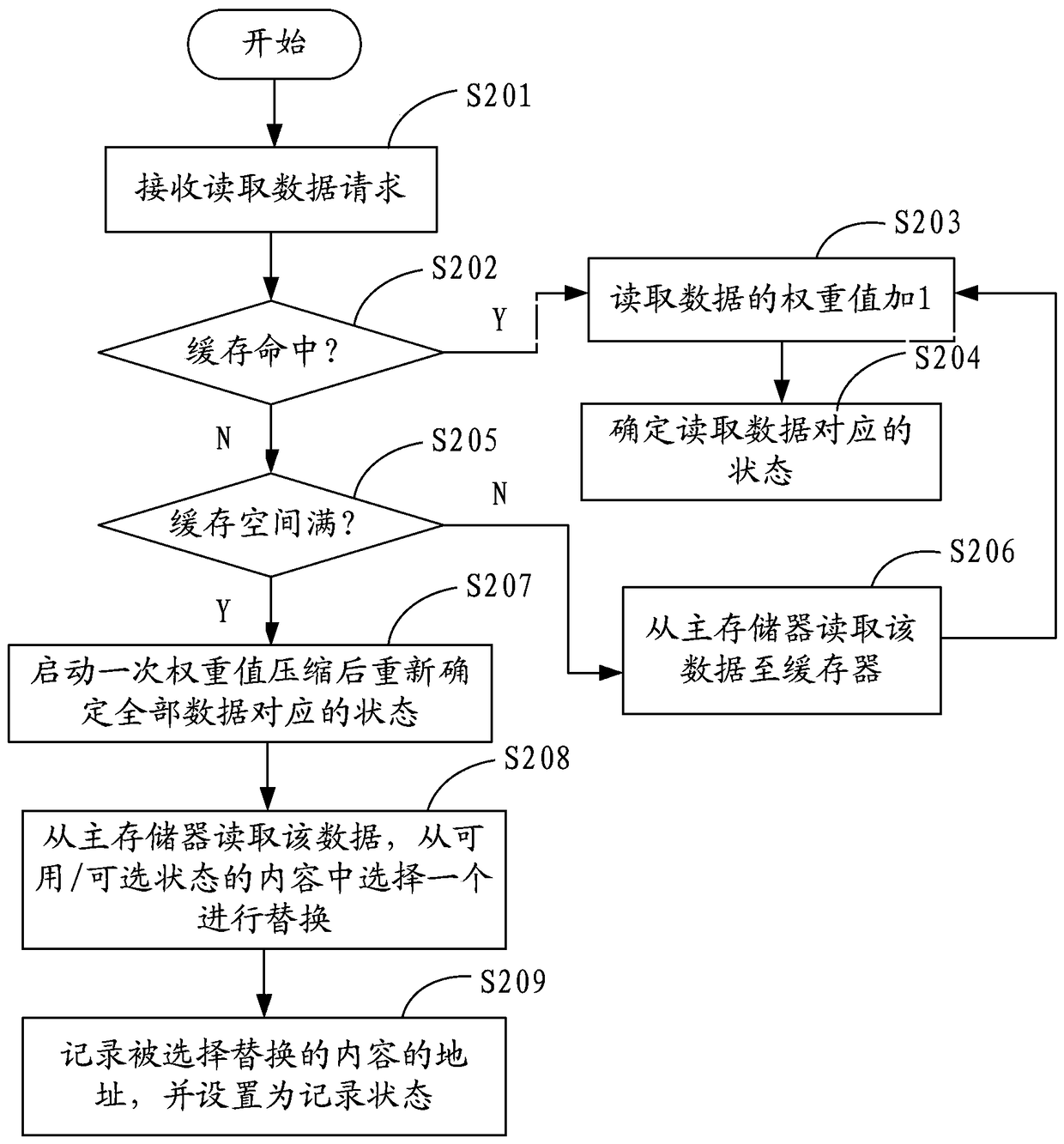

Data caching system and method

The invention provides a data cache system and method. The system is connected with a cache memory and a central processing unit CPU, and the data in the cache memory is marked with a weight value, which is used to represent the number of times the data is read in the cache memory. The system includes: a receiving module, used to receive the read data request sent by the CPU; a read module, used to read the corresponding data according to the read data request; a weight value calculation module, used for cache hit The weight value of the data read in the cache memory is increased by 1. When the space of the cache memory is full, the data with a weight value of zero will be randomly selected for replacement when the cache memory needs to be replaced next time. The data caching system and method of the present invention caches the most frequently used content in the cache memory instead of the recently used content, so that it is more rapid and effective to determine the content to be replaced in the cache memory.

Owner:WARECONN TECH SERVICE (TIANJIN) CO LTD

Complex event detection method on basis of IMF (instance matching frequency) internal and external memory replacement policy

InactiveCN102339256BEfficiencyImplement compressed storageMemory adressing/allocation/relocationInternal memoryData mining

The invention relates to a complex event detection method on the basis of an IMF (Instance Matching Frequency) internal and external memory replacement policy. In the method, when a user does not terminate a detection process, an event flow is continuously scanned; a current event is read; an object statistical table is constructed and modified; and different treatments are carried out according to the type of the current event; if the current event is a terminal event, a complex event detection process is triggered and a detected sequence which meets a user defined mode is output; if the current event is a nonterminal event and an internal memory quota of the current event is not full, the internal memory storage of an event instance is carried out on the basis of an object tree and an index thereof; and if the current event is the nonterminal event and the internal memory quota of the current event is full, the internal and external memory replacement of the event instance is carried out on the basis of the IMF policy and the current event is associated with an event instance bit map to carry out external memory storage of each event instance of an replaced object. The complex event detection method can effectively support the large time scale complex event detection and has high efficiency of utilizing the space and processing time.

Owner:NORTHEASTERN UNIV LIAONING

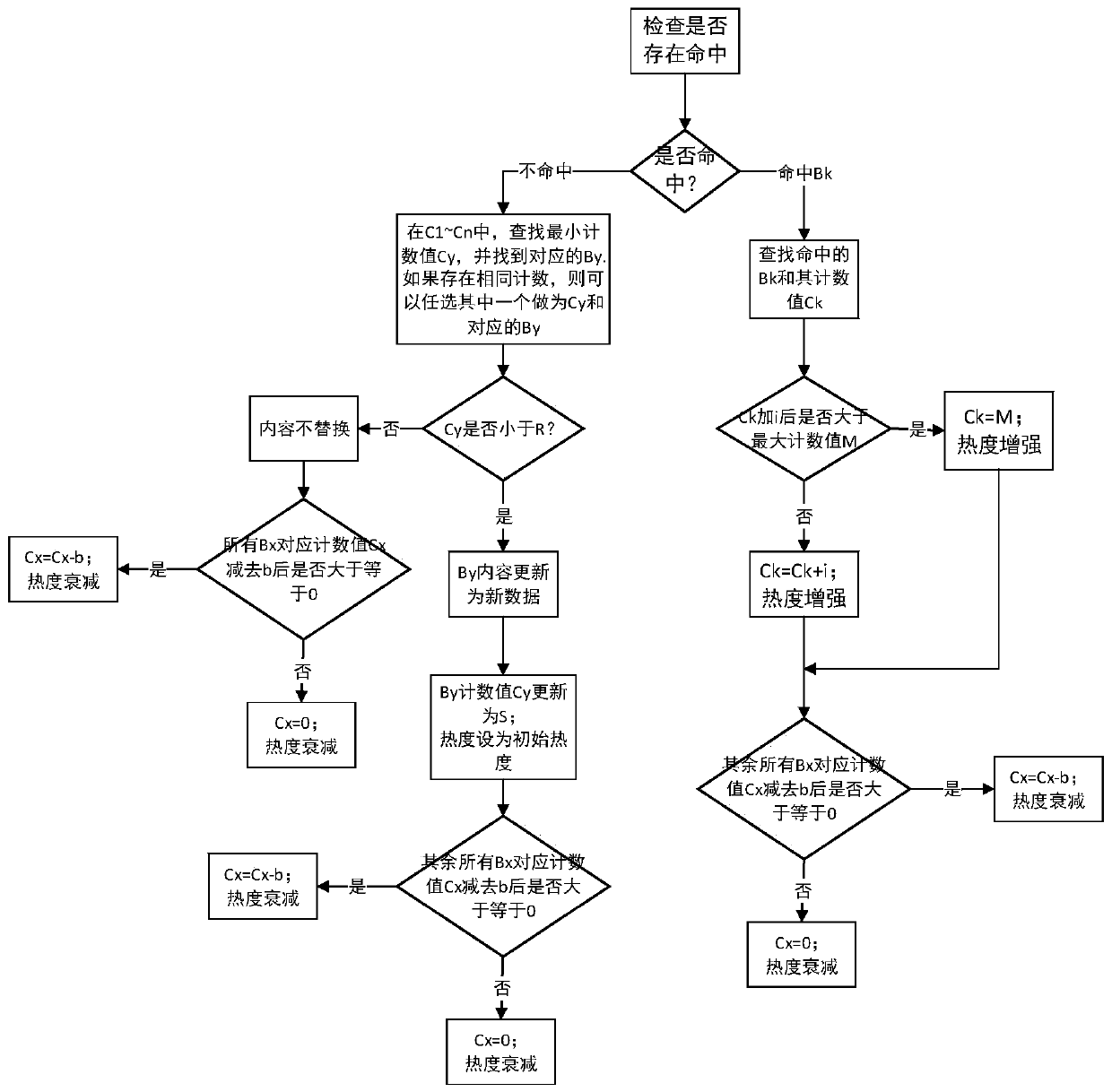

Cache memory replacement method and system based on use popularity

ActiveCN110990300AReplacement method is flexibleImprove versatilityMemory systemsParallel computingAccess frequency

The invention discloses a cache memory replacement method and system based on use popularity. The method comprises the steps of: dividing a Cache into n Cache blocks, and enabling the n Cache blocks and corresponding popularity values to form a popularity comparison group, judging whether the CPU data to be read exist in the Cache or not according to a received CPU data reading request, in a hit case, searching for the hit Cache block and the corresponding popularity value, increasing the popularity value according to a preset popularity enhancement factor, attenuating the popularity values ofother un-hit Cache blocks according to a popularity attenuation factor, and reading the CPU data in the hit Cache block, and in a no hit case, searching for the Cache block of which the popularity value is minimum and is less than or equal to the replacement threshold, replacing the CPU data to be read into the Cache block, and attenuating the popularity values of the rest un-hit Cache blocks according to the popularity attenuation factor. The access frequency of the code in the Cache is counted, and replacement is carried out after the access popularity is reduced; and the method is suitablefor different execution codes through heat enhancement factor and heat attenuation factor parameters, and a high hit rate is kept.

Owner:TIH MICROELECTRONIC TECH CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com