Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

63 results about "Adder tree" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

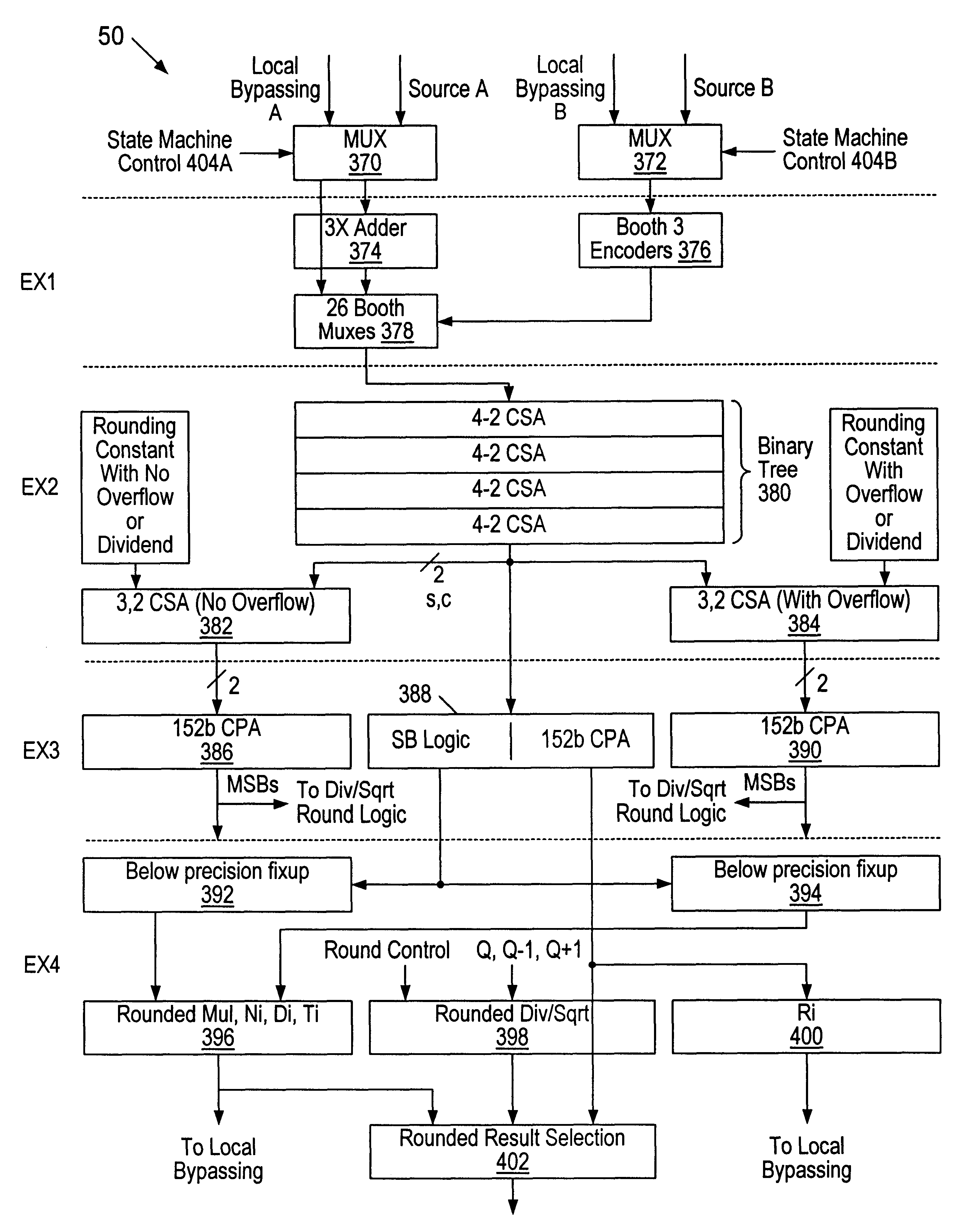

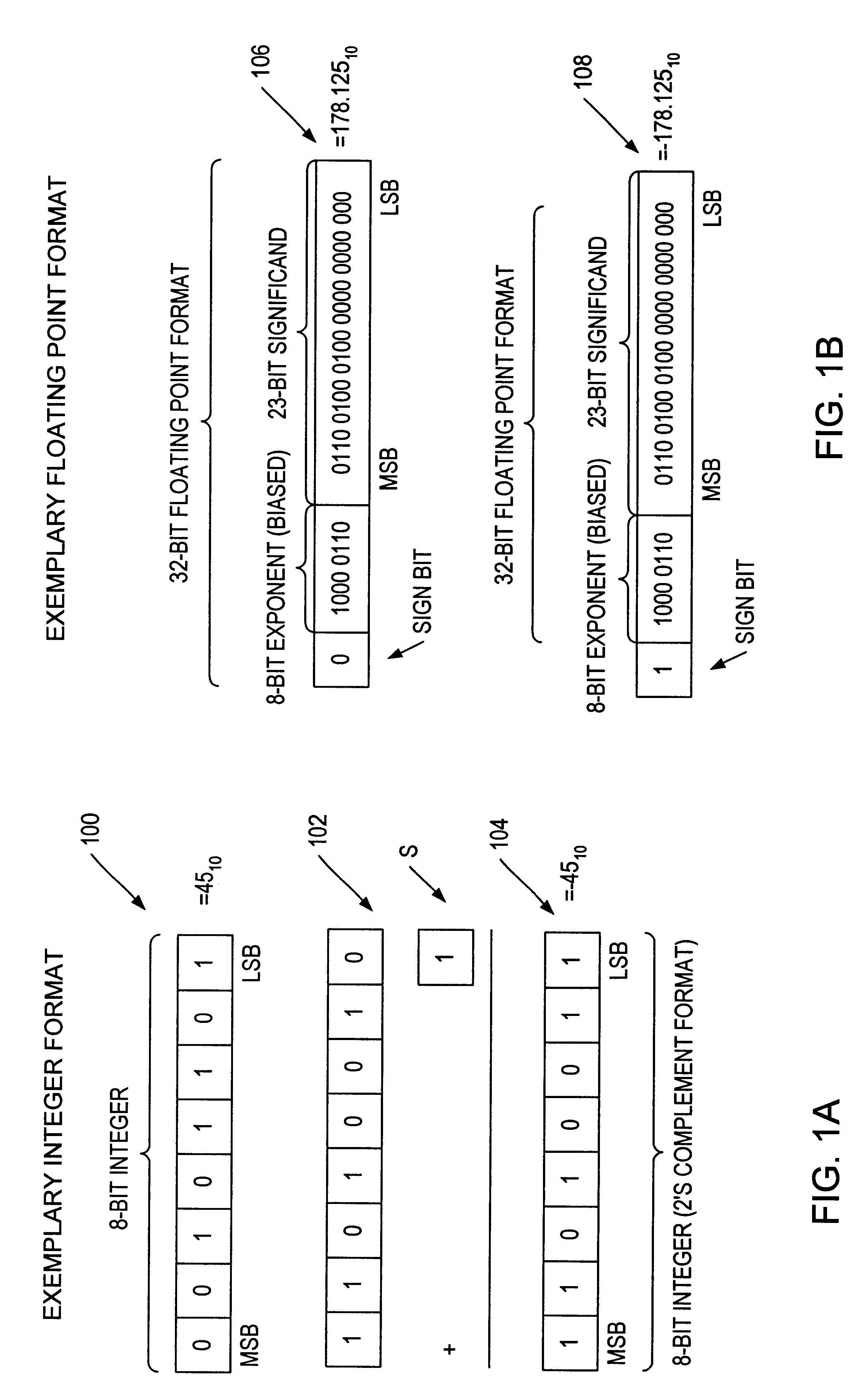

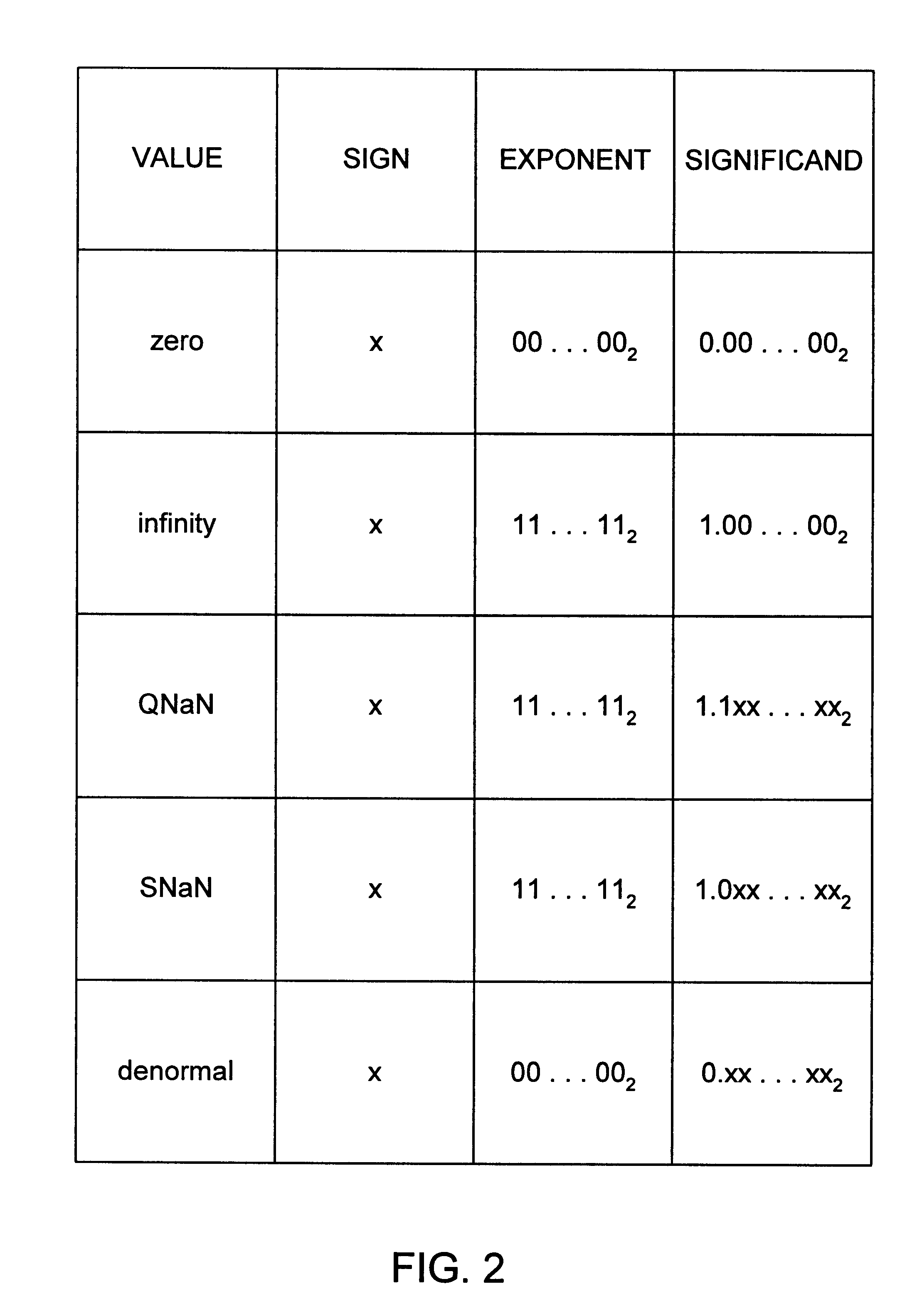

Shared FP and SIMD 3D multiplier

InactiveUS6490607B1Computations using contact-making devicesRuntime instruction translationComputerized systemTheoretical computer science

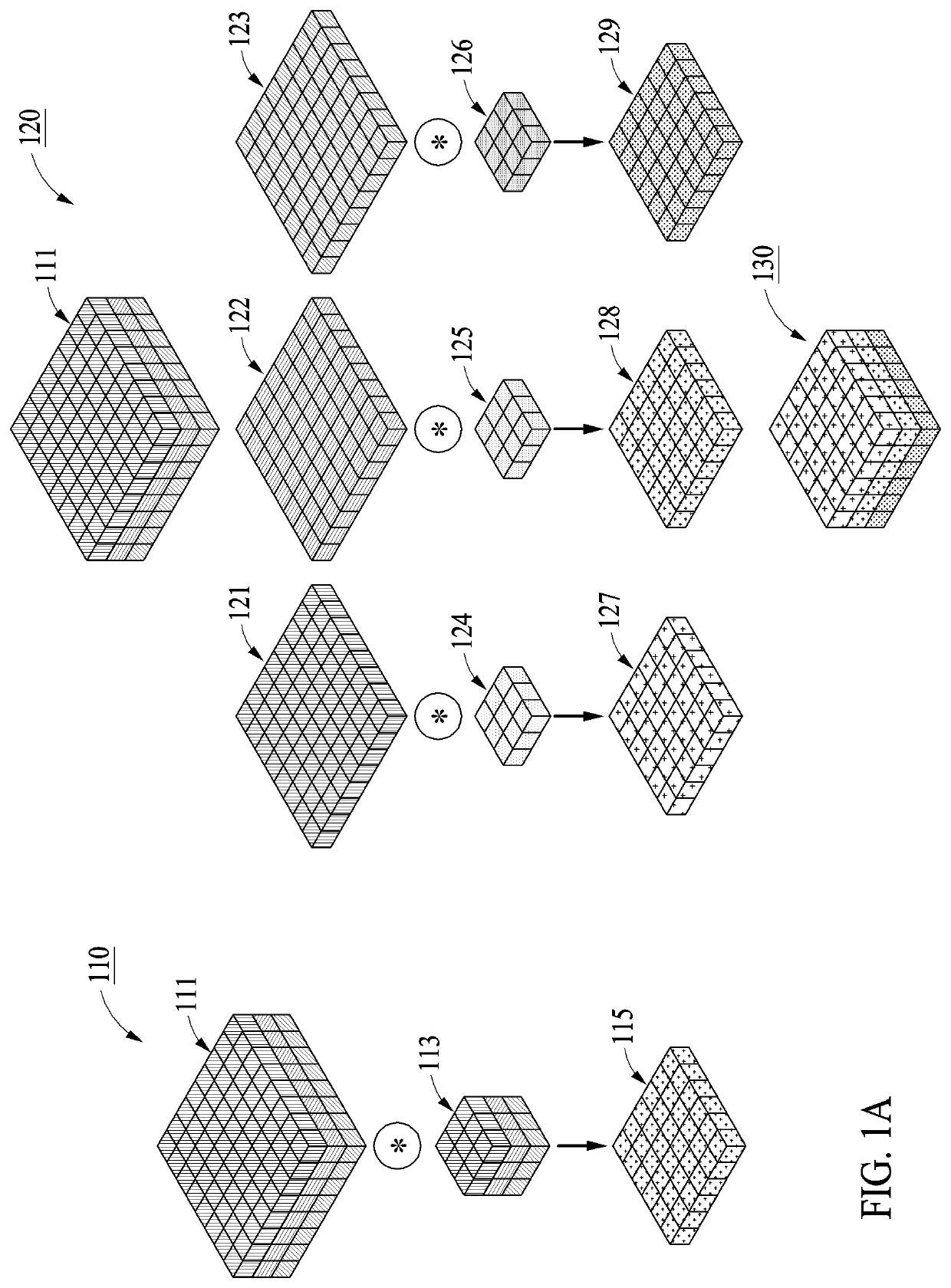

A multiplier configured to perform multiplication of both scalar floating point values (XxY) and packed floating point values (i.e., X1xY1 and X2xY2). In addition, the multiplier may be configured to calculate XxY-Z. The multiplier comprises selection logic for selecting source operands, a partial product generator, an adder tree, and two or more adders configured to sum the results from the adder tree to achieve a final result. The multiplier may also be configured to perform iterative multiplication operations to implement such arithmetical operations such as division and square root. The multiplier may be configured to generate two versions of the final result, one assuming there is an overflow, and another assuming there is not an overflow. A computer system and method for performing multiplication are also disclosed.

Owner:ADVANCED SILICON TECH

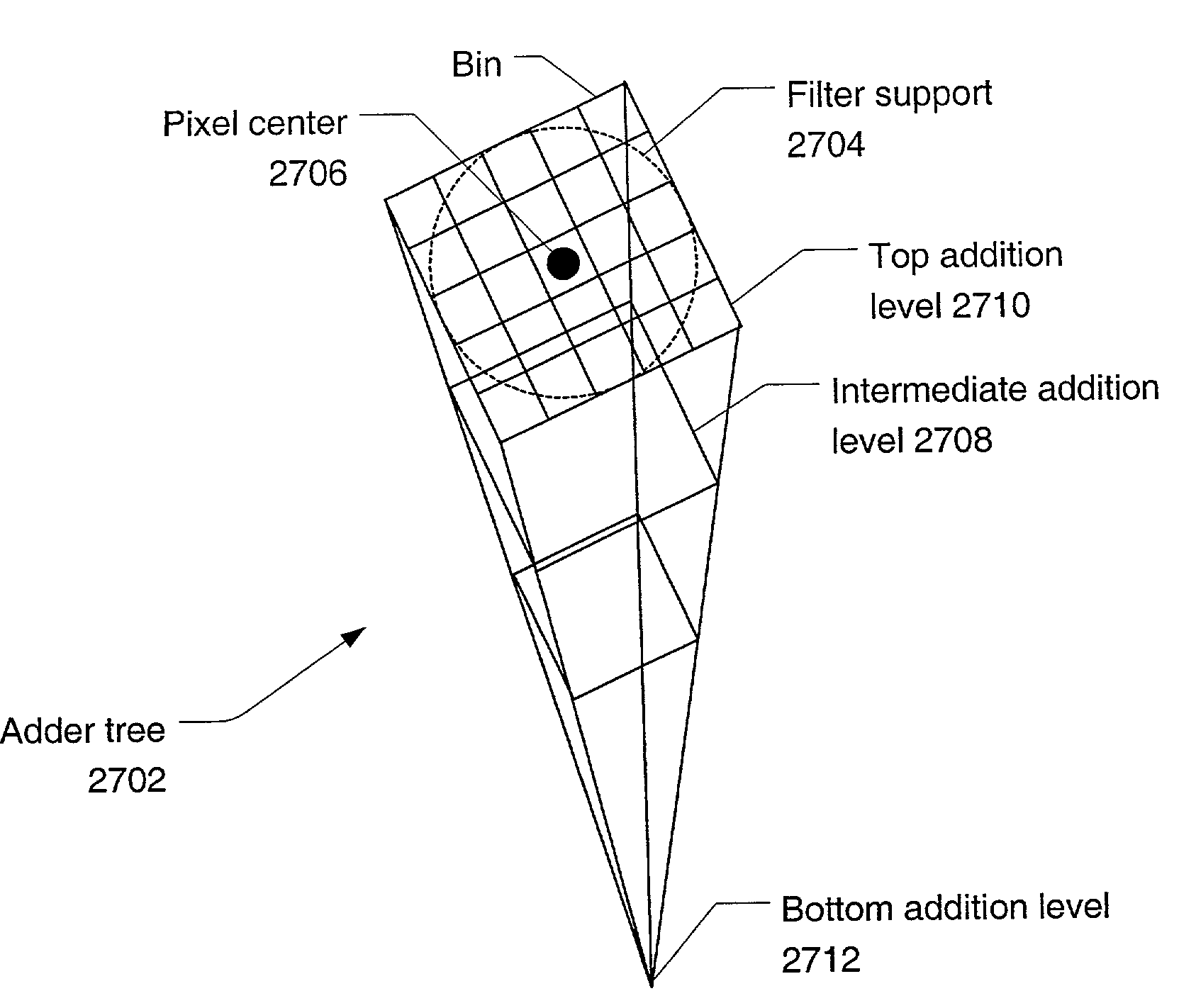

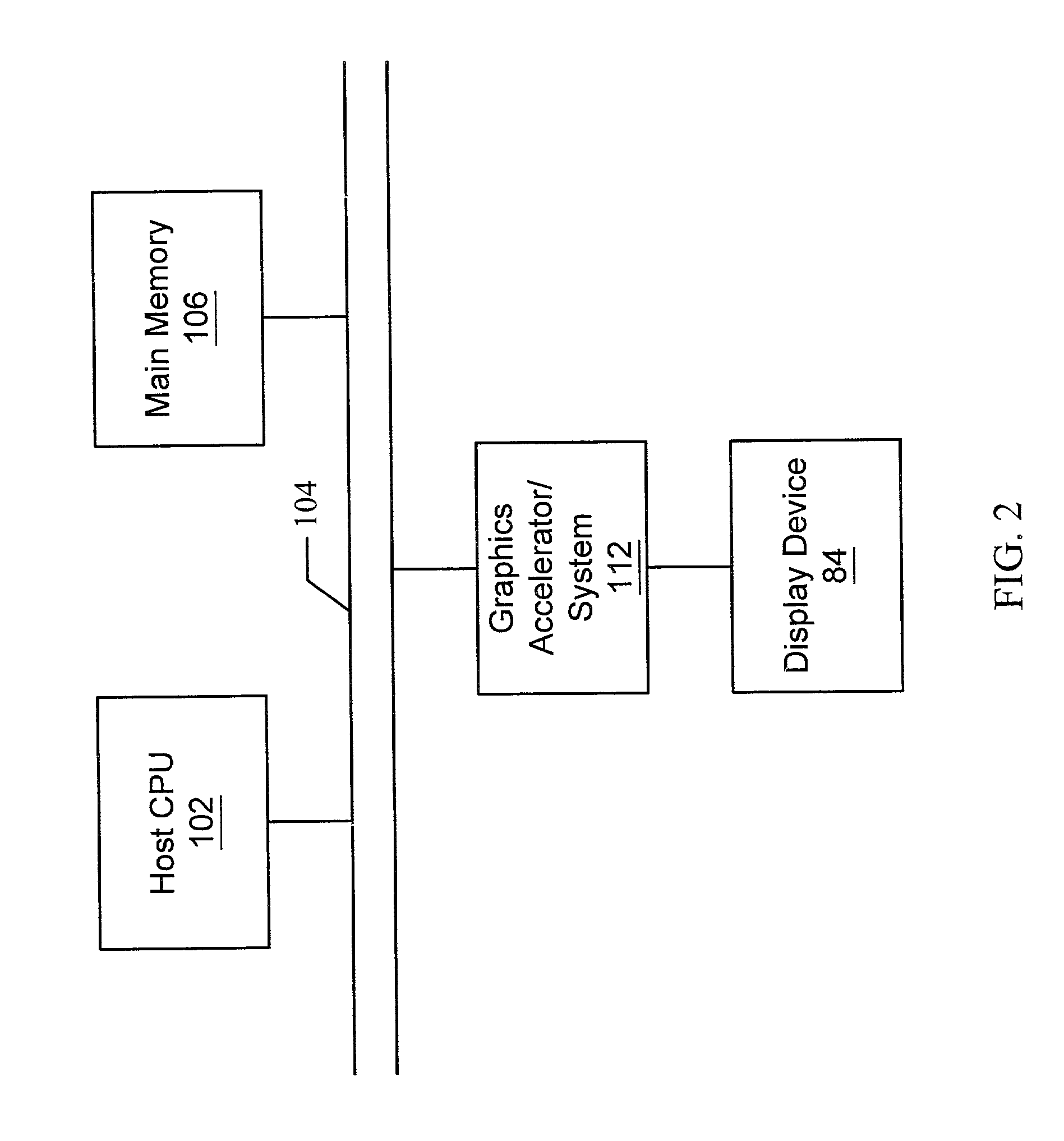

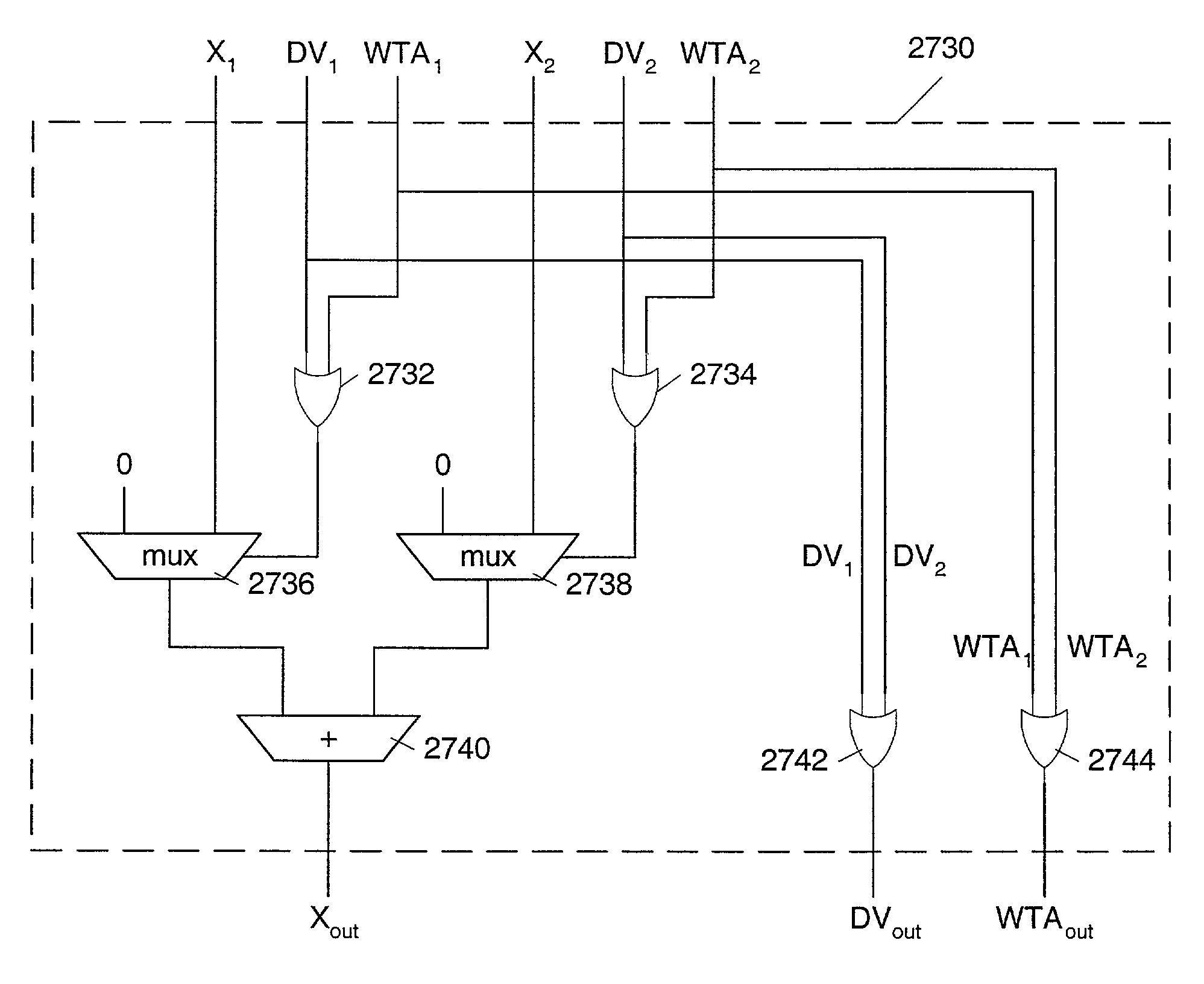

Graphics system with an improved filtering adder tree

InactiveUS20020015041A1Weight moreLess contributionComputation using non-contact making devicesDigital computer detailsGraphicsGraphic system

A sample-to-pixel calculation unit in a graphics system may comprise an adder tree. The adder tree includes a plurality of adder cells coupled in a tree configuration. Input values are presented to a first layer of adder cells. Each input value may have two associated control signals: a data valid signal and a winner-take all signal. The final output of the adder tree equals (a) a sum of those input values whose data valid signals are asserted provided that none of the winner-take all signals are asserted, or (b) a selected one of the input values if one of the winner-take-all bits is asserted. The selected input value is the one whose winner-take-all bit is set. The adder tree may be used to perform sums of weighted sample attributes and / or sums of coefficients values as part of pixel value computations.

Owner:ORACLE INT CORP

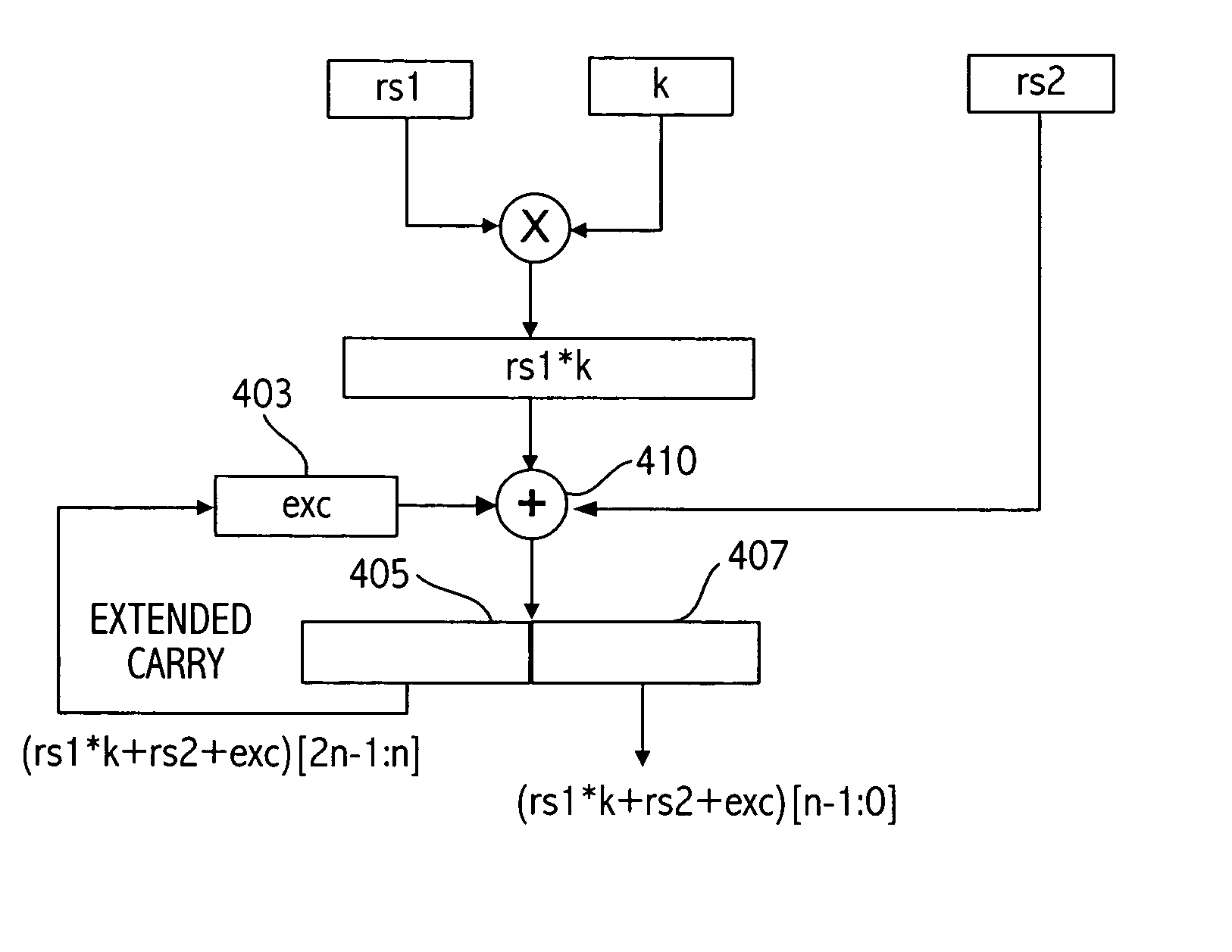

Method and apparatus for implementing processor instructions for accelerating public-key cryptography

ActiveUS20040267855A1Conditional code generationPublic key for secure communicationParallel computingCarry-save adder

In response to executing an arithmetic instruction, a first number is multiplied by a second number, and a partial result from a previously executed single arithmetic instruction is fed back from a first carry save adder structure generating high order bits of the current arithmetic instruction to a second carry save adder tree structure being utilized to generate low order bits of the current arithmetic instruction to generate a result that represents the first number multiplied by the second number summed with the high order bits from the previously executed arithmetic instruction. Execution of the arithmetic instruction may instead generate a result that represents the first number multiplied by the second number summed with the partial result and also summed with a third number, the third number being fed to the carry save adder tree structure.

Owner:ORACLE INT CORP

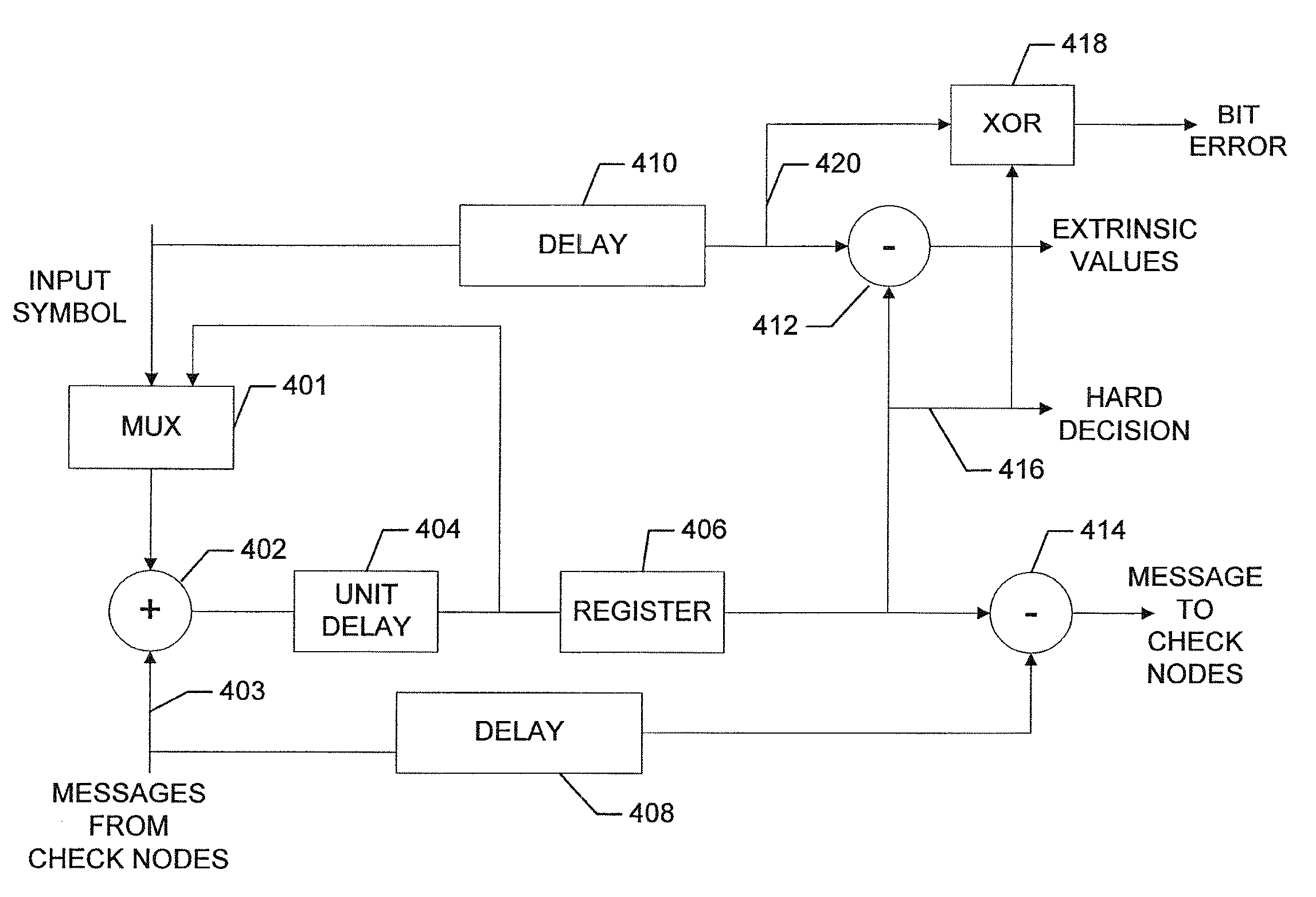

Bit error detector for iterative ECC decoder

Owner:SEAGATE TECH LLC

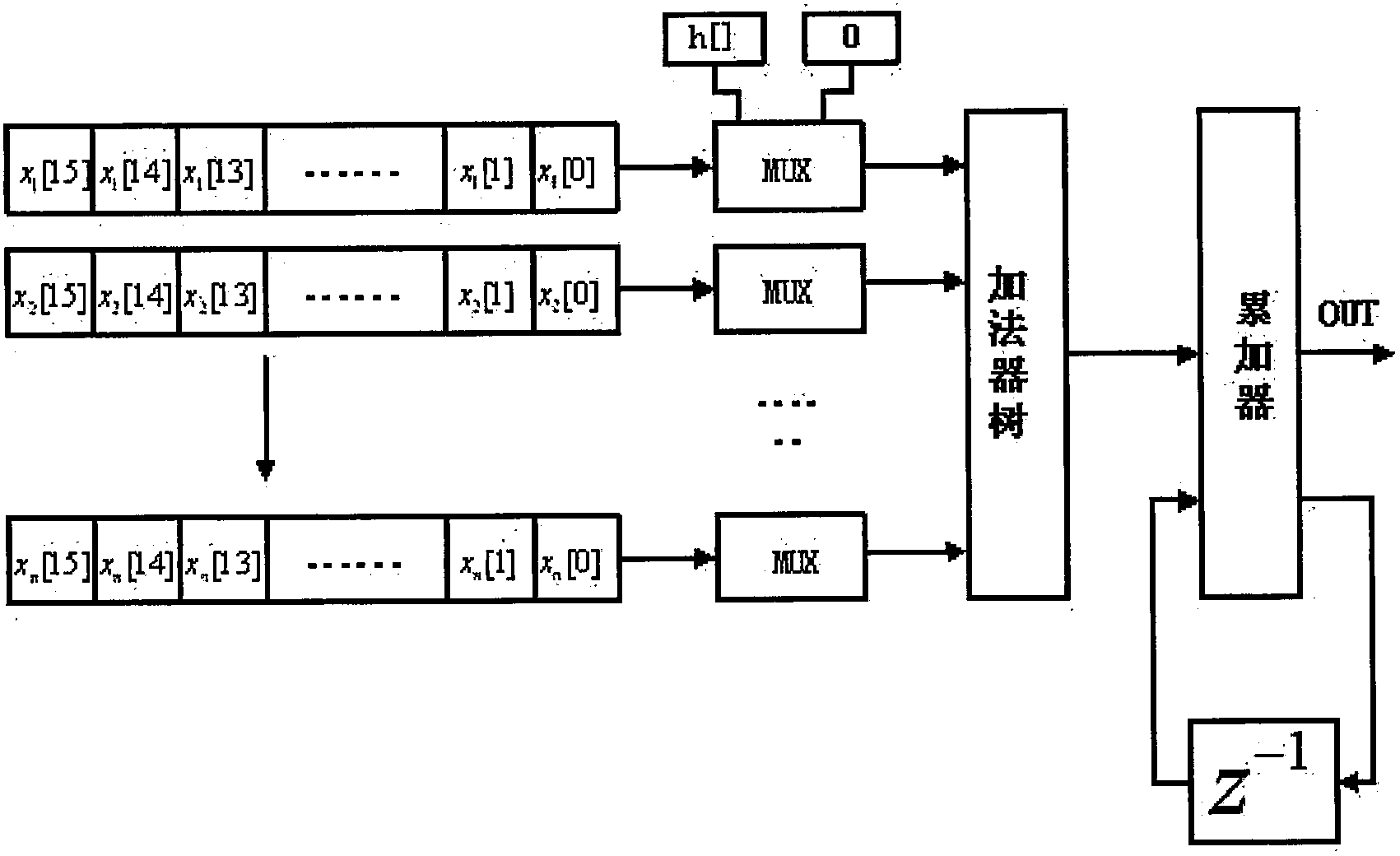

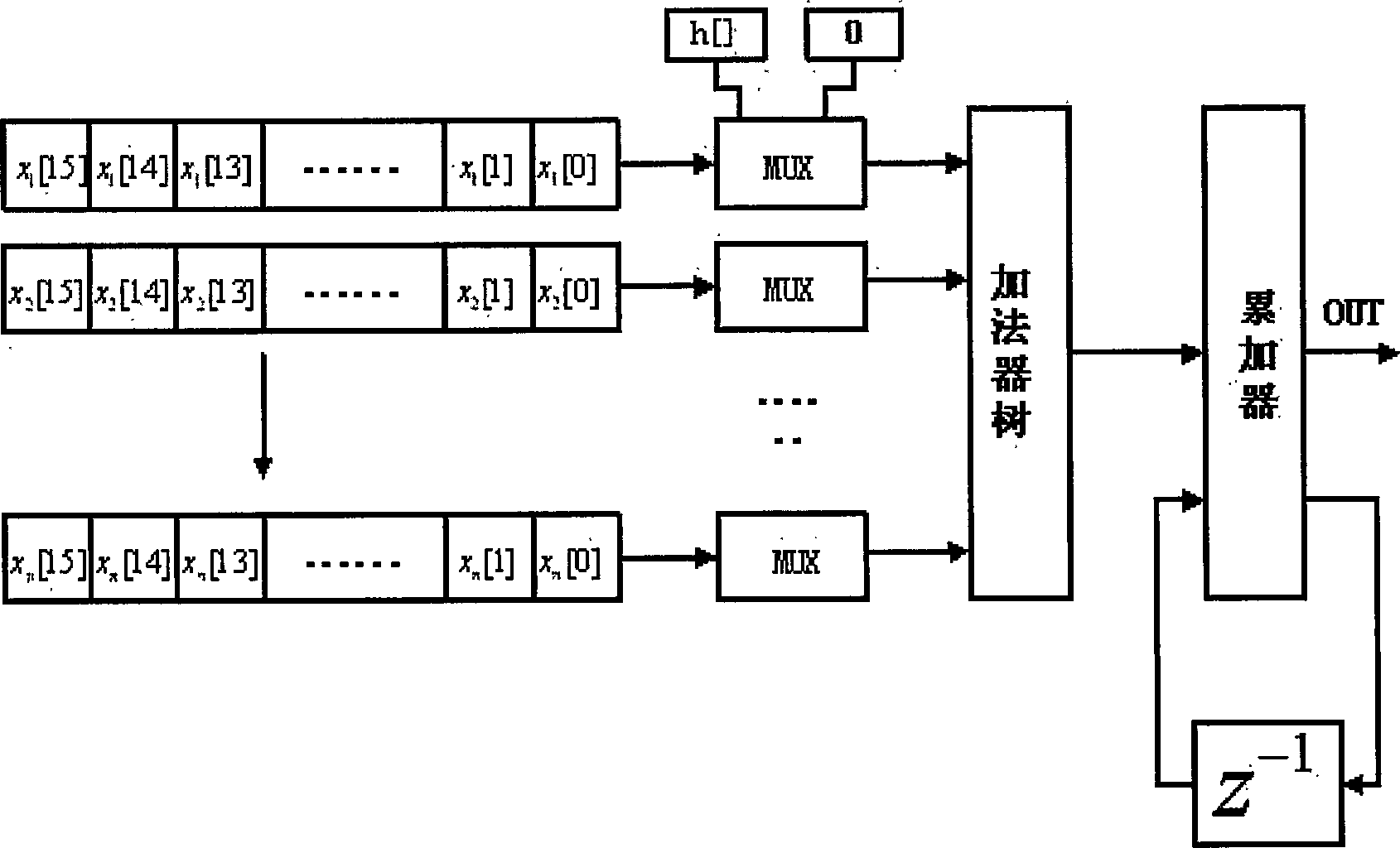

Signed multiply-accumulate algorithm method using adder tree structure

InactiveCN102681815AReduce areaRealize multiply-accumulate calculationDigital data processing detailsProgrammable logic deviceDistributed algorithm

The invention relates to a hardware multiply-accumulate algorithm and particularly relates to a signed multiply-accumulate algorithm method using an adder tree structure. According to the method, data are divided into two parts, i.e. coefficient items and data items, by adopting a complement form, the data items are decomposed into bit units according to a binary principle, the coefficient items are subjected to binary multiplication with bits of the data items according to an addition allocation principle, and the products are subjected to binary accumulation so as to obtain a final output result. The signed multiply-accumulate algorithm method using the adder tree structure overcomes the disadvantage of fixed coefficients of the original DA distributed algorithm and does not need a large number of ROMs (Read Only Memories) to serve as a coefficient table, the occupied area of a chip is smaller, the multiply-accumulate calculation of signed numbers is realized, and the extension is convenient, so that the signed multiply-accumulate algorithm method is especially suitable for the realization of a programmable logic device.

Owner:深圳市清友能源技术有限公司

Graphics system with an improved filtering adder tree

InactiveUS6989843B2Weight moreLess contributionComputation using non-contact making devicesDigital computer detailsGraphicsControl signal

Owner:ORACLE INT CORP

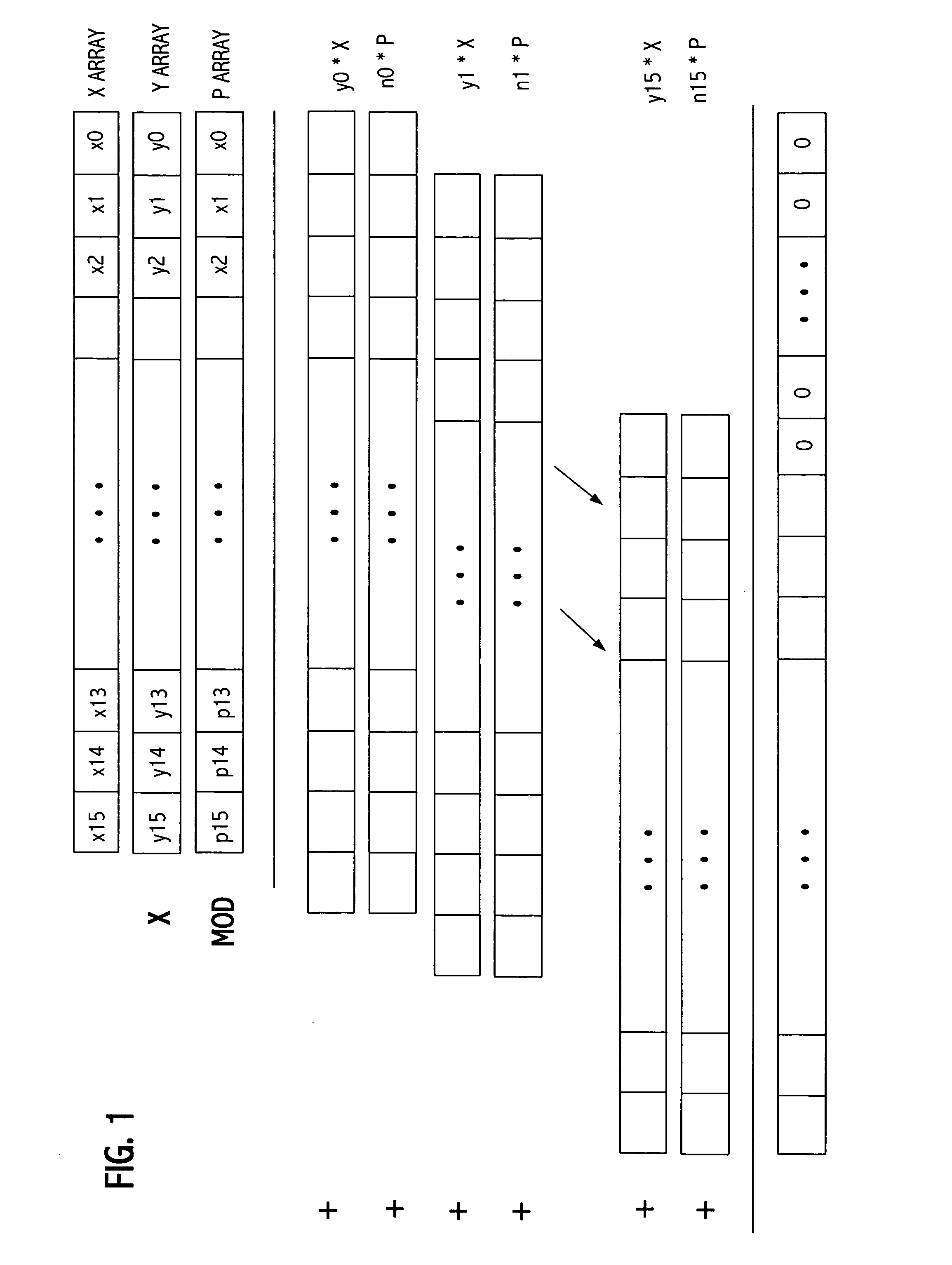

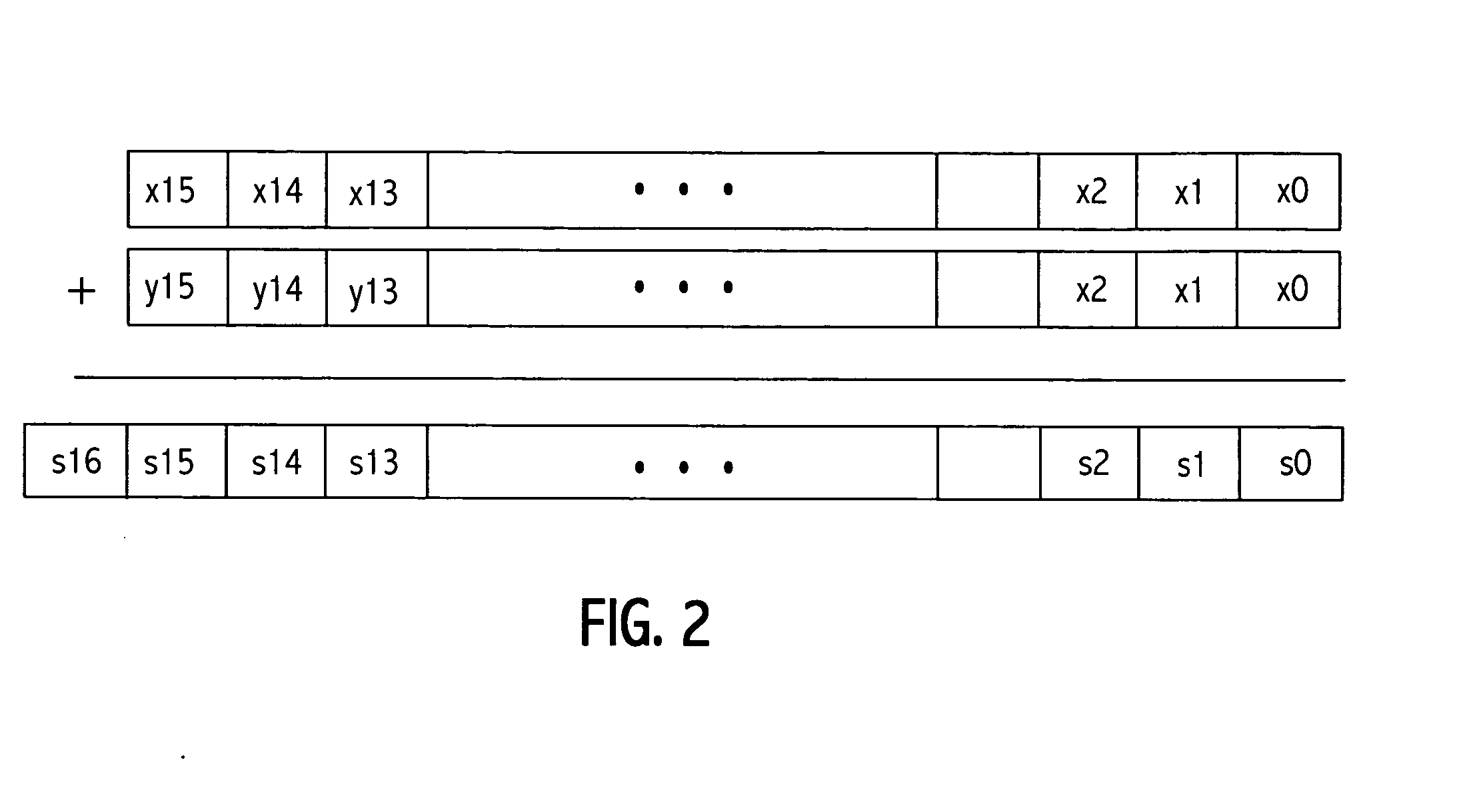

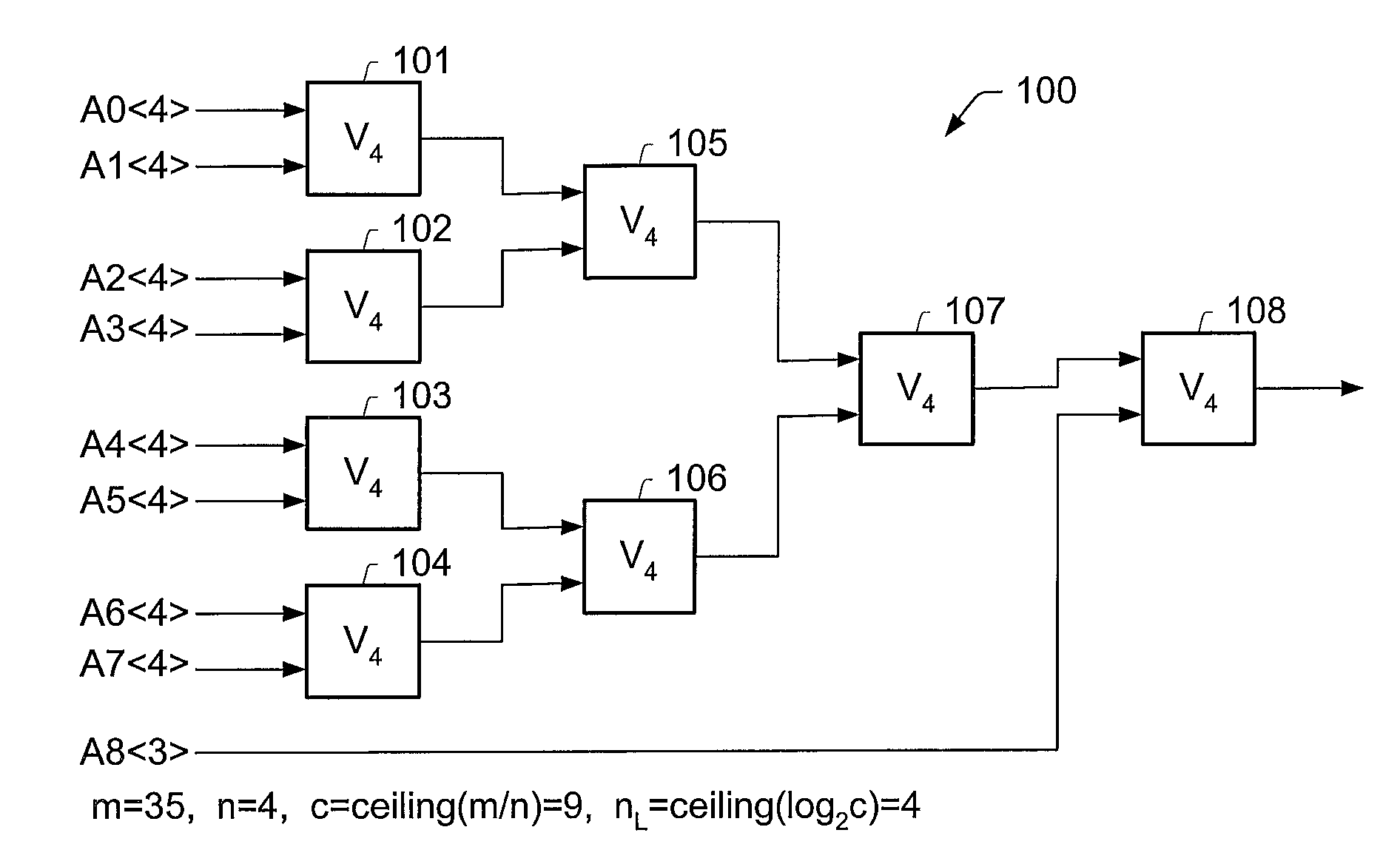

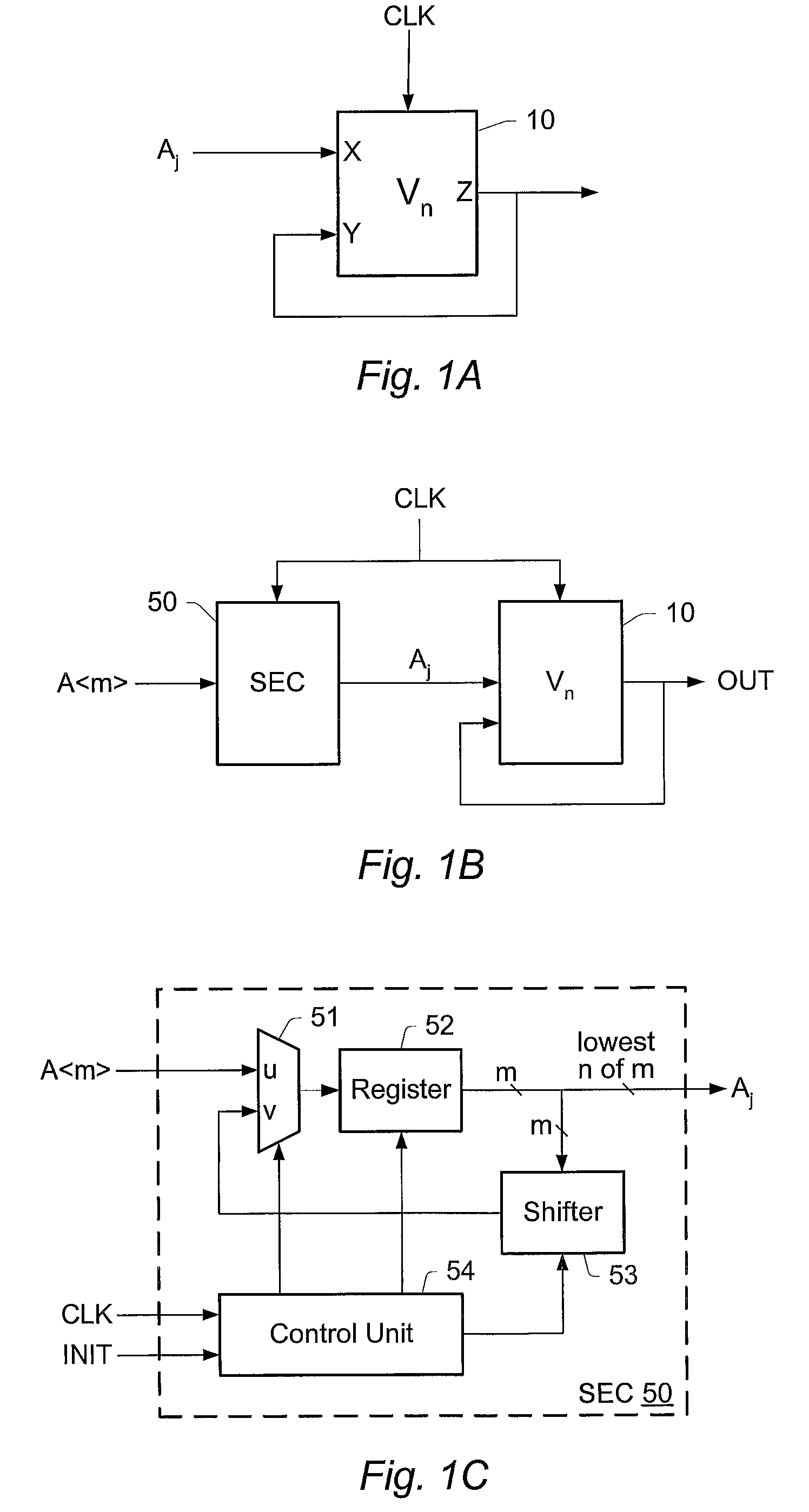

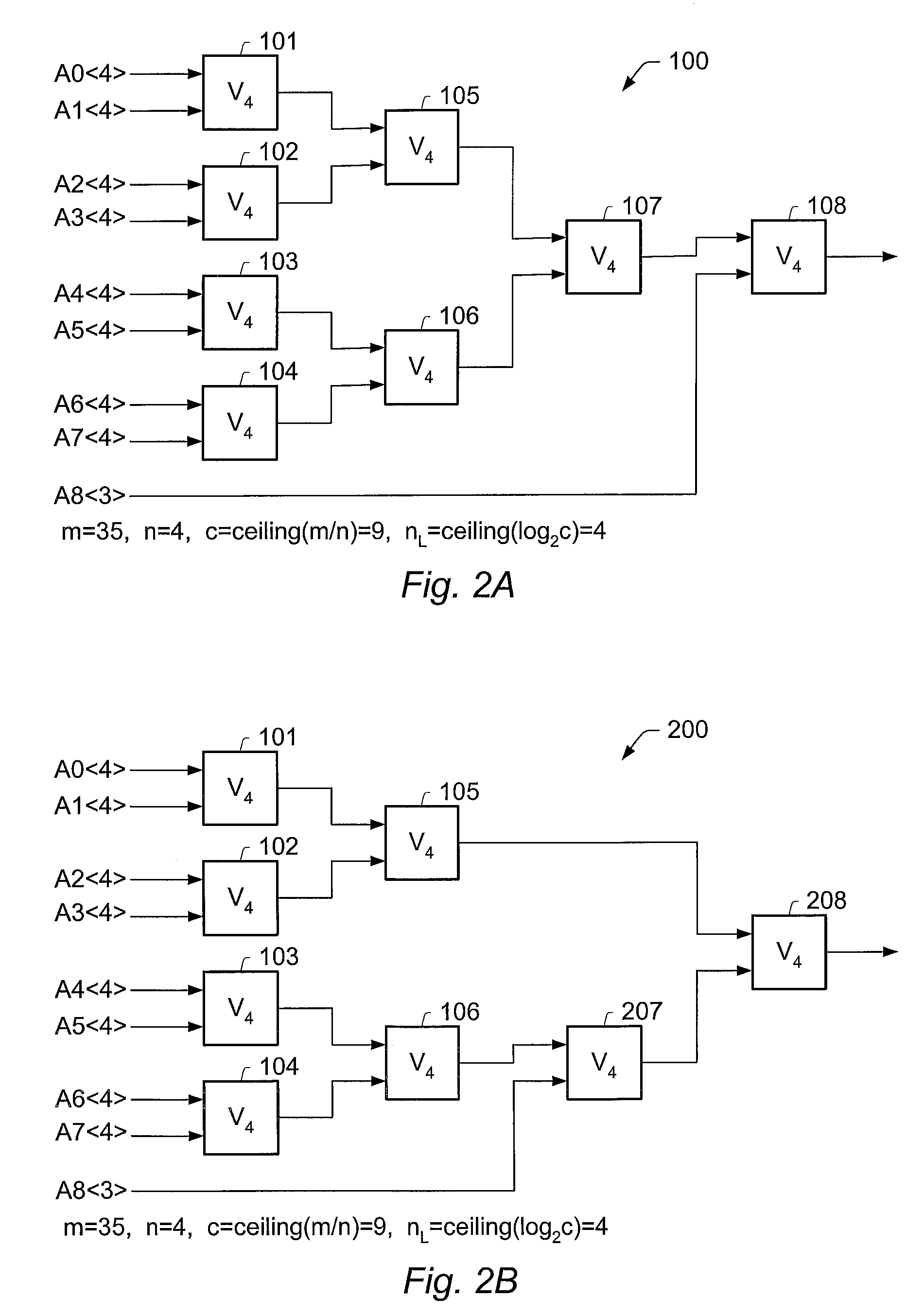

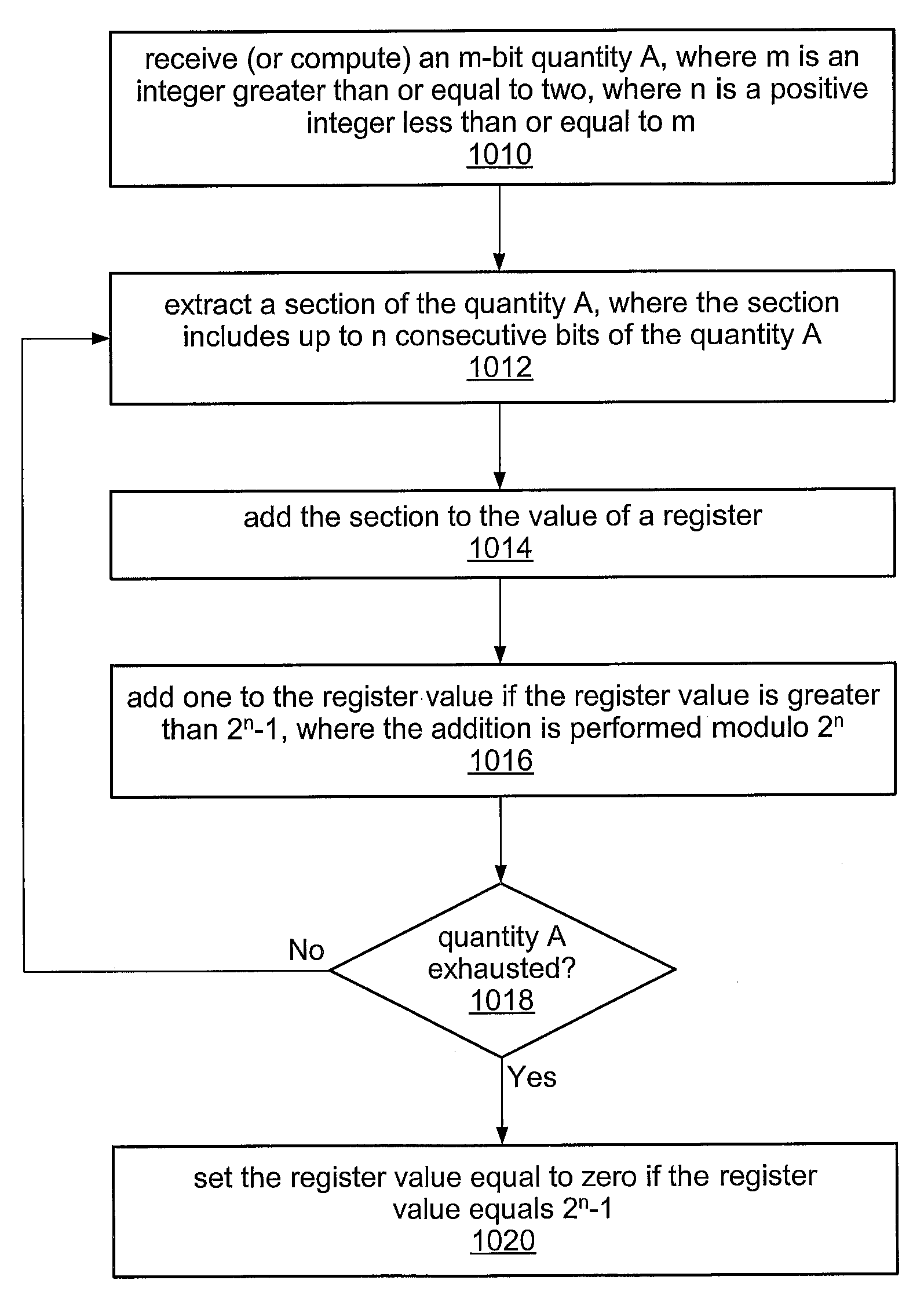

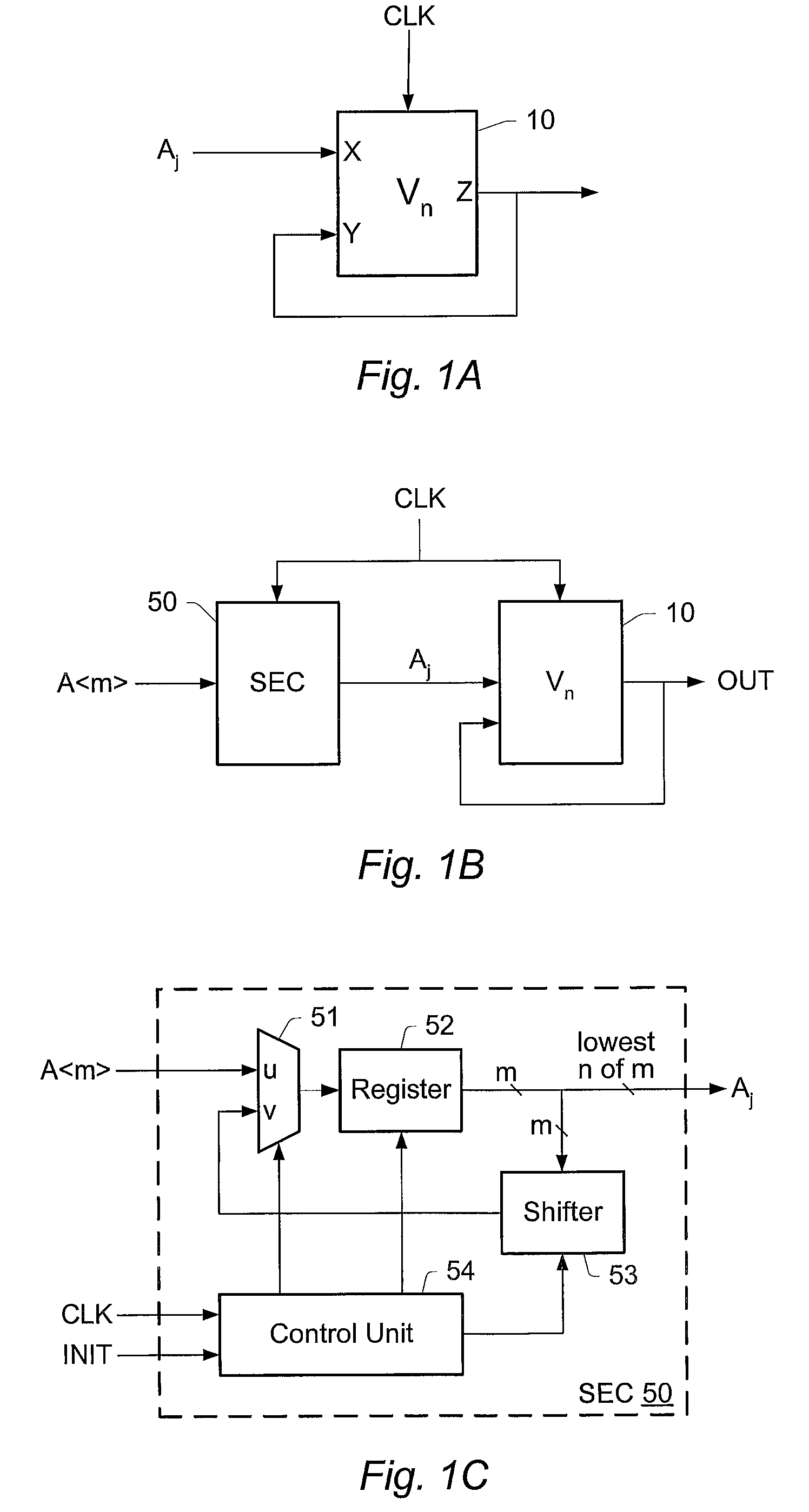

Efficient computation of the modulo operation based on divisor (2n-1)

InactiveUS7849125B2Computations using residue arithmeticComputation using denominational number representationComputer scienceModulo operation

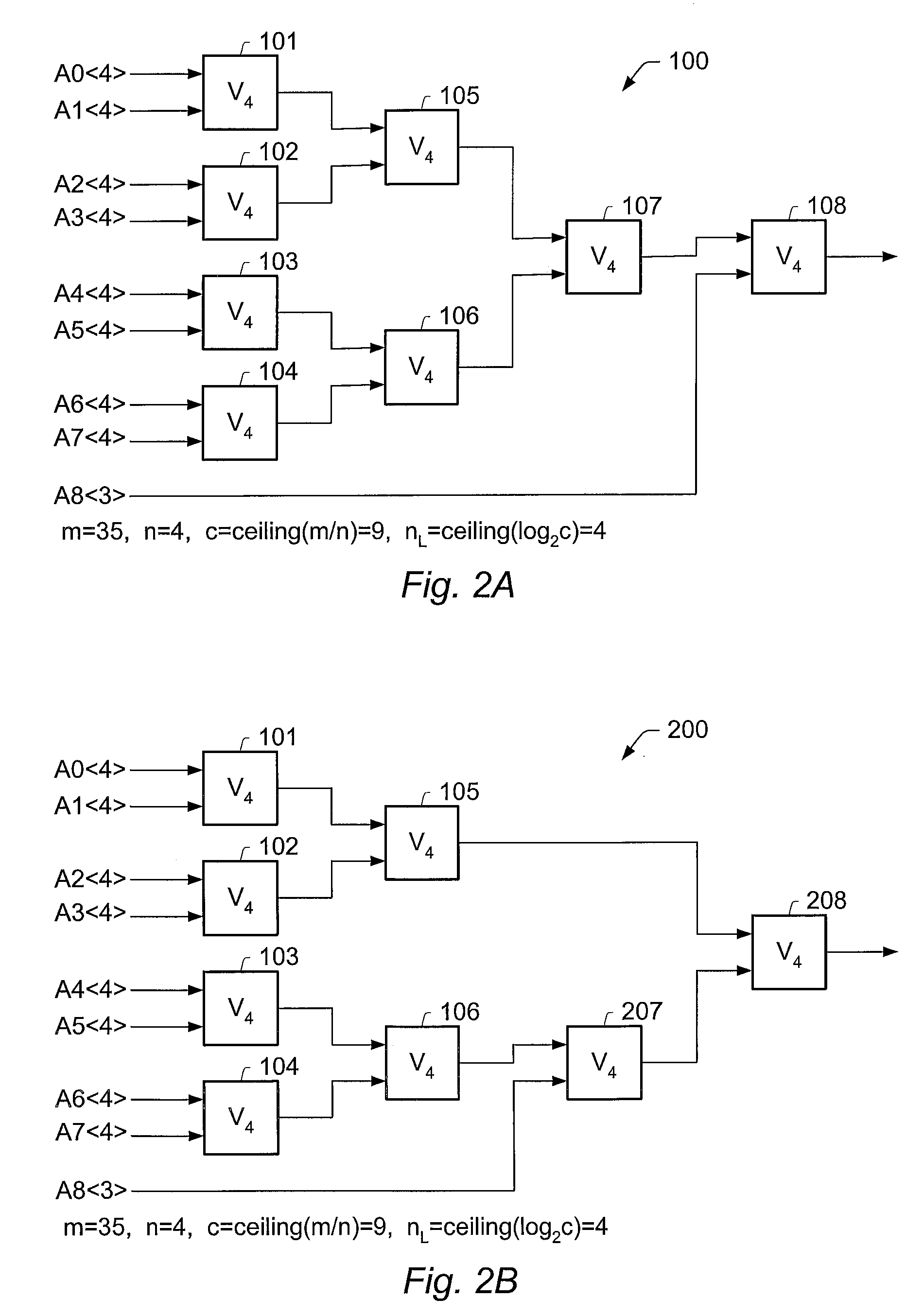

A system and method for computing A mod (2n−1), where A is an m bit quantity, where n is a positive integer, where m is greater than or equal to n. The quantity A may be partitioned into a plurality of sections, each being at most n bits long. The value A mod (2n−1) may be computed by adding the sections in mod(2n−1) fashion. This addition of the sections of A may be performed in a single clock cycle using an adder tree, or, sequentially in multiple clock cycles using a two-input adder circuit provided the output of the adder circuit is coupled to one of the two inputs. The computation A mod (2n−1) may be performed as a part of an interleaving / deinterleaving operation, or, as part of an encryption / decryption operation.

Owner:INTEL CORP

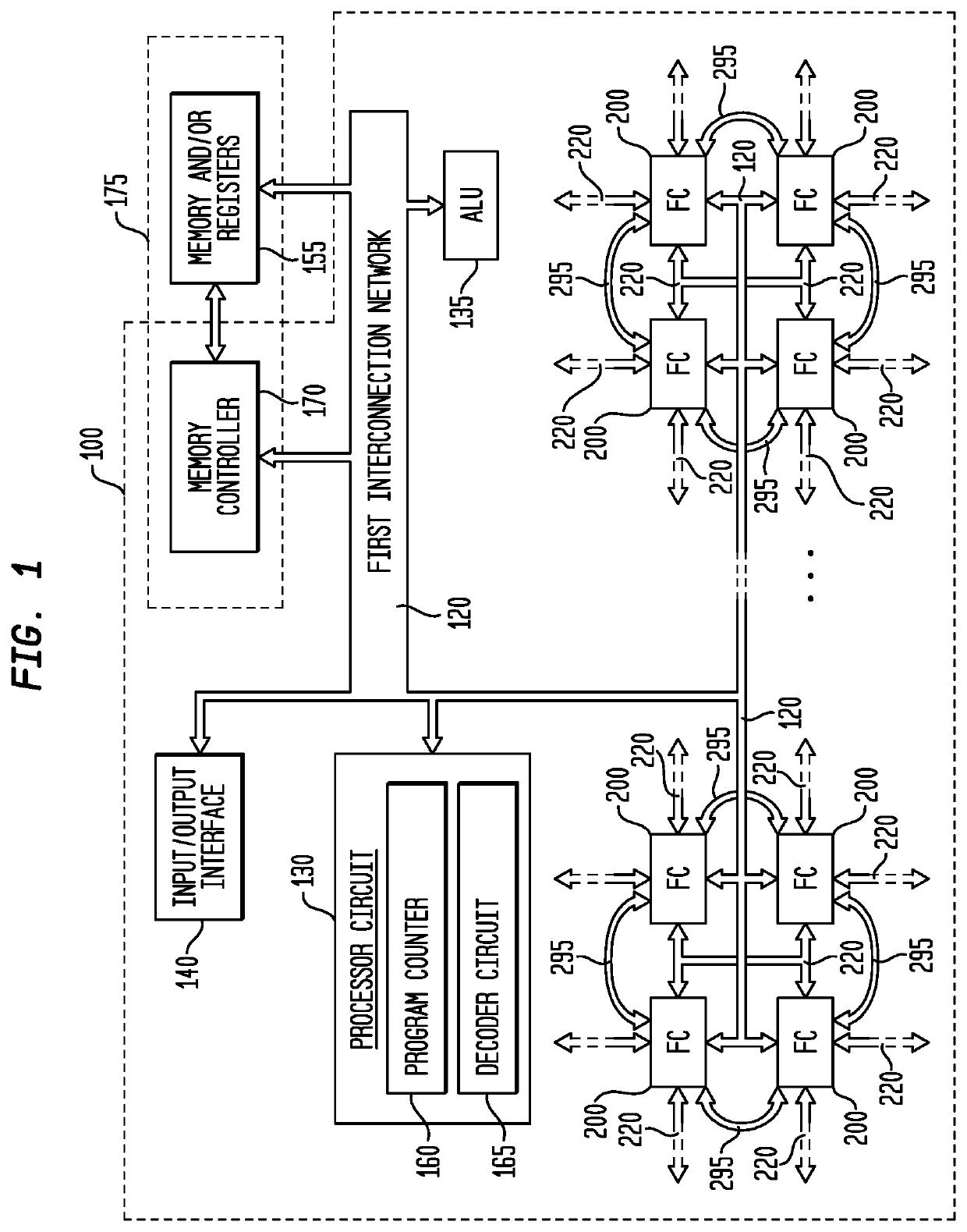

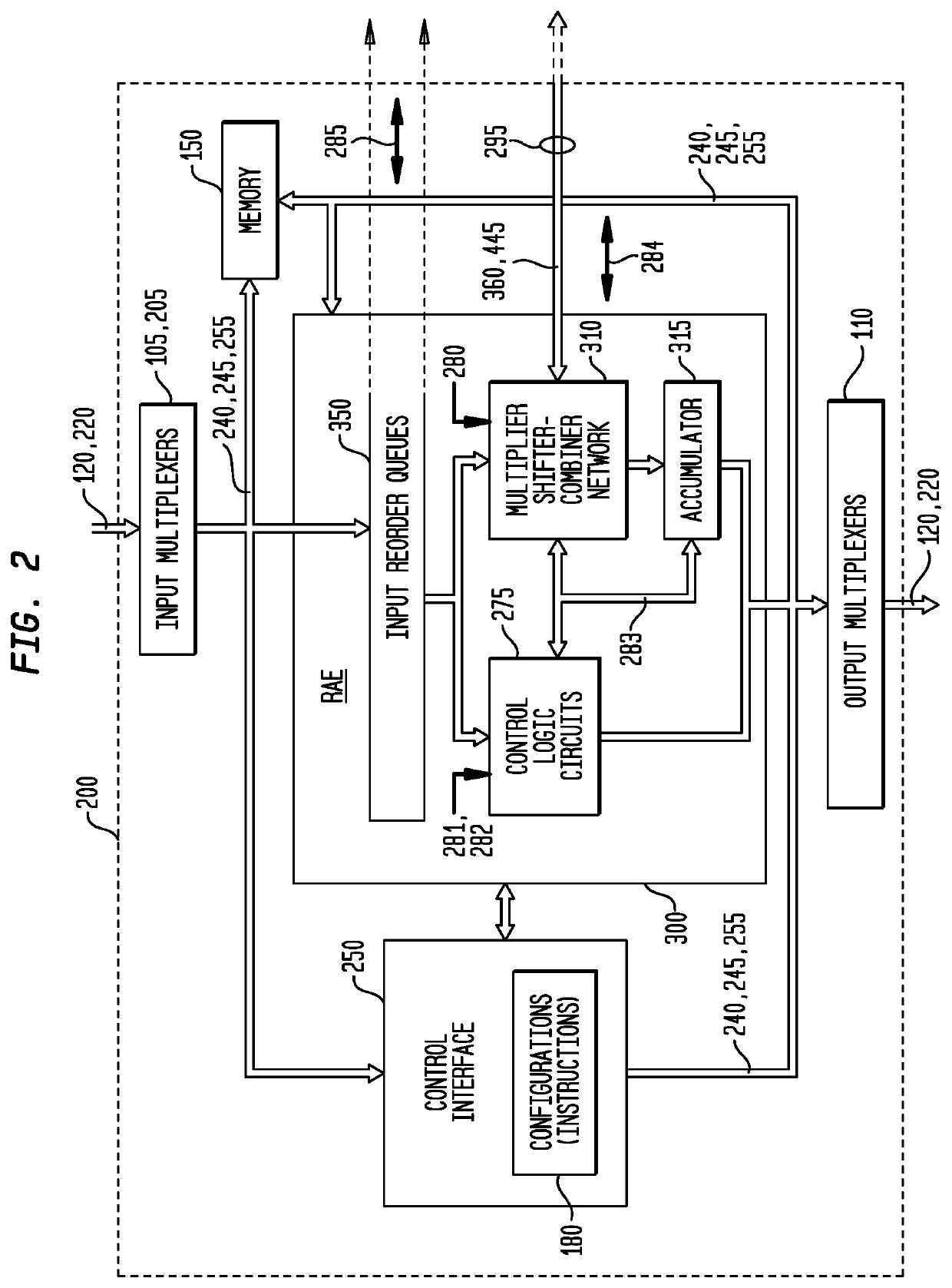

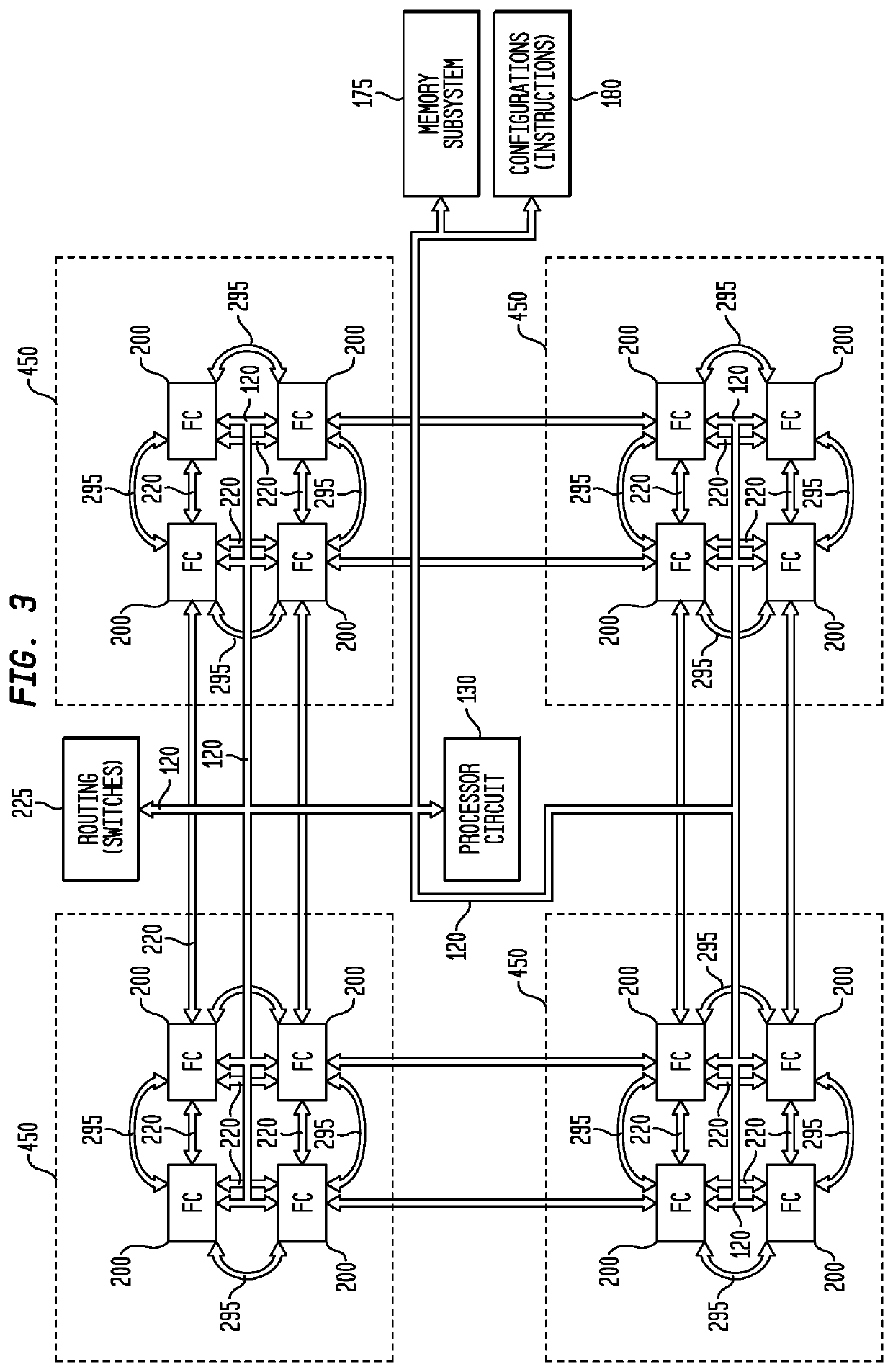

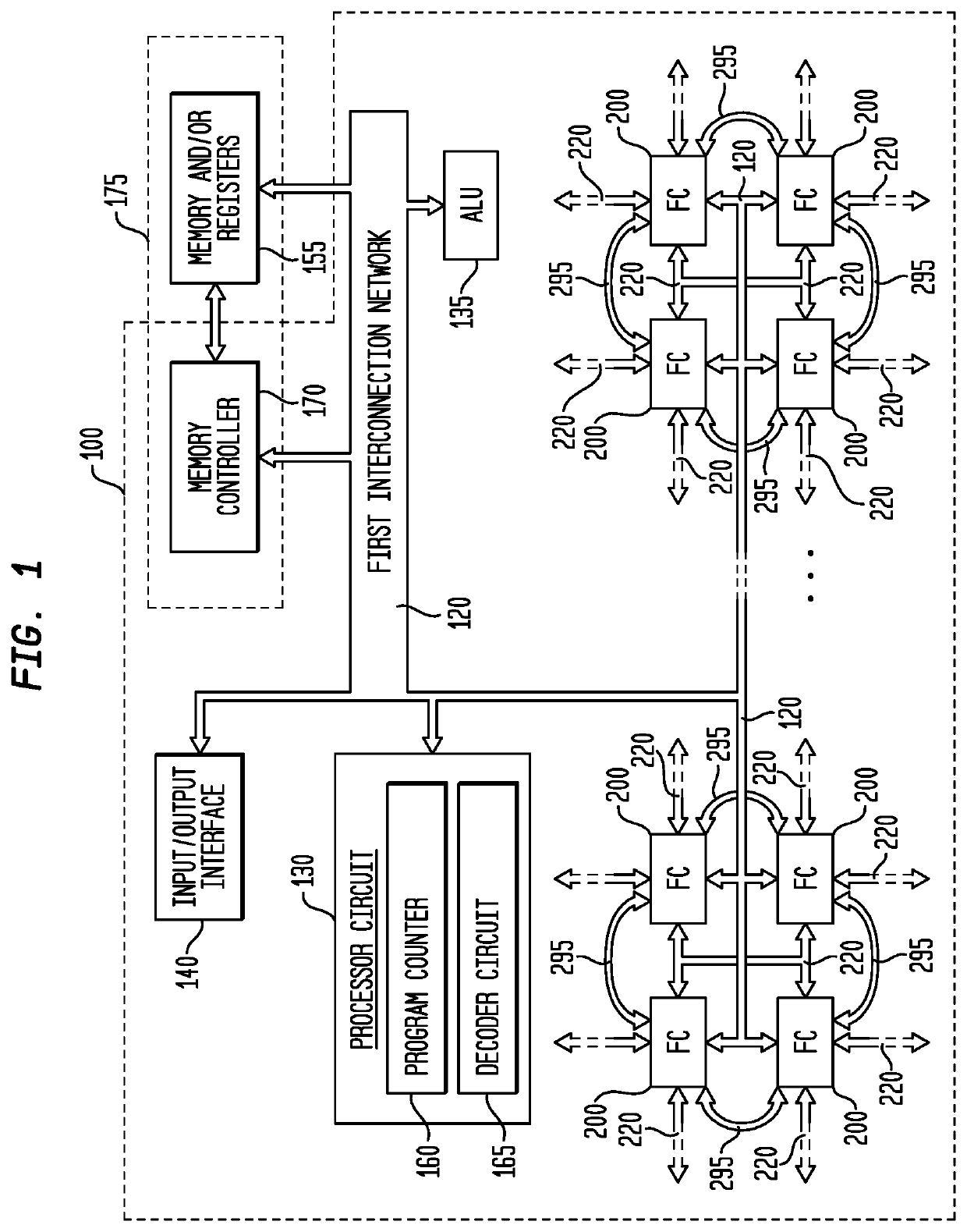

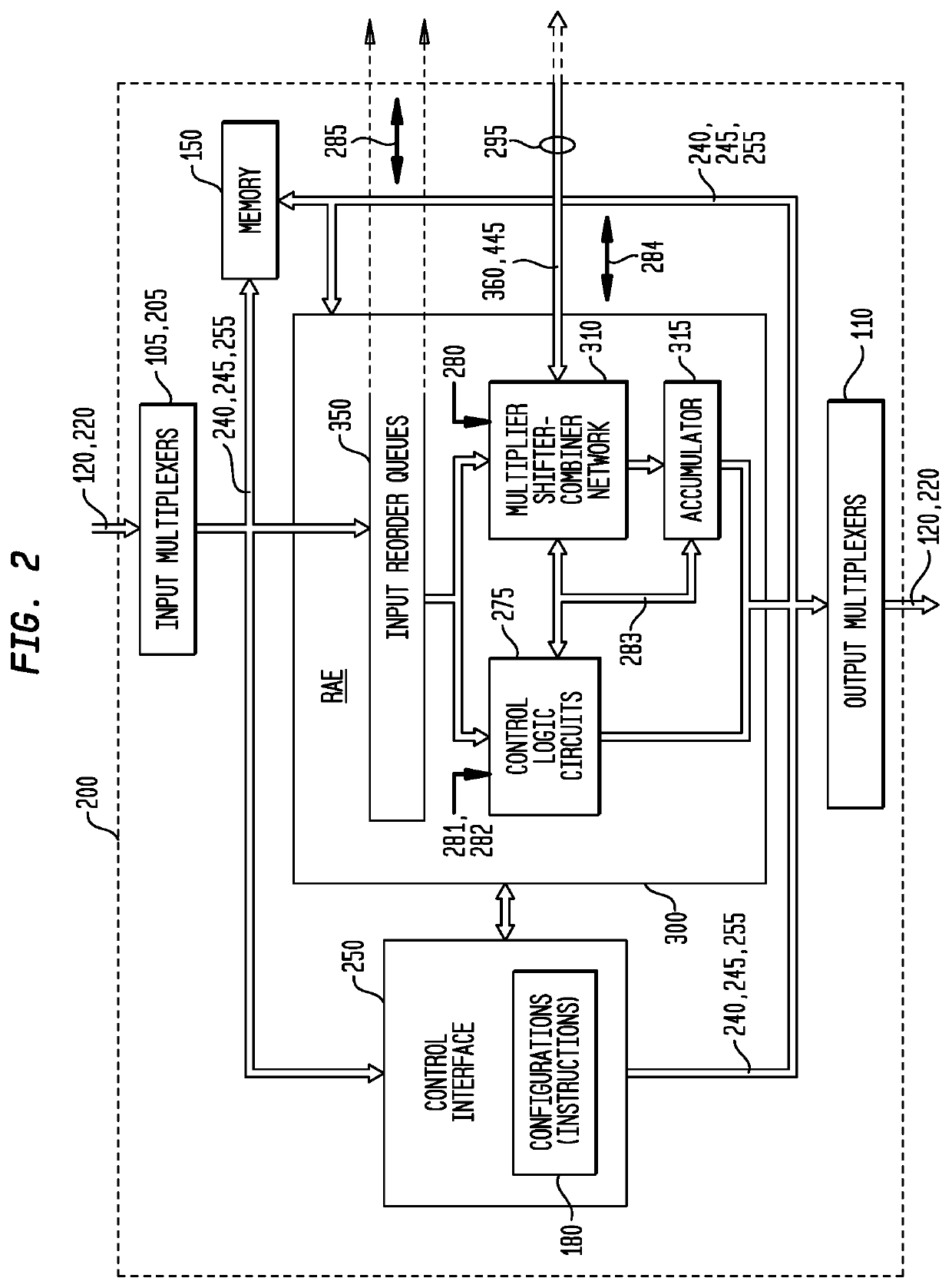

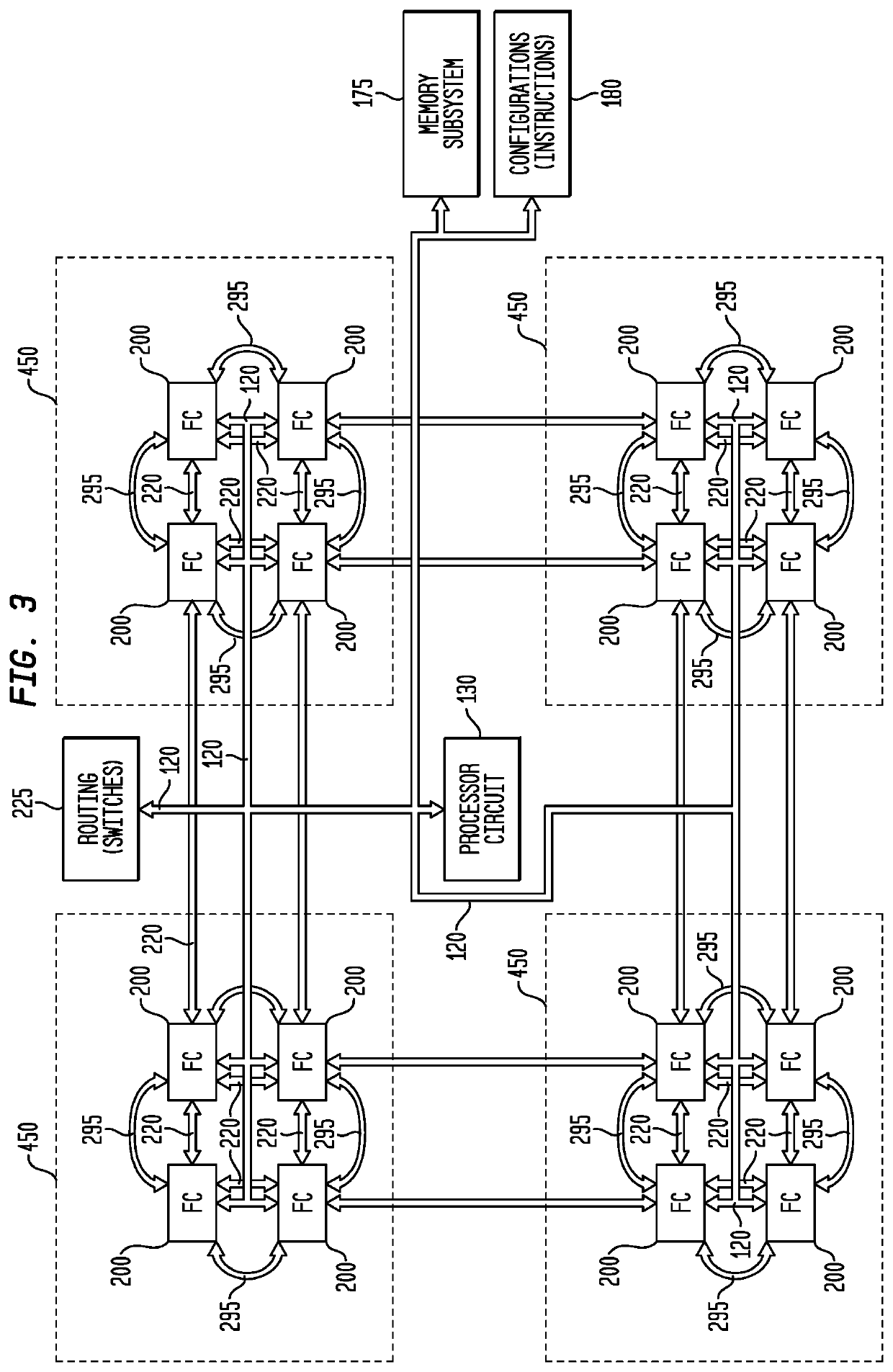

Reconfigurable Processor Circuit Architecture

ActiveUS20210073171A1Lower latencyImprove scalabilityExclusive-OR circuitsProgram initiation/switchingComputer architectureBinary multiplier

A representative reconfigurable processing circuit and a reconfigurable arithmetic circuit are disclosed, each of which may include input reordering queues; a multiplier shifter and combiner network coupled to the input reordering queues; an accumulator circuit; and a control logic circuit, along with a processor and various interconnection networks. A representative reconfigurable arithmetic circuit has a plurality of operating modes, such as floating point and integer arithmetic modes, logical manipulation modes, Boolean logic, shift, rotate, conditional operations, and format conversion, and is configurable for a wide variety of multiplication modes. Dedicated routing connecting multiplier adder trees allows multiple reconfigurable arithmetic circuits to be reconfigurably combined, in pair or quad configurations, for larger adders, complex multiplies and general sum of products use, for example.

Owner:CORNAMI INC

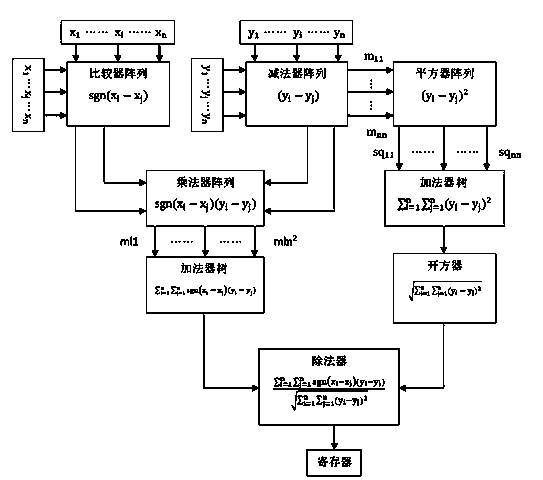

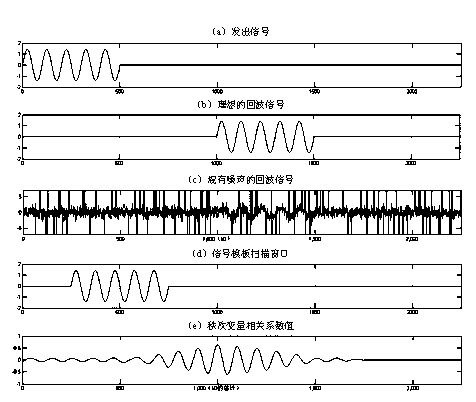

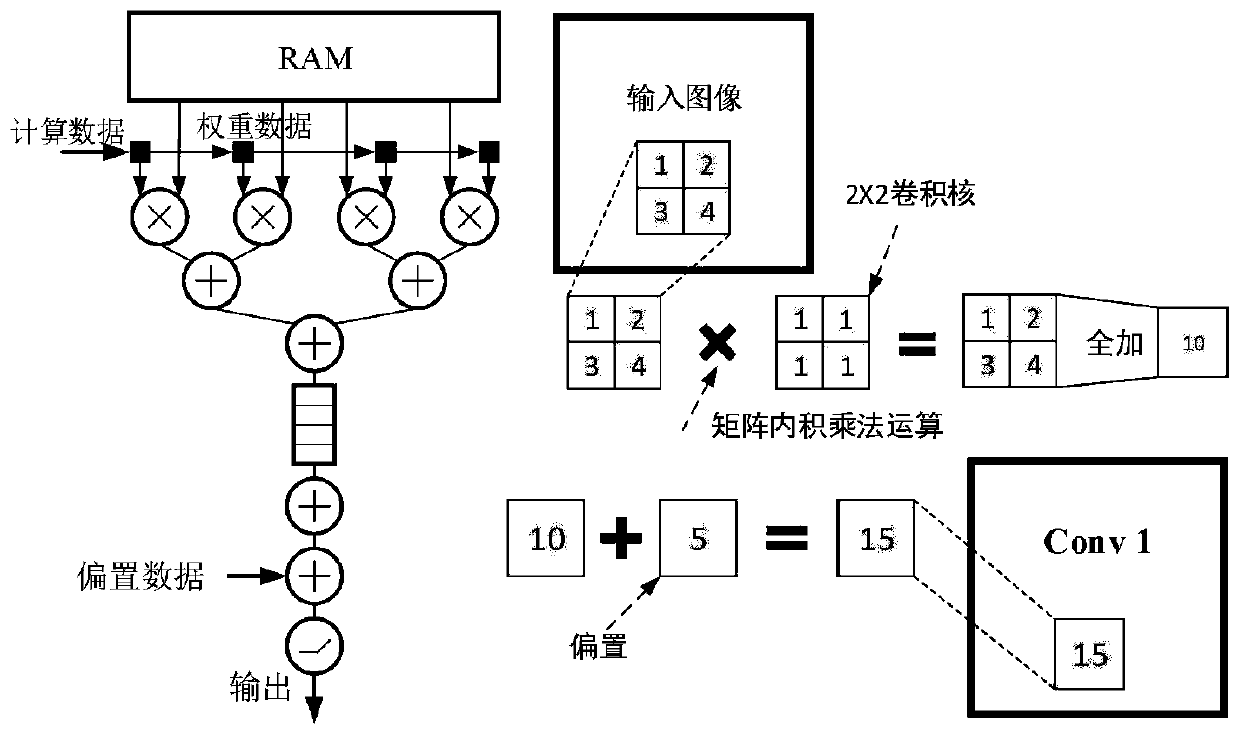

Pearson rank variable correlation coefficient based signal detection circuit and method

InactiveCN104392086AImprove robustnessGood performanceSpecial data processing applicationsCorrelation coefficientEnvironmental noise

The invention relates to a Pearson's rank-variate correlation coefficient based signal detection circuit which comprises a comparator array, a subtracter array, a squarer array, a multiplier array, two adder trees, a square root extractor, a divider and a register, wherein the comparator array, the subtracter array and the squarer array are respectively arranged as n*n matrixes (n is the signal length); each element on the matrixes is respectively subjected to comparison operation and subtraction; the multiplier array is arranged as multipliers with n<2>*2 inputs; the adder trees are arranged as adders with n<2> inputs; the divider is used for finishing 2 input dividing operation; the register is used for registering a result of a related operation. When an impulse noise component is contained in environmental noise, the matching for the correlation coefficients of a filter and a product moment is basically invalid, and a Pearson's rank-variate correlation coefficient in the environmental noise containing the impulse noise component presents excellent robustness, including a mathematical expectation approaching to a true value and a smaller standard deviation.

Owner:GUANGDONG UNIV OF TECH

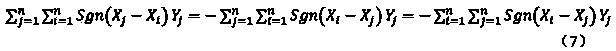

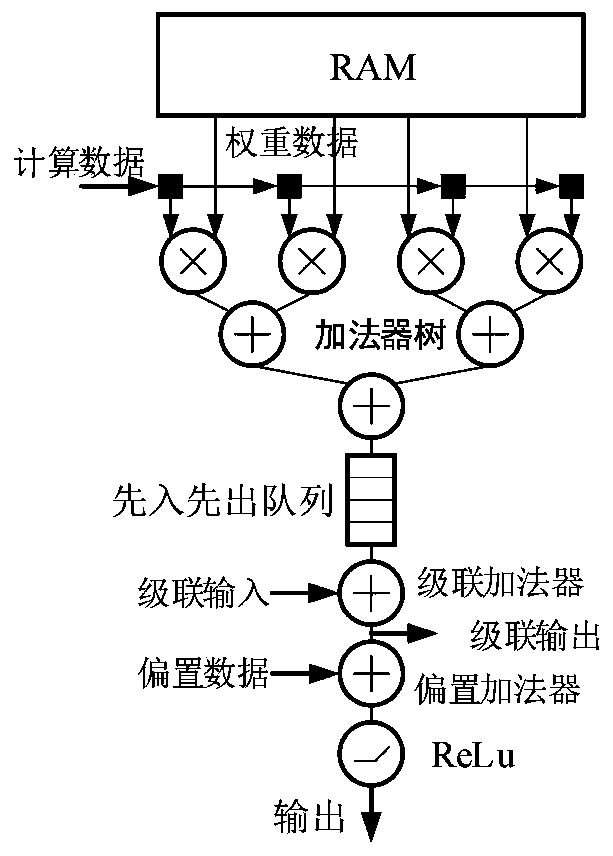

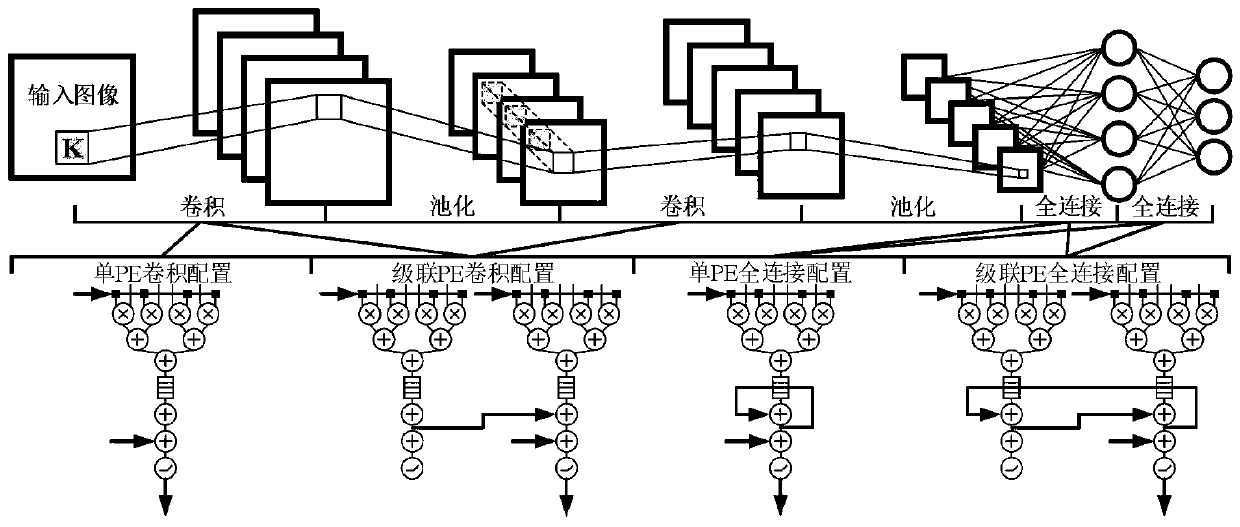

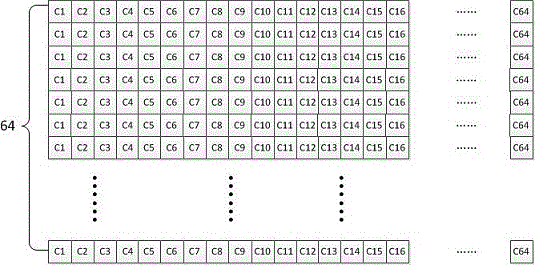

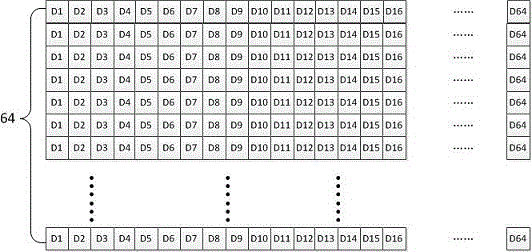

Universal computing circuit of neural network accelerator

ActiveCN110807522AReduce inference timeSimple designNeural architecturesPhysical realisationActivation functionBinary multiplier

The invention discloses a general calculation module circuit of a neural network accelerator. The general calculation module circuit is composed of m universal computing modules PE, any ith universalcomputing module PE is composed of an RAM, 2n multipliers, an adder tree, a cascade adder, a bias adder, a first-in first-out queue and a ReLu activation function module. The single PE convolution configuration, the cascaded PE convolution configuration, the single PE full-connection configuration graph and the cascaded PE full-connection configuration are utilized to respectively construct calculation circuits of different neural networks. According to the invention, the universal computing circuit can be configured according to the variable of the neural network accelerator, so that the neural network can be built or modified more simply, conveniently and quickly, the inference time of the neural network is shortened, and the hardware development time of related deep research is reduced.

Owner:HEFEI UNIV OF TECH

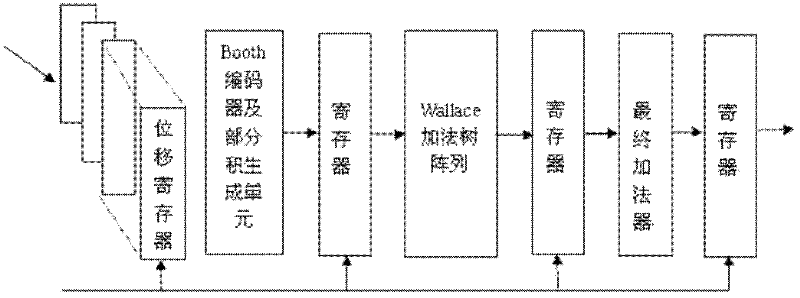

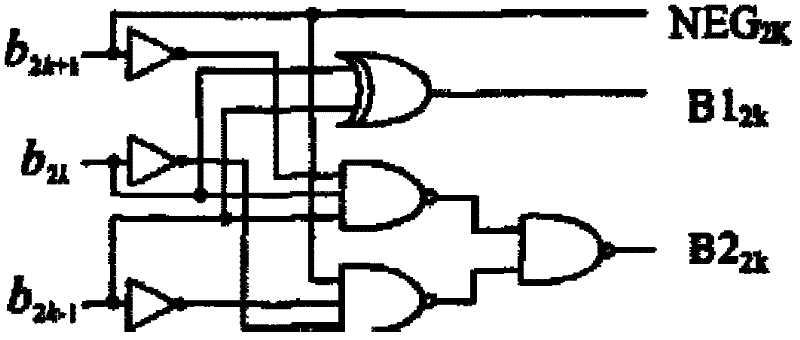

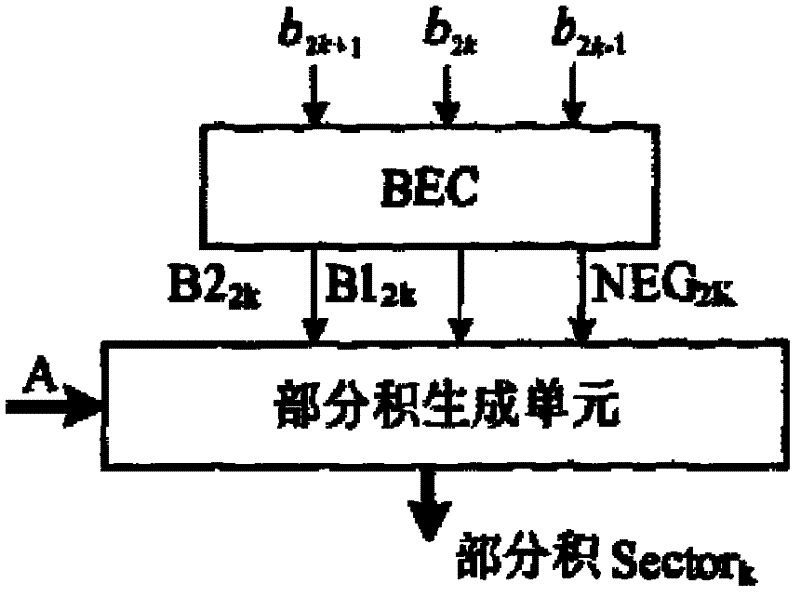

FPGA (field-programmable gate array)-based high-speed FIR (finite impulse response) digital filter

InactiveCN102355232AEasy to adjustApplications for different occasionsDigital technique networkData compressionBinary multiplier

An FPGA-based high-speed FIR digital filter is characterized in that an improved Booth coding mode and a partial product adder array module are used as the first stage of the pipeline design; a Wallace adder tree for compressing and adding 2M data is used as the second stage of the pipeline design; and a final adder is used as the third stage of the pipeline design. The order number and coefficient of the filter can be adjusted conveniently by reasonably partitioning a high-speed multiplier and combining the Wallace adder tree array through the pipeline technology, so that the FPGA-based high-speed FIR digital filter is applicable to different occasions and the operation speed is improved greatly.

Owner:BEIHANG UNIV

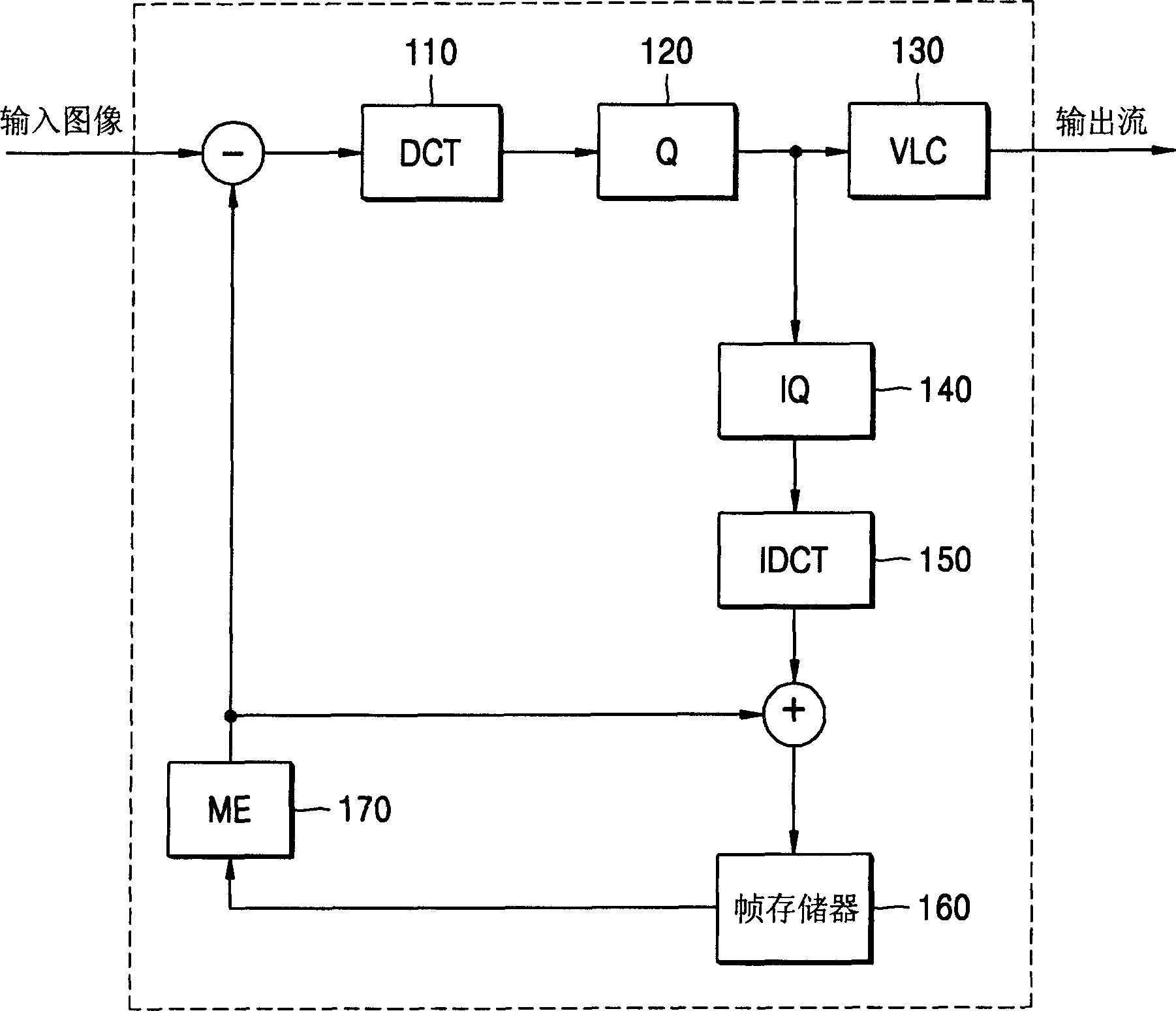

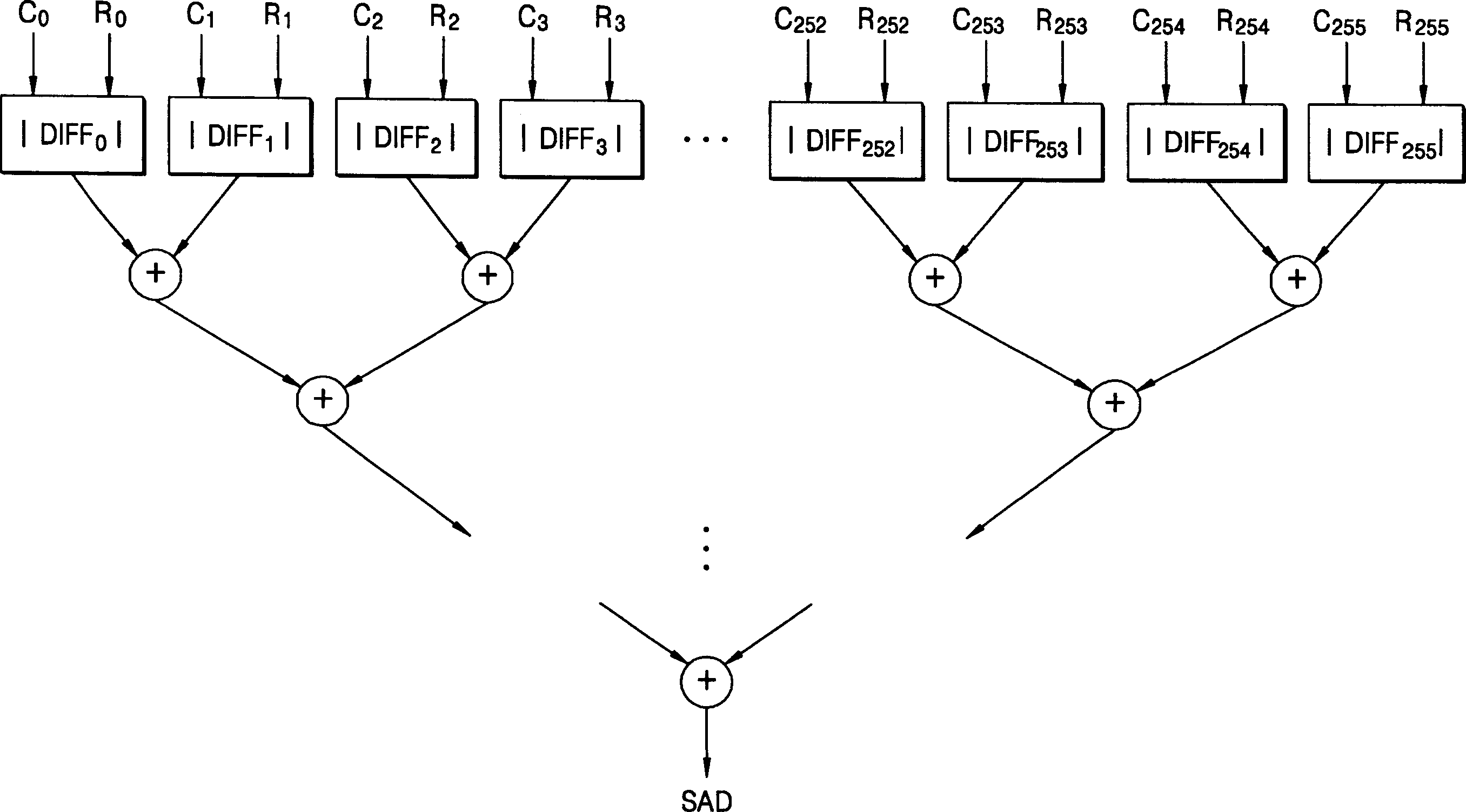

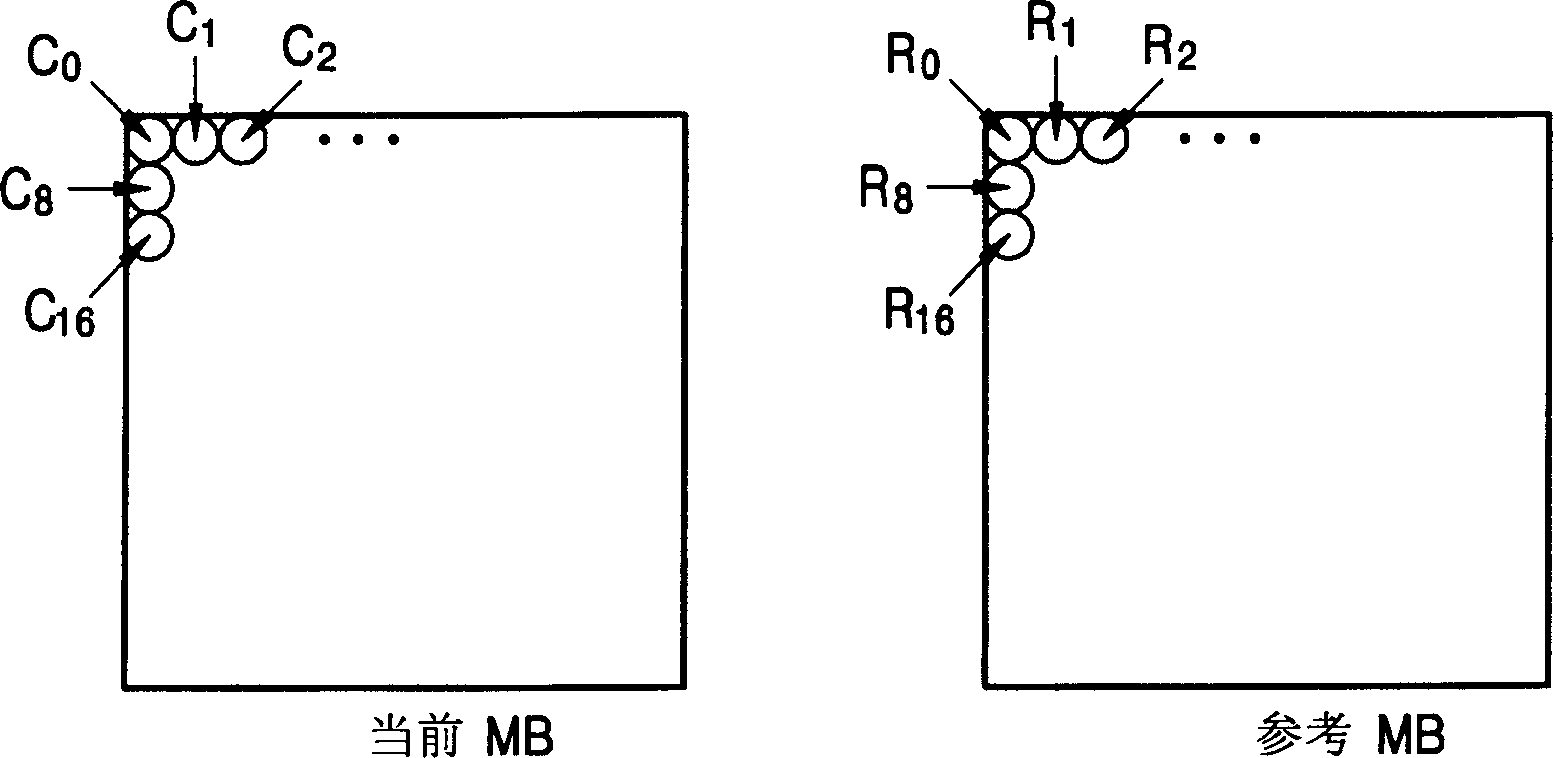

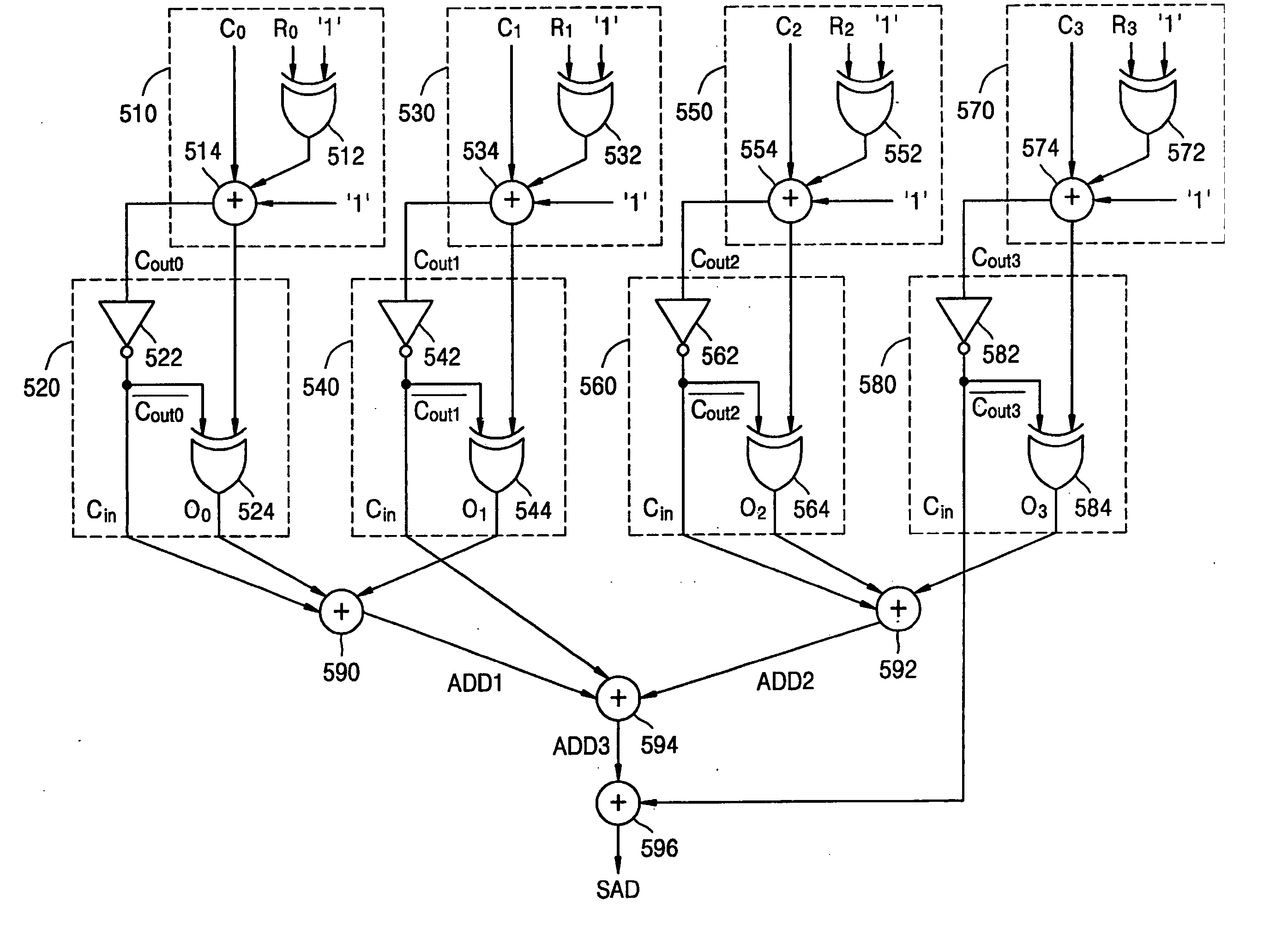

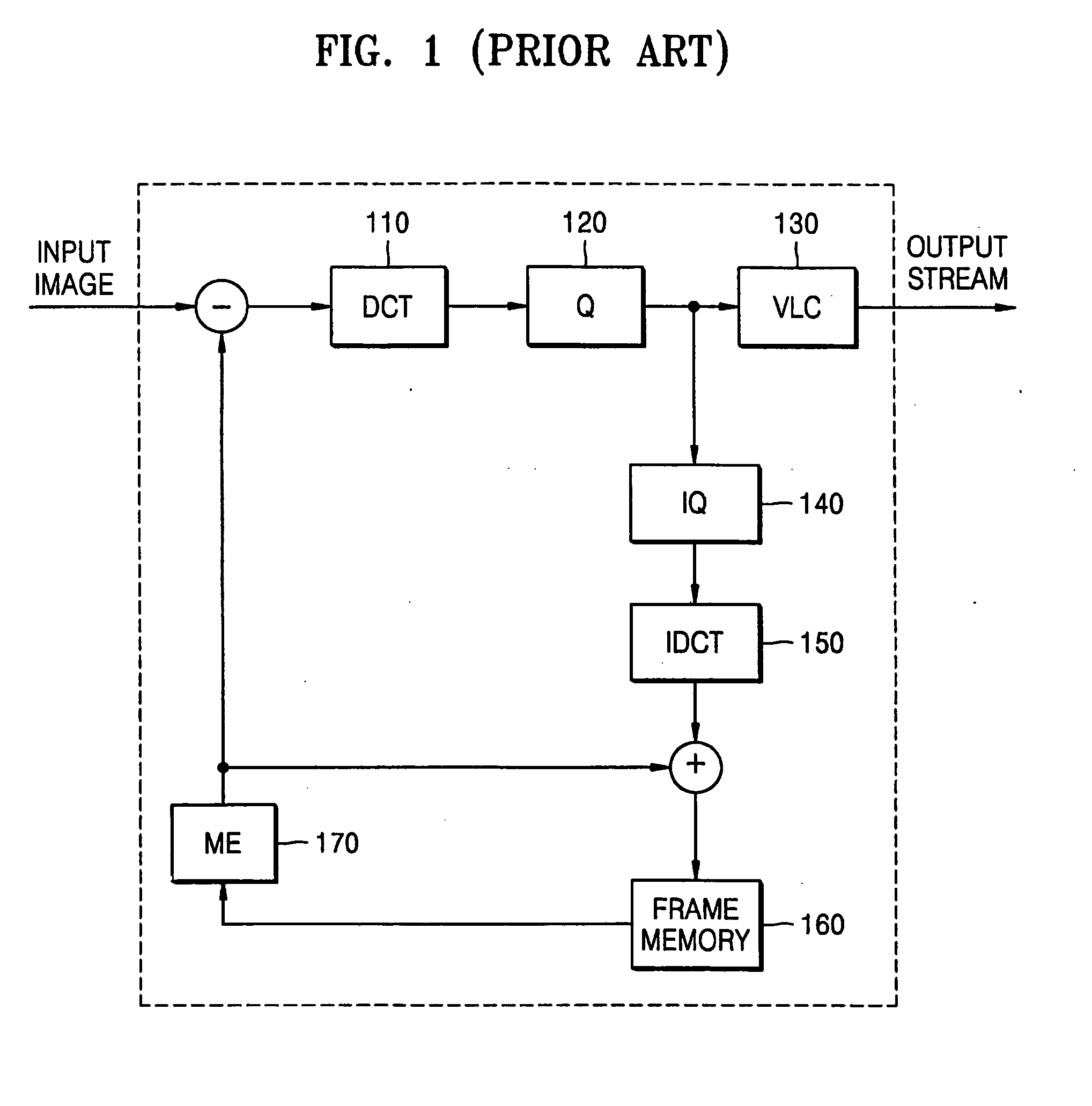

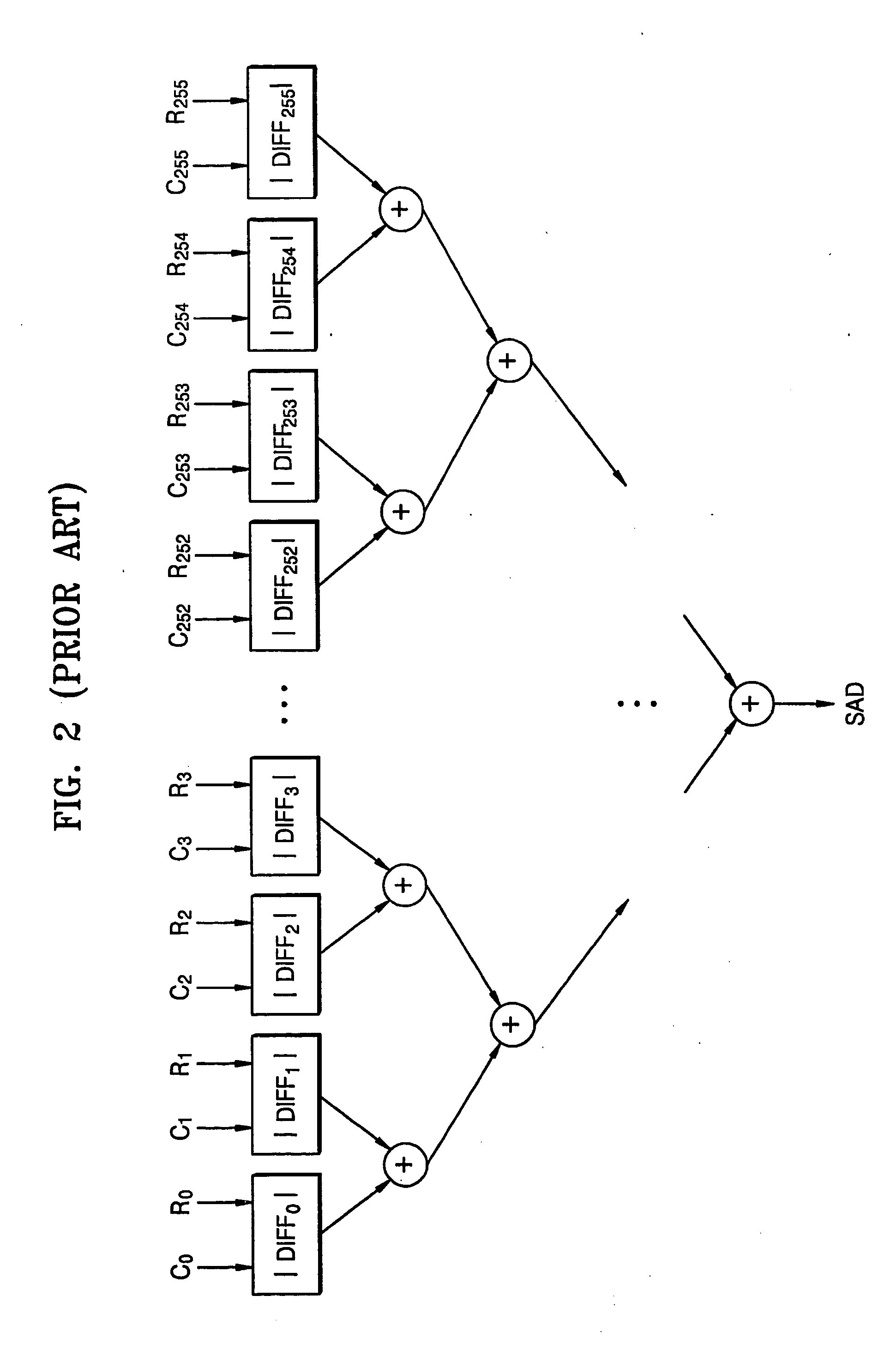

Apparatus for calculating absolute difference value, and motion estimation apparatus and motion picture encoding apparatus

InactiveCN1625266ATelevision systemsDigital video signal modificationAbsolute differenceMotion estimation

An apparatus for calculating an absolute difference that promotes an efficient structure of a SAD calculation unit having a tree structure, and a motion estimation apparatus and a moving image encoding apparatus using the apparatus for calculating an absolute difference. The number of adders required for each absolute difference calculation unit can be reduced by performing calculation after inputting output carries output from a plurality of pseudo absolute difference calculation units to adders in the adder tree.

Owner:SAMSUNG ELECTRONICS CO LTD

EFFICIENT COMPUTATION OF THE MODULO OPERATION BASED ON DIVISOR (2n-1)

InactiveUS20080010332A1Computation using denominational number representationParallel computingHemt circuits

A system and method for computing A mod (2n−1), where A is an m bit quantity, where n is a positive integer, where m is greater than or equal to n. The quantity A may be partitioned into a plurality of sections, each being at most n bits long. The value A mod (2n−1) may be computed by adding the sections in mod(2n−1) fashion. This addition of the sections of A may be performed in a single clock cycle using an adder tree, or, sequentially in multiple clock cycles using a two-input adder circuit provided the output of the adder circuit is coupled to one of the two inputs. The computation A mod (2n−1) may be performed as a part of an interleaving / deinterleaving operation, or, as part of an encryption / decryption operation.

Owner:INTEL CORP

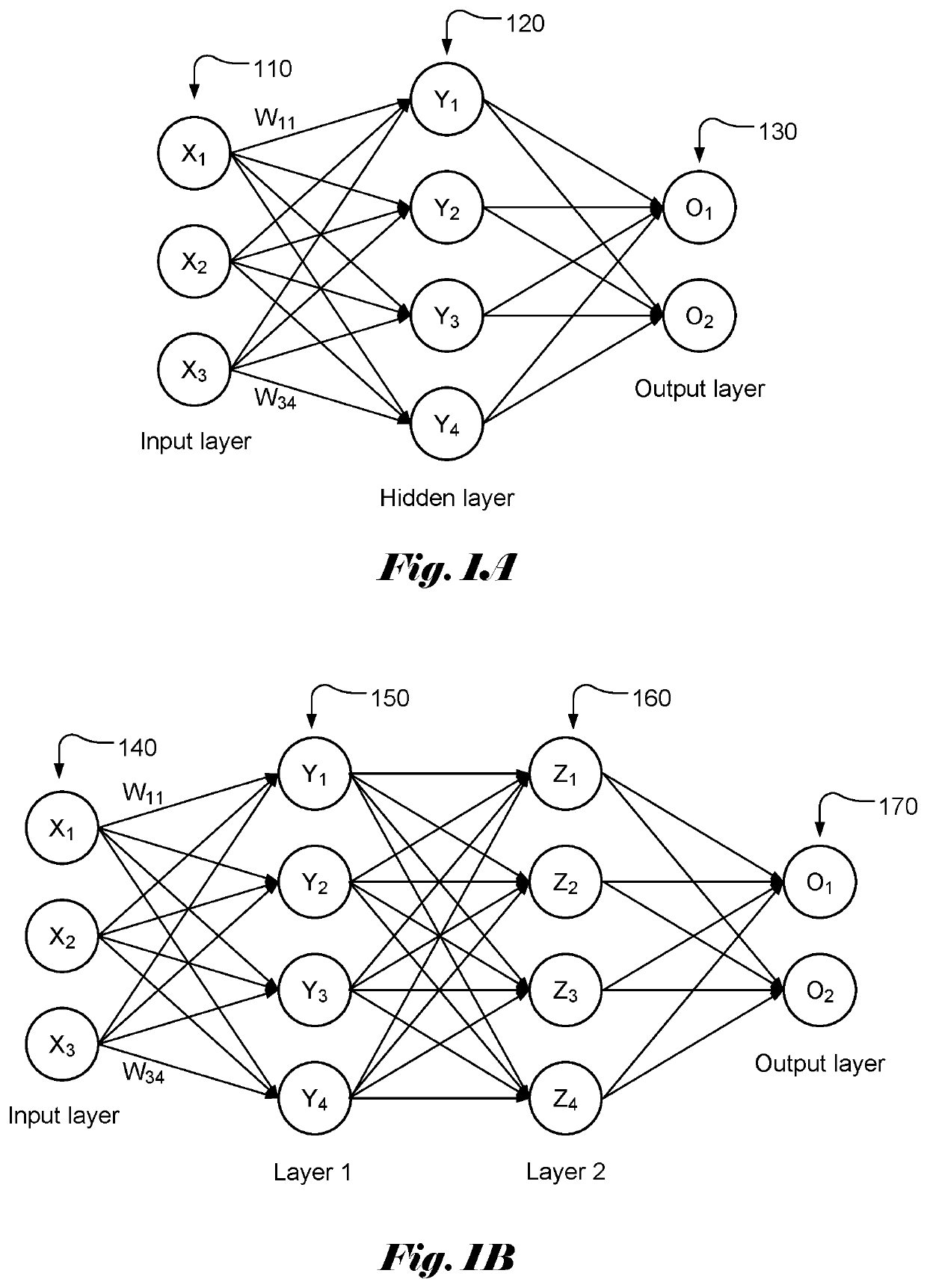

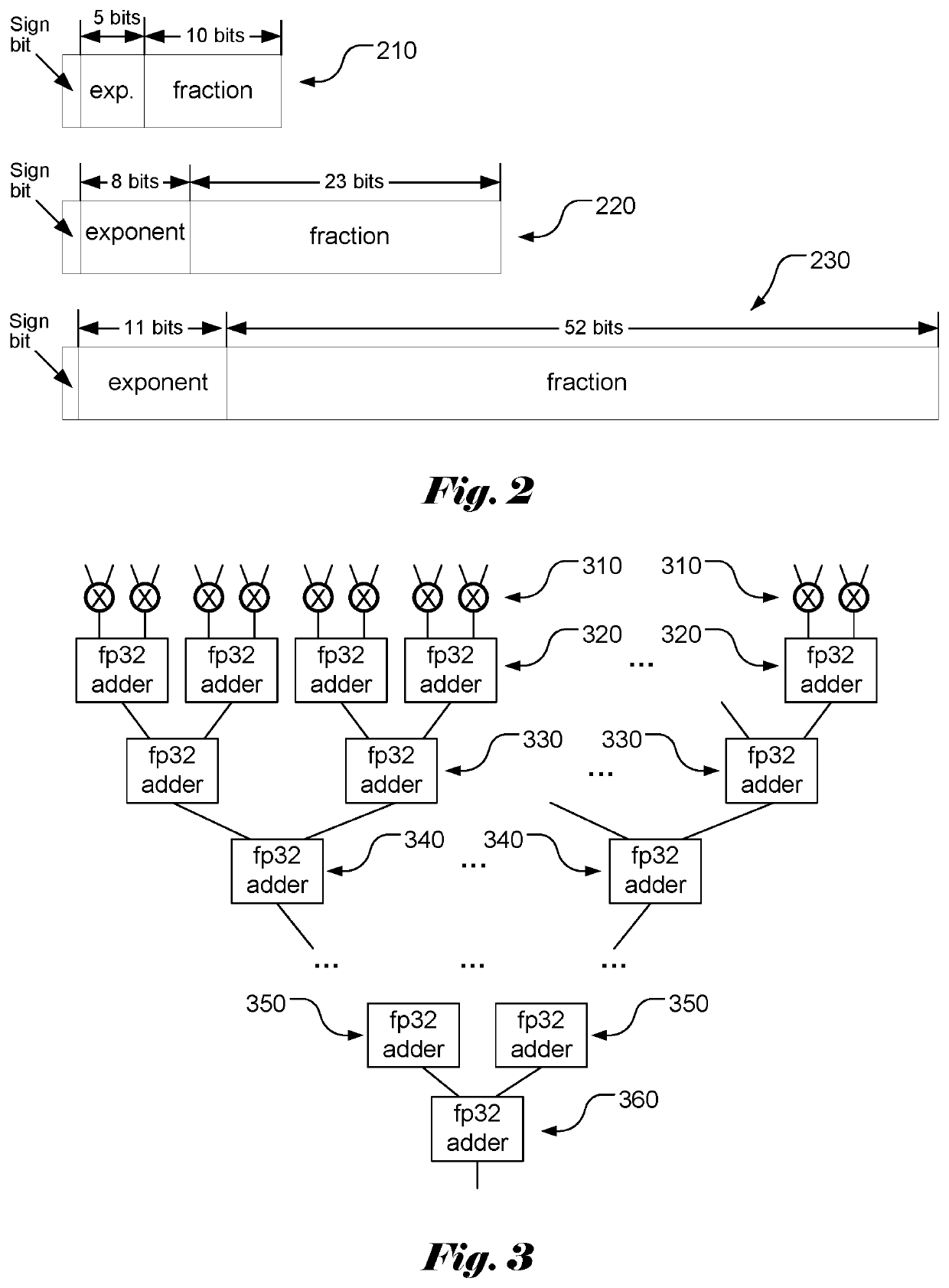

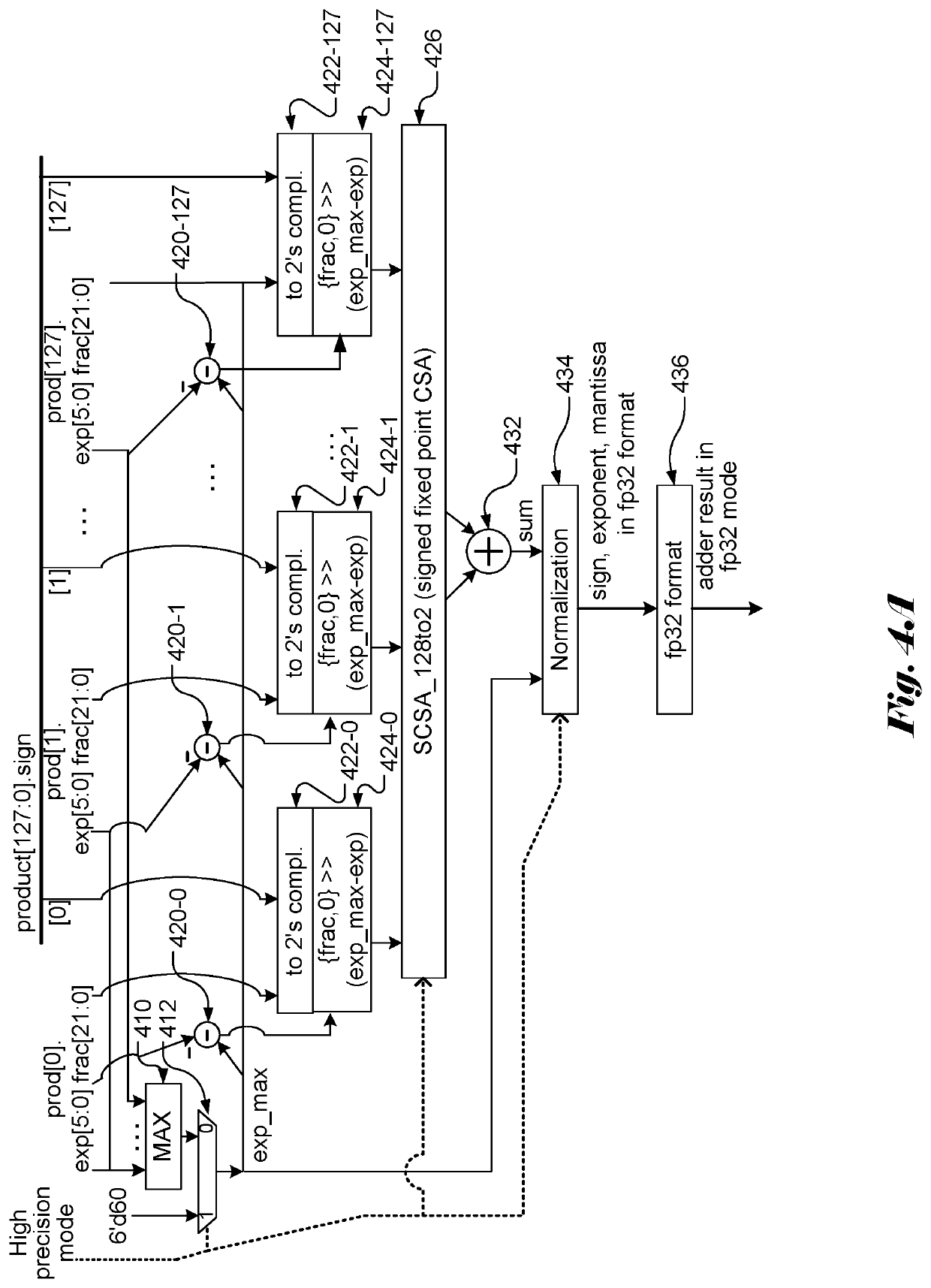

Apparatus and Method of Fast Floating-Point Adder Tree for Neural Networks

ActiveUS20200272417A1Computation using non-contact making devicesPhysical realisationData packSign bit

A computing device to implement fast floating-point adder tree for the neural network applications is disclosed. The fast float-point adder tree comprises a data preparation module, a fast fixed-point Carry-Save Adder (CSA) tree, and a normalization module. The floating-point input data comprises a sign bit, exponent part and fraction part. The data preparation module aligns the fraction part of the input data and prepares the input data for subsequent processing. The fast adder uses a signed fixed-point CSA tree to quickly add a large number of fixed-point data into 2 output values and then uses a normal adder to add the 2 output values into one output value. The fast adder uses for a large number of operands is based on multiple levels of fast adders for a small number of operands. The output from the signed fixed-point Carry-Save Adder tree is converted to a selected floating-point format.

Owner:DINOPLUSAI HLDG LTD

Apparatus for calculating absolute difference value, and motion estimation apparatus and motion picture encoding apparatus which use the apparatus for calculating the absolute difference value

InactiveUS20050131979A1Effective structureReduce in quantityDigital video signal modificationComputation using denominational number representationAbsolute differenceComputer science

An apparatus calculates an absolute difference value, which facilitates an efficient structure of an SAD calculating unit having a tree-like structure, and a motion estimation apparatus and a motion picture encoding apparatus that use the apparatus that calculates the absolute difference value. By performing calculations after inputting carry-outs output from a plurality of pseudo absolute difference calculating units to adders in an adder tree, the number of adders necessary for each absolute difference value calculating unit may be reduced.

Owner:SAMSUNG ELECTRONICS CO LTD

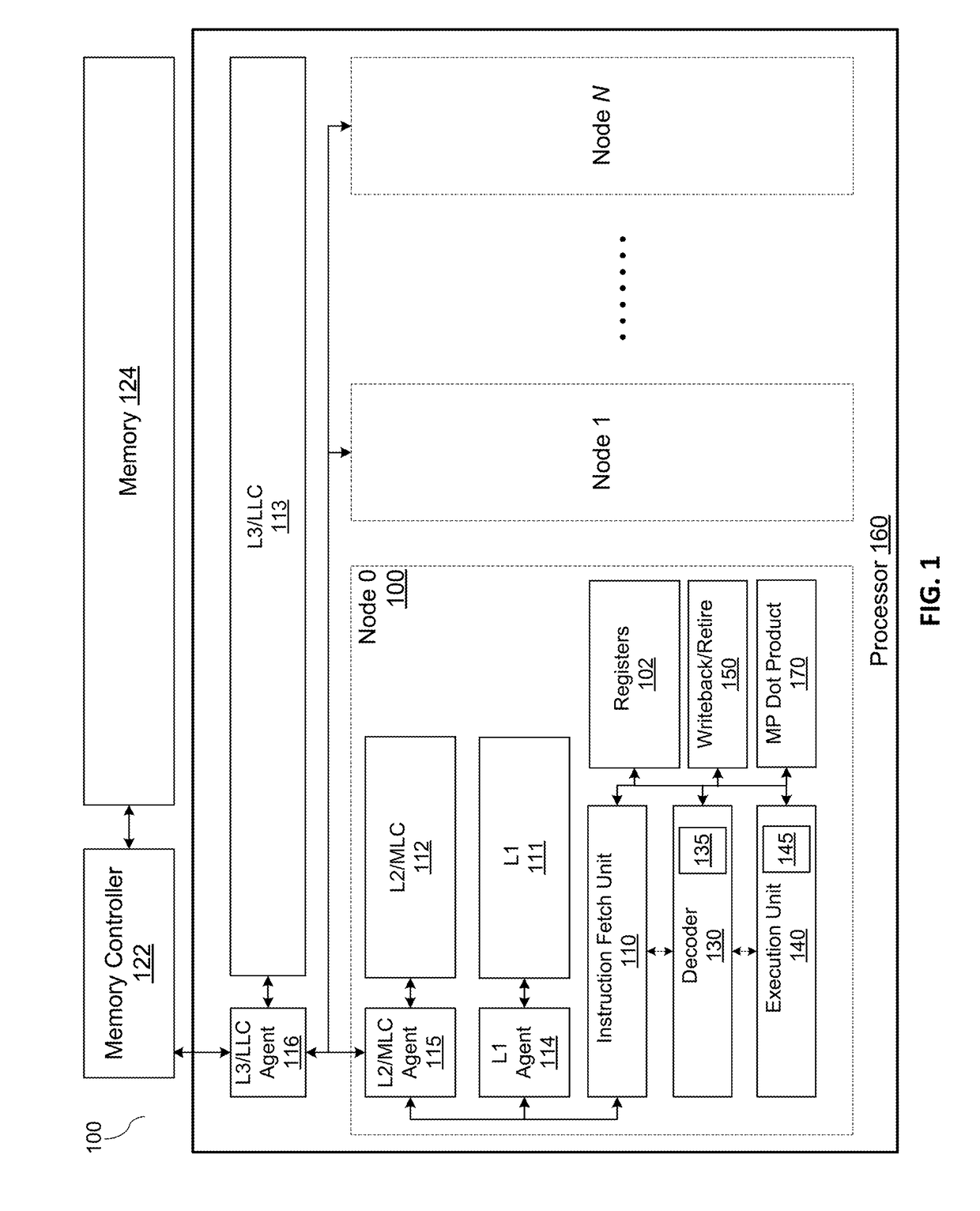

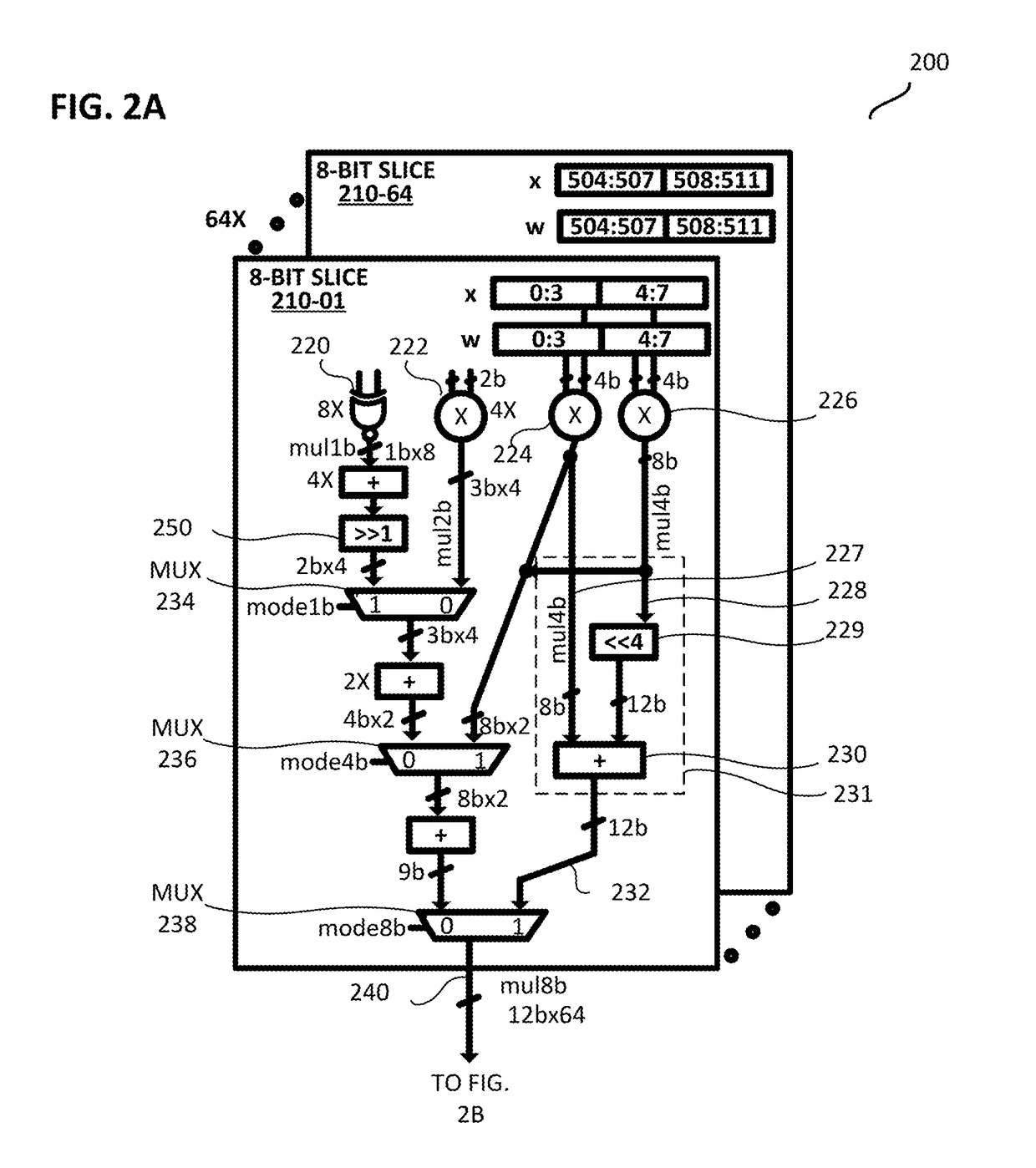

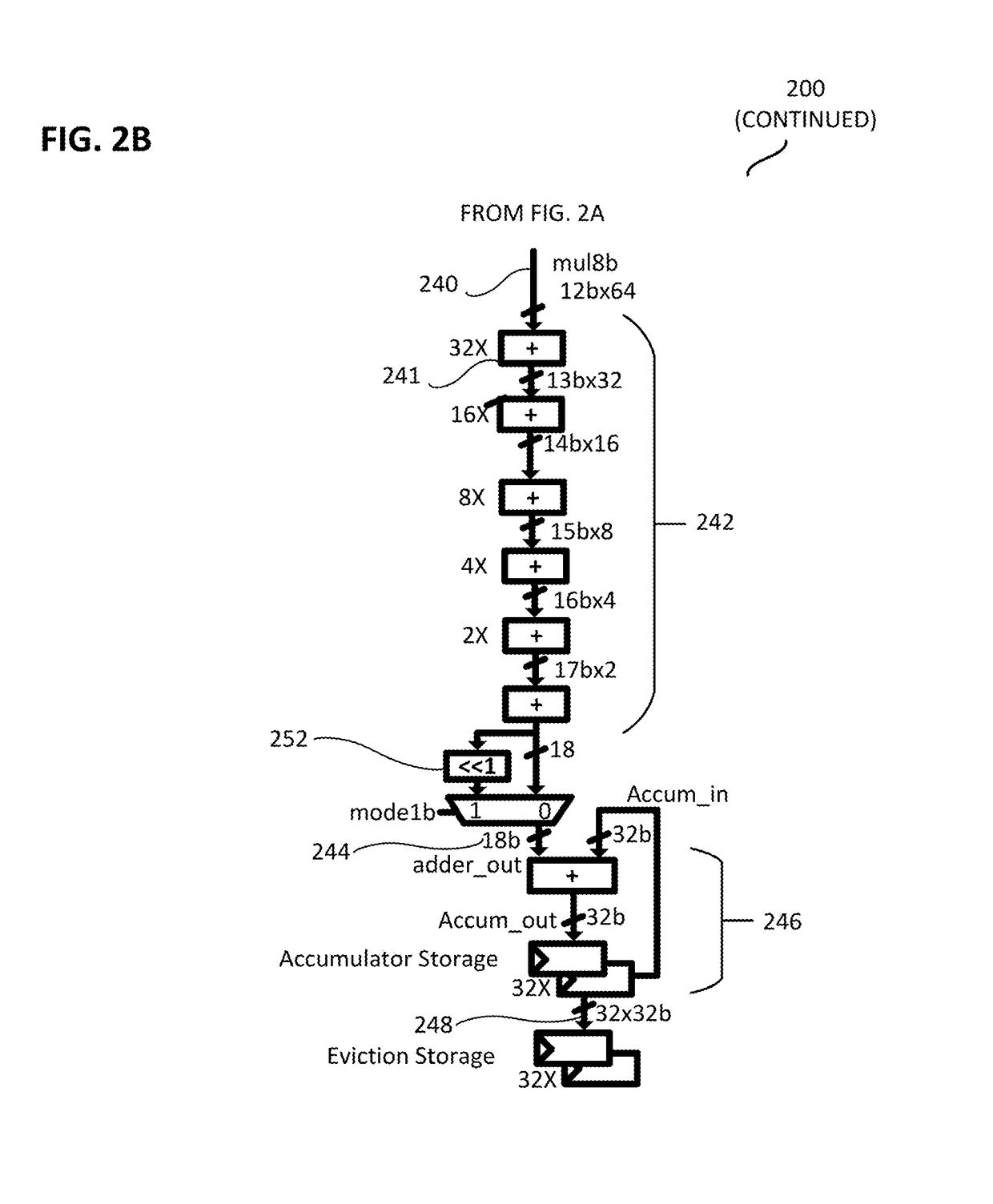

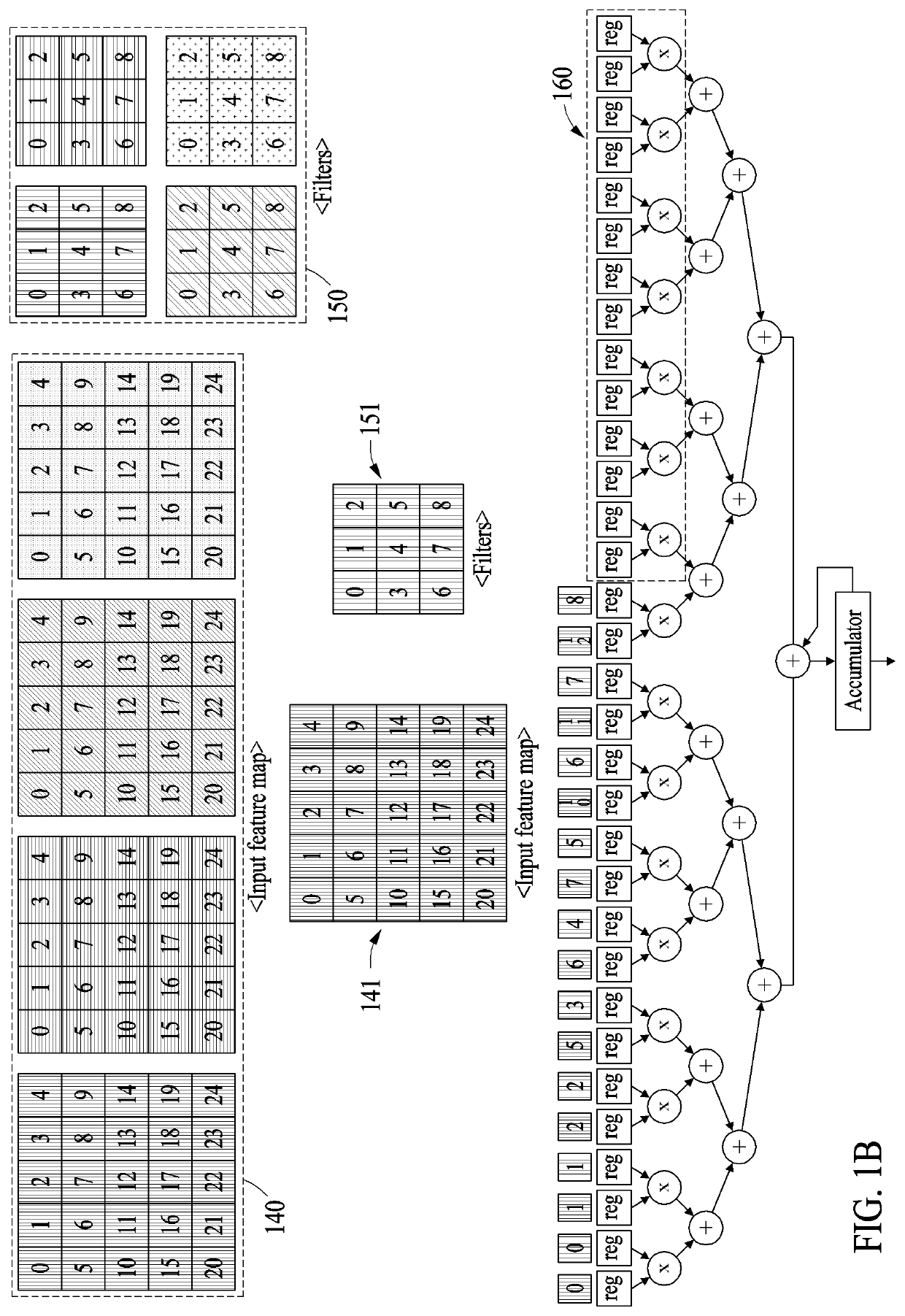

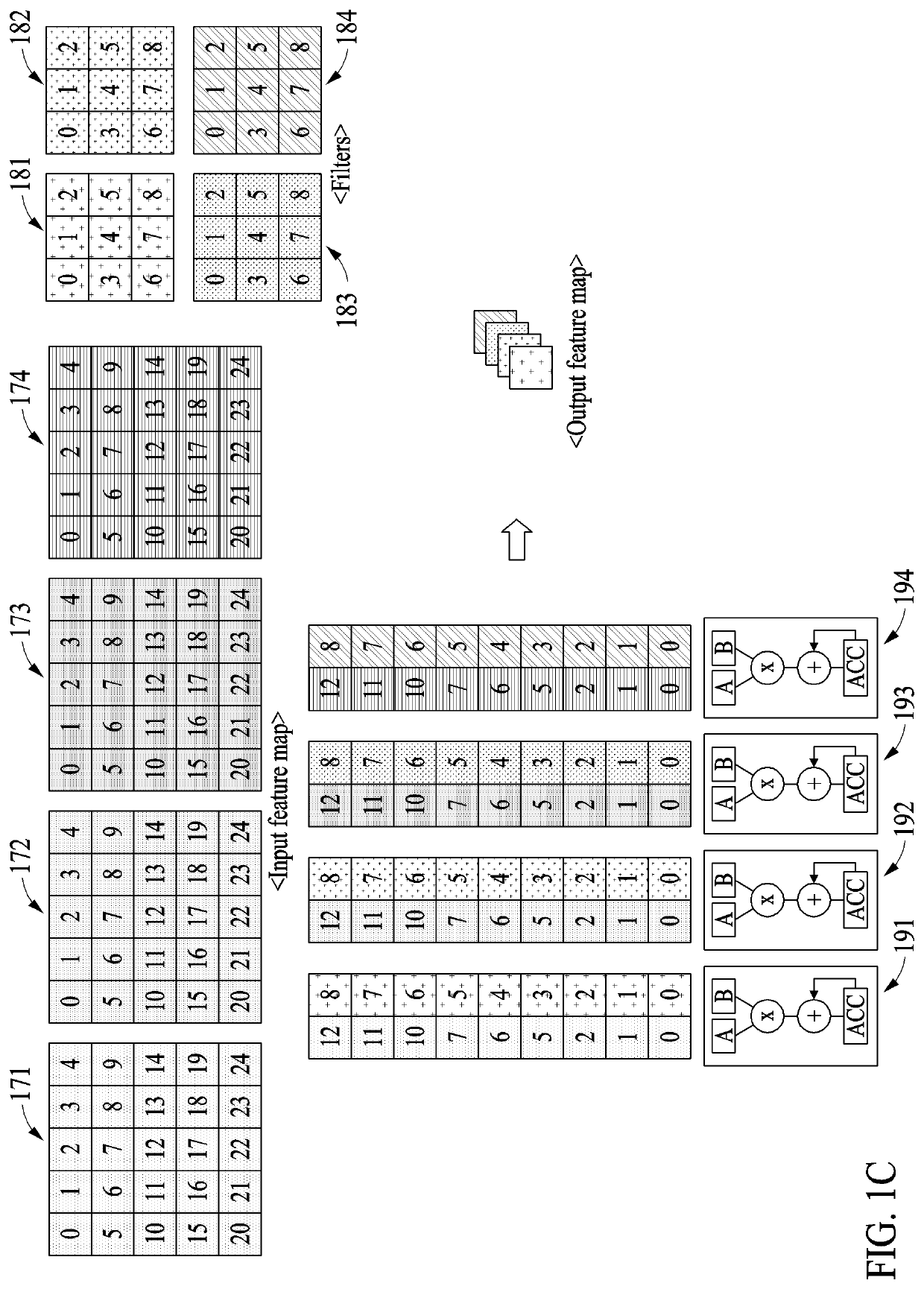

Reconfigurable multi-precision integer dot-product hardware accelerator for machine-learning applications

ActiveUS20190042252A1Exclusive-OR circuitsDigital data processing detailsAlgorithmHardware acceleration

A configurable integrated circuit to compute vector dot products between a first N-bit vector and a second N-bit vector in a plurality of precision modes. An embodiment includes M slices, each of which calculates the vector dot products between a corresponding segment of the first and the second N-bit vectors. Each of the slices outputs intermediary multiplier results for the lower precision modes, but not for highest precision mode. A plurality of adder trees to sum up the plurality of intermediate multiplier results, with each adder tree producing a respective adder out result. An accumulator to merge the adder out result from a first adder tree with the adder out result from a second adder tree to produce the vector dot product of the first and the second N-bit vector in the highest precision mode.

Owner:INTEL CORP

Reconfigurable arithmetic engine circuit

PendingUS20210072954A1Improve scalabilityLower latencyExclusive-OR circuitsRegister arrangementsComputer architectureBinary multiplier

A representative reconfigurable processing circuit and a reconfigurable arithmetic circuit are disclosed, each of which may include input reordering queues; a multiplier shifter and combiner network coupled to the input reordering queues; an accumulator circuit; and a control logic circuit, along with a processor and various interconnection networks. A representative reconfigurable arithmetic circuit has a plurality of operating modes, such as floating point and integer arithmetic modes, logical manipulation modes, Boolean logic, shift, rotate, conditional operations, and format conversion, and is configurable for a wide variety of multiplication modes. Dedicated routing connecting multiplier adder trees allows multiple reconfigurable arithmetic circuits to be reconfigurably combined, in pair or quad configurations, for larger adders, complex multiplies and general sum of products use, for example.

Owner:CORNAMI INC

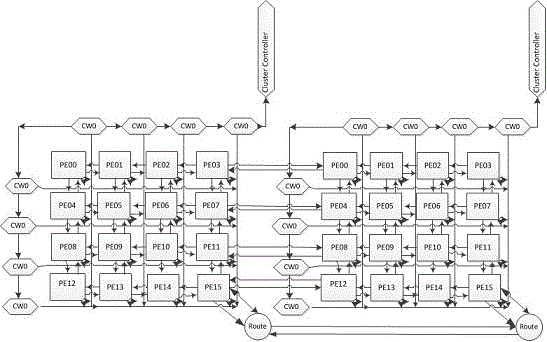

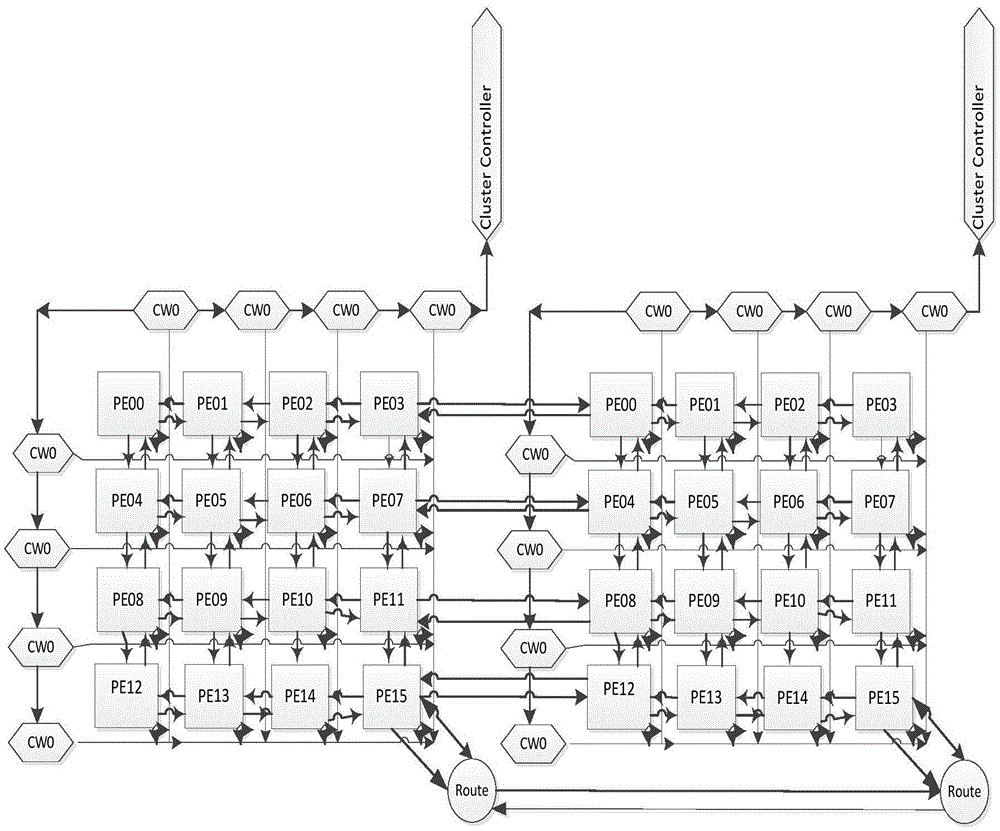

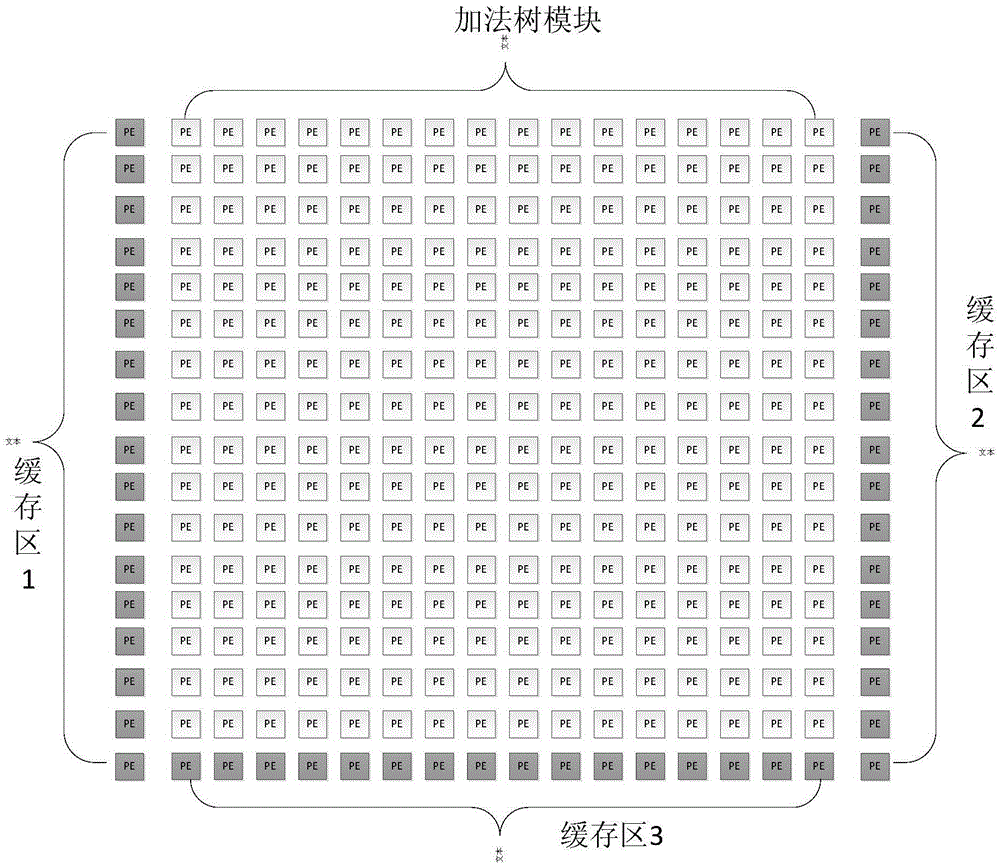

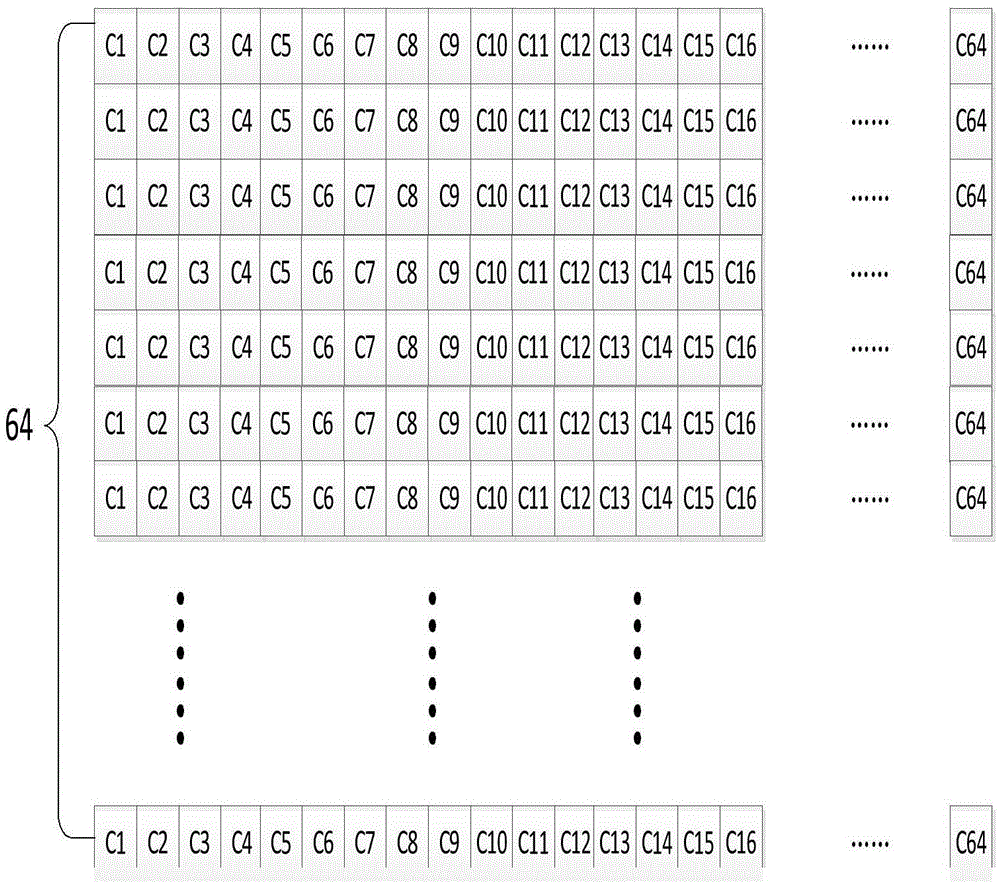

High efficiency video coding adder tree parallel implementation method

ActiveCN105847810ACalculation speedImprove the efficiency of motion estimation calculationDigital video signal modificationDigital videoComputation process

The invention provides a high efficiency video coding (HEVC) adder tree parallel implementation method, and relates to the technical field of digital video coding and decoding. By utilizing a two-dimensional processing element array structure, an SAD value in a luminance block division mode is computed and is subjected to parallel processing, so that motion estimation computation efficiency is effectively improved; and by utilizing a method for selecting a processing element (PE) for storing the SAD value according to the type of the block division mode, computation speed of an adder tree is increased, and computation efficiency is improved. Compared with the traditional pixel block storage manner (storing a single pixel through the single PE ), a manner of storing 4*4 pixel blocks through the single PE has the advantage that the amount of utilized PEs is reduced to 1 / 16th of the original amount of the utilized PEs; compared with an adder tree serial structure implementation method, the parallel implementation method has the speed increased to nearly 100 times; and computation of the SAD values in twelve types of the block division modes are obtained through combination of the SAD values in 4*4 block division modes, so that excess computation processes can be reduced, and computation efficiency is improved.

Owner:XIAN UNIV OF POSTS & TELECOMM

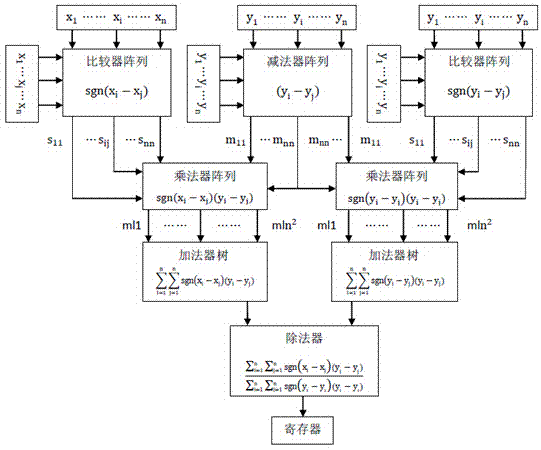

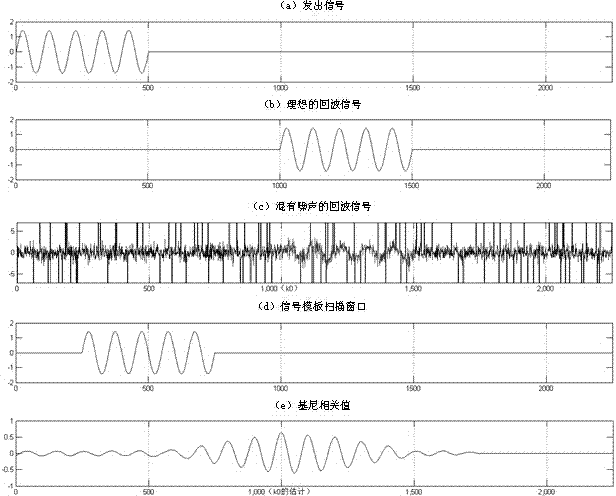

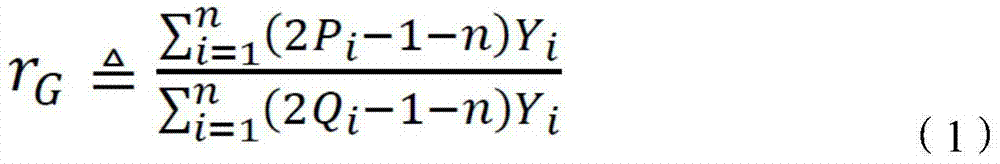

Signal detection circuit and method based on Gini Correlation

ActiveCN104333423AImprove robustnessGood performanceReceivers monitoringCorrelation coefficientEnvironmental noise

The invention relates to a signal detection circuit and method based on Gini Correlation. The circuit comprises two comparator arrays, a subtracter array, two multiplier arrays, two adder trees, a divider and a register, wherein the comparator arrays and the subtracter array are n*n matrixes (n is a signal length); each element on the matrixes is applied to comparison computation and subtraction computation respectively; the multiplier arrays are n2 2-input multipliers; the adder trees are n2-input adders; the divider is used for finishing 2-input division computation; and the register is used for registering results of relevant computations. When environmental noise comprises a pulse noise component, matching of a filter with a Pearson's Product Moment Correlation Coefficient basically fails. The Gini Correlation shows excellent robustness, comprising mathematical expectation which is very close to a true value and a small standard different, in a noise environment comprising pulse components.

Owner:中山易美杰智能科技有限公司

Asymmetrical partition mode based high efficiency video coding adder tree parallel realization method

ActiveCN105578189ACalculation speedImprove the efficiency of motion estimation calculationDigital video signal modificationDigital videoProcessing element

The invention discloses an asymmetrical partition mode based high efficiency video coding adder tree parallel realization method, relating to the technical field of digital video coding and decoding. According to the method, a two-dimension processing element array structure is adopted, and an SAD value of a brightness block partition mode is calculated and processed parallelly, so that the motion estimation operation efficiency is improved effectively. A PE for storing the SAD value is selected according to whether the SAD value is used in subsequent process, so that the calculation speed of anadder tree is improved, and the calculation efficiency is improved. In the conventional pixel block storage manner, a single PE stores a single pixel, while in the method, a single PE stores 4*4 pixel blocks, so that the quantity of processing units is reduced to 1 / 16 that of processing units in prior art. Compared with the adder tree serial structure realization method, the parallel structure improves the speed by nearly 92 times. Calculation of the SAD values of 36 partition modes is realized through merging of SAD values of 4*4 partition mode, so that excessive calculation steps are reduced, and the calculation efficiency is improved.

Owner:XIAN UNIV OF POSTS & TELECOMM

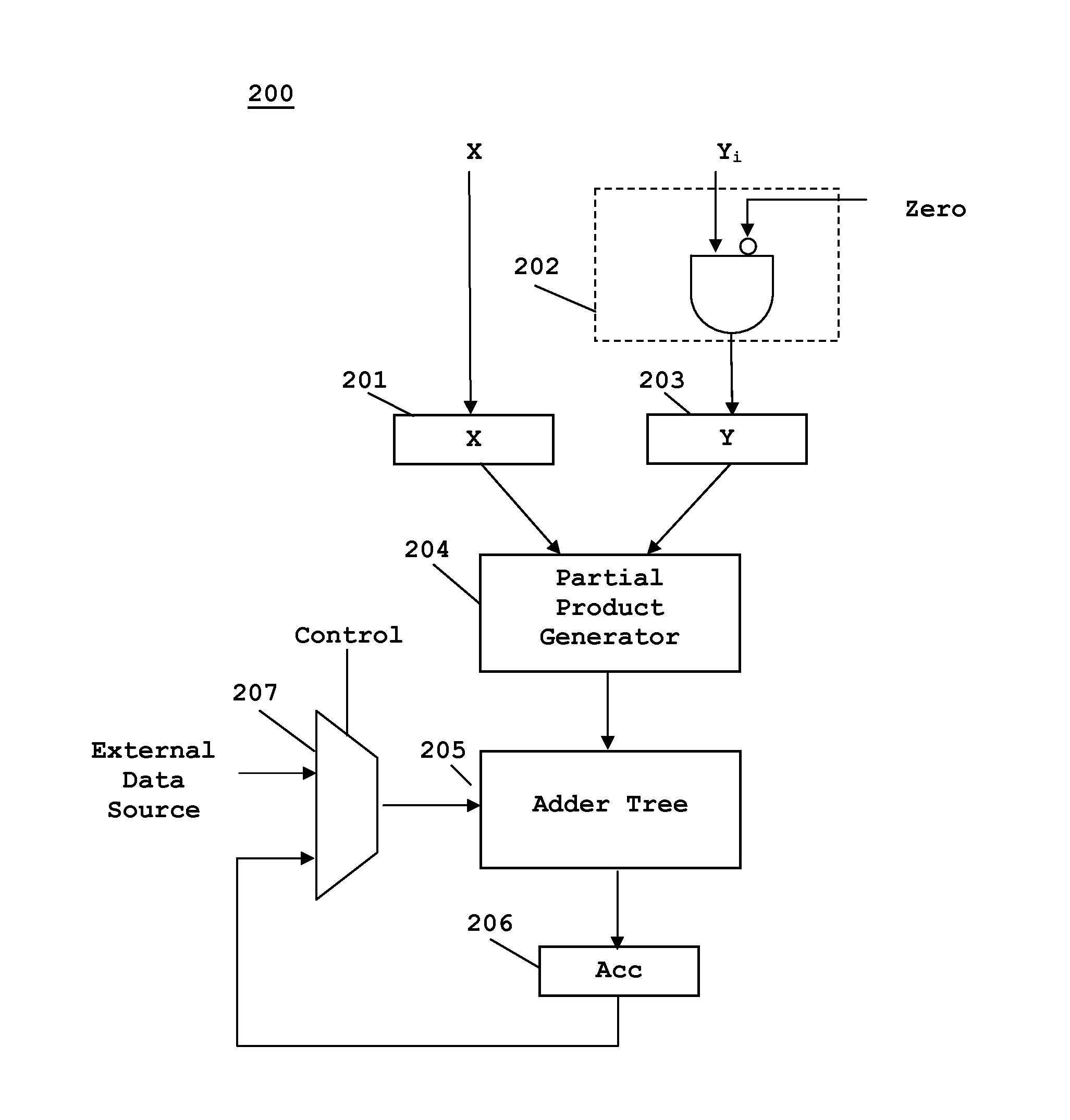

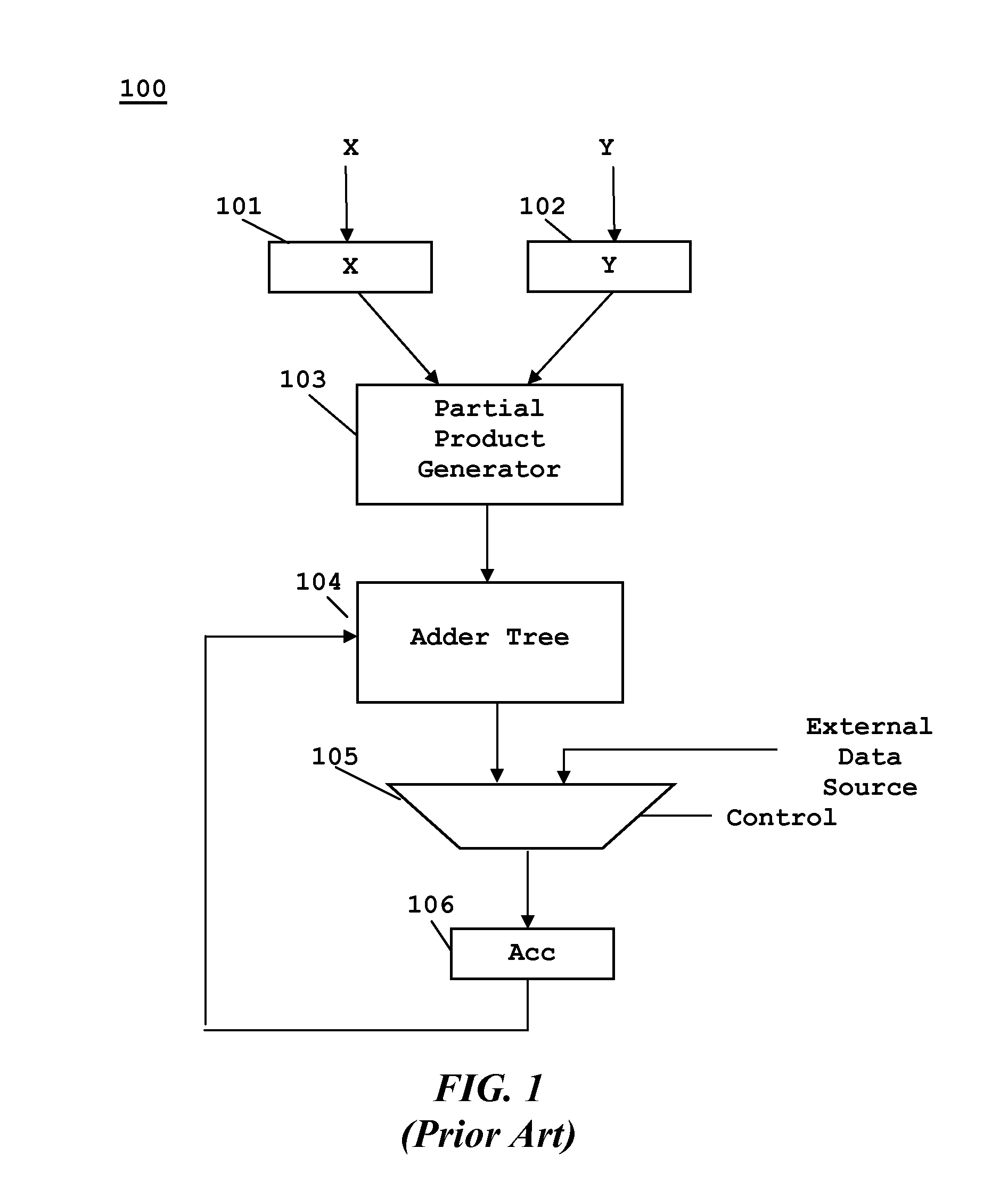

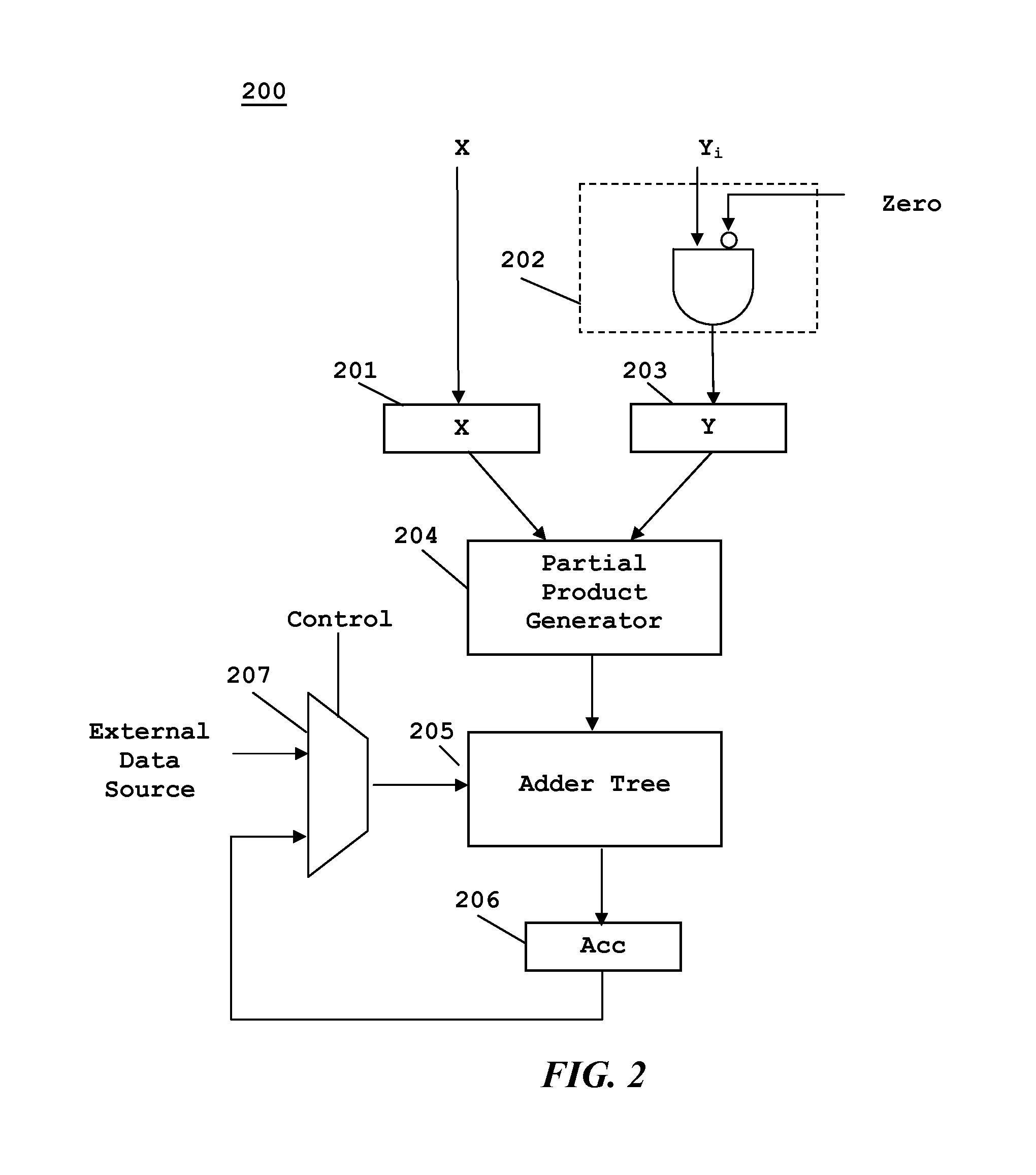

Efficient implementation of a multiplier/accumulator with load

ActiveUS20160132295A1Computation using non-contact making devicesMultiply–accumulate operationMultiplexer

This invention is multiply-accumulate circuit supporting a load of the accumulator. During multiply-accumulate operation a partial product generator forms partial produces from the product inputs. An adder tree sums the partial product and the accumulator value. The sum is stored back in the accumulator overwriting the prior value. During load operation an input gate forces one of the product inputs to all 0's. Thus the partial product generator generates partial products corresponding to a zero product. The adder tree adds this zero product to the external load value. The sum, which corresponds to the external load value is stored back in the accumulator overwriting the prior value. A multiplexer at the side input of the adder tree selects the accumulator value for normal operation or the external load value for load operation.

Owner:TEXAS INSTR INC

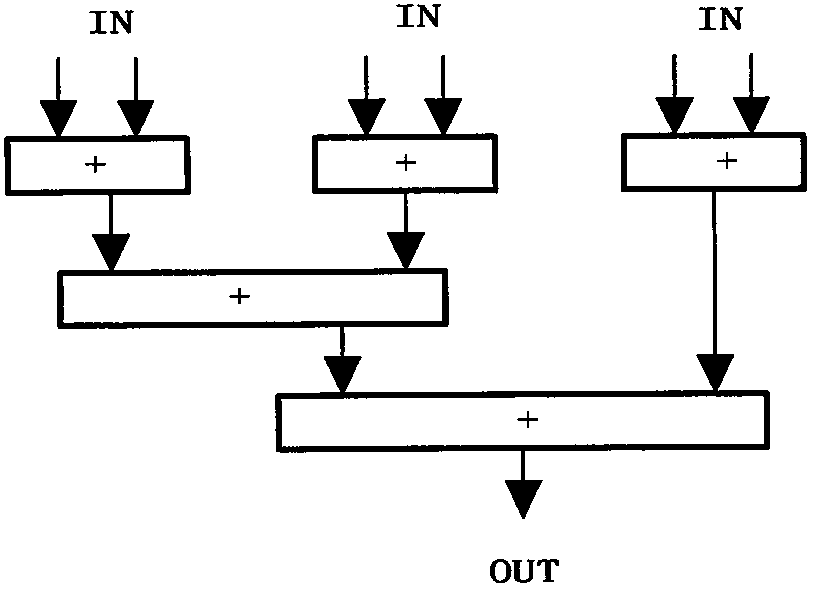

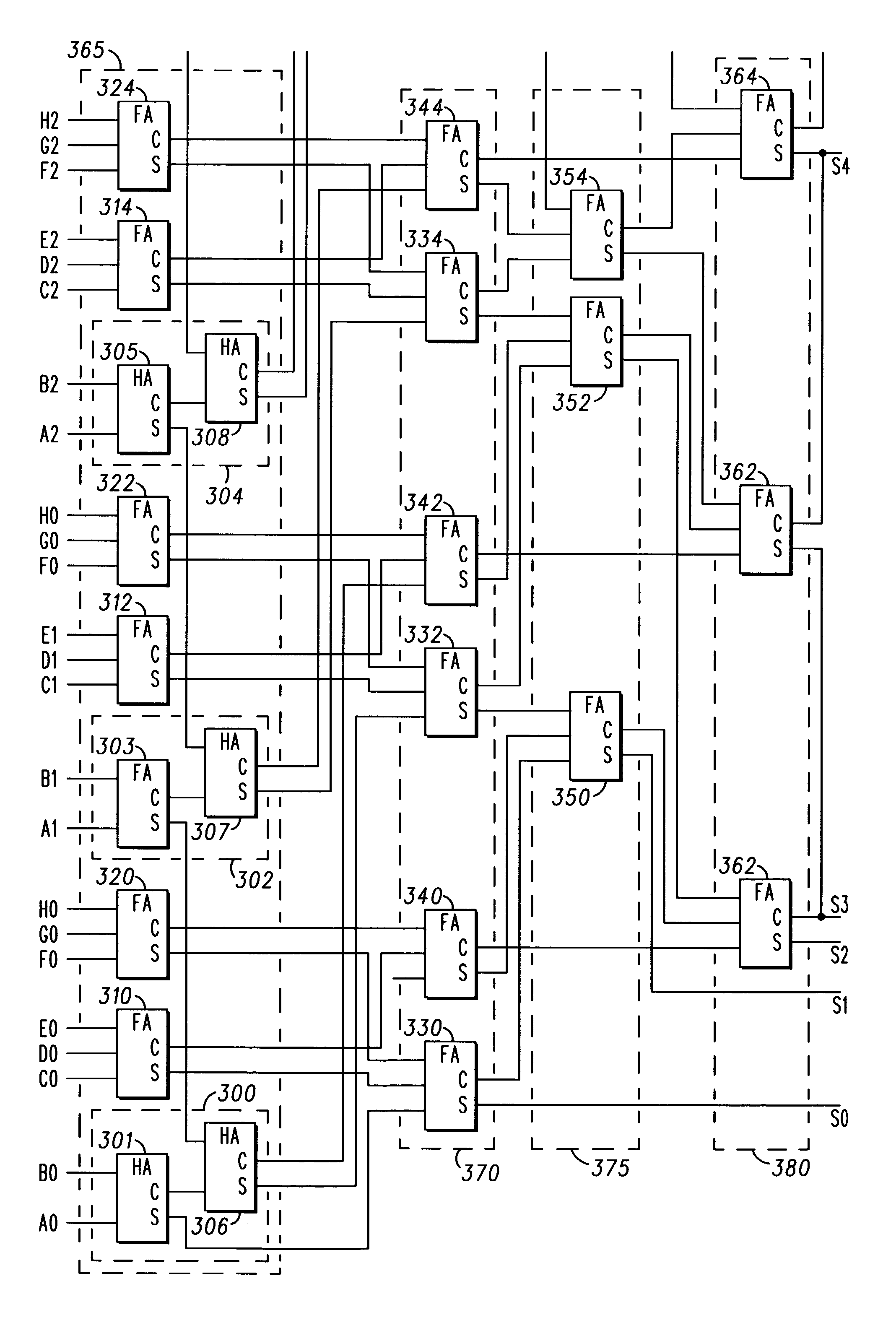

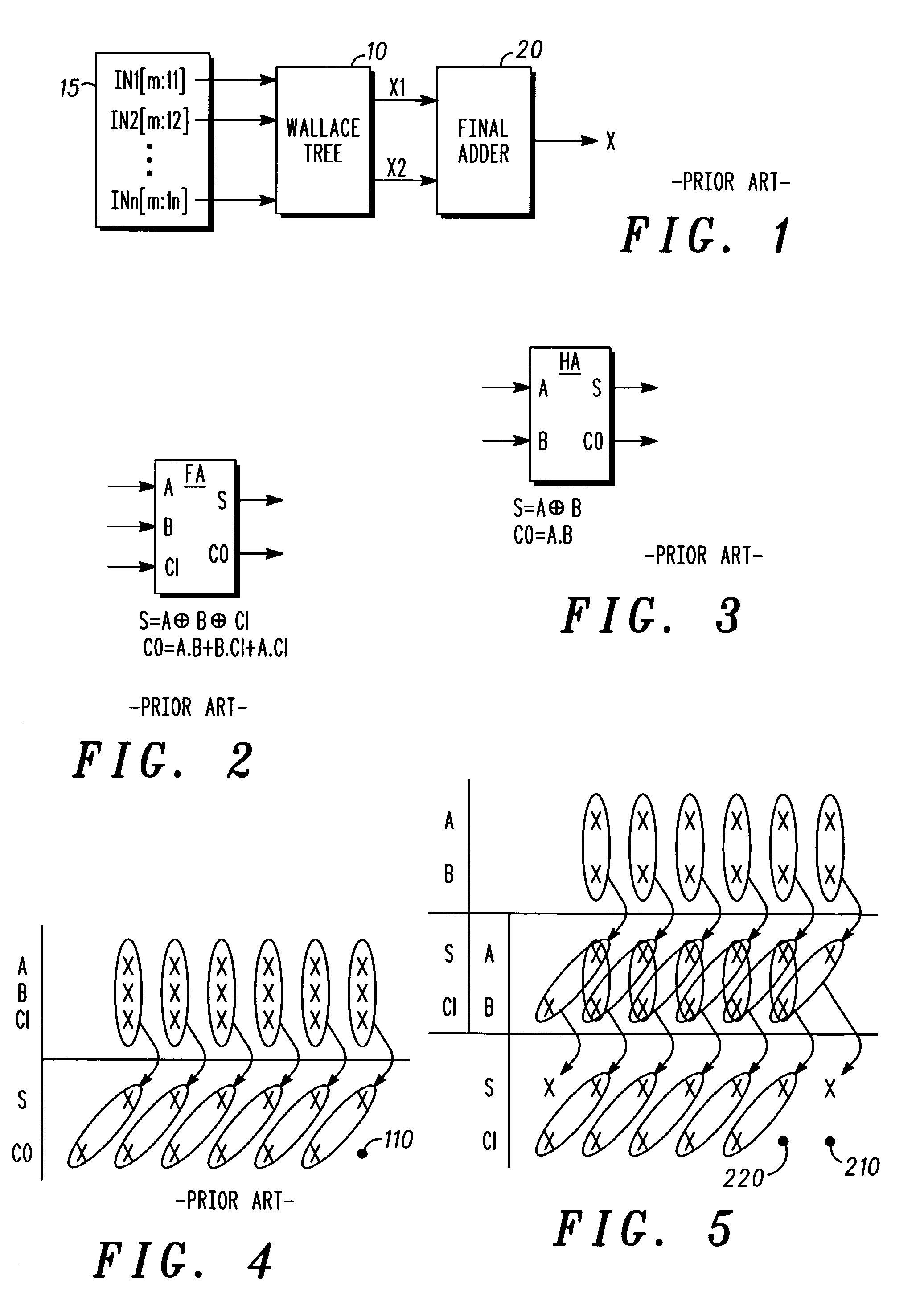

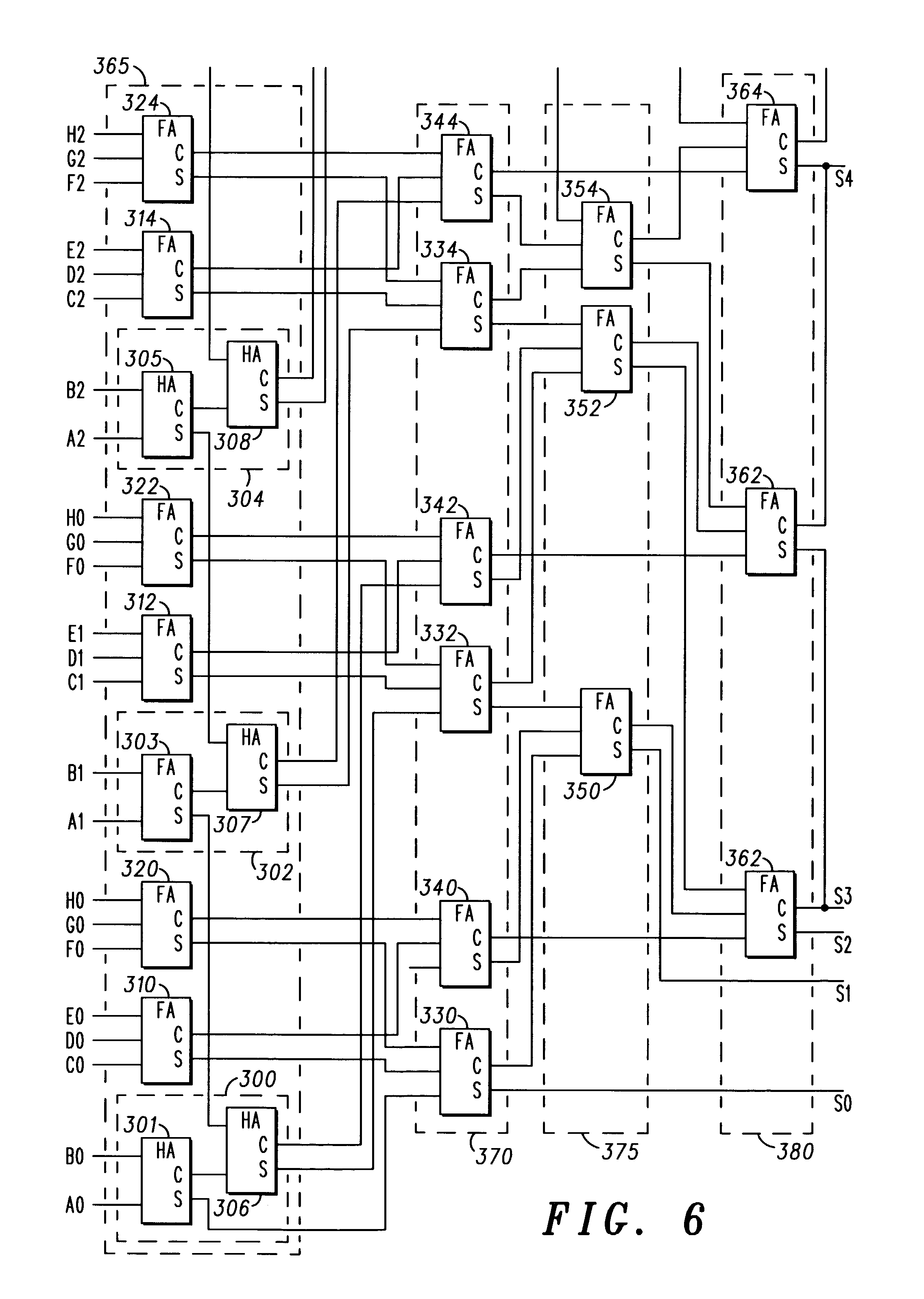

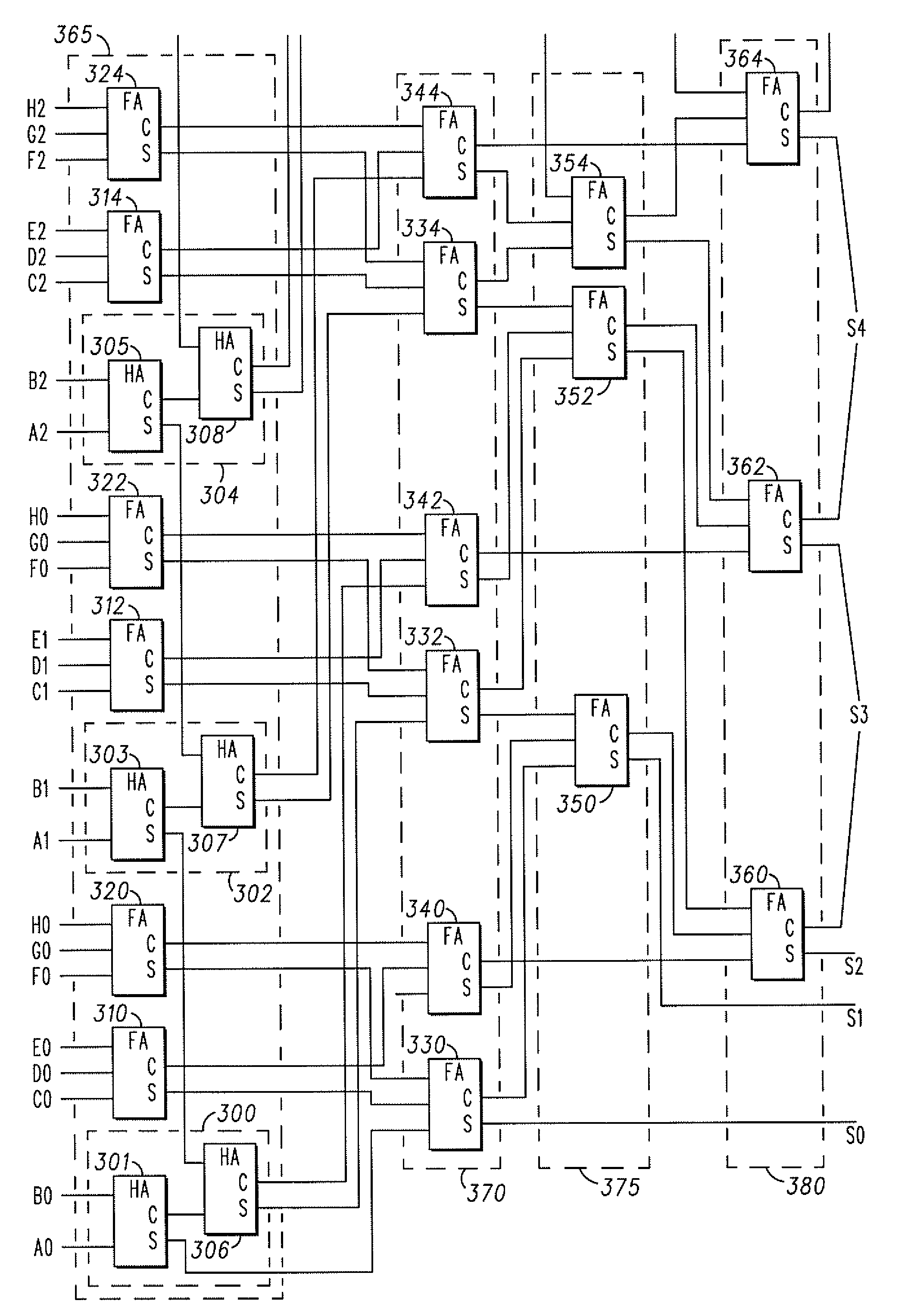

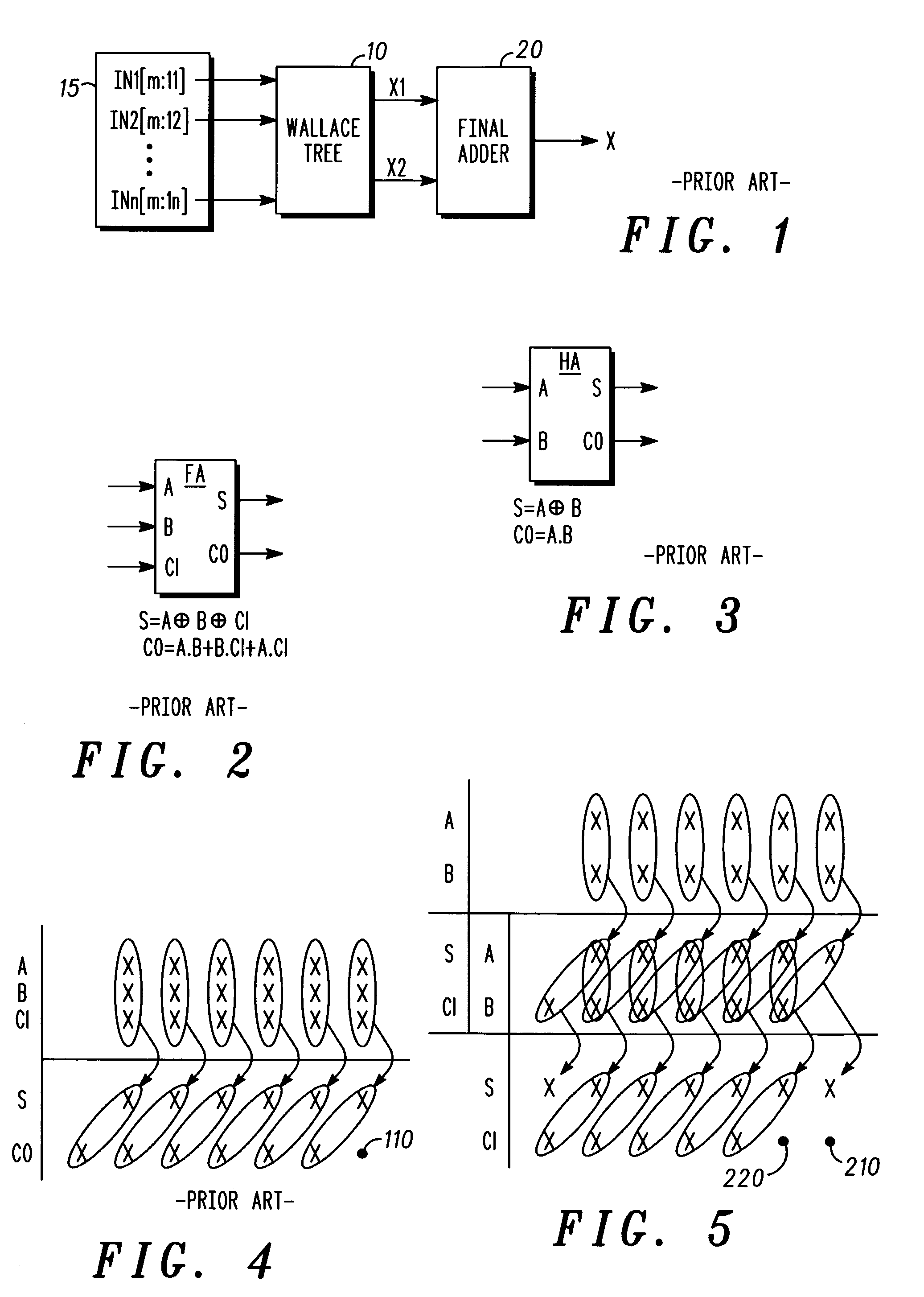

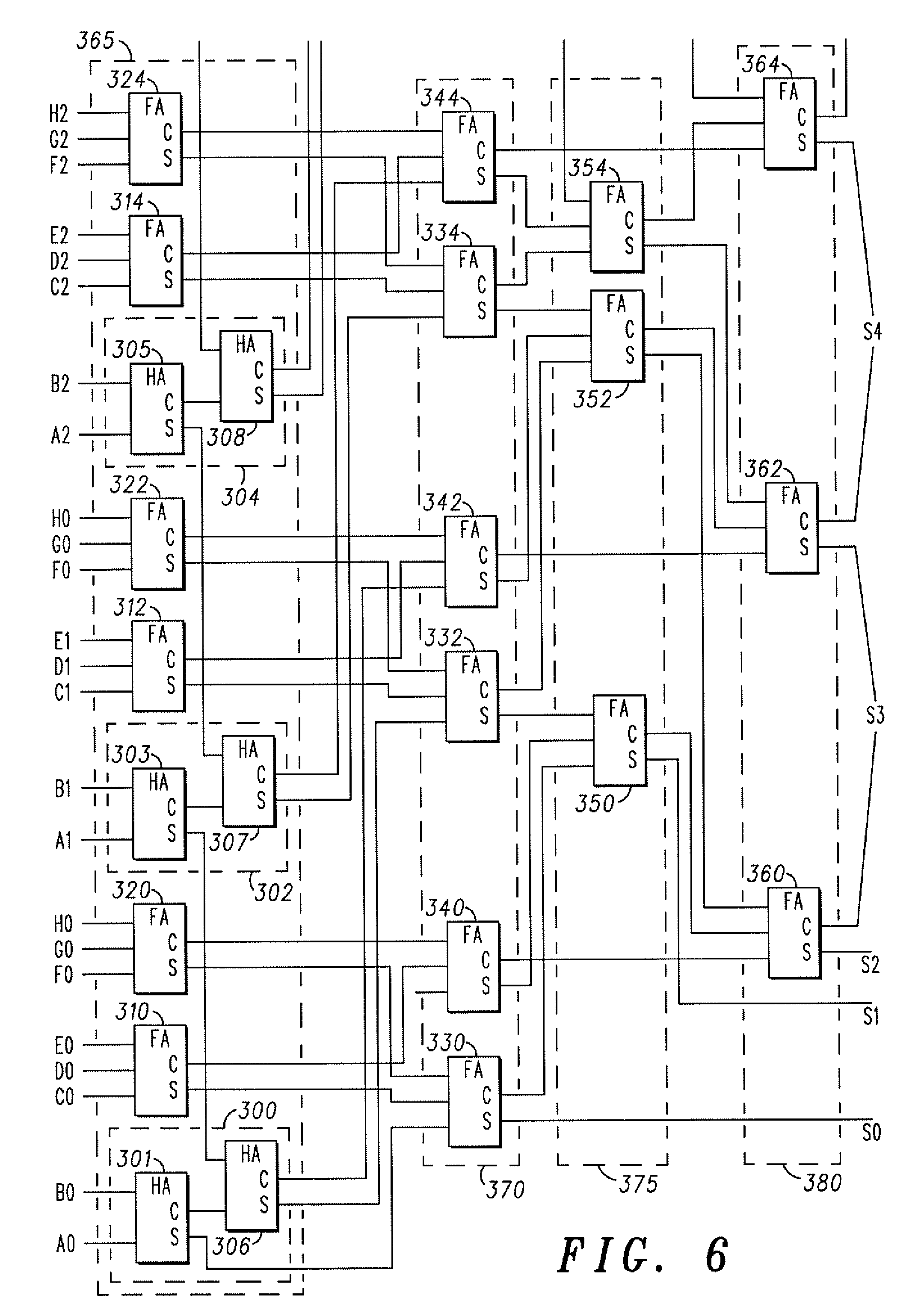

Adder tree structure DSP system and method

ActiveUS20030093454A1Reduce Propagation DelayAddressing slow performanceComputation using non-contact making devicesPropagation delayWallace tree

A Wallace tree structure such as that used in a DSP is arranged to sum vectors. The structure has a number of adder stages (365, 370, 375), each of which may have half adders (300) with two input nodes, and full adders (310) with three input nodes. The structure is designed with reference to the vectors to be summed. The number of full- and half-adders in each stage and the arrangement of vector inputs depends upon their characteristics. An algorithm calculates the possible tree structures and input arrangements, and selects an optimum design having a small final stage ripple adder (380), the design being based upon the characteristics of the vector inputs. This leads to reduced propagation delay and a reduced amount of semiconductor material for implementation of the DSP.

Owner:NXP USA INC

Adder tree structure digital signal processor system and method

ActiveUS7124162B2Reduce degradationAddressing slow performanceComputation using non-contact making devicesPropagation delayWallace tree

A Wallace tree structure such as that used in a digital signal processor (DSP) is arranged to sum vectors. The structure has a number of adder stages, each of which may have half adders with two input nodes, and full adders with three input nodes. The structure is designed with reference to the vectors to be summed. The number of full- and half-adders in each stage and the arrangement of vector inputs depends upon their characteristics. An algorithm calculates the possible tree structures and input arrangements, and selects an optimum design having a small final stage ripple adder after the last stage of the Wallace tree structure, the design being based upon the characteristics of the vector inputs. This leads to reduced propagation delay and a reduced amount of semiconductor material for implementation of the DSP.

Owner:NXP USA INC

Method and apparatus with deep learning operations

PendingUS20220164164A1Well formedComputation using non-contact making devicesPhysical realisationMultiplexingMultiplexer

An apparatus with deep learning includes: a systolic adder tree including adder trees connected in row and column directions; and an input multiplexer connected to an input register of at least one of the adder trees and configured to determine column directional data movement between the adder trees based on operation modes.

Owner:SAMSUNG ELECTRONICS CO LTD

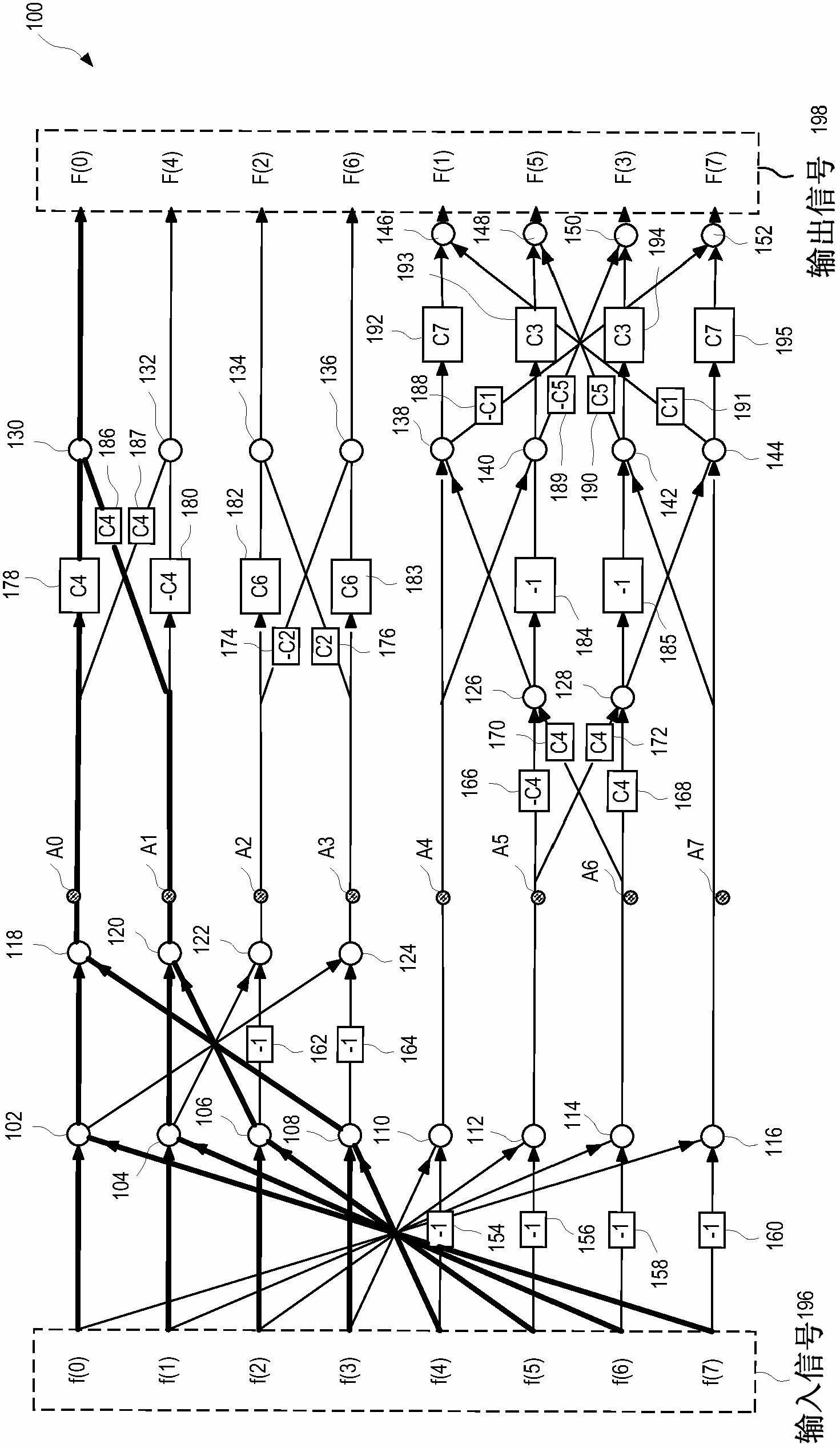

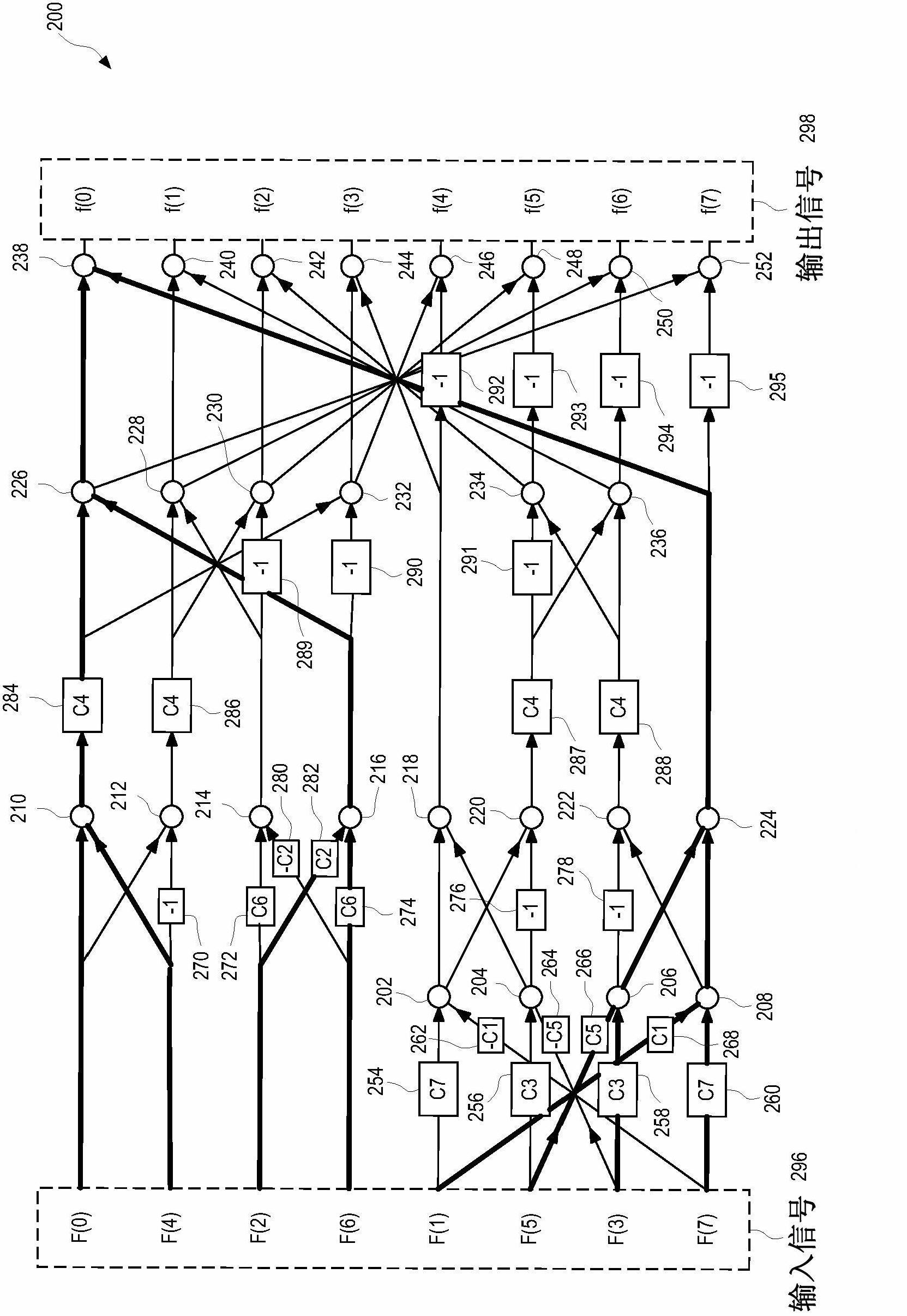

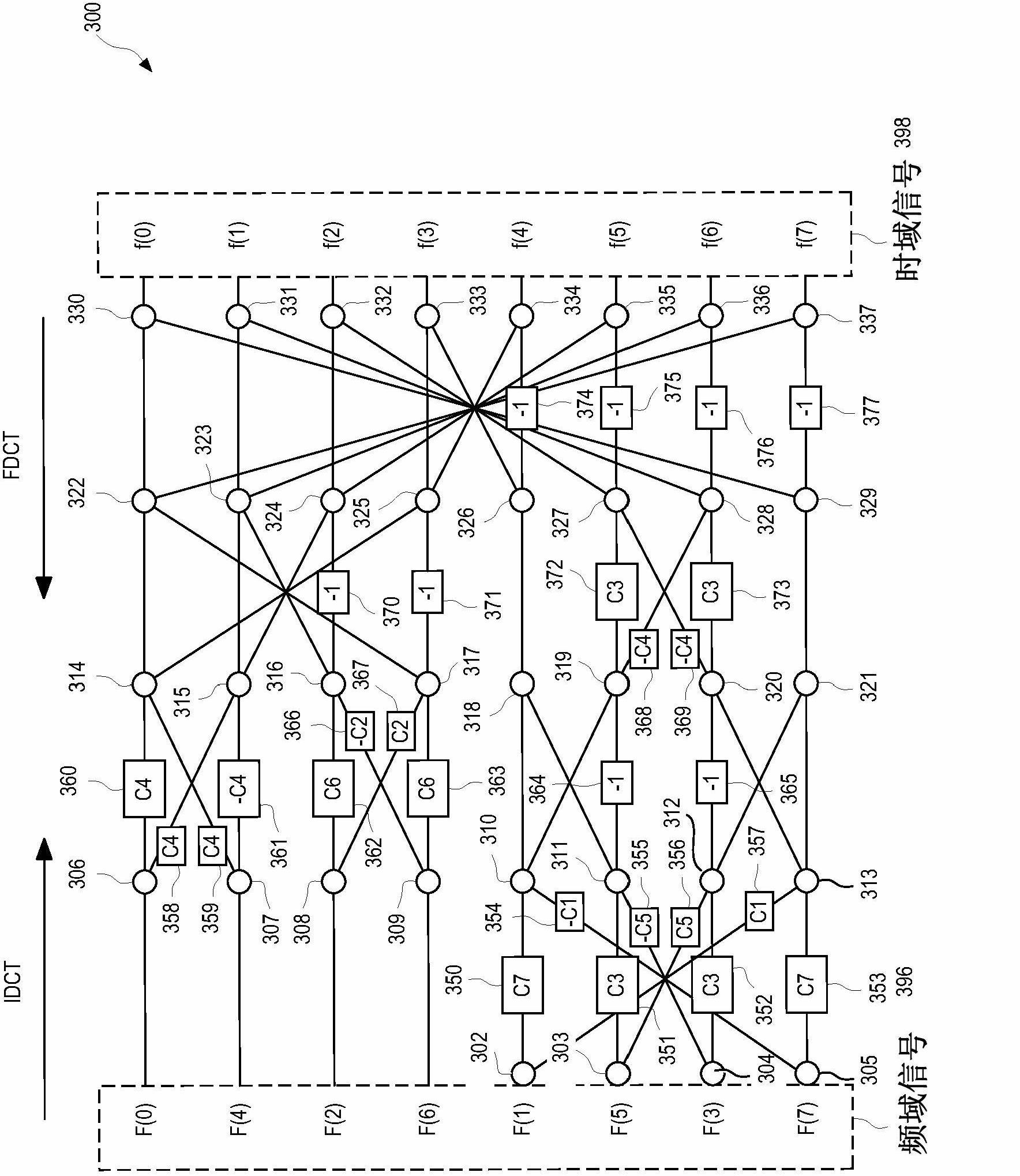

Circuits for shared flow graph based discrete cosine transform

InactiveCN102652314ASpecial data processing applicationsSpecific program execution arrangementsMultiplexerDiscrete cosine transform

A circuit for performing a discrete cosine transformation (DCT) of input signals (416) includes a forward adder-tree module (402), a first set of multiplexers (404), a shared flow-graph module (406), an inverse adder-tree module (408), and a second set of multiplexers (410) coupled in series. In operation, the multiplexers (404) are configured to process input signals via the forward adder-tree module (402) and the shared flow-graph module (406) to perform a forward DCT of the input signals (416) or via the shared flow-graph module (406) and the inverse adder-tree module (408) to perform an inverse DCT of the input signals (416).

Owner:TEXAS INSTR INC

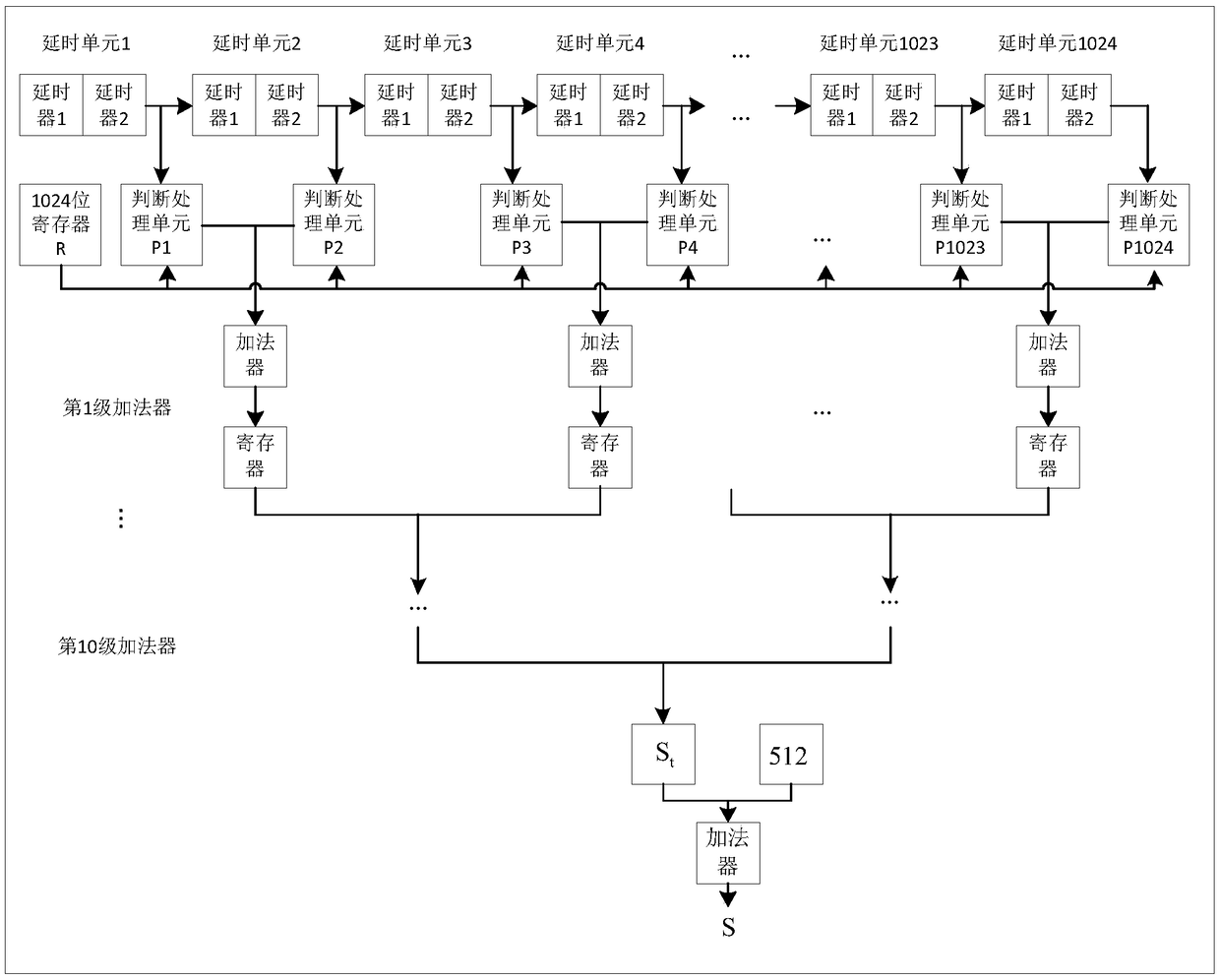

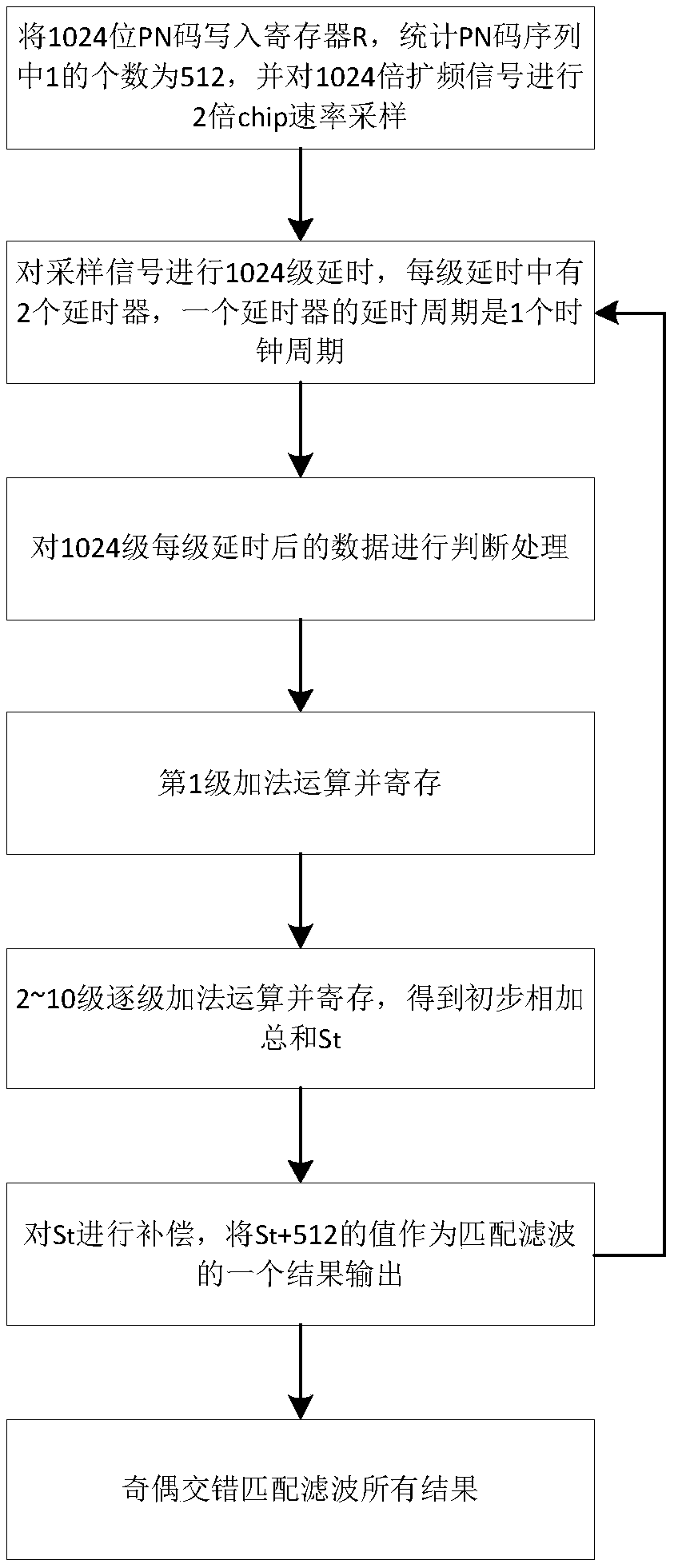

An interleaved matching filtering method suitable for specific integrated circuit design

ActiveCN109388882AReduce consumptionReduce Design ComplexityCAD circuit designSpecial data processing applicationsApplication specificMatched filter

The invention discloses an interleaved matching filtering method suitable for specific integrated circuit design, and belongs to the technical field of application specific integrated circuits. At first, that delay unit is used to delay the spread spectrum oversampling signal; Then, the delayed data is judged and processed, and the delayed signal is directly output or inverted according to the value of the corresponding position of the PN code, and the inverted operation is accomplished by the way of bit-by-bit inverted compensation. Finally, the pipeline adder tree is used to add and registerthe judged data step by step, and the result of the last addition plus the compensation value is the output result of the odd-even interleaved matched filter. The invention has the following advantages: (1) the design structure of the digitally matched filter can be simplified; (2) the logic sequence is optimized and logic resources are saved; (3) the timing path is shortened to meet the higher timing requirements; (4) The output result is still the sequential matched filter result of each sampling value, so it is easy to process the subsequent signal.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

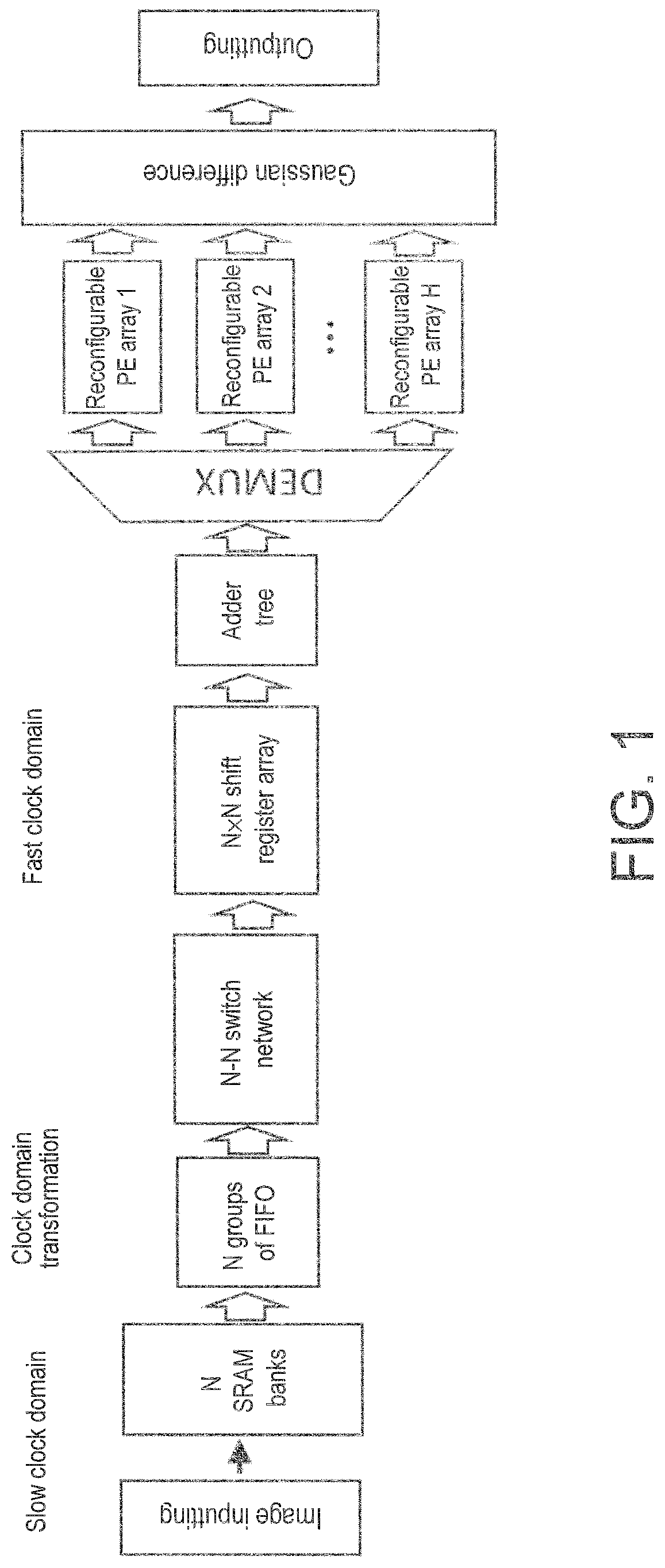

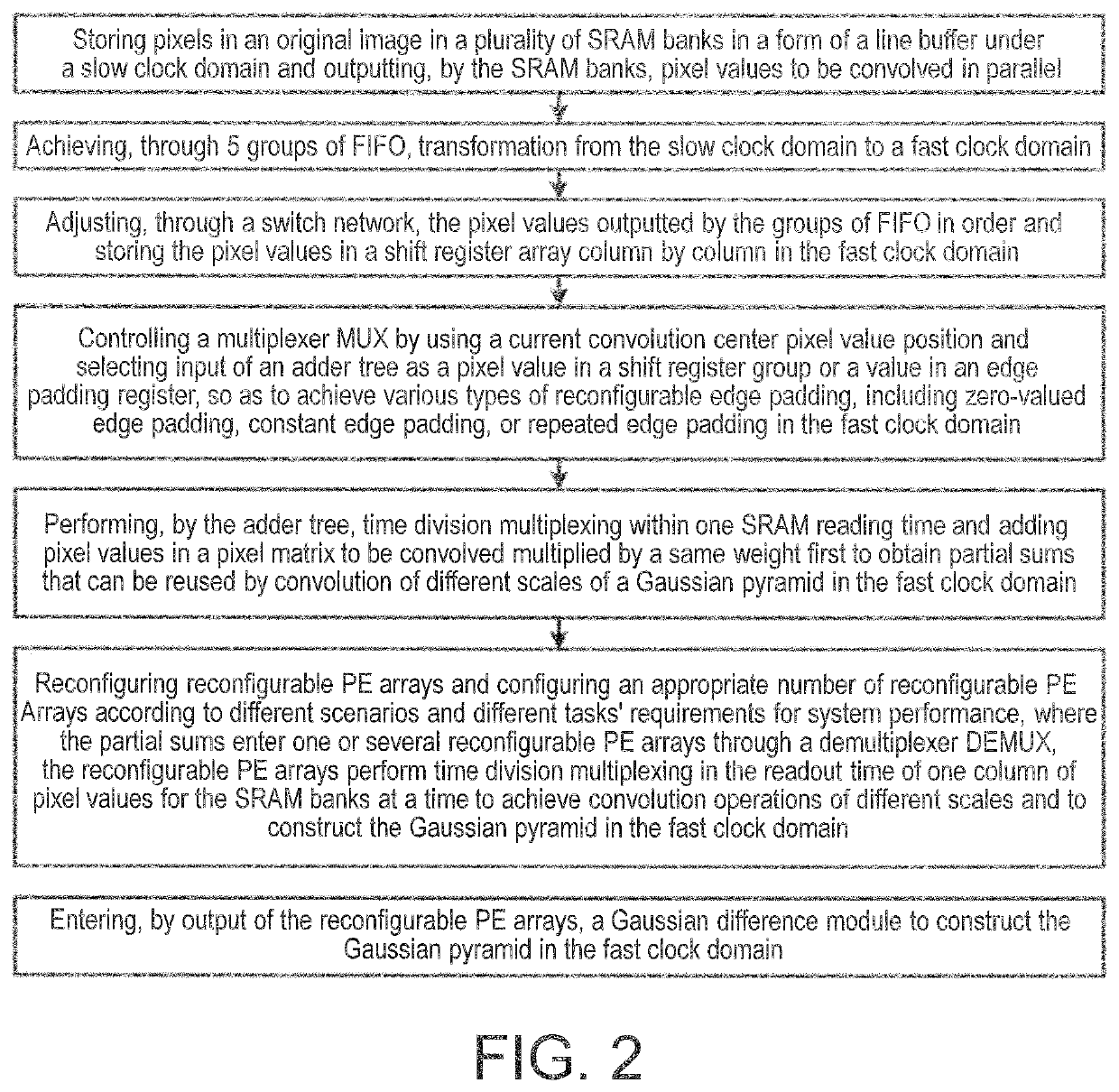

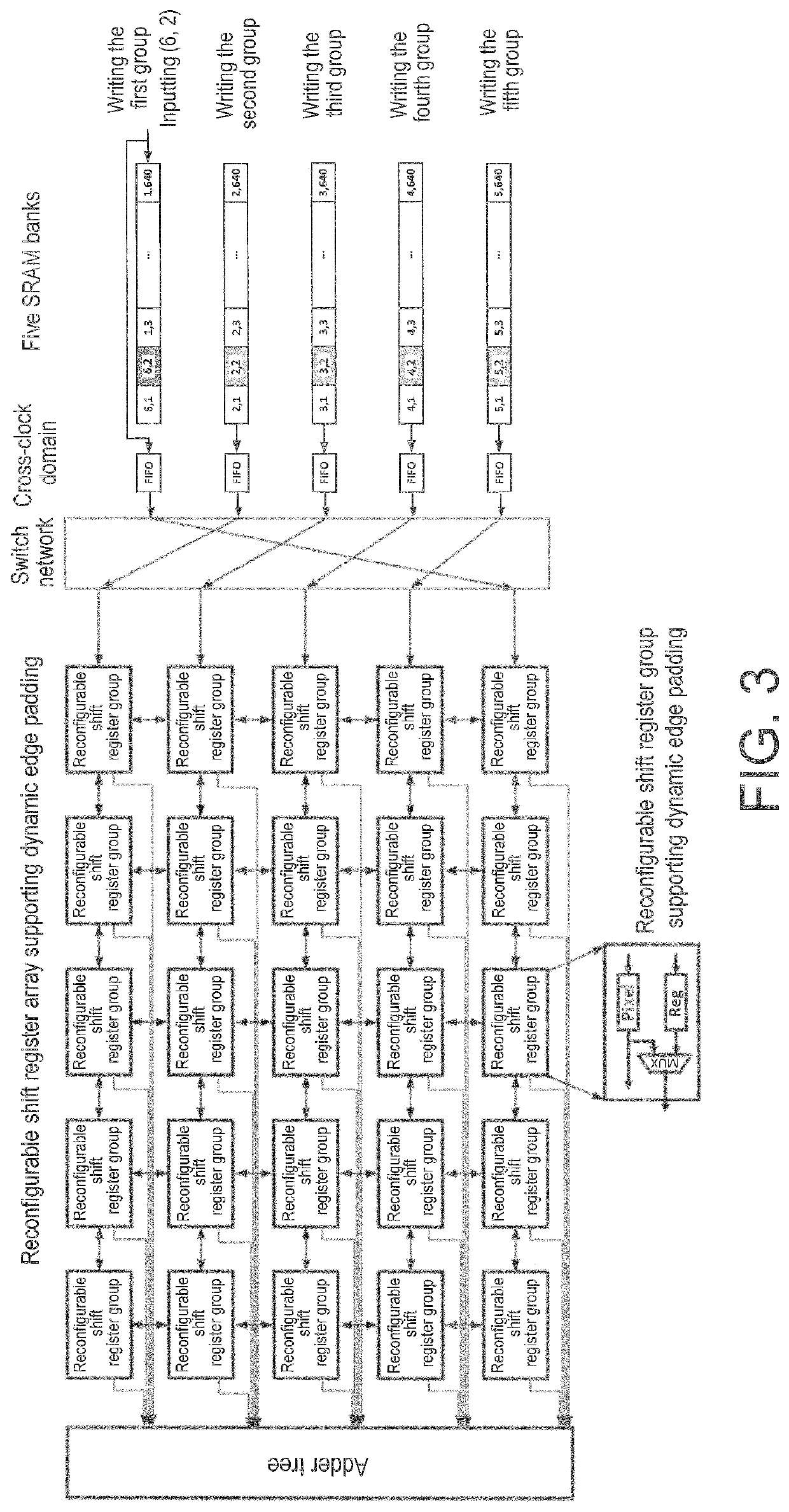

Reconfigurable hardware acceleration method and system for gaussian pyramid construction

PendingUS20220351432A1Operational speed enhancementGeometric image transformationShift registerDifference of Gaussians

The disclosure discloses a reconfigurable hardware acceleration method and system for Gaussian pyramid construction and belongs to the field of hardware accelerator design. The system provided by the disclosure includes a static random access memory (SRAM) bank, a first in first out (FIFO) group, a switch network, a shift register array, an adder tree module, a demultiplexer, a reconfigurable PE array, and a Gaussian difference module. In the disclosure, according to the requirements of different scenarios and different tasks for the system, reconfigurable PE array resources can be configured to realize convolution calculations of different scales. The disclosure includes methods of fast and slow dual clock domain design, dynamic edge padding design, and input image partial sum reusing design.

Owner:HUAZHONG UNIV OF SCI & TECH

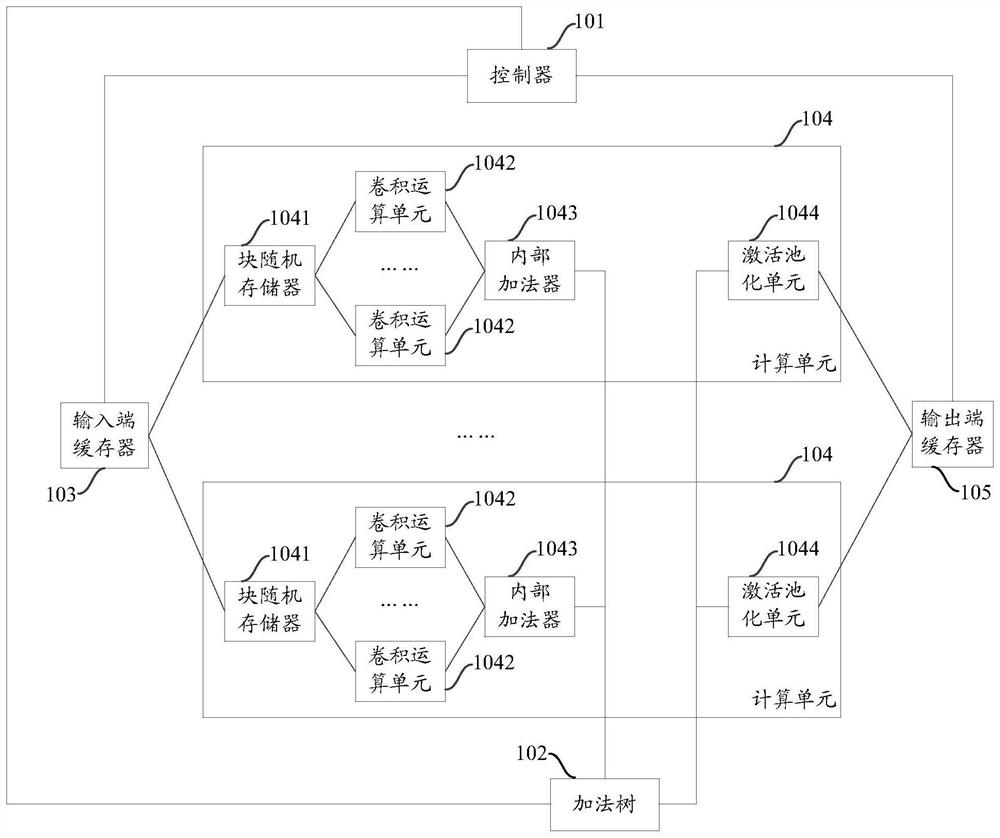

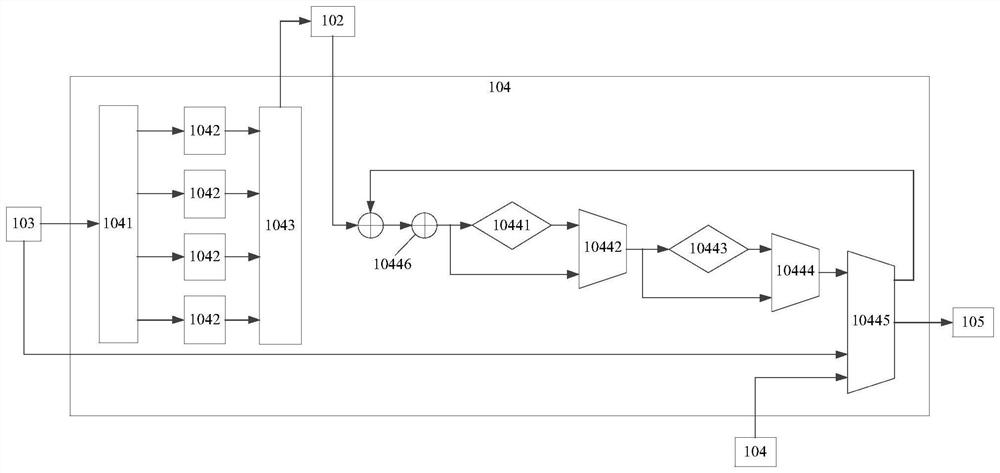

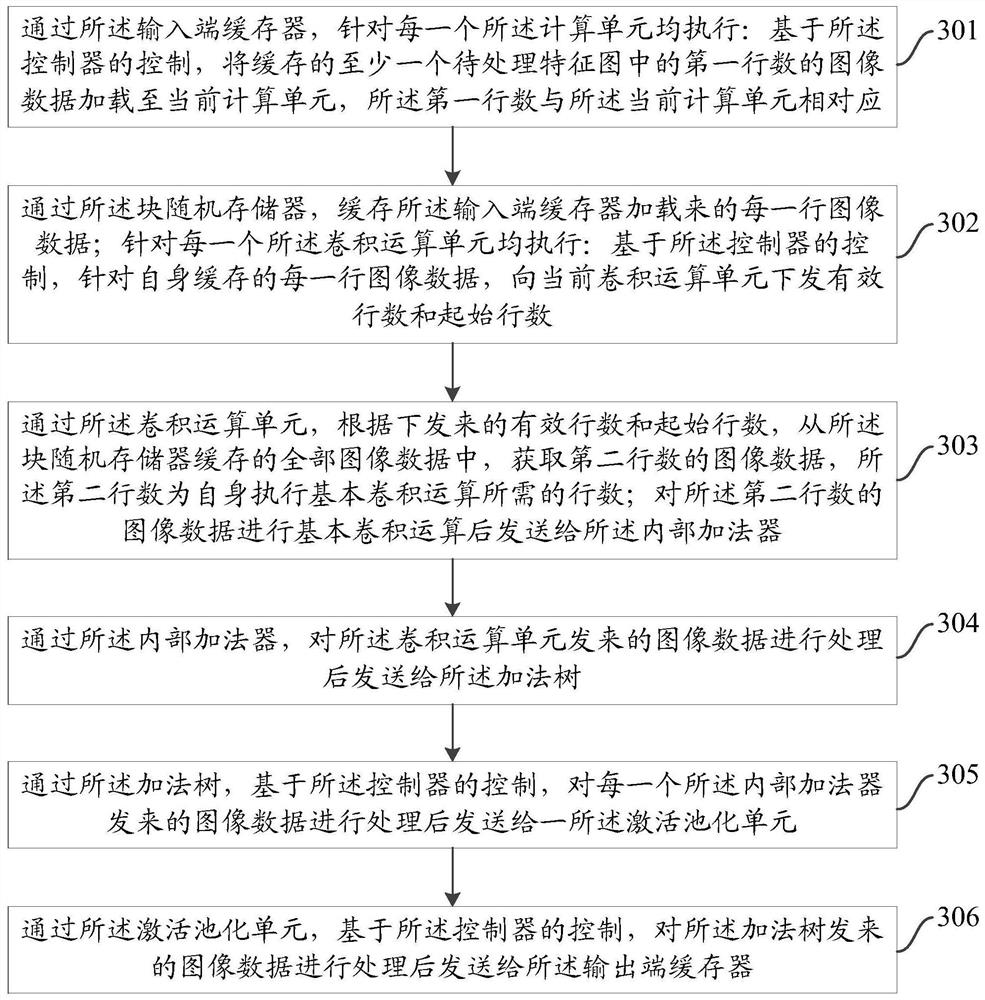

A basic computing unit and computing method of a convolutional neural network

ActiveCN109165728BCompletion time controllableNeural architecturesPhysical realisationTheoretical computer scienceController (computing)

The invention provides a basic computing unit and a computing method of a convolutional neural network. The basic computing unit includes a controller, an addition tree, an input buffer, several computing units, and an output buffer; the computing unit includes a block random access memory, Several convolution operation units, internal adders, activation pooling units. Based on the control of the controller, the input buffer loads the image data of the corresponding number of lines to each calculation unit, and the block random access memory sends the effective number of lines and the initial number of lines to each convolution operation unit so that it can obtain the image data of the corresponding number of lines; The convolution operation unit processes the image data and sends it to the addition tree through the internal adder; the addition tree processes the image data sent by each internal adder and sends it to an activation pooling unit; the activation pooling unit processes the image data and sends it to the output terminal cache. The solution can realize the algorithm based on hardware, so that the completion time of the algorithm can be controlled.

Owner:INSPUR GROUP CO LTD

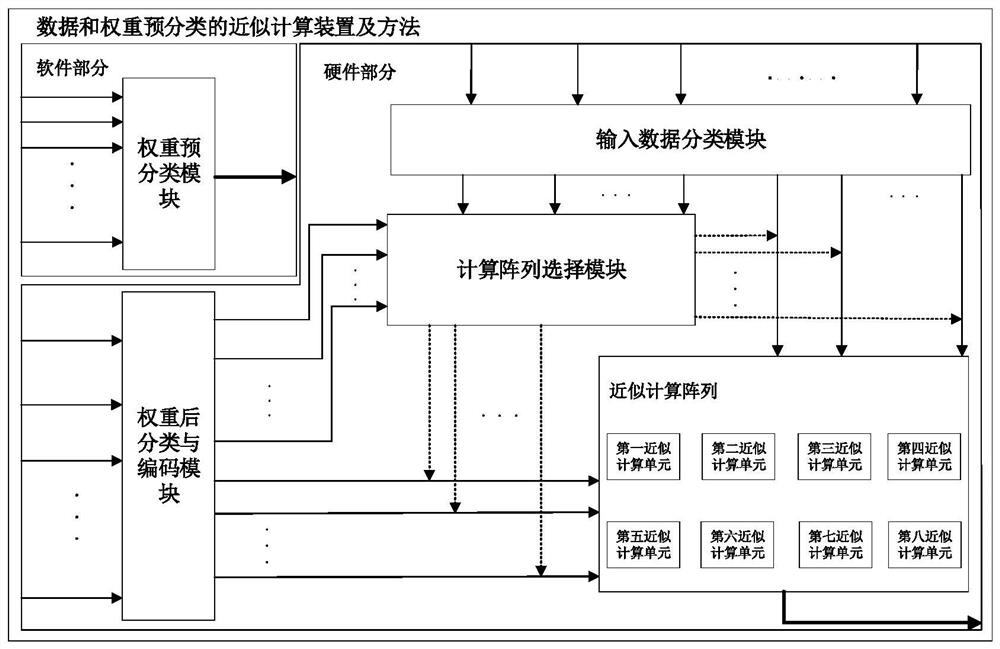

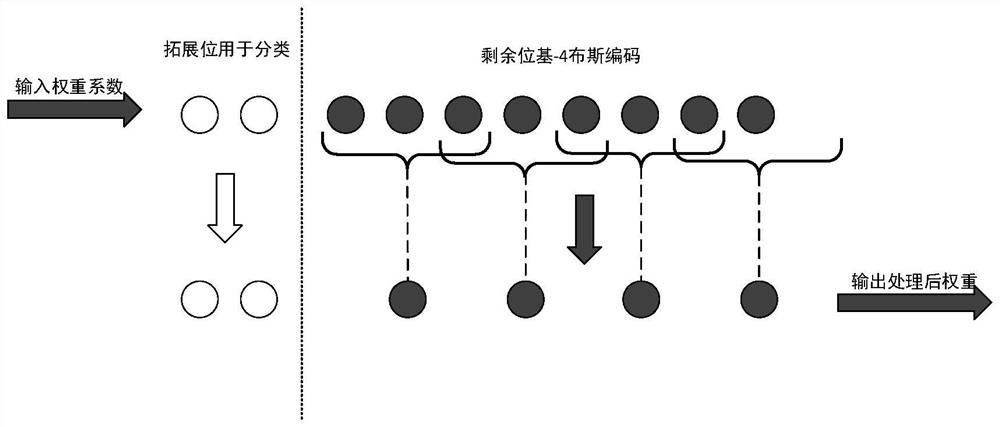

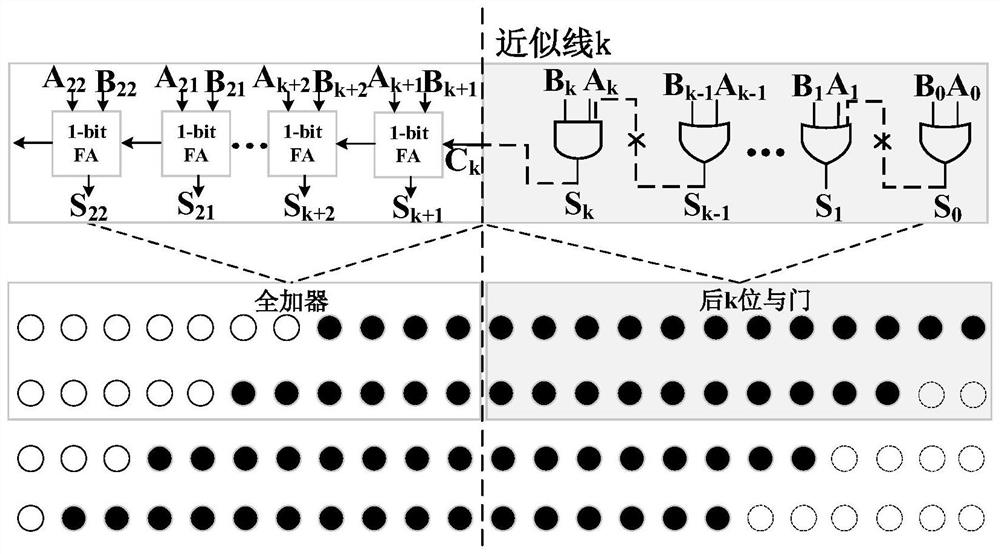

Approximate calculation device and method suitable for neural network data and weight pre-classification

PendingCN113253973AAvoid blindnessOvercoming hysteresisDigital data processing detailsCharacter and pattern recognitionEngineeringNetwork model

According to the approximate calculation device and method suitable for neural network data and weight pre-classification, weight parameters of a neural network are pre-processed, fine classification is conducted according to the number of continuous' 0 'from the lowest bit, simple classification is conducted on input data according to the same features, and the two parties are combined to serve as an important basis for configuring an approximate scheme; approximate calculation arrays with different approximation degrees are configured by controlling approximate line positions of the full adder adder tree accumulation circuit and the low-order or approximate adder accumulation circuit. Aiming at the characteristic that weight parameters of a neural network model are often known and fixed, fine pre-classification processing is performed on the weight parameters, fine simple dichotomy processing is performed according to the characteristics of input system data, and approximate multiplication operation arrays with different configurations are dynamically selected by combining the fine pre-classification processing and the dichotomy processing. The selection of the approximation scheme is accurate, the characteristics of the corresponding data and weight are matched in real time, and the blindness, hysteresis and complexity of the dynamic configuration scheme of the existing approximation multiplication are overcome.

Owner:NANJING PROCHIP ELECTRONIC TECH CO LTD

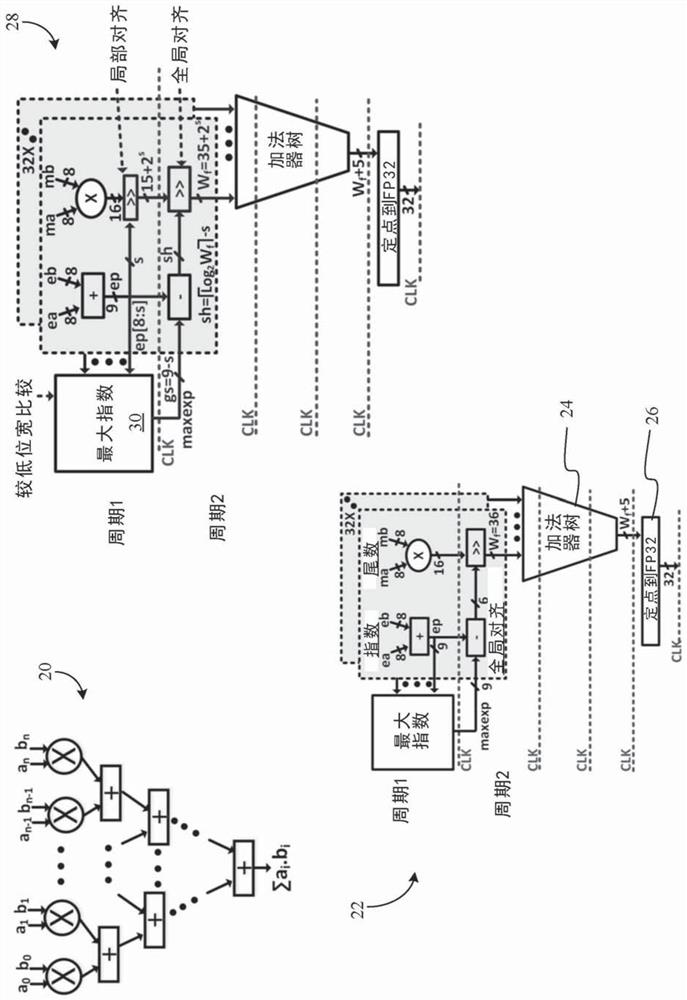

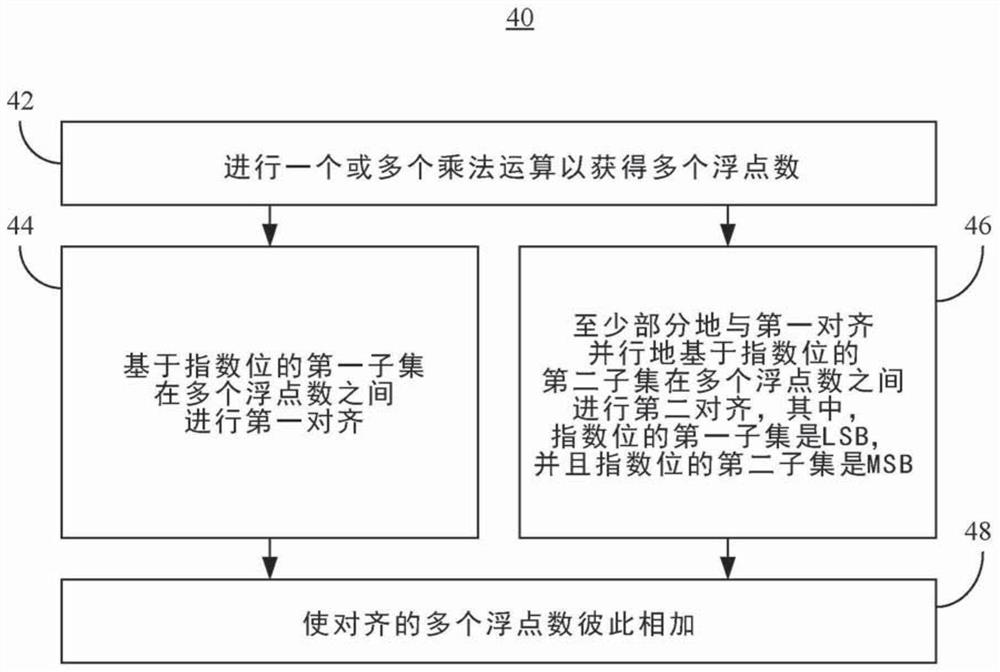

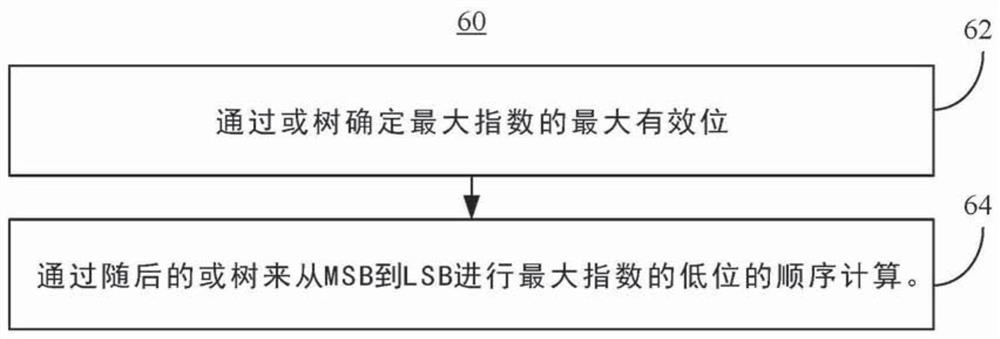

Floating-point dot-product hardware with wide multiply-adder tree for machine learning accelerators

The invention relates to floating-point dot-product hardware with a wide multiply-adder tree for machine learning accelerators. Systems, apparatuses and methods may provide for technology that conducta first alignment between a plurality of floating-point numbers based on a first subset of exponent bits. The technology may also conduct, at least partially in parallel with the first alignment, a second alignment between the plurality of floating-point numbers based on a second subset of exponent bits, where the first subset of exponent bits are LSBs and the second subset of exponent bits are MSBs. In one example, technology adds the aligned plurality of floating-point numbers to one another. With regard to the second alignment, the technology may also identify individual exponents of a plurality of floating-point numbers, identify a maximum exponent across the individual exponents, and conduct a subtraction of the individual exponents from the maximum exponent, where the subtraction isconducted from MSB to LSB.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com