Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

48results about How to "Improve memory usage efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

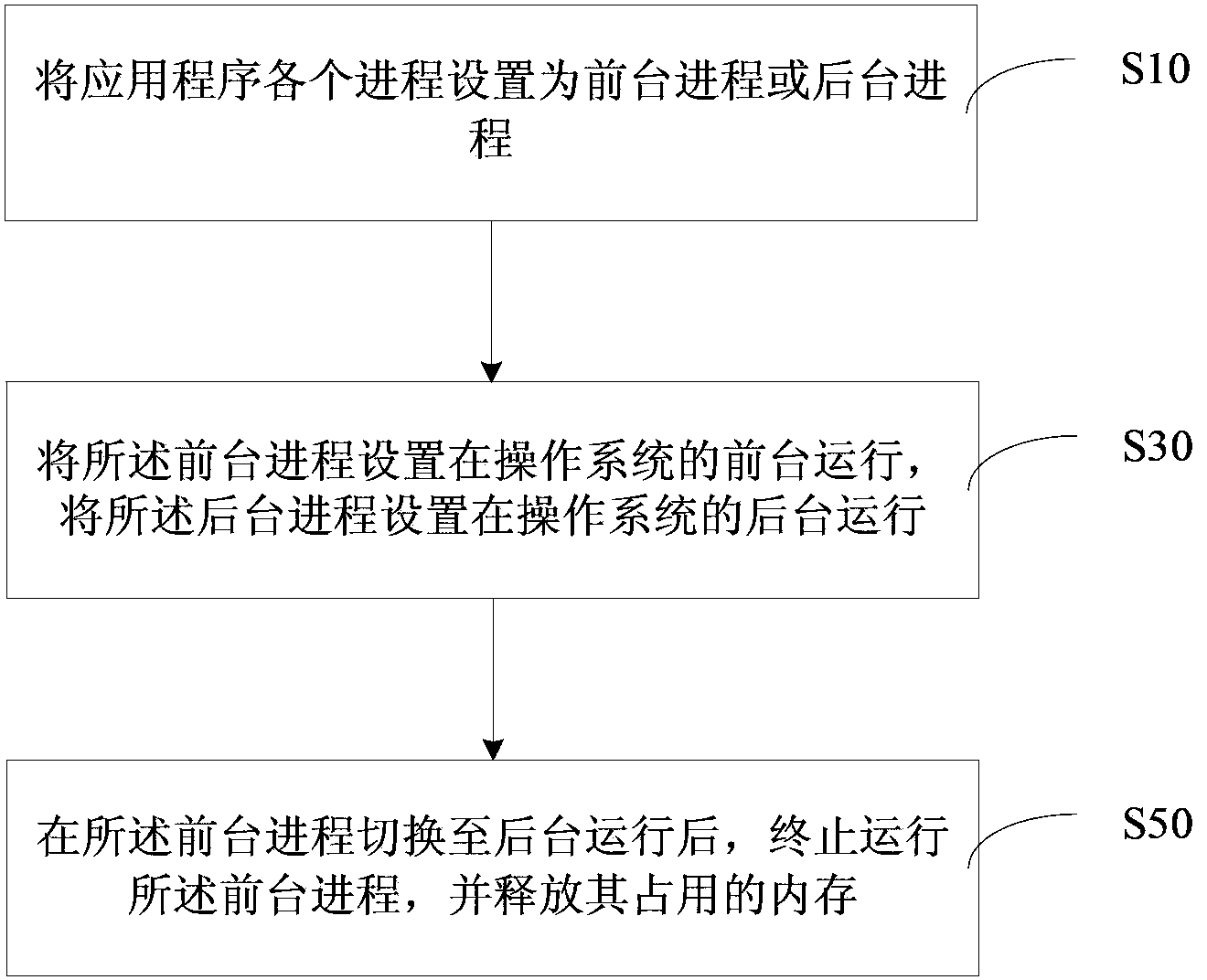

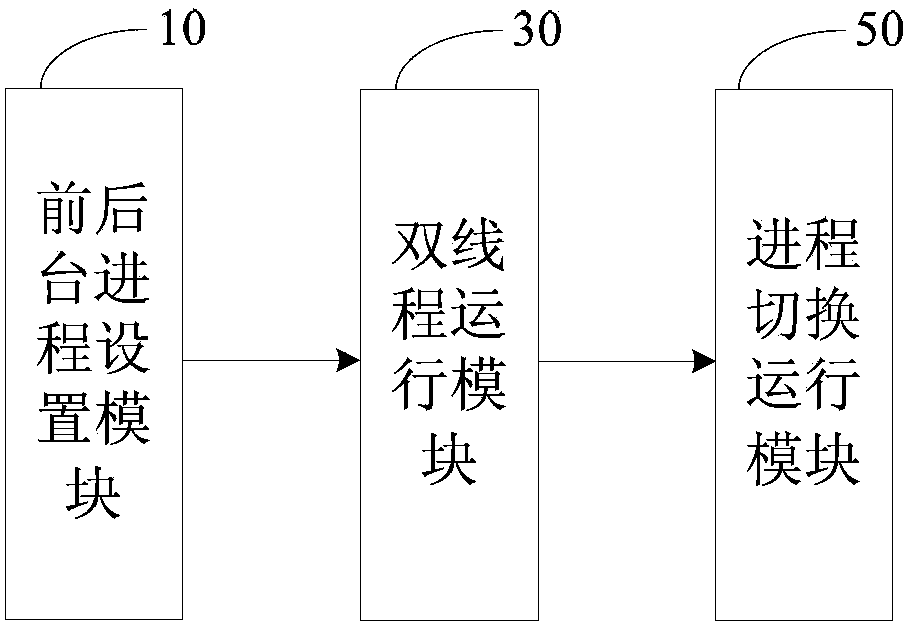

Application operation method, system and application

ActiveCN104252389ASave memoryImprove memory usage efficiencyProgram initiation/switchingBackground process

The invention provides an application operation method. The method comprises the following steps of setting each course of an application into a foreground course or a background course; setting the foreground course to operate on a foreground of an operation system, and setting the background course to operate on a background of the operation system; after the foreground course is switched to the background course, stopping operating the foreground course, and releasing internal storage which is occupied by the foreground course. The invention also provides an application operation system and an application. By virtue of the technology provided by the invention, the aim of saving the internal storage is reached, the using efficiency of the internal storage is improved, and the operation efficiency of the application is promoted.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

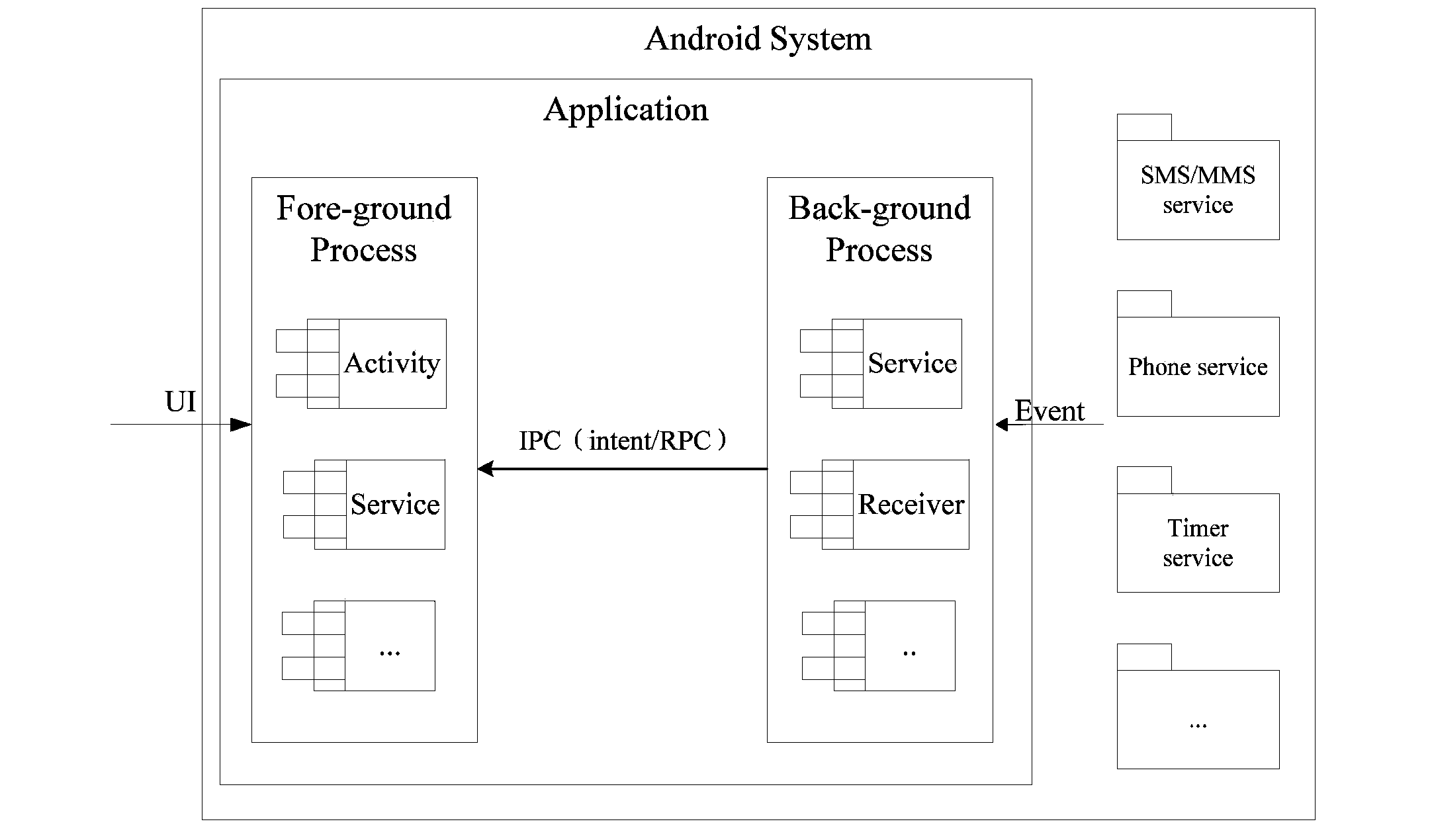

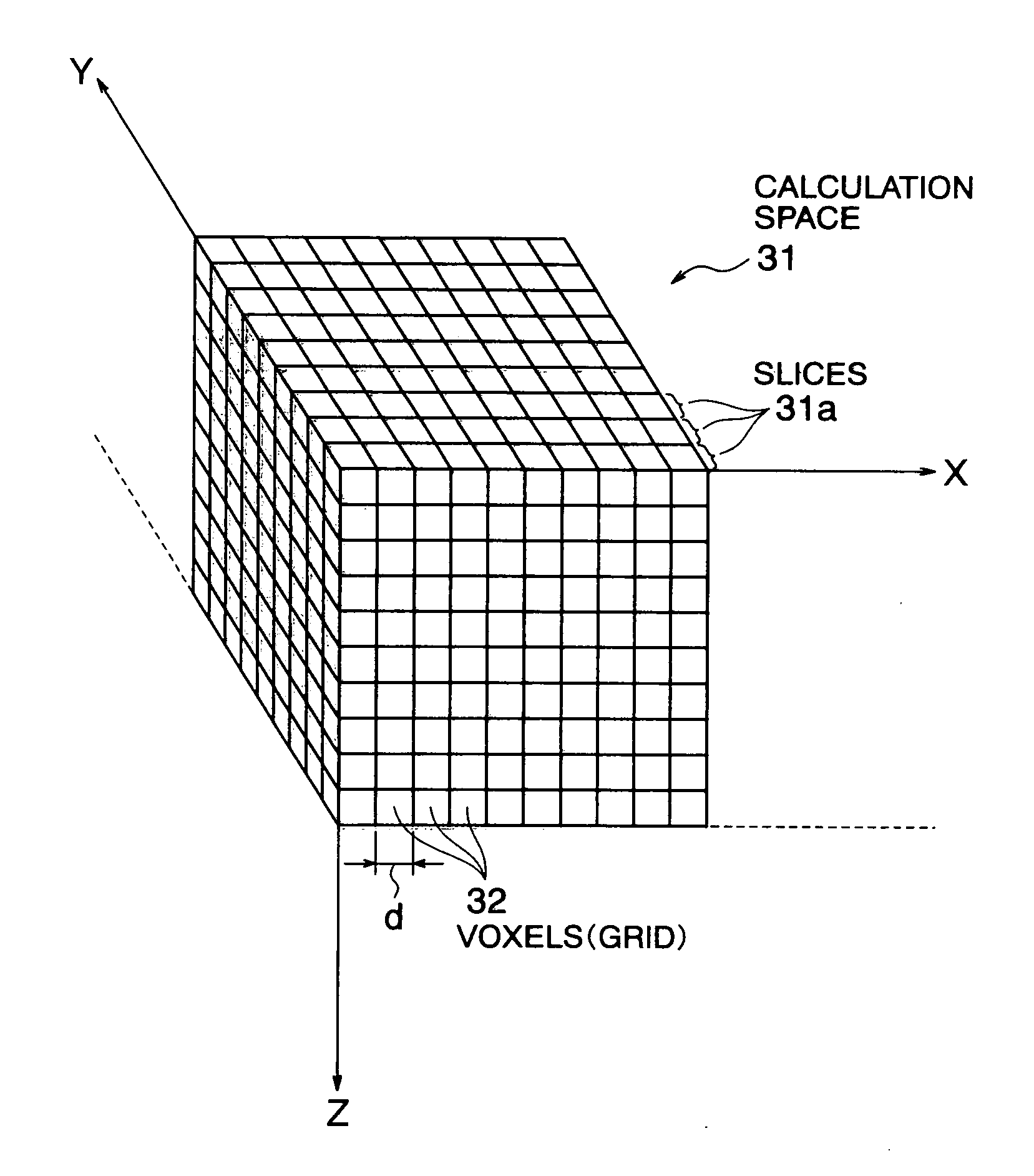

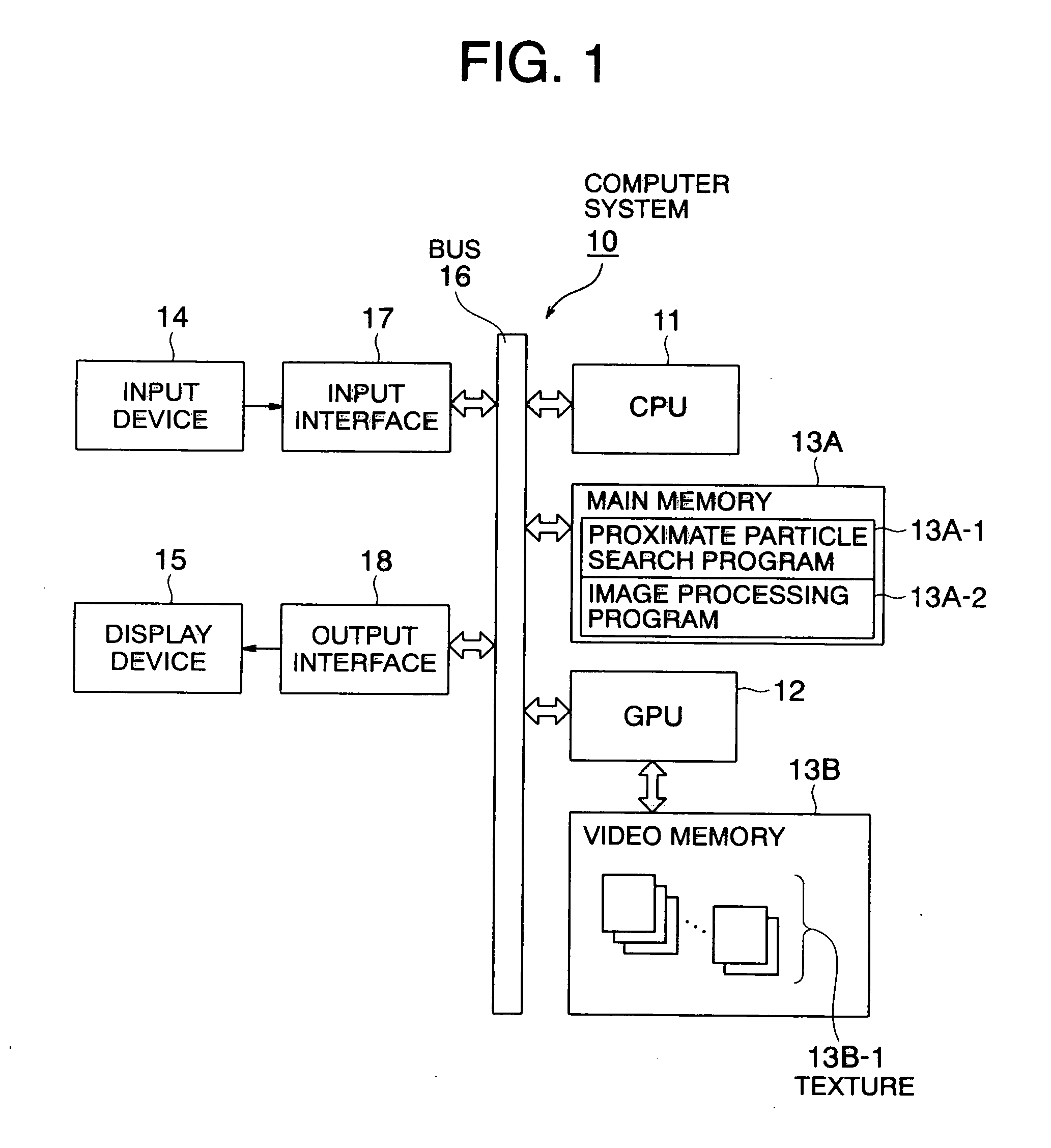

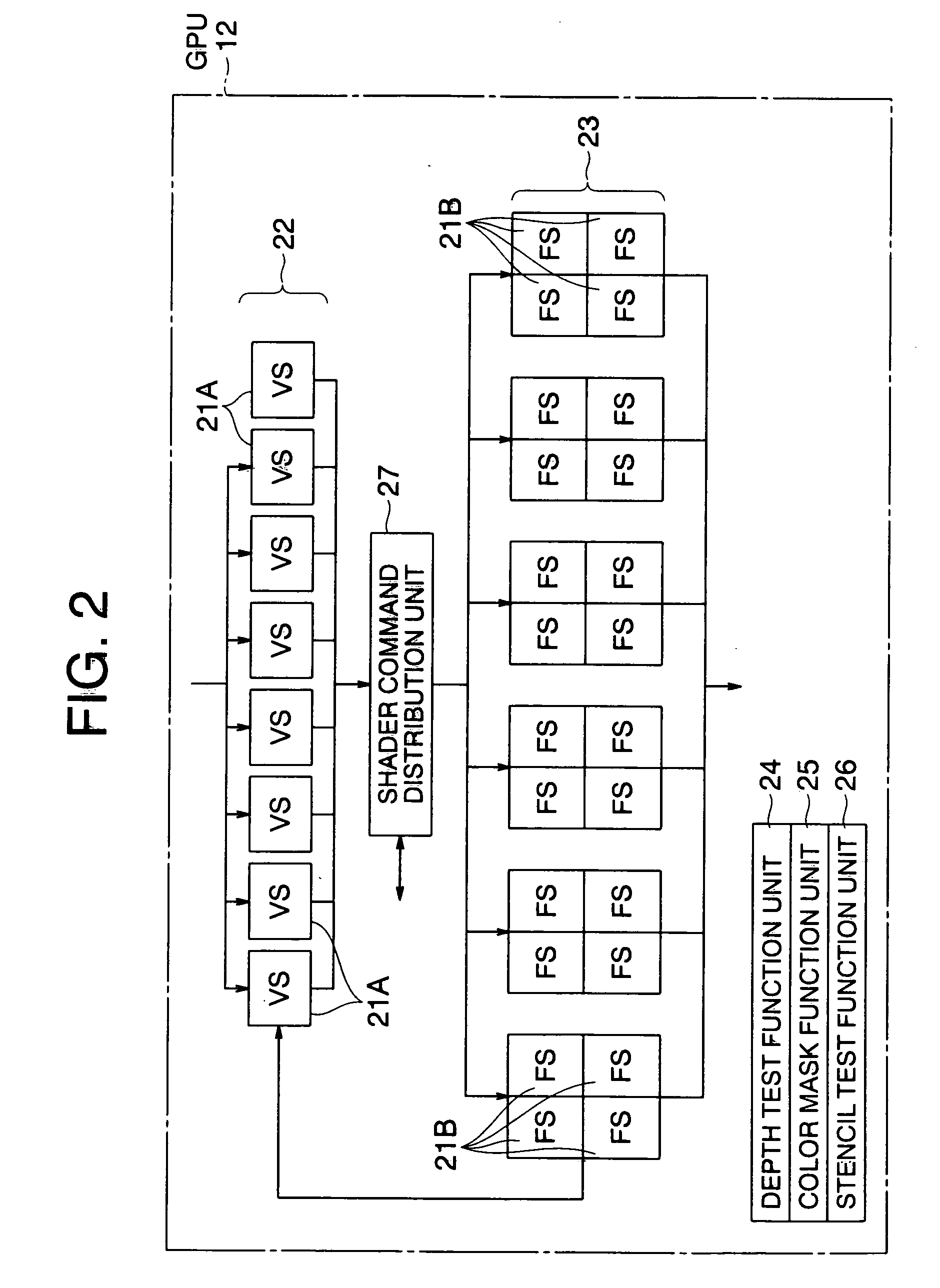

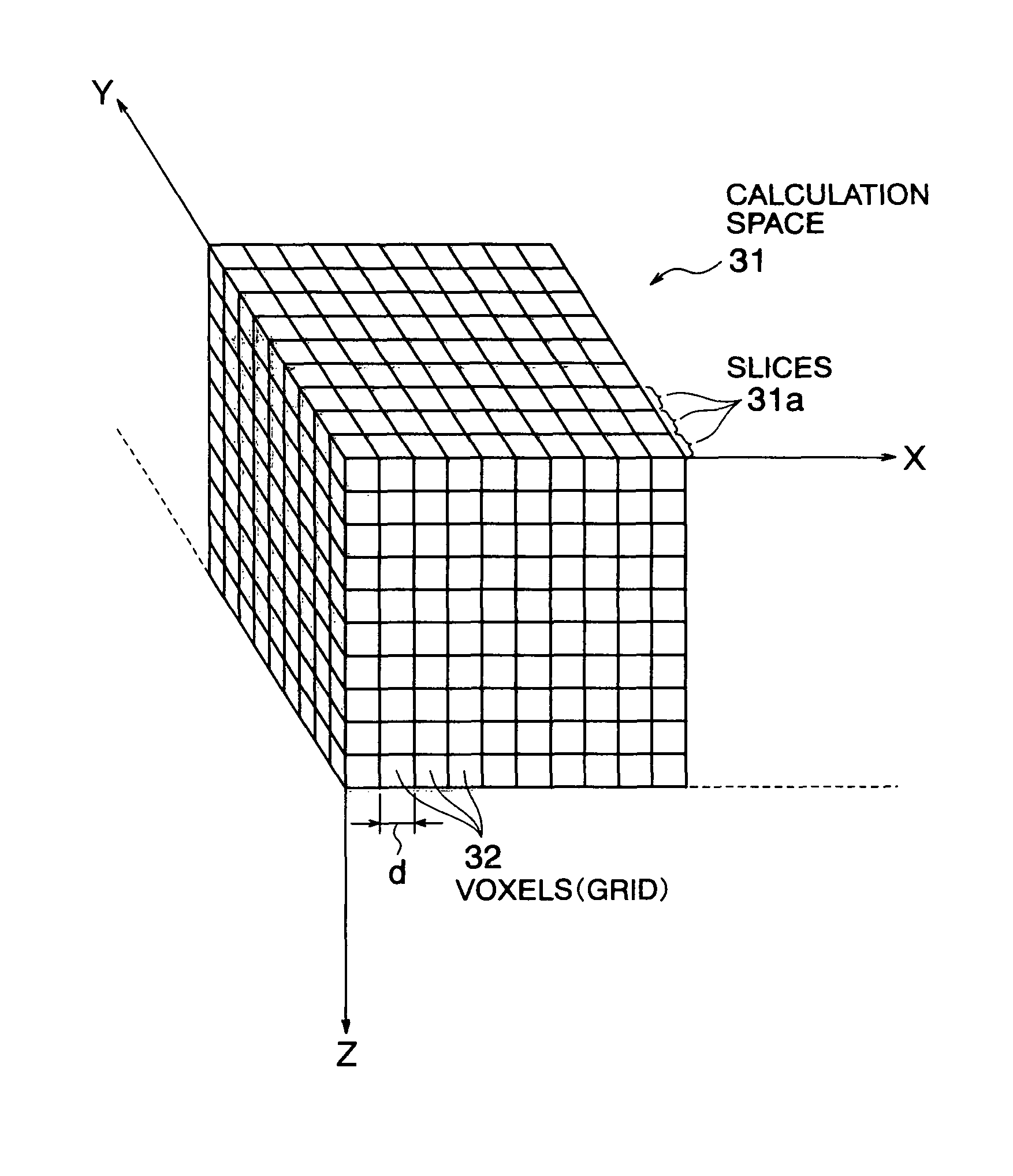

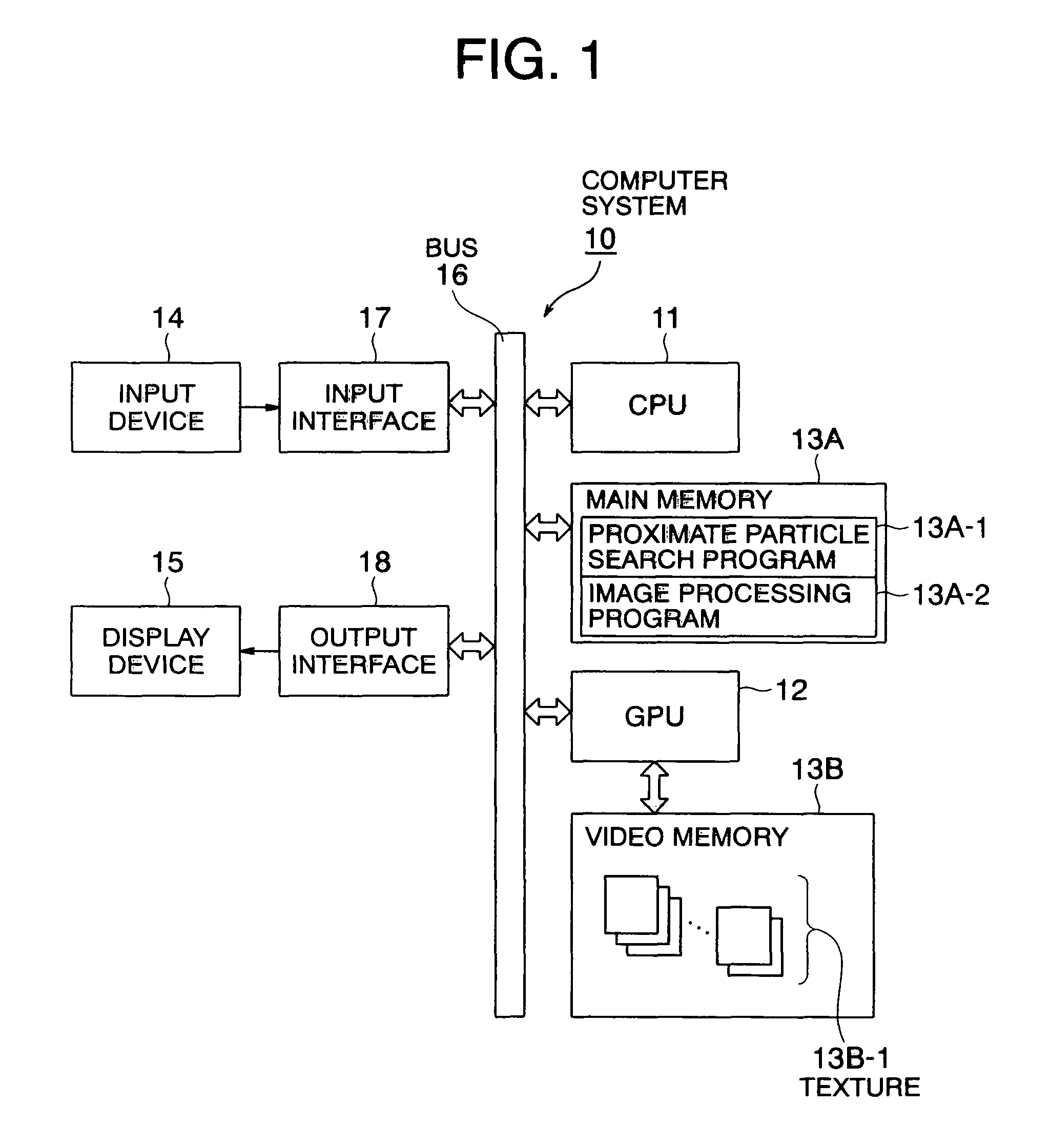

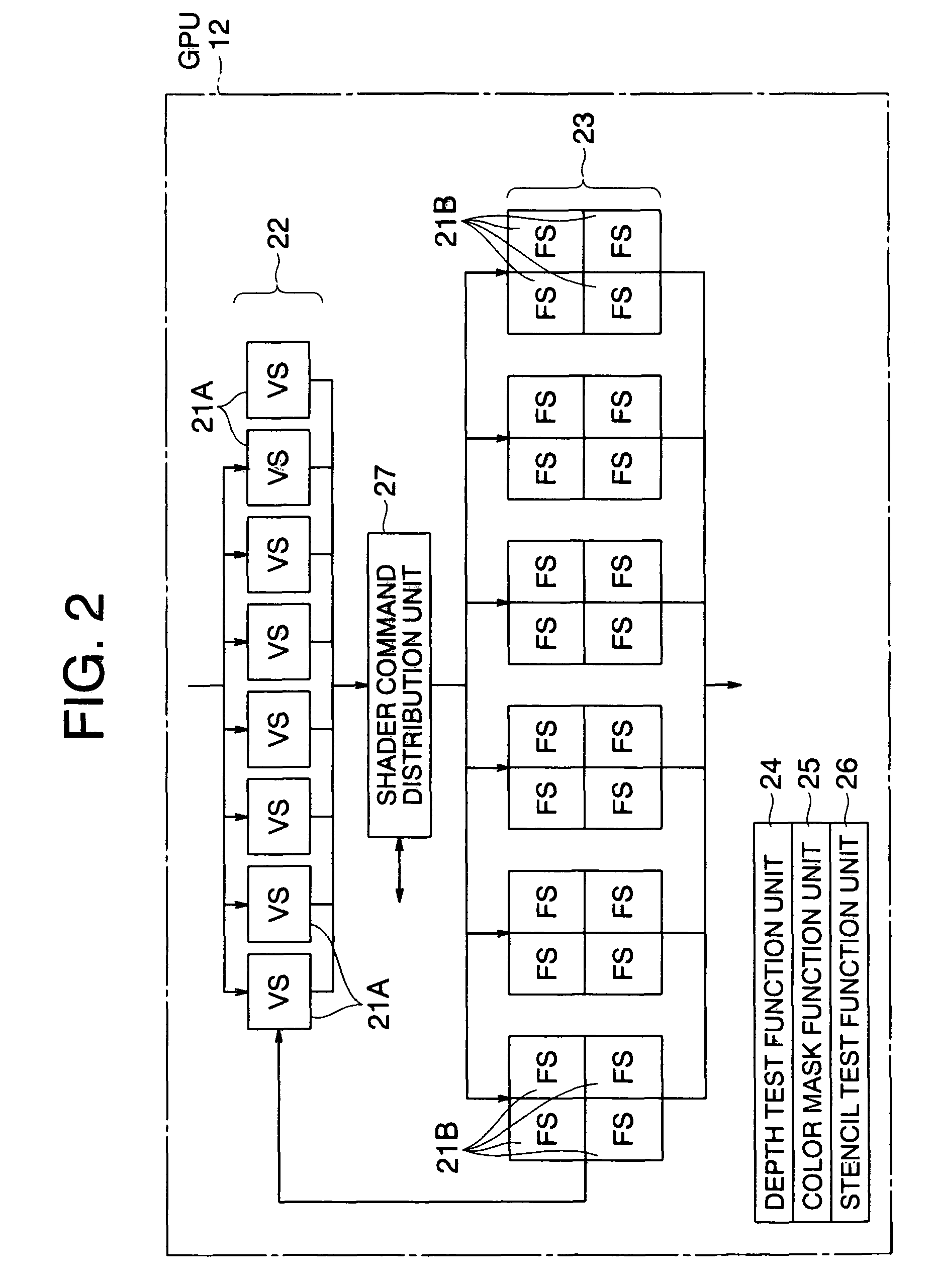

Sliced data structure for particle-based simulation, and method for loading particle-based simulation using sliced data structure into GPU

ActiveUS20090070079A1Good efficiencyLimited efficiencyComputation using non-denominational number representationDesign optimisation/simulationVoxelTheoretical computer science

The sliced data structure used for a particle-based simulation using a CPU or GPU is a data structure for a calculation space. The space is a three-dimensional calculation space constructed from numerous voxels; a plurality of slices perpendicular to the Y axis is formed; numerous voxels are divided by a plurality of two-dimensional slices; the respective starting coordinates of the maximum and minimum voxels are calculated for a range of voxels in which particles are present in each of a plurality of two-dimensional slices; the voxel range is determined as a bounding box surrounded by a rectangular shape; and memory is provided for the voxels contained in the bounding boxes of each of the plurality of two-dimensional slices.

Owner:PROMETECH SOFTWARE

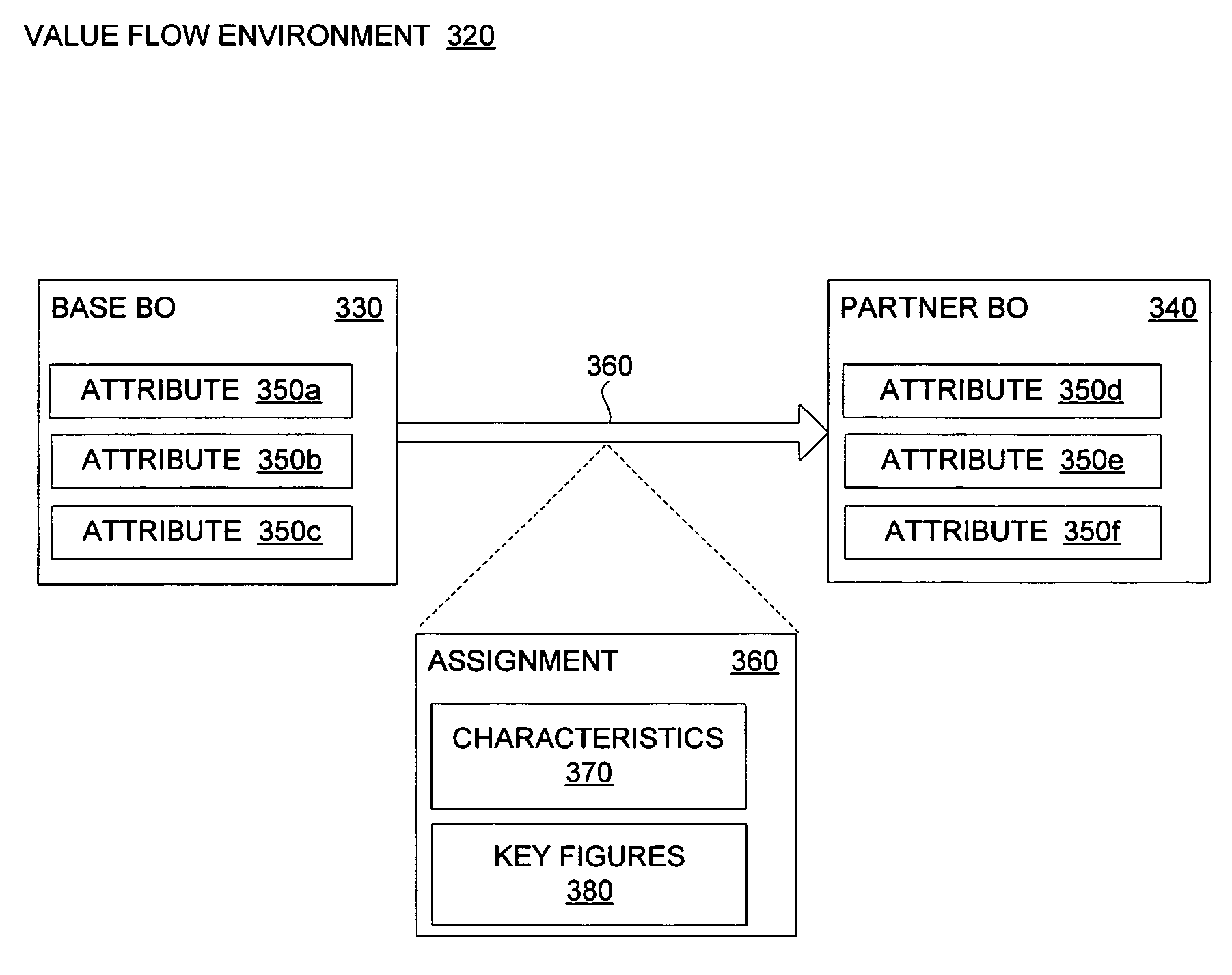

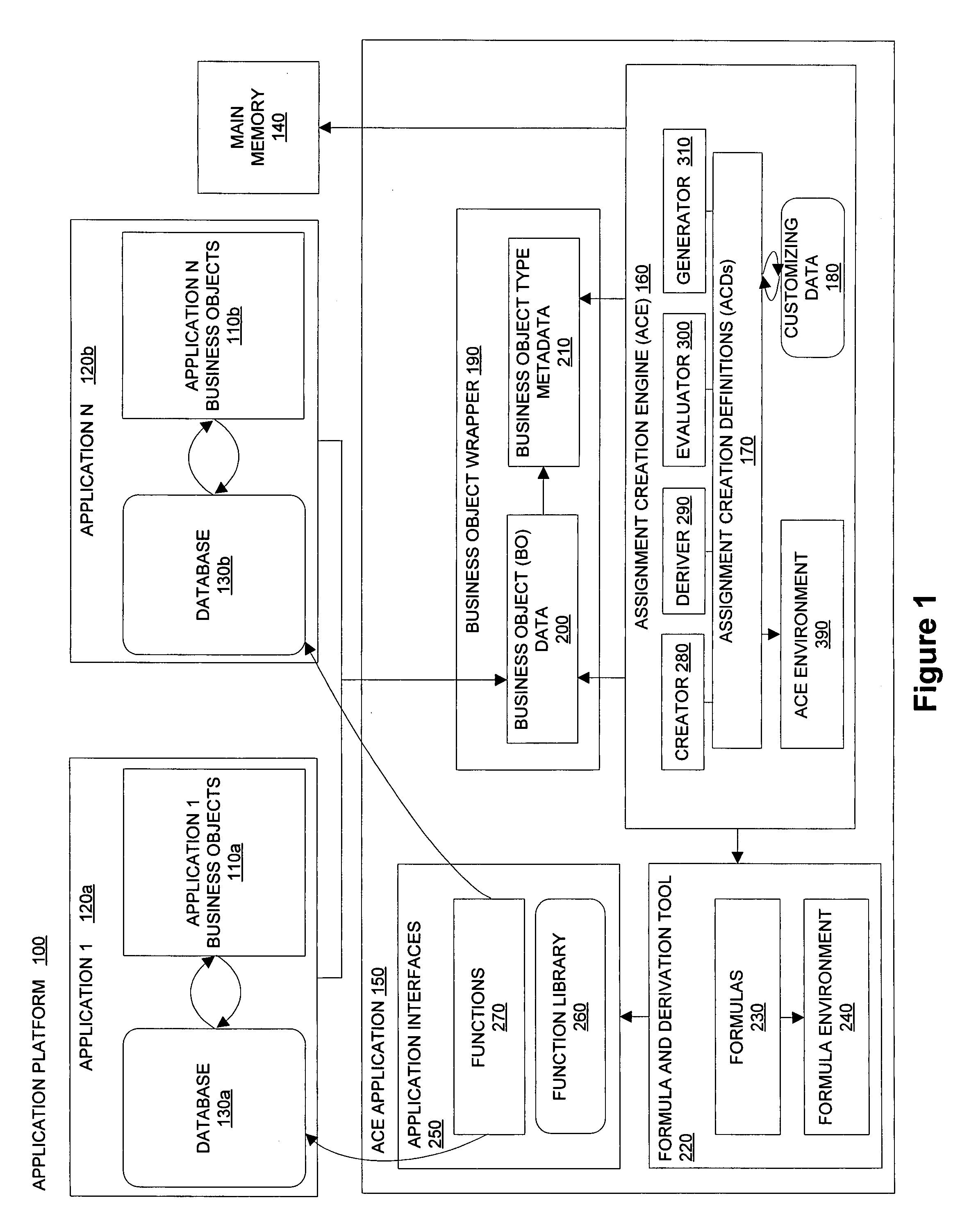

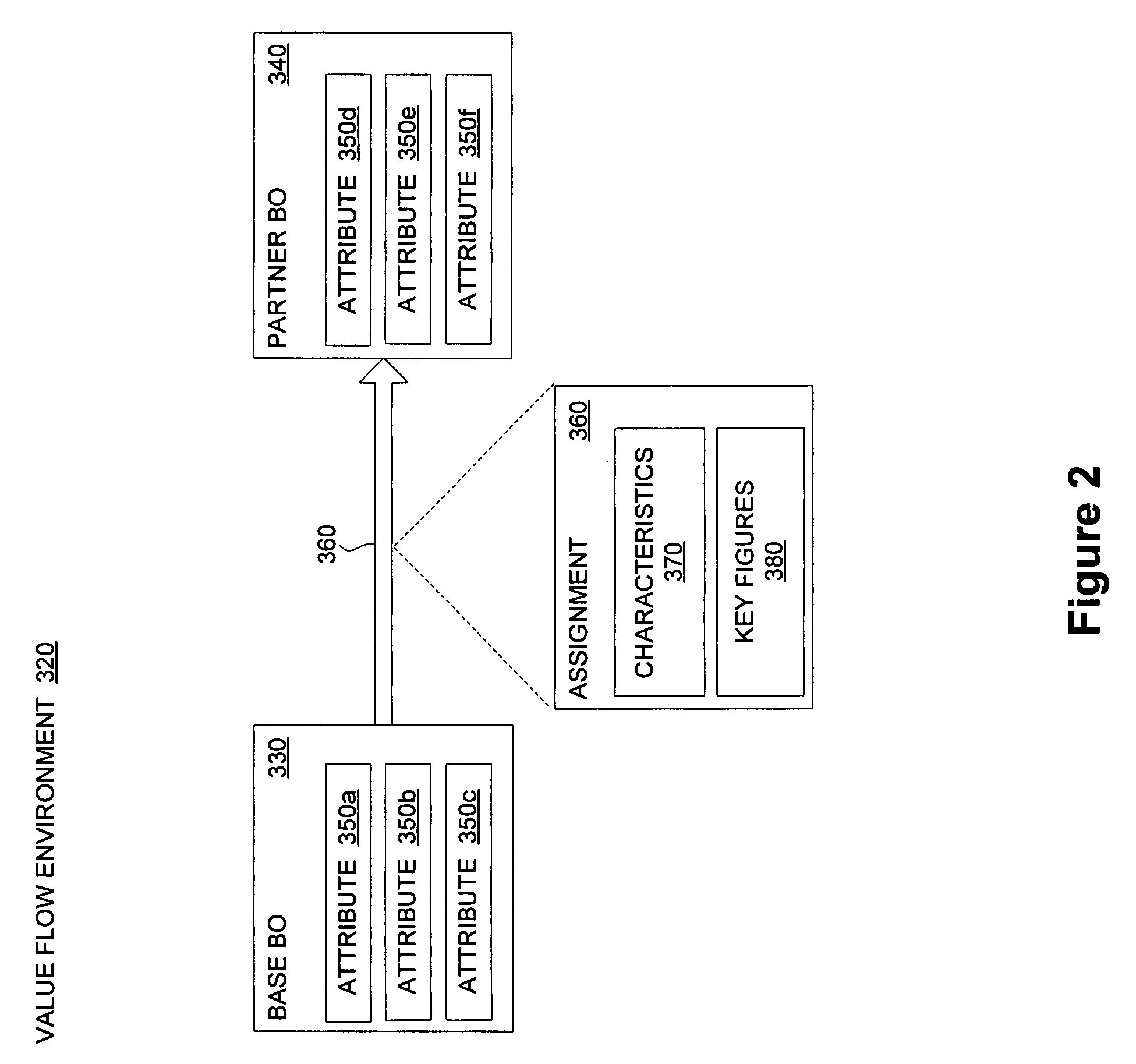

Systems and methods for handling attributes used for assignment generation in a value flow environment

InactiveUS20080147457A1Increase speedImprove efficiencySoftware designSpecific program execution arrangementsEvaluation functionSoftware engineering

Systems and methods are provided for handling data to generate an assignment between a base business object and a partner business object in a value flow environment. In one implementation, a function of an assignment creation definition is read to identify an attribute of the base business object, a value of the attribute being required for evaluation of the function. Further, it is determined whether the value of the attribute is stored in a main memory and, if the value of the attribute is stored in the main memory, then the value is retrieved from the main memory. If the value of the attribute is not stored in the main memory, then the value is retrieved from a database. Thereafter, the function is evaluated based on the retrieved value of the attribute to generate the assignment.

Owner:SAP AG

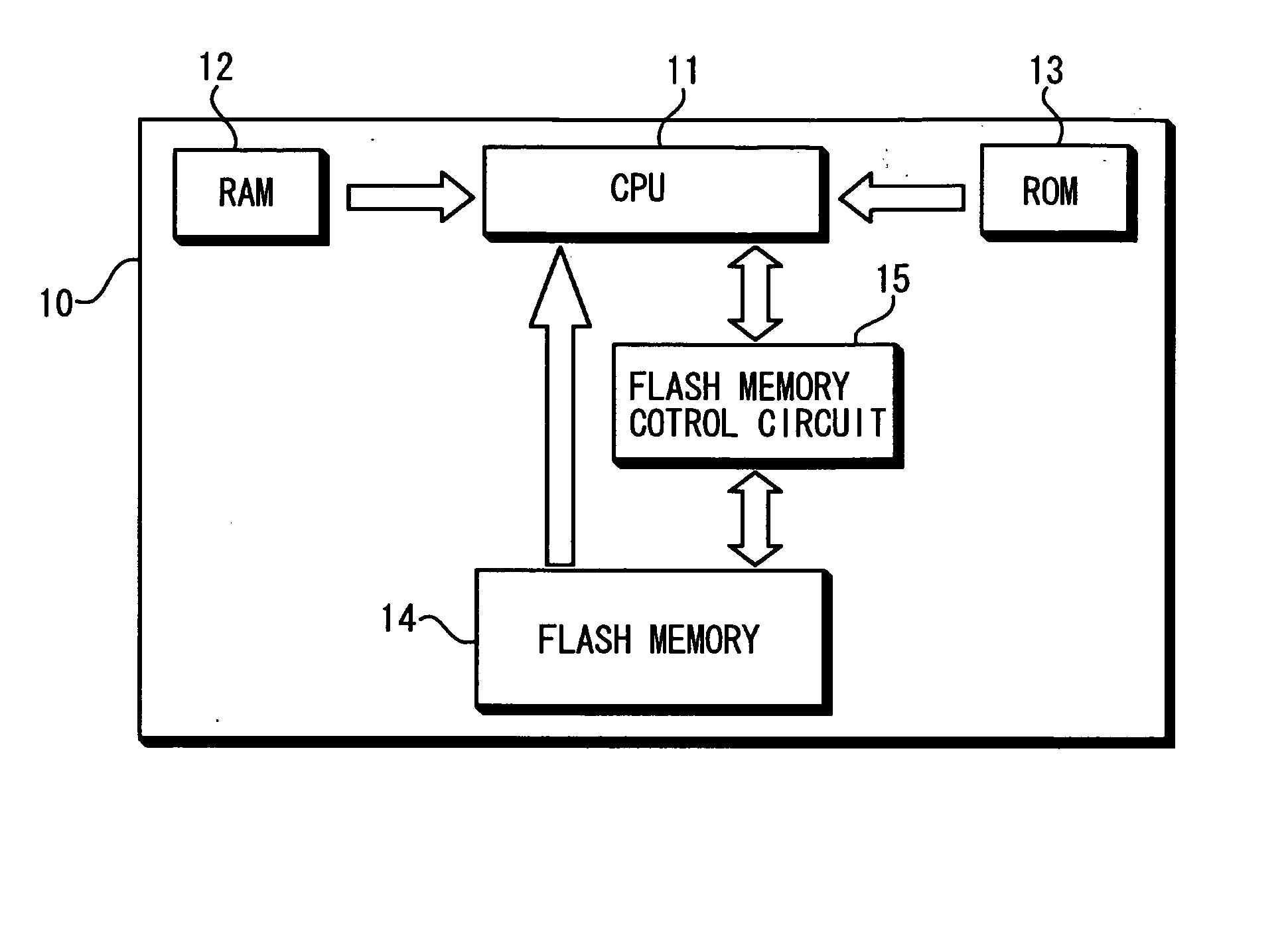

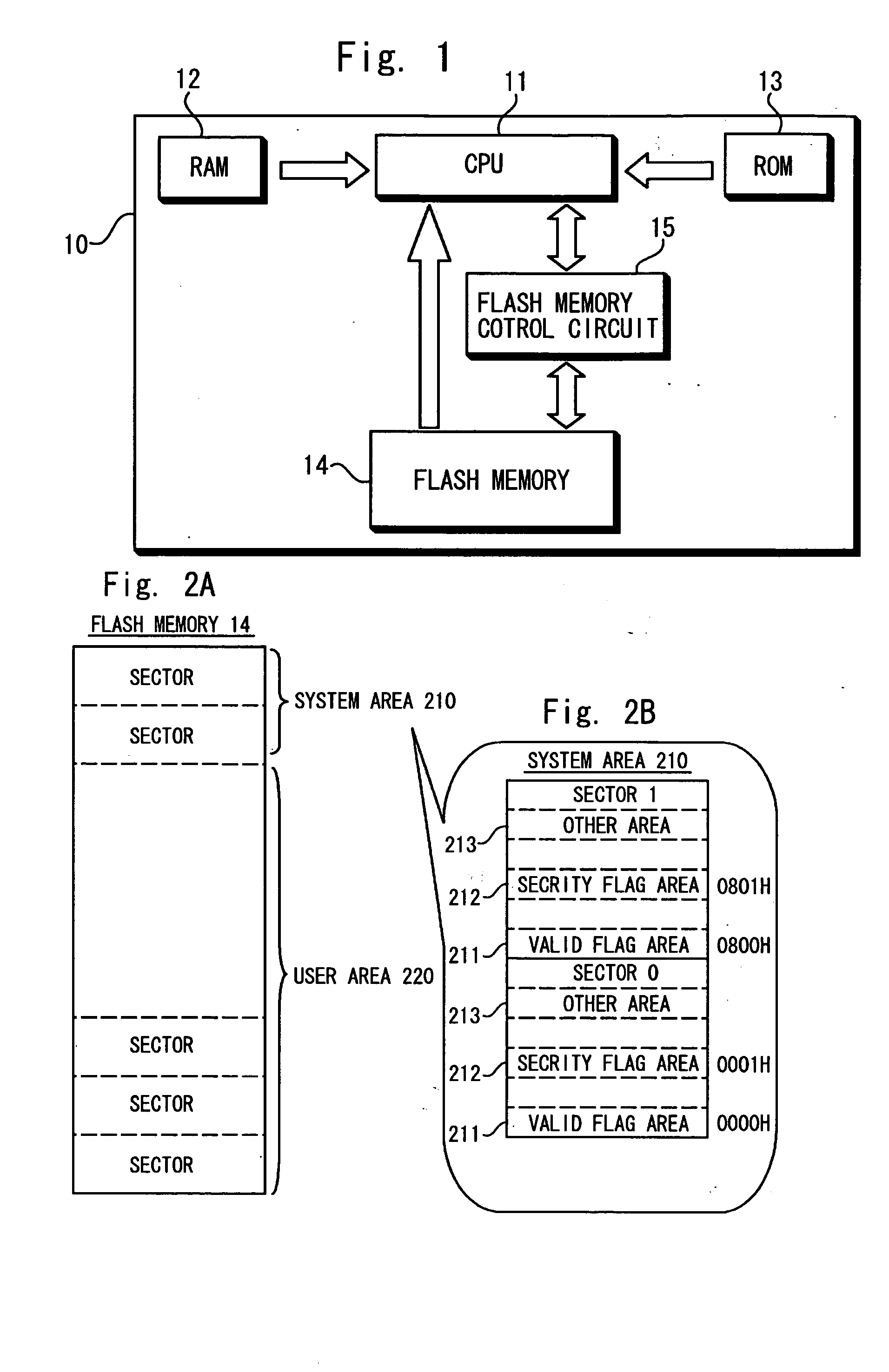

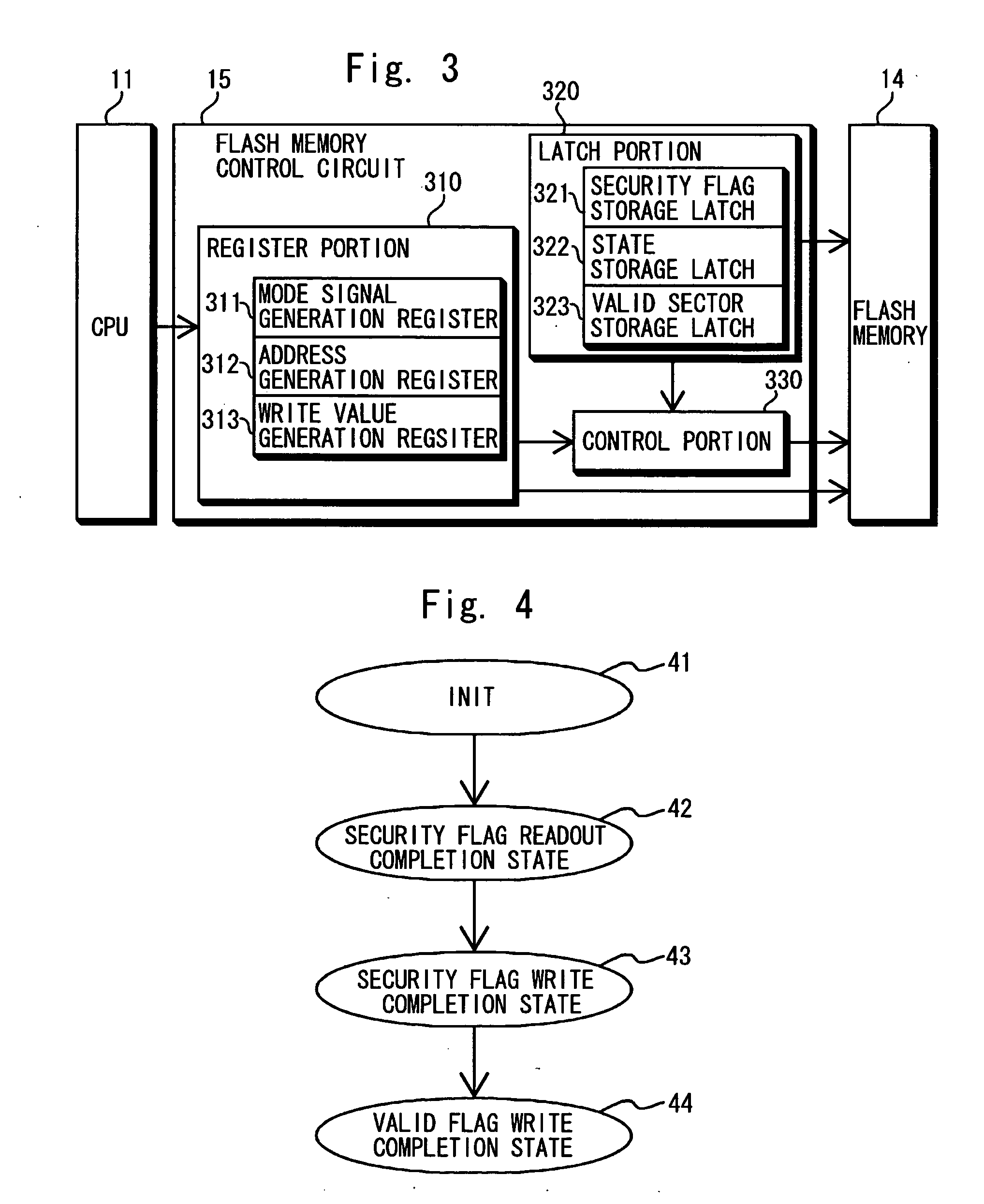

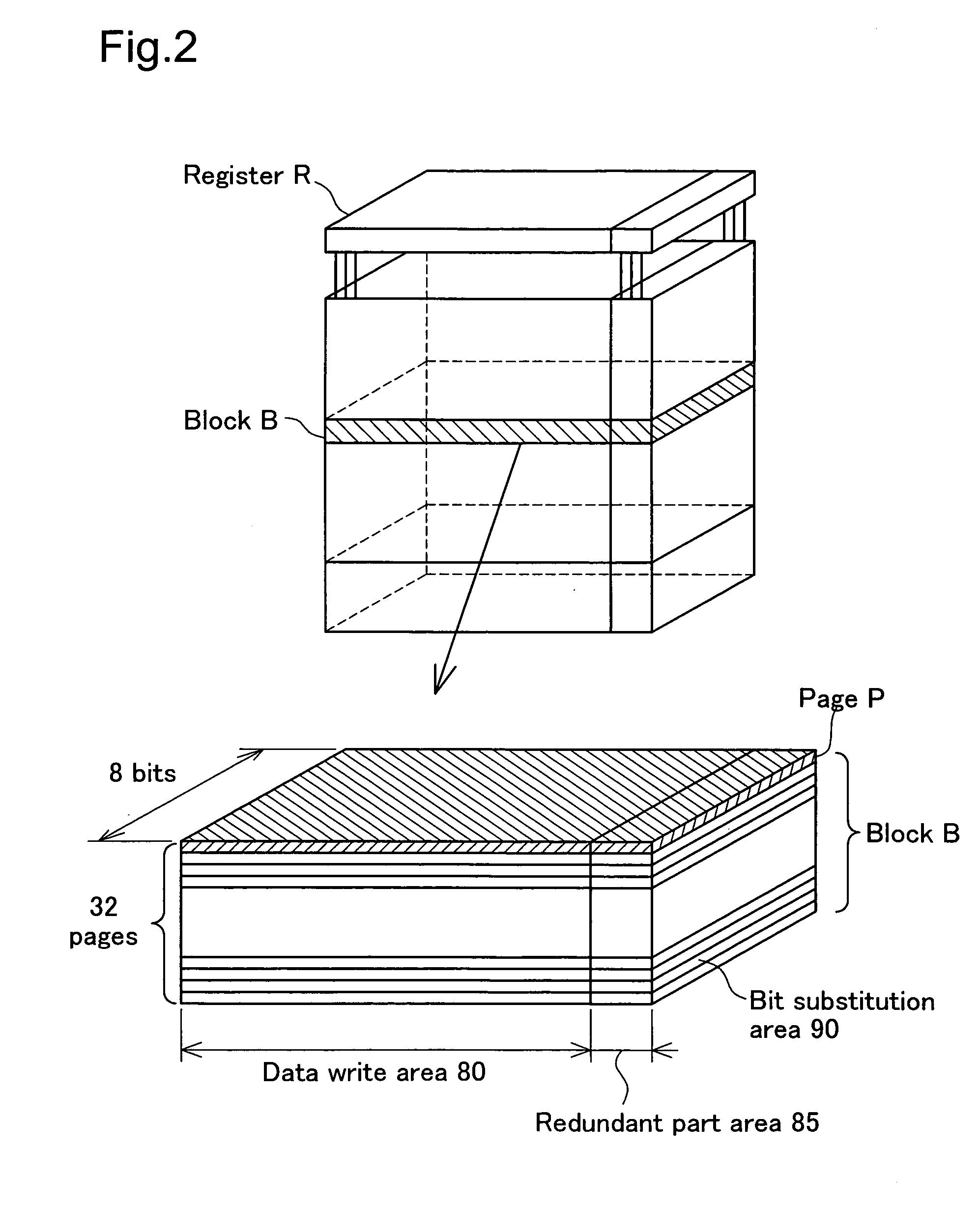

Electronic device, nonvolatile memory and method of overwriting data in nonvolatile memory

InactiveUS20050068842A1Improve memory usage efficiencyFaster overwriting operationMemory loss protectionError detection/correctionData storeNon-volatile memory

Owner:NEC ELECTRONICS CORP

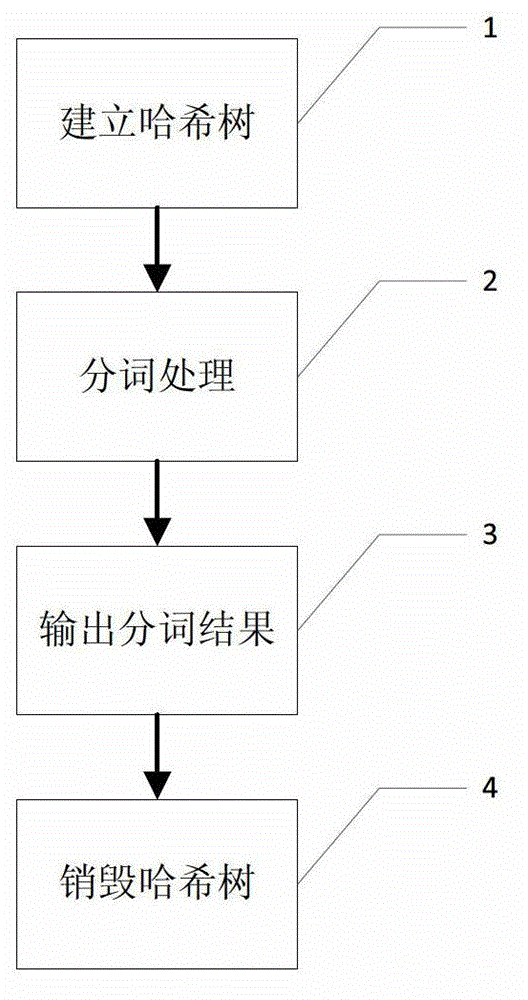

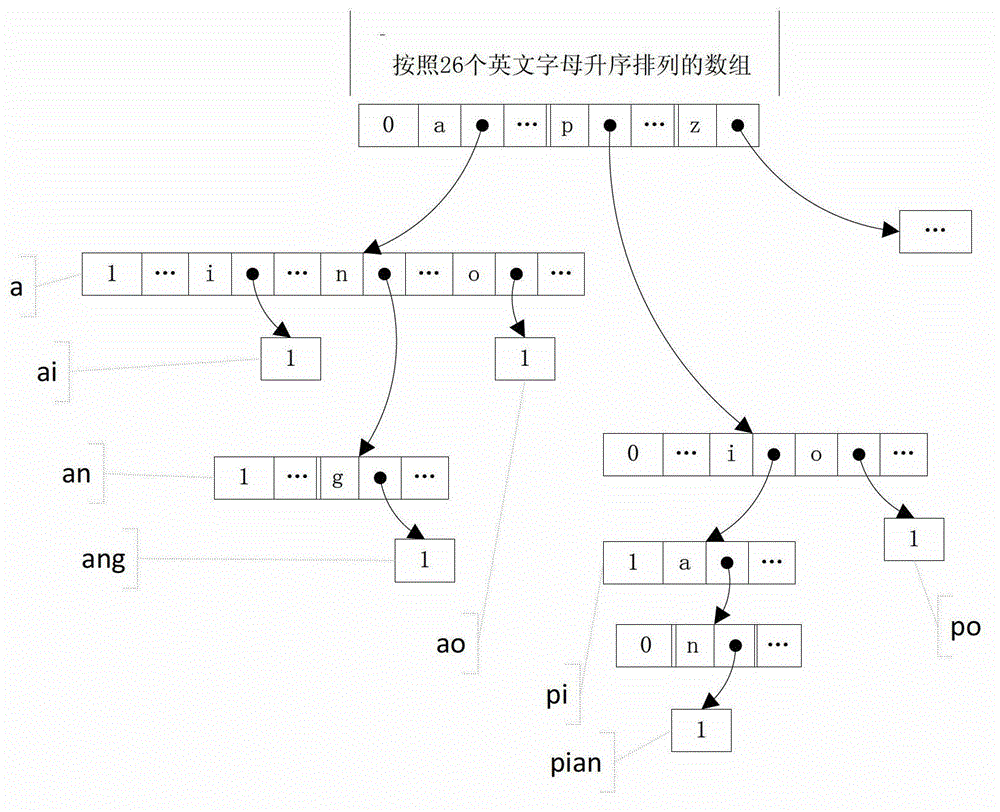

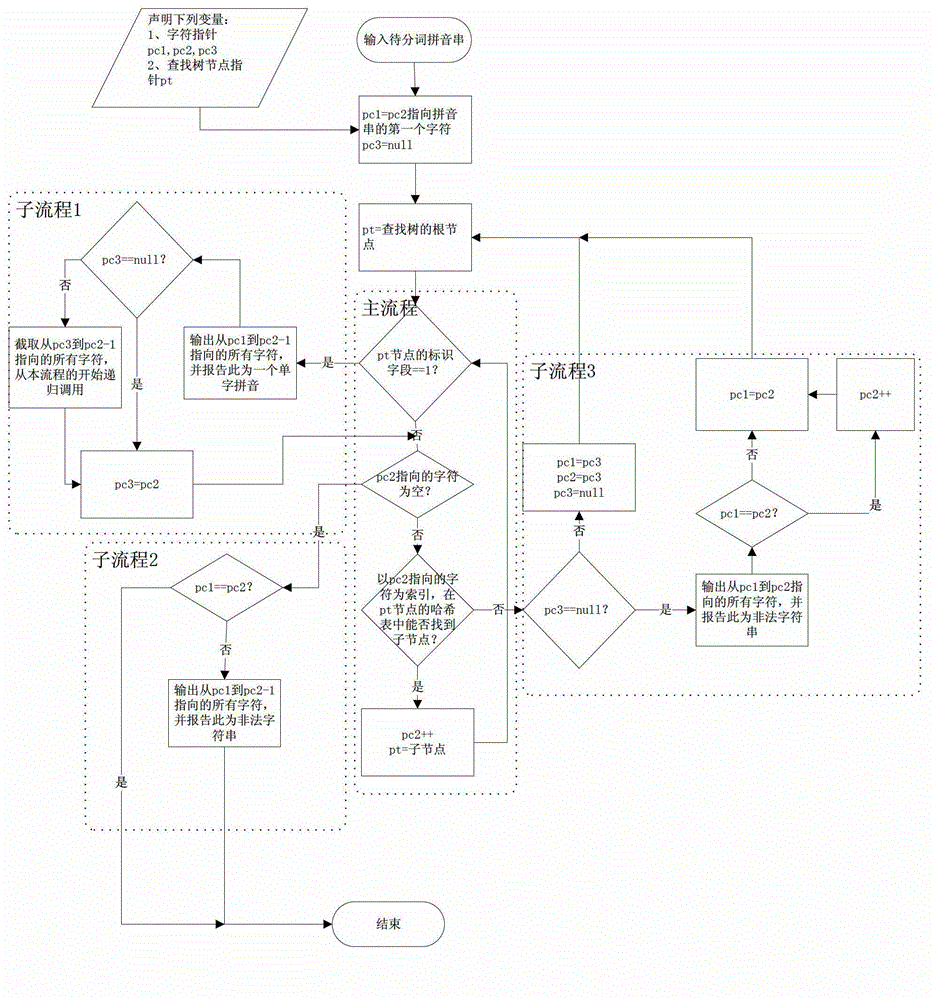

Chinese PINYIN quick word segmentation method based on word search tree

ActiveCN102867049AReduce comparisonImprove search efficiencySpecial data processing applicationsWord searchTime complexity

The invention discloses a Chinese PINYIN quick word segmentation method based on a word search tree. The method is implemented by a computer or embedded mobile equipment and comprises the following working steps of: 1, building a Chinese character PINYIN search tree according to all the known Chinese character PINYIN lists; 2, combining the search tree with a hash table according to the built word search tree, and segmenting a string of given Chinese PINYINs; 3, working out a word segmentation result; and 4, destroying the search tree and releasing resources. Due to a public prefix of a character string, a construction space is saved, so that unnecessary character string comparison is greatly reduced; by the redundancy hash table with an index, the search efficiency is improved; and the time complexity of an algorithm is reduced to the minimum.

Owner:康威通信技术股份有限公司

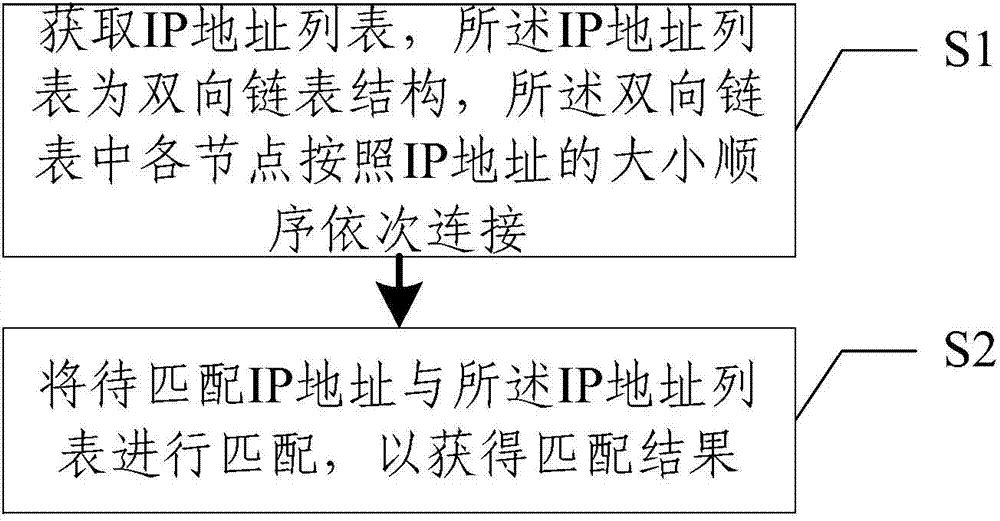

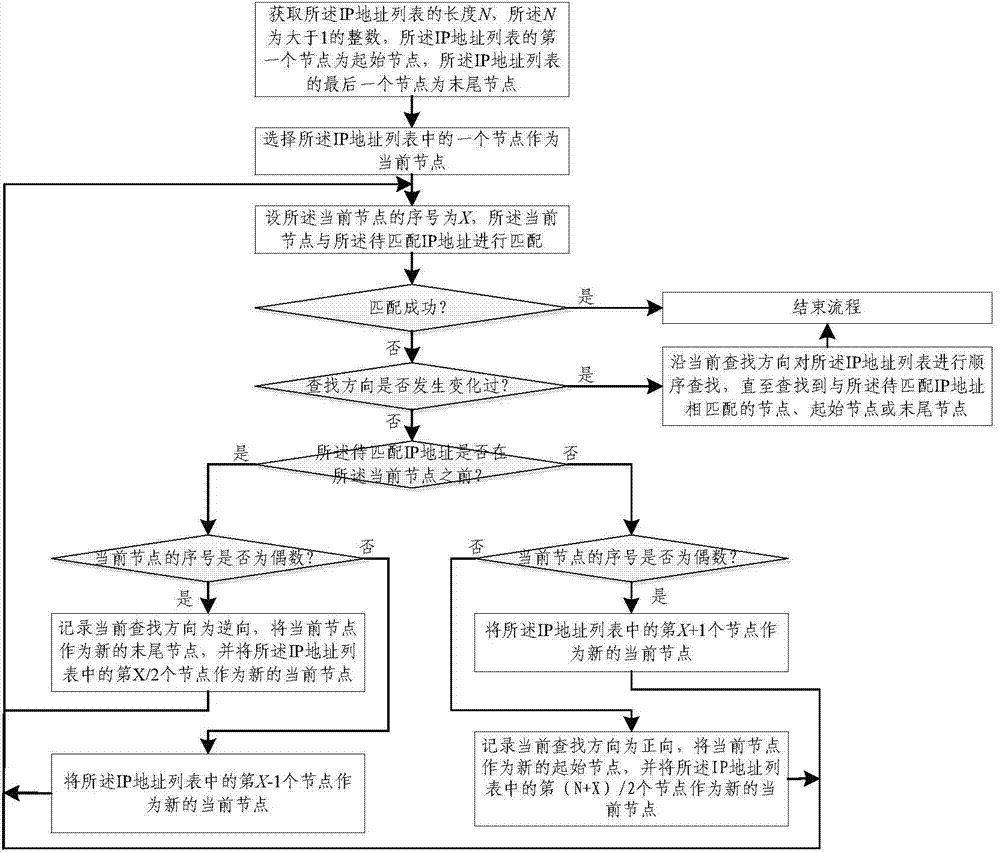

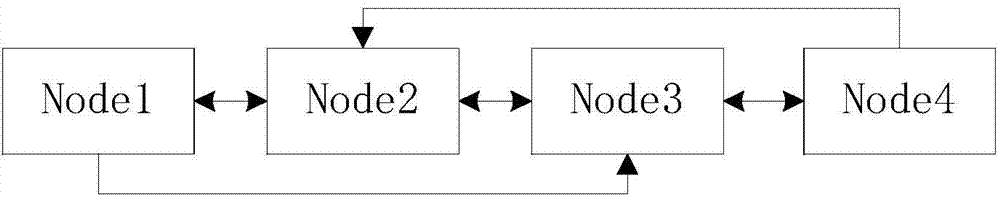

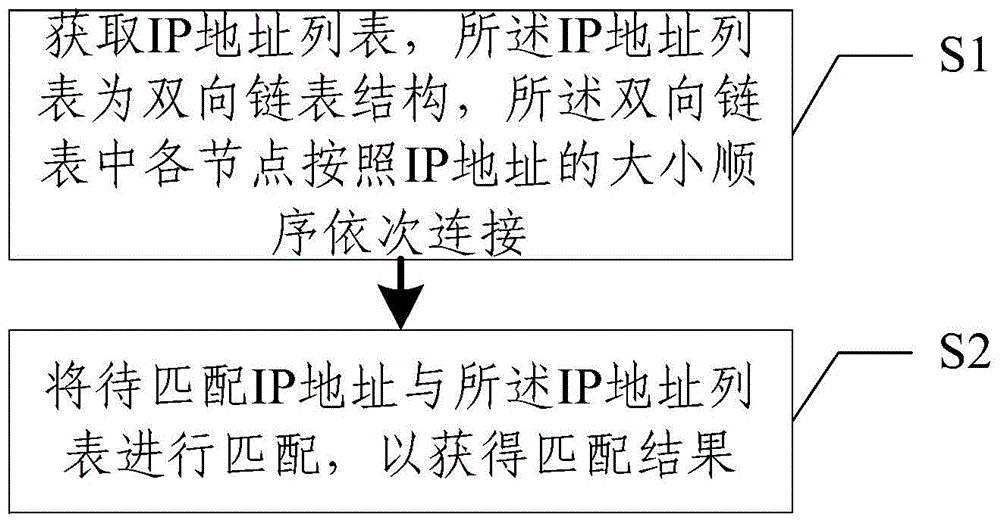

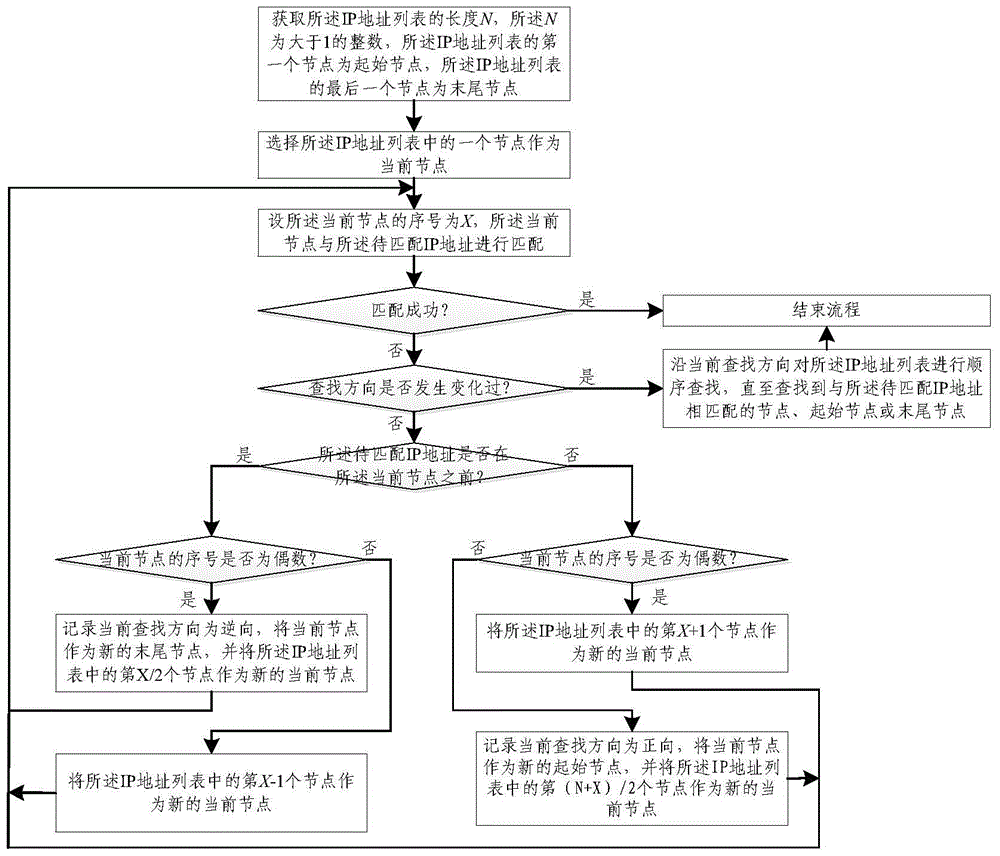

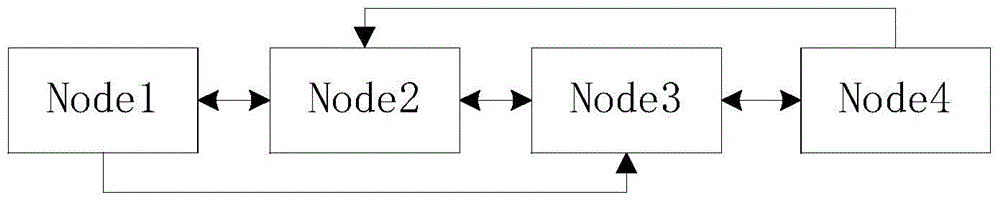

IP address list matching method and device

InactiveCN103581358AImprove memory usage efficiencyEliminate the Risk of Storage Spaces FailureTransmissionIp addressNetwork communication

The invention discloses an IP address list matching method and device, and relates to the technical field of network communication. The method comprises the following steps of S1, obtaining an IP address list, wherein the IP address list is of a two-way chain table structure, and nodes in the two-way chain table are sequentially connected according to the size sequence of IP addresses; S2, conducting matching on the IP addresses to be matched and the IP address list to obtain the matching result. The IP address list is arranged to be of the two-way chain table structure, the nodes in the two-way chain table are sequentially connected according to the size sequence of the IP addresses, due to the fact that the nodes in the two-way link table are in a discontinuous memory, the memory use efficiency of network equipment is improved, and risks caused by failure distribution of large segment continuous memory space are removed.

Owner:OPZOON TECH

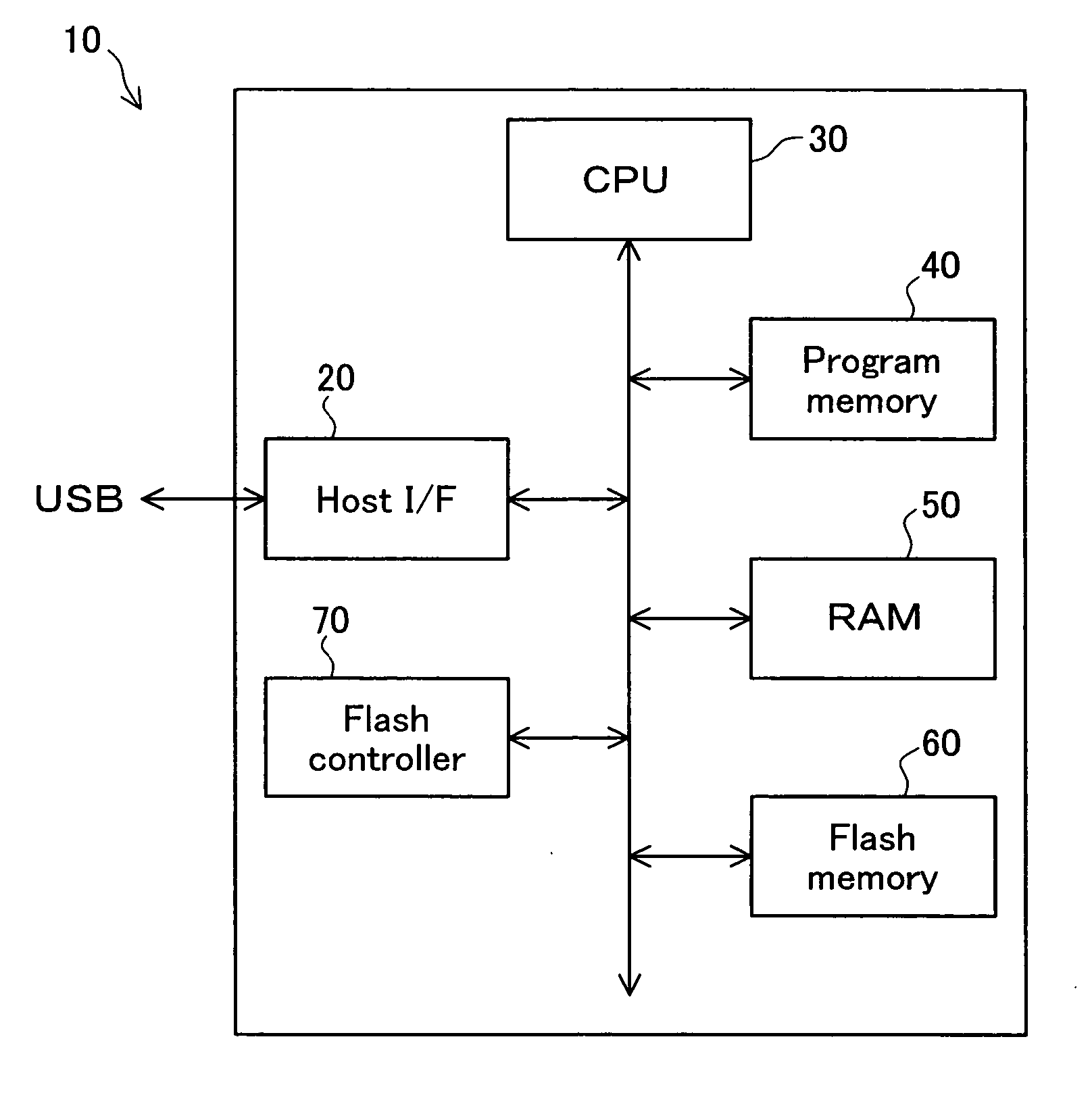

External storage device

InactiveUS20050283647A1Easy to processEasy to integrateMemory loss protectionError detection/correctionExternal storageManagement unit

This is an external storage device for which erase is performed as units of blocks comprising a plurality of pages, and that uses flash memory that uses this page as the minimum unit for reading and writing data, the flash memory comprising a redundant part area for storing codes for performing error correction of specified bit counts for each of the pages, and a bit substitute area at specified areas within the flash memory. The external storage device includes a bit management unit existed within the page and that is for allocating the address of the bit substitute area to the defective bits that are beyond scope of the page unit error correction, and a block control unit that replaces the defective bit with the bit within the bit substitution area and performs reading and writing of the data to the block containing the defective bits. With this external storage device, it is possible to improve the use efficiency of the flash memory.

Owner:BUFFALO CORP LTD

Sliced data structure for particle-based simulation, and method for loading particle-based simulation using sliced data structure into GPU

ActiveUS7920996B2Improve memory usage efficiencyImprove efficiencyComputation using non-denominational number representationDesign optimisation/simulationVoxelComputational physics

The sliced data structure used for a particle-based simulation using a CPU or GPU is a data structure for a calculation space. The space is a three-dimensional calculation space constructed from numerous voxels; a plurality of slices perpendicular to the Y axis is formed; numerous voxels are divided by a plurality of two-dimensional slices; the respective starting coordinates of the maximum and minimum voxels are calculated for a range of voxels in which particles are present in each of a plurality of two-dimensional slices; the voxel range is determined as a bounding box surrounded by a rectangular shape; and memory is provided for the voxels contained in the bounding boxes of each of the plurality of two-dimensional slices.

Owner:PROMETECH SOFTWARE

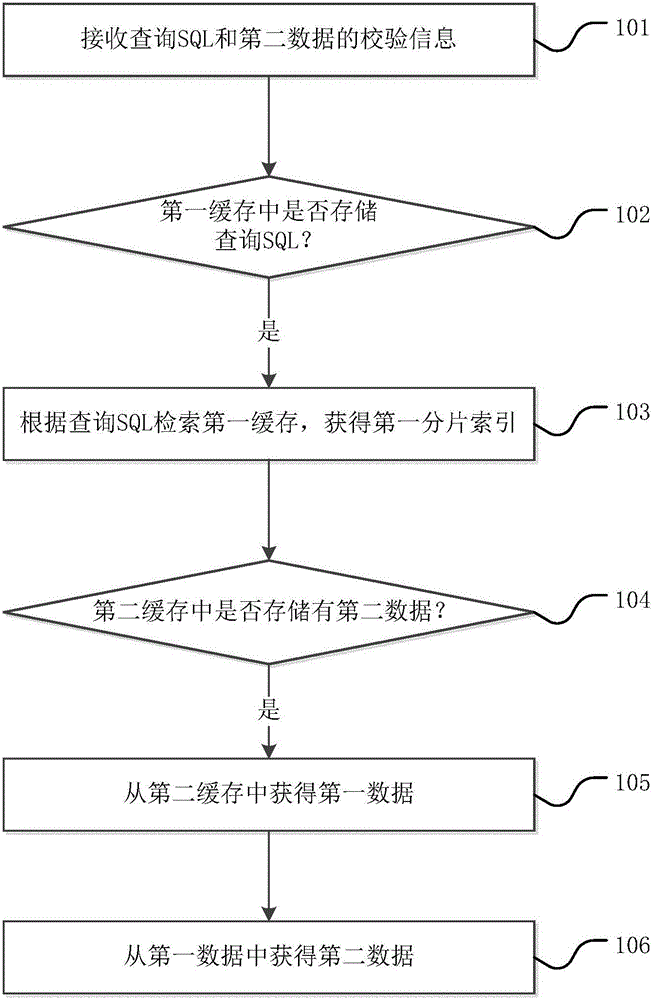

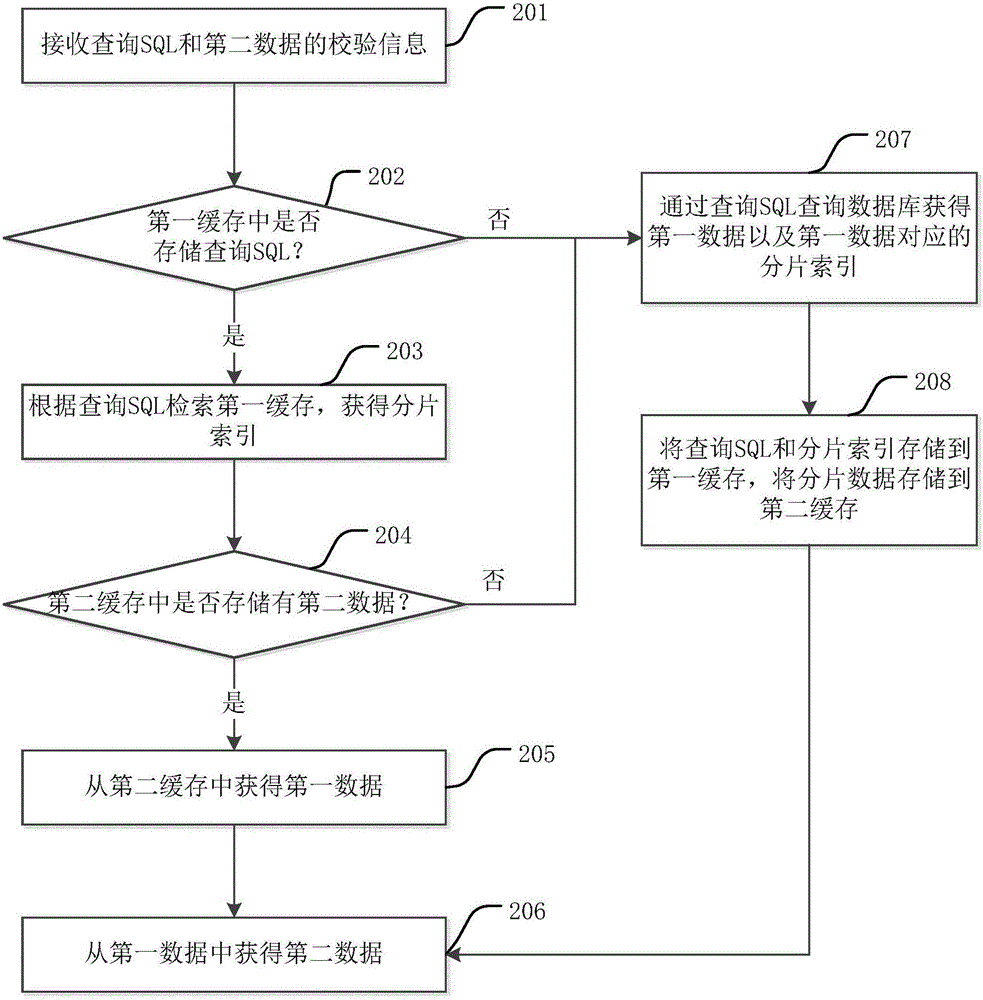

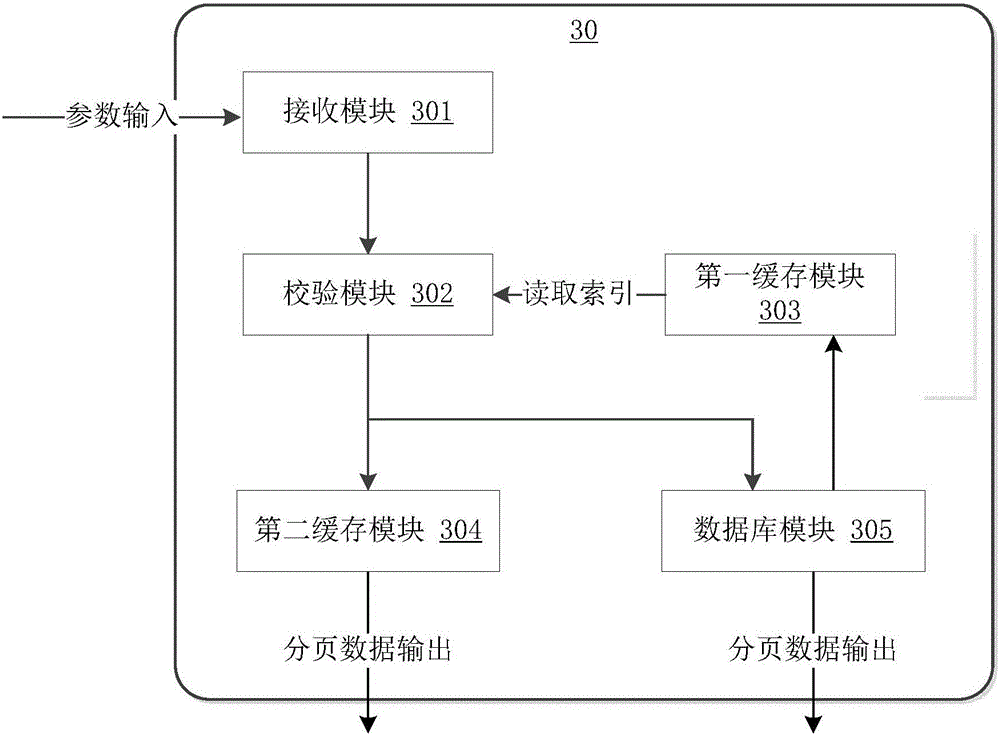

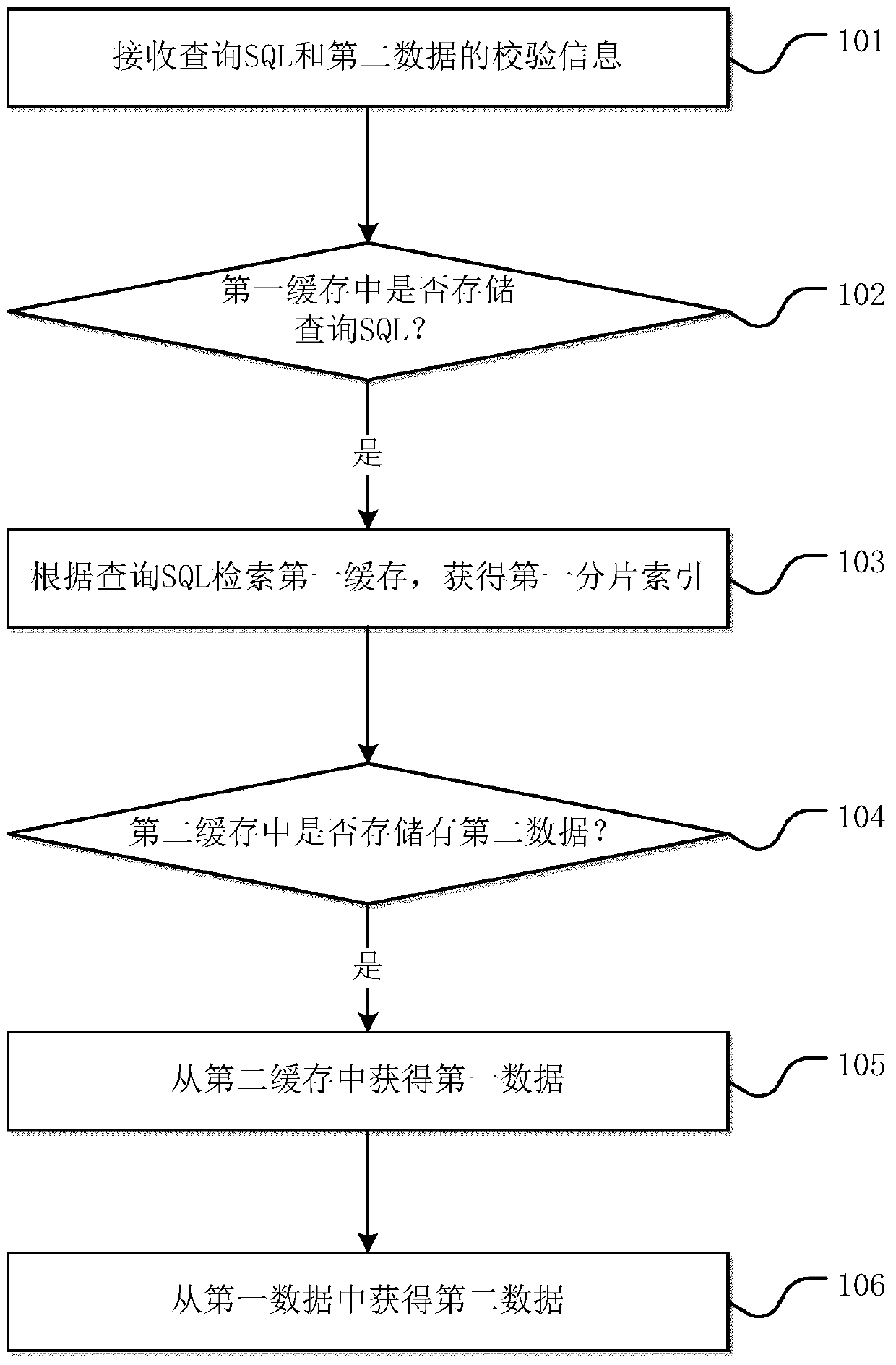

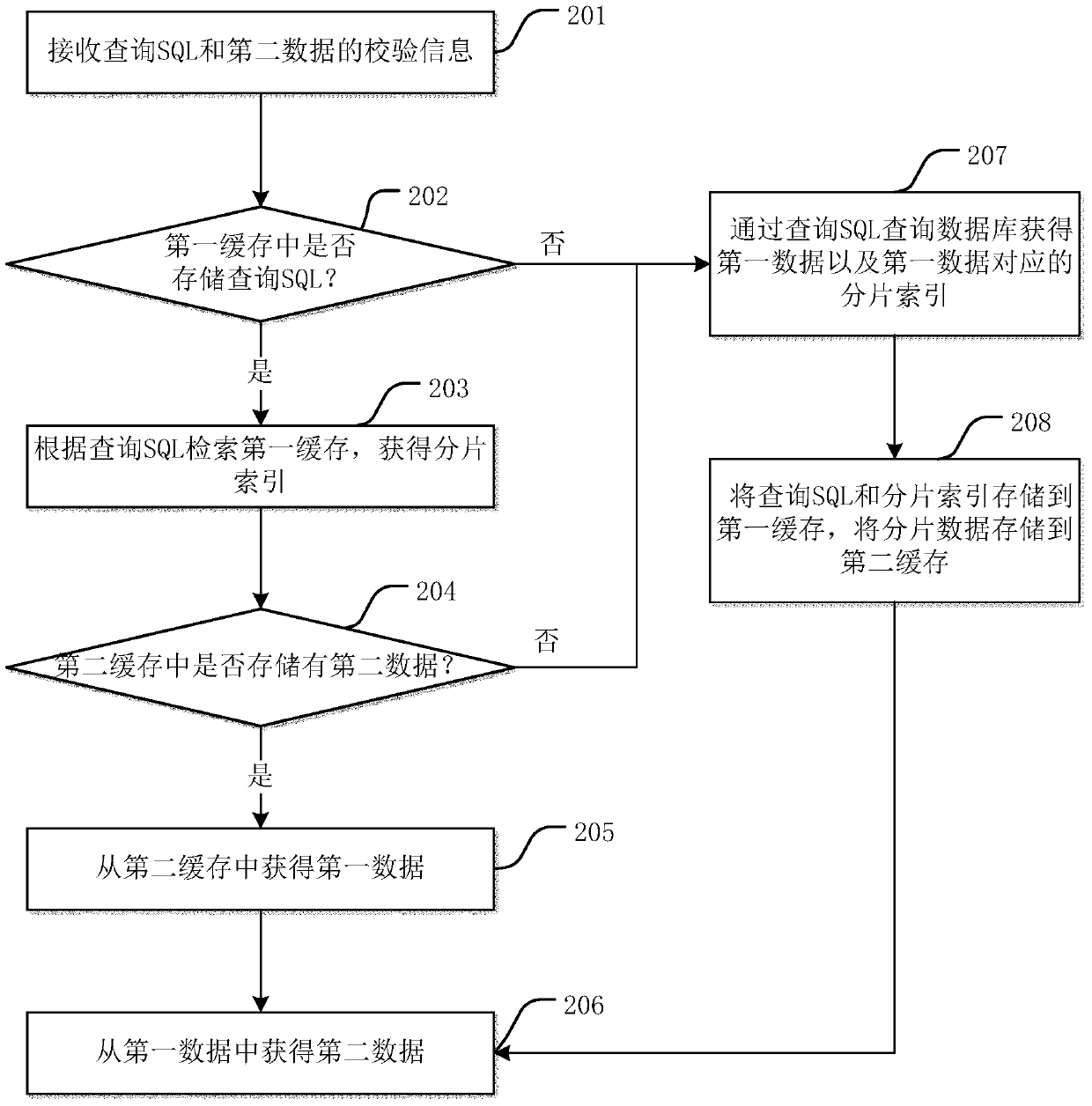

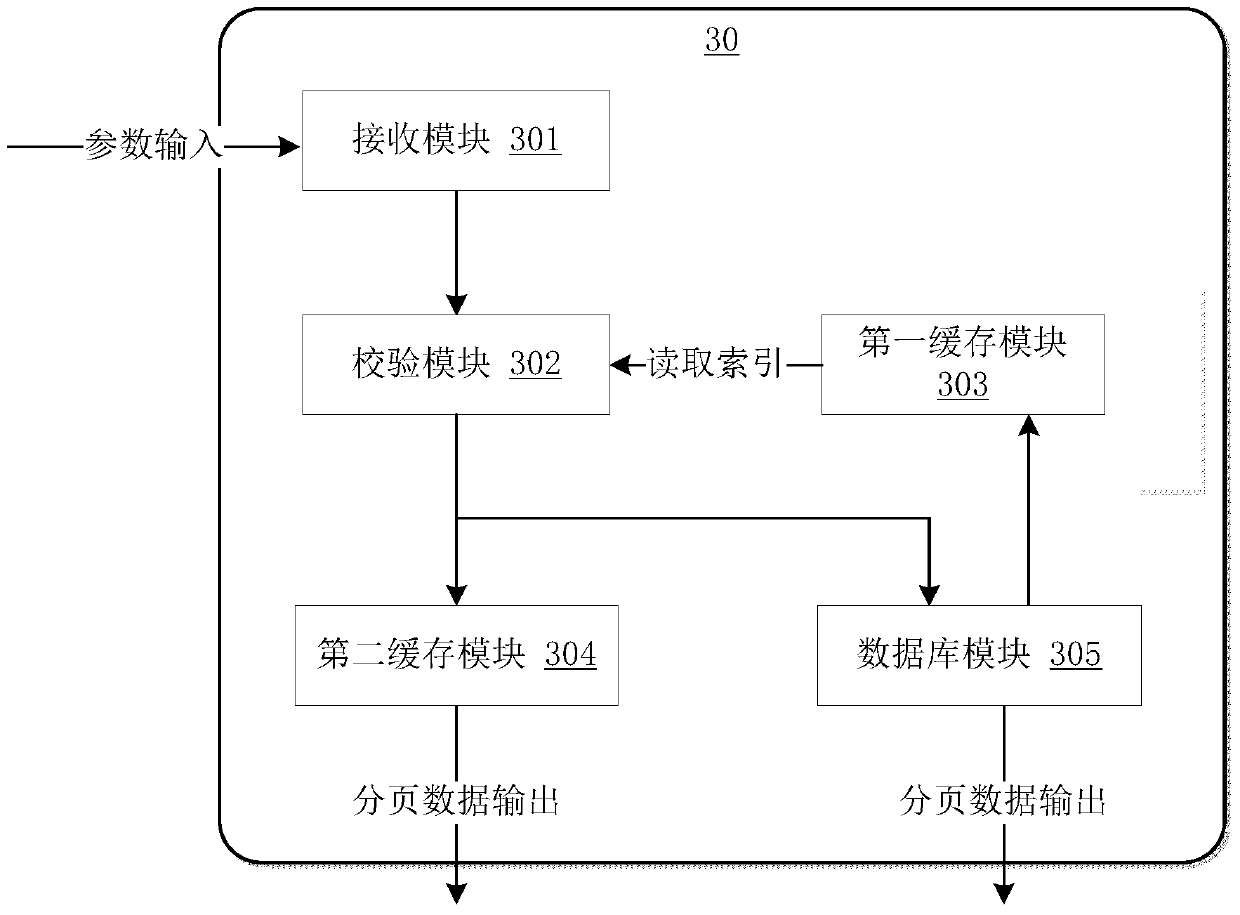

Paging realization method and paging system

ActiveCN107180043AAvoid disadvantagesImprove memory usage efficiencySpecial data processing applicationsCombined useParallel computing

An embodiment of the invention provides a paging realization method. The method comprises the steps of receiving paging request information, wherein paging request data comprises a query SQL and check information of second data; judging whether a first cache stores the query SQL or not; retrieving the first cache according to the query SQL to obtain a first fragmentation index; judging whether a second cache stores the second data or not according to the check information; obtaining first data from the second cache; and obtaining the second data according to the first data, wherein the first fragmentation index includes at least one fragmentation index, the first cache stores a corresponding relationship between the query SQL and the first fragmentation index, the second cache stores the first data, and the first data is marked with one fragmentation index. Through combined use of the first cache and the second cache, the shortcomings of database paging and memory paging are overcome, the memory usage efficiency is improved, and a high-concurrent paging request is satisfied.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

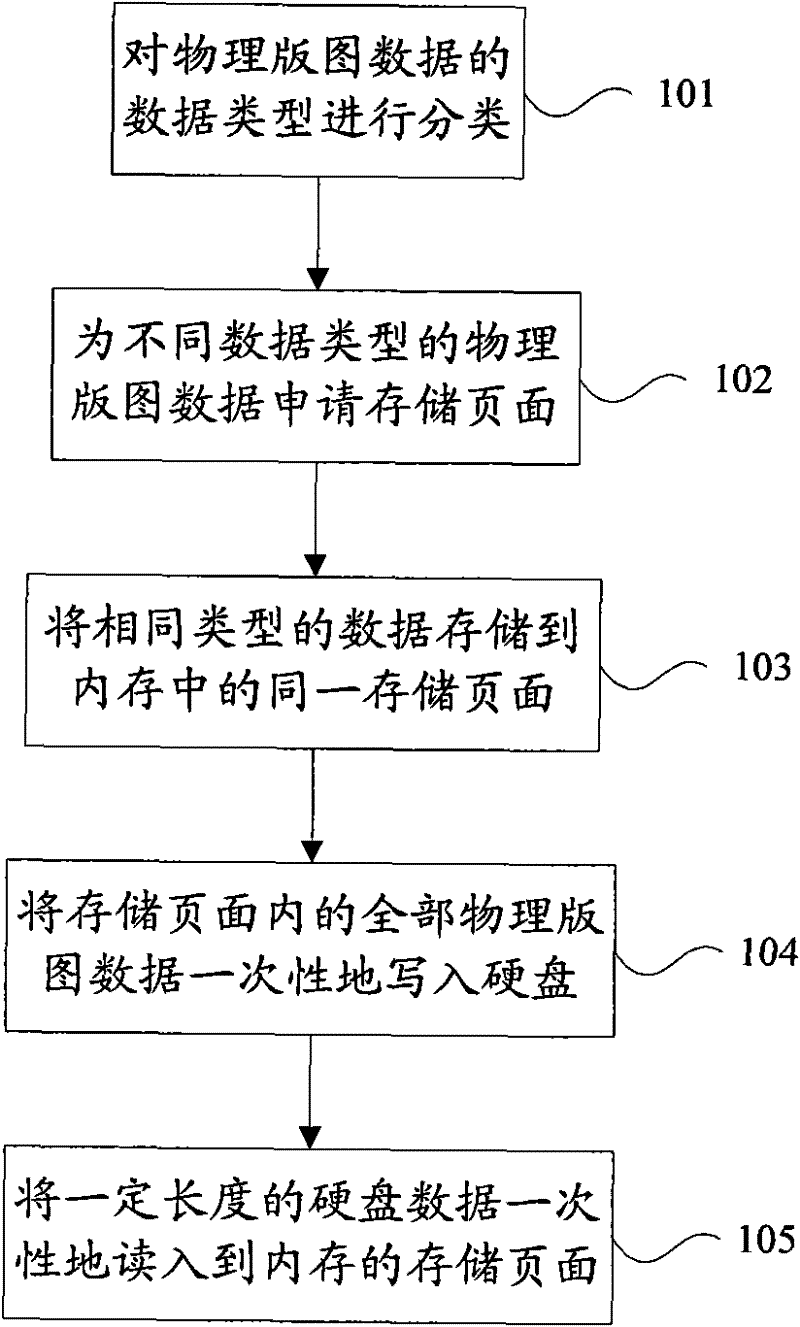

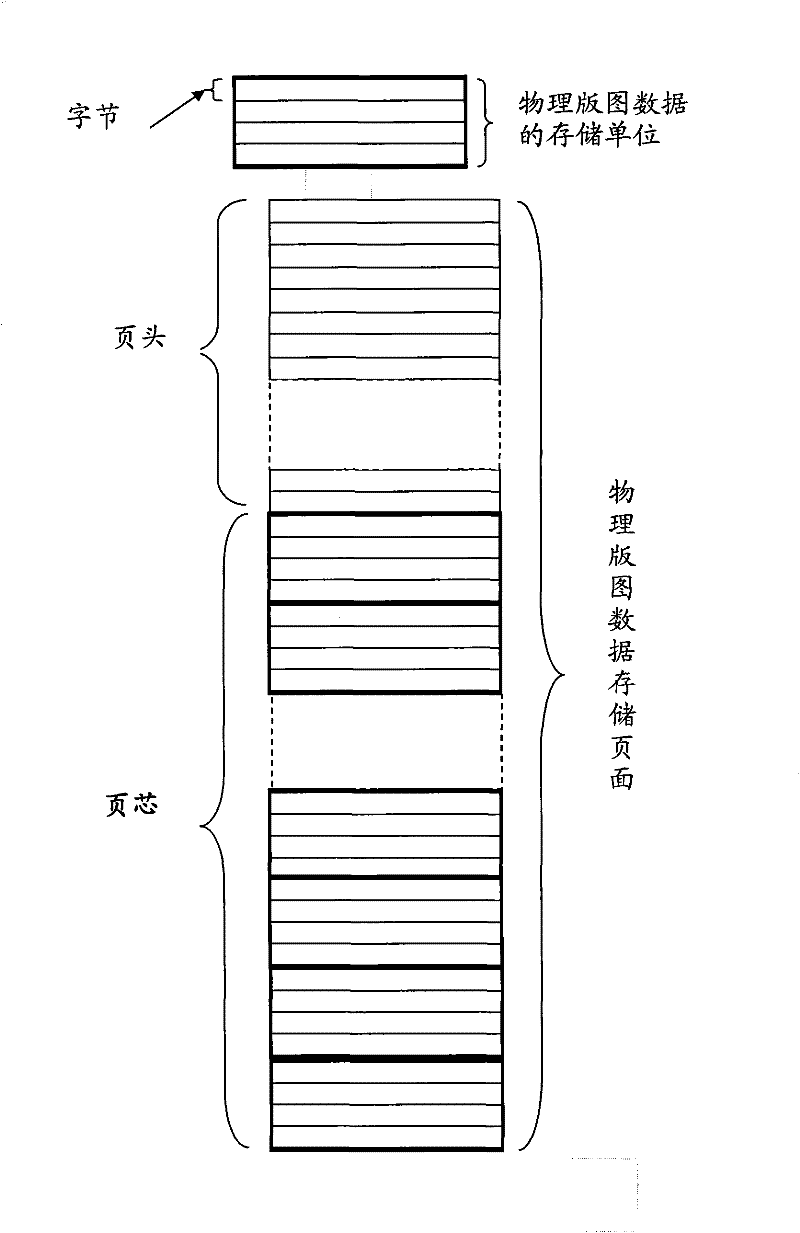

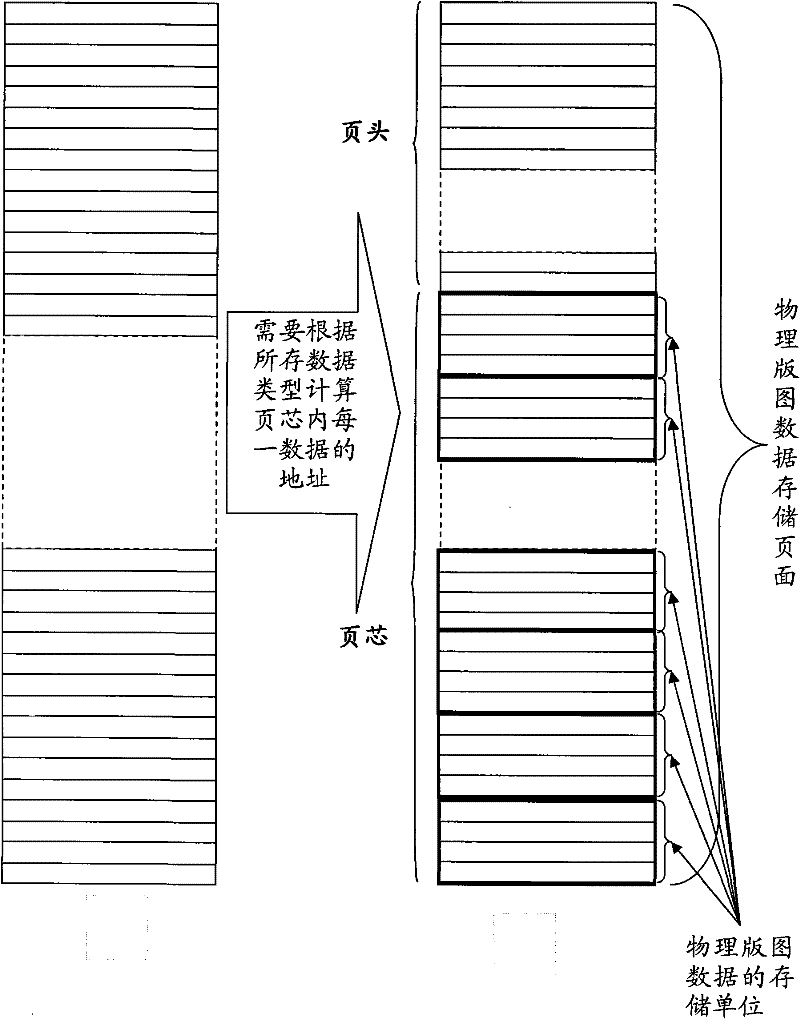

Method of quickly reading and writing mass data file

ActiveCN102193873AReduce memory fragmentationImprove memory usage efficiencyInput/output to record carriersMemory adressing/allocation/relocationData fileComputer memory

The invention discloses a method of quickly reading and writing a physics territory data, comprising the steps of classifying the data type of the physics territory data, applying storage page for data of different data types, storing the data of same type in the same storage page of a memory, and writing in all the data in the storage page to a hard disk one-time by utilizing the quick writing-in process from the storage page to the hard disk. By the method of quickly reading and writing a physics territory data of the invention, the concrete data type, concrete data unit and reconstruction of the concrete data unit require no concern, the reconstructions one by one of all data are avoided, the reading and writing speeds are improved, and the quick reading and writing of the mass integrated circuit physical layout data between the computer memorizer and the hard disk is realized.

Owner:INST OF MICROELECTRONICS CHINESE ACAD OF SCI

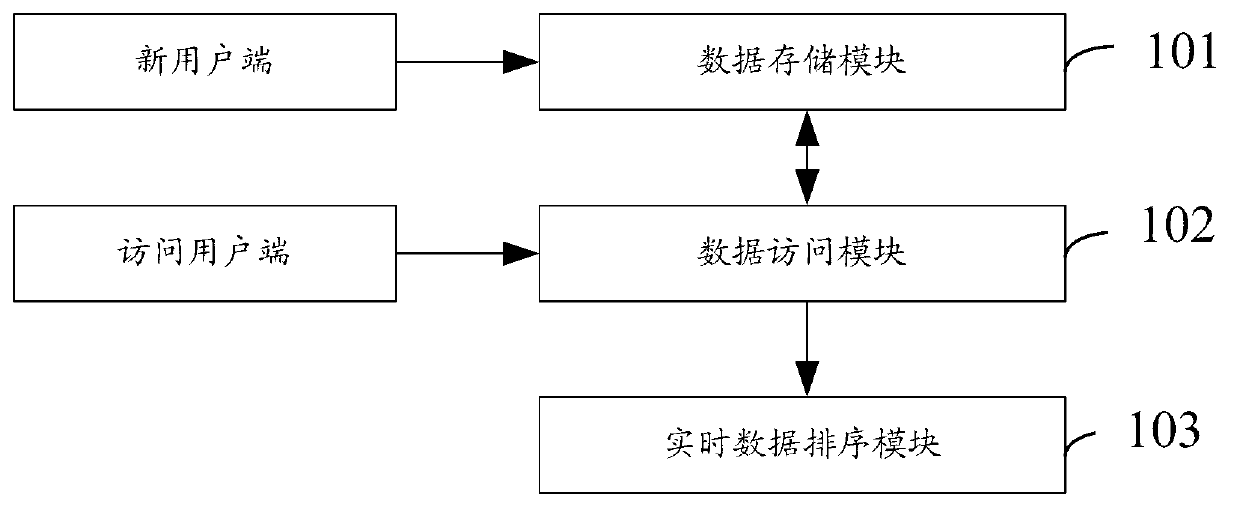

Large-user-amount data access and real-time ordering system applied to service

InactiveCN102999581AEasy accessProcessing speedSpecial data processing applicationsReal-time dataData access

The invention relates to a large-user-amount data access and real-time ordering system applied to services. The system is connected with a new user and an access user and comprises a data storage module, a data access module and a real-time data ordering module. By utilizing the system, the problem of efficiency in large-user-amount data access and real-time ordering in value added services is solved.

Owner:BEIJING BEWINNER DIANYI INFORMATION TECH CO LTD

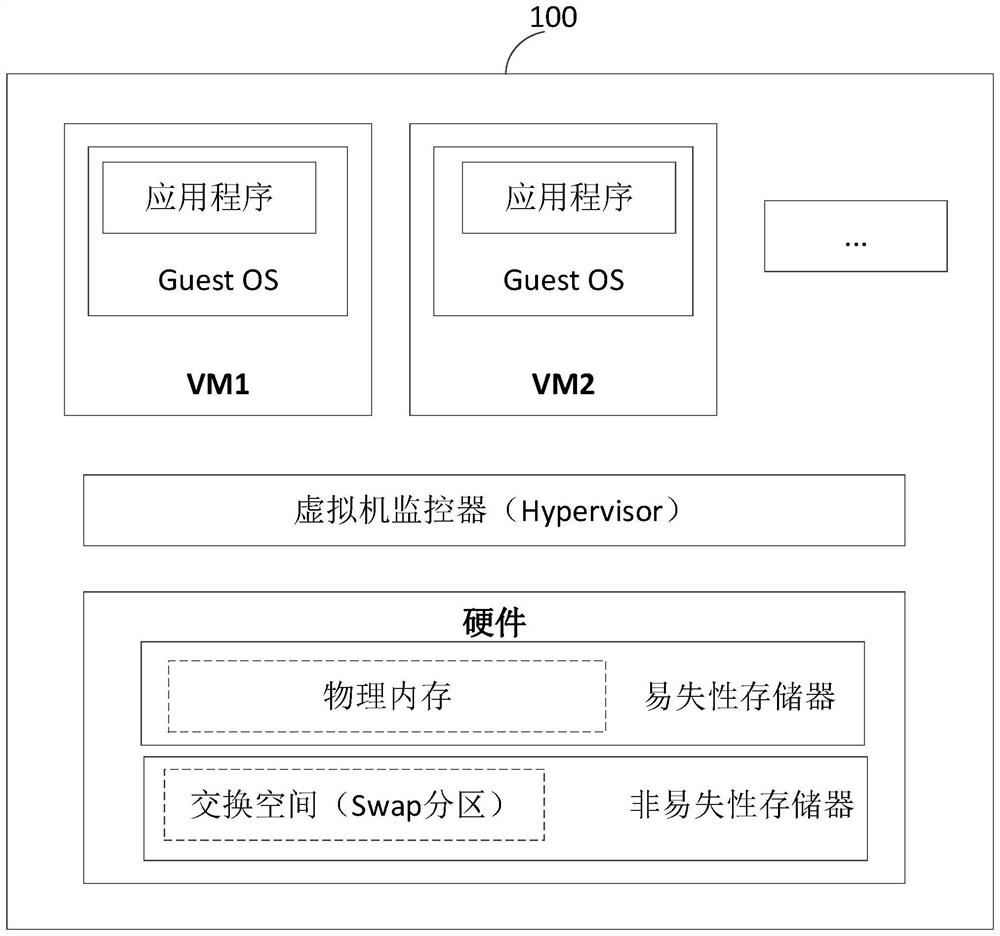

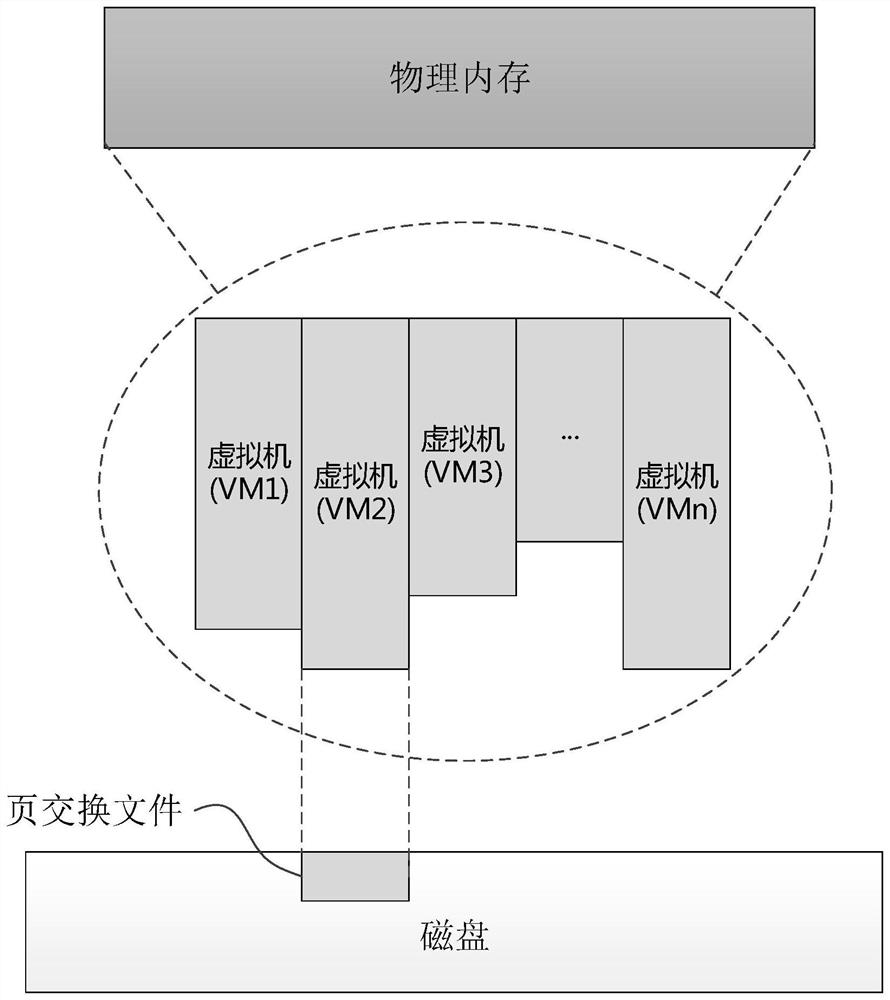

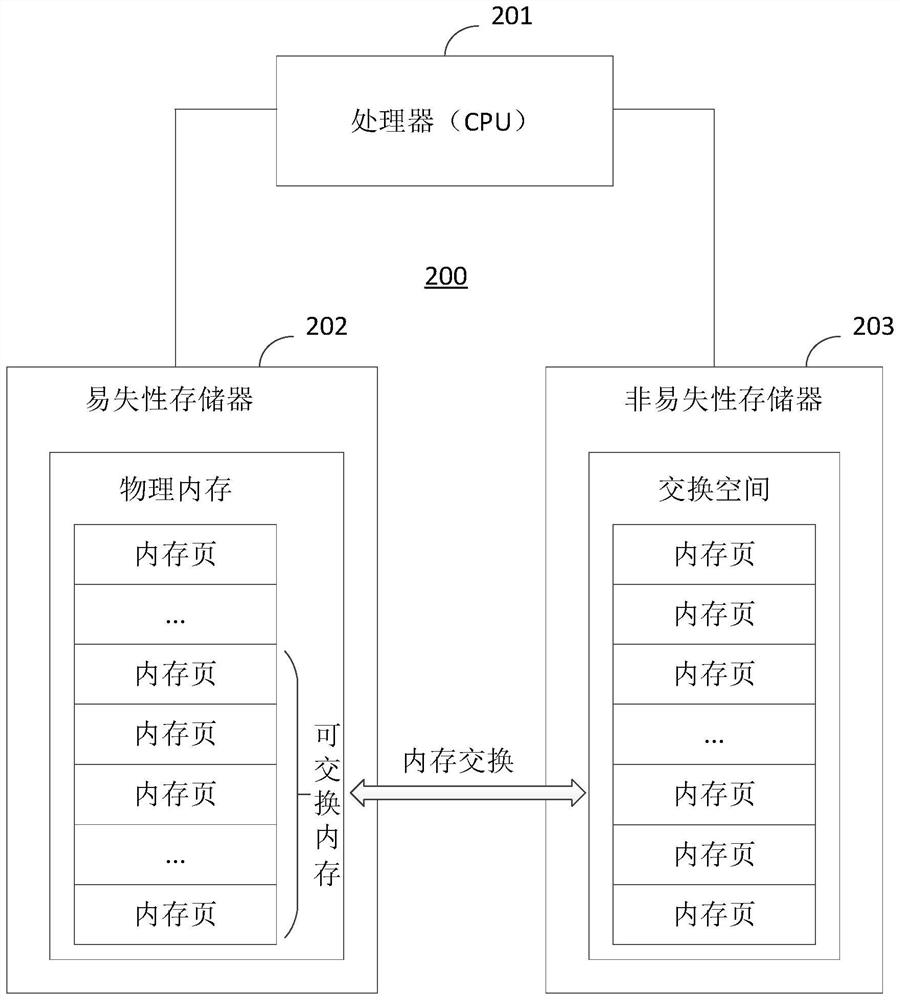

Virtual machine memory management method and equipment

PendingCN112579251AImprove stabilityAvoid ability to declineMemory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemTerm memory

The invention provides a virtual machine memory management method and equipment. The method comprises the following steps: identifying a non-operating system memory of a virtual machine from total memory allocated to the virtual machine; wherein the total memory comprises the memory of the virtual machine and the management memory of the virtual machine monitor; wherein the memory of the virtual machine comprises the memory of an operating system of the virtual machine and the memory of a non-operating system of the virtual machine; taking a memory of a non-operating system of the virtual machine as an exchangeable memory; and storing the data in the exchangeable memory into a nonvolatile memory. The problem of obvious reduction of the performance of the computer in an excessive submissionscene of the virtual machine can be avoided, the running stability of the virtual machine is improved, and the user experience is improved.

Owner:HUAWEI TECH CO LTD

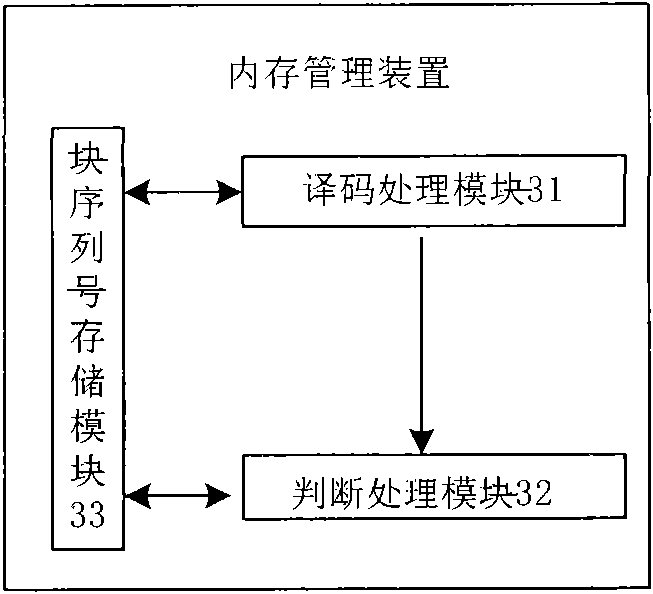

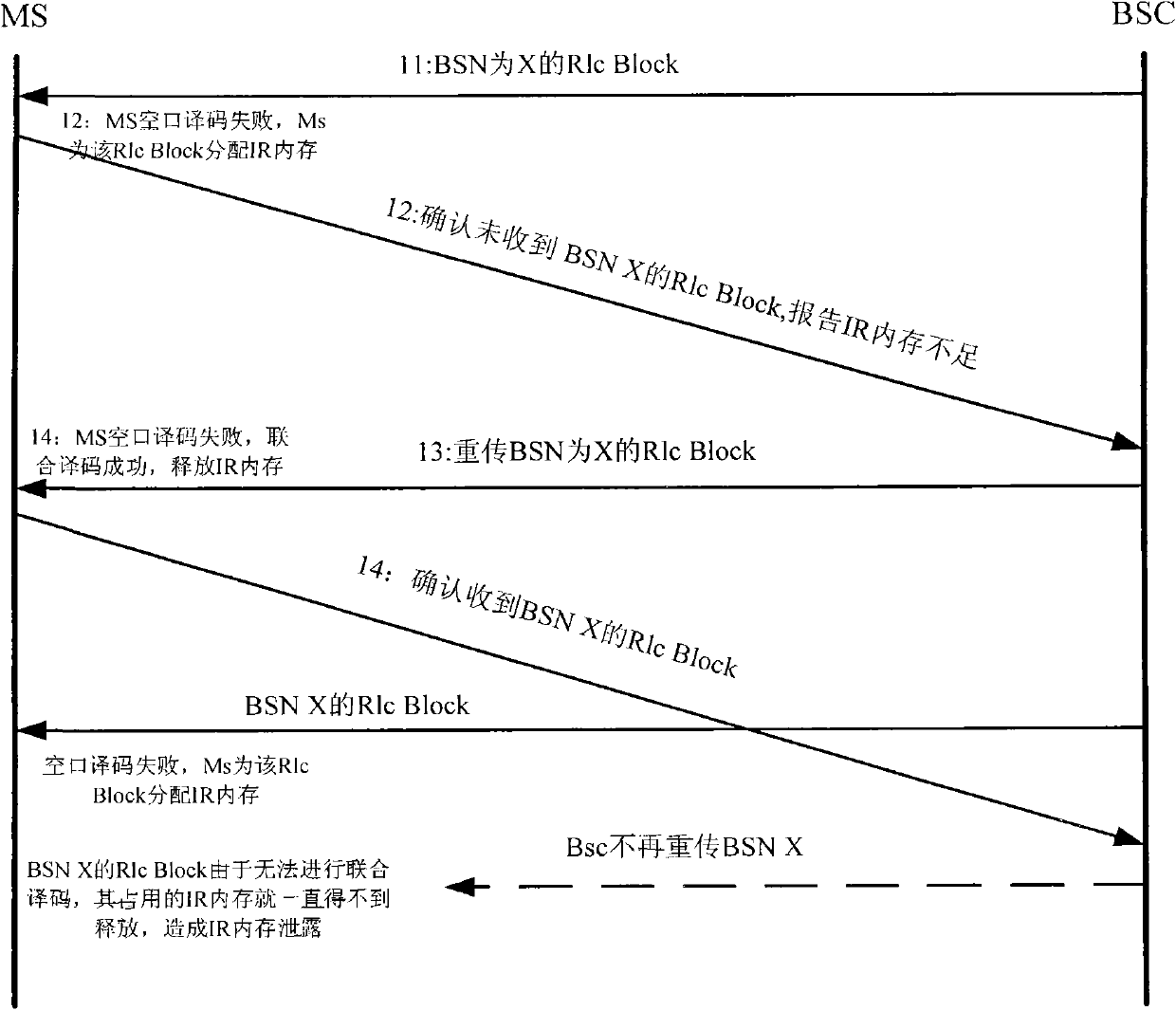

Memory management method and device

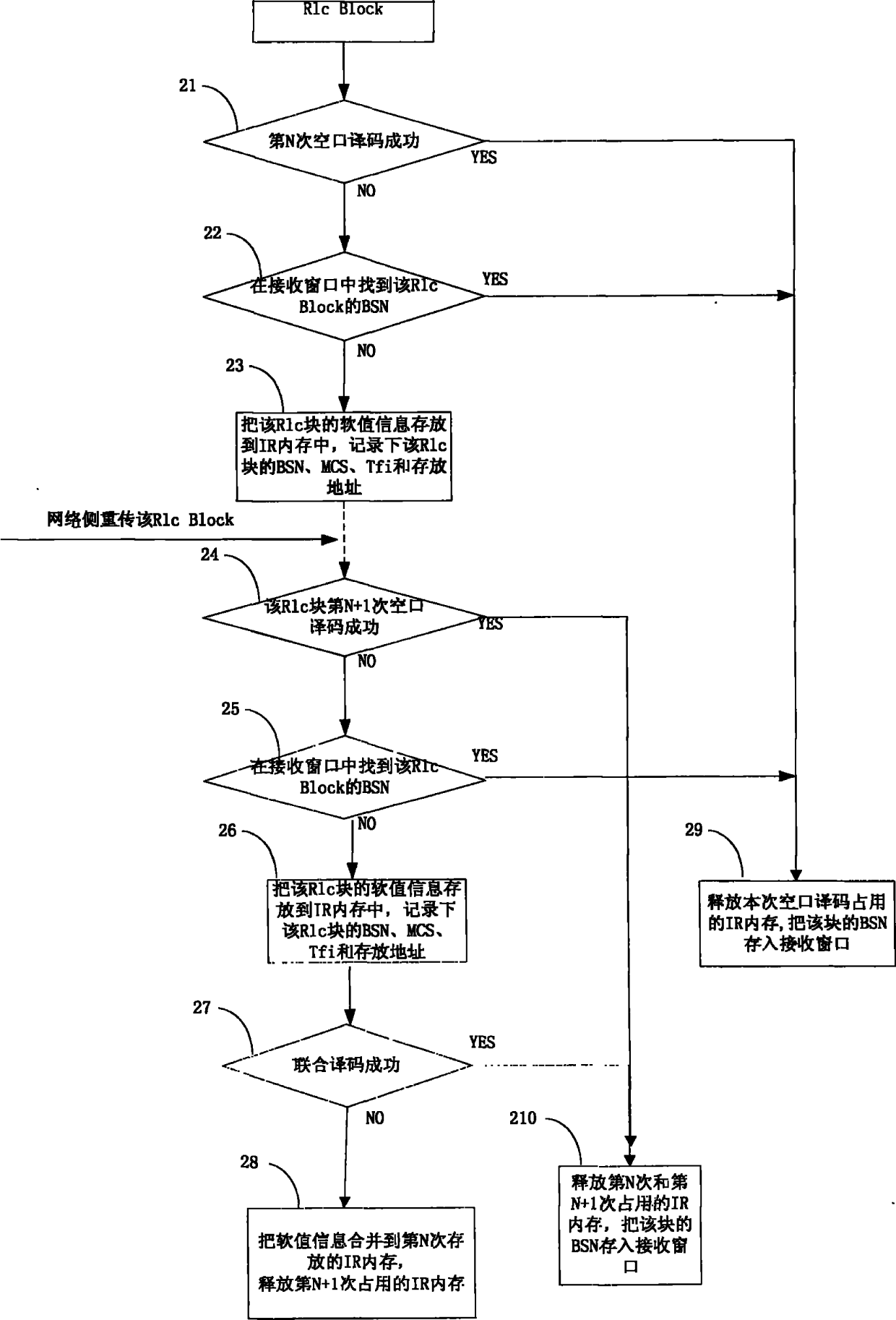

InactiveCN101795184AFix memory leaksImprove memory usage efficiencyError prevention/detection by using return channelTime differenceMemory management unit

The embodiment of the invention provides a memory management method and a memory management device. The method mainly comprises the following steps: receiving an RLC (radio link control) block sent by a network side, allocating memory for the RLC block, performing idle decoding on the RLC block, after the idle decoding fails, judging whether block sequence number information of the RLC block subjected to the idle decoding contains the block sequence number of the RLC block or not, if so, releasing the memory allocated to the RLC block, and otherwise, storing soft value information of the RLC block in the memory allocated to the RLC block. The method and the device of the invention can solve the problem of leakage of the memory of a mobile station caused by uplink interactive time difference, and can improve the service efficiency of the memory of the mobile station.

Owner:HUAWEI DEVICE CO LTD

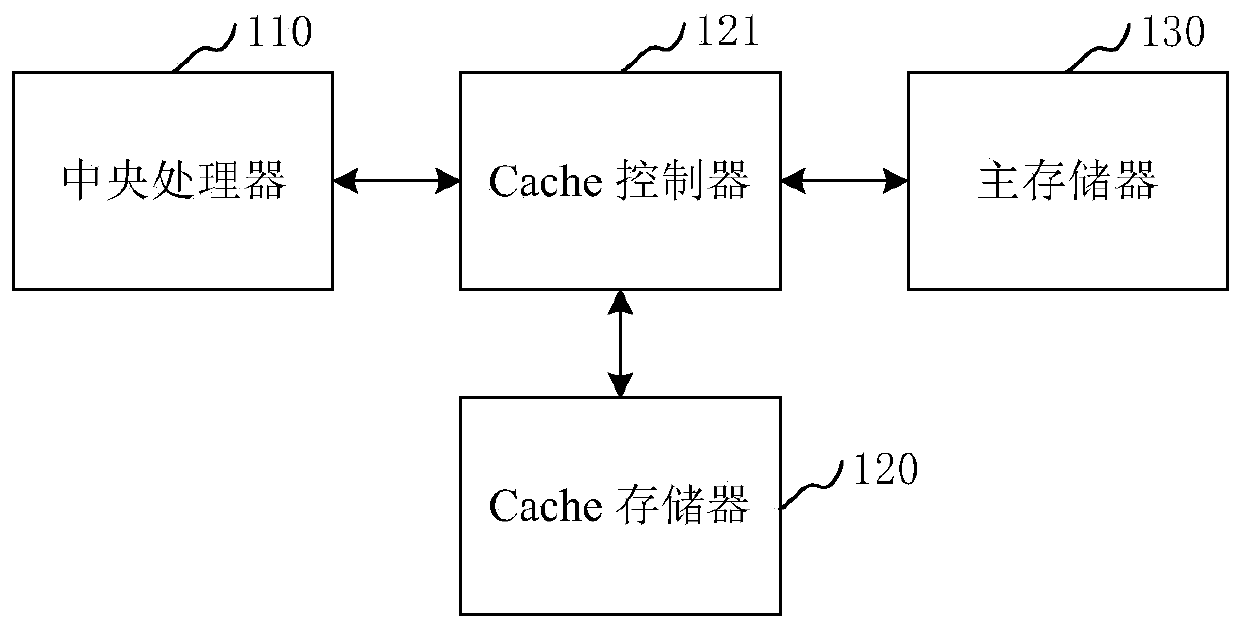

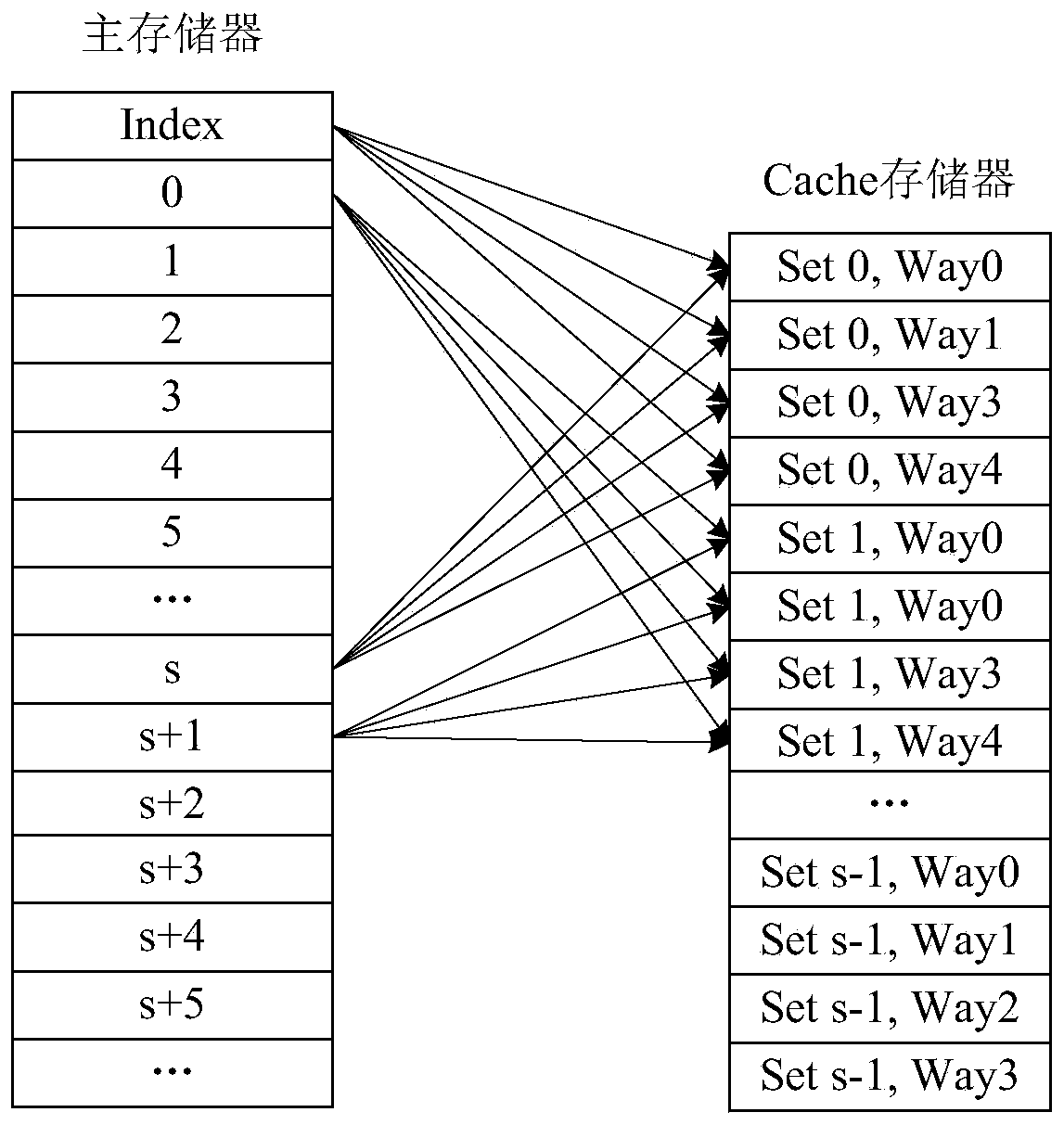

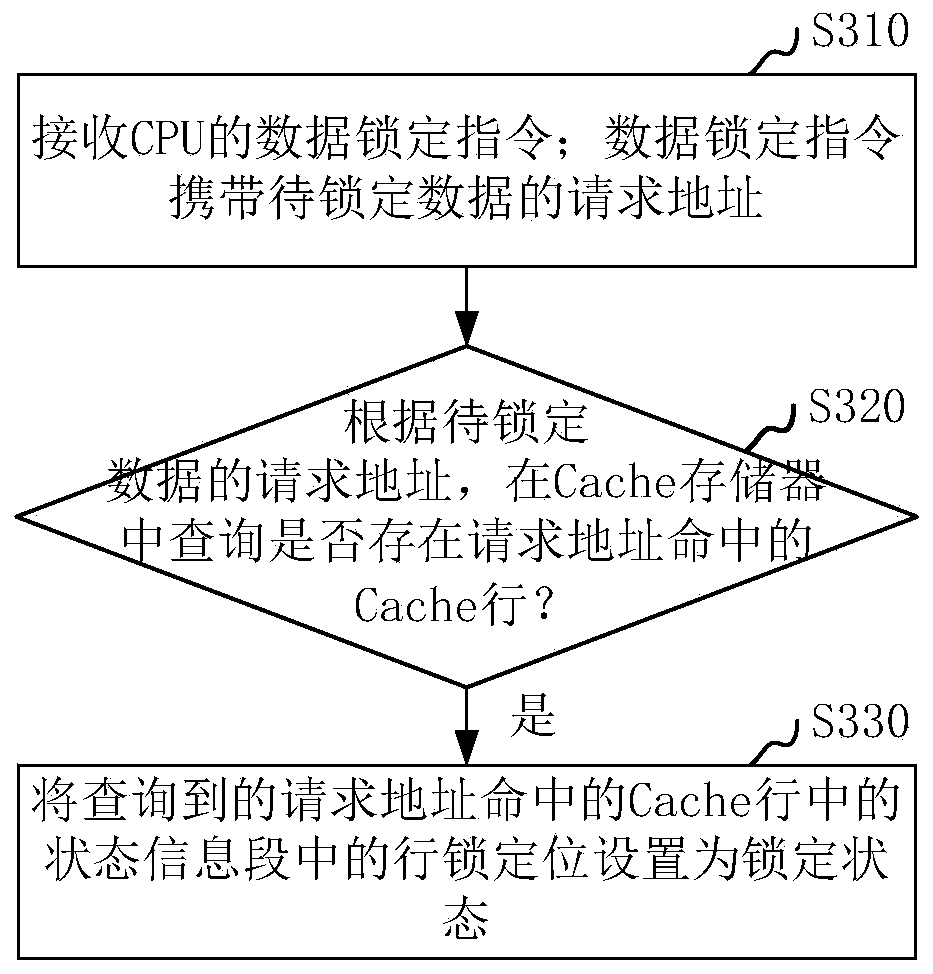

Cache data locking method and device and computer device

ActiveCN109933543AImplement individual lockingTake advantage ofMemory systemsParallel computingComputer device

The invention relates to a Cache data locking method and device, a computer device and a storage medium. The method comprises the following steps: receiving a data locking instruction of a CPU, wherein the data locking instruction carries a request address of to-be-locked data; according to the request address of the to-be-locked data, querying a Cache line hit by the request address in a Cache memory; and setting the line locking position in the state information segment in the Cache line hit by the inquired request address as a locking state. By adopting the method, the storage space of eachCache line of each path can be fully utilized, and the memory use efficiency of the Cache memory is effectively improved.

Owner:ZHUHAI JIELI TECH

Electronic device for controlling file system and operating method thereof

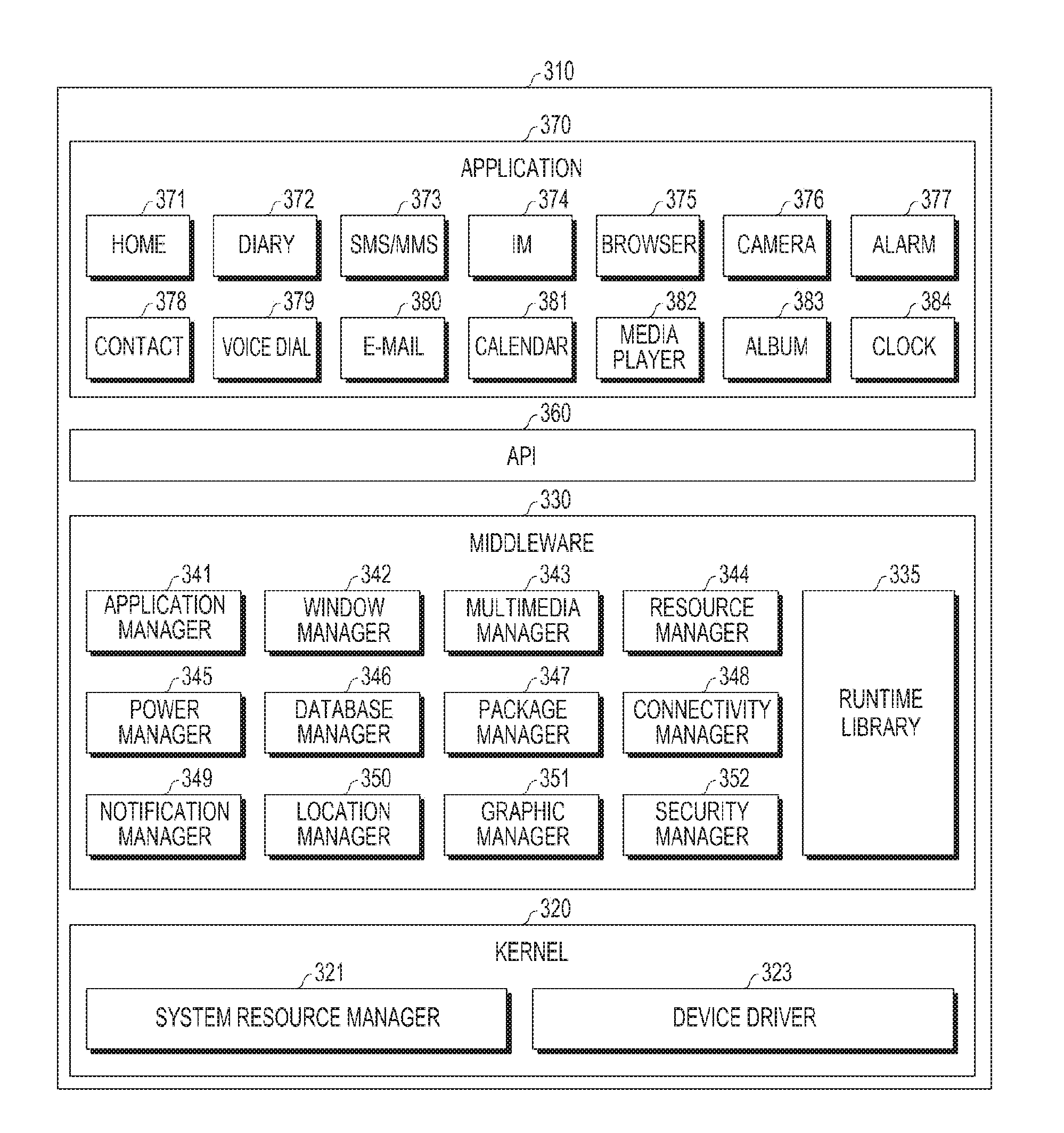

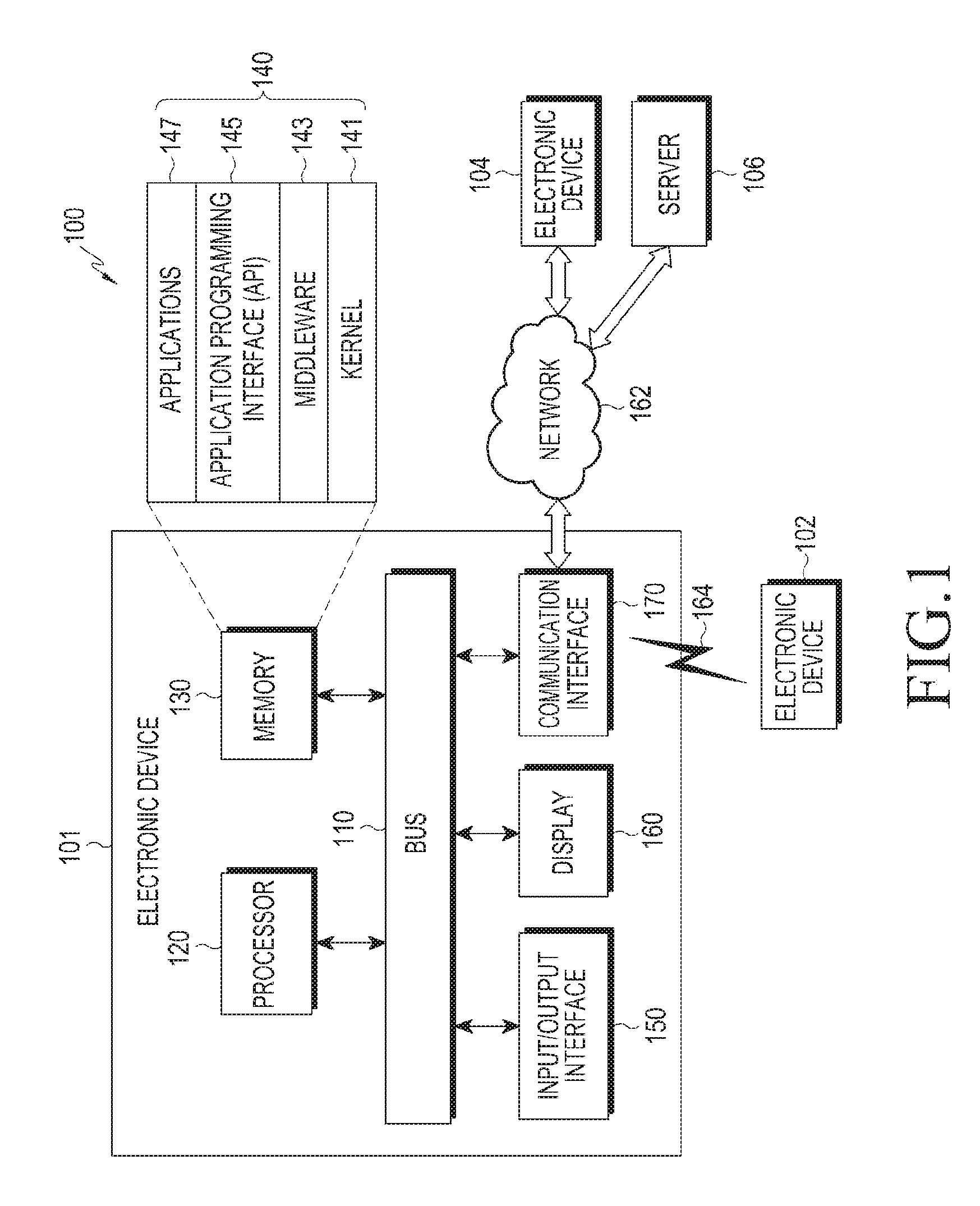

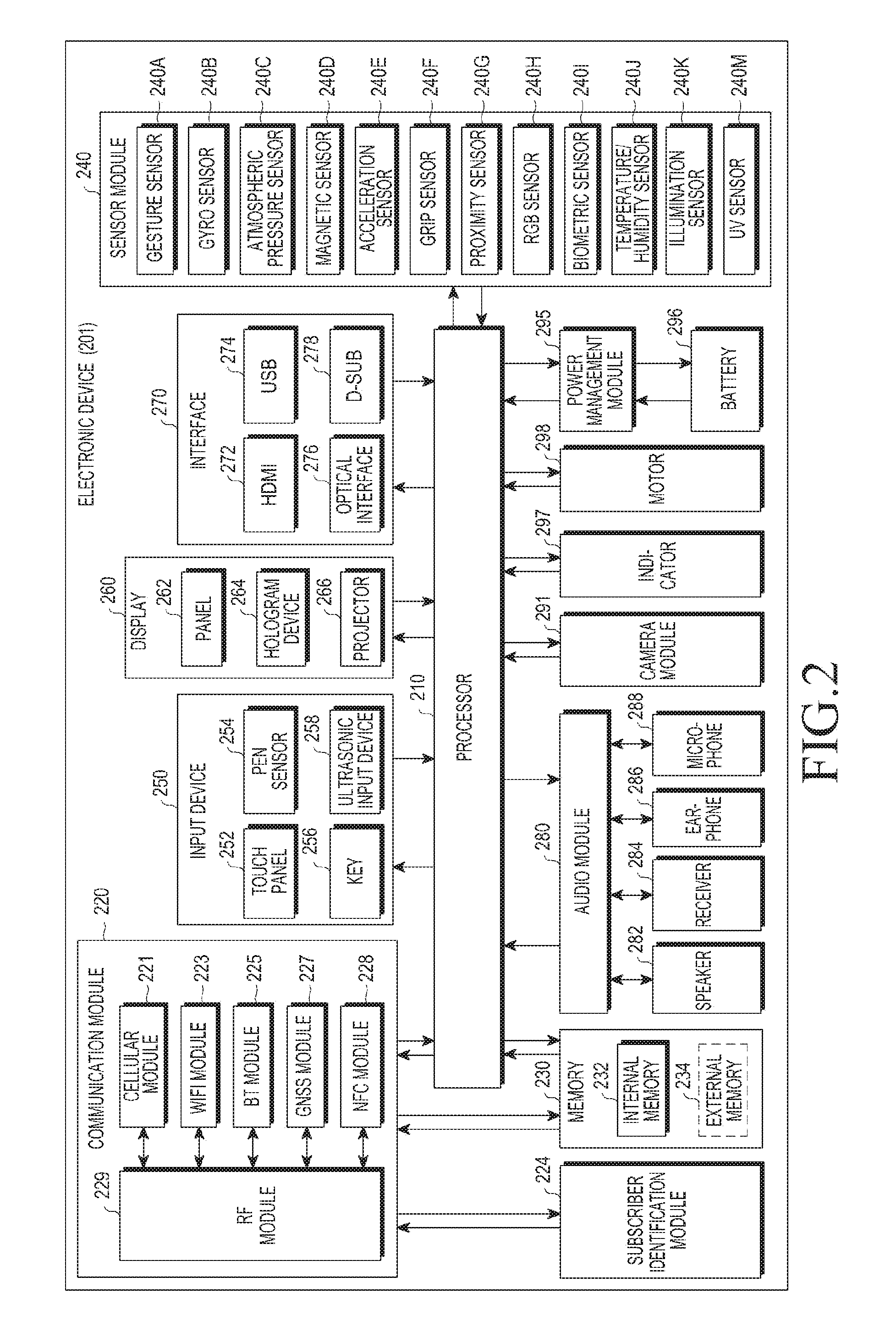

ActiveUS20170046358A1Efficient managementStable managementMemory architecture accessing/allocationDigital data information retrievalVirtual memoryVirtual file system

A method of operating an electronic device and the electronic device are provided. The method includes mounting at least one lower file system, which is configured to generate a file object managing a page cache, and mounting a highest file system, to which a virtual file system directly accesses, at a higher layer of a layer corresponding to the lower file system; in response to a file mapping request of a software program, generating a virtual memory area including a virtual address for a file corresponding to the file mapping request; and generating a first virtual address link between a file object of at least one lower file system having a page cache of a file corresponding to the file mapping request and the virtual memory area.

Owner:SAMSUNG ELECTRONICS CO LTD

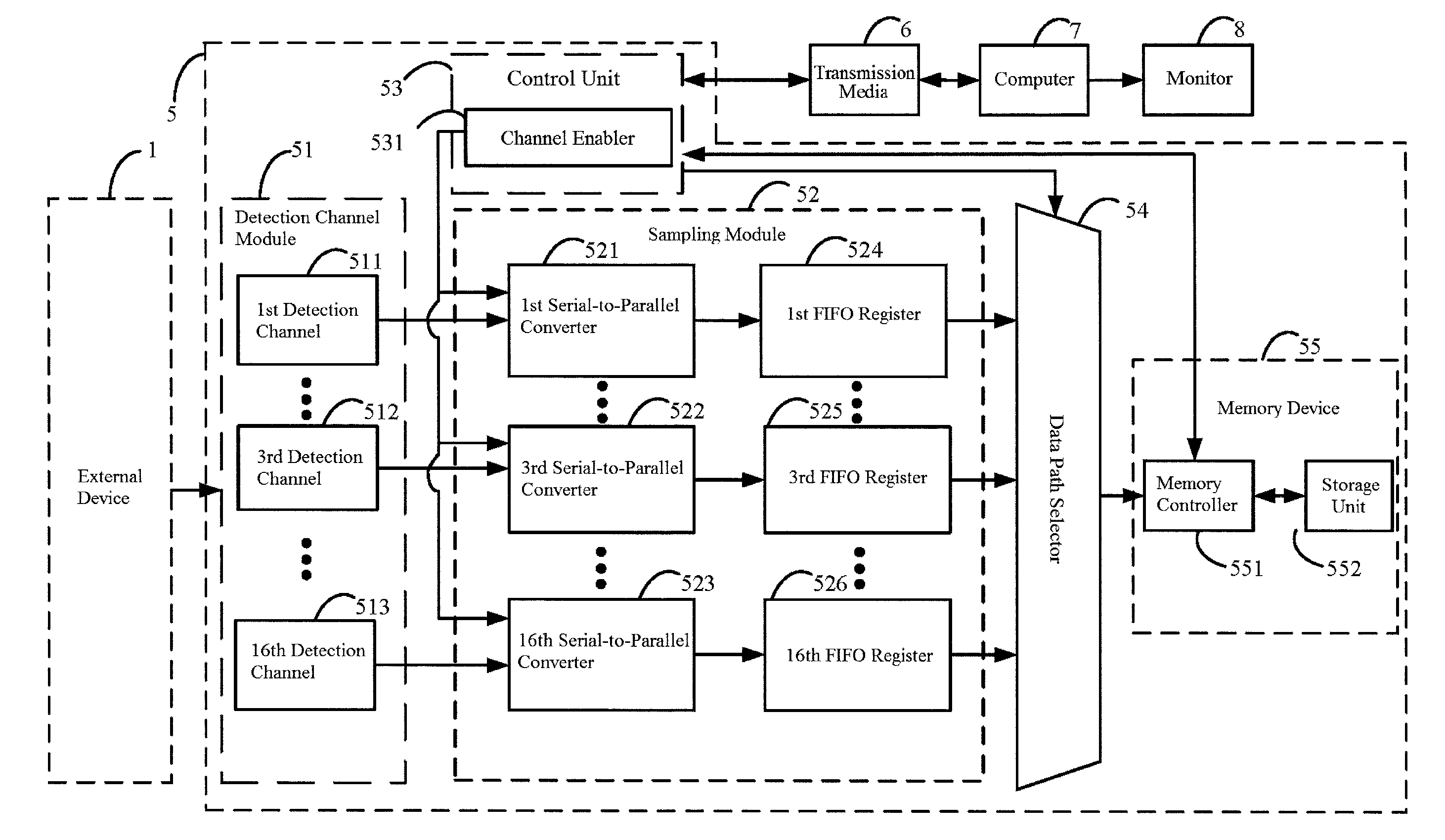

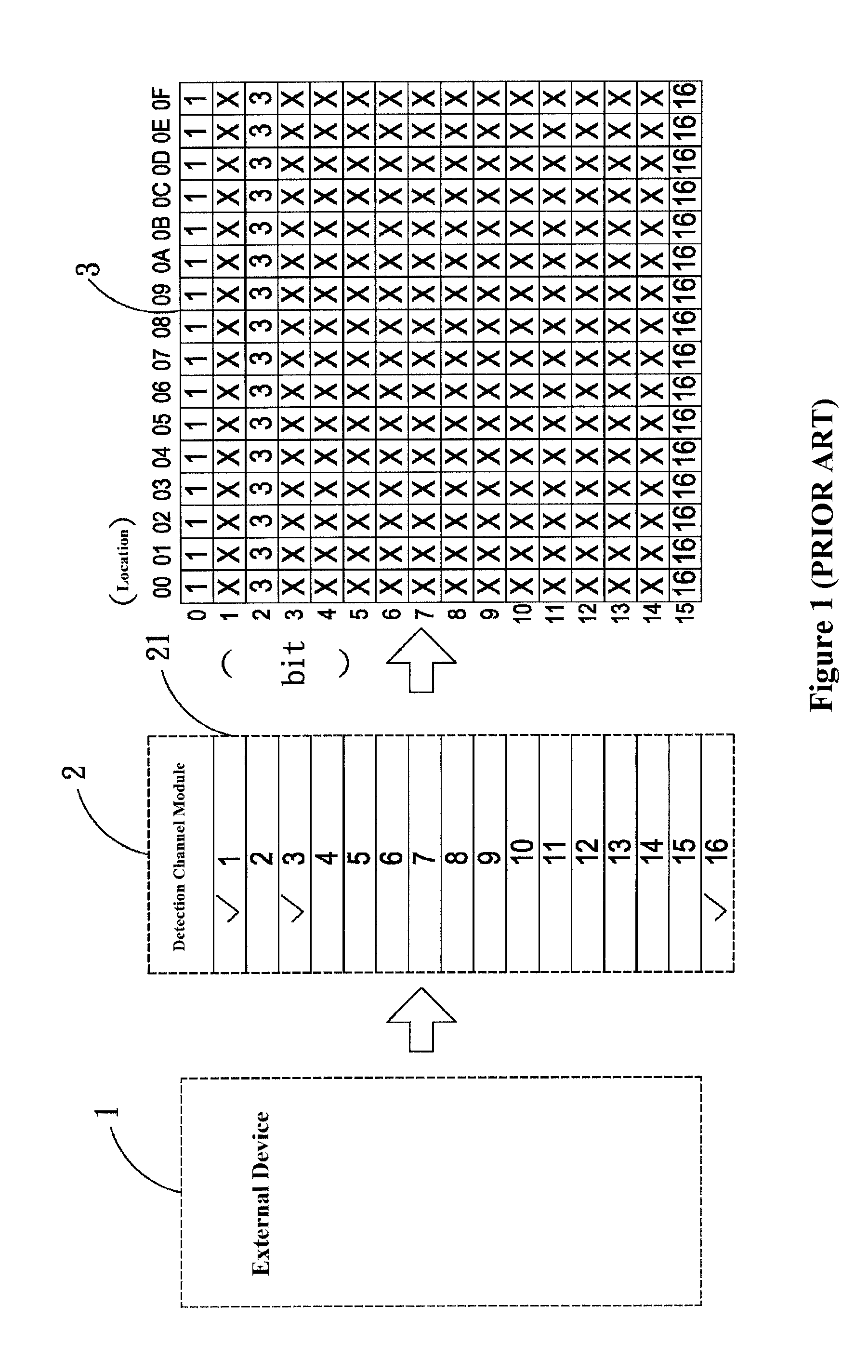

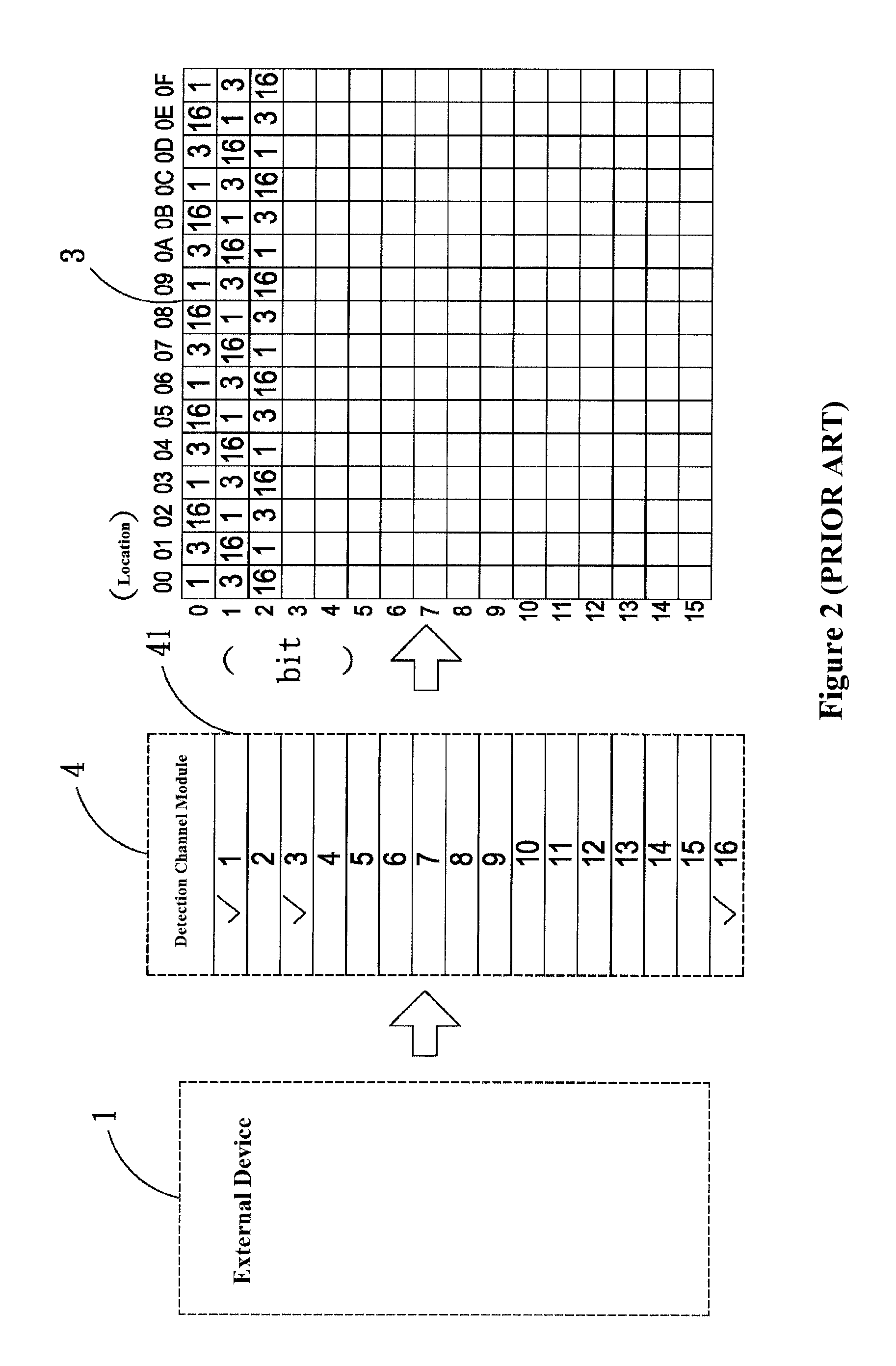

Electronic measuring device and method of converting serial data to parallel data for storage using the same

InactiveUS8806095B2Accurate and effective data structureImprove memory usage efficiencyDigital variable/waveform displayStatic storageComputer moduleDatapath

Owner:ZEROPLUS TECH CO LTD

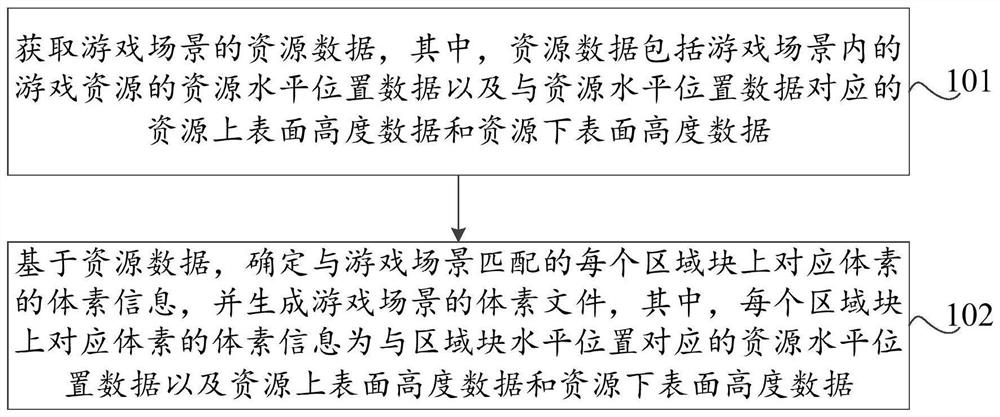

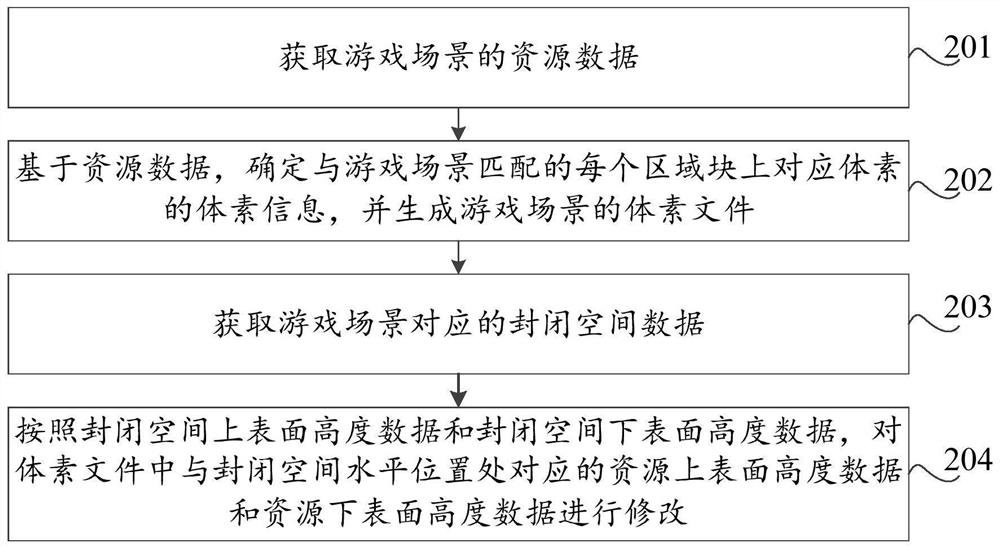

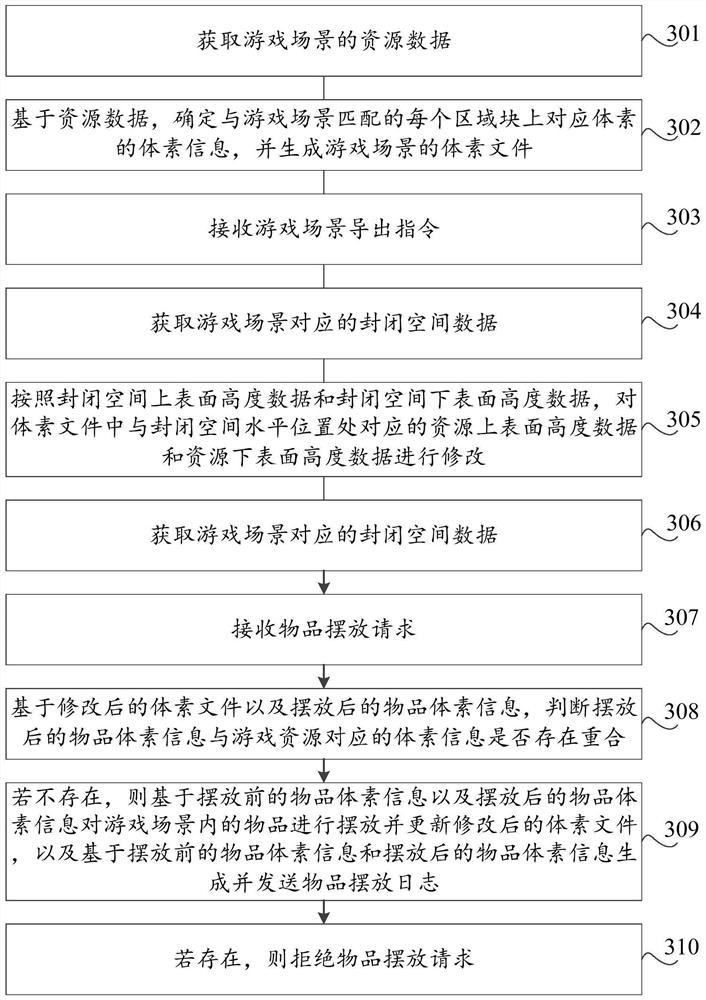

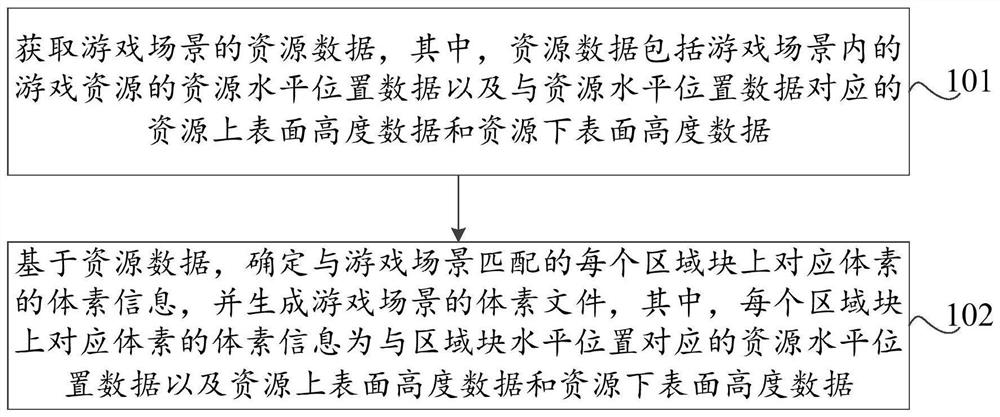

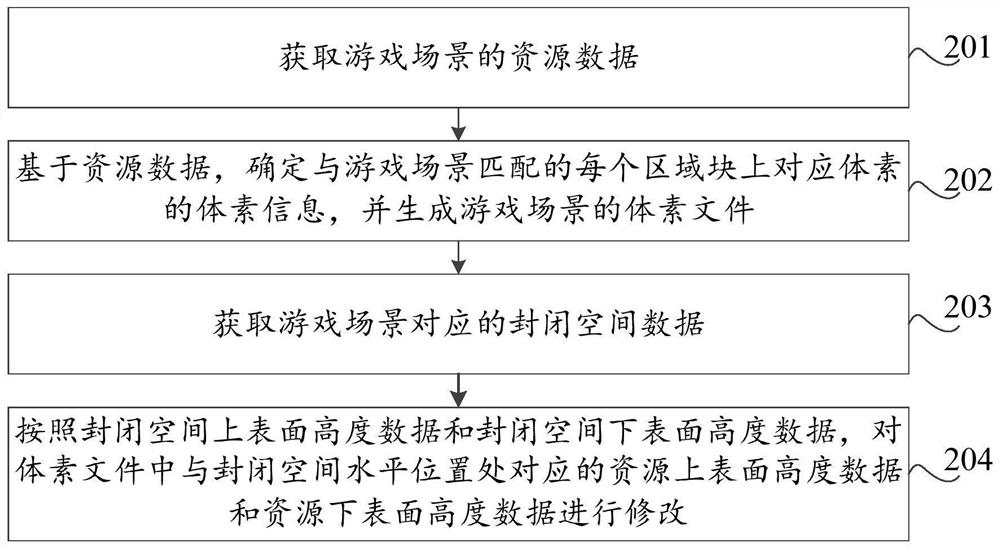

Game data processing method and device, storage medium and computer equipment

The invention discloses a game data processing method and device, a storage medium and computer equipment. The method comprises the steps of obtaining resource data of a game scene, wherein the resource data comprises resource horizontal position data of game resources in the game scene and resource upper surface height data and resource lower surface height data corresponding to the resource horizontal position data; based on the resource data, determining voxel information of corresponding voxels on each region block matched with the game scene, and generating a voxel file of the game scene,wherein the voxel information of the corresponding voxel on each region block is the resource horizontal position data corresponding to the horizontal position of the region block, the resource uppersurface height data and the resource lower surface height data. By use of the method, the memory occupation size of the voxel file is reduced, the memory use efficiency is improved, in addition, high-precision floating-point number representation can be adopted in the height direction, and the resource representation precision can be improved.

Owner:ARC GAMES CO LTD

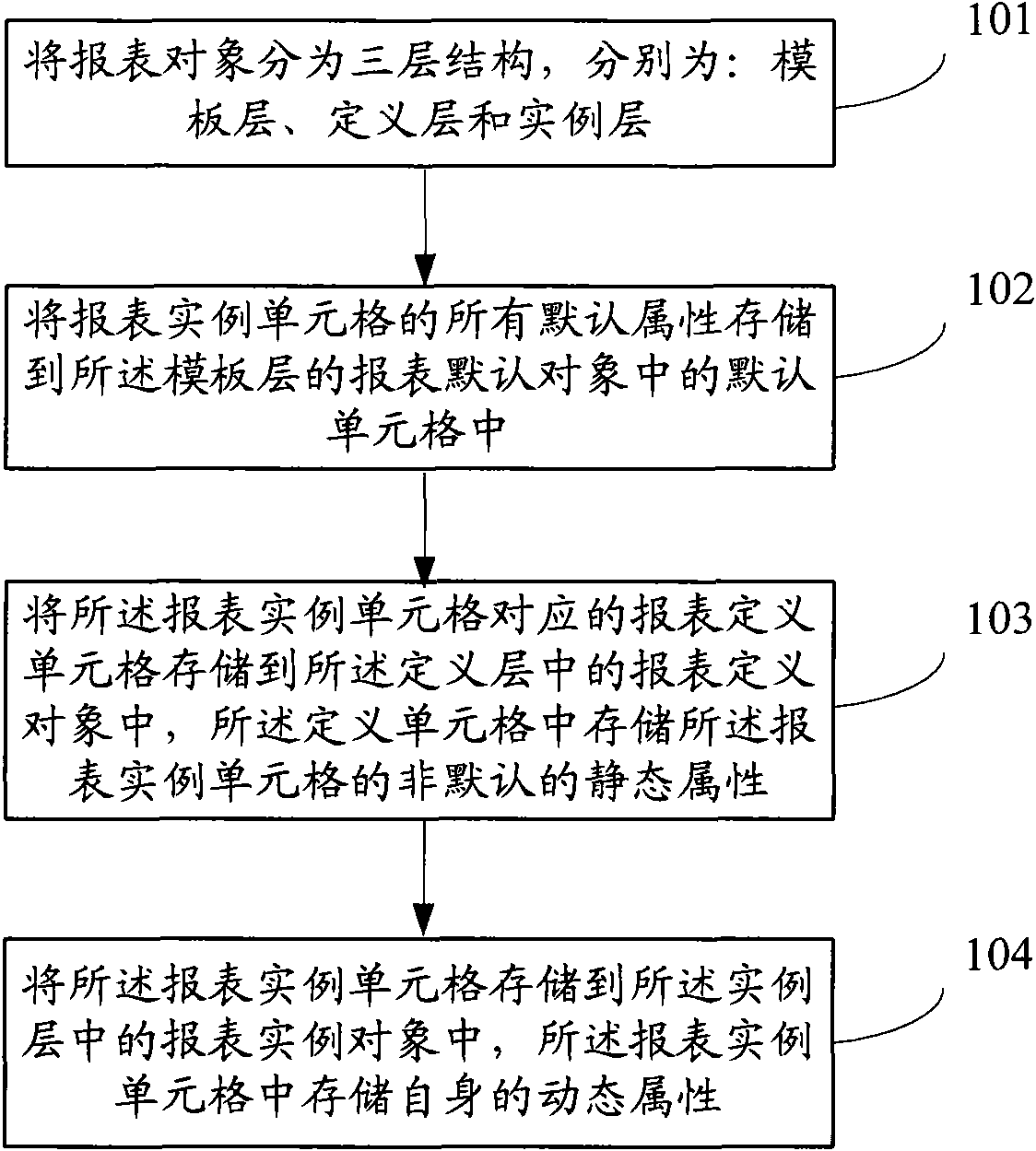

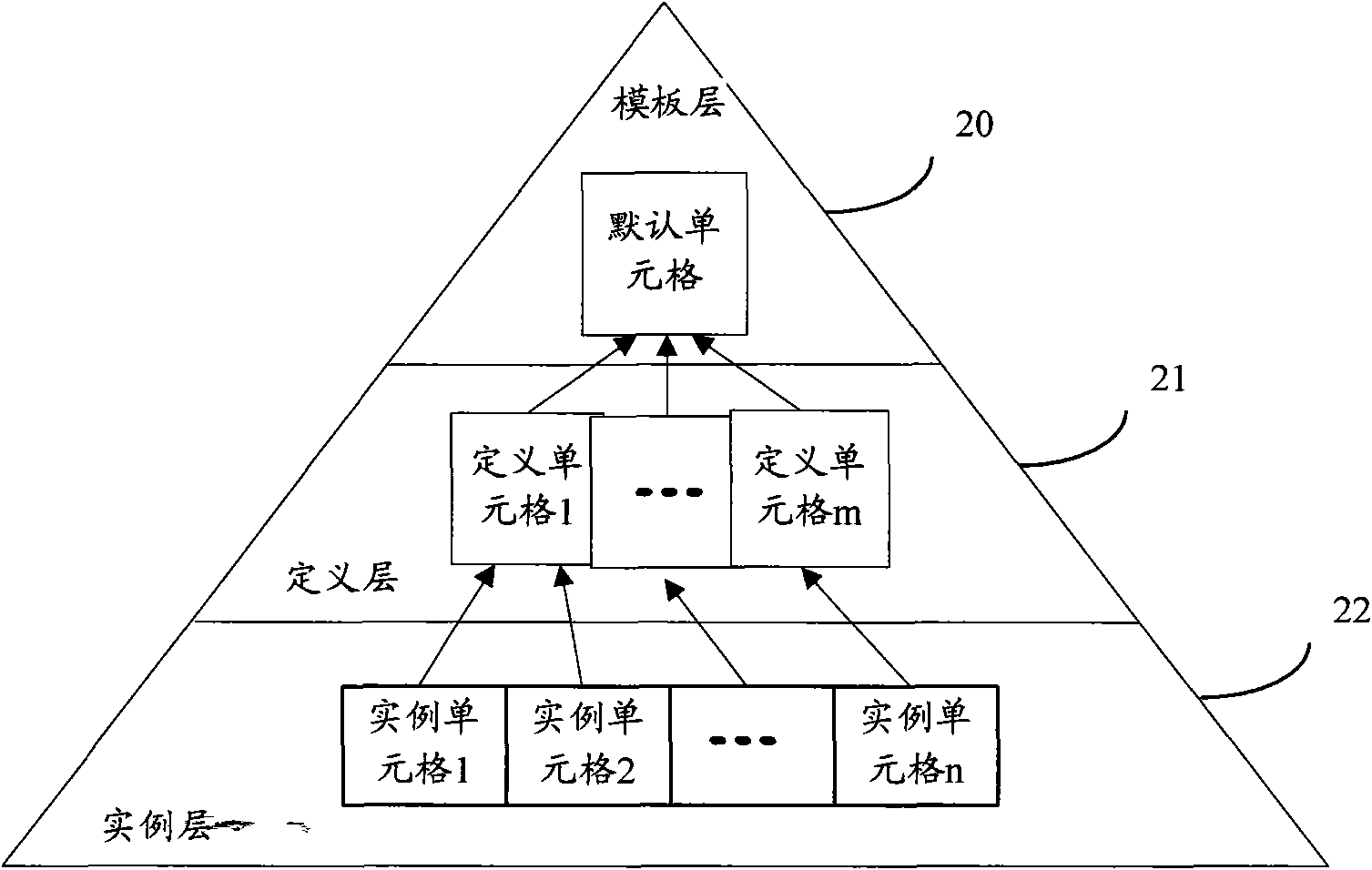

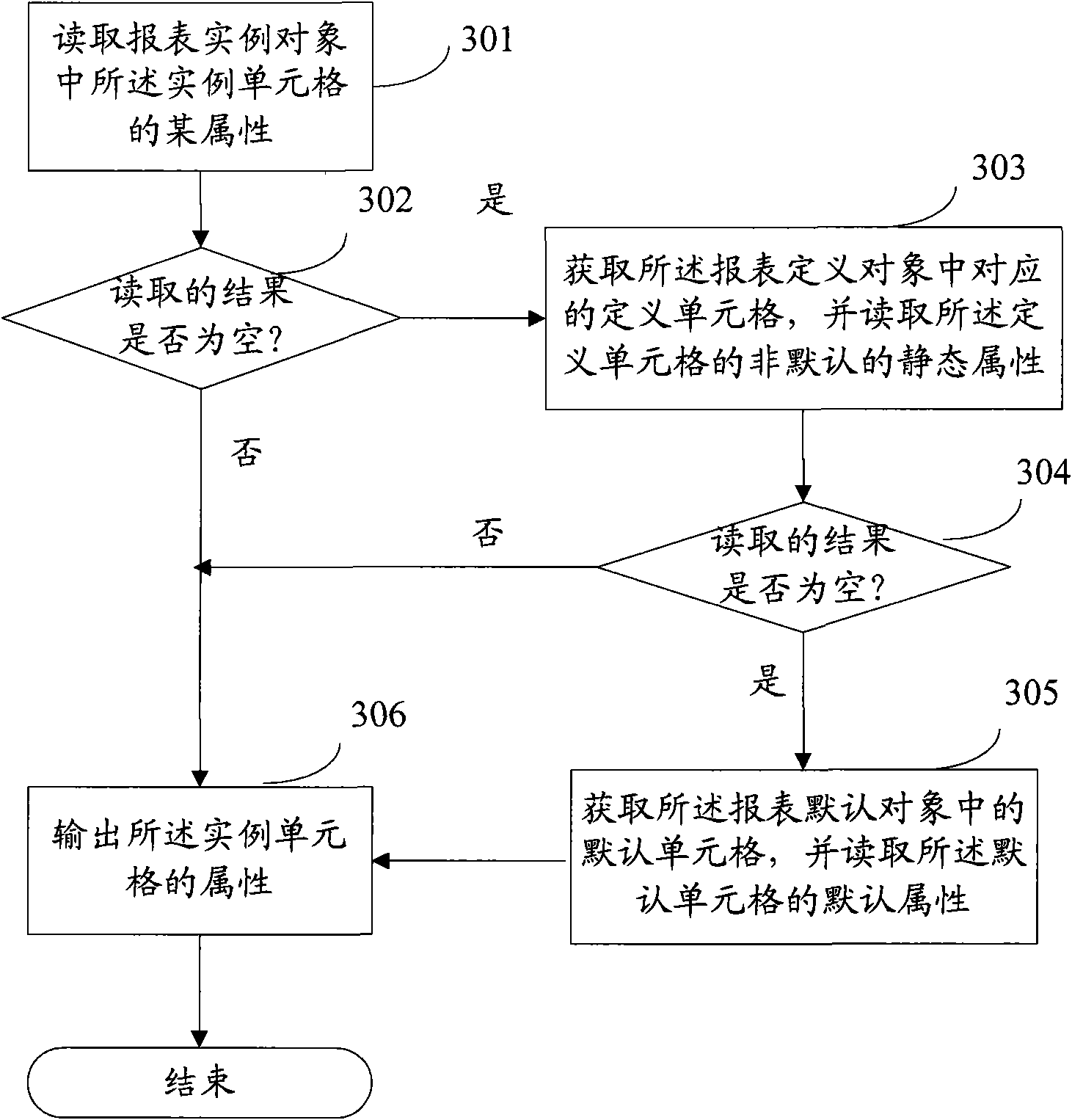

Method and apparatus for storing report form embodiment, and method for reading report form

InactiveCN101615187AEasy access to relevant attributesSave storage spaceSpecial data processing applicationsDatabase

The invention discloses a method and an apparatus for storing a report form embodiment. The method comprises the following steps: dividing the report form subject into a three-layer structure including a template layer, a definition layer and an embodiment layer; storing all default attributes of report form embodiment table cell into a default table cell of the report form default object of the template layer; storing the report form definition table cell corresponding to the report form embodiment table cell into the report form definition object of the definition layer, wherein the definition table cell stores the non-default static attribute of the report form embodiment table cell; and storing the report form embodiment table cell into the report form embodiment subject in the embodiment layer, wherein the report form embodiment table cell stores the automatic attribute thereof. The invention also discloses a method for reading the report form embodiment. By utilizing the invention, the memory resource can be saved, and the memory usage efficiency is improved.

Owner:NEUSOFT CORP

Dual laser beam dual side simultaneous welding wire filling droplet transfer monitoring system and method based on high-speed photography

ActiveCN111203639ADetailed analysisReduce memory requirementsLaser beam welding apparatusImaging processingControl system

The invention discloses a dual laser beam dual side simultaneous welding wire filling droplet transfer monitoring system and method based on high-speed photography. In the process of dual laser beam dual side simultaneous welding wire filling, a droplet transfer mode and frequency are monitored in real-time by a high-speed camera system, so that the purpose of monitoring dual laser beam dual sidesynchronous welding wire filling droplet transfer at different wire feeding speeds in the whole process is achieved. The dual laser beam dual side simultaneous welding wire filling droplet transfer monitoring system mainly includes four parts of a duel laser beam duel side simultaneous welding wire filling system, a high-speed video acquisition and processing system, a full digital wire feeding control system and an image processing system, and is characterized in that the droplet transfer on both sides of a stringer is monitored simultaneously by two high-speed cameras, and the acquired images are processed quickly by the digital image processing software, so that the droplet transfer frequency is calculated quantitatively. According to the dual laser beam dual side simultaneous welding wire filling droplet transfer monitoring system and method, the droplet transfer mode and frequency can be monitored in real time, and thus the weld quality of laser welding is improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

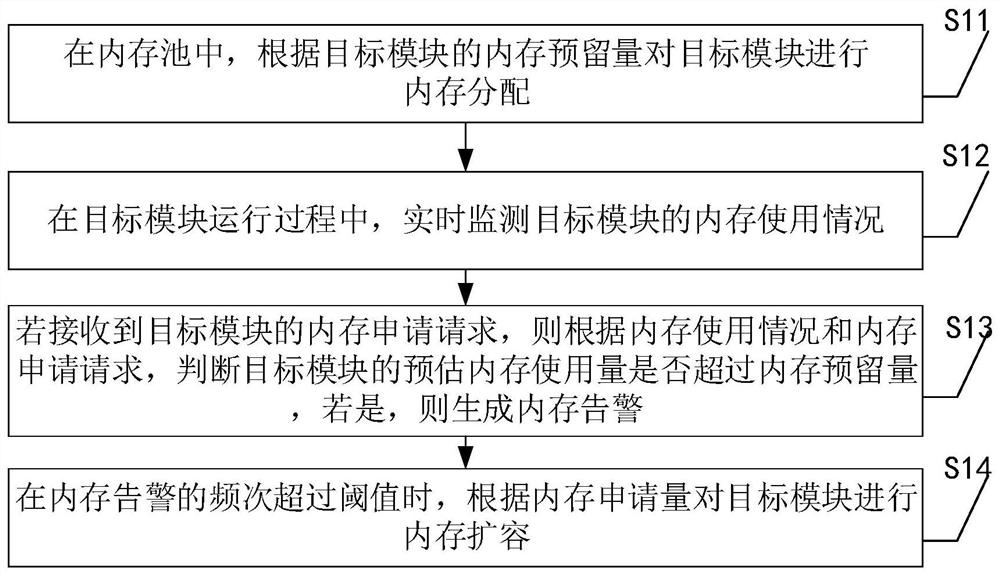

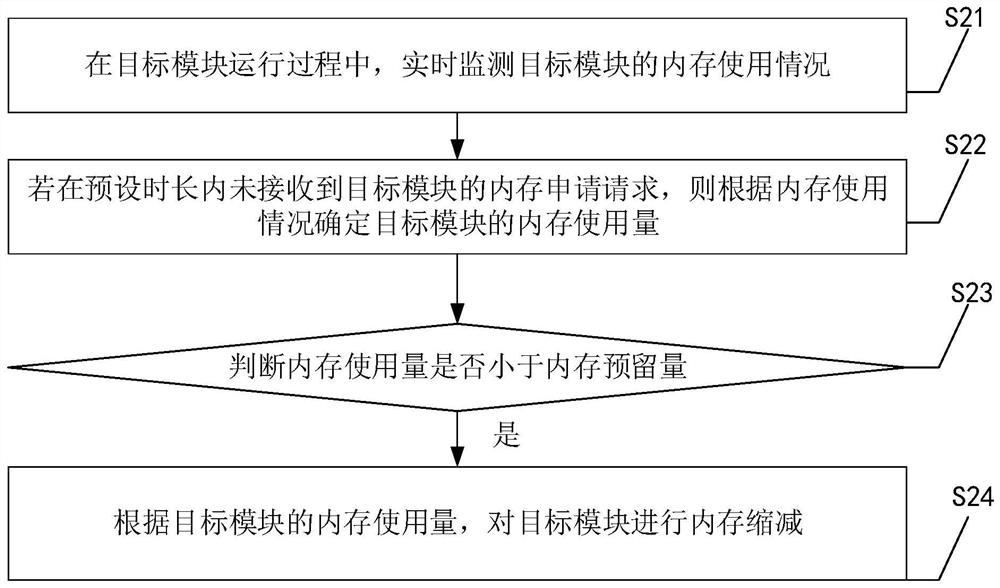

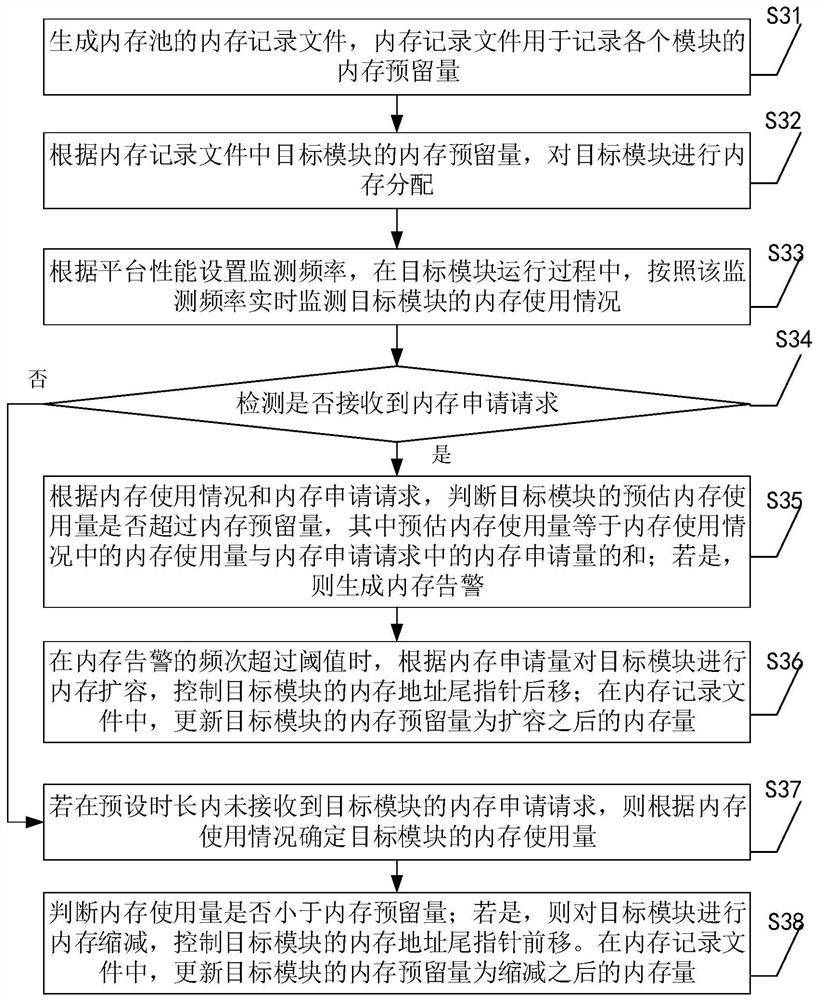

Memory management method, device and equipment and readable storage medium

InactiveCN113608862AMonitor memory usage in real timeAccurate assessment of memory requirementsResource allocationHardware monitoringEngineeringTerm memory

The invention discloses a memory management method, which can allocate a memory for a module in advance, avoid the defects caused by dynamic memory application, can monitor the memory use condition and the memory application request of the module in real time after the memory is allocated for the module, and can generate a memory alarm when the memory demand of the module exceeds the memory amount allocated in advance, and perform memory expansion on the module when the frequency of the memory alarm is relatively high. The purpose of accurately evaluating the memory requirement of the module is achieved, the problem that the software performance cannot be fully exerted due to the fact that too little memory is distributed to the module is avoided, and the memory use efficiency is improved. In addition, the invention further provides a memory management device and equipment and a readable storage medium, and the technical effects of the memory management device and equipment correspond to the technical effects of the method.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

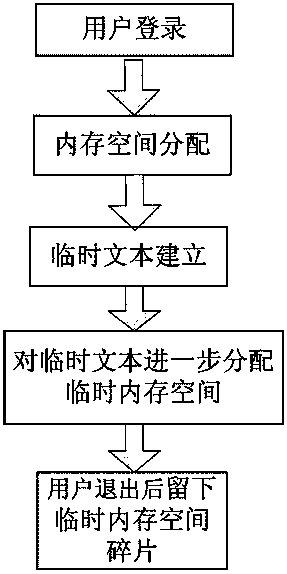

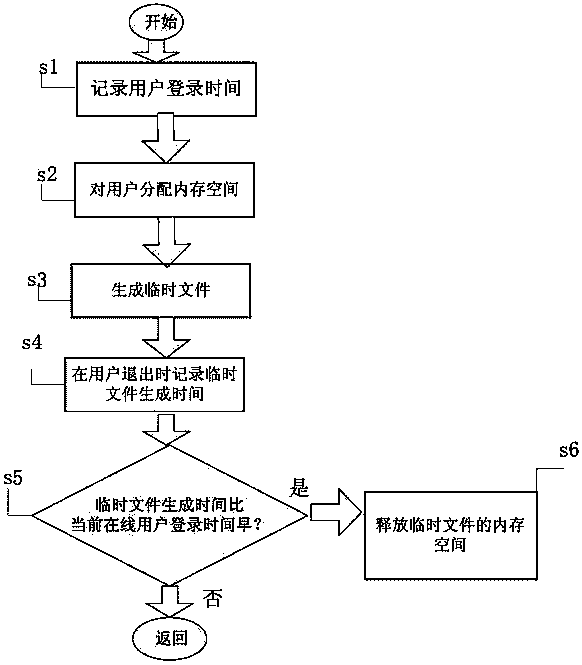

Dynamic memory releasing method

InactiveCN103744797ASolve the problem of generating memory fragmentationImprove memory usage efficiencyResource allocationMemory adressing/allocation/relocationSpace allocationTemporary file

The invention provides a dynamic memory releasing method used for dynamically releasing memory used in a temporary file generated when a user logs in to a server. The dynamic memory releasing method comprises the step of recording the user log-in time, namely, the time when the user logs in to the server is recorded; the step of allocating memory space, namely, the memory space is allocated for the log-in user; the step of generating the temporary file, namely, the corresponding temporary file is generated when the user operates the file; the step of recording the temporary file generating time, namely, the generating time of the temporary file when the user logs out the server is recorded; the step of comparing the generating time of the temporary file, namely, the generating time of the temporary file and the time when all current on-line users log in to the server are compared; the step of releasing the memory space of the temporary file, namely, when the generating time of the temporary time is earlier than the log-in time of all the current on-line users, the memory space of the temporary file is released. By means of the method, the memory space of the temporary file generated when the users log out the server can be dynamically and accurately released, and the memory using rate is greatly improved.

Owner:OPZOON TECH

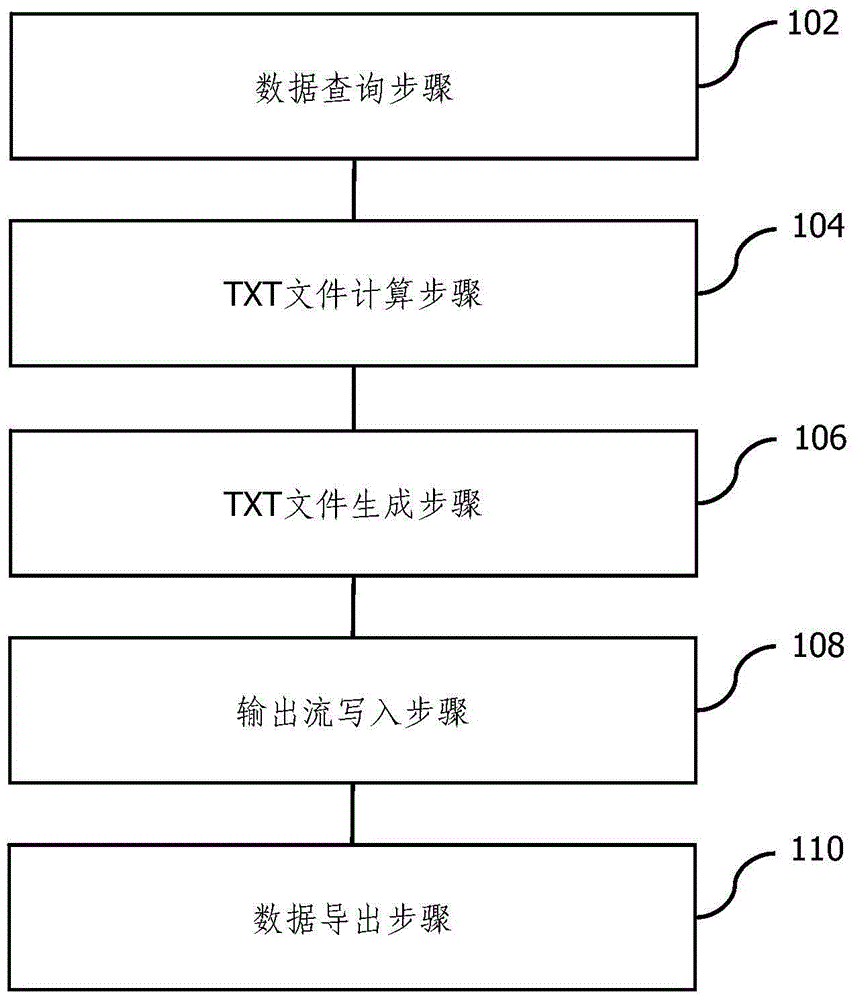

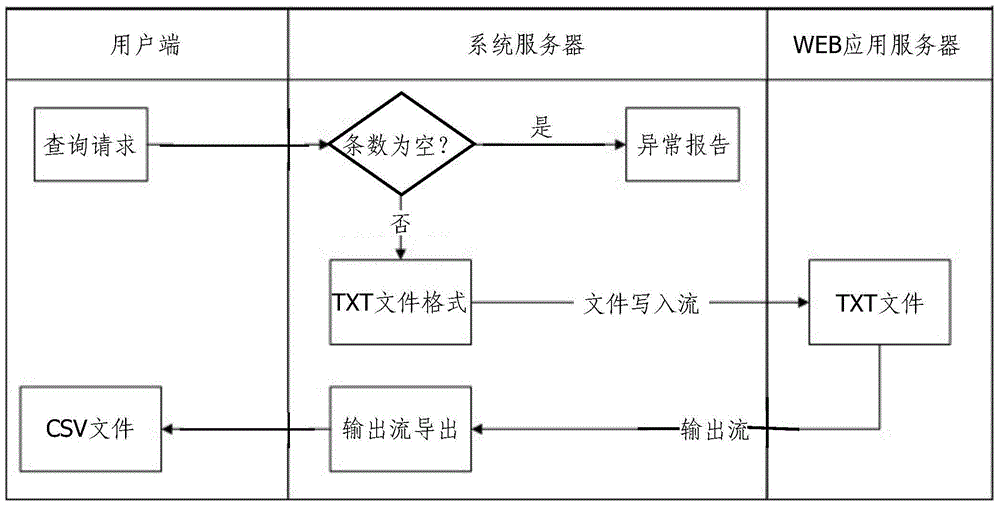

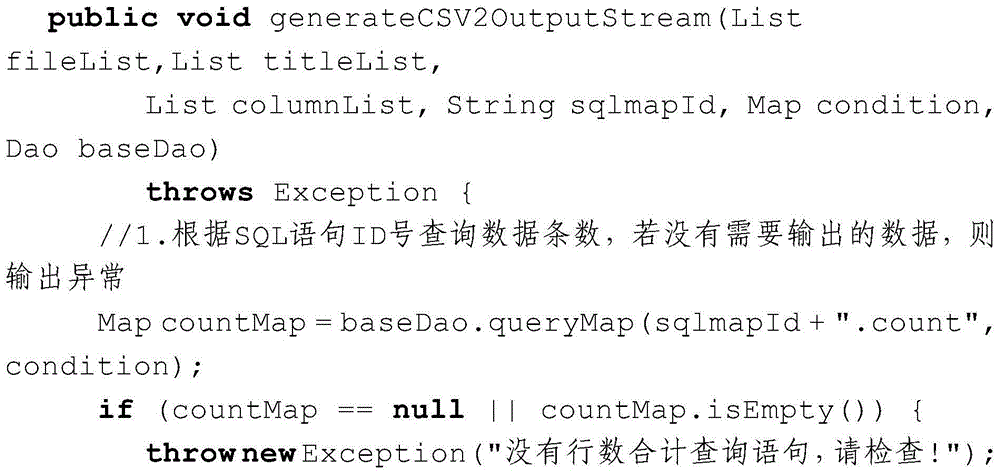

Method for downloading mass data on data platform

InactiveCN104866485AReduce occupancyImprove memory usage efficiencySpecial data processing applicationsData queryData export

The invention discloses a method for downloading mass data on a data platform, which is suitable for downloading the mass data. The method comprises the following steps of a data query step that a system server queries a number of data items which conform to the conditions in a database; a TXT file computing step that the system server pages the data items and determines a file format of TXT files according to the total number of the data items and the number of the TXT files corresponding to each page; a TXT file generation step that the TXT files are generated on a local WEB application server according to the file format of TXT files, the system server writes the data items into the TXT files and releases the memory resources of the system server after writing; an output flow writing step that an output stream is formed in an internal memory of the system server, the output stream is data flow, the data items in the TXT files are circularly read from the local WEB application server and the output stream is written; and a data export step that the data items are exported from the output stream, and the internal memory resources of the system server are released after exporting.

Owner:上海宝钢国际经济贸易有限公司

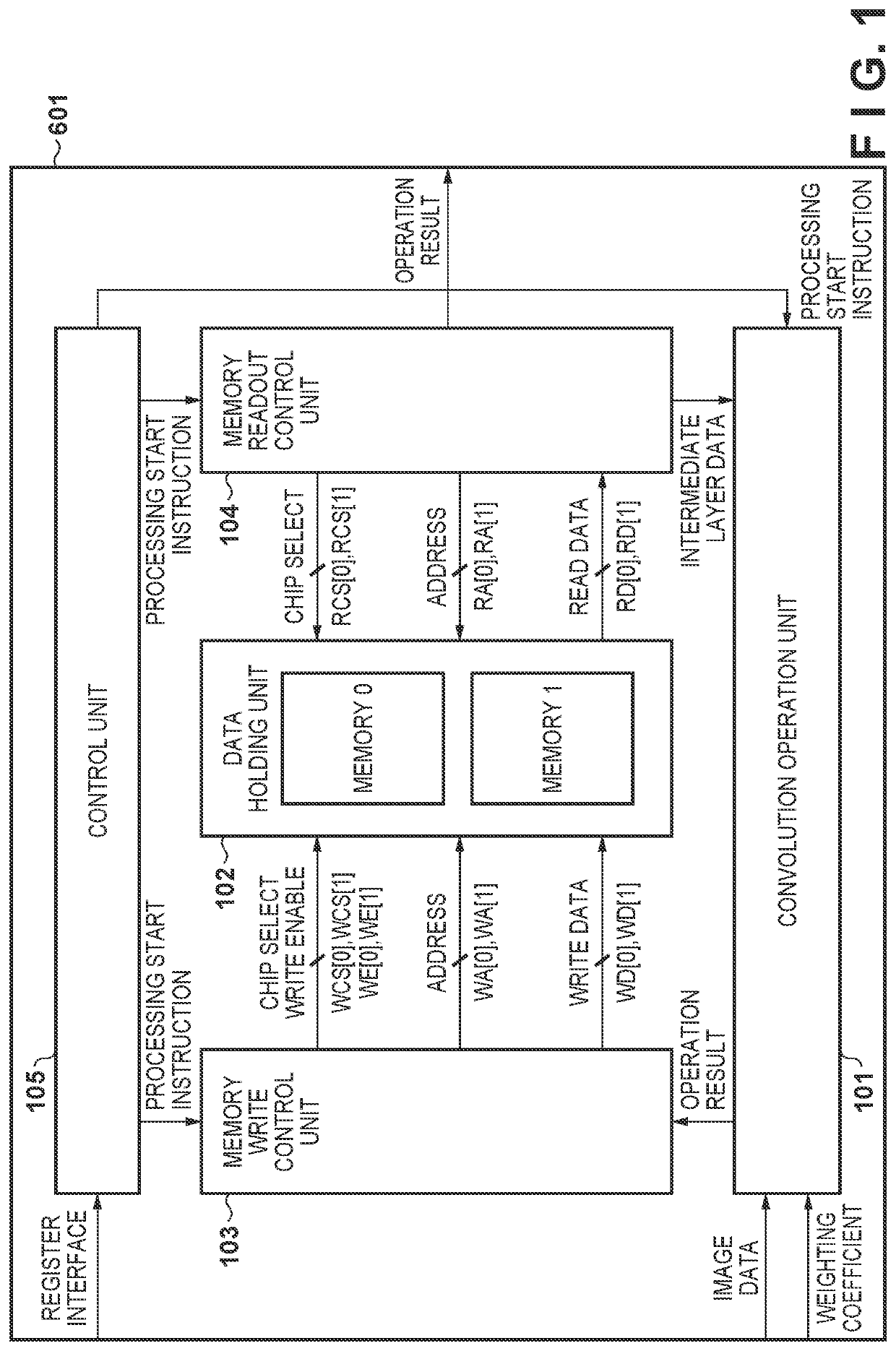

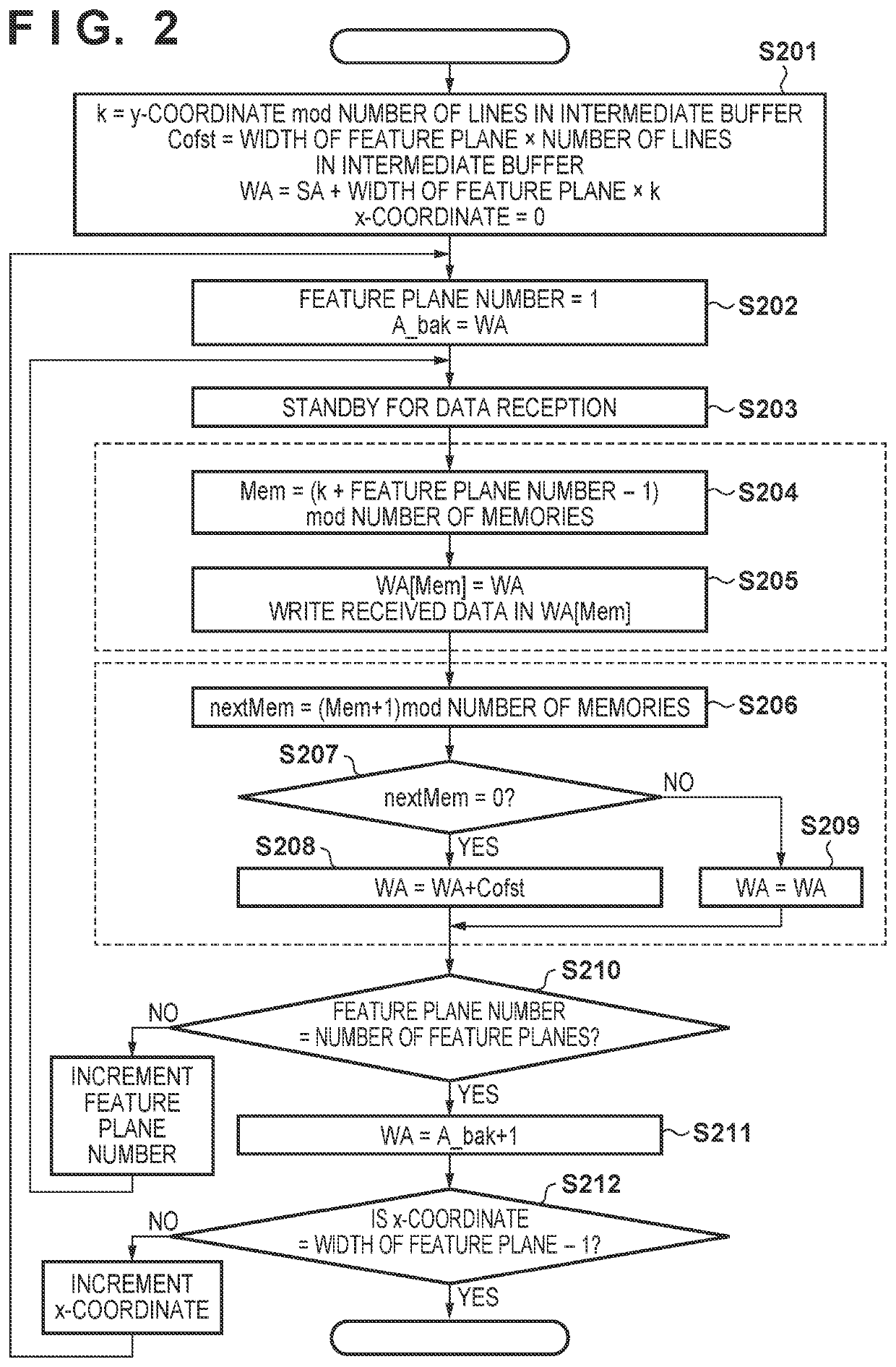

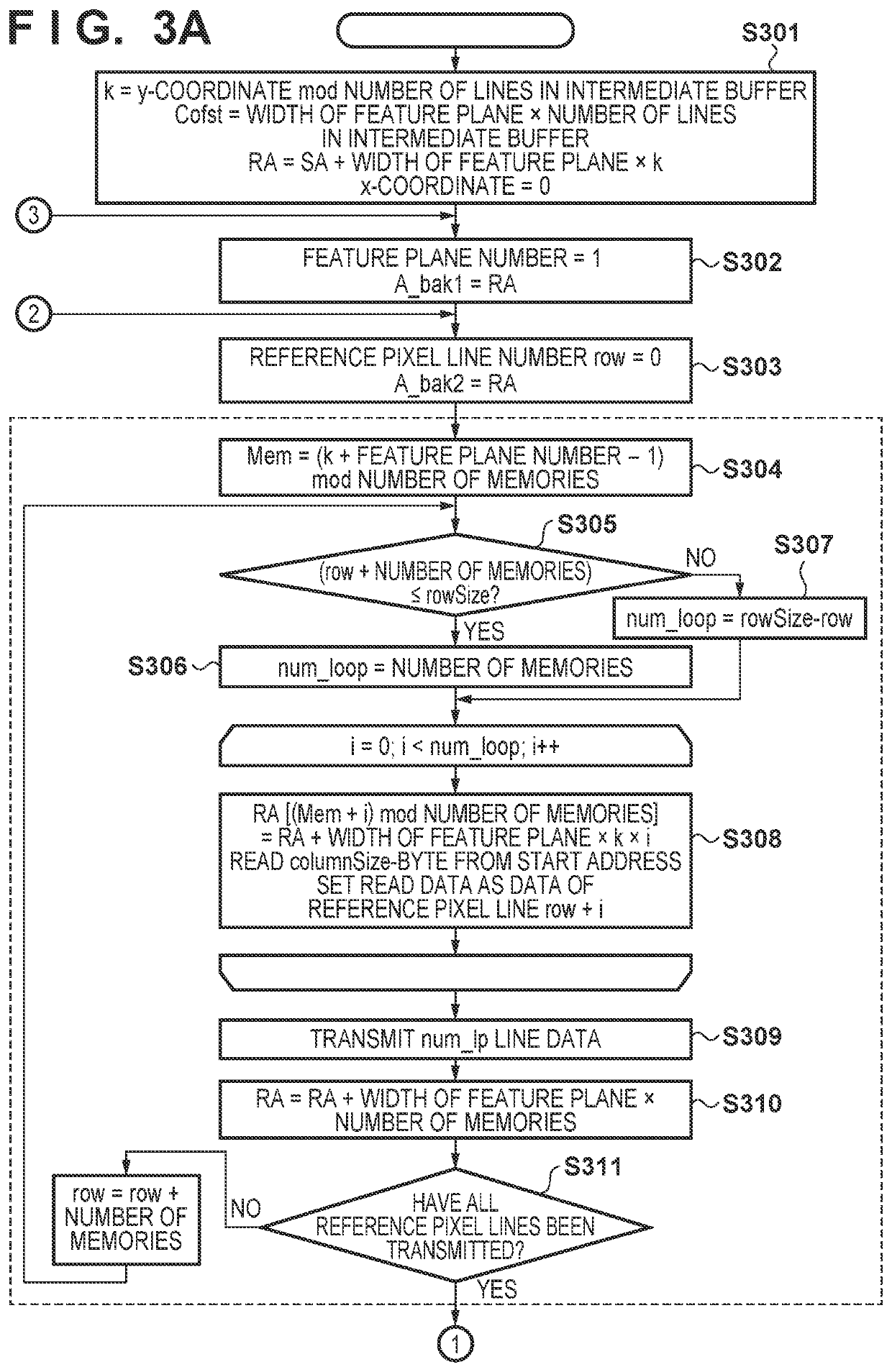

Operation processing apparatus, operation processing method, and non-transitory computer-readable storage medium

ActiveUS20210011653A1Improve memory usage efficiencyIncrease speedInput/output to record carriersImage memory managementComputer hardwareData transport

An apparatus for calculating feature planes by hierarchically performing filter operation processing for input image data, comprises an operation unit configured to perform a convolution operation, a holding unit including memories configured to store image data and an operation result of the operation unit, a unit configured to receive the operation result, and write, out of the operation result, data of successive lines of the same feature plane in different memories of the memories and write data at the same coordinates of feature planes in the same layer in different memories of the memories, and a unit configured to read out the data of the successive lines from the different memories, read out the data at the same coordinates of the different feature planes in the same layer, and transmit the data to the operation unit.

Owner:CANON KK

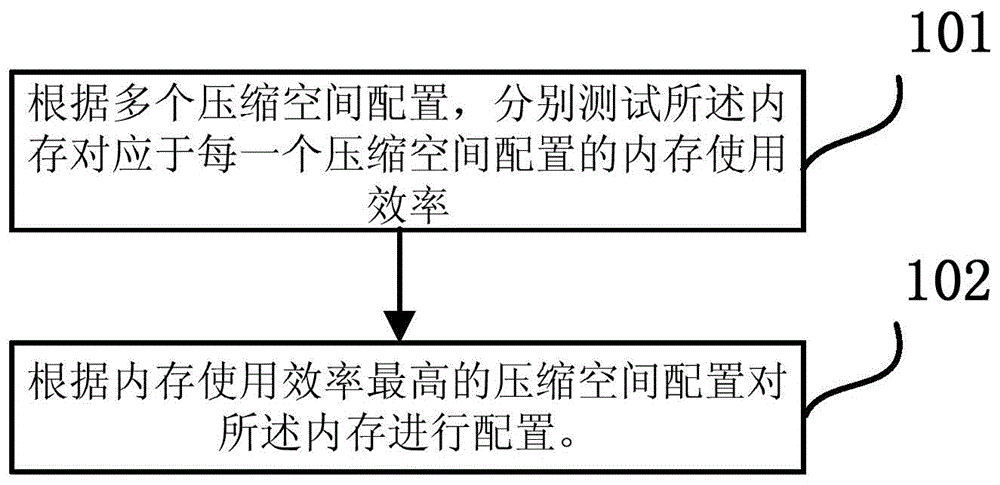

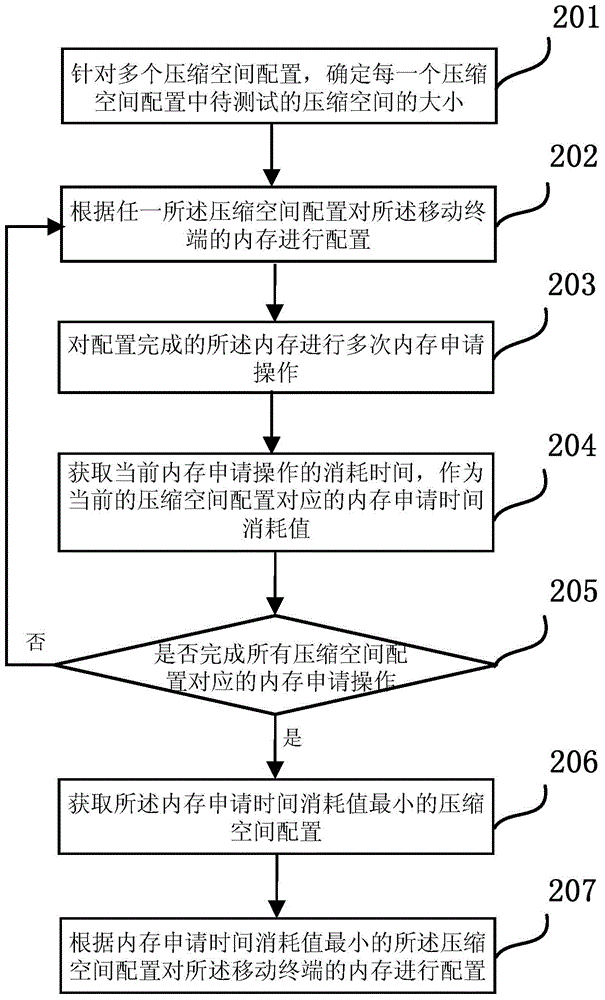

Memory allocation method and device

InactiveCN105204940AAvoid efficiencyAvoid the situationResource allocationInput/output processes for data processingParallel computingHigh memory

The invention provides a memory allocation method and device which are used for a terminal provided with a memory. The memory allocation method specifically includes the test step and the allocation step, wherein in the test step, according to multiple compression space allocations, memory use efficiency of each compression space allocation corresponding to the memory is tested; in the allocation step, the memory is allocated according to the compression space allocation with the highest memory use efficiency. By means of the memory allocation method and device, the size of compression space in the memory compression technology can be dynamically adjusted according to different running programs of users, the aim of improving the memory use efficiency of a system to the largest extent is achieved, and the situation that in the prior art, after the memory compression technology is adopted, memory use efficiency of part of user terminals is reduced is avoided.

Owner:ZTE CORP

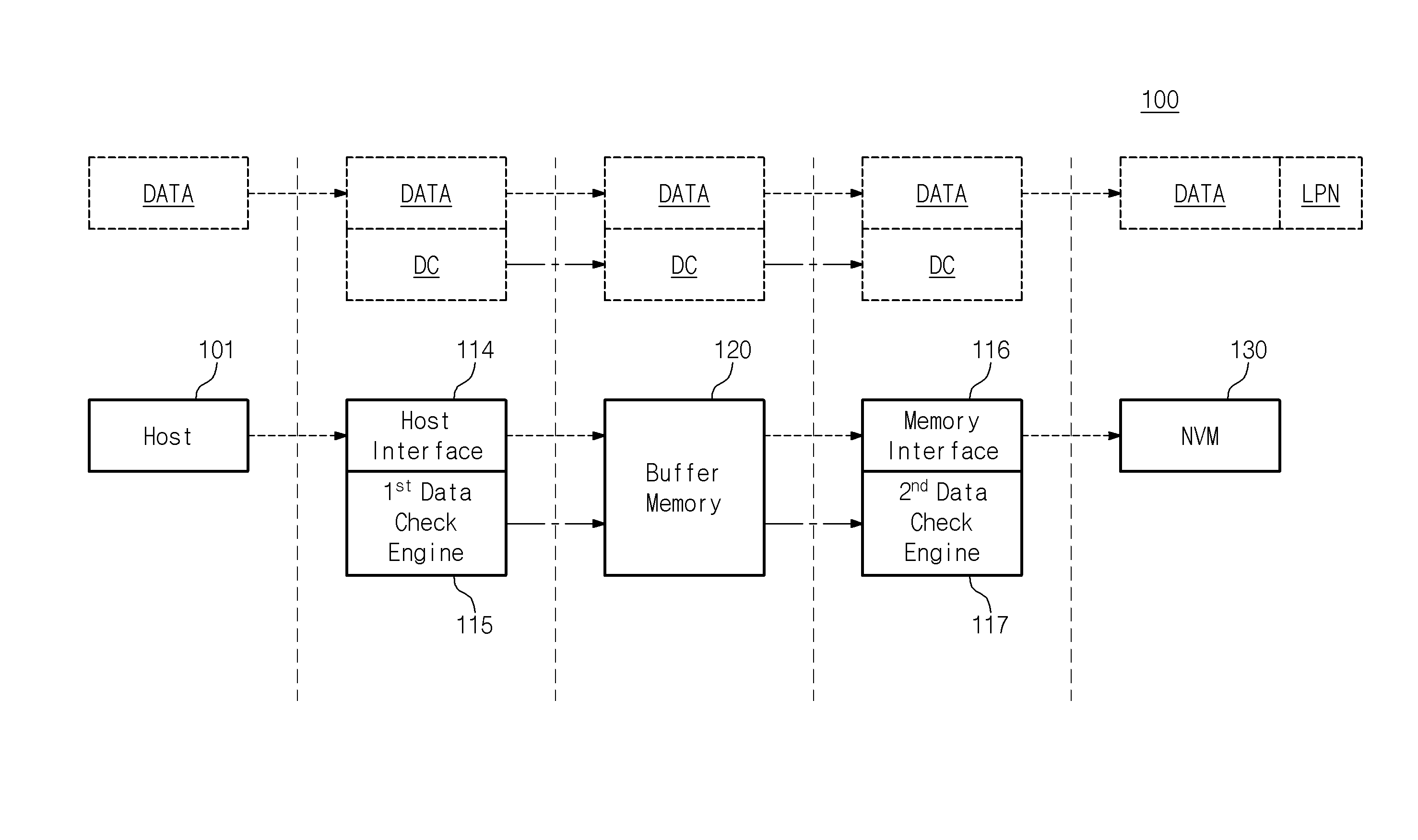

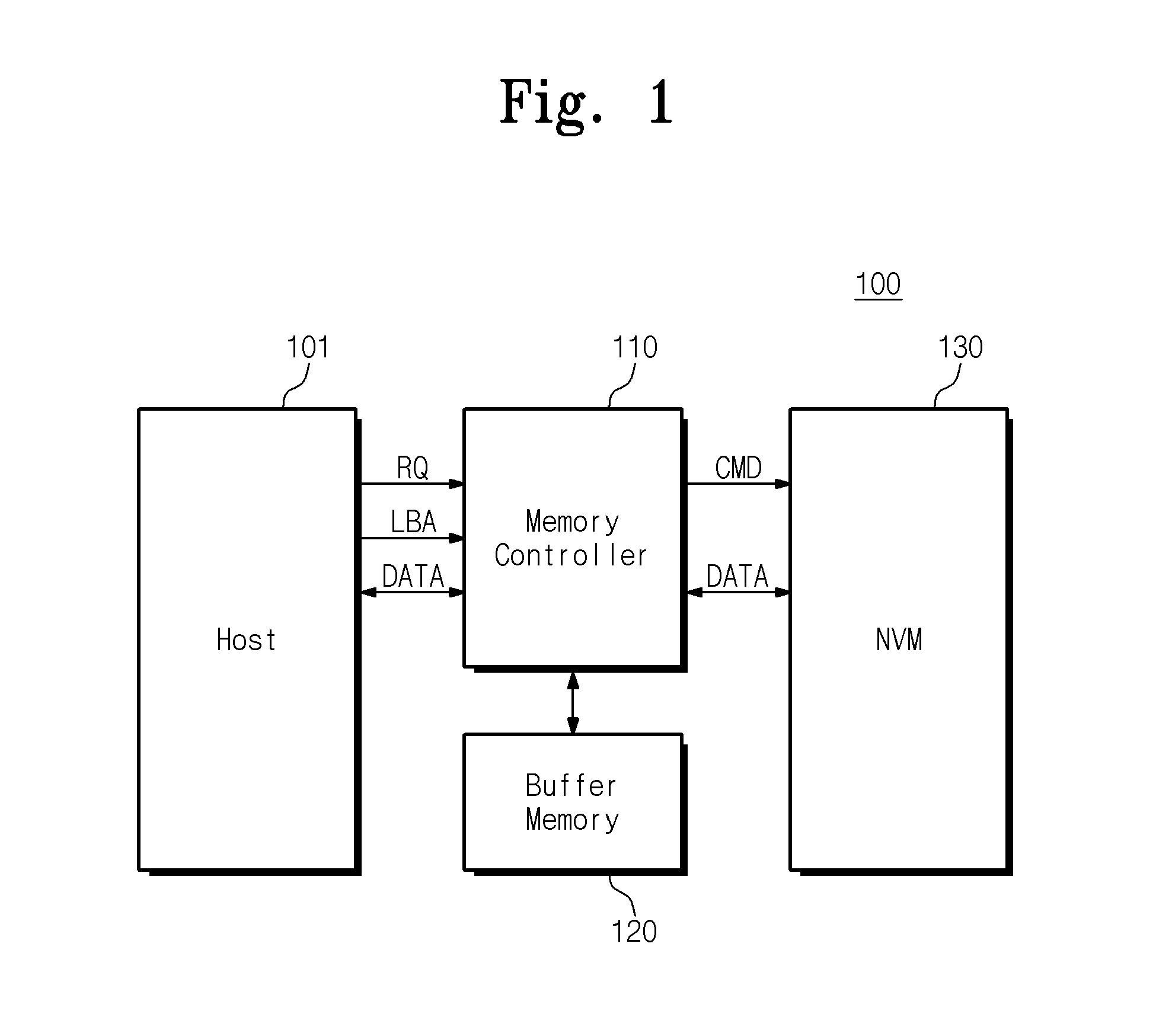

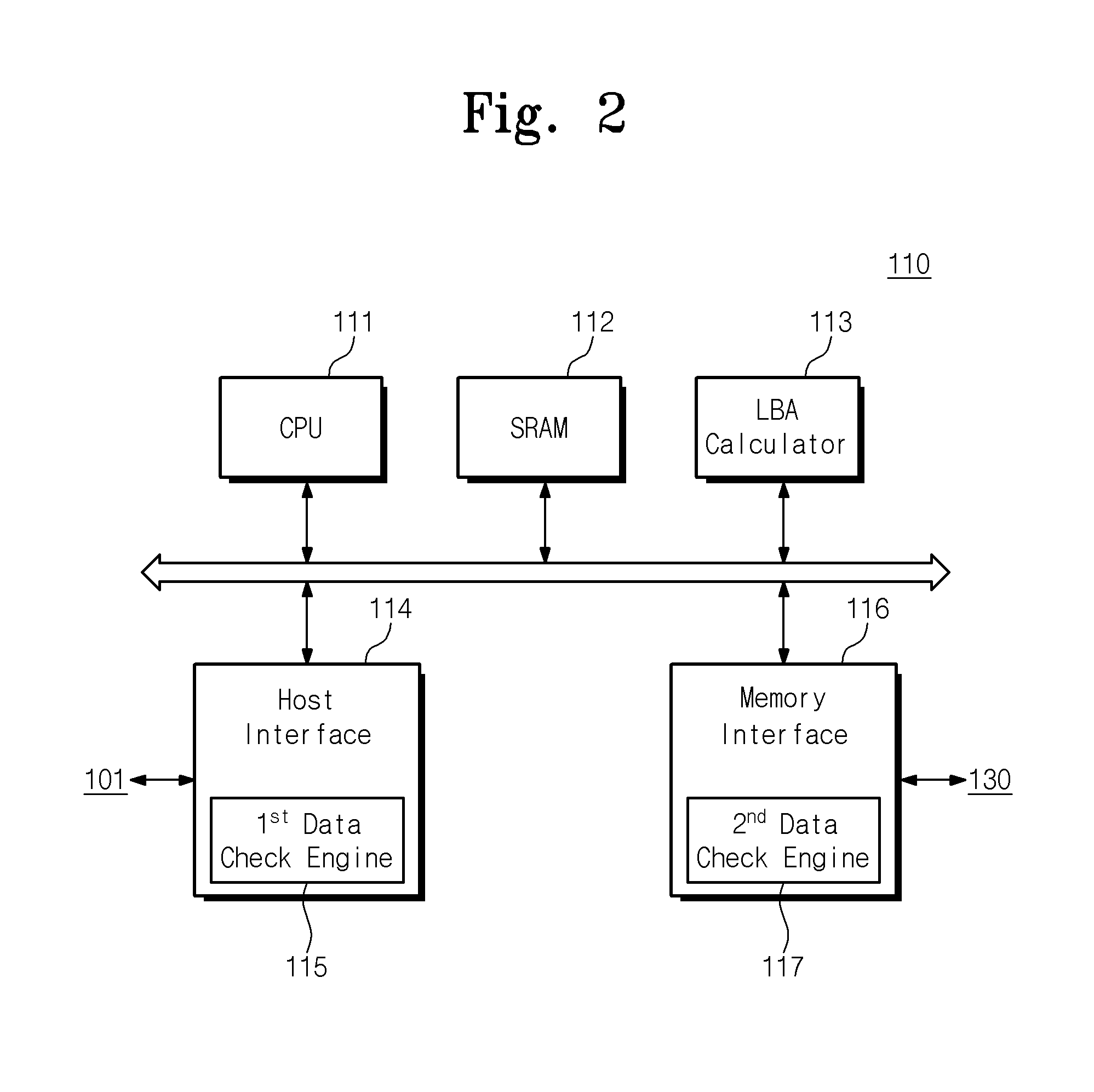

Memory system monitoring data integrity and related method of operation

ActiveUS9542264B2Improve memory usage efficiencyError detection onlyStatic storageData integrityInternet privacy

A memory controller comprises at least one interface configured to receive a request, user data, and an address from an external source, a first data check engine configured to generate data check information based on the received address and the user data in response to the received request, and a second data check engine configured to check the integrity of the user data based on the generated data check information where the user data is transmitted to the nonvolatile memory. The memory controller is configured to transmit the user data received from the external source to an external destination where the integrity of the user data is verified according to a check result, and is further configured to transmit an interrupt signal to the external source and the external destination where the check result indicates that the user data comprises an error.

Owner:SAMSUNG ELECTRONICS CO LTD

Paging implementation method and paging system

ActiveCN107180043BAvoid disadvantagesImprove memory usage efficiencySpecial data processing applicationsDatabase indexingParallel computingPaging

An embodiment of the invention provides a paging realization method. The method comprises the steps of receiving paging request information, wherein paging request data comprises a query SQL and check information of second data; judging whether a first cache stores the query SQL or not; retrieving the first cache according to the query SQL to obtain a first fragmentation index; judging whether a second cache stores the second data or not according to the check information; obtaining first data from the second cache; and obtaining the second data according to the first data, wherein the first fragmentation index includes at least one fragmentation index, the first cache stores a corresponding relationship between the query SQL and the first fragmentation index, the second cache stores the first data, and the first data is marked with one fragmentation index. Through combined use of the first cache and the second cache, the shortcomings of database paging and memory paging are overcome, the memory usage efficiency is improved, and a high-concurrent paging request is satisfied.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

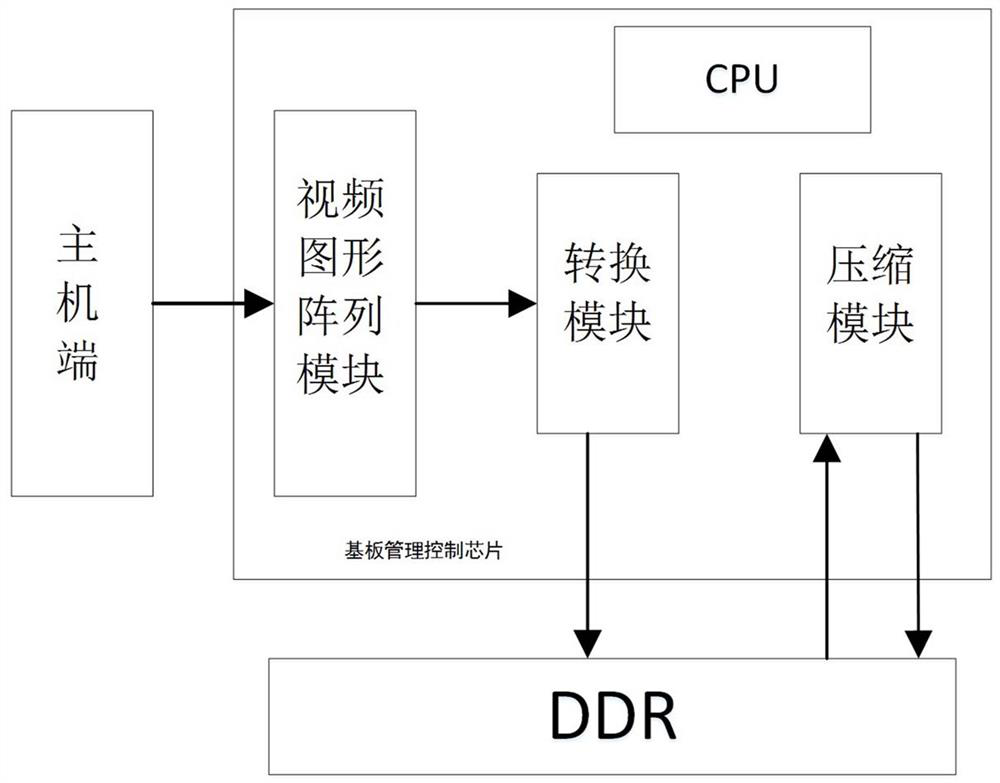

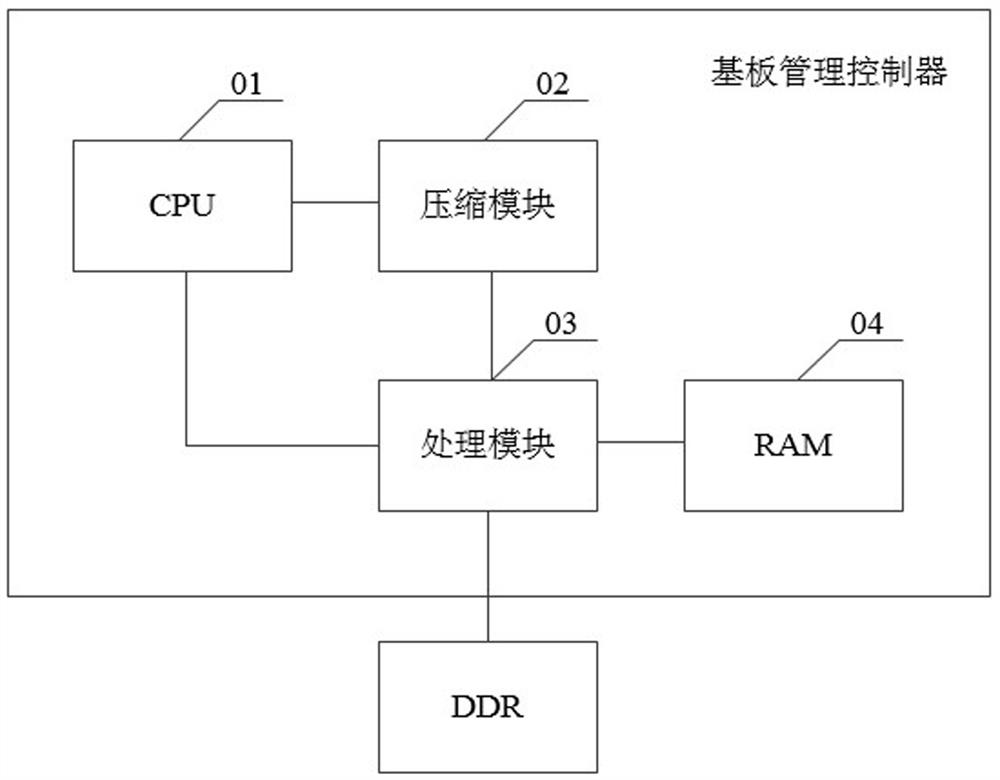

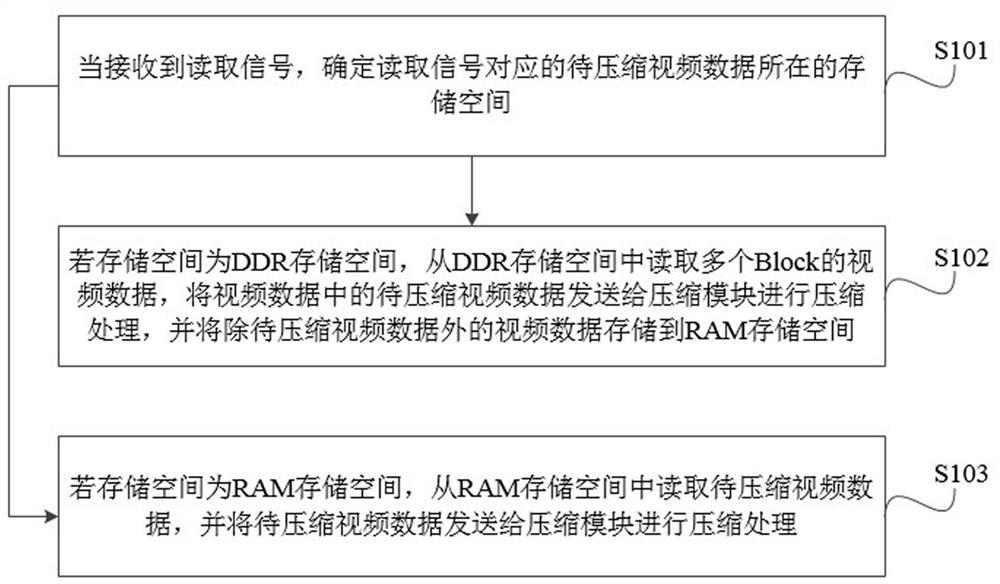

Video data processing method, system and device and computer readable storage medium

ActiveCN114466196AIncrease the amount of dataReduce occupancyDigital video signal modificationComputer hardwareVideo transmission

The invention discloses a video data processing method, system and device and a computer readable storage medium, and relates to the field of video transmission, and the video data processing method comprises the steps: determining a storage space where to-be-compressed video data corresponding to a reading signal is located when the reading signal is received; if the storage space is a DDR storage space, reading video data of a plurality of Blocks from the DDR storage space, sending to-be-compressed video data in the video data to a compression module for compression processing, and storing the video data except the to-be-compressed video data into an RAM storage space; and if the storage space is the RAM storage space, reading the to-be-compressed video data from the RAM storage space, and sending the to-be-compressed video data to a compression module for compression processing. According to the method and the device, the memory use efficiency can be improved, the occupation of the DDR bus bandwidth by the video compression function is reduced, and the purpose of improving the system performance is achieved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

IP address list matching method and device

InactiveCN103581358BImprove memory usage efficiencyEliminate the Risk of Storage Spaces FailureTransmissionIp addressNetwork communication

The invention discloses an IP address list matching method and device, and relates to the technical field of network communication. The method comprises the following steps of S1, obtaining an IP address list, wherein the IP address list is of a two-way chain table structure, and nodes in the two-way chain table are sequentially connected according to the size sequence of IP addresses; S2, conducting matching on the IP addresses to be matched and the IP address list to obtain the matching result. The IP address list is arranged to be of the two-way chain table structure, the nodes in the two-way chain table are sequentially connected according to the size sequence of the IP addresses, due to the fact that the nodes in the two-way link table are in a discontinuous memory, the memory use efficiency of network equipment is improved, and risks caused by failure distribution of large segment continuous memory space are removed.

Owner:OPZOON TECH

Game data processing method and device, storage medium, and computer equipment

The present application discloses a game data processing method and device, a storage medium, and computer equipment. The method includes: acquiring resource data of a game scene, the resource data including resource level position data of game resources in the game scene and Resource upper surface height data and resource lower surface height data corresponding to the resource horizontal position data; based on the resource data, determine voxel information corresponding to voxels on each area block matching the game scene, and generate The voxel file of the game scene, the voxel information corresponding to the voxel on each of the area blocks is the horizontal position data of the resource corresponding to the horizontal position of the area block, the height data of the upper surface of the resource and the Resource subsurface height data. This application reduces the memory usage size of the voxel file, improves the memory usage efficiency, and can use high-precision floating-point number representation in the height direction, which helps to improve the resource representation accuracy.

Owner:ARC GAMES CO LTD

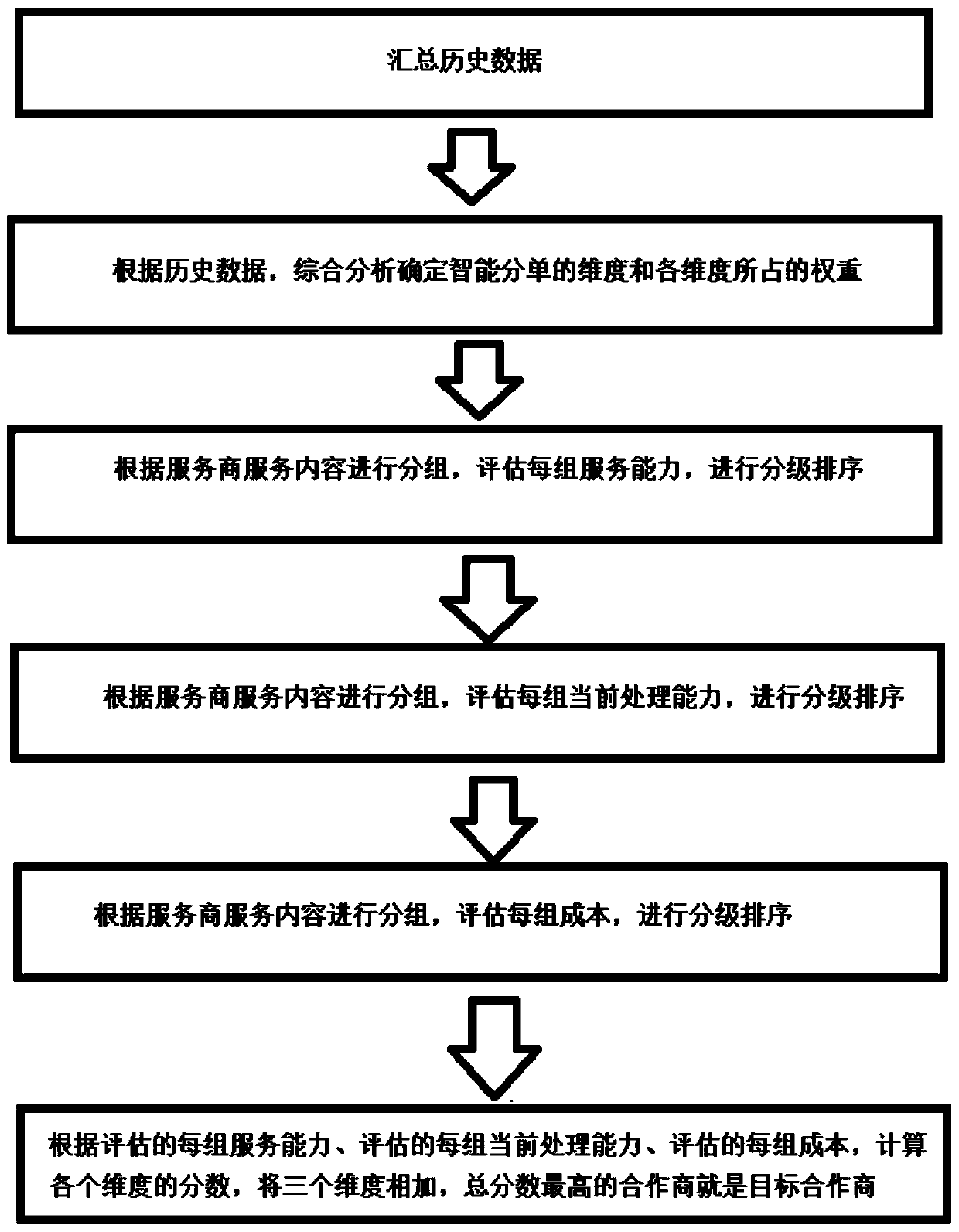

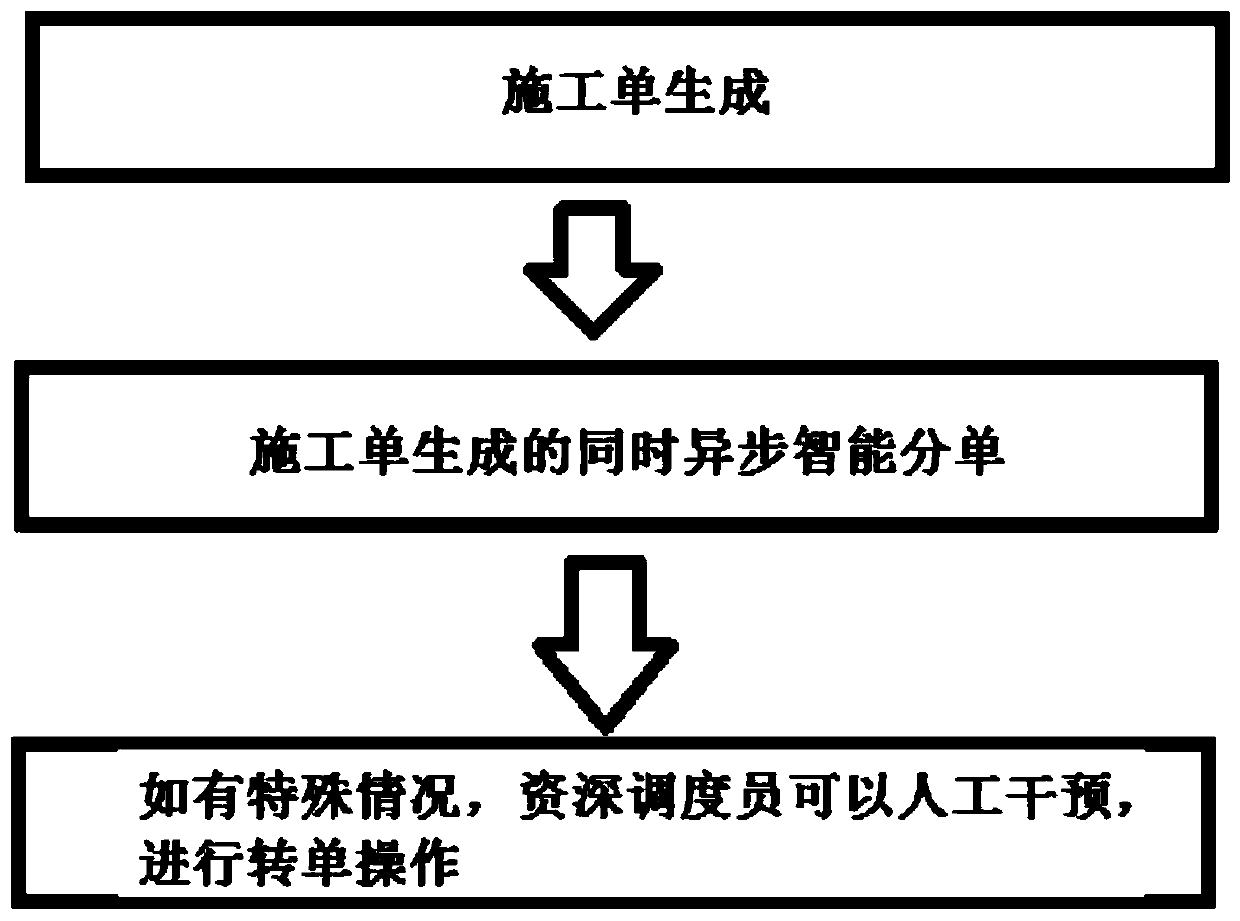

Intelligent order distribution rule dimension and statistical method for home decoration construction orders

InactiveCN111275286AImprove memory usage efficiencyReduce startup timeResourcesTime delaysService provider

The invention discloses an intelligent order distribution rule dimension and statistical method for home decoration construction orders, and relates to the field of artificial intelligence. The methodincludes: collecting historical data; performing analyzing to determine the dimension of the intelligent order and the weight of each dimension; grouping according to the service provider service content, evaluating the service capability of each group, and carrying out hierarchical sorting; grouping according to the service provider service content, evaluating the current processing capacity ofeach group, and carrying out hierarchical sorting; and calculating the score of each dimension, and adding the three dimensions, so that the partner with the highest total score is the target partner.By calculating the request flow reported in real time in real time, the cache is preheated as required, the memory of the server is reasonably used, and the memory use efficiency is improved; according to the method, cache preheating is carried out after the system is started, so that the system starting time is shortened; cache preheating is automatically carried out, the automation degree is high, and external manual participation is not needed; based on real-time calculation, compared with a timing cache preheating scheme, cache preheating can be carried out in time, and no time delay condition exists.

Owner:江苏艾佳家居用品有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com