Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

55results about How to "Improving Semantic Segmentation Accuracy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Three-dimensional building fine geometric reconstruction method integrating airborne and vehicle-mounted three-dimensional laser point clouds and streetscape images

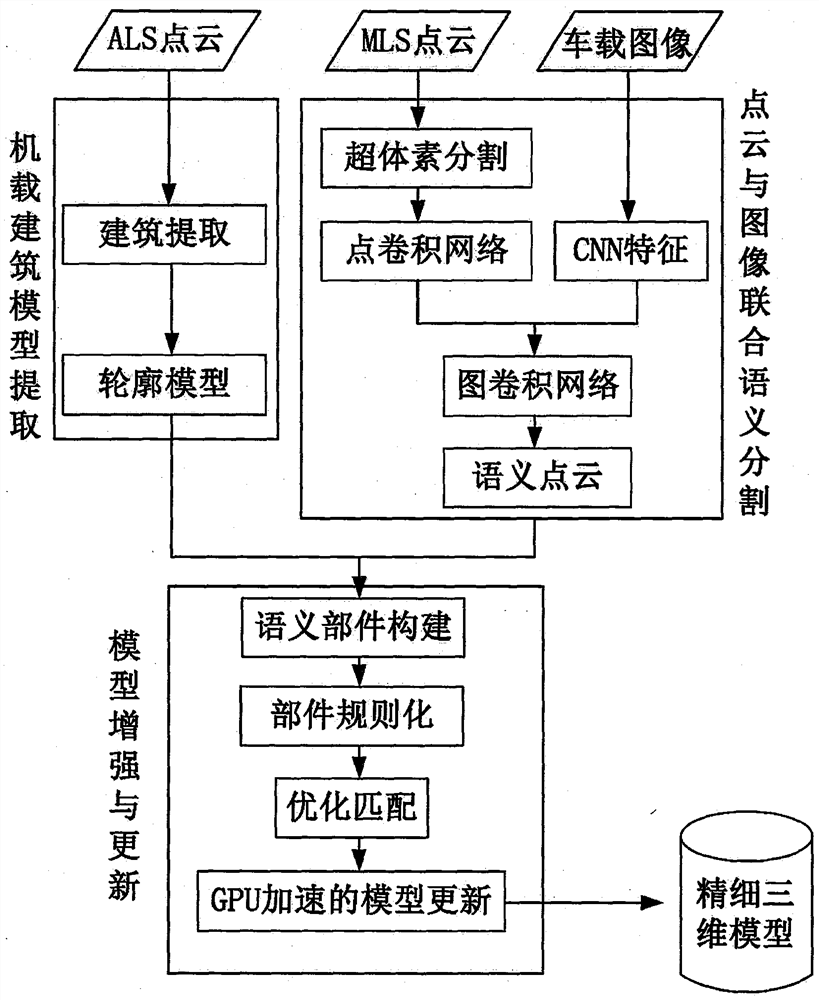

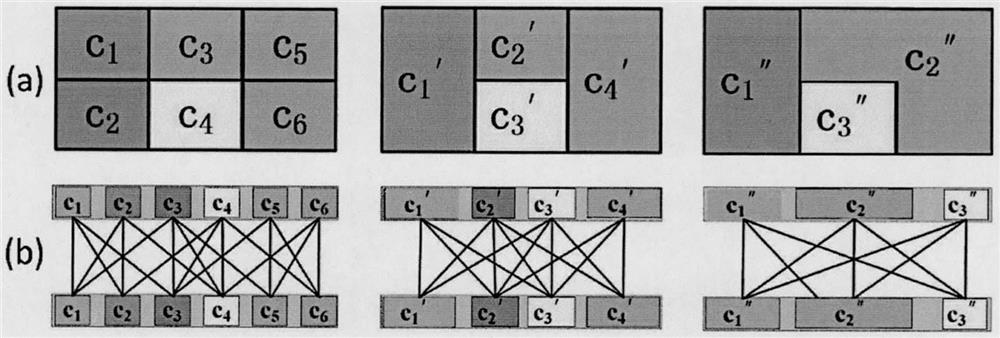

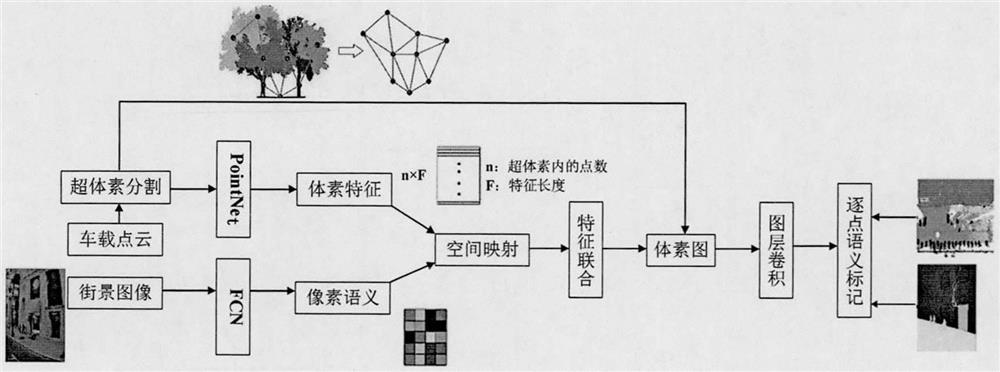

PendingCN111815776AGuaranteed geometric accuracyEfficient extractionInternal combustion piston engines3D modellingPoint cloudModel reconstruction

The invention provides a three-dimensional building fine geometric reconstruction method integrating airborne and vehicle-mounted three-dimensional laser point clouds and streetscape images. The method comprises the following steps: (1) a quick modeling method based on airborne laser data; (2) combining the semantic segmentation framework of the vehicle-mounted point cloud and the image; and (3) amodel automatic enhancement algorithm fusing multi-source data. According to the method, airborne laser point cloud, vehicle-mounted laser point cloud and streetscape images are taken as research objects, model reconstruction, model enhancement and updating are taken as targets, joint processing of point cloud and image data of different platforms is realized, and the fusion potential of variousdata is fully mined. The final research result will perfect the fusion and fine modeling framework of vehicle-mounted-airborne data, promote the development of the point cloud data semantic segmentation technology, and serve the emerging application fields of unmanned driving and the like.

Owner:山东水利技师学院

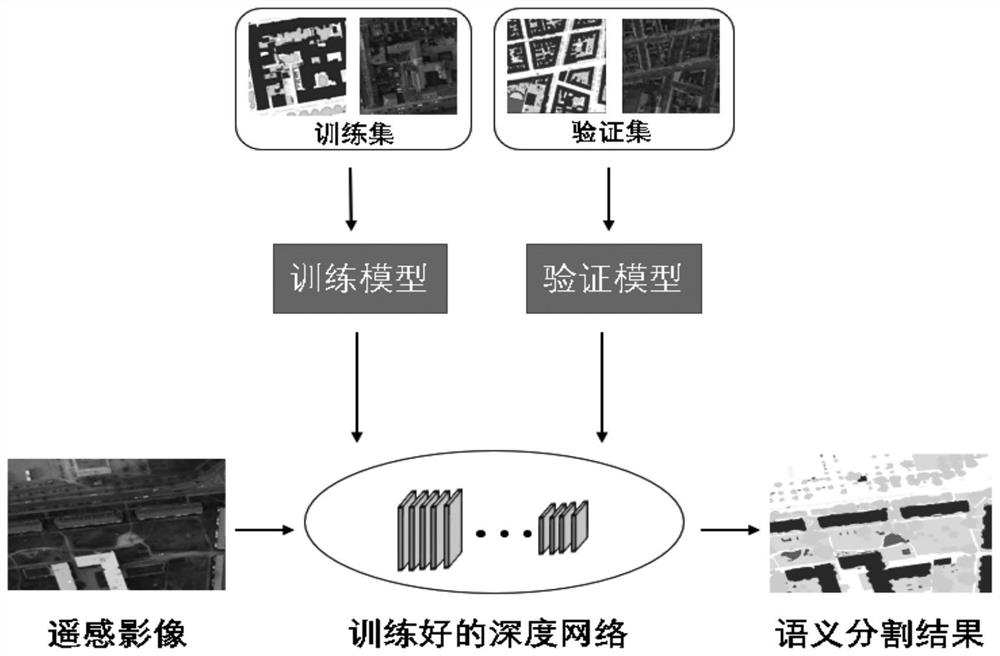

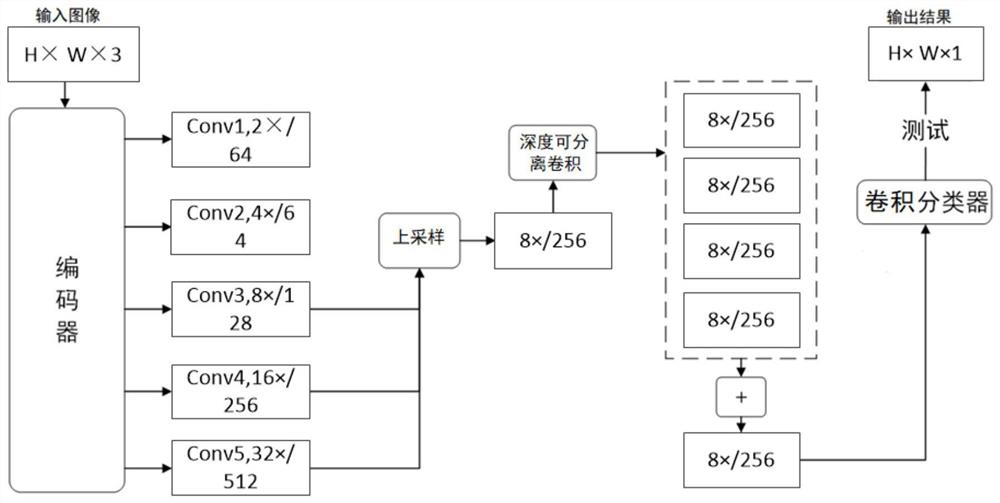

Lightweight semantic segmentation method for high-resolution remote sensing image

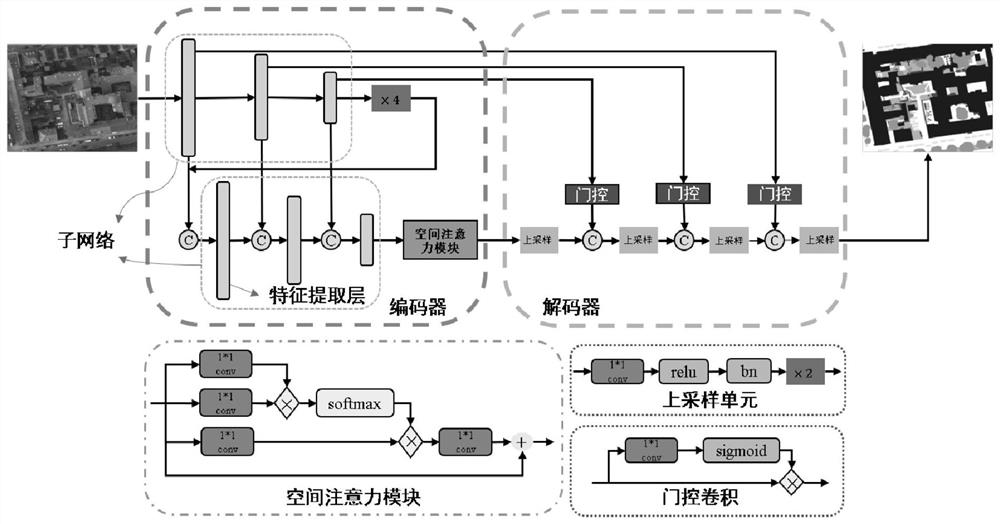

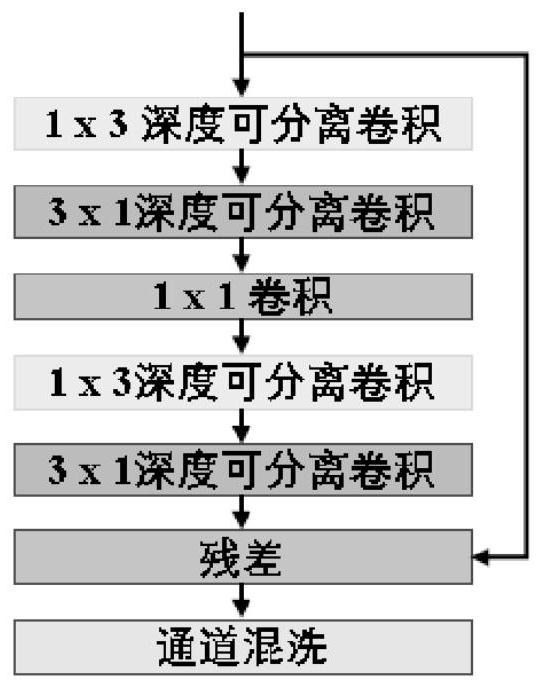

ActiveCN112183360ASolve the inefficiency of operationRun fastScene recognitionComputation complexityEncoder decoder

A lightweight semantic segmentation method for a high-resolution remote sensing image comprises the steps of network construction, training and testing. Specifically, a deep semantic segmentation network of an encoder-decoder structure is constructed for a pytorch deep learning framework, after network training is carried out based on a remote sensing image data sample set, a to-be-tested remote sensing image serves as network input. A segmentation result of the remote sensing image is obtained. According to the method, on one hand, model parameters are reduced by decomposing depth separable convolution, the calculation complexity is reduced, the semantic segmentation time of the high-resolution remote sensing image is shortened, and the semantic segmentation efficiency of the high-resolution remote sensing image is improved; and on the other hand, semantic segmentation precision is improved through multi-scale feature aggregation, a spatial attention module and gating convolution, sothat the proposed lightweight deep semantic segmentation network can accurately and efficiently realize semantic segmentation of a high-resolution remote sensing image.

Owner:SHANGHAI JIAO TONG UNIV

Semantic segmentation method and system for RGB-D image

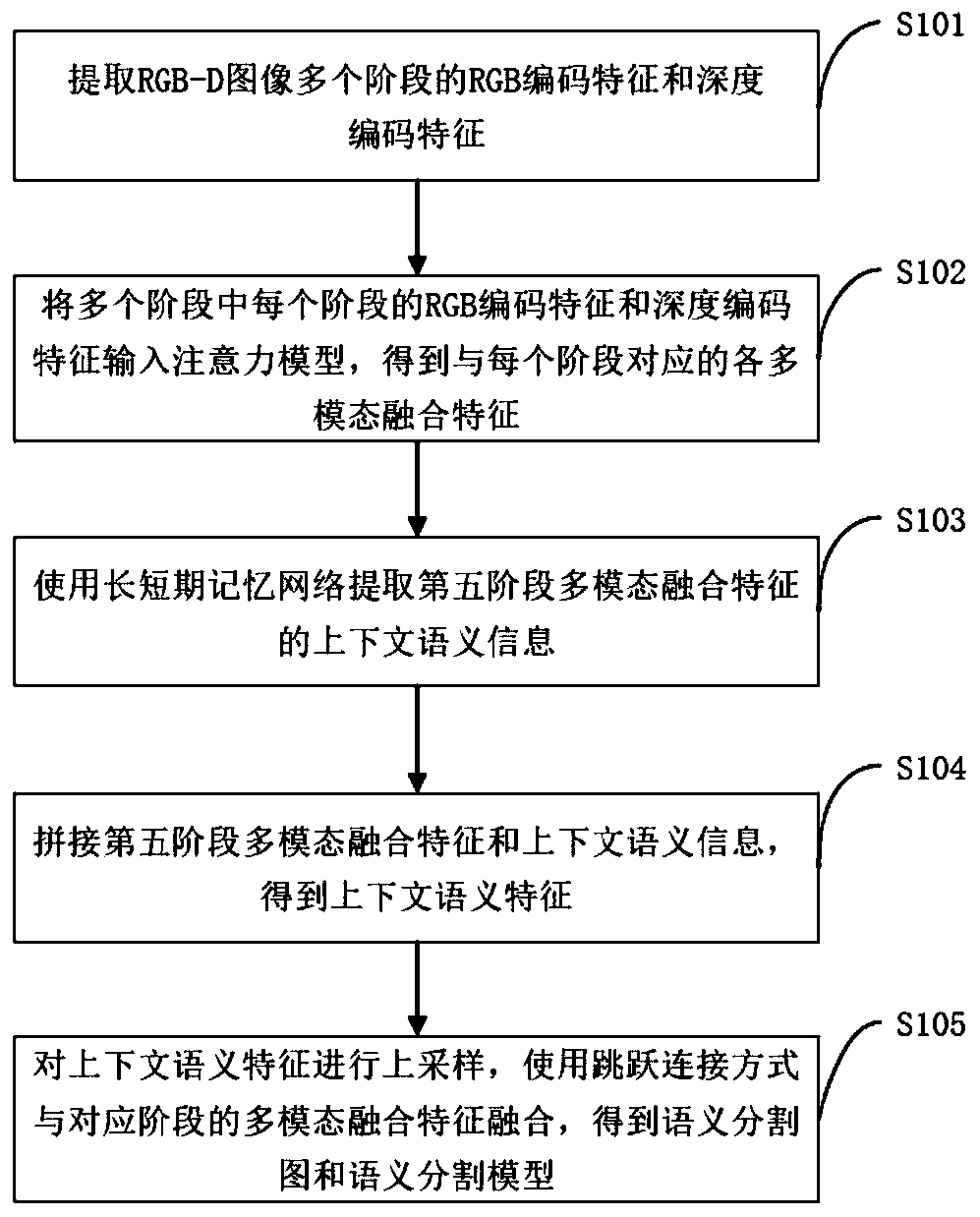

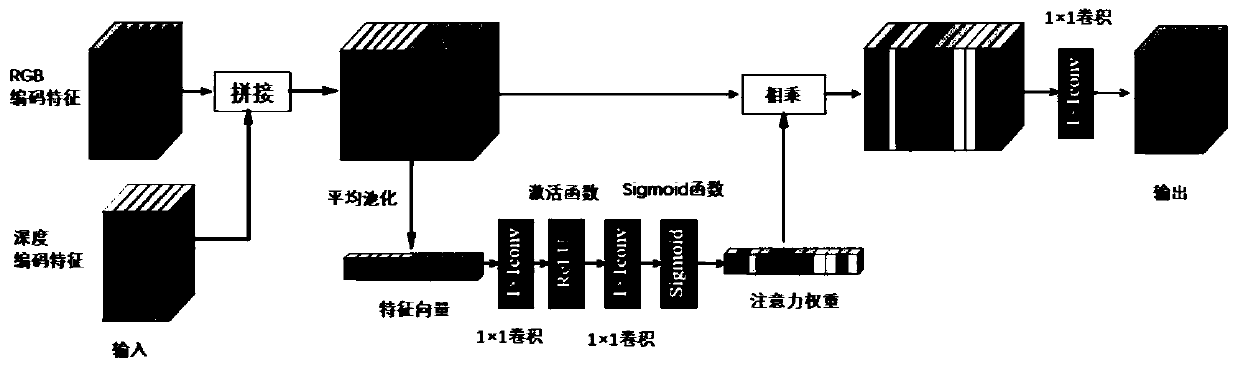

ActiveCN110298361AImproving Semantic Segmentation AccuracyEfficient use ofCharacter and pattern recognitionNeural architecturesAttention modelComputer vision

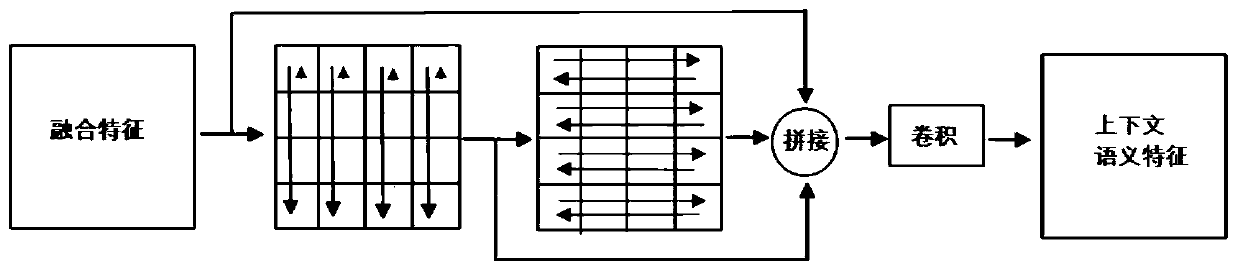

The invention discloses a semantic segmentation method and system for an RGB-D image. The semantic segmentation method comprises the steps: extracting RGB coding features and depth coding features ofan RGB-D image in multiple stages; inputting the RGB coding features and the depth coding features of each stage in the plurality of stages into an attention model to obtain each multi-mode fusion feature corresponding to each stage; extracting context semantic information of the multi-modal fusion features in the fifth stage by using a long short-term memory network; splicing the multi-modal fusion features and the context semantic information in the fifth stage to obtain context semantic features; and performing up-sampling on the context semantic features, and fusing the context semantic features with the multi-modal fusion features of the corresponding stage by using a jump connection mode to obtain a semantic segmentation map and a semantic segmentation model. By extracting RGB codingfeatures and depth coding features of the RGB-D image in multiple stages, the semantic segmentation method effectively utilizes color information and depth information of the RGB-D image, and effectively mines context semantic information of the image by using a long short-term memory network, so that the semantic segmentation accuracy of the RGB-D image is improved.

Owner:HANGZHOU WEIMING XINKE TECH CO LTD +1

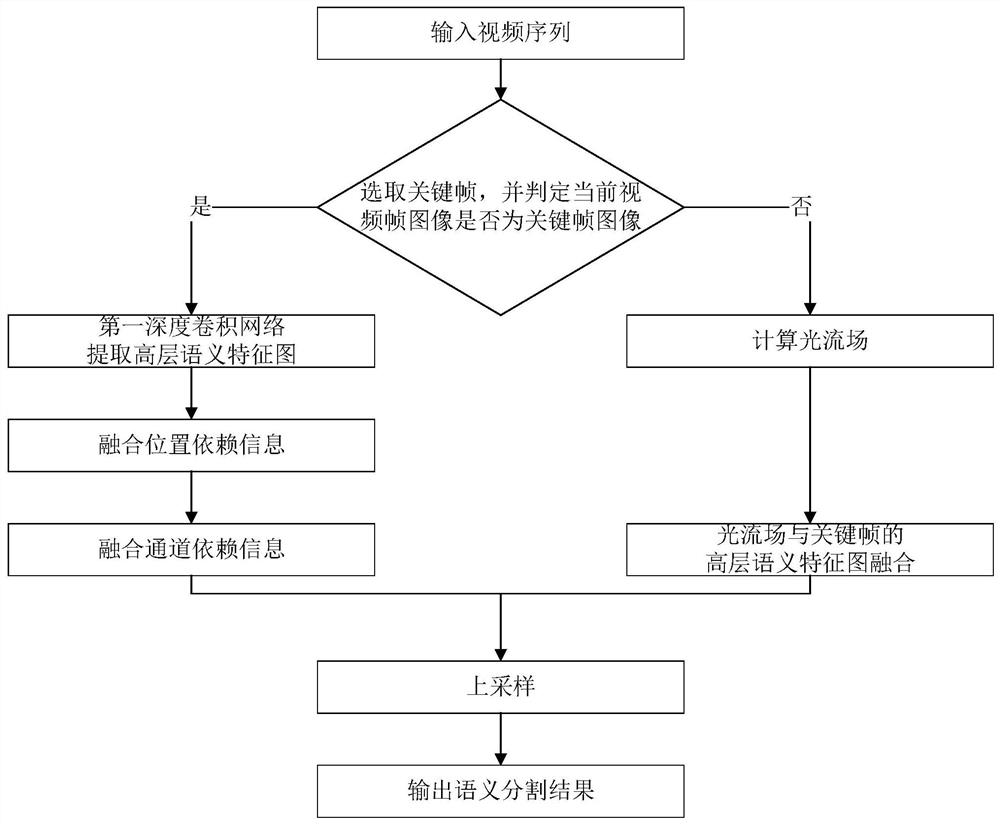

Adaptive adversarial learning-based urban traffic scene semantic segmentation method and system

ActiveCN110111335AImprove generalization abilityImproving Semantic Segmentation AccuracyImage enhancementImage analysisTraining data setsGenerative adversarial network

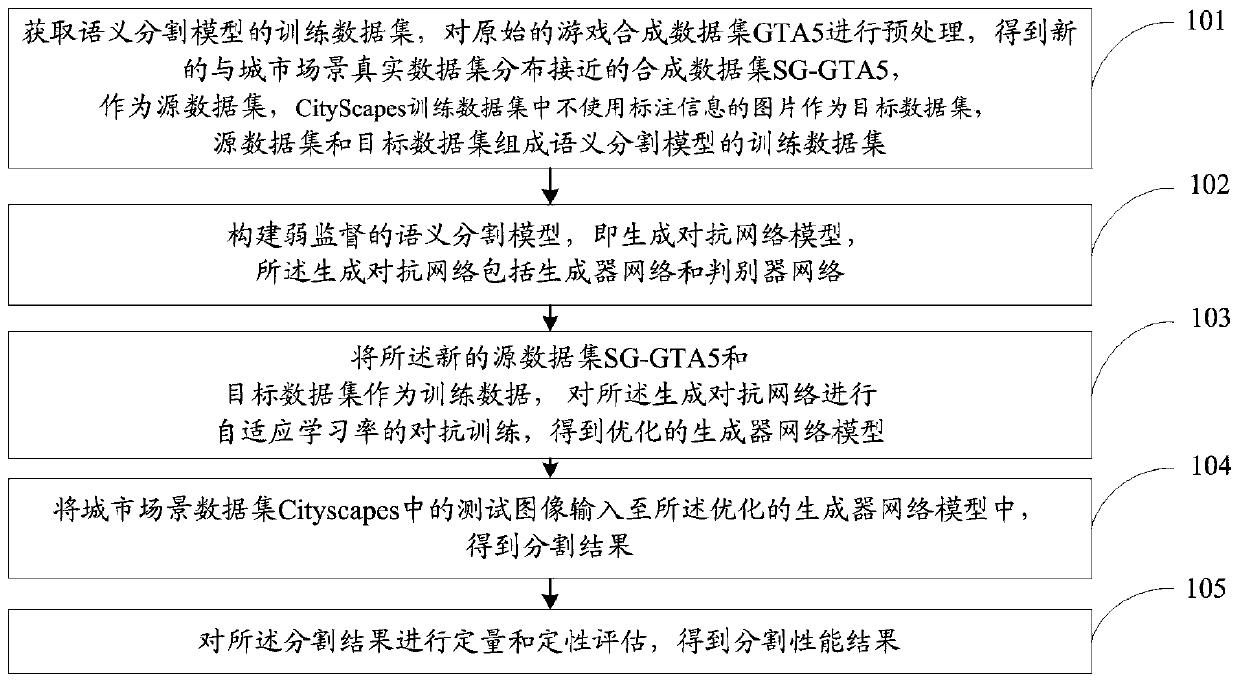

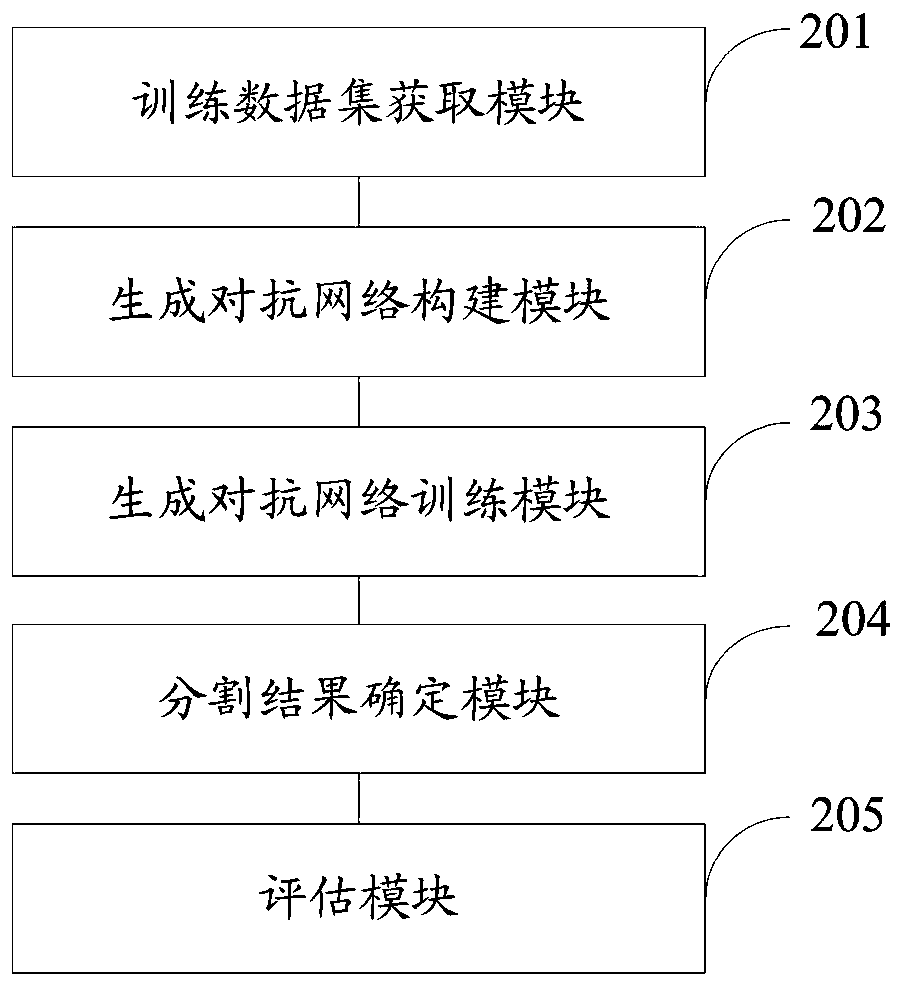

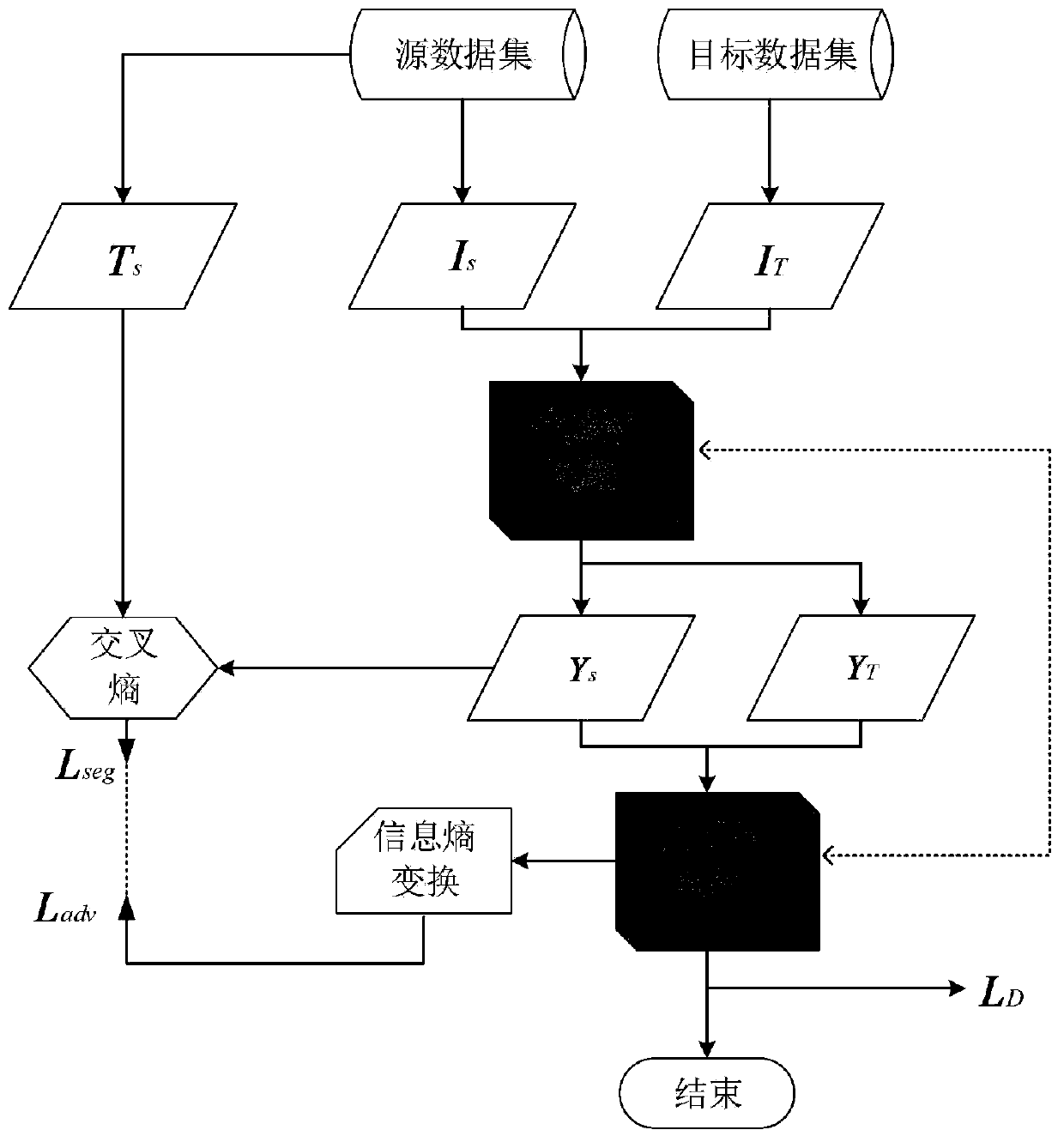

The invention discloses an adaptive adversarial learning-based urban traffic scene semantic segmentation method and system. The method comprises steps of obtaining training data of the semantic segmentation model, and preprocessing the game synthesis data set GTA5 to obtain a new synthesis data set SG-GTA5 which is close to urban scene real data set Cityscapes in distribution; constructing a generative adversarial network model for semantic segmentation; based on the training data set, performing self-adaptive confrontation learning on the generative adversarial network model, using self-adaptive learning rates in confrontation learning of different feature layers, adjusting loss values of the feature layers through the learning rates, and then dynamically updating network parameters to obtain an optimized generative adversarial network model; and carrying out verification on the city scene real data set CityScapes. According to the method, the semantic segmentation precision of a complex urban traffic scene which lacks annotation information and has more scale targets can be improved, and the generalization ability of a semantic segmentation model is enhanced.

Owner:NANCHANG HANGKONG UNIVERSITY

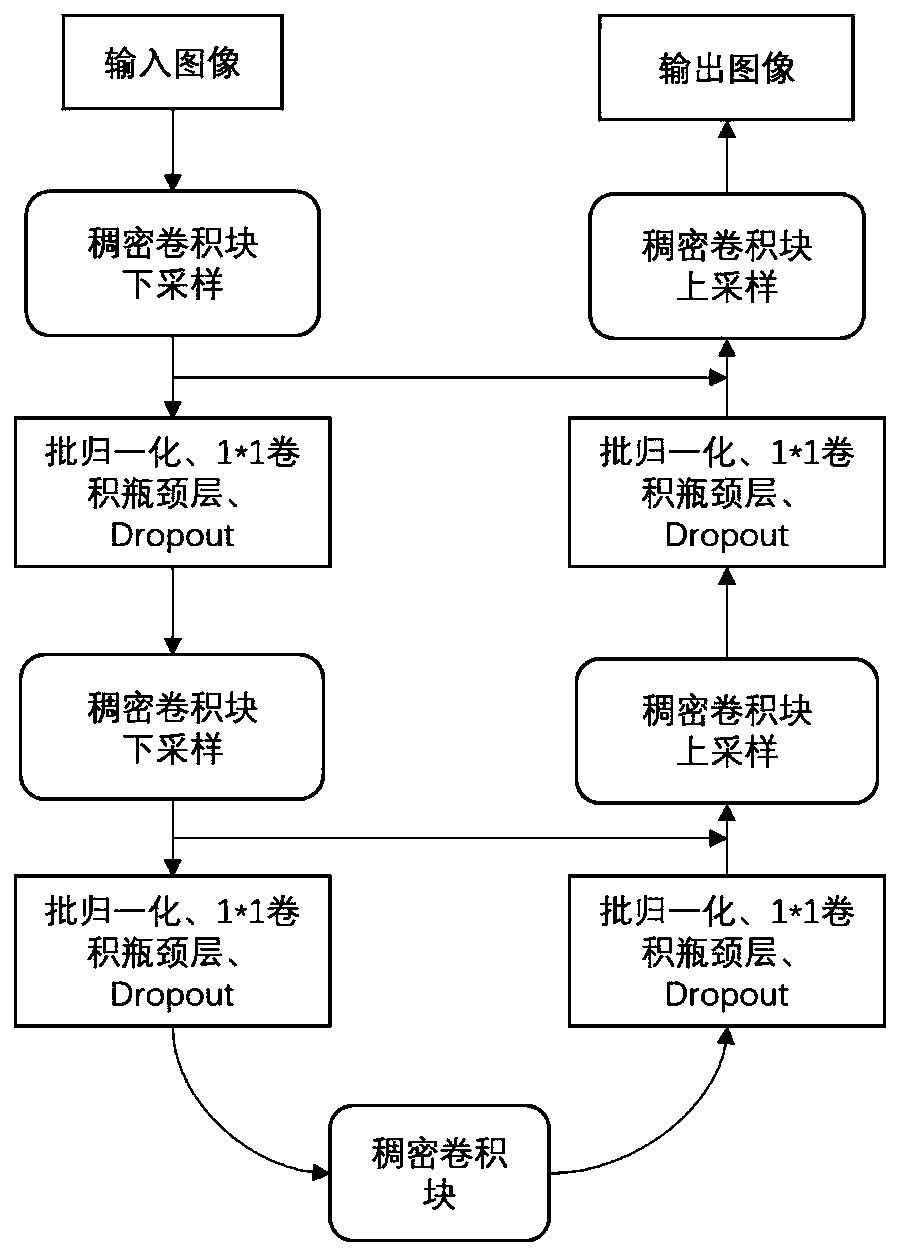

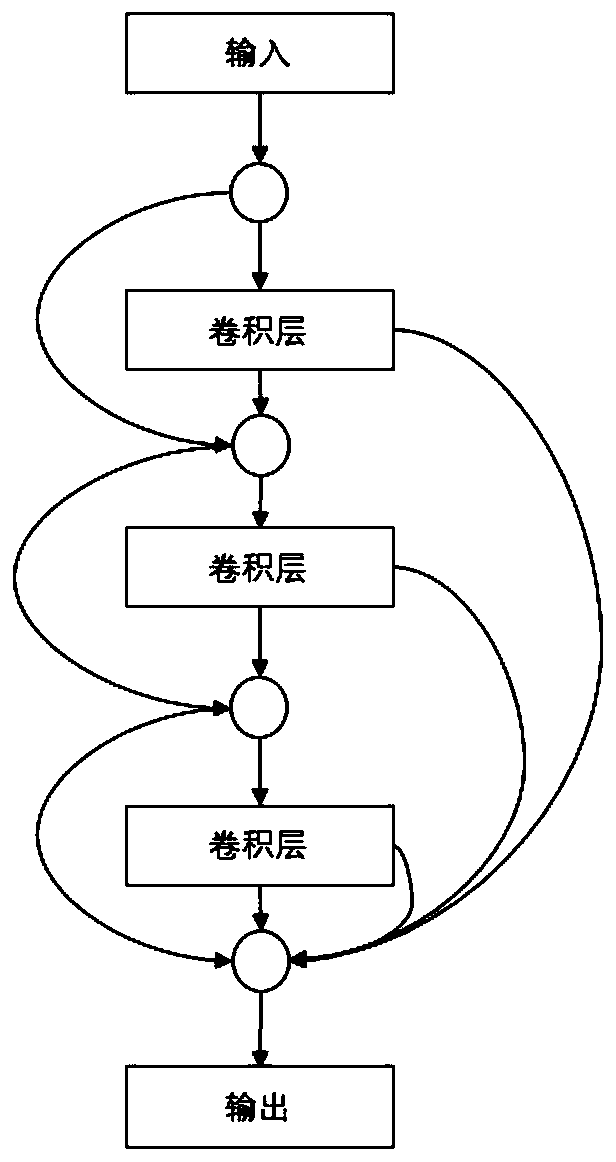

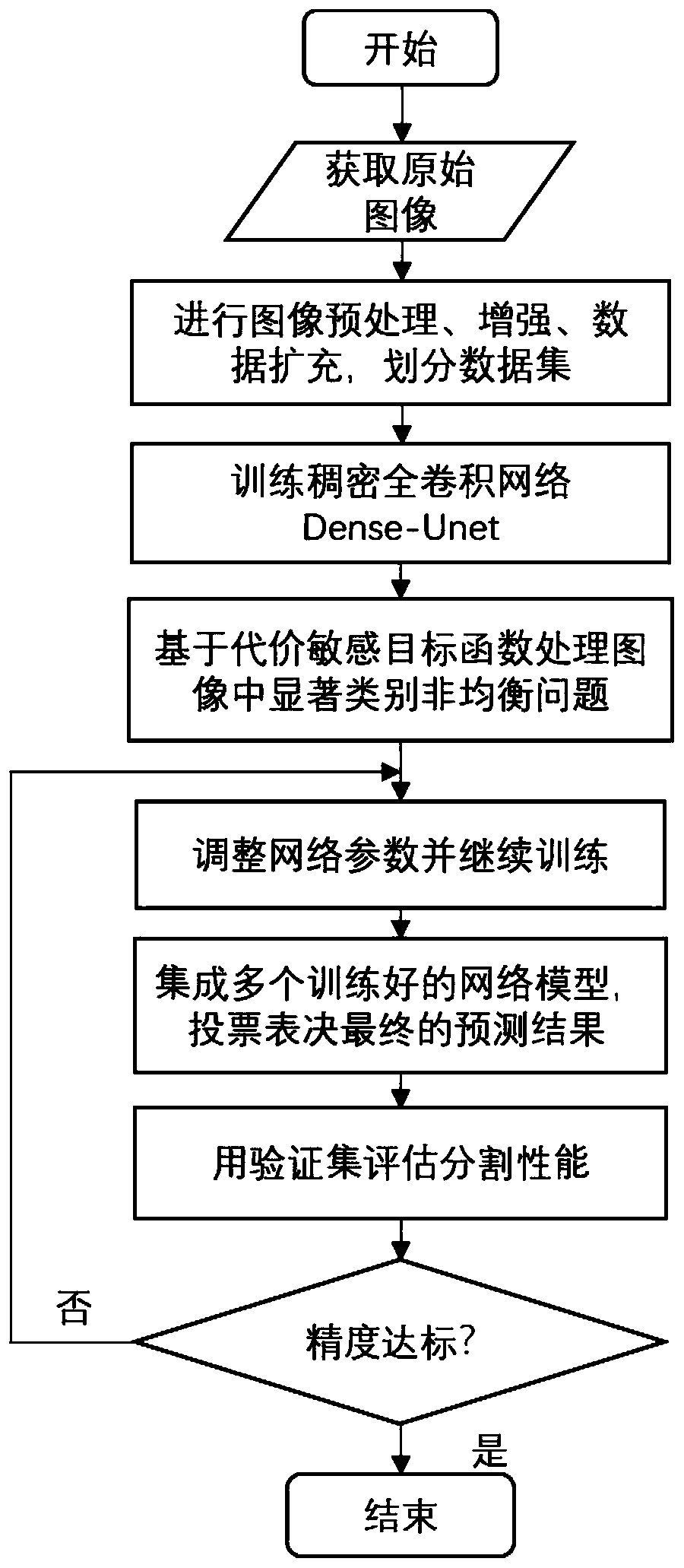

Remote sensing image thin and weak target segmentation method

InactiveCN110689544ASolve the problem of poor segmentation accuracyPrecise Segmentation EffectImage enhancementImage analysisNetwork structureEngineering

The invention provides a remote sensing image thin and weak target segmentation method. Firstly, data enhancement and corresponding preprocessing are carried out on an original remote sensing image, U-net is improved by means of the dense connection thought of DenseNet, and a Dense-Unet network structure is provided. Dense convolution is used in a network structure, the cascade relation between convolution channels is enhanced, through a symmetric structure and a jump connection thought, the connection between features of all layers is further tighter, and thin and weak target features can belearned more effectively. In order to ensure the real-time performance of final network identification and reduce the parameter quantity, a bottleneck layer and a batch normalization layer are introduced behind each dense block. And the objective function is adjusted by using the cost-sensitive vector weight, so that the problem of unbalanced segmentation target categories is solved, and the segmentation precision is further improved. And finally, a plurality of independent models are trained by using an ensemble learning method, the independent models are combined, and target category information is jointly predicted in the picture.

Owner:HARBIN ENG UNIV

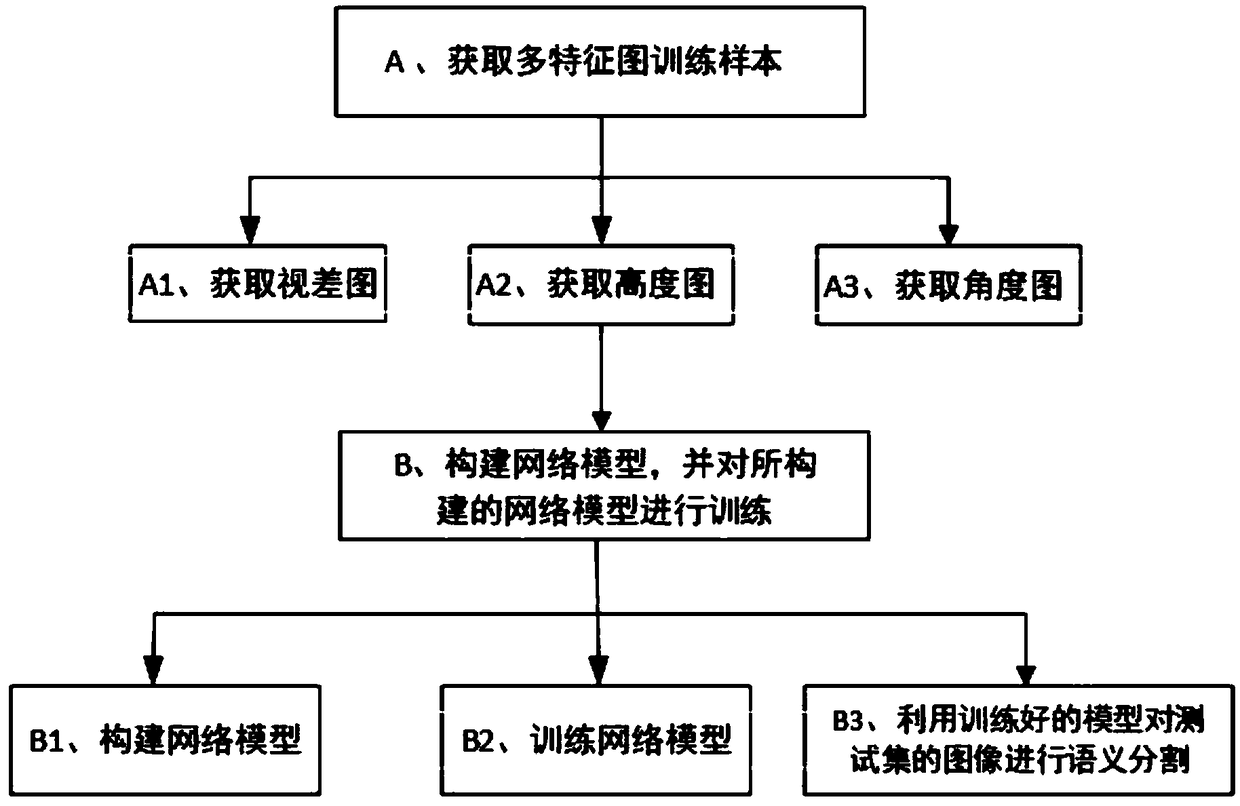

Traffic image semantic segmentation method based on multi-feature map

InactiveCN108734713AAchieve integrationEasy to understandImage analysisNeural architecturesColor imageHeight map

The invention discloses a traffic image semantic segmentation method based on a multi-feature map. The method comprises the following steps: obtaining a multi-feature map training sample: a disparitymap, a height map and an angle map; constructing a network model, training the network model, inputting the trained network model and a six-channel test image into the network model, outputting a probability value that each pixel belongs to each object category in the six-channel image via a multi-class classifier softmax layer, then predicting the object category to which each pixel in the six-channel image belongs, and finally outputting an image semantic segmentation map. By adoption of the traffic image semantic segmentation method based on the multi-feature map provided by the invention,the fusion of a color image with a depth map, the height map and the angle map, more feature information of the image can be obtained, and it is conducive to understanding the road traffic scene and improving the semantic segmentation accuracy. According to the traffic image semantic segmentation method based on the multi-feature map provided by the invention, by means of the learned effective features, the object category to which each pixel in the image belongs can be predicted, and the image semantic segmentation map is output.

Owner:DALIAN UNIV OF TECH

Road blocking information extraction based on deep learning image semantic segmentation

ActiveCN110287932AReduce misjudgmentGuaranteed correspondenceImage enhancementImage analysisPost disasterAlgorithm

The invention discloses a construction method of a road blocking image semantic segmentation sample library for full convolutional neural network training. The construction method comprises the steps of performing vectorization, enhancement and standardization on samples; secondly, introducing a classical convolutional neural network type and a network structure improvement method, and explaining a network realization method and a training process; then, using the full convolutional neural network obtained through training for conducting remote sensing image road surface semantic segmentation, and on the basis that the road surface which is not damaged after disaster is extracted, judging the road integrity through the length proportion of the road which is not damaged before disaster and after disaster. The precision evaluation indexes of the improved full convolutional neural network model are superior to those of an original full convolutional neural network model. The improved model is more suitable for specific problems of post-disaster undamaged pavement detection and road integrity judgment, and the adverse effects of tree and shadow shielding on road blocking information extraction can be effectively overcome.

Owner:AEROSPACE INFORMATION RES INST CAS

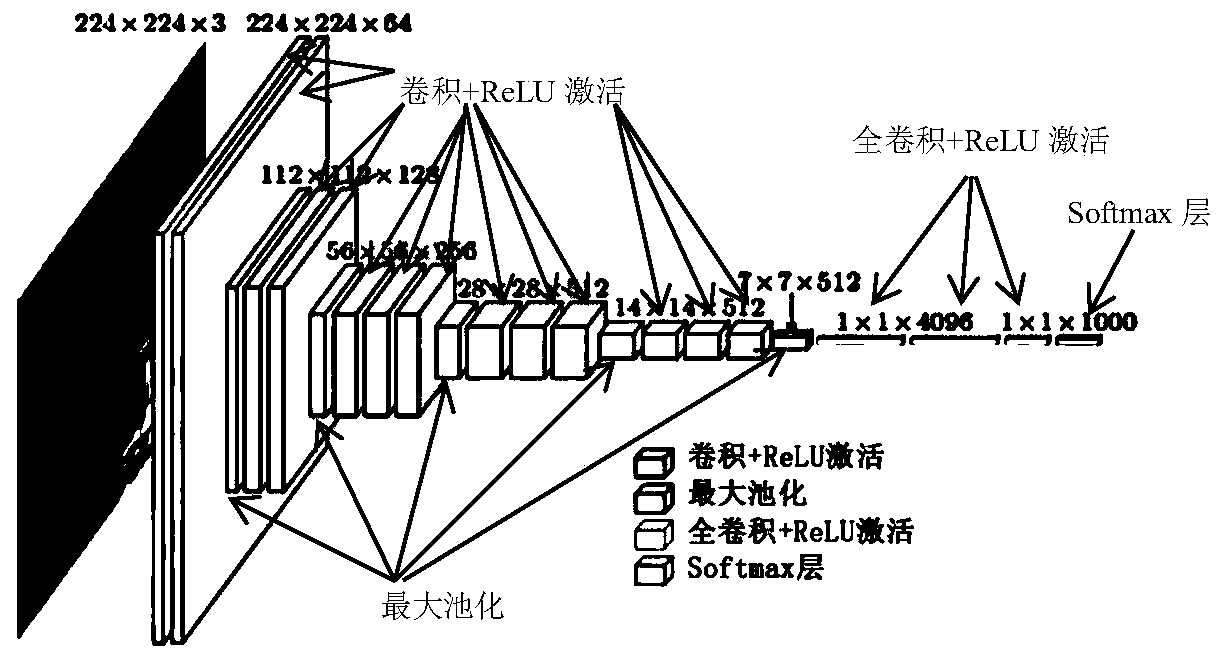

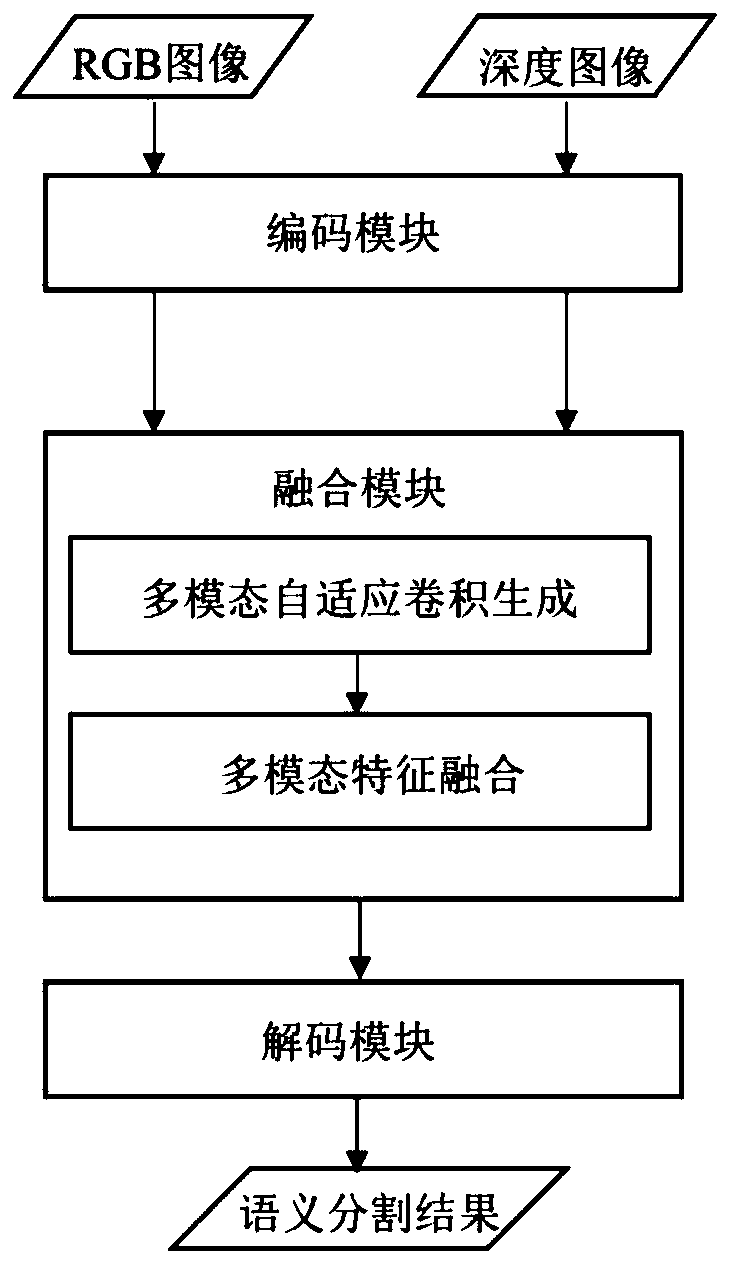

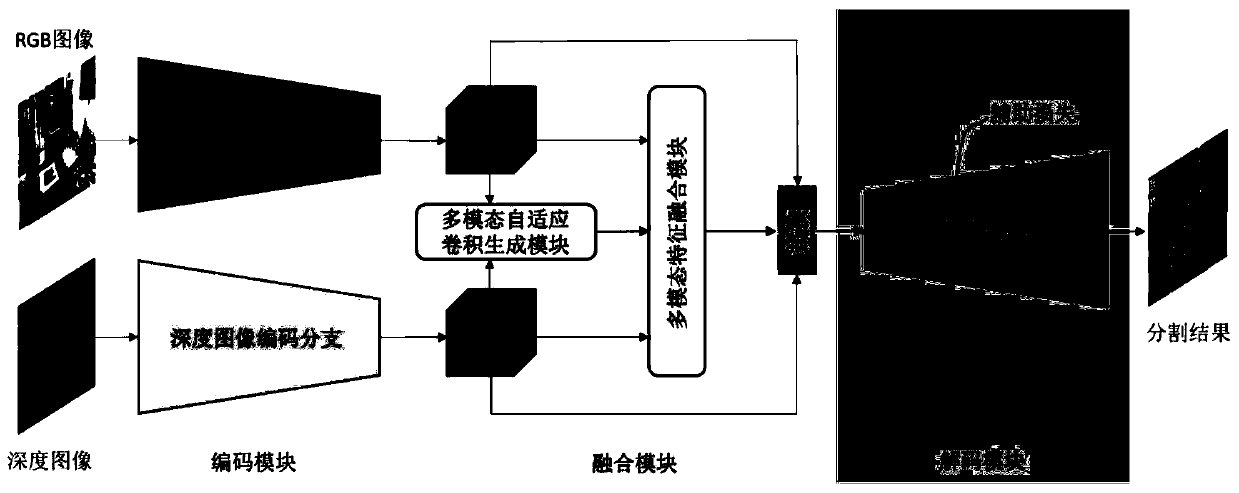

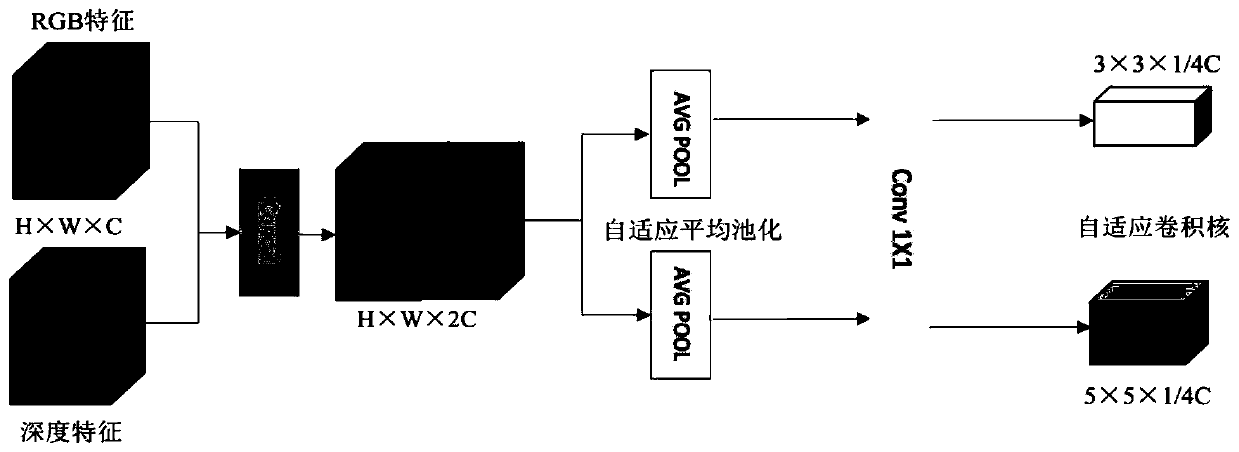

RGB-D image semantic segmentation method based on multi-modal adaptive convolution

PendingCN111340814AFine Semantic Segmentation ResultsImproving Semantic Segmentation AccuracyImage enhancementImage analysisPattern recognitionRgb image

The invention relates to an RGB-D image semantic segmentation method based on multi-modal adaptive convolution. The method comprises the steps that an encoding module extracts RGB image features and depth image features; the RGB features and the depth features are sent to a fusion module for fusion; the method comprises the following steps: firstly, inputting multi-modal features into a multi-modal adaptive convolution generation module, and calculating two multi-modal adaptive convolution kernels with different scales; then, enabling the multi-modal feature fusion module to carry out depth separable convolution operation on the RGB features and the depth features and an adaptive convolution kernel to obtain adaptive convolution fusion features; splicing the fusion features with the RGB features and the depth features to obtain final fusion features; enabling the decoding module to perform continuous up-sampling on the final fusion feature, and obtaining a semantic segmentation resultthrough convolution operation. According to the invention, multi-modal features are interacted cooperatively through adaptive convolution, and convolution kernel parameters are dynamically adjusted according to an input multi-modal image, so that the method is more flexible than a traditional convolution kernel with fixed parameters.

Owner:BEIJING UNIV OF TECH

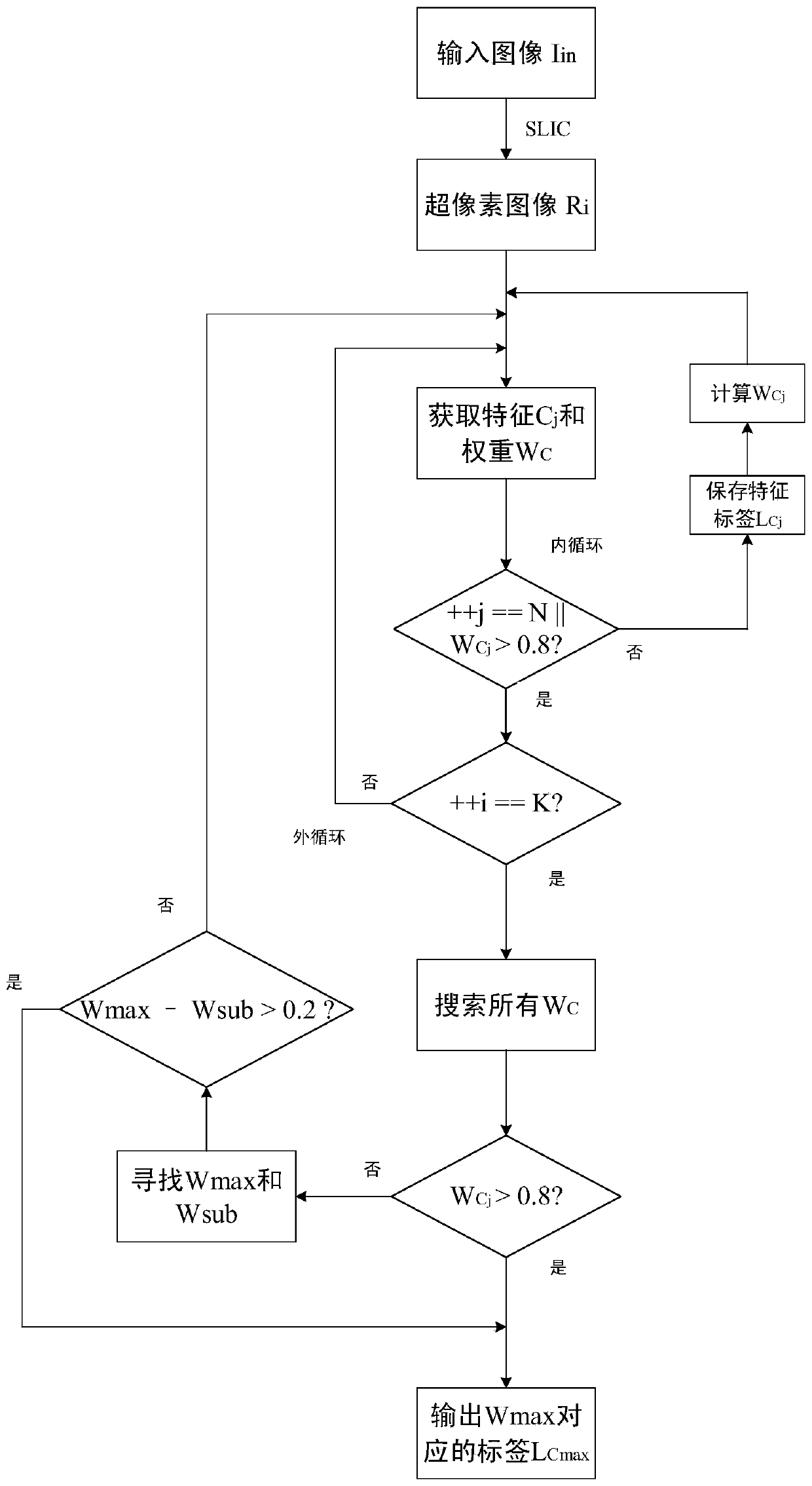

A semantic segmentation optimization method and device for an edge image

InactiveCN109919159AEfficient extractionAccurate segmentationCharacter and pattern recognitionComputer visionImage edge

The invention relates to a semantic segmentation optimization method for an edge image. The method comprises the steps of selecting image data; Using the image data to train and verify an image semantic segmentation model and a full connection condition random field model; Obtaining a semantic segmentation result of the image by using the trained image semantic segmentation model; Obtaining a superpixel segmentation result of the image edge information by using a superpixel segmentation algorithm; Optimizing the semantic segmentation result by using the superpixel segmentation result to form afirst optimization result; And optimizing the first optimization result by using the trained full connection condition random field model. According to the method provided by the invention, the advanced semantic information in the image can be effectively extracted, the image edge information is reserved through the super-pixel segmentation algorithm, the semantic segmentation accuracy of an existing segmentation model on the image edge is improved through the local edge optimization algorithm, and the method is flexible to implement, high in compatibility and relatively high in robustness.

Owner:XIDIAN UNIV

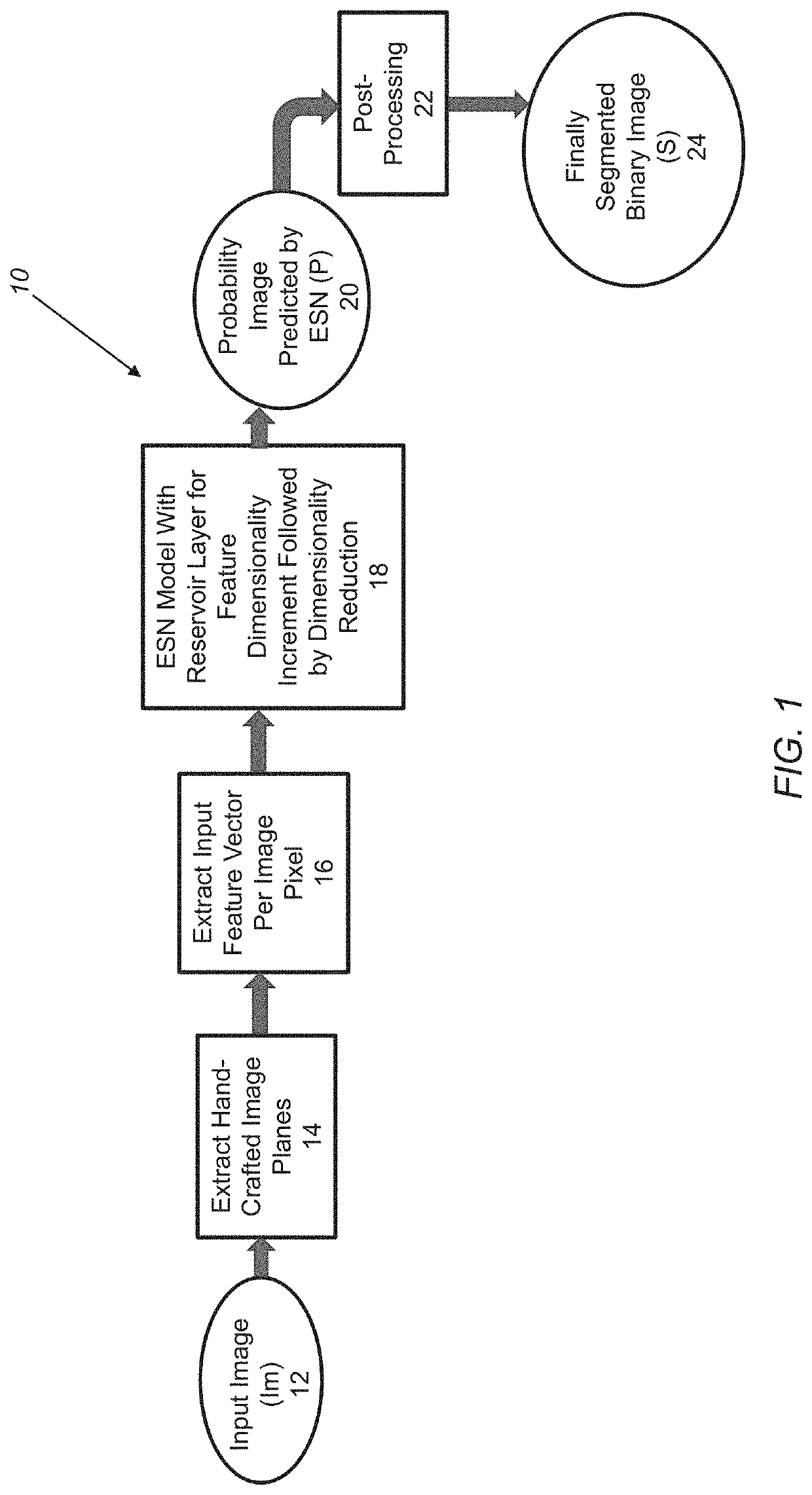

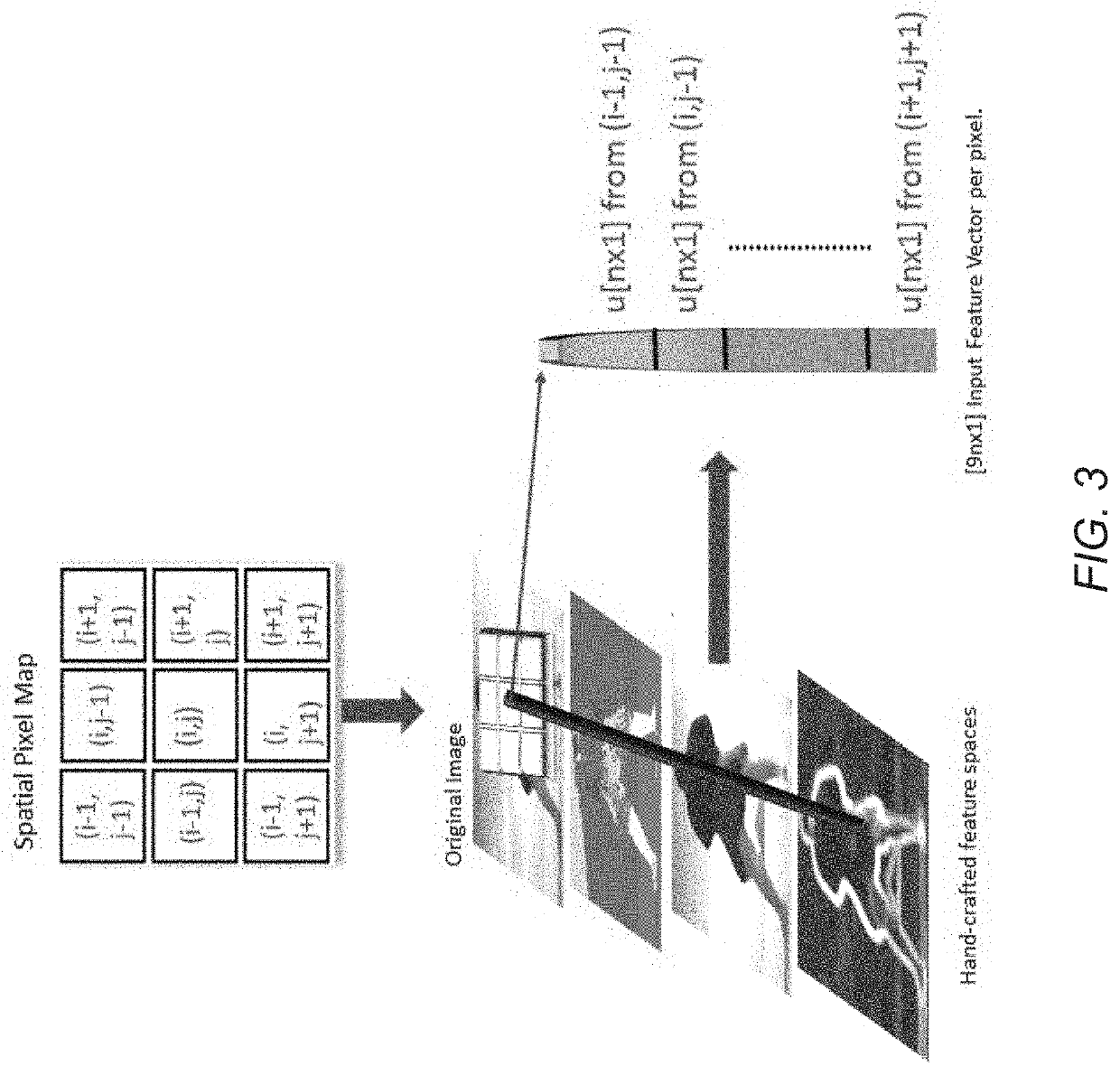

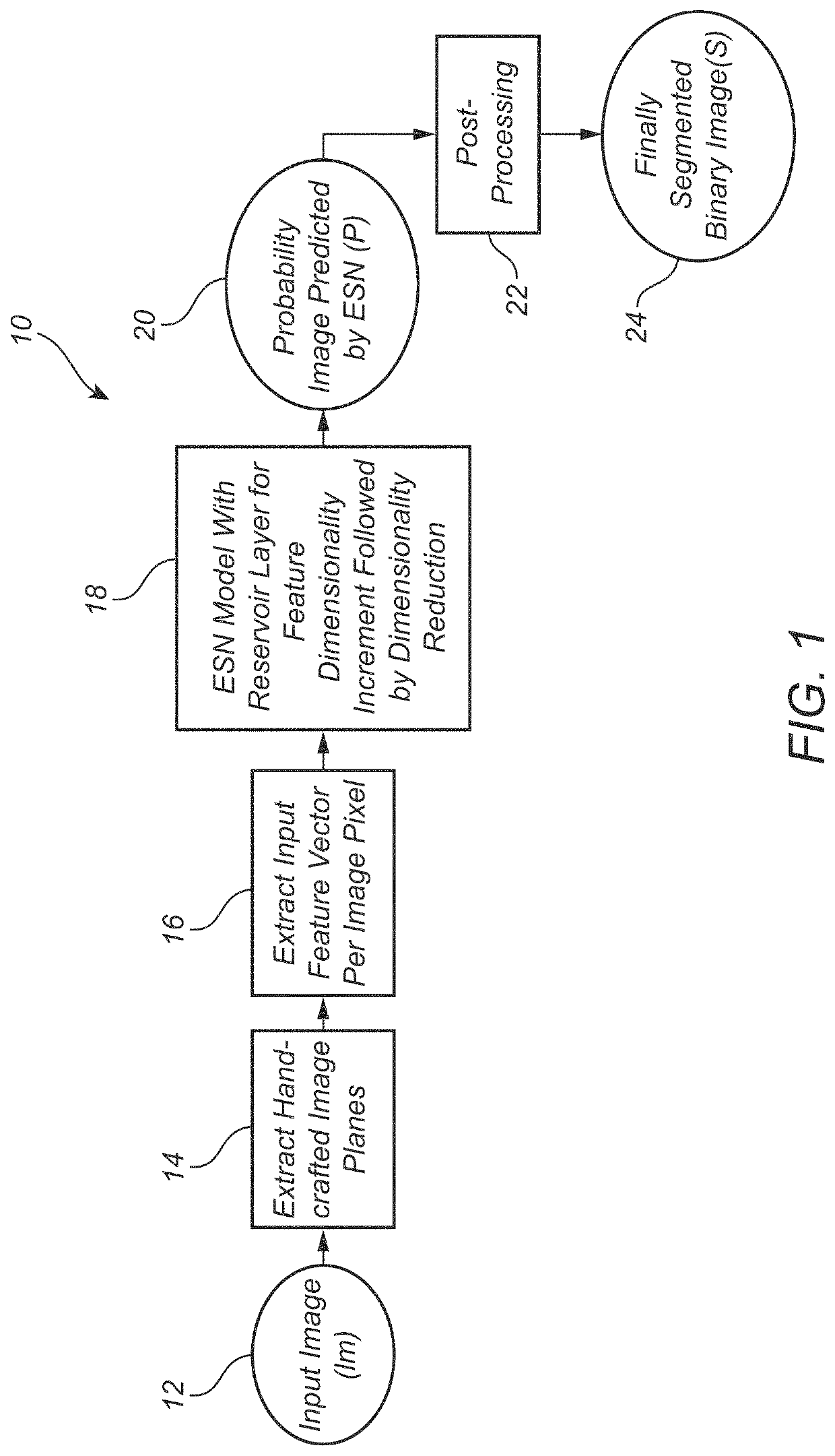

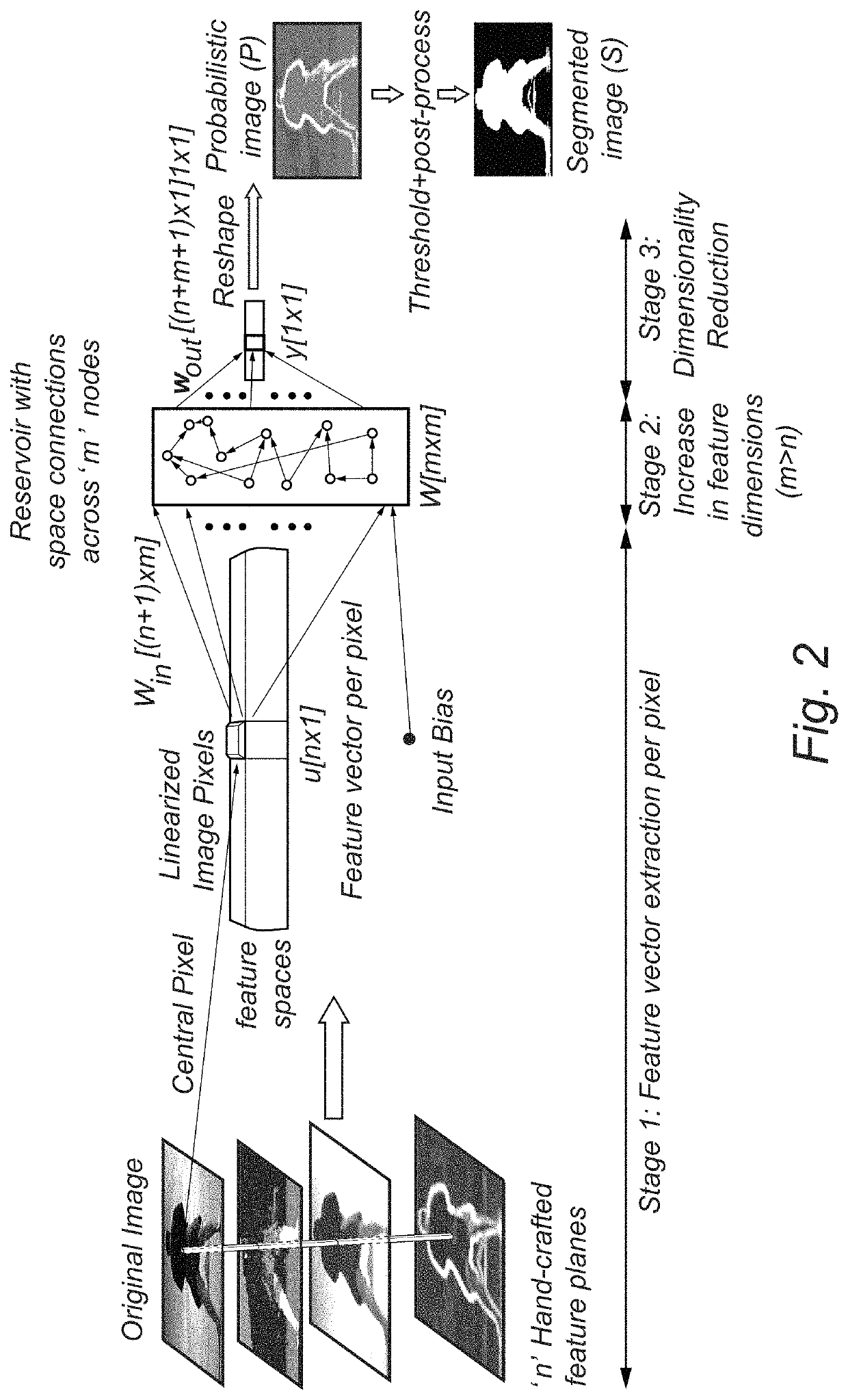

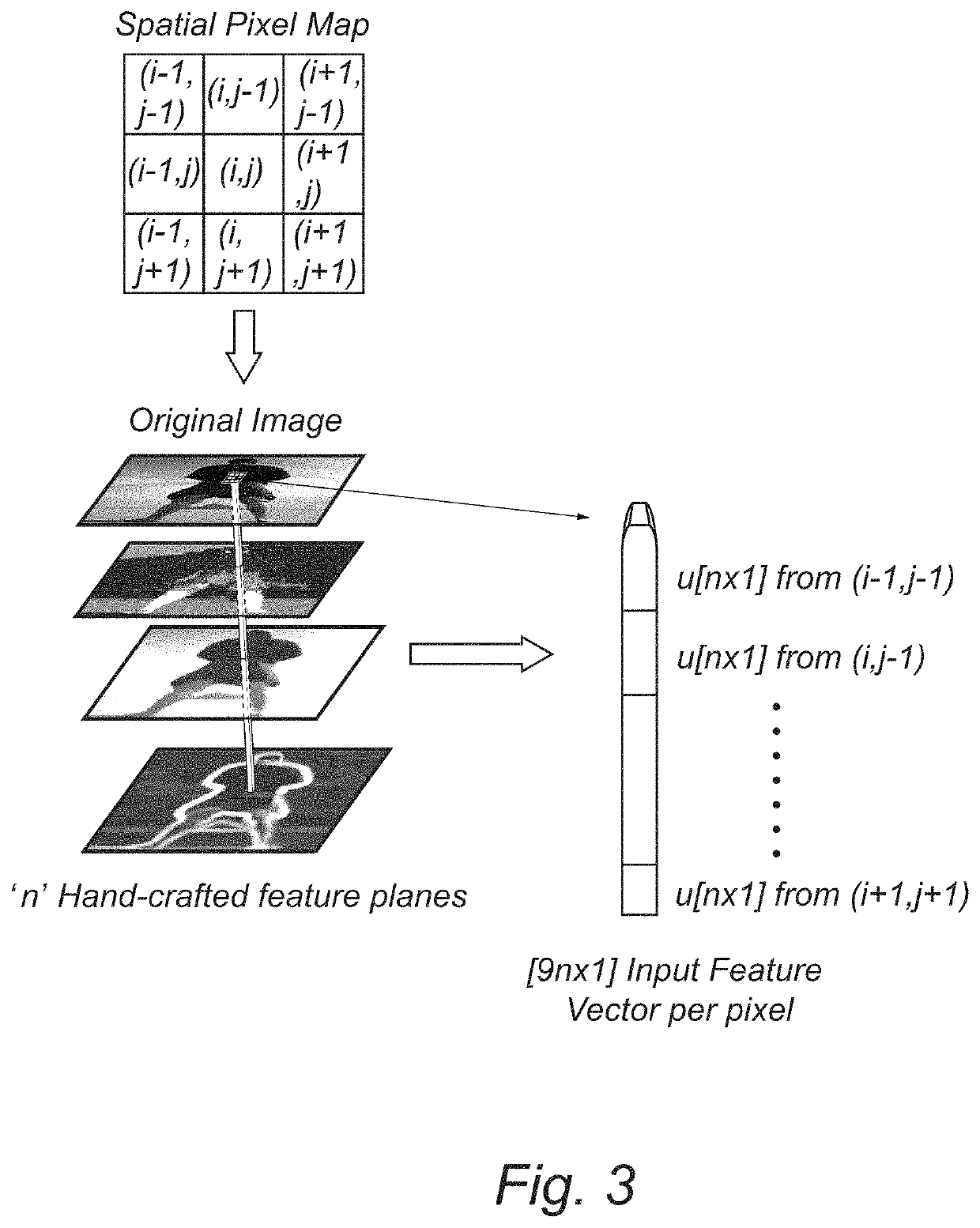

Methods and systems for providing fast semantic proposals for image and video annotation

ActiveUS20200082540A1Shorten the timeFast and accurate pre-proposalsImage enhancementAutonomous decision making processFeature vectorFeature Dimension

Methods and systems for providing fast semantic proposals for image and video annotation including: extracting image planes from an input image; linearizing each of the image planes to generate a one-dimensional array to extract an input feature vector per image pixel for the image planes; abstracting features for a region of interest using a modified echo state network model, wherein a reservoir increases feature dimensions per pixel location to multiple dimensions followed by feature reduction to one dimension per pixel location, wherein the echo state network model includes both spatial and temporal state factors for reservoir nodes associated with each pixel vector, and wherein the echo state network model outputs a probability image; post-processing the probability image to form a segmented binary image mask; and applying the segmented binary image mask to the input image to segment the region of interest and form a semantic proposal image.

Owner:VOLVO CAR CORP

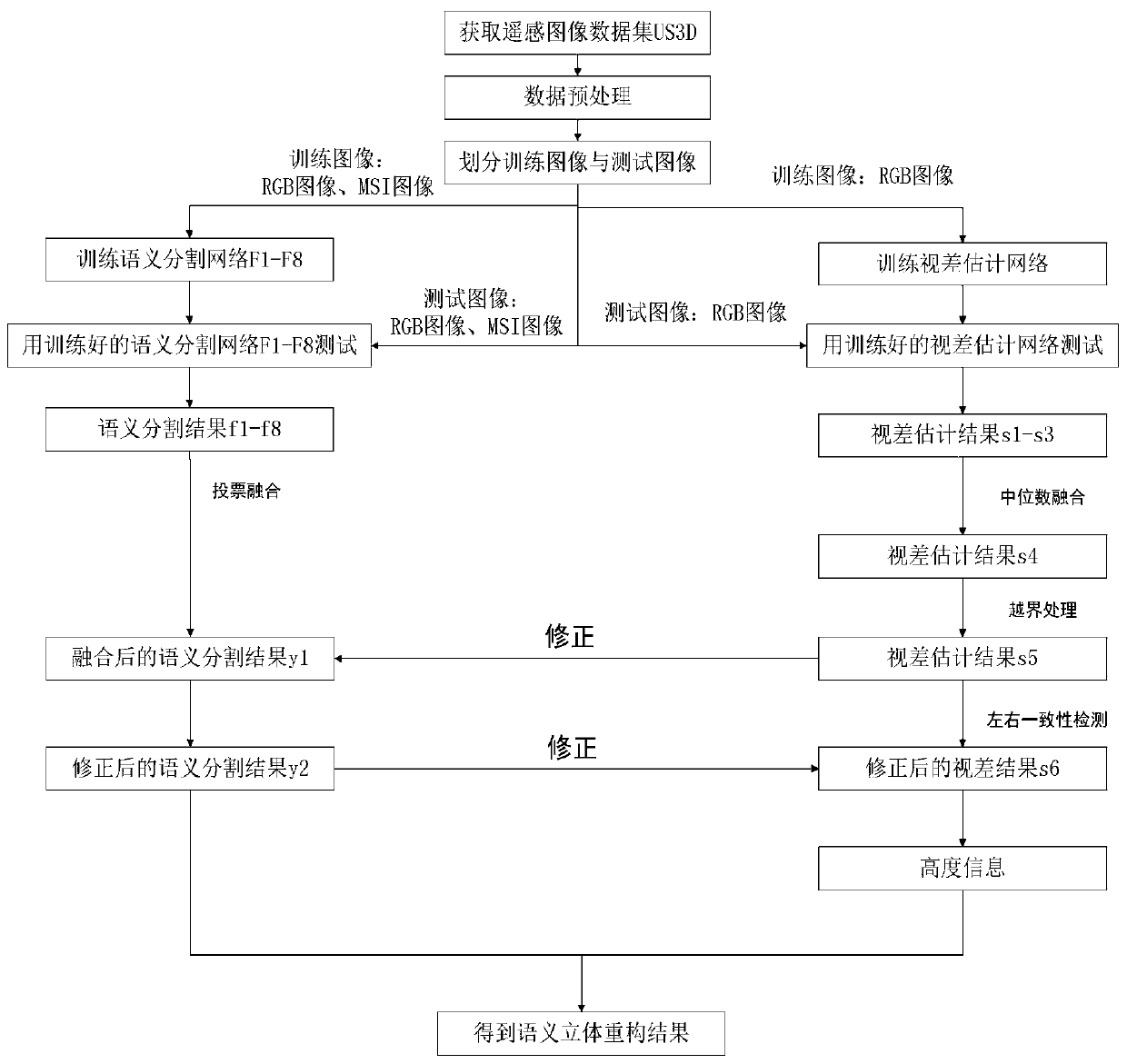

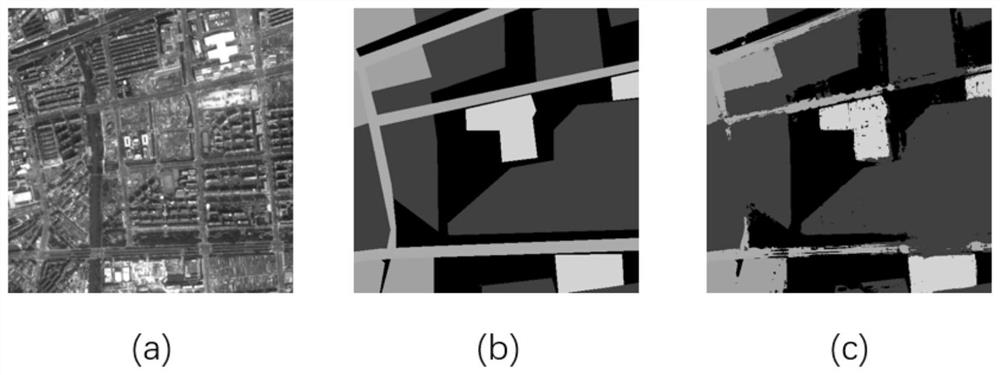

Semantic stereo reconstruction method of remote sensing image

ActiveCN110110682AIncrease the proportionImproving Semantic Segmentation AccuracyScene recognitionParallaxRelevant information

The invention discloses a semantic stereo reconstruction method for a remote sensing image, and mainly solves the problem of low semantic three-dimensional reconstruction precision caused by ignoringrelated information of semantic segmentation and parallax estimation in the prior art. According to the implementation scheme, firstly, experimental data are preprocessed; a semantic segmentation network and a parallax estimation network are trained by using the training data; the trained network is tested on the test image, and test results of different frequency band information are fused to obtain a fused semantic segmentation result and a fused parallax result; the error correction module is used for assisting each other to correct the error part of the opposite side; and the disparity information is calculated to obtain height information, and the semantic segmentation result is combined with the height information to obtain a semantic three-dimensional reconstruction result of the image. According to the method, the proportion of small samples is improved, the influence of data on the network is balanced, the semantic information and the parallax result are fused with each other,the accuracy of semantic three-dimensional reconstruction of the remote sensing image is improved, and the method can be used for urban scene three-dimensional reconstruction.

Owner:XIDIAN UNIV

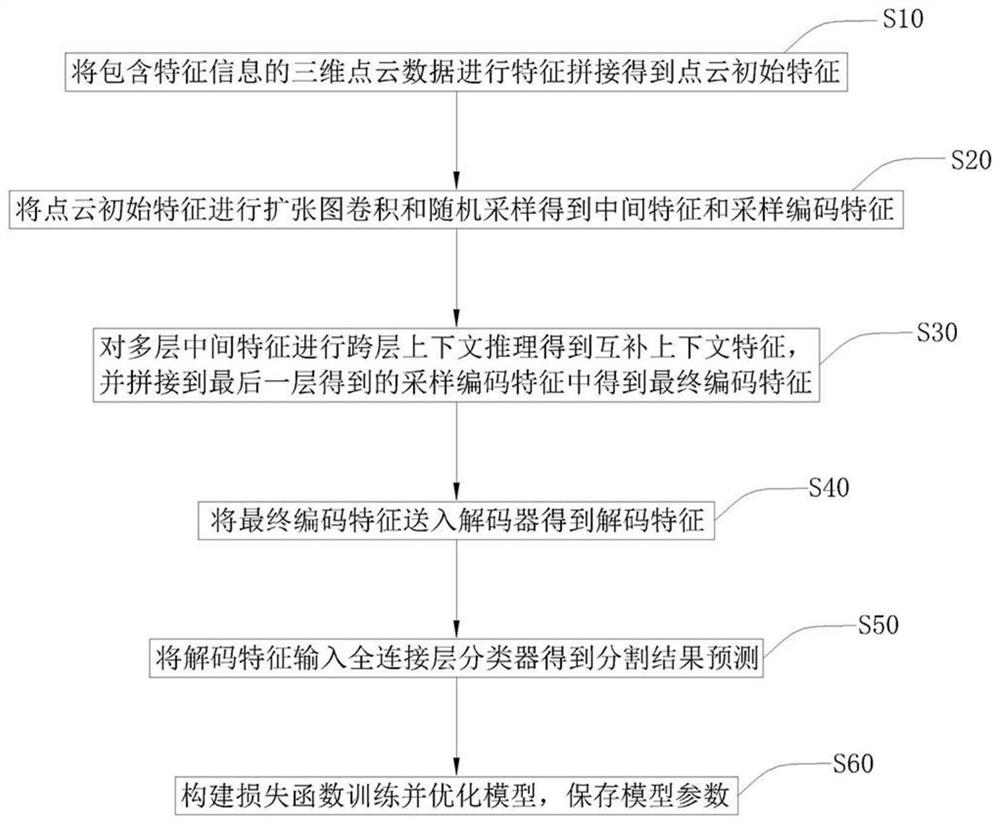

Large-scene point cloud semantic segmentation method

ActiveCN112819833AImprove applicabilityEasy to handleImage enhancementImage analysisPoint cloudContextual reasoning

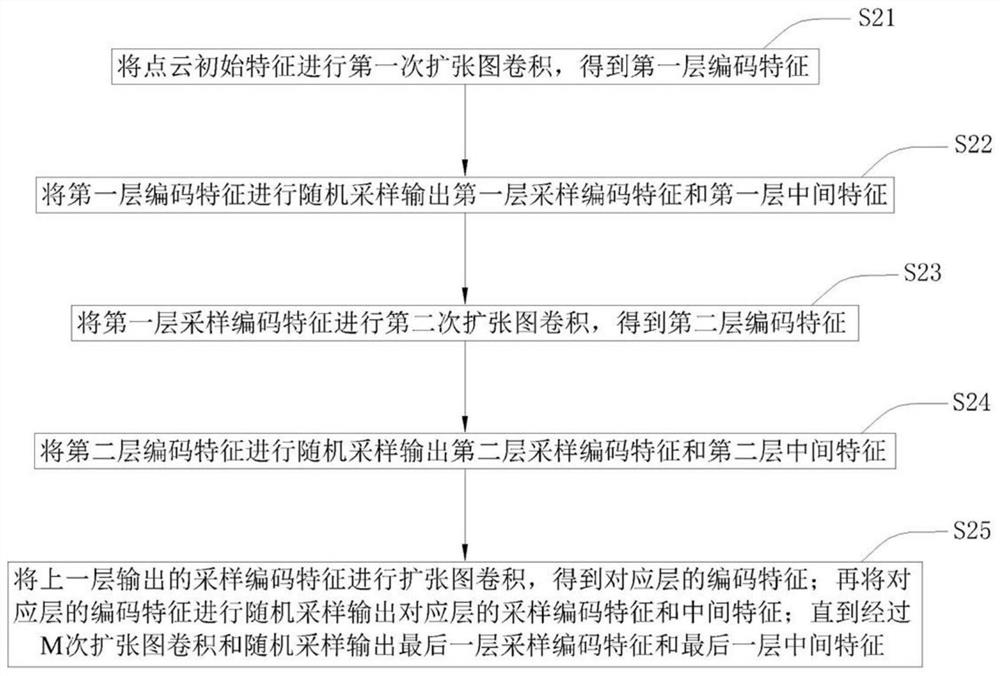

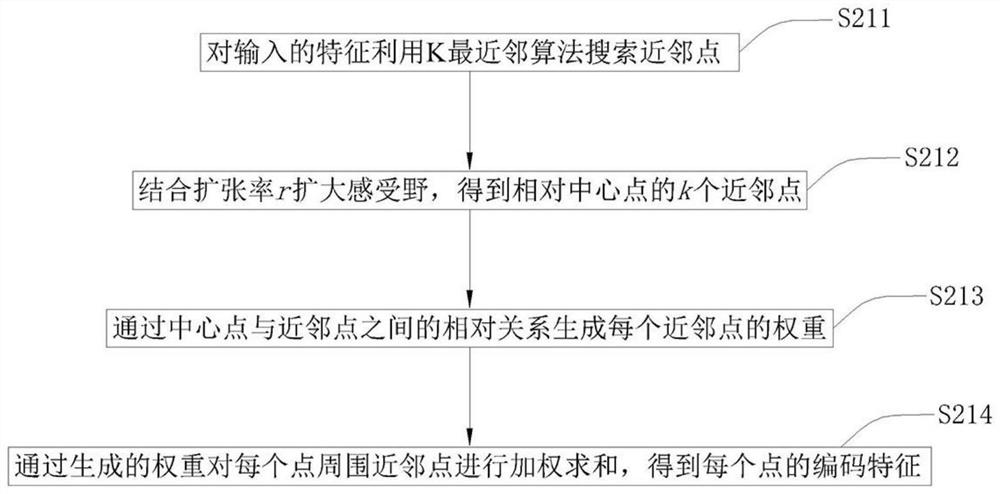

The invention discloses a large-scene point cloud semantic segmentation method. The method comprises the following steps: carrying out feature splicing on three-dimensional point cloud data containing feature information to obtain point cloud initial features; performing expansion graph convolution and random sampling on the point cloud initial features to obtain multi-layer intermediate features and sampling coding features; performing cross-layer context reasoning on the multi-layer intermediate features to obtain complementary context features, and splicing the complementary context features into the sampling coding features obtained in the last layer to obtain final coding features; decoding the final coding feature to obtain a decoding feature; inputting the decoding feature into a full connection layer classifier to obtain segmentation result prediction; and constructing a loss function, training and optimizing the model, and storing model parameters. According to the method, cross-layer context reasoning is used for aggregating multiple layers of contexts in the coding stage, attention fusion is adopted for feature selection in the decoding stage, information loss can be effectively made up and feature redundancy can be effectively reduced while efficiency is guaranteed, and therefore the accuracy rate is improved.

Owner:SICHUAN UNIV

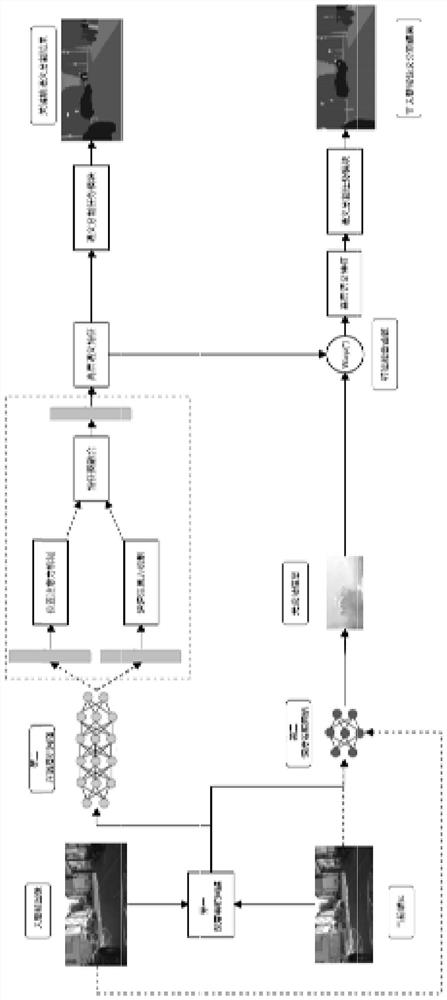

Road scene semantic segmentation method based on convolutional neural network

ActiveCN109635662AImprove learning effectReduce redundancyCharacter and pattern recognitionNeural architecturesHidden layerOptimal weight

The invention discloses a road scene semantic segmentation method based on a convolutional neural network. The method comprises the steps: firstly building the convolutional neural network which comprises an input layer, a hidden layer, and an output layer, and the hidden layer is composed of 13 neural network blocks, 7 upsampling layers, and 8 cascade layers; inputting each original road scene image in the training set into a convolutional neural network for training to obtain 12 semantic segmentation prediction images corresponding to each original road scene image; calculating a loss function value between a set composed of 12 semantic segmentation prediction images corresponding to each original road scene image and a set composed of 12 single hot coding images processed by the corresponding real semantic segmentation image; obtaining an optimal weight vector and an optimal offset item of the convolutional neural network classification training model; inputting the road scene imageto be subjected to semantic segmentation into a convolutional neural network classification training model for prediction to obtain a corresponding predicted semantic segmentation image. The method has the advantage of high semantic segmentation precision.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

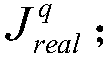

Video semantic segmentation method based on optical flow feature fusion

ActiveCN111652081AImprove semantic segmentation speedRich semantic informationCharacter and pattern recognitionPattern recognitionRadiology

The invention discloses a video semantic segmentation method based on optical flow feature fusion. The method comprises the following steps: the step 1, judging whether a current video frame image ofa video sequence is a key frame image or a non-key frame image; if the image is a key frame image, executing the step 2, and if the image is a non-key frame image, executing the step 3; the step 2, extracting a high-level semantic feature map of fusion position dependent information and channel dependent information of the current video frame image; the step 3, calculating an optical flow field toobtain a high-level semantic feature map of the current video frame image; and the step 4, performing up-sampling on the high-level semantic feature maps obtained in the step 2 and the step 3 to obtain a semantic segmentation map. According to the method, the thought of an optical flow field and an attention mechanism is fused, so that the rate and accuracy of video semantic segmentation can be improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

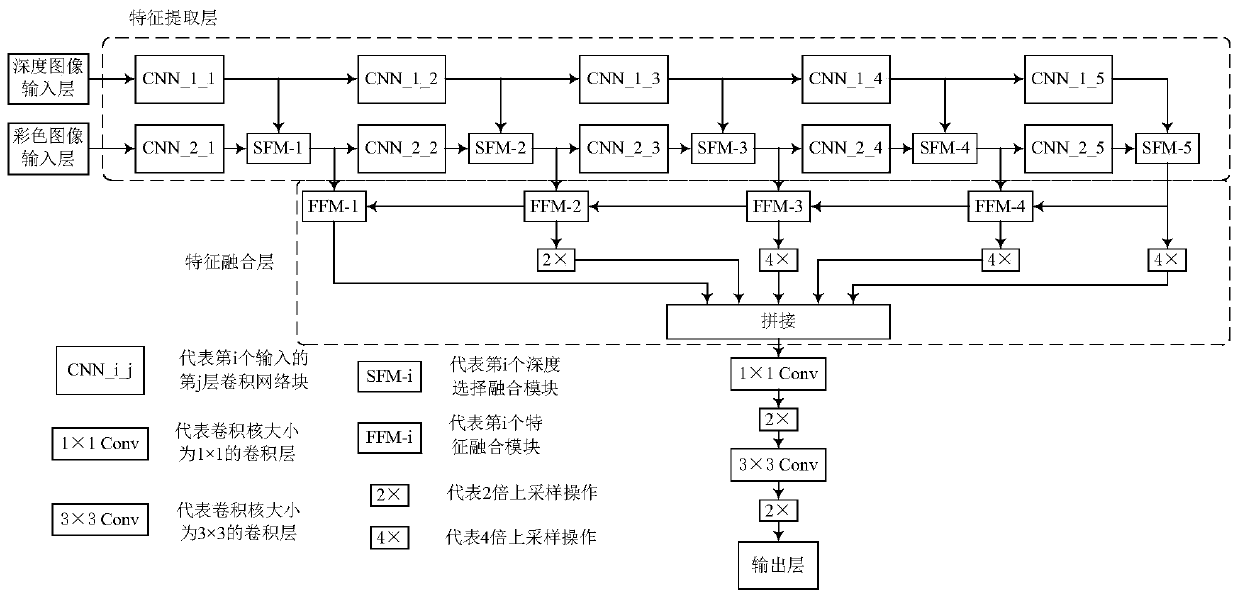

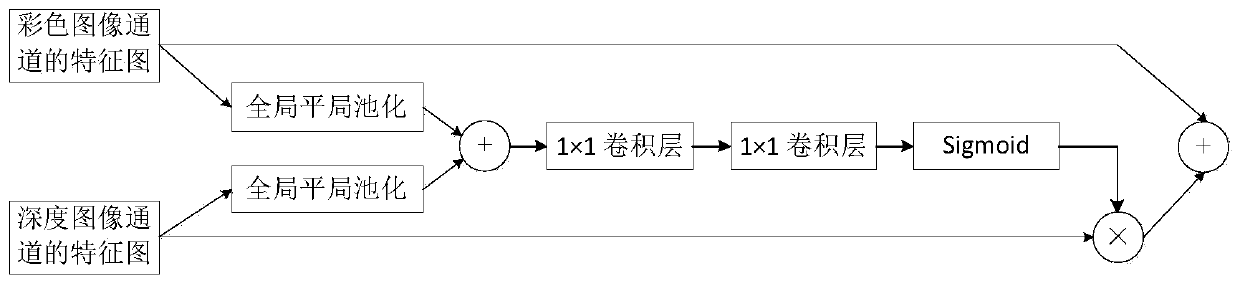

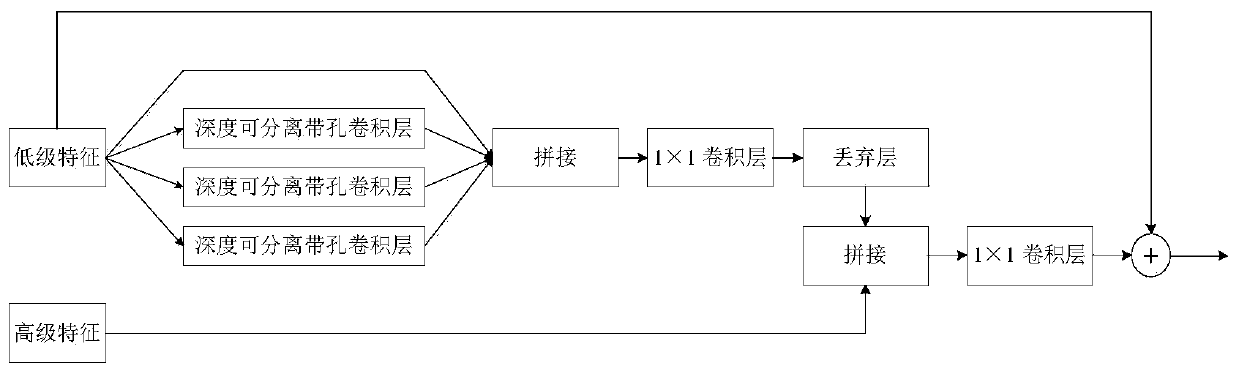

Indoor scene semantic segmentation method based on convolutional neural network

PendingCN111563507AAccurate descriptionImproving Semantic Segmentation AccuracyImage enhancementImage analysisFeature fusionNeural network nn

The invention discloses an indoor scene semantic segmentation method based on a convolutional neural network. The method comprises: in a training stage, constructing a convolutional neural network which comprises an input layer, a feature extraction layer, a feature fusion layer and an output layer; inputting an original indoor scene image into the convolutional neural network for training to obtain a corresponding semantic segmentation prediction graph; calculating a loss function value between a set formed by semantic segmentation prediction images corresponding to the original indoor sceneimages and a set formed by one-hot coded images processed by corresponding real semantic segmentation images, and obtaining a final weight vector and a bias term of a convolutional neural network classification training model; and in a test stage, inputting an indoor scene image to be semantically segmented into the convolutional neural network classification training model to obtain a predicted semantic segmentation image. According to the method, the semantic segmentation efficiency and accuracy of the indoor scene image can be improved.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

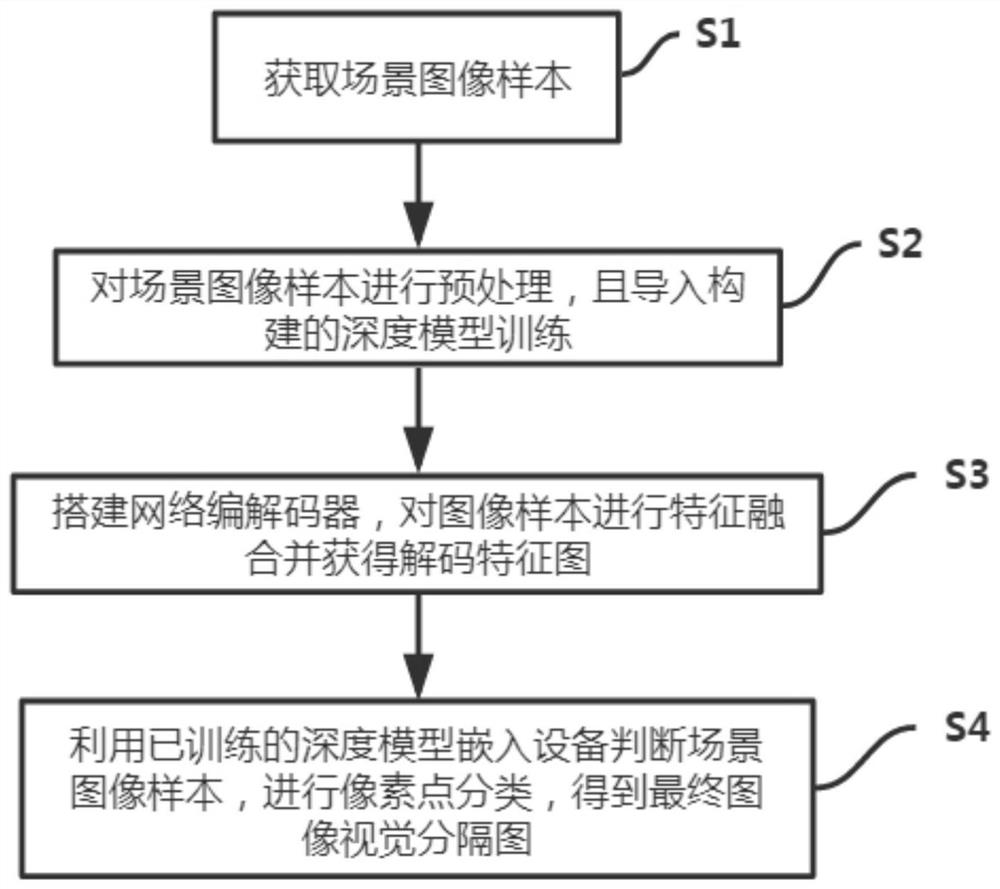

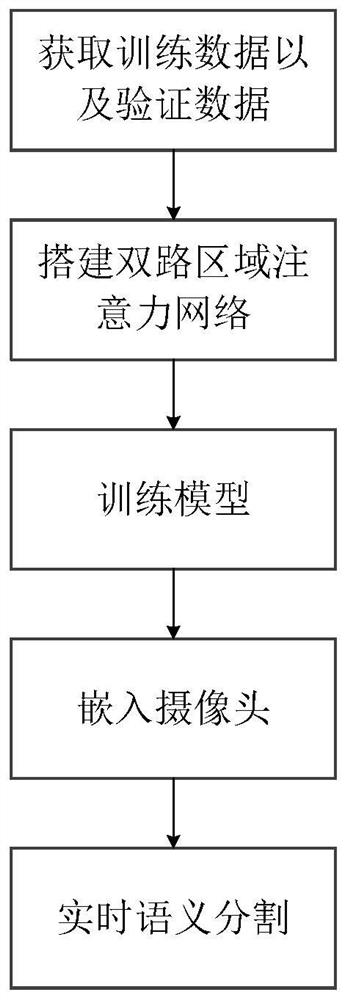

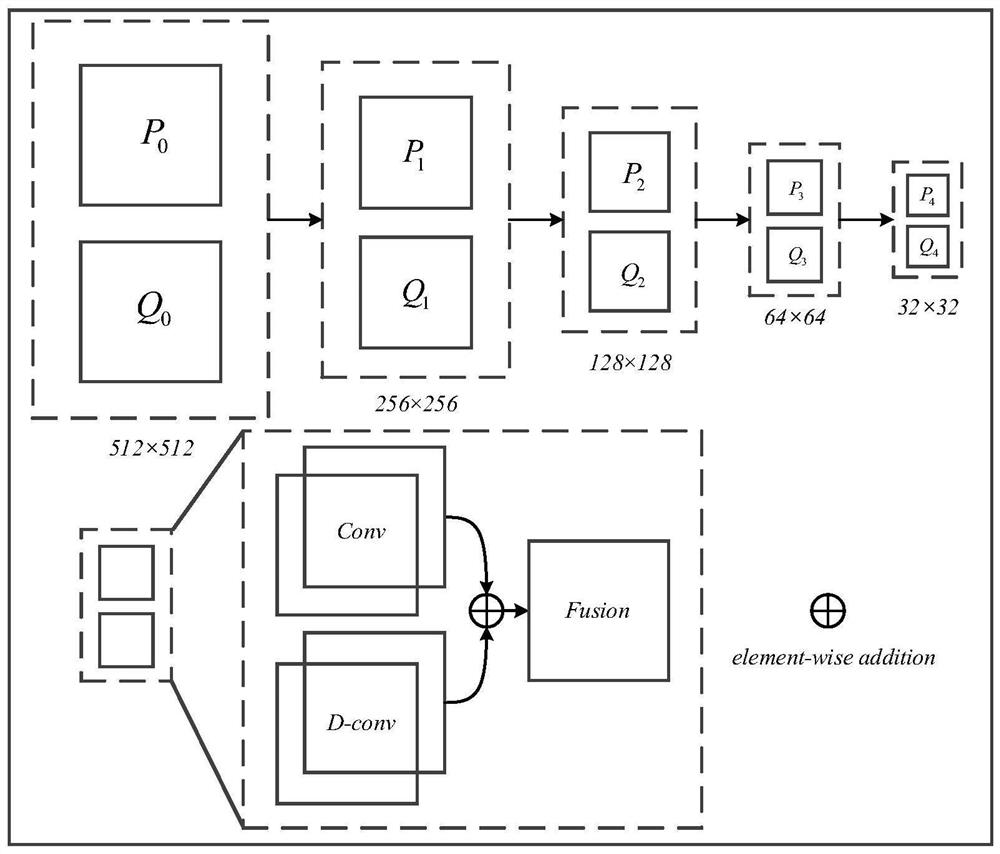

Image visual semantic segmentation method based on two-way region attention coding and decoding

ActiveCN113065578AReal-time Semantic SegmentationImproving Semantic Segmentation AccuracyImage enhancementImage analysisRadiologySample image

The invention discloses an image visual semantic segmentation method based on two-way region attention coding and decoding. The method comprises the following specific steps: acquiring an image sample of a specific scene in advance; normalizing an RBG channel of the sample image, and preparing to train a depth model; encoding an image through a two-way encoder to obtain multi-scale and refined image depth features; carrying out adaptive channel feature enhancement on different distributed targets through regional information by using a decoder based on regional attention; fusing encoder shallow layer features and decoder deep layer features in different extraction stages through skip-connection, and multiplexing the depth features to the maximum extent; and finally, mapping from a final convolutional layer of the deep neural network to an original image, and classifying each pixel point to obtain a final image visual segmentation image. The invention can be embedded into equipment such as a monitoring probe and the like, and the image with complicated distribution is guided through regional information, so that accurate visual semantic segmentation of the image is realized.

Owner:合肥市正茂科技有限公司

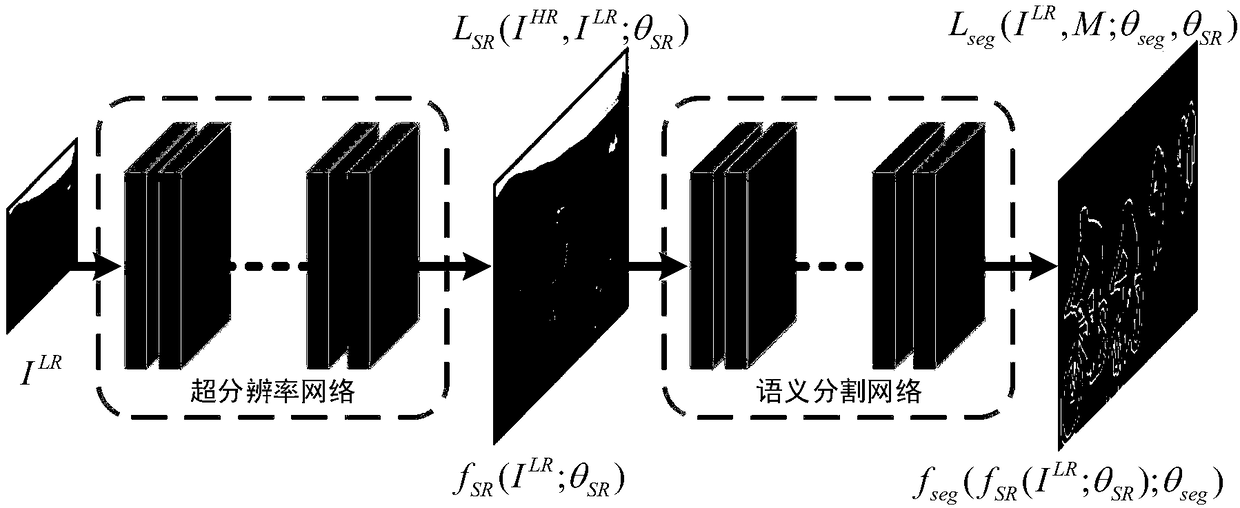

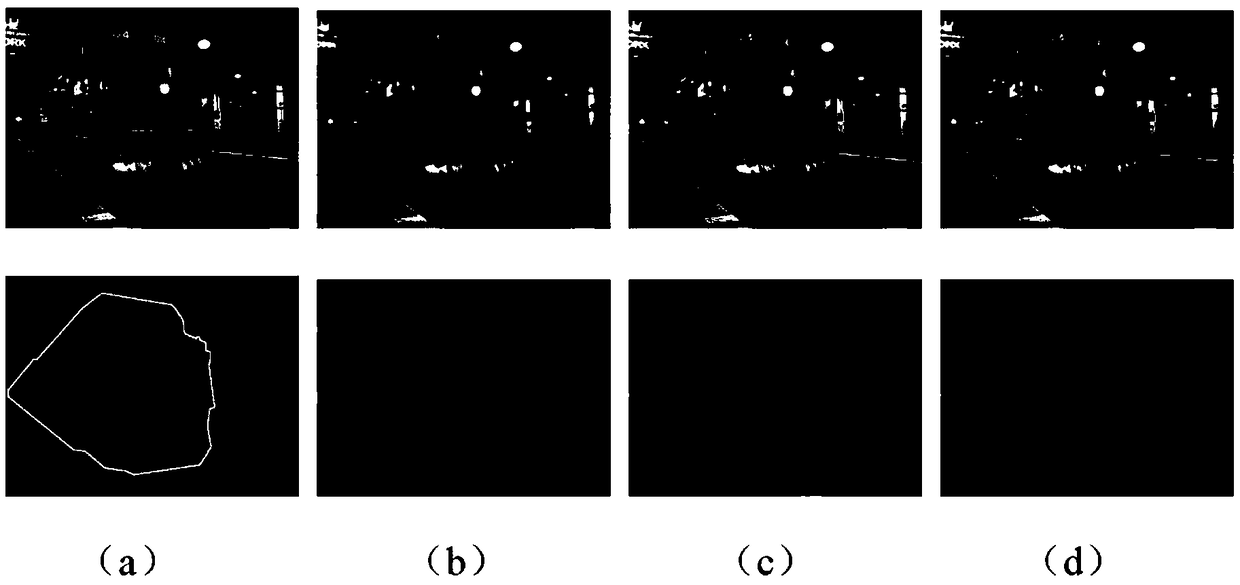

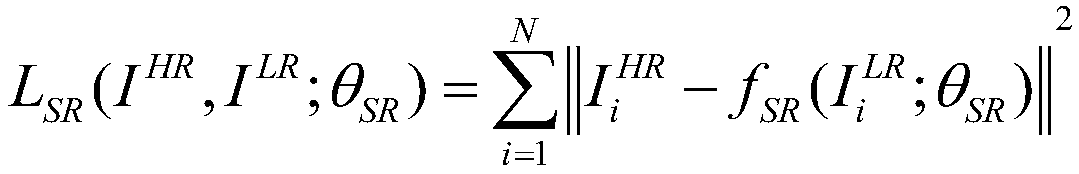

An image super-resolution reconstruction method driven by semantic segmentation

ActiveCN109191392AImprove visual qualityRich in detailsImage enhancementImage analysisPattern recognitionImage resolution

The invention belongs to the technical field of digital image processing, in particular to an image super-resolution reconstruction method driven by semantic segmentation. The method of the inventionspecifically comprises the following steps: separately training a super-resolution network model and a semantic segmentation network model of an image; cascading the independently trained super-resolution network and semantic segmentation network; driven by the task of semantic segmentation, training the super-resolution network. After the low-resolution image is processed by the task-driven network, the accurate semantic segmentation results are obtained. The experiment result shows that the invention can make the super-resolution network better adapt to the segmentation task, provide clear and high-resolution input images for the semantic segmentation network, and effectively improve the segmentation accuracy of the low-resolution image.

Owner:FUDAN UNIV

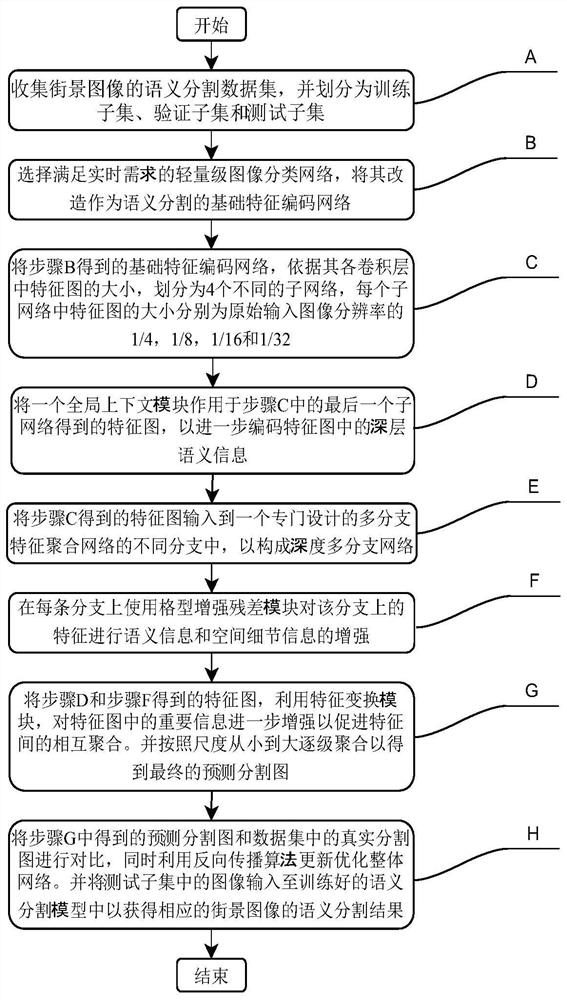

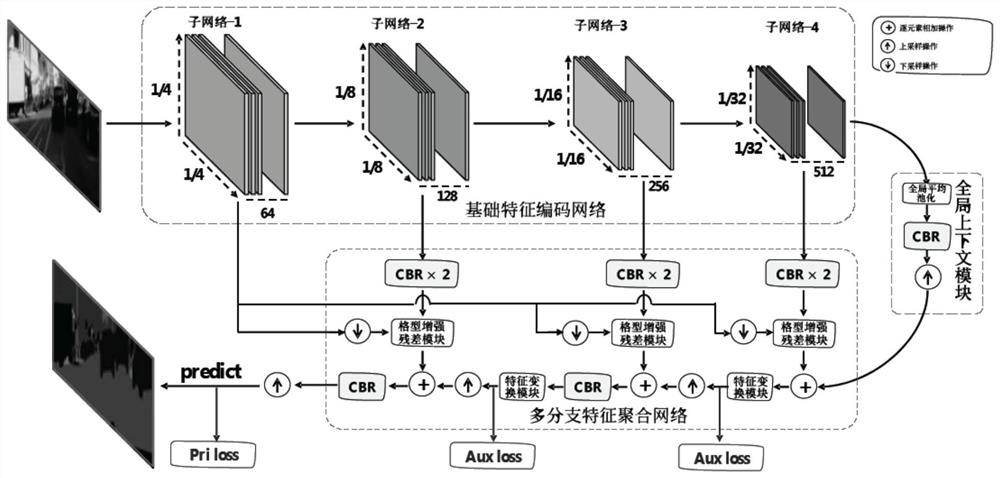

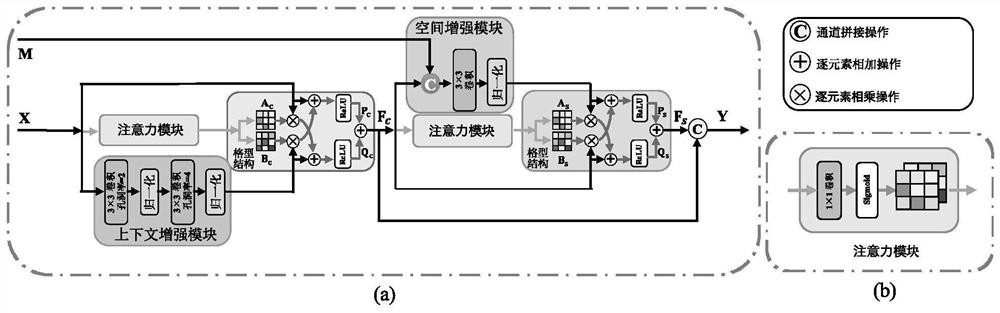

Real-time street view image semantic segmentation method based on deep multi-branch aggregation

ActiveCN113011336AImproving Semantic Segmentation AccuracyRelief speedCharacter and pattern recognitionNeural architecturesVisual technologyEncoder decoder

The invention discloses a real-time street view image semantic segmentation method based on deep multi-branch aggregation, and relates to a computer vision technology. A popular encoder-decoder structure is adopted; the method comprises the following steps: firstly, transforming a lightweight image classification network as a basis to serve as an encoder; dividing the encoder into different sub-networks, and sending features in each sub-network into a designed multi-branch feature aggregation network and a global context module; performing enhancement on spatial details and semantic information on features needing to be aggregated by using a lattice-type enhancement residual module and a feature transformation module in the multi-branch feature aggregation network; and finally, according to the sizes of the feature maps, aggregating the output feature maps of the global context module and the output feature maps of the multi-branch feature aggregation network step by step from small to large so as to obtain a final semantic segmentation result map. While the streetscape image with a large resolution is processed, high streetscape image semantic segmentation precision and real-time prediction speed are maintained.

Owner:XIAMEN UNIV

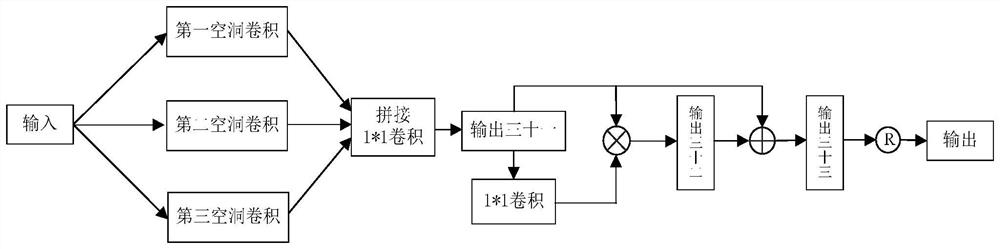

Semantic segmentation of road scene based on multi-scale perforated convolutional neural network

ActiveCN109508639AImproving Semantic Segmentation AccuracyImprove efficiencyCharacter and pattern recognitionNeural architecturesHidden layerOptimal weight

The invention discloses a road scene semantic segmentation method based on a multi-scale perforated convolutional neural network. In a training stage, a multi-scale perforated convolutional neural network is constructed. The hidden layer thereof comprises nine neural network blocks, five cascade layers and six up-sampling blocks. The original road scene images are input into the multi-scale perforated convolutional neural network for training, and 12 corresponding semantic segmentation prediction maps are obtained. By calculating the loss function value between the set of 12 semantic segmentation prediction maps corresponding to the original road scene image and the set of 12 monothermally coded images processed from the corresponding real semantic segmentation image, The optimal weight vector and bias term of multi-scale perforated convolution neural network classification training model are obtained. In the testing phase, the road scene images to be segmented are input into the multi-scale perforated convolutional neural network classification training model, and the predictive semantic segmentation images are obtained. The invention has the advantages of improving the efficiencyand accuracy of the semantic segmentation of the road scene images.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

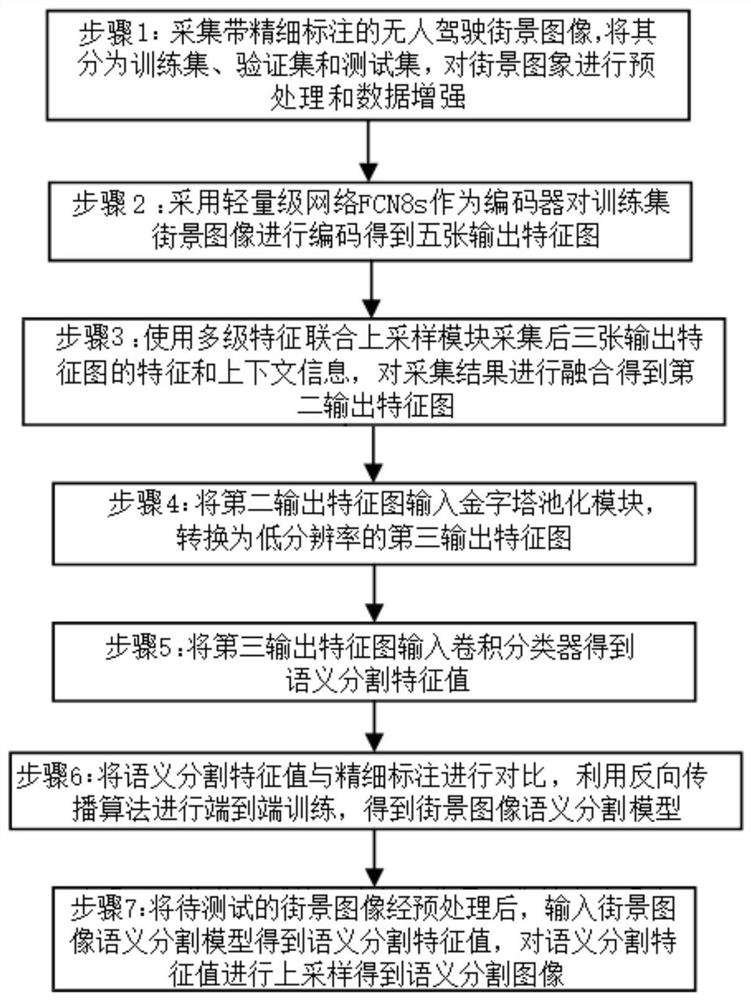

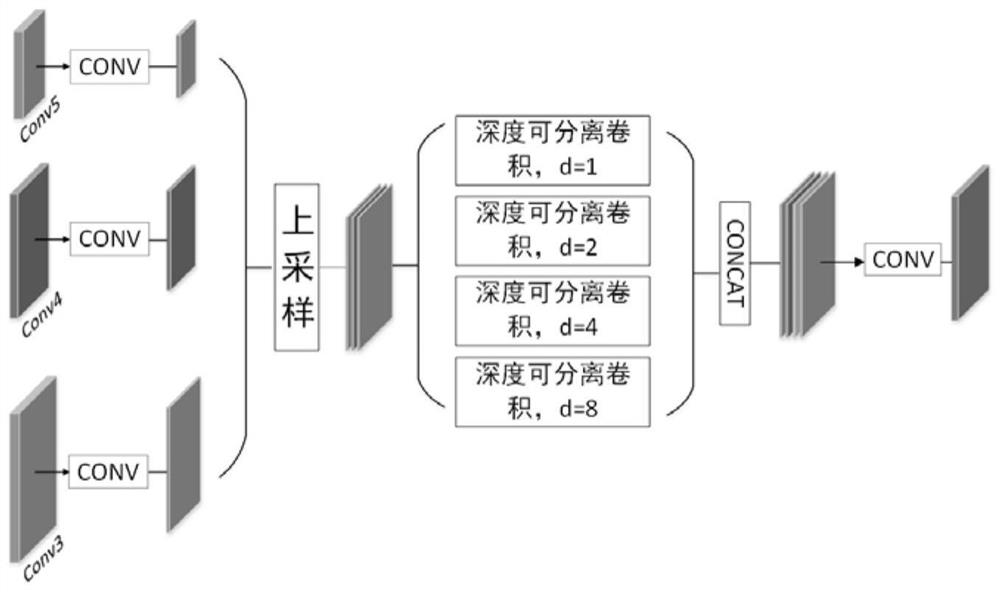

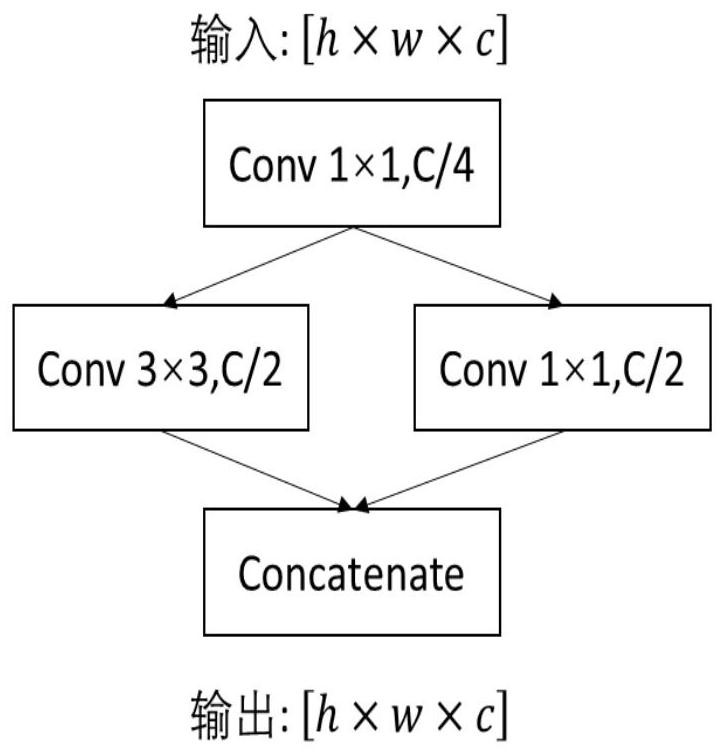

Streetscape image semantic segmentation system and segmentation method, electronic equipment and computer readable medium

PendingCN112819000AReduce computationSplitting speed is fastCharacter and pattern recognitionNeural learning methodsPattern recognitionEngineering

The invention discloses a streetscape image semantic segmentation system and segmentation method, electronic equipment and a computer readable medium. The segmentation method comprises the following steps: step 1, acquiring a streetscape image and carrying out preprocessing and data enhancement on the streetscape image; step 2, encoding the streetscape image into an output feature map by using an encoder; step 3, collecting features of the last three output feature maps by using a multi-level feature combined up-sampling module, and fusing the features to obtain a second output feature map; 4, converting the second output feature map into a third output feature map; 5, inputting the third output feature map into a convolution classifier to obtain a semantic segmentation feature value; step 6, performing end-to-end training by using a back propagation algorithm to obtain a streetscape image semantic segmentation model; and 7, performing semantic segmentation on the streetscape image by using the streetscape image semantic segmentation model. According to the method, under the condition that semantic segmentation precision is not reduced, the speed of network segmentation is increased, and the real-time response capability of the method in application is enhanced.

Owner:CHANGCHUN UNIV OF TECH

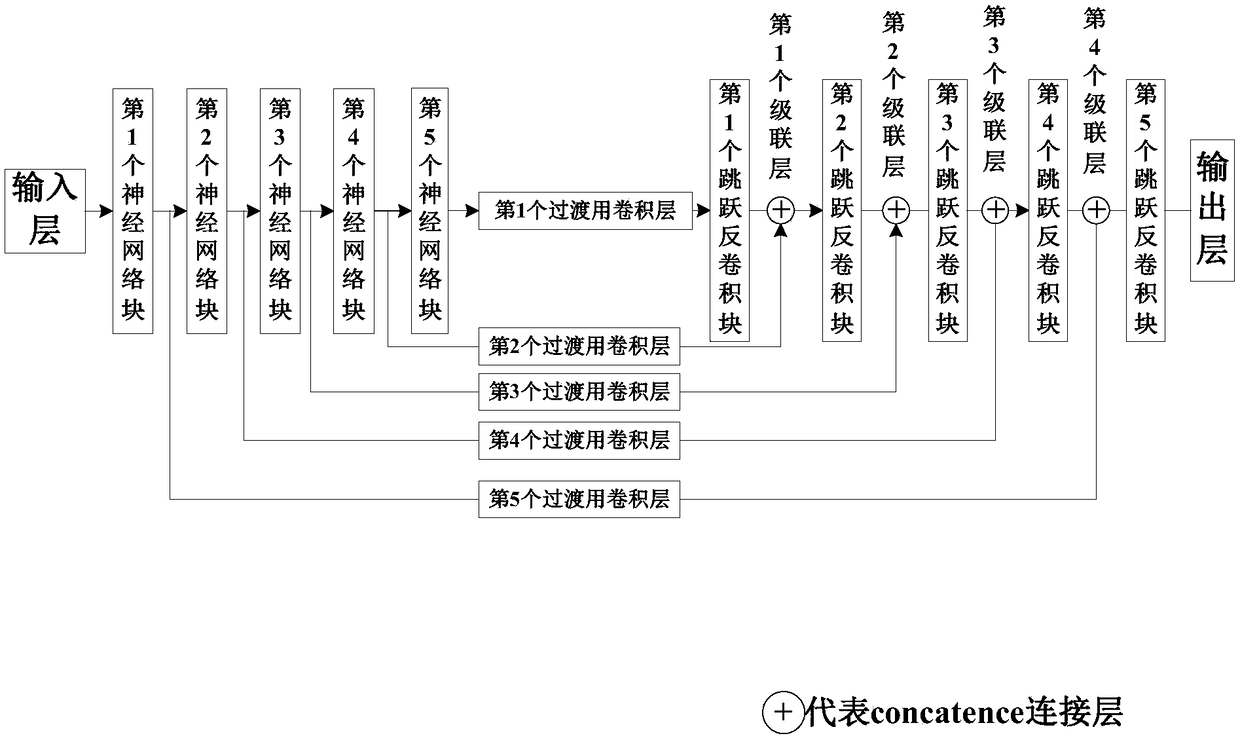

A road scene semantic segmentation method based on a convolution neural network

ActiveCN109446933AImproving Semantic Segmentation AccuracyAccurate descriptionCharacter and pattern recognitionNeural architecturesComputer visionOptimal weight

The invention discloses a road scene semantic segmentation method based on a convolution neural network. In a training stage, a convolution neural network is constructed. The hidden layer comprises five neural network blocks, five transition convolution layers, five skip deconvolution blocks and four cascade layers. The original road scene images are inputted into the convolution neural network for training, and 12 corresponding semantic segmentation prediction maps are obtained. Secondly, by calculating the loss function value between the set of 12 semantic segmentation prediction images corresponding to the original road scene images and the set of 12 heat-coded images corresponding to the real semantic segmentation images, the optimal weight vector and bias term of the classification training model of the convolution neural network are obtained. In the testing phase, the road scene images to be semantically segmented are inputted into the convolution neural network classification training model to obtain the predictive semantic segmentation images. The invention has the advantages of improving the efficiency and accuracy of the semantic segmentation of the road scene images.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

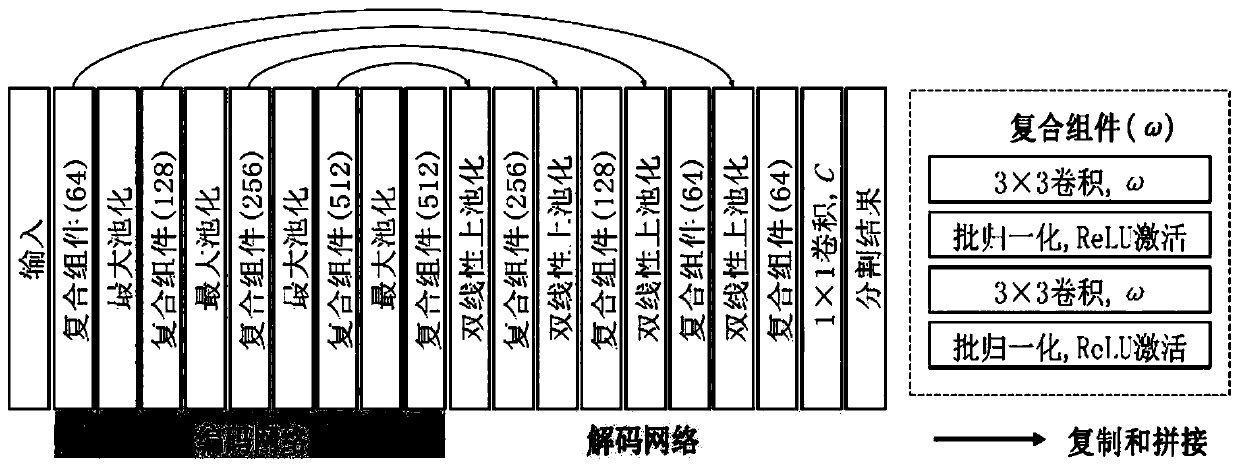

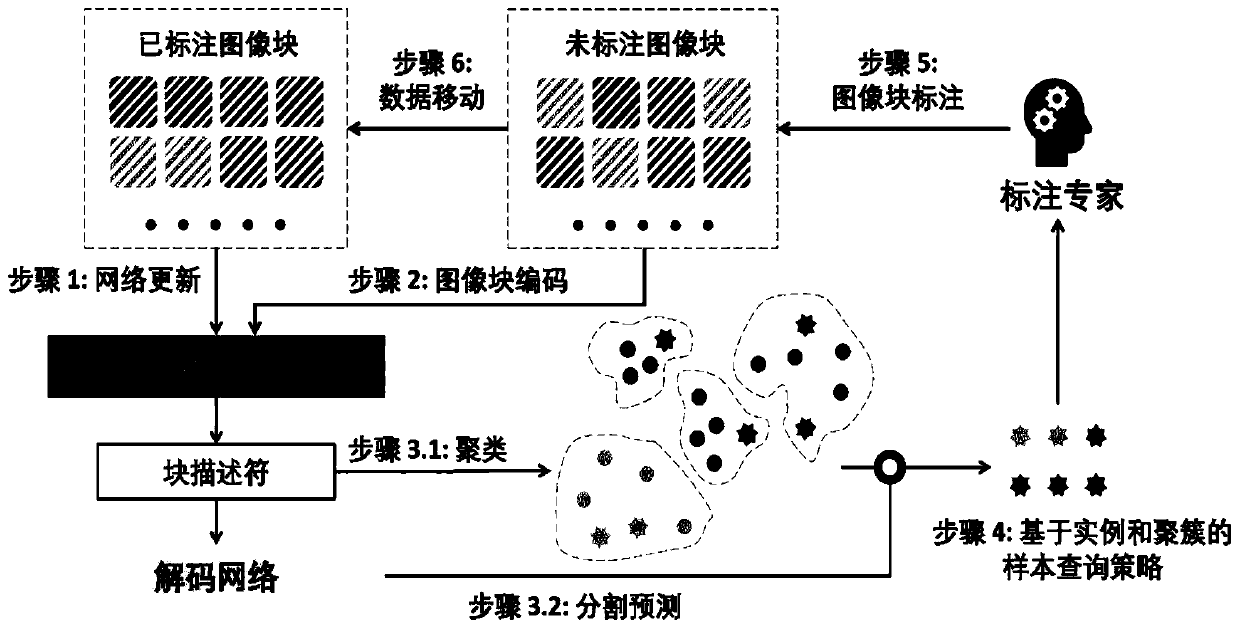

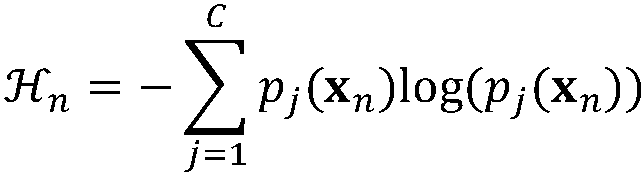

Medical image annotation recommendation method based on block level active learning

ActiveCN110222772AAvoid wastingReduce labeling costsImage analysisCharacter and pattern recognitionPattern recognitionBlock level

The invention discloses a medical image annotation recommendation method based on block-level active learning, which comprises the following steps: firstly, dividing a whole image into different regions, identifying and distinguishing the types of objects contained in each region, and then annotating and recommending image blocks and object types to realize fine-grained evaluation of annotation values of each region of the image. According to the method, the region with the annotation value is positioned, so that the problem of repeated recommendation on the medical image in an existing annotation recommendation method is solved. According to the method, the basic unit of image annotation recommendation is reduced to the image block level, resource waste caused by repeated annotation of similar objects in the image is avoided, and the annotation cost is further reduced. Compared with the current best medical image annotation recommendation method, the method has the advantages that under the condition that the same semantic segmentation precision is achieved, the annotation cost can be reduced by 15% at most, or under the condition that the same annotation cost is achieved, the semantic segmentation precision can be improved by 2%.

Owner:ZHEJIANG UNIV

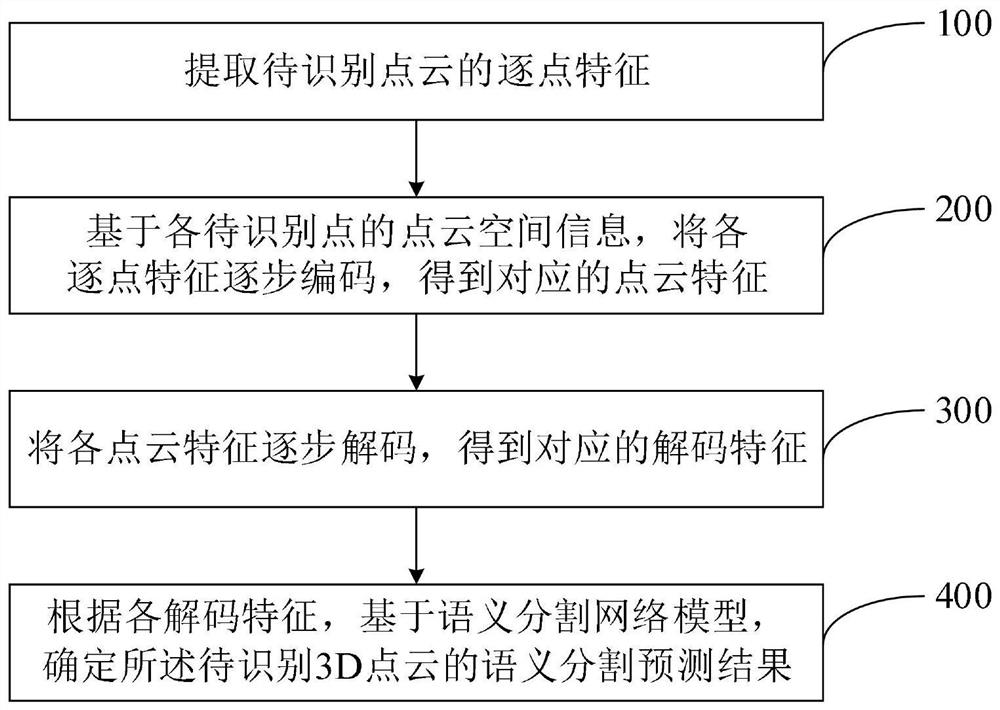

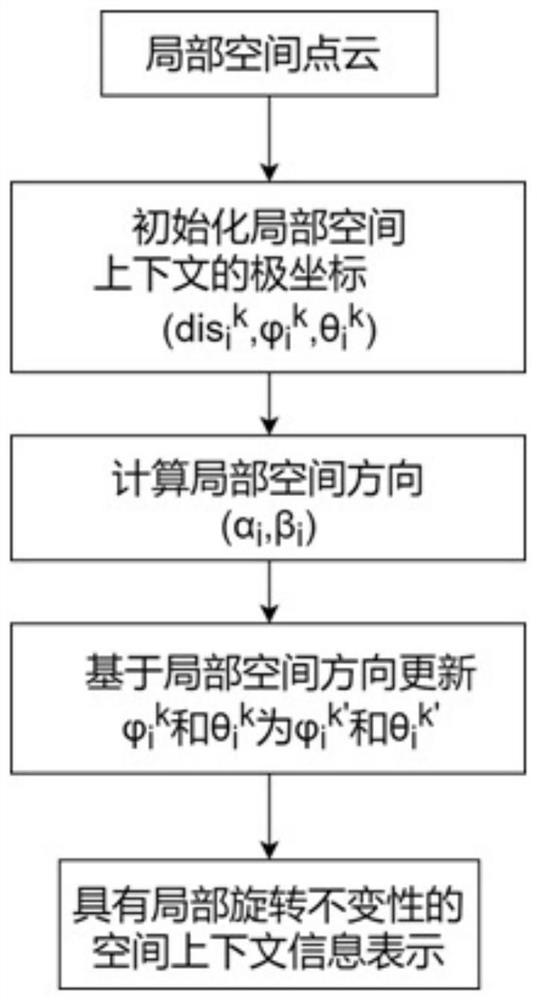

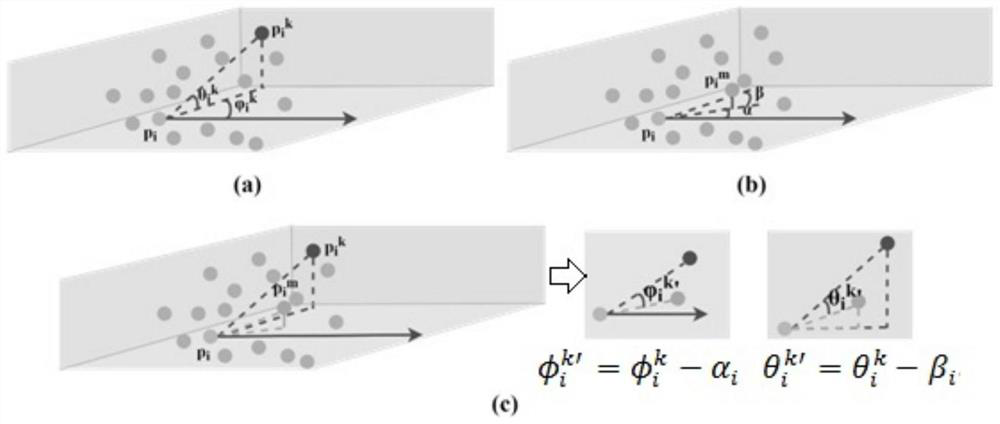

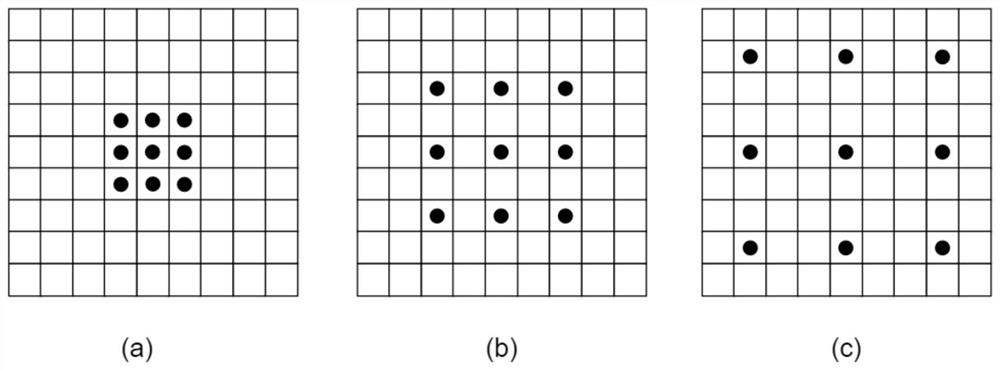

Large-scale point cloud semantic segmentation method and system

ActiveCN113011430AEffective space featuresImproving Semantic Segmentation AccuracyCharacter and pattern recognitionPoint cloudAlgorithm

The invention relates to a large-scale point cloud semantic segmentation method and system, and the method comprises the steps: extracting point-by-point features of a to-be-recognized point cloud,wherein the to-be-recognized point cloud is composed of a plurality of to-be-recognized points; on the basis of the point cloud space information of each to-be-recognized point, gradually encoding each point-by-point feature to obtain a corresponding point cloud feature; decoding the point cloud features step by step to acquire corresponding decoding feature; and according to each decoding feature, based on a semantic segmentation network model, determining a semantic segmentation prediction result of the to-be-recognized 3D point cloud. The invention extracts point-by-point features of the to-be-recognized point cloud, extracting more effective spatial features from large-scale point cloud information, gradually encodes each point-by-point feature based on point cloud spatial information of each to-be-recognized point to obtain point cloud features, further decodes to obtain decoding features, and determining a semantic segmentation prediction result of the to-be-recognized 3D point cloud according to the decoding features. Therefore, the semantic information of the surrounding space environment is obtained, and semantic segmentation precision is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

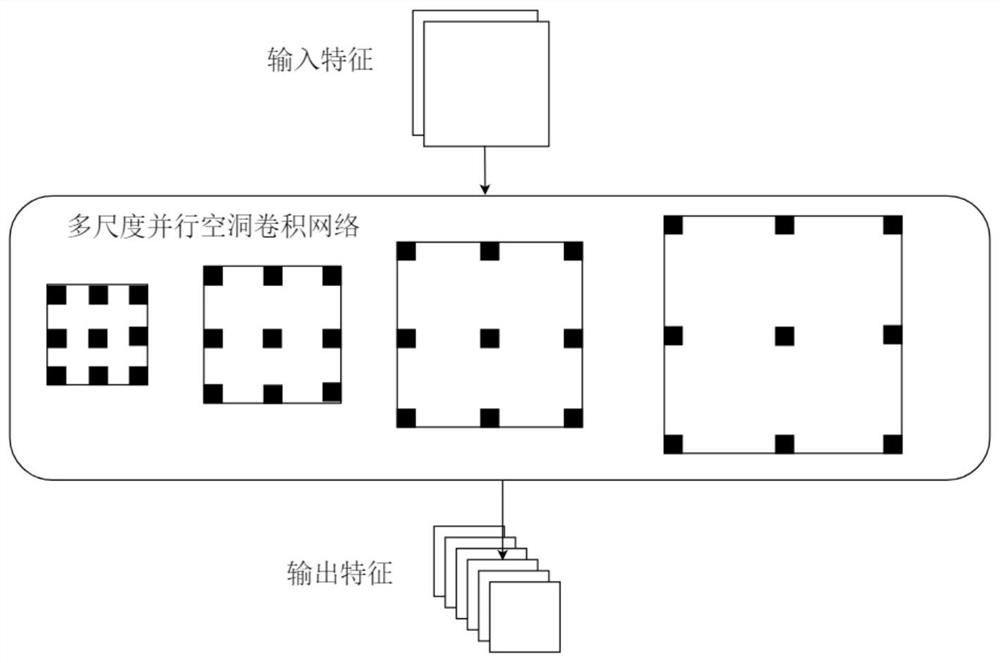

Remote sensing image semantic segmentation method based on parallel cavity convolution

PendingCN112837320AEasy layoutNot easy to missImage enhancementImage analysisVideo memoryConditional random field

The invention discloses a remote sensing image semantic segmentation method based on parallel cavity convolution, and relates to the technical field of remote sensing images, and the method comprises the following steps: obtaining a high-resolution remote sensing image in advance, and carrying out the slicing, normalization and standardization of the high-resolution remote sensing image, and obtaining a source high-resolution remote sensing image; initializing a low-layer network of a feature extraction network resnet101 based on a resnet101 parameter pre-trained on an ImageNet, constructing a parallel cavity convolutional network, and extracting a shallow-layer feature of a source high-resolution remote sensing image; inputting the shallow layer features into a parallel dilated convolutional network to obtain multi-scale information, and fusing the multi-scale information; and fusing the fused features with the shallow features again, and repairing image-level information by using a full-connection conditional random field to obtain a semantic segmentation result. Under the condition that extra parameters are not increased, the receptive field of convolution is expanded, and compared with standard convolution achieving the same receptive field, the parallel cavity convolution method can save more video memories.

Owner:HUAZHONG UNIV OF SCI & TECH

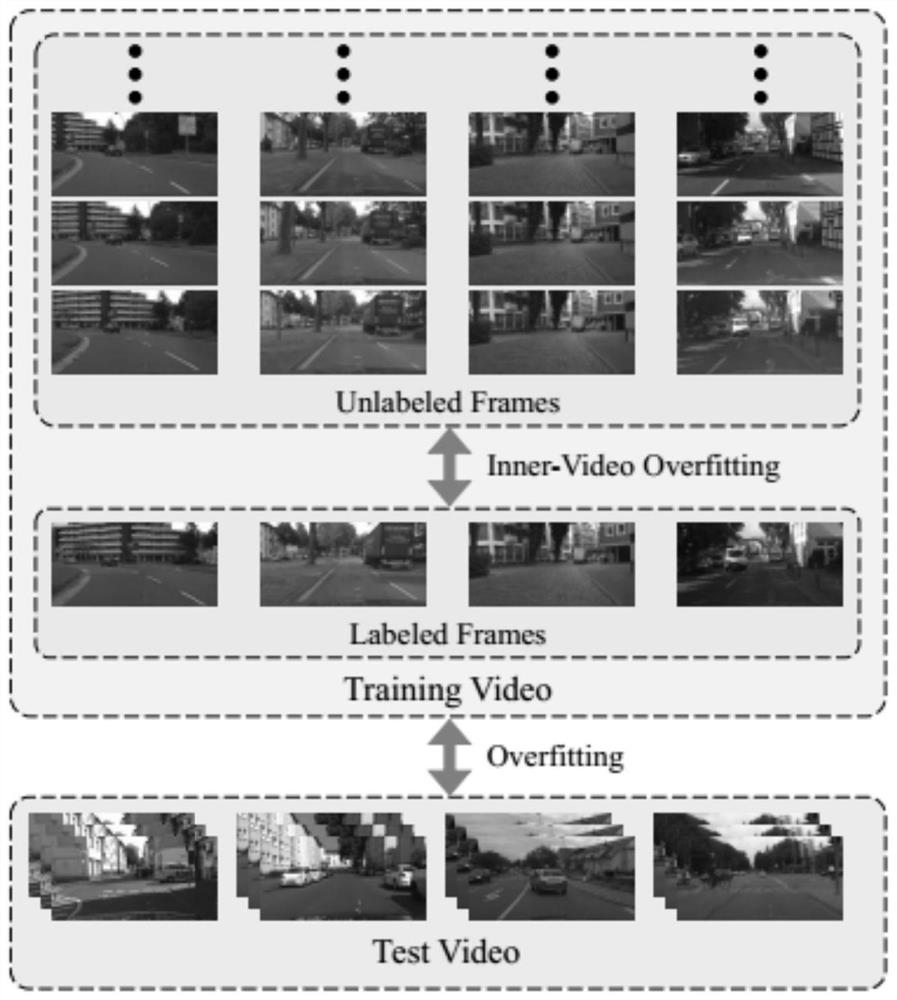

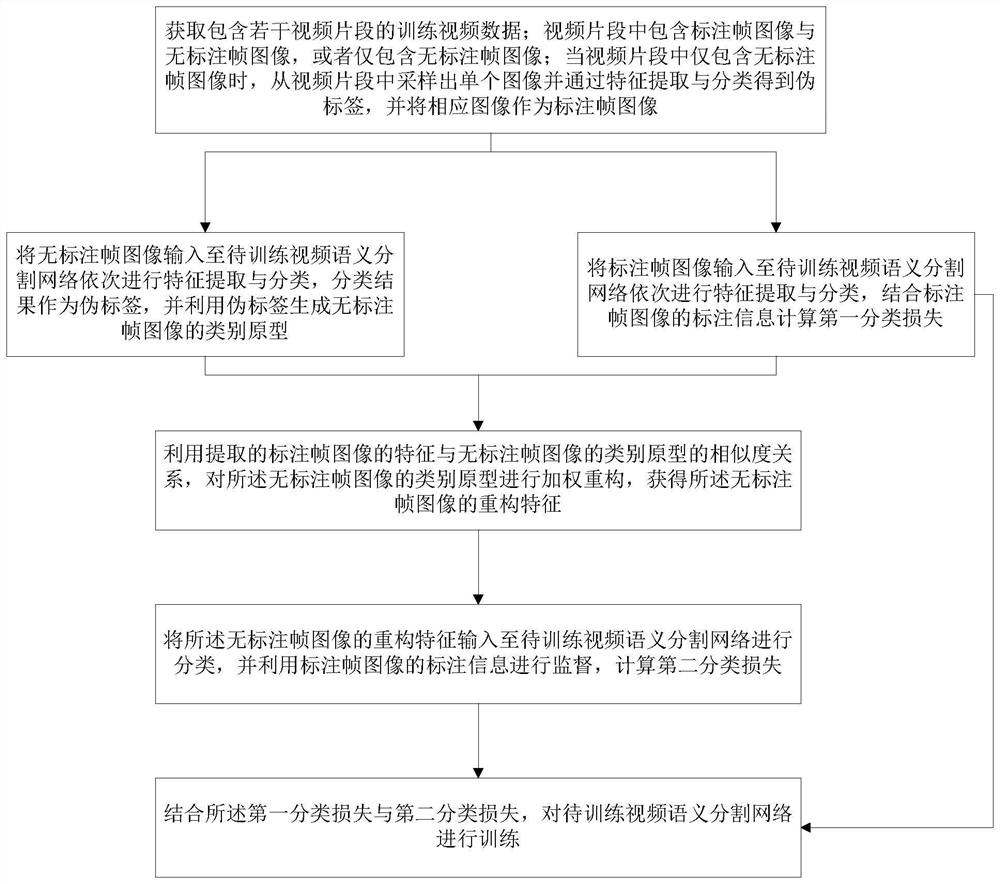

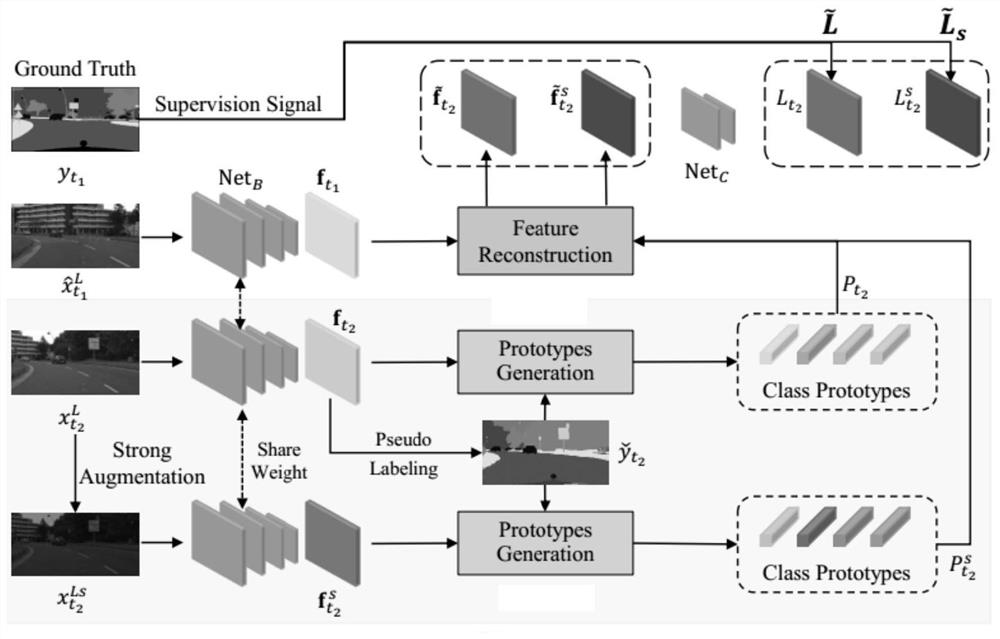

Video semantic segmentation network training method, system and device and storage medium

PendingCN114494973AReduce overfittingImprove generalization abilityCharacter and pattern recognitionPattern recognitionTest set

The invention discloses a video semantic segmentation network training method, system and device, and a storage medium, designs an inter-frame feature reconstruction scheme by using the inherent relevance of video data, reconstructs labeled frame features by means of a category prototype extracted from unlabeled frame features, and improves the video semantic segmentation efficiency. Therefore, the annotation information is utilized to supervise and learn the reconstruction features, the purpose of providing accurate supervision signals for the non-annotated frames by utilizing the single-frame annotation information of the video data is achieved, different frames of the training video data are supervised by the same supervision signals, feature distribution of the different frames is drawn close, and the robustness of the video data is improved. The inter-frame over-fitting phenomenon can be effectively relieved, so that the generalization performance of the model is improved; and tests on a test set show that the video semantic segmentation network trained by the method provided by the invention obtains higher segmentation precision.

Owner:UNIV OF SCI & TECH OF CHINA

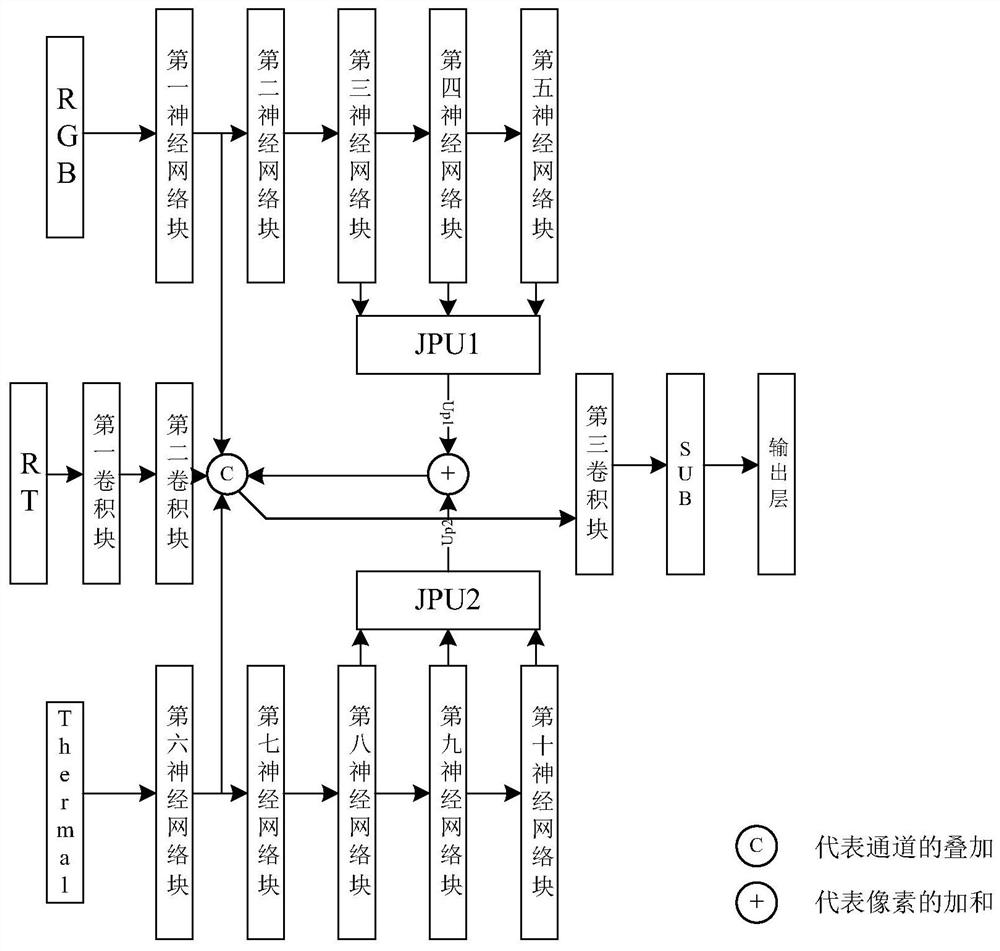

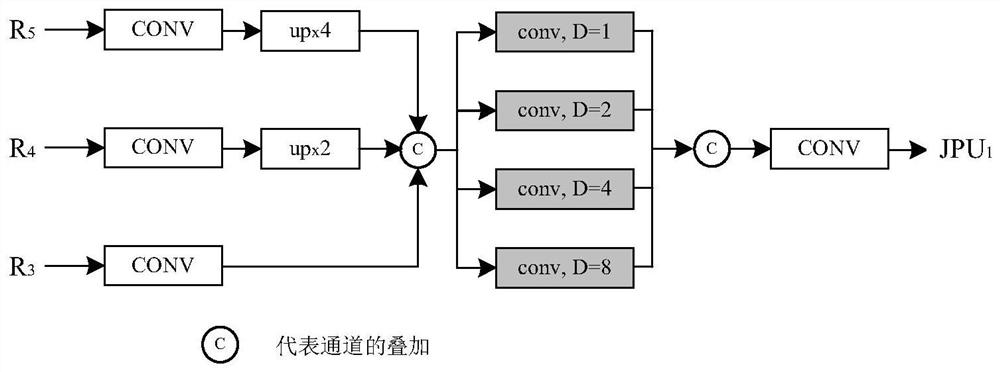

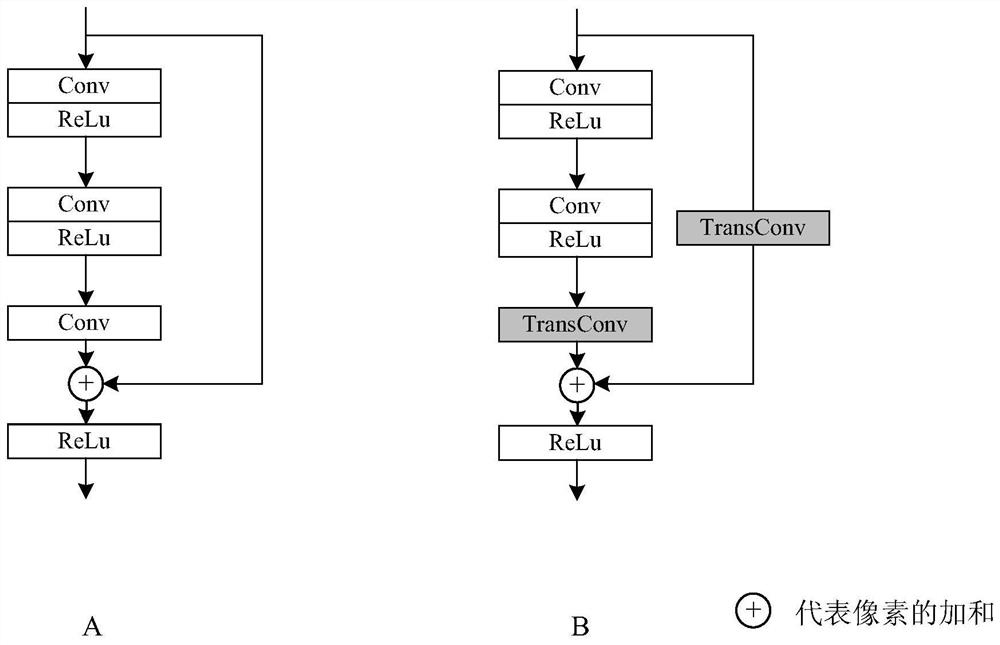

Road scene semantic segmentation method based on convolutional neural network

InactiveCN112508956AImproving Semantic Segmentation AccuracyAccurate descriptionImage enhancementImage analysisComputer visionConvolution

The invention discloses a road scene semantic segmentation method based on a convolutional neural network. The method includes: n a training stage, building a convolutional neural network, and a hidden layer of the convolutional neural network comprises ten neural network blocks, three convolutional blocks, two joint pyramid up-sampling modules and separable up-sampling blocks; inputting the original road scene image into a convolutional neural network for training to obtain nine corresponding semantic segmentation prediction images; calculating a loss function value between a set formed by nine semantic segmentation prediction images corresponding to an original road scene image and a corresponding semantic segmentation label image set to obtain an optimal weight vector and an offset termof a convolutional neural network classification training model; in a test stage, inputting a road scene image to be semantically segmented into the convolutional neural network classification training model to obtain a predicted semantic segmentation image. According to the invention, the semantic segmentation efficiency of the road scene image is improved, and the accuracy is improved.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

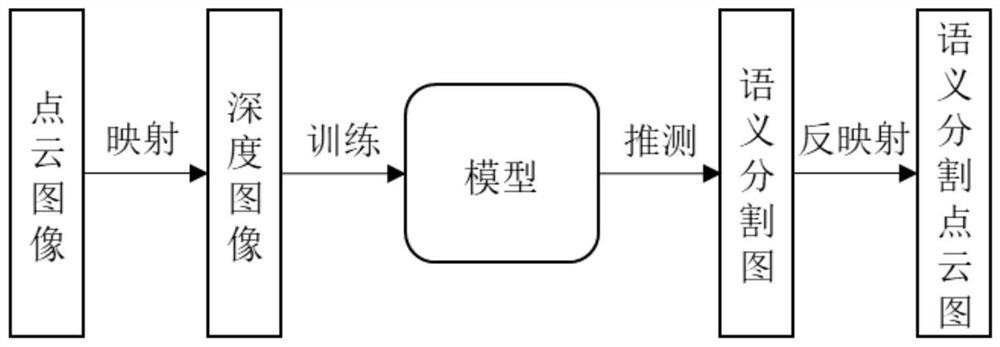

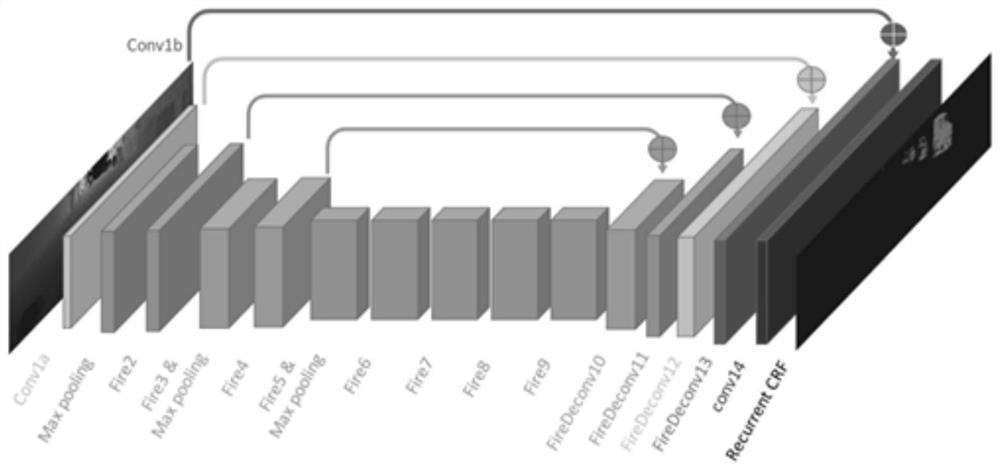

Laser radar environment sensing method and system based on deep learning

InactiveCN112001272AReduce algorithm difficultyReduce development and maintenance costsImage enhancementImage analysisPoint cloudLidar point cloud

The invention discloses a laser radar environment sensing method and system based on deep learning. The method comprises the following steps: step 1, mapping laser radar point cloud into a depth map;converting each point of the laser radar from a spherical coordinate system to an image coordinate system; 2, executing a depth map semantic segmentation step; and step 3, mapping the semantic segmentation graph into a semantic segmentation point cloud graph. Laser radar spherical mapping is adopted, a stable mapping formula is used, the algorithm difficulty is low, and the development and maintenance cost is low; semantic segmentation is high in precision and multiple in segmentation types; and environment recognition data suitable for the automatic driving automobile is formed, so that the automatic driving automobile can identify surrounding objects conveniently.

Owner:苏州富洁智能科技有限公司

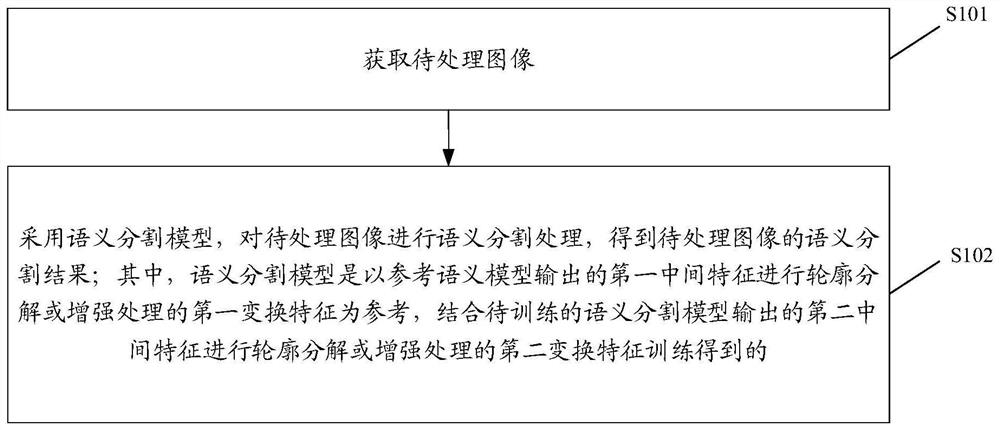

Semantic segmentation method and device, electronic equipment and computer readable storage medium

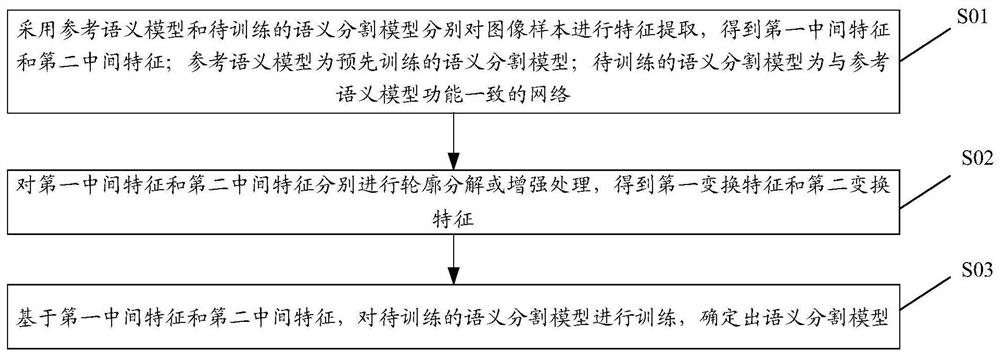

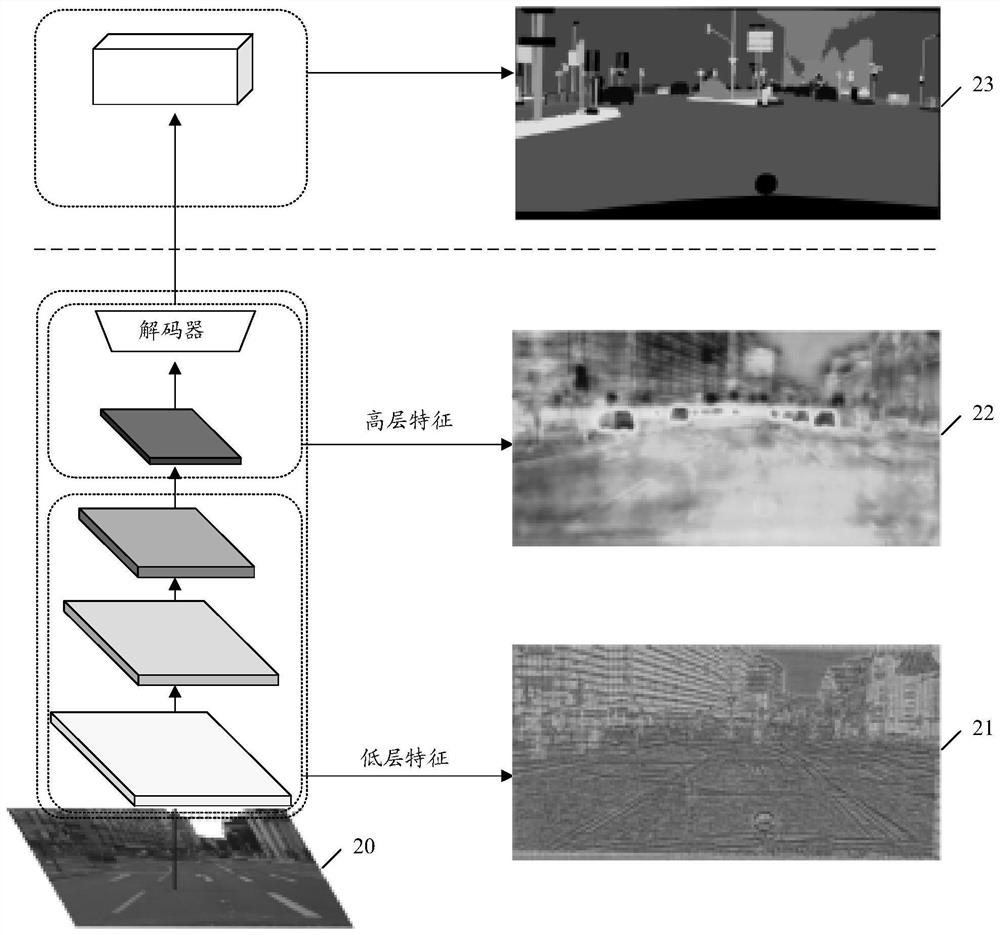

PendingCN113470057AExtensive knowledgeImproving Semantic Segmentation AccuracyImage enhancementImage analysisEngineeringSemantic feature

The embodiment of the invention provides a semantic segmentation method and device, electronic equipment and a computer readable storage medium. The method comprises: obtaining a to-be-processed image; and performing semantic segmentation processing on the to-be-processed image by adopting the semantic segmentation model to obtain a semantic segmentation result of the to-be-processed image, wherein the semantic segmentation model is obtained by taking a first transformation feature, which is obtained by performing contour decomposition or enhancement processing on a first intermediate feature output by a reference semantic model, as a reference, and combining with a second transformation feature, which is obtained by performing contour decomposition or enhancement processing on a second intermediate feature output by the to-be-trained semantic segmentation model. The first intermediate feature and the second intermediate feature comprise at least one of the following groups: a first texture feature and a second texture feature; and a first semantic feature and a second semantic feature. The first transformation feature and the second transformation feature comprise at least one of the following groups: a first contour feature and a second contour feature; and a first enhancement feature and a second enhancement feature.

Owner:SHANGHAI SENSETIME INTELLIGENT TECH CO LTD

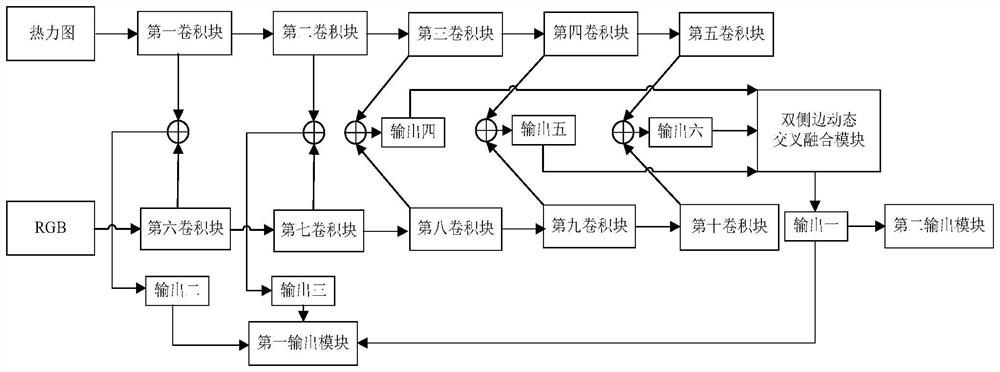

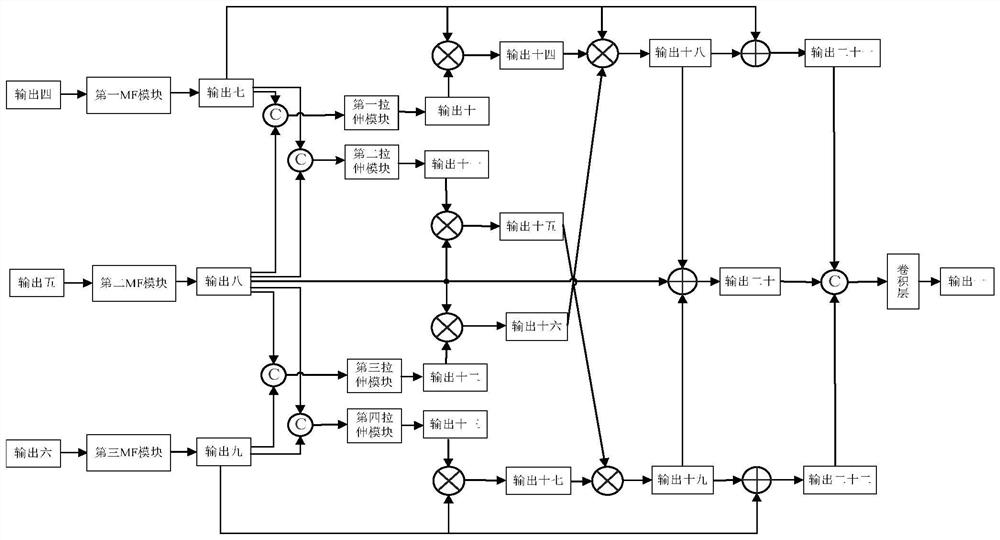

Road scene image processing method based on double-side dynamic cross fusion

PendingCN113470033AImproving Semantic Segmentation AccuracyAccurate descriptionImage enhancementImage analysisImaging processingImage manipulation

The invention discloses a road scene image processing method based on double-side dynamic cross fusion. The method comprises two processes of a training stage and a testing stage, and includes selecting road scene images and corresponding thermodynamic diagrams and real semantic understanding images to form a training set; constructing a convolutional neural network; performing data enhancement on the training set to obtain an initial input image pair, and inputting the initial input image pair into a convolutional neural network for processing to obtain a corresponding road scene prediction map; calculating a loss function value between the road scene prediction map and the corresponding real semantic segmentation image; repeating the above steps to obtain a convolutional neural network classification training model; and inputting the road scene image to be subjected to semantic segmentation and the corresponding thermodynamic image into the convolutional neural network classification training model to obtain a corresponding predicted semantic segmentation image. According to the invention, the semantic segmentation accuracy of the road scene image is effectively improved, the loss of detail features is reduced, and the edge of an object can be better restored.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

Methods and systems for providing fast semantic proposals for image and video annotation

ActiveUS10706557B2Shorten the timeFast and accurate pre-proposalsImage enhancementImage analysisFeature vectorFeature Dimension

Methods and systems for providing fast semantic proposals for image and video annotation including: extracting image planes from an input image; linearizing each of the image planes to generate a one-dimensional array to extract an input feature vector per image pixel for the image planes; abstracting features for a region of interest using a modified echo state network model, wherein a reservoir increases feature dimensions per pixel location to multiple dimensions followed by feature reduction to one dimension per pixel location, wherein the echo state network model includes both spatial and temporal state factors for reservoir nodes associated with each pixel vector, and wherein the echo state network model outputs a probability image; post-processing the probability image to form a segmented binary image mask; and applying the segmented binary image mask to the input image to segment the region of interest and form a semantic proposal image.

Owner:VOLVO CAR CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com