Road scene image processing method based on double-side dynamic cross fusion

A scene image, cross fusion technology, applied in the field of image processing of deep learning, can solve the problems of low segmentation accuracy, reduced image feature information, and unrepresentativeness, so as to improve the accuracy of semantic segmentation, reduce the loss of detailed features, reduce The effect of information loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

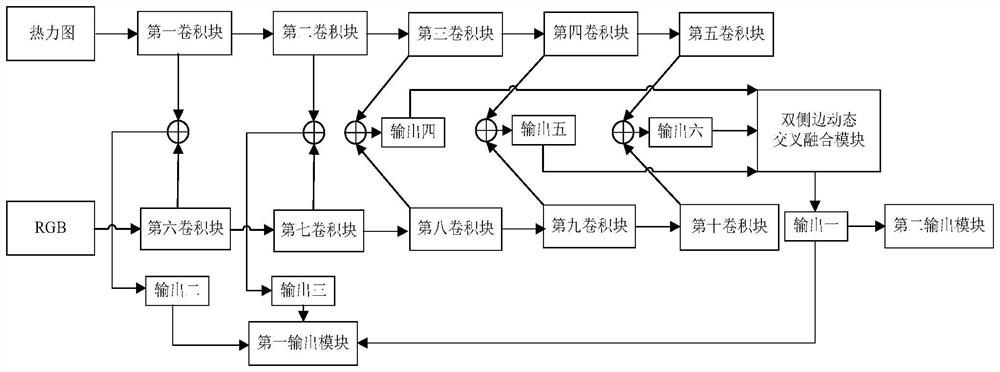

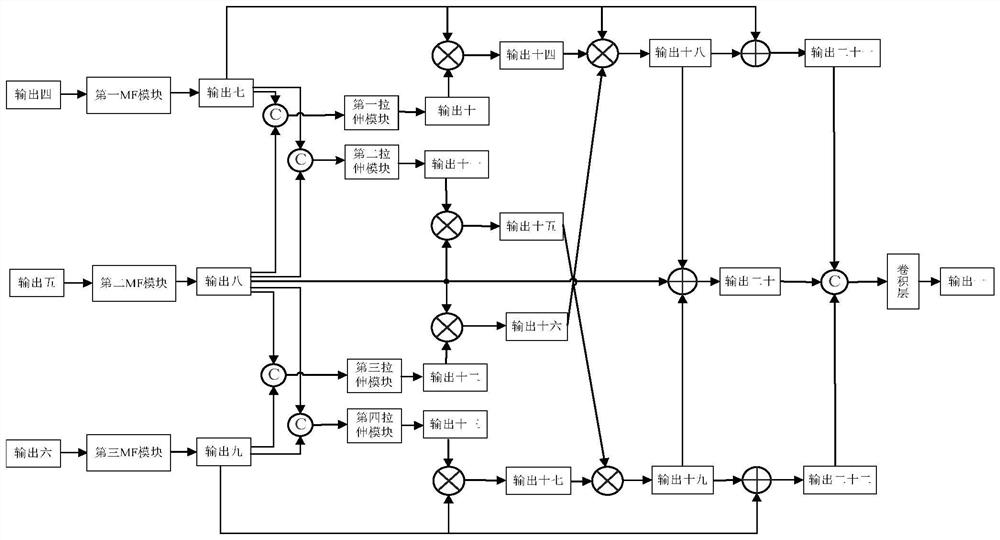

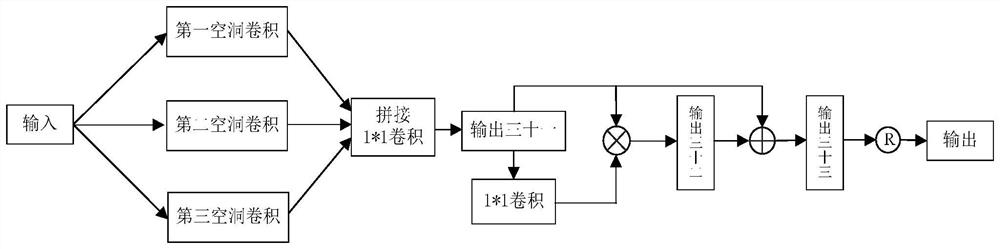

[0049] The present invention proposes an unmanned driving road scene understanding method based on bilateral dynamic cross-fusion, and its overall realization block diagram is as follows figure 1 As shown, it includes two processes of training phase and testing phase;

[0050] The specific steps of the described training phase process are:

[0051] Step 1_1: Select Q pieces of original road scene images and the thermal map (Thermal) and real semantic segmentation images corresponding to each original road scene image, and form a training set, record the qth original road scene image in the training set for {I q (i, j)}, combine the training set with {I q (i, j)} corresponding to the real semantic segmentation image is denoted as Then, the existing one-hot encoding technology (one-hot) is used to process the real semantic segmentation ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com