Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

81 results about "Variable bitrate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Variable bitrate (VBR) is a term used in telecommunications and computing that relates to the bitrate used in sound or video encoding. As opposed to constant bitrate (CBR), VBR files vary the amount of output data per time segment. VBR allows a higher bitrate (and therefore more storage space) to be allocated to the more complex segments of media files while less space is allocated to less complex segments. The average of these rates can be calculated to produce an average bitrate for the file.

Bitrate constrained variable bitrate audio encoding

ActiveUS7634413B1Excessively high bitratesImprove sound qualitySpeech analysisCoding blockSound quality

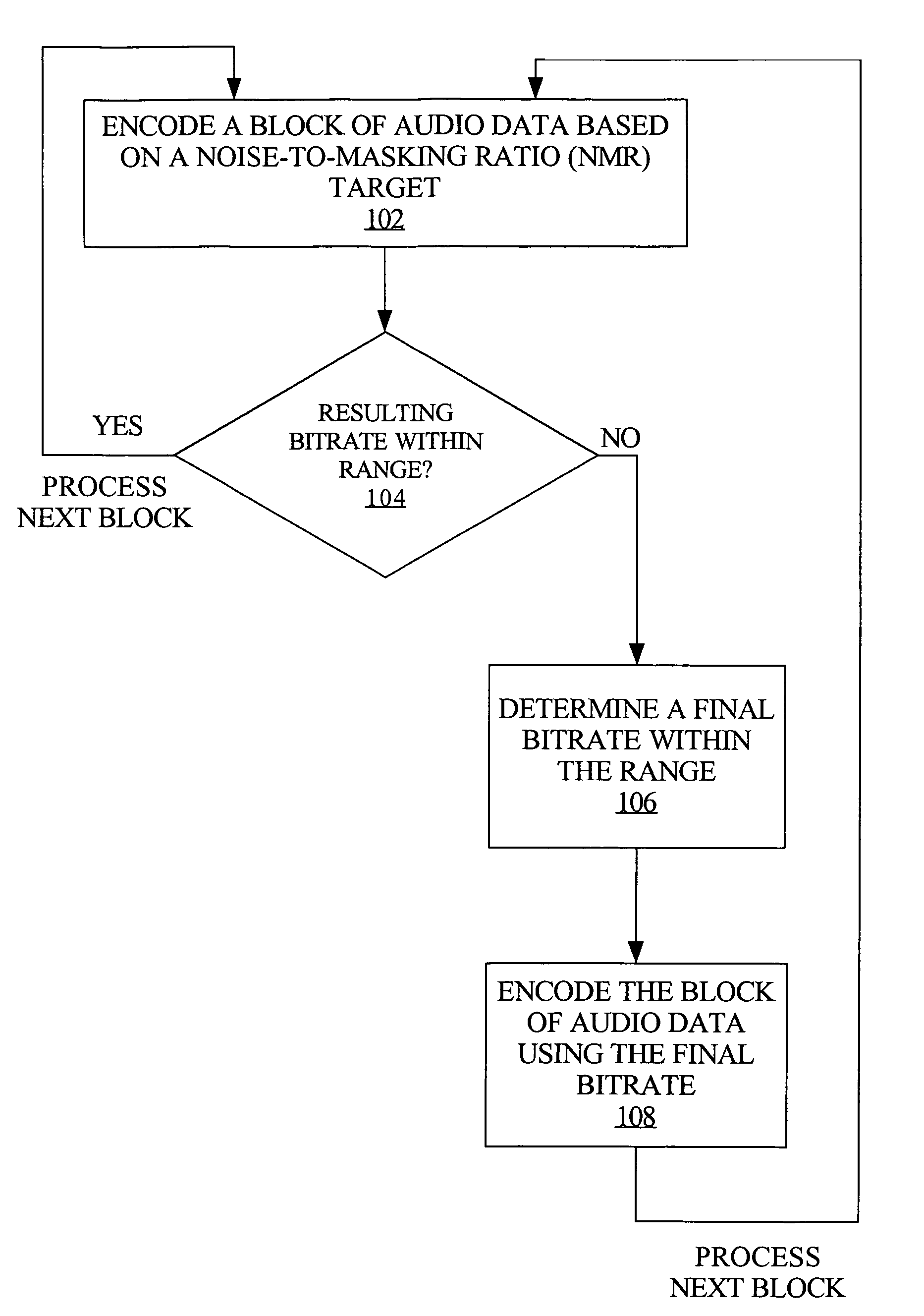

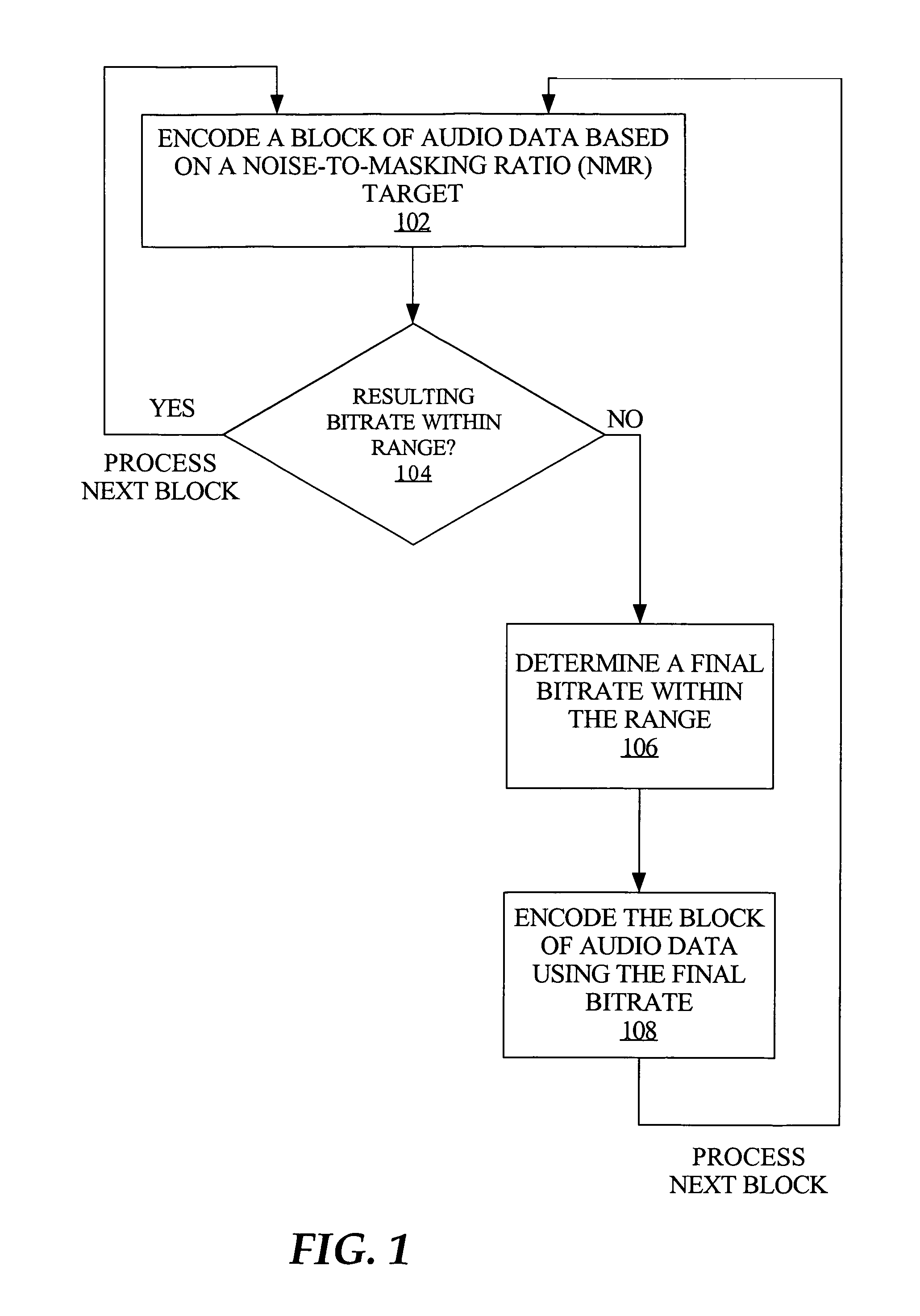

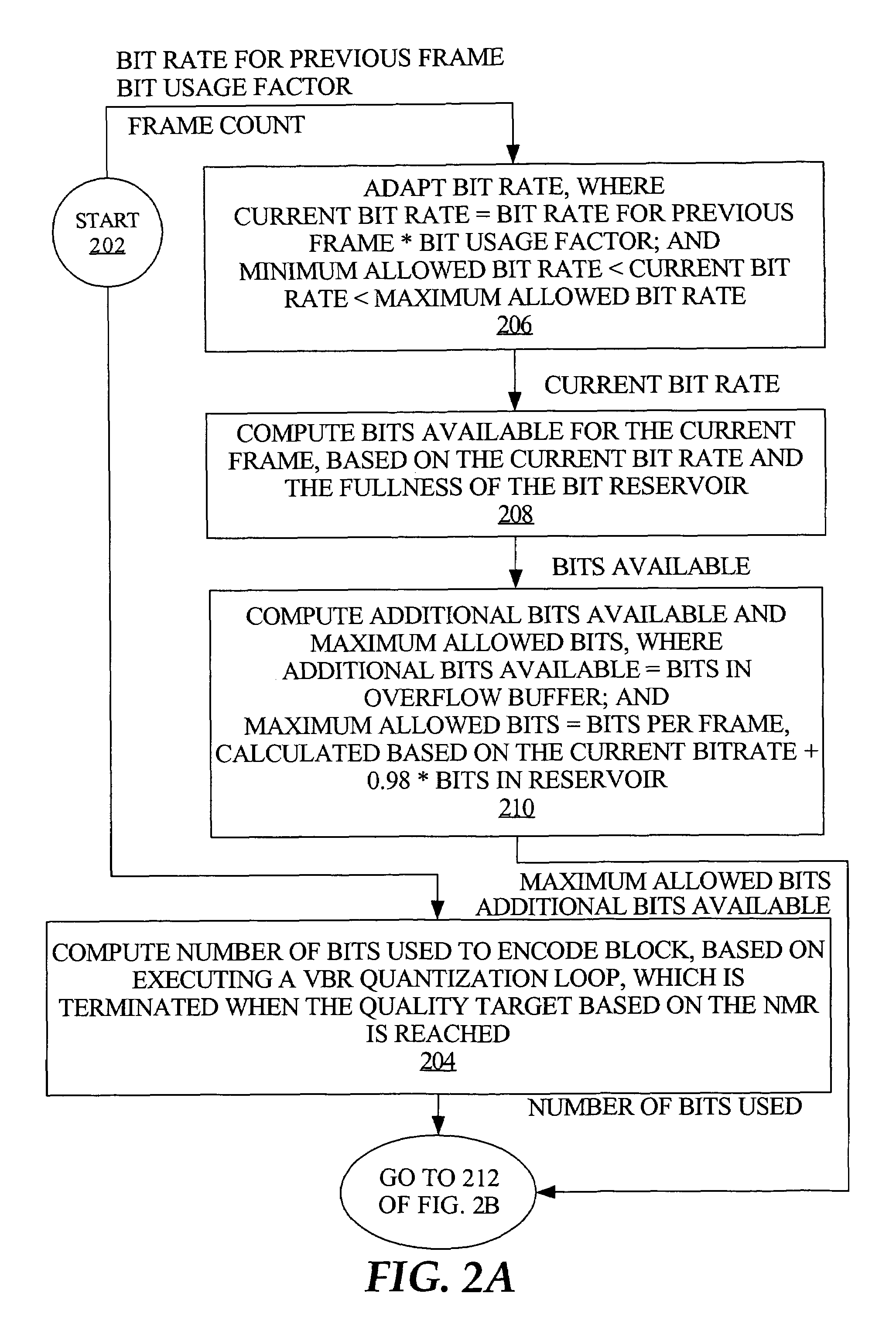

A hybrid audio encoding technique incorporates both ABR, or CBR, and VBR encoding modes. For each audio coding block, after a VBR quantization loop meets the NMR target, a second quantization loop might be called to adaptively control the final bitrate. That is, if the NMR-based quantization loop results in a bitrate that is not within a specified range, then a bitrate-based CBR or ABR quantization loop determines a final bitrate that is within the range and is adaptively determined based on the encoding difficulty of the audio data. Excessive bitrates from use of conventional VBR mode are eliminated, while still providing much more constant perceptual sound quality than use of conventional CBR mode can achieve.

Owner:APPLE INC

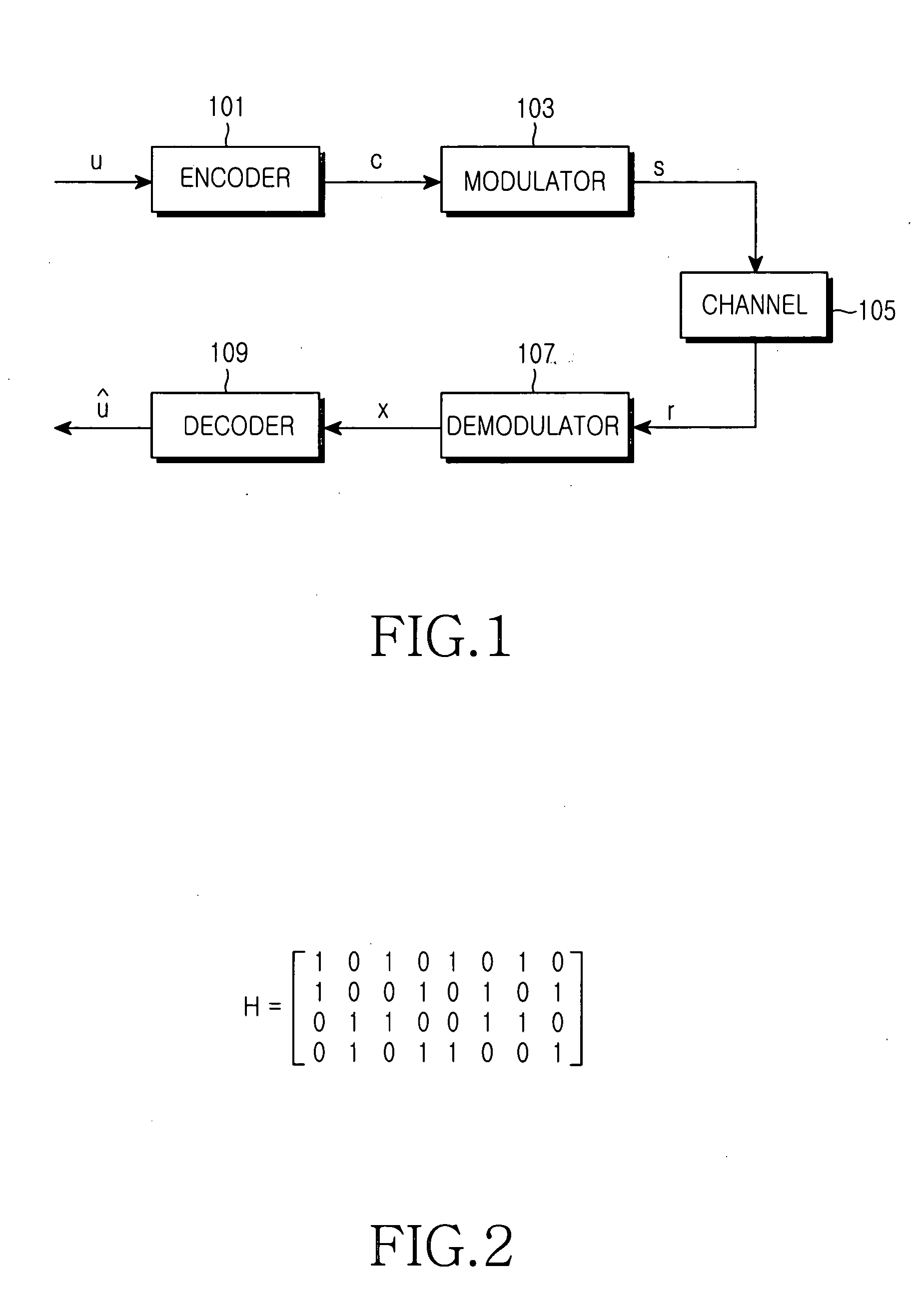

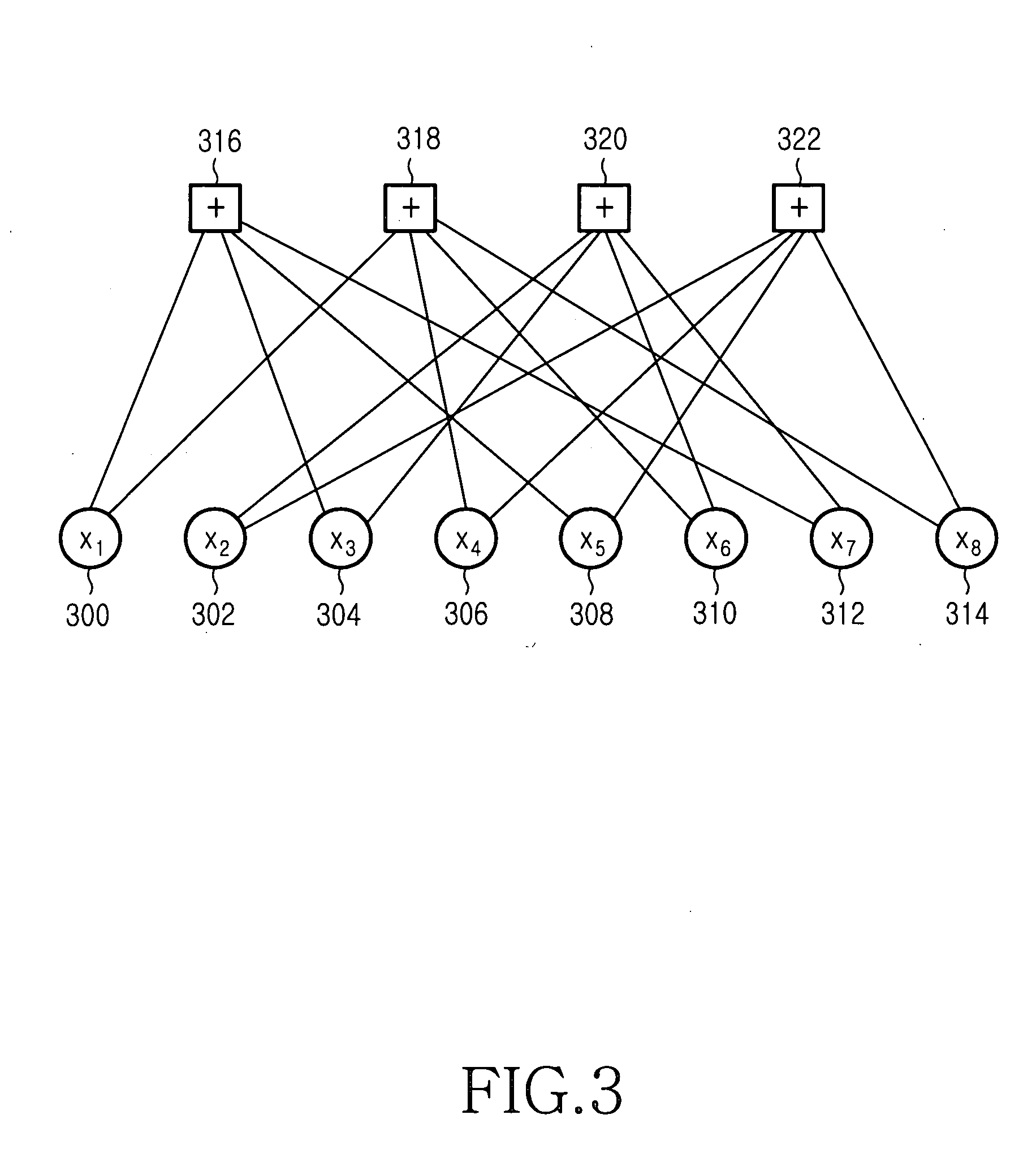

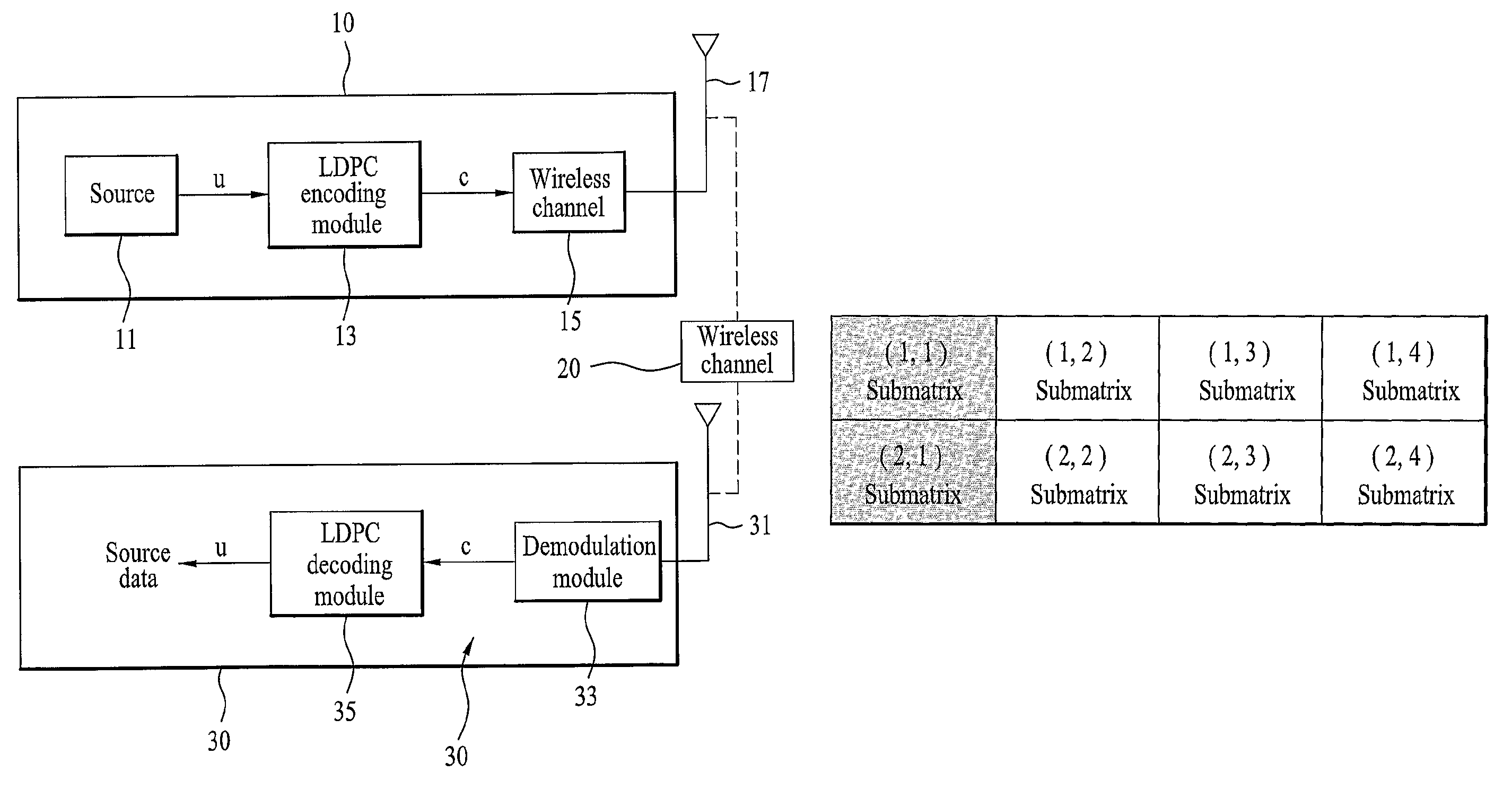

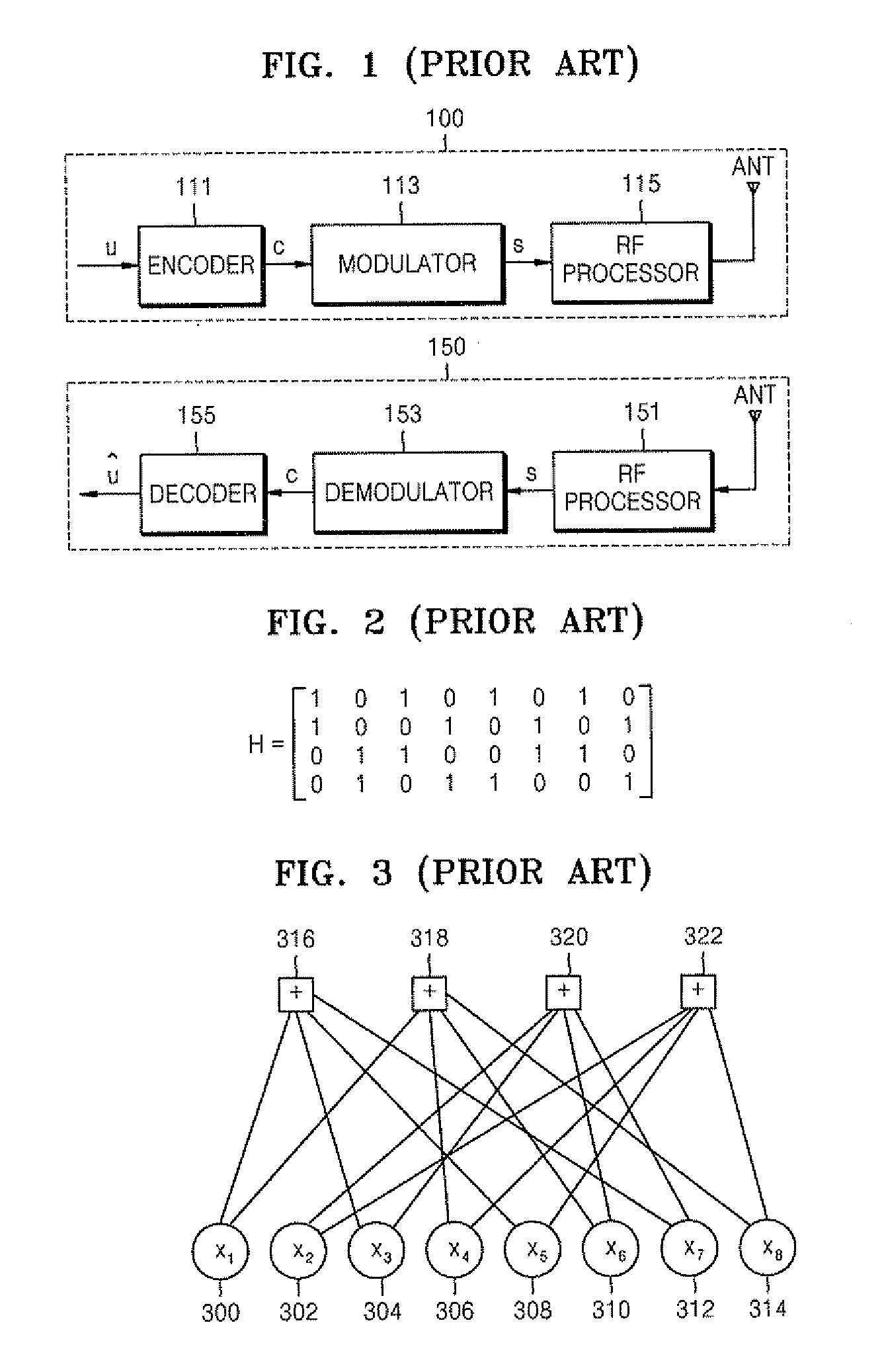

Channel coding/decoding apparatus and method using a parallel concatenated low density parity check code

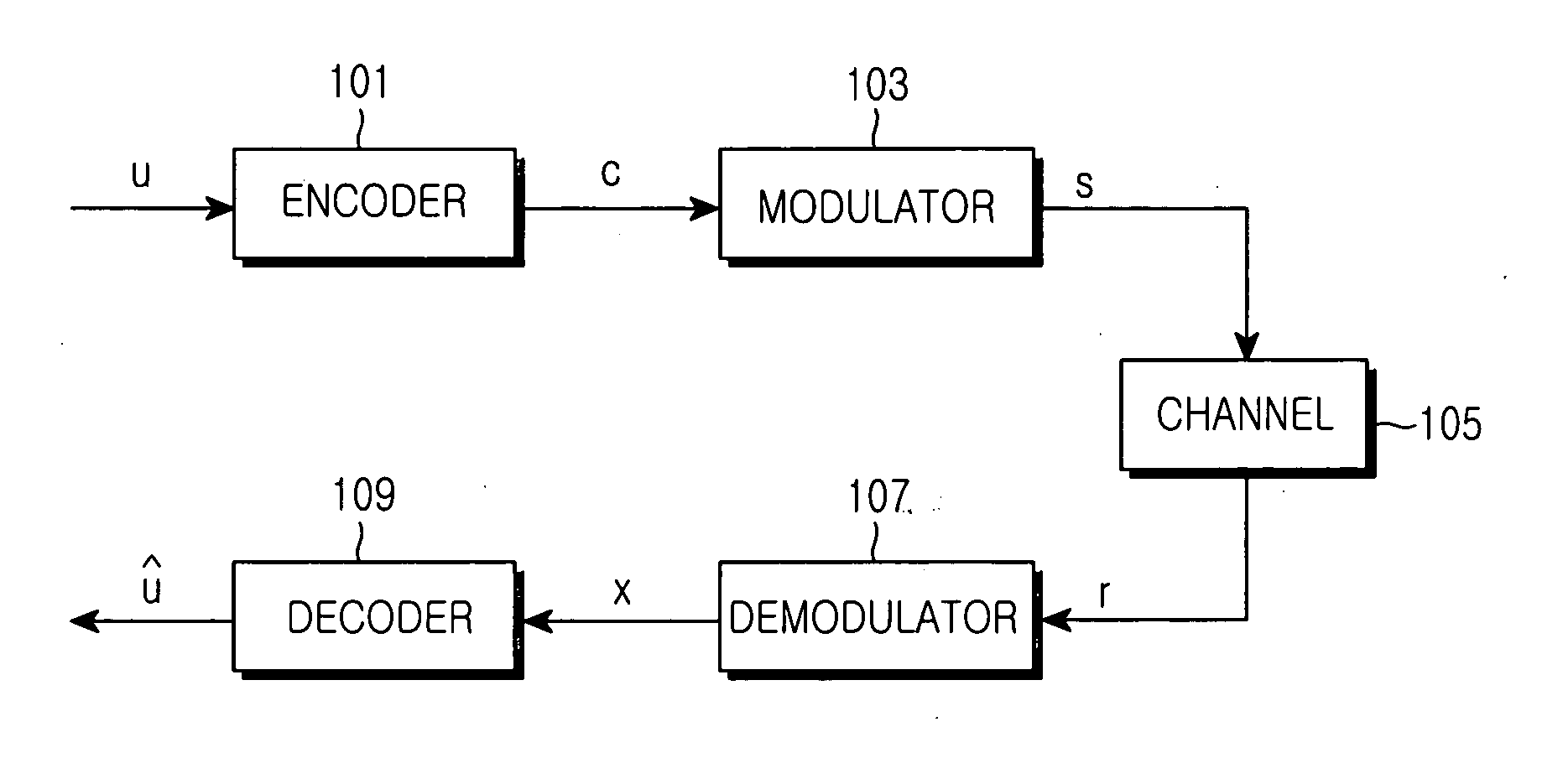

InactiveUS20050149841A1Reduce complexityError prevention/detection by using return channelError detection/correctionComputer hardwareLow-density parity-check code

A parallel concatenated low density parity check (LDPC) code having a variable code rate is provided by generating, upon receiving information bits, a first component LDPC code according to the information bits, interleaving the information bits according to a predetermined interleaving rule, and generating a second component LDPC code according to the interleaved information bits. With use of the parallel concatenated LDPC code, a mobile communication system can use a Hybrid Automatic Retransmission Request (HARQ) scheme and an Adaptive Modulation and Coding (AMC) scheme without restriction.

Owner:SAMSUNG ELECTRONICS CO LTD

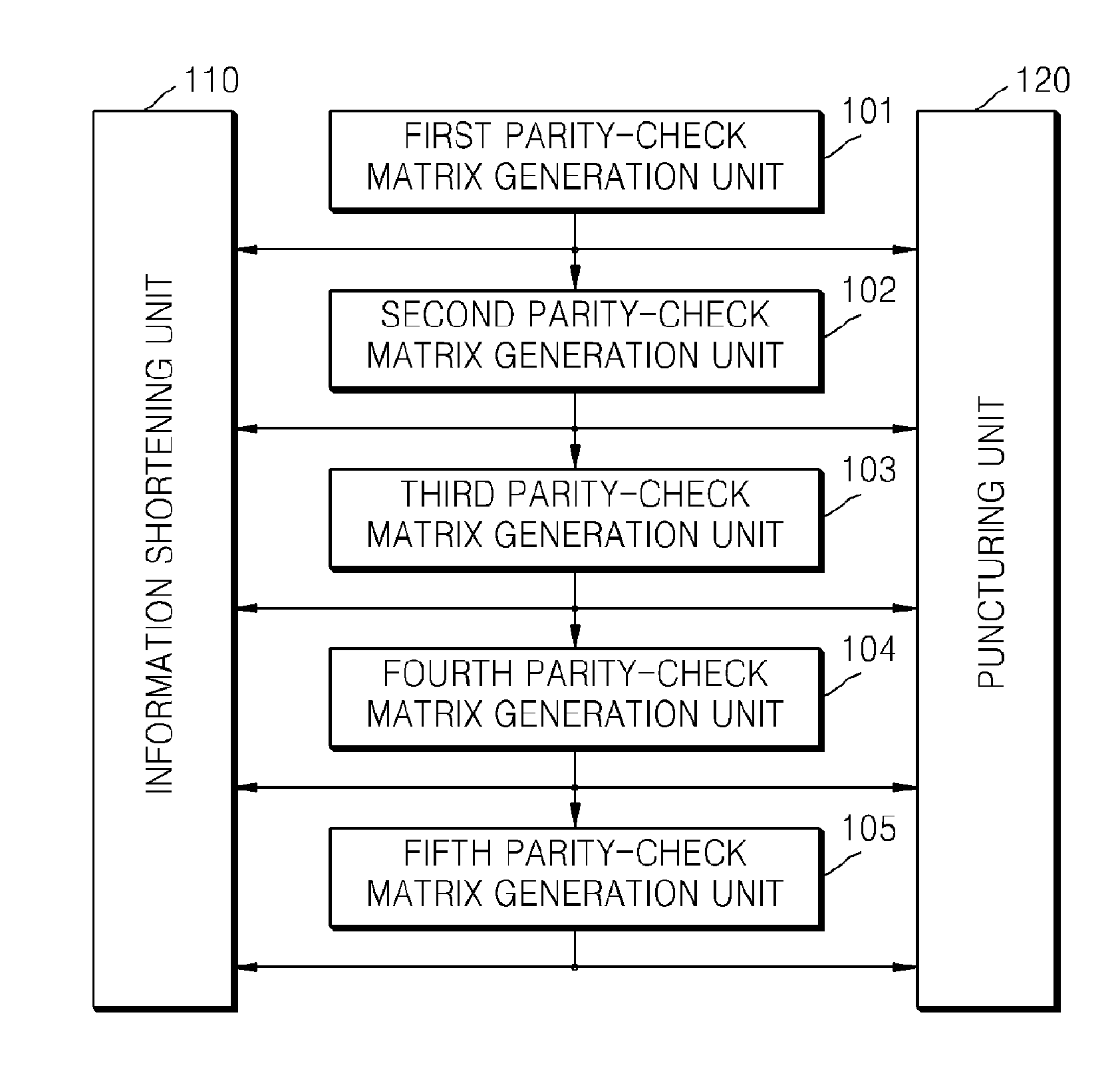

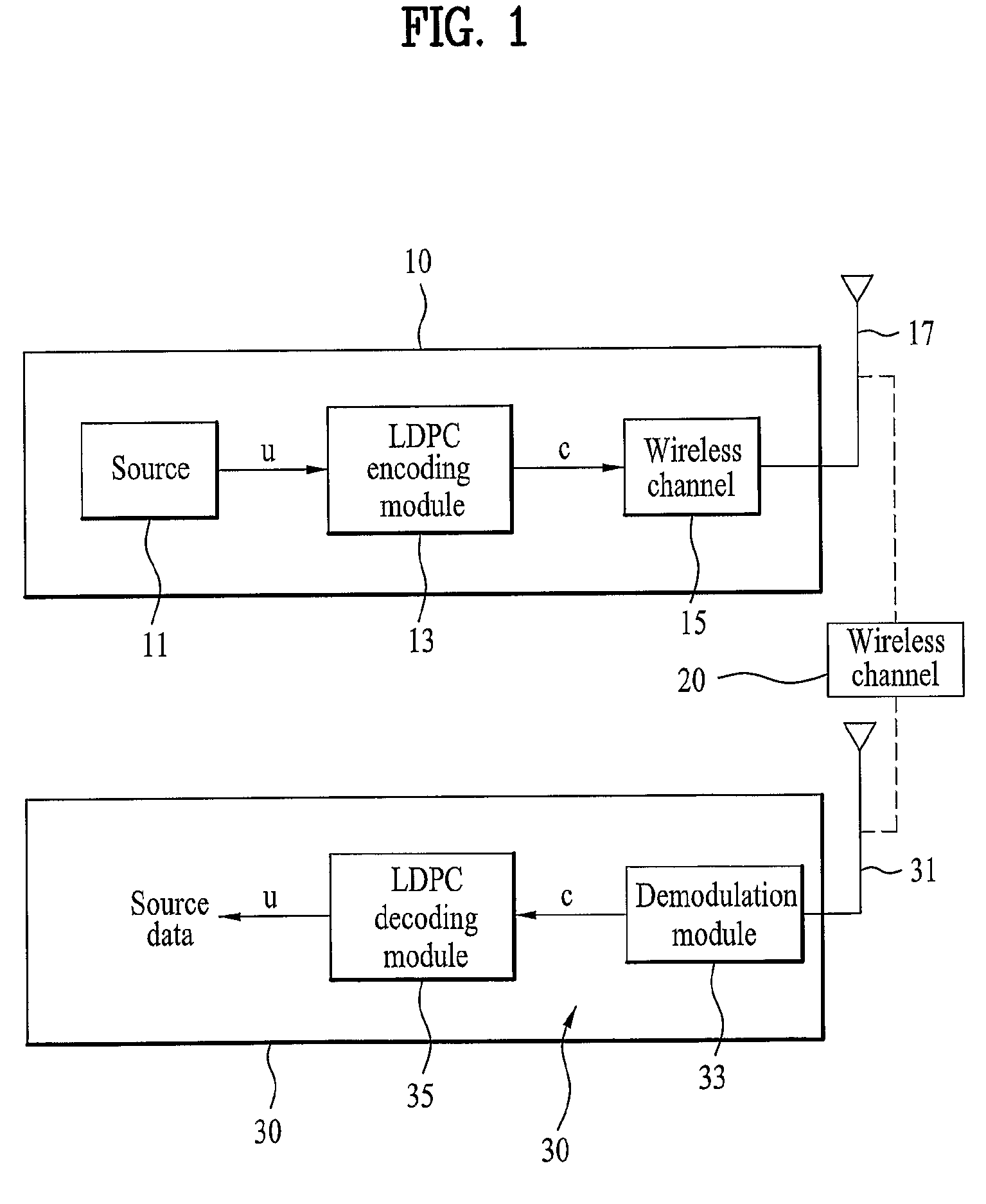

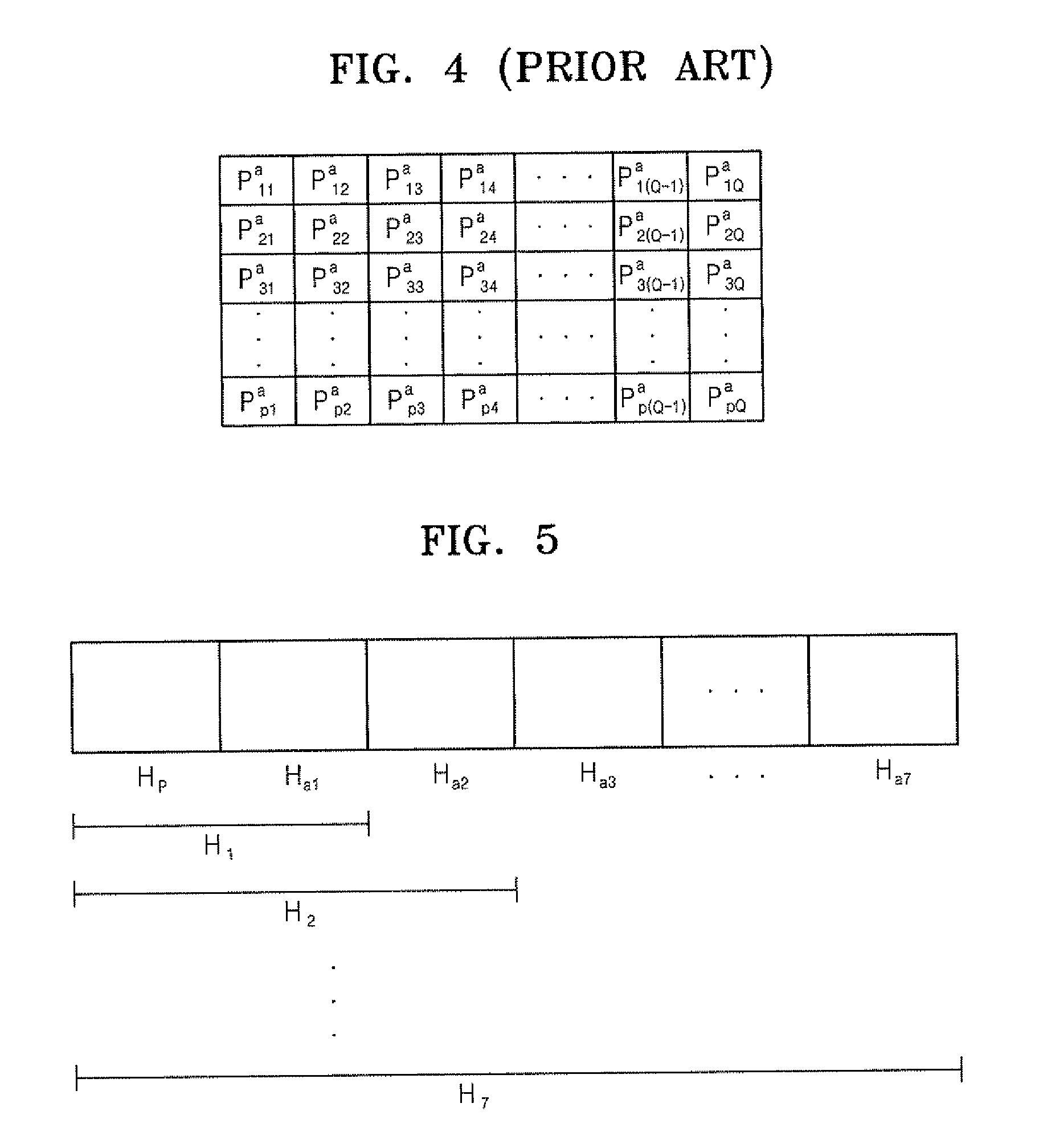

Method of generating parity-check matrix, encoding/decoding method for low density parity-check code with variable information length and variable code rate and apparatus using the same

InactiveUS20100325511A1Easily embodiedIncrease speedError correction/detection using multiple parity bitsCode conversionGeneration processParity-check matrix

A method of generating a parity-check matrix of a low density parity-check (LDPC) code with a variable information length and a variable code rate, an encoding / decoding method, and an apparatus using the same are provided. The method of generating a parity-check matrix of an LDPC code includes: a first parity-check matrix generation process of generating a first parity-check matrix constructed with a first information block and a parity block; and an m-th parity-check matrix generation process of generating an m-th parity-check matrix by an m-th information block to a generated (m−1)-th parity-check matrix (1<m≦M, where M is a natural number equal to or greater than two). Accordingly, it is possible to provide an LDPC code with a variable information length and a variable code rate which has a low complexity for encoding / decoding and a high quality of correction and detection of errors.

Owner:ELECTRONICS & TELECOMM RES INST

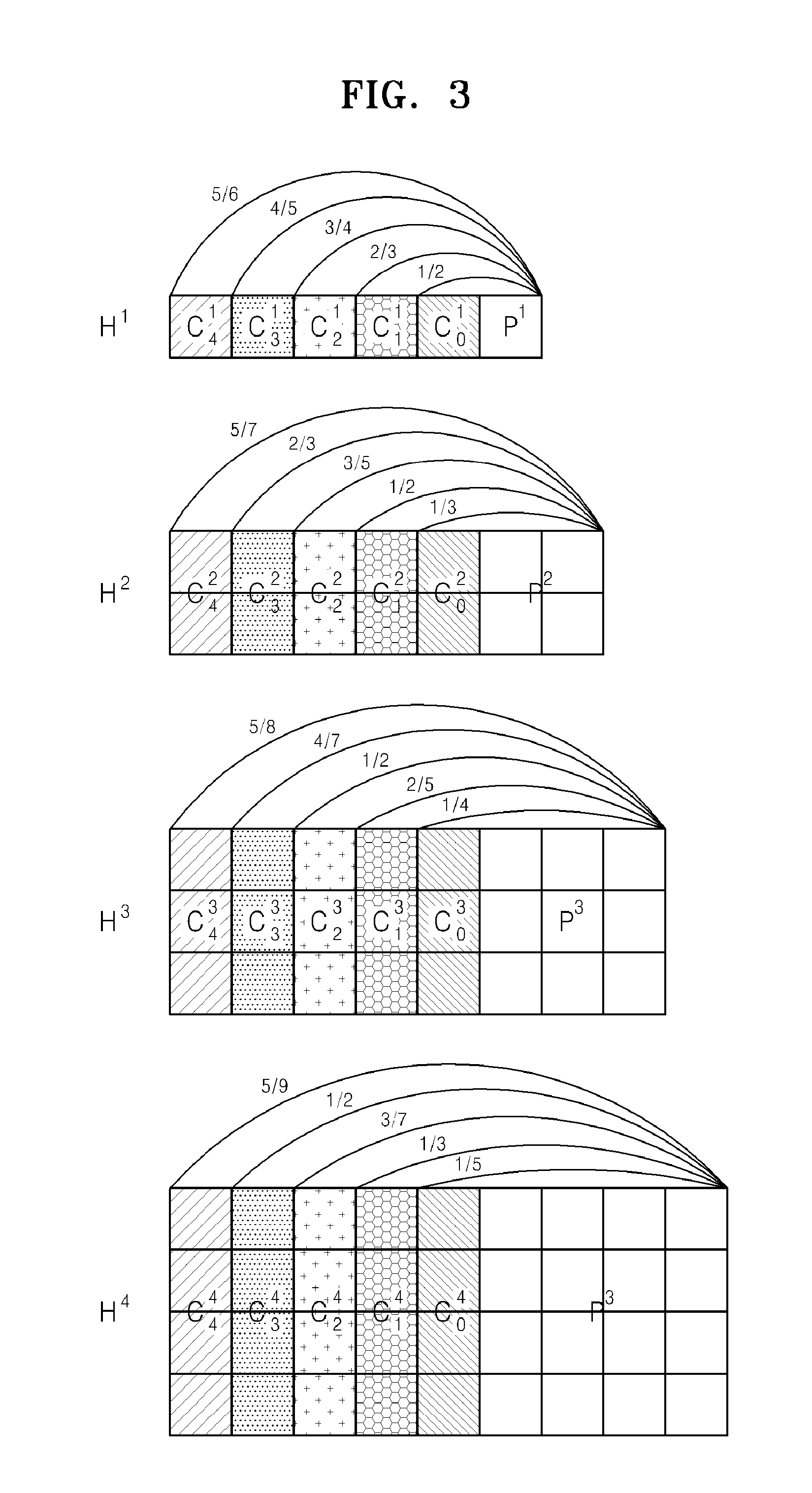

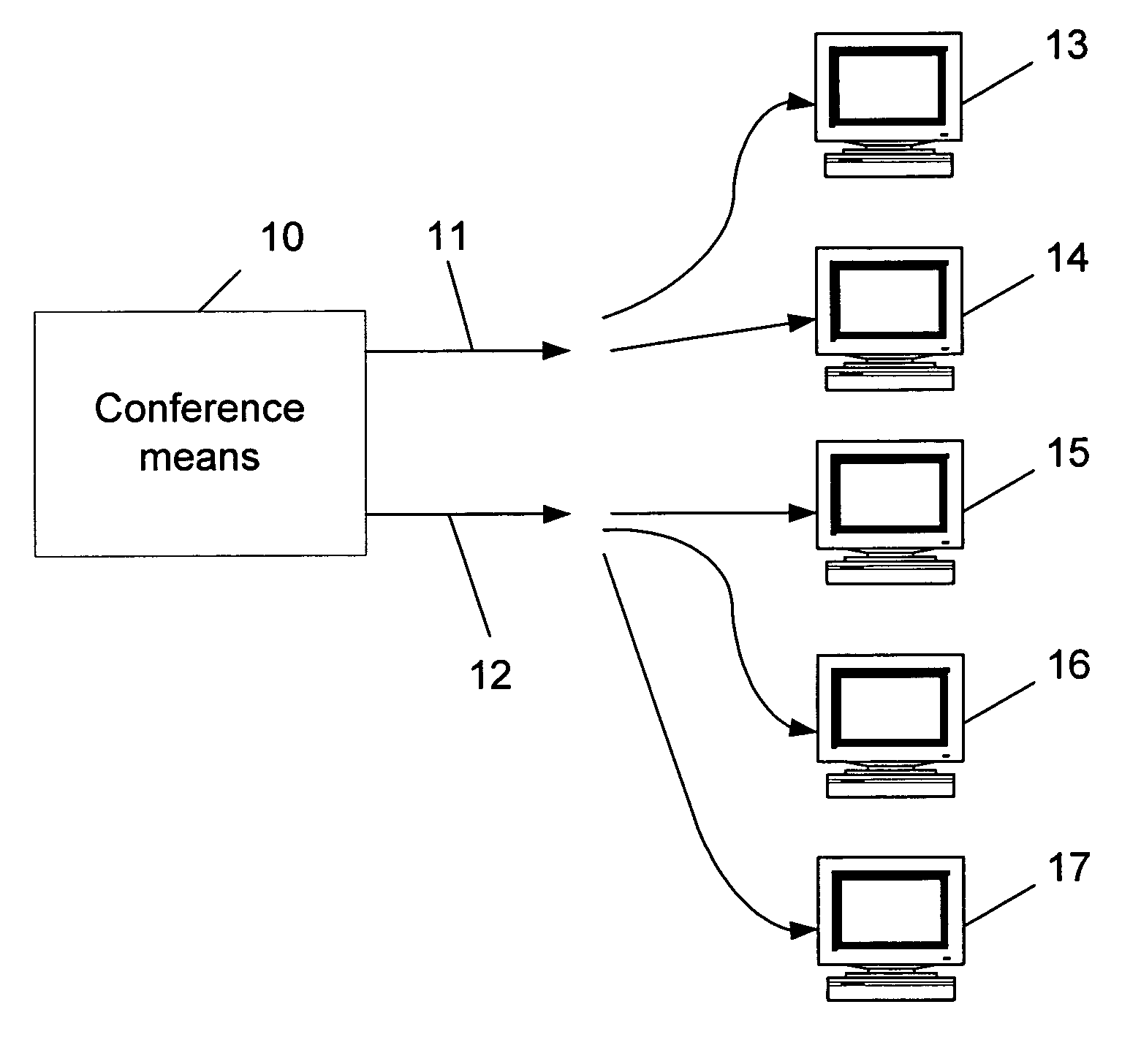

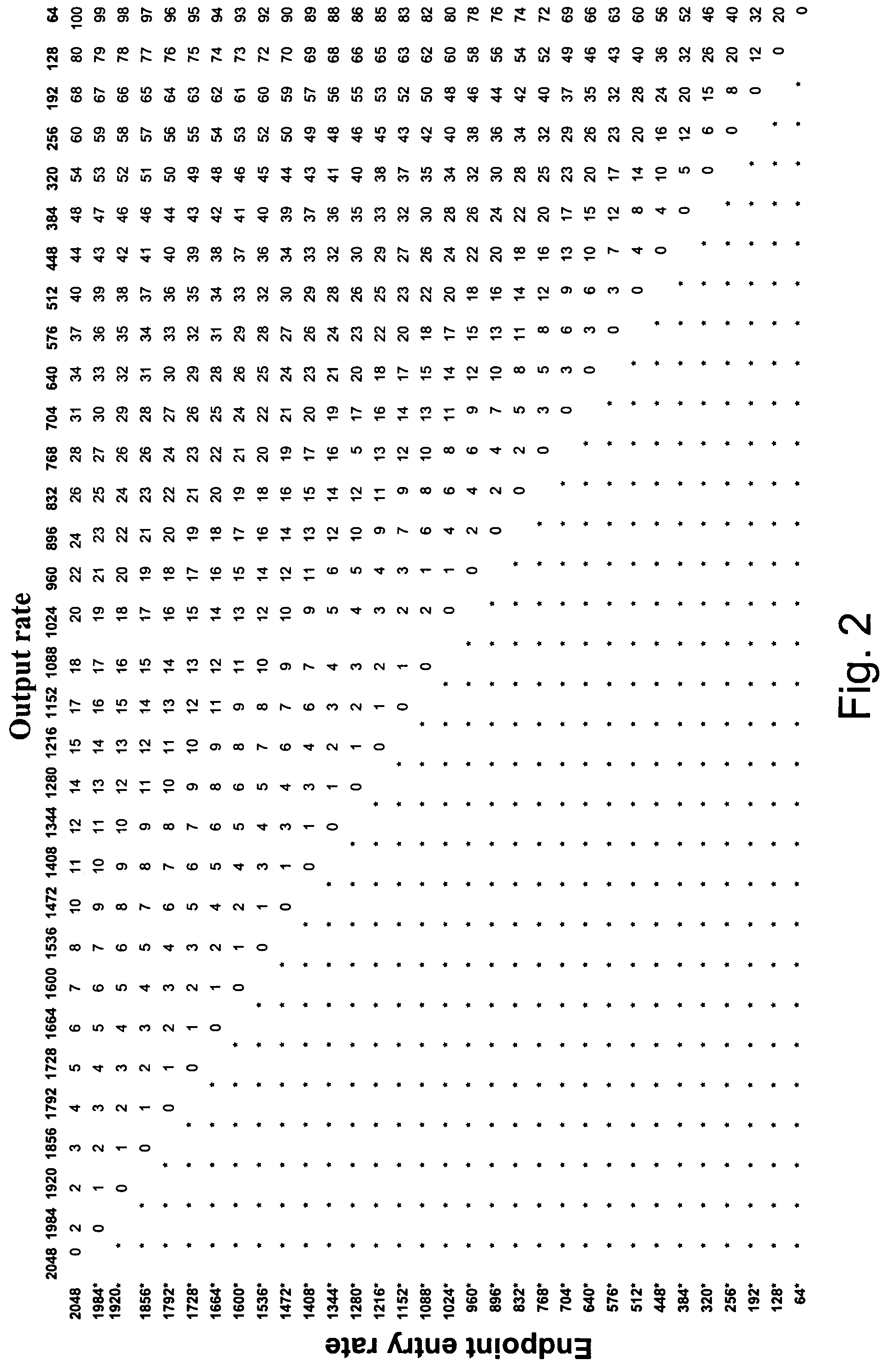

Method for dynamically optimizing bandwidth allocation in variable bitrate (multi-rate) conferences

ActiveUS7492731B2Reduce rateMultiplex system selection arrangementsSpecial service provision for substationHigh rateVideo rate

Method for dynamically optimizing bandwidth allocation in a variable bitrate conference environment. Conference means with two or more outputs are provided, where each one can output data at different rates, in order to support two or more endpoints which may have different media rates. Two or more endpoints are connected to these conference means for participating in the conference. Whenever more than one video rate is used by participants during the conference, each set of output rates is selected from all possible combinations of output rates in the conference means, wherein the lowest output rate in each selected set is the entry rate of the endpoint joining the conference at the lowest rate. A Quality Drop Coefficient (QDC) for each endpoint that joins the conference is determined for each selected set, wherein the QDC is computed according to the endpoint entry rate and the highest rate, among the output rates of each selected set, that is lower or equal to said endpoints' entry rate. A Quality Drop Value (QDV) is calculated for each of the selected sets, wherein, preferably, the set of output rates with the lowest QDV is determined as the optimal video rate set to select. The video rate of all the endpoints having a video rate above the highest optimal video rate is reduced to the highest optimal video rate, if required, and the video rate of other endpoints having video rate between two consecutive levels of optimal video rates is reduced to the lowest level among said levels. Whenever a change occurs in either the amount of participating endpoints in the conference or in the declared bit rate capability of the participating endpoints, the video rates of all the outputs are recalculated.

Owner:AVAYA INC

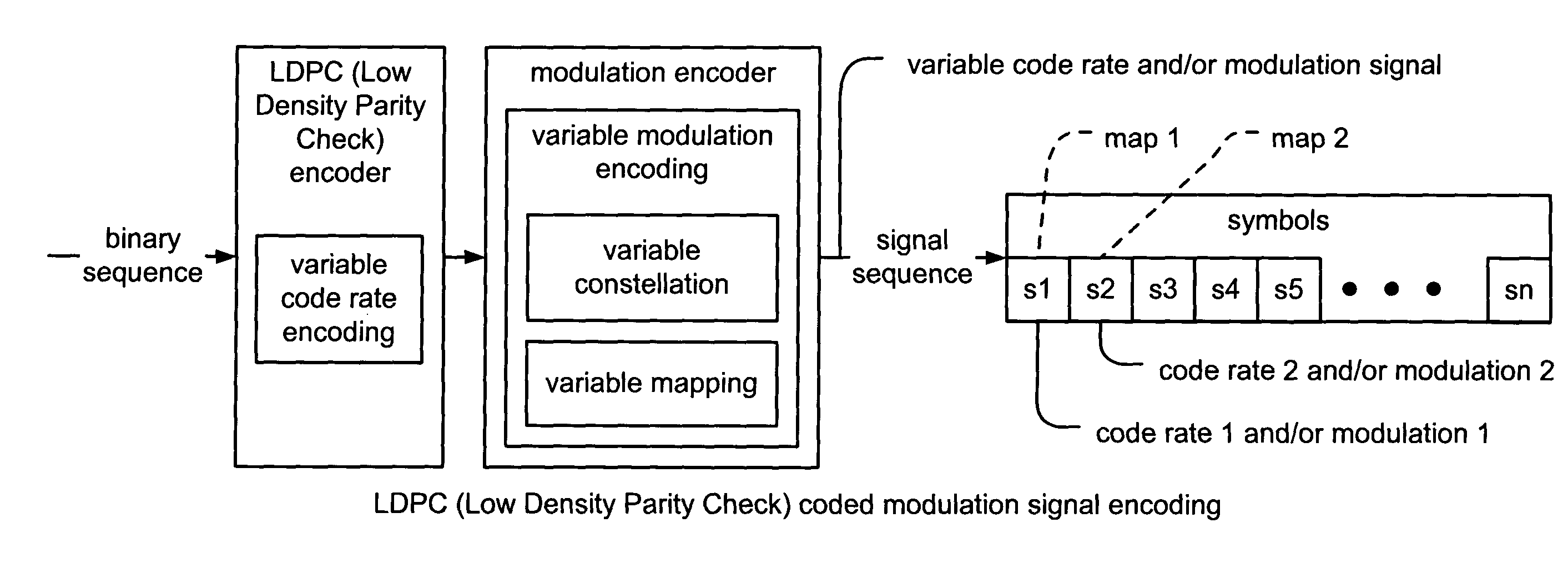

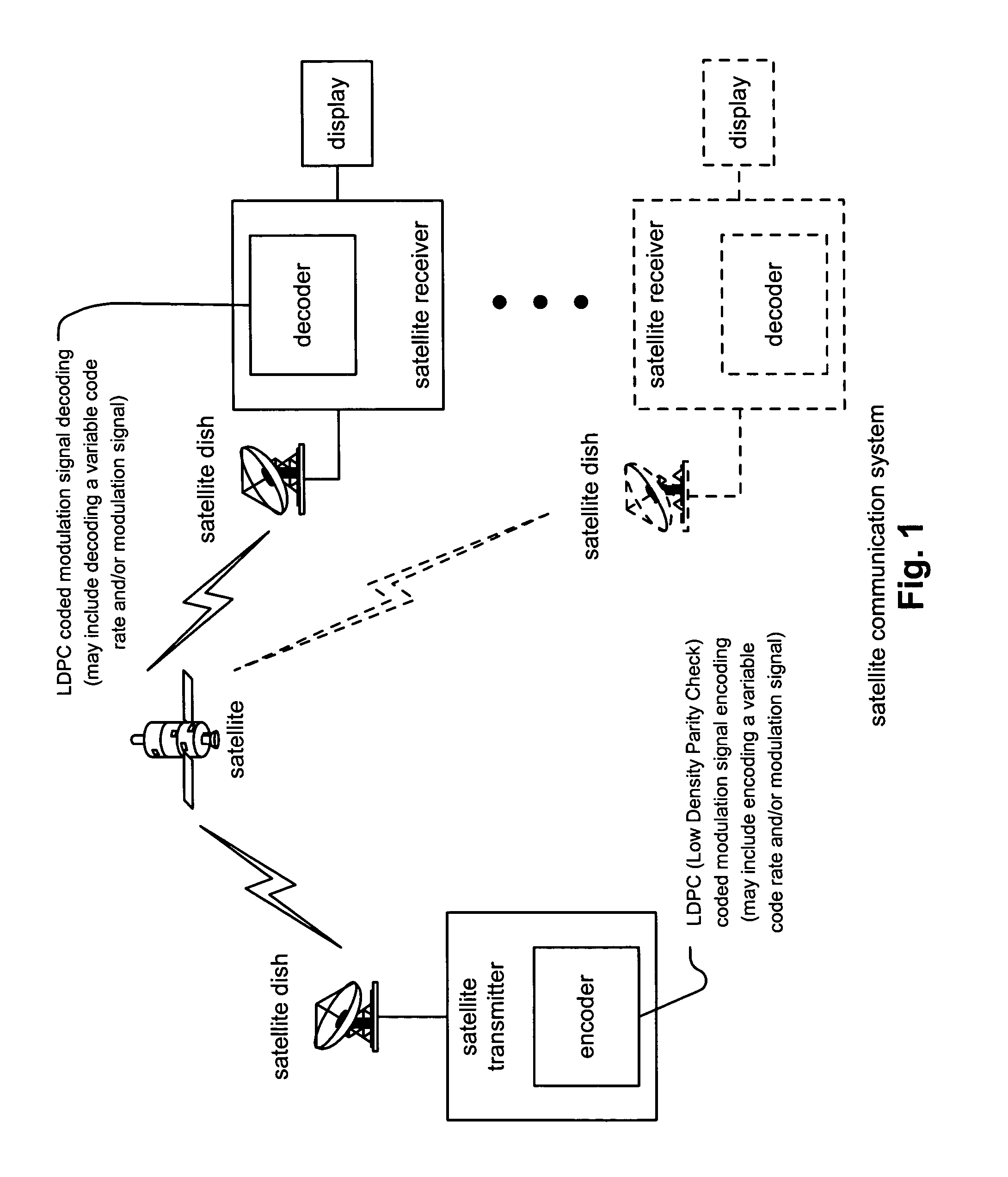

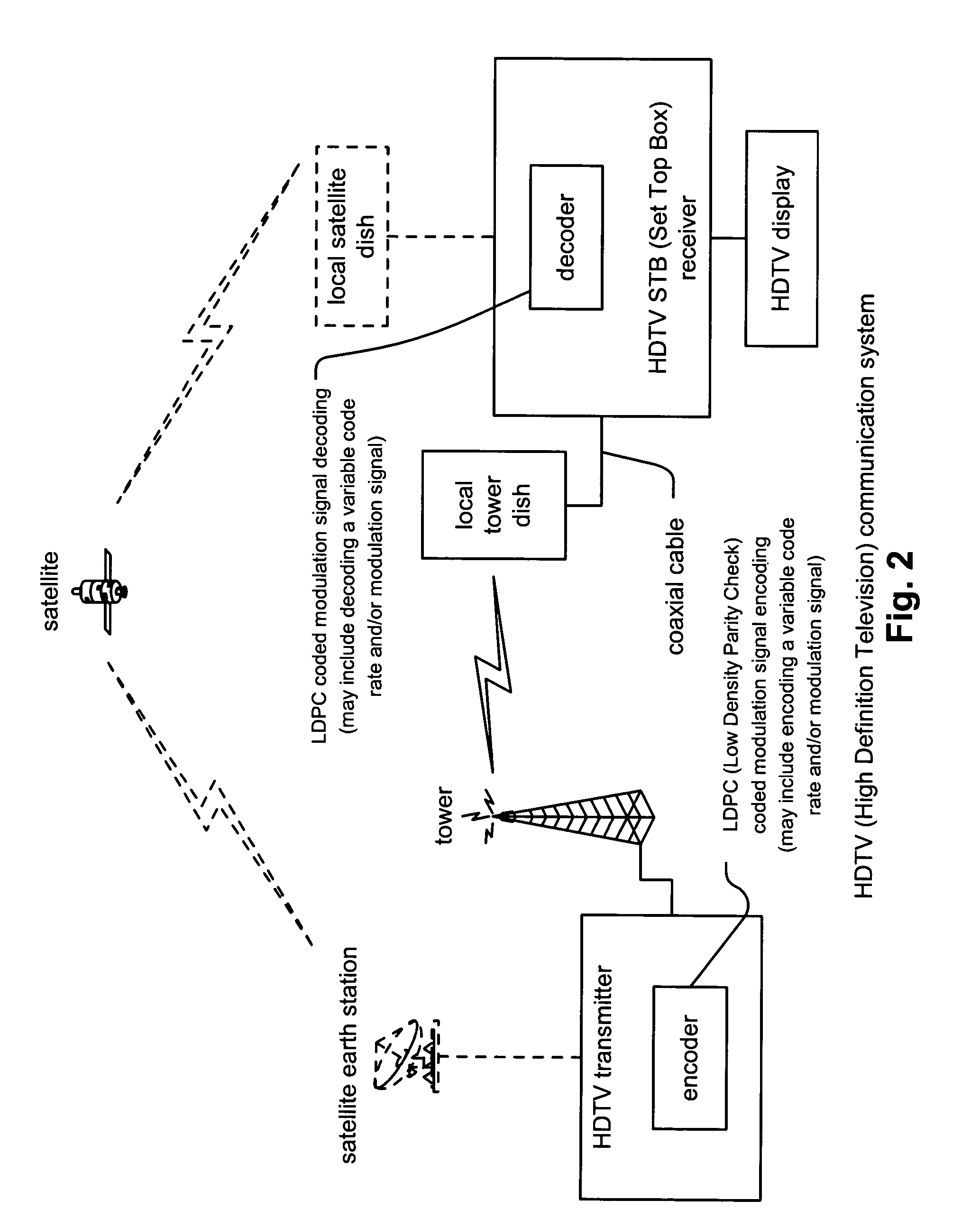

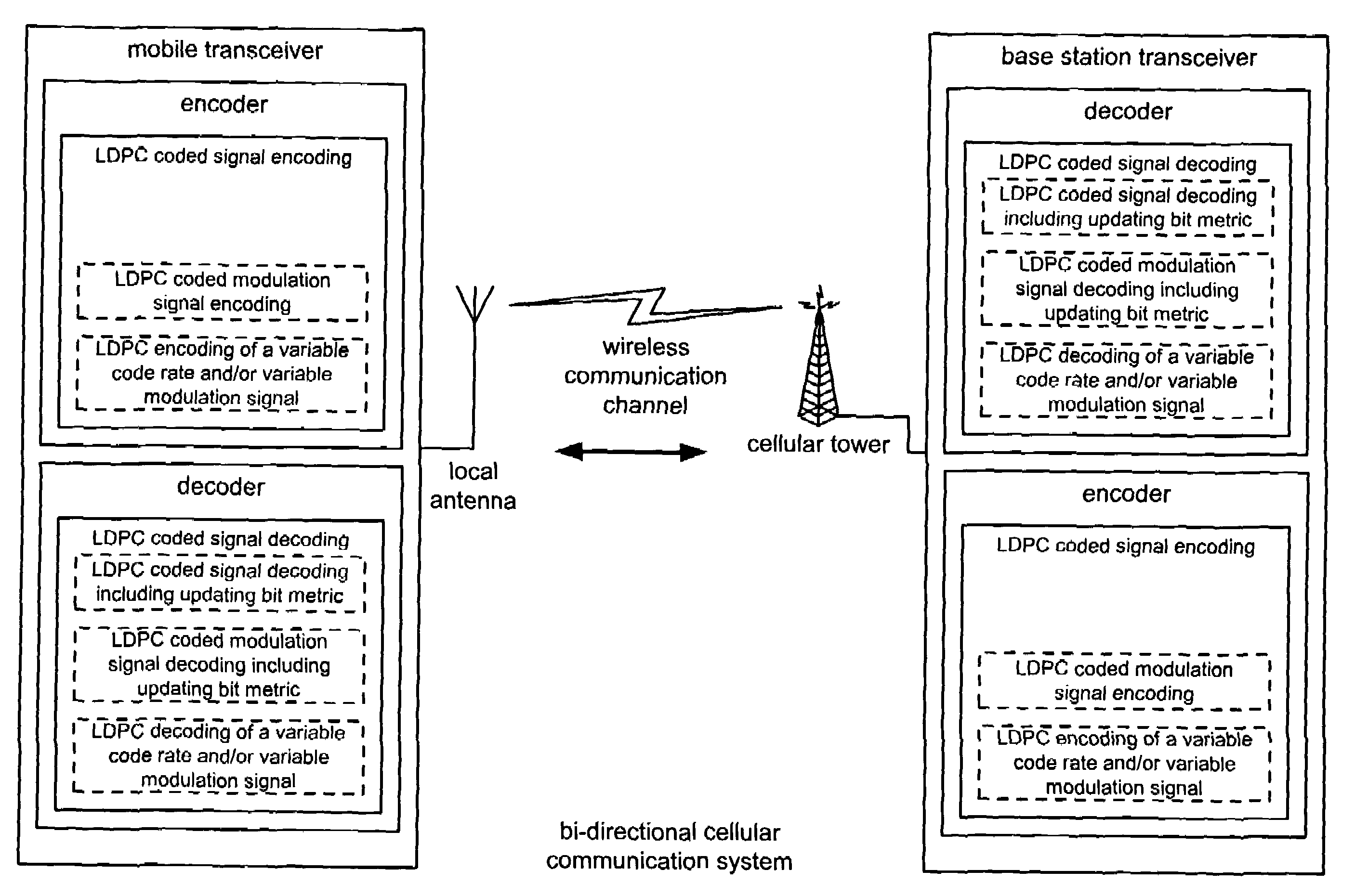

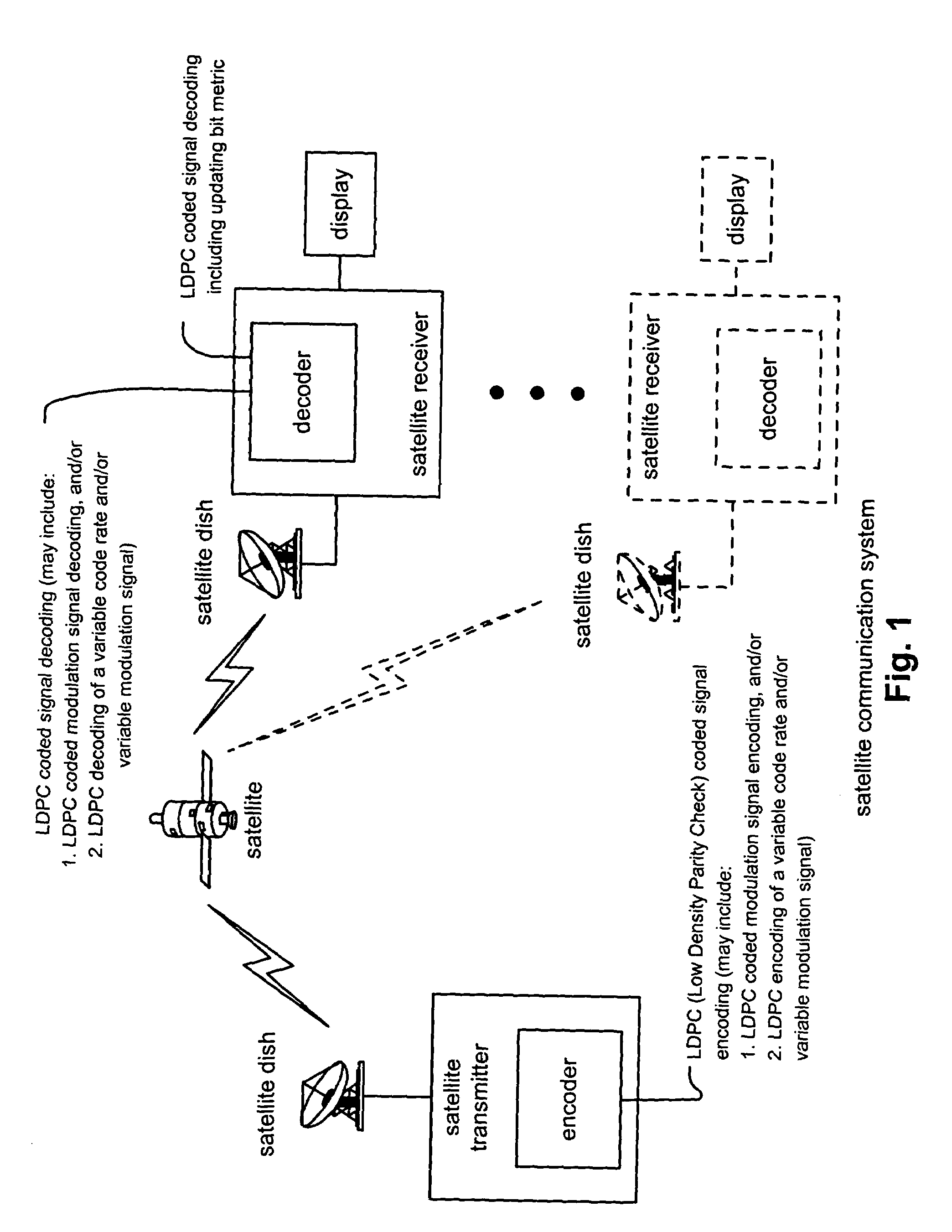

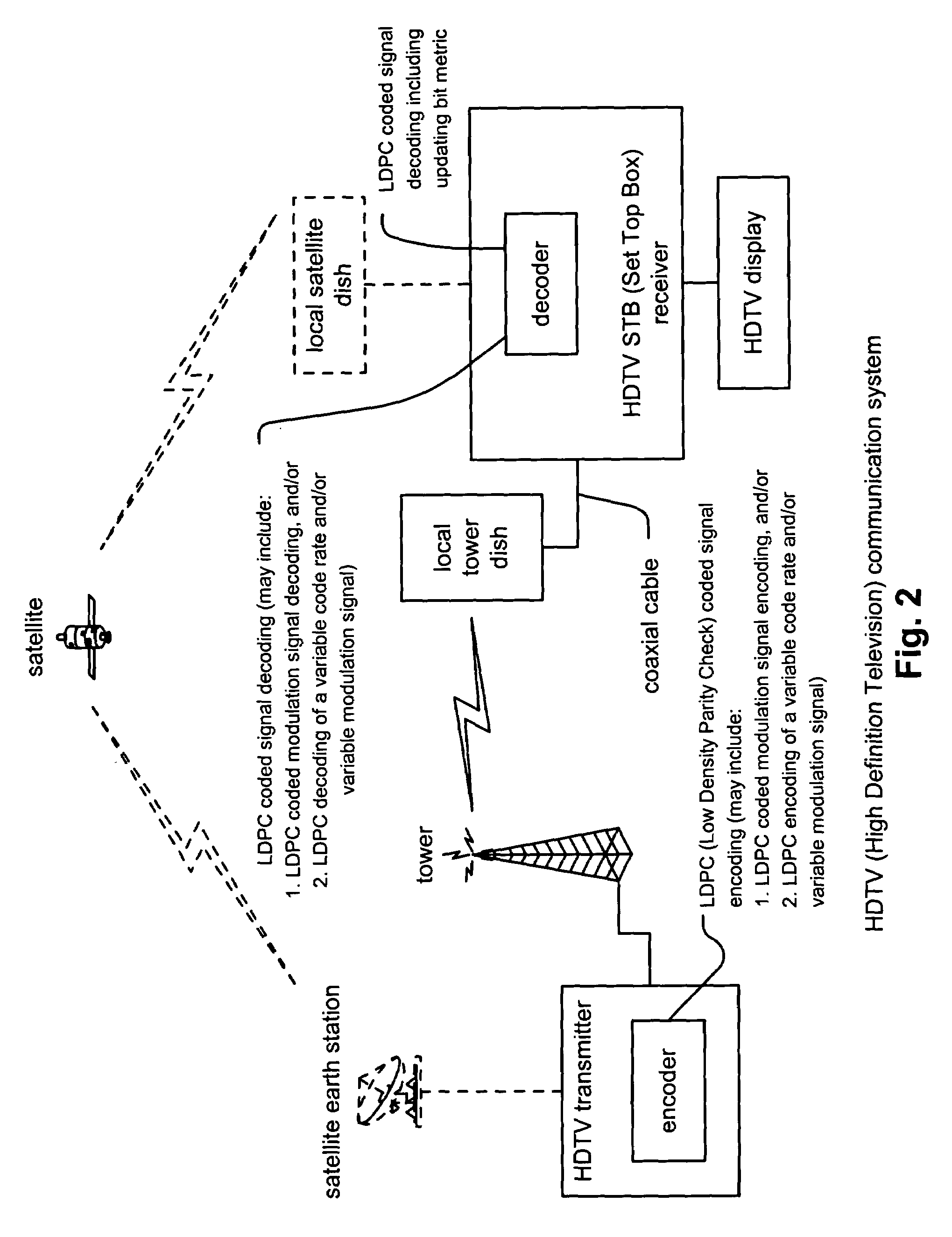

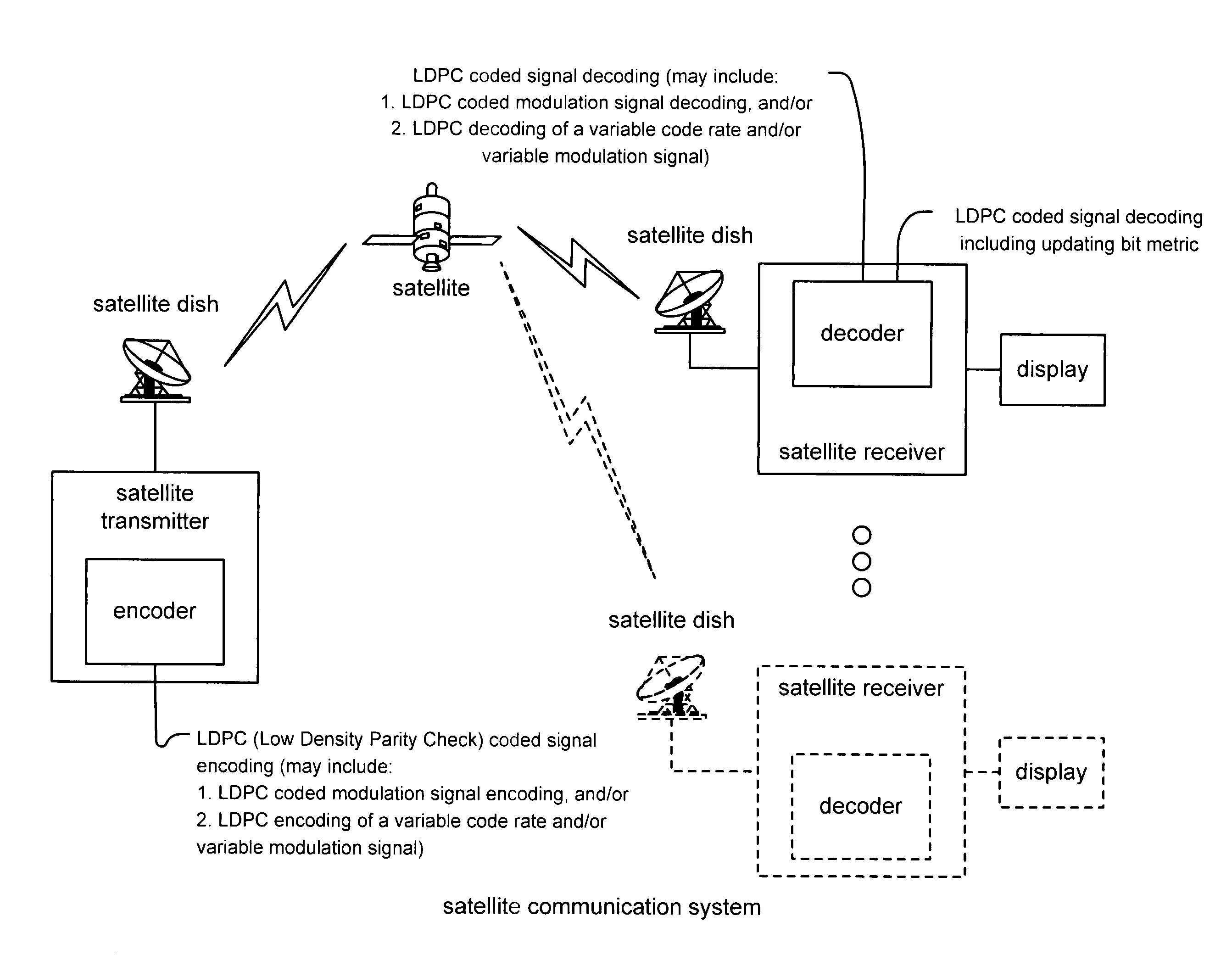

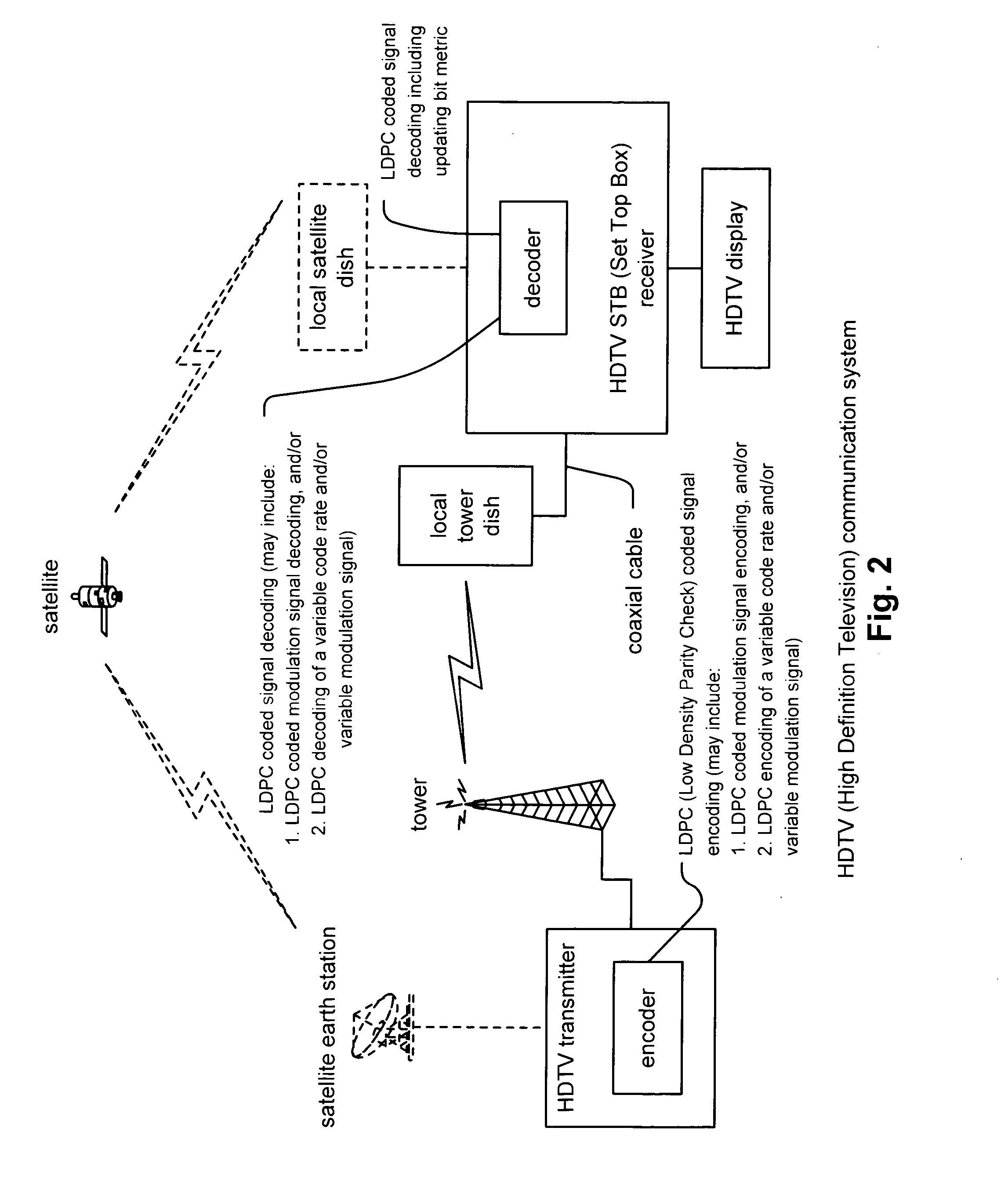

Variable modulation with LDPC (low density parity check) coding

InactiveUS7139964B2Error prevention/detection by using return channelOther decoding techniquesTheoretical computer scienceLow-density parity-check code

Variable modulation within combined LDPC (Low Density Parity Check) coding and modulation coding systems. A novel approach is presented for variable modulation encoding of LDPC coded symbols. In addition, LDPC encoding, that generates an LDPC variable code rate signal, may also be performed as well. The encoding can generate an LDPC variable code rate and / or modulation signal whose code rate and / or modulation may vary as frequently as on a symbol by symbol basis. Some embodiments employ a common constellation shape for all of the symbols of the signal sequence, yet individual symbols may be mapped according different mappings of the commonly shaped constellation; such an embodiment may be viewed as generating a LDPC variable mapped signal. In general, any one or more of the code rate, constellation shape, or mapping of the individual symbols of a signal sequence may vary as frequently as on a symbol by symbol basis.

Owner:AVAGO TECH INT SALES PTE LTD

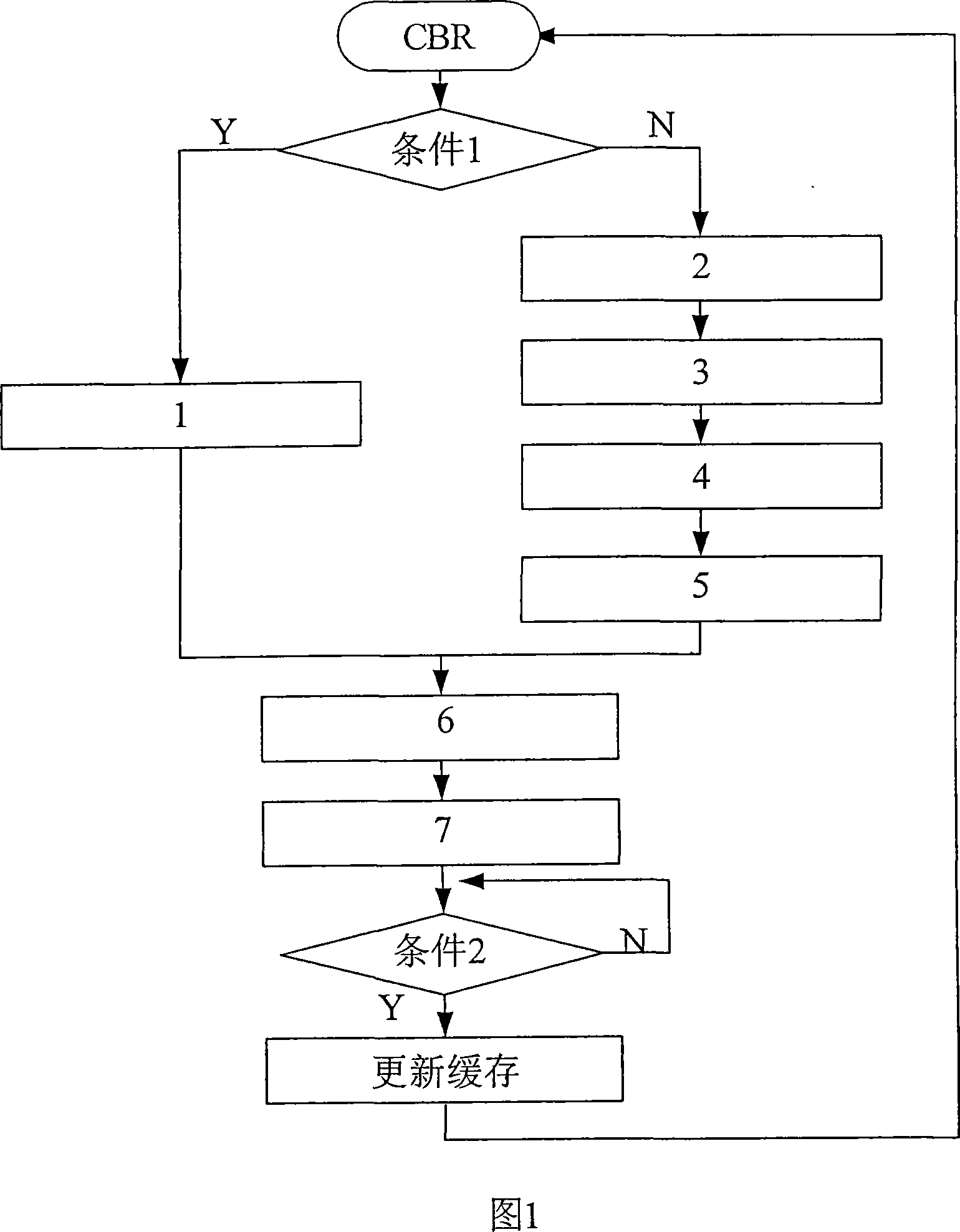

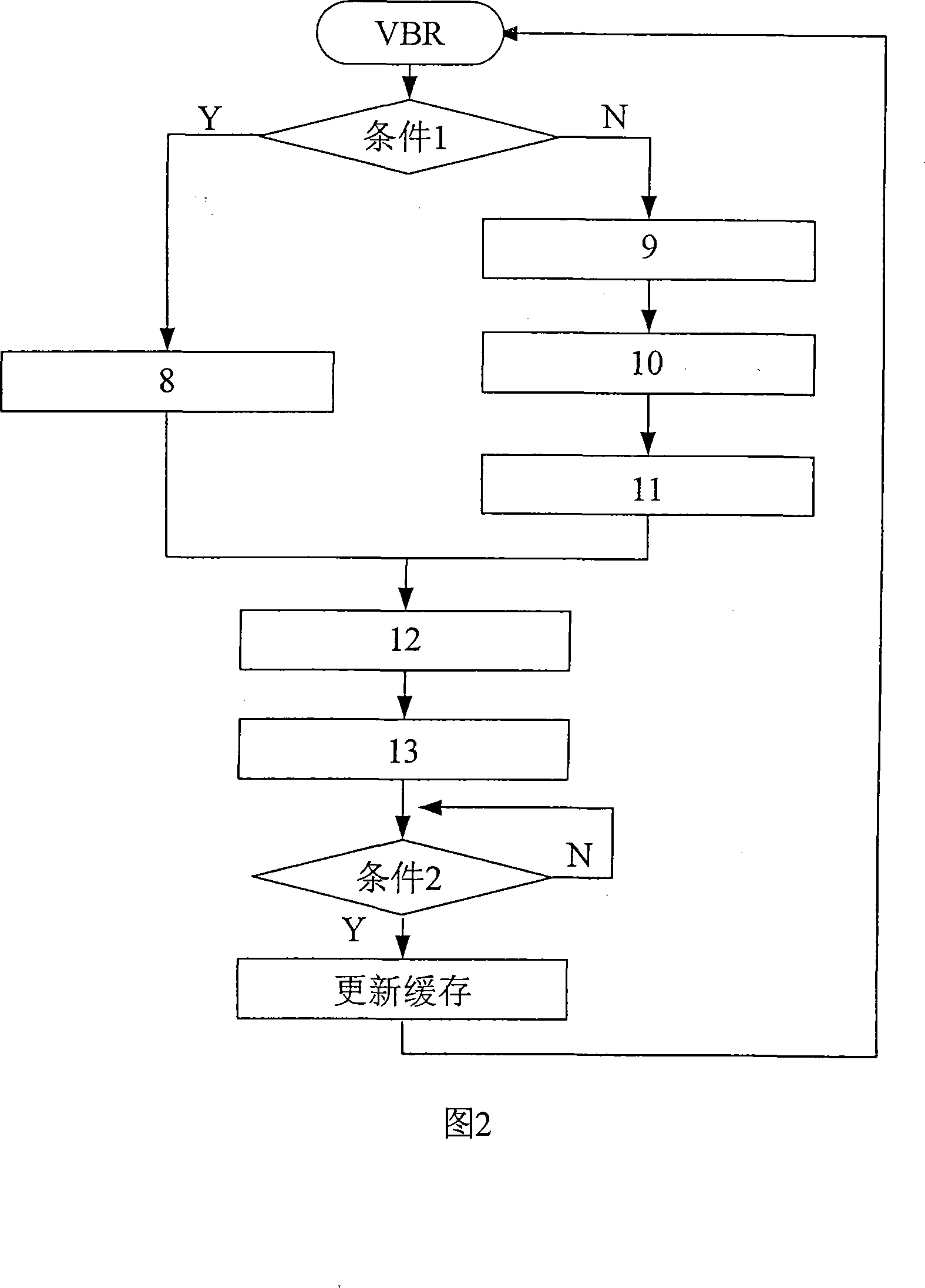

Self-adapting code rate control method

InactiveCN101252689ASuitable designSimple calculationTelevision systemsDigital video signal modificationImaging qualitySelf adaptive

The invention relates to a self-adaptive code rate controlling method with low complexity. The controlling method comprises two types of operating modes, namely, variable code rate and fixed code rate; wherein the variable code rate is usually used on non-real time application occasions requiring constant image quality, and the fixed code rate is usually used on real time application occasions requiring constant bandwidth; the method can self-adaptively adjust the quantization step size according to different application occasions and the characteristics of video sources, to achieve reasonable distribution of code rates; simultaneously, variation range limitation on the quantization step size is added, thereby quality jump among continuous images is effectively prevented, smooth transition is realized, and the realization of hardware is convenient.

Owner:ZHEJIANG HUATU MICROCHIP TECH CO LTD

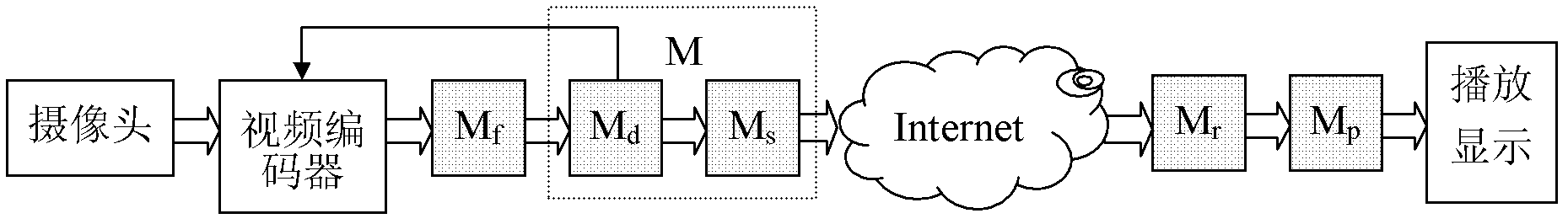

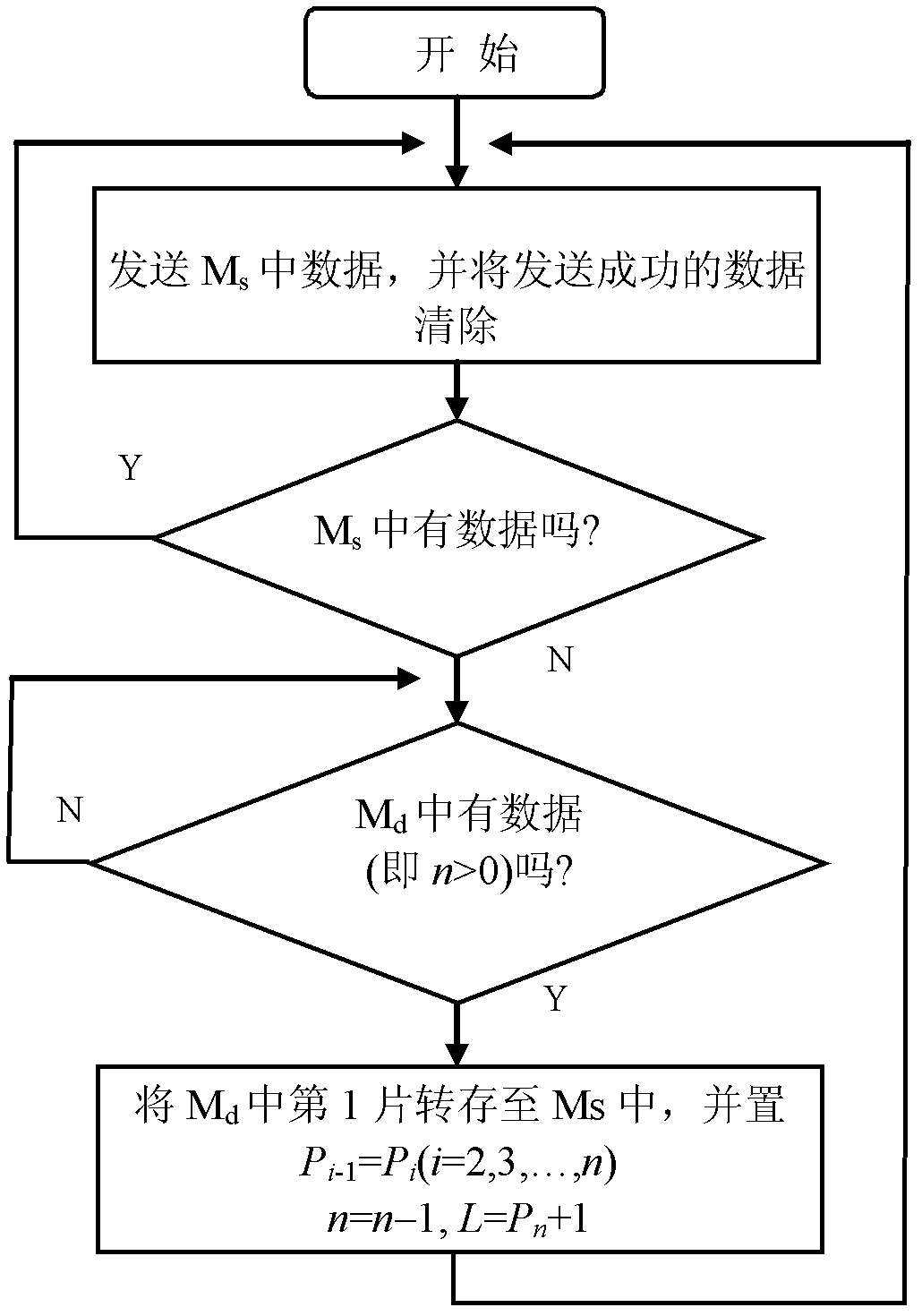

Network bandwidth-adaptive video stream transmission control method

ActiveCN102325274AGuaranteed Adaptive AdjustmentEffective real-time video surveillanceSelective content distributionArray data structureVideo transmission

The invention relates to a network bandwidth-adaptive video stream transmission control method. In the method, a transmitter is provided with a video data buffer and a data transmission buffer, a video data input thread and a video data transmission thread which are concurrently run are set at the same time, and three main control methods for video frame loss, video code rate up-regulation and video code rate down-regulation in a video transmission process are established according to variations of a network bandwidth; a frame address array is set in the control method to realize the by-the-frame transmission and discarding of video data to avoid the occurrence of 'broken video frames' in the transmission process; and a weight accumulation method in which time is taken as a parameter is adopted by the control method to judge the variation trend of video data accumulation to ensure the regulation accuracy of a video code rate. By the method, the video code rate can be ensured to be adaptively regulated along the fluctuations of the network bandwidth or along variations of video scenes under a variable code rate; and the method can be effectively applied to real-time video monitoring in dynamic bandwidth network environments.

Owner:ZHEJIANG WANLI UNIV

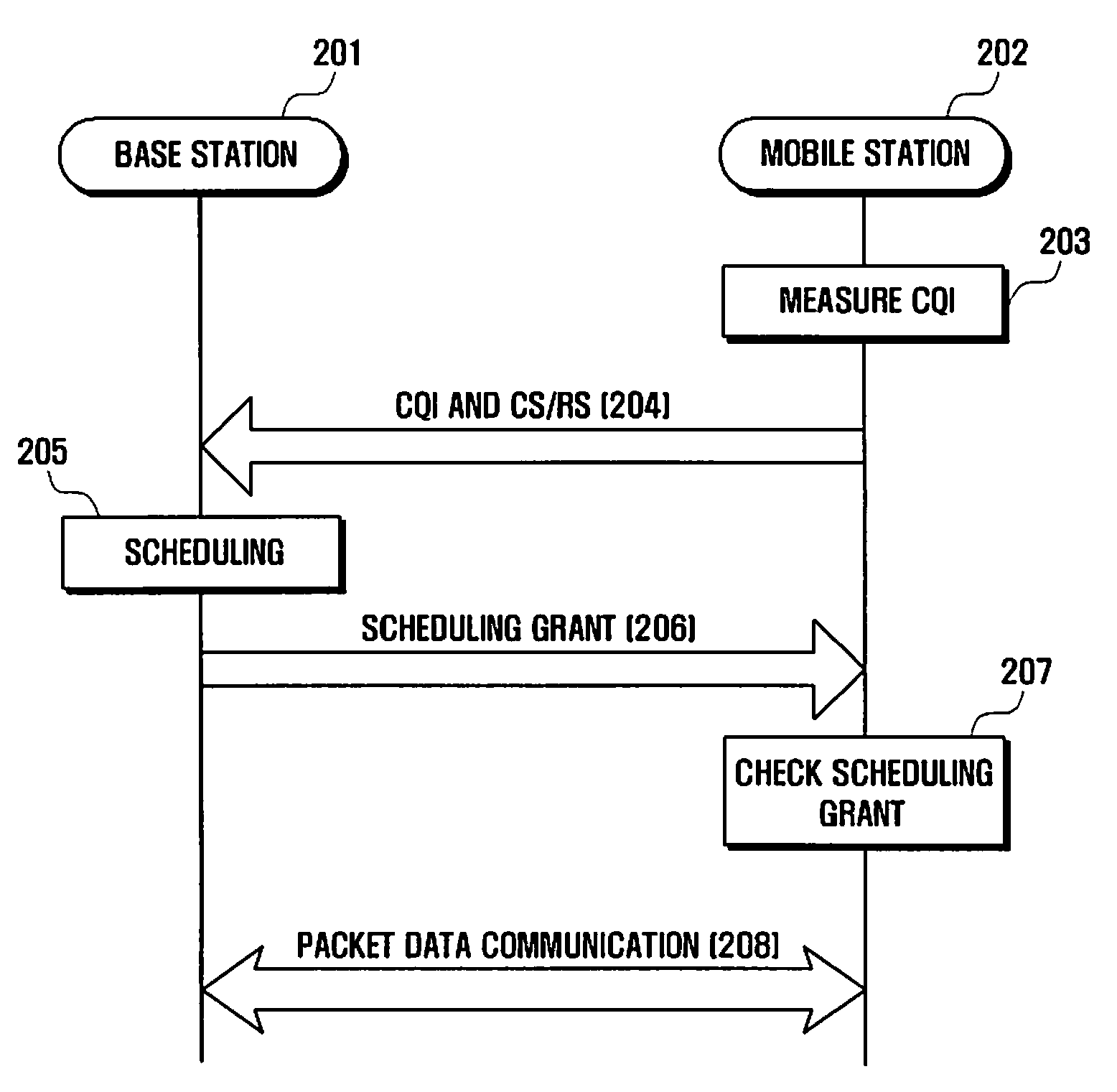

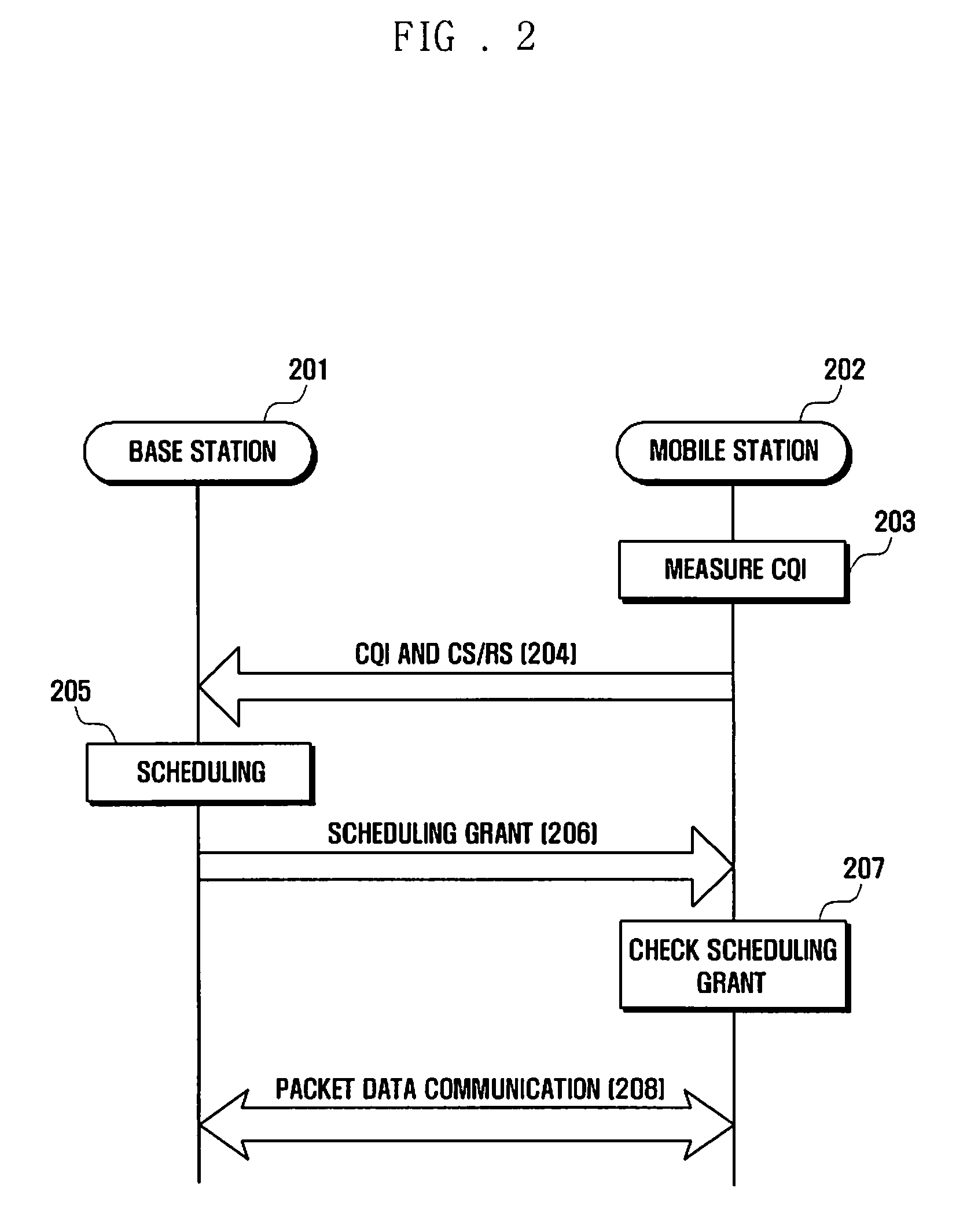

Control channel element detection method using cqi in a wireless communication system

ActiveUS20100150090A1Reduce power consumptionReduce degradationPower managementEnergy efficient ICTCommunications systemControl channel

A control channel element detection method and apparatus is provided for detecting the Control Channel Elements (CCEs) carrying control information for a mobile station using a Channel Quality Indicator (CQI). A CCE detection method includes searching a current subframe for CCEs, and locating the CCEs carrying control information for the mobile station by decoding the current subframe with variable code rates. Searching for the CCEs includes searching the current subframe for the CCEs carrying the control information for the mobile station while changing a size type of the CCEs according to a result of a comparison between a CQI of the current subframe and a CQI of a previous subframe.

Owner:SAMSUNG ELECTRONICS CO LTD

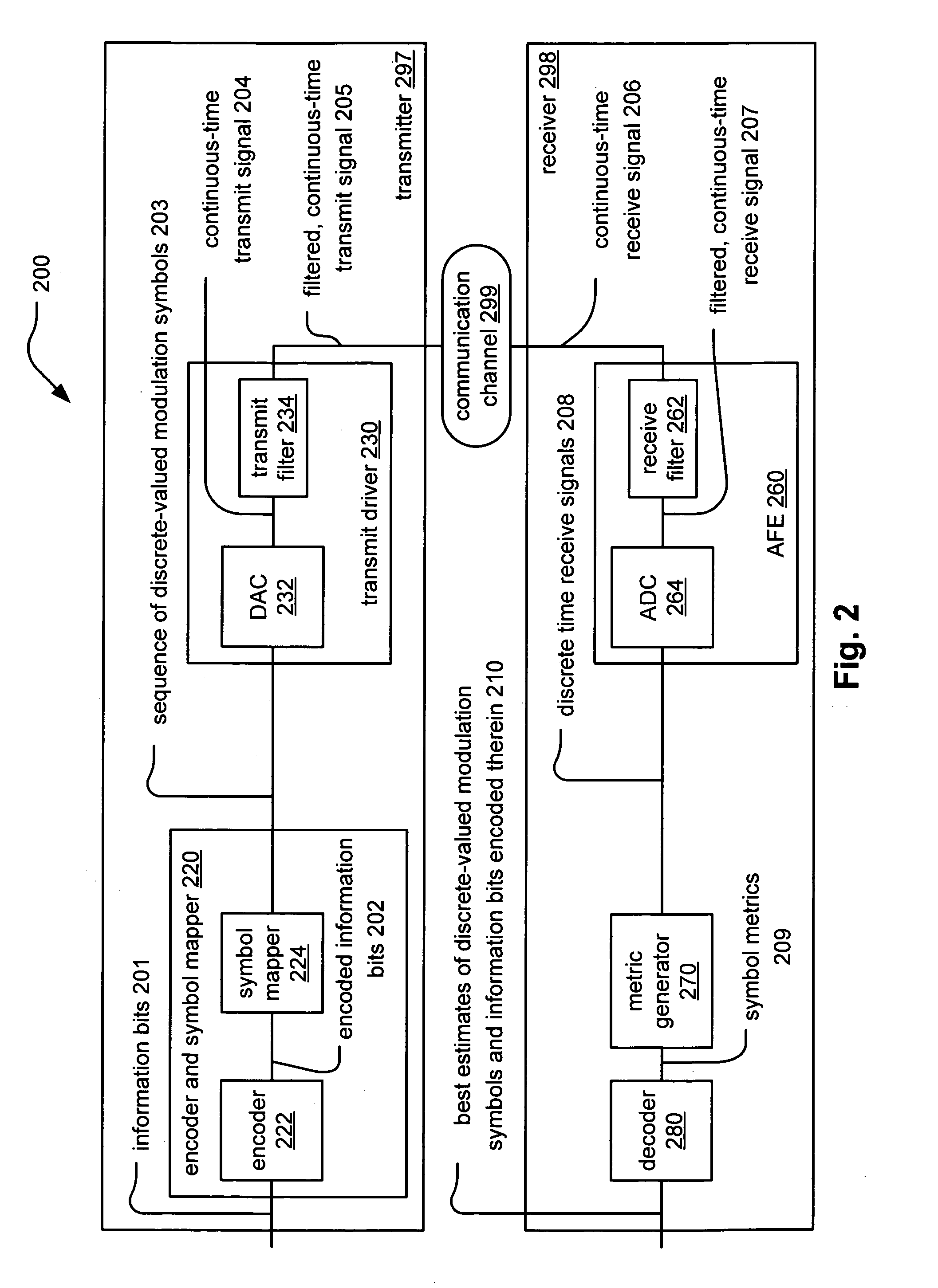

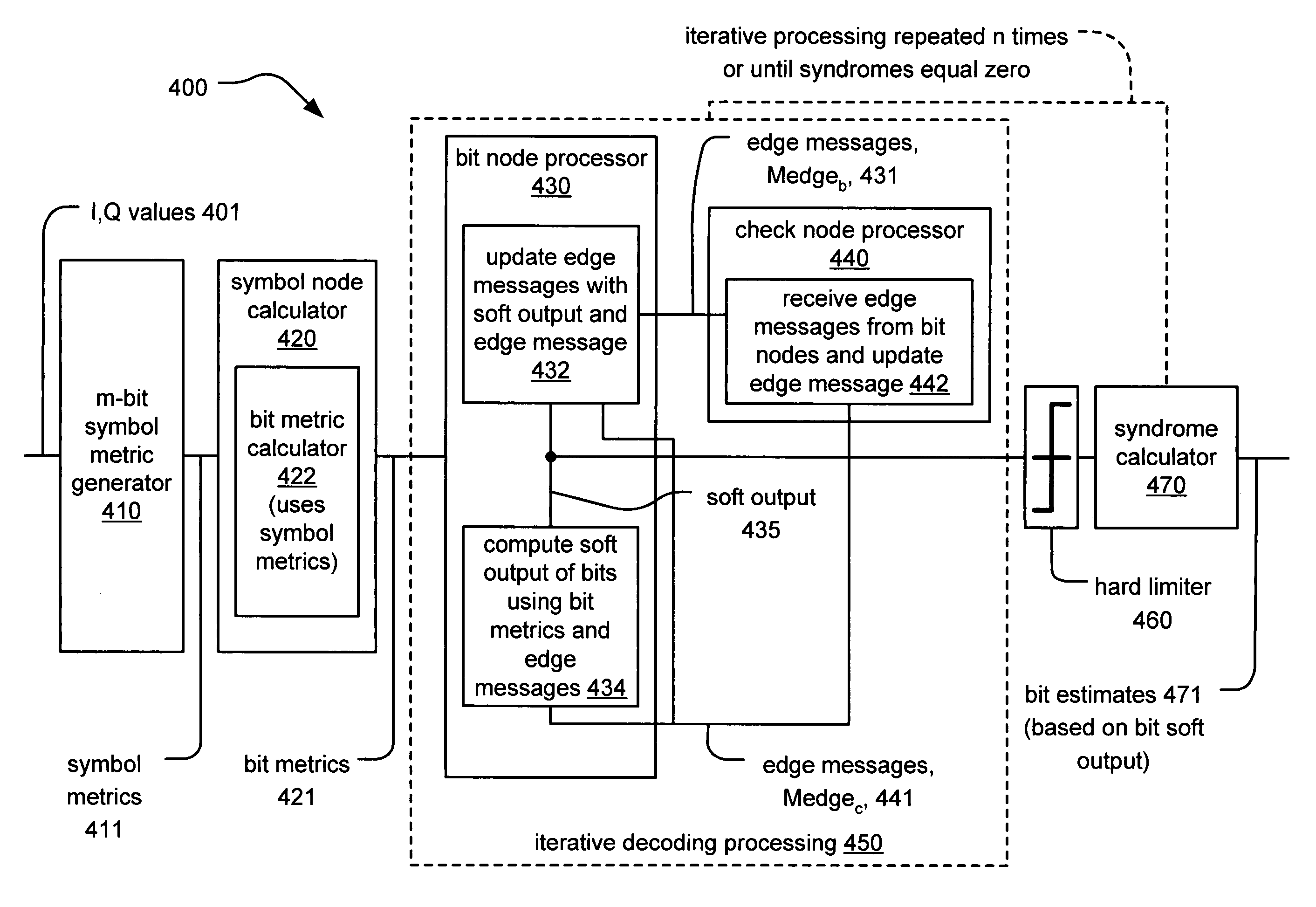

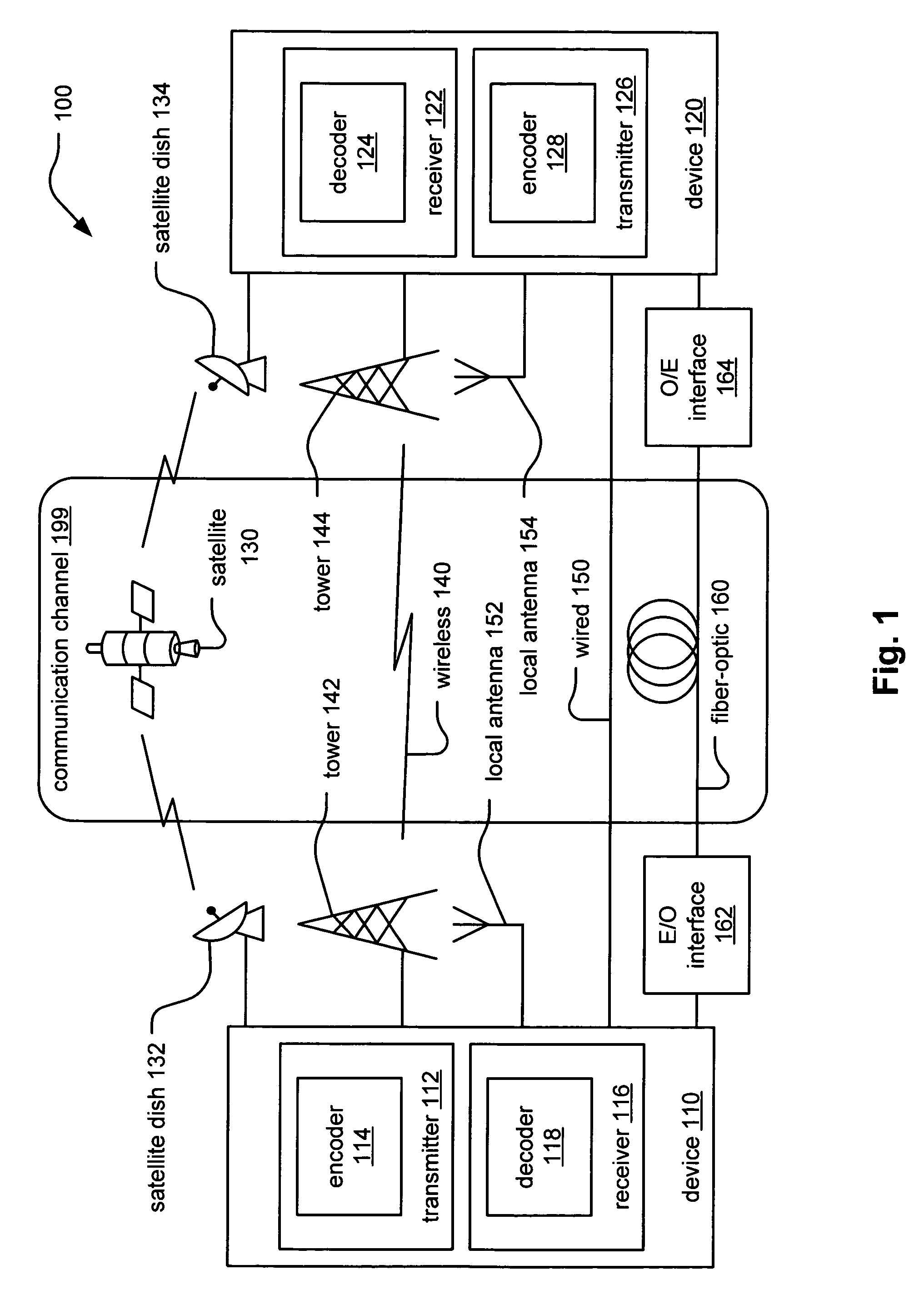

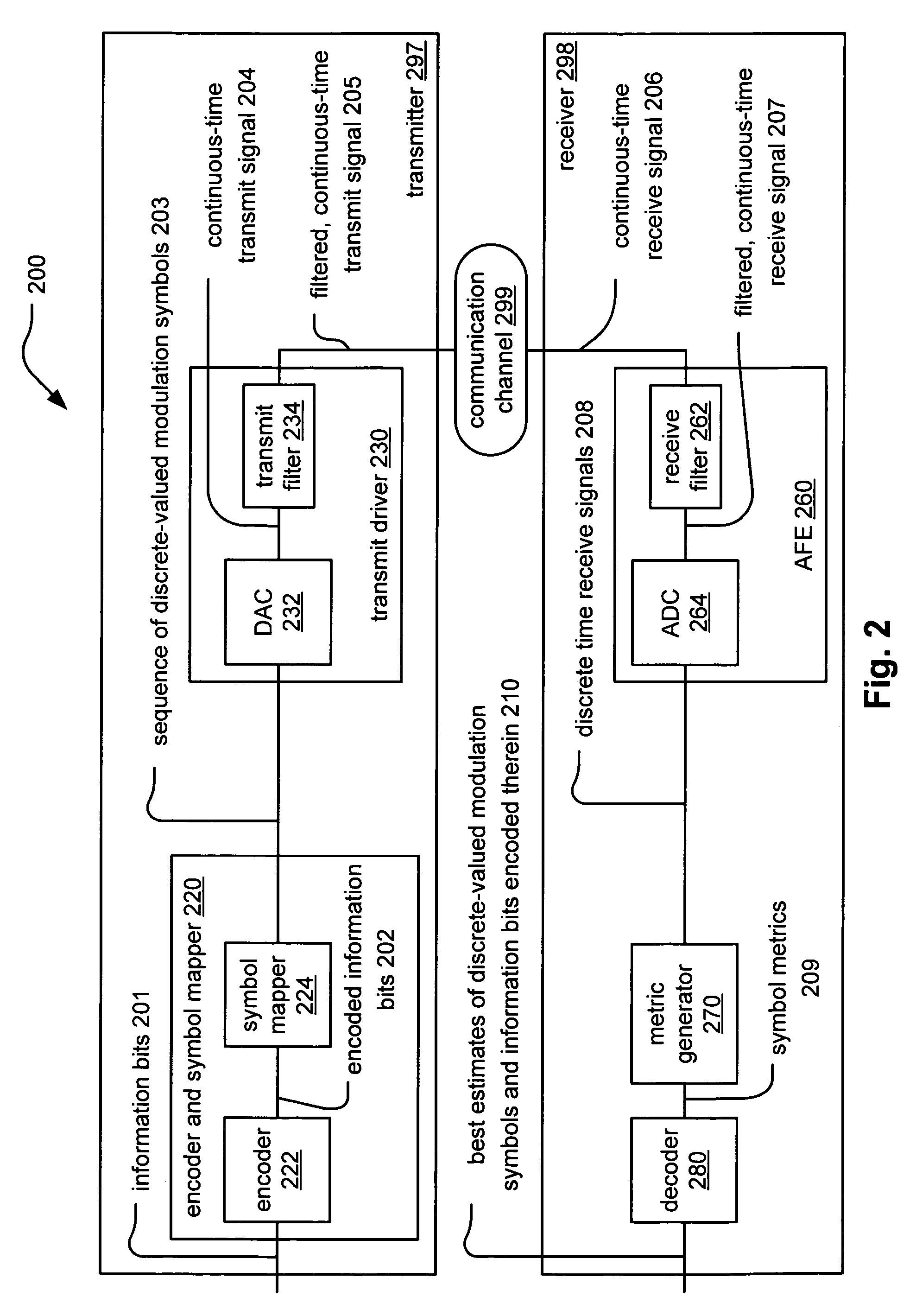

Iterative metric updating when decoding LDPC (low density parity check) coded signals and LDPC coded modulation signals

InactiveUS7216283B2Other decoding techniquesError correction/detection using LDPC codesLow-density parity-check codeLow density

Iterative metric updating when decoding LDPC (Low Density Parity Check) coded signals and LDPC coded modulation signals. A novel approach is presented for updating the bit metrics employed when performing iterative decoding of LDPC coded signals. This bit metric updating is also applicable to decoding of signals that have been generated using combined LDPC coding and modulation encoding to generate LDPC coded modulation signals. In addition, the bit metric updating is also extendible to decoding of LDPC variable code rate and / or variable modulation signals whose code rate and / or modulation may vary as frequently as on a symbol by symbol basis. By ensuring that the bit metrics are updated during the various iterations of the iterative decoding processing, a higher performance can be achieved than when the bit metrics remain as fixed values during the iterative decoding processing.

Owner:AVAGO TECH INT SALES PTE LTD

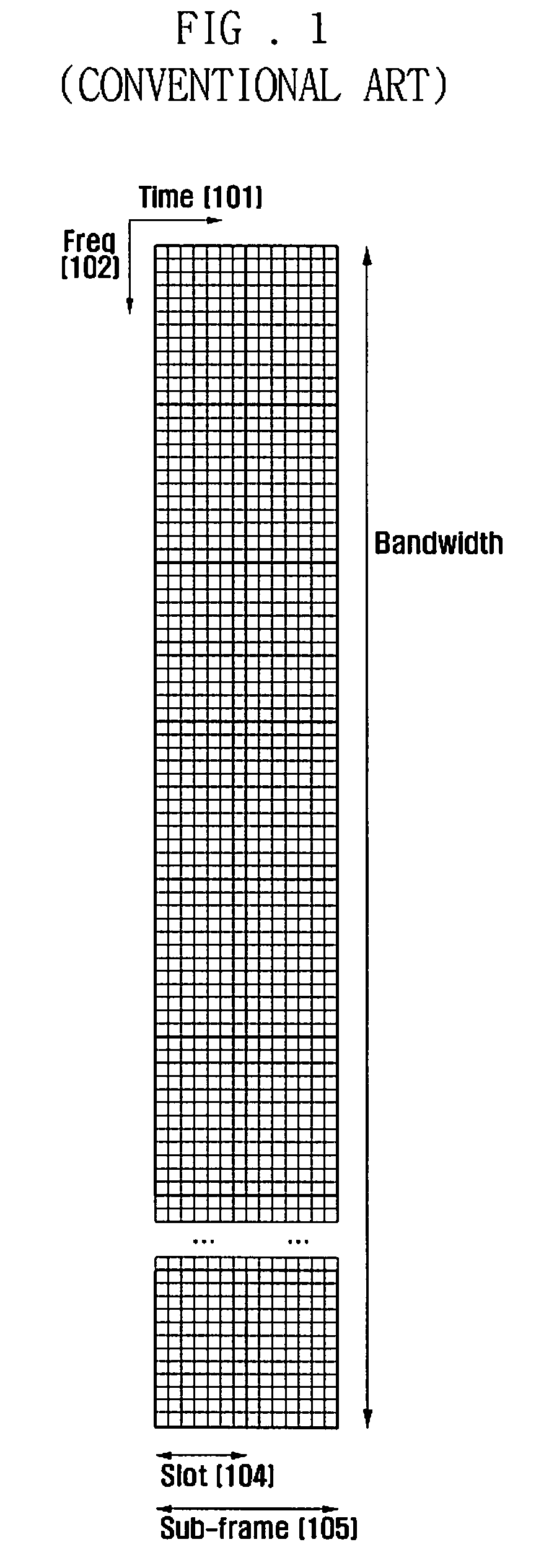

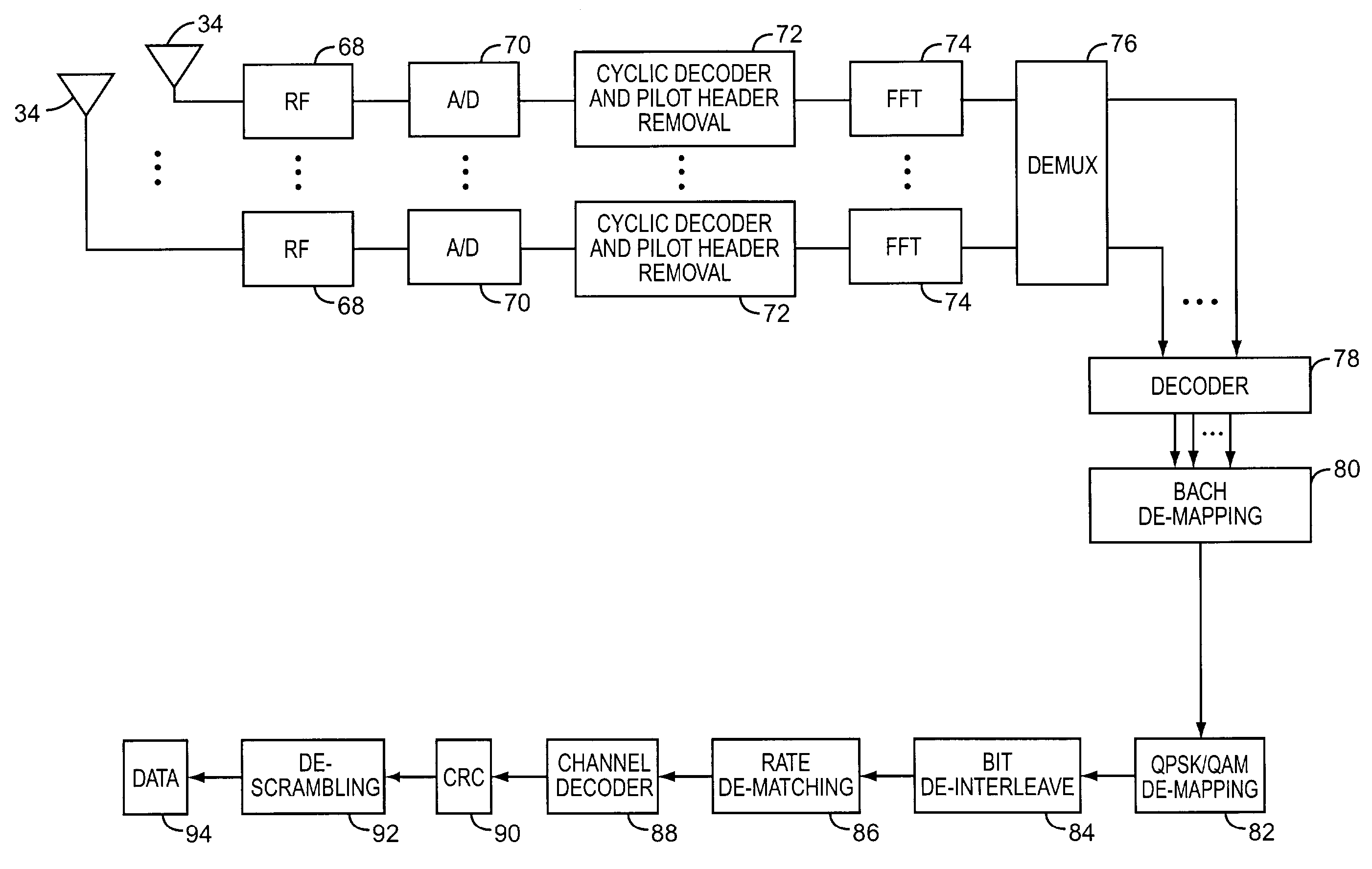

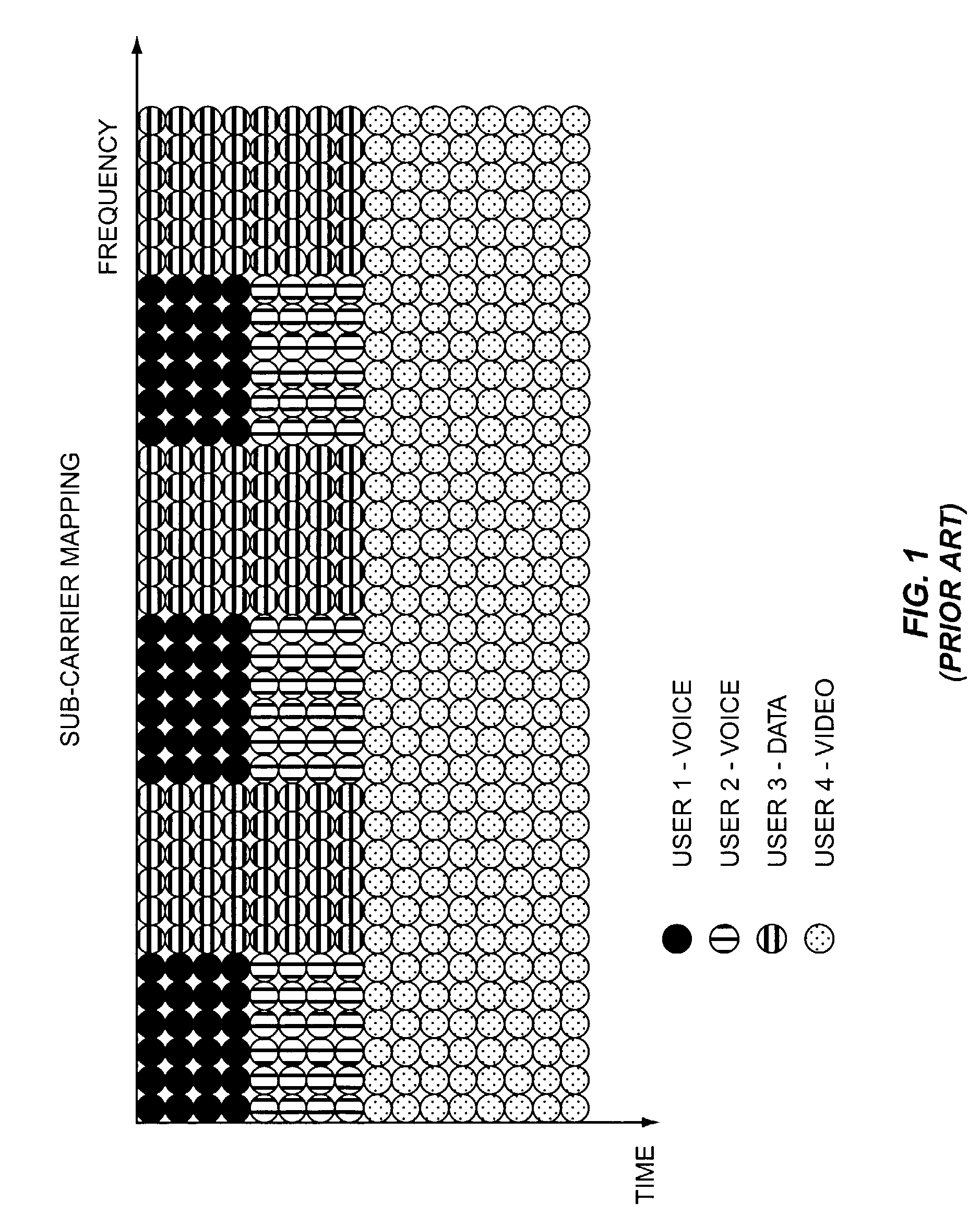

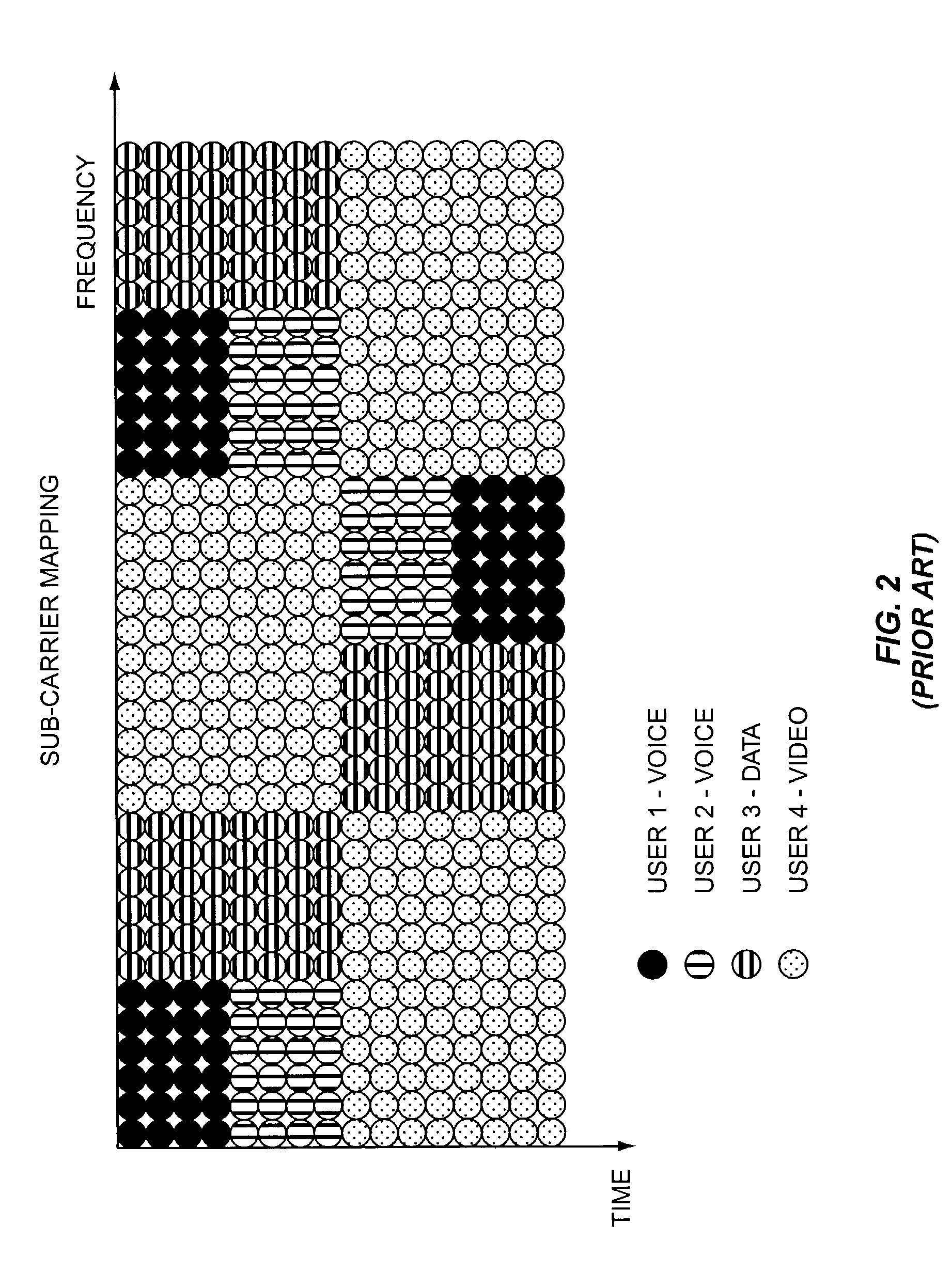

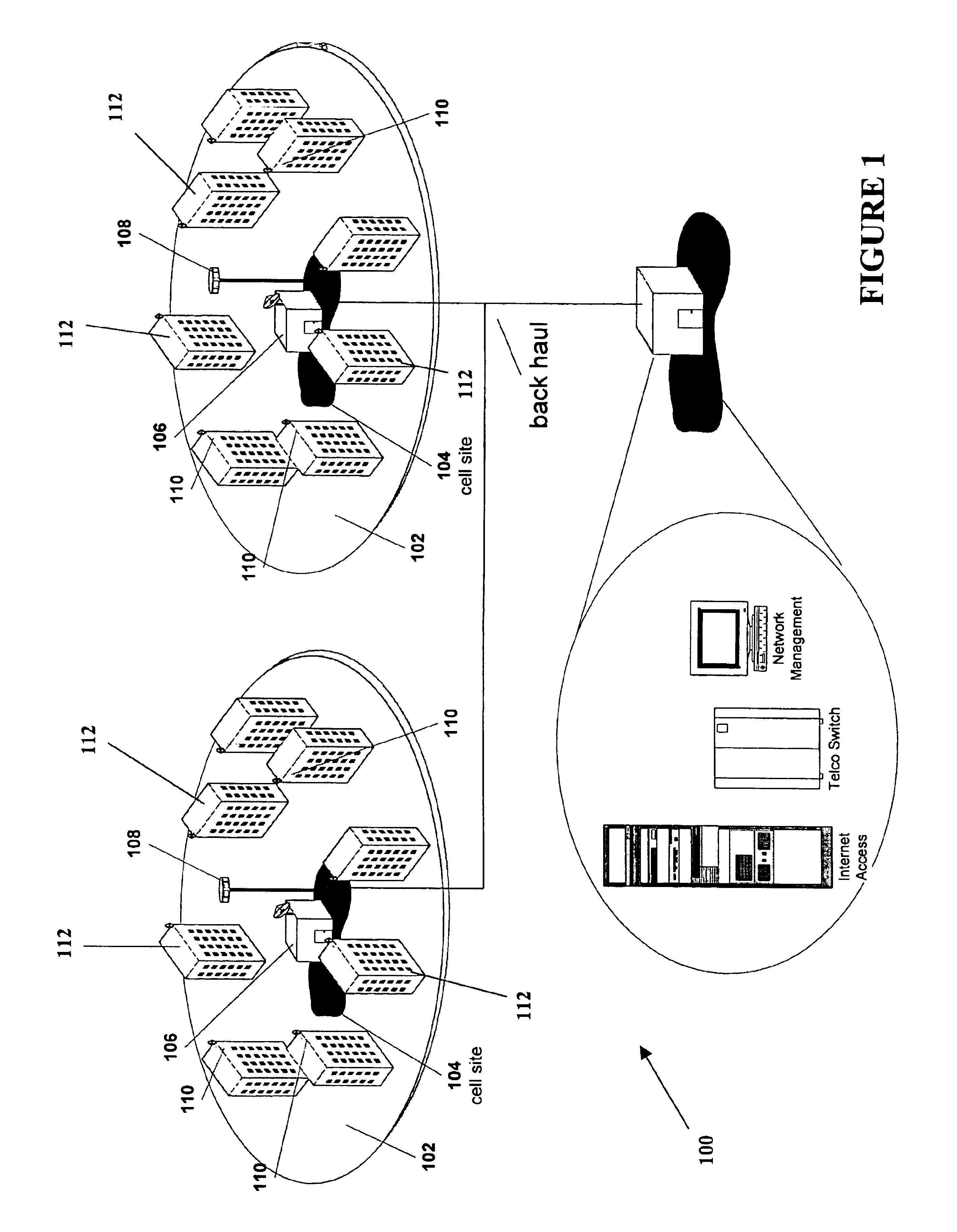

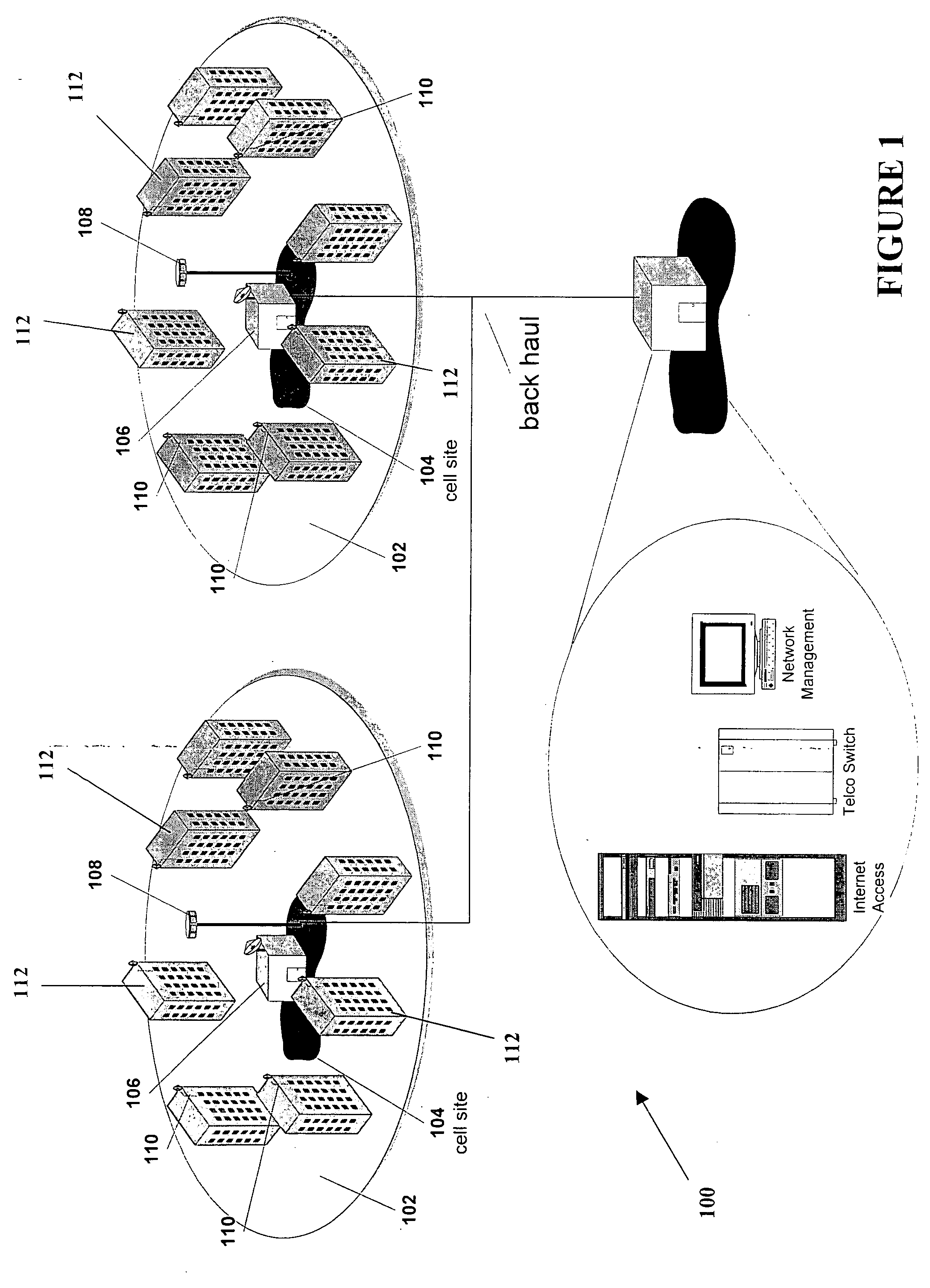

Channel mapping for OFDM

ActiveUS7317680B2Minimize impactReduce computational complexityTransmission path divisionInter user/terminal allocationEngineeringSubcarrier

The present invention provides a technique for supporting variable bitrate services in an OFDM environment while minimizing the impact of the variations of fading channels and interference. In general, a basic access channel (BACH) is defined by a set number of sub-carriers over multiple OFDM symbols. While the number of sub-carriers remains fixed for the BACH, the sub-carriers for any given BACH will hop from one symbol to another. Thus, the BACH is defined by a hopping pattern for a select number of sub-carriers over a sequence of symbols.

Owner:MICROSOFT TECH LICENSING LLC

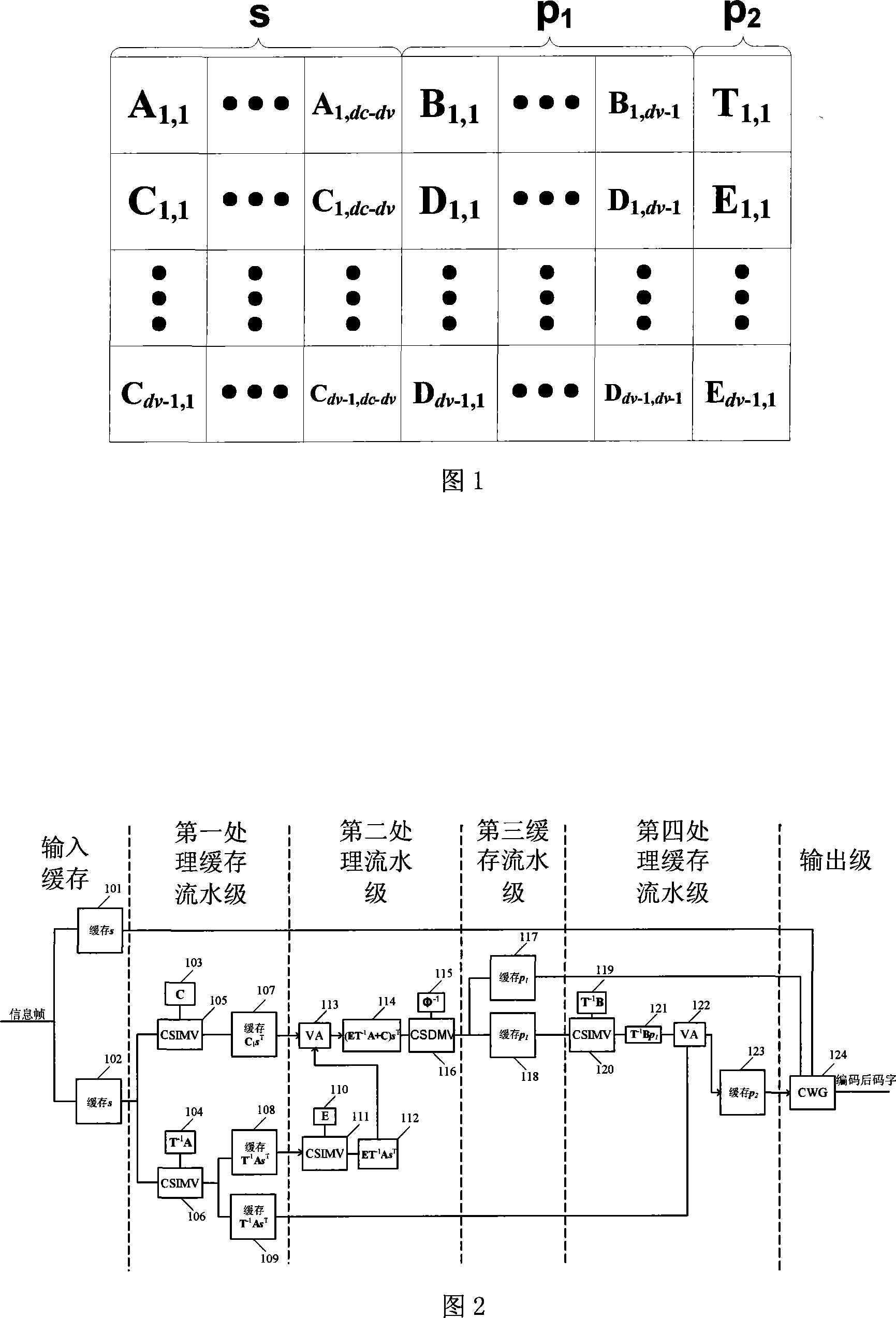

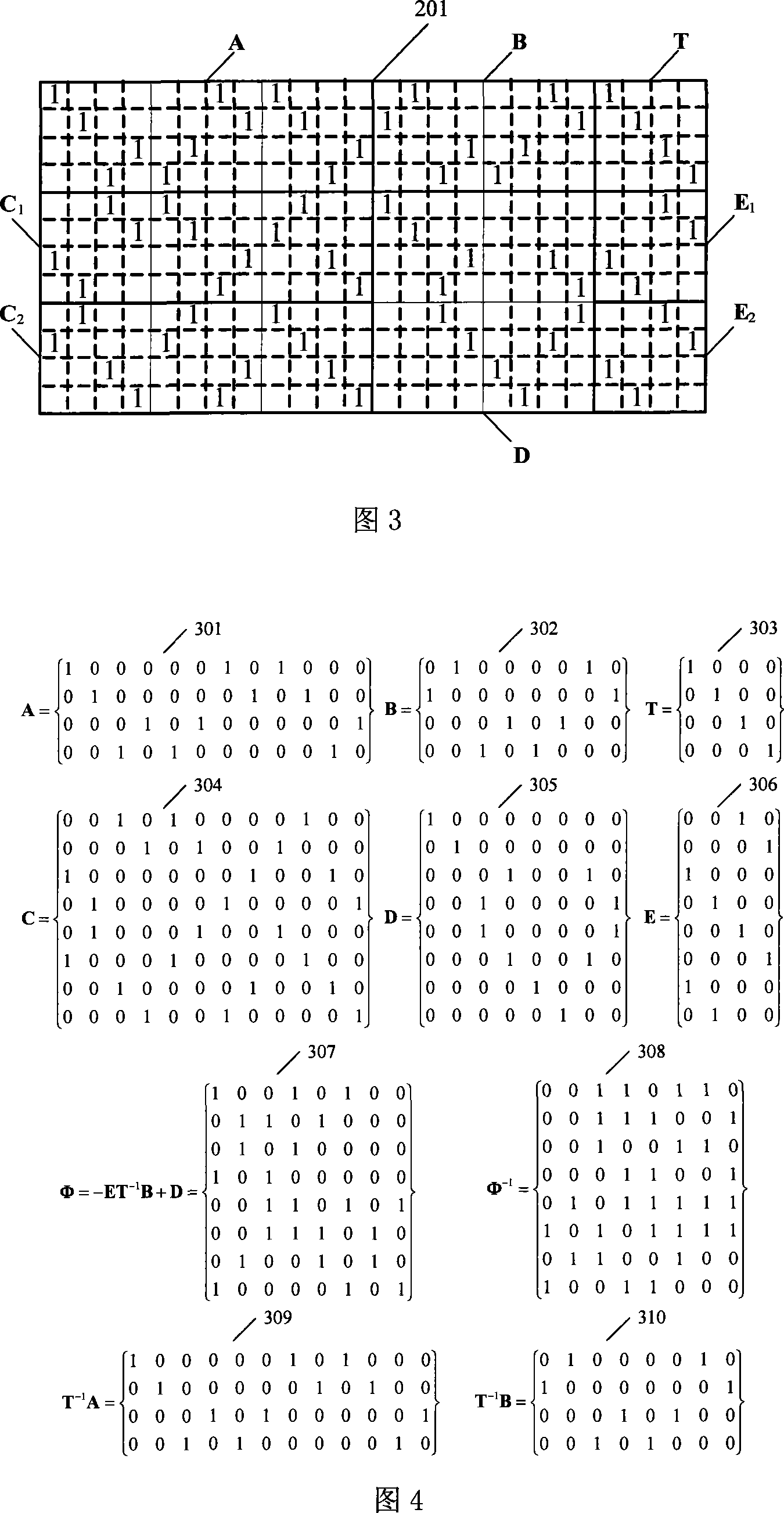

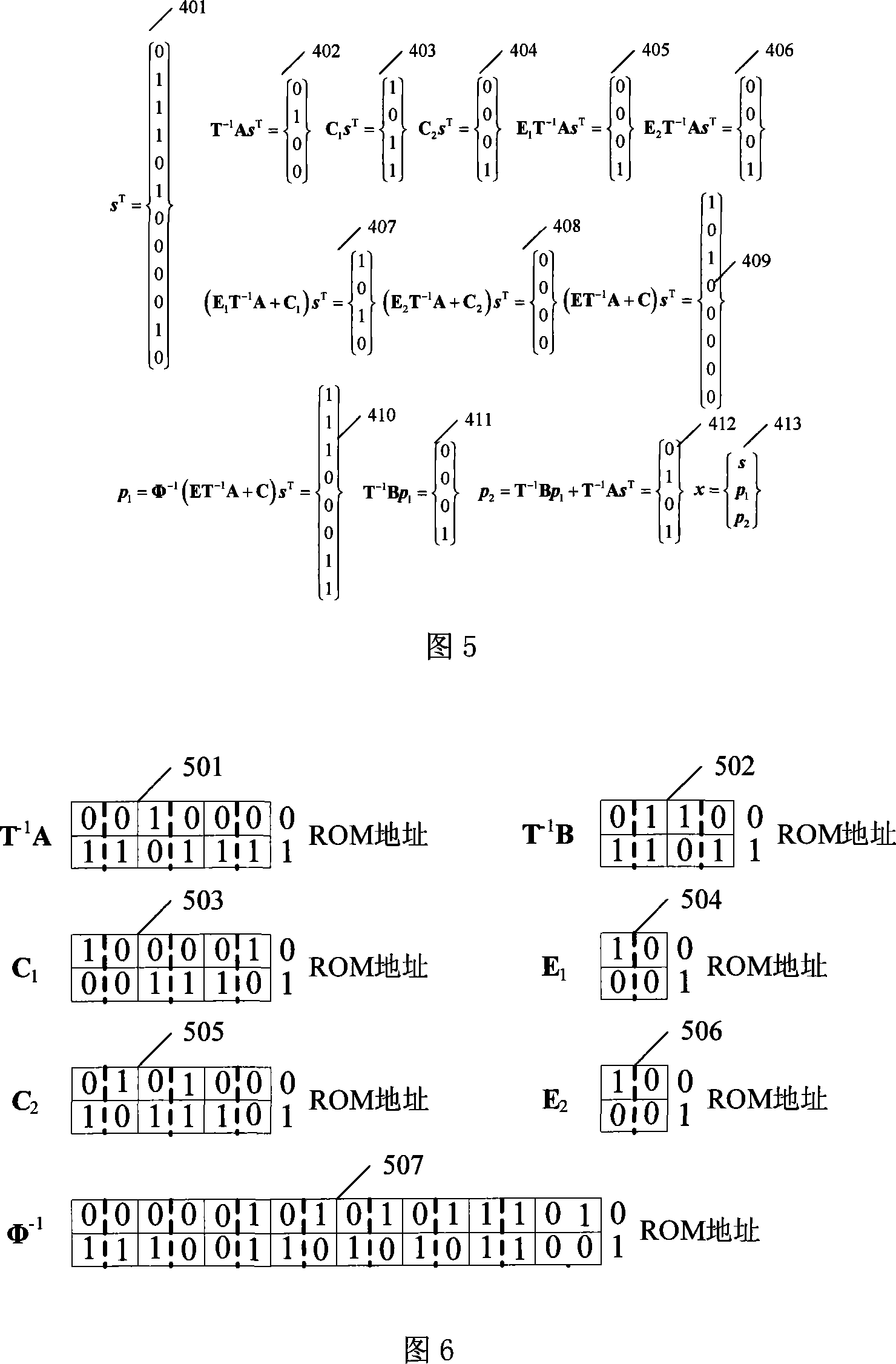

Encoder of LDPC code of layered quasi-circulation extended structure

InactiveCN101119118ASave resourcesImprove throughputError correction/detection using multiple parity bitsVariable-length codeEuclidean vector

The present invention discloses a coder of LDPC code used in hierarchical quasi-cyclic expansion structure, comprising an input cache, a first depositing and caching pipeline grade, a second depositing pipeline grade, a third caching pipeline grade, a fourth depositing and caching pipeline grade and an output grade. By utilizing the character that the check matrix H is formed by connecting the quasi-cyclic sift matrix, the present invention simplifies the pipeline structure of the RU coding method, reduces the grades of the pipeline from sixth grade to fourth grade, and shortens the coding delay. Besides, the present invention decreases the largest pipeline delay and increases the coding thuoughput according to the fulfilling character of the main functional module. And the present invention also reduces the energy consumption of the coder ROM according to the operational character of the quasi-cyclic sift matrix; replaces the sparse matrixmultiply vector in the RU method with the quasi-cyclic sift unit matrix multiply vector; and replaces the non sparse matrix multiply vector in the RU method with the quasi-cyclic sift matrix multiply vector. A larger storing space can be remained in the ping pong RAM amid the grades to fit the demand of the variable length code and the VBR.

Owner:SHANGHAI JIAO TONG UNIV

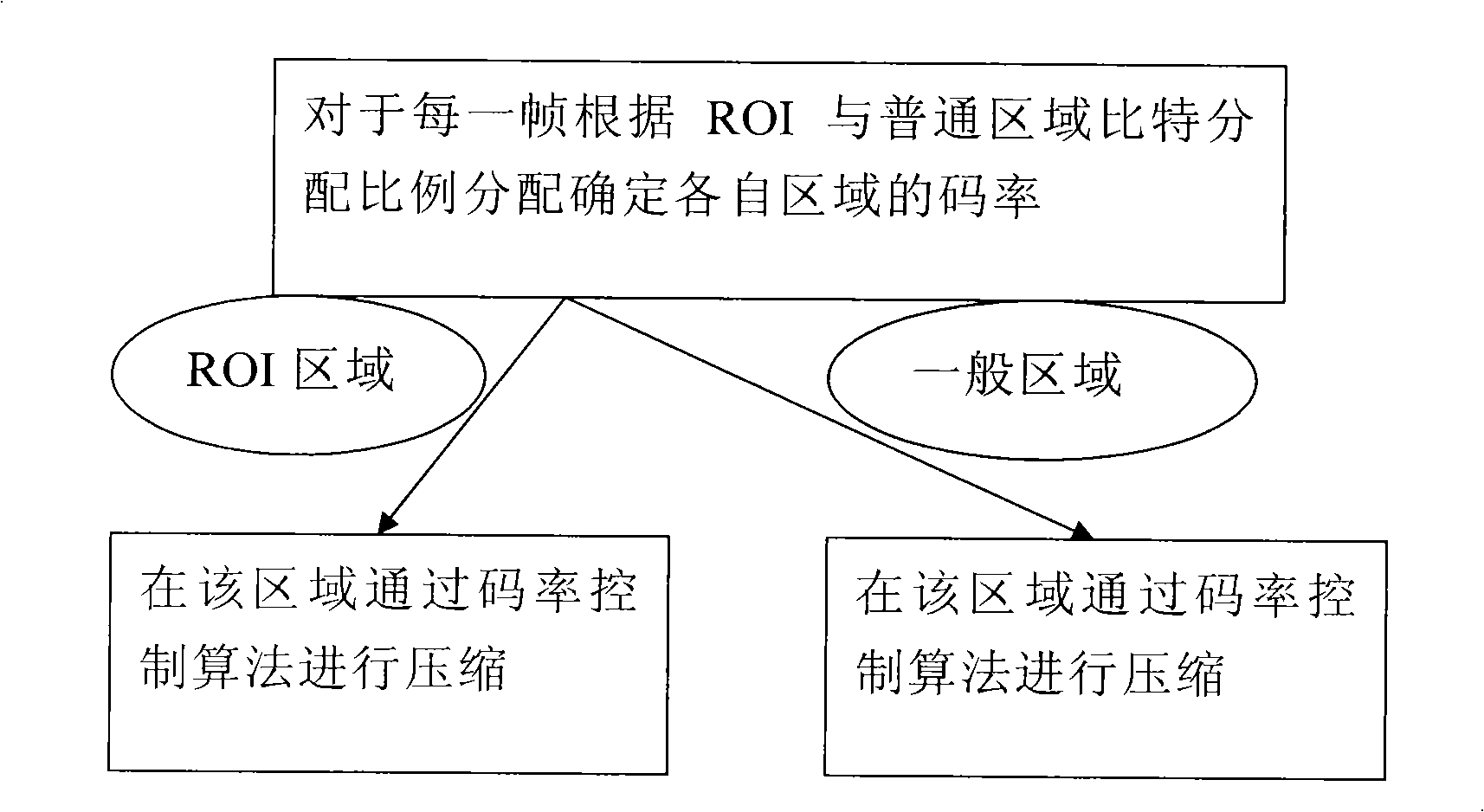

Method for encoding H.264 interesting region

InactiveCN101494785AReduce complexityTelevision systemsDigital video signal modificationVideo monitoringThree level

The present invention relates to a H.264 interested region coding method, specifically to a method of using code rate to control the coding of interested region, including coding methods at three levels of group-level, image-level and basic unit-level. The invention has advantages of using code rate control algorithm to performing the coding of interested region without adding extra information in the code stream, without limitation to the number and shapes of the interested region, and without adding the complexity of coding algorithm. The invention is a more flexible interested region coding method, which can be applied in all Profile of H.264, and has important application value in video monitoring, and the like fields having high requirement to the integral compression ratio; experiments show that the method is an effective H.264 interested region coding method, is suitable for constant code rate control algorithm and variable code rate control algorithm.

Owner:江苏儒灵童文化产业集团有限公司

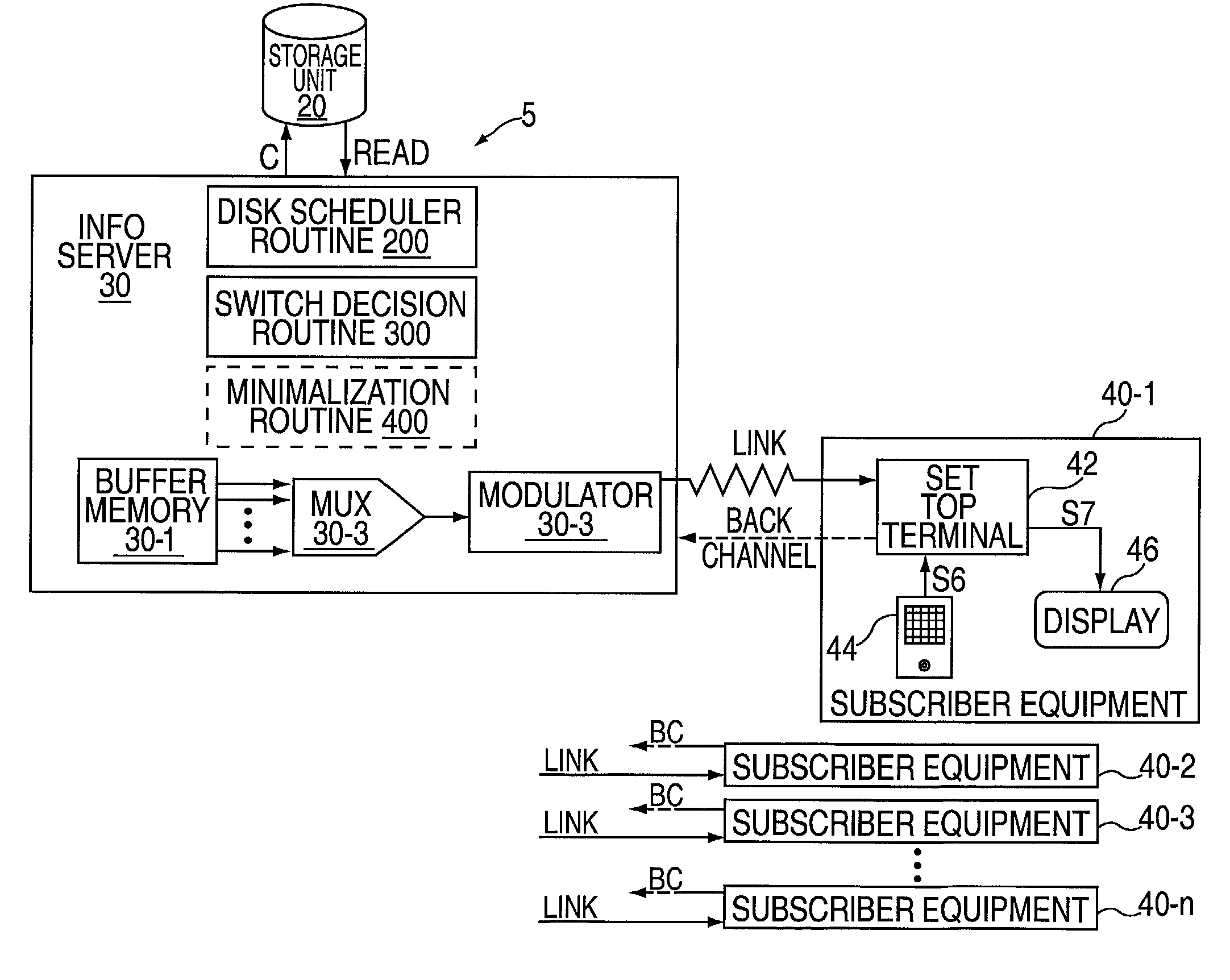

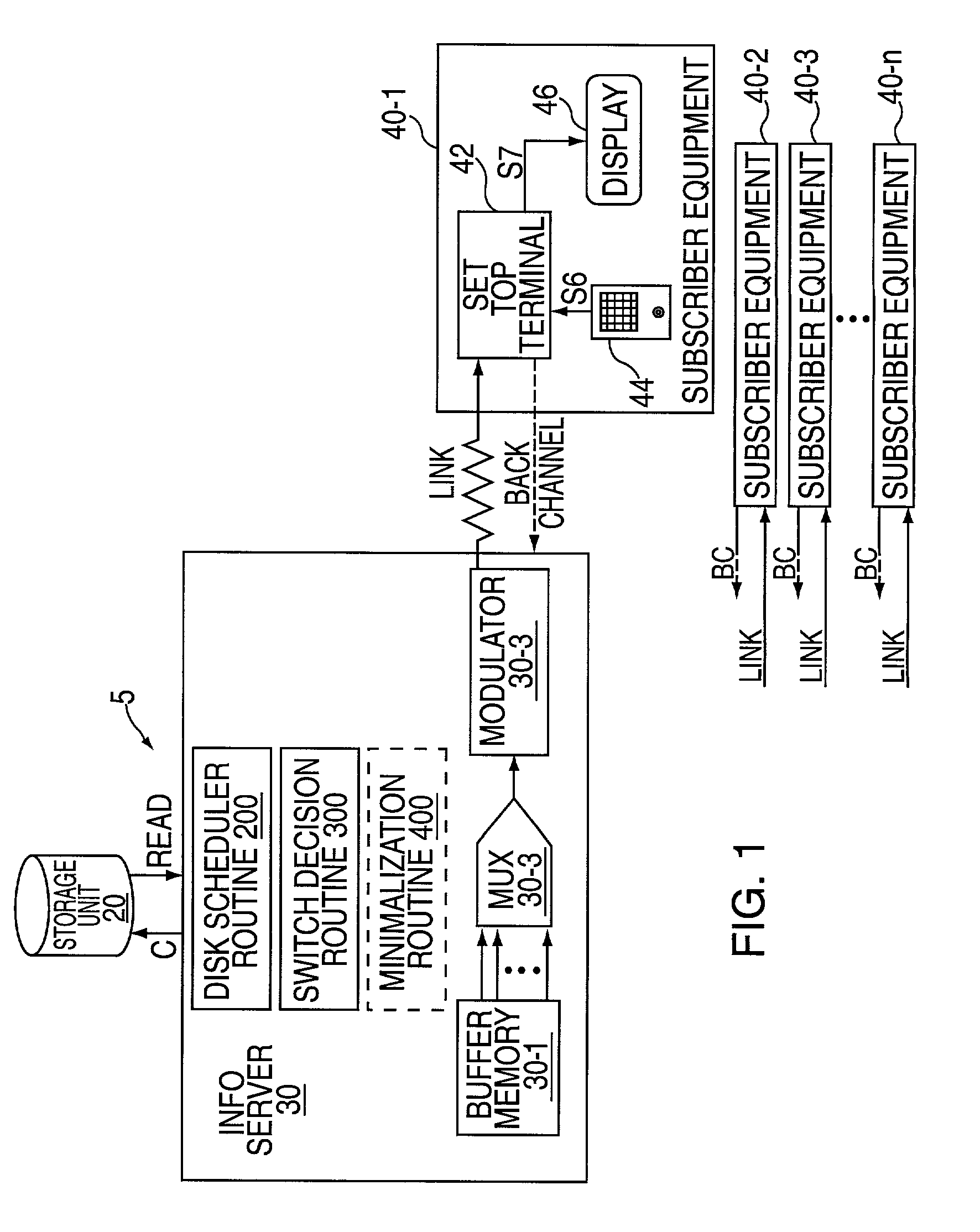

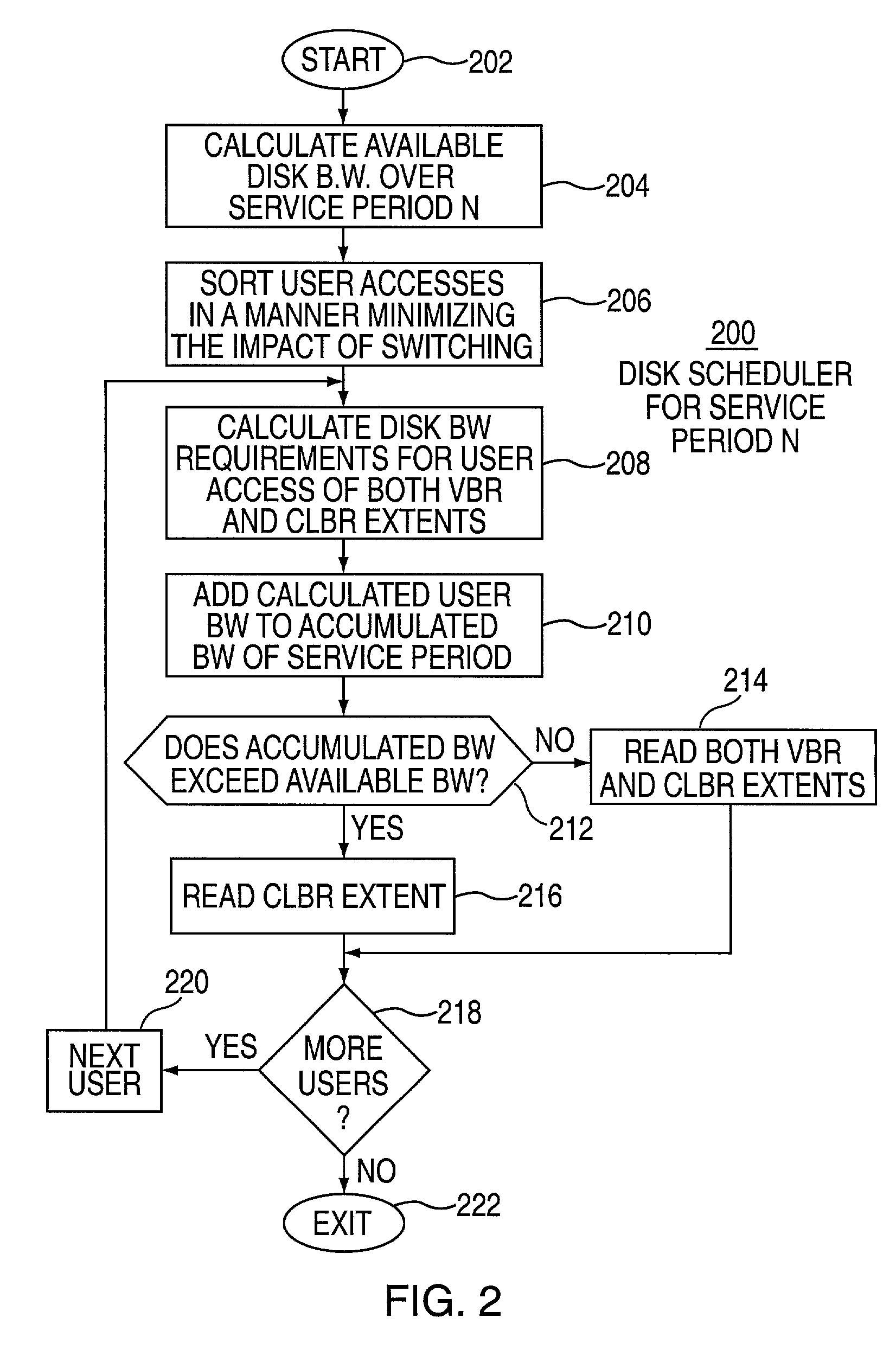

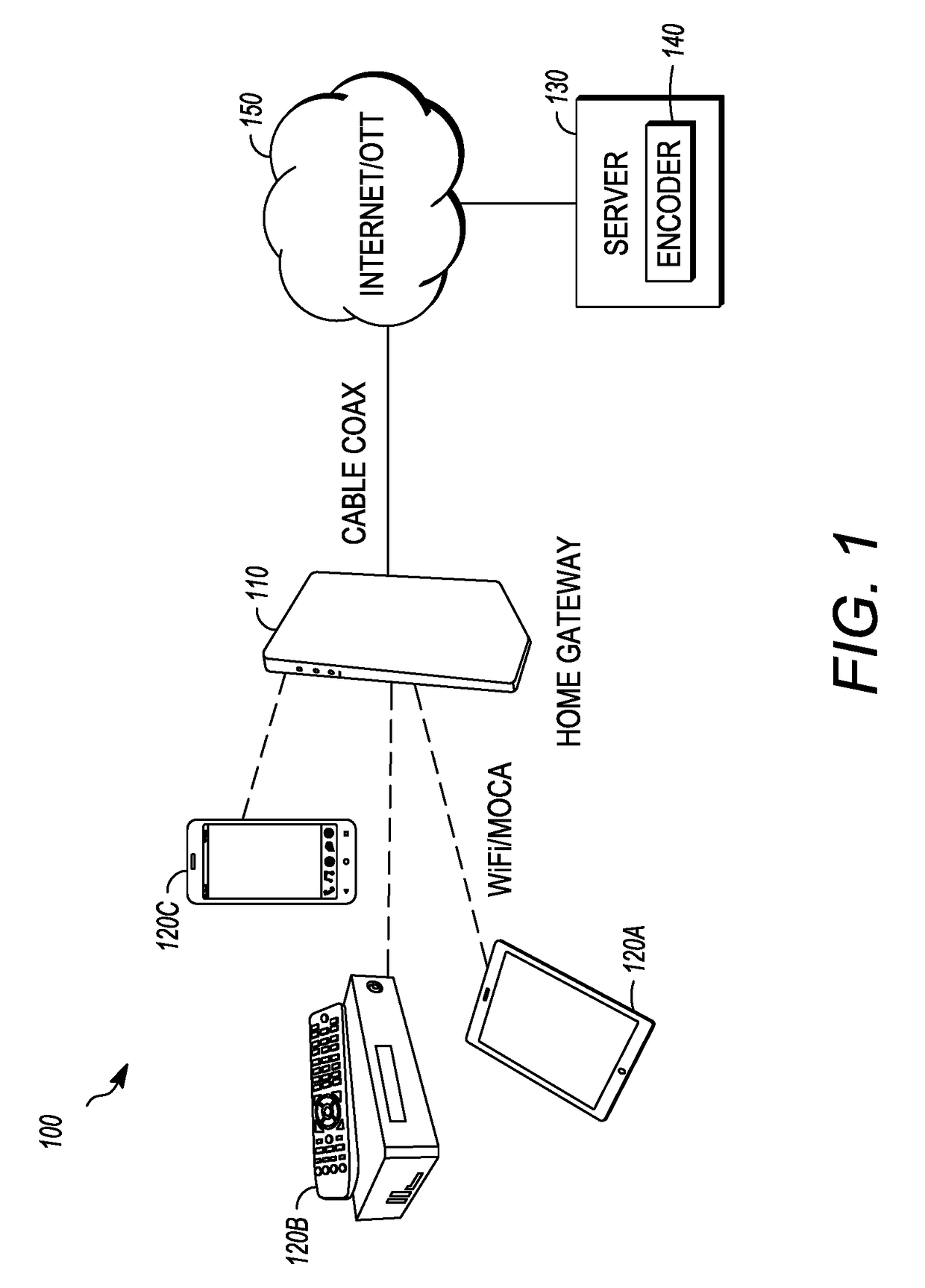

Method and apparatus for processing variable bit rate information in an information distribution system

InactiveUS7167488B2Low constantMultiplex system selection arrangementsBroadcast transmission systemsDistribution systemVariable bitrate

A method and apparatus for managing both link and disk bandwidth utilization within the context of a multiple subscriber or user information distribution system by selectively providing variable bitrate and constant low bitrate information streams to one or more subscribers.

Owner:COMCAST IP HLDG I

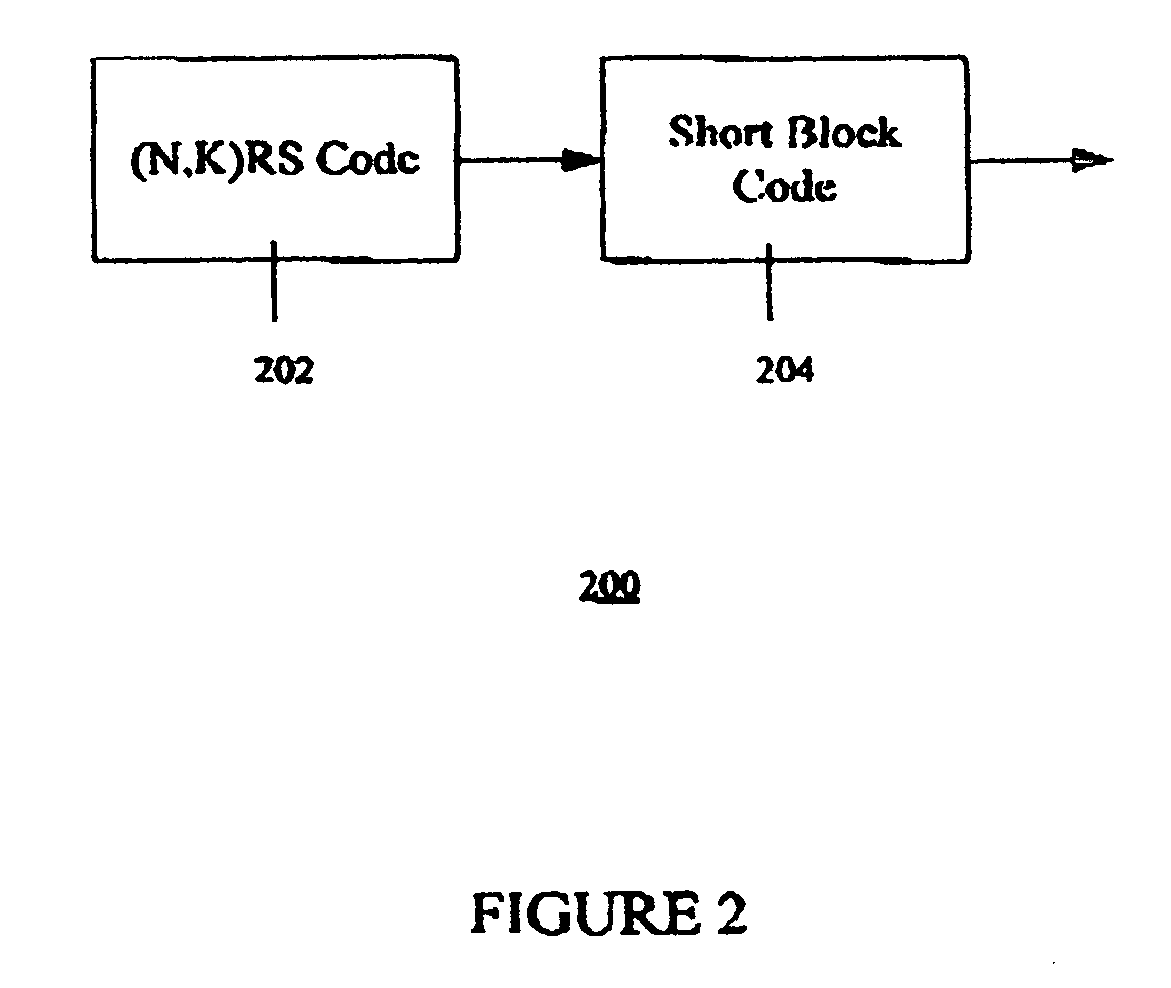

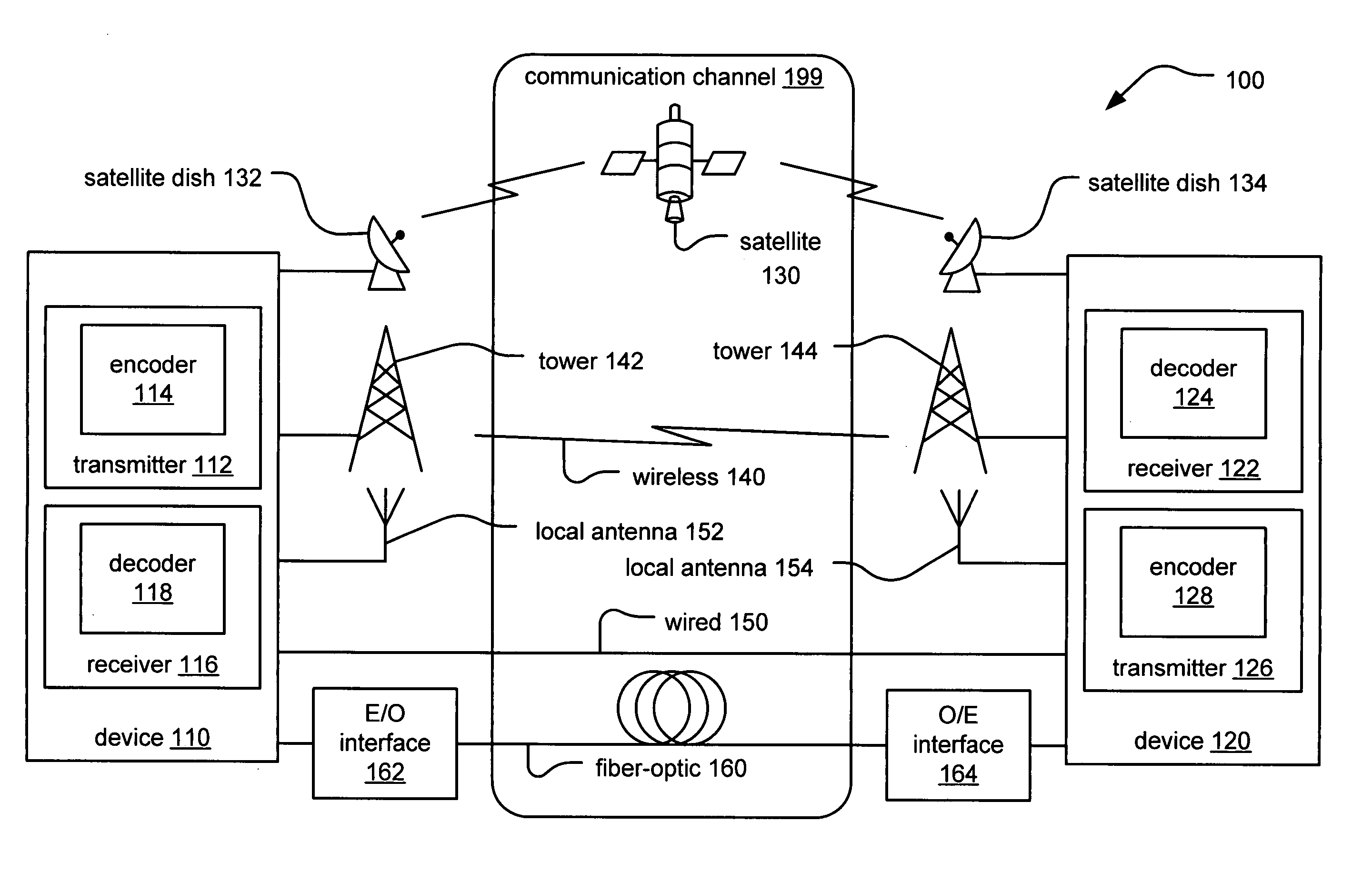

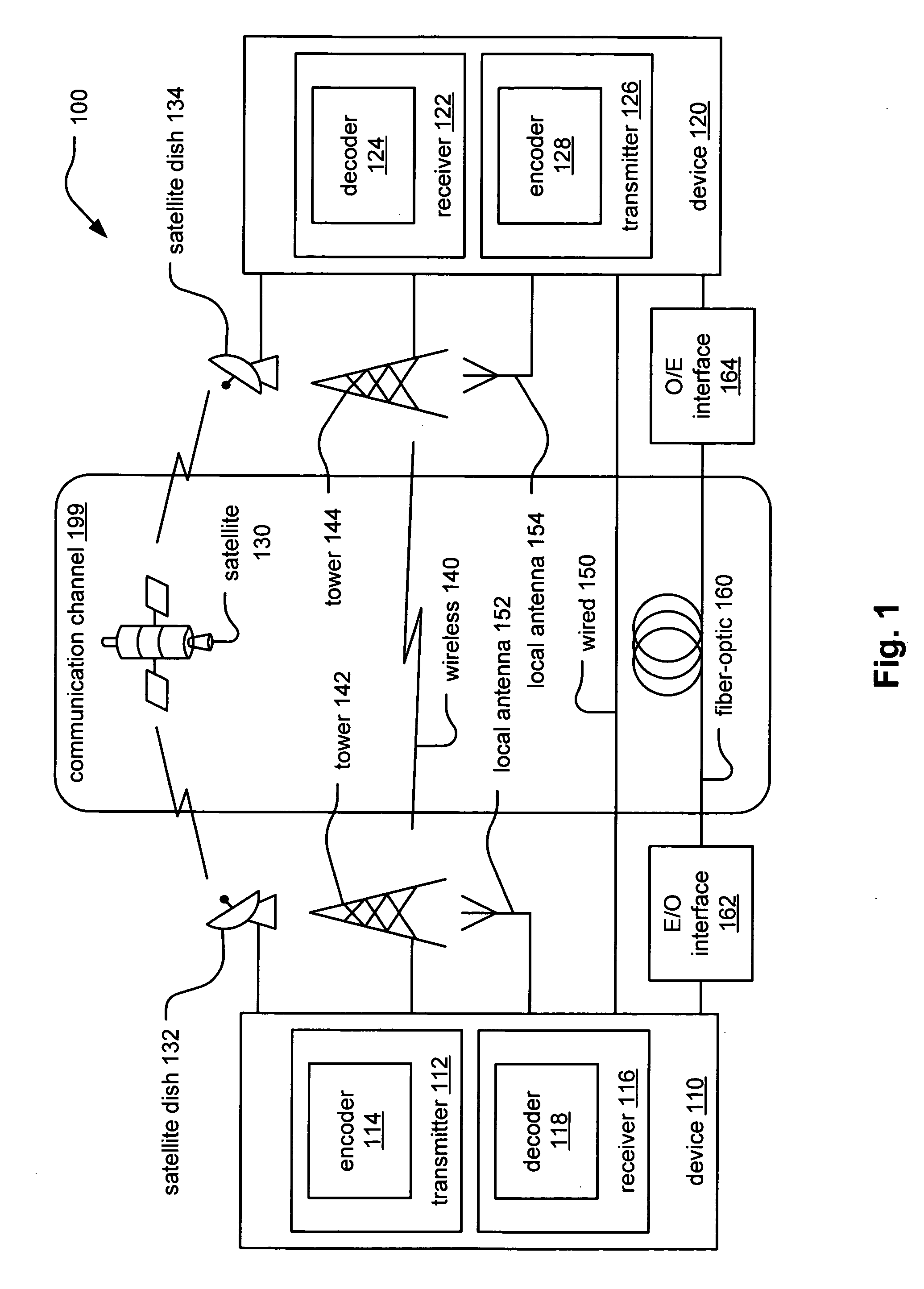

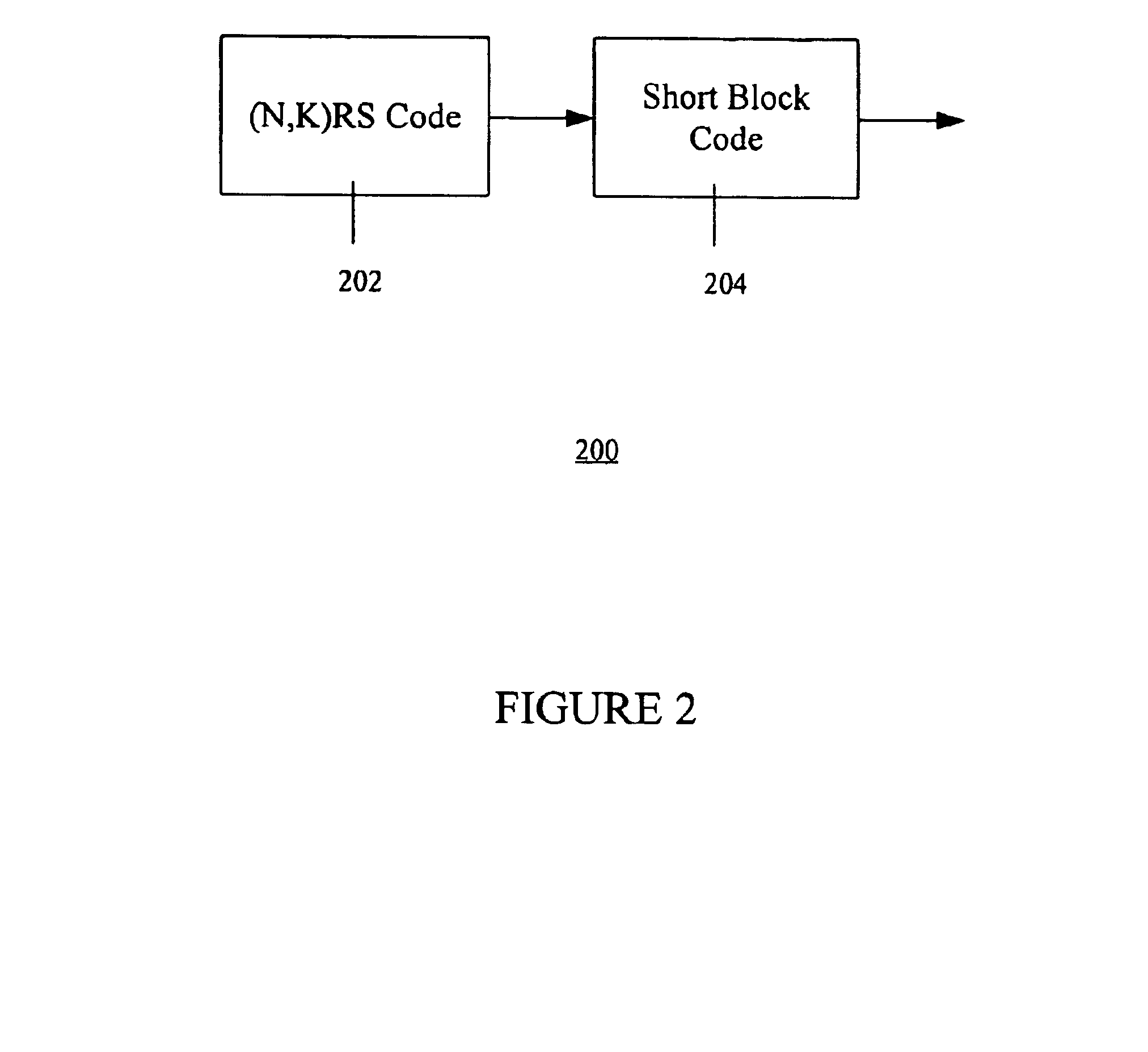

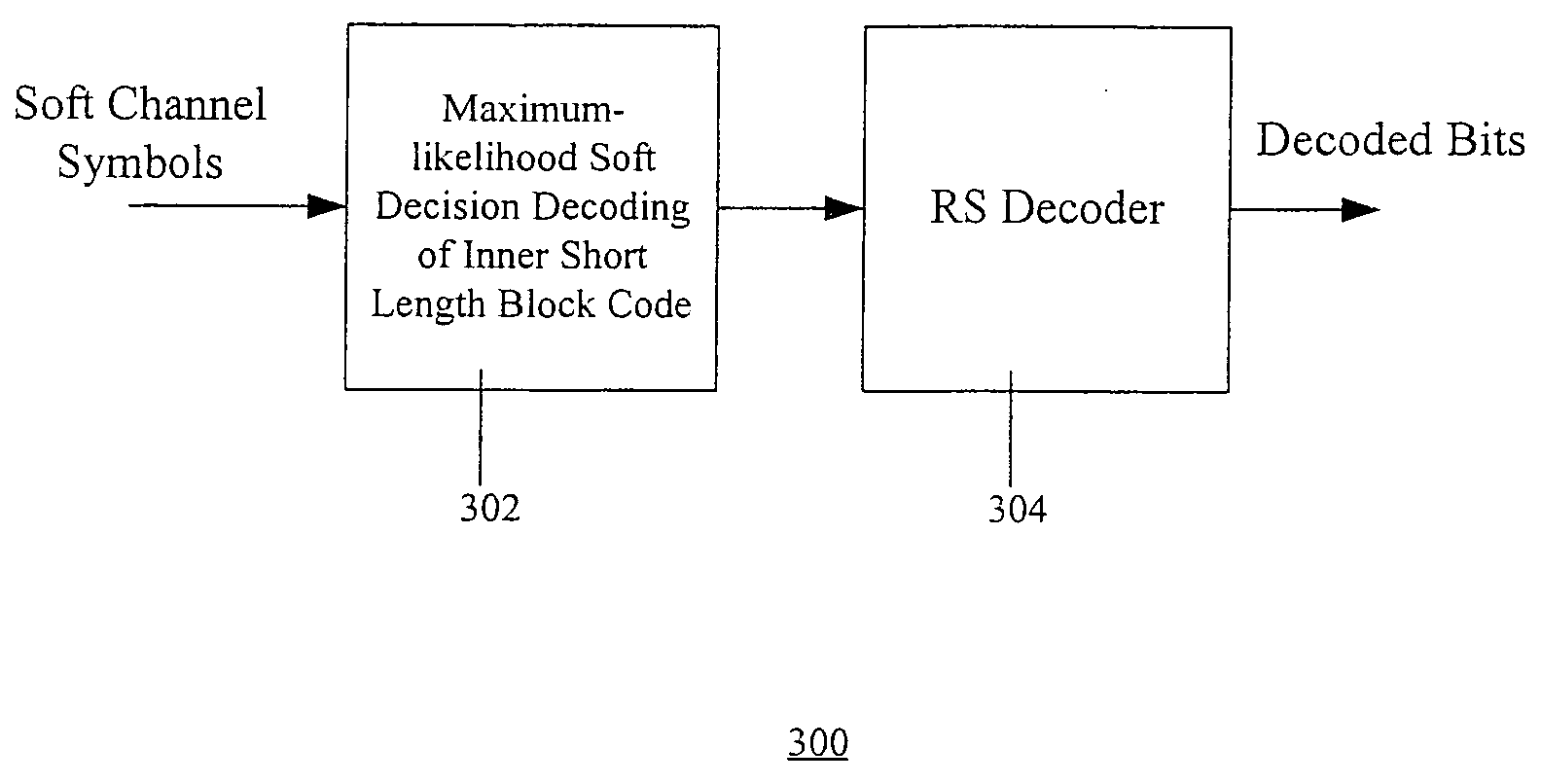

Method and apparatus for concatenated channel coding with variable code rate and coding gain in a data transmission system

InactiveUS20050102605A1Valid encodingReduce complexityError correction/detection using convolutional codesError preventionComputer hardwareBlock code

A novel method and apparatus for efficiently coding and decoding data in a data transmission system is described. A concatenated coding scheme is presented that is easily implemented, and that provides acceptable coding performance characteristics for use in data transmission systems. The inventive concatenated channel coding technique is well suited for small or variable size packet data transmission systems. The technique may also be adapted for use in a continuous mode data transmission system. The method and apparatus reduces the complexity, cost, size and power consumption typically associated with the prior art channel coding methods and apparatuses, while still achieving acceptable coding performance. The present invention advantageously performs concatenated channel coding without the necessity of a symbol interleaver. In addition, the present invention is simple to implement and thereby consumes much less space and power that do the prior art approaches. The present invention not only eliminates the need for a symbol interleaver between the outer and inner codes, but it also enjoys a drastically reduced implementation complexity of the inner code Viterbi decoder. The preferred embodiment of the present invention comprises an inner code having short length block codes derived from short constraint length convolutional codes utilizing trellis tailbiting and a decoder comprising four four-state Viterbi decoders having a short corresponding maximum length. The inner code preferably comprises short block codes derived from four-state (i.e., constraint length 3), nonsystematic, punctured and unpunctured convolutional code. One significant advantage of the preferred embodiment of the present concatenated coding technique is that packet data transmission systems can be designed to have variable coding gains and coding rates.

Owner:TUMBLEWEED HLDG LLC +1

Efficient front end memory arrangement to support parallel bit node and check node processing in LDPC (Low Density Parity Check) decoders

InactiveUS20050262421A1Error correction/detection using multiple parity bitsCode conversionParallel computingLow density

Efficient front end memory arrangement to support parallel bit node and check node processing in LDPC (Low Density Parity Check) decoders. A novel approach is presented by which the front end design of device capable to decode LDPC coded signals facilitates parallel decoding processing of the LDPC coded signal. The implementation of the front end memory management in conjunction with the implementation of a metric generator operate cooperatively lend themselves for very efficient parallel decoding processing of LDPC coded signals. There are several embodiments by which the front end memory management and the metric generator may be implemented to facilitate this parallel decoding processing of LDPC coded signals. This also allows for the decoding of variable code rate and / or variable modulation signals whose code rate and / or modulation varies as frequently as on a block by block basis (e.g., a block may include a group of symbols within a frame).

Owner:AVAGO TECH INT SALES PTE LTD

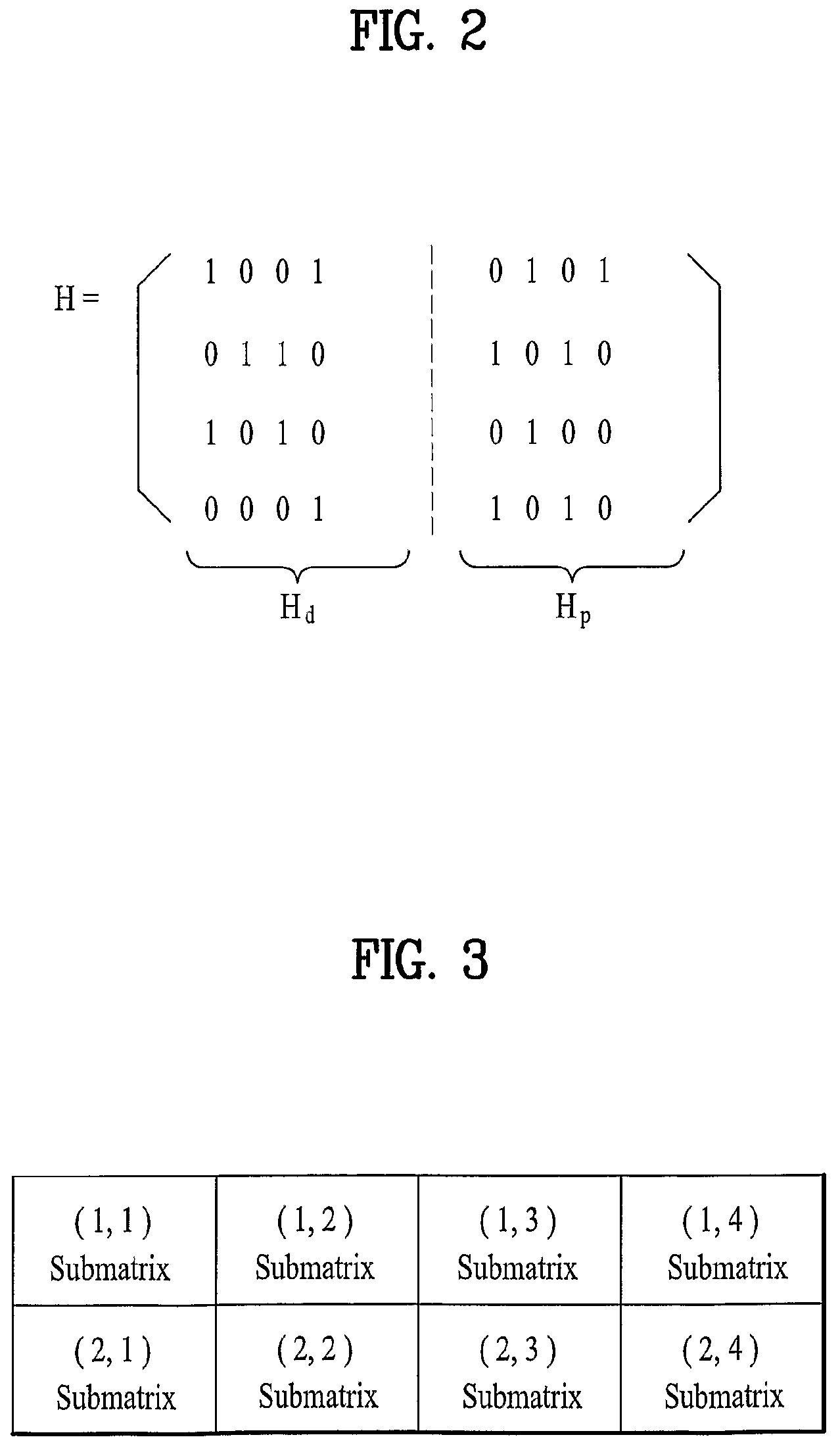

Method of encoding and decoding adaptive to variable code rate using LDPC code

InactiveUS7930622B2Error detection/correctionError correction/detection using multiple parity bitsAdaptive encodingParity-check matrix

A variable code rate adaptive encoding / decoding method using LDDC code is disclosed, in which an input source data is encoded using the LDPC (low density parity check) code defined by a first parity check matrix configured with a plurality of submatrices. The present invention includes the steps of generating a second parity check matrix corresponding to a code rate by reducing a portion of a plurality of submatrices configuring a first parity check matrix according to the code rate to be applied to encoding an input source data and encoding the input source data using the second parity check matrix.

Owner:LG ELECTRONICS INC

Audio communication system with bitrate indication

InactiveCN101828411ADocking-station type assembliesTransducers for sound channels pluralityCommunications systemTransducer

An audio producing device includes a receiver for wirelessly receiving a digital audio signal from an audio source device. The audio source device may transmit the digital audio signal at a variable bitrate. A logic device determines whether the bitrate at which the audio signal is received is below a predetermined threshold. A first indicator provides an indication to a user of the system when the bitrate is below the threshold. An electro-acoustic transducer utilizes information in the digital audio signal to produce audio out loud.

Owner:BOSE CORP

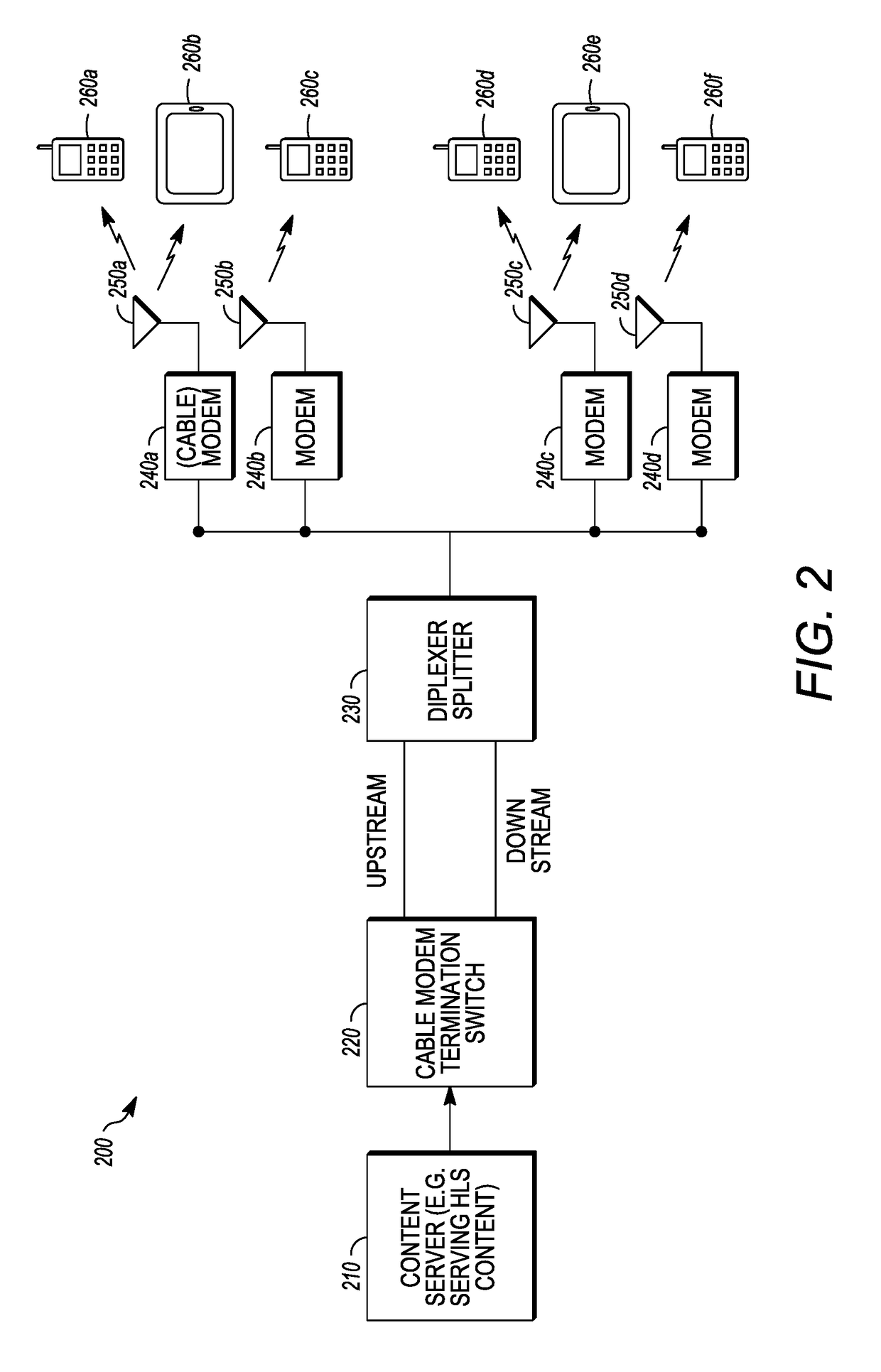

Quality tagging in adaptive bitrate technologies

A method is provided for tagging a quality metric in adaptive bitrate (ABR) streaming, which allows a client to intelligently select a variant bitrate stream using the tagged quality metric. The method includes encoding multiple streams of video data at variant bitrates, each bitrate stream having a plurality of chunks, computing a quality metric for each chunk of each stream, and tagging the quality metric with each chunk of each stream.

Owner:ARRIS ENTERPRISES LLC

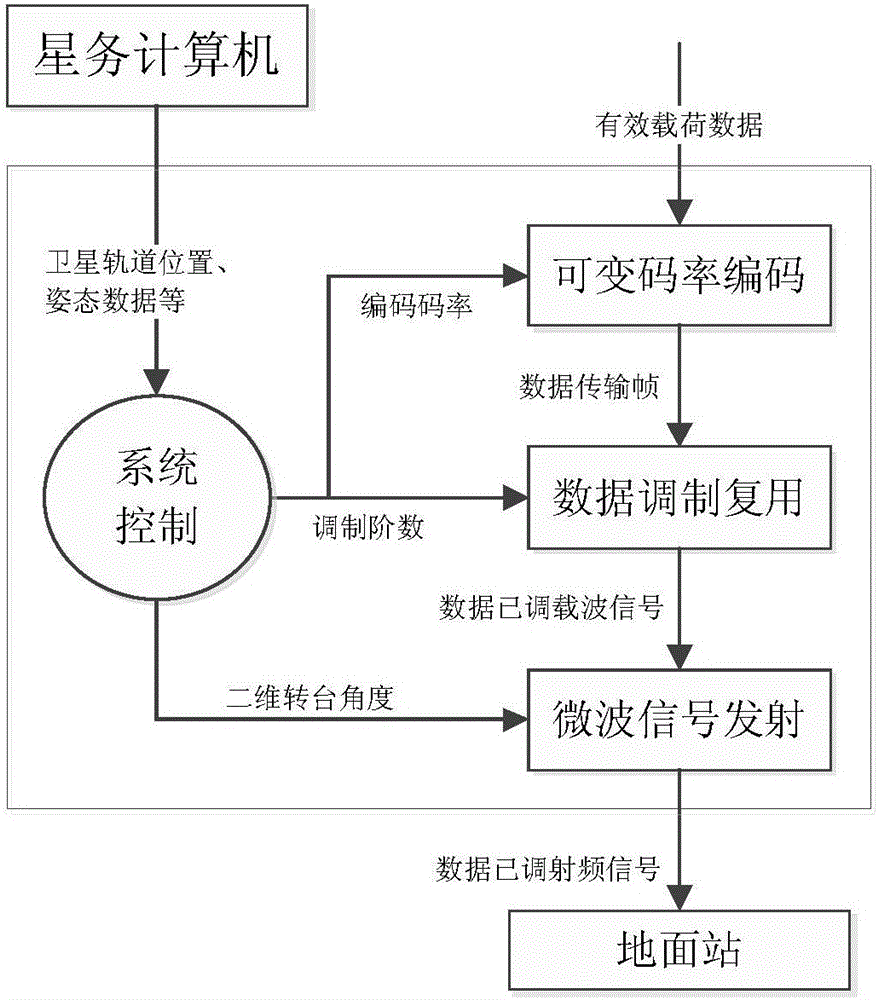

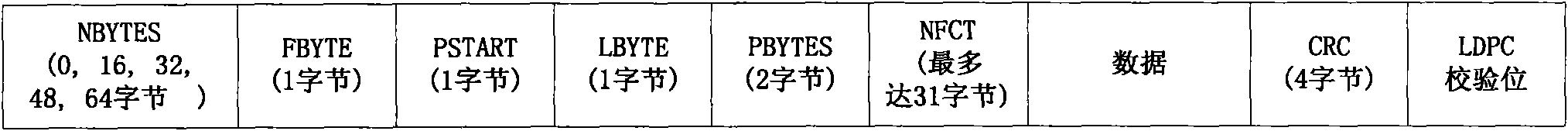

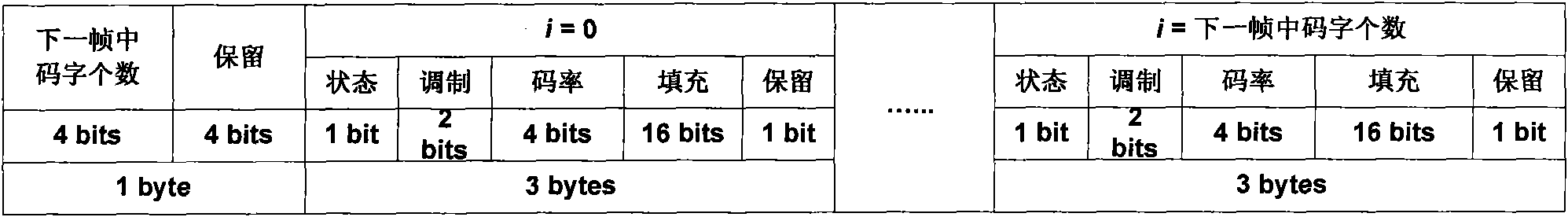

Efficient superspeed effective load data transmission system for remote sensing satellite

ActiveCN105978664AThe frequency band does not changeConstant Receiving CapabilityError preventionRadio transmissionFrequency spectrumSignal on

The invention provides an efficient superspeed effective load data transmission system for a remote sensing satellite. The efficient superspeed effective load data transmission system comprises a variable bit rate coding module, a data modulation multiplexing module, a microwave signal transmitting module and a system control module; the system control module calculates an encoding rate of a low-density parity-check code, a modulation order of amplitude phase shift keying and a turntable angle of a bearing antenna according to the relative positions of a satellite and a ground station; the variable bit rate coding module finishes channel coding of effective load data, and constitutes a data transmission frame; the data modulation multiplexing module realizes amplitude phase shift keying modulation of the data transmission frame, and performs orthogonal frequency division multiplexing on a transmission code element signal on a plurality of sub channels, after that, the transmission code element signal becomes a data modulated carrier signal; and the microwave signal transmitting module converts the data modulated carrier signal into a data modulated radio frequency signal through frequency spectrum shifting, outputs a space electromagnetic wave of a certain power, and keeps an antennae beam to automatically track the ground station; and therefore, a transmission bit rate of a Gbps magnitude can be realized.

Owner:NAT SPACE SCI CENT CAS

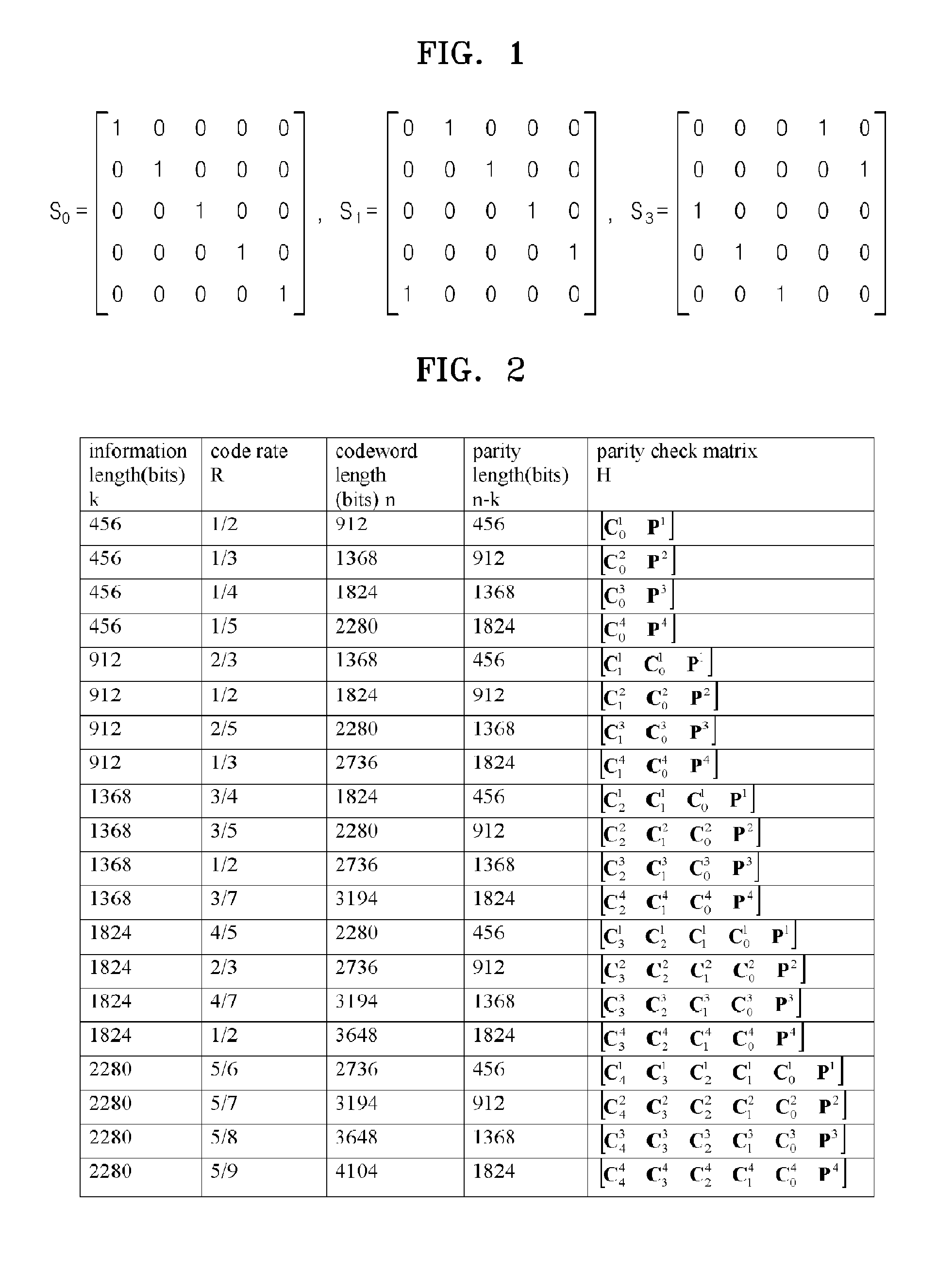

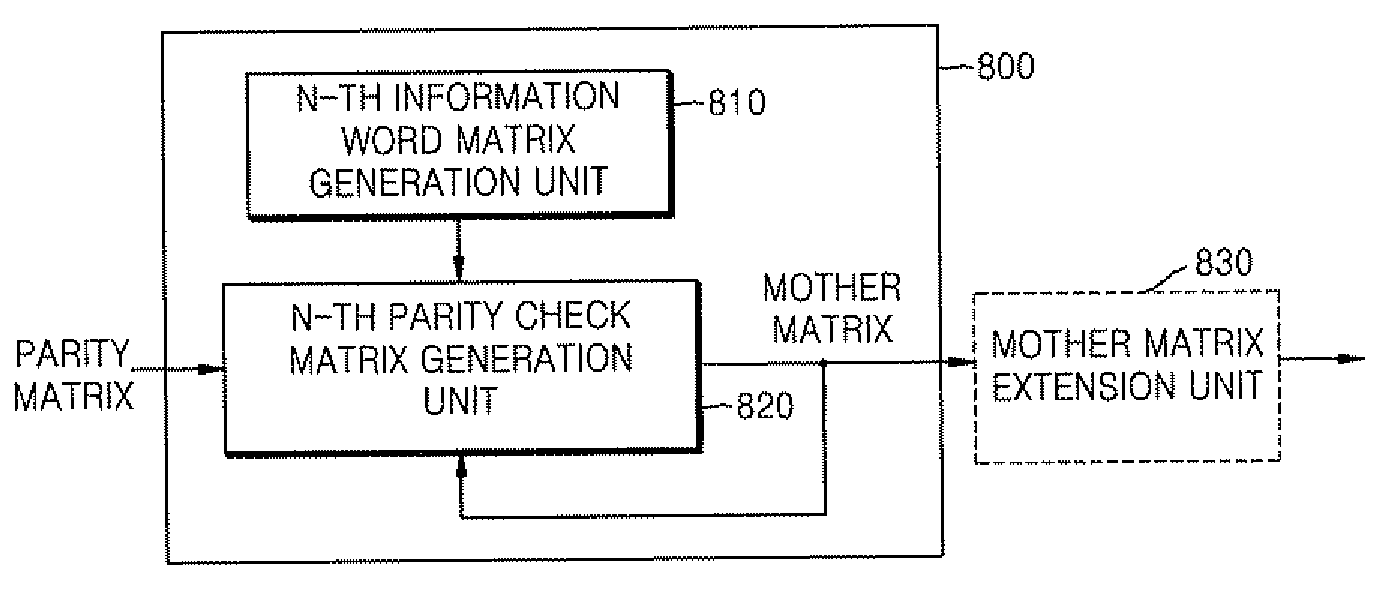

Method and apparatus for designing low density parity check code with multiple code rates, and information storage medium thereof

InactiveUS20080294963A1Improve error correction performanceError correction/detection using multiple parity bitsCode conversionTheoretical computer scienceParity-check matrix

A method and apparatus for generating a low density parity check (LDPC) code having a variable code rate, the method of generating the LDPC code having a variable code rate including: generating a first parity check matrix by combining a parity matrix or a parity check matrix and a first information word matrix; and generating a second parity check matrix by combining the first parity check matrix and a second information word matrix. According to the method and apparatus, error correction performance is enhanced.

Owner:SAMSUNG ELECTRONICS CO LTD

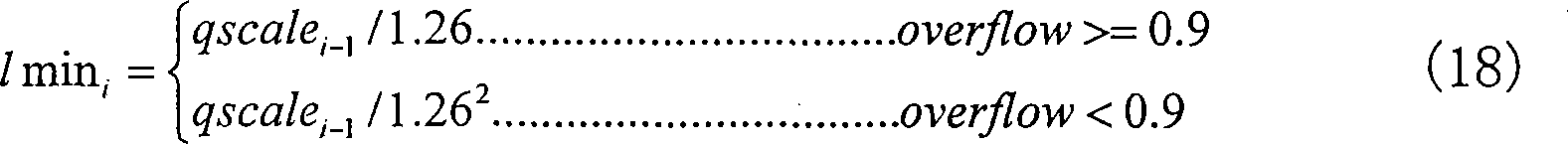

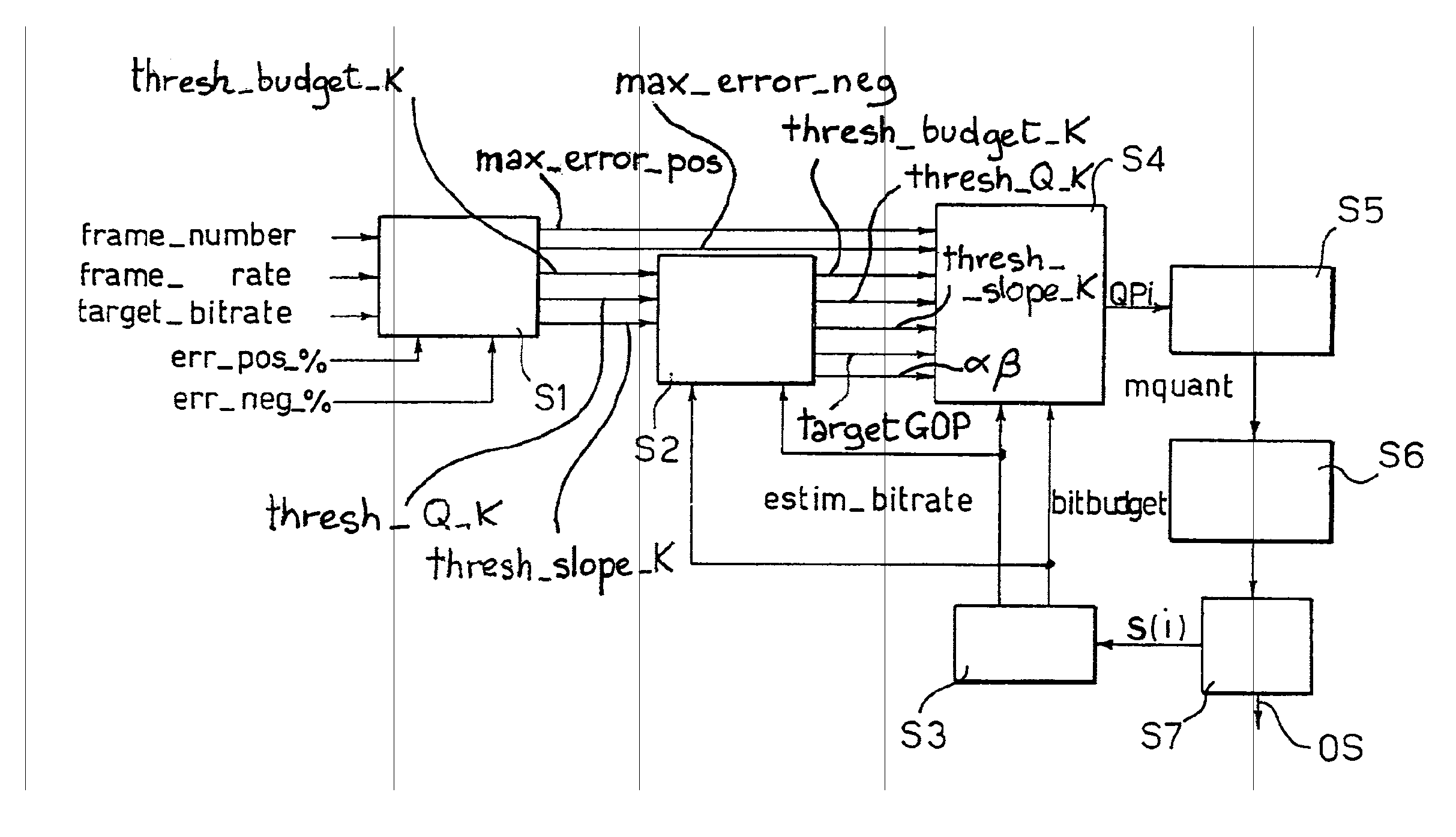

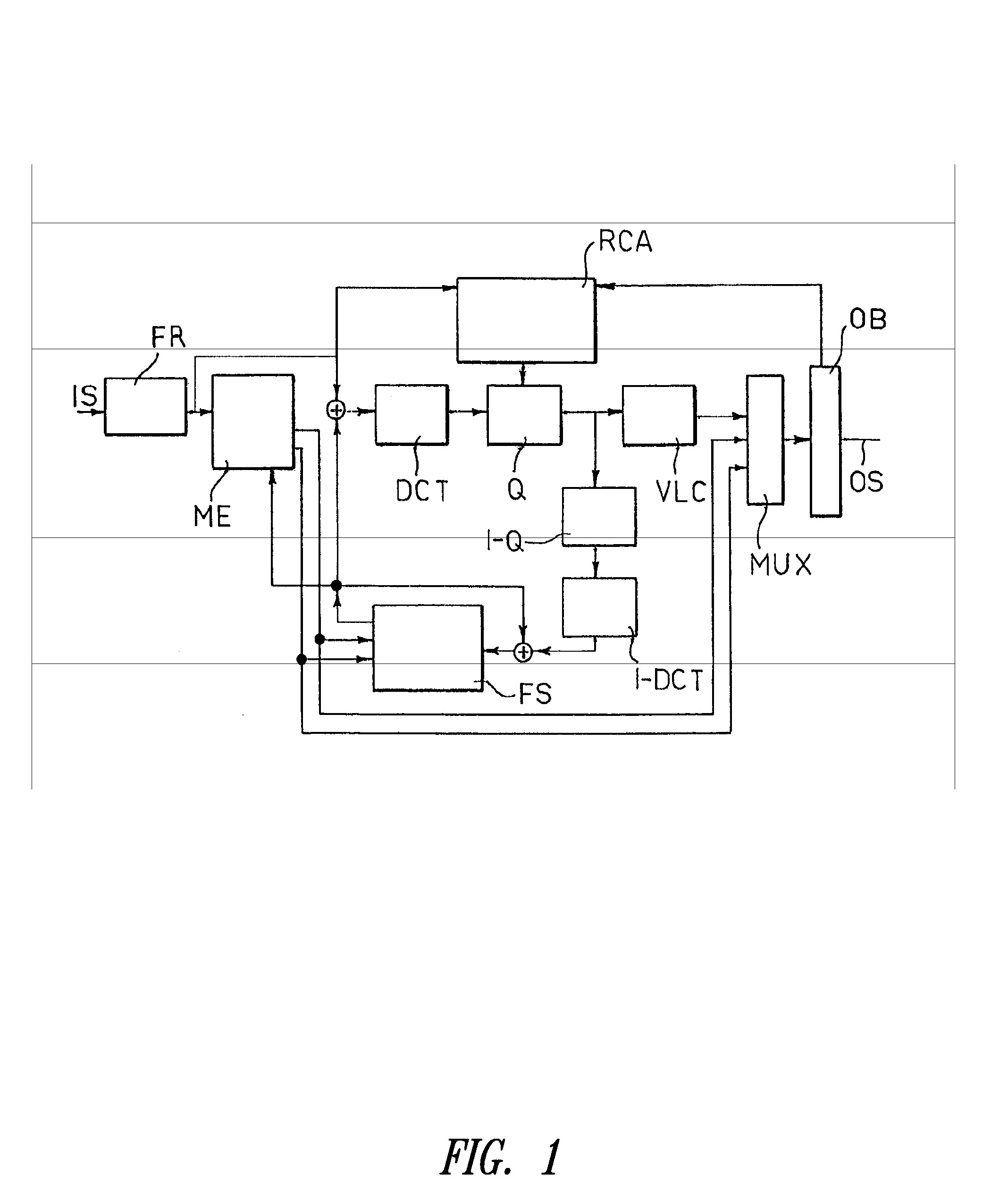

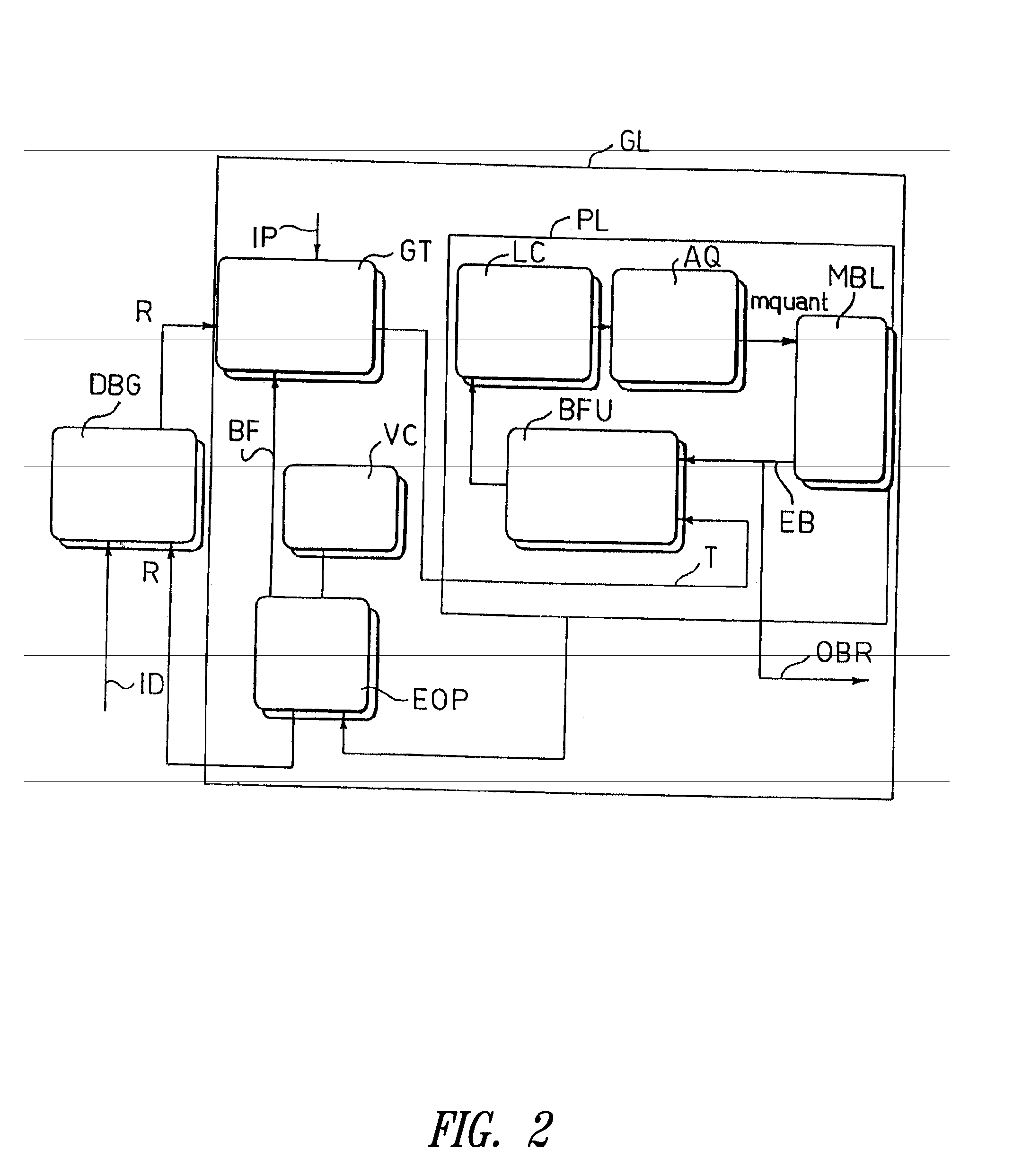

Method and apparatus for variable bit-rate control in video encoding systems and computer program product therefor

ActiveUS7349474B2Easy to solveColor television with pulse code modulationColor television with bandwidth reductionAlgorithmVideo sequence

A method for controlling the bit-rate of a bitstream of encoded video signals at a variable bitrate, the bitstream being generated by compressing a video sequence of moving pictures, wherein each picture comprises a plurality of macroblocks of pixels compressed by any of transform coding, temporal prediction, bi-dimensional motion compensated interpolation or combinations thereof, to produce any of I and / or P and / or B frames, the method involving quantization of said macroblocks effected as a function of a quantization parameter. The method includes defining a target bit-rate as well as maximum positive and negative error values between the target bit-rate and an average value of the current bit-rate of the bitstream, controlling the current bit-rate in order to constrain it between said maximum positive and negative error values, and defining an allowed range of variation for updating at least one reference parameter representative of the average value of the quantization parameter over each picture, wherein said allowed range is determined as a function of the target bit-rate and the maximum positive and negative error values.

Owner:STMICROELECTRONICS SRL

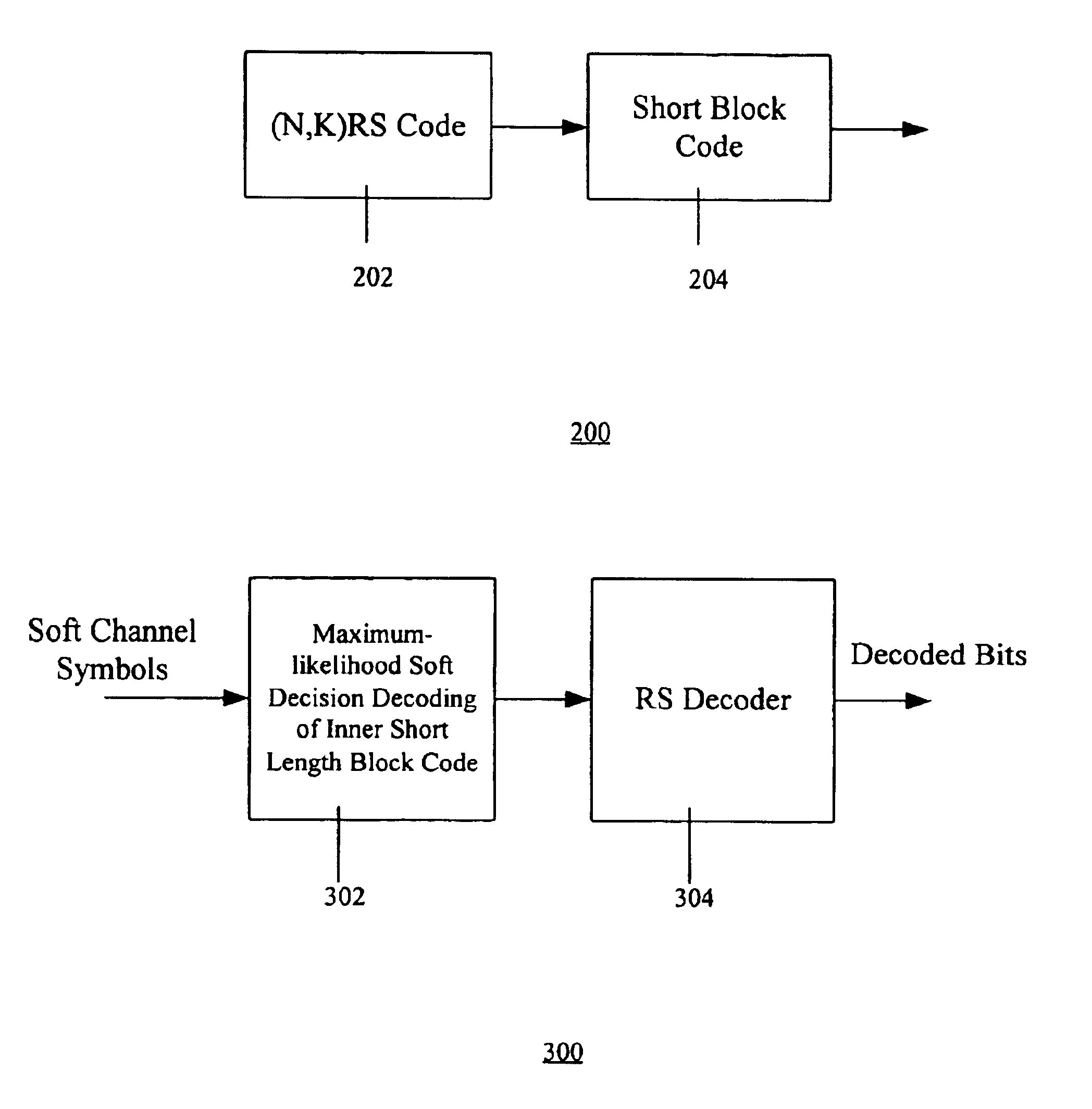

Method and apparatus for concatenated channel coding with variable code rate and coding gain in a data transmission system

InactiveUS6883133B1Valid encodingReduce complexityError correction/detection using convolutional codesOther decoding techniquesComputer hardwareBlock code

A novel method and apparatus for efficiently coding and decoding data in a data transmission system is described. A concatenated coding scheme is presented that is easily implemented, and that provides acceptable coding performance characteristics for use in data transmission systems. The inventive concatenated channel coding technique is well suited for small or variable size packet data transmission systems. The technique may also be adapted for use in a continuous mode data transmission system. The method and apparatus reduces the complexity, cost, size and power consumption typically associated with the prior art channel coding methods and apparatuses, while still achieving acceptable coding performance. The present invention advantageously performs concatenated channel coding without the necessity of a symbol interleaver. In addition, the present invention is simple to implement and thereby consumes much less space and power that do the prior art approaches. The present invention not only eliminates the need for a symbol interleaver between the outer and inner codes, but it also enjoys a drastically reduced implementation complexity of the inner code Viterbi decoder. The preferred embodiment of the present invention comprises an inner code having short length block codes derived from short constraint length convolutional codes utilizing trellis tailbiting and a decoder comprising four four-state Viterbi decoders having a short corresponding maximum length. The inner code preferably comprises short block codes derived from four-state (i.e., constraint length 3), nonsystematic, punctured and unpunctured convolutional code. One significant advantage of the preferred embodiment of the present concatenated coding technique is that packet data transmission systems can be designed to have variable coding gains and coding rates.

Owner:TUMBLEWEED HLDG LLC +1

Efficient front end memory arrangement to support parallel bit node and check node processing in LDPC (Low Density Parity Check) decoders

InactiveUS7587659B2Error correction/detection using multiple parity bitsCode conversionParallel computingLow density

Efficient front end memory arrangement to support parallel bit node and check node processing in LDPC (Low Density Parity Check) decoders. A novel approach is presented by which the front end design of device capable to decode LDPC coded signals facilitates parallel decoding processing of the LDPC coded signal. The implementation of the front end memory management in conjunction with the implementation of a metric generator operate cooperatively lend themselves for very efficient parallel decoding processing of LDPC coded signals. There are several embodiments by which the front end memory management and the metric generator may be implemented to facilitate this parallel decoding processing of LDPC coded signals. This also allows for the decoding of variable code rate and / or variable modulation signals whose code rate and / or modulation varies as frequently as on a block by block basis (e.g., a block may include a group of symbols within a frame).

Owner:AVAGO TECH INT SALES PTE LTD

Method and apparatus for concatenated channel coding with variable code rate and coding gain in a data transmission system

InactiveUS20070033509A1Valid encodingReduce complexityError correction/detection using convolutional codesError preventionComputer hardwareTransport system

A novel method and apparatus for efficiently coding and decoding data in a data transmission system is described. A concatenated coding scheme is presented that is easily implemented, and that provides acceptable coding performance characteristics for use in data transmission systems. The inventive concatenated channel coding technique is well suited for small or variable size packet data transmission systems. The technique may also be adapted for use in a continuous mode data transmission system. The method and apparatus reduces the complexity, cost, size and power consumption typically associated with the prior art channel coding methods and apparatuses, while still achieving acceptable coding performance. The present invention advantageously performs concatenated channel coding without the necessity of a symbol interleaver. In addition, the present invention is simple to implement and thereby consumes much less space and power that do the prior art approaches. The present invention not only eliminates the need for a symbol interleaver between the outer and inner codes, but it also enjoys a drastically reduced implementation complexity of the inner code Viterbi decoder. The preferred embodiment of the present invention comprises an inner code having short length block codes derived from short constraint length convolutional codes utilizing trellis tailbiting and a decoder comprising four four-state Viterbi decoders having a short corresponding maximum length. The inner code preferably comprises short block codes derived from four-state (i.e., constraint length 3), nonsystematic, punctured and unpunctured convolutional code. One significant advantage of the preferred embodiment of the present concatenated coding technique is that packet data transmission systems can be designed to have variable coding gains and coding rates.

Owner:TUMBLEWEED HLDG LLC +1

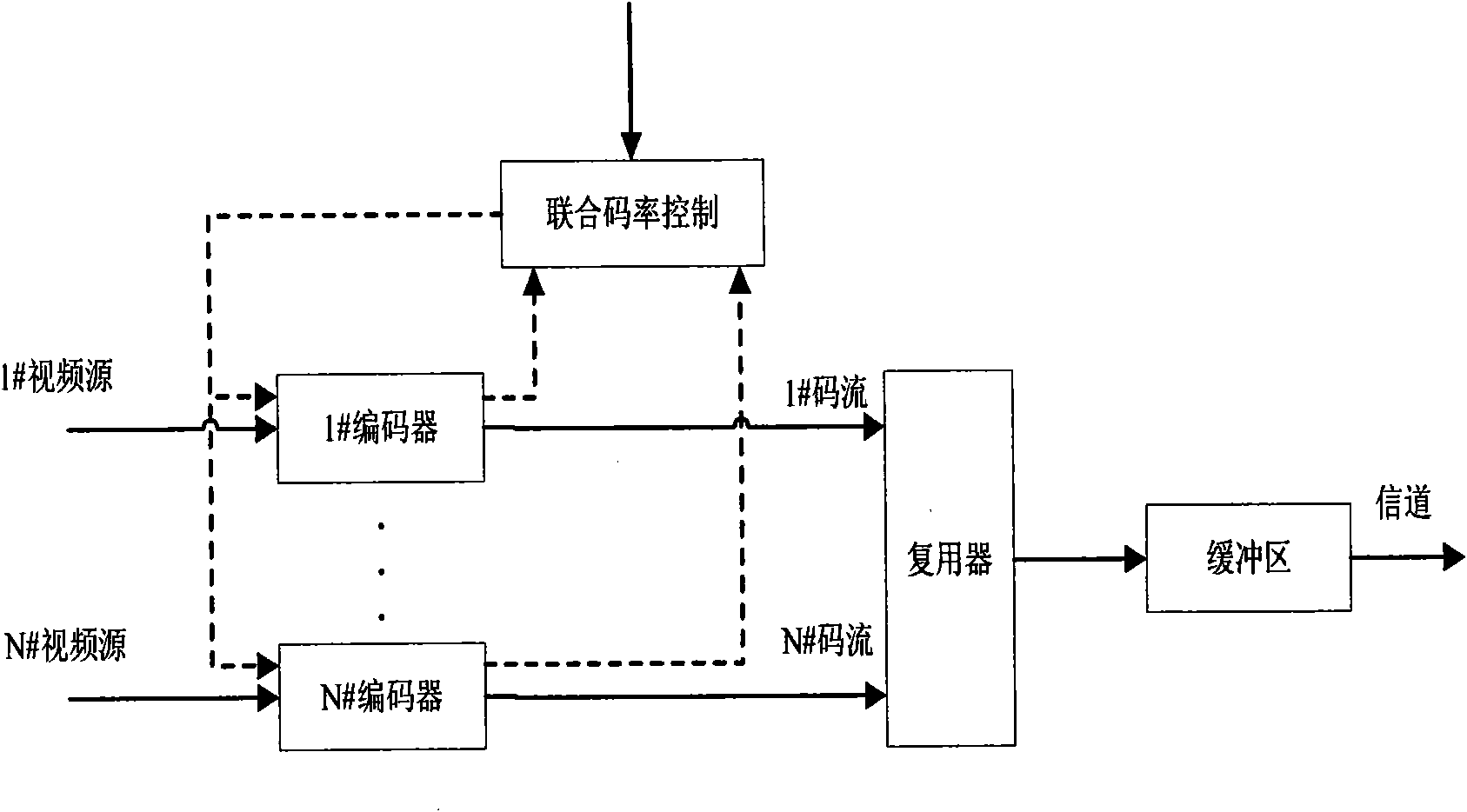

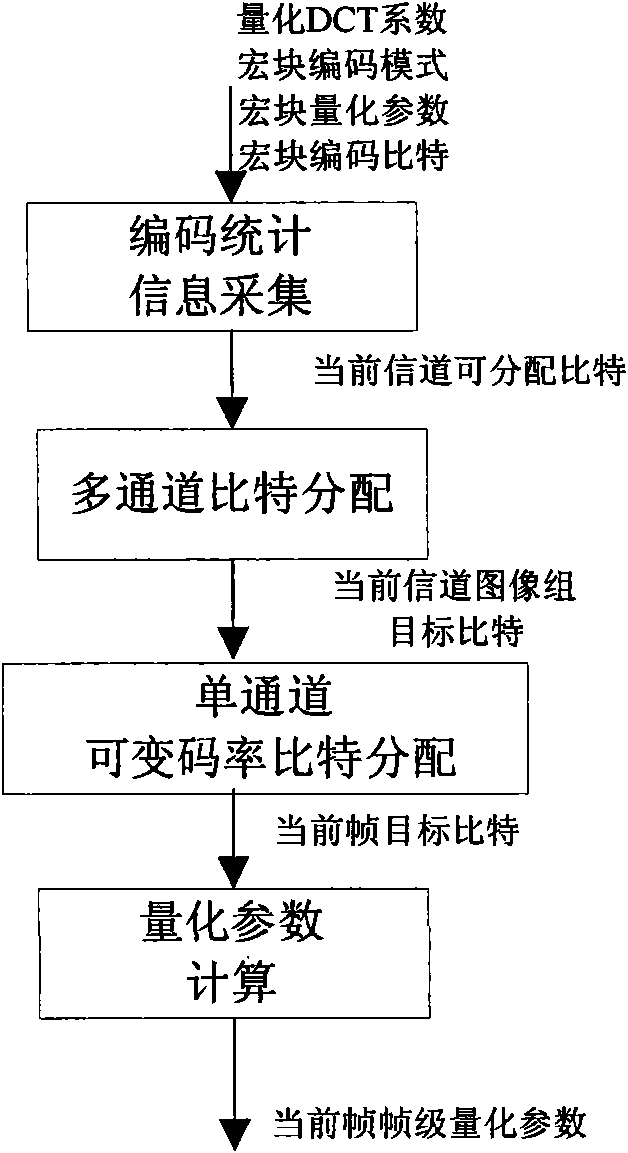

Multi-channel video unicode rate control method

InactiveCN101854531AReduce the difference in video qualityMeet bandwidth requirementsPulse modulation television signal transmissionDigital video signal modificationSource imageBandwidth requirement

The invention relates to a multi-channel video unicode rate control method, which comprises the following steps: collecting code statistics information, allocating multi-channel bit, allocating single-channel variable code rate bit, and calculating quantization parameter. The method not only can allocate bit to each channel according to the complexity of the video source image content, ensure that the final multiplexing bit stream of each channel, satisfy the requirement of the band width, reduce the difference of the video quality of each channel, and can ensure the stability of the mage quality inside the channel since each channel by adopting the variable code rate technology. In addition, the method is completely based on the feedback way, the analysis of the image complexity is basedon the statistics information produced during the coding process, so an additional image complexity analysis module is not required, and the cost can be reduced.

Owner:ZHENJIANG TANGQIAO MICROELECTRONICS

Iterative metric updating when decoding LDPC (low density parity check) coded signals and LDPC coded modulation signals

InactiveUS20070124644A1Other decoding techniquesError detection/correctionLow-density parity-check codeModulation coding

Iterative metric updating when decoding LDPC (Low Density Parity Check) coded signals and LDPC coded modulation signals. A novel approach is presented for updating the bit metrics employed when performing iterative decoding of LDPC coded signals. This bit metric updating is also applicable to decoding of signals that have been generated using combined LDPC coding and modulation encoding to generate LDPC coded modulation signals. In addition, the bit metric updating is also extendible to decoding of LDPC variable code rate and / or variable modulation signals whose code rate and / or modulation may vary as frequently as on a symbol by symbol basis. By ensuring that the bit metrics are updated during the various iterations of the iterative decoding processing, a higher performance can be achieved than when the bit metrics remain as fixed values during the iterative decoding processing.

Owner:AVAGO TECH INT SALES PTE LTD

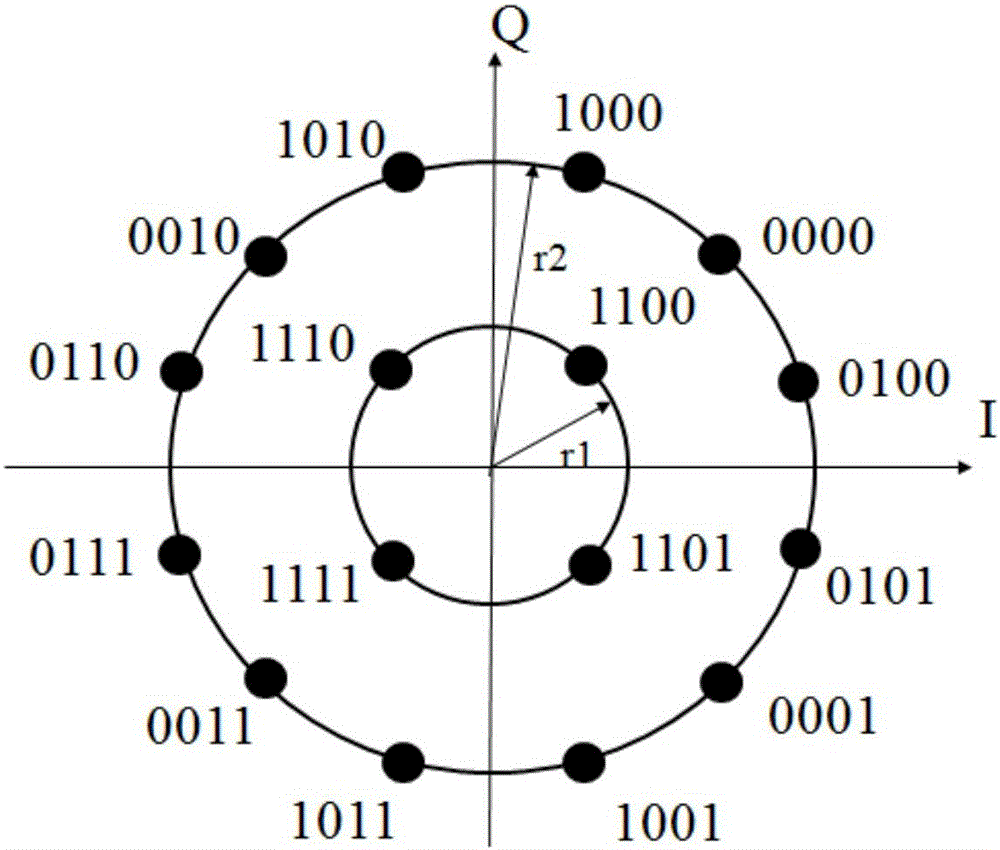

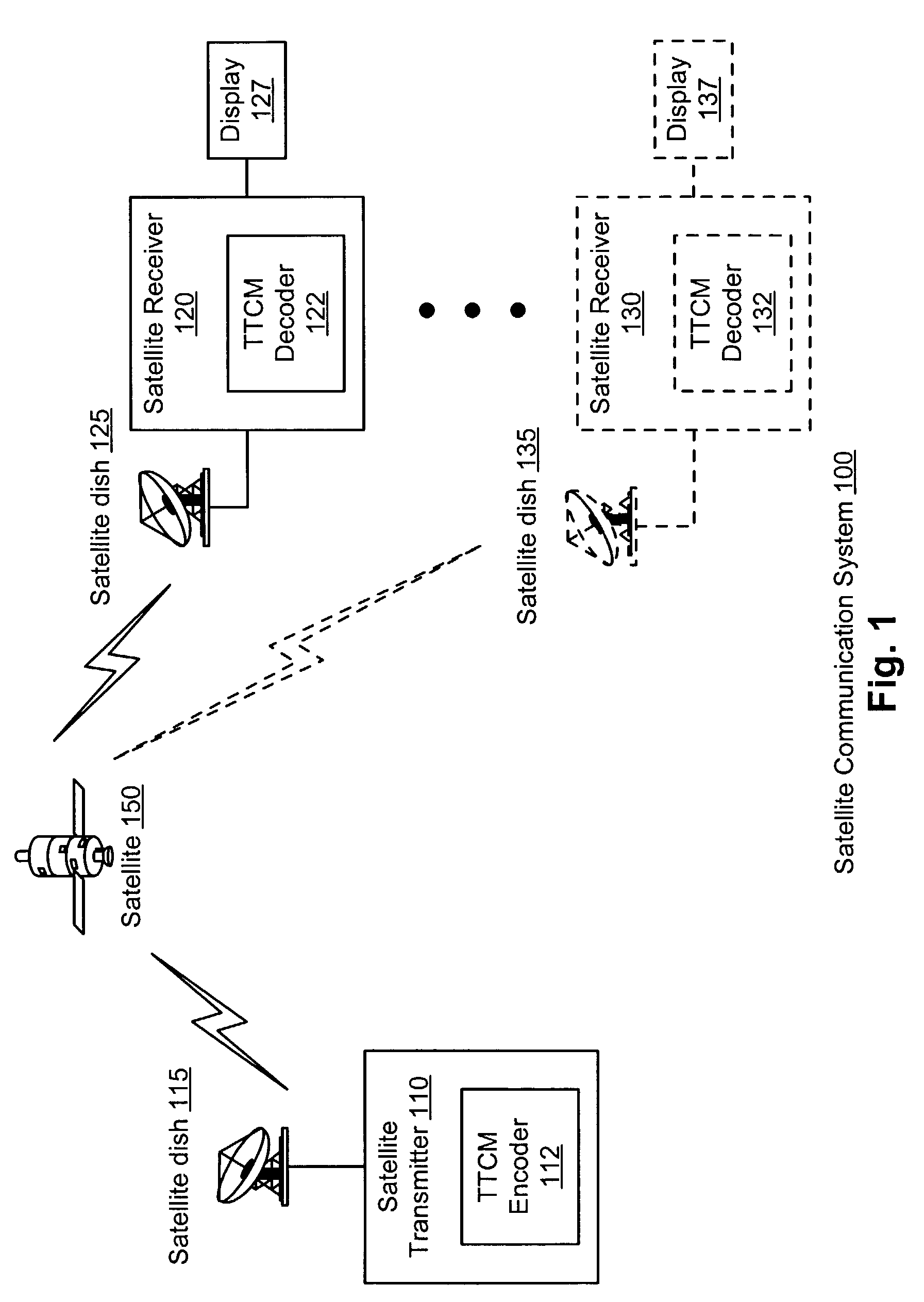

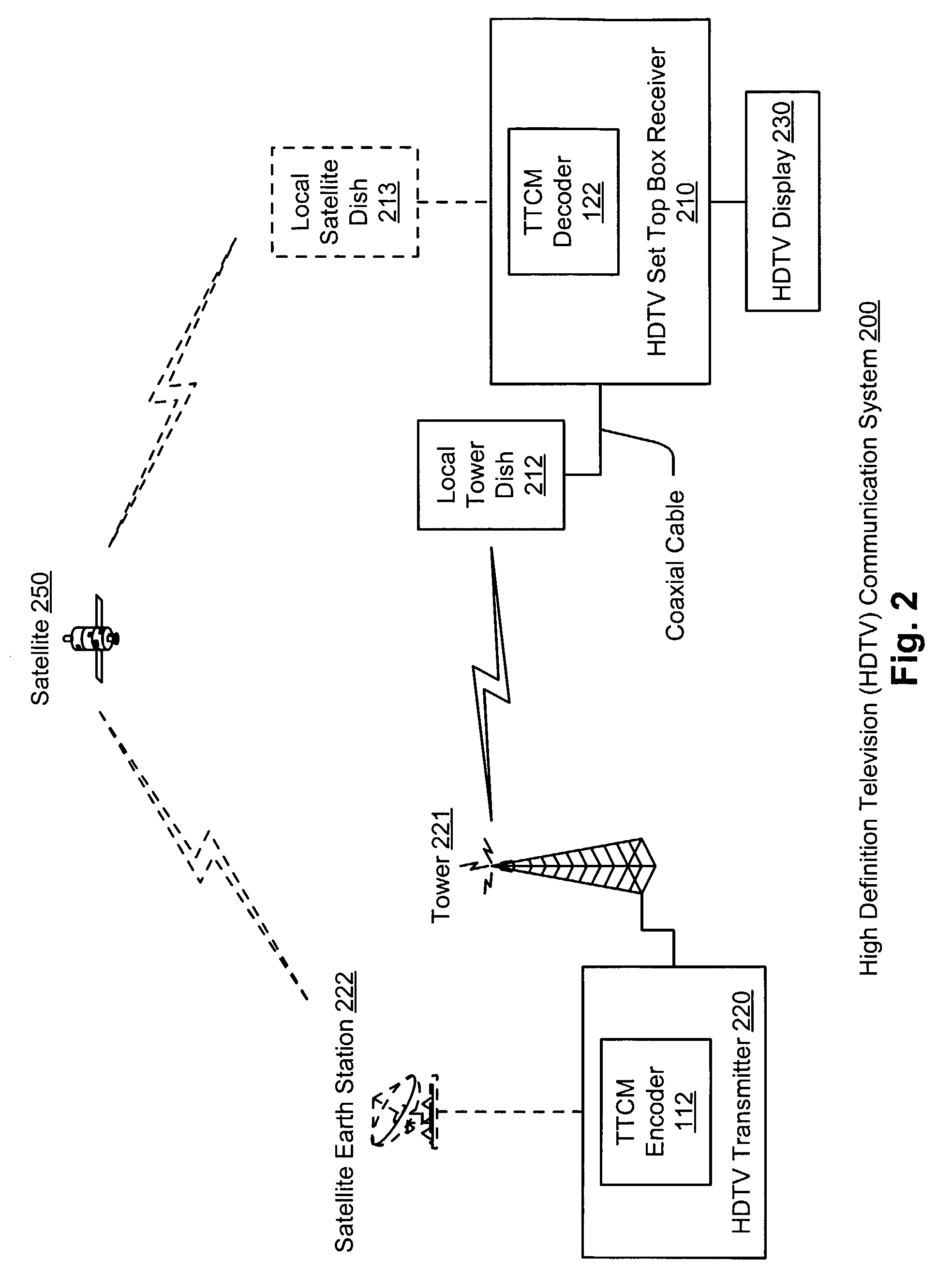

Metric calculation design for variable code rate decoding of broadband trellis, TCM, or TTCM

InactiveUS7065695B2Data representation error detection/correctionOther decoding techniquesComputer architectureAdaptive design

Metric calculation design for variable code rate decoding of broadband trellis, TCM (trellis coded modulated), or TTCM (turbo trellis coded modulation). A single design can accommodate a large number of code rates by multiplexing the appropriate paths within the design. By controlling where to scale for any noise of a received symbol within a received signal, this adaptable design may be implemented in a manner that is very efficient in terms of performance, processing requirements (such as multipliers and gates), as well as real estate consumption. In supporting multiple code rates, appropriately selection of the coefficients of the various constellations employed, using the inherent redundancy and symmetry along the I and Q axes, can result in great savings of gates borrowing upon the inherent redundancy contained therein; in addition, no subtraction (but only summing) need be performed when capitalizing on this symmetry.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Direct broadcast satellite variable coding modulation and self-adaptive coding modulation hybrid working method

InactiveCN101860735AImprove transmission efficiencyOptimize resource usagePulse modulation television signal transmissionGHz frequency transmissionRate modulationSignal-to-quantization-noise ratio

The invention discloses a direct broadcast satellite variable coding modulation and self-adaptive coding modulation hybrid working method, which comprises: a step A, in which a front-end system changes the modulation mode and code rate of a frame of data dynamically during program transmission according to program content transmission needs and thus a variable code rate modulation working mode is realized; and a step B, in which the front-end system, in the variable code rate modulation working mode, changes the variable code rate modulation working mode into a self-adaptive coding modulation working mode when acquiring the current system signal-to-noise ratio of a user. In the invention, based on the real-time variable characteristic of a transmission frame structure in a direct broadcast satellite system and the combination of the respective advantages of both the variable code rate modulation working mode and the self-adaptive coding modulation working mode, the utilization of satellite frequency resources is further optimized, the coverage requirements of the satellite broadcast systems are detailed, and the transmission effectiveness of the direct broadcast satellite system is improved.

Owner:ACAD OF BROADCASTING SCI SARFT

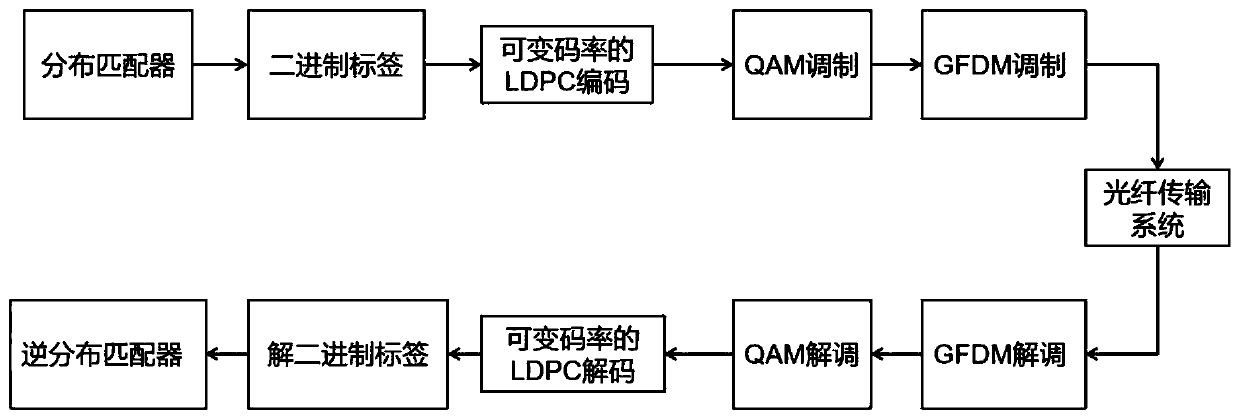

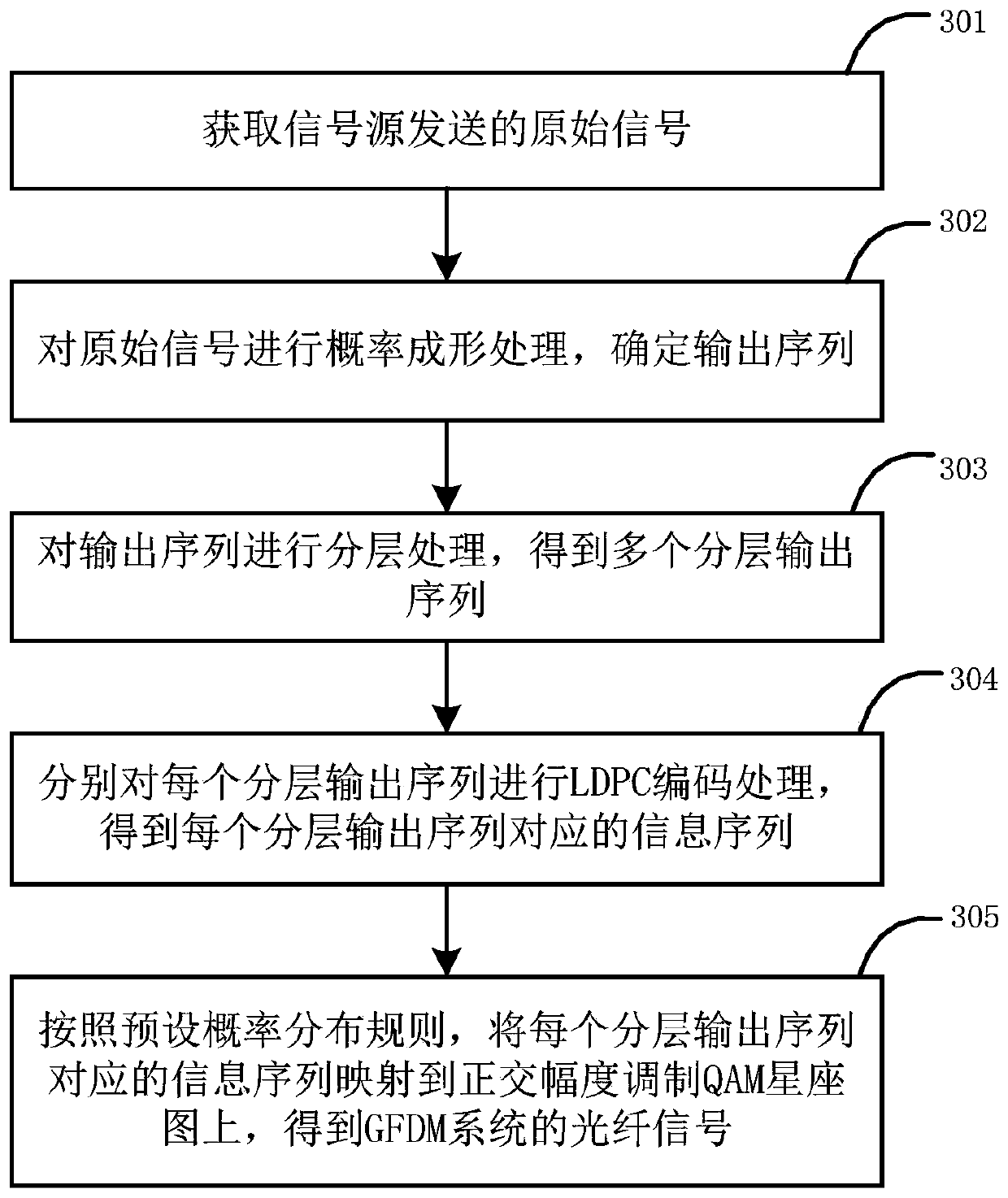

Generalized frequency division multiplexing system and optical fiber signal generation method and device

ActiveCN110418220AReduce energy lossImprove distributionFrequency-division multiplexing selectionTime-division multiplexingSignal source

The embodiment of the invention provides a generalized frequency division multiplexing system, an optical fiber signal generation method and an optical fiber signal generation device. The method comprises the following steps: acquiring an original signal sent by a signal source; performing probability shaping processing on the original signal, and determining an output sequence; layering the output sequence to obtain a plurality of layered output sequences; carrying out lDPC coding processing on each layered output sequence to obtain an information sequence corresponding to each layered outputsequence; and mapping the information sequence corresponding to each layered output sequence to a constellation diagram according to a preset probability distribution rule to obtain an optical fibersignal of the GFDM system. By applying the technical scheme provided by the embodiment of the invention, the GFDM system optical fiber signal can be obtained through probability forming and a variablecode rate LDPC coding technology, the energy loss of signal transmission is reduced, the coding efficiency is improved, and the error code performance and the channel capacity are improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Variable bitrate equipment

The present invention refers to a signal concentrator comprising:a parallel to serial conversion device comprising a plurality of parallel inputs and a serial output,a control unit comprising detection means adapted for detecting the activity of said plurality of parallel inputs of said parallel to serial conversion device, indication means adapted for indicating the active parallel inputs to the parallel to serial conversion device and controlling means adapted for setting an operating bitrate of the serial output in function of said activity of said plurality of parallel inputs.

Owner:RPX CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com