Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

72 results about "Relationship learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Learning Relationship represents the central engine of a one-to-one enterprise strategy. A Learning Relationship is a one-to-one relationship. It is the single unique and distinct characteristic of any CRM program. Now think about some of the implications:

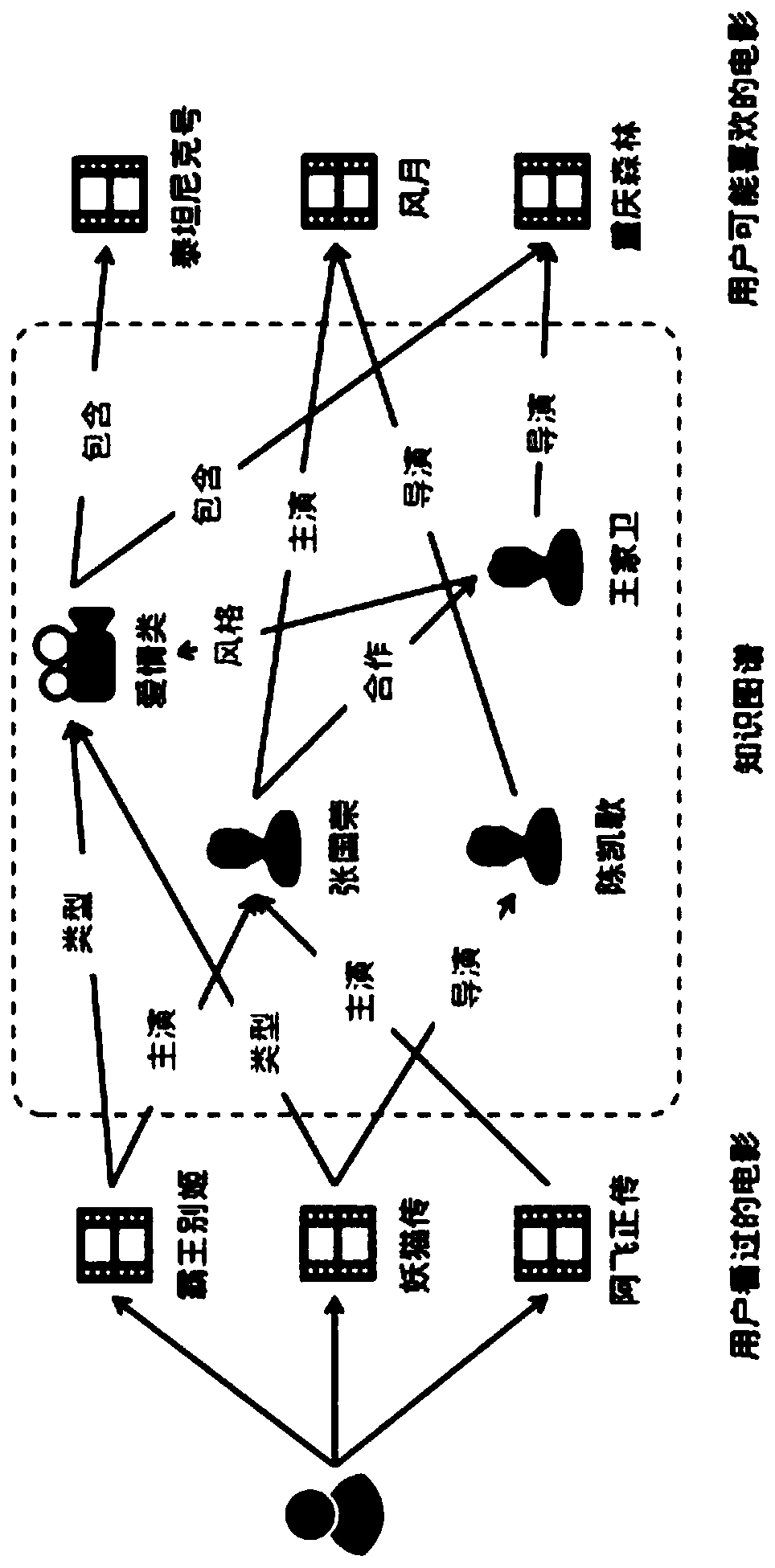

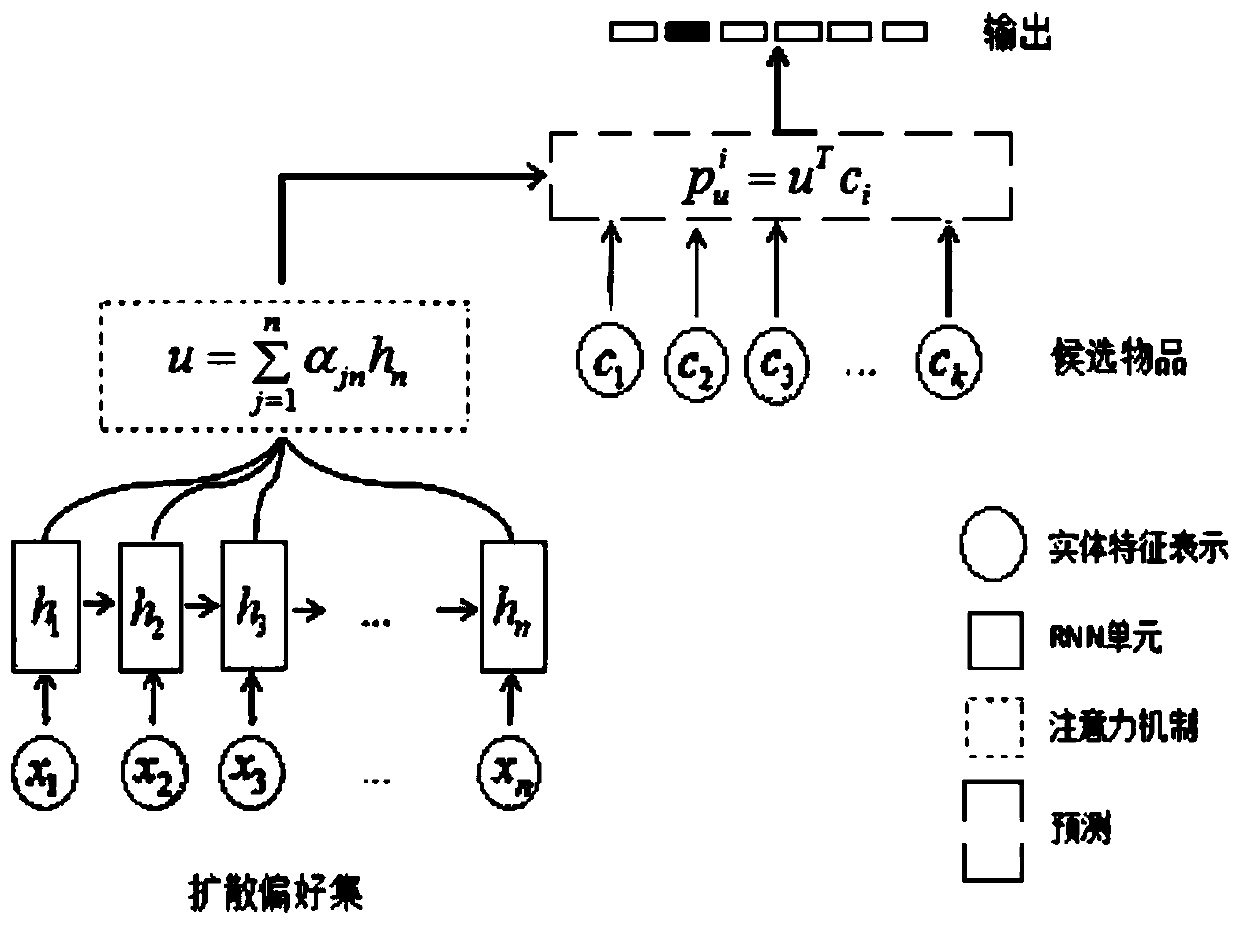

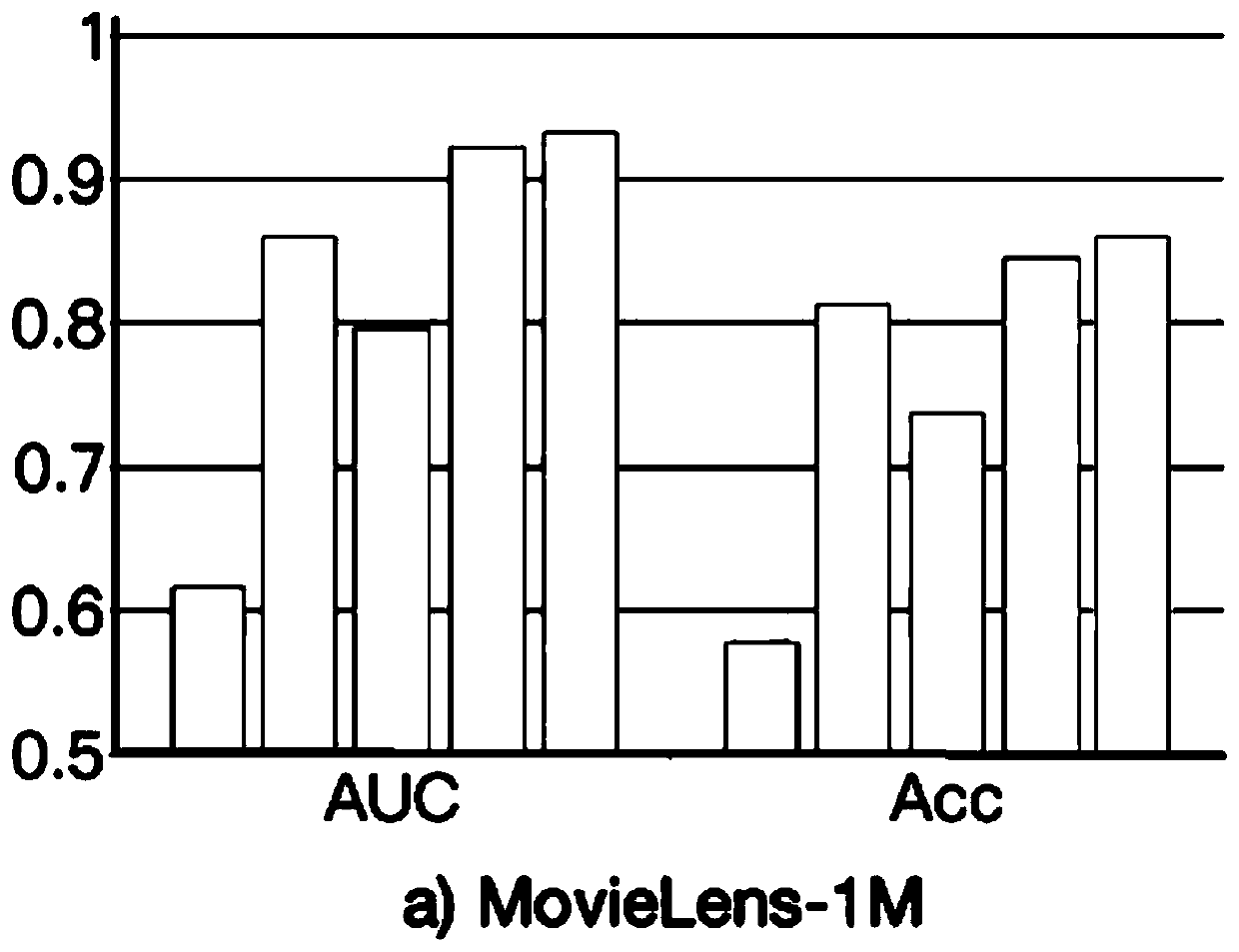

Recommendation model based on knowledge graph and recurrent neural network

ActiveCN110275964ARich preference informationEnrich historical preference dataEnergy efficient computingNeural learning methodsRecommendation modelFeature learning

The invention discloses a recommendation model based on a knowledge graph and a recurrent neural network. The recommendation model comprises a knowledge graph feature learning module, a diffusion preference set and a recurrent neural network recommendation module. The knowledge graph feature learning module learns each entity and relationship in the knowledge graph to obtain a low-dimensional vector; the diffusion preference set comprises h + 1 layers of diffusion preference sets, wherein h is the number of diffusion layers; each layer of adjacent diffusion preference sets are connected through a knowledge graph, and the recurrent neural network recommendation module learns the diffusion preference set of the user, obtains a deeper user preference representation containing more useful information, and is used for subsequently predicting the probability that the user likes a certain article. The diffusion preference set of the user is acquired by using the knowledge graph and the preference diffusion idea, and the diffusion preference set is used as the input of the recurrent neural network to learn the deeper user preference feature representation for subsequently predicting the probability that the user likes a certain article.

Owner:程淑玉

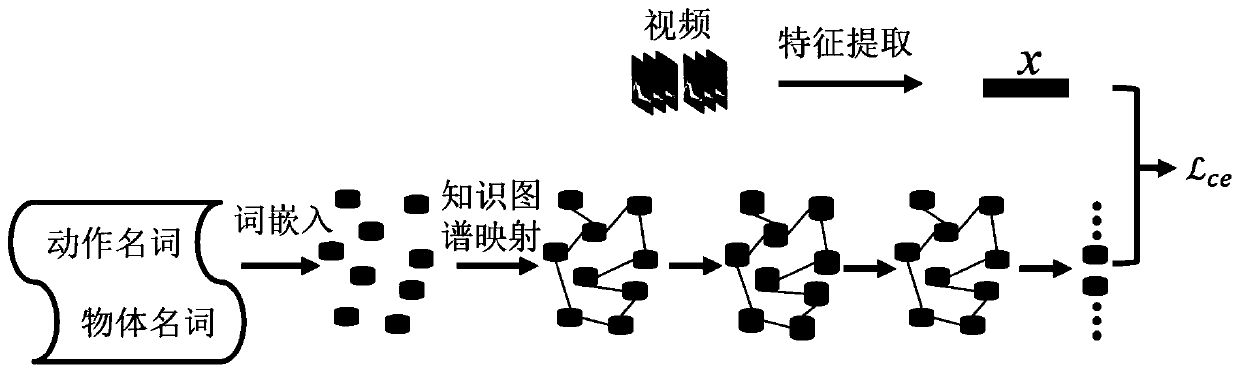

Human body behavior recognition method based on zero sample learning

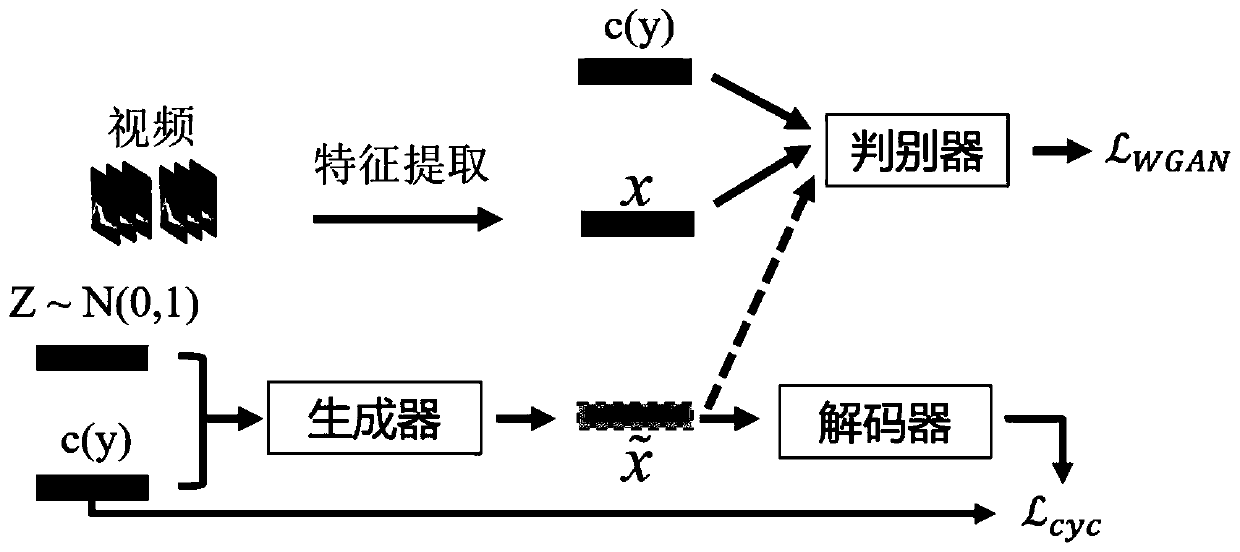

ActiveCN111126218AImprove classification performanceImprove accuracyBiometric pattern recognitionNeural architecturesHuman bodyGenerative adversarial network

The invention discloses a human body behavior recognition method based on zero sample learning, which improves the classification performance and accuracy of a trained classifier and promotes the realization of automatic labeling of human body behavior categories. The method comprises the following steps: (1) constructing a knowledge graph based on action classes and action associated objects, anddynamically updating the relationship of the knowledge graph through a graph convolutional network AMGCN based on an attention mechanism so as to better describe the relationship of nodes in the graph; (2) learning a generative adversarial network WGAN-GCC based on gradient penalty and cyclic consistency constraint, so that a learned generator can better generate unknown class features; and (3) combining the graph convolution network and the generative adversarial network into a double-flow deep neural network, so that the trained classifier is more discriminative.

Owner:BEIJING UNIV OF TECH

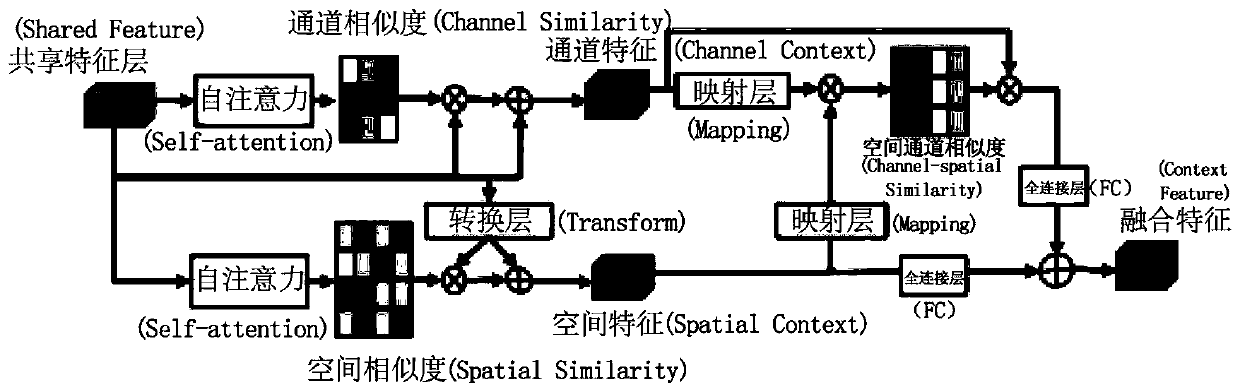

Brain tumor segmentation method based on multi-level structure relation learning network

ActiveCN111402259AImprove effectivenessReduce semantic differencesImage enhancementImage analysisInformation miningBrain tumor

The invention provides an advanced multi-level structure relationship learning network for segmenting brain tumor data. In each subnet, an environmental information mining module is introduced betweenan encoder and a decoder, and environmental information in a single domain and environmental information between different domains are respectively mined by adopting a dual self-attention mechanism and spatial interaction learning.

Owner:杭州健培科技有限公司

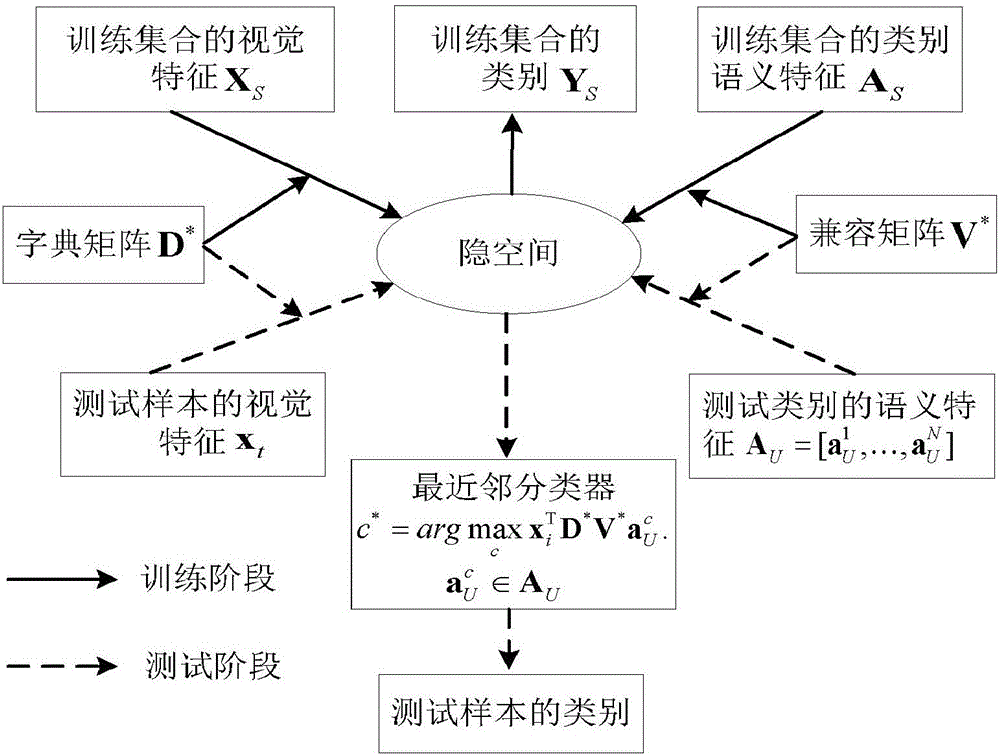

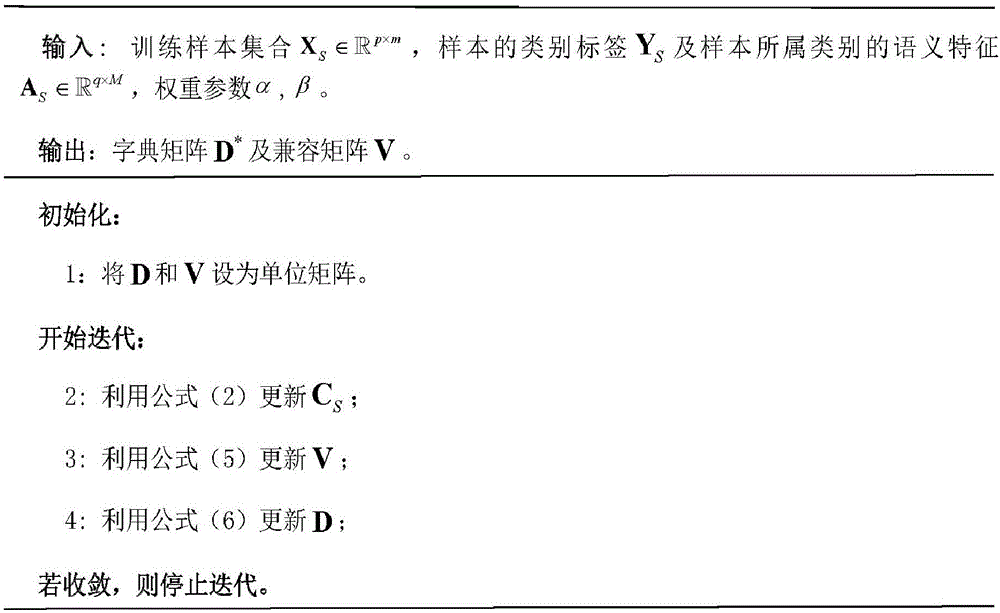

Zero sample classification method based on multi-mode dictionary learning

ActiveCN106485271AAchieve transferImprove training efficiencyCharacter and pattern recognitionSemantic vectorDictionary learning

Owner:TIANJIN UNIV

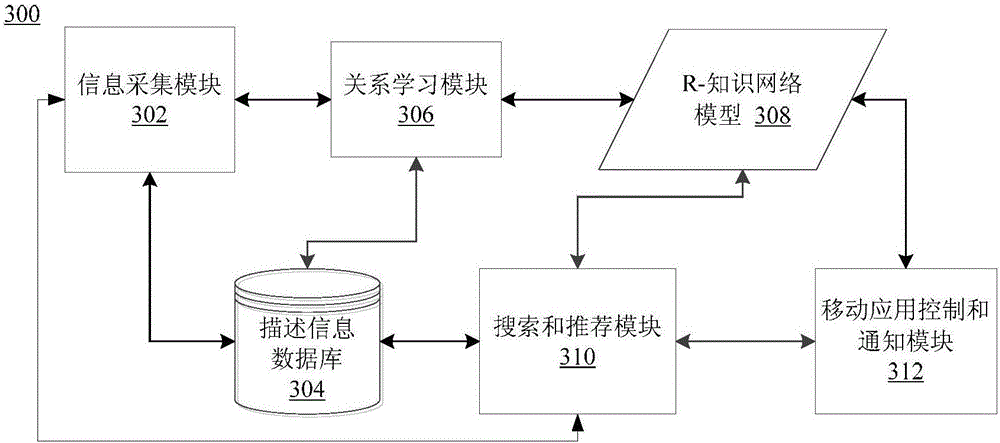

Heterogenous network (r-knowledge) for bridging users and apps via relationship learning

InactiveUS20170169351A1Relational databasesBiological neural network modelsHeterogeneous networkNetwork model

The present invention provides a method a method for learning and using relationships among users and apps on a mobile device. The method includes collecting user profile information and app profile information on the mobile device and obtaining ontology knowledge on contexts and relationships. The user profile information is associated with users including one or more owners of the mobile device and one or more people whose information have been accessed on the mobile device. The method further includes generating a network model based on the user profile information, the app profile information and the ontology knowledge. The network model is a heterogeneous information network model that links the users and the apps. Further, information based on the generated network model are outputted, which at least one of a role of a user, a relationship between two apps or app functions, and a recommended apps list.

Owner:TCL CORPORATION

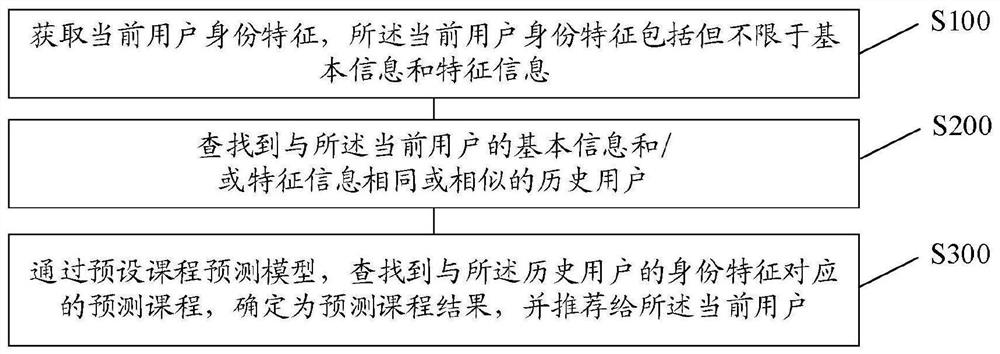

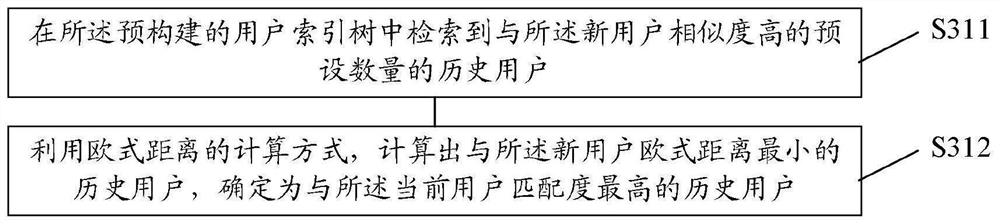

Online course recommendation method and device, electronic equipment and storage medium

PendingCN112214670AAchieve accuracyImprove experienceDigital data information retrievalSpecial data processing applicationsInformation findingEngineering

The invention belongs to the field of smart cities and can be applied to the technical field of smart education. The invention provides an online course recommendation method and device, electronic equipment and a storage medium, and the method comprises the steps: obtaining the identity features of a current user, wherein the identity features of the current user comprise but not to be limited tobasic information and feature information; searching a historical user which is the same as or similar to the basic information and / or the feature information of the current user; and searching a prediction course corresponding to the identity characteristics of the historical user through a preset course prediction model, determining the prediction course as a prediction course result, and recommending the prediction course result to the current user. Relational learning of user identity features and predicted courses is achieved through the learning ability of artificial intelligence, and the accuracy of course recommendation is achieved. The problem of cold start of a new user is solved by utilizing the pre-constructed user index tree, and the experience satisfaction of the user is improved according to the analysis of the real-time user behavior data.

Owner:PINGAN INT SMART CITY TECH CO LTD

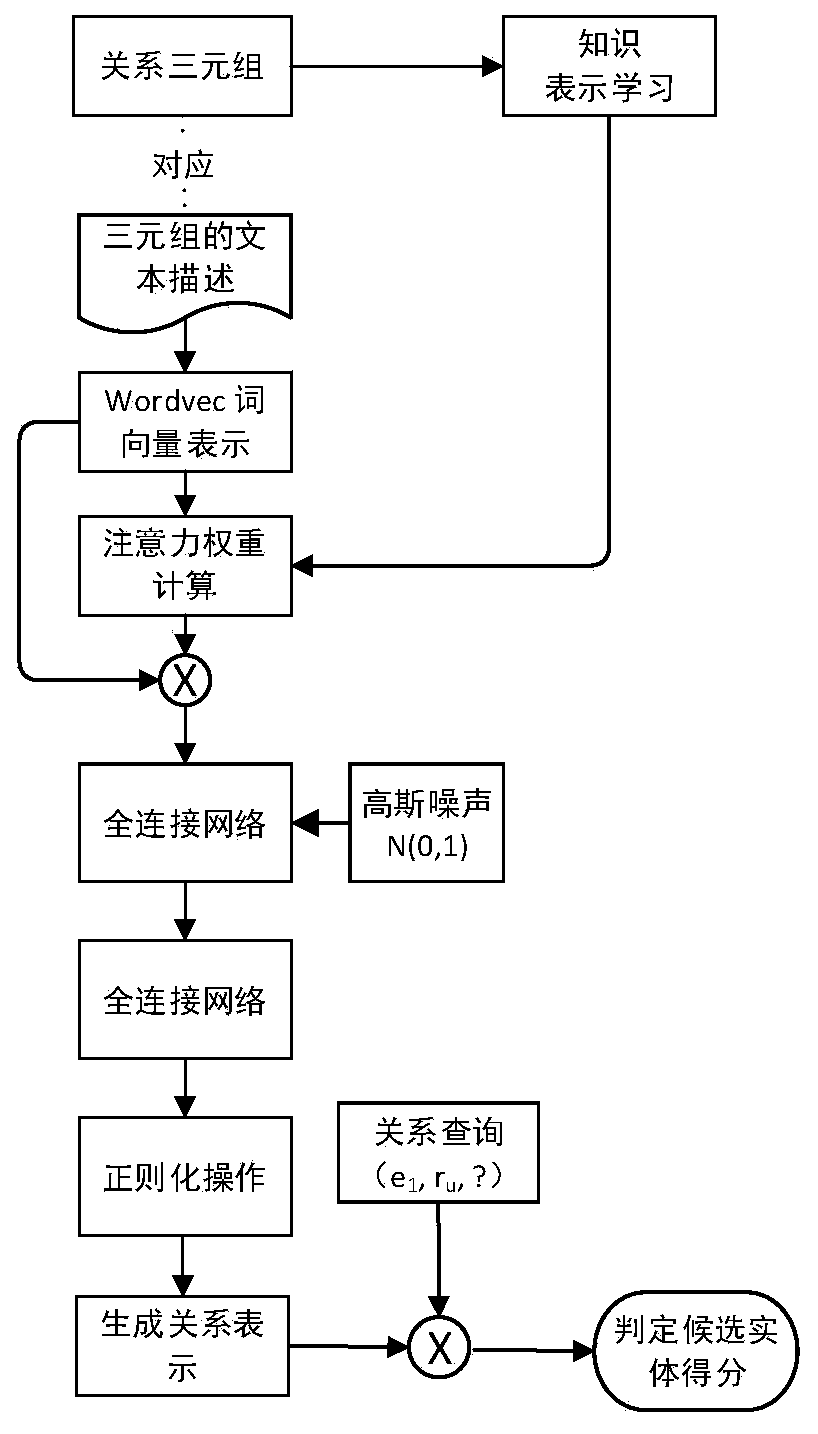

Sample knowledge graph relationship learning method and system based on adversarial attention mechanism

ActiveCN111046187ASmall scaleAchieve learningNeural architecturesEnergy efficient computingPattern recognitionConfusion

The invention discloses a sample knowledge graph relation learning method and system based on an adversarial attention mechanism. The method comprises: obtaining relation triples in a target knowledgegraph and natural text description corresponding to the relation triples; performing representation learning on the target knowledge graph to obtain vector representation of a triple; performing linedrawing representation learning on the text corresponding to the triple to obtain word vector representation in the text; constructing a conditional adversarial generation network with a denoising attention module and a confusion attention module; and performing optimization training on the conditional adversarial generation network, and predicting a target entity corresponding to the relationship query without the relationship type ru based on the trained conditional adversarial generation network. The relationship category of traditional relationship prediction is expanded from a visible relationship to an unseen relationship category, so that the range of predicting the relationship category is enlarged. And the scale of the training data is reduced from the traditional big data scaleto the learning and prediction of the unseen relationship by only needing a small number of samples or even one sample.

Owner:SHANDONG UNIV OF FINANCE & ECONOMICS

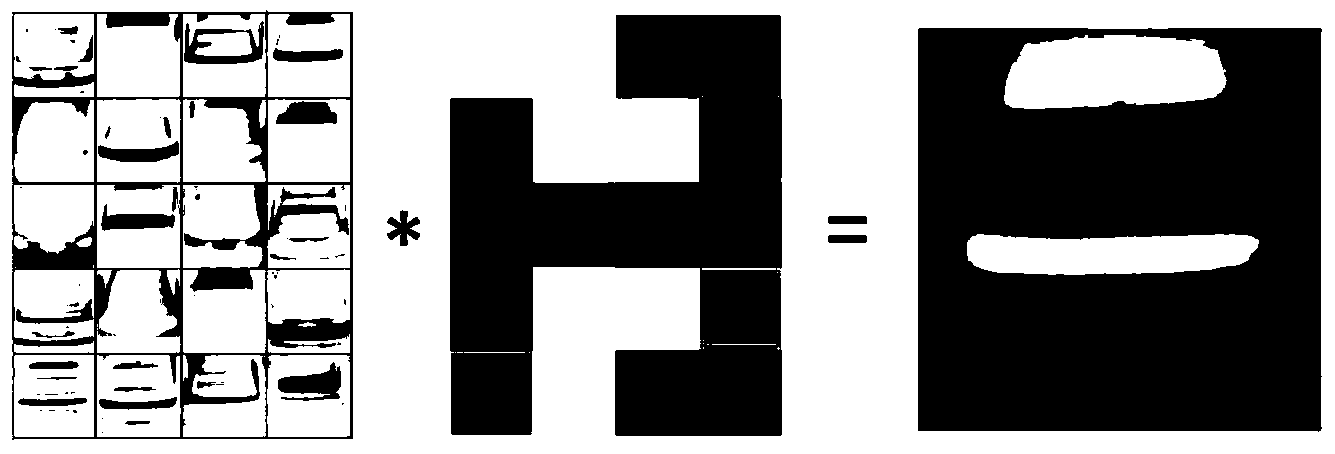

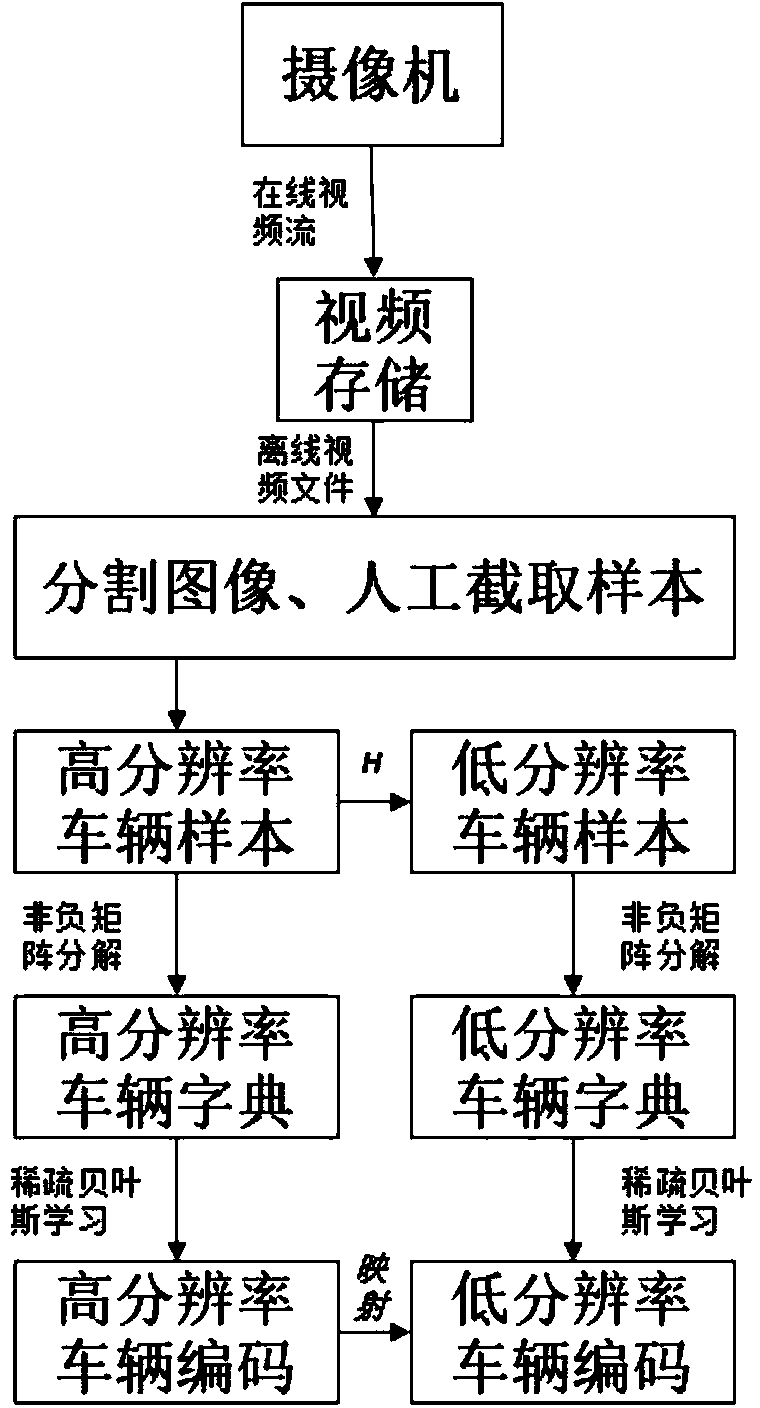

Vehicle low-resolution imaging method

InactiveCN104376303AResolve detectionTelevision system detailsCharacter and pattern recognitionDecompositionImage resolution

The invention relates to the field of computer vision, in particular to a vehicle low-resolution imaging method. The method comprises the steps of equipment installation, video collection, learning of mapping relations, learning of classifiers, vehicle detection and the like. According to the method, a vehicle global texture information training template is adopted to solve the problems existing in vehicle detection at a low resolution; sample codes and distribution of the sample codes are obtained through a nonnegative matrix decomposition training model dictionary and sparse Bayesian learning with a low-resolution vehicle image as a sample, and the model dictionary and the sample codes can reconstruct a sample image; the mapping relation between the vehicle sample codes at a high resolution and the mapping relation between the vehicle sample codes at a low resolution are learned, a video source is segmented into image sequences, a vehicle is detected frame by frame according to a vehicle model obtained through learning and training, and detection results are recorded in a database.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

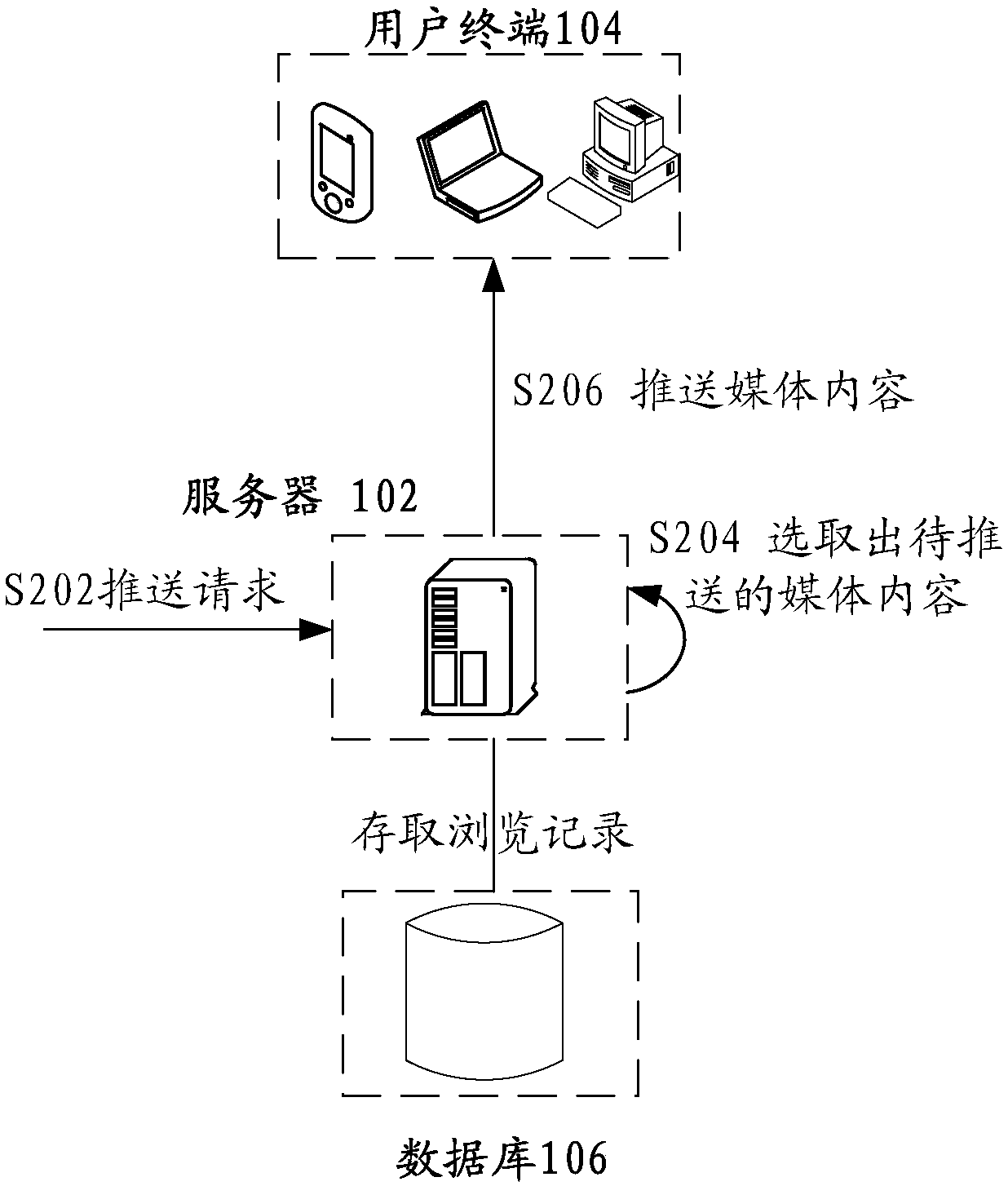

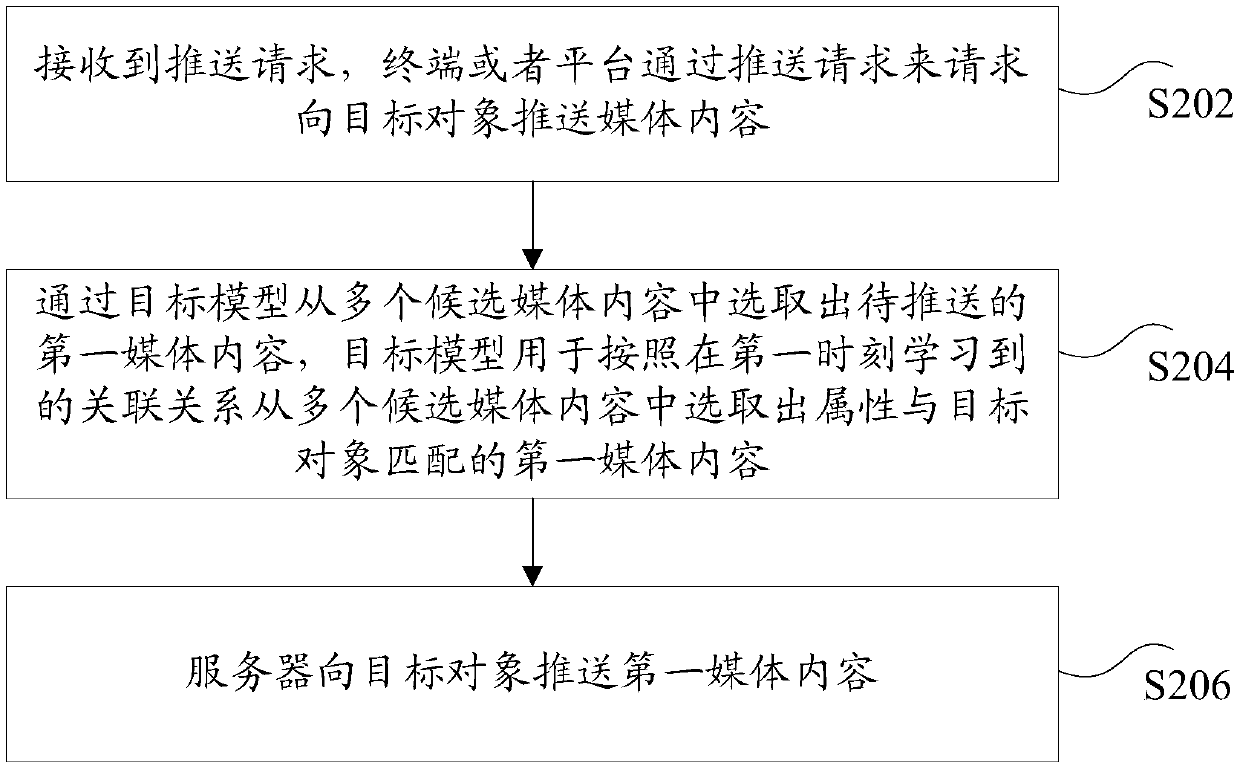

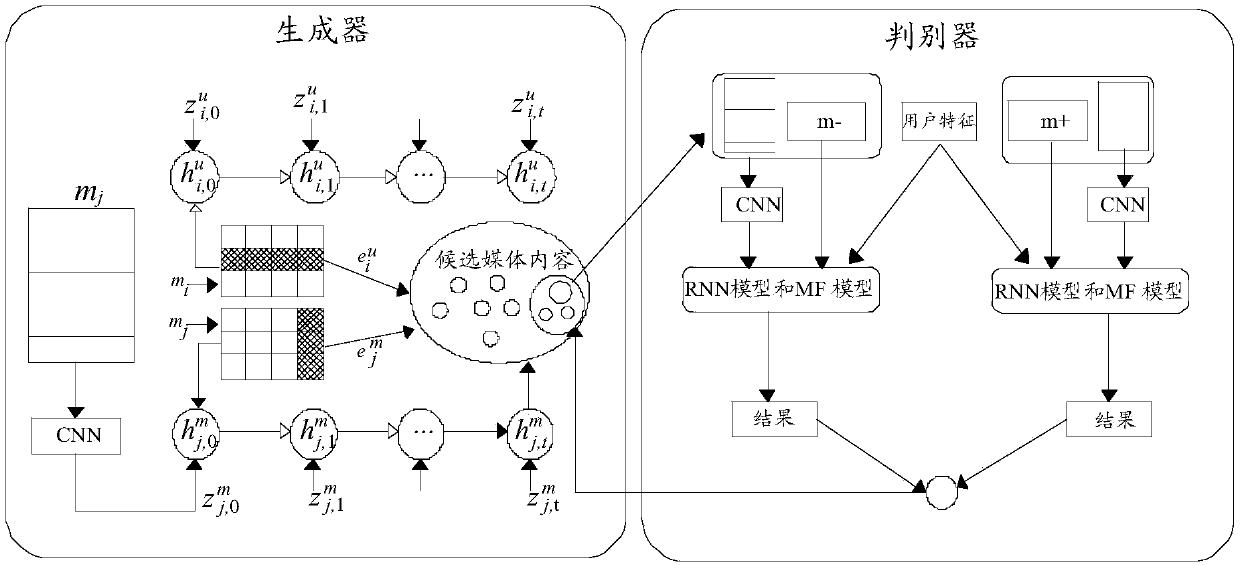

Pushing method and device of media contents, storage medium and electronic device

ActiveCN108595493AFix technical issues with lower accuracyImprove accuracySpecial data processing applicationsObjective modelWorld Wide Web

Owner:TENCENT TECH (SHENZHEN) CO LTD

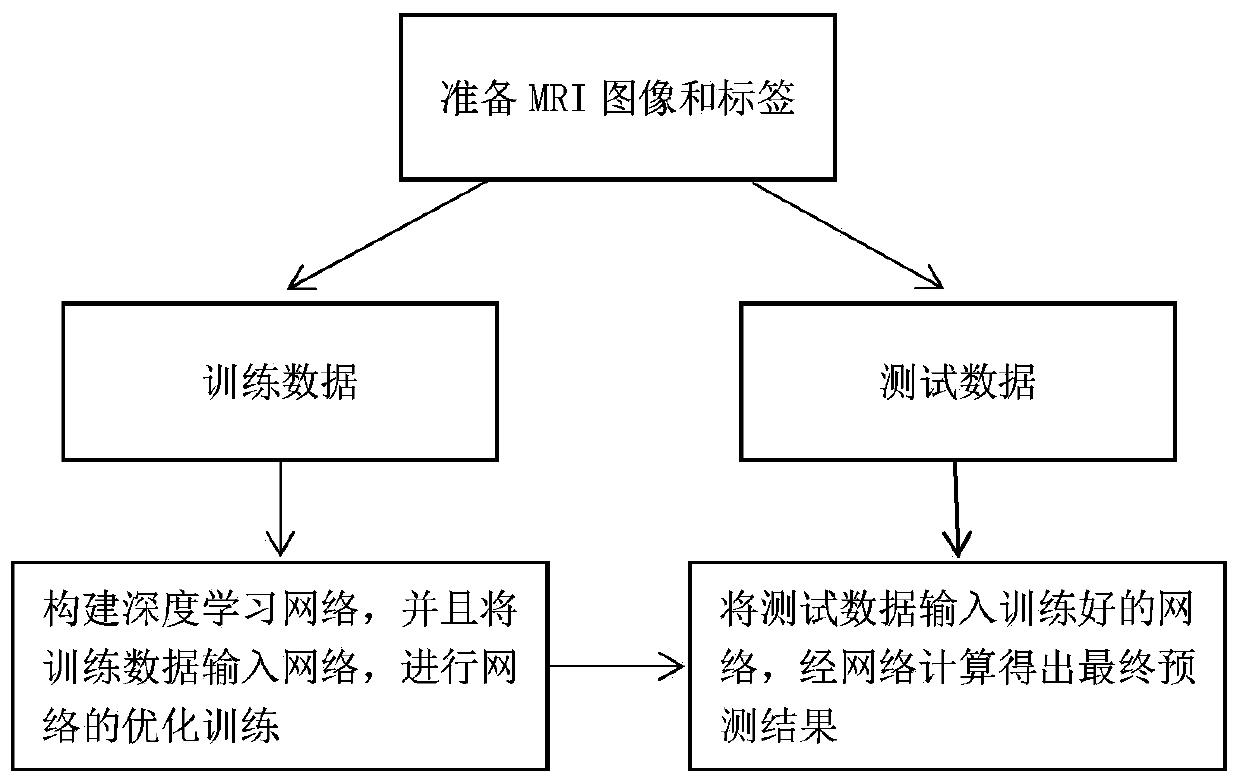

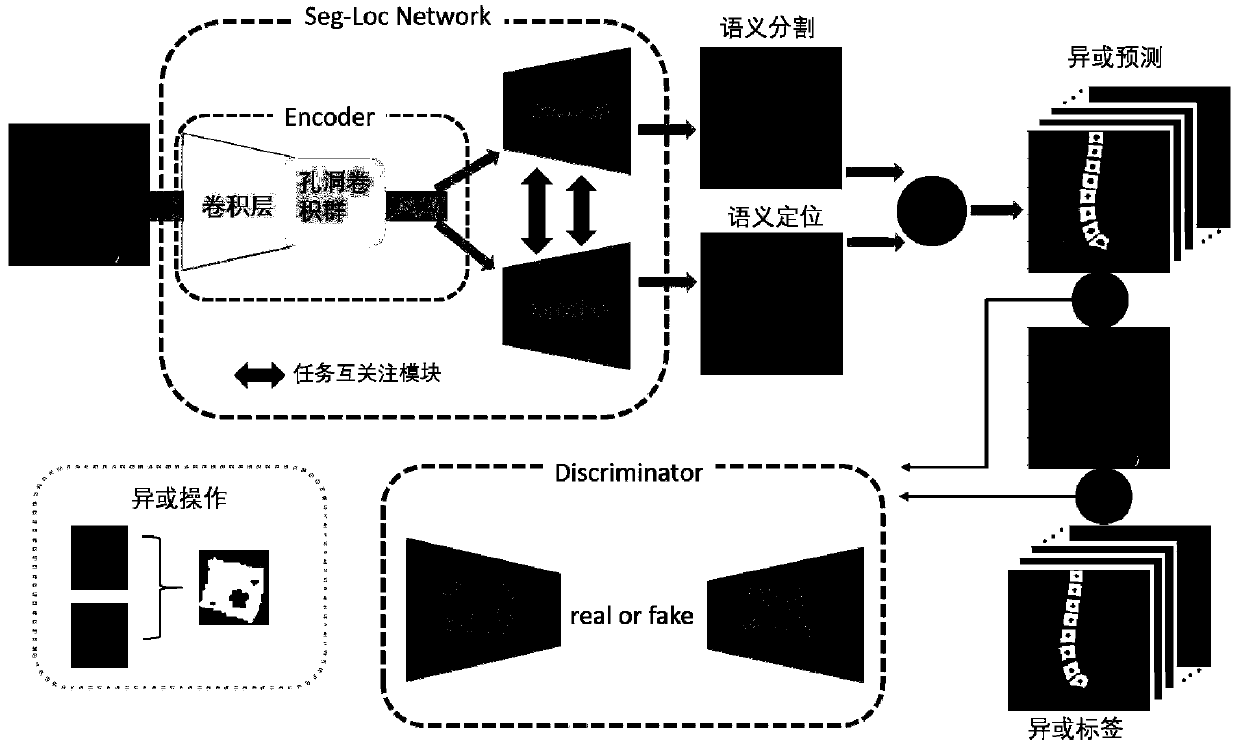

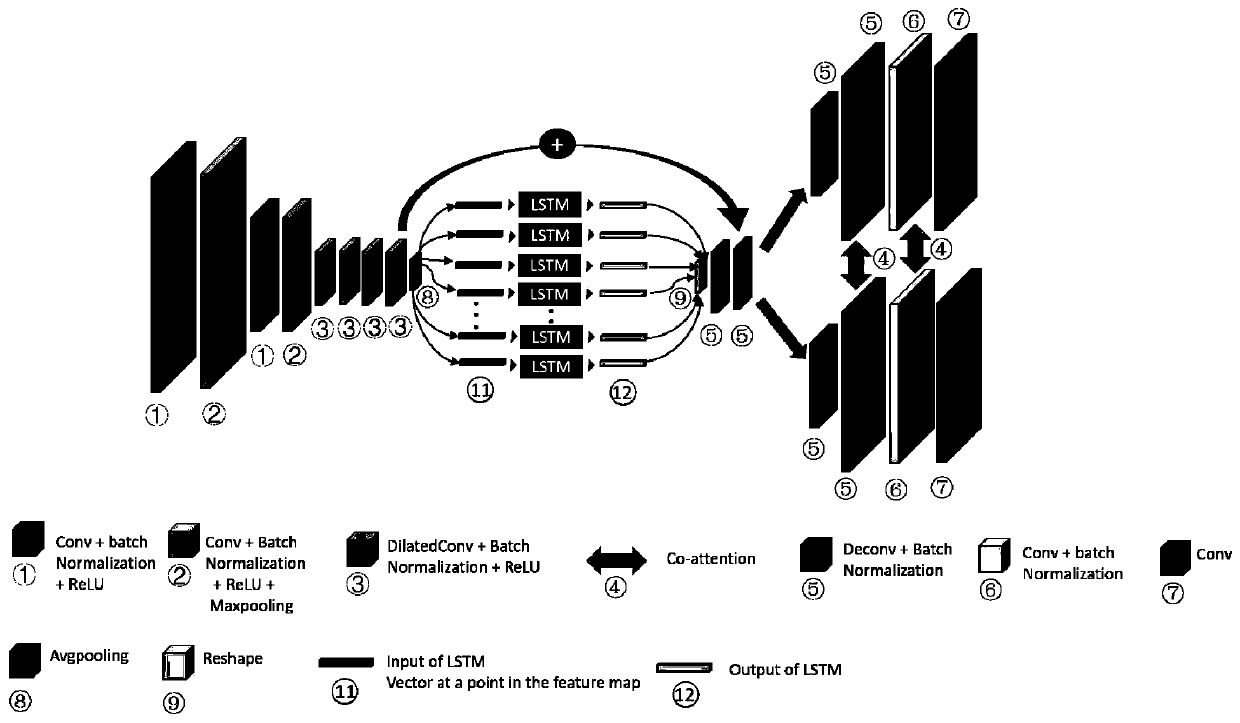

Multi-task relationship learning method for centrum positioning, identification and segmentation in nuclear magnetic resonance imaging

ActiveCN111192248APromote resultsImage enhancementImage analysisSpinal columnNMR - Nuclear magnetic resonance

The invention relates to a multi-task relationship learning method for centrum positioning, identification and segmentation in nuclear magnetic resonance imaging. According to the method, a relationship among multiple tasks is fully utilized based on deep learning, and challenges caused by intervertebral similarity and image quality are greatly improved. For automatic analysis of the spine, an effective multi-task learning framework is provided. The framework can be easily popularized to the application of other images, and a universal framework is provided for effectively solving the three tasks of image positioning, identification and segmentation.

Owner:SHANDONG UNIV

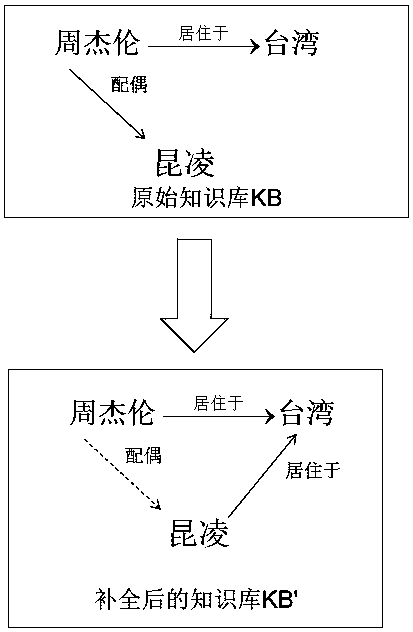

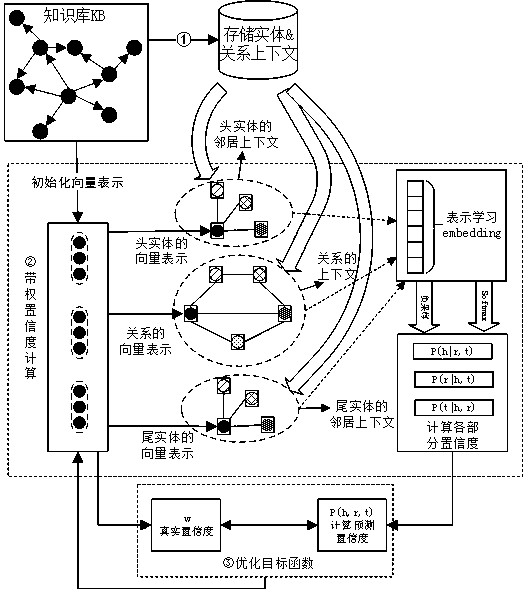

Knowledge base completion method based on WCUR algorithm

PendingCN111027700ALow-dimensional vector representation is goodEfficient completionKnowledge representationAlgorithmDescent algorithm

The invention relates to a knowledge base completion method based on a WCUR algorithm, and the method comprises the following steps: S1, traversing a whole knowledge base, and obtaining the context information of an entity and a relation; S2, on the basis of considering a single triple, combining the context information of the entity and the relationship of the previous stage, and respectively calculating three parts P (h | Networbar (h), P (t | Networbar (t)) and P (r | Path (r)) of the confidence of the triple; S3, optimizing the target function through a gradient descent algorithm, and reversely updating the vector of each entity and relationship to obtain optimal representation; and S4, obtaining new knowledge according to the optimal representation, and adding the new knowledge to theoriginal knowledge base to realize knowledge base completion. The confidence coefficient of each triad can be measured in combination with a probability embedding model, better low-dimensional vectorrepresentation is learned for each entity and relationship, and the knowledge base is complemented more efficiently.

Owner:FUZHOU UNIV

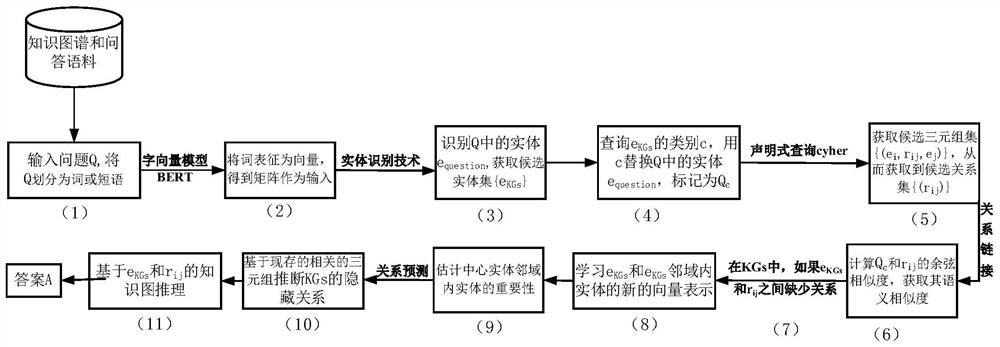

Question-answering method based on knowledge graph completion

ActiveCN112015868AAbility to understand literal meaningHave logical reasoningSemantic analysisNeural architecturesGraph inferenceTheoretical computer science

The invention relates to a question-answering method based on knowledge graph completion, and belongs to the field of natural language processing, and the method comprises the following steps: S1, dividing input Q into words or phrases; s2, representing the words as vectors by using a word vector model BERT to obtain a matrix as model input; s3, identifying entities in the Q by using an entity identification technology to obtain a candidate entity set; s4, querying the category of the eKGs, and replacing the entities in the Q with c; s5, constructing a declarative query cyber, and obtaining acandidate triple set so as to obtain a candidate relationship set; s6, linking based on the relationship between Qc and rij; s7, in the KGs, if the relationship between the eKGs and the rij is absent;s8, learning new vector representation of entities eKGs and entities in neighborhoods of the eKGs; s9, estimating the importance of entities in the neighborhood of the central entity; s10, executingrelation prediction on the basis of existing related triples; and S11, obtaining an answer A based on knowledge graph reasoning of entities and relationships.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

From-bottom-to-top high-dimension-data causal network learning method

The invention discloses a from-bottom-to-top high-dimension-data causal network learning method. The method includes the steps of a causal relationship local structure discovery algorithm, wherein a local causal relation learning method and a causal relationship intensity communication strategy are adopted to learn the local causal relationship intensity relationship among variables; a global variable causal sorting algorithm, wherein on the basis of the biggest loop-free directed subgraph model, high-dimension variable global causal relationship sorting is achieved on the basis of local structure strength measurement and a redundant causal relationship elimination strategy, wherein on the basis of global causal sorting, reliable causal relationship discovery on high-dimension observation data is finally achieved.

Owner:GUANGDONG UNIV OF TECH

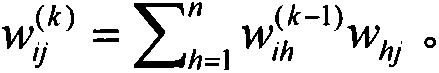

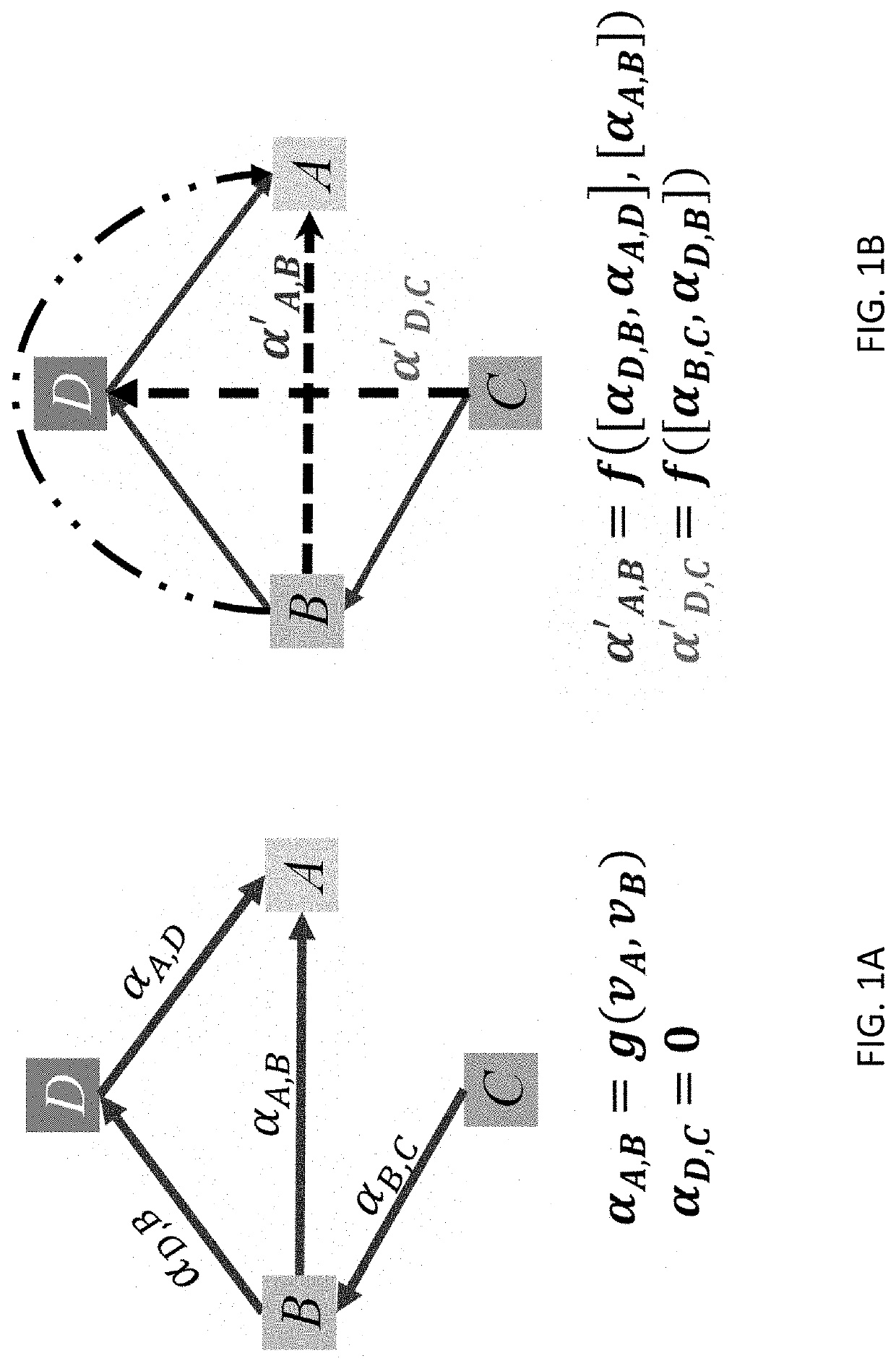

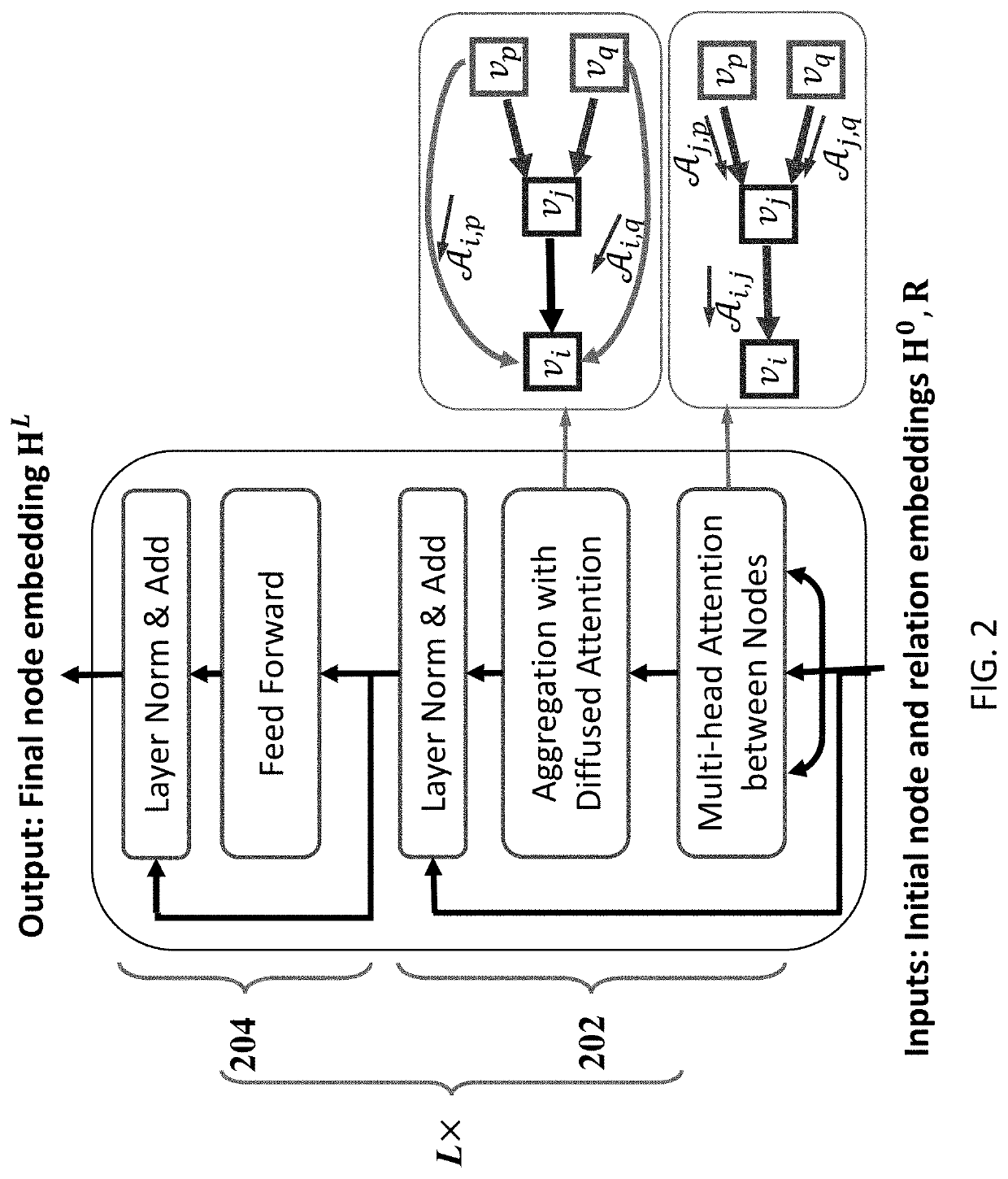

Method and system for relation learning by multi-hop attention graph neural network

PendingUS20220092413A1Knowledge representationNeural architecturesAlgorithmTheoretical computer science

System and method for completing knowledge graph. The system includes a computing device, the computing device has a processer and a storage device storing computer executable code. The computer executable code is configured to: provide an incomplete knowledge graph comprising a plurality of nodes and a plurality of edges, each of the edges connecting two of the plurality of nodes; calculate an attention matrix of the incomplete knowledge graph based on one-hop attention between any two of the plurality of the nodes that are connected by one of the plurality of the edges; calculate multi-head diffusion attention for any two of the plurality of nodes from the attention matrix; obtain updated embedding of the incomplete knowledge graph using the multi-head diffusion attention; and update the incomplete knowledge graph to obtain updated knowledge graph based on the updated embedding.

Owner:BEIJING WODONG TIANJUN INFORMATION TECH CO LTD +2

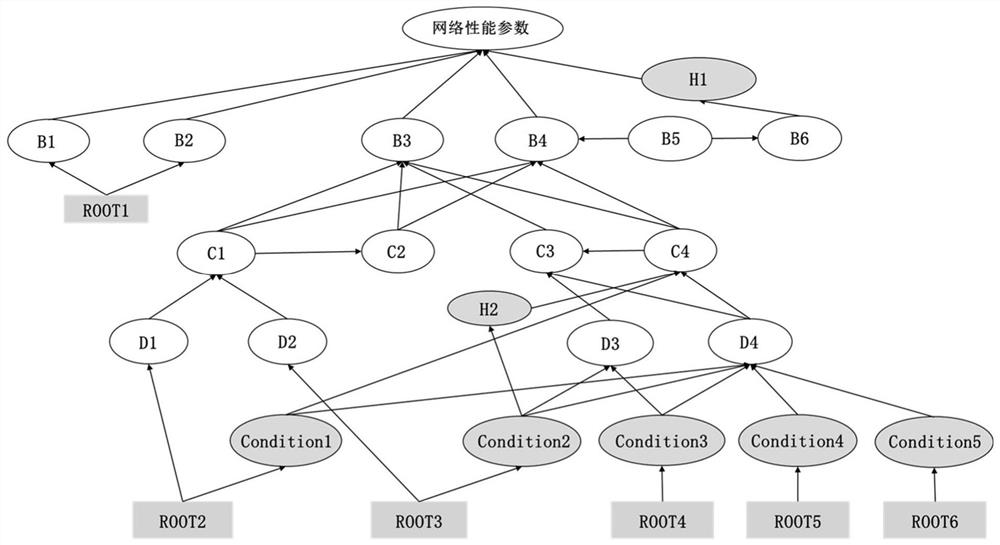

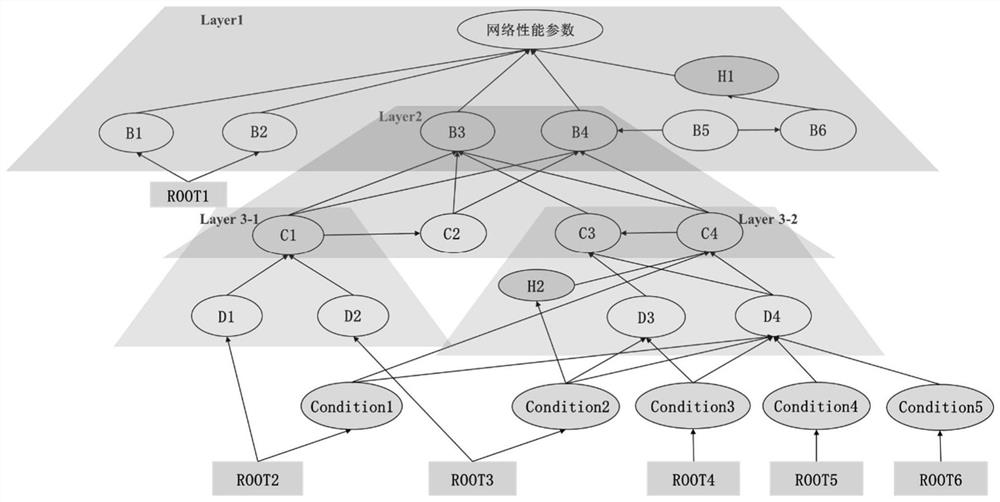

Mechanism data dual-drive combined performance degradation fault root cause positioning method

ActiveCN113746663AImprove accuracyImprove versatilityEnsemble learningNeural architecturesData setRoot cause

The invention discloses a mechanism data dual-drive combined performance degradation positioning method. The problem of root cause positioning of communication drive test performance degradation in different scenes is solved. The method comprises two modules, the causal relationship learning module designs a causal relationship learning model, considers the isomerism of node relationships, and clarifies the equation representation of the node relationships in a causal relationship graph; the causal inference module carries out causal inference based on the intervention index and the distribution index, and carries out inference of a final fault root cause based on the intervention deviation and the distribution abnormity condition. According to the method, an efficient algorithm with interpretability is adopted, the root cause positioning accuracy of a traditional method is greatly improved under a current network test environment data set test, meanwhile, the recall rate is high, and generalizability is achieved. In addition, practical application of enterprise maintenance engineers is facilitated, scheme analysis and conclusions can be issued to an operation and maintenance base layer, the operation and maintenance efficiency is improved, and the operation and maintenance cost is reduced.

Owner:XI AN JIAOTONG UNIV +1

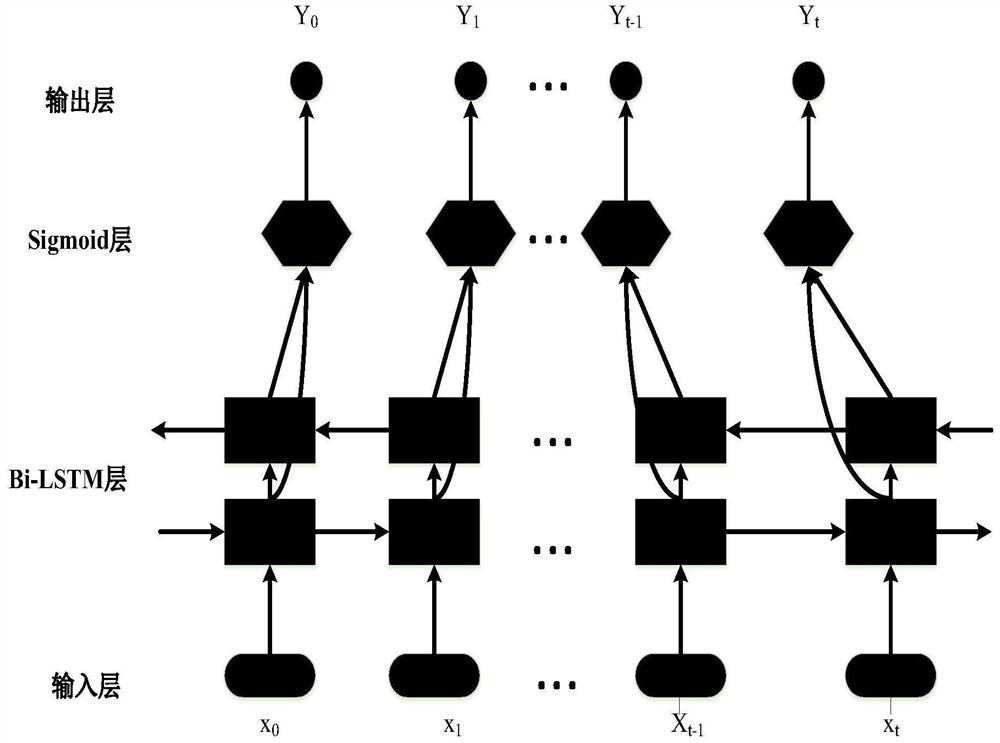

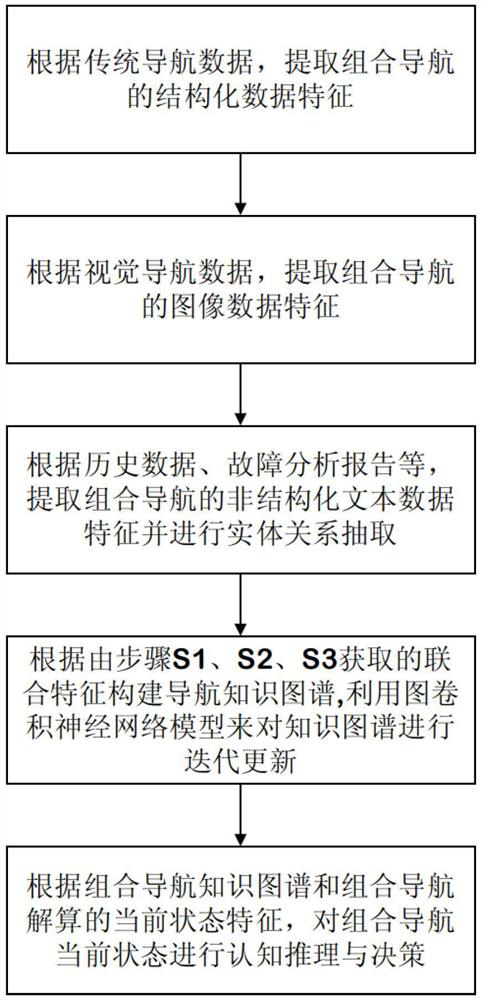

Navigation knowledge graph construction and reasoning application method

PendingCN114111764AMake full use ofImprove accuracyMathematical modelsNavigation by speed/acceleration measurementsConditional random fieldTime domain

The invention provides a navigation knowledge graph construction and reasoning application method, which comprises the following steps of: performing preprocessing, time domain-to-frequency domain conversion, bandwidth acquisition by amplitude integral value segmentation and bandwidth information conversion on structured navigation data to obtain a characteristic value required by anomaly detection; extracting environmental information characteristics in the visual navigation data by adopting a neural network model; target entities in historical and text data are identified by adopting a bidirectional long-short-term memory recurrent neural network model and a conditional random field model, and a relation between the target entities is extracted by adopting a text-based convolutional neural network model. After the combined navigation data is extracted, constructing a navigation knowledge graph according to the extracted combined features and entity relationship information; the knowledge graph is subjected to relation learning and iterative updating by means of a graph convolutional neural network model, so that the knowledge graph obtains more comprehensive feature representation. And according to the integrated navigation knowledge graph and the current state characteristics calculated by the integrated navigation, carrying out cognitive reasoning and decision making on the current state of the integrated navigation.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Heterogenous network (r-knowledge) for bridging users and apps via relationship learning

ActiveCN106845644AMathematical modelsBiological neural network modelsInformation networksEngineering

The present invention provides a method a method for learning and using relationships among users and apps on a mobile device. The method includes collecting user profile information and app profile information on the mobile device and obtaining ontology knowledge on contexts and relationships. The user profile information is associated with users including one or more owners of the mobile device and one or more people whose information have been accessed on the mobile device. The method further includes generating a network model based on the user profile information, the app profile information and the ontology knowledge. The network model is a heterogeneous information network model that links the users and the apps. Further, information based on the generated network model are outputted, which at least one of a role of a user, a relationship between two apps or app functions, and a recommended apps list.

Owner:TCL CORPORATION

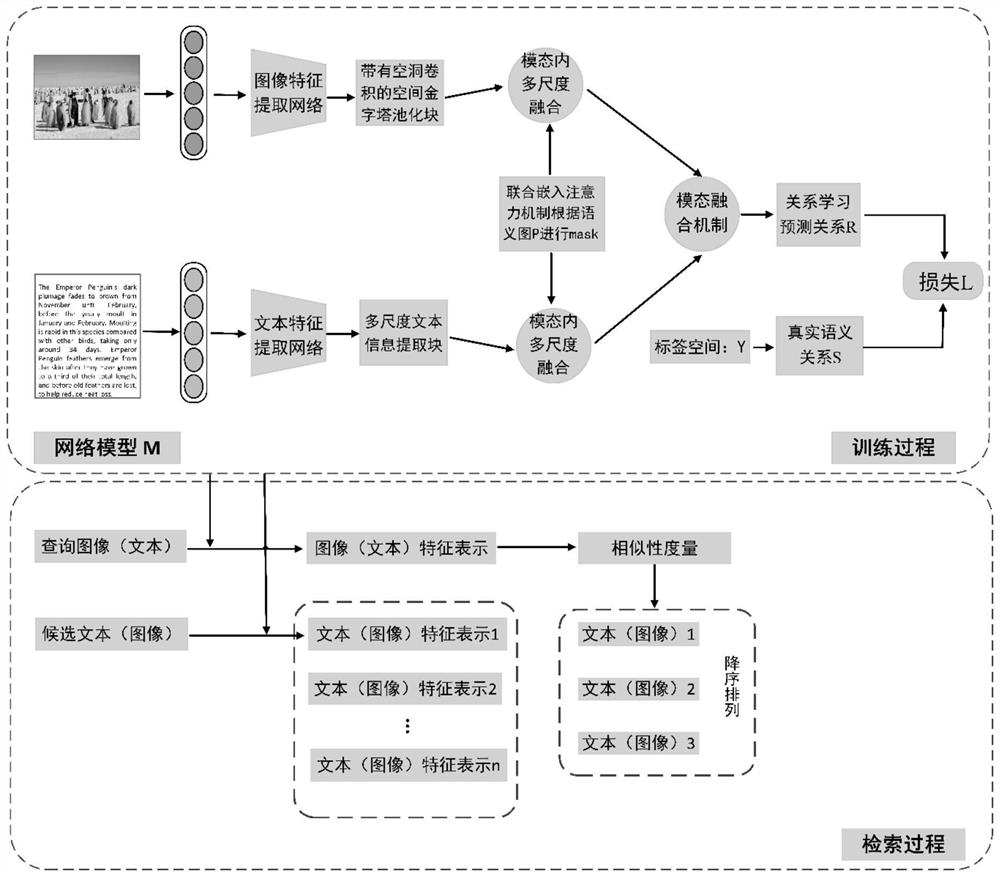

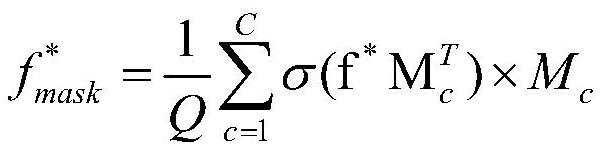

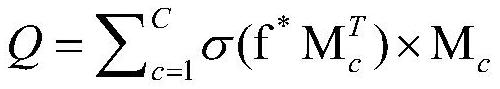

Cross-modal retrieval method based on modal relation learning

InactiveCN114817673AGood image-text mutual retrieval performanceRetain similarityOther databases clustering/classificationMetadata based other databases retrievalFeature vectorData set

The invention provides a cross-modal retrieval method based on modal relation learning, which comprises the following steps of: inputting image text pairs with the same semantics in a data set and class labels to which the image text pairs belong into a cross-modal retrieval network model based on modal relation learning for training until the model converges, thereby obtaining a network model M; s2, respectively extracting feature vectors of an image / text to be queried and each text / image in the candidate library by utilizing the network model M obtained by training in S1, thereby calculating the similarity between the image / text to be queried and the text / image in the candidate library, carrying out descending sorting according to the similarity, and returning a retrieval result with the highest similarity; an inter-modal and intra-modal dual fusion mechanism is established for inter-modal relation learning, multi-scale features are fused in the modals, complementary relation learning is directly performed on the fused features by using label relation information between the modals, and in addition, an inter-modal attention mechanism is added for feature joint embedding, so that multi-scale multi-scale feature fusion is realized. And the cross-modal retrieval performance is further improved.

Owner:HUAQIAO UNIVERSITY

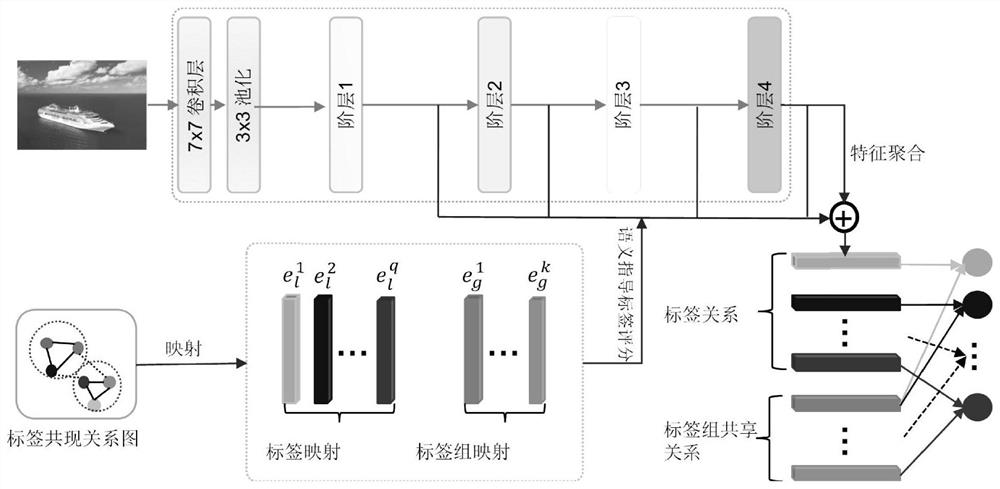

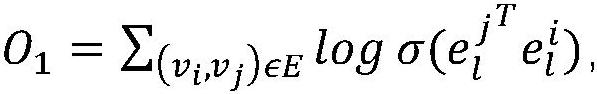

Multi-label image deep learning classification method and equipment

ActiveCN112308115AEffective guidanceImprove scalabilityInternal combustion piston enginesCharacter and pattern recognitionRelation graphFeature extraction

The invention relates to a multi-label learning technology in the field of machine learning, and relates to a multi-label image deep learning classification method and equipment. The method comprisesthe following steps: acquiring a label relation graph; acquiring mapping of all types of labels and mapping of all label groups according to the label relation graph; constructing a deep convolutionalneural network and carrying out image general feature extraction; selecting feature maps of different layers of the convolutional neural network, and mapping the feature maps to a label and label group mapping dimension through a mapping function; calculating a conformity score and a normalization score of the label and the label group at the current pixel point position for all the pixel pointsin the selected feature maps; acquiring a final label related semantic feature and a final label group related semantic feature; and performing label prediction. According to the method, the label relationship is effectively utilized, richer image general features and label relationship features are learned, and a multi-label classification task is better carried out.

Owner:ANHUI UNIVERSITY OF TECHNOLOGY

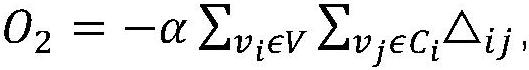

Multi-task neural network framework for remote sensing scene classification and classification method

ActiveCN111291651AImprove discrimination abilityEasy to distinguishScene recognitionNeural architecturesFeature vectorFeature extraction

The invention relates to a neural network framework for remote sensing scene classification and a classification method, in particular to a multi-task neural network framework for remote sensing sceneclassification and a classification method, and solves the problems of limitation of information amount, inaccurate scene recognition and low classification precision of existing network frameworks and classification methods. The network framework comprises a convolution feature extraction layer, a classification task full-connection feature extraction layer, a classification task discriminationlayer and a classification task loss layer; the network framework is characterized by further comprising an auxiliary task full-connection feature extraction layer, an auxiliary task discrimination layer, an auxiliary task loss layer, a classification task feature mapping layer, an auxiliary task feature mapping layer and a relationship learning loss layer. Wherein the two feature mapping layers respectively carry out dimensionality reduction on full-connection feature vectors adapted to two tasks, the relation learning loss layer carries out subtraction on the vectors after dimensionality reduction and takes norms of difference vectors as relation learning losses, and the relation learning losses and discrimination losses of the two tasks are added into optimization training together.

Owner:XI'AN INST OF OPTICS & FINE MECHANICS - CHINESE ACAD OF SCI

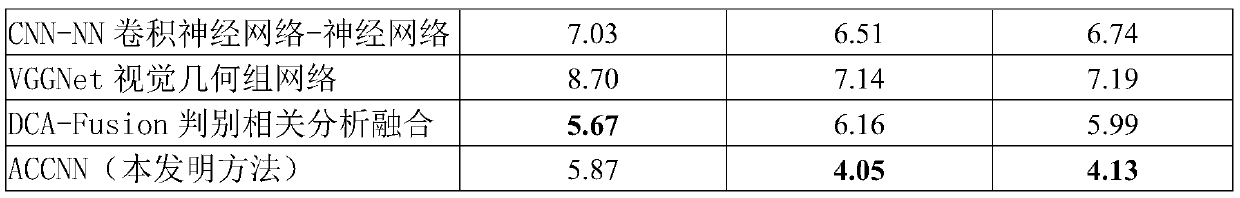

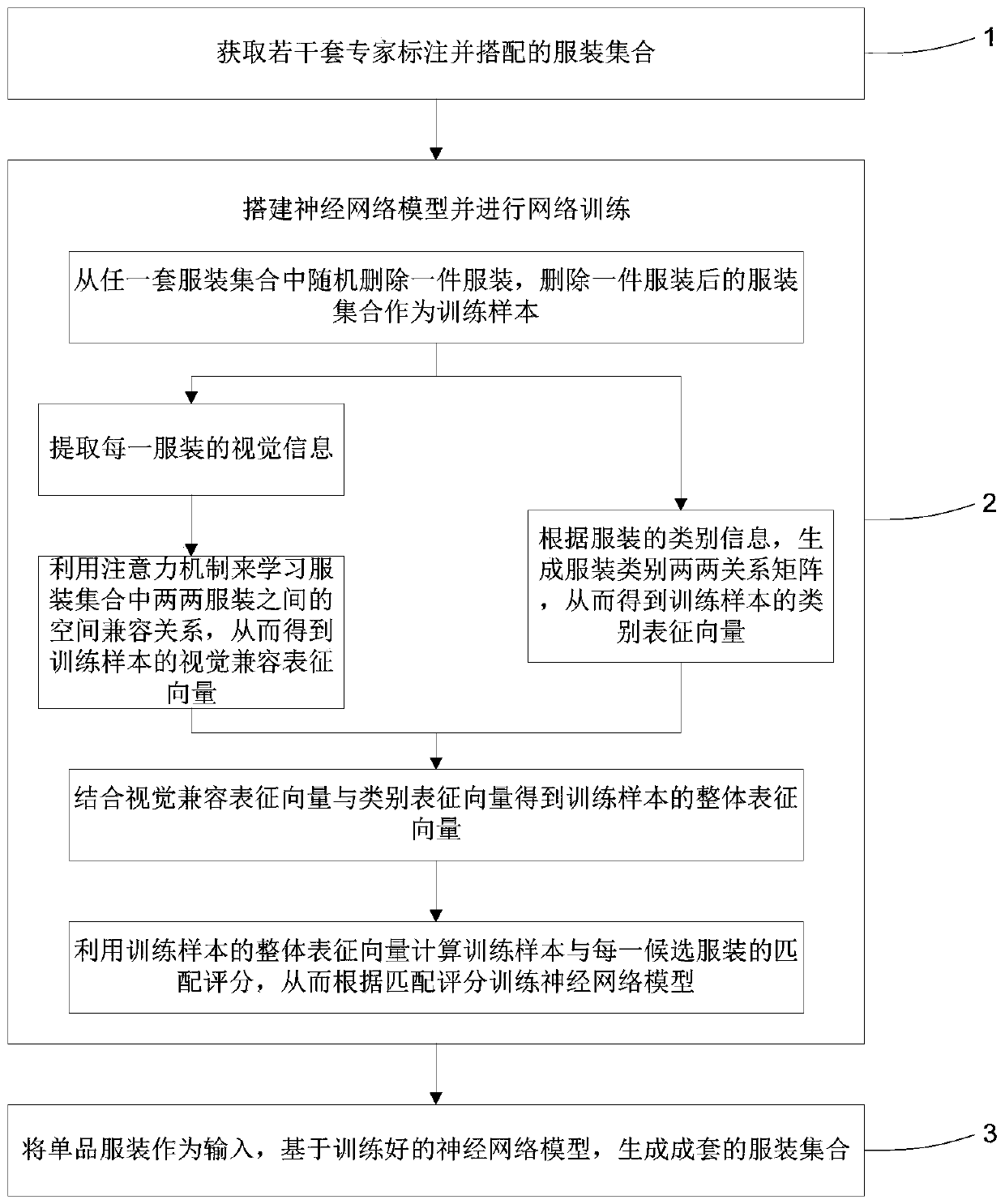

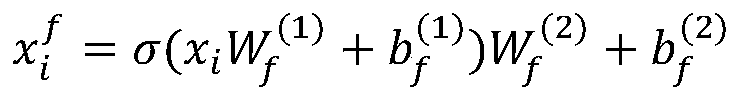

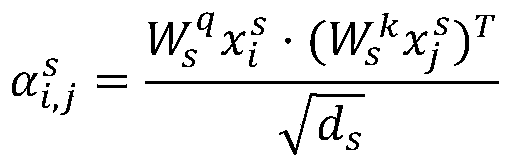

Fashionable clothing intelligent matching and recommending method based on visual combination relation learning

The invention discloses a fashionable garment intelligent matching and recommending method based on visual combination relation learning. According to the method, aiming at extraction of clothing visual information and modeling of multi-clothing visual compatibility and a mutual influence relationship, clothing can be intelligently matched, matching scores among the clothing are obtained, matchingcategory analysis is further assisted, missing parts in current matching can be intelligently identified, and missing single items are subjected to targeted prediction; through a model training and optimization strategy, the model can learn expert experience in a self-adaptive manner, and can intelligently generate beautiful clothes matching for a user.

Owner:UNIV OF SCI & TECH OF CHINA

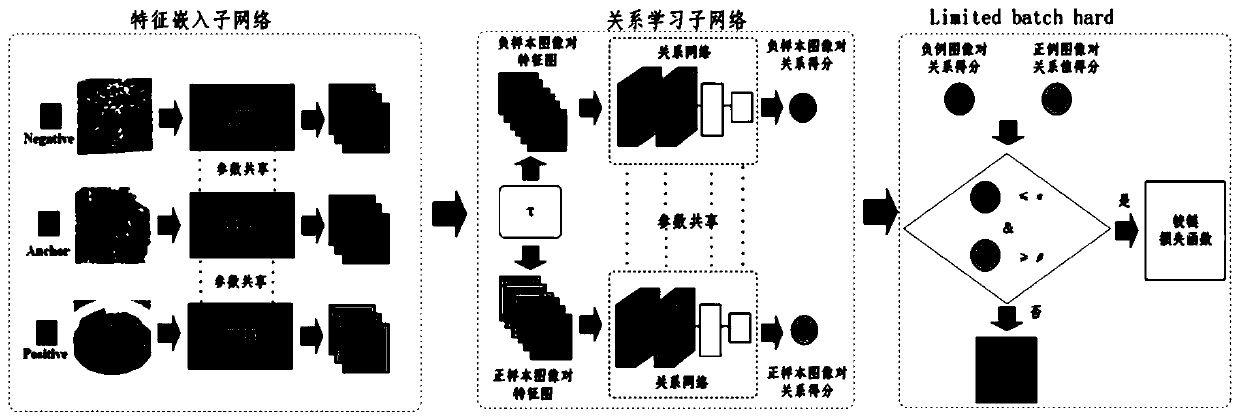

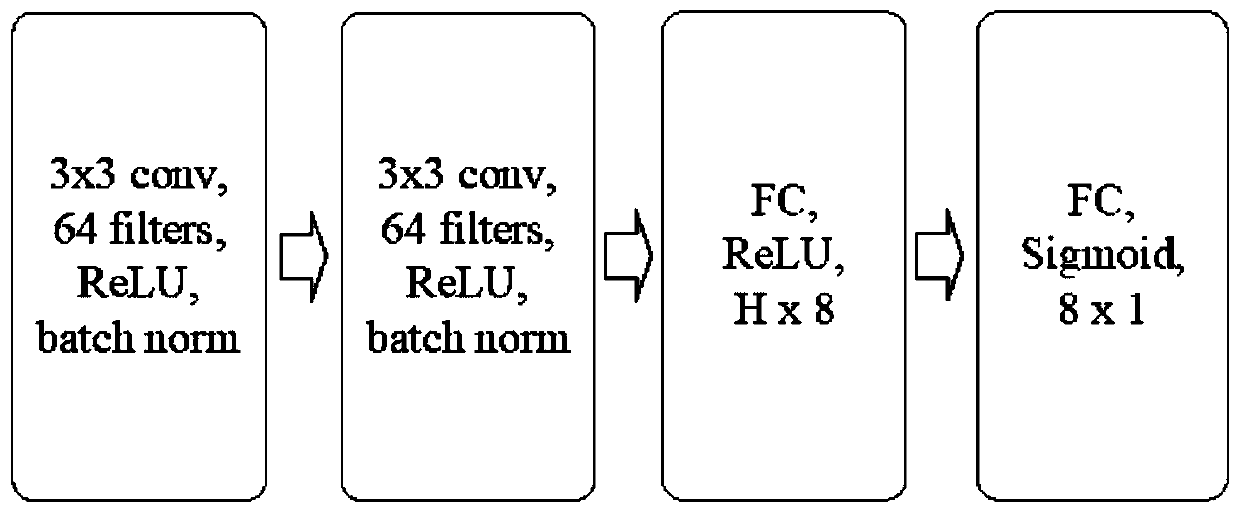

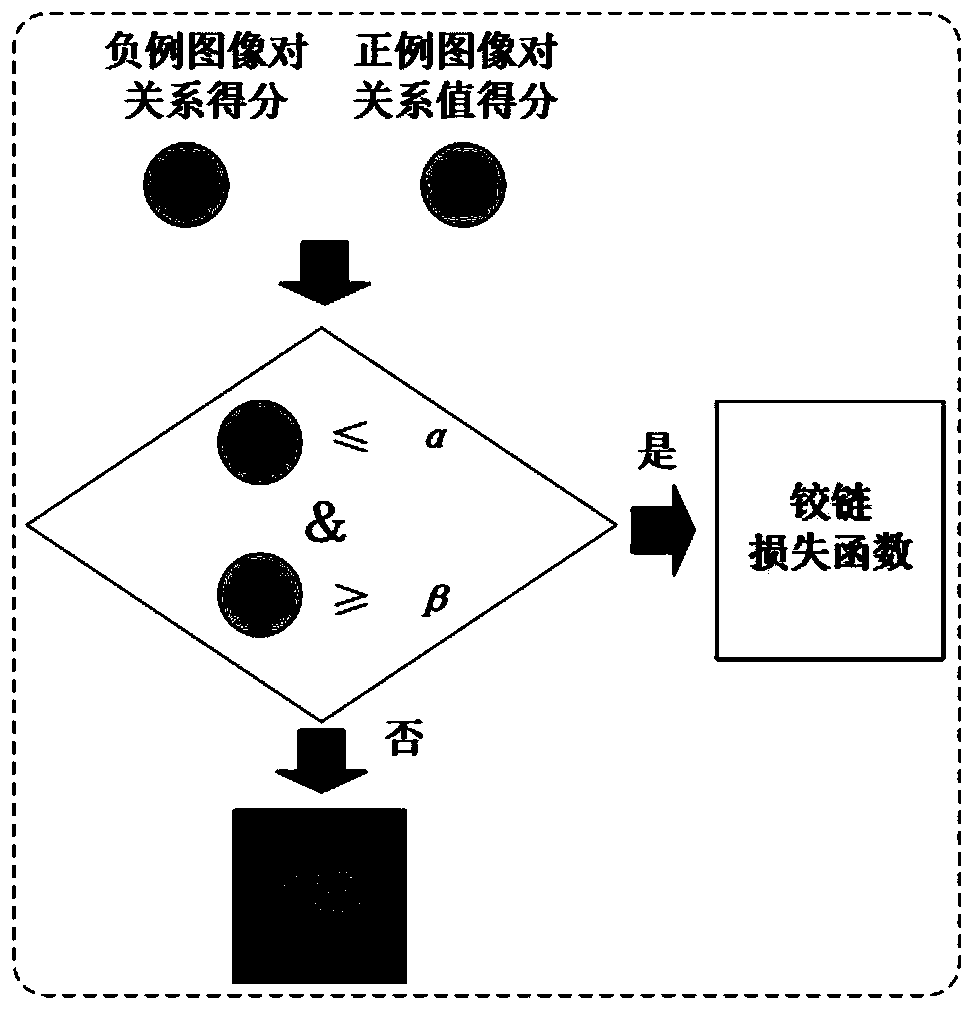

Small sample food image recognition model training method and food image recognition method

PendingCN111062424AImprove classification performanceImprove classification effectCharacter and pattern recognitionNeural architecturesData setImage pair

The invention provides a small sample food image recognition model training method and a food image recognition method. The model training method comprises the following steps: constructing a triple comprising positive and negative samples and an anchor image by using a training data set, and inputting the triple into a ternary convolutional neural network to extract feature representation of thetriple; carrying out feature map fusion to obtain a positive and negative sample image pair feature map; and screening the positive and negative sample images based on the relationship value, and training the feature embedding network and the relationship learning network by using the screened positive and negative sample images.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

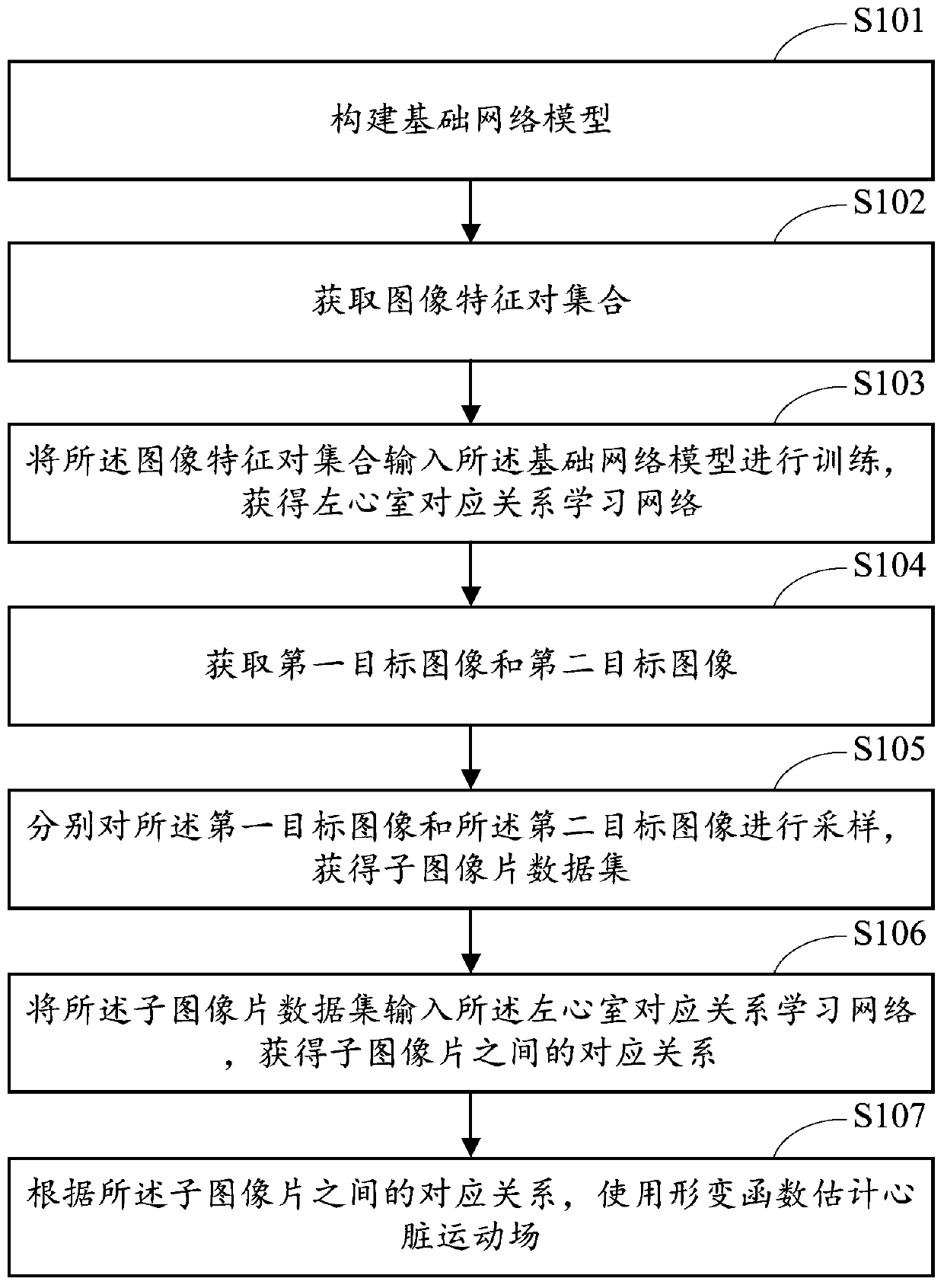

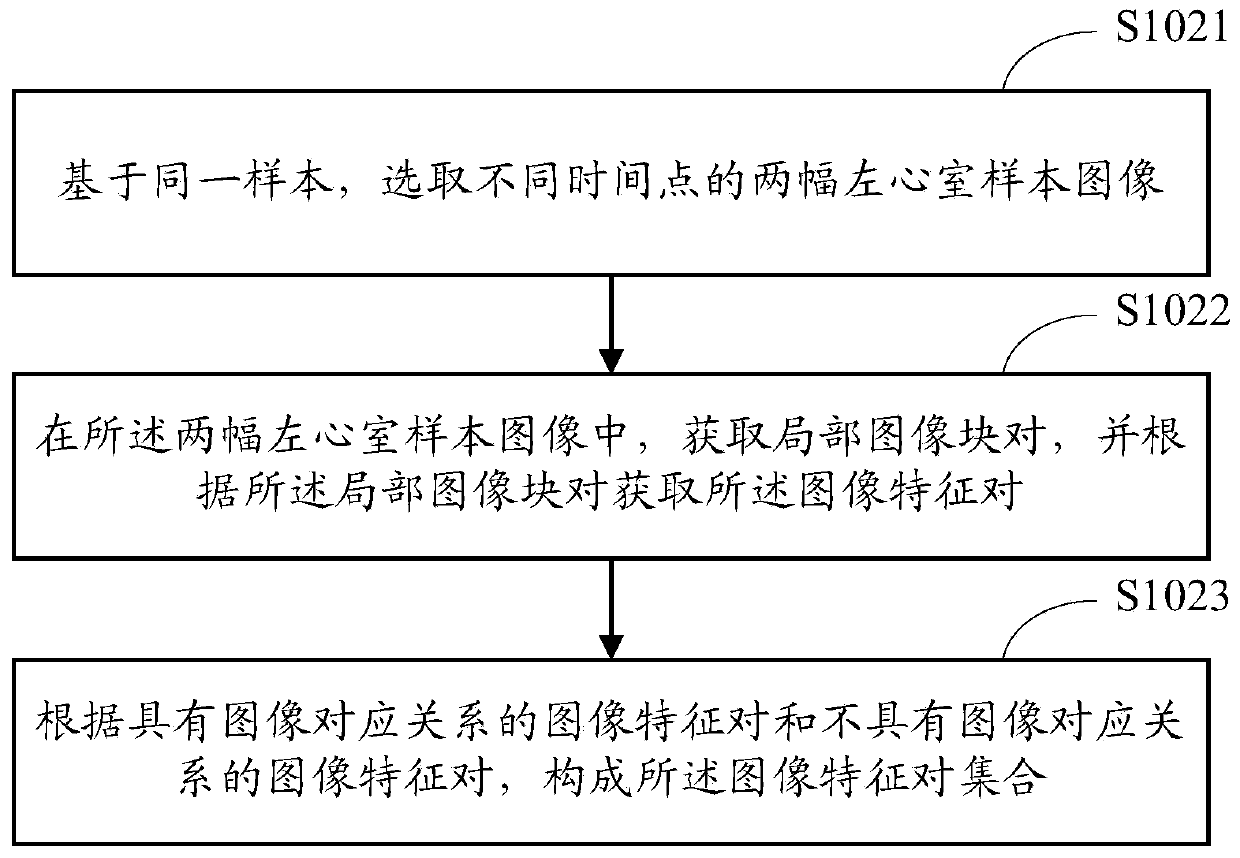

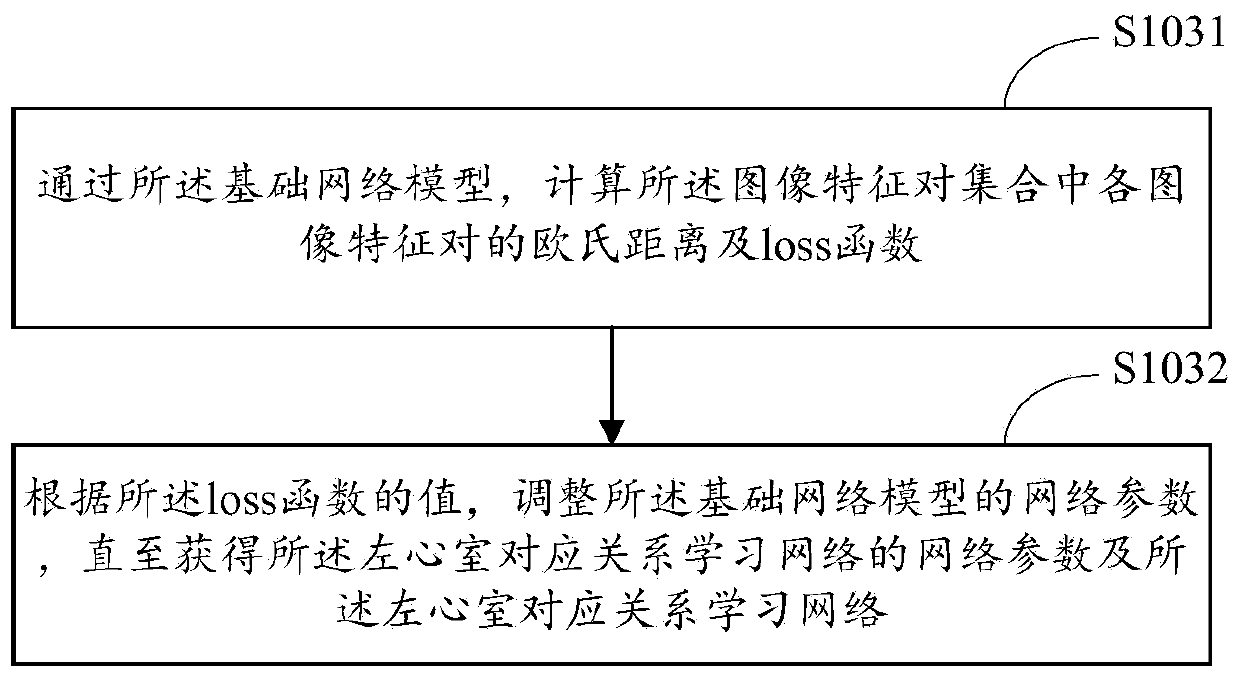

Heart motion estimation method and system and terminal equipment

ActiveCN110136111AAccurate analysisOvercome the mismatch problemImage enhancementImage analysisData setSample image

The invention is applicable to the technical field of image processing, and provides a heart motion estimation method and system and terminal equipment, and the method comprises the steps: building abasic network model which is a deep learning network of dense connection mixed hole convolution; obtaining an image feature pair set according to the left ventricle sample image; inputting the image feature pair set into a basic network model for training to obtain a left ventricle corresponding relation learning network; obtaining a first target image and a second target image; respectively sampling the first target image and the second target image to obtain a sub-image slice data set; inputting the sub-image slice data set into a left ventricle corresponding relation learning network to obtain a corresponding relation between sub-image slices; and estimating the heart motion field by using a deformation function according to the corresponding relationship between the sub-image patches.By means of the method, a stable and reasonable heart motion field can be obtained on the premise that the cardiac muscle layer is not segmented.

Owner:SHENZHEN UNIV

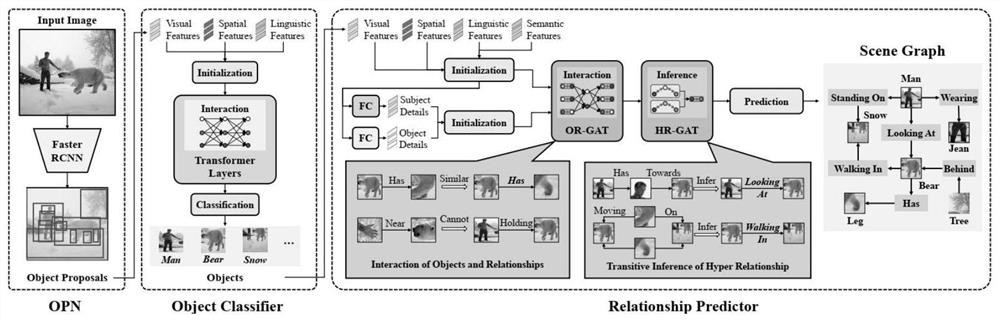

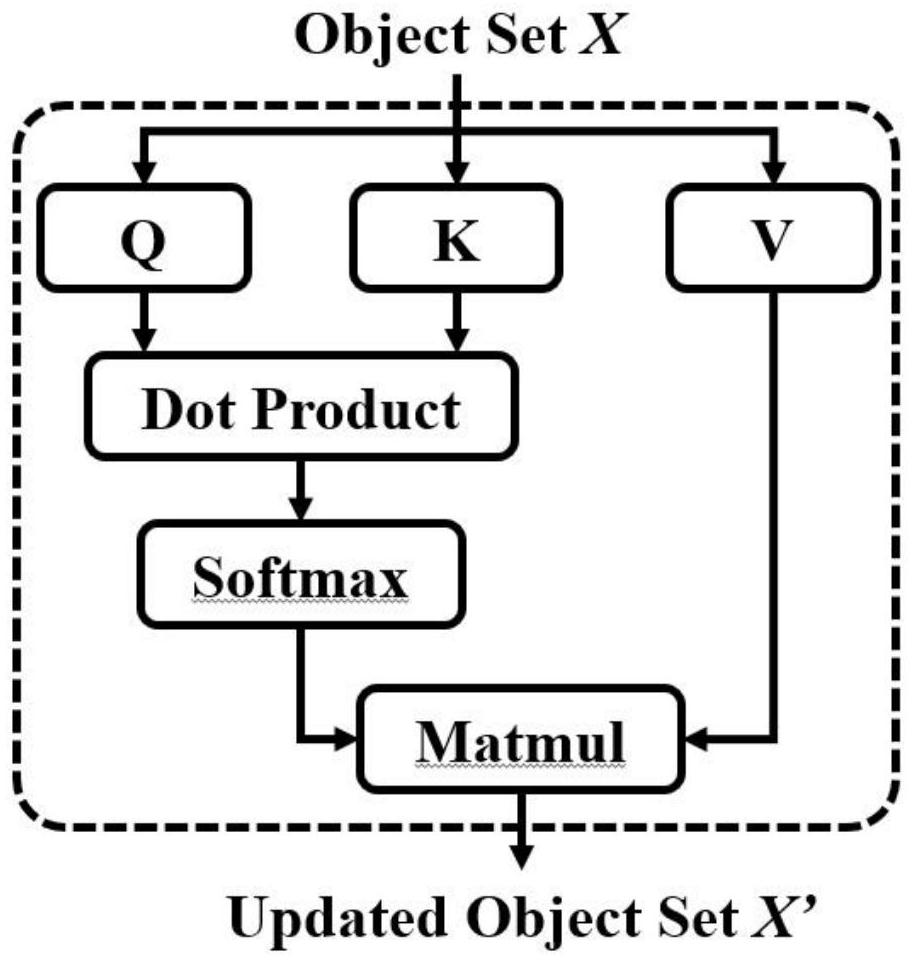

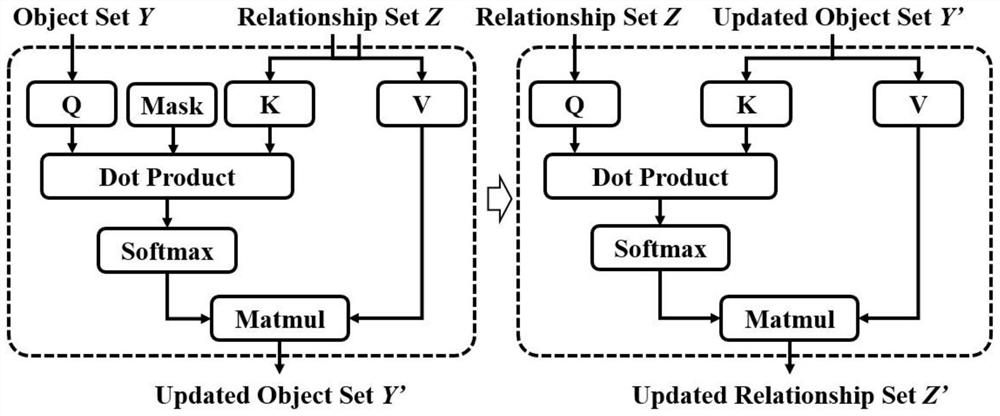

Scene graph generation method based on super relation learning network

ActiveCN113065587AReasoning highEasy to integrateCharacter and pattern recognitionNeural architecturesPattern recognitionBack propagation algorithm

The invention discloses a scene graph generation method based on super relation learning. The method comprises the following steps: 1, target interaction is enhanced through a target self-attention network, and features of targets are fused; 2, interaction between the target and the relation is enhanced through the target-relation attention network, and features between the target and the relation are fused; and 3, transmission reasoning of the super relation is integrated through the super relation attention network. and 4, model training is performed, a target loss function and a relation loss function are put into an optimizer, and gradient return and updating are performed on network parameters through a back propagation algorithm. The invention provides a deep neural network for scene graph generation, and particularly provides a super relation learning network, interaction and transmission reasoning between a target and a relation are fully utilized, the reasoning capability of the relation in scene graph generation is improved, and the performance in the field of scene graph generation is greatly improved.

Owner:HANGZHOU DIANZI UNIV +1

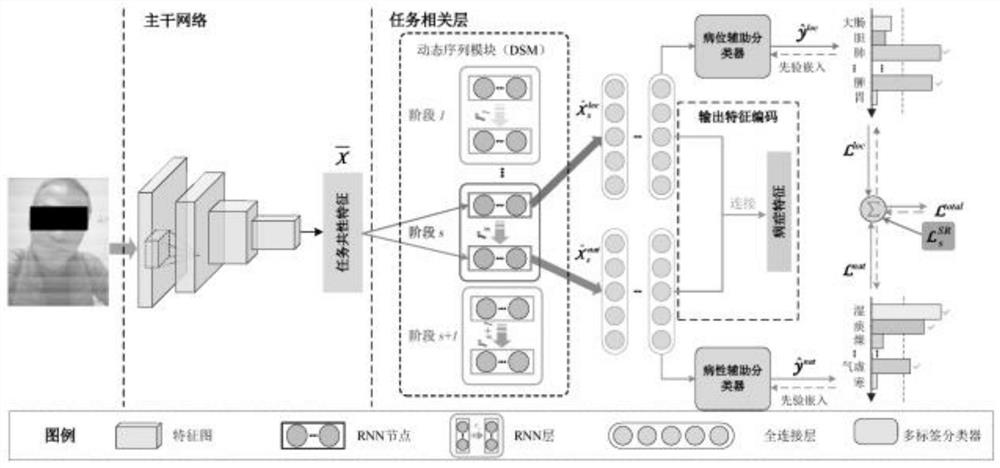

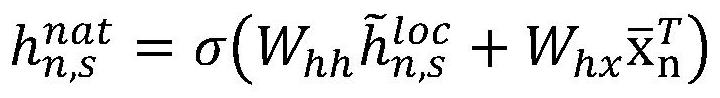

Visceral organ feature coding method based on face image multi-stage relationship learning

ActiveCN111612133AImprove targetingCharacter and pattern recognitionNeural architecturesHuman bodyFeature coding

The invention discloses a visceral organ feature coding method based on face image multi-stage relation learning, and the method comprises the steps: collecting face images, obtaining labels marked for each face image, wherein the labels comprise visceral organ labels and organ feature labels associated with each visceral organ; after data augmentation is carried out on the face image, carrying out normalization and standardization on RGB three channels to acquire a training set and simultaneously performing supervised learning of two subtask branches on the face image training set by utilizing visceral organ tags and organ feature tags to embed priori guidance knowledge of visceral features, and finally obtaining a visceral feature coding model embedded with the priori knowledge. According to the method, the relevance among the face image, the visceral organ labels and the organ feature labels can be fully considered, modeling and analysis are carried out on the multi-stage relation learning model, and a visual and objective basic support is provided for human body health care and health preservation through the coding result of the human body visceral organ features.

Owner:广州华见智能科技有限公司

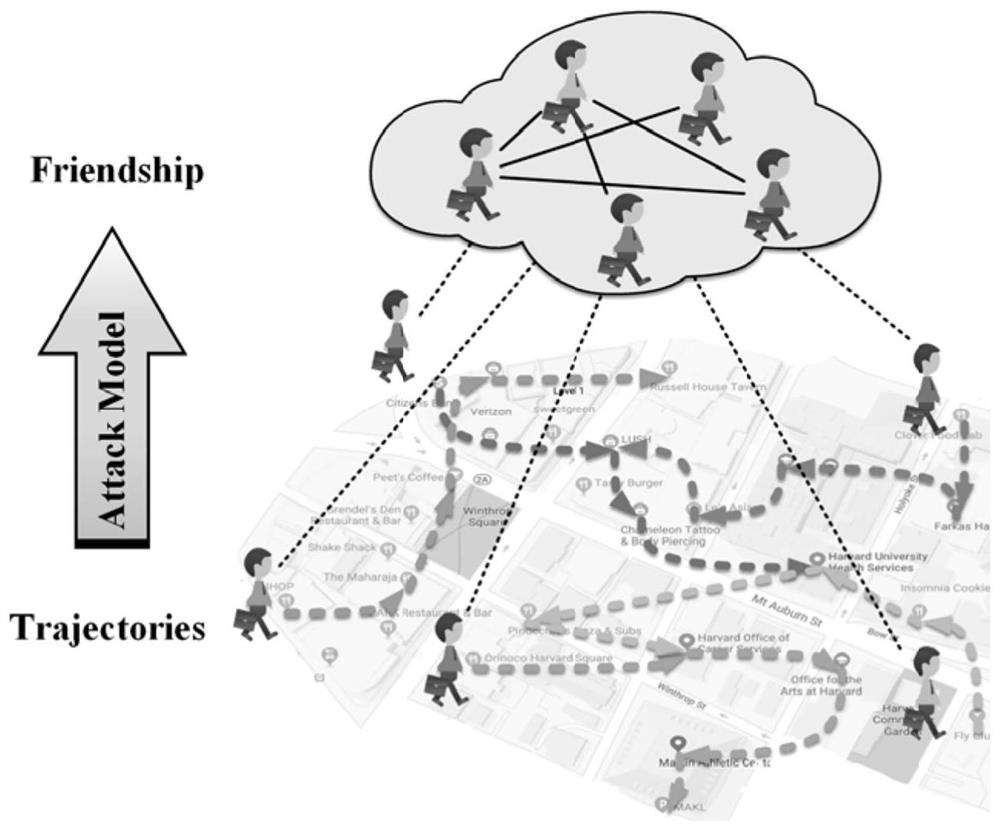

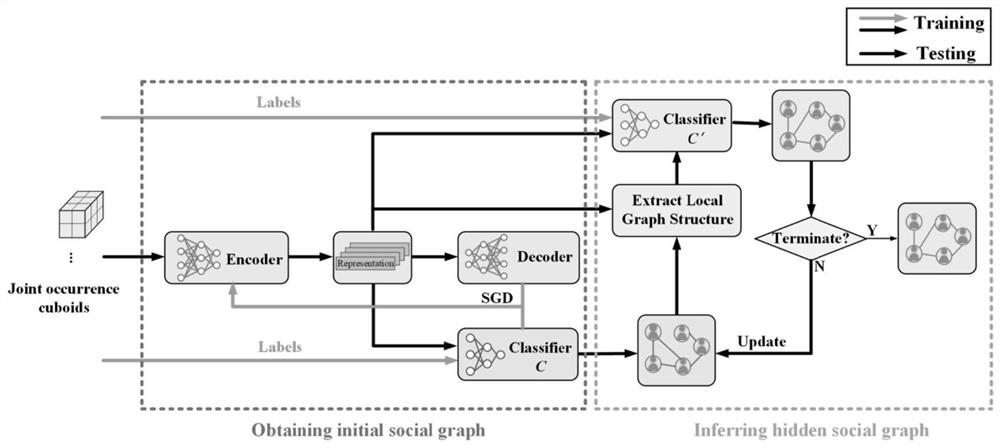

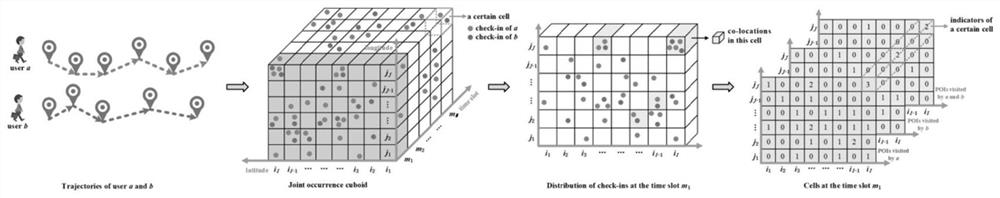

Mobile social network user relationship inference method based on space-time relationship learning

ActiveCN111738447AData processing applicationsDigital data information retrievalData setSocial graph

The invention provides a mobile social network user relationship inference method based on space-time relationship learning. The method gives consideration to the mobility and sociality among individuals at the same time, and considers the effectiveness of a social network structure on social connection prediction. The method comprises the steps: firstly constructing a preliminary social graph based on the mobility of a user, extracting social network structure features of the user pair from the preliminarily constructed social graph, and finally performing friend relationship inference by integrating the features in the two aspects of mobility and sociality. Once the model is trained, different scenes can be better migrated to predict the friend relationship between users. Experiments ontwo real world data sets prove that the method is always superior to the existing method. In addition, the model is also effective for the relationship with a small amount of sign-in data and withoutmeeting events.

Owner:DONGHUA UNIV

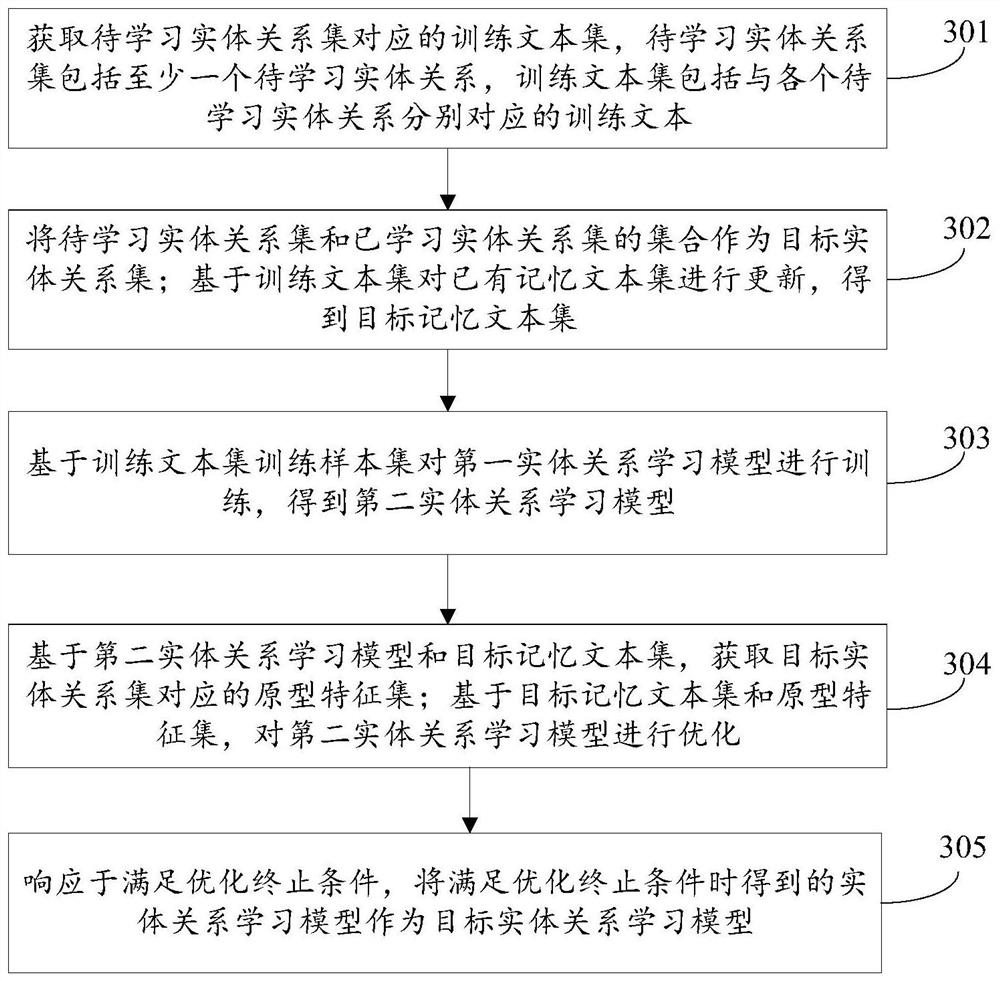

Entity relationship extraction method, entity relationship learning model acquisition method and equipment

PendingCN111737415AImprove accuracyImprove learning effectSemantic analysisCharacter and pattern recognitionPattern recognitionFeature set

The invention discloses an entity relationship extraction method, an entity relationship learning model acquisition method and equipment. The method comprises: obtaining a target text and a target entity relationship learning model, wherein the target entity relationship learning model is obtained based on a prototype feature set corresponding to a target entity relationship set; calling a targetentity relationship learning model to obtain text features of the target text and target prototype features corresponding to the target entity relationships; determining the matching degree of the target text and any target entity relationship based on the text feature and the target prototype feature corresponding to any target entity relationship; and determining an entity relationship corresponding to the target text based on the matching degree of the target text and each target entity relationship. In this way, prototype features in the prototype feature set can represent the entity relationship more comprehensively, the target entity relationship learning model obtained on the basis of the prototype feature set has a good entity relationship learning effect, and the accuracy of entity relationship extraction by means of the target entity relationship learning model is high.

Owner:TSINGHUA UNIV +1

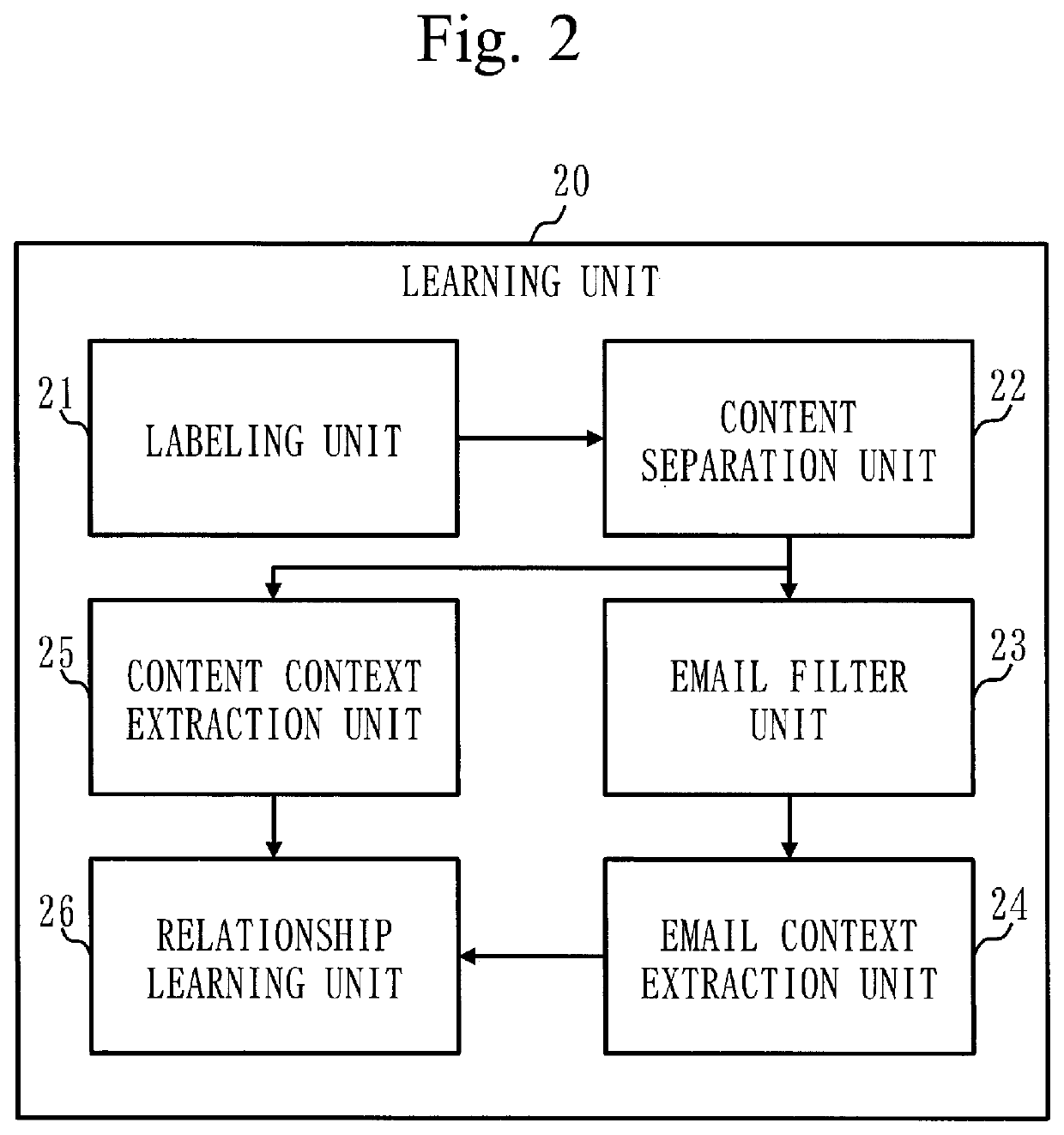

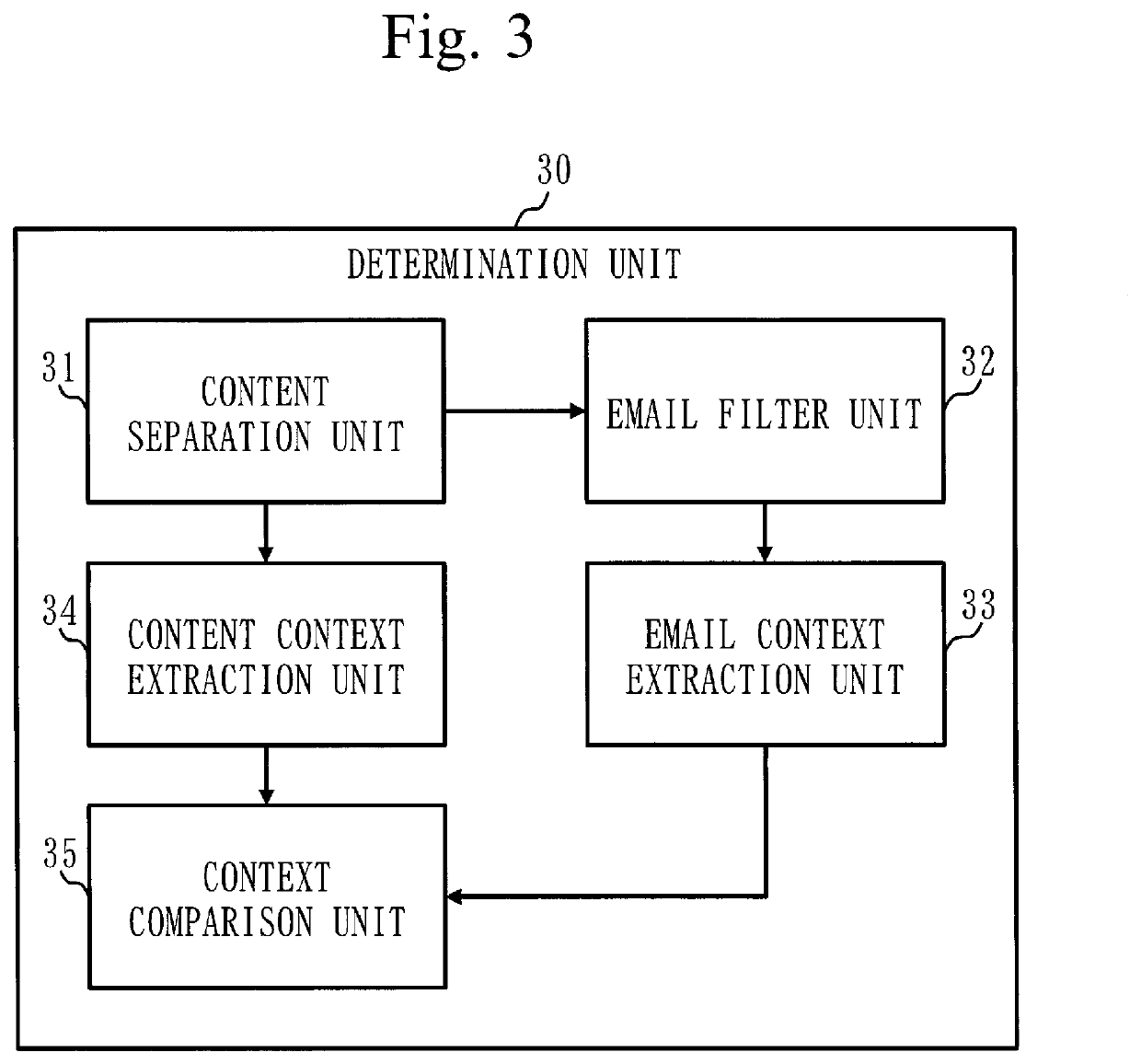

Email inspection device, email inspection method, and computer readable medium

InactiveUS20210092139A1Possible to detectRelational databasesMachine learningLearning unitEngineering

In an email inspection device (10), a learning unit (20) learns a relationship between a feature of each email included in a plurality of emails and a feature of a resource accompanying each email. The resource accompanying each email includes at least either one of a file attached to each email and a resource specified by a URL in a message body of each email. A determination unit (30) extracts a feature of an inspection-target email and a feature of a resource accompanying the inspection-target email, and determines whether or not the inspection-target email is a suspicious email depending on whether or not the relationship learned by the learning unit (20) exists between the extracted features.

Owner:MITSUBISHI ELECTRIC CORP

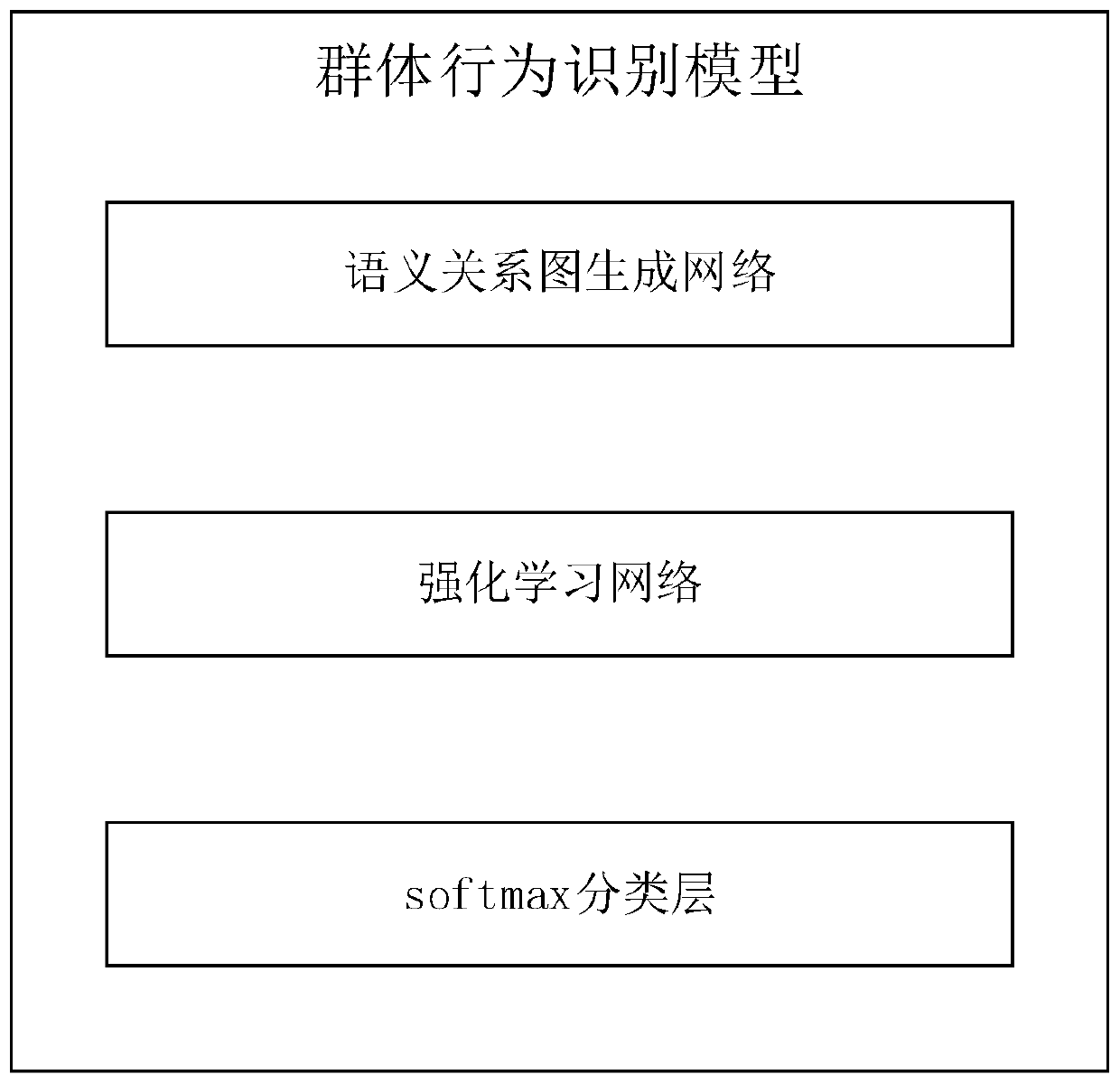

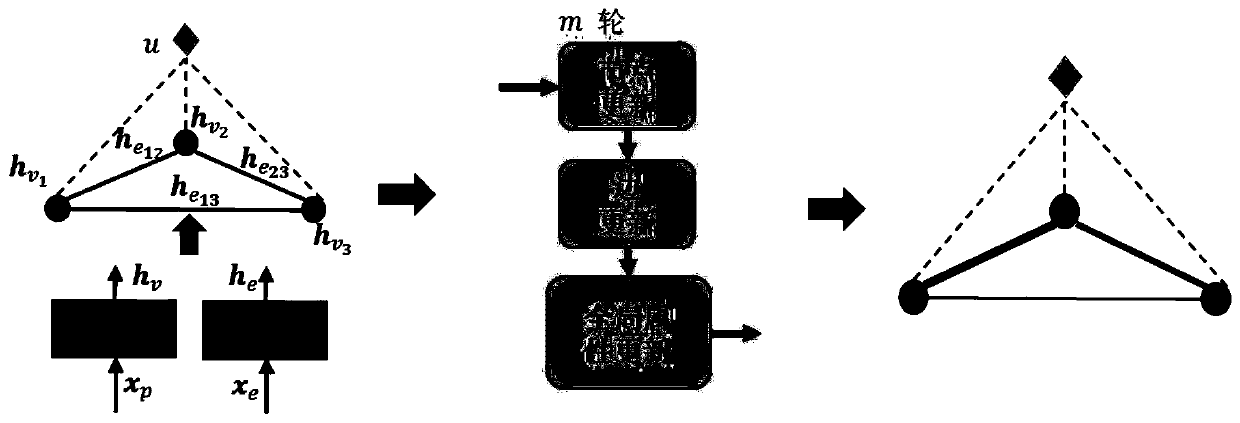

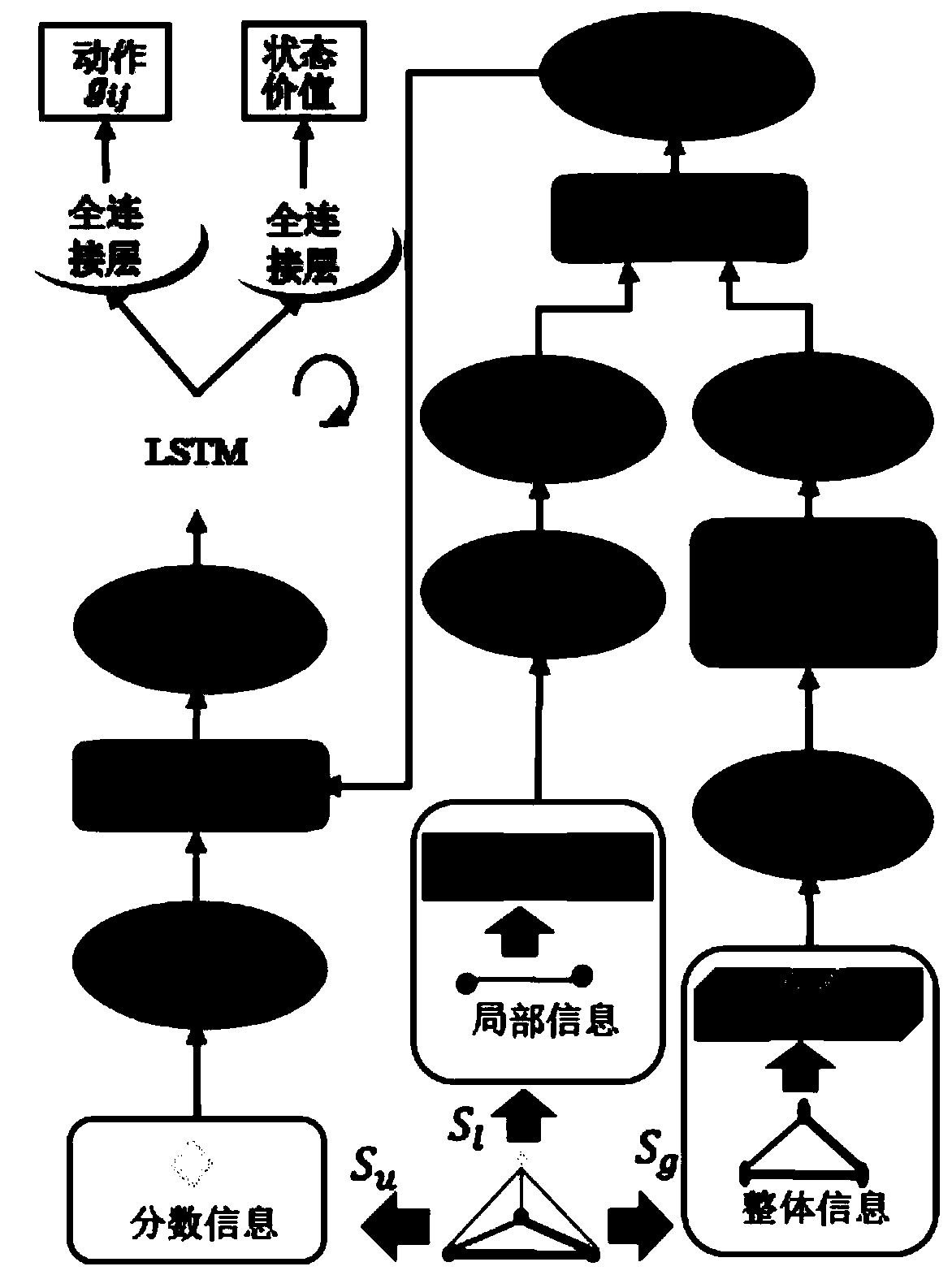

Group behavior recognition model based on progressive relationship learning and training method thereof

PendingCN110516599AImprove accuracySuppress invalid relationshipCharacter and pattern recognitionGraph generationRelationship learning

The invention belongs to the field of behavior recognition, particularly relates to a group behavior recognition model based on progressive relation learning and a training method thereof, and aims tosolve the problem of low group behavior recognition accuracy in the prior art by mining key relations in group behaviors. The group behavior recognition model comprises a semantic relation graph generation network, a reinforcement learning network and a softmax classification layer. The network parameters of the other network are trained on the basis of alternately keeping the network parametersof one network unchanged / removing the network for the semantic relation graph network and the reinforcement learning network until a preset training end condition is met, and the trained group behavior recognition model is obtained. The group behavior recognition model obtained through the method has higher recognition accuracy.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Electronic credential security event fusion analysis method

InactiveCN110275942AThe result is accurateSemantic analysisCharacter and pattern recognitionCosine similarityThird party

The invention relates to the field of data analysis, discloses an electronic credential security event fusion analysis method, and solves the technical problem of better completing a fusion analysis system task in an electronic credential third-party supervision system. The method comprises the following steps: s101: data acquisition, s102: data preprocessing, s103, feature extraction, S104, fusion calculation and S105, result output; S104 inclues safety event study and judgment and association relationship learning; the safety event study and judgment uses a Kmeans clustering algorithm to obtain a warning threshold value of a safety event of an enterprise or a use; the association relationship learning adopts a Skip-gram model to train a word vector after coding corresponding to an abnormal behavior; after the word vectors of the abnormal behaviors are obtained, the cosine similarity is used for calculating the similarity between the word vectors, and then the association similarity between enterprises and the association similarity between users are obtained. When an enterprise has a relatively concentrated safety event time or a relatively small number of abnormal behaviors, the safety event early warning threshold value algorithm can dynamically study and judge the early warning threshold value and the result is relatively accurate.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com