Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

43 results about "Queuing network model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method of incorporating DBMS wizards with analytical models for DBMS servers performance optimization

ActiveUS20070083500A1Enhancing autonomic computingImprove balanceDigital data information retrievalDigital data processing detailsAnalytic modelResource utilization

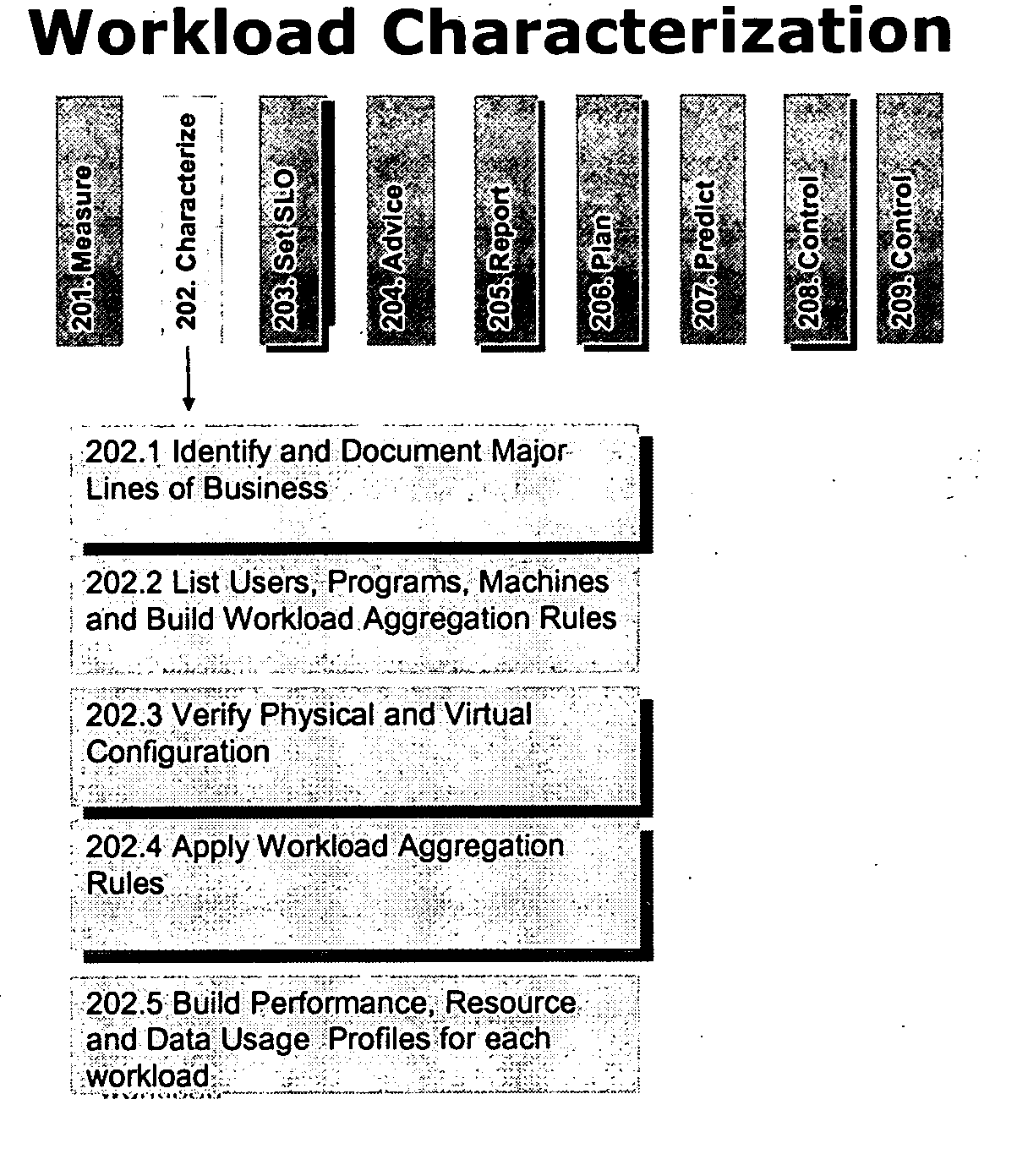

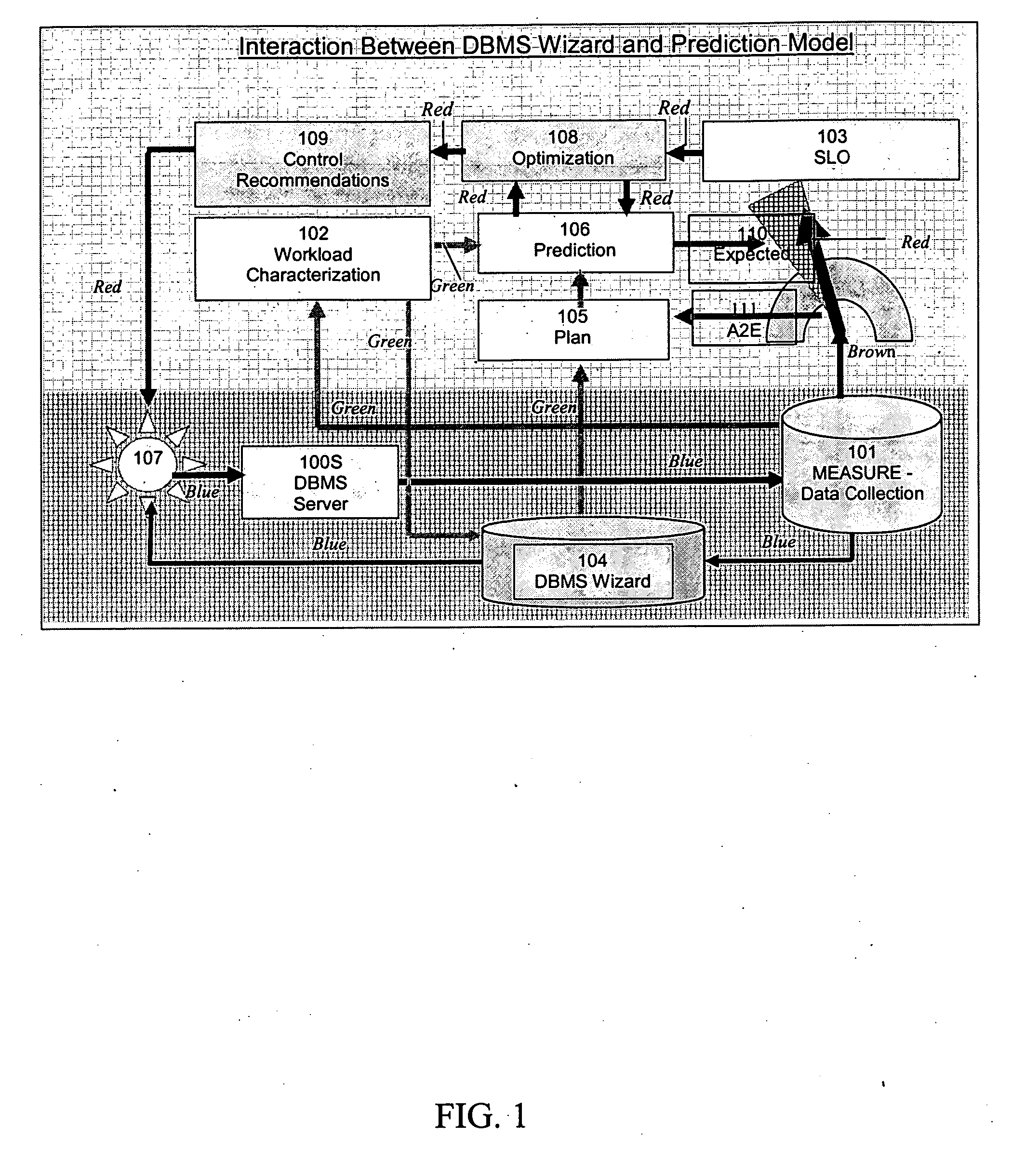

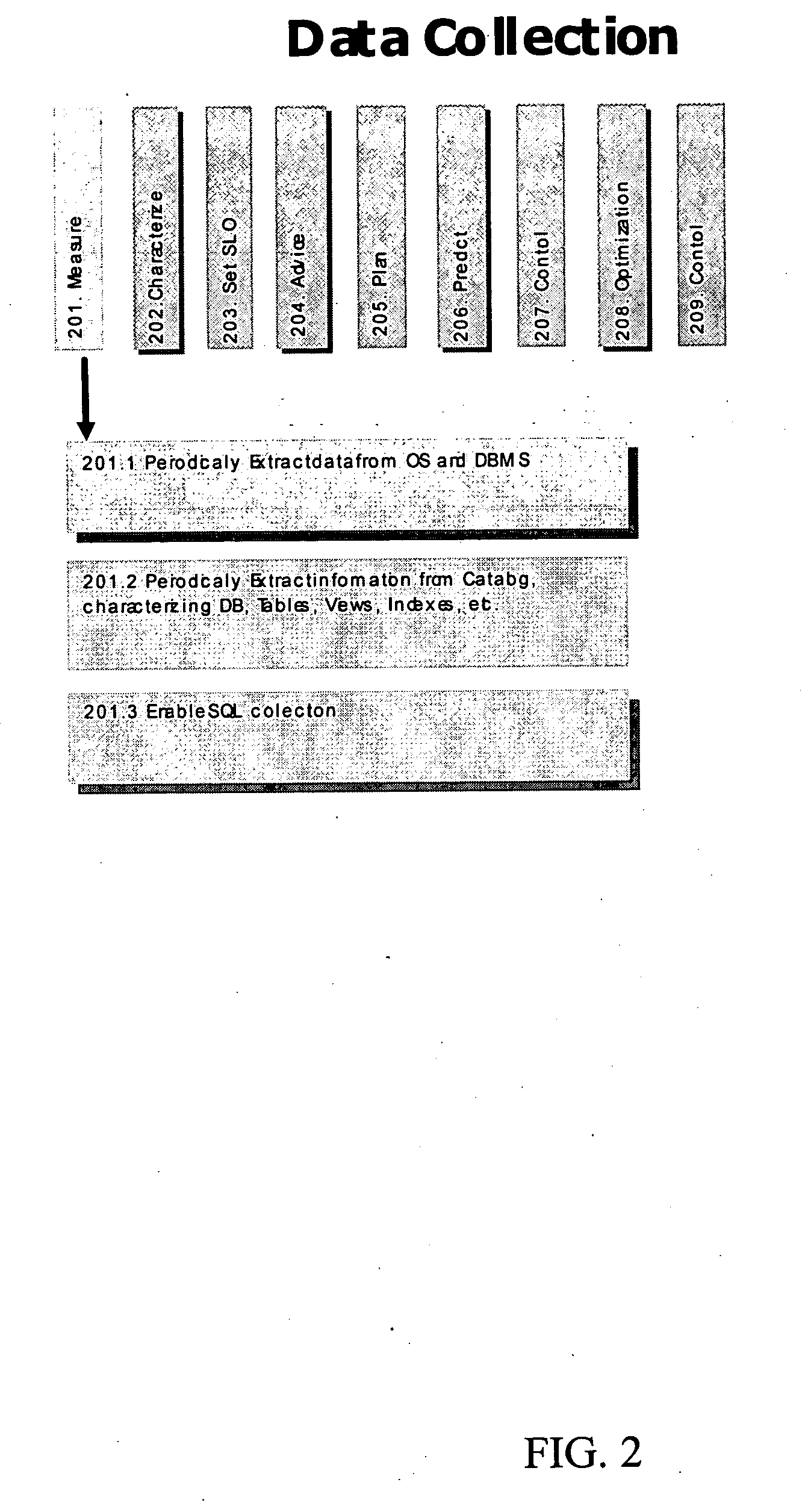

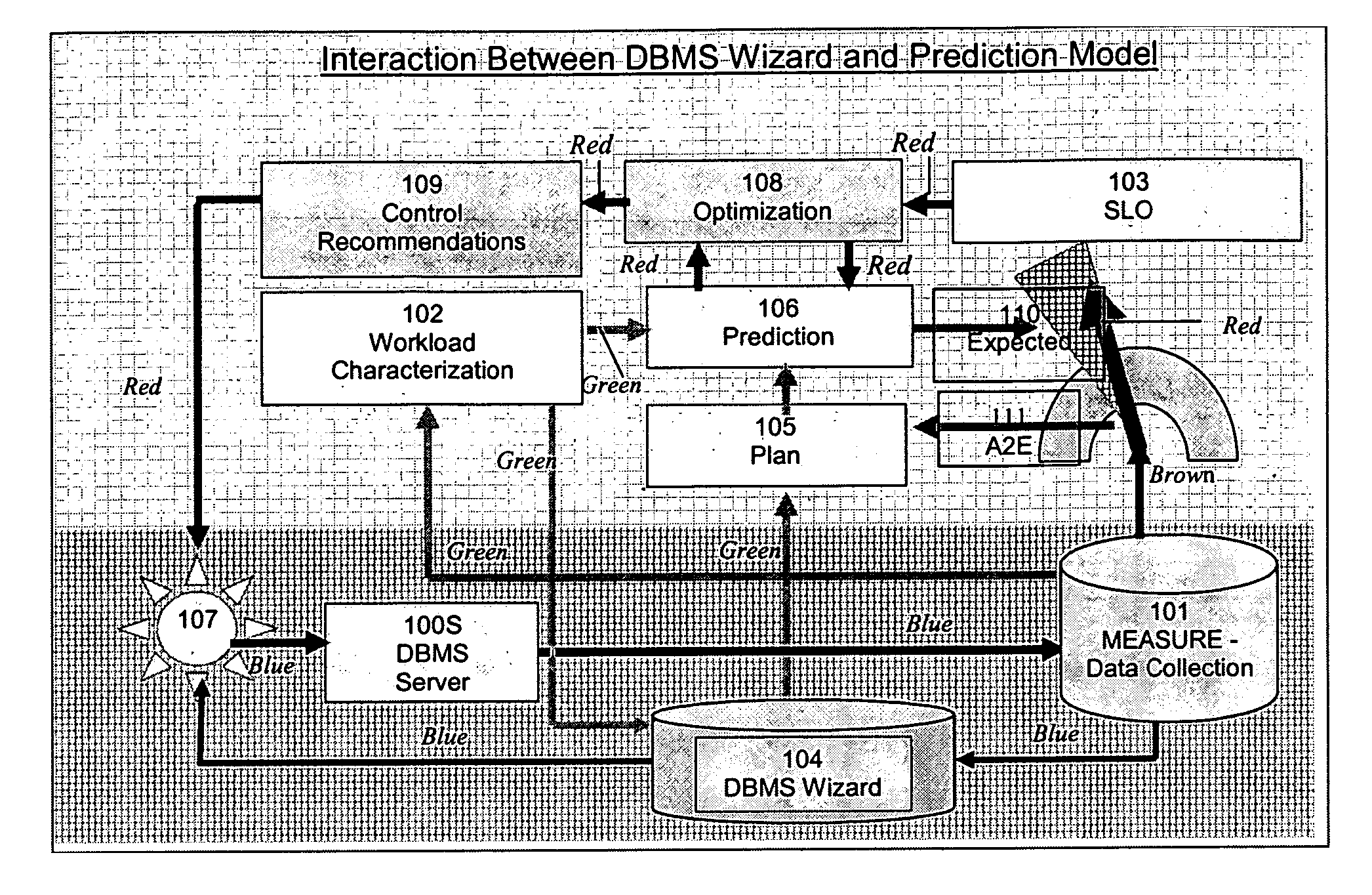

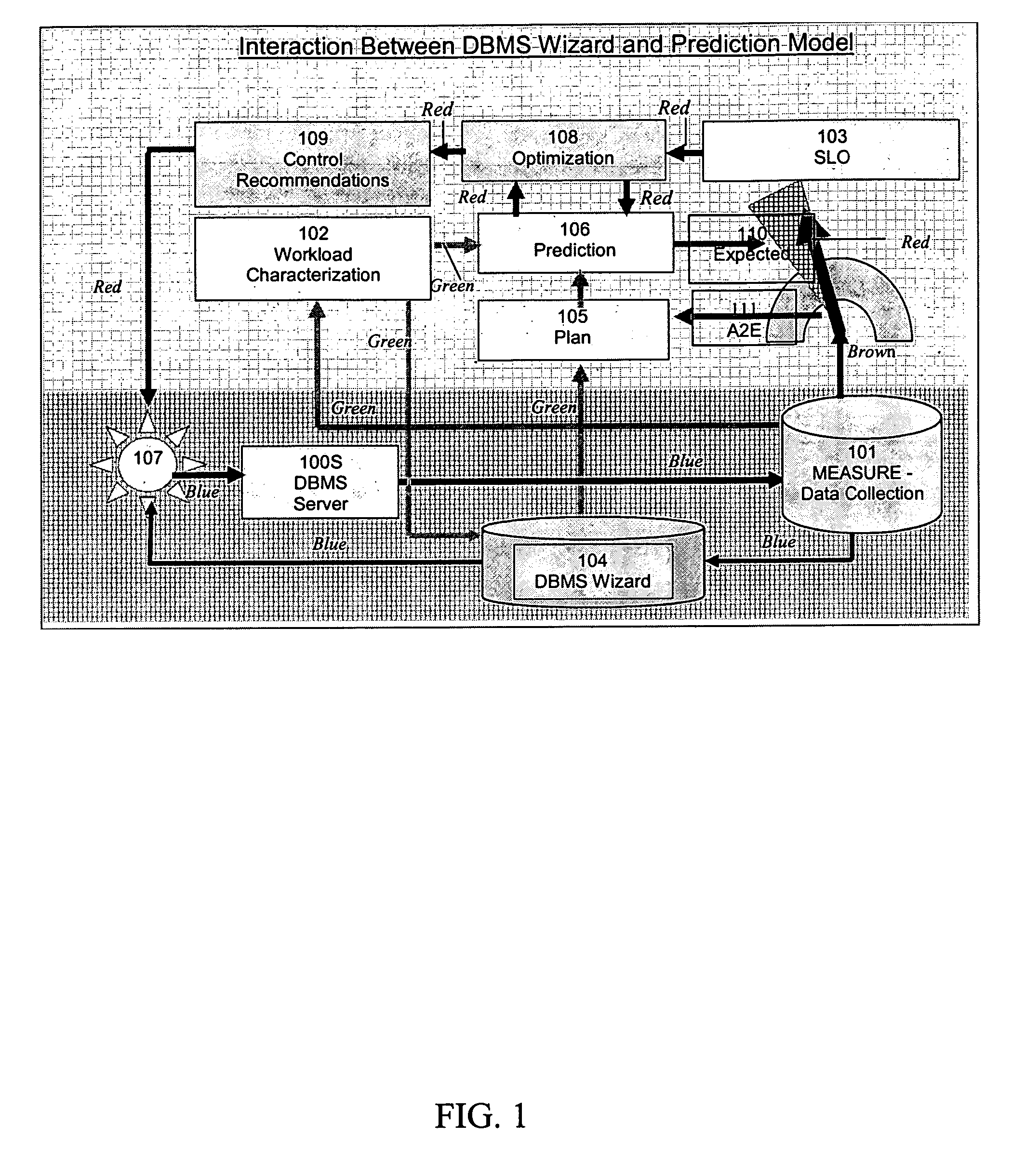

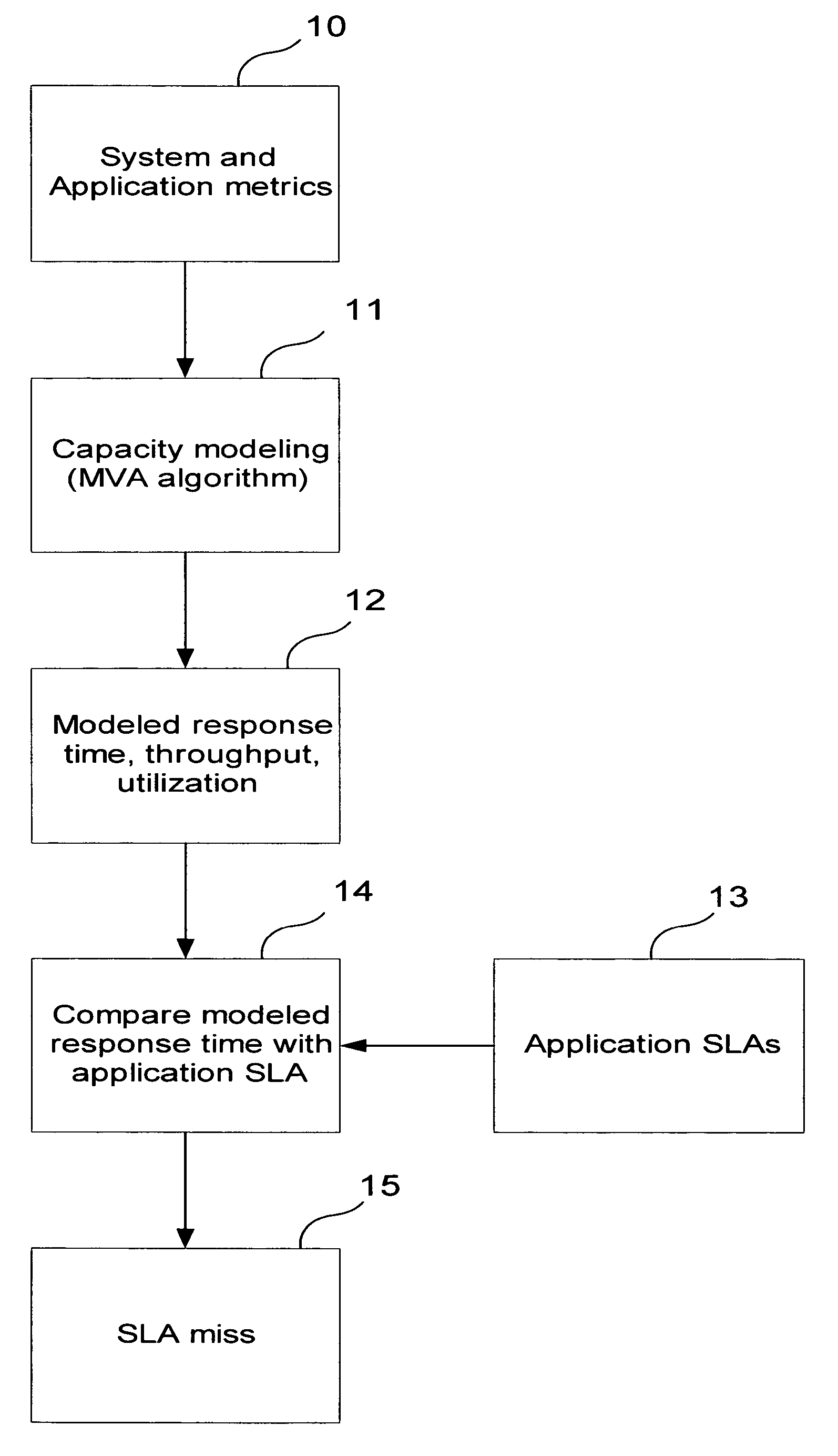

Disclosed is an improved method and system for implementing DBMS server performance optimization. According to some approaches, the method and system incorporates DBMS wizards recommendations with analytical queuing network models for purpose of evaluating different alternatives and selecting the optimum performance management solution with a set of expectations, enhancing autonomic computing by generating periodic control measures which include recommendation to add or remove indexes and materialized views, change the level of concurrency, workloads priorities, improving the balance of the resource utilization, which provides a framework for a continuous process of the workload management by means of measuring the difference between the actual results and expected, understanding the cause of the difference, finding a new corrective solution and setting new expectations.

Owner:DYNATRACE

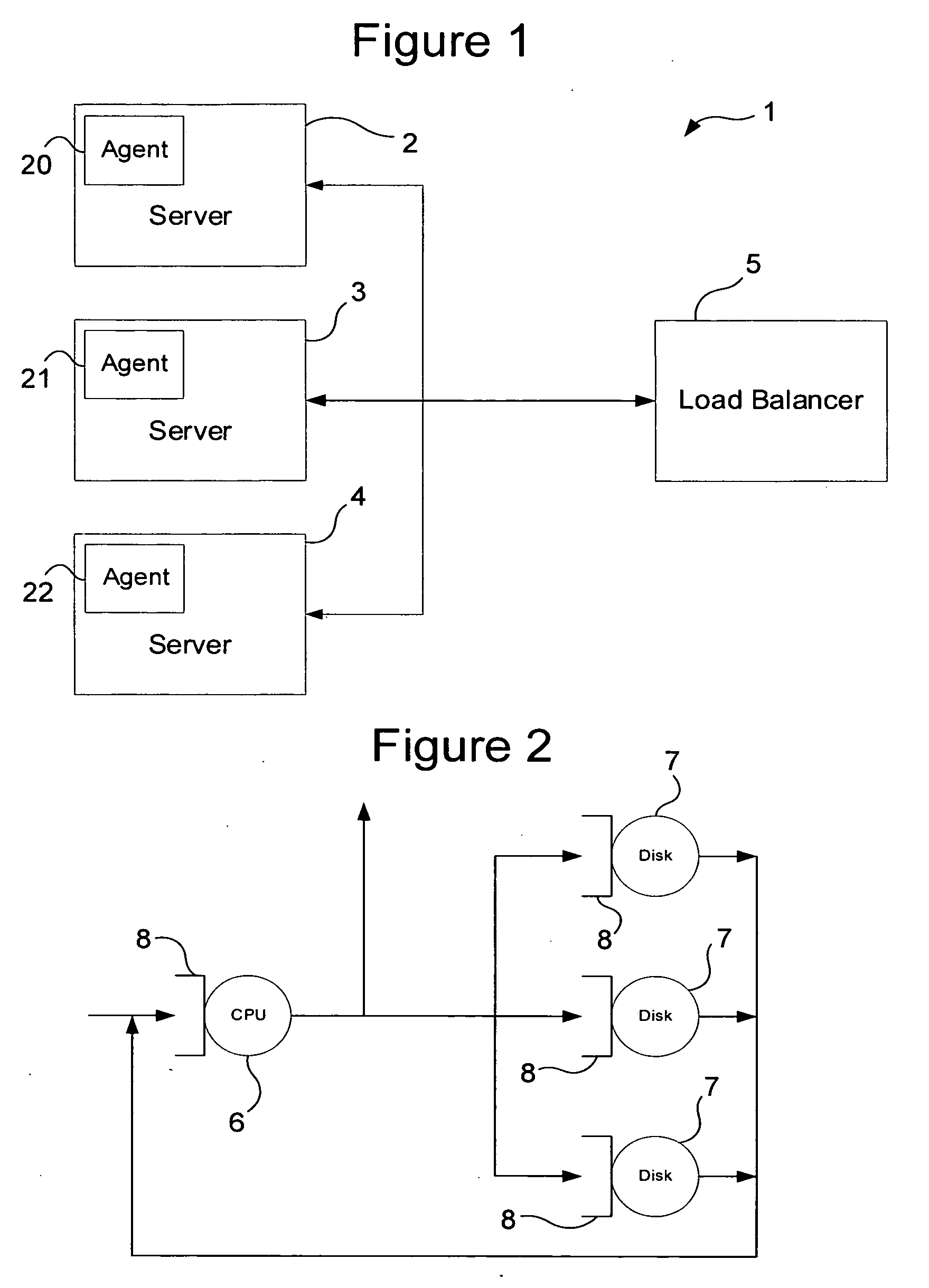

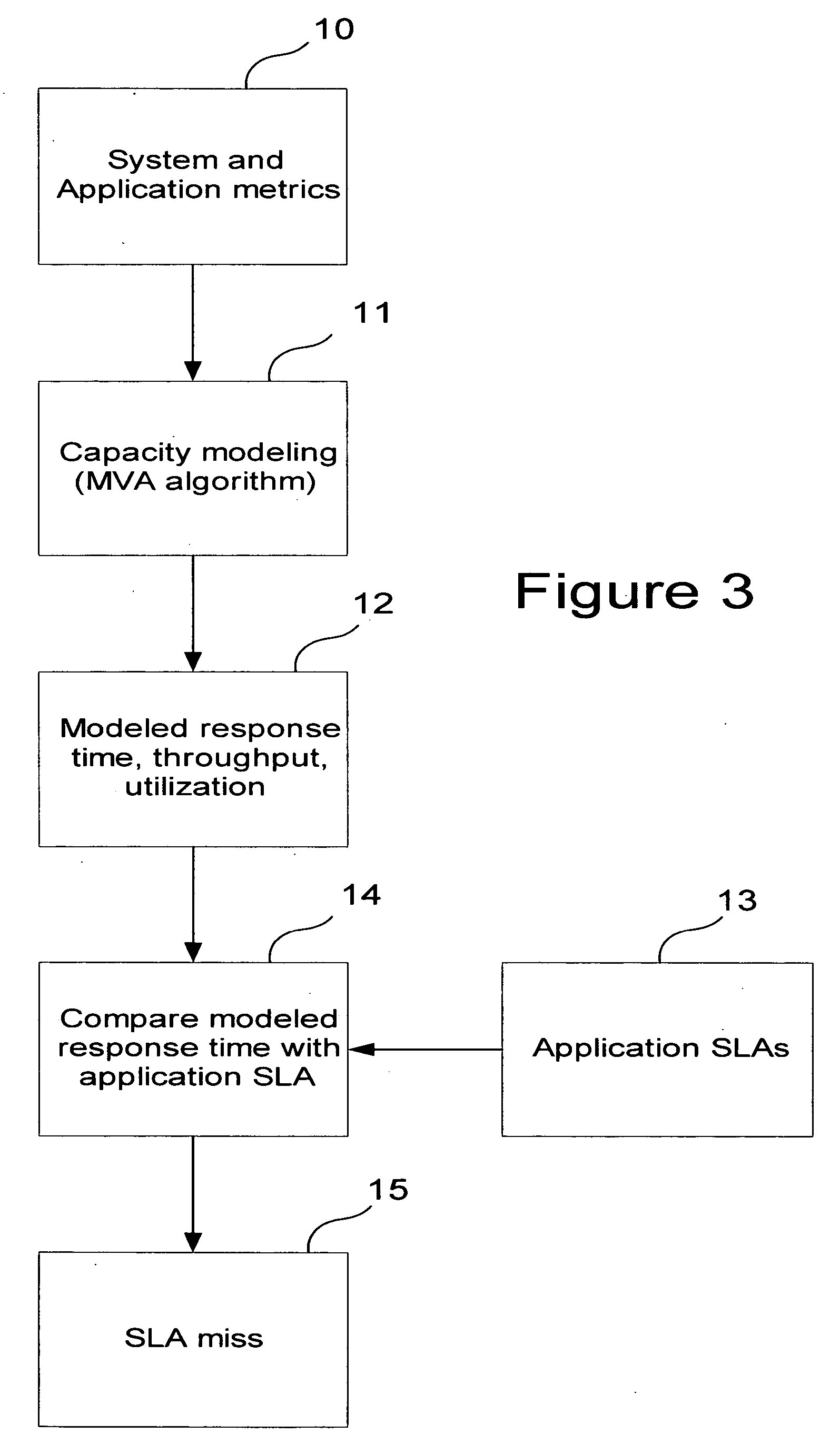

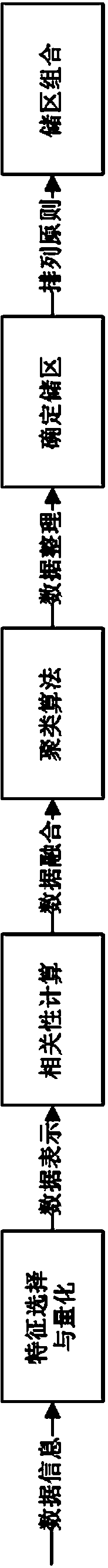

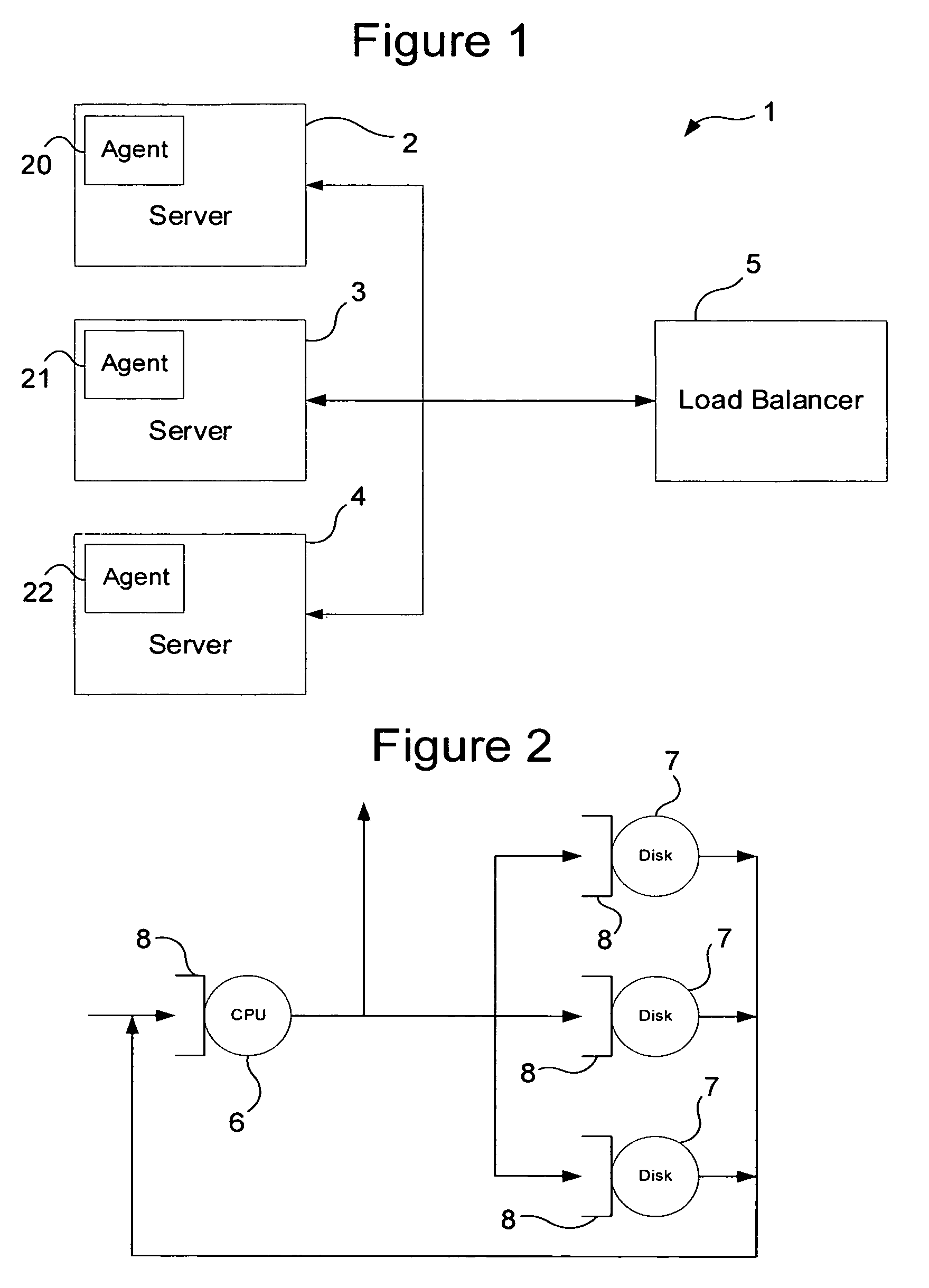

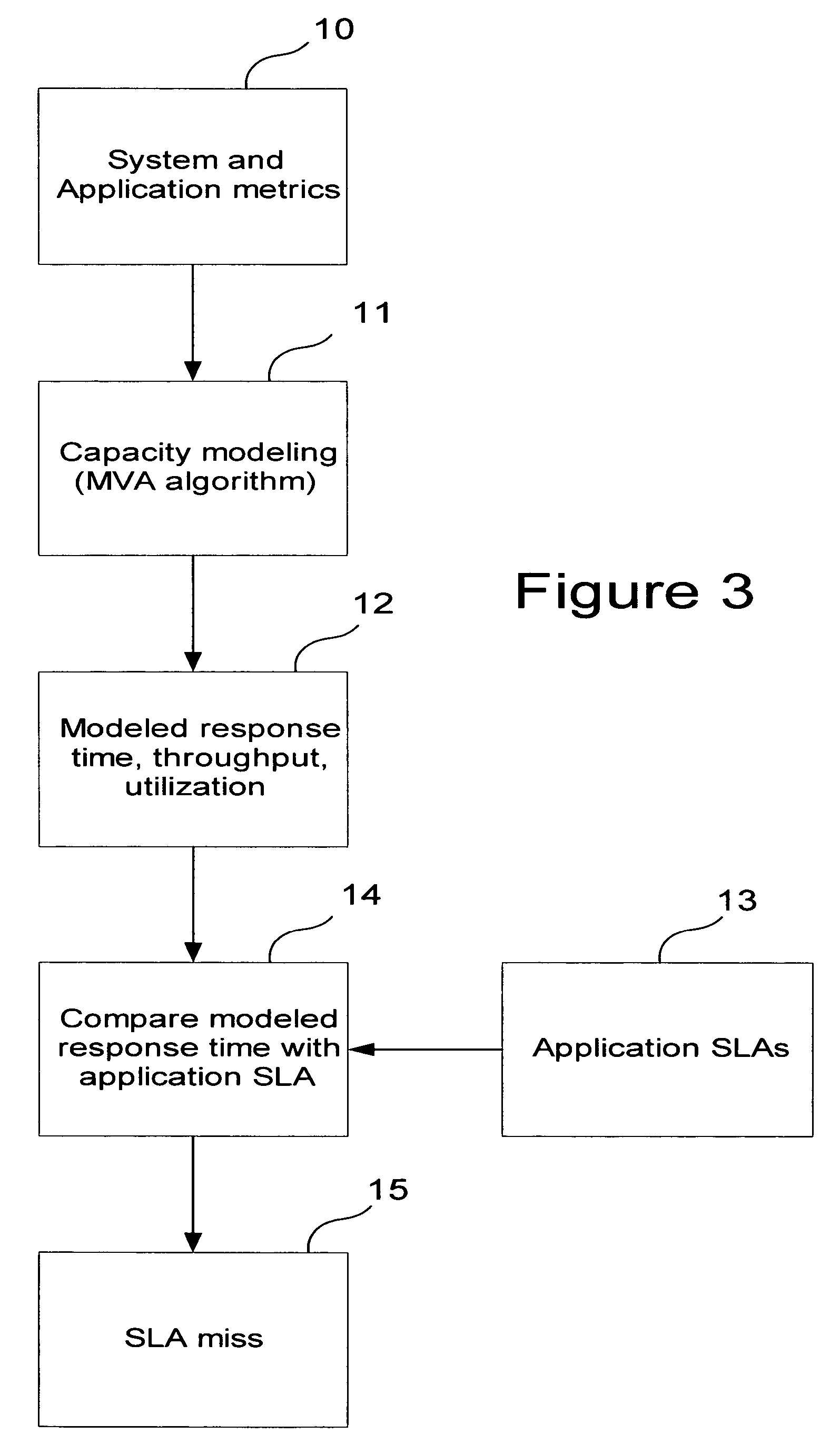

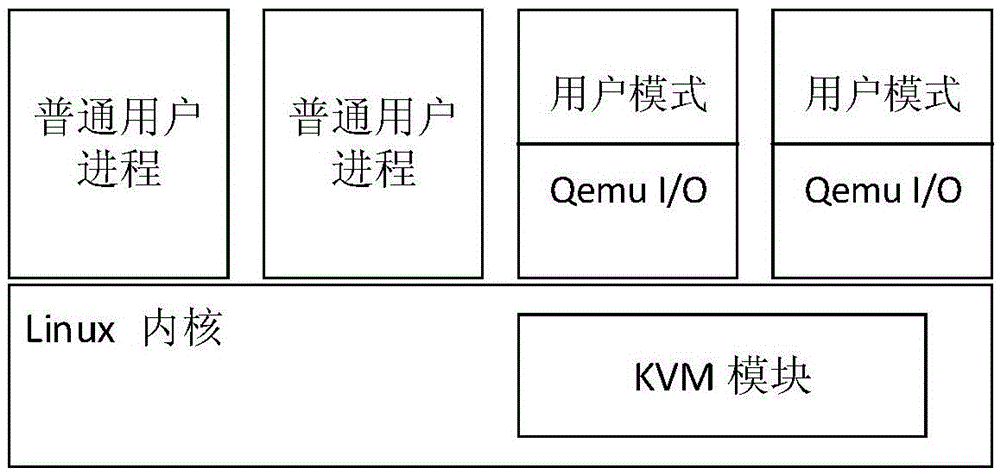

Method of distributing load amongst two or more computer system resources

InactiveUS20050240935A1Resource allocationHardware monitoringService-level agreementQueuing network model

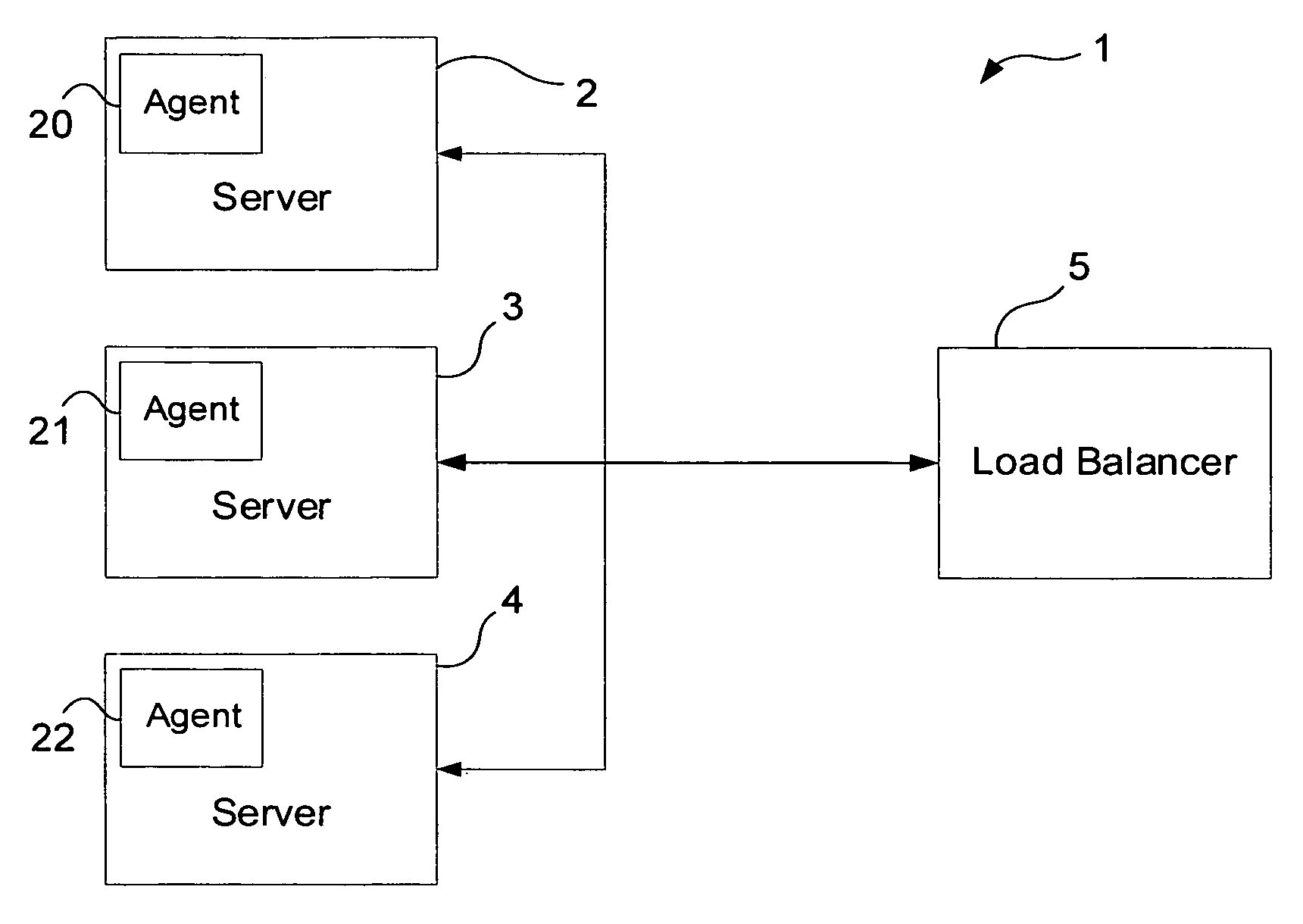

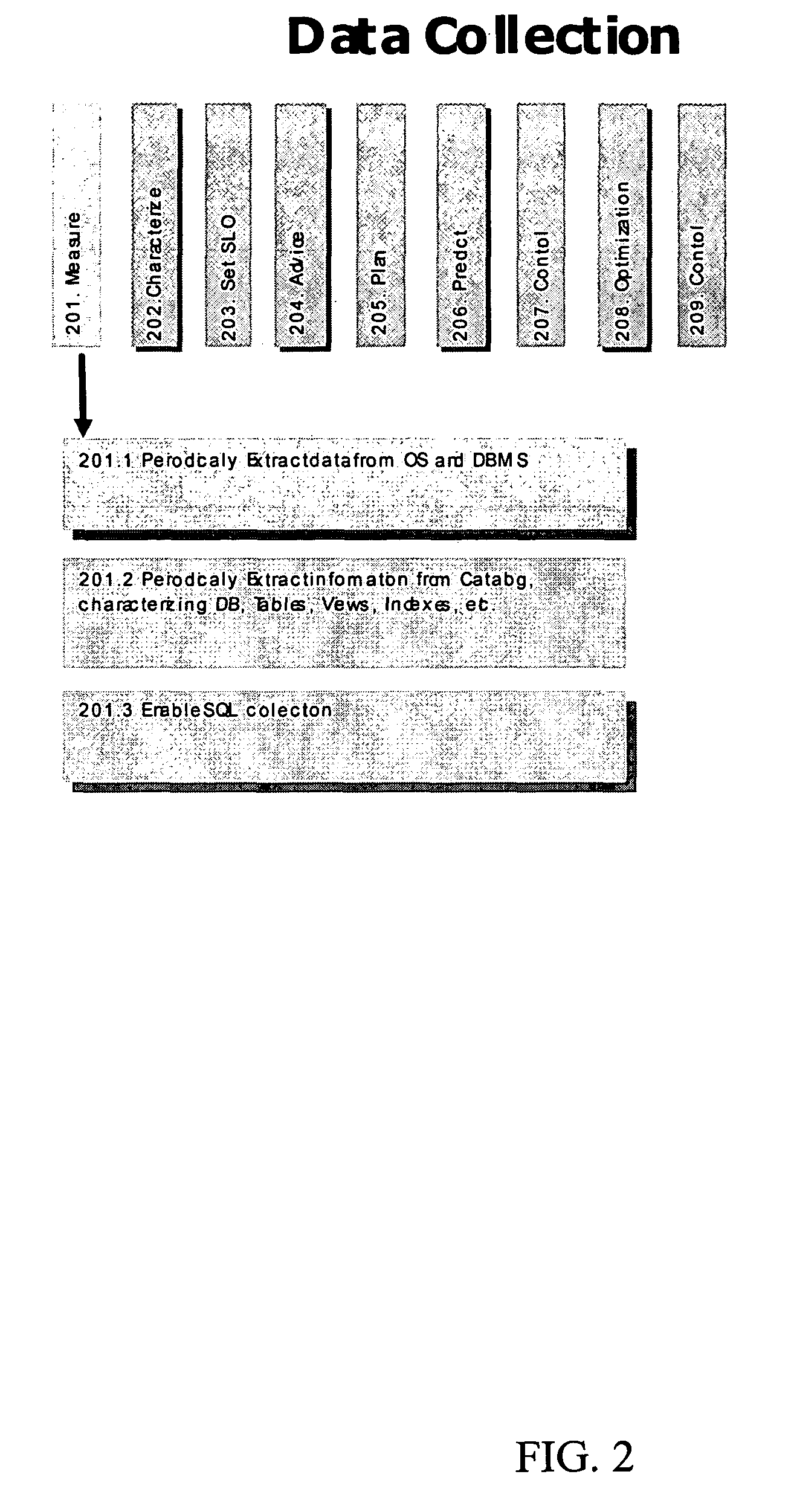

A method of distributing load amongst two or more computer system resources. The method includes distributing load to the system resources in accordance with their modeled response times and a predetermined Service level Agreement (SLA) associated with each system resource. By modeling the response time of each resource, load can be distributed with a view to maintaining response times within a predetermined Service level Agreement (SLA). The response time may be modeled by analytical modeling, which uses a queuing network model for predicting the response time, typically along with other parameters such as, utilization, throughput and queue length.

Owner:MICRO FOCUS LLC

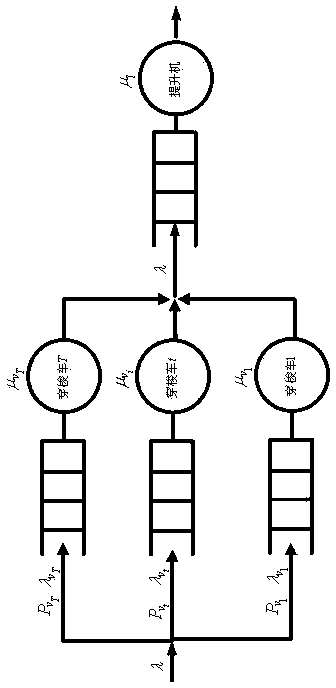

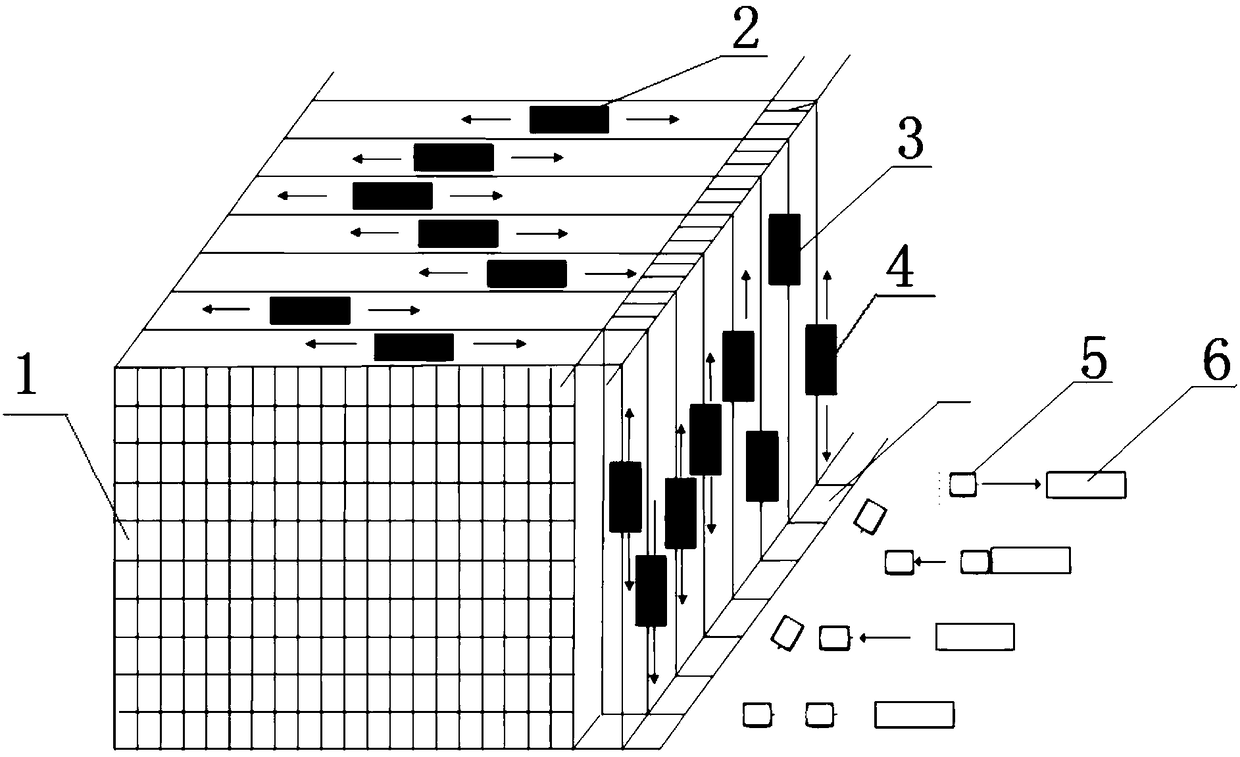

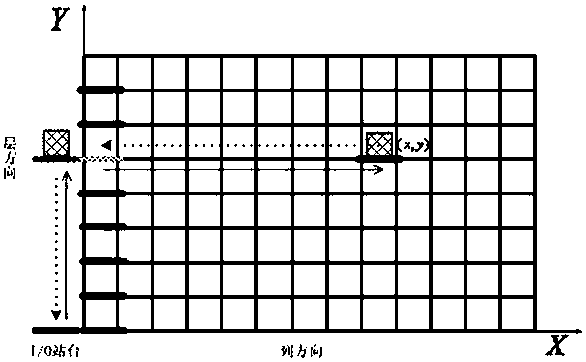

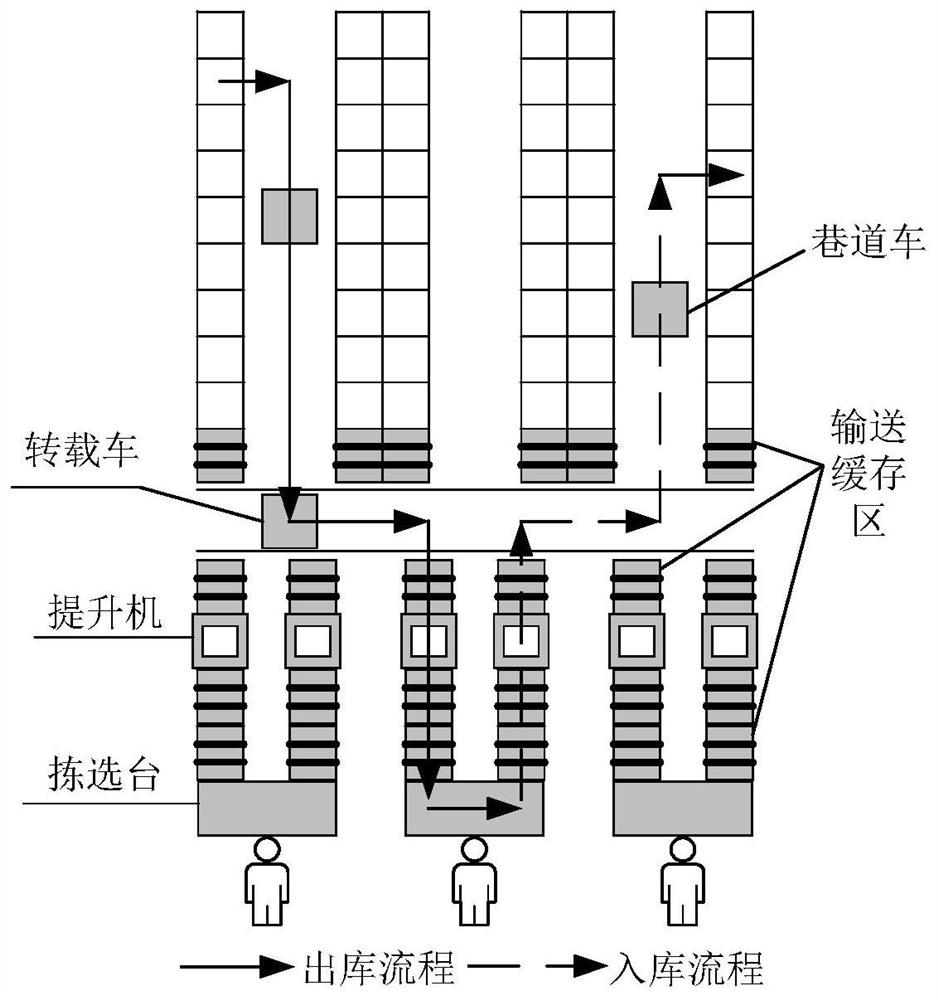

Goods location allocating method applied to automatic warehousing system of multi-layer shuttle vehicle

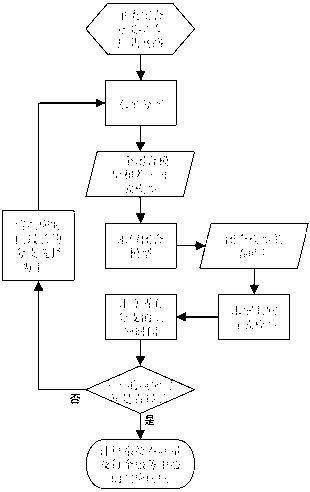

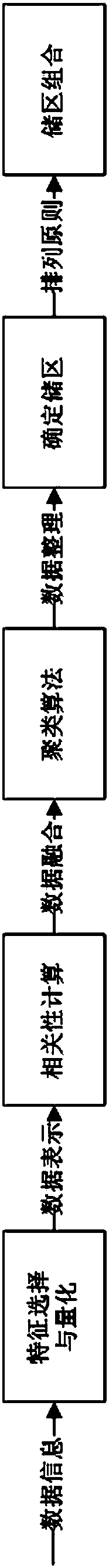

The invention discloses a goods location allocating method applied to an automatic warehousing system of a multi-layer shuttle vehicle. The method comprises the following steps: firstly, according to the quantities of goods shelves and tunnels, generating plane layout structure data of the system; then analyzing the waiting time of the shuttle vehicle executing an outbound task as well as the idle time of a hoister; establishing an open queuing network model for describing the system; analyzing the relationship among the waiting time of the shuttle vehicle, the idle time of the hoister and the time of inbound and outbound works by a decomposition process; determining that the higher the reaching rate of a task serviced by the shuttle vehicle is, the lower the goods location of the task is, and the goods with highest correlation are allocated at different layers, so that multiple shuttle vehicles can provide service simultaneously; finally putting forward a principle of dividing storage zones in light of item correlation, establishing a correlation matrix of outbound items, clustering the items by an ant colony algorithm, and combining and arranging the storage zones in a two-dimensional plane according to the analysis result of the queuing network model, thereby realizing the allocation of goods location. By the method, the waiting time of the shuttle vehicle and the idle time of the hoister can be effectively shortened, so that the rate of equipment utilization and the throughput capacity of a distribution center are increased.

Owner:SHANDONG UNIV

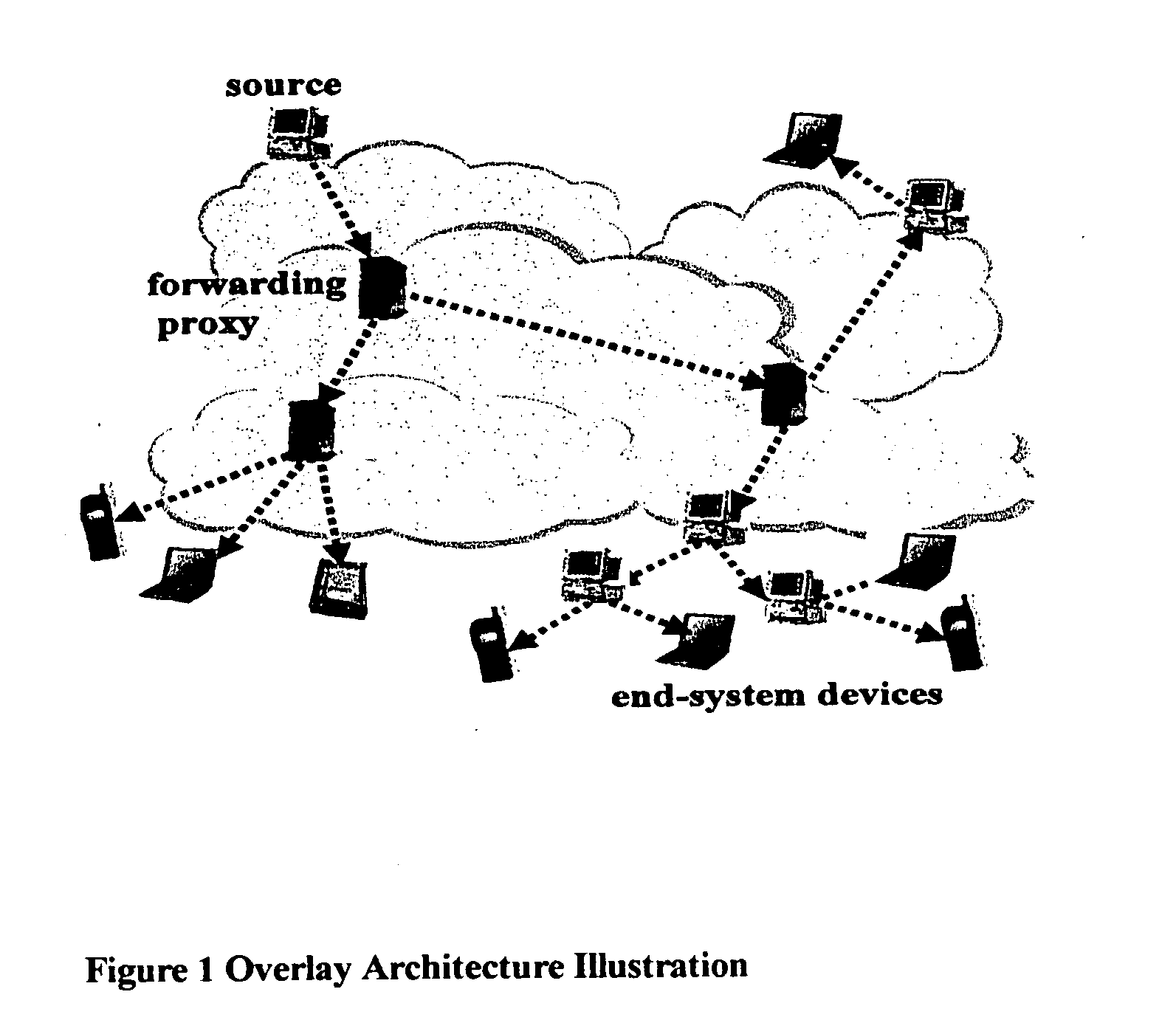

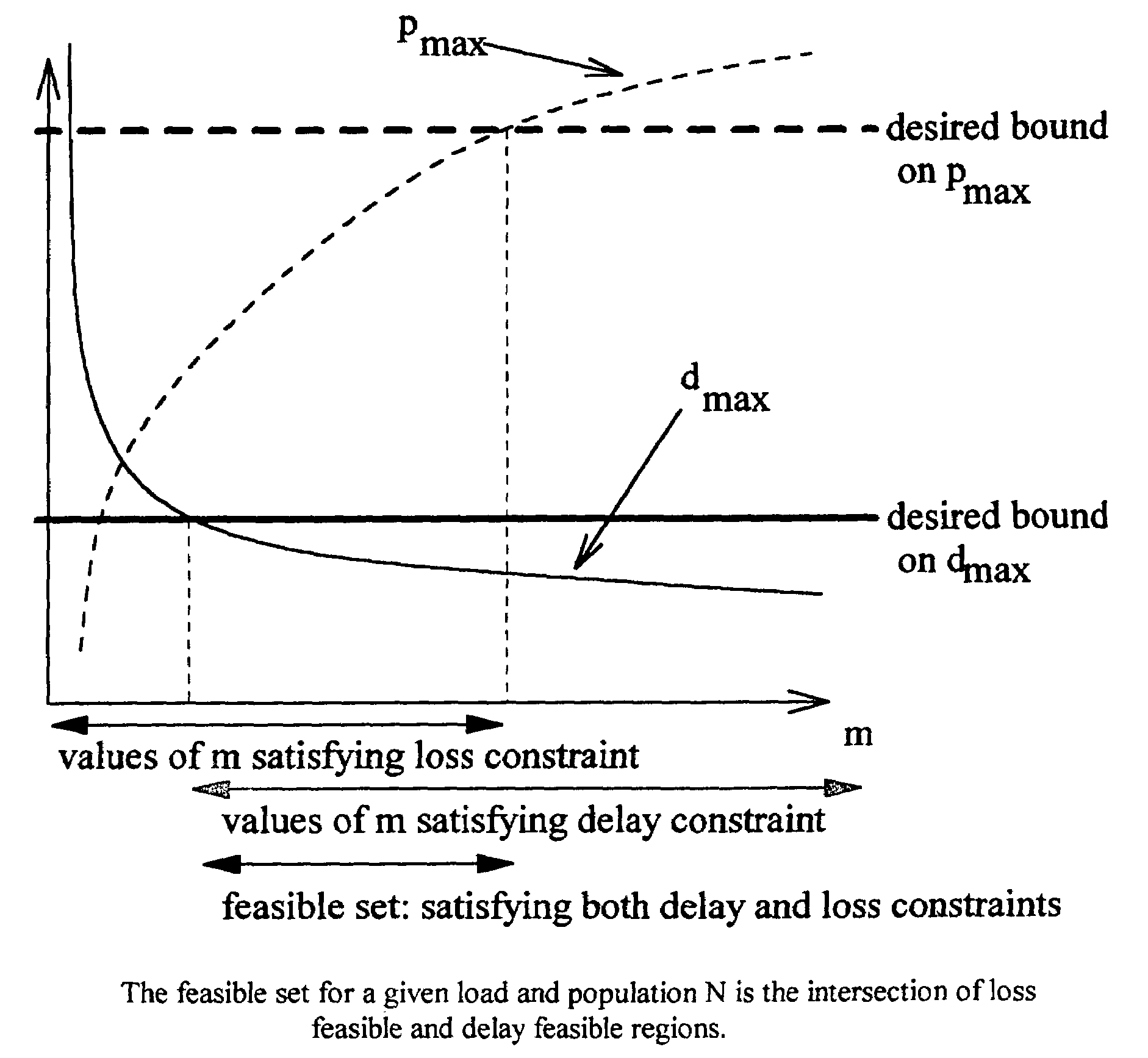

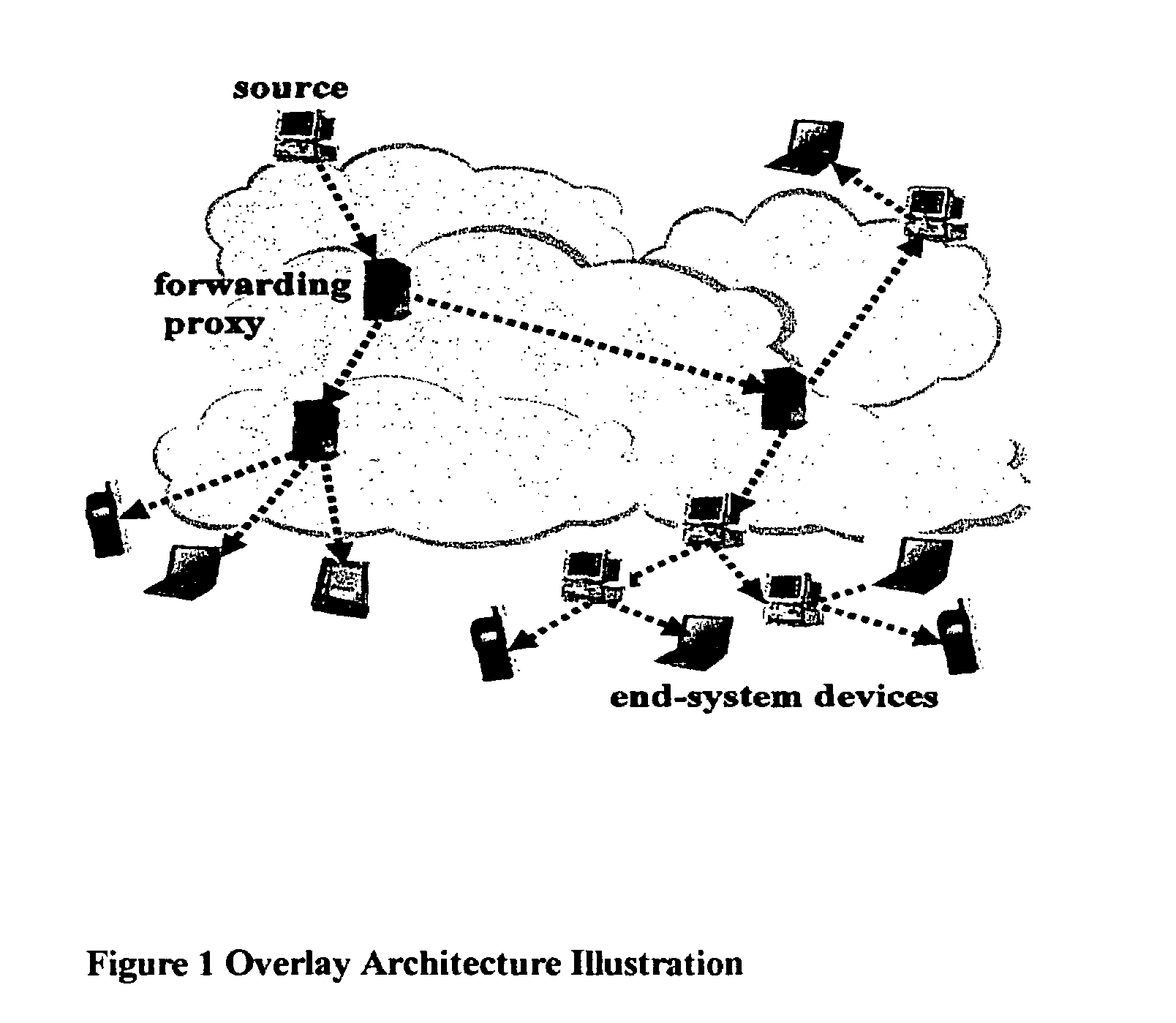

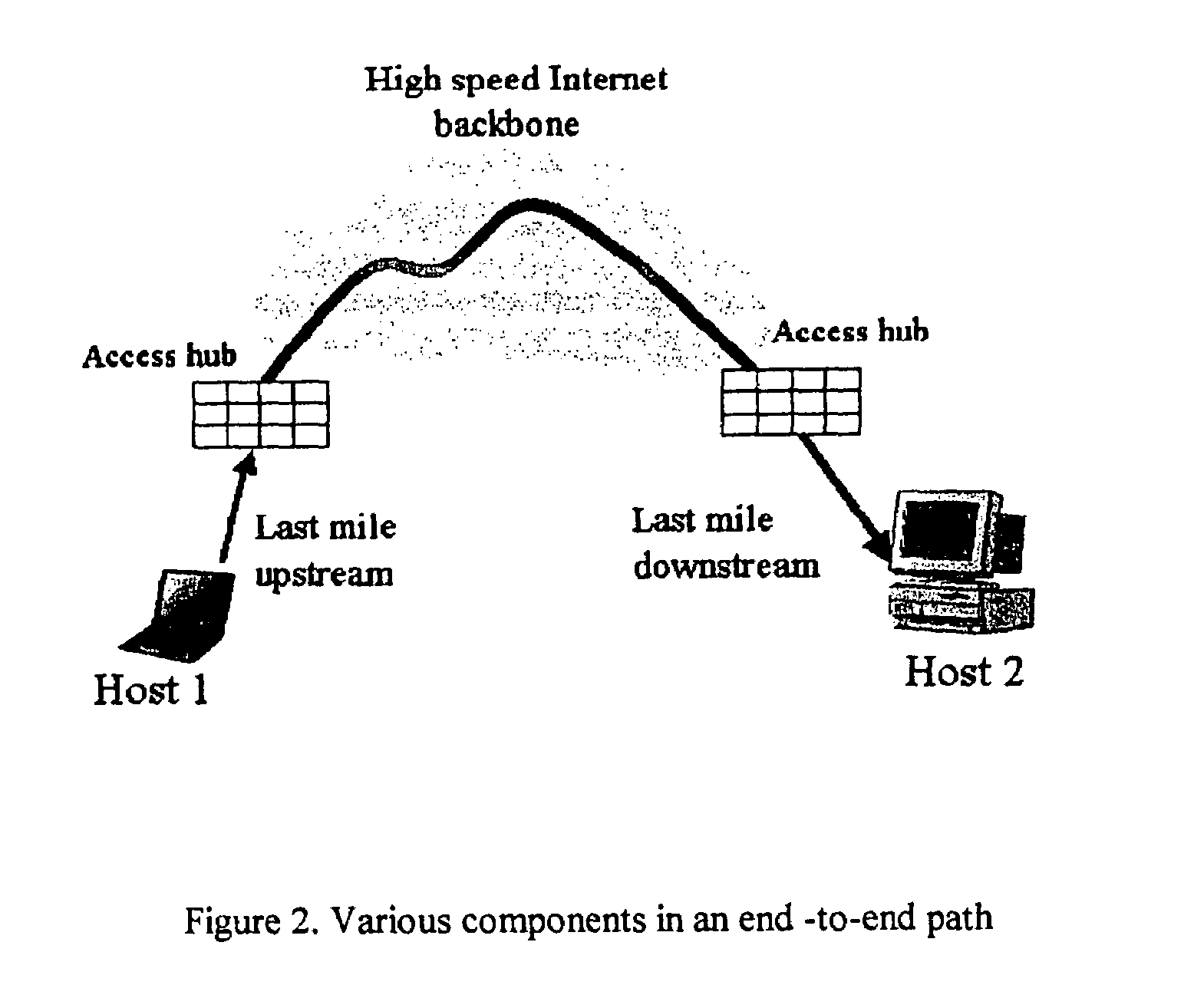

On-demand group communication services with quality of service (QoS) guarantees

ActiveUS20060153100A1Data switching by path configurationNetwork connectionsQuality of servicePacket arrival

The present invention broadly contemplates addressing QoS concerns in overlay design to account for the last mile problem. In accordance with the present invention, a simple queuing network model for bandwidth usage in the last-mile bottlenecks is used to capture the effects of the asymmetry, the contention for bandwidth on the outgoing link, and to provide characterization of network throughput and latency. Using this characterization computationally inexpensive heuristics are preferably used for organizing end-systems into a multicast overlay which meets specified latency and packet loss bounds, given a specific packet arrival process.

Owner:HULU

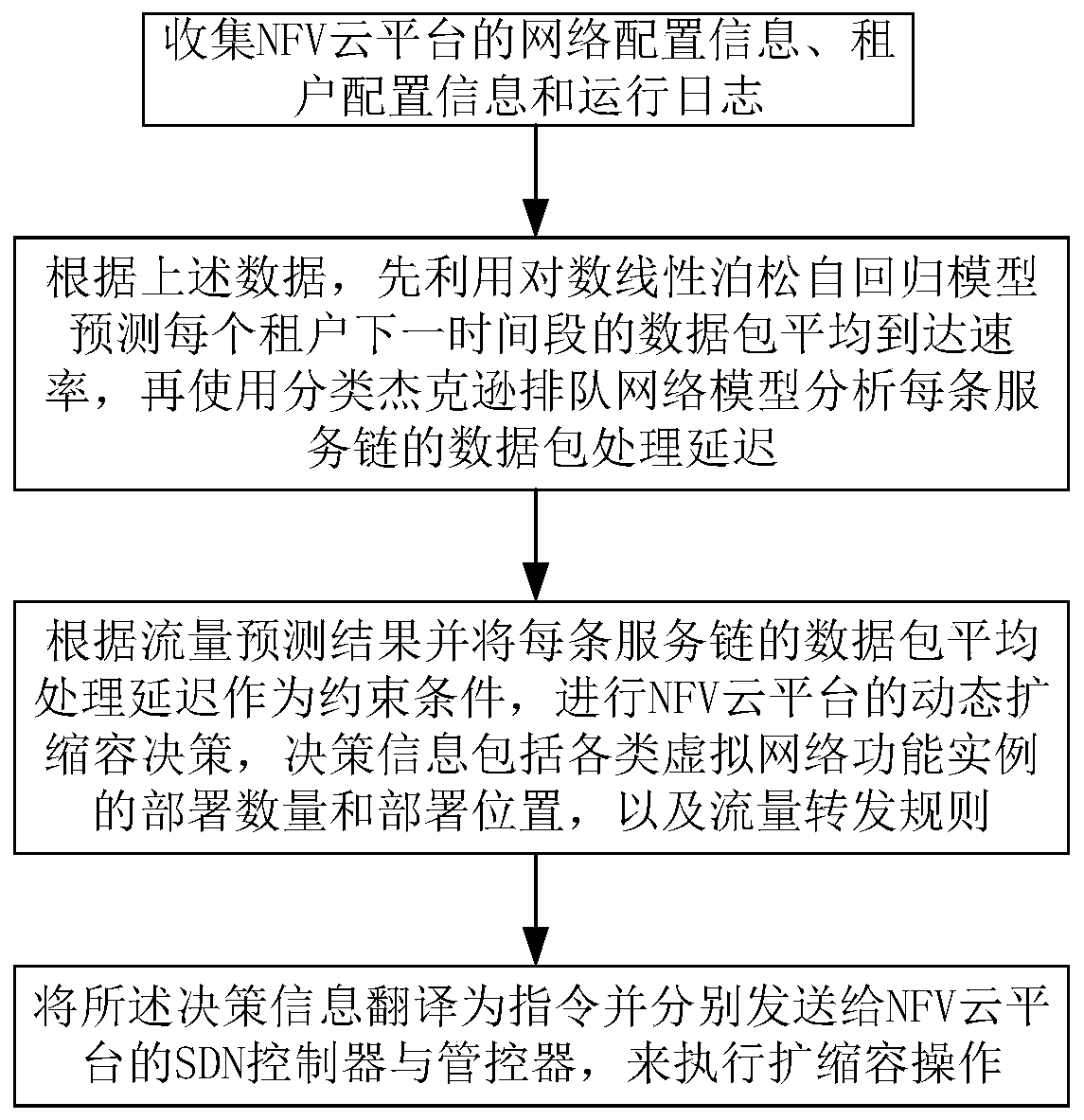

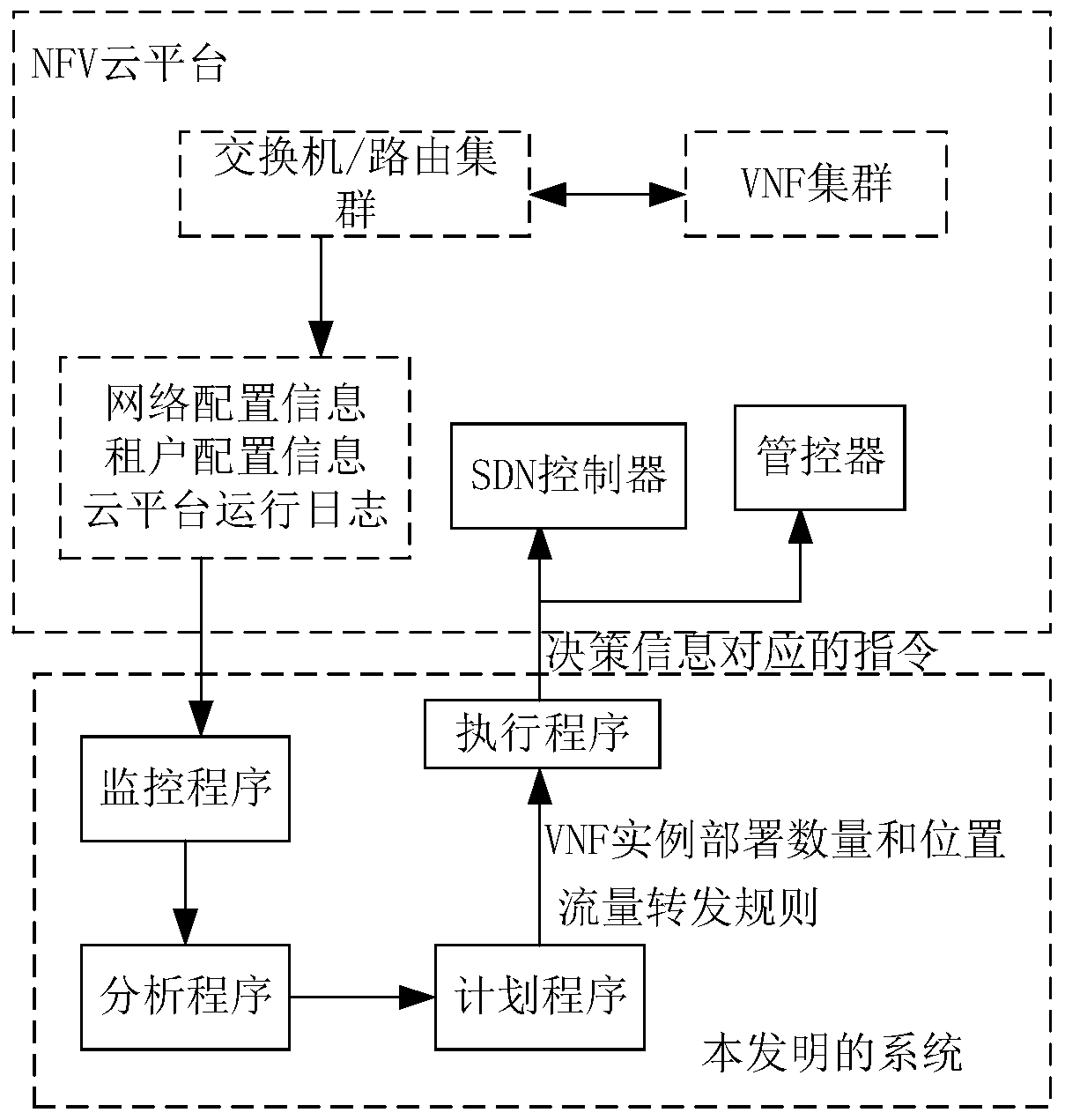

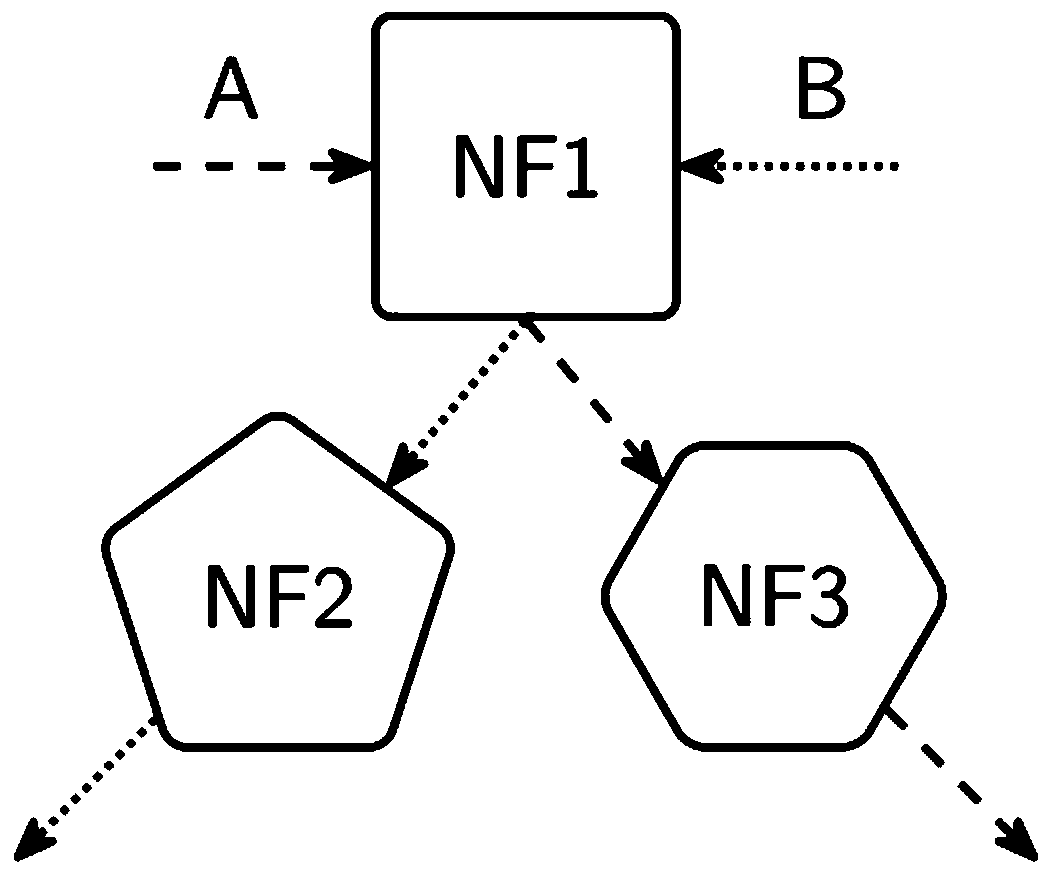

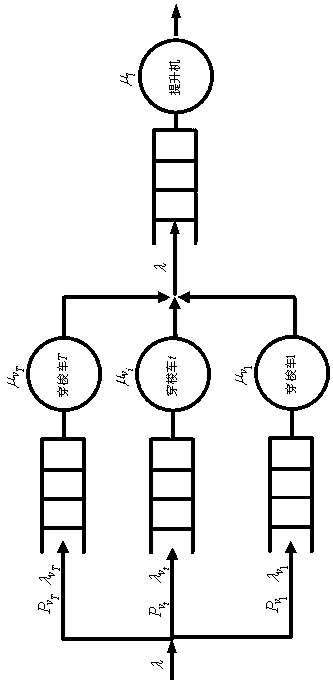

Delay-guaranteed NFV cloud platform dynamic capacity expanding and shrinking method and system

ActiveCN109995583AMinimize overheadImprove resource utilizationData switching networksTraffic capacityTraffic prediction

The invention discloses a delay-guaranteed NFV cloud platform dynamic capacity expanding and shrinking method and system. The method comprises the steps of collecting network configuration information, tenant configuration information and operation logs of an NFV cloud platform; according to the collected network configuration information of the NFV cloud platform, tenant configuration informationand an operation log, predicting a data packet average arrival rate of each tenant in a next time period by utilizing a logarithmic linear Poisson autoregression model to perform flow prediction, andanalyzing data packet average processing delay of each service chain by utilizing a classification Boxson queuing network model based on the data packet average arrival rate of each tenant in the next time period; according to the flow prediction result and the data packet average processing delay of each service chain, carrying out dynamic capacity expansion and shrinkage decision-making of theNFV cloud platform, the decision-making information comprising the deployment number and positions of various virtual network function instances and a flow forwarding rule; and translating the decision information into an instruction, and respectively sending the instruction to an SDN controller and a controller of the NFV cloud platform to execute capacity expansion and shrinkage operation.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

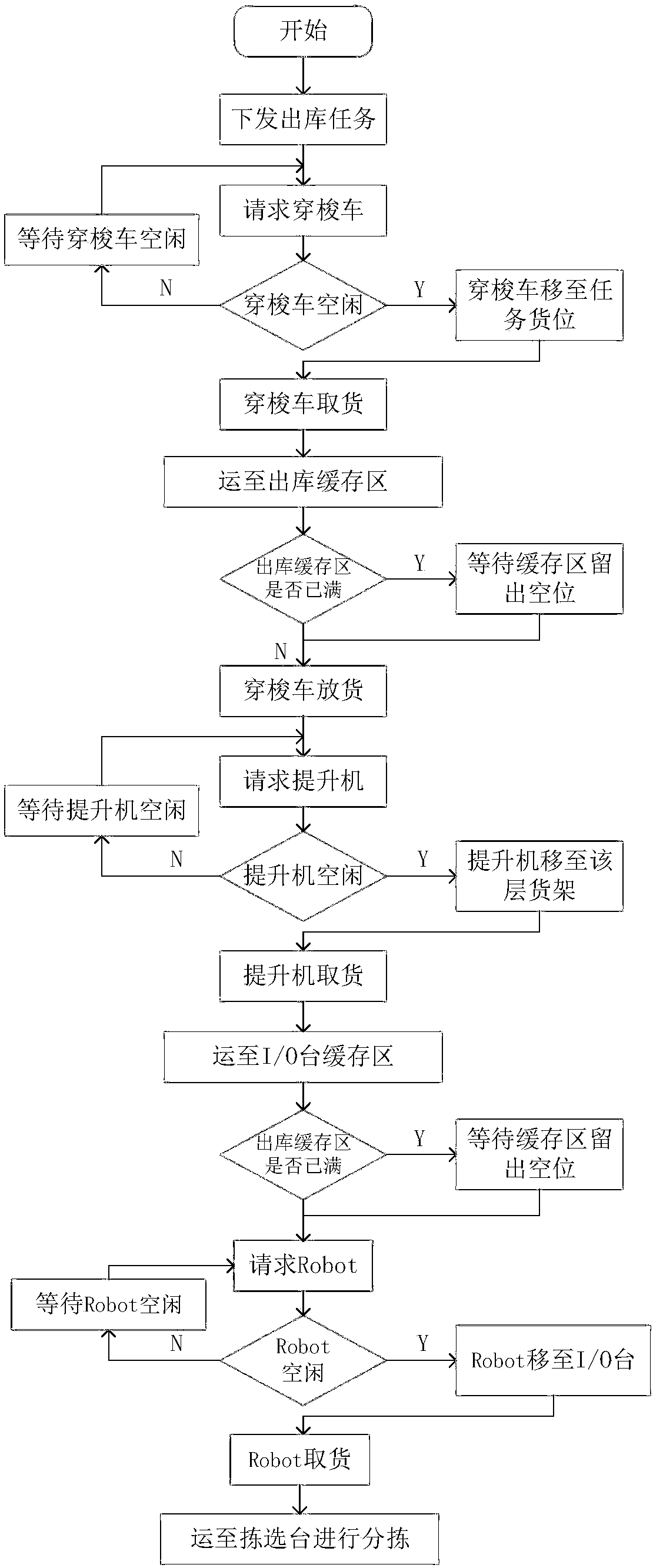

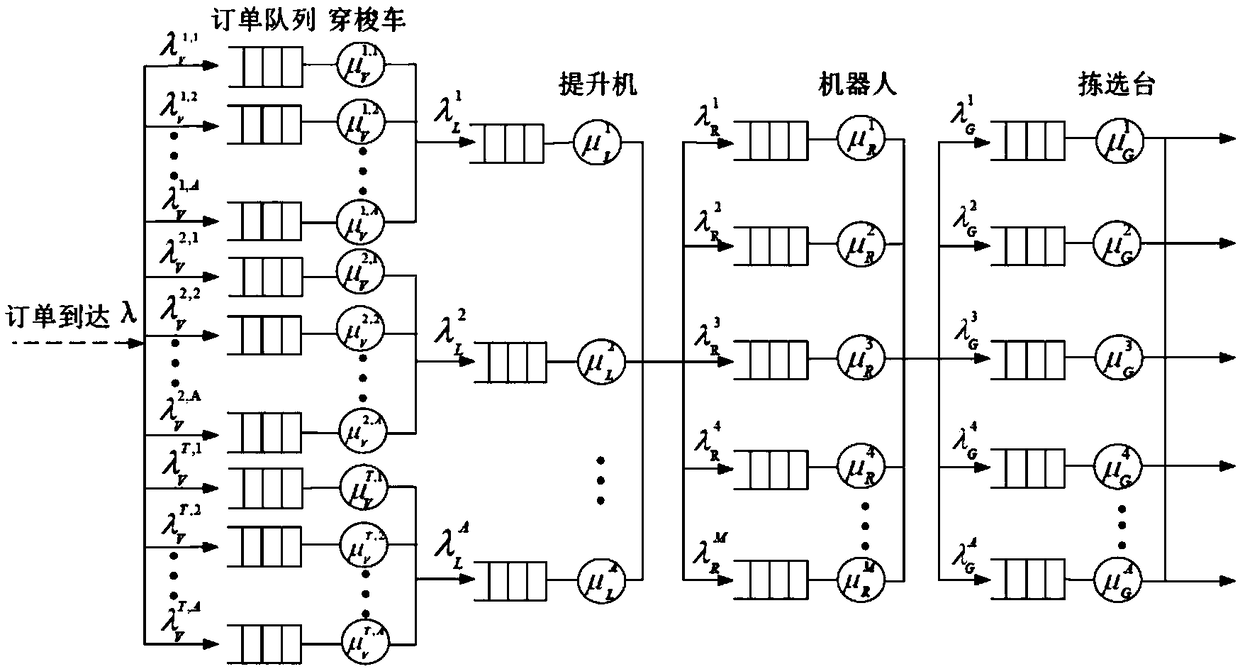

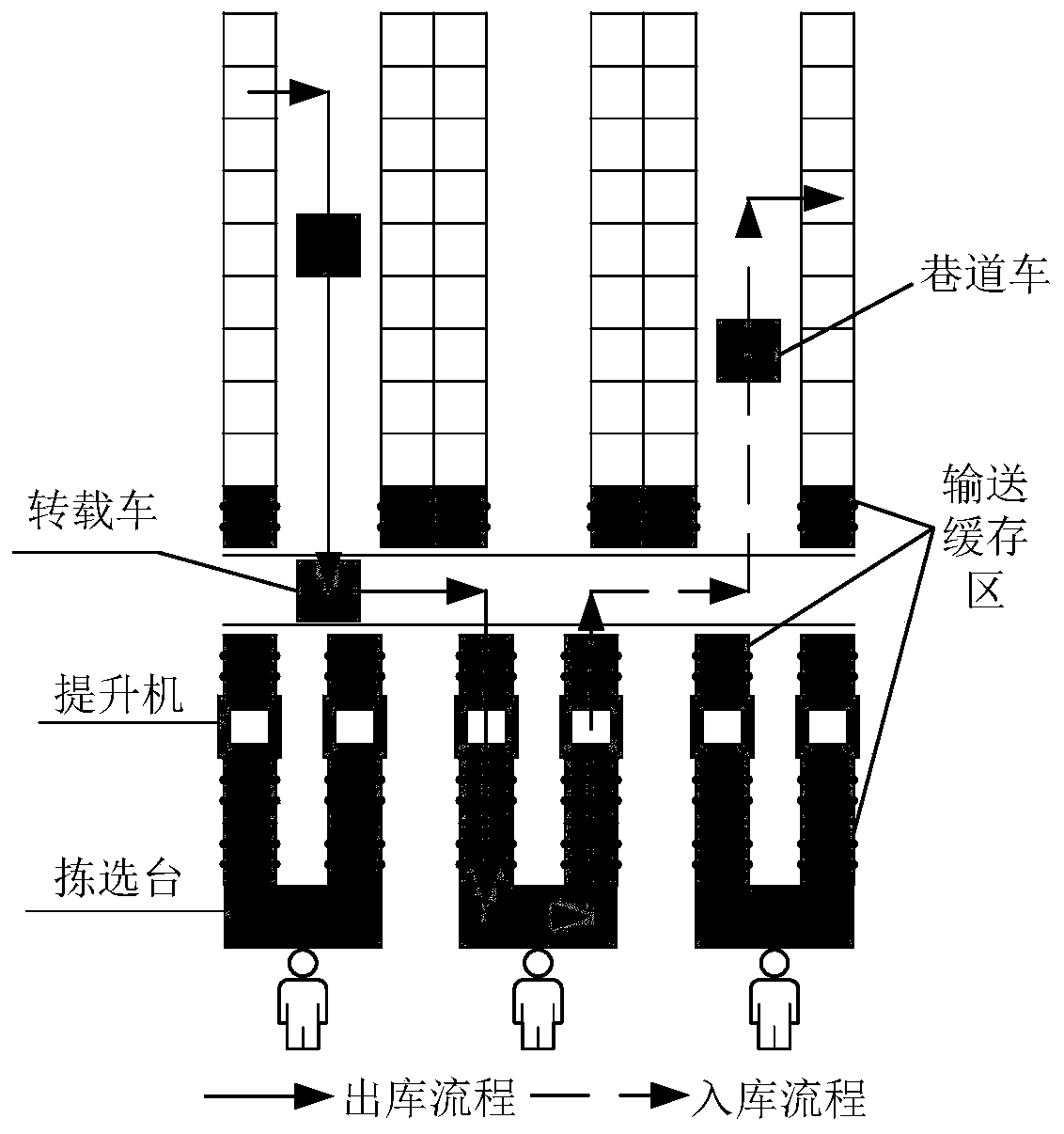

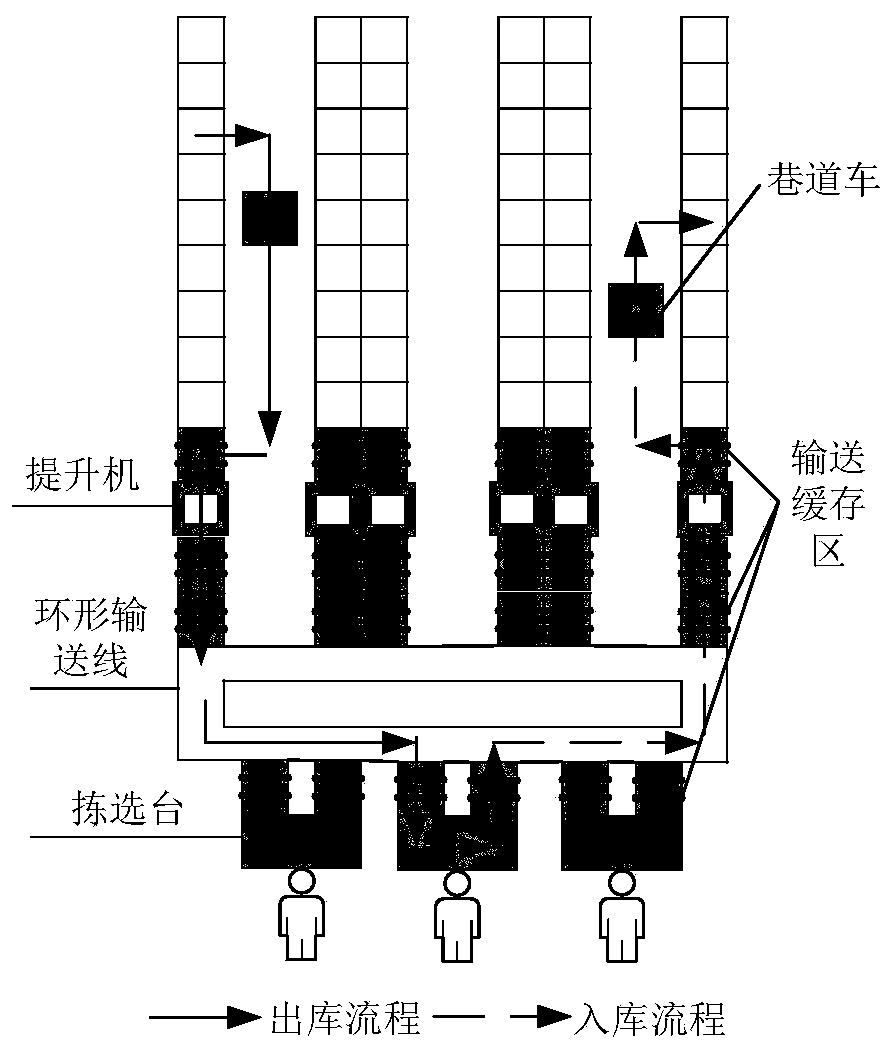

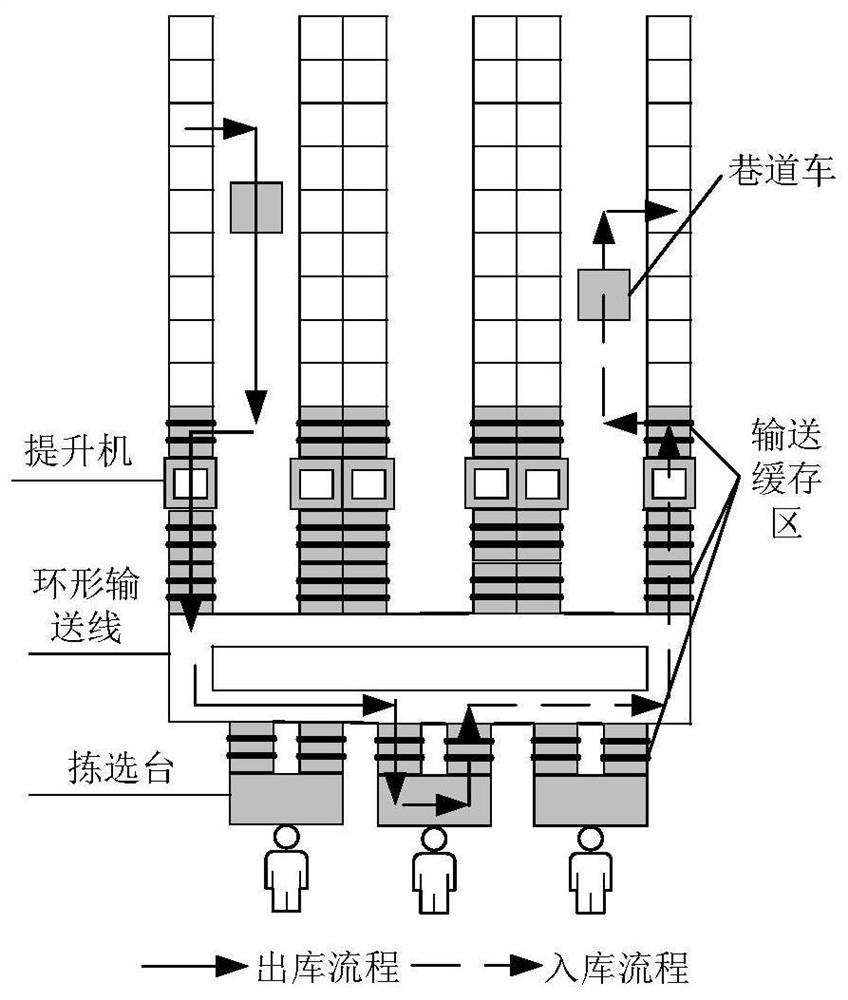

Multilayer shuttling car warehousing conveying system based on warehousing robot and conveying method

InactiveCN109292343AIncrease the number ofIncrease flexibilityStorage devicesSimulationNetwork model

The invention discloses a multilayer shuttling car warehousing conveying system based on a warehousing robot and a conveying method. The system comprises a stereoscopic warehouse for storing goods, the warehousing robot for transporting the goods, and a warehousing intelligent control system for realizing the goods storage control. An open-loop queuing network model is built to analyze performances of the system, to analyze equipment factors of influencing the order aging, to calculate the order service time, the waiting time of the warehousing robot and the utilization rate of the warehousingrobot and to analyze the order arrival rate and the number sensitivity of the warehousing robot; and when the storage capacity is higher, the order arrival rate is higher or the change amplitude is higher, and the advantages of the multilayer shuttling car warehousing conveying system based on the warehousing robot are more obvious.

Owner:SHANDONG UNIV

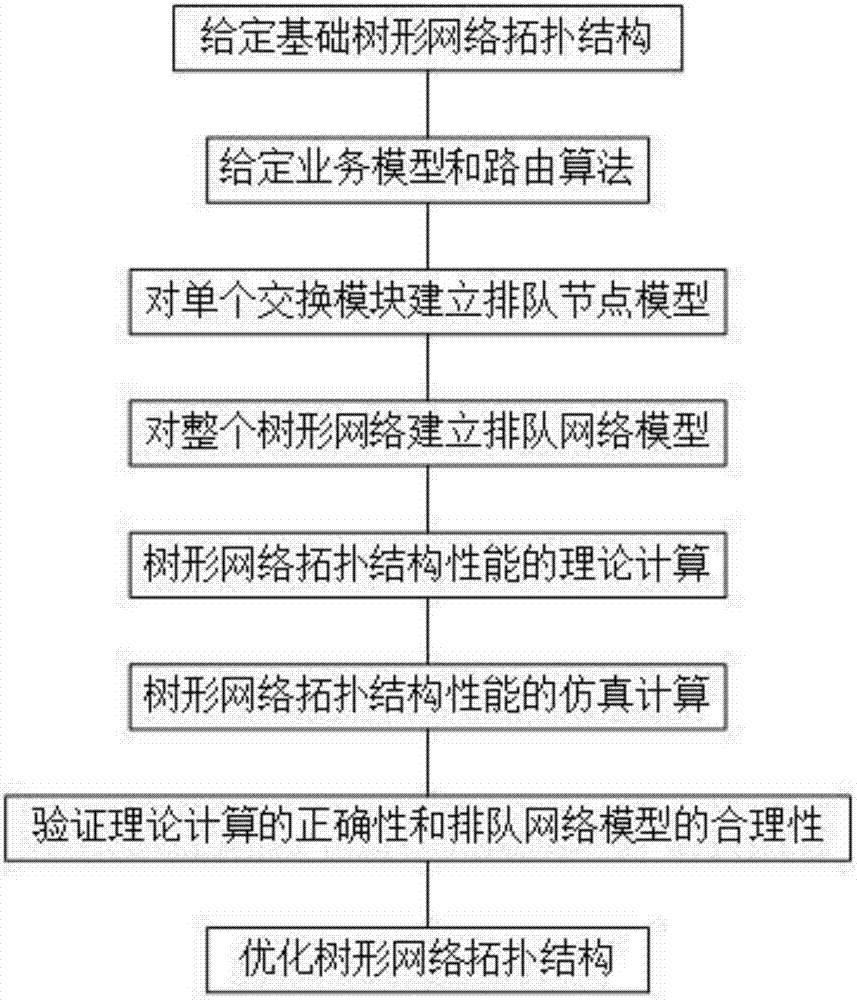

Optimization method for tree network topology structure based on queuing theory

ActiveCN106936645ARealize quantitative analysisReflect performanceData switching networksService modelLimited resources

The invention provides an optimization method for a tree network topology structure based on a queuing theory and is sued for solving the optimization design problem of large-scale user node interconnection under limited resources and preset service. The method comprises the realization steps of presetting a basic tree network topology structure, a service model and a routing algorithm; establishing a queuing node model of a single basic switching module and a queuing network mode of a whole tree network; carrying out theoretical calculation and simulating calculation on the performance of the tree network topology structure; verifying the theoretical calculation accuracy and the reasonability of the queuing network model; and optimizing the tree network topology structure and parameters. According to the method, the queuing network model is established, the tree network is analyzed quantitatively, the influences of the service strength, the cache, the switching modules and the network topology structure on the network performance are taken into consideration, and the method is applicable to the establishment of the optimum tree network topology structure under preset service demands.

Owner:XIDIAN UNIV

Method of incorporating DBMS wizards with analytical models for DBMS servers performance optimization

ActiveUS8200659B2Improve balanceEnhancing autonomic computingDigital data information retrievalDigital data processing detailsAnalytic modelResource utilization

Disclosed is an improved method and system for implementing DBMS server performance optimization. According to some approaches, the method and system incorporates DBMS wizards recommendations with analytical queuing network models for purpose of evaluating different alternatives and selecting the optimum performance management solution with a set of expectations, enhancing autonomic computing by generating periodic control measures which include recommendation to add or remove indexes and materialized views, change the level of concurrency, workloads priorities, improving the balance of the resource utilization, which provides a framework for a continuous process of the workload management by means of measuring the difference between the actual results and expected, understanding the cause of the difference, finding a new corrective solution and setting new expectations.

Owner:DYNATRACE

Method of distributing load amongst two or more computer system resources

InactiveUS7962916B2Resource allocationHardware monitoringService-level agreementService level requirement

Owner:MICRO FOCUS LLC

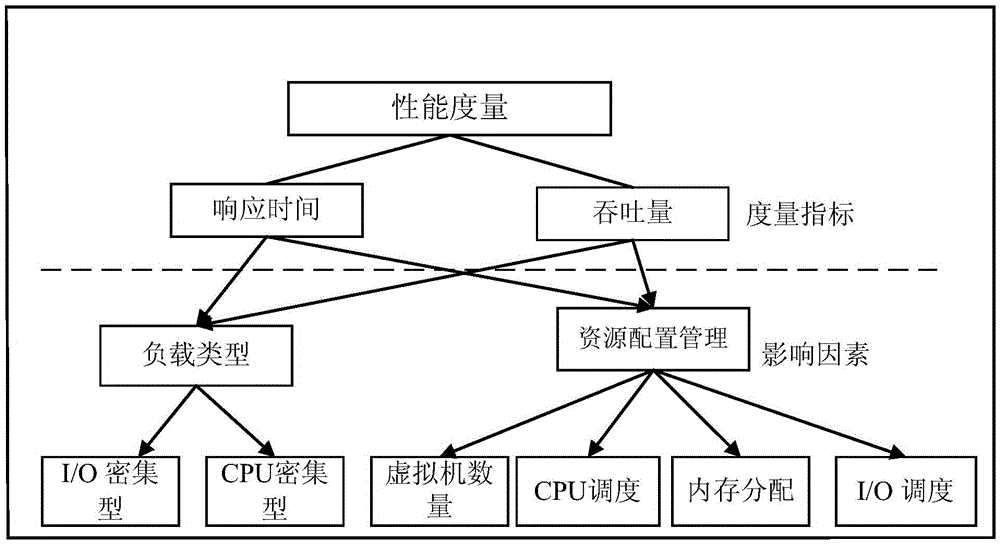

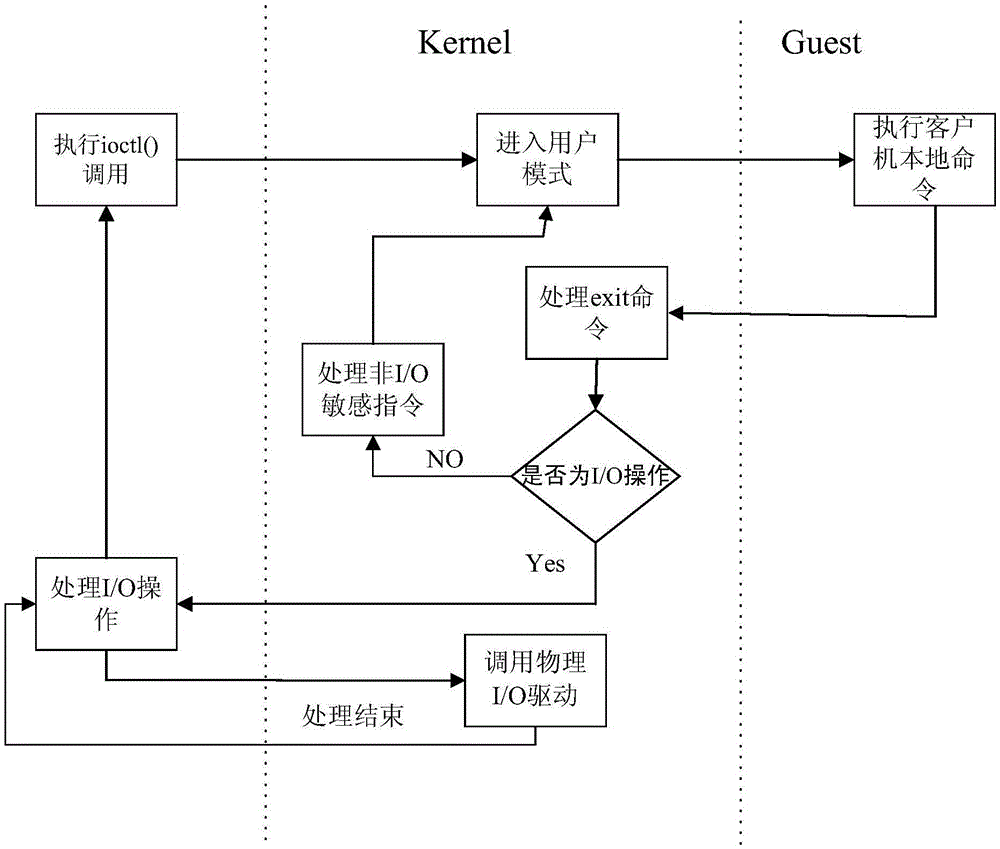

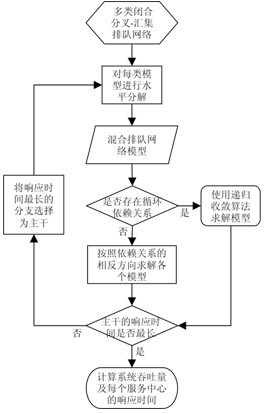

Performance evaluation method aiming at KVM virtualization server

ActiveCN105630575AVerify correctnessImprove the efficiency of performance evaluationHardware monitoringSoftware simulation/interpretation/emulationService-level agreementOperational system

The invention relates to a performance evaluation method aiming at a KVM virtualization server. The method comprises the steps of firstly at the application level, determining that the performance evaluation measure index of the KVM virtualization server is response time and throughput according to the quality of service parameter, namely QoS parameter, in a service level agreement and a common performance measure in the current actual application environment; then with the help of an open type queuing network model, establishing a virtualization server performance evaluation model through combining with the load characteristic of the virtualization server according to a resource scheduling and resource virtualization implementing mode in the KVM; and at last based on the performance evaluation mode, illustrating how to compute the performance measure index and evaluate the performance of a virtual machine in an Linux operation system. According to the method, the problem about evaluating the performance of the virtualization server in the KVM is solved, the performance evaluation efficiency is improved, the establishment of a complex performance testing environment is avoided, the performance evaluation cost is reduced, and the performance measures of main components in the virtualization server can be obtained, thus being capable of helping the user find system performance bottlenecks.

Owner:兰雨晴

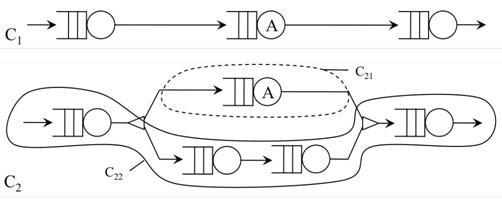

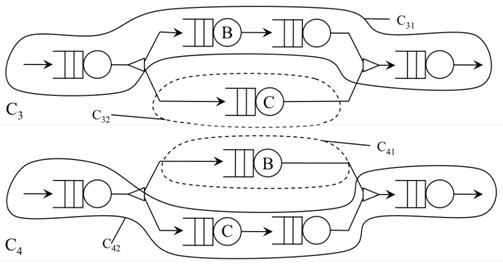

Method for analyzing performance of multi-class closed fork-join queuing network based on horizontal decomposition

ActiveCN102123053AImprove the efficiency of performance analysisEfficient solutionData switching networksDecompositionQueuing network

The invention discloses a method for analyzing performance of a multi-class closed fork-join queuing network based on horizontal decomposition. According to the method, each class of model including fork-join operation in the models is subjected to horizontal decomposition so that a computer can implement rapid and accurate analysis on the performance of the queuing network models to obtain analyzable parameters of actual system performance, thereby improving the efficiency of the computer in analyzing the system performance.

Owner:ZHEJIANG UNIV

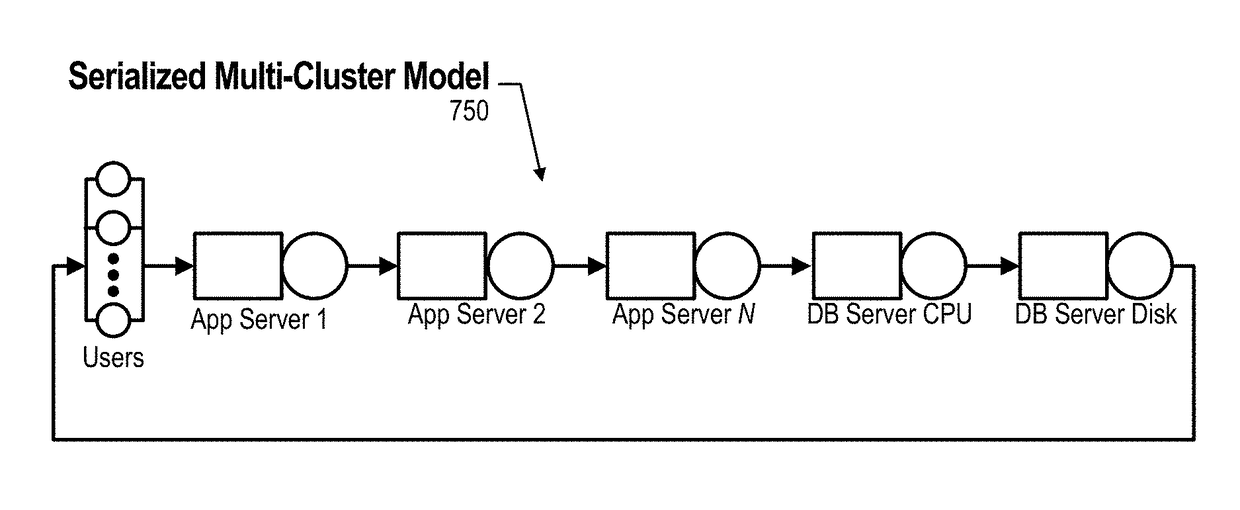

Predicting Performance Regression of a Computer System with a Complex Queuing Network Model

InactiveUS20160140262A1Reliability/availability analysisFunctional testingPredictive systemsResource utilization

An approach is provided for predicting system performance. The approach predicts system performance by identifying a Queuing Network Model (QNM) corresponding to a clustered system that handles a plurality of service demands using a plurality of parallel server nodes that process a workload for a quantity of users. A workload description is received that includes server demand data. Performance of the clustered system is predicted by transforming the QNM to a linear model by serializing the parallel services as sequential services, identifying transaction groups corresponding to each of the server nodes, and distributing the workload among the transaction groups across the plurality of nodes. The approach further solves analytically the linear model with the result being a predicted resource utilization (RU) and a predicted response time (RT).

Owner:IBM CORP

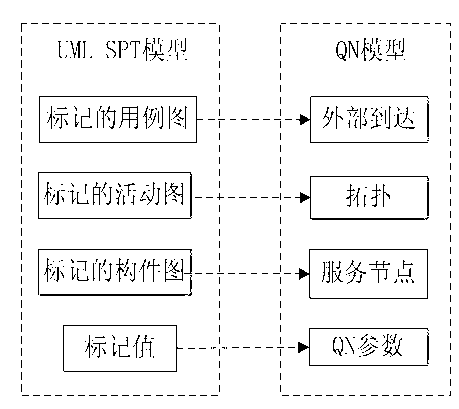

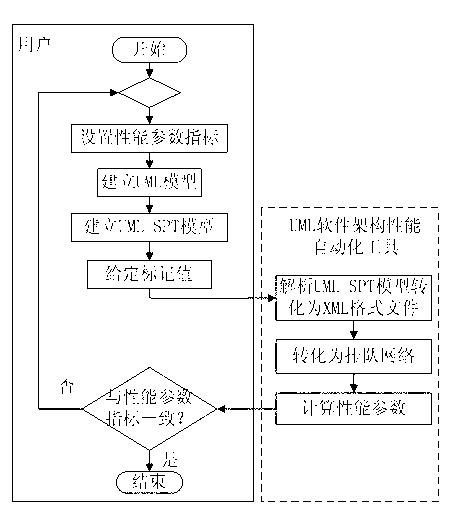

Performance predicating method for software system based on UML (Unified Modeling Language) architecture

InactiveCN102799530AReduce complexityImprove development efficiencySoftware testing/debuggingSoftware systemSoftware engineering

The invention discloses a performance predicting method for a software system based on a UML (Unified Modeling Language) architecture. The performance predicting method comprises the following steps: firstly, establishing a UML model of the software system; adding a stereotype and a marking value on a UML diagram and enabling the stereotype and the marking value to be converted into the UML diagram with labels to generate a UMLSPT (Unified Modeling Language Subsystem Parameter Table) model; generating a queue network model algorithm and a queue network model by using the UML model; and finally, calculating to obtain a software performance parameter value according to a solving method of the queue network module performance parameter and realizing the prediction of software performances. According to the software performance predicting method provided by the invention, a user can solve the performance index of the software by establishing the UML model of the software system and adding the stereotype and the marking value and enabling the stereotype and the marking value to become the UMLSPT model. Therefore, the complexity of software performance prediction is greatly reduced and the development efficiency of the software is improved.

Owner:南通壹选智能科技有限公司

On-demand group communication services with quality of service (QoS) guarantees

The present invention broadly contemplates addressing QoS concerns in overlay design to account for the last mile problem. In accordance with the present invention, a simple queuing network model for bandwidth usage in the last-mile bottlenecks is used to capture the effects of the asymmetry, the contention for bandwidth on the outgoing link, and to provide characterization of network throughput and latency. Using this characterization computationally inexpensive heuristics are preferably used for organizing end-systems into a multicast overlay which meets specified latency and packet loss bounds, given a specific packet arrival process.

Owner:HULU

Predicting performance regression of a computer system with a complex queuing network model

An approach is provided for predicting system performance. The approach predicts system performance by identifying a Queuing Network Model (QNM) corresponding to a clustered system that handles a plurality of service demands using a plurality of parallel server nodes that process a workload for a quantity of users. A workload description is received that includes server demand data. Performance of the clustered system is predicted by transforming the QNM to a linear model by serializing the parallel services as sequential services, identifying transaction groups corresponding to each of the server nodes, and distributing the workload among the transaction groups across the plurality of nodes. The approach further solves analytically the linear model with the result being a predicted resource utilization (RU) and a predicted response time (RT).

Owner:INT BUSINESS MASCH CORP

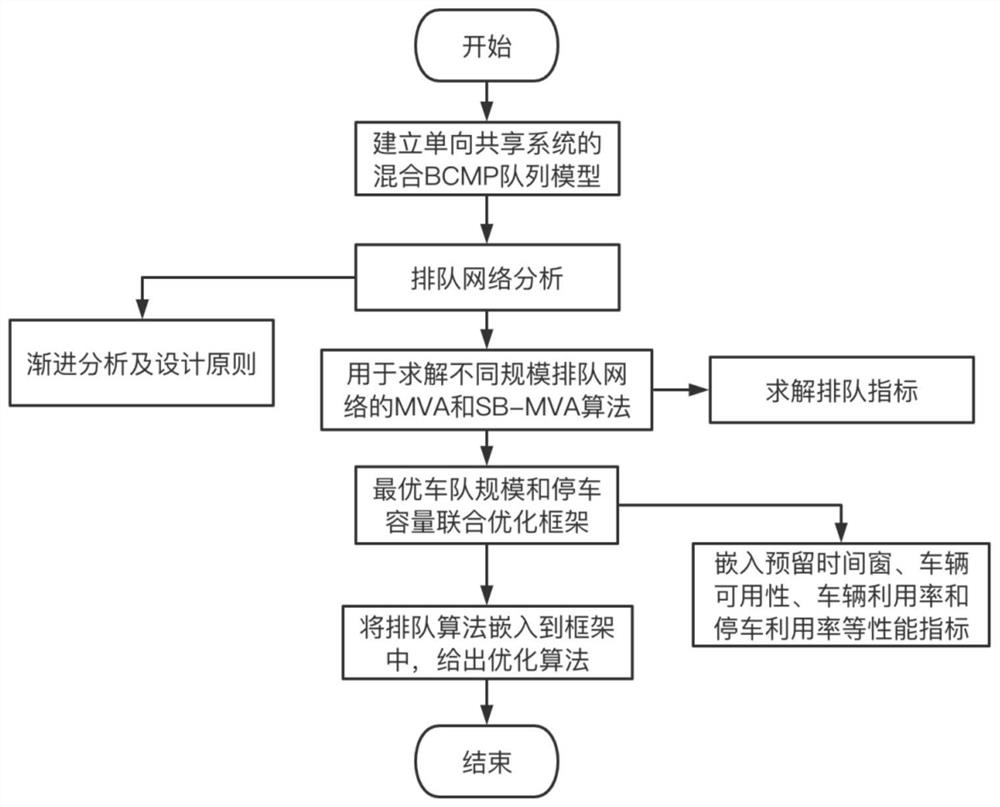

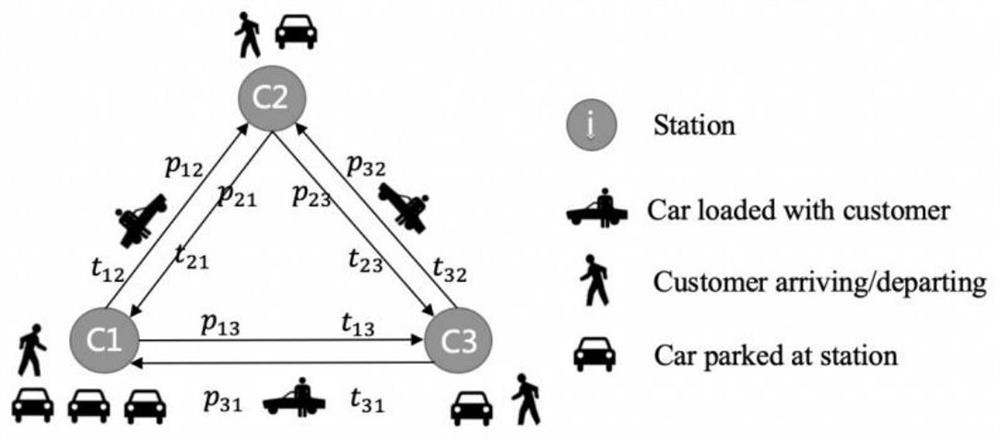

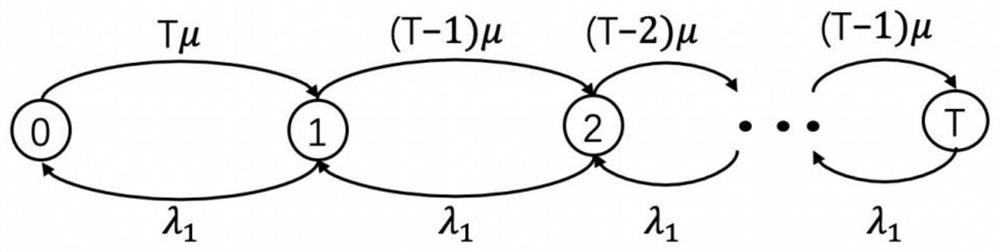

One-way vehicle sharing system scale optimization method based on queuing theory

ActiveCN112419601AProof of uniquenessIndication of parksing free spacesChecking apparatusOccupancy rateParking space

The invention provides a one-way vehicle sharing system scale optimization method based on a queuing theory, and relates to the technical field of planning and design of one-way vehicle sharing systems. A hybrid BCMP queuing network model is used for describing vehicle circulation in a one-way sharing system, realistic factors such as reservation strategies, road congestion and limited parking spaces are clearly introduced, and the asymptotic relationship between the system scale and the vehicle availability Vi and parking occupancy rate Ui is clear and incorporated into a joint optimization framework. Then a joint optimization problem of the system scale is proposed according to a profit maximization principle, and an optimal motorcade scale and parking station capacity meeting a given performance index are determined. According to the invention, the remaining capacity of a physical road is mapped to the number of servers of a queuing network to be clearly introduced into road congestion, and a parking station model is modeled as an interdependent open network to limit the capacity of a parking lot.

Owner:东北大学秦皇岛分校

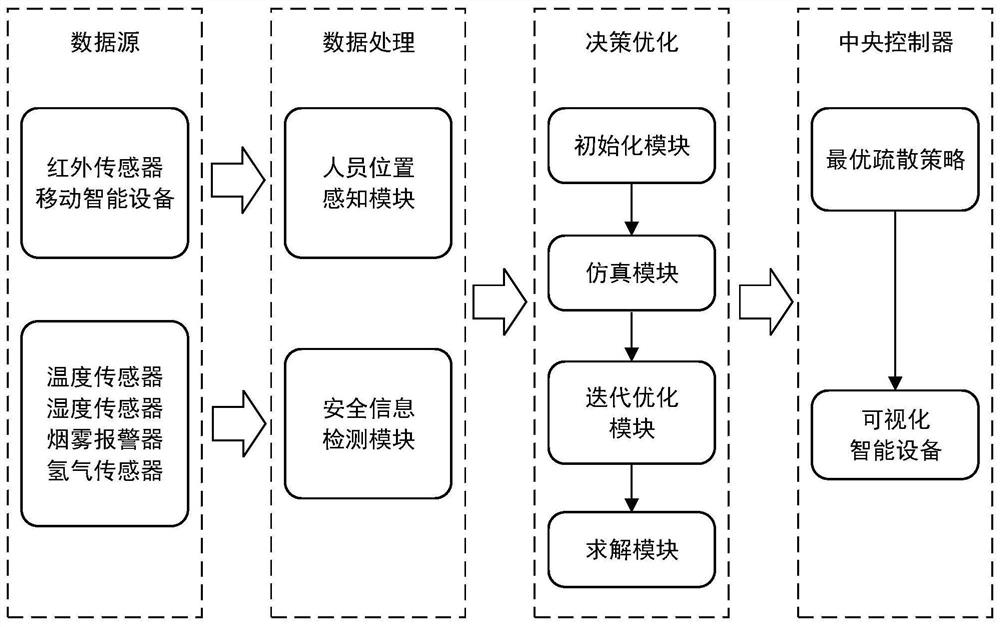

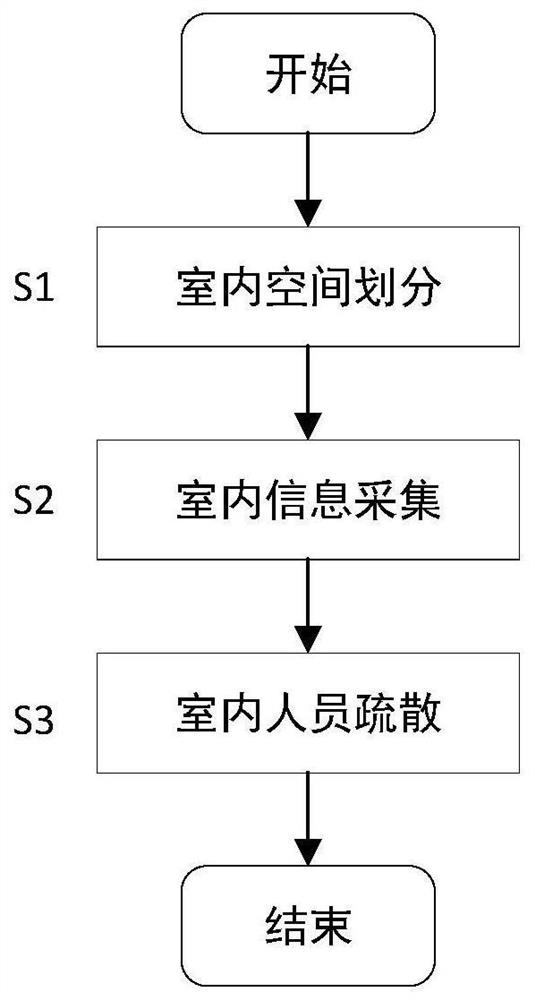

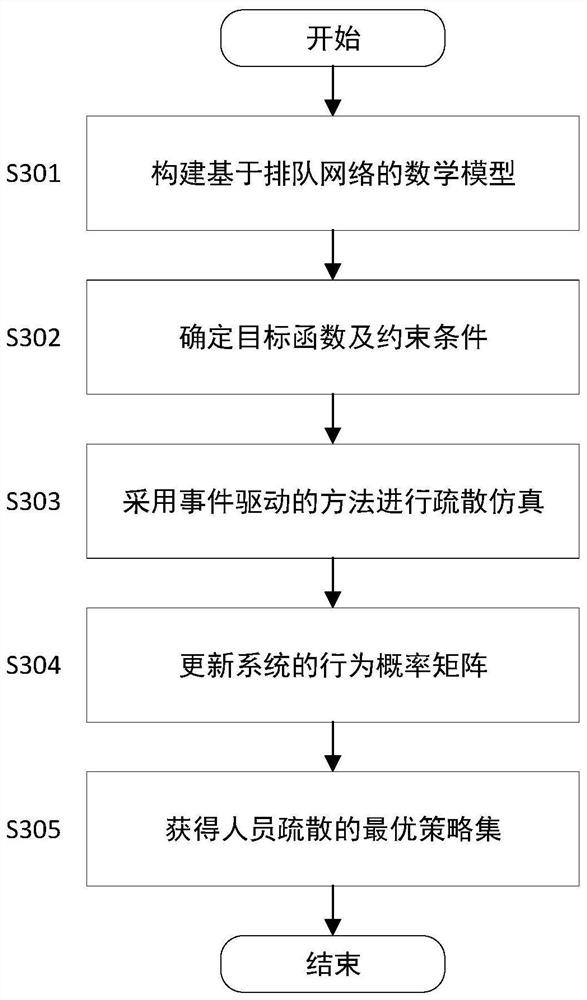

Indoor personnel evacuation method and evacuation system based on queuing network

PendingCN112199747AThe physical mechanism is clearly describedClear descriptionGeometric CADDesign optimisation/simulationLocal optimumComputation complexity

The invention discloses an indoor personnel evacuation method and system based on a queuing network. The system comprises a personnel position sensing module, a safety information detection module, adecision optimization module and a central controller module. According to the method, a complex evacuation network is modeled by adopting a queuing network model, the interference of redundant information is ignored, only the influence between people and the influence between people and the environment are considered, and the method is easy to implement and is close to the actual evacuation process. According to the method, an event-driven mode is adopted to solve the problem of large calculation amount in a complex network, and the calculation complexity of the system is greatly reduced. Inthe actual simulation process, the current state and the future state of the system are considered, the evacuation strategy of the system is updated through a value iteration method, it is guaranteedthat the strategy cannot stop at the local optimal solution, and the obtained evacuation strategy is globally optimal.

Owner:XI AN JIAOTONG UNIV

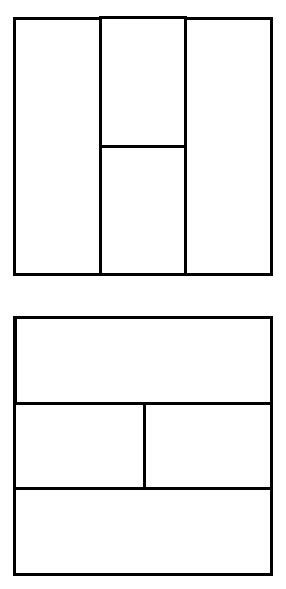

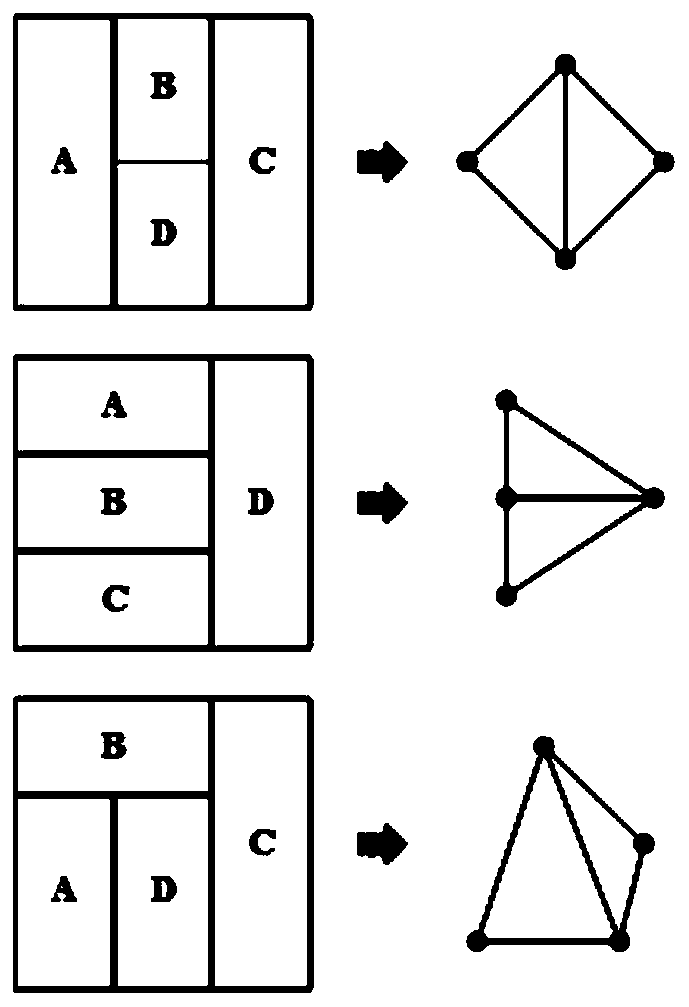

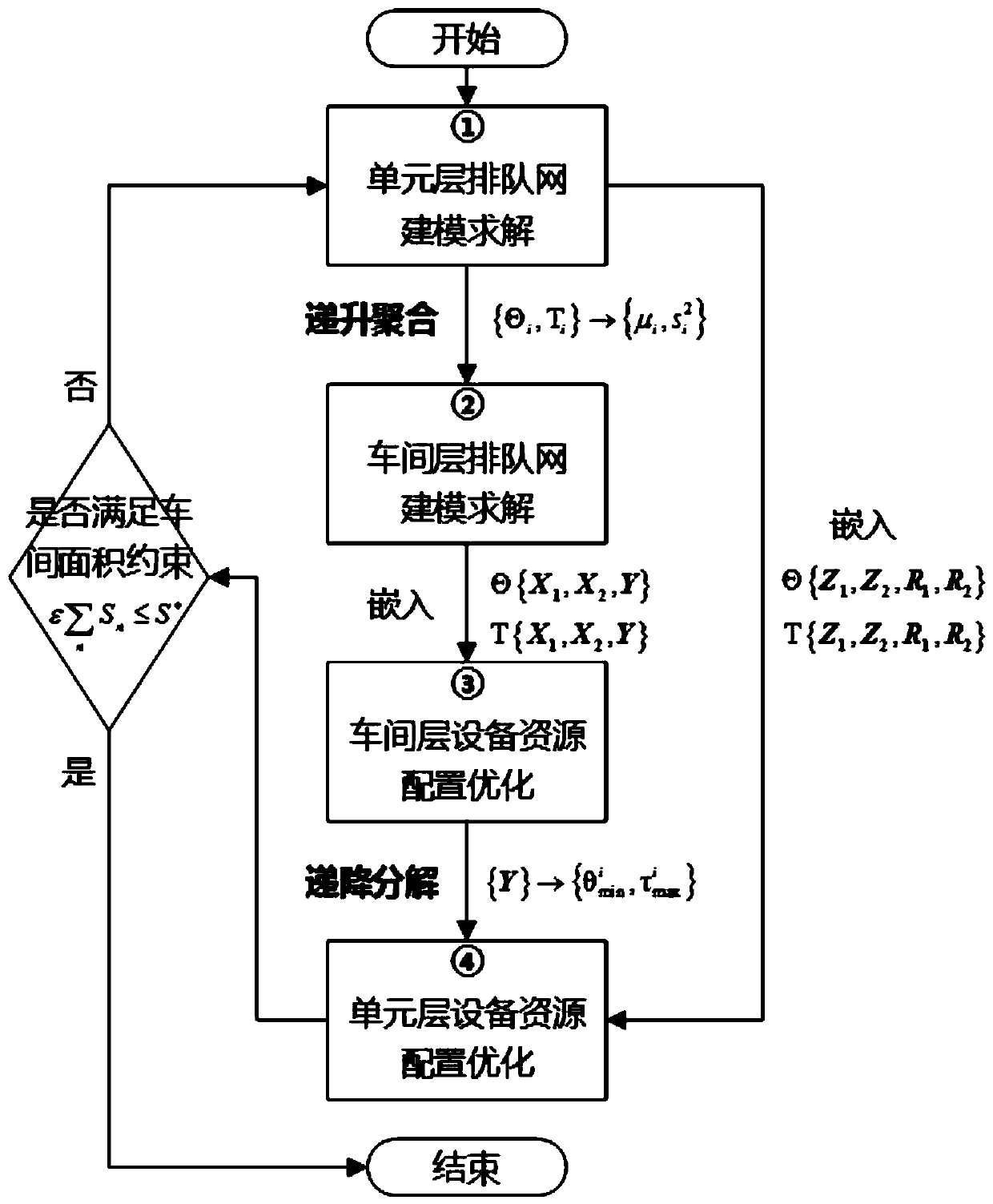

Planning and designing method for customized production workshop

ActiveCN110570018ASolving Joint Optimization ProblemsOptimize investment efficiencyForecastingBuying/selling/leasing transactionsBinary space partitioningTrust region

The invention discloses a planning and designing method for a customized production workshop, and the method comprises the steps: carrying out the workshop plane division through employing a binary space segmentation tree method according to the key technological path of a customized product, and analyzing a production unit layout initial scheme through an isomorphism theory; under the index constraints of the expected productivity and the order advance period of the production workshop, utilizing a trust region-SQP algorithm embedded with the queuing network model to respectively solve the equipment resource planning model corresponding to each production unit layer, including the number of processing machine tools, the number of industrial robots and the operation rate of the industrialrobots, and the equipment resource planning model corresponding to the production workshop layer, including the number of AGVs and the operation rate of the AGVs. And the overall layout of the production workshop and the equipment resource configuration scheme are solved through a hierarchical optimization algorithm, so that the investment cost is minimum.

Owner:GUANGDONG UNIV OF TECH

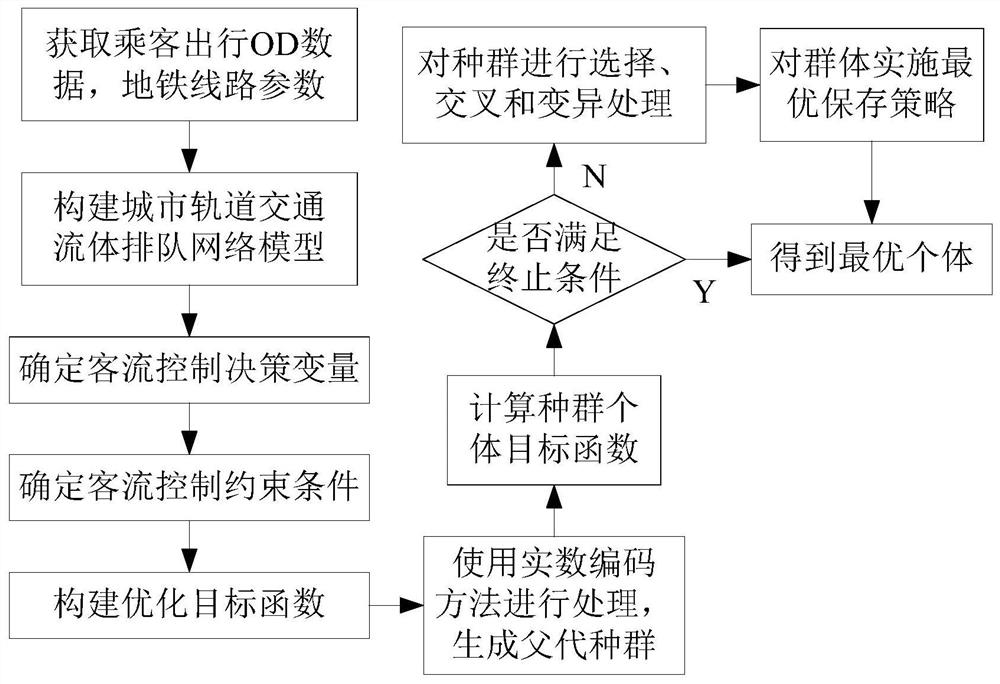

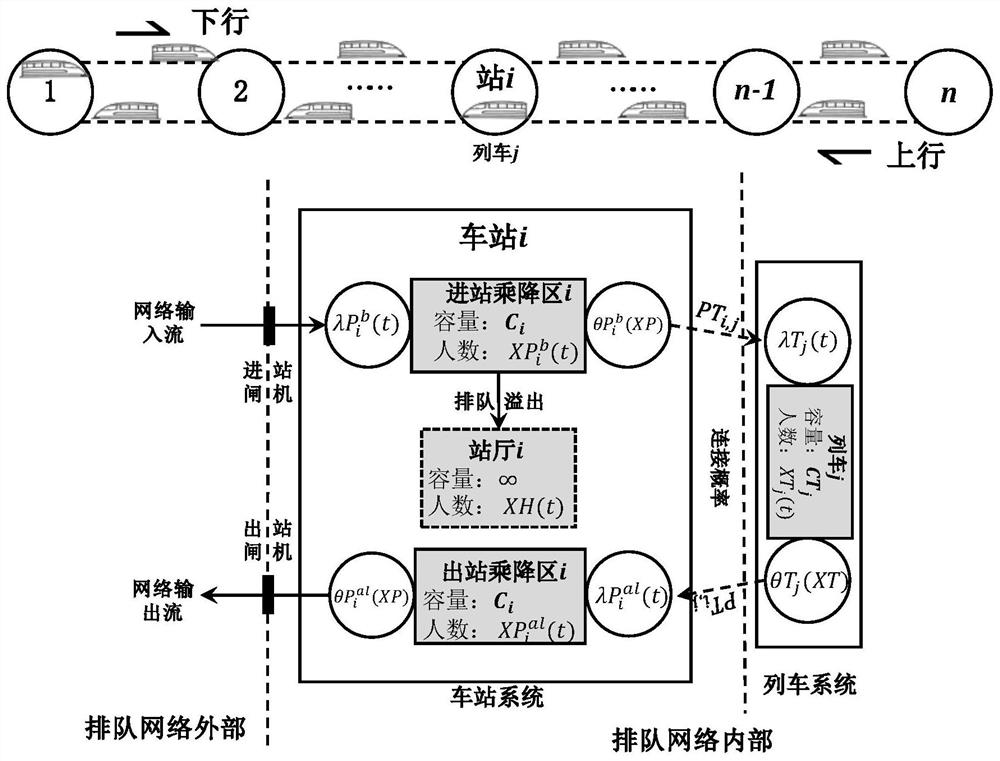

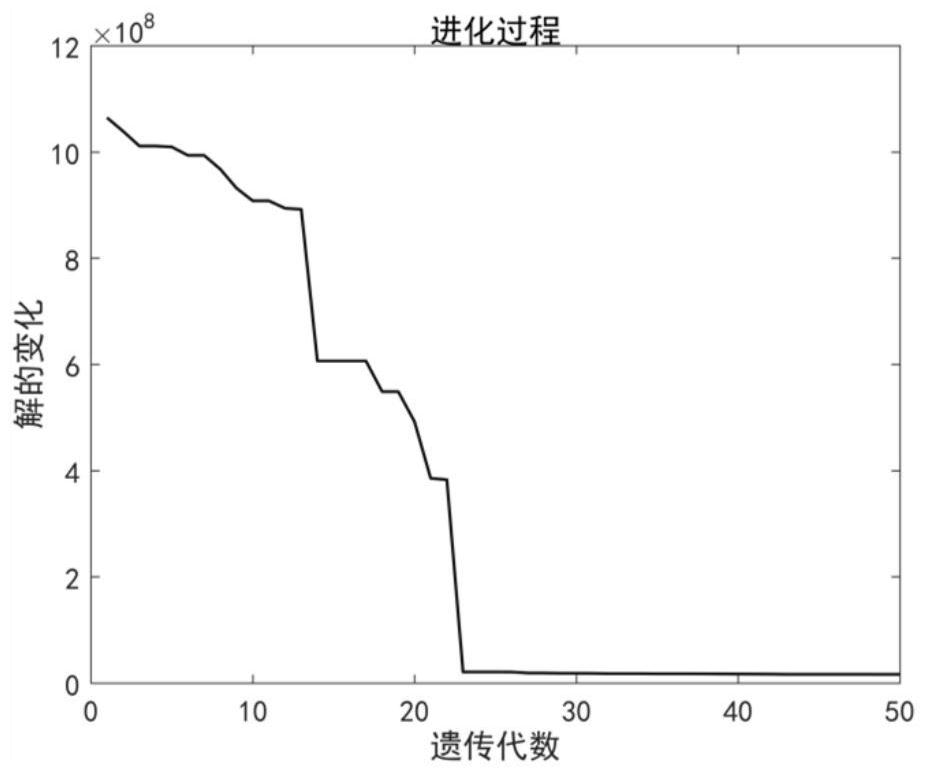

Urban rail transit passenger flow control optimization method based on fluid queuing network

ActiveCN112906179AReduced travel time costsReduce queuing overflowForecastingDesign optimisation/simulationSimulationQueuing network model

The invention discloses an urban rail transit passenger flow control optimization method based on a fluid queuing network, and the method comprises the following steps: S1, building an urban rail transit passenger flow control optimization model based on the fluid queuing networkm, including: S11, obtaining passenger travel OD data and subway line parameters; s12, constructing an urban rail transit fluid queuing network model; s13, determining a passenger flow control decision variable; s14, determining passenger flow control constraint conditions; s15, constructing an optimization objective function; S2, solving the optimization model, including: S21, calculating a population individual objective function; s22, judging whether the individual fitness meets a termination condition or not; if yes, ending; otherwise, performing the next step; and S23, performing selection, crossover and variation processing. On the basis of an urban rail transit passenger flow control scheme, a passenger flow control optimization model is established in combination with a fluid queuing network model, and the possibility of platform queuing overflow and queuing explosion is reduced.

Owner:SOUTHWEST JIAOTONG UNIV +1

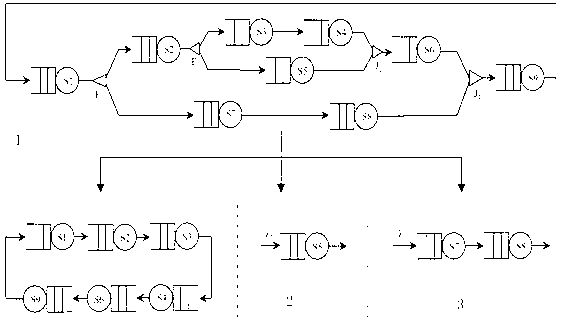

Method for analyzing performances of single closed fork-join queuing network based on horizontal decomposition

ActiveCN102158357BPromote decompositionReduce computational complexityData switching networksComputation complexityDecomposition

The invention discloses a method for analyzing performances of a single closed fork-join queuing network based on horizontal decomposition. The method is characterized in that a horizontal decomposition method is utilized to decompose a single closed fork-join queuing network model into a plurality of product-form queuing network models, thus an average value analysis method can be used to quickly solve the model, and the horizontal decomposition is only carried out on a queuing network comprising nested fork-joint operations once without recursive decomposition like a layer decomposition method, thereby greatly reducing the computational complexity, improving the efficiency that a computer computes and analyzes the single closed fork-join queuing network model, and promoting the computational performance.

Owner:ZHEJIANG UNIV

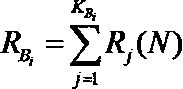

Storage space allocation method for multi-storey shuttle car automatic storage system

InactiveCN103971222BIncrease profitImprove efficiencyLogisticsSpecial data processing applicationsIdle timeDecomposition

Owner:SHANDONG UNIV

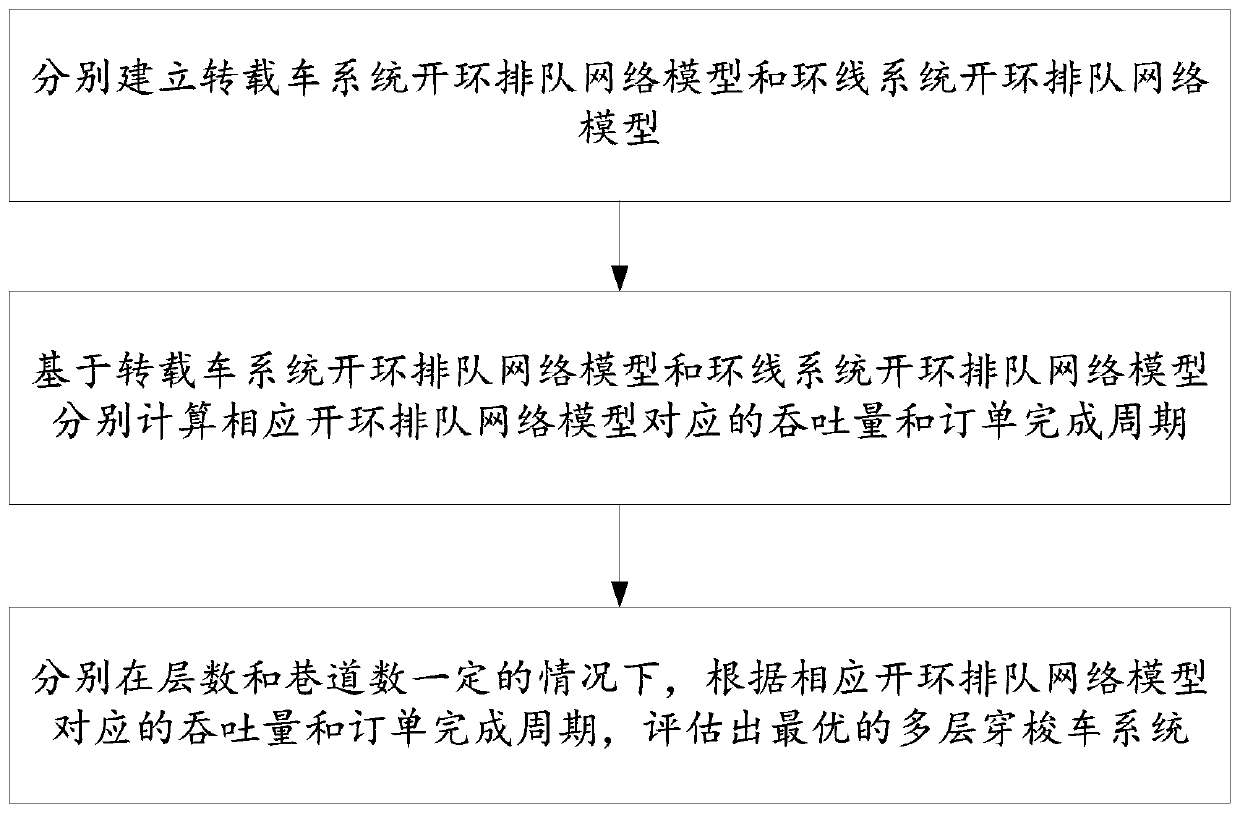

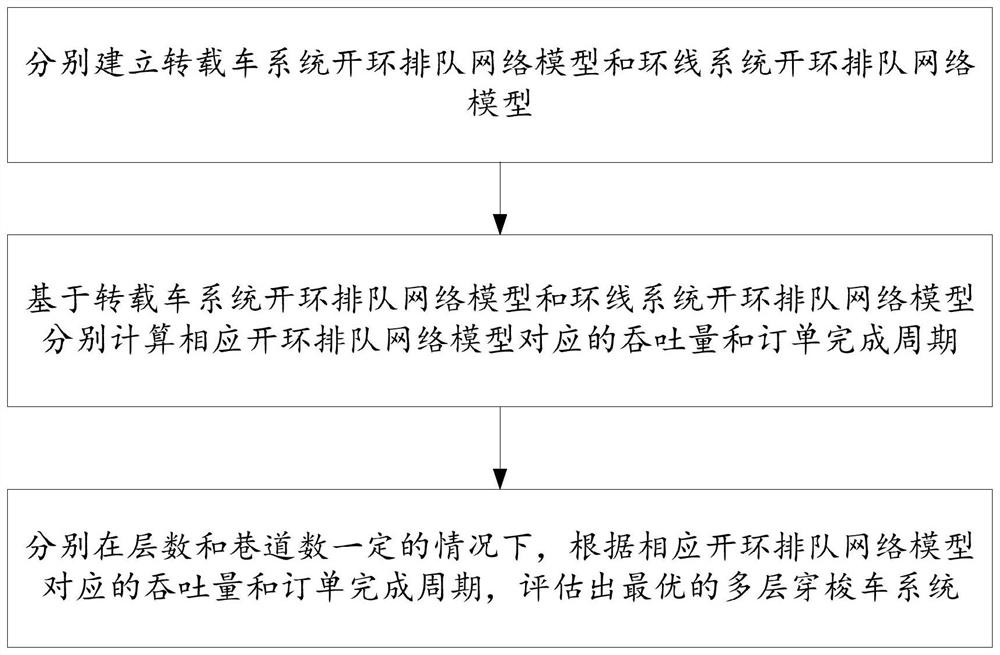

Performance evaluation method and device for multi-layer shuttle vehicle system

ActiveCN111160698AImprove accuracyReduce initial decision costResourcesLogisticsQueuing network modelQueuing network

The invention provides a performance evaluation method and device for a multi-layer shuttle vehicle system. The performance evaluation method for the multi-layer shuttle vehicle system comprises the following steps: respectively establishing an open-loop queuing network model of a transfer vehicle system and an open-loop queuing network model of a loop line system; based on the transfer vehicle system open-loop queuing network model and the loop line system open-loop queuing network model, the throughput and the order completion period corresponding to the corresponding open-loop queuing network model are calculated respectively; under the condition that the number of layers and the number of roadways are fixed, the optimal multi-layer shuttle vehicle system is evaluated according to the throughput and the order completion period corresponding to the corresponding open-loop queuing network model.

Owner:SHANDONG UNIV

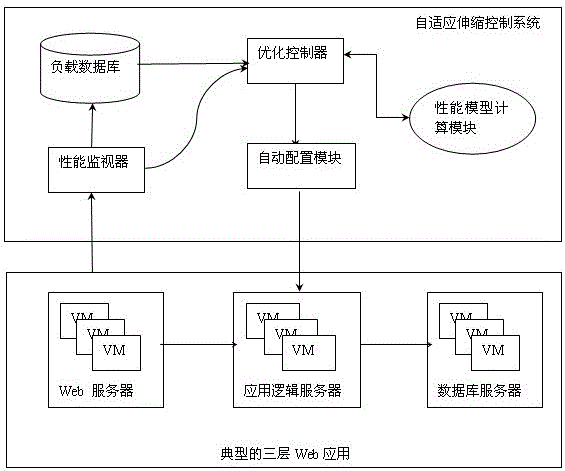

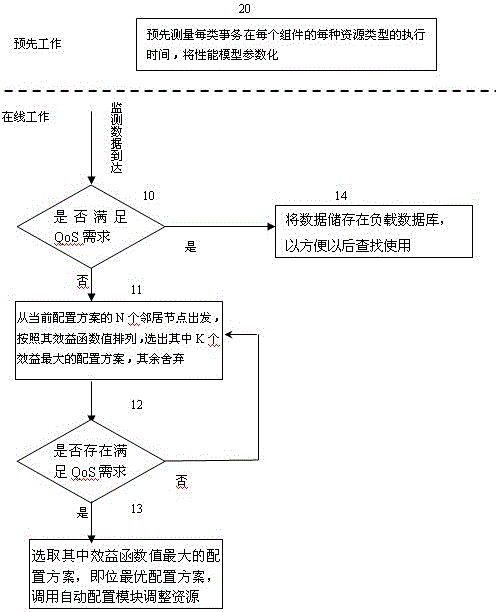

Adaptive scaling control system and method for web application in cloud computing platform

The invention discloses a self-adapting flexible control system of Web application in a cloud computing platform and a method of the self-adapting flexible control system, which are used to dynamically adjust computing resources according to load change. The system comprises a performance monitor, a load database, a performance model computing module, an optimization controller and an automatic configuring module. The method comprises the steps that: firstly, the performance monitor constructs a layered queue network model according to structure of the Web application and processing procedure of requests; the web application is deployed in a real cloud computing platform and a record label is inserted into each layer of component of the web application, so as to record the actual execution time of each request at each resource of each component, thereby obtaining parameters needed by a web application performance model in the performance model computing module; when application load changes, the optimization controller computes the performance of the application of each resource configuration scheme via a heuristic search algorithm, finds the configuration scheme which needs the minimal cost and also can meet the requirement on Qos (quality of service), and then takes the configuration scheme as the optimal configuration scheme; and finally, the automatic configuring module readjusts resources needed by each component of the application.

Owner:南京大学镇江高新技术研究院

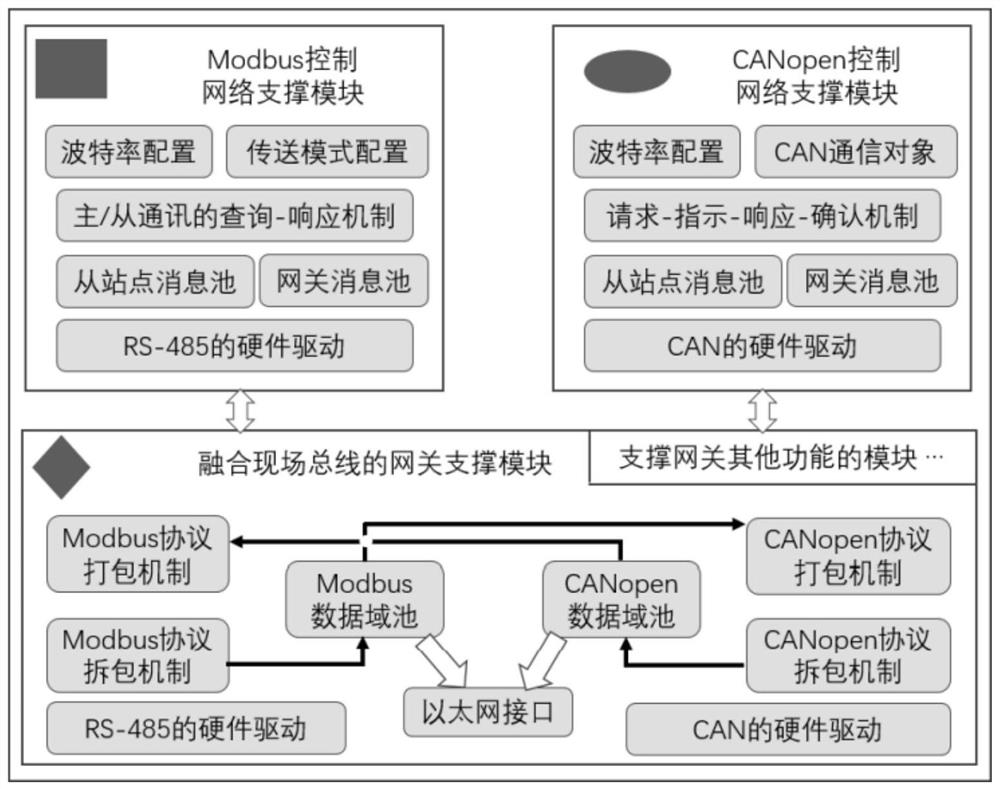

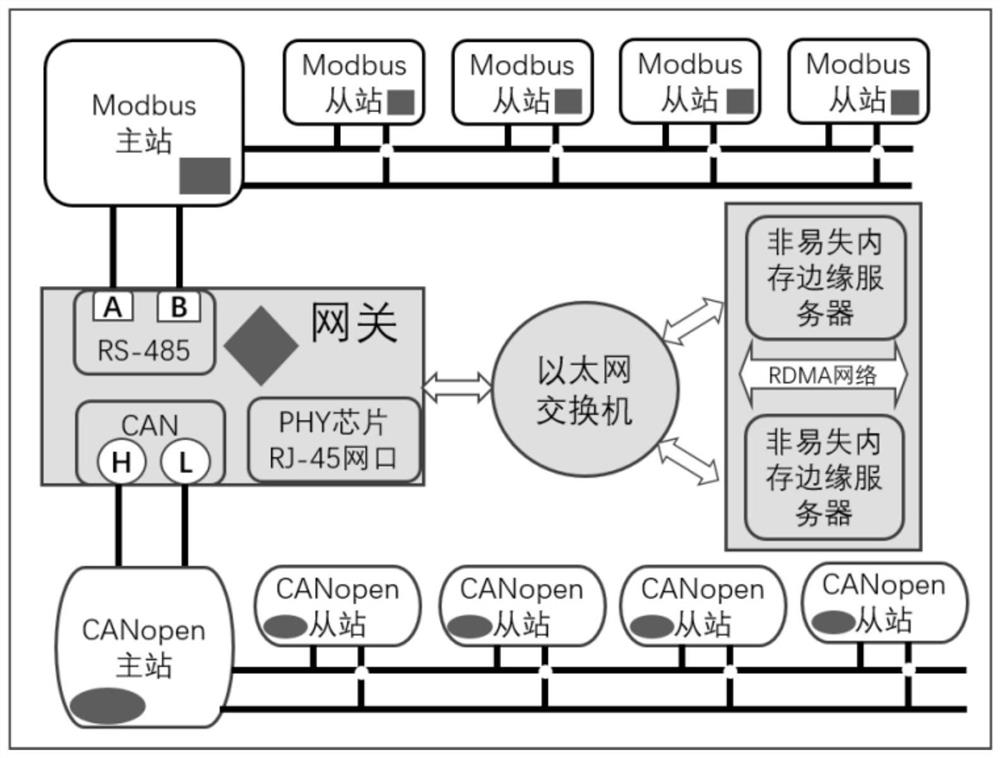

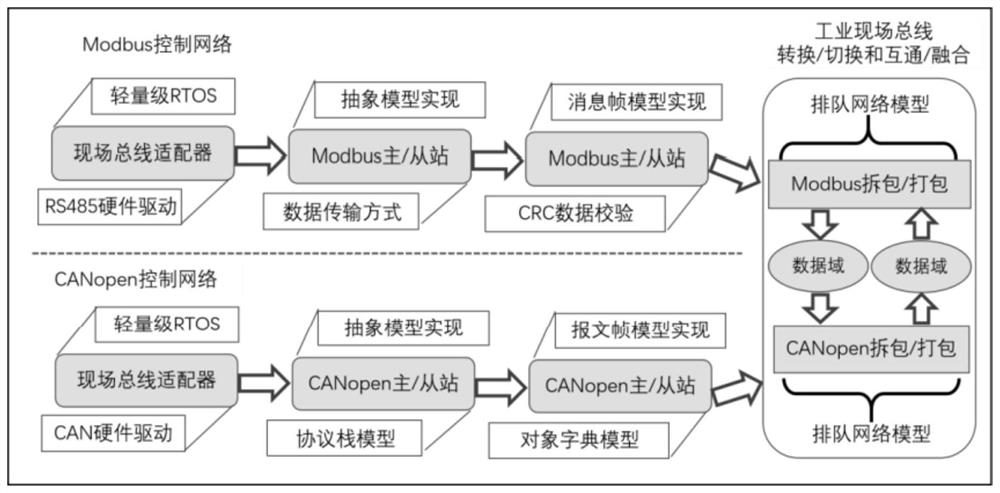

Heterogeneous industrial field bus fusion method and system

ActiveCN114338274AEasy to integrateAchieve integrationTotal factory controlElectric digital data processingEngineeringQueuing network

The invention discloses a heterogeneous industrial field bus fusion method and a heterogeneous industrial field bus fusion system, which solve the defects of poor isomerism and poor software maintenance and reliability caused by coexistence of field bus standards, and are characterized in that: aiming at a heterogeneous industrial field bus protocol, a master station / slave station control network is constructed, and based on a Modbus RTU (Remote Terminal Unit) message model and a CANopen message model, a CANopen message model is established; under the support of a data structure and a processing function provided by a set RTOS, parameters are obtained, a packing and unpacking mechanism of Modbus RTU and CANopen information frames is constructed based on a queuing network model, and when data are continuously exchanged between two protocols, management of interaction tasks and management of a buffer area are abstracted into a multi-task, multi-queue and multi-service mode. According to the heterogeneous industrial field bus fusion method and the heterogeneous industrial field bus fusion system, equipment hooked on various industrial field buses can be conveniently fused to an IT network, and industrial field bus network intercommunication and fusion are realized.

Owner:SHANGHAI JIAO TONG UNIV

A performance evaluation method and device for a multi-storey shuttle vehicle system

ActiveCN111160698BImprove accuracyReduce initial decision costResourcesLogisticsSimulationQueuing network

The disclosure provides a method and device for evaluating the performance of a multi-storey shuttle vehicle system. Among them, a method for evaluating the performance of a multi-storey shuttle vehicle system, including establishing the open-loop queuing network model of the transfer vehicle system and the open-loop queuing network model of the loop system; Calculate the throughput and order completion cycle corresponding to the corresponding open-loop queuing network model; respectively, in the case of a certain number of floors and lanes, according to the corresponding throughput and order completion cycle of the corresponding open-loop queuing network model, the optimal multi-storey shuttle system.

Owner:SHANDONG UNIV

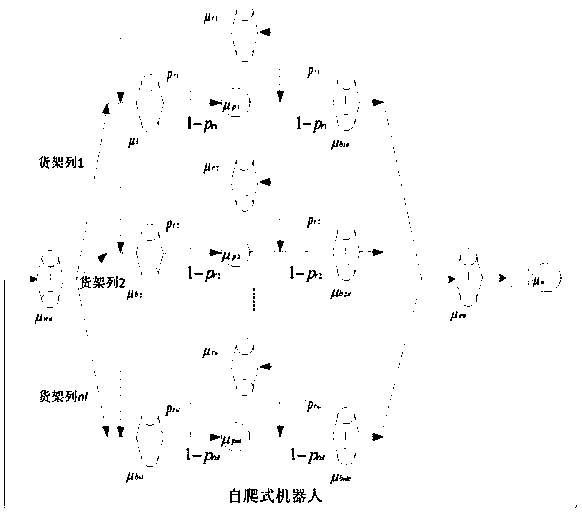

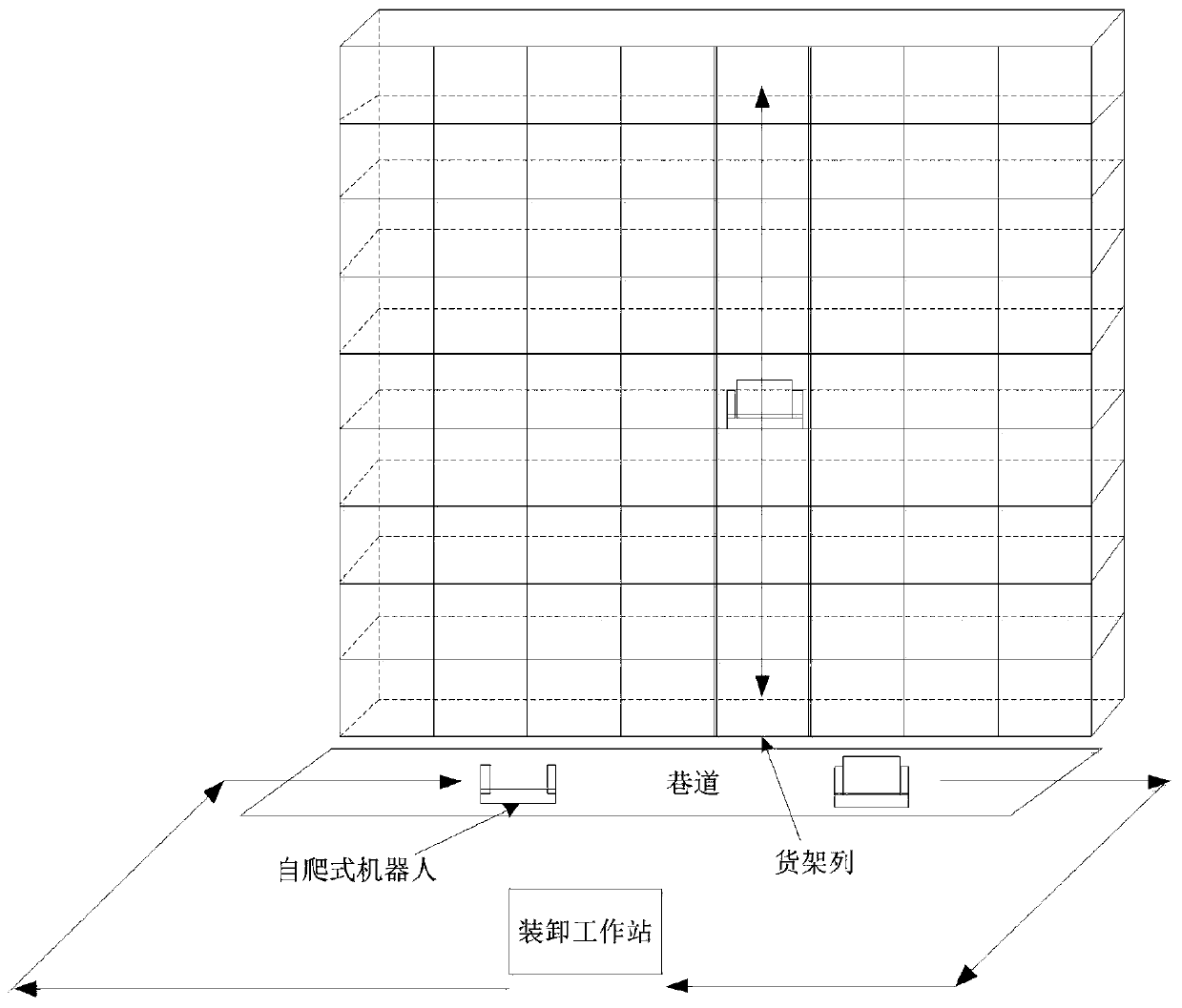

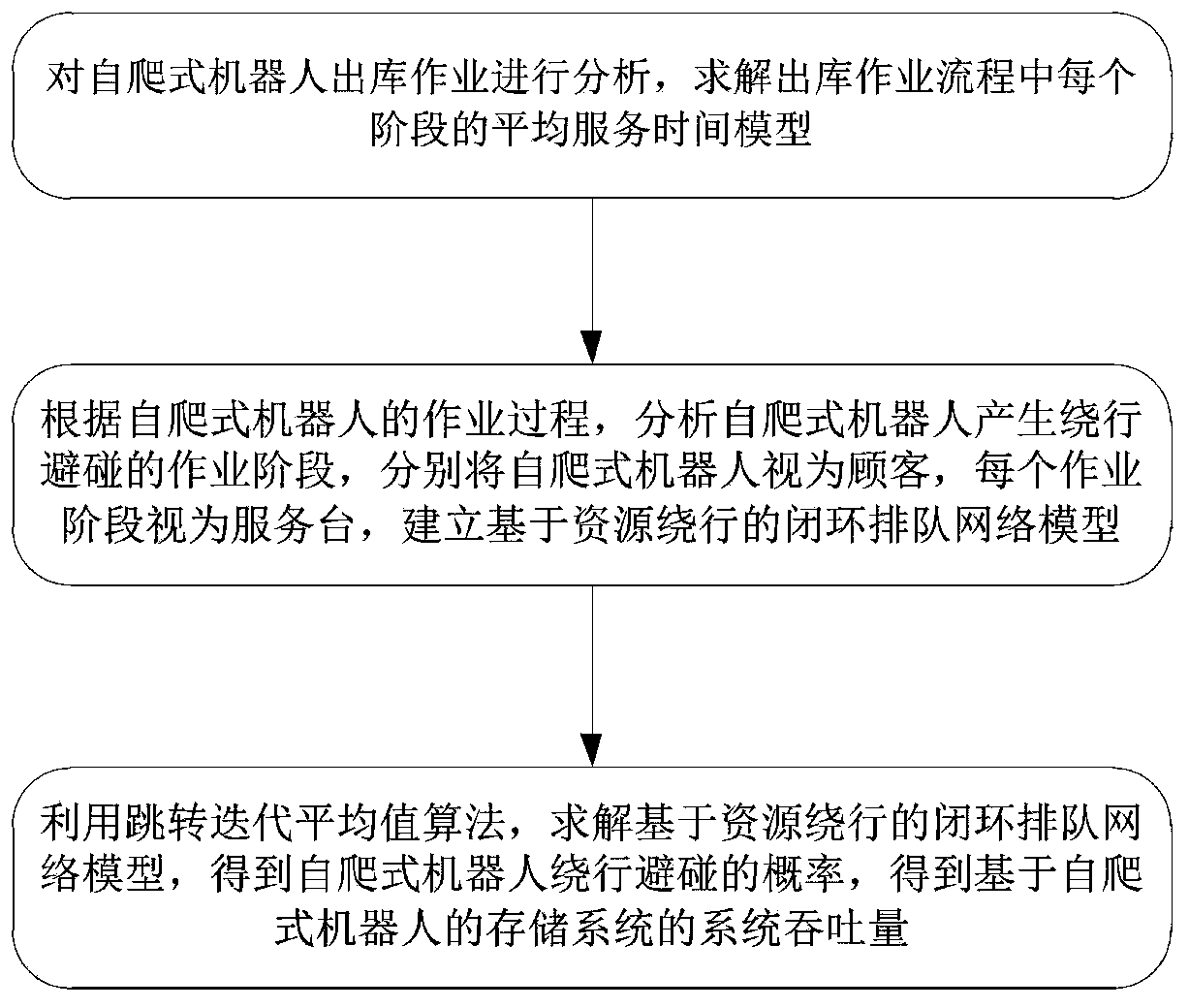

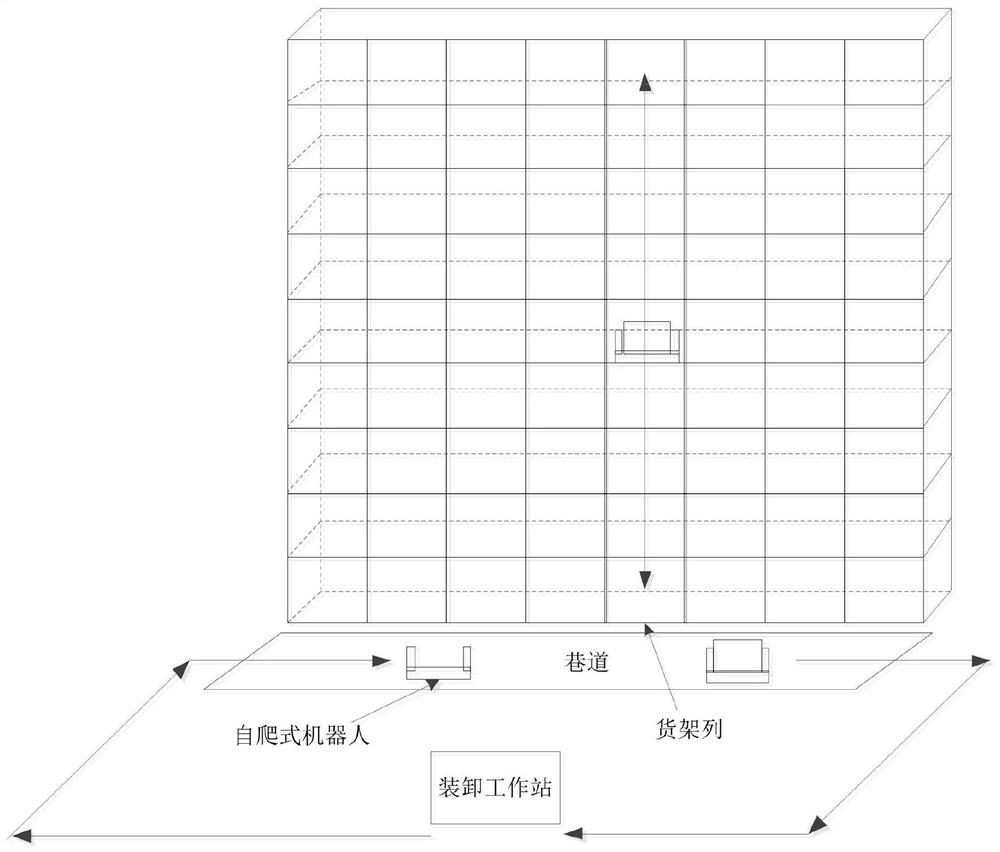

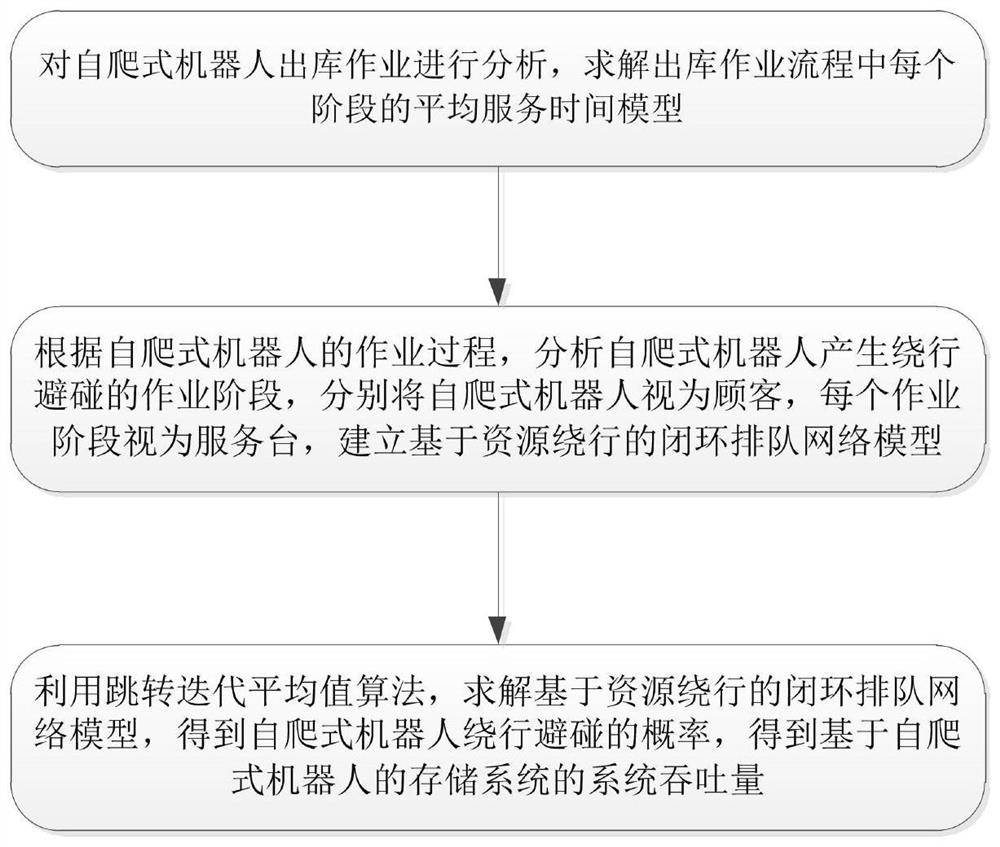

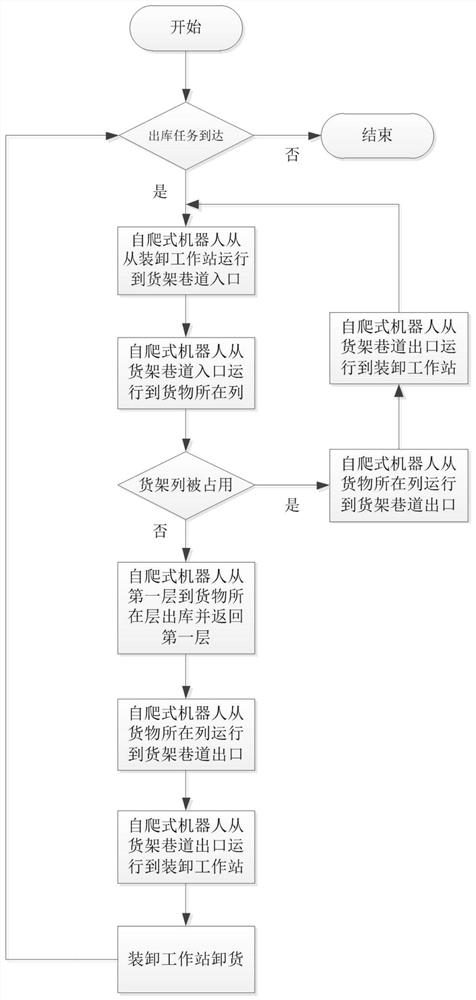

Bypassing collision prevention optimization method of self-crawling type robot based storing system

InactiveCN111573095AReduce congestionEstimated ThroughputStorage devicesSimulationQueuing network model

The invention relates to the technical field of warehousing design and discloses a bypassing collision prevention optimization method of a self-crawling type robot based storing system. The method ischaracterized by comprising the following steps: analyzing the warehouse-out operation of the self-crawling type robot to solve the average service time model of each stage during the warehouse-out operation procedure, according to the operating procedure of the self-crawling type robot, analyzing the operating stage, generating bypassing collision prevention, of the self-crawling type robot, establishing a resource bypassing based closed loop queuing network model by taking the self-crawling type robot as a customer and each operating stage as a service desk, and utilizing the skip iterationmean value algorithm to solve the resource bypassing based closed loop queuing network model. According to the bypassing collision prevention optimization method, the resource bypassing based closed loop queuing network model is adopted to precisely simulate the actual warehouse-out task process of the self-crawling type robot based storing system and the bypassing collision prevention of the self-crawling type robot.

Owner:龚业明

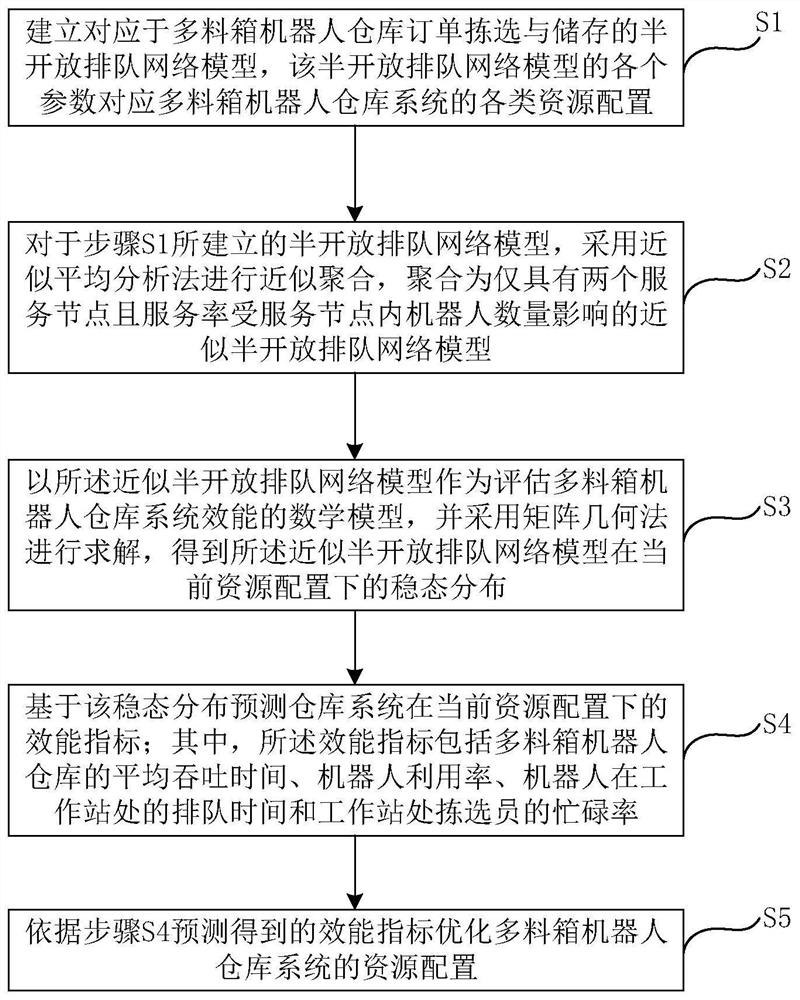

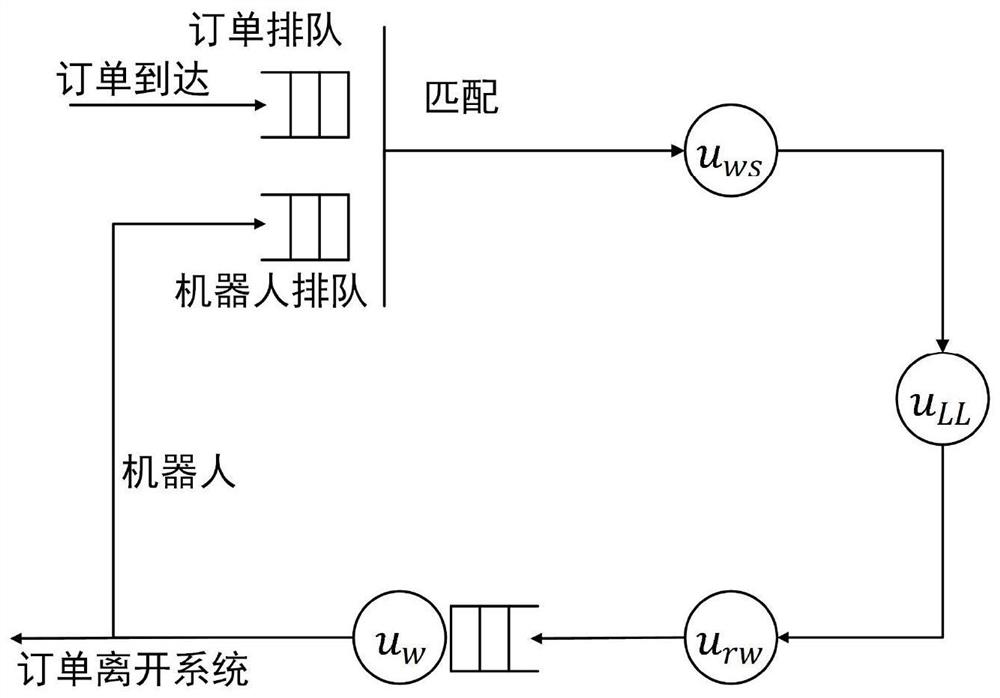

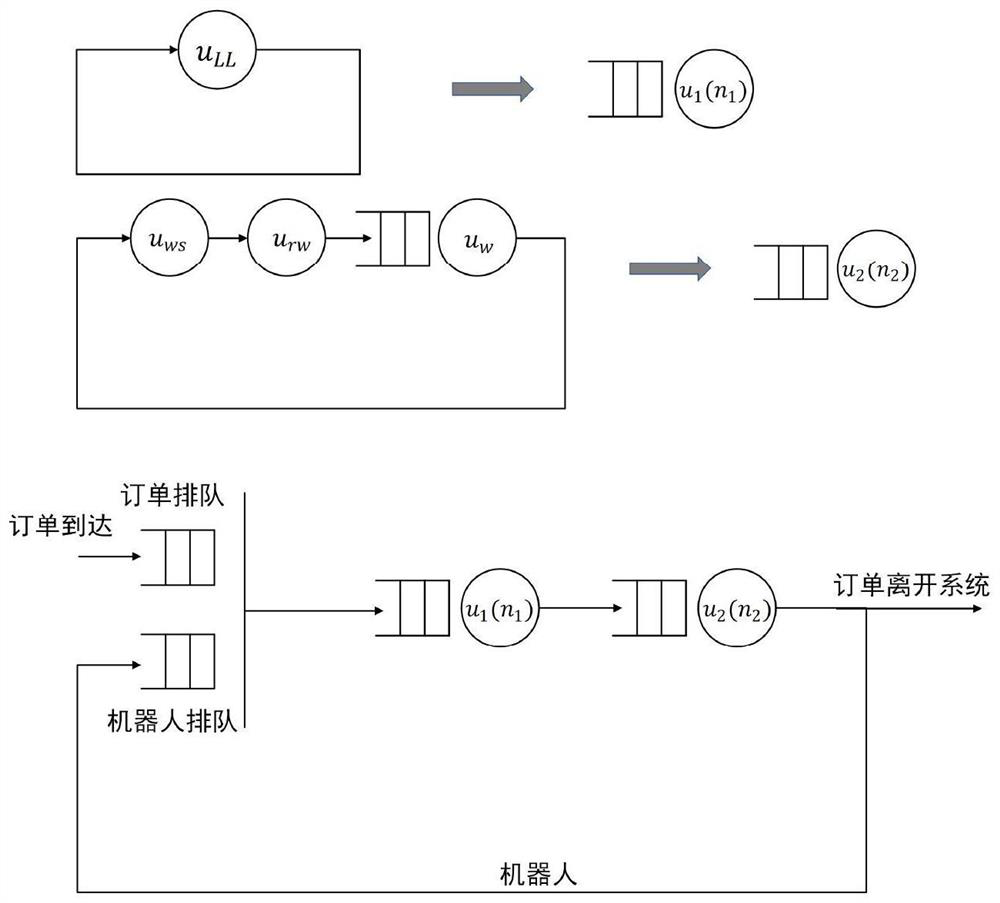

Method for predicting and optimizing efficiency of multi-workbin robot warehouse system

PendingCN114202270AImprove performanceResource allocation conforms toForecastingResourcesSemi openAlgorithm

The invention provides a method for predicting the efficiency of a multi-workbin robot warehouse system, and the method comprises the steps: building a semi-open queuing network model corresponding to the order selection and storage of a multi-workbin robot warehouse, and enabling all parameters of the model to correspond to all kinds of resource configurations of the warehouse system; carrying out approximate aggregation on the semi-open queuing network model by adopting an approximate average analysis method to obtain an approximate semi-open queuing network model which only has two service nodes and the service rate of which is influenced by the number of robots in the service nodes; taking the approximate semi-open queuing network model as a mathematical model for evaluating the efficiency of the multi-workbin robot warehouse system, and solving by adopting a matrix geometry method to obtain steady-state distribution of the mathematical model under the current resource configuration; and predicting the efficiency indexes of the system under the current resource configuration based on the steady state distribution: the average throughput time of the multi-workbin robot warehouse, the robot utilization rate, the queuing time of the robot at the workstation and the busy rate of the picker at the workstation.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

An optimization method for detour and collision avoidance of storage system based on self-climbing robot

InactiveCN111573095BReduce congestionEstimated ThroughputStorage devicesSimulationQueuing network model

The invention relates to the technical field of storage design, and the invention discloses a detour and collision avoidance optimization method based on a self-climbing robot storage system, which is characterized in that it includes the following steps: analyzing the self-climbing robot out of the warehouse, Solve the average service time model of each stage in the outbound operation process. According to the operation process of the self-climbing robot, analyze the operation stages in which the self-climbing robot produces detours and avoid collisions. The operation stage is regarded as a service desk, and a closed-loop queuing network model based on resource bypass is established, and the closed-loop queuing network model based on resource bypass is solved by using the jump iterative average algorithm. The detour and collision avoidance optimization method of the self-climbing robot-based storage system accurately simulates the actual outbound task process of the self-crawling robot-based storage system and the self-climbing robot avoiding collisions.

Owner:龚业明

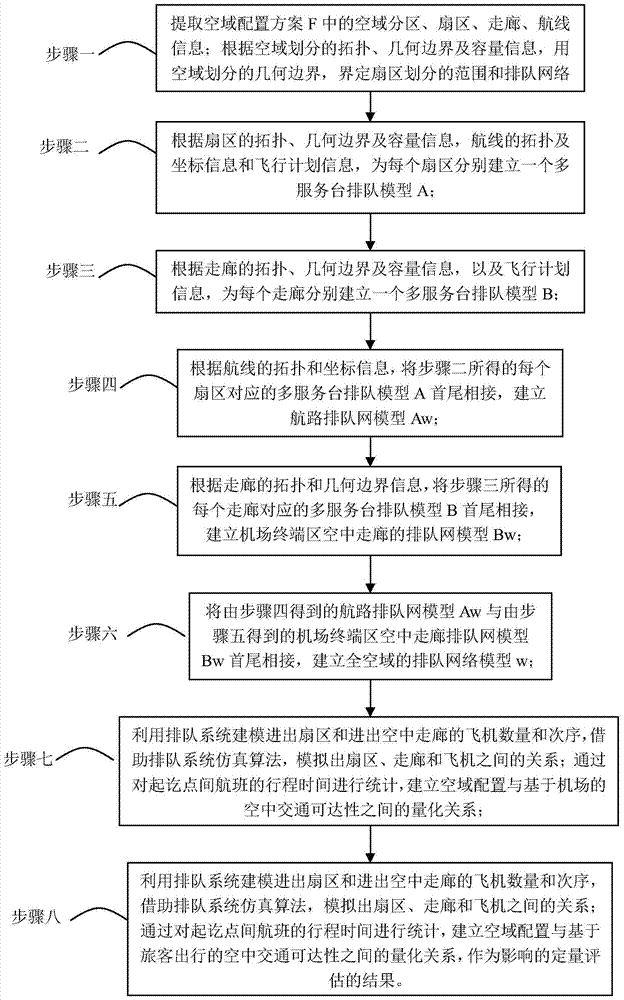

A method for quantitatively assessing the impact of airspace configuration on air traffic accessibility

ActiveCN104616106BSolve problems that cannot be quantifiedImprove punctualityResourcesReachabilityQueuing network model

A method for quantitatively evaluating the influence of airspace configuration on air traffic accessibility, the invention relates to a method for evaluating the influence of airspace configuration on air traffic accessibility. The accessibility of air traffic has a significant impact on the operating efficiency of airlines. In order to indirectly evaluate the impact of airspace configuration on airline operating efficiency, the present invention proposes a method for establishing the quantitative relationship between airspace configuration and air traffic accessibility, with traffic demand and airspace configuration scheme as input, based on the queuing network model framework , taking the airspace partition as the boundary, abstracting the air traffic demand into a statistical flow, corresponding to a multi-server queuing model for each sector and air corridor, and connecting them end to end to form a queuing network model for the entire airspace, using the queuing system simulation method, statistics of airport-based accessibility and passenger travel-based accessibility. The invention is suitable for evaluating the impact of airspace configuration on air traffic accessibility.

Owner:HARBIN INST OF TECH

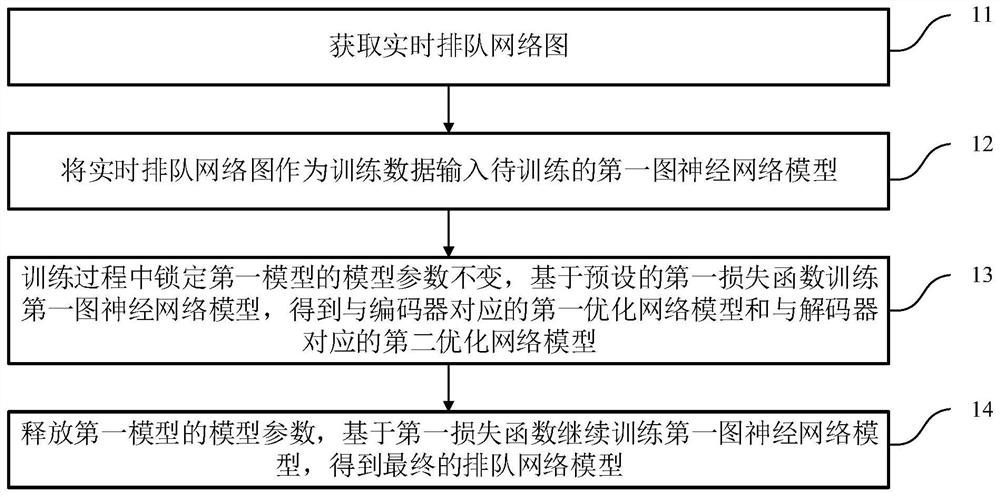

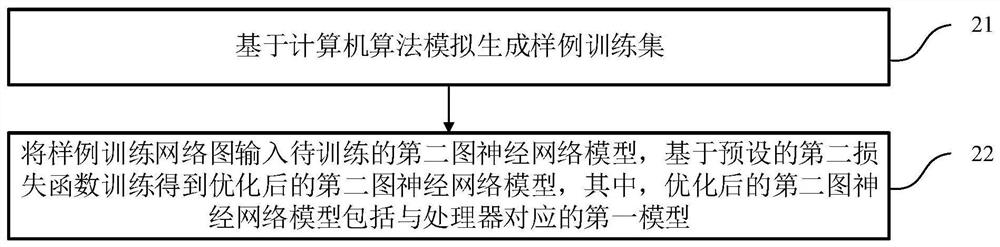

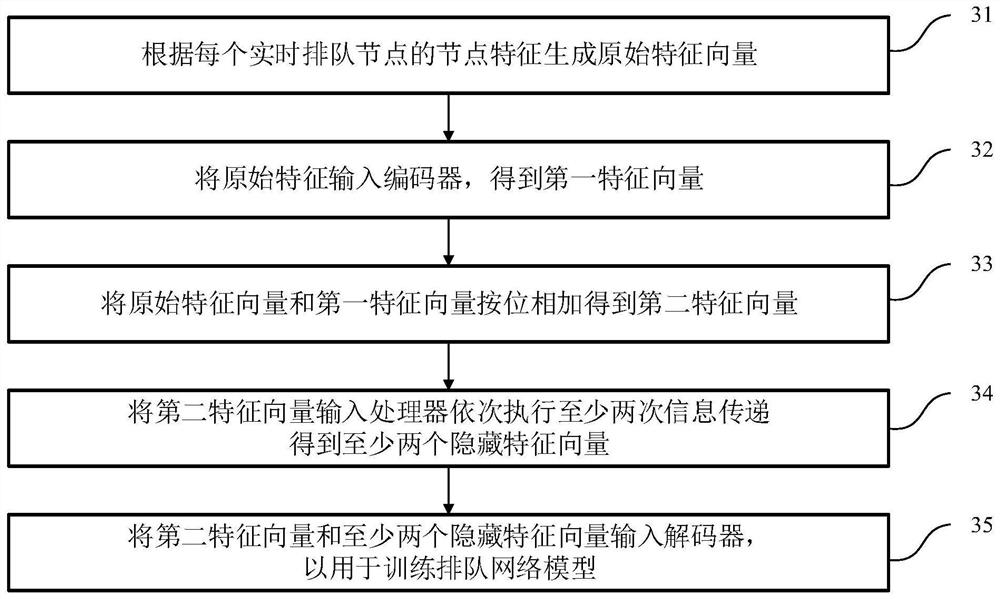

Queuing network model training method, queuing optimization method, equipment and medium

PendingCN114298275ASolve the problem of selectivitySolve the problem of duration predictionCharacter and pattern recognitionNeural architecturesEngineeringQueuing network

The invention discloses a training method of a queuing network model, a queuing optimization method, equipment and a medium. The training method comprises the following steps: acquiring a real-time queuing network diagram; inputting the real-time queuing network graph as training data into a to-be-trained first graph neural network model; the first graph neural network model comprises a trained first model corresponding to the processor; in the training process, locking model parameters of the first model to be unchanged, and training the first graph neural network model based on a preset first loss function to obtain a first optimization network model corresponding to the encoder and a second optimization network model corresponding to the decoder; releasing model parameters of the first model, and continuing to train the first graph neural network model based on the first loss function to obtain a final queuing network model. According to the method, the trained processor is utilized, the encoder and the decoder are trained by utilizing the field data, training is performed again according to the field data, and relatively high prediction accuracy can be achieved by utilizing less data training.

Owner:SHANGHAI CLEARTV CORP LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com