Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

436 results about "Execution cycle" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Execution Cycle. The execution cycle represents the remaining machine cycles in the execution of an instruction. The execution cycle may consist of more than one machine cycle. The time required to complete the execution cycle is referred to as execution time. Throughout this cycle, operations are controlled by the instruction register.

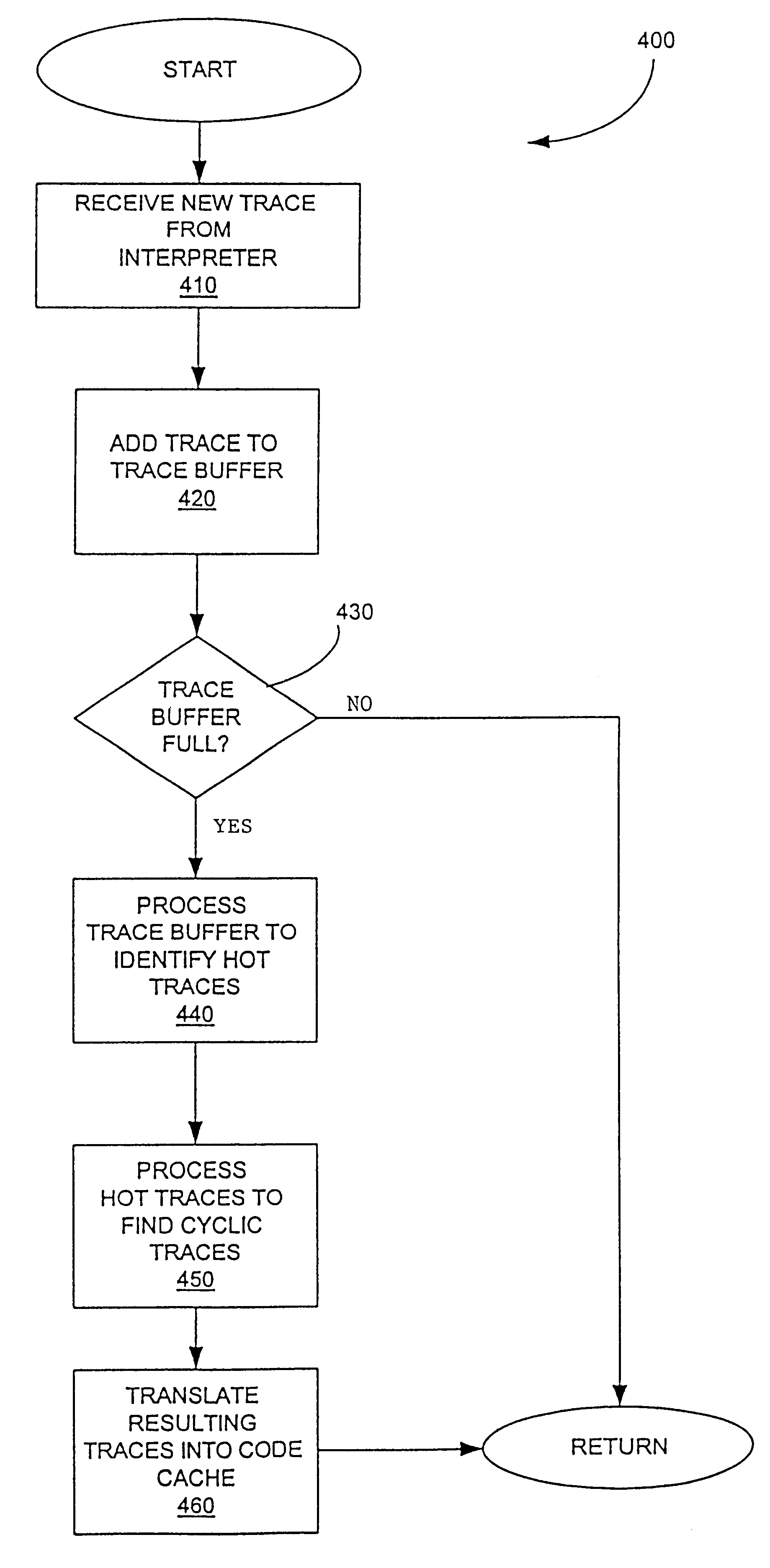

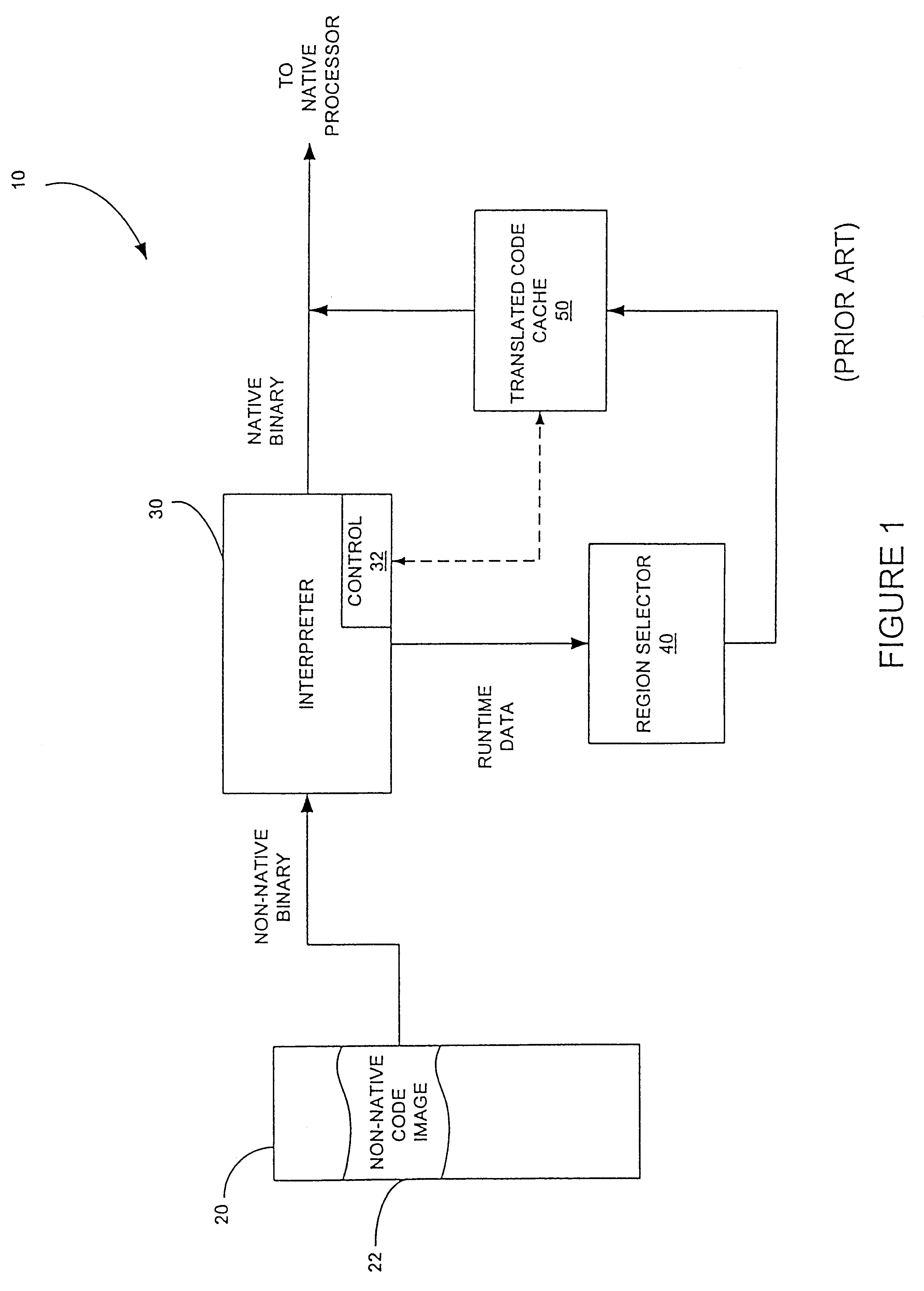

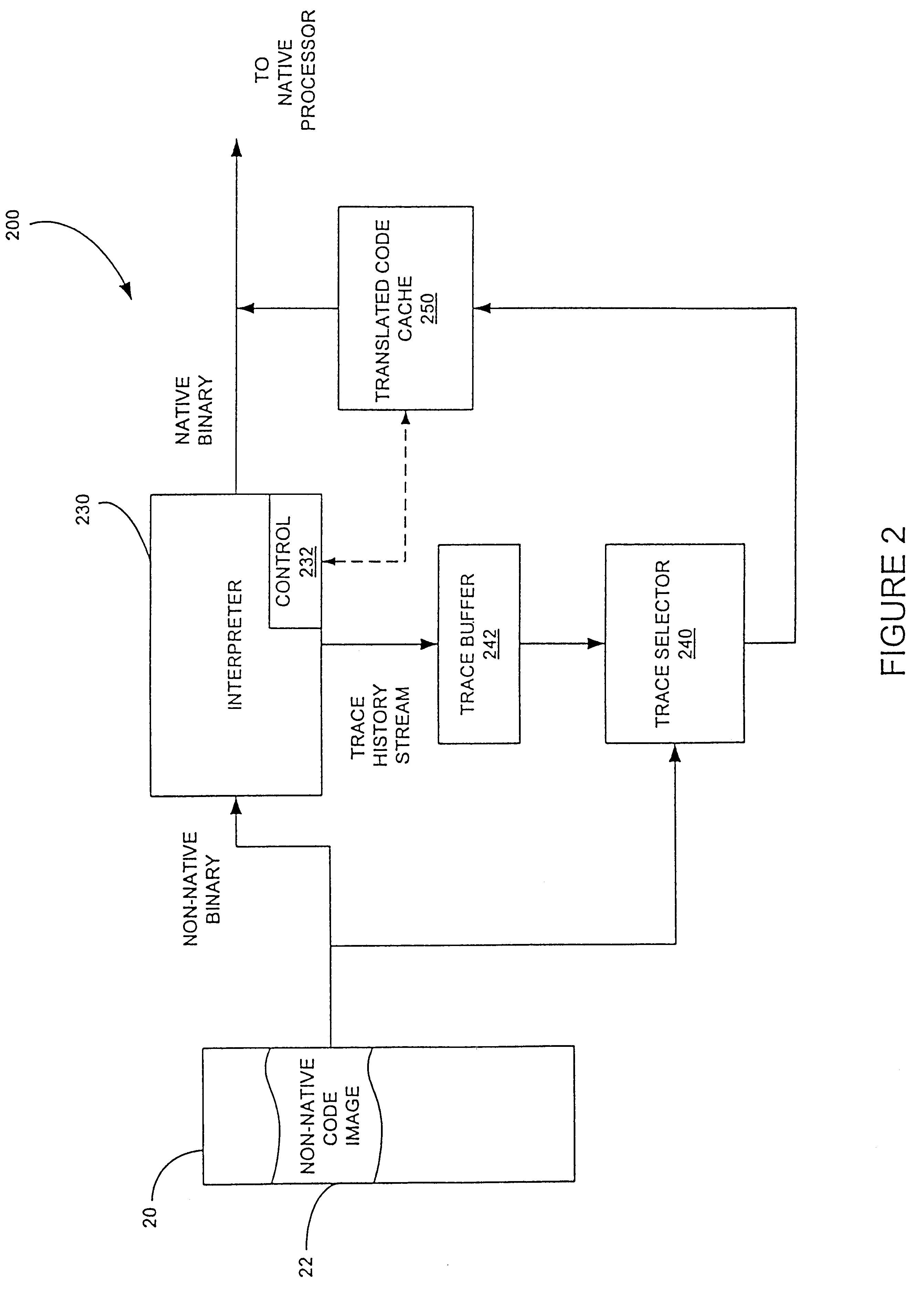

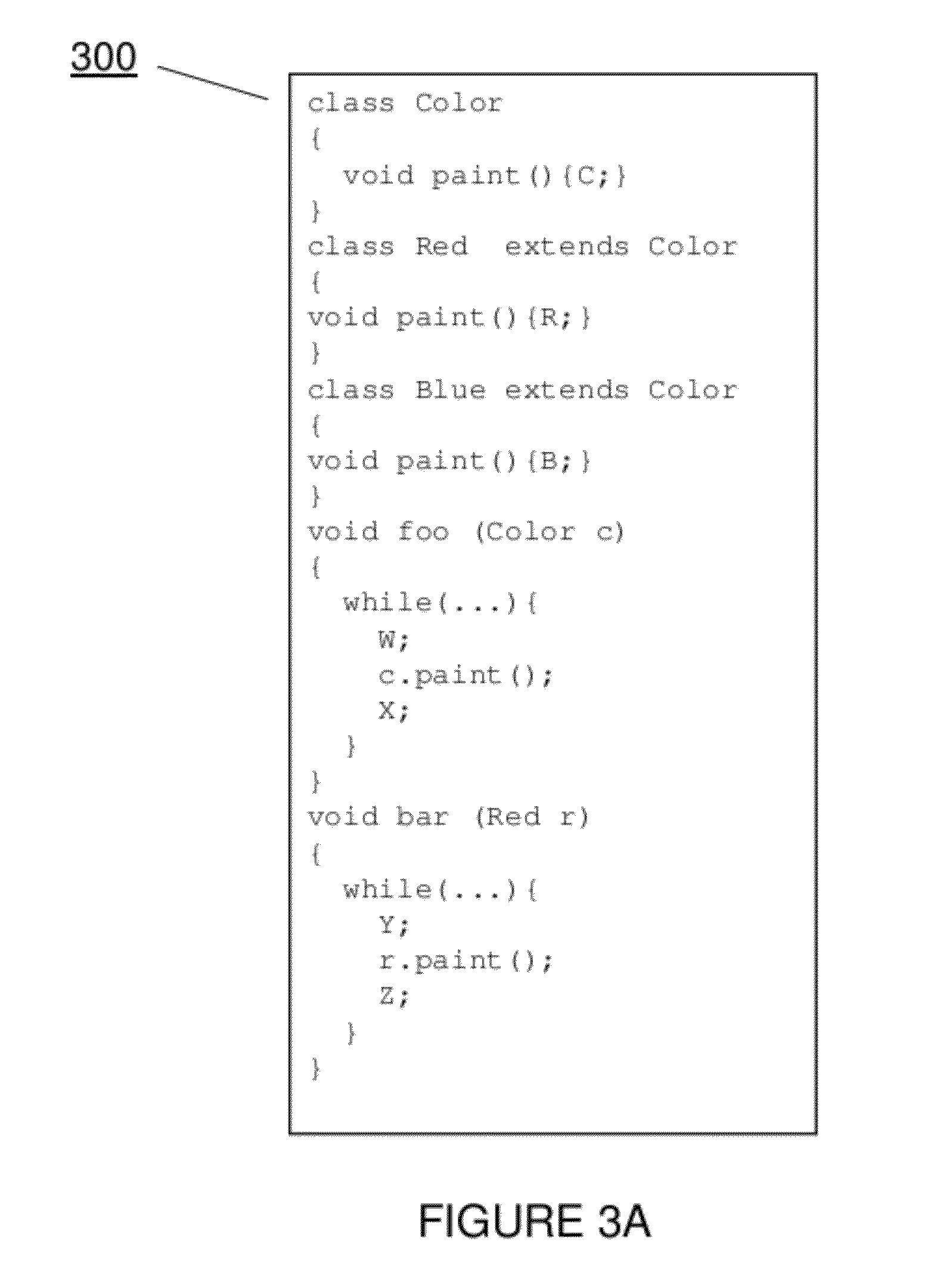

Method for selecting active code traces for translation in a caching dynamic translator

A method is shown for selecting active, or hot, code traces in an executing program for storage in a code cache. A trace is a sequence of dynamic instructions characterized by a start address and a branch history which allows the trace to be dynamically disassembled. Each trace is terminated by execution of a trace terminating condition which is a backward taken branch, an indirect branch, or a branch whose execution causes the branch history for the trace to reach a predetermined limit. As each trace is generated by the executing program, it is loaded into a buffer for processing. When the buffer is full, a counter corresponding to the start address of each trace is incremented. When the count for a start address exceeds a threshold, then the start address is marked as being hot. Each hot trace is then checked to see if the next trace in the buffer shares the same start address, in which case the hot trace is cyclic. If the start address of the next trace is not the same as the hot trace, then the traces in the buffer are checked to see they form a larger cycle of execution. If the traces subsequent to the hot trace are not hot themselves and are followed by a trace having the same start address as the hot trace, then their branch histories are companded with the branch history of the hot trace to form a cyclic trace. The cyclic traces are then disassembled and the instructions executed in the trace are stored in a code cache.

Owner:HEWLETT PACKARD DEV CO LP

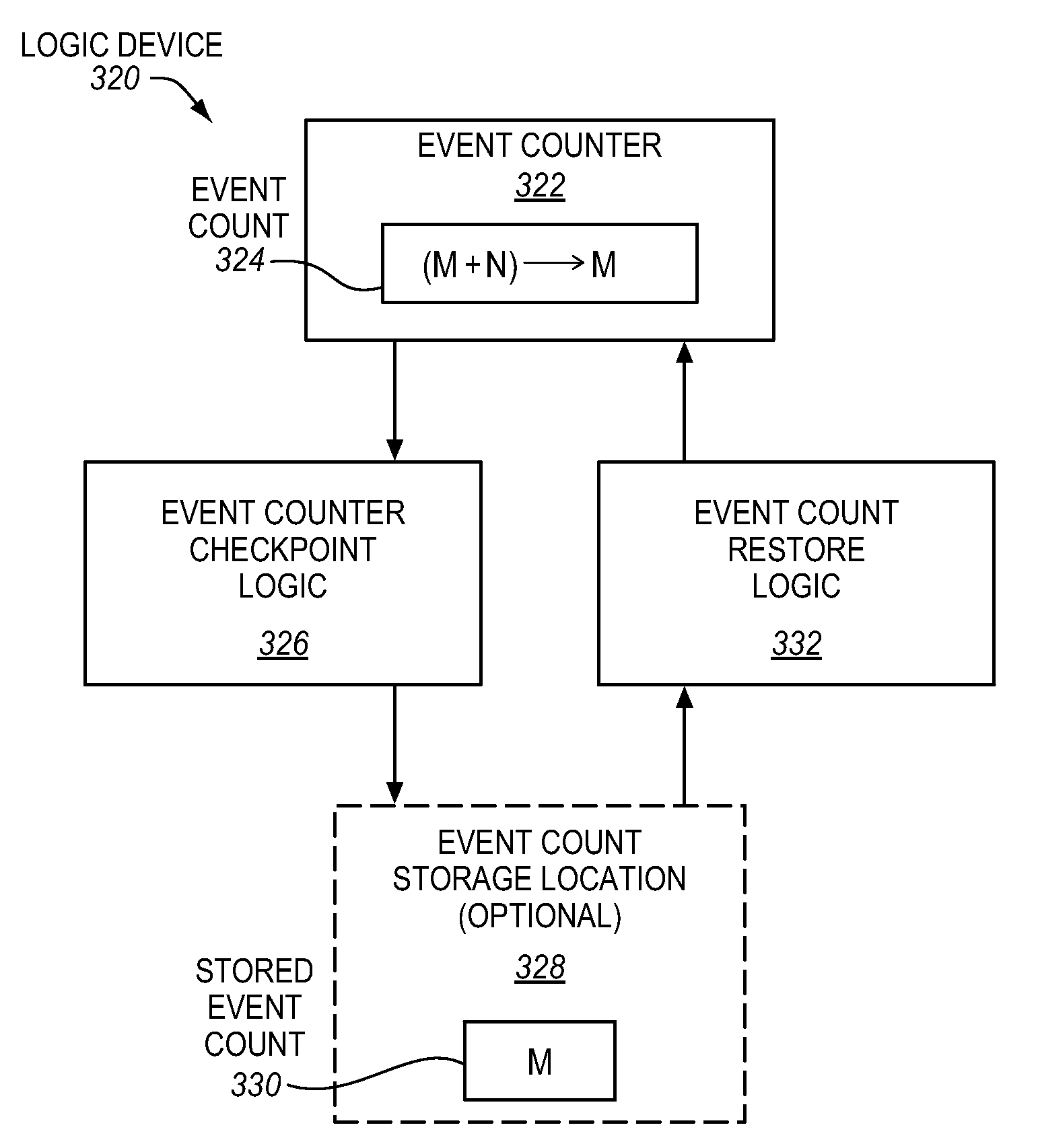

Method, apparatus, and system for speculative execution event counter checkpointing and restoring

InactiveUS20120227045A1Memory architecture accessing/allocationError detection/correctionSpeculative executionExecution cycle

An apparatus, method, and system are described herein for providing programmable control of performance / event counters. An event counter is programmable to track different events, as well as to be checkpointed when speculative code regions are encountered. So when a speculative code region is aborted, the event counter is able to be restored to it pre-speculation value. Moreover, the difference between a cumulative event count of committed and uncommitted execution and the committed execution, represents an event count / contribution for uncommitted execution. From information on the uncommitted execution, hardware / software may be tuned to enhance future execution to avoid wasted execution cycles.

Owner:INTEL CORP

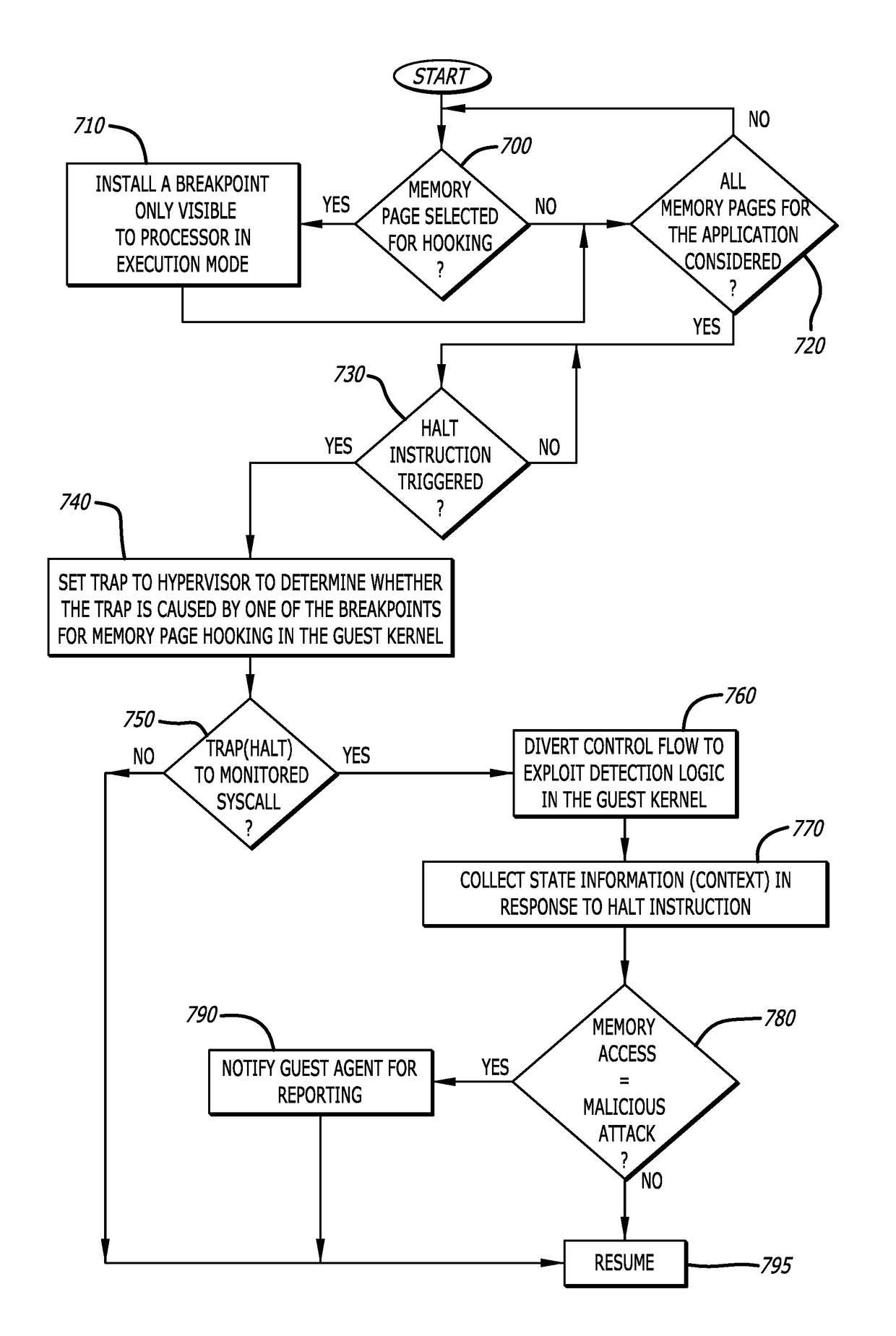

System and method of threat detection under hypervisor control

ActiveUS10033759B1Input/output to record carriersPlatform integrity maintainanceSystem callApplication software

A computing device is described that comprises one or more hardware processors and a memory communicatively coupled to the one or more hardware processors. The memory comprises software that, when executed by the processors, operates as (i) a virtual machine and (ii) a hypervisor. The virtual machine includes a guest kernel that facilitates communications between a guest application being processed within the virtual machine and one or more virtual resources. The hypervisor configures a portion of the guest kernel to intercept a system call from the guest application and redirect information associated with the system call to the hypervisor. The hypervisor enables logic within the guest kernel to analyze information associated with the system call to determine whether the system call is associated with a malicious attack in response to the system call being initiated during a memory page execution cycle. Alternatively, the hypervisor operates to obfuscate interception of the system call in response to the system call being initiated during memory page read cycle.

Owner:FIREEYE SECURITY HLDG US LLC

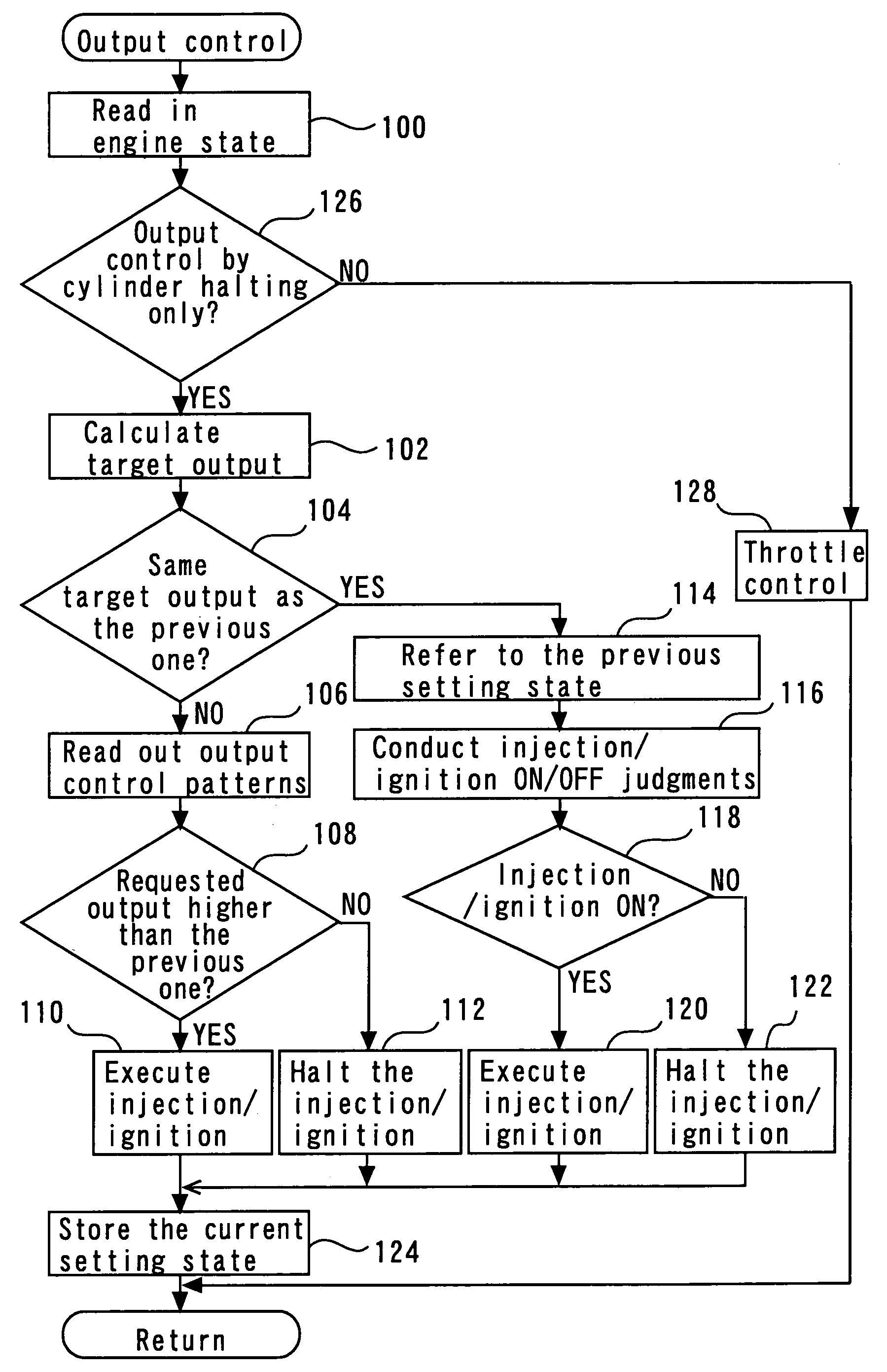

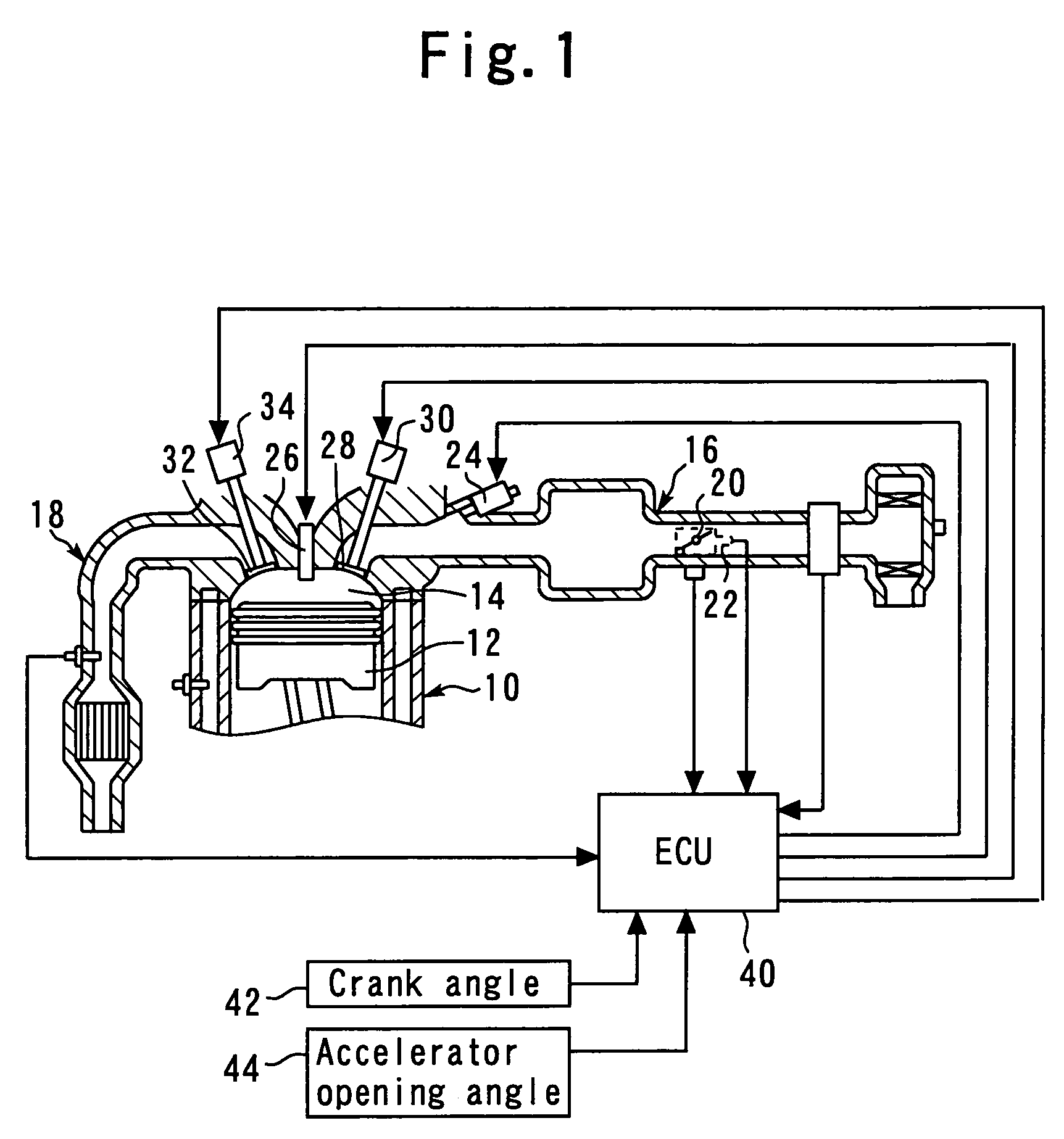

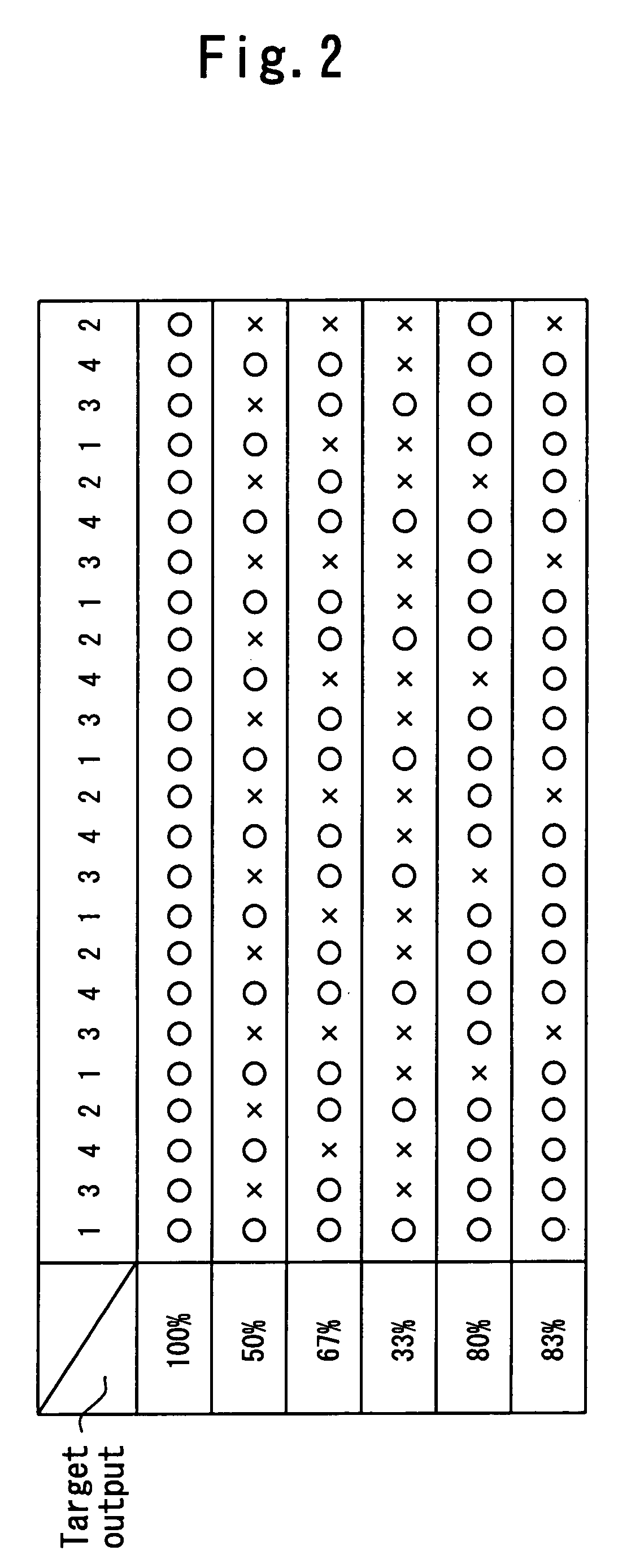

Output control system for internal combustion engine

InactiveUS7066136B2Easy to controlElectrical controlInternal combustion piston enginesCombustionExhaust valve

An intake electromagnetic driving valve and an exhaust electromagnetic driving valve are provided which use electromagnetic force to drive an intake valve and an exhaust valve, respectively. In step 102, the ratio between the number of combustion execution cycles and the number of combustion halts is set to obtain a desired target output value. Output control patterns that each consist of combustion execution timing equivalent to the required number of combustion execution cycles, and combustion halt timing equivalent to the required number of combustion halts are set in step 106, 114. In steps 108 to 112, or 118 to 122, in accordance with the output control patterns, whether combustion is to be executed is set with respect to the explosion timing that arrives in each cylinder in order.

Owner:TOYOTA JIDOSHA KK

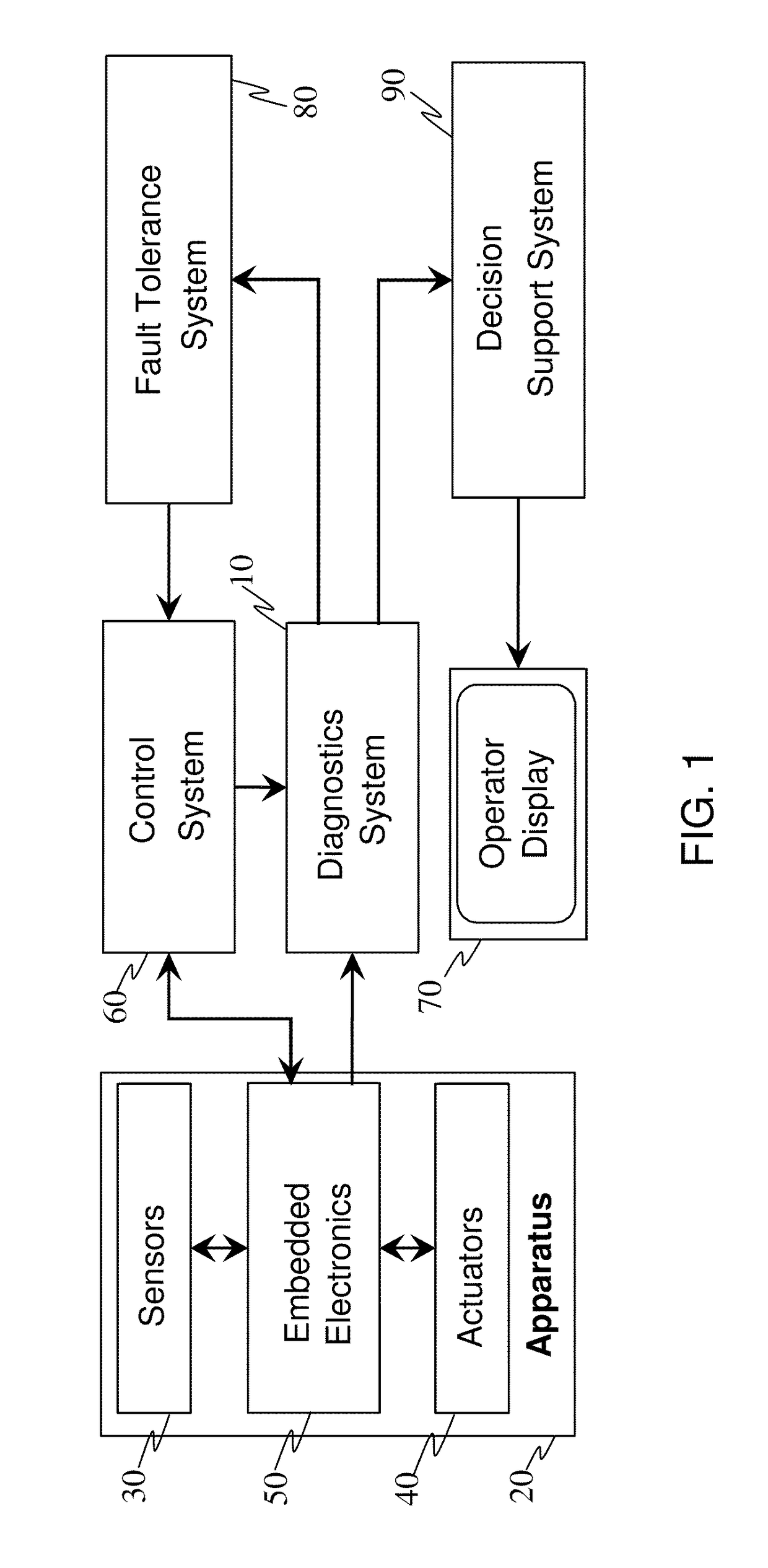

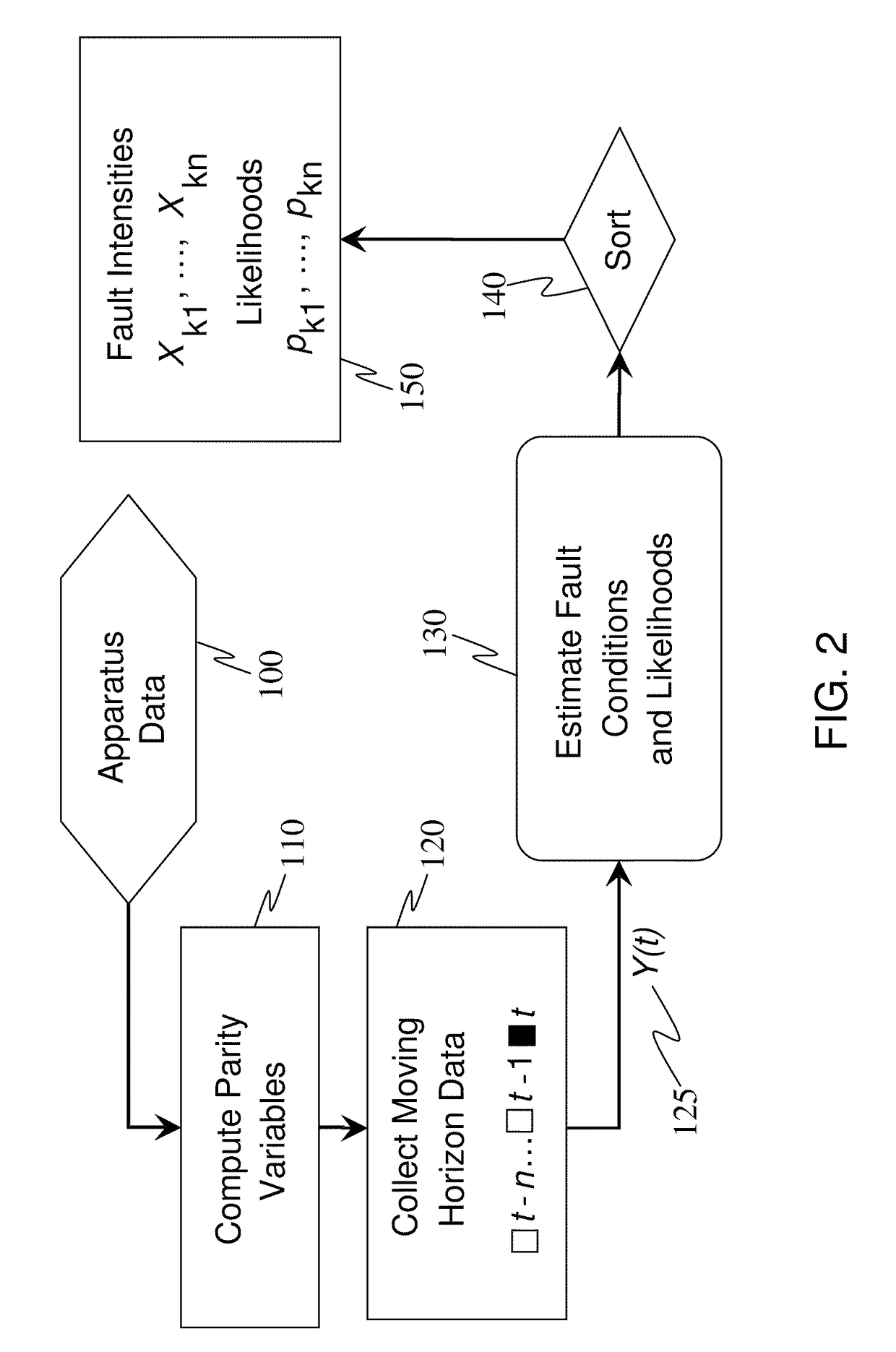

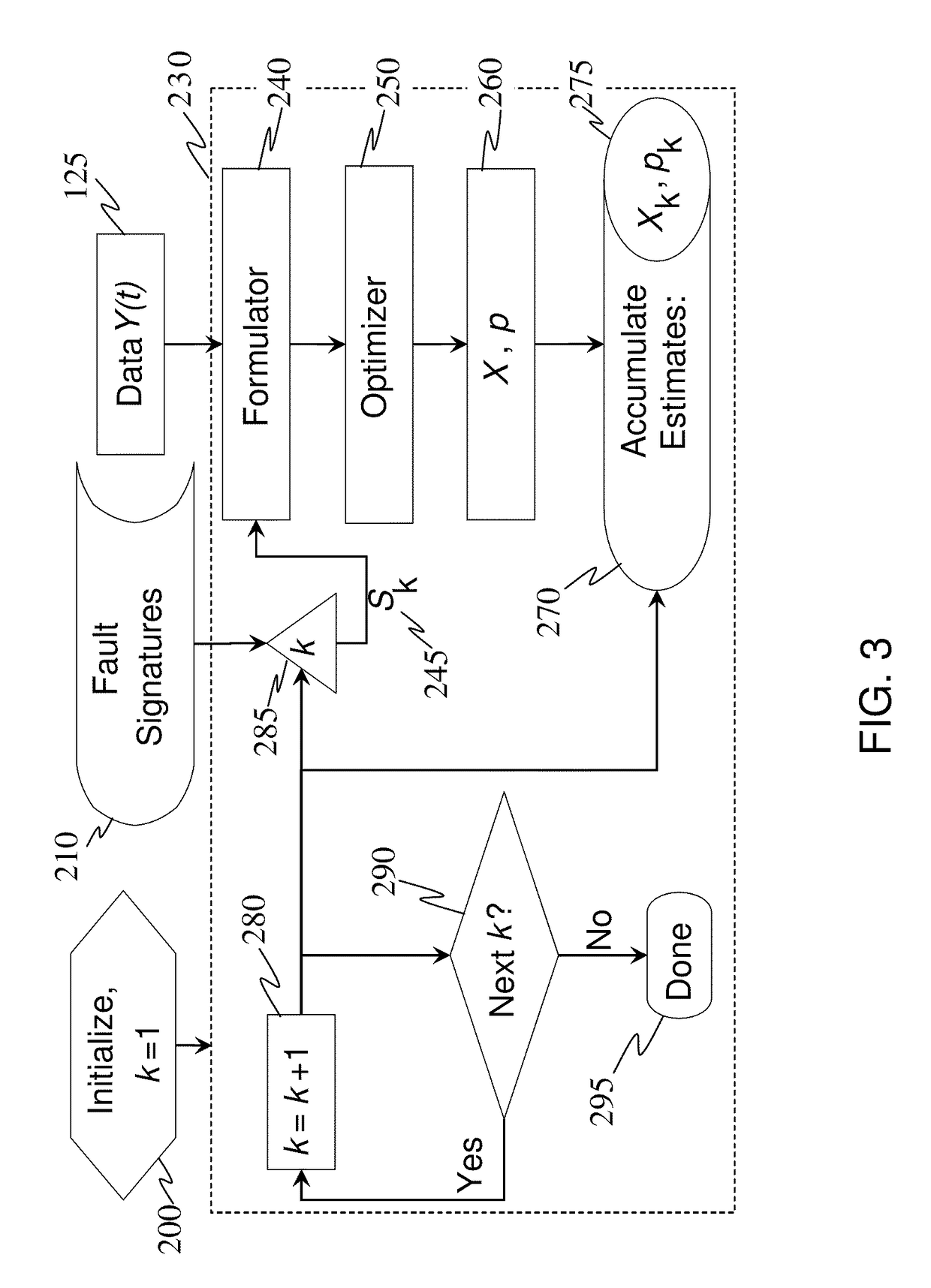

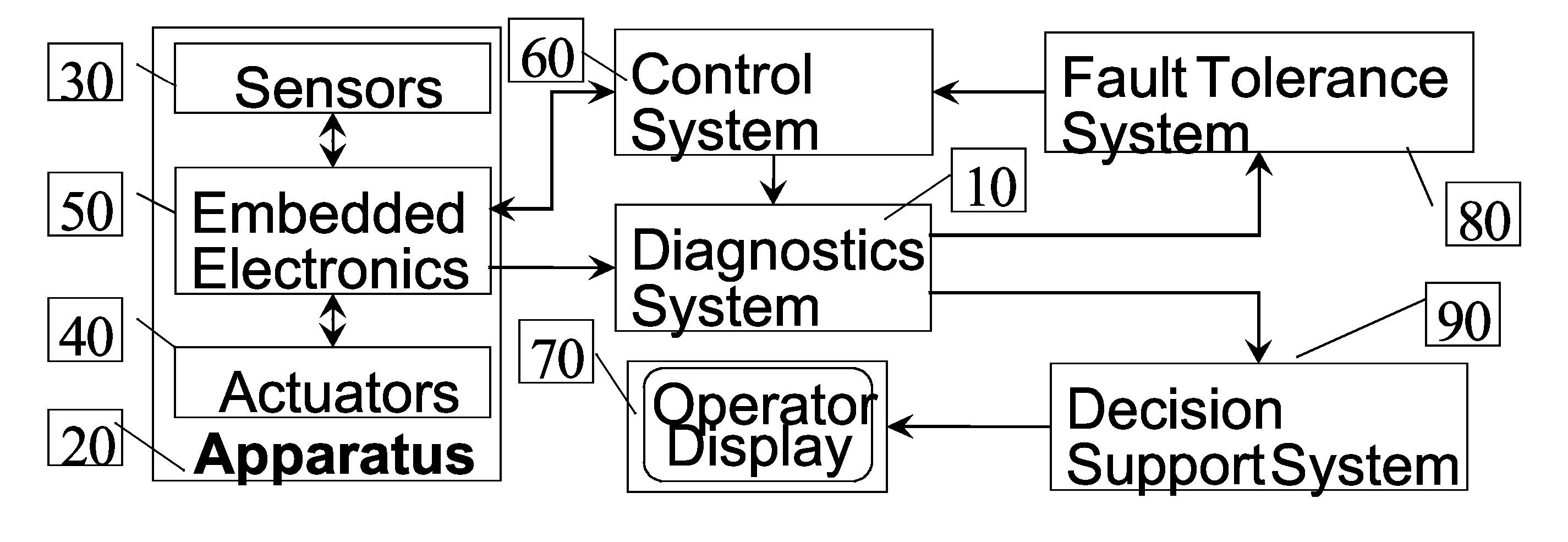

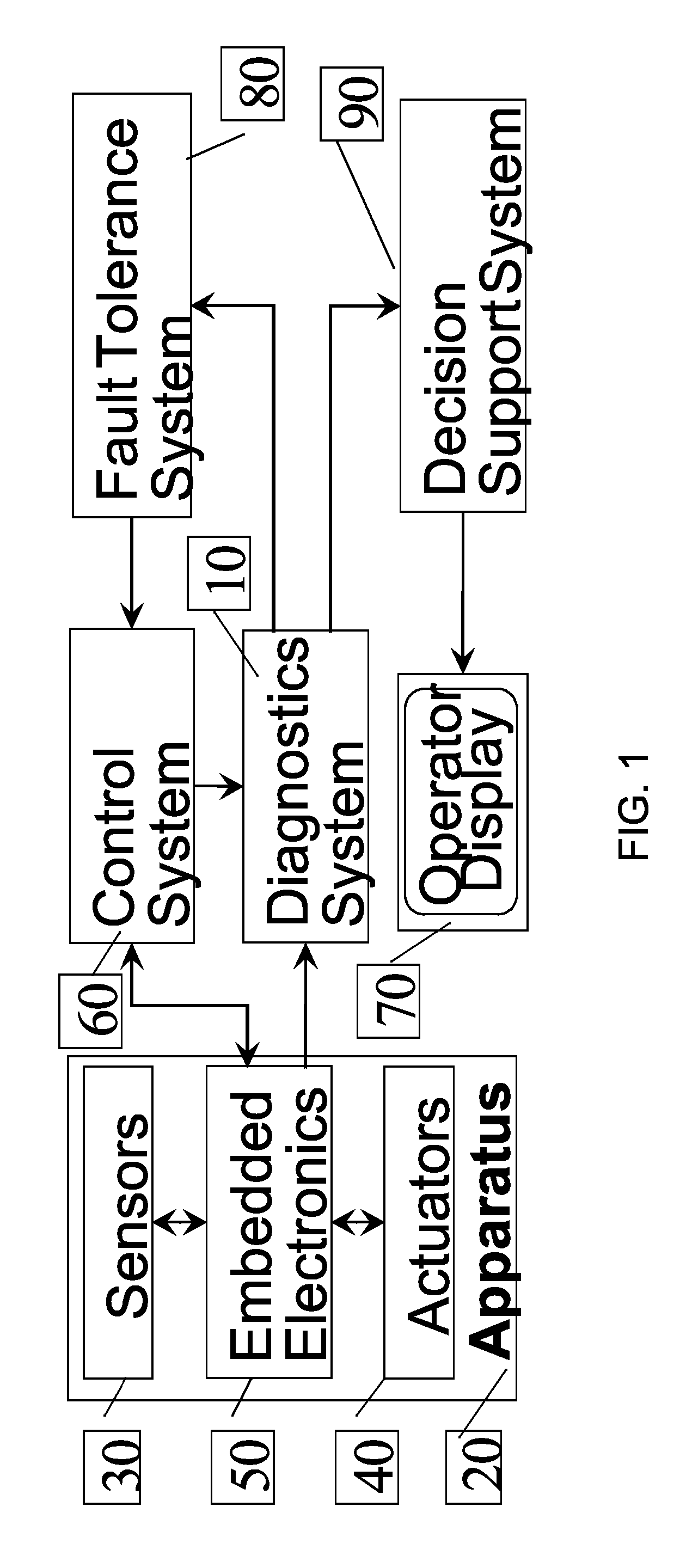

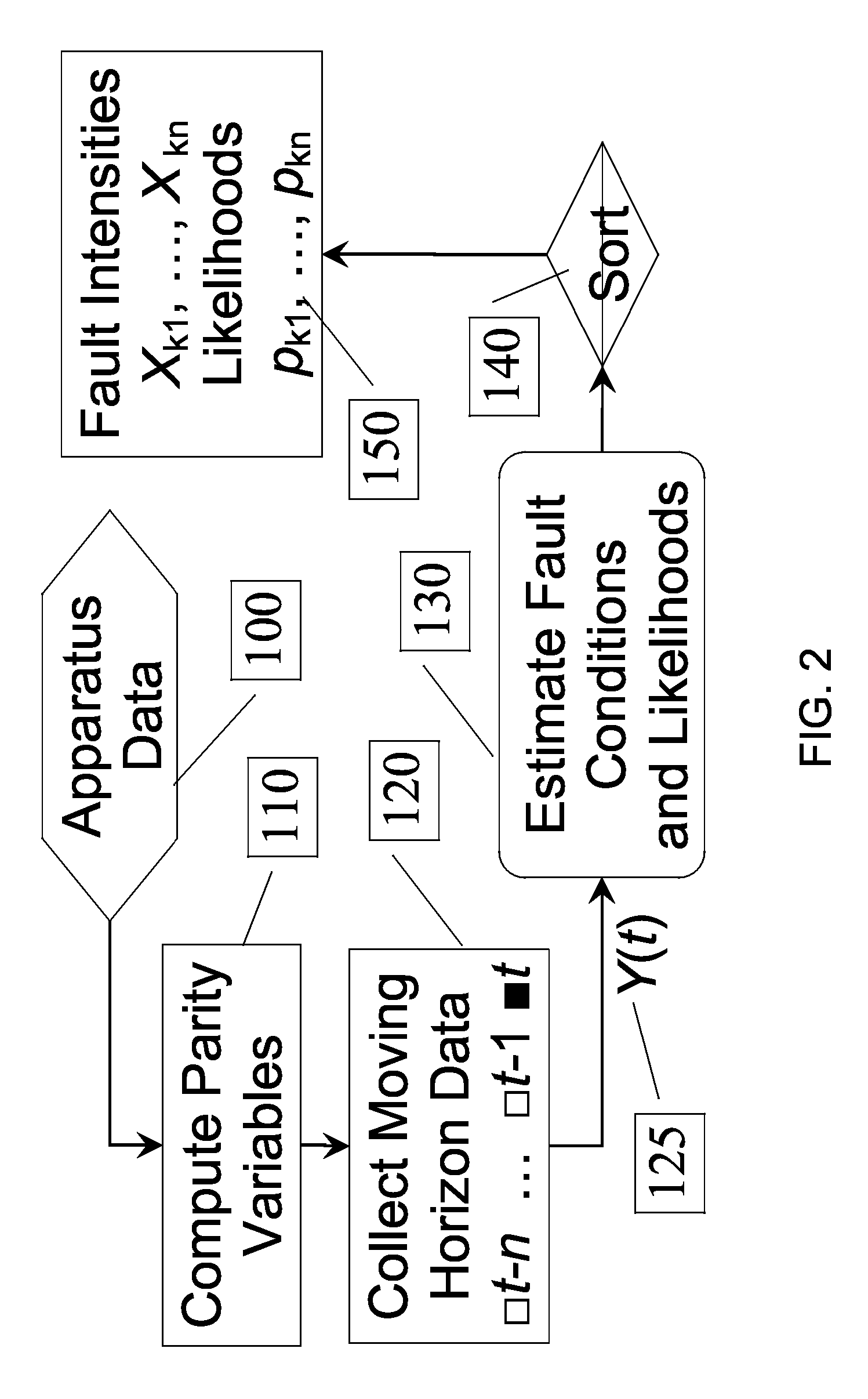

Method and system for diagnostics of apparatus

ActiveUS8121818B2Improve accuracyImprove performancePlug gaugesAmplifier modifications to reduce noise influenceControl systemEstimation methods

Proposed is a method, implemented in software, for estimating fault state of an apparatus outfitted with sensors. At each execution period the method processes sensor data from the apparatus to obtain a set of parity parameters, which are further used for estimating fault state. The estimation method formulates a convex optimization problem for each fault hypothesis and employs a convex solver to compute fault parameter estimates and fault likelihoods for each fault hypothesis. The highest likelihoods and corresponding parameter estimates are transmitted to a display device or an automated decision and control system. The obtained accurate estimate of fault state can be used to improve safety, performance, or maintenance processes for the apparatus.

Owner:MITEK ANALYTICS

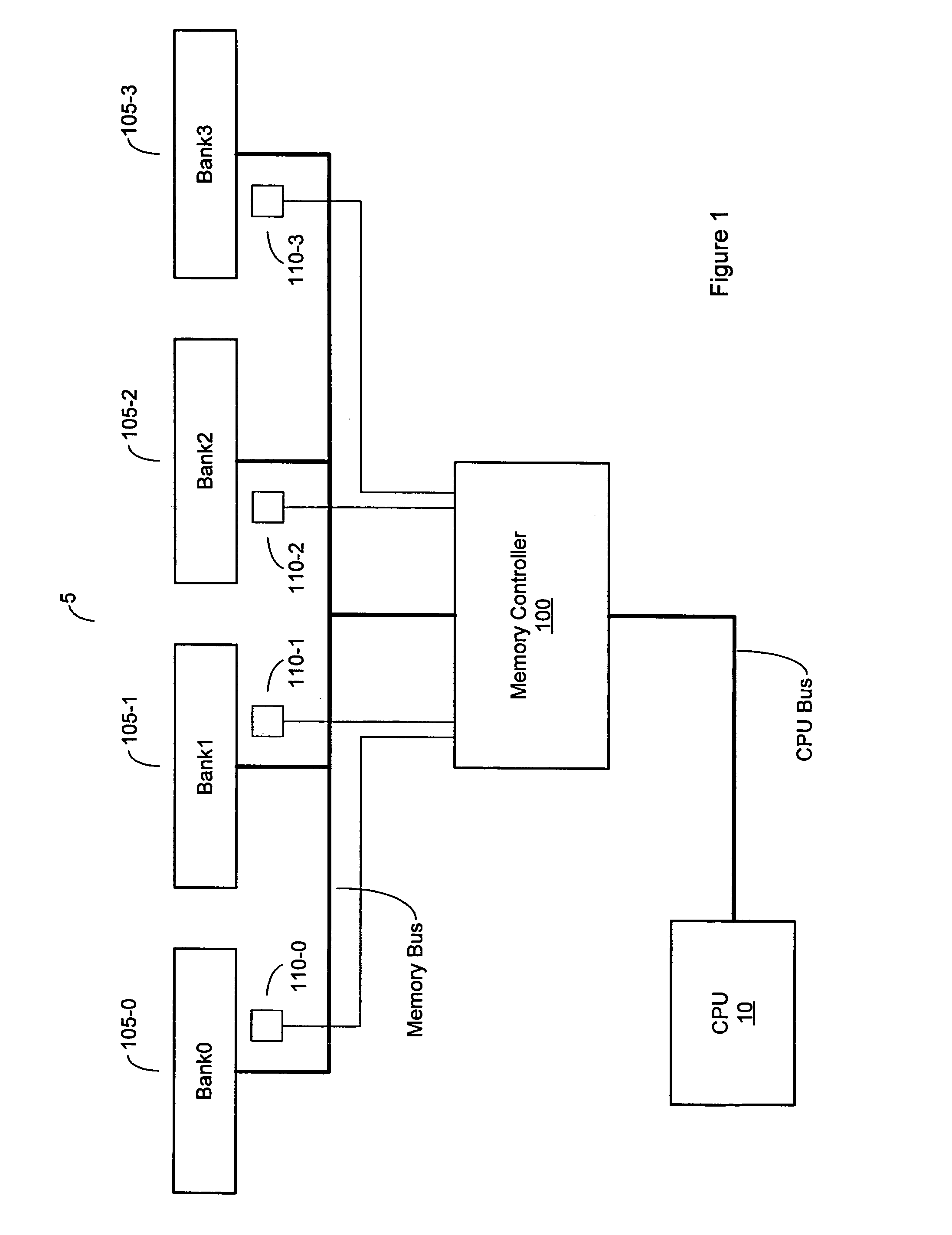

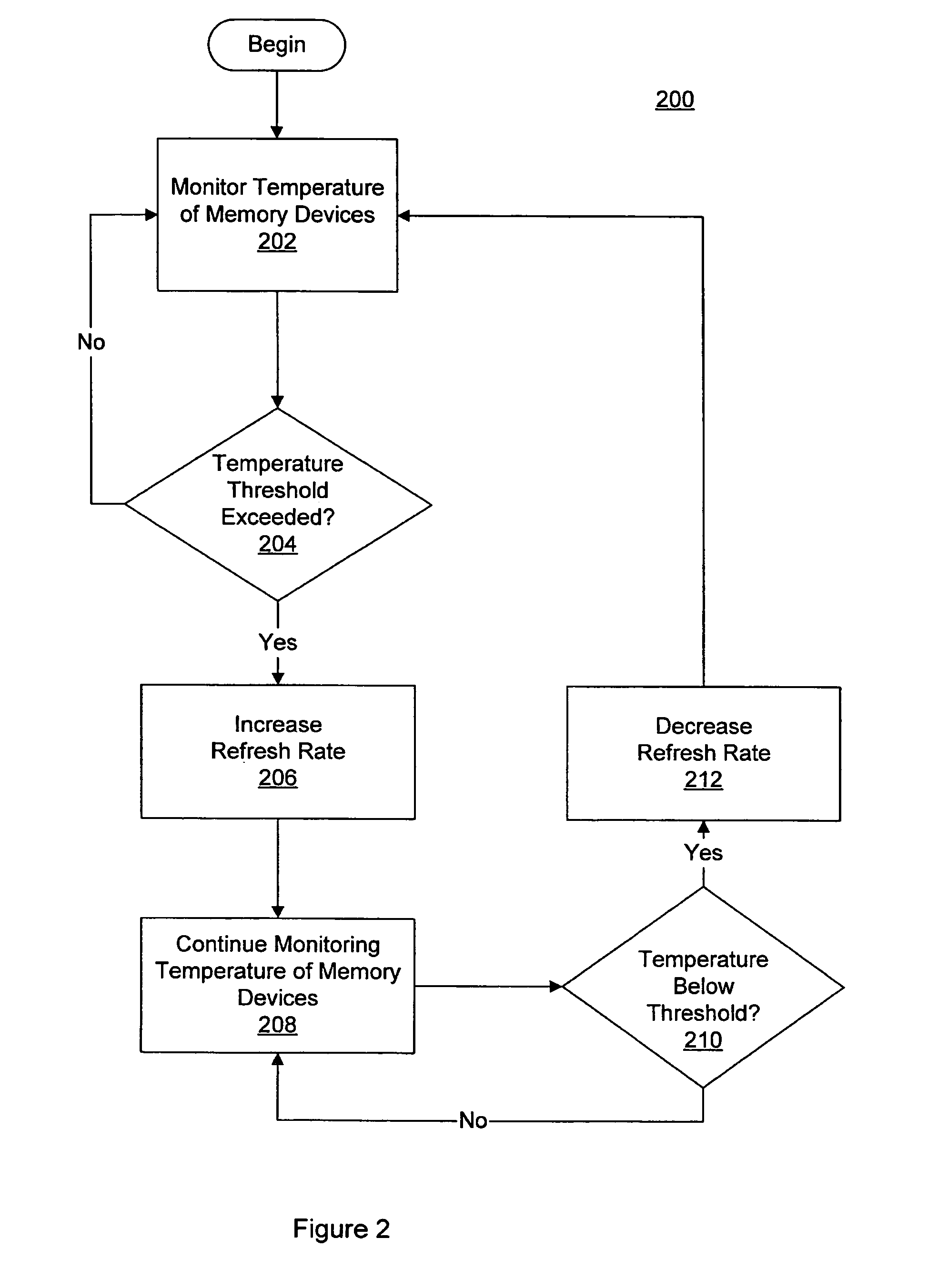

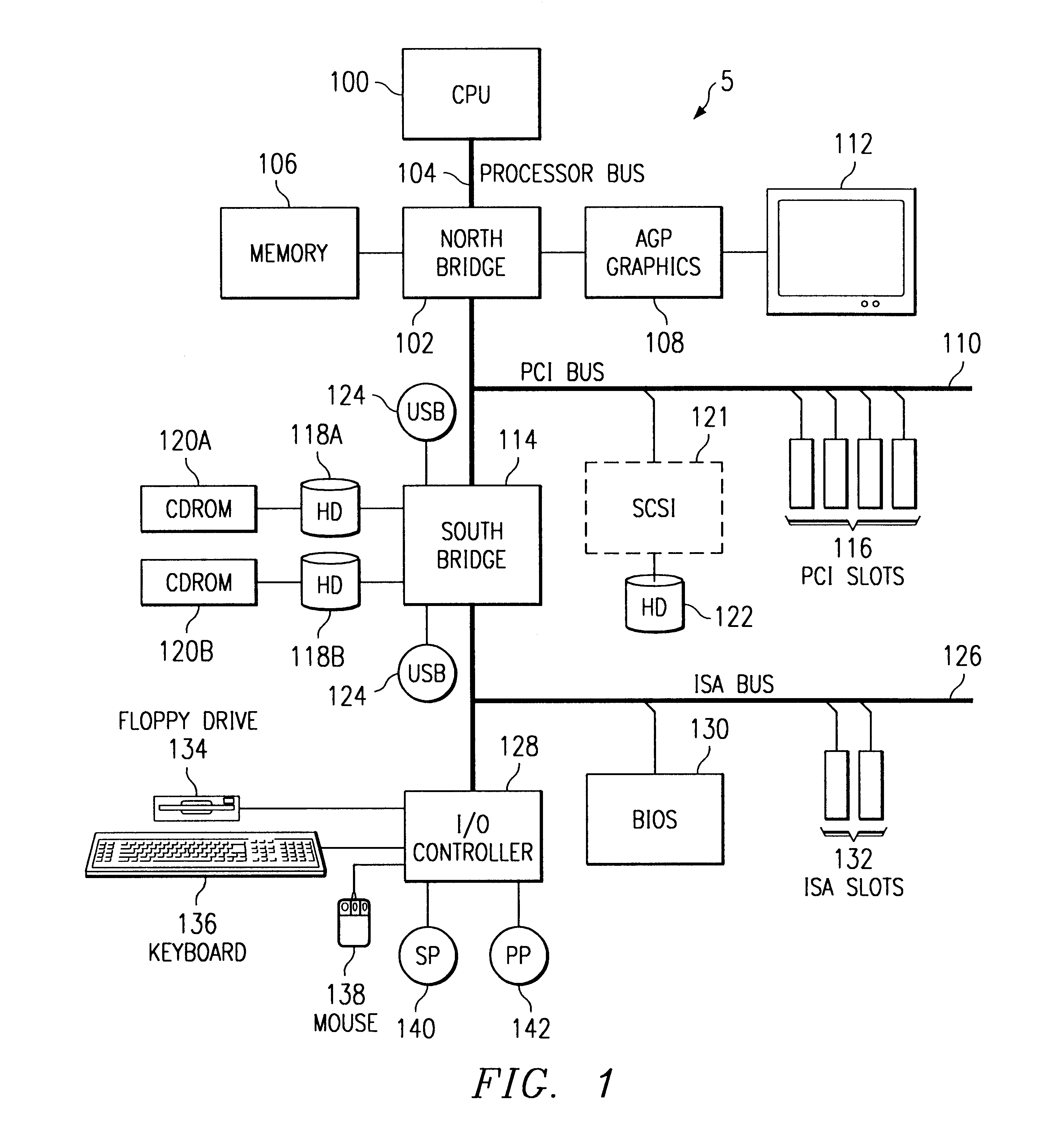

Variable memory refresh rate for DRAM

A method and apparatus for controlling a DRAM refresh rate. In one embodiment, a computer system includes a memory subsystem having a memory controller and one or more DRAM (dynamic random access memory) devices. The memory controller is configured to periodically initiate a refresh cycle to the one or more DRAM devices. The memory controller is also configured to monitor the temperature of the one or more DRAM devices. If the temperature exceeds a preset threshold, the memory controller is configured to increase the rate at which the periodic refresh cycle is performed.

Owner:ORACLE INT CORP

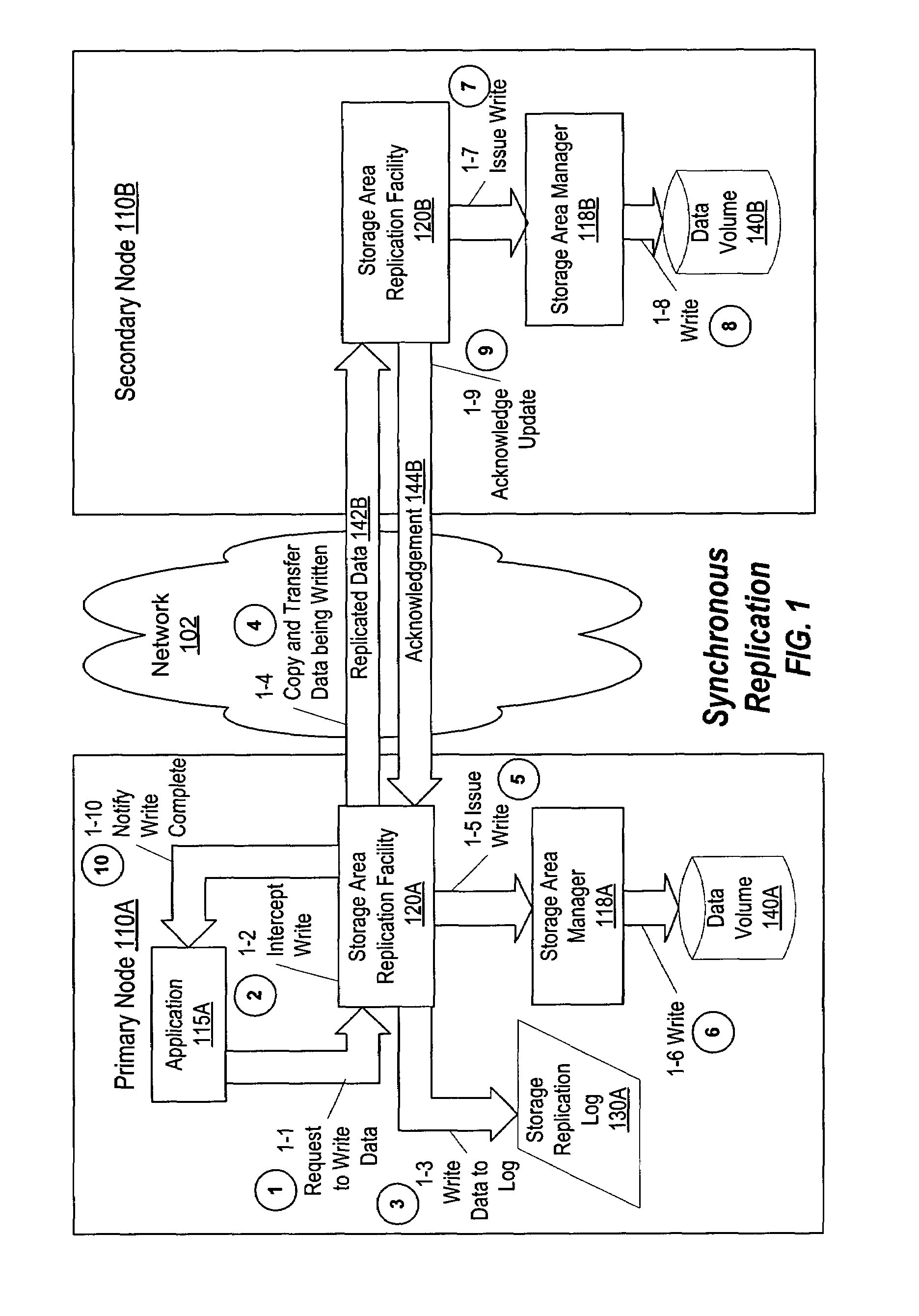

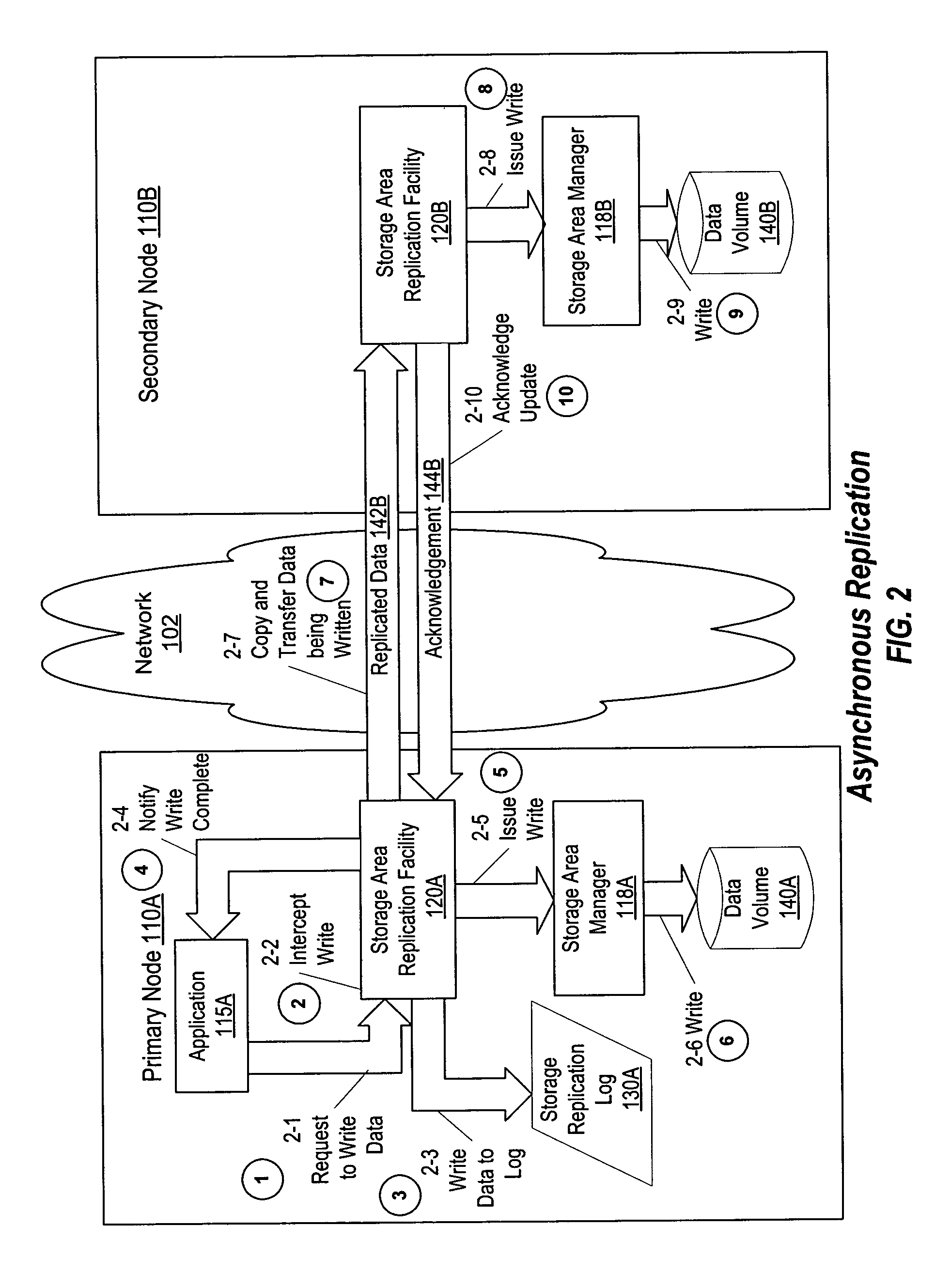

Method and system for performing periodic replication using a log

ActiveUS7406487B1Reduce data volumeReduce the amount requiredData processing applicationsDatabase distribution/replicationData shippingExecution cycle

Disclosed is a method and system for performing periodic replication using a write-ordered log. According to one embodiment, a plurality of write operations to a primary data volume are tracked using a write operation log and then data associated with the plurality of write operations is replicated to a secondary data volume by coalescing the plurality of write operations utilizing the write operation log and transferring data associated with the plurality of write operations to the secondary data volume. According to another embodiment the described tracking includes storing metadata associated with the plurality of write operations within the write operation log. In another embodiment, the described coalescing includes identifying a non-overlapping portion of a first write operation and a second write operation of the plurality of write operations utilizing the metadata.

Owner:SYMANTEC OPERATING CORP

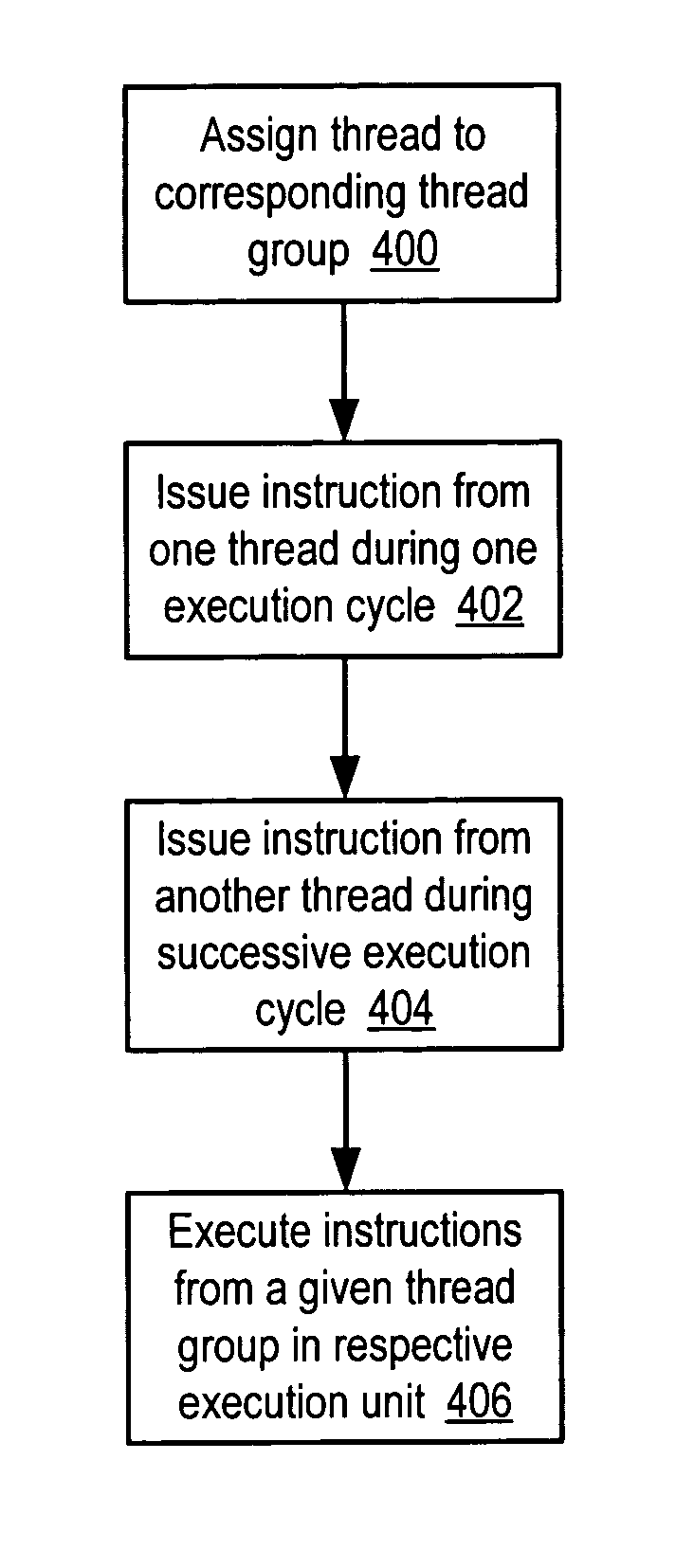

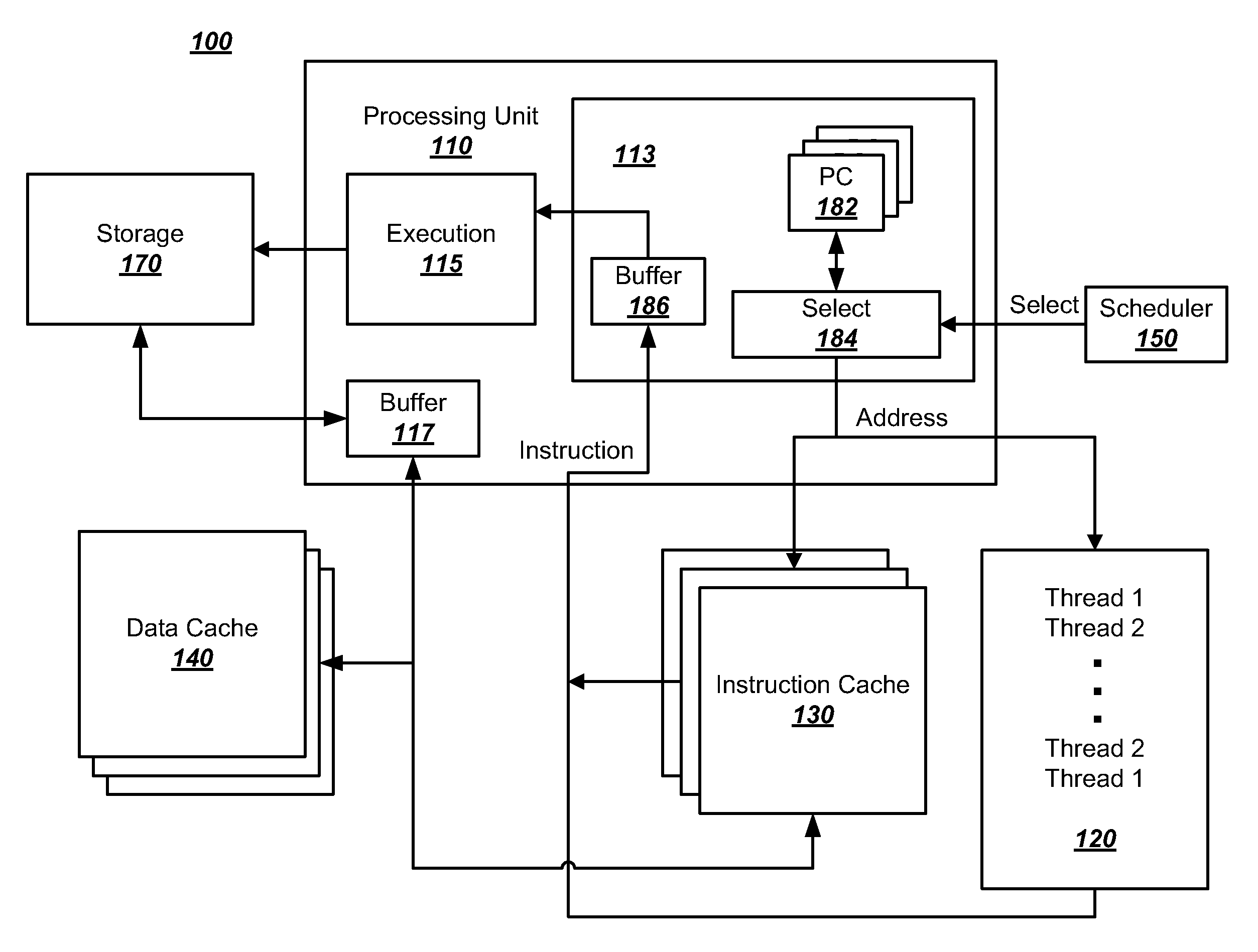

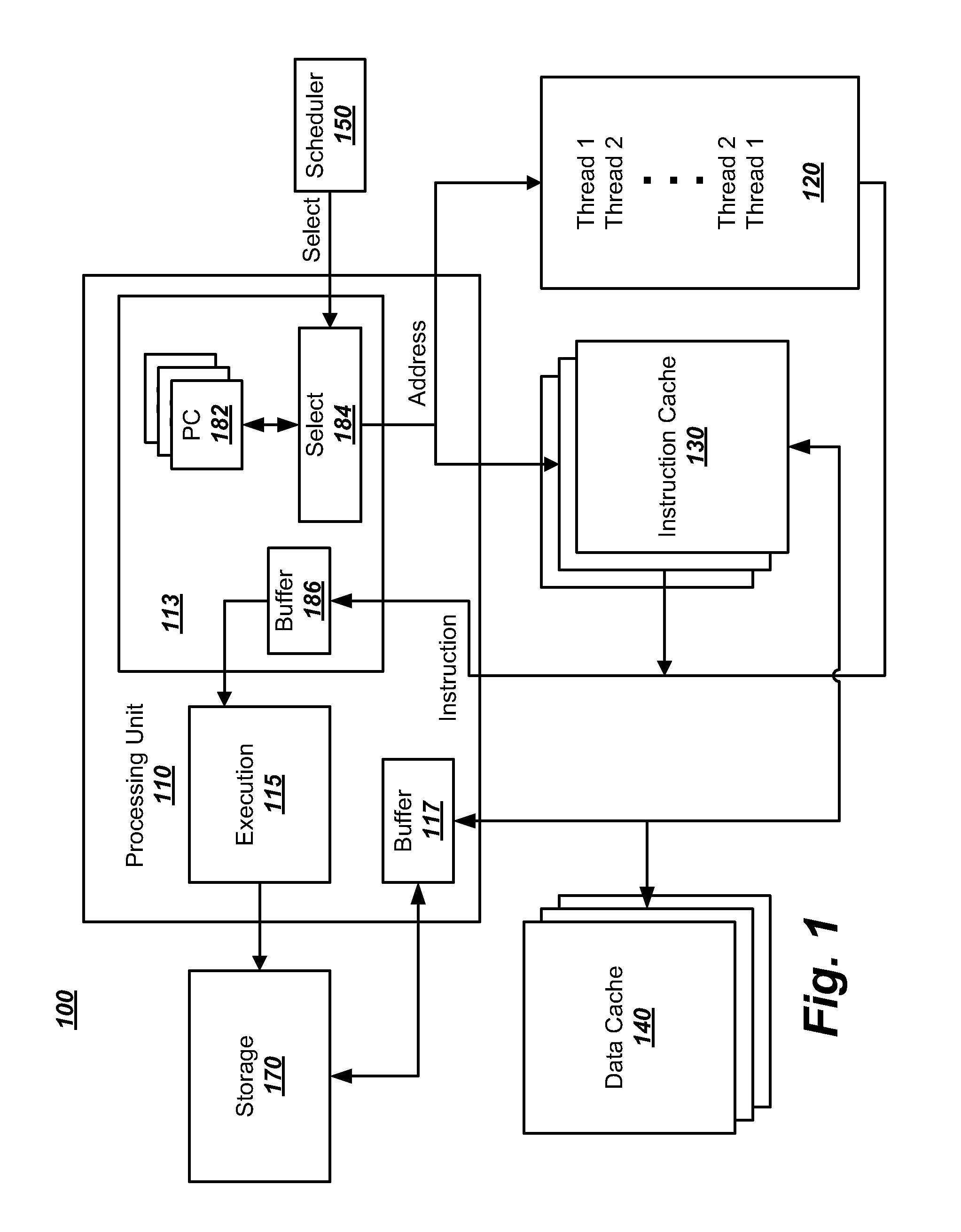

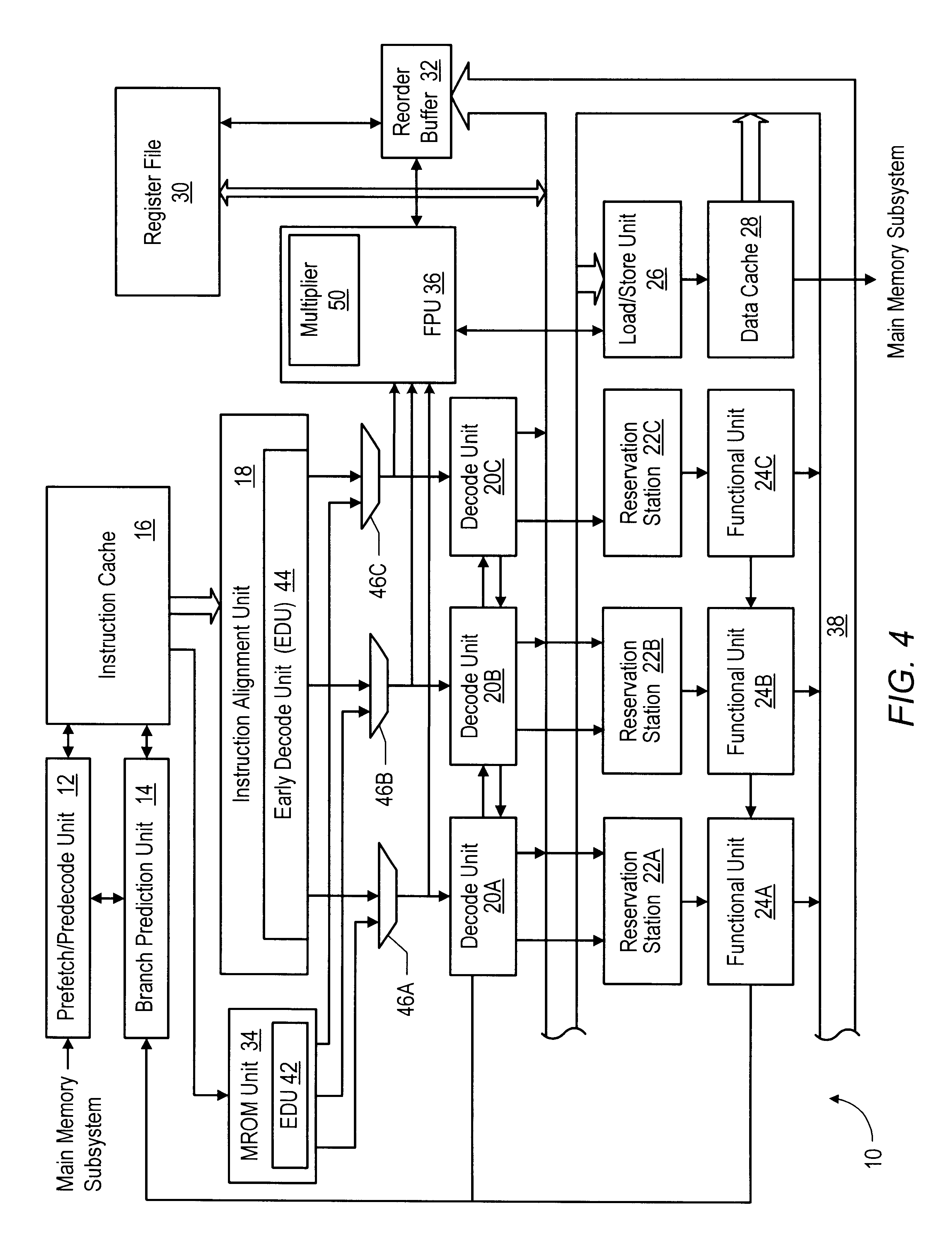

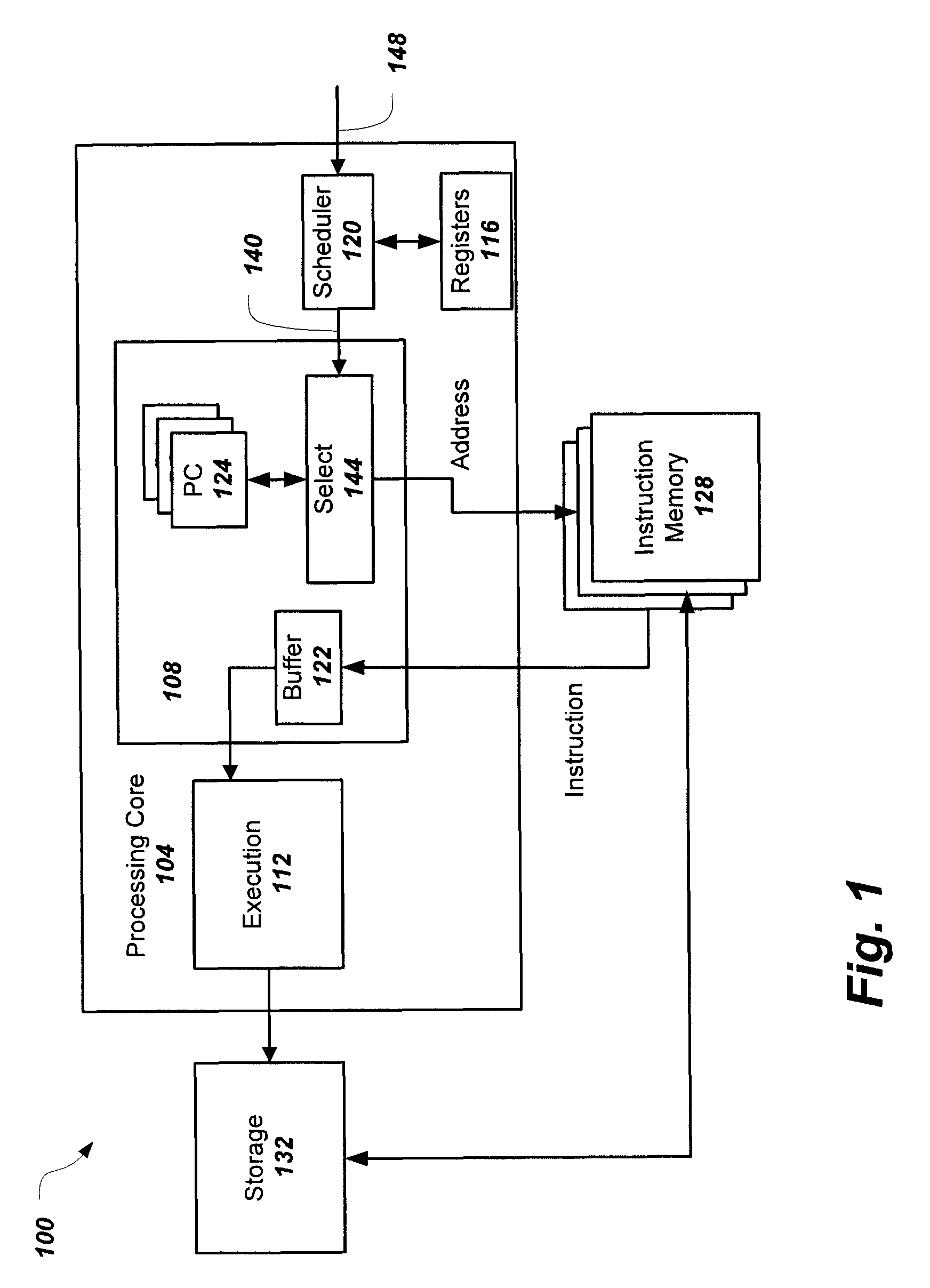

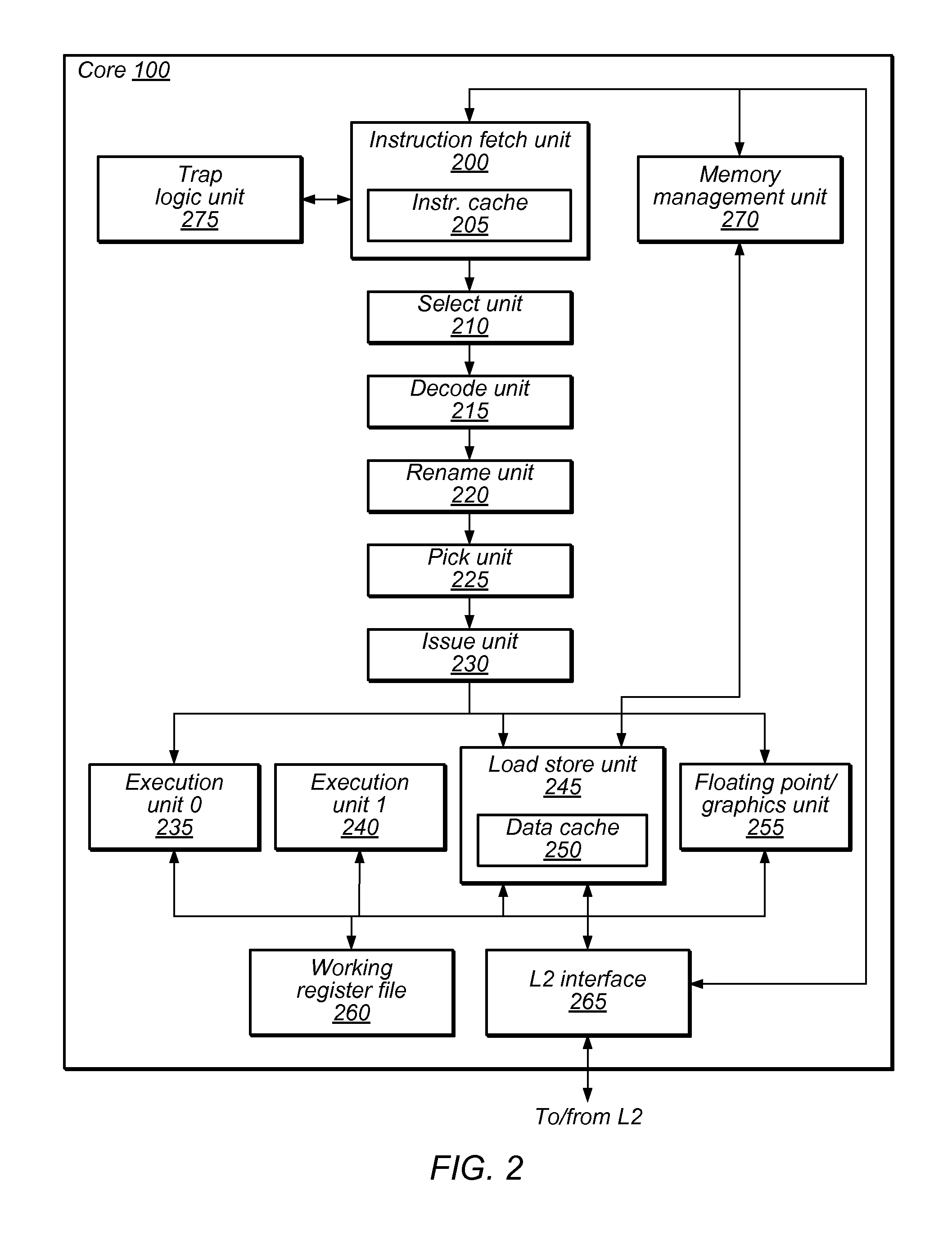

Apparatus and method for fine-grained multithreading in a multipipelined processor core

ActiveUS20060004995A1Digital computer detailsSpecific program execution arrangementsGranularityParallel computing

An apparatus and method for fine-grained multithreading in a multipipelined processor core. According to one embodiment, a processor may include instruction fetch logic configured to assign a given one of a plurality of threads to a corresponding one of a plurality of thread groups, where each of the plurality of thread groups may comprise a subset of the plurality of threads, to issue a first instruction from one of the plurality of threads during one execution cycle, and to issue a second instruction from another one of the plurality of threads during a successive execution cycle. The processor may further include a plurality of execution units, each configured to execute instructions issued from a respective thread group.

Owner:ORACLE INT CORP

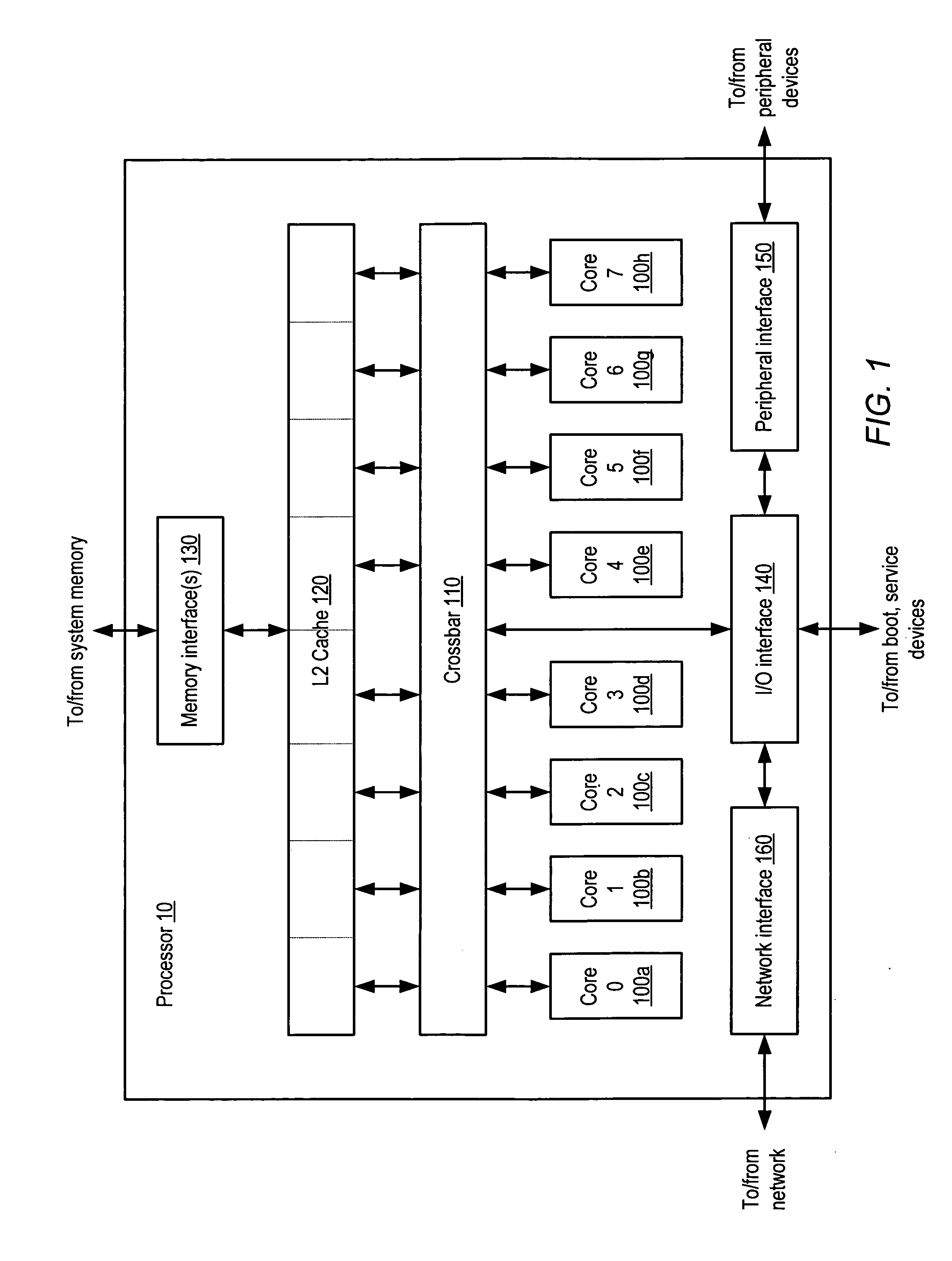

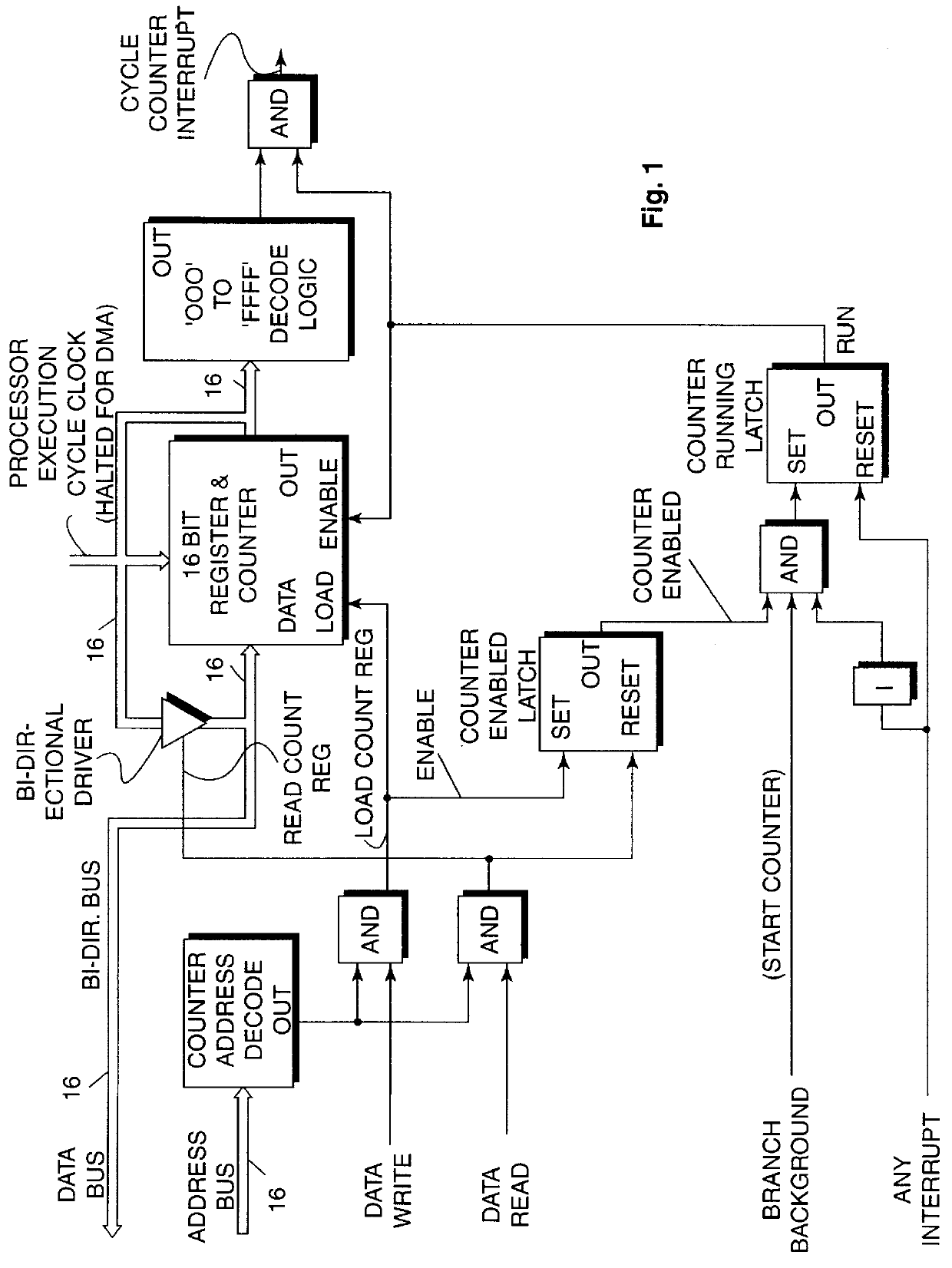

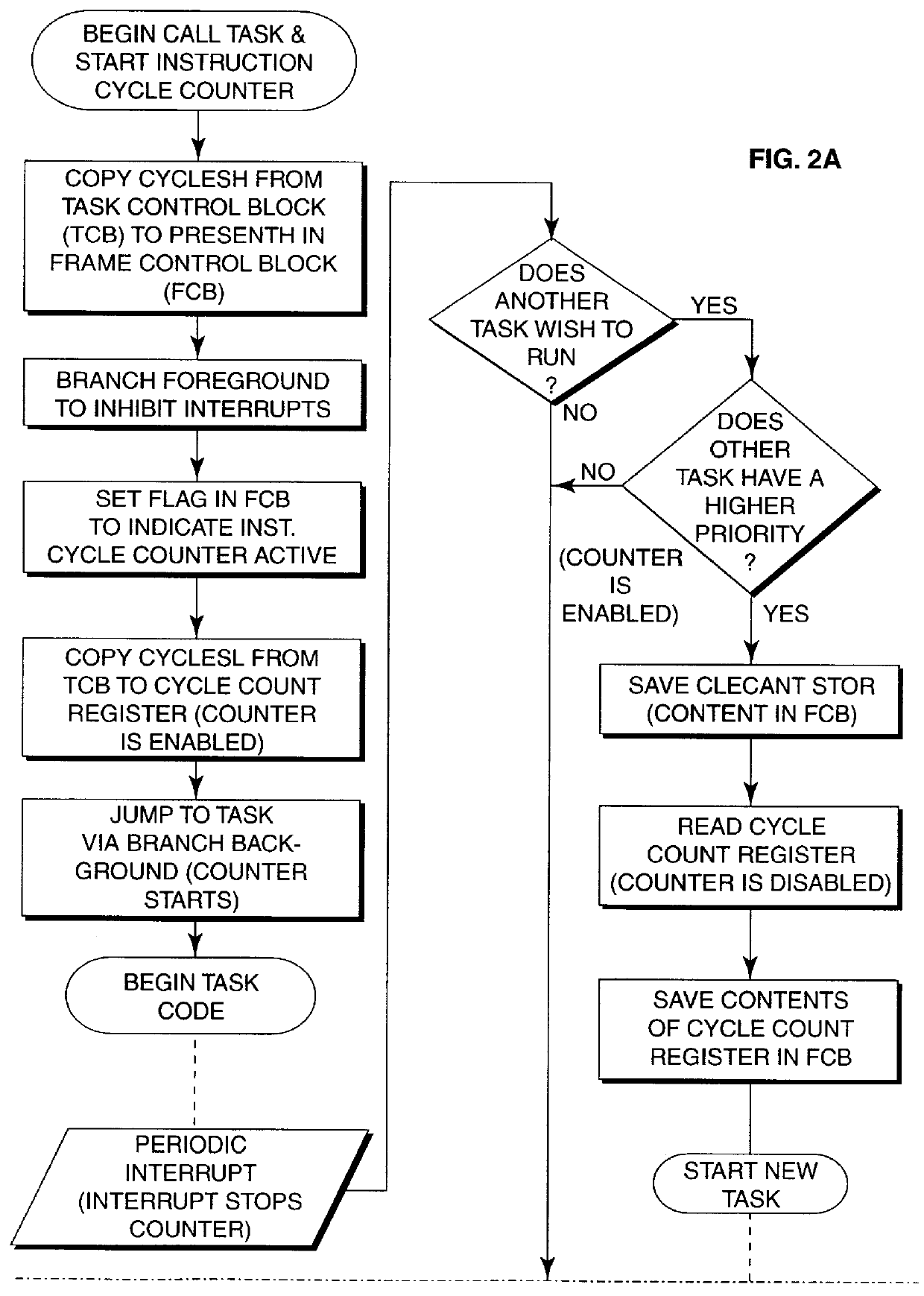

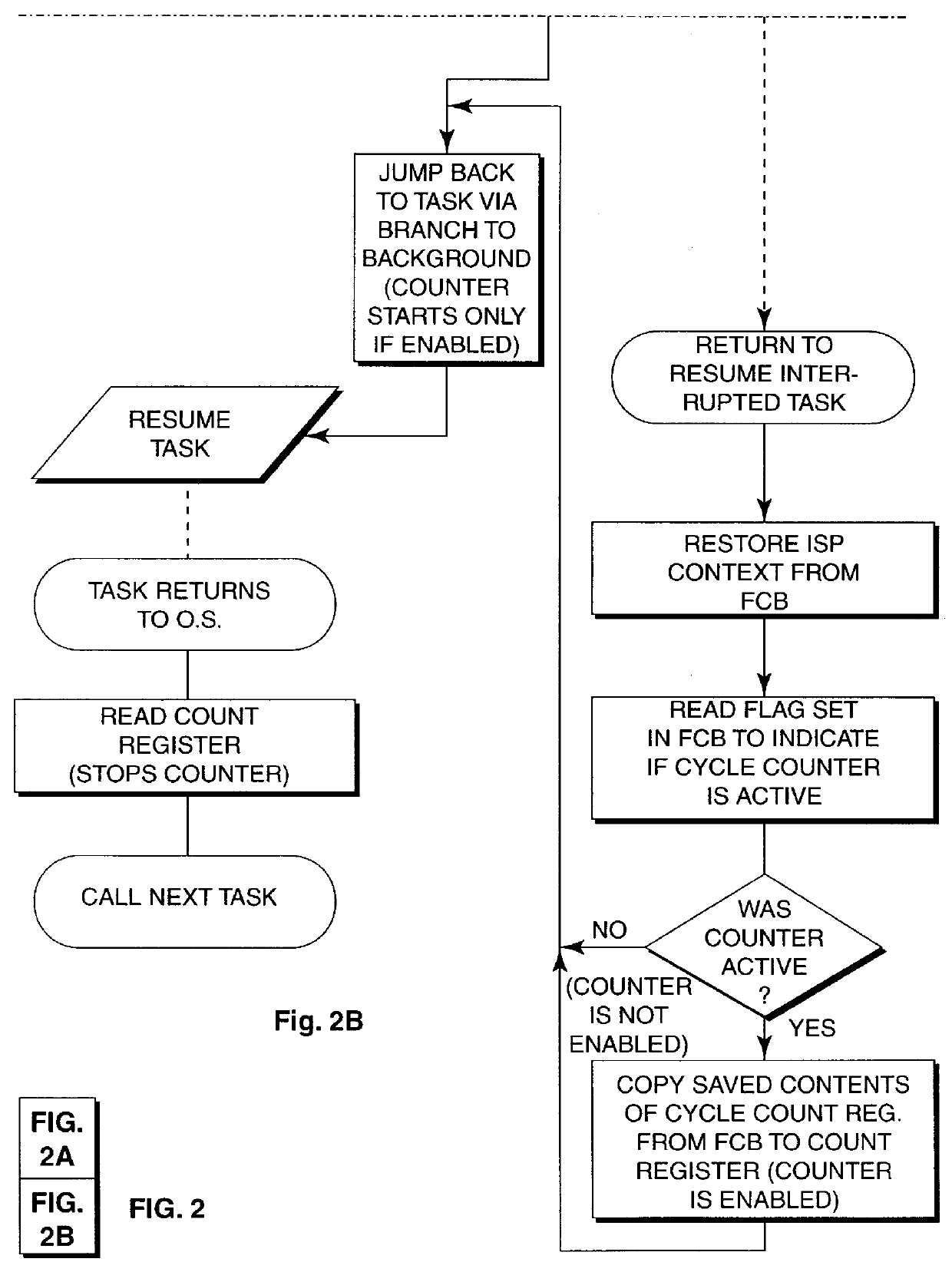

Monitoring processor execution cycles to prevent task overrun in multi-task, hard, real-time system

InactiveUS6085218AMinimal overheadIntegrity guaranteedDigital data processing detailsHardware monitoringEmbedded systemTime system

Hard, real-time, multi-tasking system is monitored by combined hardware and software and logic to detect overrun of any task beyond a declared maximum processor cycle limit for the task. Processor execution cycles utilized by DMA or interrupt processing and not related to the task being executed are not counted. Counter hardware and control logic reduces software overhead for monitoring execution cycle utilization by a task and provides capability not only of overrun detection, but programmed cycle usage alarm, consumed cycle count and overall processor loading or utilization measurements to be made.

Owner:IBM CORP

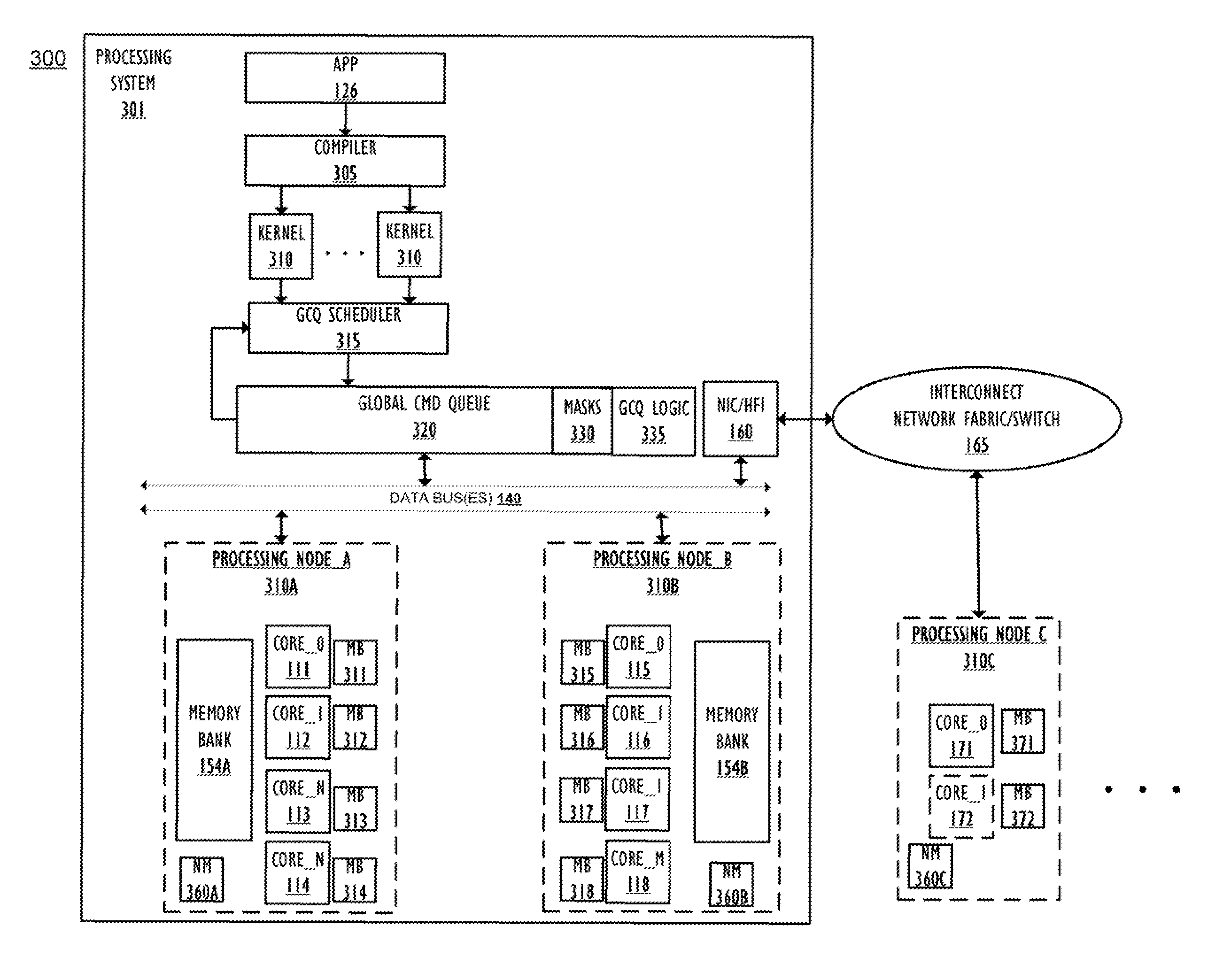

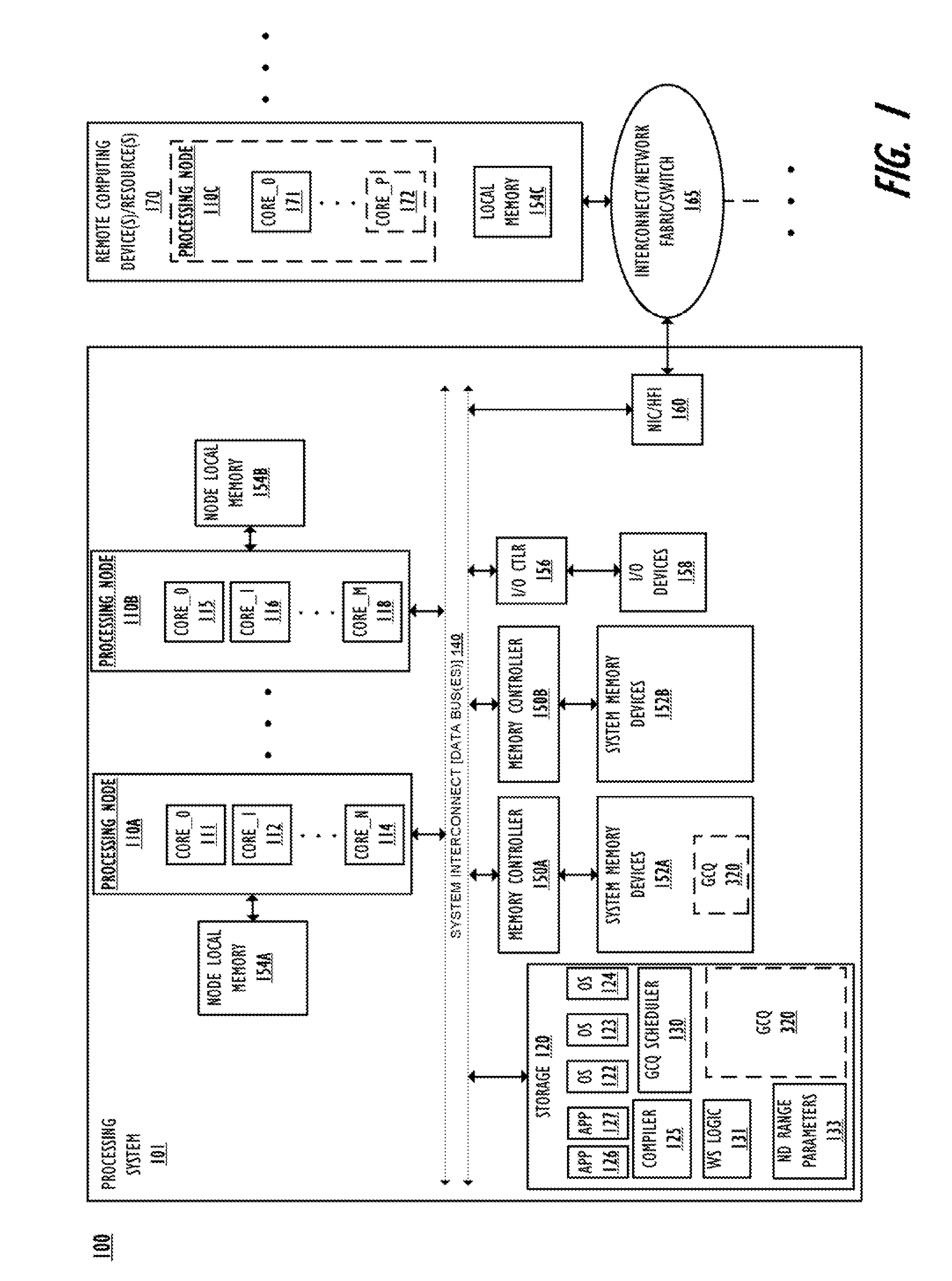

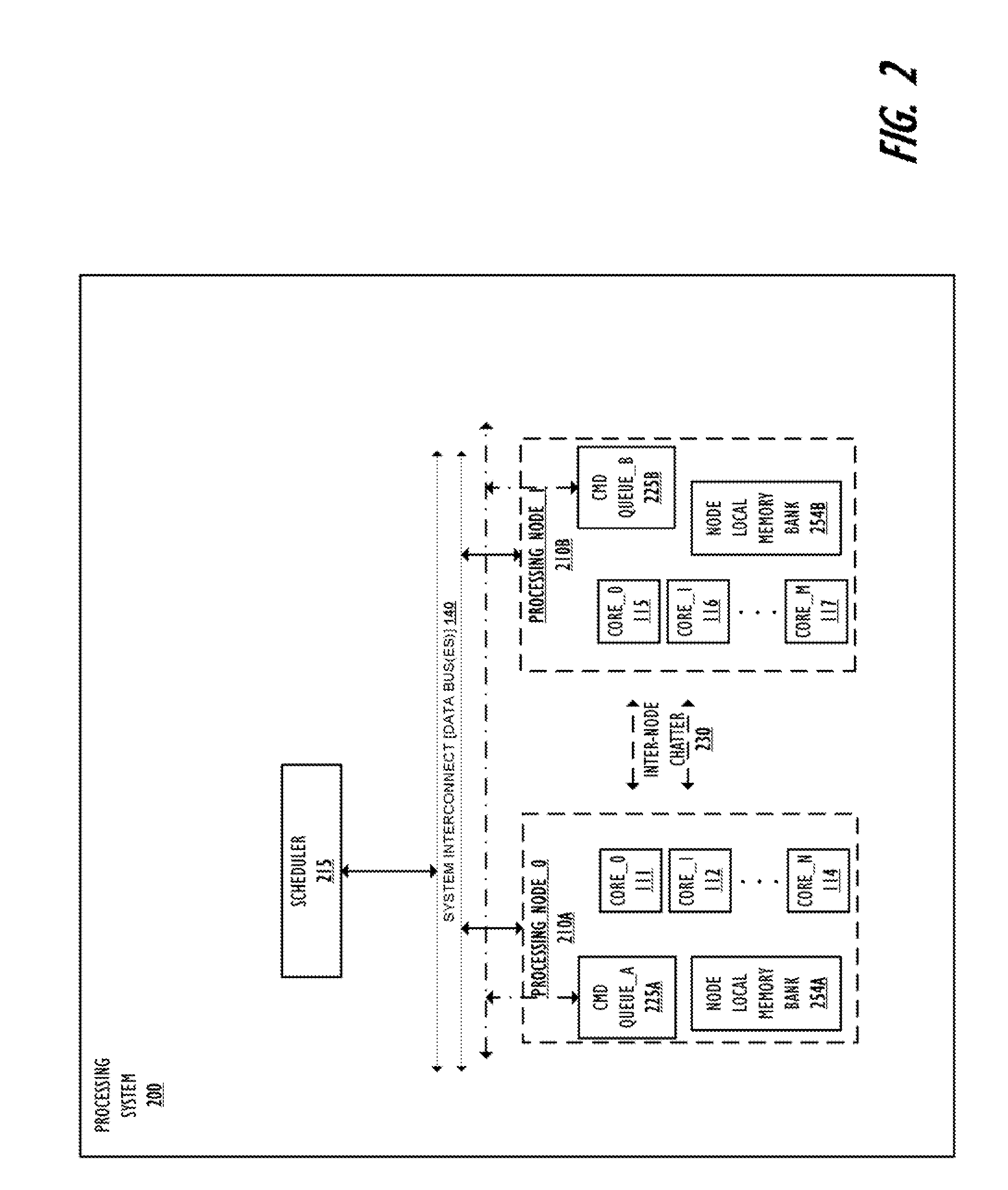

Method to reduce queue synchronization of multiple work items in a system with high memory latency between processing nodes

InactiveUS20110161976A1High access latencyEfficient dispatch/completionResource allocationProgram synchronisationParallel computingEngineering

A method efficiently dispatches / completes a work element within a multi-node, data processing system that has a global command queue (GCQ) and at least one high latency node. The method comprises: at the high latency processor node, work scheduling logic establishing a local command / work queue (LCQ) in which multiple work items for execution by local processing units can be staged prior to execution; a first local processing unit retrieving via a work request a larger chunk size of work than can be completed in a normal work completion / execution cycle by the local processing unit; storing the larger chunk size of work retrieved in a local command / work queue (LCQ); enabling the first local processing unit to locally schedule and complete portions of the work stored within the LCQ; and transmitting a next work request to the GCQ only when all the work within the LCQ has been dispatched by the local processing units.

Owner:IBM CORP

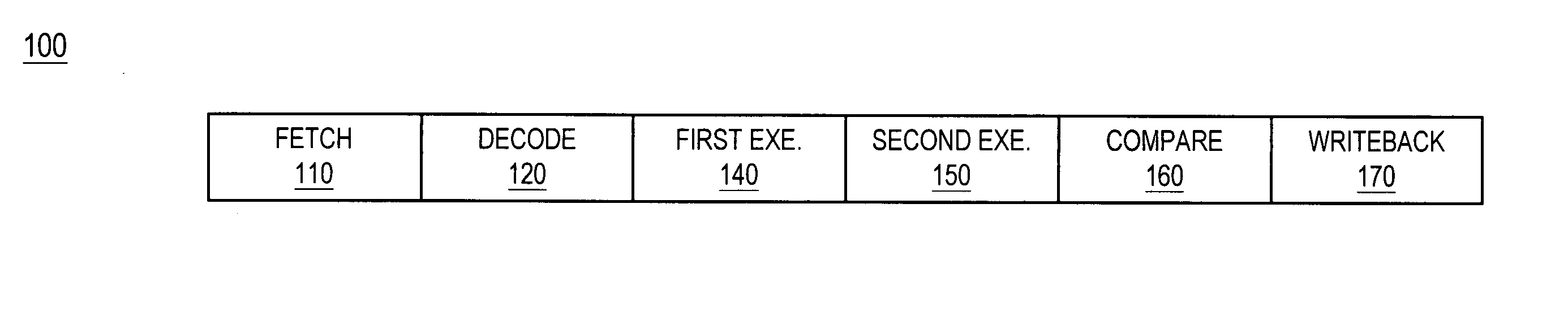

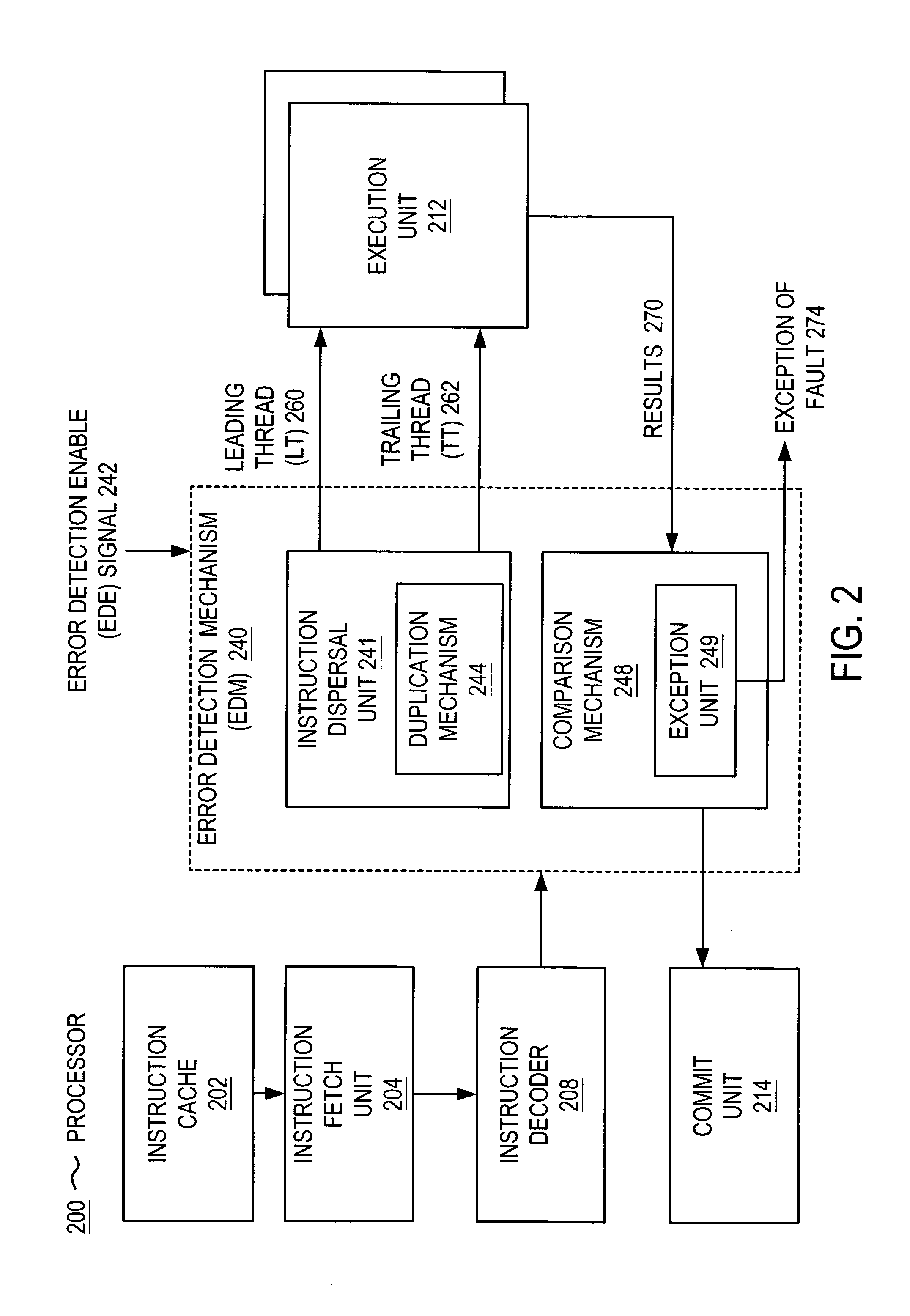

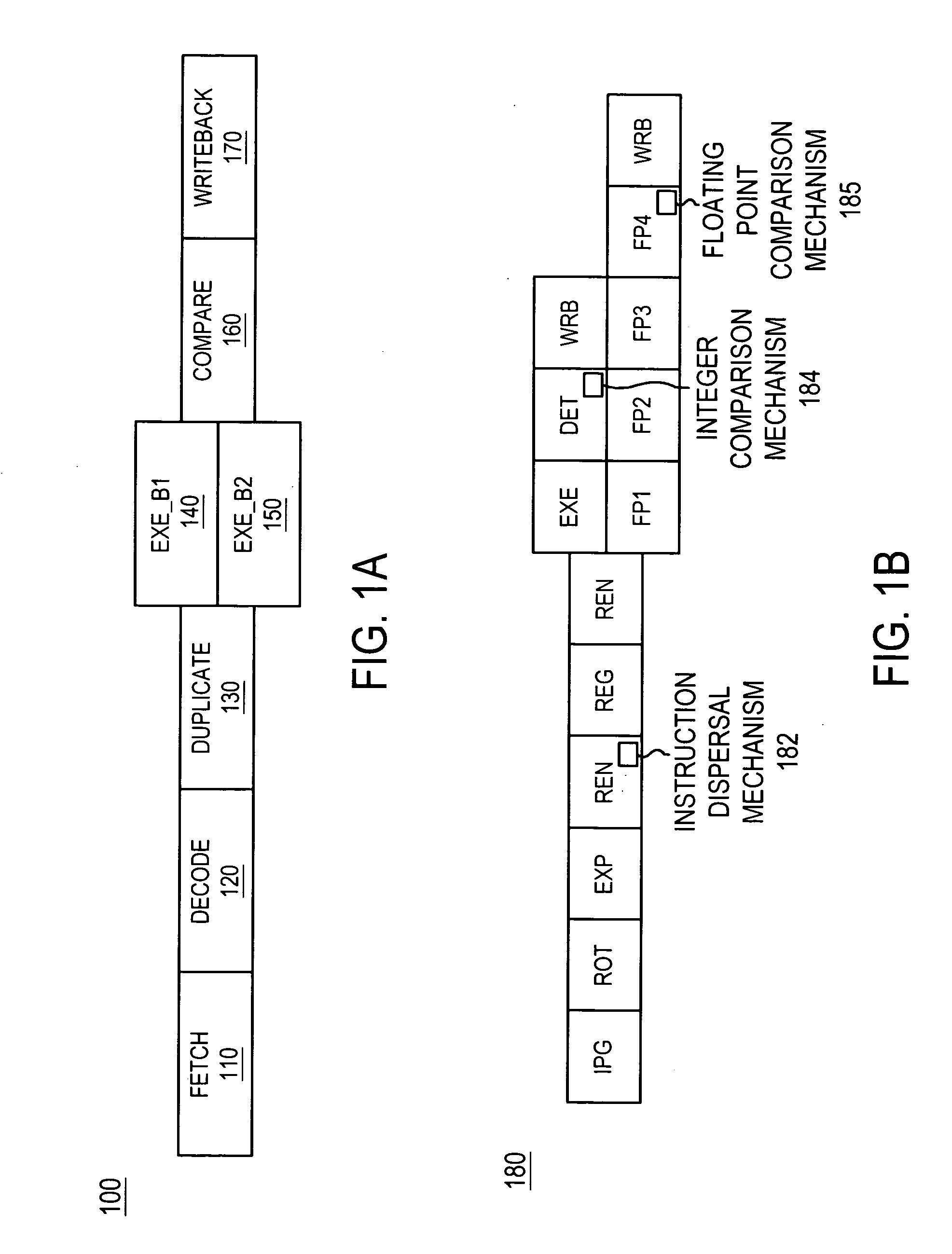

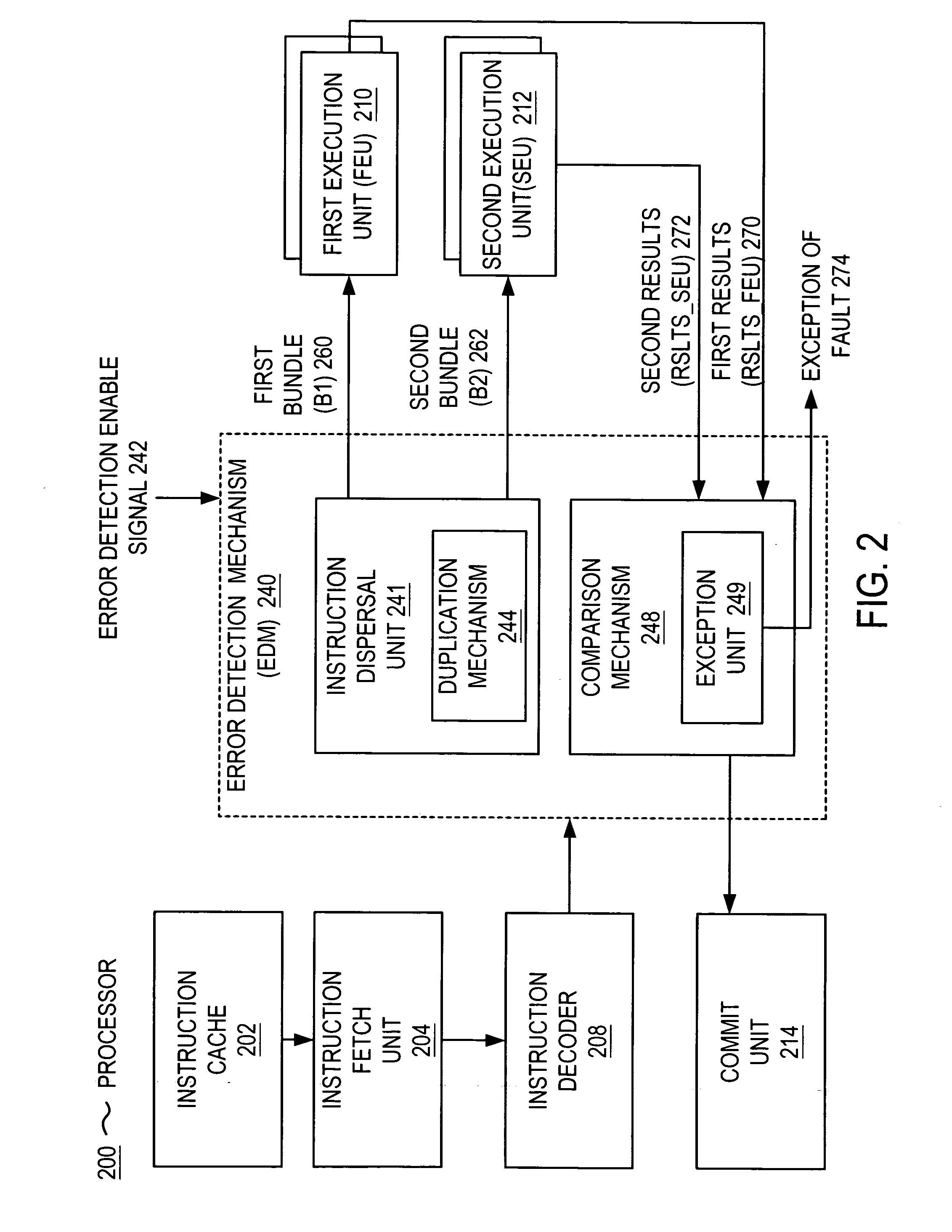

Error detection method and system for processors that employ alternating threads

InactiveUS20050138478A1Program controlRedundant operation error correctionParallel computingExecution unit

Microprocessor that includes a mechanism for detecting soft errors. The processor includes an instruction fetch unit for fetching an instruction and an instruction decoder for decoding the instruction. The mechanism for detecting soft errors includes duplication hardware for duplicating the instruction and comparison hardware. The processor further includes a first execution unit for executing the instruction in a first execution cycle and the duplicated instruction in a second execution cycle. The comparison hardware compares the results of the first execution cycle and the results of the second execution cycle. The comparison hardware can include an exception unit for generating an exception (e.g., raising a fault) when the results are not the same. The processor also includes a commit unit for committing one of the results when the results are the same.

Owner:HEWLETT PACKARD DEV CO LP

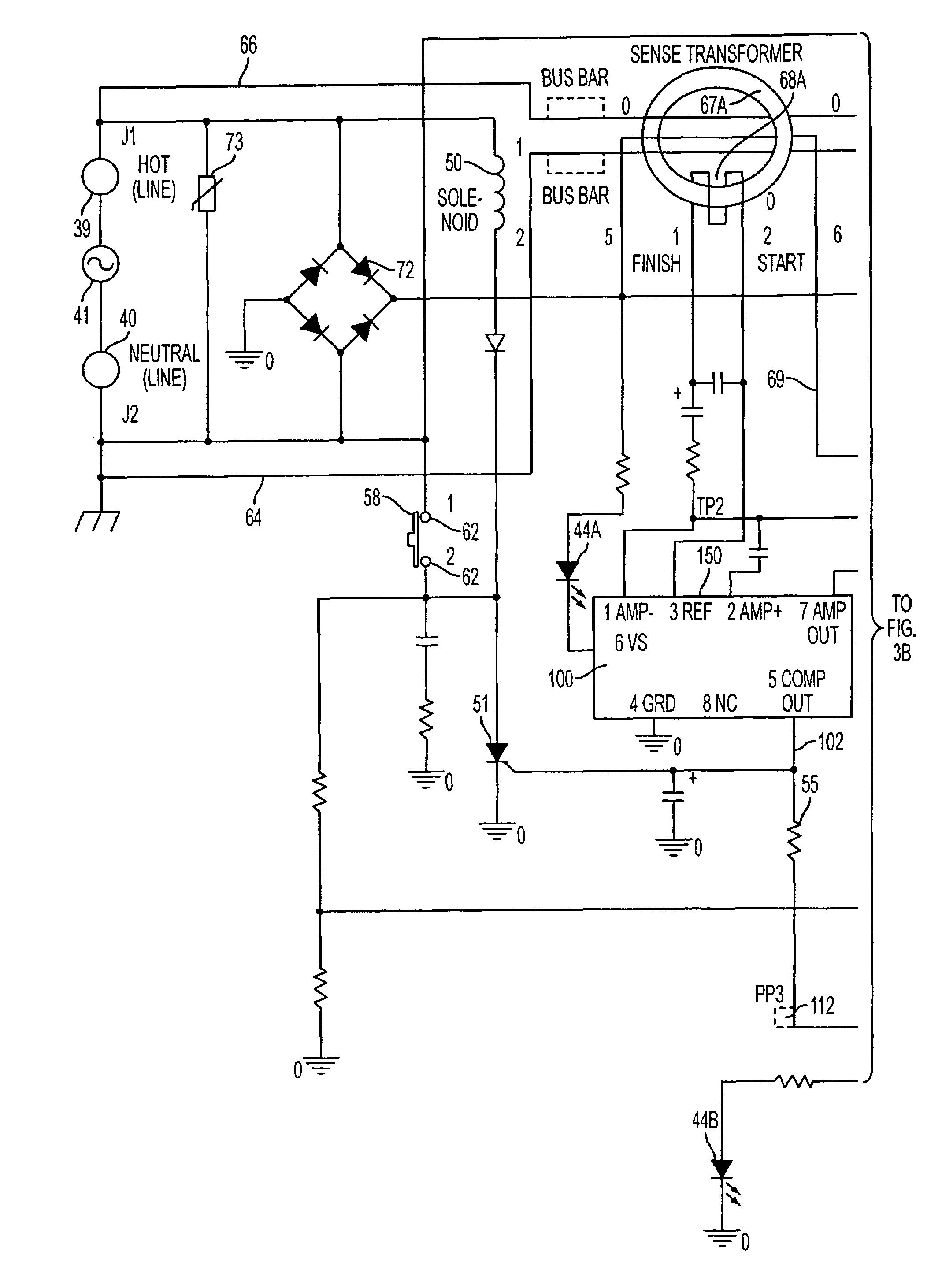

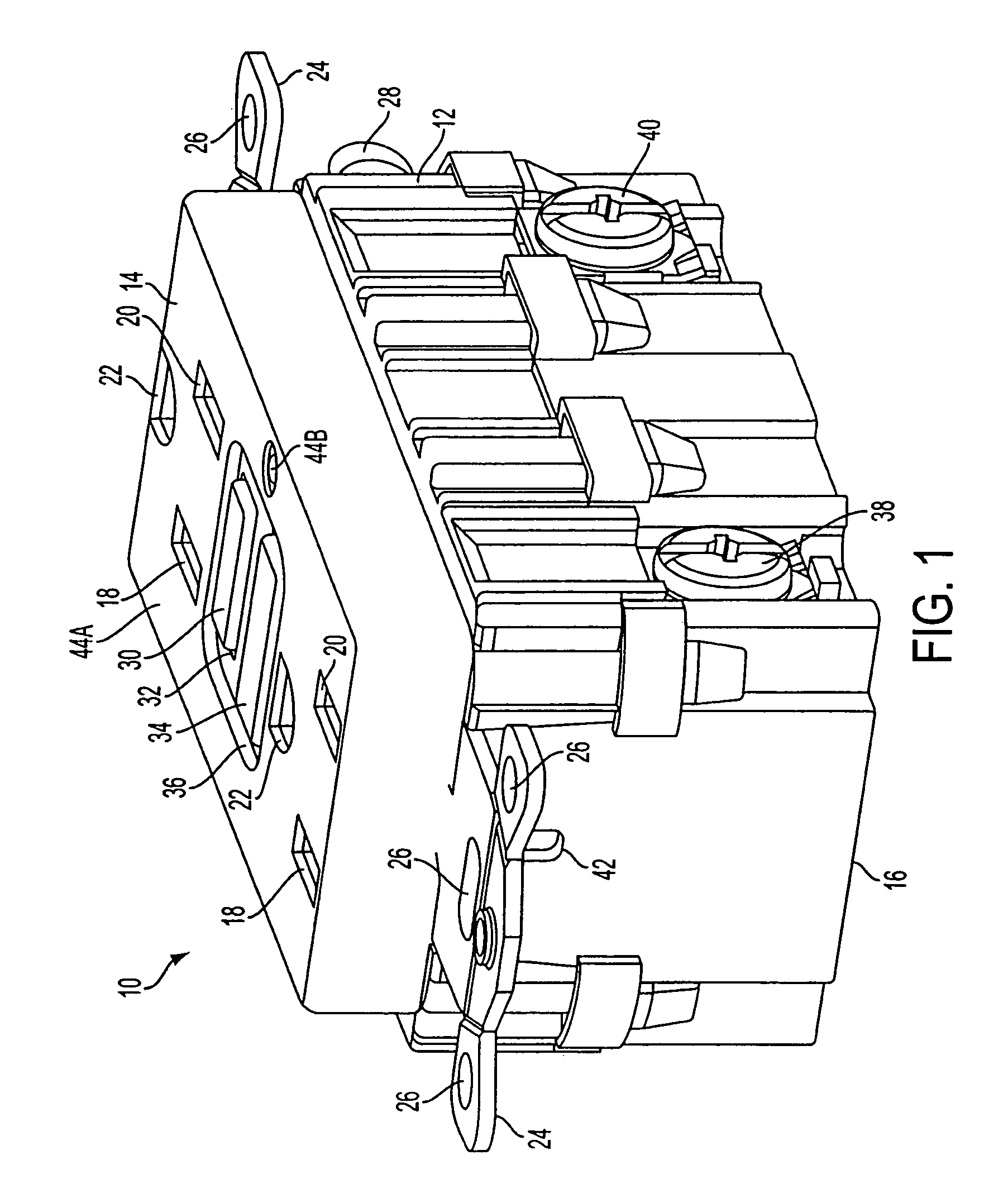

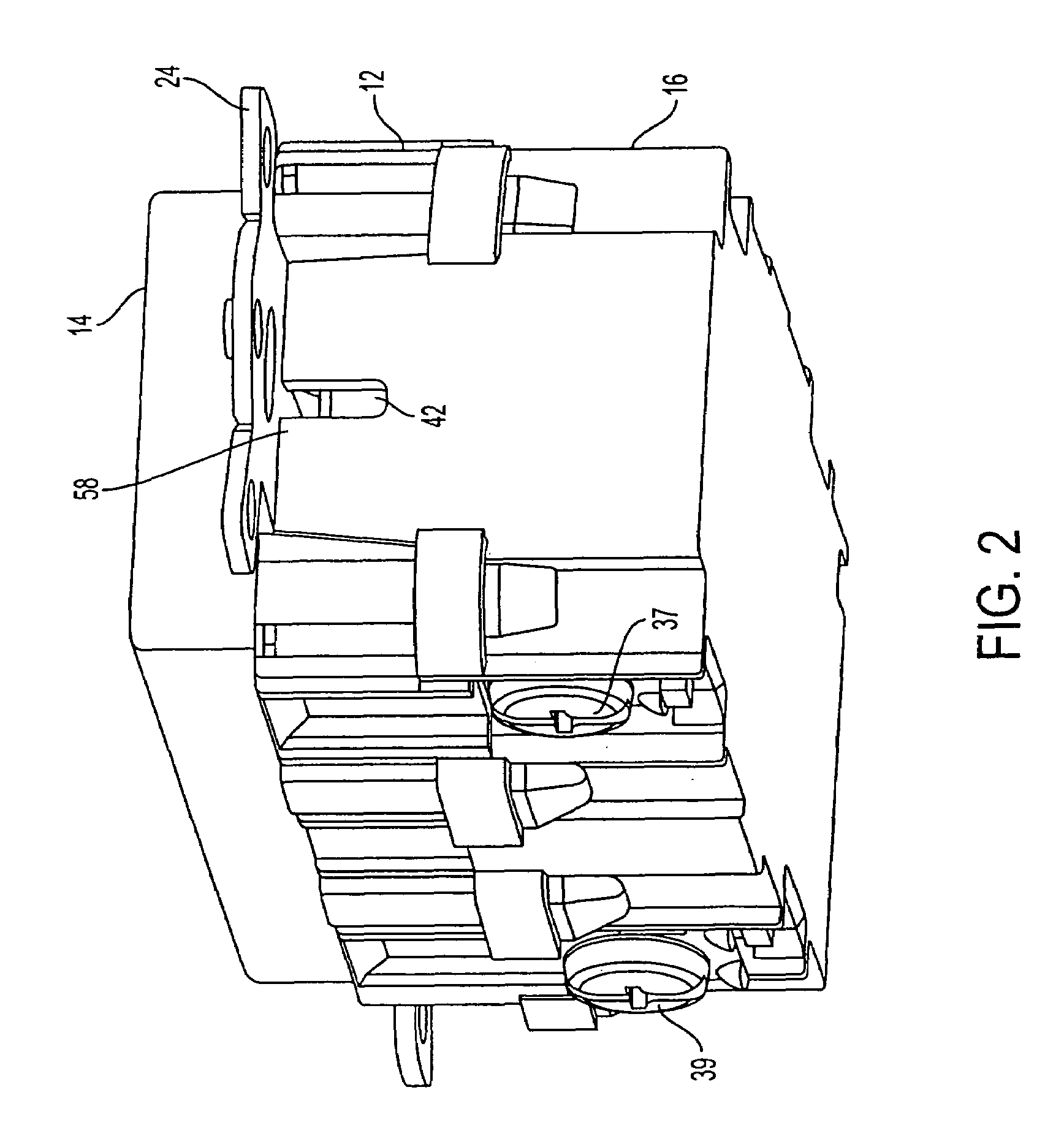

Self testing ground fault circuit interrupter (GFCI)

ActiveUS7443309B2Determines the operability of the switching deviceProtective switch detailsElectronic circuit testingStatus testGround failure

A self testing fault detector having a line side and a load side and a conductive path there between, said apparatus is provided. The self testing fault detector includes a controller, adapted to perform periodic status tests on a protection circuit of the self testing fault detector without interrupting power to the load.

Owner:HUBBELL INC

Method and system for diagnostics of apparatus

ActiveUS20100121609A1Efficient solutionImprove securityPlug gaugesAmplifier modifications to reduce noise influenceHypothesisEstimation methods

Proposed is a method, implemented in software, for estimating fault state of an apparatus outfitted with sensors. At each execution period the method processes sensor data from the apparatus to obtain a set of parity parameters, which are further used for estimating fault state. The estimation method formulates a convex optimization problem for each fault hypothesis and employs a convex solver to compute fault parameter estimates and fault likelihoods for each fault hypothesis. The highest likelihoods and corresponding parameter estimates are transmitted to a display device or an automated decision and control system. The obtained accurate estimate of fault state can be used to improve safety, performance, or maintenance processes for the apparatus.

Owner:MITEK ANALYTICS

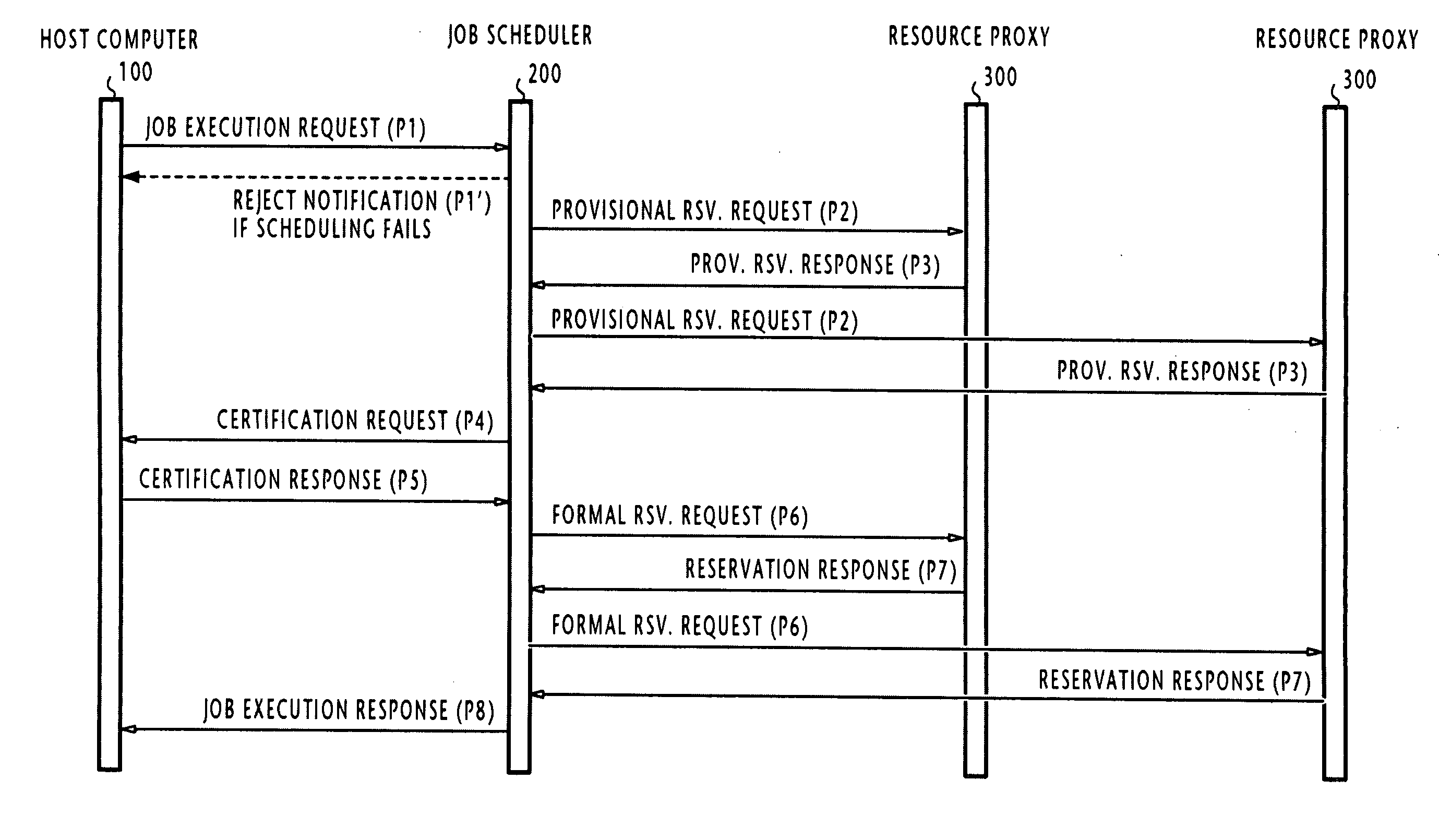

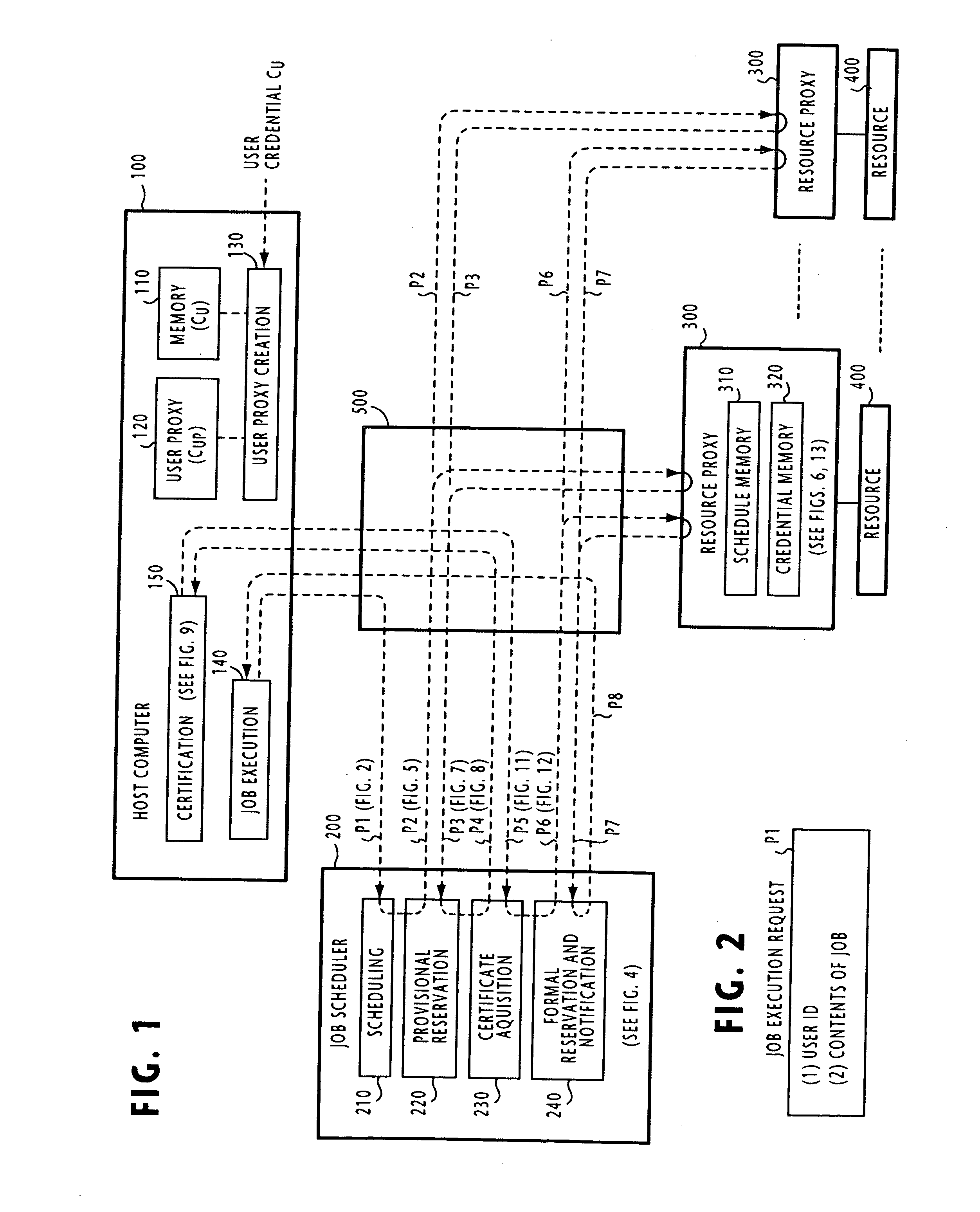

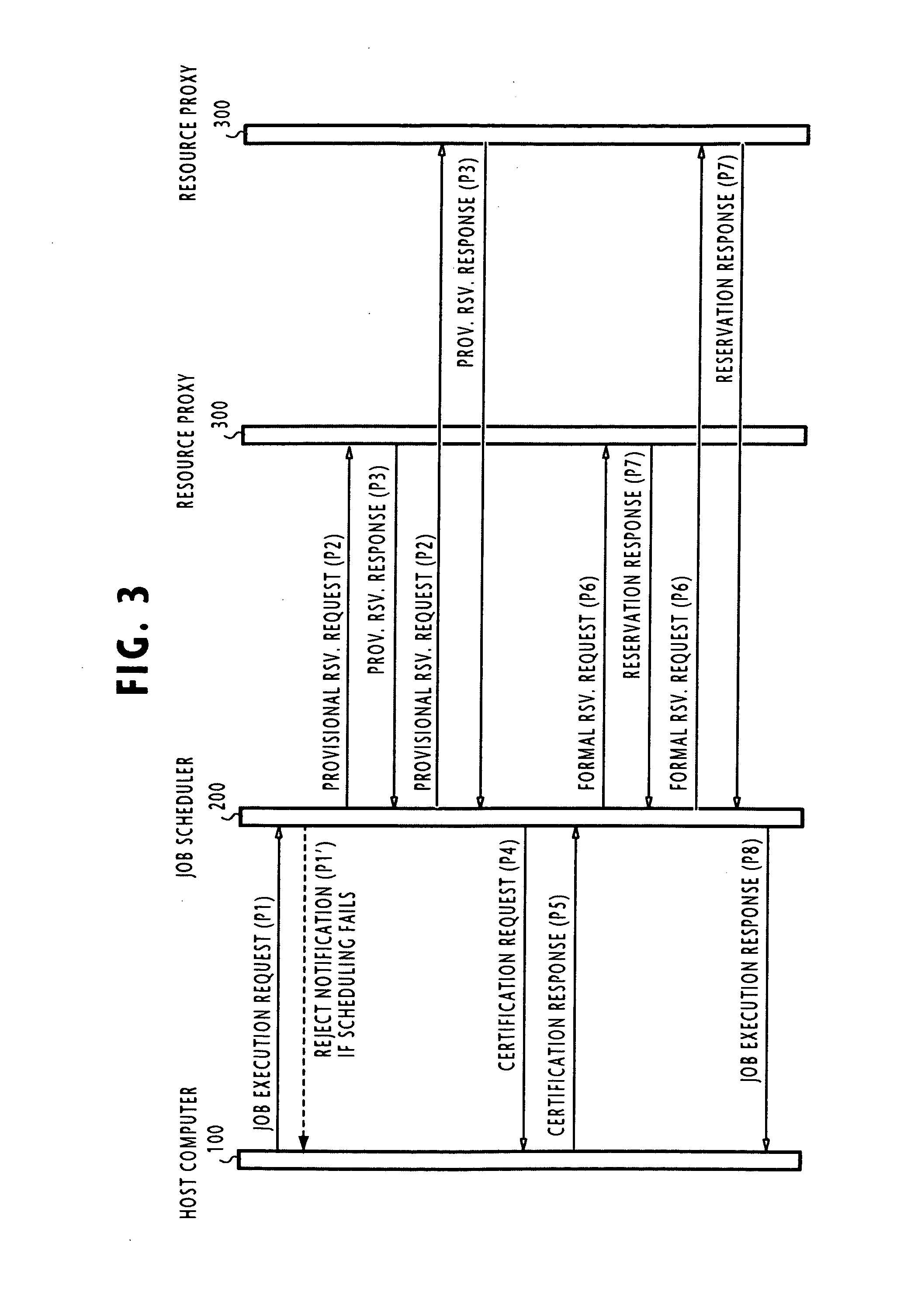

Distributed computing system for resource reservation and user verification

InactiveUS20050117587A1Reduce the burden onResource allocationUser identity/authority verificationUser verificationComputer terminal

In a distributed computing system, an end terminal transmits a job execution request to a job scheduler, which in response determines a resource and a job execution period necessary for executing a job on the resource based on contents of the job indicated in the message. A reservation request message containing the job execution period and the resource identity is sent from the job scheduler to a target resource proxy. The resource proxy determines a scheduled period of the resource based on the job execution period by checking its schedule memory, and transmits a reservation response to the job scheduler. The job scheduler acquires a certificate signed by the user from the end terminal and transmits the acquired certificate to the resource proxy. In response to the certificate of the user, the resource proxy makes a reservation of the identified resource according to the scheduled period.

Owner:NEC CORP

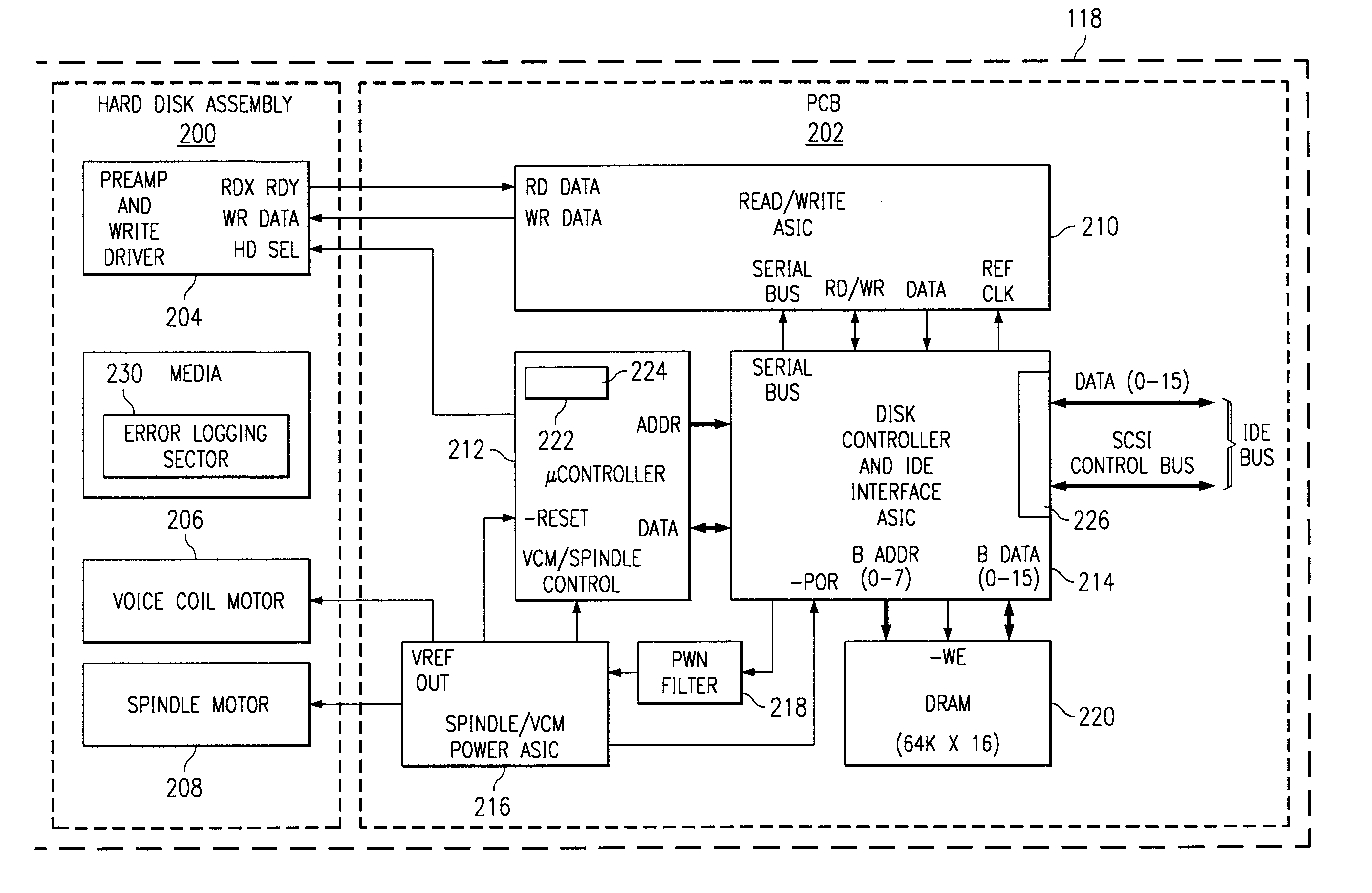

Background read scanning with defect reallocation

InactiveUS6412089B1Disc-shaped record carriersRecord information storageComputerized systemExecution cycle

A method, apparatus and computer system for correcting errors and defects in a storage device. The storage device includes media for storing data. A periodic read scan is performed to test the data. If a repeatable error is found, the data is moved to a new sector from a pool of available sectors. Defects are counted and identified in a defect list for reporting to the host. The storage device is scannable is small segments to minimize impact on performance.

Owner:HEWLETT PACKARD DEV CO LP

Mechanism for tracking age of common resource requests within a resource management subsystem

One embodiment of the present disclosure sets forth an effective way to maintain fairness and order in the scheduling of common resource access requests related to replay operations. Specifically, a streaming multiprocessor (SM) includes a total order queue (TOQ) configured to schedule the access requests over one or more execution cycles. Access requests are allowed to make forward progress when needed common resources have been allocated to the request. Where multiple access requests require the same common resource, priority is given to the older access request. Access requests may be placed in a sleep state pending availability of certain common resources. Deadlock may be avoided by allowing an older access request to steal resources from a younger resource request. One advantage of the disclosed technique is that older common resource access requests are not repeatedly blocked from making forward progress by newer access requests.

Owner:NVIDIA CORP

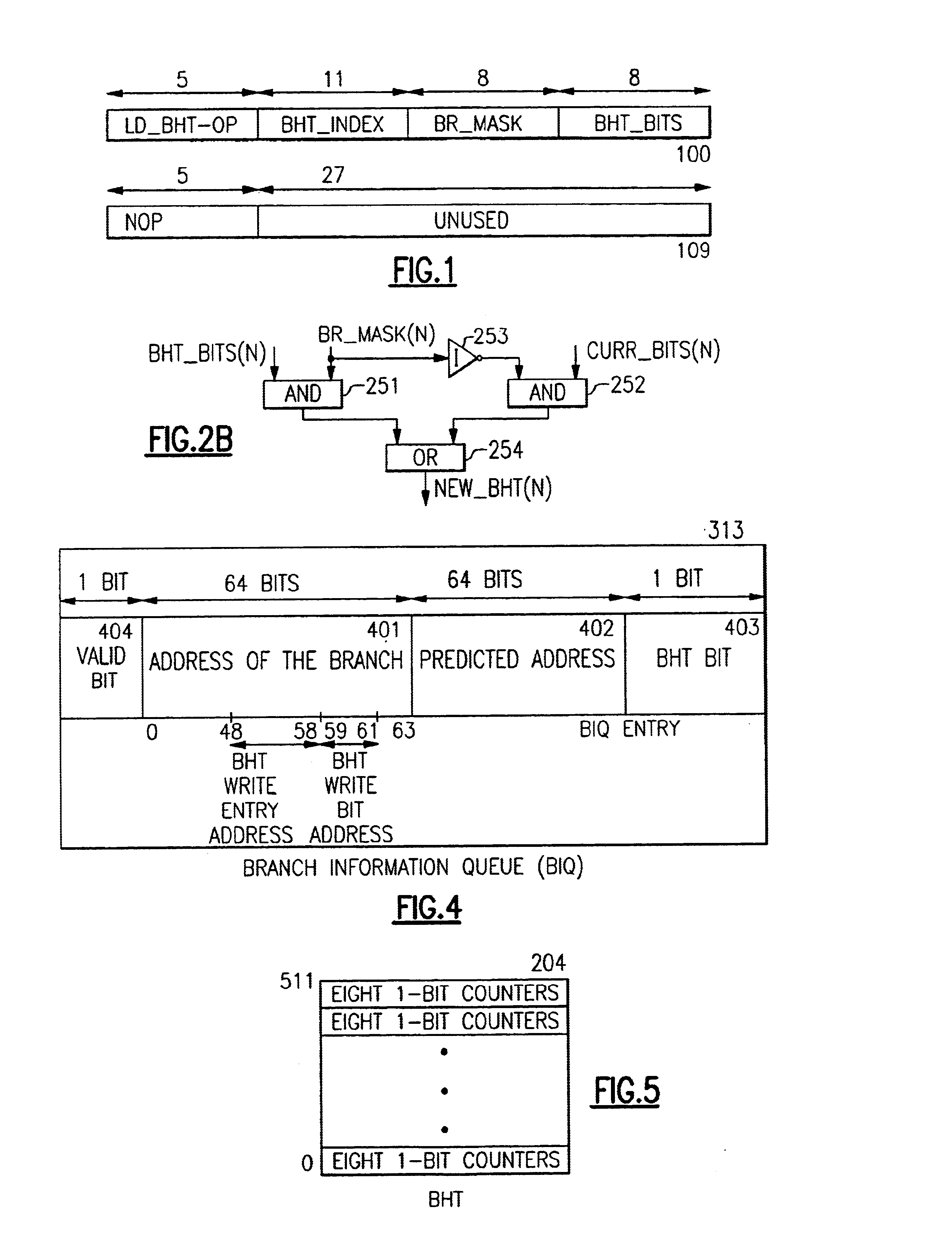

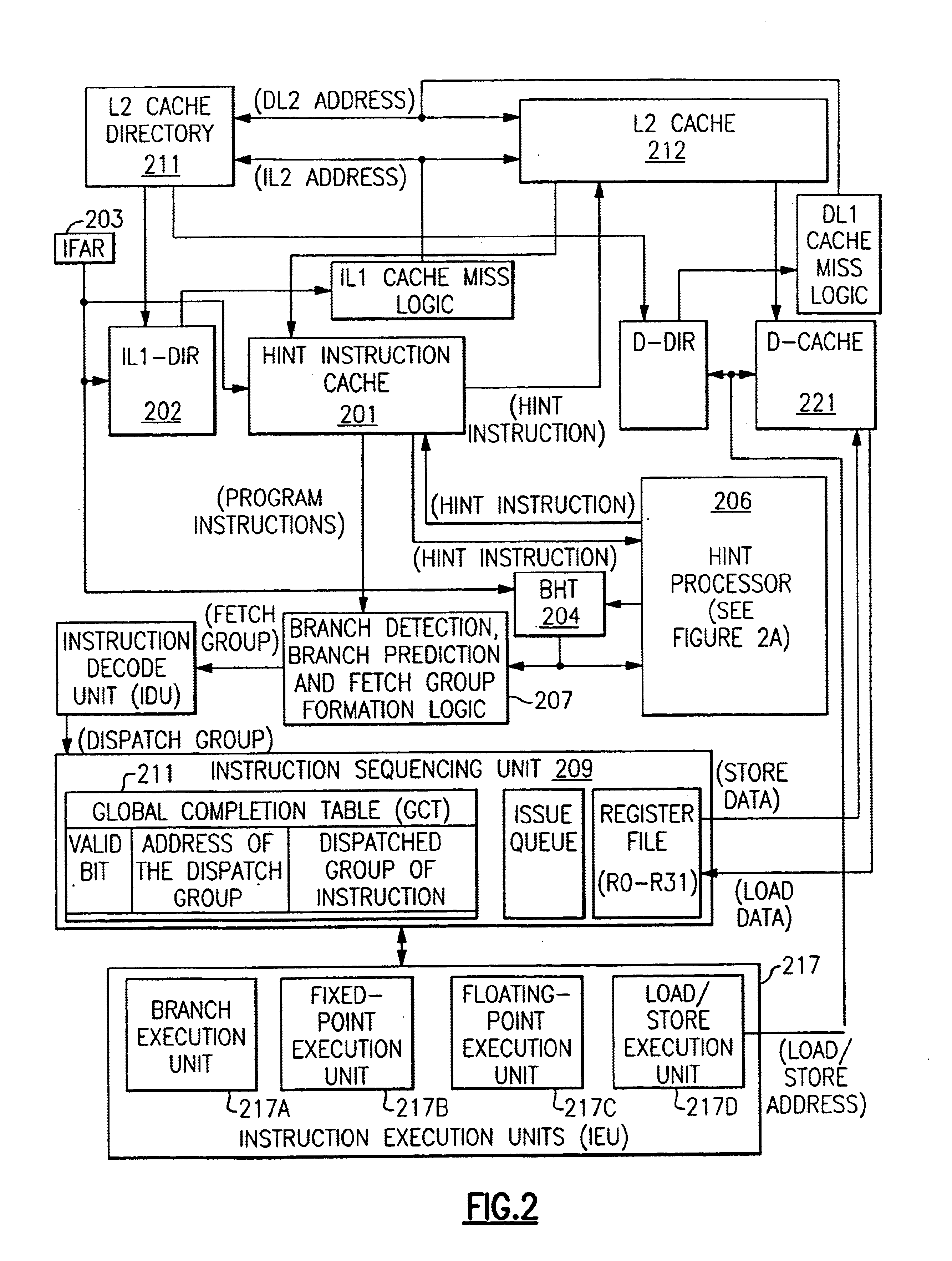

Branch prediction apparatus and process for restoring replaced branch history for use in future branch predictions for an executing program

InactiveUS6877089B2Saving and fast utilizationImprove programming speedDigital computer detailsConcurrent instruction executionParallel computingSemiconductor

Apparatus and methods implemented in a processor semiconductor logic chip for providing novel “hint instructions” that uniquely preserve and reuse branch predictions replaced in a branch history table (BHT). A branch prediction is lost in the BHT after its associated instruction is replaced in an instruction cache. The unique “hint instructions” are generated and stored in a unique instruction cache which associates each hint instruction with a line of instructions. The hint instructions contains the latest branch history for all branch instructions executed in an associated line of instructions, and they are stored in the instruction cache during instruction cache hits in the associated line. During an instruction cache miss in an instruction line, the associated hint instruction is stored in a second level cache with a copy of the associated instruction line being replaced in the instruction cache. In the second level cache, the copy of the line is located through the instruction cache directory entry associated with the line being replaced in the instruction cache. Later, the hint instruction can be retrieved into the instruction cache when its associated instruction line is fetched from the second level cache, and then its associated hint instruction is also retrieved and used to restore the latest branch predictions for that instruction line. In the prior art this branch prediction would have been lost. It is estimated that this invention improves program performance for each replaced branch prediction by about 80%, due to increasing the probability of BHT bits correctly predicting the branch paths in the program from about 50% to over 90%. Each incorrect BHT branch prediction may result in the loss of many execution cycles, resulting in additional instruction re-execution overhead when incorrect branch paths are belatedly discovered.

Owner:IBM CORP

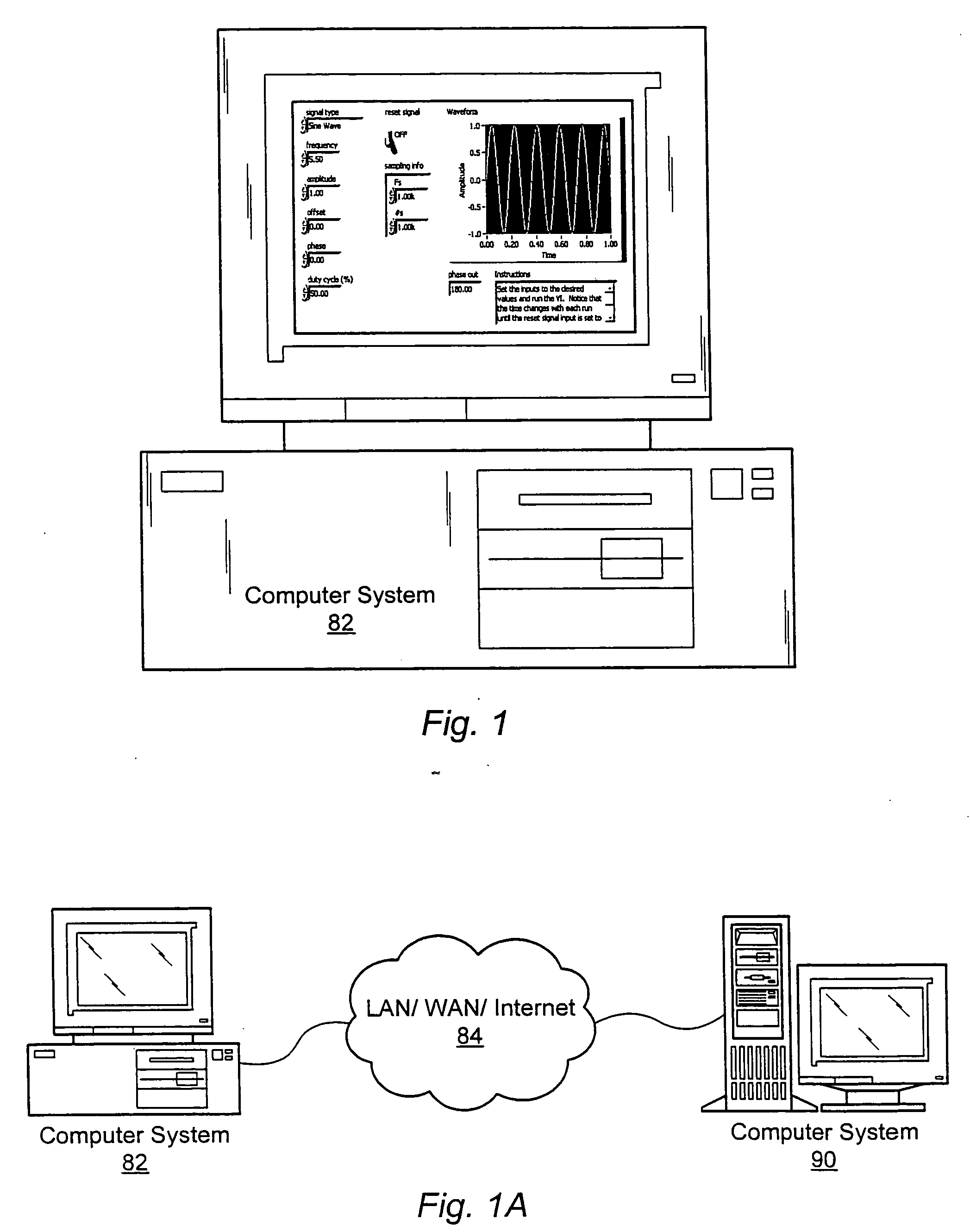

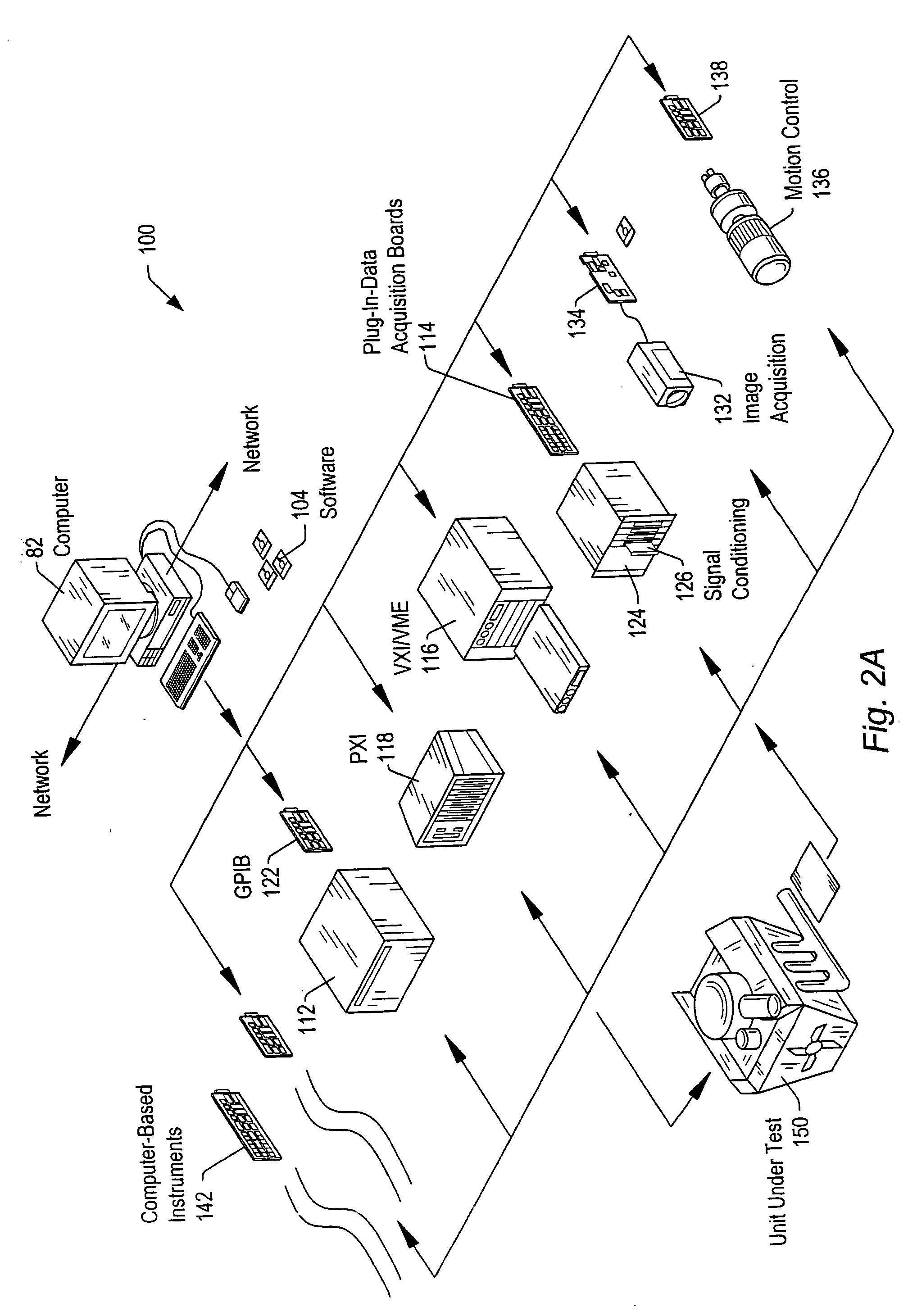

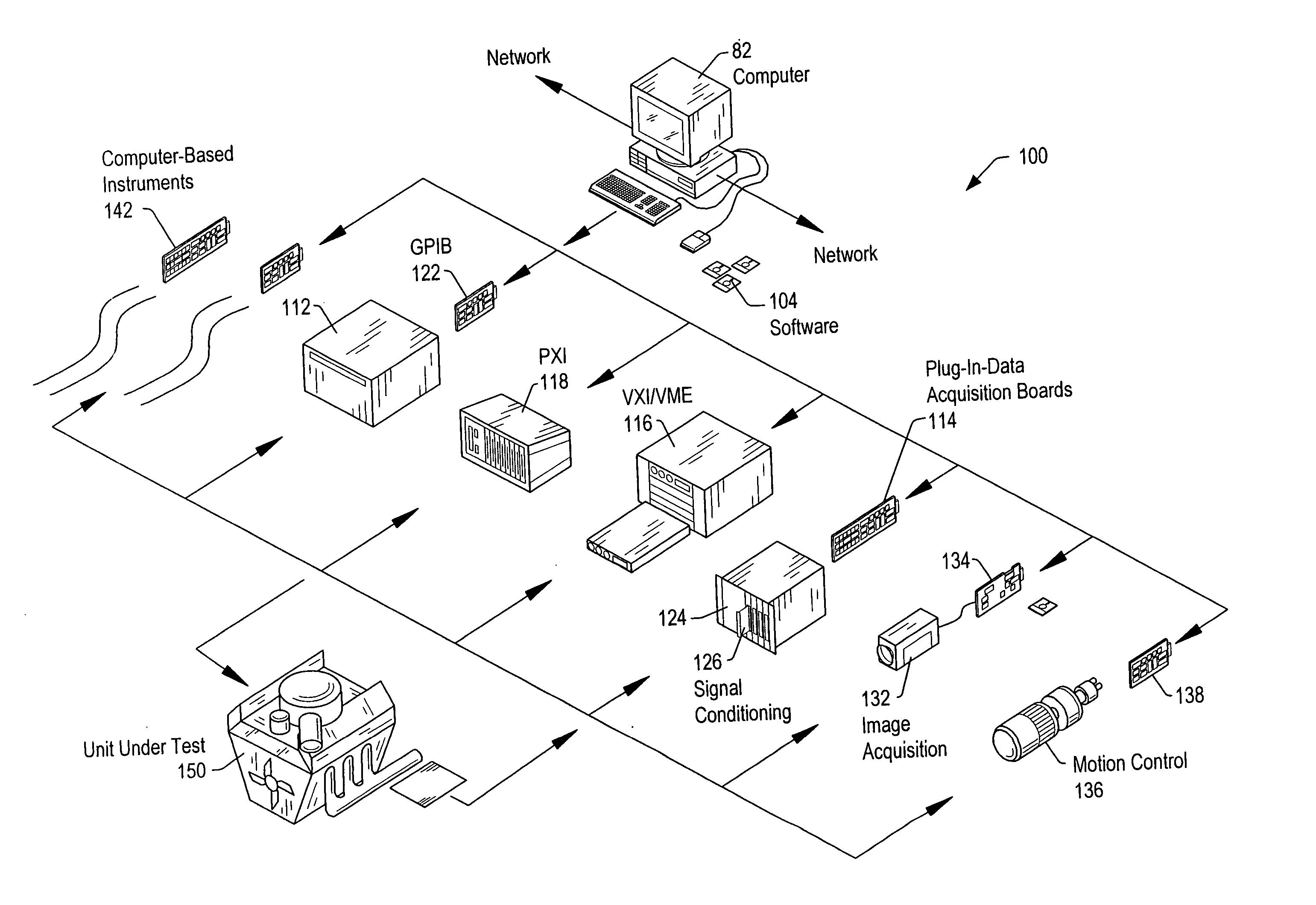

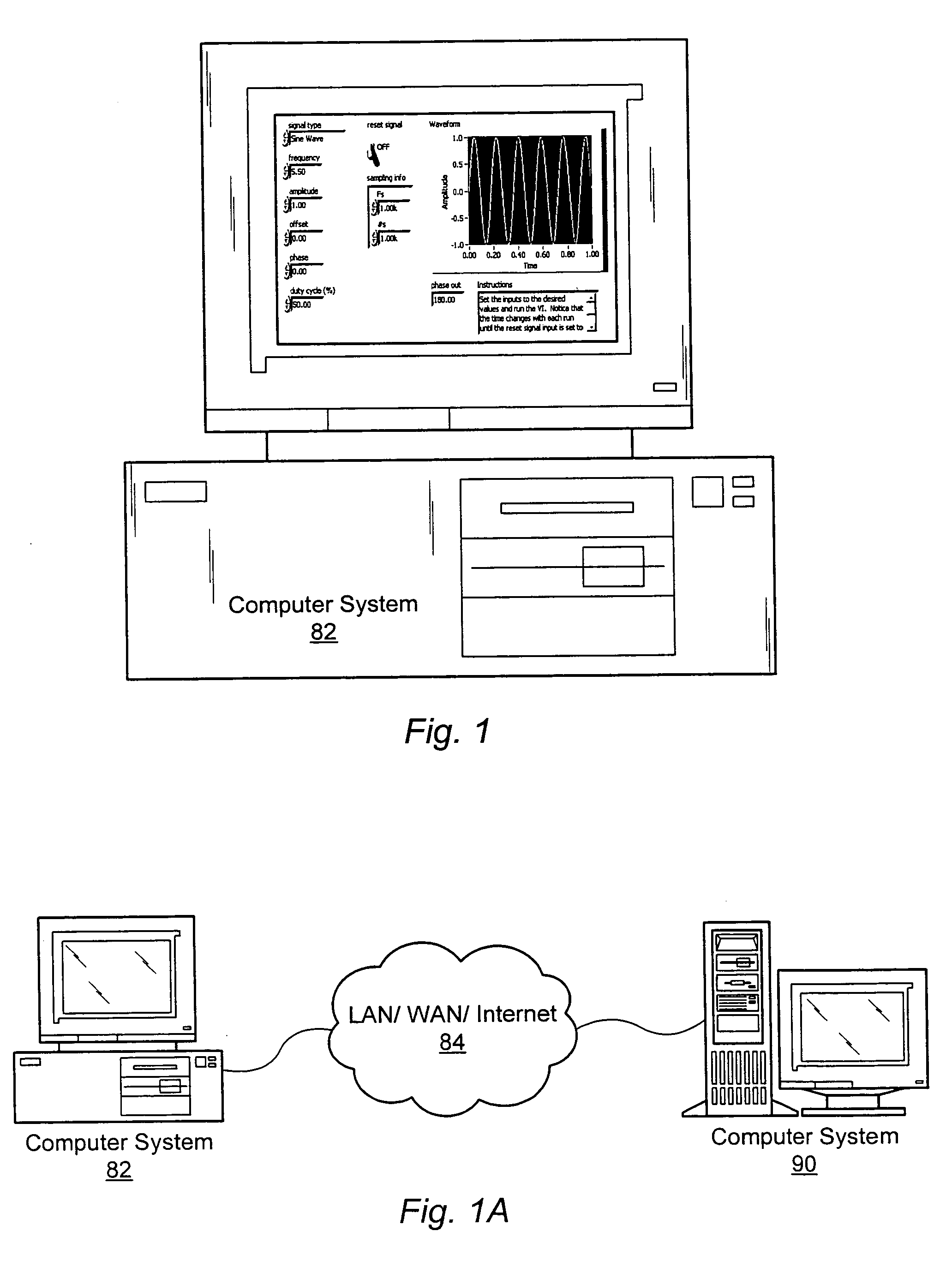

Graphical program which executes a timed loop

A system and method for creating a graphical program operable to execute a timed loop. A loop may be displayed in the graphical program and configured with timing information in response to user input. The timing information may include an execution period which specifies a desired period at which the loop should execute during execution of the graphical program. The timing information may also include information such as a timing source, offset, and priority. During execution of the graphical program, the execution period of the loop may control the rate at which the loop executes.

Owner:NATIONAL INSTRUMENTS

Instruction dispatching method and apparatus

InactiveUS20080040724A1Avoid delayGeneral purpose stored program computerMultiprogramming arrangementsScheduling instructionsParallel computing

A system, apparatus and method for instruction dispatch on a multi-thread processing device are described herein. The instruction dispatching method includes, in an instruction execution period having a plurality of execution cycles, successively fetching and issuing an instruction for each of a plurality of instruction execution threads according to an allocation of execution cycles of the instruction execution period among the plurality of instruction execution threads. Remaining execution cycles are subsequently used to successively fetch and issue another instruction for each of the plurality of instruction execution threads having at least one remaining allocated execution cycle of the instruction execution period. Other embodiments may be described and claimed.

Owner:MARVELL WORLD TRADE LTD

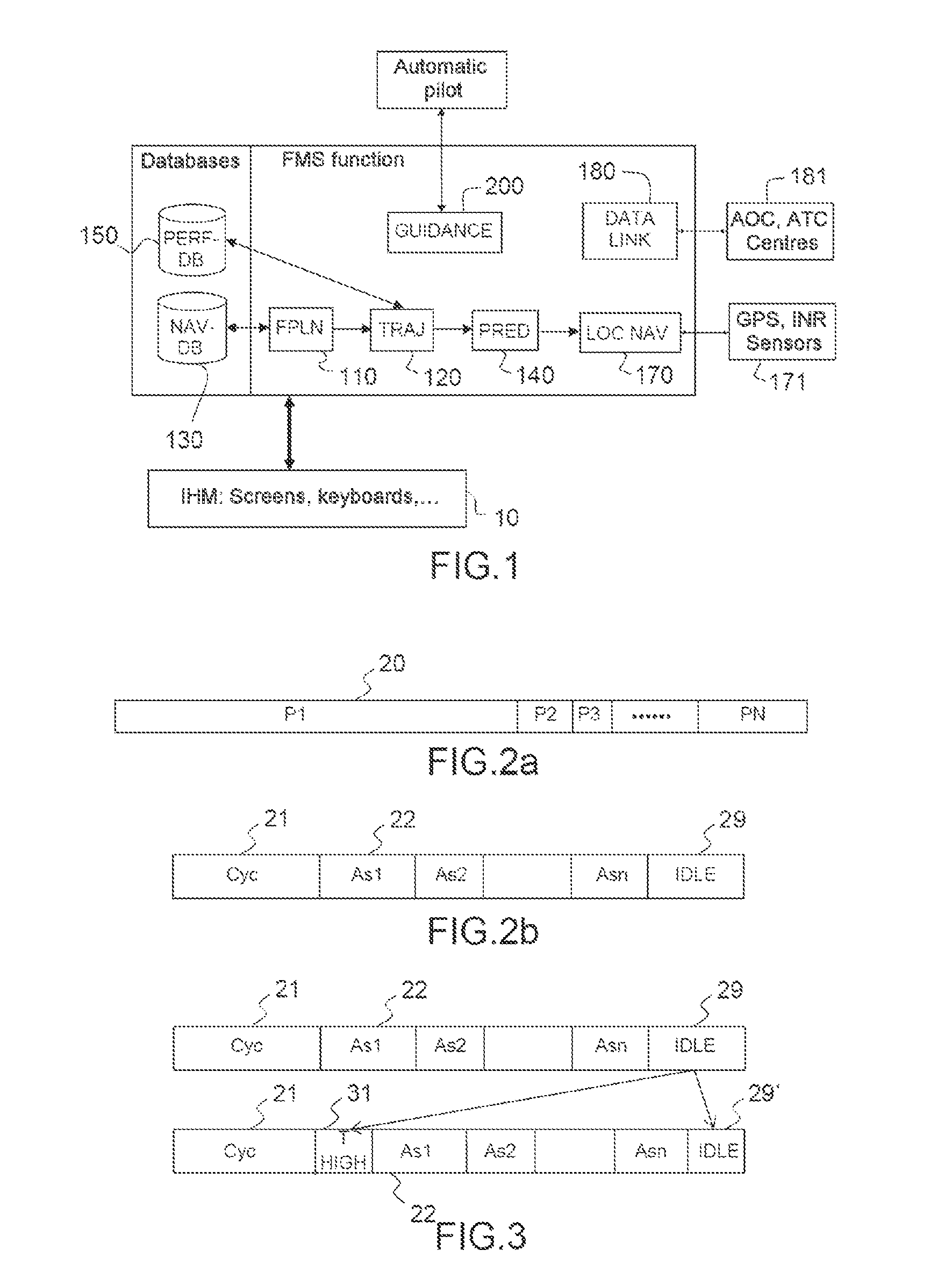

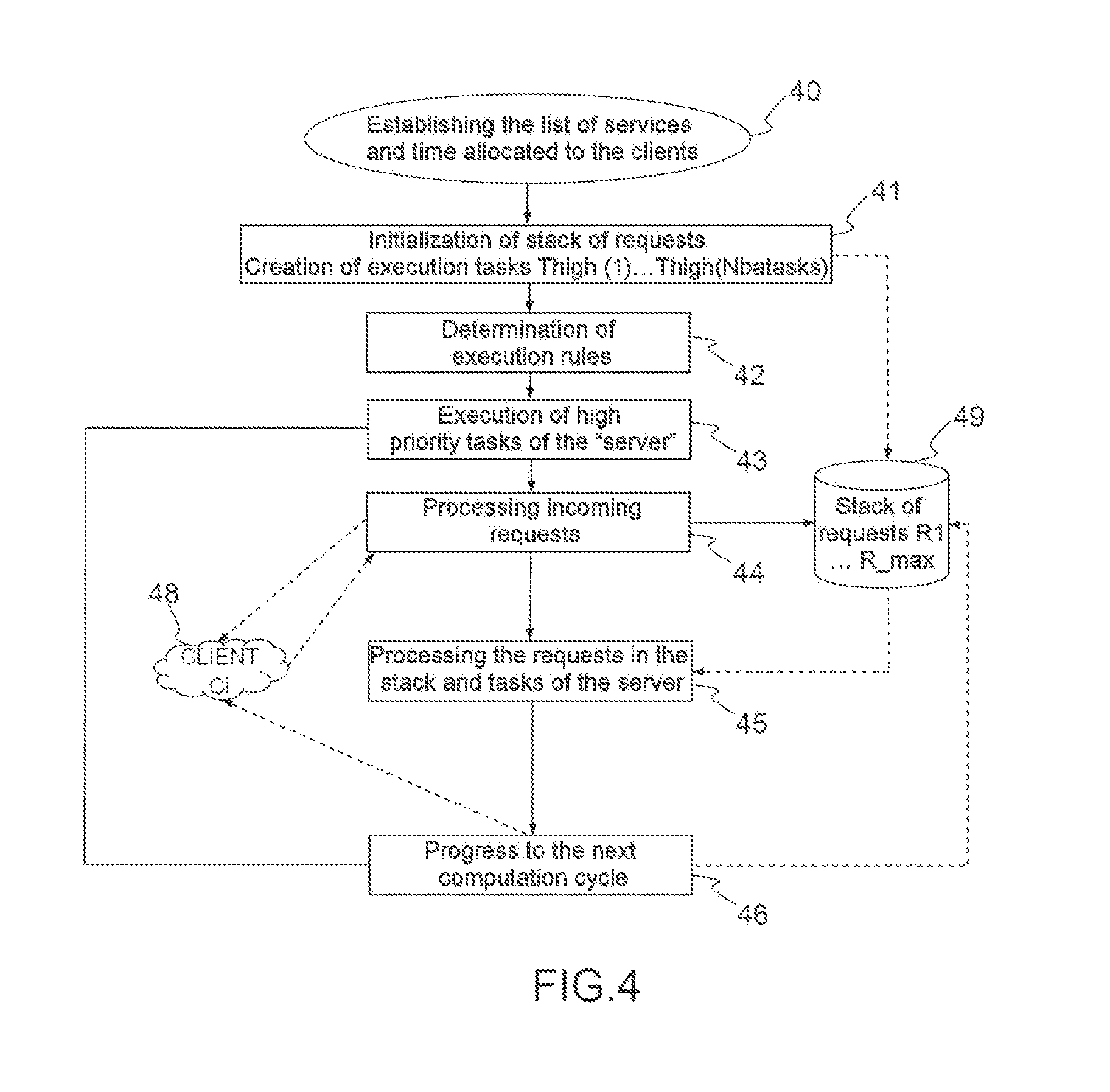

Method for the execution of services in real time, notably of flight management and real time system using such a method

A system executes services by a “server” for a “client”. A preliminary step establishes a list of available services of the server, set up for the client, in which a processing time is determined releasable by the server for the client per code execution cycle MIF. The system creates on start-up: NT execution tasks for the client, each having a priority of execution level and an allocated average duration of execution, NT being at least 1, the sum of durations of the tasks being at most the releasable processing time; execution rules associating each of the tasks with at least one service of the list; then, during each MIF cycle, the system executes the services on their associated tasks, a task executed by priority and for at most its allocated average time of execution, the non-executed part of a service being executed on its associated task in the next cycle.

Owner:THALES SA

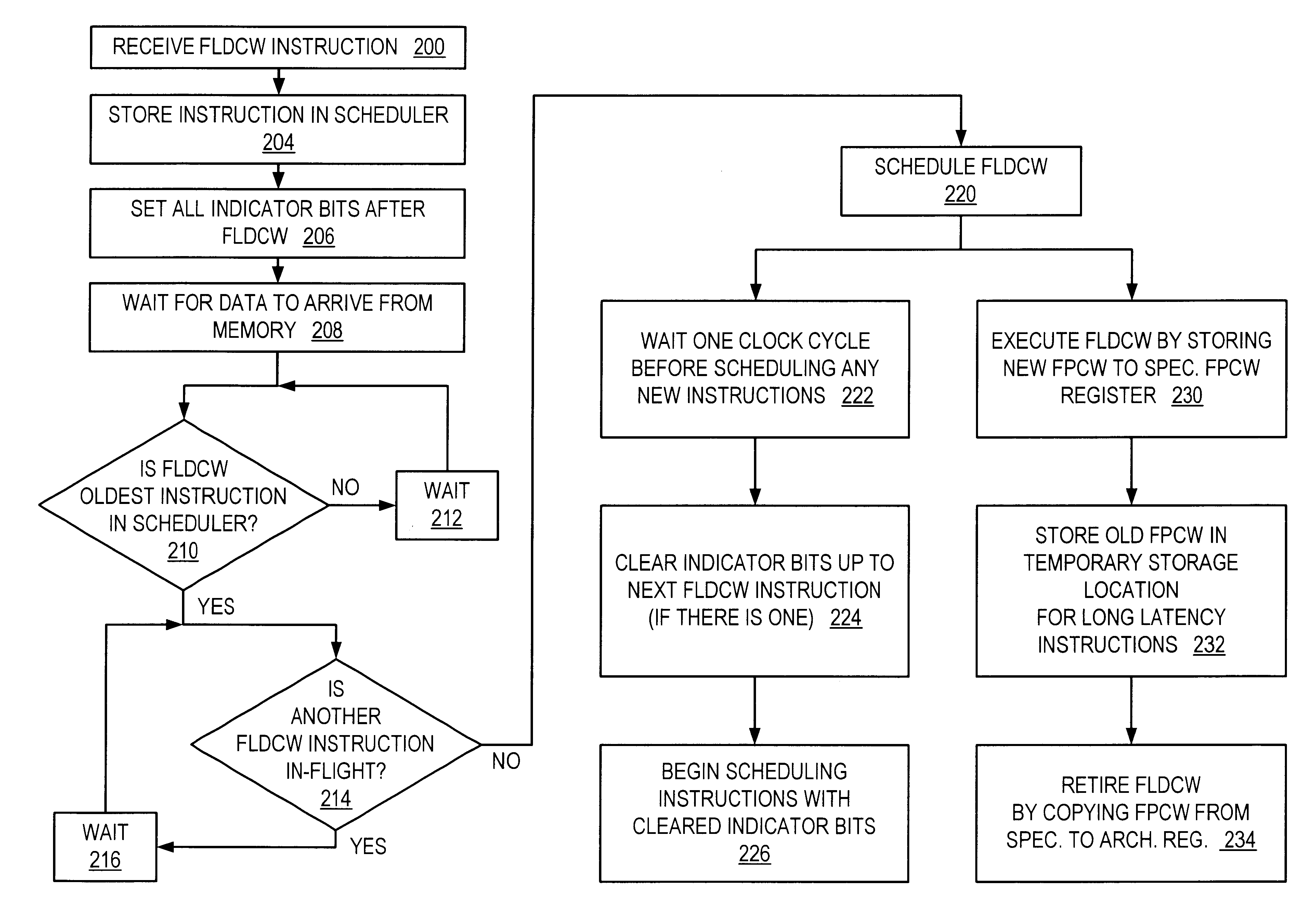

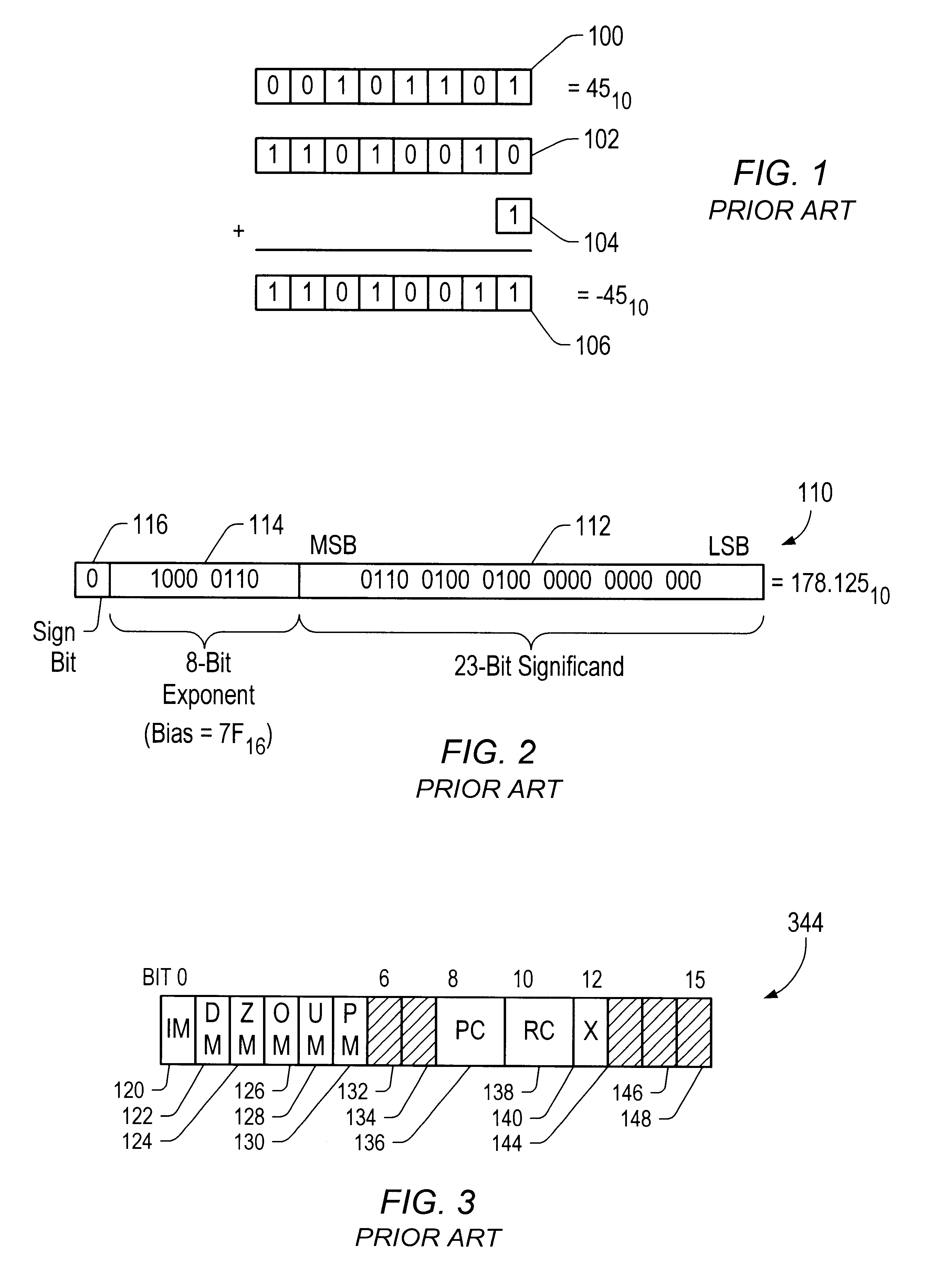

Rapid execution of floating point load control word instructions

InactiveUS6405305B1Conditional code generationDigital computer detailsScheduling instructionsParallel computing

A microprocessor with a floating point unit configured to rapidly execute floating point load control word (FLDCW) type instructions in an out of program order context is disclosed. The floating point unit is configured to schedule instructions older than the FLDCW-type instruction before the FLDCW-type instruction is scheduled. The FLDCW-type instruction acts as a barrier to prevent instructions occurring after the FLDCW-type instruction in program order from executing before the FLDCW-type instruction. Indicator bits may be used to simplify instruction scheduling, and copies of the floating point control word may be stored for instruction that have long execution cycles. A method and computer configured to rapidly execute FLDCW-type instructions in an out of program order context are also disclosed.

Owner:ADVANCED MICRO DEVICES INC

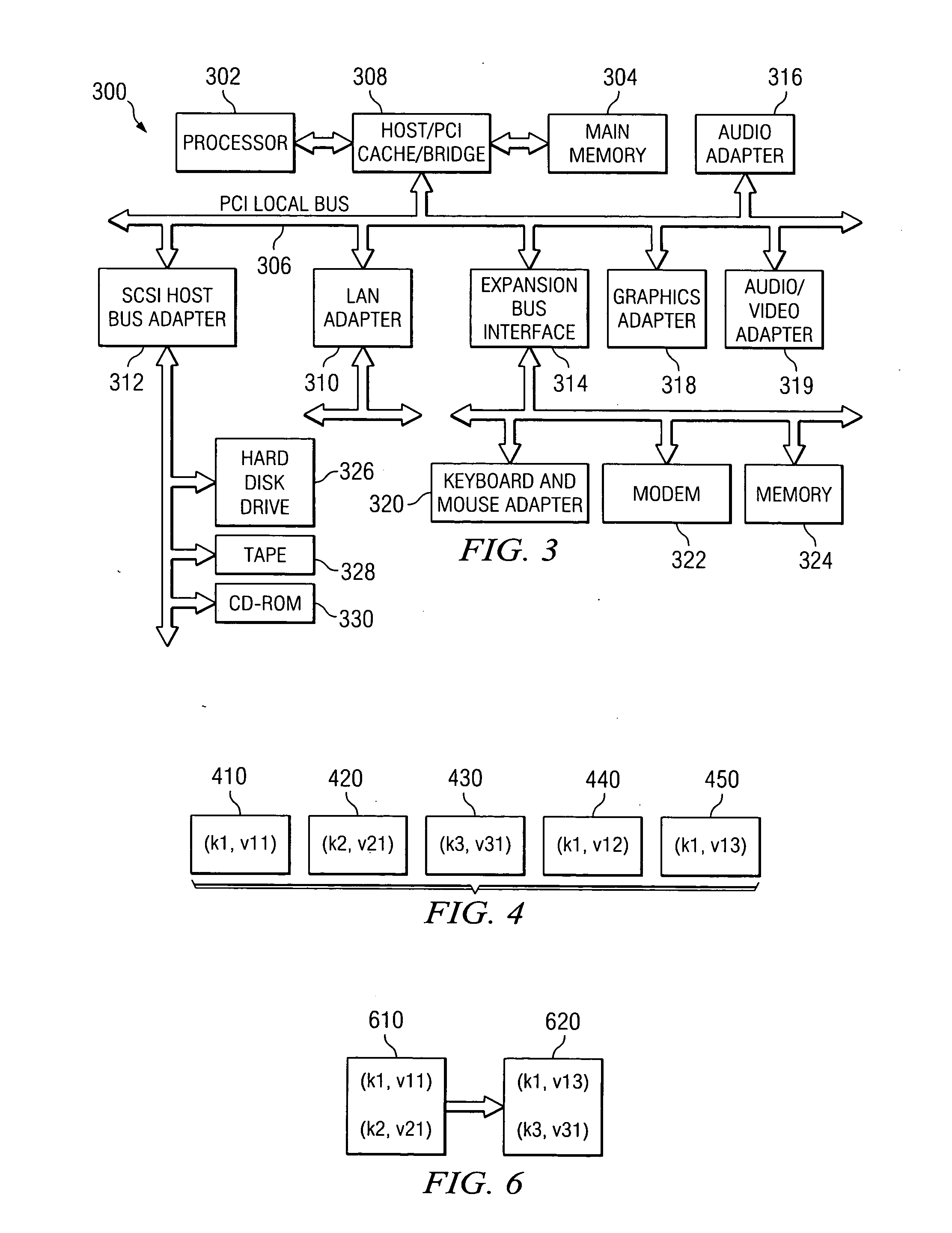

System and method for maintaining checkpoints of a keyed data structure using a sequential log

A system and method for maintaining checkpoints of a keyed data structure using a sequential log are provided. The system and method are built upon the idea of writing all updates to a keyed data structure in a physically sequential location. The system and method make use of a two-stage operation. In a first stage, various values of the same key are combined such that only the latest value in a given checkpoint interval is maintained for writing to persistent storage. In a second stage of the operation, a periodic write operation is performed to actually store the latest values for the key-value pairs to a persistent storage. All such updates to key-value pairs are written to the end of a sequential log. This minimizes the physical storage input / output (I / O) overhead for the write operations. Data structures are provided for identifying the most current entries in the sequential log for each key-value pair.

Owner:IBM CORP

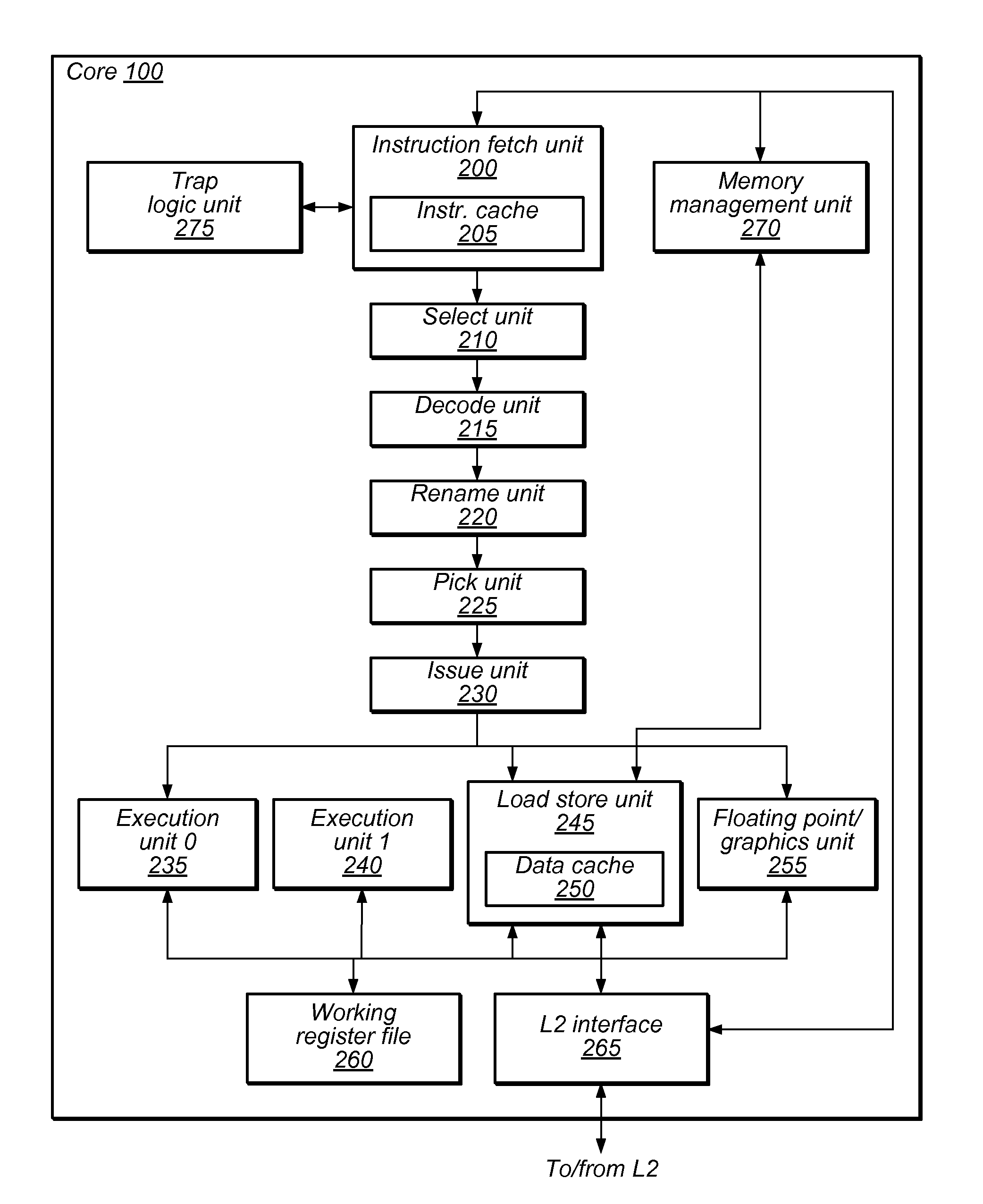

Error detection method and system for processors that employs lockstepped concurrent threads

InactiveUS20050108509A1Digital computer detailsConcurrent instruction executionExecution unitExecution cycle

A processor that includes an in-order execution architecture for executing at least two instructions per cycle (e.g., 2n instructions are processed per cycle, where n is an integer greater than or equal to one) and at least two symmetric execution units. The processor includes an instruction fetch unit for fetching n instructions (where n is an integer greater than or equal to one) and an instruction decoder for decoding the n instruction. The error detection mechanism includes duplication hardware for duplicating the n instructions into a first bundle of n instructions and a second bundle of n instructions. A first execution unit for executing the first bundle of instructions in a first execution cycle, and a second symmetric execution unit for executing the second bundle of instructions in the first execution cycle are provided. The error detection mechanism also includes comparison hardware for comparing the results of the first execution unit and the results of the second execution unit. The comparison hardware can have an exception unit for generating an exception (e.g., raising a fault) when the results are not the same. A commit unit is provided for committing one of the results when the results are the same.

Owner:HEWLETT PACKARD DEV CO LP

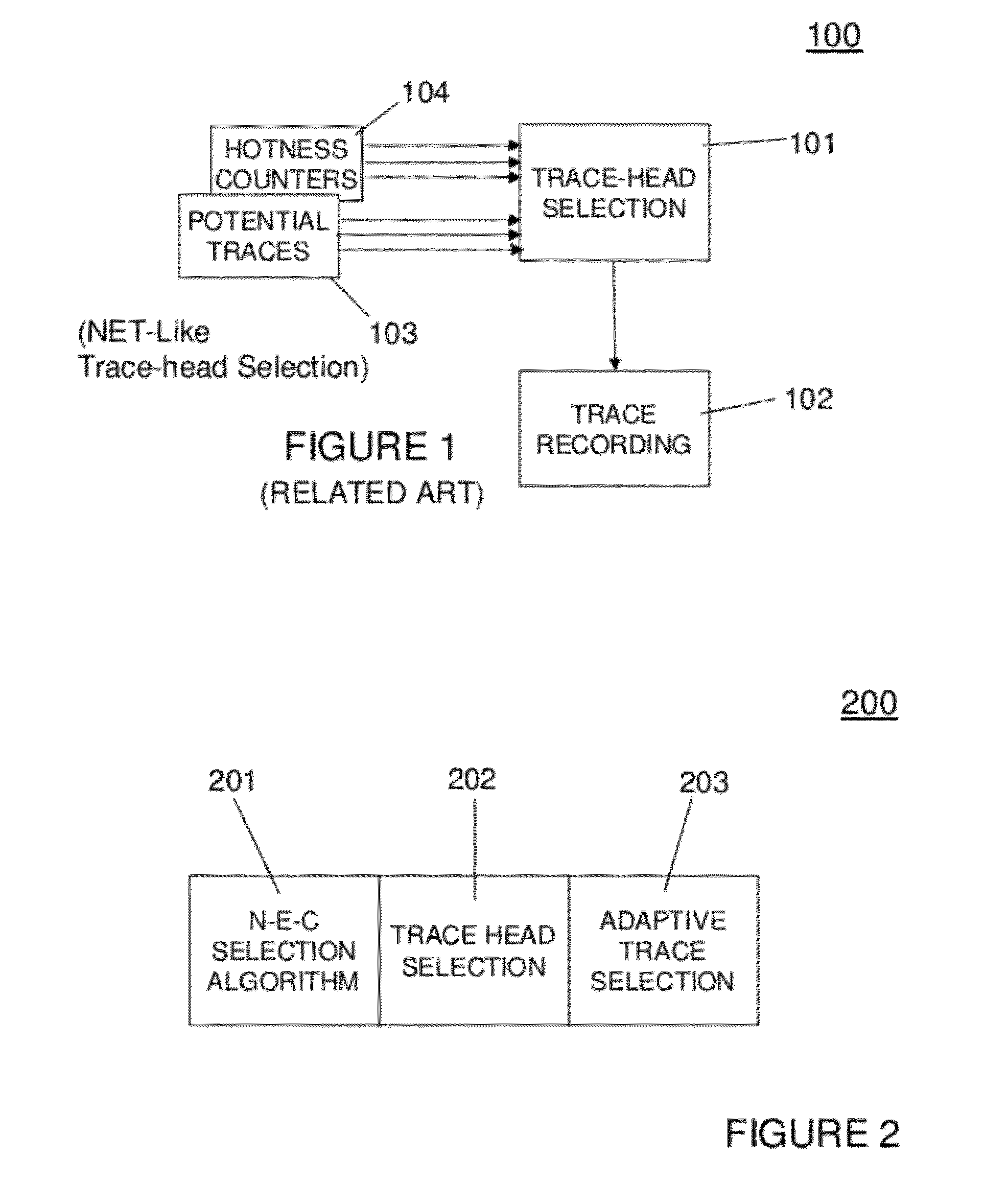

Adaptive next-executing-cycle trace selection for trace-driven code optimizers

ActiveUS20120204164A1Compilation is improvedReduce the amount of solutionSoftware engineeringProgram controlDynamic compilationParallel computing

An apparatus includes a processor for executing instructions at runtime and instructions for dynamically compiling the set of instructions executing at runtime. A memory device stores the instructions to be executed and the dynamic compiling instructions. A memory device serves as a trace buffer used to store traces during formation during the dynamic compiling. The dynamic compiling instructions includes a next-executing-cycle (N-E-C) trace selection process for forming traces for the instructions executing at runtime. The N-E-C trace selection process continues through an existing trace-head when forming traces without terminating a recording of a current trace if an existing trace-head is encountered.

Owner:GLOBALFOUNDRIES US INC

Visualization tool for viewing timing information for a graphical program

ActiveUS20050034106A1Easy to switchPrecise alignmentHardware monitoringVisual/graphical programmingGraphicsVisual presentation

A system and method for viewing timing of one or more loops in a graphical program. A graphical program having one or more loops may be created. In one embodiment the one or more loops may include one or more timed loops, i.e., the loops may be configured to execute according to particular execution periods. The graphical program may be executed, and timing analysis data regarding timing of the one or more loops during execution of the graphical program may be stored. A graphical user interface (GUI) for viewing timing of the one or more loops during execution of the graphical program may be displayed. In various embodiments the GUI may display any of various kinds of information regarding timing of the one or more loops, and any kind of visual presentation may be used in displaying the information.

Owner:NATIONAL INSTRUMENTS

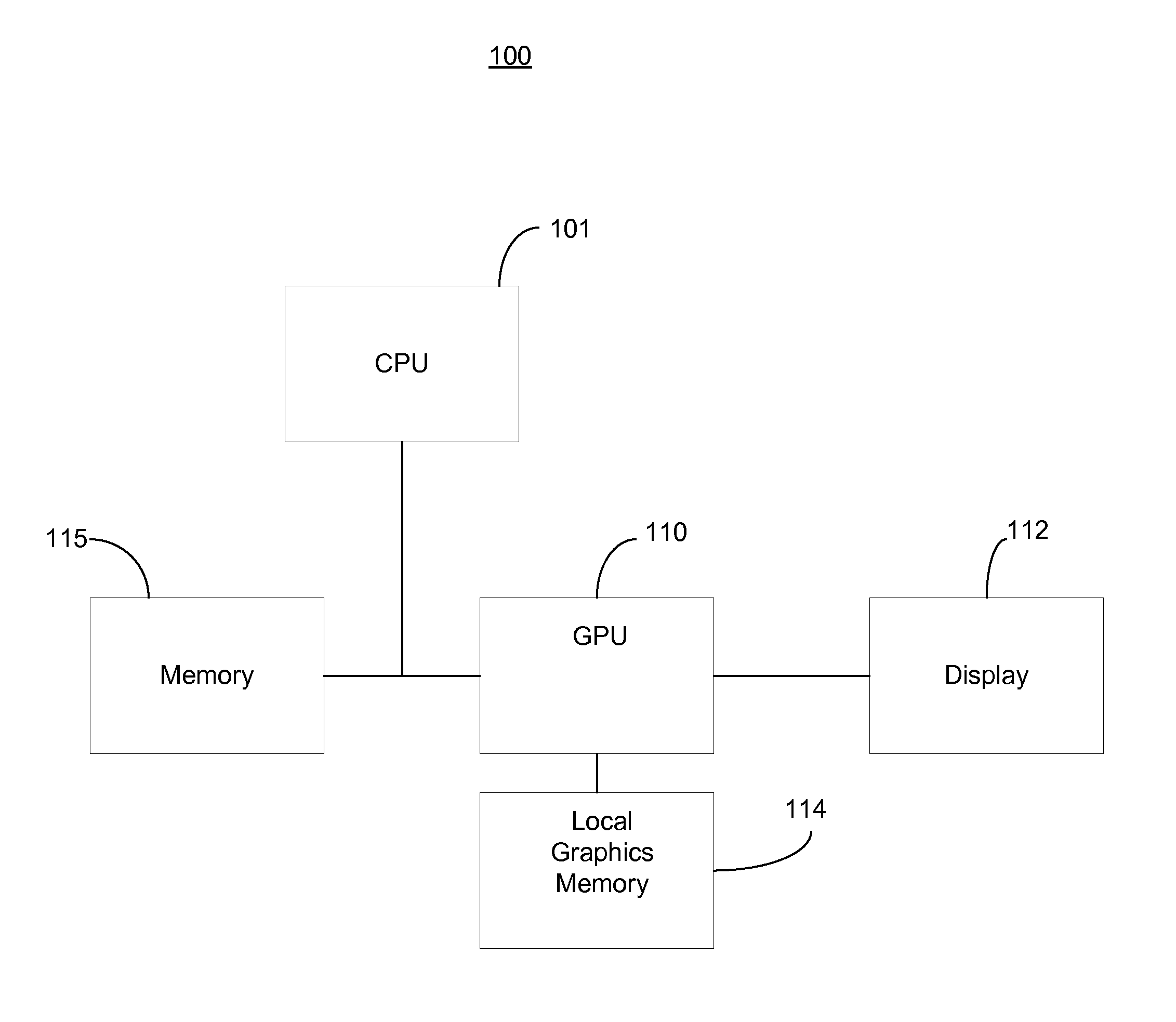

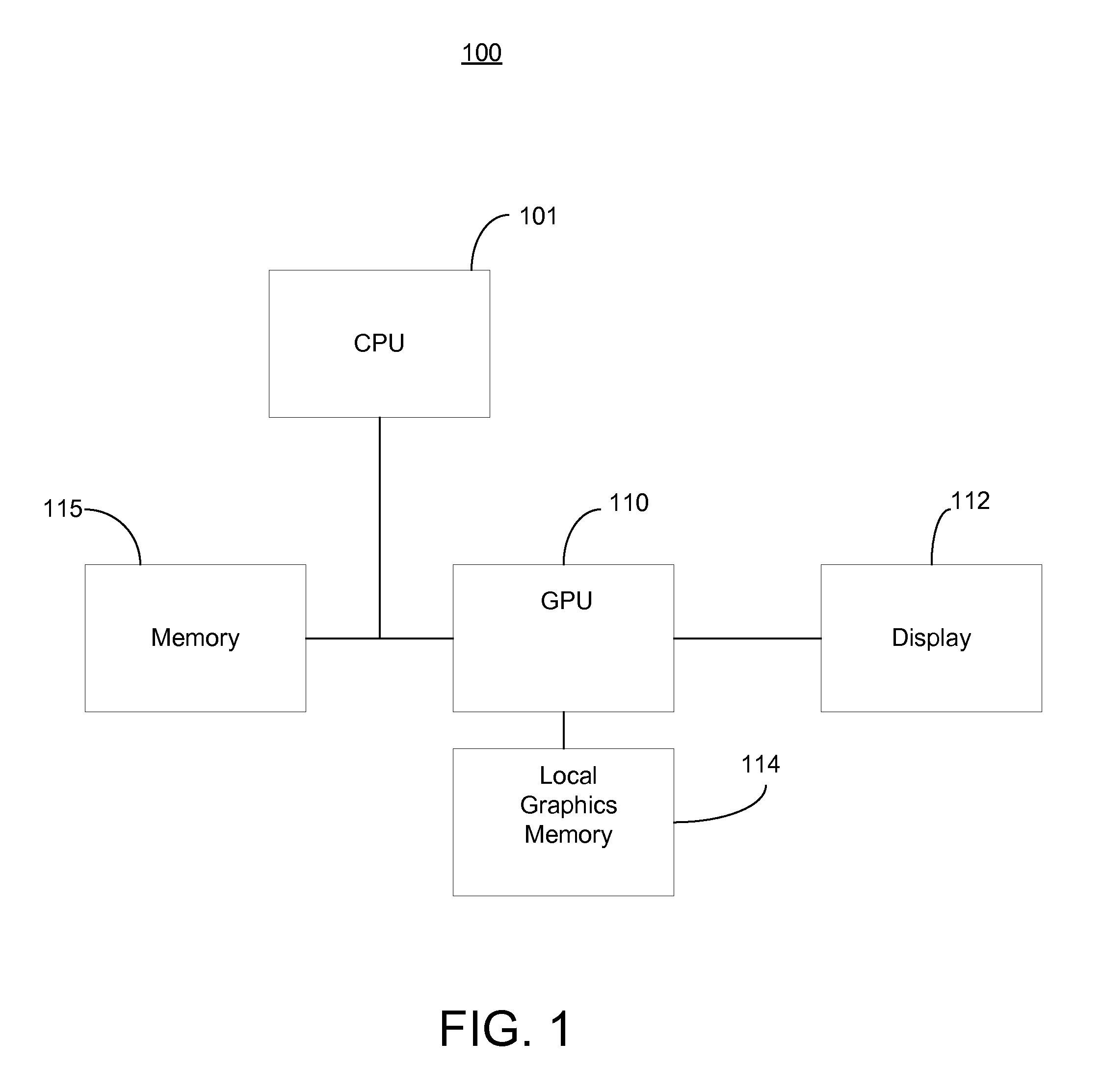

System and method for long running compute using buffers as timeslices

InactiveUS20130162661A1Many timesLong runProcessor architectures/configurationProgram controlGraphicsGraphics processing unit

A system and method for using command buffers as timeslices or periods of execution for a long running compute task on a graphics processor. Embodiments of the present invention allow execution of long running compute applications with operating systems that manage and schedule graphics processing unit (GPU) resources and that may have a predetermined execution time limit for each command buffer. The method includes receiving a request from an application and determining a plurality of command buffers required to execute the request. Each of the plurality of command buffers may correspond to some portion of execution time or timeslice. The method further includes sending the plurality of command buffers to an operating system operable for scheduling the plurality of command buffers for execution on a graphics processor. The command buffers from a different request are time multiplexed within the execution of the plurality of command buffers on the graphics processor.

Owner:NVIDIA CORP

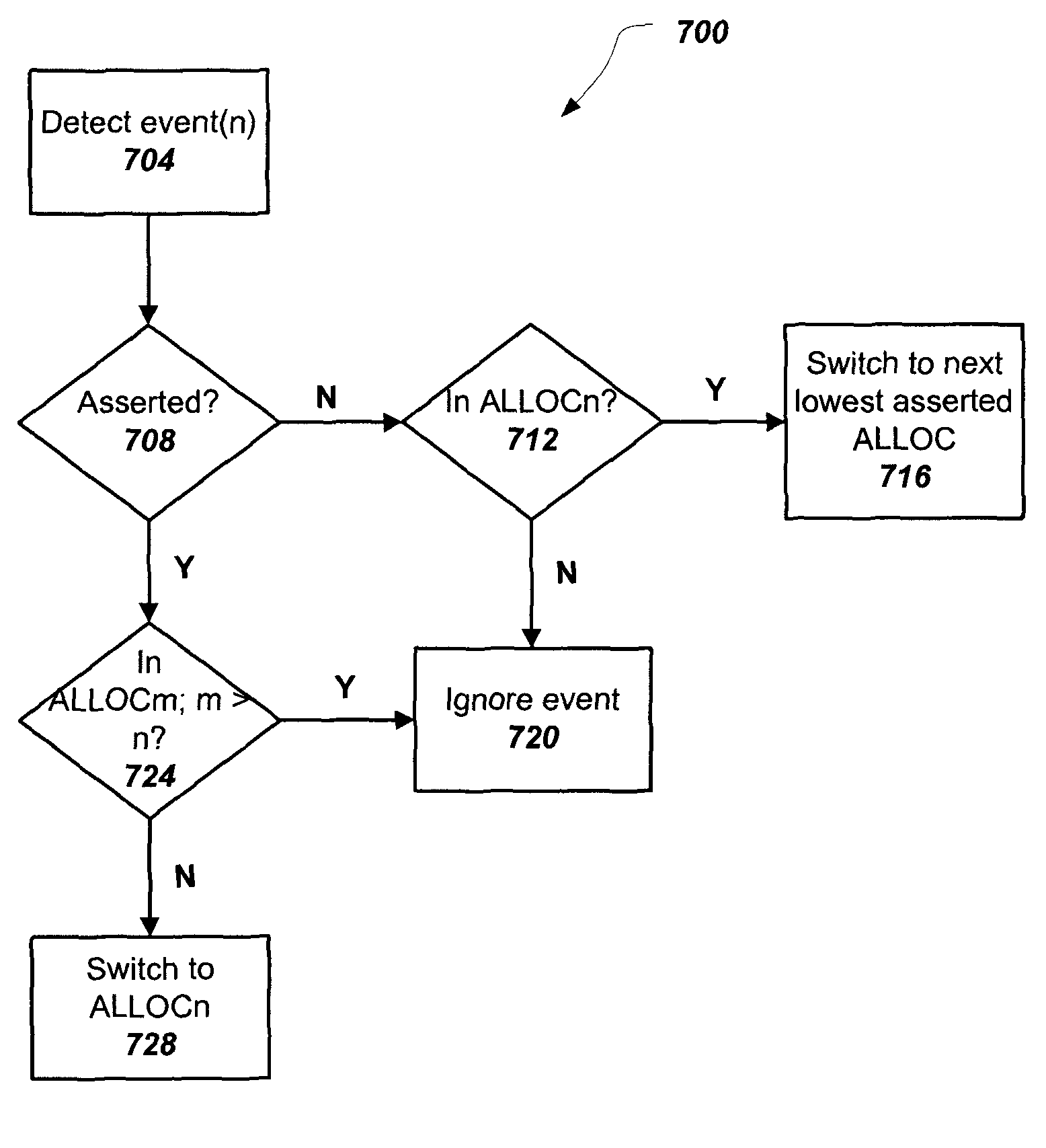

Event-based bandwidth allocation mode switching method and apparatus

Owner:MARVELL ASIA PTE LTD

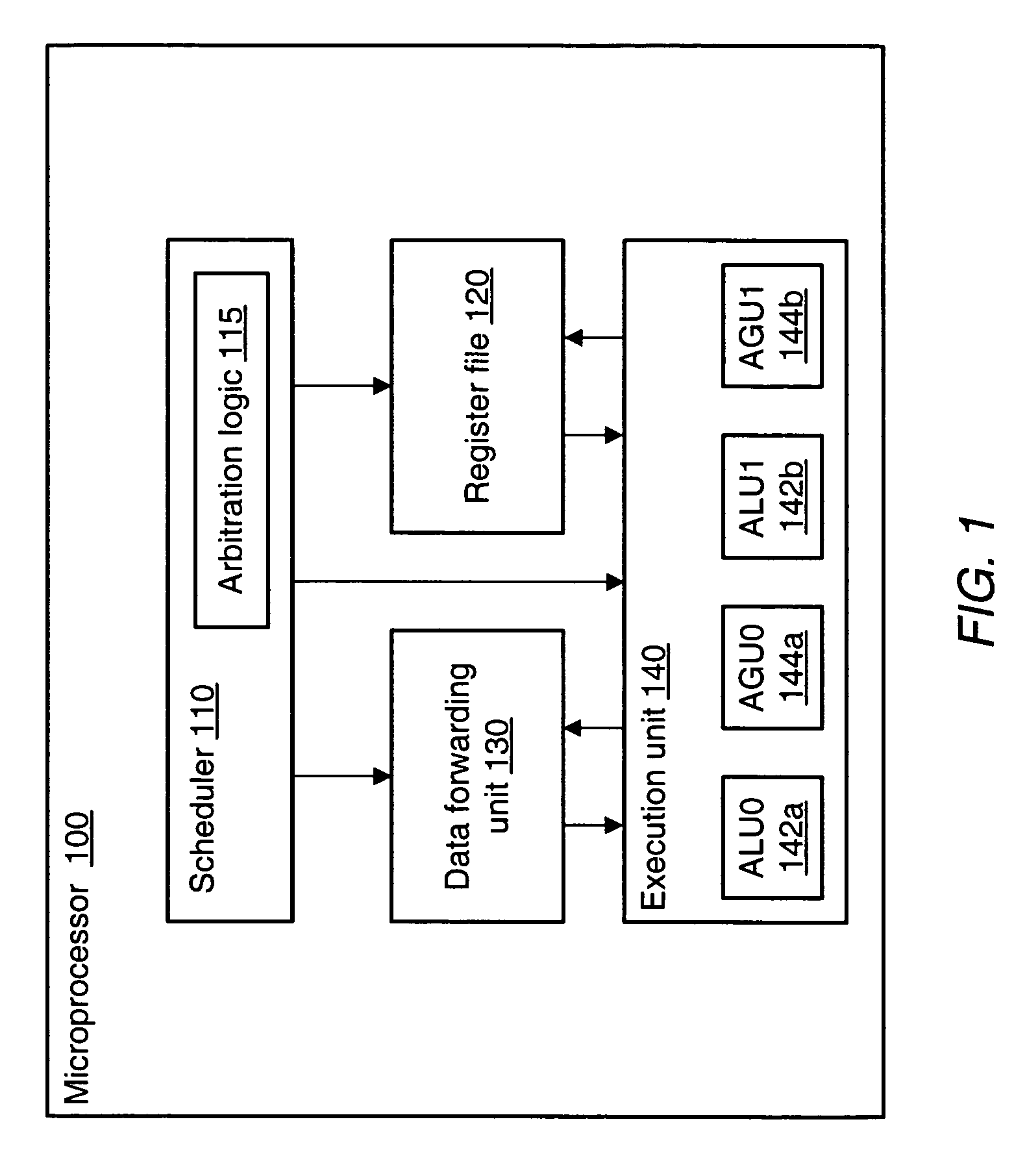

Apparatus and method for port arbitration in a register file on the basis of functional unit issue slots

A microprocessor is configured to provide port arbitration in a register file. The microprocessor includes a plurality of functional units configured to collectively operate on a maximum number of operands in a given execution cycle, and a register file providing a number of read ports that is insufficient to provide the maximum number of operands to the plurality of functional units in the given execution cycle. The microprocessor also includes an arbitration logic coupled to allocate the read ports of the register file for use by selected functional units during the given execution cycle.

Owner:ADVANCED MICRO DEVICES INC

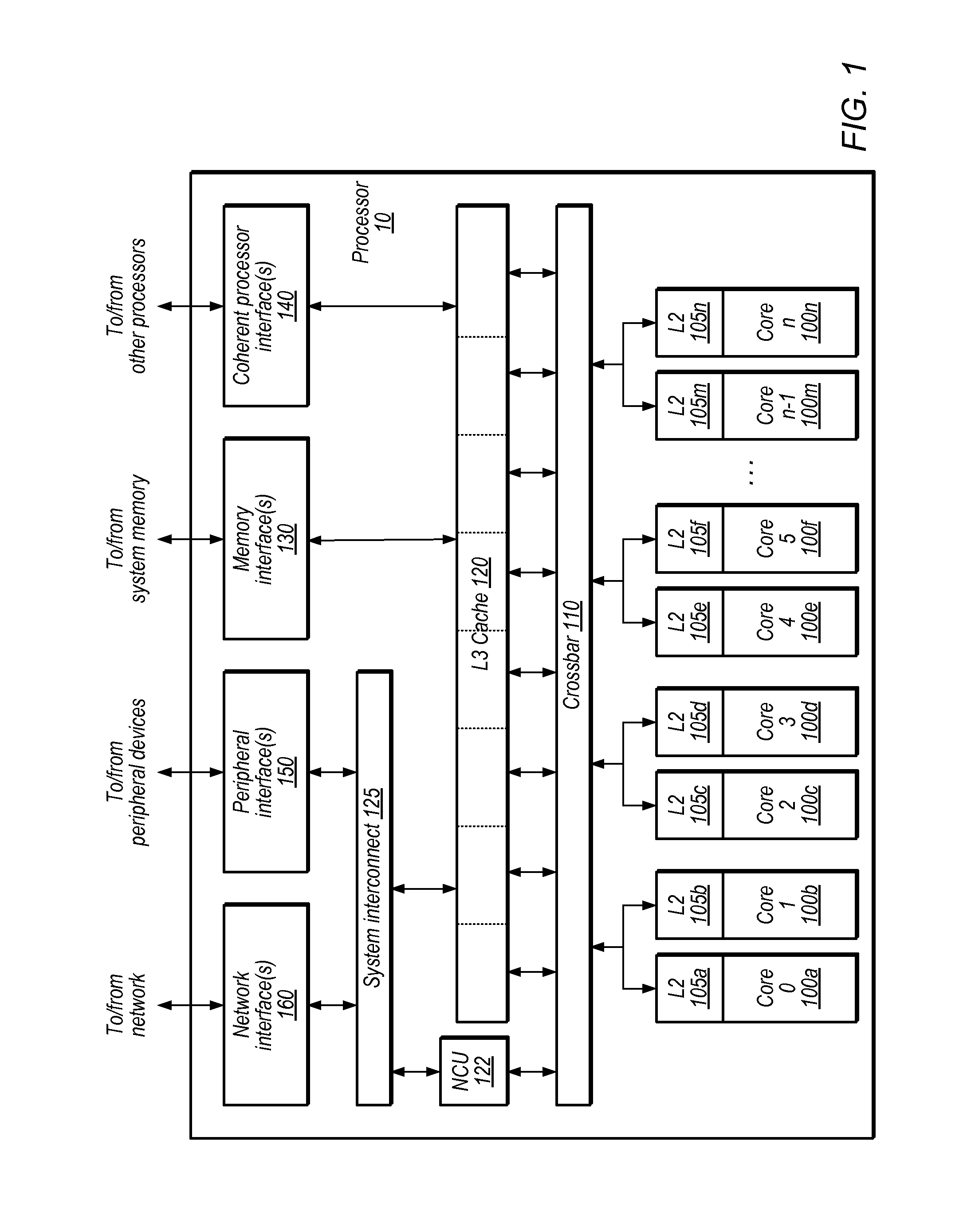

System and Method for Out-of-Order Resource Allocation and Deallocation in a Threaded Machine

ActiveUS20100318998A1Reduce waiting timeFaster search resultMultiprogramming arrangementsMemory systemsResource allocationOperating system

A system and method for managing the dynamic sharing of processor resources between threads in a multi-threaded processor are disclosed. Out-of-order allocation and deallocation may be employed to efficiently use the various resources of the processor. Each element of an allocate vector may indicate whether a corresponding resource is available for allocation. A search of the allocate vector may be performed to identify resources available for allocation. Upon allocation of a resource, a thread identifier associated with the thread to which the resource is allocated may be associated with the allocate vector entry corresponding to the allocated resource. Multiple instances of a particular resource type may be allocated or deallocated in a single processor execution cycle. Each element of a deallocate vector may indicate whether a corresponding resource is ready for deallocation. Examples of resources that may be dynamically shared between threads are reorder buffers, load buffers and store buffers.

Owner:SUN MICROSYSTEMS INC

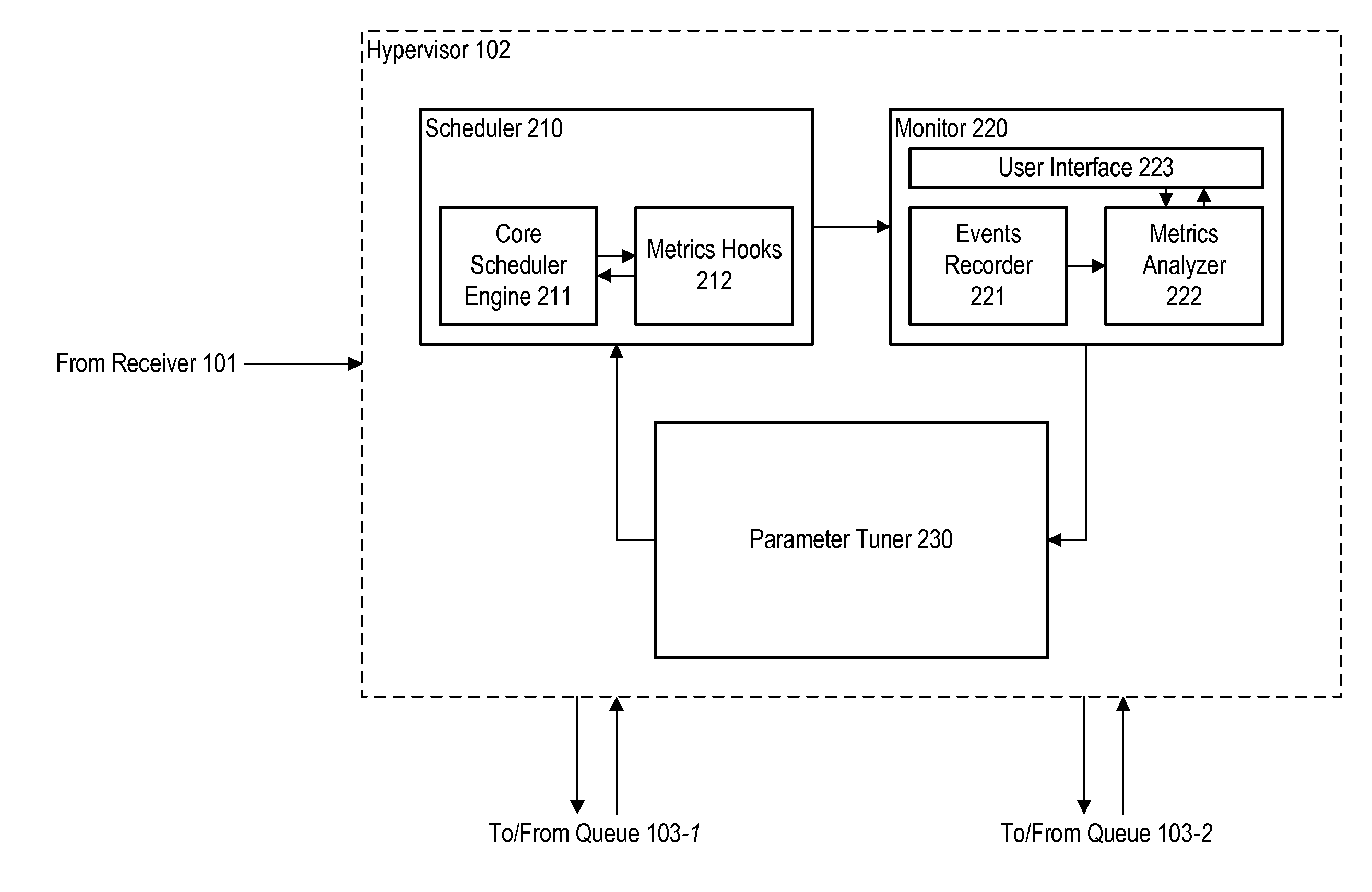

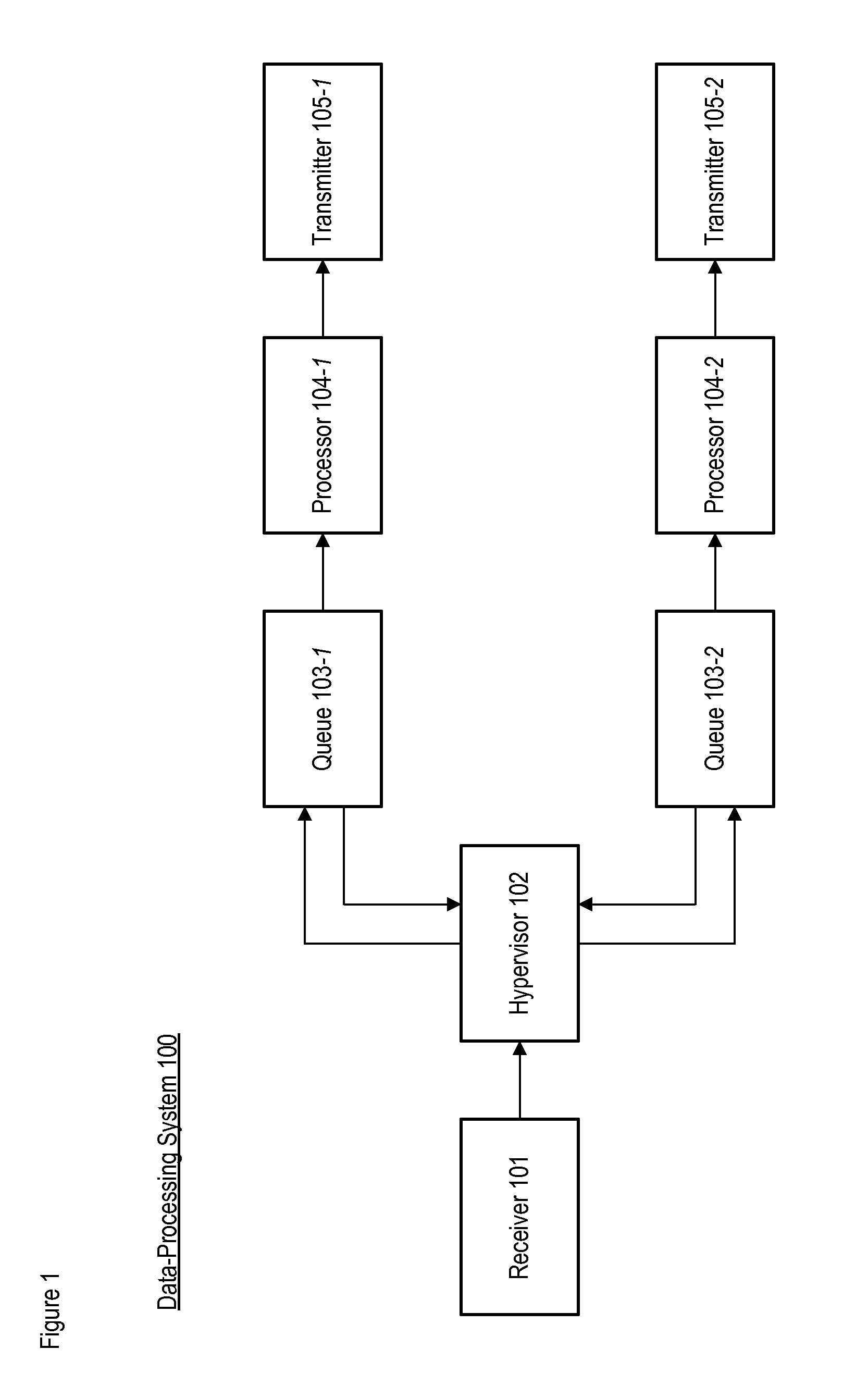

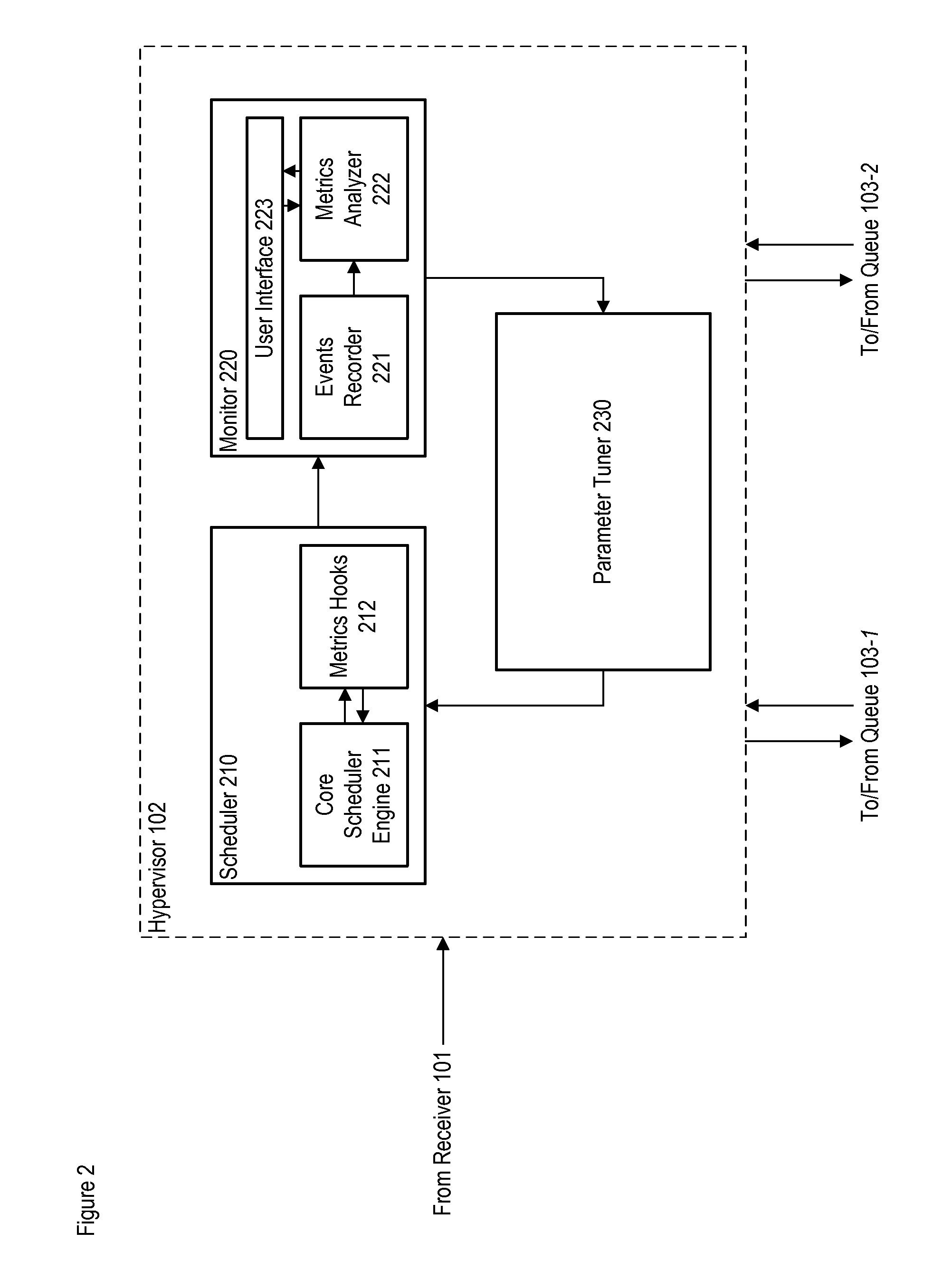

Dynamic Techniques for Optimizing Soft Real-Time Task Performance in Virtual Machines

ActiveUS20110035752A1Improve soft real-time task performanceImprove task performanceDigital computer detailsMultiprogramming arrangementsData processing systemData treatment

Methods are disclosed that dynamically improve soft real-time task performance in virtualized computing environments under the management of an enhanced hypervisor comprising a credit scheduler. The enhanced hypervisor analyzes the on-going performance of the domains of interest and of the virtualized data-processing system. Based on the performance metrics disclosed herein, some of the governing parameters of the credit scheduler are adjusted. Adjustments are typically performed cyclically, wherein the performance metrics of an execution cycle are analyzed and, if need be, adjustments are applied in a later execution cycle. In alternative embodiments, some of the analysis and tuning functions are in a separate application that resides outside the hypervisor. The performance metrics disclosed herein include: a “total-time” metric; a “timeslice” metric; a number of “latency” metrics; and a “count” metric. In contrast to prior art, the present invention enables on-going monitoring of a virtualized data-processing system accompanied by dynamic adjustments based on objective metrics.

Owner:AVAYA INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com