Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

142 results about "Cache page" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Computing systems and methods for managing flash memory device

InactiveUS20100287327A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRandom access memoryData field

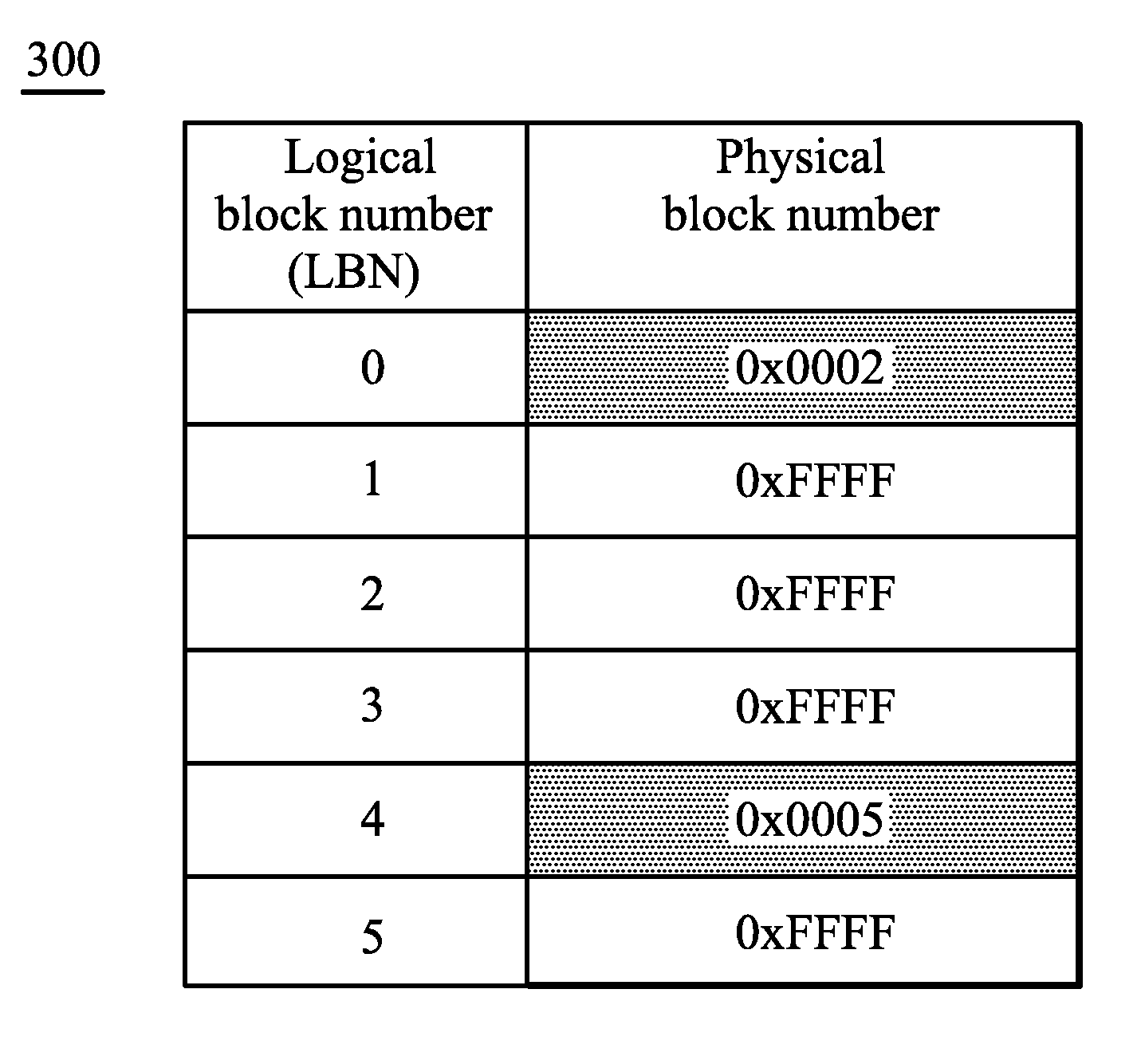

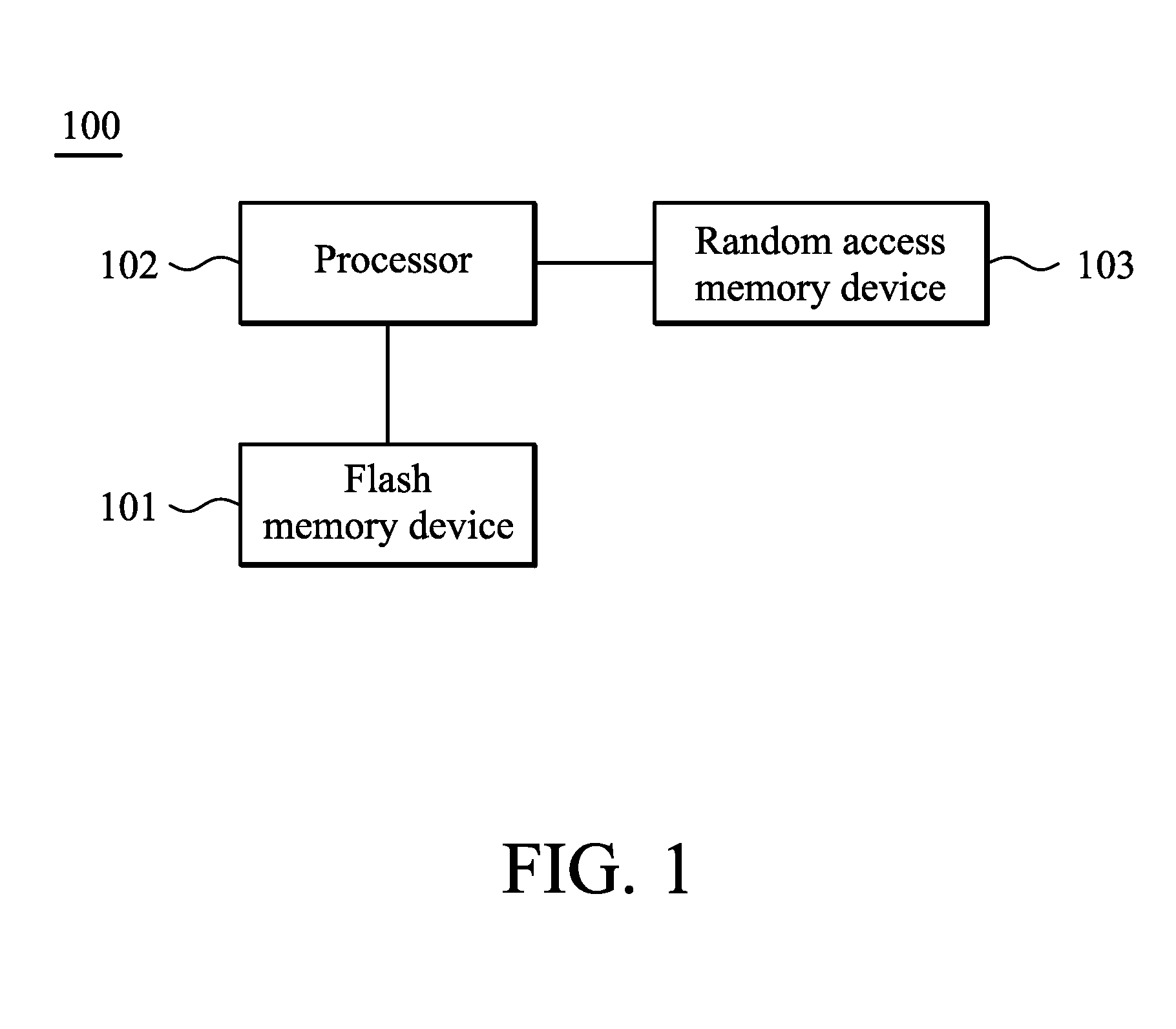

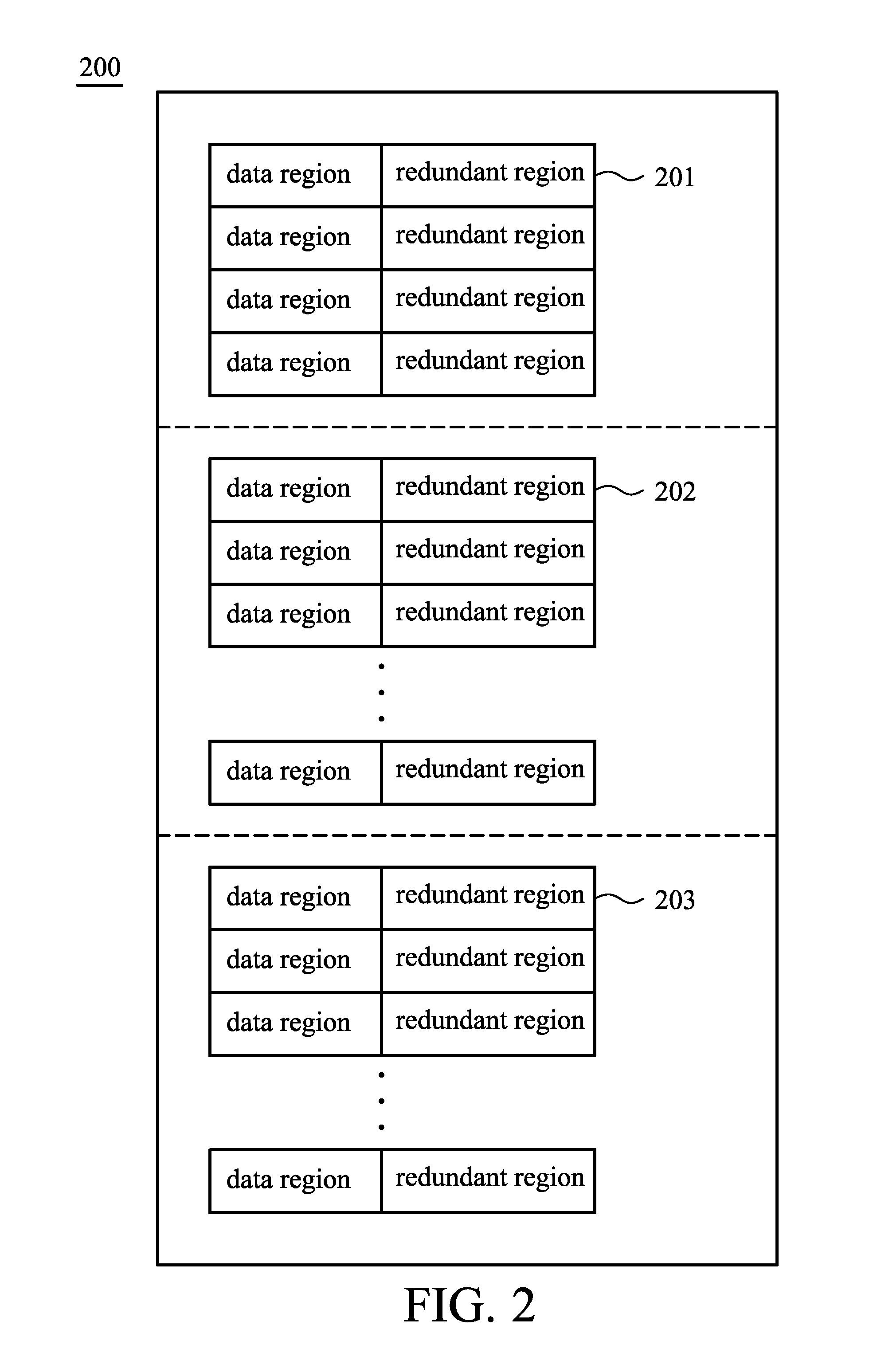

A computing system is provided. A flash memory device includes at least one mapping block, at least one modification block and at least one cache block. A processor is configured to perform: receiving a write command with a write logical address and predetermined data, loading content of a cache page from the cache block corresponding to the modification block according to the write logical address to a random access memory device in response to that a page of the mapping block corresponding to the write logical address has been used, the processor, reading orderly the content of the cache page stored in the random access memory device to obtain location information of an empty page of the modification block, and writing the predetermined data to the empty page according to the location information. Each cache page includes data fields to store location information corresponding to the data has been written in the pages of the modification block in order.

Owner:INTEL CORP

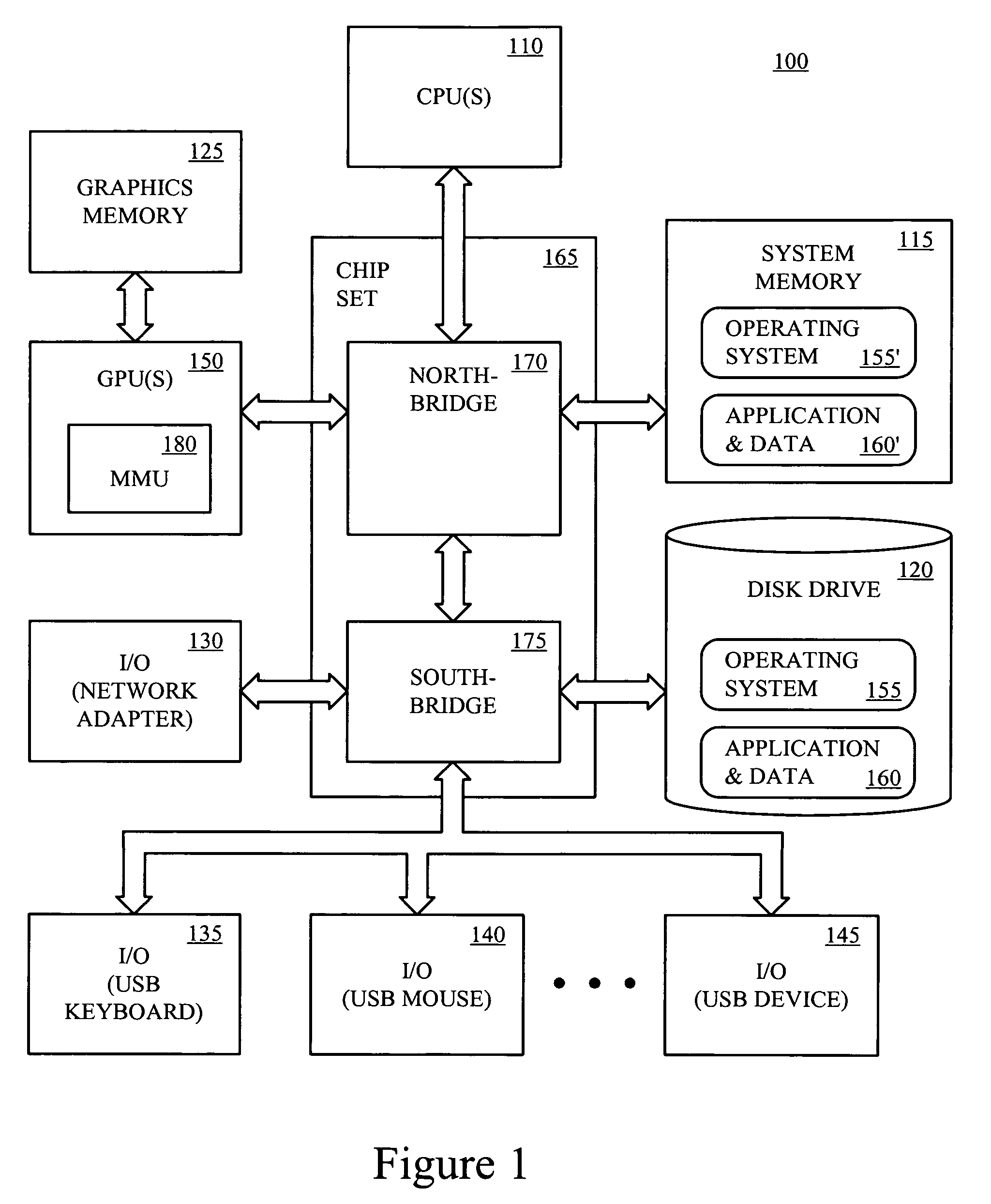

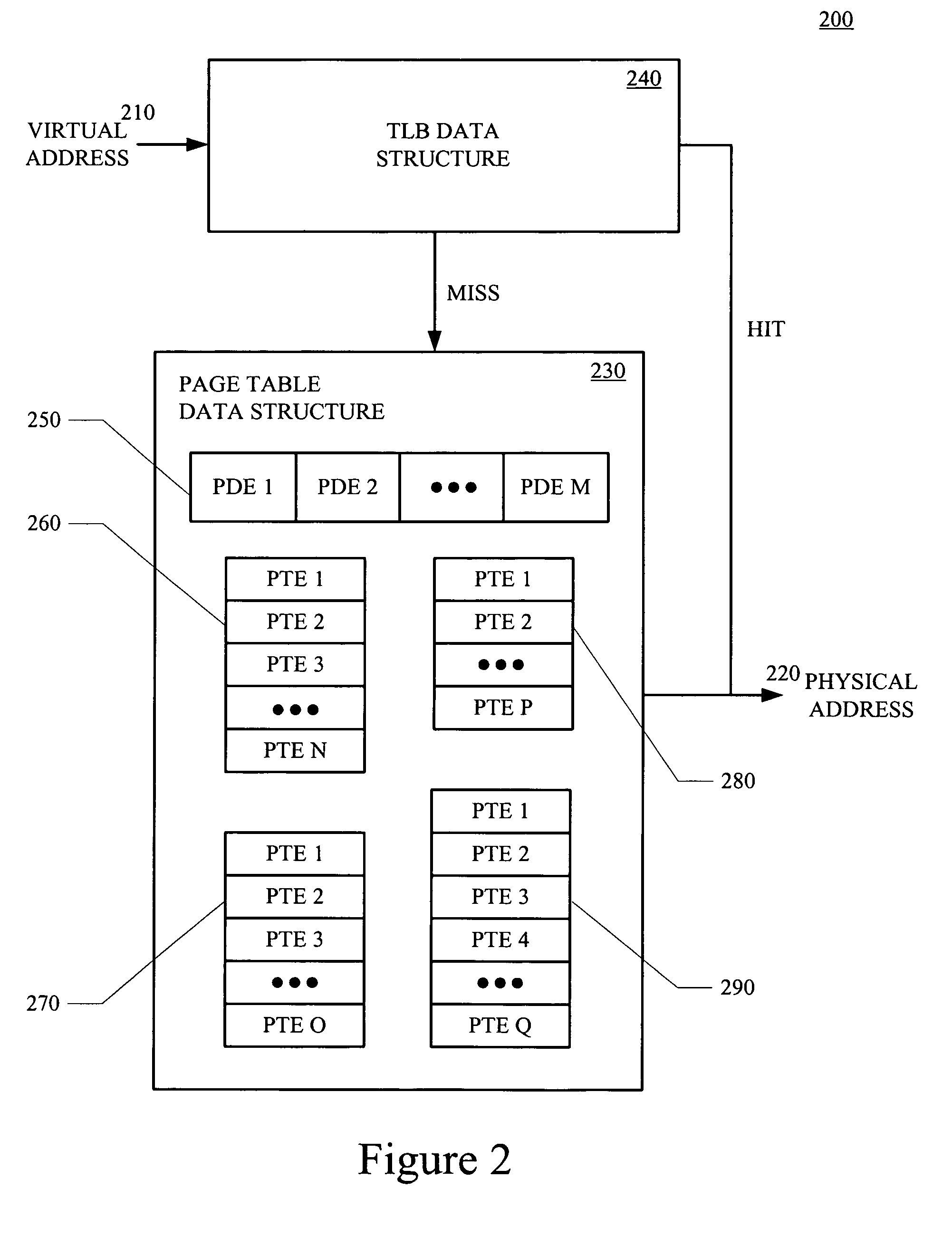

Memory access techniques utilizing a set-associative translation lookaside buffer

ActiveUS8707011B1Memory architecture accessing/allocationMemory systemsCache pageTranslation lookaside buffer

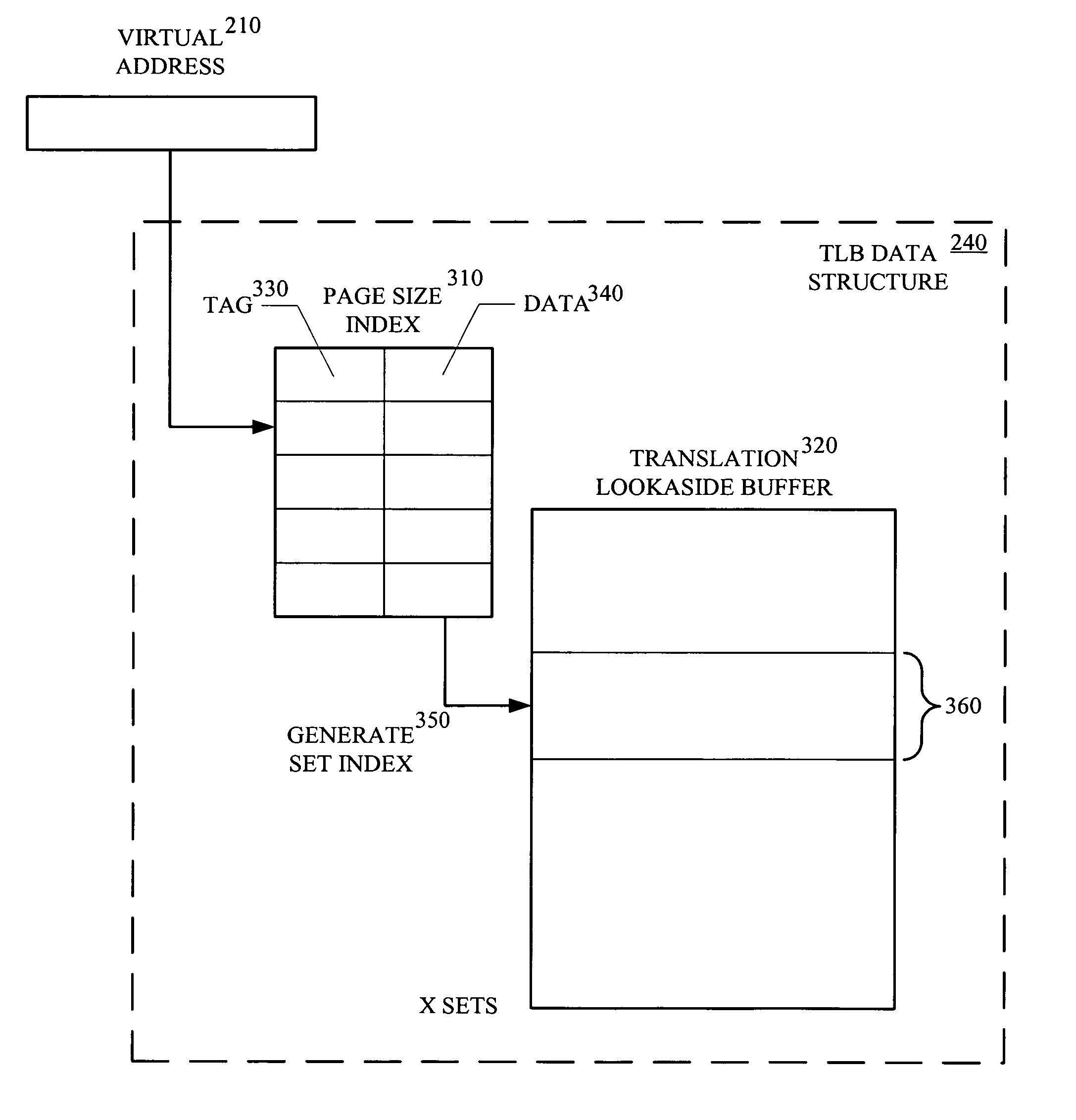

A memory access technique, in accordance with one embodiment of the present invention, includes caching page size data for use in accessing a set-associative translation lookaside buffer (TLB). The technique utilizes a translation lookaside buffer data structure that includes a page size table and a translation lookaside buffer. Upon receipt of a memory access request a page size is looked-up in the page size table utilizing the page directory index in the virtual address. A set index is calculated utilizing the page size. A given set of entries is then looked-up in the translation lookaside buffer utilizing the set index. The virtual address is compared to each TLB entry in the given set. If the comparison results in a TLB hit, the physical address is received from the matching TLB entry.

Owner:NVIDIA CORP

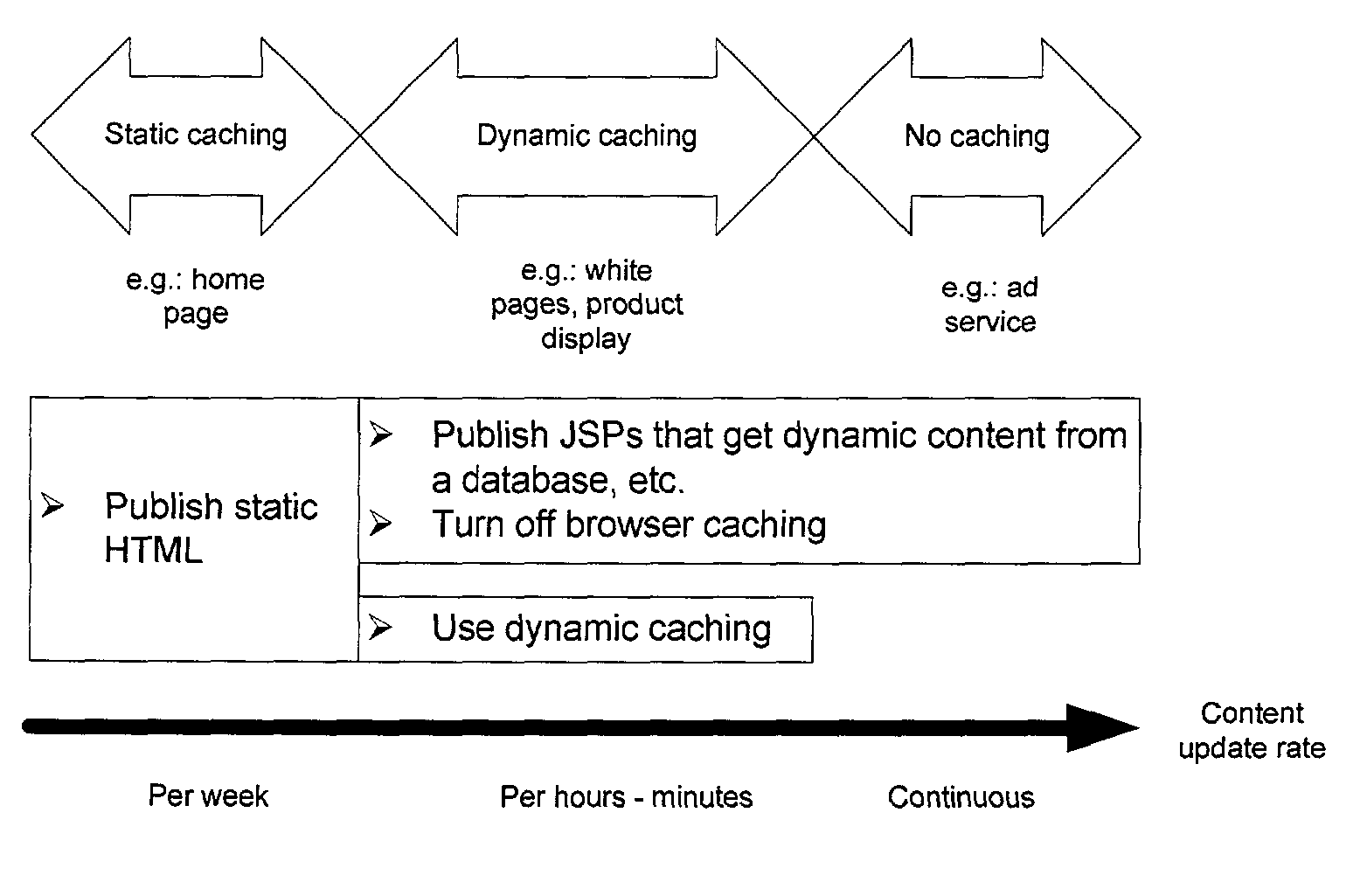

Method and system for specifying a cache policy for caching web pages which include dynamic content

InactiveUS6988135B2Digital data information retrievalMultiple digital computer combinationsData processing systemData treatment

A data processing system and method are described for specifying a cache policy for caching pages which include dynamic content. A user is permitted to request a page to be displayed. The page includes multiple fragments. An application is executed which generates those fragments. The generation of fragments is are unchanged by the caching policy. Each one of the servlets is executed to present a different one of the fragments. Caching of the page fragments can now be processed separately from the execution of the application.

Owner:TWITTER INC

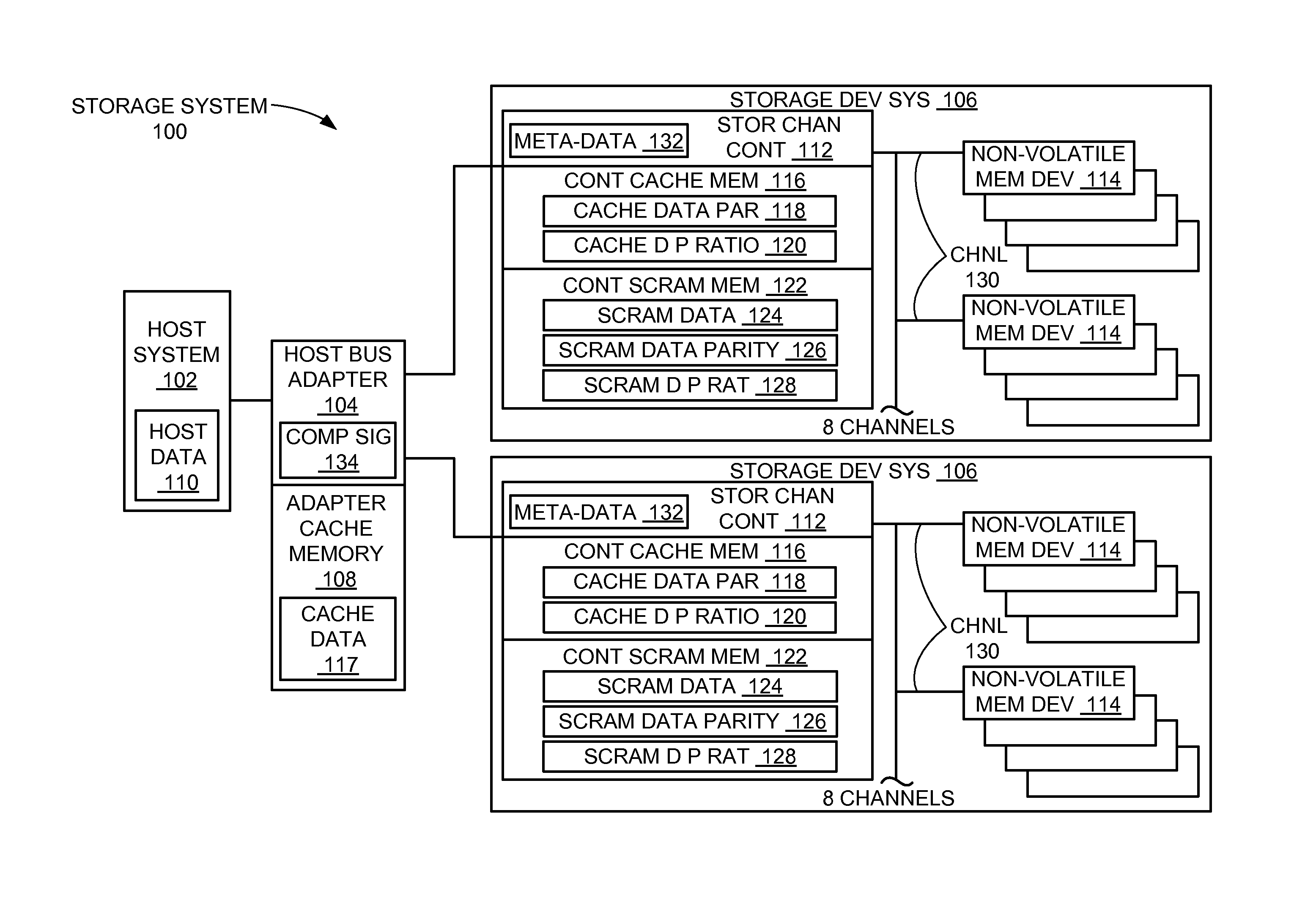

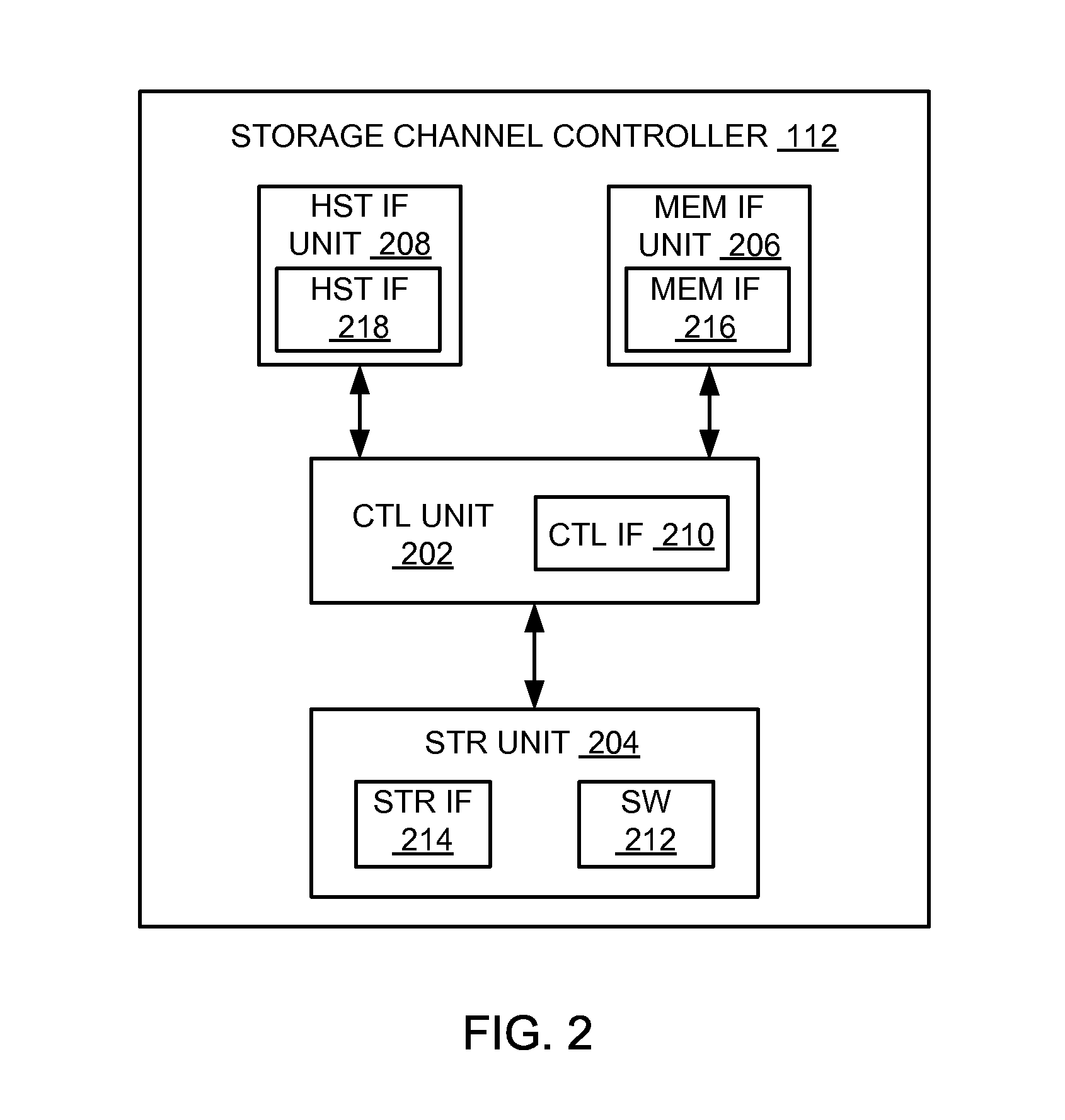

Data hardening in a storage system

ActiveUS20140304454A1Memory architecture accessing/allocationError detection/correctionElectricityComputer module

A storage system, and a method of data hardening in the storage system, including: a de-glitch module configured for a detection of a power failure event; a write page module, coupled to the de-glitch module, the write page module configured for an execution of a cache write command based on the power failure event to send a cache page from a cache memory to a storage channel controller, wherein the cache memory is a volatile memory; and a signal empty module, coupled to the write page module, the signal empty module configured for a generation of a sleep signal to shut down a host bus adapter, wherein the host bus adapter interfaces with the storage channel controller to write the cache page back to the cache memory upon a power up of the host bus adapter and the storage channel controller.

Owner:SANDISK TECH LLC

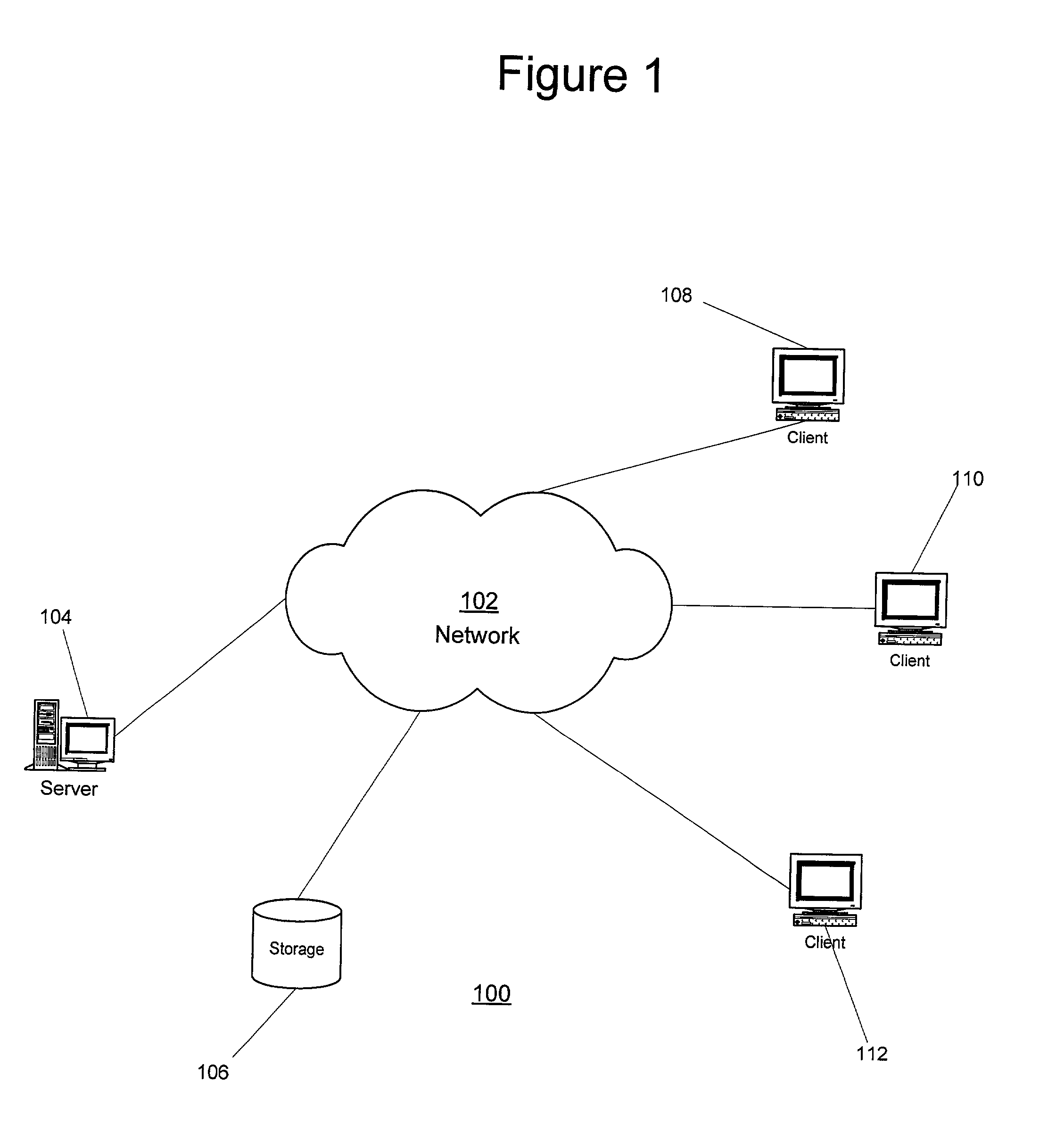

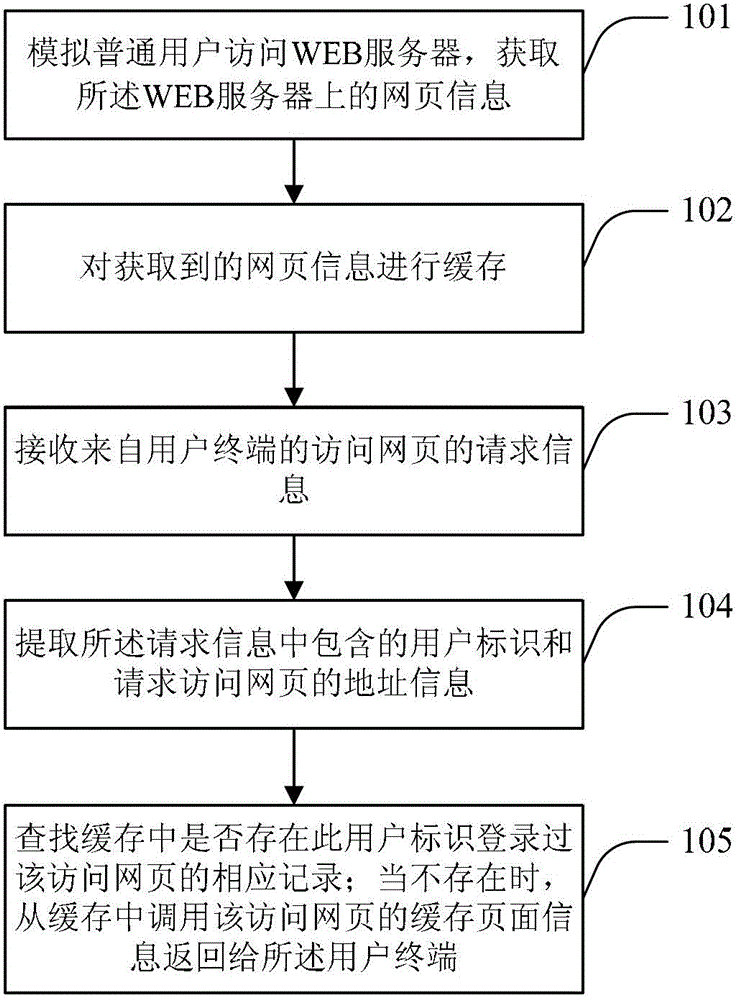

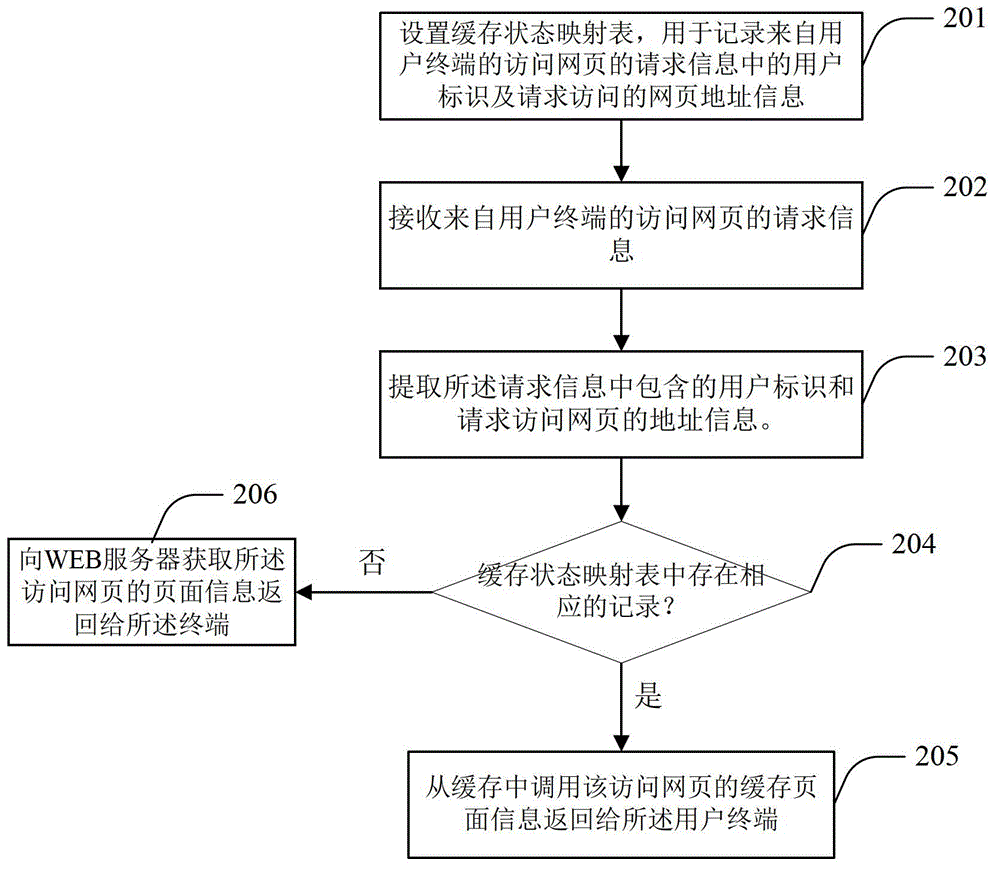

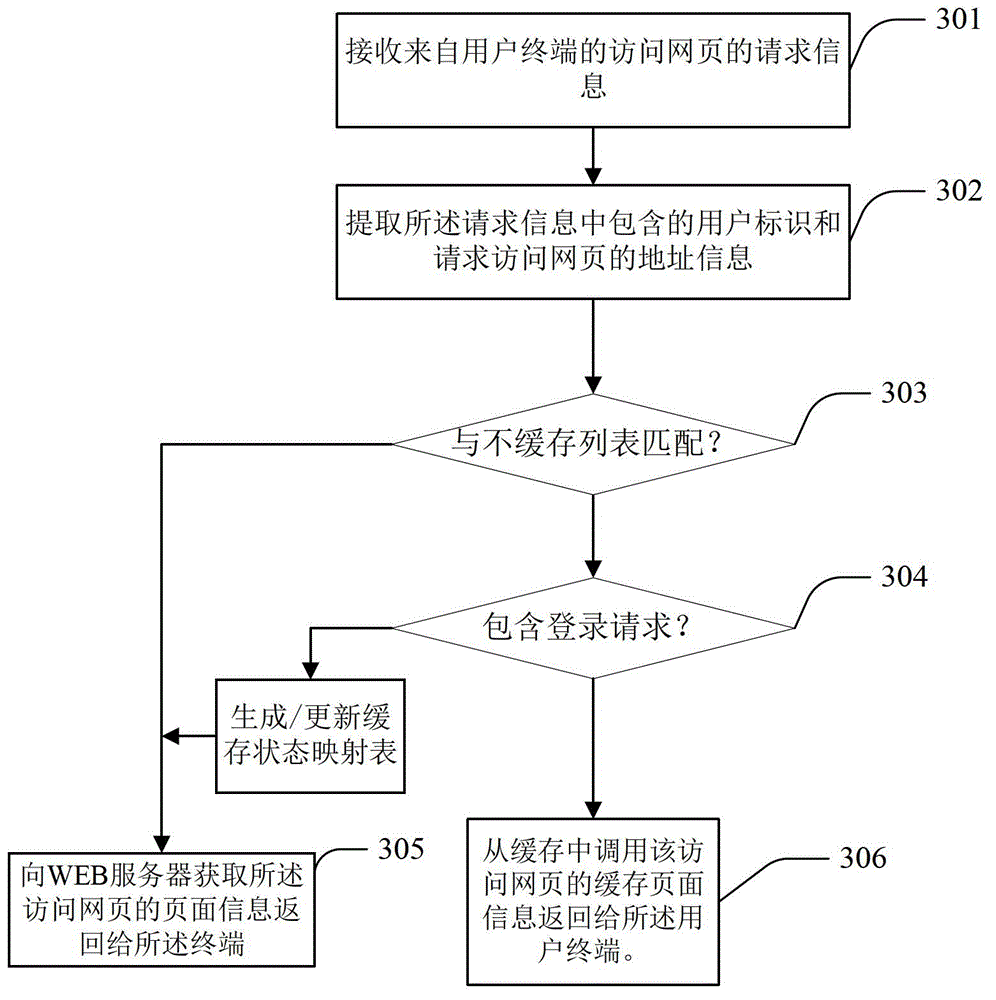

Network access method and server based on cache

ActiveCN102868719AImprove performanceImprove hit rateDigital data information retrievalTransmissionAccess methodWeb service

The invention provides a network access method and a server based on a cache. The method comprises the following steps of: simulating an ordinary user to access a WEB server to obtain information of a web page on the WEB server; caching the obtained information of the web page; receiving request information of accessing the web page from a user terminal; extracting a user identification contained in the requested information and address information of requesting to access the web page; searching whether a corresponding record indicating that the user identification logs on the accessed web page exists in the cache; and when the record does not exist, calling cache page information of the accessing page from the cache and returning to the user terminal. Since only when a network request never logs on the web page can the server send the network cache corresponding to the network request to the terminal, leakage of sensitive information of a user is prevented, a hit ratio of the page cache is increased, and property of an agent server responding to the request of network access is improved.

Owner:360 TECH GRP CO LTD

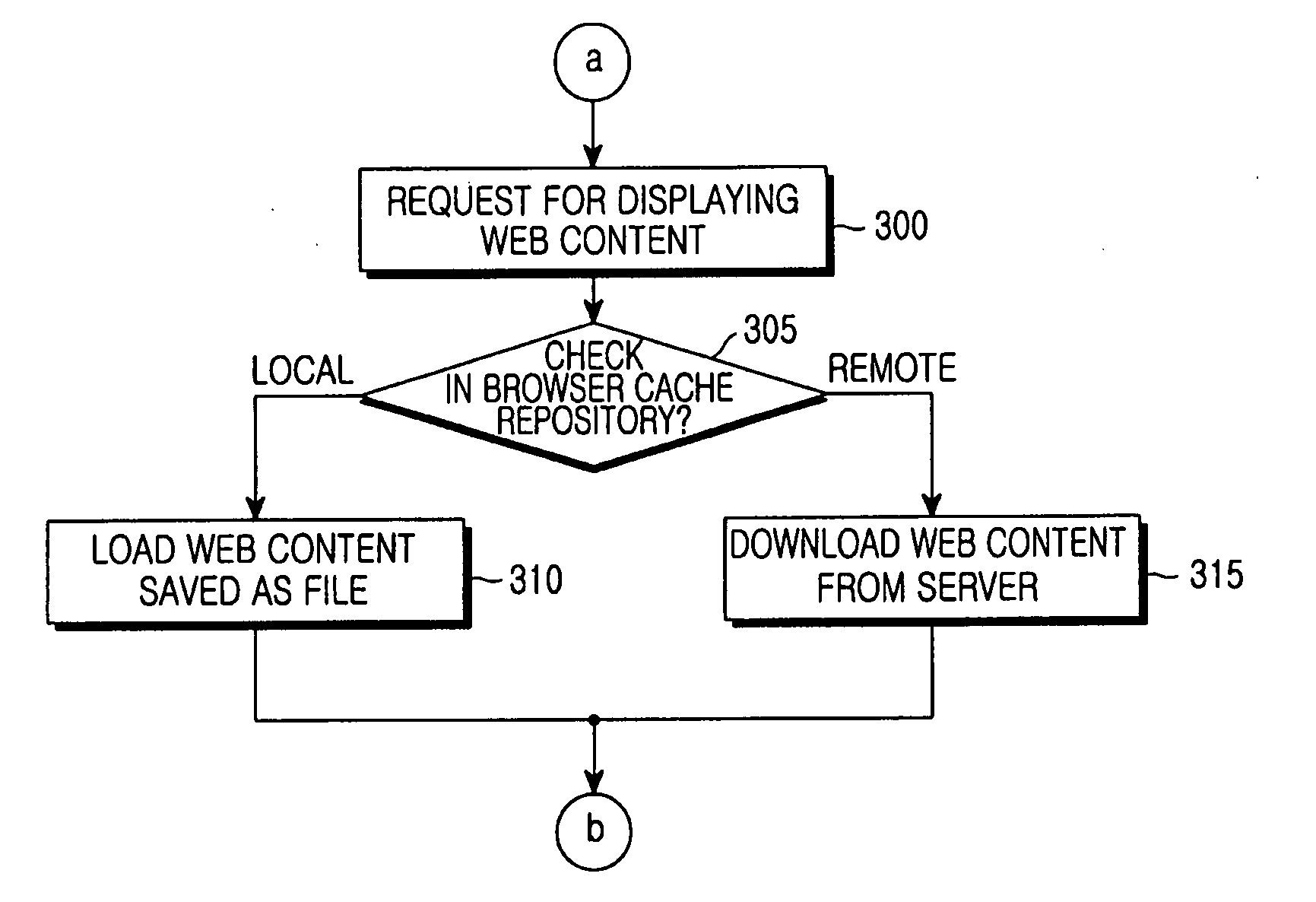

System for displaying cached webpages, a server therefor, a terminal therefor, a method therefor and a computer-readable recording medium on which the method is recorded

InactiveUS20120311419A1Increase probabilityImprove caching efficiencyDigital data information retrievalTransmissionCache serverWeb service

The present invention relates to a system, a server, a terminal and method for displaying cached webpages and to a computer-readable recoding medium on which the method is recorded. The system comprises a Web service server which stores at least one webpage; a caching server which collects links to webpages that match preset conditions in the webpage(s) for creating a caching page list that comprises at least one of the links to the collected webpages, and a terminal which refers to the link(s) to webpage(s) in the caching page list to cache the webpage(s), and simultaneously display the input link and the link to the cached webpage in response to a user call up of a specific webpage and wherein the terminal which receives the caching page list, displays the link(s) to webpage(s) in the caching page list so received, and provides a display such that webpages cached in links of the webpages are displayed are separate from non-cached webpages.

Owner:SK PLANET CO LTD

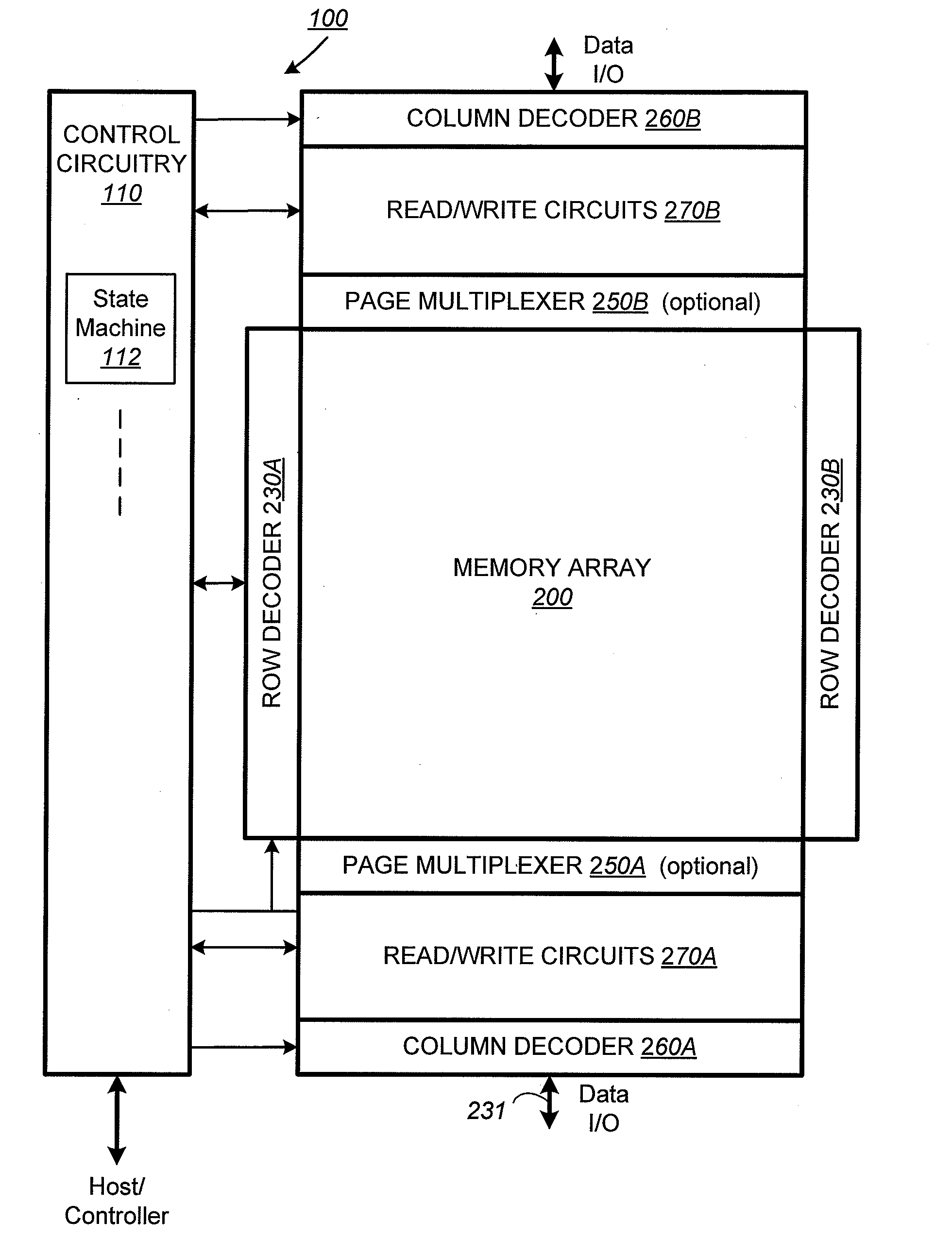

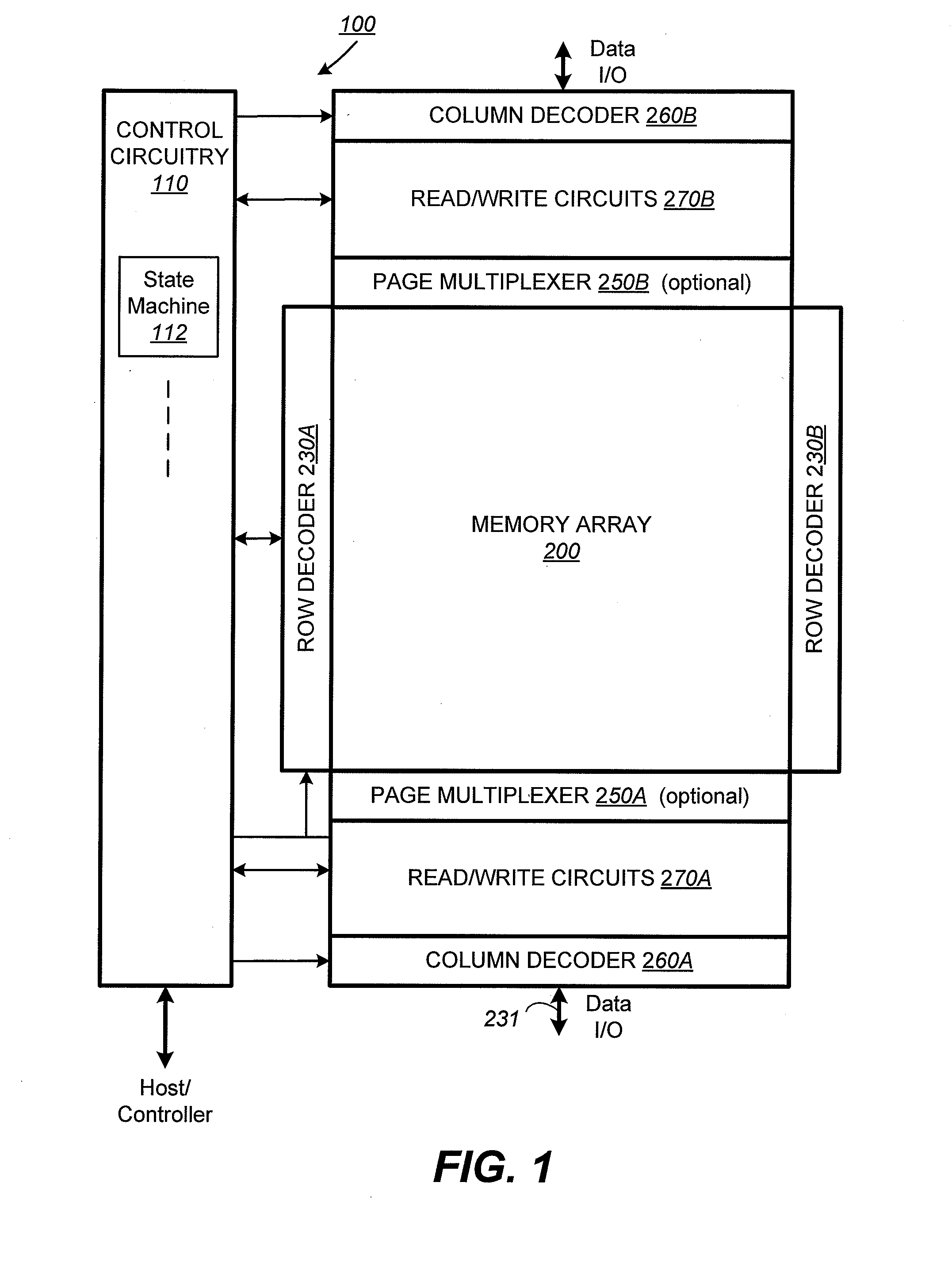

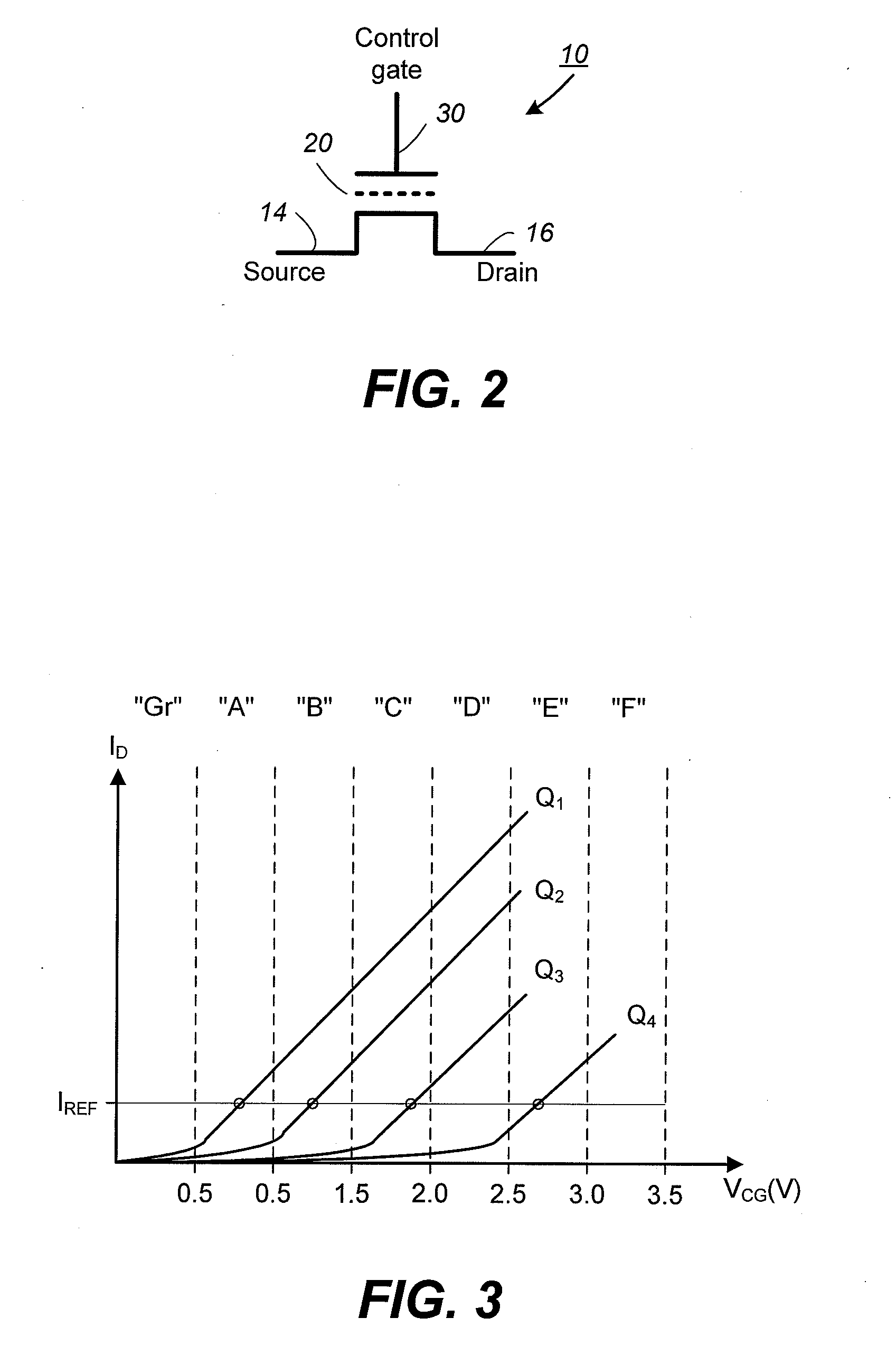

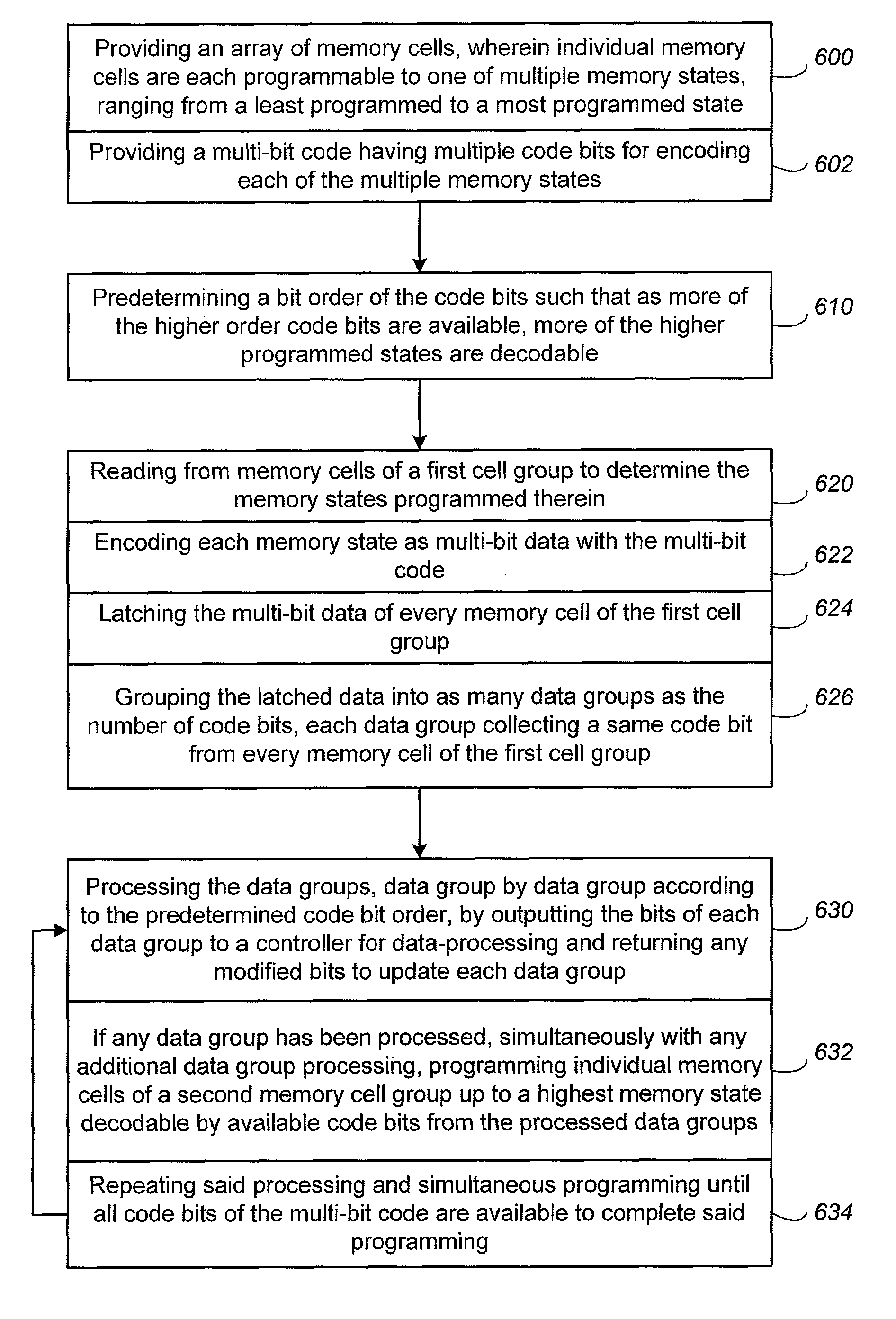

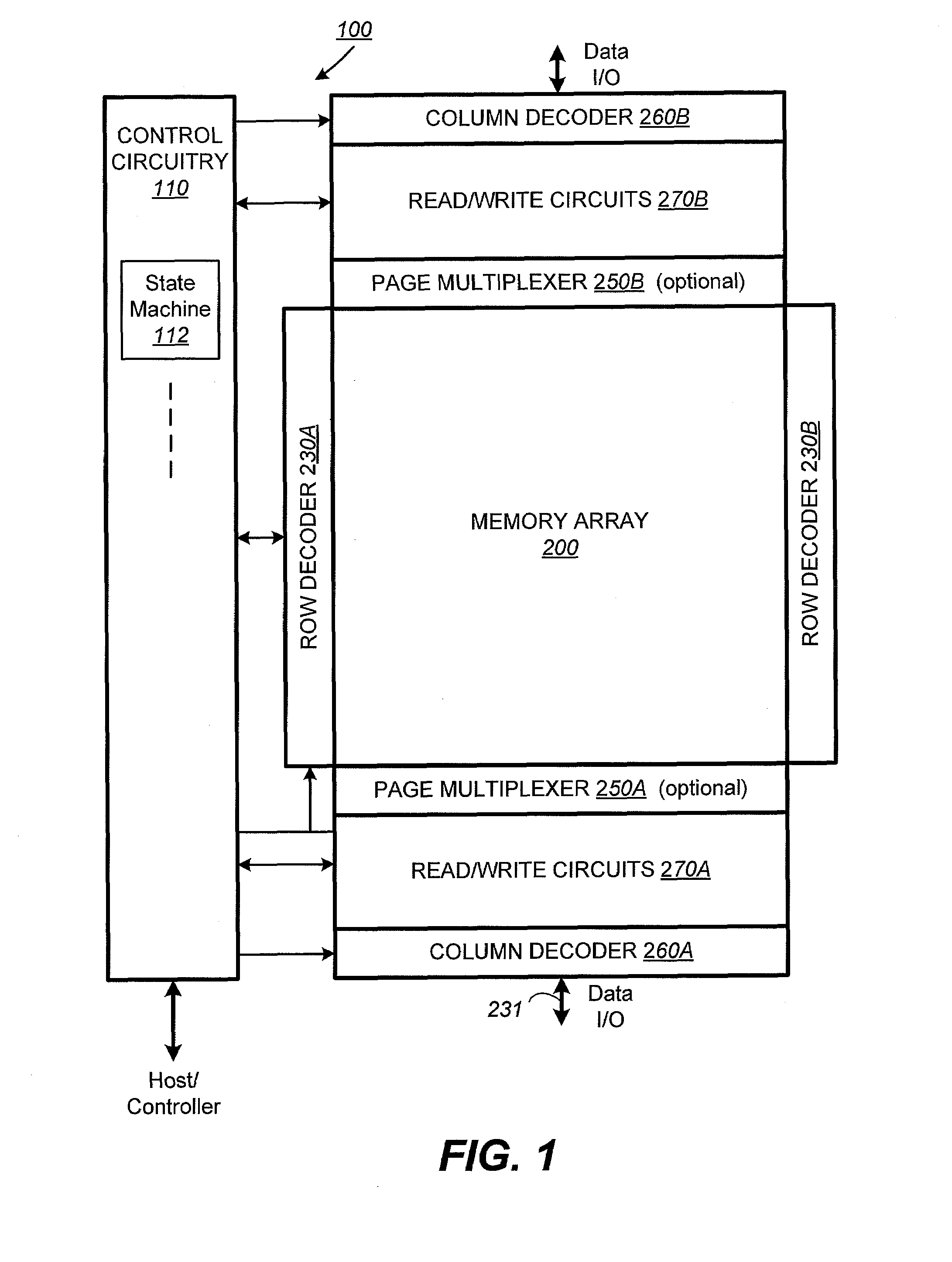

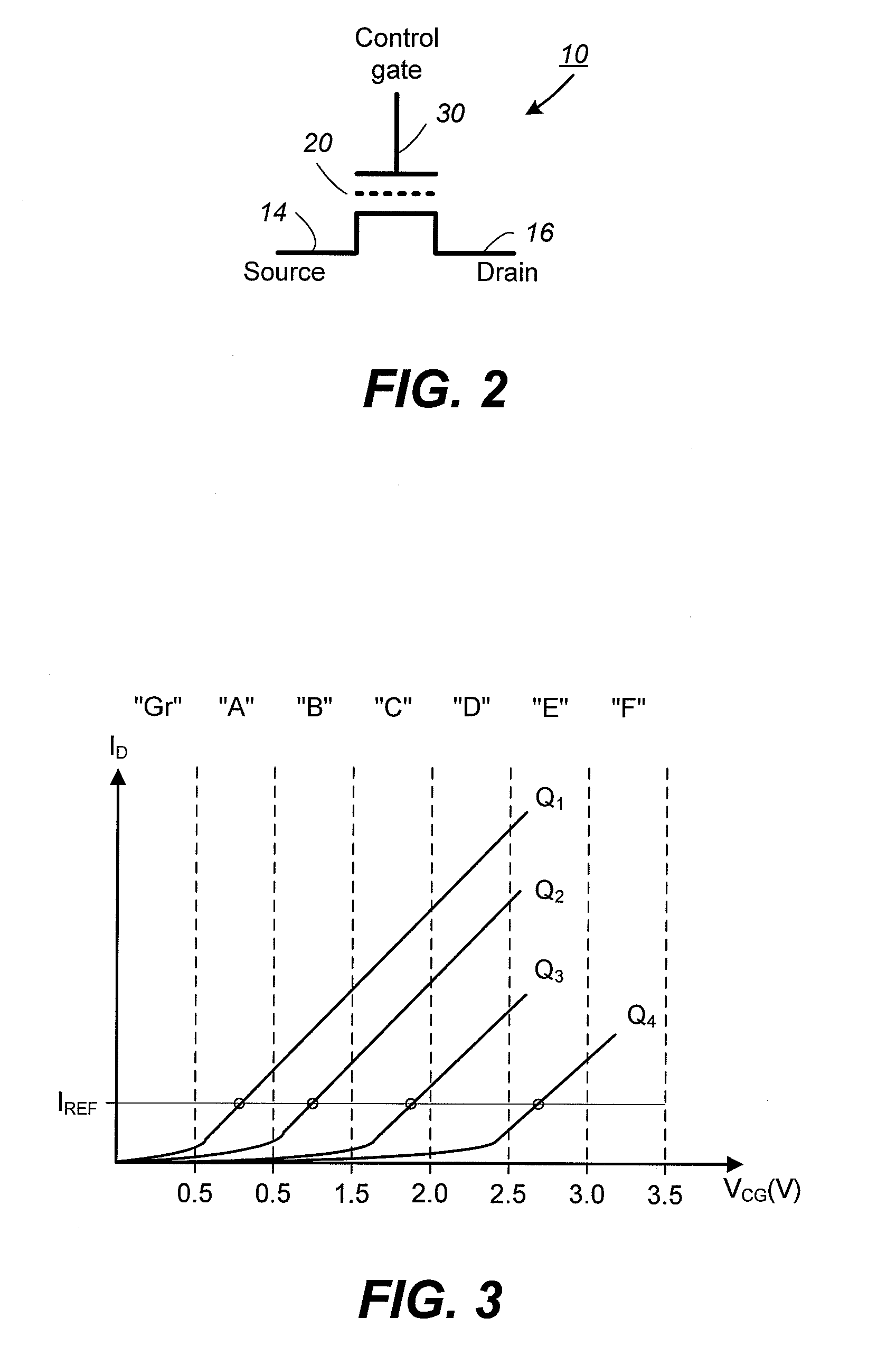

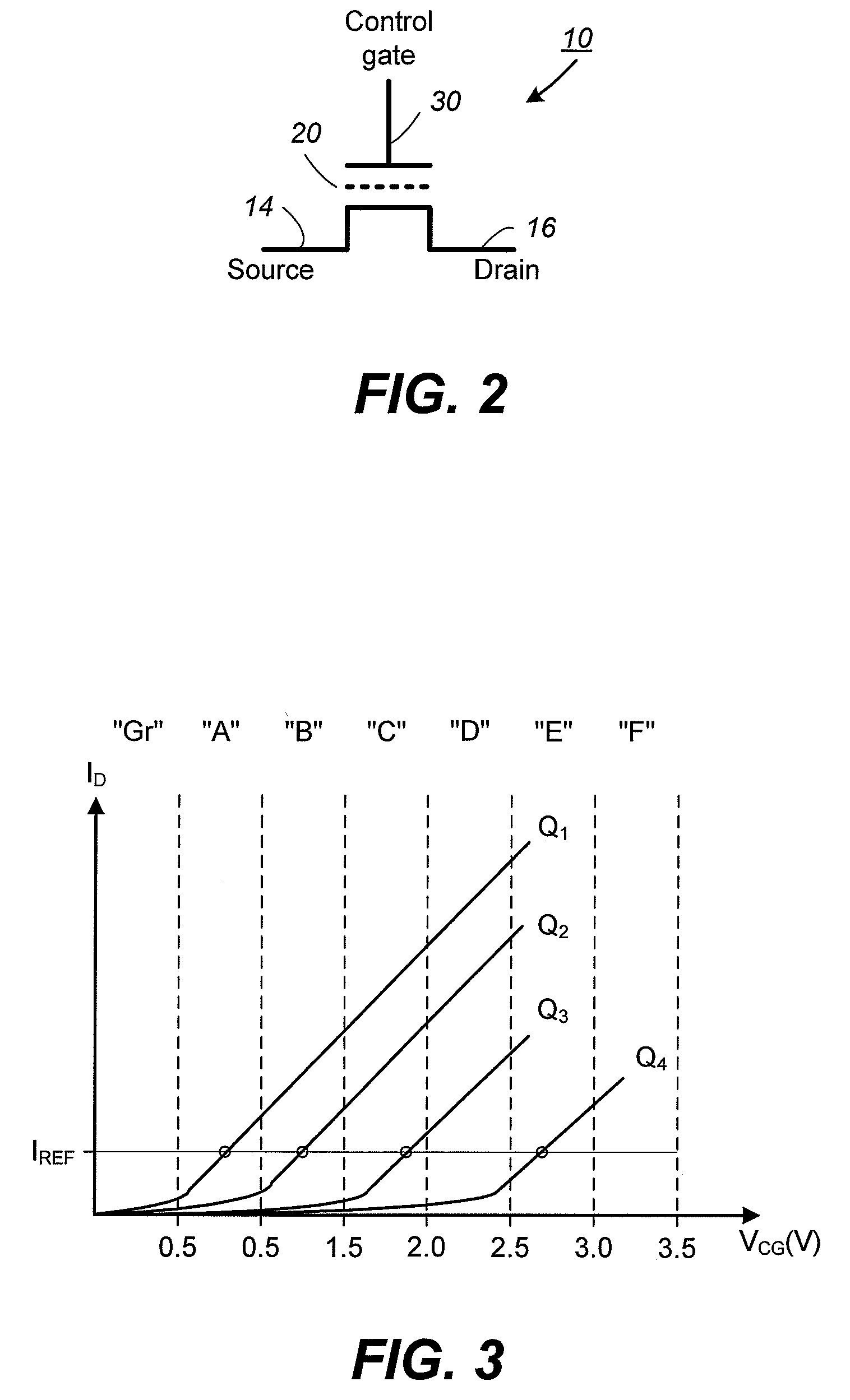

Method for Cache Page Copy in a Non-Volatile Memory

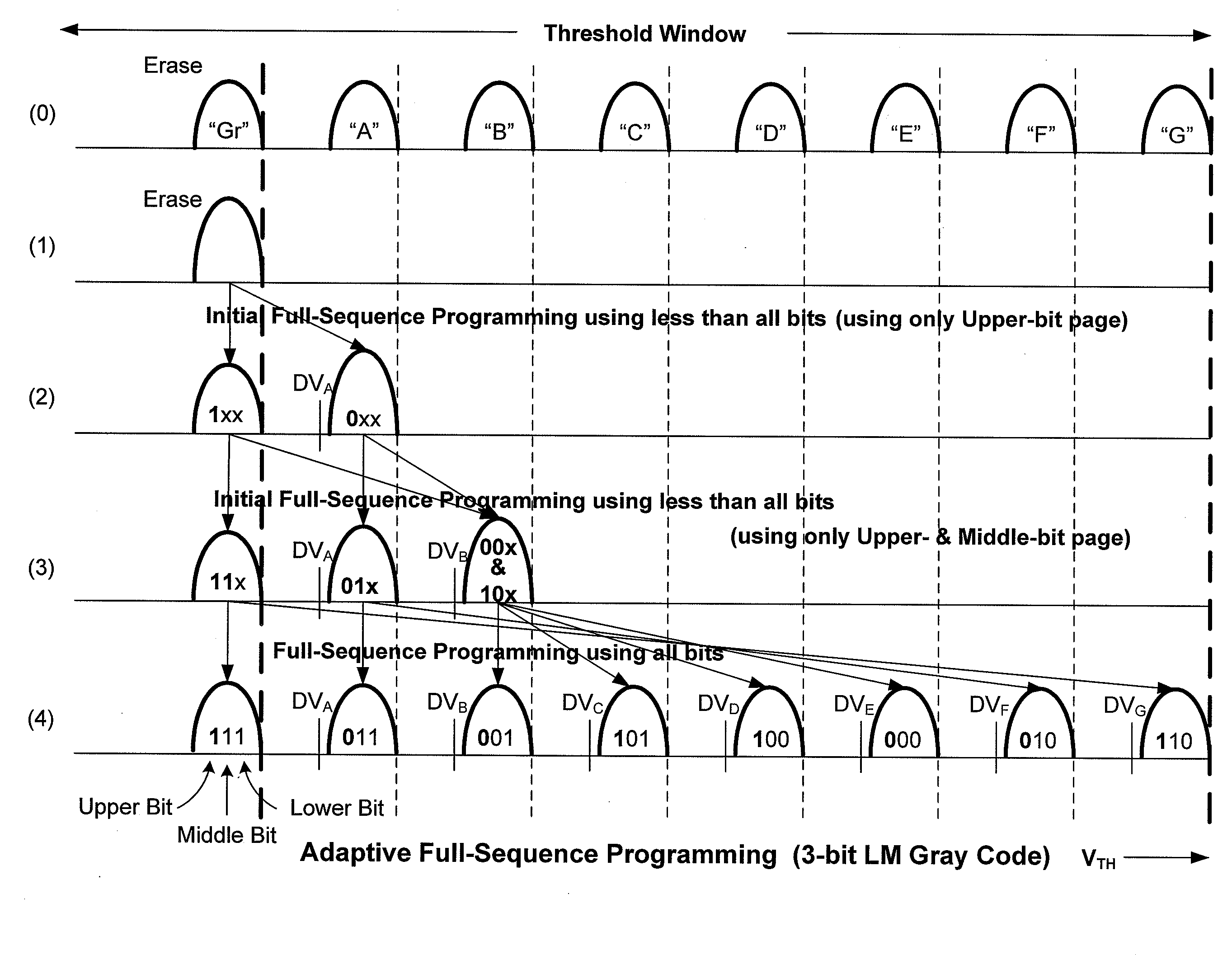

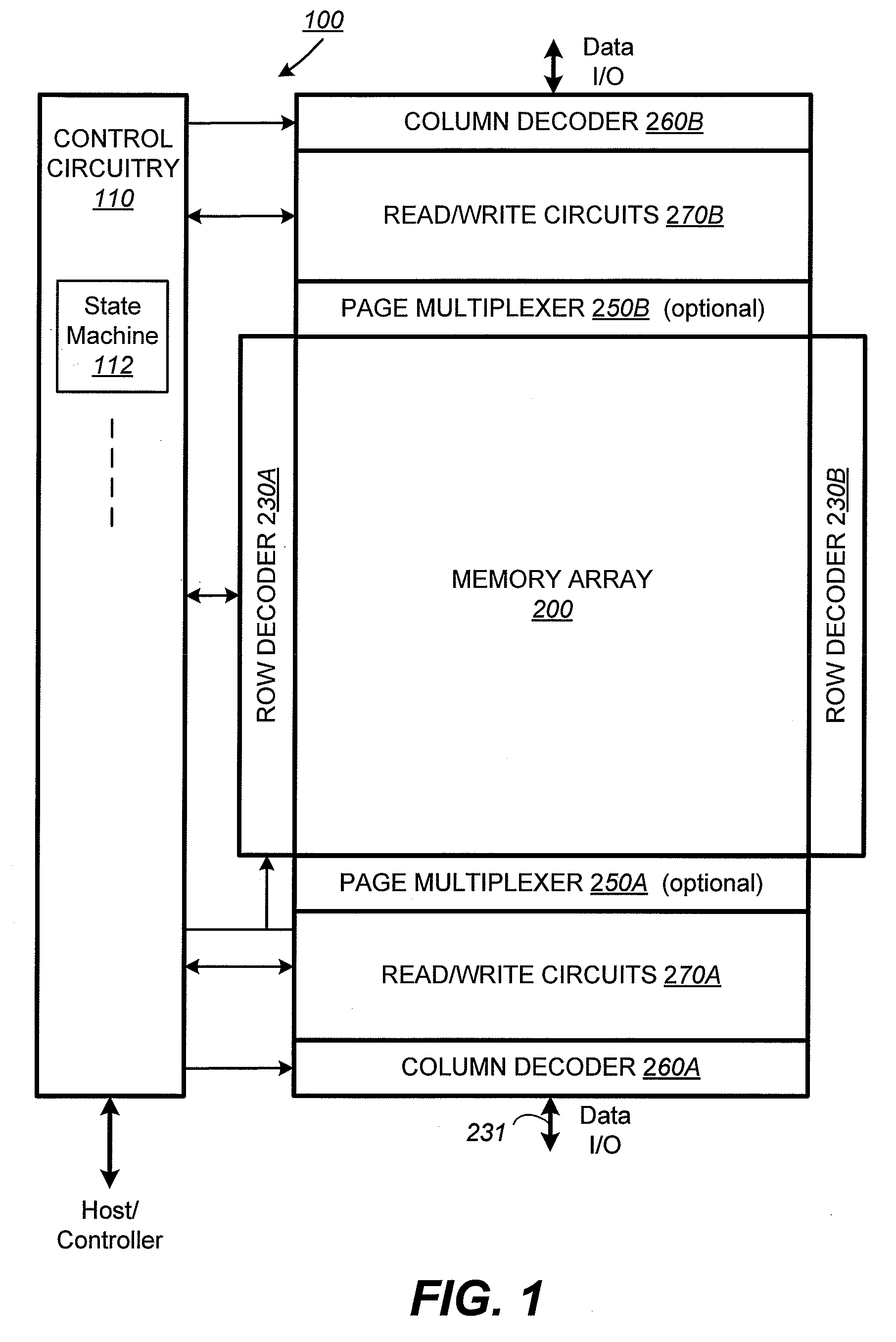

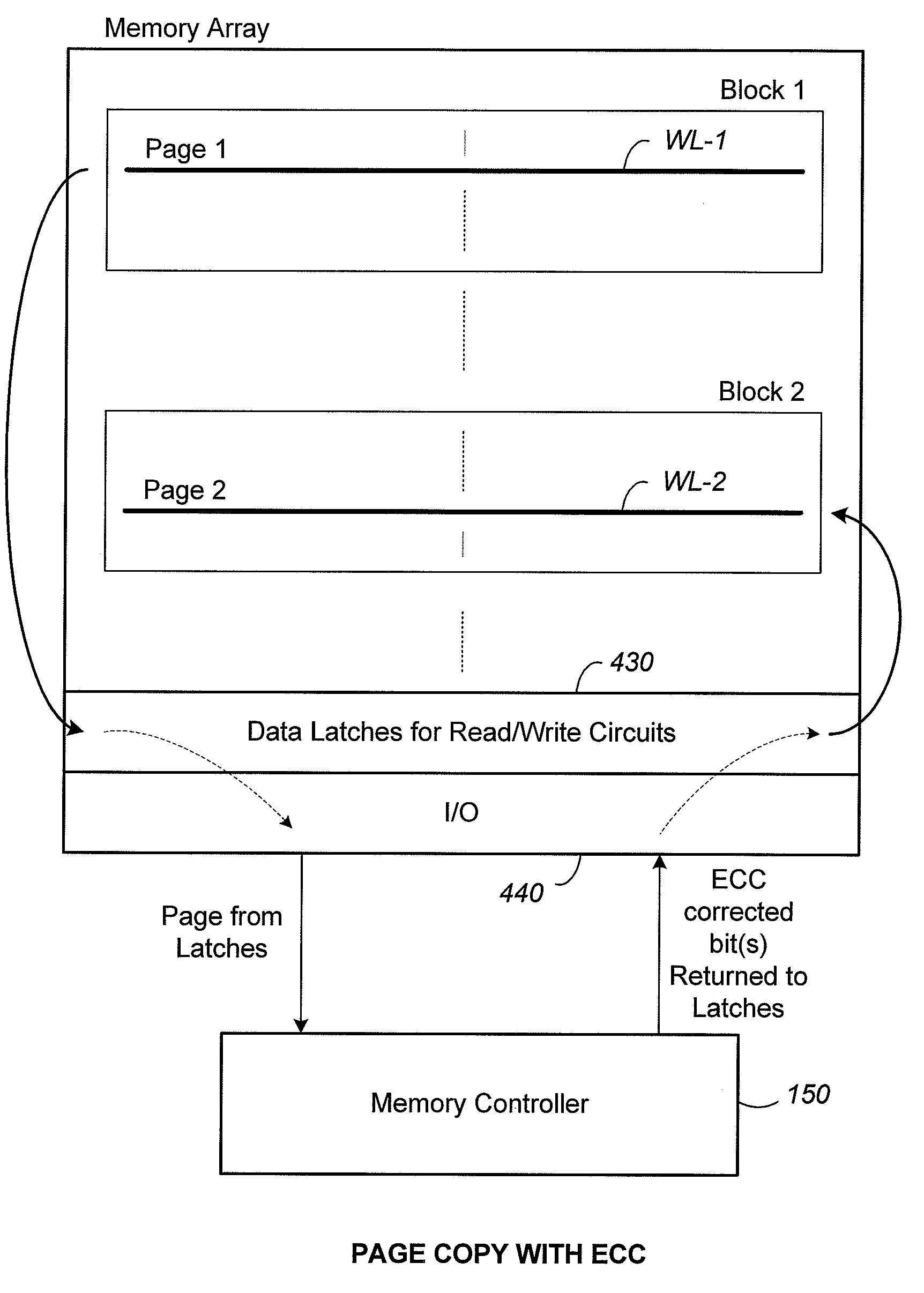

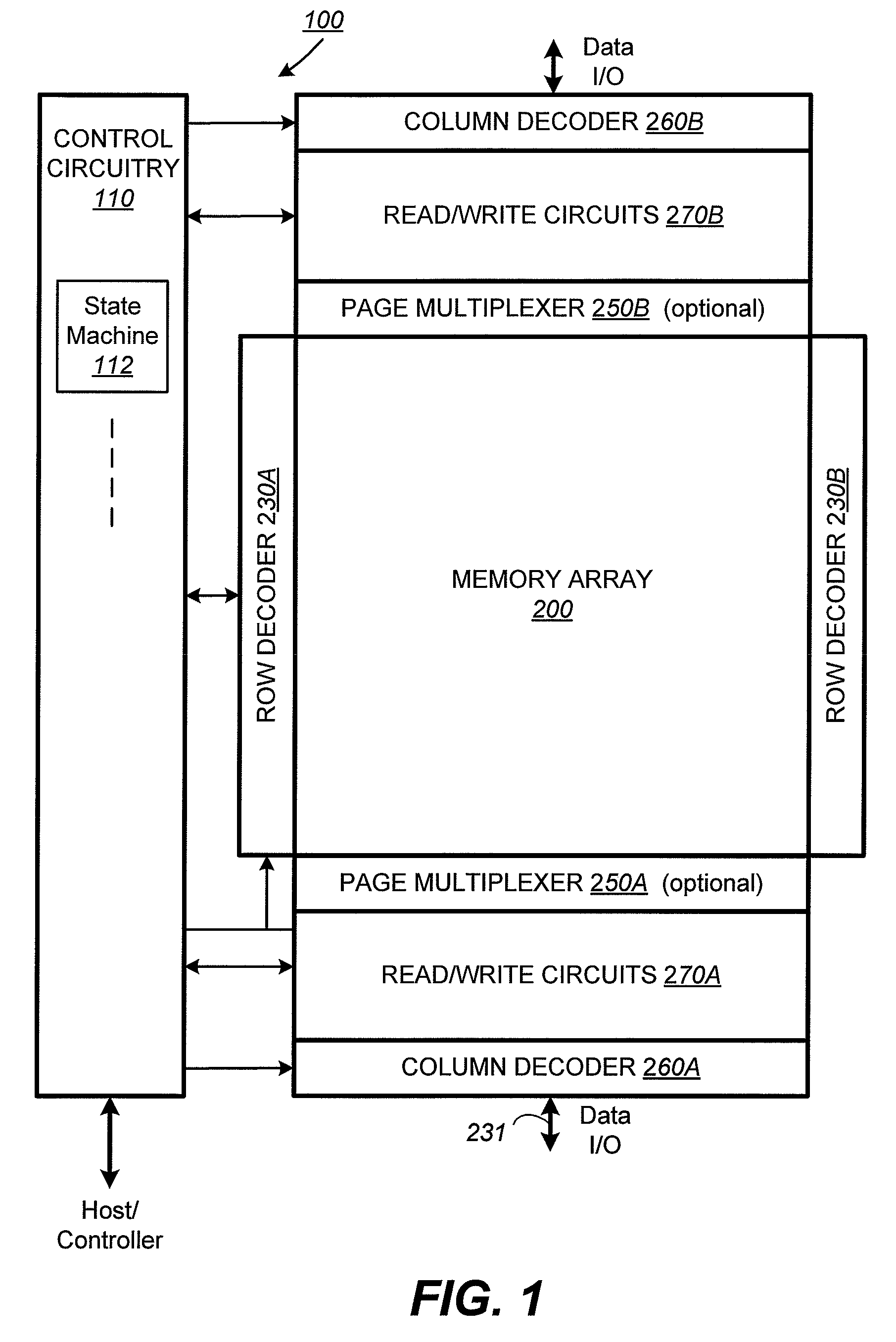

ActiveUS20080219059A1Efficiently relocatedData efficientRead-only memoriesDigital storageCache pageSelf adaptive

A non-volatile memory and methods include cached page copying using a minimum number of data latches for each memory cell. Multi-bit data is read in parallel from each memory cell of a group associated with a first word line. The read data is organized into multiple data-groups for shuttling out of the memory group-by-group according to a predetermined order for data-processing. Modified data are returned for updating the respective data group. The predetermined order is such that as more of the data groups are processed and available for programming, more of the higher programmed states are decodable. An adaptive full-sequence programming is performed concurrently with the processing. The programming copies the read data to another group of memory cells associated with a second word line, typically in a different erase block and preferably compensated for perturbative effects due to a word line adjacent the first word line.

Owner:SANDISK TECH LLC

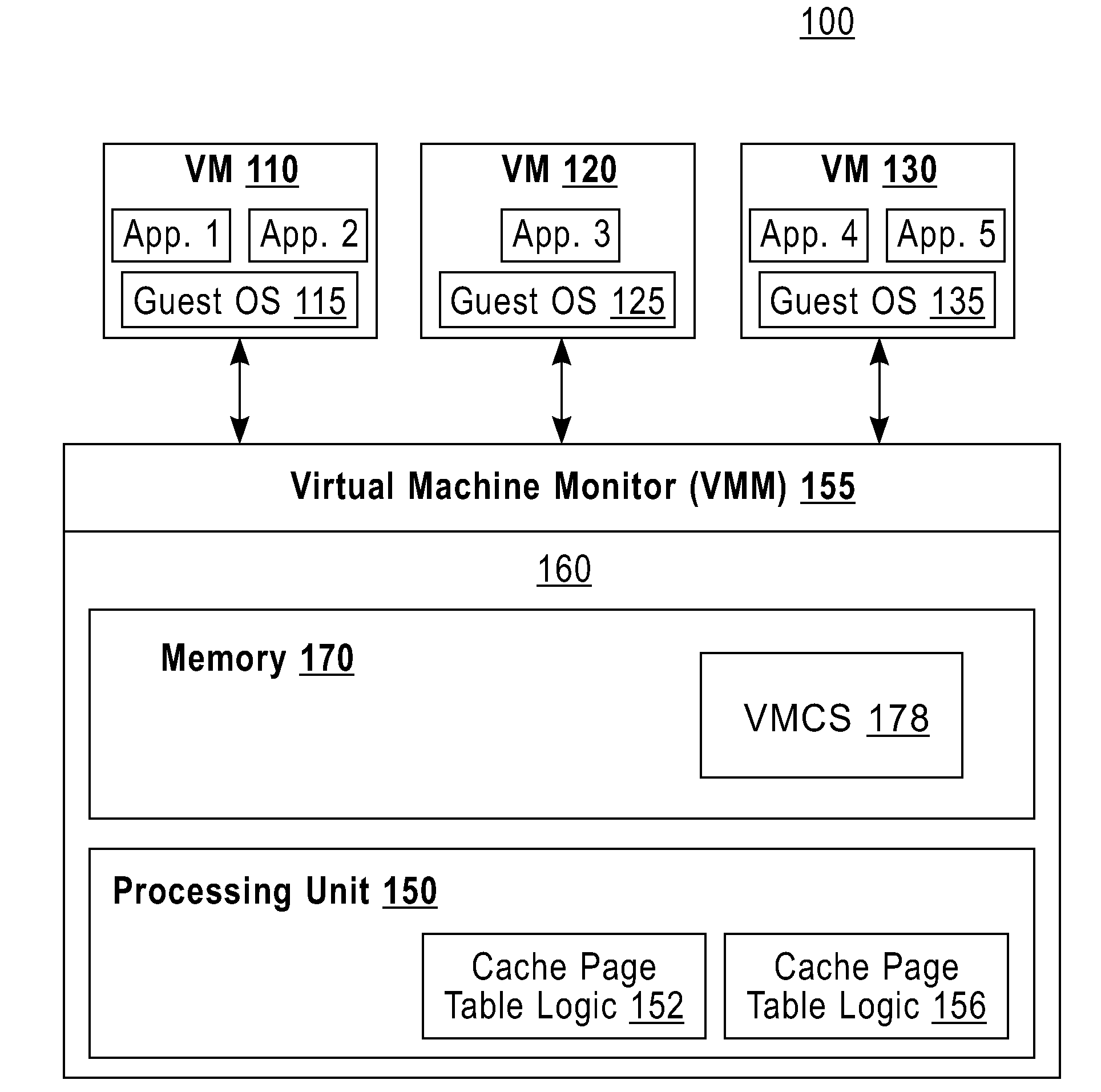

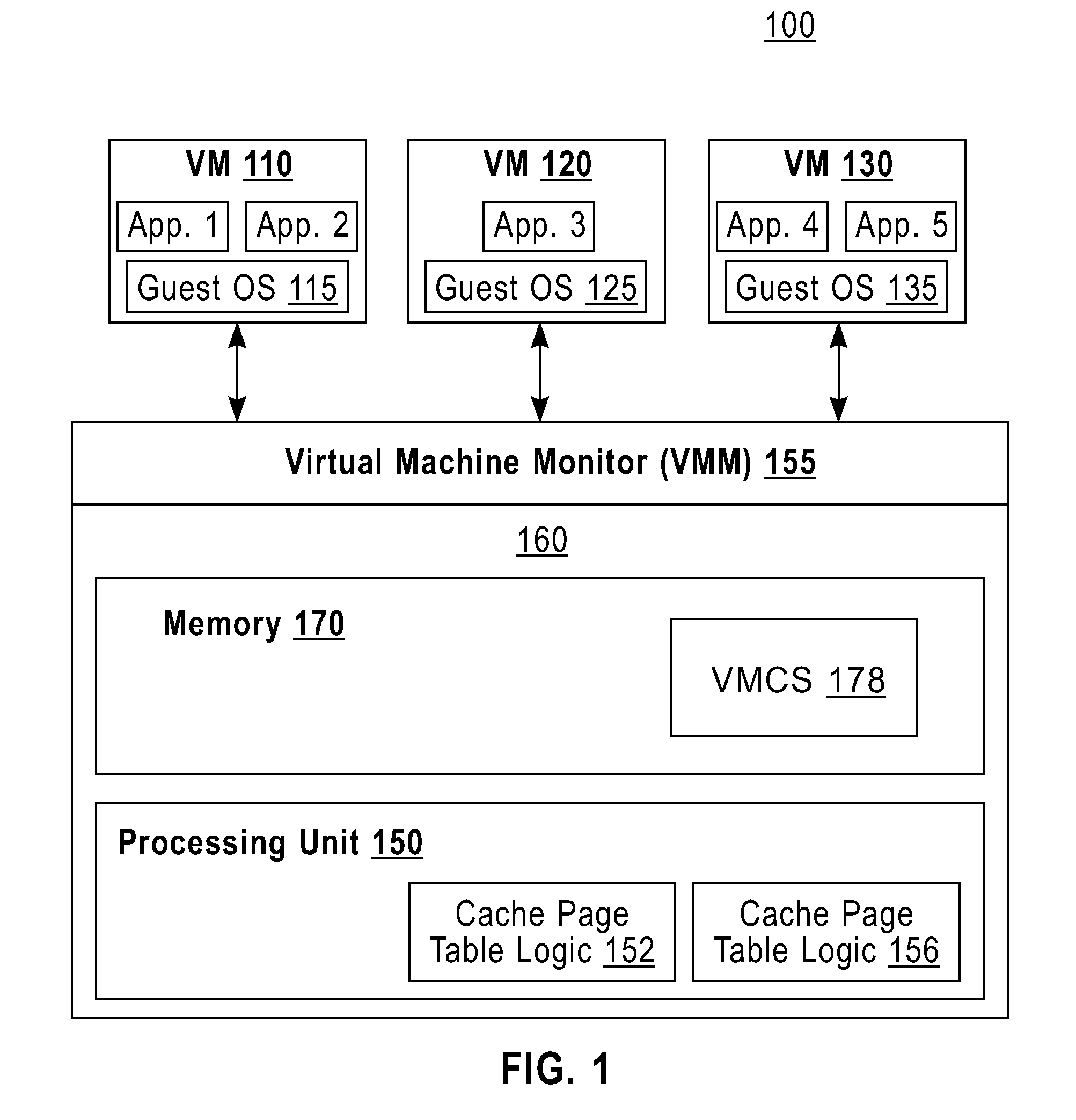

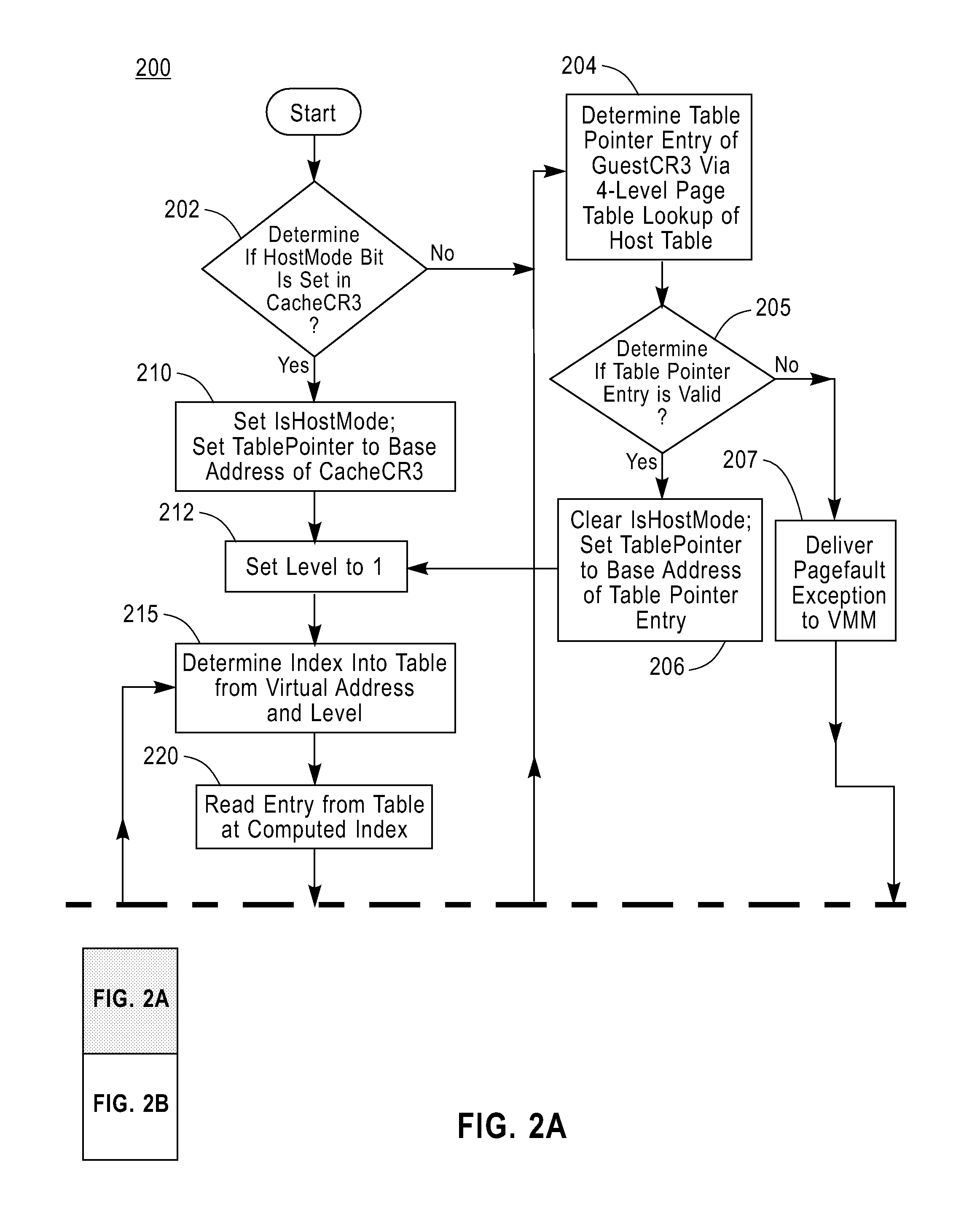

Method and apparatus for caching of page translations for virtual machines

ActiveUS8078827B2Memory architecture accessing/allocationComputer security arrangementsVirtual memoryMemory address

A method for caching of page translations for virtual machines includes managing a number of virtual machines using a guest page table of a guest operating system, which provides a first translation from a guest-virtual memory address to a first guest-physical memory address or an invalid entry, and a host page table of a host operating system, which provides a second translation from the first guest-physical memory address to a host-physical memory address or an invalid entry, and managing a cache page table, wherein the cache page table selectively provides a third translation from the guest-virtual memory address to the host-physical memory address, a second guest-physical memory address or an invalid entry.

Owner:DAEDALUS BLUE LLC

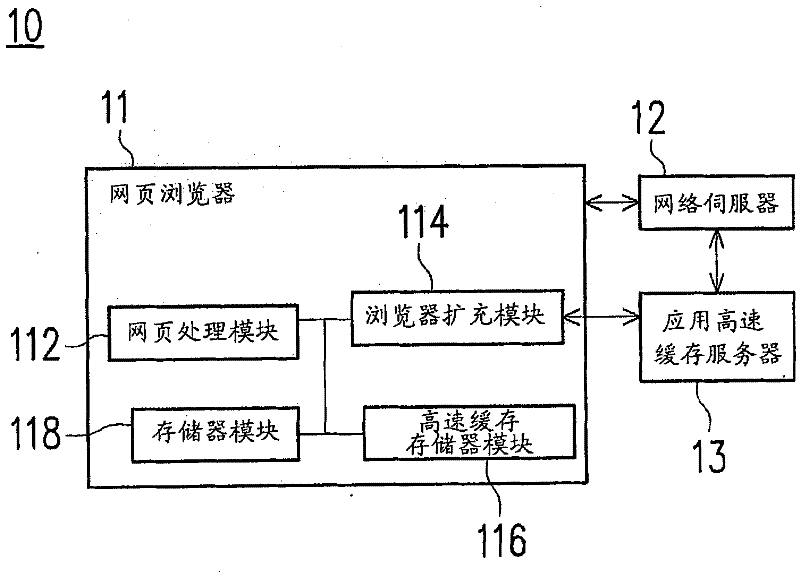

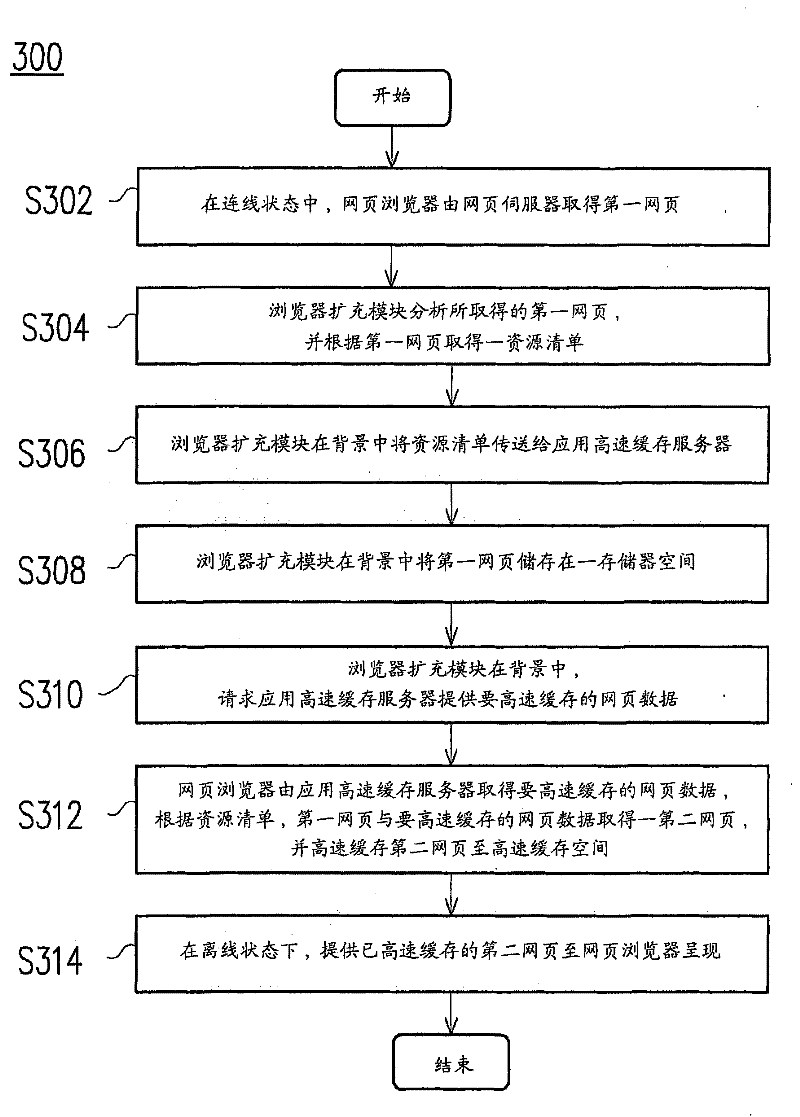

Offline Webpage Browsing Method and System

A method and system for offline web browsing. The offline web browsing method is suitable for a web browser and includes the following steps. The web browser obtains a first web page from a web server in the online state. converting the first web page into a 5th generation hyperfile by means of a browser extension module plugged into the web browser and an application caching server coupled to the web browser A second webpage of the markup language specification is cached in a cache space. The cached second web page is provided to the web browser, and the second web page is rendered by the web browser.

Owner:ACER INC

Relocating Page Tables And Data Amongst Memory Modules In A Virtualized Environment

InactiveUS20110173370A1Memory adressing/allocation/relocationMultiprogramming arrangementsLogical Memory IPage table

Relocating data in a virtualized environment maintained by a hypervisor administering access to memory with a Cache Page Table (‘CPT’) and a Physical Page Table (‘PPT’), the CPT and PPT including virtual to physical mappings. Relocating data includes converting the virtual to physical mappings of the CPT to virtual to logical mappings; establishing a Logical Memory Block (‘LMB’) relocation tracker that includes logical addresses of an LMB, source physical addresses of the LMB, target physical addresses of the LMB, a translation block indicator for each relocation granule, and a pin count associated with each relocation granule; establishing a PPT entry tracker including PPT entries corresponding to the LMB to be relocated; relocating the LMB in a number of relocation granules including blocking translations to the relocation granules during relocation; and removing the logical addresses from the LMB relocation tracker.

Owner:IBM CORP

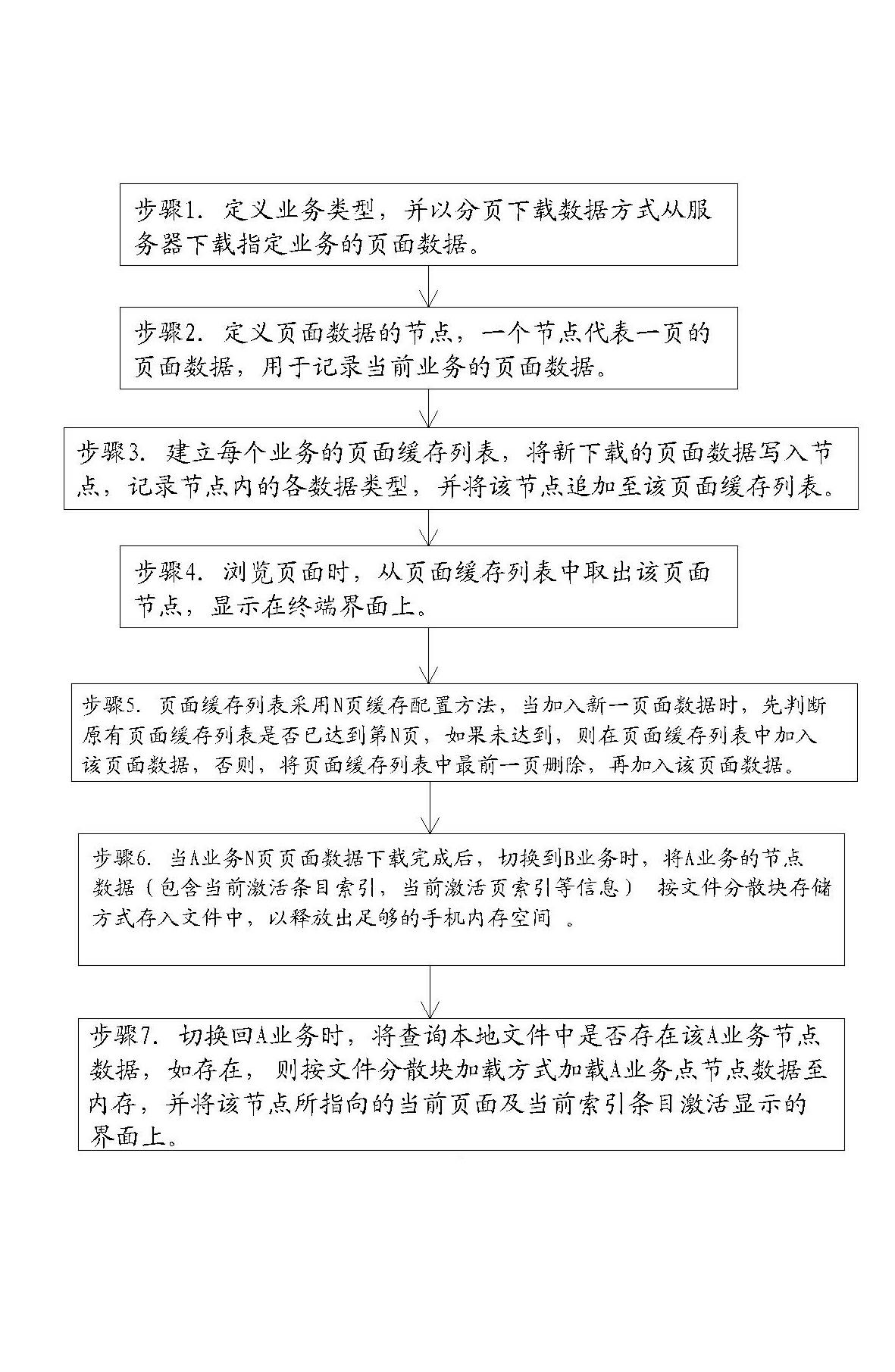

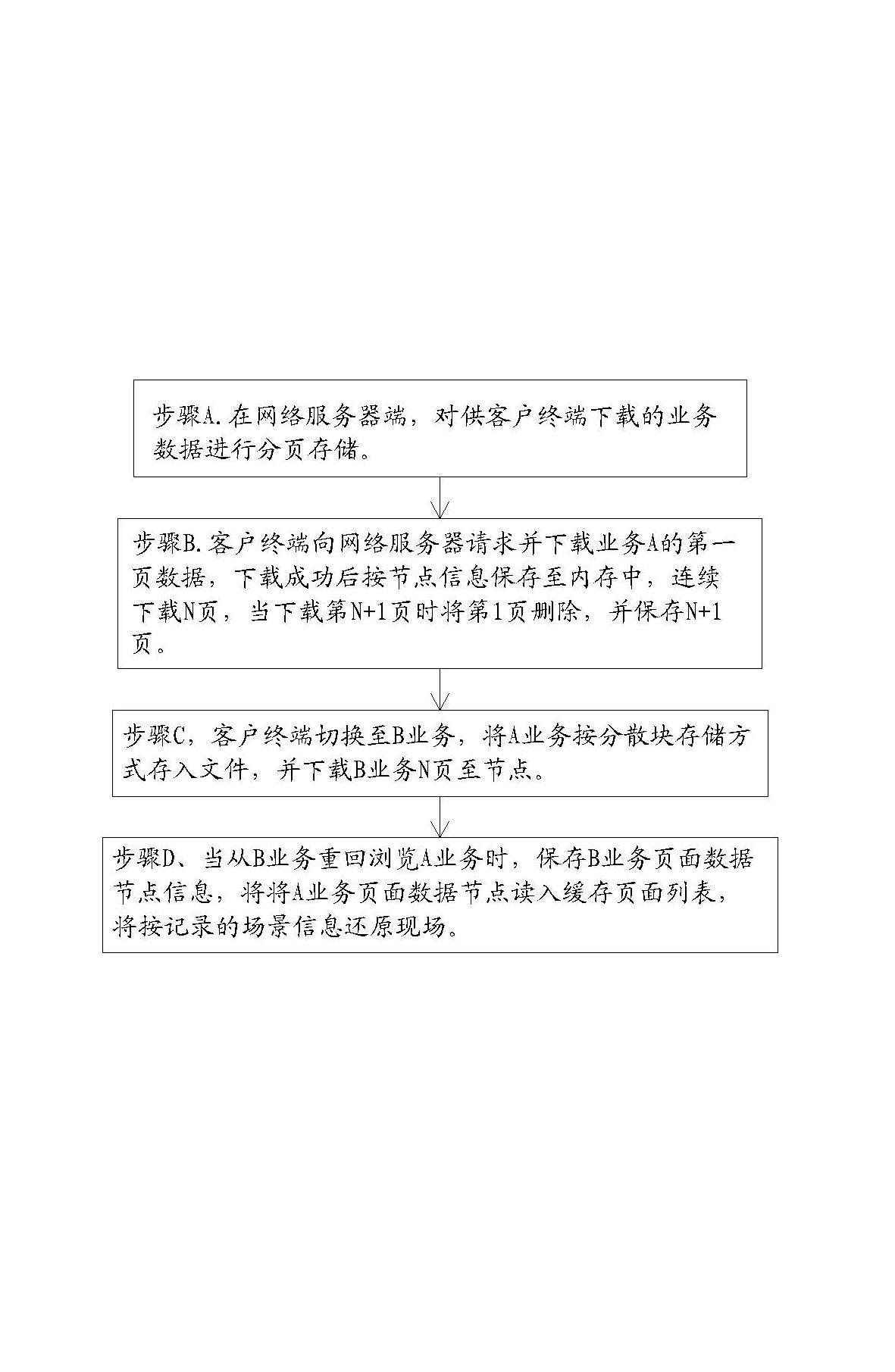

Page data processing method for mobile terminal

ActiveCN102591943AReduce data download pressureReduce data trafficSpecial data processing applicationsTraffic capacityData information

The invention relates to the technical field of communications, in particular to a page data processing method for a mobile terminal, which includes the steps: defining business types, downloading page data of designated business from a server in a data paging download manner, and recording the page data into node data information; utilizing a terminal cache page management module to store the page data, and quantitatively deleting early-stage page data according to browsing time; and storing inactive business data into a file, and recovering an original browsing scene according to business node information when an original inactive business page is browsed again. The page data processing method has the advantages that data download pressure of a server is greatly relieved; a user only needs to download once when browsing the data, so that browsing speed is increased while data traffic is saved; and memory use space is greatly saved so as to play an important role in software with powerful operation functions and numerous businesses of medium-end and low-end mobile phones.

Owner:XIAMEN YAXON NETWORKS CO LTD

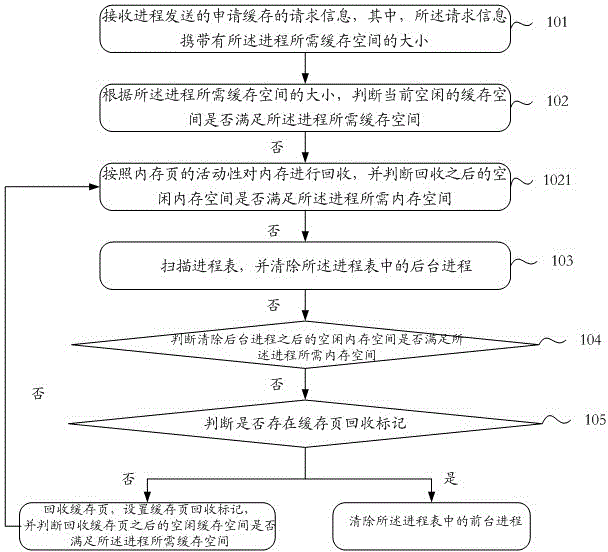

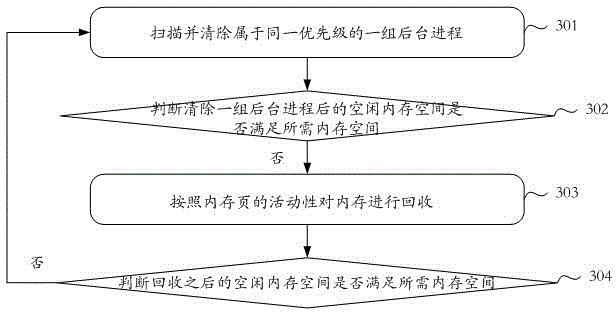

Cache recovery method and device

InactiveCN105446814ADoes not affect experienceEfficient recyclingResource allocationInternal memoryRecovery method

Embodiments of the invention disclose a cache recovery method. The cache recovery method mainly comprises the following steps: receiving request information of applying for an internal memory sent by a process, wherein the request information carries the size of an internal memory space necessary for the process; judging whether a current idle internal memory space satisfies the internal memory space necessary for the process according to the size of the internal memory space necessary for the process; if not, scanning a process table, and clearing background processes in the process table; judging whether the idle internal memory space with the background processes cleared satisfies the internal memory space necessary for the process; if not, judging whether a cache page recovery marker exists; if the cache page recovery marker does not exist, recovering a cache page, setting the cache page recovery marker, judging whether the idle internal memory space satisfies the internal memory space necessary for the process after the cache page is recovered, and if the cache page recovery marker exists, clearing foreground processes in the process table. The cache recovery method disclosed by the embodiments of the invention is used for improving the user experience to a certain extent.

Owner:QINGDAO HISENSE MOBILE COMM TECH CO LTD

Method for cache page copy in a non-volatile memory

A non-volatile memory and methods include cached page copying using a minimum number of data latches for each memory cell. Multi-bit data is read in parallel from each memory cell of a group associated with a first word line. The read data is organized into multiple data-groups for shuttling out of the memory group-by-group according to a predetermined order for data-processing. Modified data are returned for updating the respective data group. The predetermined order is such that as more of the data groups are processed and available for programming, more of the higher programmed states are decodable. An adaptive full-sequence programming is performed concurrently with the processing. The programming copies the read data to another group of memory cells associated with a second word line, typically in a different erase block and preferably compensated for perturbative effects due to a word line adjacent the first word line.

Owner:SANDISK TECH LLC

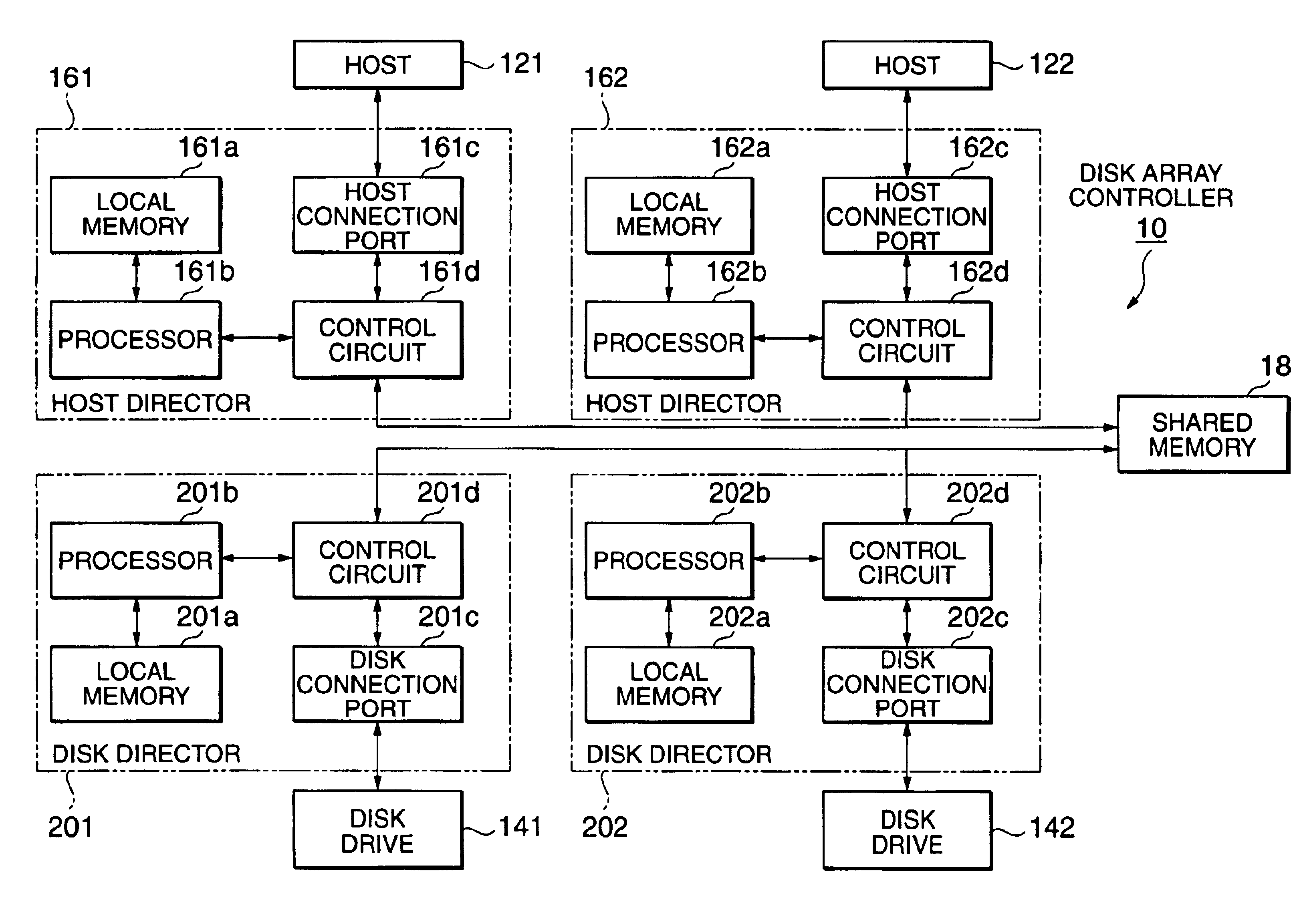

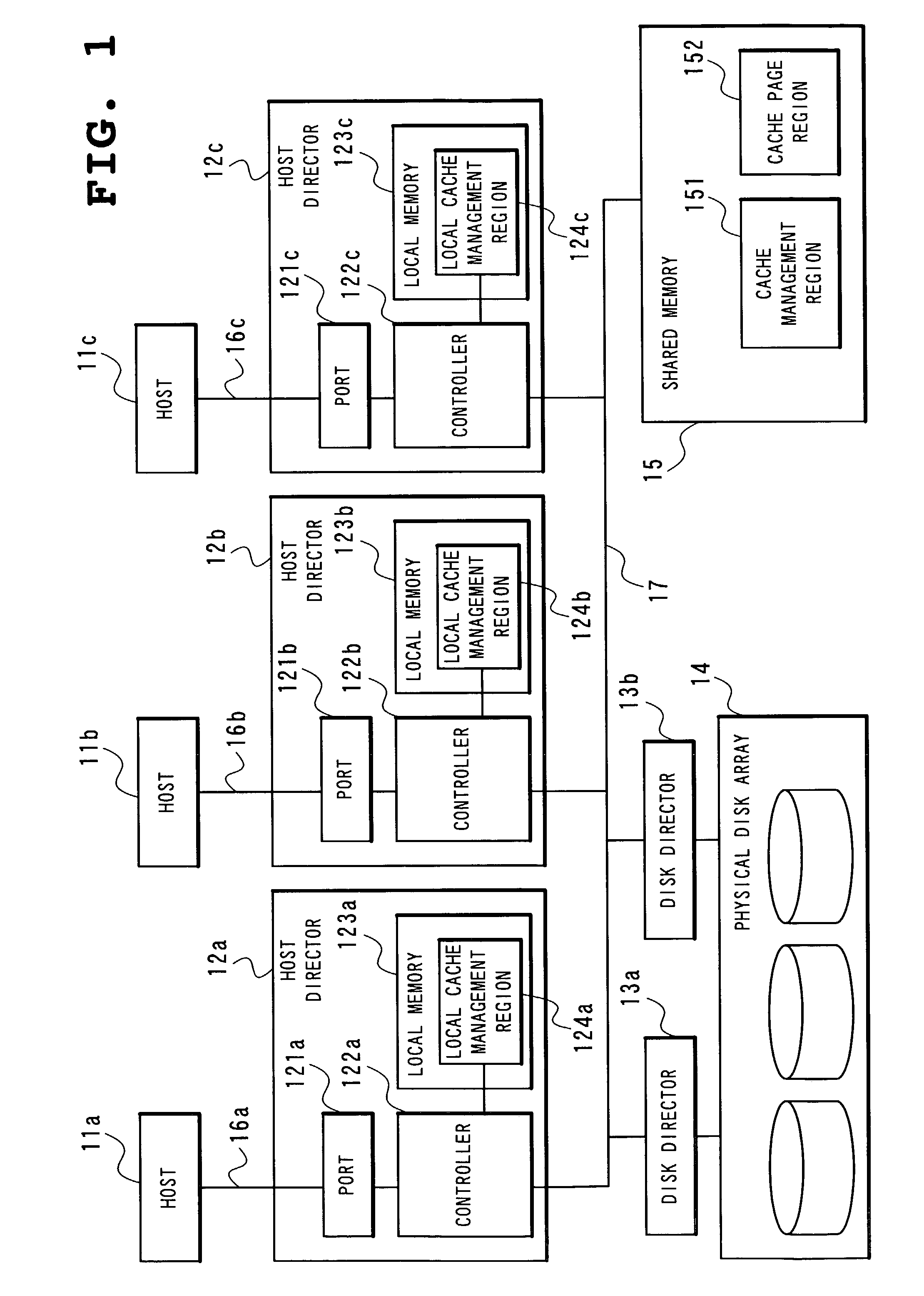

Disk cache control for servicing a plurality of hosts

InactiveUS6845426B2Improve performanceSaving informationInput/output to record carriersMemory adressing/allocation/relocationCache pageDisk array

A disk array controller prevents a cache page conflicts between a plurality of commands issued from the same host. A disk array controller 10 includes host directors 161 and 162, which are provided for hosts 121 and 122, one for each, and which controls I / O requests from the hosts 121 and 122 to execute input / output to or from disk drives 141 and 142, and a shared memory 18 shared by the host directors 161 and 162 and forming a disk cache. When the host 121 issues a plurality of read commands to the same cache page, the host director 161 starts a plurality of data transfers while occupying the cache page during processing of said plurality of read commands.

Owner:NEC CORP

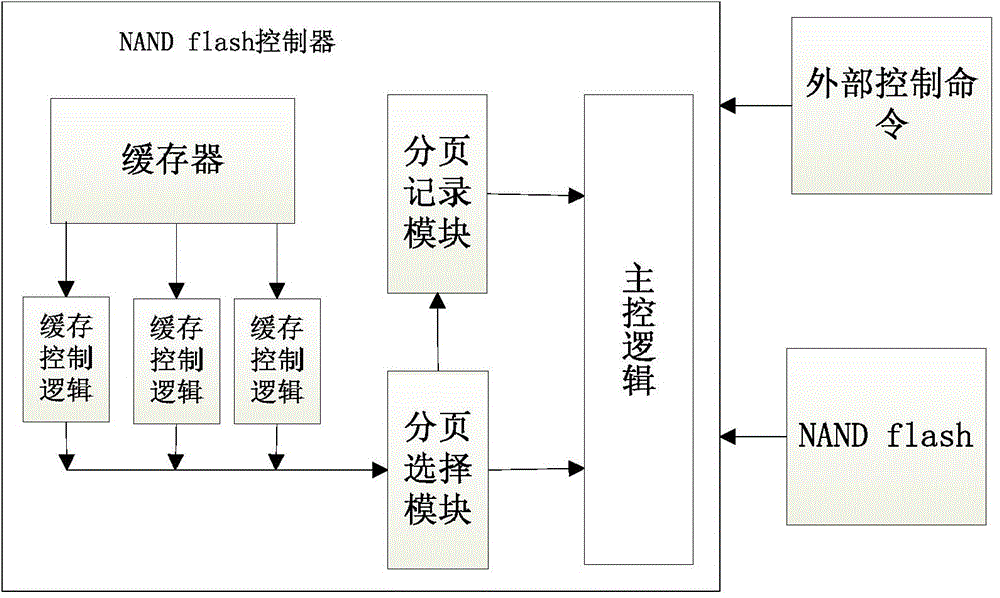

Method for increasing reading and writing speeds of NAND flash controller

ActiveCN103559146AImprove read and write speedFlexible cache operation modeMemory adressing/allocation/relocationCache pagePaging

The invention discloses a method for increasing the reading and writing speeds of an NAND flash controller, and belongs to the technical field of integrated circuit design. One end of the NAND flash controller is connected to a system bus, and the other end of the NAND flash controller is directly connected with a NAND flash. The method comprises the following steps: reading the parameter of a page size from the NAND flash; dynamically allocating cache inside the NAND flash controller to generate a plurality of cache sub-pages adapting to the page size of the NAND flash; selecting a current cache sub-page via an external control command; selecting a current cache page to allow the external control command to directly operate the current cache page. The method has the advantages that the controller can well adapt to different models of NAND flash, the own limited cache resources are utilized to the maximum extent, a more flexible cache operating way is provided for the external control command, and the reading and writing speeds of the NAND flash are increased.

Owner:SHANDONG UNIV

Cache employing multiple page replacement algorithms

InactiveUS20130219125A1Well formedMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingLeast recently frequently used

The present invention extends to methods, systems, and computer program products for implementing a cache using multiple page replacement algorithms. An exemplary cache can include two logical portions where the first portion implements the least recently used (LRU) algorithm and the second portion implements the least recently used two (LRU2) algorithm to perform page replacement within the respective portion. By implementing multiple algorithms, a more efficient cache can be implemented where the pages most likely to be accessed again are retained in the cache. Multiple page replacement algorithms can be used in any cache including an operating system cache for caching pages accessed via buffered I / O, as well as a cache for caching pages accessed via unbuffered I / O such as accesses to virtual disks made by virtual machines.

Owner:MICROSOFT TECH LICENSING LLC

Non-Volatile Memory With Cache Page Copy

ActiveUS20080219057A1Efficiently relocatedData efficientMemory architecture accessing/allocationRead-only memoriesParallel computingCache page

A non-volatile memory and methods includes cached page copying using a minimum number of data latches for each memory cell. Multi-bit data is read in parallel from each memory cell of a group associated with a first word line. The read data is organized into multiple data-groups for shuttling out of the memory group-by-group according to a predetermined order for data-processing. Modified data are returned for updating the respective data group. The predetermined order is such that as more of the data groups are processed and available for programming, more of the higher programmed states are decodable. An adaptive full-sequence programming is performed concurrently with the processing. The programming copies the read data to another group of memory cells associated with a second word line, typically in a different erase block and preferably compensated for perturbative effects due to a word line adjacent the first word line.

Owner:SANDISK TECH LLC

Non-volatile memory with cache page copy

ActiveUS7499320B2Improve performanceMemory architecture accessing/allocationRead-only memoriesParallel computingCache page

A non-volatile memory and methods includes cached page copying using a minimum number of data latches for each memory cell. Multi-bit data is read in parallel from each memory cell of a group associated with a first word line. The read data is organized into multiple data-groups for shuttling out of the memory group-by-group according to a predetermined order for data-processing. Modified data are returned for updating the respective data group. The predetermined order is such that as more of the data groups are processed and available for programming, more of the higher programmed states are decodable. An adaptive full-sequence programming is performed concurrently with the processing. The programming copies the read data to another group of memory cells associated with a second word line, typically in a different erase block and preferably compensated for perturbative effects due to a word line adjacent the first word line.

Owner:SANDISK TECH LLC

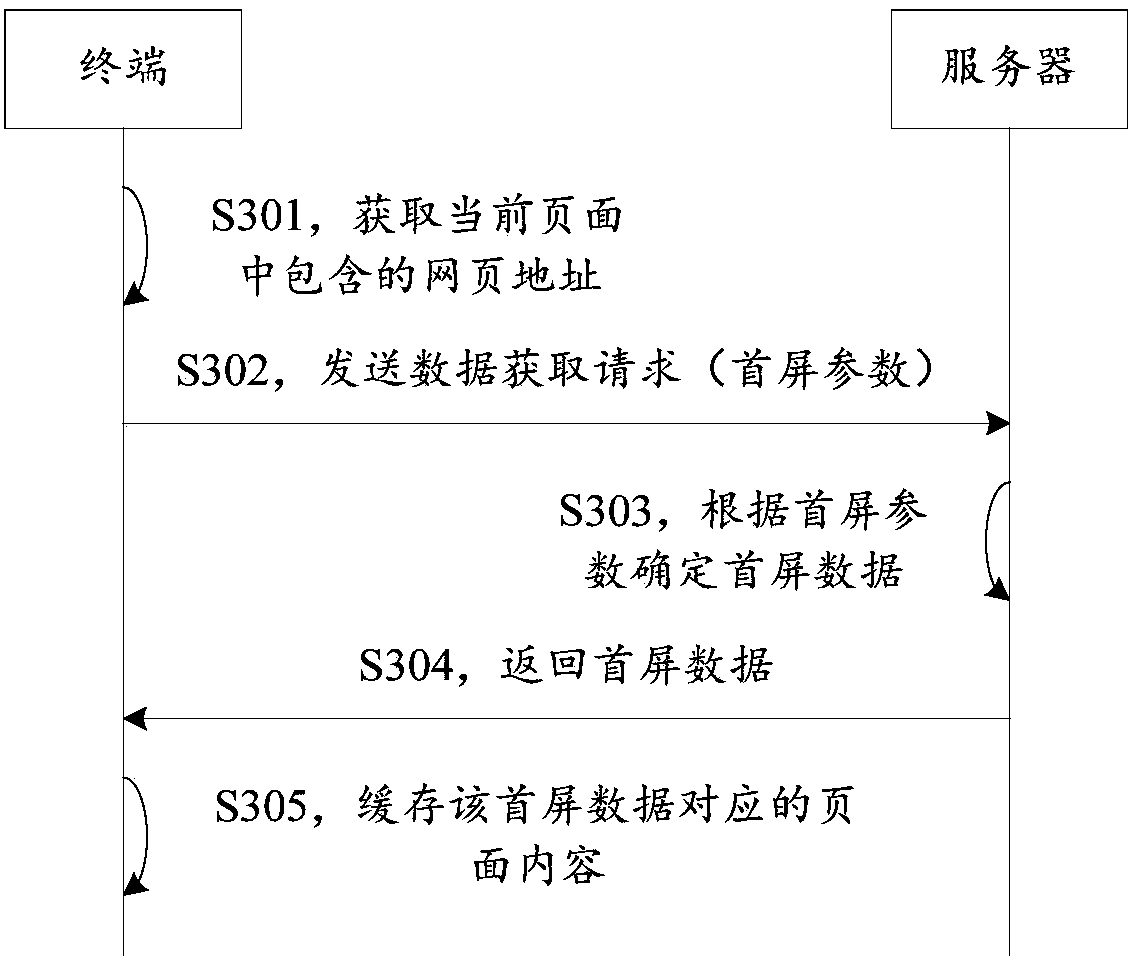

Page content caching method and device

ActiveCN108984548AImprove performanceReduce consumptionSpecial data processing applicationsCache pageLocation area

The invention relates to a page content caching method and device. The method comprises a step of obtaining a webpage address included in a current page displayed on a screen of a terminal, a step ofobtaining a first screen parameter which is used for indicating a location area of a first screen area in a web page corresponding to the webpage address, wherein the first screen area is a visiblepage area when the web page is not scrolled when the terminal displays the web page, a step of obtaining first screen data according to the first screen parameter, wherein the first screen data is webpage data corresponding to the first screen area, and a step of caching page content corresponding to the first screen data. According to the method shown in the present invention, when the terminalpreloads the web page corresponding to the webpage address, the page content of the entire web page is not cached but only the page content of the first screen area when the terminal displays the webpage is cached, the amount of cached data in preloading is reduced, thus the consumption of pre-loaded cache resources is reduced, and the cache resources of the terminal are saved.

Owner:深圳市雅阅科技有限公司

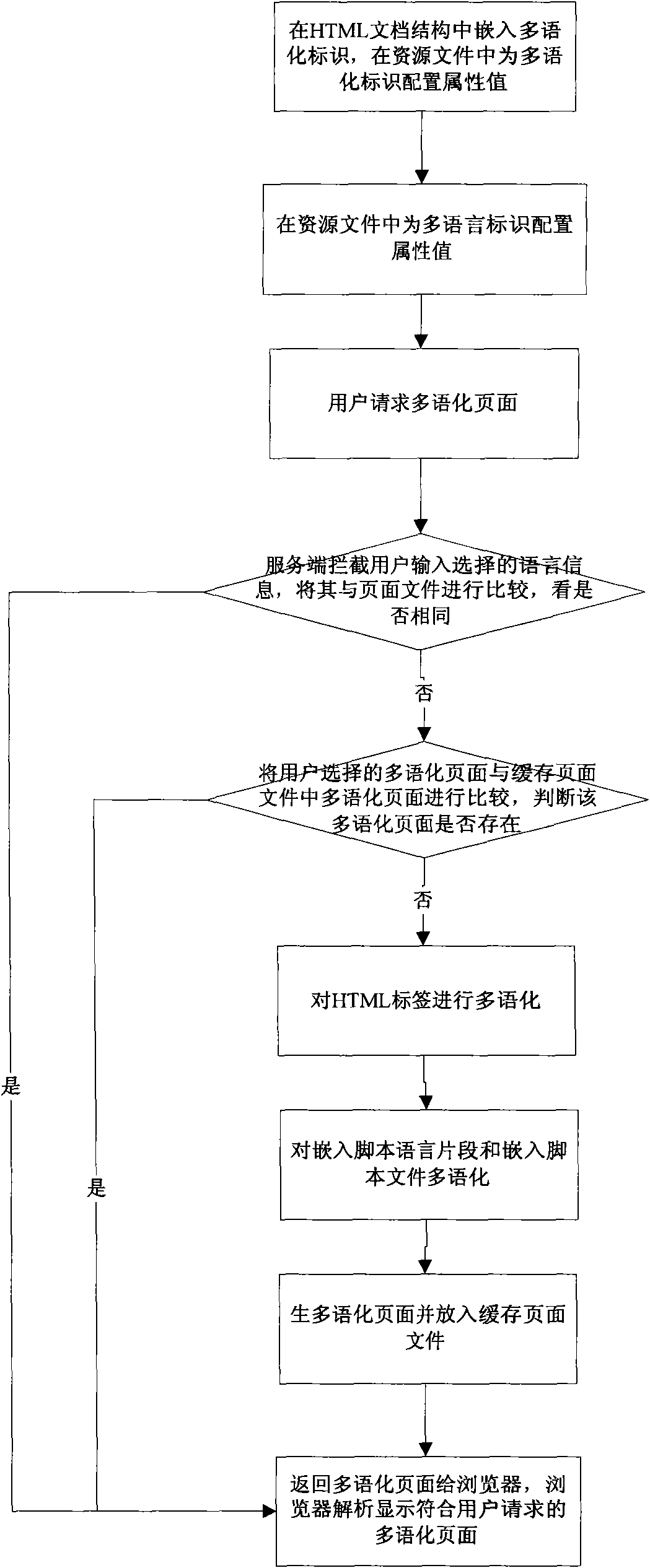

Multilingual method of Web application system and device

InactiveCN101676904AQuick responseEasy to embedSpecial data processing applicationsWeb applicationMore language

The invention discloses a multilingual method of a Web application system, which comprises the following steps: 1) embedding multilingual marks in an HTML file structure, and configuring attribute values for the multilingual marks in resource files; 2) intercepting multilingual pages requested by users, and comparing the multilingual pages with page files first, if the multilingual pages and the page files are the same, directly displaying the default page language by a browser, and if the multilingual pages and the page files are different, comparing the multilingual pages with cache page files, and judging whether the multilingual pages exist or not; and 3) analyzing the HTML file structure, carrying out multilingualism for HTML tags by using the resource files and carrying out multilingualism for embedded script language fragments and embedded script files, and then, generating cache files. The method and the device of the invention greatly save service resources, improve the response speed of the multilingualism of the Web application system, and can be modified and maintained easily.

Owner:ZTE CORP

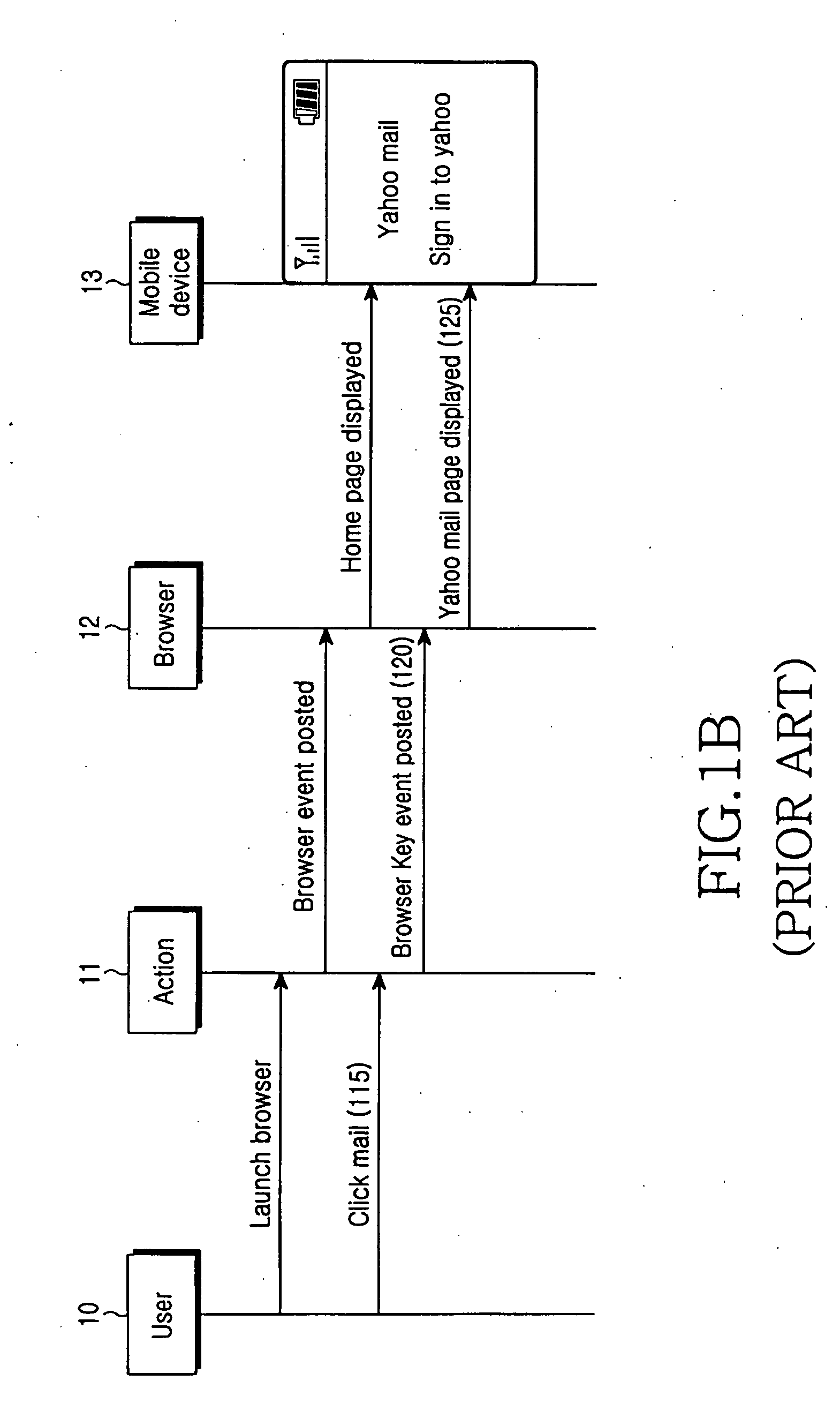

Method and system of browsing using smart browsing cache

InactiveUS20070282945A1Effective displayDigital data information retrievalMultiple digital computer combinationsCache pageMobile device

Provided is a method and a system of browsing using a browser cache involving interacting with a browser and performing a cache associated action performed by a user using a mobile device. The method involves mapping an action performed by the user to a browser event and passing the browser event to the browser for processing and to perform the respective action. The method also involves a receiving user action as a browser event or a browser key event by the browser and processing the events; and displaying the browser cache page to the user by the mobile device.

Owner:SAMSUNG ELECTRONICS CO LTD

System for displaying cached webpages, a server therefor, a terminal therefor, a method therefor and a computer-readable recording medium on which the method is recorded

InactiveCN102812452ARaise the possibilityImprove caching efficiencyMultiple digital computer combinationsWireless commuication servicesCache serverUser input

Owner:SK PLANET CO LTD

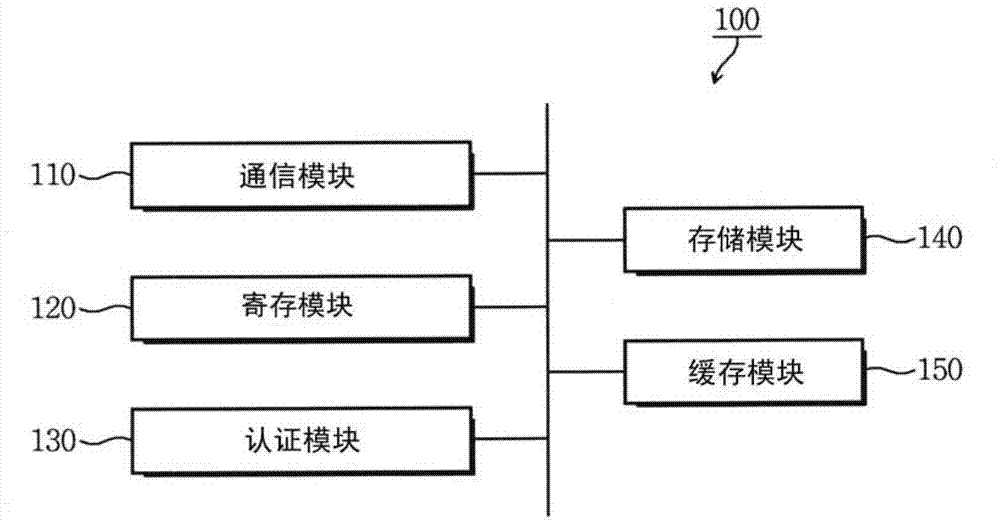

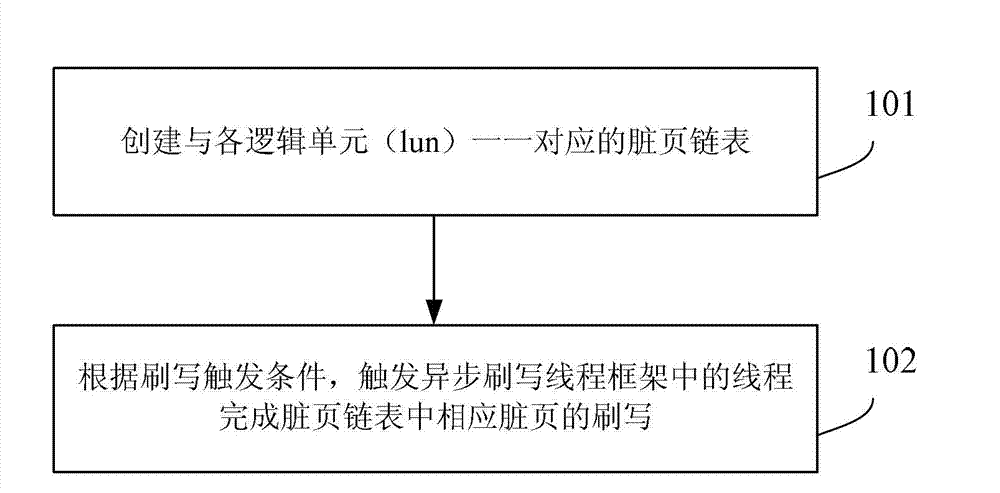

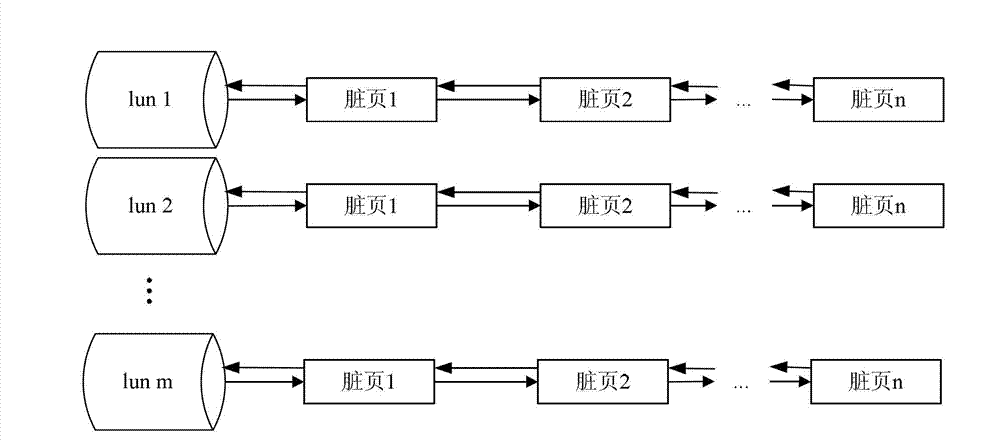

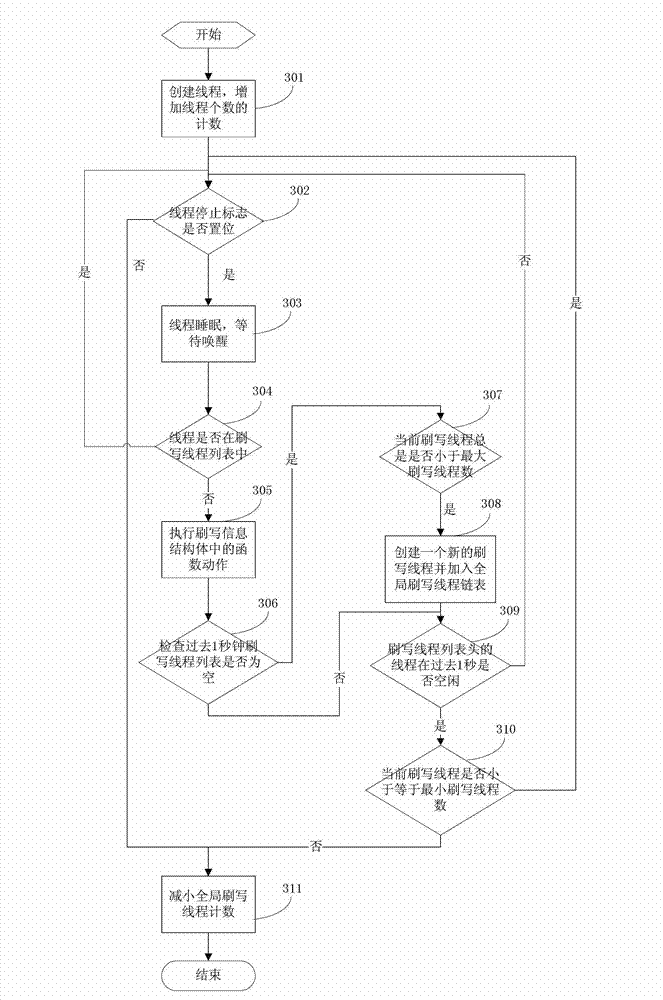

Method and device for flushing data

ActiveCN103049396AWrite quicklyIncrease usageInput/output to record carriersMemory adressing/allocation/relocationDirty pageCache page

The invention provides a method and a device for flushing data. The method comprises triggering threads in an asynchronous flash thread framework to complete flashing of corresponding dirty pages in a dirty page chain table, wherein the dirty pages are cache pages with data saved. According to the method and the device for flushing data, the data during cache can be written in discs rapidly, the cache usage rate is improved, and accordingly, the read-write efficiency is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

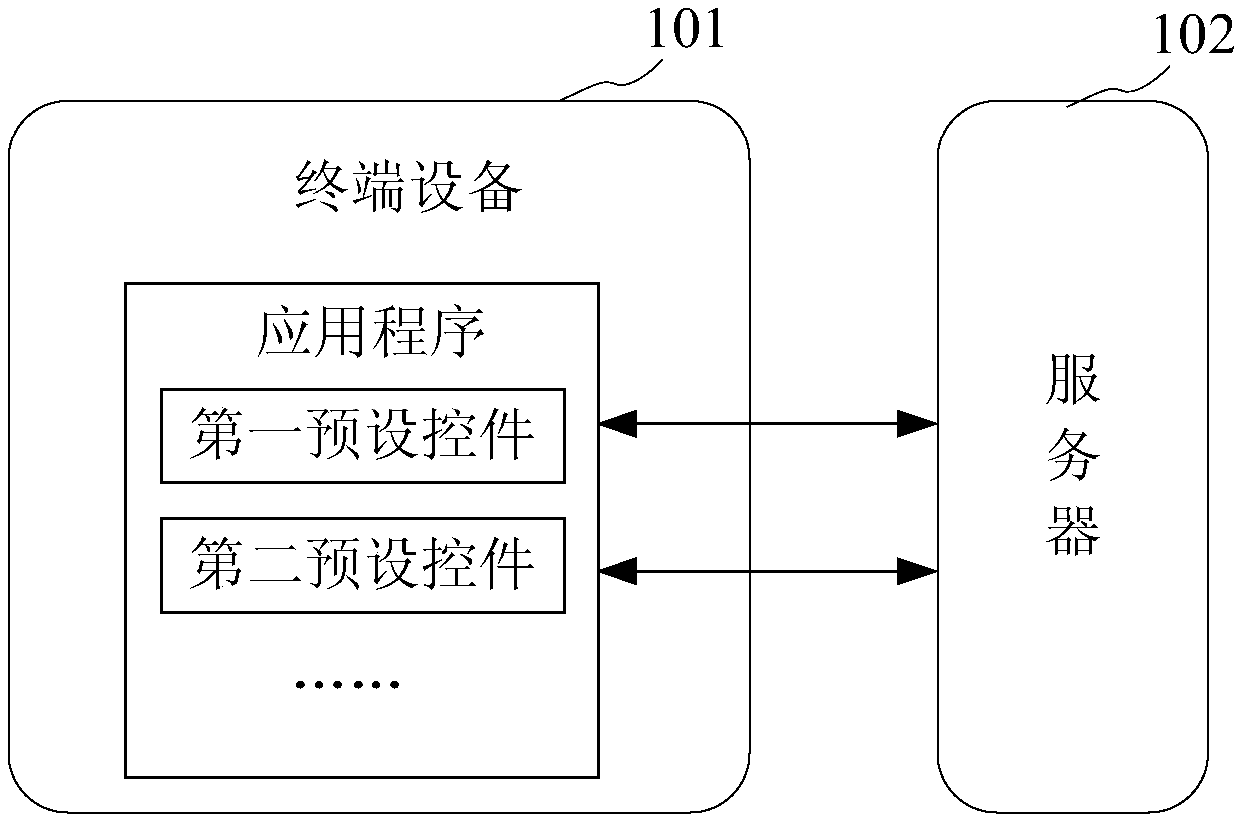

Method and equipment for realizing quick loading of webpage on the basis of big data

The embodiment of the invention provides a method and equipment for realizing the quick loading of a webpage on the basis of big data. The method comprises the following steps that: through a first preset control of which the current state is a visible state, parsing a current page, and determining a target jumping link of which the popular degree is greater than a preset popular degree in the current page; through a second preset control of which the current state is an invisible state, loading and caching page information corresponding to the target jumping link to enable a jumping page corresponding to the page information to be under a hidden state; and when the target jumping link is triggered, according to the cached page information, displaying the jumping page. The method is used for improving page loading efficiency.

Owner:OCEAN UNIV OF CHINA

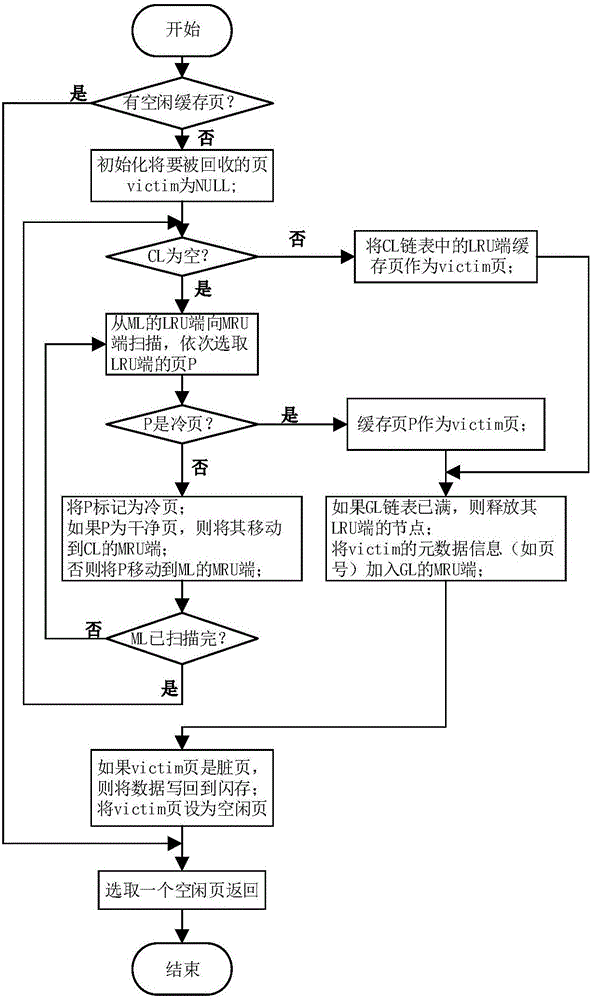

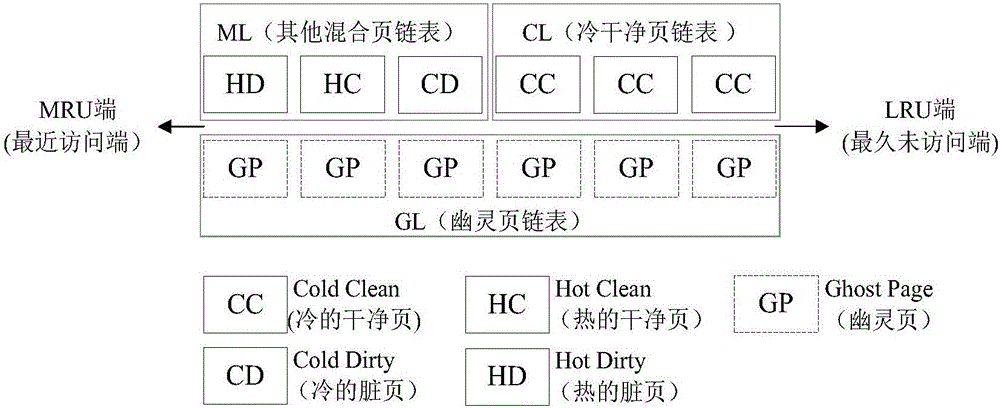

NAND flash memory-oriented page replacement method

ActiveCN106294197AReduce overhead operationsReduce the number of writesMemory adressing/allocation/relocationCache pageCache hit rate

The present invention discloses an NAND flash memory-oriented page replacement method, belonging to the field of data storage. According to the method, a cold clean page linked list and another hybrid page linked list respectively manage cold clean pages and other cache pages, and a reserved ghost page linked list records metadata information of recent obsolete cache pages; before page visit processing, initialization is performed first, if the page is hit in another hybrid page linked list or the cold clean page linked list, the page is marked a hot page and is moved to a recently visited terminal of another hybrid page linked list; if the page is hit in the ghost page linked list, record of the page in the ghost page linked list is deleted, a new cache page is allocated to the page and is marked a hot page, and the page is moved to the recently visited terminal of another hybrid page linked list; and finally, if it is determined that the page is read or write request, the data is read or written in the cache page and is returned. Through adoption of the method, flash memory write operation is reduced, and a higher cache hit ratio is maintained as far as possible.

Owner:HUAZHONG UNIV OF SCI & TECH

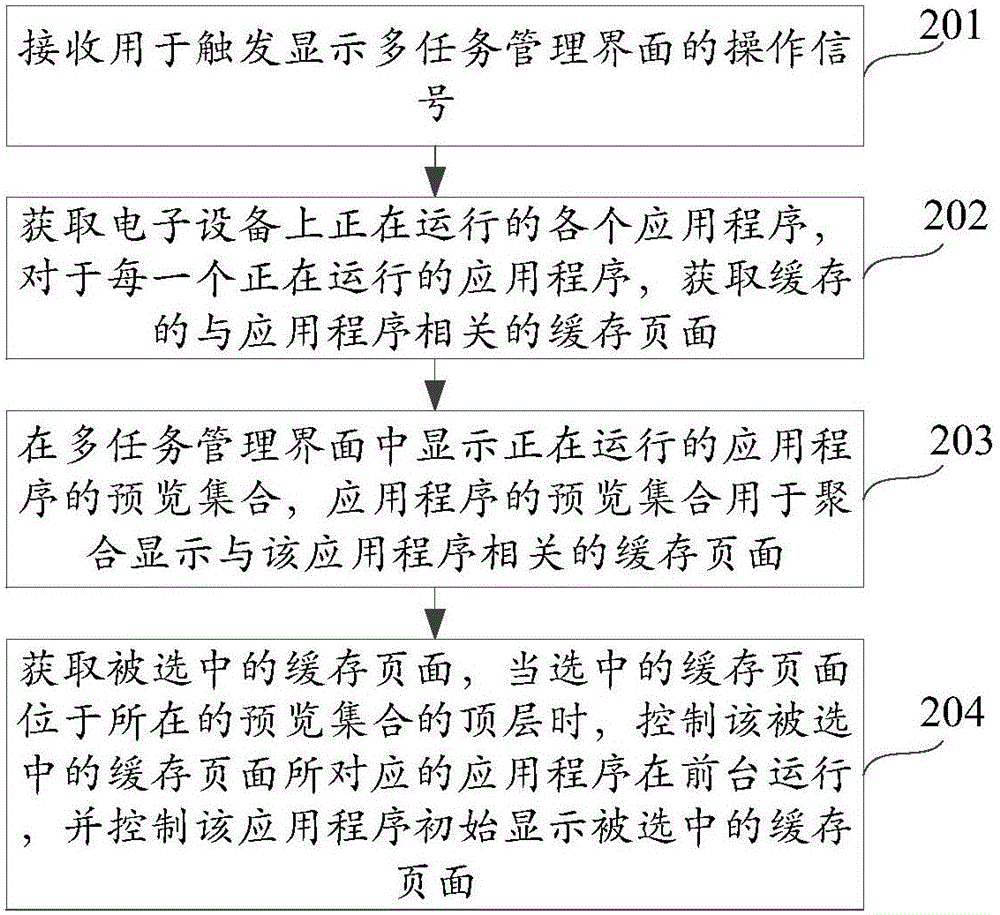

Multi-task management method and device

The invention discloses a multi-task management method and device, and belongs to the field of electronic devices. The multi-task management method includes: receiving an operation signal for triggering and displaying a multi-task management interface; acquiring all the operating application programs of an electronic device; acquiring a cache page associated with the application program of a cache for each operating application program; displaying a preview set of the operating application programs in the multi-task management interface, wherein the preview set of the application programs is used for aggregating and displaying the cache pages associated with the application programs; acquiring a selected cache page, and controlling an application program corresponding to the selected cache page to operate on the foreground, and controlling the application program to initially display the selected cache page. The multi-task management method and device can solve the technical problem of a complex operation of changing to a target page of the application program in the prior art, and can simplify the operation of changing to the target page of the application program.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

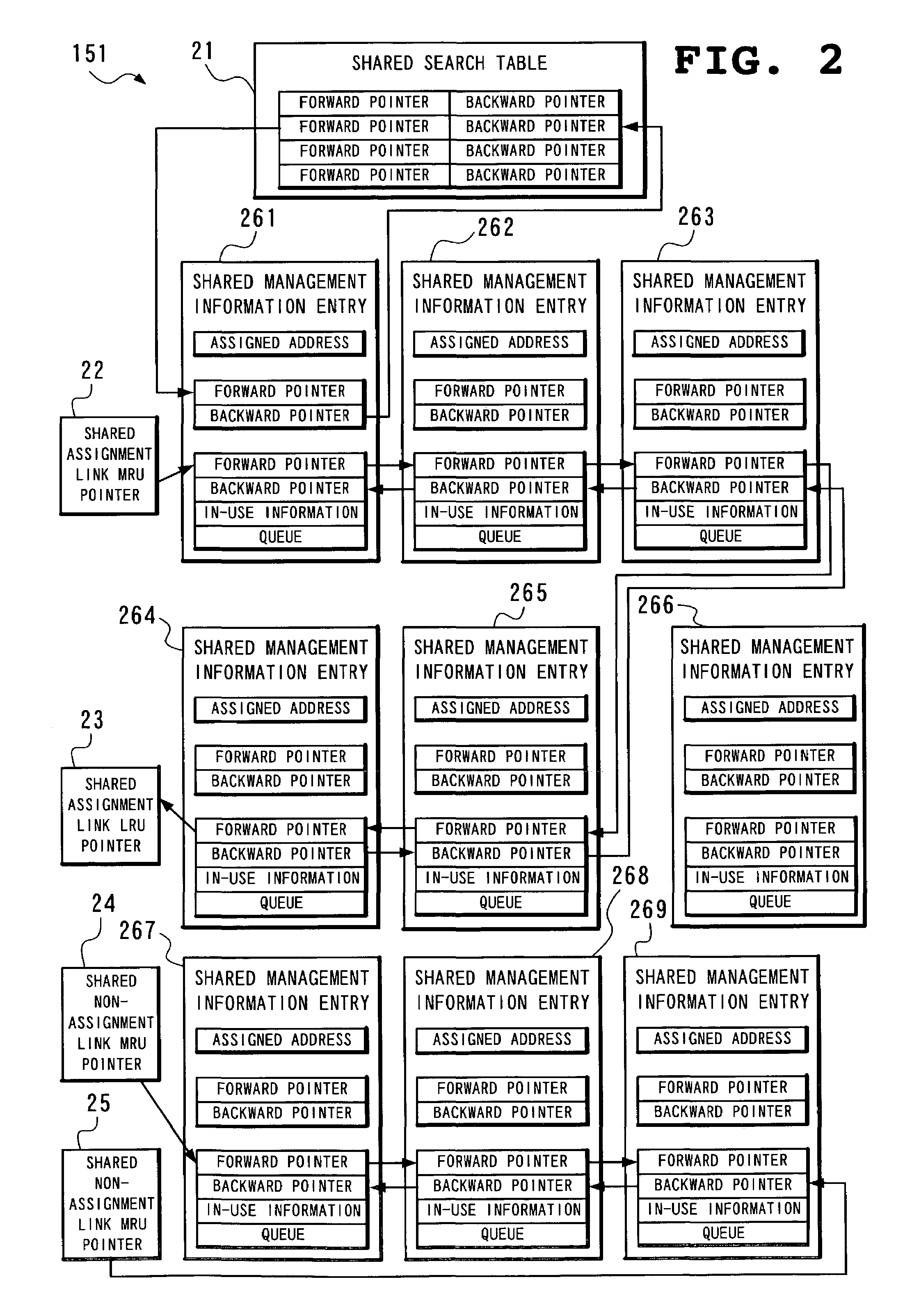

Disk cache management method of disk array device

InactiveUS7032068B2Reduce performanceImprove responsivenessMemory architecture accessing/allocationInput/output to record carriersCache pageDisk array

A local memory mounted on a host director is provided with a local cache management region for managing a cache page at a steady open state in a cache management region on a shared memory, so that even after data transfer is completed after the use of a cache page on the shared memory subjected to opening processing is finished, the cache page in question is kept open at the steady open state without closing the cache page in question and when an access to the cache page in question is made from the same host director, processing of opening the cache page in question is omitted to improve response performance.

Owner:NEC CORP

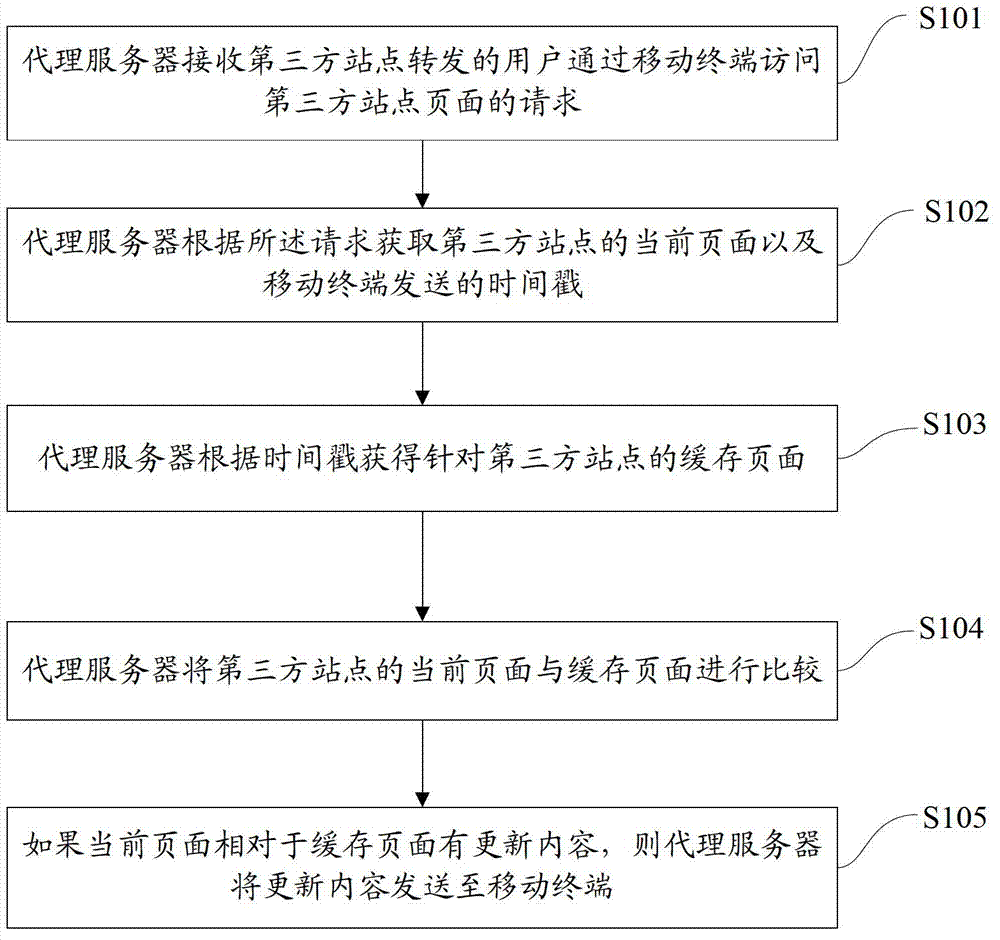

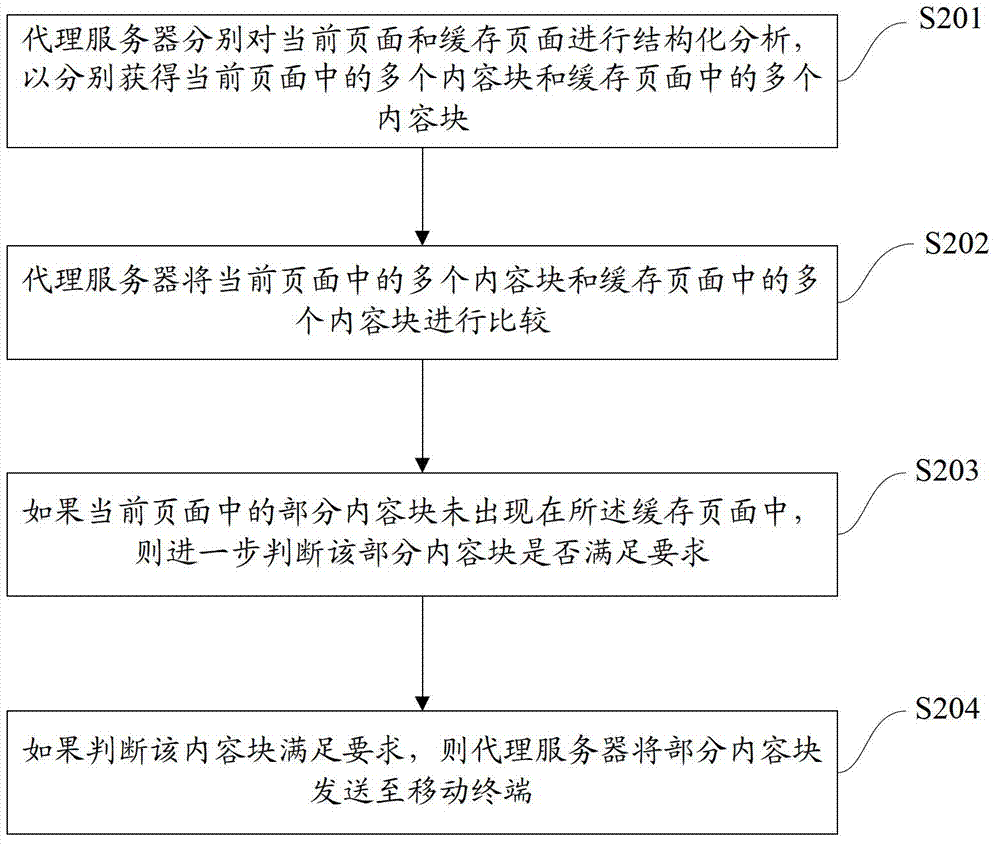

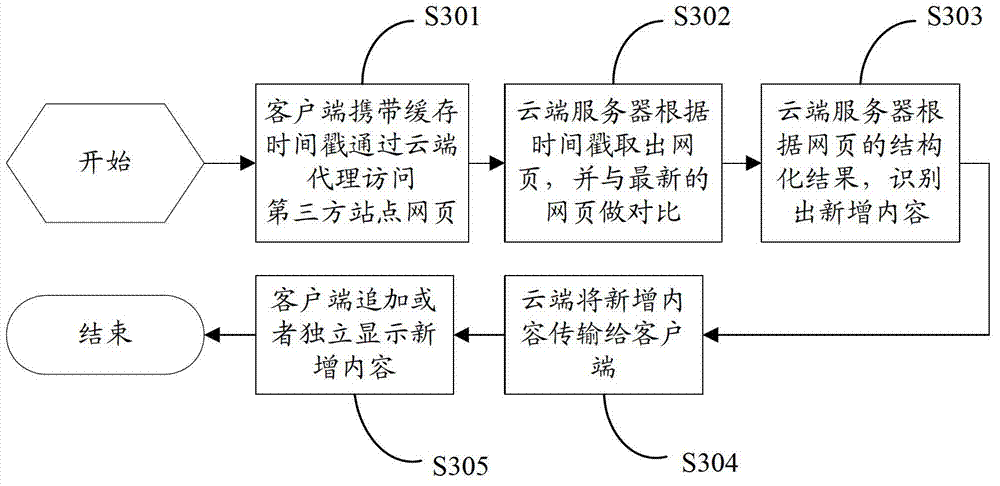

Method, system and device for providing update contents of web pages

The invention provides a method for providing update contents of web pages. The method includes that a proxy server receives a request of a user for accessing pages of a third-party website via a mobile terminal, and the request is forwarded via the third-party website; the proxy server acquires a current page of the third-party website and a time stamp according to the request, and the time stamp is transmitted by the mobile terminal; the proxy server acquires a cache page for the third-party website according to the time stamp; the proxy server compares the current page of the third-party website to the cache page; the proxy server transmits update contents to the mobile terminal if the current page has the update contents relative to the cache page. The invention further provides a system, the proxy server and the mobile terminal for providing the update contents of the web pages. The method, the system, the proxy server and the mobile terminal have the advantages that the page, which is accessed by the user in the past, of the third-party website can be recorded, and the current page and the cache page are compared to each other when the user accesses the page again, so that the update contents can be provided for the user, and network traffic can be saved for the user.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

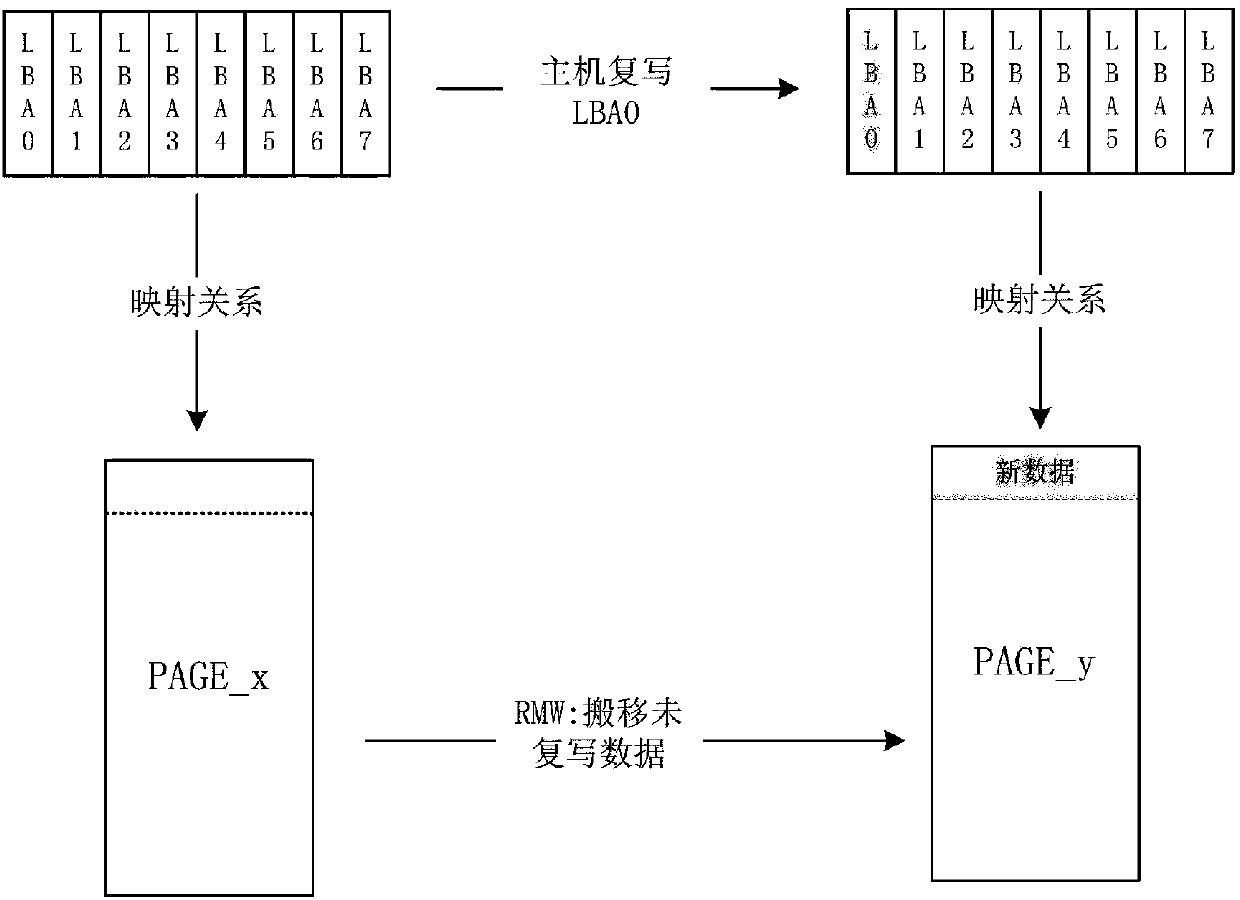

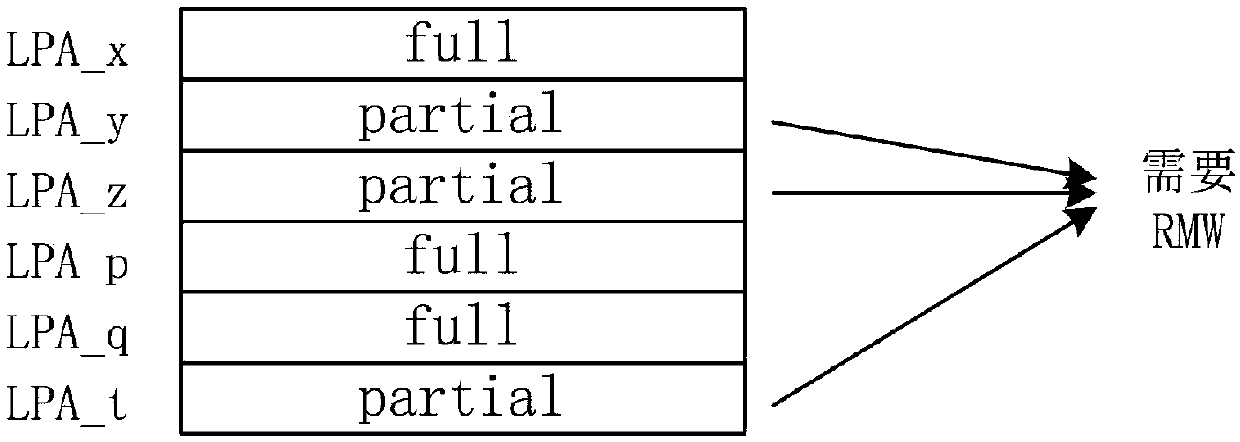

Method for improving SSD comprehensive performance

InactiveCN107832007AImprove performanceReduced trigger probabilityInput/output to record carriersMemory systemsDisk controllerParallel computing

The invention discloses a method for improving the SSD comprehensive performance. The method is characterized in that two stages of caches are adopted as writing caches of a solid state disk controller, the first stage is a partial-write cache, the second stage is a full-write cache, the partial-write cache and the full-write cache are managed with the size of physical pages as the cache page unit, one physical page comprises logic data blocks of N hosts, a writing command received by the solid state disk controller from the hosts includes the steps of writing data in the partial-write cache first, transferring the data of cache pages in the partial-write cache into the full-write cache when the data of a certain cache page in the partial-write cache is fully written, a background of the solid state disk controller only executes to write the data cached in the full-write cache in an actual FLASH storage space, and the partial-write cache temporarily does not write data in the actual FLASH storage space. By arranging the two stages of caches, it is ensured that full data is actually written in each page of the FLASH storage space, the triggering probability of read-modify write (RMW) is greatly reduced, and the SSD comprehensive performance is remarkably improved.

Owner:RAMAXEL TECH SHENZHEN

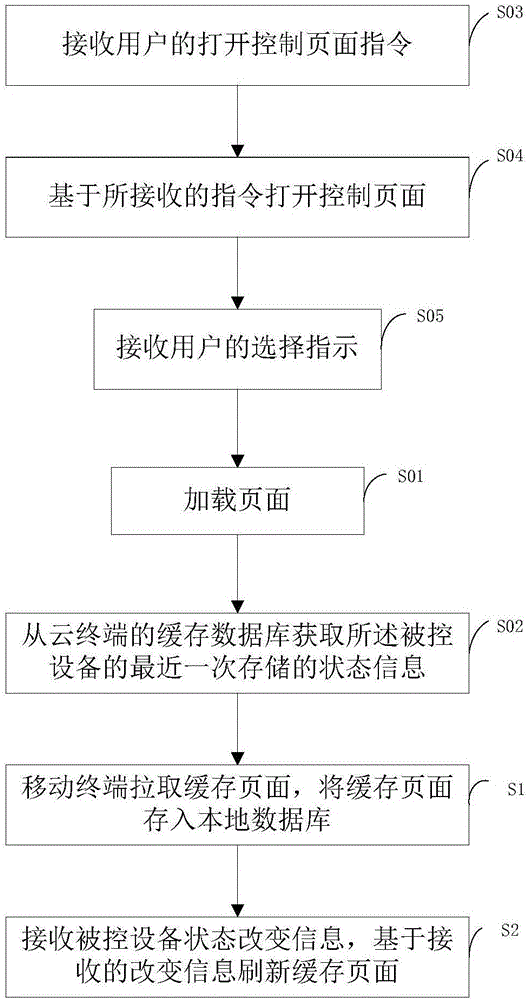

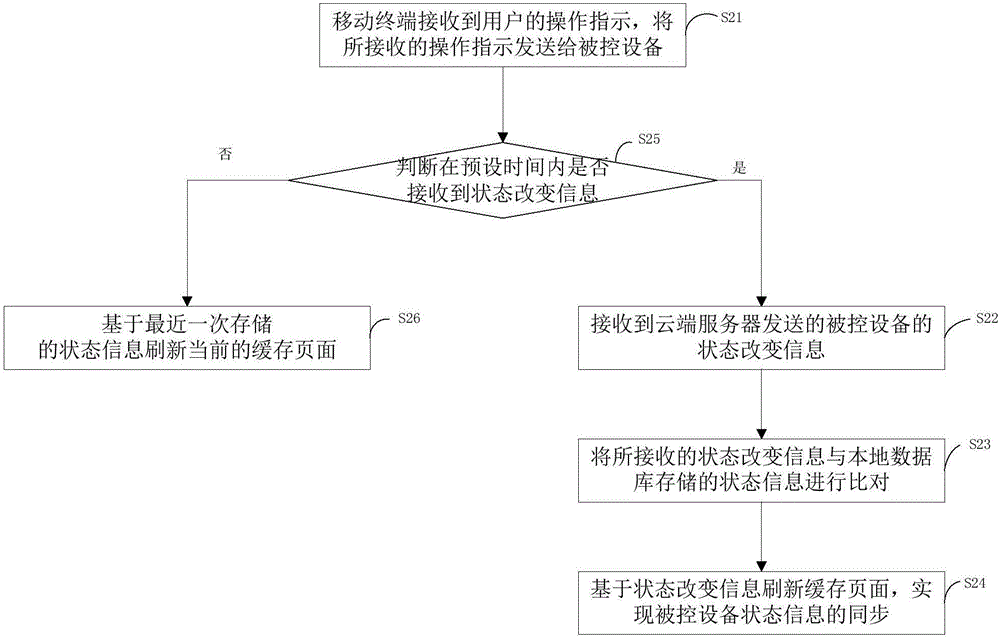

Synchronization method and synchronization system of controlled equipment state information, and mobile terminal

The invention is suitable for the field of communication technology, and provides a synchronization method and a synchronization system of controlled equipment state information, and a mobile terminal. The synchronization method comprises the steps of calling a caching page, storing the called caching page into a local database, wherein the caching page comprises latest state information of the controlled equipment; receiving state change information of the controlled equipment, updating the caching page based on the received state information, performing feedback of the change information to a cloud server by the controlled equipment, transmitting the change information to the mobile terminal by the cloud server, and realizing state information synchronization of the controlled equipment. The synchronization method, the synchronization system and the mobile terminal have advantages of realizing state information synchronization among the cloud server, the controlled equipment and the mobile terminal, realizing benefit to a user and improving user experience.

Owner:TCL CORPORATION

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com