Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

32 results about "High performance parallel computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

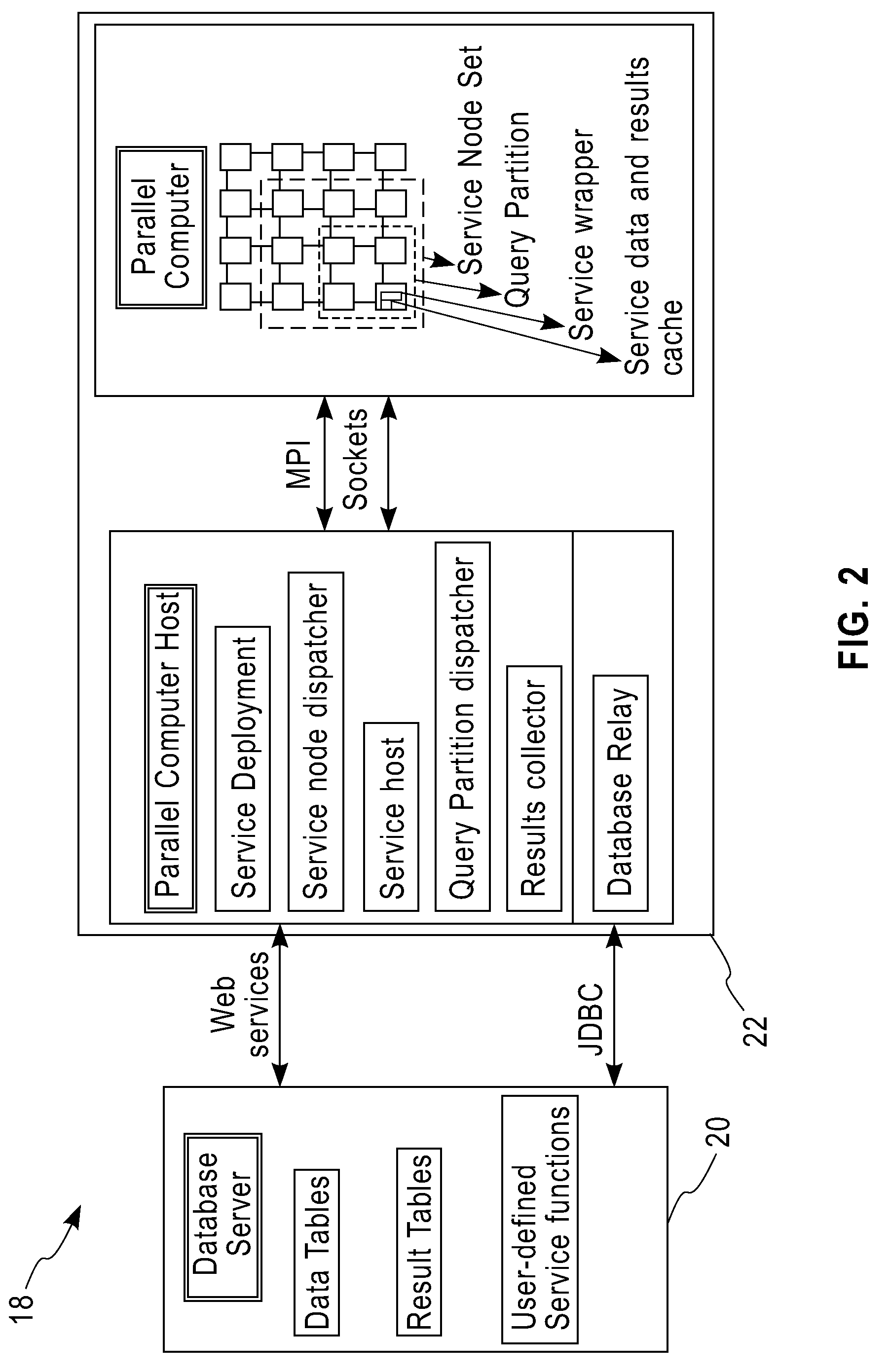

System and method for executing compute-intensive database user-defined programs on an attached high-performance parallel computer

InactiveUS20090077011A1Improve query performanceEasy to guaranteeDigital data information retrievalDigital data processing detailsDatabase queryData set

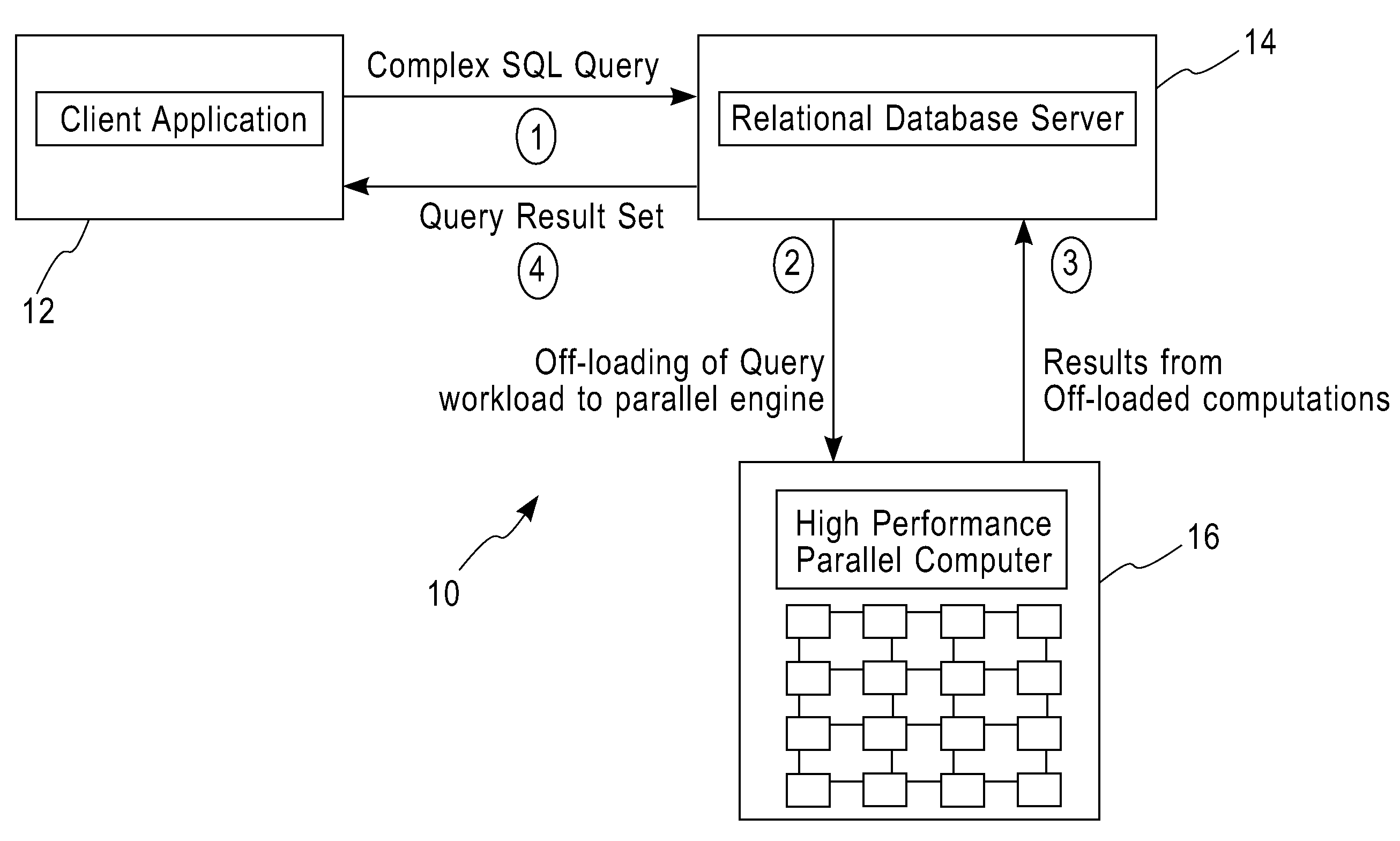

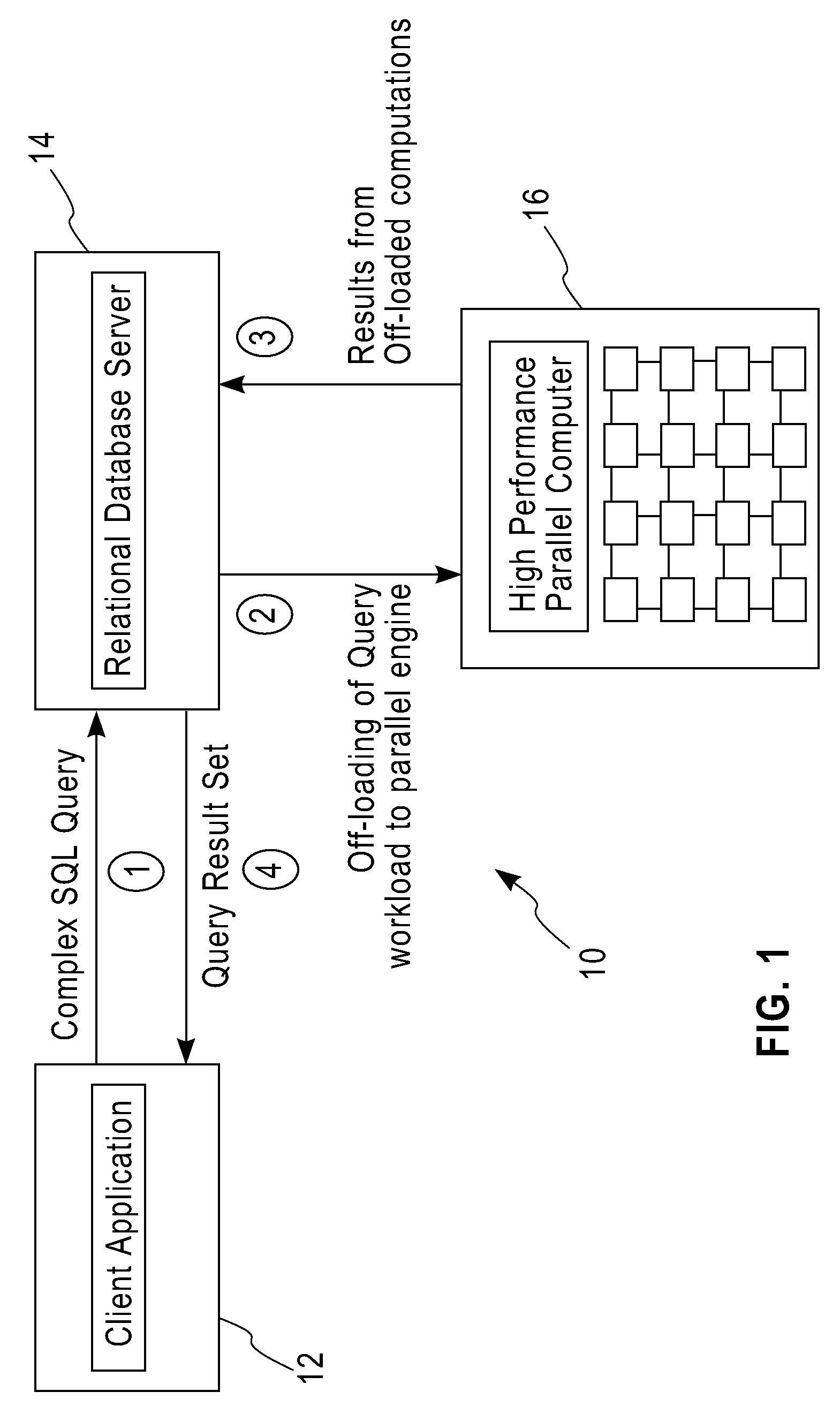

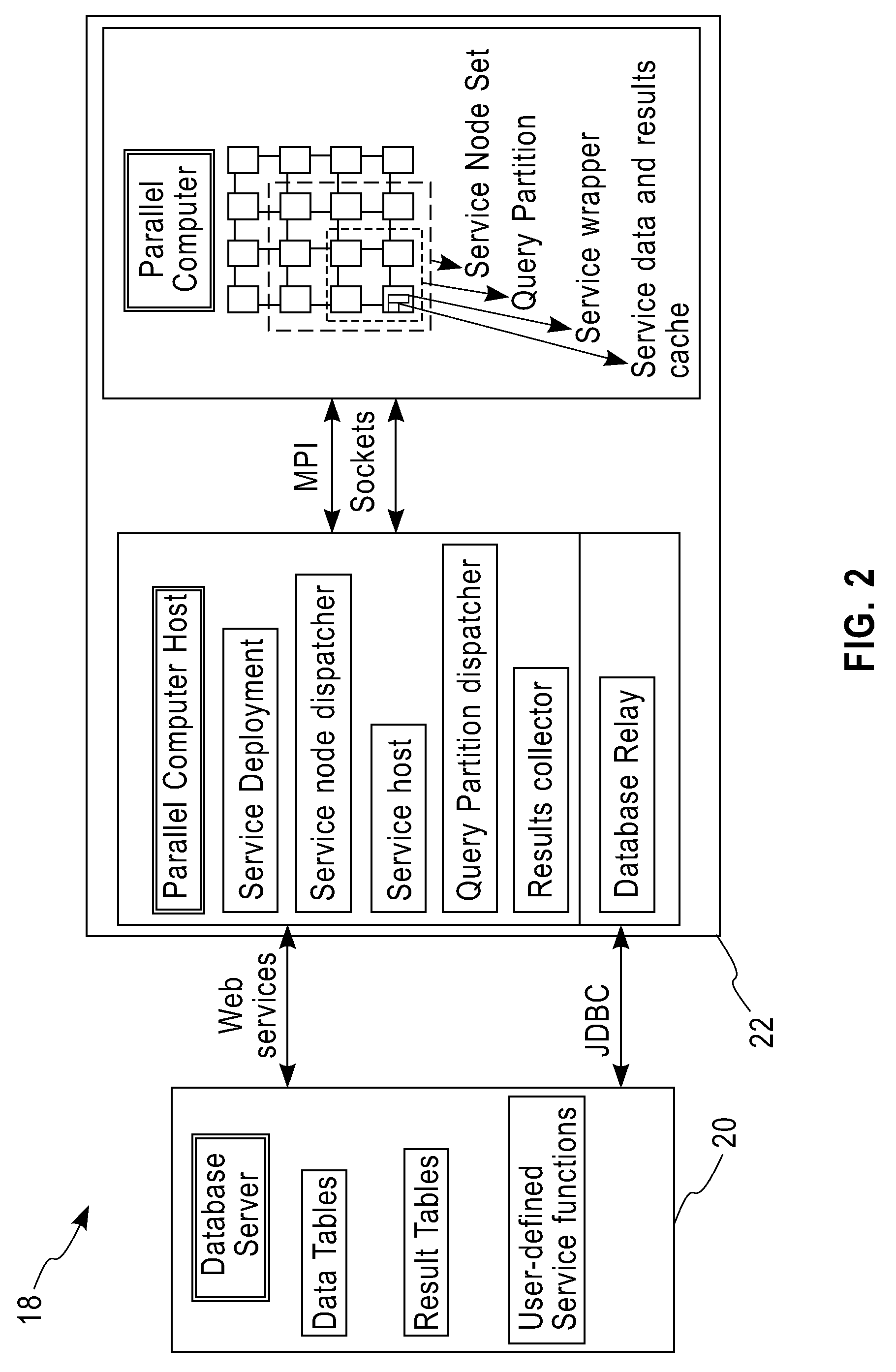

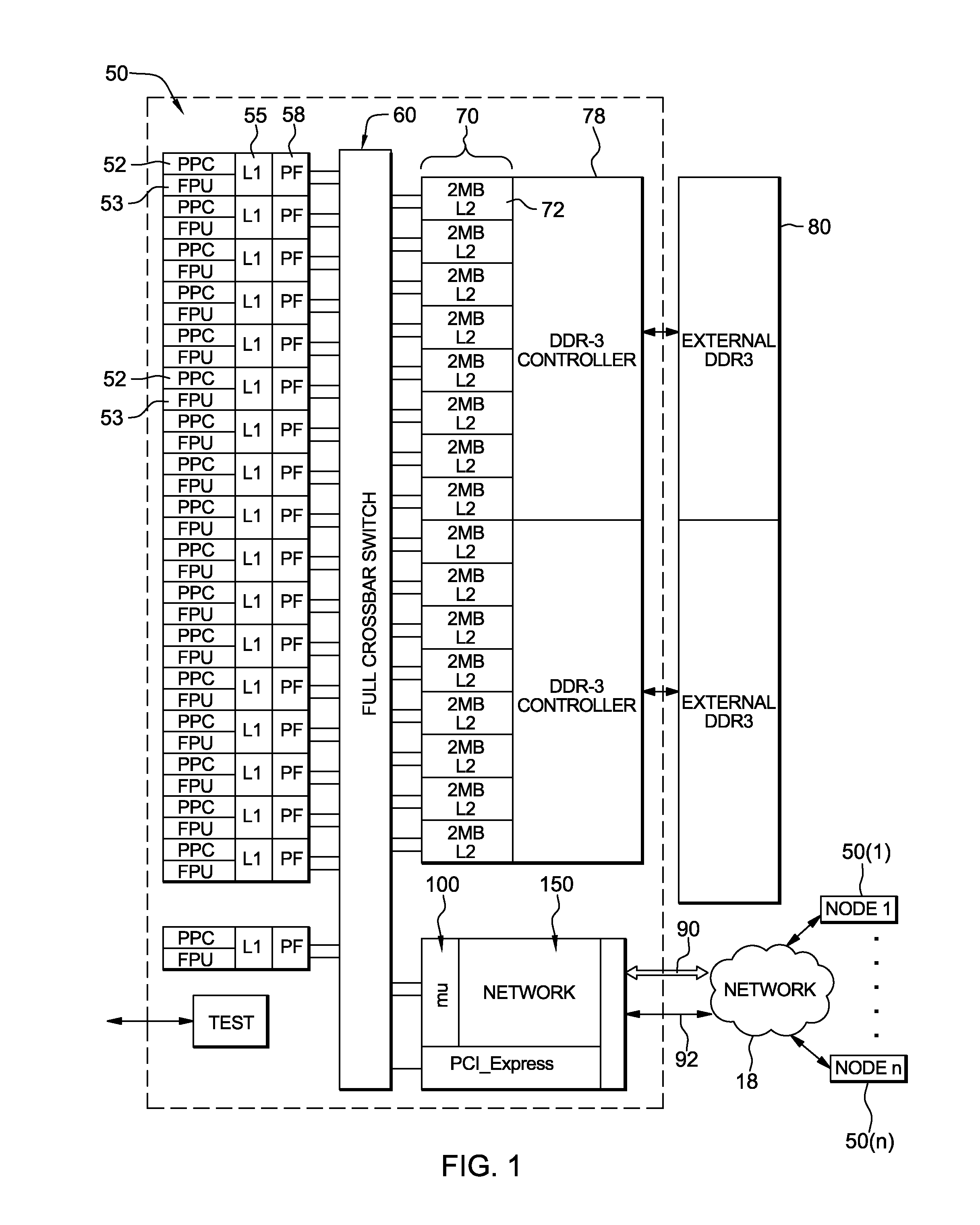

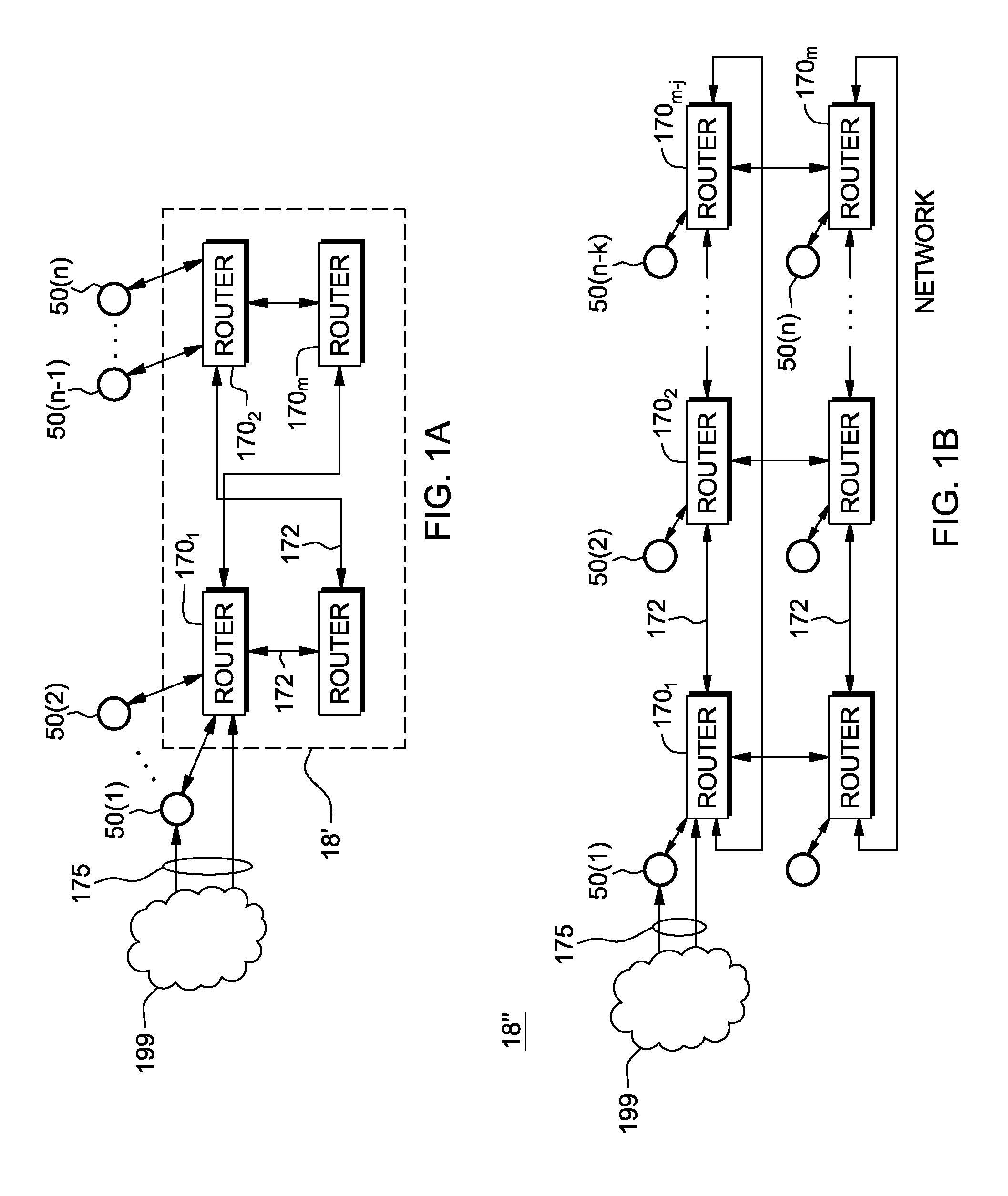

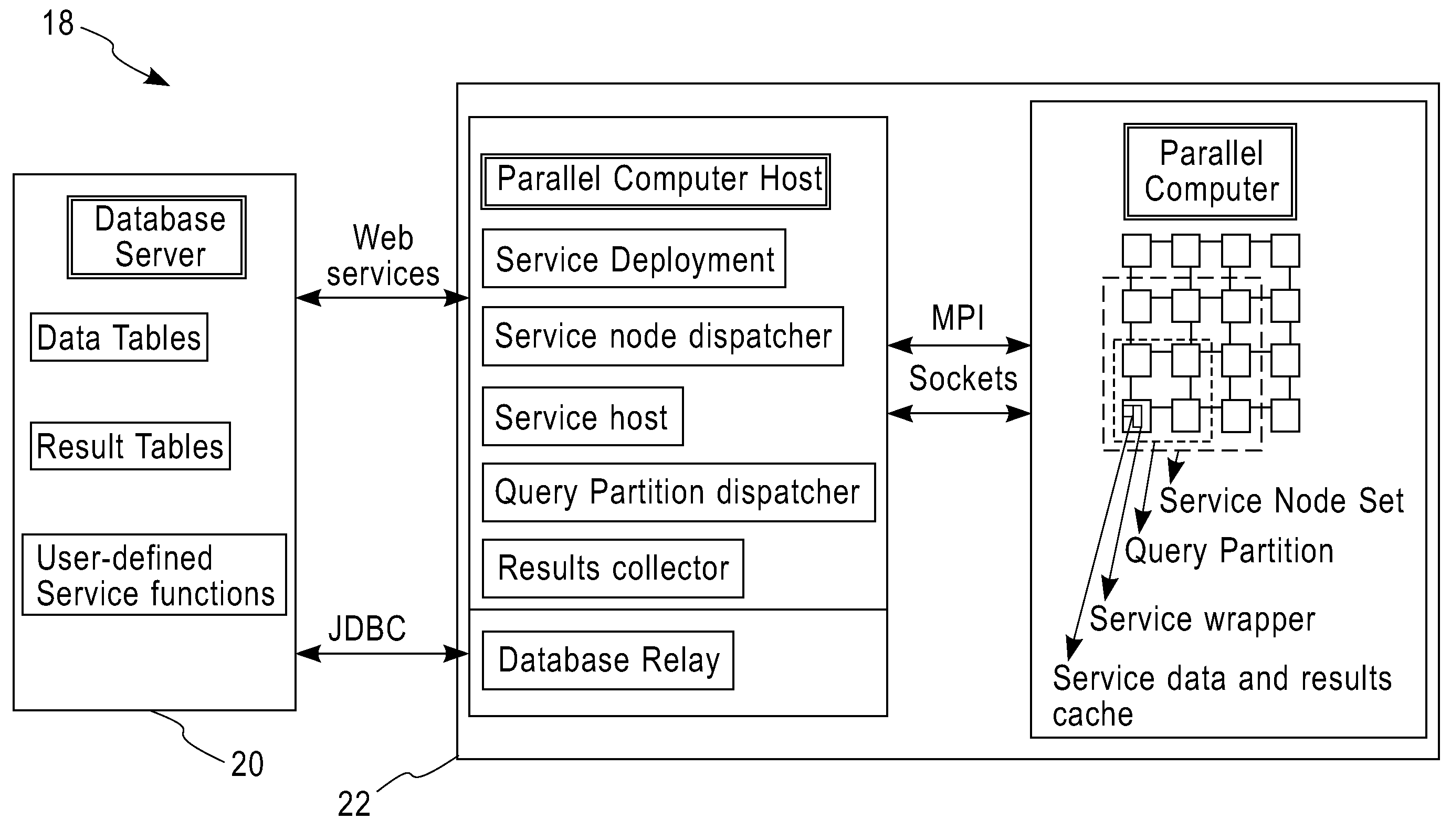

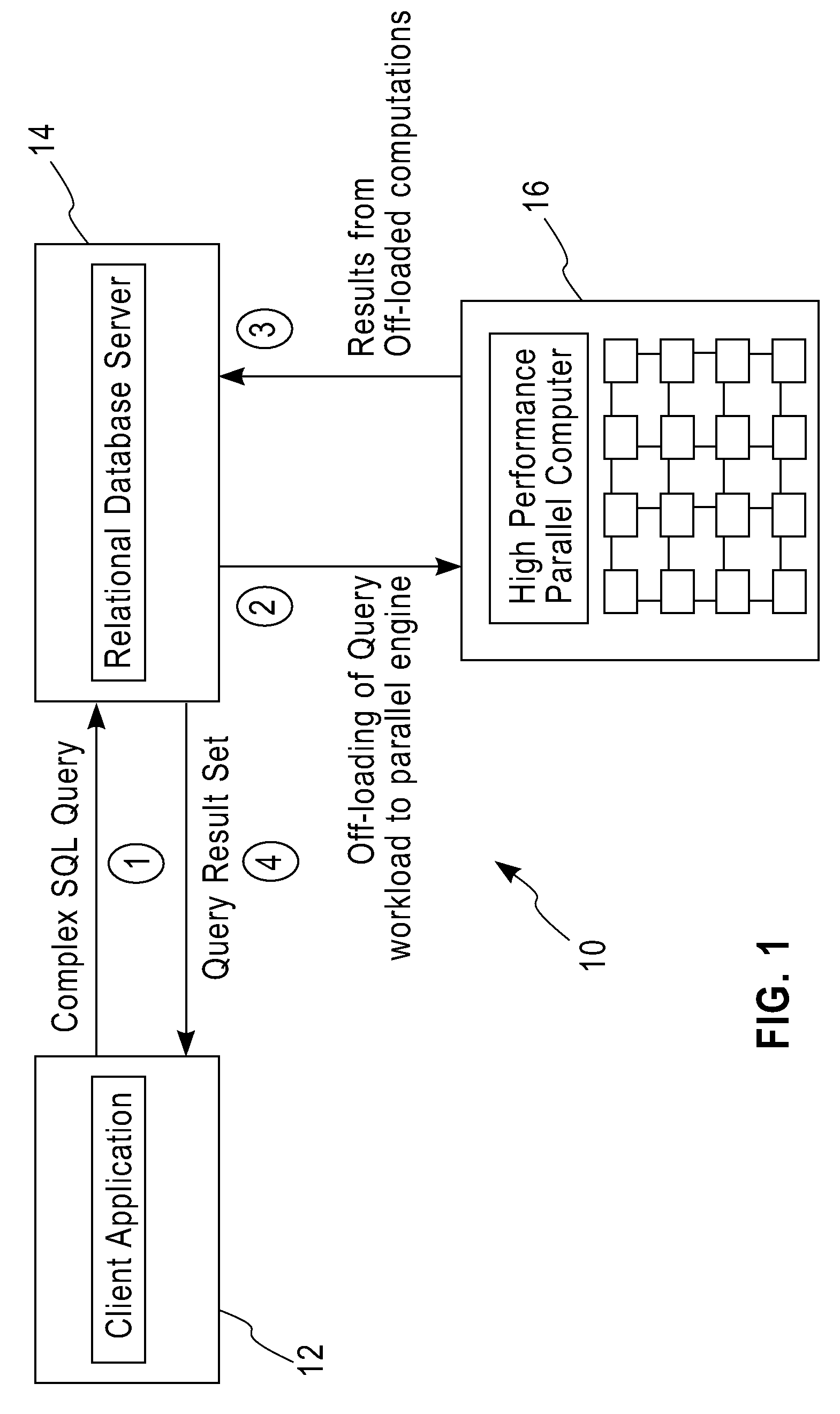

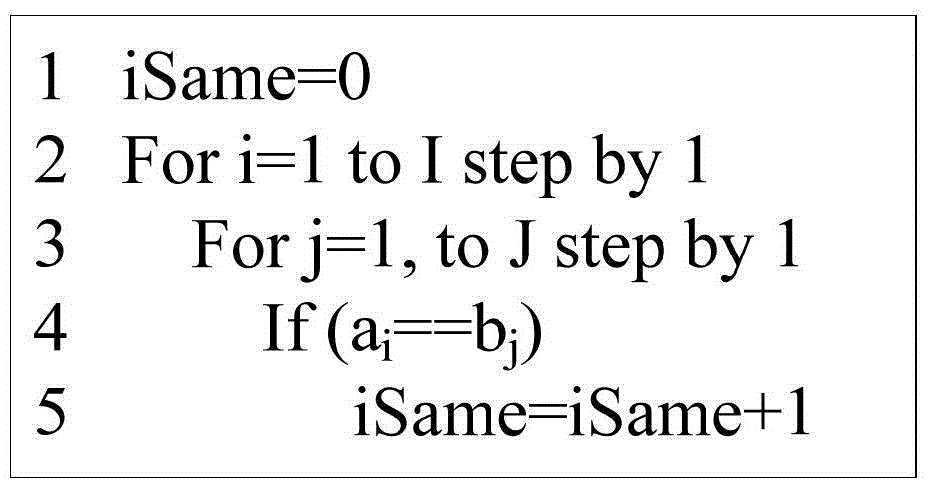

The invention pertains to a system and method for dispatching and executing the compute-intensive parts of the workflow for database queries on an attached high-performance, parallel computing platform. The performance overhead for moving the required data and results between the database platform and the high-performance computing platform where the workload is executed is amortized in several ways, for example,by exploiting the fine-grained parallelism and superior hardware performance on the parallel computing platform for speeding up compute-intensive calculations,by using in-memory data structures on the parallel computing platform to cache data sets between a sequence of time-lagged queries on the same data, so that these queries can be processed without further data transfer overheads,by replicating data within the parallel computing platform so that multiple independent queries on the same target data set can be simultaneously processed using independent parallel partitions of the high-performance computing platform.A specific embodiment of this invention was used for deploying a bio-informatics application involving gene and protein sequence matching using the Smith-Waterman algorithm on a database system connected via an Ethernet local area network to a parallel supercomputer.

Owner:IBM CORP

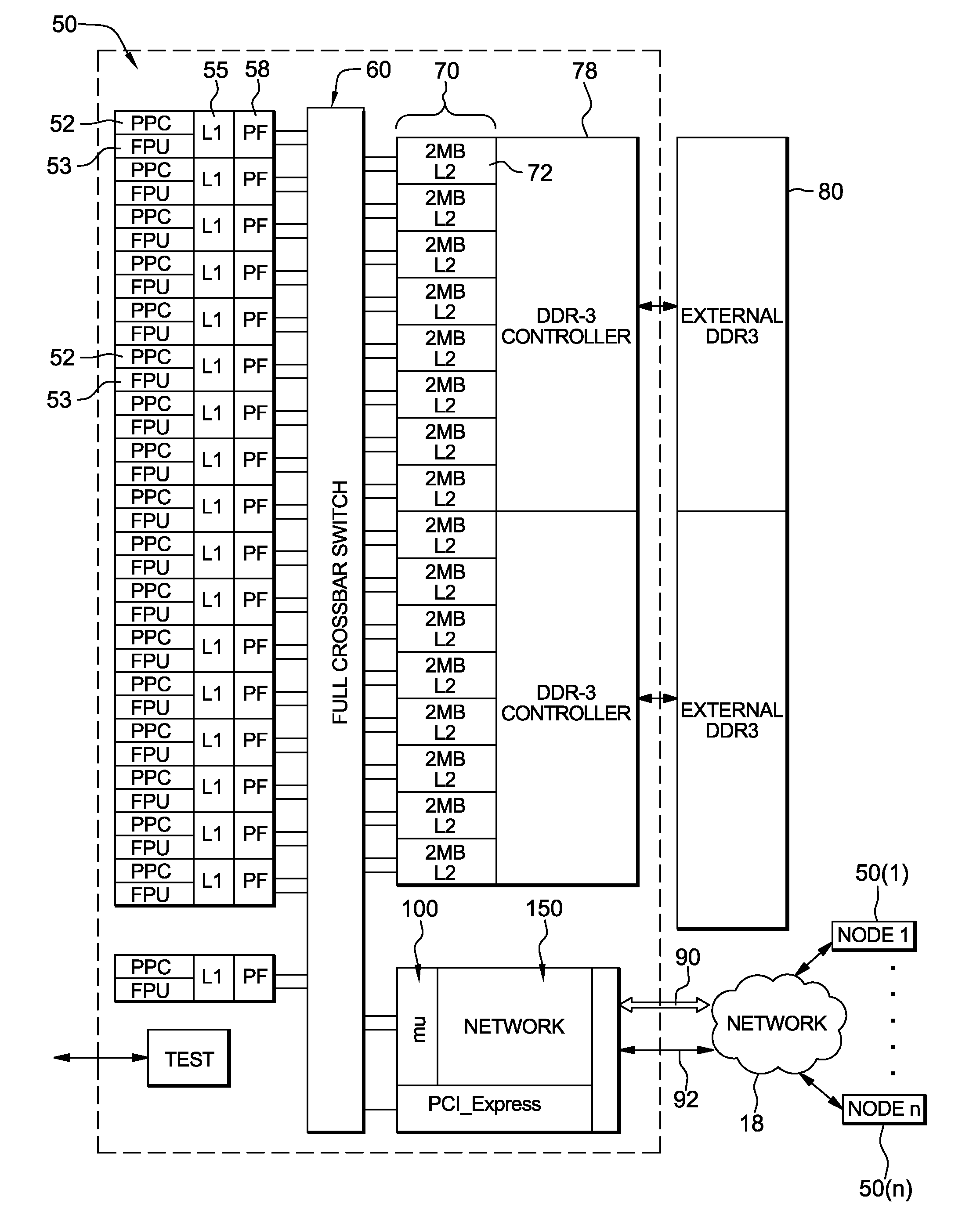

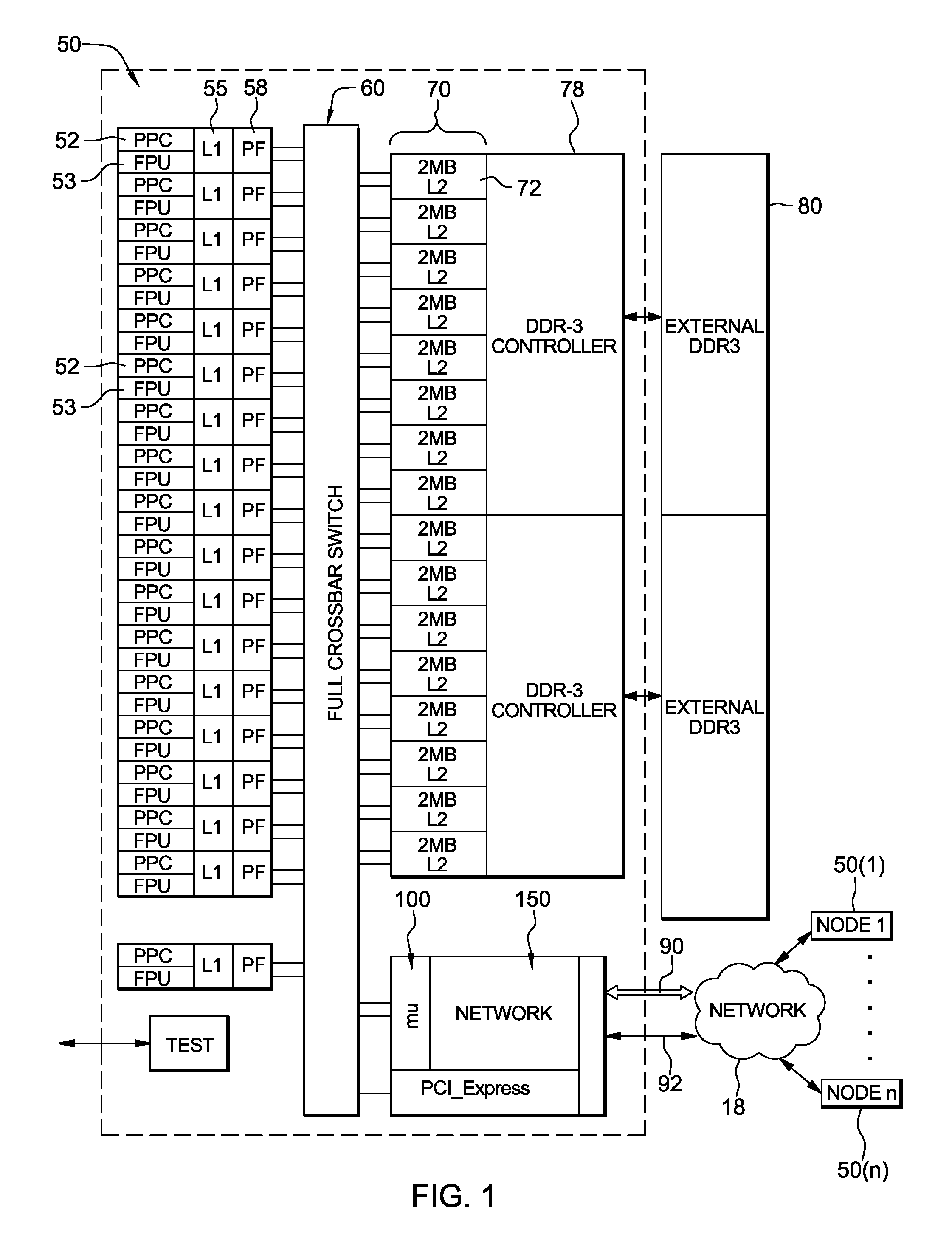

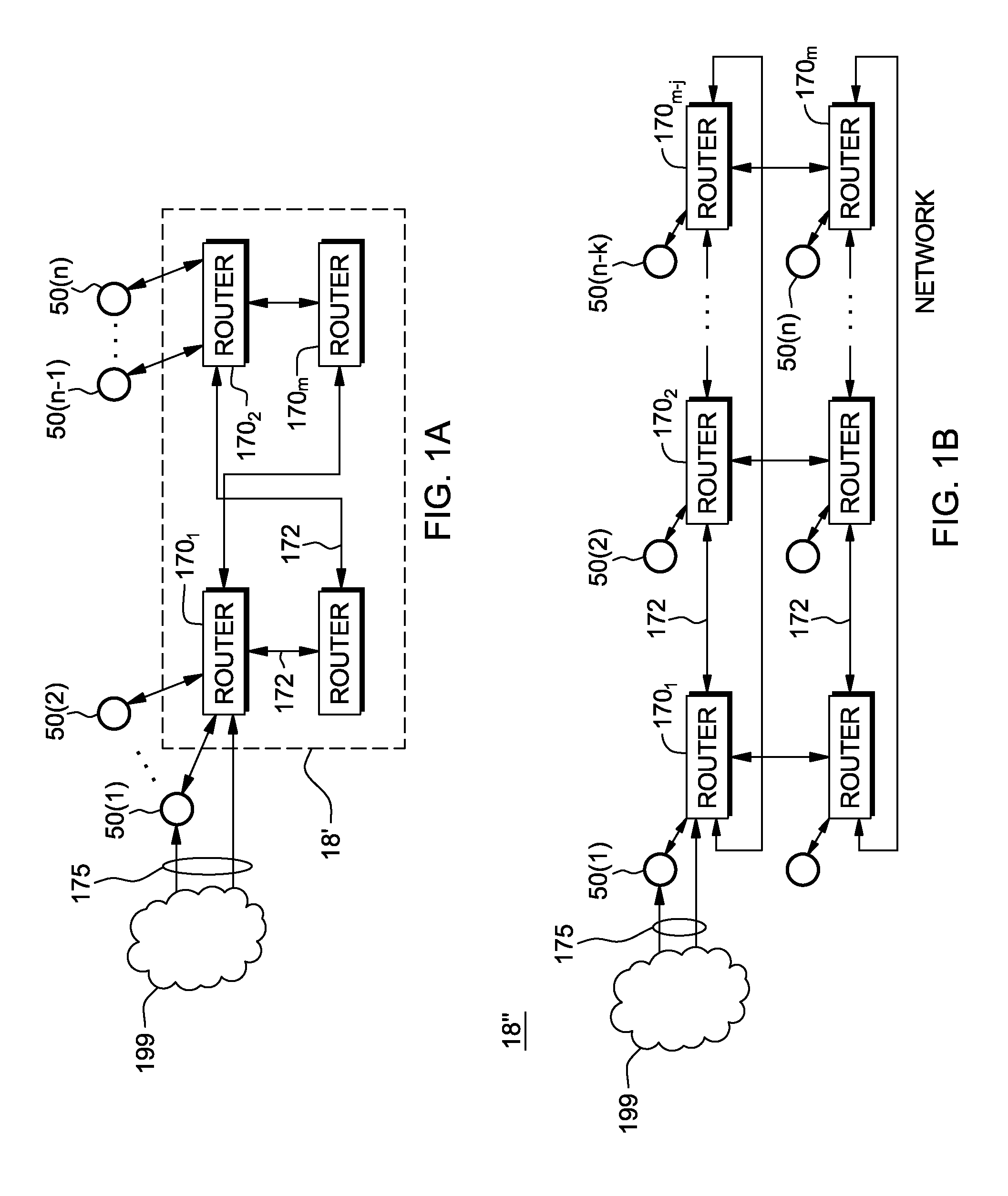

Non-volatile memory for checkpoint storage

InactiveUS20110173488A1Increase speedImprove efficiencyRedundant operation error correctionSupporting systemApplication software

A system, method and computer program product for supporting system initiated checkpoints in high performance parallel computing systems and storing of checkpoint data to a non-volatile memory storage device. The system and method generates selective control signals to perform checkpointing of system related data in presence of messaging activity associated with a user application running at the node. The checkpointing is initiated by the system such that checkpoint data of a plurality of network nodes may be obtained even in the presence of user applications running on highly parallel computers that include ongoing user messaging activity. In one embodiment, the non-volatile memory is a pluggable flash memory card.

Owner:IBM CORP

System and method for executing compute-intensive database user-defined programs on an attached high-performance parallel computer

InactiveUS7885969B2Easy to guaranteeReduce overheadDigital data information retrievalDigital data processing detailsDatabase queryData set

Owner:INT BUSINESS MASCH CORP

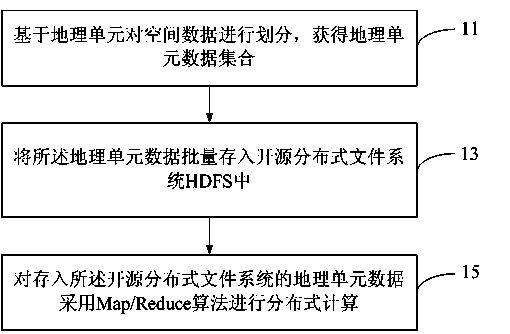

Spatial data processing method and system

ActiveCN103793442AImprove scalabilityImprove acceleration performanceGeographical information databasesSpecial data processing applicationsData setFile system

The invention provides a spatial data processing method and system. The spatial data processing method includes acquiring a geographic unit data set on the basis of dividing spatial data by geographic units; storing the geographic unit data set to an open source distribution type file system in batches; performing distribution type computing on the geographic unit data set stored in the open source distribution type file system by the Map / Reduce algorithm. By the aid of the spatial data processing method, a task can be divided into a plurality of tasks to be processed simultaneously, high-performance parallel computing is achieved; since the open source distribution type file system and the Map / Reduce algorithm are adopted, high-performance computing of geographic information can be supported by using an ordinary computer and computing model, and the method has the advantages of high adaptability and low cost.

Owner:BEIJING SUPERMAP SOFTWARE CO LTD

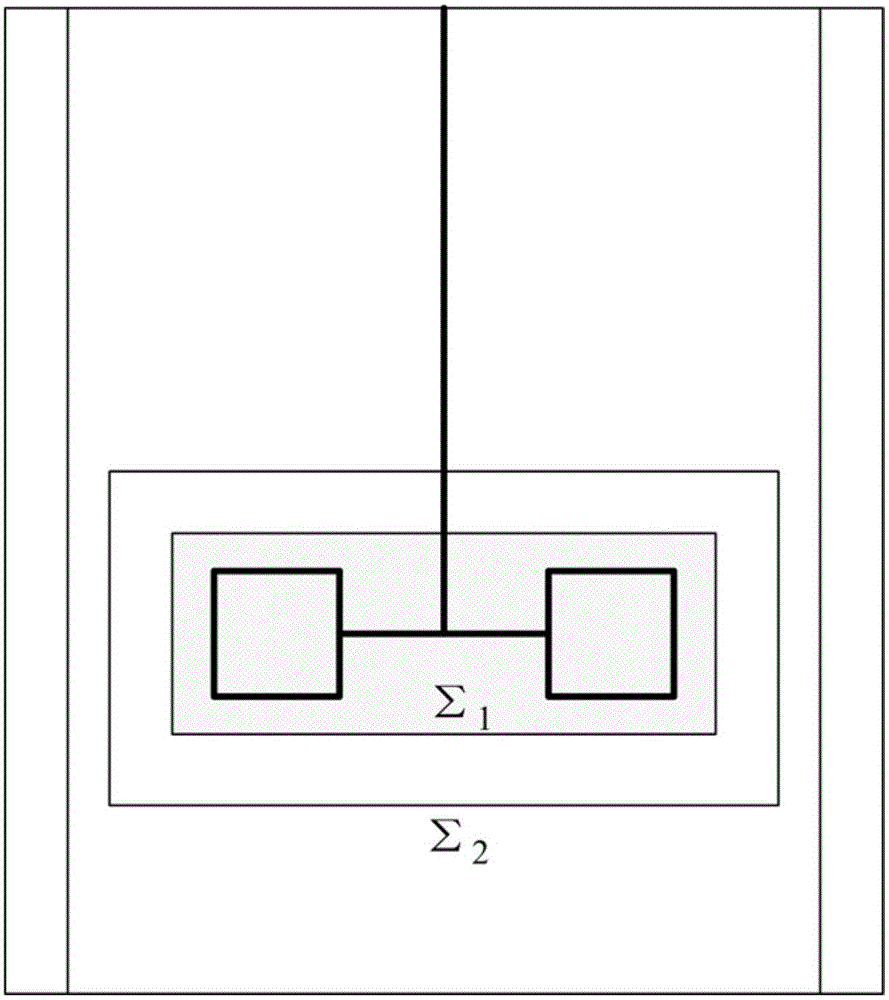

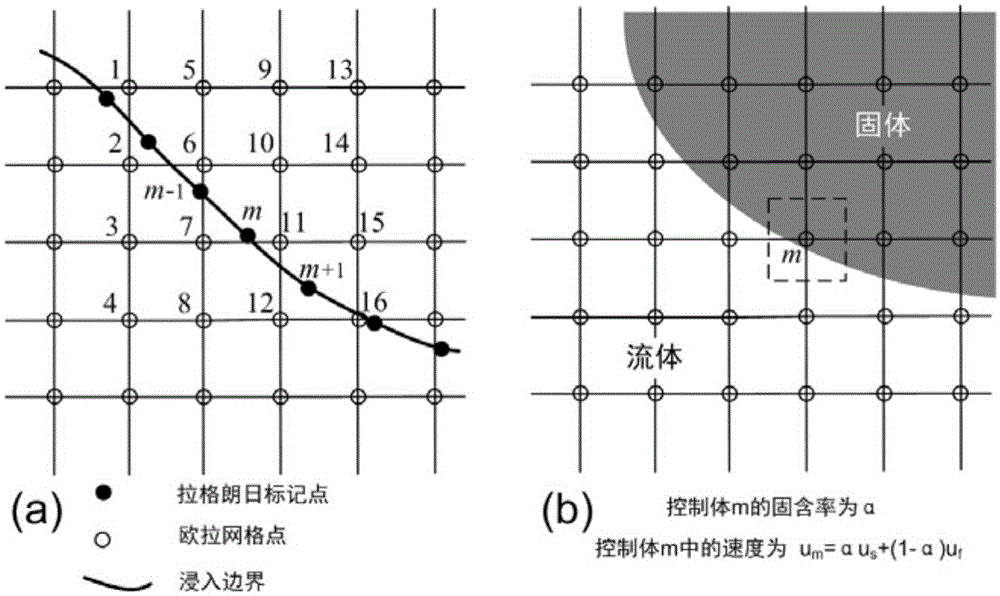

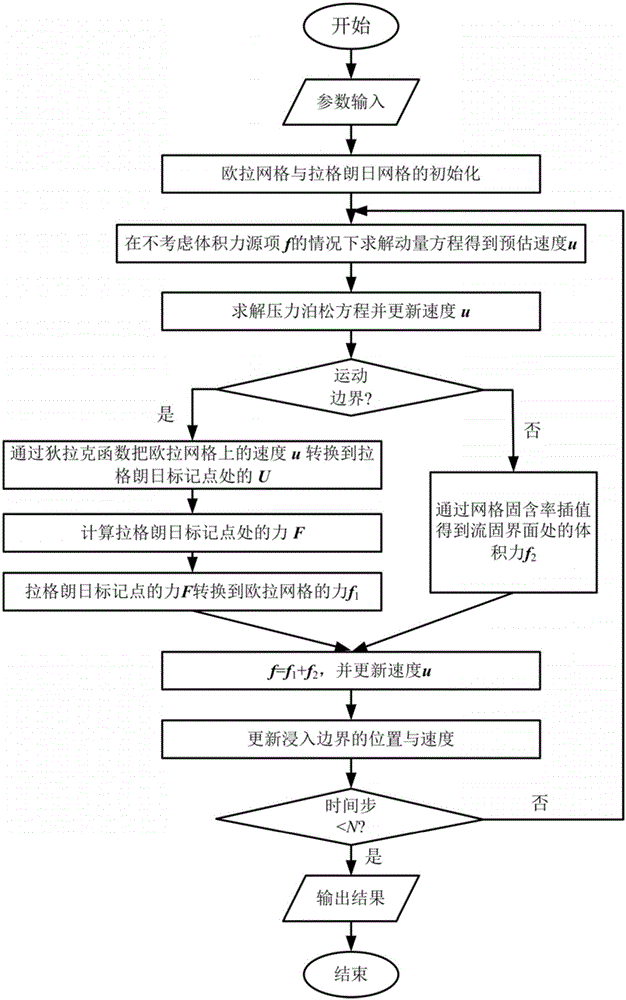

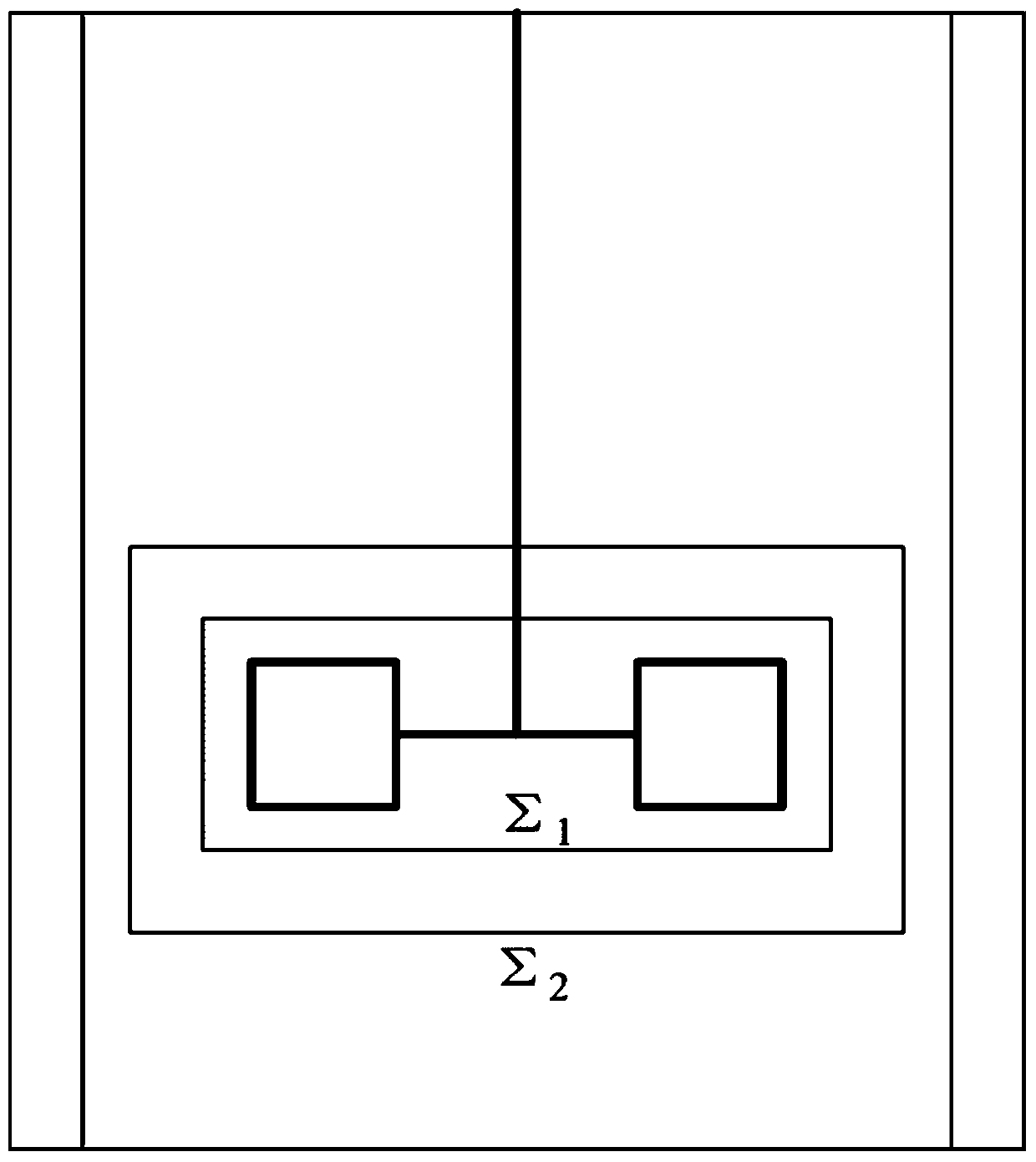

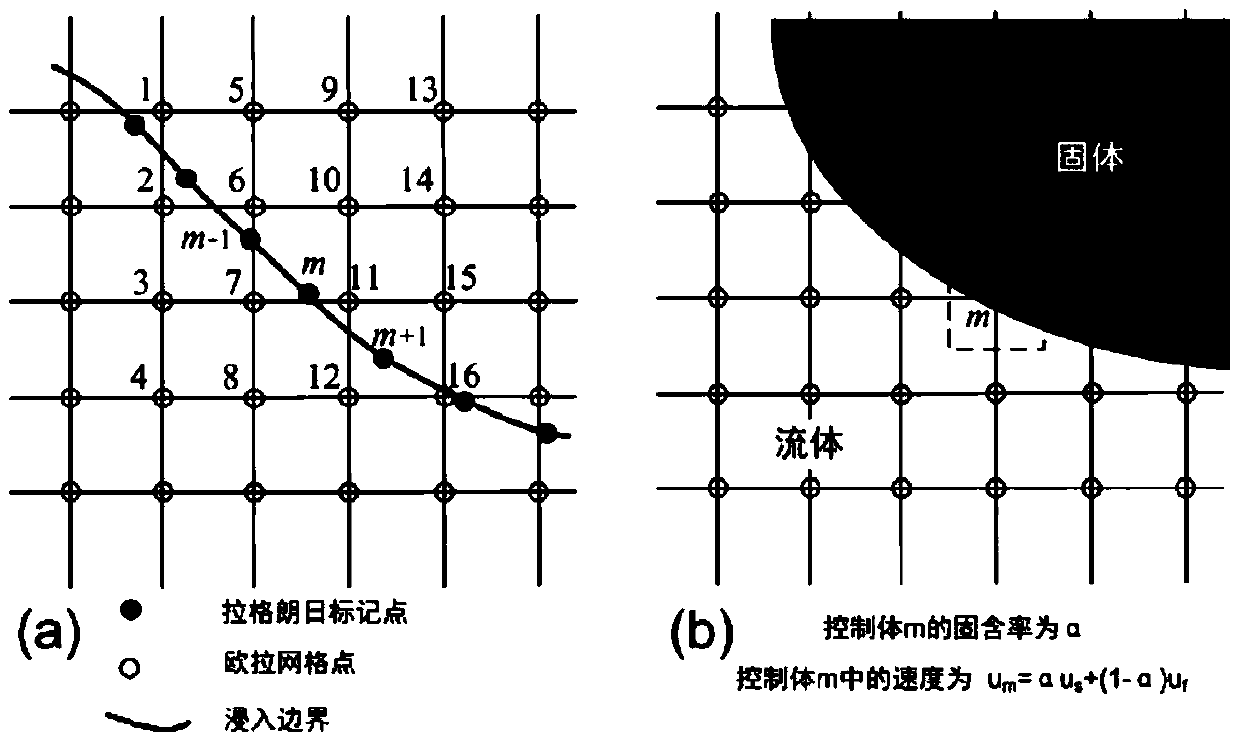

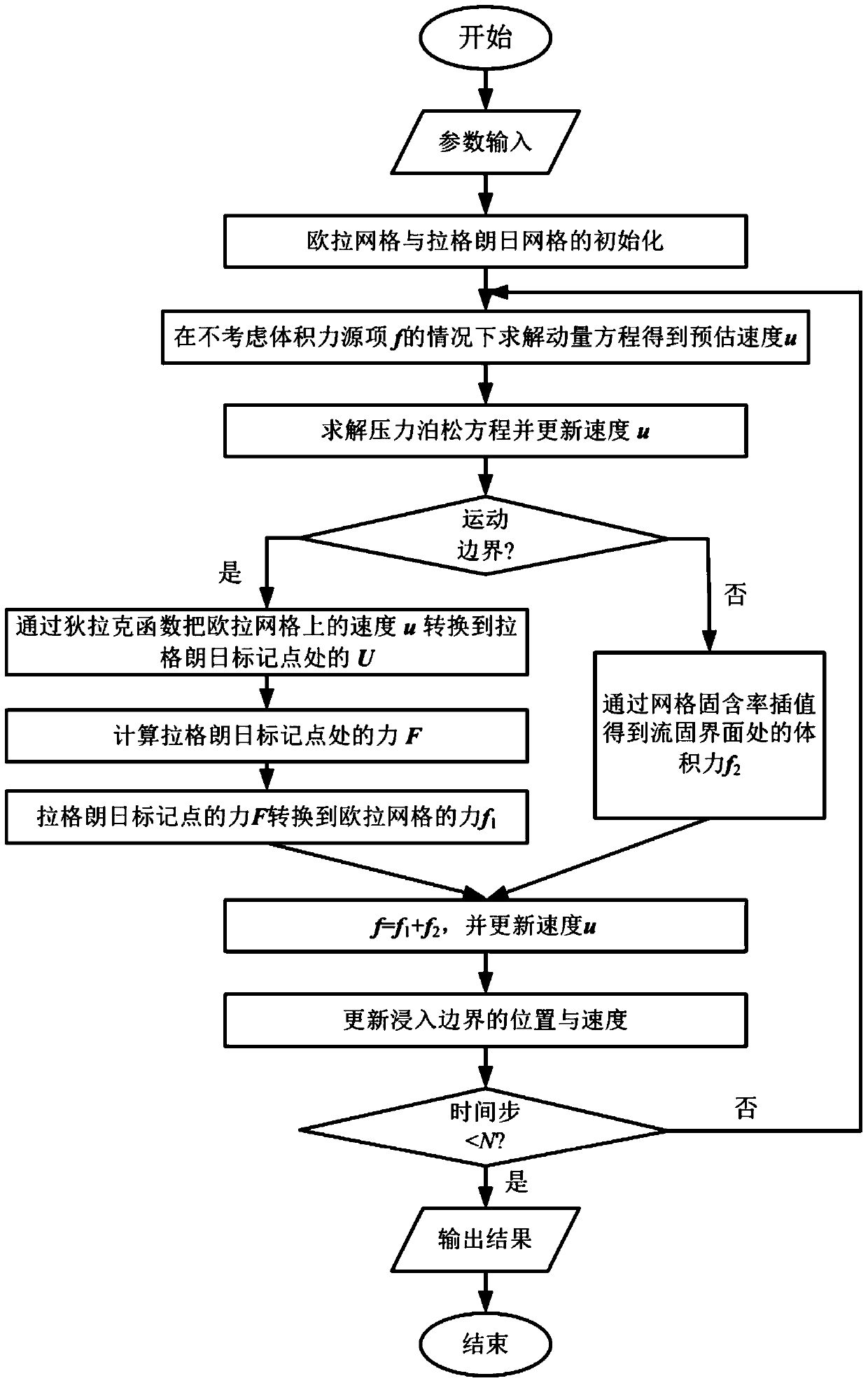

Stirred tank reactor simulation method based on immersed boundary method

ActiveCN105069184APromote generationReduce workloadSpecial data processing applicationsBoundary knot methodSolid particle

The present invention provides a stirred tank reactor simulation method based on an immersed boundary method. The method includes: a preparation phase including determination and setting of calculating parameters, generation of fluid grid files, generation of Lagrange mark point information, and preparation of calculating resources; numerical calculation: setting boundary conditions of computational domains and solving velocity fields and temperature fields based on fluid grids; combining with a discrete element method to achieve an interface analytic simulation of two-phase flow of particle flow, wherein the immersed boundary method is directly employed to apply non-slip boundary conditions to particle surfaces; a post-processing phase, and outputting information of each Euler mesh and information of each particle after a simulation is completed. According to the characteristics of different boundaries, in the calculation, the mixed immersed boundary method is employed to process, and the mass numerical simulation of turbulent flow in the stirred tank reactor is achieved through combination of large eddy simulation and high performance parallel computing technology. The simulation method may be applied to simulation of turbulent flow and simulation of solid particle suspension in the stirred tank reactor and the like.

Owner:INST OF PROCESS ENG CHINESE ACAD OF SCI

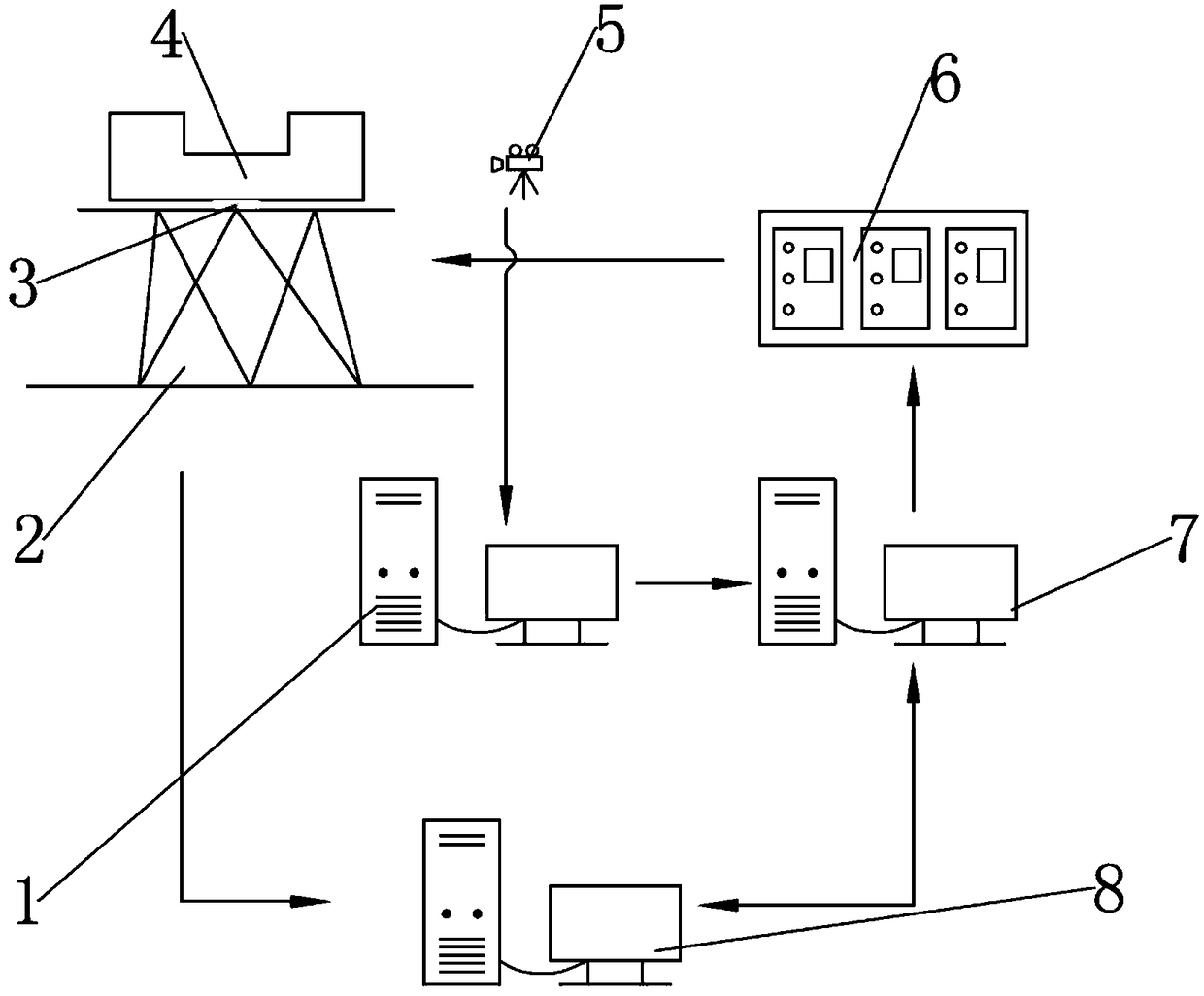

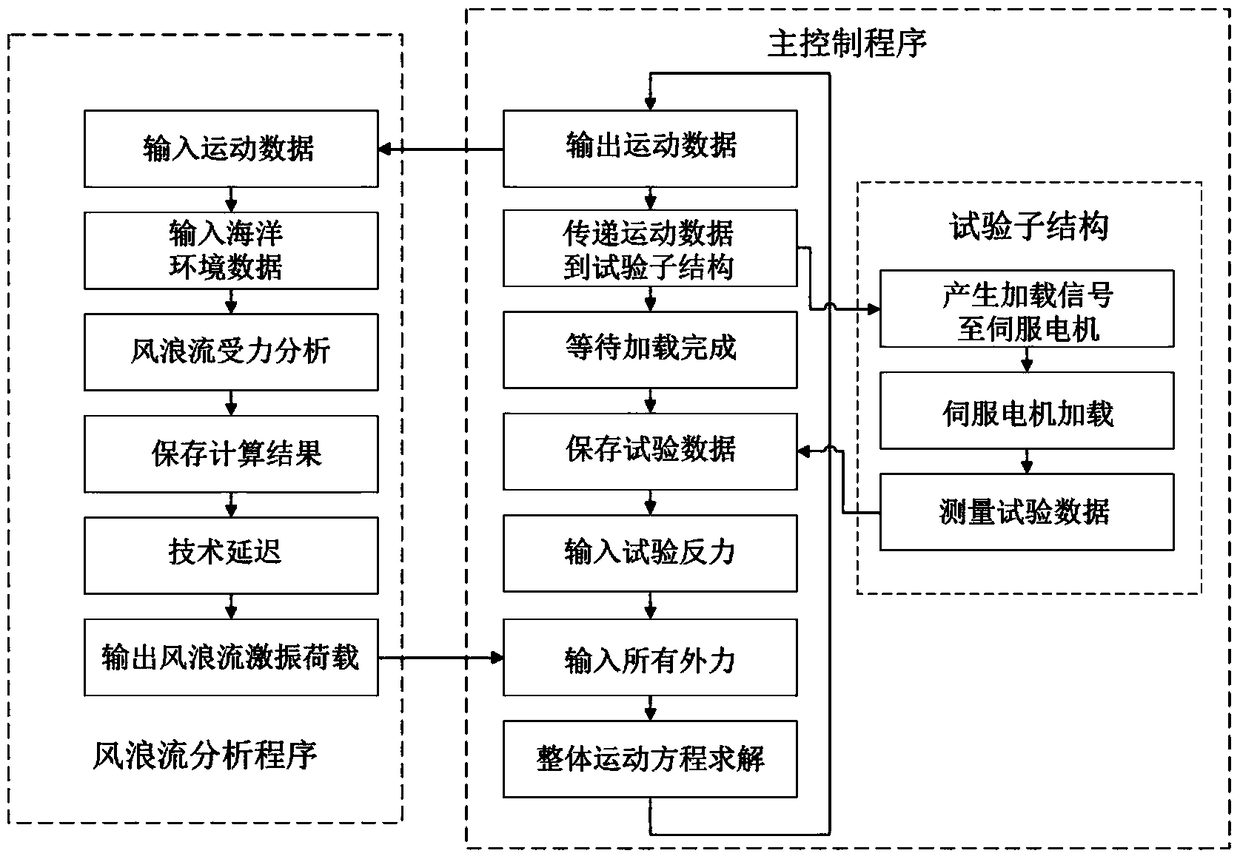

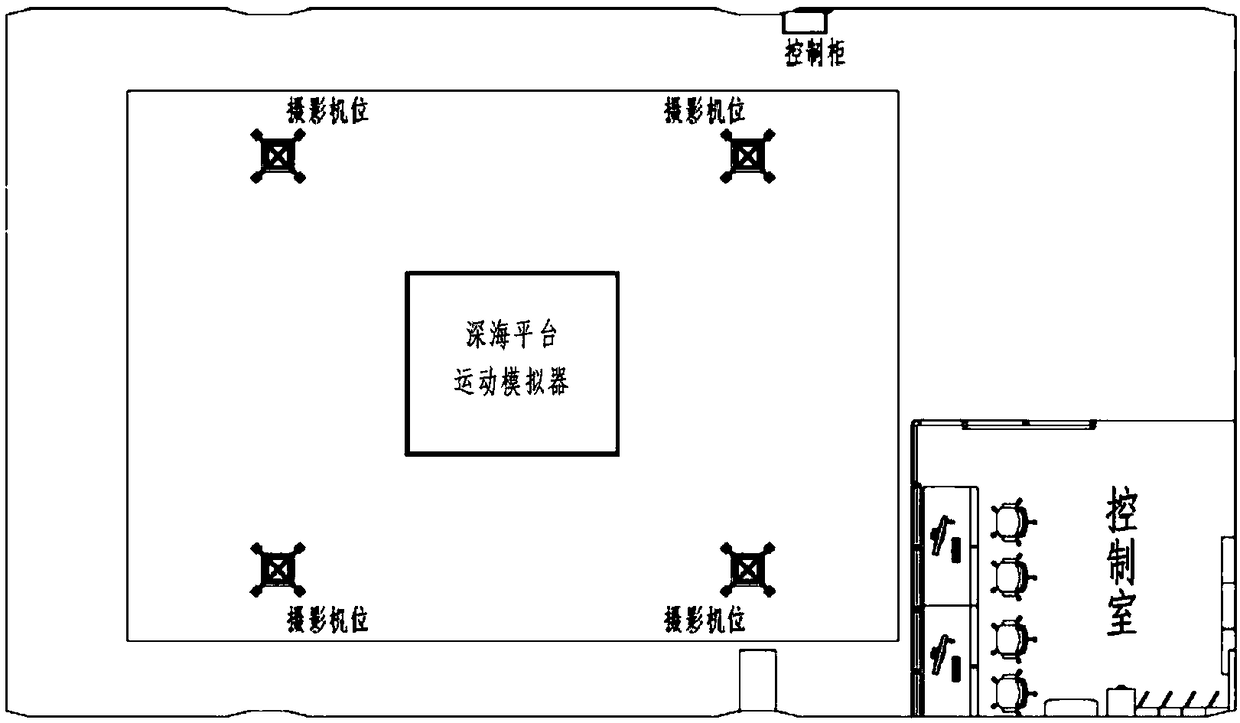

Hybrid model test platform for vibration control of deep sea platform

PendingCN109060296AAvoid layoutRealize contactless data collectionHydrodynamic testingData acquisitionMulti platform

The invention relates to a hybrid model test platform for vibration control of a deep sea platform. The hybrid model test platform for vibration control of the deep sea platform comprises a deep sea platform motion simulation system, a test data acquisition and analysis system, a high-performance parallel computation and control system and a structure vibration control device test model, wherein the deep sea platform motion simulation system comprises a deep sea platform motion simulator; the deep sea platform motion simulator has a fixed lower platform and upper platform; the upper platform is a six-degree-of-freedom motion platform, realizes six-degree-of-freedom motion of the motion platform by driving a servo motor, and is used for simulating the motion deep sea platform; the test dataacquisition and analysis system comprises a three-axis force transducer, a high-speed camera and an image processing computer; the high-performance parallel computation and control system comprises amain control computer and a high-performance parallel workstation; the structure vibration control device test model is put on the upper platform of the deep sea platform motion simulator; and the deep sea platform motion simulator simulates six-degree-of-freedom motion of the deep sea platform under the control of the main control computer.

Owner:TIANJIN UNIV

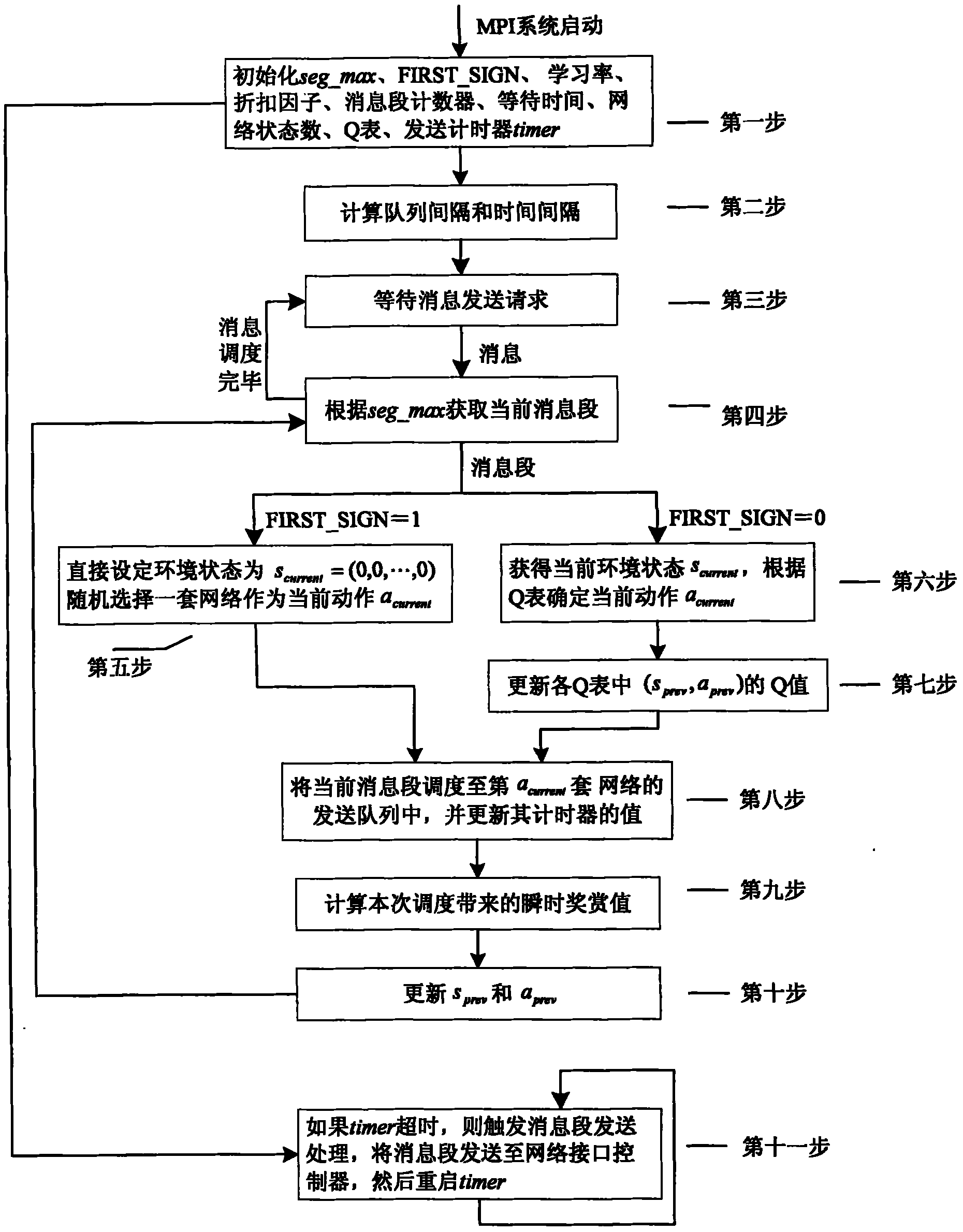

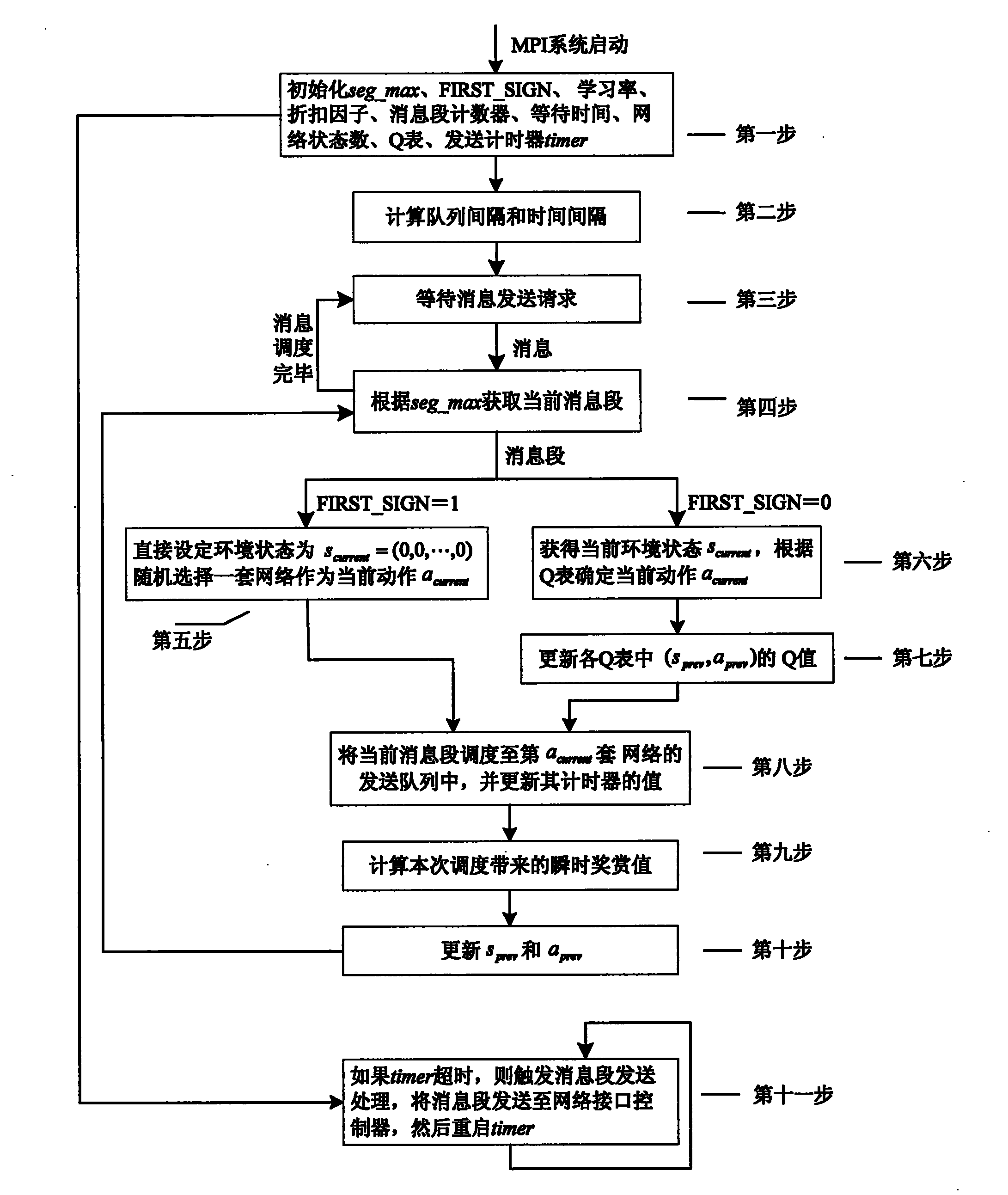

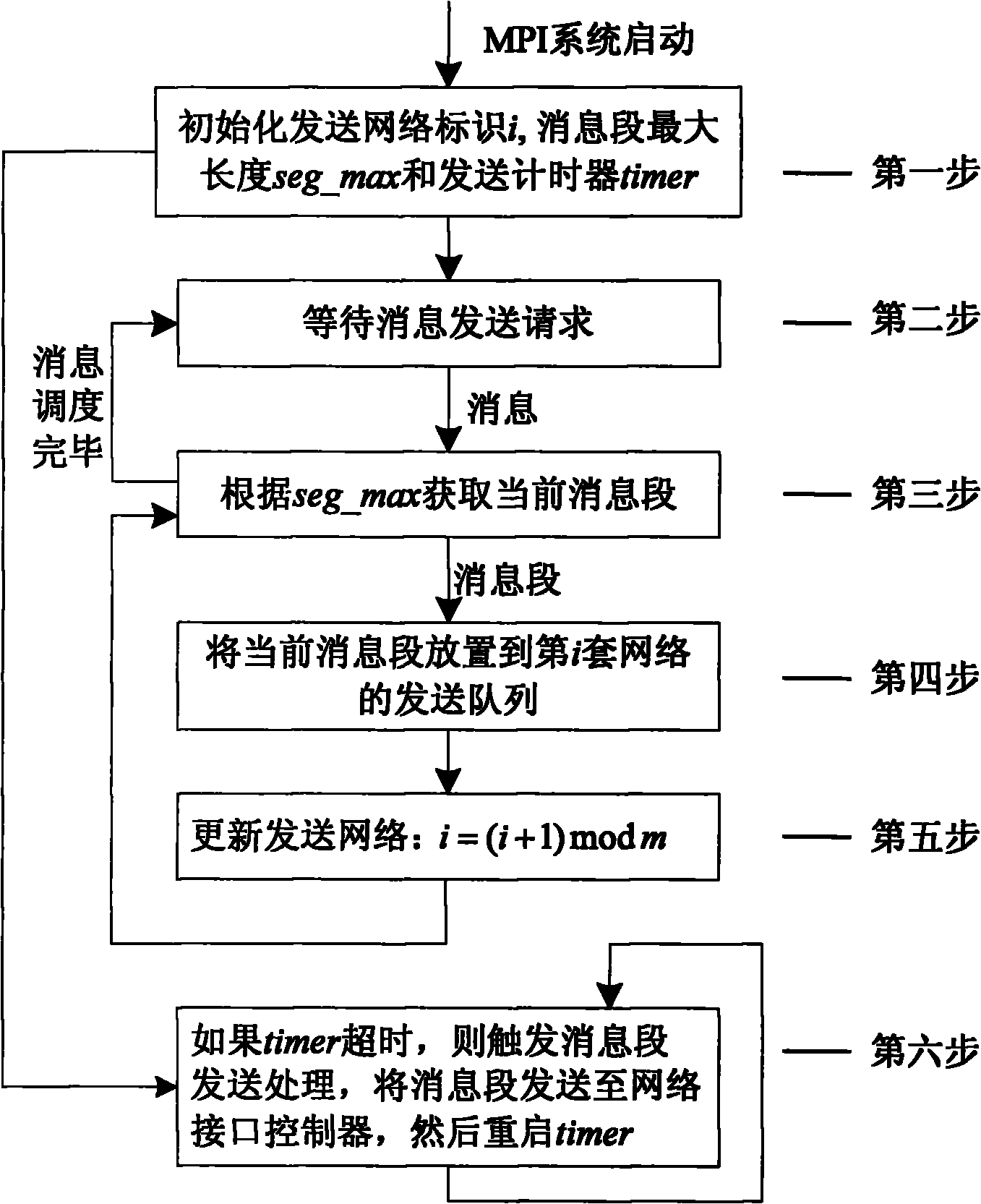

MPI (Moldflow Plastics Insight) information scheduling method based on reinforcement learning under multi-network environment

InactiveCN101833479AGet the latest status in real timeGood application effectInterprogram communicationCombined methodReward value

The invention discloses an MPI (Moldflow Plastics Insight) information scheduling method based on reinforcement learning under a multi-network environment, aiming at overcoming the defect of low practical application performance of a high-performance parallel computer, caused by the traditional circulating scheduling method. The method comprises the steps of: initiating parameters in a process of starting an MPI system, creating Cm<2> Q tables according to a multiple Q table combined method for a computing environment matched with m networks; continuously receiving an MPI information sending request sent by application in a process of starting the MPI system, determining a current information segment, then obtaining a current environment state, scheduling the current information segment to an optimal network according to the state information of historical empirical values stored in the Q tables; and finally, computing an instant reward value obtained by the scheduling and updating Q values in the Q tables. By adopting the invention, the problems that communication loads are distributed unequally, can not adapt to the network state dynamic change and have poor adaptability on the computing environment can be solved, and the practical application performance of the high-performance parallel computer is improved.

Owner:NAT UNIV OF DEFENSE TECH

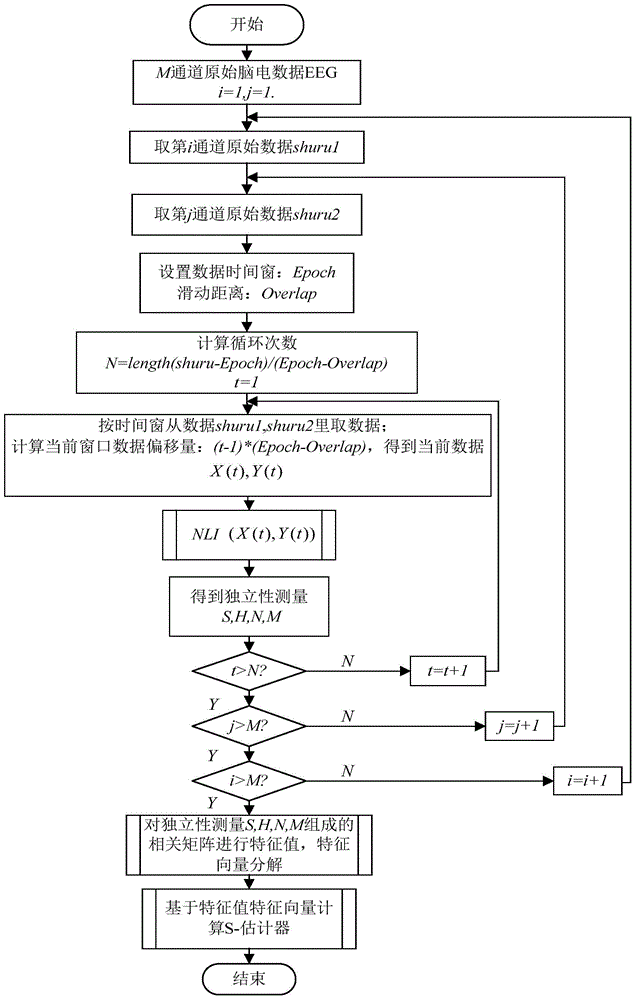

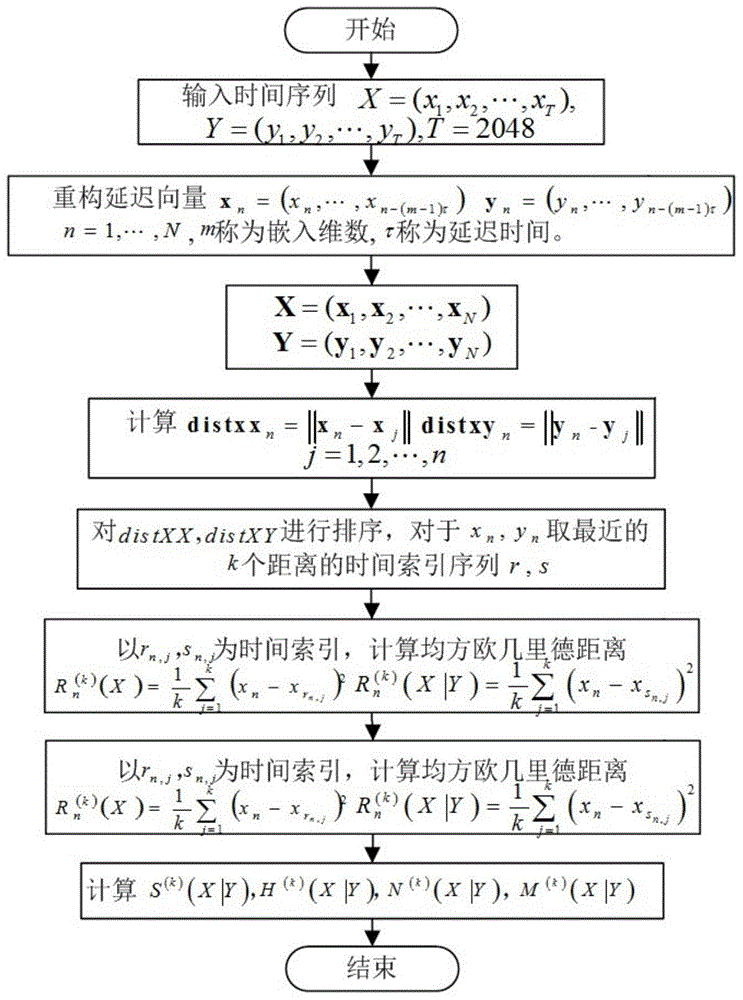

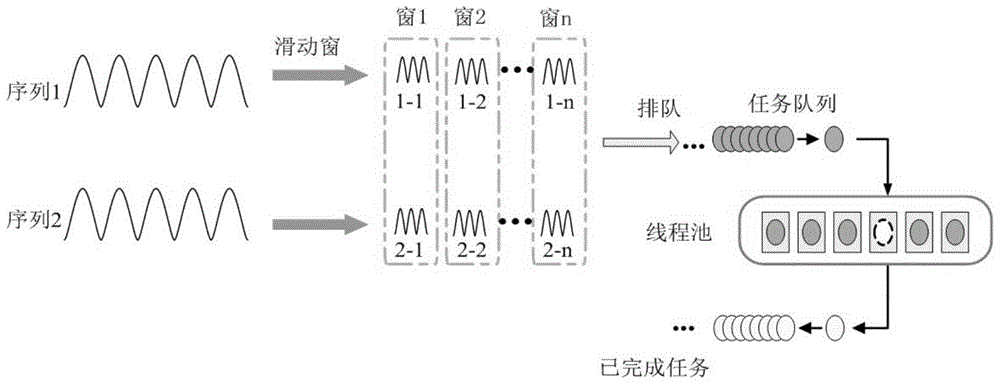

Parallel quantification computation method for global cross correlation of non-linear data

InactiveCN105718425AEasy to handleStrong noise resistanceComplex mathematical operationsCross correlation analysisOriginal data

The present invention discloses a parallel quantification computation method for global cross correlation of non-linear data. A parallel NLI algorithm is developed by using a CPU multi-thread technology and a CUDA based GPGPU multi-thread method in high-performance parallel computation, and an idea of parallel computation is introduced into the algorithm, so as to improve performance of the algorithm and assist analysis of a multi-channel signal; and data processing is extended from dual channels to multiple channels, and by combining a process where an S estimator performs data analysis from locally to globally to locally again, synchronization strength of a signal within a certain regional range is quantified, and more, more accurate and more useful information is mined out of original data to analyze a synchronization problem of the multi-channel signal, so that execution efficiency of the algorithm is greatly improved while the effect of the algorithm is reserved. As an experiment proves, the method has higher efficiency and usability in actual cross correlation analysis of multi-channel non-linear data.

Owner:WUHAN UNIV

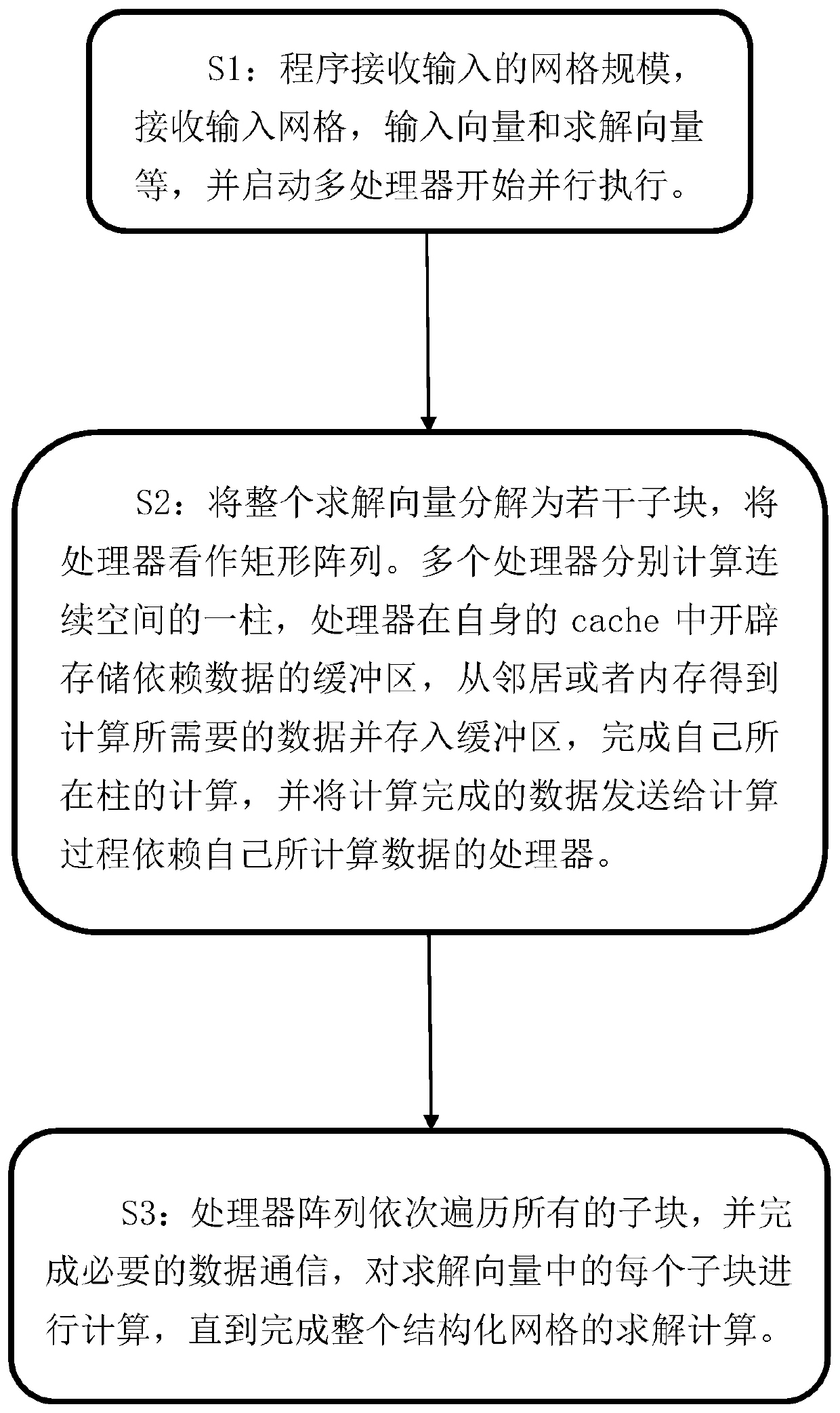

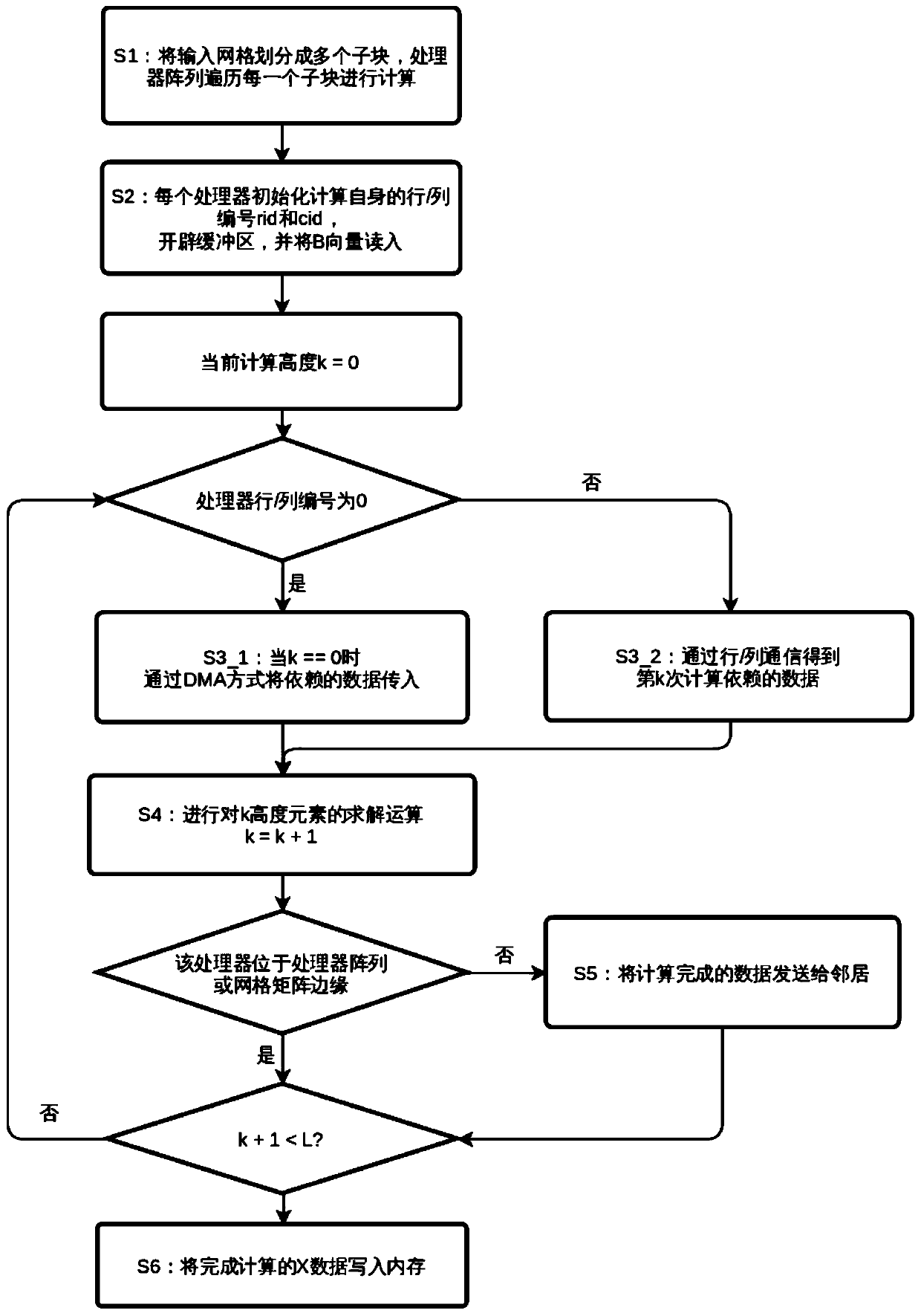

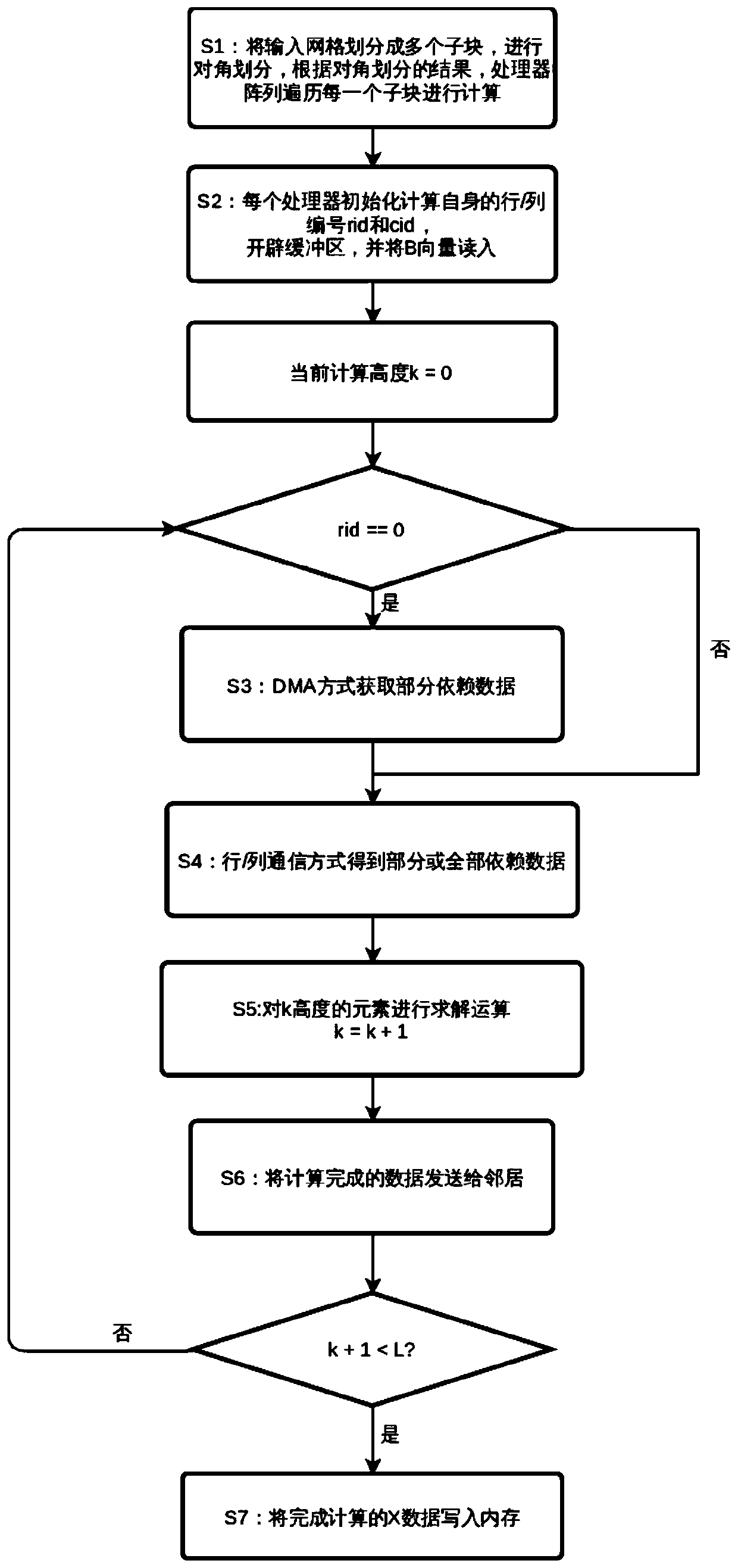

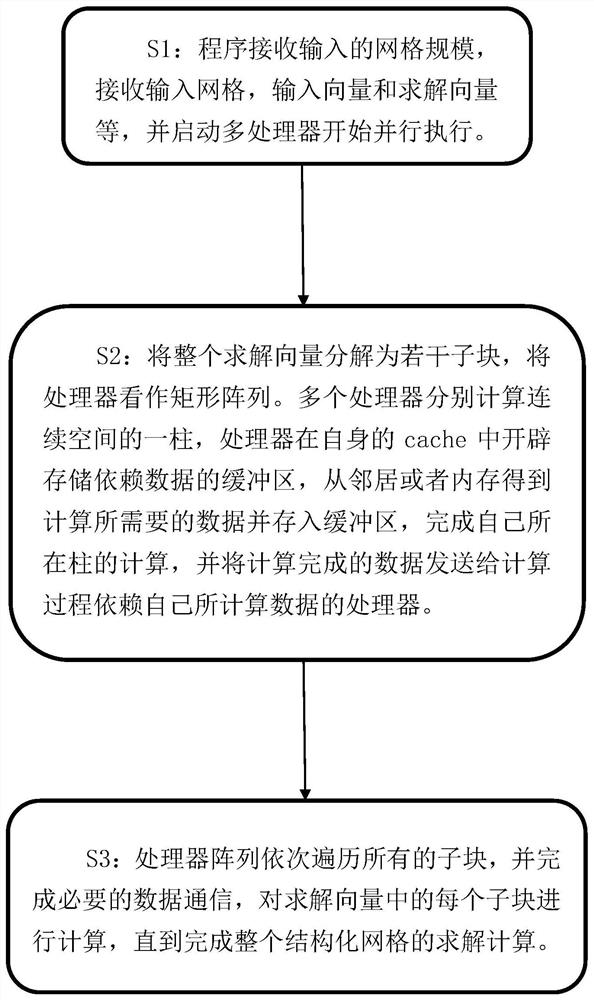

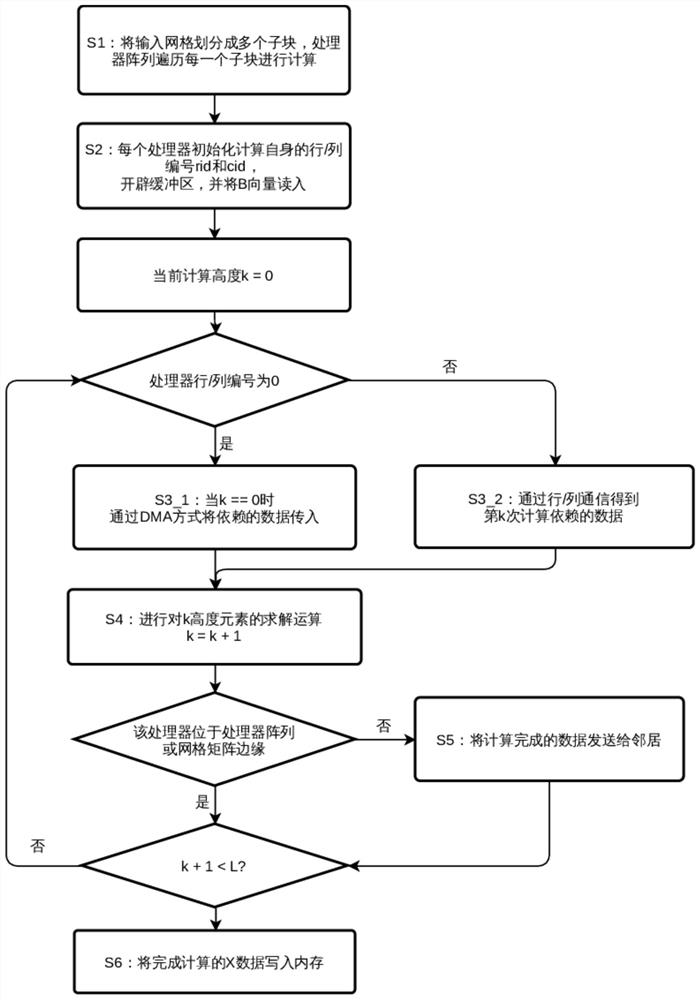

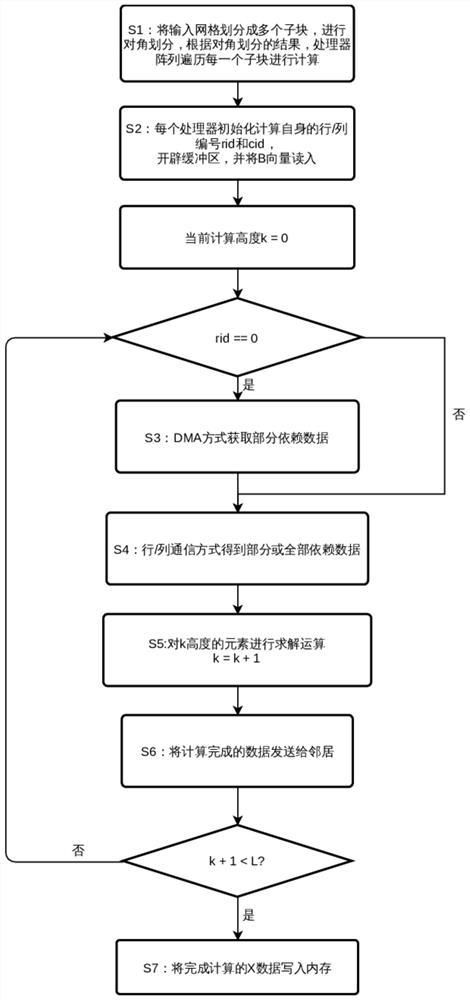

Lower trigonometric equation parallel solving method for structural grid sparse matrix

ActiveCN111079078AEfficient solutionMinimize memory access overheadComplex mathematical operationsComputational scienceComplete data

A method for optimizing inter-processor communication and high-speed buffer memory cache space utilization in solving a structured grid sparse lower trigonometric equation in high-performance parallelcomputing comprises: receiving a solving vector and the like of a structured grid, and starting multiple processors to start parallel execution; decomposing the solving vector into a plurality of sub-blocks; dividing each sub-block into a plurality of columns, regarding a multiprocessor as a rectangular array, wherein each processor is responsible for calculating a column, the processor opens upa buffer area for storing dependent data in a cache of the processor, obtains data required by calculation from neighbors or a memory of the processor, stores the data into the buffer area, completescalculation of the column of the processor, and sends the calculated data to other processors depending on the data; and traversing all the sub-blocks by the processor rectangular array, completing data communication required by calculation, and calculating each sub-block until the whole solving calculation is completed. Dependent data of each operation is transmitted through rapid communication between processors, so that the memory access overhead is minimized, and the resource utilization rate is maximized.

Owner:TSINGHUA UNIV +2

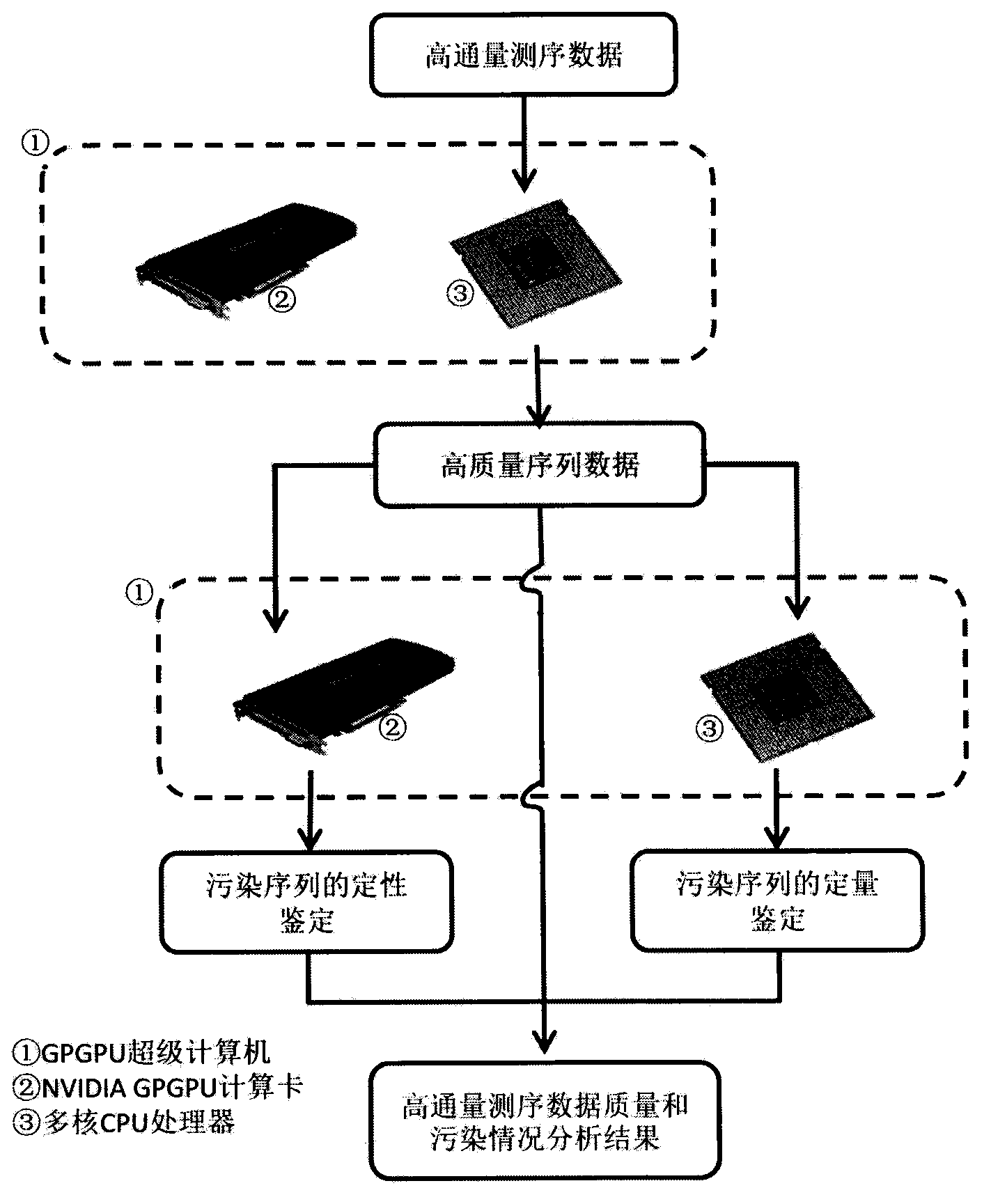

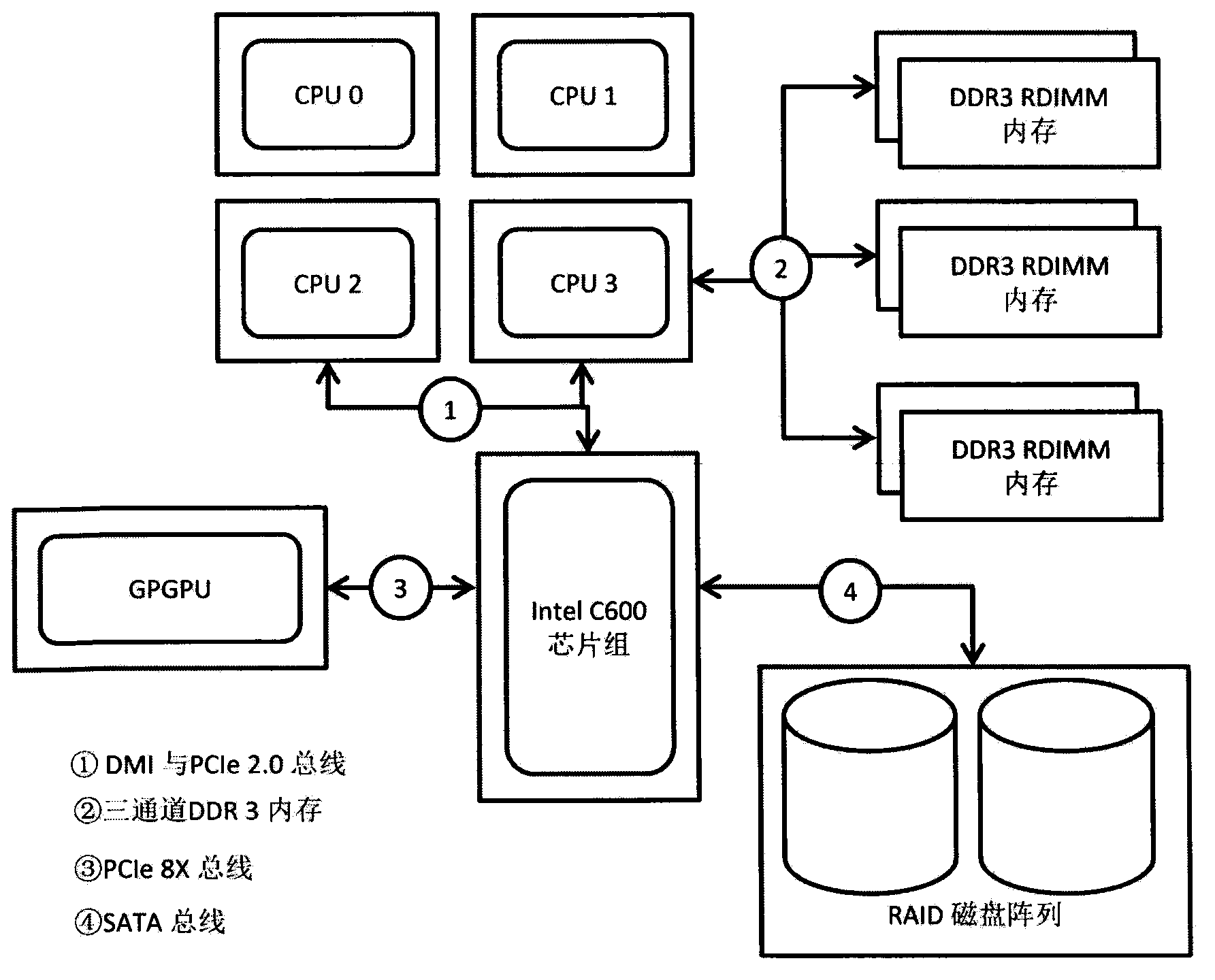

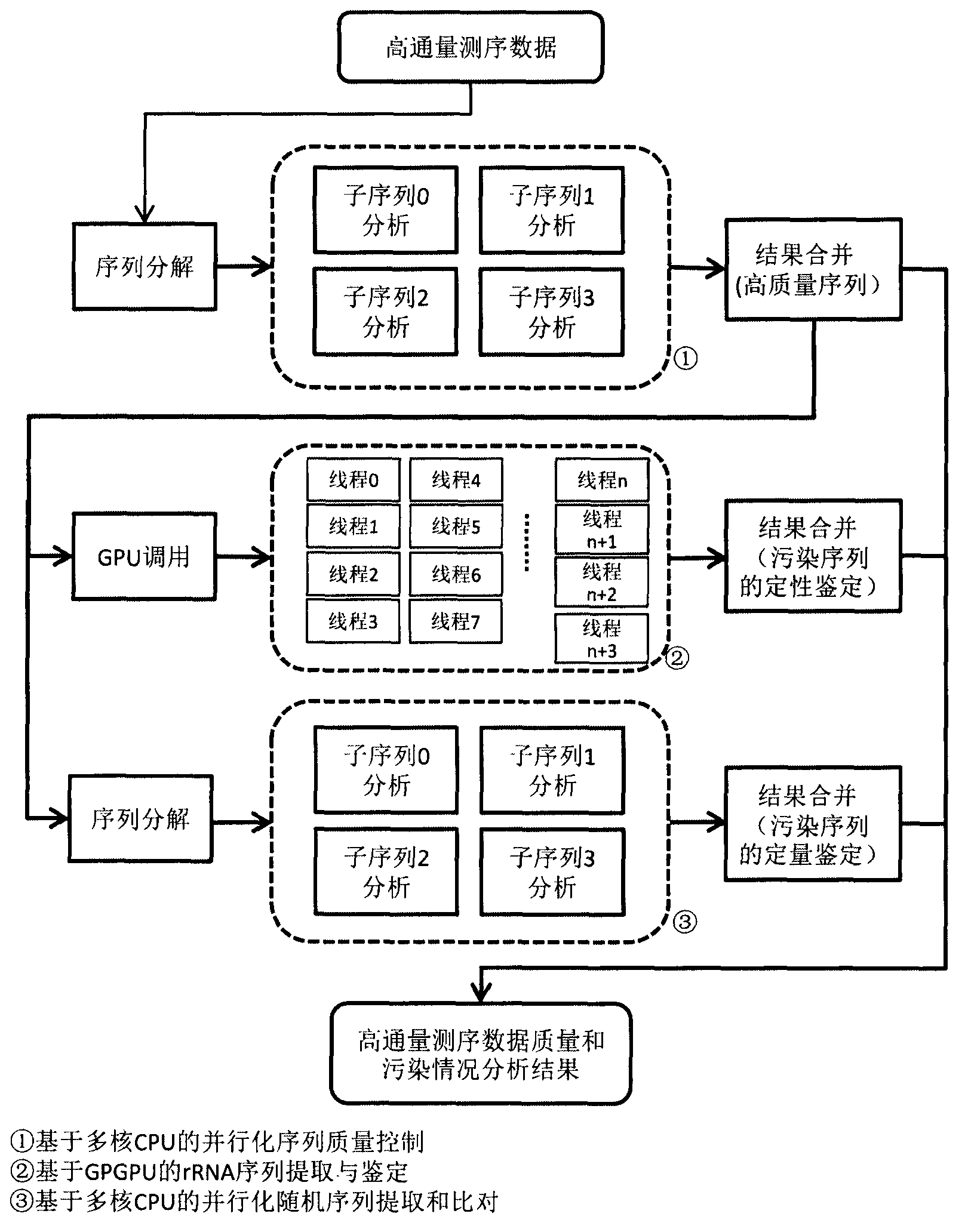

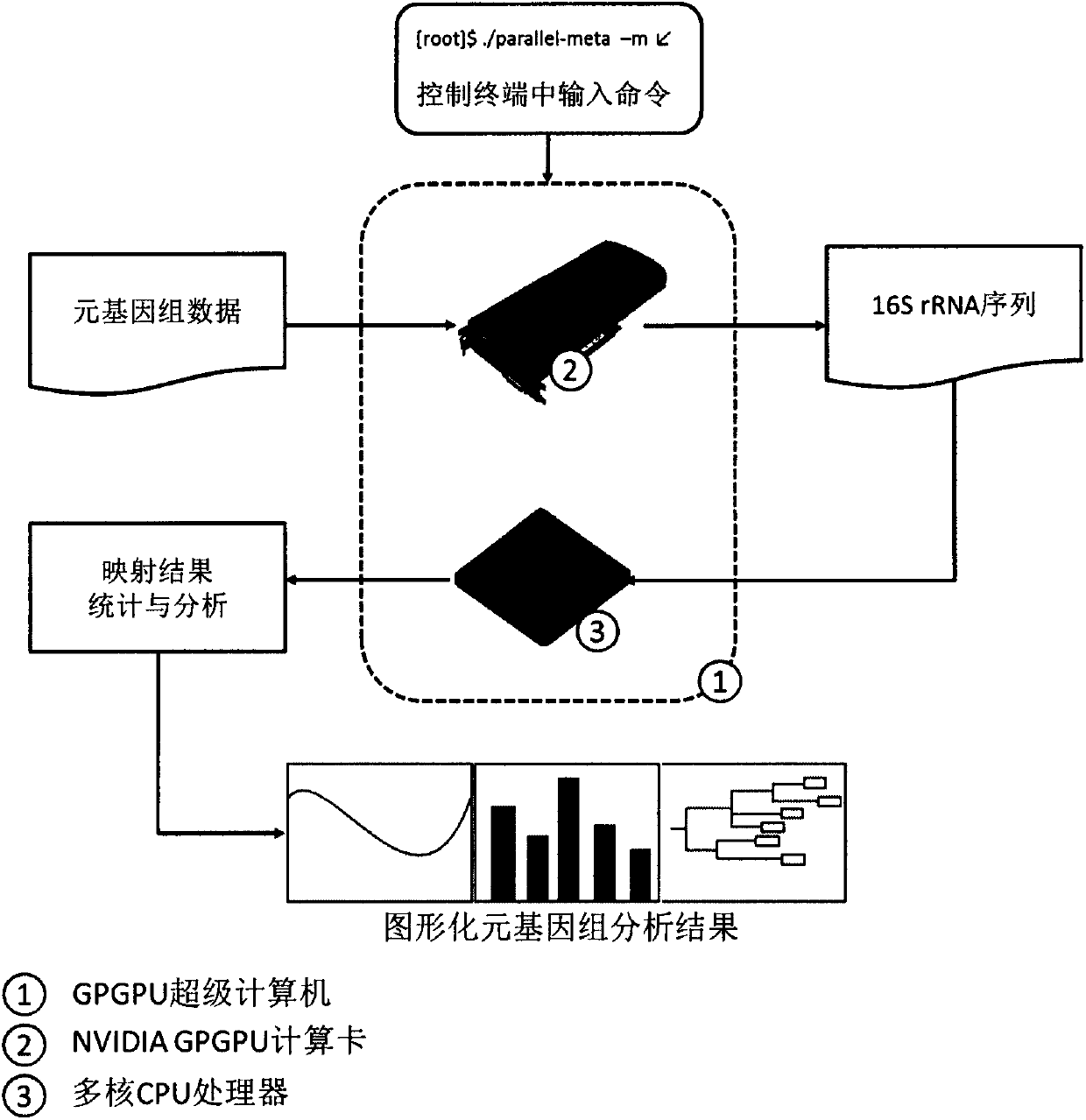

High-throughput sequencing data quality control system based on multi-core CPU and GPGPU hardware

InactiveCN103838985ASpeed upImprove quality control efficiencySpecial data processing applicationsQuality control systemData quality

The invention provides a computing analysis system which is based on multi-core CPU and GPGPU hardware and combines software and hardware, and particularly relates to a high-throughput sequencing data quality control system based on multi-core CPU and GPGPU hardware. According to the characteristic that massive data can be processed in parallel in the high-throughput sequencing data processing process, the system is provided to solve the problem that a traditional computer cannot meet the analysis requirement for carrying out quality control over massive high-throughput sequencing data. A main module of the high-throughput sequencing data quality control system based on the multi-core CPU and GPGPU hardware comprises a multi-core CPU and GPGPU computer and a unified software platform. The system is characterized in that a high-performance parallel computing and storage hardware system and the high-performance, unified and configurable software platform are included. According to high-throughput sequencing data quality control processing based on the multi-core CPU and GPGPU hardware, analysis efficiency of high-throughput sequencing data quality control can be obviously improved.

Owner:YELLOW SEA FISHERIES RES INST CHINESE ACAD OF FISHERIES SCI +1

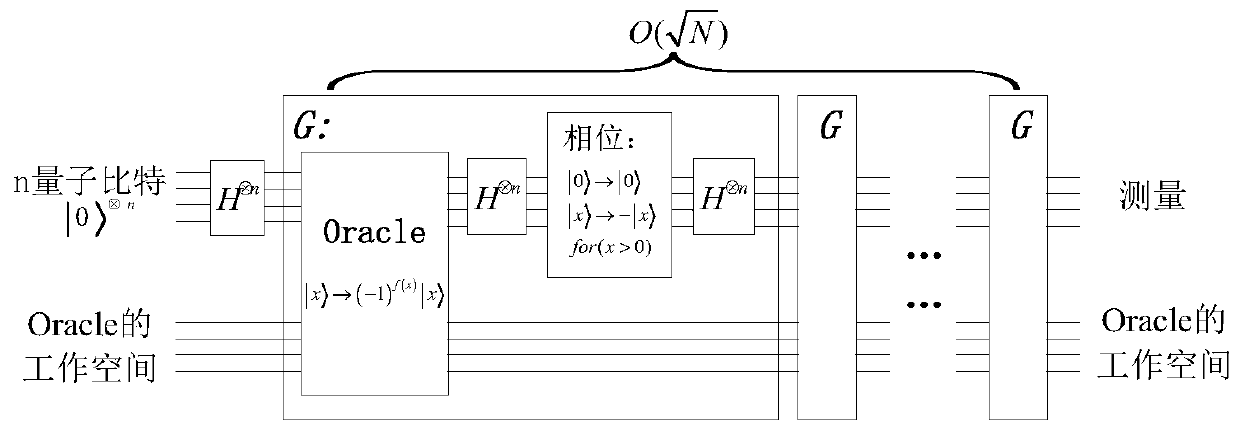

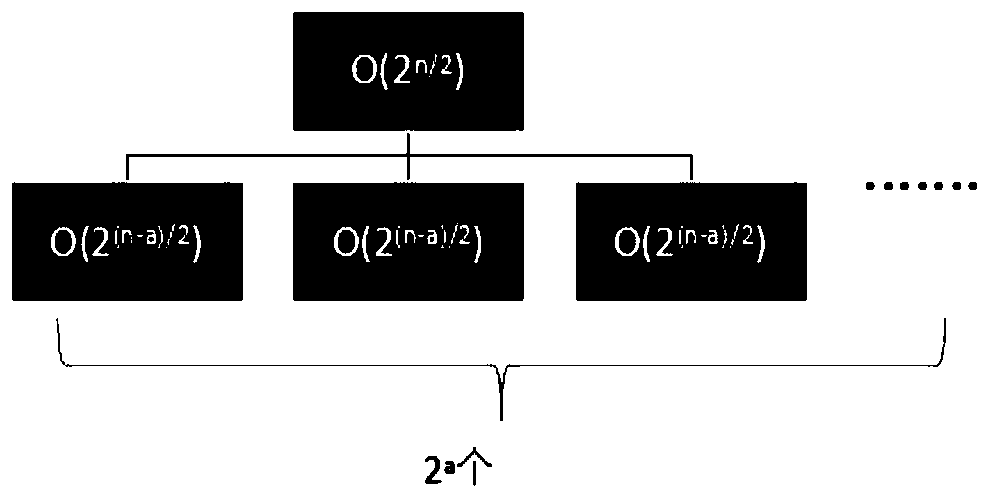

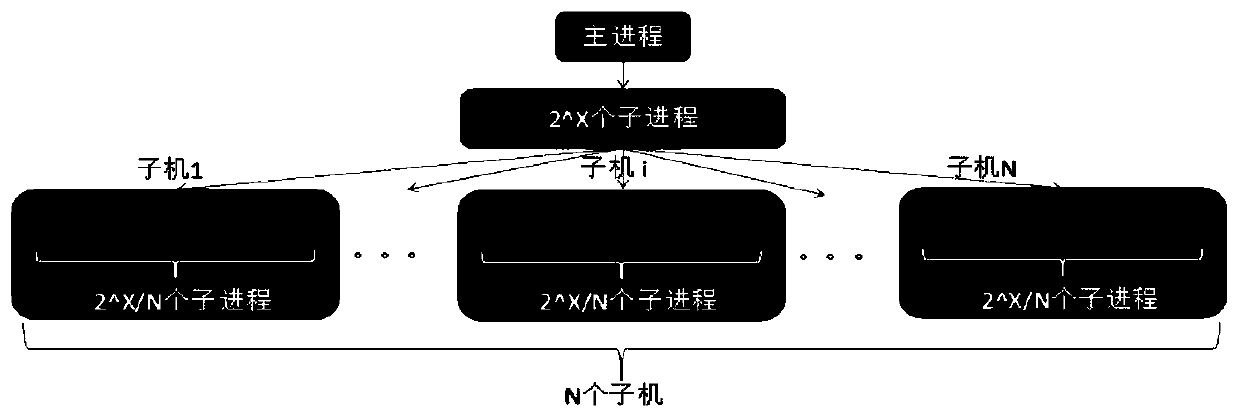

Grover quantum simulation algorithm optimization method based on cloud computing

PendingCN109978171ASave storage spaceShorten operation timeQuantum computersProgram initiation/switchingComputational modelQuantum search

The invention discloses a Grover quantum simulation algorithm optimization method based on cloud computing, and mainly solves the problems of few quantum bits and low simulation efficiency of quantumalgorithm simulation at home and abroad. According to the invention, optimization is carried out for Grover quantum search single iteration unitary operation, an optimization algorithm which greatly saves storage space and remarkably improves unitary operation efficiency is provided, and distributed processing is conducted on large-matrix unitary operation on a cloud computing cluster by utilizingthe advantages of a MapReduce parallel computing model based on Hadoop. The high-performance parallel computing capability provided by the cloud computing platform is fully utilized, the advantages of the cluster architecture are utilized to construct the multi-bit quantum parallel computing model, and the simulation algorithm efficiency is successfully improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

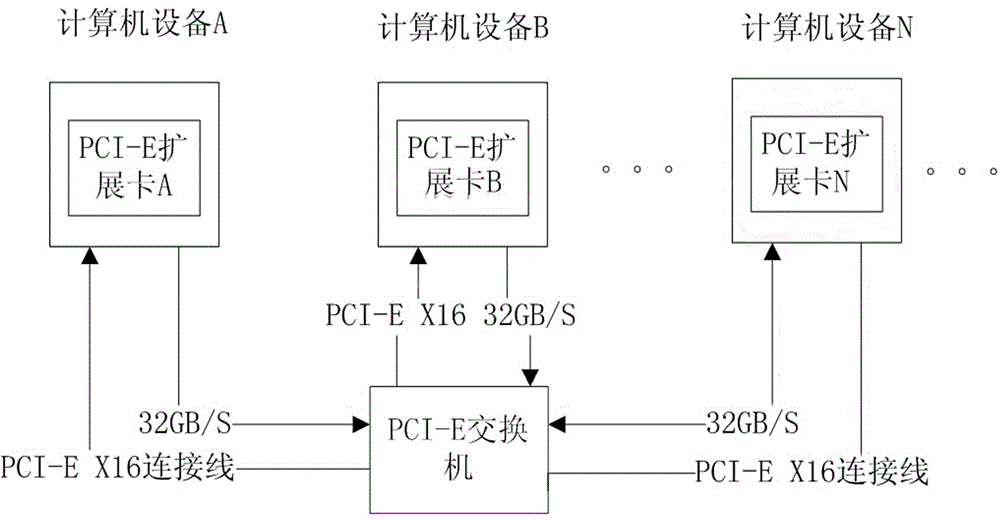

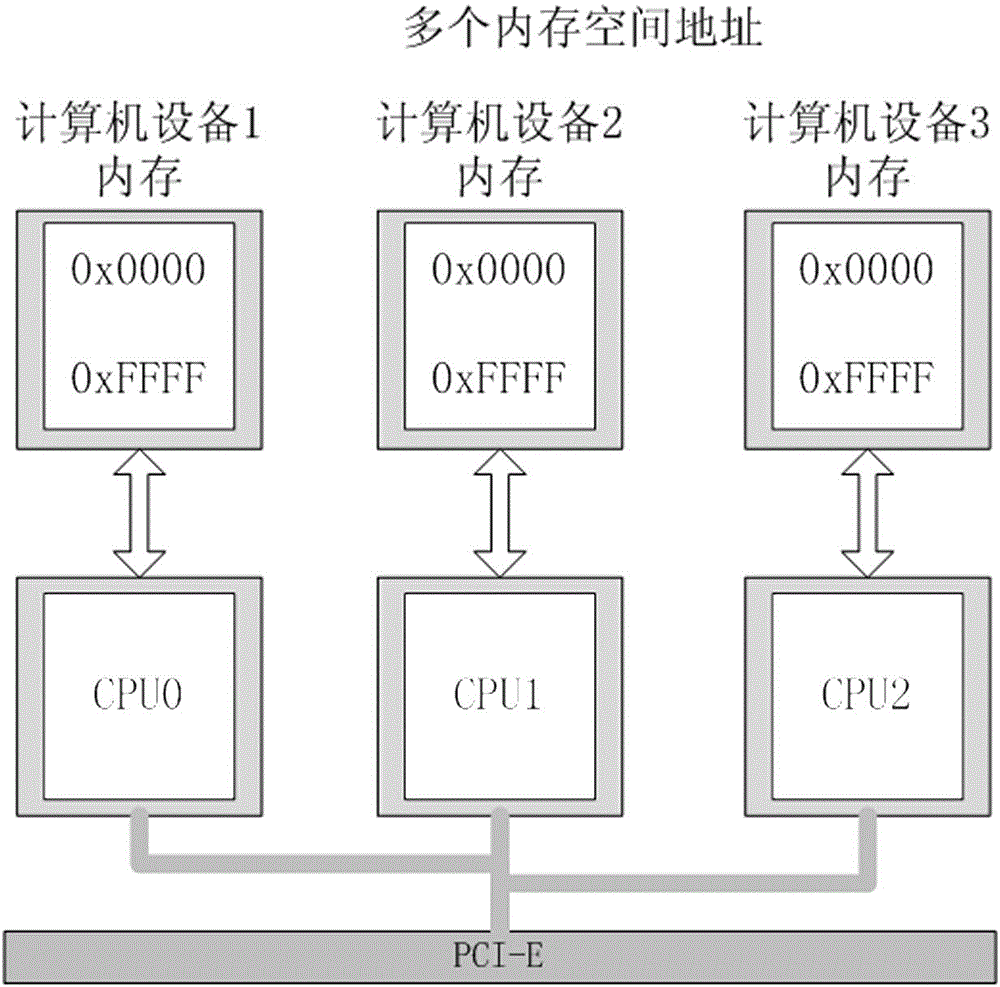

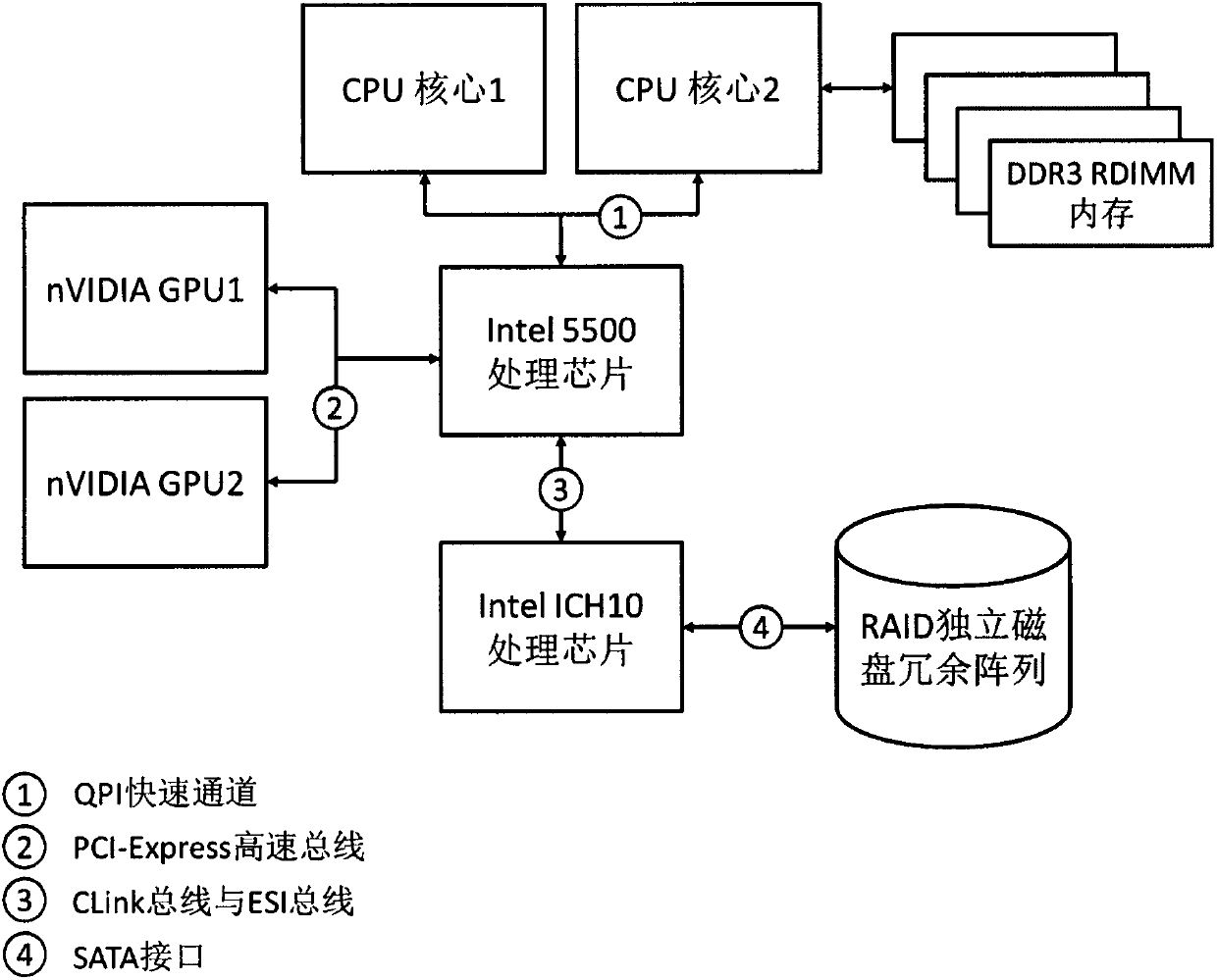

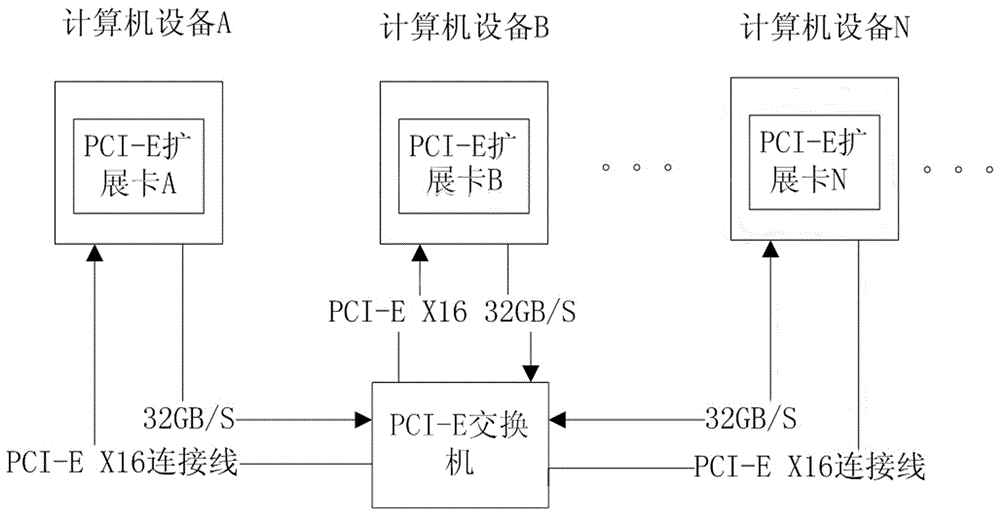

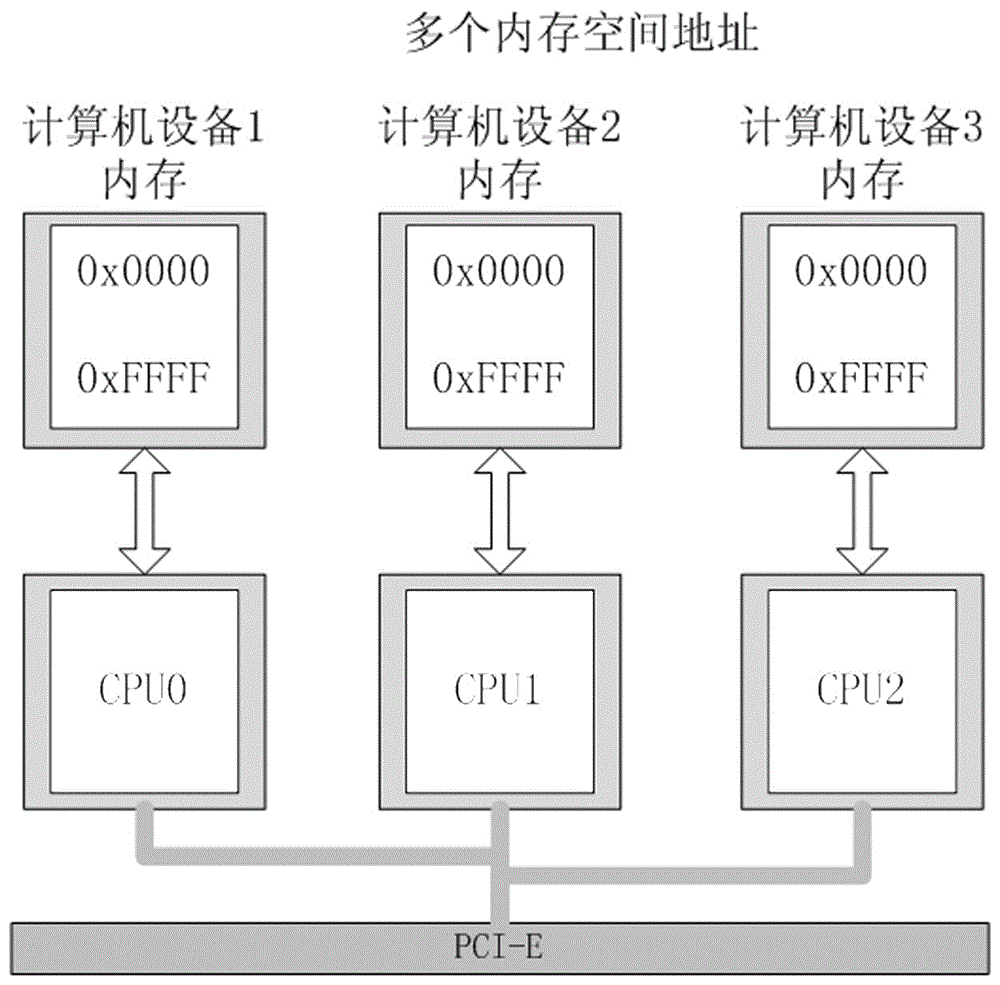

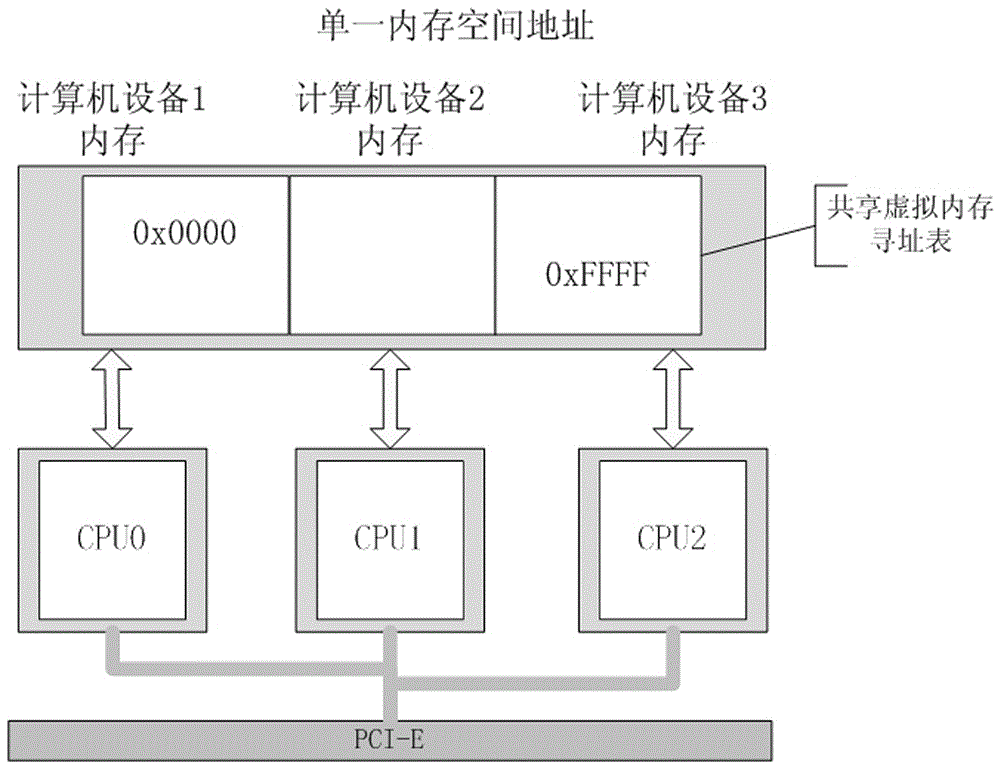

High-performance parallel computing method based on external PCI-E connection

ActiveCN104156332AImprove Parallel Computing EfficiencyEasy to take outElectric digital data processingVirtual memoryComputation process

The invention provides a high-performance parallel computing method based on external PCI-E connection. The method includes the steps of (a) connecting all pieces of computer equipment through a PCI-E bus, (b) running a parallel computing program, (c) constructing a virtual memory addressing table, (d) sending virtual memory information, (e) receiving the virtual memory information, (f) judging correctness of the received information, (g) judging whether a virtual memory address is constructed, (h) allocating computing tasks, (i) executing the computing tasks, and (j) obtaining results and providing the results for a user. The data transmission speed between processors is increased in the parallel computing process through the parallel computing method; the parallel computing method is improved, so that the copy data size of a system CPU is reduced, efficiency of parallel computing operation is greatly improved, multiple parallel computing resources are effectively connected for communication, and data are transmitted at a high speed.

Owner:韩林

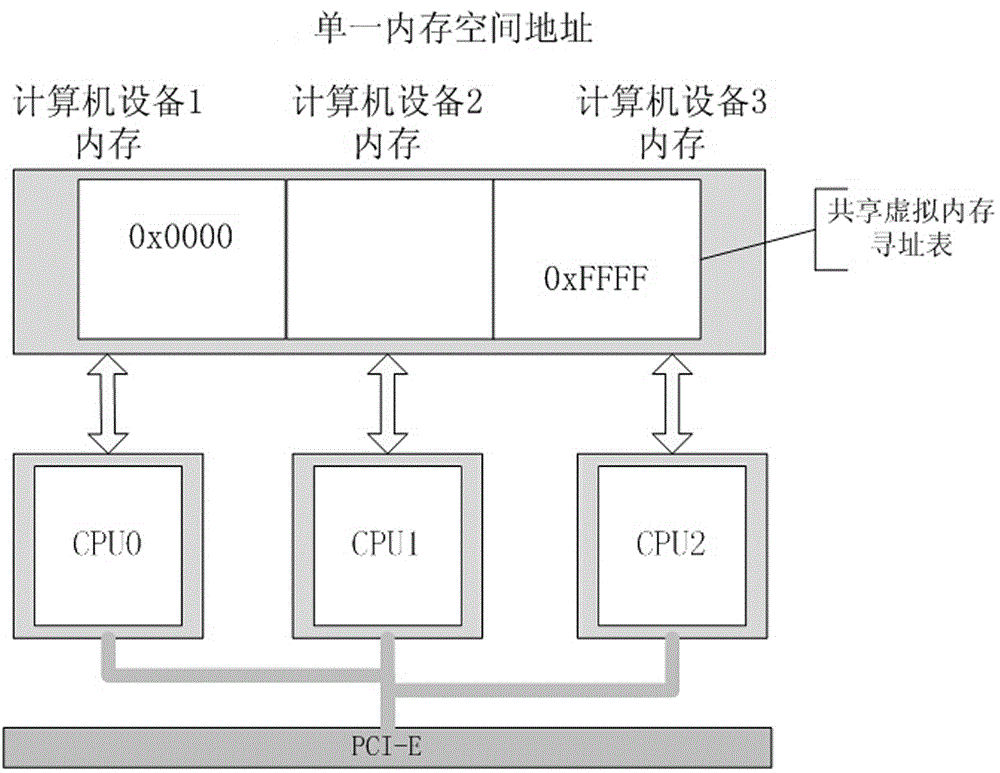

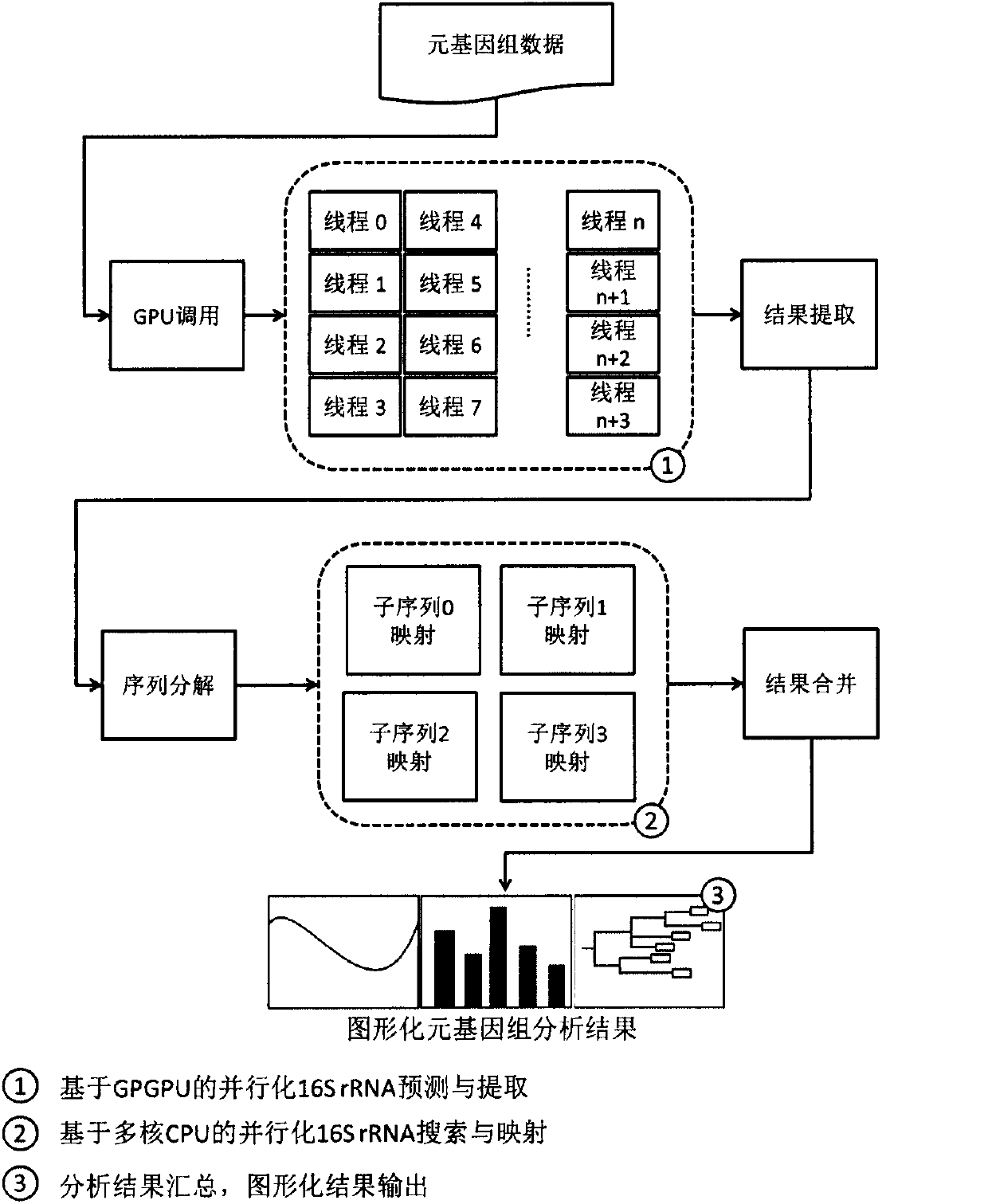

High-performance metagenomic data analysis system on basis of GPGPU (General Purpose Graphics Processing Units) and multi-core CPU (Central Processing Unit) hardware

InactiveCN103310125ASolve Computational BottlenecksSpeed upSpecial data processing applicationsData analysis systemGenomic data

The invention relates to a high-performance metagenomic data analysis system on the basis of GPGPU (General Purpose Graphics Processing Units) and multi-core CPU (Central Processing Unit) hardware. Aiming at the condition that a conventional computer cannot meet the requirement on analysis of mass metagenomic data and according to the characteristic that the mass data in metagenomic data processing can be processed in parallel, the invention discloses a calculation analysis system which is on the basis of the GPGPU and the multi-core CPU hardware and combines software and hardware methods. A main module of the metagenomic calculation and analysis system on the basis of a GPGPU super computer comprises a GPGPU and multi-core CPU computer and a uniform software platform. The high-performance metagenomic data analysis system on the basis of the GPGPU and multi-core CPU hardware has the characteristics of (1) a high-performance parallel calculation and storage hardware system and (2) the high-performance uniform configurable software platform. Metagenomic sequence processing on the basis of the GPGPU hardware can obviously improve analysis efficiency of the metagenomic data.

Owner:QINGDAO INST OF BIOENERGY & BIOPROCESS TECH CHINESE ACADEMY OF SCI

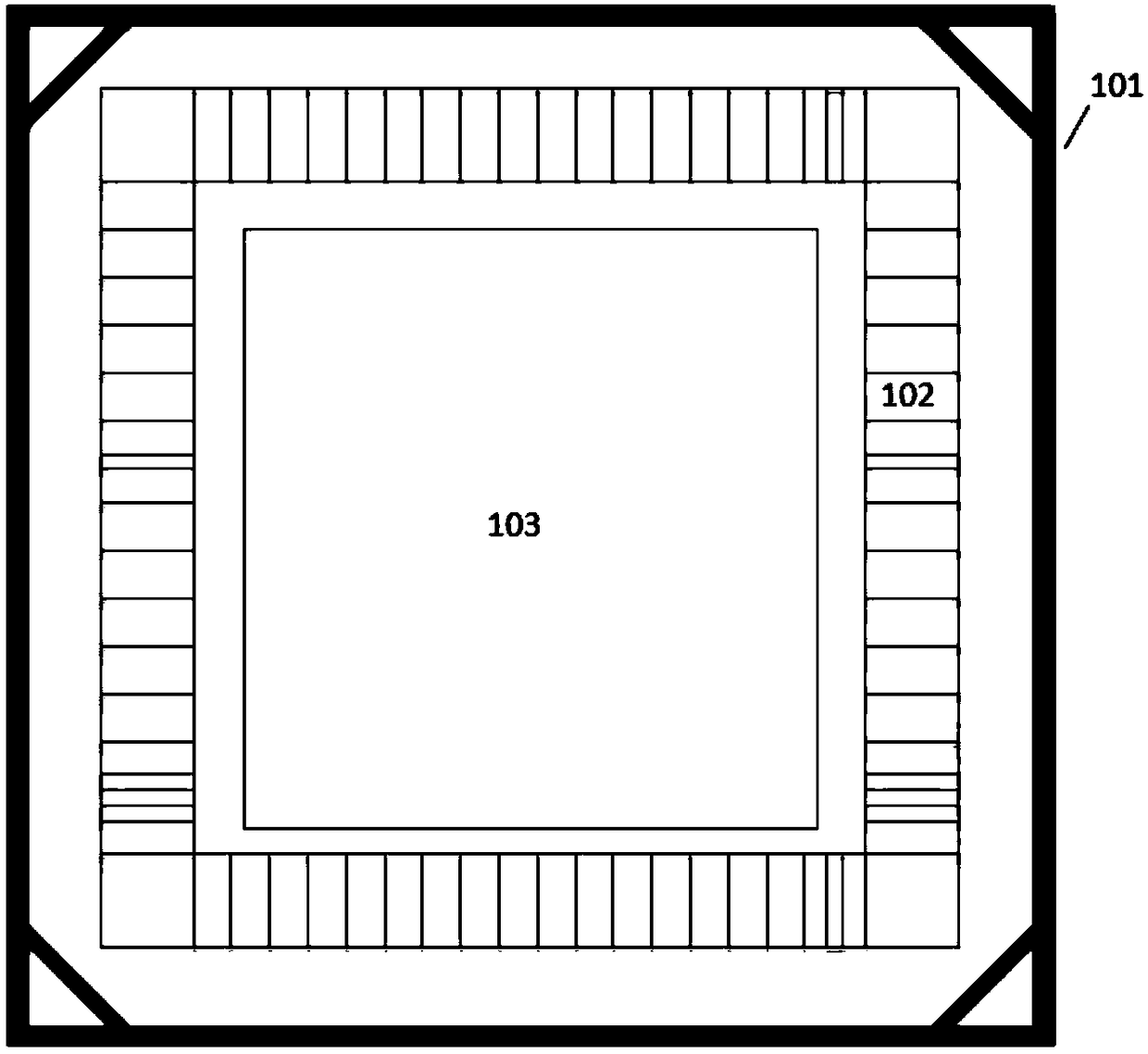

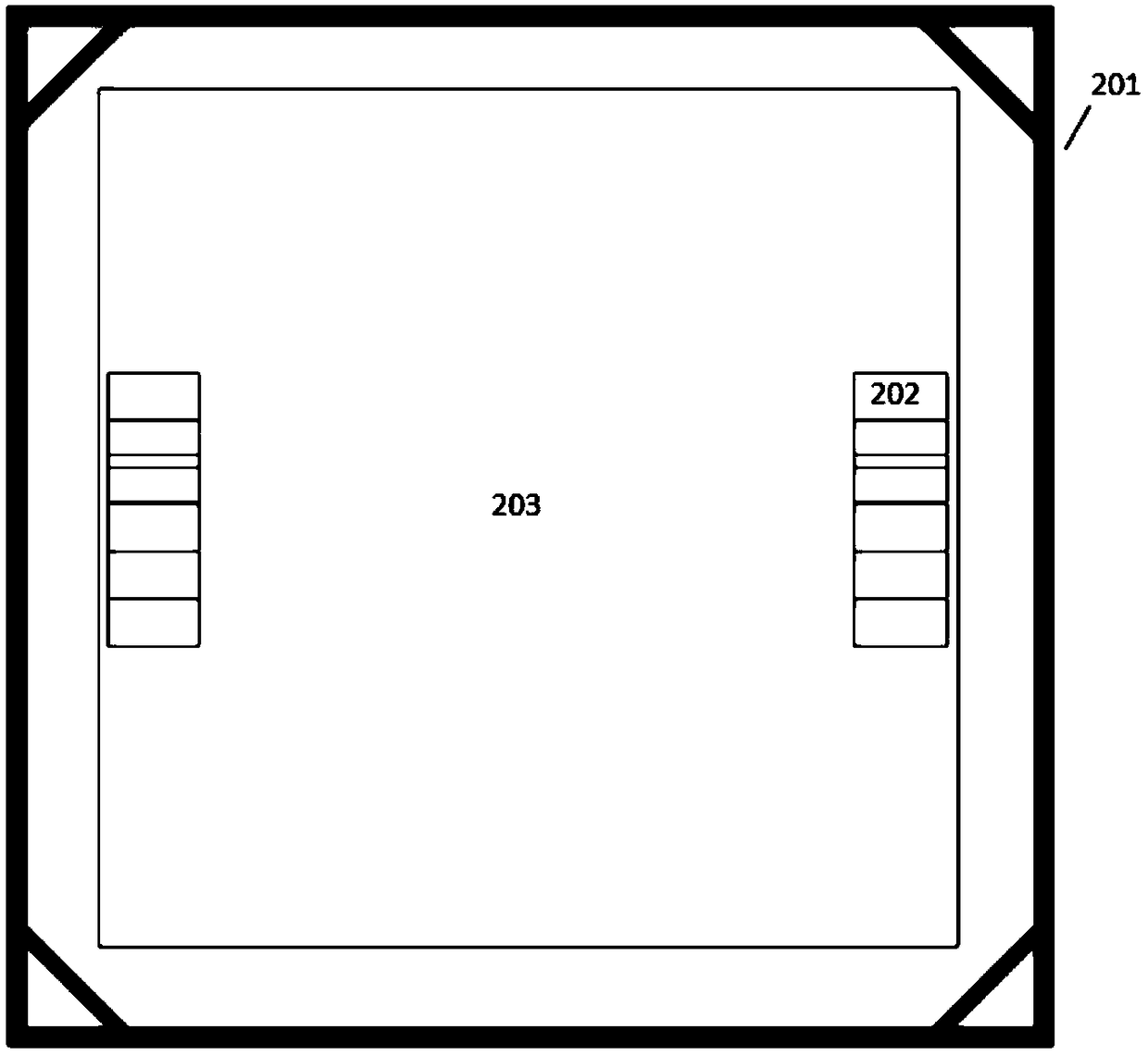

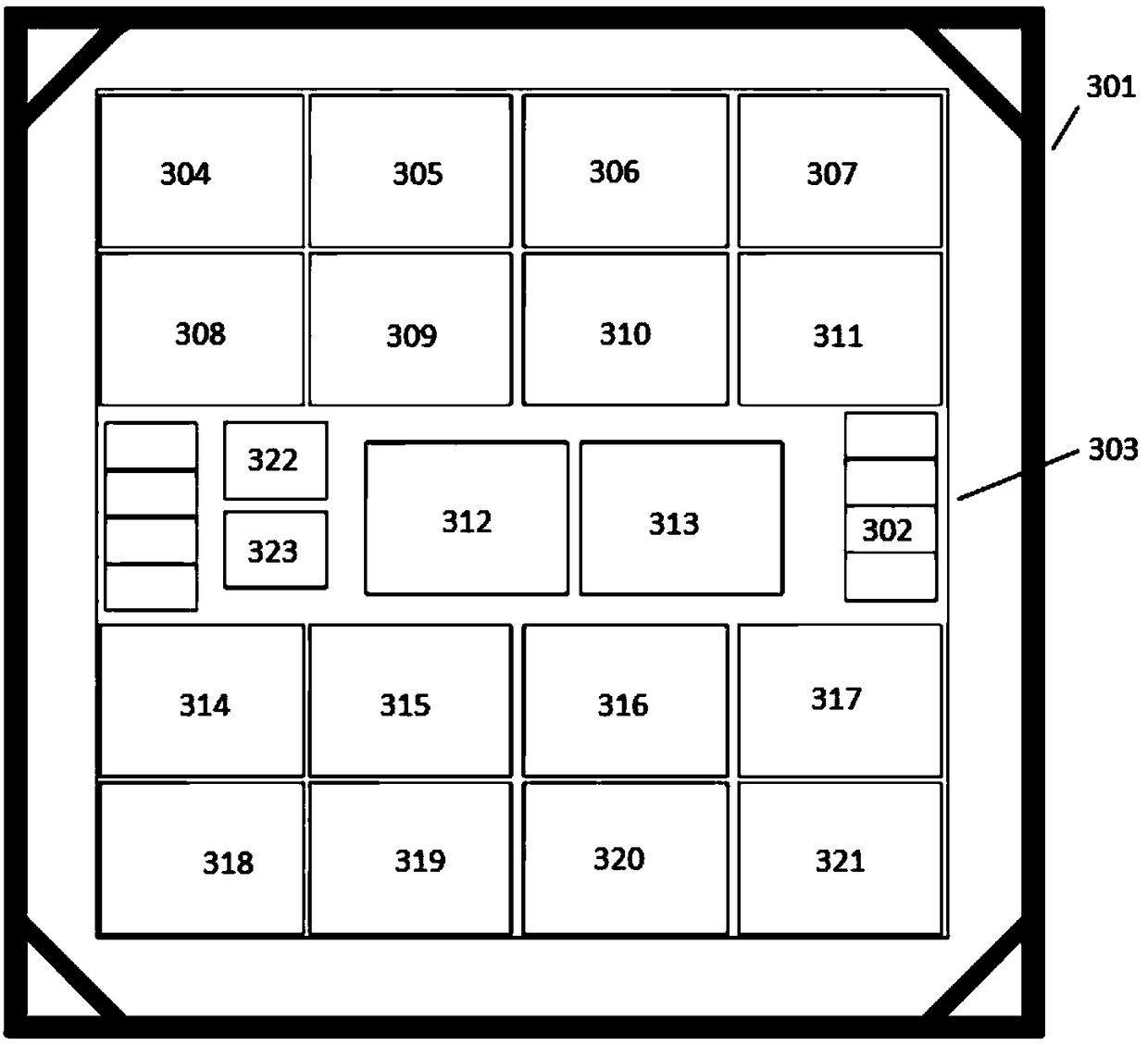

Layout structure for saving chip area and a preparation method thereof

InactiveCN109344507AReduce area wasteLow costSpecial data processing applicationsComputer moduleEngineering

The invention belongs to the technical field of chip layout design, in particular to a layout structure and a preparation method for saving chip area. The invention mainly relates to a chip layout structure in which the design rule is reasonably utilized in a digital money mining machine chip or a high-performance parallel computing chip, and the module shape is changed to reduce the waste of thechip corner area to the minimum in the AIO flow. By cutting off the right angle corner of the core module, the core module cutting off the right angle corner is arranged at the chip corner, and the cutting angle is placed relative to the chip sealring corner; And the area of the core module with the right angle cut is kept constant by increasing the width or length of the core module with the right angle cut. So as to realize the reduction of chip area.

Owner:广芯微电子(广州)股份有限公司

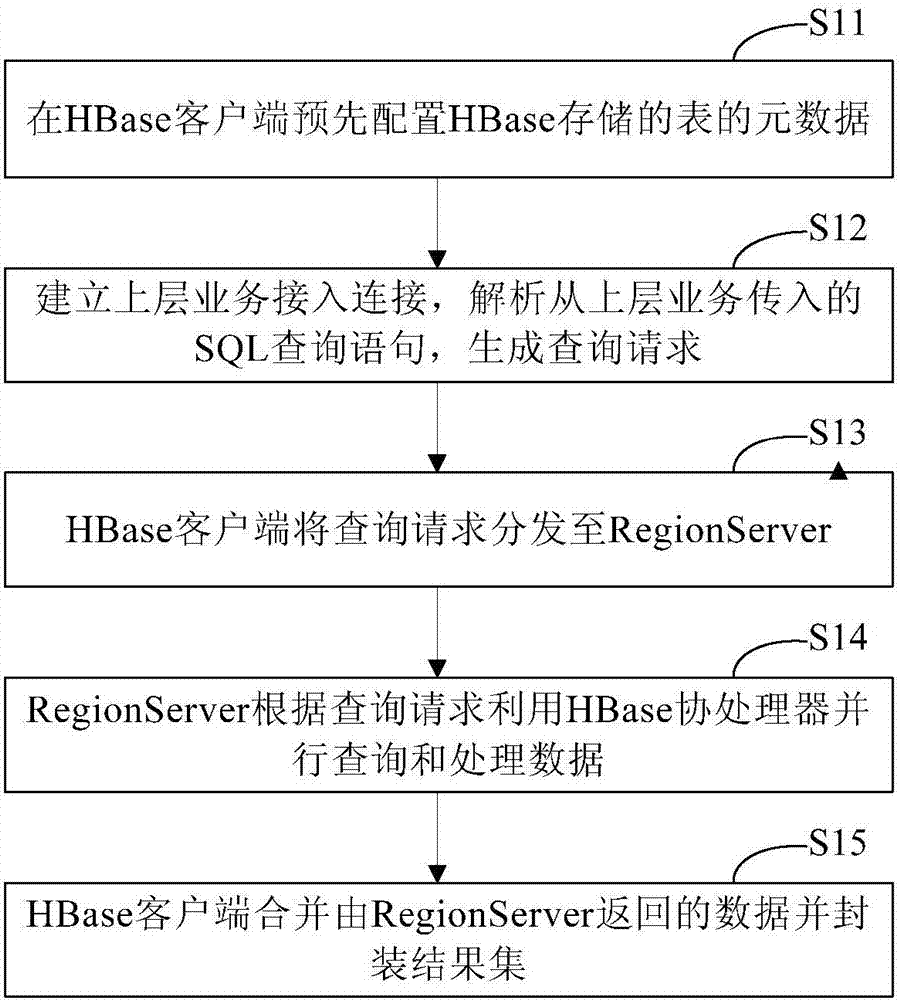

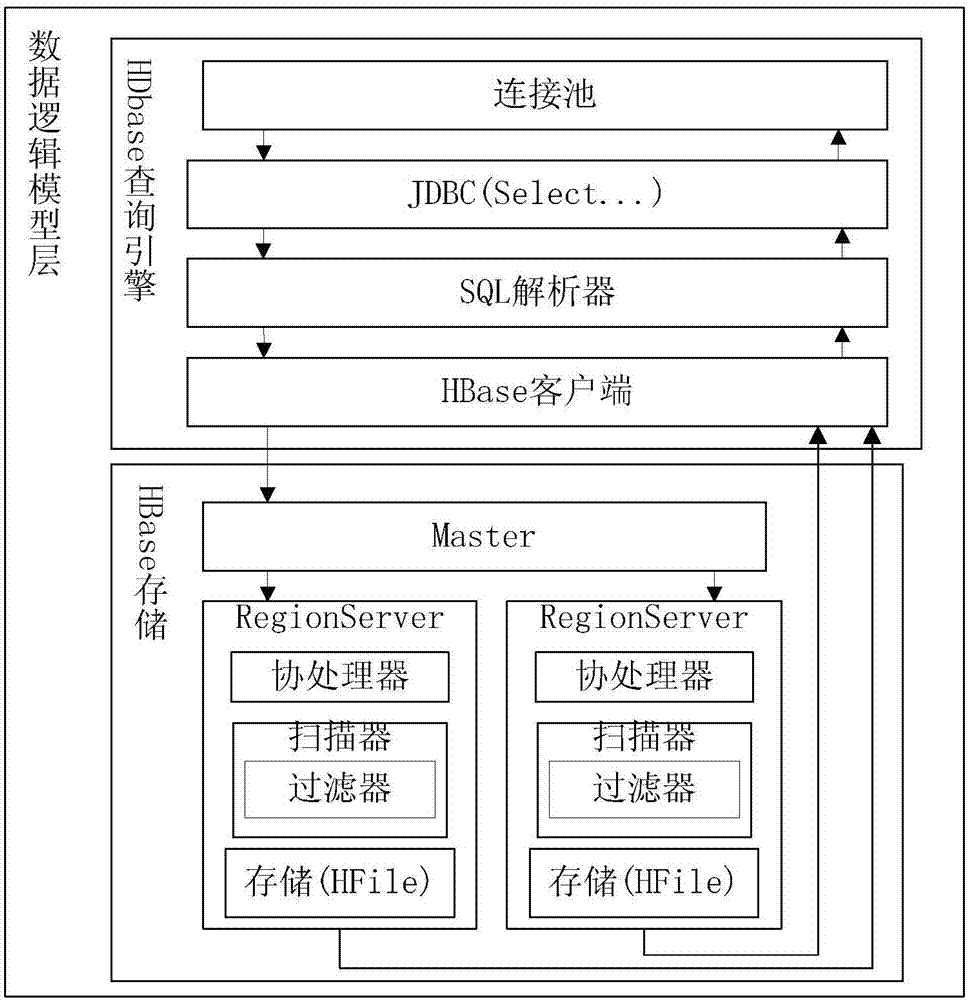

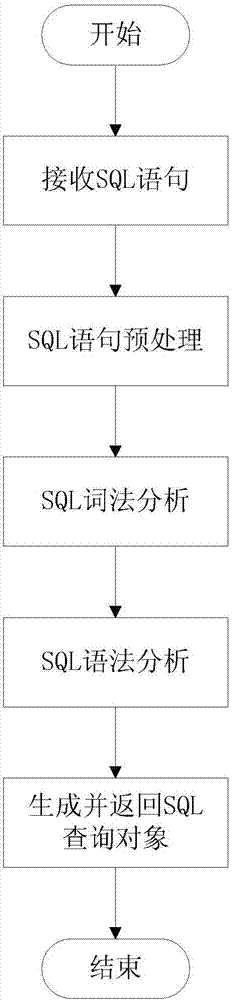

Method and system of SQL-like queries based on HBase coprocessor

ActiveCN107368477AImprove filtration efficiencyImprove computing efficiencySpecial data processing applicationsReal-time dataCoprocessor

The invention provides a method and a system of SQL-like queries based on an HBase coprocessor. The method and the system can standardize and unify query conditions and returned results, and can execute high-performance parallel computing of real-time data while intrusion into an upper-layer service is avoided. The method includes: preconfiguring the metadata of tables, which are stored by HBase, on an HBase client; establishing an upper-layer service access connection, parsing SQL query statements imported from the upper-layer service, and generating query requests; distributing the query requests to RegionServers by the HBase client; utilizing the HBase coprocessor by the RegionServers to carry out the parallel queries and processing on the data according to the query requests; and merging data, which is returned by the RegionServers, by the HBase client, and encapsulating a result set.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

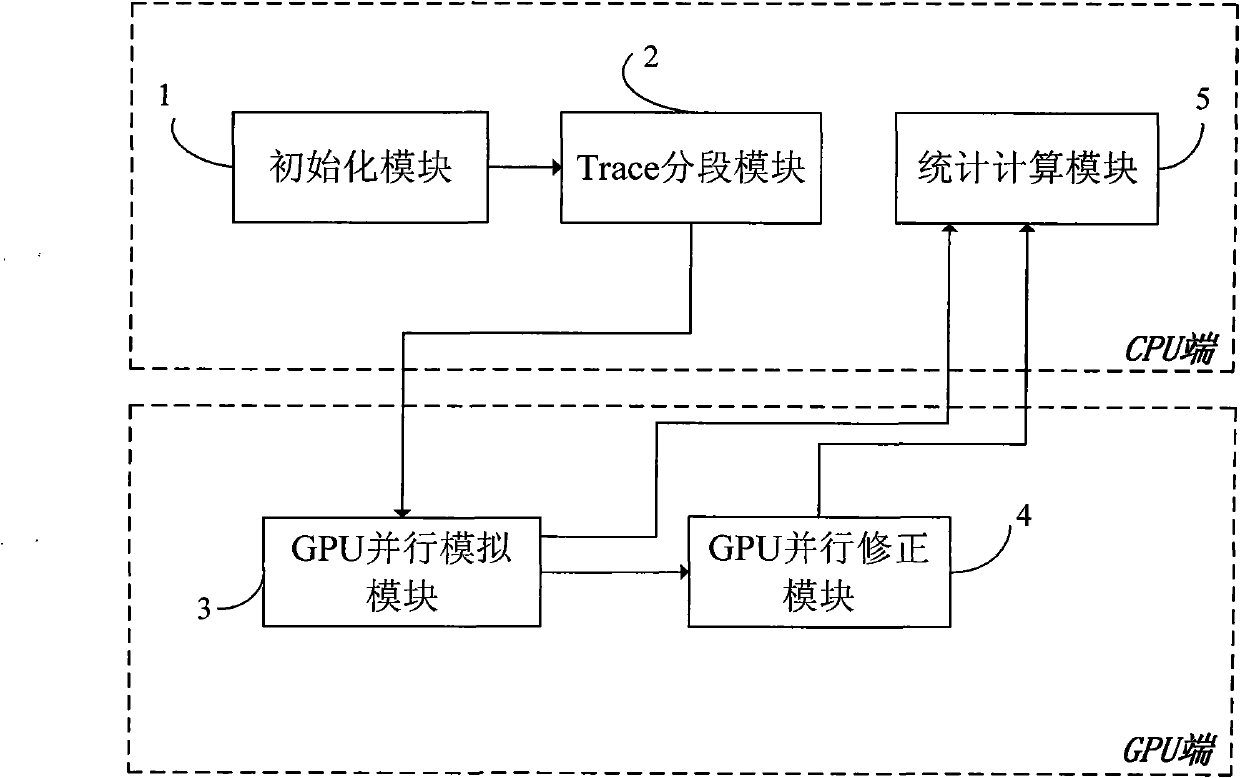

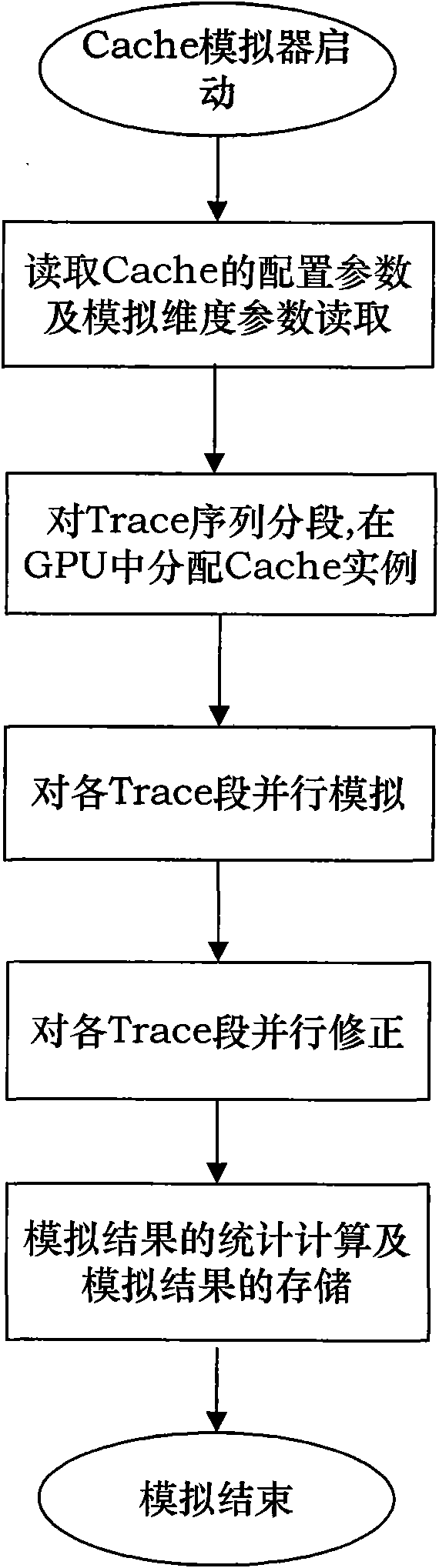

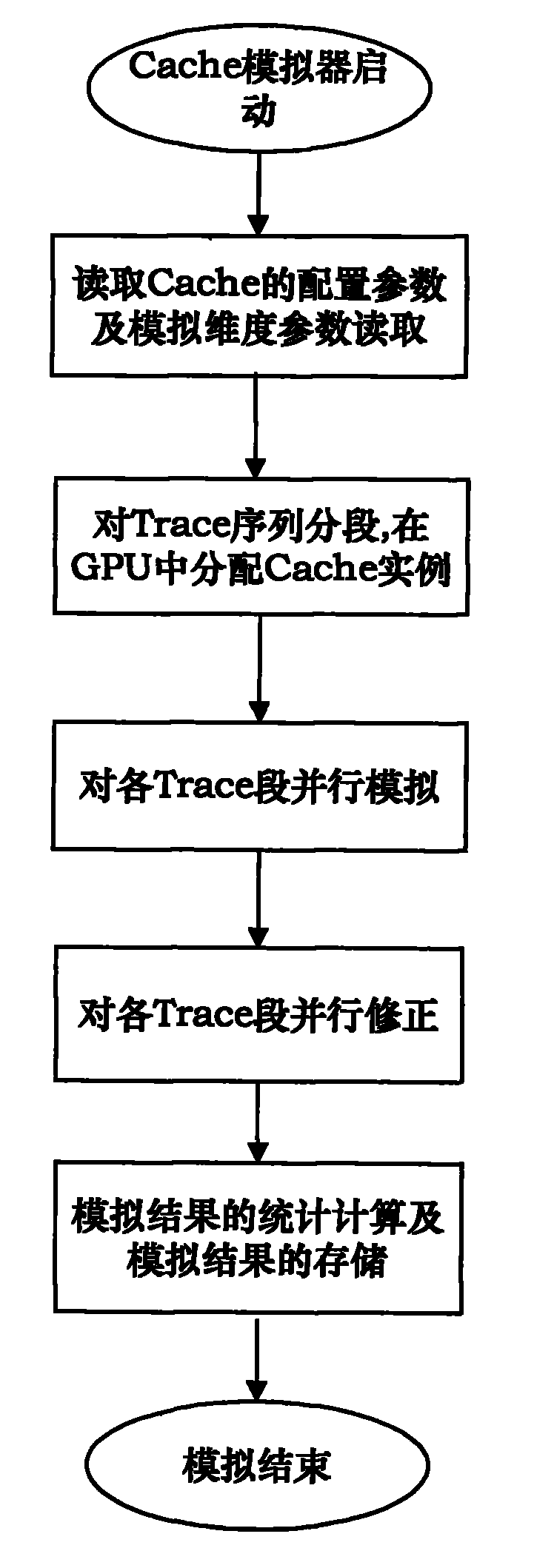

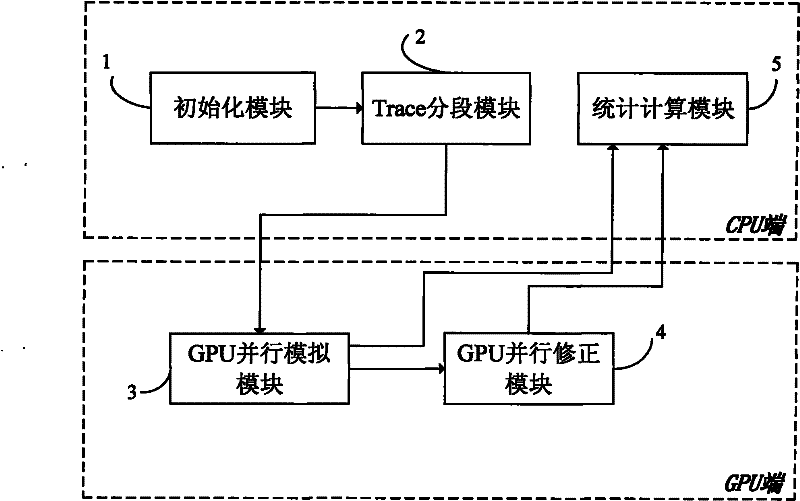

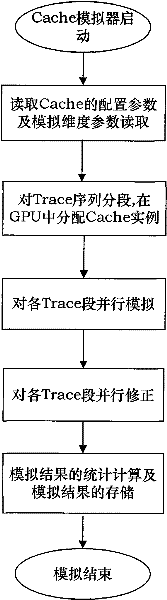

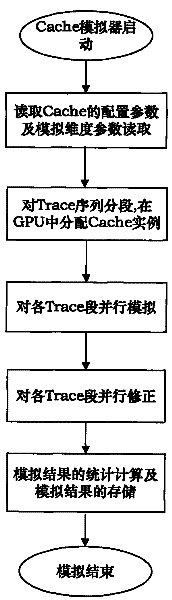

Cache simulator based on GPU and time parallel speedup simulating method thereof

InactiveCN101770391AImprove Simulation EfficiencyImprove performanceMemory adressing/allocation/relocationImage memory managementGranularityParallel simulation

The invention provides a Cache simulator based on a GPU and a time parallel speedup simulating method thereof. The Cache simulator comprises an initializing module, a Trace segmentation module, a GPU parallel simulating module, a GPU parallel modifying module and a statistical calculation module. The cache simulator is based on the GPU with strong high-performance parallel computing capacity, has multi granularity and multi configuration abilities and parallel simulation characteristic. The invention adopts a time parallel speedup method to segment longer Trace arrays, realizes the parallel simulation of multi Trace segments on the GPU, and utilizes the GPU parallel modifying module to modify the error caused by the modifying and simulating processes in the simulating process. The invention has the advantages that the Cache simulating efficiency is improved, computing resources are better utilized and simultaneously higher cost-performance ratio is achieved.

Owner:BEIHANG UNIV

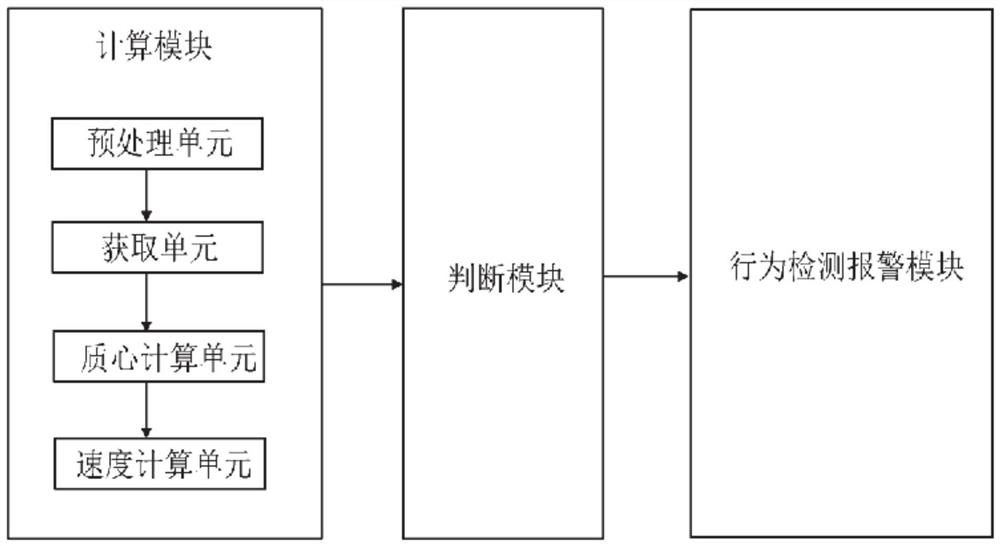

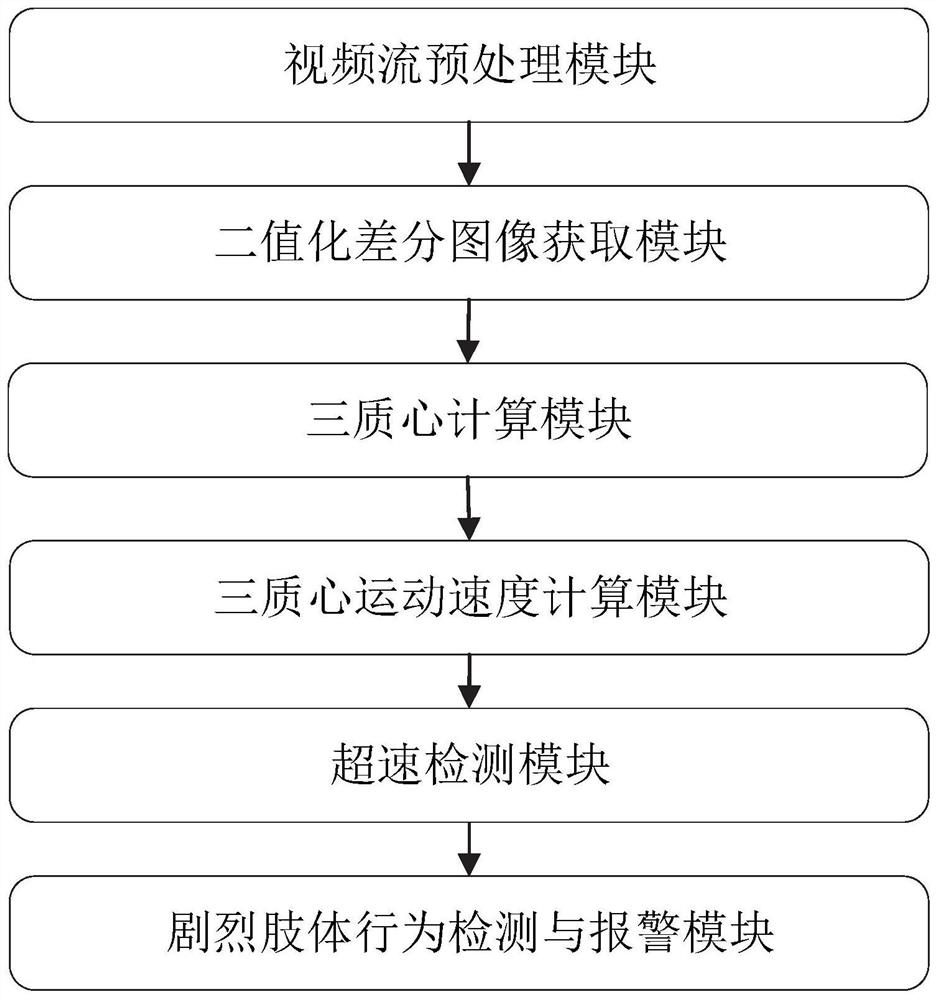

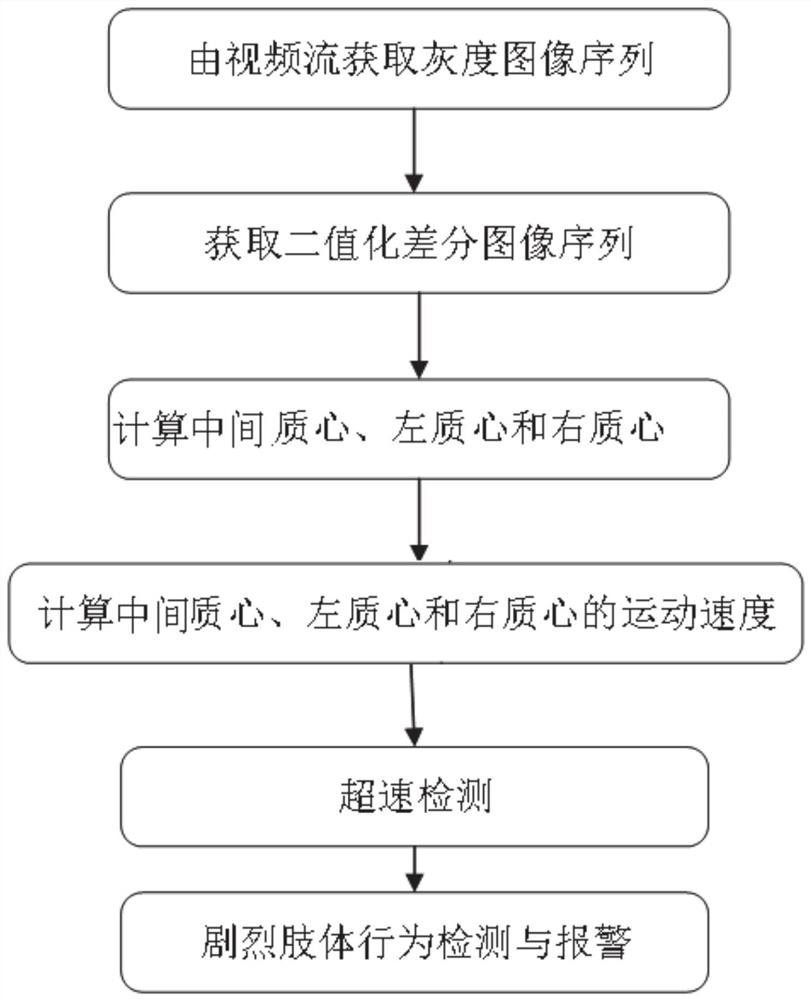

Behavior detection and identification method and system

ActiveCN113297926AStrong complementaritySimple algorithmImage analysisCharacter and pattern recognitionConcurrent computationVisual technology

The invention provides a behavior detection and identification method and system, and belongs to the technical field of computer vision. The method comprises the steps: obtaining a binary difference image sequence of a behavior video stream, calculating a middle mass center movement speed, a left mass center movement speed and a right mass center movement speed of each frame of image, comparing with a preset speed threshold value; if one or more of the three mass center movement speeds are greater than a speed threshold value, judging that the behavior in the image is overspeed, otherwise, judging that the behavior is not overspeed; counting the behavior overspeed times in unit time, if the overspeed times are larger than a preset overspeed times threshold value, judging that a violent limb behavior is detected, and giving an alarm, and otherwise, clearing away the recorded overspeed times, and starting a new statistical period. According to the invention, the movement speeds of a middle mass center, a left mass center and a right mass center are calculated, the algorithm is simple, the three mass centers have good complementarity, severe limb behaviors can be detected, missing detection and false detection are prevented, the detection reliability is improved, high-performance parallel computing equipment does not need to be arranged, and the cost performance is high.

Owner:SHANDONG UNIV

Non-volatile memory for checkpoint storage

InactiveUS8788879B2Increase speedImprove efficiencyError detection/correctionSupporting systemApplication software

A system, method and computer program product for supporting system initiated checkpoints in high performance parallel computing systems and storing of checkpoint data to a non-volatile memory storage device. The system and method generates selective control signals to perform checkpointing of system related data in presence of messaging activity associated with a user application running at the node. The checkpointing is initiated by the system such that checkpoint data of a plurality of network nodes may be obtained even in the presence of user applications running on highly parallel computers that include ongoing user messaging activity. In one embodiment, the non-volatile memory is a pluggable flash memory card.

Owner:INT BUSINESS MASCH CORP

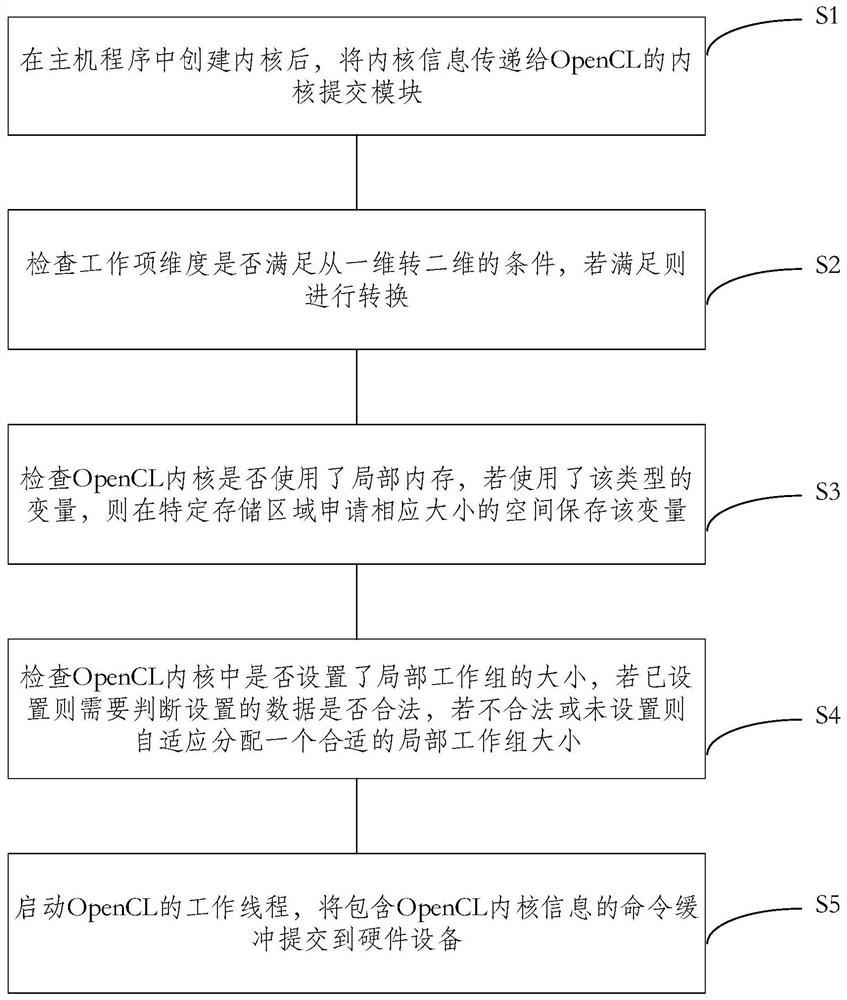

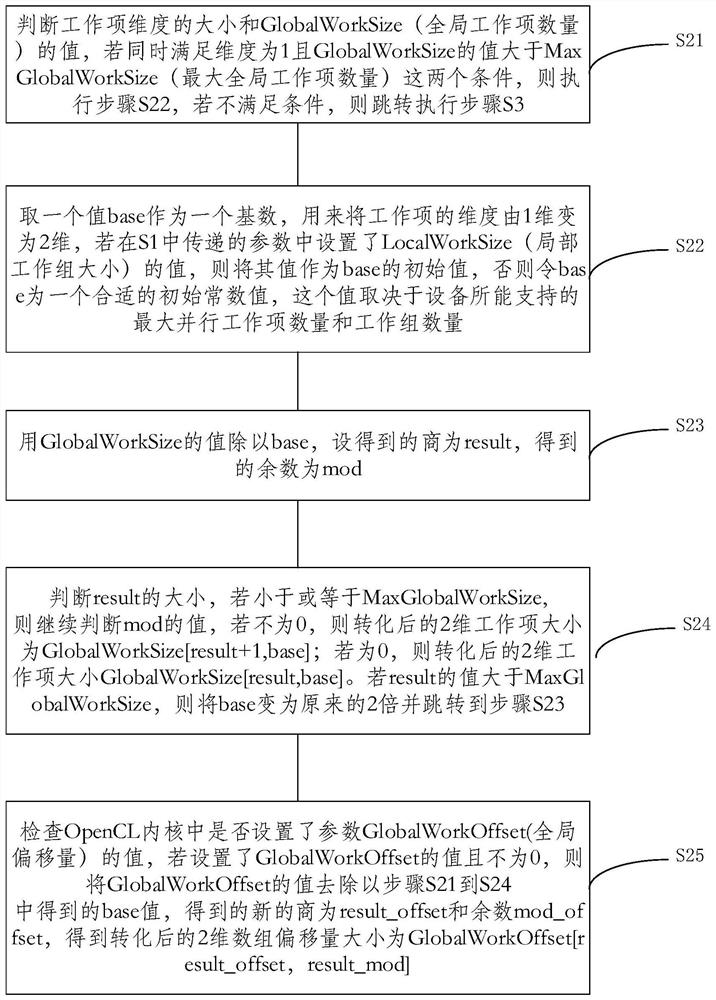

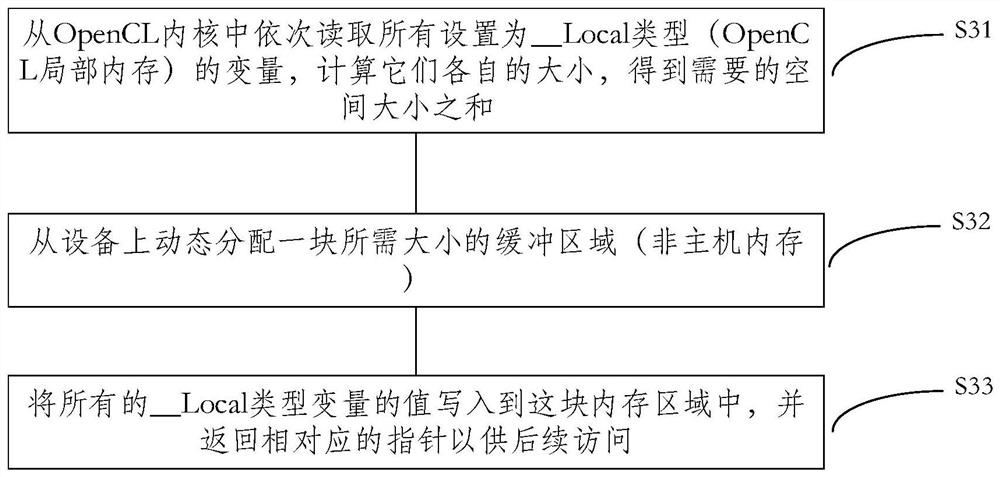

Method and device for submitting OpenCL kernel

PendingCN112433847AImprove the efficiency of parallel computingResource allocationConcurrent computationEngineering

Owner:中国船舶集团有限公司 +1

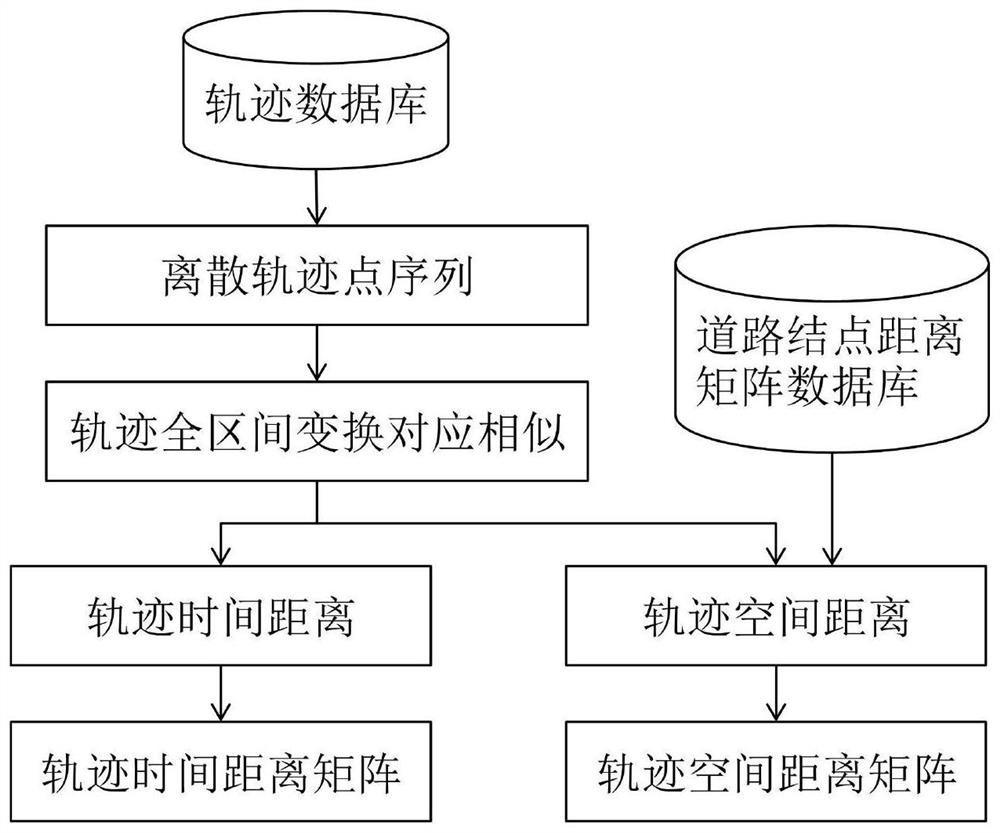

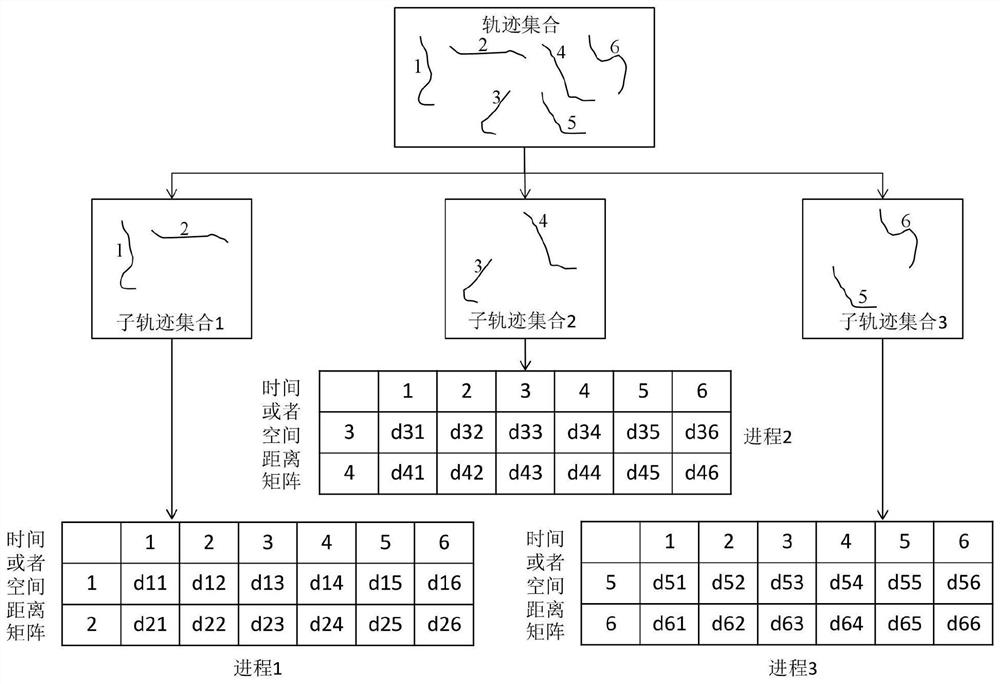

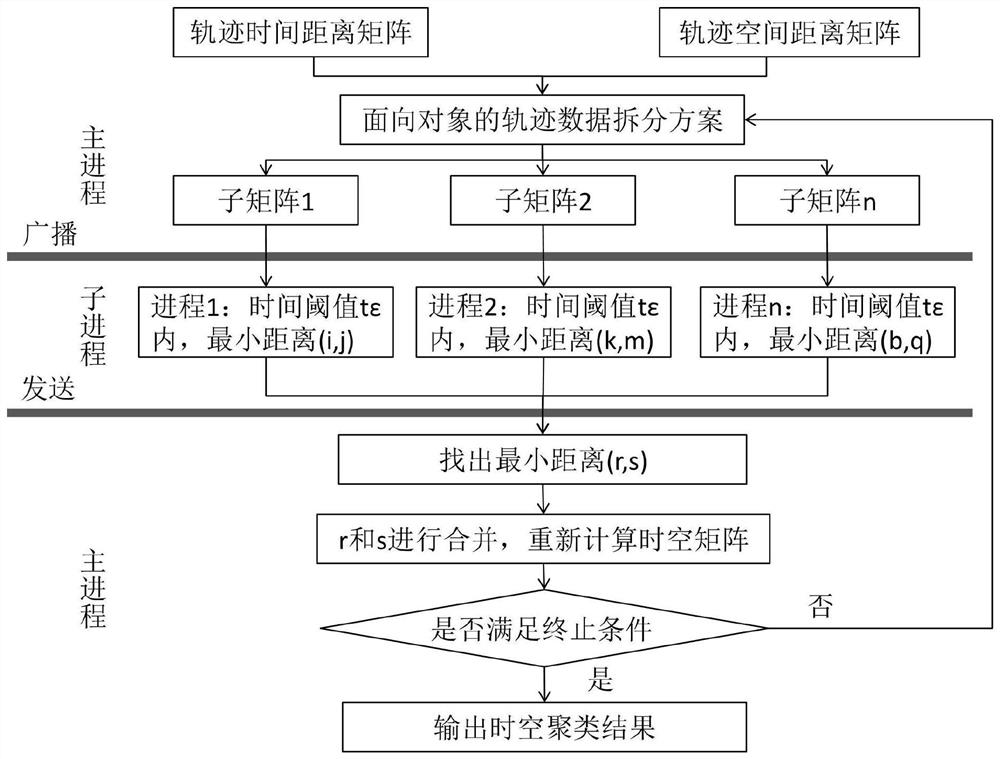

Vehicle trajectory clustering method based on parallel ST-AGNES algorithm

ActiveCN112307286AIncrease computing speedEasy to handleInternal combustion piston enginesGeographical information databasesConcurrent computationAlgorithm

The invention discloses a vehicle trajectory clustering method based on a parallel ST-AGNES algorithm, and the method is characterized in that multiple processing units are utilized to work together,and a certain task is executed at the same time to achieve higher performance. Parallel computing is an effective means for improving the computing speed and the processing capacity of a computer system. Therefore, the invention provides the vehicle trajectory clustering method based on the parallel ST-AGNES algorithm from a brand-new perspective, and the vehicle trajectory time and space similarity measurement method based on dynamic time warping better solves the problems of different vehicle trajectory sampling rates and inconsistent time scales. The method comprises the steps: constructinga time and space similarity matrix of vehicle tracks; studying a splitting scheme of irregular trajectory data and a communication mechanism of parallel computing, wherein one of the object-orientedtrajectory data splitting schemes is an object-oriented trajectory data splitting scheme; carrying out clustering connection based on the minimum distance, and designing a parallel STAGNES algorithm oriented to vehicle trajectory data.

Owner:SOUTHWEST UNIVERSITY

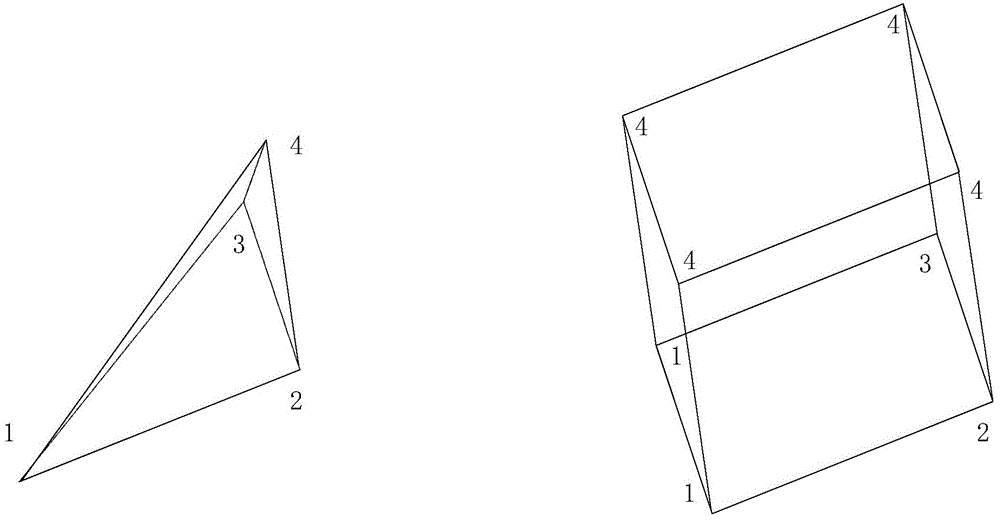

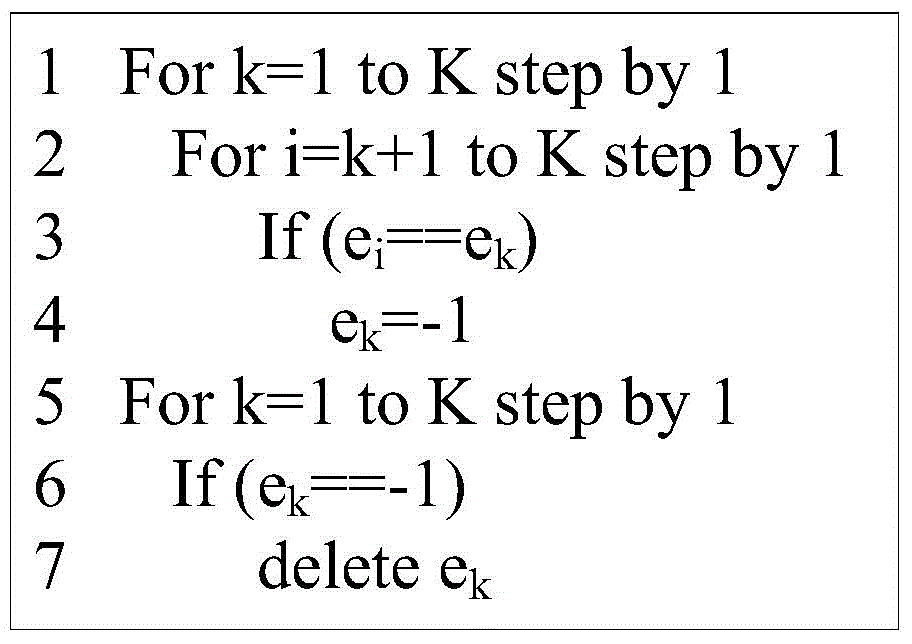

Low-computation complexity construction method of pneumatic flow field grid undirected graph

InactiveCN105631067AHigh speedGood lifting effectSpecial data processing applicationsComputation complexityUndirected graph

The invention belongs to the technical field of high-performance parallel computation, and specifically relates to a low-computation complexity construction method of a pneumatic flow field grid undirected graph. The method mainly comprises the following three steps: deleting the redundant grid point data in each grid unit; generating a unit set of the grid points, wherein the unit set S of the grid points comprises M subsets (S1, S2 to SM), each grid point corresponds to one subset and each subset comprises the numbers of all the grid units which comprise the grid point; and comparing the grid units in each subset according to the unit set of the grid points. According to the method, the generation speed of the pneumatic flow field grid undirected graph can be effectively improved; and the larger the grid quantity is, the more obvious the enhancing effect is.

Owner:BEIJING LINJIN SPACE AIRCRAFT SYST ENG INST +1

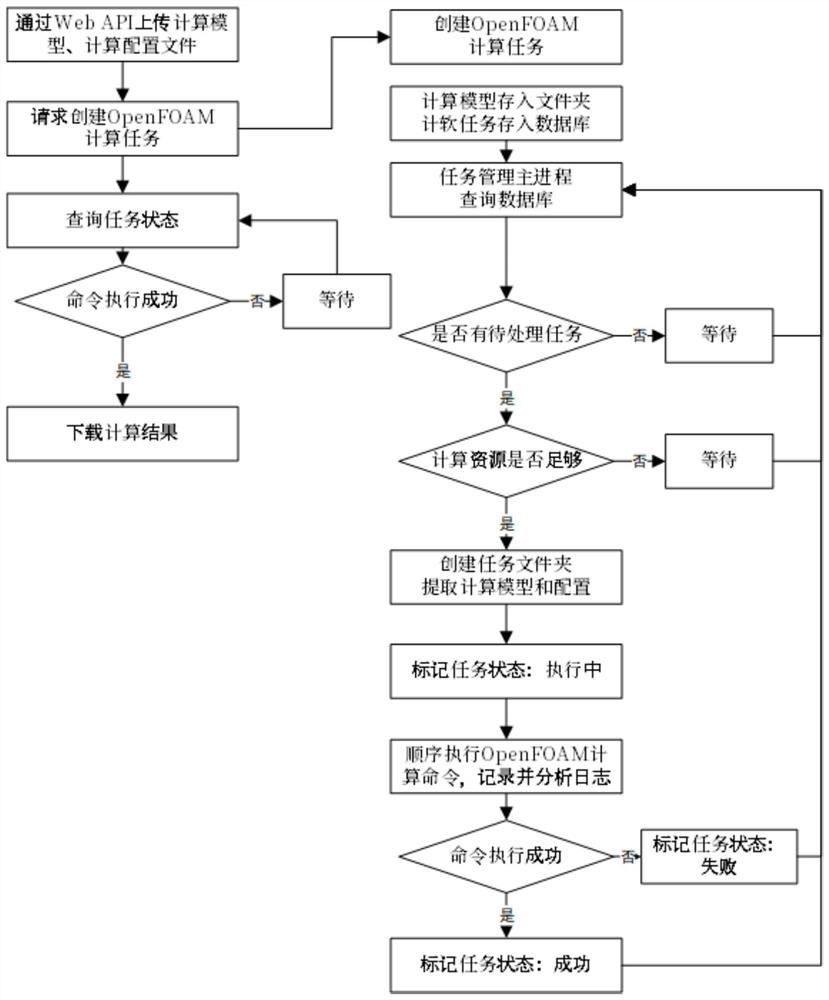

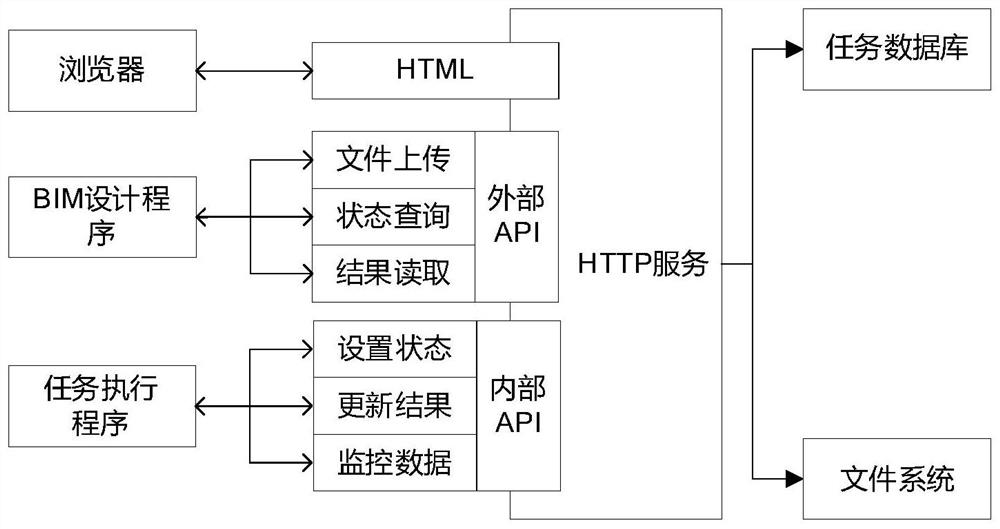

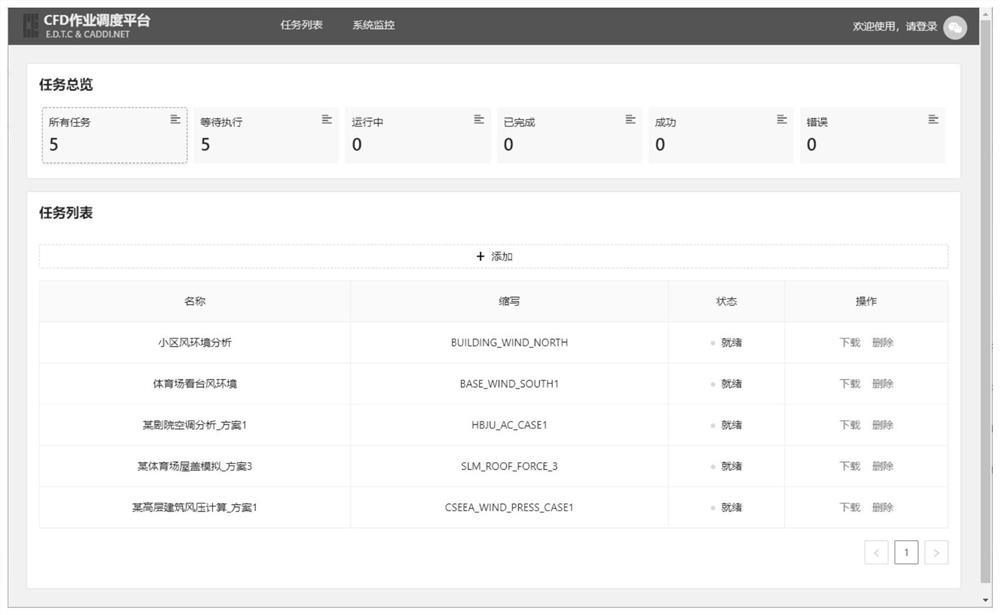

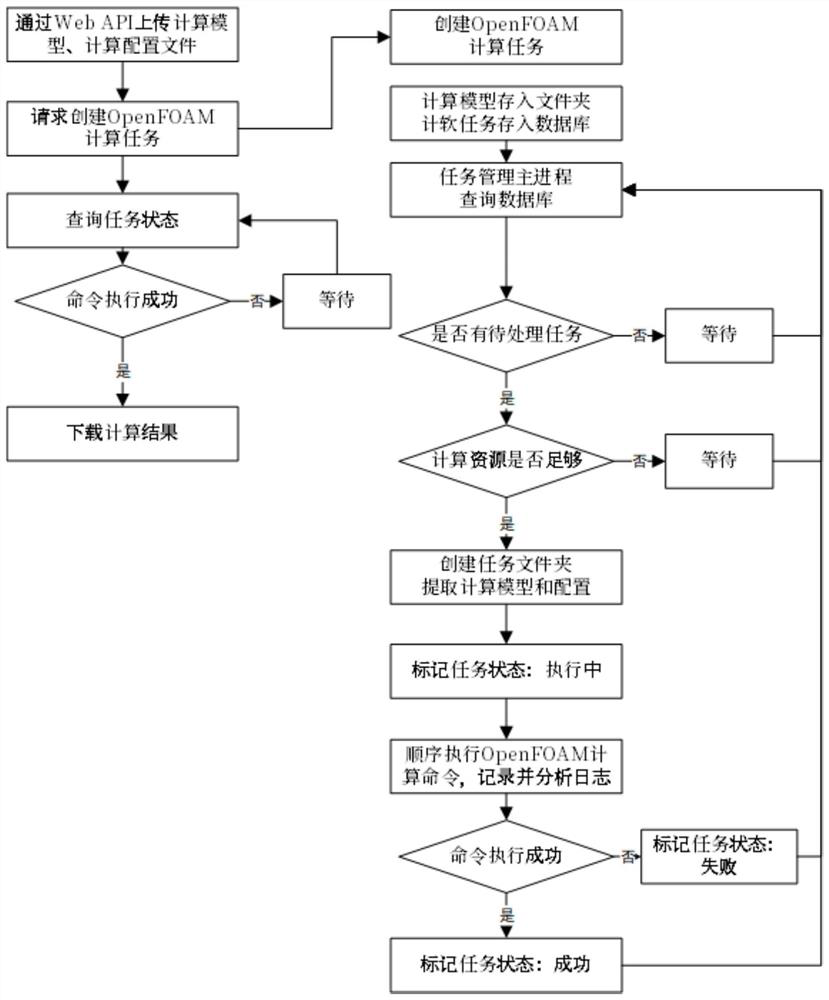

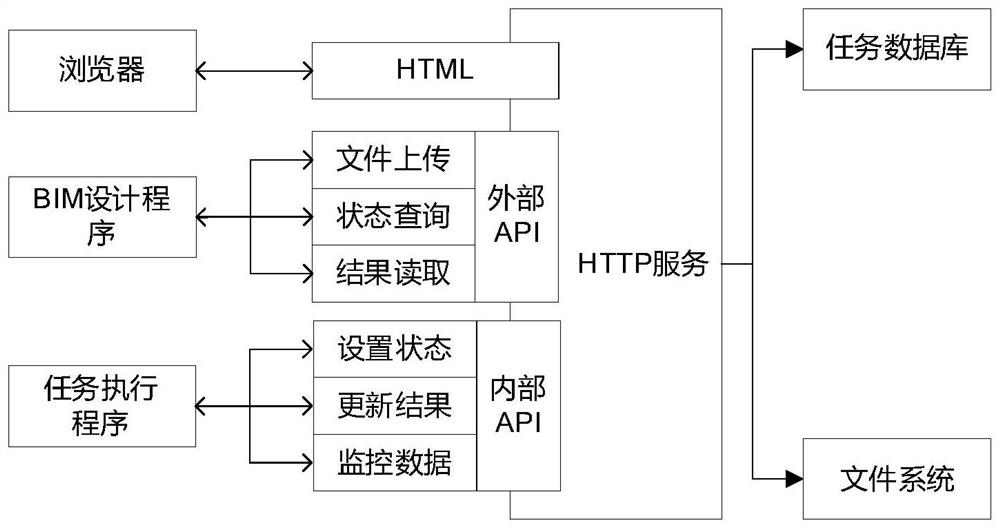

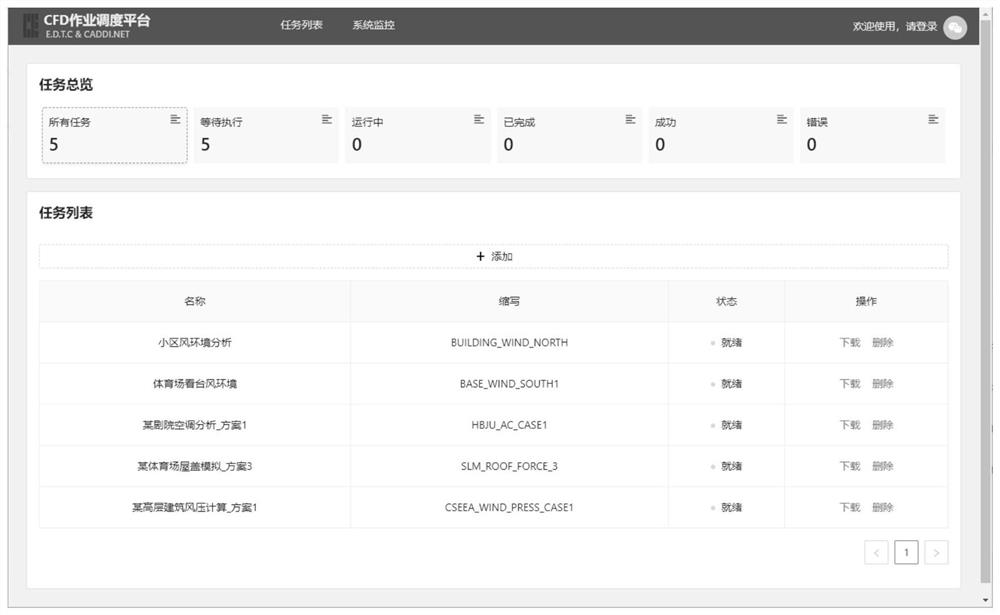

Openfoam computing task management method based on web technology

ActiveCN113656114BReduce the difficulty of operationAvoid wastingDesign optimisation/simulationProgram loading/initiatingConcurrent computationInterface (computing)

The present invention provides an OpenFOAM computing task management method based on Web technology. The method is based on Web technology, provides unified, convenient and visualized OpenFOAM cloud computing task management and post-processing visual operation interface and API communication interface, and provides CFD for multi-user usage scenarios. The calculation task creation and management mechanism, users do not need to master the remote login and operation skills of the server, and do not need to understand the OpenFOAM calculation details, which significantly reduces the operation difficulty of the OpenFOAM application in the process of building CFD-aided design; this method also uses the CFD multi-task management program. Personnel on duty avoids waste of resources caused by manual monitoring and untimely error handling; through automatic allocation of computing resources, it avoids resource competition caused by multi-user use, resulting in reduced efficiency; fully utilizes the multi-core high-performance parallel computing capabilities of computing servers to maintain The server can continuously and efficiently carry out CFD analysis of construction projects, providing a new technical means for CFD batch automatic calculation.

Owner:中南建筑设计院股份有限公司

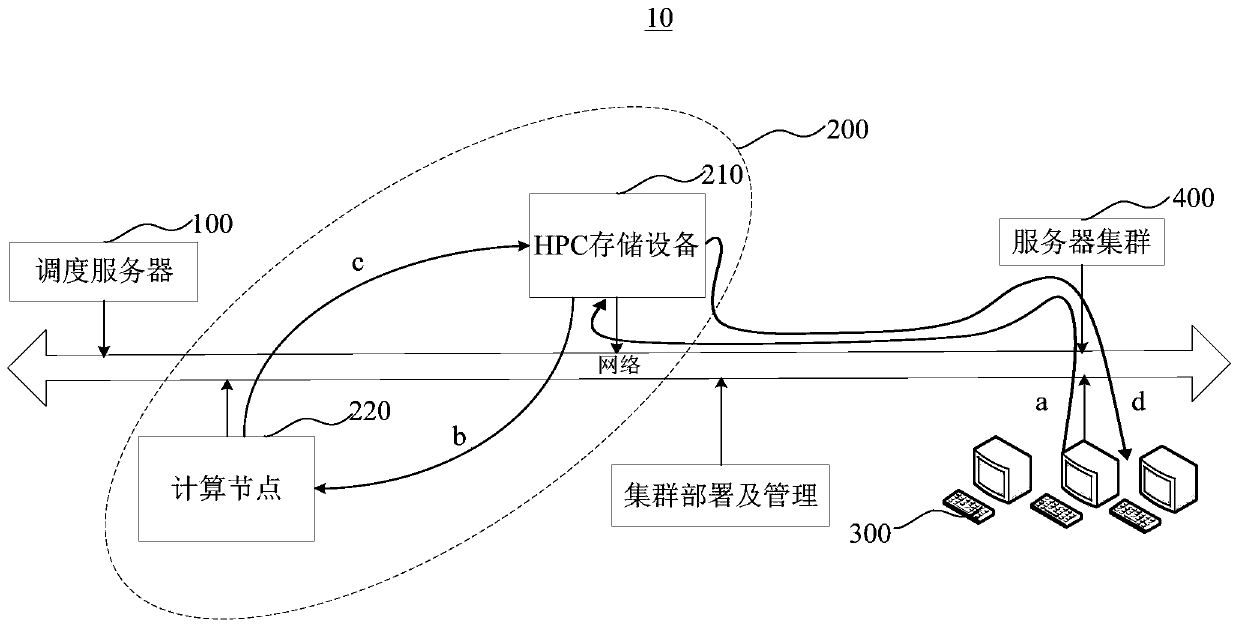

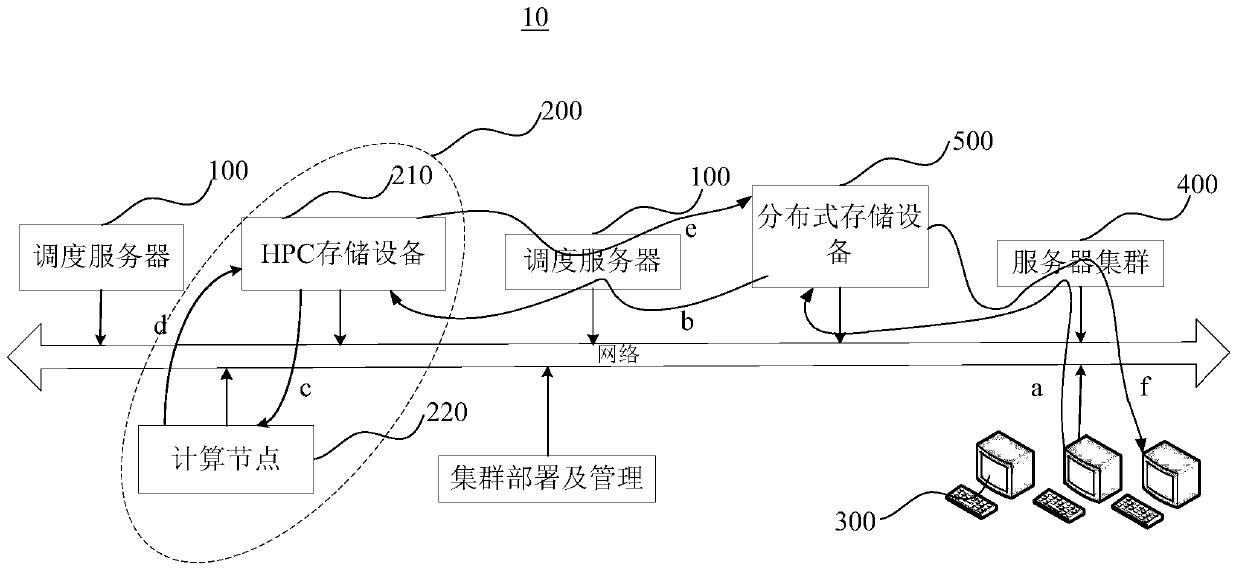

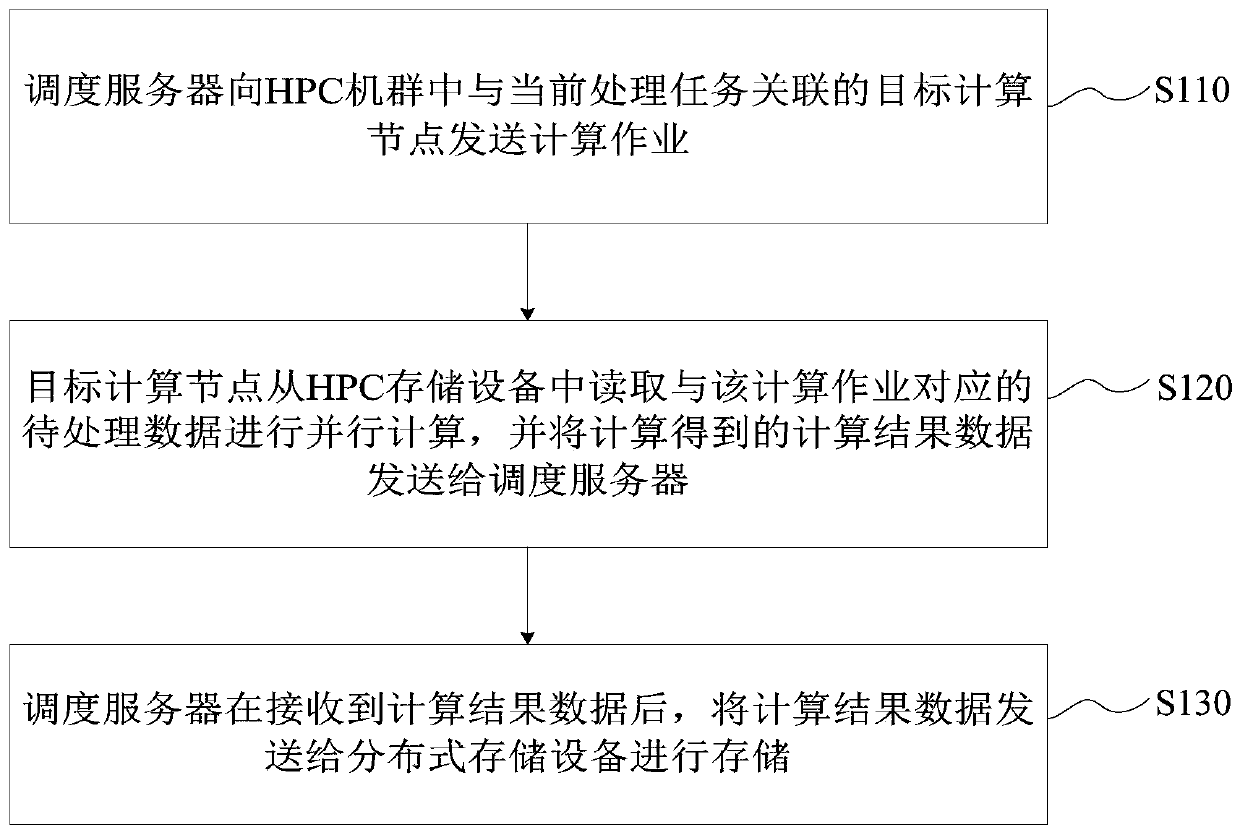

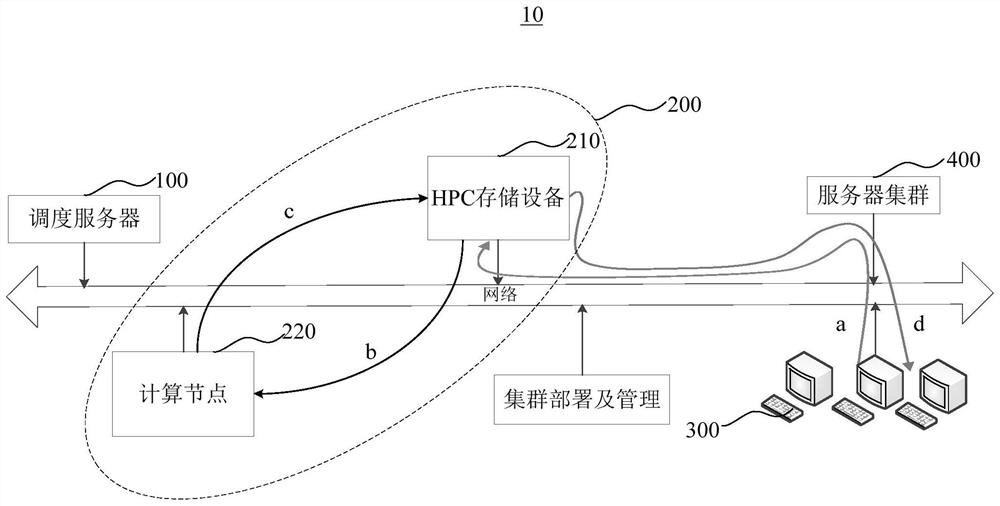

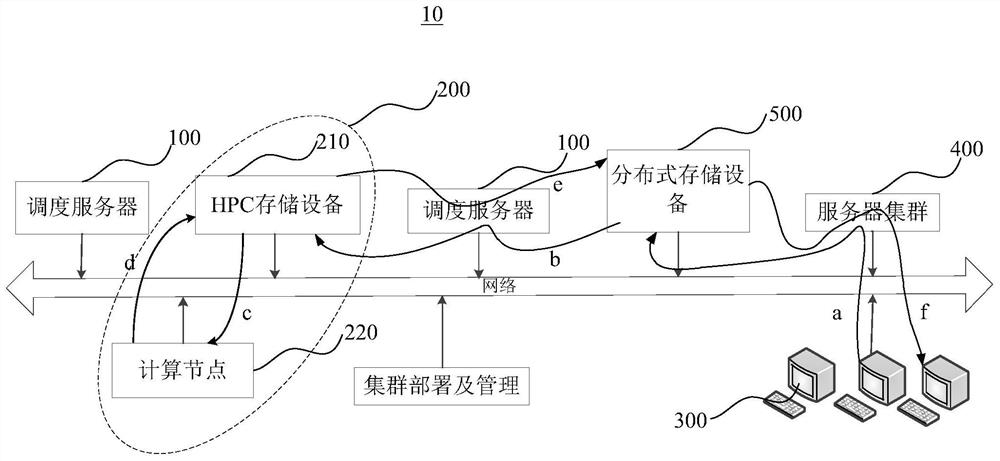

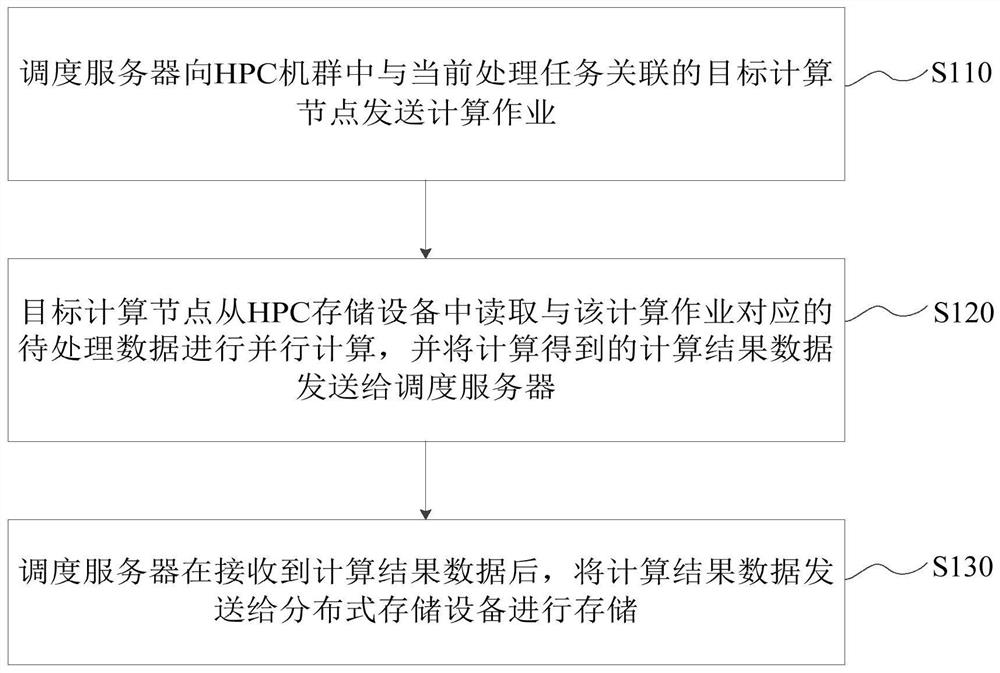

Data processing method

ActiveCN110012080ASave operating timeReduce capacity requirementsTransmissionReal-time dataData operations

The invention provides a data processing method. The HPC storage device and the distributed storage device are separately used; when high-performance parallel computing is required, the HPC storage device is used for storing to-be-processed data pre-copied from the distributed storage device and is responsible for providing parallel computing real-time data for computing nodes of the HPC cluster,computing result data of the computing nodes are transferred to the distributed storage device, and the distributed storage device is used for storing data uploaded by a terminal, the computing resultdata and subsequent data needing to be processed by the terminal. Thus, the page view of the HPC storage device and the network bandwidth burden during parallel data calculation can be reduced, the capacity requirement of the HPC storage device is reduced, and the total ownership cost of the HPC storage device is reduced. In addition, when high-performance parallel computing is needed, a terminaldoes not need to independently upload data or download computing result data, data operation time is saved, and sharing of the computing result data is facilitated.

Owner:NEW H3C TECH CO LTD

Cache simulator based on GPU and time parallel speedup simulating method thereof

InactiveCN101770391BImprove Simulation EfficiencyEfficient use ofMemory adressing/allocation/relocationImage memory managementGranularityParallel simulation

The invention provides a Cache simulator based on a GPU and a time parallel speedup simulating method thereof. The Cache simulator comprises an initializing module, a Trace segmentation module, a GPU parallel simulating module, a GPU parallel modifying module and a statistical calculation module. The cache simulator is based on the GPU with strong high-performance parallel computing capacity, hasmulti granularity and multi configuration abilities and parallel simulation characteristic. The invention adopts a time parallel speedup method to segment longer Trace arrays, realizes the parallel simulation of multi Trace segments on the GPU, and utilizes the GPU parallel modifying module to modify the error caused by the modifying and simulating processes in the simulating process. The invention has the advantages that the Cache simulating efficiency is improved, computing resources are better utilized and simultaneously higher cost-performance ratio is achieved.

Owner:BEIHANG UNIV

High performance parallel computing method based on external pci‑e connection

ActiveCN104156332BImprove Parallel Computing EfficiencyEasy to take outElectric digital data processingVirtual memoryComputation process

The invention provides a high-performance parallel computing method based on external PCI-E connection. The method includes the steps of (a) connecting all pieces of computer equipment through a PCI-E bus, (b) running a parallel computing program, (c) constructing a virtual memory addressing table, (d) sending virtual memory information, (e) receiving the virtual memory information, (f) judging correctness of the received information, (g) judging whether a virtual memory address is constructed, (h) allocating computing tasks, (i) executing the computing tasks, and (j) obtaining results and providing the results for a user. The data transmission speed between processors is increased in the parallel computing process through the parallel computing method; the parallel computing method is improved, so that the copy data size of a system CPU is reduced, efficiency of parallel computing operation is greatly improved, multiple parallel computing resources are effectively connected for communication, and data are transmitted at a high speed.

Owner:韩林

data processing method

ActiveCN110012080BSave operating timeReduce capacity requirementsTransmissionComputer hardwareConcurrent computation

Owner:NEW H3C TECH CO LTD

A Simulation Method of Stirred Reactor Based on Immersed Boundary Method

ActiveCN105069184BPromote generationReduce workloadSpecial data processing applicationsParticle flowDiscrete element method

The present invention provides a stirred tank reactor simulation method based on an immersed boundary method. The method includes: a preparation phase including determination and setting of calculating parameters, generation of fluid grid files, generation of Lagrange mark point information, and preparation of calculating resources; numerical calculation: setting boundary conditions of computational domains and solving velocity fields and temperature fields based on fluid grids; combining with a discrete element method to achieve an interface analytic simulation of two-phase flow of particle flow, wherein the immersed boundary method is directly employed to apply non-slip boundary conditions to particle surfaces; a post-processing phase, and outputting information of each Euler mesh and information of each particle after a simulation is completed. According to the characteristics of different boundaries, in the calculation, the mixed immersed boundary method is employed to process, and the mass numerical simulation of turbulent flow in the stirred tank reactor is achieved through combination of large eddy simulation and high performance parallel computing technology. The simulation method may be applied to simulation of turbulent flow and simulation of solid particle suspension in the stirred tank reactor and the like.

Owner:INST OF PROCESS ENG CHINESE ACAD OF SCI

OpenFOAM computing task management method based on Web technology

ActiveCN113656114AReduce the difficulty of operationAvoid wastingDesign optimisation/simulationProgram loading/initiatingConcurrent computationInterface (computing)

The invention provides an OpenFOAM computing task management method based on a Web technology. The method comprises the steps of providing a unified, convenient and visual OpenFOAM cloud computing task management and post-processing visual operation interface and an API communication interface based on a Web technology; and providing a CFD calculation task creation and management mechanism for a multi-user use scene. A user does not need to master remote login and operation skills of the server and does not need to know OpenFOAM calculation details, so that the operation difficulty of an OpenFOAM application in a building CFD aided design process is remarkably reduced. According to the method, unattended operation is achieved through a CFD multi-task management program, and resource waste caused by manual monitoring and untimely error processing is avoided; by automatically allocating the computing resources, efficiency reduction caused by resource competition during multi-user use is avoided; according to the invention, the multi-core high-performance parallel computing capability of the computing server is fully exerted, the server can continuously and efficiently carry out CFD analysis work of constructional engineering, and a new technical means is provided for CFD batch automatic computing.

Owner:中南建筑设计院股份有限公司

MPI (Moldflow Plastics Insight) information scheduling method based on reinforcement learning under multi-network environment

InactiveCN101833479BGet the latest status in real timeGood application effectInterprogram communicationDistributed computingReinforcement learning

The invention discloses an MPI (Moldflow Plastics Insight) information scheduling method based on reinforcement learning under a multi-network environment, aiming at overcoming the defect of low practical application performance of a high-performance parallel computer, caused by the traditional circulating scheduling method. The method comprises the steps of: initiating parameters in a process of starting an MPI system, creating Cm<2> Q tables according to a multiple Q table combined method for a computing environment matched with m networks; continuously receiving an MPI information sending request sent by application in a process of starting the MPI system, determining a current information segment, then obtaining a current environment state, scheduling the current information segment to an optimal network according to the state information of historical empirical values stored in the Q tables; and finally, computing an instant reward value obtained by the scheduling and updating Q values in the Q tables. By adopting the invention, the problems that communication loads are distributed unequally, can not adapt to the network state dynamic change and have poor adaptability on the computing environment can be solved, and the practical application performance of the high-performance parallel computer is improved.

Owner:NAT UNIV OF DEFENSE TECH

A Parallel Solution Method for Lower Triangular Equations Oriented to Structured Mesh Sparse Matrix

ActiveCN111079078BEfficient solutionMinimize memory access overheadComplex mathematical operationsComputational scienceConcurrent computation

A method for optimizing inter-processor communication and cache space utilization in solving structured grid sparse lower triangular equations in high-performance parallel computing, including: receiving the solution vector of structured grid, etc., starting multiprocessors to start parallel execution ;Decompose the solution vector into multiple sub-blocks, divide each sub-block into multiple columns, treat multiprocessors as a rectangular array, each processor is responsible for computing a column, and the processors open up storage dependent data in their own caches Buffer, get the data required for calculation from neighbors or its own memory and store it in the buffer, complete its own column calculation, and send the calculated data to other processors that depend on the data; the rectangular array of processors traverses all sub-blocks, and completes Calculate the required data communication, and perform calculations for each sub-block until the entire solution calculation is completed. The dependent data of each operation is transmitted through fast communication between processors, minimizing memory access overhead and maximizing resource utilization.

Owner:TSINGHUA UNIV +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com