Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

925 results about "High performance computation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

High-Performance Computing. High Performance Computing (HPC) is the IT practice of aggregating computing power to deliver more performance than a typical computer can provide.

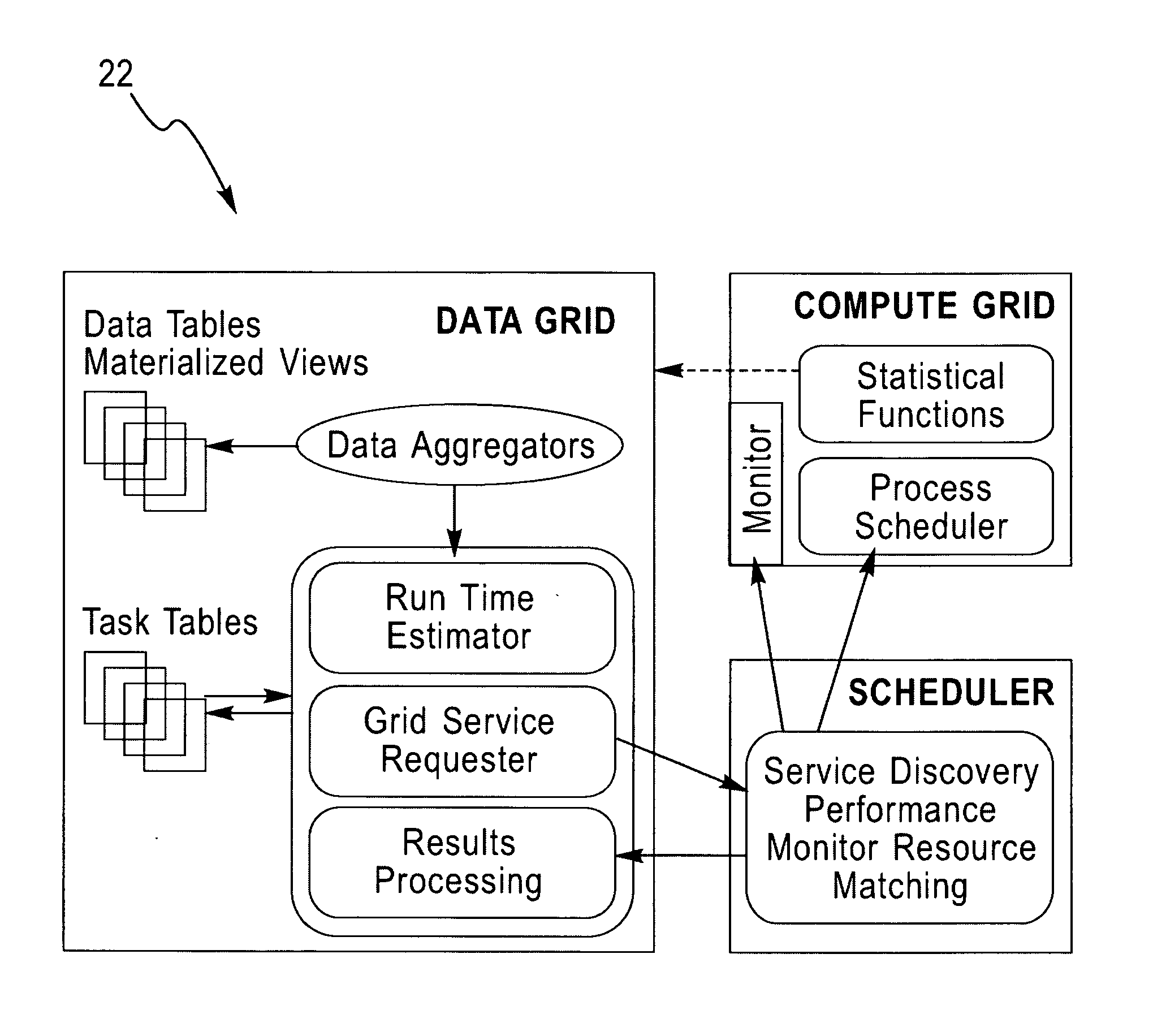

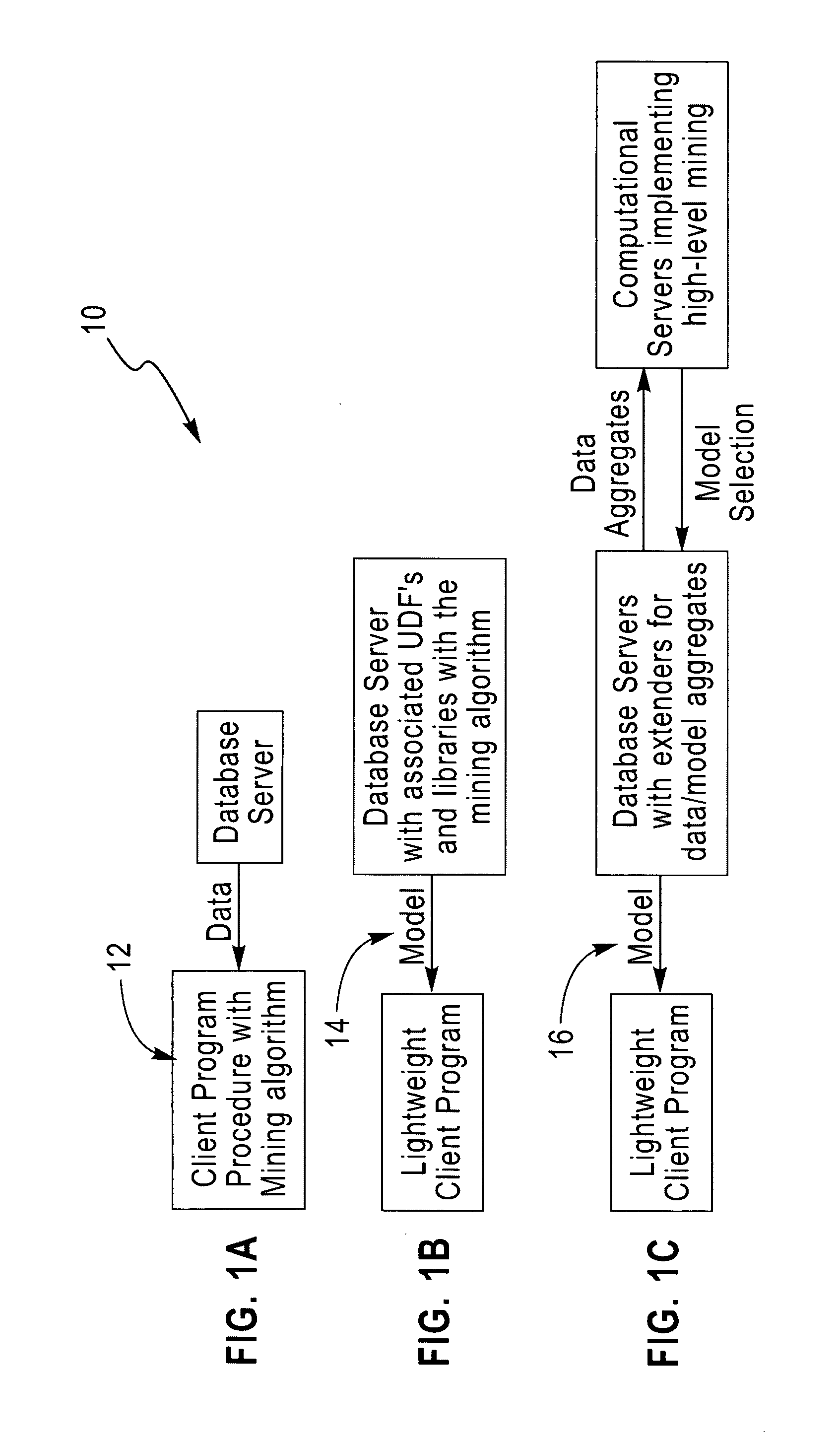

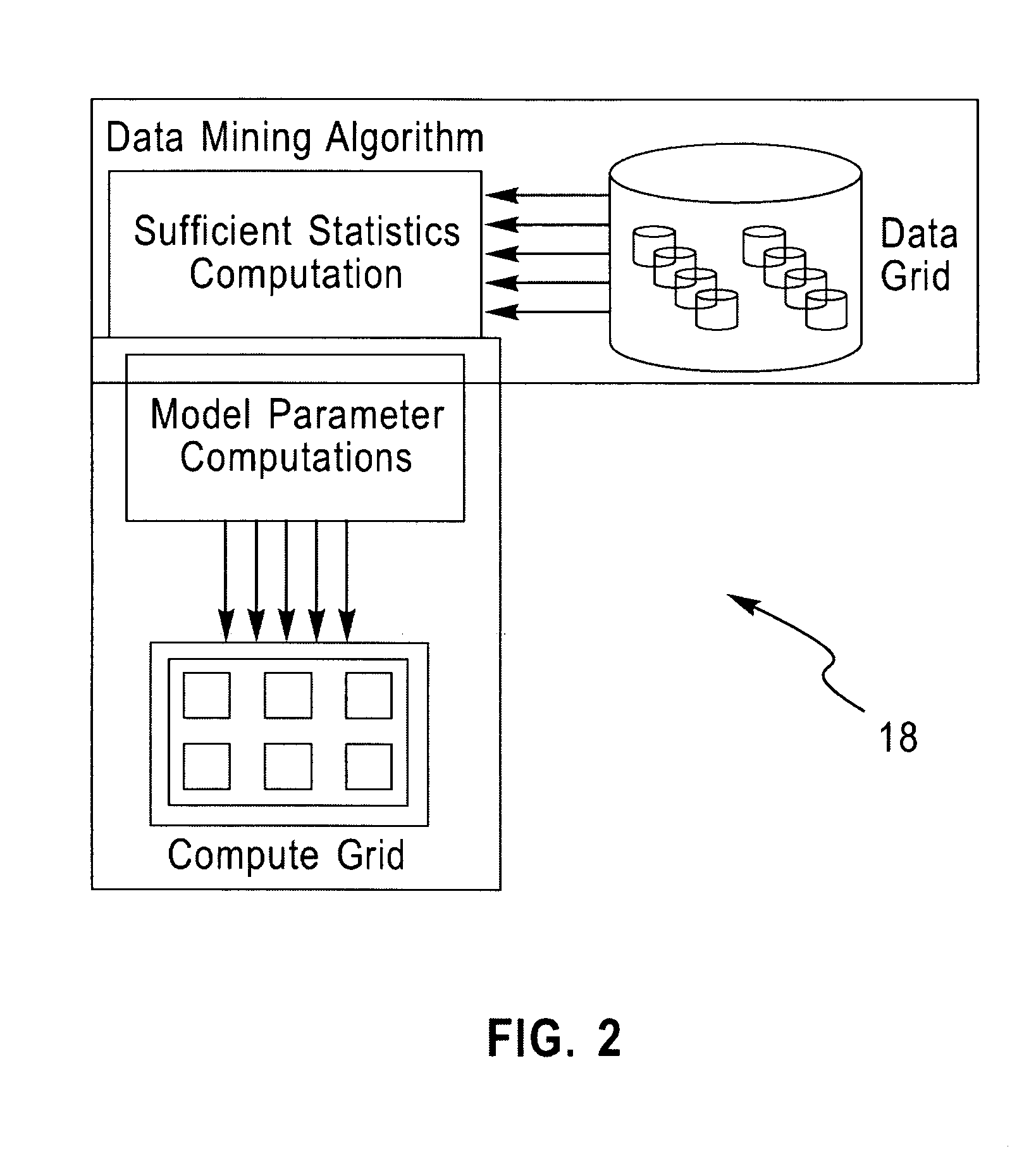

System and architecture for enterprise-scale, parallel data mining

InactiveUS20070174290A1Scalable storage and query performanceImprove robustnessDigital data information retrievalOffice automationQuality dataRelational database

A grid-based approach for enterprise-scale data mining that leverages database technology for I / O parallelism and on-demand compute servers for compute parallelism in the statistical computations is described. By enterprise-scale, we mean the highly-automated use of data mining in vertical business applications, where the data is stored on one or more relational database systems, and where a distributed architecture comprising of high-performance compute servers or a network of low-cost, commodity processors, is used to improve application performance, provide better quality data mining models, and for overall workload management. The approach relies on an algorithmic decomposition of the data mining kernel on the data and compute grids, which provides a simple way to exploit the parallelism on the respective grids, while minimizing the data transfer between them. The overall approach is compatible with existing standards for data mining task specification and results reporting in databases, and hence applications using these standards-based interfaces do not require any modification to realize the benefits of this grid-based approach.

Owner:IBM CORP

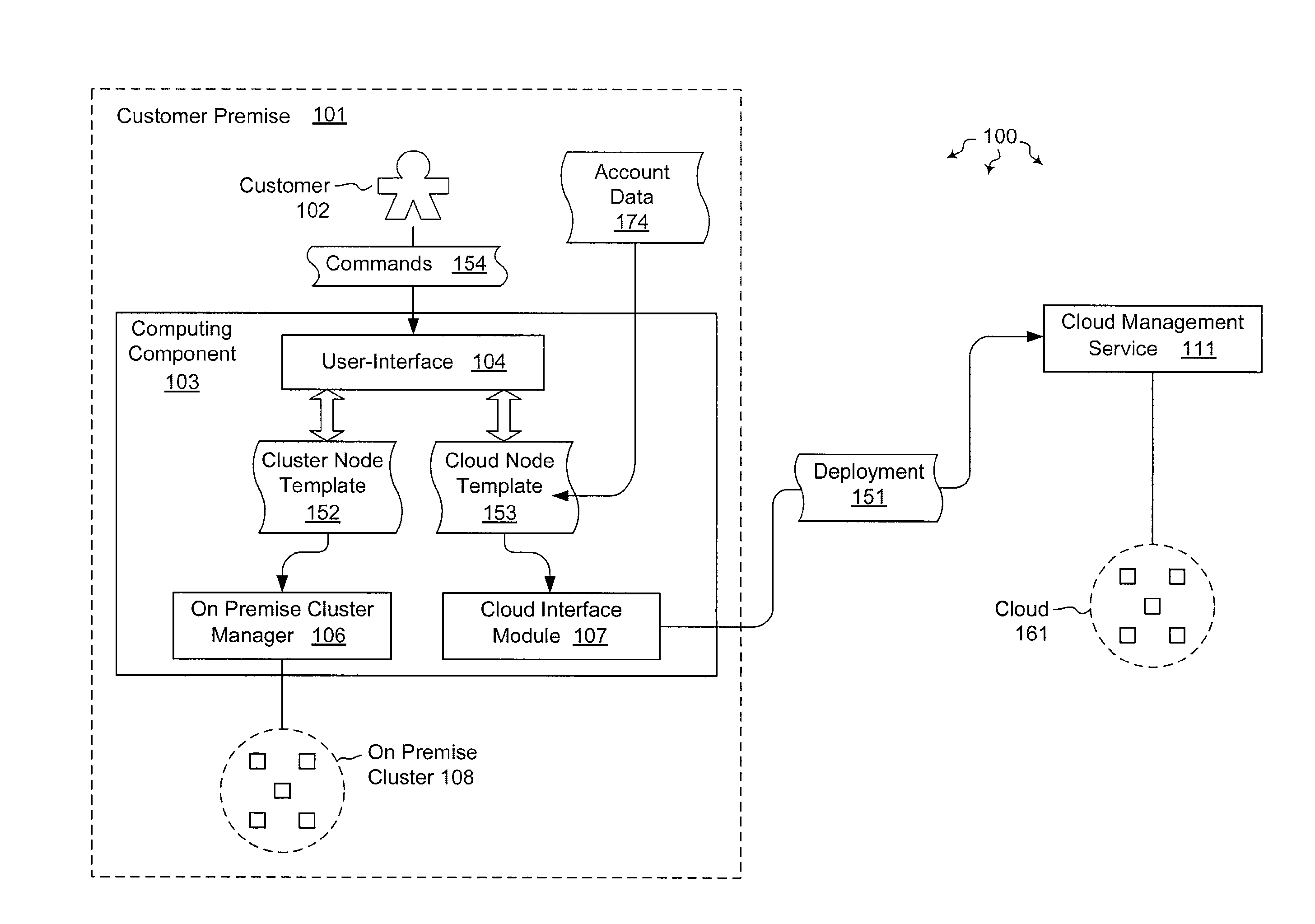

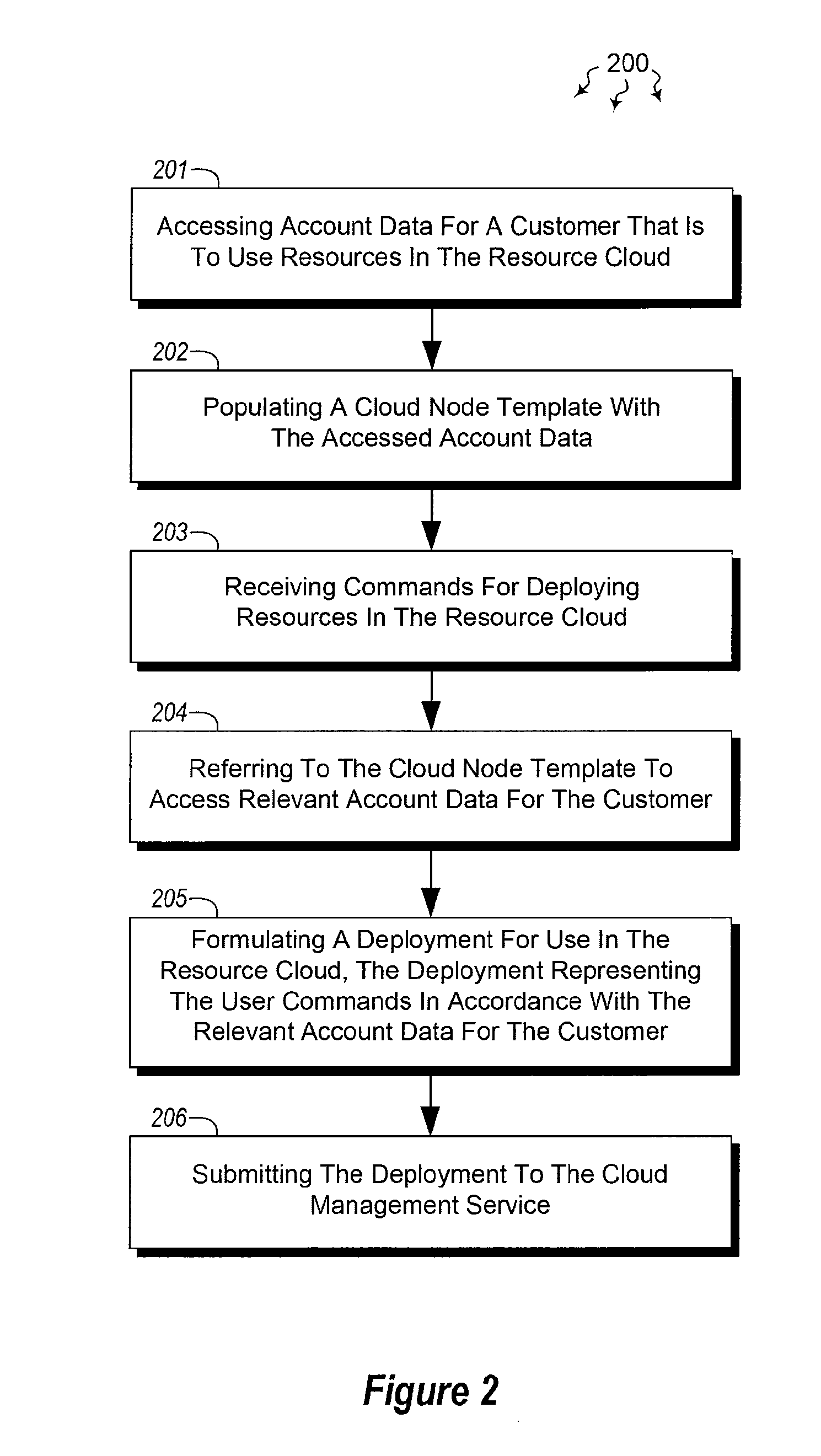

Using templates to configure cloud resources

ActiveUS20120072597A1Well formedDigital computer detailsProgram controlTheoretical computer scienceCloud resources

The present invention extends to methods, systems, and computer program products for using templates to configure cloud resources. Embodiments of the invention include encapsulating cloud configuration information in an importable / exportable node template. Node templates can also be used to bind groups of nodes to different cloud subscriptions and cloud service accounts. Accordingly, managing the configuration of cloud based resources can be facilitated through an interface at a (e.g., high performance) computing component. Templates can also specify a schedule for starting / stopping instance running within a resource cloud.

Owner:MICROSOFT TECH LICENSING LLC

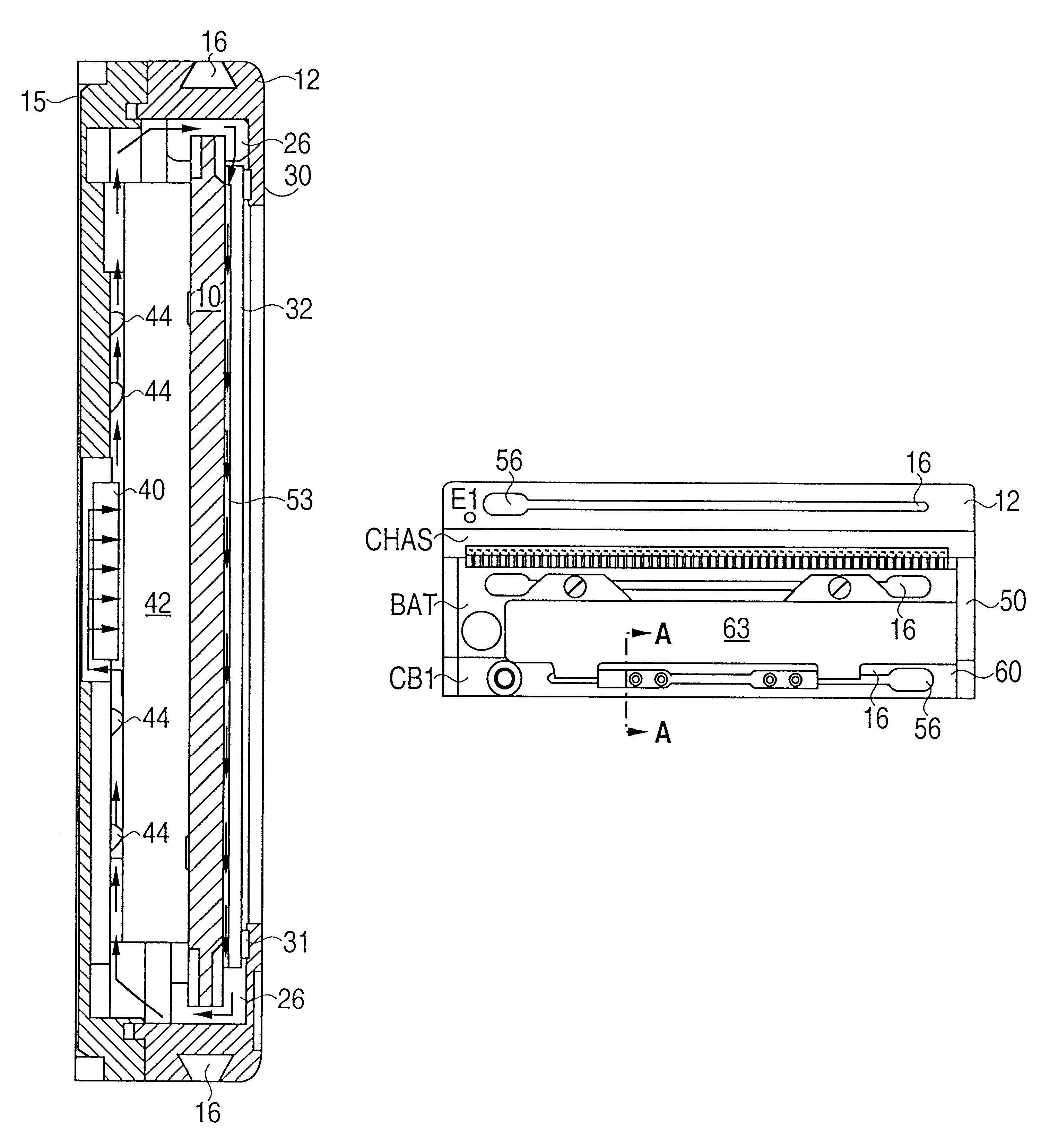

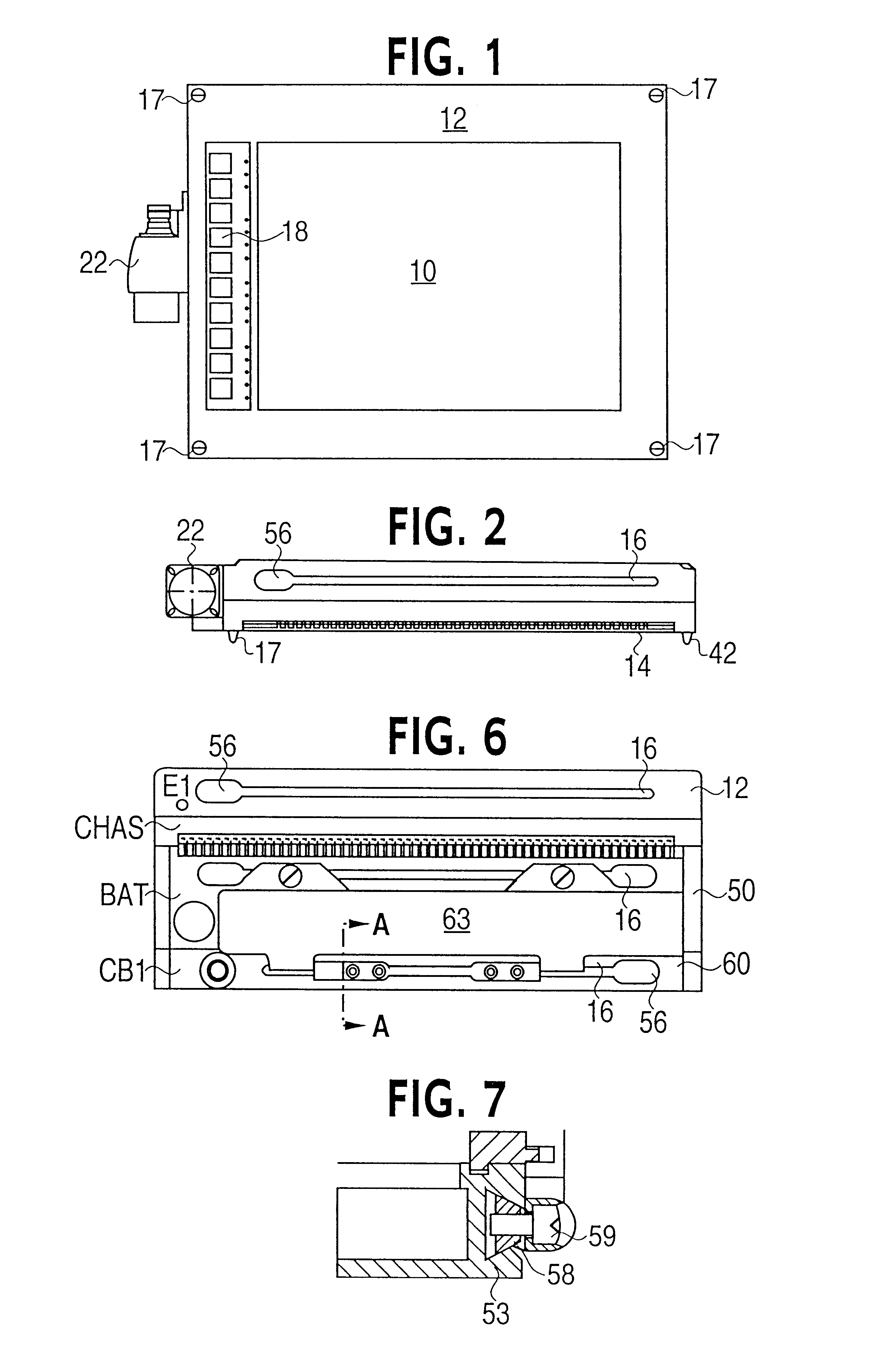

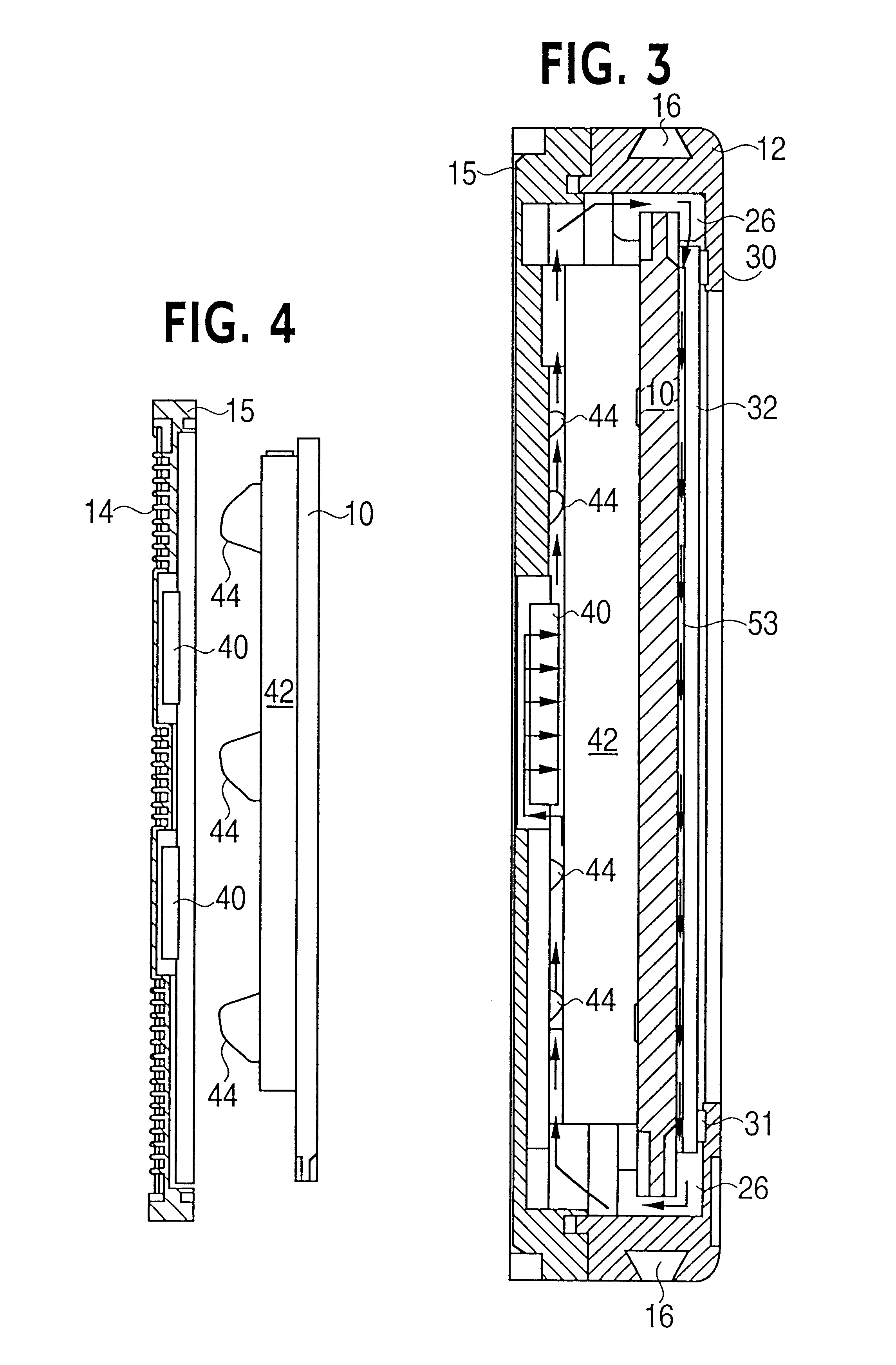

Ultra-rugged, high-performance computer system

InactiveUS6504713B1Static indicating devicesDigital data processing detailsEngineeringHigh performance computation

A display module includes a sealed, heat conductive housing, a display screen secured to the housing by spaced, resilient mounting members, a touch responsive screen separated from the display screen by a narrow air gap, an airtight seal between a peripheral lip of the housing and the touch responsive screen, and a fan in the housing to circulate a fluid through the gap.

Owner:IV PHOENIX GROUP

Virtual supercomputer

ActiveUS7774191B2Improve efficiencyFinanceResource allocationInformation processingOperational system

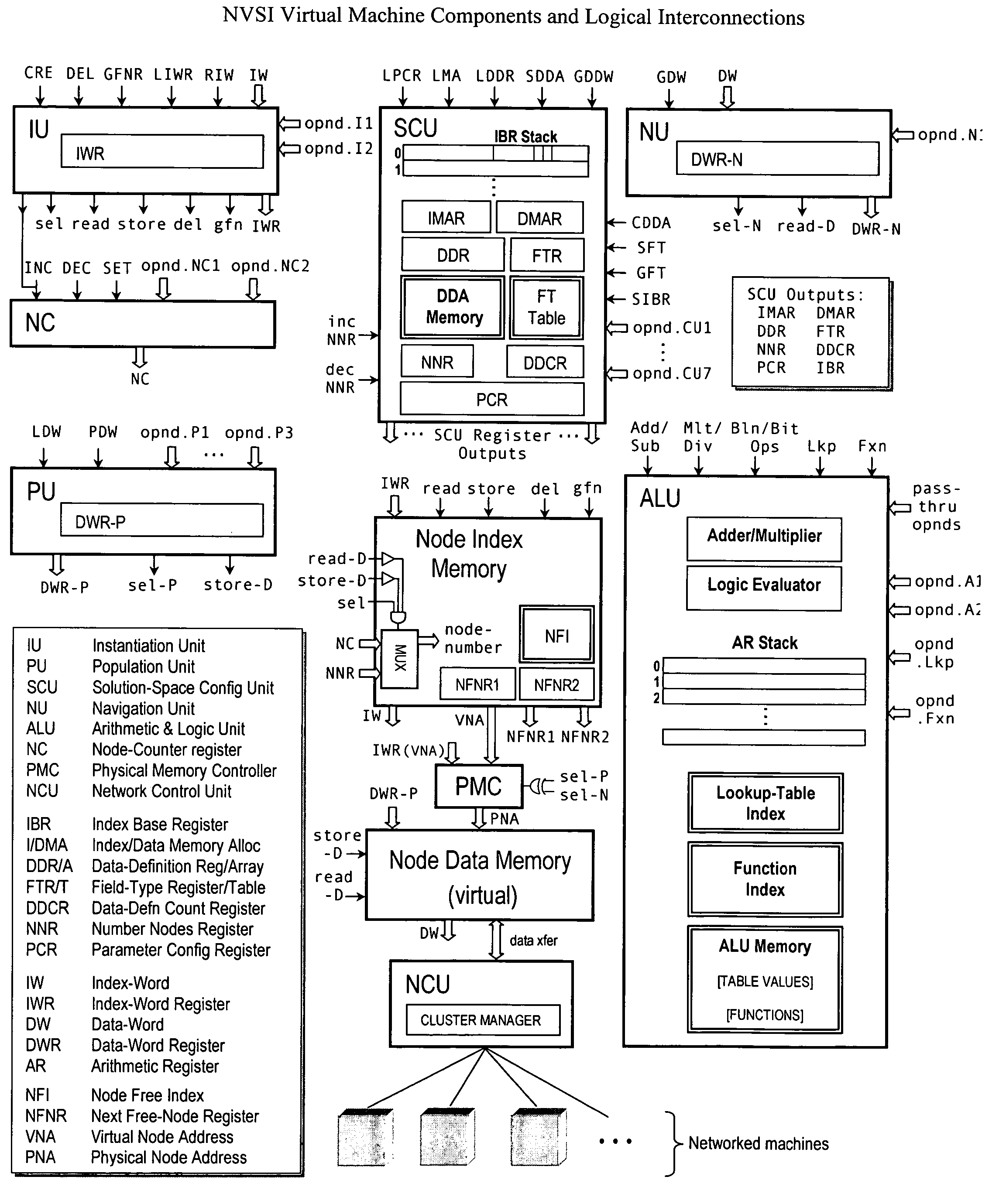

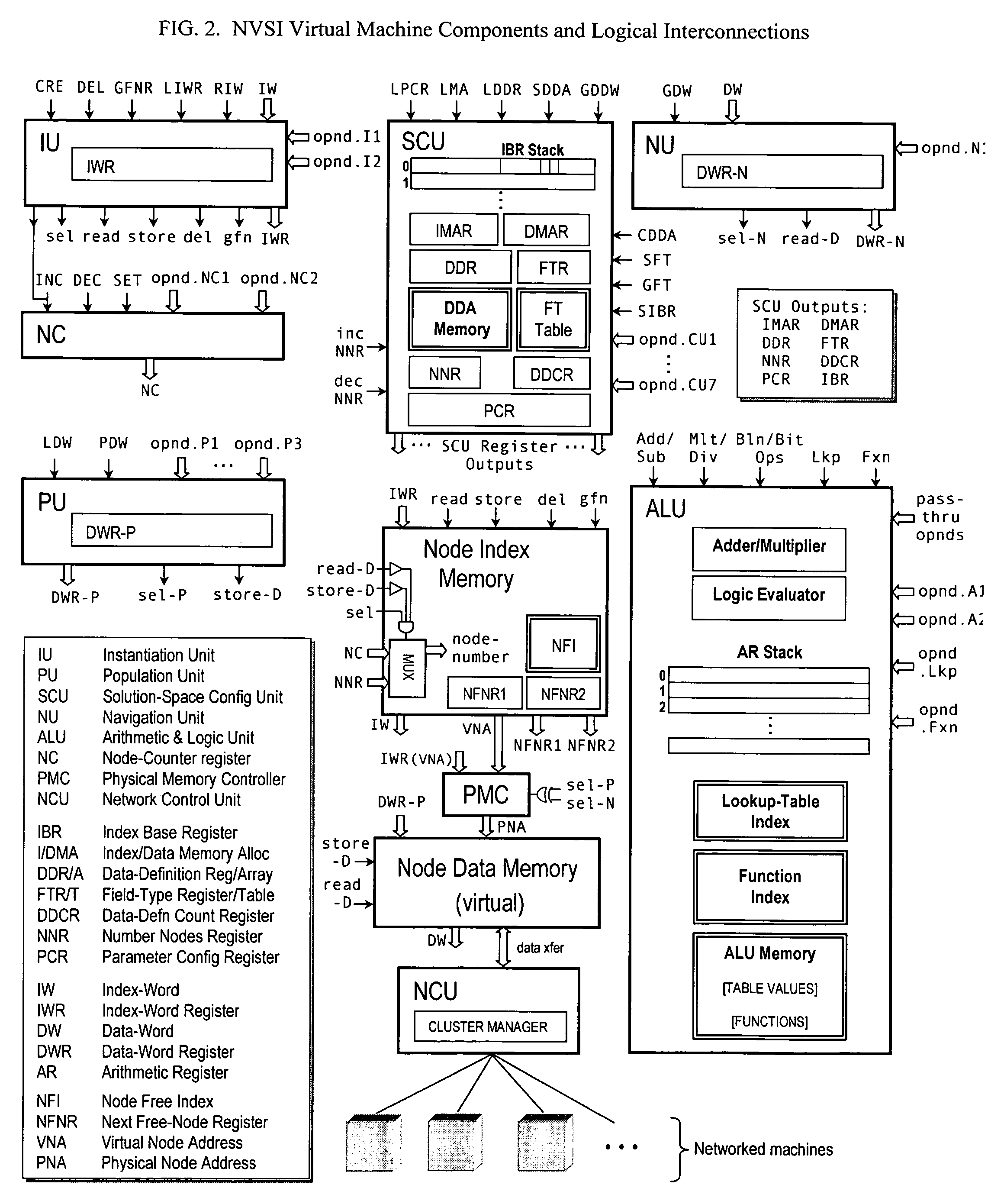

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

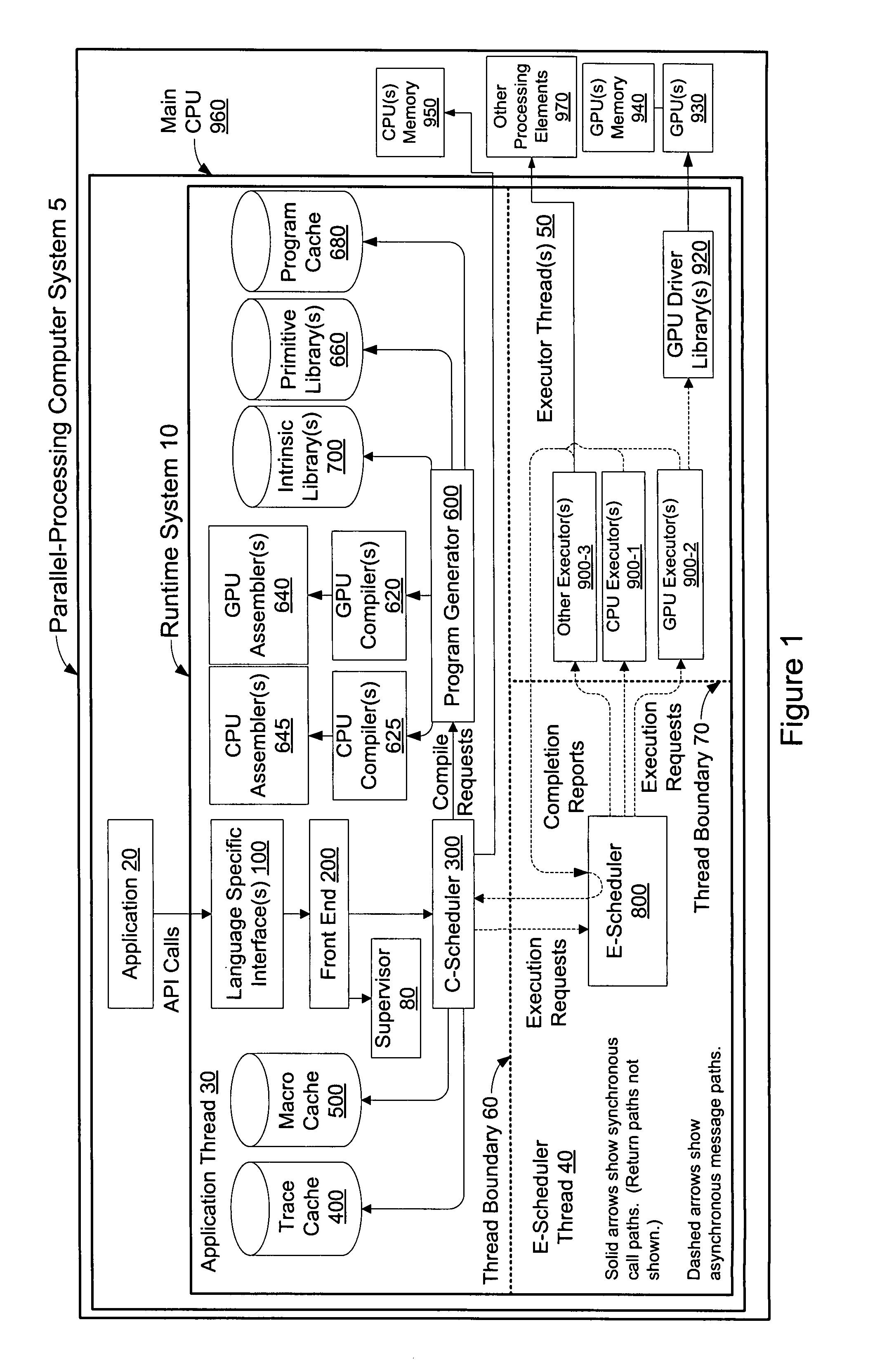

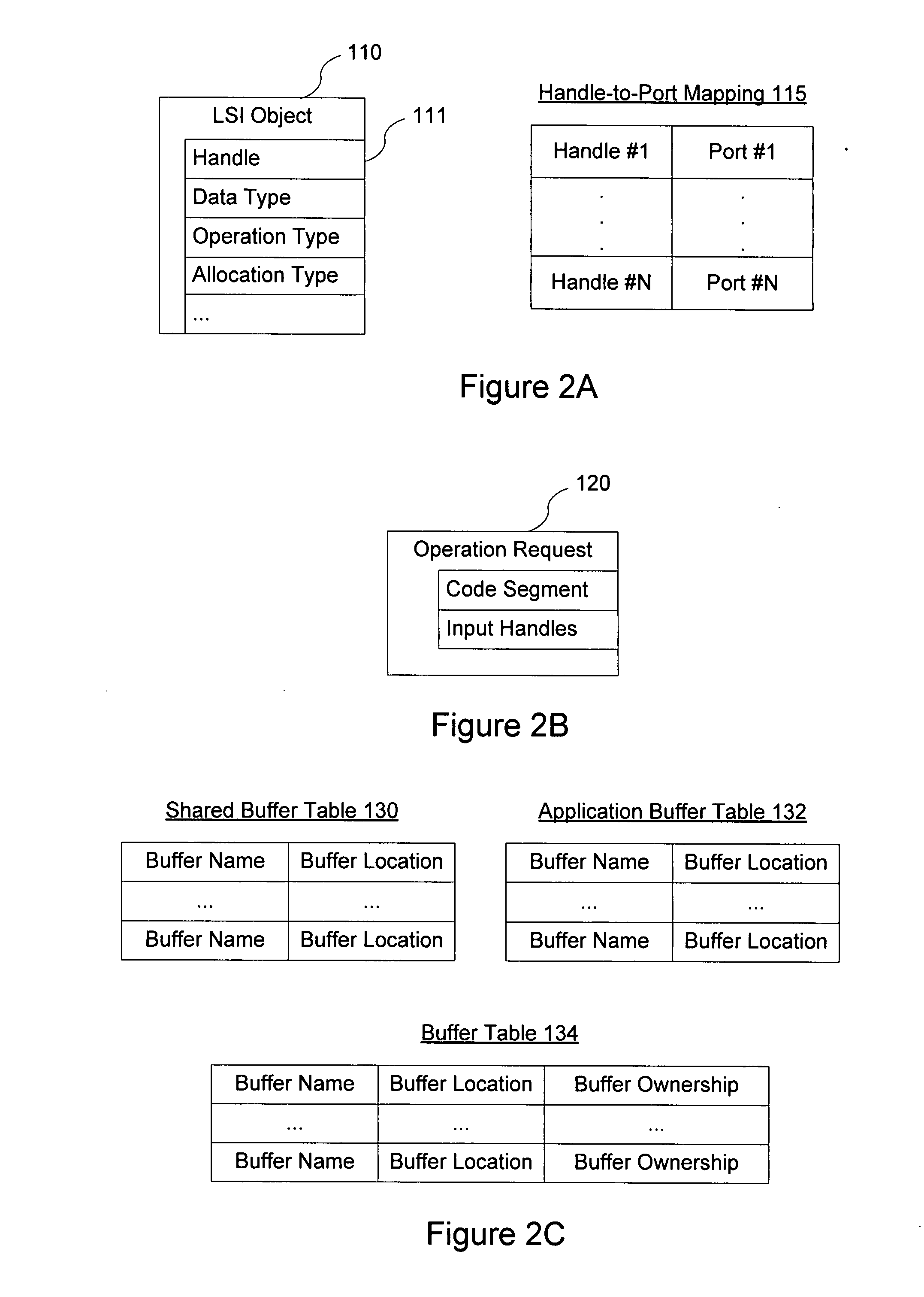

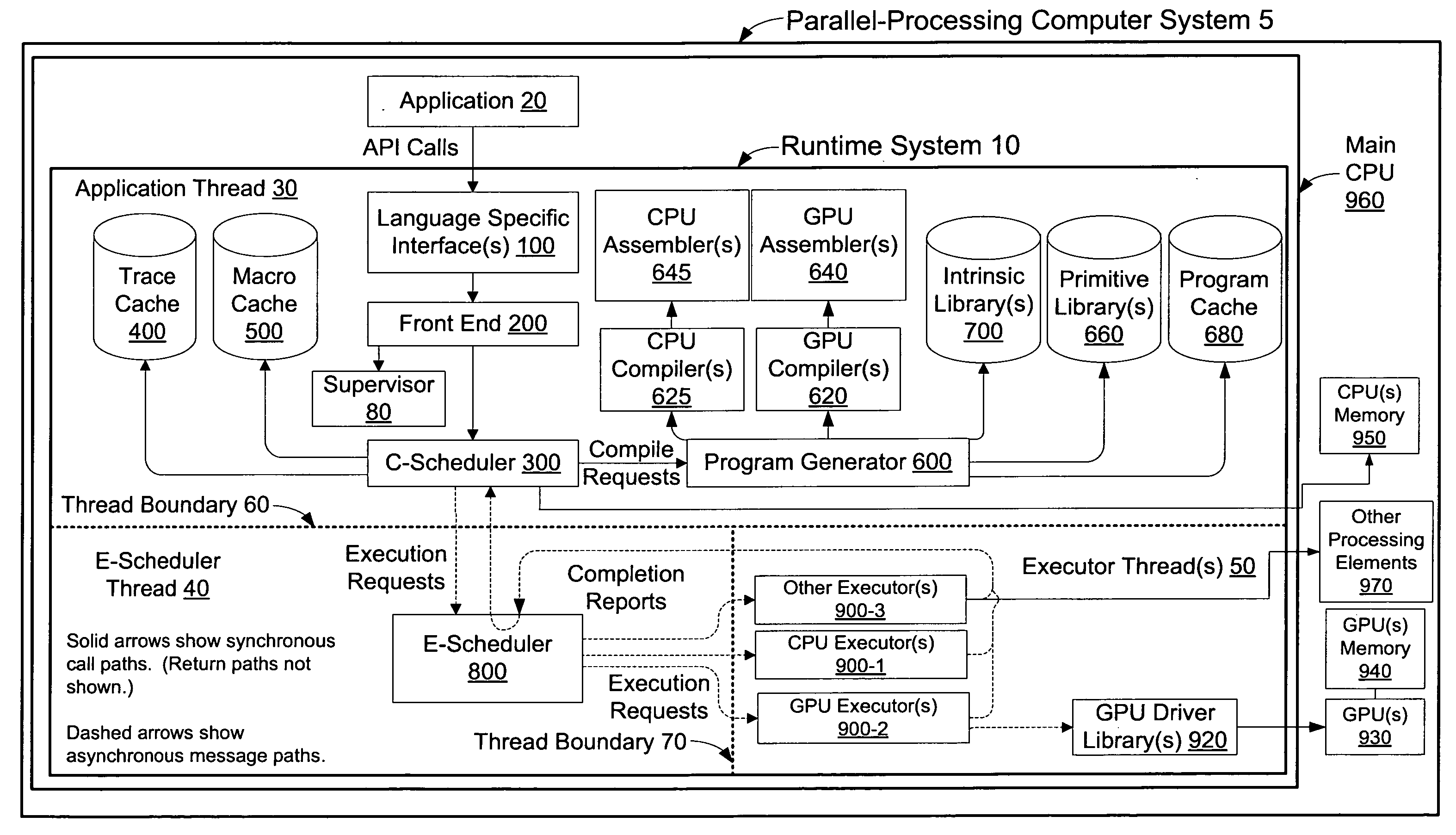

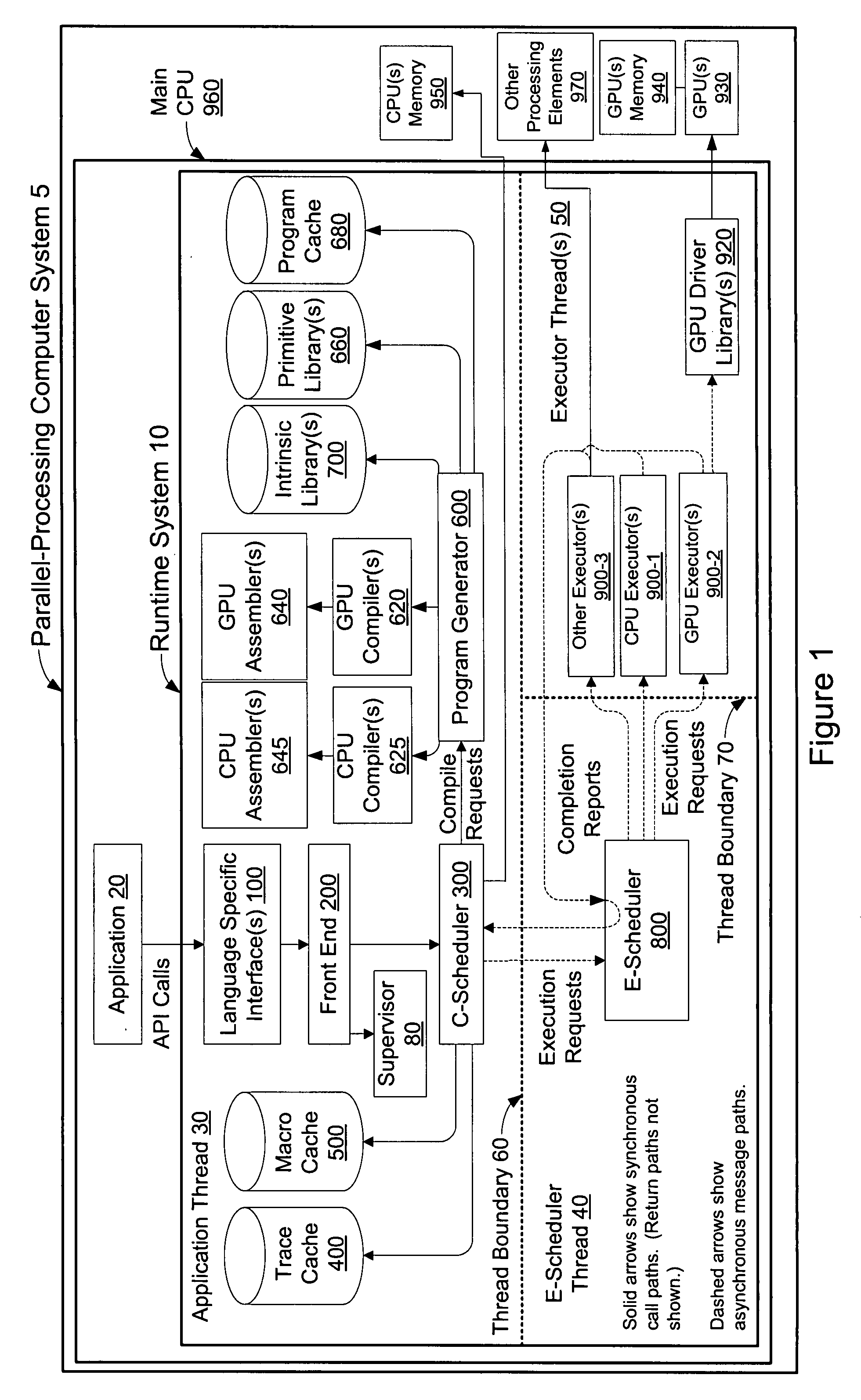

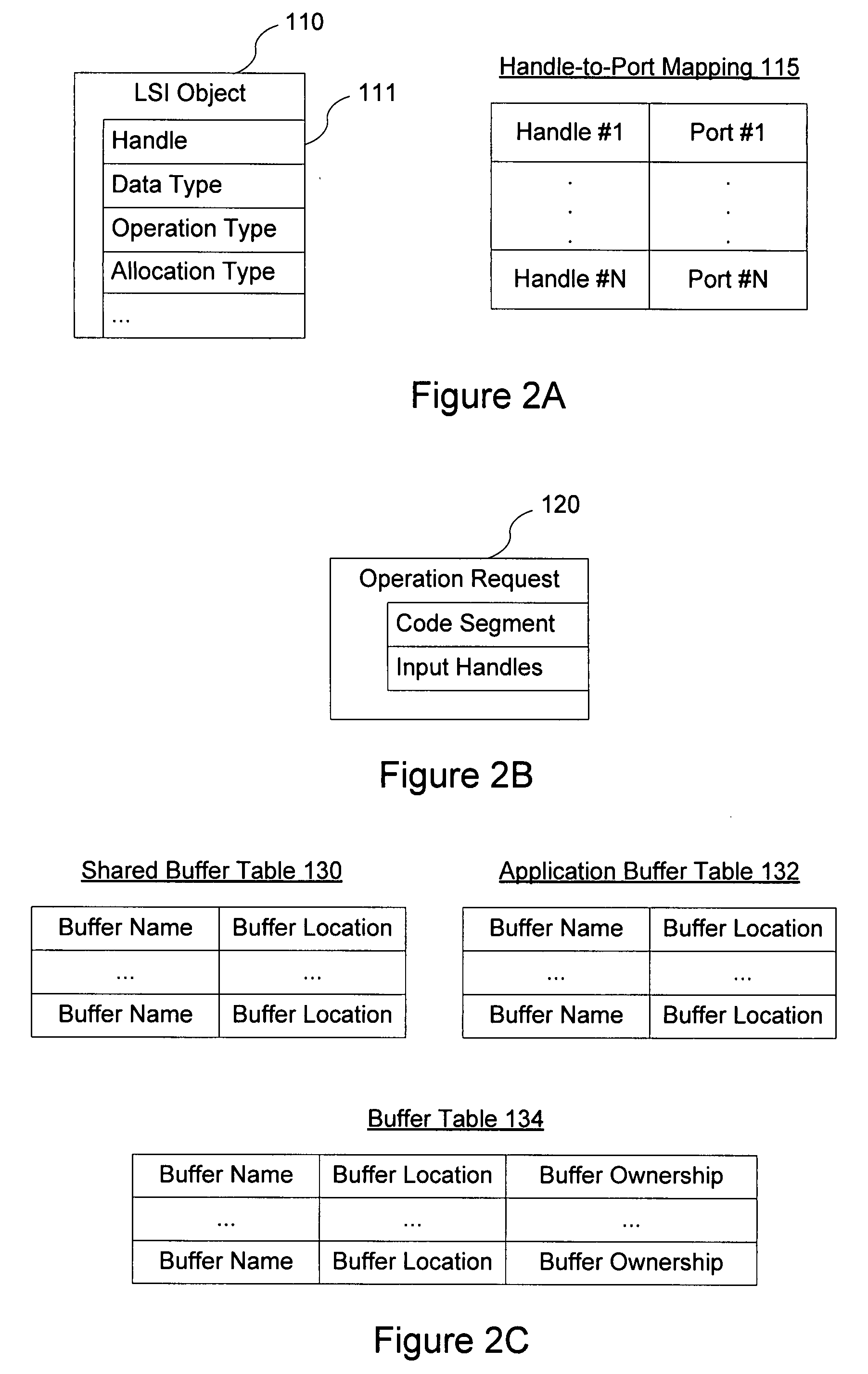

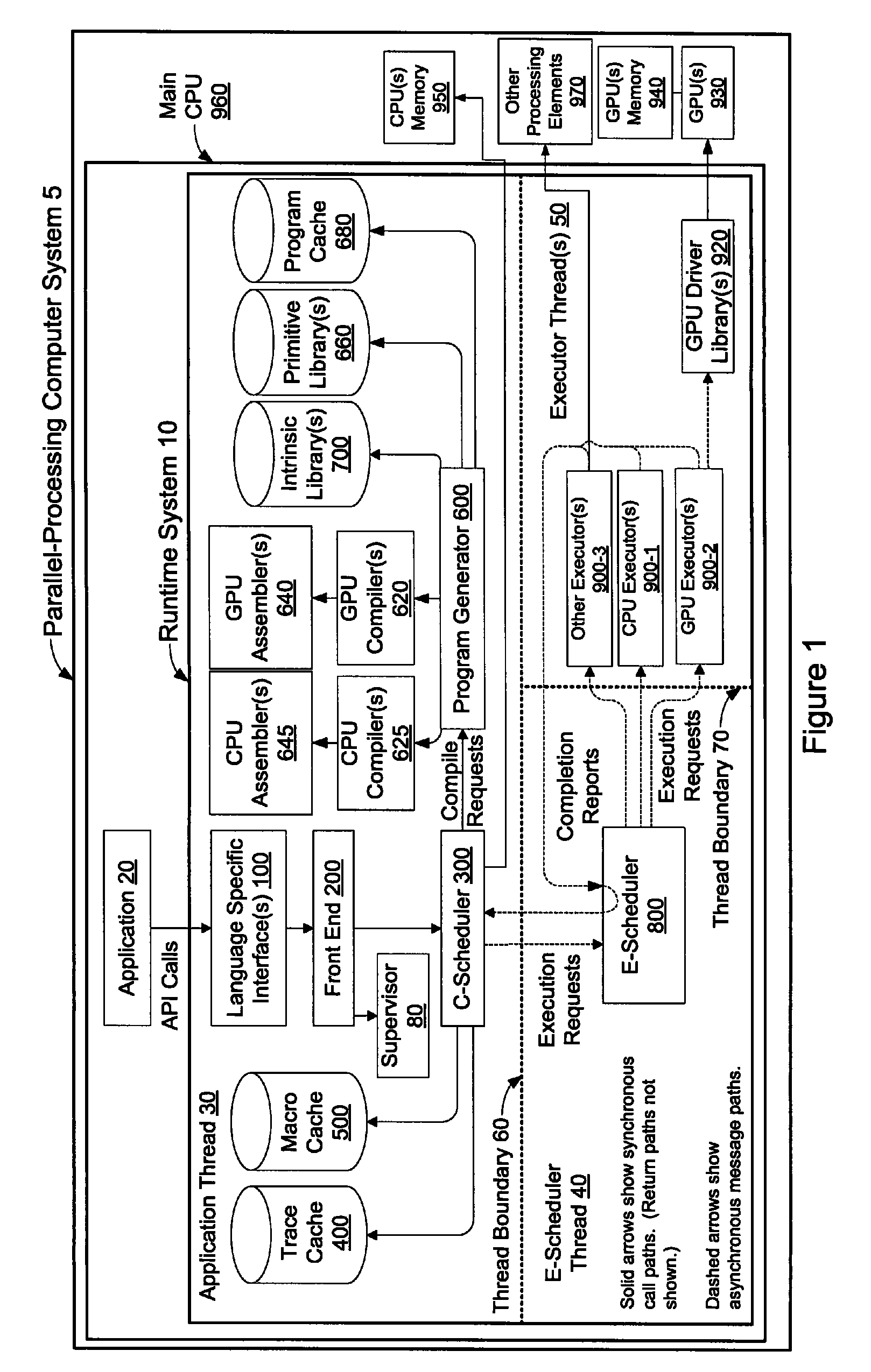

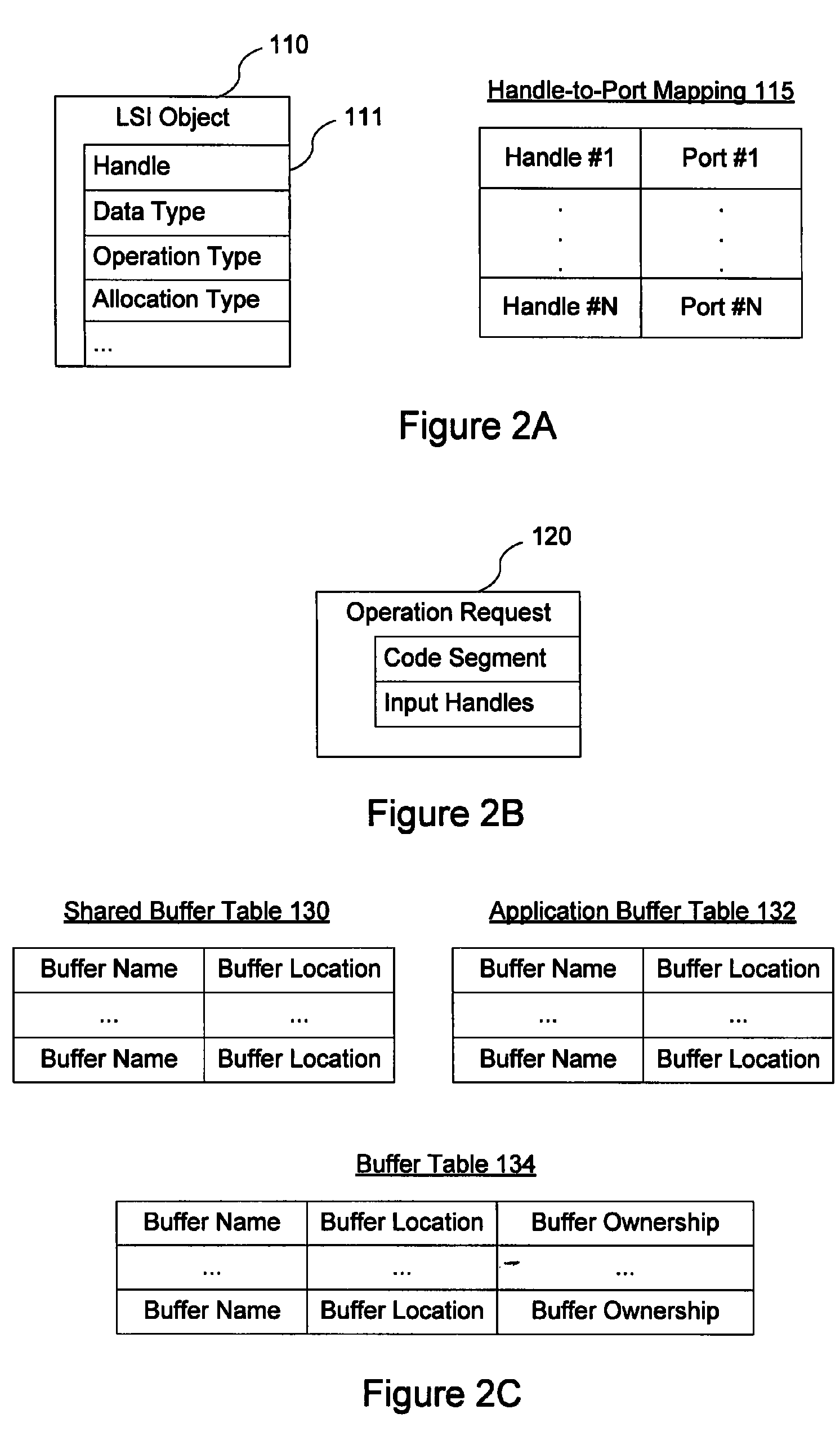

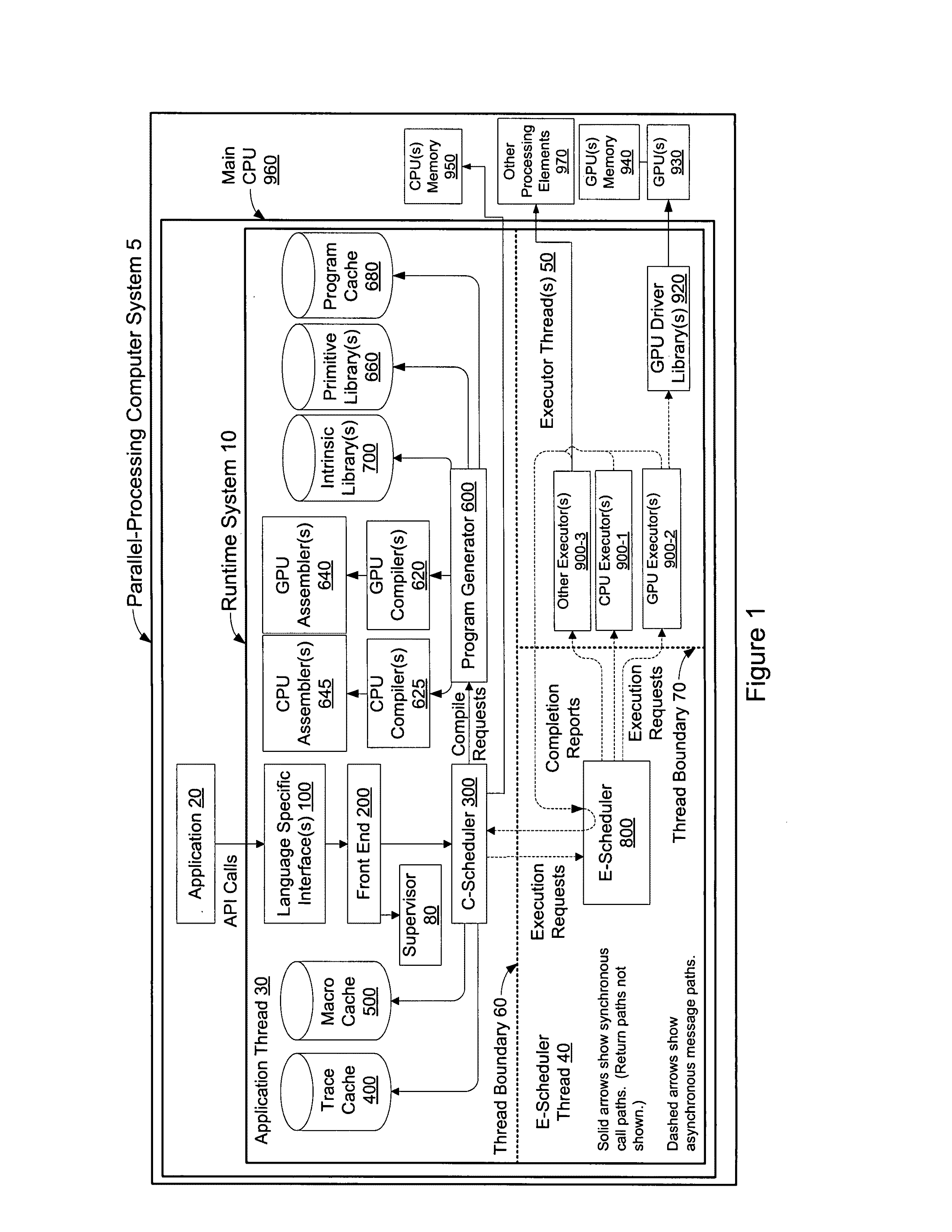

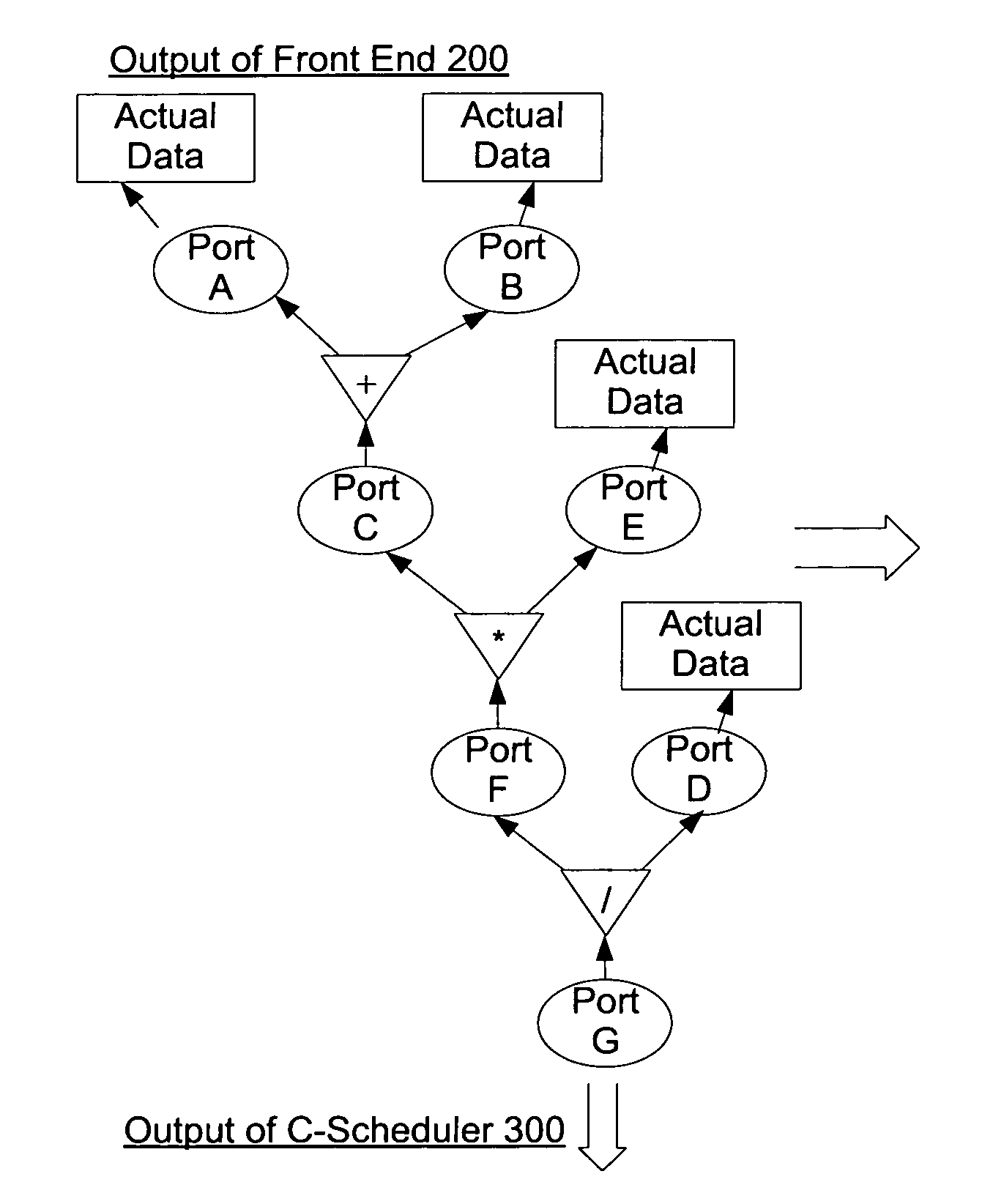

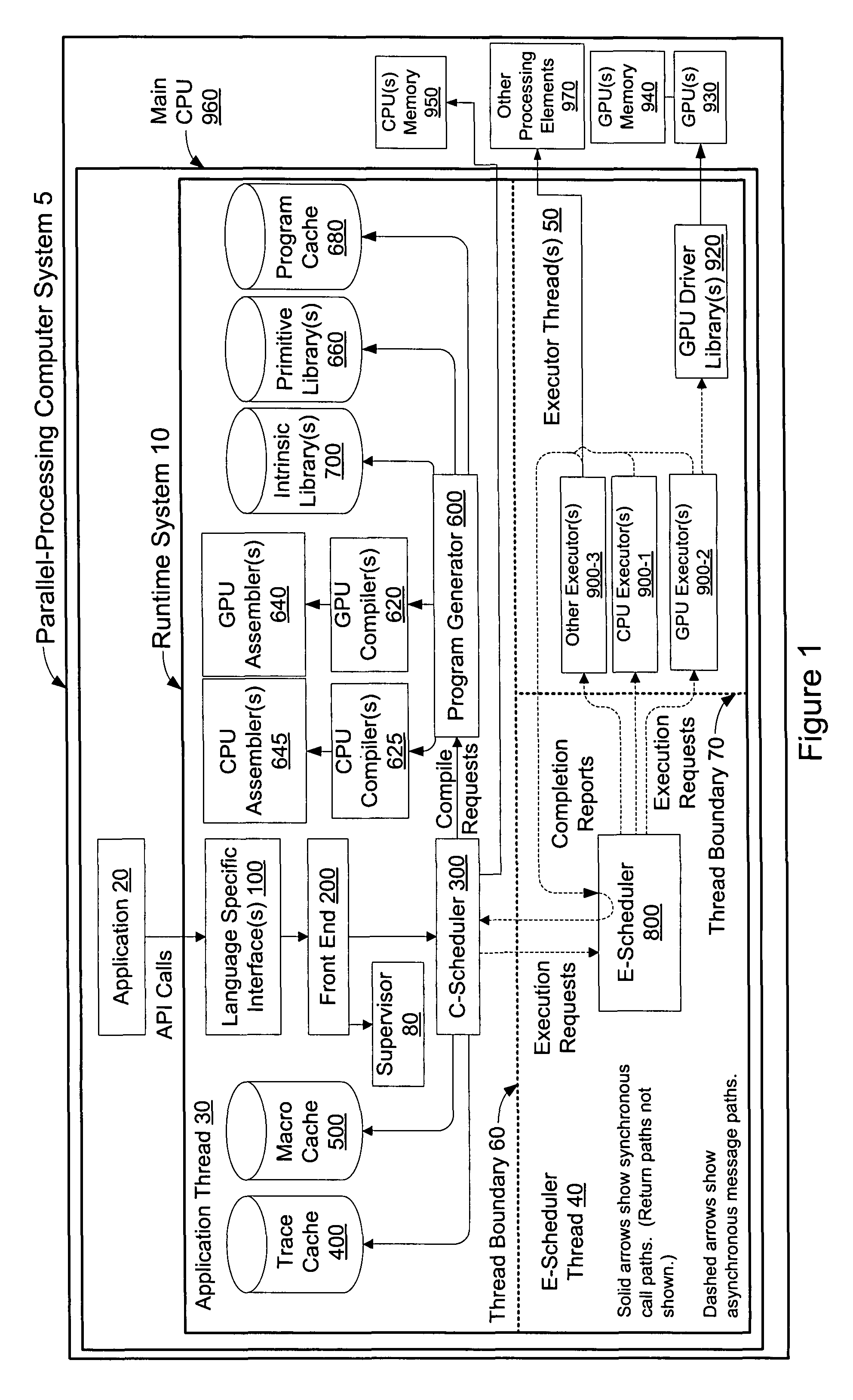

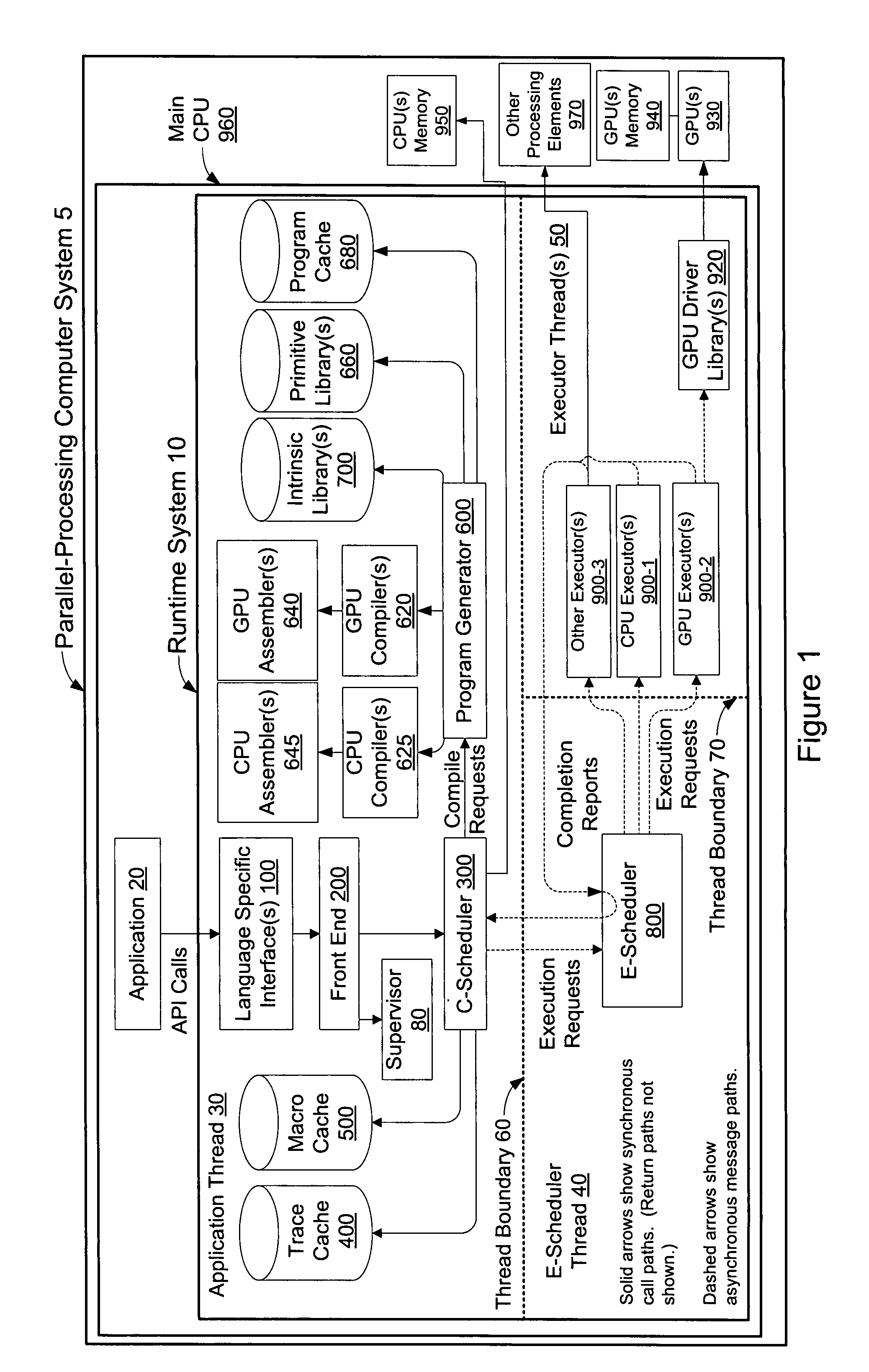

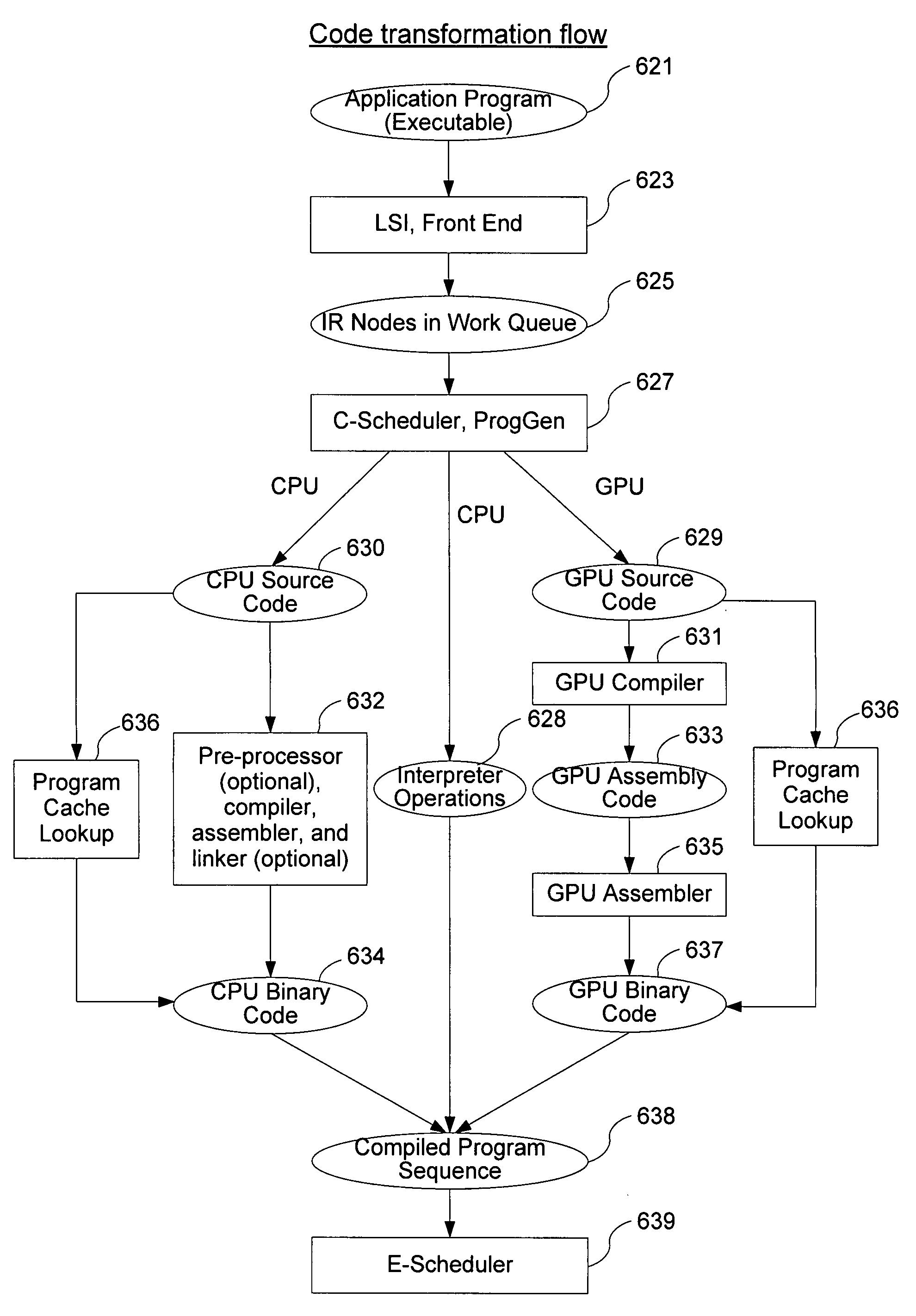

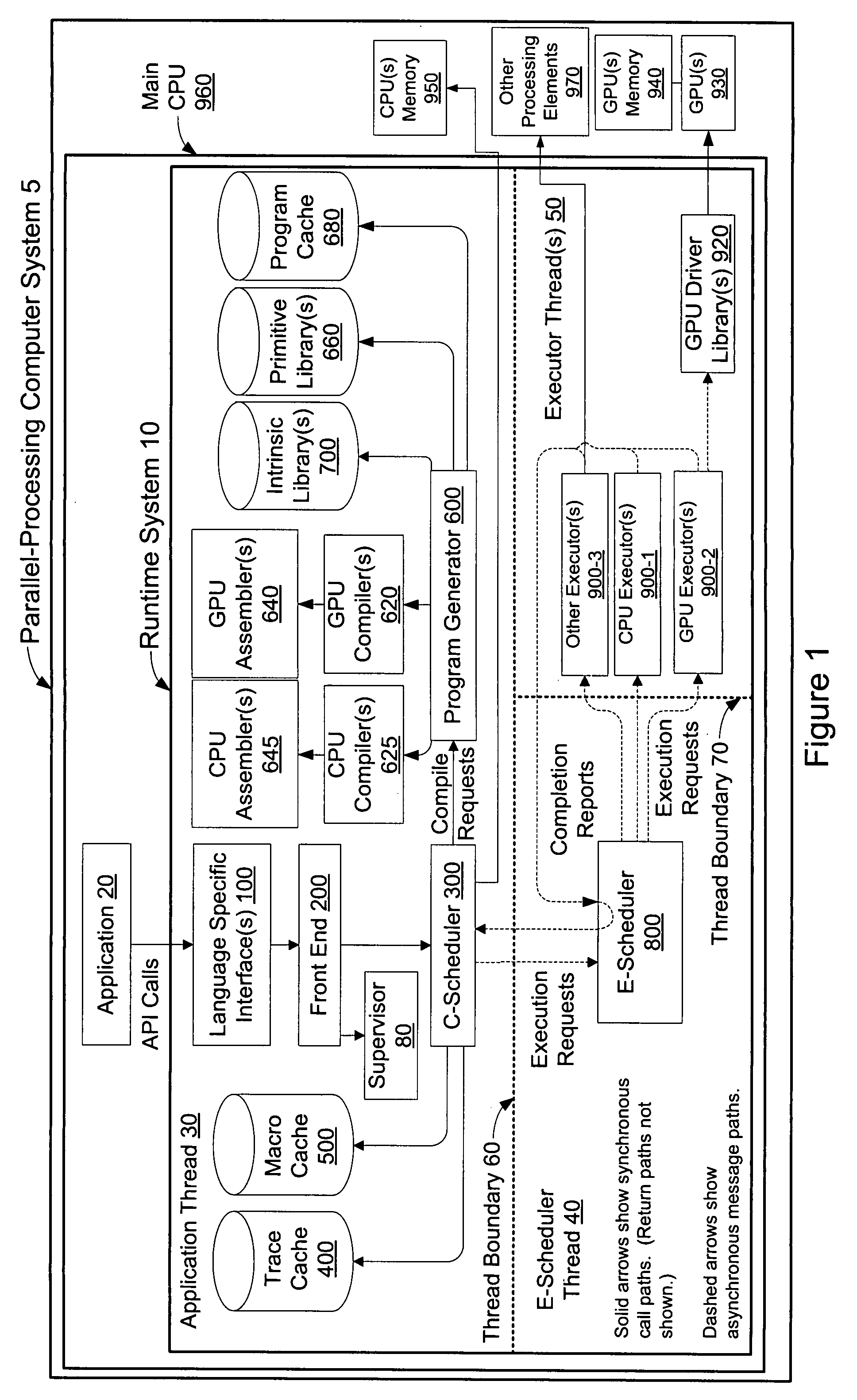

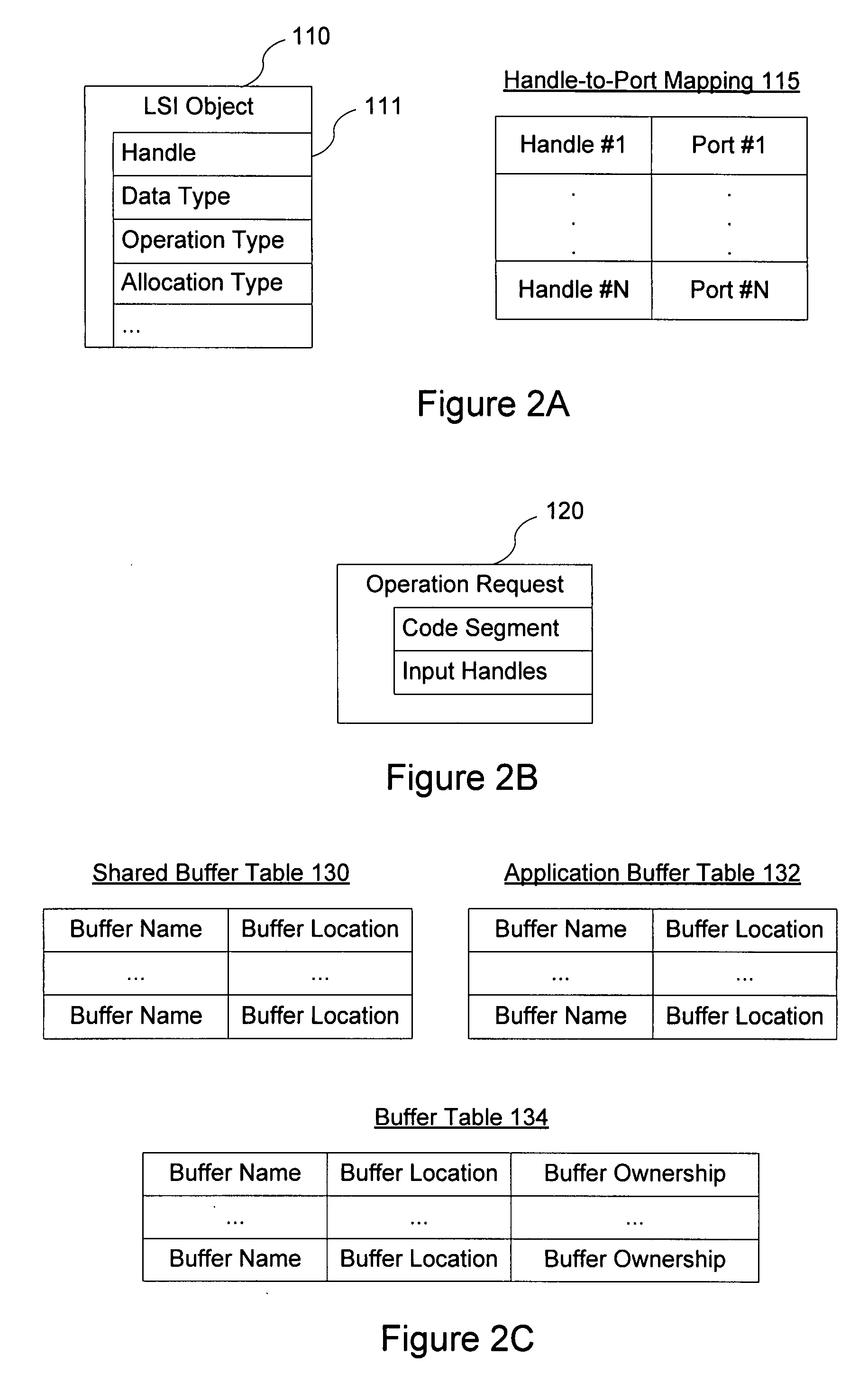

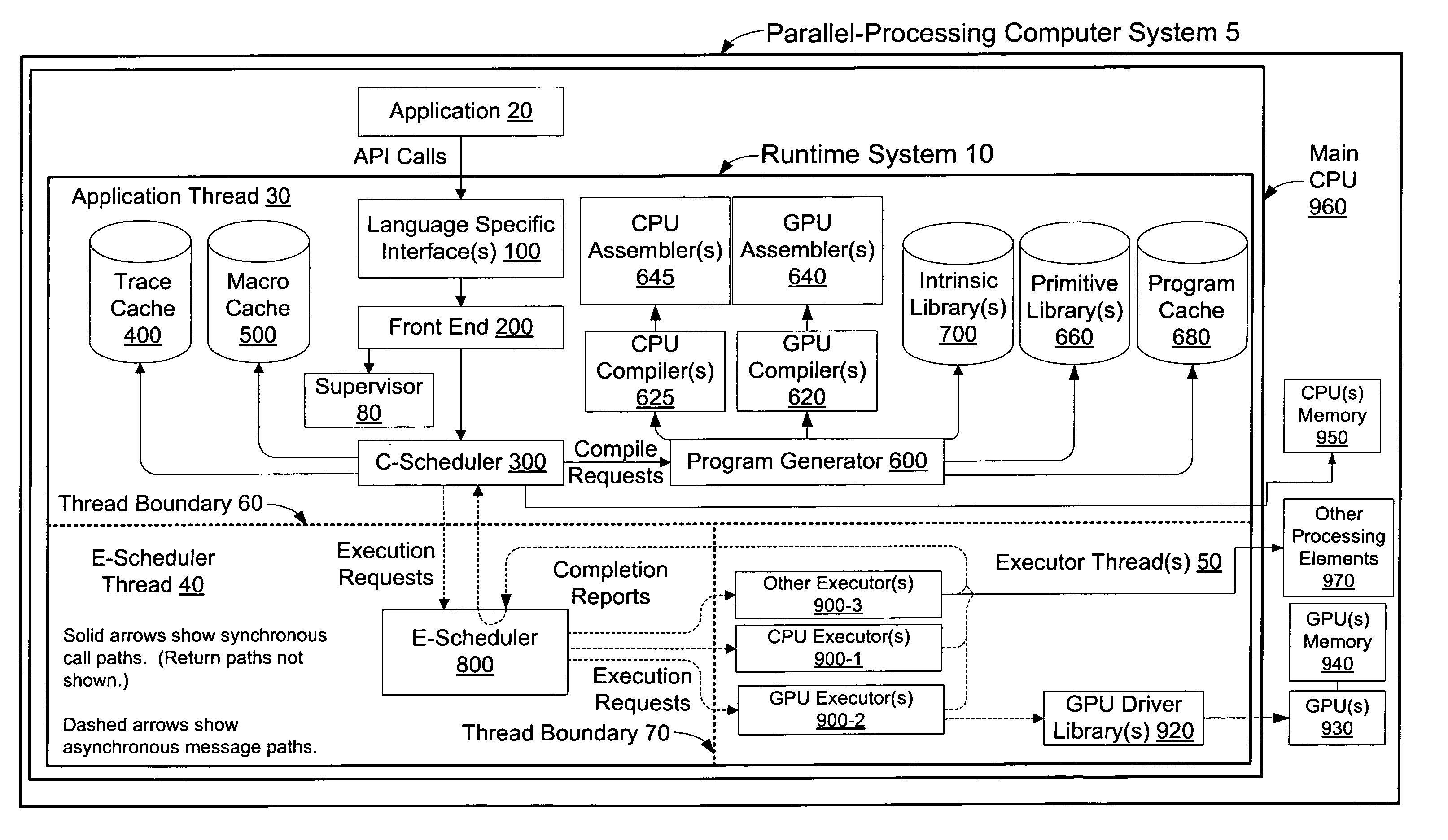

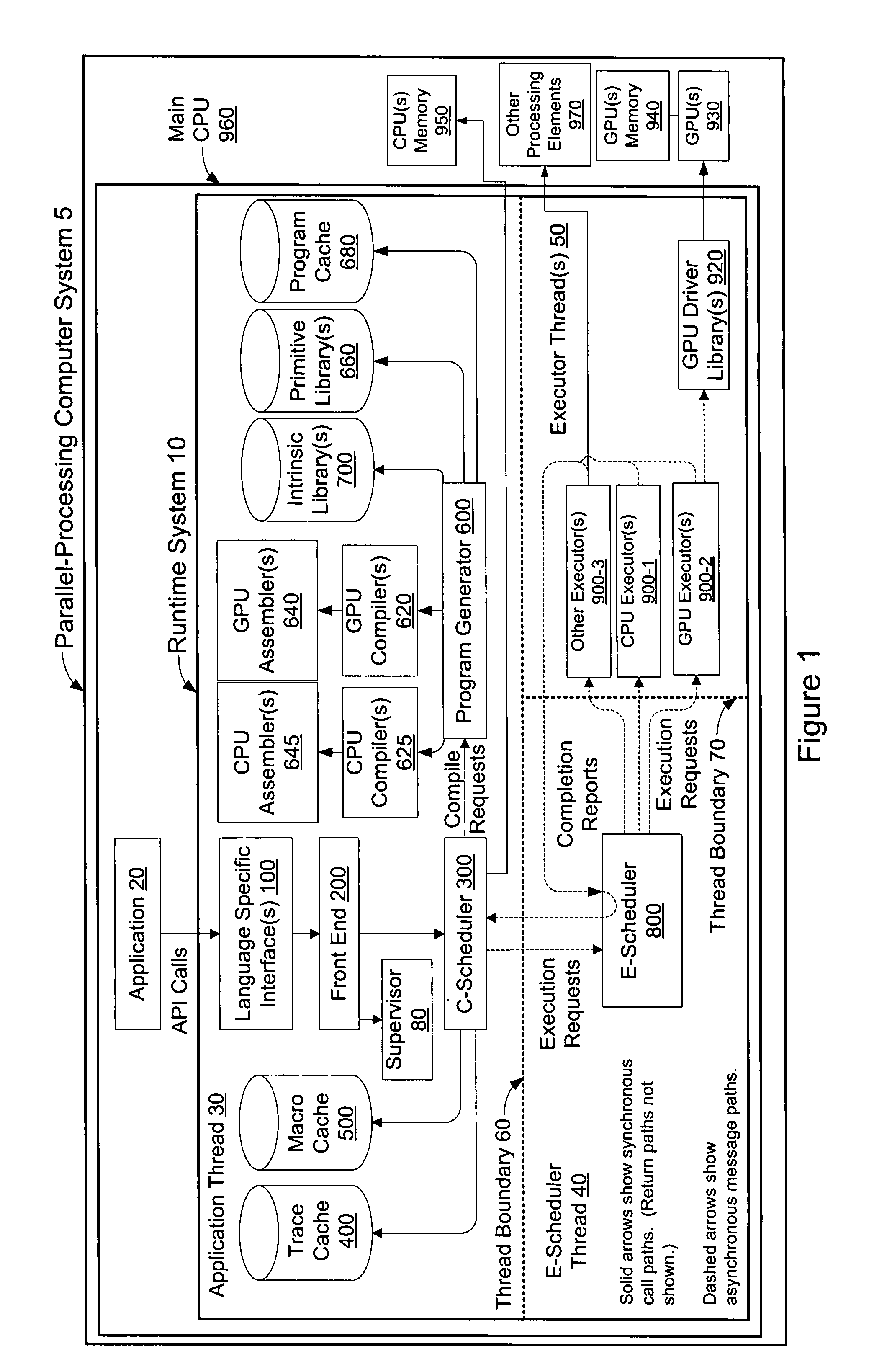

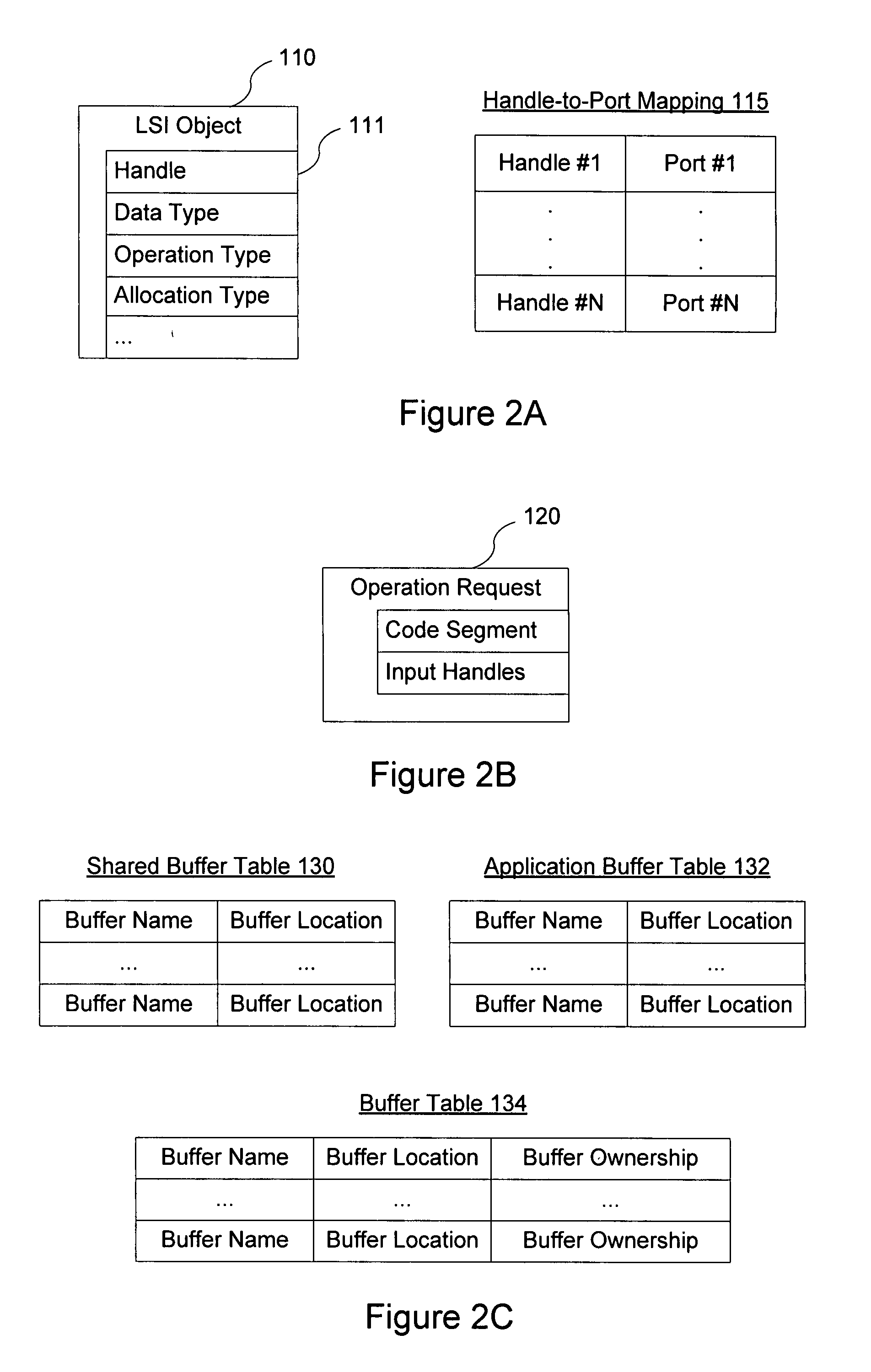

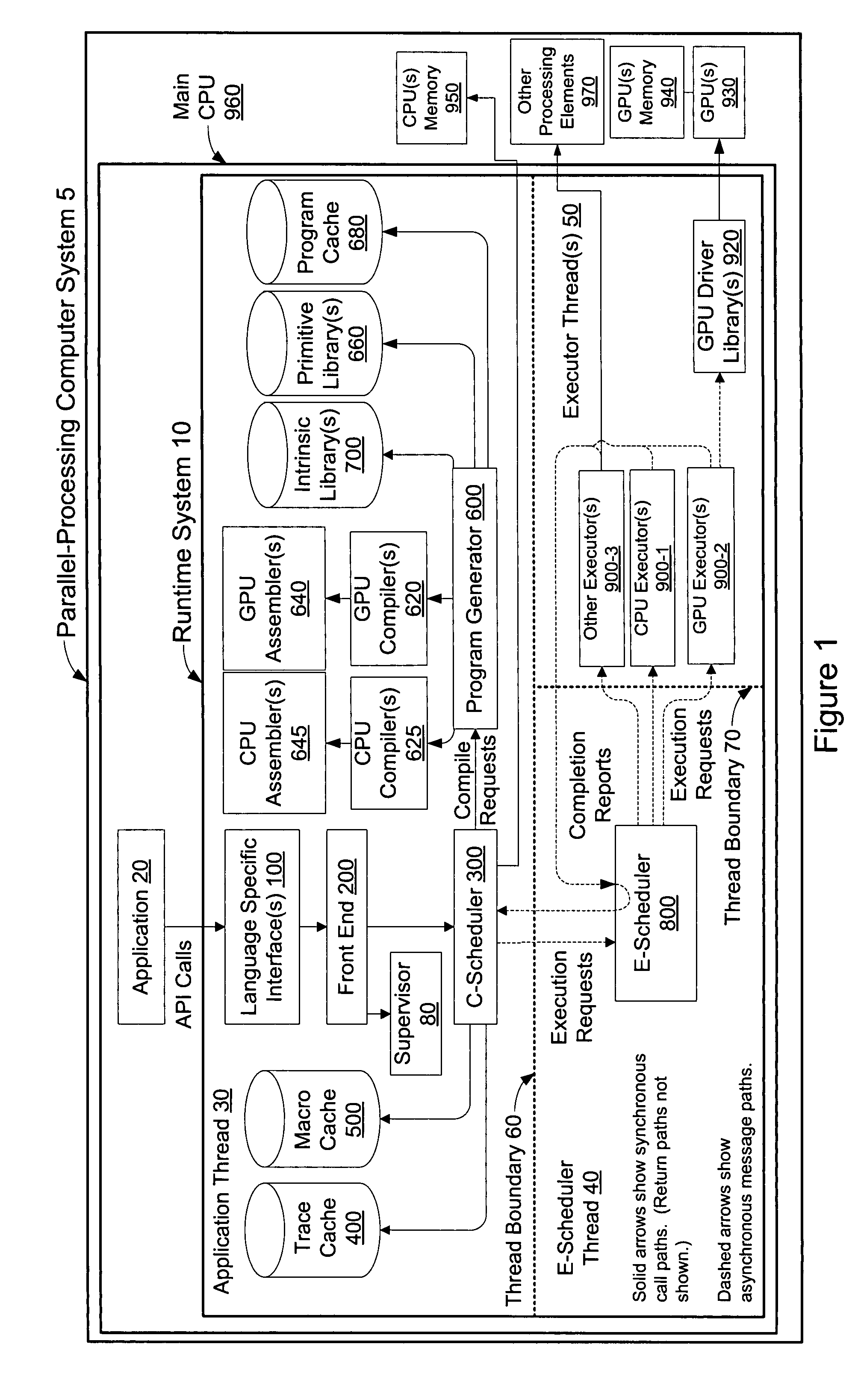

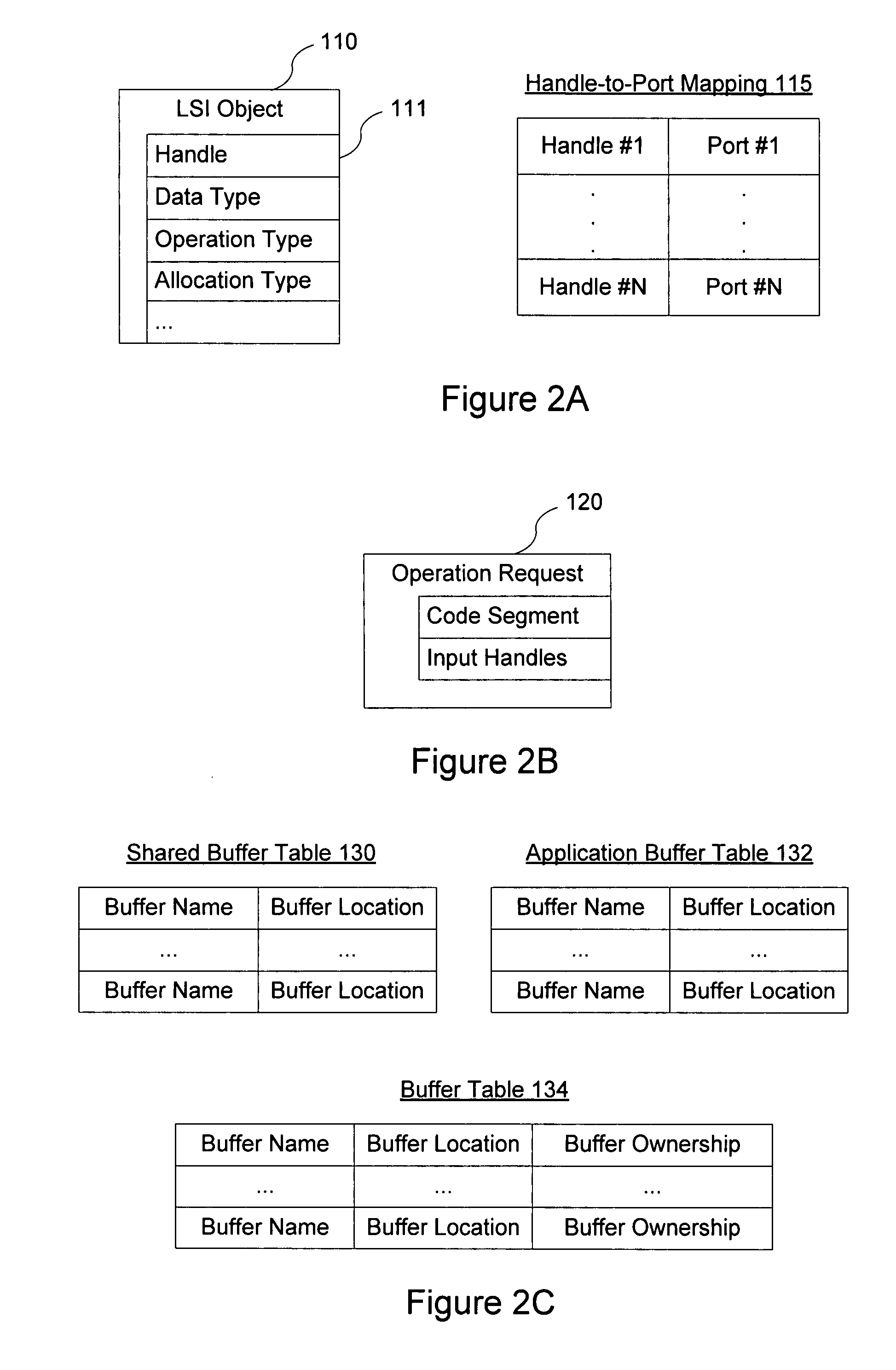

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS20070294512A1Error detection/correctionSoftware engineeringPerformance computingProcessing element

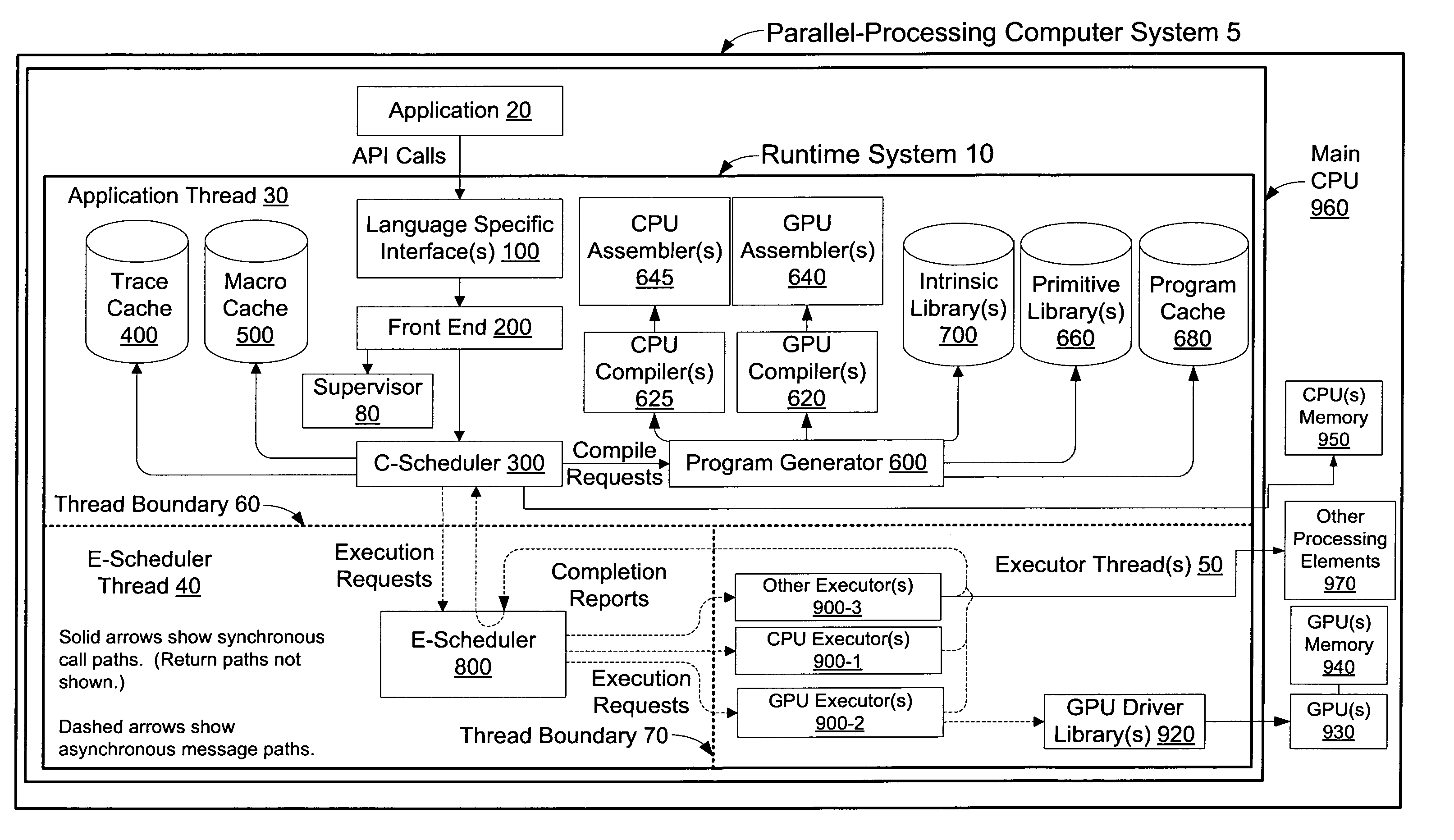

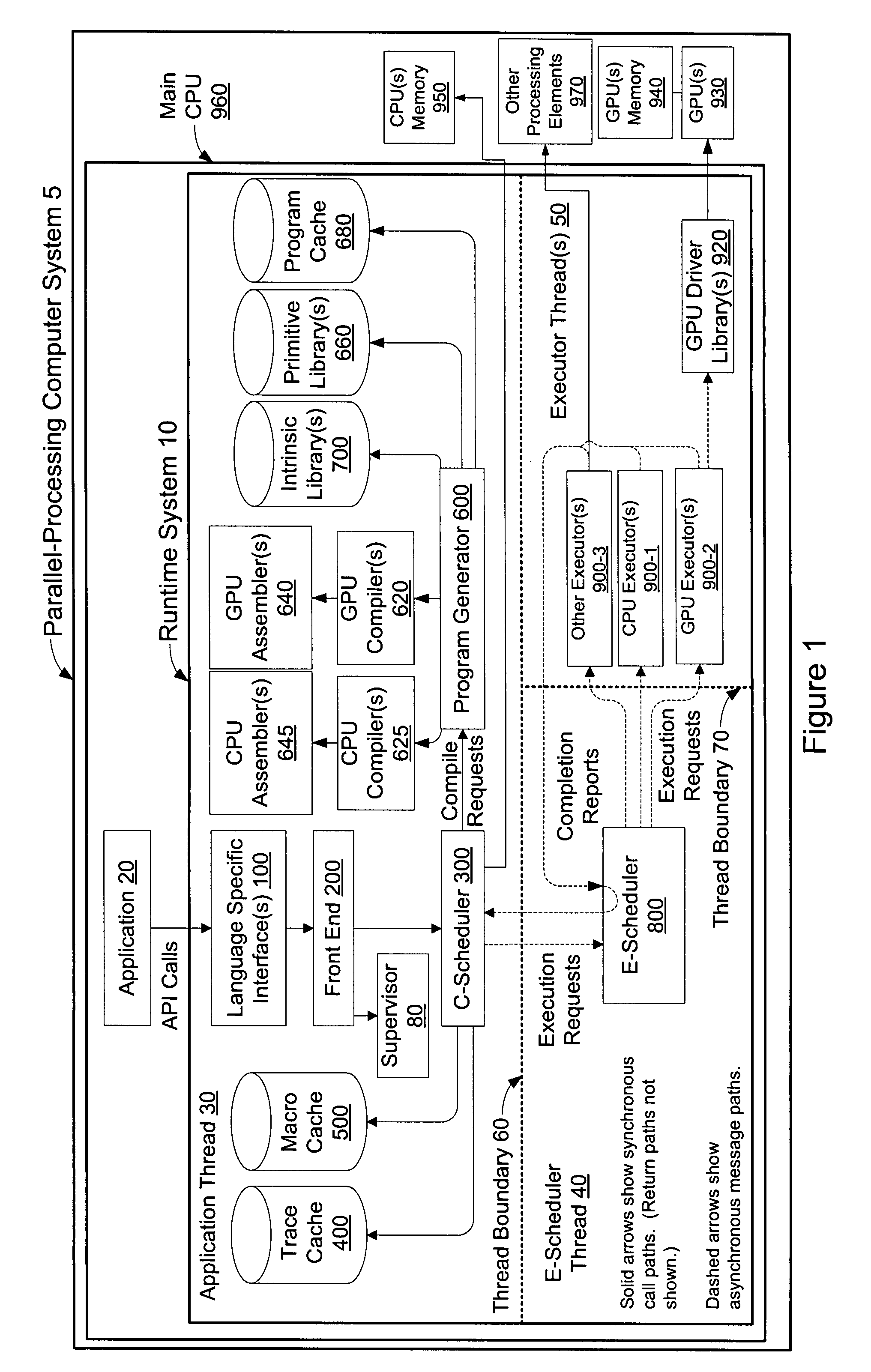

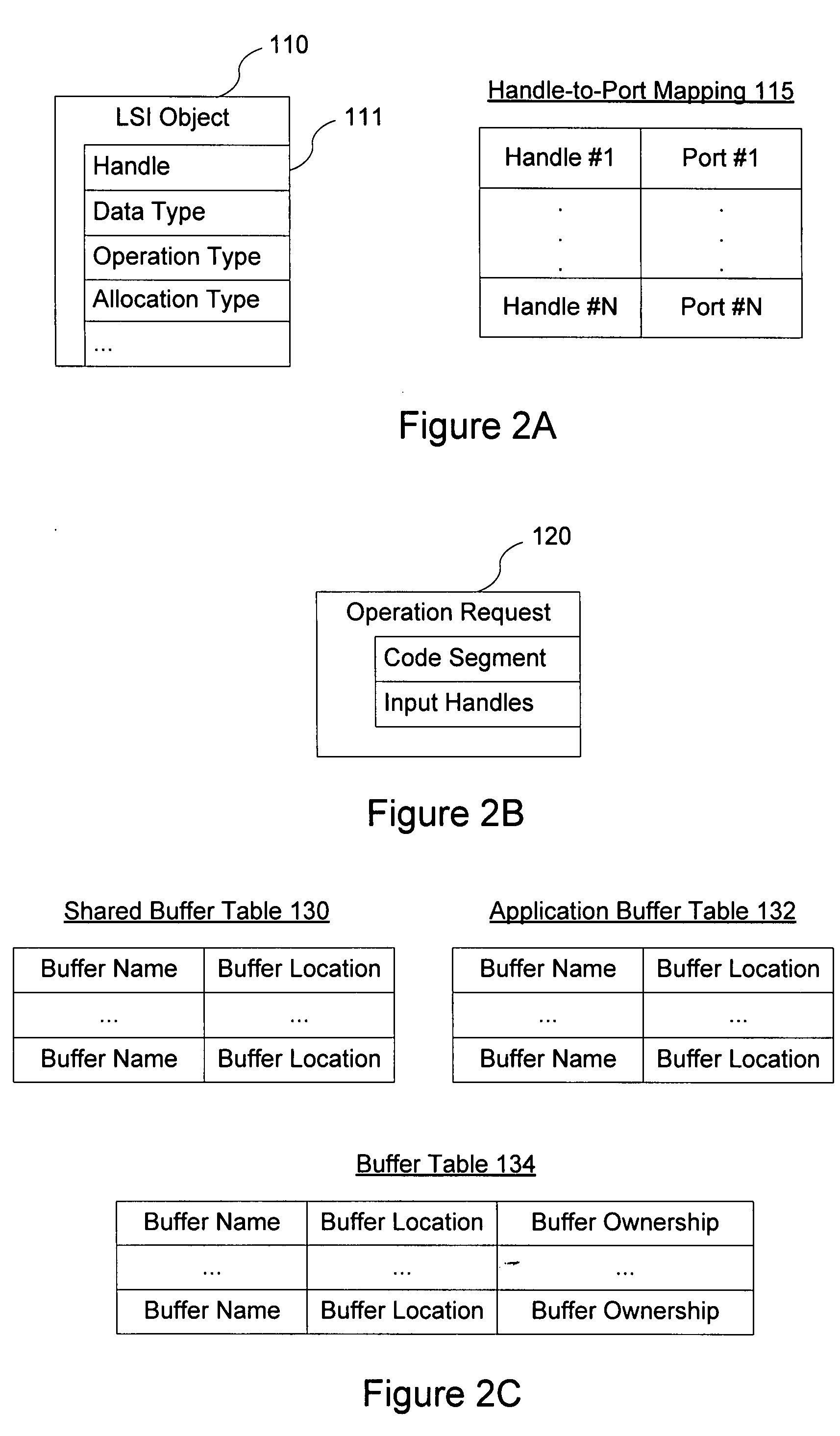

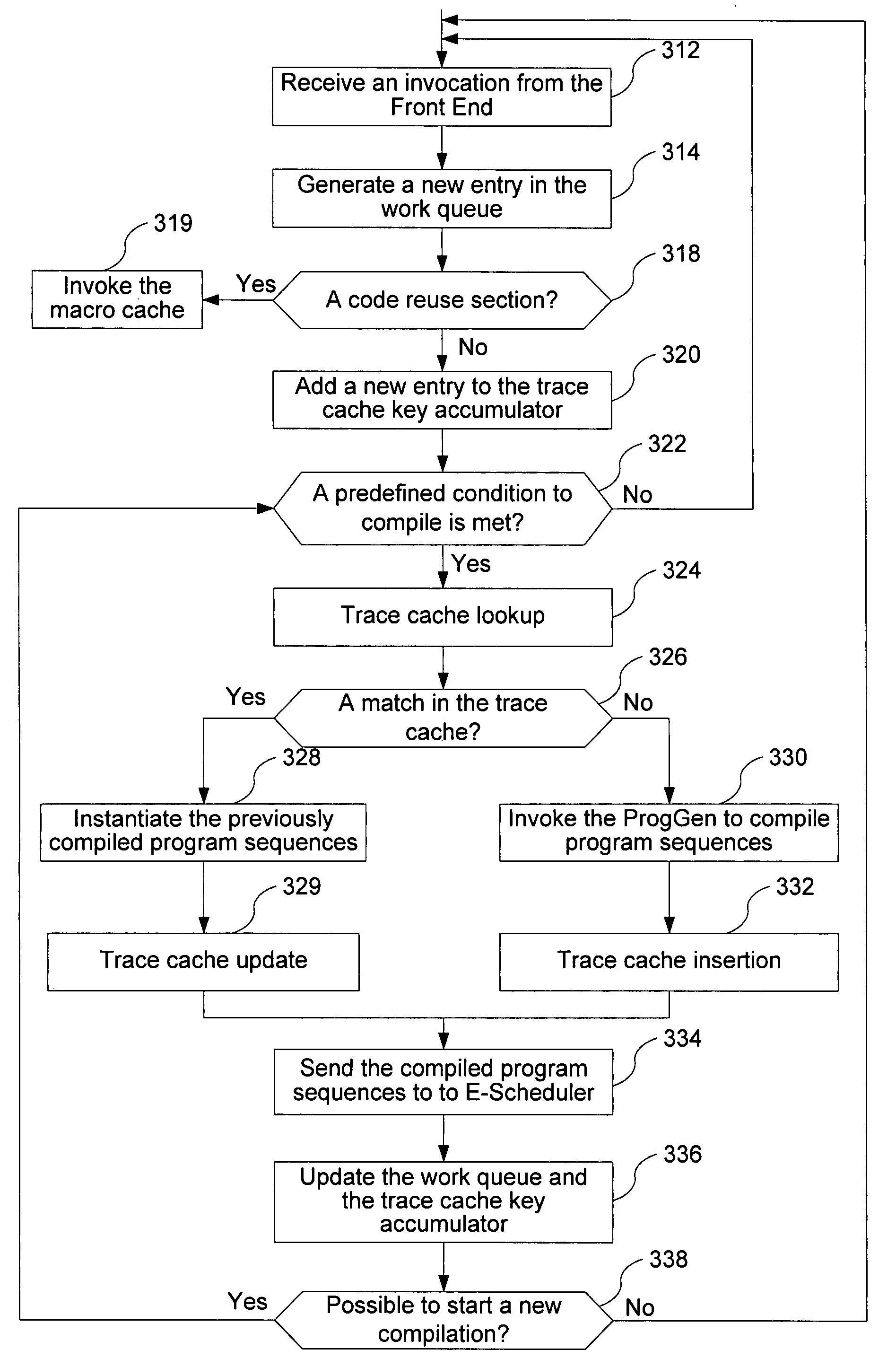

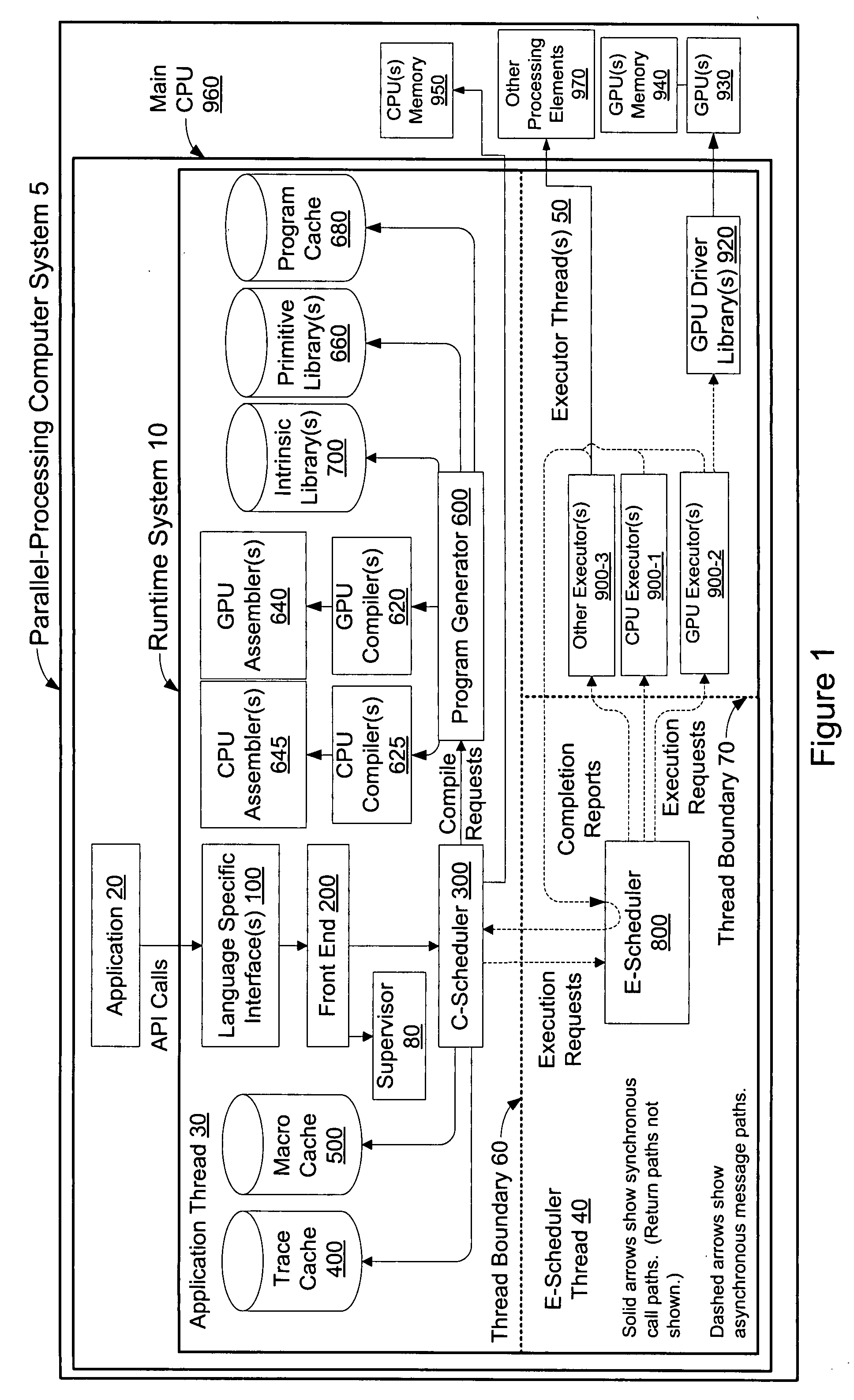

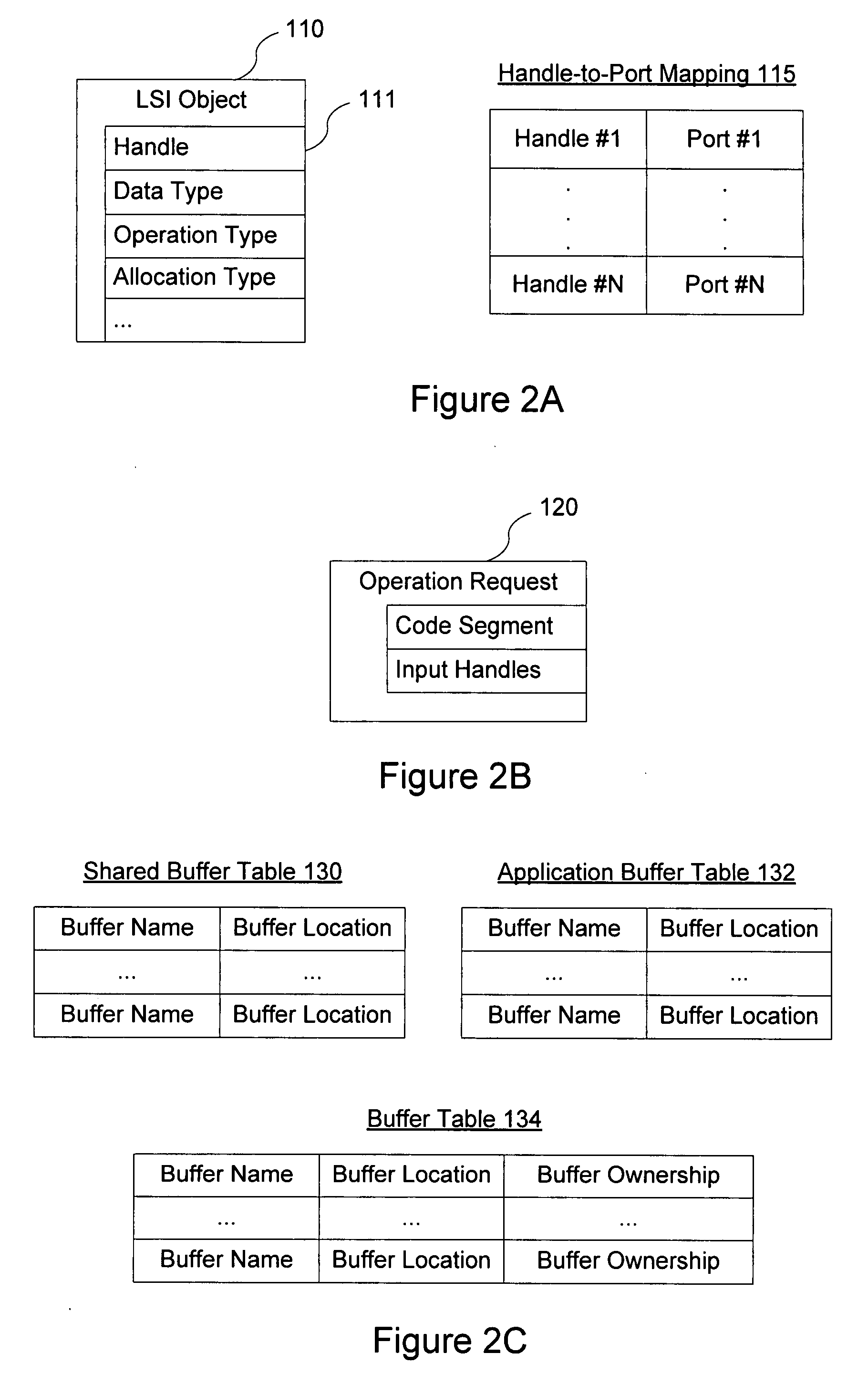

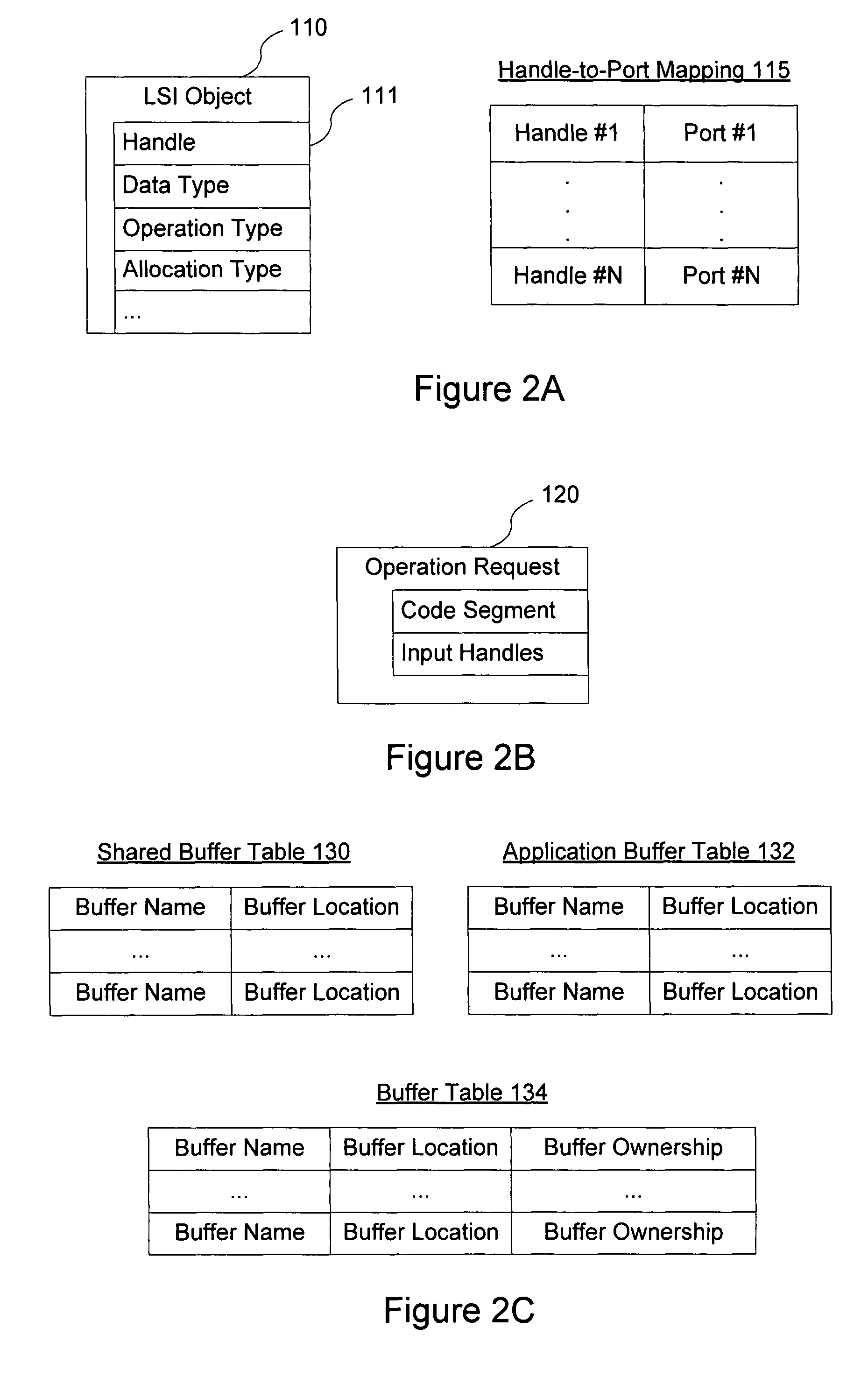

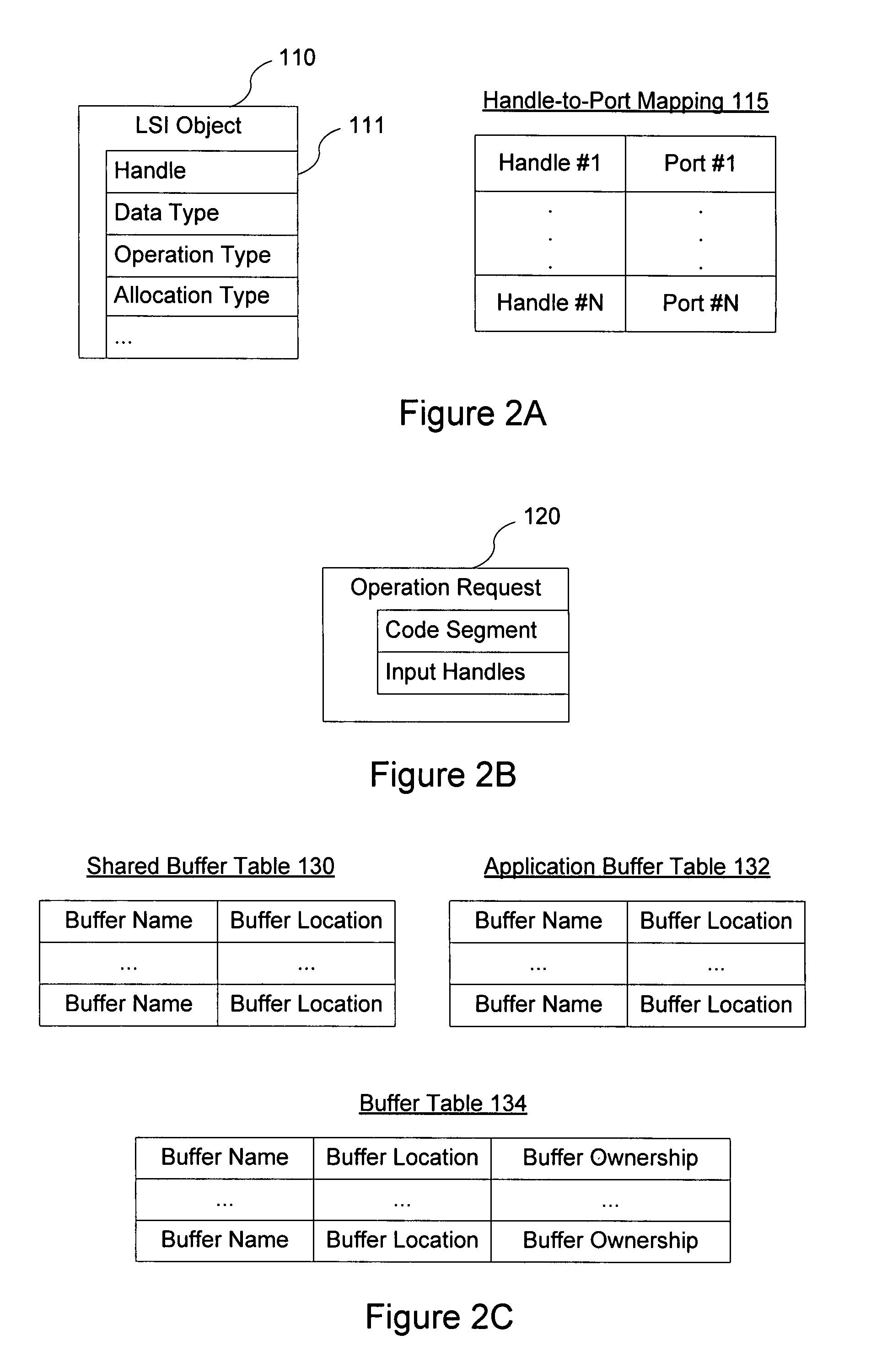

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

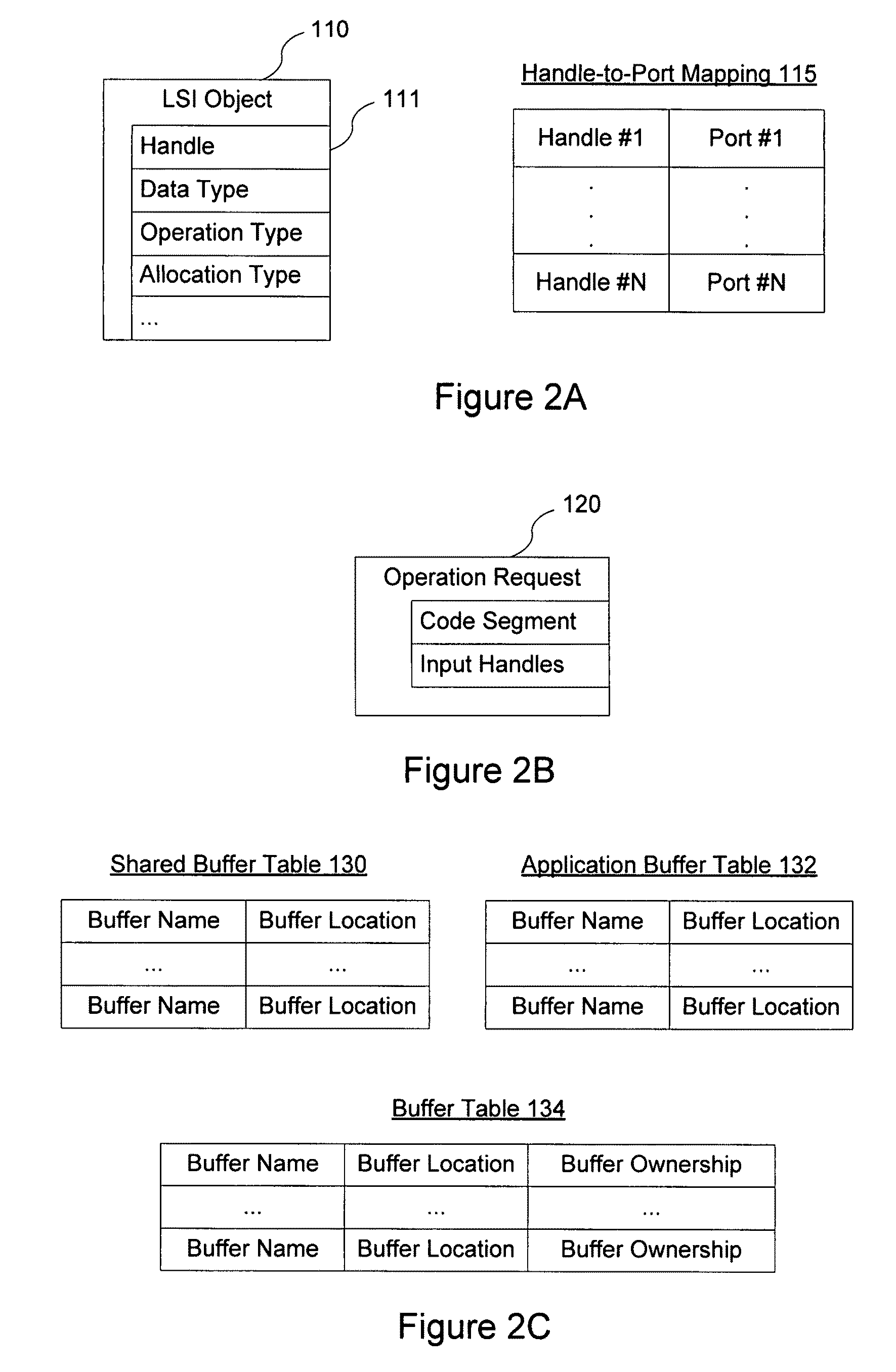

Application program interface of a parallel-processing computer system that supports multiple programming languages

ActiveUS20070294663A1Interprogram communicationKitchen cabinetsApplication programming interfacePerformance computing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

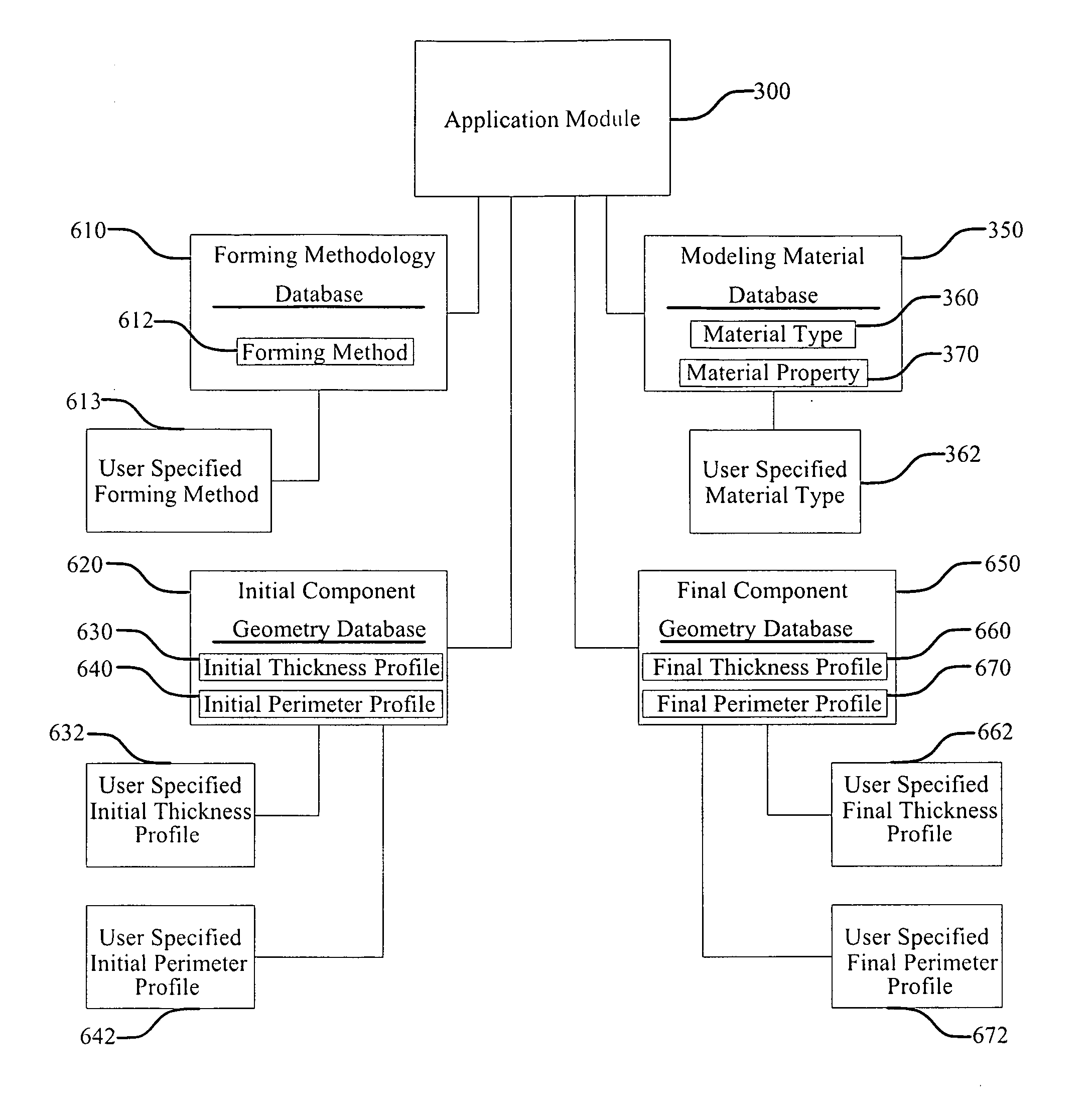

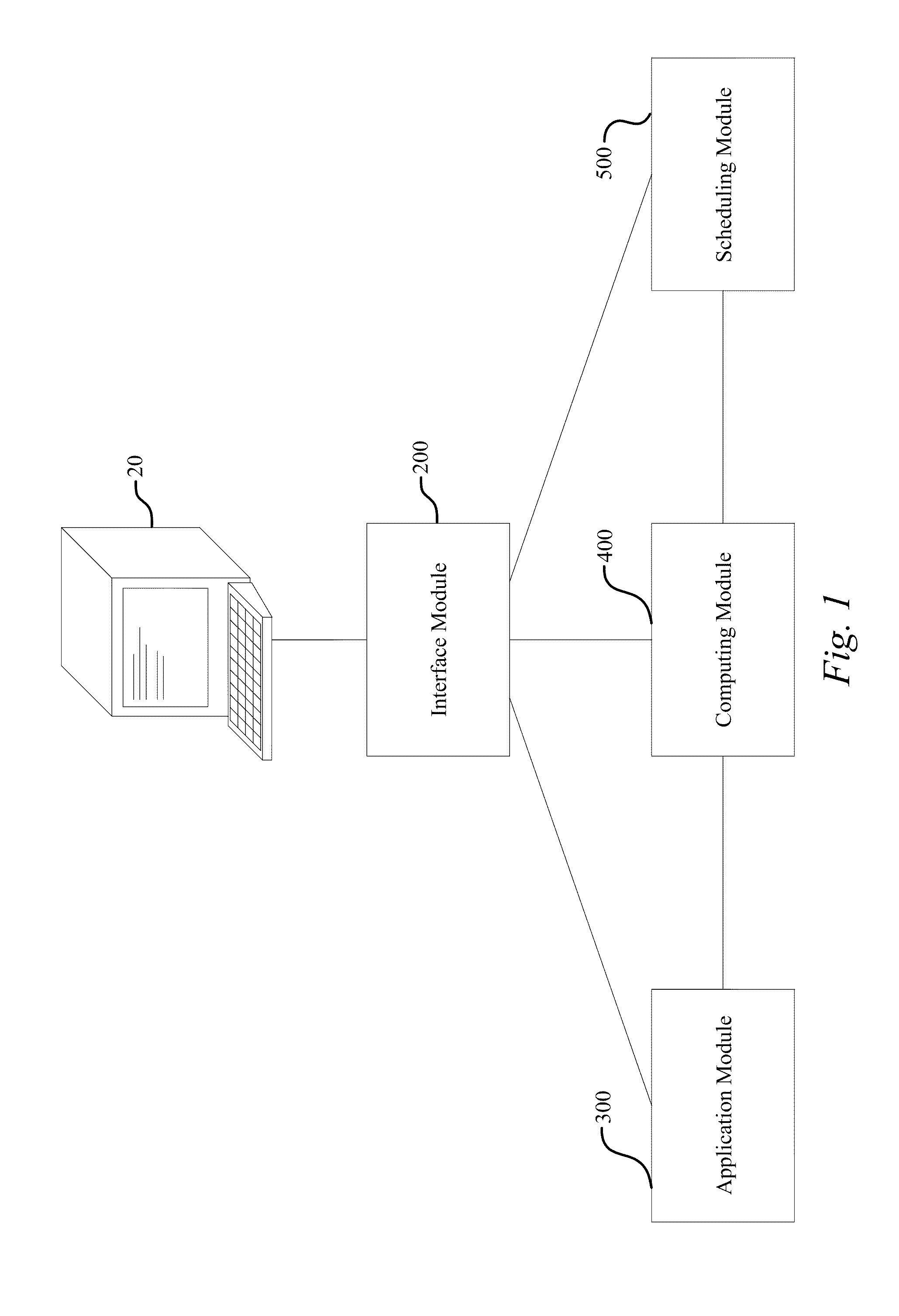

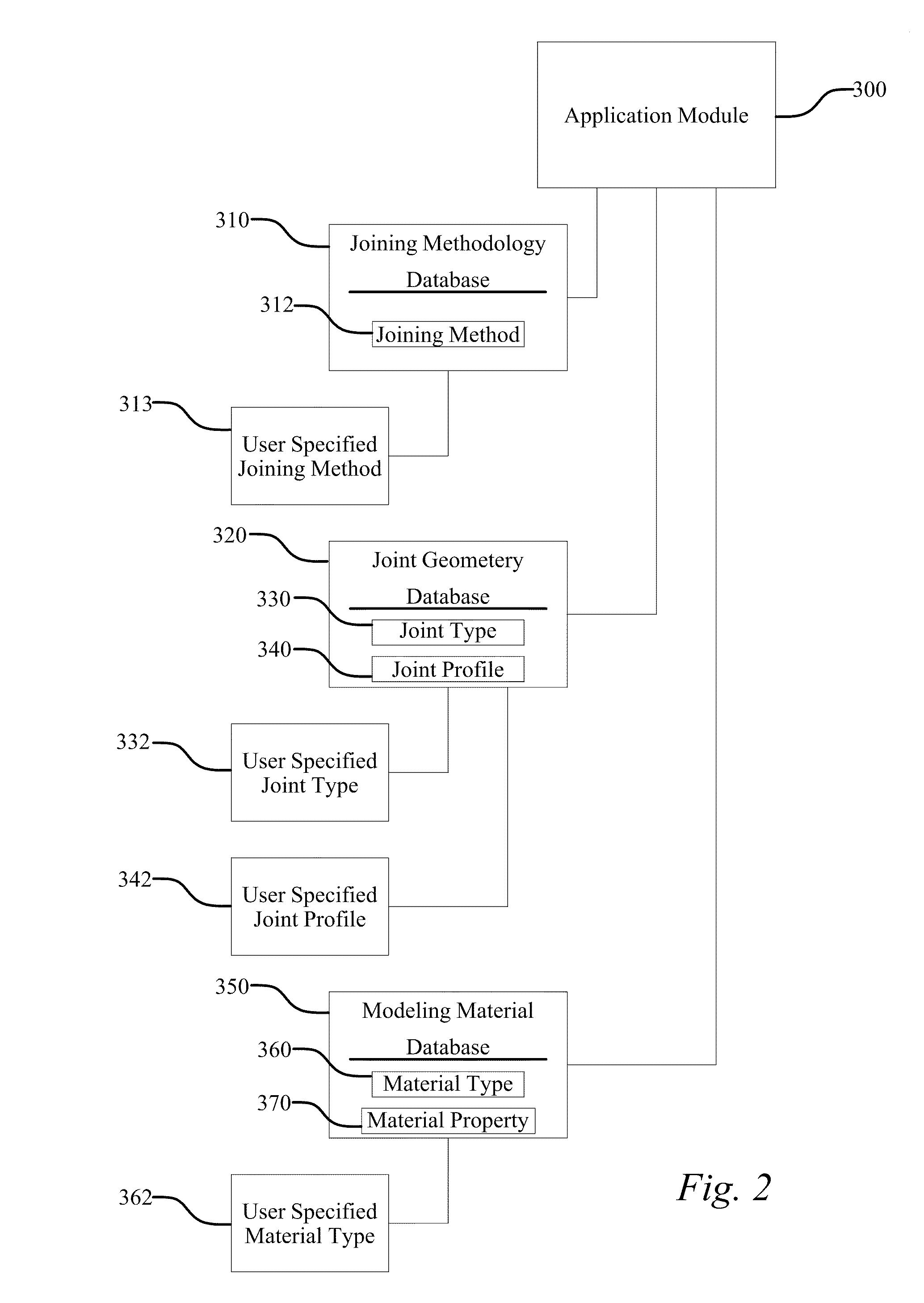

Remote High-Performance Computing Material Joining and Material Forming Modeling System and Method

InactiveUS20100121472A1Arc welding apparatusAnalogue computers for control systemsPerformance computingModelSim

A remote high-performance computing material joining and material forming modeling system (100) enables a remote user (10) using a user input device (20) to use high speed computing capabilities to model materials joining and material forming processes. The modeling system (100) includes an interface module (200), an application module (300), a computing module (400), and a scheduling module (500). The interface module (200) is in operative communication with the user input device (20), as well as the application module (300), the computing module (400), and the scheduling module (500). The application module (300) is in operative communication with the interface module (200) and the computing module (400). The scheduling module (500) is in operative communication with the interface module (200) and the computing module (400). Lastly, the computing module (400) is in operative communication with the interface module (200), the application module (300), and the scheduling module (500).

Owner:EDISON WELDING INSTITUTE INC

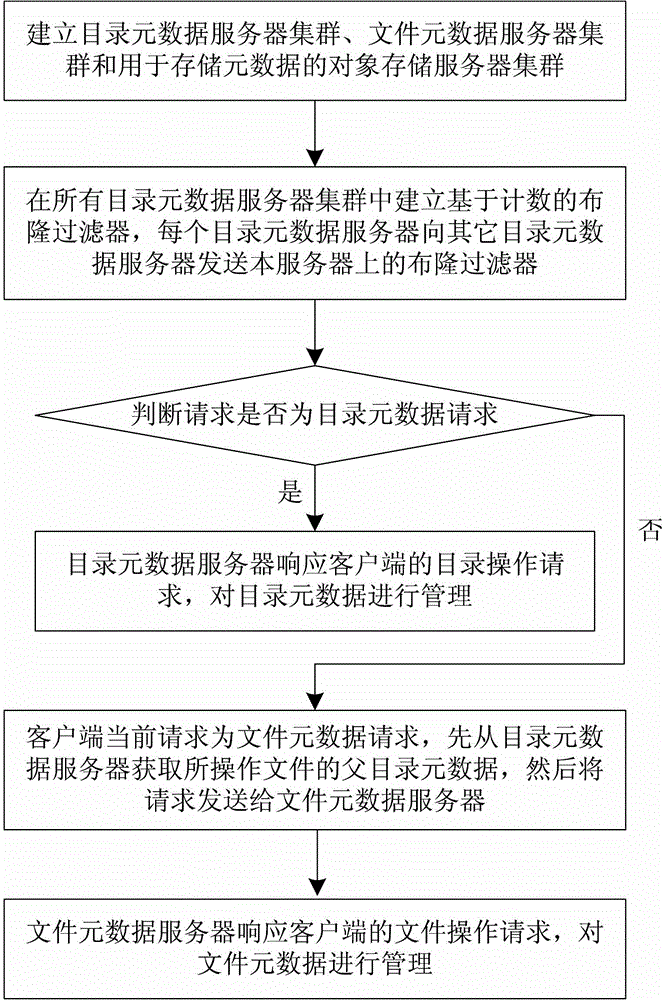

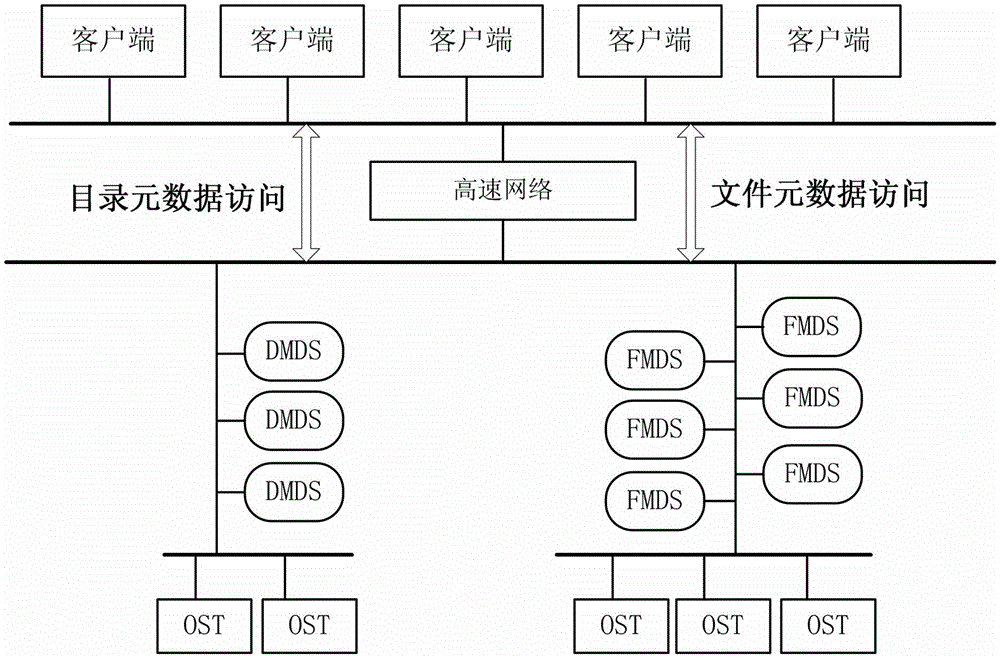

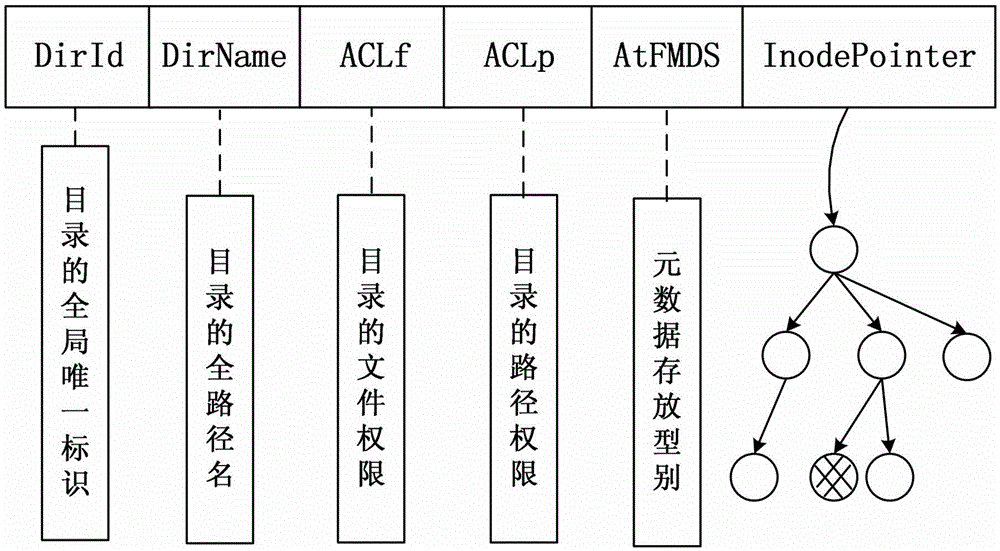

Distributed file system metadata management method facing to high-performance calculation

ActiveCN103150394AInhibit migrationLoad balancingTransmissionSpecial data processing applicationsDistributed File SystemMetadata management

The invention discloses a distributed file system metadata management method facing to high-performance calculation. The method comprises the following steps of: 1) establishing a catalogue metadata server cluster, a file metadata server cluster and an object storage server cluster; 2) establishing a global counting-based bloom filter in the catalogue metadata server cluster; 3) when the operation request of a client side arrives, skipping to execute step 4) or 5); 4) enabling the catalogue metadata server cluster to respond to the catalogue operation request of the client side to manage the catalogue metadata; and 5) enabling the file metadata server cluster to respond to the file operation request of the client side to manage the file metadata data. According to the distributed file system metadata management method disclosed by the invention, the metadata transferring problem brought by catalogue renaming can be effectively solved, and the distributed file system metadata management method has the advantages of high storage performance, small maintenance expenditure, high load, no bottleneck, good expansibility and balanced load.

Owner:NAT UNIV OF DEFENSE TECH

Systems and methods for generating reference results using a parallel-processing computer system

ActiveUS20080005547A1Multiprogramming arrangementsMultiple digital computer combinationsApplication softwareParallel processing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

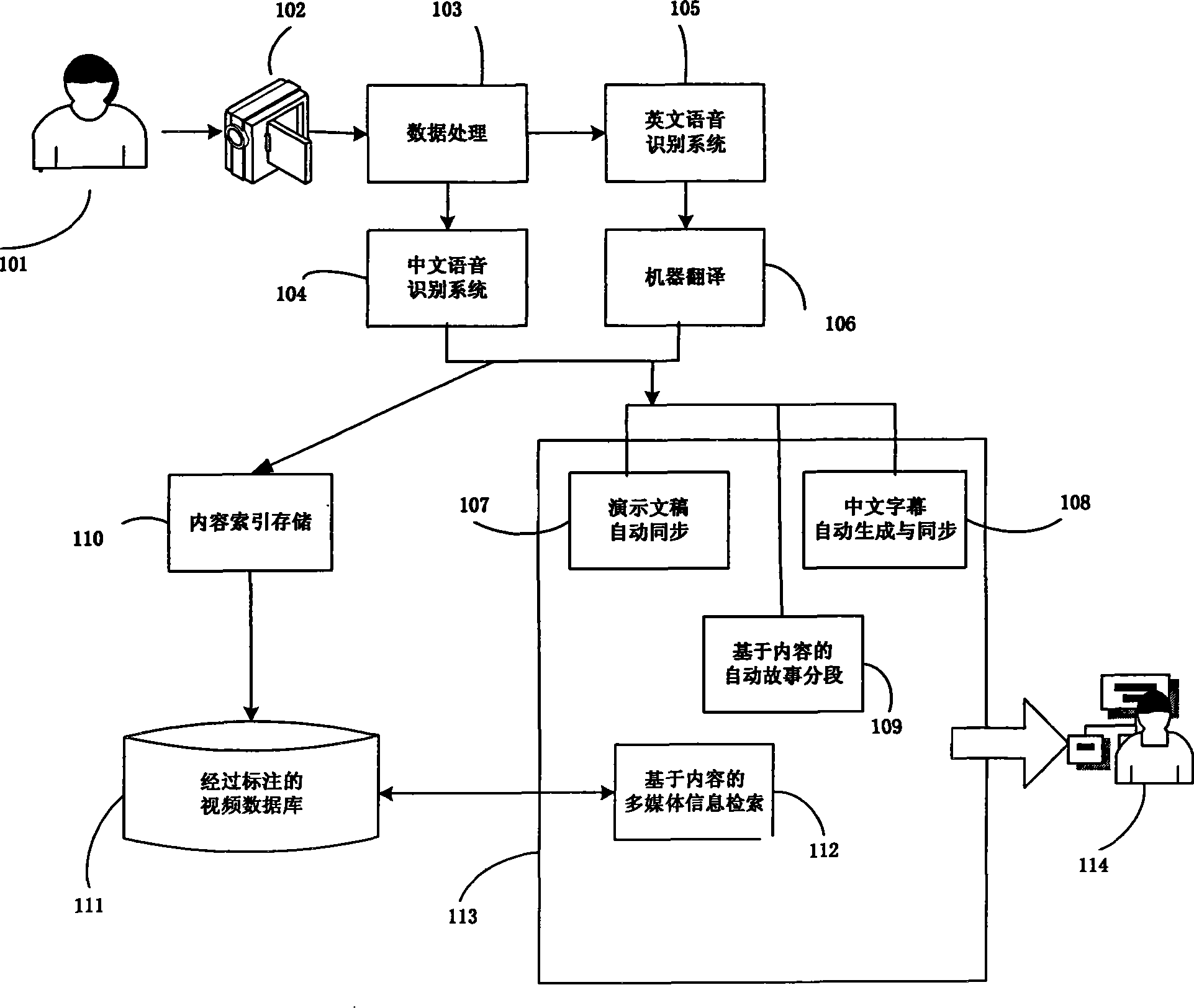

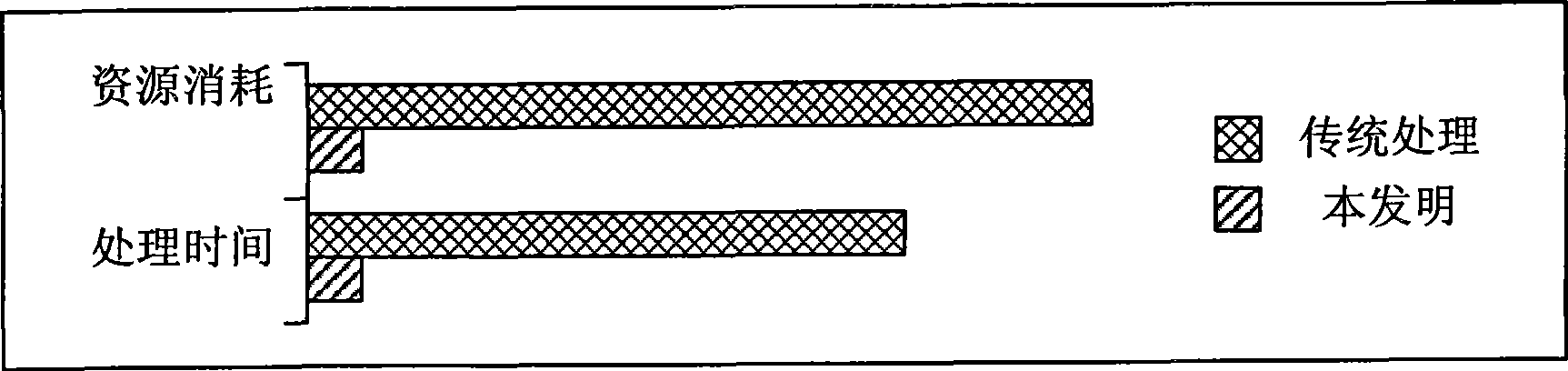

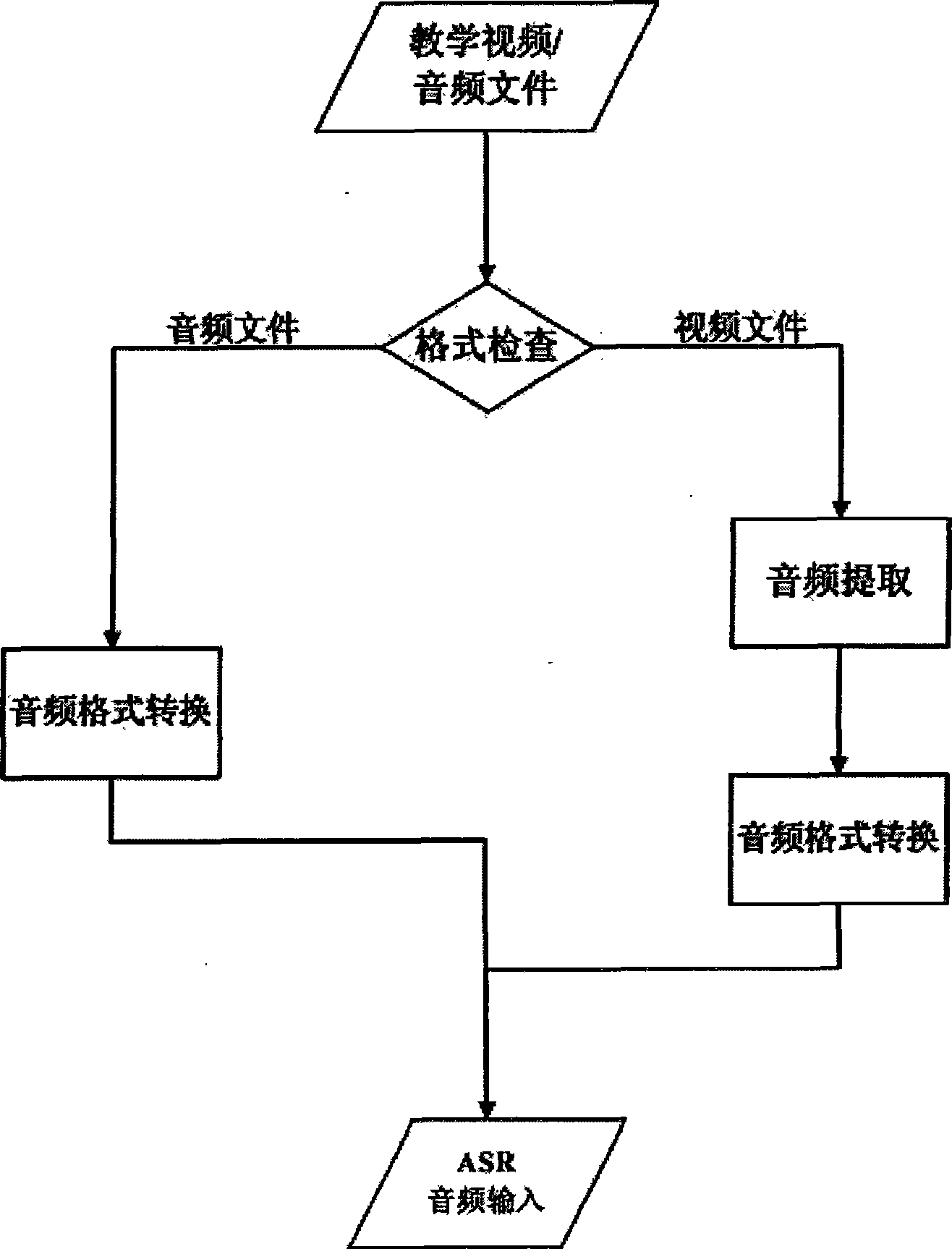

Multimedia resource processing method based on speech recognition and on-line teaching system thereof

ActiveCN101382937AProcessing speedSpeed up preparationSpeech recognitionElectrical appliancesContent retrievalSpeech identification

The invention discloses a multimedia resource processing method based on speech recognition and an online teaching system thereof. The method comprises the steps: audio and video frequencies are collected simultaneously and data are processed to process audio documents into a speech recognizable format; the audio documents are imposed with the speech recognition to generate script documents and with automatic generation and synchronization of Chinese captions with the video documents; and a content retrieval is imposed on the video documents, an automatic story segmentation based on the content is imposed on the script documents of the audio documents which are stored into the video database after being matched and labeled. The multimedia resource processing method based on the speech recognition and the online teaching system thereof are applied with the automatic processing technology of the multimedia resources, greatly increase the processing speed of such information as captions and the like by the use of high-performance computation of the computer, and reduce the participation degree of manual processing, thus accelerating the video manufacturing process and improving the work efficiency.

Owner:SHENZHEN INST OF ADVANCED TECH

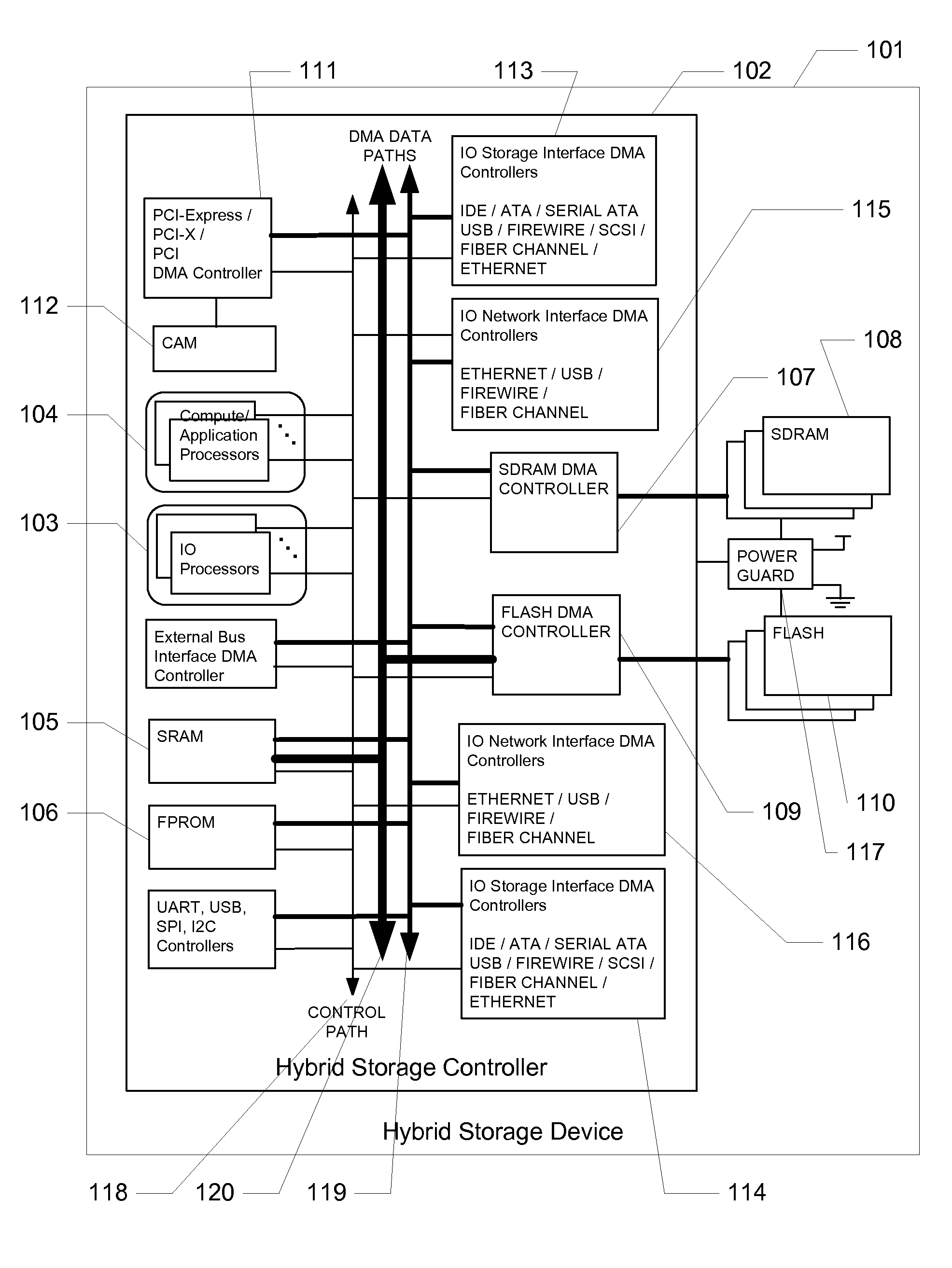

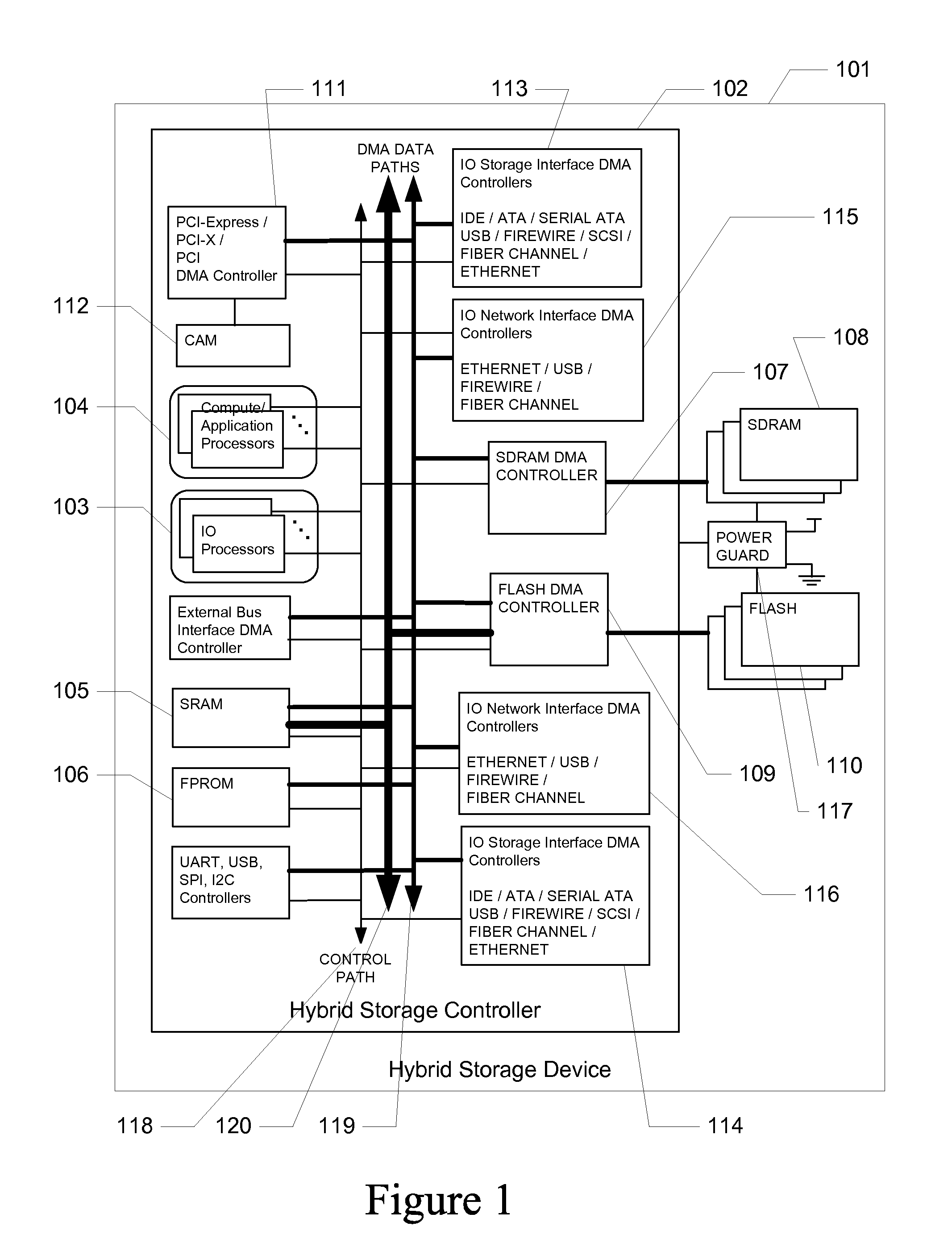

Hybrid multi-tiered caching storage system

ActiveUS7613876B2High performance computer data storage solutionMemory architecture accessing/allocationMemory systemsHybrid storage systemData store

A hybrid storage system comprising mechanical disk drive means, flash memory means, SDRAM memory means, and SRAM memory means is described. IO processor means and DMA controller means are devised to eliminate host intervention. Multi-tiered caching system and novel data structure for mapping logical address to physical address results in a configurable and scalable high performance computer data storage solution.

Owner:BITMICRO LLC

Multi-thread runtime system

ActiveUS20070294696A1Error detection/correctionSoftware engineeringPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

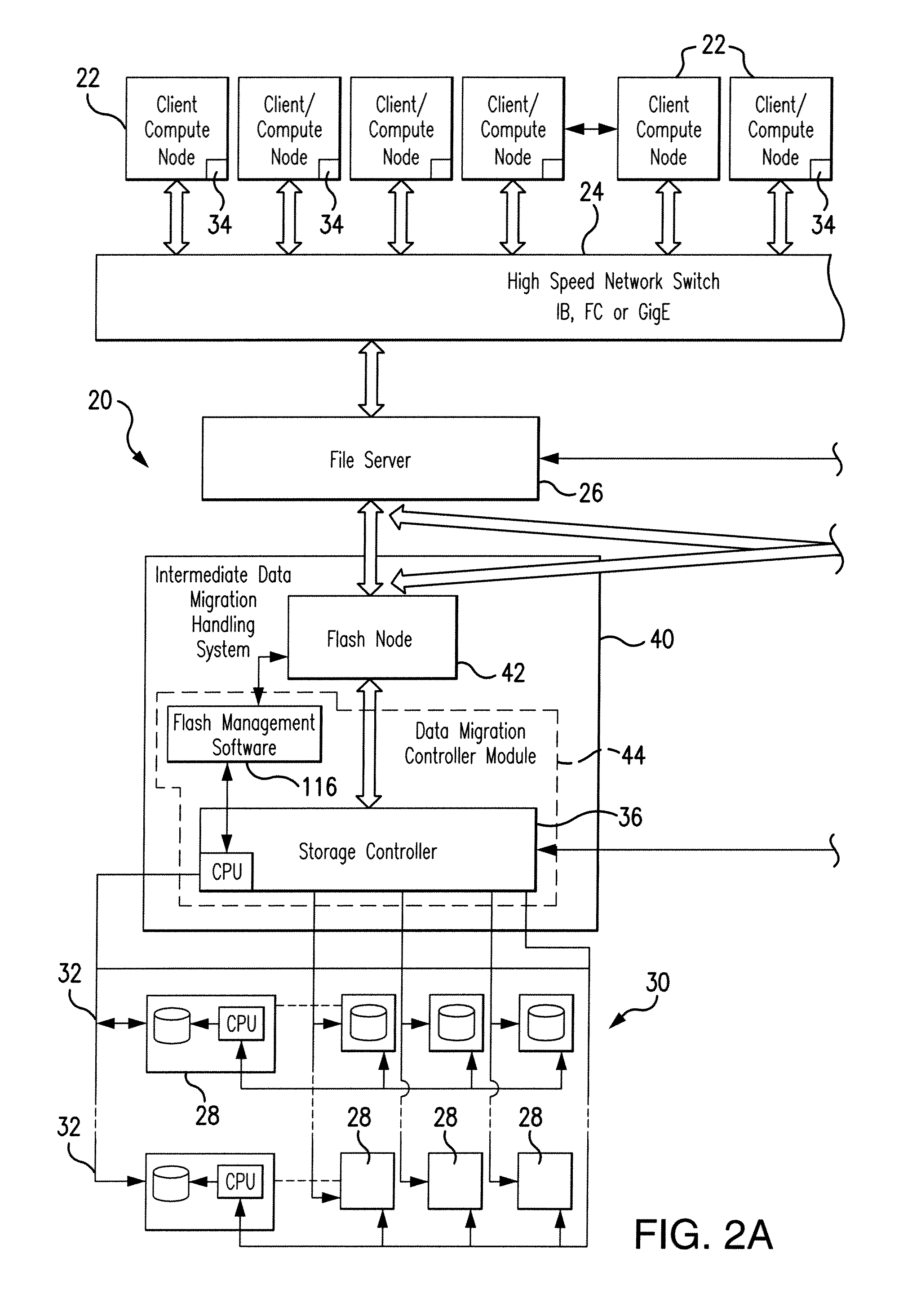

System and method for data migration between high-performance computing architectures and data storage devices with increased data reliability and integrity

ActiveUS8719520B1Increase malfunction protectionReduce operating costsError detection/correctionMemory adressing/allocation/relocationHigh performance computationData reliability

A system for data migration between high performance computing architectures and data storage disks includes an intermediate data migration handling system which has an intermediate data storage module coupled to the computer architecture to store data received, and a data controller module which includes data management software supporting the data transfer activity between the intermediate data storage module and the disk drives in an orderly manner independent of the random I / O activity of the computer architecture. RAID calculations are performed on the data prior to storage in the intermediate storage module, as well as when reading data from it for assuring data integrity, and carrying out reconstruction of corrupted data. The data transfer to the disk drives is actuated in sequence determined by the data management software based on minimization of seeking time, tier usage, predetermined time since the previous I / O cycle, or fullness of the intermediate data storage module. The storage controller deactivates the disk drives which are not needed for the data transfer.

Owner:DATADIRECT NETWORKS

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS8108844B2Error detection/correctionSoftware engineeringPerformance computingProcessing element

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Multi-thread runtime system

ActiveUS7814486B2Software engineeringError detection/correctionPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

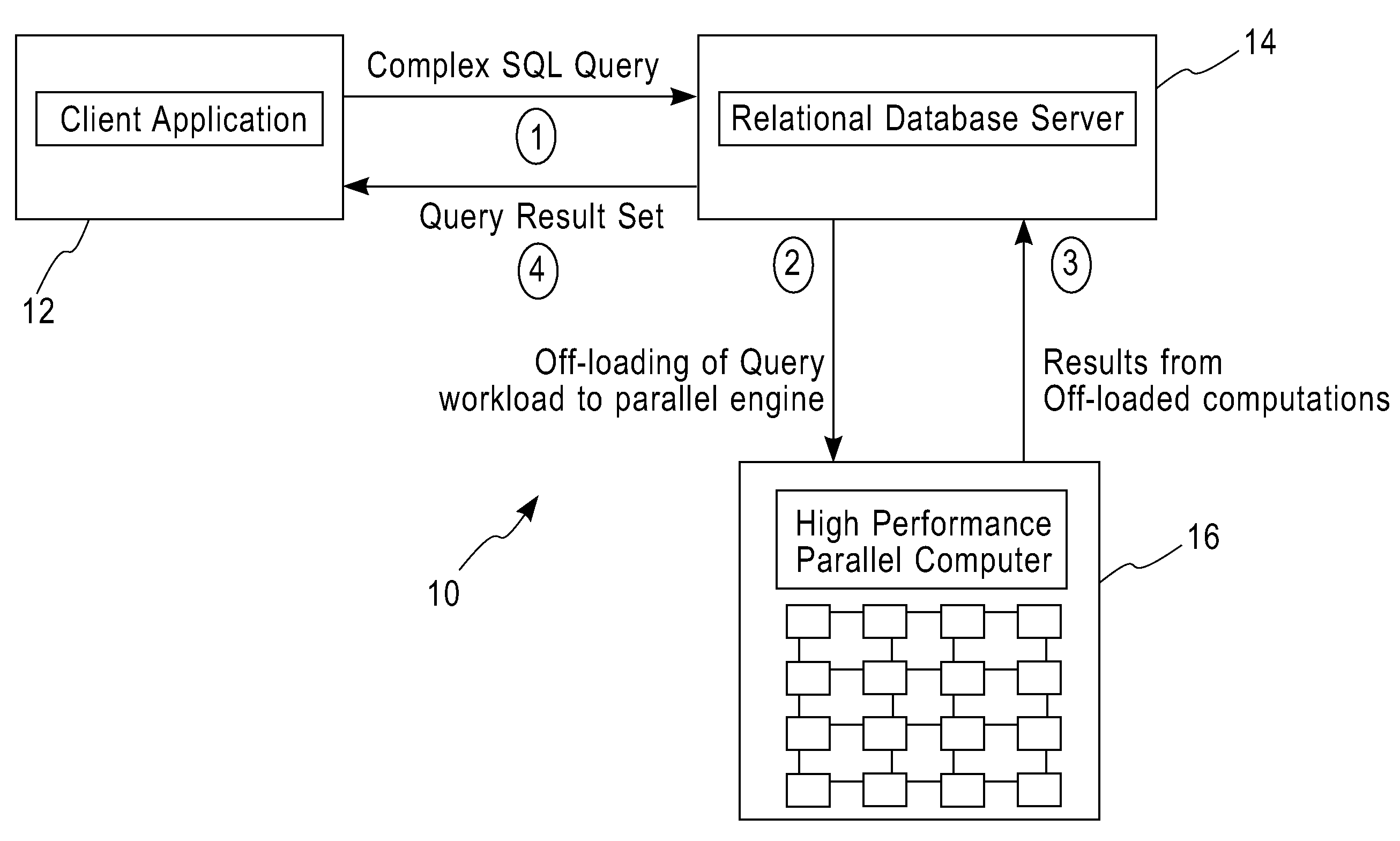

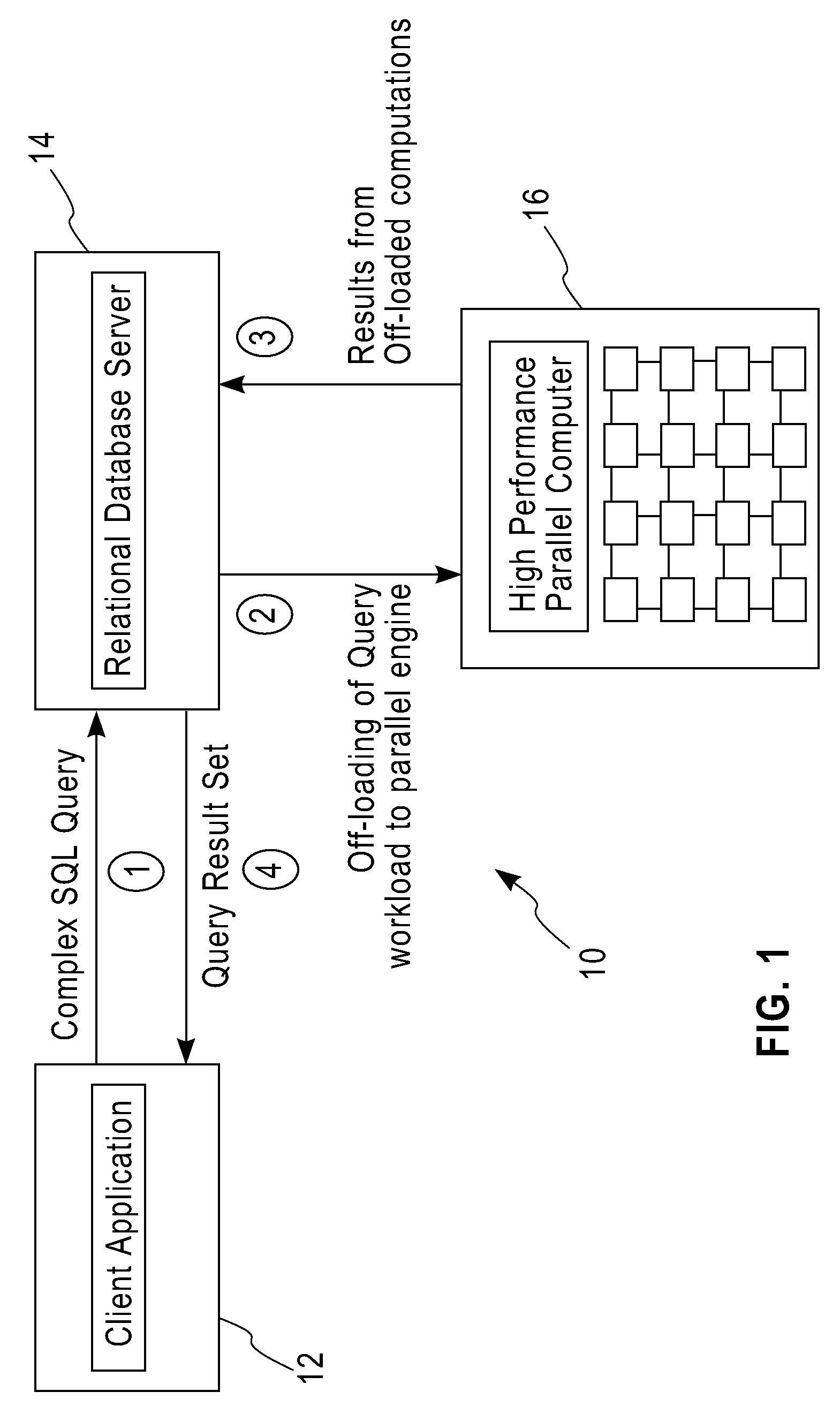

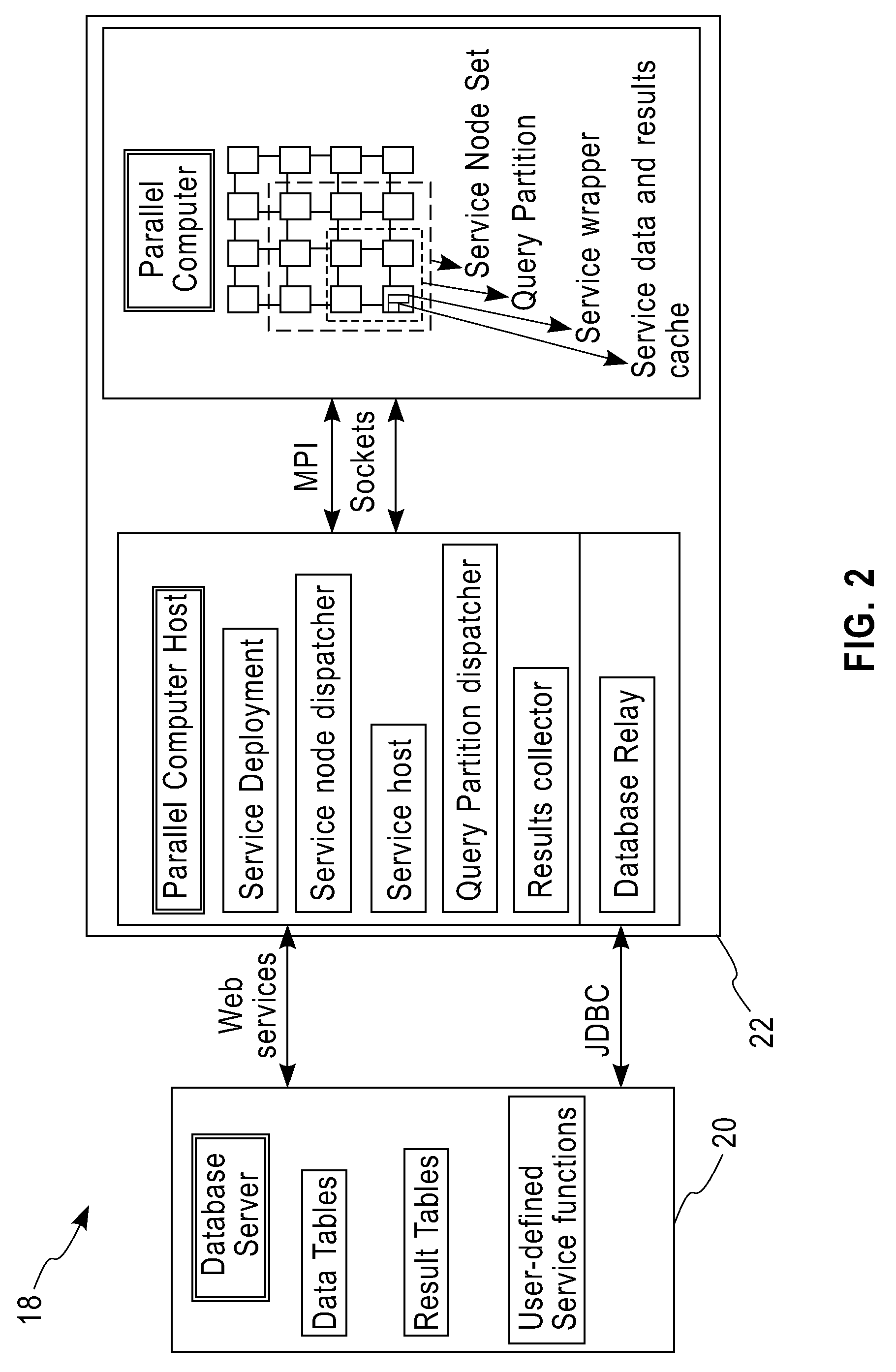

System and method for executing compute-intensive database user-defined programs on an attached high-performance parallel computer

InactiveUS20090077011A1Improve query performanceEasy to guaranteeDigital data information retrievalDigital data processing detailsDatabase queryData set

The invention pertains to a system and method for dispatching and executing the compute-intensive parts of the workflow for database queries on an attached high-performance, parallel computing platform. The performance overhead for moving the required data and results between the database platform and the high-performance computing platform where the workload is executed is amortized in several ways, for example,by exploiting the fine-grained parallelism and superior hardware performance on the parallel computing platform for speeding up compute-intensive calculations,by using in-memory data structures on the parallel computing platform to cache data sets between a sequence of time-lagged queries on the same data, so that these queries can be processed without further data transfer overheads,by replicating data within the parallel computing platform so that multiple independent queries on the same target data set can be simultaneously processed using independent parallel partitions of the high-performance computing platform.A specific embodiment of this invention was used for deploying a bio-informatics application involving gene and protein sequence matching using the Smith-Waterman algorithm on a database system connected via an Ethernet local area network to a parallel supercomputer.

Owner:IBM CORP

Systems and methods for determining compute kernels for an application in a parallel-processing computer system

ActiveUS8136104B2Error detection/correctionMultiprogramming arrangementsPerformance computingParallel processing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

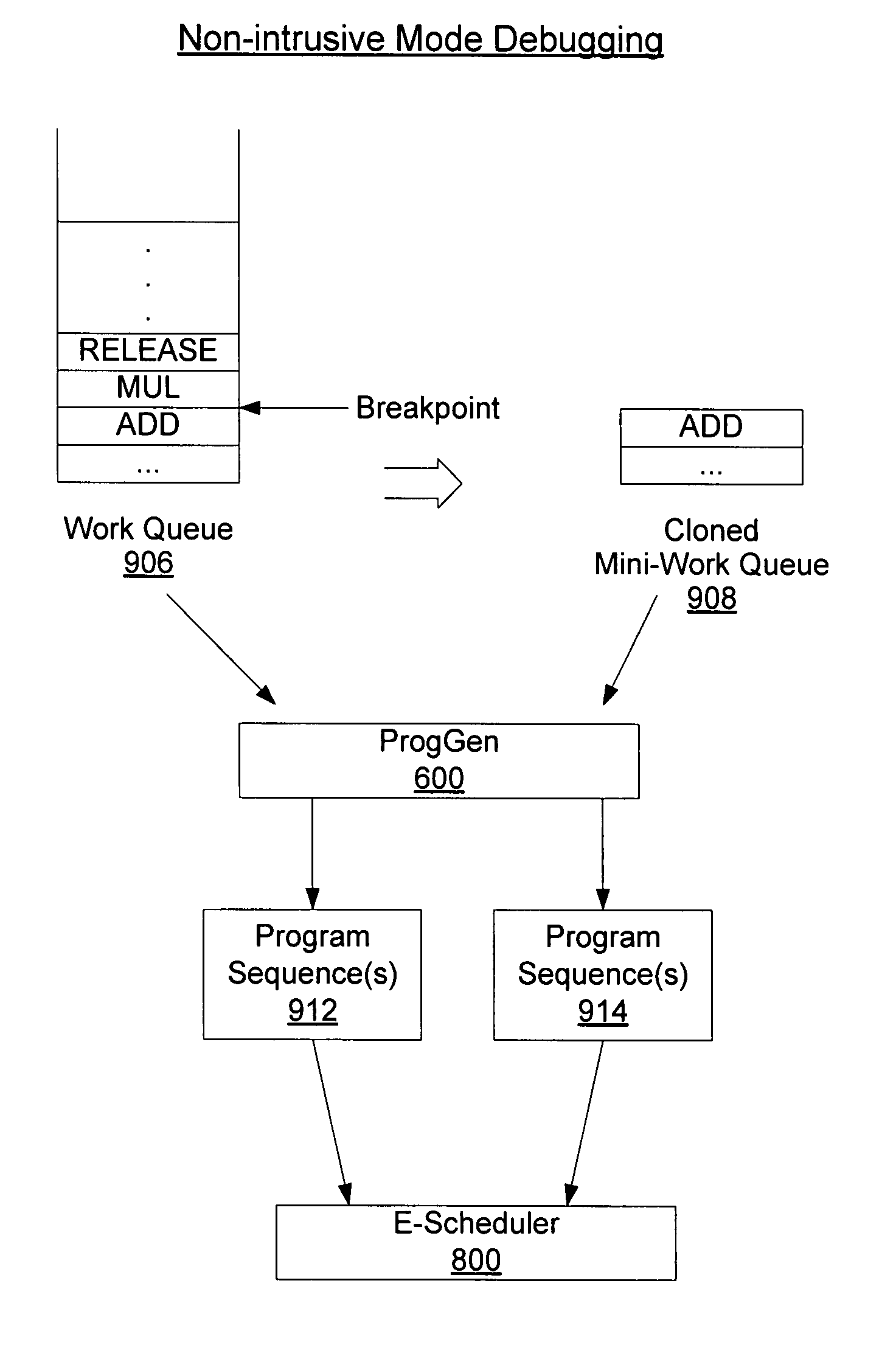

Systems and methods for debugging an application running on a parallel-processing computer system

ActiveUS8024708B2Error detection/correctionSpecific program execution arrangementsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

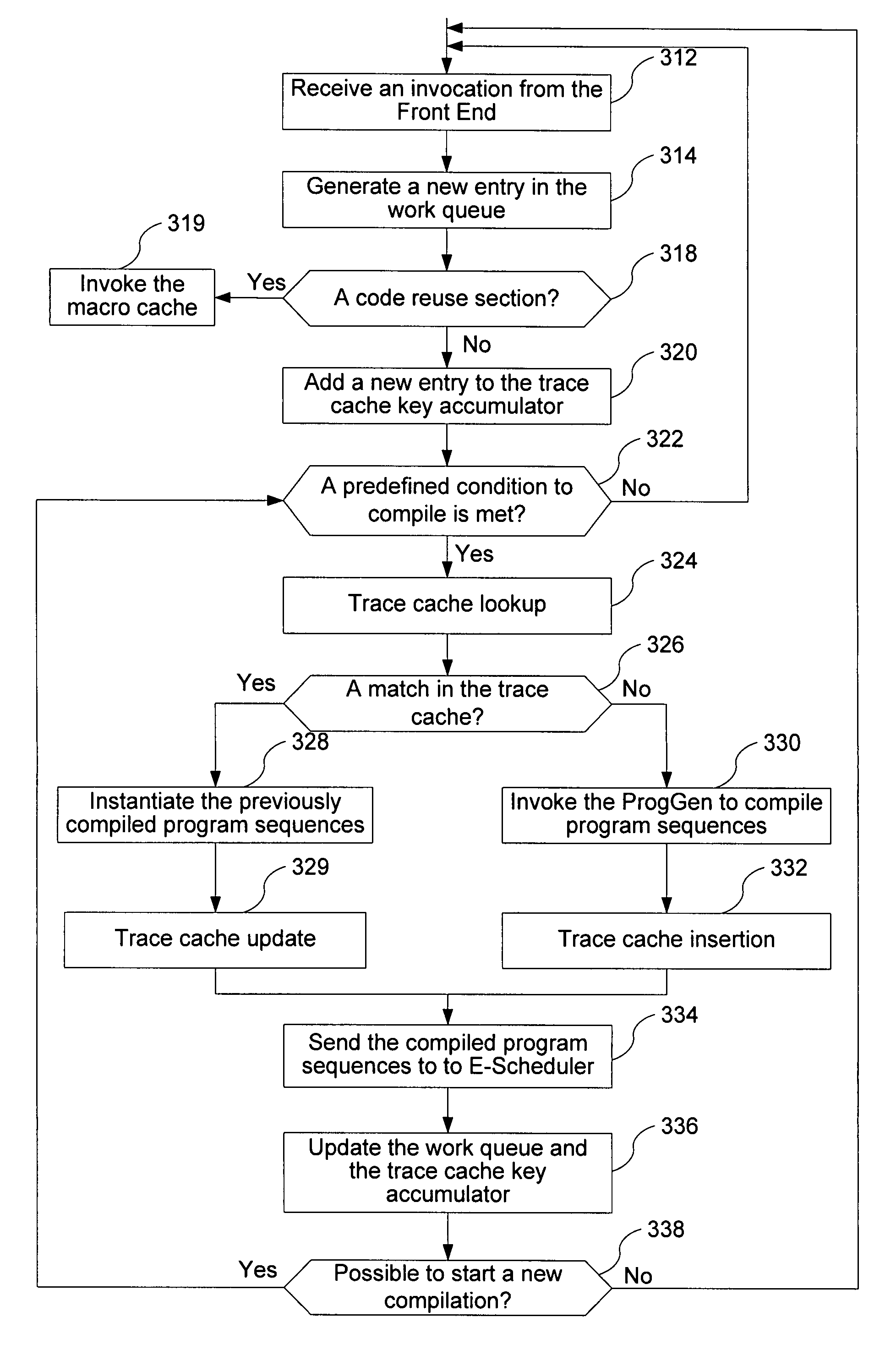

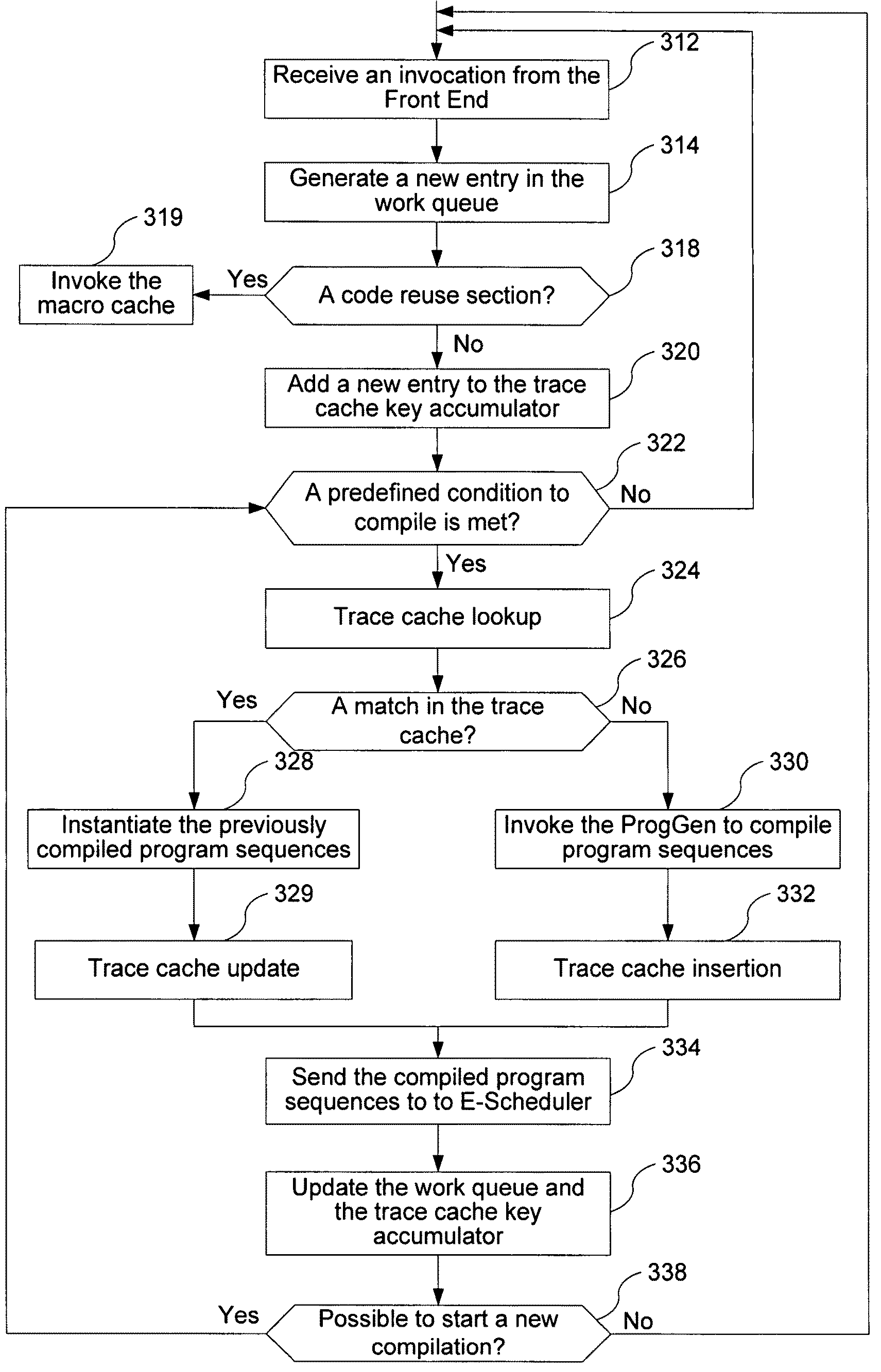

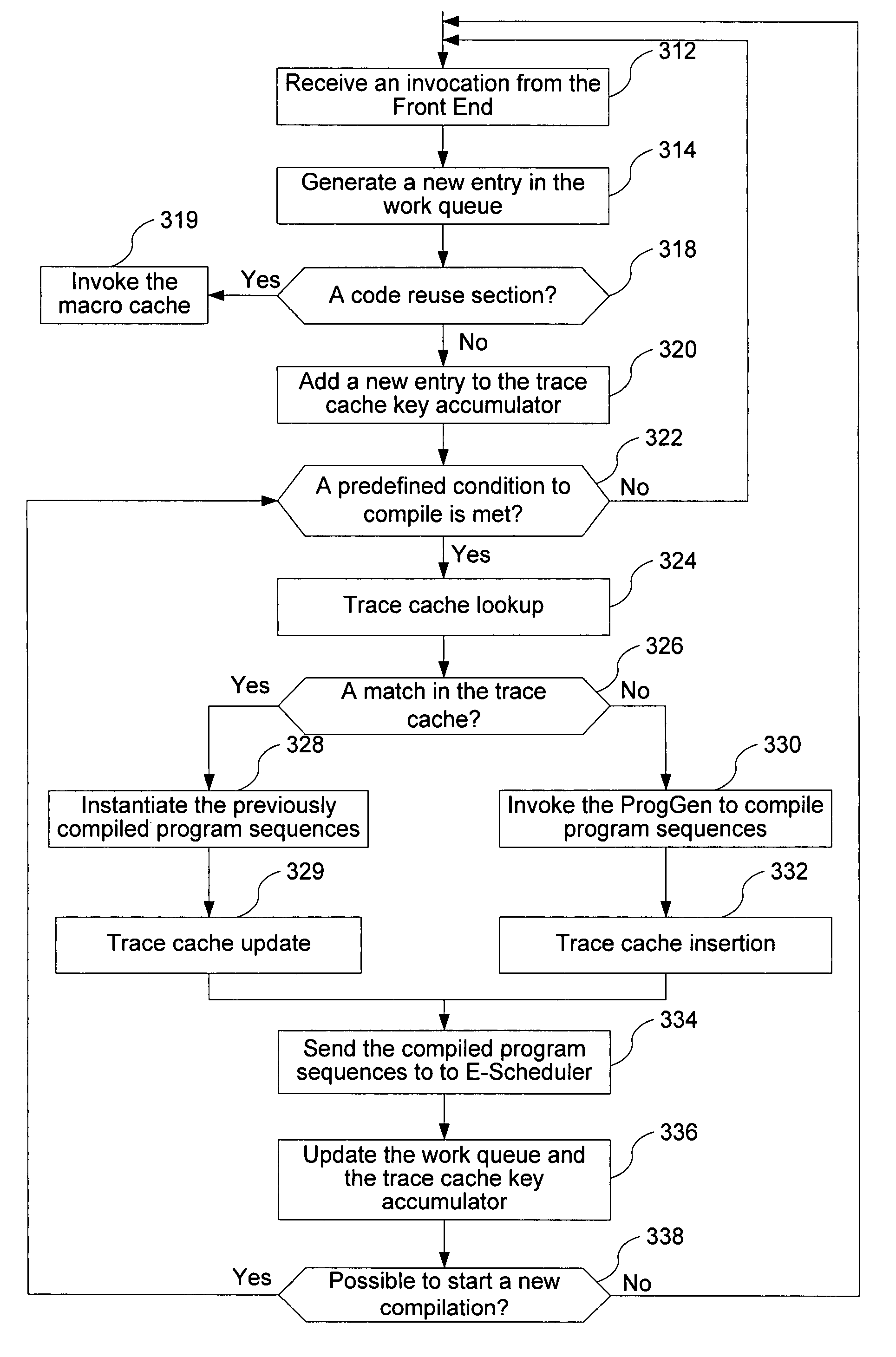

Systems and methods for caching compute kernels for an application running on a parallel-processing computer system

ActiveUS20070294682A1Multiprogramming arrangementsMemory systemsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for debugging an application running on a parallel-processing computer system

ActiveUS20070294671A1Error detection/correctionSpecific program execution arrangementsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

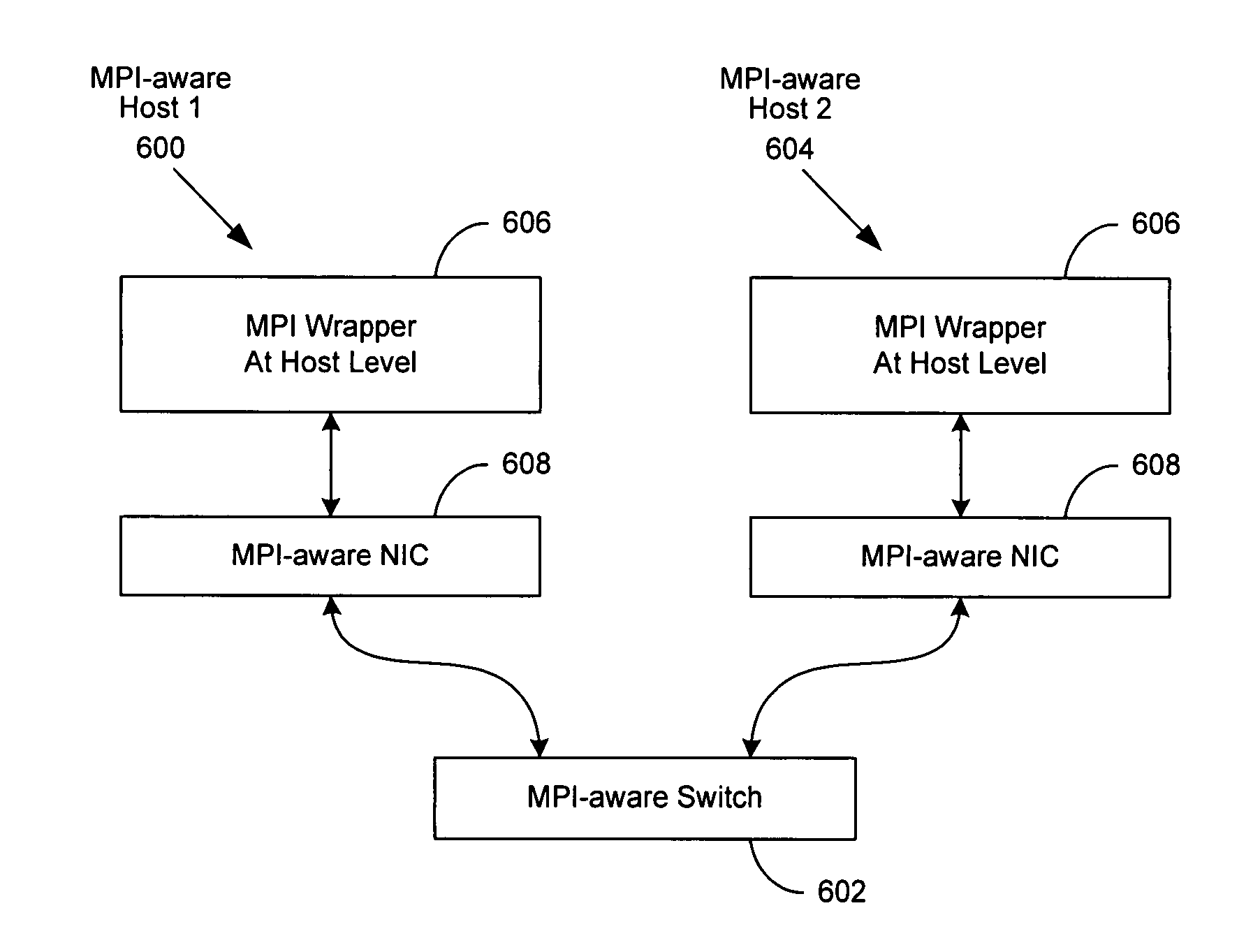

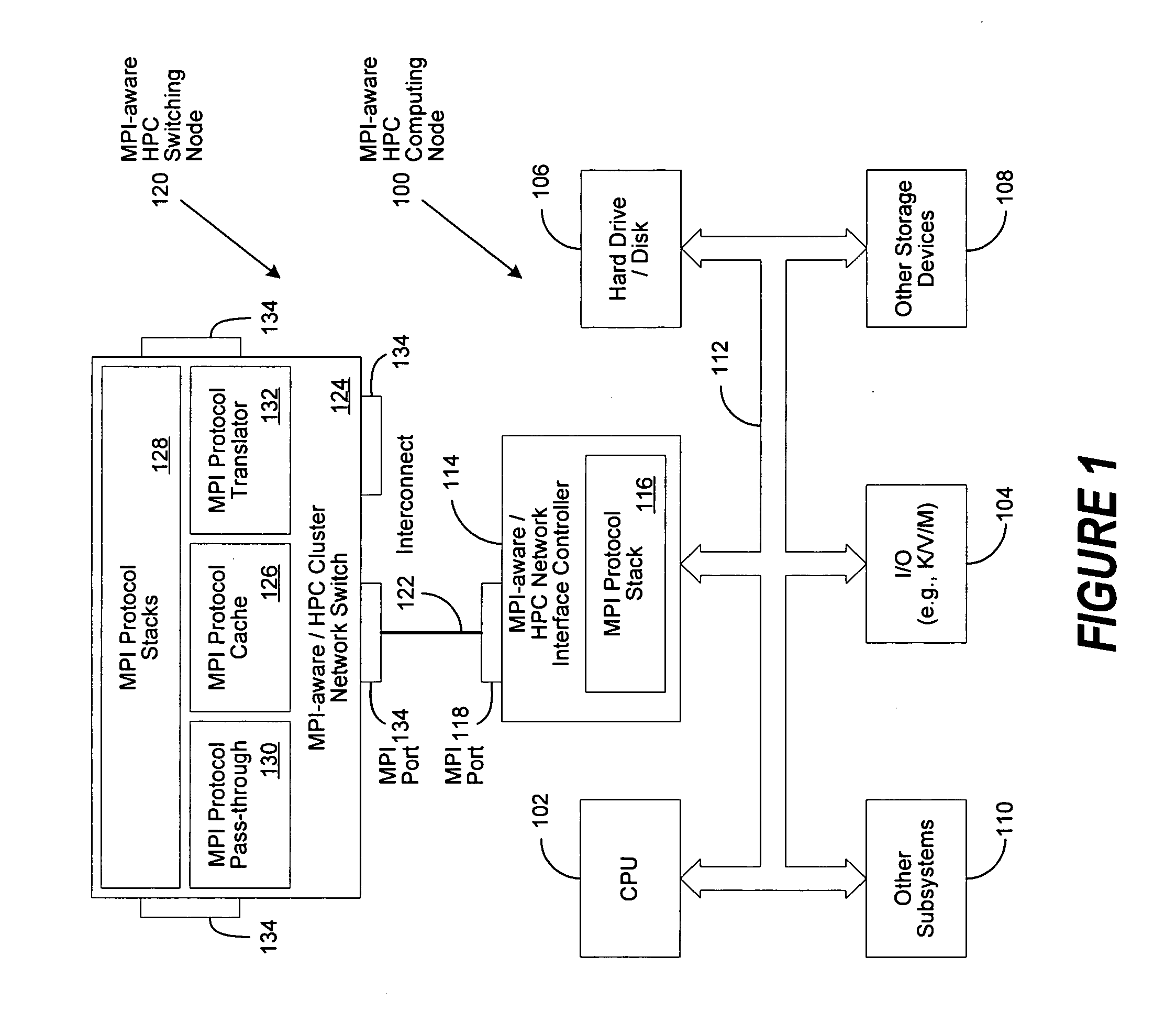

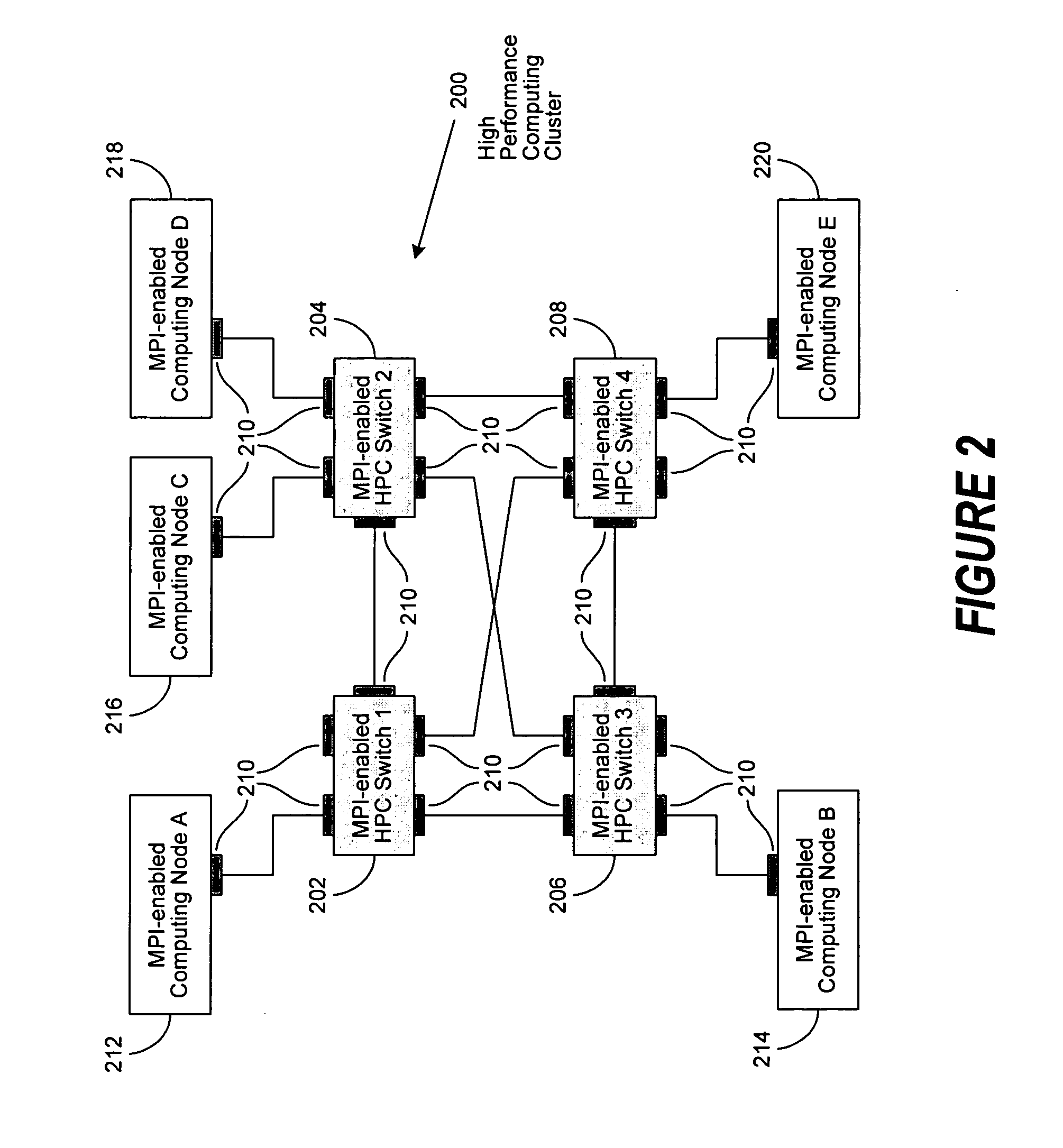

MPI-aware networking infrastructure

InactiveUS20060282838A1Reduced message-passing protocol communication overheadImprove performanceMultiprogramming arrangementsTransmissionExtensibilityInformation processing

The present invention provides for reduced message-passing protocol communication overhead between a plurality of high performance computing (HPC) cluster computing nodes. In particular, HPC cluster performance and MPI host-to-host functionality, performance, scalability, security, and reliability can be improved. A first information handling system host, and its associated network interface controller (NIC) is enabled with a lightweight implementation of a message-passing protocol, which does not require the use of intermediate protocols. A second information handling system host, and its associated NIC is enabled with the same lightweight message-passing protocol implementation. A high-speed network switch, likewise enabled with the same lightweight message-passing protocol implementation, interconnected to the first host and the second host, and potentially to a plurality of like hosts and switches, can create an HPC cluster network capable of higher performance and greater scalability.

Owner:DELL PROD LP

Method and apparatus for improving responsiveness of a power management system in a computing device

InactiveUS20070283176A1Reduce perceptionEnergy efficient ICTVolume/mass flow measurementEngineeringElectric power

A computer system has multiple performance states. The computer system periodically determines utilization information for the computer system and adjusts the performance state according to the utilization information. If a performance increase is required, the computer system always goes to the maximum performance state. If a performance decrease is required, the computer system steps the performance state down to a next lower performance state.

Owner:ADVANCED SILICON TECH

Cooling high performance computer systems

InactiveUS7813121B2Improve cooling effectDigital data processing detailsCooling/ventilation/heating modificationsComputerized systemEngineering

A computer system may include a chassis defining a front and a rear. The chassis may include a vertically oriented midplane disposed therein, the midplane including a plurality of front module slots for receiving front electronic modules from the front of the chassis, and a plurality of rear module slots for receiving rear electronic modules from the rear of the chassis. A cooling system may be provided within the chassis and may generate an upwardly-directed front air flow within the chassis directed at selected ones of the front electronic modules and an upwardly-directed rear air flow within the chassis directed at selected ones of the rear electronic modules. The front air flow is separate from and independent of the rear air flow. The selected front and rear electronic modules may be disposed in the chassis so as to separate the front air flow into a plurality of substantially equal front air streams and the rear air flow into a plurality of substantially equal rear air streams, respectively.

Owner:III HLDG 1

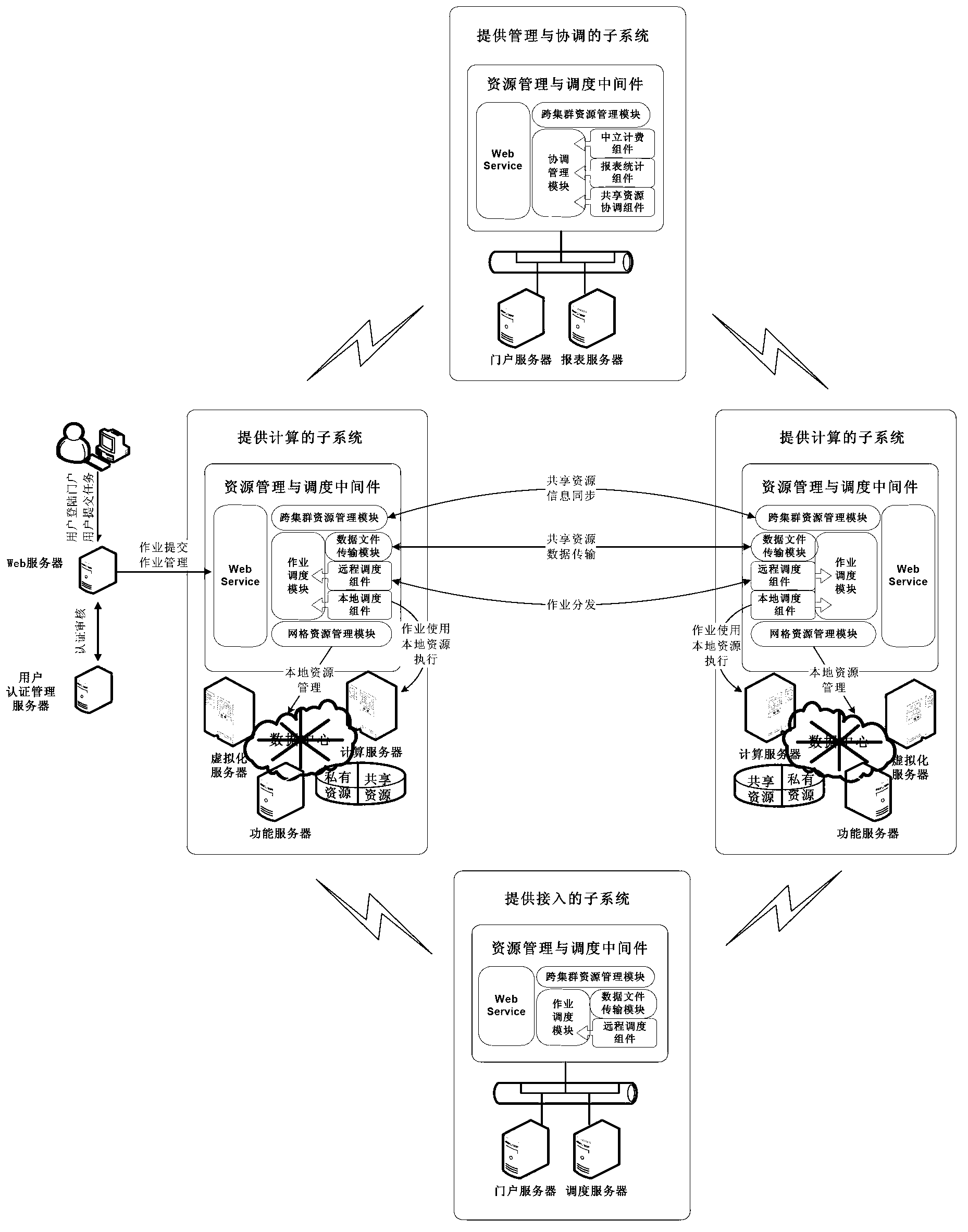

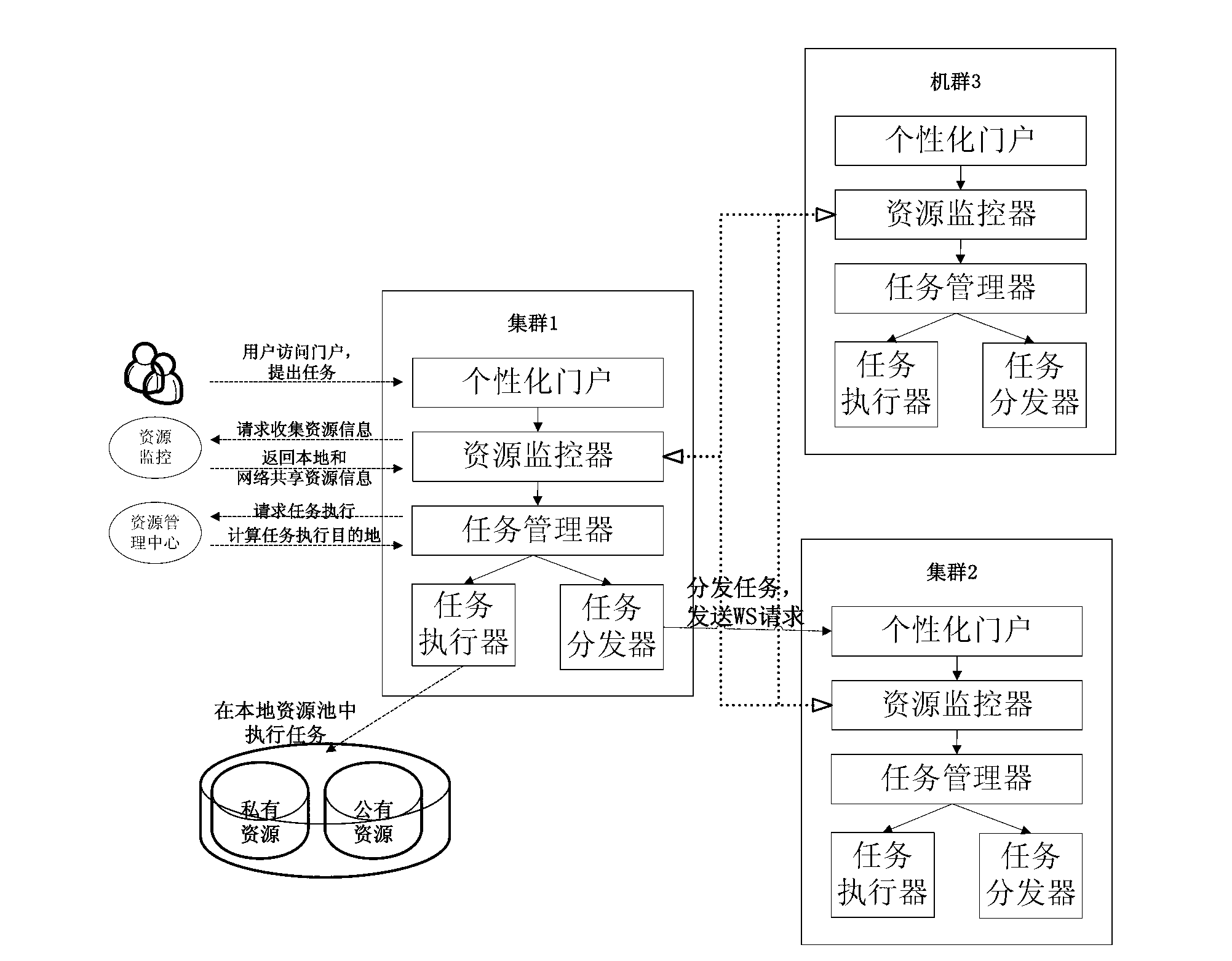

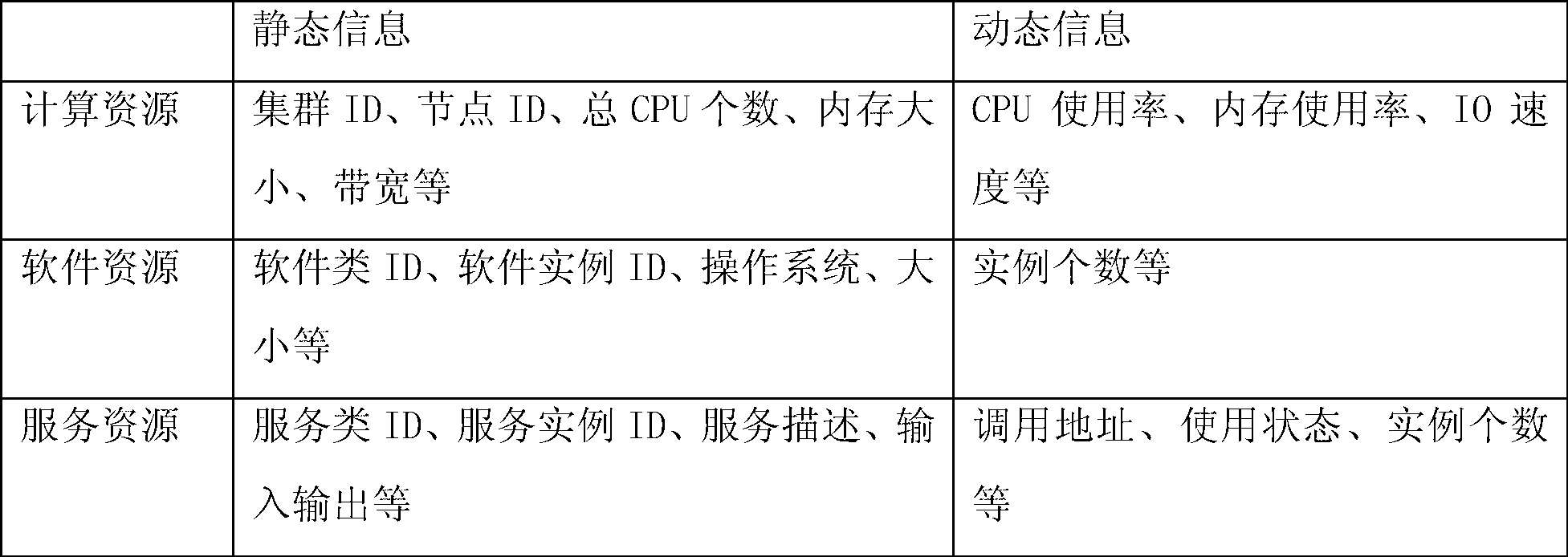

Decentralized cross cluster resource management and task scheduling system and scheduling method

ActiveCN103207814AImprove execution efficiencyImprove experienceResource allocationEnergy efficient computingData centerPerformance computing

The invention relates to a decentralized cross cluster resource management and task scheduling system and a scheduling method. The scheduling system comprises a subsystem for providing management and coordination service, a subsystem for providing computing service and a subsystem for providing access service, the subsystem for providing the management and coordination service acquires information of other subsystems, provides monitoring, reporting, billing, resource sharing coordination work, and provides decision reference for management and planning of a high-performance computing system. The subsystem for providing the computing service is provided with a data center of a high-performance computing node and collects local and remote resources, so that work scheduling is performed according to the local and remote resources. The subsystem for providing the access service provides localized work submission and access management service for a user. The decentralized cross cluster resource management and task scheduling method integrates single cluster resources, on one hand, work execution efficiency and user experience are improved, on the other hand, existing resources are effectively and maximally used, and the cost for purchasing hardware to expand computing power is saved.

Owner:BEIJING SIMULATION CENT

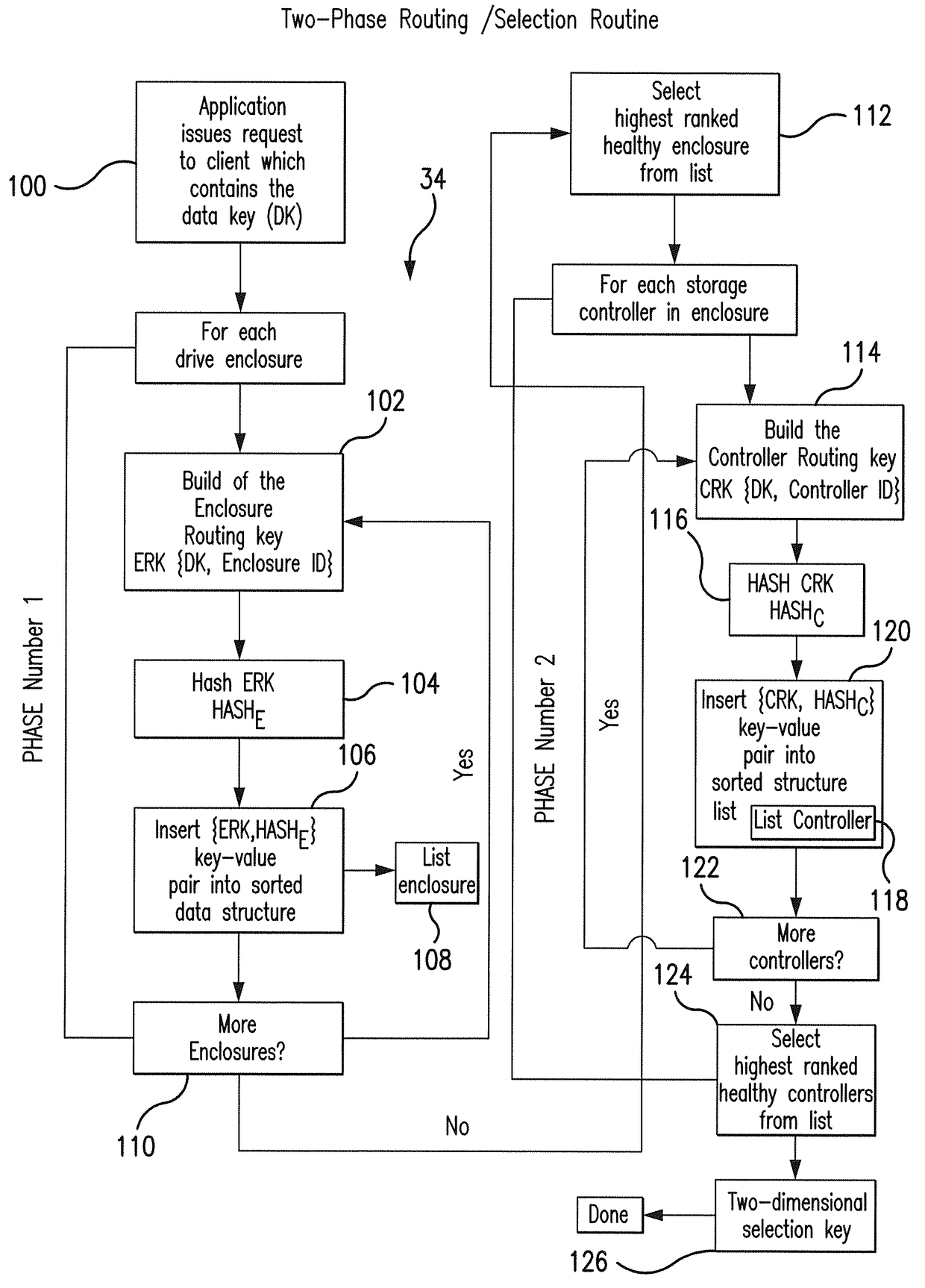

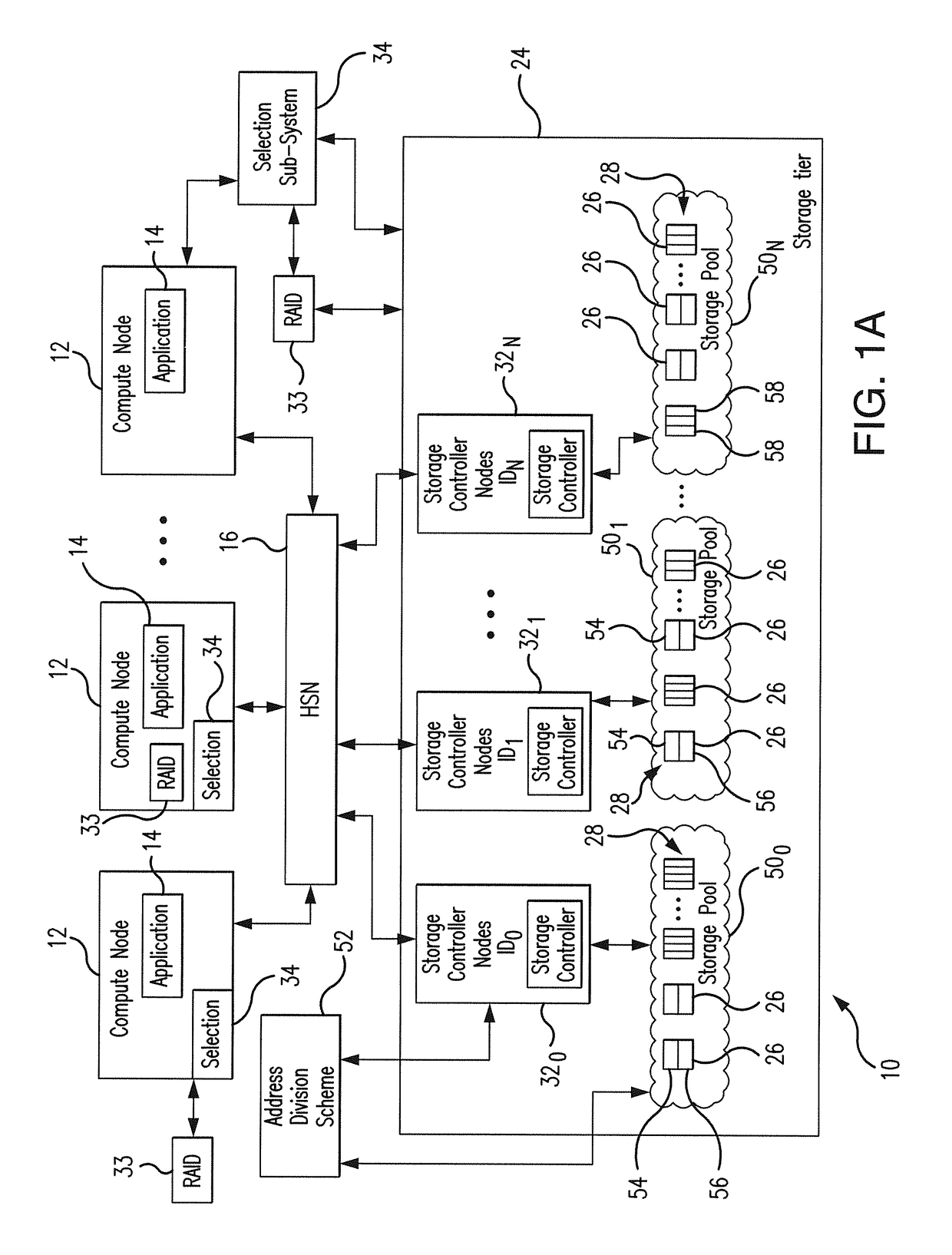

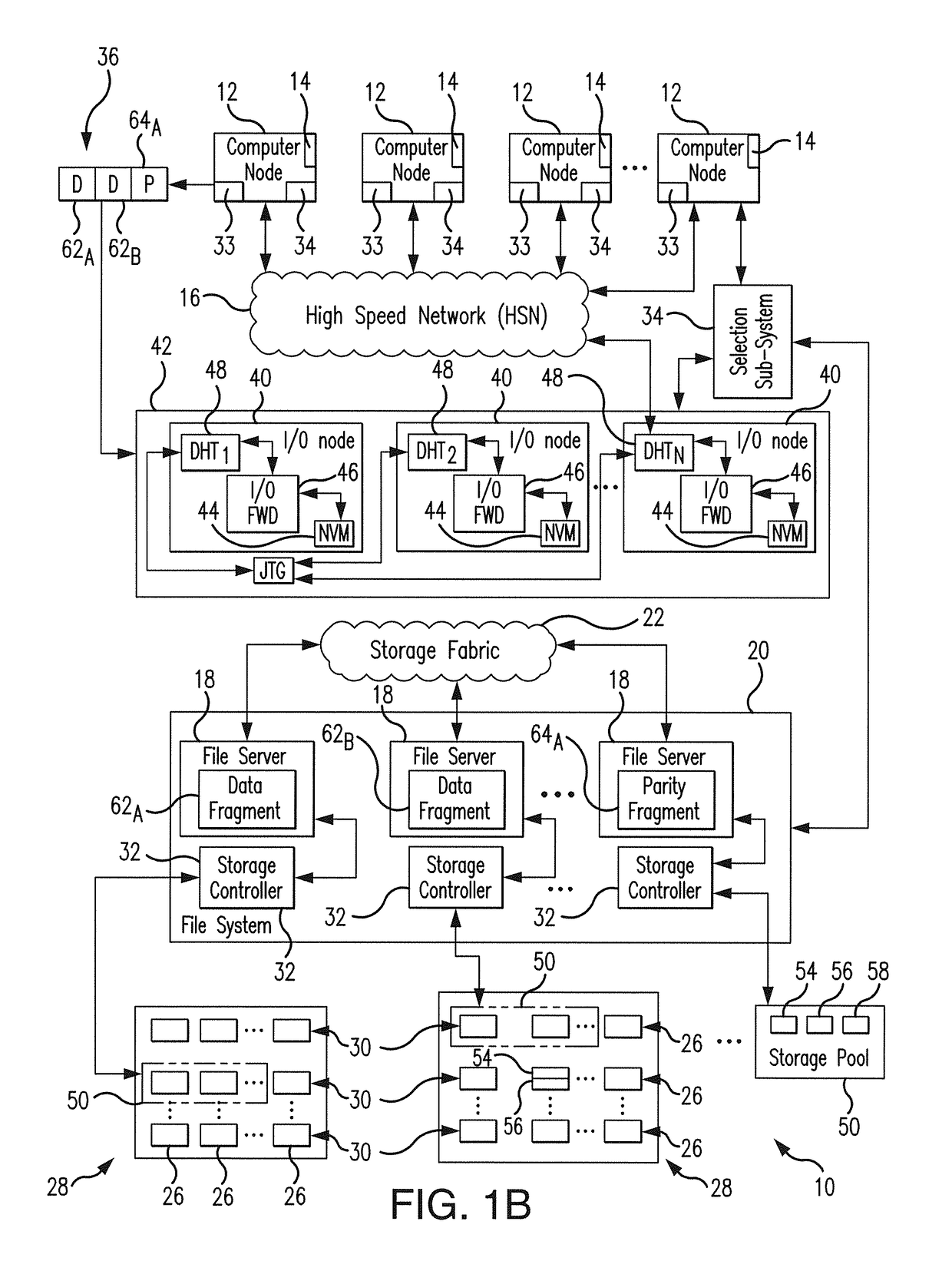

Low latency and reduced overhead data storage system and method for sharing multiple storage devices by high performance computing architectures

ActiveUS9959062B1Improve the level ofReliable rebuildInput/output to record carriersRedundant hardware error correctionData setPerformance computing

A data migration system supports a low-latency and reduced overhead data storage protocol for data storage sharing in a non-collision fashion which does not require inter-communication and permanent arbitration between data storage controllers to decide on the data placement / routing. The multiple data fragments of data sets are prevented from routing to the same storage devices by a multi-step selection protocol which selects (in a first phase of the selection routine) a healthy highest ranked drive enclosure, and further selects (in a second phase of the selection routine) a healthy highest-ranked data storage controller residing in the selected drive enclosure, for routing data fragments to different storage pools assigned to the selected data storage devices for exclusive “writing” and data modification. The selection protocol also contemplates various failure scenarios in a data placement collision free manner.

Owner:DATADIRECT NETWORKS

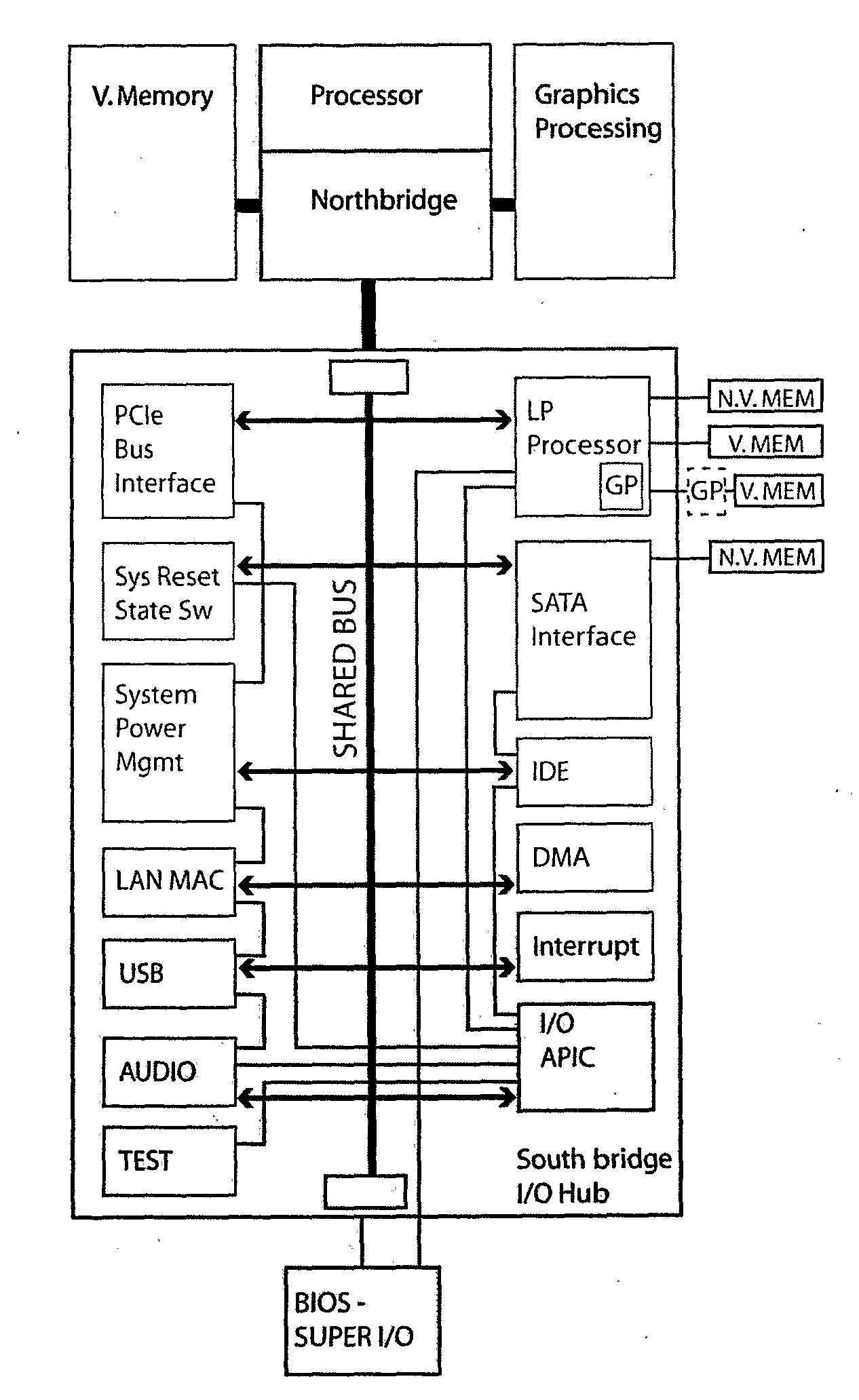

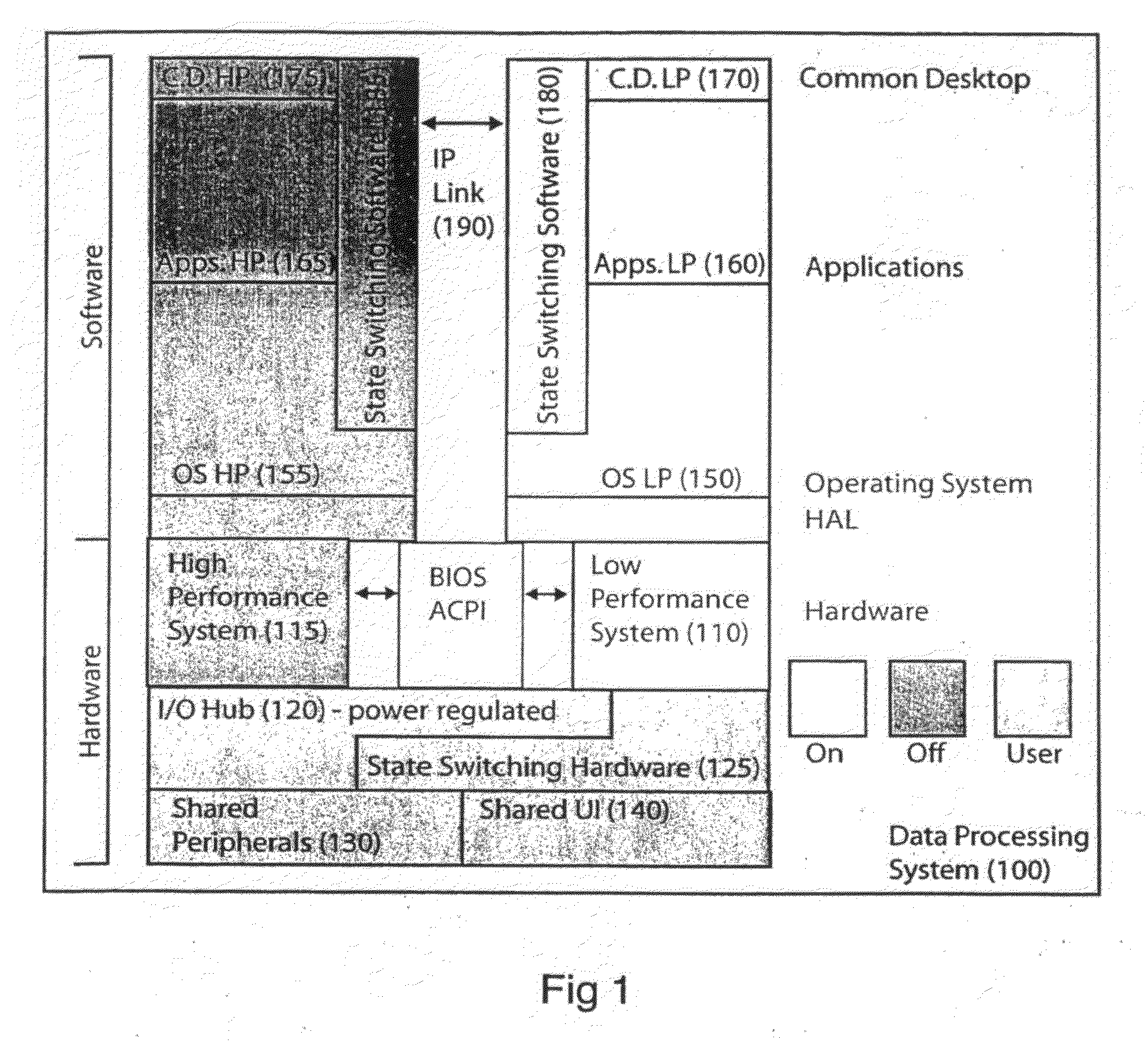

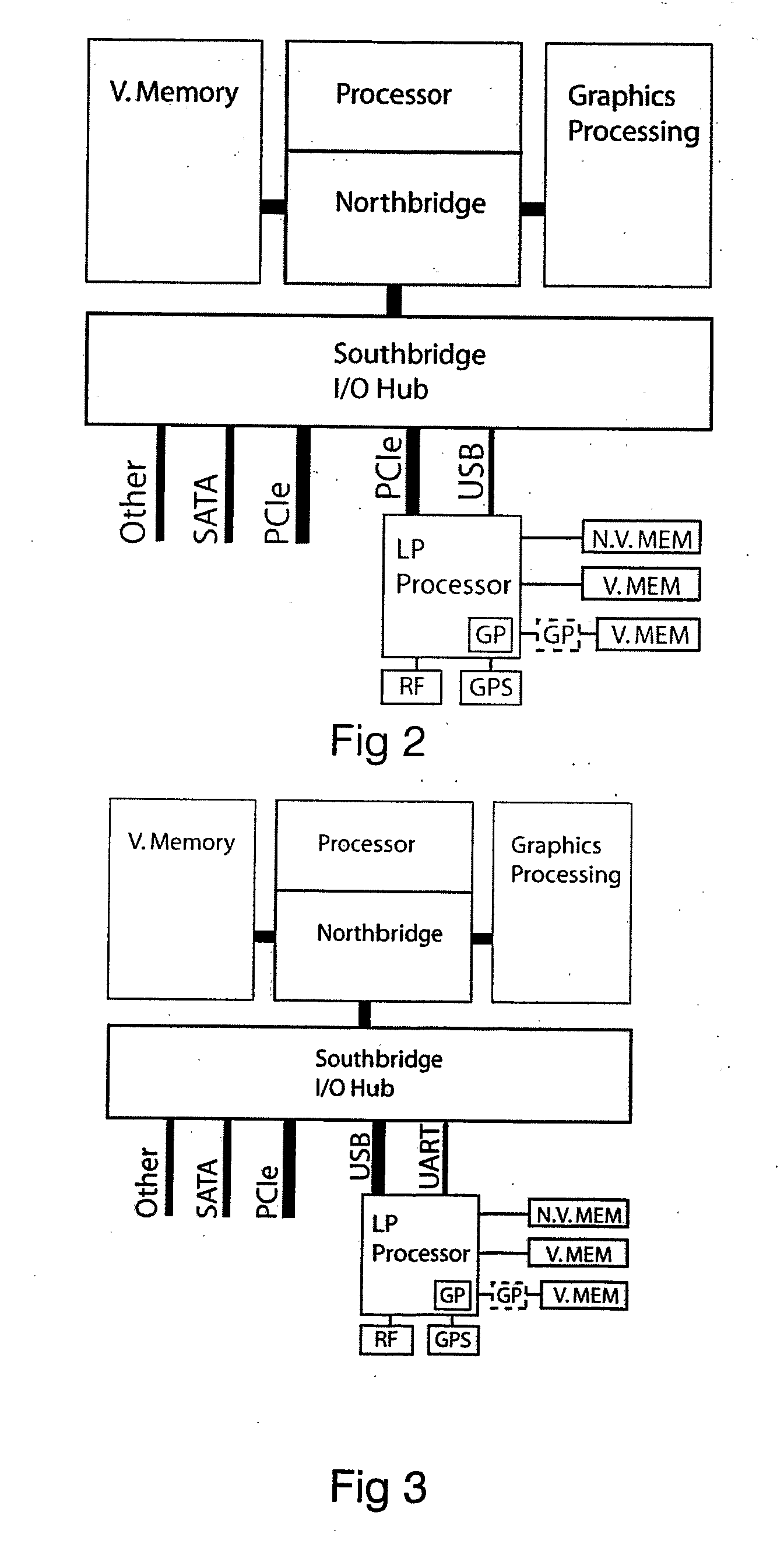

Dual Mode Power-Saving Computing System

ActiveUS20090193243A1Increase power consumptionReduce power consumptionEnergy efficient ICTVolume/mass flow measurementData processing systemPerformance computing

The present invention relates to a data processing system comprising both a high performance computing sub-system having typical high power consumption and a low performance subsystem requiring less power. The data processing system acts as a single computing device by moving the execution of software from the low performance subsystem to the high performance subsystem when high computing power is needed and vice versa when low computing performance is sufficient, allowing in the latter case to put the high performance subsystem into a power saving state. The invention relates also to related algorithms.

Owner:CUPP COMPUTING

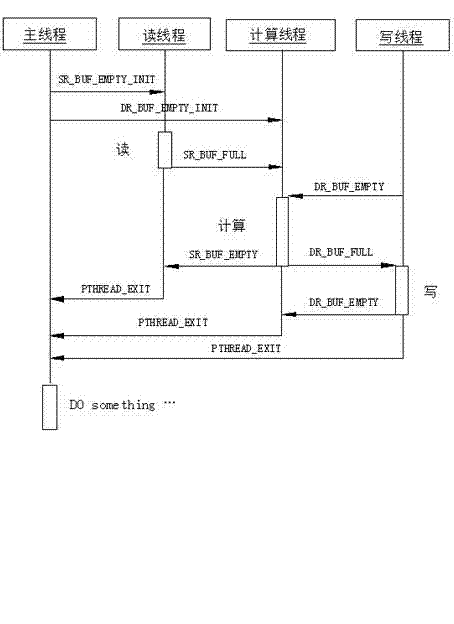

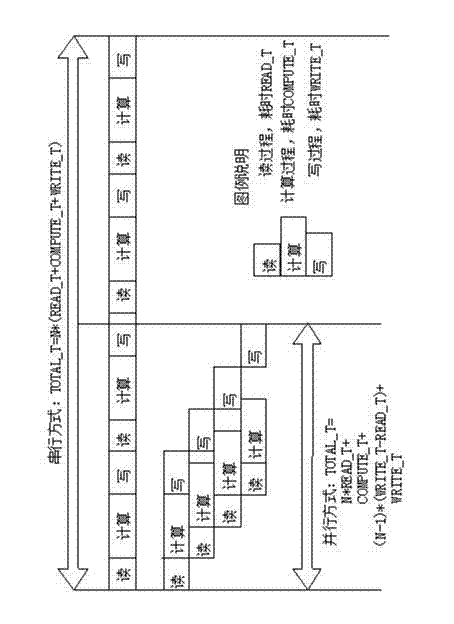

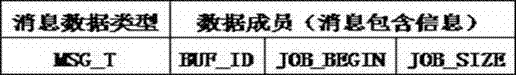

Multi-thread parallel processing method based on multi-thread programming and message queue

ActiveCN102902512AFast and efficient multi-threaded transformationReduce running timeConcurrent instruction executionComputer architectureConcurrent computation

The invention provides a multi-thread parallel processing method based on a multi-thread programming and a message queue, belonging to the field of high-performance computation of a computer. The parallelization of traditional single-thread serial software is modified, and current modern multi-core CPU (Central Processing Unit) computation equipment, a pthread multi-thread parallel computing technology and a technology for realizing in-thread communication of the message queue are utilized. The method comprises the following steps of: in a single node, establishing three types of pthread threads including a reading thread, a computing thread and a writing thread, wherein the quantity of each type of the threads is flexible and configurable; exploring multi-buffering and establishing four queues for the in-thread communication; and allocating a computing task and managing a buffering space resource. The method is widely applied to the application field with multi-thread parallel processing requirements; a software developer is guided to carry out multi-thread modification on existing software so as to realize the optimization of the utilization of a system resource; and the hardware resource utilization rate is obviously improved, and the computation efficiency of software and the whole performance of the software are improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Systems and methods for compiling an application for a parallel-processing computer system

Owner:GOOGLE LLC

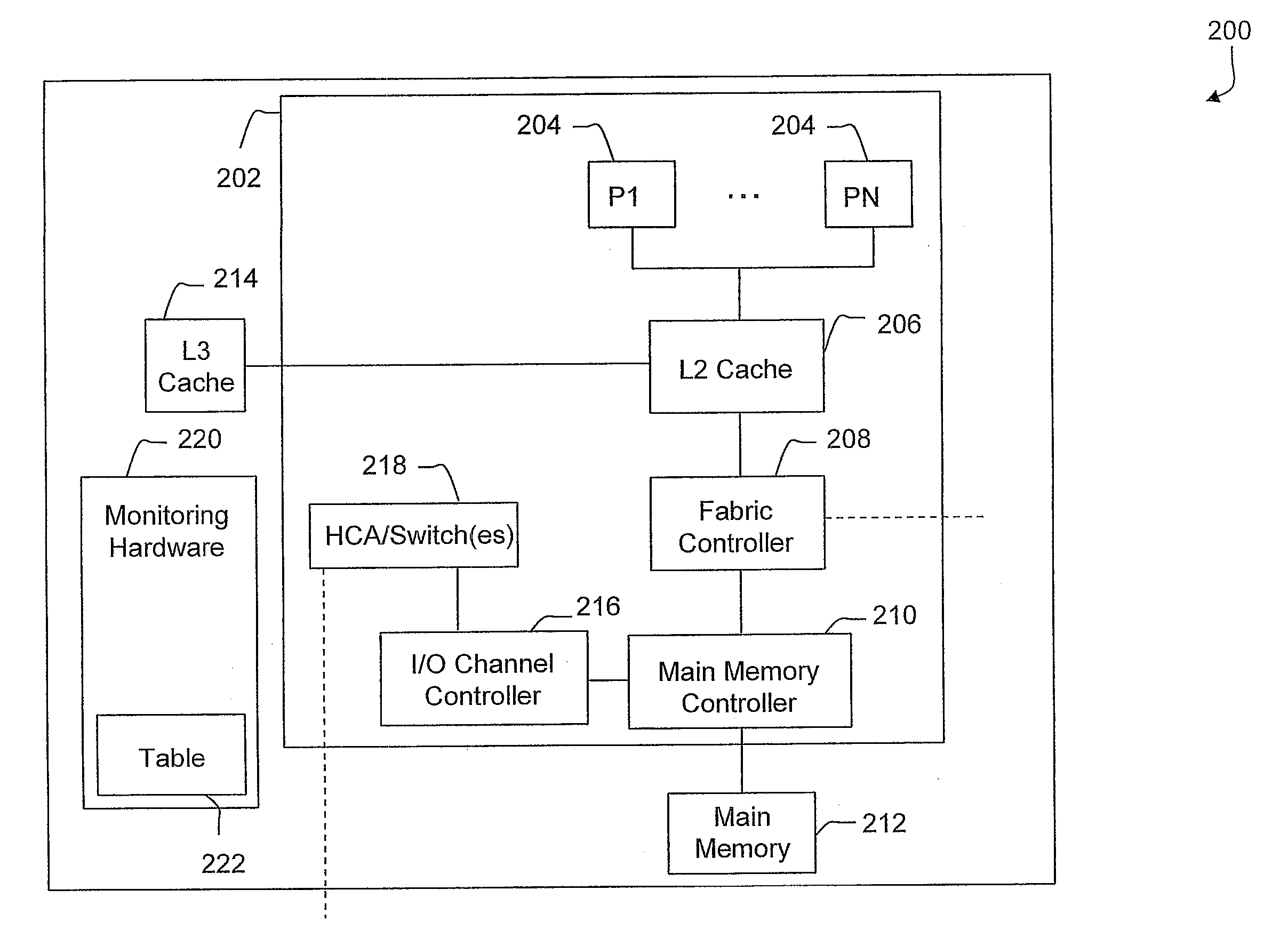

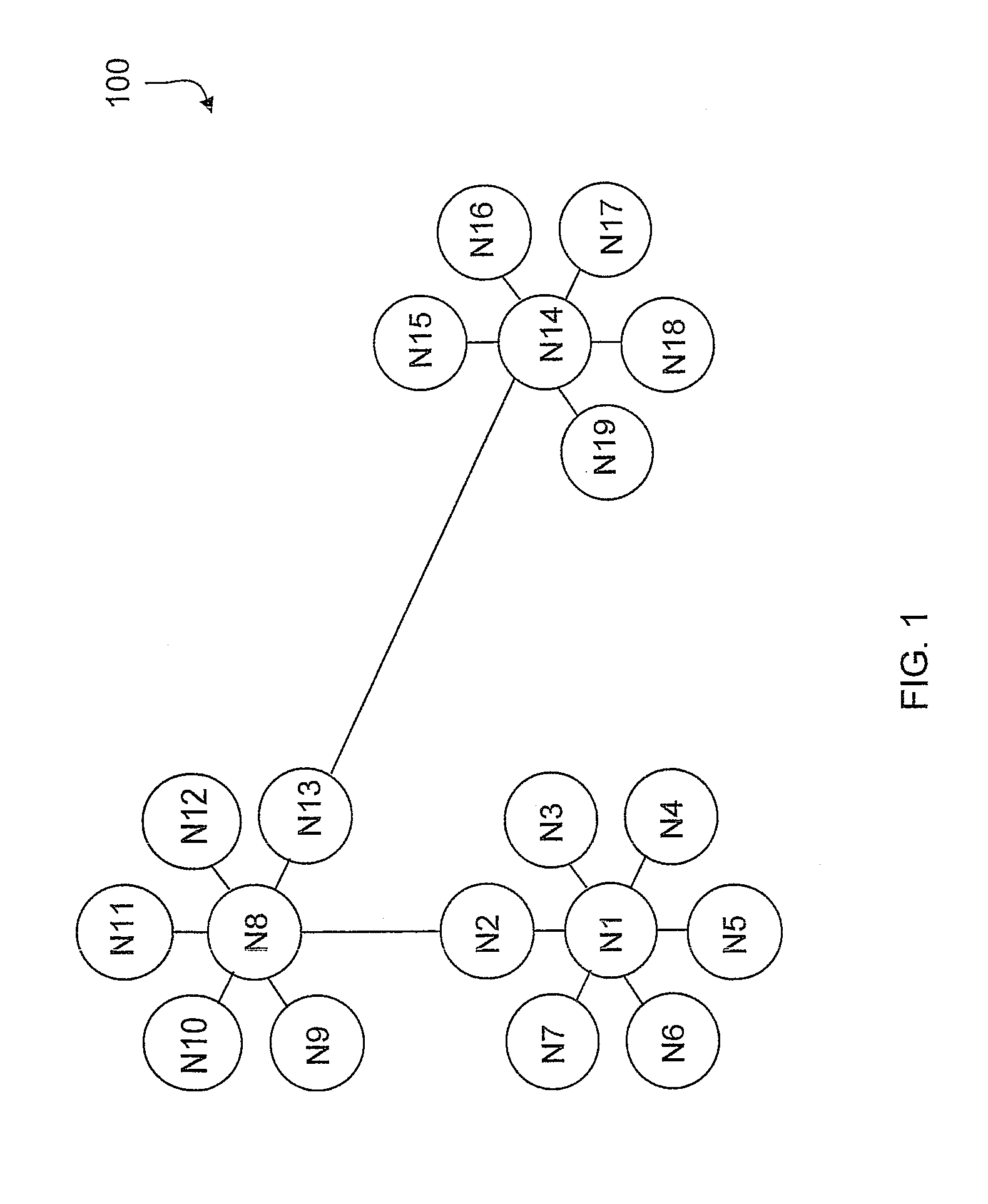

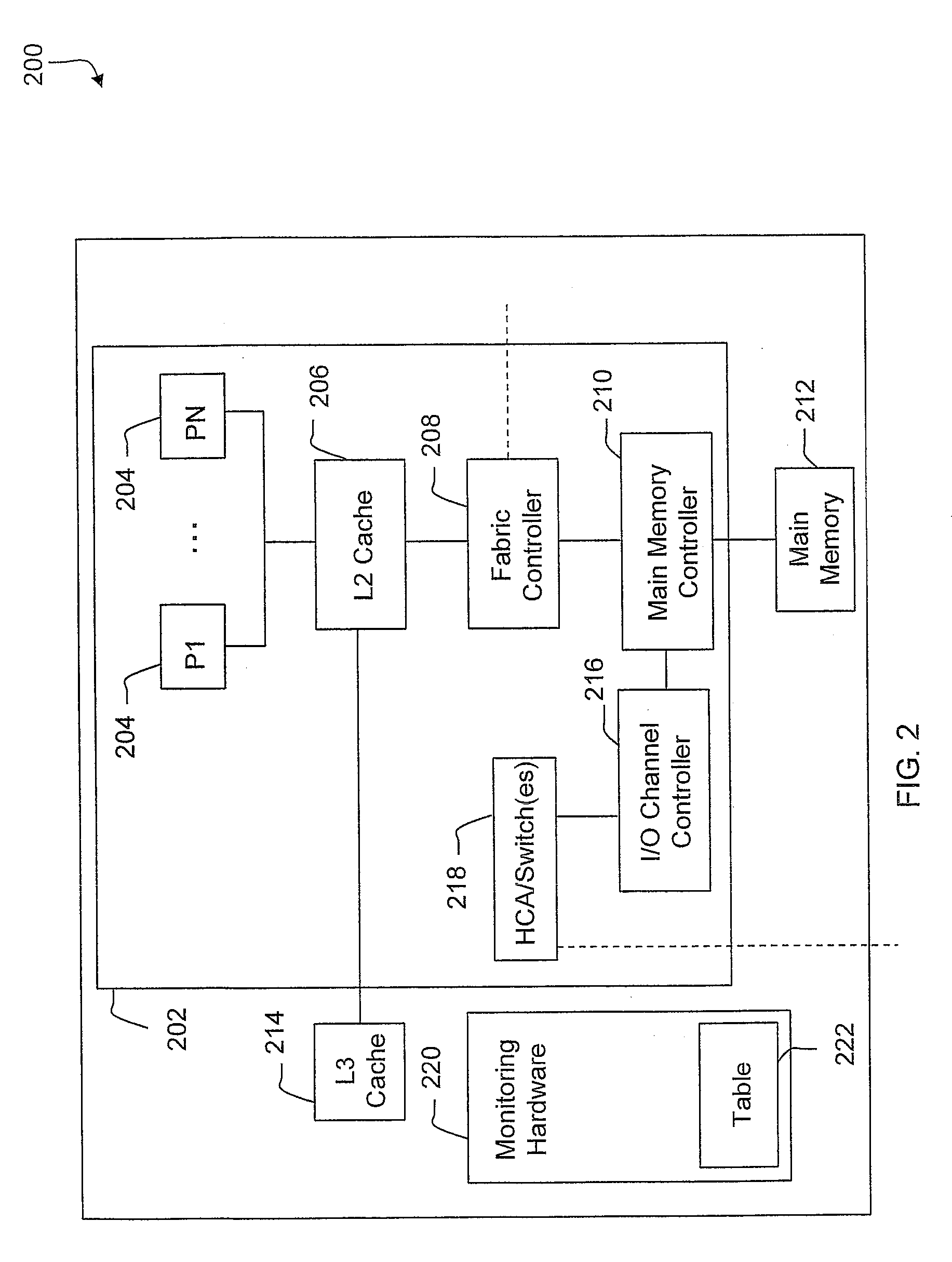

Techniques for dynamically assigning jobs to processors in a cluster based on processor workload

InactiveUS20100153541A1Digital computer detailsProgram controlPerformance computingParallel computing

A technique for operating a high performance computing (HPC) cluster includes monitoring workloads of multiple processors included in the HPC cluster. The HPC cluster includes multiple nodes that each include two or more of the multiple processors. One or more threads assigned to one or more of the multiple processors are moved to a different one of the multiple processors based on the workloads of the multiple processors.

Owner:IBM CORP

Techniques for dynamically assigning jobs to processors in a cluster using local job tables

A technique for operating a high performance computing cluster includes monitoring workloads of multiple processors. The high performance computing cluster includes multiple nodes that each include two or more of the multiple processors. Workload information for the multiple processors is periodically updated in respective local job tables maintained in each of the multiple nodes. Based on the workload information in the respective local job tables, one or more threads are periodically moved to a different one of the multiple processors.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com