Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

619 results about "Head posture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

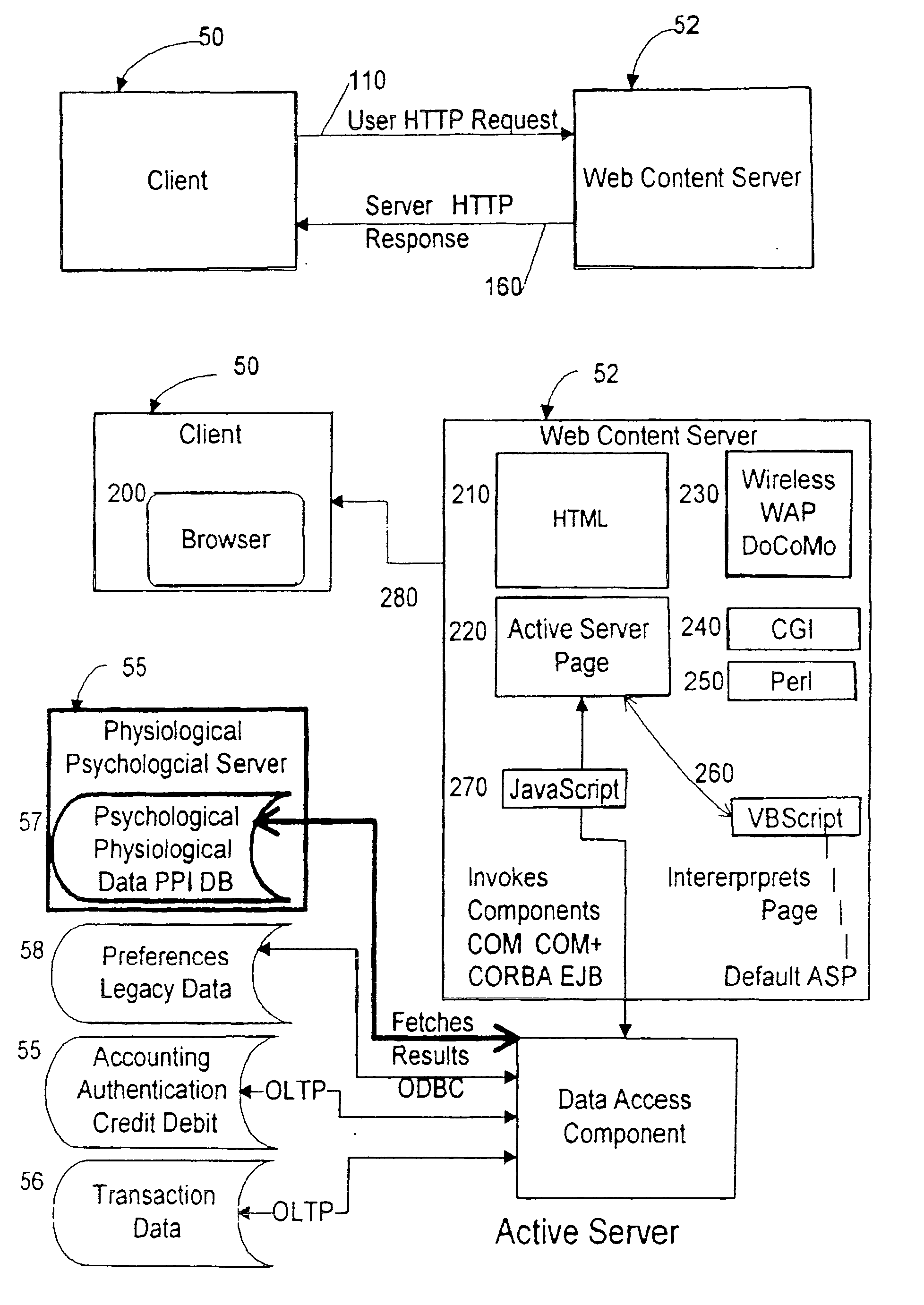

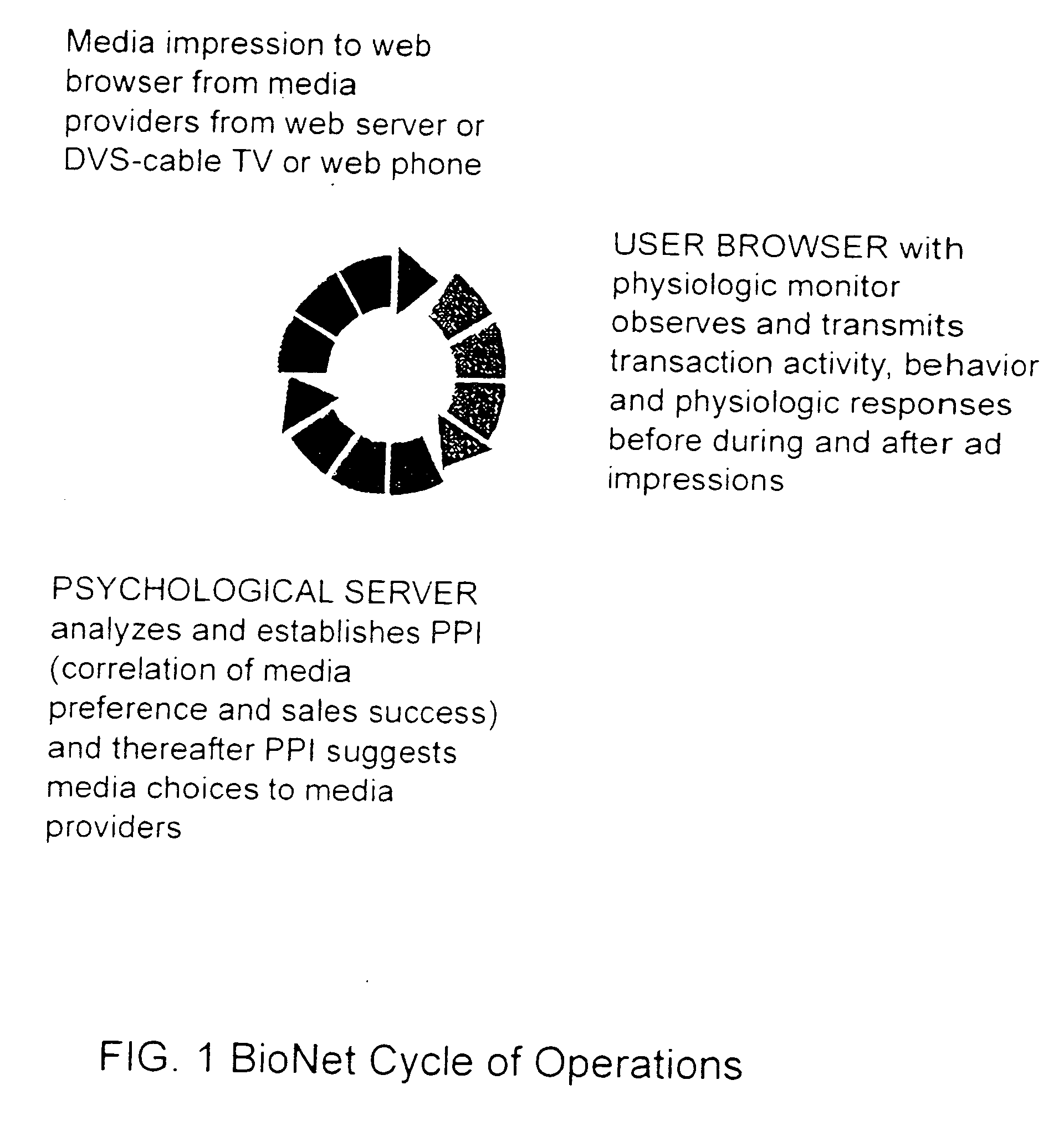

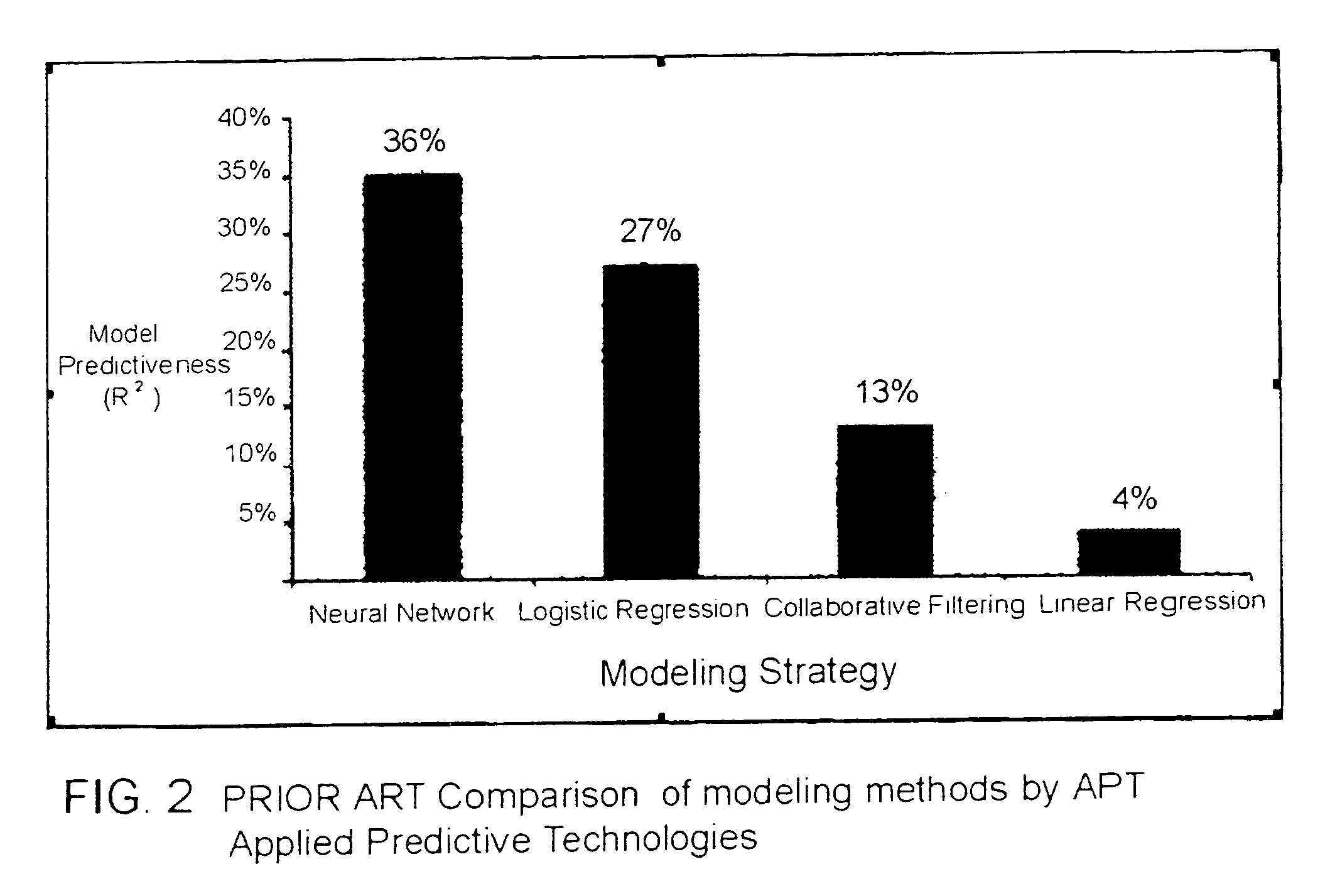

Input device for web content manager responsive to browser viewers' psychological preferences, behavioral responses and physiological stress indicators

InactiveUS20060293921A1Easy and efficient to manufactureLow costDiagnostic recording/measuringSensorsPulse oximetersBehavioral response

Owner:MCCARTHY JOHN +1

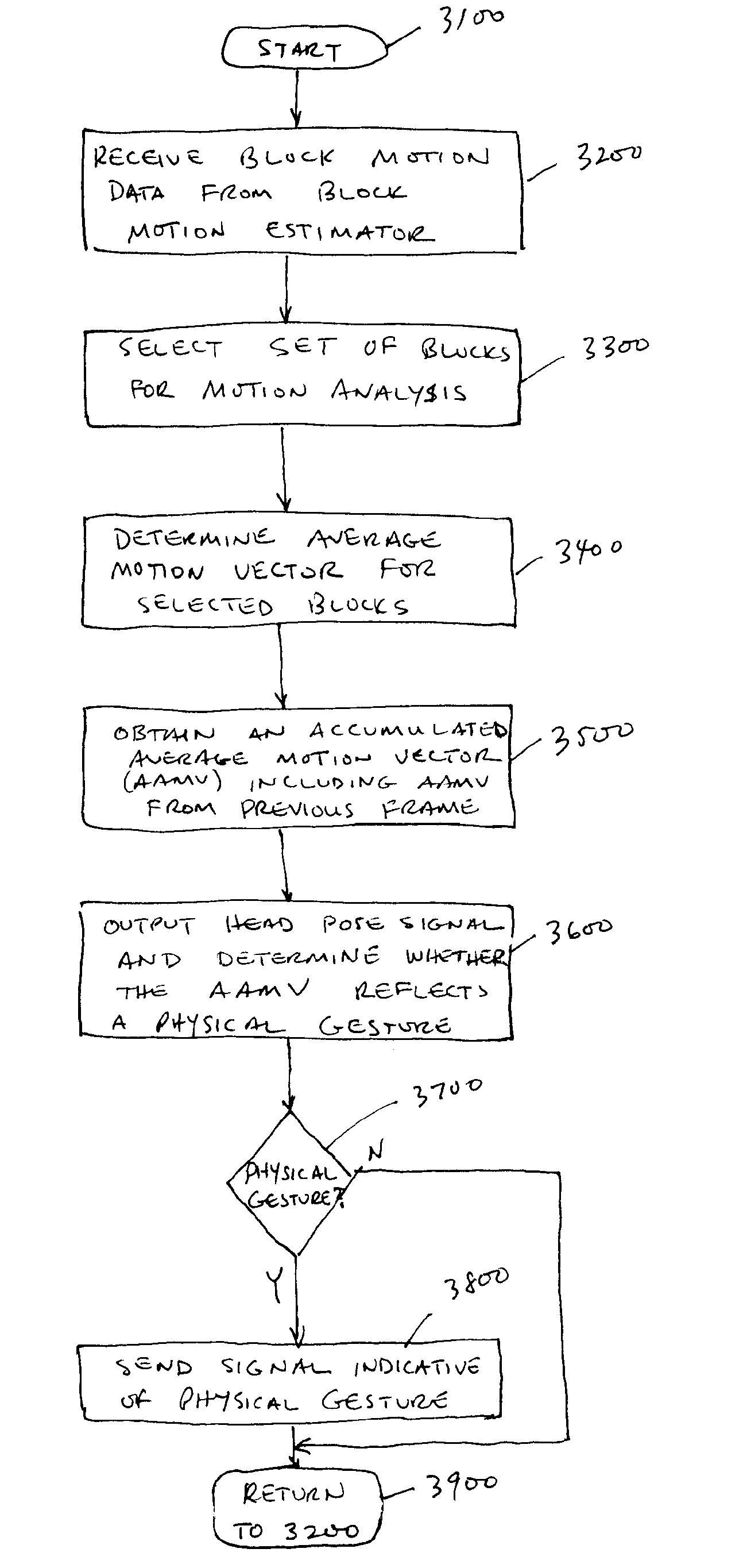

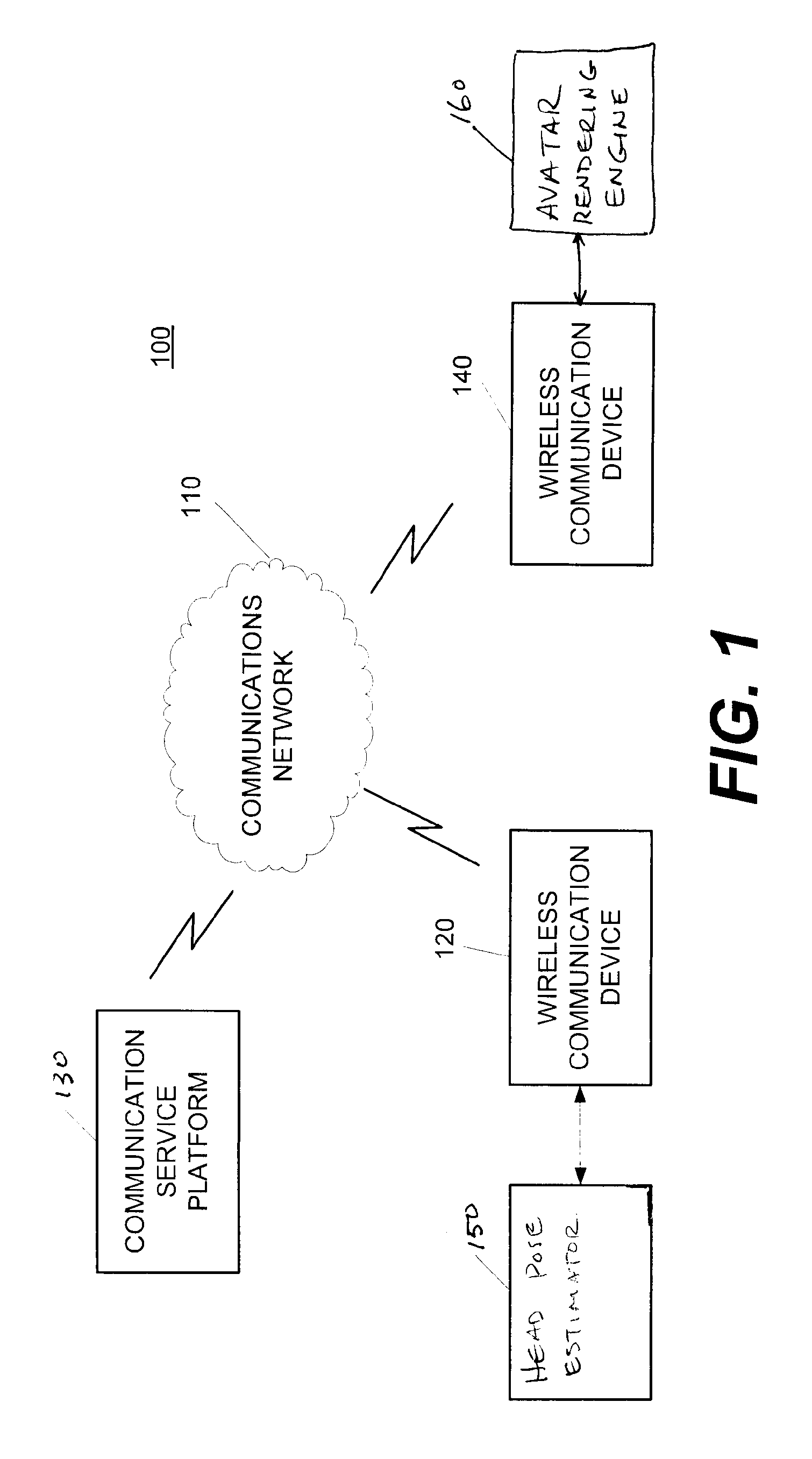

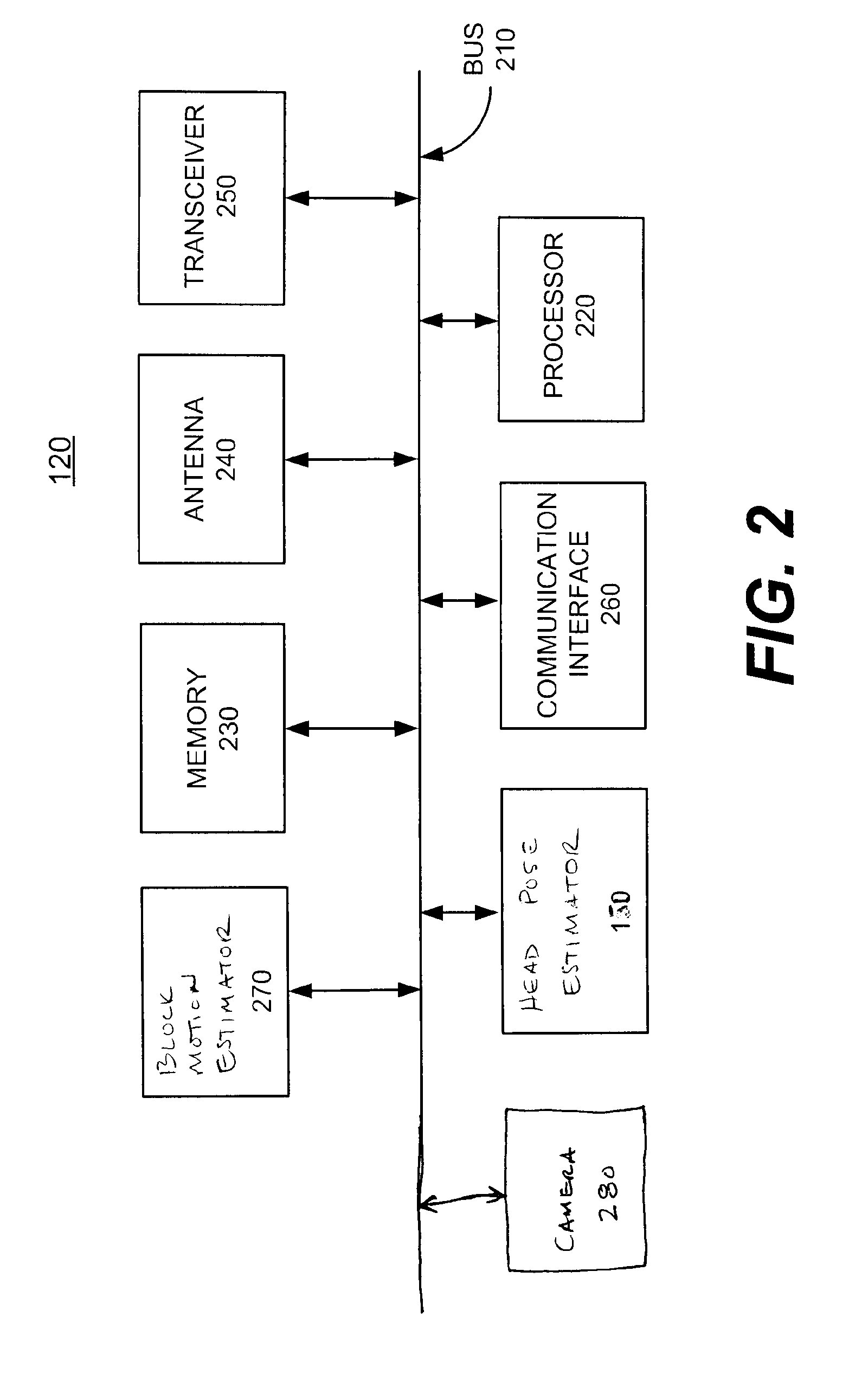

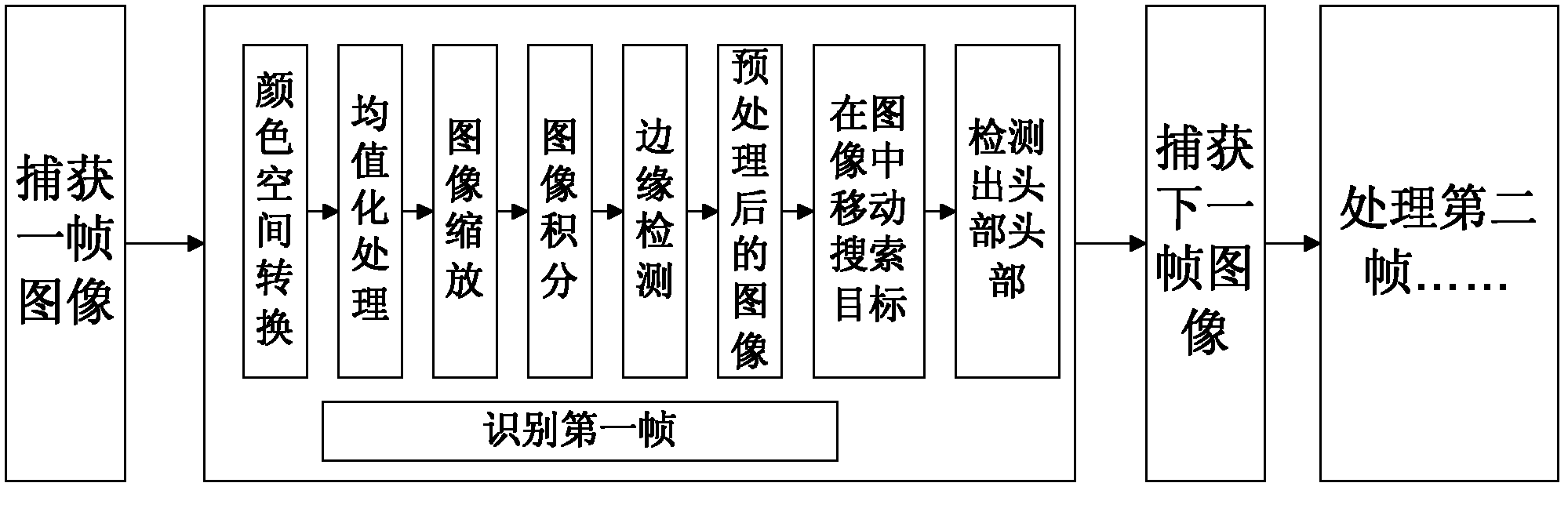

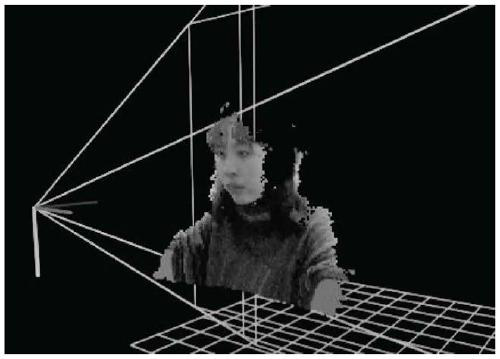

Apparatus and methods for head pose estimation and head gesture detection

InactiveUS7412077B2Color television with pulse code modulationColor television with bandwidth reductionFrame basedMotion vector

A method for head pose estimation may include receiving block motion vectors for a frame of video from a block motion estimator, selecting at least one block for analysis, determining an average motion vector for the at least one selected block, combining the average motion vectors over time (all past frames of video) to determine an accumulated average motion vector, estimating the orientation of a user's head in the video frame based on the accumulated average motion vector, and outputting at least one parameter indicative of the estimated orientation.

Owner:GOOGLE TECHNOLOGY HOLDINGS LLC

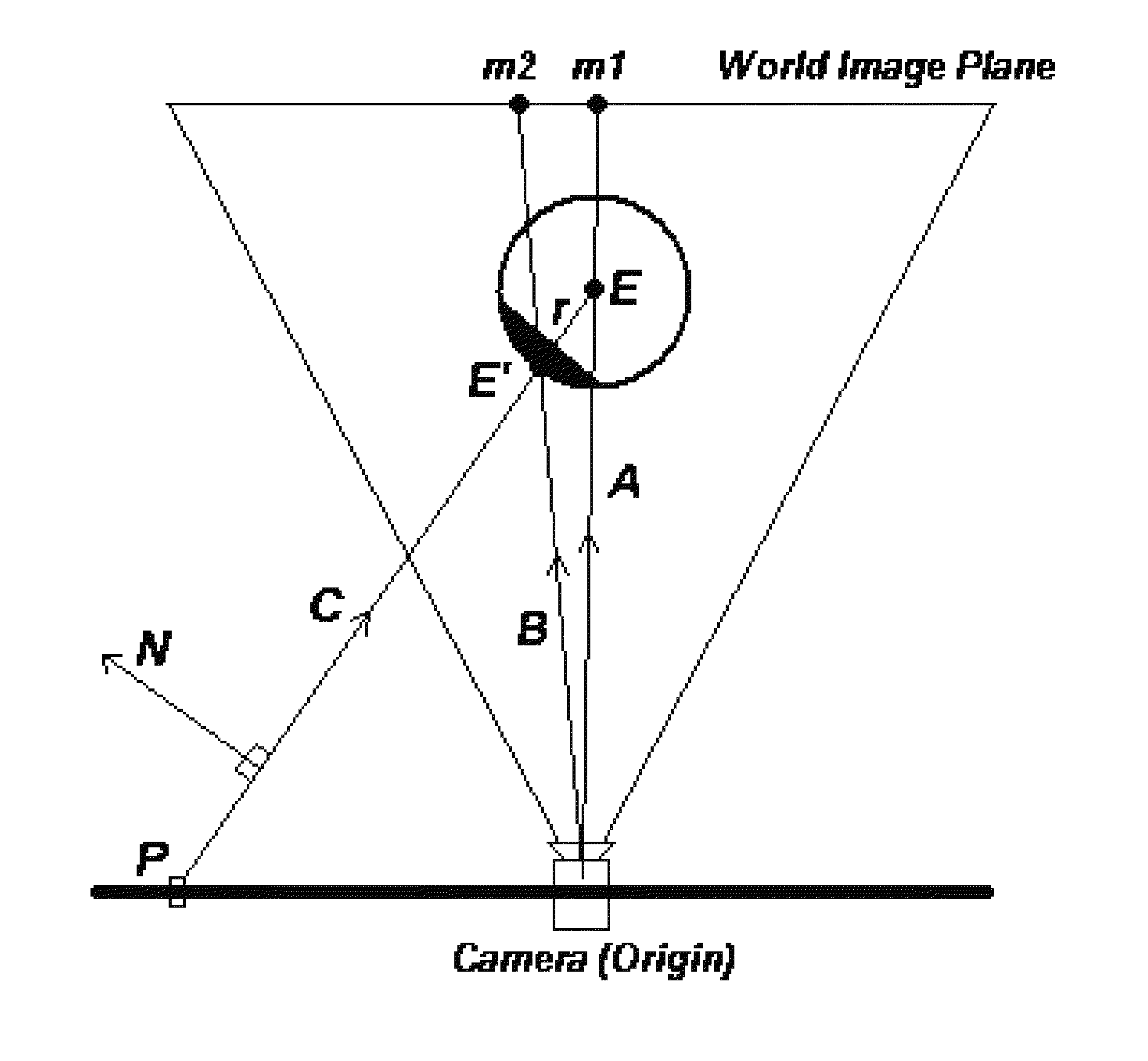

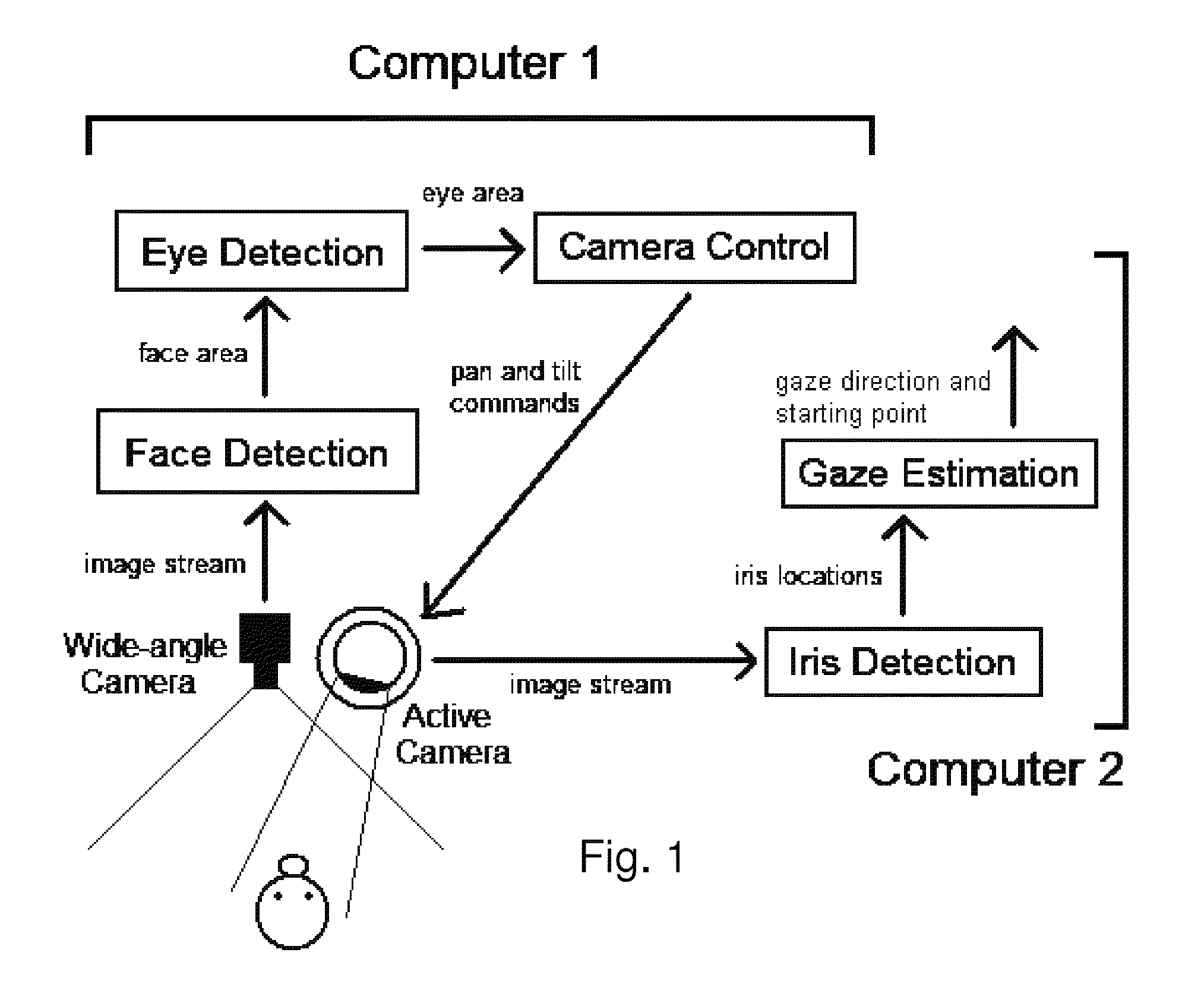

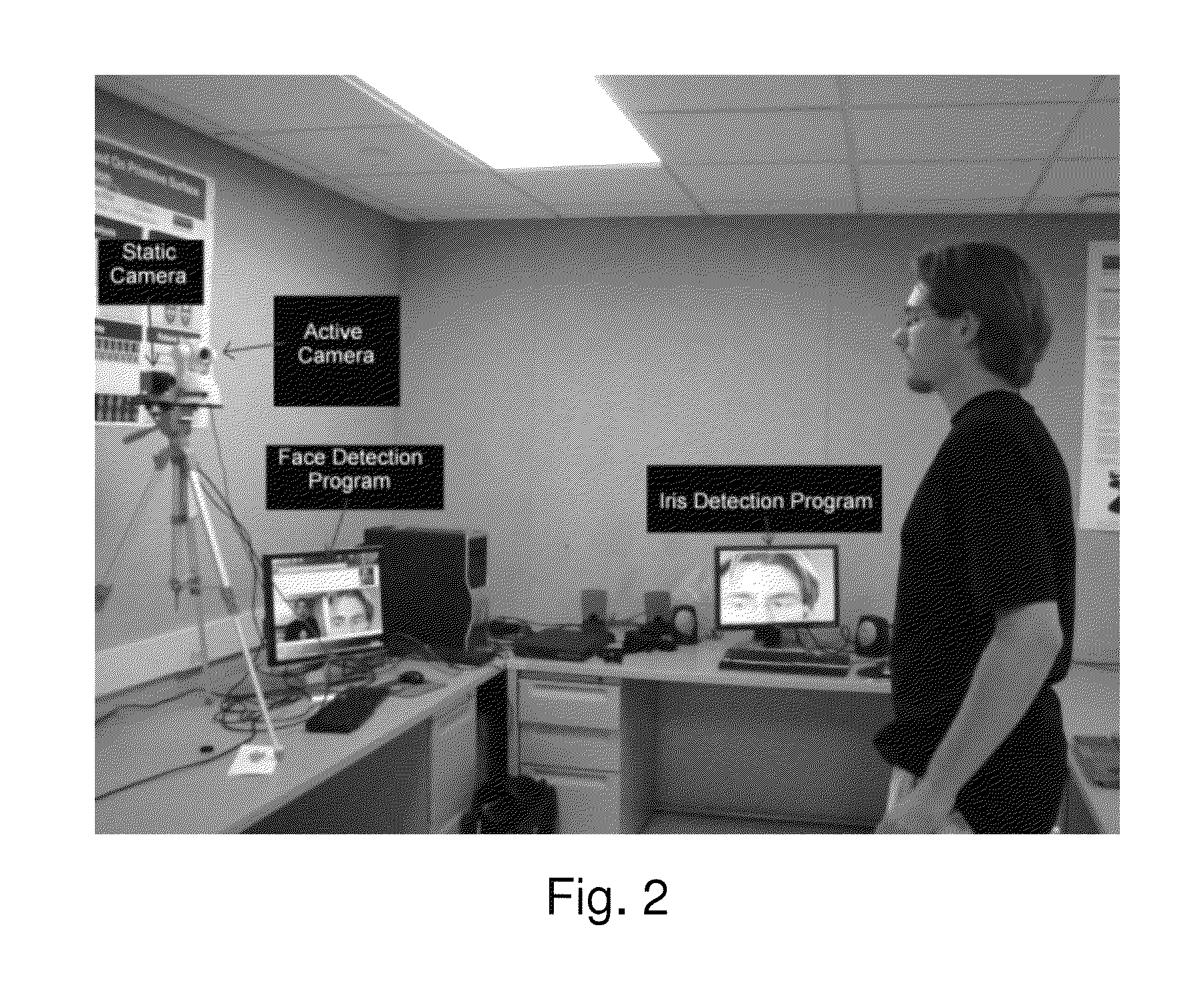

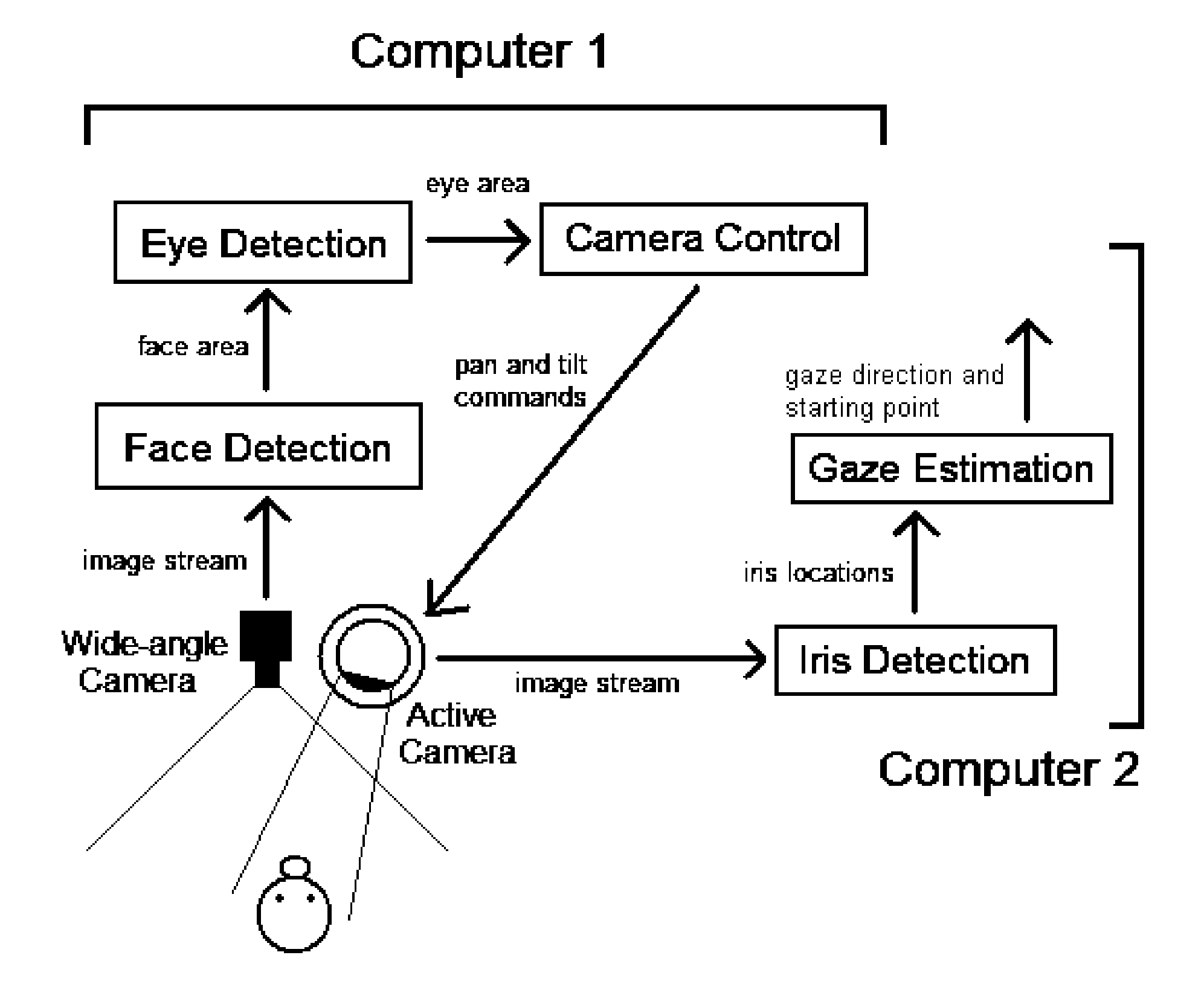

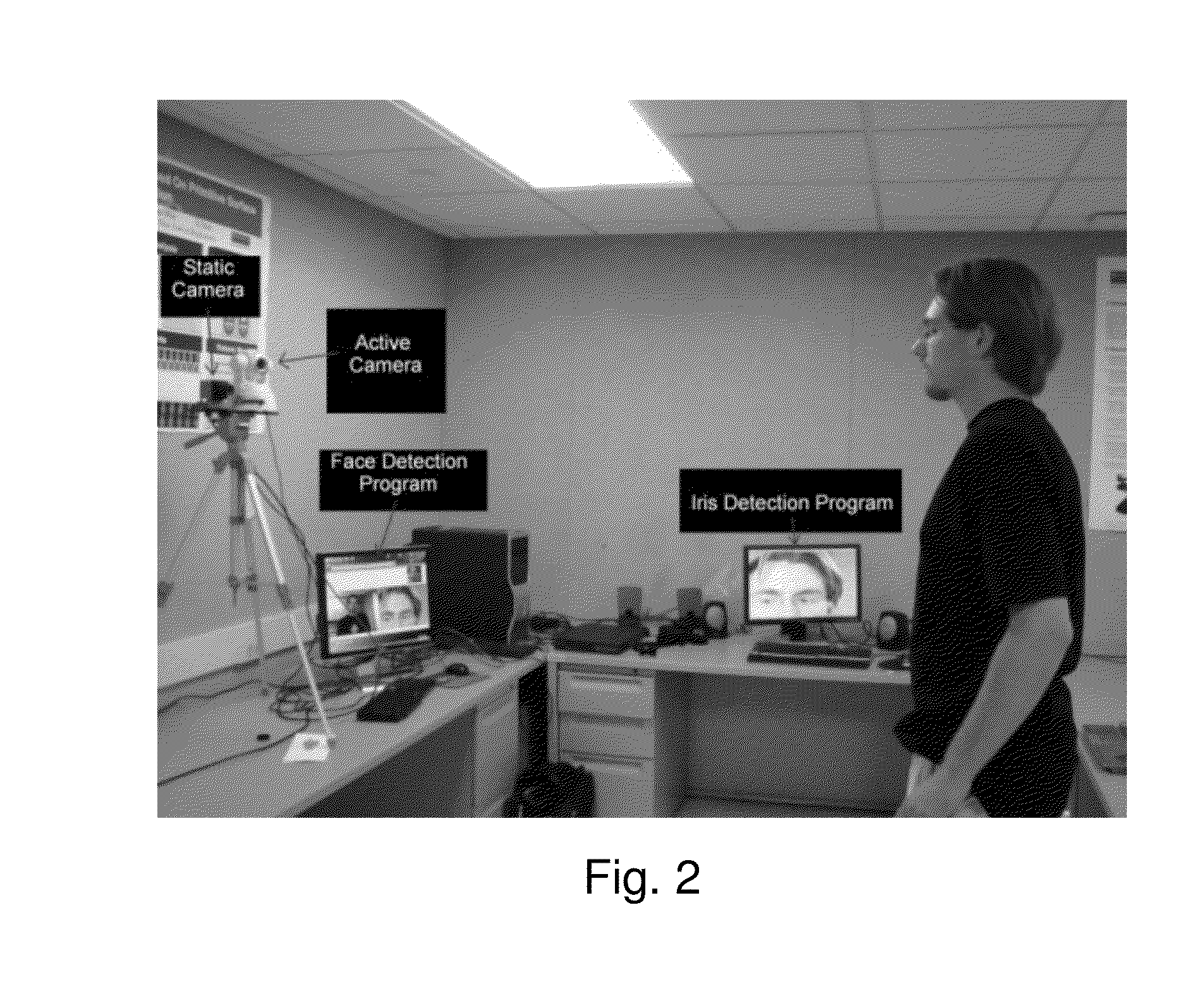

Real time eye tracking for human computer interaction

ActiveUS8885882B1Improve interactivityMore intelligent behaviorImage enhancementImage analysisOptical axisGaze directions

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

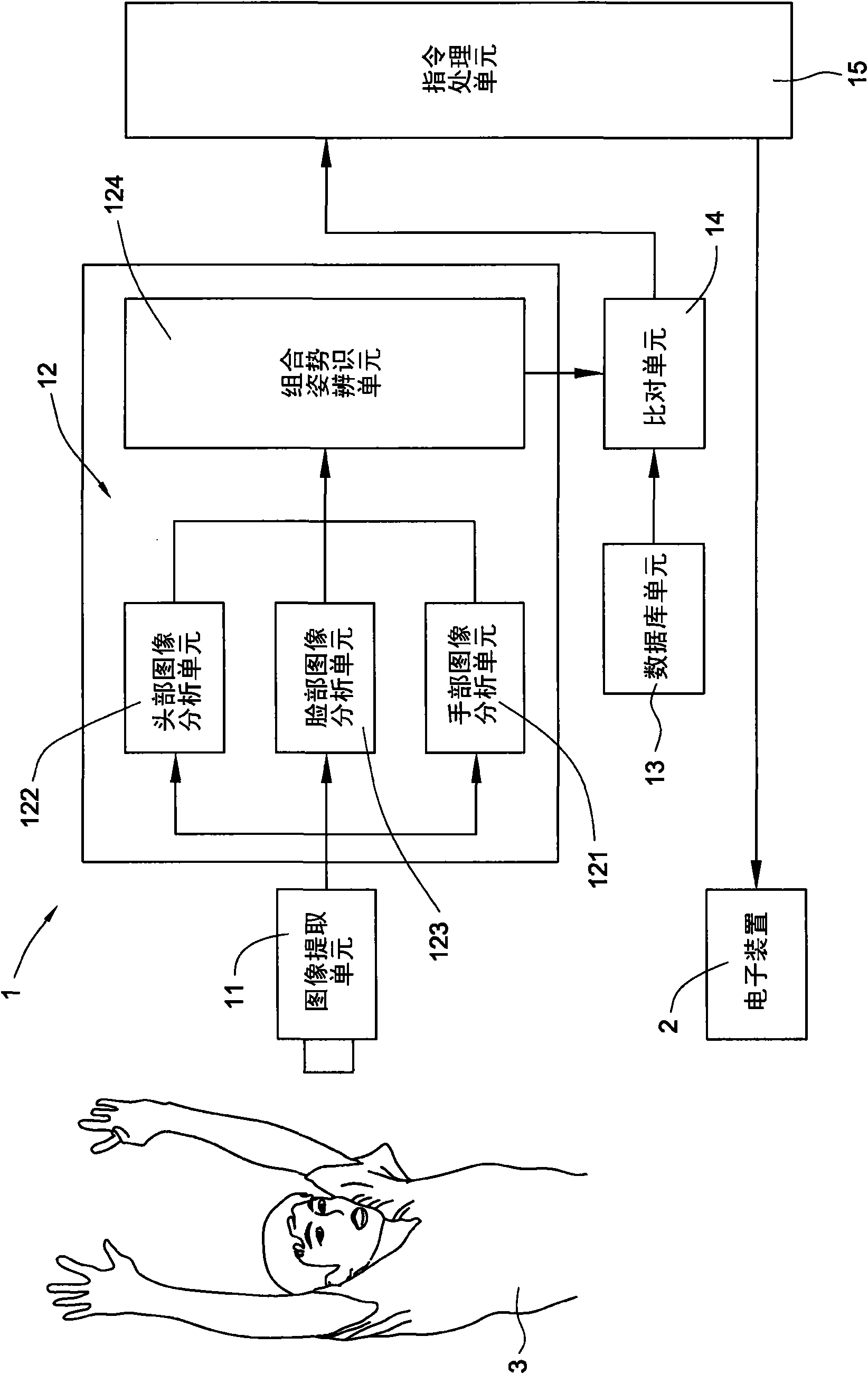

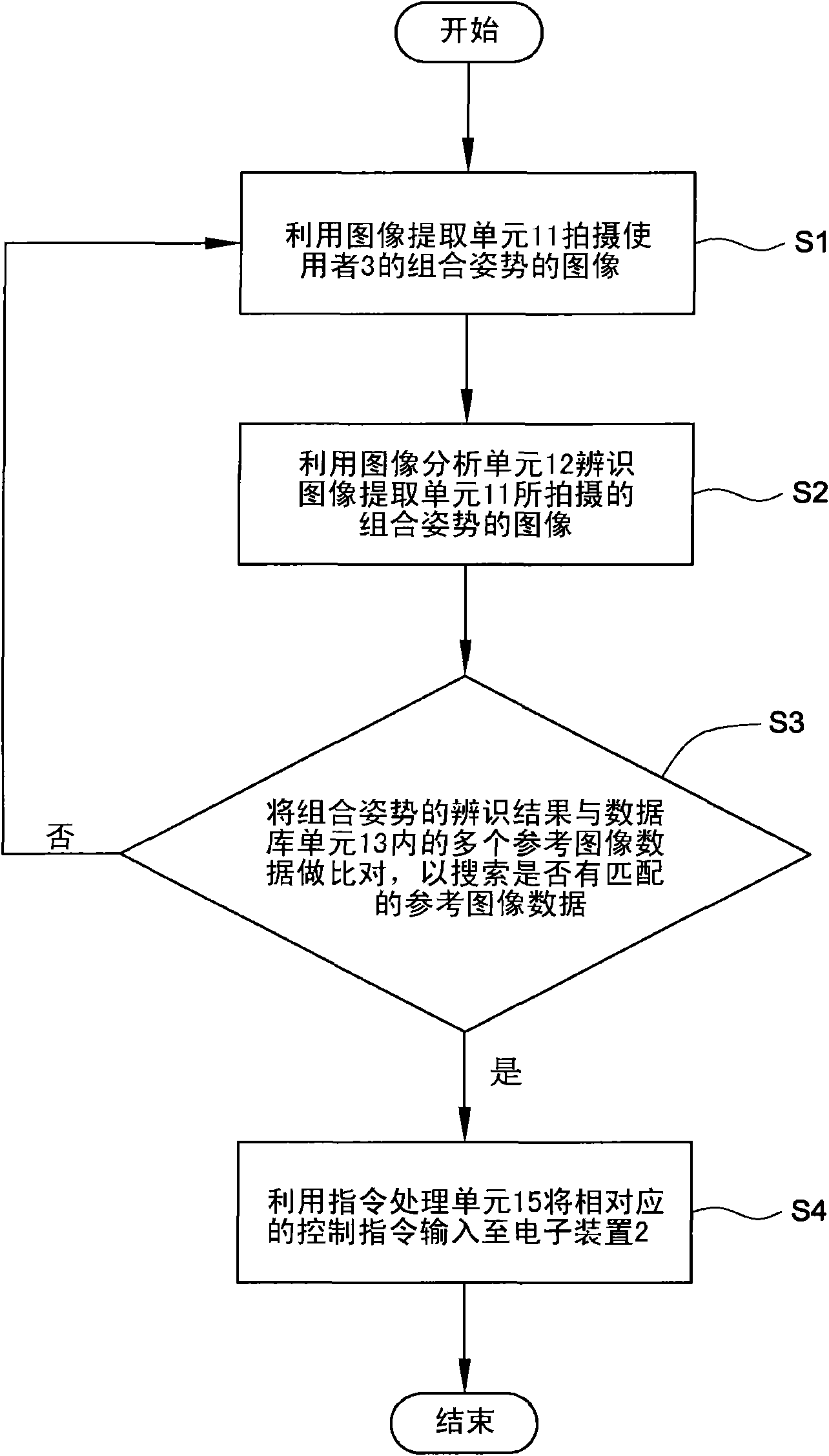

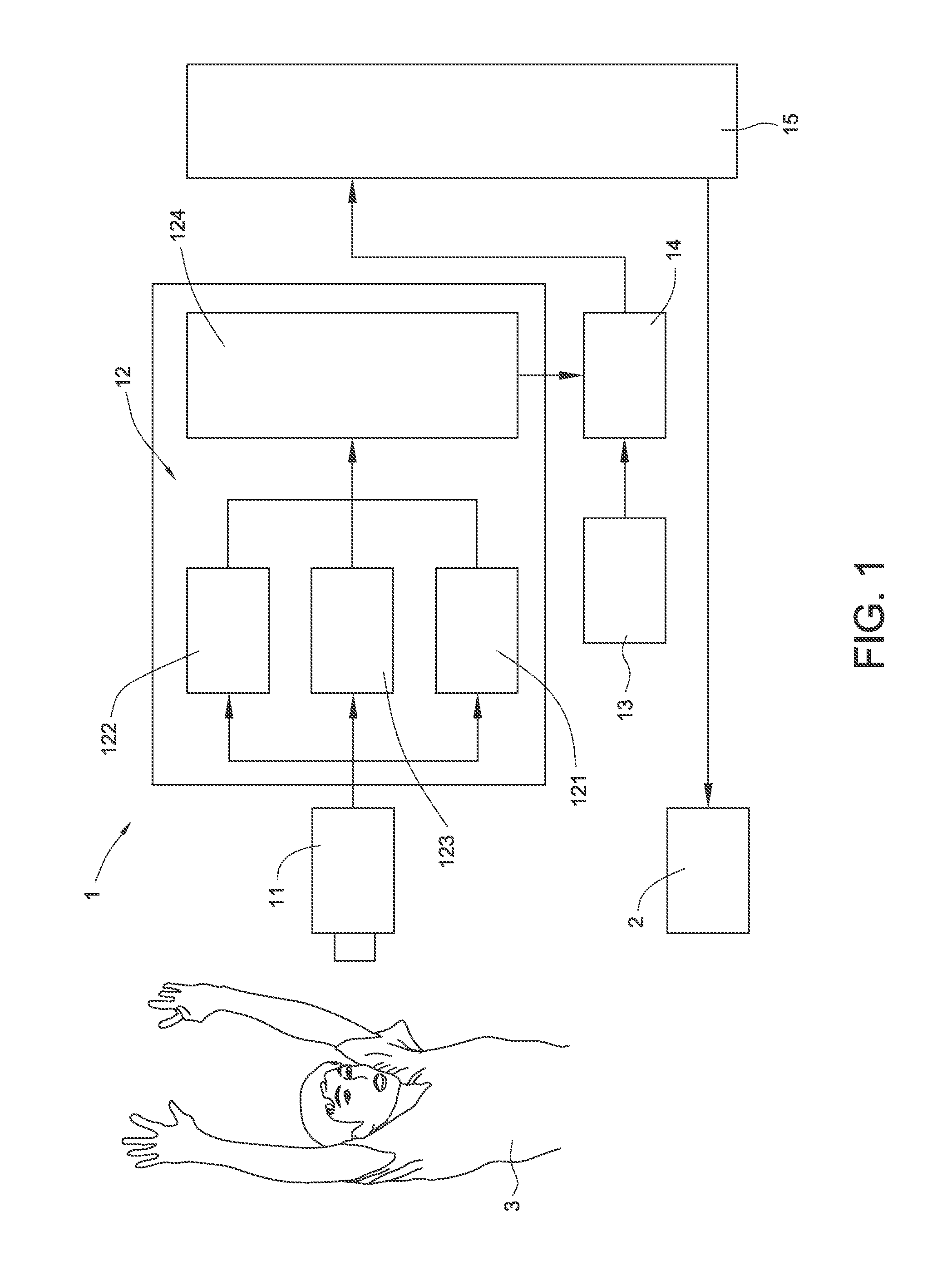

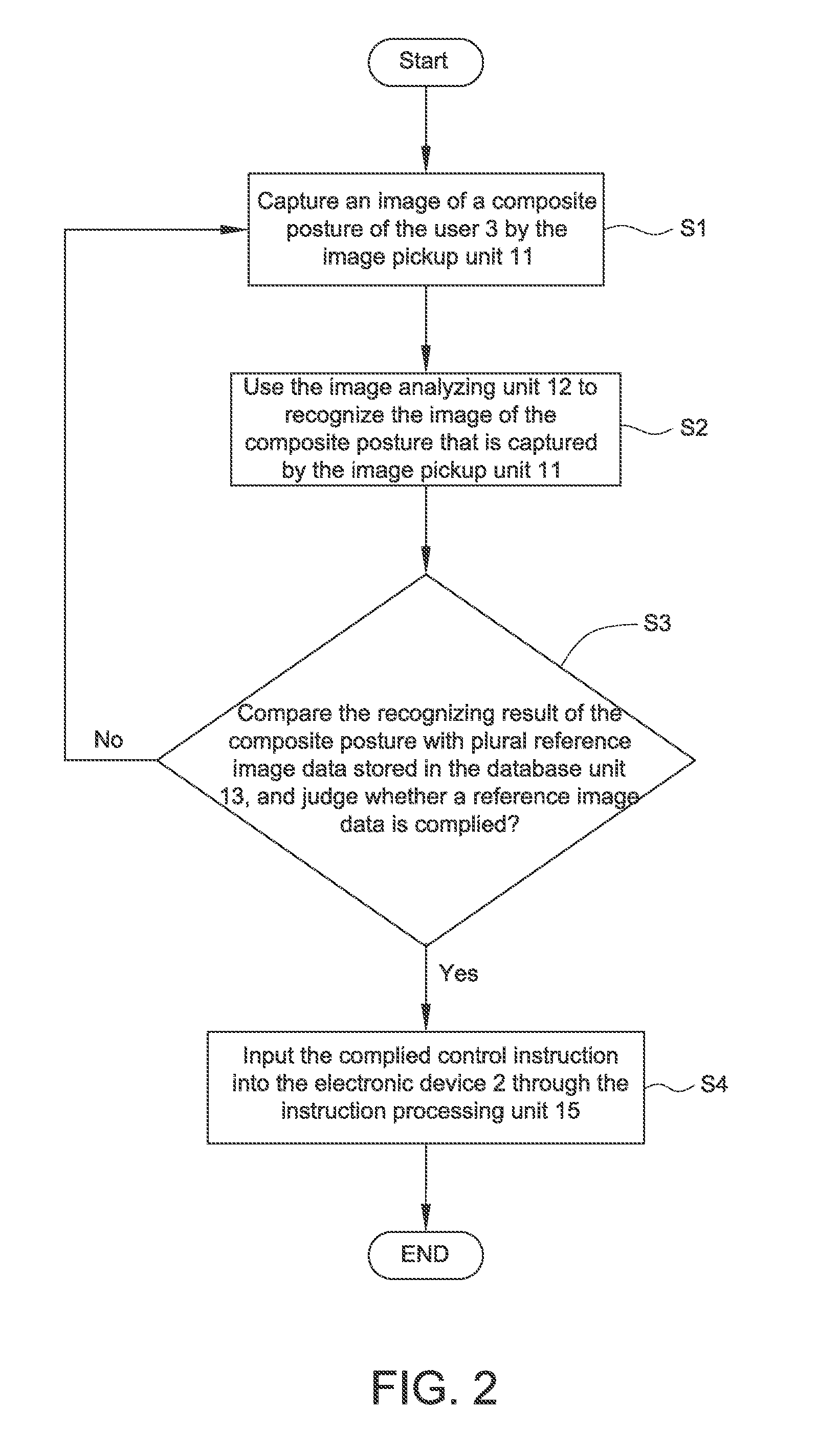

System and method for control through identifying user posture by image extraction device

InactiveCN102117117APrevent stray entryInput/output for user-computer interactionCharacter and pattern recognitionImage extractionPhysical medicine and rehabilitation

The invention provides a system and a method for control through identifying user posture by an image extraction device, in which a hand posture, a head posture and variation of facial expression of the user are synchronously matched to form different combined postures so as to control an electronic device, and each combined posture can transmit a corresponding control instruction. Since the complexity of the combined postures is higher than that of inertia actions of people, the system and the method for control through identifying the user posture by the image extraction device can avoid unnecessary control instructions generated by unaware inertia actions of users.

Owner:PRIMAX ELECTRONICS LTD

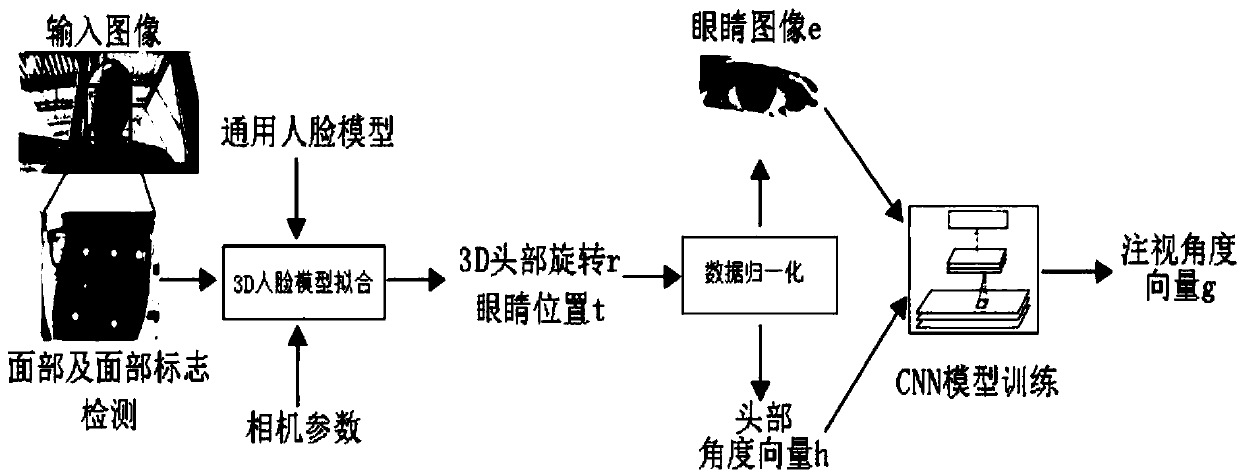

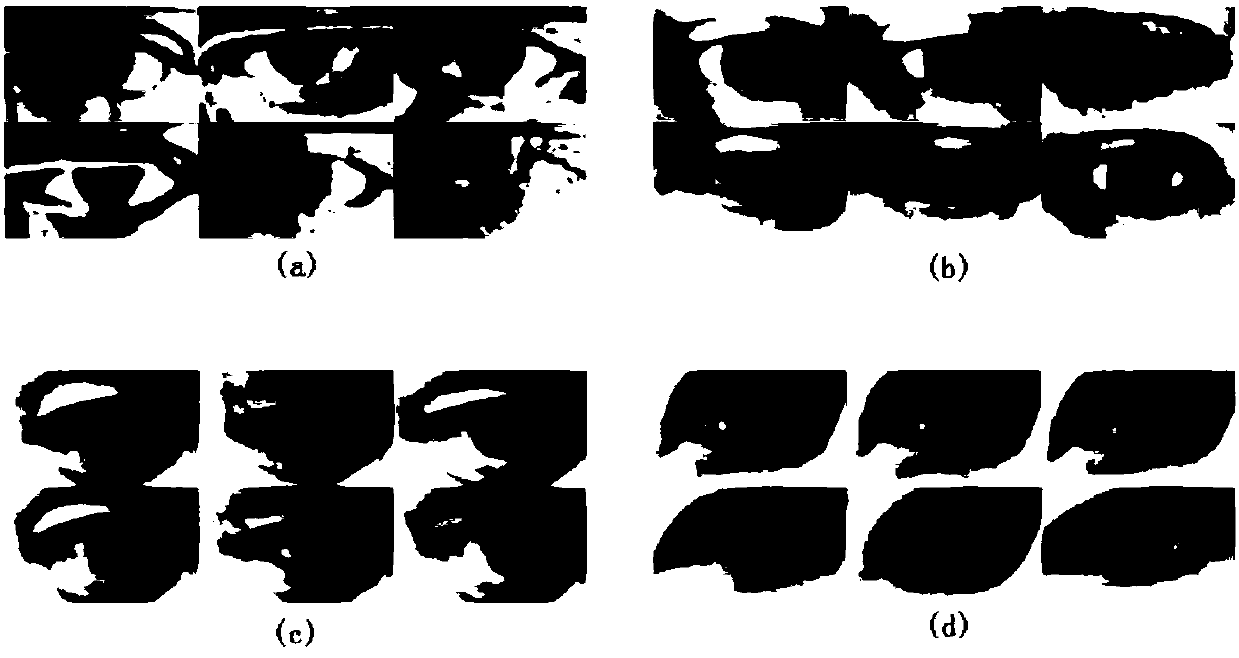

Line-of-sight estimation method based on depth appearance gaze network

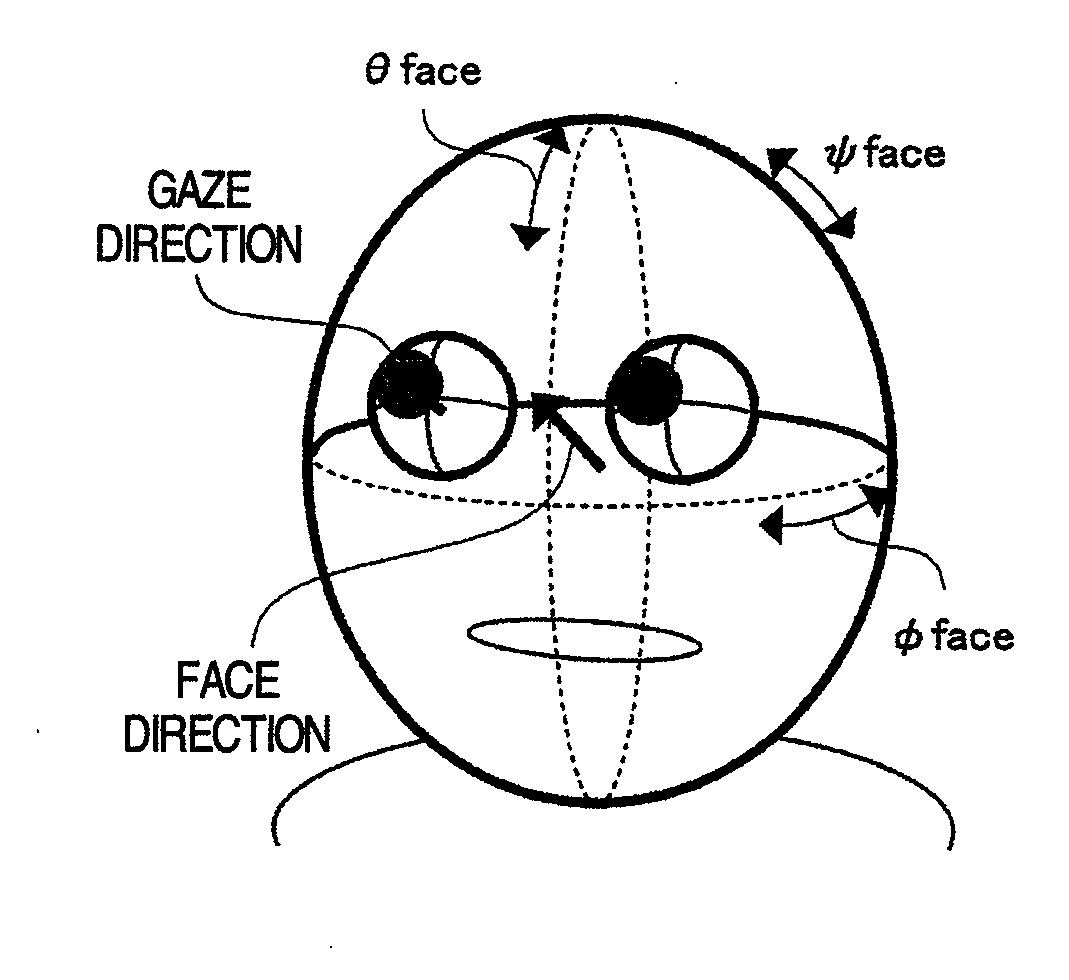

The invention provides a line-of-sight estimation method based on a depth appearance gaze network. Main content of the method includes a gaze data set, a gaze network, and cross-data set evaluation. The method comprise the following steps: a large number of images from different participants are collected as a gaze data set, face marks are manually annotated on subsets of the data set, face calibration is performed on input images obtained via a monocular RGB camera, a face detection method and a face mark detection method are adopted to position the marks, a general three-dimensional face shape model is fitted to estimate a detected three-dimensional face posture, a spatial normalization technique is applied, the head posture and eye images are distorted to a normalized training space, and a convolutional neural network is used to learn mapping of the head posture and the eye images to three-dimensional gaze in a camera coordinate system. According to the method, a continuous conditional neural network model is employed to detect the face marks and average face shapes for performing three-dimensional posture estimation, the method is suitable for line-of-sight estimation in different environments, and accuracy of estimation results is improved.

Owner:SHENZHEN WEITESHI TECH

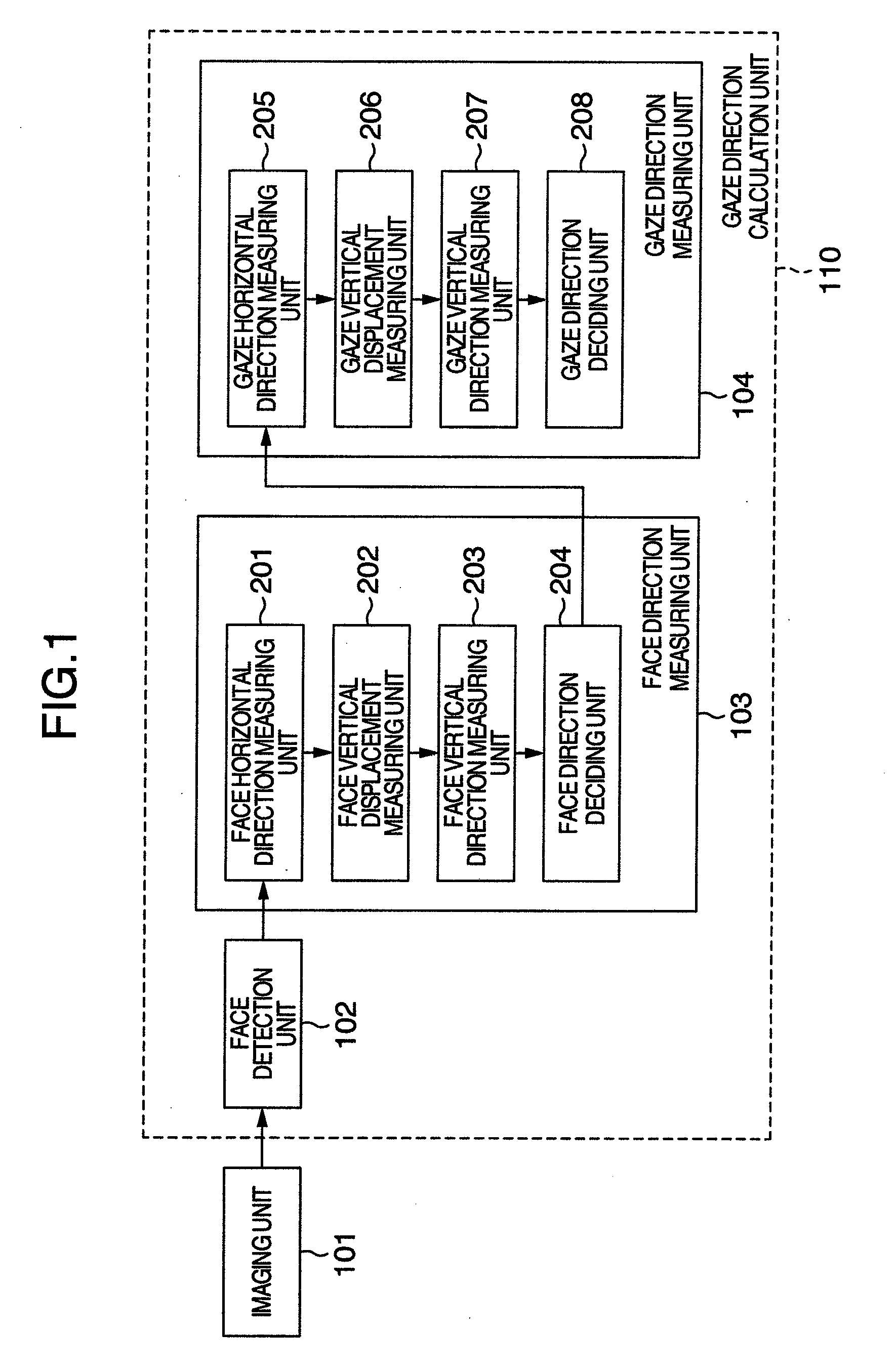

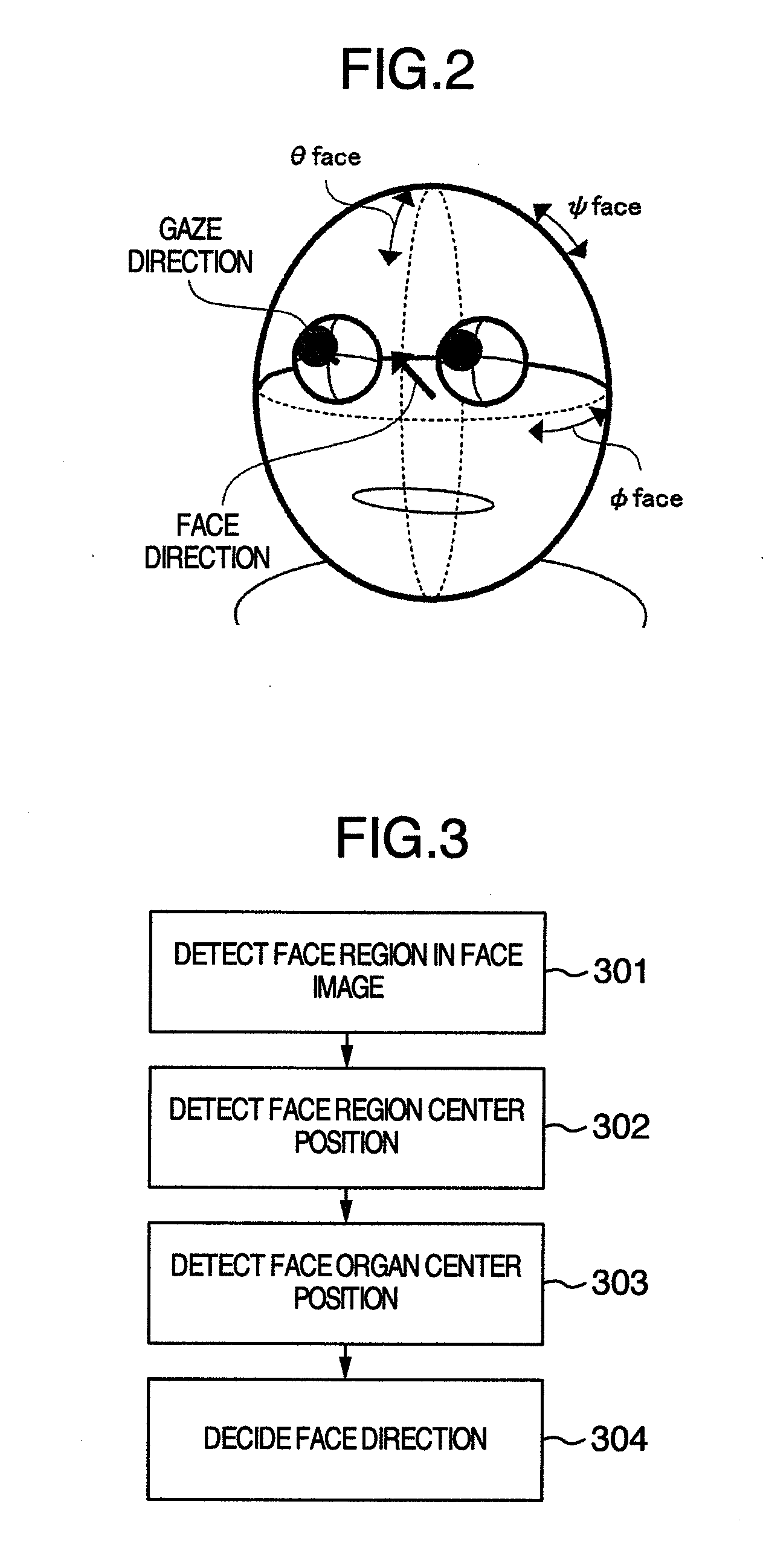

Gaze direction measuring method and gaze direction measuring device

InactiveUS20090109400A1Reduce the impactAccurate measurementImage enhancementImage analysisPupilVertical displacement

A face horizontal direction measuring unit measures the angle of the face horizontal direction for a face image obtained by an imaging unit. By using information on the radius of the head and information on the shoulder position of the person obtained by the aforementioned measurement, a face vertical displacement measuring unit measures a face displacement in the vertical direction not affected by a head posture. According to the obtained displacement, the face angle in the vertical direction is decided. By using the obtained face direction, the angle of the gaze in the horizontal direction and the eyeball radius are measured. Further, a gaze vertical displacement measuring unit is provided for which measuring the pupil center position against the eyeball center position as the gaze displacement in the vertical direction. This displacement amount is used to measure the gaze angle in the vertical direction.

Owner:HITACHI LTD

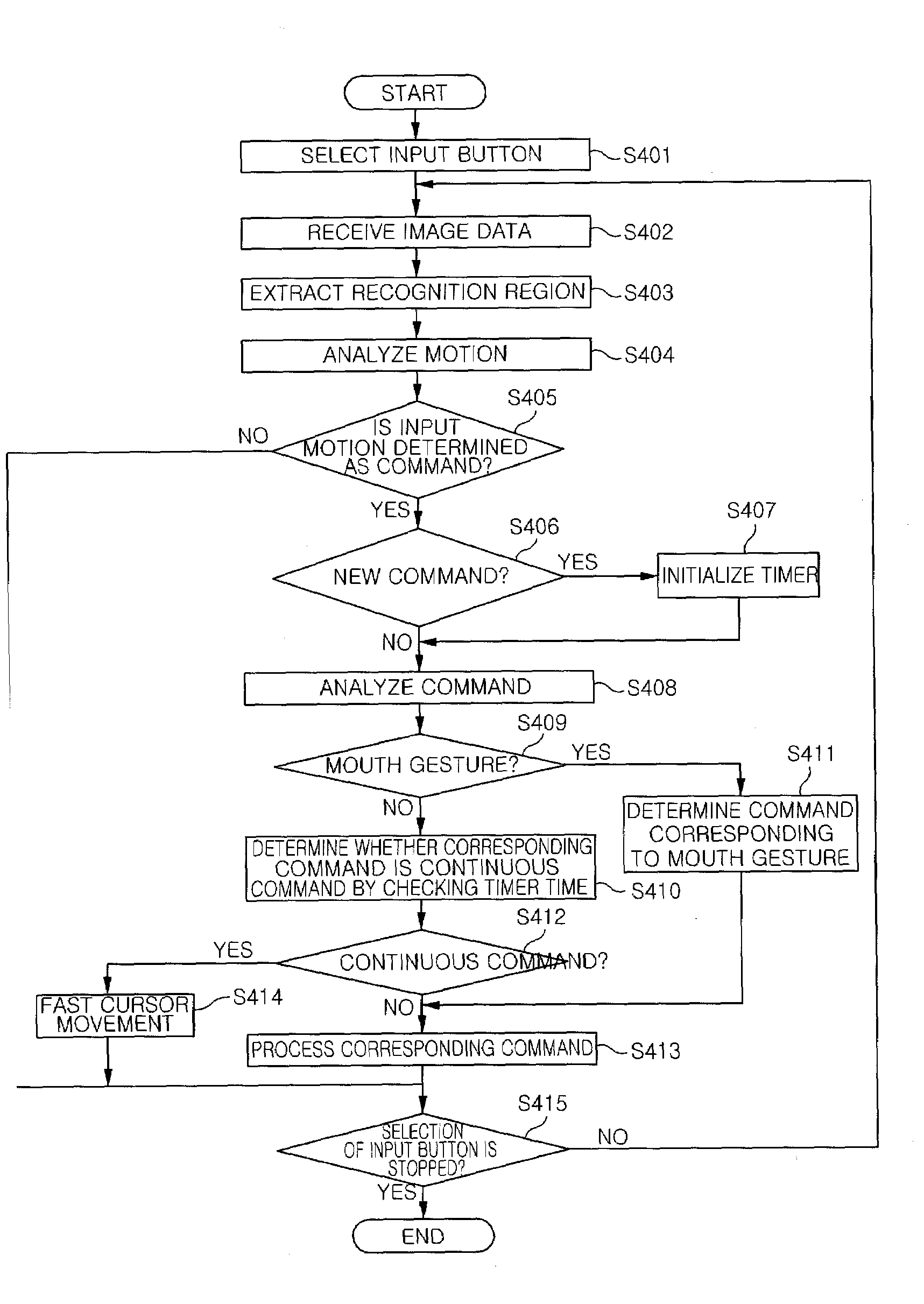

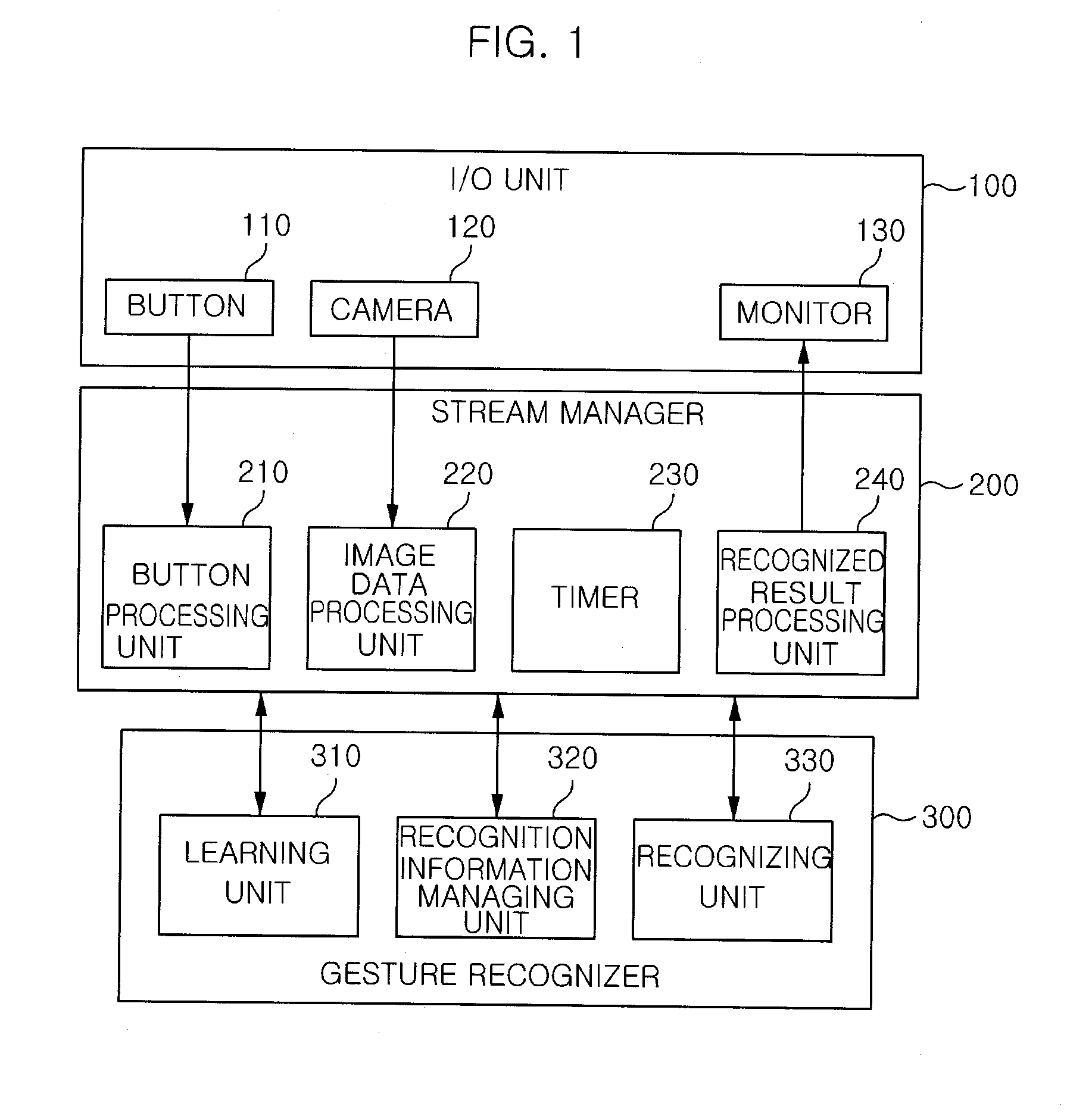

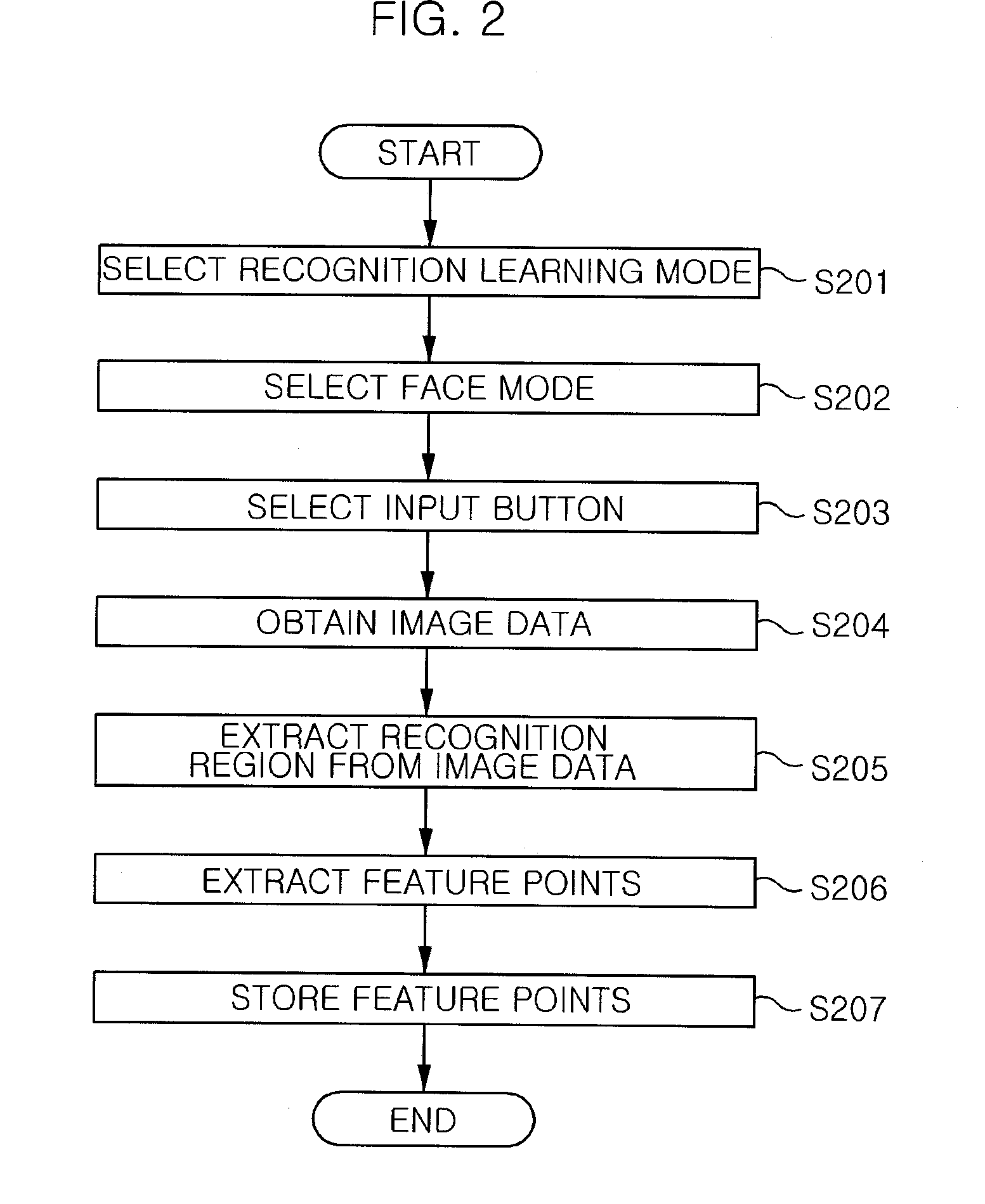

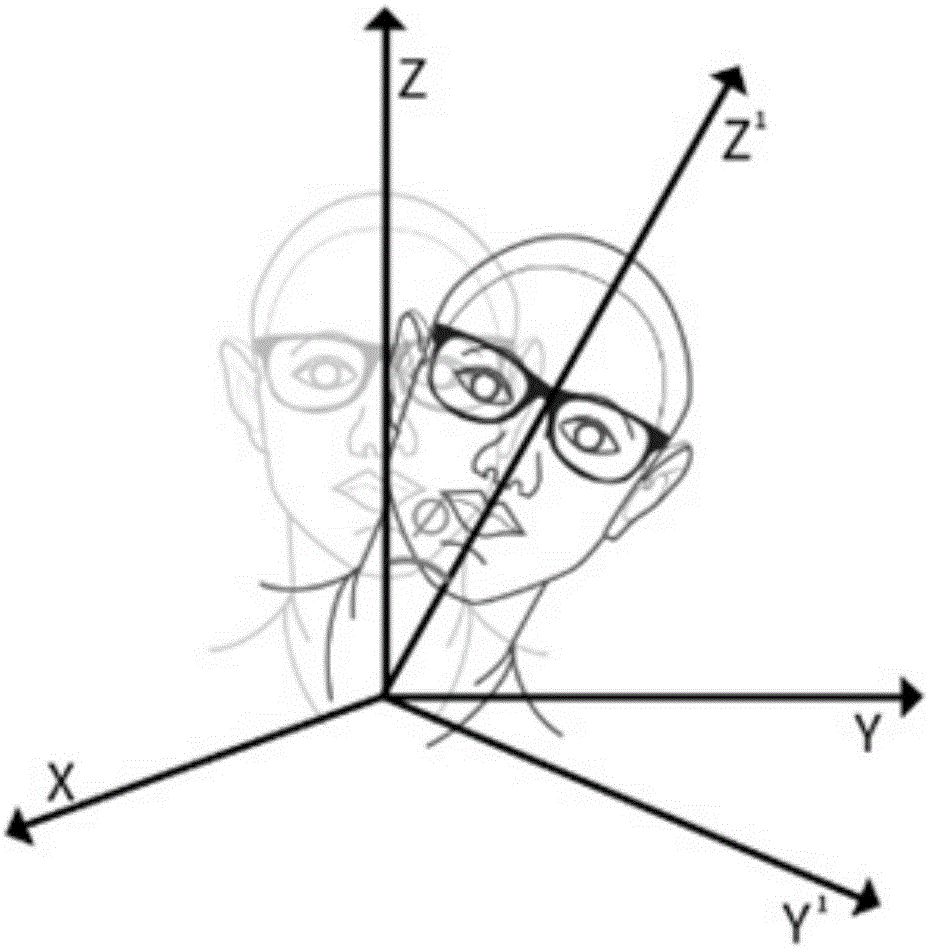

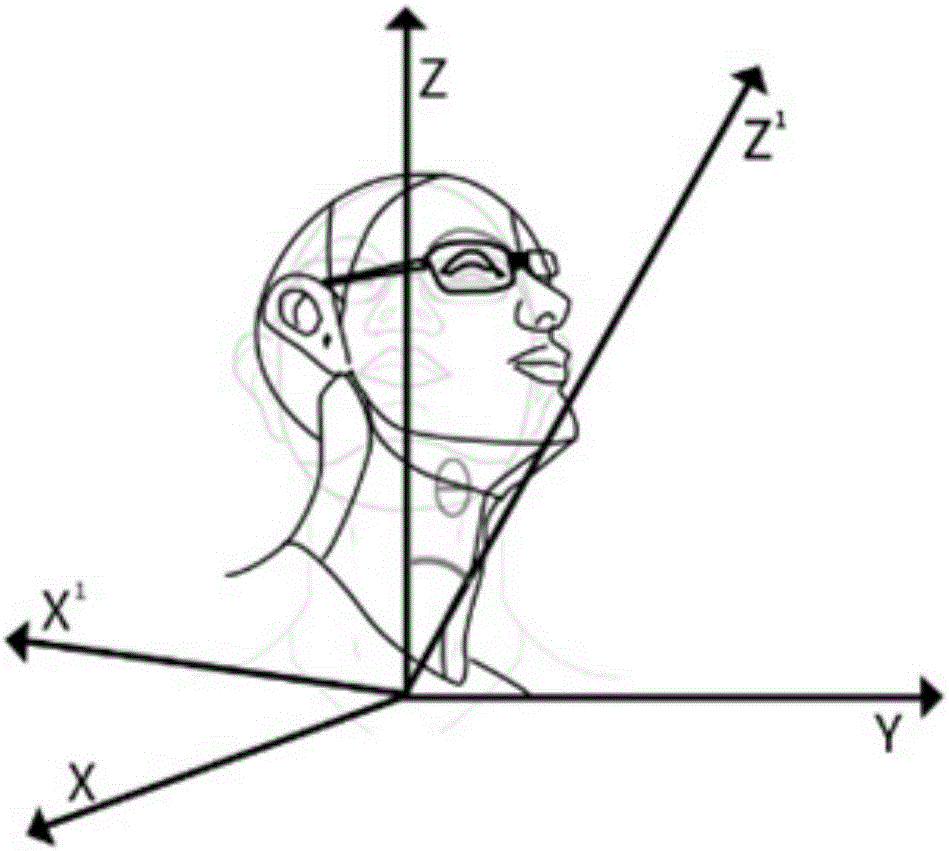

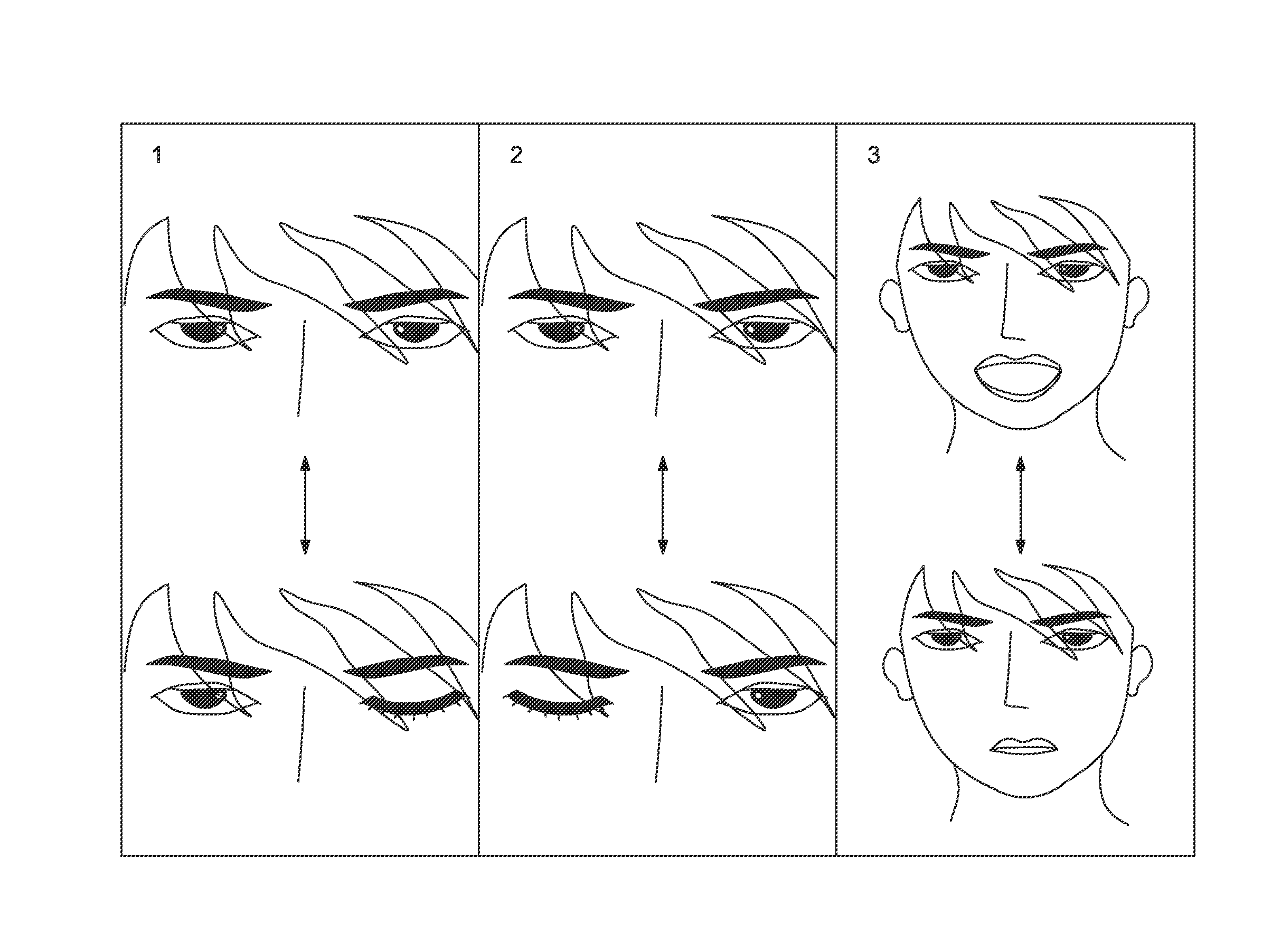

User interface apparatus and method using head gesture

InactiveUS20090153366A1Easy to useLow costCharacter and pattern recognitionElectronic switchingHuman–computer interactionUser interface

Disclosed is a user interface apparatus and method using a head gesture. A user interface apparatus and method according to an embodiment of the invention matches a specific head gesture of a user with a specific command and stores a matched result, receives image data of a head gesture of the user and determines whether the received image data corresponds to the specific command, and provides a determined command to a terminal body. As a result, without being affected by ambient noises and causing noise damages to peoples around a terminal, the utilization of the terminal is not inconvenient to the user even in the case where the user can use only one hand.

Owner:ELECTRONICS & TELECOMM RES INST

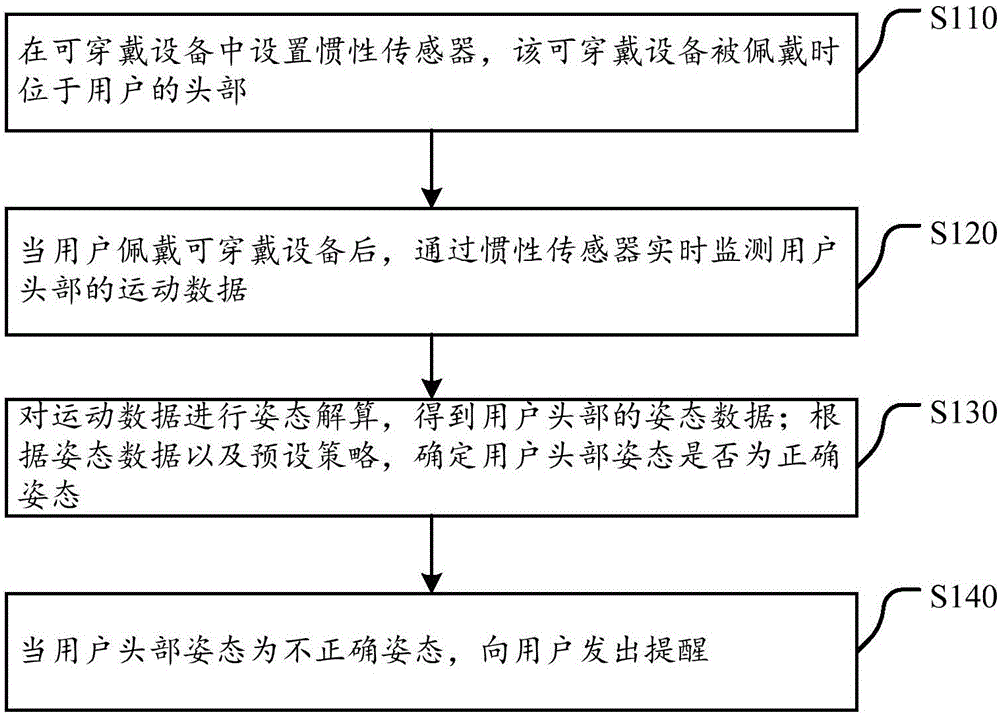

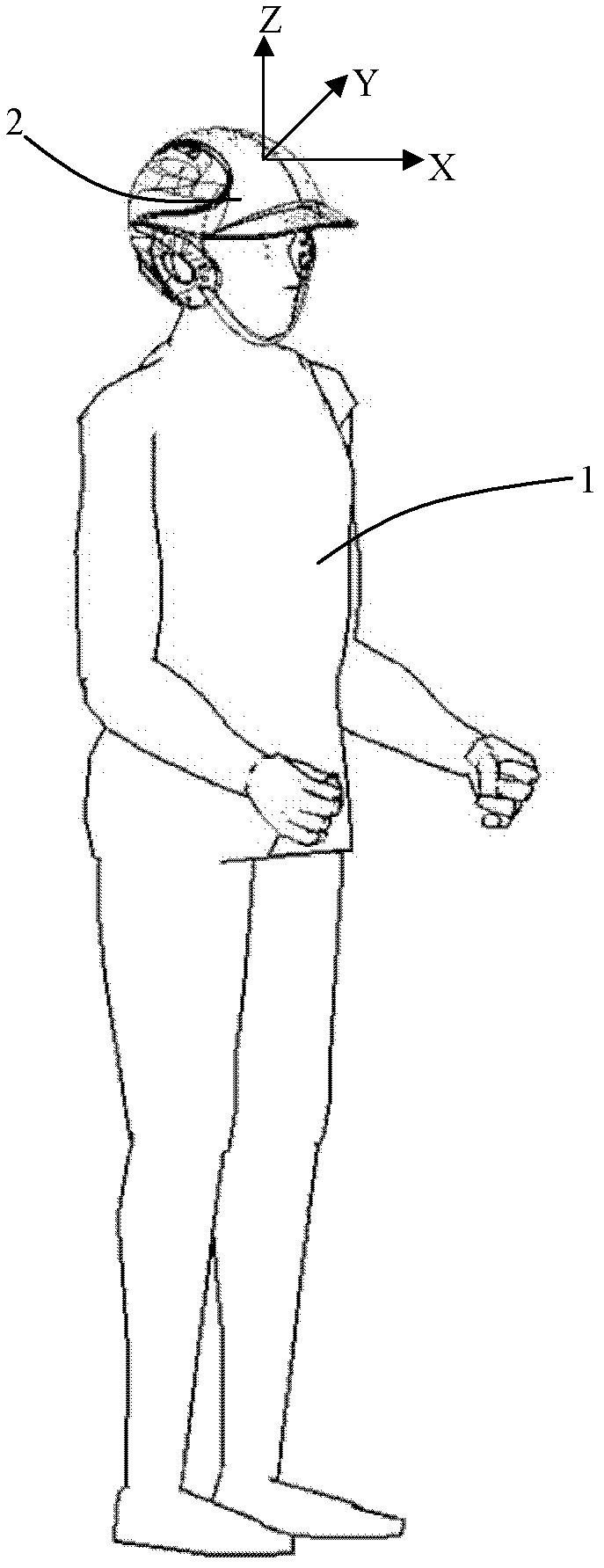

User posture monitoring method and wearable equipment

PendingCN106510719AMonitor exercise dataSports data is reliablePhysical therapies and activitiesInertial sensorsHead movementsComputer science

The invention discloses a user posture monitoring method and wearable equipment. The method comprises the following steps: setting an inertia sensor in the wearable equipment which is located at a user head when worn; when a user wears the wearable equipment, monitoring motion data of the user head through the inertia sensor in real time; performing posture calculation on the motion data to obtain posture data of the user head; determining whether a user head posture is correct according to the posture data and a preset strategy; and when the user head posture is incorrect, reminding the user. According to the scheme, the wearable equipment is located at the user head; the inertia sensor arranged in the wearable equipment and the user head can be kept relatively static, so that the motion data of the user head can be accurately monitored, and credible posture data of the user head can be acquired; by analysis of the credible posture data, effective monitoring of the user head posture is realized; and when the posture is incorrect, a warning can be sent to remind the user to pay attention to the health of the cervical vertebra.

Owner:GOERTEK INC

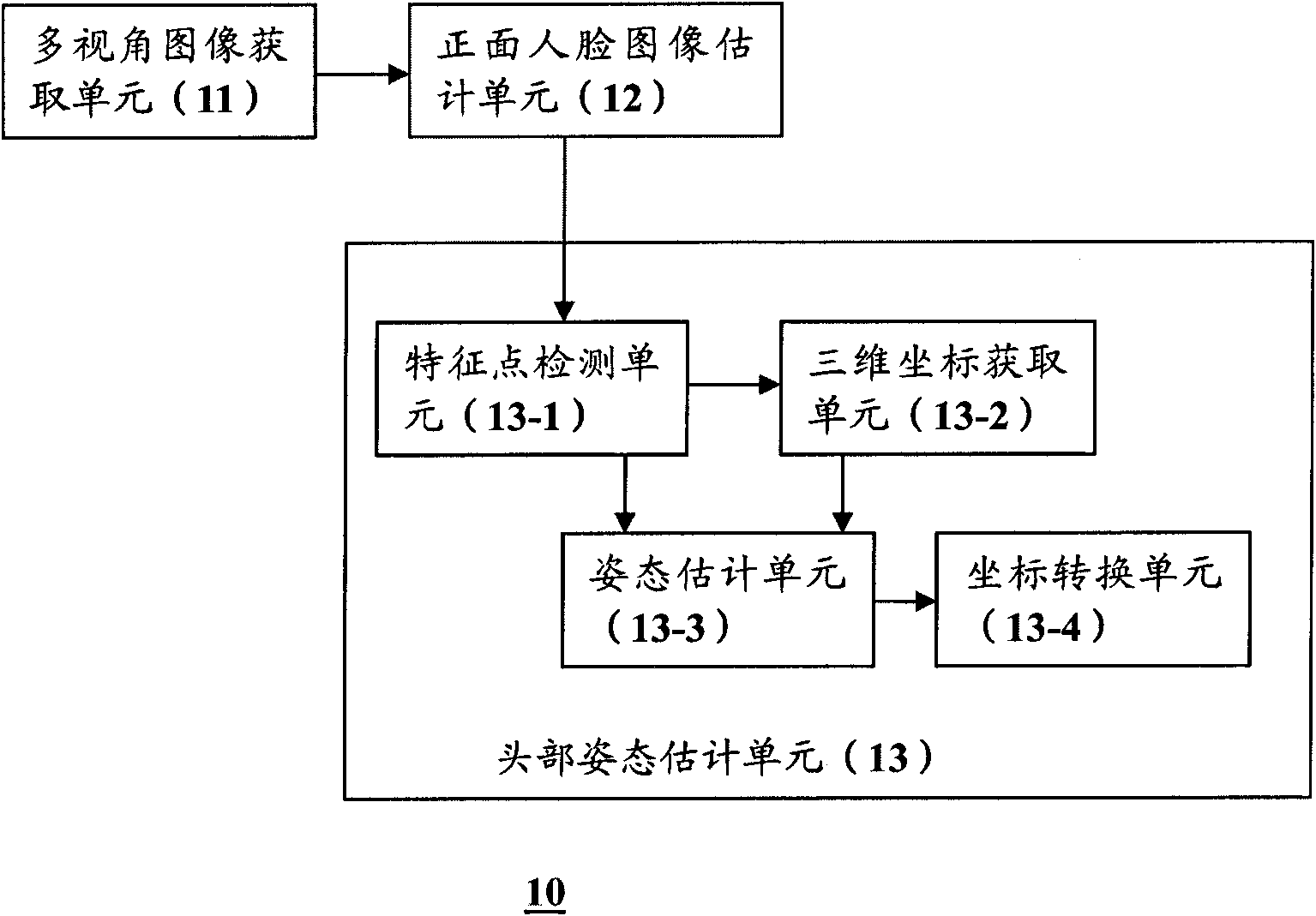

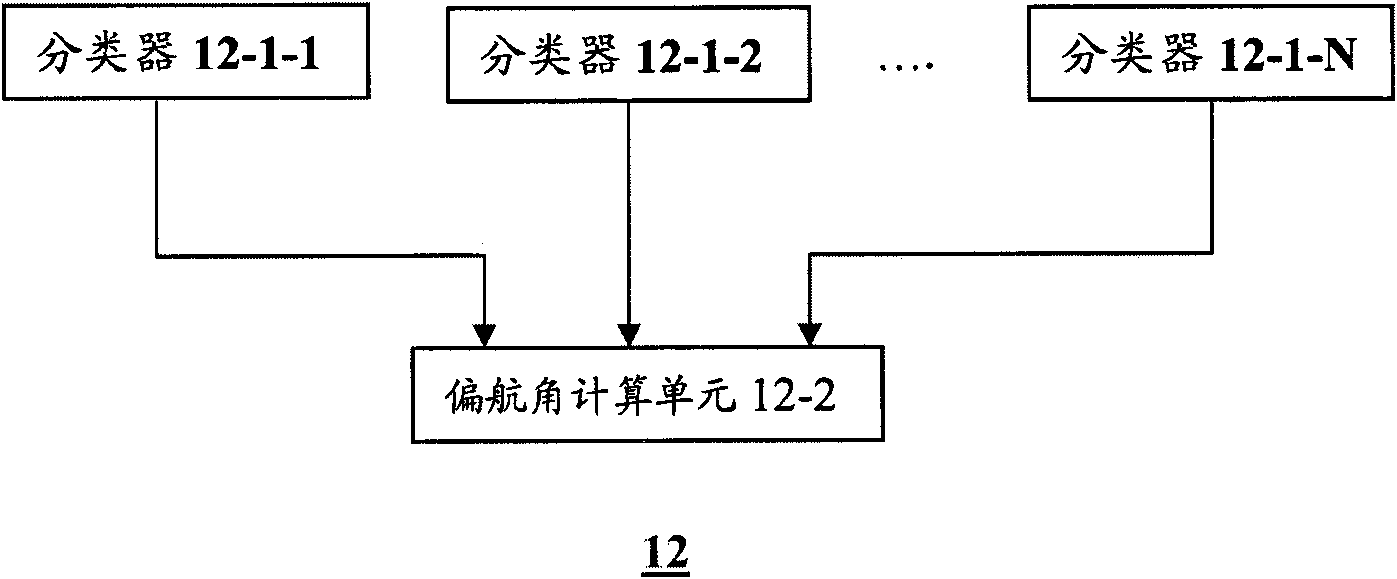

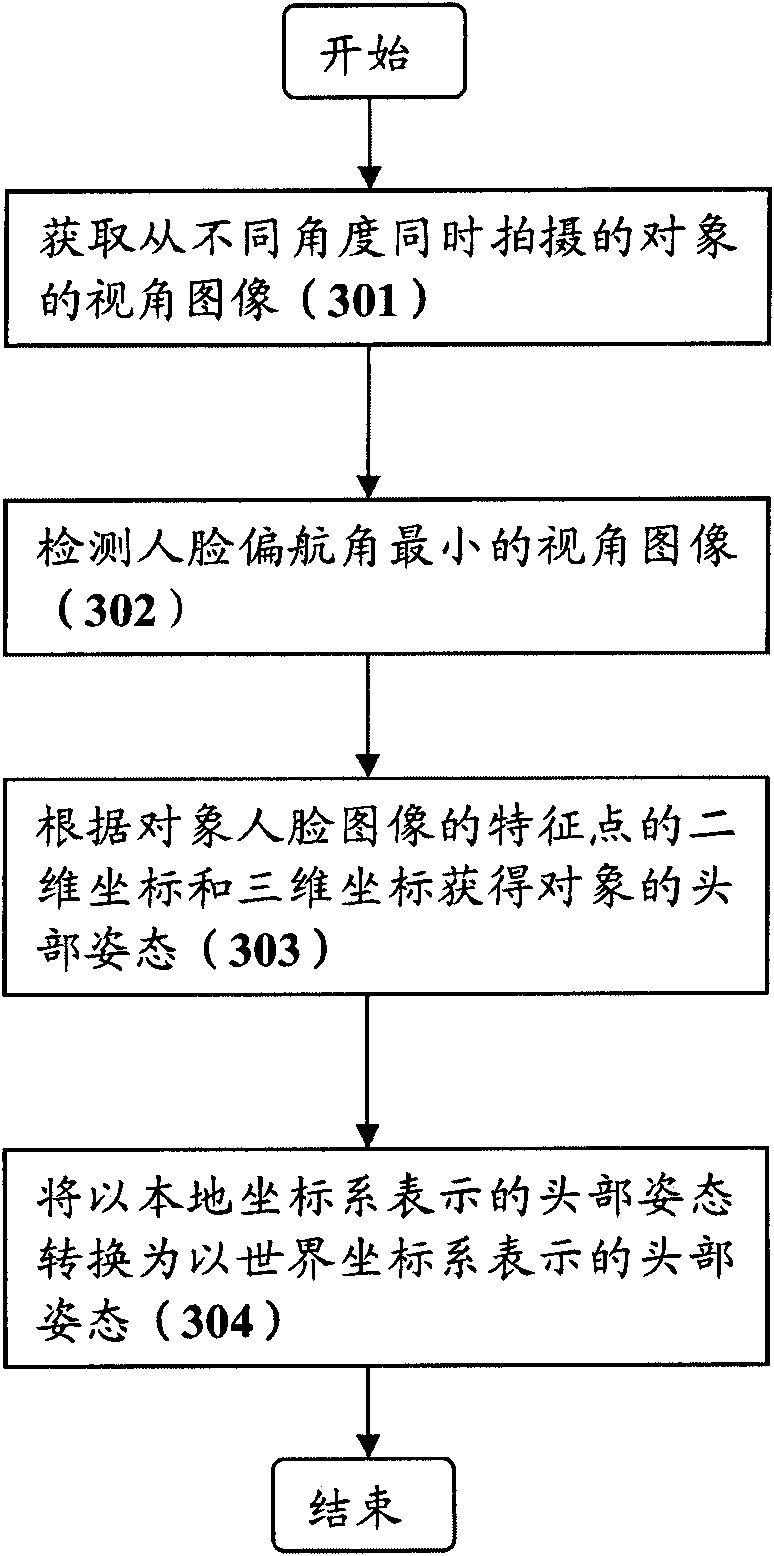

Equipment and method for detecting head posture

InactiveCN102156537AAccurate detectionEasy to useInput/output for user-computer interactionGraph readingImage estimationAngular degrees

The invention provides equipment and a method for detecting a head posture. The equipment for detecting the head posture comprises a multi-visual-angle image acquiring unit, a front human face image estimation unit, a head posture estimation unit and a coordinate conversion unit, wherein the multi-visual-angle image acquiring unit is used for acquiring visual angle images, which is shot from different angles, of an object; the front human face image estimation unit detects a visual angle image of a human face having a minimum yaw angle from the acquired visual angle images; the head posture estimation unit acquires a three-dimensional coordinate of a predetermined human face characteristic point from a human face three-dimensional model, detects the predetermined human face characteristic point and a two-dimensional coordinate of the predetermined human face characteristic point from the detected visual angle image, and calculates a first head posture relative to image capturing equipment for shooting the visual angle image of the human face having the minimum yaw angle according to the two-dimensional coordinate and the three-dimensional coordinate of the predetermined human face characteristic point; and the coordinate conversion unit converts the first head posture into a second head posture represented by a world coordinate system according to the world coordinate system coordinates of the image capturing equipment.

Owner:SAMSUNG ELECTRONICS CO LTD +1

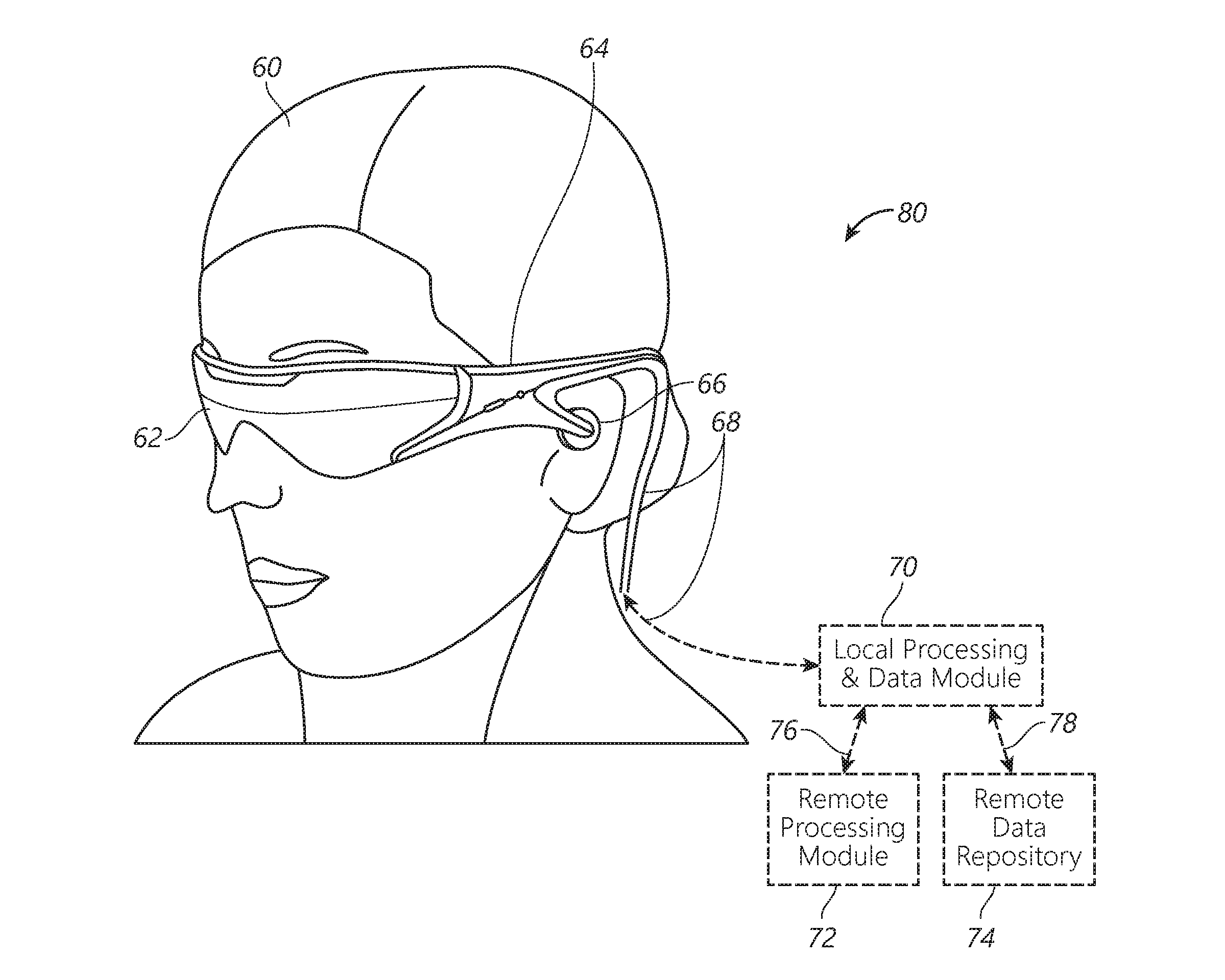

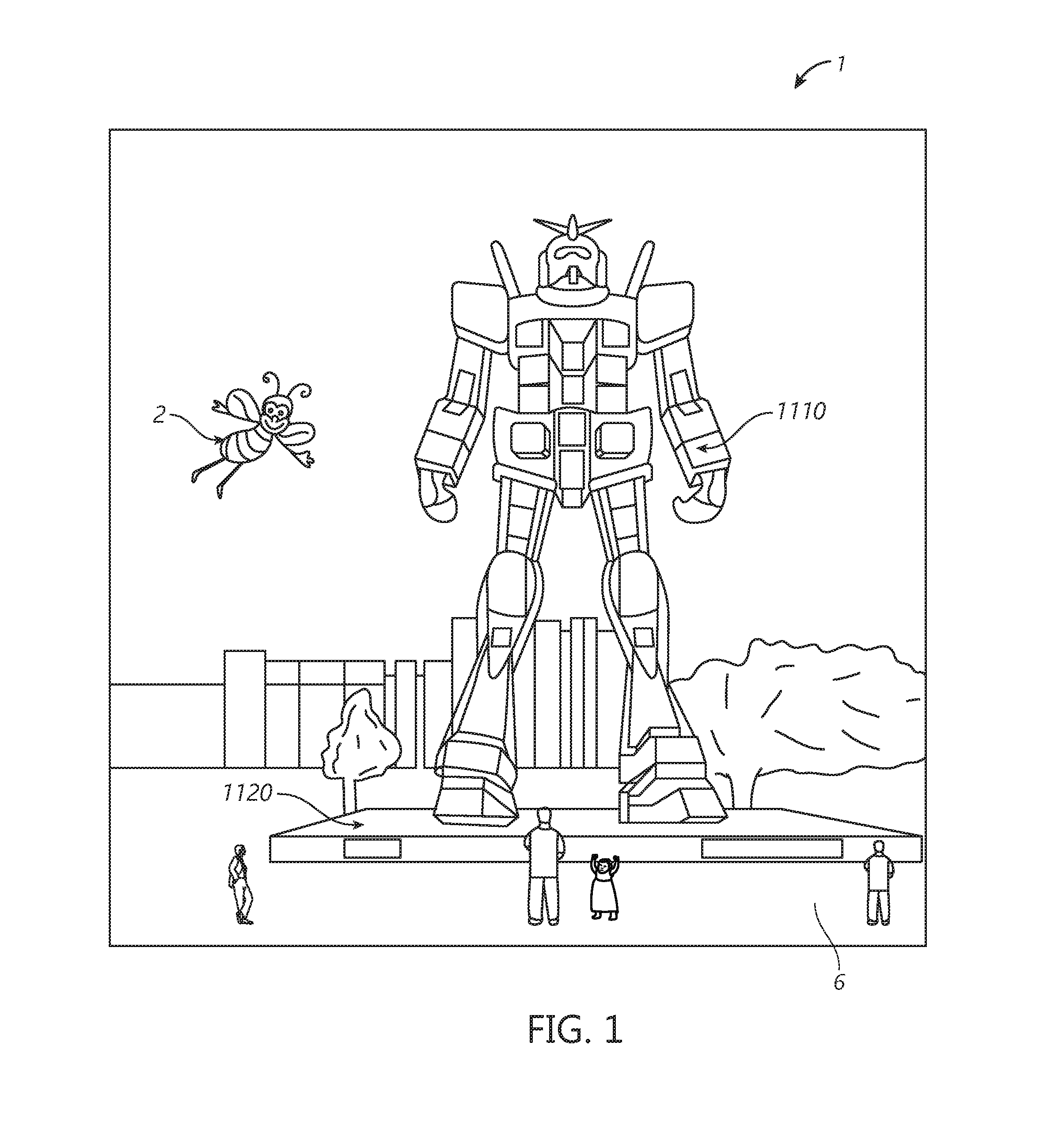

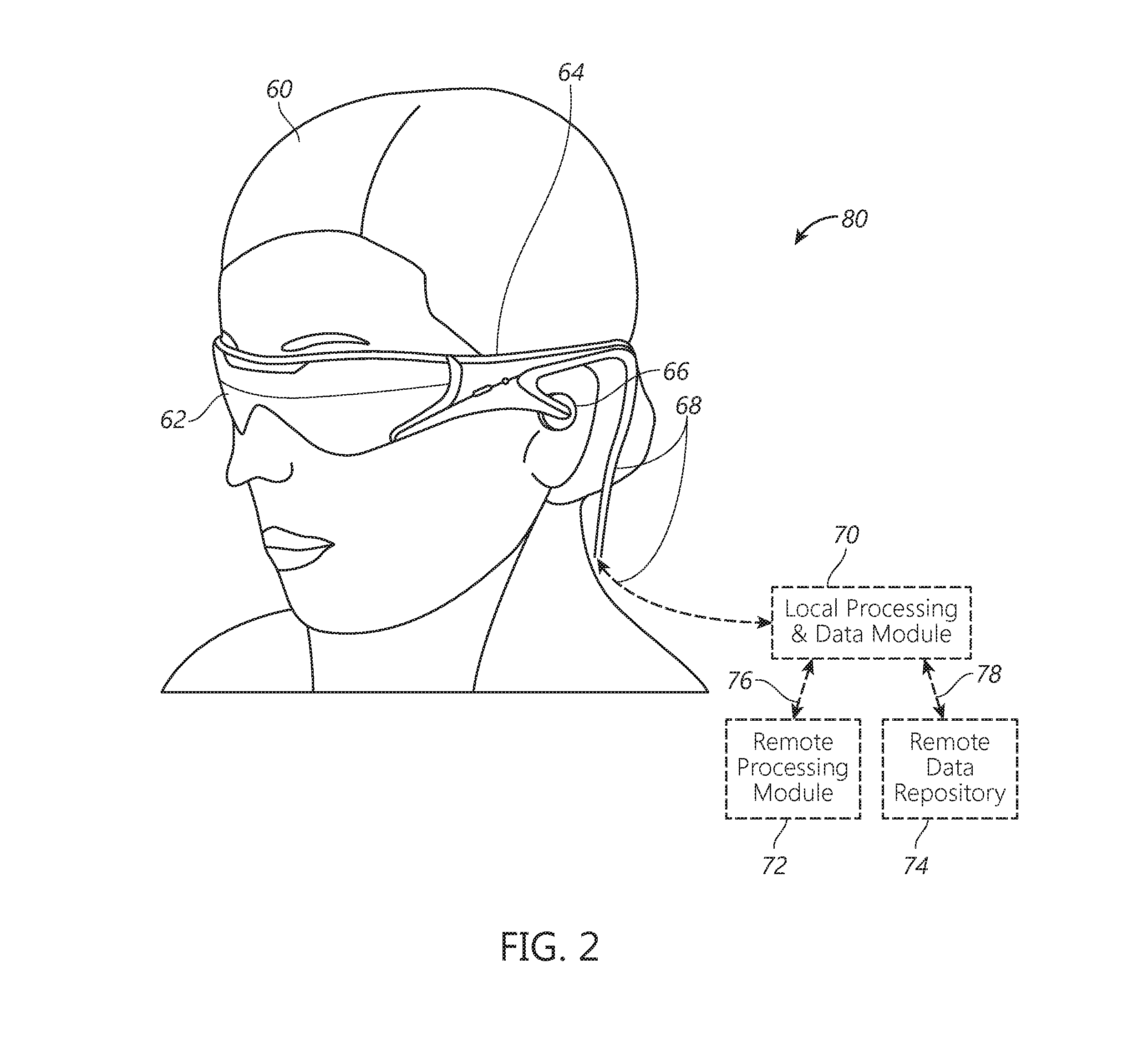

Virtual and augmented reality systems and methods

ActiveUS20170053450A1Reduce power consumptionReduce redundant informationImage analysisCharacter and pattern recognitionDisplay deviceDepth plane

A virtual or augmented reality display system that controls a display using control information included with the virtual or augmented reality imagery that is intended to be shown on the display. The control information can be used to specify one of multiple possible display depth planes. The control information can also specify pixel shifts within a given depth plane or between depth planes. The system can also enhance head pose measurements from a sensor by using gain factors which vary based upon the user's head pose position within a physiological range of movement.

Owner:MAGIC LEAP

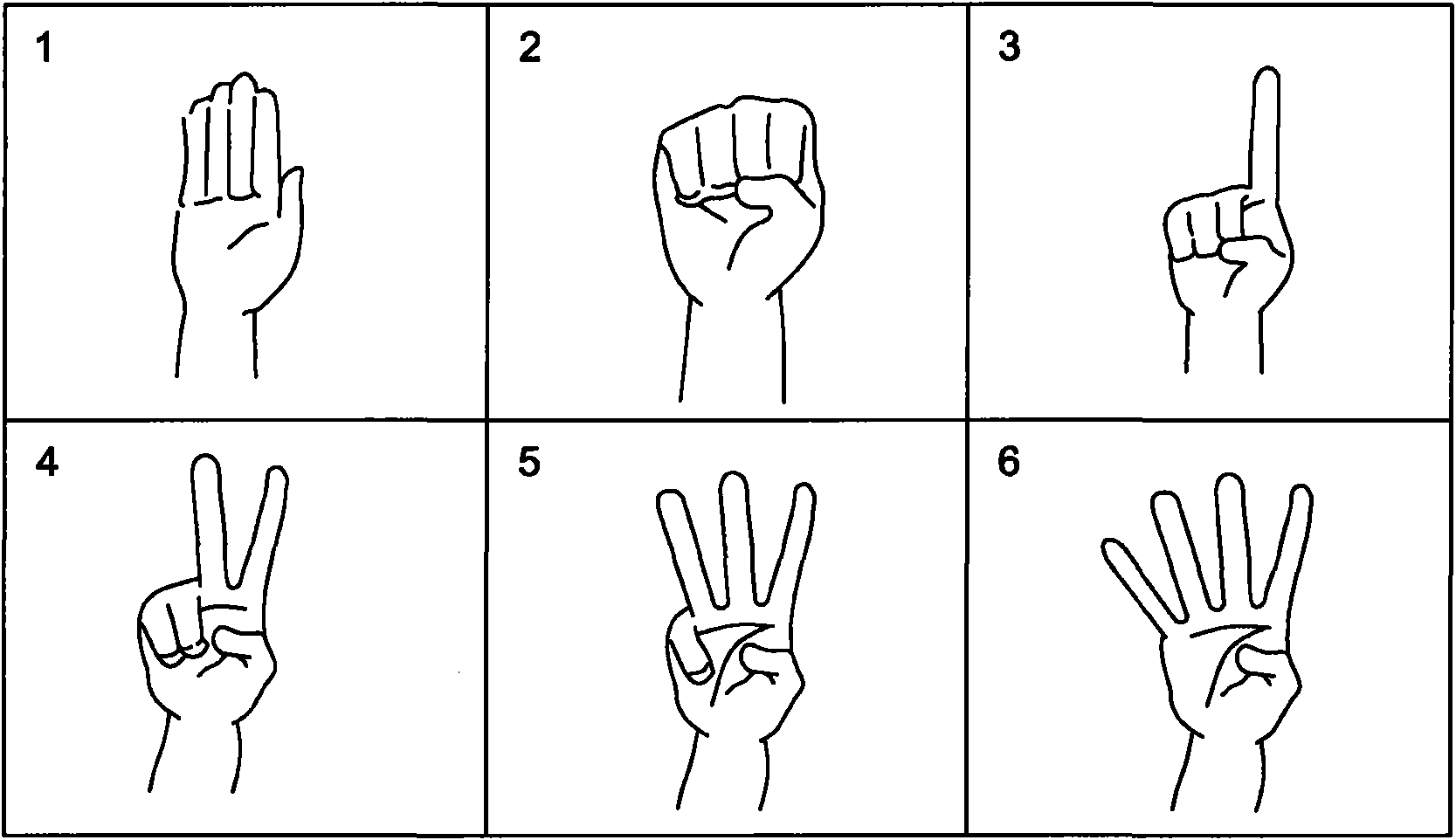

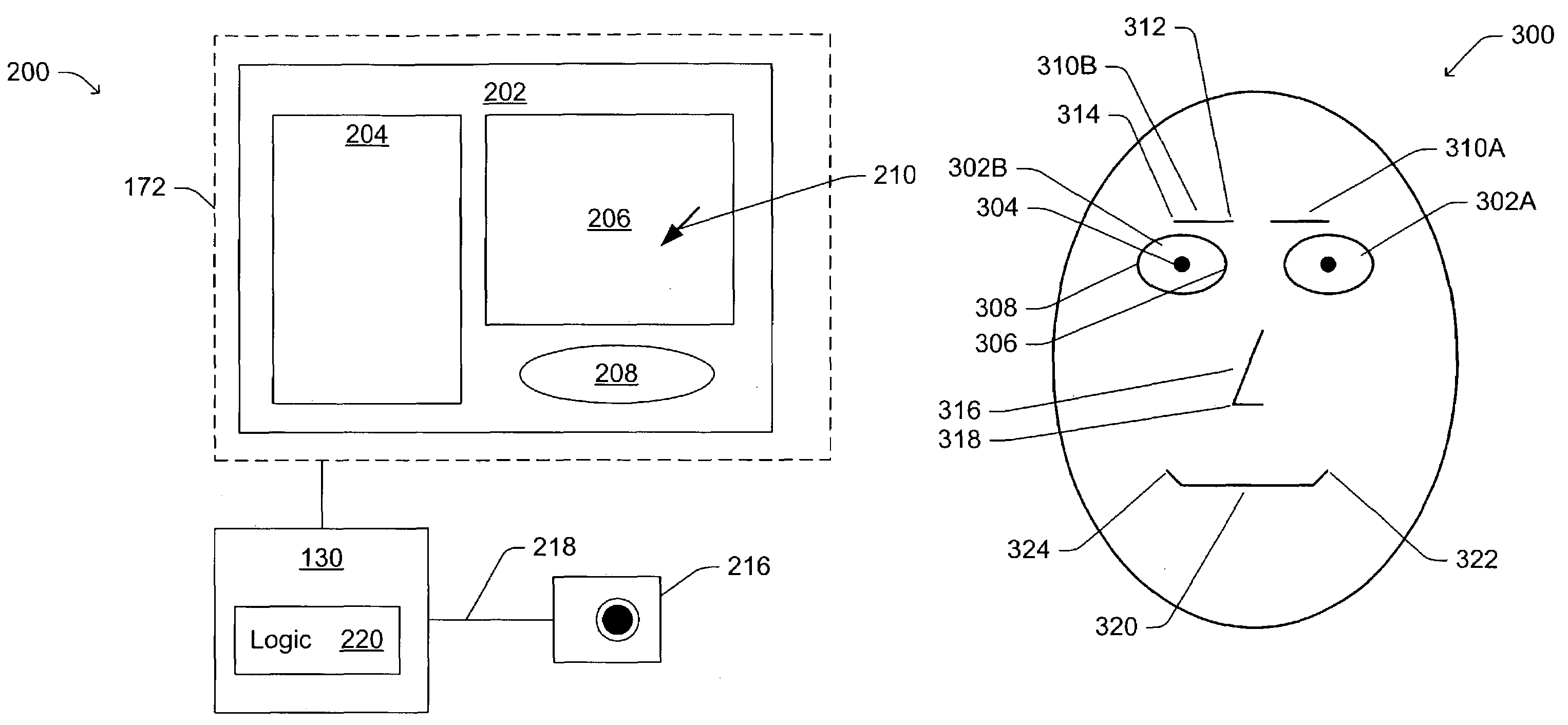

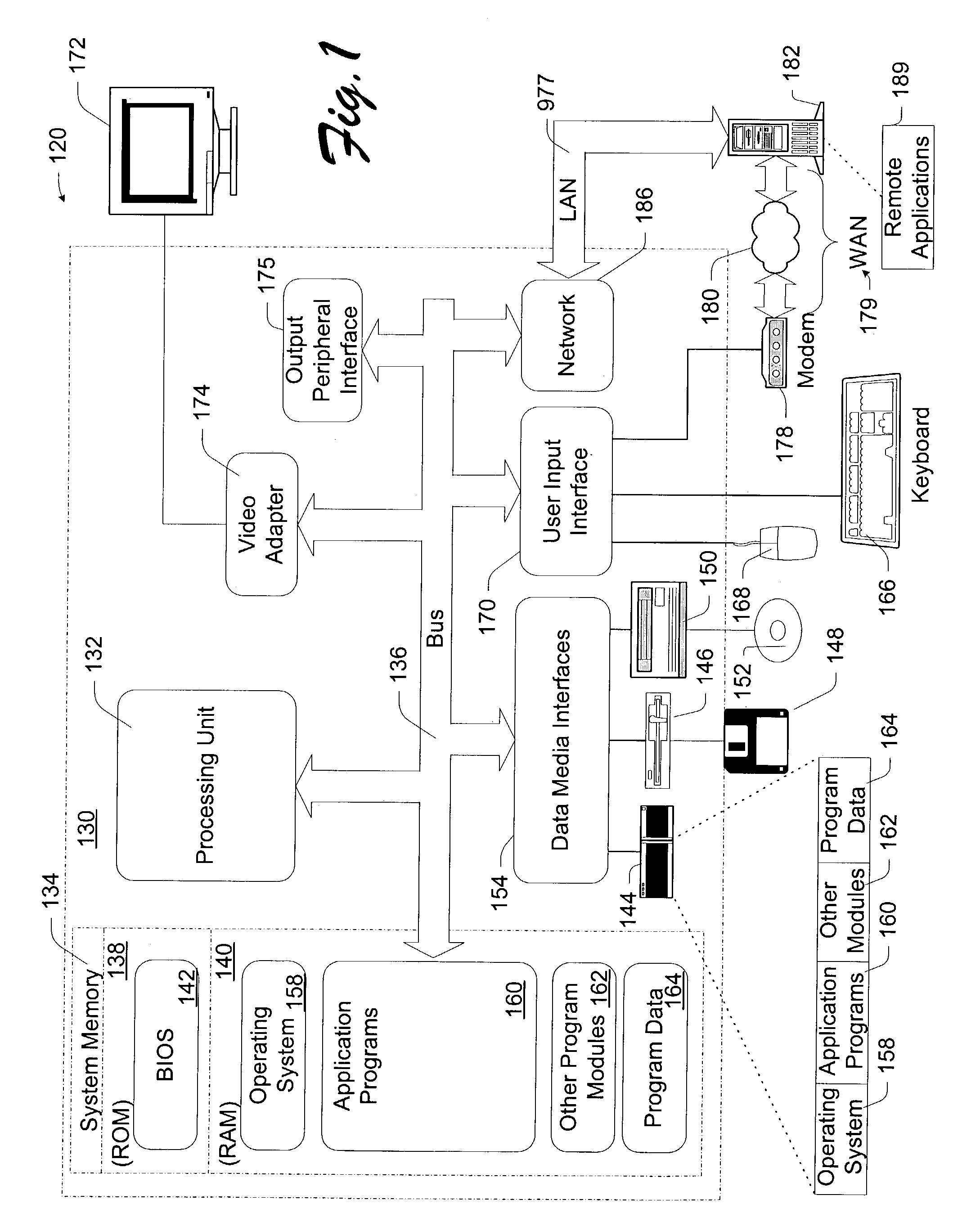

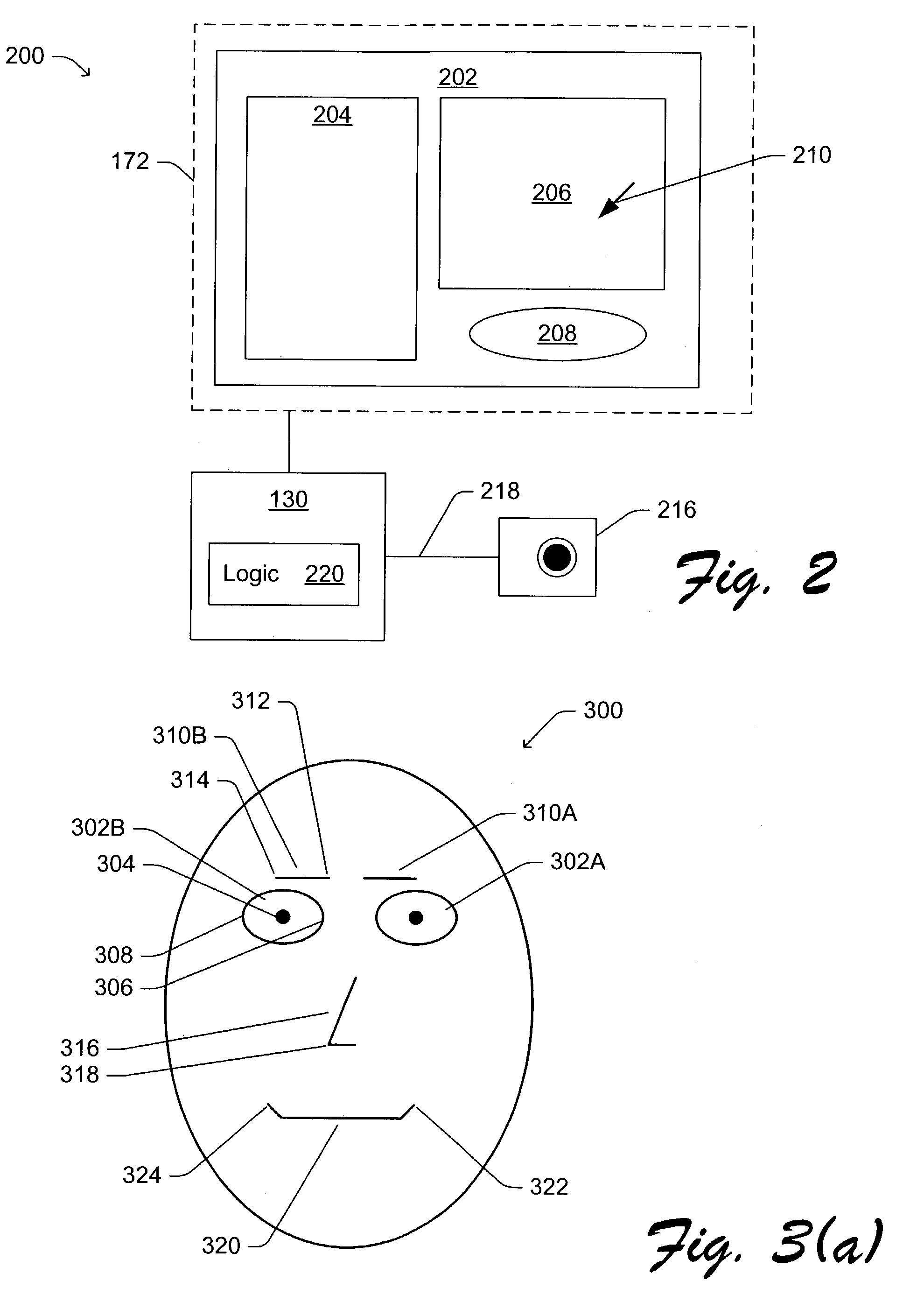

System and method for generating control instruction by using image pickup device to recognize users posture

InactiveUS20110158546A1Character and pattern recognitionInput/output processes for data processingControl electronicsFacial expression

A system and a method are provided for generating a control instruction by using an image pickup device to recognize a user's posture. An electronic device is controlled according to different composite postures. Each composite posture is a combination of the hand posture, the head posture and the facial expression change of the user. Each composite posture indicates a corresponding control instruction. Since the composite posture is more complex than peoples' habitual actions, the possibility of causing erroneous control instruction from unintentional habitual actions of the user will be minimized or eliminated.

Owner:PRIMAX ELECTRONICS LTD

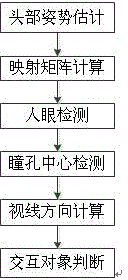

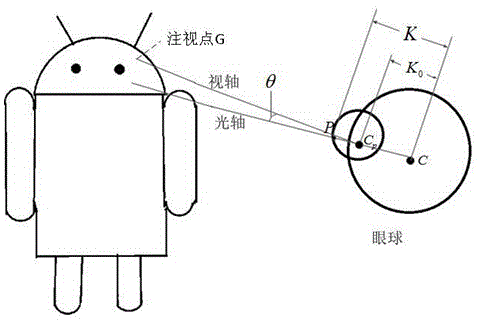

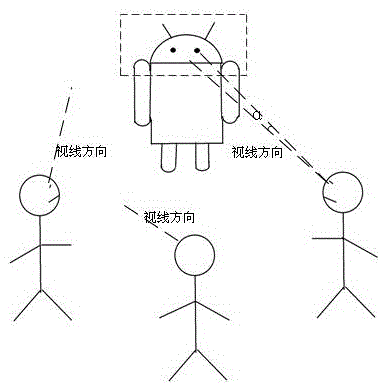

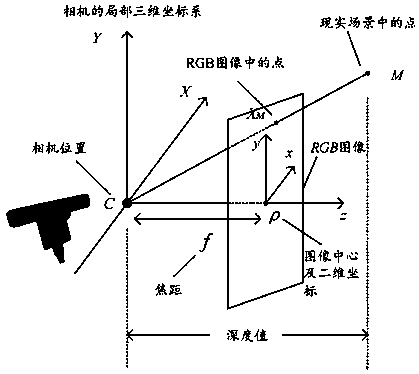

3D (three-dimensional) sight direction estimation method for robot interaction object detection

ActiveCN104951808ASimple hardwareEasy to implementAquisition of 3D object measurementsHough transformEstimation methods

The invention discloses a 3D (three-dimensional) sight direction estimation method for robot interaction object detection. The method comprises steps as follows: S1, head posture estimation; S2, mapping matrix calculation; S3, human eye detection; S4, pupil center detection; S5, sight direction calculation; S6, interaction object judgment. According to the 3D (three-dimensional) sight direction estimation method for robot interaction objection detection, an RGBD (red, green, blue and depth) sensor is used for head posture estimation and applied to a robot, and a system only adopts the RGBD sensor, does not require other sensors and has the characteristics of simple hardware and easiness in use. A training strong classifier is used for human eye detection and is simple to use and good in detection and tracking effect; a projecting integral method, a Hough transformation method and perspective correction are adopted when the pupil center is detected, and the obtained pupil center can be more accurate.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

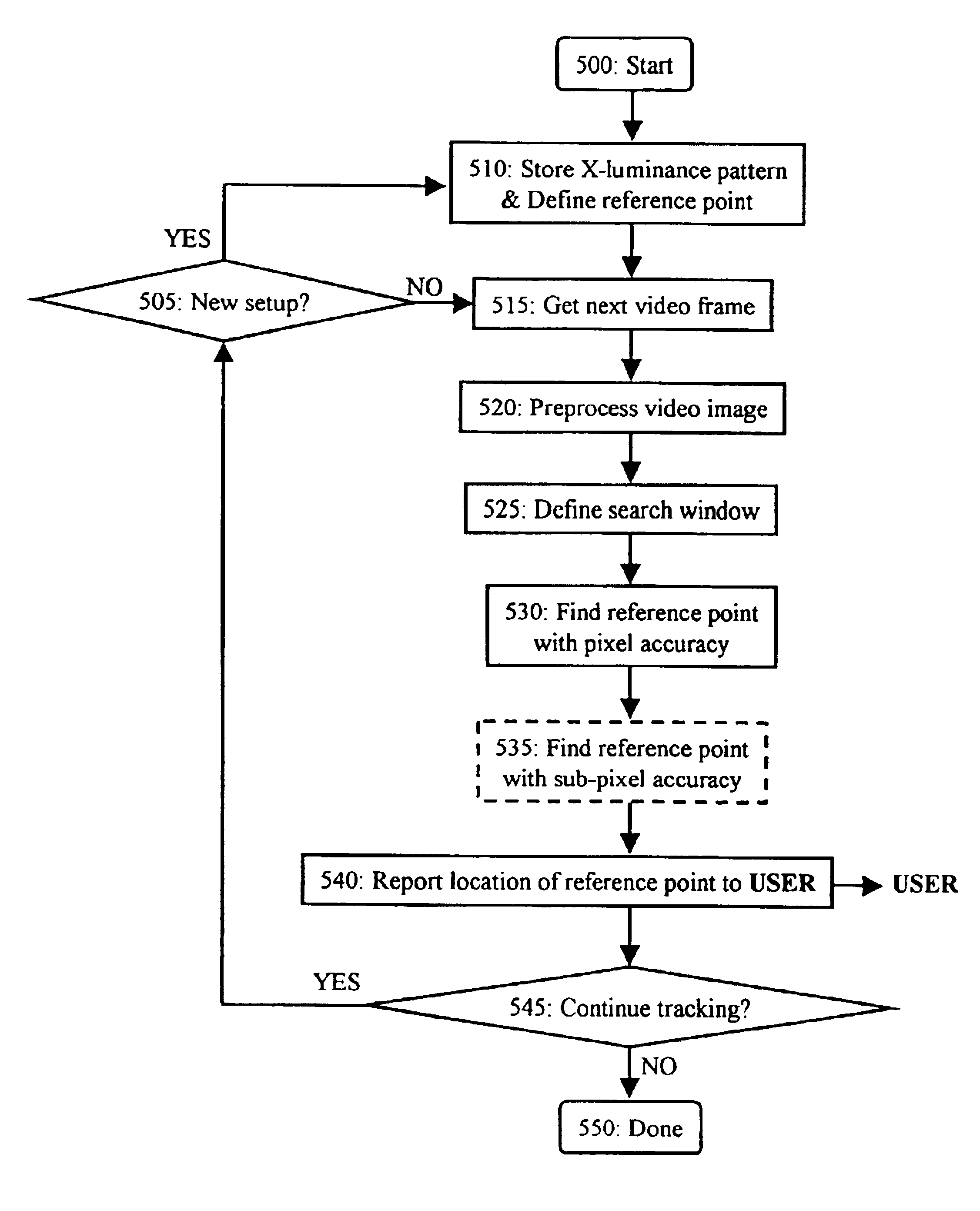

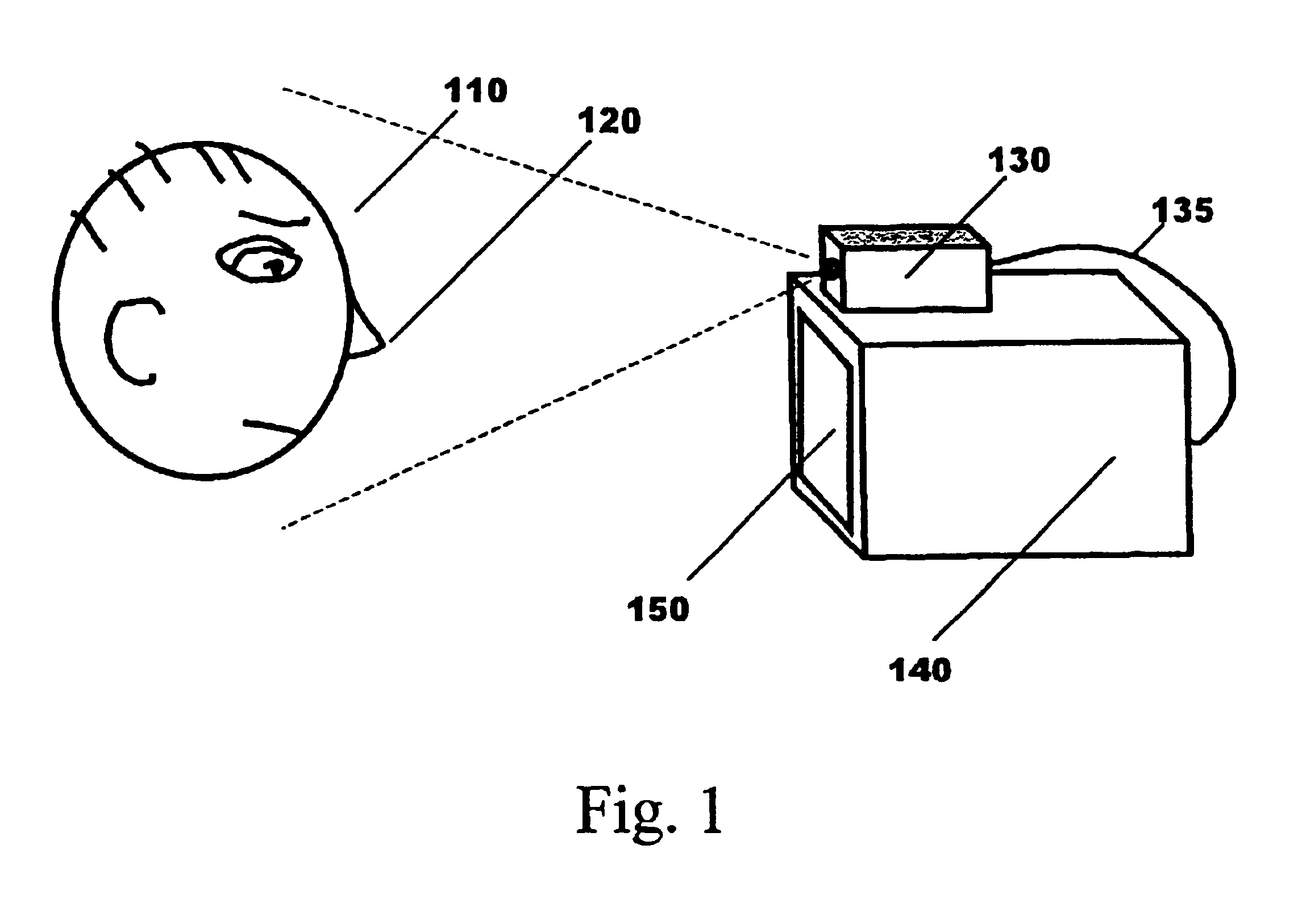

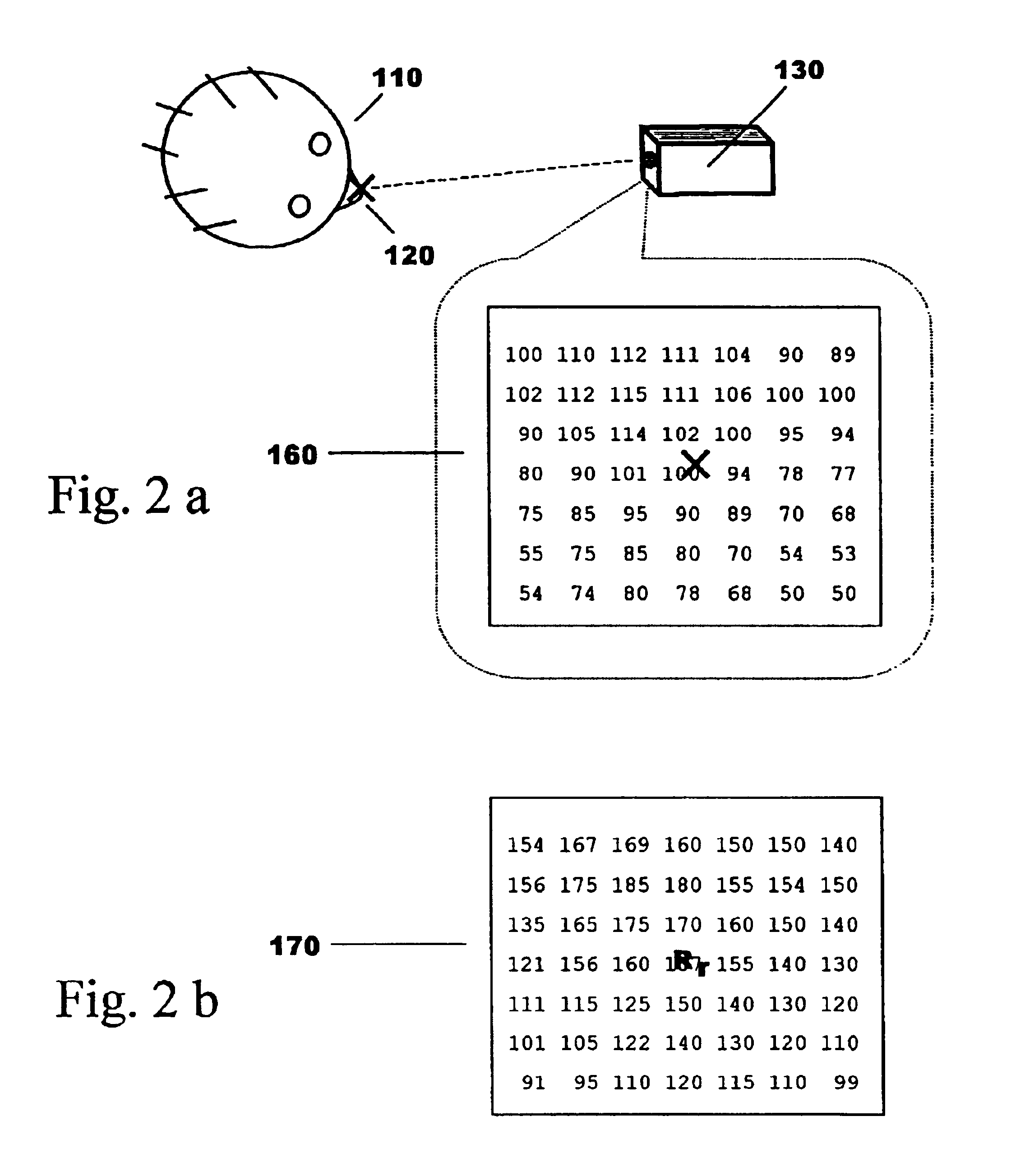

Method for video-based nose location tracking and hands-free computer input devices based thereon

InactiveUS6925122B2Precise and smooth location trackingInput/output for user-computer interactionTelevision system detailsHands-free computingNose

A method for tracking the location of the tip of the nose with a video camera, and a hands-free computer input device based thereon have been described. According to this invention, a convex shape such as the shape of the tip of the nose, is a robust object suitable for precise and smooth location tracking purposes. The disclosed method and apparatus are substantially invariant to changes in head pose, user preferred seating distances and brightness of the lighting conditions. The location of the nose can be tracked with pixel and sub-pixel accuracy.

Owner:NAT RES COUNCIL OF CANADA

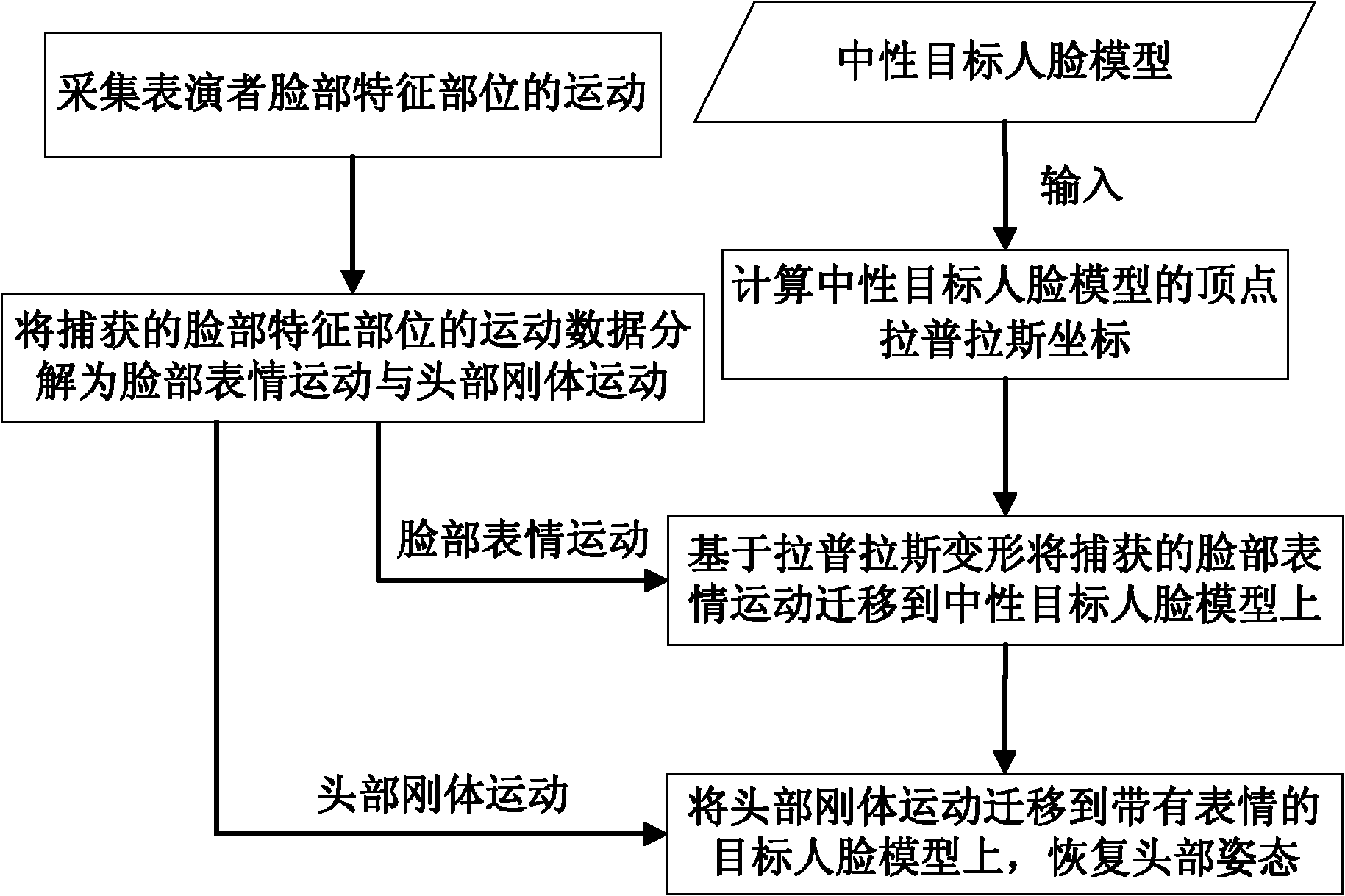

Data driving face expression synthesis method based on Laplace transformation

InactiveCN101944238AAchieve migrationClear algorithmAnimationLaplacian coordinatesPattern recognition

The invention discloses a data driving face expression synthesis method based on Laplace transformation, comprising the following steps of: resolving face motion data captured after the face motion of a performer is acquired into a face expression motion and a head rigid motion; calculating the Laplace coordinates of each peak in a loaded neutral target face model; moving the face expression motion to the neutral target face model to make the neutral target face model have consistent expressions with the performer; and moving the head rigid motion to the target face model having the consistent expressions with the performer to make the final target face model have consistent face expressions and head postures with the performer. Based on a Laplace transformation technology being capable of keeping the original detail features of the model, the invention keeps the existing detail features on the target face model while realizing the moving of the face expressions, has the advantages of definite arithmetic, friendly interface and robust result and can be conveniently applied to the computer game field, the online chat field and other man-machine interaction fields.

Owner:ZHEJIANG UNIV

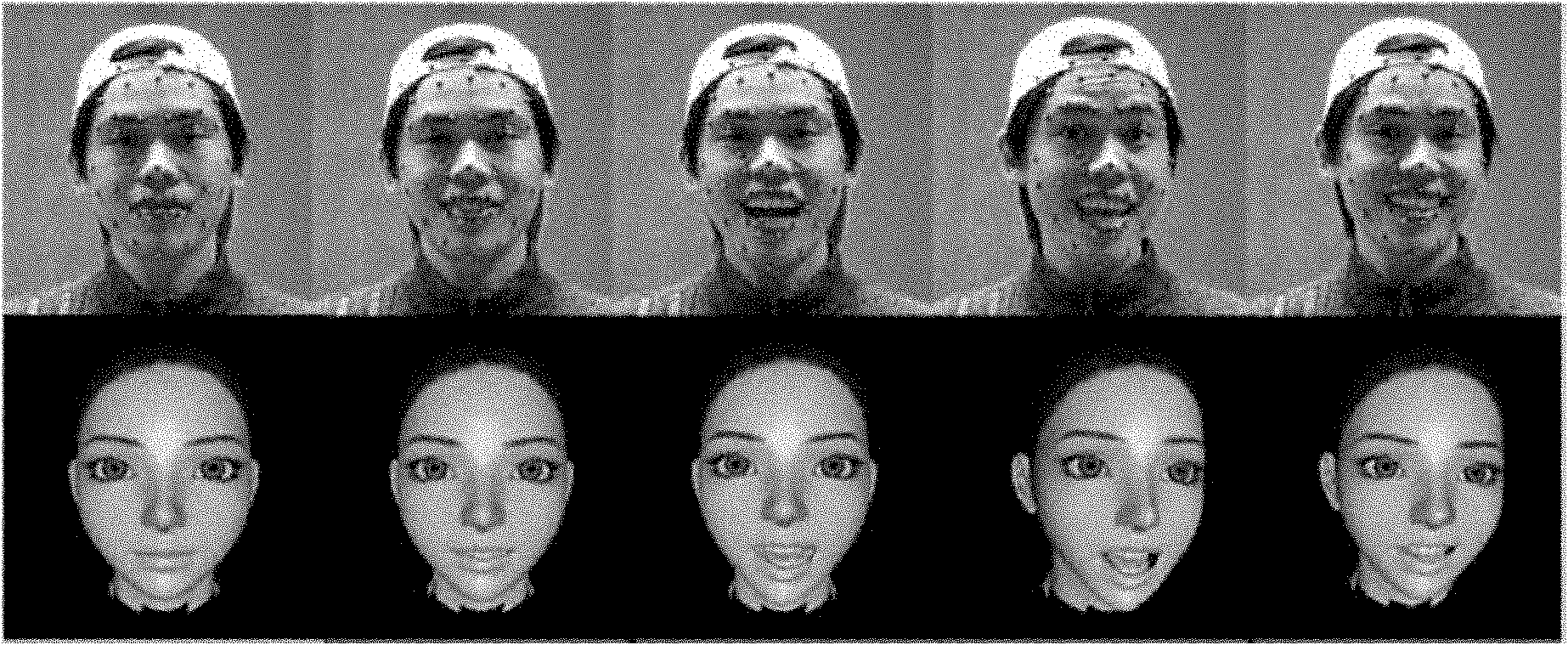

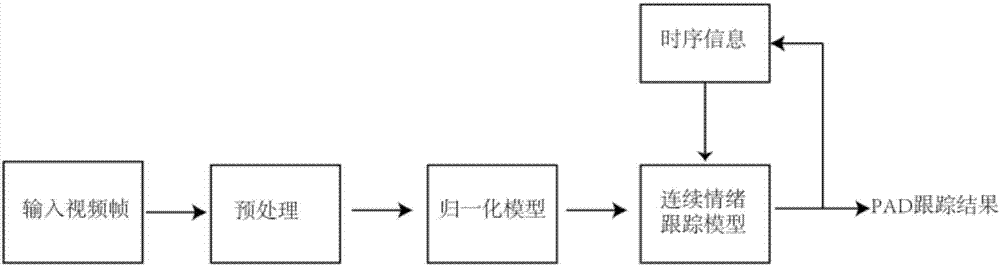

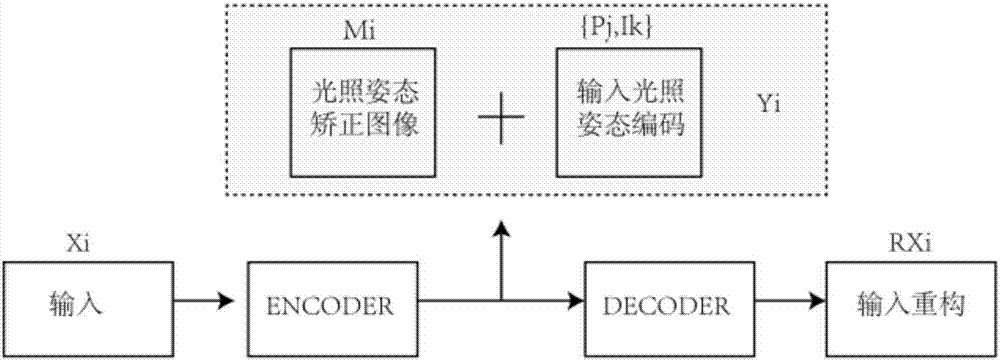

Robust continuous emotion tracking method based on deep learning

ActiveCN106919903AImprove accuracyImprove stabilityNeural architecturesAcquiring/recognising facial featuresPattern recognitionNormalization model

The invention relates to a robust continuous emotion tracking method based on deep learning. The method comprises the steps that (1) a training sample is constructed, and a normalization model and a continuous emotion tracking model are trained; (2) an expression image is acquired and preprocessed, the expression image obtained after being preprocessed is sent to the trained normalization model, and an expression picture with standard illumination and a standard head posture is obtained; (3) a standard image obtained after normalization is used as input of the continuous emotion tracking model, expression-related features are automatically extracted and input through the continuous emotion tracking model, and a tracking result of a current frame is generated according to time sequence information; and the steps (2) and (3) are repeated till a whole continuous emotion tracking process is completed. The method based on deep learning is adopted to construct an emotion recognition model so as to realize continuous emotion tracking and prediction, the method has robustness on illumination and posture changes, and the time sequence information of expressions can be fully utilized to track the emotion of a current user more stably based on historical emotion features.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

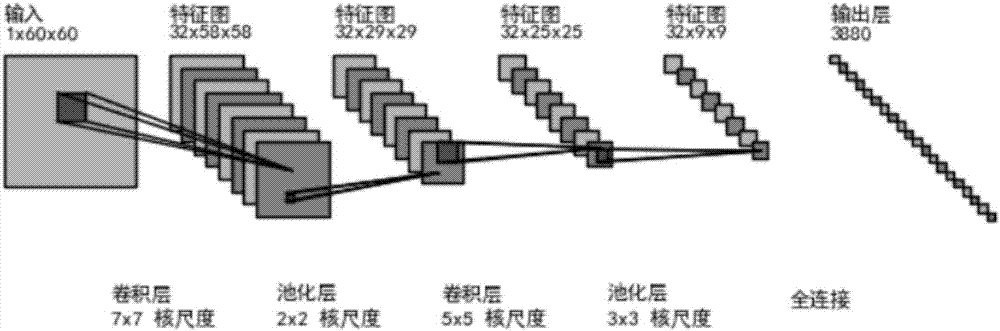

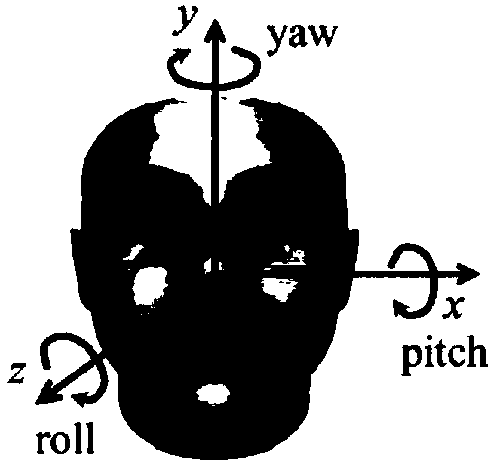

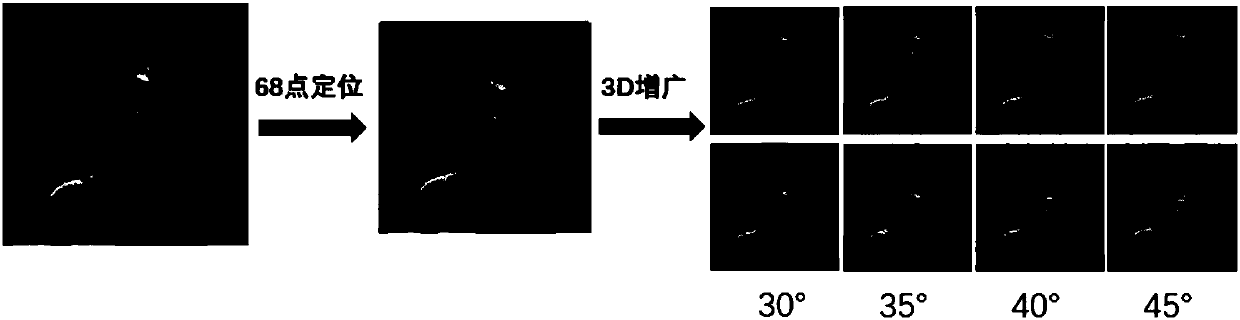

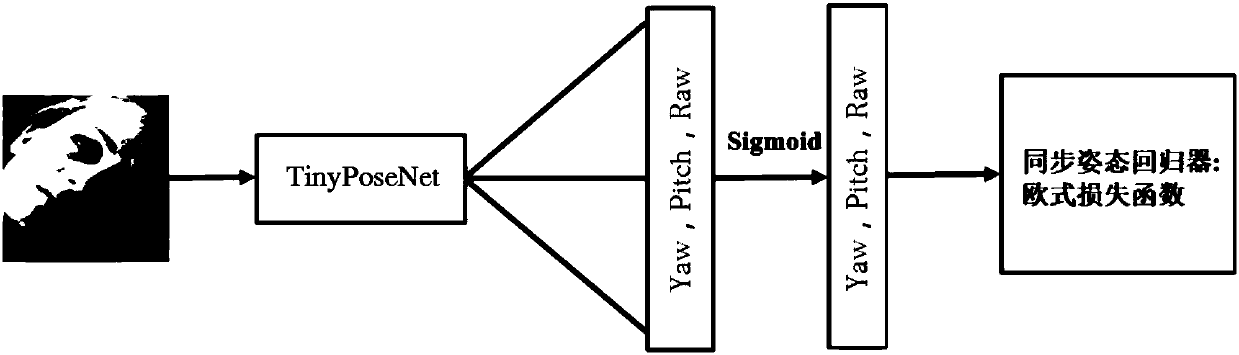

Head attitude estimation method based on depth learning

The invention discloses a head attitude estimation method based on depth learning. The method comprises a step of acquiring an image data set for training and carrying out information labeling of a face head deflection angle on image data, a step of carrying out sample expanding and preprocessing on the data set and cutting out a face part, a step of zooming all face images which are subjected topreprocessing into resolutions of 90*90 pixels, a step of taking the above data set as a training sample and carrying out network training by using a depth network TinyPoseNet, and a step of extracting a trained TinyPoseNet network model, obtaining a cut face image of an image which needs to be tested according to the above steps, cutting an area of 80*80 pixels in the middle of the image, carrying out forward calculation of the TinyPoseNet network model and thus estimating an angle of face head posture deflection in the tested image. The method has the advantages of an ultra-small calculationamount, strong robustness, high accuracy, fast calculation, simple operation and strong universality.

Owner:SEETATECH BEIJING TECH CO LTD

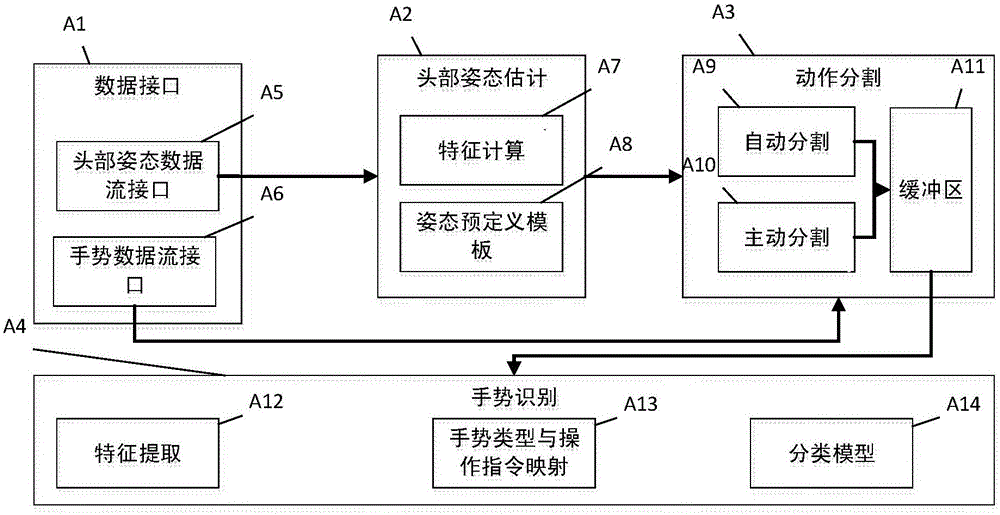

Gesture recognition system and method adopting action segmentation

ActiveCN105809144AHigh precisionImprove efficiencyInput/output for user-computer interactionCharacter and pattern recognitionHead movementsFrame sequence

The invention provides a gesture recognition system and method adopting action segmentation and relates to the field of machine vision and man-machine interaction.The gesture recognition method comprises the following steps that firstly, head movements are detected, and head posture changes are calculated; then, a segmentation signal is sent according to posture estimation information, gesture segmentation beginning and end points are judged, if the signal indicates initiative gesture action segmentation, gesture video frame sequences are captured within a time interval of gesture execution, and preprocessing and characteristic extraction are conducted on gesture frame images; if the signal indicates automatic action segmentation, the video frame sequences are acquired in real time, segmentation points are automatically analyzed by analyzing the movement change rule of adjacent gestures for action segmentation, then vision-unrelated characteristics are extracted from segmented effective element gesture sequences, and a type result is obtained by adopting a gesture recognition algorithm for eliminating spatial and temporal disparities.The gesture recognition method greatly reduces redundant information of continuous gestures and the calculation expenditures of the recognition algorithm and improves the gesture recognition accuracy and real-timeliness.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Real time eye tracking for human computer interaction

ActiveUS9311527B1Improve interactivityMore intelligent behaviorInput/output for user-computer interactionImage enhancementLarge screenVisual axis

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

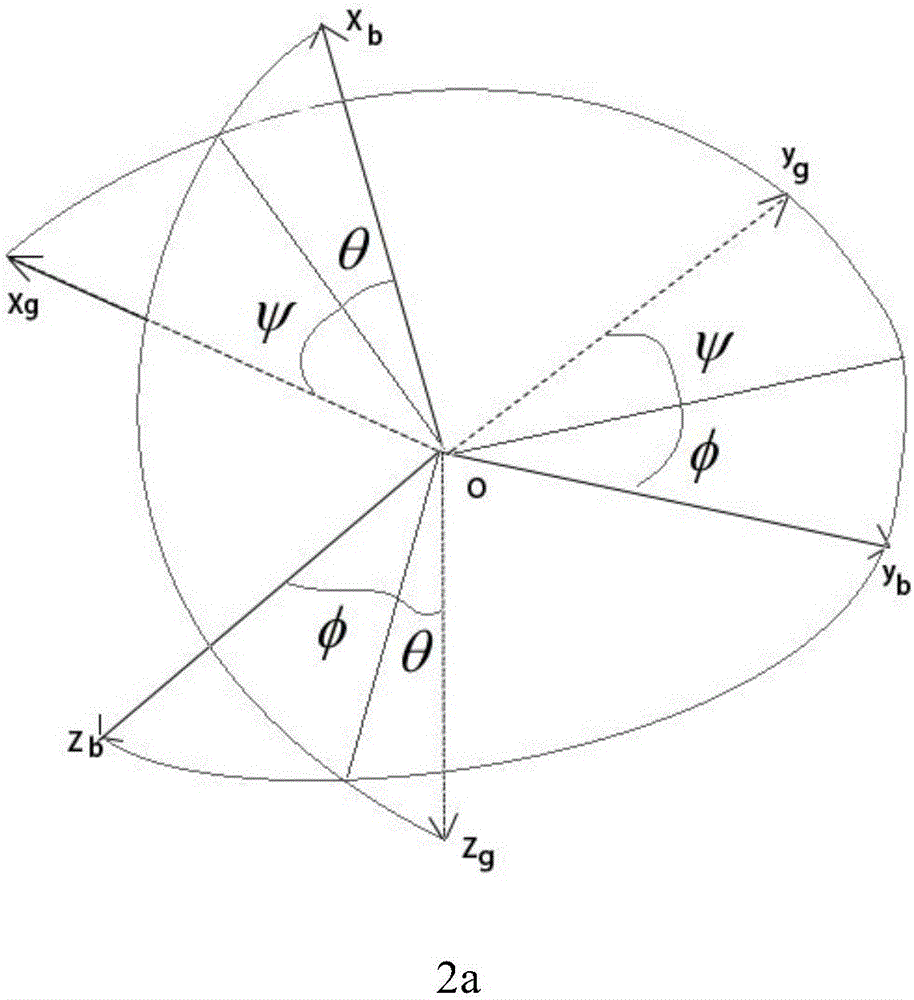

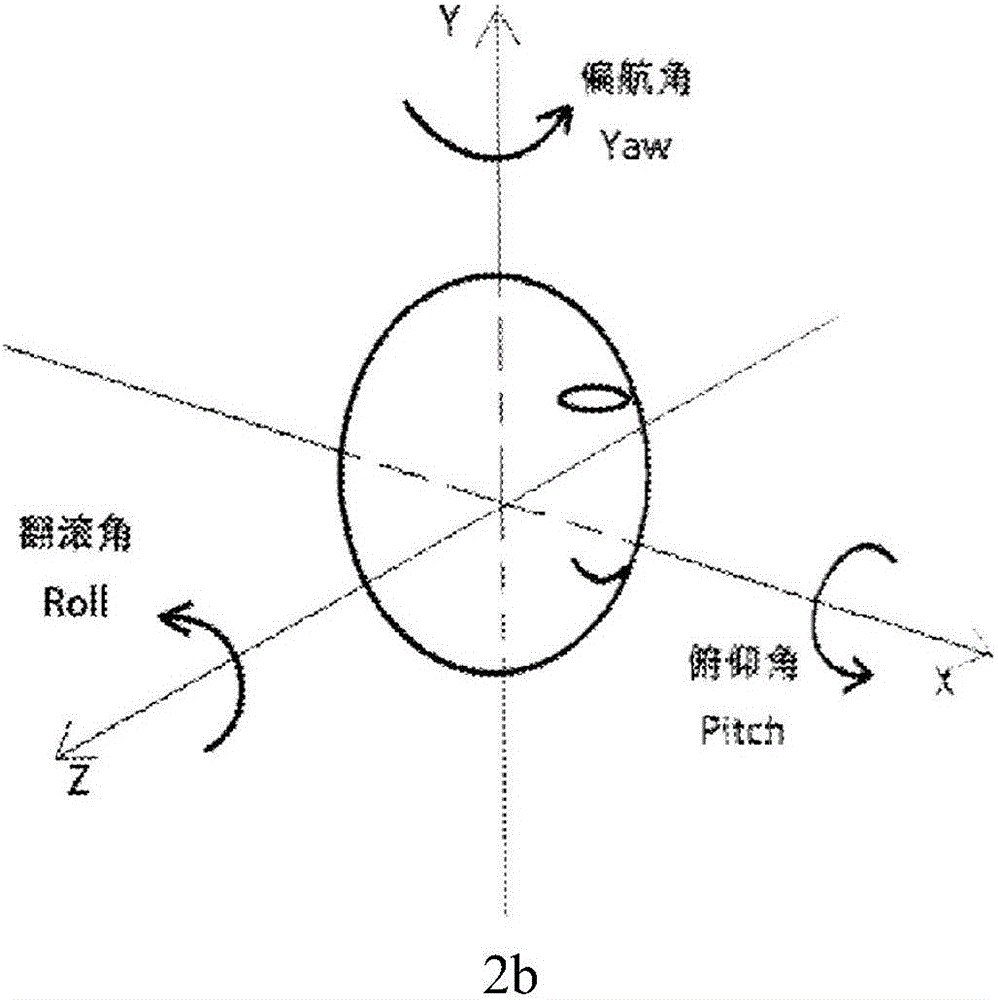

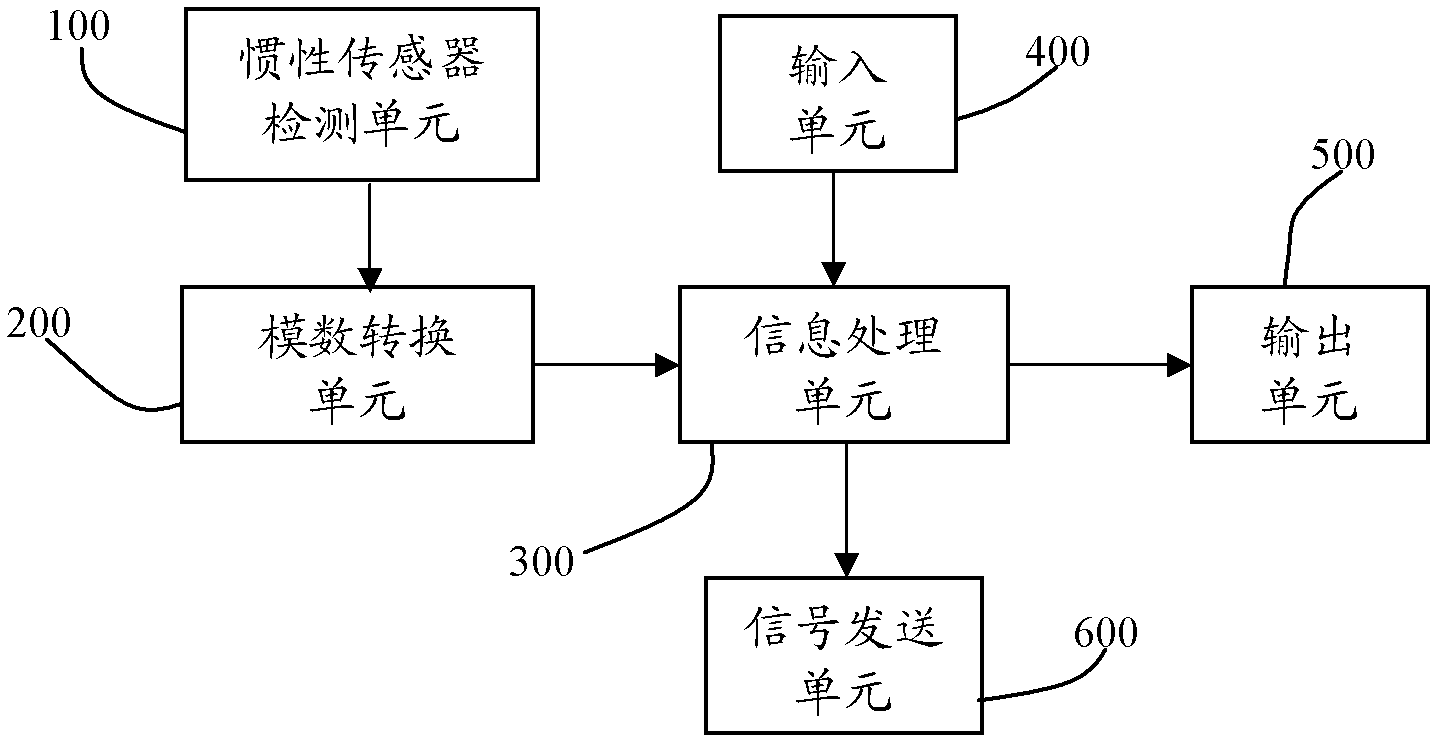

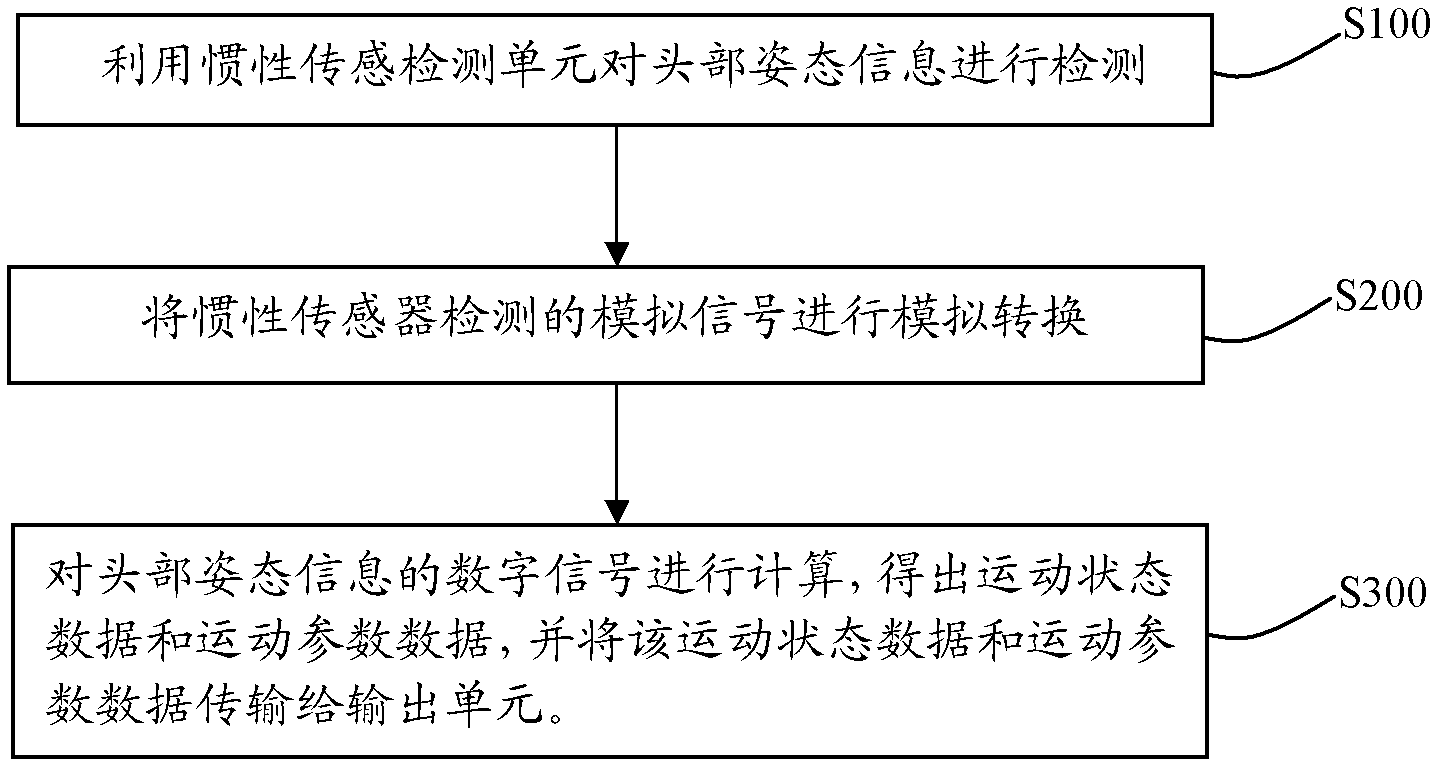

Head posture sensing device and method

ActiveCN103076045AStable and efficient judgmentGet motion parametersMeasurement devicesInformation processingHead movements

The invention provides a head posture sensing device and a head posture sensing method. The device comprises an inertia sensor detection unit, an analogue-to-digital conversion unit connected with the inertia sensor detection unit and an information processing unit connected with the analogue-to-digital conversion unit, wherein the information processing unit is connected with an input unit and an output unit; the inertia sensor detection unit comprises an inertia sensor, and is used for detecting head posture information, and outputting an analogue signal of the head posture information; and the analogue-to-digital conversion unit comprises an analogue-to-digital converter, and is used for converting the analogue signal detected by the inertia sensor into a digital signal of the head posture information. According to the head posture sensing device and the head posture sensing method, various motion statuses of a head can be detected stably and efficiently, and motion parameters of the head can be acquired.

Owner:NINGBO CHIKEWEI ELECTRONICS CO LTD

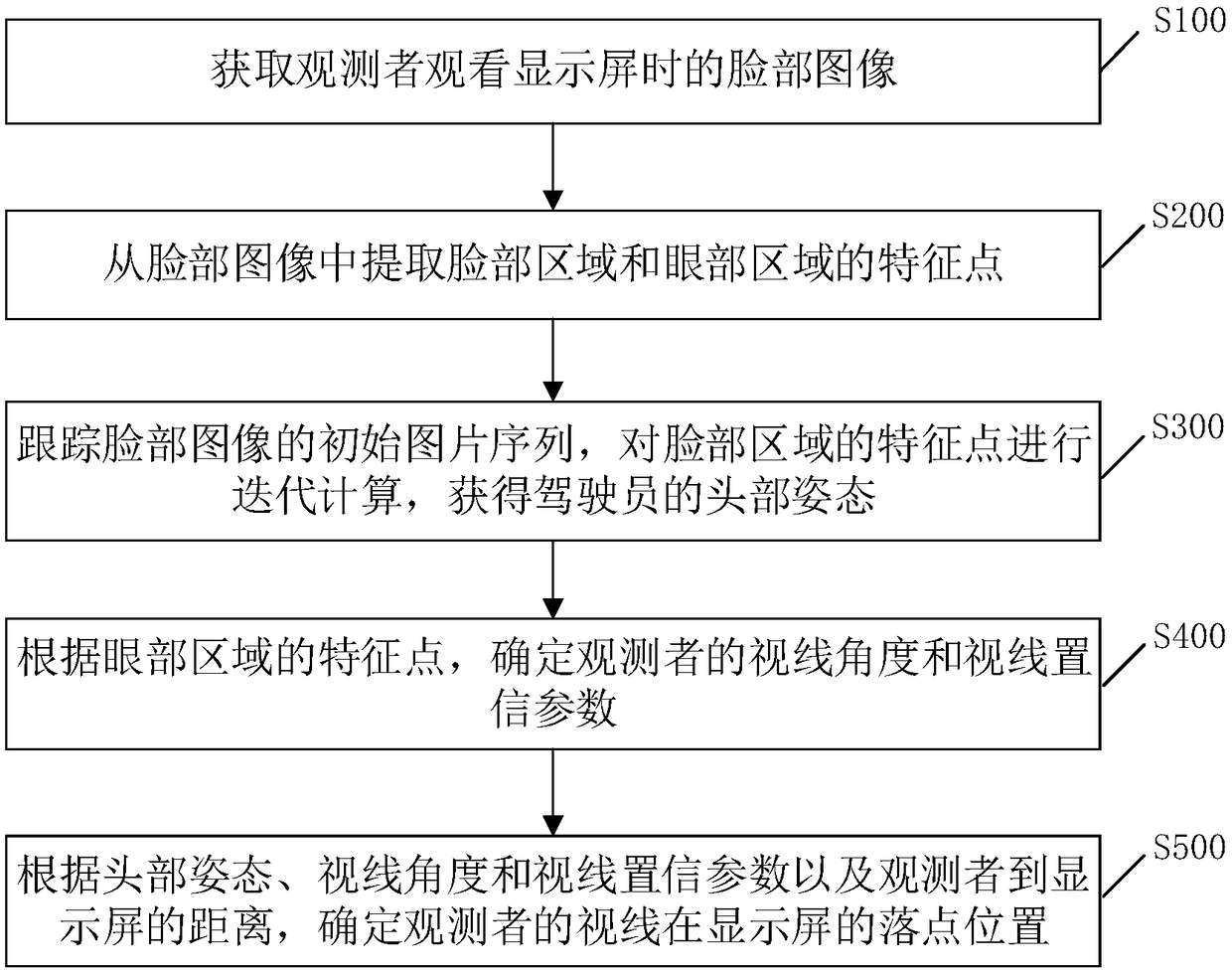

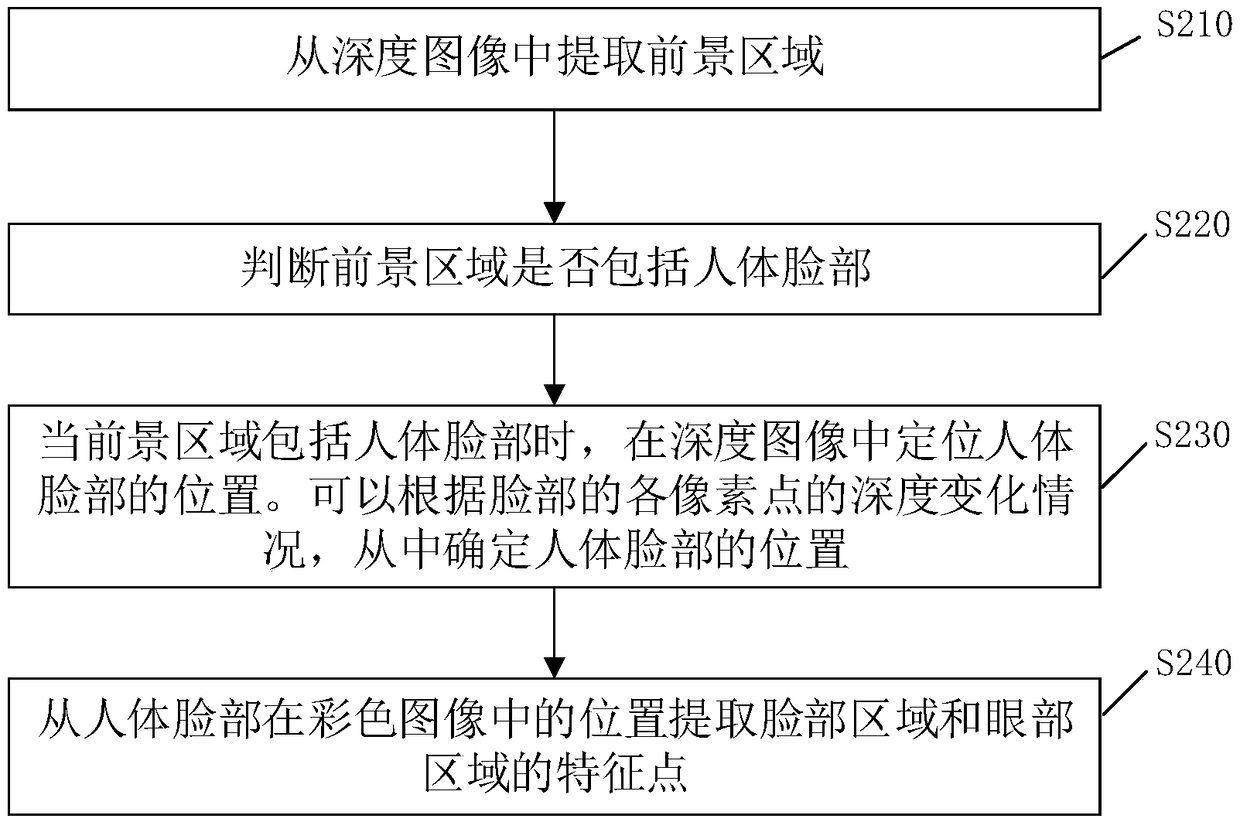

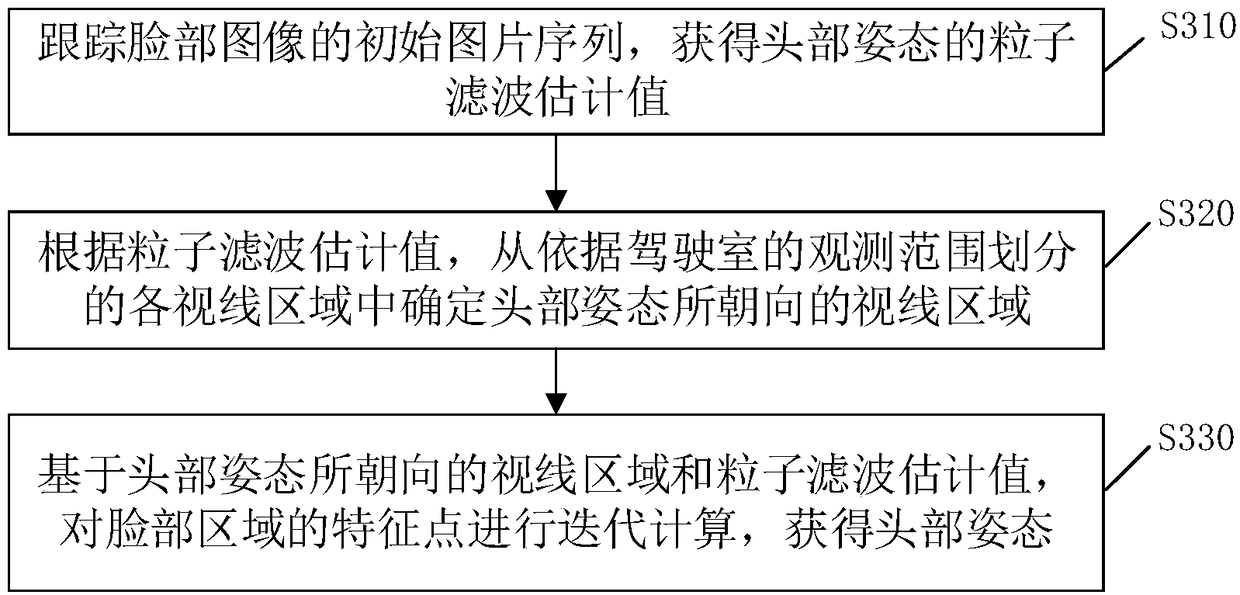

Method, device, storage medium and terminal device for detecting line-of-sight point

ActiveCN109271914AEasy to detectImprove accuracyInput/output for user-computer interactionAcquiring/recognising eyesTerminal equipmentComputer terminal

The invention provides a method, a device, a storage medium and a terminal device for detecting a line-of-sight point, wherein, the method comprises the following steps: obtaining a face image when anobserver looks at a display screen; extracting feature points of a face region and an eye region from the face image; tracking an initial picture sequence of the face image, iteratively calculating afeature point of the face region to obtain a head posture of the observer; determining a line-of-sight angle and a line-of-sight confidence parameter of the observer according to a characteristic point of the eye region; according to the head posture, the line-of-sight angle and the line-of-sight confidence parameter, and the distance of the observer to the display screen, determining the line-of-sight of the observer to be at a point-of-arrival position of the display screen. By adopting the invention, the position of the line of sight falling point of the observer can be conveniently, quickly and accurately determined.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

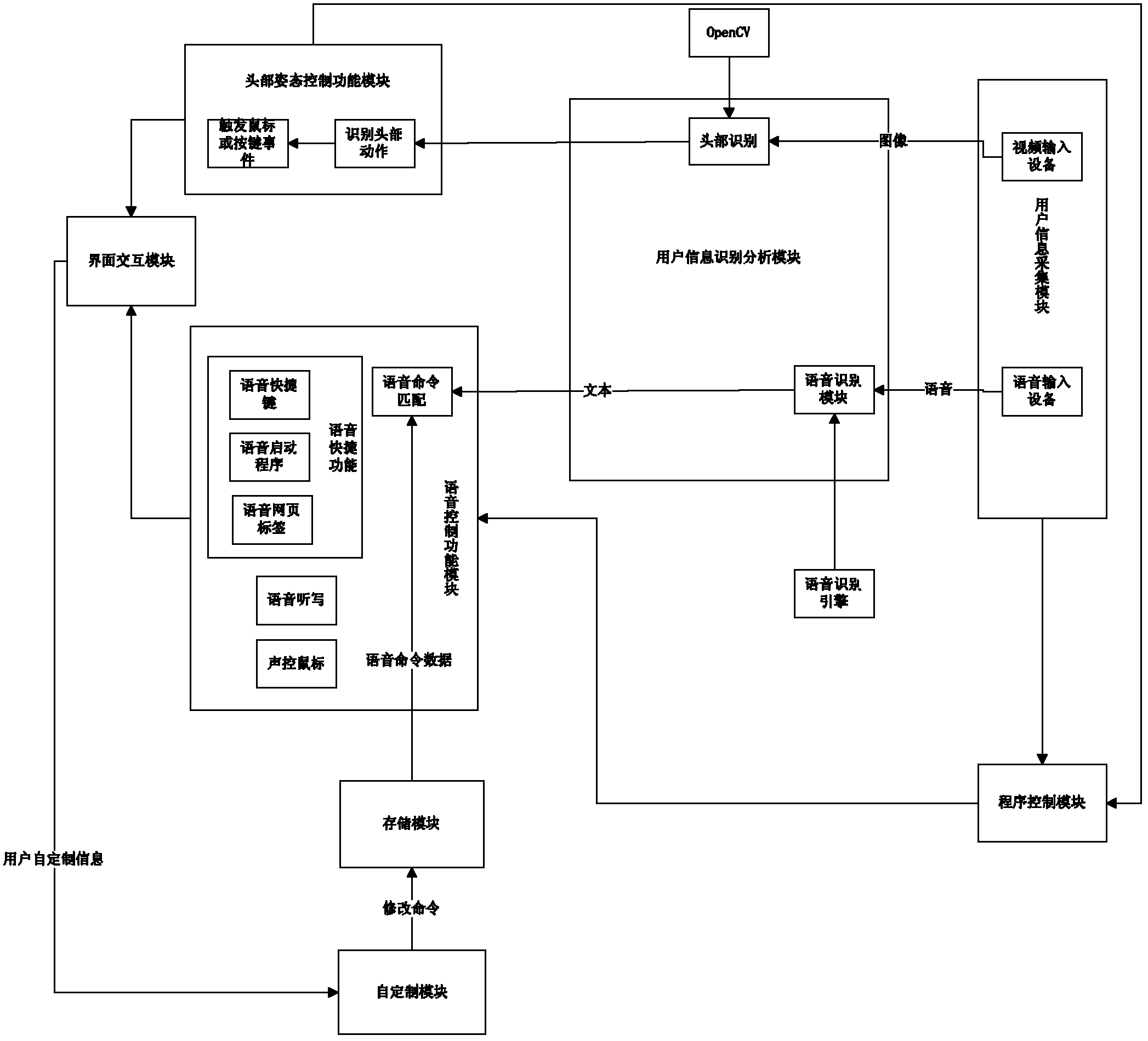

Multidimensional sense man-machine interaction system and method

InactiveCN102622085ARich, simple and fast interactive operationsImprove office efficiencyInput/output for user-computer interactionGraph readingComputer usersMultidimensional scaling

The invention discloses a multidimensional sense man-machine interaction system and a multidimensional sense man-machine interaction method, which are based on combine interaction modes of head posture control, voice control, keyboard and mouse operation, etc. The system is composed of the following 8 modules: a user information collecting module, a user information identifying and analyzing module, a head posture control functional module, a voice control functional module, a storage module, an interface interaction module, a self-customizing module and a program control module. The method is realized by the following four processes: collecting user information, identifying and analyzing the user information, realizing head posture control or voice control and outputting a user interface. The system and the method disclosed by the invention have the advantages of large application range, good maintainability, good expandability and the like. In addition, the system provides a novel man-machine interaction process method for a computer user, so that the operation efficiency of a common user can be improved, and great help on neck fitness and learning of old people on computers can be realized.

Owner:BEIHANG UNIV

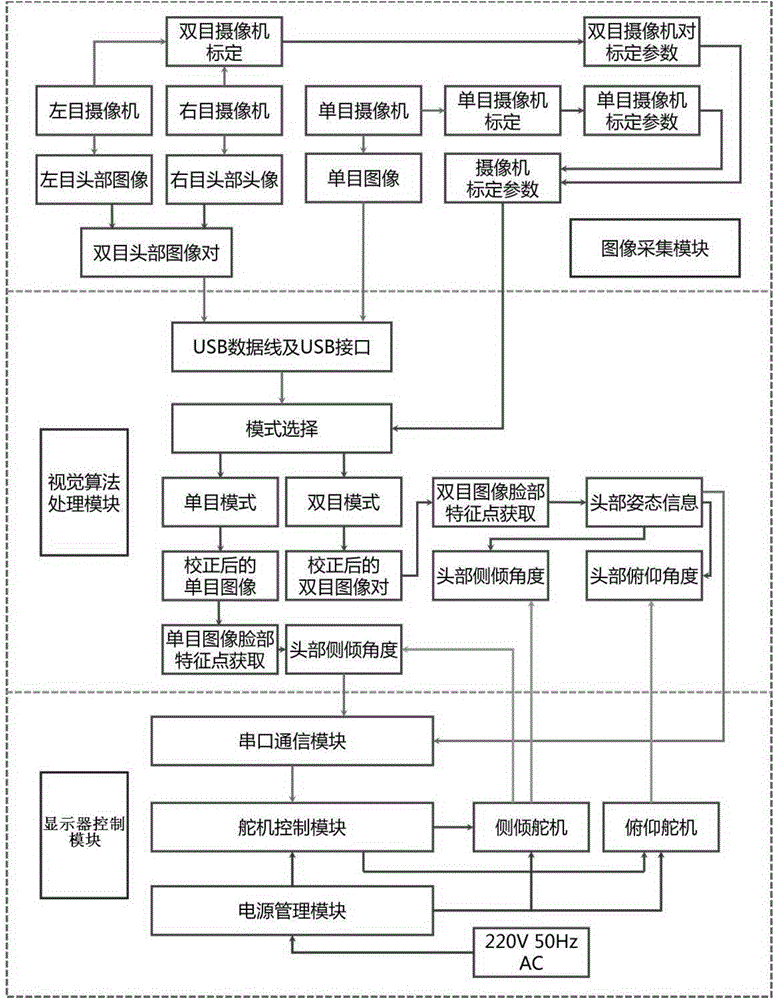

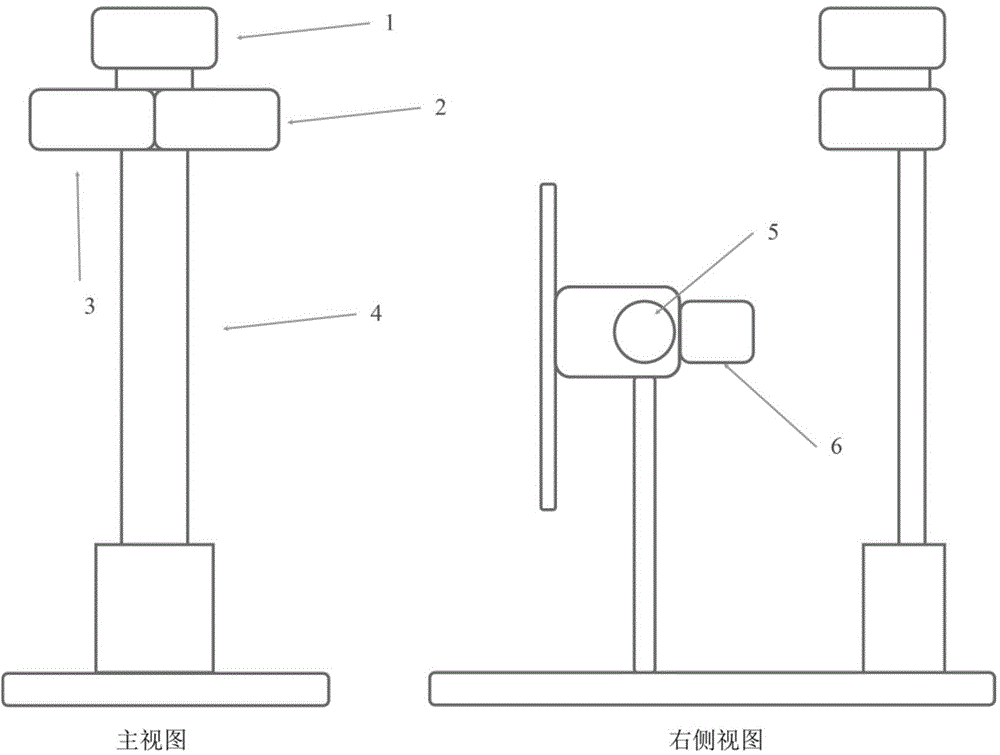

Intelligent display system automatically tracking head posture

ActiveCN103558910ABest sight positionProtect eyesightInput/output for user-computer interactionGraph readingMicrocontrollerVision algorithms

The invention relates to an intelligent display system automatically tracking a head posture. The system can capture the head posture of a human body in real time, a display follows the head of the human body, the display and the head are constantly kept at optimum positions, eye fatigue can be relieved, and myopia can be prevented. The system comprises an image acquisition module, a vision algorithm processing module and a display control module. The image acquisition module is used for acquiring the image of the head of the human body in real time; the vision algorithm processing module is used for firstly preprocessing the image, constantly keeping the head at a vertical position in the image, secondly extracting feature points of a human face by the aid of an ASM (active shape model) algorithm, and finally acquiring the spatial posture of the head according to the triangular surveying principle of elevation angles and side-tipping angles. Head posture information is transmitted to a steering gear control module and then calculated by a single chip microcomputer to form a PWM (pulse width modulation) signal for controlling a steering gear, so that the display follows the head posture at two degrees of freedom of elevation and side-tipping.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

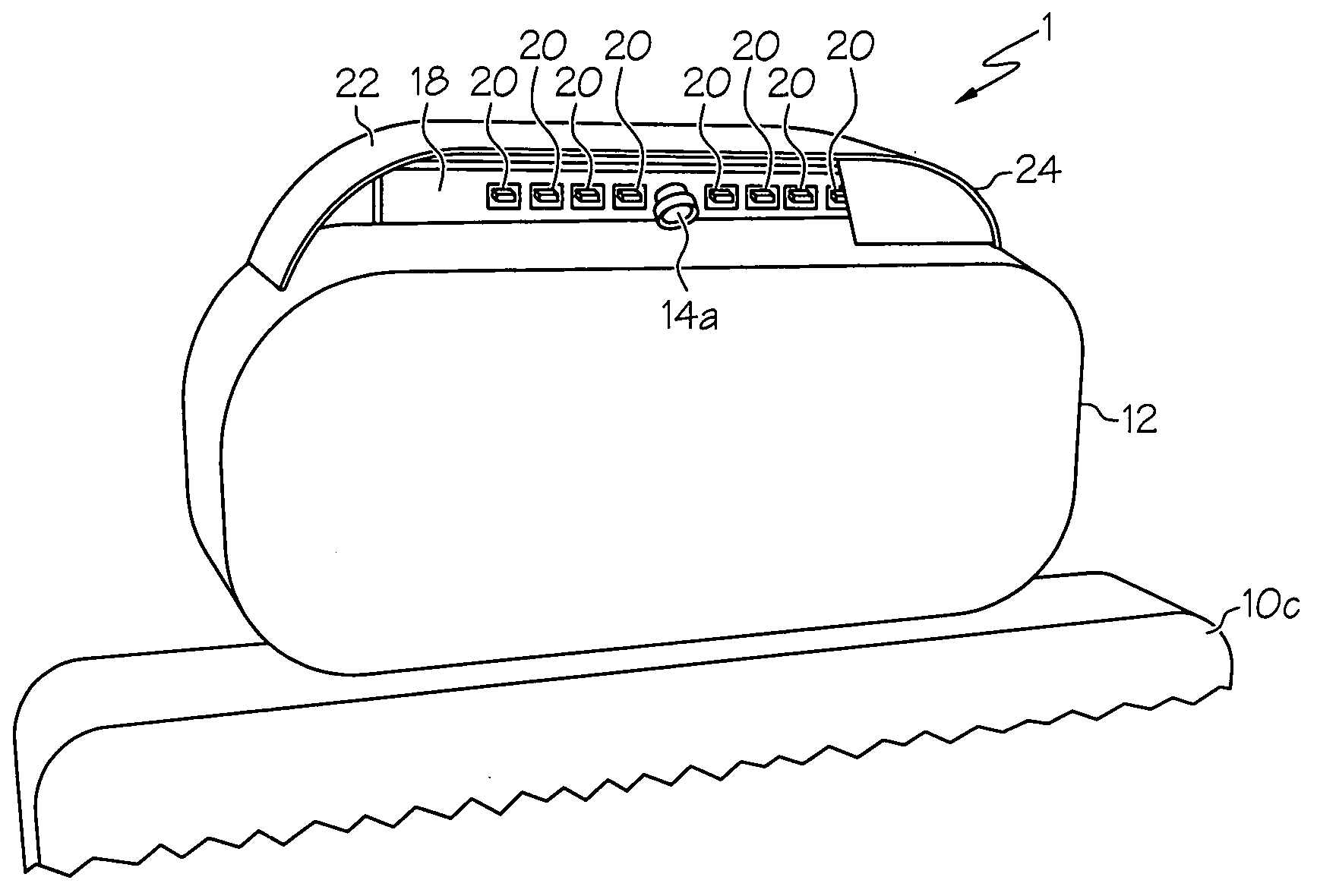

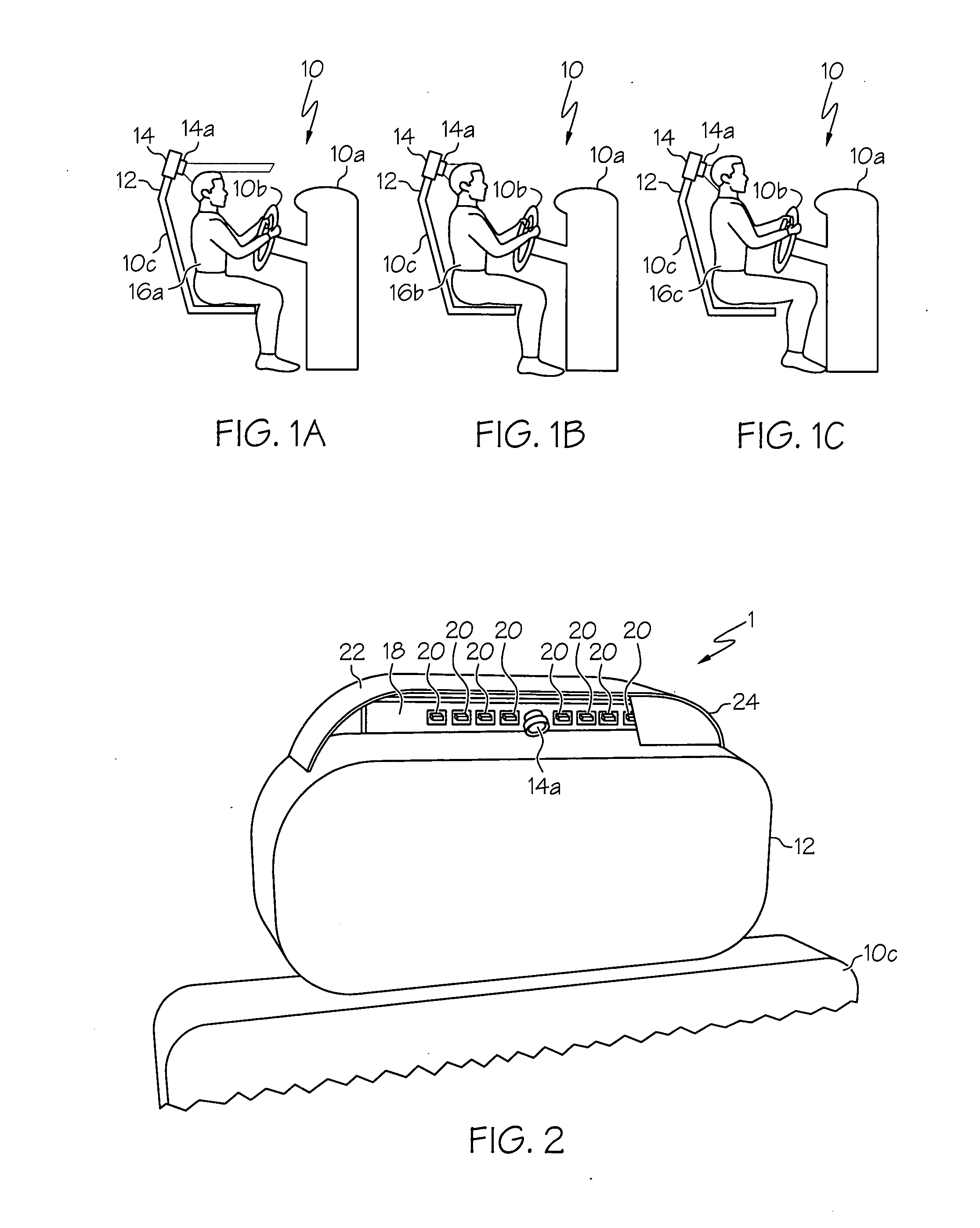

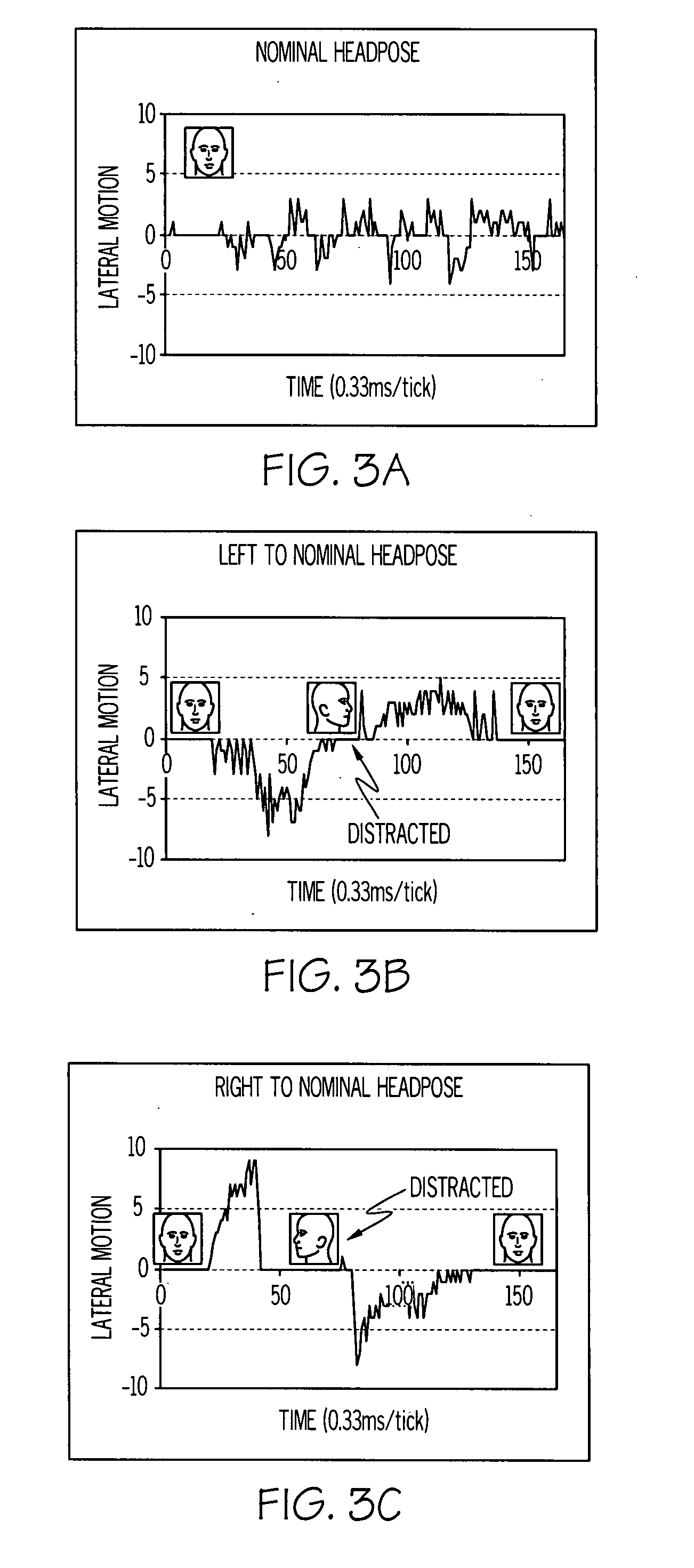

Method and apparatus for assessing head pose of a vehicle driver

The head pose of a motor vehicle driver with respect to a vehicle frame of reference is assessed with a relative motion sensor positioned rearward of the driver's head, such as in or on the headrest of the driver's seat. The relative motion sensor detects changes in the position of the driver's head, and the detected changes are used to determine the driver's head pose, and specifically, whether the head pose is forward-looking (i.e., with the driver paying attention to the forward field-of-view) or non-forward-looking. The determined head pose is assumed to be initially forward-looking, and is thereafter biased toward forward-looking whenever driver behavior characteristic of a forward-looking head pose is recognized.

Owner:APTIV TECH LTD

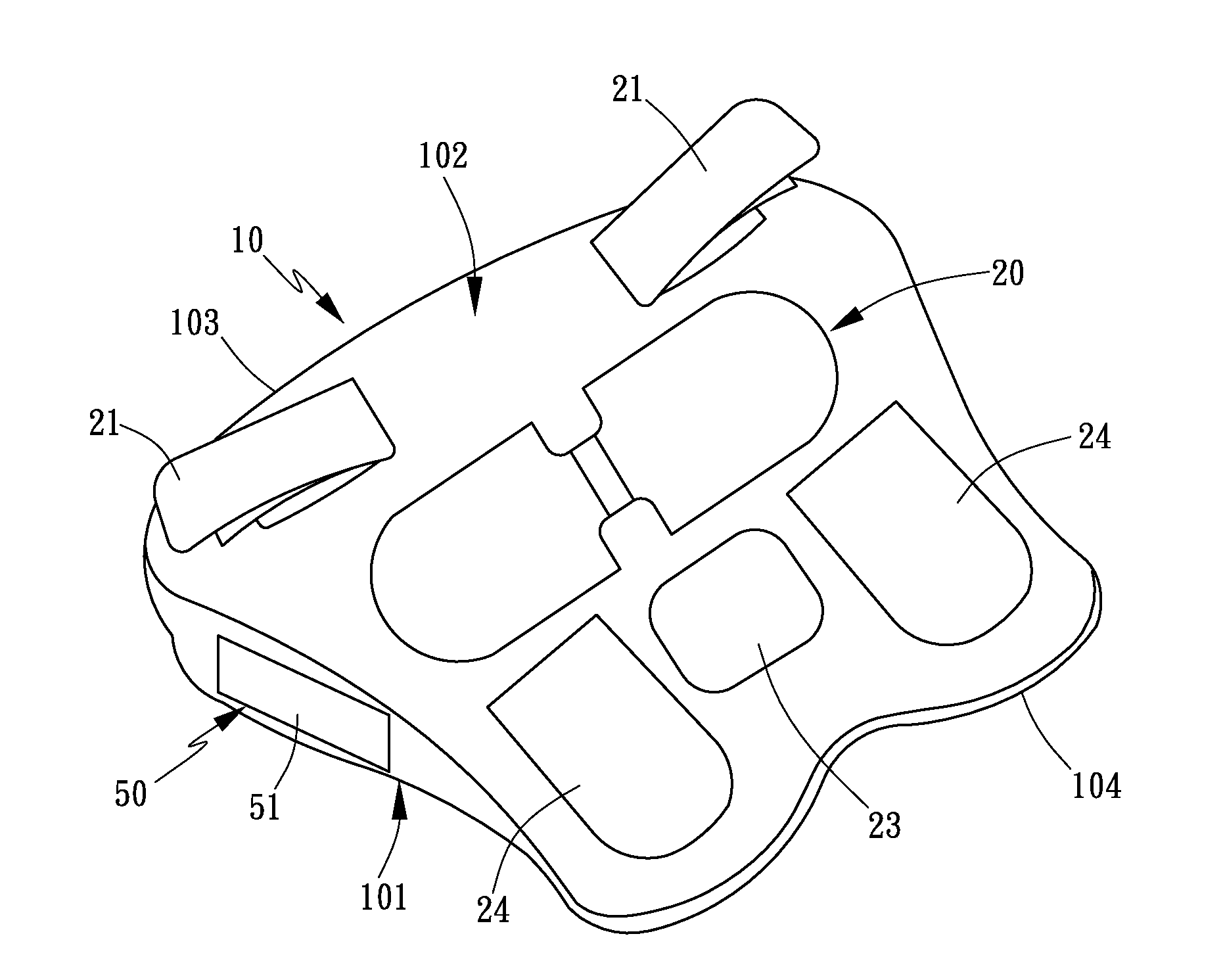

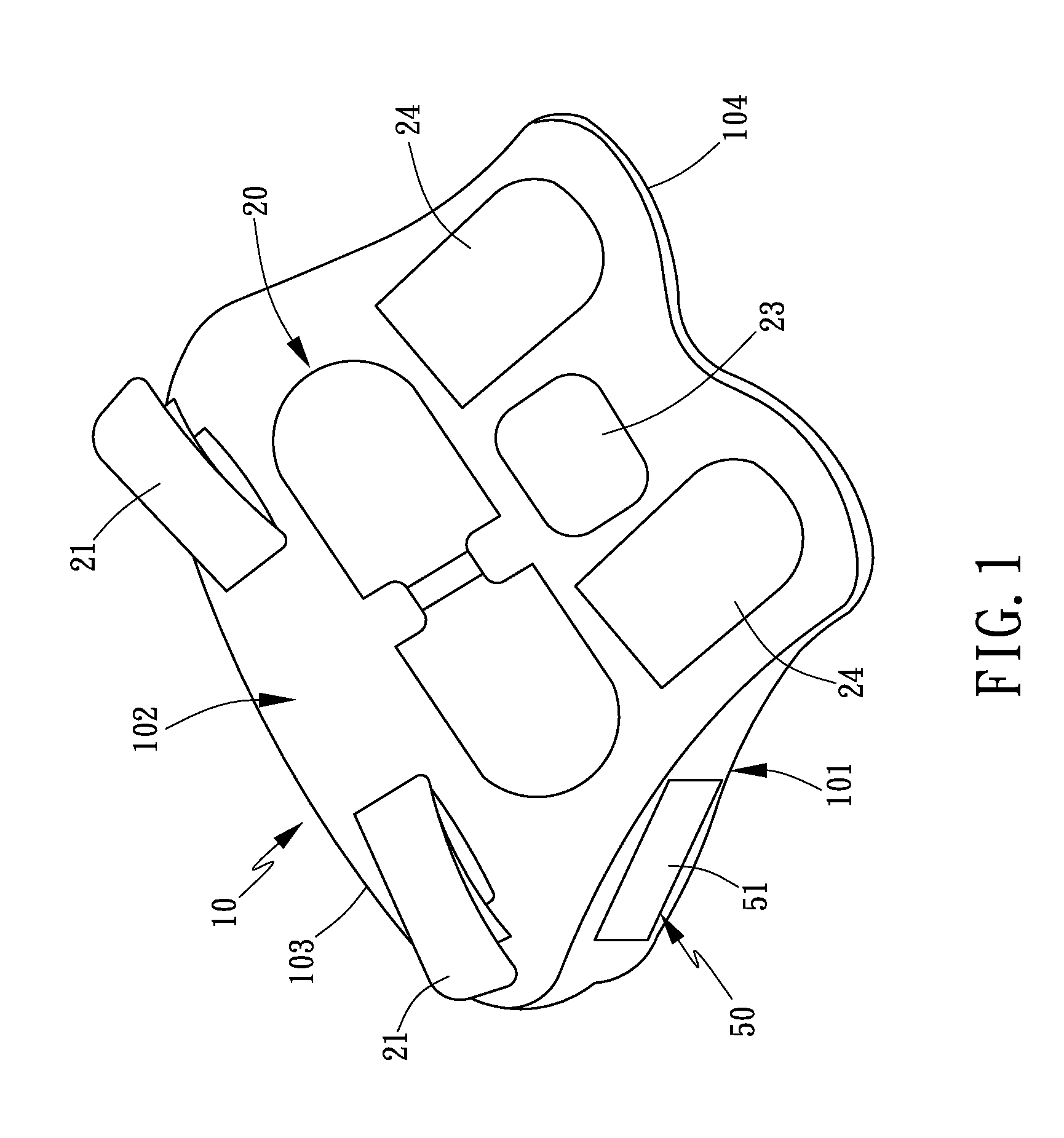

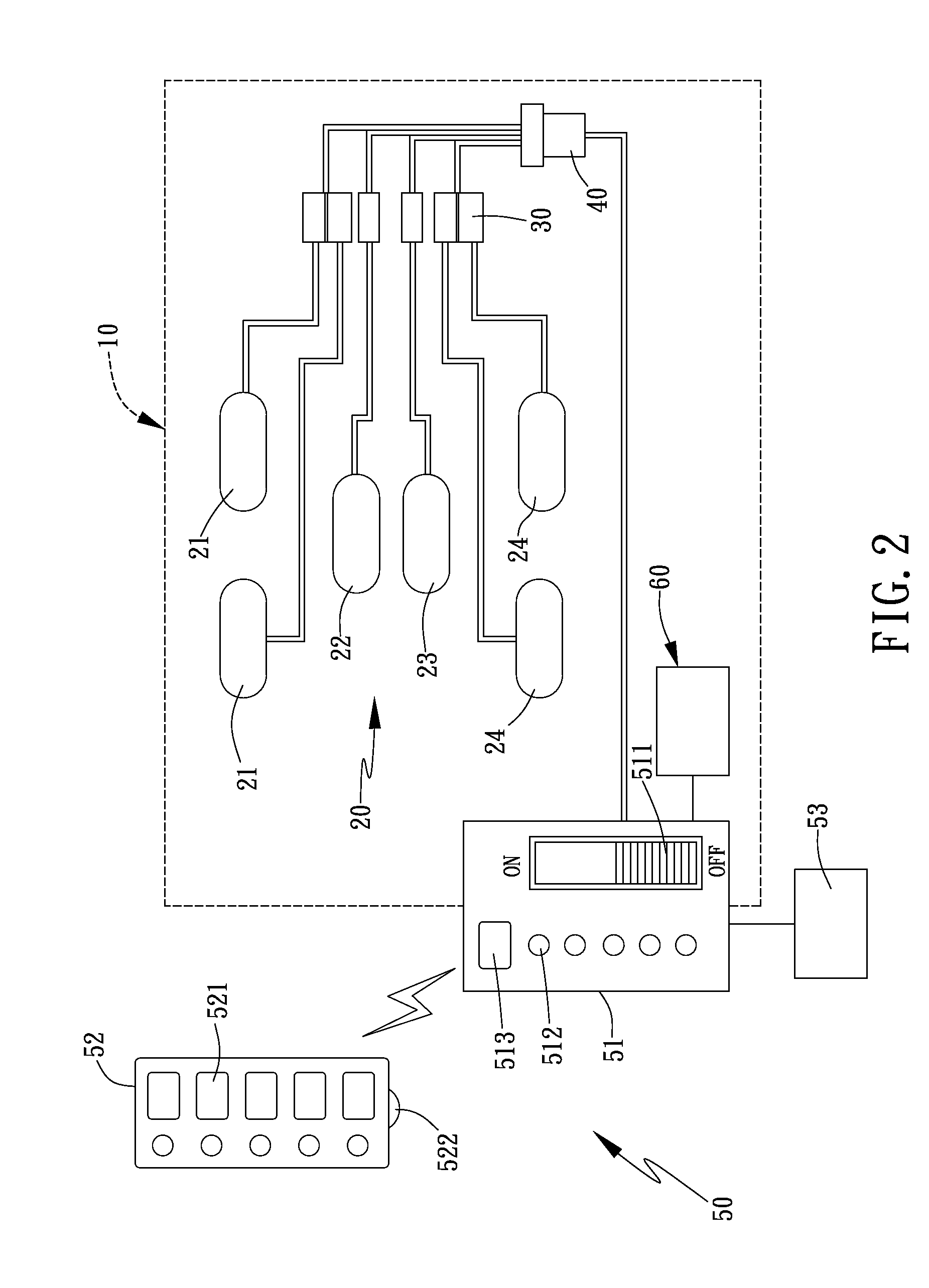

Anti-snoring massage pillow

An anti-snoring massage pillow comprises a pillow body provided with a sound sensing unit, a control unit, an inflator unit, at least one exhaust unit and an air bag system including at least one air bag. When the user who lies on the pillow body snores, the sound of snoring will be sensed by the sound sensing unit, and then the control unit will drive the inflator unit to inflate the air bag system. After being inflated for a center period, the control unit will stop driving the inflator unit to inflate the air bag system, and the air in the air bag system will be exhausted through the exhaust unit. By such arrangements, the head posture and position of the user can be adjusted by inflation of the air bag system, and the air bag system can further offer a massage to the head of the user during the inflation process.

Owner:XIANG TIAN ELECTRONICS CO LTD

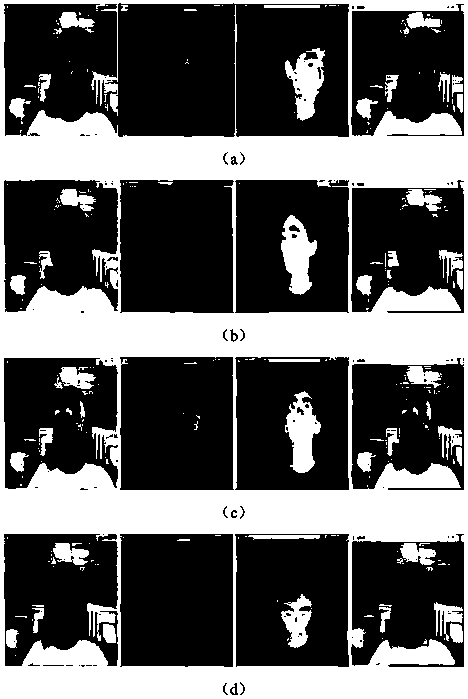

Head posture detection method and system based on RGB-D image

ActiveCN111414798AAccurate detectionPrecise registrationImage enhancementImage analysisPoint cloudRgb image

The invention discloses a head posture detection method based on an RGB-D image, and the method comprises the following steps: (1) collecting a head posture depth image and an RGB image, and aligningthe head posture depth image and the RGB image to obtain a head posture image; (2) carrying out point cloud calculation on the head posture images aligned in the step (1) to obtain to-be-detected headpoint cloud data; and (3) substituting the point cloud data in the step (2) into the three-dimensional standard head model to be registered with the point cloud of the three-dimensional standard headmodel to complete head posture detection. According to the algorithm, the human head posture can be accurately detected in a diagnosis and treatment room environment with a uniform and sufficient light source, and the posture estimation robustness when the head posture angle is large is improved.

Owner:SHENYANG POLYTECHNIC UNIV

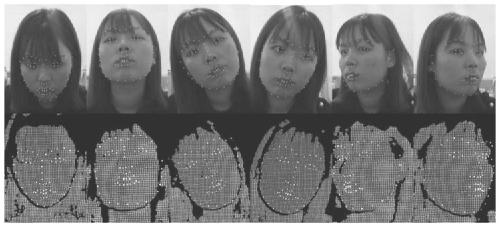

Human face positioning and head gesture identifying method based on multiple features harmonization

InactiveCN1601549AImprove robustnessFast operationImage enhancementCharacter and pattern recognitionHead movementsPattern recognition

The method includes following steps: determining motion posture of head part; primary orientating human face; pre estimating posture of head part; determining position and posture of head part. The invention discloses effective algorithm for orientating human face and estimating posture, is applicable to analyzing motion of human face in moving scene under variable illumination condition. Advantages are: strong robustness of algorithm, fast calculation speed, and independent from specific person. Validation is carried out on platform of intelligent platform of wheel chair, and shows better effect. High versatility makes the invention possible to apply to lots of system related to human face. The invention is a key technology for realizing intelligent man-machine interaction.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Multi-index fusion-based driver fatigue detection method

InactiveCN108875642AFatigue state detectionOvercoming distractionsAcquiring/recognising eyesPattern recognitionNormal state

The invention discloses a multi-index fusion-based driver fatigue detection method. The method comprises the steps of firstly, acquiring a face image of a driver in a normal state to carry out human face positioning, selecting a first face feature point, and determining a driver eye fatigue judgment threshold value through the first face feature point; acquiring a face image of the driver in a driving state, carrying out human face positioning, selecting a second face feature point, and calculating an eye fatigue index, a mouth fatigue index and a head posture fatigue index of the driver; andin combination with the eye fatigue index, the mouth fatigue index and the head posture fatigue index, judging whether the driver is in fatigue driving or not. According to the method, various physiological characteristics of a person in a fatigue state are comprehensively considered, and the fatigue state of the driver is detected in a multi-index fusion mode, so that the fatigue state of the driver can be detected more accurately, and the problems of easy disturbance and low identification accuracy of a traditional single-index detection method are effectively solved.

Owner:CHANGAN UNIV

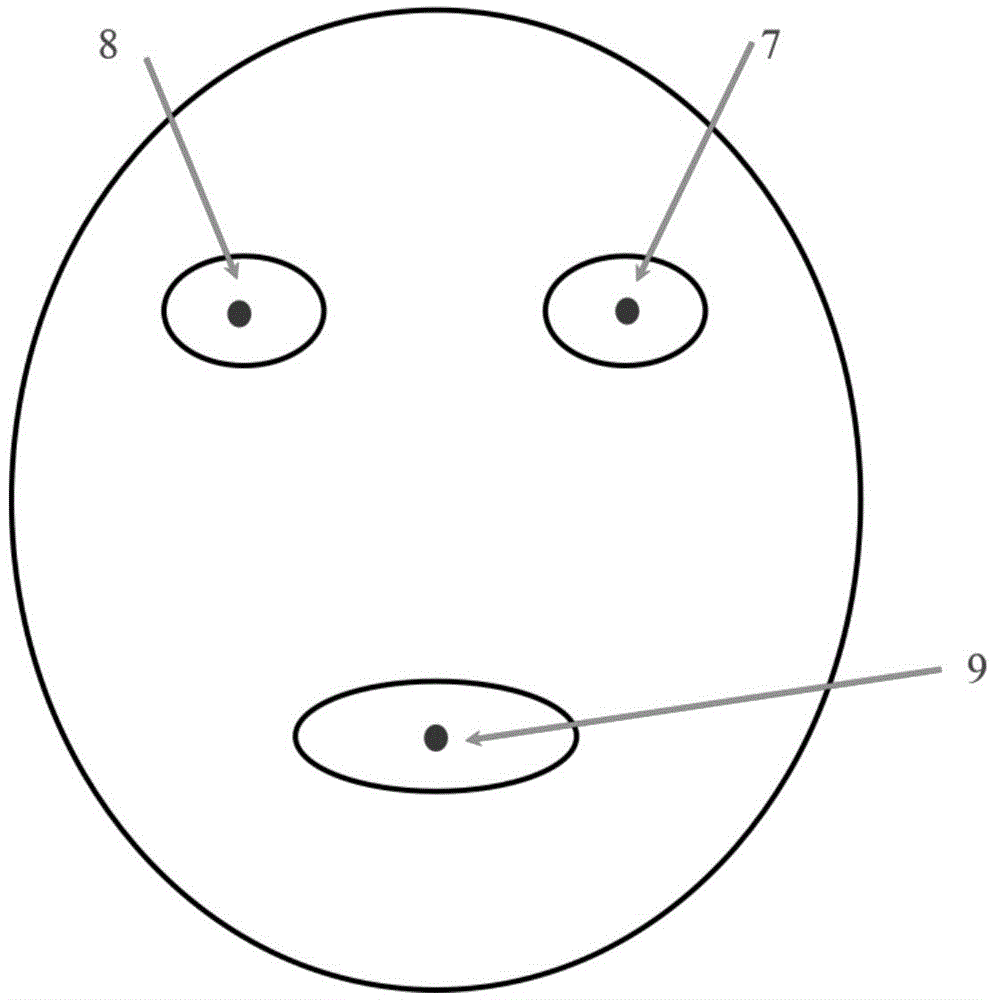

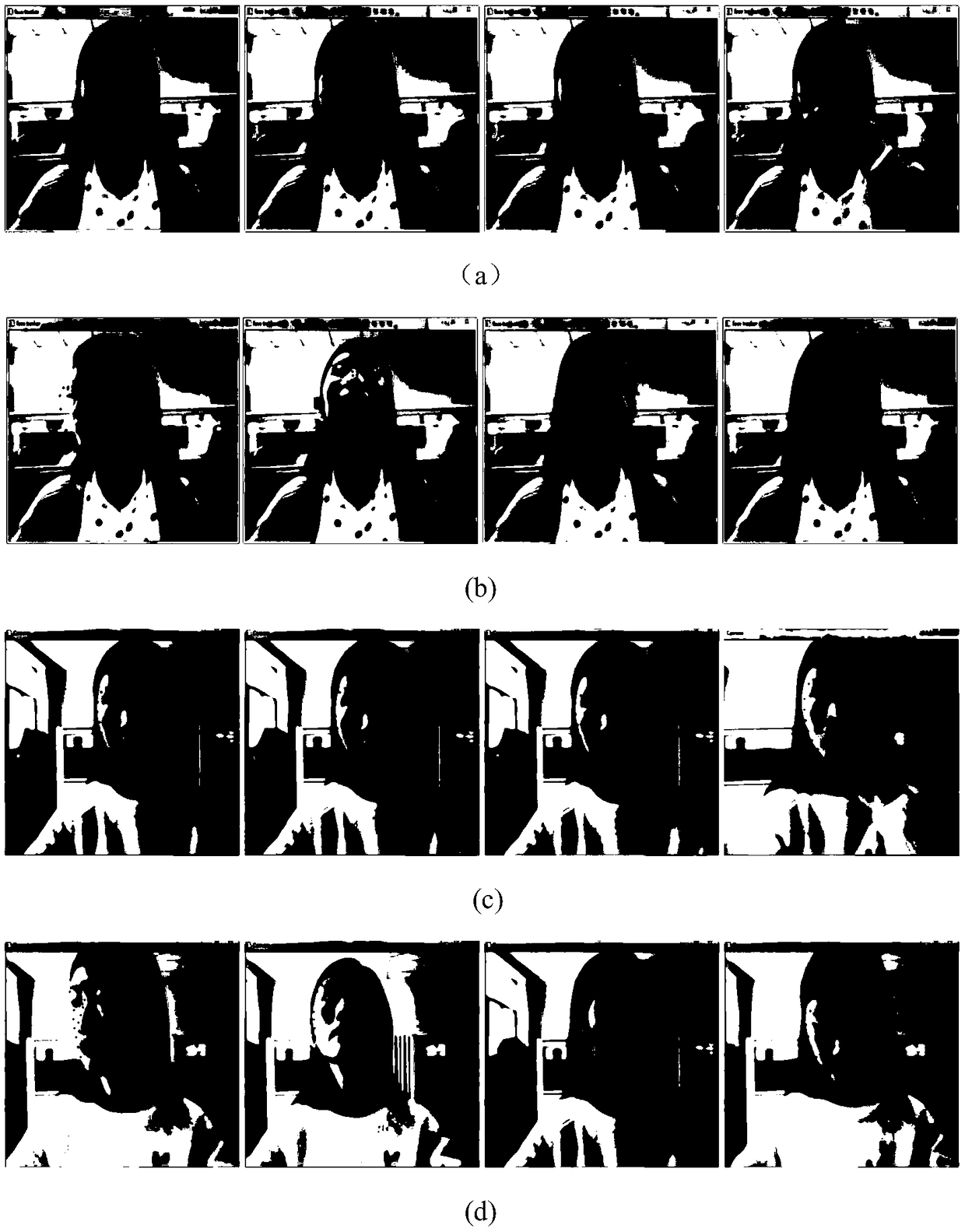

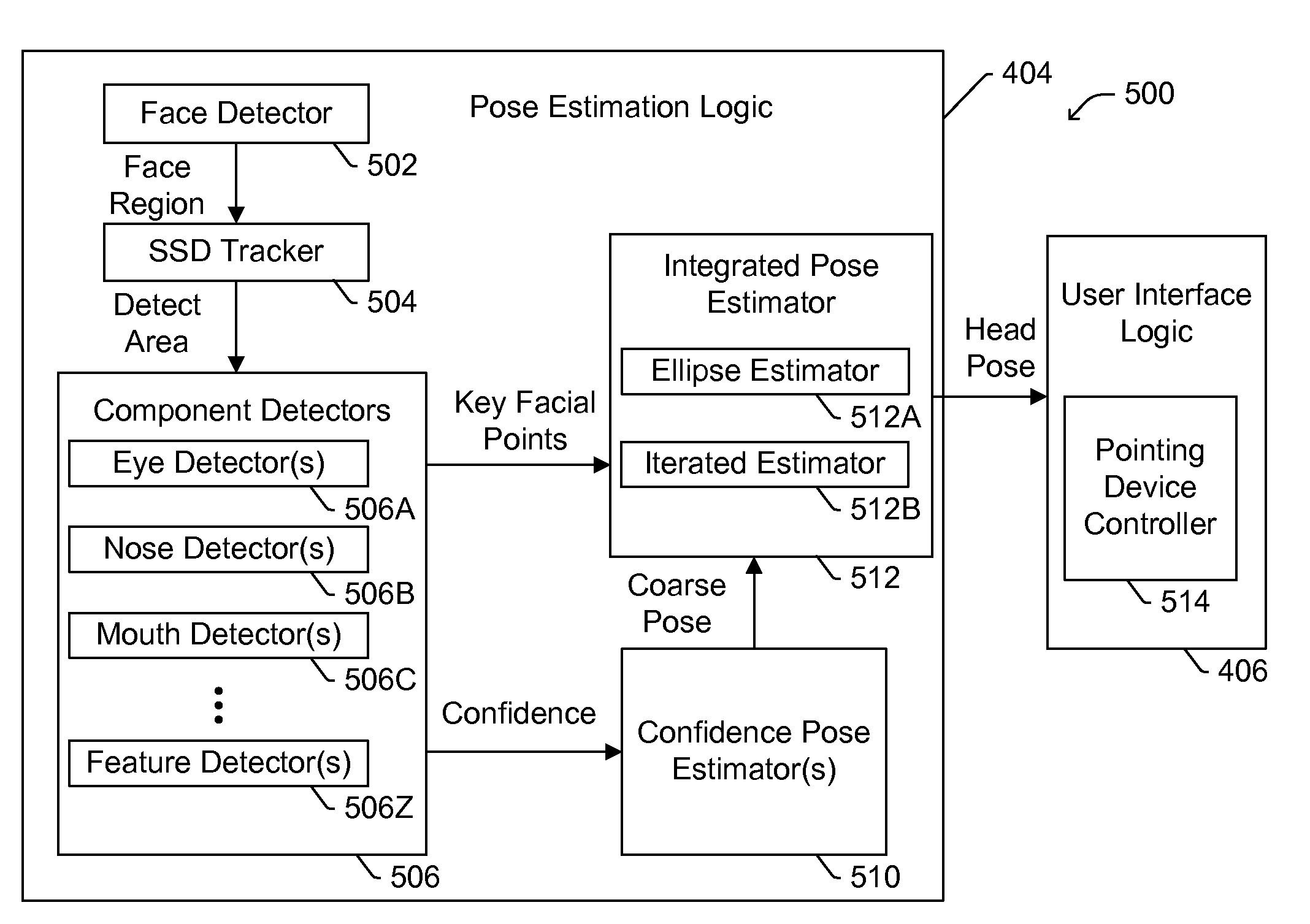

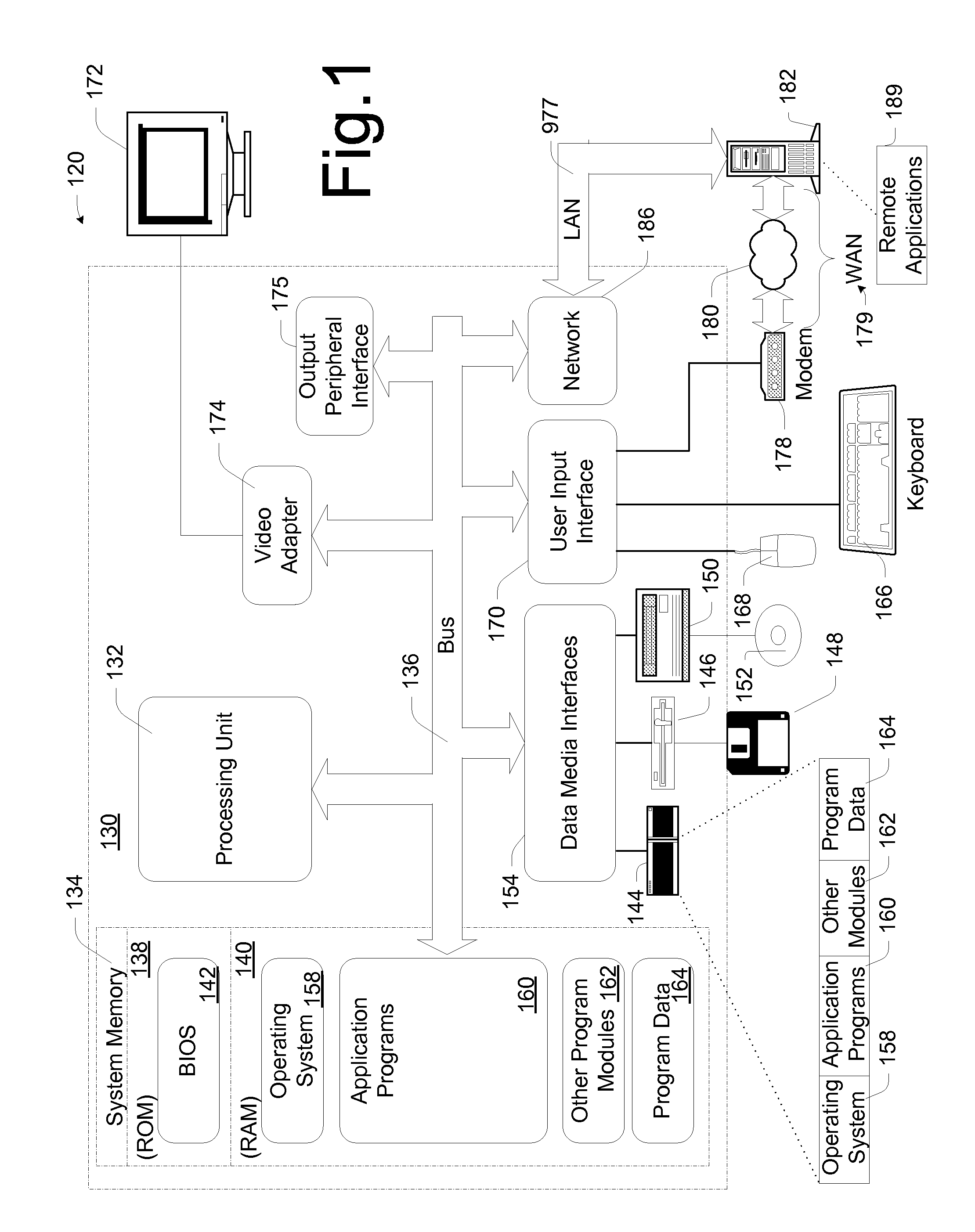

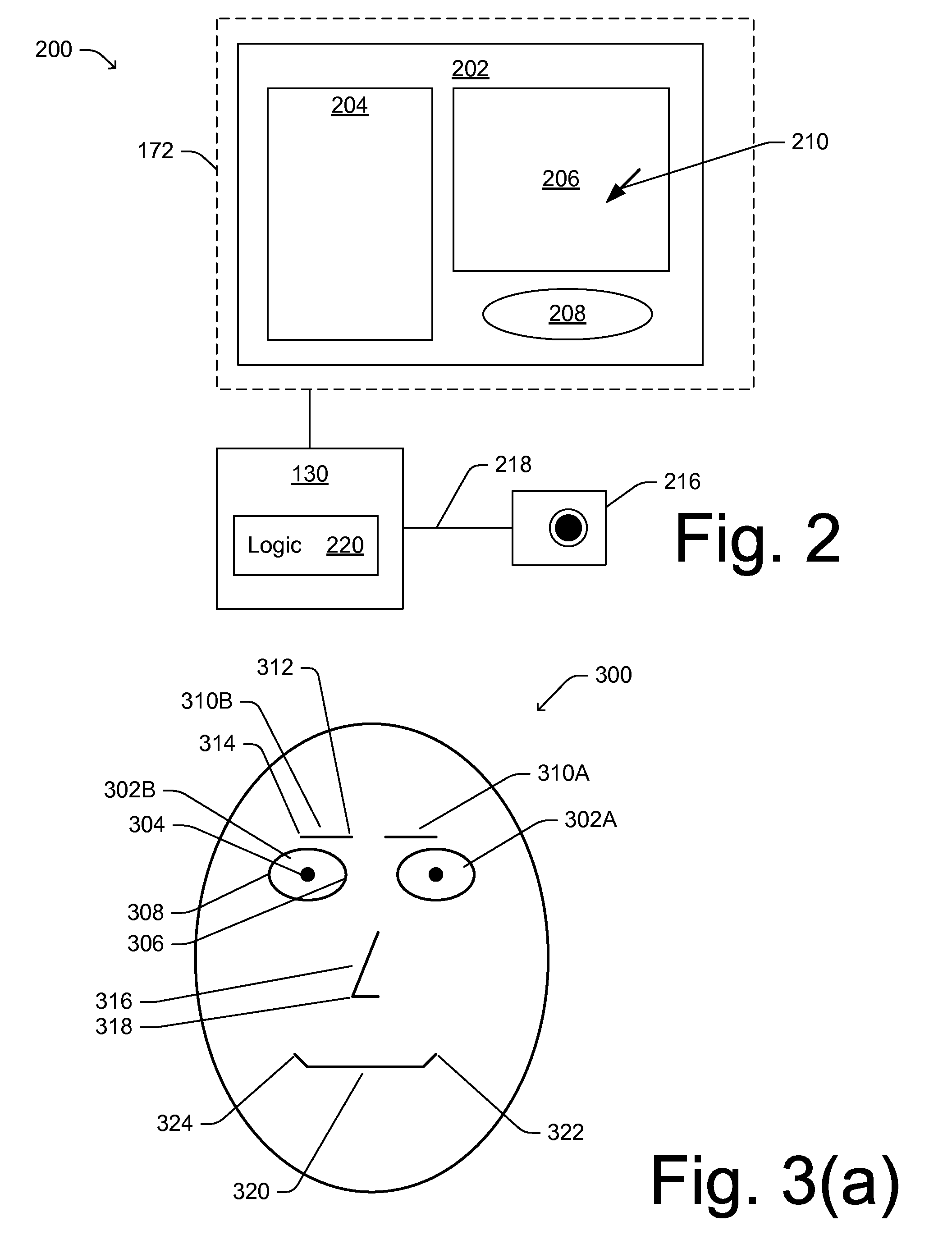

Head Pose Assessment Methods and Systems

InactiveUS20080298637A1Effective assessmentInput/output for user-computer interactionImage enhancementGraphicsGraphical user interface

Improvements are provided to effectively assess a user's face and head pose such that a computer or like device can track the user's attention towards a display device(s). Then the region of the display or graphical user interface that the user is turned towards can be automatically selected without requiring the user to provide further inputs. A frontal face detector is applied to detect the user's frontal face and then key facial points such as left / right eye center, left / right mouth corner, nose tip, etc., are detected by component detectors. The system then tracks the user's head by an image tracker and determines yaw, tilt and roll angle and other pose information of the user's head through a coarse to fine process according to key facial points and / or confidence outputs by pose estimator.

Owner:MICROSOFT TECH LICENSING LLC

Head pose assessment methods and systems

InactiveUS7391888B2Effective assessmentInput/output for user-computer interactionImage enhancementGraphicsGraphical user interface

Improvements are provided to effectively assess a user's face and head pose such that a computer or like device can track the user's attention towards a display device(s). Then the region of the display or graphical user interface that the user is turned towards can be automatically selected without requiring the user to provide further inputs. A frontal face detector is applied to detect the user's frontal face and then key facial points such as left / right eye center, left / right mouth corner, nose tip, etc., are detected by component detectors. The system then tracks the user's head by an image tracker and determines yaw, tilt and roll angle and other pose information of the user's head through a coarse to fine process according to key facial points and / or confidence outputs by pose estimator.

Owner:MICROSOFT TECH LICENSING LLC

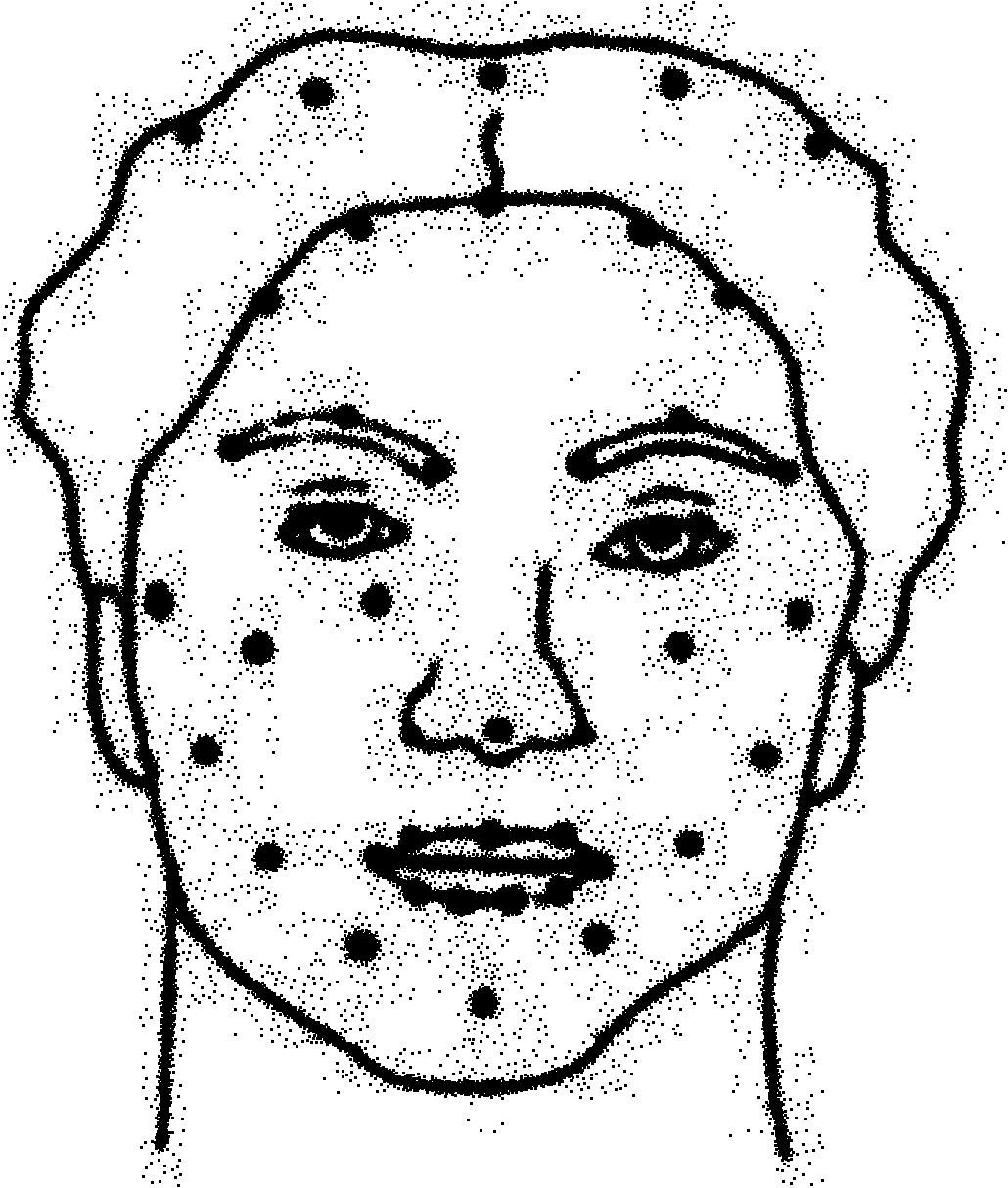

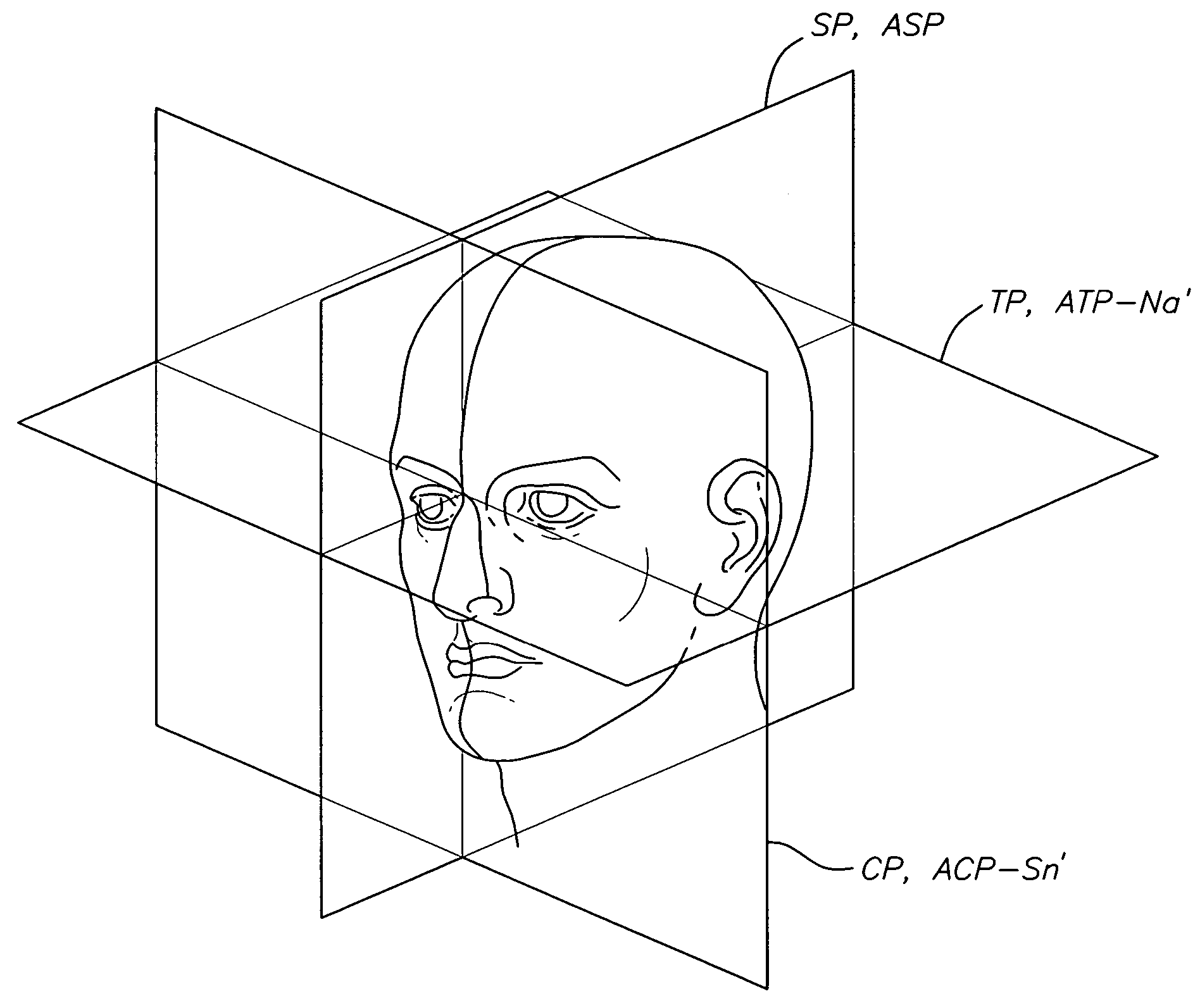

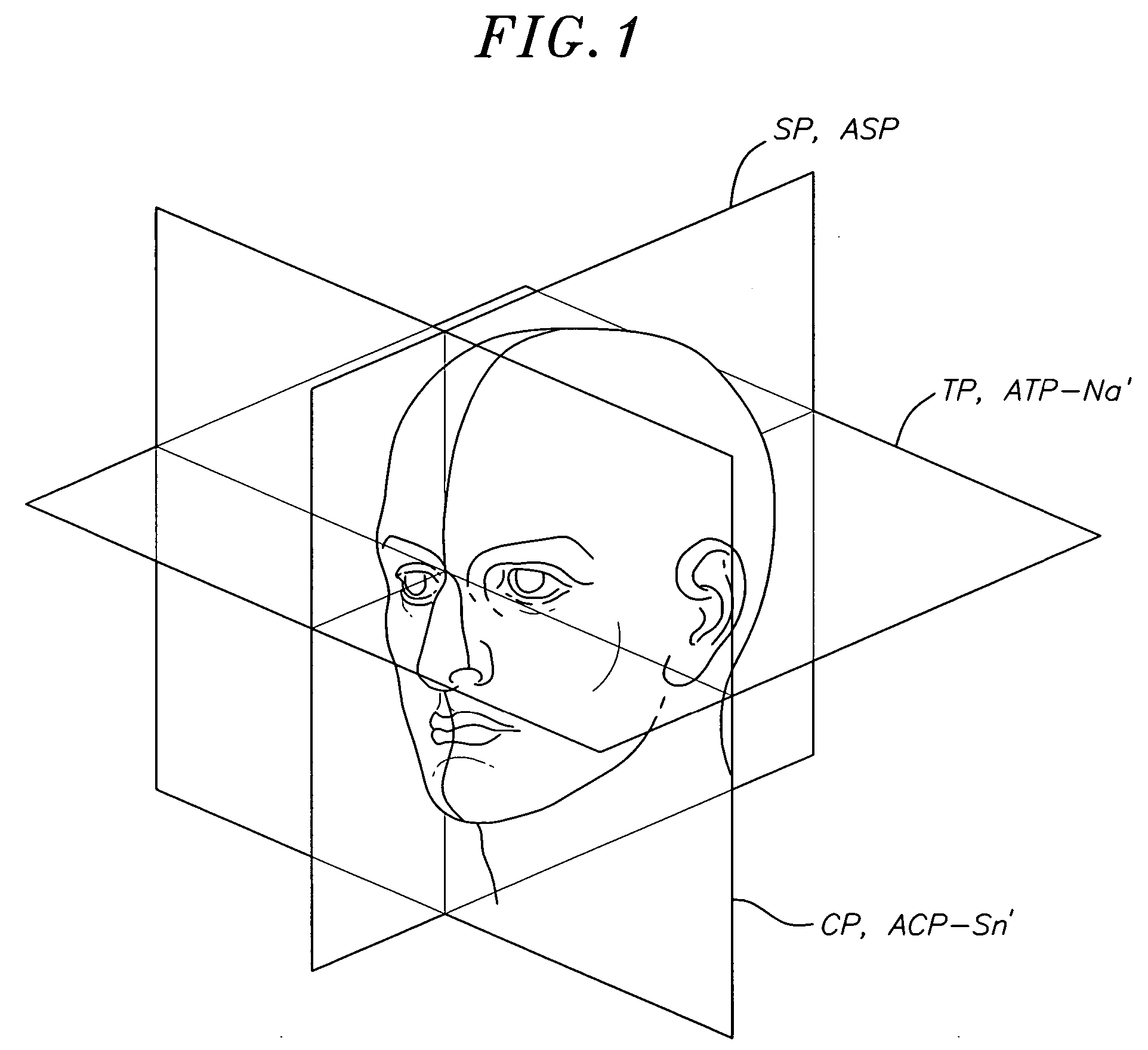

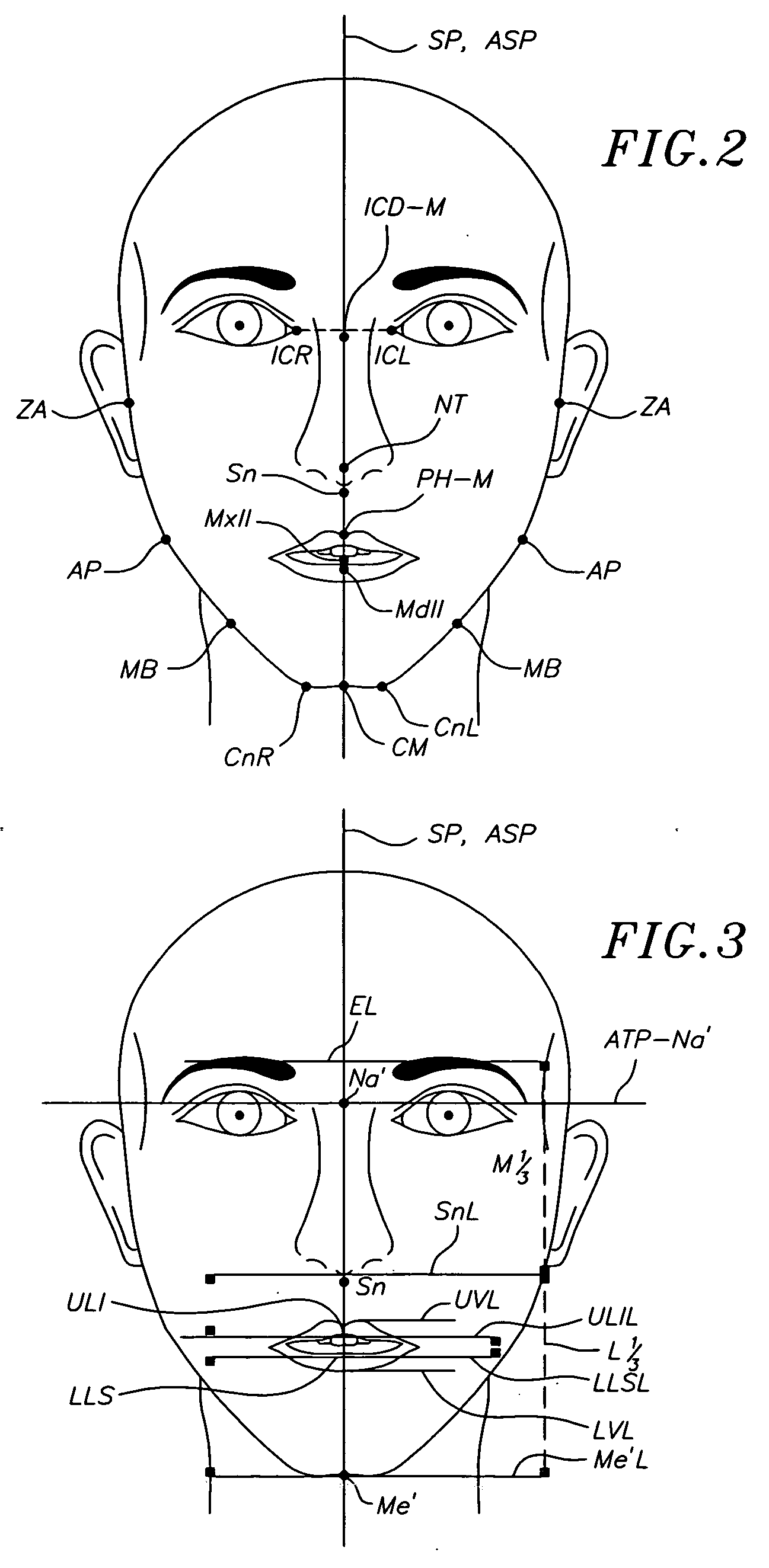

Method for determining and measuring frontal head posture and frontal view thereof

InactiveUS20070106182A1Improve balanceAddress bad outcomesPerson identificationCharacter and pattern recognitionCoronal planeNose

A method for determining and measuring profile and frontal head posture and front views thereof to establish a 3-D model of a patient's head and face. In the method, a patient places his or her head in a natural head position and establishes a postural sagittal plane. Additionally, the practitioner may move the patients head to a corrected sagittal plane. If still necessary, an anatomic sagittal plane that comprises an anatomical midline of the patient's face when viewed from a frontal view is constructed. An profile anatomical coronal plane is located and aligned with the frontal anatomical sagittal plane. Next, an anatomical transverse plane is established through the soft tissue nasion and aligning it perpendicularly to the anatomical sagittal and coronal planes. By developing the 3 planes a 3-D reference frame is established from which soft tissue and hard tissue landmarks are identified and measured.

Owner:G WILLIAM ARNETT & SALLY A WARNER ARNETT TRUSTEES OF THE WARNER ARNETT TRUST +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com