Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

102 results about "Active shape model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

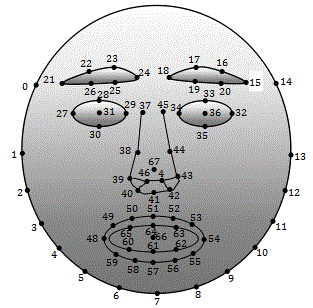

Active shape models (ASMs) are statistical models of the shape of objects which iteratively deform to fit to an example of the object in a new image, developed by Tim Cootes and Chris Taylor in 1995. The shapes are constrained by the PDM (point distribution model) Statistical Shape Model to vary only in ways seen in a training set of labelled examples. The shape of an object is represented by a set of points (controlled by the shape model). The ASM algorithm aims to match the model to a new image.

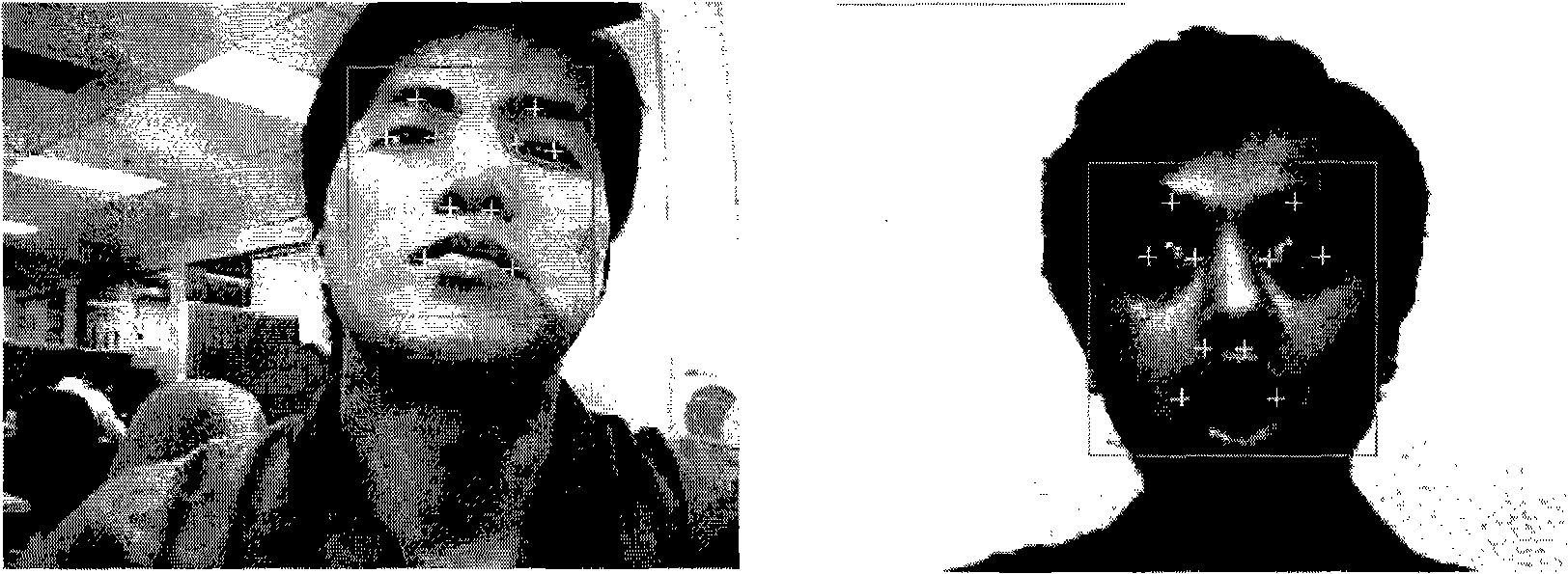

Video-based 3D human face expression cartoon driving method

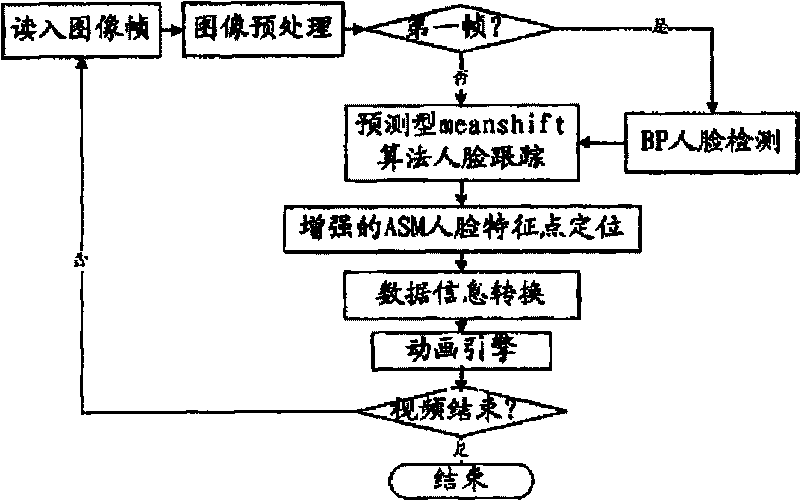

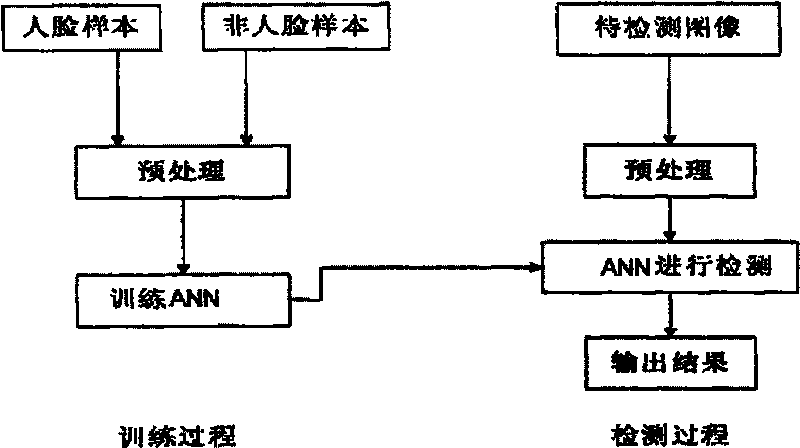

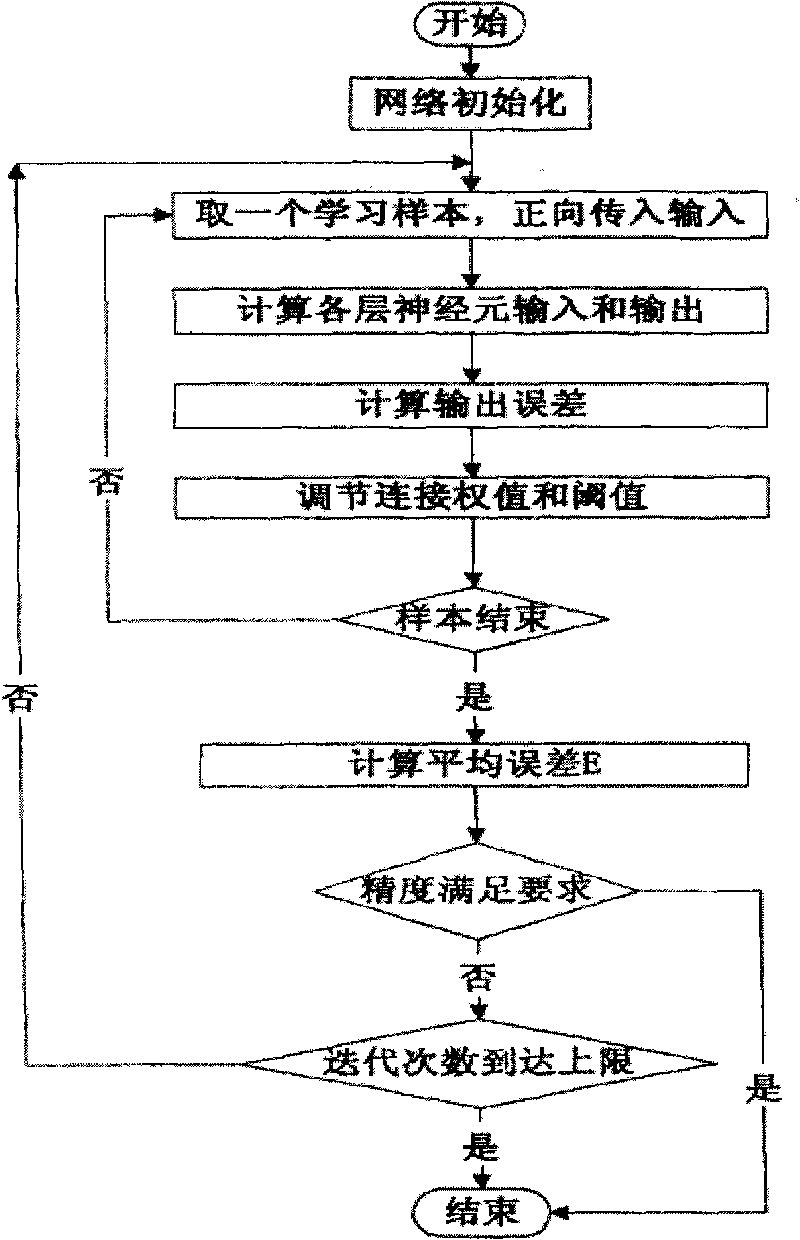

InactiveCN101739712ASufficient prior knowledgeGood tracking resultCharacter and pattern recognition3D-image renderingFace detectionPattern recognition

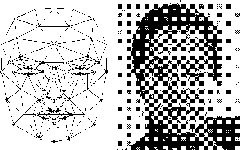

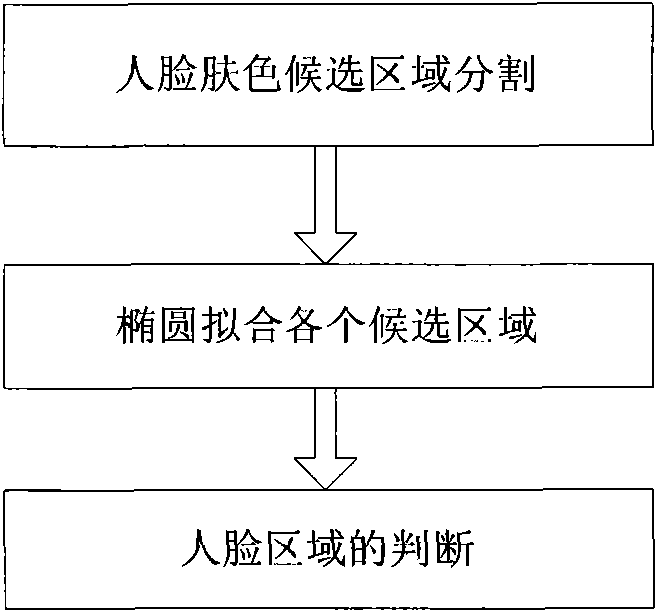

The invention discloses a video-based 3D human face expression cartoon driving method, which comprises the following steps: (1) image preprocessing, namely improving the image quality through light compensation, Gaussian smoothing and morphology operation of a gray level image; (2) BP human face detection, namely detecting a human face in a video through a BP neural network algorithm, and returning the size of the position of the human face for providing a smaller search range for human face characteristic point positioning of the next step to ensure instantaneity; (3) ASM human face characteristic point positioning and tracking, namely precisely extracting characteristic point information of human face shape, eyes, eyebrows, mouth and nose through an enhanced active shape model algorithm and a predicted meanshift algorithm, and returning the definite position; and (4) data information conversion, namely converting the data information acquired in the human face characteristic point positioning and tracking step to acquire the motion information of the human face. The method can overcome the defects in the prior art, and can achieve live human face cartoon driving effect.

Owner:SICHUAN UNIV

Rapid generation method for facial animation

InactiveCN101826217AImprove descriptive powerQuick changeImage analysis3D-image renderingPattern recognitionImaging processing

The invention relates to a rapid generation method for facial animation, belonging to the image processing technical field. The method comprises the following steps: firstly detecting coordinates of a plurality of feature points matched with a grid model face in the original face pictures by virtue of an improved active shape model algorithm; completing fast matching between a grid model and a facial photo according to information of the feature points; performing refinement treatment on the mouth area of the matched grid model; and describing a basic facial mouth shape and expression change via facial animation parameters and driving the grid model by a parameter flow, and deforming the refined grid model by a grid deformation method based on a thin plate spline interpolation so as to generate animation. The method can quickly realize replacement of animated characters to generate vivid and natural facial animation.

Owner:SHANGHAI JIAO TONG UNIV

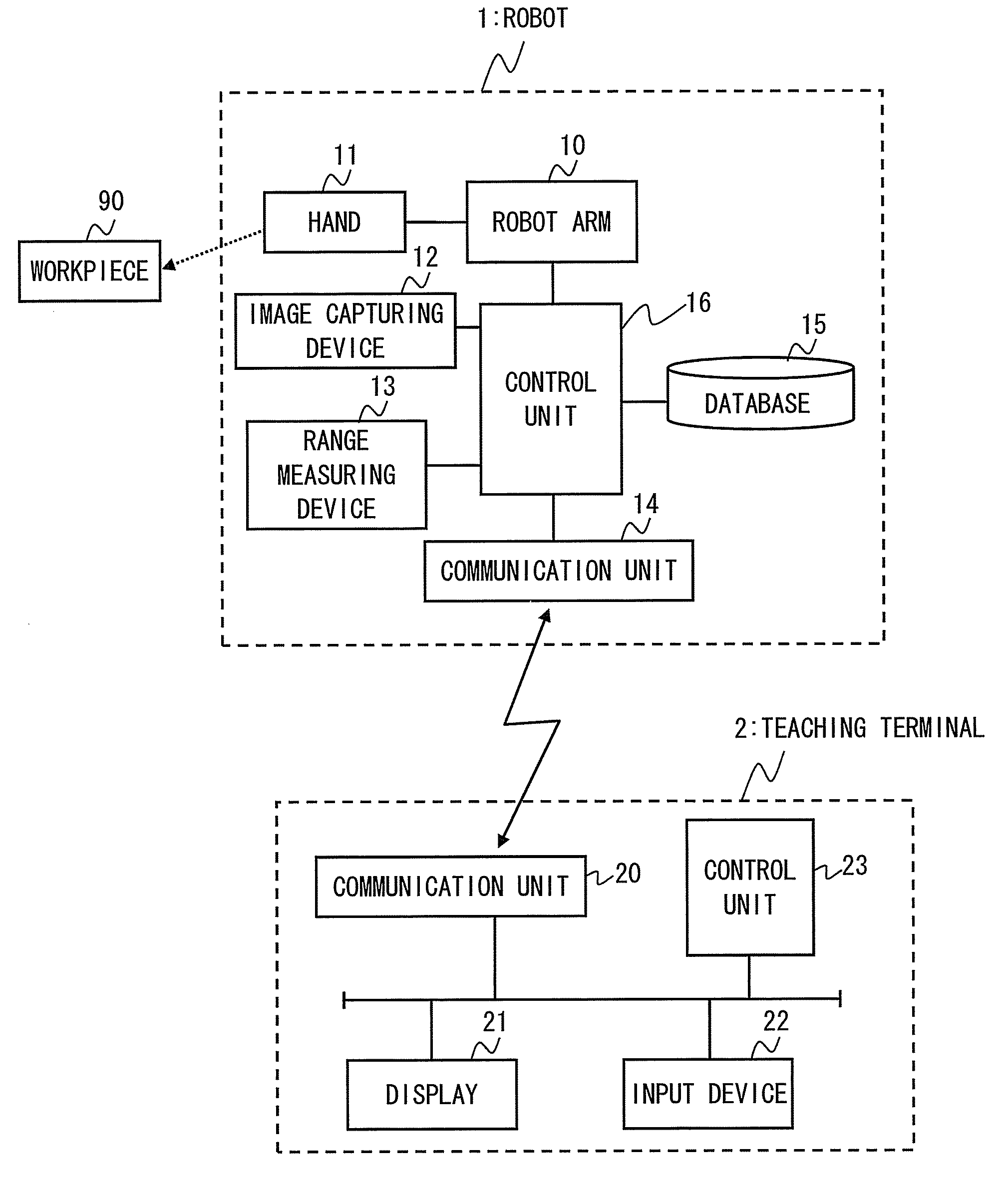

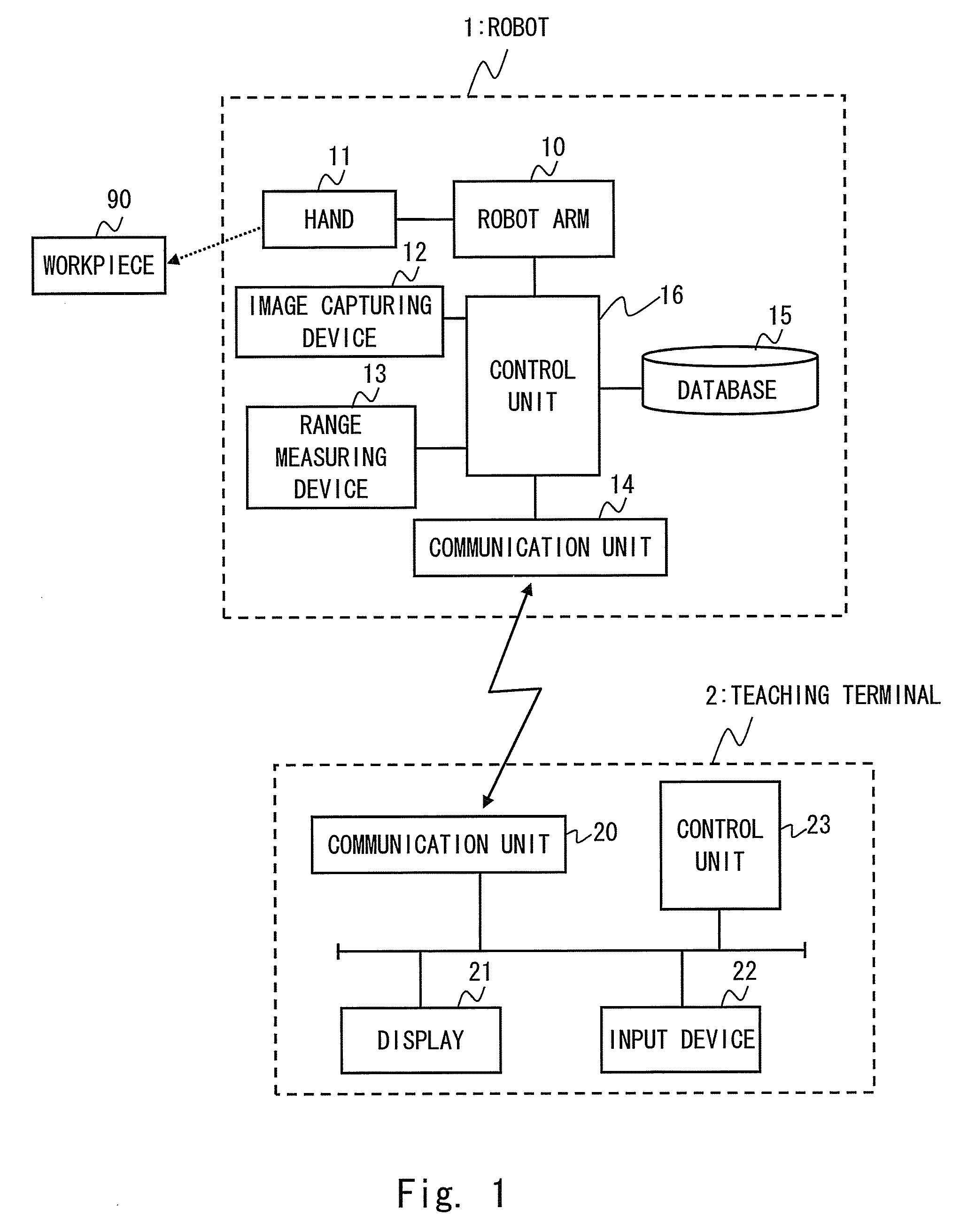

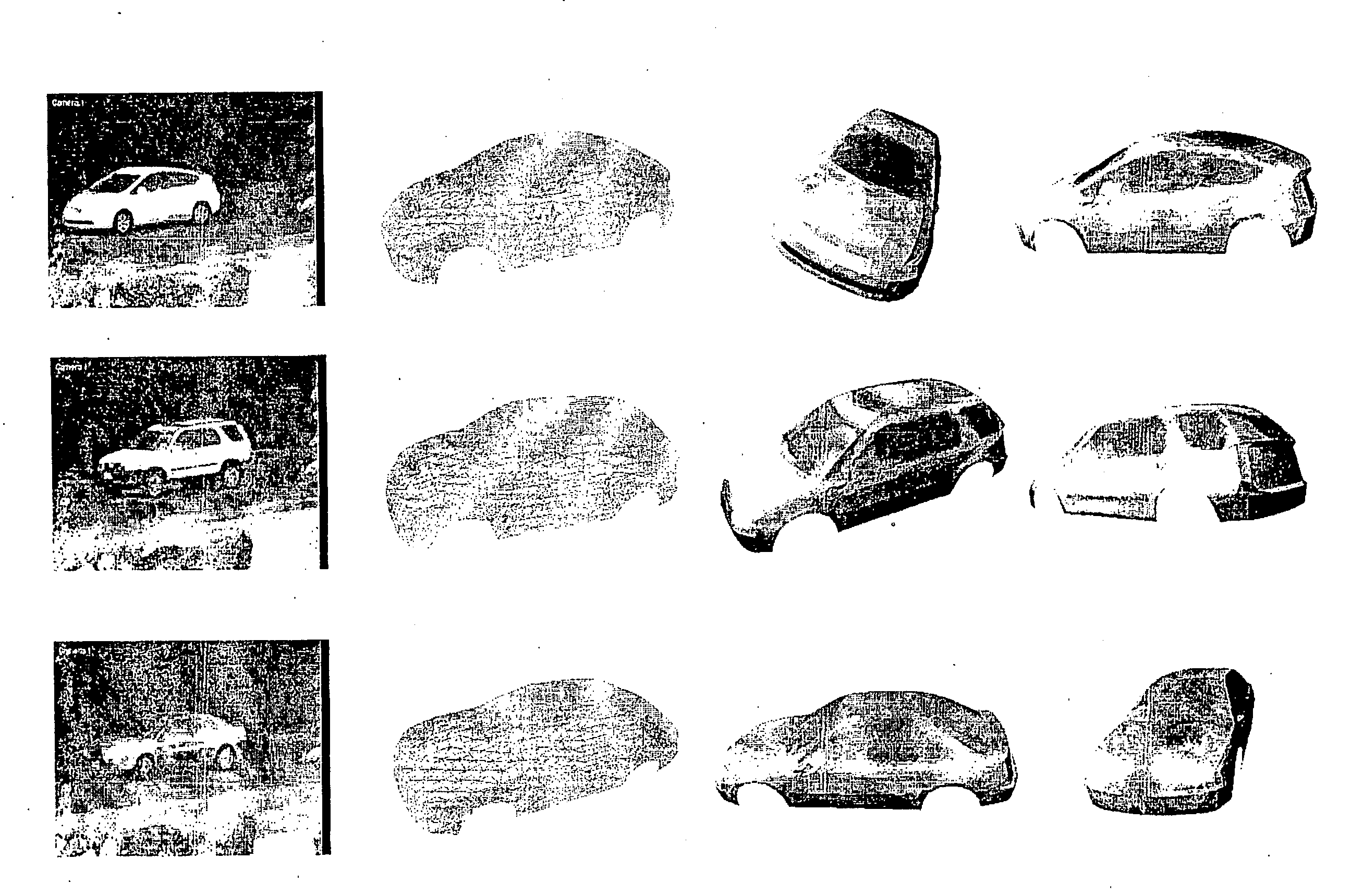

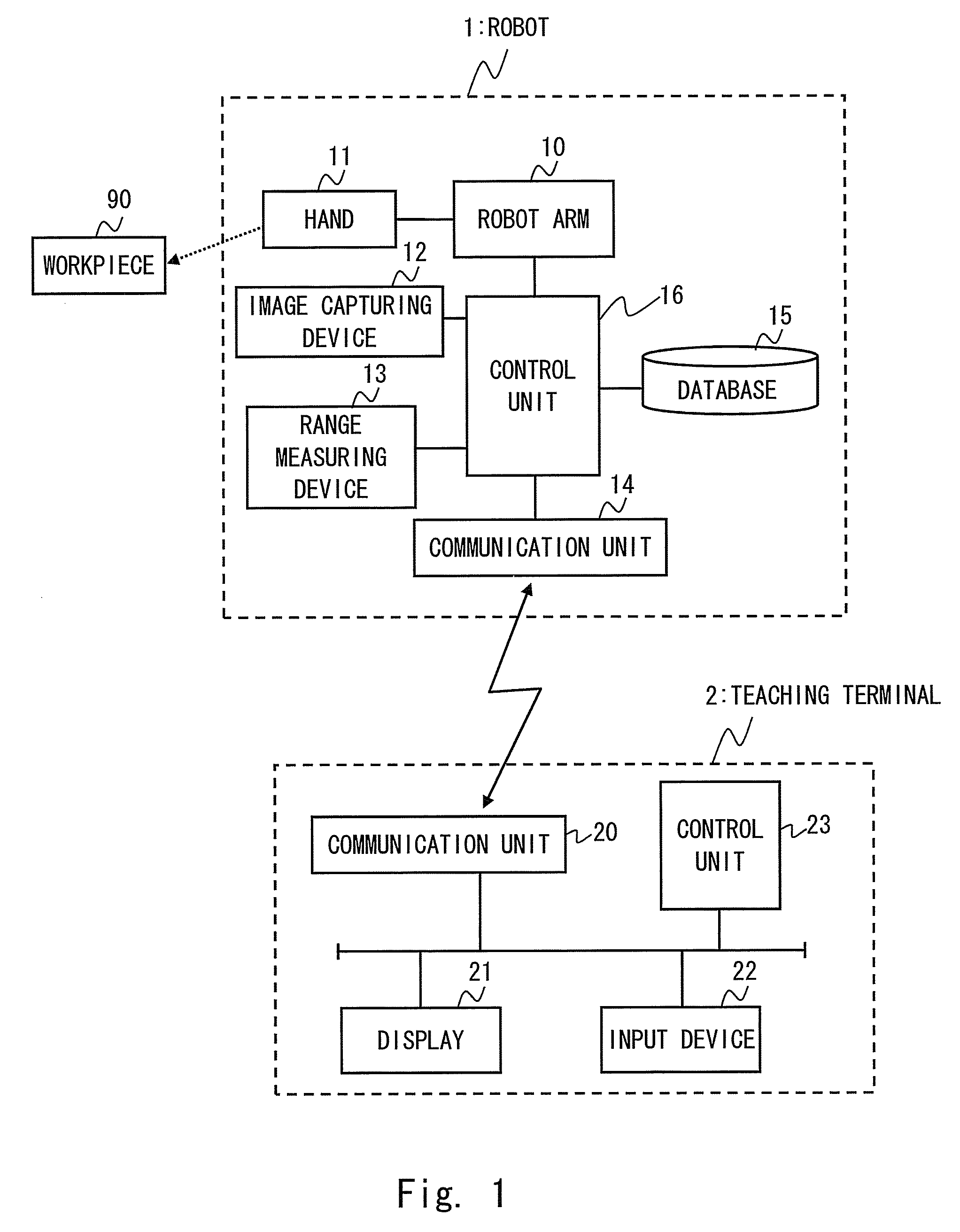

Action teaching system and action teaching method

To make it possible to teach a grasping action for a work object whose shape and 3D position are unknown to a robot by an intuitive and simple input operation by an operator. a captured image of the working space is displayed on a display device; (b) an operation in which a recognition area including a part of a work object to be grasped by a hand is specified in two dimensions on an image of the work object displayed on the display device is received; (c) an operation in which a primitive shape model to be applied to the part to be grasped is specified from among a plurality of primitive shape models; (d) a parameter group to specify the shape, position, and posture of the primitive shape model is determined by fitting the specified primitive shape model onto 3D position data of a space corresponding to the recognition area; (e) a grasping pattern applicable to grasping of the work object is selected by searching a database in which grasping patterns applicable by a hand to primitive shape models are stored.

Owner:TOYOTA JIDOSHA KK

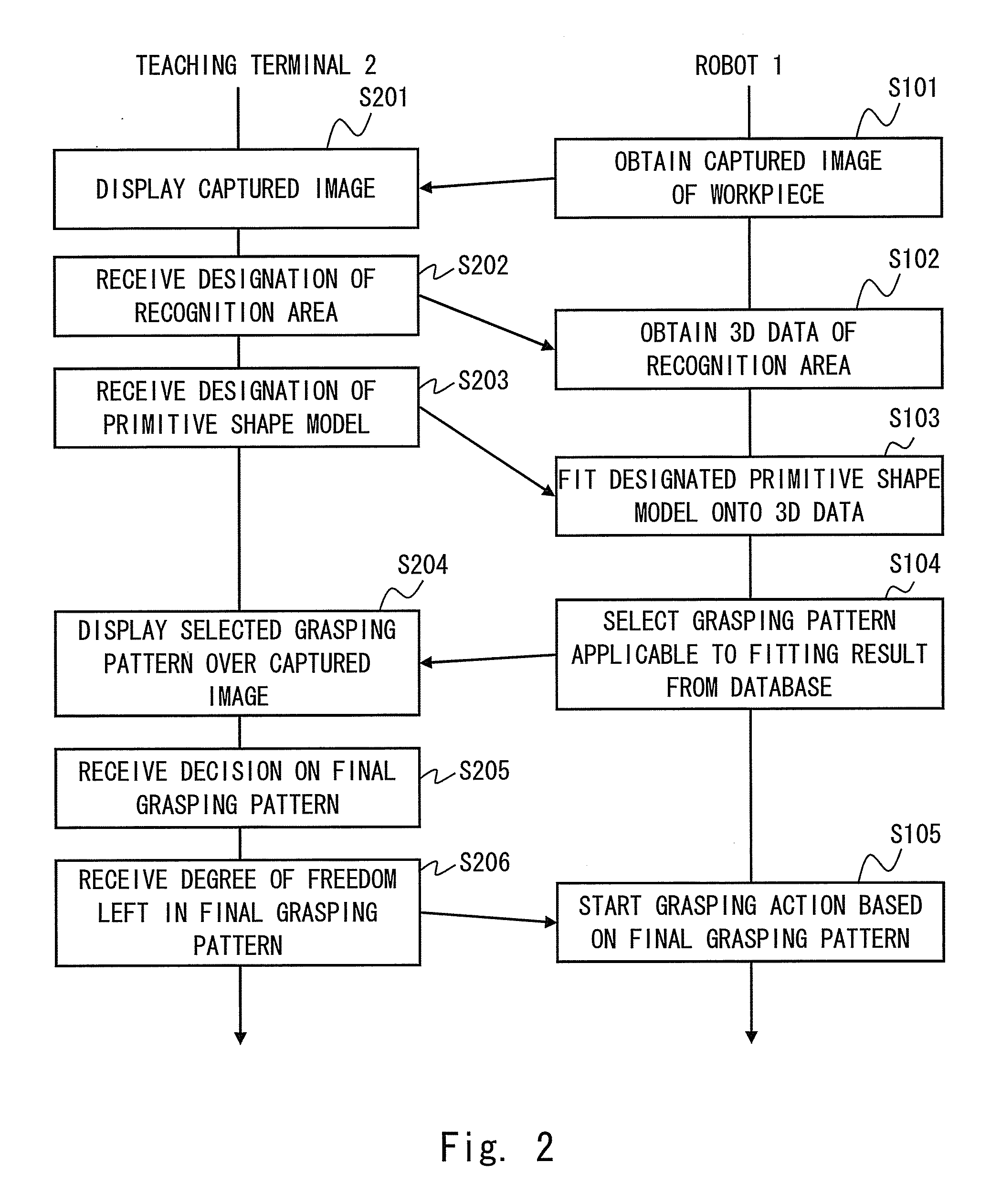

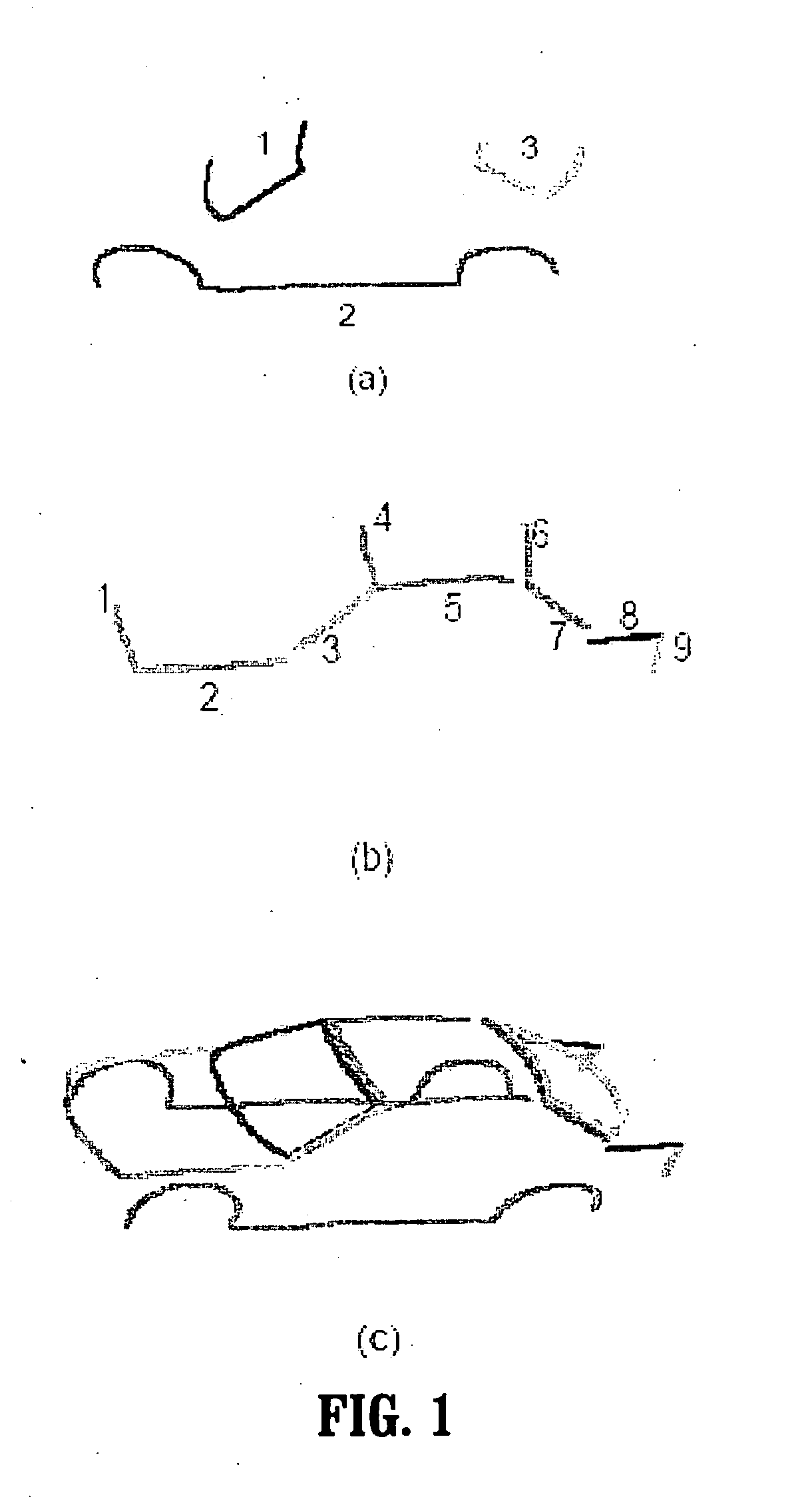

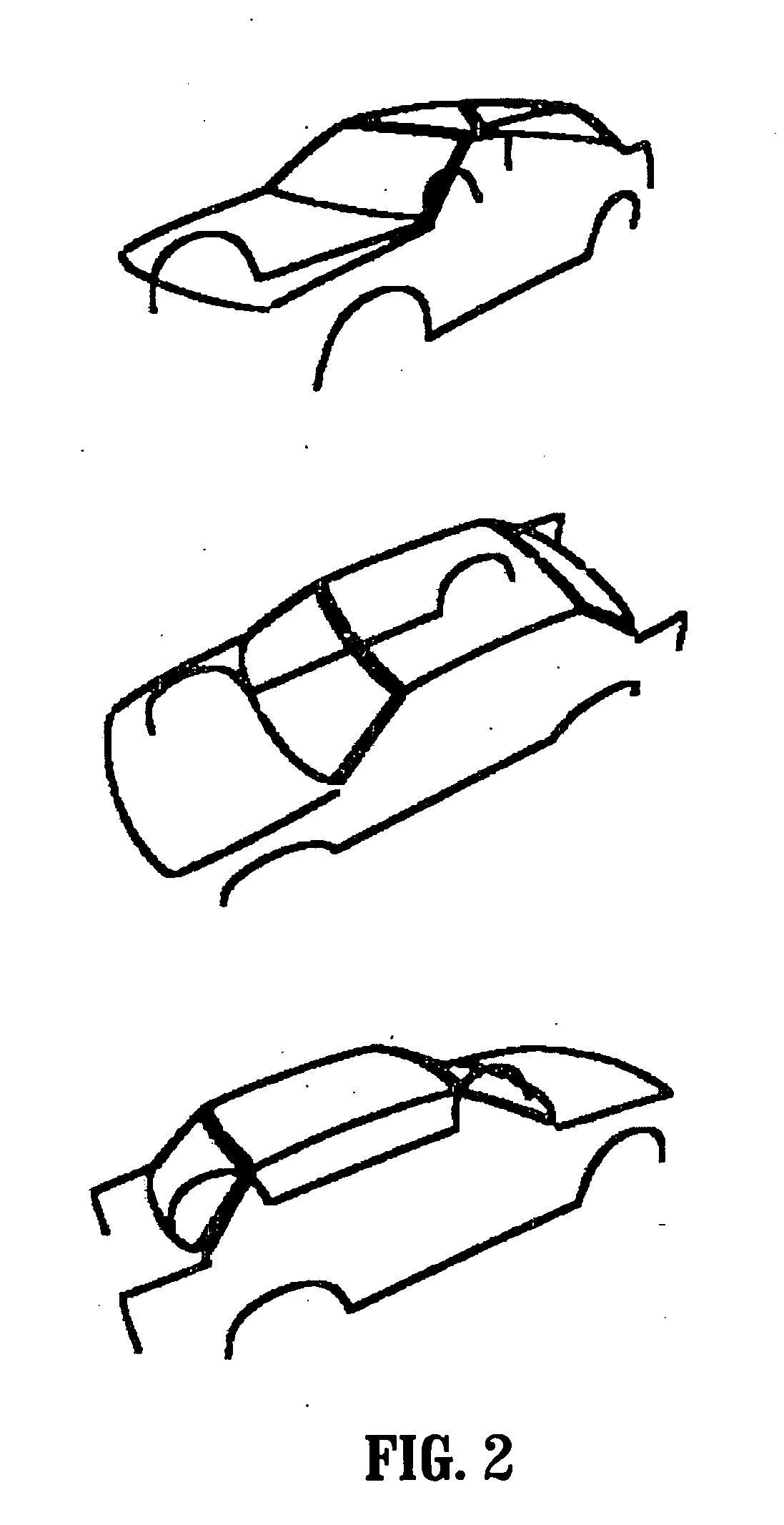

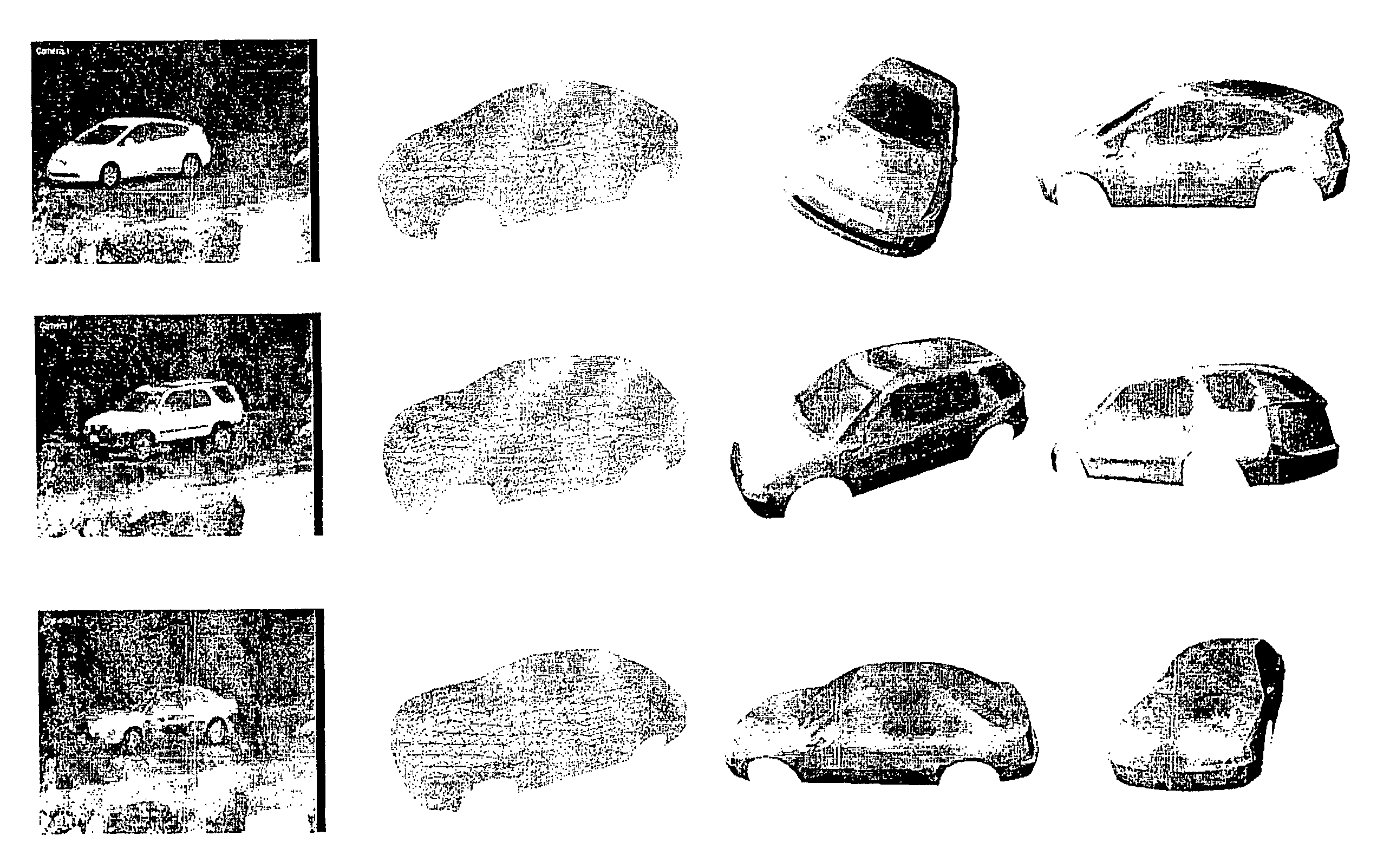

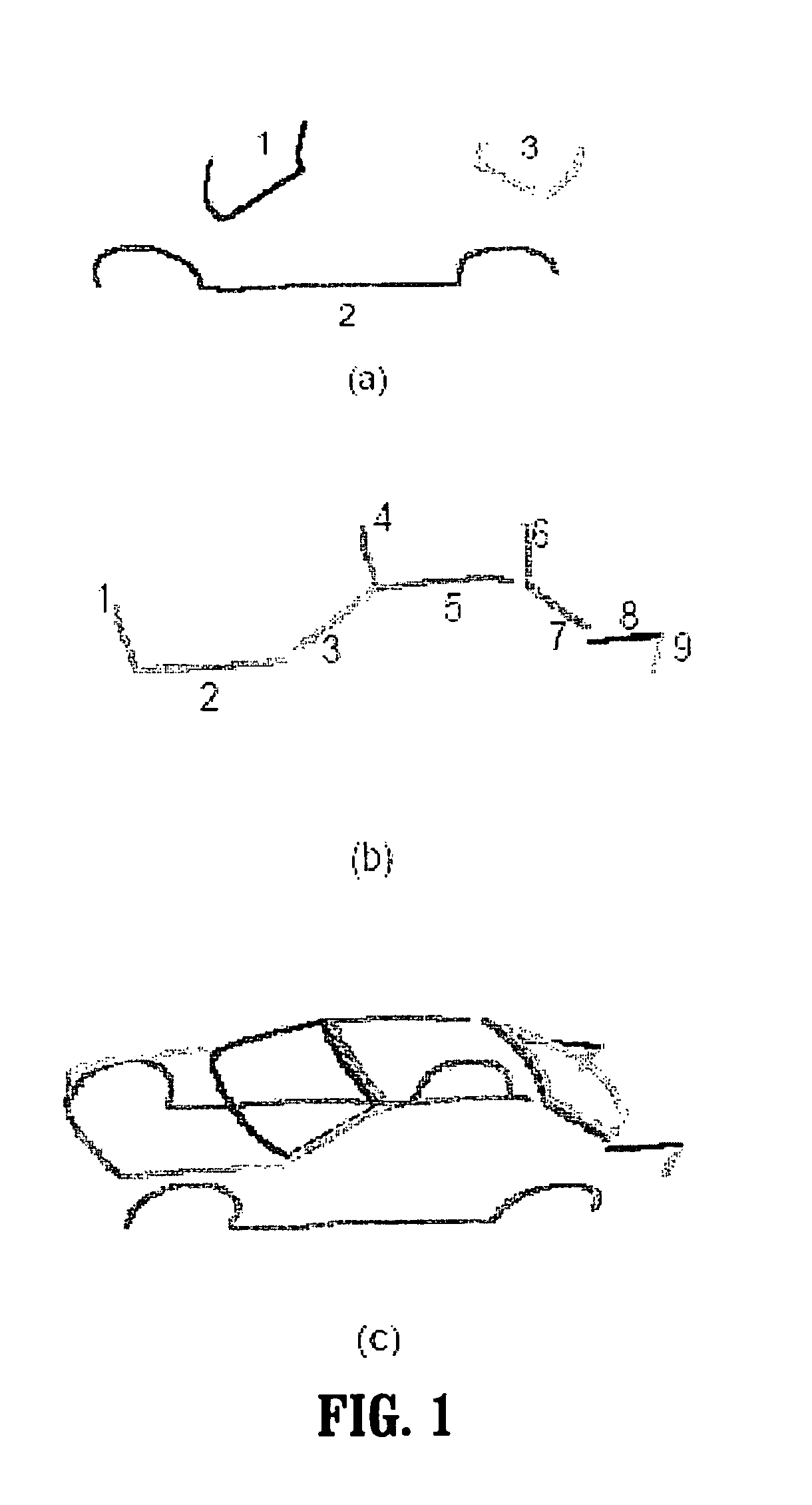

Active Shape Model for Vehicle Modeling and Re-Identification

A method for modeling a vehicle, includes: receiving an image that includes a vehicle; and constructing a three-dimensional (3D) model of the vehicle, wherein the 3D model is constructed by: (a) taking a predetermined set of base shapes that are extracted from a subset of vehicles; (b) multiplying each of the base shapes by a parameter; (c) adding the resultant of each multiplication to form a vector that represents the vehicle's shape; (d) fitting the vector to the vehicle in the image; and (e) repeating steps (a)-(d) by modifying the parameters until a difference between a fit vector and the vehicle in the image is minimized.

Owner:SIEMENS HEATHCARE GMBH

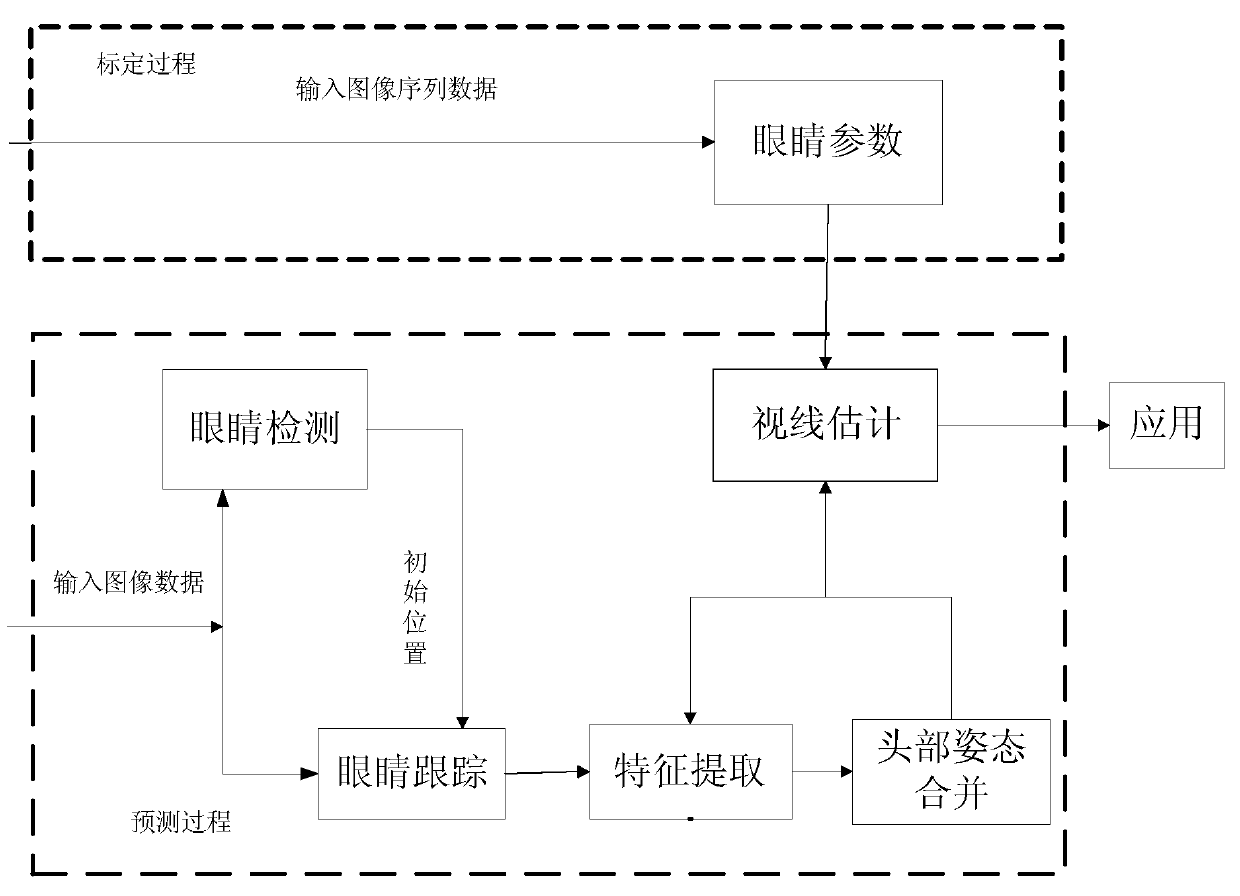

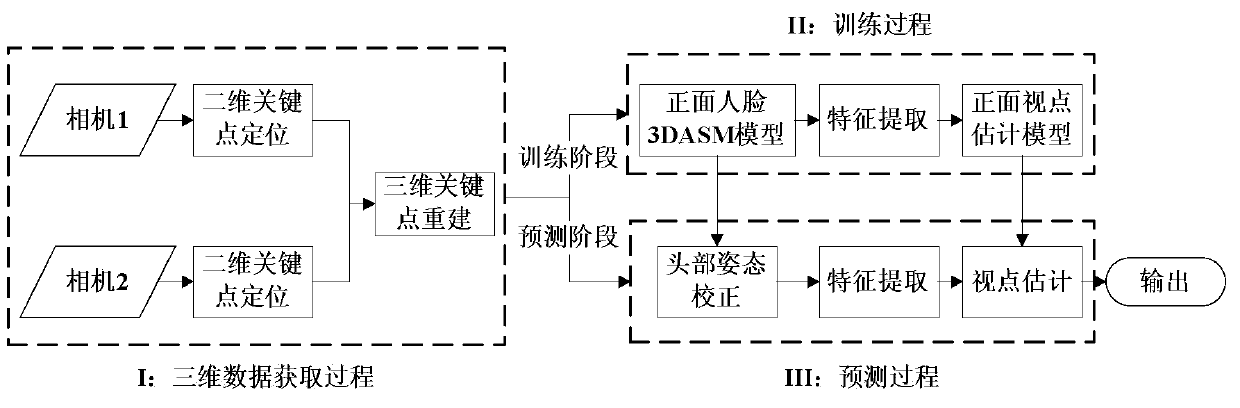

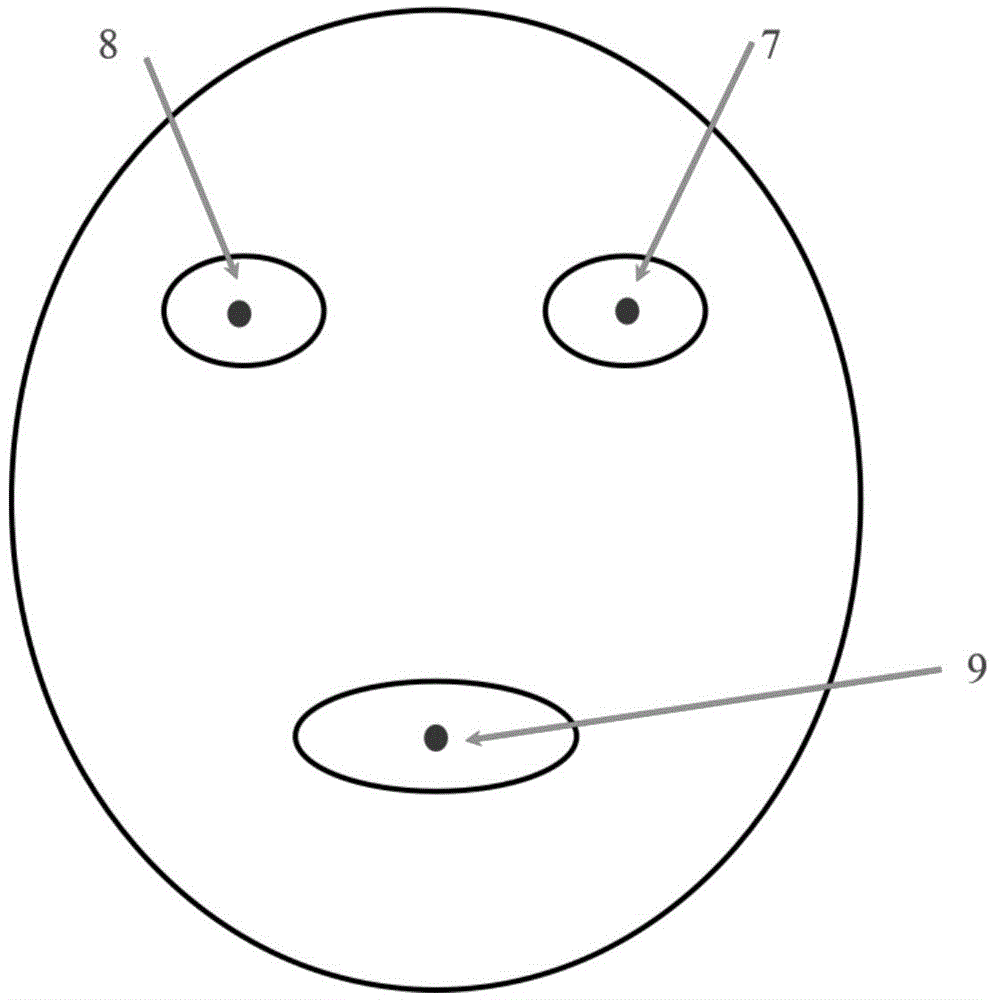

Visual line estimation method and visual line estimation device based on three-dimensional active shape model

ActiveCN104978548AHigh precisionImprove stabilityImage analysisCharacter and pattern recognitionHead movementsViewpoints

The invention discloses a visual line estimation method based on a three-dimensional active shape model. The visual line estimation method comprises the steps of a first step, utilizing a user face front image which is acquired by two cameras as training data; a second step, performing two-dimensional key point positioning on the acquired image, wherein the two-dimensional key point positioning comprises pupil positioning and active shape model (ASM) positioning the user face in the image; a third step, performing three-dimensional coordinate reconstruction on the two-dimensional key point, obtaining three-dimensional coordinates of the pupil centers of a left eye and a right eye in a world coordinate system, and obtaining a face three-dimensional ASM shape; a fourth step, representing the visual line characteristics of the left eye and the right eye by means of relative position relationship between contours of double eyes and the pupil centers; and a fifth step, establishing a front viewpoint estimation model according to the obtained visual line characteristic; wherein the front viewpoint estimation model is used for performing human eye visual line estimation on a prediction sample. According to the visual line estimation method and the visual line estimation device provided by the technical solution of the invention, through establishing the user face three-dimensional ASM, the head gesture of man is estimated and displayed, thereby improving adaptability of visual line estimation to head motion.

Owner:HANVON CORP

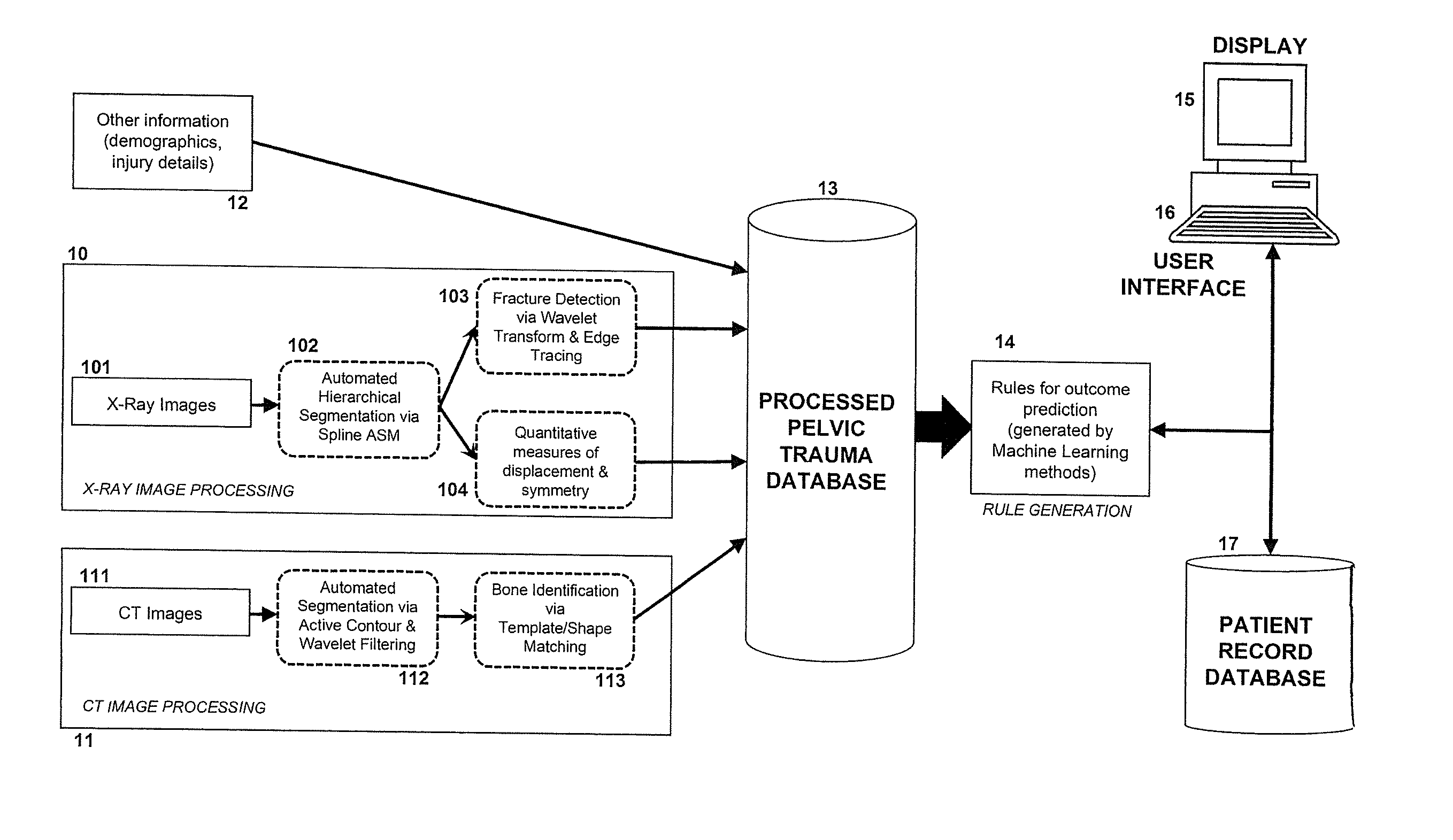

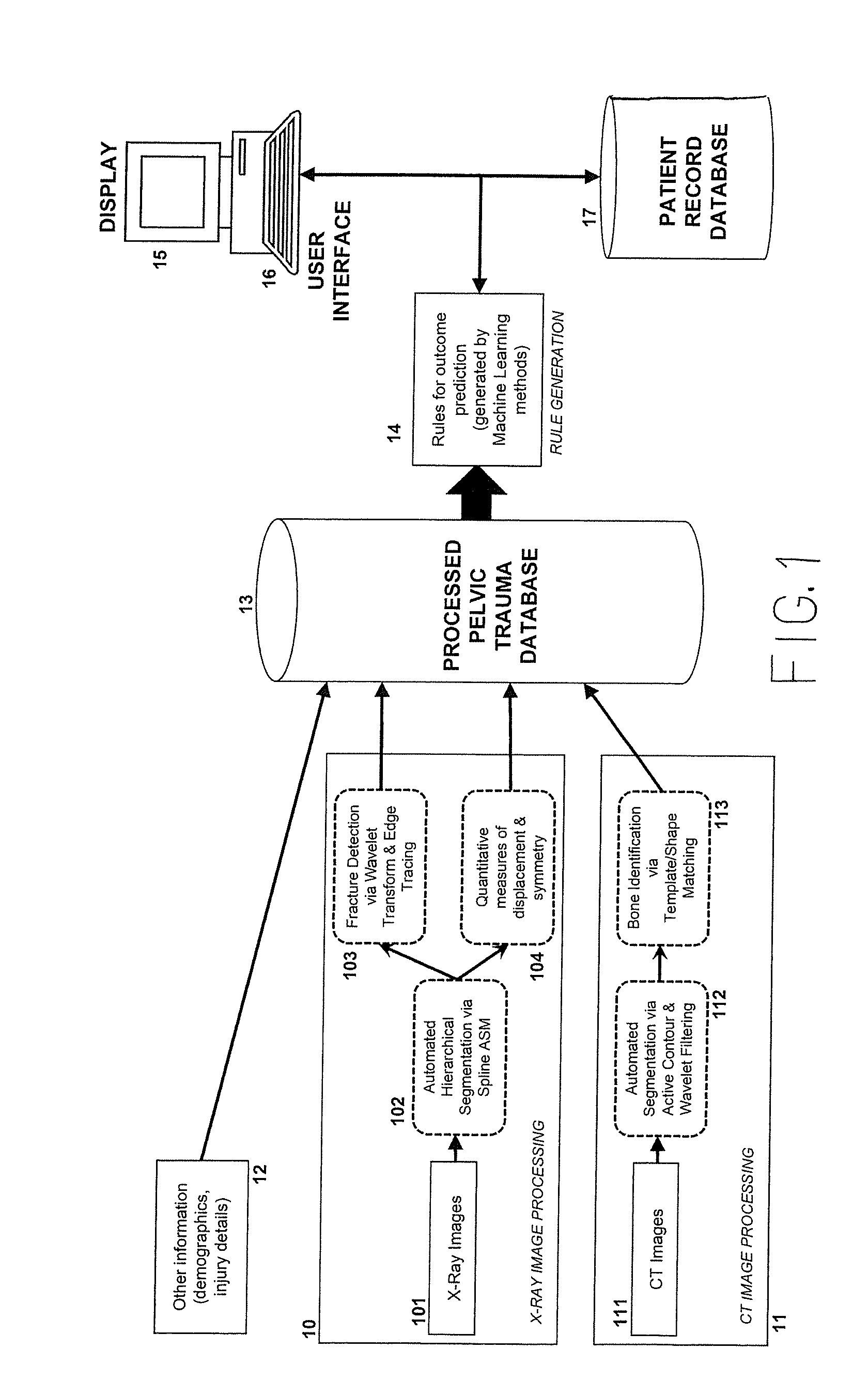

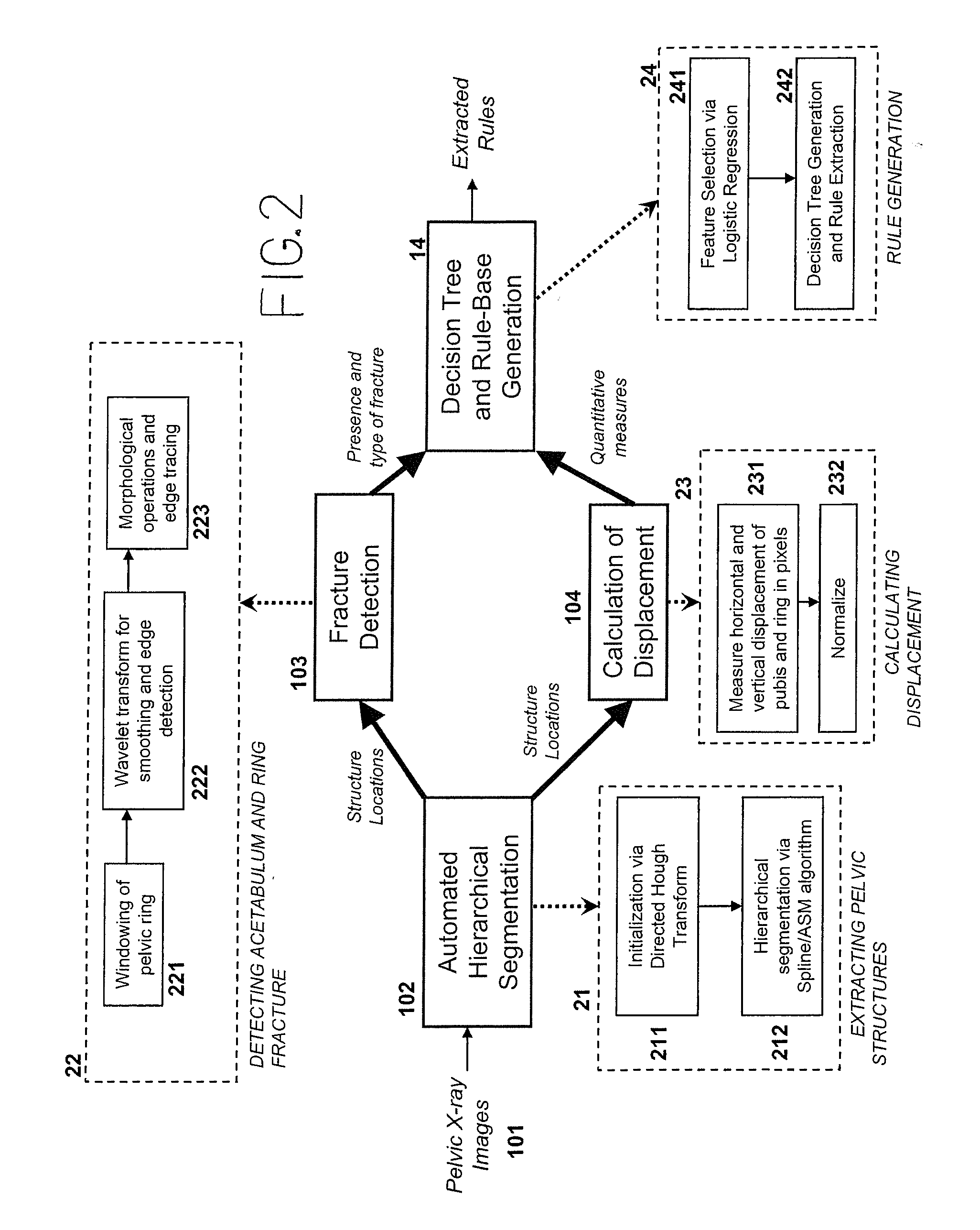

Accurate Pelvic Fracture Detection for X-Ray and CT Images

ActiveUS20120143037A1Accurate segmentationRapid and accurate treatment choiceImage enhancementImage analysisDiagnostic Radiology ModalityX-ray

Accurate pelvic fracture detection is accomplished with automated X-ray and Computed Tomography (CT) images for diagnosis and recommended therapy. The system combines computational methods to process images from two different modalities, using Active Shape Model (ASM), spline interpolation, active contours, and wavelet transform. By processing both X-ray and CT images, features which may be visible under one modality and not under the other are extracted and validates and confirms information visible in both. The X-ray component uses hierarchical approach based on directed Hough Transform to detect pelvic structures, removing the need for manual initialization. The X-ray component uses cubic spline interpolation to regulate ASM deformation during X-ray image segmentation. Key regions of the pelvis are first segmented and identified, allowing detection methods to be specialized to each structure using anatomical knowledge. The CT processing component is able to distinguish bone from other non-bone objects with similar visual characteristics, such a blood and contrast fluid, permitting detection and quantification of soft tissue hemorrhage. The CT processing component draws attention to slices where irregularities are detected, reducing the time to fully examine a pelvic CT scan. The quantitative measurement of bone displacement and hemorrhage area are used as input for a trauma decision-support system, along with physiological signals, injury details and demographic information.

Owner:VIRGINIA COMMONWEALTH UNIV

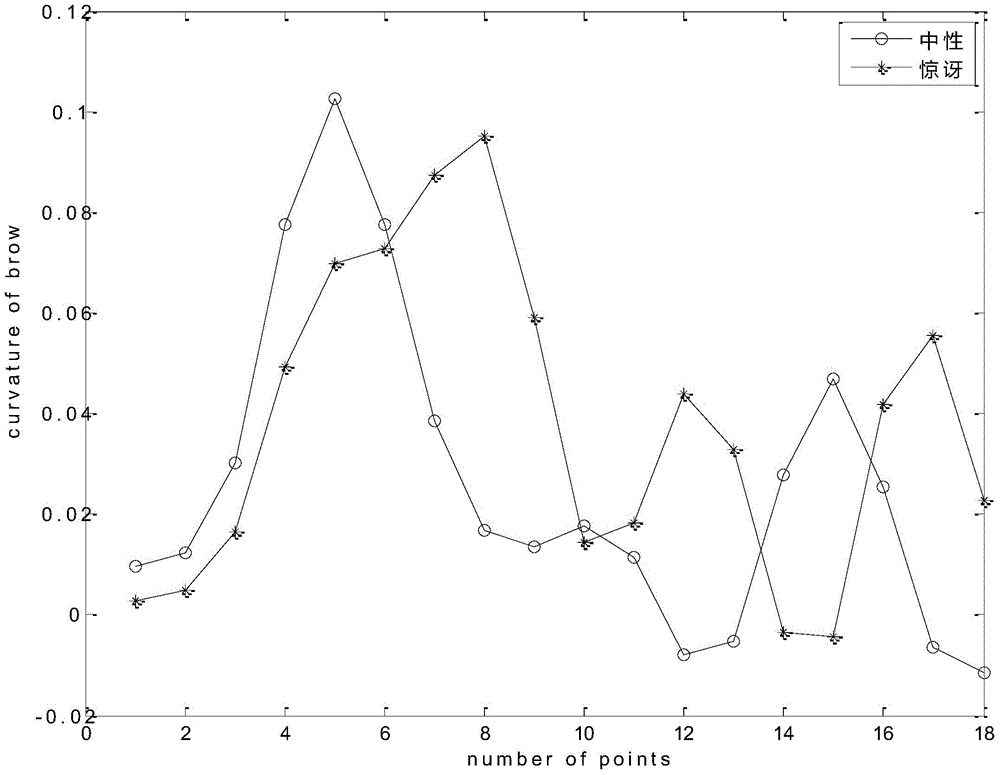

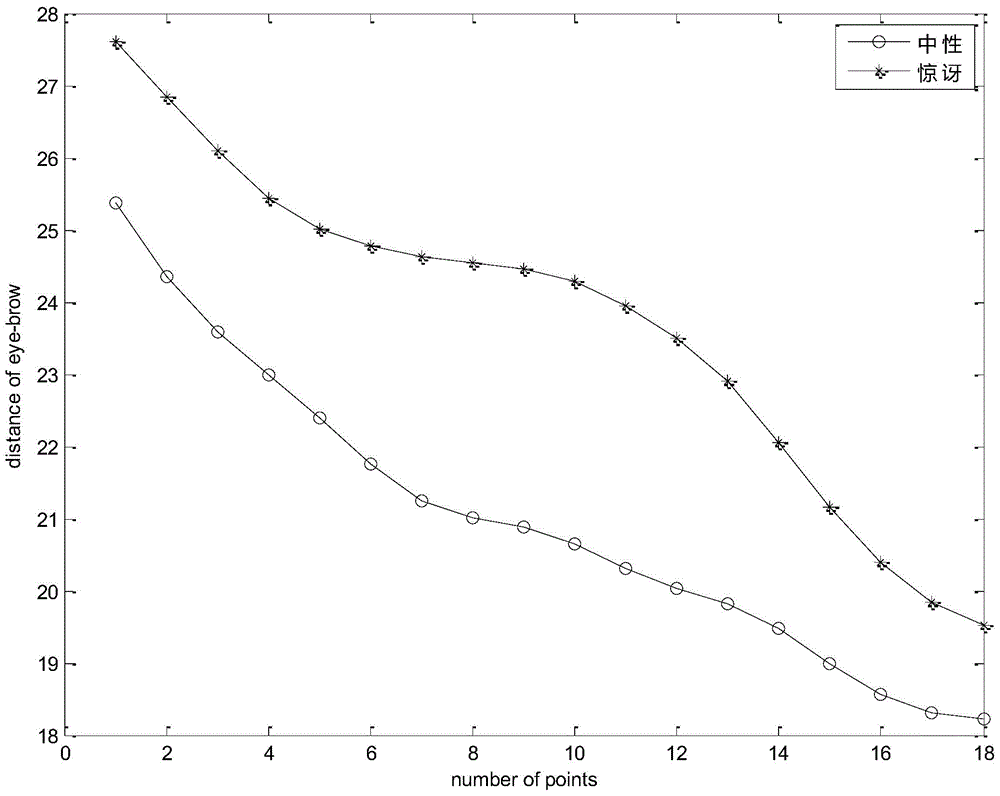

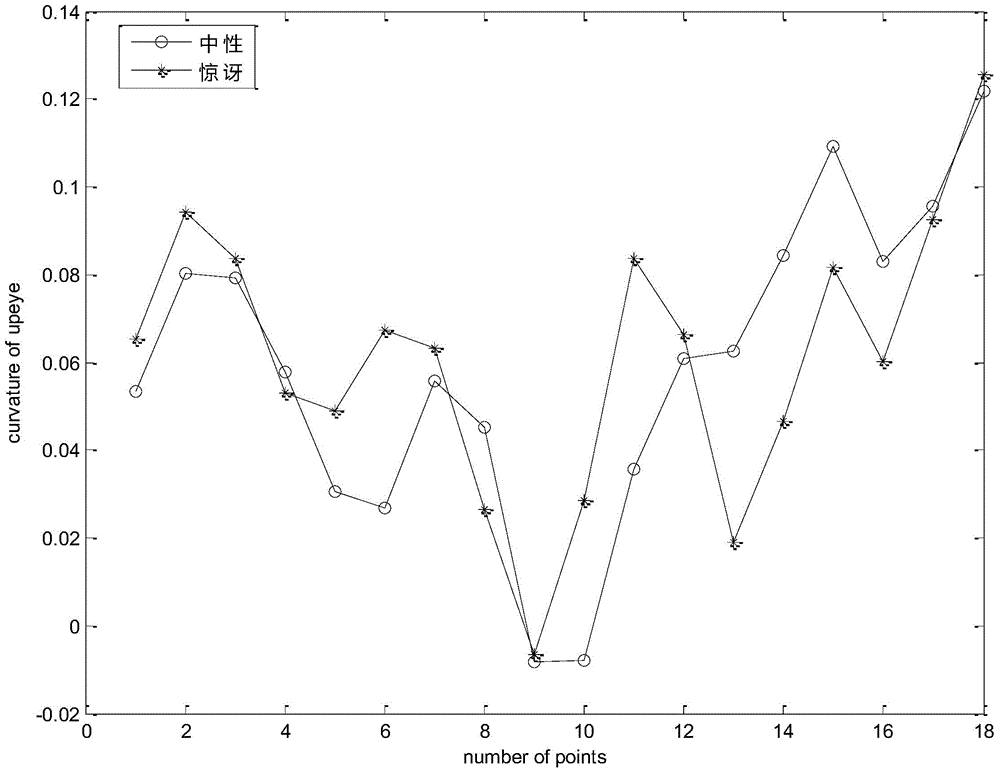

Active-shape-model-algorithm-based method for analyzing face expression

InactiveCN104951743ARealize expression recognitionVerify validityCharacter and pattern recognitionPattern recognitionNose

The invention relates to an active-shape-model-algorithm-based method for analyzing a face expression. The method comprises: a face expression database is stored or selected and parts of or all face expressions in the face expression database are selected as training images; on the basis of the active shape model algorithm, feature point localization is carried out on the training images, wherein the feature points are ones localized based on the eyebrows, eyes, noses, and mouths of the training images and form contour data of the eyebrows, eyes, noses, and mouths; data training is carried out to obtain numerical constraint conditions of all expressions; and according to the numerical constraint conditions of all expressions, a mathematical model of the face expressions is established, and then face identification is carried out based on the mathematical model.

Owner:SUZHOU UNIV

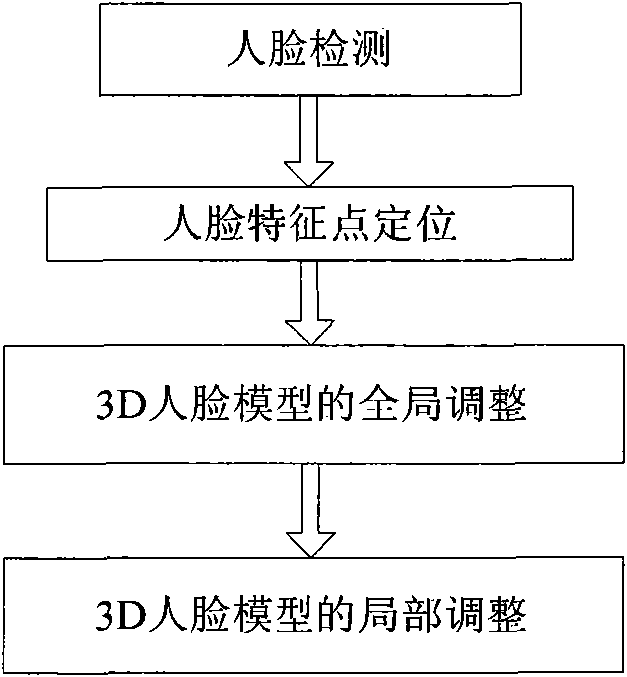

Method for adjusting universal three-dimensional human face model

InactiveCN101593365AImprove accuracyHigh speed3D-image rendering3D modellingComputer imageFace model

The invention discloses a method for adjusting a universal three-dimensional human face model, belongs to the technical field of computer image processing, and mainly relates to three-dimensional human face reconstruction technology in three-dimensional human face recognition technology in biological feature recognition. Based on the universal three-dimensional human face model, the method adjusts the three-dimensional human face model by estimating three-dimensional coordinates of human face feature points in two orthogonal two-dimensional human face images, and finally obtains a three-dimensional specific human face model. The method applies an active shape model, Procrustes analysis and symmetry structural feature of a human face to the process of adjusting the universal three-dimensional human face model. The method estimates the human face feature points through the active shape model, and adjusts the three-dimensional human face model through the Procrustes analysis and the symmetry structural feature of the human face. Compared with common methods based on images, the method of the invention not only improves the accuracy of adjusting the model, but also improves the speed of adjusting the model in certain degree, and has strong commonality in three-dimensional human face reconstruction based on the images.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Active shape model for vehicle modeling and re-identification

A method for modeling a vehicle, includes: receiving an image that includes a vehicle; and constructing a three-dimensional (3D) model of the vehicle, wherein the 3D model is constructed by: (a) taking a predetermined set of base shapes that are extracted from a subset of vehicles; (b) multiplying each of the base shapes by a parameter; (c) adding the resultant of each multiplication to form a vector that represents the vehicle's shape; (d) fitting the vector to the vehicle in the image; and (e) repeating steps (a)-(d) by modifying the parameters until a difference between a fit vector and the vehicle in the image is minimized.

Owner:SIEMENS HEALTHCARE GMBH

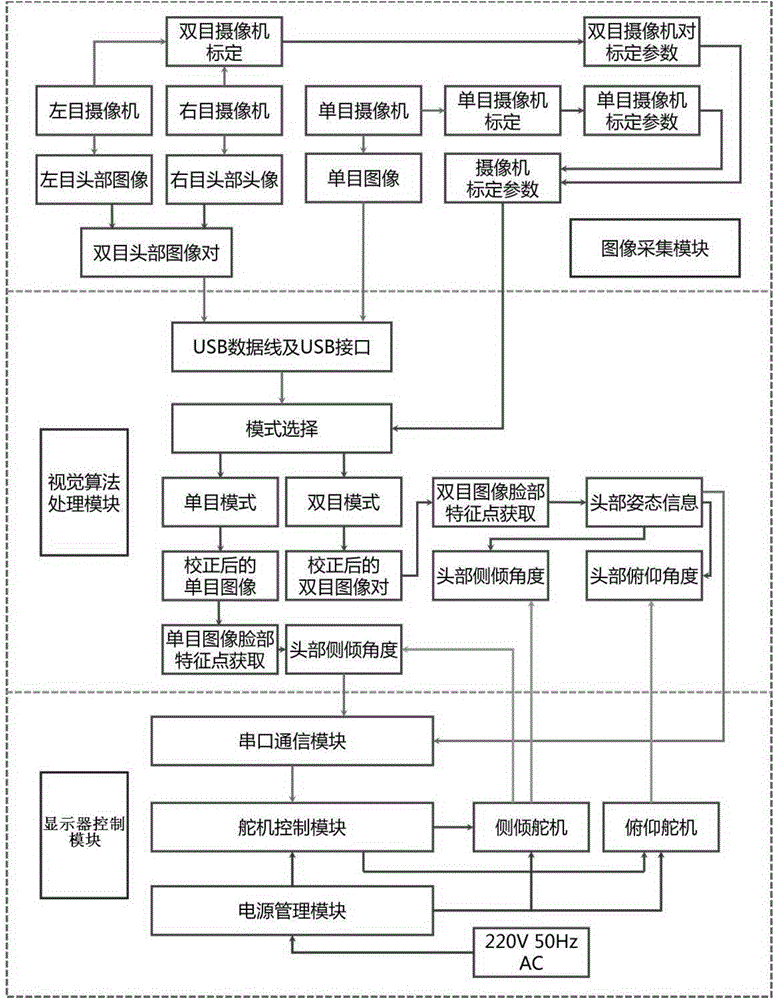

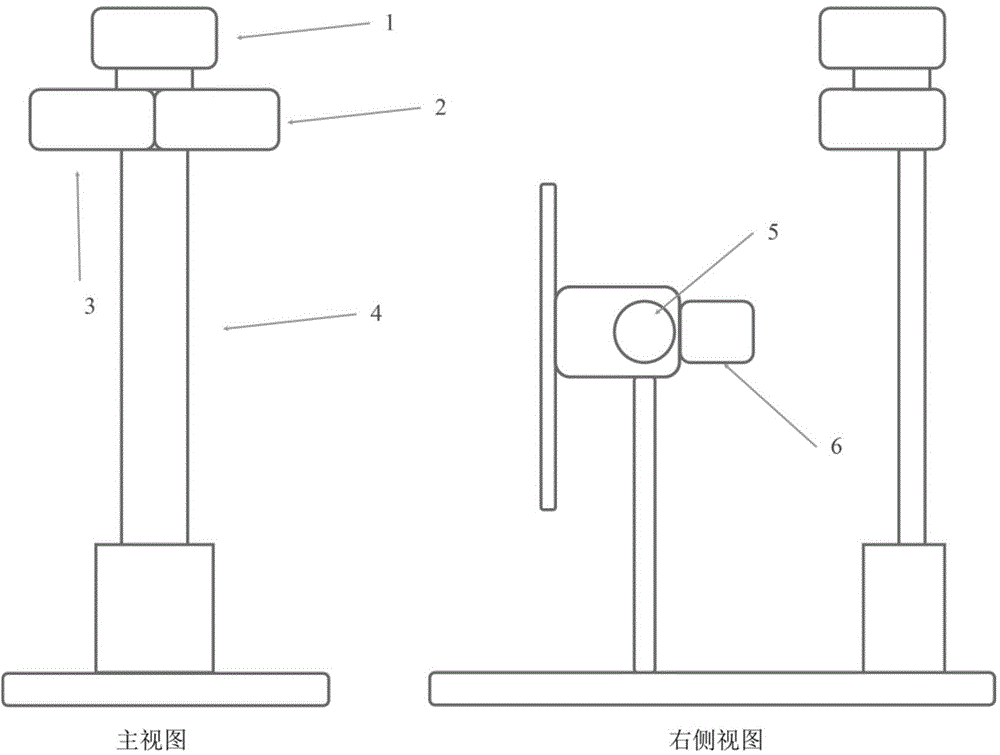

Intelligent display system automatically tracking head posture

ActiveCN103558910ABest sight positionProtect eyesightInput/output for user-computer interactionGraph readingMicrocontrollerVision algorithms

The invention relates to an intelligent display system automatically tracking a head posture. The system can capture the head posture of a human body in real time, a display follows the head of the human body, the display and the head are constantly kept at optimum positions, eye fatigue can be relieved, and myopia can be prevented. The system comprises an image acquisition module, a vision algorithm processing module and a display control module. The image acquisition module is used for acquiring the image of the head of the human body in real time; the vision algorithm processing module is used for firstly preprocessing the image, constantly keeping the head at a vertical position in the image, secondly extracting feature points of a human face by the aid of an ASM (active shape model) algorithm, and finally acquiring the spatial posture of the head according to the triangular surveying principle of elevation angles and side-tipping angles. Head posture information is transmitted to a steering gear control module and then calculated by a single chip microcomputer to form a PWM (pulse width modulation) signal for controlling a steering gear, so that the display follows the head posture at two degrees of freedom of elevation and side-tipping.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

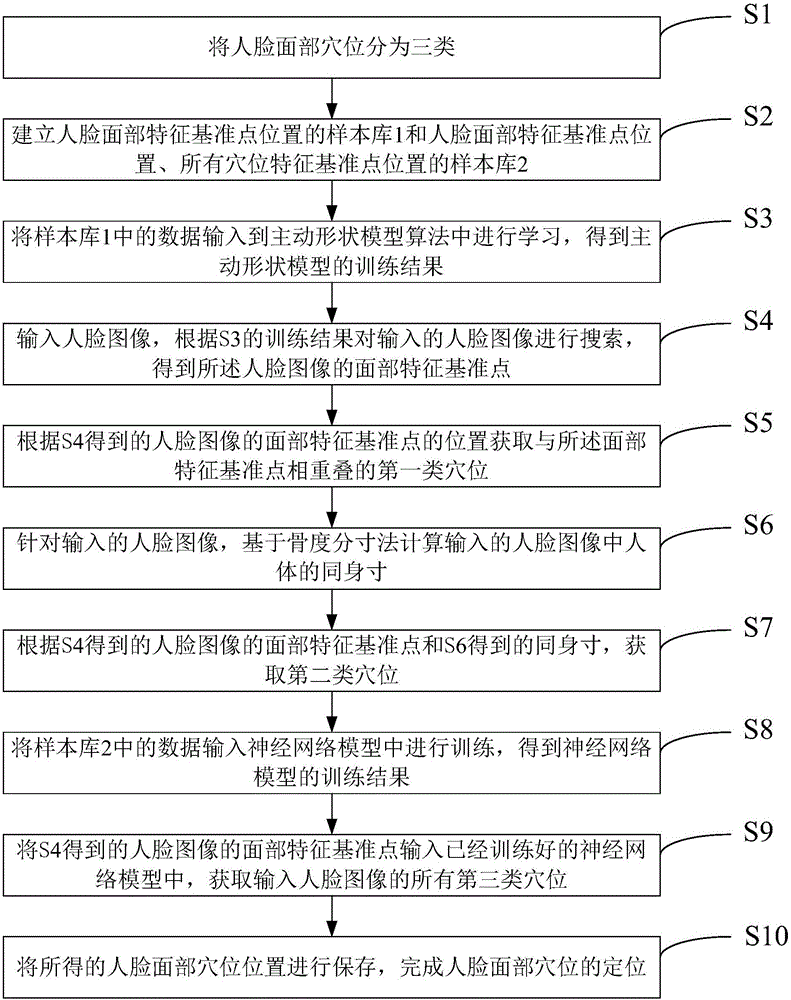

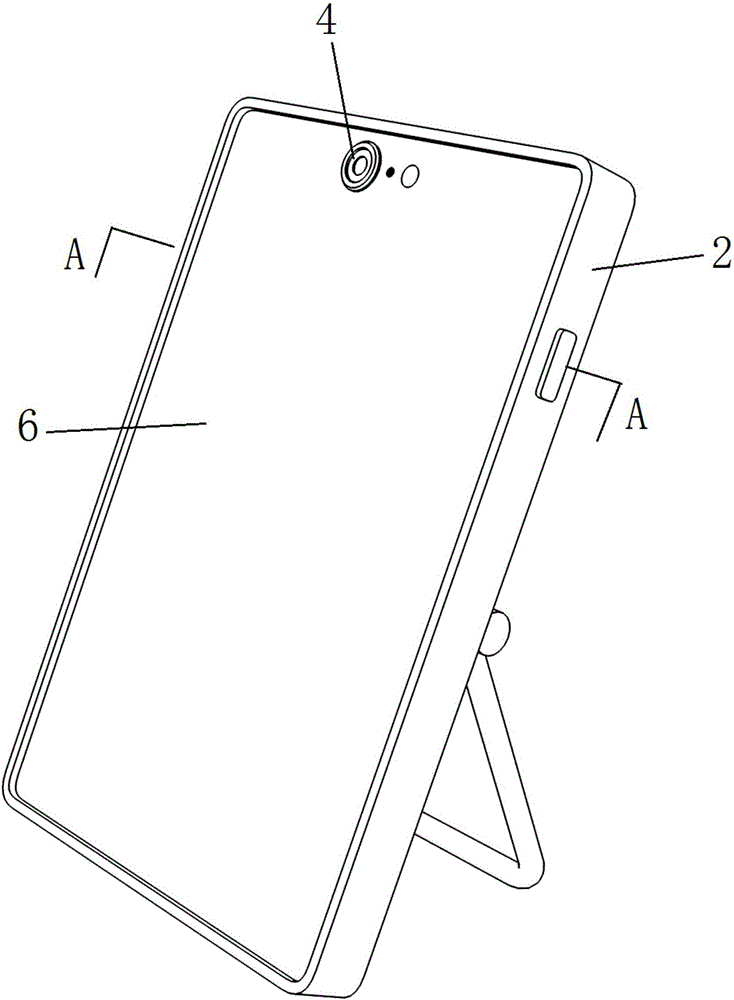

Facial acupoint positioning method and positioning device based on feature point positioning algorithm

InactiveCN105930810ALow costEasy to useBiological neural network modelsCharacter and pattern recognitionBones lengthNetwork model

The invention discloses a facial acupoint positioning method and positioning device based on a feature point positioning algorithm. The method is characterized by dividing facial acupoints into three types; inputting a facial image, carrying out search on the input facial image according to training results of a facial active shape model to obtain facial feature reference points of the facial image, and determining the first type of acupoints; calculating individualeum length through a bone-length measurement method, and obtaining the second type of acupoints according to the facial feature reference points of the facial image and the individualeum length; and inputting the facial feature reference points of the facial image to a neural network model training result to obtain the third type of acupoints of the input facial image. The positioning method can calculates the position of a specified acupoint in the face through face identification and analysis, and has the advantages of low cost and simple use, individual acupoint positioning and non-contact and the like.

Owner:BEIJING UNIV OF TECH

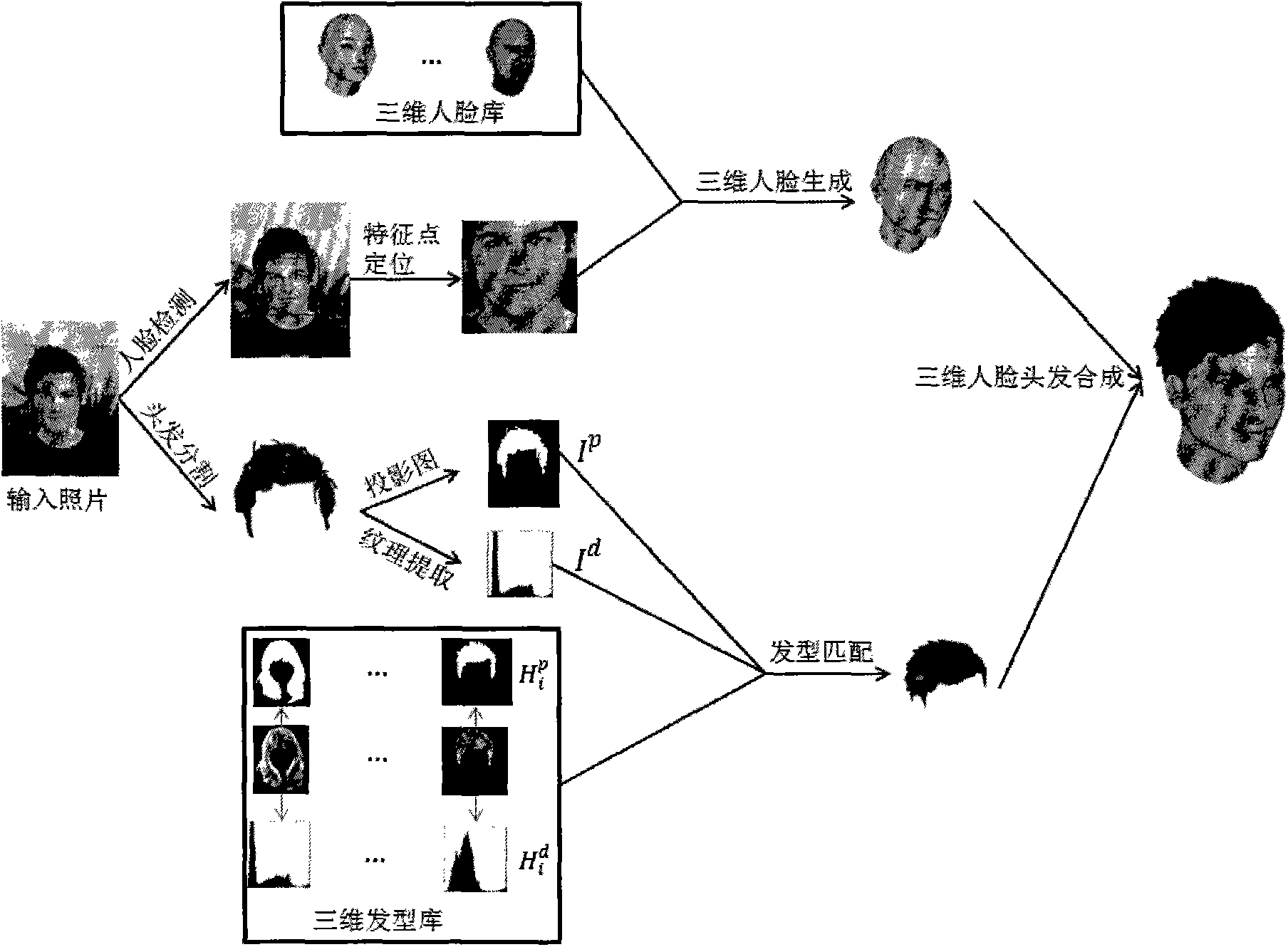

Method for automatically generating three-dimensional head portrait

ActiveCN103366400AAvoid manual additionGood repeatability3D modellingPattern recognitionFace detection

The invention relates to a method for automatically generating a three-dimensional head portrait. The method comprises the following steps of: forming a three-dimensional face database; collecting a three-dimensional hair style database; detecting a face of the input positive face picture by using a face detection algorithm, and positioning characteristic points of the front side of the face by using an active shape model; generating a three-dimensional face model based on the three-dimensional face database, the input face picture and the coordinates of the characteristic points of the face by using a deformable model method; segmenting the hair of the input positive face picture by using a hair method based on a Markov random field; extracting the hair texture according to the hair segmentation result; obtaining a final matched hair model; and combining the face model and the hair model. By means of the technical scheme, the generated head portrait model simultaneously comprises a face area and a hair area, and the hair style is prevented from being manually added; and for modeling of the hair part, direct three-dimensional reconstruction is replaced by using a search technology, and the efficiency can be improved. High fidelity can be guaranteed under the condition that the hair style database is rich enough due to extremely high repetition of the human hair style.

Owner:SHENZHEN HUACHUANG ZHENXIN SCI & TECH DEV

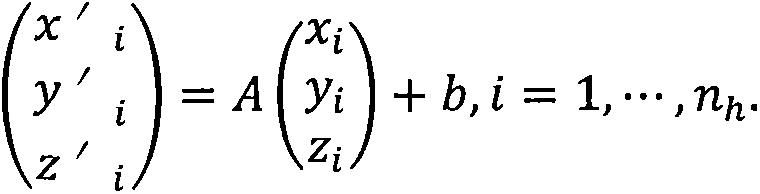

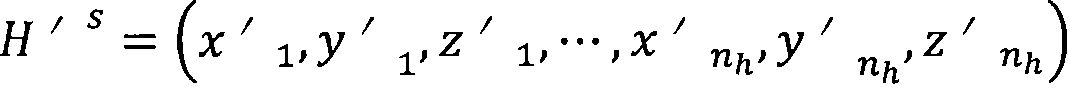

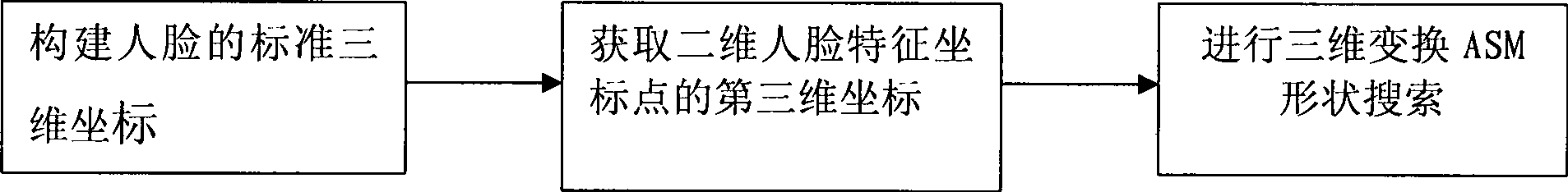

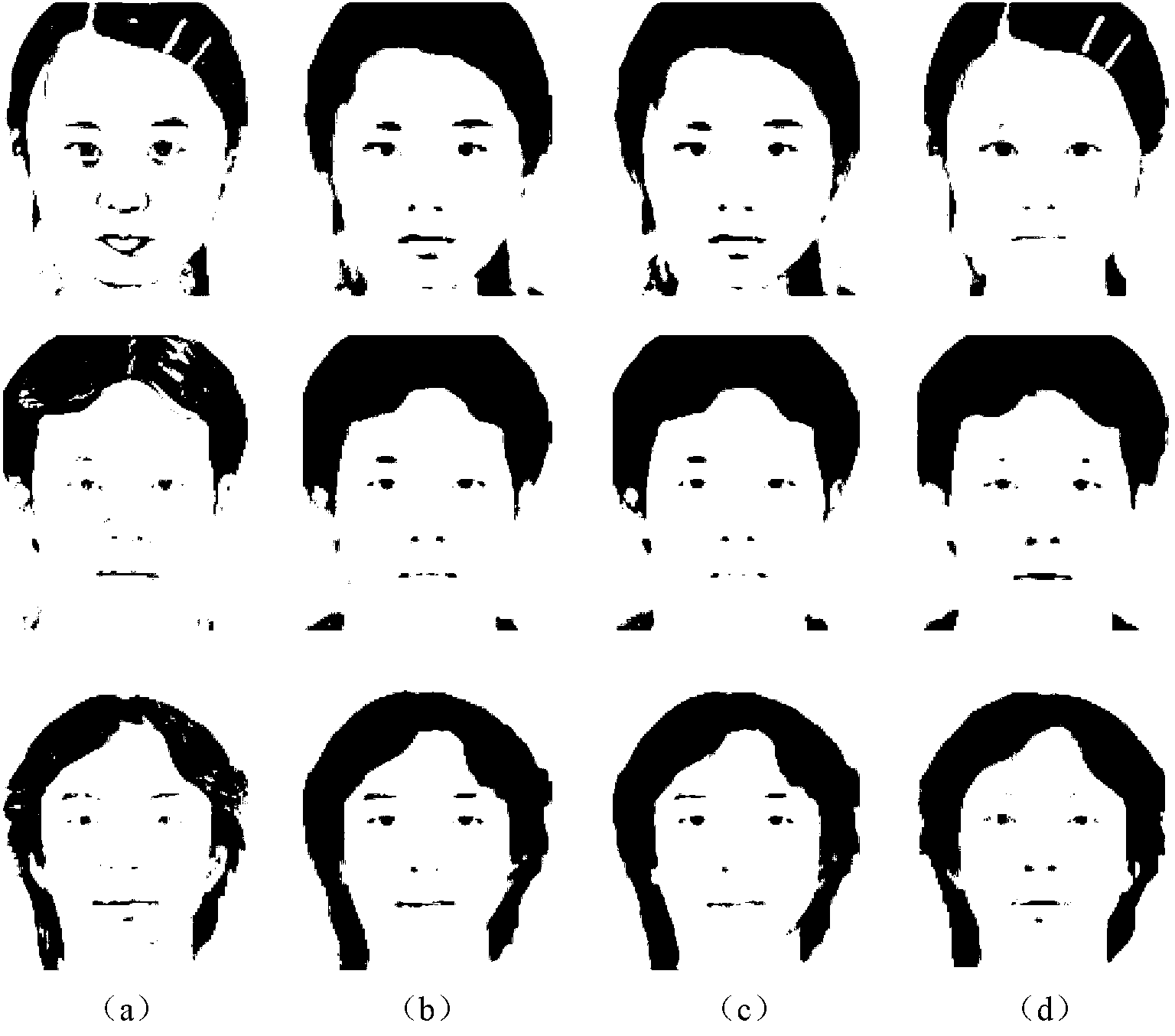

Three-dimensional transformation search method for extracting characteristic points in human face image

InactiveCN101499132AGood feature point search and approximation effectCharacter and pattern recognitionTwo stepCharacteristic point

The invention discloses a three-dimension transform search method for extracting characteristic point in face image which uses ASM (Active Shape Models) method locating face image as a base, changes two-dimension transform shape search method in present ASM into three-dimension transform shape search method. The method includes steps as follows: firstly, constructing a standard face three-dimension model; secondly, constructing three-dimension coordinates of a two-dimension stat model (basic shape) of ASM train centralizing face characteristic point according with standard face three-dimension model; finally, processing three-dimension transform to the two-dimension stat model having three-dimension coordinate and projecting to the two-dimension plane for approaching the given face characteristic point shape after search, wherein the search process adopts the method of two-step conversion and iterated approximation. The method can reflect real change of face gesture and has more search precision; the test result shows: the method can more approach fact characteristic point compared with present two-dimension transform search method.

Owner:广州衡必康信息科技有限公司

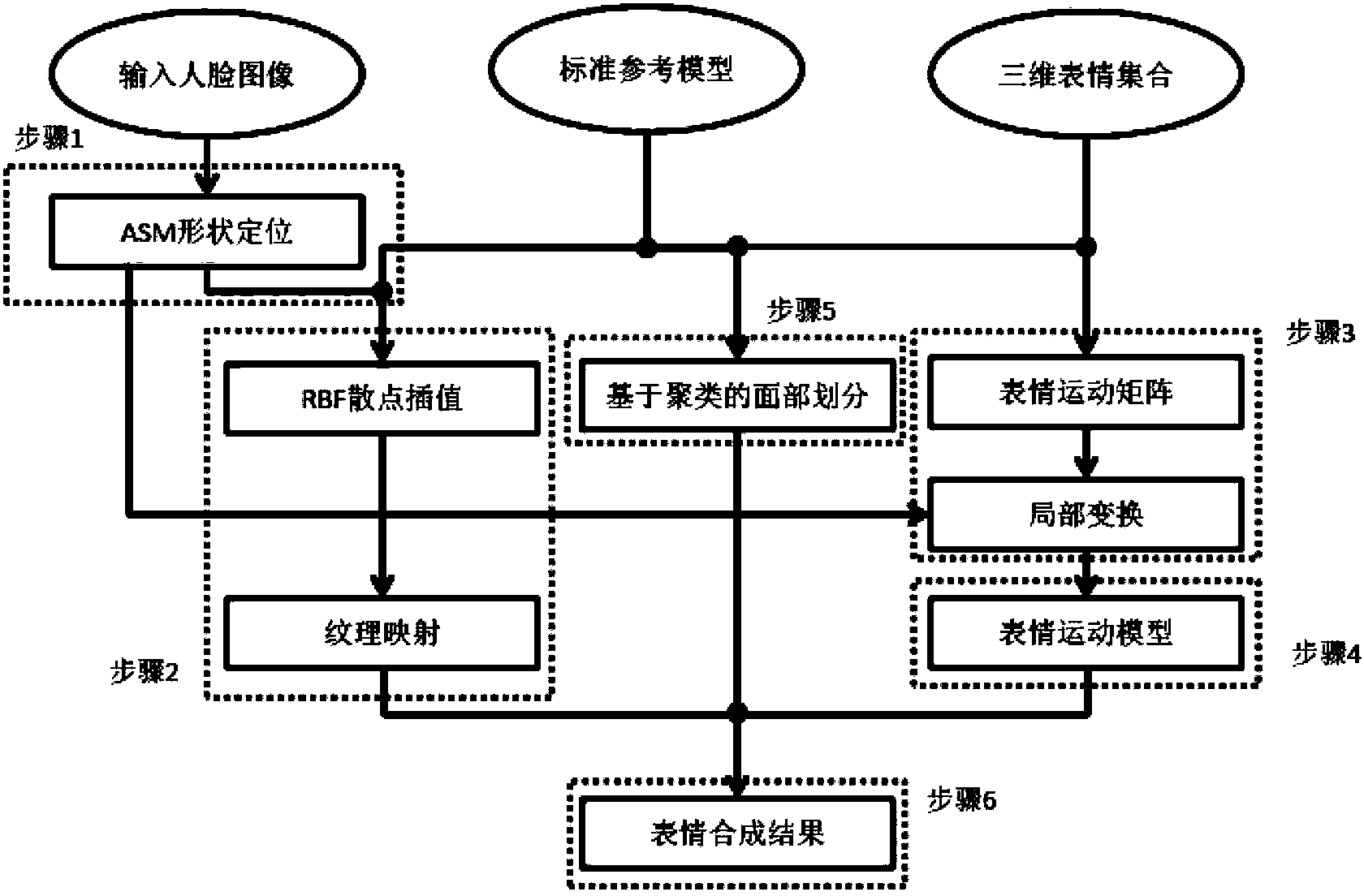

Method and device for automatically synthesizing three-dimensional expression based on single facial image

InactiveCN104346824ASynthetic real-timeShape fitsImage analysis3D-image renderingPattern recognitionReference model

The invention discloses a method for automatically synthesizing a three-dimensional expression based on a single facial image. The method comprises the following steps of 1, performing facial shape positioning on a single input image by using an ASM (active shape model); 2, finishing facial shape modeling in a scattered interpolation way according to a positioned facial shape by virtue of a three-dimensional facial reference model, and performing texture mapping to obtain a three-dimensional facial model of a target face in the image; 3, calculating a facial expression movement matrix of an expression set relative to the three-dimensional facial reference model and the three-dimensional facial model of the target face; 4, calculating a linear movement model of each expression in the expression set according to the facial expression movement matrix, obtained in step 3, of the three-dimensional facial model of the target face; 5, obtaining a facial area division result of the face by virtue of a clustering method; 6, performing facial expression synthesis. The method is favorable for systematically synthesizing more flexible and richer facial expressions.

Owner:HANVON CORP

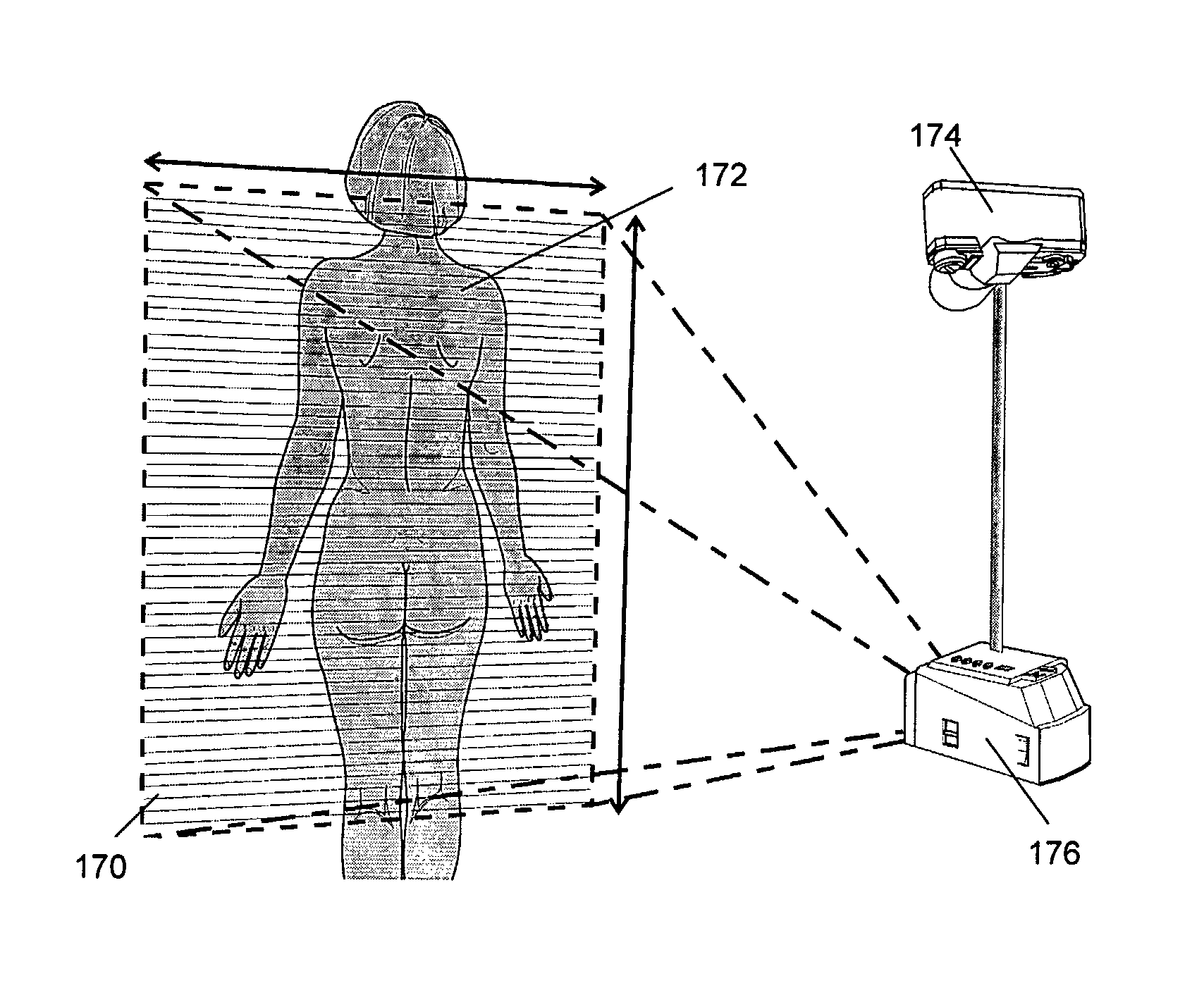

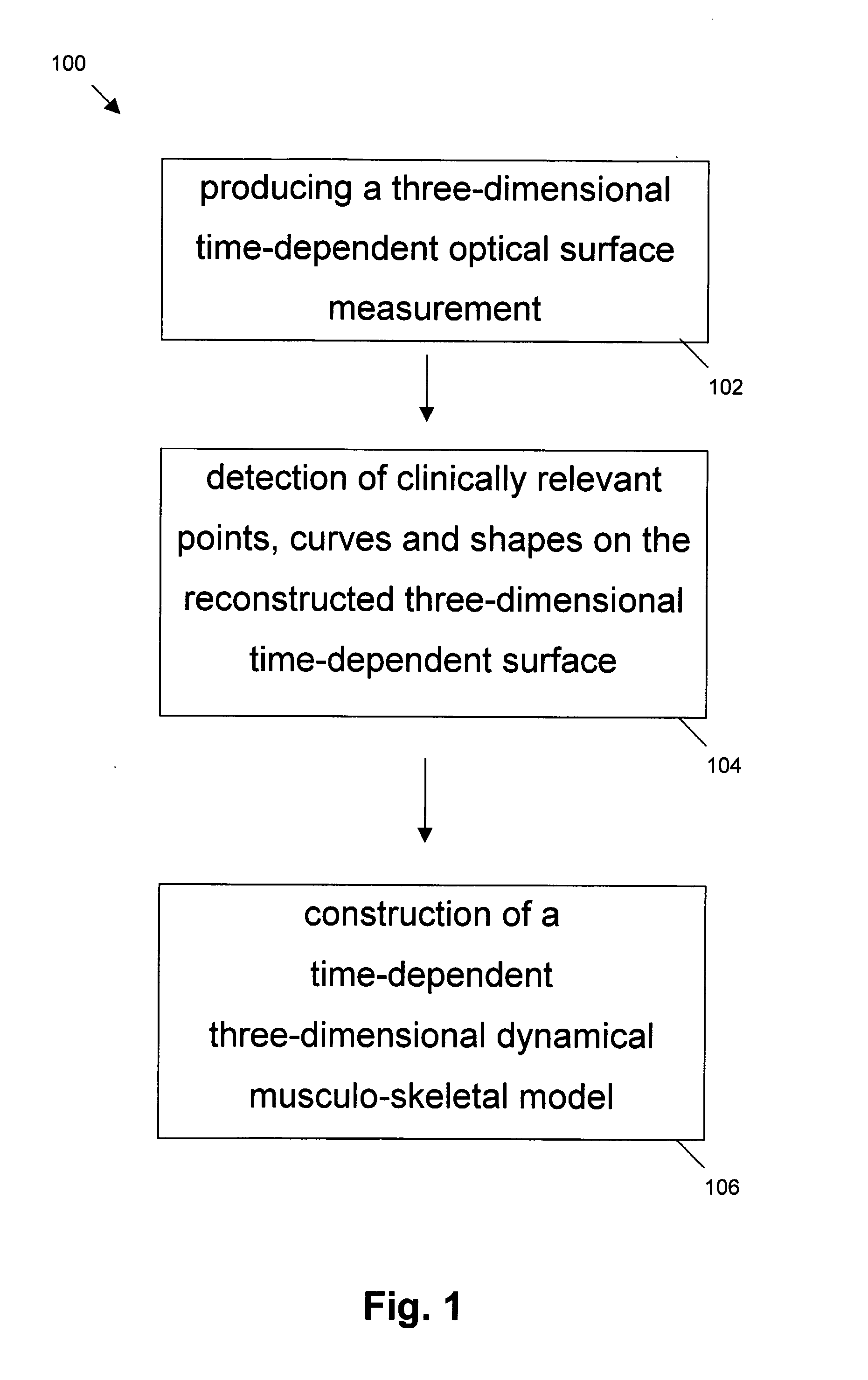

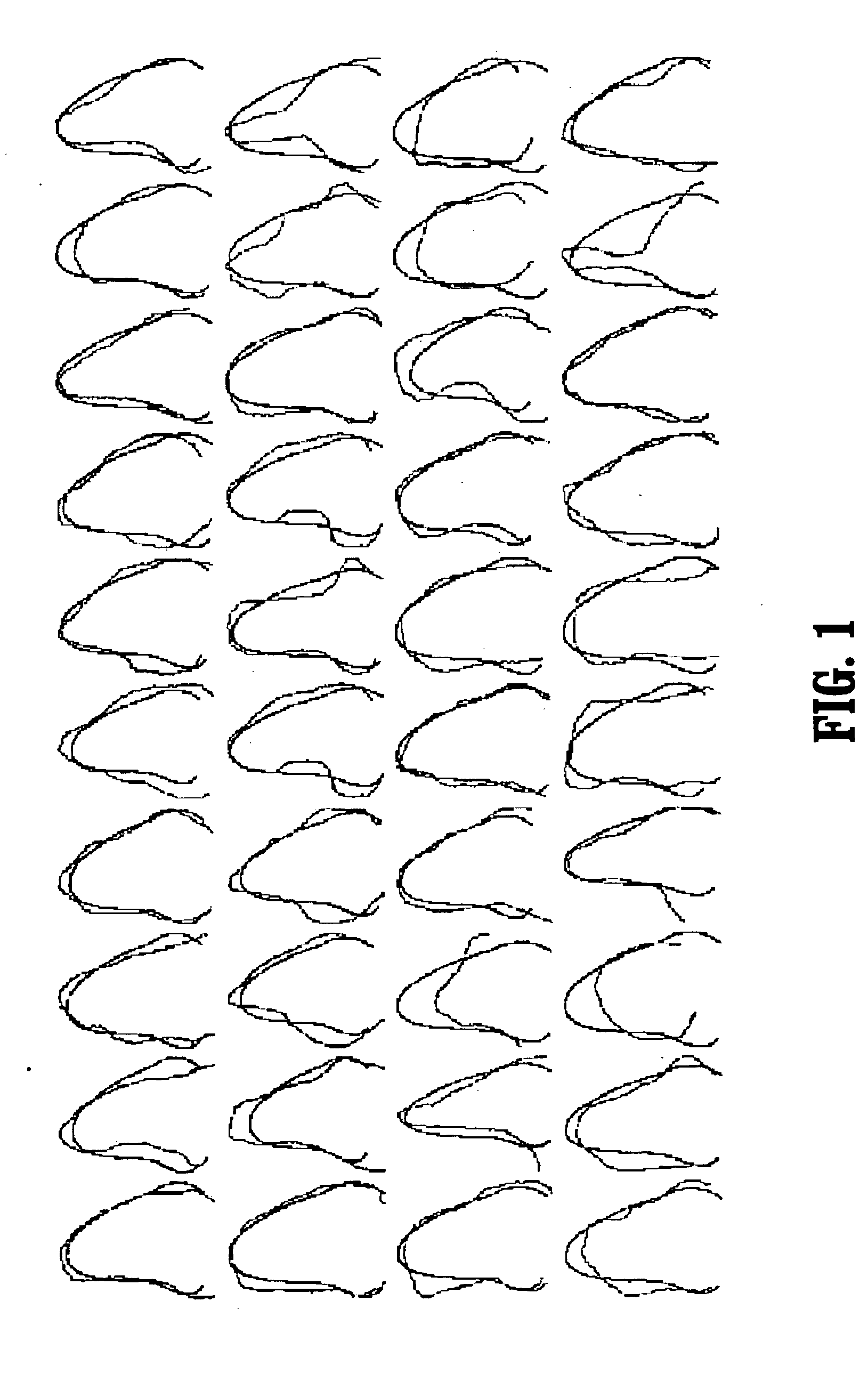

Time-dependent three-dimensional musculo-skeletal modeling based on dynamic surface measurements of bodies

ActiveUS20070171225A1No preparation timeShort recordingImage enhancementImage analysisAnatomical landmarkCurve shape

Active contour models and active shape models were developed for the detection of the kinematics landmarks on sequential back surface measurements. The anatomical landmarks correspond with the spinous process, the dimples of the posterior superior iliac spines (PSIS), the margo mediales and the elbow. Back surface curvatures are used as a basis to guide the ACM and ASM's towards interesting landmark features on the back surface. Geometrical bending and torsion costs, and the main modes of variation of the landmark points are added to the models in order to avoid unrealistic curve shapes from a biomechanical point of view. Reconstruction of the underlying skeletal structures is performed using the surface normals as approximations for skeletal rotations (e.g. axial vertebrae rotations, pelvic torsion, etc.) and anatomical formulas to estimate skeletal dimensions.

Owner:DIERS ENG

X-ray chest radiography lung segmentation method and device

ActiveCN104424629AAccurate segmentationGood initial lung shapeImage enhancementImage analysisNuclear medicineRadiography

The invention discloses an X-ray chest radiography lung segmentation method so as to carry out accurate segmentation on lungs in an X-ray chest radiography. The method includes the following steps: S101: through horizontal and vertical projection, obtaining two rectangular areas which respectively surround left and right lung images in the X-ray chest radiography; S102: initializing the lungs in the two rectangular areas so as to obtain the initial shapes of the lungs; S103: according to a weighted grey local texture model, searching for optimal matching points of characteristic points in the lung images; S104: through adjustment of attitude parameters and a shape parameter b, enabling current shapes I<Xc> of the lungs to approximate I<X>+dI<X> in the largest degree; and repeating S103 and S104 until the change quantities are smaller than preset thresholds when obtained lung shapes are compared with lung shapes obtained through a previous adjustment next to a current adjustment. The method and device are capable of obtaining better initialization lung shapes and do not cause an over-segmentation phenomenon in a follow-up adjustment process and are capable of carrying out accurate segmentation on lung areas in the X-ray chest radiography under the restraint of an active shape model.

Owner:SHENZHEN INST OF ADVANCED TECH

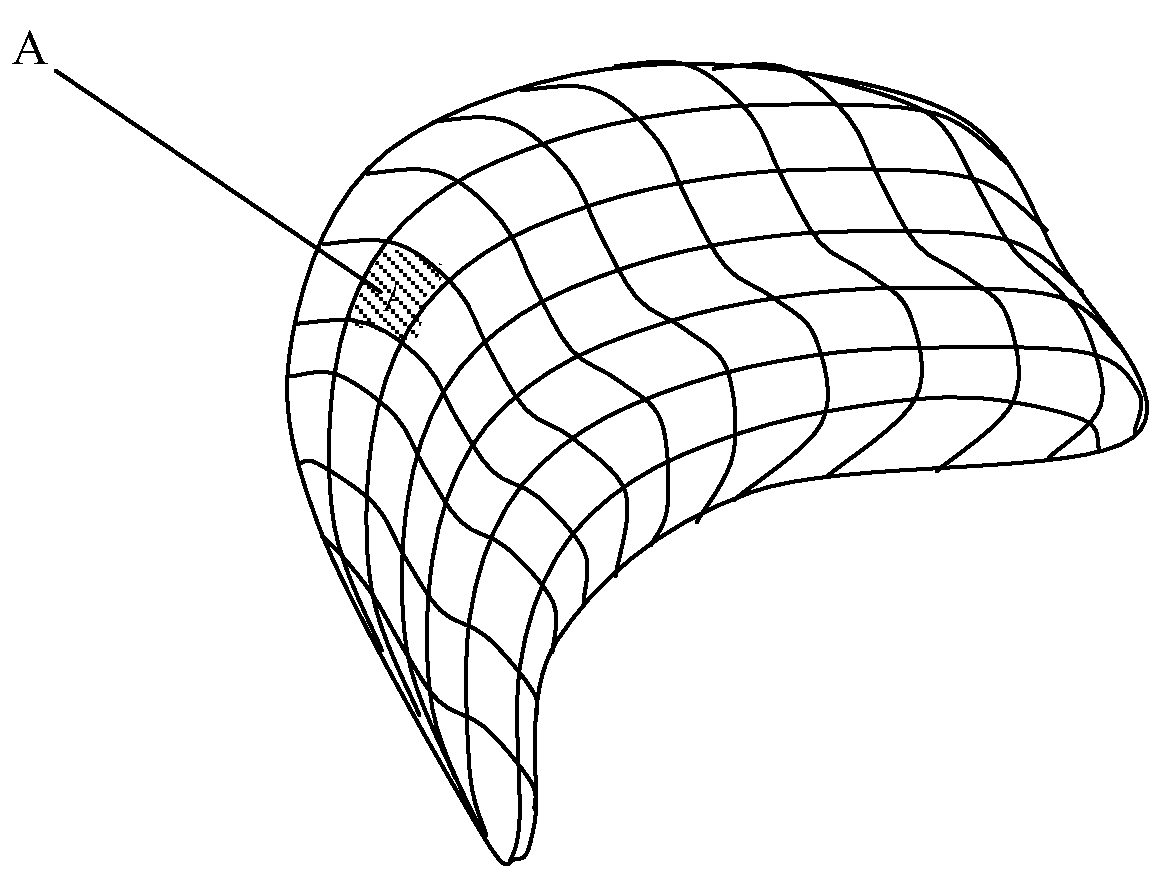

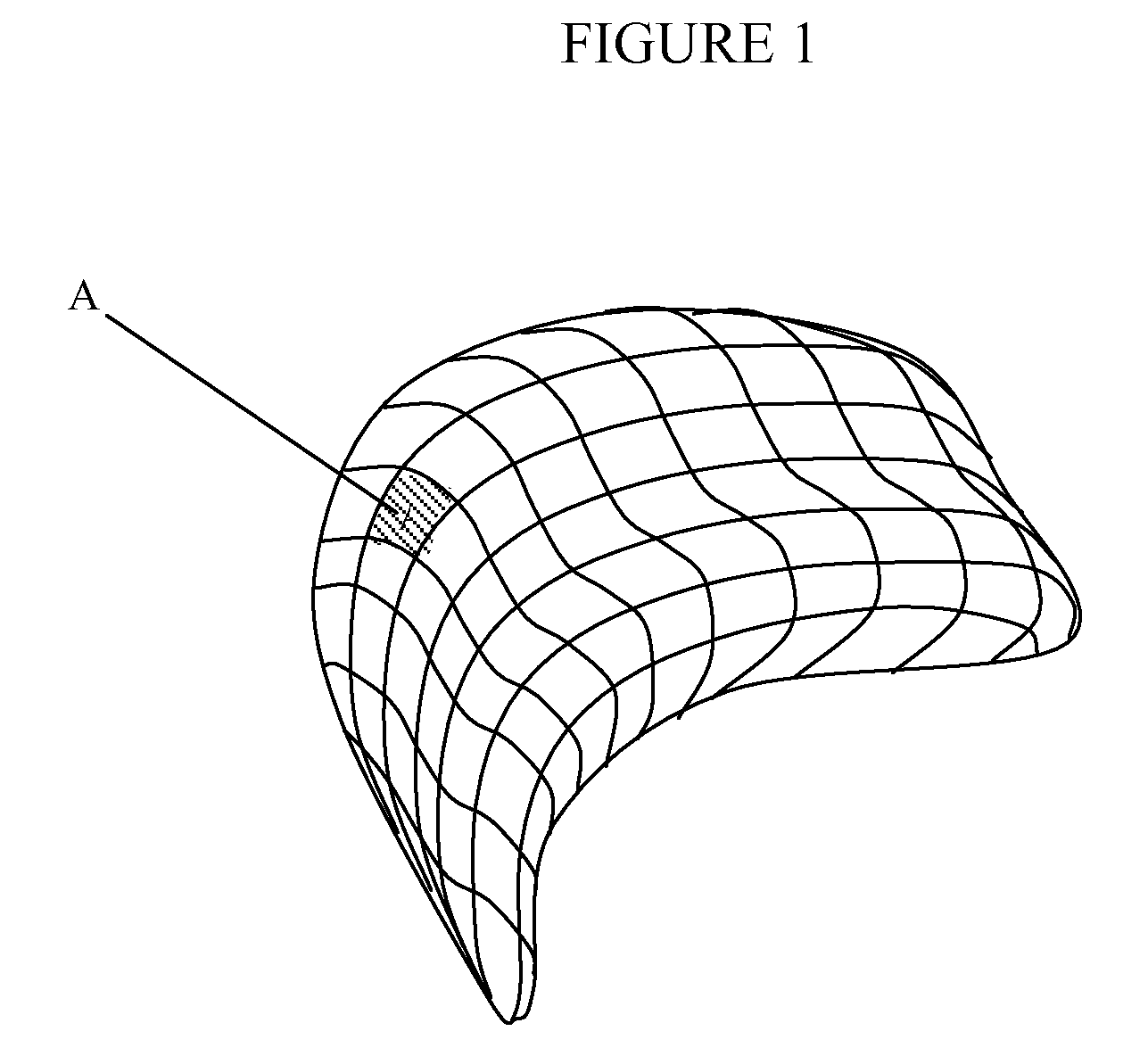

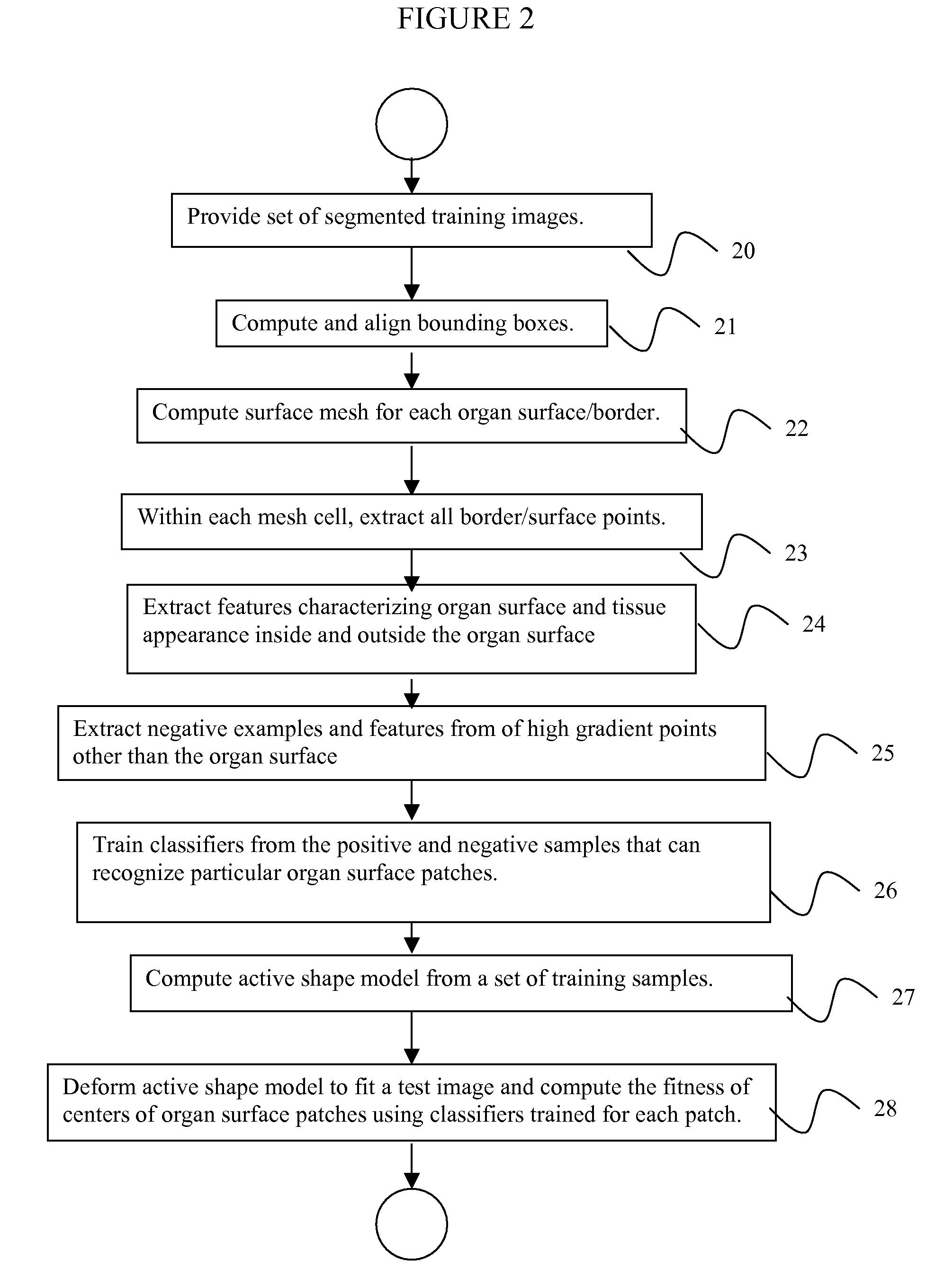

System and Method for Organ Segmentation Using Surface Patch Classification in 2D and 3D Images

A method for segmenting organs in digitized medical images includes providing a set of segmented training images of an organ, computing a surface mesh having a plurality of mesh cells that approximates a border of the organ, extracting positive examples of all mesh cells and negative examples in the neighborhood of each mesh cell which do not belong to the organ surface, training from the positive examples and negative examples a plurality of classifiers for outputting a probability of a point being a center of a particular mesh cell, computing an active shape model using a subset of center points in the mesh cells, generating a new shape by iteratively deforming the active shape model to fit a test image, and using the classifiers to calculate a probability of each center point of the new shape being a center of a mesh cell which the classifier was trained to recognize.

Owner:SIEMENS HEATHCARE GMBH

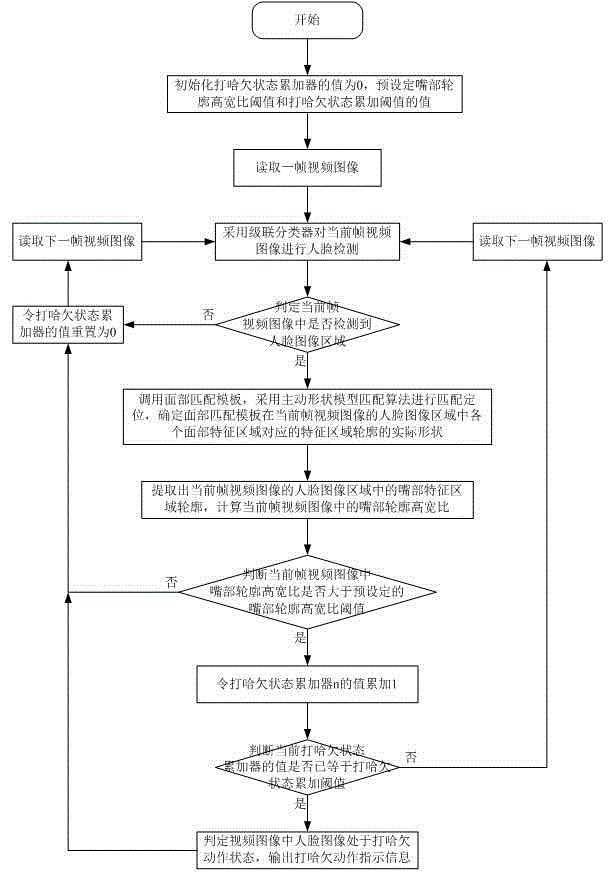

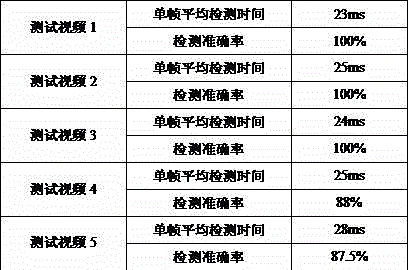

Yawning action detection method for detecting fatigue driving

InactiveCN104616438AGuaranteed accuracyGuaranteed real-timeCharacter and pattern recognitionAlarmsPattern recognitionData operations

The invention provides a yawning action detection method for detecting fatigue driving. The yawning action detection method comprises the following steps: relative position relations of facial feature regions in a facial image region in a video image are respectively determined in a matched manner by virtue of feature region contours respectively corresponding to the facial feature regions of a face matching template, so as to well ensure the accuracy of mouth location, quick matching location of the mouth regions in the facial image region in the video image is carried out by adopting an active shape model matching algorithm, the data operation amount is small, the processing speed is high, and the real-time performance of the mouth location is ensured; then, the actual shapes of mouth feature region counters are determined by carrying out matching location on the mouth regions in the facial image region in the video image to recognize the mouth opening or closing state. The yawning action detection method realizes the detection of the yawning action, is high in detection accuracy, fast in speed, provides an effective and high-real-time-performance solution for the detection of the yawning action, and can provide an alerting signal with timeliness for the fatigue driving detection.

Owner:CHONGQING ACADEMY OF SCI & TECH

Action teaching system and action teaching method

Owner:TOYOTA JIDOSHA KK

Human face fake photo automatic combining and modifying method based on portrayal

ActiveCN103279936AReduce complexityGood refactoringImage enhancementGeometric image transformationPattern recognitionLeast squares

The invention discloses a human face fake photo automatic combining and modifying method based on a portrayal. The method comprises the following steps: firstly, automatically generating initial estimation of a fake photo by applying a partial intrinsic transform method; then, extracting hair and facial contour information from an input human face portrayal, and carrying out automatic enhancement on the initial estimation; finally, judging whether partial combining errors exist in the automatically-combined human face fake photo by a user, and if obvious combining errors exist, carrying out local deformation on a corresponding human face of the re-input fake photo by adopting a deformation method based on control points, then carrying out automatic combination again, and therefore modifying the partial combining errors. In a modifying process, an active shape model is used for obtaining the feature points of the human face portrayal, and rigid deformation based on the control points is carried out by using a least square method. The human face fake photo automatic combining and modifying method based on the portrayal can automatically combine a human face fake photo fast and effectively according to the input human face portrayal, and can provides assistance for manual and automatic identification of human face sketch portrayals in criminal investigation, anti-terrorist and other fields.

Owner:北京中昊诚科技有限公司

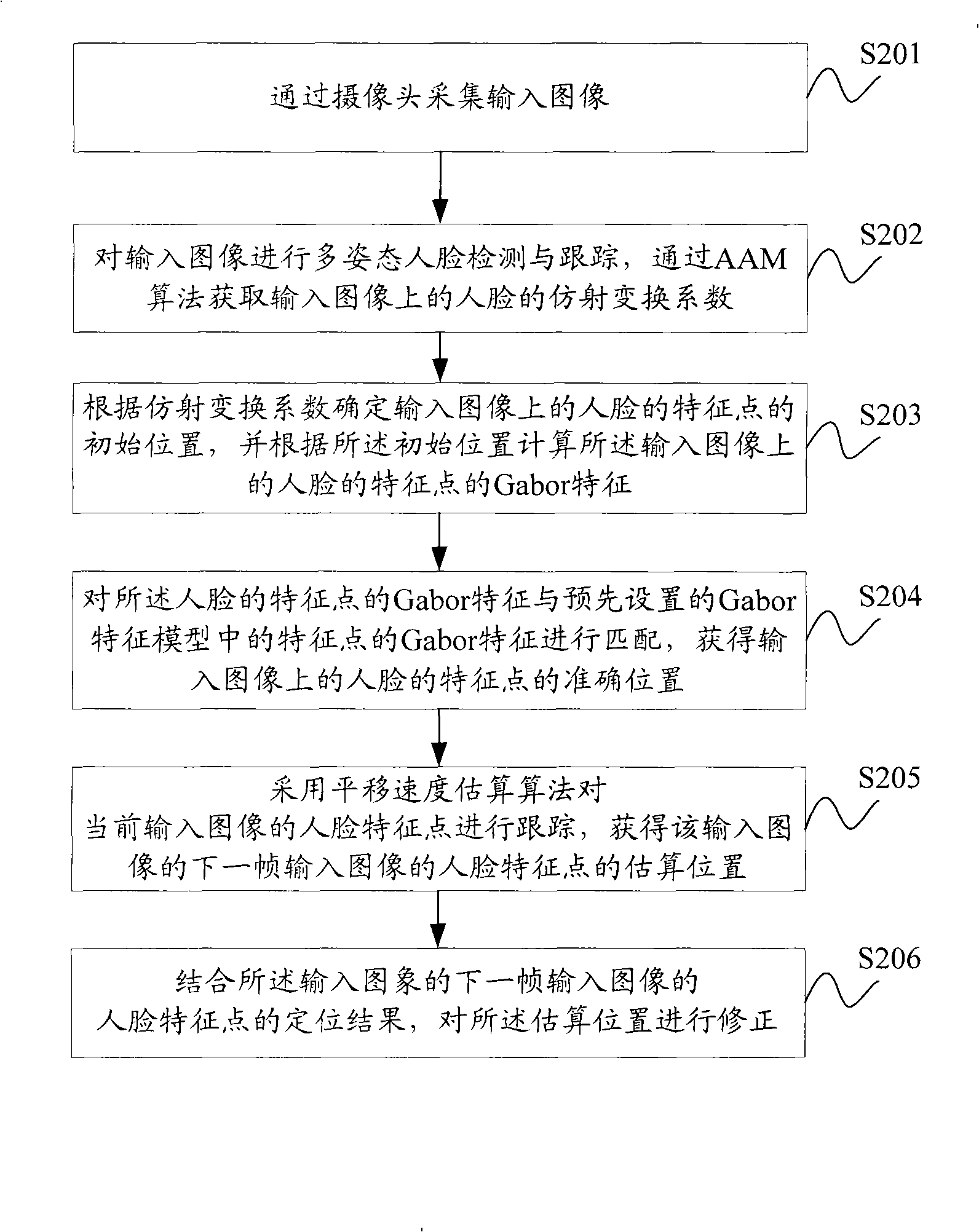

Method and device for confirming characteristic point position in image

InactiveCN101271520AHigh precisionCharacter and pattern recognitionPattern recognitionCharacteristic point

The invention discloses a method and a device for determining the position of a characteristic point in an image, which is used for solving the problem existing in the prior art that the positioning precision of the characteristic point is not high. The method for determining the position of the characteristic point in the image which is provided by the invention includes that: an active shape model ASM which consists of a shape model and a Gabor character model in a target area in the image is obtained in advance based on image sample trainings; an input image is detected, the initial position of the characteristic point in the target area of the input image is obtained, and the Gabor characteristic of the characteristic point in the target area in the input image is worked out according to the initial position; combined with the shape model in the target area, and a match is carried out between the Gabor characteristic of the characteristic point in the target area in the input image and the Gabor characteristic of the characteristic point in the Gabor characteristic model to determine the position of the characteristic point in the target area.

Owner:VIMICRO CORP

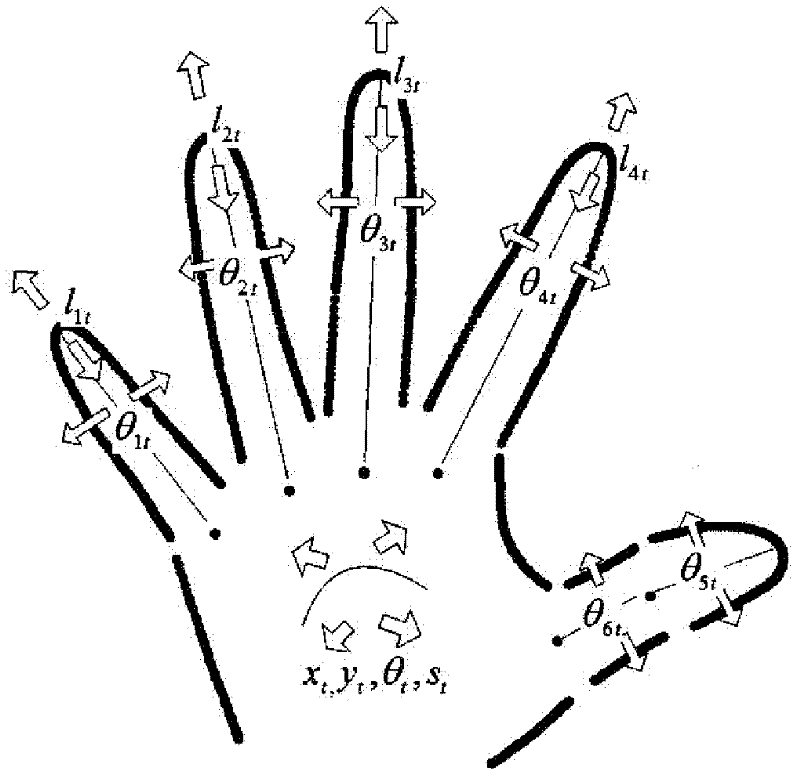

Mouse recognition method for gesture based on machine vision

InactiveCN102402289AOperational Freedom RestrictionsFreely switch operation rightsInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionMachine vision

The invention discloses a mouse recognition method for gesture based on machine vision, comprising the following steps: (1) establishing an active shape model for gestures; (2) off-line training for gestures and getting a gesture features classifier; (3) collecting images; (4) extracting partial binary pattern characteristics for the images, searching the target gesture among the images through the gesture features classifier from step (2), then step (5); (5) positioning on the fingertips; (6) mapping of mouse. The invention is a natural and intuitive way of human-computer interaction, and has advantages of no need to carry other auxiliary equipments, finishing the mouse operation through natural hand and finger movement, small influence by light and background, and freely operation right switch when multi-users are operating the computer.

Owner:SOUTH CHINA UNIV OF TECH

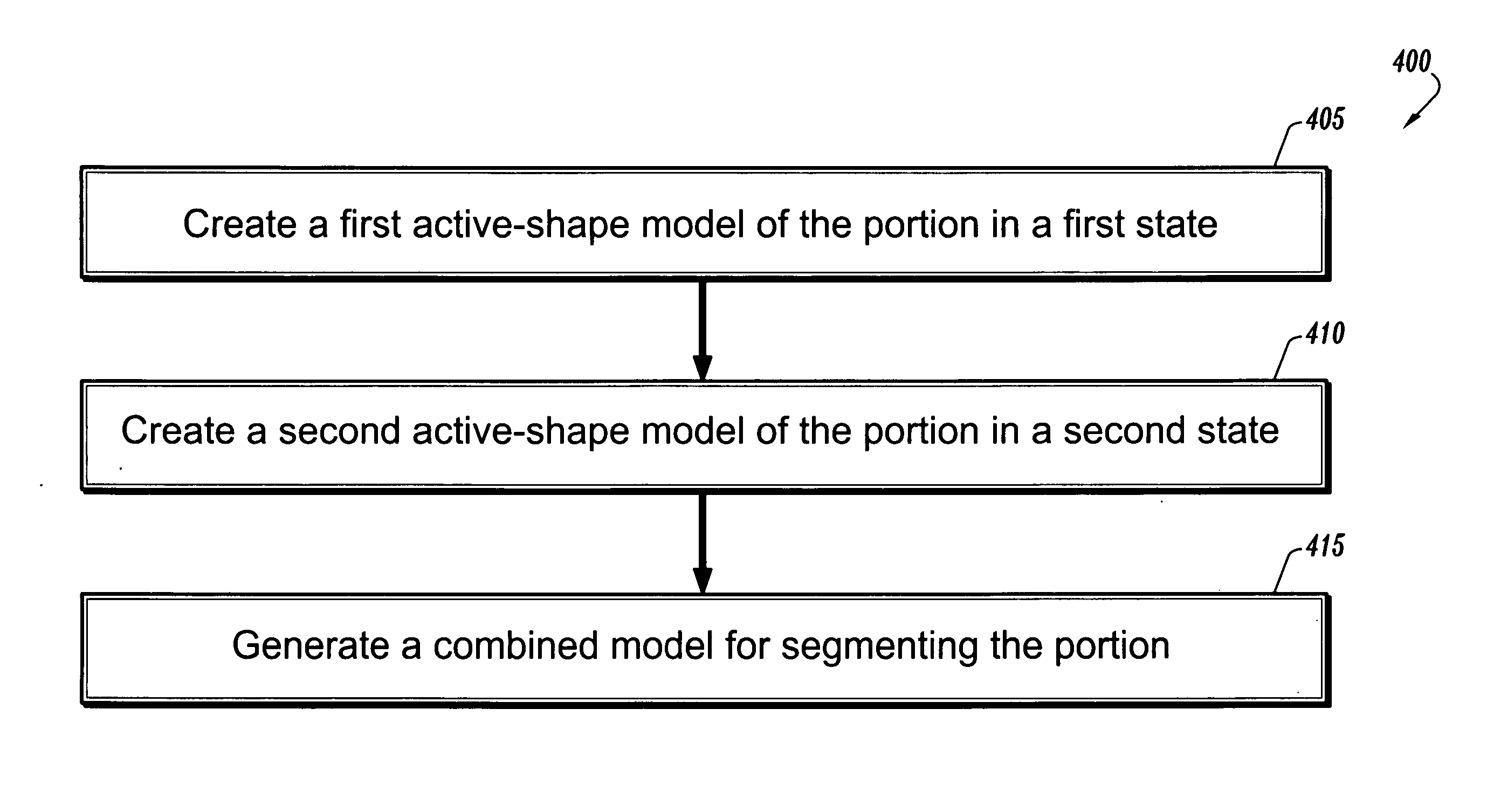

Segmentation of the left ventricle in apical echocardiographic views using a composite time-consistent active shape model

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

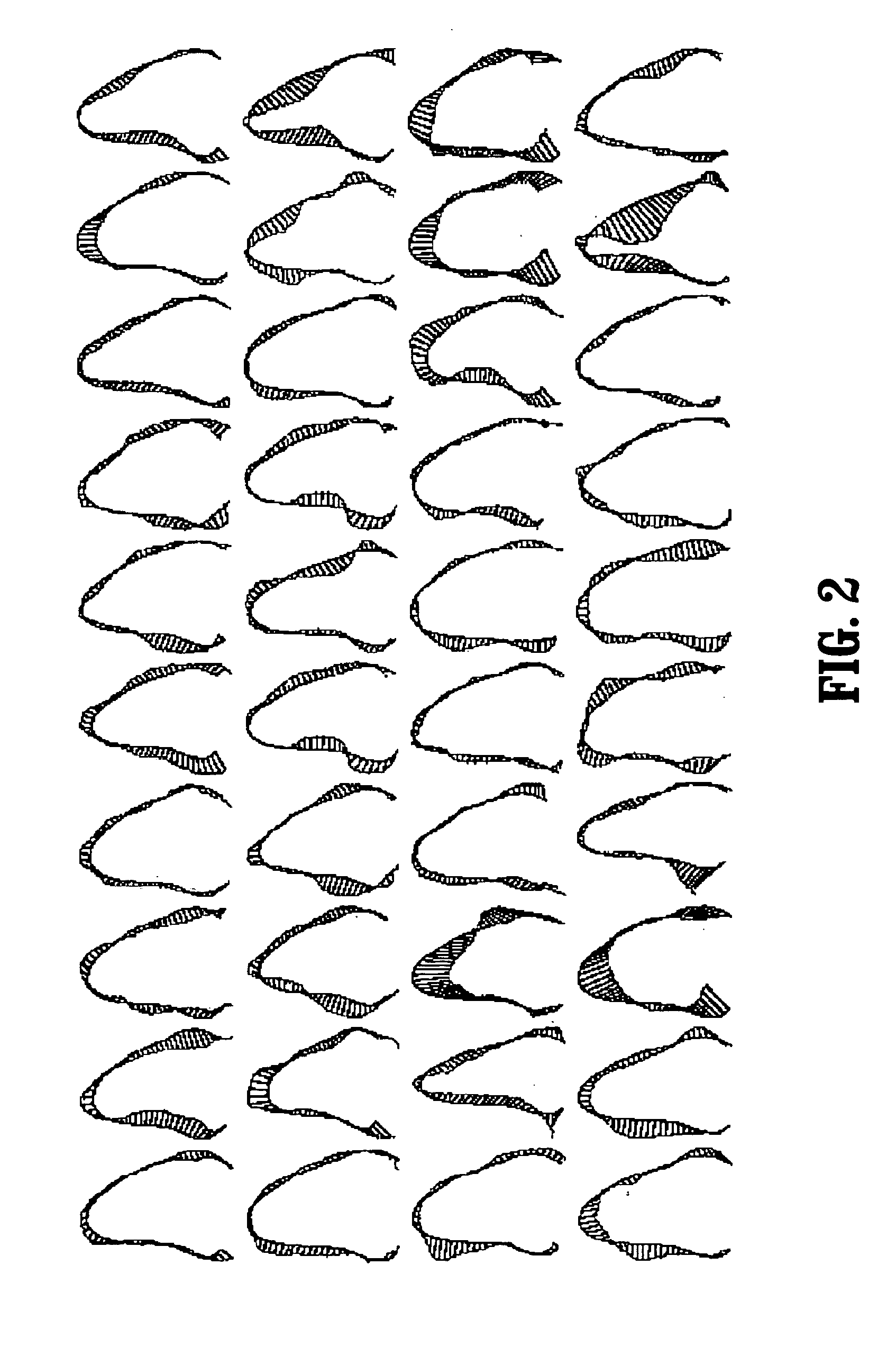

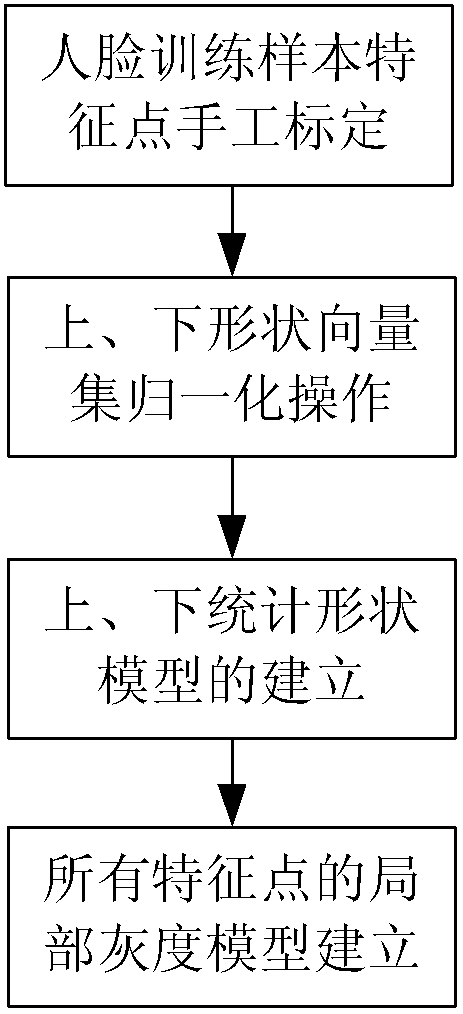

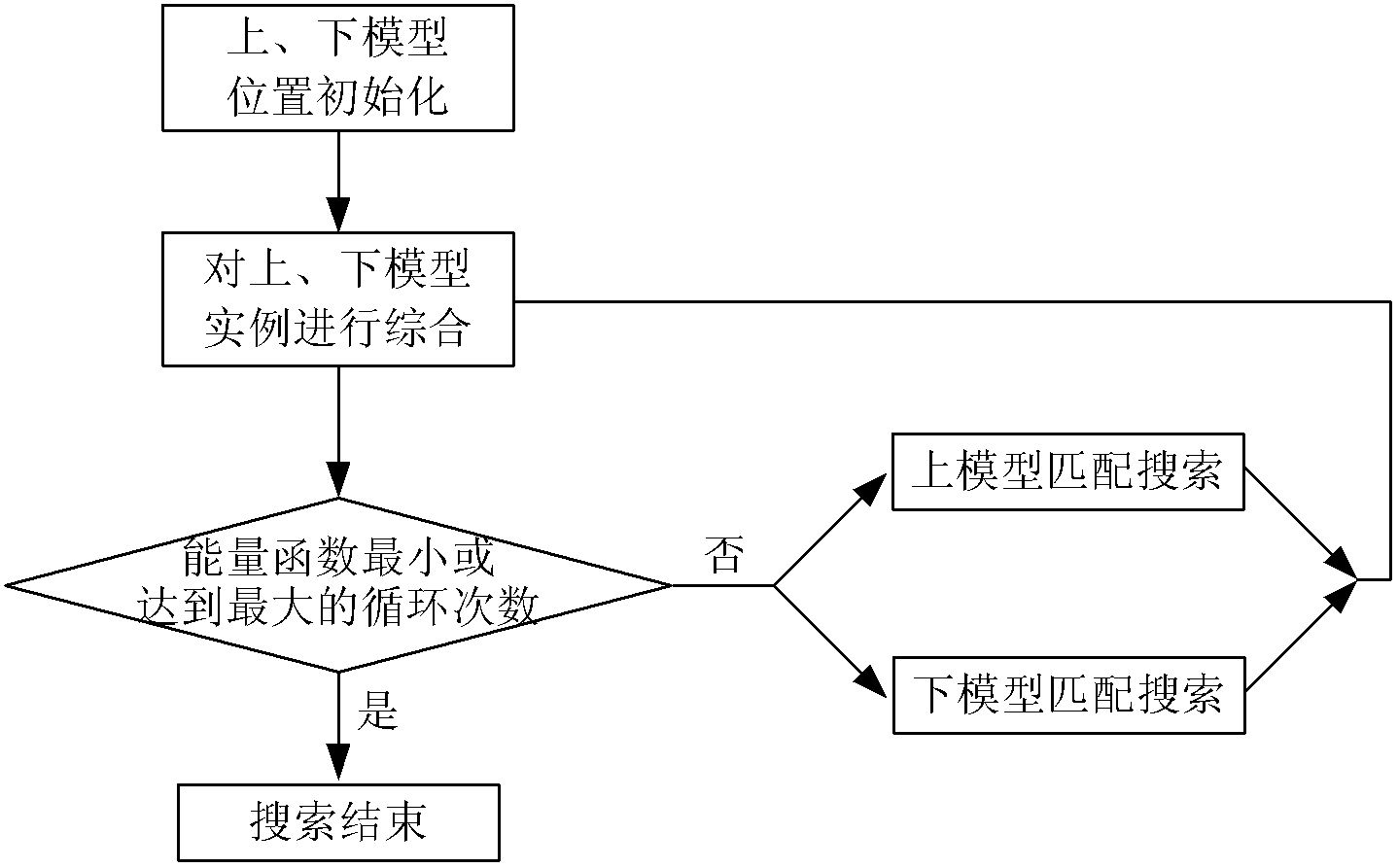

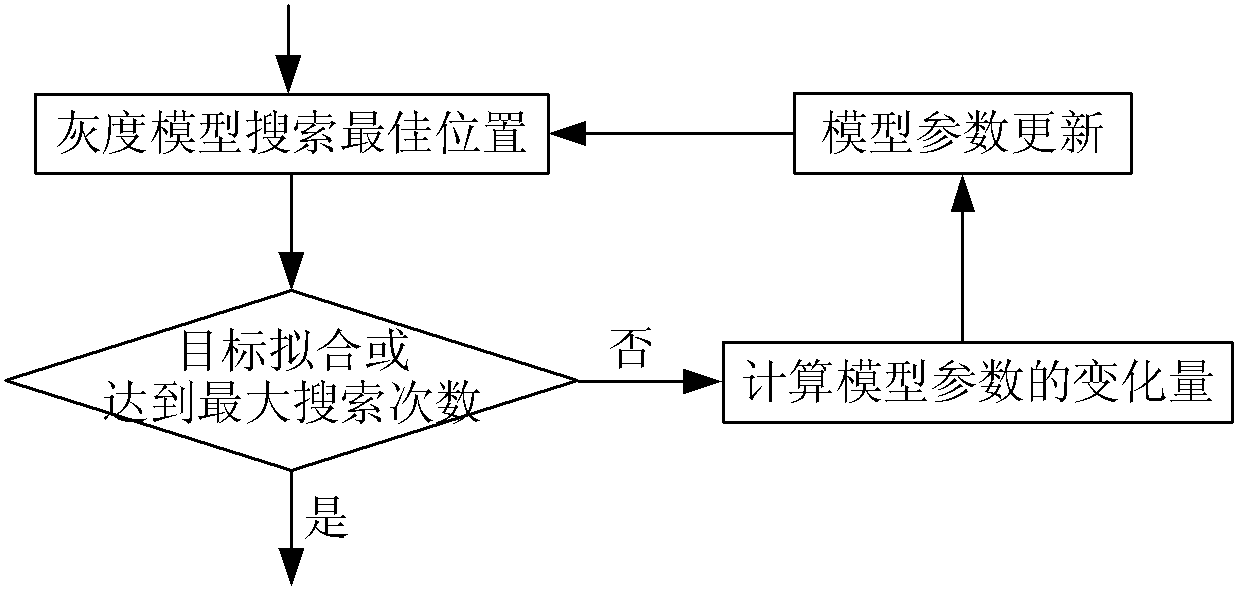

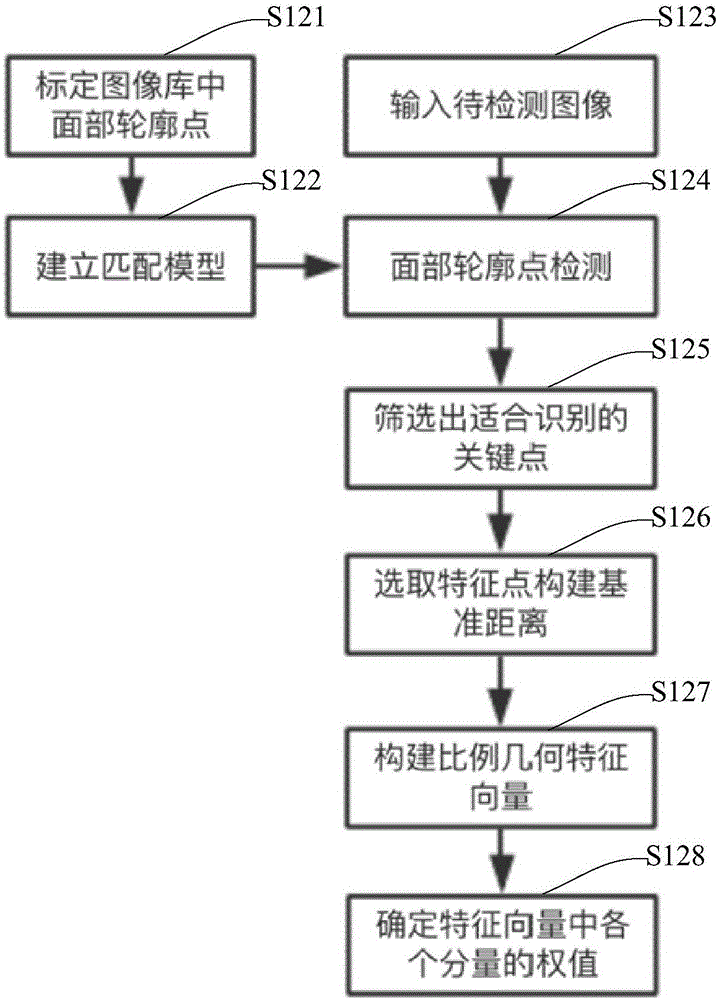

Method for positioning facial features based on improved ASM (Active Shape Model) algorithm

InactiveCN102214299AImprove accuracyAccurate Feature Extraction ResultsCharacter and pattern recognitionEnergy functionalFacial expression

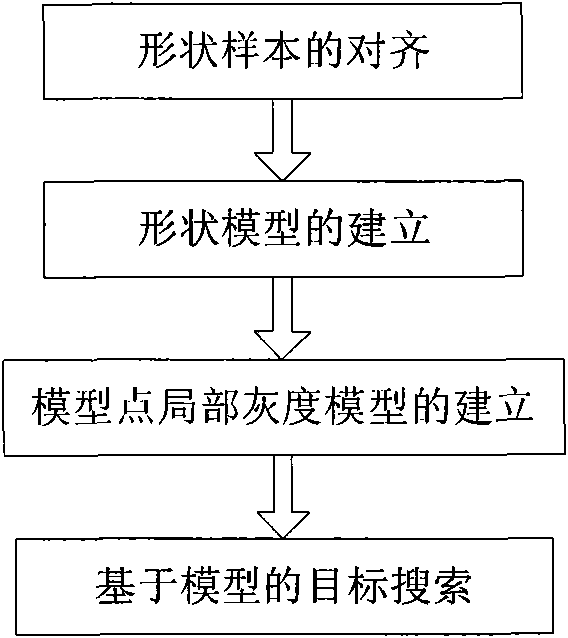

The invention discloses a method for positioning facial features based on an improved ASM (Active Shape Model) algorithm, belonging to the technical field of visual and image treatment of a computer. The method comprises the following steps of: firstly, manually calibrating feature points; secondly, establishing a statistical shape model and a local gray model of an upper model and a lower model; thirdly, separately searching and matching the feature points in the upper model and the lower model; and finally, generating an example of a comprehensive model restrained by an energy function. To solve the problem that the traditional ASM method is difficult to position features under the facial expression conditions of a human face, the method for positioning facial features based on the improved ASM algorithm, disclosed by the invention, comprises the following steps of: carrying out the regional division of the facial features into an upper shape region and a lower shape region according to the change correlation, separately modeling the statistical shape model and the local gray model, generating examples of comprehensive shapes for the upper model and the lower model to restrain errors by introducing an energy function to the feature matching and searching process, and finally, obtaining an accurate feature positioning result. Due to the method for positioning facial features based on the improved ASM algorithm, the feature positioning accuracy of the ASM algorithm to the existing facial expression conditions are further improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

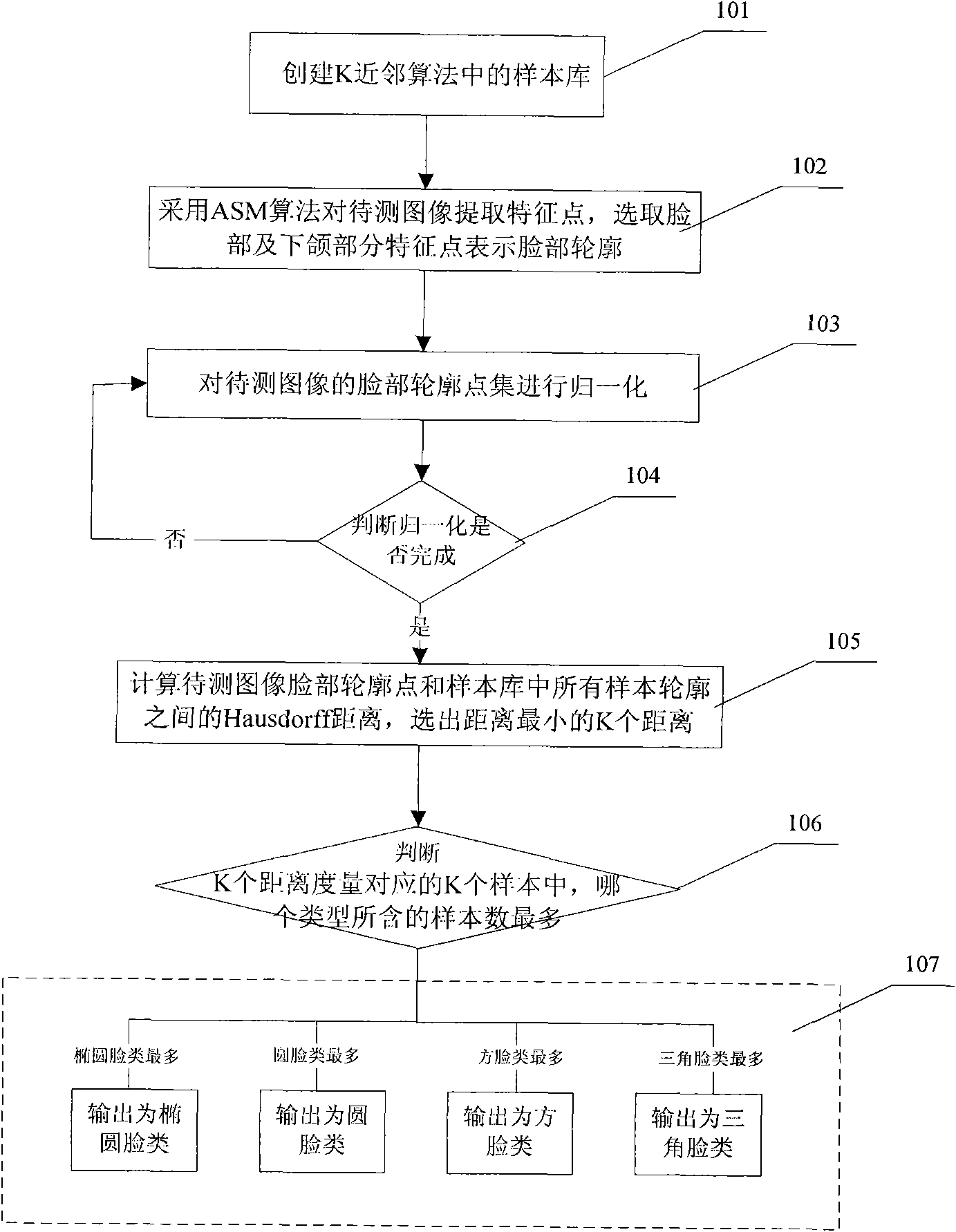

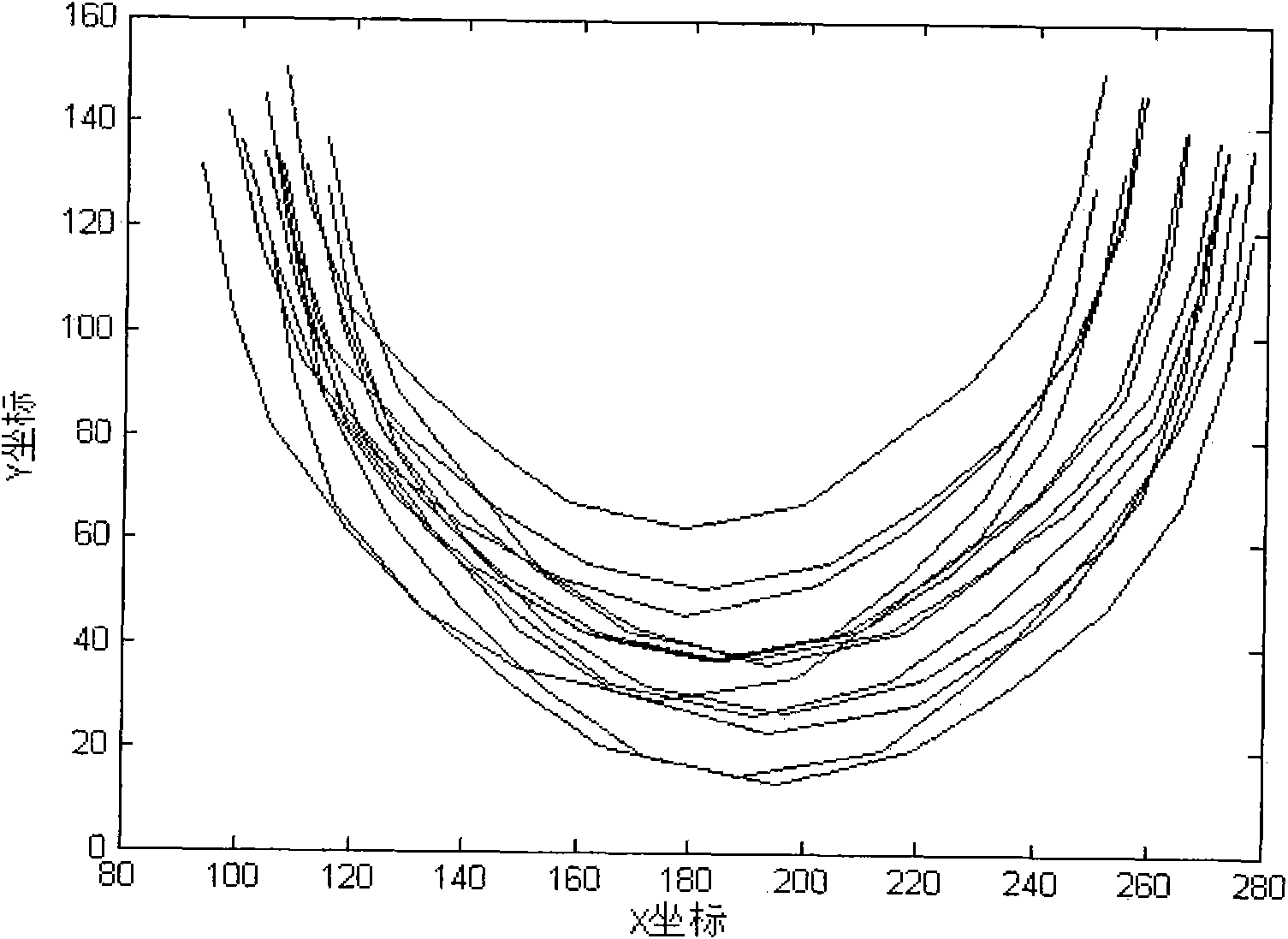

Classifying and processing method based on active shape model and K nearest neighbor algorithm for facial forms of human faces

InactiveCN102339376AImprove robustnessSimplify the build processCharacter and pattern recognitionTransmissionAlgorithmStorage management

The invention relates to a classifying and processing method based on an active shape model and a K nearest neighbor algorithm for facial forms of human faces; the method comprises the following steps of: (1) a sample database in the K nearest neighbor algorithm is established; (2) a user uploads images to be measured to a server through a network multi-media terminal, and the server extracts characteristic points of the human faces from the image to be measured by adopting an ASM (Automated Storage Management) algorithm and determines facial contours by selecting the characteristic points of the faces and lower jaws; (3) the server carries out normalization processing on point sets of the images to be measured according to a sample normalization method and integrates the point sets of the images to be measured and point sets of samples in a coordinate system; (4) the server classifies the images to be measured based on the Hausdorff distance and the K nearest neighbor algorithm to obtain a classifying result; and (5) the server automatically sends the classifying result to a network multi-medium terminal; and the network multi-medium terminal displays the classifying result. Compared with the prior art, the classifying and processing method has the advantages of high recognition rate, fast speed, easy implementation and the like.

Owner:SHANGHAI YEEGOL INFORMATION TECH

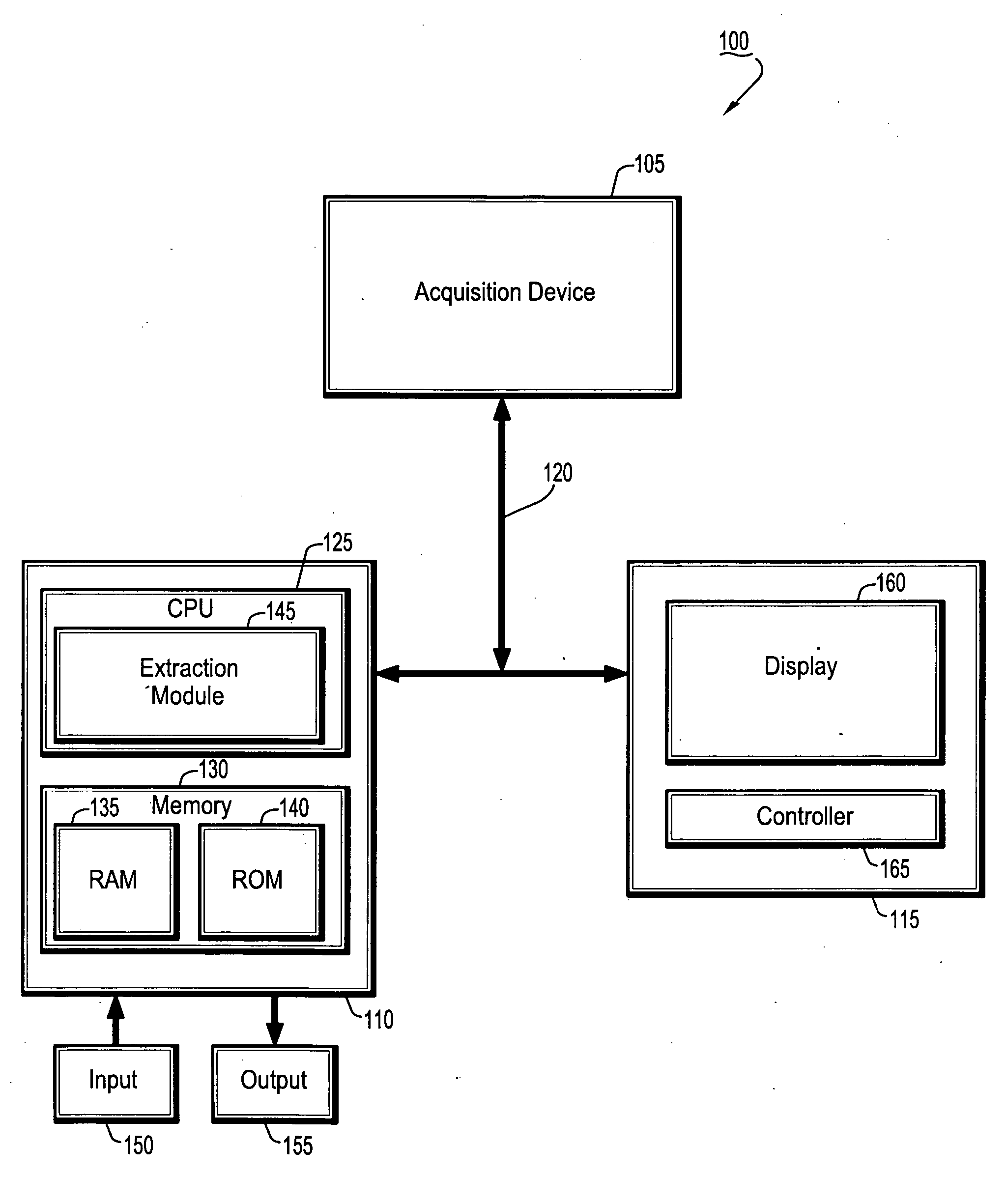

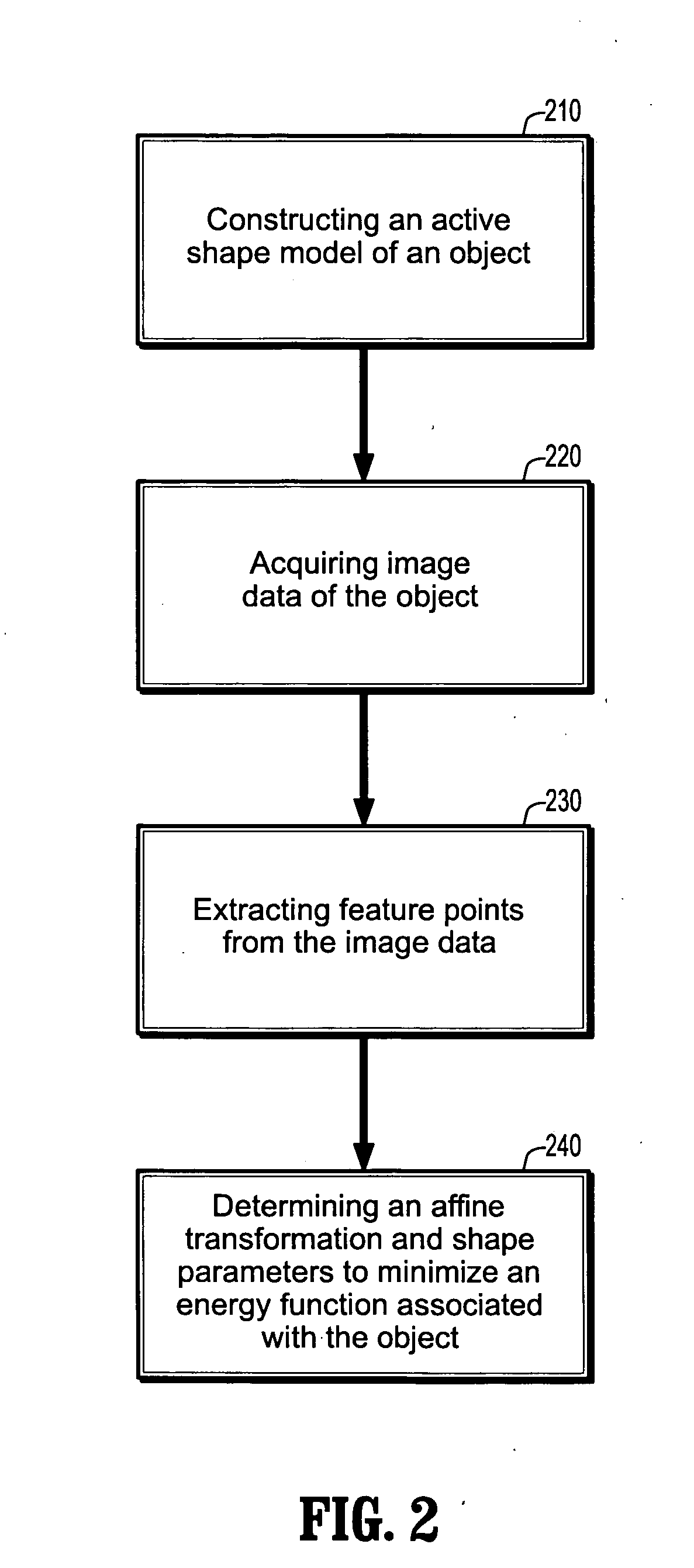

System and method for extracting an object of interest from an image using a robust active shape model

ActiveUS20060056698A1Distance minimizationMinimize energy functionImage enhancementImage analysisPattern recognitionEnergy functional

A system and method for extracting an object of interest from an image using a robust active shape model are provided. A method for extracting an object of interest from an image comprises: generating an active shape model of the object; extracting feature points from the image; and determining an affine transformation and shape parameters of the active shape model to minimize an energy function of a distance between a transformed and deformed model of the object and the feature points.

Owner:SIEMENS HEALTHCARE GMBH

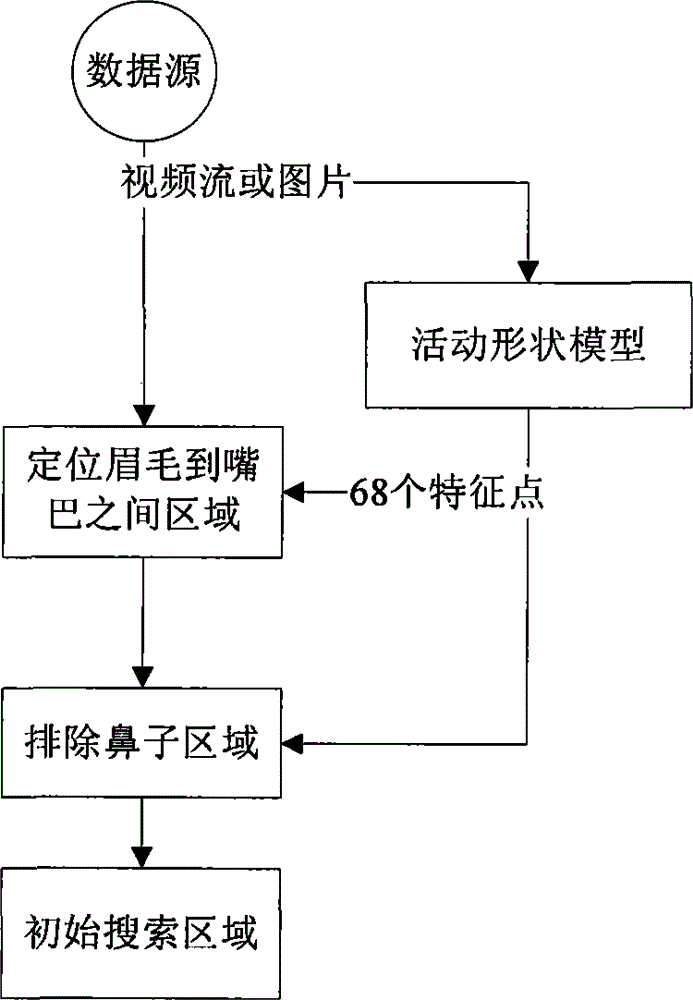

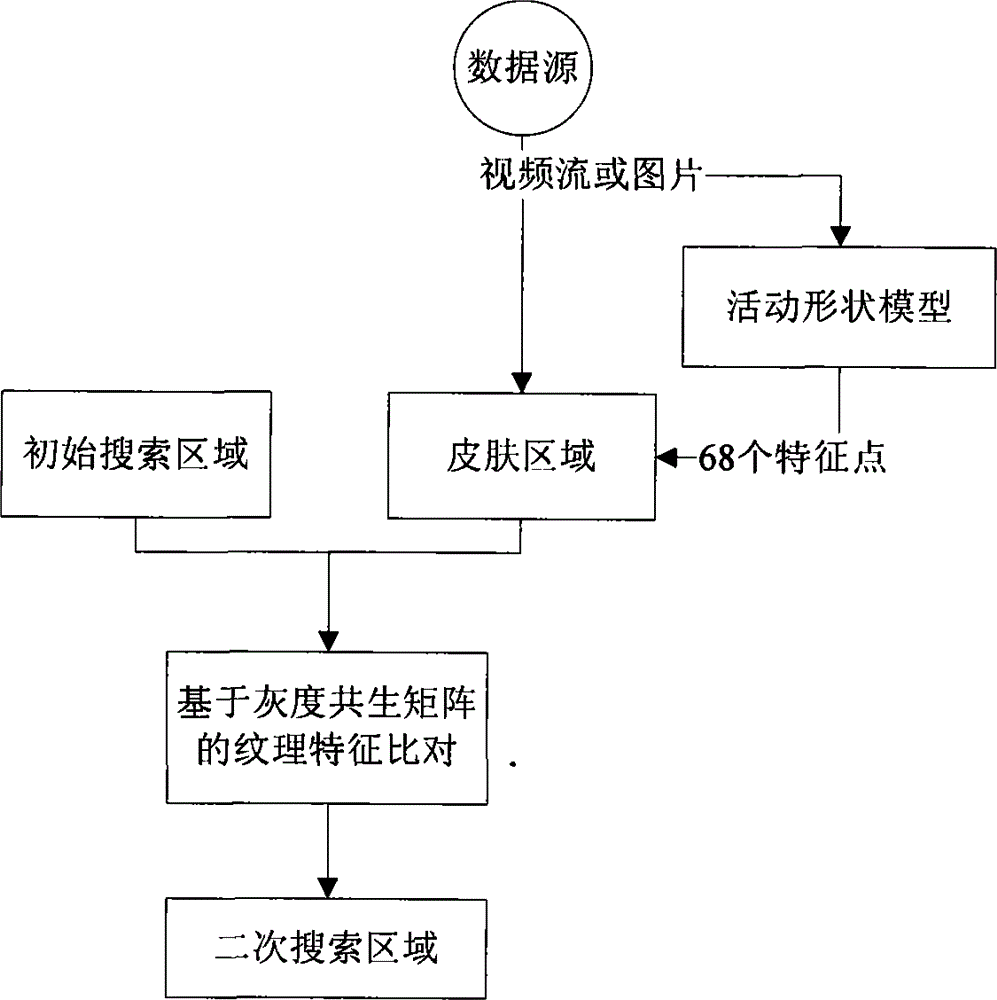

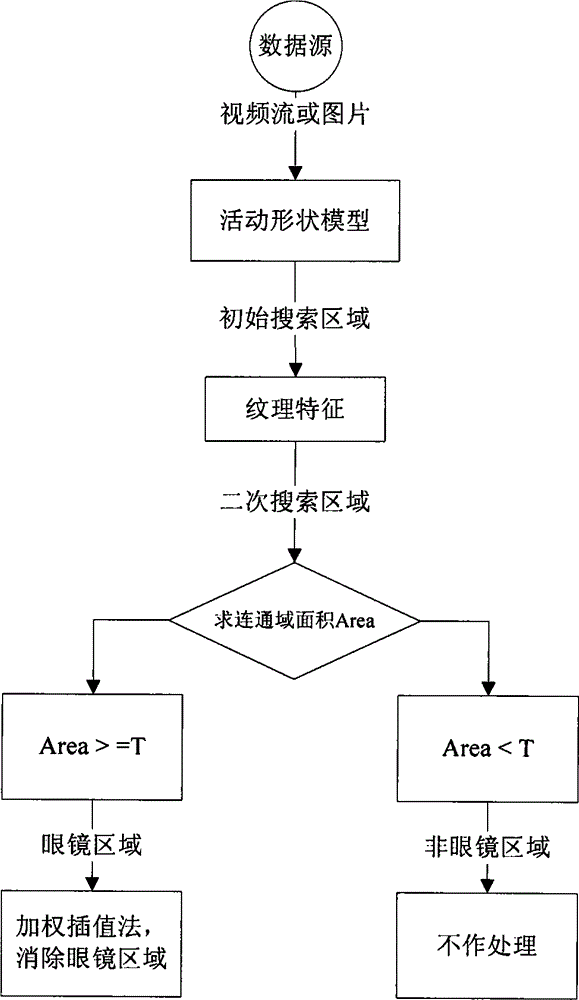

Face image glass removal method based on mobile shape model and weighted interpolation method

InactiveCN104156700AEfficient removalReduce training timeCharacter and pattern recognitionFace detectionActive shape model

The invention provides a face image glass removal method based on a mobile shape model and a weighted interpolation method, and the application of the face image glass removal method in face identification. According to the method, glass removal processing is performed on face data detected through the face detection technology, the processed data is used for face identification, and the accurate glass removal method can effectively improve the face identification accuracy. The mobile shape model is utilized by an algorithm for positioning a glass area, and the glass area is removed through the weighted-interpolation-based method, so that the glass removal effect is ensured. With adoption of the method, the problem that the recognition rate is reduced greatly because the deep color thick-frame glasses shield faces during the face identification process is effectively solved, therefore, the face identification performance is improved.

Owner:PCI TECH GRP CO LTD

Method and system for identifying human facial expression

InactiveCN102880862AReduce data processingRecognition time is shortCharacter and pattern recognitionInternal memoryGabor wavelet transform

The invention provides a method and a system for identifying human facial expression. The method for identifying the human facial expression comprises the steps of utilizing ASM (active shape model) algorithm to extract a shape model and feature points of a to-be-identified facial image, then, utilizing Gabor wavelet transform algorithm to extract amplitude texture characteristics of the feature points; obtaining a to-be-identified eigen face through projection for the amplitude texture characteristics; and identifying expression of a to-be-identified human face by comparing similarity between the to-be-identified eigen face and a sample eigen face. According to the method and the system for identifying human facial expression, the ASM algorithm is combined for extracting the feature points, so that identification through the Gabor wavelet transform algorithm can be performed only by comparing textural characteristics of the feature points. Compared with the existing facial identification technology only adopting the Gabor wavelet algorithm, the method and the system for identifying the human facial expression has the advantages of small data processing capacity, short identification time, low internal memory loss and the like.

Owner:TCL CORPORATION

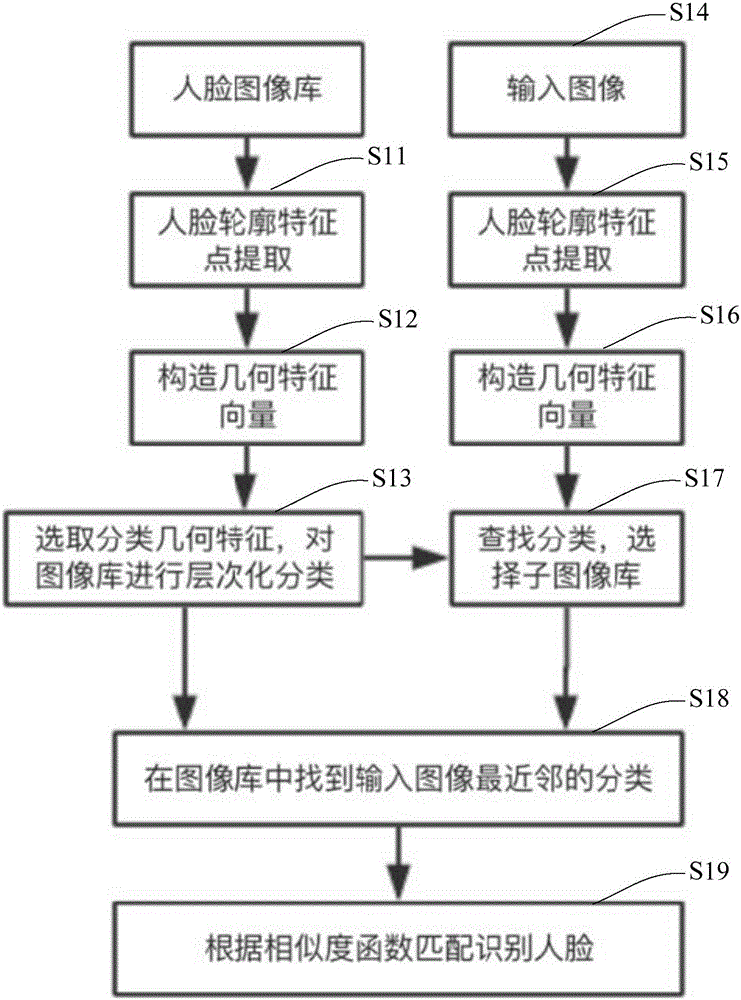

Method for human face recognition based on face geometrical features

ActiveCN105868716AImprove efficiencyGuaranteed real-timeCharacter and pattern recognitionFace detectionVery large database

The invention provides a method for human face recognition based on face geometrical features. The method includes human face feature points detection, geometrical feature construction, human face database classification and human face recognition. According to the invention, the method, based on human face detection and human face recognition technology, improves geometrical features used in the human face feature point detection method and human face recognition, and guarantees in-time and accuracy of a human face recognition system based on feature point detection which combines an active shape model and the elastic bunch graph matching algorithm and also simple and accurate face geometrical feature technology. According to the invention, the method decomposes data in a large database into a plurality of sub-databases with labels which are determined by selected classification geometrical features. As for the input image to be tested, matching data can be reduced by simply comparing classification geometrical features of the image to be tested with each sub-database label in order to greatly increase efficiency of human face recognition.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI

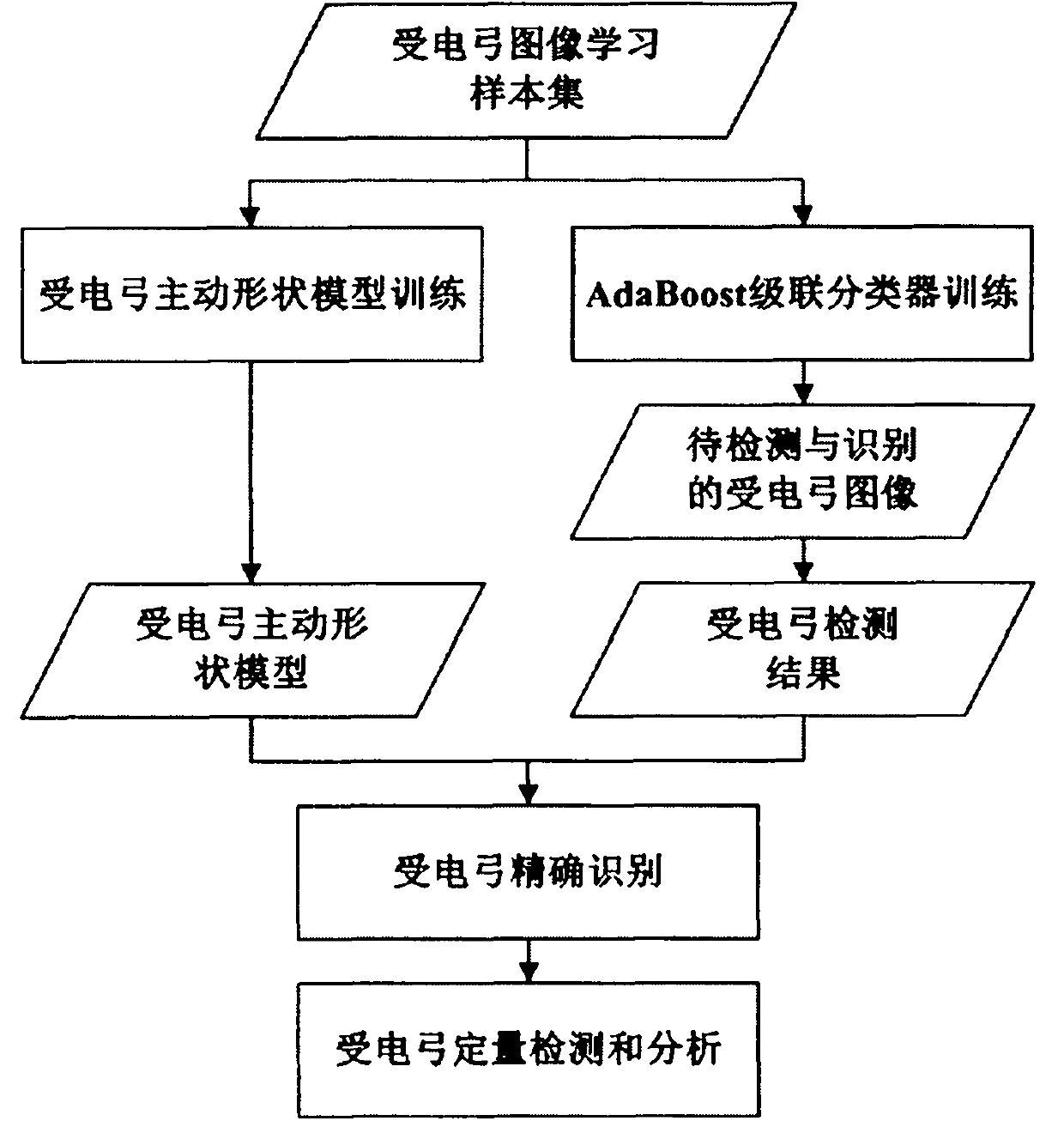

Pantograph identification method based on AdaBoost and active shape model

ActiveCN103745238AGuaranteed detection accuracyGuaranteed accuracyCharacter and pattern recognitionElectricityPattern recognition

The invention belongs to the technical field of computer digital image processing and mode identification, mainly relates to a pantograph on-line automatic identification method used for an electric traction locomotive, and specifically relates to a pantograph on-line automatic identification method based on AdaBoost and an active shape model. The basic process comprises the following steps: acquiring a plurality of pantograph images through a pantograph on-line shooting system, and forming a pantograph image learning sample set; based on a sample, training to learn to generate the pantograph active shape model and an AdaBoost cascade classifier; utilizing the AdaBoost cascade classifier to perform pantograph detection on newly acquired to-be-identified images; through combination with a pantograph detection result and the pantograph active shape model constructed through learning, accurately matching and identifying pantograph shapes; and finally, performing quantitative detection and analysis on the basis of an accurate matching result. The method provided by the invention can effectively perform on-line automatic quantitative detection on the thickness of a pantograph carbon slide plate, can rapidly perform vehicle maintenance and can save detection cost of pantograph detection.

Owner:INST OF REMOTE SENSING & DIGITAL EARTH CHINESE ACADEMY OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com