Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

30 results about "Global vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

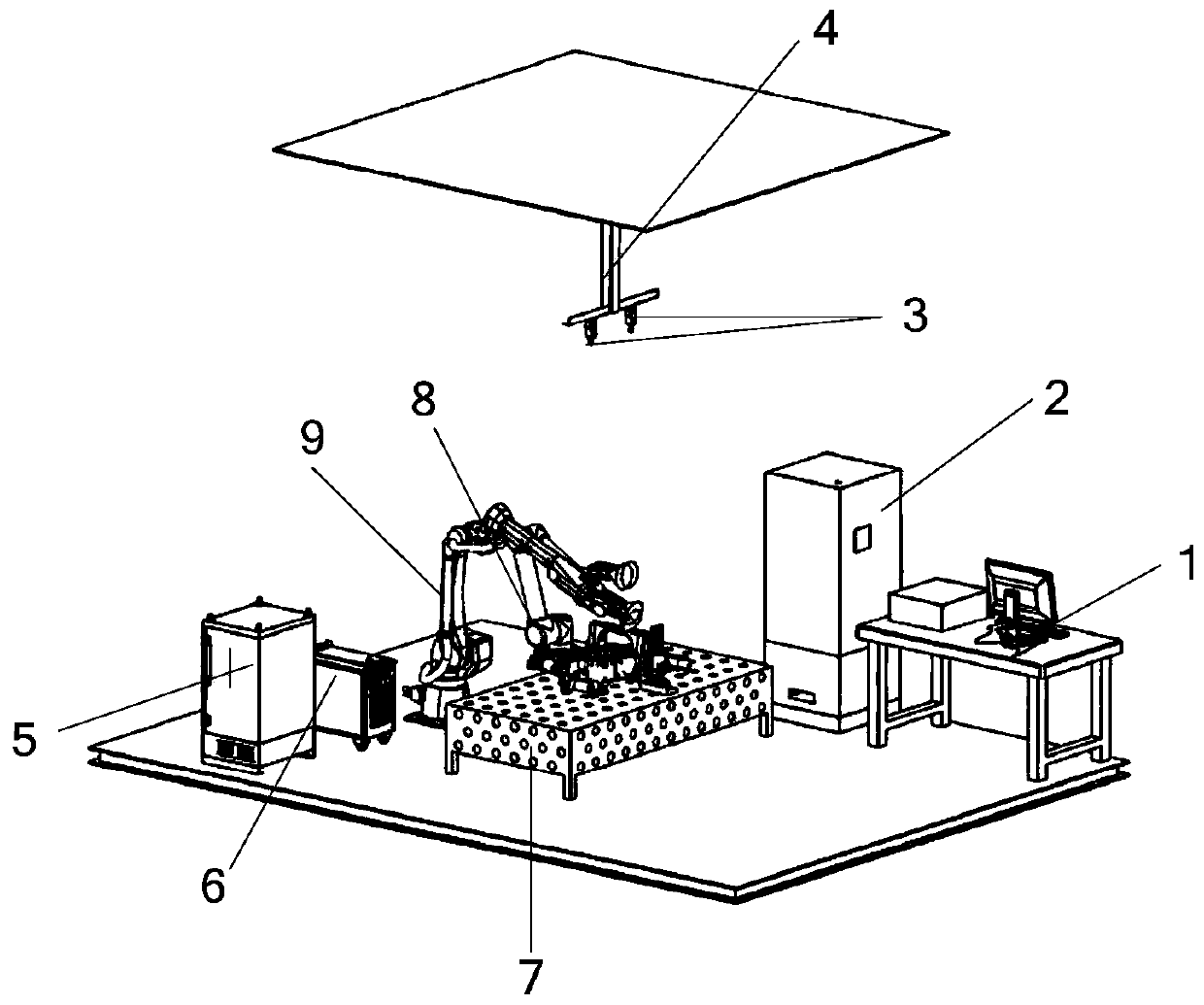

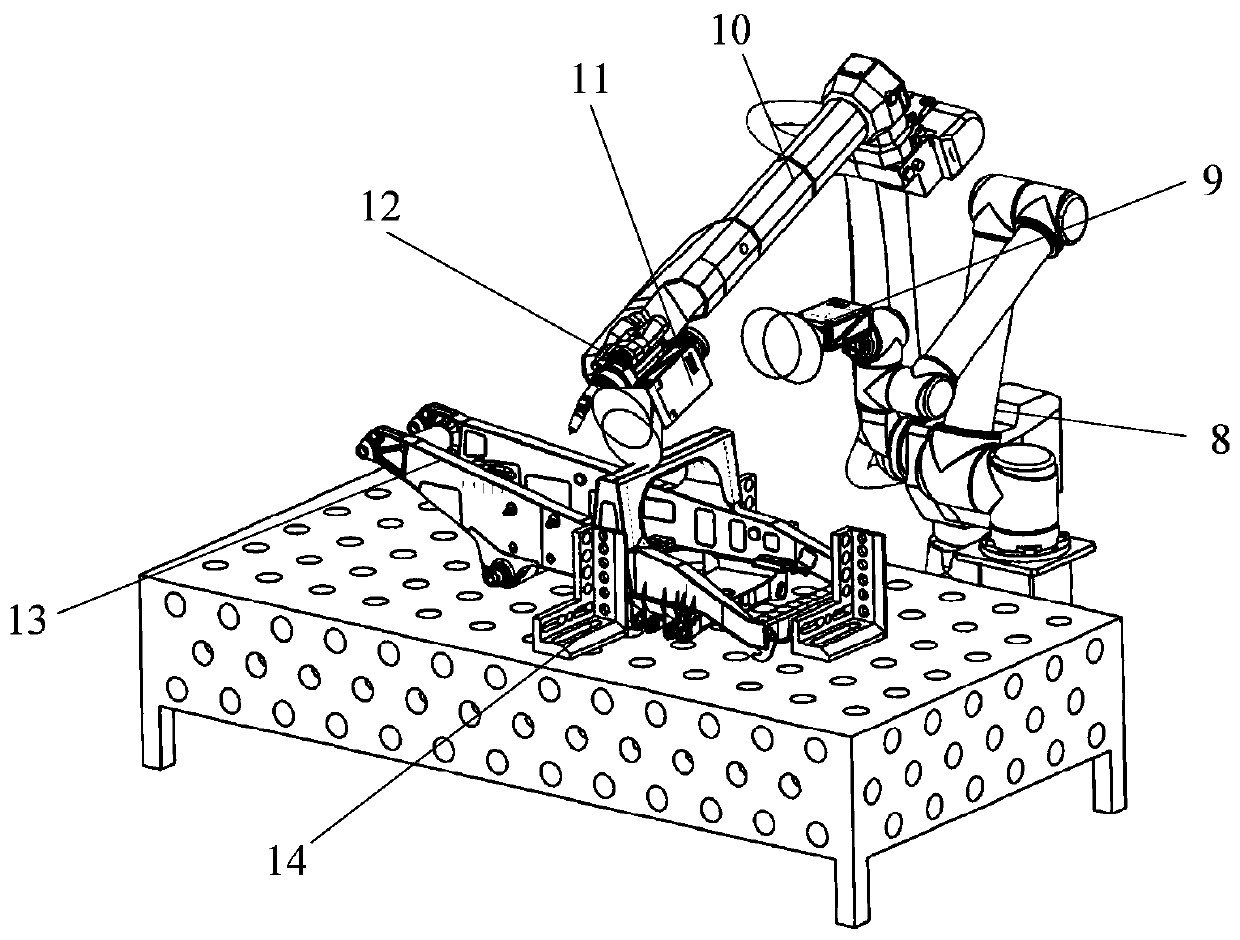

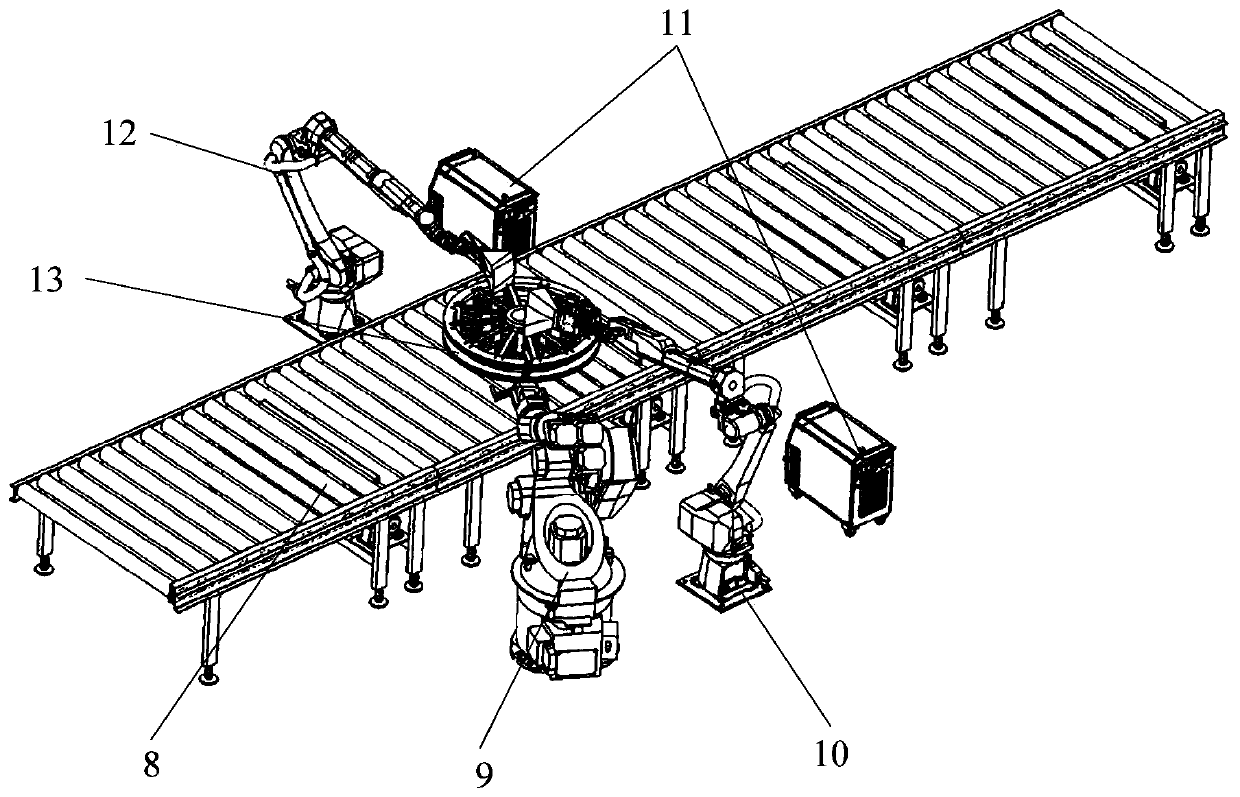

Flexible welding robot system and welding method thereof

ActiveCN110524581AAvoid harmAchieve high flexibilityProgramme-controlled manipulatorTotal factory controlImaging processingSimulation

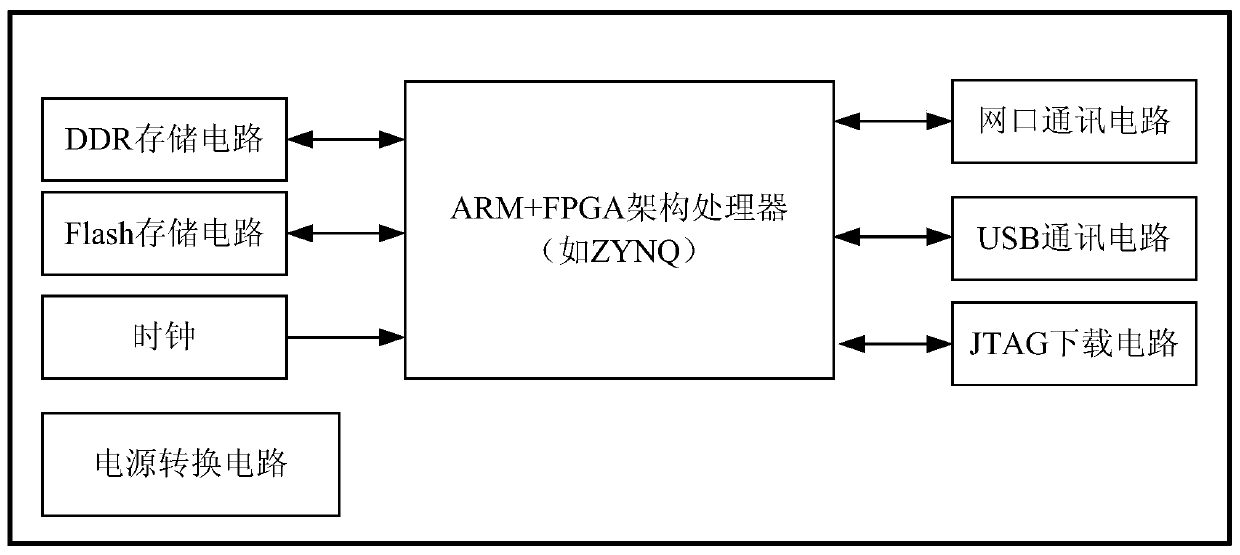

The invention discloses a flexible welding robot system and a welding method thereof. The welding method comprises the following steps: a global vision unit recognizes image information of a to-be-welded workpiece and locates the position of the to-be-welded workpiece; a flexible welding robot unit precisely recognizes the position of a to-be-welded workpiece through a precise positioning visual assembly, an image processing control machine solves a welding path, and the flexible welding robot conducts welding operation. A flexible detection robot unit recognizes the geometric dimension and quality of the appearance of a welded workpiece through a stereoscopic vision detection assembly, generates a welding quality report according to parameter information set by a user, and transmits a position with the welding deviation exceeding a threshold value and deviation value information to the flexible welding robot for repair welding. A master control unit executes image processing, data communication and motion control of the welding robot and the detection robot; and a workbench unit is used for quickly clamping different types of welding workpieces. The harm of welding operation to the body of a worker is avoided, and high flexibility and intelligence of the flexible welding robot system are achieved.

Owner:XIAN ZHONGKE PHOTOELECTRIC PRECISION ENG CO LTD

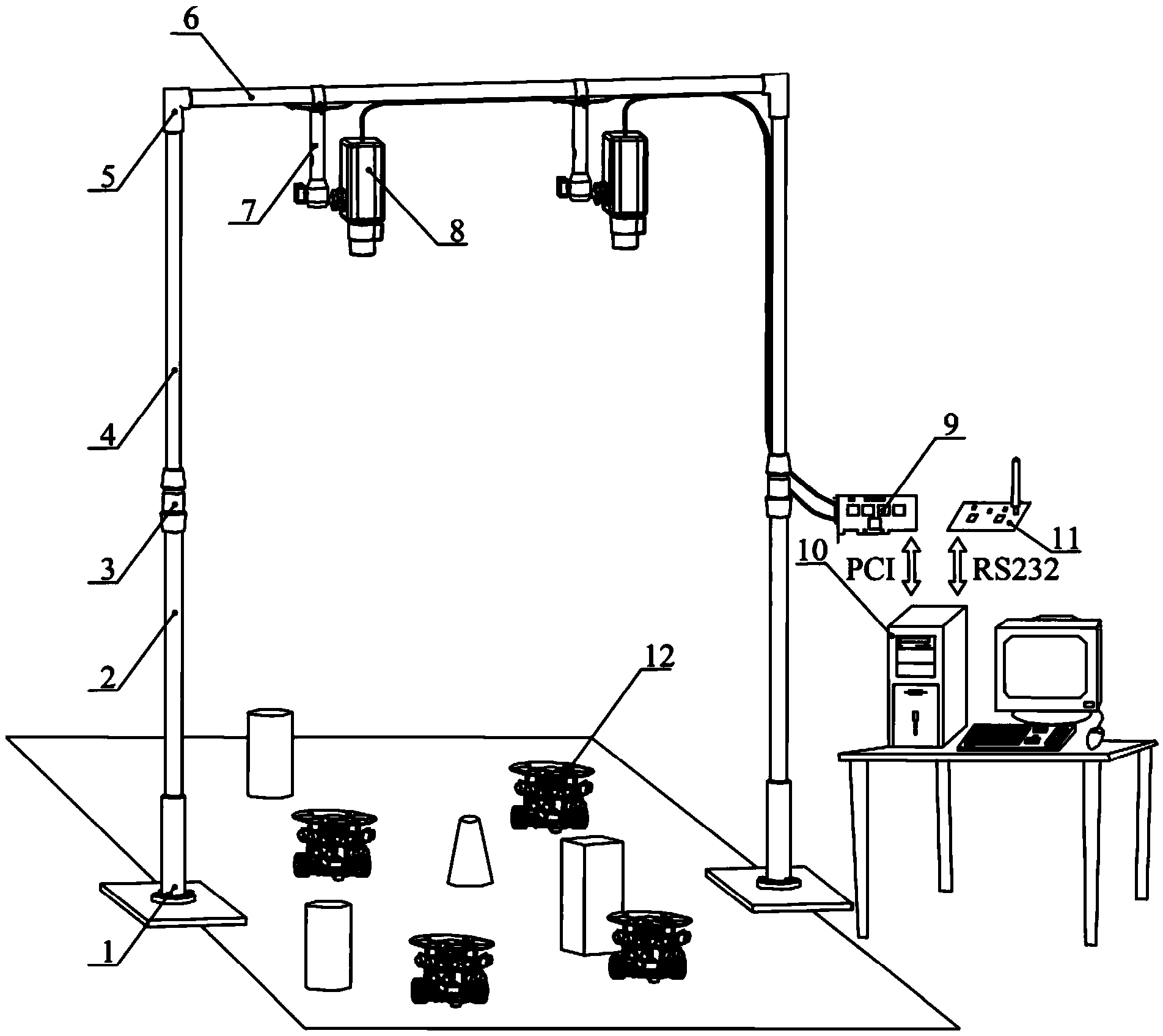

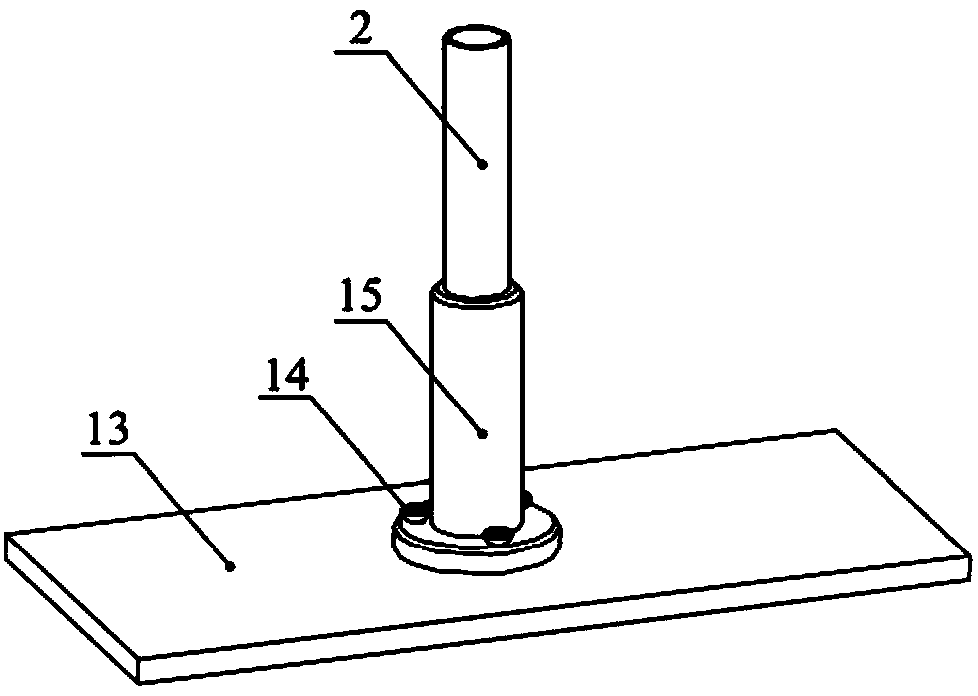

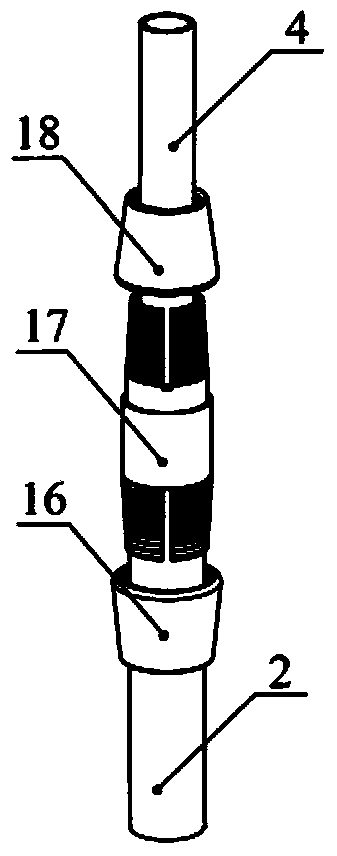

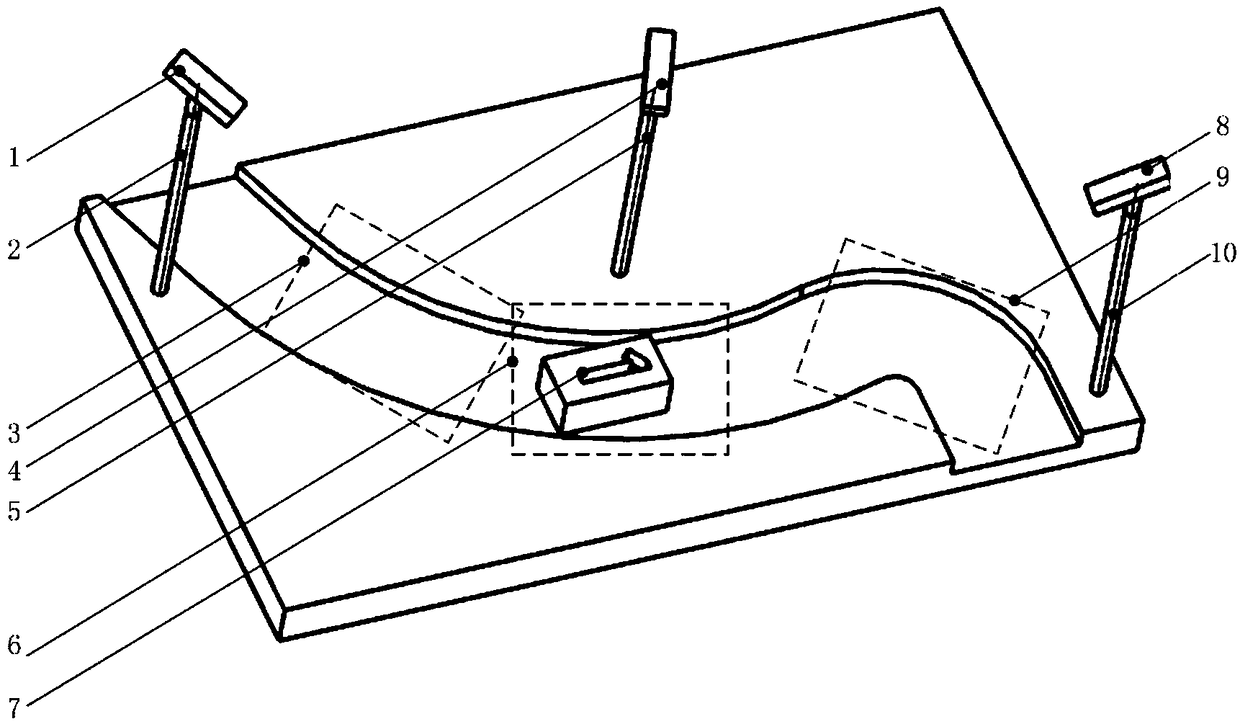

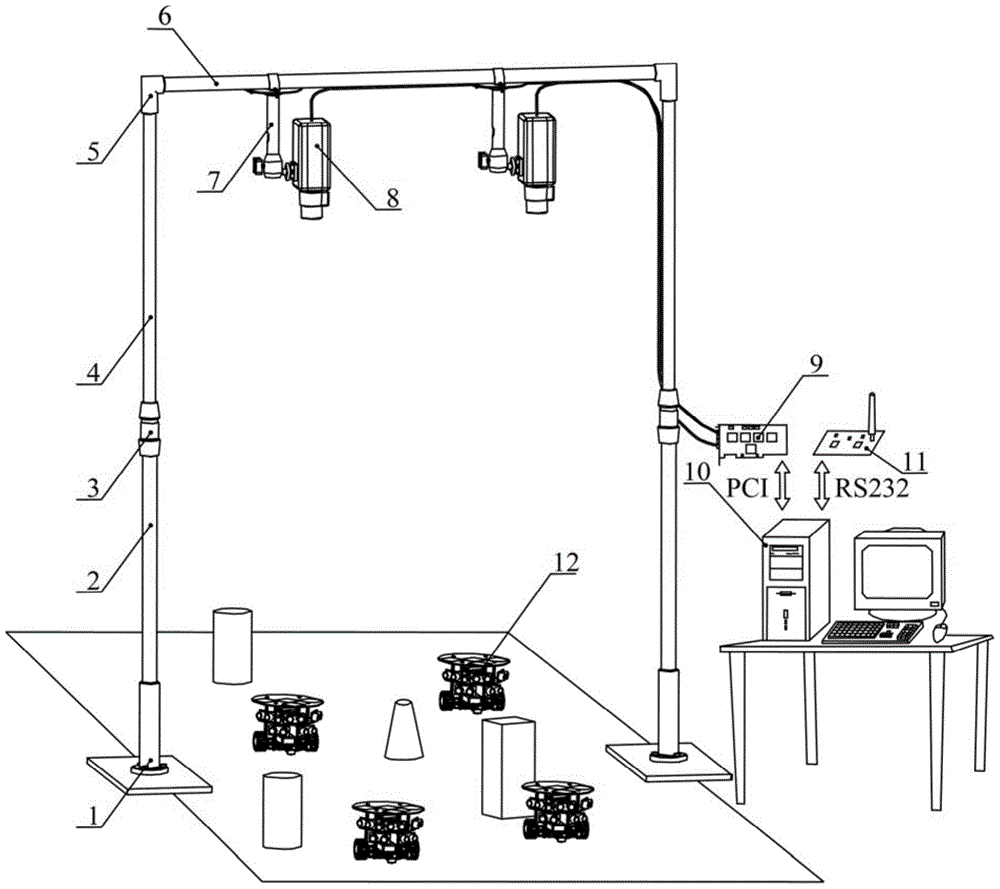

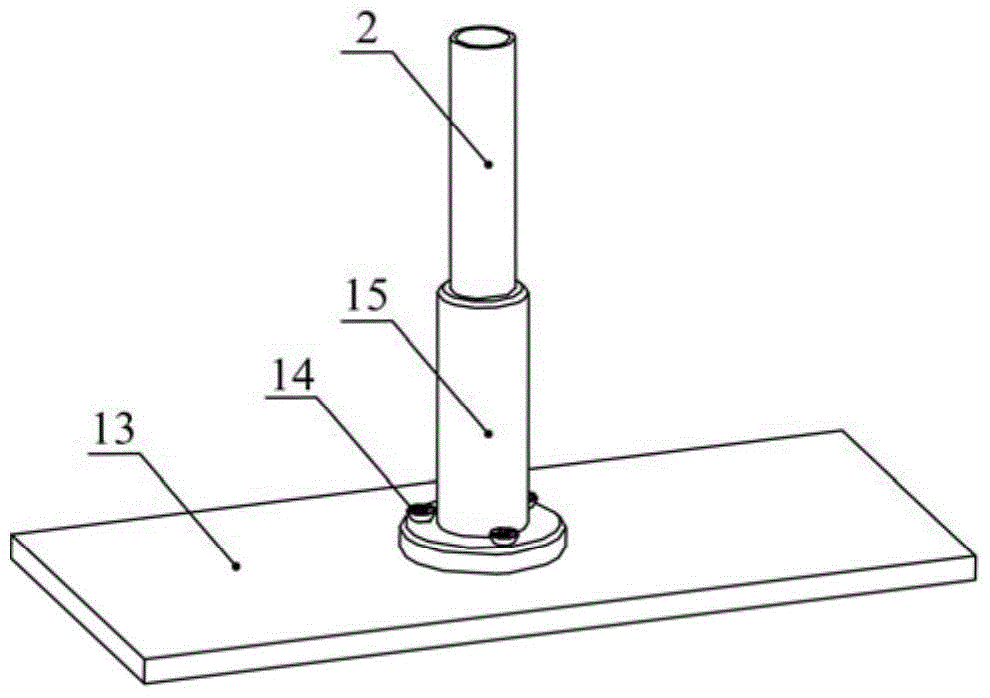

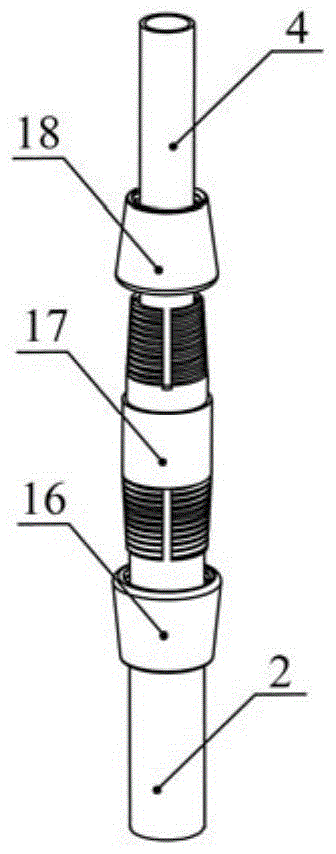

Multi-mobile-robot system collaborative experimental platform and visual segmentation and positioning method thereof

InactiveCN103970134AHigh positioning accuracySmall amount of calculationPosition/course control in two dimensionsOptical flowCcd camera

The invention discloses a multi-mobile-robot system collaborative experimental platform and a visual segmentation and location method of the collaborative experimental platform. The collaborative experimental platform is composed of a global vision monitoring system and a plurality of mobile robots the same in mechanism, wherein the global vision monitoring system is composed of an n-shaped support, a CCD camera, an image collection card and a monitoring host, and the CCD camera is installed on the n-shaped support and arranged corresponding to the mobile robots and connected with the image collection card installed in a PCI slot of the monitoring host through a video line. The visual segmentation method is combined with a frame difference method and a light stream method to segment a barrier. Threshold segmentation is performed based on a color code plate to achieve the visual location method. Experimental verification of collaborative technologies such as formation control, target search, task allocation and route planning in a multi-mobile-robot system can be met, and the multi-mobile-robot system collaborative experimental platform has the advantages of being low in cost and rapid to construct.

Owner:JIANGSU UNIV OF SCI & TECH

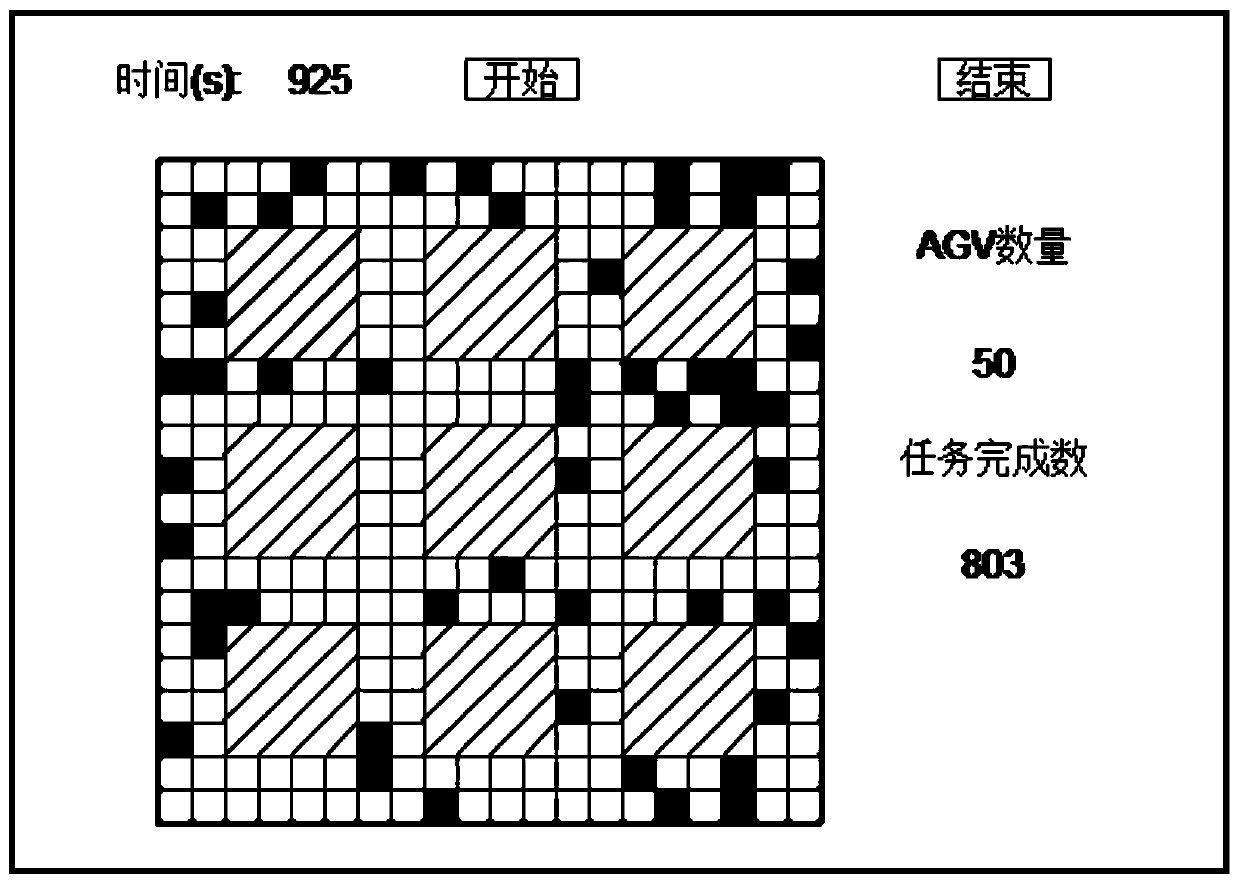

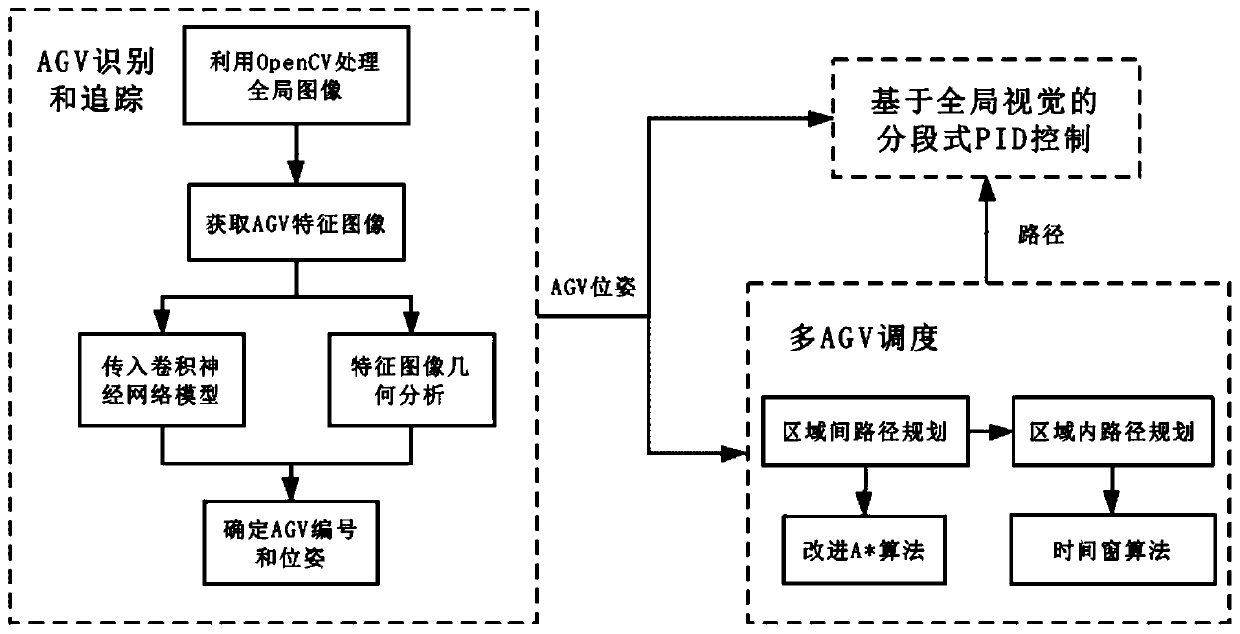

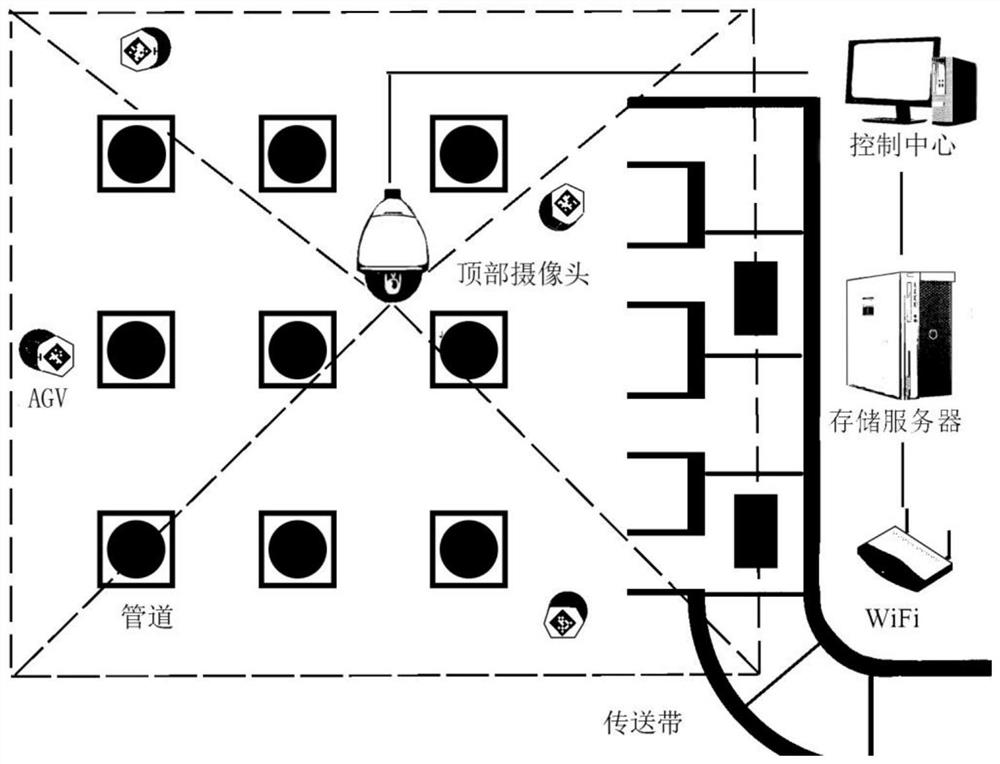

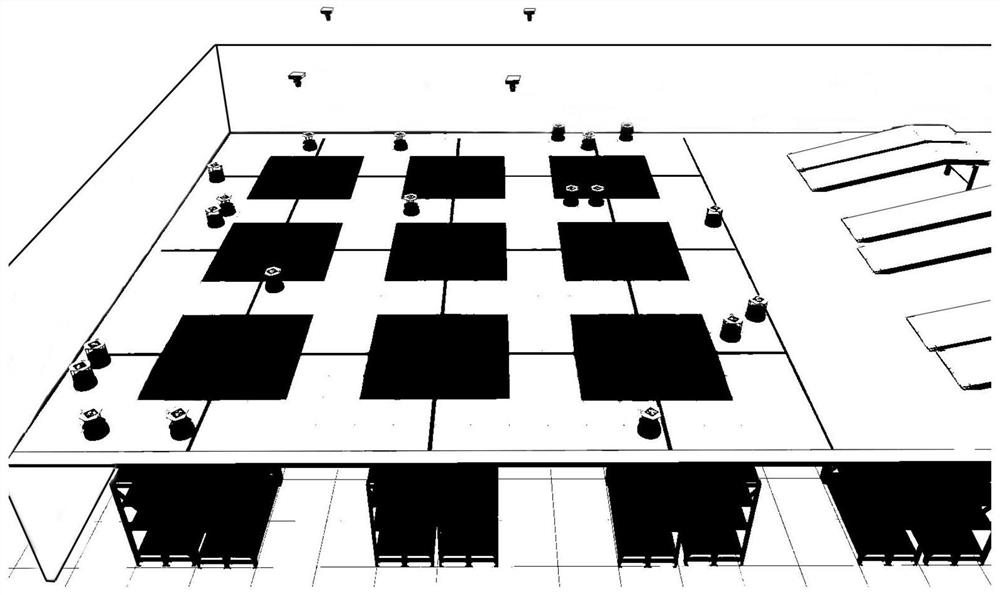

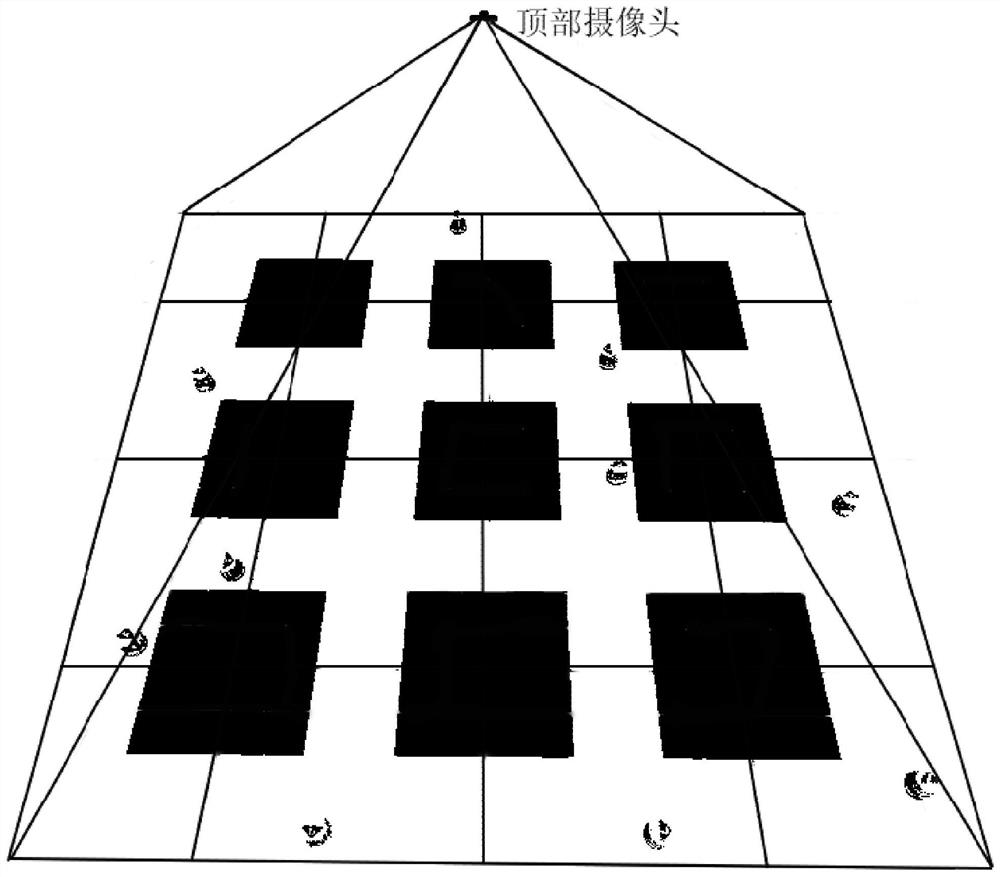

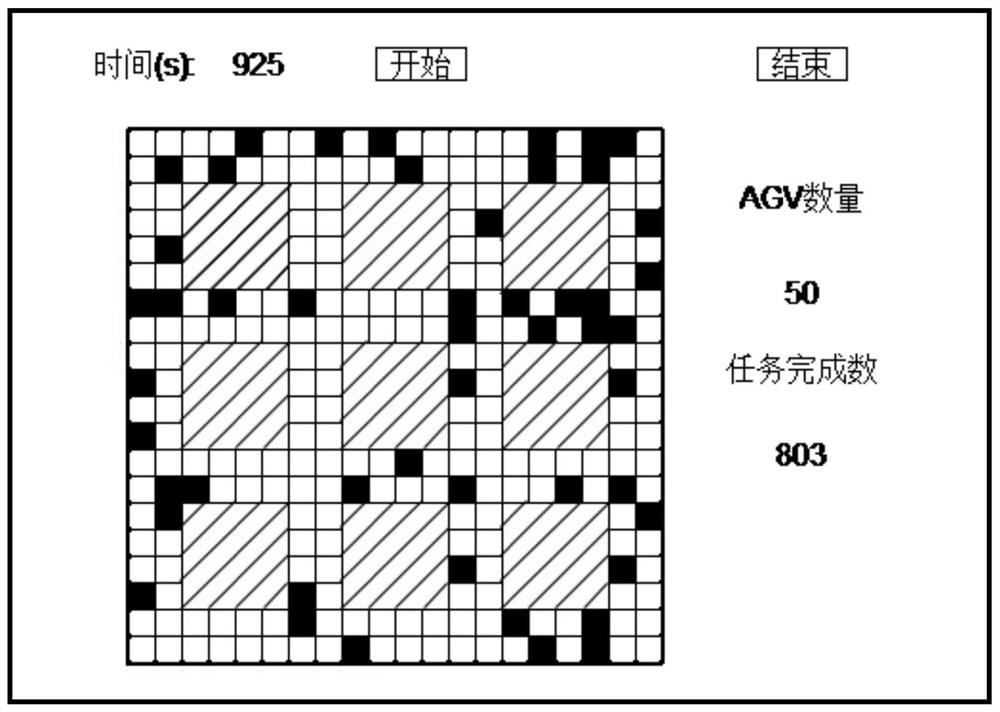

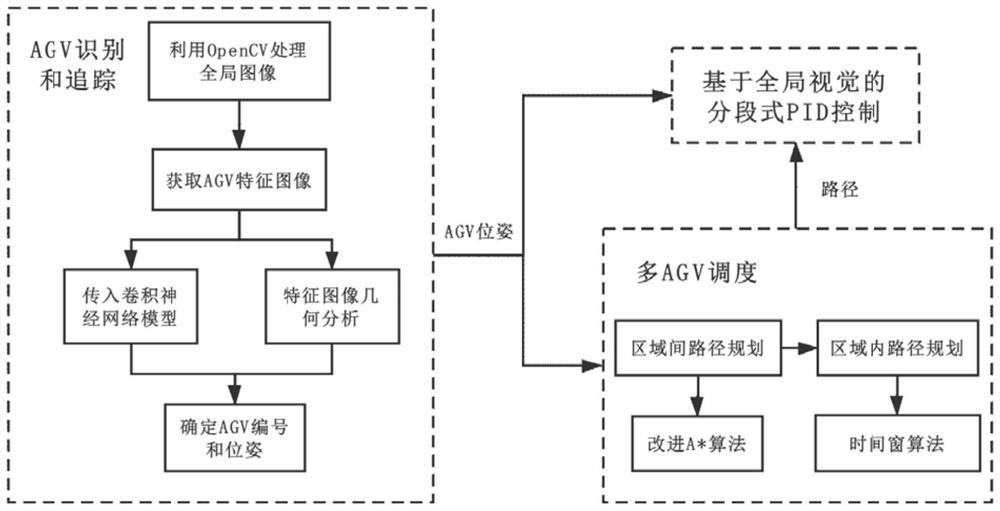

Warehouse navigation intelligent vehicle scheduling method based on global vision

ActiveCN110780671ASmooth motionReduce configuration costsPosition/course control in two dimensionsPid control algorithmSimulation

The invention discloses a warehouse navigation intelligent vehicle scheduling method based on global vision. The warehouse navigation intelligent vehicle scheduling method comprises the steps of: 1) shooting a global image by using a top camera on a ceiling of a warehouse; 2) processing the global image to track intelligent vehicles in the warehouse, and identifying a number of each AGV by using convolutional neural network identification; 3) dividing the warehouse into a plurality of regions, and sending instructions to control the AGVs by using a global controller, so that the AGVs operate according to routes; 4) performing regional path planning by using an improved A* algorithm to obtain an optimal passing region set of the AGVs from a current region to a target region; 5) utilizing atime window algorithm to plan routes in the region so that the AGV can safely exit the current region; 6) and utilizing a segmented PID control algorithm to enable the AGVs to complete scheduled transportation tasks along the planned routes. The warehouse navigation intelligent vehicle scheduling method fully allocates AGV passage tasks to all the regions, and improves the operation efficiency ofan AGV storage system.

Owner:SOUTH CHINA UNIV OF TECH

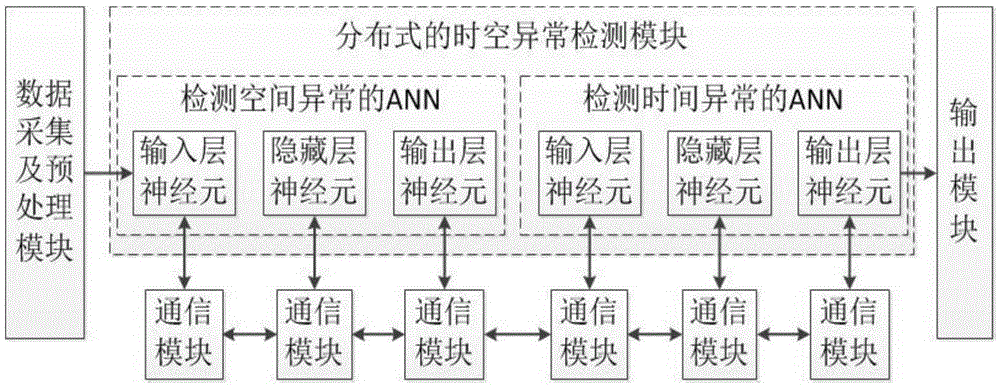

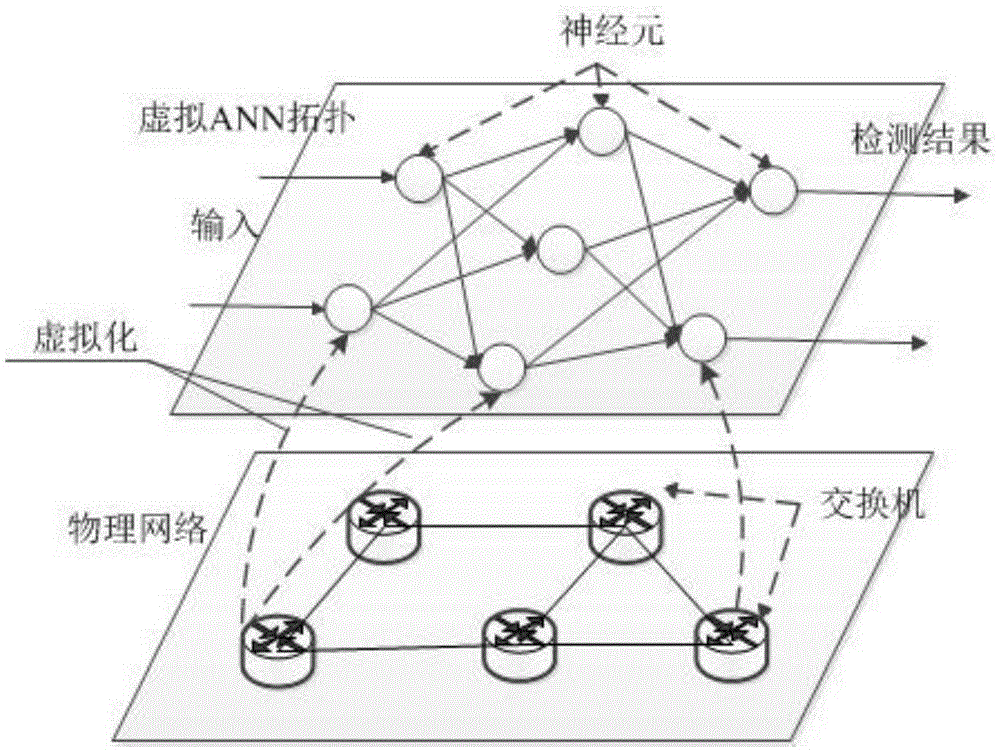

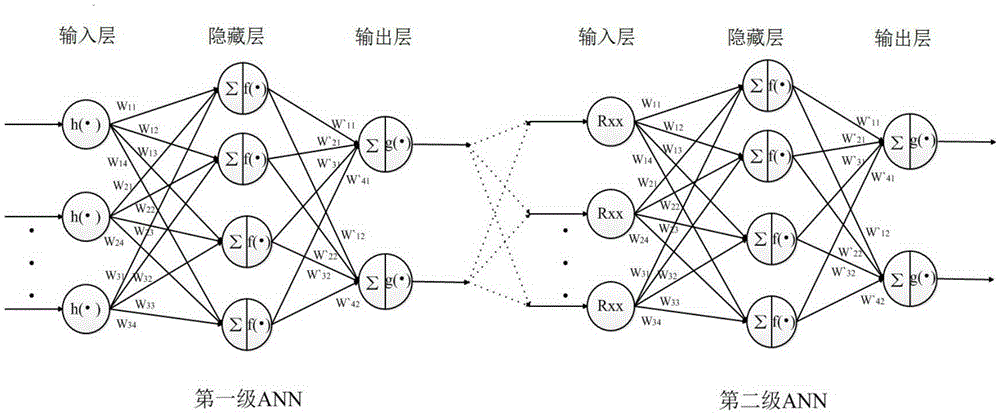

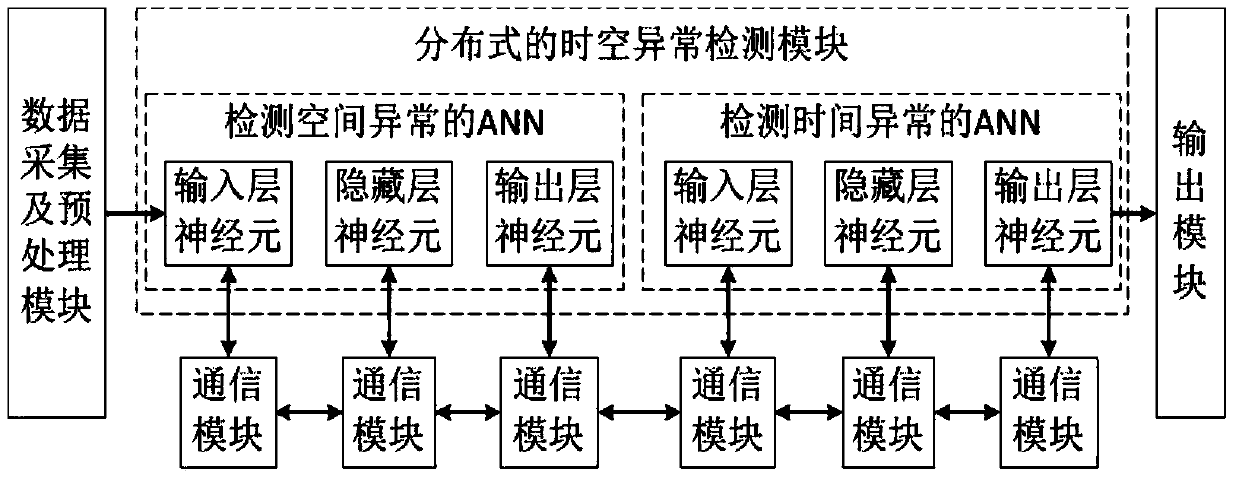

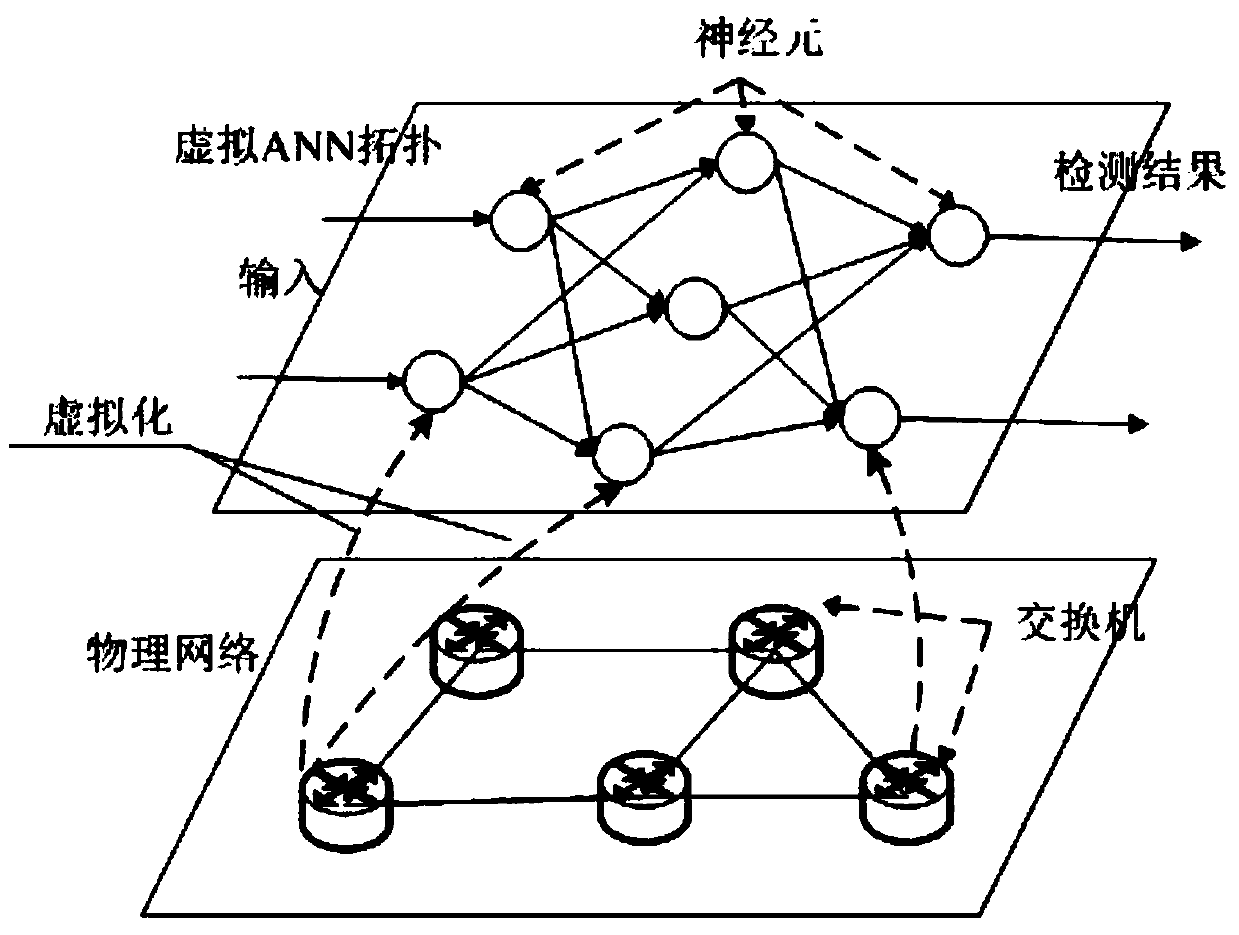

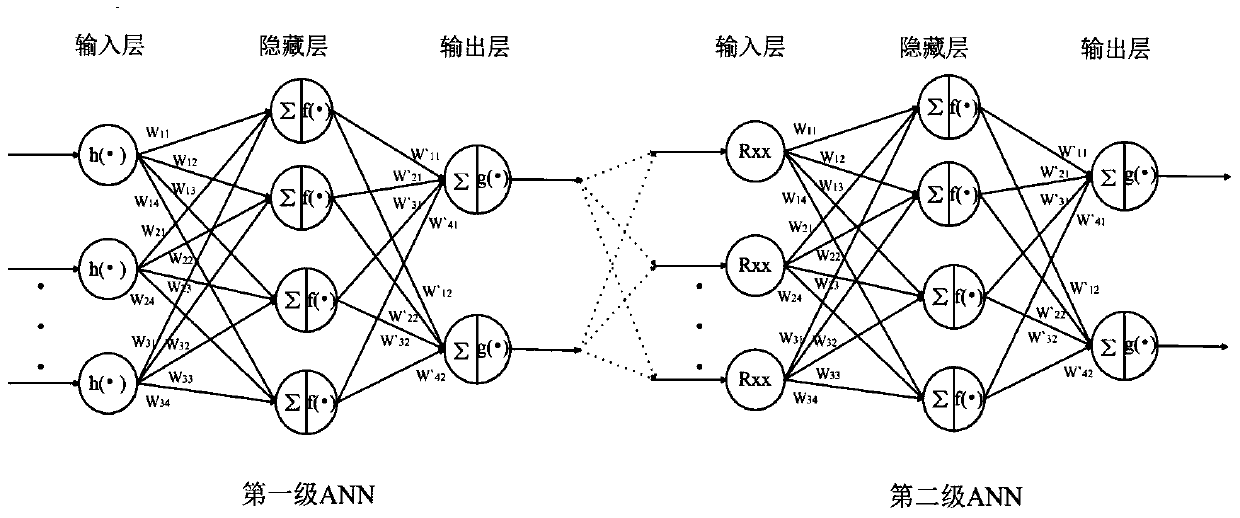

SDN network DDoS and DLDoS distributed space-time detection system

The invention relates to a SDN network DDoS and DLDoS distributed space-time detection system comprising detection nodes arranged in various SDN switches. Each detection node comprises a data acquisition module, a space-time anomaly detection module, and an output module. The data acquisition module is used for acquiring network flow flowing across the SDN switch. The space-time anomaly detection module is used for detecting the network flow acquired by the data acquisition module in a spatial domain, determining whether suspicious flow exists, and confirming whether DDoS or DLDoS exists in a time domain on the basis of a detection result of the spatial domain. The output module is used for storing the detection result according to a set format. In view of the characteristics of the DDoS and the DLDoS, the detection system detects and discriminates the DDoS and the DLDoS in the network flow spatial domain and time domain, and achieves global vision in abnormity detection by using a distributed virtual ANN overlay network.

Owner:SUN YAT SEN UNIV

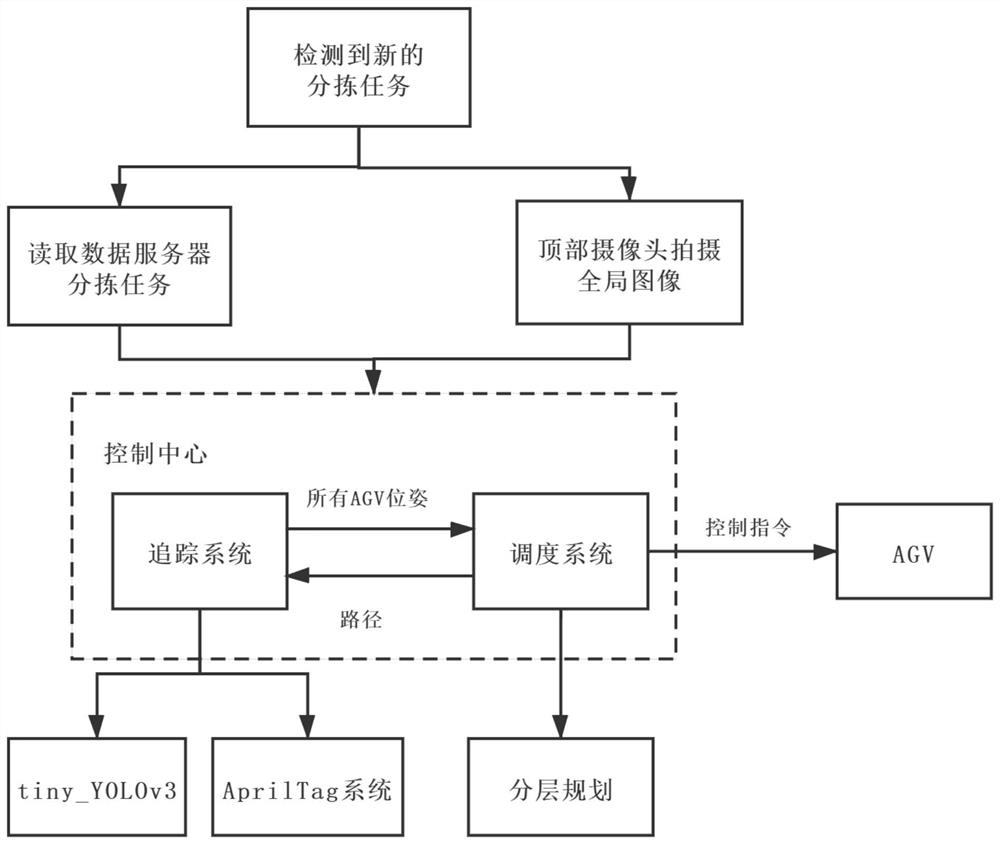

Multi-warehouse AGV tracking method based on global vision

ActiveCN112149555AReal-time accurate trackingReal-time precise schedulingForecastingCharacter and pattern recognitionInformation controlVisual perception

The invention discloses a multi-warehouse AGV tracking method based on global vision, and the method comprises the following steps: 1), photographing a global image of a warehouse, and transmitting the global image to a control center; 2) processing the global image, enabling the tracking system to use a target detection algorithm to identify the plurality of AGVs in the first frame, and determining the ID and pose of each AGV according to the AprilTag code at the top of the AGV; 3) dividing the warehouse into a plurality of areas, and performing path planning of each AGV by using a hierarchical planning algorithm after the scheduling system acquires AGV pose information sent by the control center; 4) combining the path information of each AGV with a multi-AGV tracking algorithm, predicting the positions of the AGVs, selecting an area where the AGVs are located by using a boundary frame, and determining the information of each AGV; and 5) enabling the control center to send the speed instruction converted from the path information to the AGV, and controlling the AGV to complete a cargo sorting task.

Owner:SOUTH CHINA UNIV OF TECH

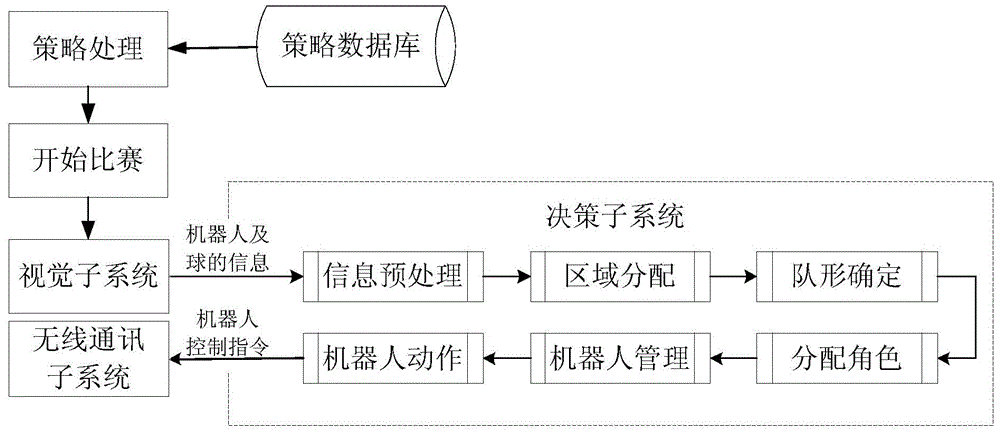

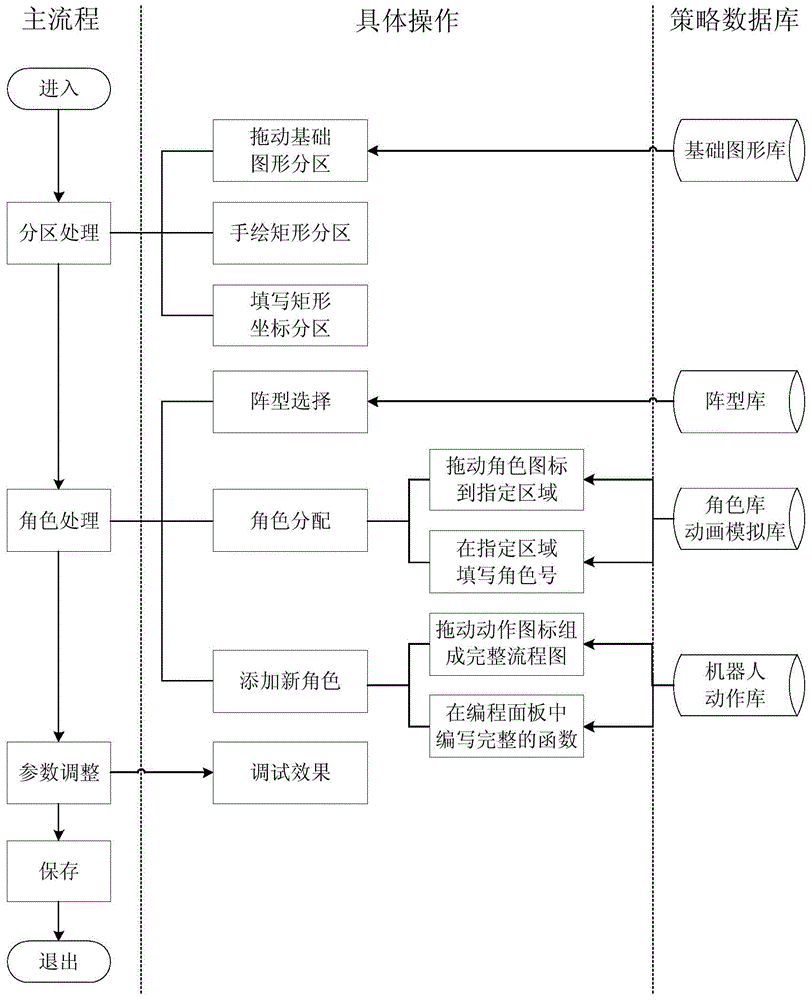

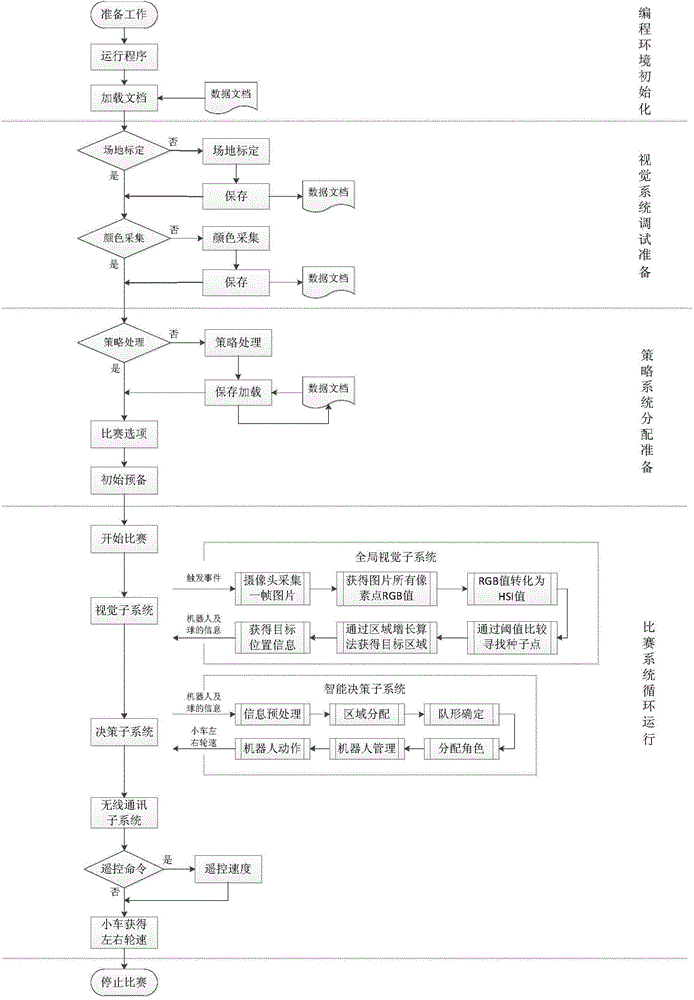

Strategy regulatory module of global vision soccer robot decision-making subsystem and method thereof

ActiveCN104834516AModify in timeEasy to operateSpecific program execution arrangementsSoccer robotOperability

The invention relates to a strategy regulatory module of a global vision soccer robot decision-making subsystem and a method thereof, wherein the module comprises a plurality of resource databases, such as a role library, a formation library, a basis graphics library, a robot action library, and so on. The module and the method of the invention can be used for re-drawing a competition area, assigning roles, modifying a role parameter and adding new role functions, and so on via graphical programming or code programming; especially the decision-making subsystem can be adjusted only on a strategy processing panel without entering into a complex program, which greatly strengthens the operability of the whole system. In addition to this, a strategy parameter can be directly modified in the game and taken effect in time so as to be convenient for an operator to timely modify our tactics according to different strategies of an opponent and a site situation.

Owner:周凡

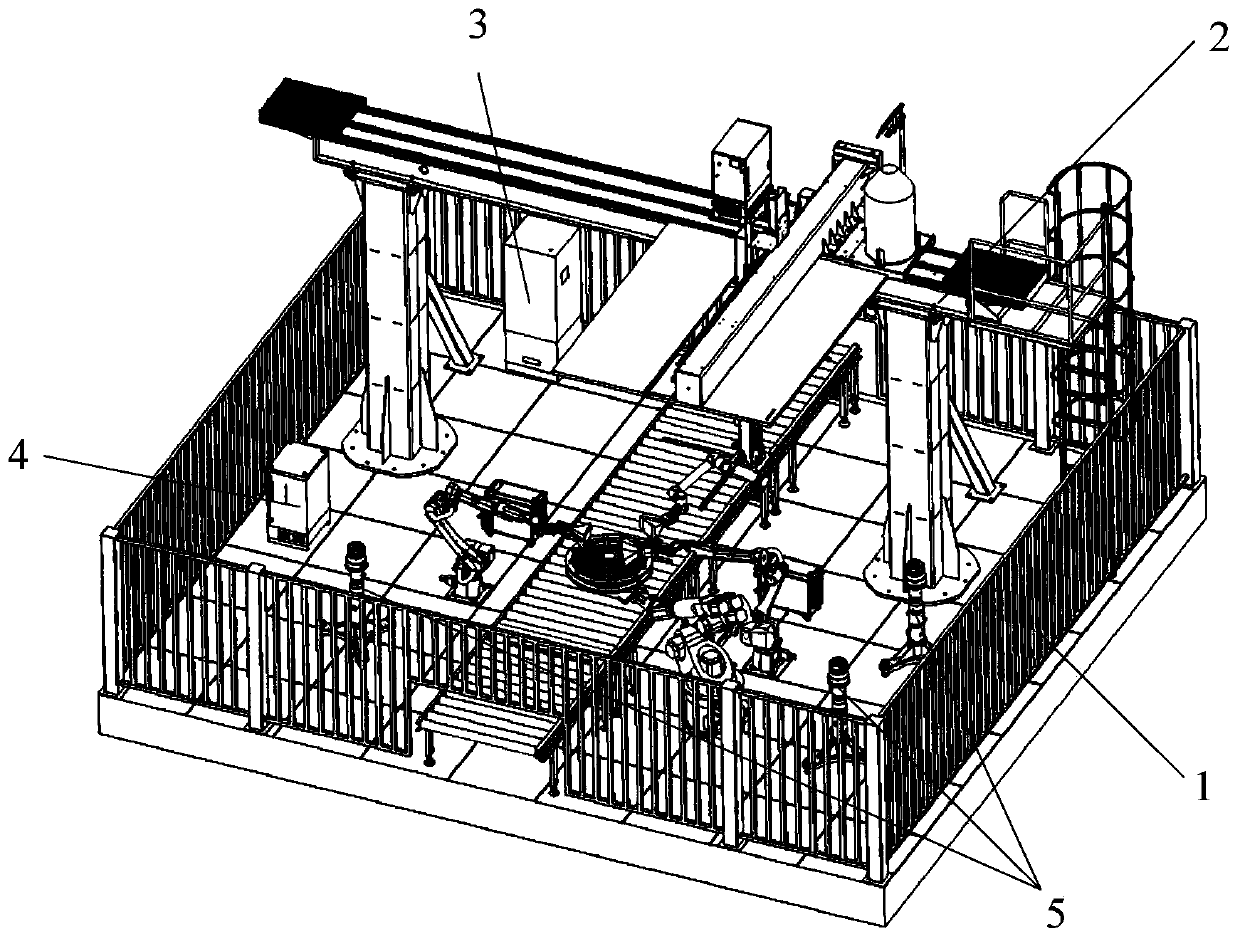

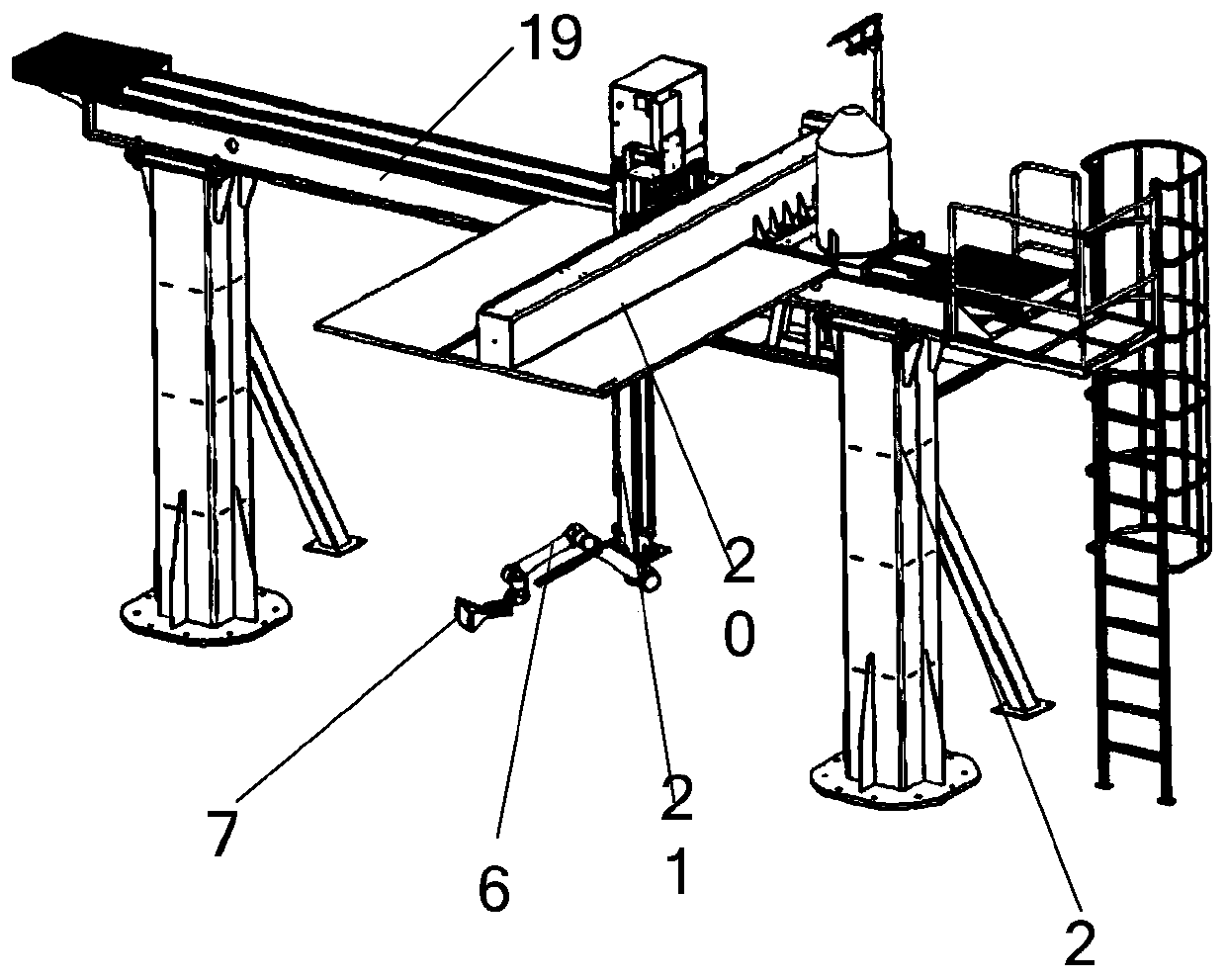

Flexible assembly welding robot workstation

ActiveCN110524582AImprove flexibilityHighly integratedProgramme-controlled manipulatorTotal factory controlEngineeringSpot welding

The invention discloses a flexible assembly welding robot workstation that comprises a global vision unit, a flexible welding robot group, a gantry truss detection unit, a master control unit and a conveying platform; the flexible welding robot group is arranged on the two sides of the conveying platform, welding workpieces are arranged on the conveying platform, the global vision unit is arrangedon the periphery of the flexible welding robot group, the gantry truss detection unit is arranged above the conveying platform, and the flexible welding robot group is controlled by the master control unit to conduct welding according to obtained image information. The high integration degree, high flexibility and high efficiency of the welding robot are achieved, the problems that manual assembly spot welding position deviation is large, and workpiece consistency is poor are solved, and the workpiece clamping and transferring frequency is effectively reduced. Multi-position assembly spot welding of workpieces is achieved, seamless connection of welding procedures is achieved, and the assembly precision, the welding consistency and the welding quality are improved.

Owner:XIAN ZHONGKE PHOTOELECTRIC PRECISION ENG CO LTD

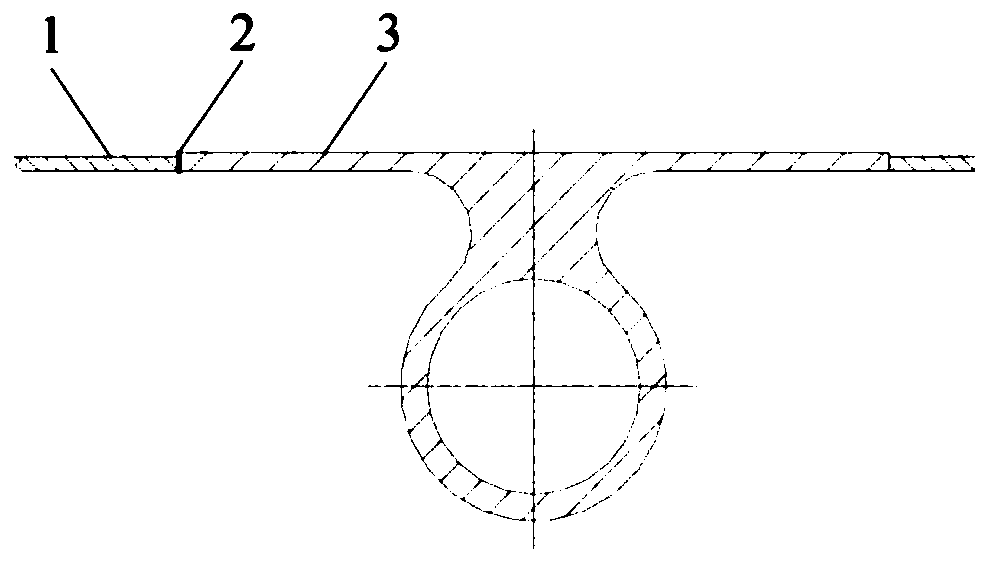

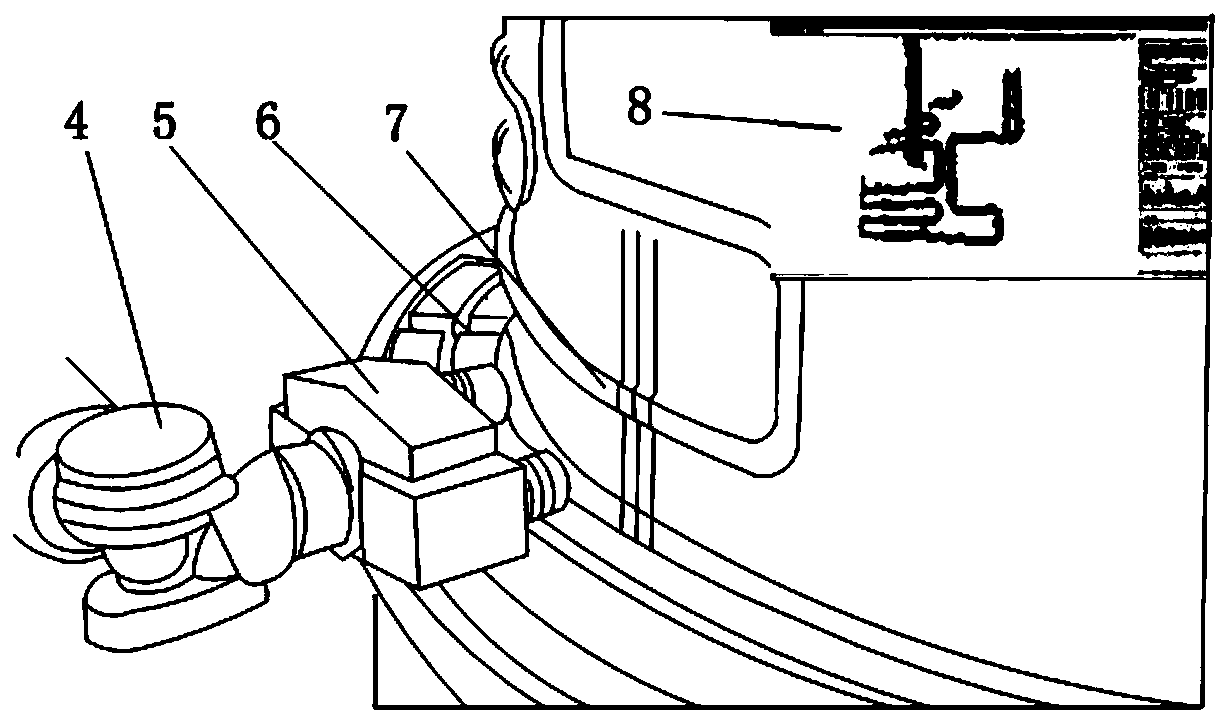

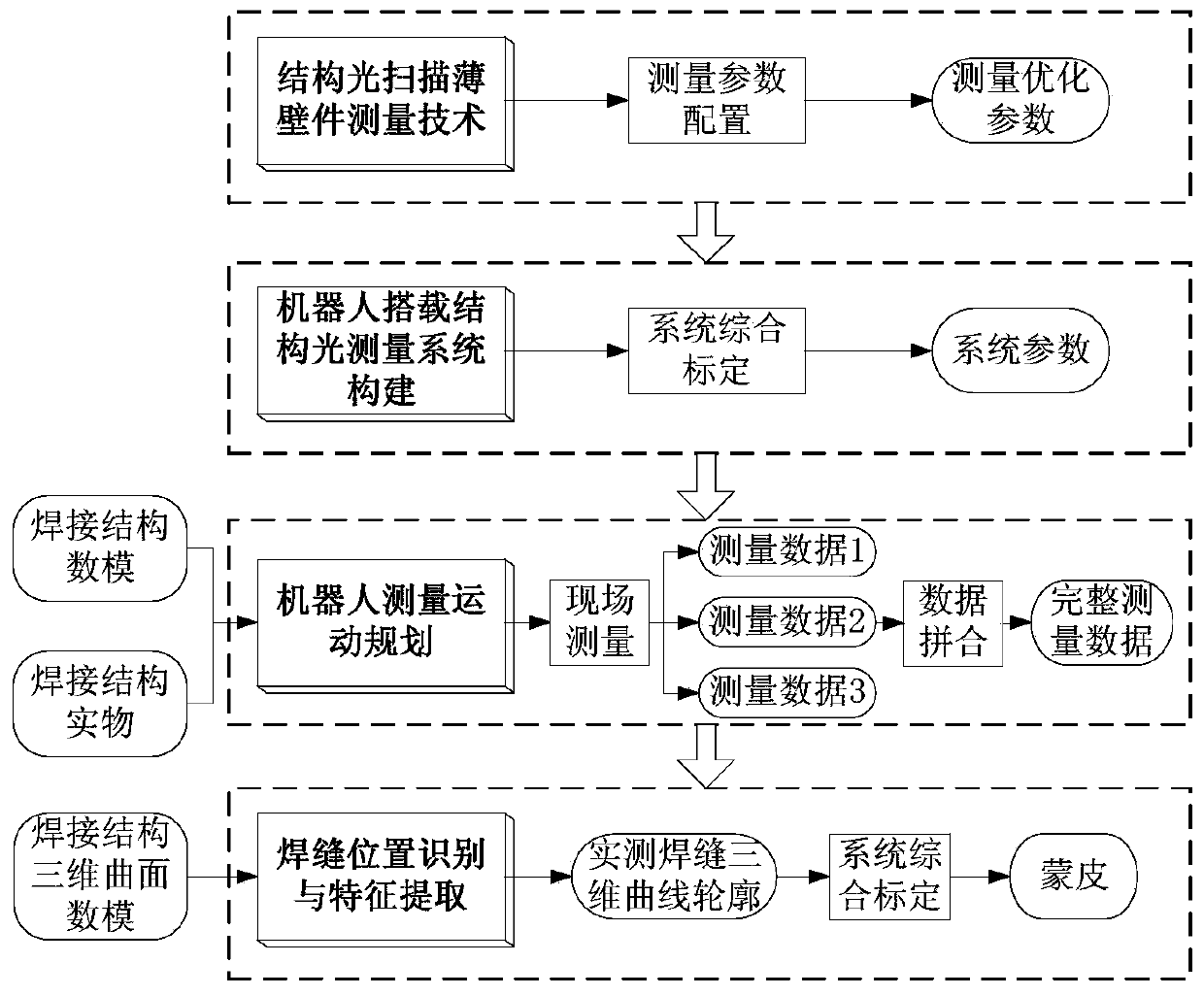

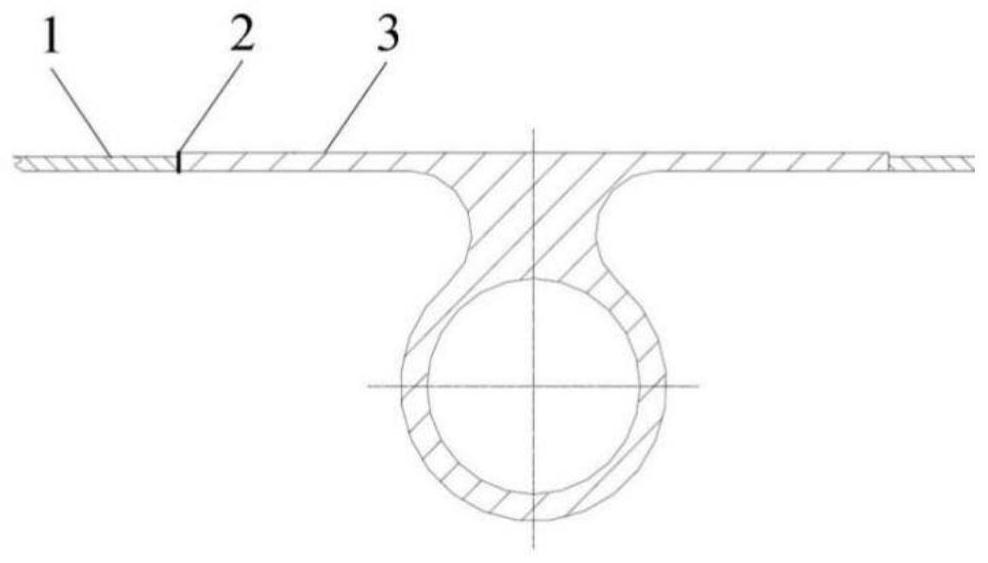

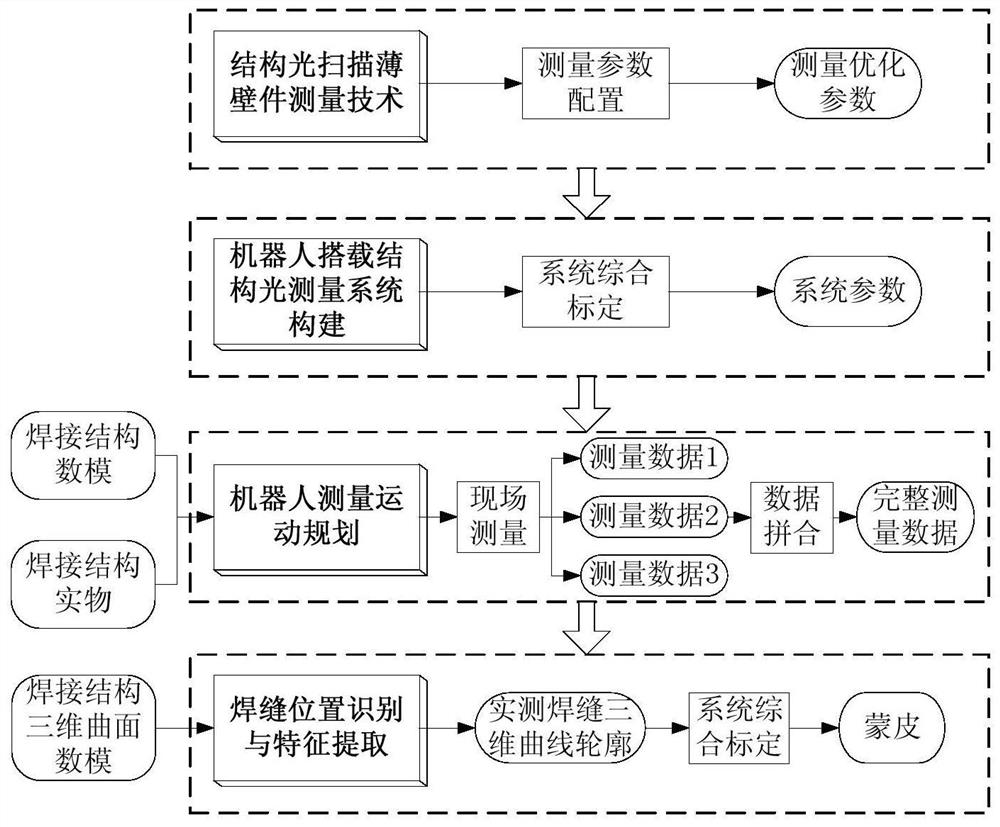

High-precision global vision measurement method for three-dimensional thin-wall structure weld joint

ActiveCN111189393AEasy to measure angleOptimizing Light Field StrengthProgramme-controlled manipulatorUsing optical meansEngineeringWeld seam

The invention relates to a high-precision global vision measurement method for a three-dimensional thin-wall structure weld joint. The method comprises the following steps: (1) preparing a workpiece and a measurement system; (2) calibrating the measurement system and optimizing parameters; (3) measuring a space curve weld joint; (4) carrying out data splicing and welding seam extraction; (5) splicing a skin and blanking; and (6) calibrating a robot welding track. The method is innovatively applied to the field of measurement of the three-dimensional contour weld joint; on-site automatic measurement of the three-dimensional contour of the actual space curve weld joint is achieved, the weld joint position and the contour characteristics of the weld joint are recognized and extracted, on thisbasis, weld joint track calibration in the robot off-line programming process is achieved, and accurate discharging of the skin in the weld joint assembling process can be achieved.

Owner:BEIJING SATELLITE MFG FACTORY

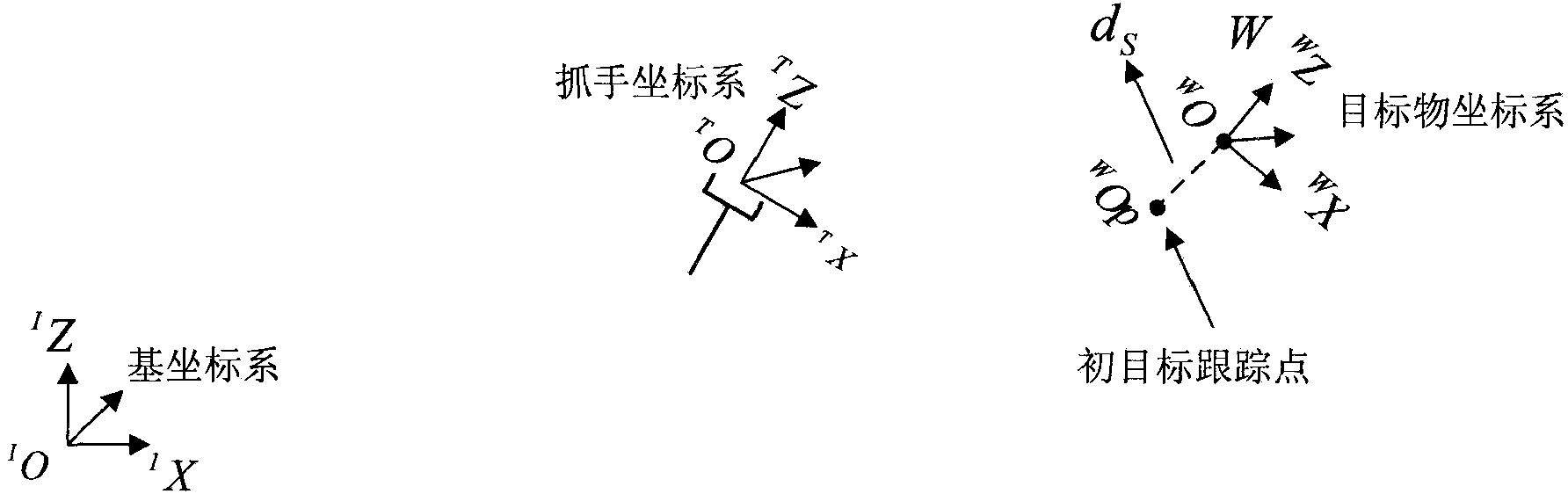

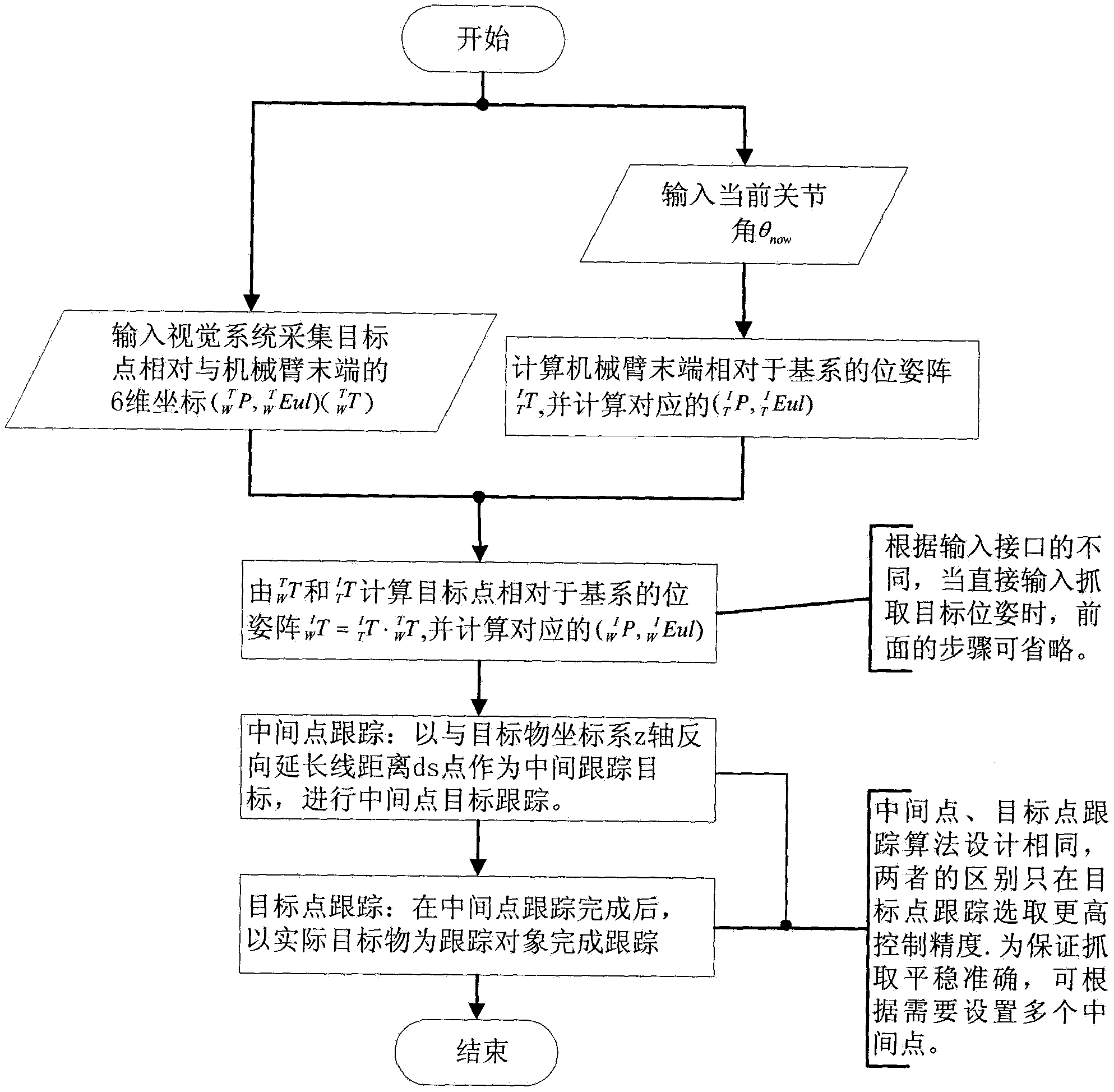

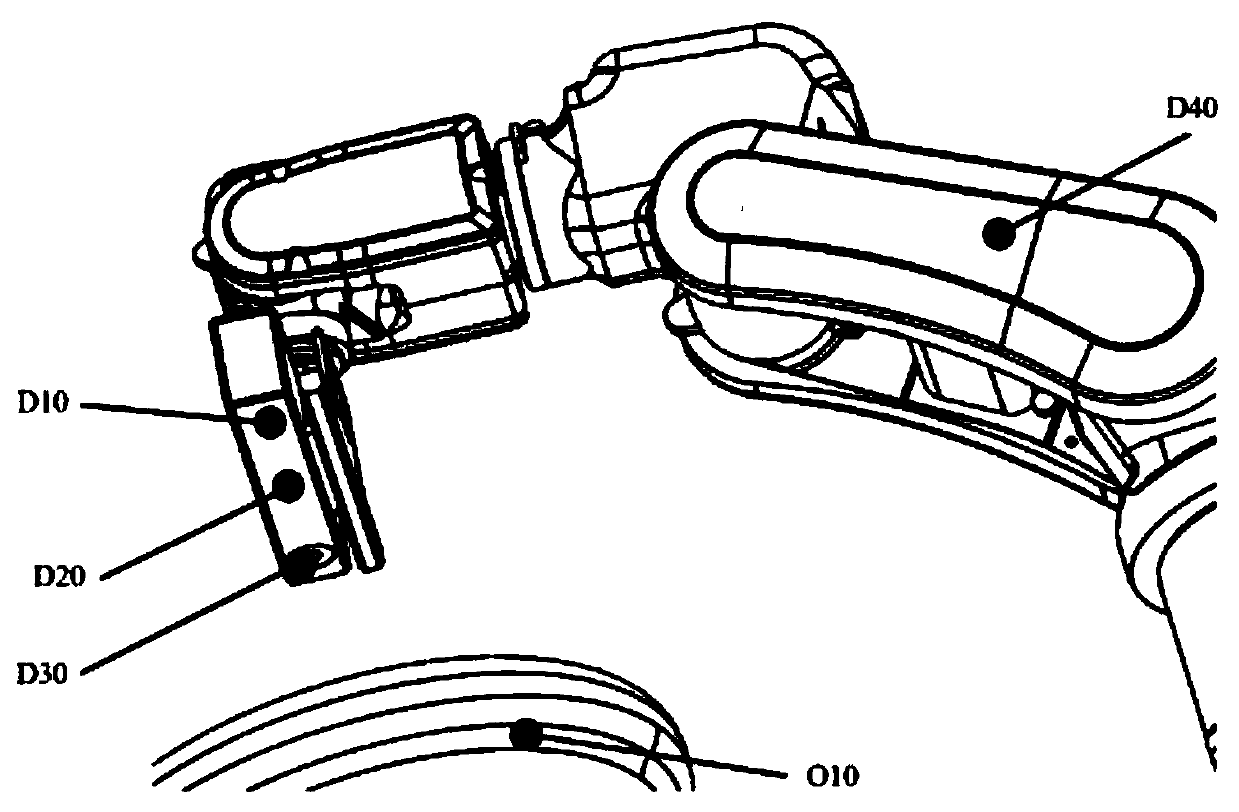

Autonomous motion planning method for large space manipulator based on multi-channel vision fusion

ActiveCN105659726BAchieve voluntary movementAchieving autonomous trackingProgramme-controlled manipulatorScale spaceComputer vision

The autonomous motion planning method of large-scale space manipulator based on multi-channel vision fusion. In multi-channel vision fusion, the priority of the global vision system is lower than that of the local vision system, and the priority of the local vision system is lower than that of the wrist vision system. The global vision system can obtain the pose information of the target object, but neither the local vision system nor the wrist vision system can obtain the pose information of the target object, so the target pose information obtained by the global vision system is used for motion planning; For the movement of the arm body, if the local vision system can obtain the pose information of the target object, before the manipulator body reaches the middle point of tracking, the target pose information obtained by the local vision system is used for motion planning; when the manipulator body moves to the tracking At the middle point, at this time, the wrist vision system has been able to obtain the pose information of the target object, and the motion planning is performed using the target pose information obtained by the wrist vision system. The invention realizes the tasks of autonomous movement, autonomous tracking and autonomous capture of target objects in a large space range.

Owner:BEIJING INST OF SPACECRAFT SYST ENG

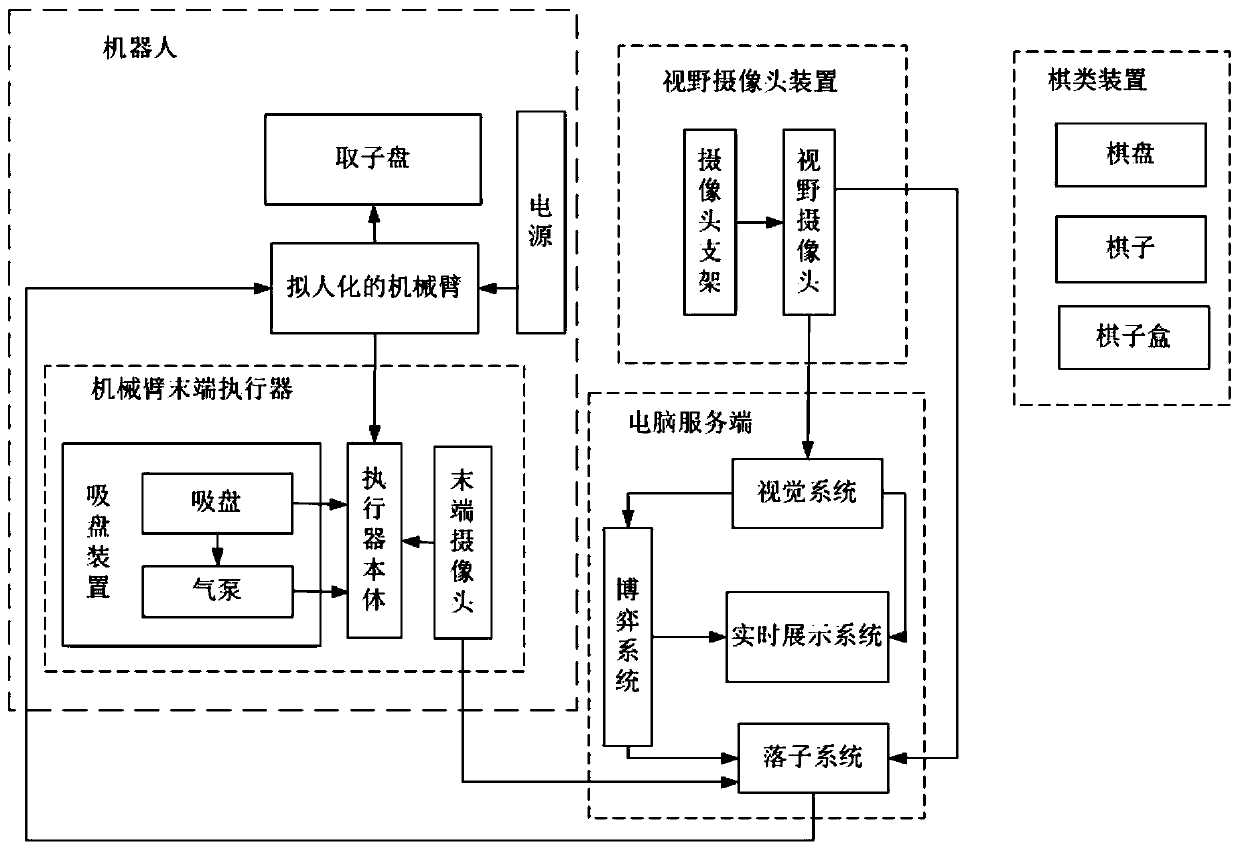

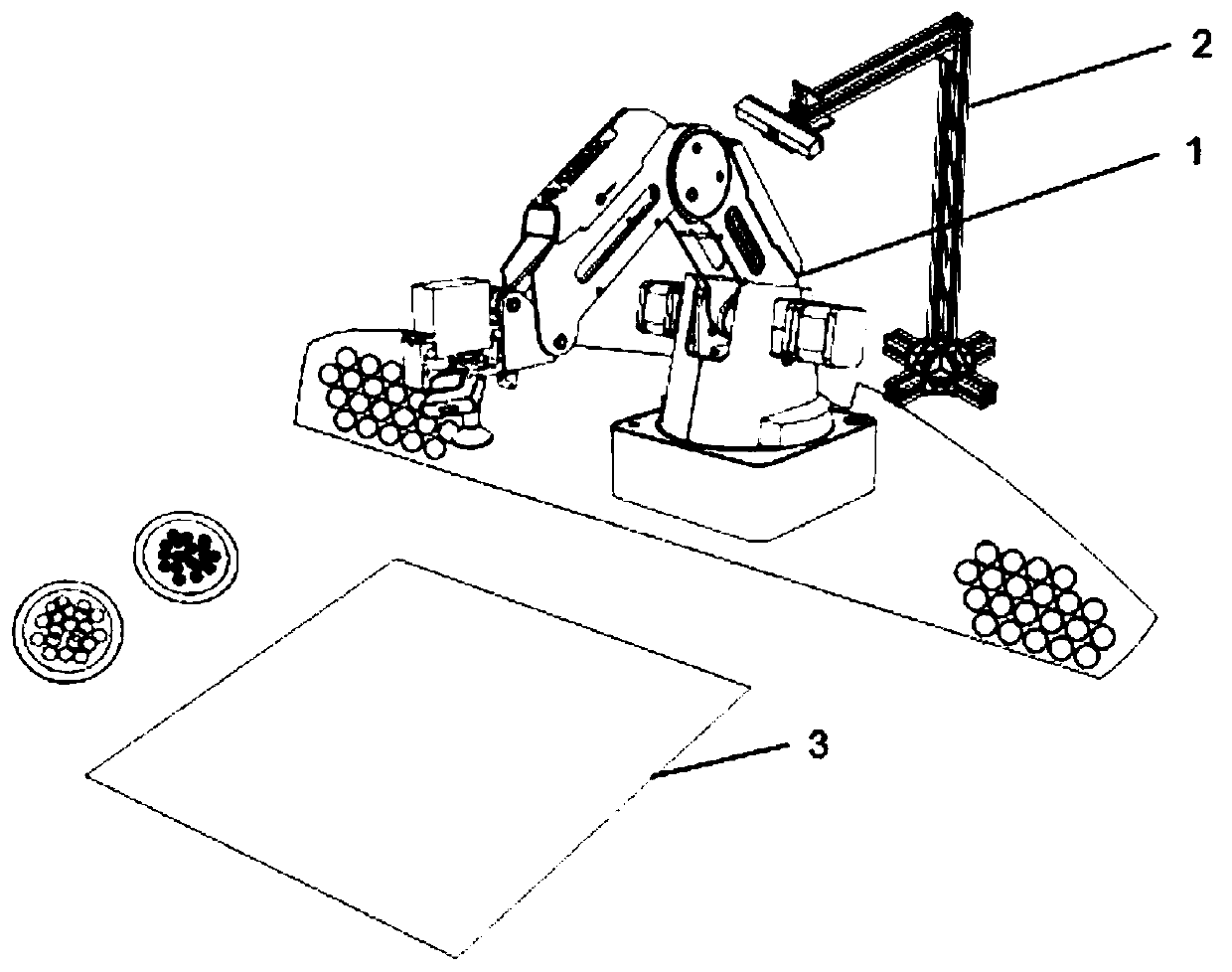

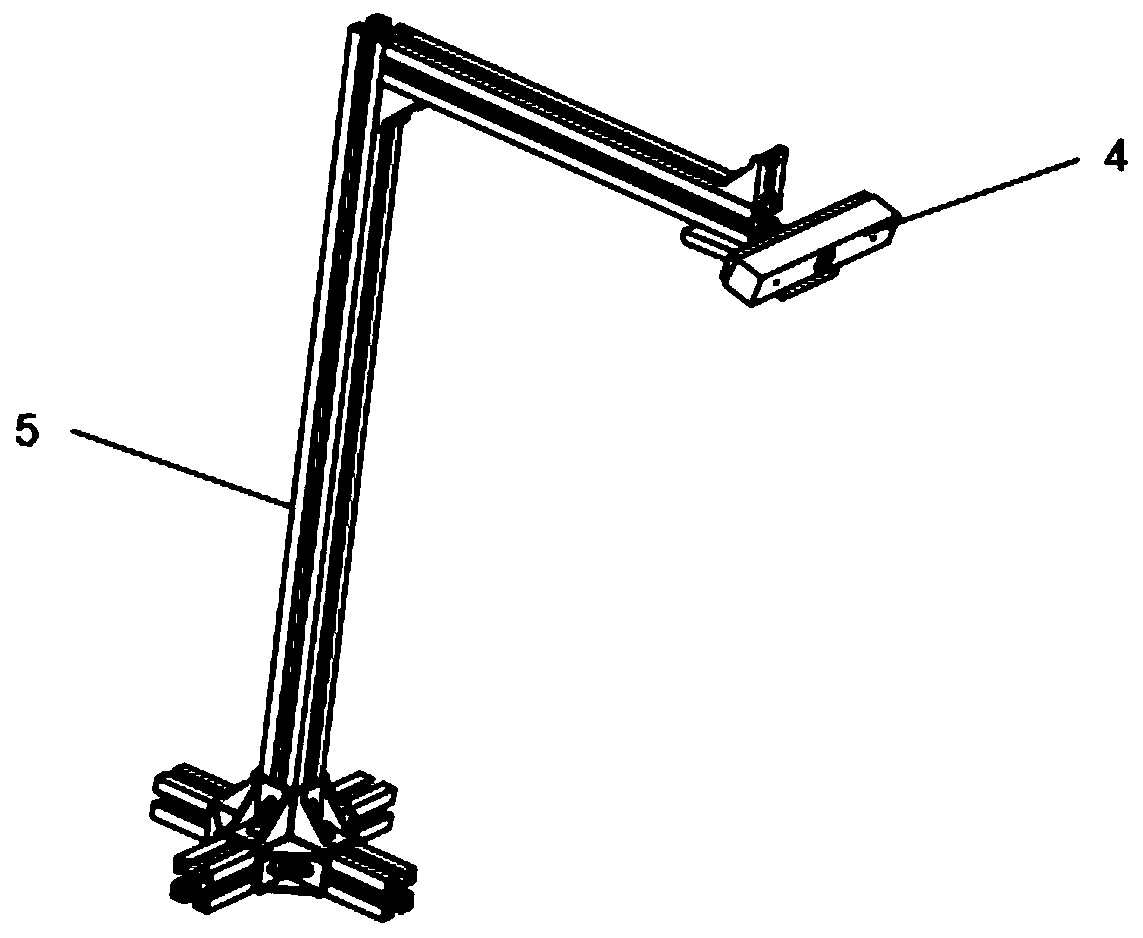

Global vision-based chess playing robot and control method thereof

ActiveCN111136669AAccurate exclusionPrecise positioning operationProgramme-controlled manipulatorBoard gamesView cameraVisual technology

The invention provides a global vision-based chess playing robot and a control method thereof, and relates to the technical field of computer vision. According to the global vision-based chess playingrobot and the control method thereof, a sucker is connected with an air pump and an actuator body correspondingly, and a tail end camera is connected with the actuator body and a computer server correspondingly; an anthropomorphic mechanical arm is connected with the computer server and the actuator body correspondingly, and the mechanical arm is located at the blank position in the middle of a chess piece taking disc; a view camera is connected with the computer server and is arranged on a camera support, and the computer server comprises a visual system, a game system, a chess piece placingsystem and a real-time display system; and the visual system is connected with the game system and the real-time display system correspondingly, the game system is connected with the chess piece placing system and the real-time display system correspondingly, and the output end of the chess piece placing system is connected with the mechanical arm. The global vision-based chess playing robot andthe control method thereof overcome the interference of moving objects, enhance the accuracy of chess piece positioning under uneven illumination, and create a scientific, convenient and fast chess playing environment for a user.

Owner:SHENYANG AEROSPACE UNIVERSITY

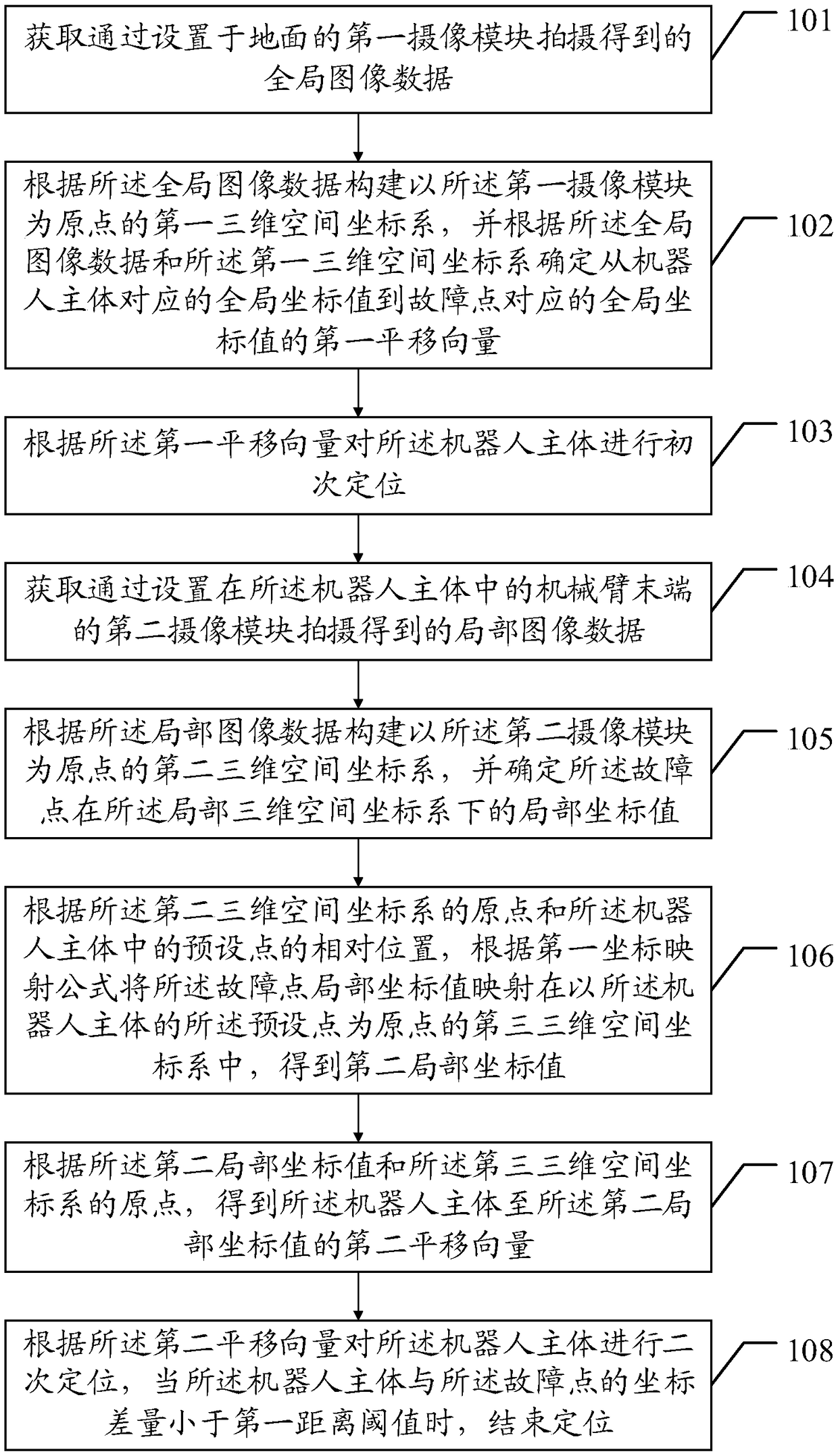

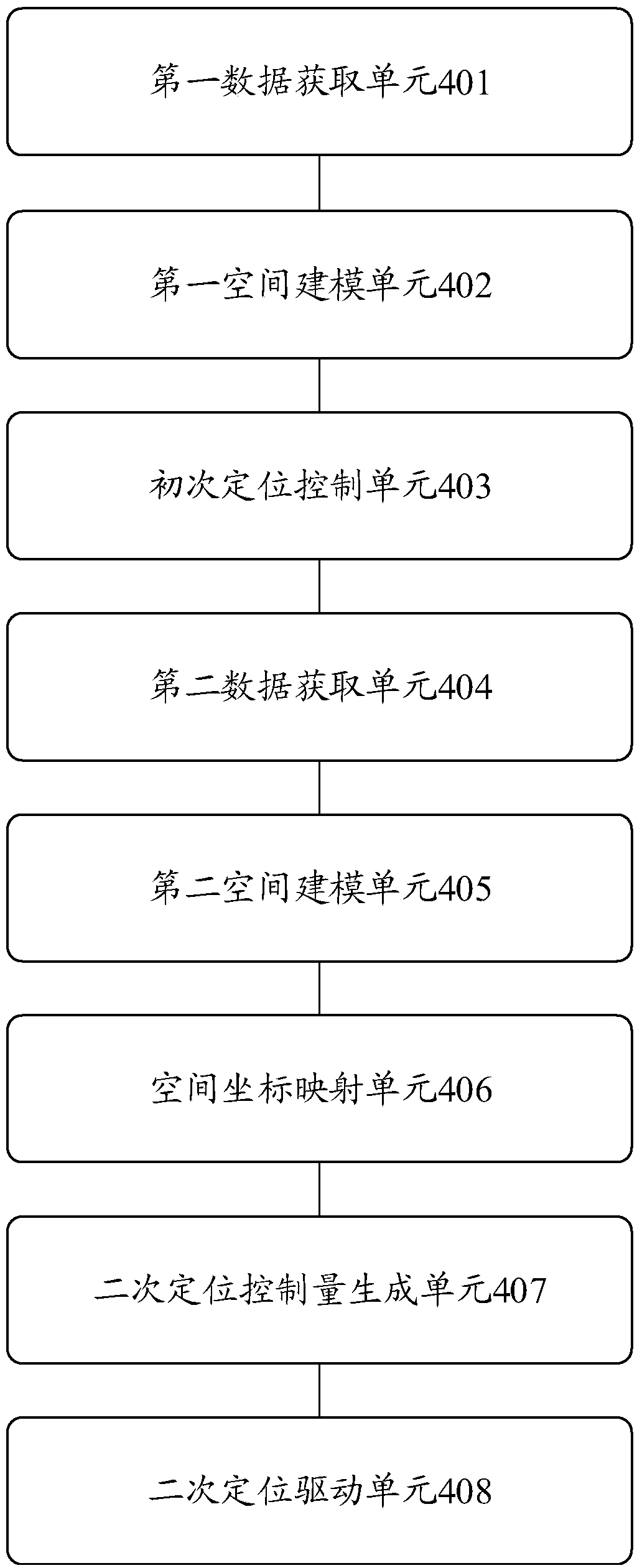

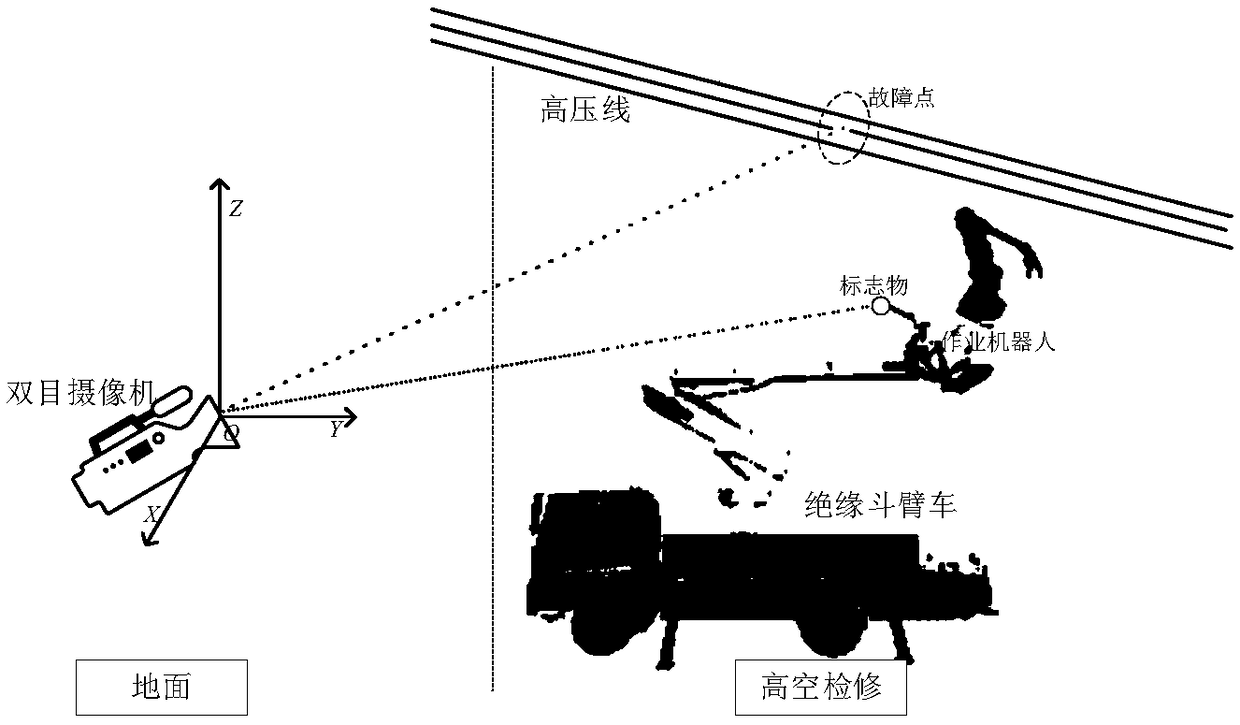

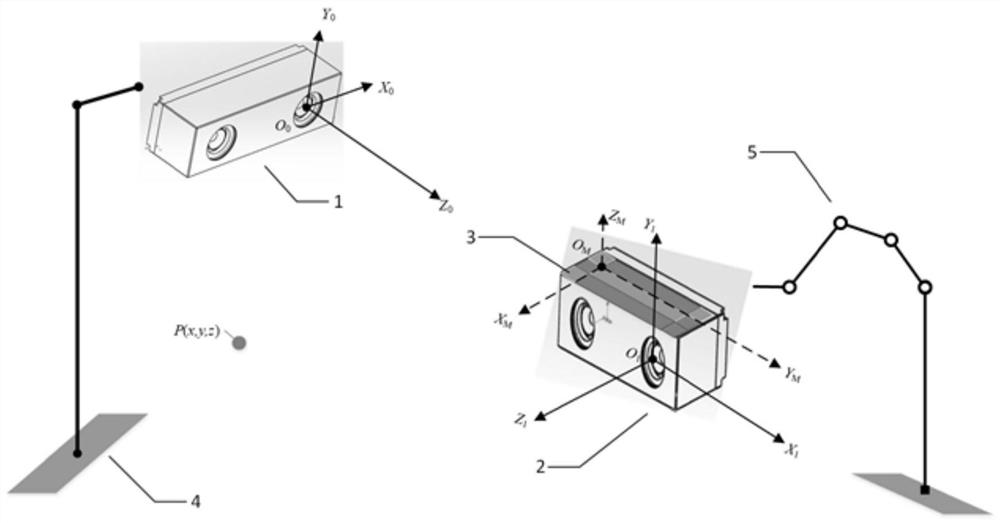

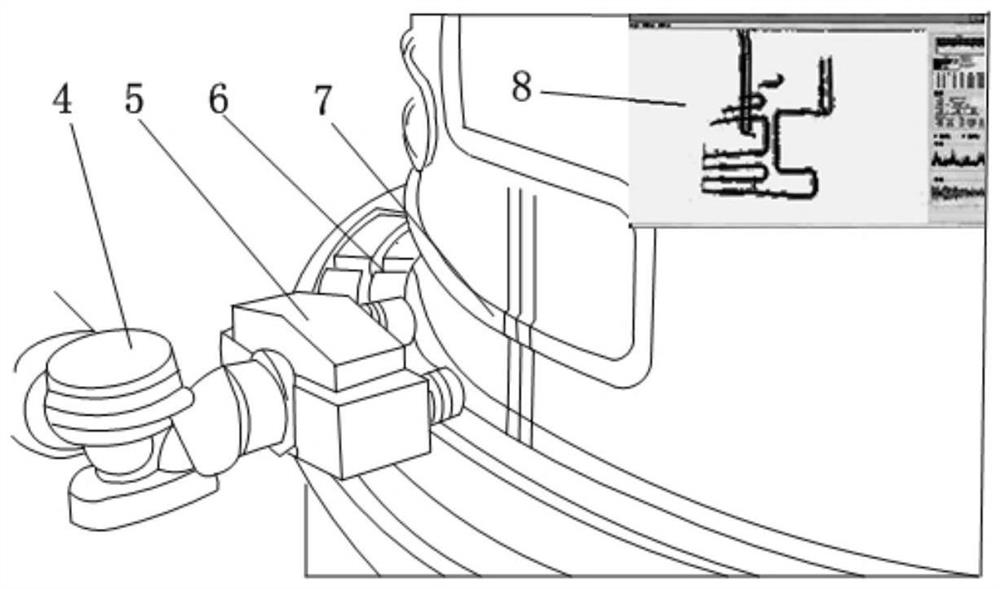

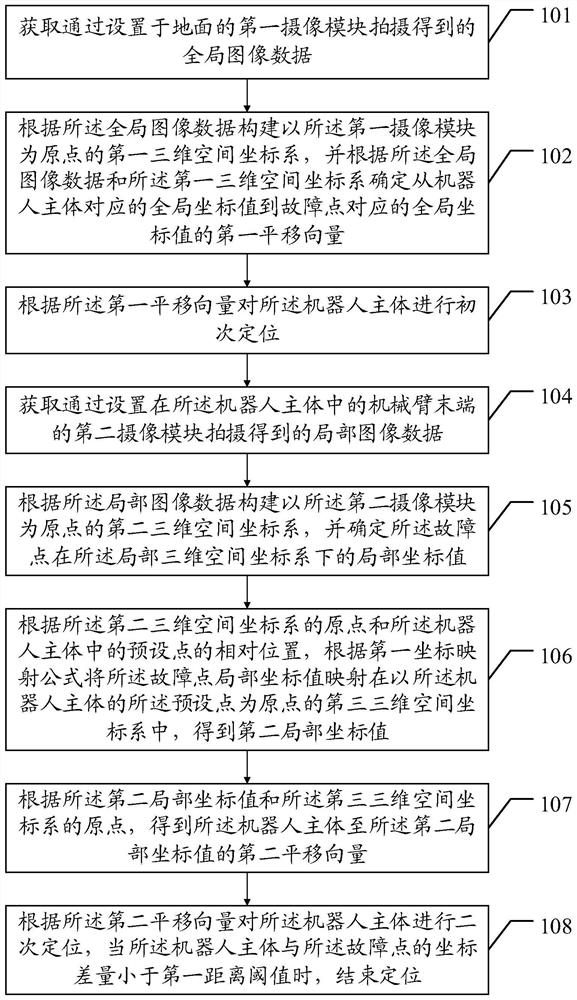

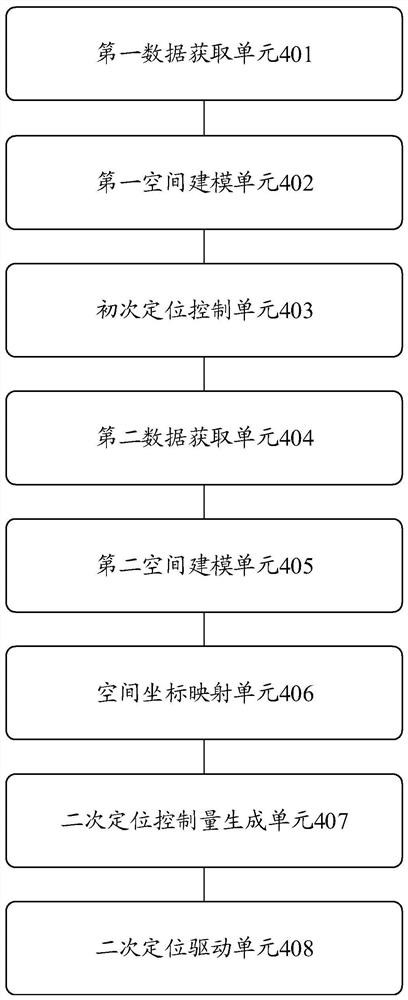

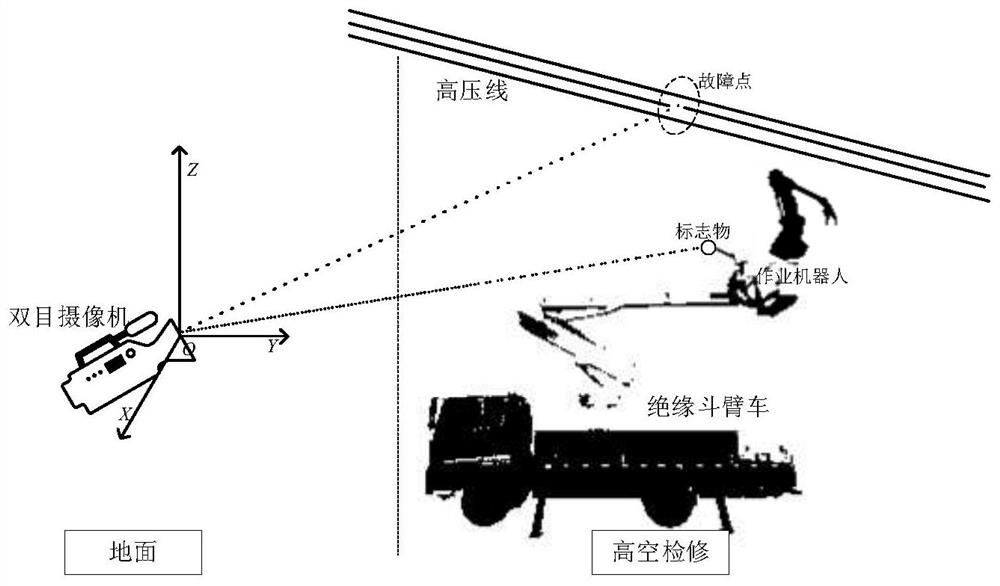

Aerial maintenance robot locating method and device based on near-earth global vision

ActiveCN109129488ATechnical Issues Affecting Secondary Positioning AccuracyProgramme-controlled manipulatorHigh-altitude wind powerComputer vision

The invention discloses an aerial maintenance robot locating method and device based on near-earth global vision. By means of a double-vision system, the global position coordinates of a robot body and fault points are acquired through a first camera shooting module, and the robot body is controlled to carry out primary locating according to the obtained global position coordinates; and the localposition coordinates of the fault points are acquired through a second camera shooting module arranged at the tail end of a mechanical arm of the robot body, and the robot body is controlled to carryout secondary precise locating. According to the aerial maintenance robot locating method and device based on near-earth global vision, the first camera shooting module serving as a global camera is arranged at the near-earth end, and the technical problems that after the global camera ascends to a high altitude along with an insulation body, the camera bears the action of vibration of the mechanical arm during work and high-altitude wind power, camera disturbance is caused, then the precision of primary locating of the global camera is reduced, and the precision of secondary locating of the robot body is influenced is avoided.

Owner:GUANGDONG ELECTRIC POWER SCI RES INST ENERGY TECH CO LTD

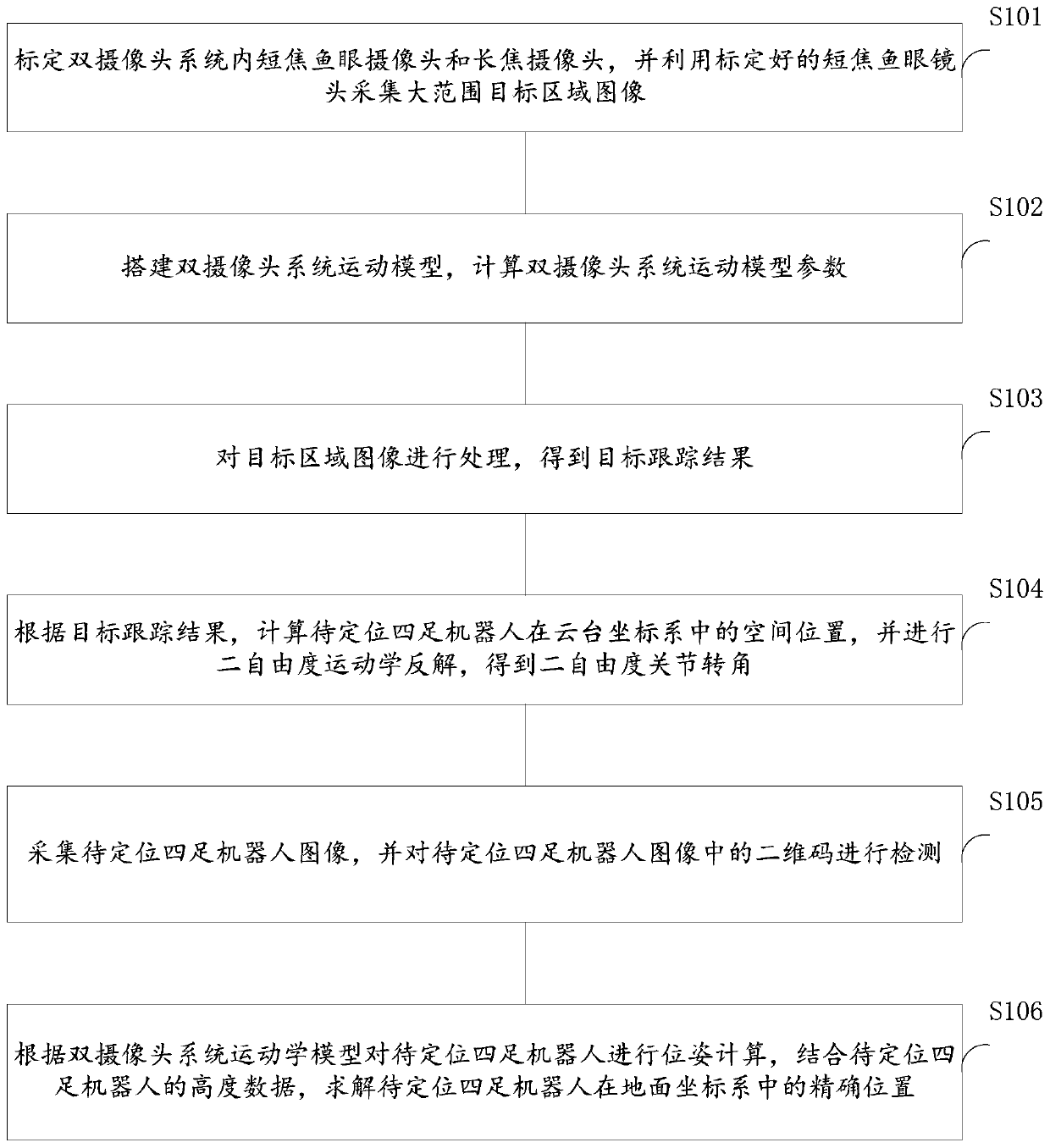

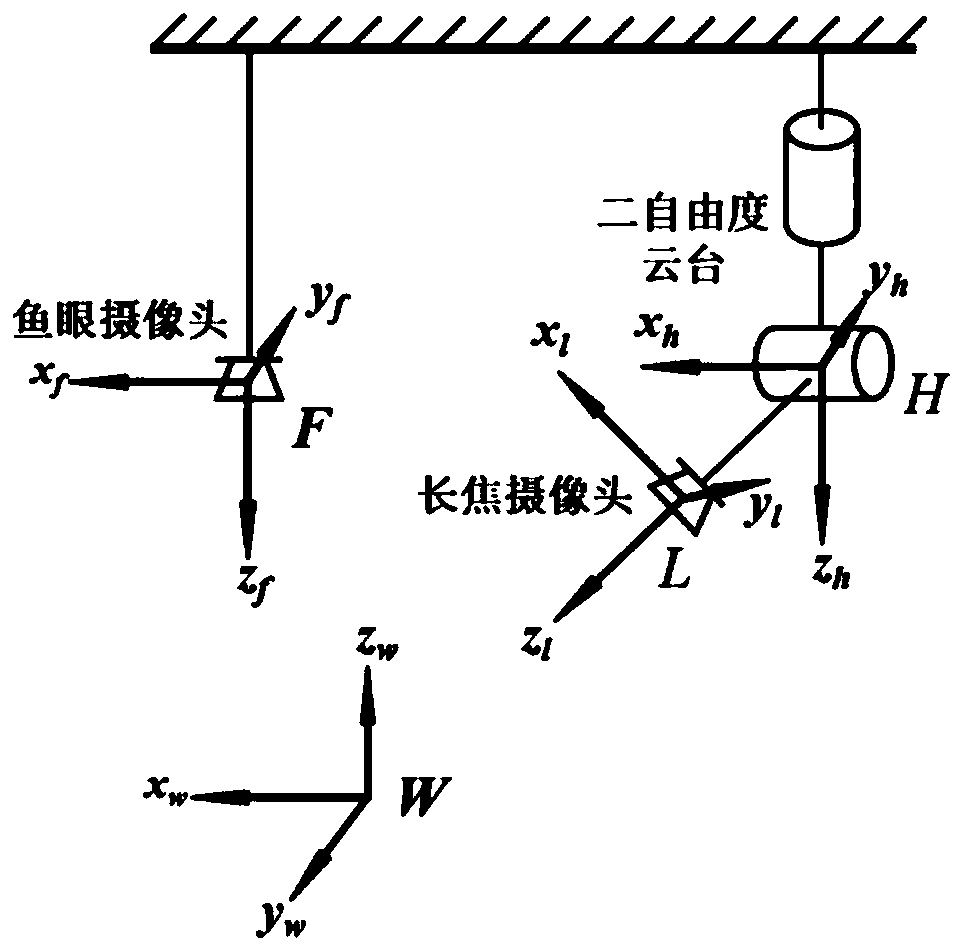

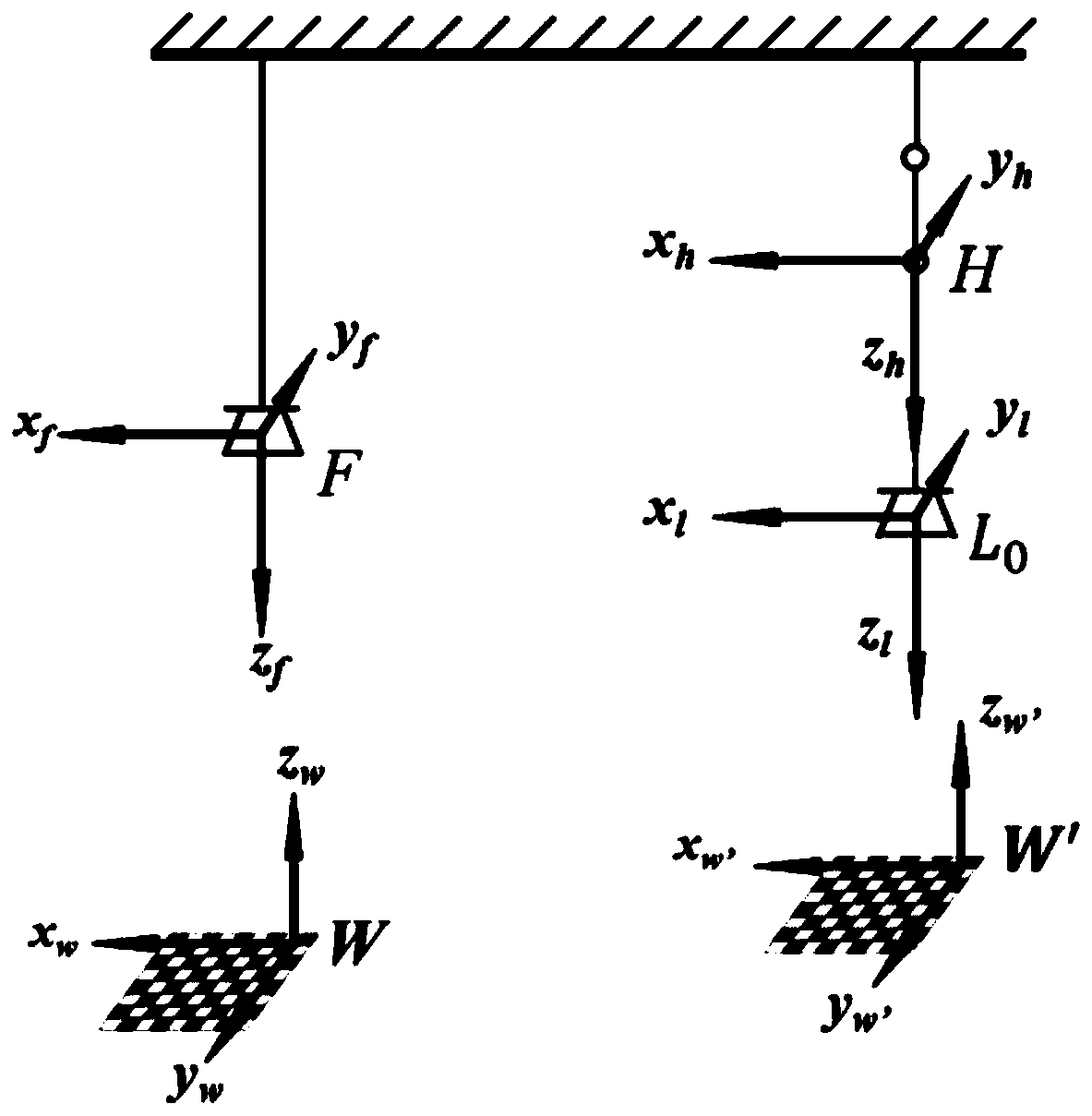

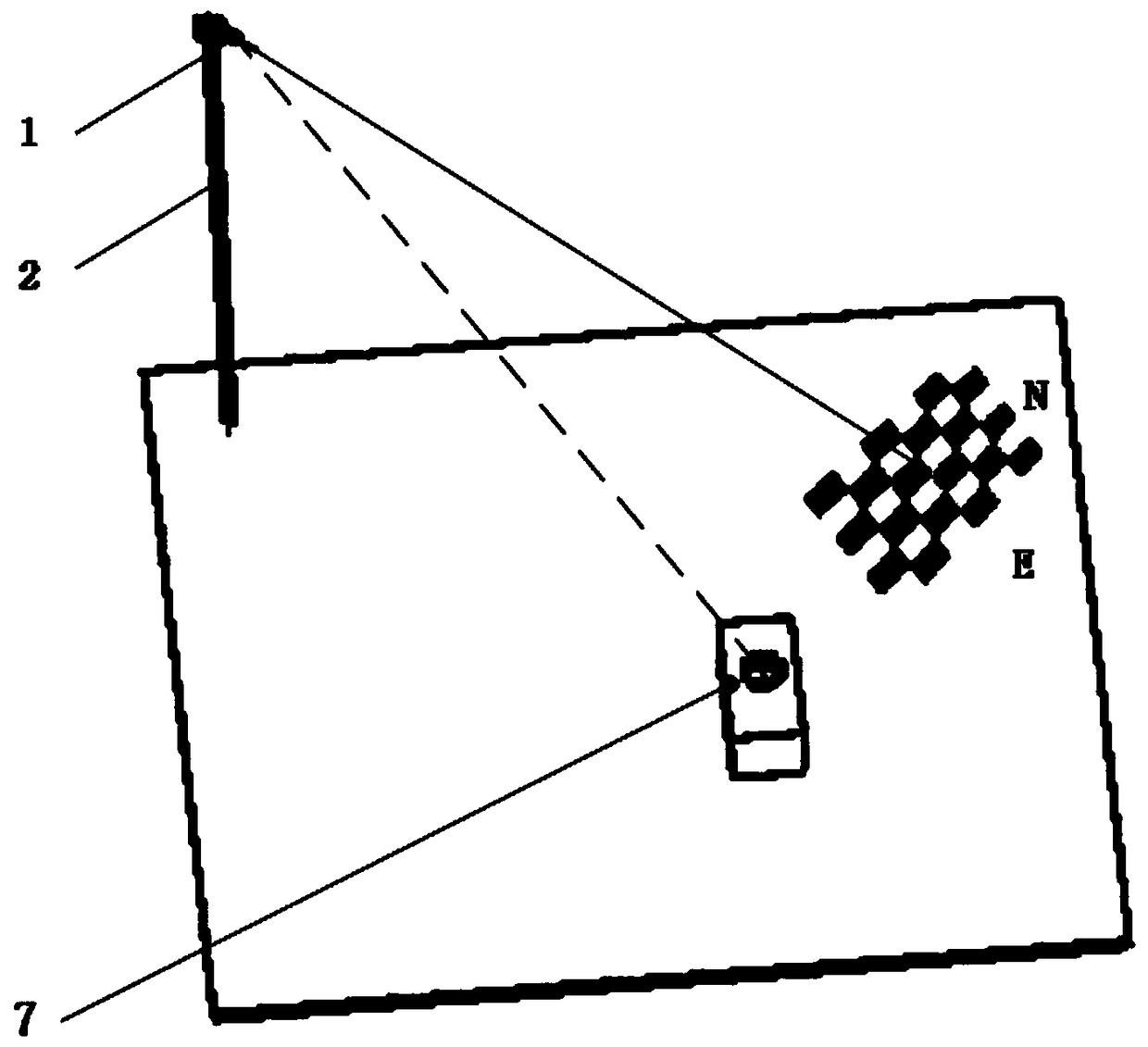

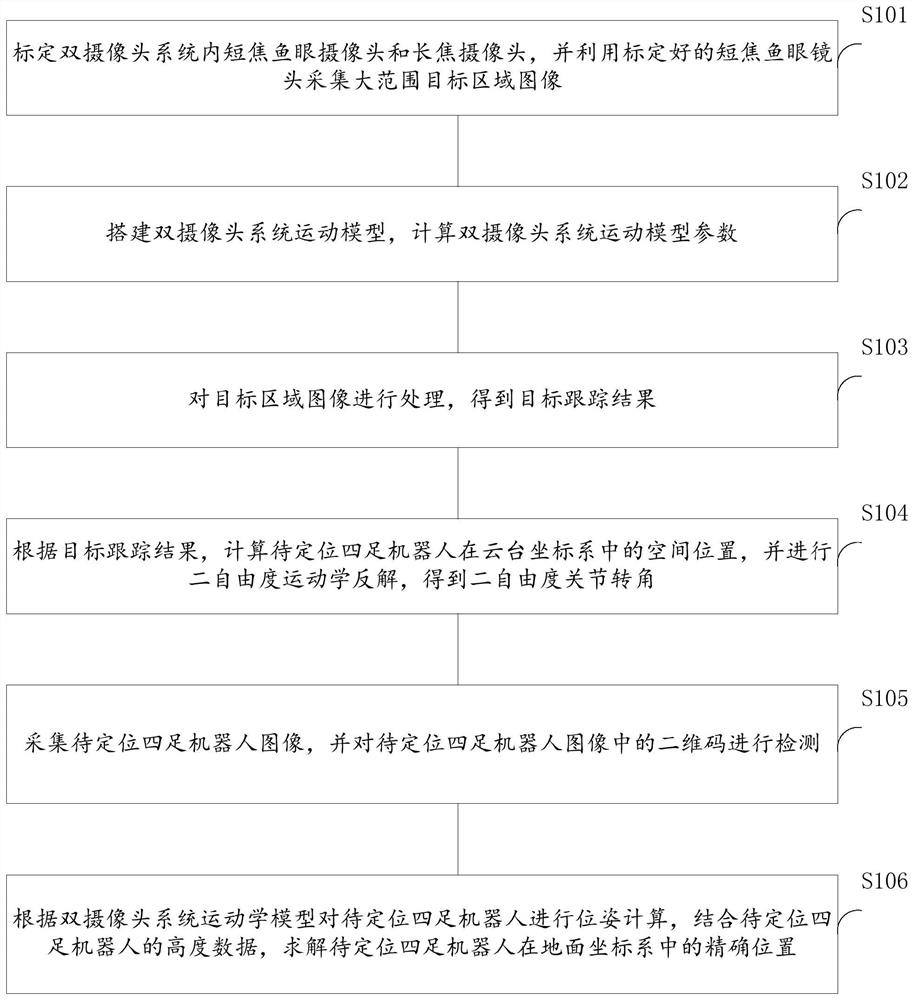

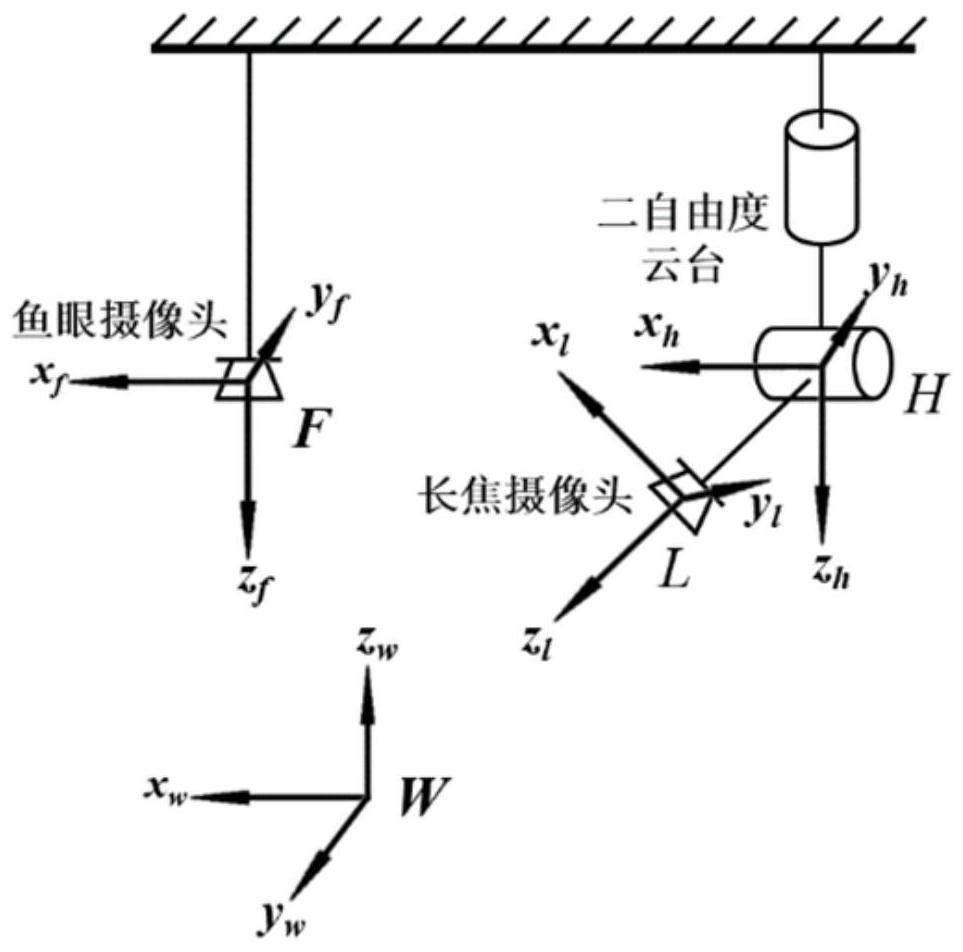

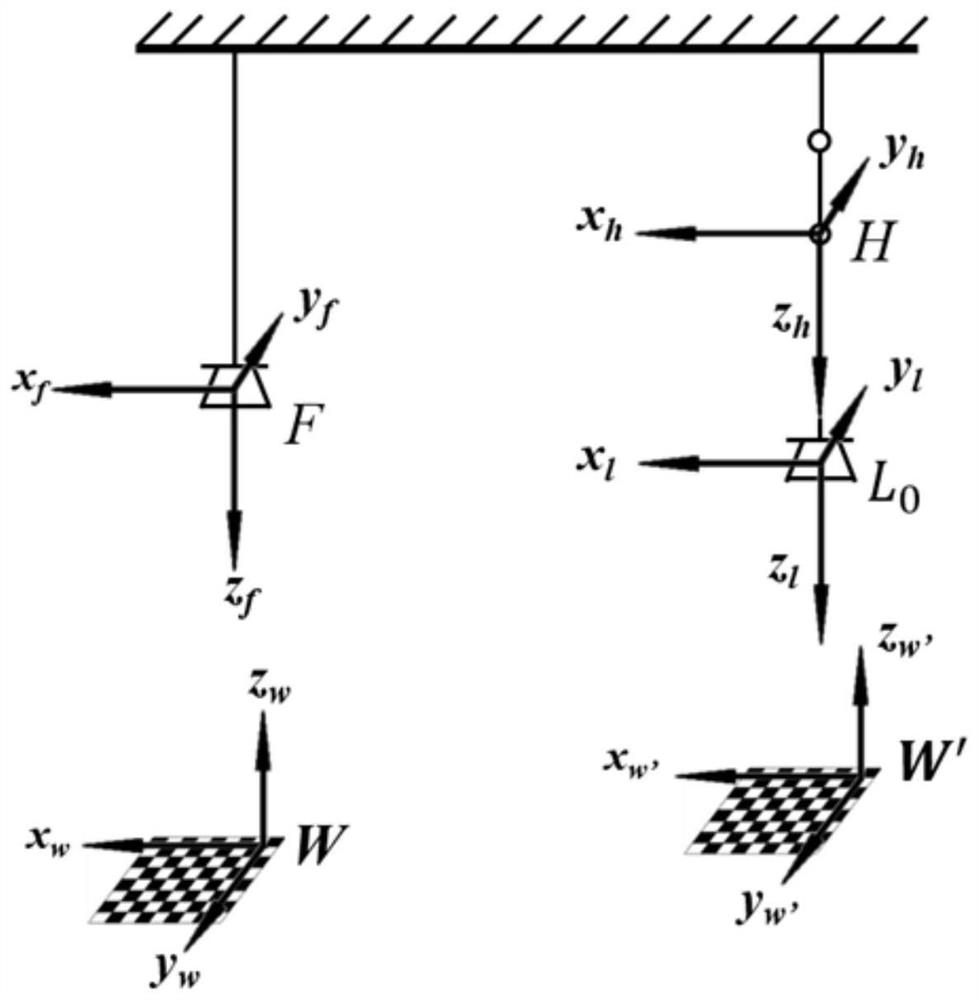

Global vision positioning method and system for a small quadruped robot

ActiveCN109872372ASolve the problem of blurred target detailsHigh positioning accuracyImage analysisUsing reradiationKinematicsModel parameters

The invention discloses a global vision positioning method and system for a small quadruped robot, and the method comprises the following steps: calibrating a dual-camera system, and calculating the motion model parameters of the dual-camera system; obtaining a calibrated target area image shot by the fisheye camera; processing the image of the target area to obtain the position of a to-be-positioned target in a fisheye camera coordinate system, calculating the spatial position of the to-be-positioned target in a holder coordinate system through a double-camera system kinematics model, and carrying out two-degree-of-freedom kinematics inverse solution to obtain a two-degree-of-freedom joint rotation angle; obtaining a to-be-positioned target image, detecting a two-dimensional code in the to-be-positioned target image to obtain a two-dimensional coordinate of a two-dimensional code center point in the to-be-positioned image, and performing transformation to obtain a two-dimensional coordinate of the two-dimensional code center point in a ground coordinate system; and correcting the two-dimensional coordinates of the two-dimensional code center point in the ground coordinate system to obtain the position and orientation of the to-be-positioned target in the ground coordinate system.

Owner:SHANDONG UNIV

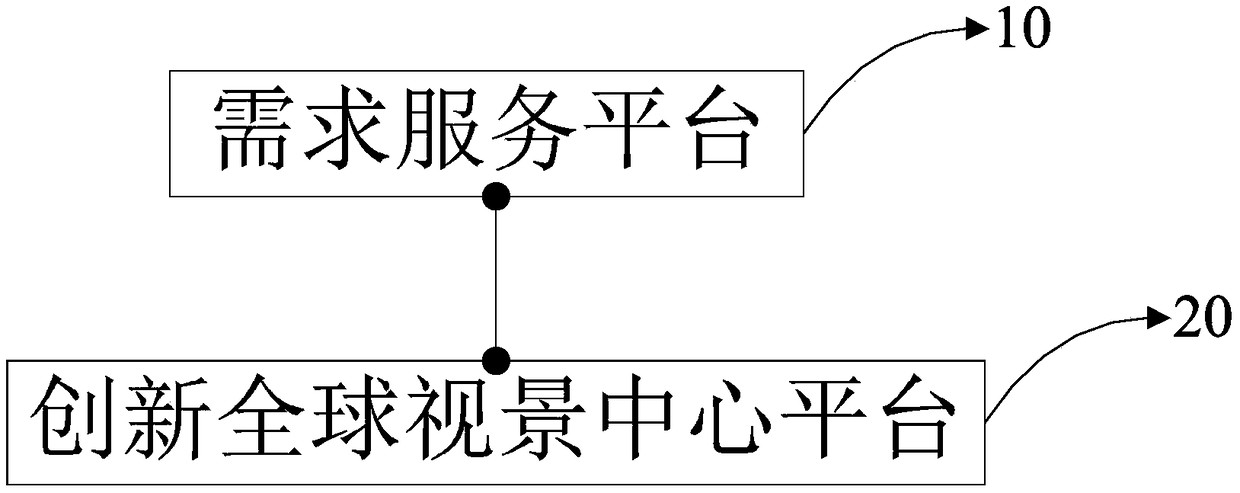

An Innovative Service Platform

InactiveCN109214667ARich visual codingIntuitively see the monthly growth rateDigital data information retrievalResourcesVisual presentationButt joint

The invention relates to the field of cloud computing, in particular to an innovative service platform. The innovative service platform comprises a demand service platform, which is used for carryingout corresponding docking project service according to the requirements put forward by customers after logging on the platform; innovate the Global Vision Center Platform to connect with the Demand Services Platform for the global visual presentation of docking project services. The innovation service platform in the embodiment of the invention can perform corresponding butt joint project serviceaccording to the requirements put forward by the customer after logging on the platform, and visually display the butt joint project service in a global view, thereby solving the technical problem that the existing innovation service platform does not display the service items well.

Owner:深圳量子防务在线科技有限公司

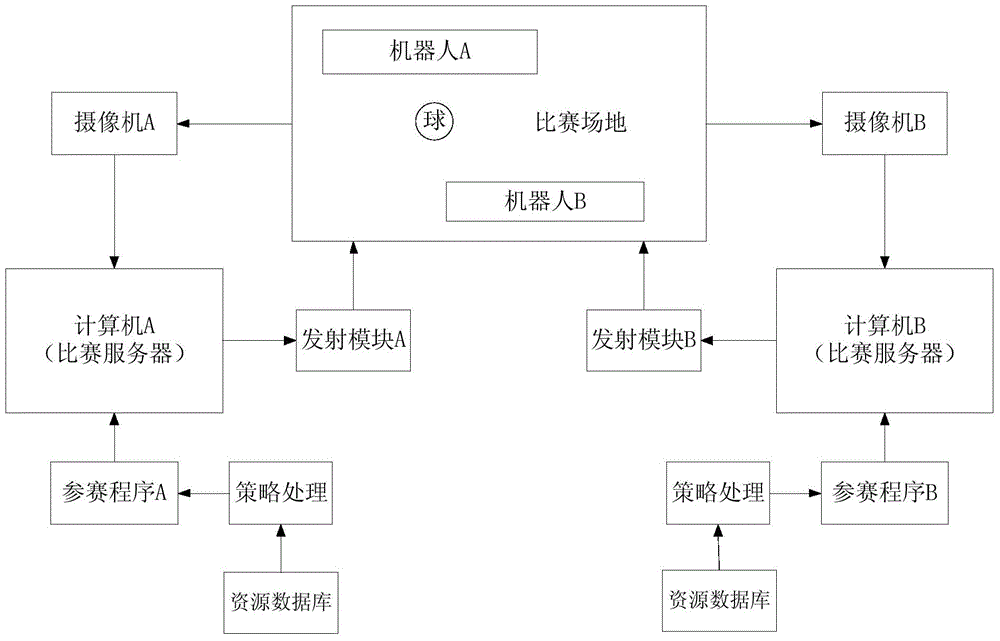

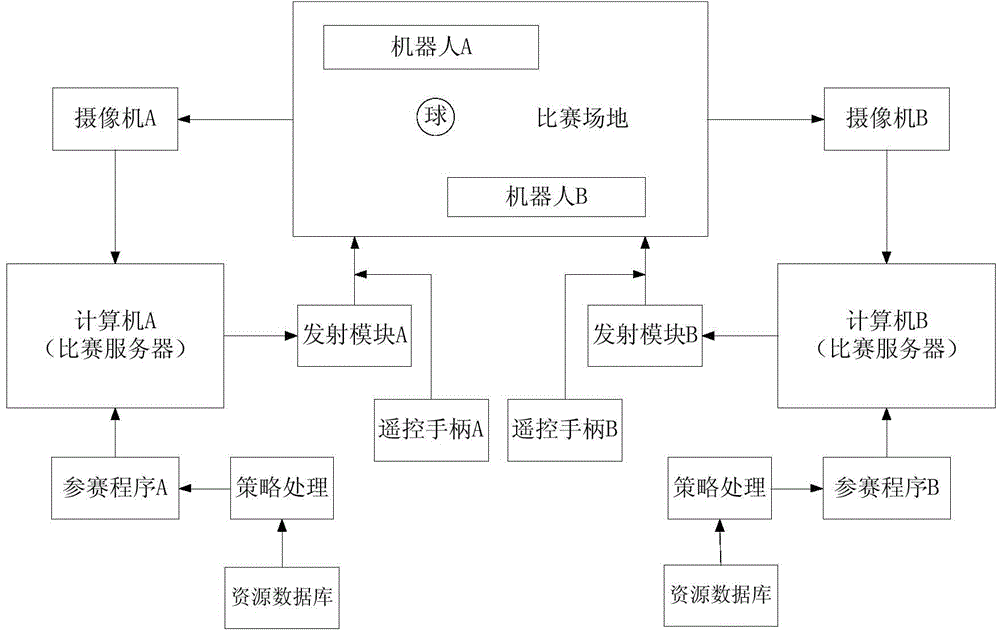

Soccer robot dual meet system based on global vision and remote collaborative control

The invention relates to a soccer robot dual meet system based on global vision and remote collaborative control. The system comprises a global vision subsystem, an intelligent decision-making subsystem, a USB wireless communication subsystem, and a robot car subsystem. When the system is running, the global vision subsystem converts image information acquired by an image acquisition device into pose information of robots and position information of a ball and transfers the information to the intelligent decision-making subsystem, the intelligent decision-making subsystem judges field attack and defense state through an expert system according to the acquired information, assigns a role to each car automatically and obtains a command value of the speed of the left and right wheels through conversion, and the USB wireless communication subsystem sends the wheel speed command value to the corresponding robots in the field through a transmitting module to control the motion of the robots. Meanwhile, a participant can also wirelessly and remotely control the motion of any car on the side thereof through an offered remote control handle (only one car can be controlled at the same time), and the priority of a remote control command is higher than that of a command issued by the expert system.

Owner:周凡

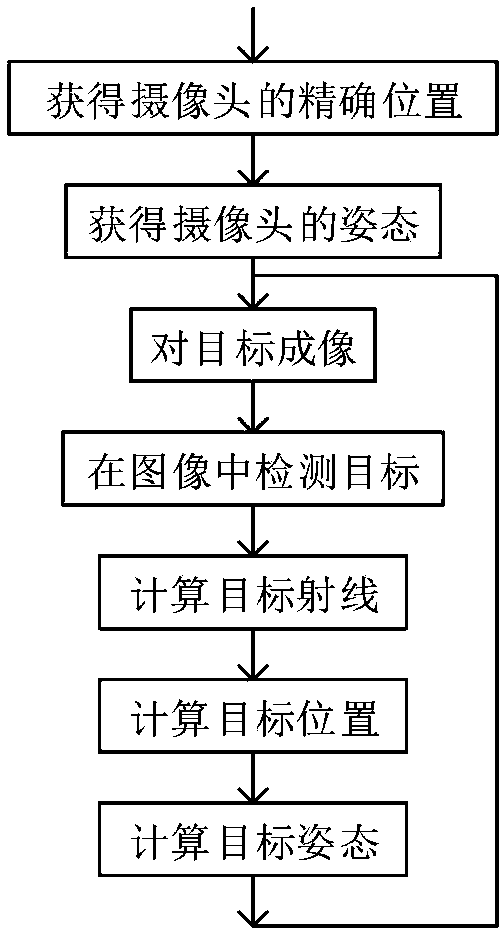

Global vision-based positioning method

InactiveCN108955683ASimple calculationHigh precisionNavigational calculation instrumentsVisual field lossComputer graphics (images)

The invention provides a global vision-based positioning method. The method comprises the following steps: (1) obtaining the precise position of a camera; (2) obtaining the posture of the camera; (3)imaging a target: putting a whole system into operation, and imaging the target; (4) detecting the target in the obtained image; 5) calculating a target ray; (6) calculating the position of the target; and 7) calculating the posture of the target: determining the posture of the target by adopting visual and IMU, OD, Geomagnetic information fusion integrated navigation according to the posture of the target in image coordinates and the posture of the camera. The method has the following advantages: the position of every target in a visual field can be easily calculated according to the positionand the orientation of the camera and the model of the facing geographical environment; and high-precision navigation positioning can be obtained by cooperating the vision with GPS, IMU, OD, geomagnetism and other positioning devices.

Owner:INST OF LASER & OPTOELECTRONICS INTELLIGENT MFG WENZHOU UNIV

Visual attribute recognition method and device and storage medium

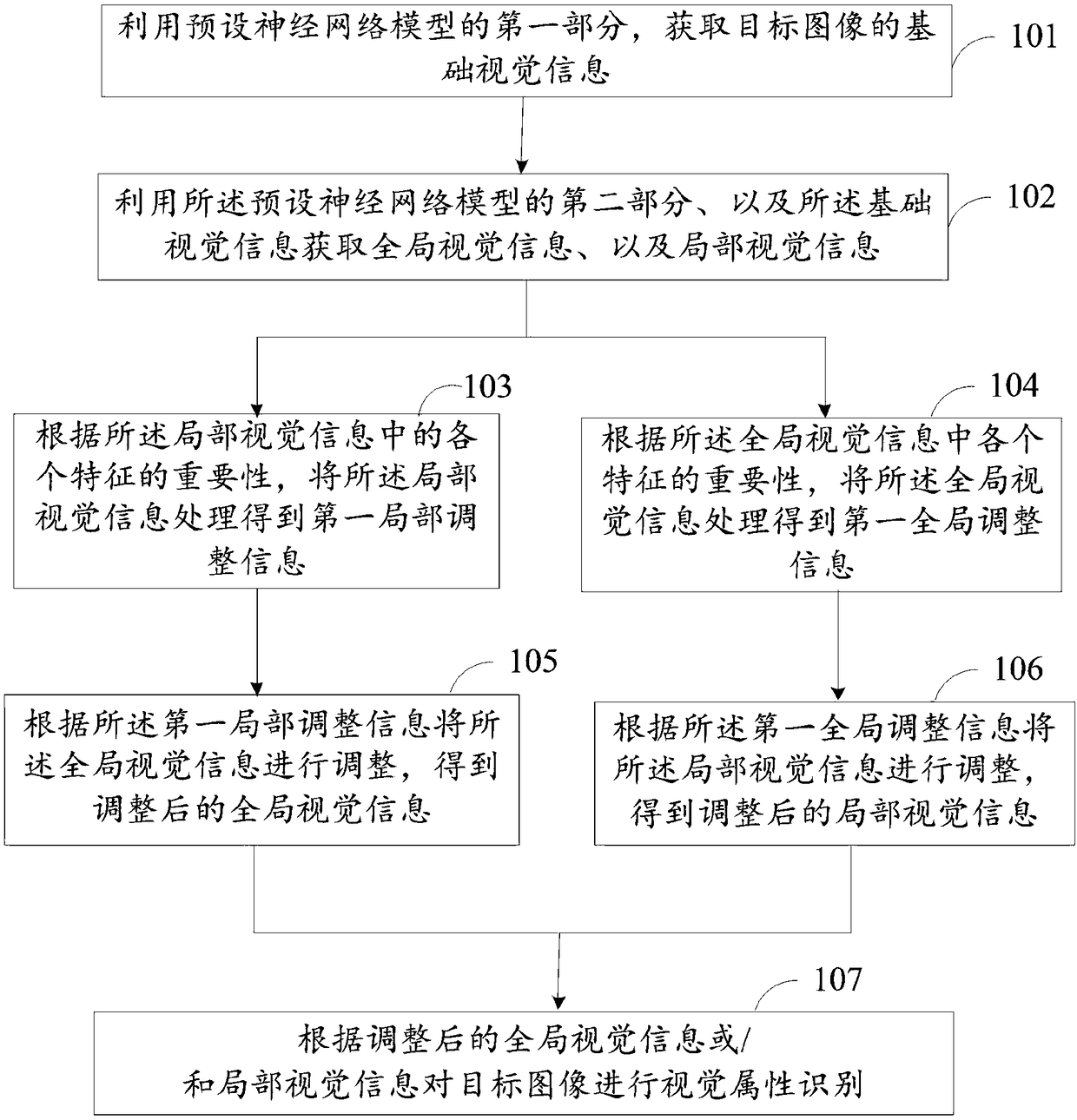

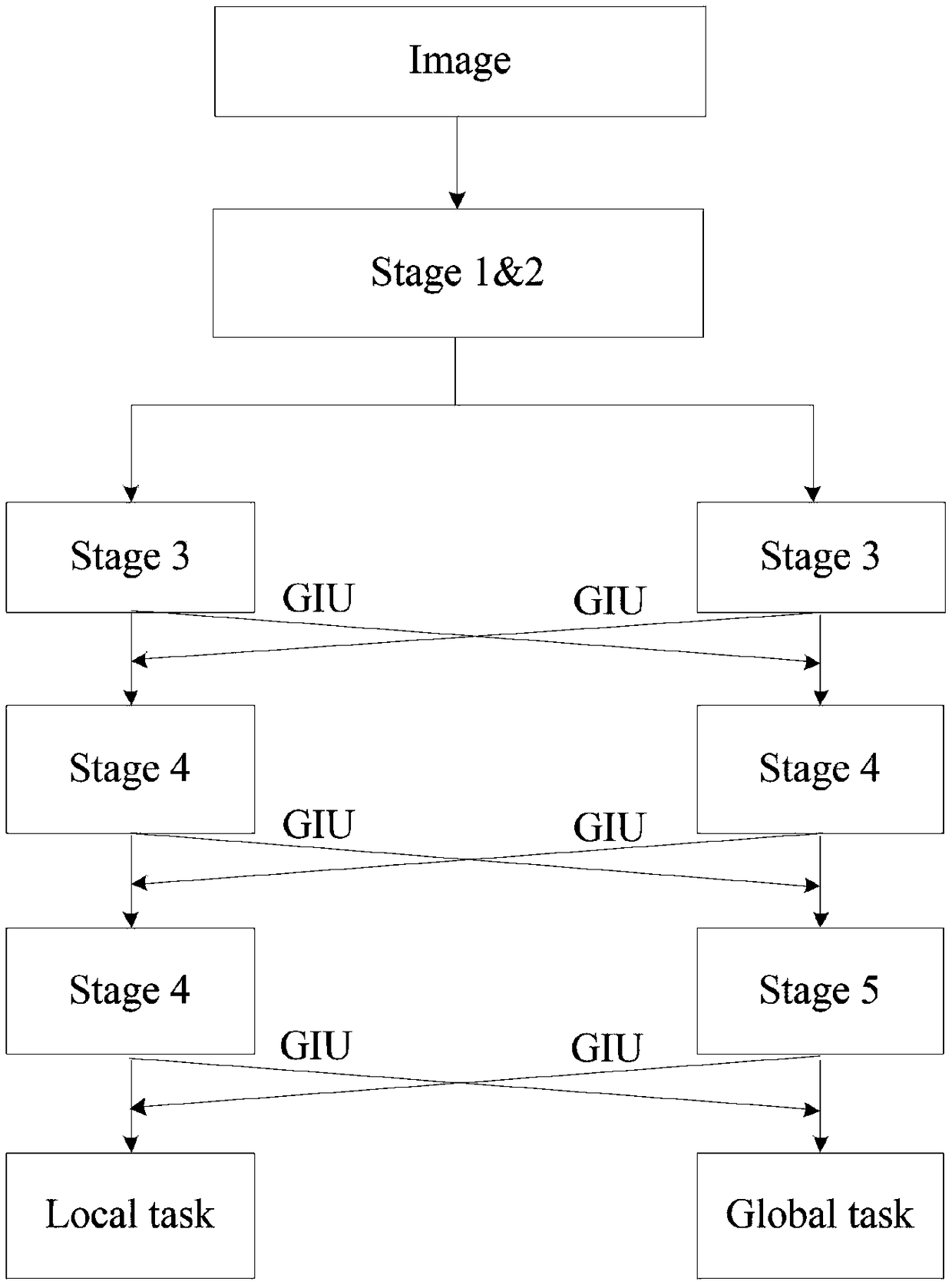

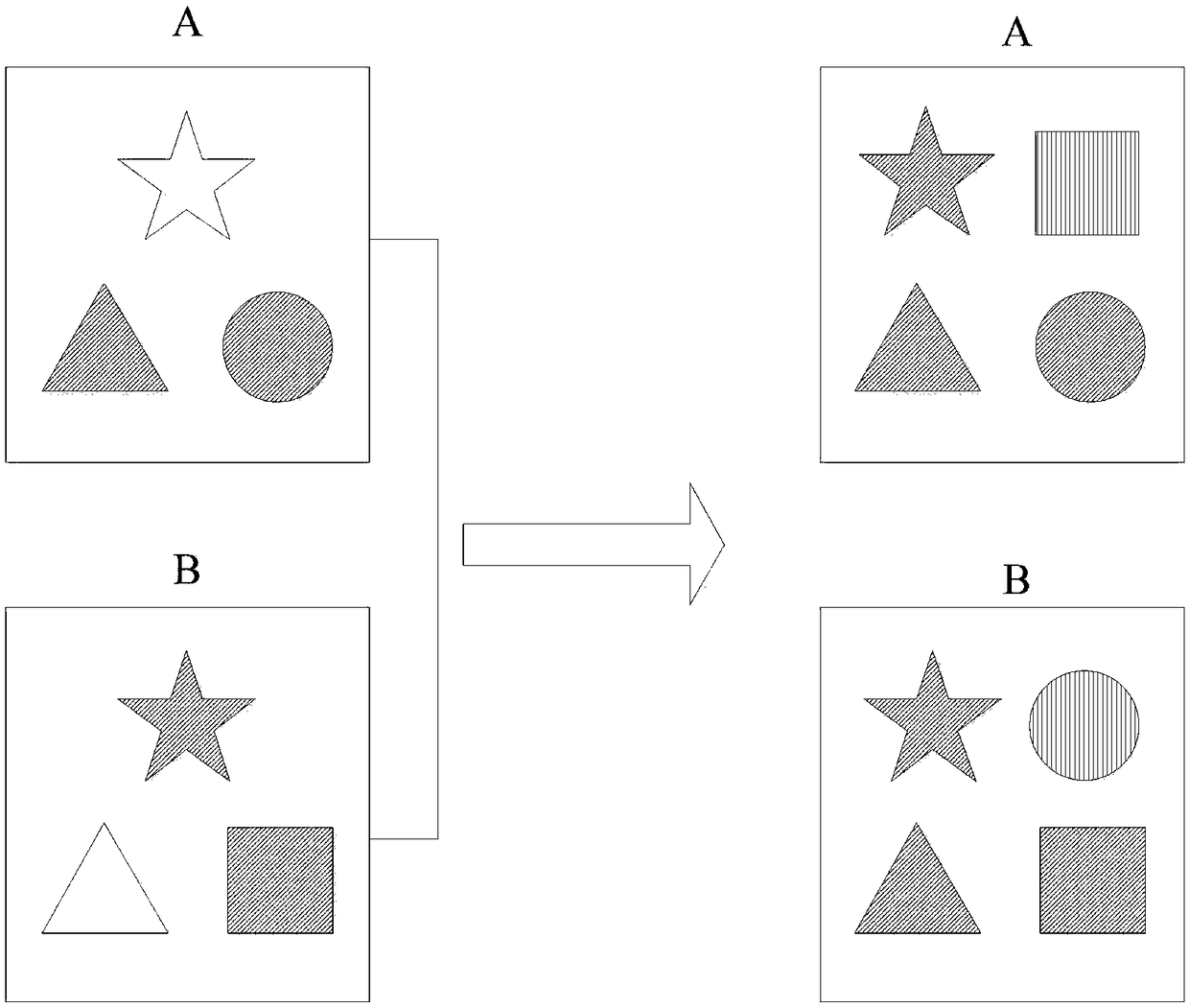

ActiveCN109447095AImprove accuracyCharacter and pattern recognitionNeural architecturesNetwork modelVisual perception

Embodiments of the present application provide a visual attribute recognition method and device and a medium. The method includes acquiring basic visual information of a target image by using a firstpart of a preset neural network model; using the second part of the preset neural network model to obtain the global visual information and the local visual information; according to the importance ofeach feature in the local vision information, processing the local vision information to obtain the first local adjustment information; according to the importance of each feature in the global vision information, processing the global vision information to obtain the first global adjustment information; adjusting the global vision information according to the first local adjustment information to obtain the adjusted global vision information; adjusting the local vision information according to the first global adjustment information to obtain the adjusted local vision information, and performing the visual attribute recognition on the target image according to the adjusted global vision information or / and the local vision information. The accuracy of visual attribute recognition can be improved.

Owner:SHANGHAI QINIU INFORMATION TECH

Cascade expansion method for working space and working visual angle of stereoscopic vision system

ActiveCN111798512AIncrease workspaceWork lessProgramme-controlled manipulatorImage enhancementOphthalmologyComputer science

The invention discloses a cascade expansion method for a working space and a working visual angle of a stereoscopic vision system. One stereoscopic vision system in the multiple stereoscopic vision systems serves as a global vision system to be fixed, and the other stereoscopic vision systems can be randomly moved in position and adjusted in view angle according to requirements in the working process, so that the working space and the working view angle of the stereoscopic vision systems are effectively expanded, and the flexibility of the stereoscopic vision systems is improved. A to-be-measured target cannot be seen under a global stereoscopic vision system; indirect positioning of a to-be-measured target under a global coordinate system can be achieved through other stereoscopic visionsystems, the purposes of overcoming limited visual angle and limited working space of a single stereoscopic vision system are achieved, the limitation that poses of multiple stereoscopic vision systems in a traditional scheme cannot be adjusted in the working process is overcome, and application of the stereoscopic vision systems is wider. The method is applied to operating room instrument navigation and has the advantages of low cost, flexible working space, high positioning precision and the like.

Owner:BEIHANG UNIV

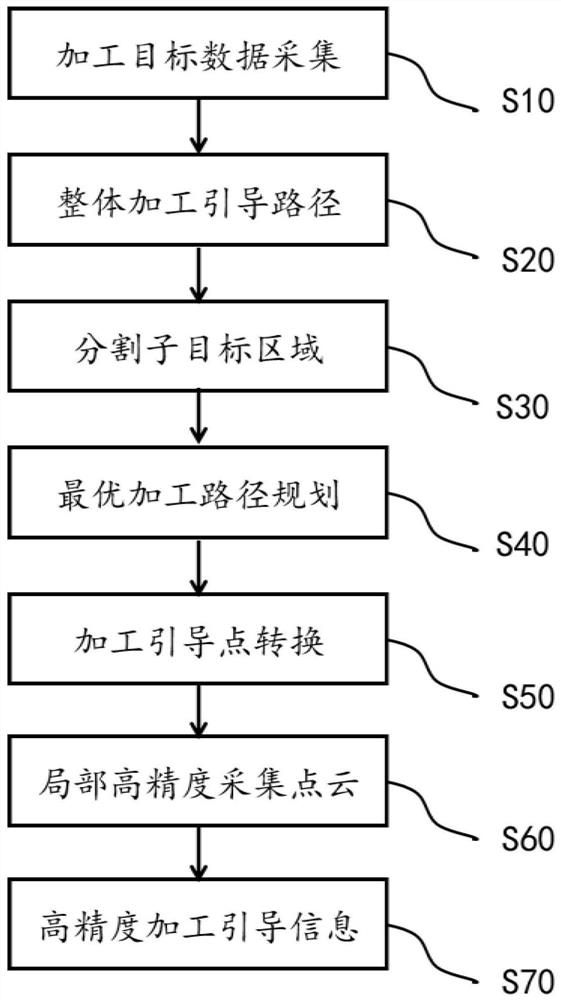

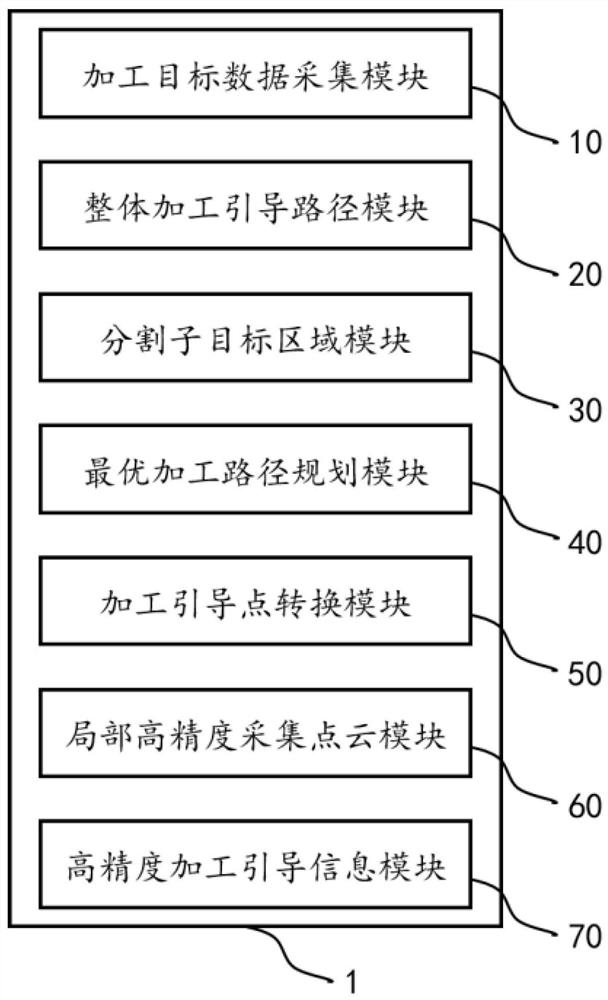

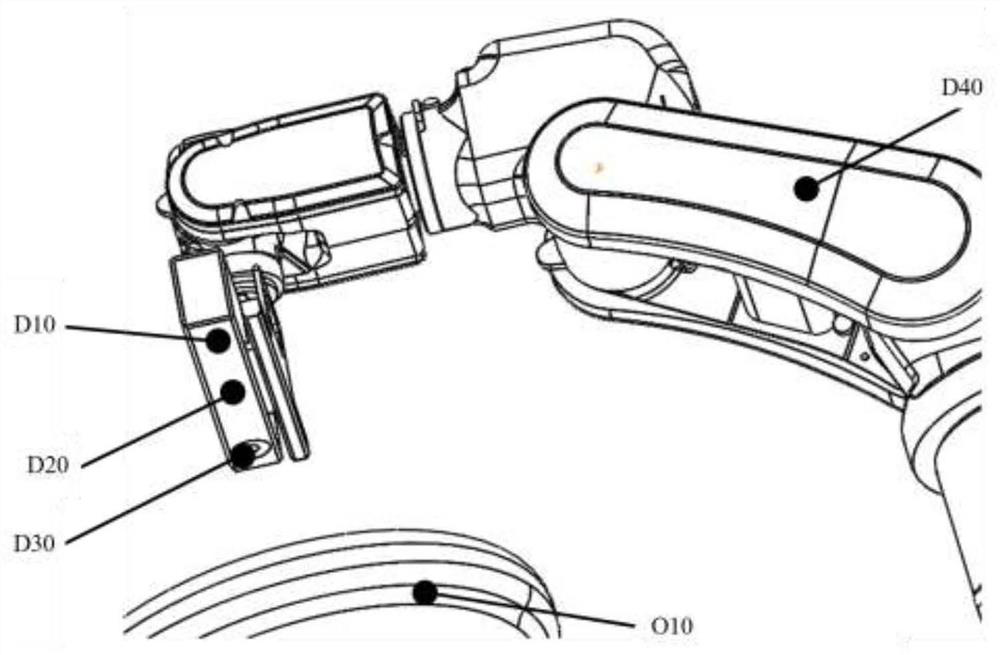

Robot vision guiding method and device based on integration of global vision and local vision

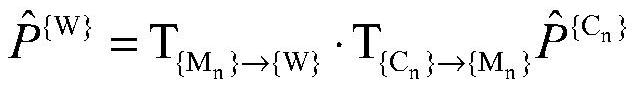

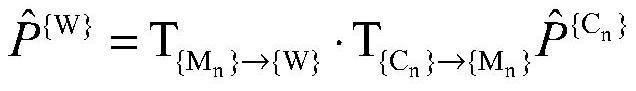

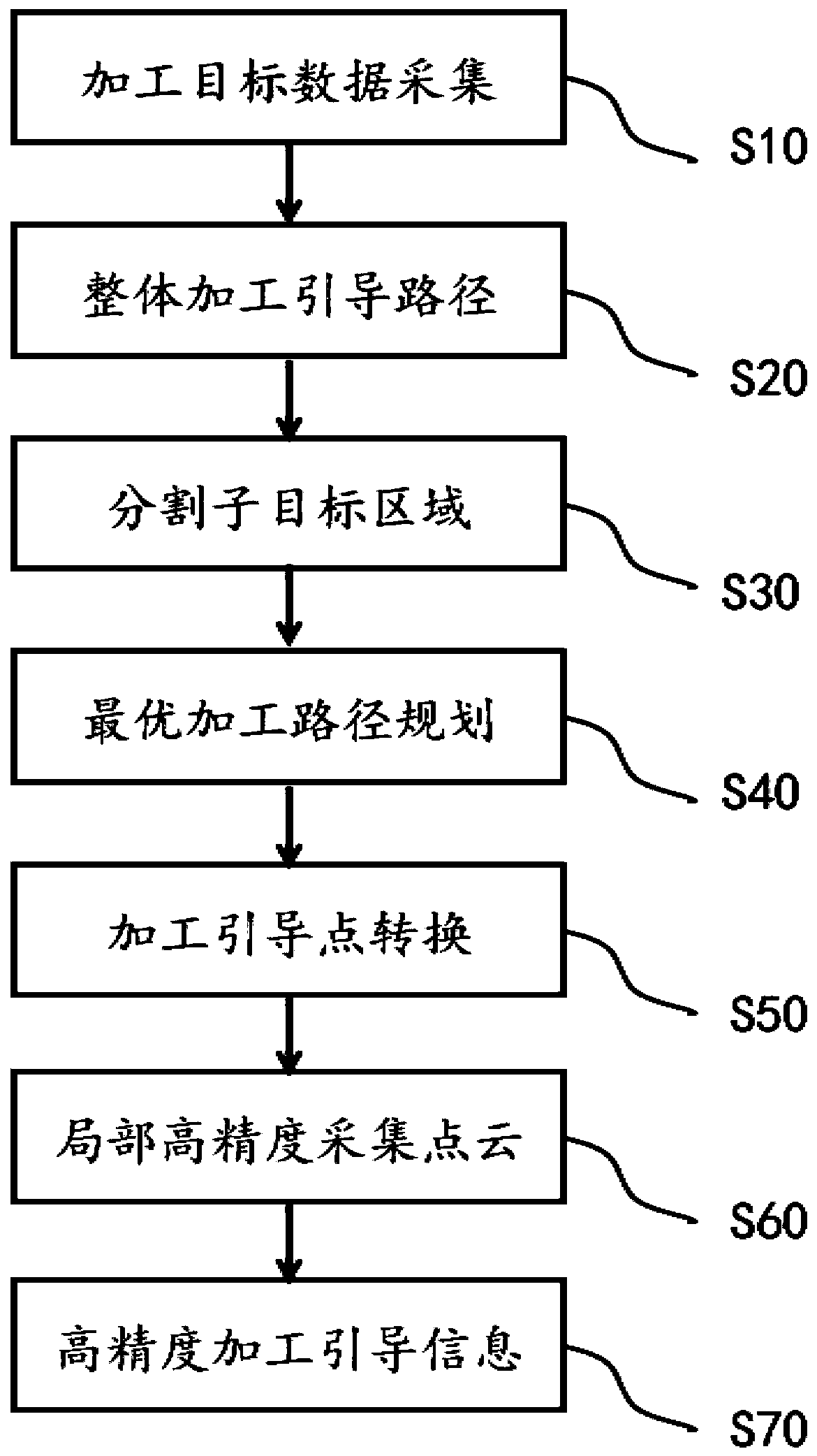

ActiveCN111216124ALower performance requirementsMeet the precision requirements of high-efficiency machiningProgramme-controlled manipulatorEngineeringComputer vision

The invention discloses a robot vision guiding method and device based on integration of global vision and local vision. The method comprises the following steps that a global vision system and a local vision system are integrated, firstly, the rough positioning of a machining target is achieved, and the partitioning of the machining target is achieved, path planning is carried out, then a high-precision visual detection system is combined to accurately detect the target, and then the robot is guided to implement high-precision and high-efficiency automatic grinding and polishing operation, sothat the precision requirement for high-efficiency machining of the large-size machining target is met.

Owner:北斗长缨(北京)科技有限公司

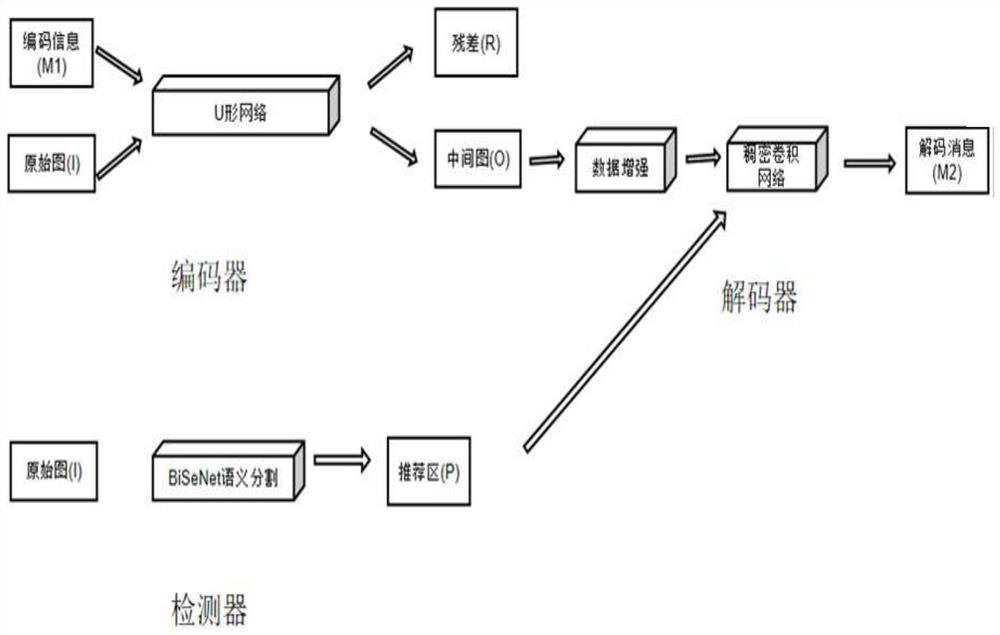

Underwater robot image secret character embedding and decoding method

The invention discloses an underwater robot image secret character embedding and decoding method. The method comprises the following steps: combining an original image I and to-be-coded information M1into four channels as input, and outputting a coded image O and a residual image R through a U-shaped network, so as to obtain a first loss; inputting the coded image O, enhancing the robustness andgeneralization ability of the model, and calculating a cross entropy to obtain a second loss; weighting the first loss function and the second loss function, and analyzing and obtaining a function model with strong coding capability and low perception loss; training a BiSeNet semantic segmentation network, generating a large number of coded images O, predicatign alternative image areas where the coded images possibly appear, and then putting the alternative image areas into a decoder to be detected and judged. According to the method, the deep learning steganography is applied to encoding, decoding and retrieval of the secret information of the bionic robot, global vision individual ID deep learning steganography mode identification, and encrypted and hidden watermarking of a consumer-level underwater robot.

Owner:BOYA GONGDAO BEIJING ROBOT TECH CO LTD

A high-precision global vision measurement method for three-dimensional thin-walled structural welds

ActiveCN111189393BRealize on-site automatic measurementPrecise cuttingProgramme-controlled manipulatorUsing optical meansWeld seamVisual perception

The invention relates to a high-precision global visual measurement method for three-dimensional thin-walled structural welds, including (1) preparation of workpieces and measurement systems; (2) measurement system calibration and parameter optimization; (3) measurement of space curve welds; (4) ) data splicing and weld seam extraction; (5) splicing skin blanking; (6) robot welding trajectory calibration. The invention’s innovative measurement field for three-dimensional contour welds realizes the on-site automatic measurement of the three-dimensional contours of the actual space curve welds, and identifies and extracts the position of the welds and the contour features of the welds, based on which the robot can be realized Weld track calibration during off-line programming, and accurate blanking of skin during weld assembly can be realized.

Owner:BEIJING SATELLITE MFG FACTORY

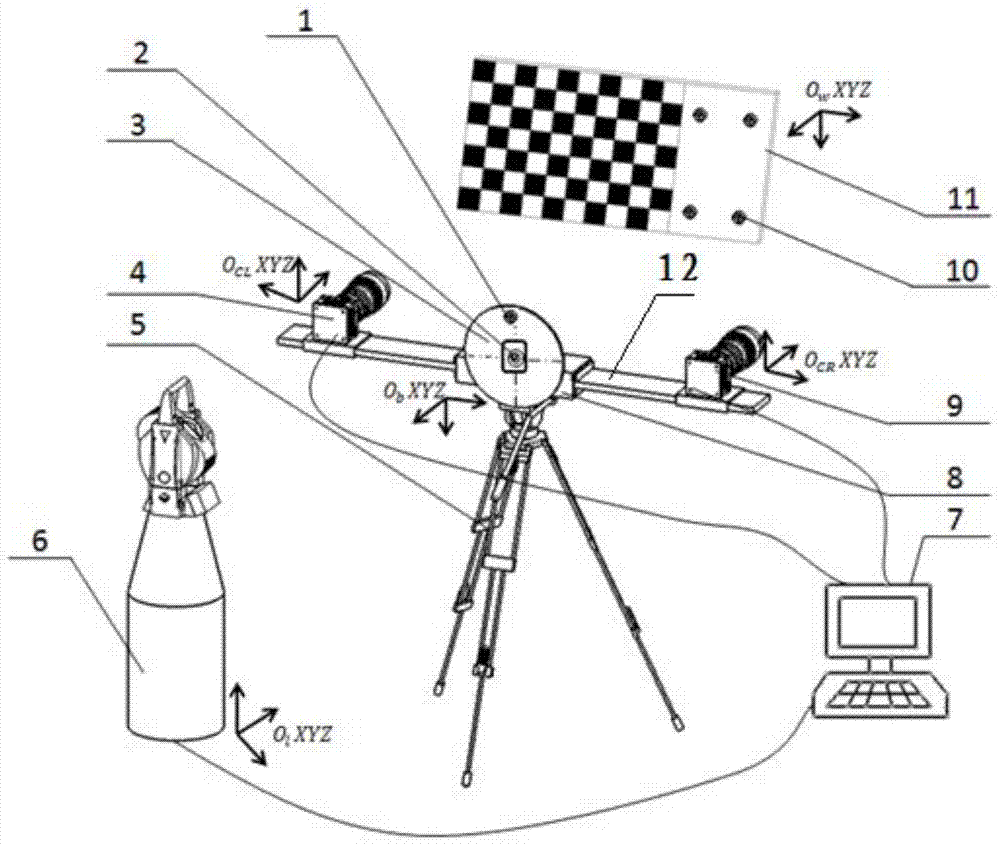

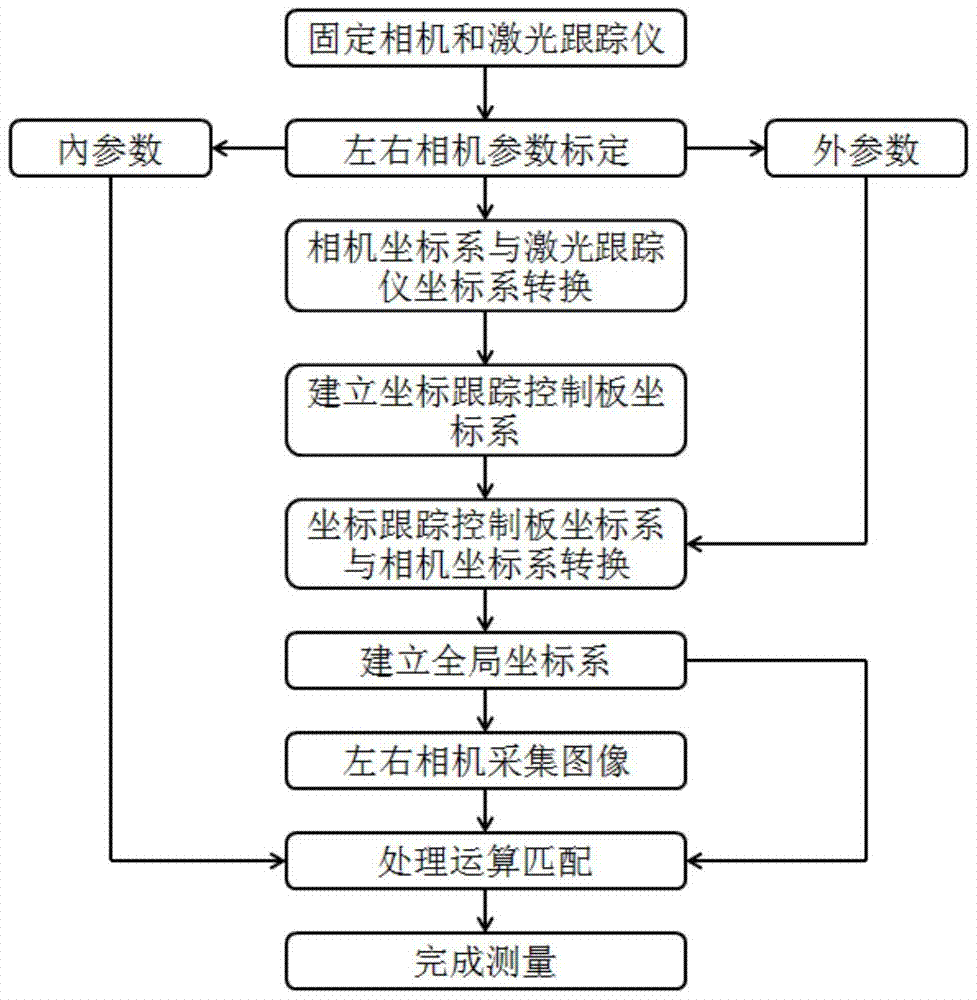

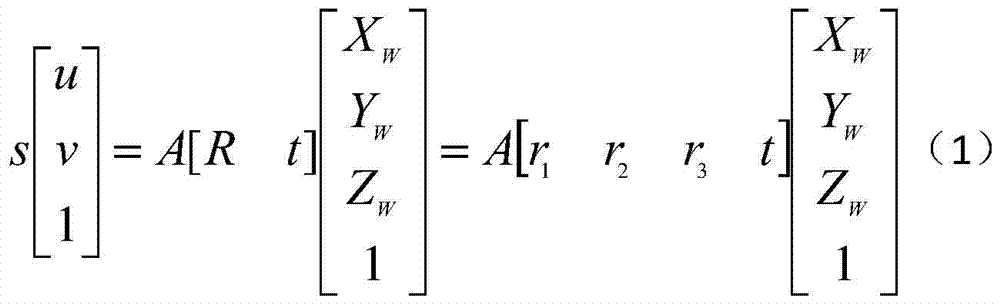

Large field of view global measurement method using coordinate tracking control board

ActiveCN104897060BFast Global Survey JobsQuickly complete the global measurement workUsing optical meansWide fieldOptical measurements

The invention relates to a global measurement method for a large field of view using a coordinate tracking control board, belonging to the field of visual measurement, and relates to a global measurement method for a large field of view using a coordinate tracking control board. This measurement method adopts the coordinate tracking control board installed on the adapter bracket to realize the effective combination of the visual measurement equipment and the laser tracker in the case of non-common field of view, separate and calibrate the internal and external parameters of the left and right cameras, and establish the overall situation of the measurement site Coordinate system, so as to complete the global measurement based on the large field of view. This method can realize rapid and high-precision measurement of large parts and components under complex working conditions within a large field of view, and is a measurement method that combines multiple optical components. The measurement method has a large field of view, high efficiency, and is convenient for measuring the occluded part of the measured part, and quickly completes the global measurement of the large field of view at the industrial site.

Owner:DALIAN UNIV OF TECH

A high-altitude maintenance robot positioning method and device based on near-earth global vision

ActiveCN109129488BTechnical Issues Affecting Secondary Positioning AccuracyProgramme-controlled manipulatorEngineeringCamera module

This application discloses a high-altitude maintenance robot positioning method and device based on near-earth global vision. This application uses a dual vision system to obtain the global position coordinates of the robot body and the fault point through the first camera module, and according to the obtained global position coordinates Control the main body of the robot to perform initial positioning, and then obtain the local position coordinates of the fault point by the second camera module arranged at the end of the mechanical arm of the main body of the robot to control the main body of the robot to perform secondary precise positioning. The first camera module is set near the ground to prevent the global camera from being disturbed by the vibration of the mechanical arm during operation and the high-altitude wind after the global camera rises to the sky with the insulated bucket truck, thereby reducing the accuracy of the initial positioning of the global camera. Accuracy, which in turn affects the technical problem of the secondary positioning accuracy of the robot main body.

Owner:GUANGDONG ELECTRIC POWER SCI RES INST ENERGY TECH CO LTD

A global vision positioning method and system for a small quadruped robot

ActiveCN109872372BSolve the problem of blurred target detailsHigh positioning accuracyImage analysisUsing reradiationComputer graphics (images)Radiology

The invention discloses a global vision positioning method and system for a small-sized quadruped robot. The method comprises the following steps: calibrating a dual-camera system, calculating motion model parameters of the dual-camera system; obtaining an image of a target area captured by a calibrated fish-eye camera; The image of the target area is processed to obtain the position of the target to be located in the fisheye camera coordinate system, and the spatial position of the target to be located in the gimbal coordinate system is calculated through the kinematic model of the dual camera system, and the two-degree-of-freedom kinematics inverse solution is performed , to obtain the two-degree-of-freedom joint rotation angle; obtain the target image to be positioned, and detect the two-dimensional code in the target image to be positioned, obtain the two-dimensional coordinates of the center point of the two-dimensional code in the image to be positioned, and obtain the center of the two-dimensional code after transformation The two-dimensional coordinates of the point in the ground coordinate system; correct the two-dimensional coordinates of the center point of the two-dimensional code in the ground coordinate system to obtain the position and orientation of the target to be positioned in the ground coordinate system.

Owner:SHANDONG UNIV

A smart car scheduling method for warehouse navigation based on global vision

ActiveCN110780671BReduce configuration costsSmall amount of calculationPosition/course control in two dimensionsPid control algorithmSimulation

Owner:SOUTH CHINA UNIV OF TECH

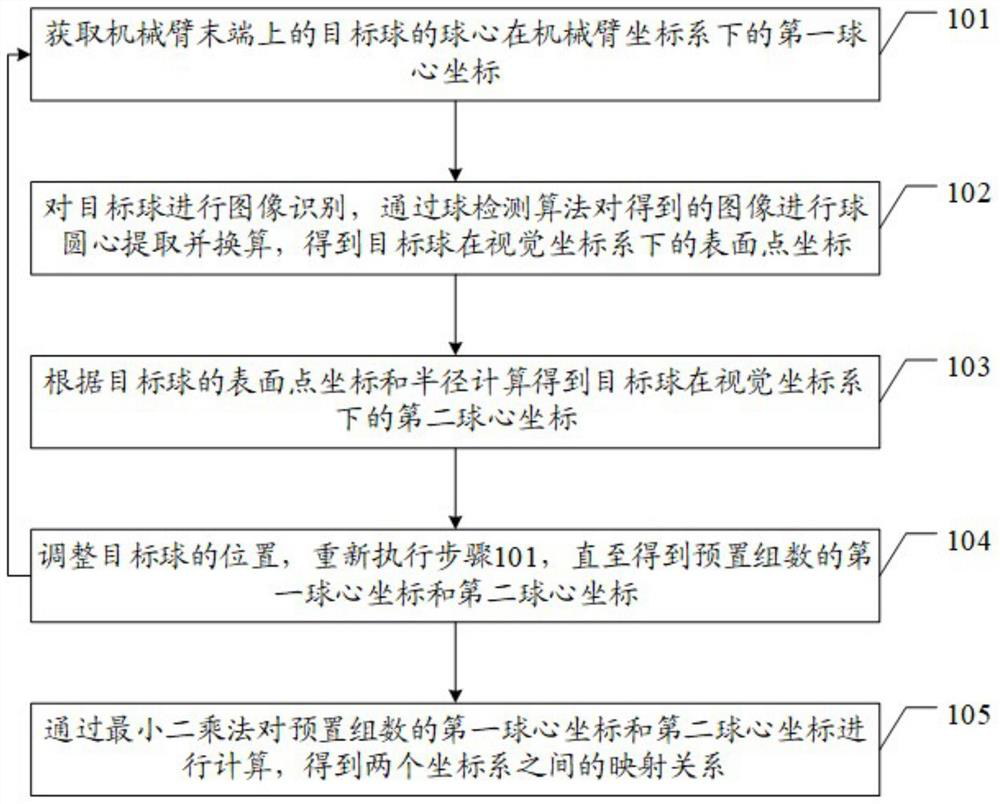

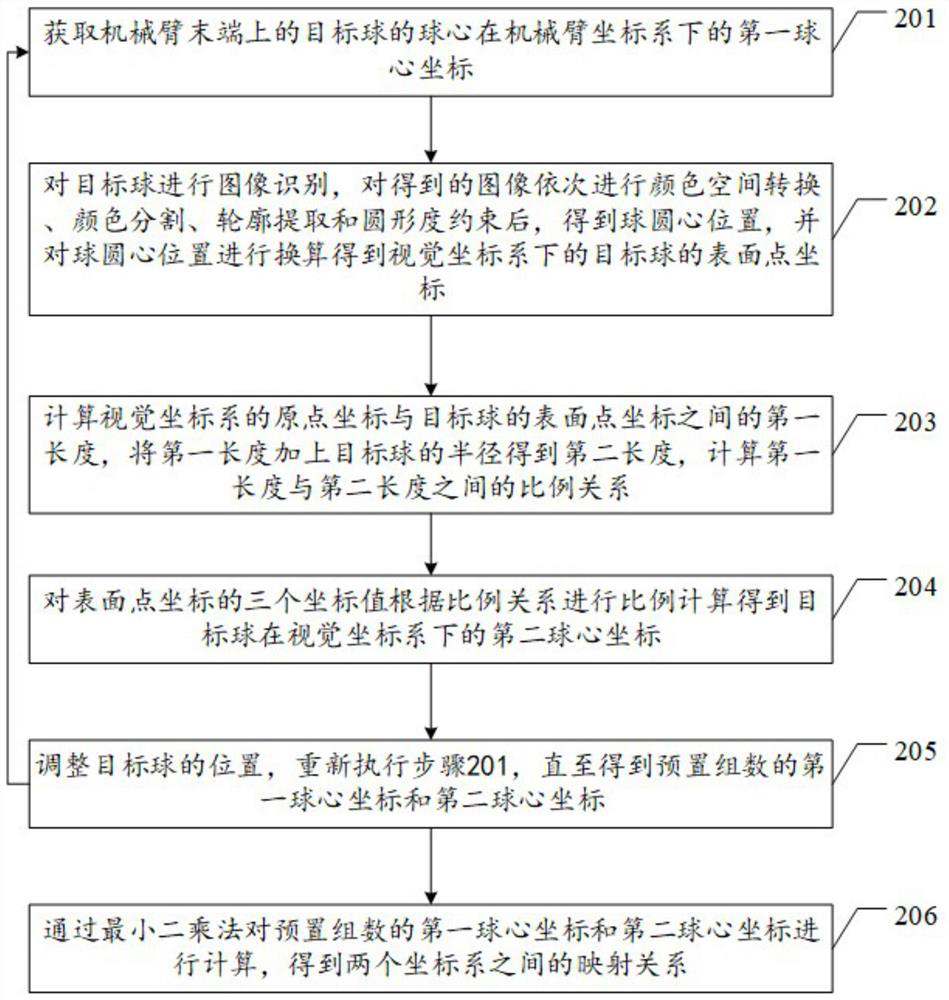

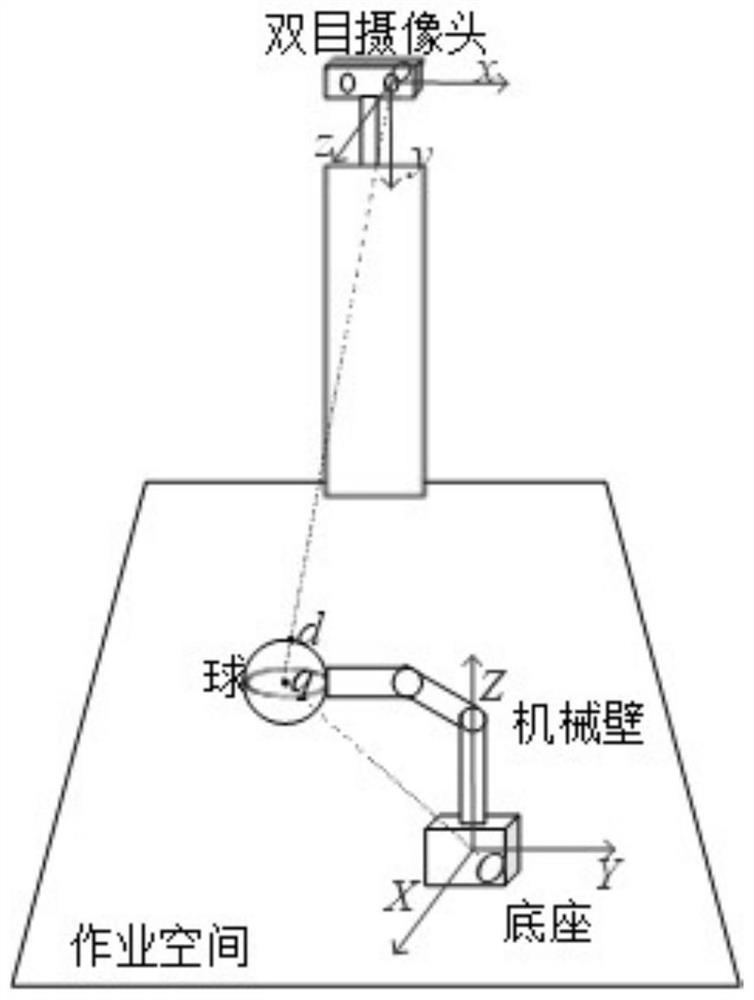

A mapping method and device for a global visual coordinate system and a robot arm coordinate system

ActiveCN109443200BHigh Mapping Transformation AccuracyRealize automatic conversion calculationImage enhancementImage analysisMedicineComputer graphics (images)

Owner:GUANGDONG ELECTRIC POWER SCI RES INST ENERGY TECH CO LTD

A kind of sdn network ddos and dldos distributed space-time detection system

The invention relates to a SDN network DDoS and DLDoS distributed space-time detection system comprising detection nodes arranged in various SDN switches. Each detection node comprises a data acquisition module, a space-time anomaly detection module, and an output module. The data acquisition module is used for acquiring network flow flowing across the SDN switch. The space-time anomaly detection module is used for detecting the network flow acquired by the data acquisition module in a spatial domain, determining whether suspicious flow exists, and confirming whether DDoS or DLDoS exists in a time domain on the basis of a detection result of the spatial domain. The output module is used for storing the detection result according to a set format. In view of the characteristics of the DDoS and the DLDoS, the detection system detects and discriminates the DDoS and the DLDoS in the network flow spatial domain and time domain, and achieves global vision in abnormity detection by using a distributed virtual ANN overlay network.

Owner:SUN YAT SEN UNIV

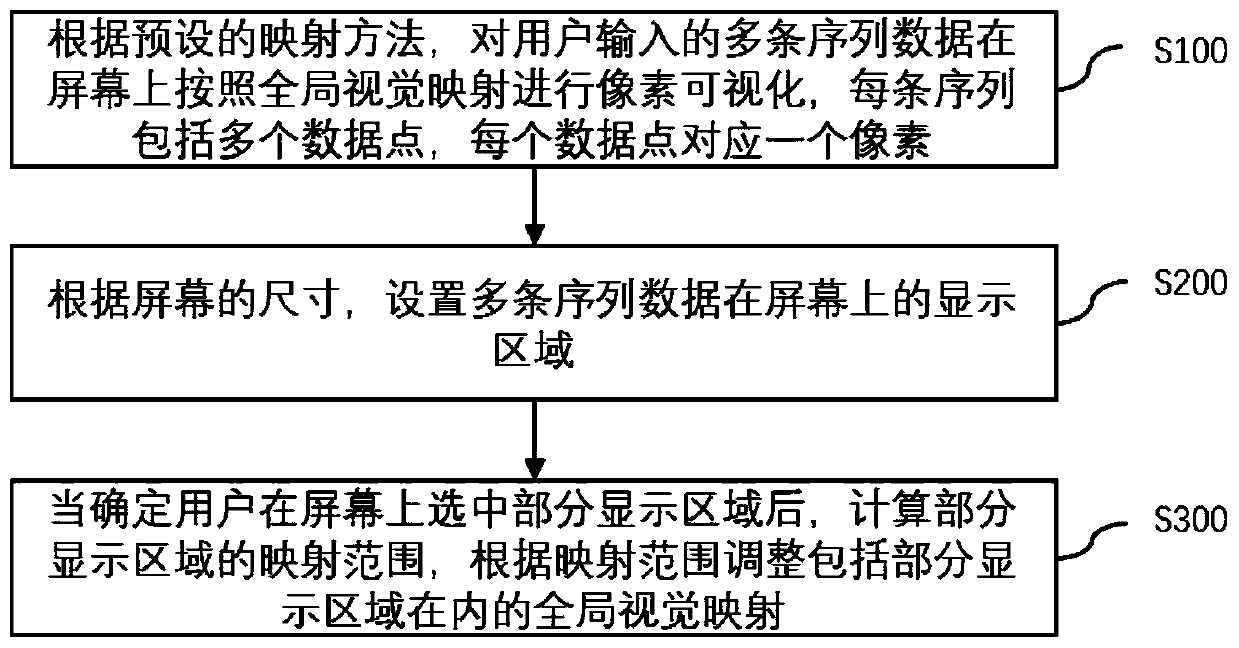

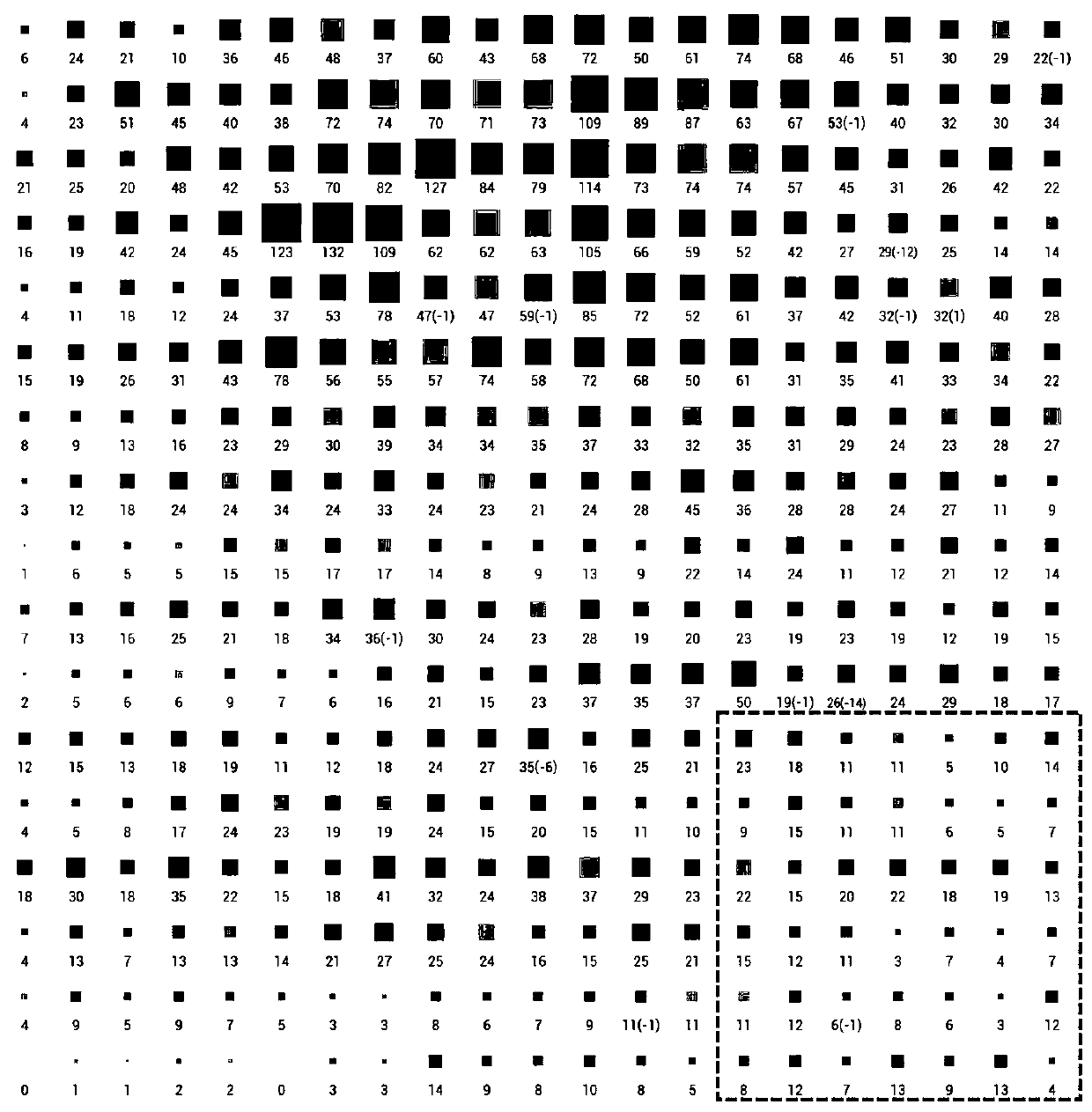

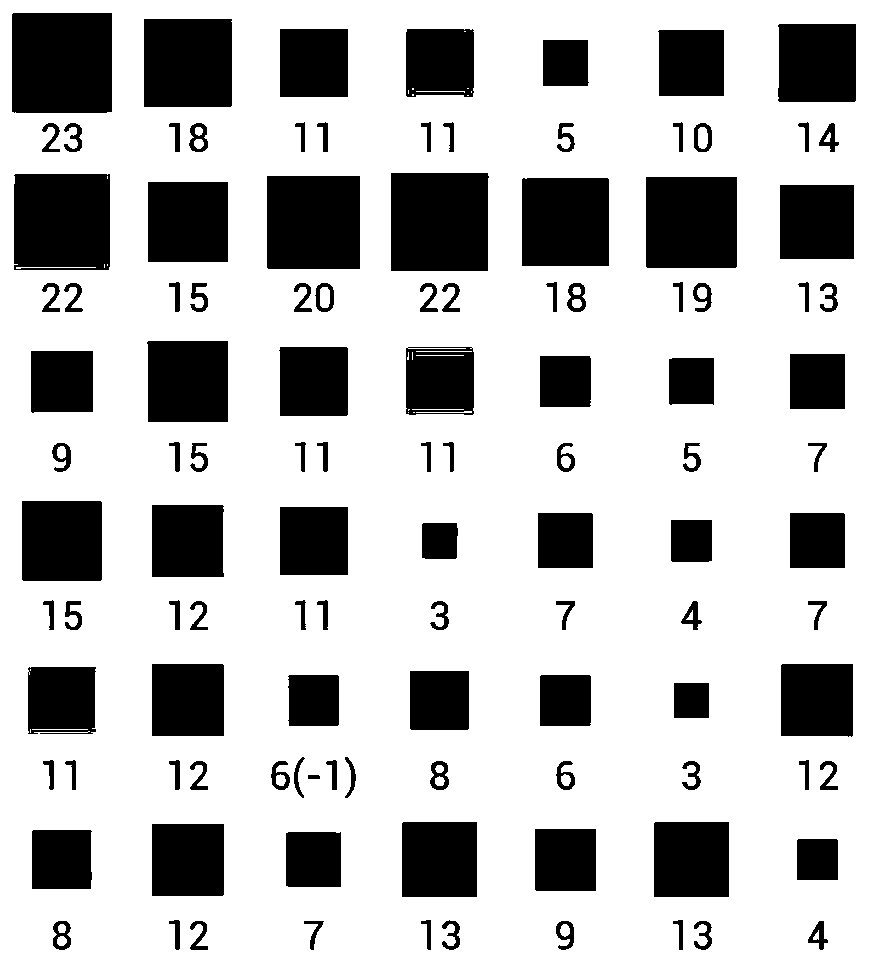

Sequence data pixel visualization adaptive visual mapping adjustment method and system

ActiveCN111596979AImprove the display effectGood local featuresExecution for user interfacesComputer graphics (images)Visual perception

The invention discloses a sequence data pixel visualization adaptive visual mapping adjustment method and system. The adjusting method comprises the steps of S100, conducting pixel visualization on multiple pieces of sequence data input by a user on a screen according to a preset mapping method and global vision mapping, wherein each sequence comprises multiple data points, and each data point corresponds to one pixel; S200, according to the size of the screen, setting a display area of the plurality of pieces of sequence data on the screen; and S300, after it is determined that the user selects a part of the display area on the screen, calculating a mapping range of the part of the display area, and adjusting global visual mapping including the part of the display area according to the mapping range. According to the invention, the visual mapping in the pixel visualization is automatically adjusted according to the mapping range of the partial display area selected by the user, so that the pixel visualization can better display the local features of the high dynamic range data.

Owner:PEKING UNIV

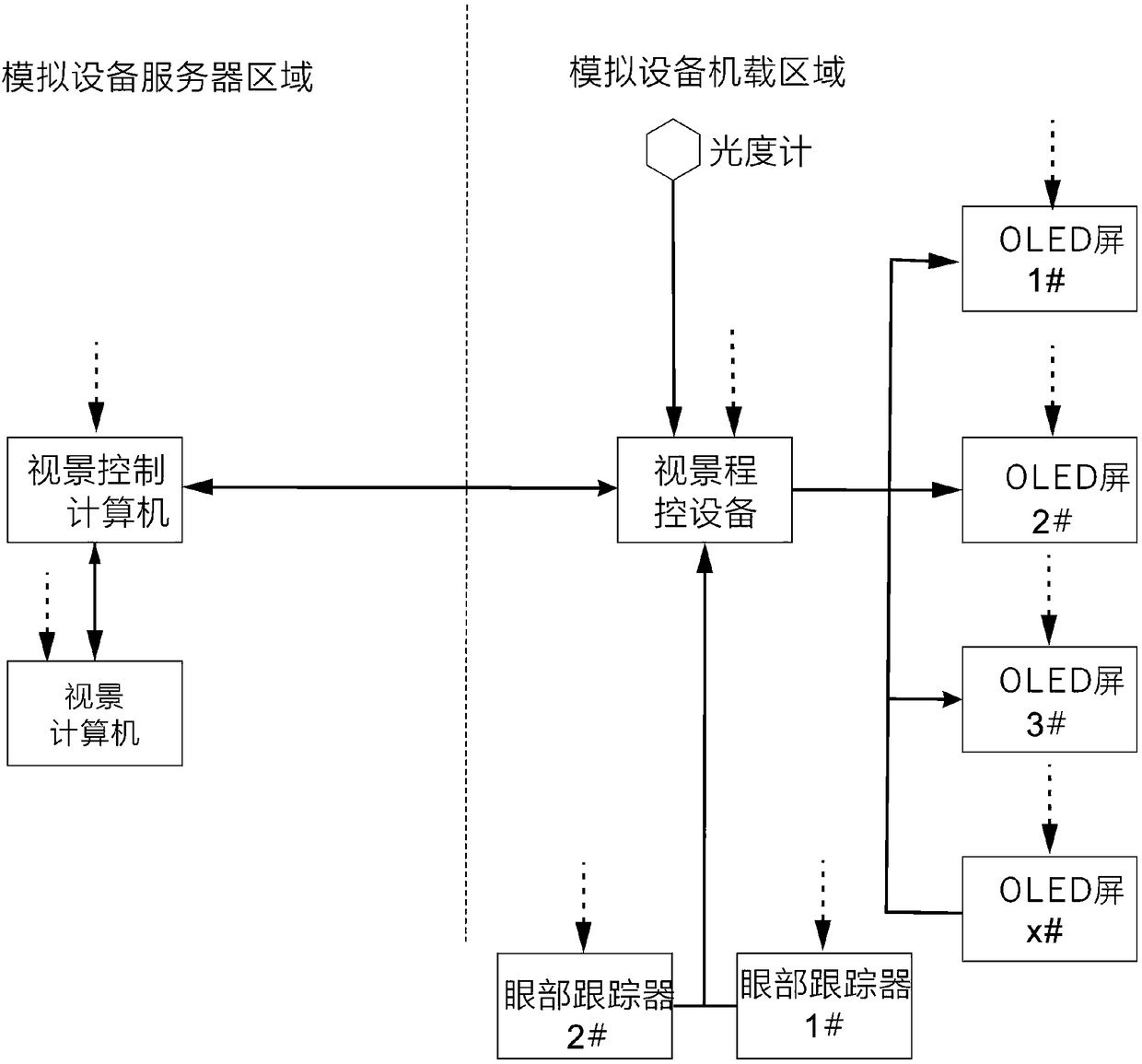

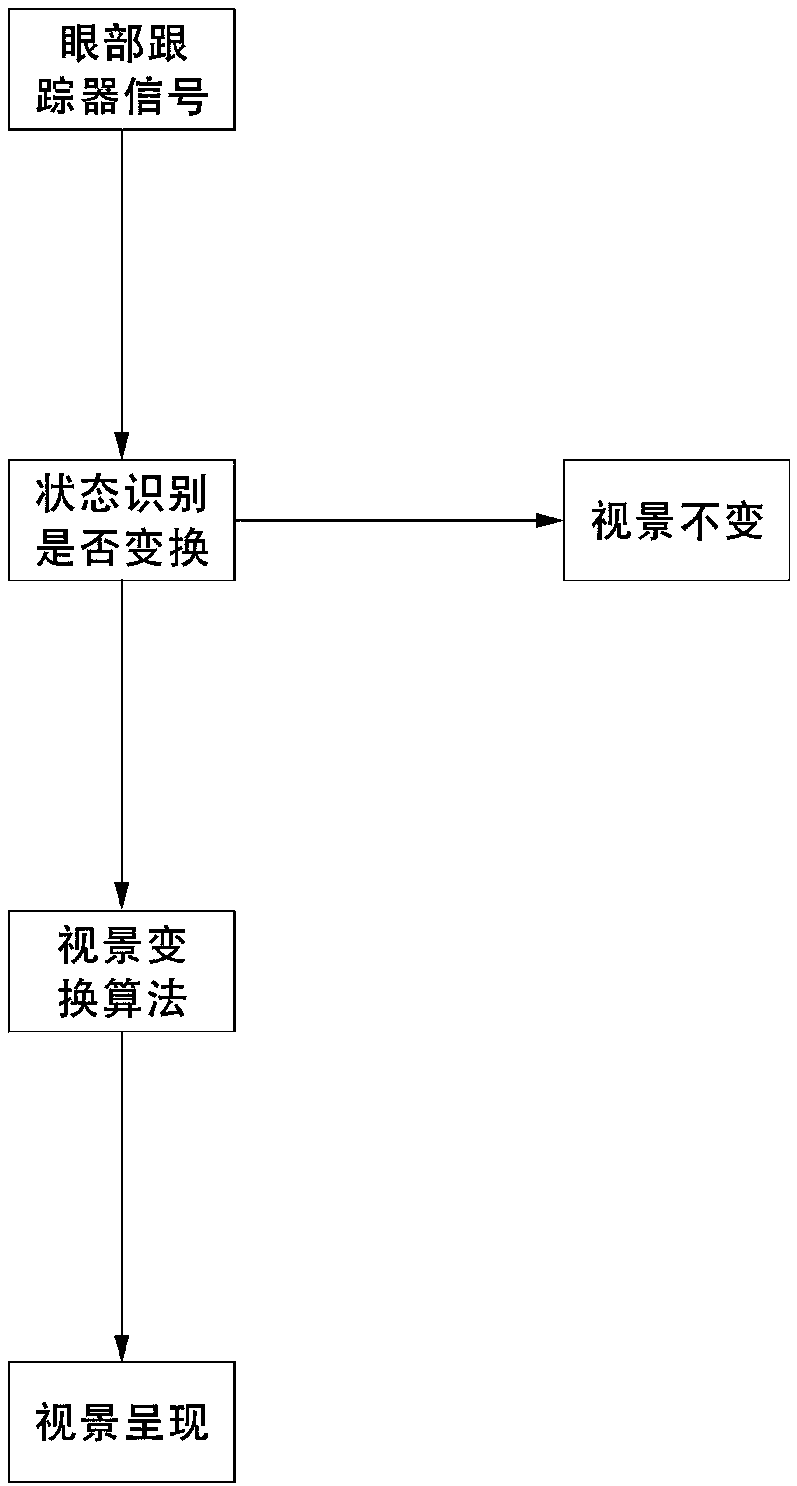

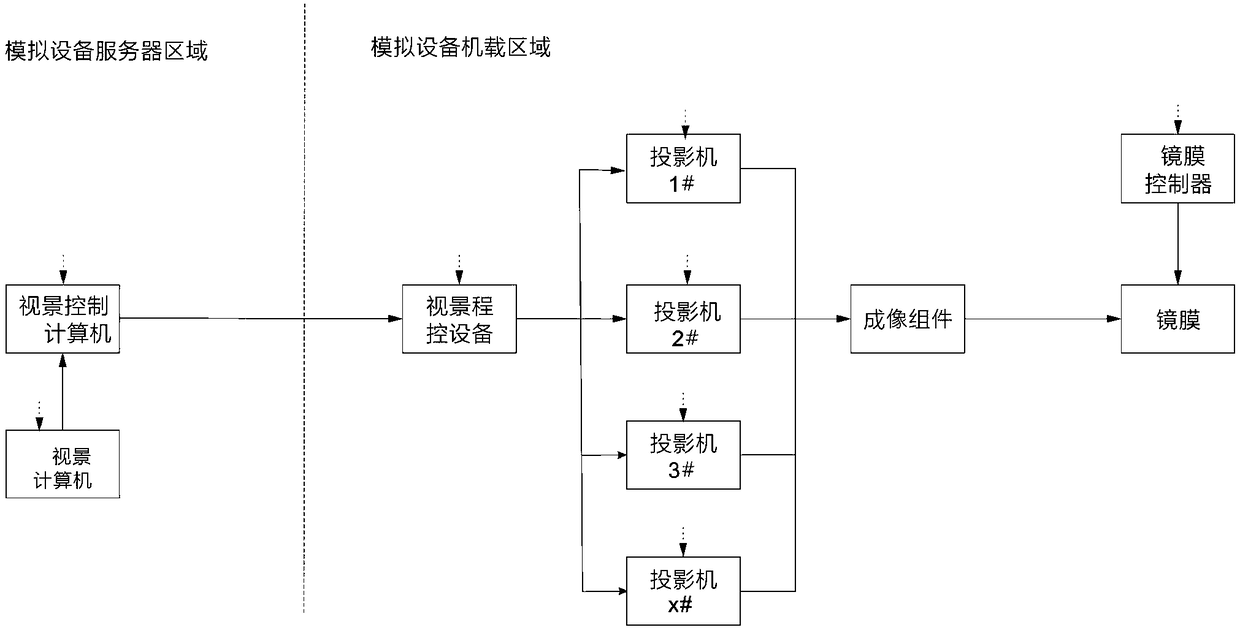

Simulated imaging system based on flexible OLED display screen

PendingCN108510939AReduce volumeReduce overall layout spaceStatic indicating devicesComputer scienceImage system

The invention relates to a simulated imaging system based on flexible OLED display screens. The simulated imaging system is technically characterized by comprising a vision computer, a vision controlcomputer, a vision program-controlled device and multiple OLED display screens. The vision control computer is connected with the vision computer and is used for controlling on and off of the vision computer and adjusting parameters. The vision computer is connected with the multiple display screens through the vision program-controlled device and used for calling vision information of the physical position where a simulator is located from a global vision database, vison images are output to the vision program-controlled device, then the vision program-controlled device allocates the vision information output by the vision computer to different OLED display screens through network decoding. The simulated imaging system is simple in structure, small in size, light in weight and capable ofremarkably reducing the production cost.

Owner:中航大(天津)模拟机工程技术有限公司

Collaborative Experiment Platform for Multi-Mobile Robot System and Its Visual Segmentation and Localization Method

InactiveCN103970134BHigh positioning accuracySmall amount of calculationPosition/course control in two dimensionsFrame differenceMonitoring system

The invention discloses a multi-mobile-robot system collaborative experimental platform and a visual segmentation and location method of the collaborative experimental platform. The collaborative experimental platform is composed of a global vision monitoring system and a plurality of mobile robots the same in mechanism, wherein the global vision monitoring system is composed of an n-shaped support, a CCD camera, an image collection card and a monitoring host, and the CCD camera is installed on the n-shaped support and arranged corresponding to the mobile robots and connected with the image collection card installed in a PCI slot of the monitoring host through a video line. The visual segmentation method is combined with a frame difference method and a light stream method to segment a barrier. Threshold segmentation is performed based on a color code plate to achieve the visual location method. Experimental verification of collaborative technologies such as formation control, target search, task allocation and route planning in a multi-mobile-robot system can be met, and the multi-mobile-robot system collaborative experimental platform has the advantages of being low in cost and rapid to construct.

Owner:JIANGSU UNIV OF SCI & TECH

Robot Vision Guidance Method and Device Based on Integrating Global Vision and Local Vision

ActiveCN111216124BLower performance requirementsMeet the precision requirements of high-efficiency machiningProgramme-controlled manipulatorComputer graphics (images)Visual inspection

The invention discloses a robot vision guidance method and device based on the integration of global vision and local vision, which integrates the global vision system and the local vision system, first realizes the rough positioning of the processing target, and realizes that the processing target is divided into blocks, and Carry out road strength planning, and then combine the high-precision visual inspection system to accurately detect the target, and then guide the robot to implement high-precision and high-efficiency automatic grinding and polishing operations, thus meeting the precision requirements for high-efficiency processing of large-volume processing targets.

Owner:北斗长缨(北京)科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com