Autonomous motion planning method for large space manipulator based on multi-channel vision fusion

A technology of motion planning and autonomous movement, applied in the field of manned spaceflight, to achieve the effect of solving the difficulty of capture, stable and accurate capture, and precise positioning and capture

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

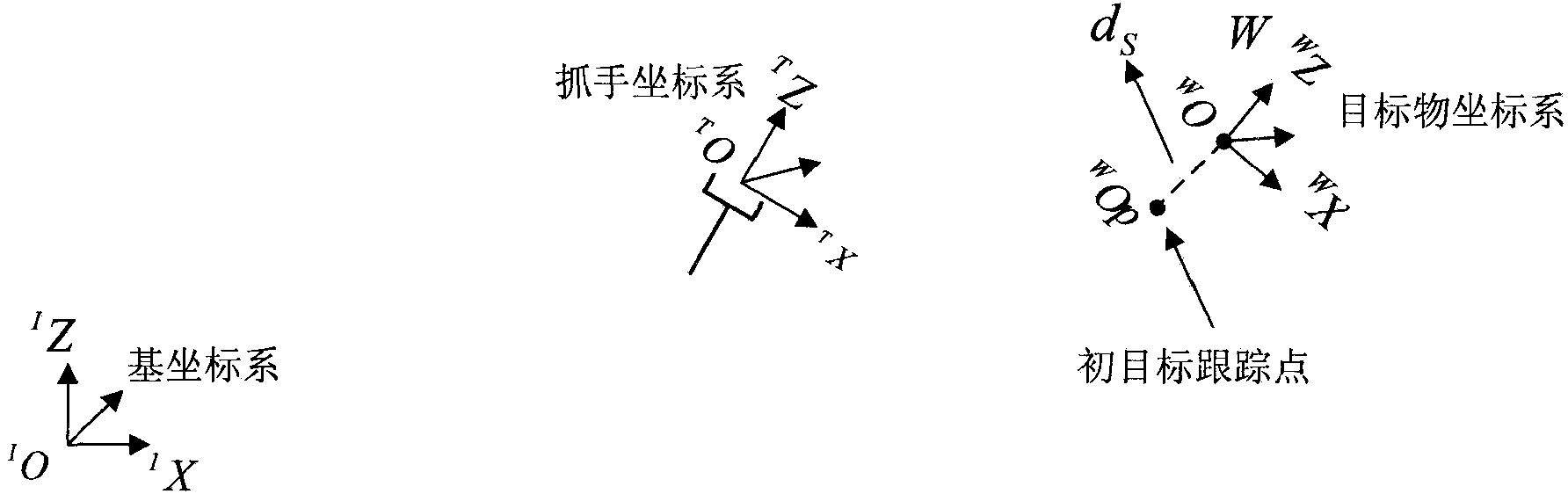

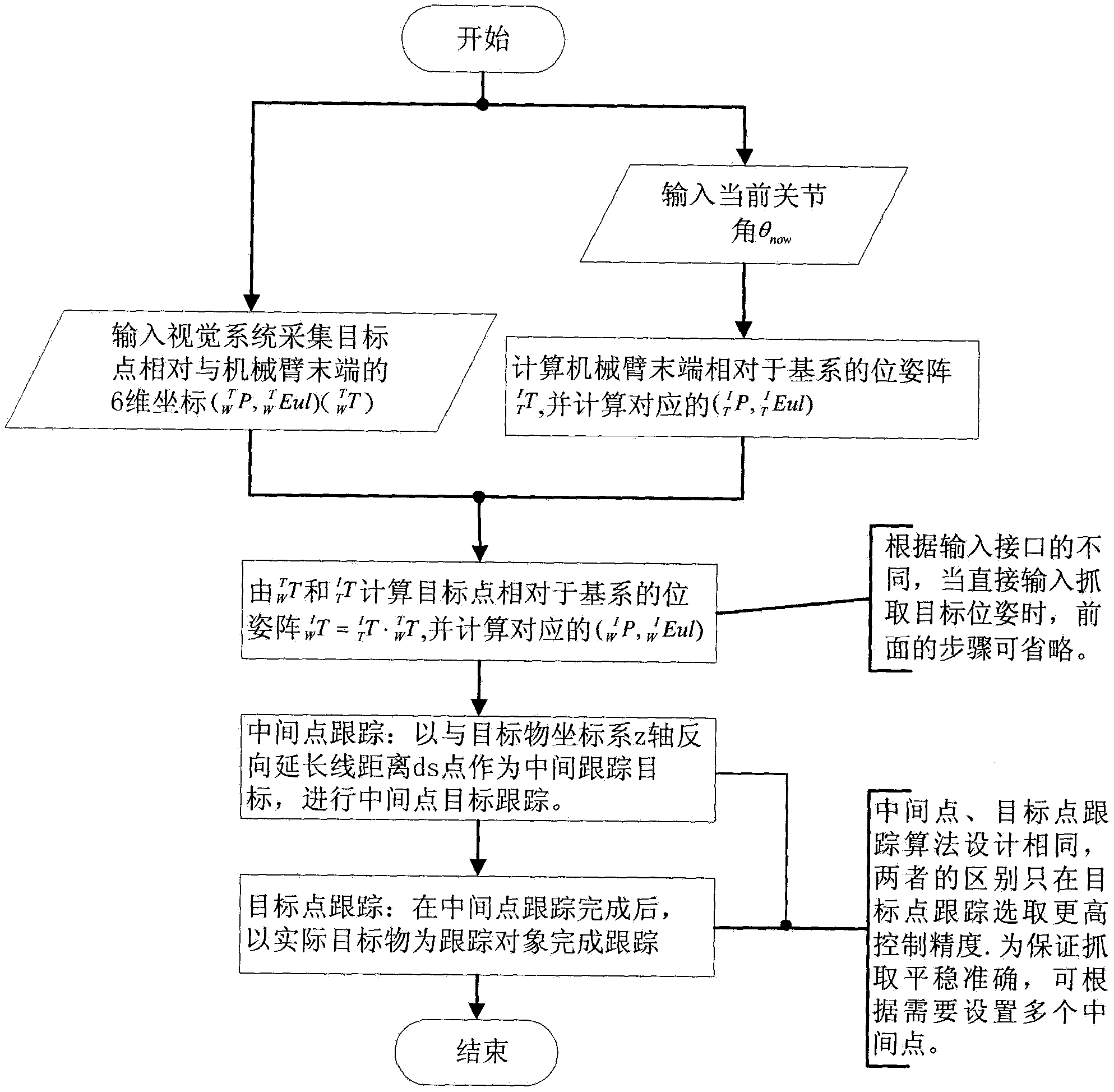

Embodiment Construction

[0017] The multi-channel vision system of the present invention includes a global vision system, a local vision system and a wrist vision system, wherein the global vision system is installed on the cabin base, and the local vision system is installed on the mechanical arm body such as a part or an arm bar. The vision system is installed at the end of the robot arm, and both the local vision system and the wrist vision system move with the body of the robot arm. The multi-channel vision system is composed of a camera, an image compression module and a pose information processing module, but the field of view of the global, local and wrist cameras is different, and these are mature technologies.

[0018] In the present invention, the focal length of the global camera in the global vision system is 8mm. When the distance target is [5m, 20m], the position measurement accuracy is better than 120mm, and the attitude measurement accuracy is better than 5°; The focal length is 20mm. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com