Global vision-based positioning method

A positioning method and visual technology, applied in the field of positioning, can solve problems such as high installation cost, inaccurate positioning, and susceptibility to interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

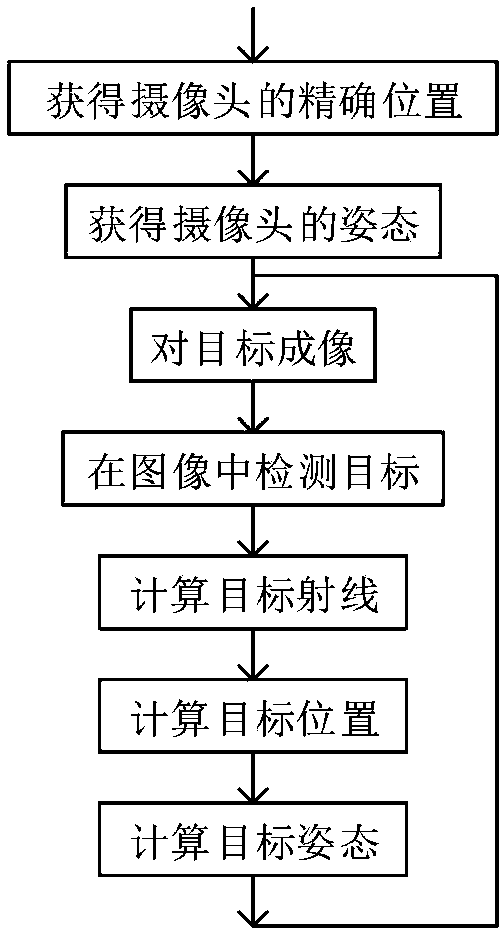

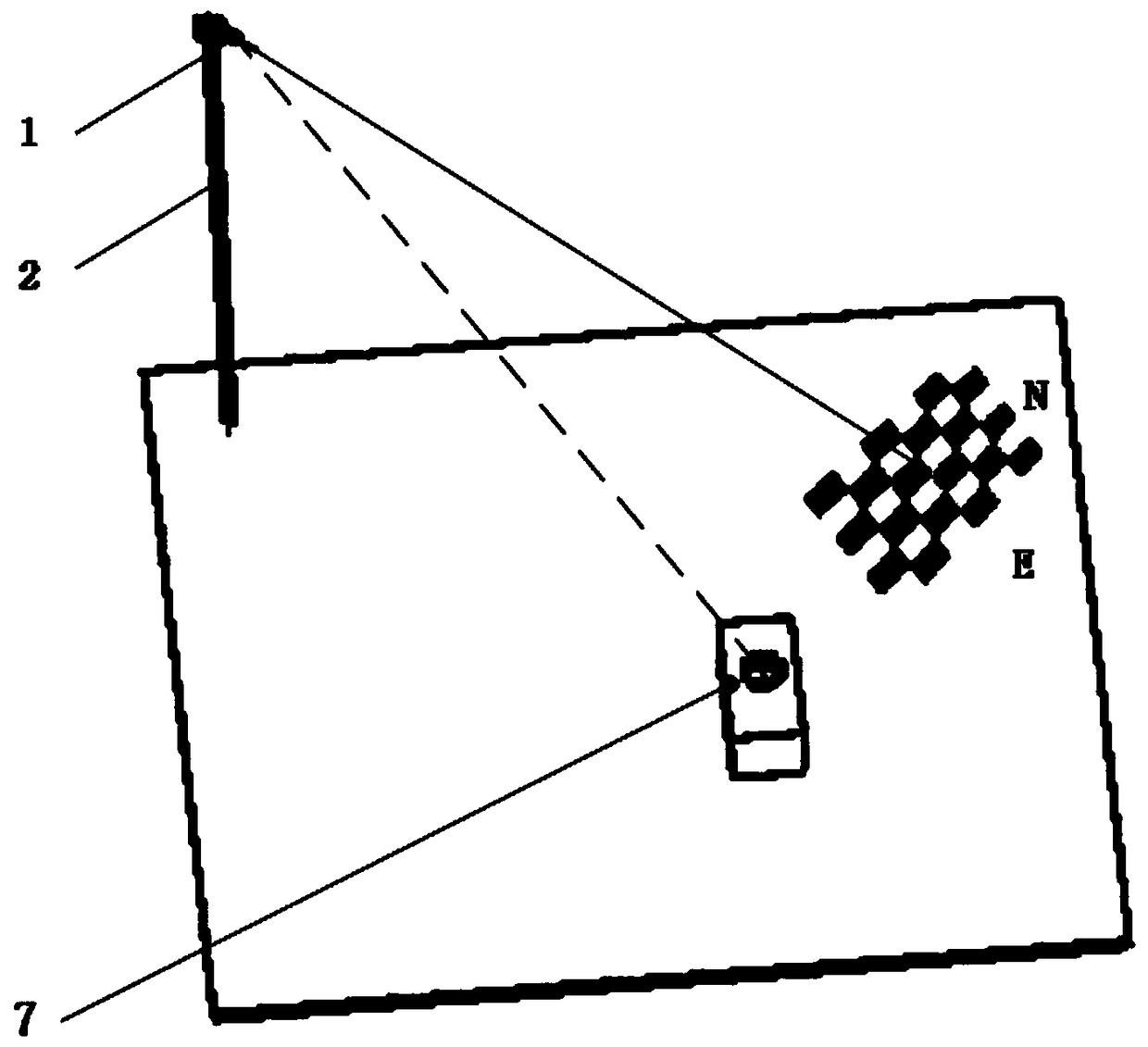

[0066] Embodiment 1 Indoor positioning technology based on global vision

[0067] The global vision positioning method adopted in the present invention is applied to indoor positioning technology. Such as Figure 9 As shown, indoor positioning has important value, but the current technical level has become a bottleneck hindering the application. If global vision is used, the target sends a visual positioning request signal, and the indoor positioning system provides accurate location information services to the target, solving the current indoor positioning problem.

[0068] Global vision: refers to a camera that looks down and can see a large range.

[0069] Visual location request signal: A visual signal that a camera can detect, such as a blinking light. Function: (1) Tell the camera to detect the position of the target; (2) Tell the camera who the target is; (3) Synchronize the time on the camera and the target.

[0070] step:

[0071] (1) The target sends a visual po...

Embodiment 2

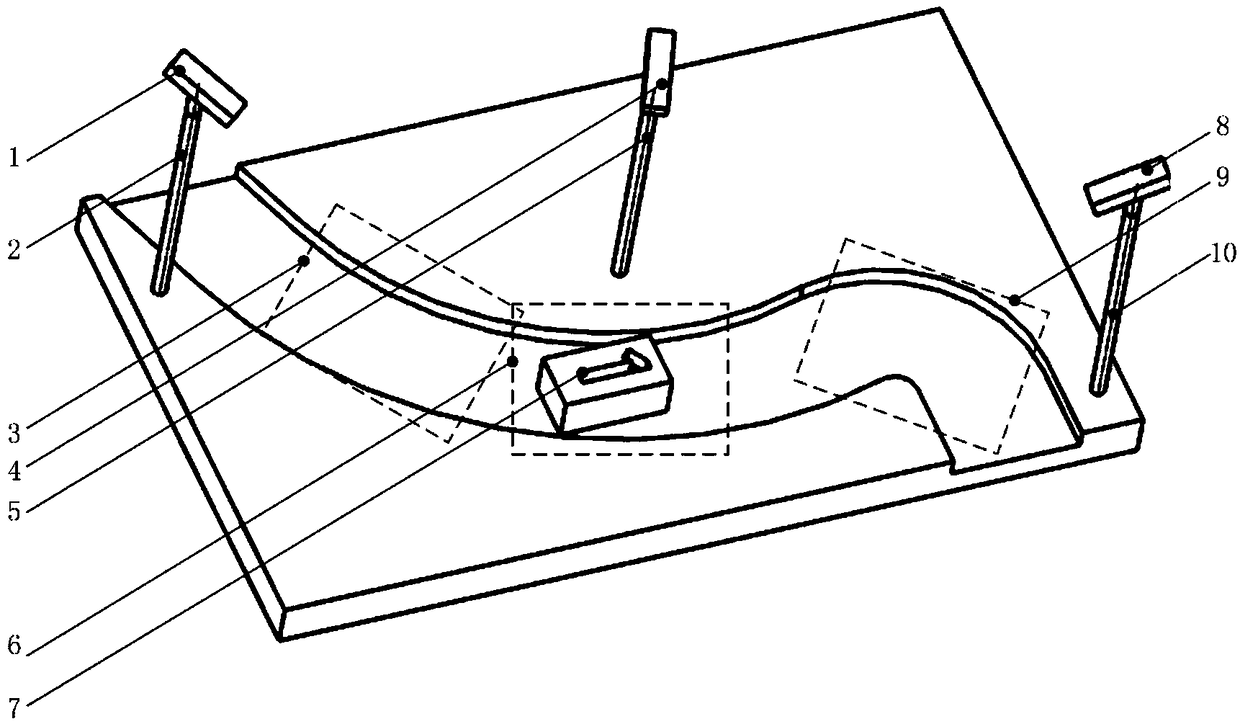

[0076] Embodiment 2 sweeping robot based on global vision

[0077] The global vision positioning method adopted in the present invention is applied in a sweeping robot. Such as Figure 10 As shown, due to the lack of awareness of the entire environment, the sweeping robot cannot establish an optimized cruising strategy; more importantly, without feedback on the sweeping effect, the sweeping robot cannot know which places need to be cleaned and which places do not need to be cleaned. Even a sweeping robot capable of modeling the environment cannot establish an accurate model of the entire environment, especially a dynamically changing environment.

[0078] Global vision refers to a camera that looks down and can see a large range. This kind of camera has two functions: (1) establish an accurate model of the entire environment to facilitate the sweeping robot to cruise; (2) can detect where is dirty and where it needs to be cleaned, and assign cleaning tasks to the sweeping ro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com