Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

39 results about "Co scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

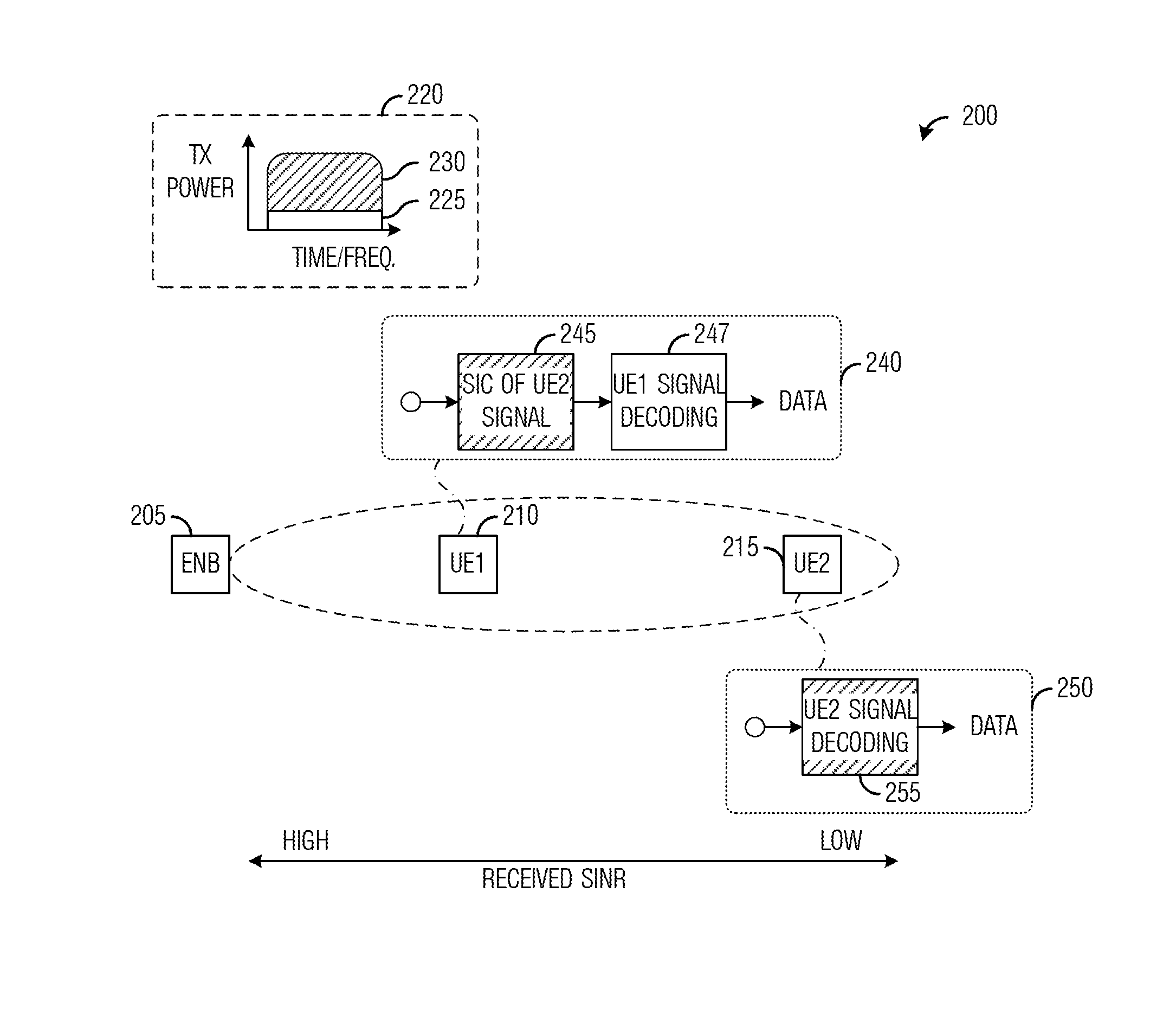

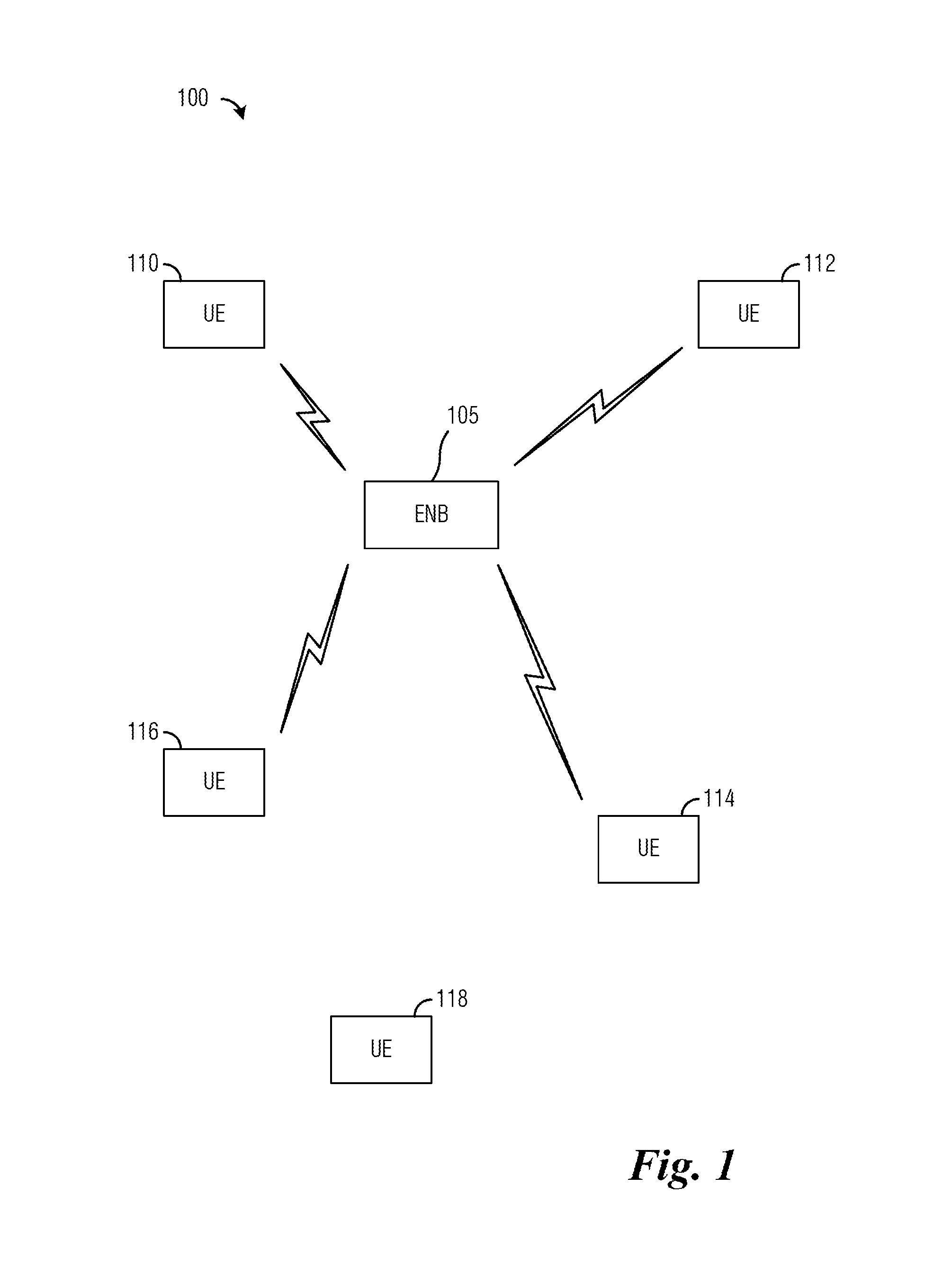

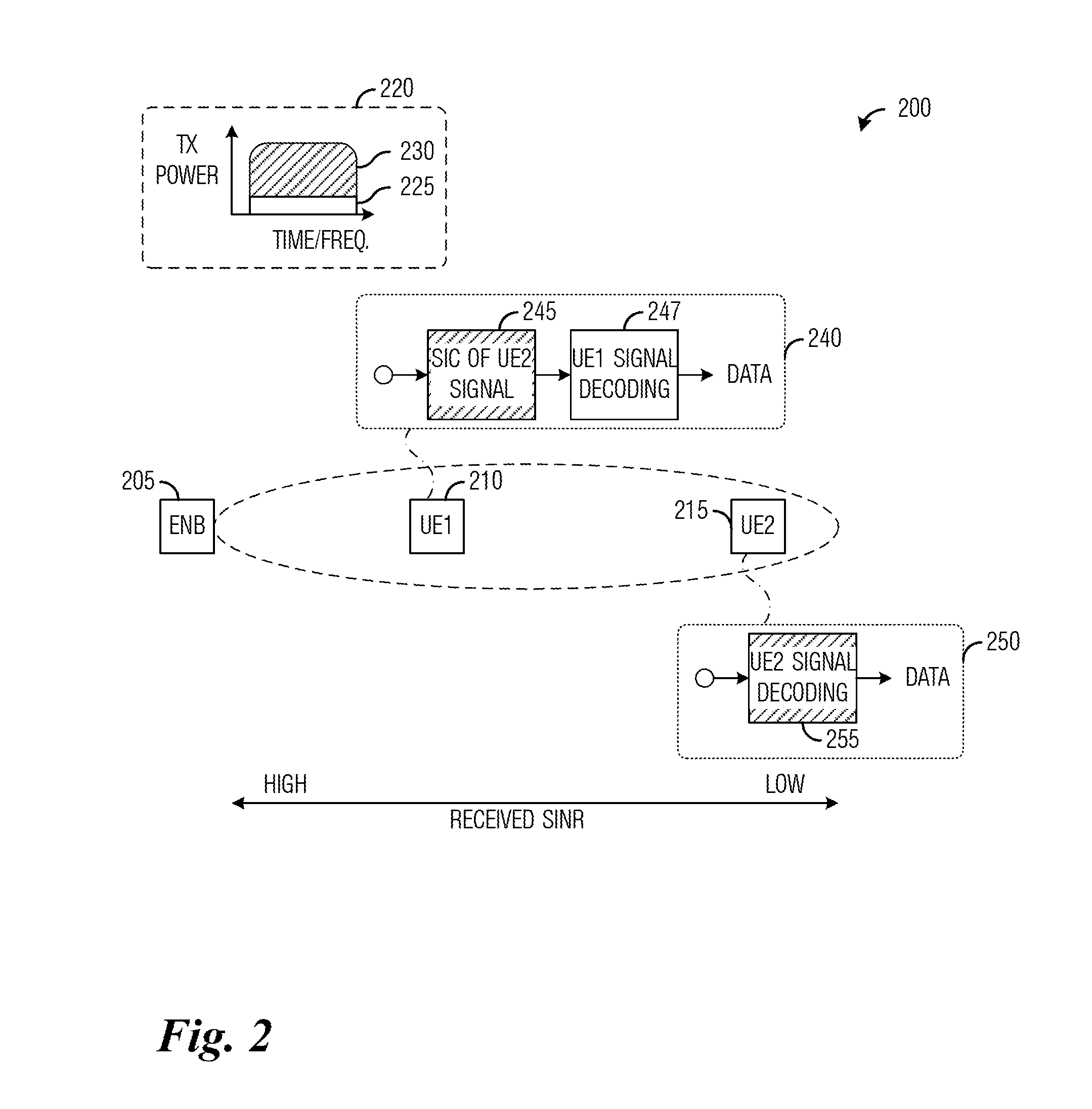

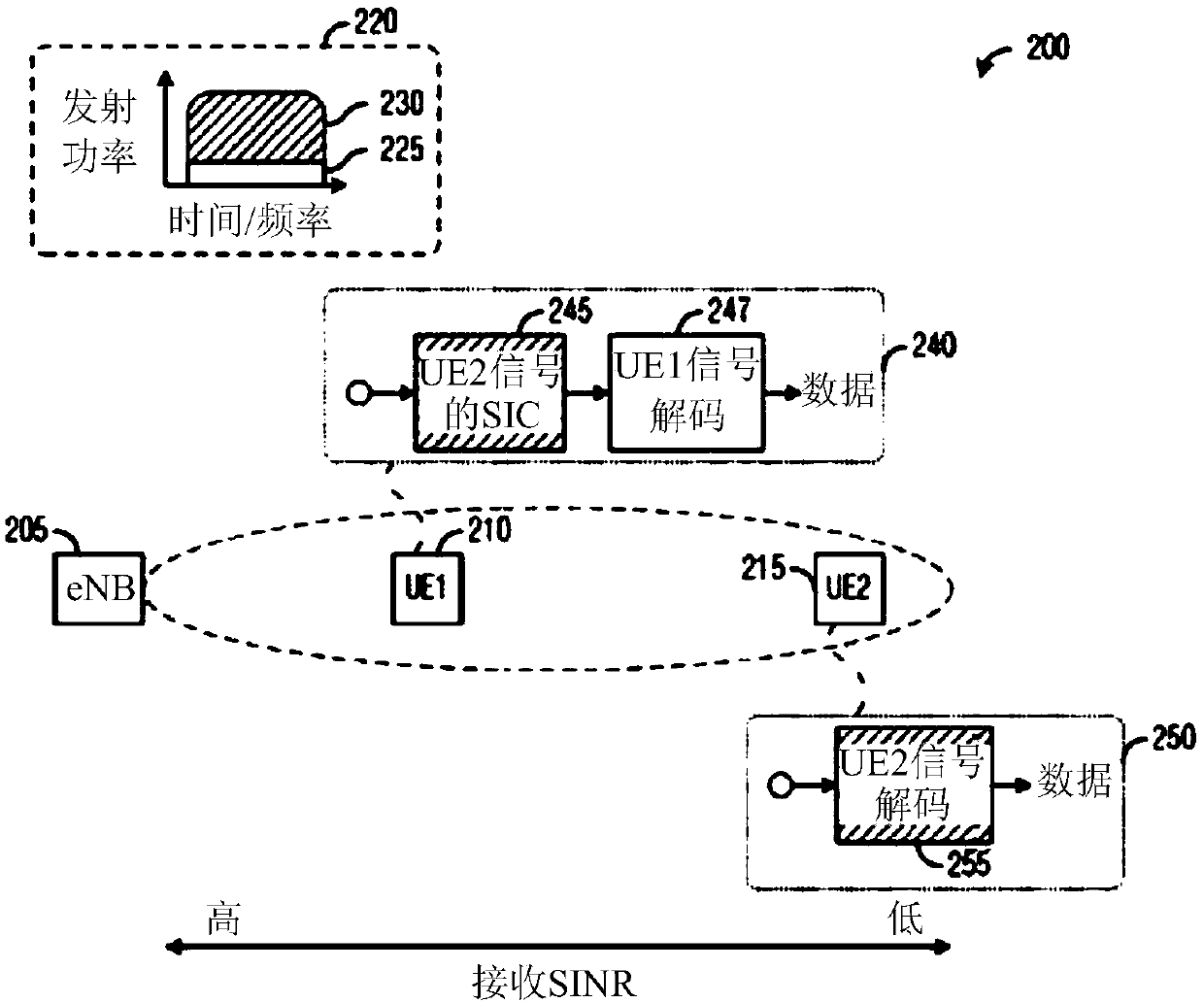

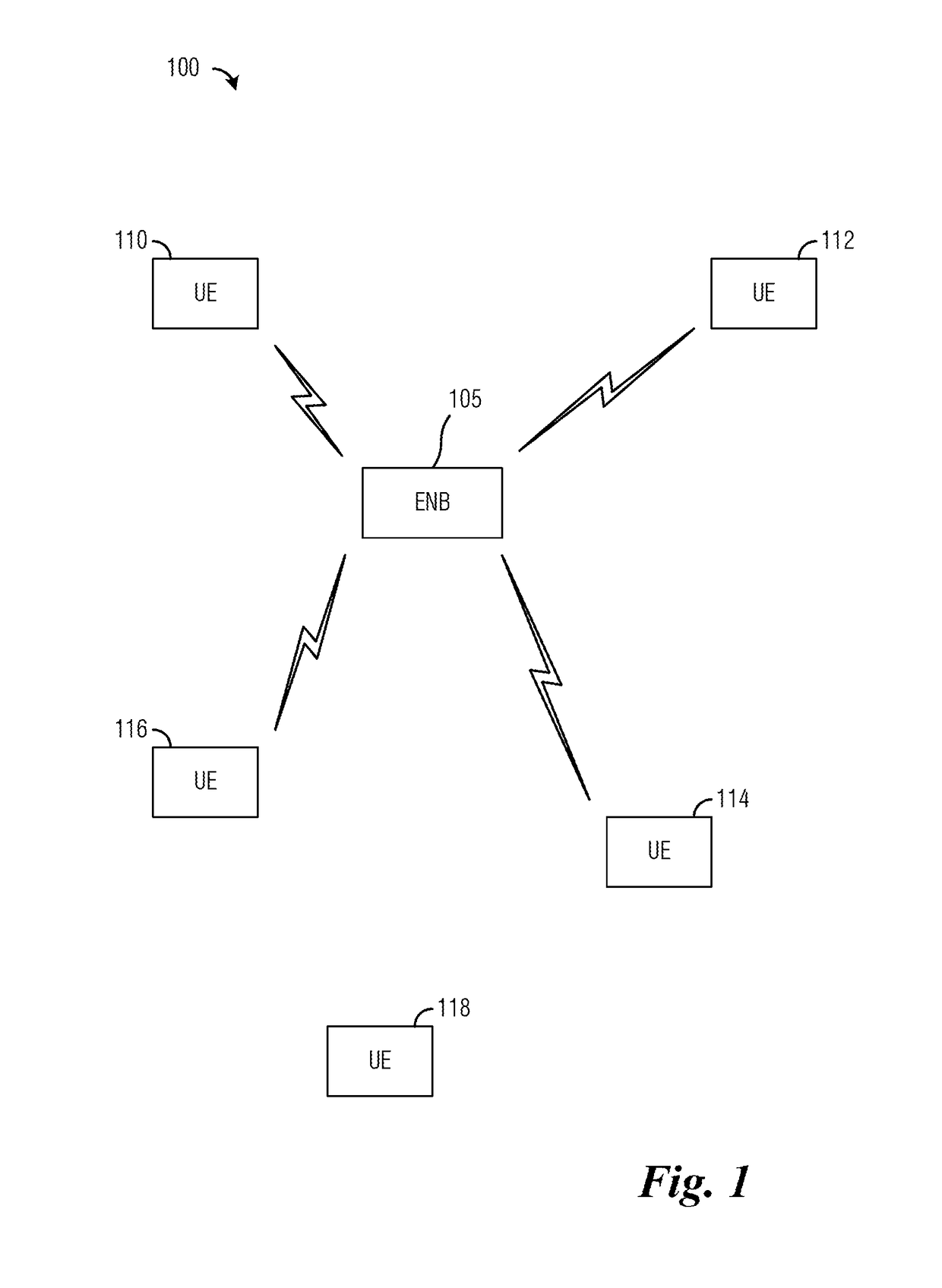

System and Method for Multi-Level Beamformed Non-Orthogonal Multiple Access Communications

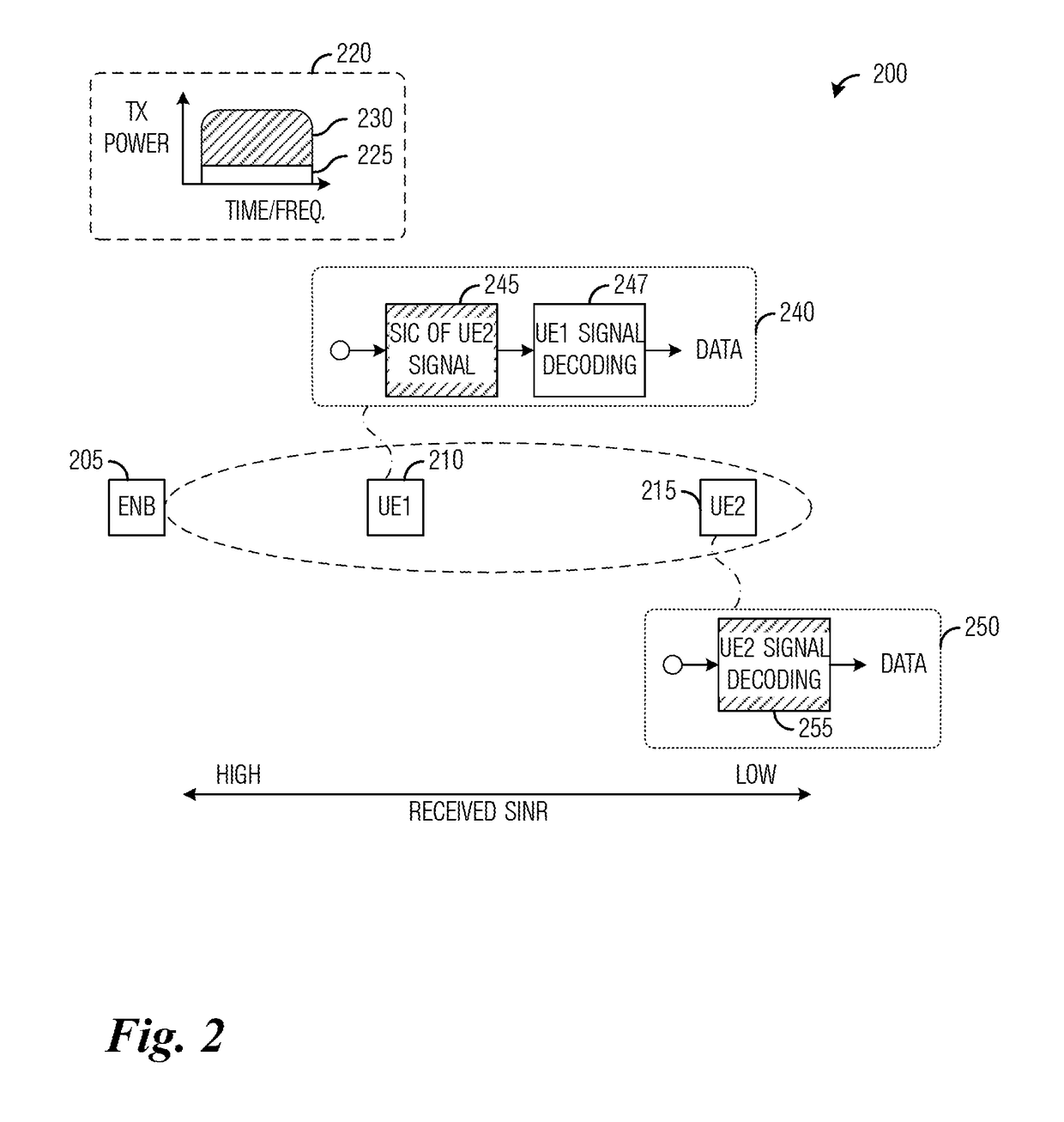

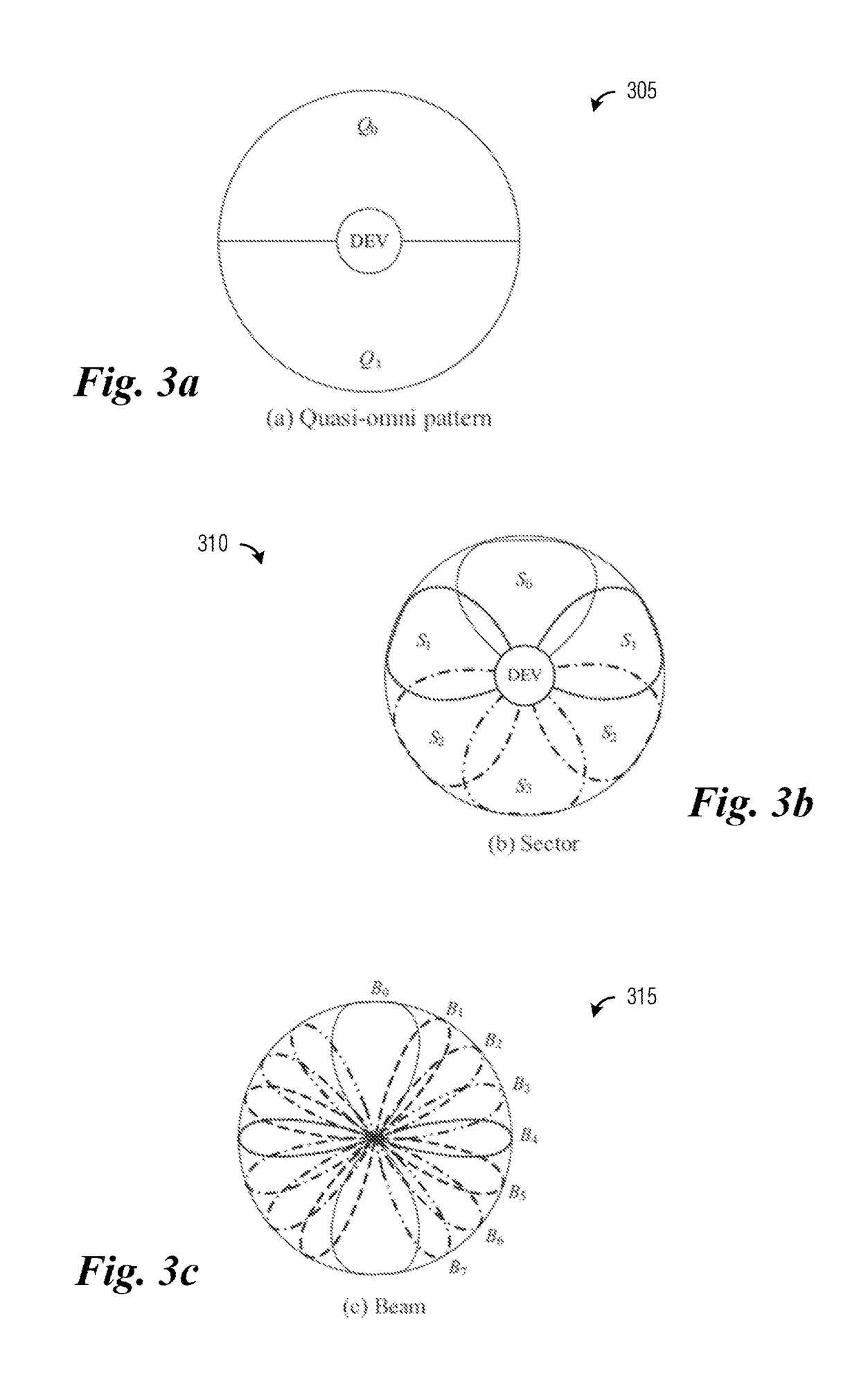

ActiveUS20160353424A1Facilitates the co-scheduling of multiple UEsImprove spectral efficiencySpatial transmit diversityWireless communicationEngineeringBroadband

Owner:FUTUREWEI TECH INC

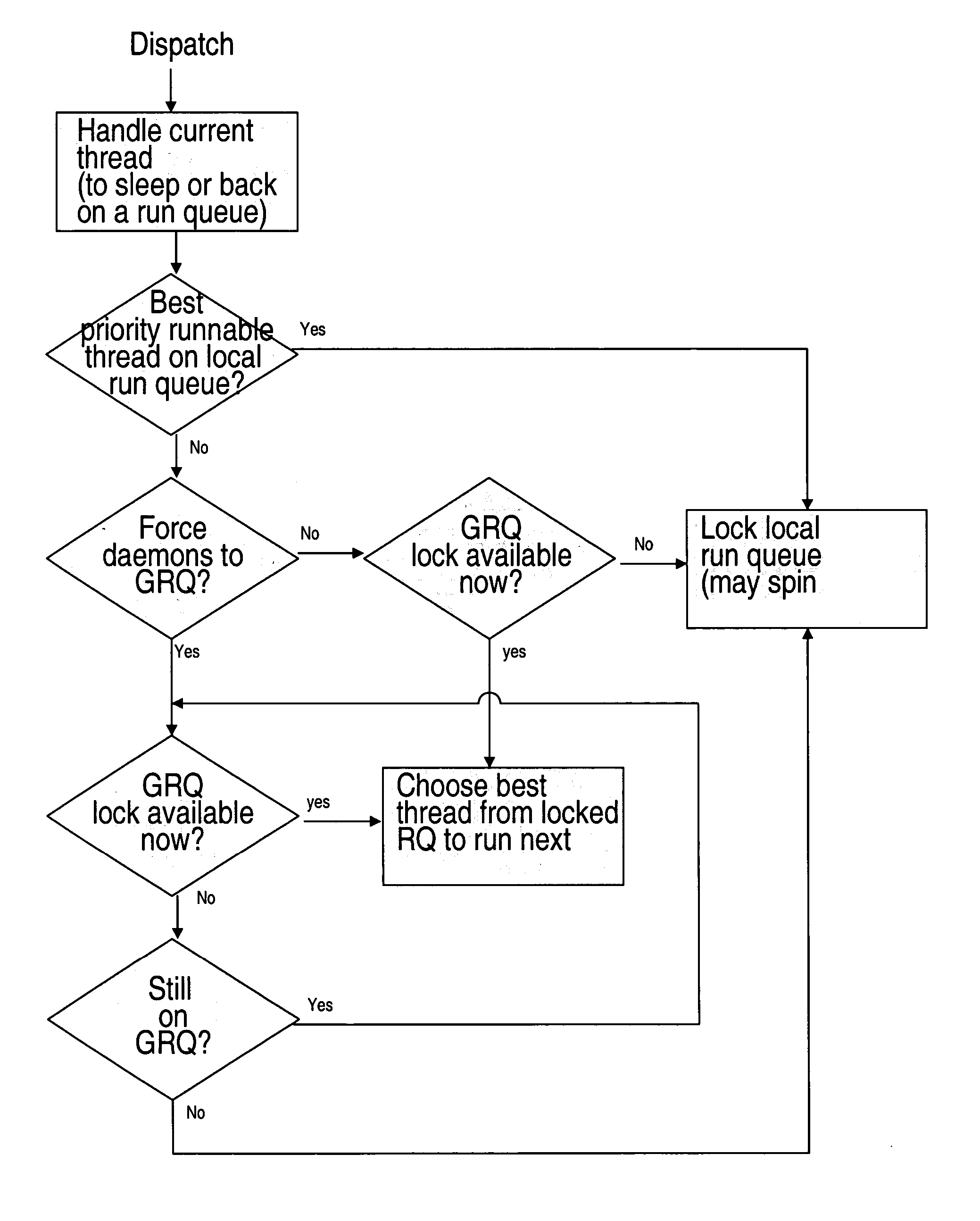

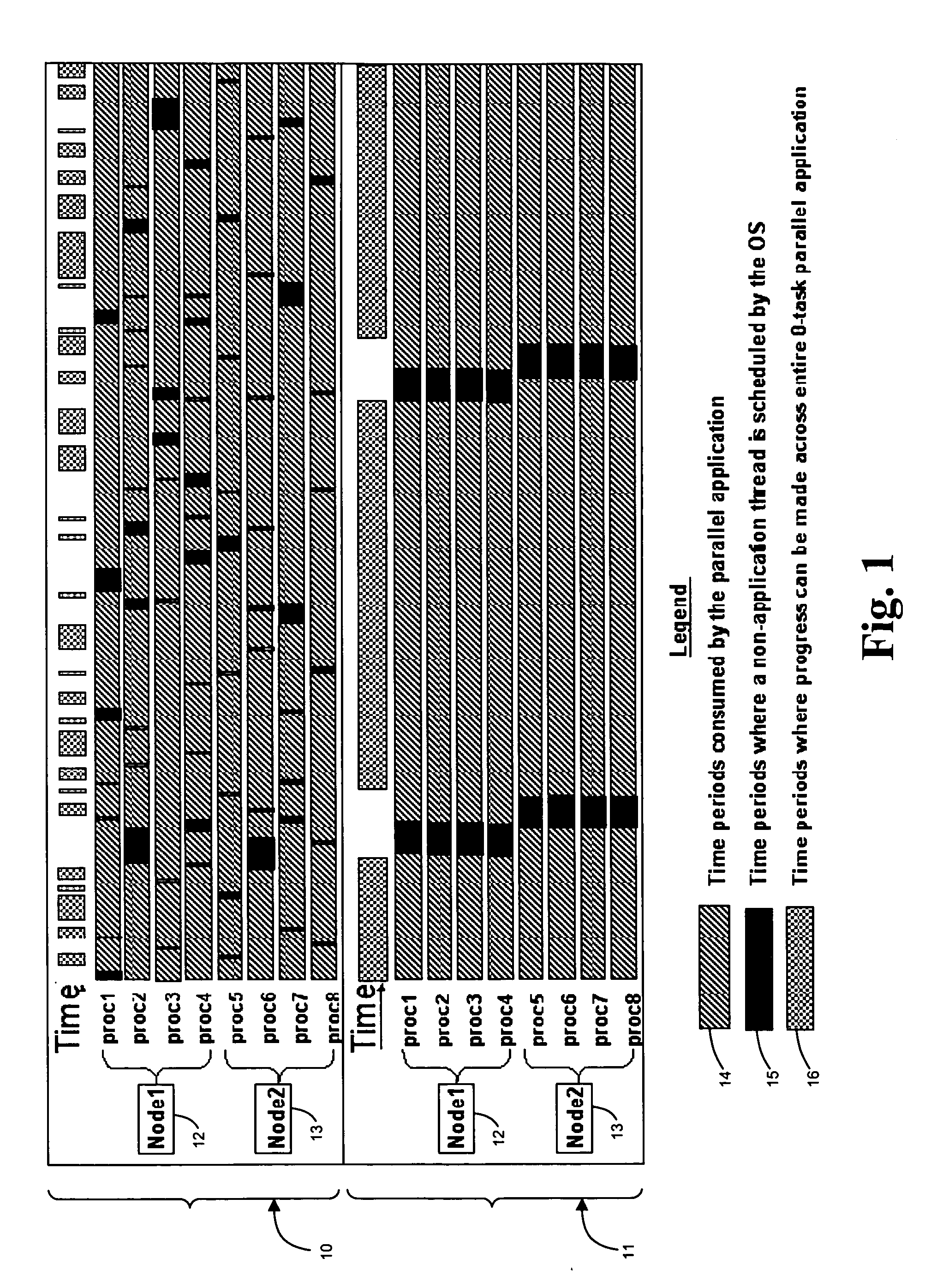

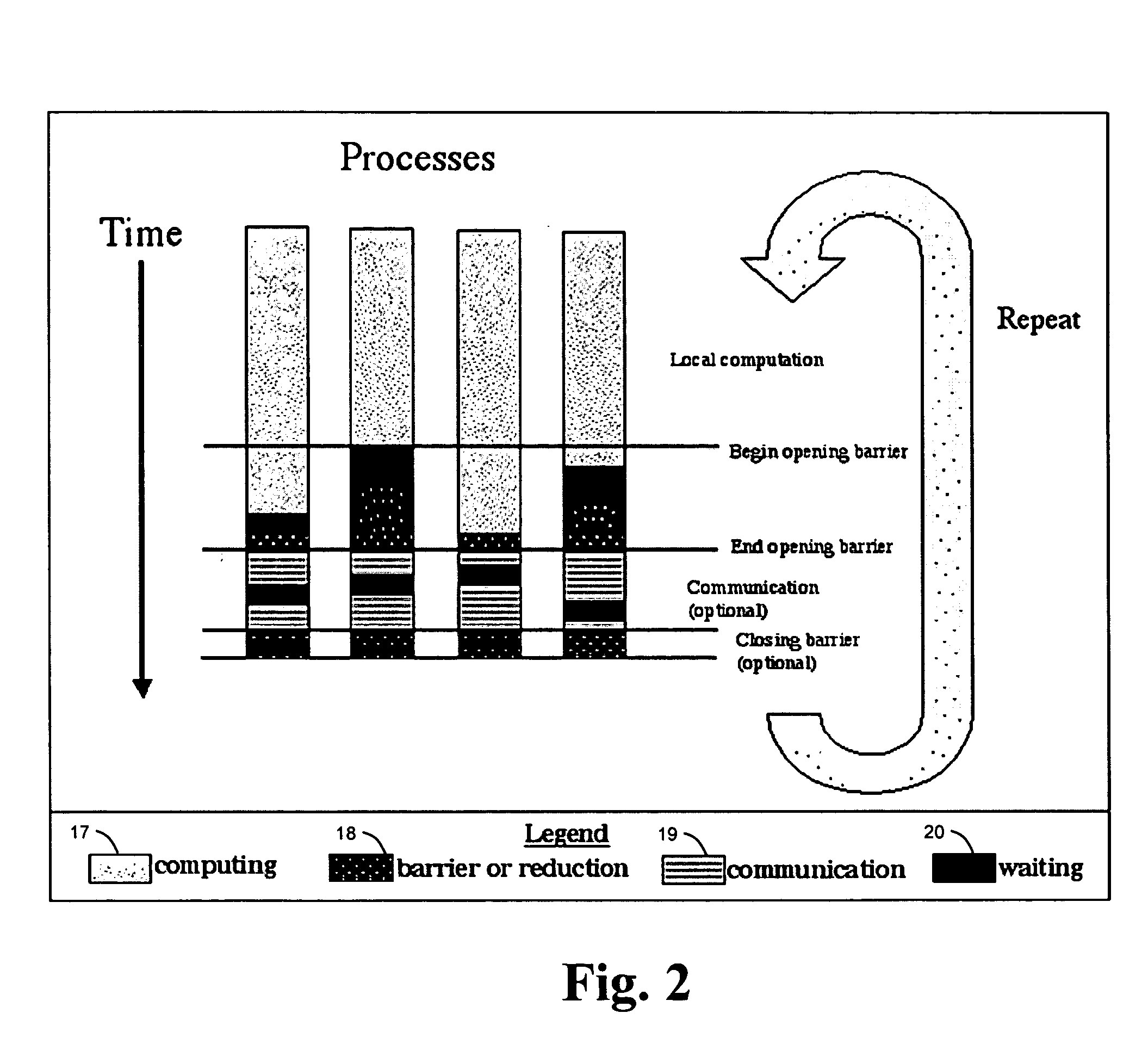

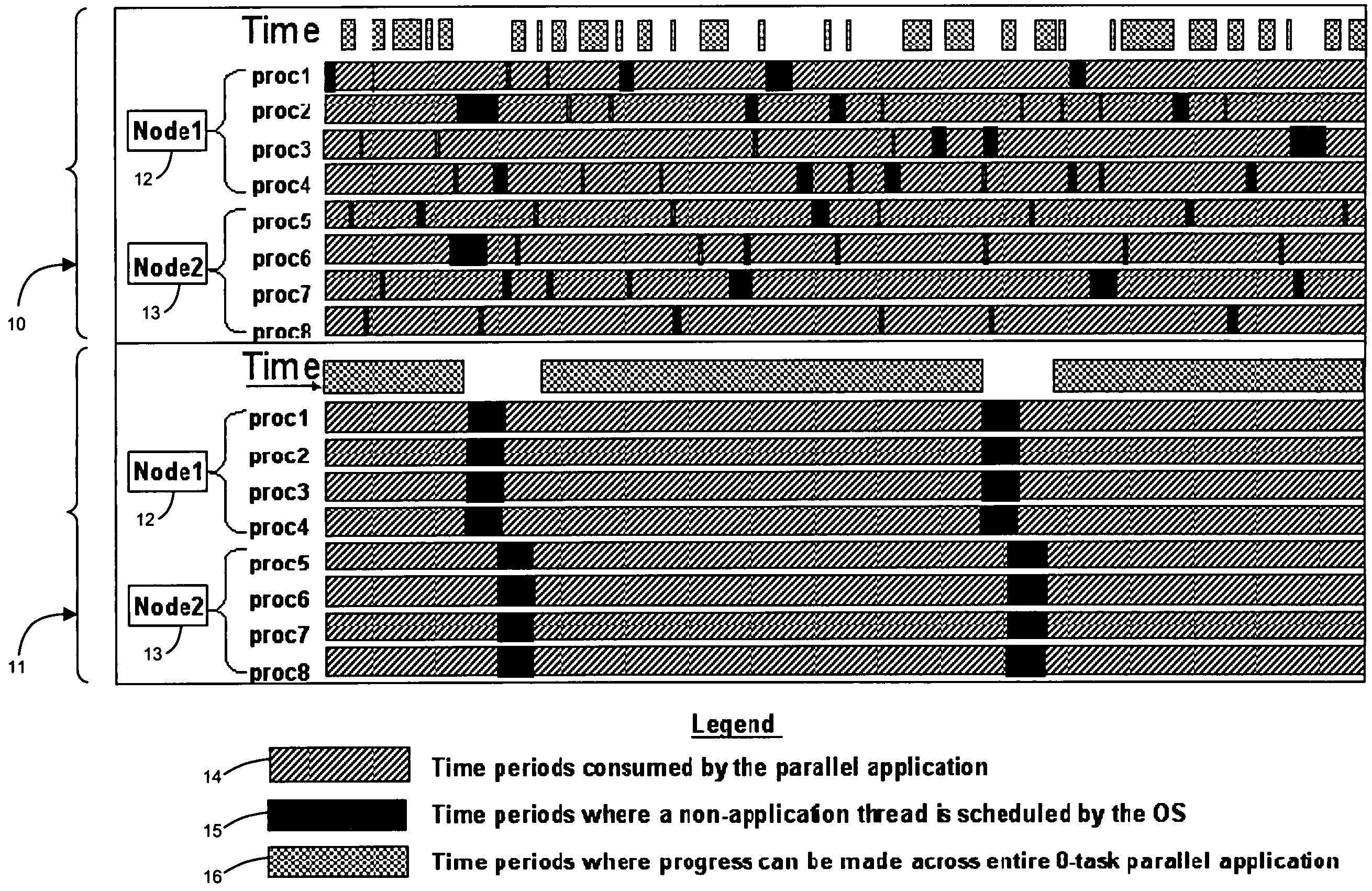

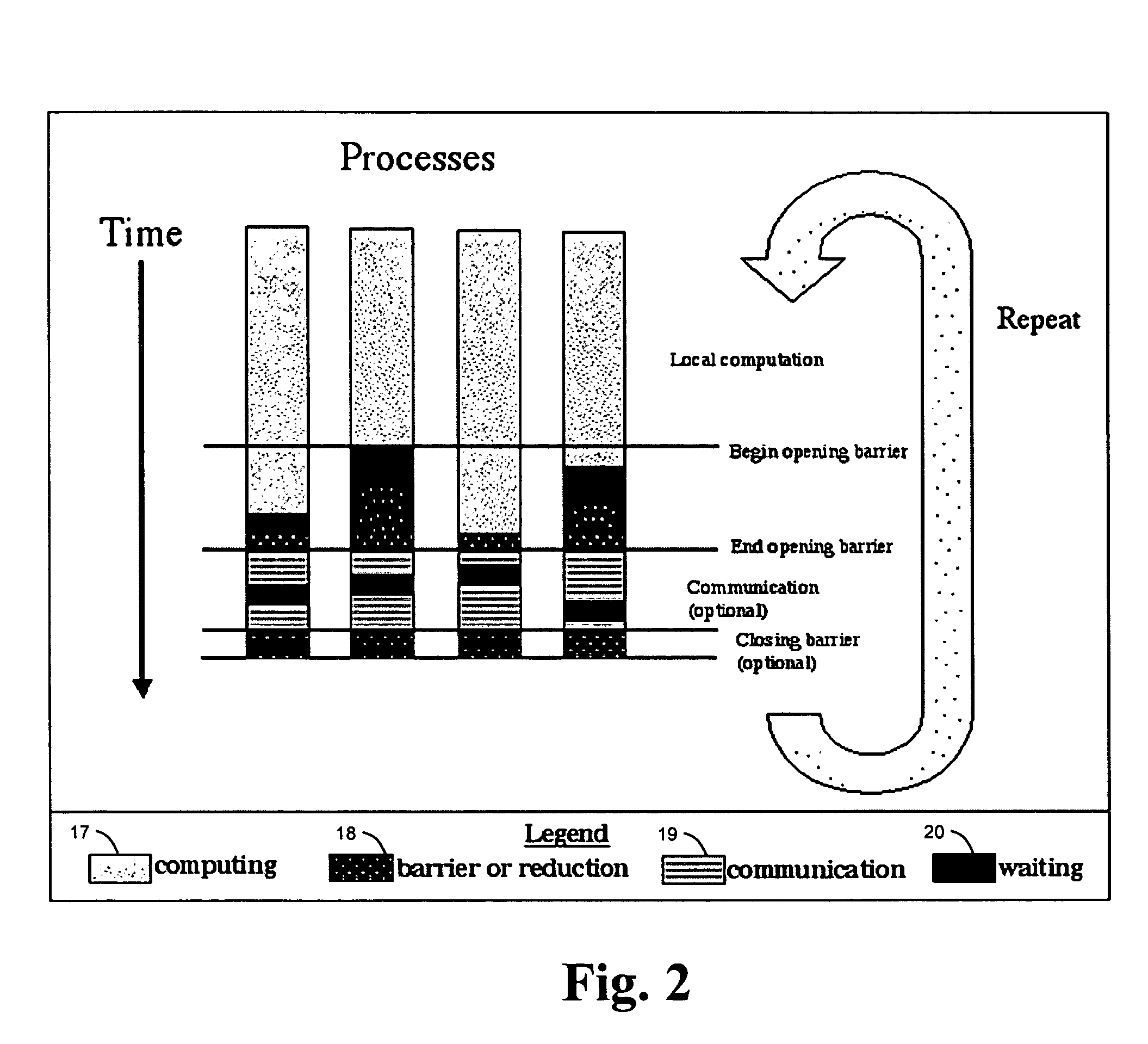

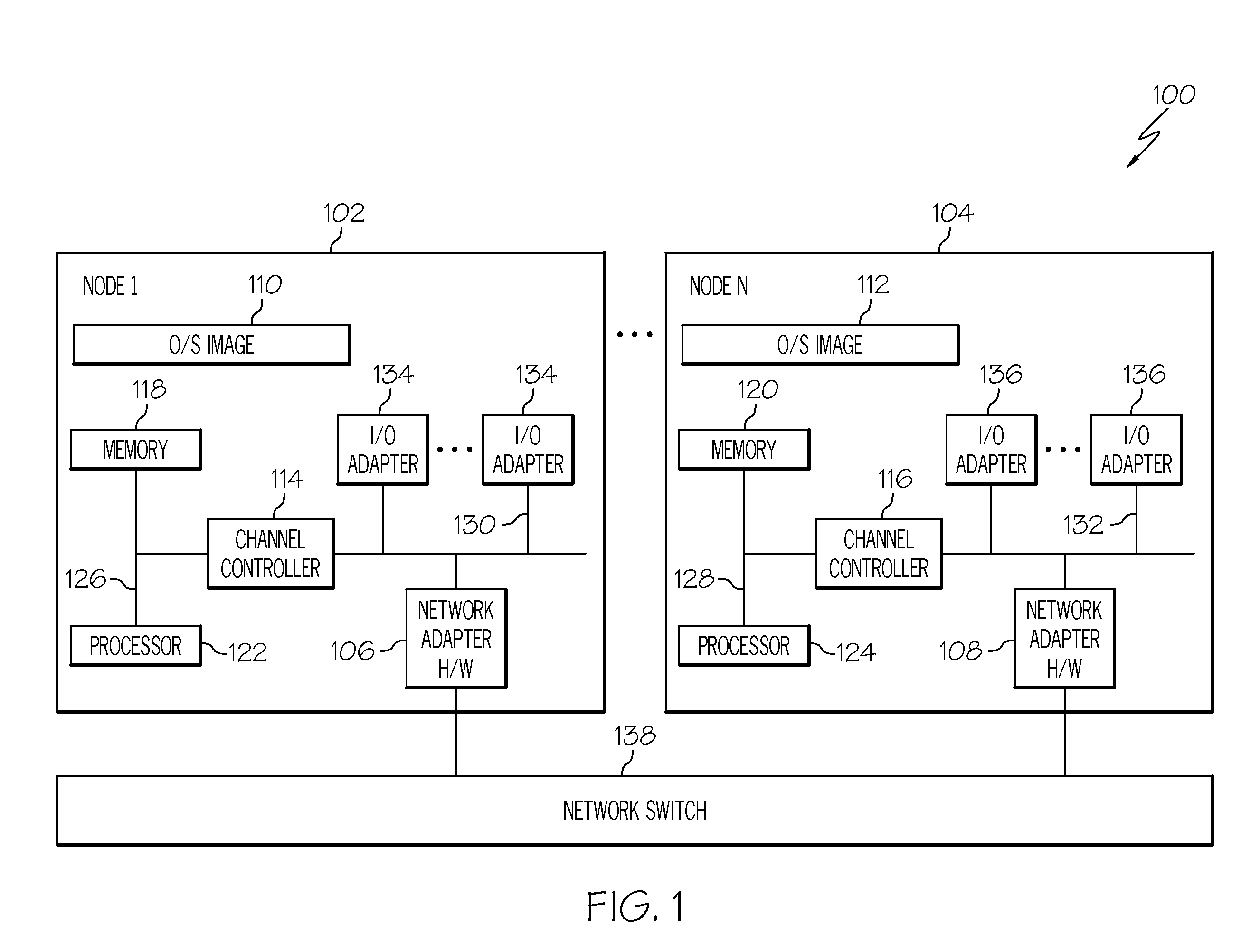

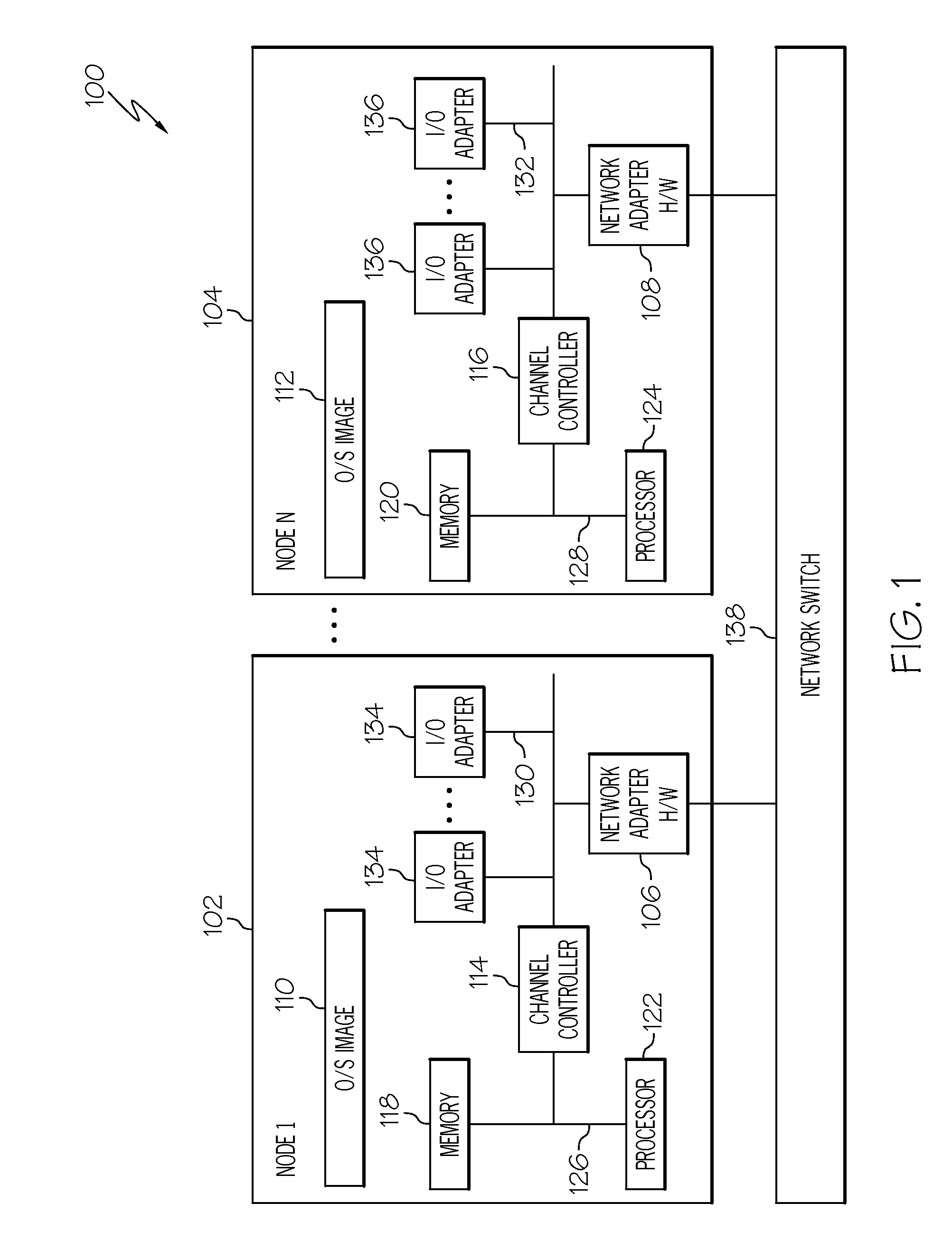

Parallel-aware, dedicated job co-scheduling method and system

InactiveUS20050131865A1Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsExtensibilityOperational system

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

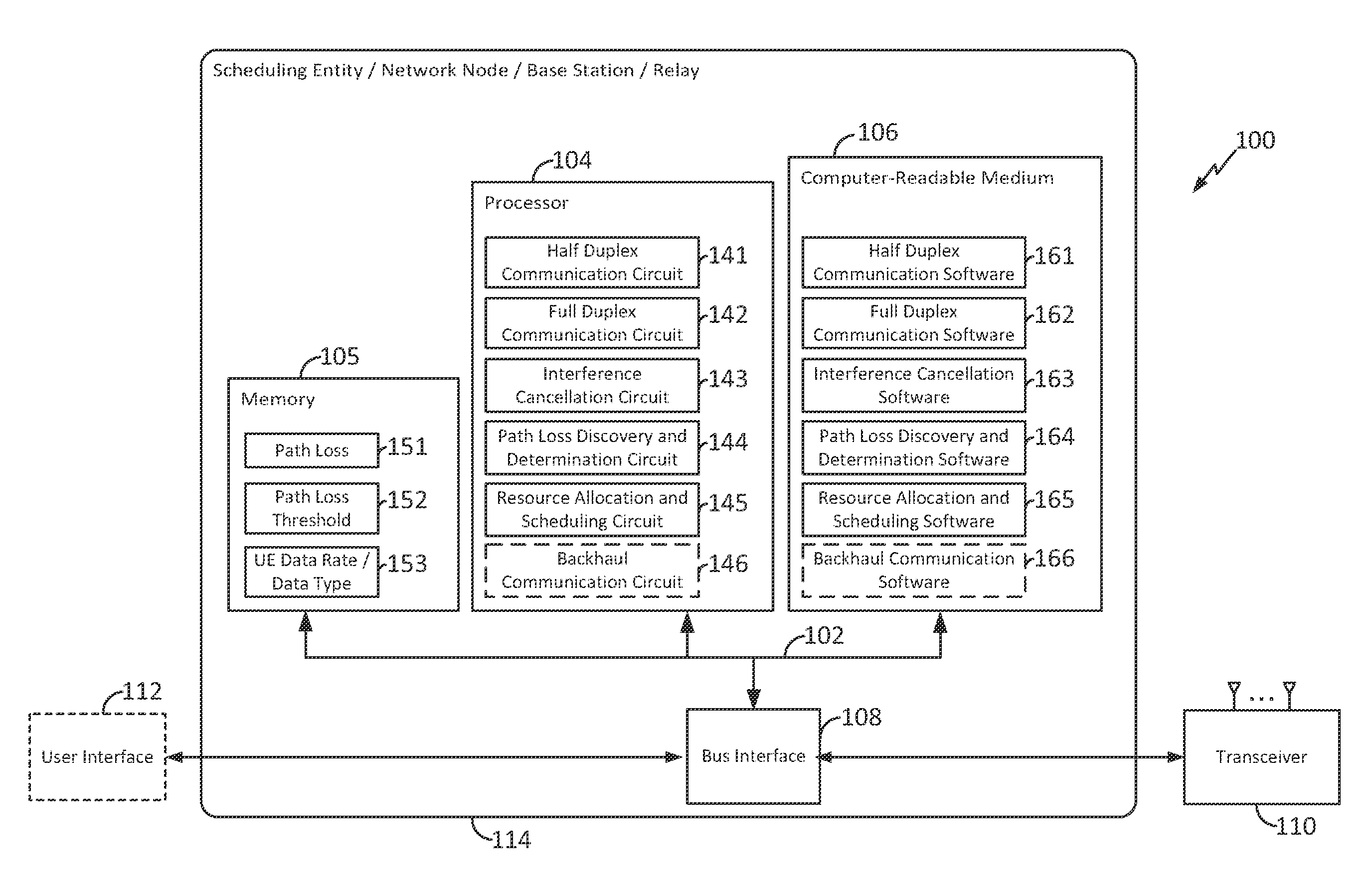

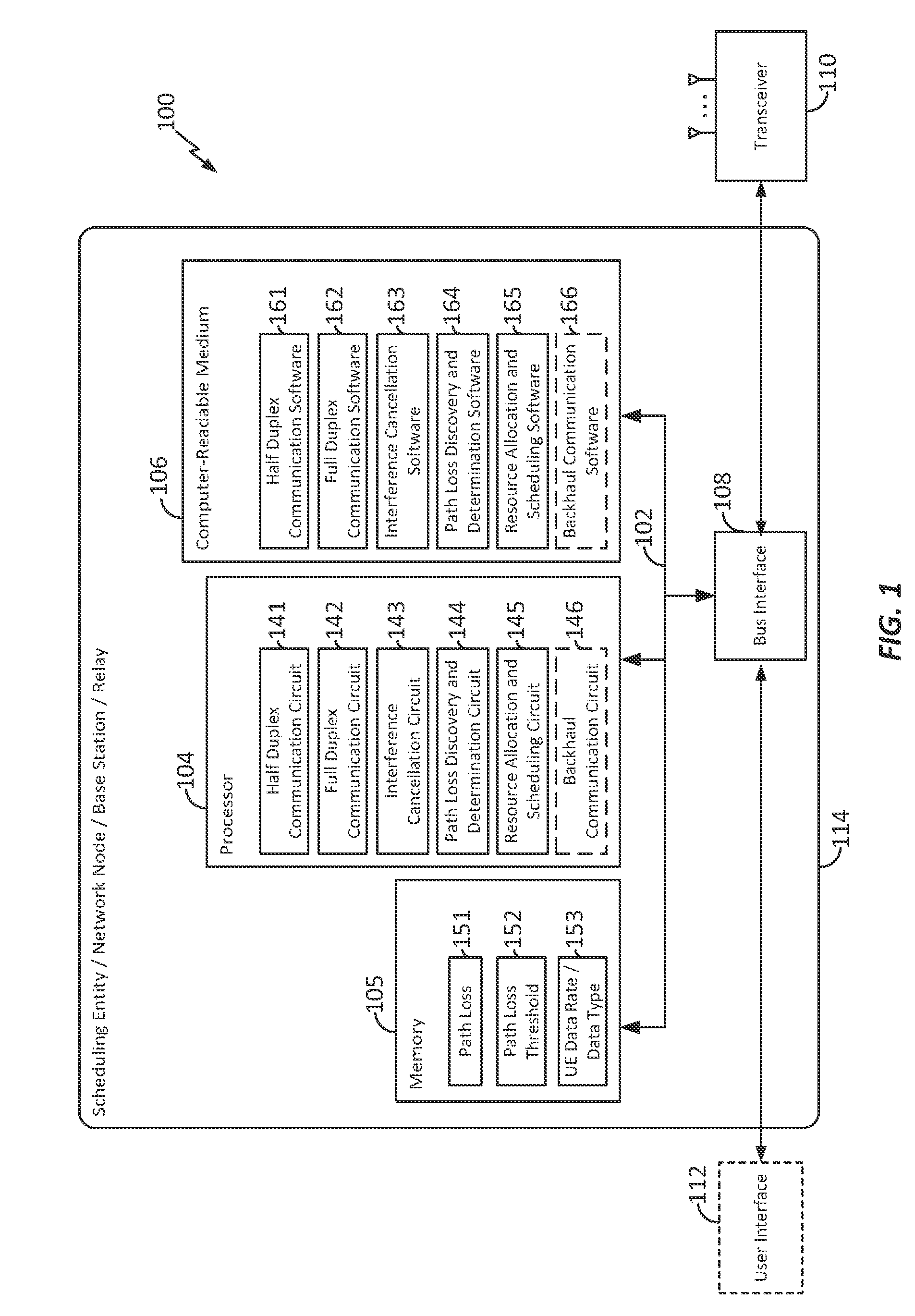

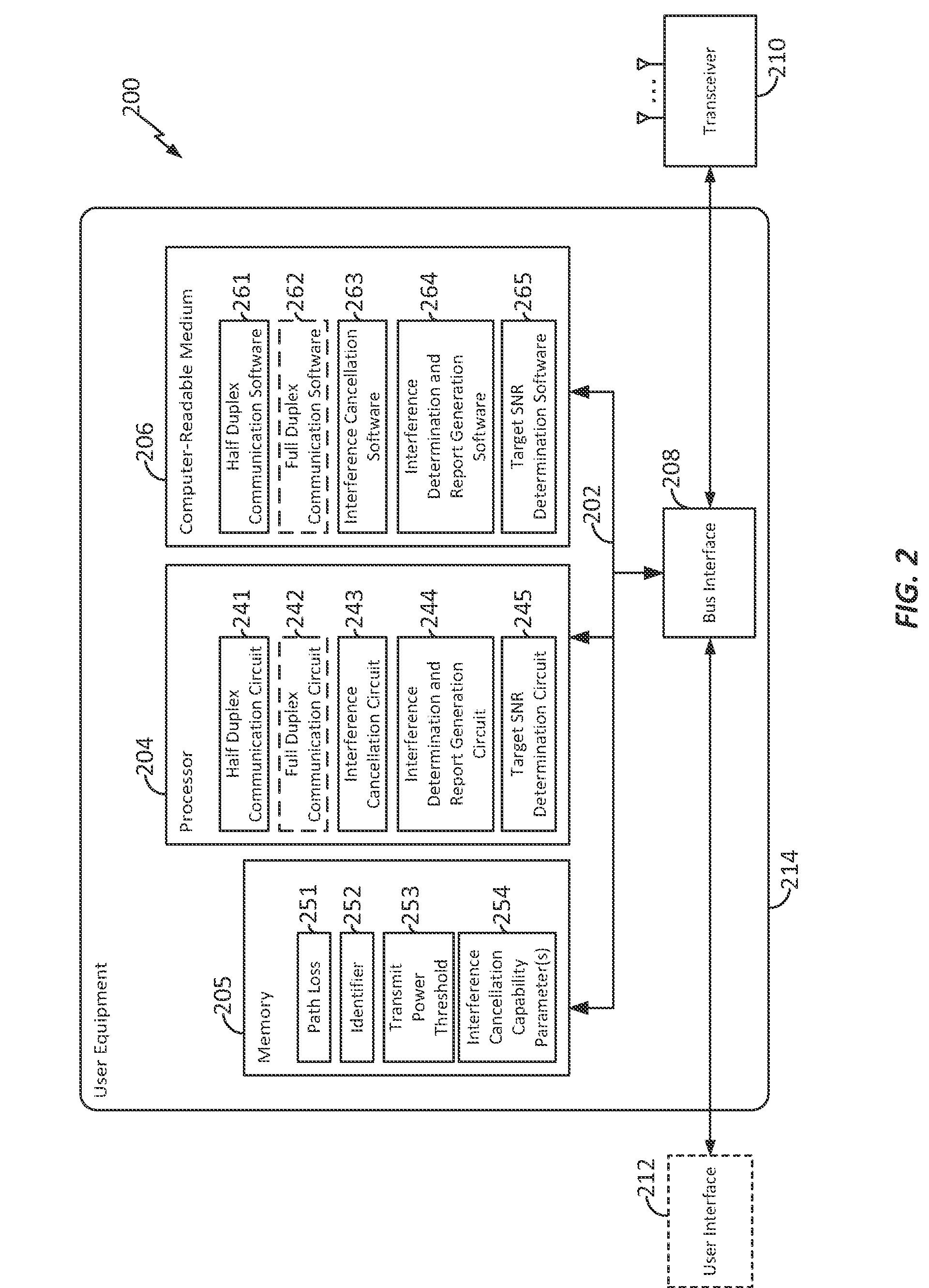

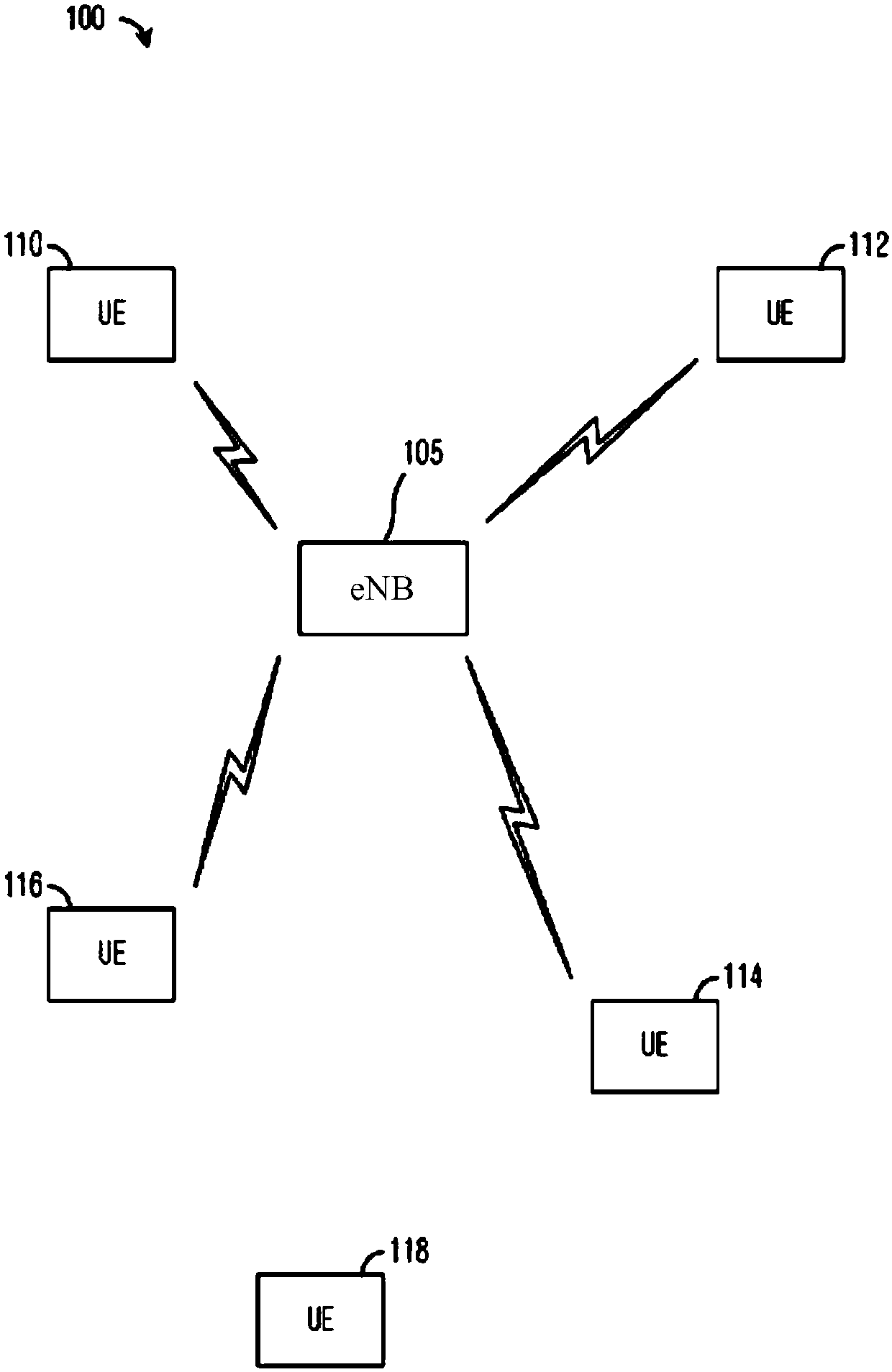

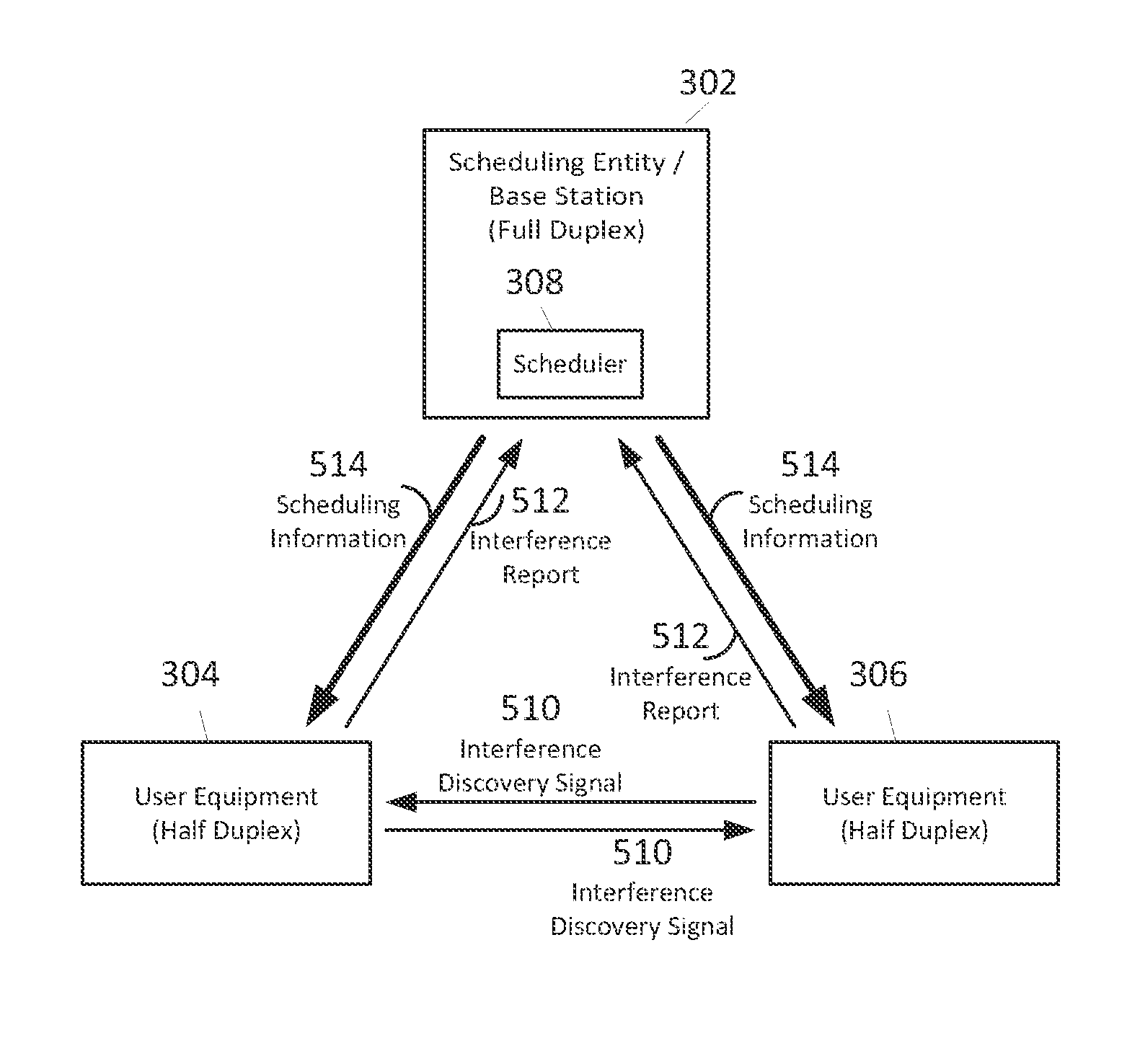

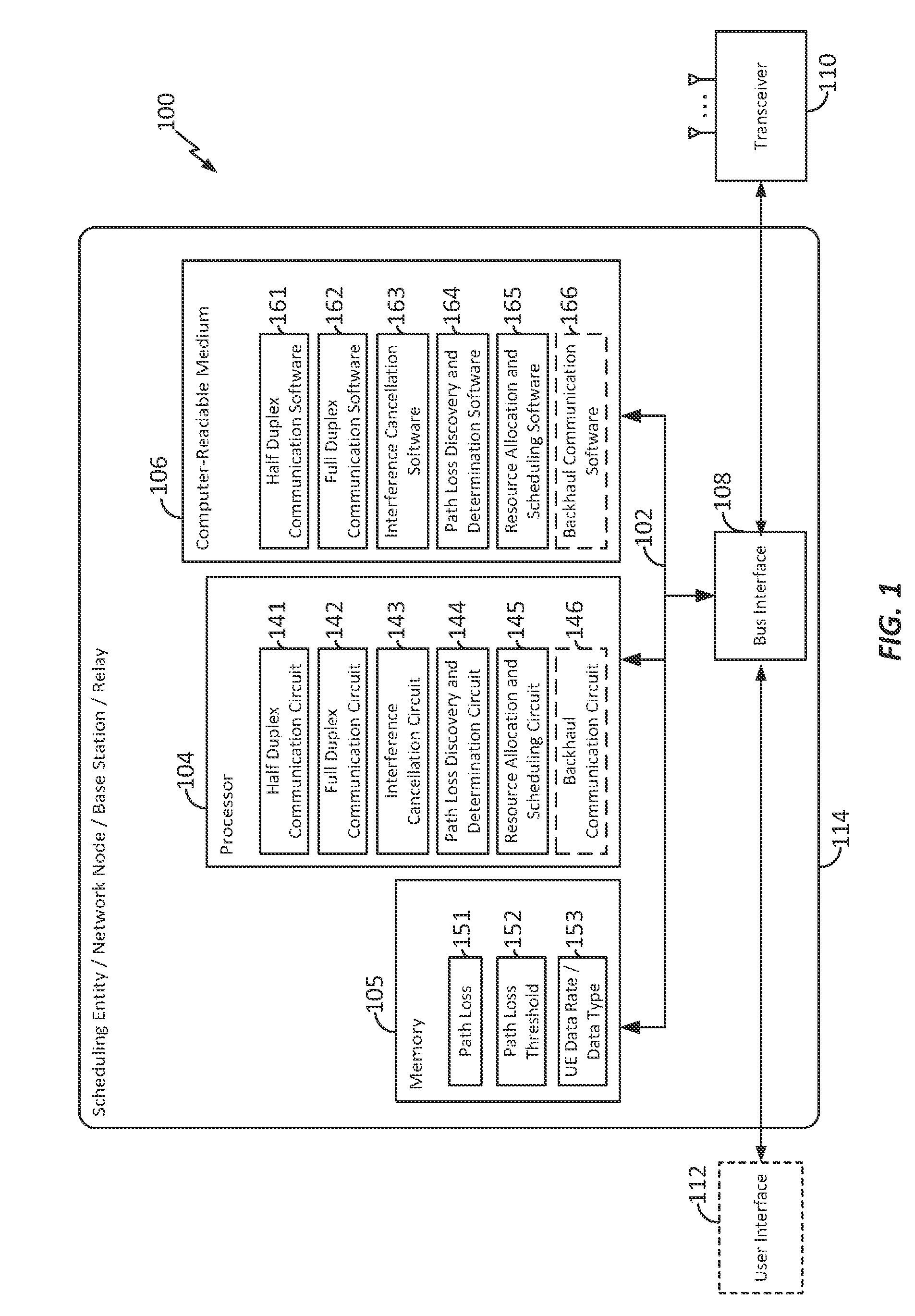

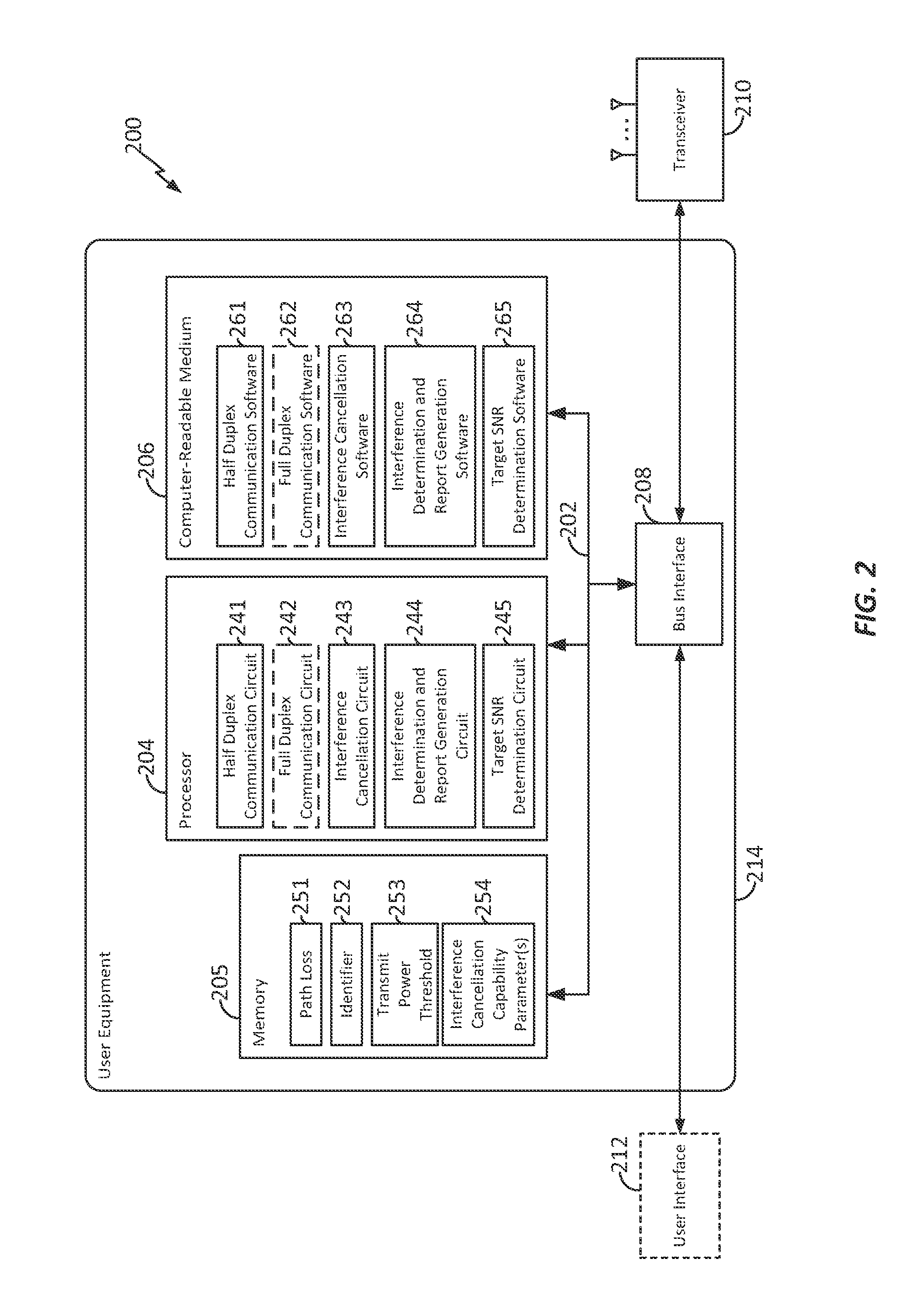

Full duplex operation in a wireless communication network

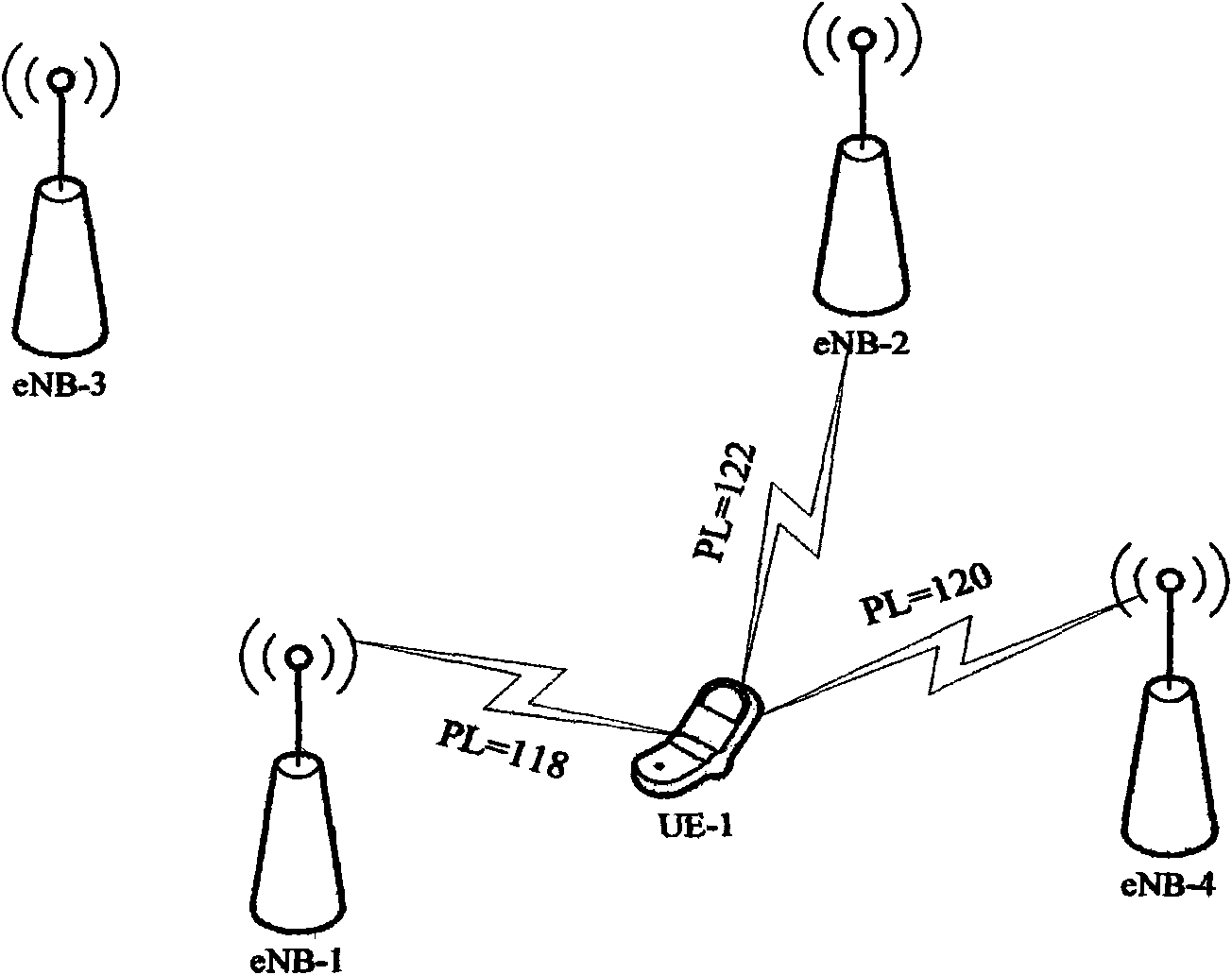

Methods, apparatus, and computer software are disclosed for communicating within a wireless communication network including a scheduling entity configured for full duplex communication, and user equipment (UE) configured for half duplex communication. In some examples, one or more UEs may be configured for limited (quasi-) full duplex communication. Some aspects relate to scheduling the UEs, including determining whether co-scheduling of the UEs to share a time-frequency resource is suitable based on one or more factors such as an inter-device path loss.

Owner:QUALCOMM INC

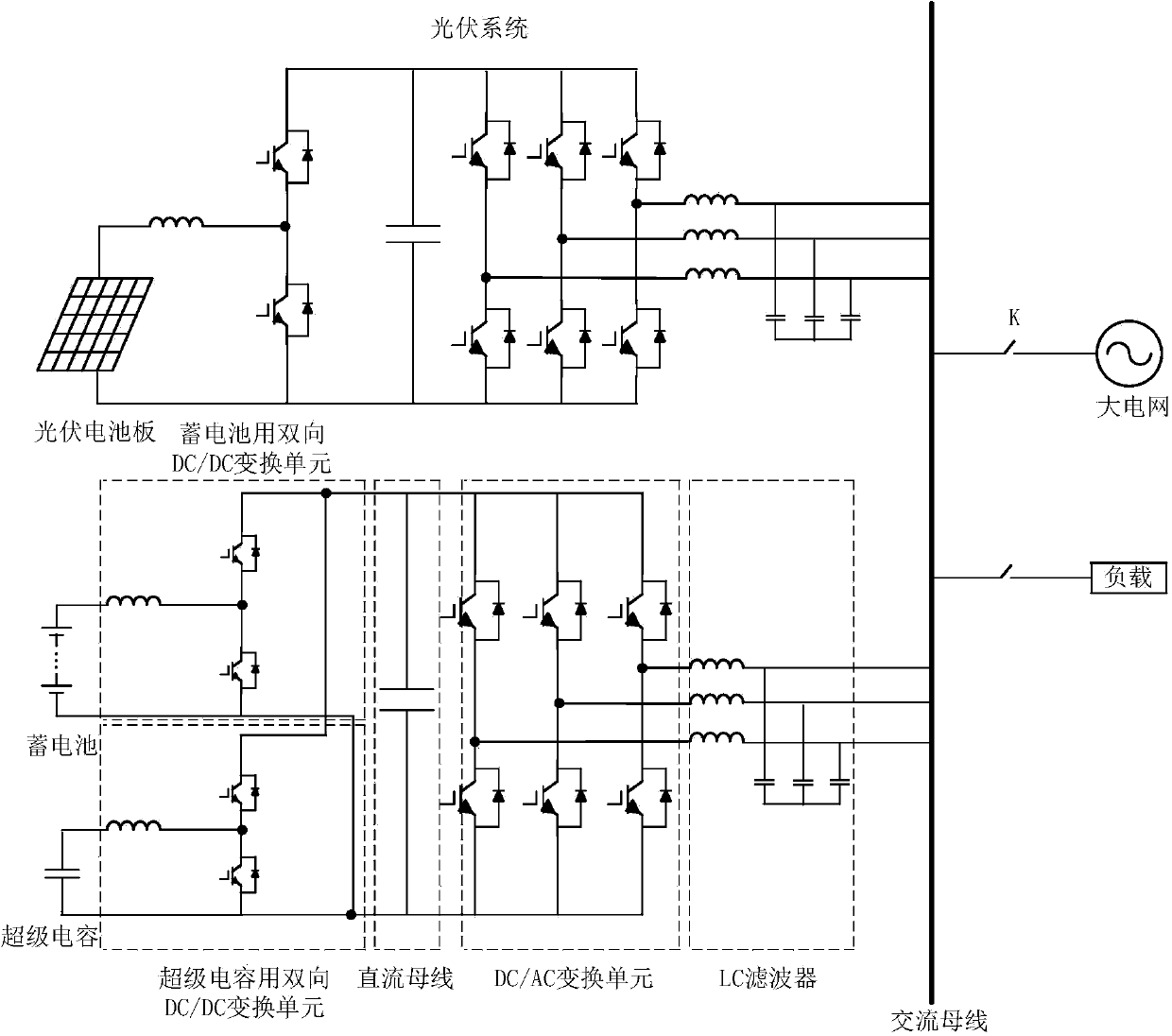

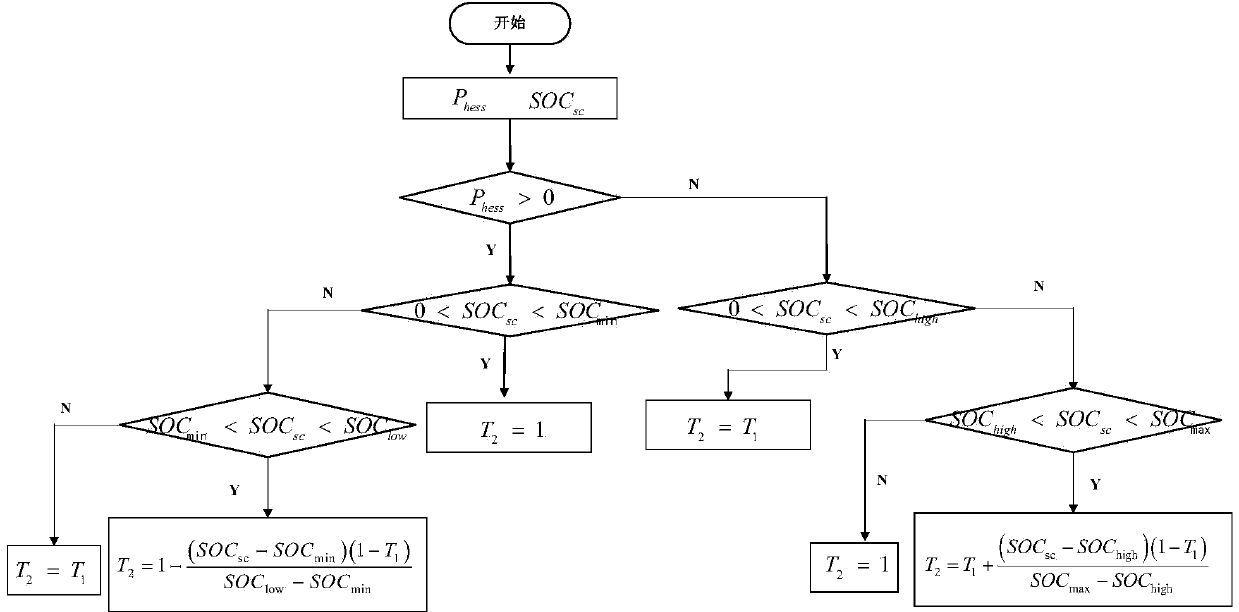

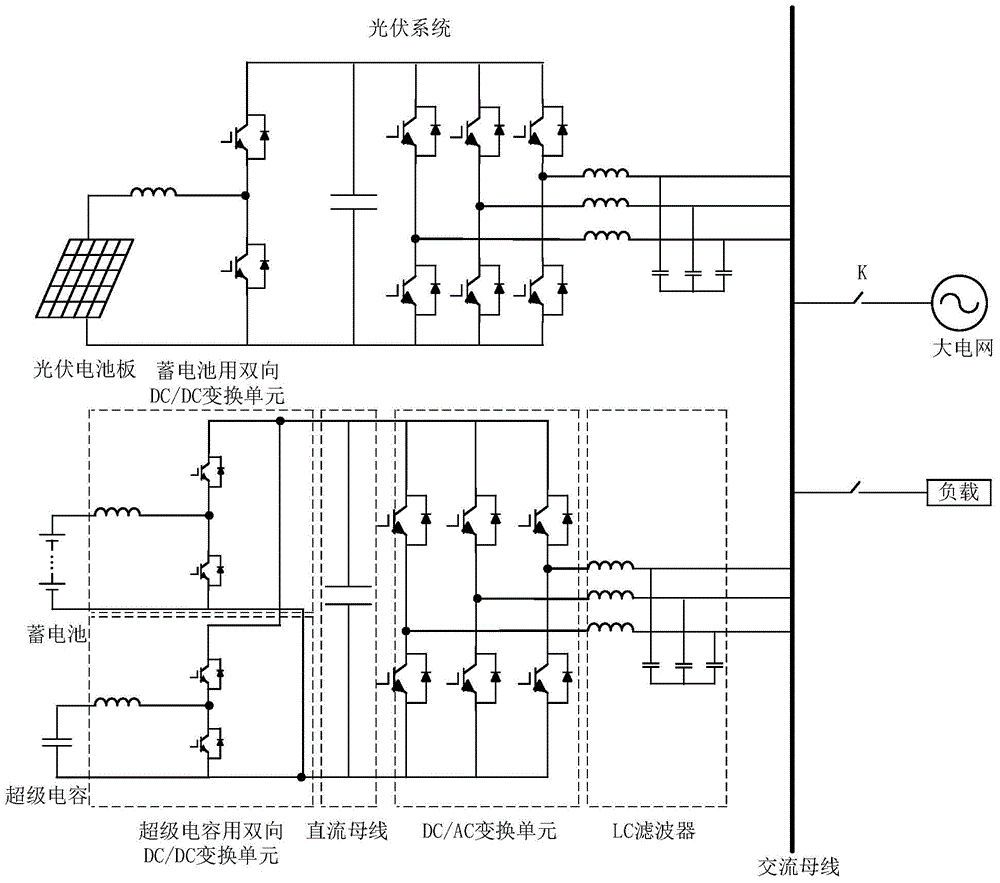

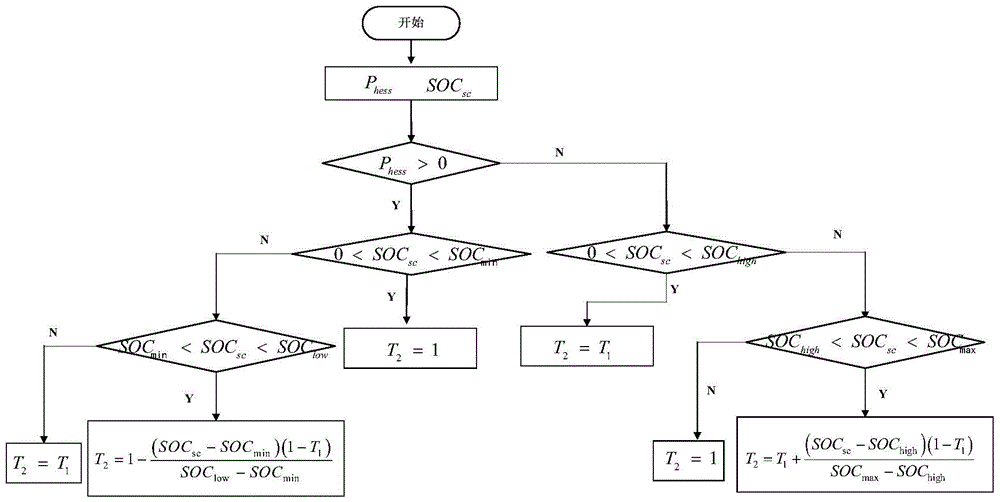

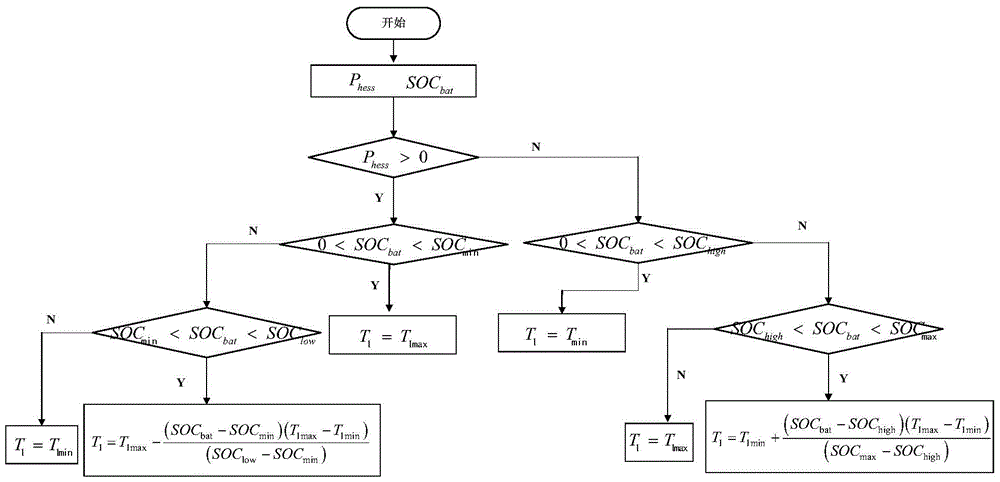

Co-scheduling strategy for multiple energy storage in distributed light storage micro-gird system

InactiveCN104184159AKeep constantAc network load balancingPower oscillations reduction/preventionCapacitancePower grid

Provided is a co-scheduling strategy for multiple energy storage in a distributed light storage micro-gird system. The based multiple energy storage adopts a two-stage converter topology structure comprising a preceding-stage bidirectional DC / DC conversion unit and a back-stage DC / AC conversion unit. A bidirectional DC / DC conversion unit for a storage battery and a bidirectional DC / DC conversion unit for a super capacitor share a DC bus, and are connected with a load and a large power grid through the DC / AC conversion unit and via an LC filter. The co-scheduling method of a two-stage converter for multiple energy storage is characterized by when the distributed light storage micro-gird system is in a grid-connected mode, carrying out double filter control on the two-stage converter for multiple energy storage, controlling the energy-storage element to carry out smooth photovoltaic output power fluctuation, and carrying out adjustment on filtering parameters according to the charge state of the super capacitor and the charge state of a storage battery; and when the distributed light storage micro-gird system is in an off-grid state, controlling the energy-storage element to provide voltage and frequency support to the distributed light storage micro-gird system, the distributed light storage micro-gird system jointly providing power for the load, wherein the energy-storage element is the storage battery and the super capacitor.

Owner:GUANGDONG YUANJING ENERGY

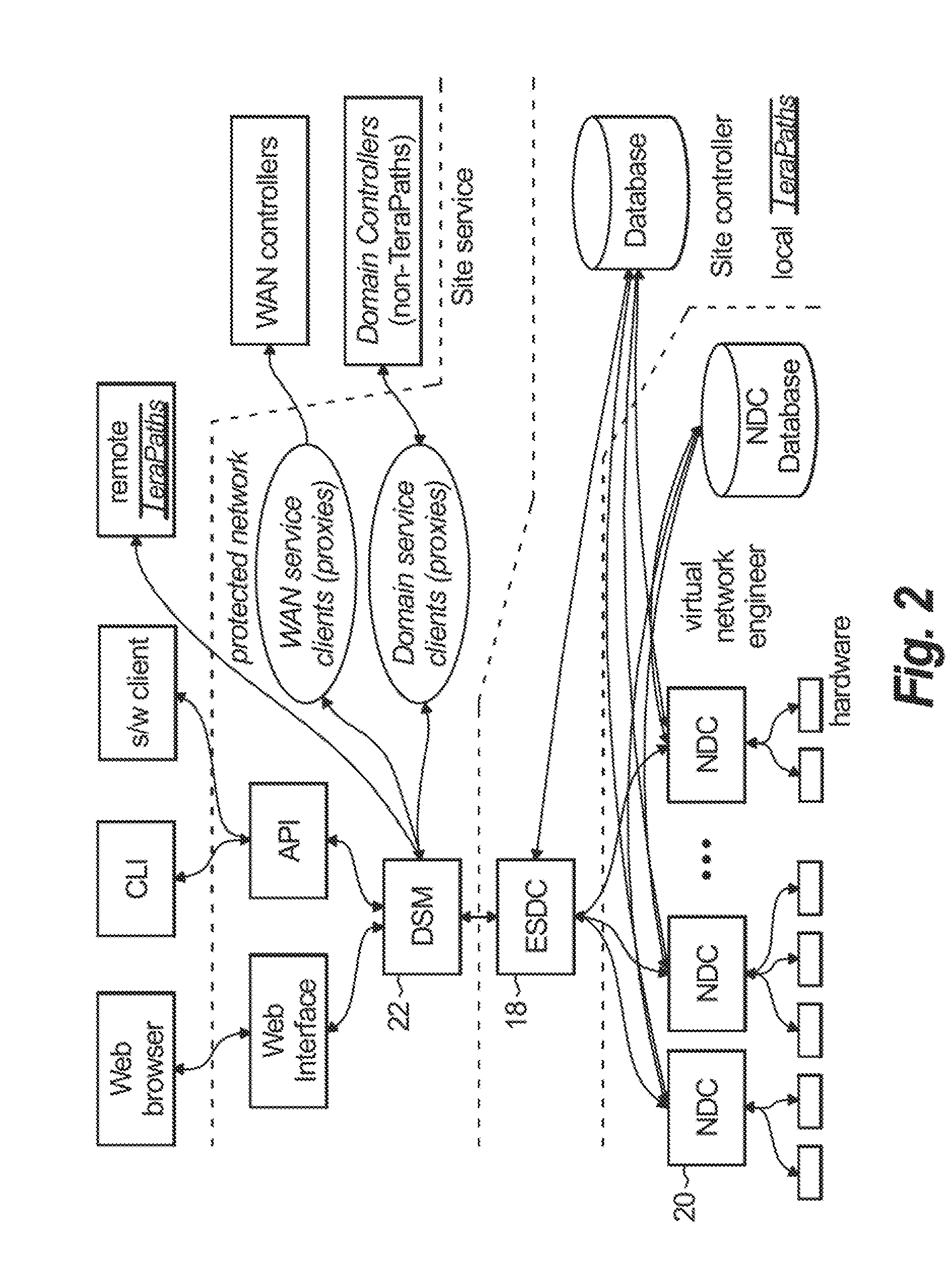

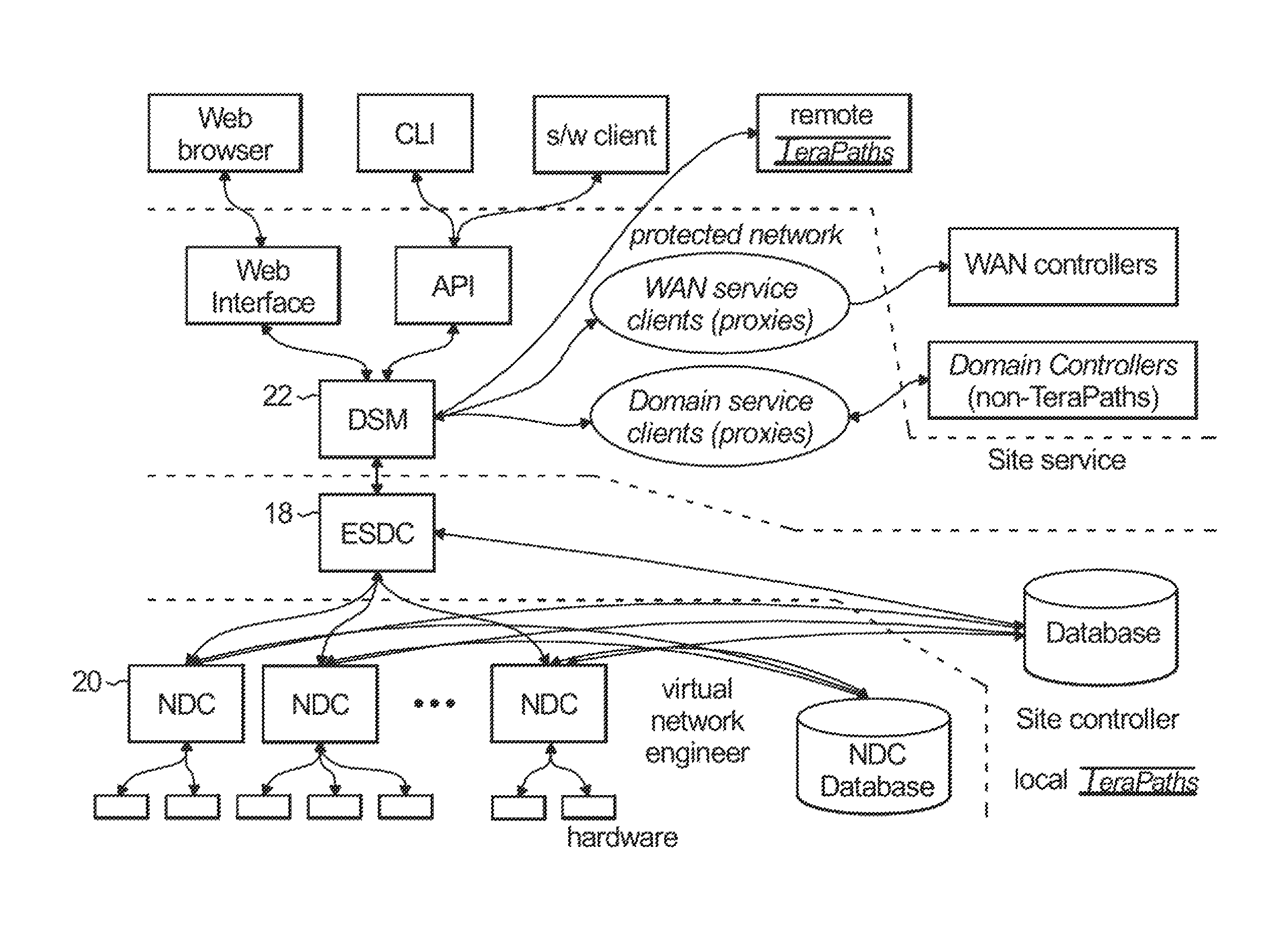

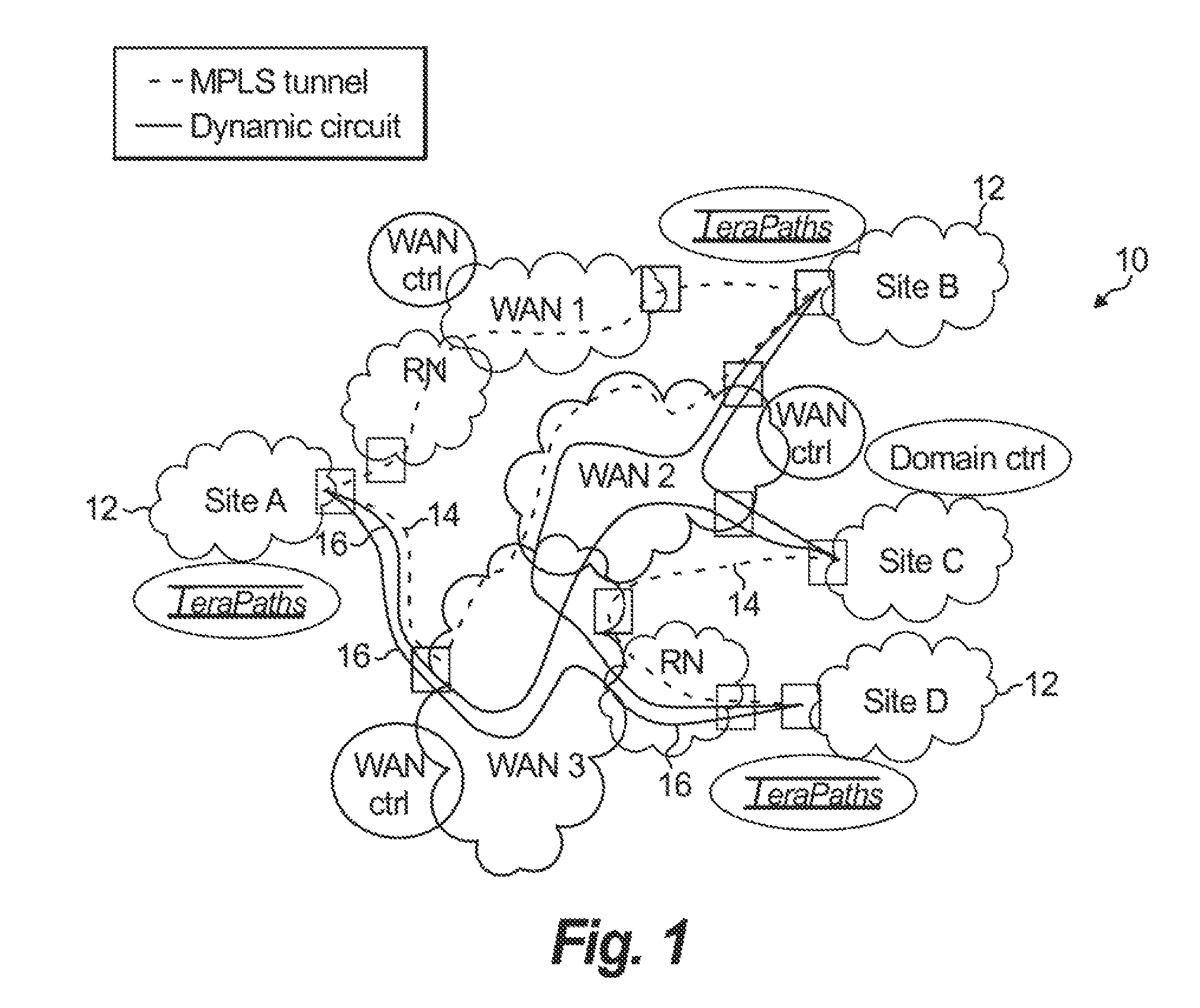

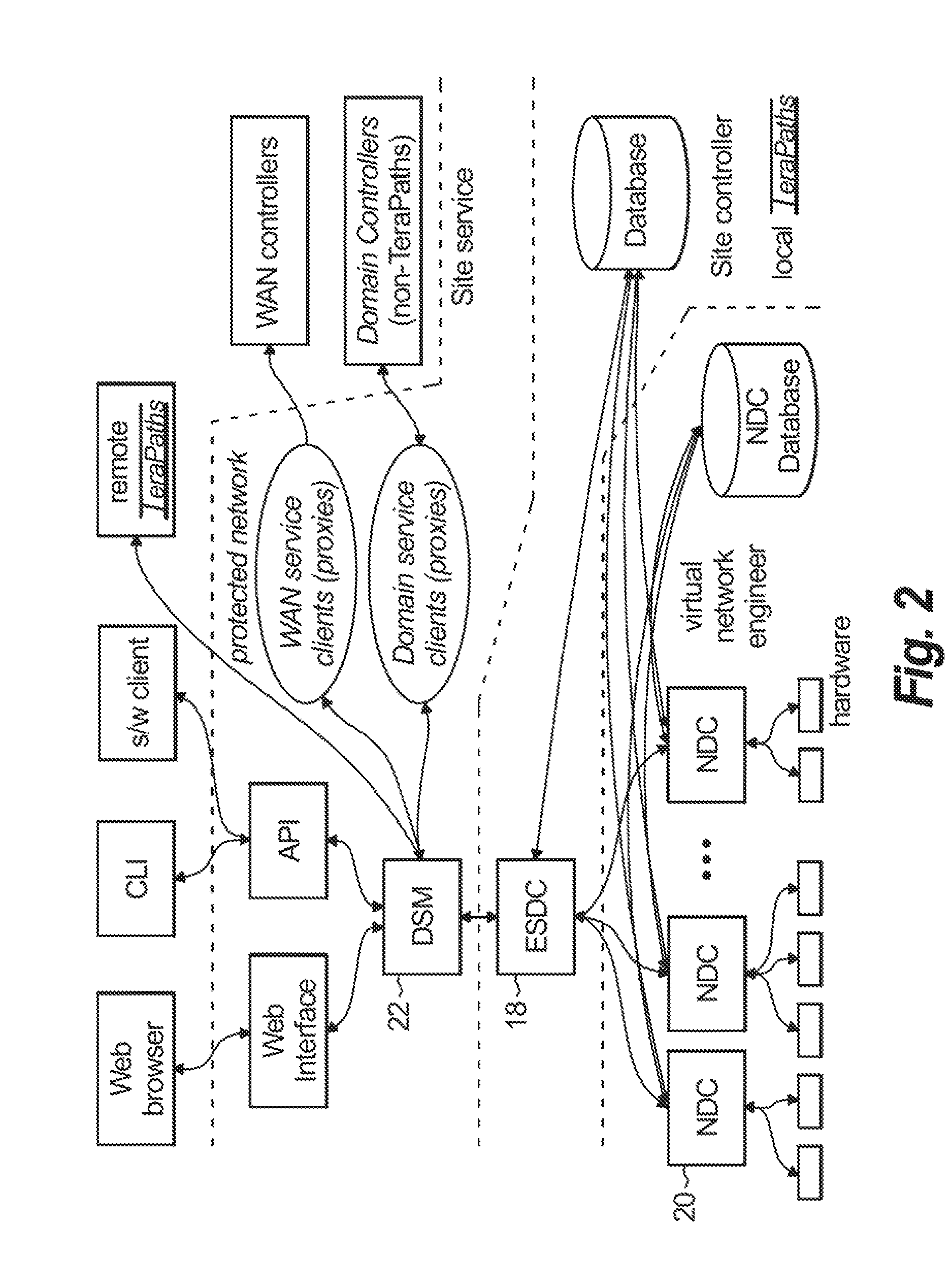

Co-Scheduling of Network Resource Provisioning and Host-to-Host Bandwidth Reservation on High-Performance Network and Storage Systems

A cross-domain network resource reservation scheduler configured to schedule a path from at least one end-site includes a management plane device configured to monitor and provide information representing at least one of functionality, performance, faults, and fault recovery associated with a network resource; a control plane device configured to at least one of schedule the network resource, provision local area network quality of service, provision local area network bandwidth, and provision wide area network bandwidth; and a service plane device configured to interface with the control plane device to reserve the network resource based on a reservation request and the information from the management plane device. Corresponding methods and computer-readable medium are also disclosed.

Owner:RGT UNIV OF CALIFORNIA THROUGH THE ERNEST ORLANDO LAWRENCE BERKELEY NAT LAB +1

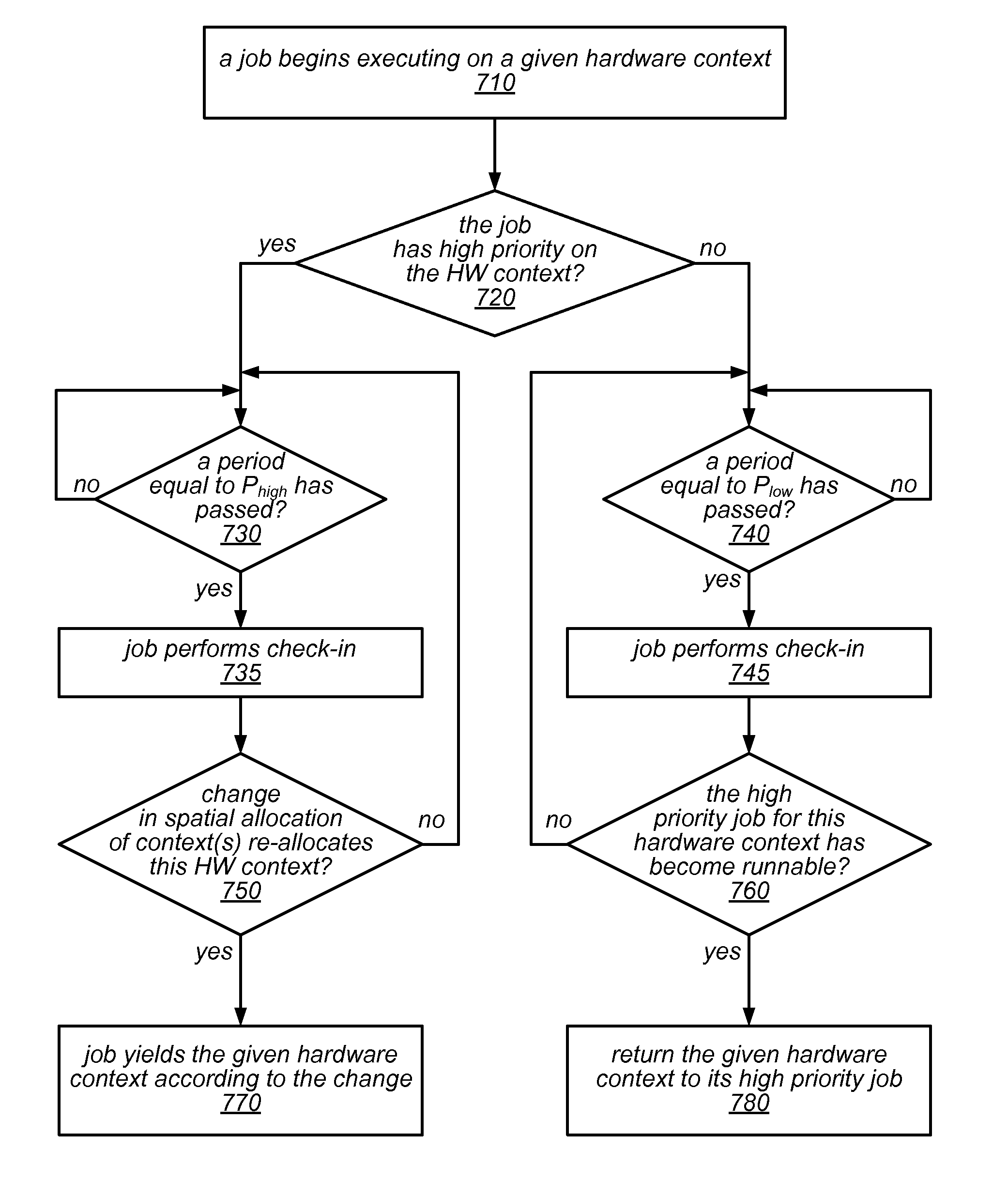

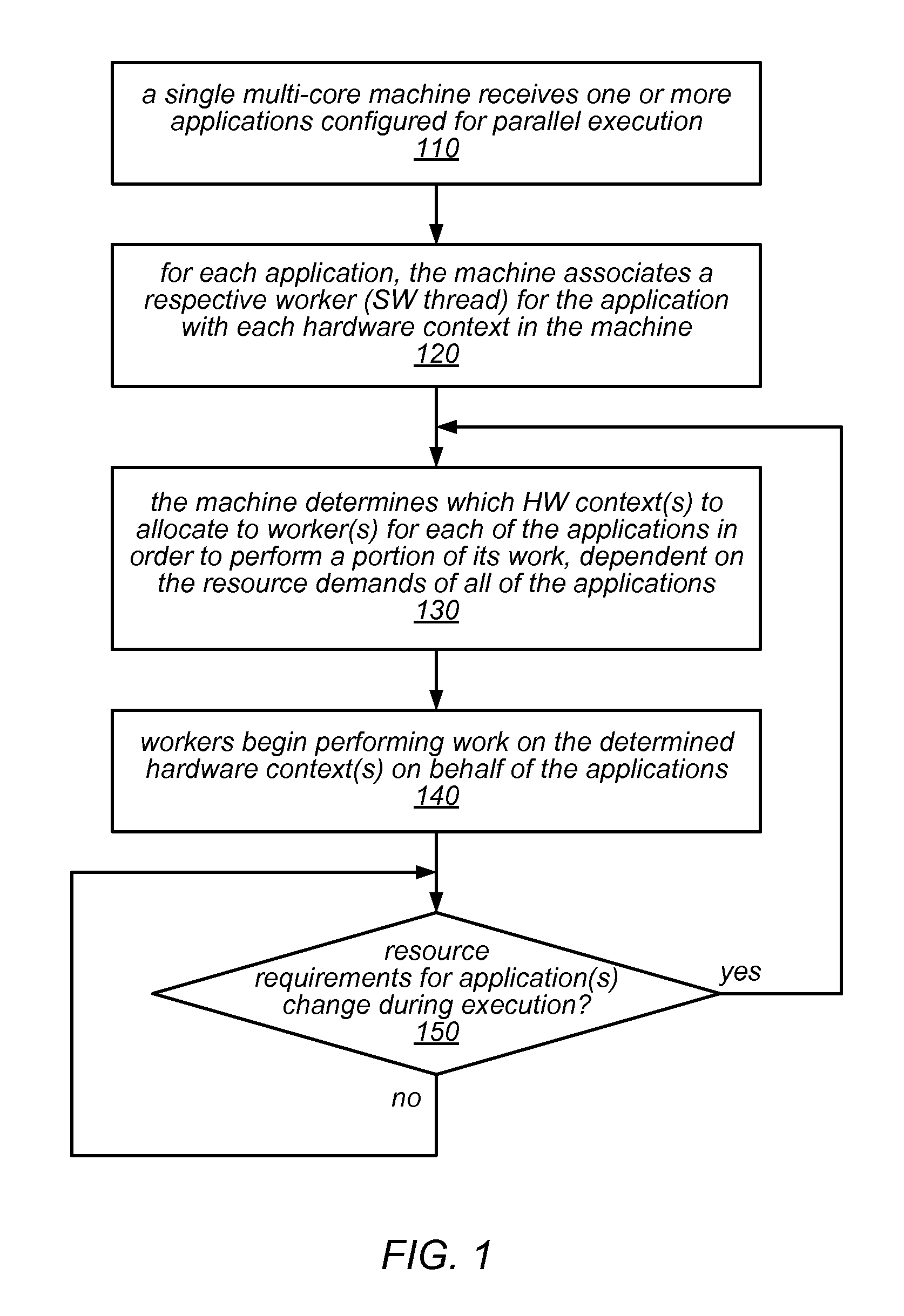

Dynamic Co-Scheduling of Hardware Contexts for Parallel Runtime Systems on Shared Machines

ActiveUS20150339158A1Reduce load imbalanceEasy to useProgram initiation/switchingResource allocationOperational systemResource management

Multi-core computers may implement a resource management layer between the operating system and resource-management-enabled parallel runtime systems. The resource management components and runtime systems may collectively implement dynamic co-scheduling of hardware contexts when executing multiple parallel applications, using a spatial scheduling policy that grants high priority to one application per hardware context and a temporal scheduling policy for re-allocating unused hardware contexts. The runtime systems may receive resources on a varying number of hardware contexts as demands of the applications change over time, and the resource management components may co-ordinate to leave one runnable software thread for each hardware context. Periodic check-in operations may be used to determine (at times convenient to the applications) when hardware contexts should be re-allocated. Over-subscription of worker threads may reduce load imbalances between applications. A co-ordination table may store per-hardware-context information about resource demands and allocations.

Owner:ORACLE INT CORP

Parallel-aware, dedicated job co-scheduling within/across symmetric multiprocessing nodes

InactiveUS7810093B2Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsOperational systemSystem usage

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

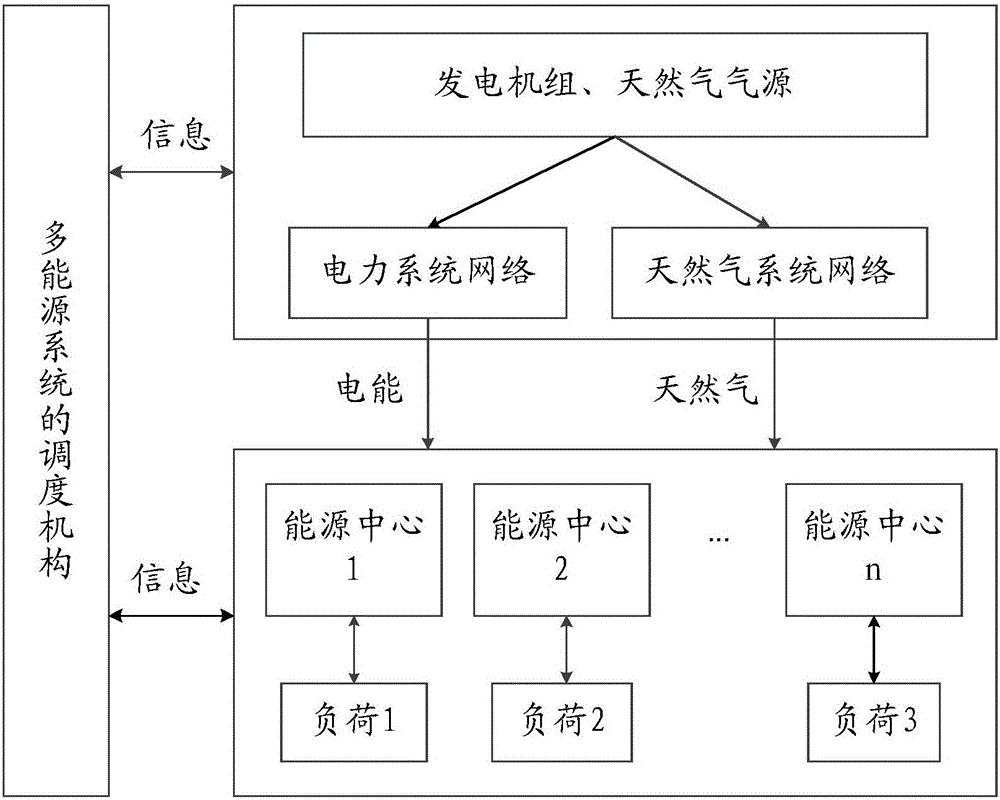

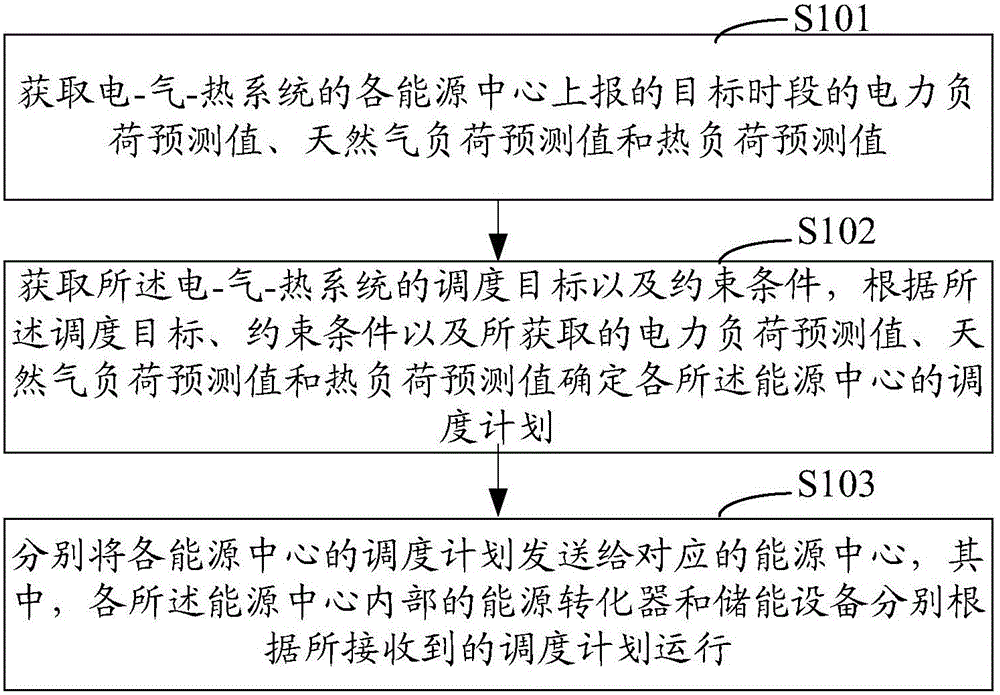

Energy center-based power-gas-heat system co-scheduling method and system

InactiveCN106447152ARealize collaborative schedulingTechnology managementResourcesTime segmentHeat load

The invention relates to an energy center-based power-gas-heat system co-scheduling method and system. The method comprises the steps of obtaining a power load prediction value, a natural gas load prediction value and a heat load prediction value of a target time segment, reported by energy centers of power-gas-heat systems; obtaining scheduling goals and constraint conditions of the power-gas-heat systems, and determining scheduling plans of the energy centers according to the scheduling goals, the constraint conditions and the obtained power load prediction value, natural gas load prediction value and heat load prediction value; and sending the scheduling plans of the energy centers to the corresponding energy centers, wherein energy converters and energy storage devices in the energy centers run according to the received scheduling plans. By adopting the scheme, the co-scheduling of the power-gas-heat systems can be effectively realized.

Owner:POWER GRID TECH RES CENT CHINA SOUTHERN POWER GRID +1

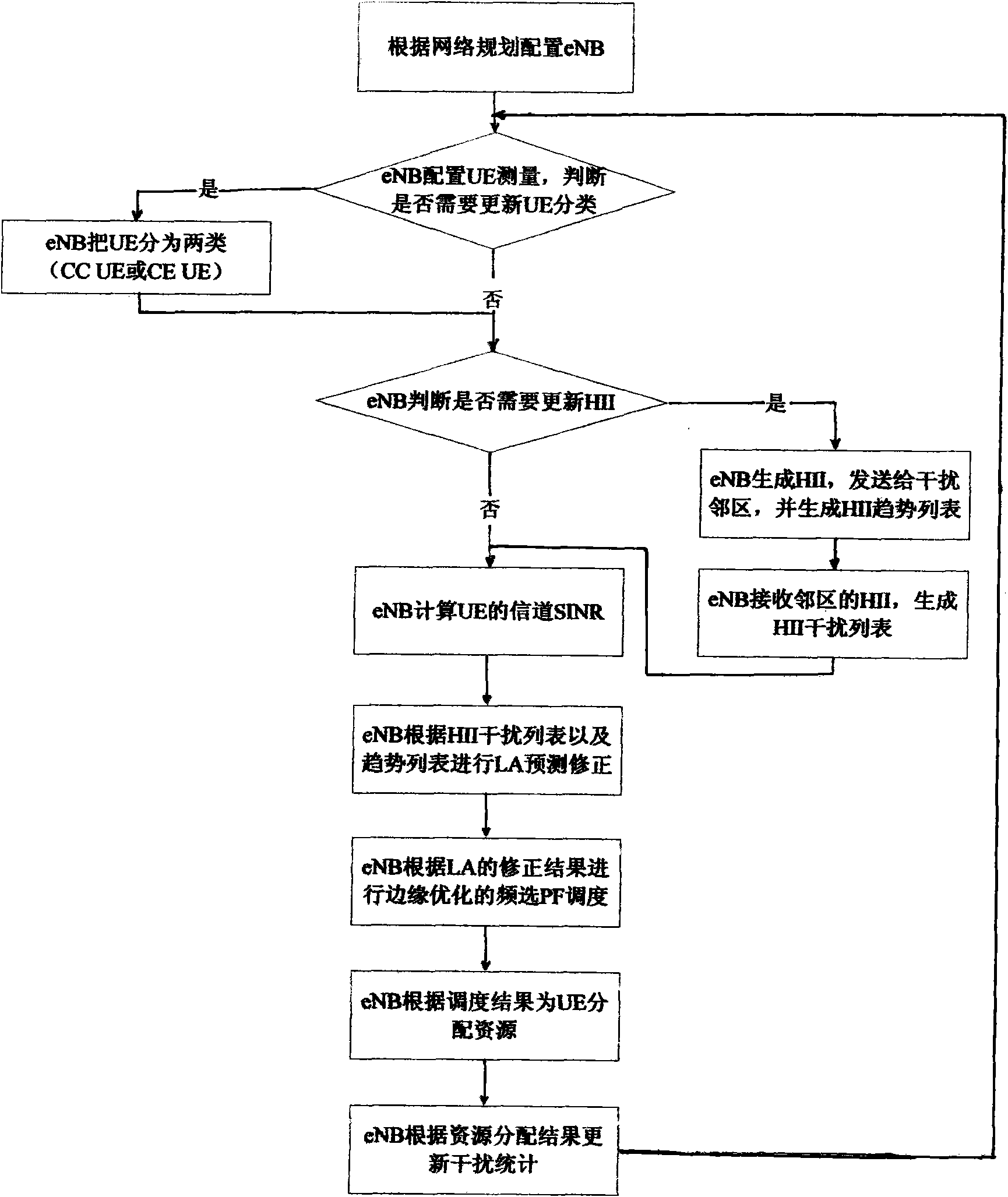

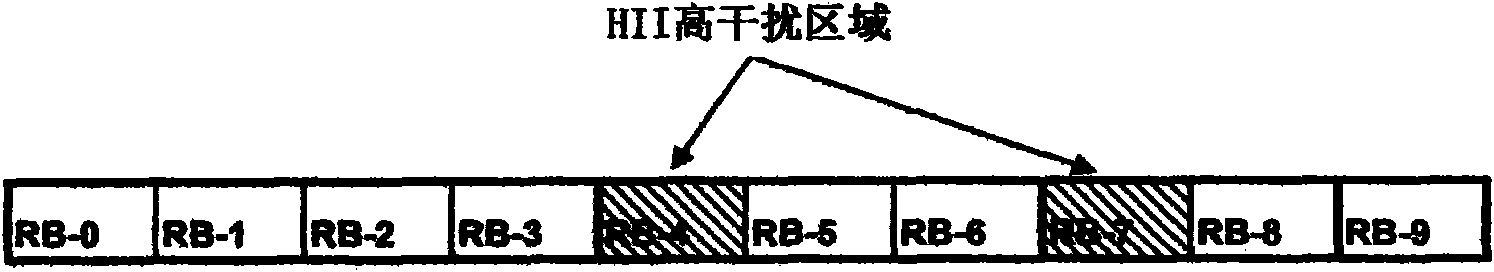

Method and device for coordinating dynamic interference between neighboring cells of wireless communication system

InactiveCN101945409AImprove throughputAchieving Interference CoordinationNetwork traffic/resource managementQuality of serviceCommunications system

The invention provides a method for defining home information infrastructure (HII) and a scheme for coordinating dynamic interference of multi-point cooperation between cells in an orthogonal frequency division multiplexing (OFDM) wireless communication system based on HII. In the scheme, co-scheduling is performed between the neighboring cells by using the HII mechanism, so that the interference between the cells is suppressed, and the throughput rate and the quality of service (QoS) level of a cell-edge user are effectively improved. Particularly, a base station generates an HII list for each main interference neighboring cell and periodically transmits the list to the base station of the interference neighboring cell. Meanwhile, when the base station is subjected to uplink scheduling, the HII list of the cell per se is updated according to the HII list transmitted by the interference neighboring cell, and the user is scheduled according to the list and the channel condition.

Owner:NEW POSTCOM EQUIP

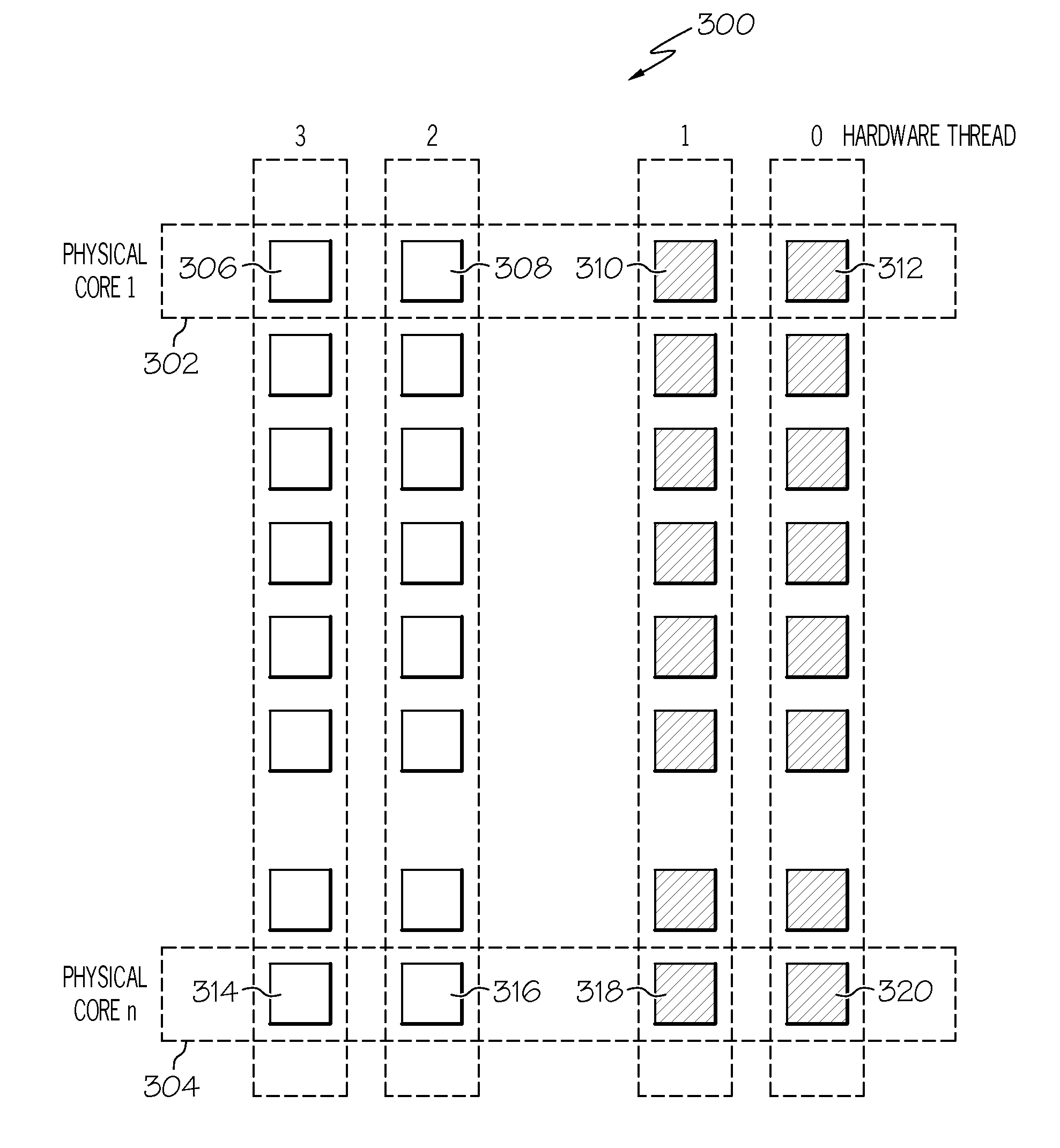

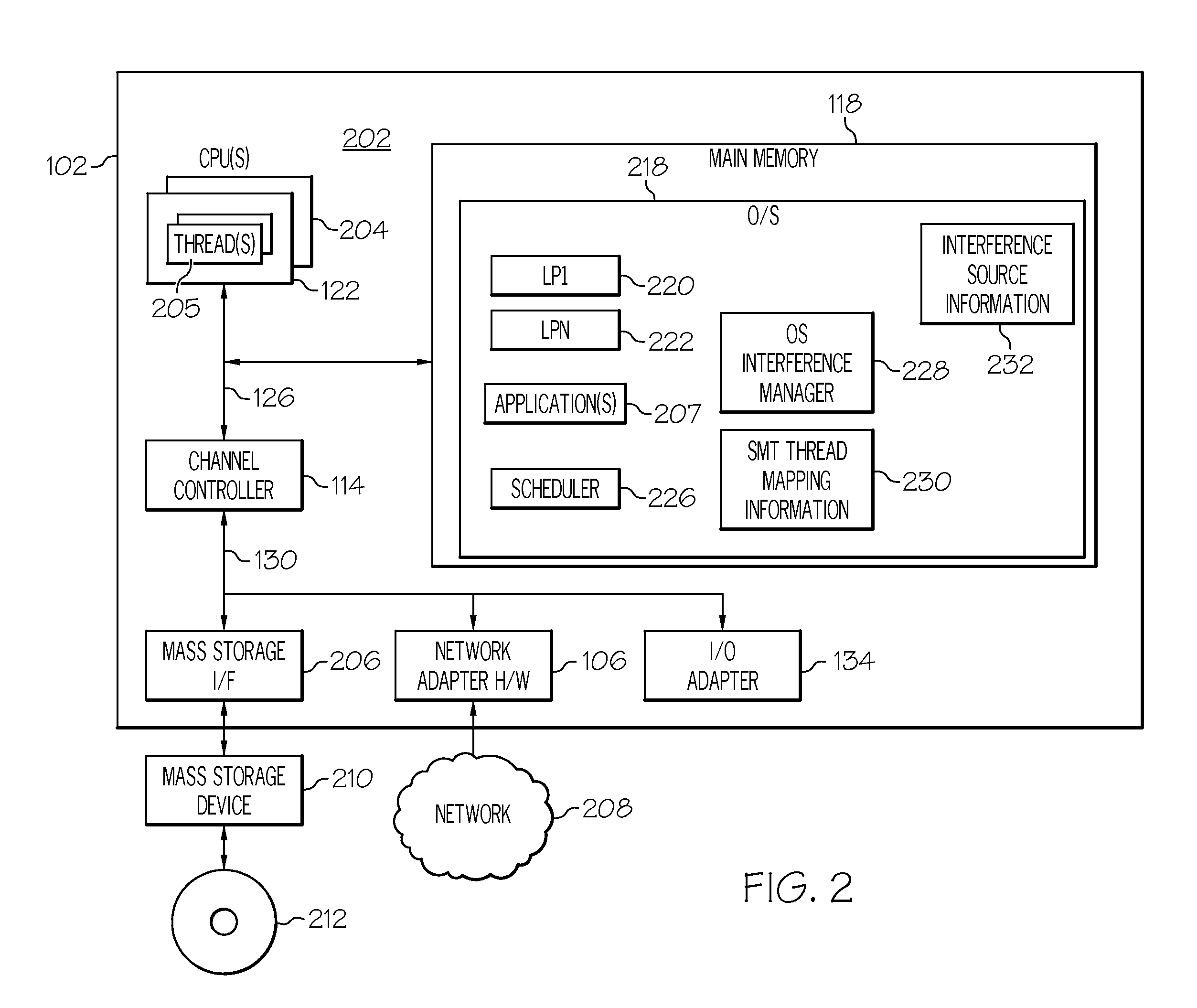

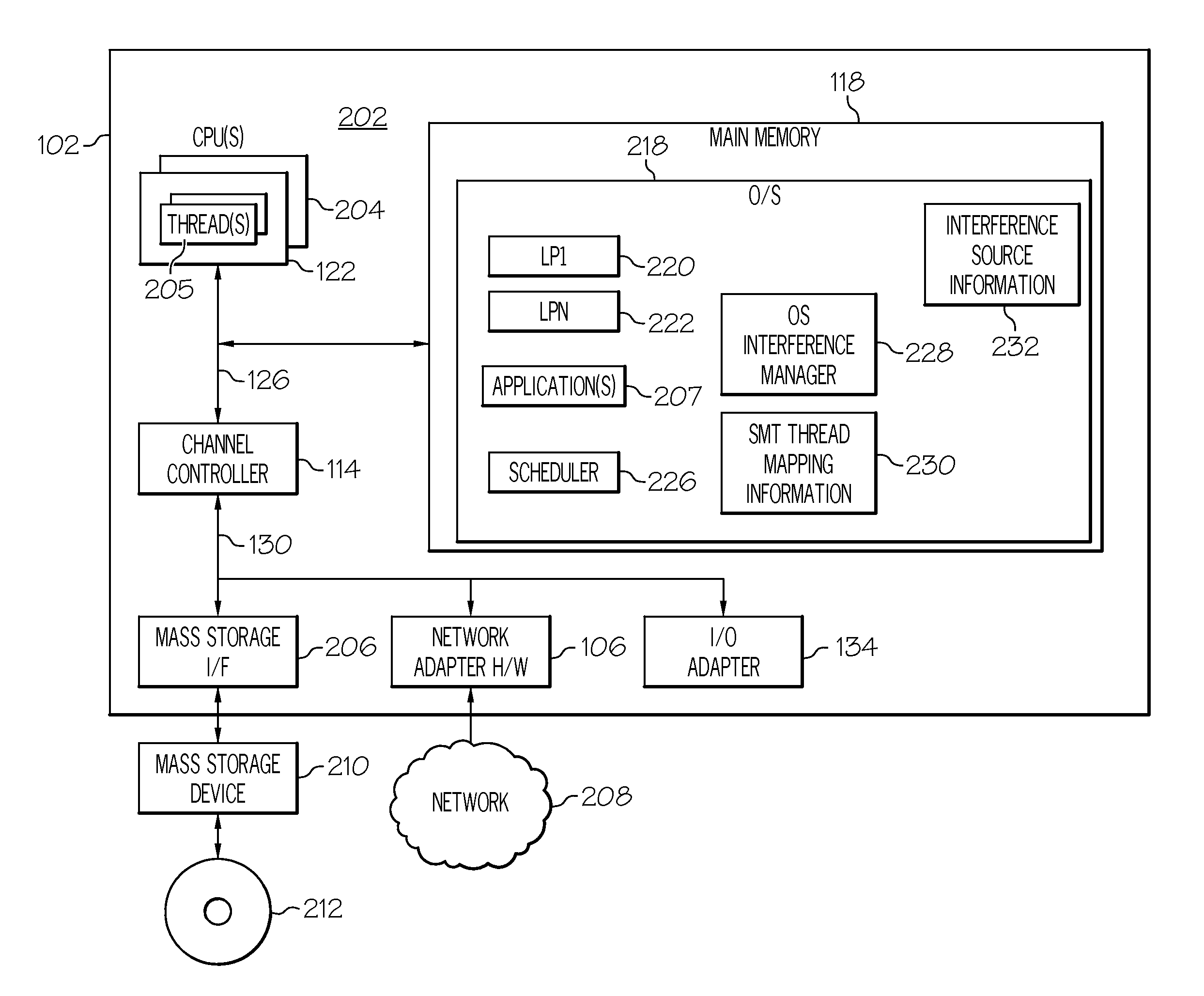

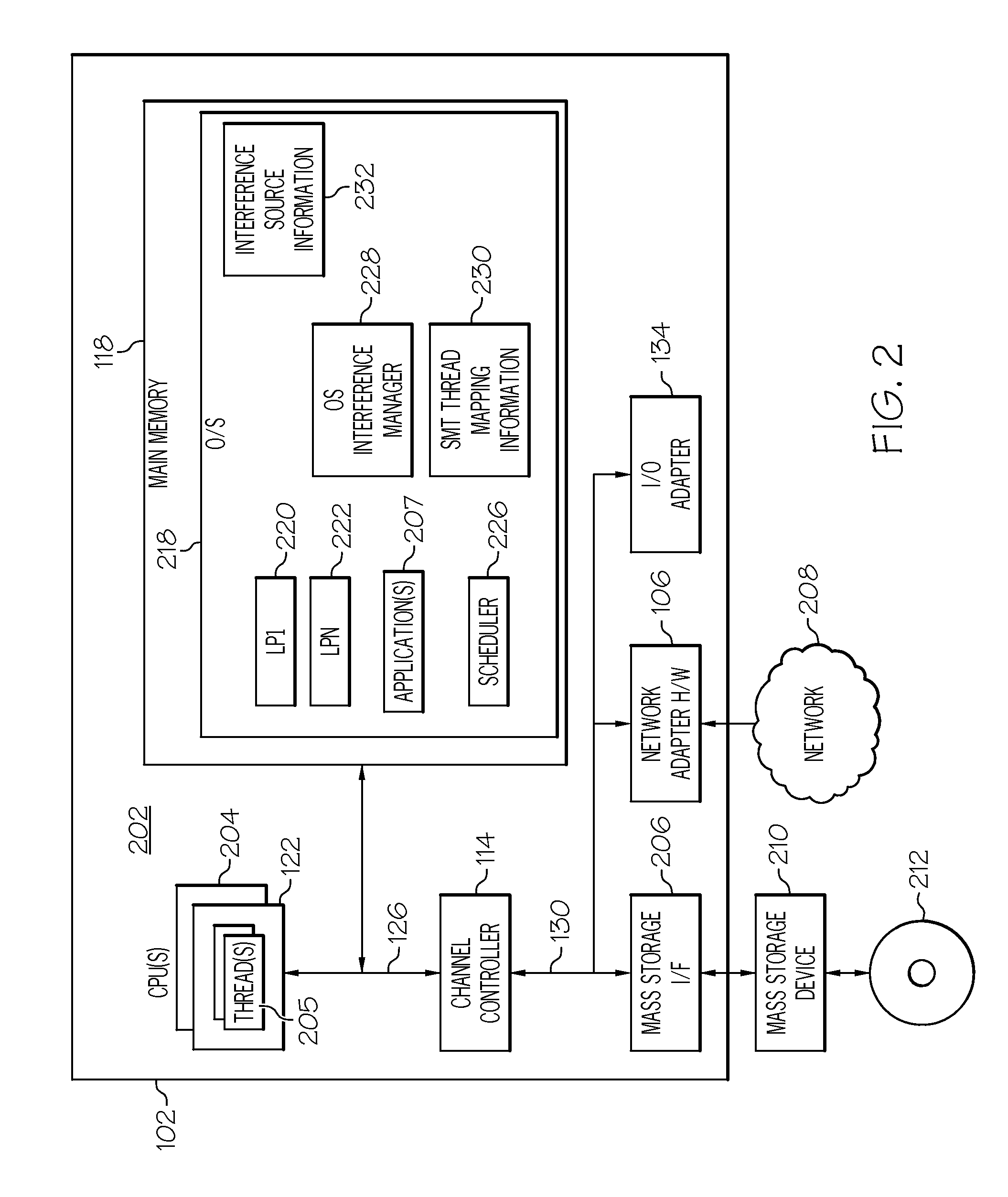

Hardware multi-threading co-scheduling for parallel processing systems

InactiveUS20110093638A1Program control using stored programsGeneral purpose stored program computerInformation processingData processing system

A method, information processing system, and computer program product are provided for managing operating system interference on applications in a parallel processing system. A mapping of hardware multi-threading threads to at least one processing core is determined, and first and second sets of logical processors of the at least one processing core are determined. The first set includes at least one of the logical processors of the at least one processing core, and the second set includes at least one of a remainder of the logical processors of the at least one processing core. A processor schedules application tasks only on the logical processors of the first set of logical processors of the at least one processing core. Operating system interference events are scheduled only on the logical processors of the second set of logical processors of the at least one processing core.

Owner:IBM CORP

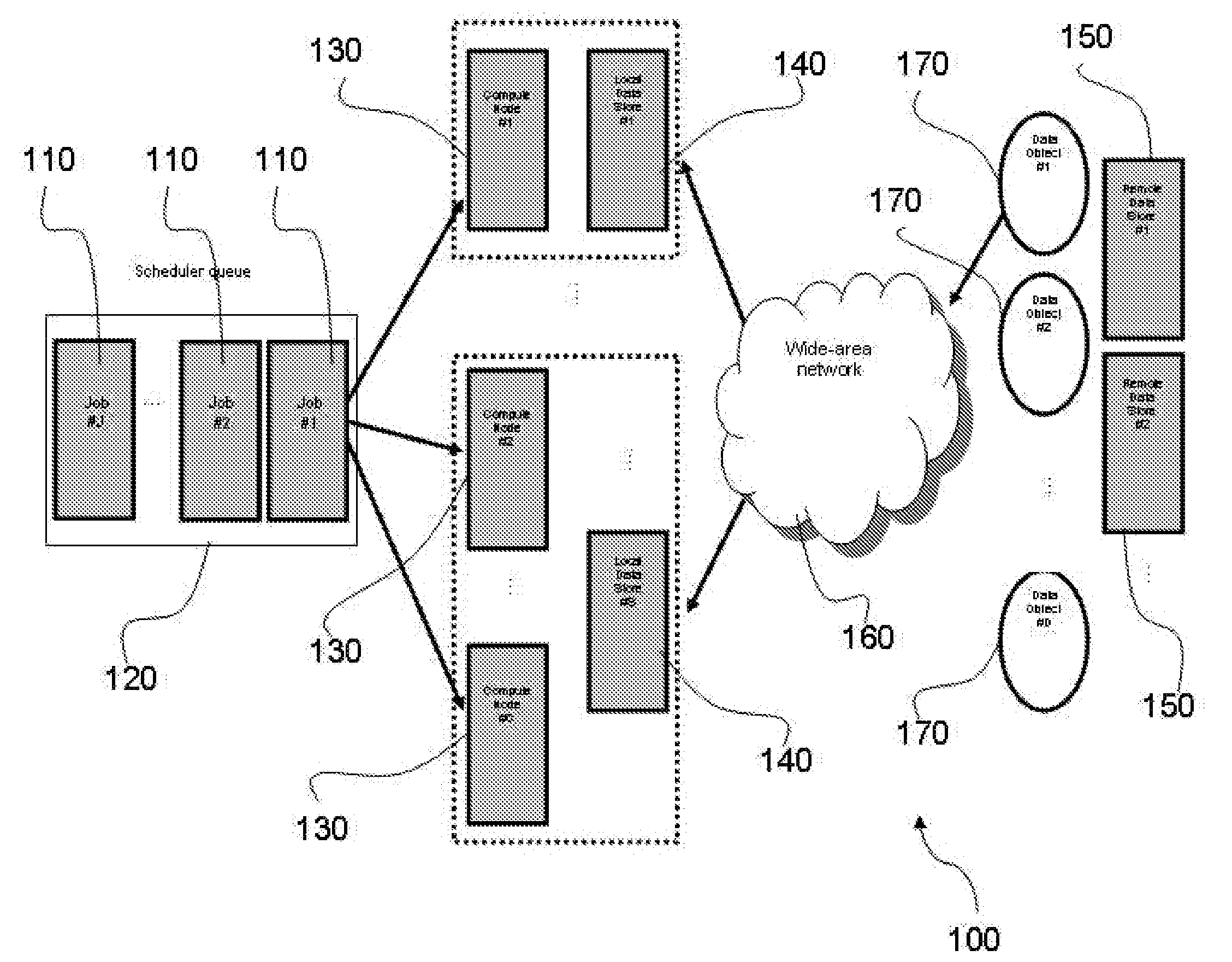

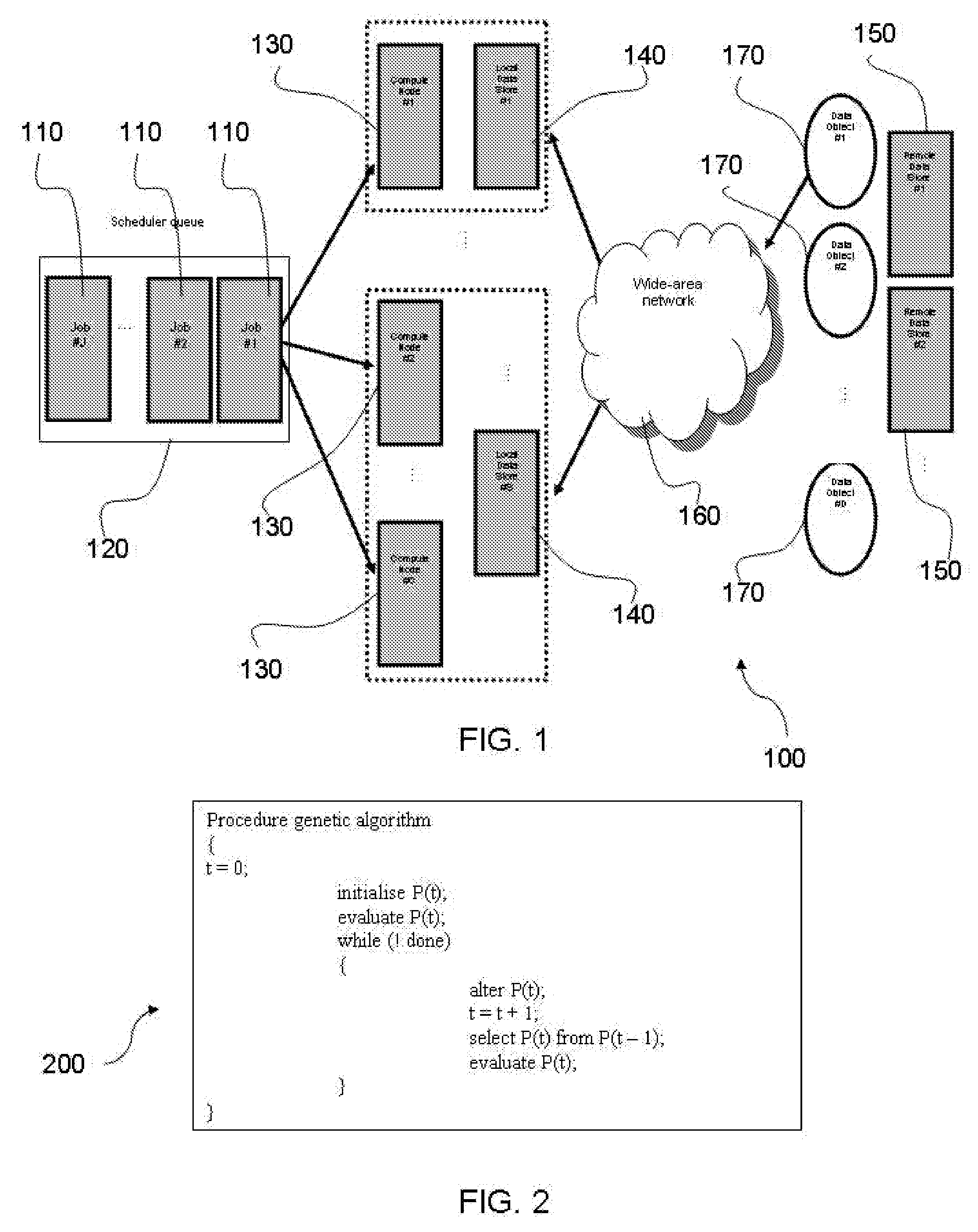

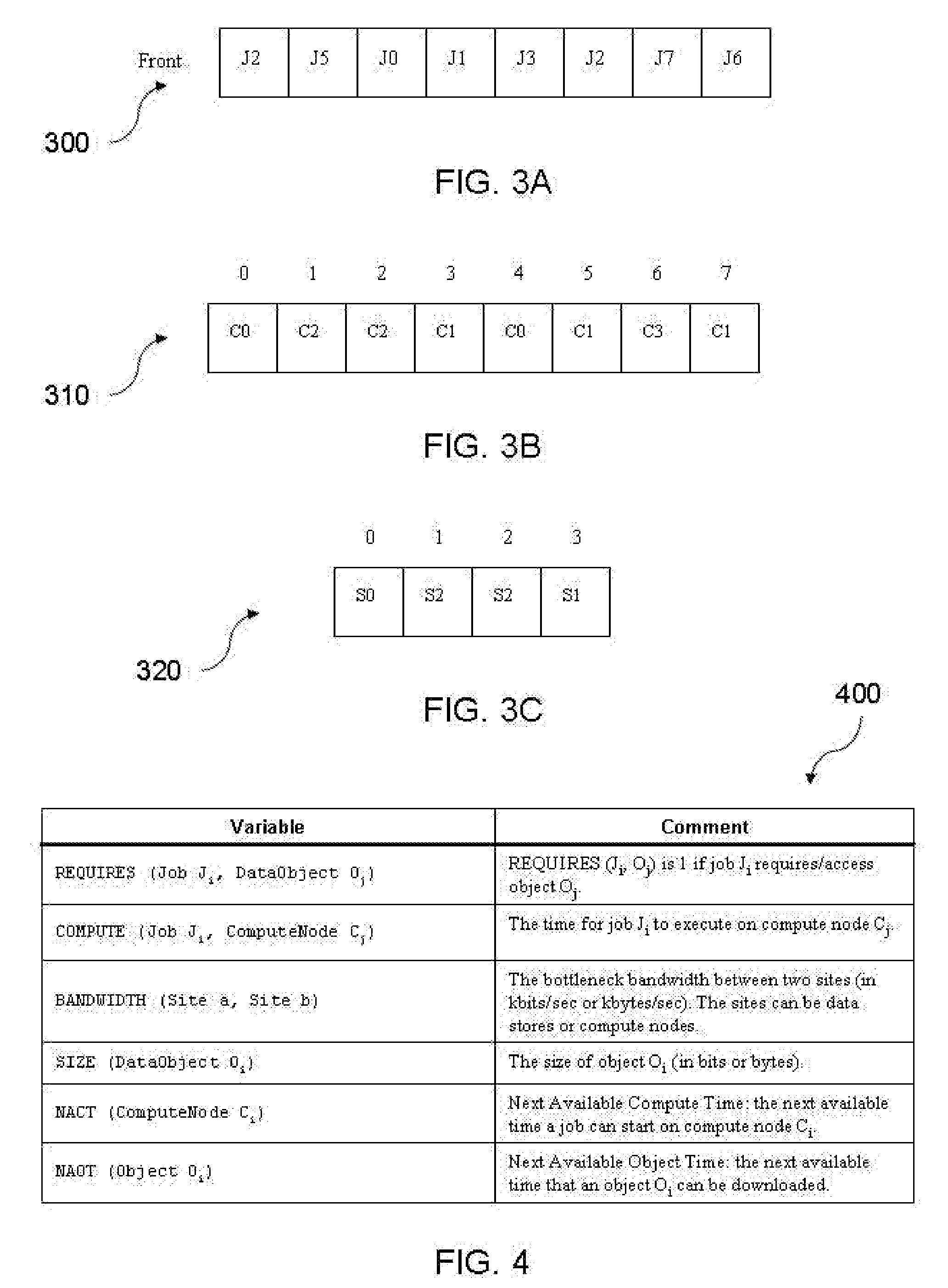

Method and means for co-scheduling job assignments and data replication in wide-area distributed systems

InactiveUS20080049254A1Significant speed-up resultEasy to solveVisual presentationProgram controlWide areaObject based

The embodiments of the invention provide a method, service, computer program product, etc. of co-scheduling job assignments and data replication in wide-area systems using a genetic method. A method begins by co-scheduling assignment of jobs and replication of data objects based on job ordering within a scheduler queue, job-to-compute node assignments, and object-to-local data store assignments. More specifically, the job ordering is determined according to an order in which the jobs are assigned from the scheduler to the compute nodes. Further, the job-to-compute node assignments are determined according to which of the jobs are assigned to which of the compute nodes; and, the object-to-local data store assignments are determined according to which of the data objects are replicated to which of the local data stores.

Owner:IBM CORP

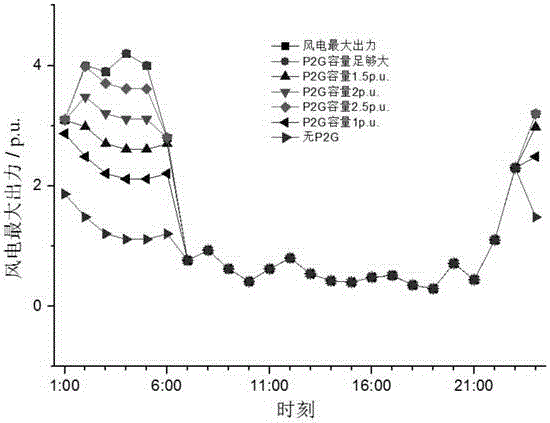

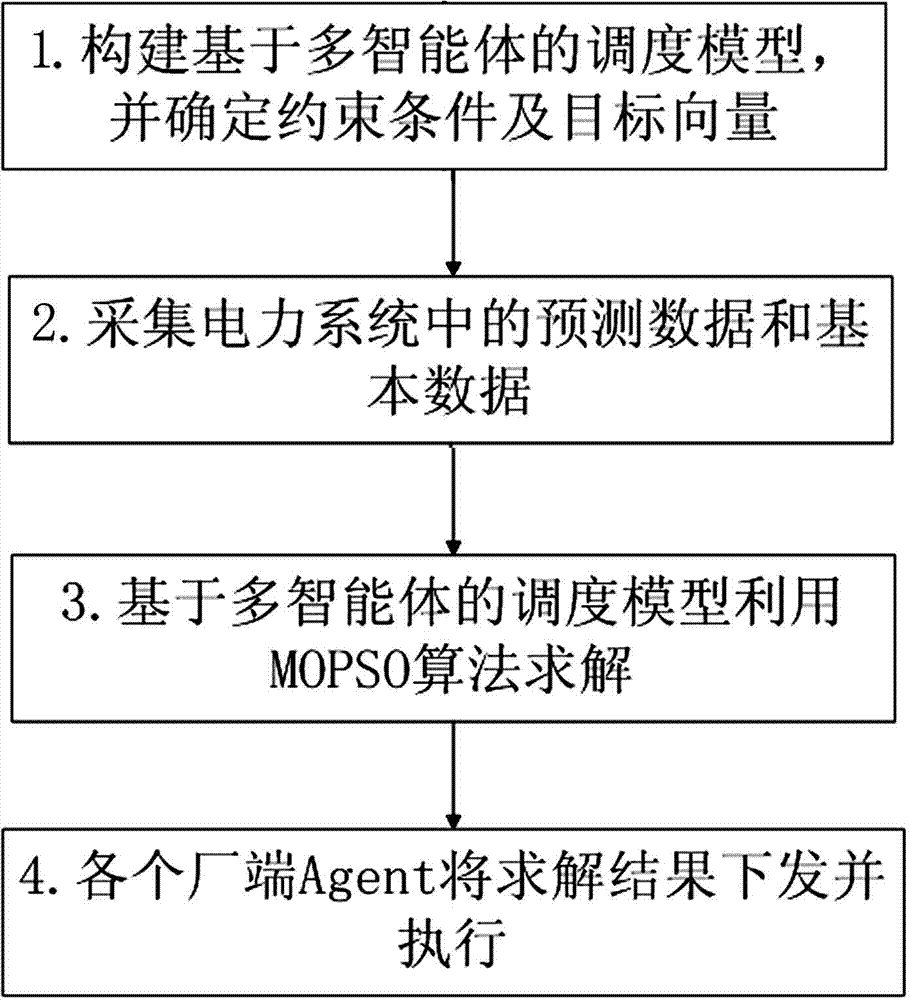

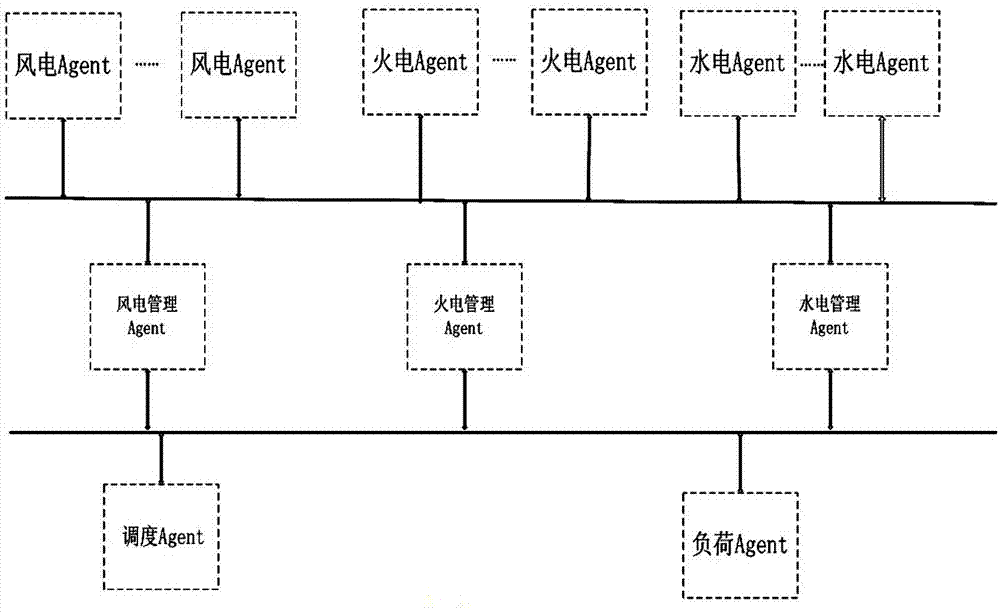

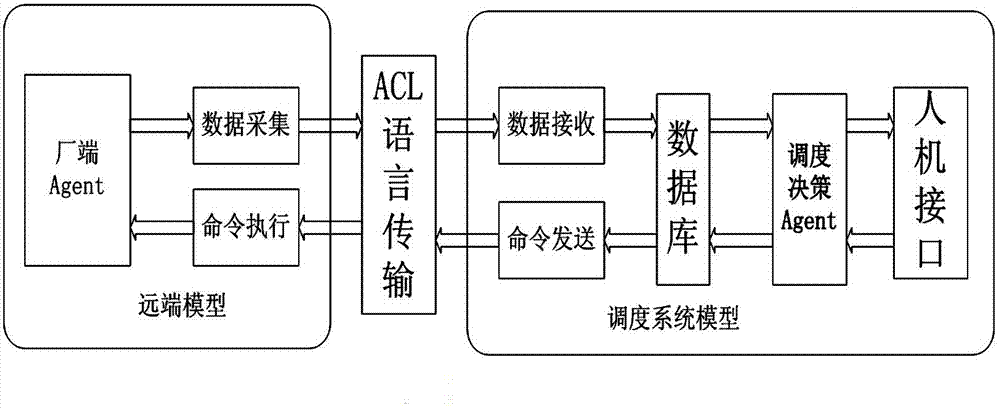

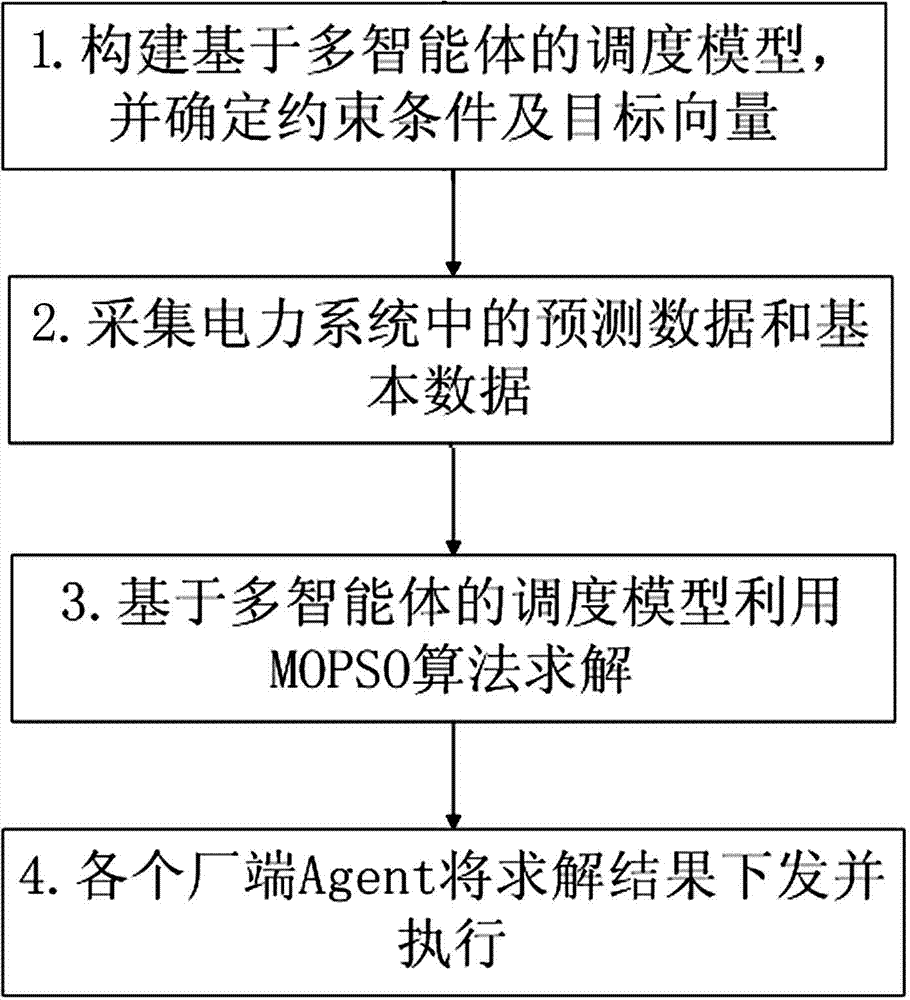

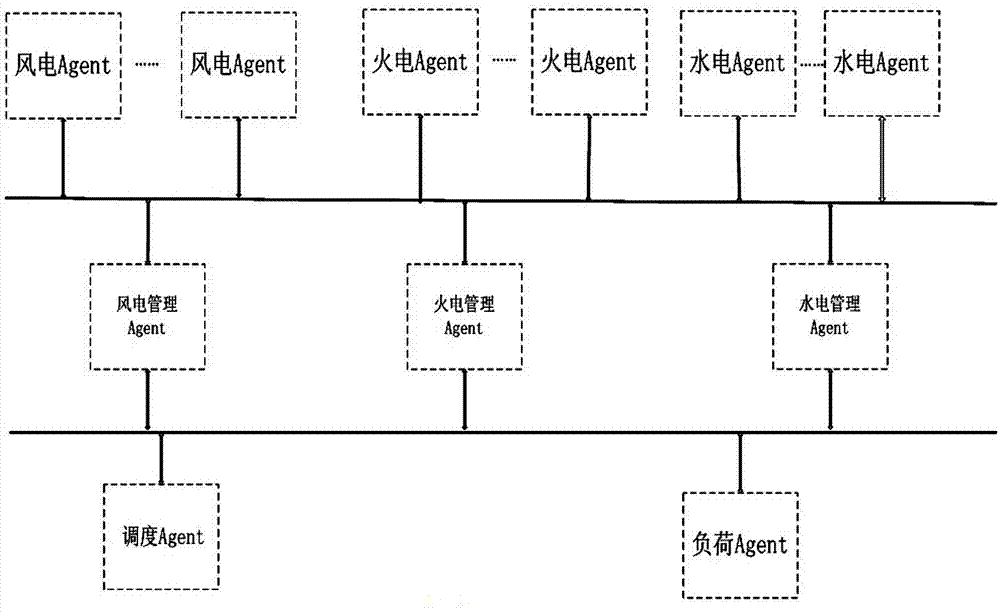

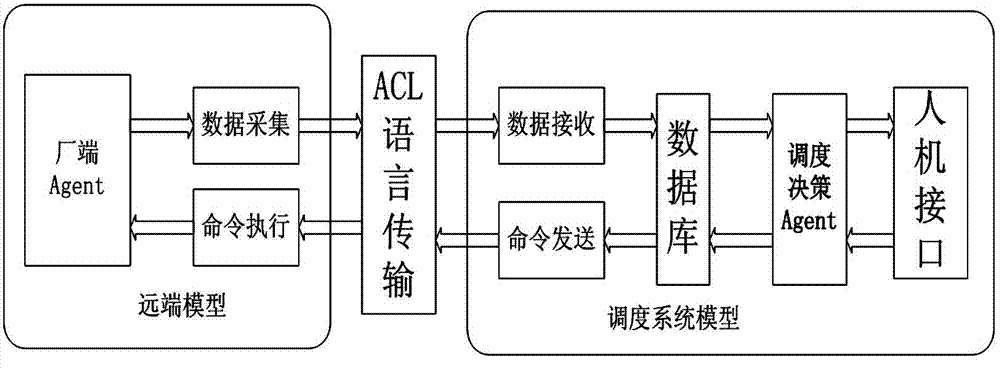

Wind-fire-water co-scheduling method on basis of multi-agent system

ActiveCN102738835ALarge output adjustment rangeOutput adjustment range is smallSingle network parallel feeding arrangementsWind energy generationOperation schedulingElectricity

The invention relates to a power scheduling operation method, in particular to a wind-fire-water co-scheduling method on the basis of a multi-agent system. The method solves the problem that a conventional power scheduling operation method cannot meet the requirement on the large-scale wind power grid-connected operation. The wind-fire-water co-scheduling method on the basis of the multi-agent system is implemented by adopting the following steps of: 1, constructing a wind-fire-water co-scheduling model on the basis of the multi-agent in a scheduling Agent; 2, acquiring predicted data of a load demand in a power system by a load Agent in the power system; 3, sending acquired basic data and real-time data into the wind-fire-water co-scheduling model on the basis of the multi-agent by each thermal power Agent; and 4, issuing and executing a scheduling command by each thermal power Agent and a hydroelectric Agent. The wind-fire-water co-scheduling method is suitable for large-scale wind power grid-connected operation scheduling.

Owner:SHANXI UNIV +2

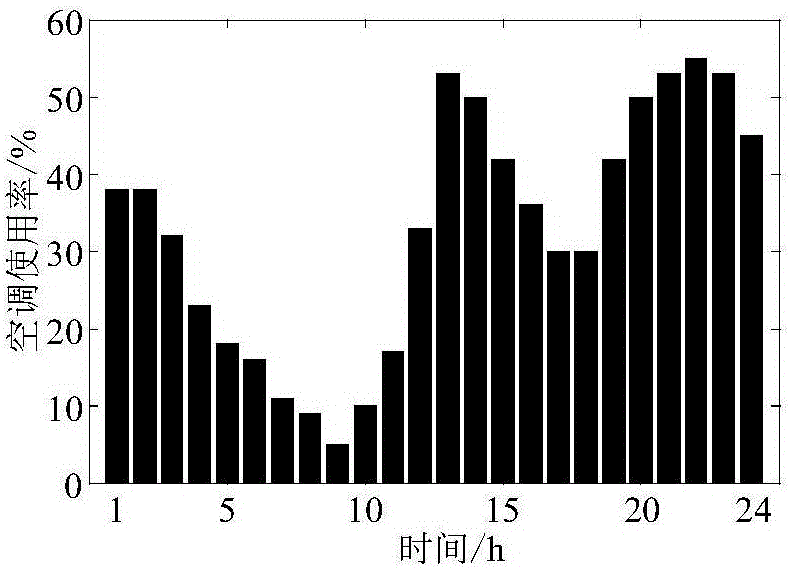

Photovoltaic intelligent community electric automobile and controllable load two-stage optimization scheduling method

The invention discloses a photovoltaic intelligent community electric automobile and controllable load two-stage optimization scheduling method. A controllable load output model is established based on residential area, controllable load use characteristics and laws of thermodynamics, an electric automobile charging model is established based on electric automobile user driving characteristics, and a photovoltaic probability model is established based on the condition that photovoltaic output forecast deviations meet normal distribution; in day-ahead scheduling, time-of-use electricity price is set with profit maximization of an operator obtained after orderly charging of electric automobiles being as a target, and the electric automobiles are guided to be charged orderly through the time-of-use electricity price to reduce system valley-peak difference; taking consideration of influence of photovoltaic prediction and temperature prediction deviation on intelligent community electric automobile and controllable load co-scheduling, supply and demand unbalance due to day-ahead predication is corrected by introducing real-time scheduling; and in real-time scheduling, with the time-of-use electricity price set in day-ahead scheduling being as a basis, a day-ahead charging scheme of the electric automobiles is optimized to reduce real-time scheduling cost and stabilize load fluctuation. The method comprises day-ahead scheduling and real-time scheduling two-stage optimization.

Owner:SOUTHWEST JIAOTONG UNIV

System and method for controlling co-scheduling of processes of parallel program

InactiveUS7424712B1More processedEffective controlProgram synchronisationMemory systemsComputer scienceCo scheduling

A system is described for controlling co-scheduling of processes in a computer comprising at least one process and a spin daemon. The process, when it is waiting for a flag to change condition, transmits a flag monitor request to the spin daemon and enables itself to be de-scheduled. The spin daemon, after receiving a flag monitor request, monitors the flag and, after the flag changes condition, enables the process to be re-scheduled for execution by the computer. Since the spin daemon can monitor flags for a number of processes, the ones of the processes that are waiting will not need to be executed, allowing other processes that are not just waiting to be processed more often.

Owner:ORACLE INT CORP

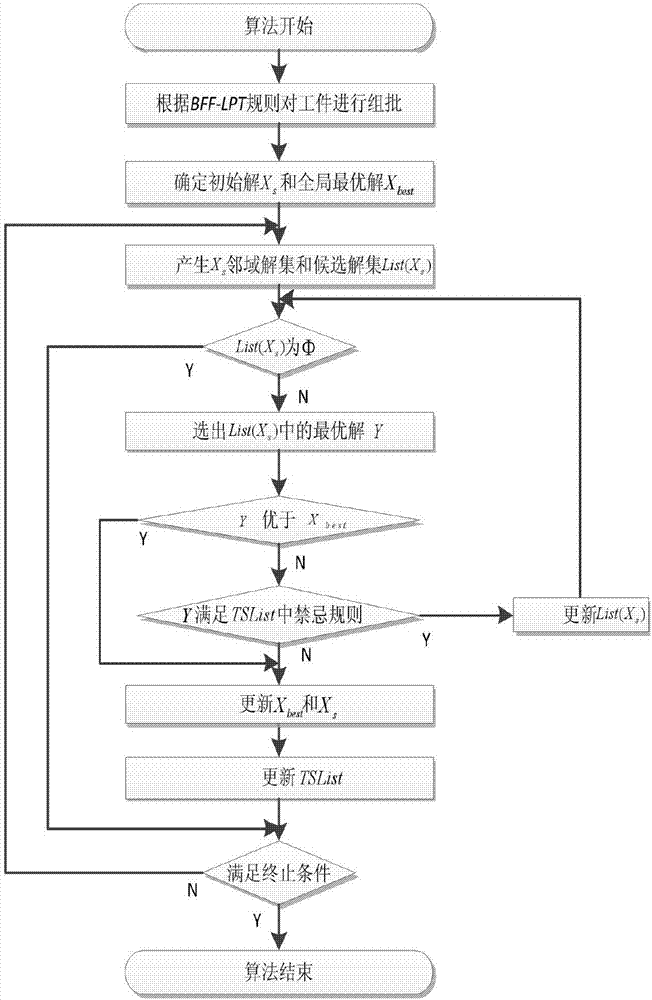

Production-transportation co-scheduling method and system based on modified taboo search algorithm

ActiveCN107392402ABenefit maximizationGood serviceRandom number generatorsForecastingTabu searchGlobal optimal

An embodiment of the invention relates to a production-transportation co-scheduling method and system based on a modified taboo search algorithm; the method comprises: 1, group-batching workpieces; 2, initializing algorithm parameters; 3, generating initial solutions; 4, generating a neighborhood solution set; 5, subjecting individuals to variation, intersection and selection; 6, determining a candidate solution set; 7, calculating fitness values of the individuals; 8, updating the candidate solution set; 9, updating a taboo table; 10, judging whether algorithm termination conditions are met, outputting a global optimal solution if yes, and returning to the step 4 if not. An approximate optimal solution is acquired for the production-transportation co-scheduling problem under multiple manufacturers; that is to say, a scientific effective production-transportation co-scheduling scheme is acquired; therefore, whole benefit in production and transportation can be maximized for an enterprise, high-quality services are provided for customers of the enterprise, and core competitiveness of the enterprise is improved.

Owner:HEFEI UNIV OF TECH

System and method for multi-level beamformed non-orthogonal multiple access communications

ActiveCN107615856AWide stickinessImprove spectral efficiencyRadio transmissionWireless communicationTelecommunicationsCommunication control

Owner:HUAWEI TECH CO LTD

Dynamic co-scheduling of hardware contexts for parallel runtime systems on shared machines

ActiveUS9542221B2Reduce load imbalanceEasy to useOperational speed enhancementProgram initiation/switchingOperational systemResource management

Multi-core computers may implement a resource management layer between the operating system and resource-management-enabled parallel runtime systems. The resource management components and runtime systems may collectively implement dynamic co-scheduling of hardware contexts when executing multiple parallel applications, using a spatial scheduling policy that grants high priority to one application per hardware context and a temporal scheduling policy for re-allocating unused hardware contexts. The runtime systems may receive resources on a varying number of hardware contexts as demands of the applications change over time, and the resource management components may co-ordinate to leave one runnable software thread for each hardware context. Periodic check-in operations may be used to determine (at times convenient to the applications) when hardware contexts should be re-allocated. Over-subscription of worker threads may reduce load imbalances between applications. A co-ordination table may store per-hardware-context information about resource demands and allocations.

Owner:ORACLE INT CORP

Co-scheduling of network resource provisioning and host-to-host bandwidth reservation on high-performance network and storage systems

ActiveUS8705342B2Error preventionFrequency-division multiplex detailsComputer terminalResource based

A cross-domain network resource reservation scheduler configured to schedule a path from at least one end-site includes a management plane device configured to monitor and provide information representing at least one of functionality, performance, faults, and fault recovery associated with a network resource; a control plane device configured to at least one of schedule the network resource, provision local area network quality of service, provision local area network bandwidth, and provision wide area network bandwidth; and a service plane device configured to interface with the control plane device to reserve the network resource based on a reservation request and the information from the management plane device. Corresponding methods and computer-readable medium are also disclosed.

Owner:RGT UNIV OF CALIFORNIA THROUGH THE ERNEST ORLANDO LAWRENCE BERKELEY NAT LAB +1

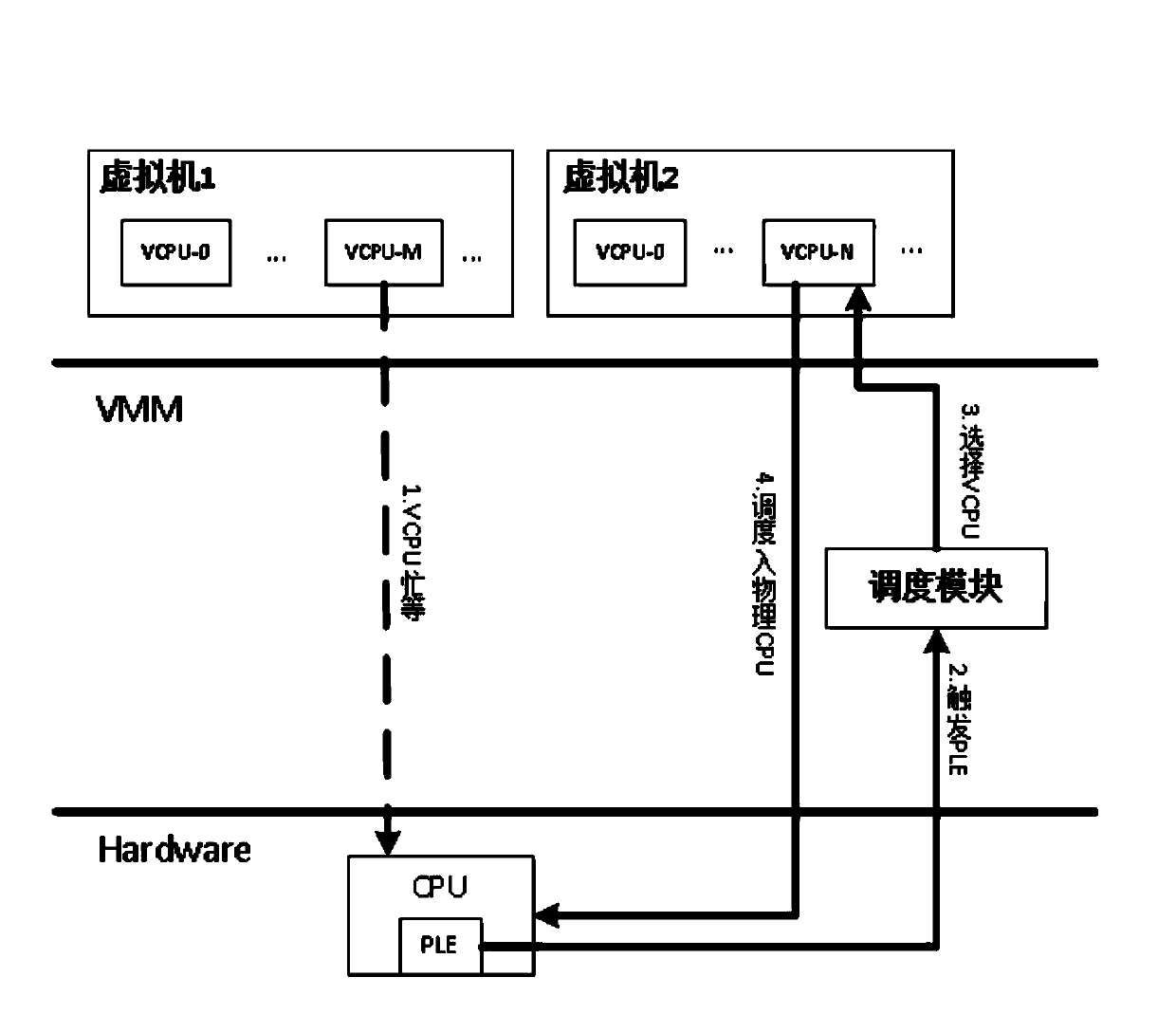

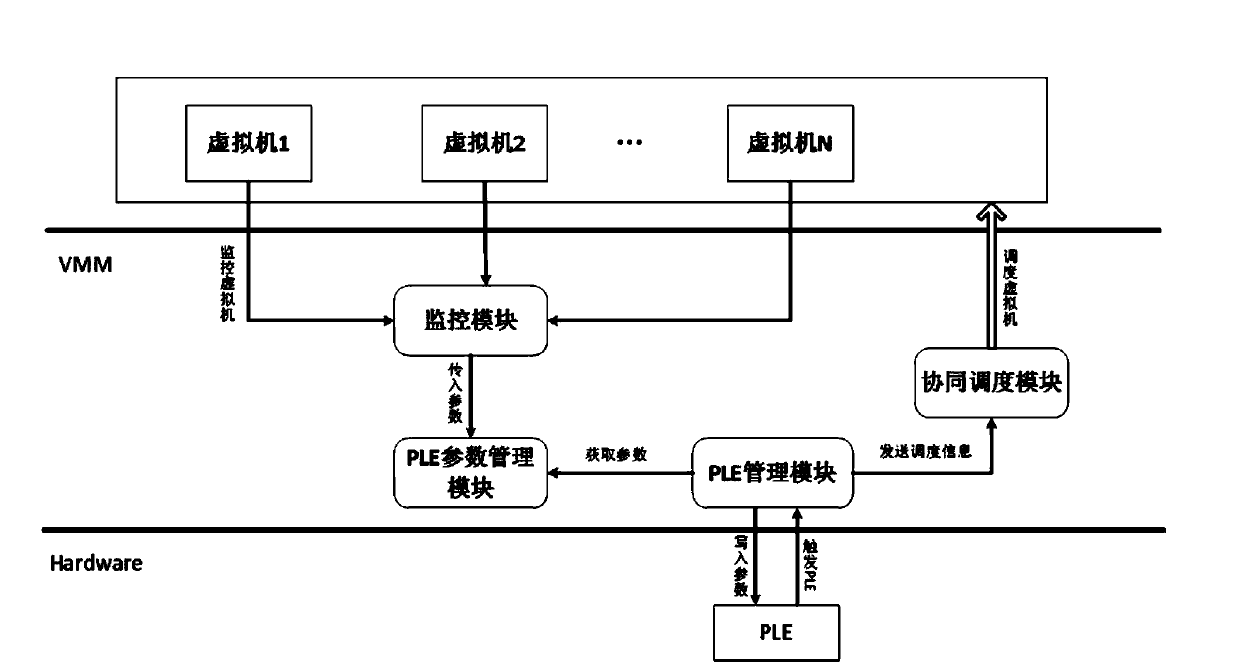

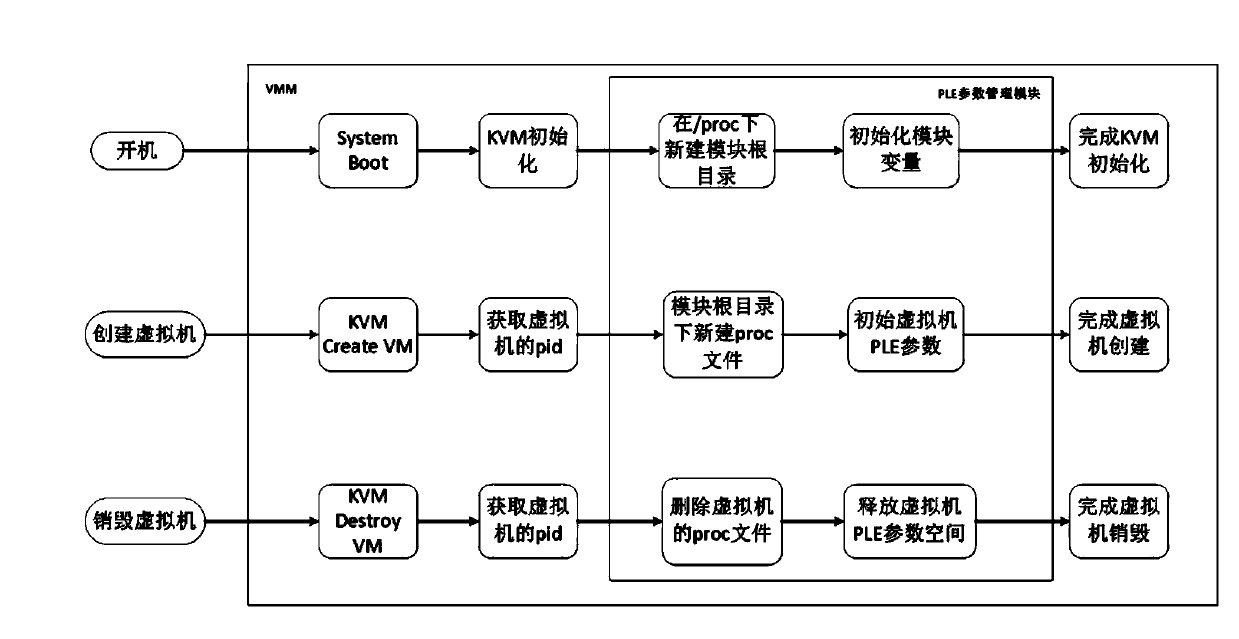

Dynamic PLE (pause loop exit) technology based virtual machine co-scheduling method

ActiveCN103744728AImprove performanceEnsure dynamic adjustmentProgram initiation/switchingSoftware simulation/interpretation/emulationBusy timeSpin locks

The invention discloses a dynamic PLE (pause loop exit) technology based virtual machine co-scheduling method which includes monitoring running states of all virtual machines to acquire average wait time needed for waiting for the spin-lock of each virtual machine, and regulating PLE parameters dynamically according to the average wait time so as to be adaptable to the current system running state; meanwhile, when the PLE is triggered by VCPUs(virtual central processing units), on the basis of the dynamic PLE technology, upgrading all VCPUs, running in a kernel mode, of corresponding virtual machines to heads of corresponding physical CPU running queues so as to realize synchronous running of the group of VCPUs in the next scheduling period. The dynamic PLE technology and the virtual machine co-scheduling are combined so that the problems such as busy time of the VCPUs of the virtual machines due to the fact that spin-lock holders are seized are solved and integral performance of the virtual machines and the system is improved effectively.

Owner:SHANGHAI JIAO TONG UNIV

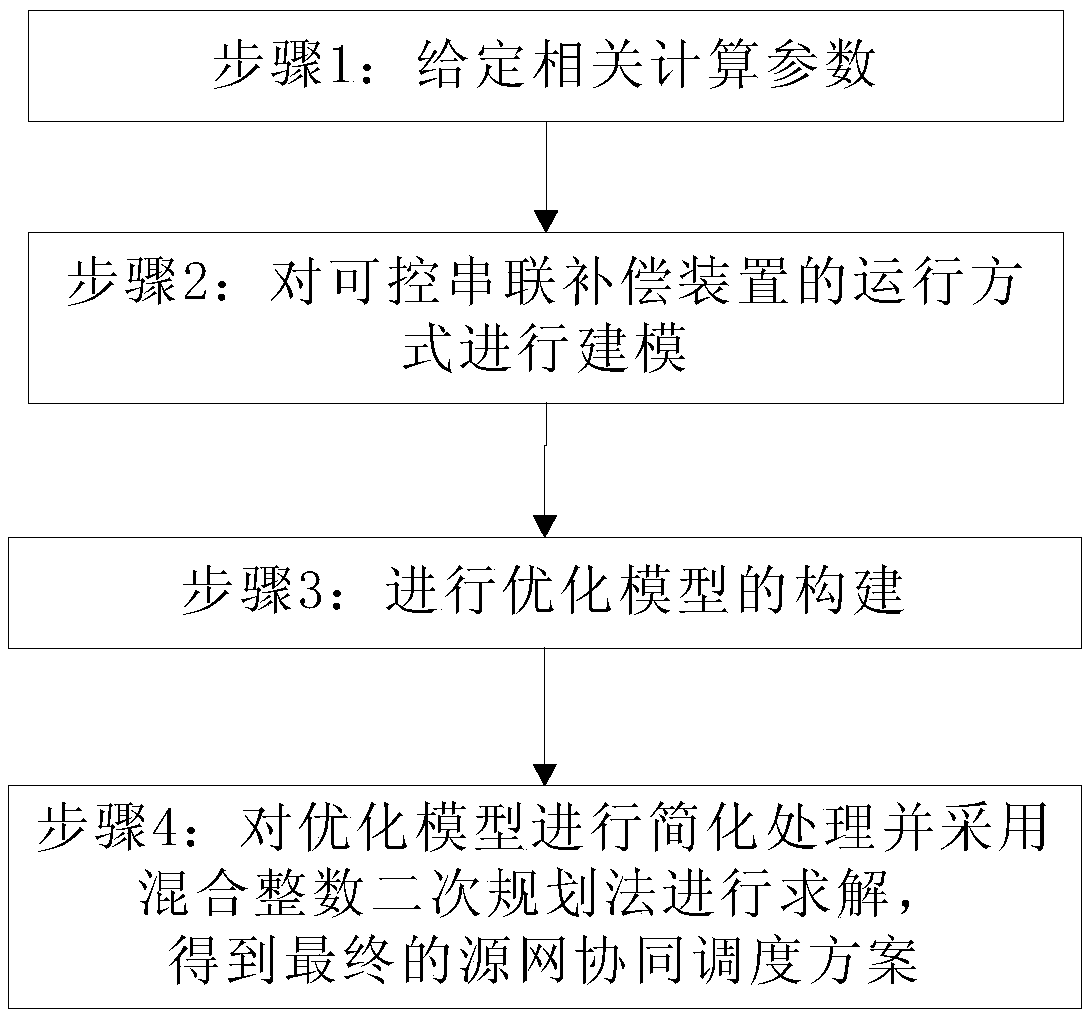

Source network co-scheduling method including controllable series connection compensation device

ActiveCN107069827AEnsure safetyEnhancing the absorption of volatilityLoad forecast in ac networkSingle network parallel feeding arrangementsOperation modeControllability

The invention discloses a source network co-scheduling method including a controllable series connection compensation device. The method comprises the following steps: step 1, relevant calculation parameters are given; step 2, the operation mode of a controllable series connection compensation device is modeled; step 3, an optimized model is constructed, the optimized model is based on the target that the sum of the power generating cost of a power system and the cost of abandoned wind power is minimal, and the optimized model includes a number of constraints; and step 4, the optimized model is simplified and employs a mixed-integer quadratic programming method to solve the problem, and a final source network co-scheduling scheme is obtained. The method integrates the controllability of current flow control equipment and energy storage systems and the like into economic scheduling decision-making of a power grid, and constructs the source network co-scheduling model of the wind and electricity coexistence in the power grid to determine the economic operation mode under the restriction of the security constraint level, can enhance the consumption of fluctuation of wind power, load, and so on injected into nodes under the premise of ensuring the safe operation of the power grid, and improves the economic benefit of power grid operation.

Owner:RES INST OF ECONOMICS & TECH STATE GRID SHANDONG ELECTRIC POWER +1

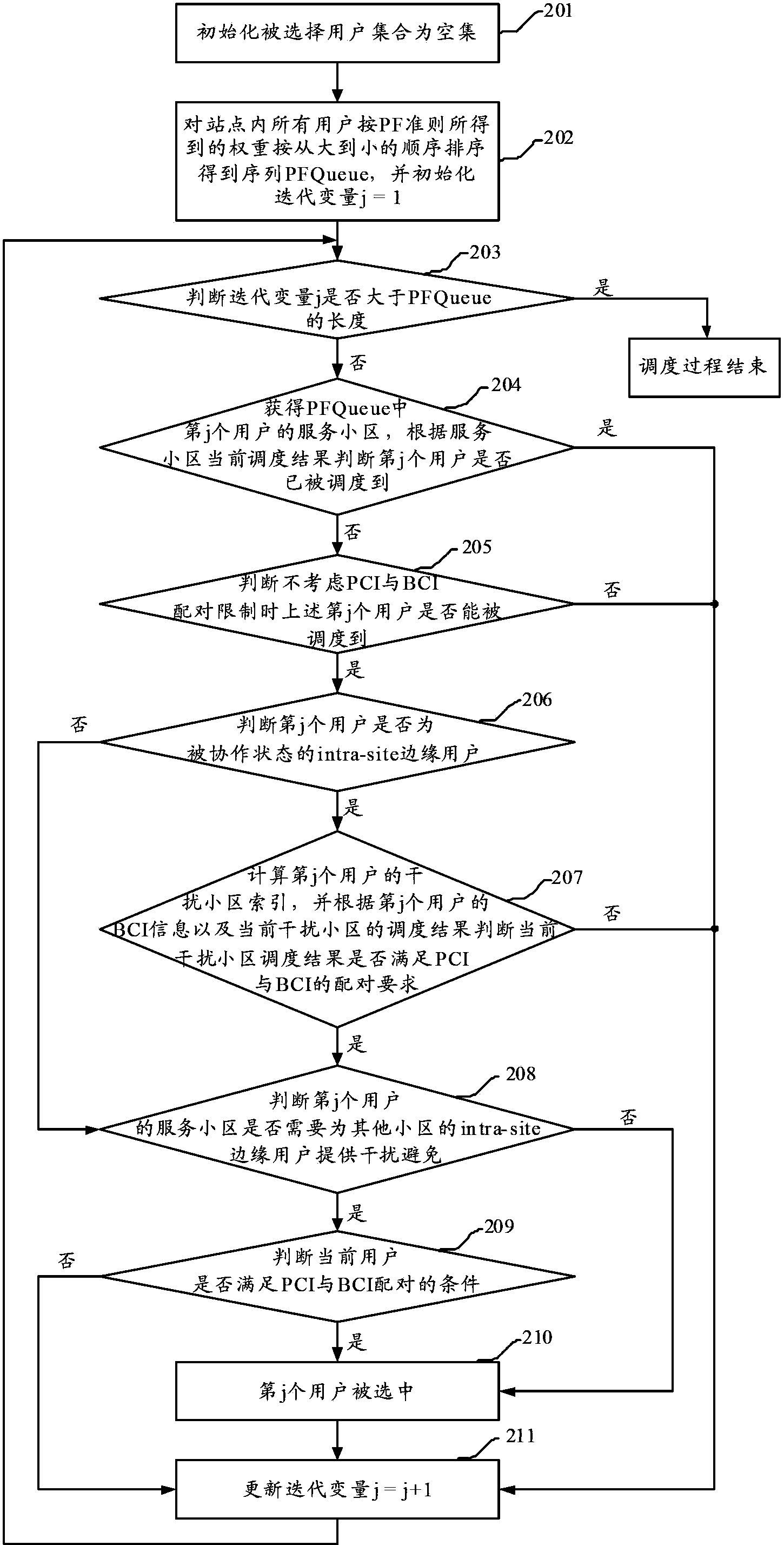

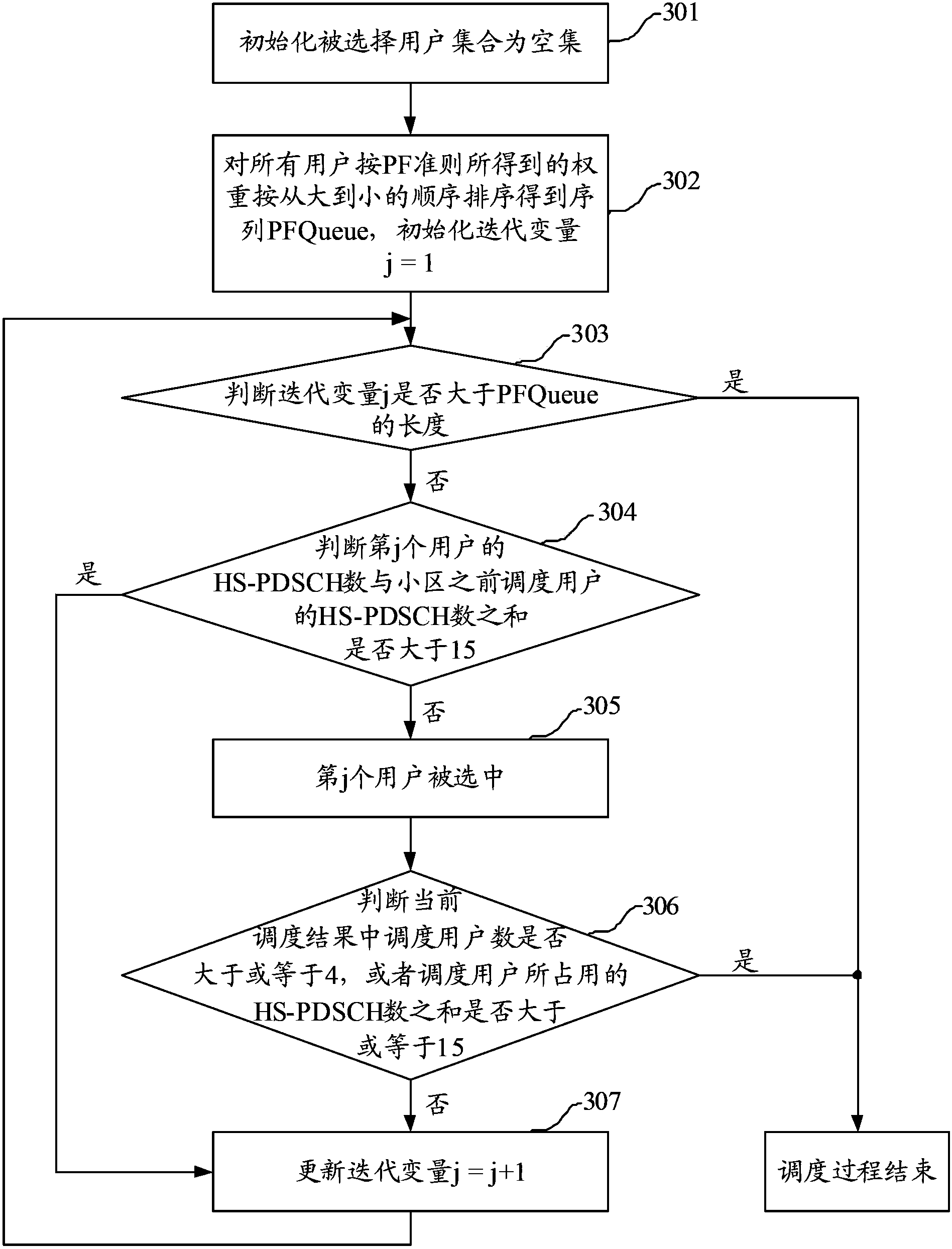

Channel information feedback method and user equipment

ActiveCN103516494AGood channel qualityHigh communication rateError prevention/detection by using return channelBaseband system detailsUser equipmentOn cells

The invention provides a channel information feedback method and user equipment. The method comprises the following steps: when first user equipment is in a cooperated state, PCI is calculated, and first CQI when an interference cell doesn't provide interference avoidance to the first user equipment is calculated; BCI is calculated, and second CQI when the interference cell provides interference avoidance to the first user equipment is calculated; and one difference value selected from difference values between PCI, BCI, the first CQI and the second CQI and the first CQI or a combination of the difference values are fed back to a base station where a serving cell is positioned. According to the invention, user equipment positioned on the edge of the cell can feed back both channel information from the user equipment to the serving cell and channel information from the user equipment to the interference cell to the base station such that co-scheduling can be conducted by the base station on cells of the base station. Thus, channel quality and communication speed of the user equipment positioned on the edge of the cell can be raised.

Owner:HUAWEI TECH CO LTD

Hardware multi-threading co-scheduling for parallel processing systems

InactiveUS8484648B2General purpose stored program computerMultiprogramming arrangementsInformation processingData processing system

A method, information processing system, and computer program product are provided for managing operating system interference on applications in a parallel processing system. A mapping of hardware multi-threading threads to at least one processing core is determined, and first and second sets of logical processors of the at least one processing core are determined. The first set includes at least one of the logical processors of the at least one processing core, and the second set includes at least one of a remainder of the logical processors of the at least one processing core. A processor schedules application tasks only on the logical processors of the first set of logical processors of the at least one processing core. Operating system interference events are scheduled only on the logical processors of the second set of logical processors of the at least one processing core.

Owner:INT BUSINESS MASCH CORP

Electric system network cluster task allocation method based on load balancing

InactiveCN103176850AAlleviate bottleneck pressureImprove system efficiencyData processing applicationsResource allocationElectric power systemCoscheduling

The invention discloses an electric system network cluster task allocation method based on load balancing. The method comprises the following steps that: according to computing and communication properties of each processor of the system and requirement of a requested task, an information system defines a time table of the task; then the system performs static allocation according to the time table based on the task; and finally the system performs co-scheduling and process migration according to an actual operating situation, so as to relieve the choke point pressure and improve the efficiency of the system. The method provided by the invention is an electric scheduling method based on a load balancing allocation algorithm, which is suitable for large-scale electric network.

Owner:STATE GRID CORP OF CHINA +2

Full duplex operation in a wireless communication network

Methods, apparatus, and computer software are disclosed for communicating within a wireless communication network including a scheduling entity configured for full duplex communication, and user equipment (UE) configured for half duplex communication. In some examples, one or more UEs may be configured for limited (quasi-) full duplex communication. Some aspects relate to scheduling the UEs, including determining whether co-scheduling of the UEs to share a time-frequency resource is suitable based on one or more factors such as an inter-device path loss.

Owner:QUALCOMM INC

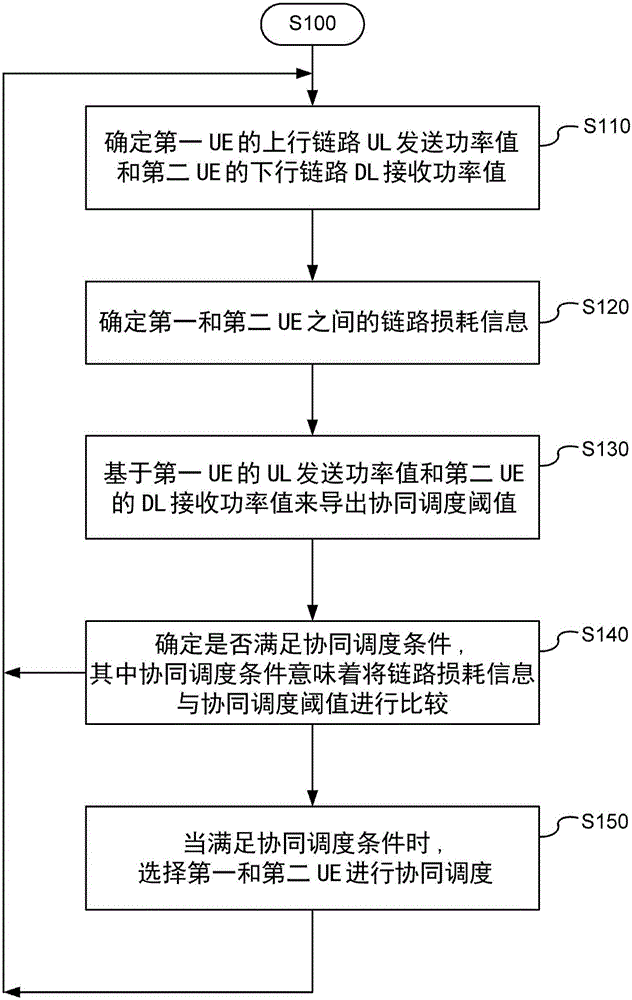

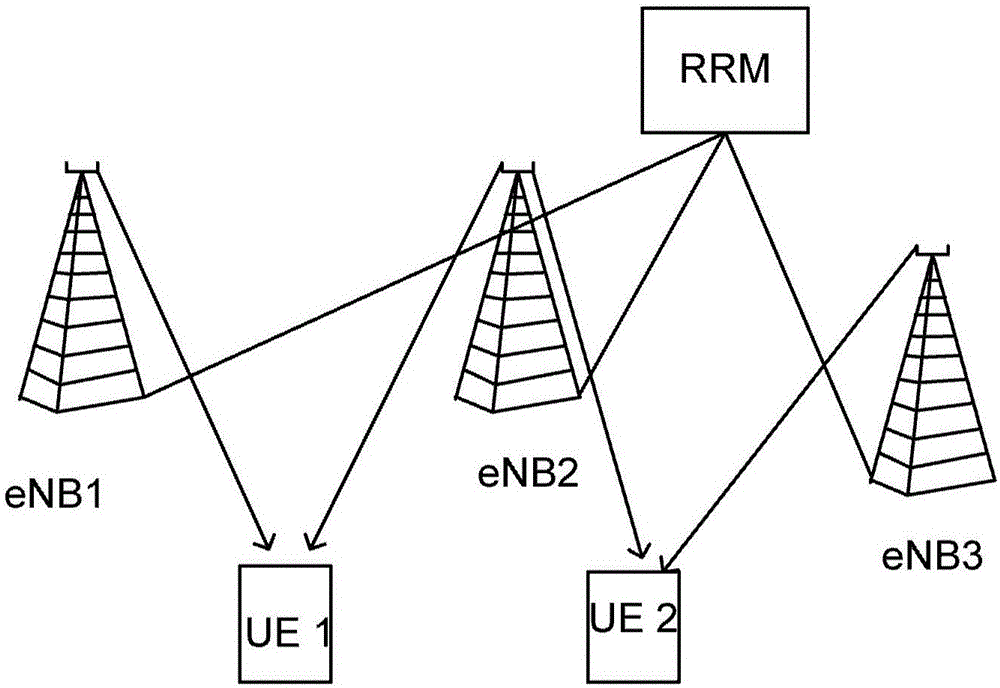

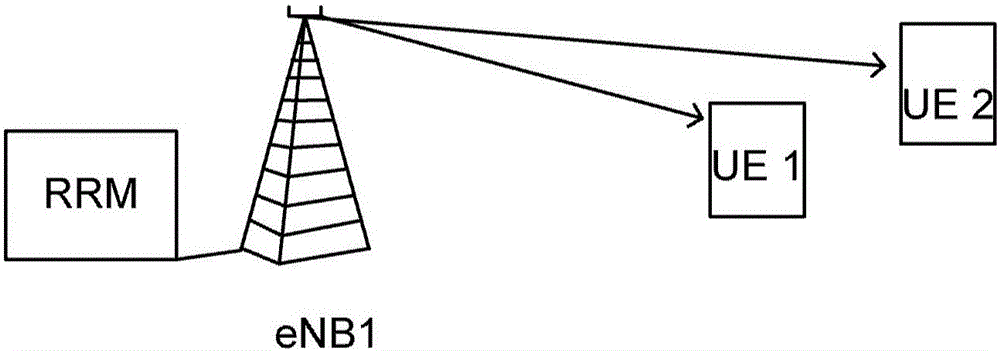

Method in network and network node for co-scheduling in network

ActiveCN105794291ASide complexity does not affectComplexity does not affectWireless communicationTelecommunicationsComputer science

The invention relates to a method (S100) and wireless communication network node for co-scheduling first and second user equipment (UE). The co-scheduling comprises scheduling the first UE and the second UE in the same time and frequency resource. The method comprises determining if a co-scheduling condition is fulfilled, wherein the co-scheduling condition implies comparing link loss information to a co-scheduling threshold value, and selecting the first and second UE for co-scheduling when the co-scheduling condition is fulfilled.

Owner:ERICSSON (CHINA) COMMUNICATION COMPANY LTD

System and method for multi-level beamformed non-orthogonal multiple access communications

ActiveUS10148332B2Facilitates the co-scheduling of multiple UEsImprove spectral efficiencySpatial transmit diversityTelecommunicationsCommunication control

Owner:FUTUREWEI TECH INC

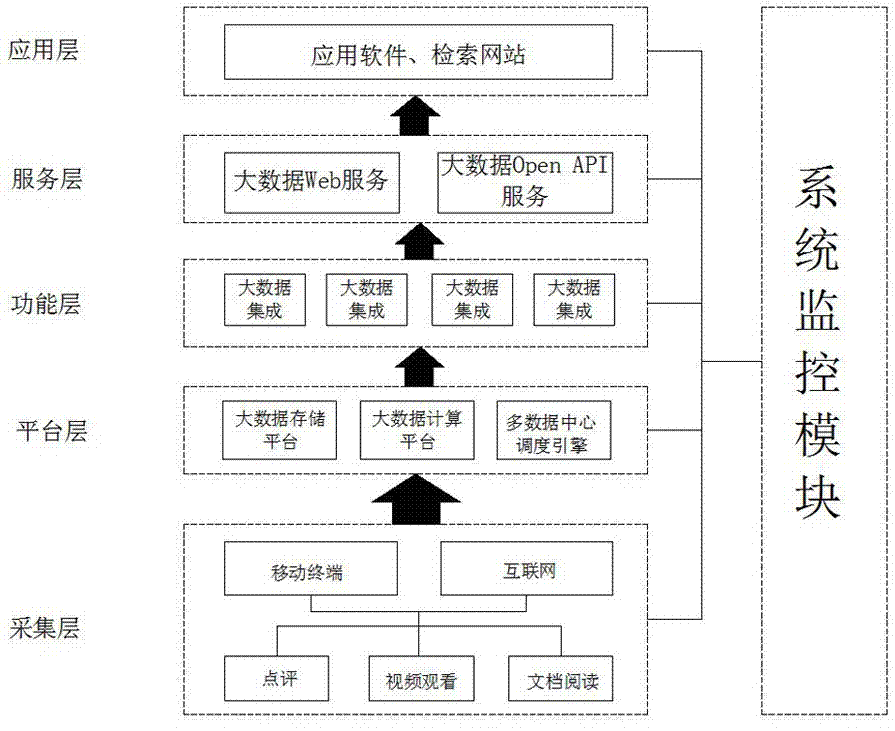

Big data platform system and operating method thereof

InactiveCN107423417AEfficient storageQuick storageData miningSpecial data processing applicationsData centerData platform

The invention discloses a bid data platform system, comprising an application layer, a service layer, a function layer, a platform layer and an acquisition layer; the acquisition layer comprises mobile terminals and the internet, the big data platform system acquires data through the two channels, and data are collected through users' actions such as browsing records and comments left on internet tools such as the mobile terminals and the internet; the platform layer is composed of a big data storage platform, a big data computing center, and a multi-data-center scheduling engine, and the platform layer provides a big data distributed storage system. The big data platform system herein has a complete frame and complete functionality, covering big data resources on the internet; efficient and quick storage, computing, integrating and scheduling are provided for data via the big data distributed storage system, a distributed data mining runtime system and an intelligent data center co-scheduling technique, the processing capacity of the big data platform system is improved, and the big data platform system can operate stably.

Owner:合肥千奴信息科技有限公司

Coordinated scheduling strategy for multi-element energy storage in distributed microgrid system for optical storage

InactiveCN104184159BConstant amplitudeConstant frequencyAc network load balancingPower oscillations reduction/preventionBattery state of chargeCapacitance

A coordinated scheduling strategy for multiple energy storage in an optical storage distributed microgrid system, based on a two-stage converter topology structure for multiple energy storage, includes a front-stage bidirectional DC / DC conversion unit and a rear-stage DC / AC conversion unit. The bidirectional DC / DC conversion unit for the battery and the bidirectional DC / DC conversion unit for the super capacitor share a DC bus, and are connected to the load and the large power grid through the DC / AC conversion unit through the LC filter. The method for coordinated scheduling of two-stage converters for multiple energy storage is as follows: in the grid-connected mode of the distributed optical storage microgrid system, double filtering control is performed on the two-stage converters for multiple energy storage, and the energy storage elements are controlled. It is used to smooth the fluctuation of photovoltaic output power, and adjust the respective filtering parameters according to the state of charge of the supercapacitor and the state of charge of the battery; when the distributed optical storage microgrid system is off-grid, the energy storage element is controlled to be distributed optical storage. The microgrid system provides voltage and frequency support, and the distributed optical storage microgrid system jointly supplies power for the load; the energy storage elements are batteries and supercapacitors.

Owner:GUANGDONG YUANJING ENERGY

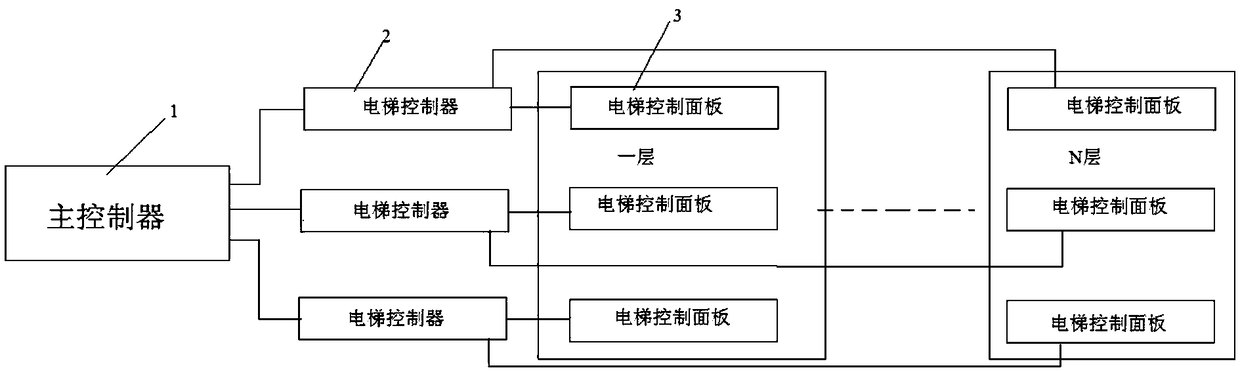

Multi-elevator co-scheduling method

InactiveCN108609443AAvoid situations where a lot of time is wastedImprove operational efficiencyElevatorsEngineeringElevator control

The invention relates to a multi-elevator co-scheduling method. According to the method, control is performed separately when elevators run in the peak period (no elevator is in idle) and in the slackperiod (some elevators are in idle), so that the elevator control structure and logics are more reasonable. Particularly, area dividing is performed on the floors of a building in the peak period, sothat the situation that the elevators stop almost at each floor in the peak period, and consequently time is largely wasted is avoided, the running efficiency of the elevators is substantially improved, the waiting time of passengers at the other floors is shortened, the method is efficient and reliable, and high efficiency of scheduling can be guaranteed.

Owner:WUHAN UNIV OF TECH

Wind-fire-water co-scheduling method on basis of multi-agent system

ActiveCN102738835BLarge output adjustment rangeOutput adjustment range is smallSingle network parallel feeding arrangementsWind energy generationOperation schedulingElectricity

The invention relates to a power scheduling operation method, in particular to a wind-fire-water co-scheduling method on the basis of a multi-agent system. The method solves the problem that a conventional power scheduling operation method cannot meet the requirement on the large-scale wind power grid-connected operation. The wind-fire-water co-scheduling method on the basis of the multi-agent system is implemented by adopting the following steps of: 1, constructing a wind-fire-water co-scheduling model on the basis of the multi-agent in a scheduling Agent; 2, acquiring predicted data of a load demand in a power system by a load Agent in the power system; 3, sending acquired basic data and real-time data into the wind-fire-water co-scheduling model on the basis of the multi-agent by each thermal power Agent; and 4, issuing and executing a scheduling command by each thermal power Agent and a hydroelectric Agent. The wind-fire-water co-scheduling method is suitable for large-scale wind power grid-connected operation scheduling.

Owner:SHANXI UNIV +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com