Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

54 results about "Block floating-point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

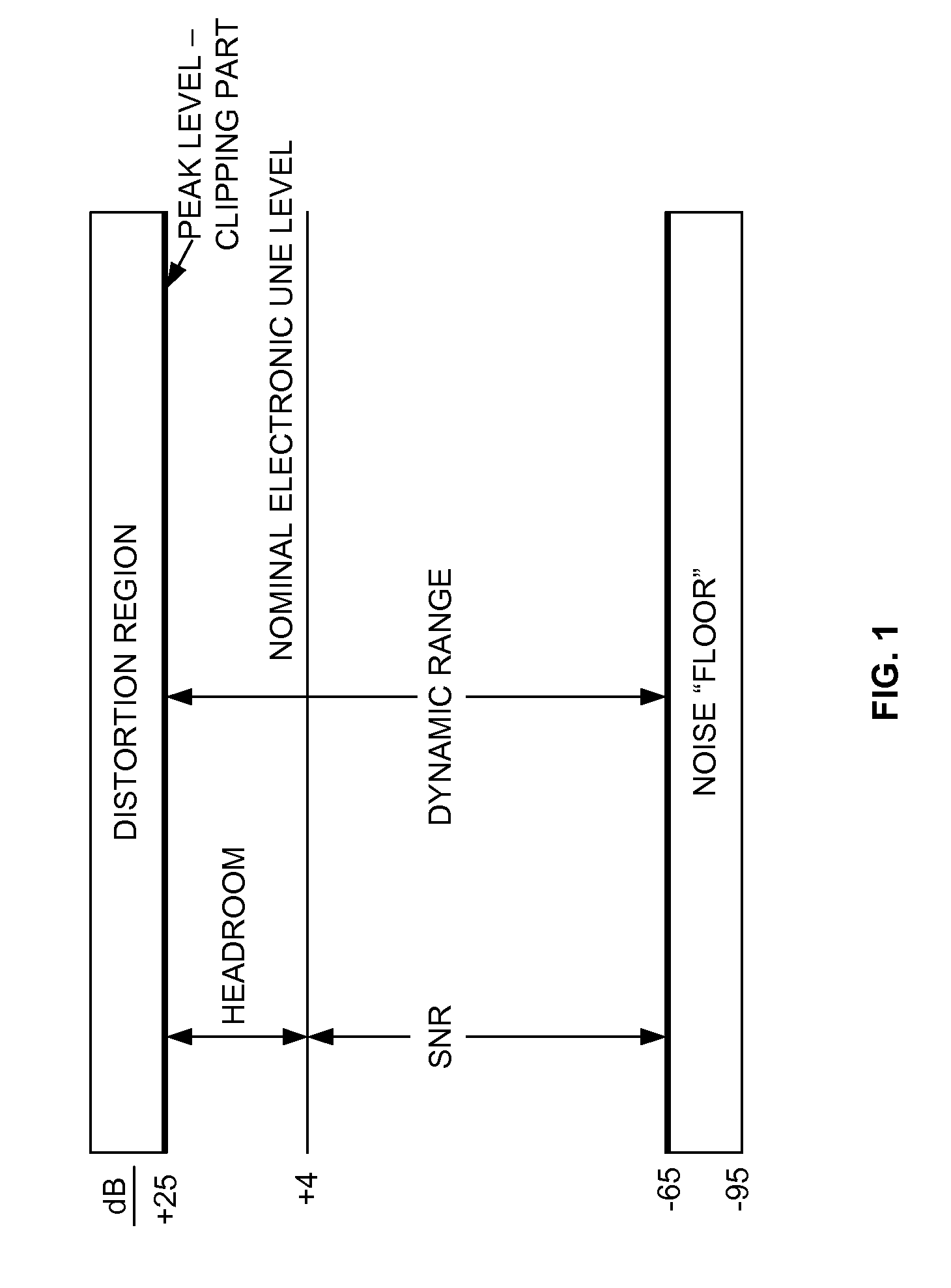

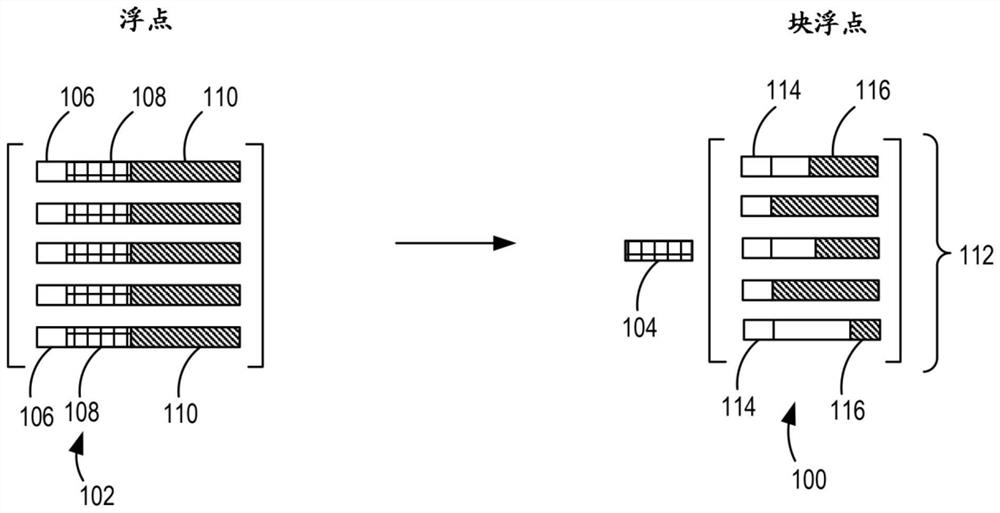

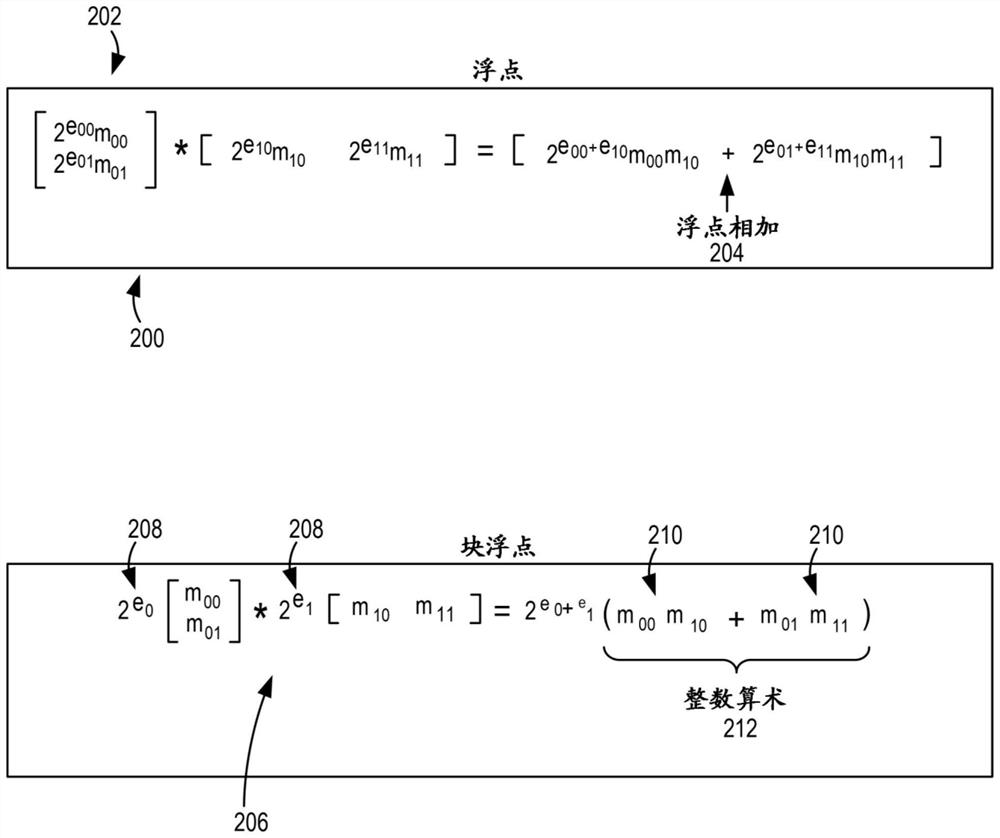

Block floating point (BFP) is a method used to provide an arithmetic approaching floating point while using a fixed-point processor. The algorithm will assign an entire block of data an exponent, rather than single units themselves being assigned an exponent, thus making them a block, rather than a simple floating point. Block floating-point algorithm operations are done through a block using a common exponent, and can be advantageous to limit the space use in the hardware to perform the same functions as floating-point algorithms.

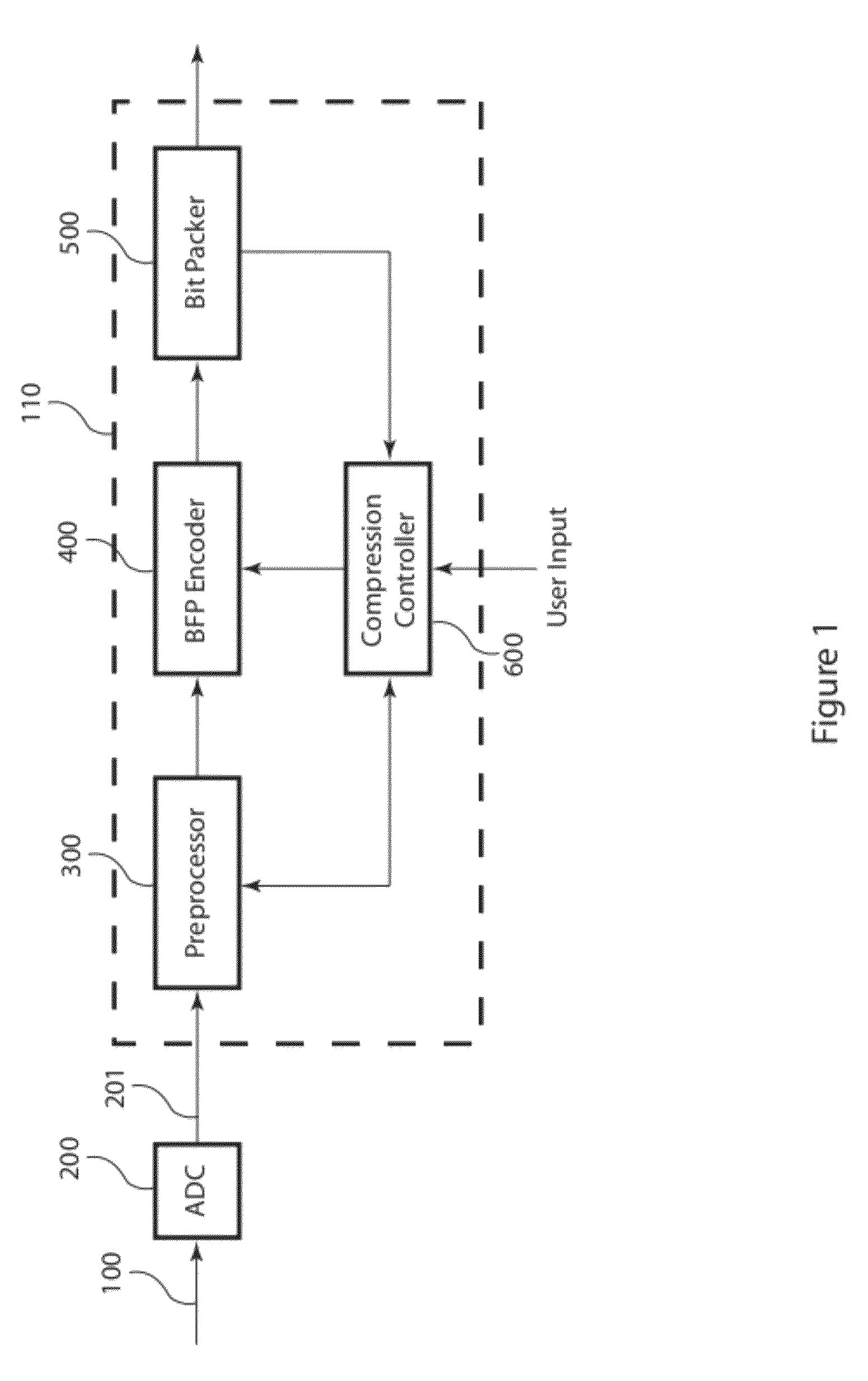

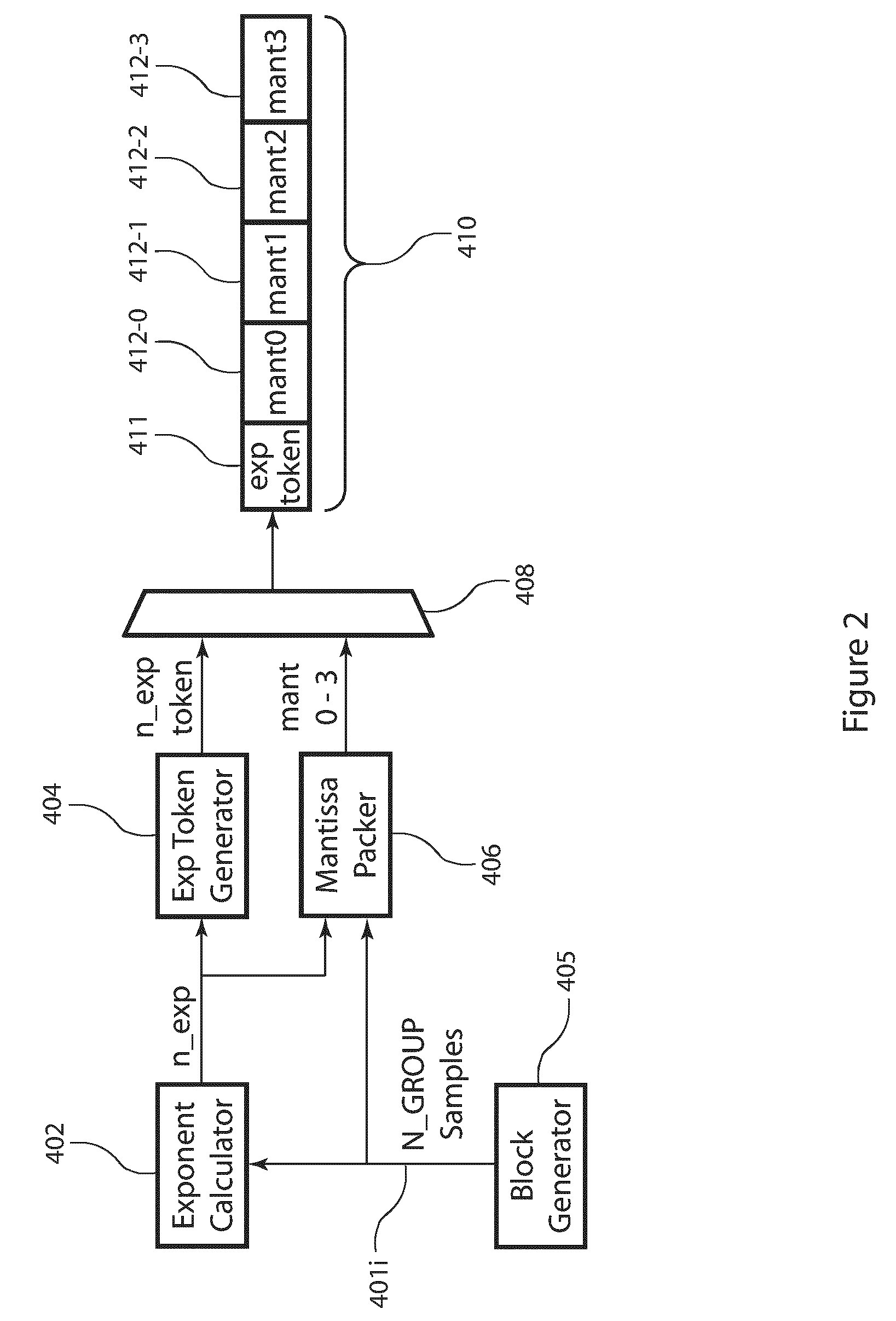

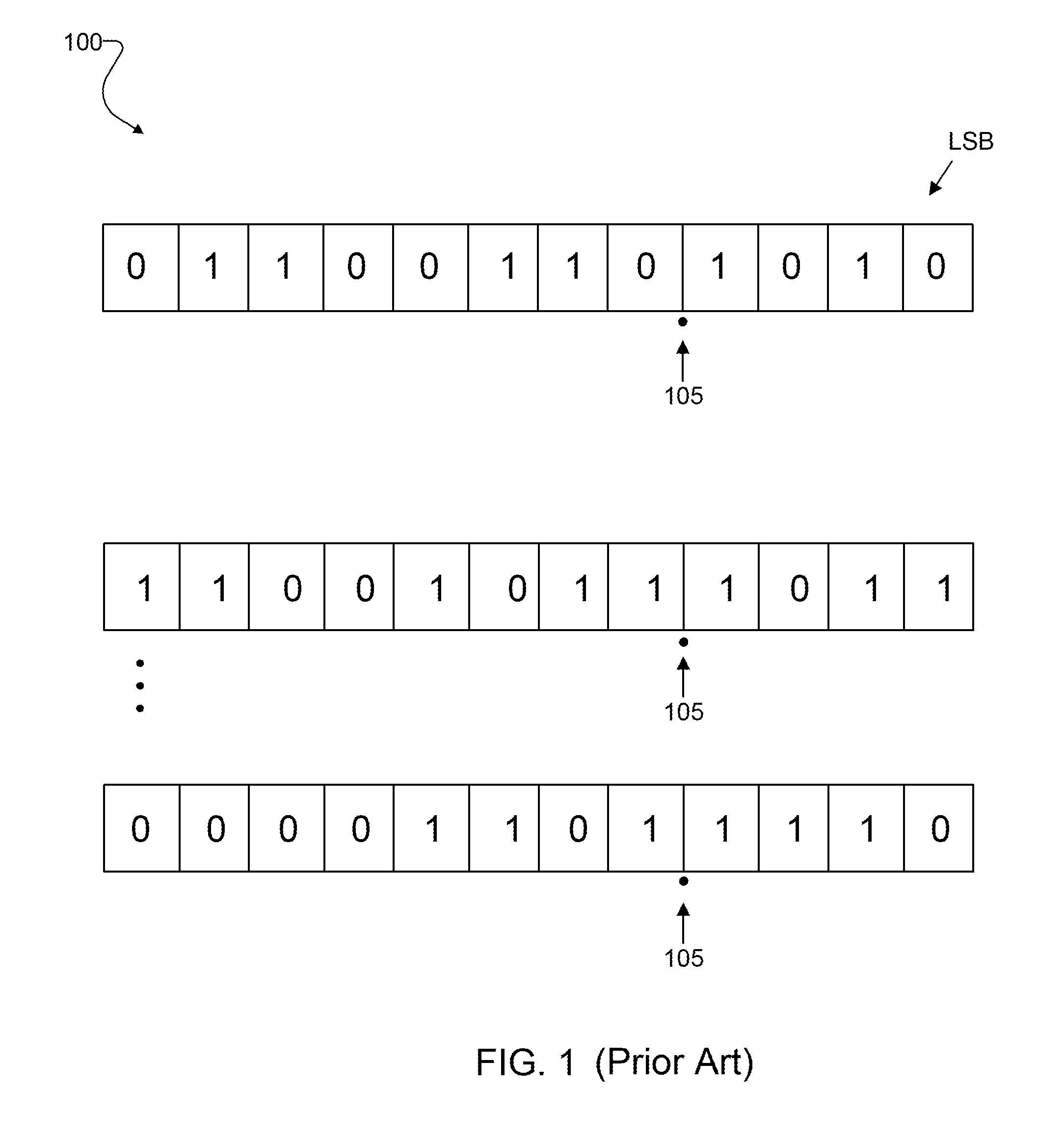

Block floating point compression of signal data

ActiveUS20110099295A1Enhanced block floating point compressionDigital data processing detailsUser identity/authority verificationMaximum magnitudeAlgorithm

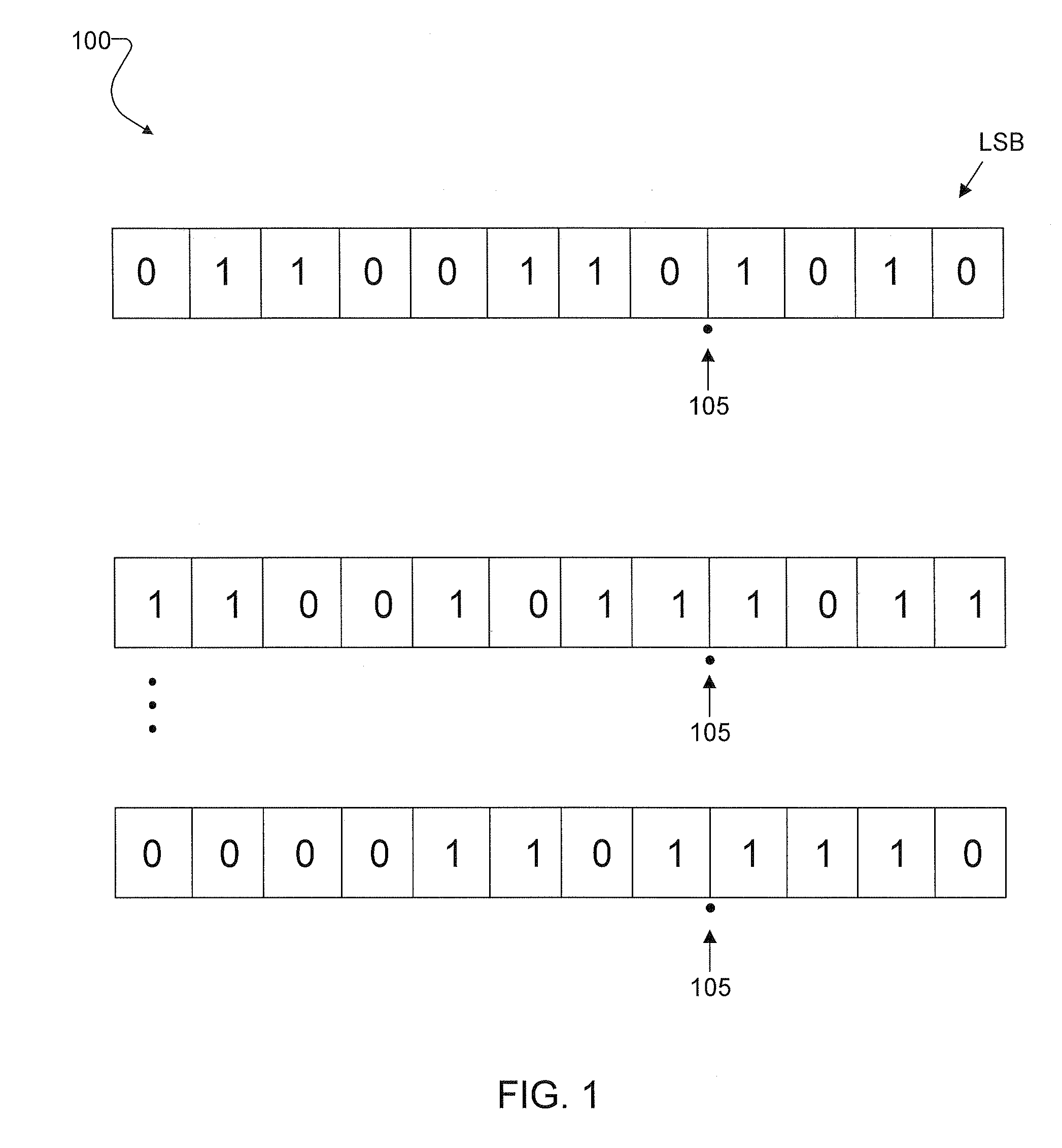

A method and apparatus for compressing signal samples uses block floating point representations where the number of bits per mantissa is determined by the maximum magnitude sample in the group. The compressor defines groups of signal samples having a fixed number of samples per group. The maximum magnitude sample in the group determines an exponent value corresponding to the number of bits for representing the maximum sample value. The exponent values are encoded to form exponent tokens. Exponent differences between consecutive exponent values may be encoded individually or jointly. The samples in the group are mapped to corresponding mantissas, each mantissa having a number of bits based on the exponent value. Removing LSBs depending on the exponent value produces mantissas having fewer bits. Feedback control monitors the compressed bit rate and / or a quality metric. This abstract does not limit the scope of the invention as described in the claims.

Owner:ALTERA CORP

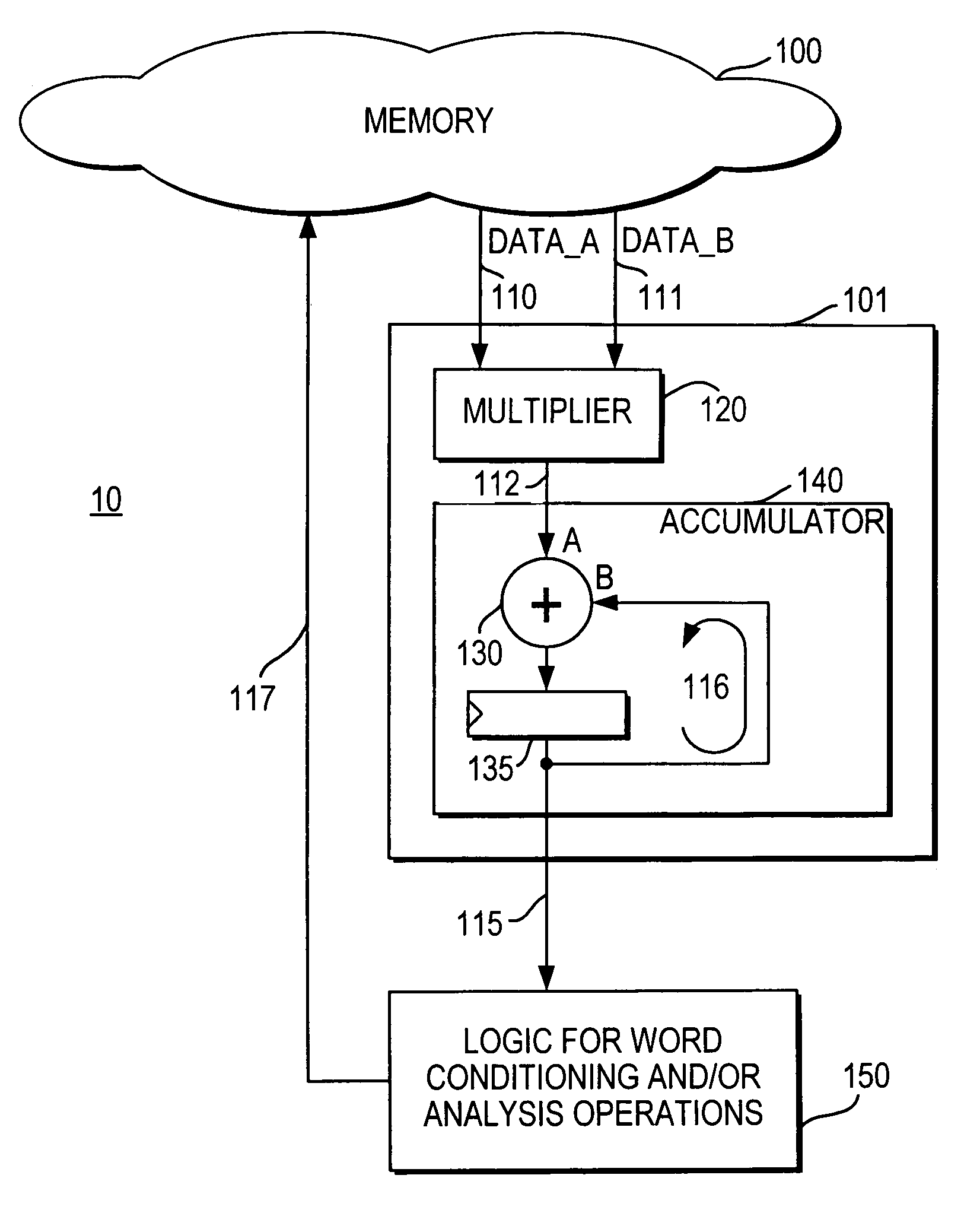

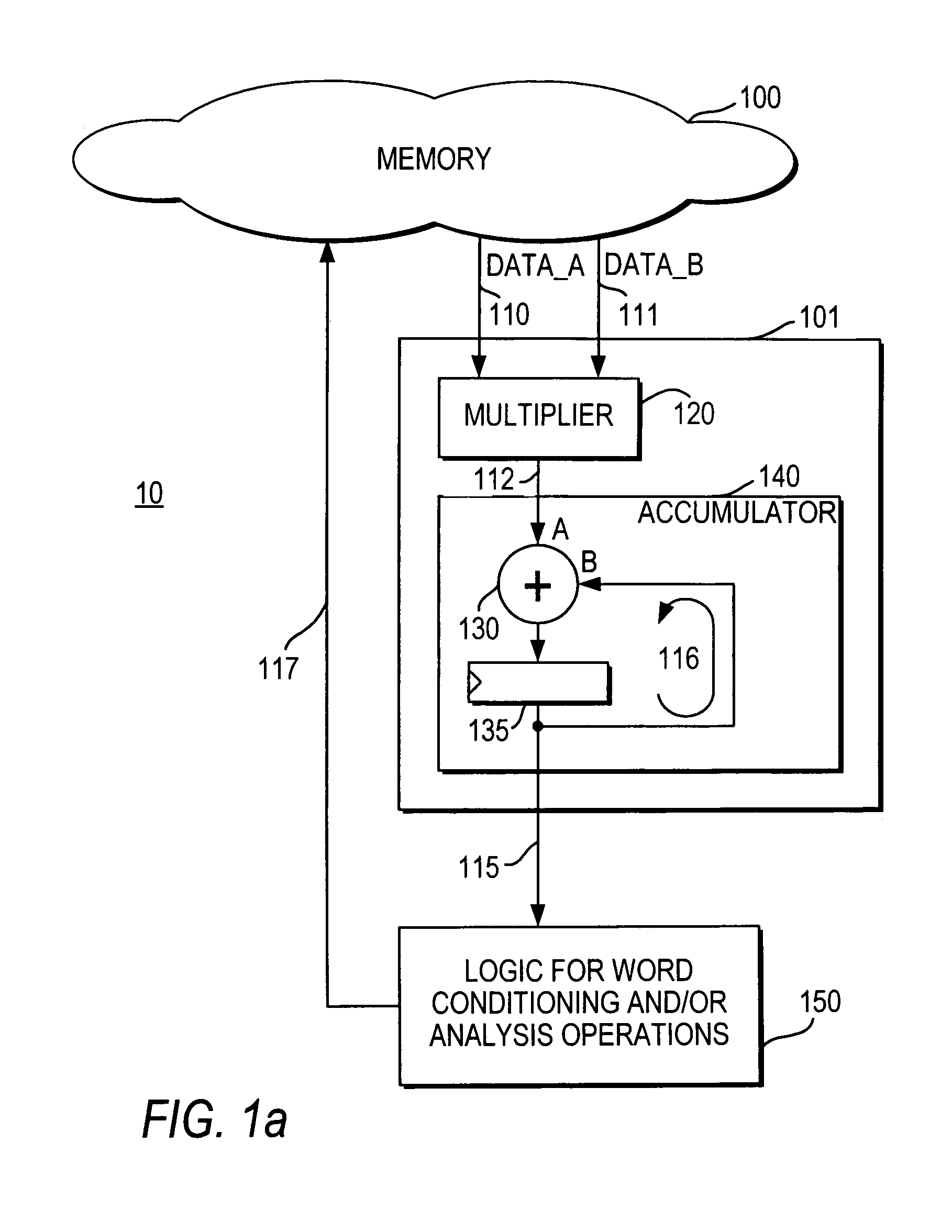

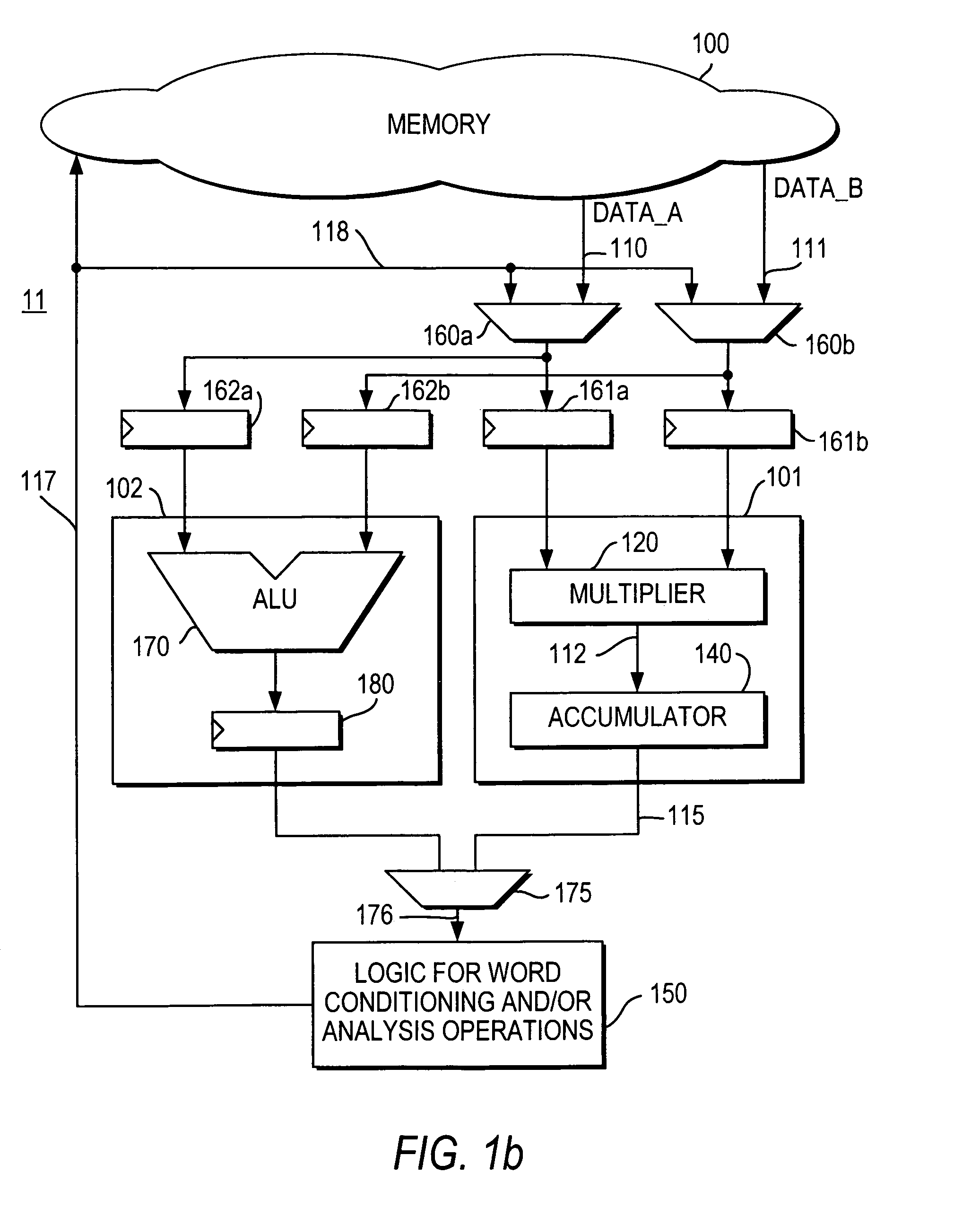

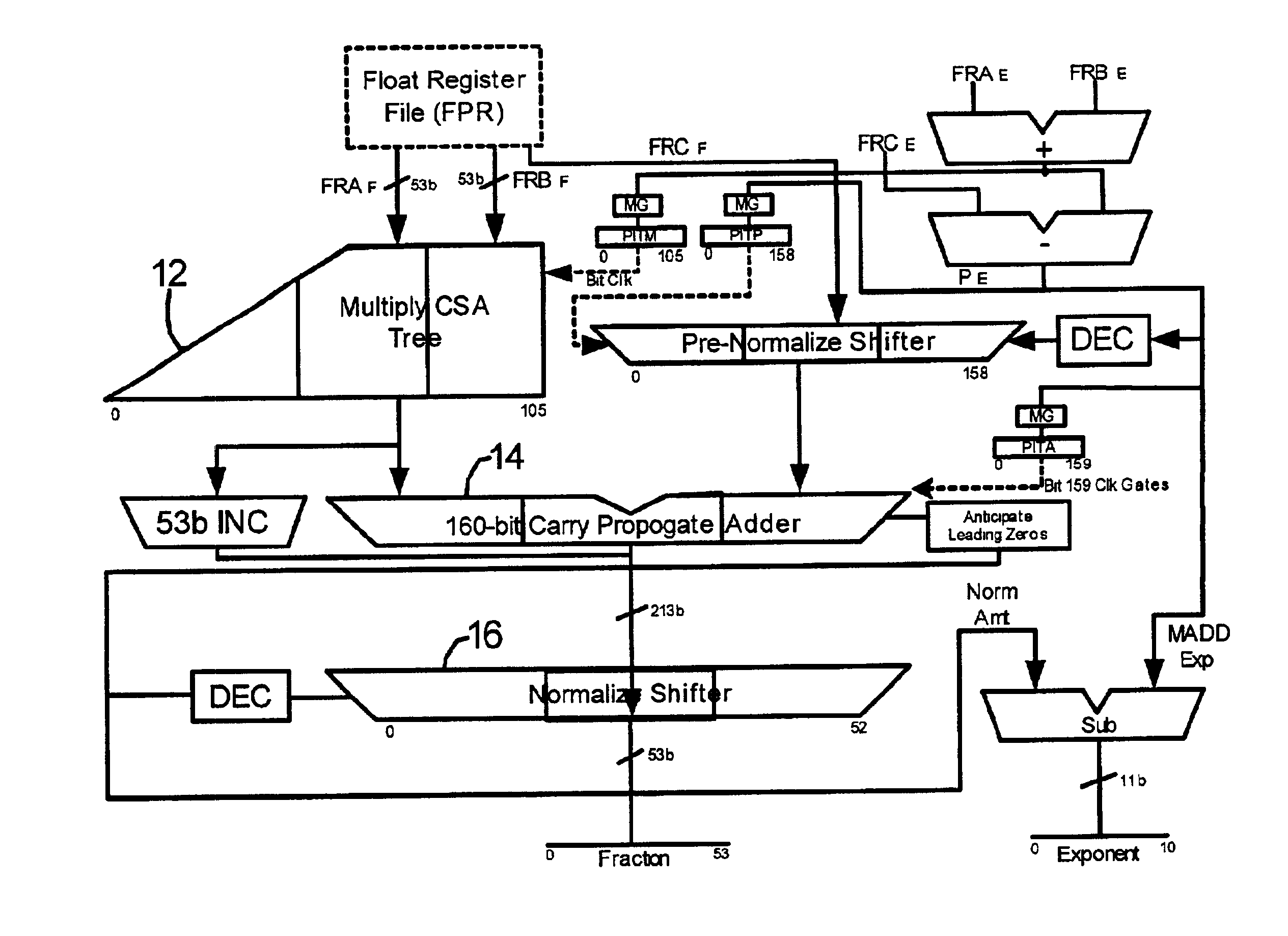

DSP processor architecture with write datapath word conditioning and analysis

InactiveUS6978287B1Improve throughputReduce delaysDigital computer detailsProgram controlRoundingBlock floating-point

Owner:ALTERA CORP

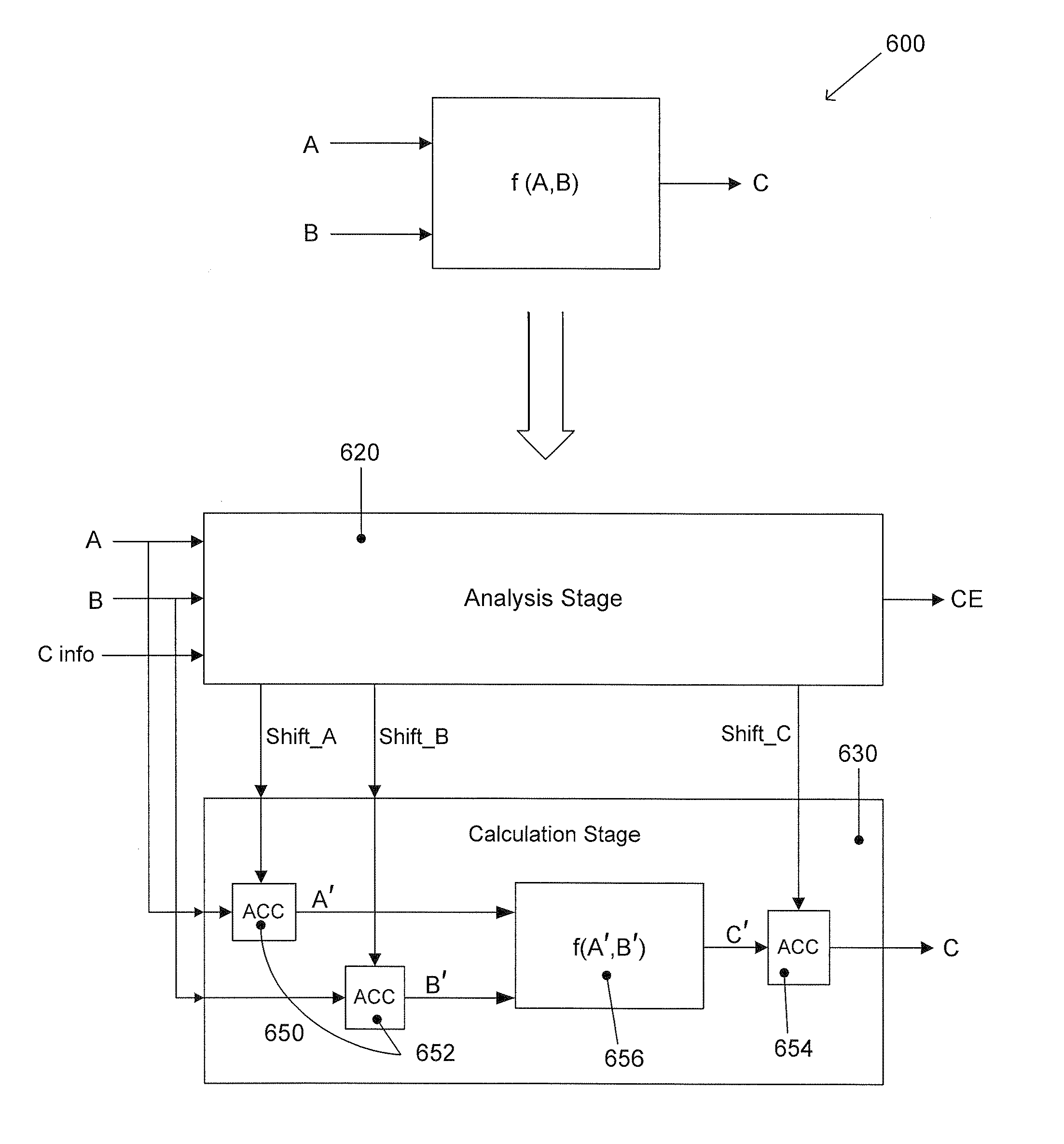

Methods and apparatus for automatic accuracy- sustaining scaling of block-floating-point operands

InactiveUS20090292750A1Digital computer detailsComplex mathematical operationsBlock floating-pointOperand

A computer-implemented method performs an operation on a set of at least one BFP operands to generate a BFP result. The method is designed to reduce the risks of overflow and loss of accuracy attributable to the operation. The method performs an analysis to determine respective shift values for each of the operands and the result. The method calculates result mantissas by shifting the stored bit patterns representing the corresponding operand mantissa values by their respective associated shift values determined in the analysis step, performing the operation on shifted operand mantissas to generate preliminary result mantissa, and shifting the preliminary result mantissas by a number of bits determined in the analysis step.

Owner:AVIGILON ANALYTICS CORP

Block floating point compression of signal data

ActiveUS8301803B2Enhanced block floating point compressionDigital data processing detailsUser identity/authority verificationMaximum magnitudeAlgorithm

A method and apparatus for compressing signal samples uses block floating point representations where the number of bits per mantissa is determined by the maximum magnitude sample in the group. The compressor defines groups of signal samples having a fixed number of samples per group. The maximum magnitude sample in the group determines an exponent value corresponding to the number of bits for representing the maximum sample value. The exponent values are encoded to form exponent tokens. Exponent differences between consecutive exponent values may be encoded individually or jointly. The samples in the group are mapped to corresponding mantissas, each mantissa having a number of bits based on the exponent value. Removing LSBs depending on the exponent value produces mantissas having fewer bits. Feedback control monitors the compressed bit rate and / or a quality metric. This abstract does not limit the scope of the invention as described in the claims.

Owner:ALTERA CORP

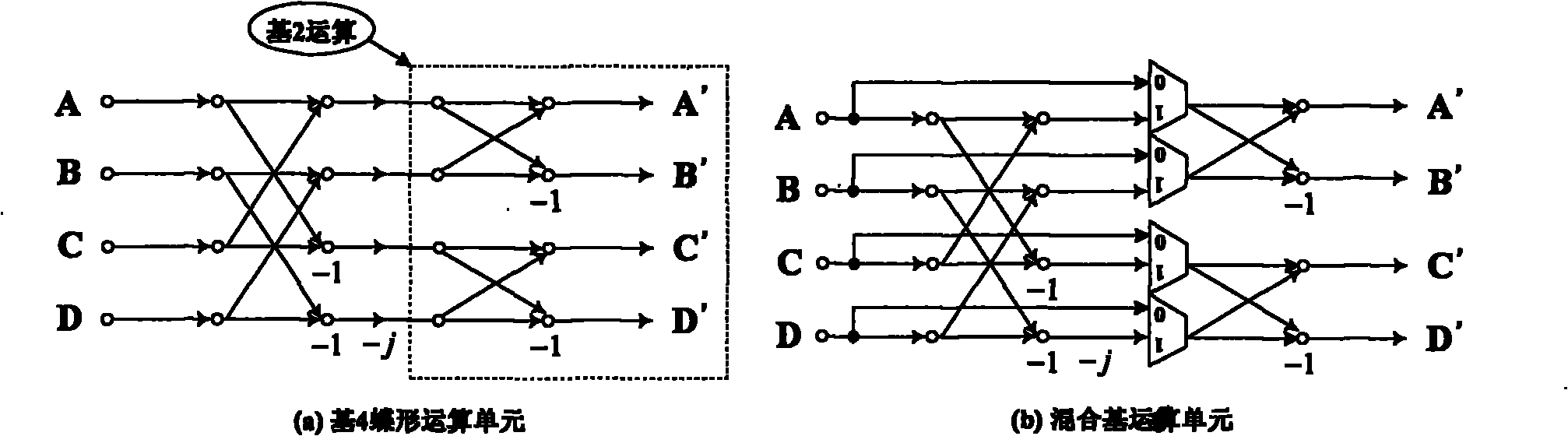

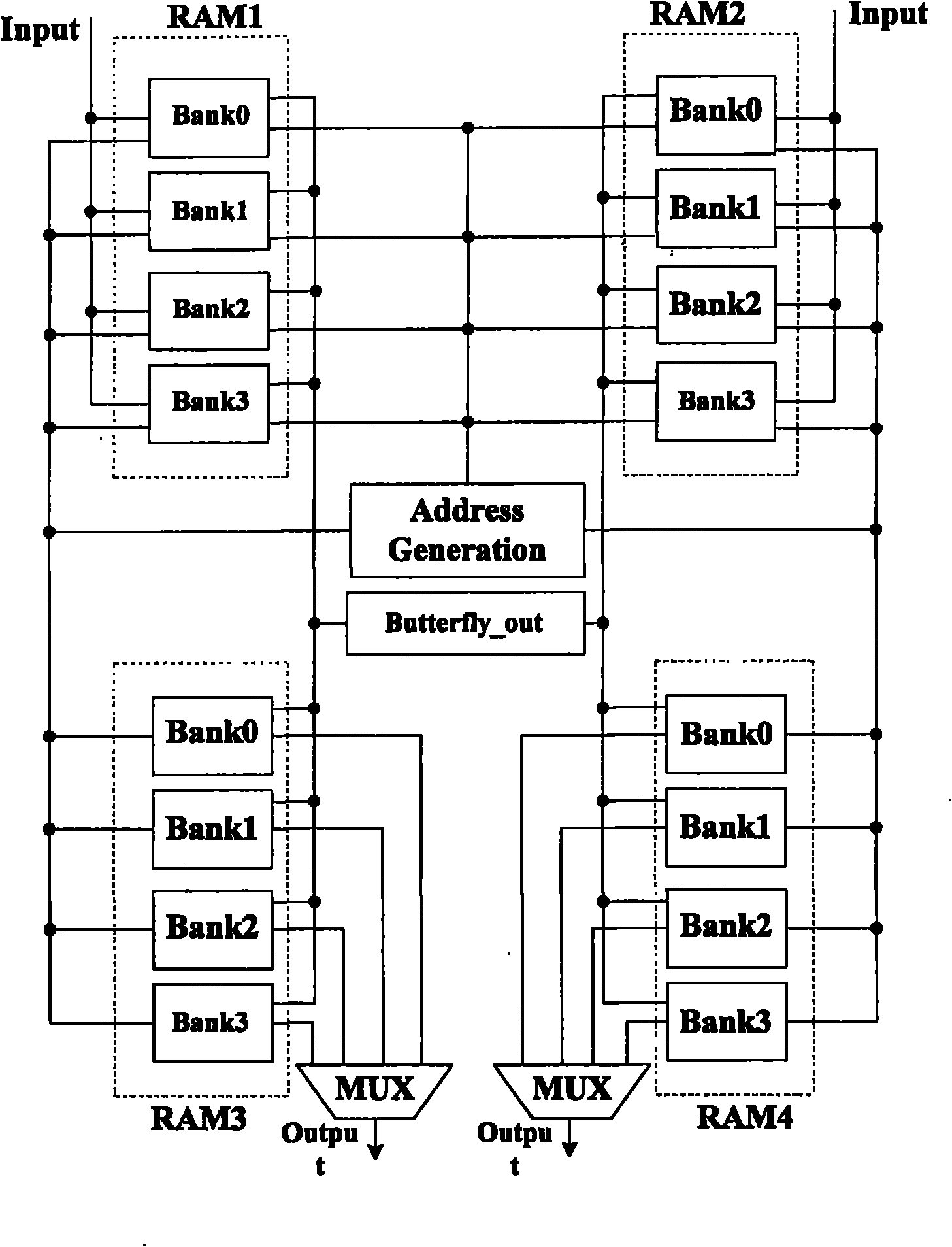

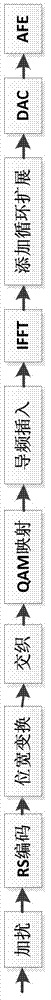

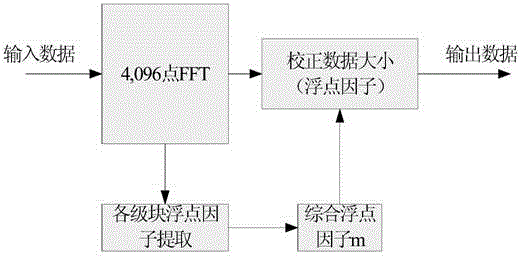

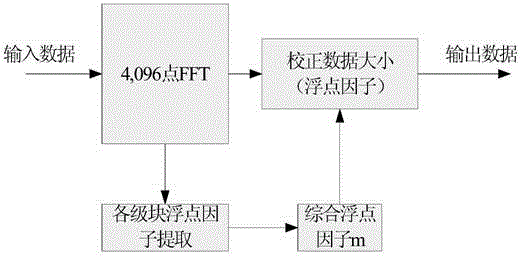

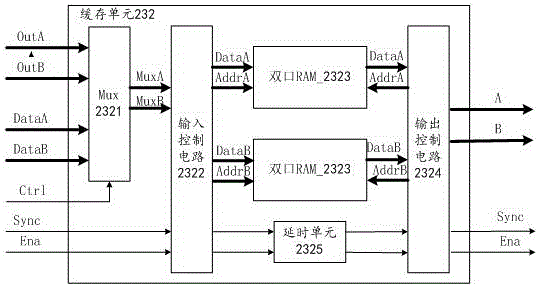

802.11n based FFT/IFFT (fast fourier transform)/(inverse fast fourier transform) processor

InactiveCN102063411AHighly configurableSave resourcesComplex mathematical operationsData streamRandom access memory

The invention discloses an 802.11n based FFT / IFFT (Fast Fourier Transformation) / (Inverse Fast Fourier Transformation) processor, comprising a RAM (Random Access Memory), an address generating module, a sequence adjusting module, a shift control module, an exponent generating module and a butterfly operation unit, wherein the processor adopts a RAM in a double ping-pang structure and is used for realizing caching of data stream; the address generating module is used for generating an address used for writing data into the RAM and reading data from the RAM; the sequence adjusting module is used for selecting the data in the RAM and adjusting the sequence; the shift control module shares an exponent at each level; the exponent generating module is used for generating a maximum exponent after operation at each level according to the calculated result of the butterfly operation unit; and the butterfly operational unit adopts a block floating point algorithm to complete radix-4 or radix-2 operation. By utilizing the invention, FFT arithmetic and IFFT arithmetic can be conveniently carried out, thus solving the problems that the traditional FFT / IFFT processor has abundant resource, accuracy is low and operation time is too long.

Owner:INST OF MICROELECTRONICS CHINESE ACAD OF SCI

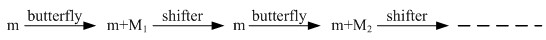

A mixed-radix FFT/IFFT implementation device and method with variable number of points

The invention discloses a mixed-radix FFT / IFFT realization device and method thereof with variable points. The present invention is a dual-purpose system of FFT and IFFT, which realizes that the number of points can be allocated; two blocks of RAM are used in the transformation process, one of which only stores input data, and when each operation completes its first-level butterfly operation, the RAM can be used to Accept the data of the next operation, and save time when completing multiple consecutive operations; in addition, the method of combining base 4 and base 2 is used to realize the number of points of the L power of 2 (L is a positive integer greater than or equal to 3) FFT and IFFT operations, in the butterfly operation, use block floating-point operations, which solves the data bit width expansion caused by multiplication and addition in the butterfly operation process, saves storage space, and at the same time performs original address storage operations on the results to achieve approximation of interstage flow. The method and device have the characteristics of simple control, high efficiency, flexible configuration, good scalability and the like.

Owner:ZHEJIANG UNIV

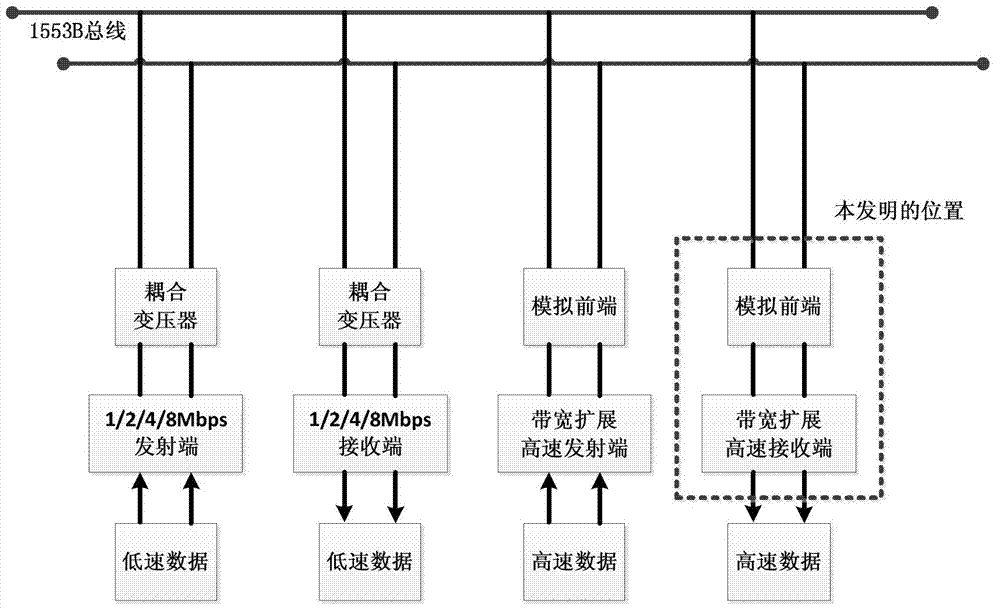

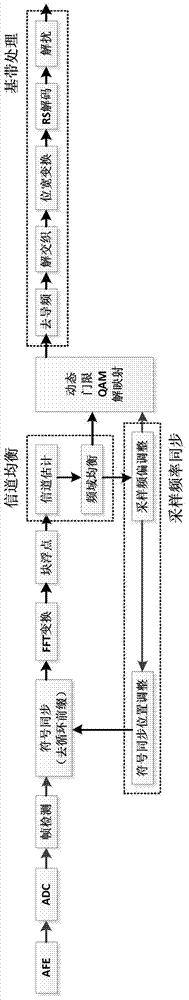

Receiving method for bandwidth expansion of 1553B communication bus

InactiveCN102821080AImprove frequency band utilizationIncrease data transfer rateTransmitter/receiver shaping networksMultiple carrier systemsCommunication deviceFrequency offset

The invention relates to a 1553B digital communication device. In order to expand the communication capacity, expand the data-transfer capacity and enhance the communication properties such as property in resisting interference and the like in a 1553B bus, the invention provides the technical scheme: a receiving method for bandwidth expansion of a 1553B communication bus. The following method sub-modules: an analog front end (AFE), a frame detecting module, a symbol synchronizing module, an inverse FFT (fast Fourier transformation) module, a block floating point module, a channel balancing module, a frequency offset synchronizing module, a QAM (quadrature amplitude modulation) de-mapping module of a dynamic threshold and a base-band processing module are provided in the receiving method, wherein the AFE is used for performing signal amplification and AD (analog-to-digital) conversion, sampling an analog signal into a digital signal and transmitting; the frame detecting module is used for detecting the access situation of a frame; the IFFT module is used for processing a base-band signal. The method provided by the invention is mainly used for 1553B digital communication.

Owner:TIANJIN UNIV

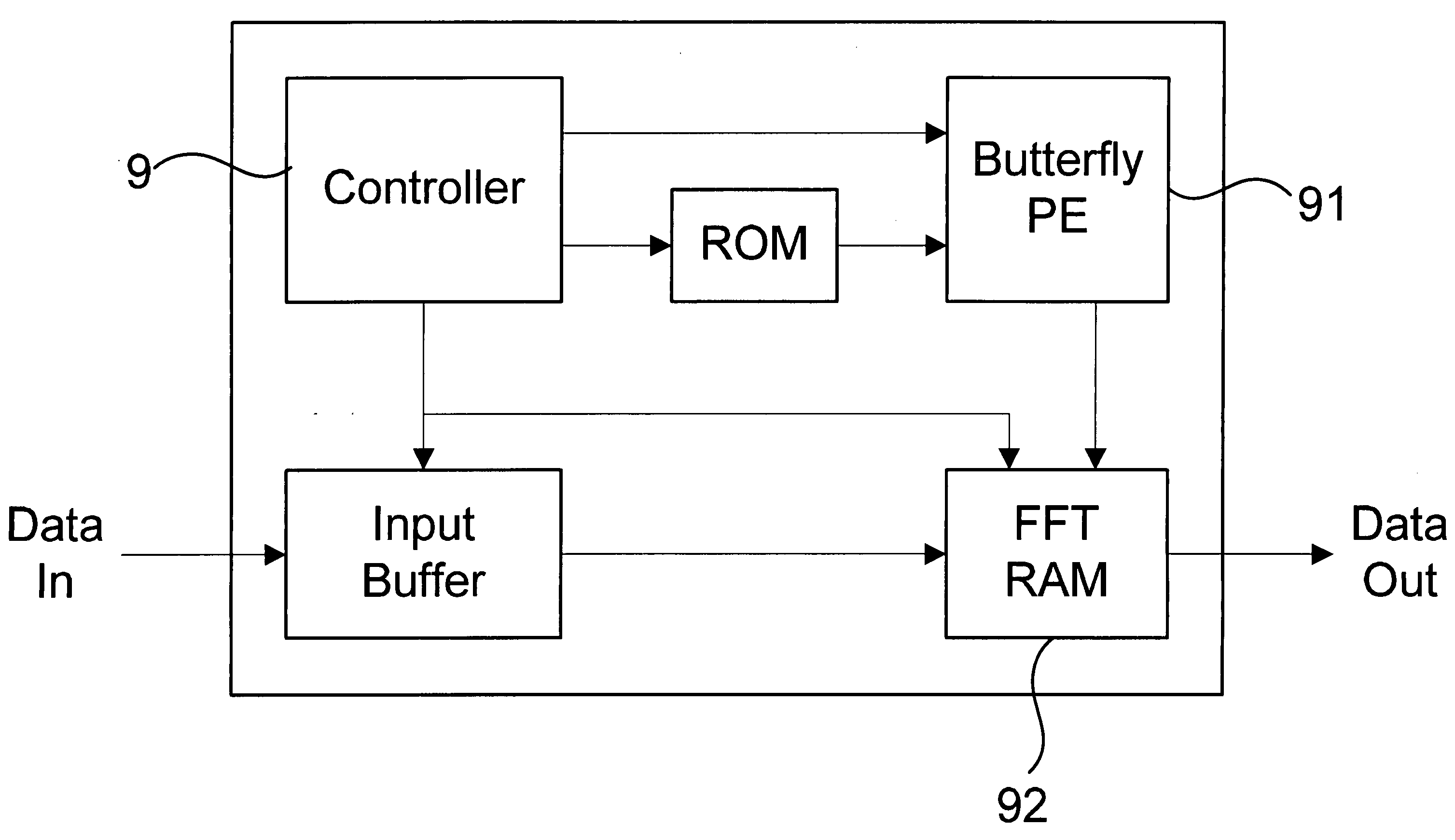

Pipeline-based reconfigurable mixed-radix FFT processor

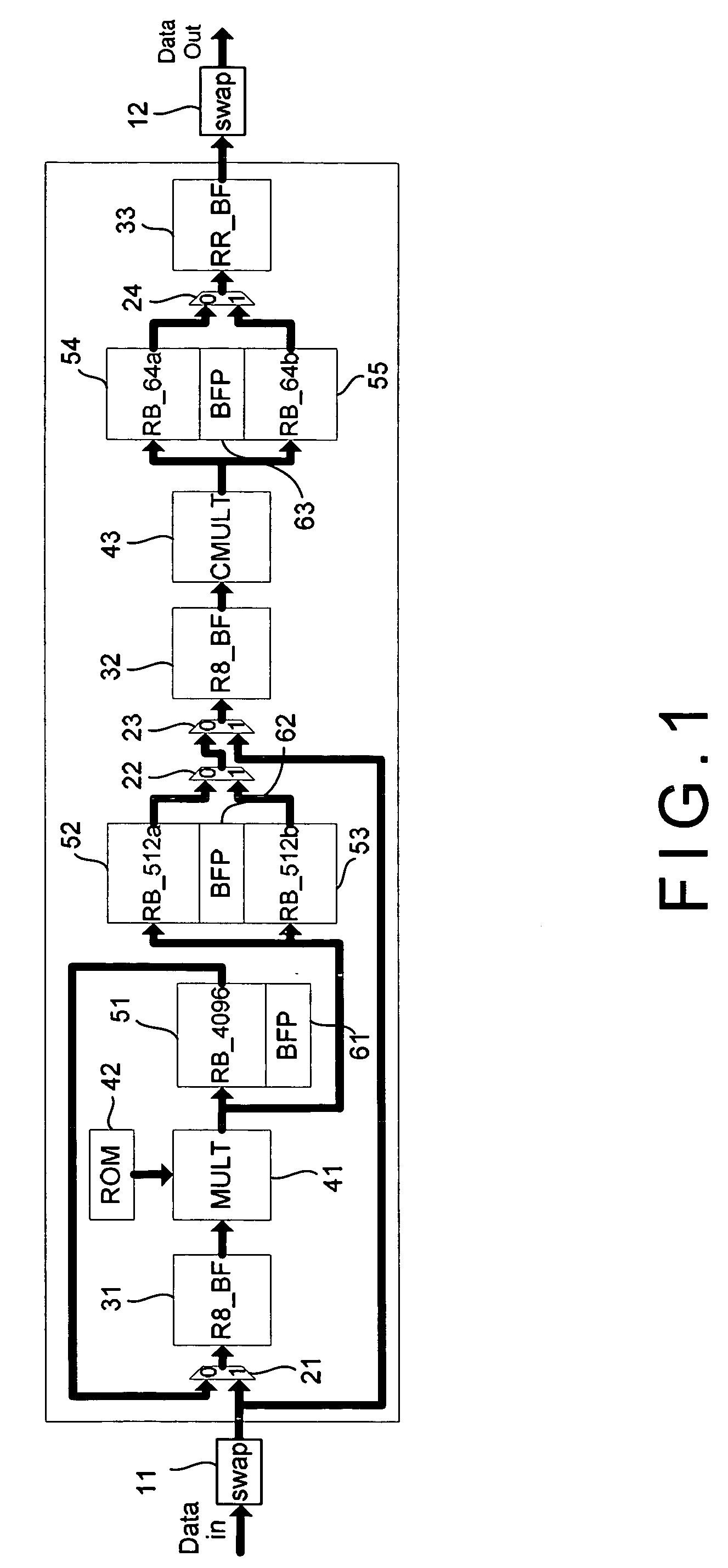

ActiveUS20080155003A1Reduce computing time costsReduce hardware costsDigital computer detailsComplex mathematical operationsSignal-to-noise ratio (imaging)Block floating-point

The present invention discloses a fast Fourier transform (FFT) processor based on multiple-path delay commutator architecture. A pipelined architecture is used and is divided into 4 stages with 8 parallel data path. Yet, only three physical computation stages are implemented. The process or uses the block floating point method to maintain the signal-to-noise ratio. Internal storage elements are required in the method to hold and switch intermediate data. With good circuit partition, the storage elements can adjust their capacity for different modes, from 16-point to 4096-point FFTs, by turning on or turning off the storage elements.

Owner:NAT CHIAO TUNG UNIV

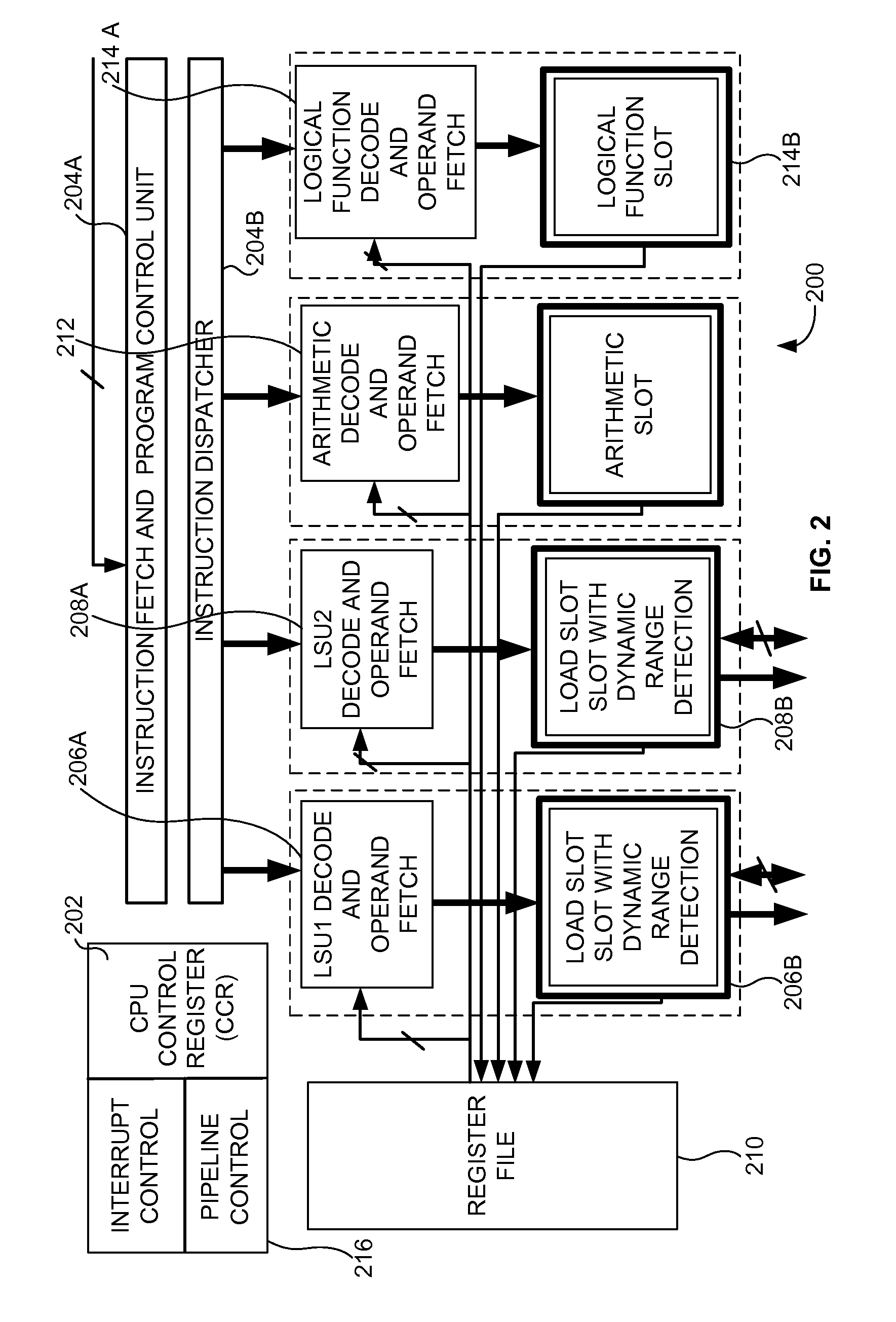

Zero Overhead Block Floating Point Implementation in CPU's

ActiveUS20120284464A1Digital data processing detailsMemory systemsBlock floating-pointProcessing element

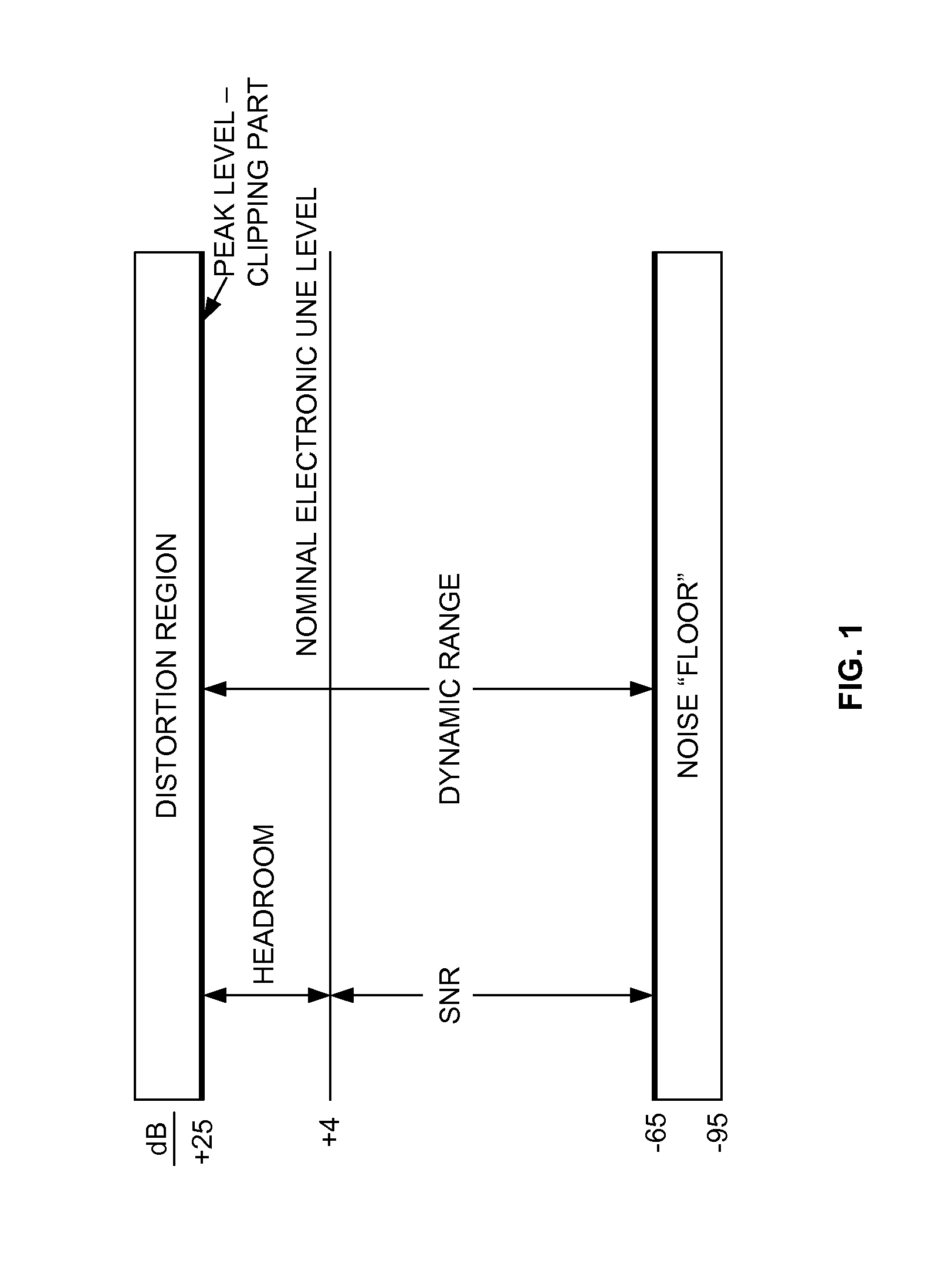

A system for computing a block floating point scaling factor by detecting a dynamic range of an input signal in a central processing unit without additional overhead cycles is provided. The system includes a dynamic range monitoring unit that detects the dynamic range of the input signal by snooping outgoing write data and incoming memory read data of the input signal. The dynamic range monitoring unit includes a running maximum count unit that stores a least value of a count of leading zeros and leading ones, and a running minimum count that stores a least value of the count of trailing zeros. The dynamic range is detected based on the least value of the count of leading zeros and leading ones and the count of trailing zeros. The system further includes a scaling factor computation module that computes the block floating point (BFP) scaling factor based on the dynamic range.

Owner:SAANKHYA LABS PVT

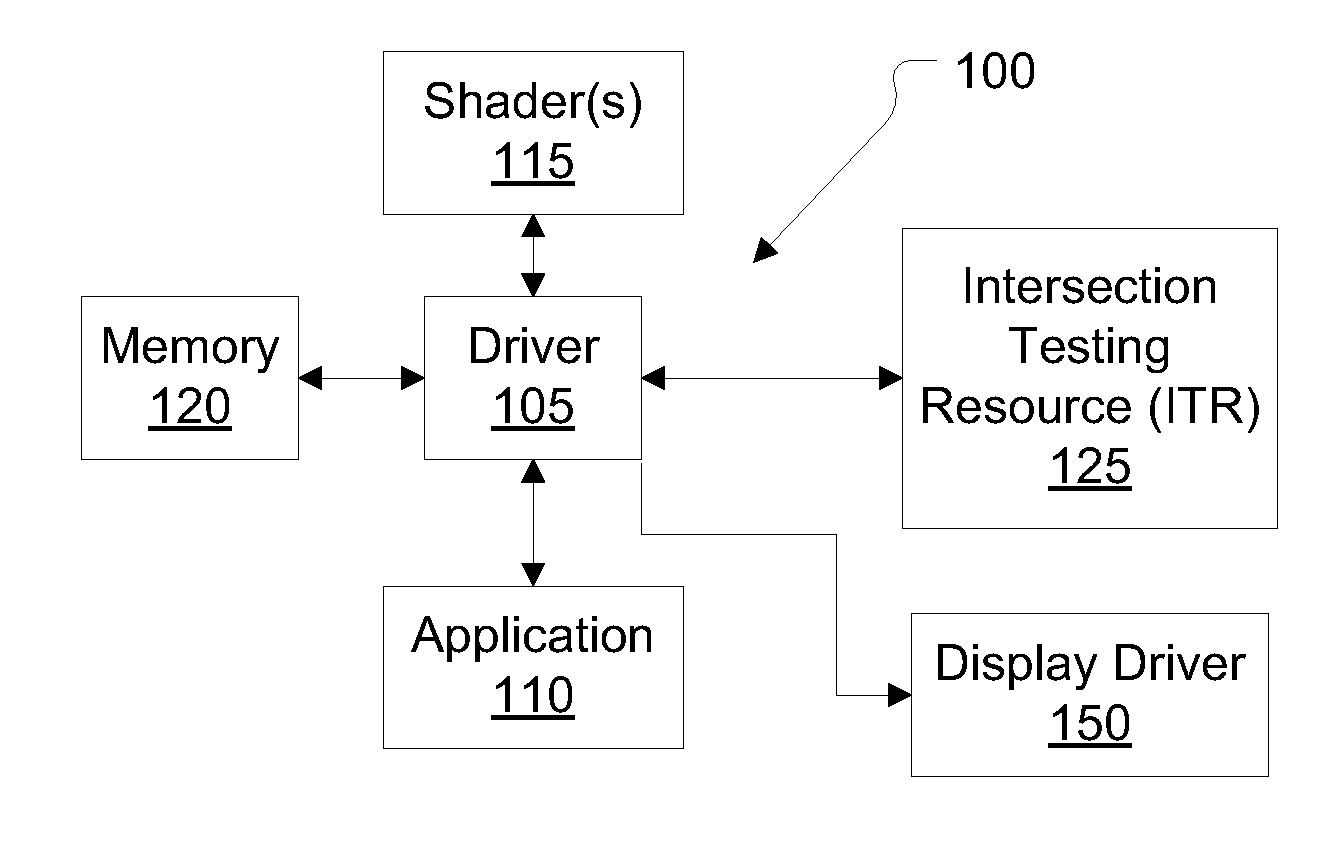

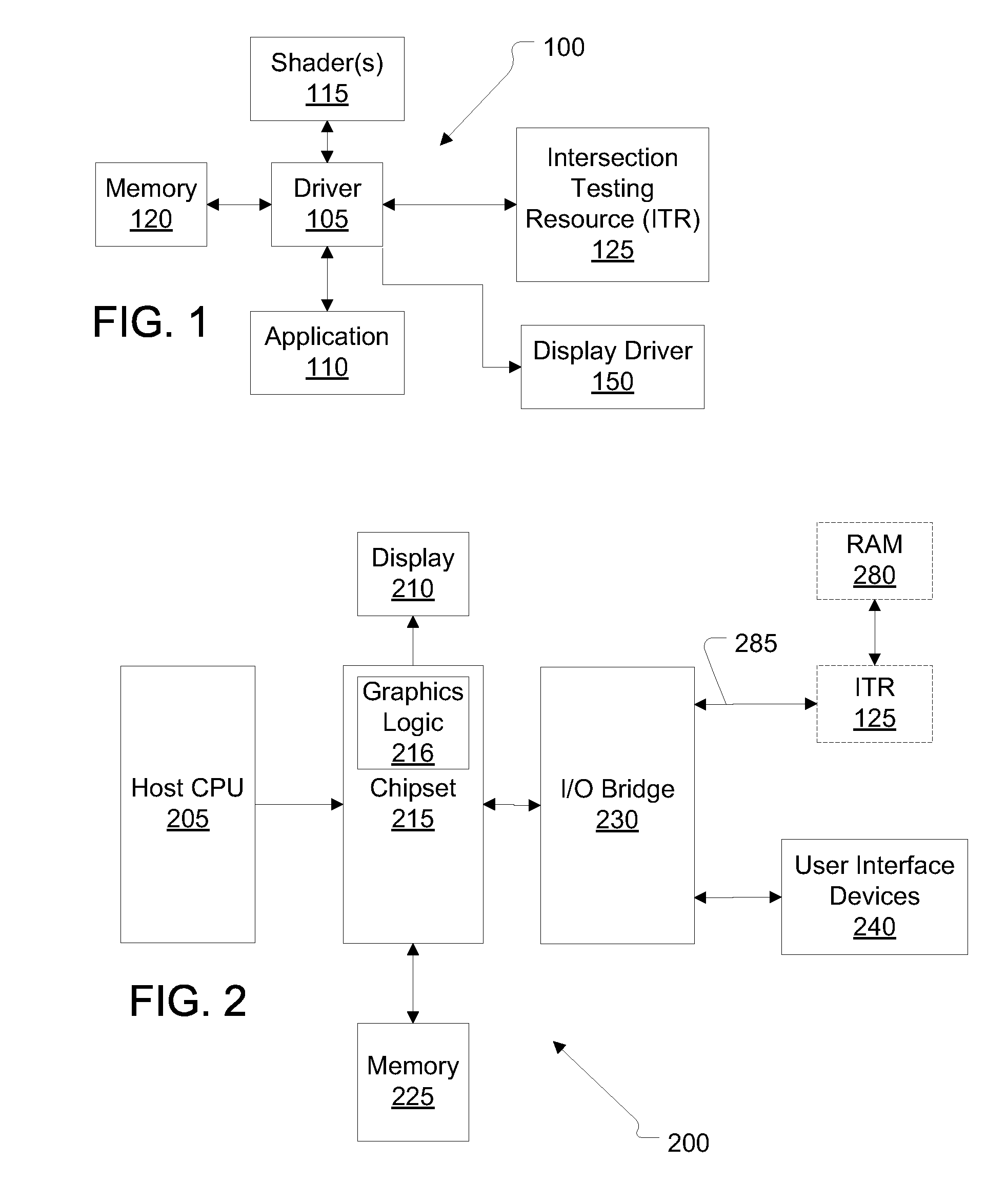

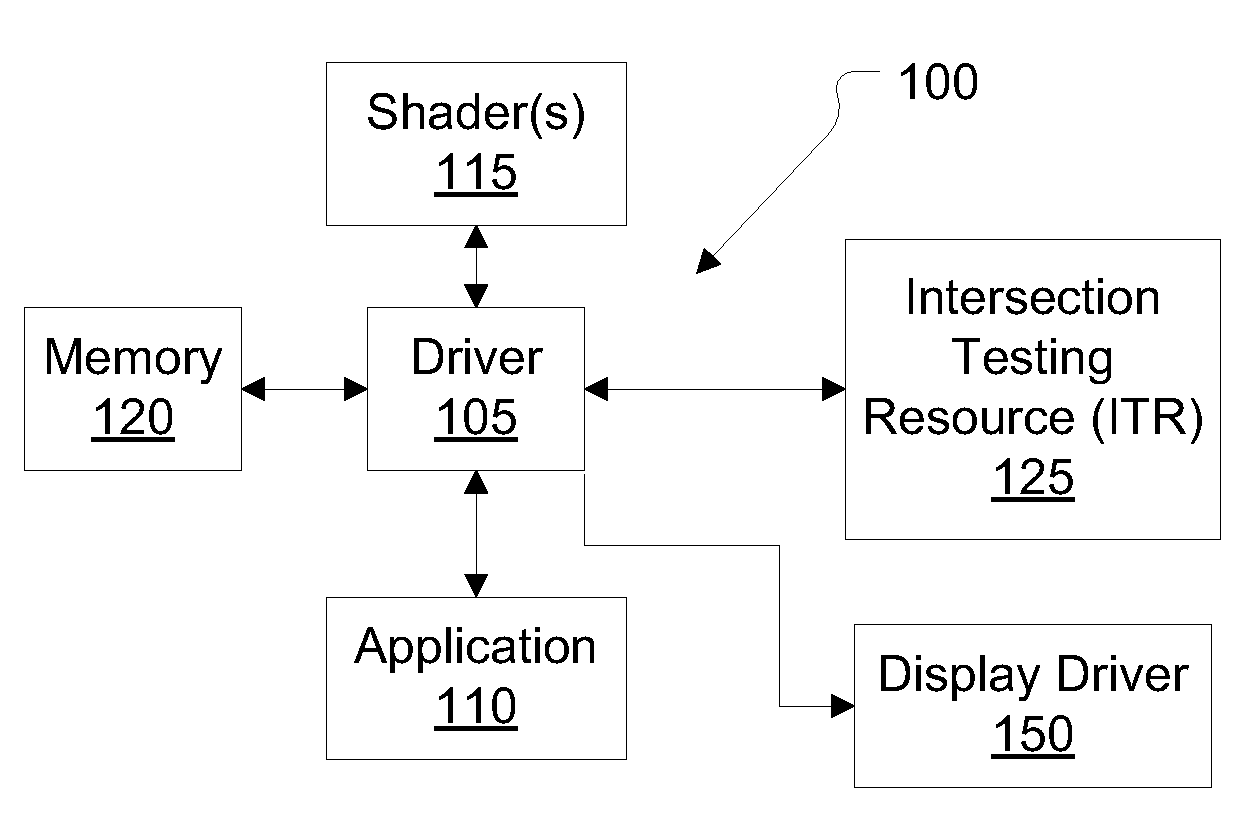

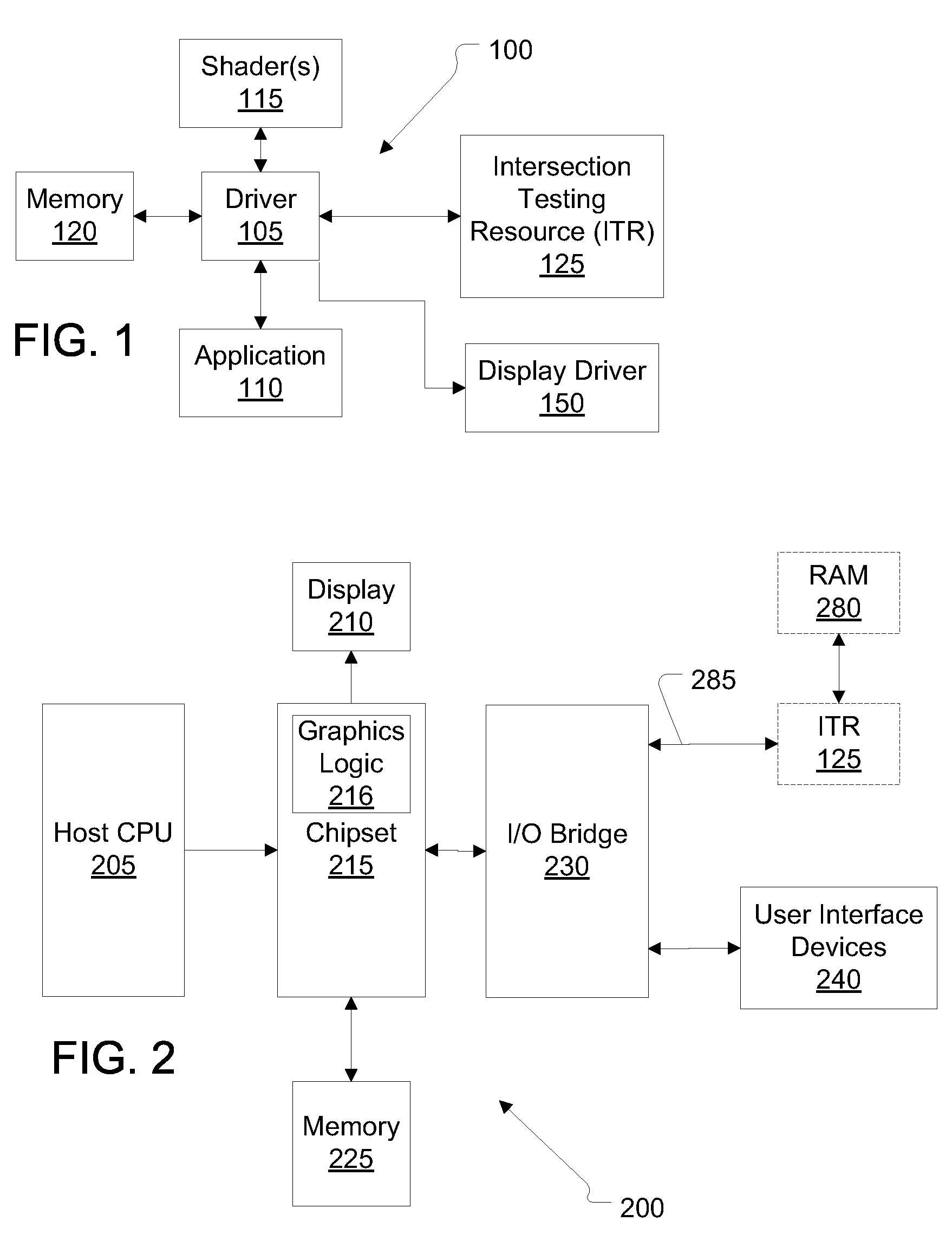

Apparatus and method for ray tracing with block floating point data

ActiveUS20090244058A1Image data processing detailsSpecial data processing applicationsGeneral purposeParallel computing

Systems and methods include high throughput and / or parallelized ray / geometric shape intersection testing using intersection testing resources accepting and operating with block floating point data. Block floating point data sacrifices precision of scene location in ways that maintain precision where more beneficial, and allow reduced precision where beneficial. In particular, rays, acceleration structures, and primitives can be represented in a variety of block floating point formats, such that storage requirements for storing such data can be reduced. Hardware accelerated intersection testing can be provided with reduced sized math units, with reduced routing requirements. A driver for hardware accelerators can maintain full-precision versions of rays and primitives to allow reduced communication requirements for high throughput intersection testing in loosely coupled systems. Embodiments also can include using BFP formatted data in programmable test cells or more general purpose processing elements.

Owner:IMAGINATION TECH LTD

Apparatus and method for ray tracing with block floating point data

Systems and methods include high throughput and / or parallelized ray / geometric shape intersection testing using intersection testing resources accepting and operating with block floating point data. Block floating point data sacrifices precision of scene location in ways that maintain precision where more beneficial, and allow reduced precision where beneficial. In particular, rays, acceleration structures, and primitives can be represented in a variety of block floating point formats, such that storage requirements for storing such data can be reduced. Hardware accelerated intersection testing can be provided with reduced sized math units, with reduced routing requirements. A driver for hardware accelerators can maintain full-precision versions of rays and primitives to allow reduced communication requirements for high throughput intersection testing in loosely coupled systems. Embodiments also can include using BFP formatted data in programmable test cells or more general purpose processing elements.

Owner:IMAGINATION TECH LTD

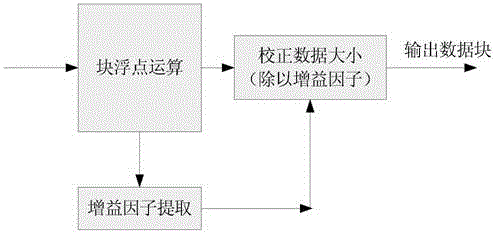

Operation method for FFT

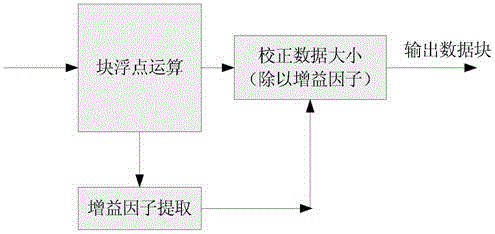

InactiveCN104679720ASolve conflictsImprove the efficiency of floating-point operationsComplex mathematical operationsBlock floating-pointParallel computing

The invention provides an operation method for FFT. The method comprises the following steps: performing block floating point operation, moving data blocks leftwards or rightwards to regulate the signal power of FFT input during operation, performing data regulation according to an internal calculation result of each stage after the data operation of each stage by virtue of a special block floating point operation module, storing a set of data sharing a shift factor with an independent data field on hardware, and dividing result data by a preset gain to obtain correct data after operation, wherein the shift factors of the data blocks in block floating points are determined by maximum values of all the data in the whole data blocks. According to the floating point operation method, conflicts between a fixed-point algorithm and a floating point algorithm are solved, floating point operation efficiency is improved, and cost is lowered.

Owner:CHENGDU GOLDENWAY TECH

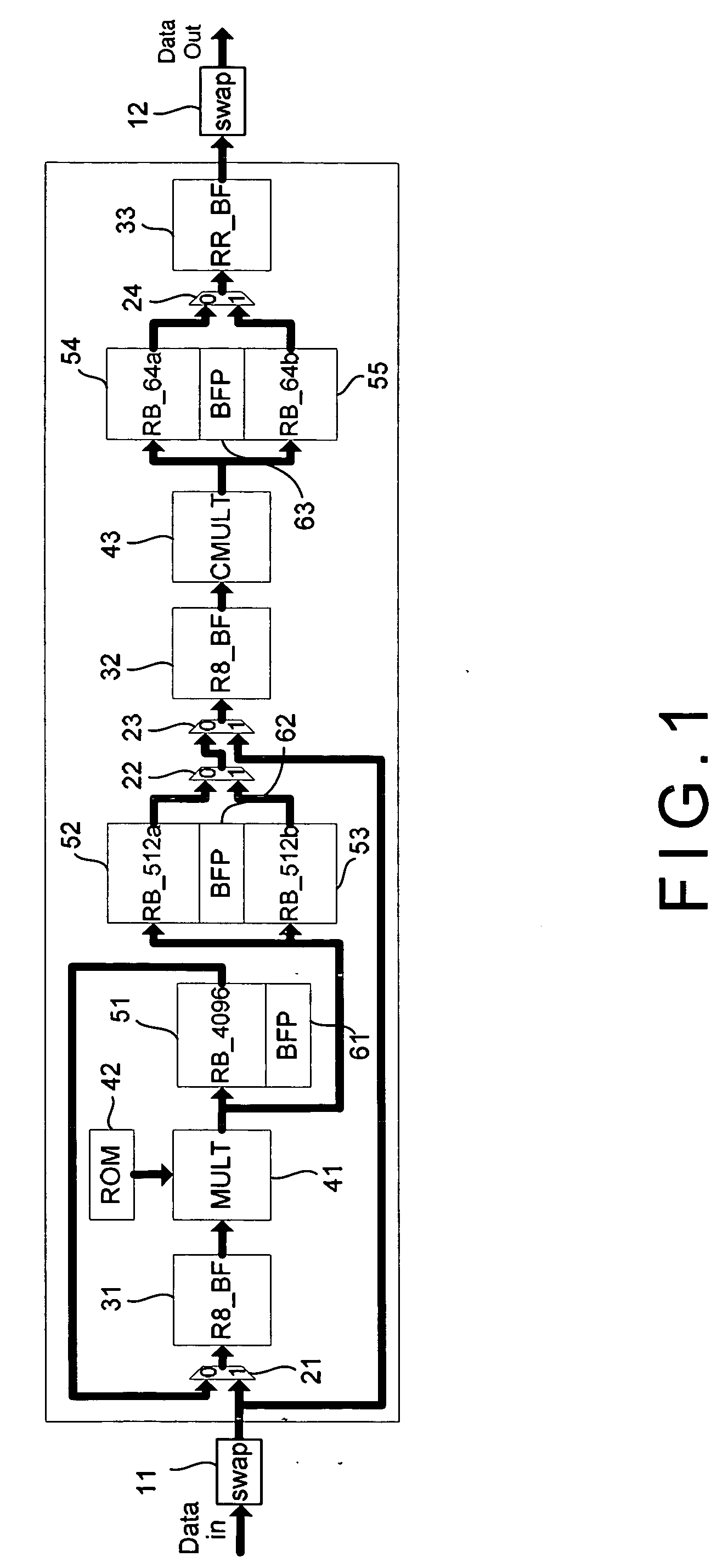

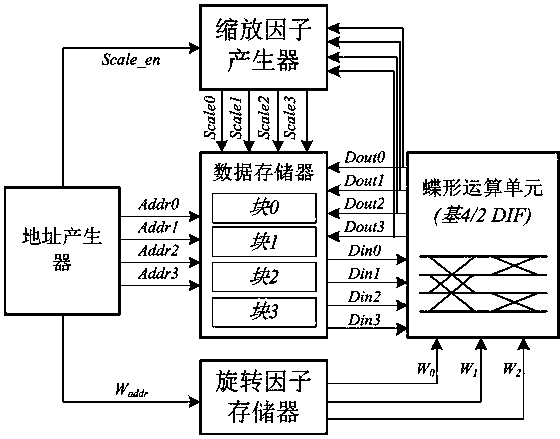

High-precision and low-power-consumption FFT (fast Fourier transform) processor

InactiveCN103412851AFlexible configurationReduce areaComplex mathematical operationsAddress generatorBlock floating-point

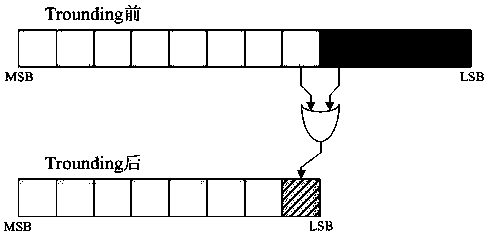

The invention belongs to the technical field of an integrated circuit design, and particularly relates to a high-precision and low-power-consumption FFT processor. The FFT processor adopts a storage device interaction based framework, and comprises a butterfly computation unit, an address generator, a data storage device, a twiddle factor storage device and a zoom factor generator. The high-precision and low-power-consumption FFT processor adopts an improved block floating point technology and or gate based Trounding technology, a data path is equipped with a constant coefficient multiplying unit array and a common plural multiplying unit, and which module is used for multiplying operation is selected according to a data sequence number based determination mechanism. The FFT process has small error and high entire conversion accuracy, and can effectively reduce the power consumption of the multiplying operation; and the FFT processor can be excellently applied to an embedded type low-power-consumption system.

Owner:FUDAN UNIV

Pipeline-based reconfigurable mixed-radix FFT processor

ActiveUS7849123B2Low costShorten the timeDigital computer detailsComplex mathematical operationsSignal-to-noise ratio (imaging)Block floating-point

The present invention discloses a fast Fourier transform (FFT) processor based on multiple-path delay commutator architecture. A pipelined architecture is used and is divided into 4 stages with 8 parallel data path. Yet, only three physical computation stages are implemented. The process or uses the block floating point method to maintain the signal-to-noise ratio. Internal storage elements are required in the method to hold and switch intermediate data. With good circuit partition, the storage elements can adjust their capacity for different modes, from 16-point to 4096-point FFTs, by turning on or turning off the storage elements.

Owner:NAT CHIAO TUNG UNIV

Floating point unit power reduction scheme

ActiveUS6922714B2Reduce power consumptionReduce amountComputations using contact-making devicesFloating-point unitBlock floating-point

A system and method for reducing the power consumption of a floating point unit of a processor wherein the processor iteratively performs floating point calculations based upon one or more input operands. The exponential value of a floating point is precalculated within an iterative loop through a superscalar instruction buffer resident on the processor that holds at least 3 iterations of the largest single cycle iteration possible on the processor, and the precalculated exponent value is used to generate a bit mask that enables a minimal number of fractional data flow bits. Alternately, a look-ahead can be used to obtain the exponent value from at least two subsequent iterations of the loop.

Owner:INTELLECTUAL DISCOVERY INC

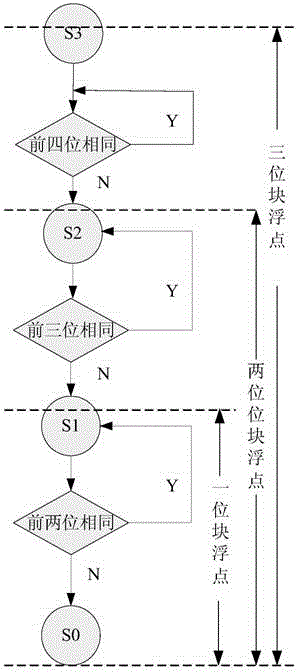

Operation method of FFT (Fast Fourier Transformation) processor

ActiveCN104679721ASolve conflictsImprove the efficiency of floating-point operationsComplex mathematical operationsBlock floating-pointFft processor

The invention provides an operation method of an FFT (Fast Fourier Transformation) processor. The method comprises the following steps: setting multiple stages of butterfly operation in an FPGA (Field Programmable Gate Array) program, wherein all the stages share a block floating point shifting factor; performing judgment through data according to the judgment state of the previous block floating point factor before each stage of operation to decide a shifting choice during the output of a data memory; controlling a gain which is output finally through the shifting sum of all the stages. The invention provides a floating point operation method, the contradiction between a fixed point algorithm and a floating point algorithm is solved, the floating point operation efficiency is improved, and the cost is reduced.

Owner:南京晶达微电子科技有限公司

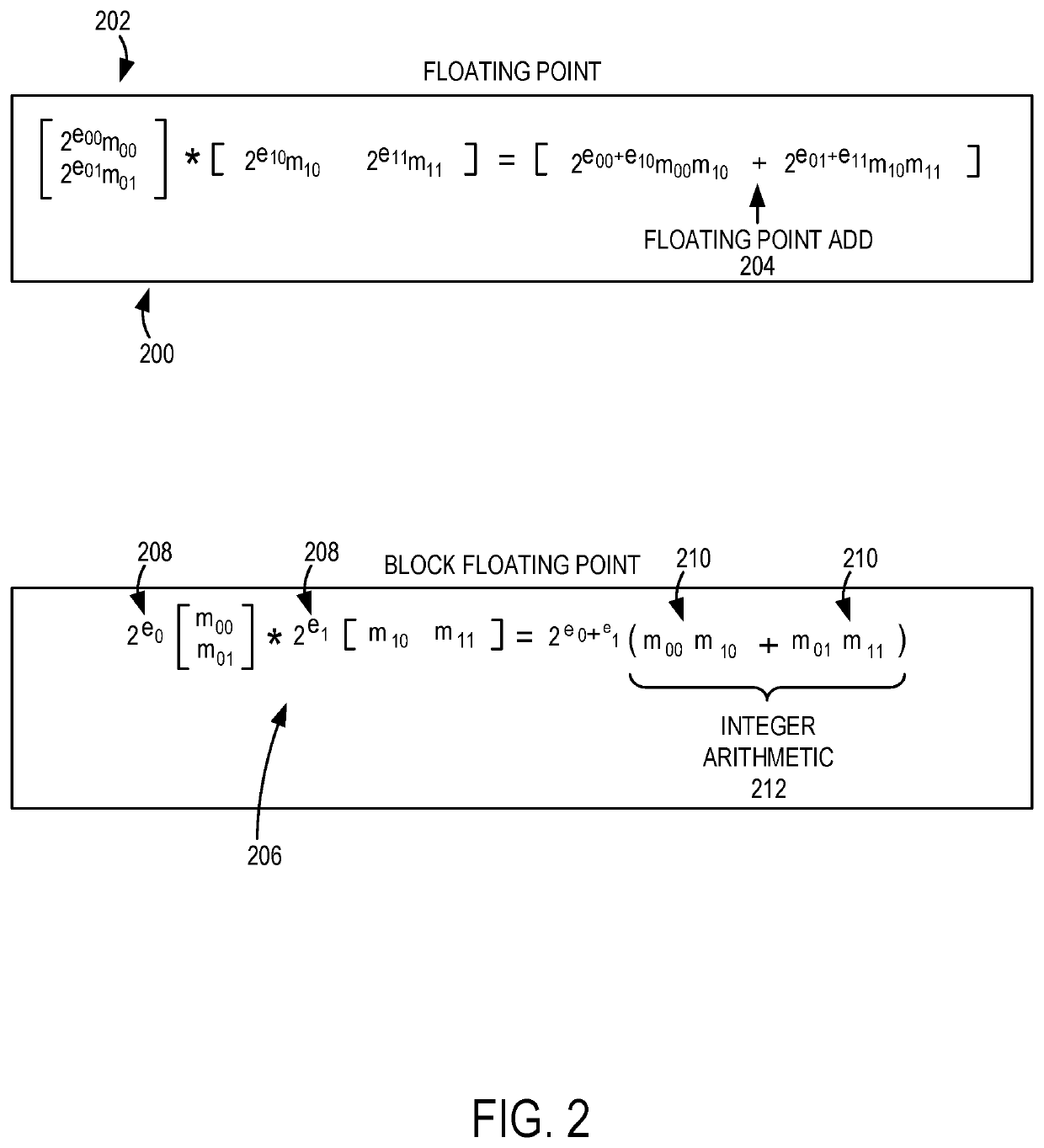

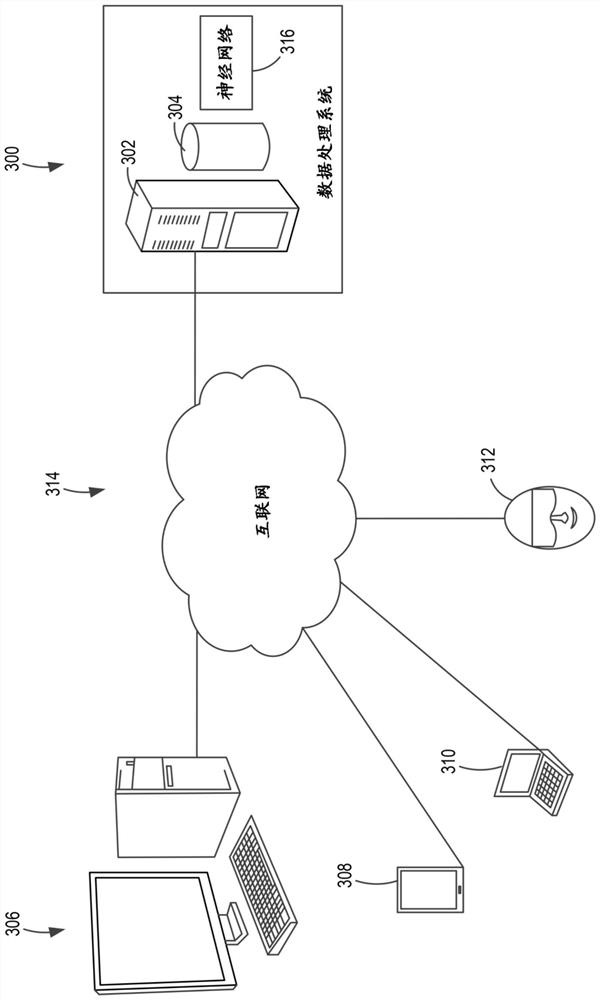

Block floating point computations using shared exponents

ActiveUS20190347072A1Well formedEasy to appreciateDigital data processing detailsComplex mathematical operationsBlock floating-pointParallel computing

A system for block floating point computation in a neural network receives a plurality of floating point numbers. An exponent value for an exponent portion of each floating point number of the plurality of floating point numbers is identified and mantissa portions of the floating point numbers are grouped. A shared exponent value of the grouped mantissa portions is selected according to the identified exponent values and then removed from the grouped mantissa portions to define multi-tiered shared exponent block floating point numbers. One or more dot product operations are performed on the grouped mantissa portions of the multi-tiered shared exponent block floating point numbers to obtain individual results. The individual results are shifted to generate a final dot product value, which is used to implement the neural network. The shared exponent block floating point computations reduce processing time with less reduction in system accuracy.

Owner:MICROSOFT TECH LICENSING LLC

FFT processor

ActiveCN106383807AHigh precisionSmall granularityComplex mathematical operationsTime delaysGranularity

The invention discloses an FFT processor. A radix-2 butterfly operation unit of the FFT processor at least comprises an exponent alignment circuit, an operation unit and a normalization circuit. The exponent alignment circuit transmits a unified exponent part of floating point input data after exponent alignment to the normalization circuit; the operation unit receives a cardinal number part of the floating point input data after the exponent alignment and executes corresponding FFT operation; the normalization circuit executes exponent normalization processing on an operation result of the operation unit according to the exponent part to generate floating point output data; and the operation unit comprises one or more fixed point adders, fixed point multipliers and / or fixed point subtractors. According to a pseudo floating point operation structure, a plurality of data sharing exponent fields are not needed, the calculation precision is relatively high, the granularity of prefix alignment operation is relatively small, and the calculation scale and the time delay are both much smaller than those of an existing block floating point structure, so that the pseudo floating point operation structure is more suitably applied to pipeline structure realization.

Owner:SHENZHEN POLYTECHNIC

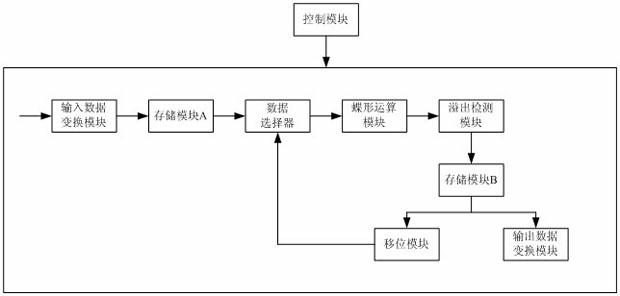

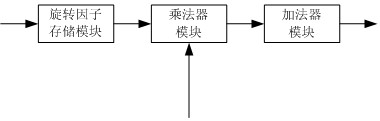

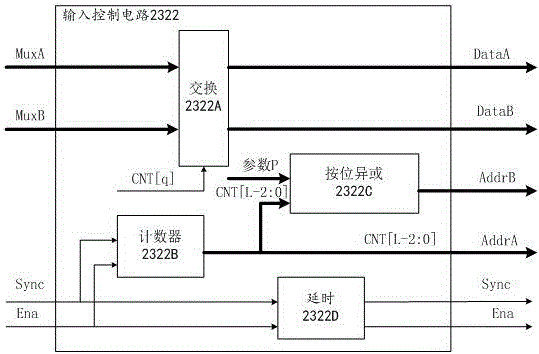

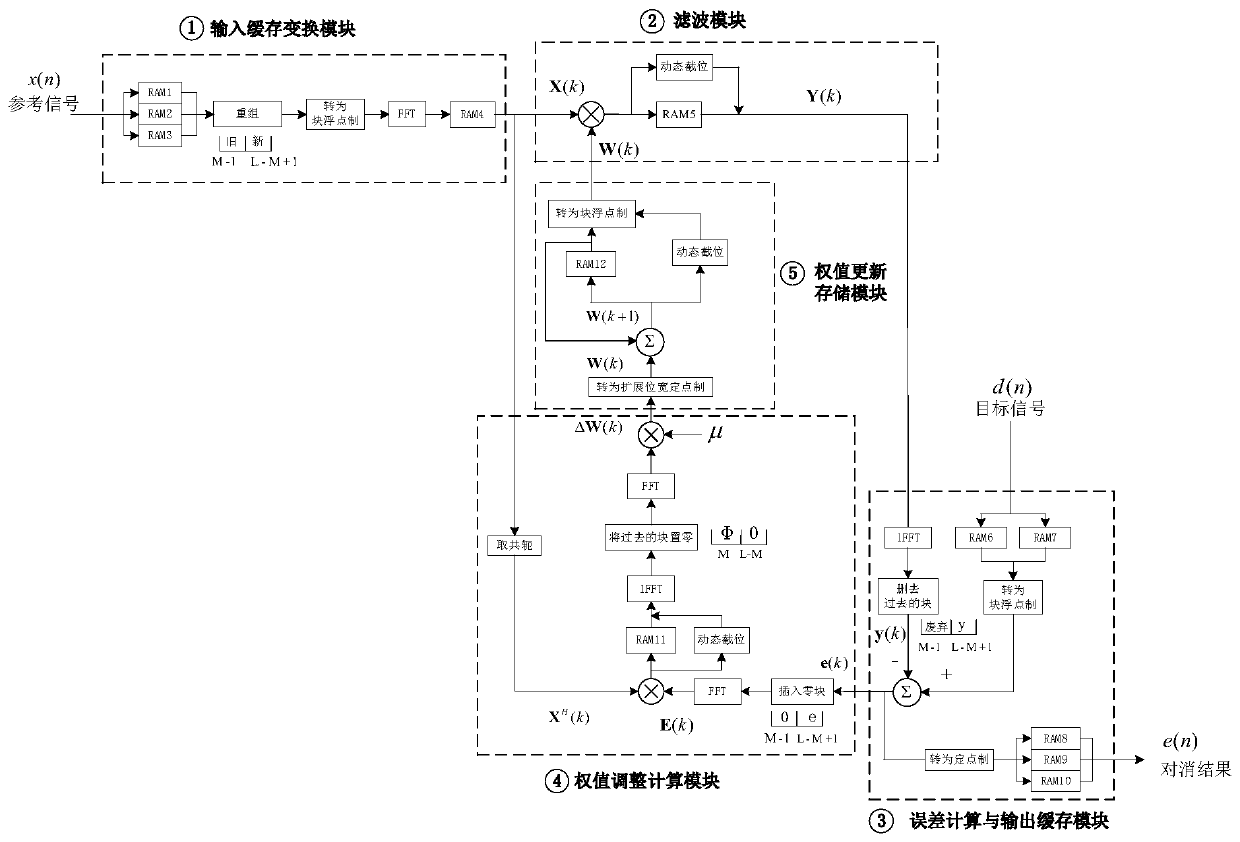

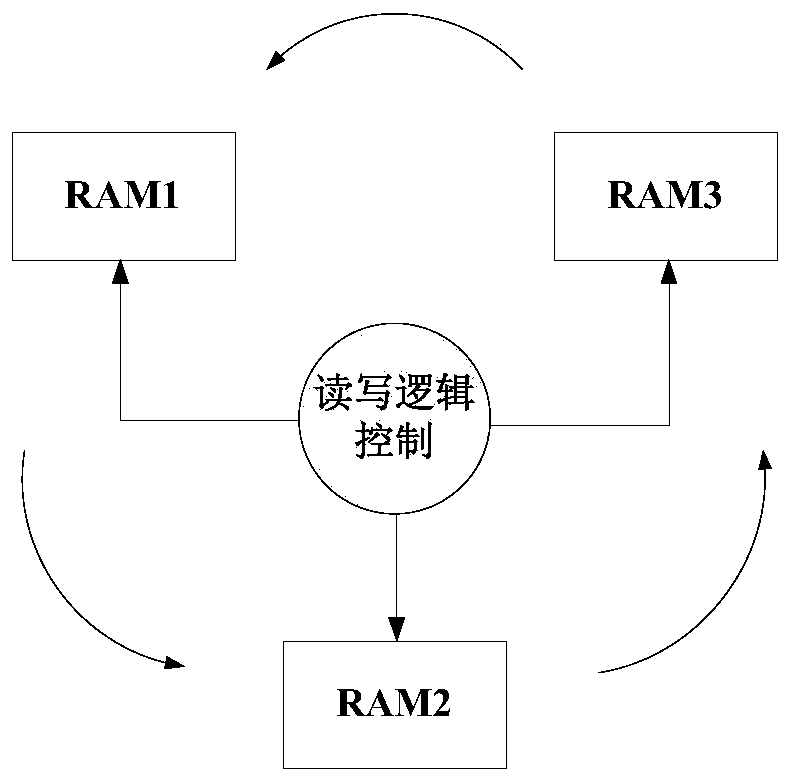

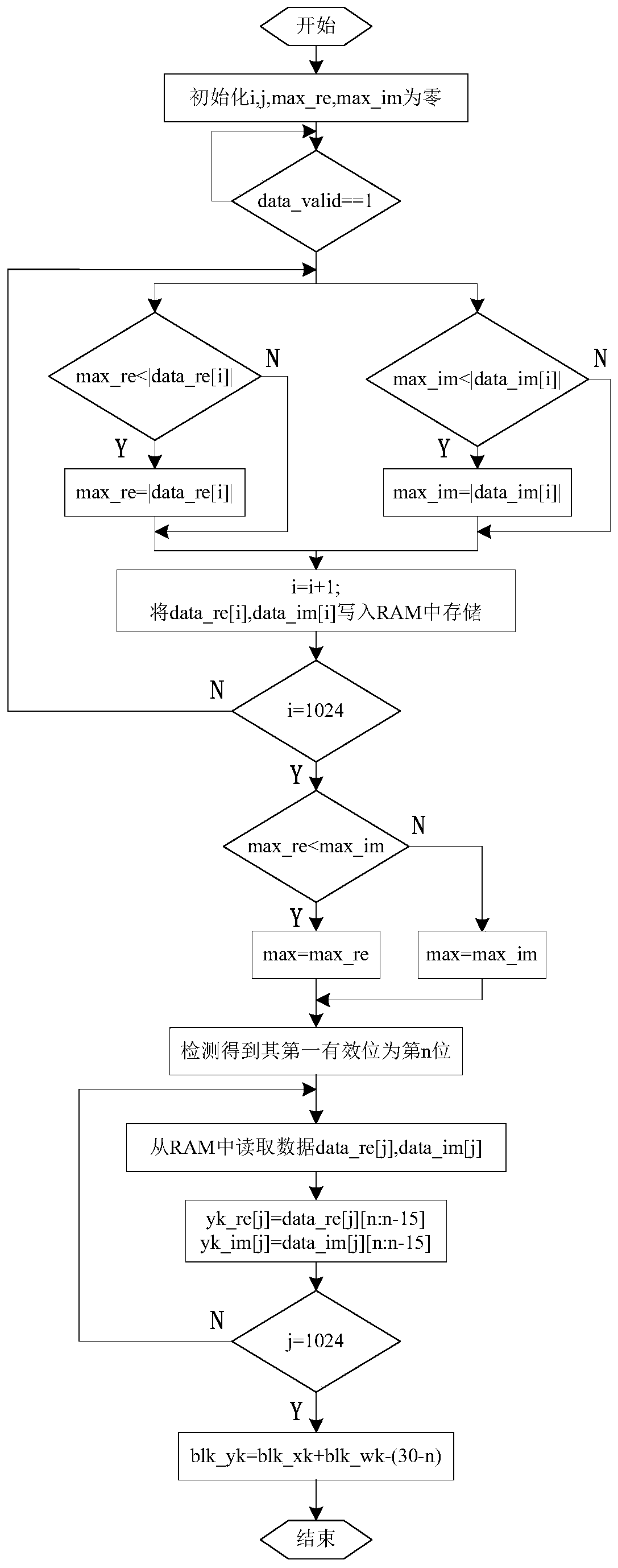

FPGA implementation device and method based on FBLMS algorithm of block floating points

ActiveCN111506294AGuaranteed accuracyHigh precisionDigital data processing detailsCAD circuit designModularityBlock floating-point

The invention belongs to the technical field of real-time adaptive signal processing, particularly relates to an FPGA implementation device and method of an FBLMS algorithm based on a block floating point, and aims to solve the problem that conflicts exist among performance, speed and resources when an existing FPGA device implements the FBLMS algorithm. The method comprises the following steps that an input cache transformation module performs block cache recombination on a reference signal, converts the reference signal into block floating points and then performs FFT transformation; a filtering module carries out filtering in a frequency domain and carries out dynamic bit cutting; an error calculation and output caching module performs block caching on the target signal, subtracts the filtered output after the target signal is converted into a block floating point, and converts the result into a fixed-point system to obtain a final cancellation result; and a weight adjustment calculation module and a weight update storage module obtain the adjustment amount of the weight and update the weight block by block. Aiming at a recursive structure of the FBLMS algorithm, a block floating point data format and a dynamic bit cutting method are adopted, so the data is ensured to have a large dynamic range and high precision, conflicts among performance, speed and resources are solved,and the reusability and expansibility are also improved through modular design.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

Multipath data floating point processor prototype

The invention discloses a multipath data floating point processor prototype. A framework is characterized by aligning structured data, half-structured data and non-structured data to be three paths of arrays; applying a high singular value decomposition, and decomposing the three arrays to be a two-order tensor matrix mode; transforming the matrix mode to a sparse domain, and performing blocked floating point quantization treatment. Finally, the multipath data floating point processor prototype is structured.

Owner:SHANGHAI DATACENT SCI CO LTD

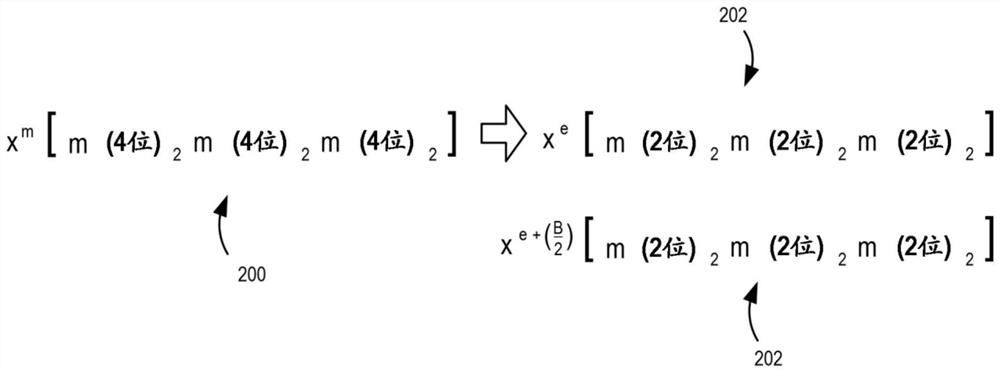

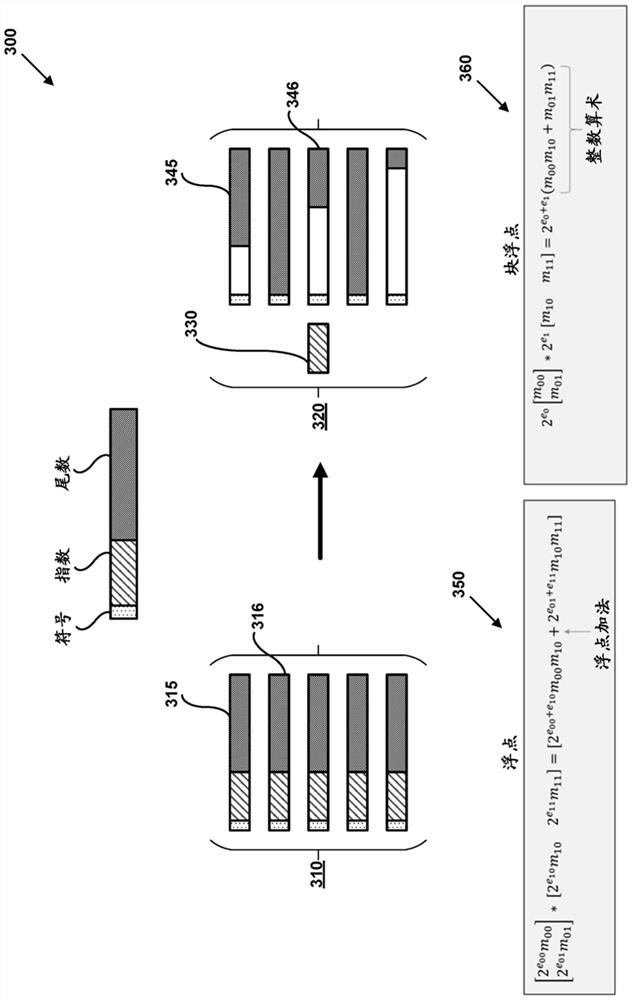

Block floating point computations using reduced bit-width vectors

PendingCN112074806AComputation using non-contact making devicesNeural architecturesAlgorithmParallel computing

A system for block floating point computation in a neural network receives a block floating point number comprising a mantissa portion. A bit-width of the block floating point number is reduced by decomposing the block floating point number into a plurality of numbers each having a mantissa portion with a bit-width that is smaller than a bit-width of the mantissa portion of the block floating point number. One or more dot product operations are performed separately on each of the plurality of numbers to obtain individual results, which are summed to generate a final dot product value. The final dot product value is used to implement the neural network. The reduced bit width computations allow higher precision mathematical operations to be performed on lower-precision processors with improved accuracy.

Owner:MICROSOFT TECH LICENSING LLC

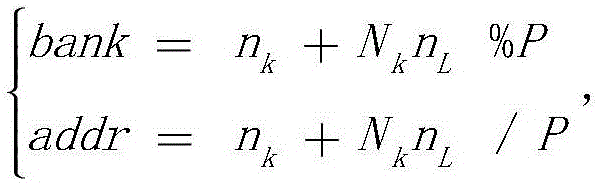

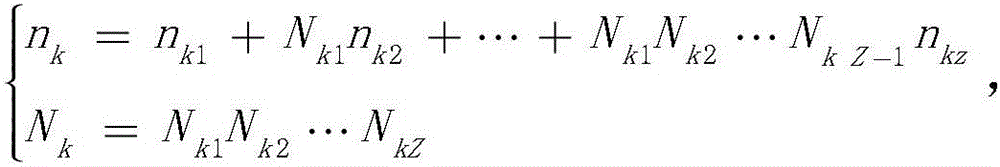

Block-floating-point method for FFT (fast Fourier transform) processor

InactiveCN106354693AAdjustable sizeDigital data processing detailsComplex mathematical operationsBlock floating-pointFft processor

The invention relates to the technical field of FFT (fast Fourier transform) processors, and discloses a block-floating-point method for a FFT processor. The block-floating-point method includes the steps: performing corresponding operations for data blocks of the level in some level of the FFT processor; performing corresponding zooming operations for the data blocks according to the operation results, calculating out block-floating-point indexes of the data blocks of the level, and storing the block-floating-point indexes according to accessing formulas of the block-floating-point indexes; reading the block-floating-point indexes according to the accessing formulas of the block-floating-point indexes in nest level of some level of the FFT processor, and performing corresponding aligned operations according to the block-floating-point indexes. The block-floating-point method has the advantage that sizes of the data blocks can be properly adjusted as required.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Zero overhead block floating point implementation in CPU's

A system for computing a block floating point scaling factor by detecting a dynamic range of an input signal in a central processing unit without additional overhead cycles is provided. The system includes a dynamic range monitoring unit that detects the dynamic range of the input signal by snooping outgoing write data and incoming memory read data of the input signal. The dynamic range monitoring unit includes a running maximum count unit that stores a least value of a count of leading zeros and leading ones, and a running minimum count that stores a least value of the count of trailing zeros. The dynamic range is detected based on the least value of the count of leading zeros and leading ones and the count of trailing zeros. The system further includes a scaling factor computation module that computes the block floating point (BFP) scaling factor based on the dynamic range.

Owner:SAANKHYA LABS PVT LTD

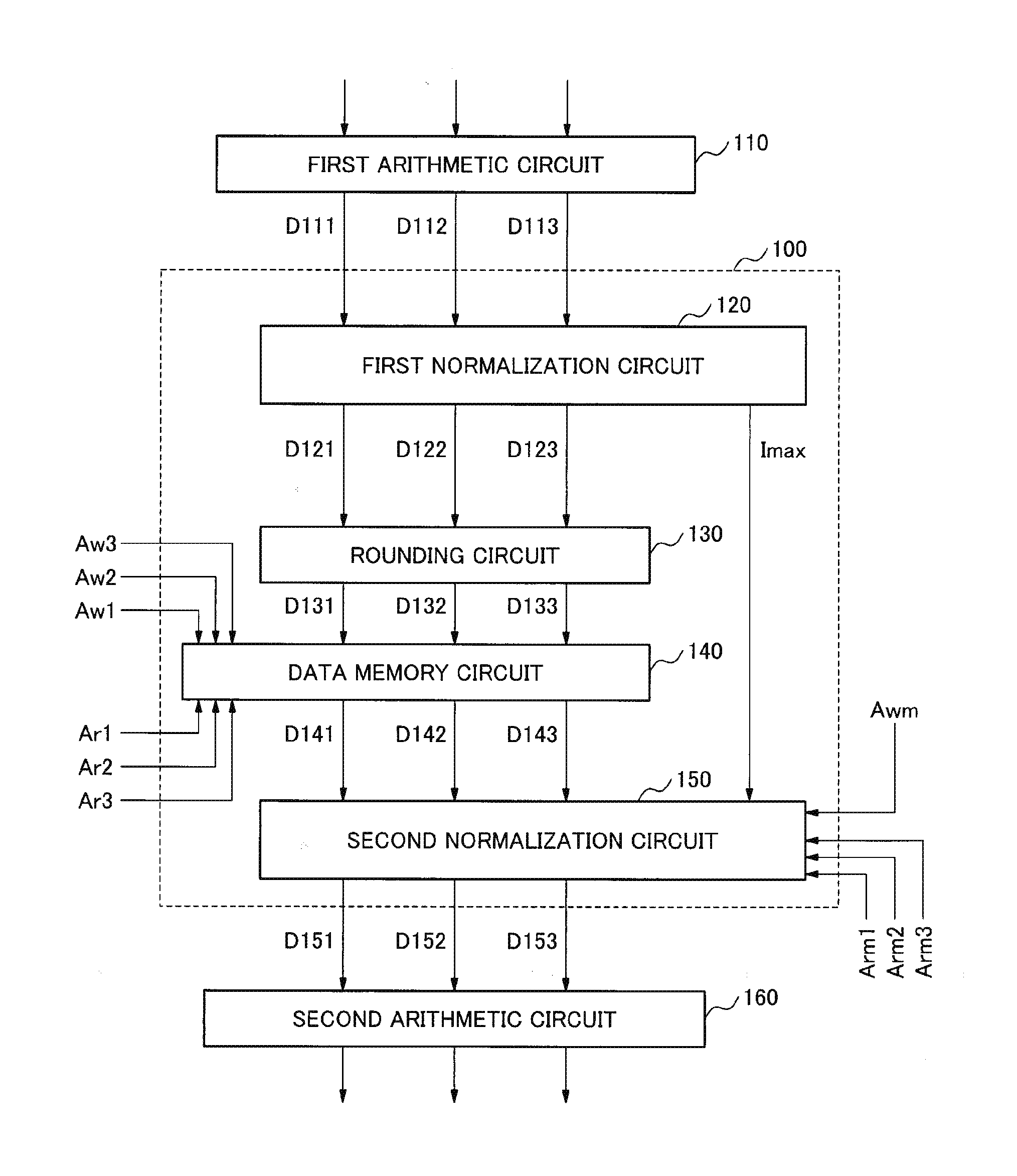

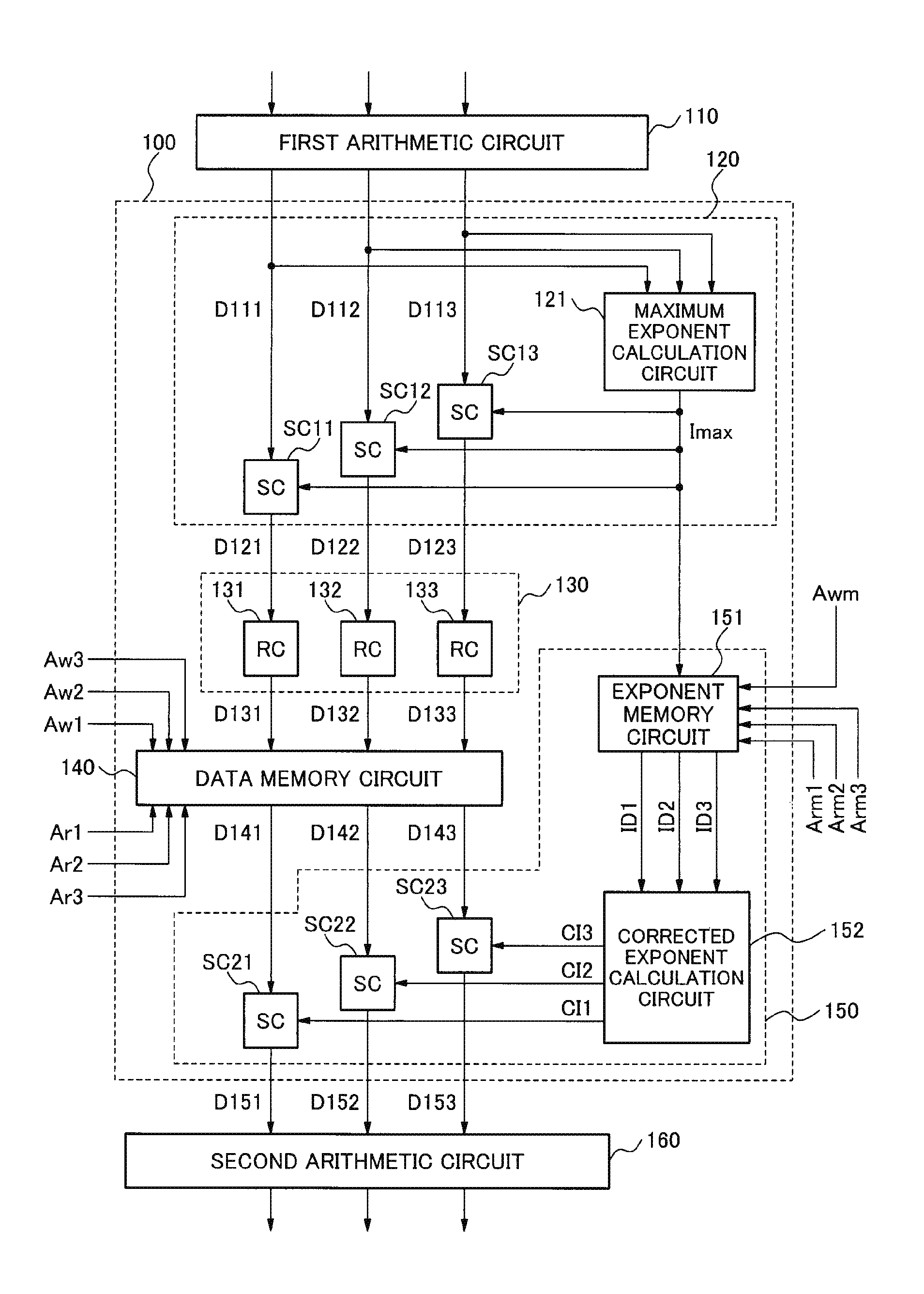

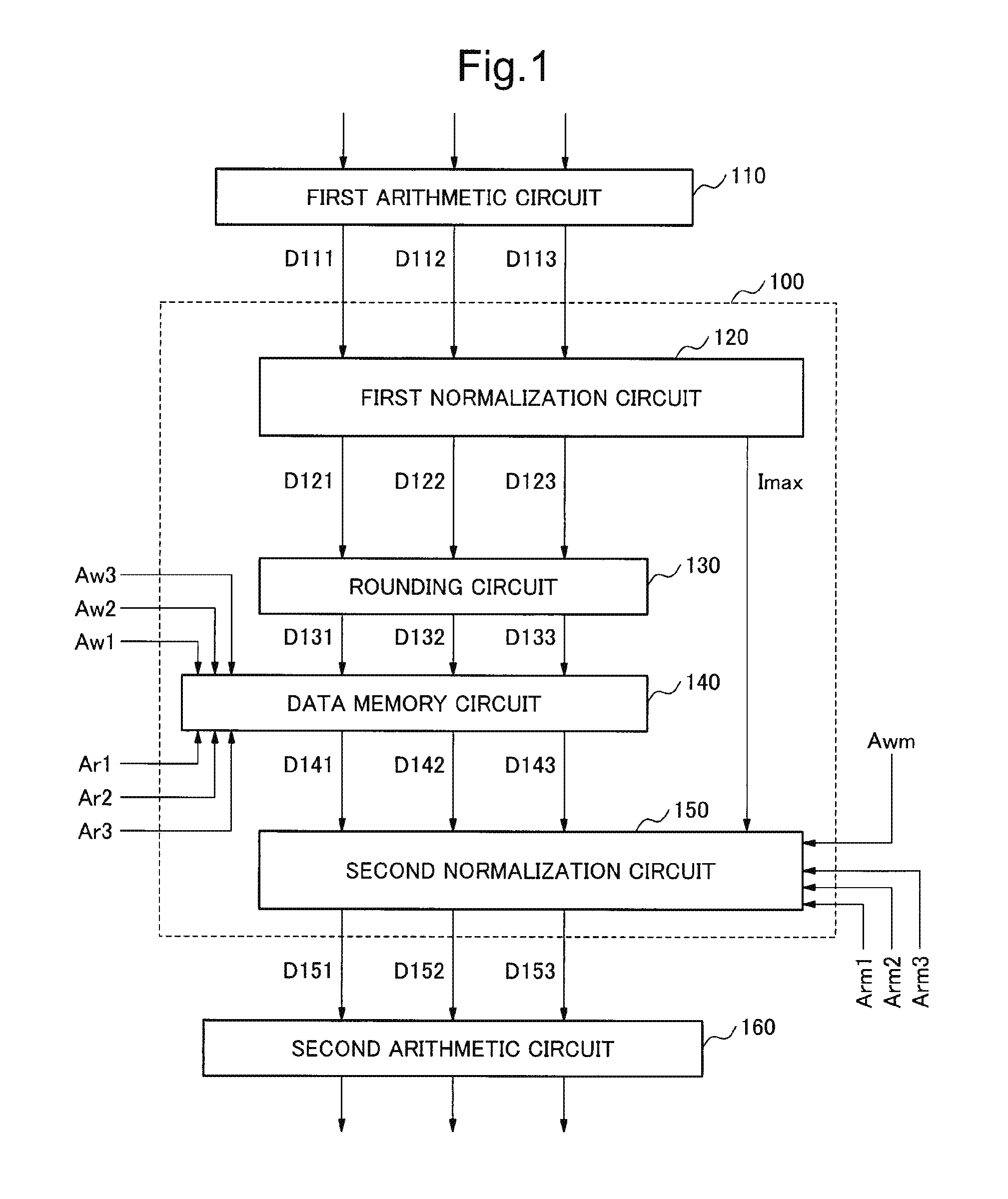

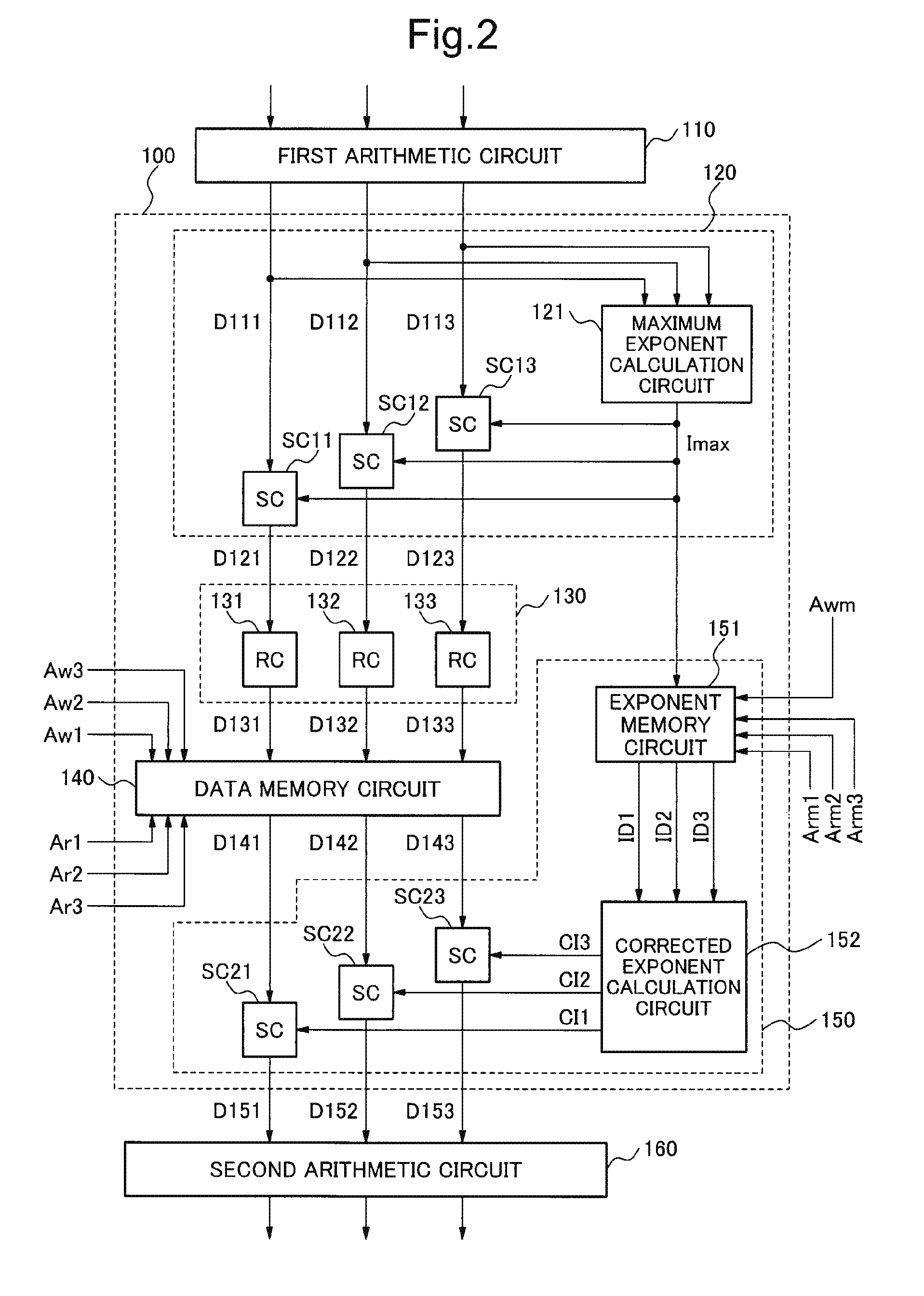

Arithmetic processing apparatus and an arithmetic processing method

ActiveUS20140089361A1Small circuit scaleImprove accuracyComputation using non-contact making devicesDigital computer detailsAlgorithmBlock floating-point

Provided is an arithmetic processing apparatus and an arithmetic processing method which can perform block floating point processing with small circuit scale and high precision.A first normalization circuit (120) performs a first normalization, in which a plurality pieces of data, which have a common exponent and which are either fixed-point number representation data or mantissa portion data of block floating-point number representation, are inputted in each of a plurality of cycles and the plurality of pieces of data inputted in each of the plurality of cycles are respectively normalized with the common exponent on the basis of a maximum exponent for the plurality of pieces of data inputted in a corresponding one of the plurality of cycle. A rounding circuit (130) outputs a plurality of pieces of rounded data which are obtained by reducing a bit width of respective one of the plurality of pieces of data on which the first normalization is performed. A first storage circuit (140) stores a plurality of pieces of rounded data regarding the plurality of cycles in which the first normalization is performed and outputs a plurality of designated pieces of rounded data among the stored plurality of pieces of rounded data. A second normalization circuit (150) performs a second normalization, in which the plurality of designated pieces of rounded data are respectively normalized with an exponent which is common to the plurality of designated pieces of rounded data on the basis of the maximum exponents used in the first normalization for the plurality of designated pieces of rounded data and a maximum value of the maximum exponents, and outputs a result of the second normalization.

Owner:NEC CORP

OFDM receiver and frequency response equalization method thereof

PendingCN106713199AReduce dynamic changesMulti-frequency code systemsBlock floating-pointEqualization

The invention provides an OFDM receiver comprising a fast Fourier transform calculation module and a constellation de-mapping module. The OFDM receiver also comprises a channel compression module which is arranged between the output end of the fast Fourier transform calculation module and the input end of the constellation de-mapping module. The channel compression module performs planarization on the frequency response outputted by the fast Fourier transform module according to block floating point calculation and outputs the frequency response after planarization to the constellation de-mapping module. The invention also discloses a frequency response equalization method of the OFDM. With application of the technical scheme, the process of frequency response planarization can be completed through a small amount of system overhead.

Owner:XINGTERA SEMICON TECH (SHANGHAI) CO LTD

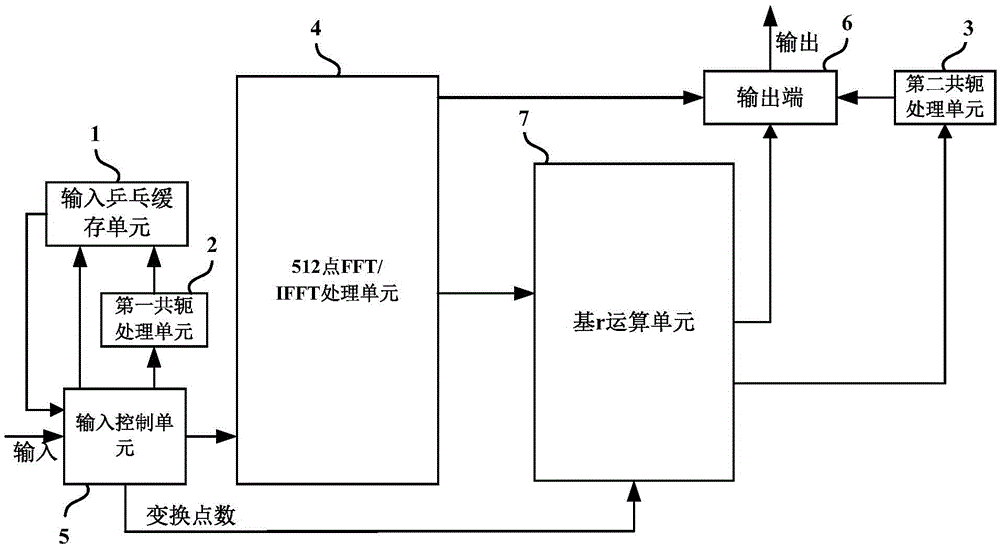

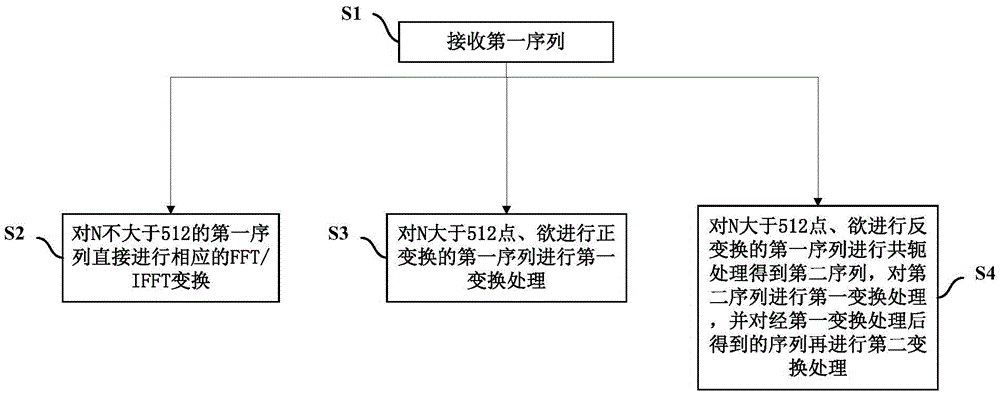

FFT/IFFT device and method based on LTE system

ActiveCN105608054AReduce occupancySimple structureComplex mathematical operationsBinary multiplierBlock floating-point

The invention discloses an FFT / IFFT device and method based on an LTE system. Six transformation point numbers defined by the LTE system are divided into two classes, namely, small transformation point numbers (128, 256 and 512) and large transformation point numbers (1024, 1536 and 2048), wherein the small transformation point numbers are all 2 of integer power, and corresponding FFT / IFFT is directly carried out; the large transformation point numbers are all realized based on 512-point FFT / IFFT and r-base operation. The structure is simple, and few multiplier and summator resources are needed; compared with the prior art, processing with six transmission bandwidths can be supported at the same time, and block-floating-point FFT / IFFT can also be supported. In addition, only 512 twiddle factors need to be used and stored in the process of executing 1536-point FFT / IFFT, only 1536 twiddle factors need to be stored in total in the process of executing 1024-point FFT / IFFT, 1536-point FFT / IFFT and 2048-point FFT / IFFT, and thus resource occupation is reduced.

Owner:WUHAN HONGXIN TELECOMM TECH CO LTD

Arithmetic processing apparatus and an arithmetic processing method

ActiveUS9519457B2Small scaleImprove accuracyComputation using non-contact making devicesBlock floating-pointComputer science

Provided is an arithmetic processing apparatus and an arithmetic processing method which can perform block floating point processing with small circuit scale and high precision.A first normalization circuit (120) performs a first normalization, in which a plurality pieces of data, which have a common exponent and which are either fixed-point number representation data or mantissa portion data of block floating-point number representation, are inputted in each of a plurality of cycles and the plurality of pieces of data inputted in each of the plurality of cycles are respectively normalized with the common exponent on the basis of a maximum exponent for the plurality of pieces of data inputted in a corresponding one of the plurality of cycle. A rounding circuit (130) outputs a plurality of pieces of rounded data which are obtained by reducing a bit width of respective one of the plurality of pieces of data on which the first normalization is performed. A first storage circuit (140) stores a plurality of pieces of rounded data regarding the plurality of cycles in which the first normalization is performed and outputs a plurality of designated pieces of rounded data among the stored plurality of pieces of rounded data. A second normalization circuit (150) performs a second normalization, in which the plurality of designated pieces of rounded data are respectively normalized with an exponent which is common to the plurality of designated pieces of rounded data on the basis of the maximum exponents used in the first normalization for the plurality of designated pieces of rounded data and a maximum value of the maximum exponents, and outputs a result of the second normalization.

Owner:NEC CORP

Methods and apparatus for automatic accuracy-sustaining scaling of block-floating-point operands

InactiveUS8280939B2Digital computer detailsComputation using denominational number representationBlock floating-pointOperand

A computer-implemented method performs an operation on a set of at least one BFP operands to generate a BFP result. The method is designed to reduce the risks of overflow and loss of accuracy attributable to the operation. The method performs an analysis to determine respective shift values for each of the operands and the result. The method calculates result mantissas by shifting the stored bit patterns representing the corresponding operand mantissa values by their respective associated shift values determined in the analysis step, performing the operation on shifted operand mantissas to generate preliminary result mantissa, and shifting the preliminary result mantissas by a number of bits determined in the analysis step.

Owner:AVIGILON ANALYTICS CORP

Training neural network accelerators using mixed precision data formats

Technology related to training a neural network accelerator using mixed precision data formats is disclosed. In one example of the disclosed technology, a neural network accelerator is configured to accelerate a given layer of a multi-layer neural network. An input tensor for the given layer can be converted from a normal-precision floating-point format to a quantized-precision floating-point format. A tensor operation can be performed using the converted input tensor. A result of the tensor operation can be converted from the block floating-point format to the normal-precision floating-point format. The converted result can be used to generate an output tensor of the layer of the neural network, where the output tensor is in normal-precision floating-point format.

Owner:MICROSOFT TECH LICENSING LLC

Block floating point computations using shared exponents

PendingCN112088354ADigital data processing detailsComplex mathematical operationsAlgorithmParallel computing

A system for block floating point computation in a neural network receives a plurality of floating point numbers. An exponent value for an exponent portion of each floating point number of the plurality of floating point numbers is identified and mantissa portions of the floating point numbers are grouped. A shared exponent value of the grouped mantissa portions is selected according to the identified exponent values and then removed from the grouped mantissa portions to define multi-tiered shared exponent block floating point numbers. One or more dot product operations are performed on the grouped mantissa portions of the multi-tiered shared exponent block floating point numbers to obtain individual results. The individual results are shifted to generate a final dot product value, which is used to implement the neural network. The shared exponent block floating point computations reduce processing time with less reduction in system accuracy.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com