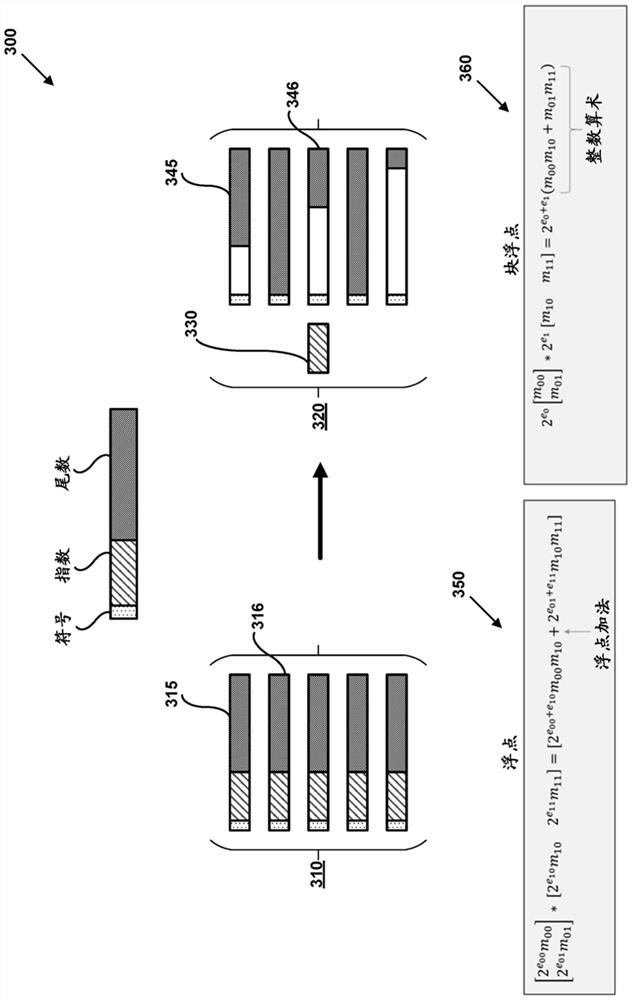

Training neural network accelerators using mixed precision data formats

A neural network and multi-layer neural network technology, which is applied in the field of training neural network accelerators using mixed-precision data formats, can solve the problem of large calculations using models

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0013] general considerations

[0014] The present invention is illustrated in the context of representative embodiments, which are not intended to be limiting in any way.

[0015] As used in this application, the singular forms "a," "an," and "the" include plural referents unless the context clearly dictates otherwise. Additionally, the term "includes" means "comprises". Furthermore, the term "coupled" includes mechanical, electrical, magnetic, optical and other practical means of coupling or linking items together and does not exclude the presence of intervening elements between the coupled items. Also, as used herein, the term "and / or" means any one or combination of items in a phrase.

[0016] The systems, methods and devices described herein should not be construed as limiting in any way. Rather, the present disclosure relates to all novel and non-obvious features and aspects of the various disclosed embodiments both individually and in various combinations and subcomb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com