Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

117results about How to "Reduce training parameters" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

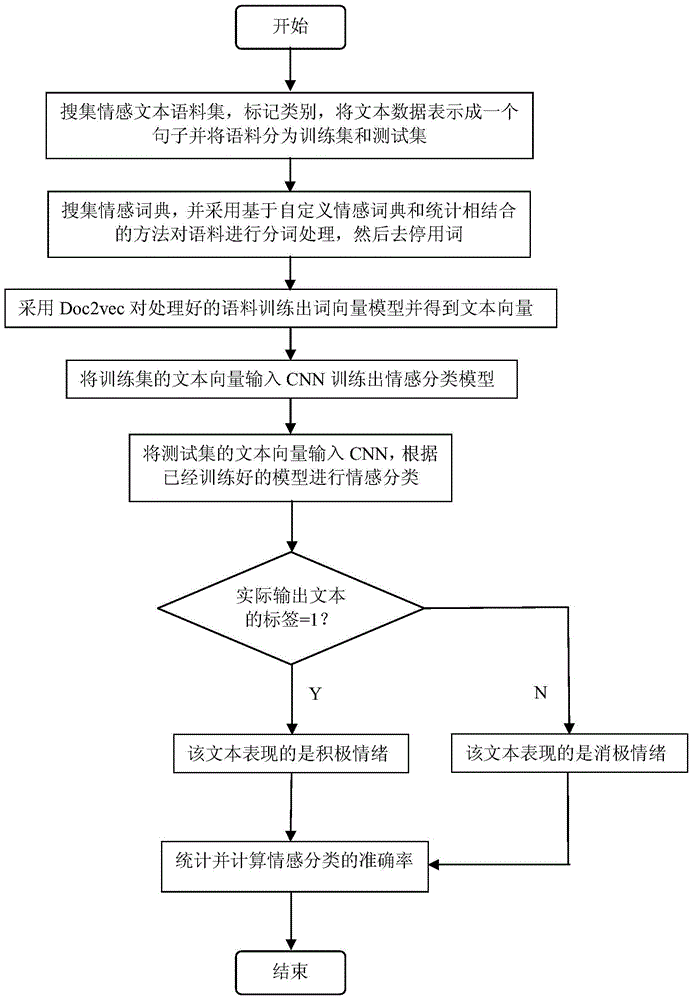

Sentiment classification method capable of combining Doc2vce with convolutional neural network

ActiveCN105740349AReduce training parametersThe structure of the neural network is simpleNatural language data processingSpecial data processing applicationsLearning methodsSemantic relationship

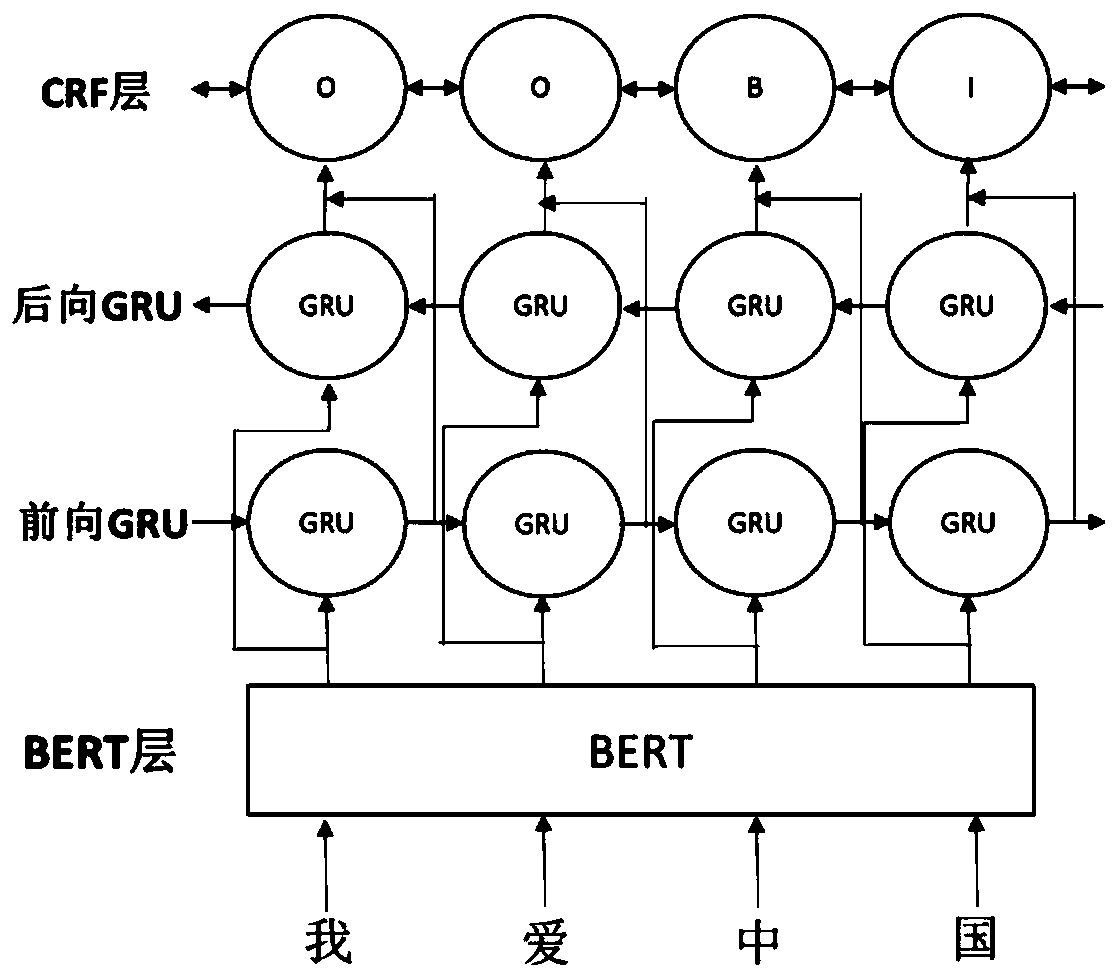

The invention requests to protect a sentiment classification method capable of combining Doc2vce with a convolutional neural network, and effectively combines the Doc2vce with the CNN (Convolutional Neural Network). For characteristic representation, the combination method considers a semantic relationship between words, solves dimensionality disasters and also considers a sequence problem between words. The CNN can make up the deficiencies of a superficial characteristic learning method through learning one deep nonlinear network structure. The representation of input data is expressed in a distributed way, so that powerful characteristic learning capability is shown, characteristic extraction and mode classification can be simultaneously carried out, and two characteristics of the spare connection and the weight sharing of the CNN model can reduce the training parameters of the network, a neural network structure becomes simple and higher in adaptation. Since the Doc2ec and the CNN are combined to process a sentiment classification problem, the accuracy of sentiment classification can be obviously improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

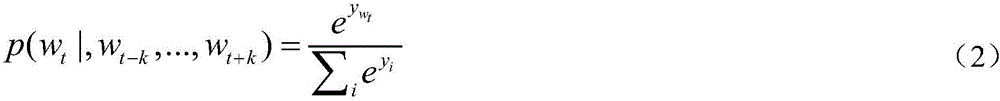

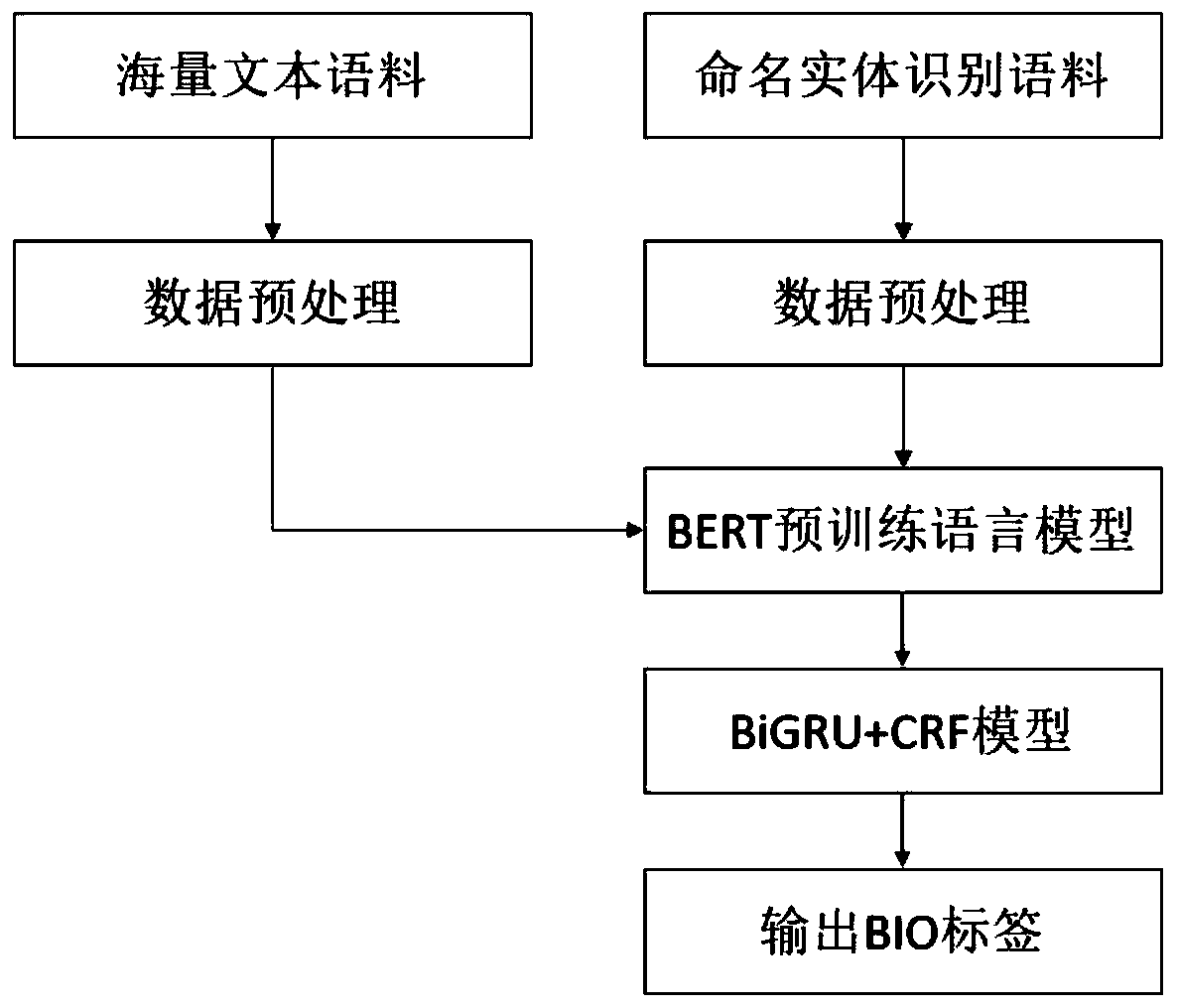

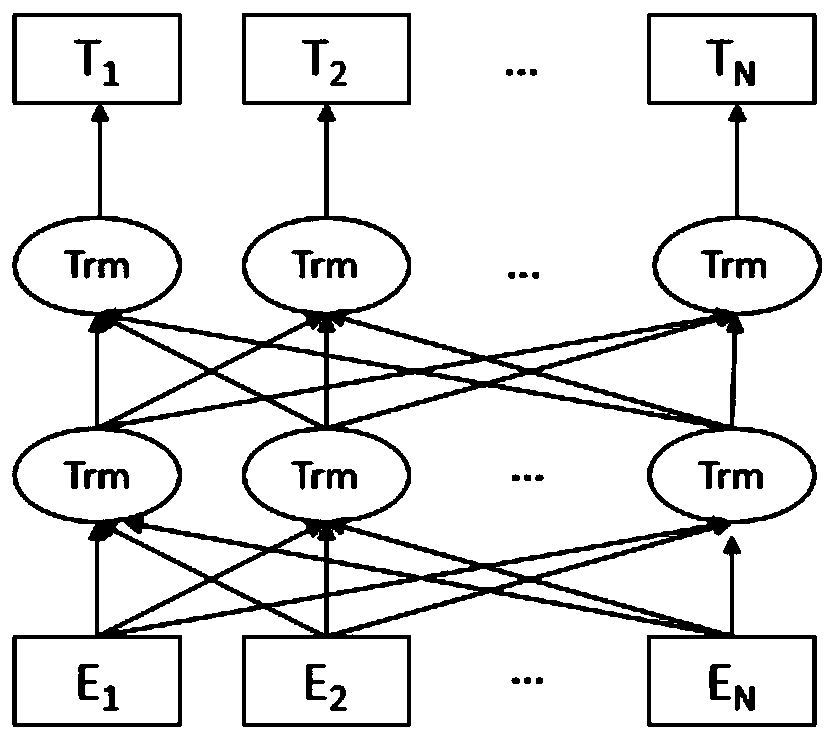

Chinese named entity recognition method based on BERT-BiGRU-CRF

ActiveCN110083831AHigh precisionEffectively represent ambiguitySemantic analysisEnergy efficient computingSemantic vectorPattern recognition

The invention discloses a Chinese named entity recognition method based on BERT-BiGRU-CRF. The method comprises three stages: in the first stage, preprocessing mass text corpora, and pre-training a BERT language model; in the second stage, preprocessing the named entity recognition corpus, and encoding the named entity recognition corpus through the trained BERT language model; and at the third stage, inputting the encoded corpus into a BiGRU+CRF model for training, and performing named entity recognition on the to-be-recognized statement by using the trained model. Construction of the Chinesenamed entity recognition method based on BERT-BiGRU-CRF is carried out, semantic representation of characters is enhanced through a BERT pre-training language model, semantic vectors are dynamicallygenerated according to contexts of the characters, and the ambiguity of the characters is effectively represented. Compared with a method based on fine tuning of a language model, the method has the advantages that training parameters are reduced, and the training time is saved.

Owner:WUHAN UNIV

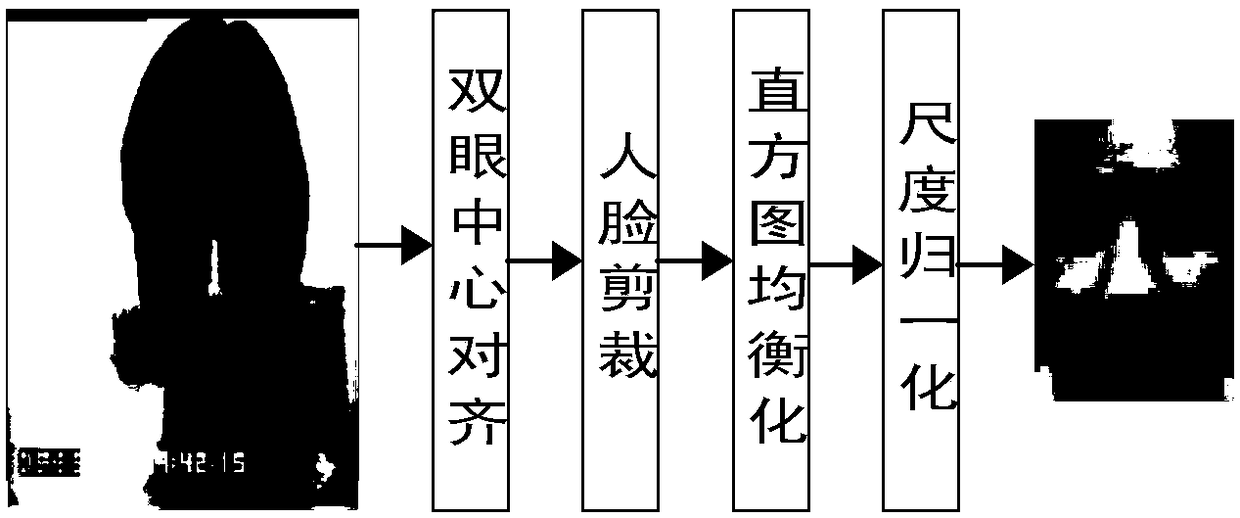

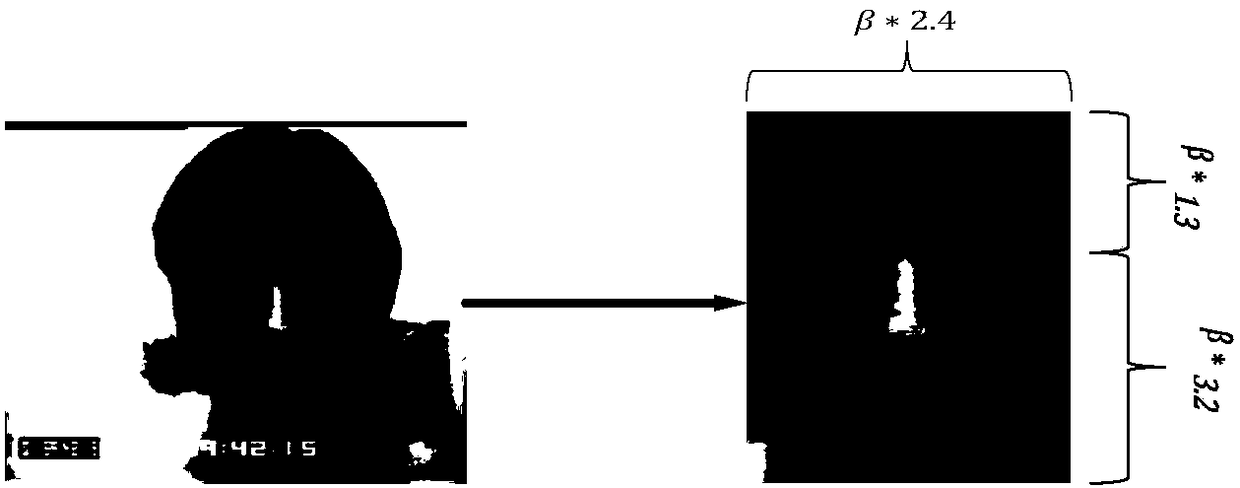

Improved CNN-based facial expression recognition method

InactiveCN108108677AReduce training parametersSmall amount of calculationImage enhancementImage analysisFace detectionSvm classifier

The invention provides an improved CNN-based facial expression recognition method, and relates to the field of image classification and identification. The improved CNN-based facial expression recognition method comprises the following steps: s1, acquiring a facial expression image from a video stream by using a face detection alignment algorithm JDA algorithm integrating the face detection and alignment functions; s2, correcting the human face posture in a real environment by using the face according to the facial expression image obtained in the step s1, removing the background information irrelevant to the expression information and adopting the scale normalization; s3, training the convolutional neural network model to obtain and store an optimal network parameter before extracting feature of the normalized facial expression image obtained in the step s2; s4 loading a CNN model and the optimal network parameters obtained by s3 for the optimal network parameters obtained in the steps3, and performing feature extraction on the normalized facial expression images obtained in the step s2; s5, classifying and recognizing the facial expression features obtained in the step s4 by using an SVM classifier. The method has high robustness and good generalization performance.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

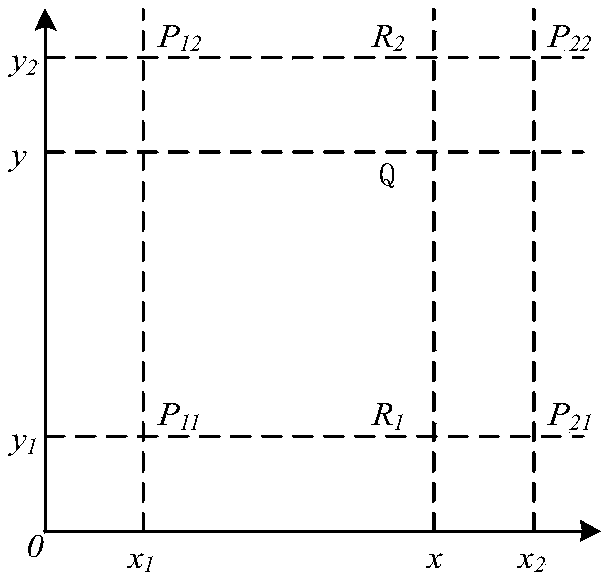

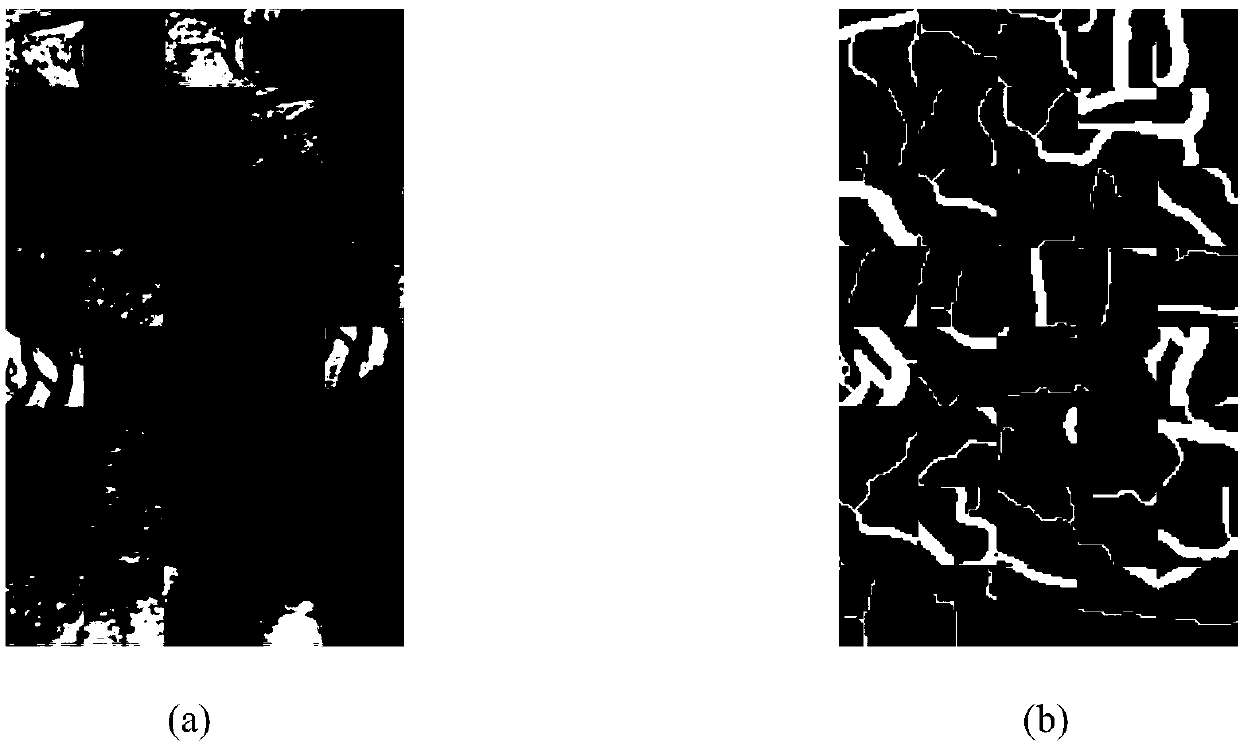

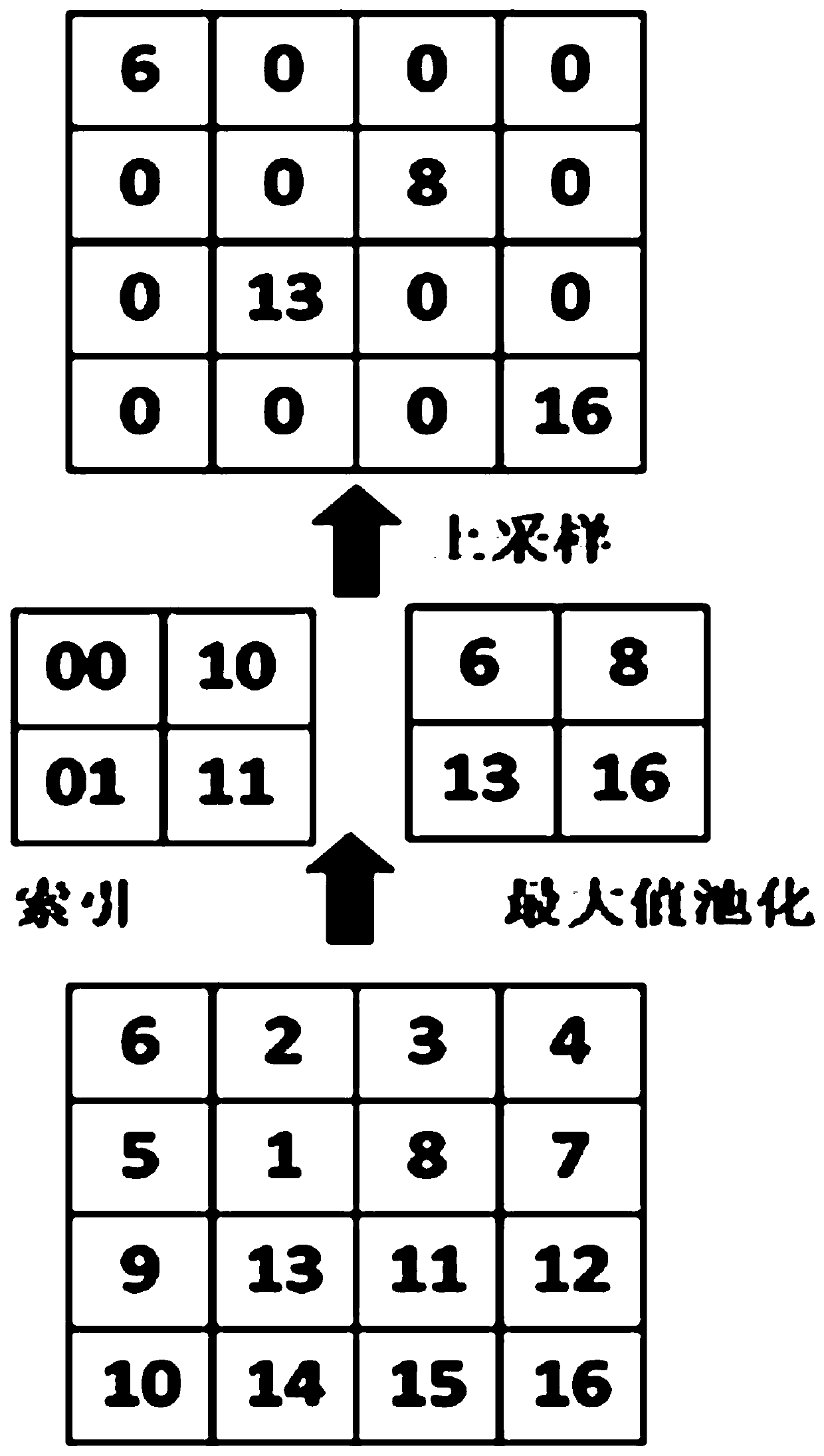

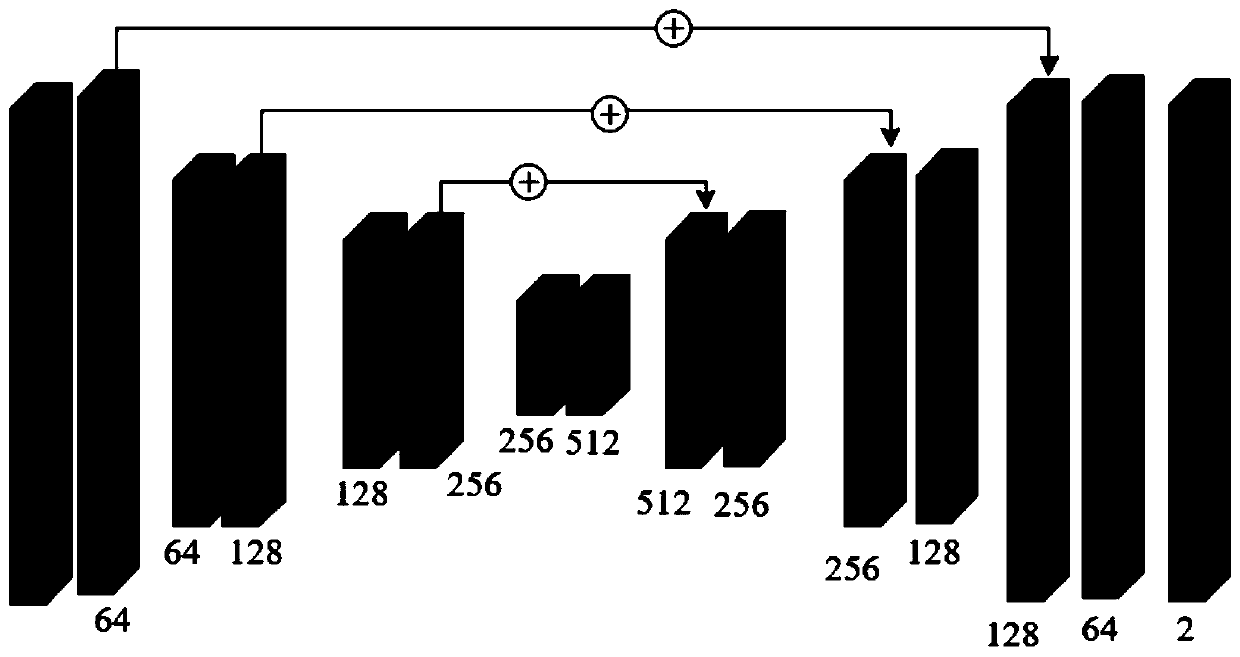

A retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network

InactiveCN108986124AExpand the receptive fieldReduce training parametersImage enhancementImage analysisAdaptive histogram equalizationHistogram

The invention belongs to the technical field of image processing, in order to realize automatic extraction and segmentation of retinal blood vessels, improve the anti-interference ability to factors such as blood vessel shadow and tissue deformation, and make the average accuracy rate of blood vessel segmentation result higher. The invention relates to a retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network. Firstly, retinal images are pre-processed appropriately, including adaptive histogram equalization and gamma brightness adjustment. Atthe same time, aiming at the problem of less retinal image data, data amplification is carried out, the experiment image is clipped and divided into blocks, Secondly, through construction of a multi-scale retinal vascular segmentation network, the spatial pyramidal cavity pooling is introduced into the convolutional neural network of the encoder-decoder structure, and the parameters of the model are optimized independently through many iterations to realize the automatic segmentation process of the pixel-level retinal blood vessels and obtain the retinal blood vessel segmentation map. The invention is mainly applied to the design and manufacture of medical devices.

Owner:TIANJIN UNIV

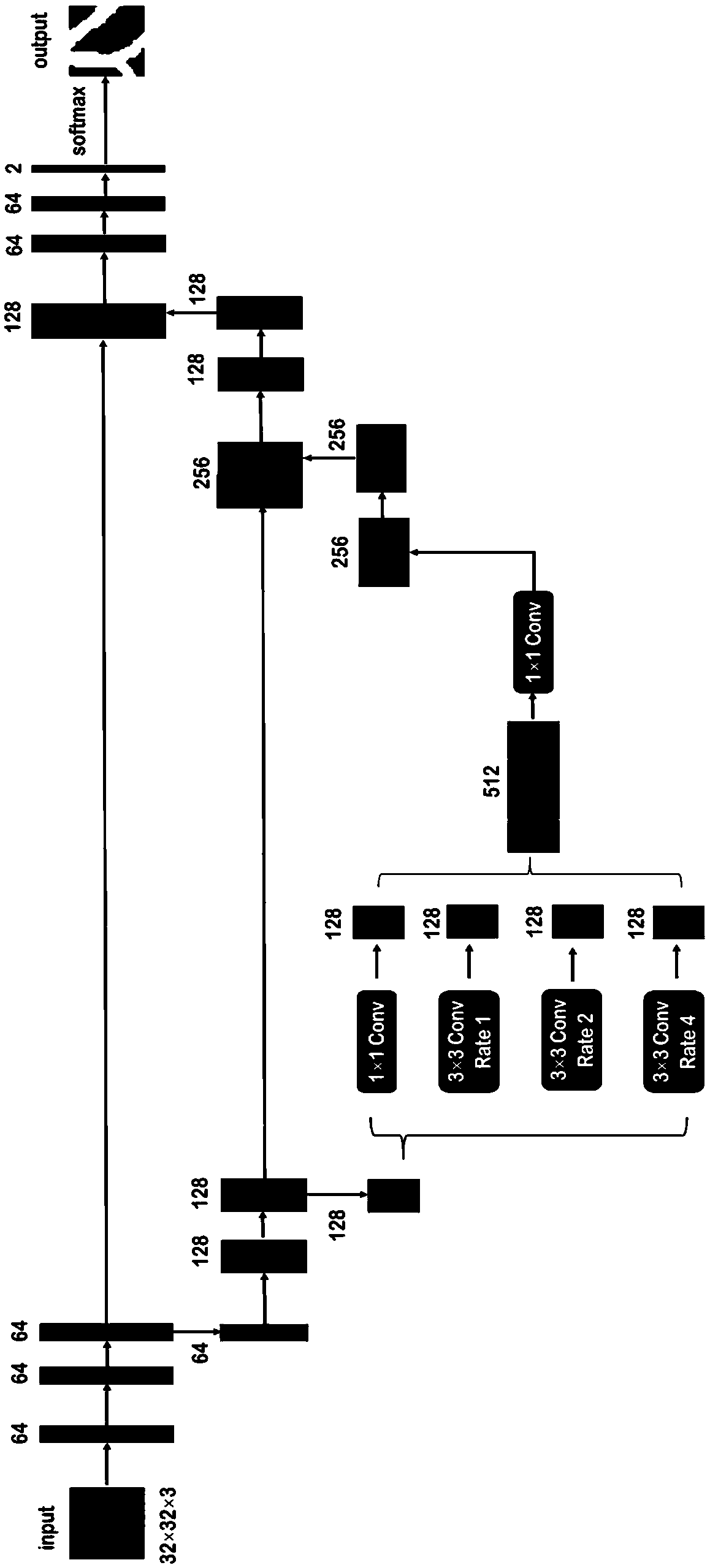

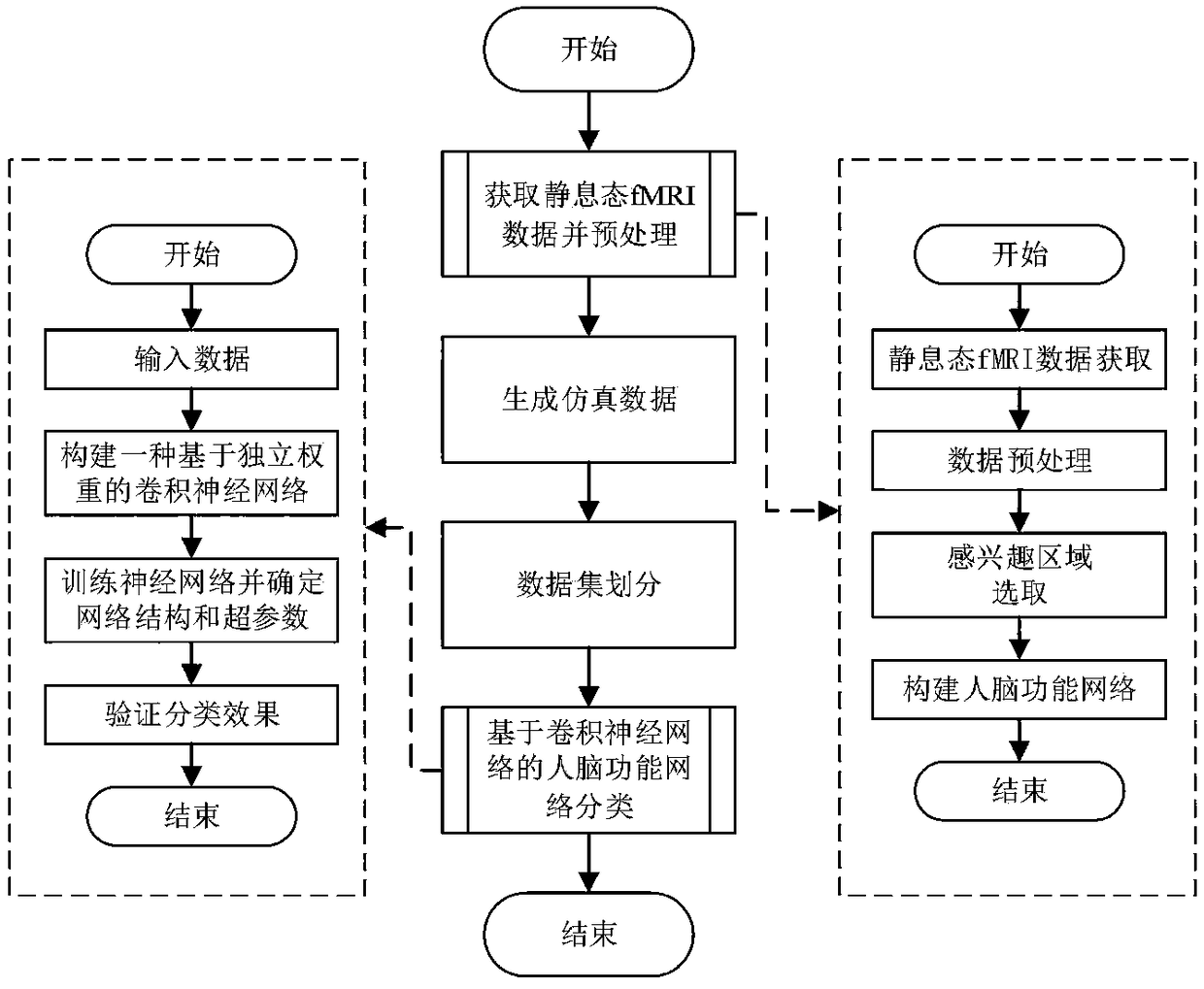

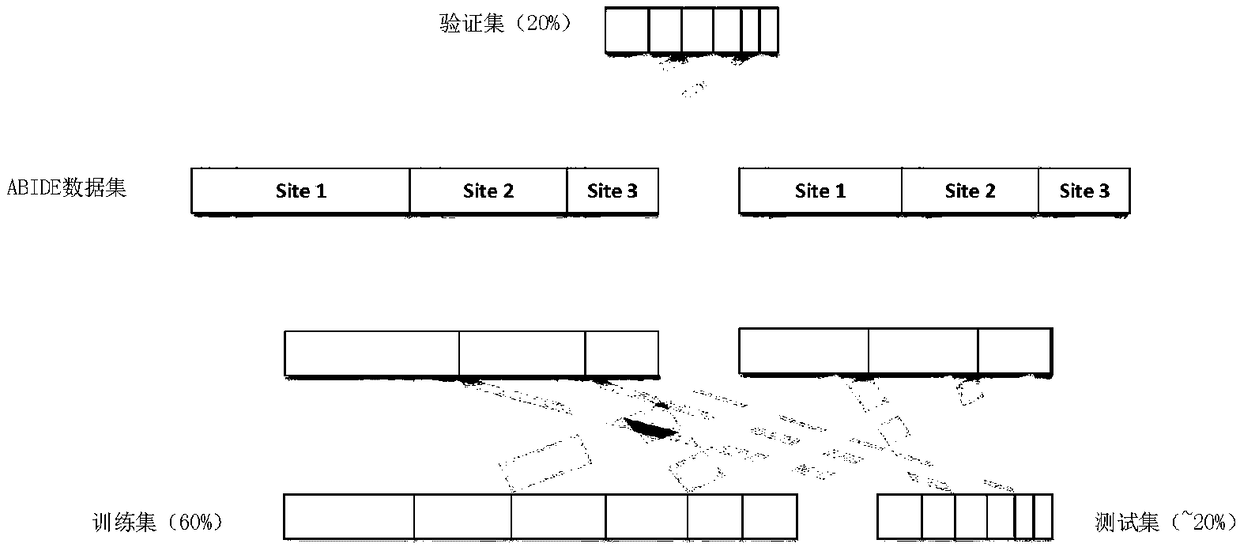

A human brain function network classification method based on a convolution neural network

ActiveCN109376751AEfficient use ofAccurate diagnosisCharacter and pattern recognitionMedical automated diagnosisHuman brainNODAL

The invention discloses a human brain function network classification method based on a convolution neural network, and belongs to the field of brain science research. the method comprises the following steps: obtaining resting state fMRI data and preprocessing the data; generating simulation data; performing data set partitioning; performing classification of human brain functional network basedon convolution neural network. The method of the invention is based on a convolution neural network, and uses Element-wise Filters to give unique weights to each edge and node of the human brain functional network data, thereby constructing a multi-layer neural network comprising an edge-to-node layer and a node-to-graph layer. The method of the invention can better utilize the topological structure information of the data of the human brain function network and carry out the feature expression, thereby improving the classification effect, and the method is reasonable and reliable, and can provide powerful help for the diagnosis of neuropsychiatric diseases.

Owner:BEIJING UNIV OF TECH

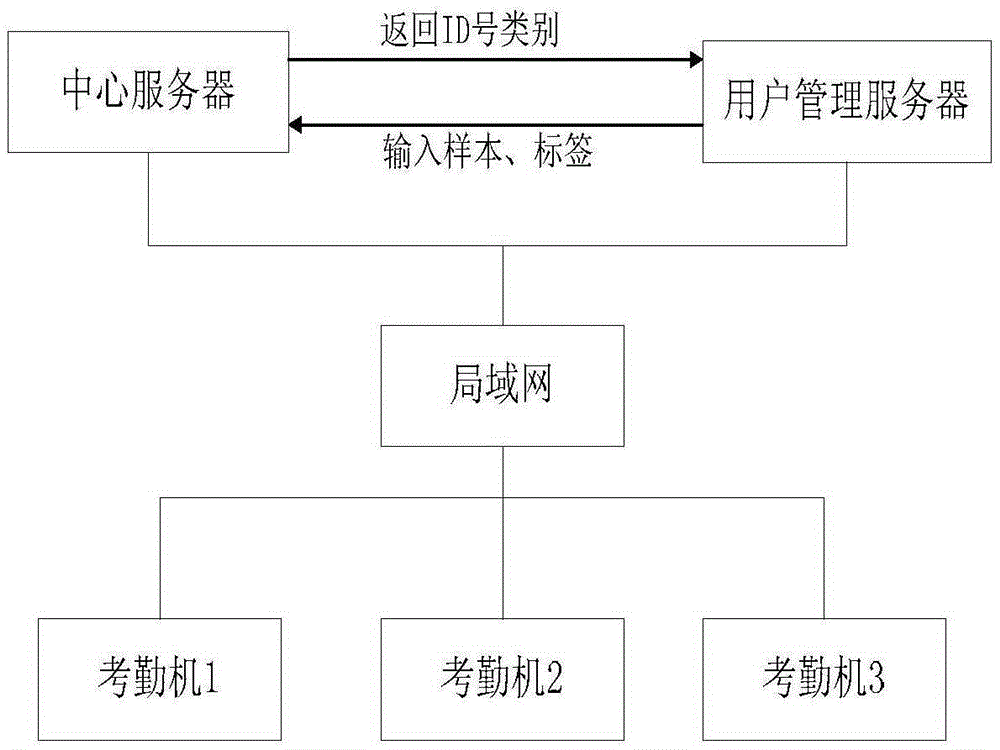

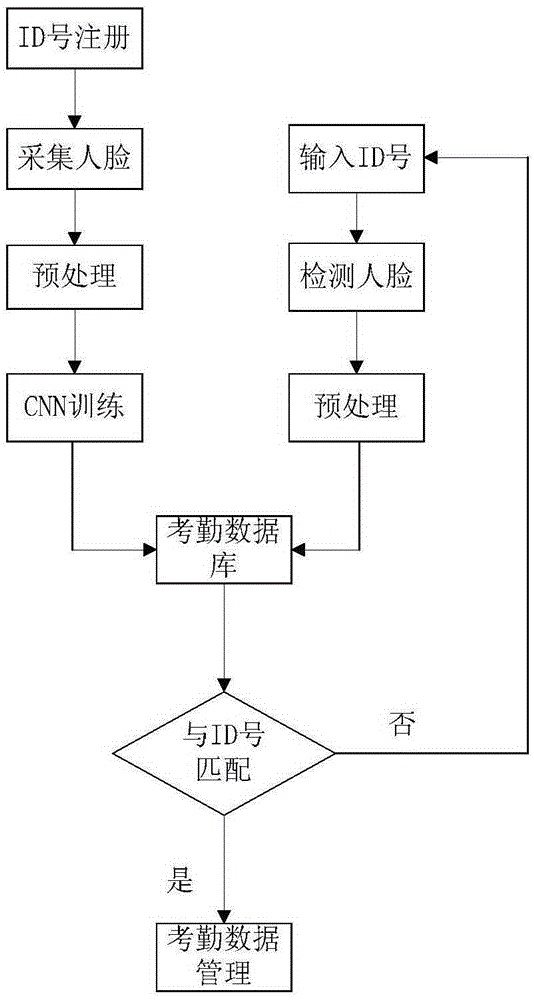

Face identification method and attendance system based on deep convolution neural network

InactiveCN105426875AAccurate descriptionGuaranteed immutabilityRegistering/indicating time of eventsCharacter and pattern recognitionNerve networkManual extraction

The invention relates to a face identification method and an attendance system based on a deep convolution neural network. The system comprises that user information and face sample labels are input into a user management server and then sent to a central server; in the central server, the pre-processed sample labels are used to establish a face identification model based on the deep convolution neutral network; employees carry out the online face identification through a trained neutral network on attendance machines at each site, and face identification results will be returned to the user management server by an internal local area network; and management staffs can carry out checking, modification and other operations to attendance records on the user management server. A face identification algorithm based on the deep convolution neutral network used by the invention can avoid problems such as incomplete and uncertain characteristic description caused by traditional manual extraction, can also make use of advantages of a receptive field and weight sharing, and can increase a face identification rate so as to increase an accuracy rate of the attendance system.

Owner:WUHAN UNIV OF SCI & TECH

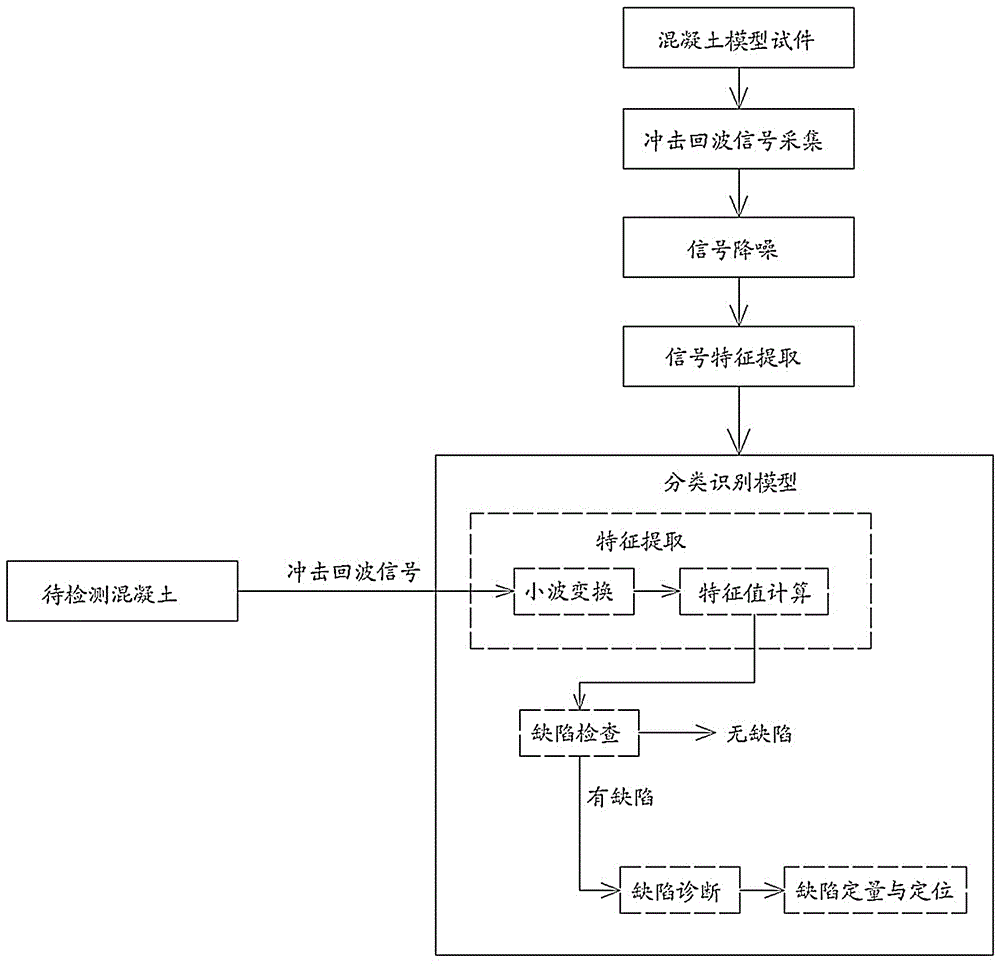

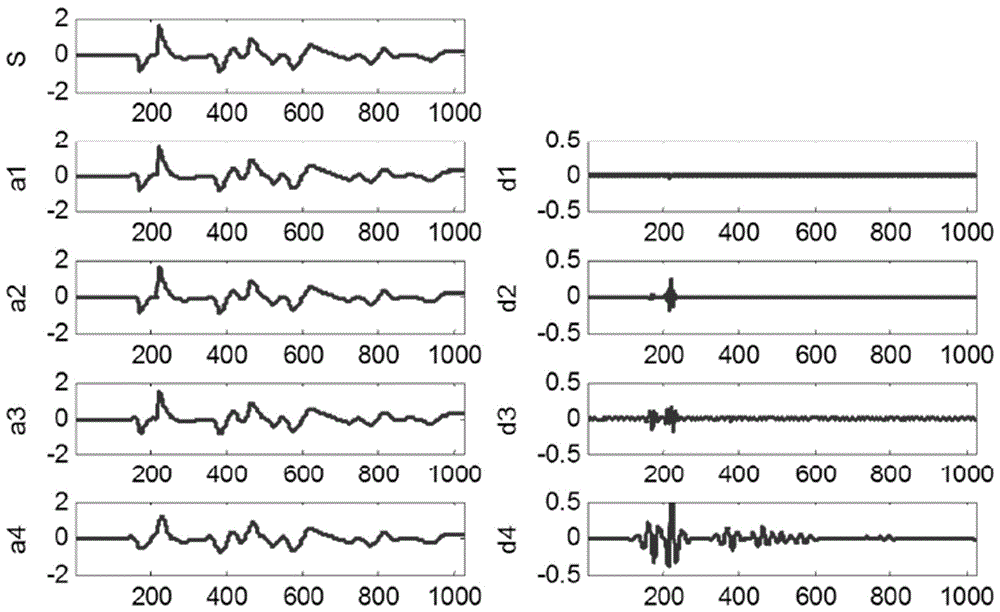

Intelligent detection and quantitative recognition method for defect of concrete

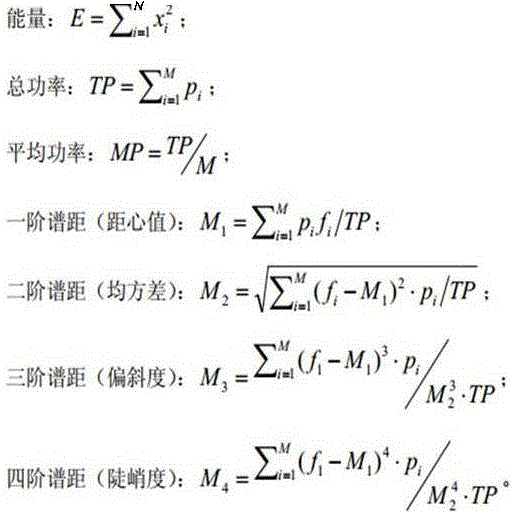

ActiveCN105929024AImprove effectivenessImprove detection depthAnalysing solids using sonic/ultrasonic/infrasonic wavesProcessing detected response signalLearning machineNon destructive

The invention discloses an intelligent detection and quantitative recognition method for the defect of concrete. According to the method, a concrete test piece is subjected to impact echo signal sample acquisition, signal noise reduction treatment and characteristic value extraction so as to construct a recognition model for analysis components including feature extraction, defect inspection, defect diagnosis and defect quantification and positioning; and the model is used for detecting and recognizing to-be-detected concrete. The intelligent detection and quantitative recognition method provided by the invention is directed at disadvantages of conventional detection technology for concrete defects and based on theoretical analysis, value simulation and model testing, employs advanced signal processing and artificial intelligence technology and fully digs out characteristic information of a testing signal, thereby establishing the model for intelligent rapid detection and classified recognition based on wavelet analysis and an extreme learning machine; and the model has good classified recognition performance, realizes intelligent rapid quantitative recognition and evaluation of the variety, properties and scope of the defect of concrete and further improves the innovation and application level of non-destructive testing technology for the defect of concrete.

Owner:ANHUI & HUAI RIVER WATER RESOURCES RES INST

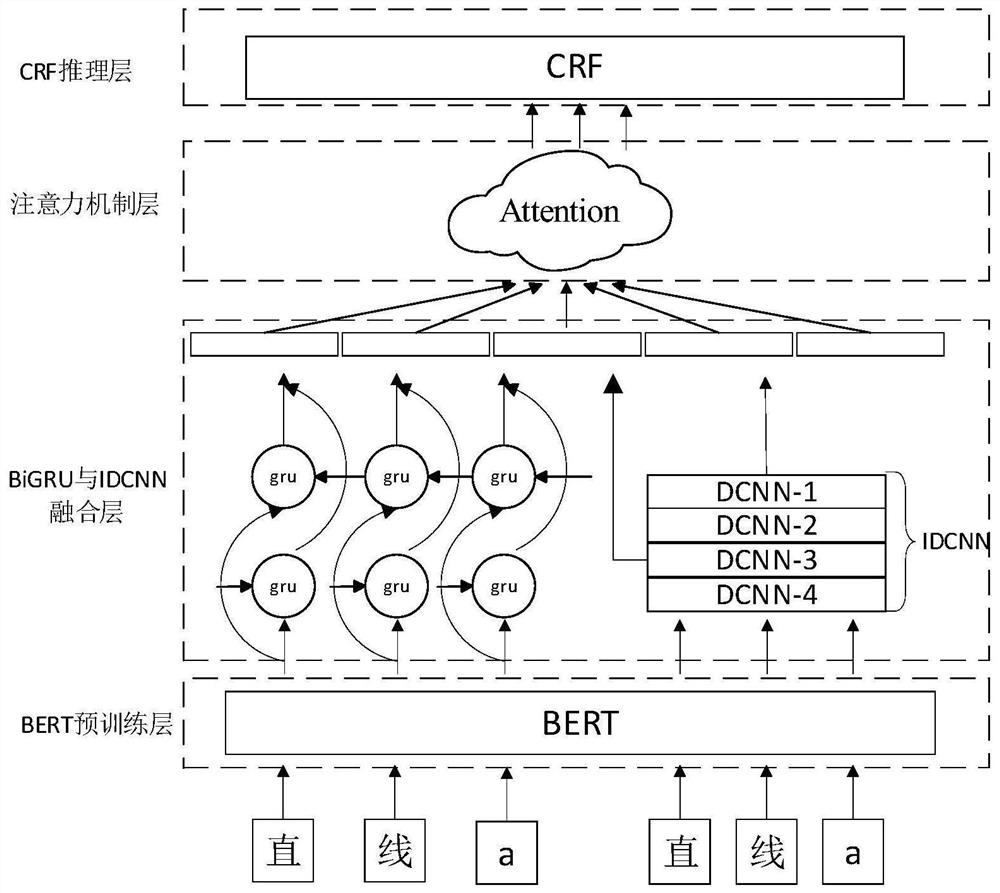

BERT-BiGRU-IDCNN-CRF named entity identification method based on attention mechanism

PendingCN112733541ASolve the problem of not being able to characterize polysemyImprove the shortcomings of ignoring local featuresNatural language data processingNeural architecturesFeature vectorNamed-entity recognition

The invention discloses a BERT-BiGRU-IDCNN-CRFnamed entity recognition method based on an attention mechanism. The method comprises the steps: training a BERT pre-training language model through large-scale label-free prediction; on the basis of the trained BERT model, constructing a complete BERT-BiGRU-IDCNN-Attention-CRF named entity recognition model; constructing an entity recognition training set, and training the complete entity recognition model on the training set; inputting an expected material to be subjected to entity recognition into the trained entity recognition model, and outputting a named entity recognition result. According to the method, feature vectors extracted by the BiGRU and IDCNN neural networks are combined, the defect that local features are ignored in the process of extracting global context features by the BiGRU neural network is overcome, and meanwhile, the attention mechanism is introduced, and weight allocation is performed on the extracted features, so that the features playing a key role in entity recognition are enhanced, irrelevant features are weakened, and the recognition effect of named entity recognition is further improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

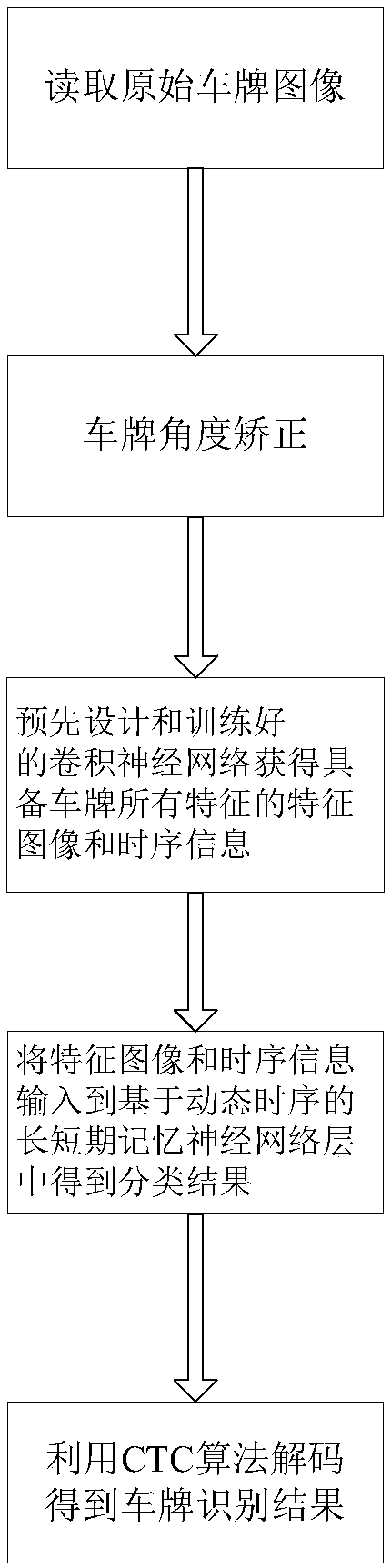

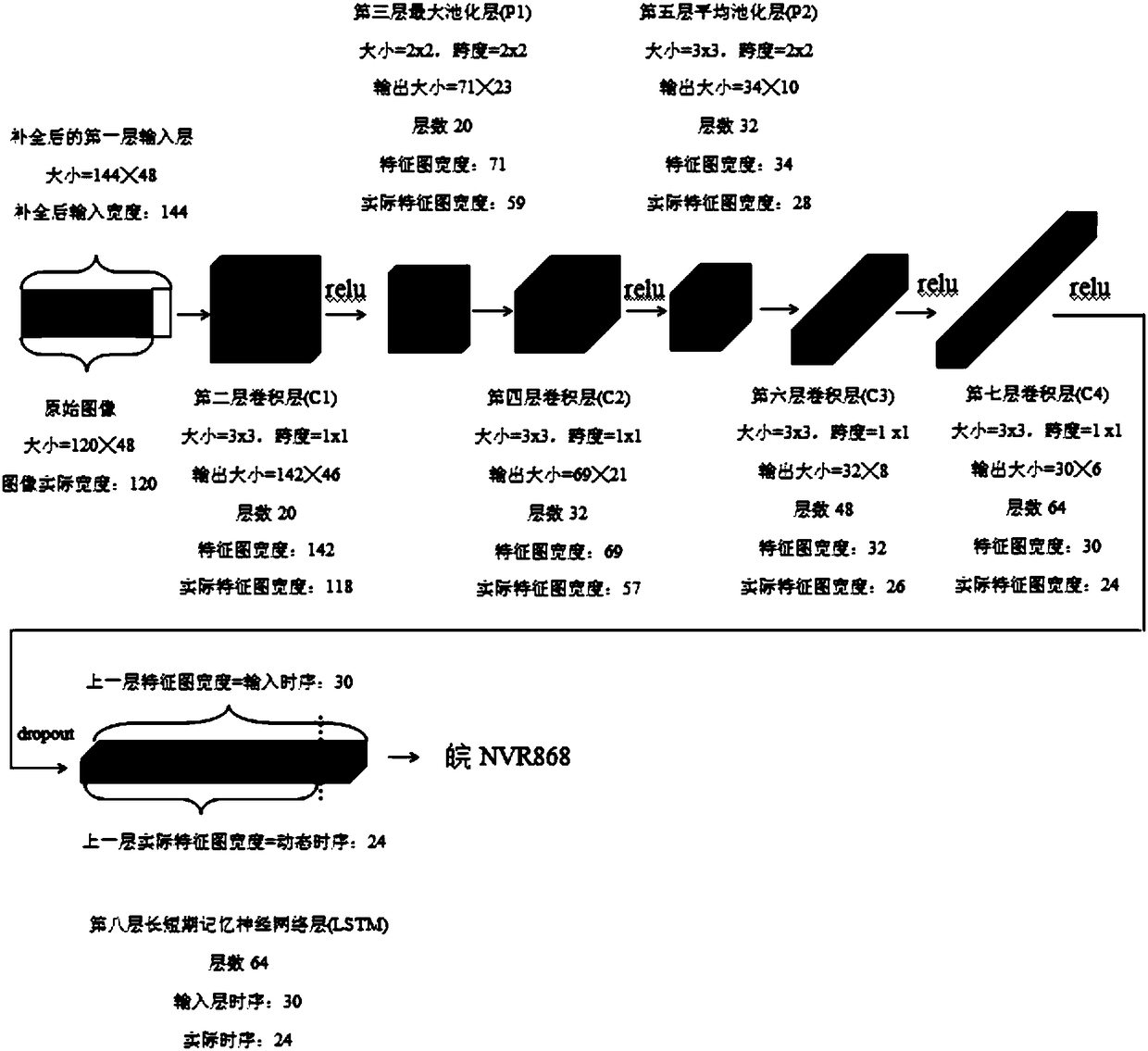

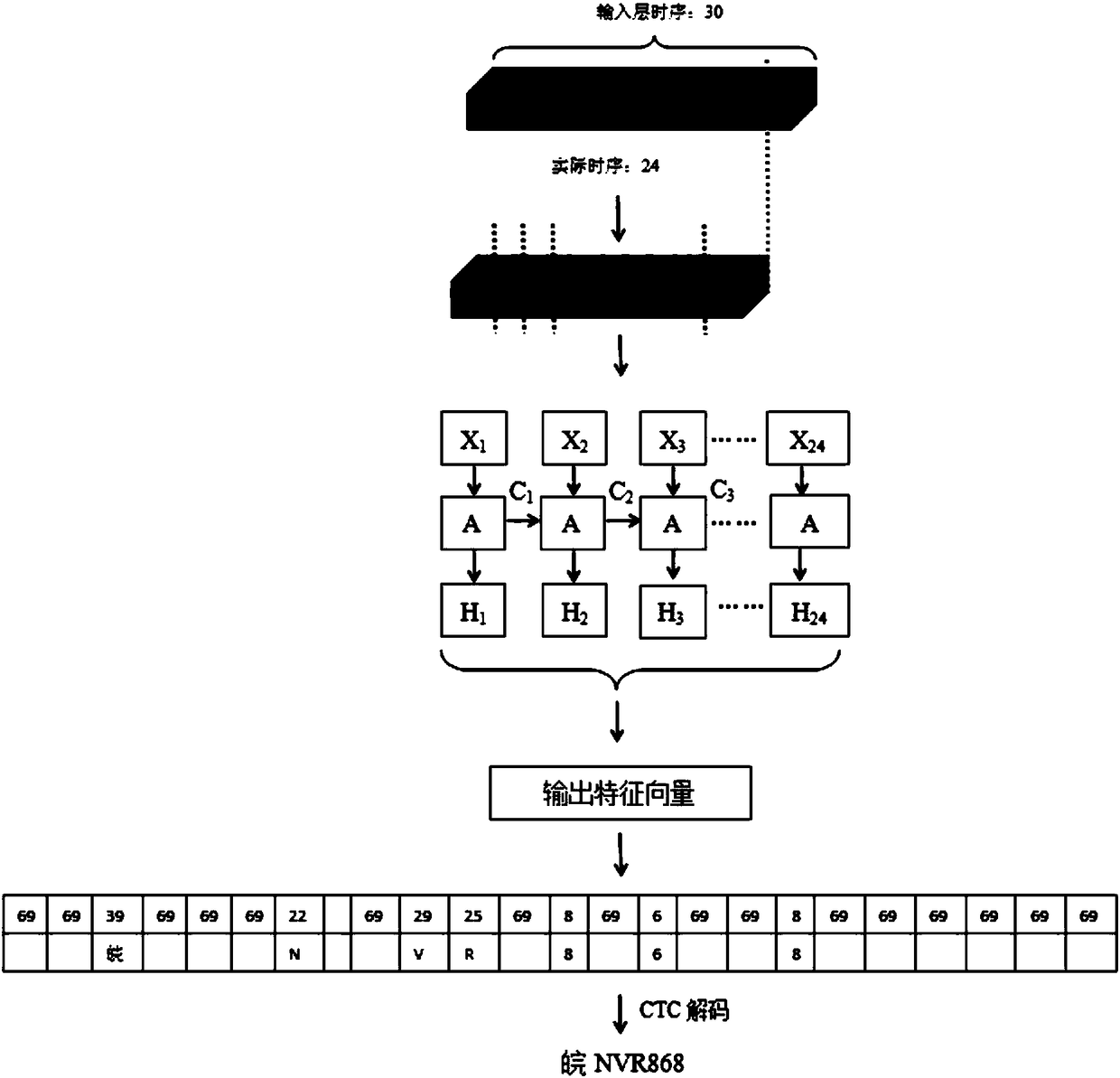

Dynamic time sequence convolutional neural network-based license plate recognition method

ActiveCN108388896AReduce training parametersSolve the problem of low accuracy rate and wrong recognition resultsCharacter and pattern recognitionNeural architecturesShort-term memoryLeak detection

The invention discloses a dynamic time sequence convolutional neural network-based license plate recognition method. The method comprises the following steps of: reading an original license plate image; carrying out license plate angle correction to obtain a to-be-recognized license plate image; inputting the to-be-recognized license plate image into a previously designed and trained convolutionalneural network so as to obtain a feature image and time sequence information, wherein the feature image comprises all the features of the license plate; and carrying out character recognition, inputting the feature image into a convolutional neural network of a long and short-term memory neural network layer on the basis of time sequence information of the last layer so as to obtain a classification result, and carrying out decoding by utilizing a CTC algorithm so as to obtain a final license plate character result. According to the method, vision modes are directly recognized from original images through using convolutional neural networks, self-learning and correction are carried out, the convolutional neural networks can be repeatedly used after being trained for one time, and the timeof single recognition is in a millisecond level, so that the method can be applied to the scenes needing to recognize license plates in real time. The dynamic time sequence-based long and short-termneural network layer is combined with CTC algorithm-based decoding, so that recognition error problems such as leak detection and repeated detection are effectively avoided, and the algorithm robustness is improved.

Owner:浙江芯劢微电子股份有限公司

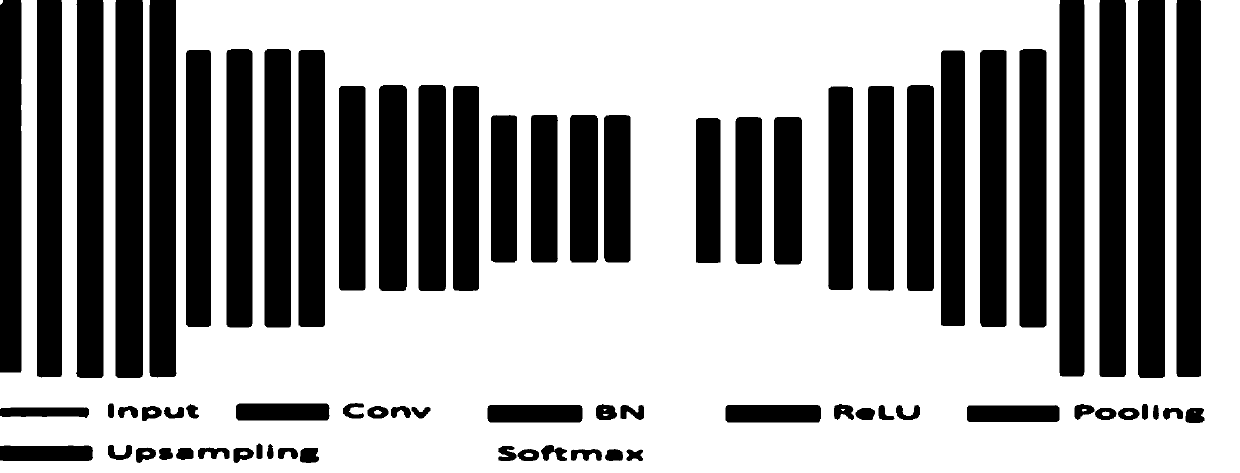

Vehicle driving lane positioning method based on semantic segmentation

InactiveCN108764137AConvenient and accurate vehicle positioningReduce training parametersImage analysisCharacter and pattern recognitionEncoder decoderDecoder architecture

The invention discloses a vehicle driving lane positioning method based on semantic segmentation. The method comprises the steps of firstly, constructing a double-lane semantic segmentation network onthe basis of a Segnet network after a road image is acquired; performing feature extraction on the road image; outputting a lane segmentation mask image of the road image; judging whether each pixelpoint on the road image belongs to a left lane, a right lane or a non-lane; carrying out target detection on a vehicle in the road image; acquiring the position of the vehicle on the road image; and finally, by fusing the lane segmentation mask image and a vehicle target detection result, judging the lane where the vehicle is located. According to the method, an encoder-decoder architecture network is adopted to realize end-to-end training of a double-lane semantic segmentation model; and the requirement of detection timeliness is met.

Owner:FUZHOU UNIVERSITY

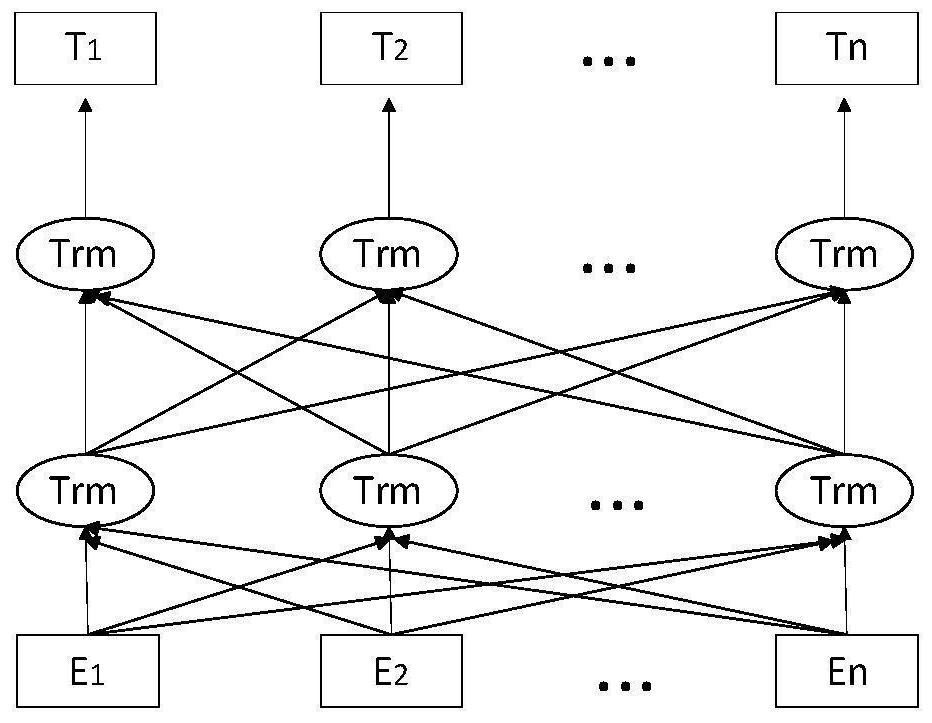

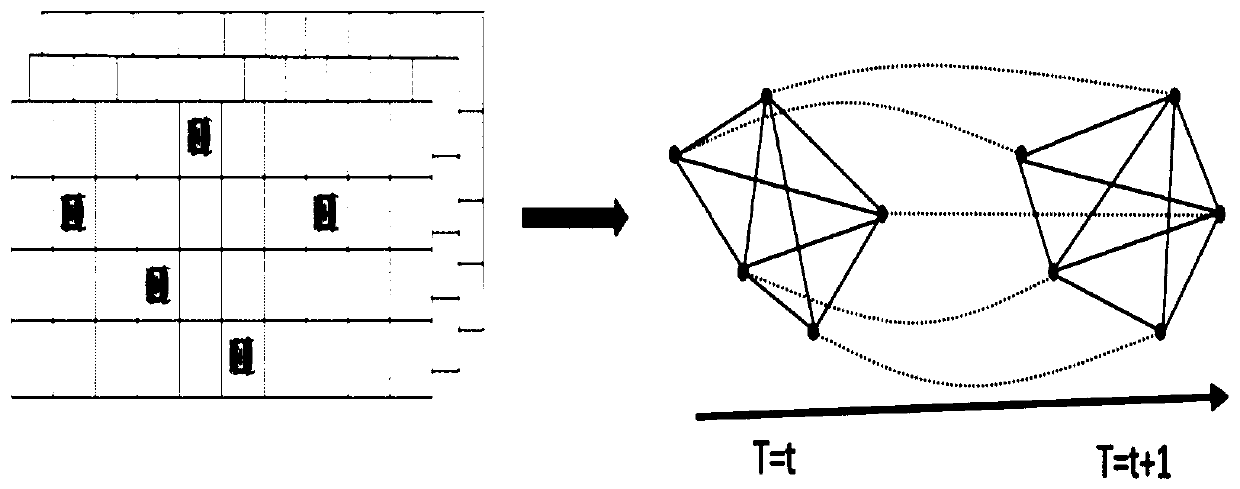

Vehicle track prediction method and device

ActiveCN111091708AHigh precisionIncrease flexibilityDetection of traffic movementForecastingSimulationTerminal equipment

The invention is applicable to the technical field of intelligent transportation and provides a vehicle track prediction method. The method comprises the following steps: acquiring history track dataof multiple vehicles in a preset time period, performing preprocessing on the history track data, thus obtaining a time-space diagram sequence corresponding to the history track data, wherein the time-space diagram sequence comprises time-space diagrams respectively corresponding to moments which are sequentially arranged in the preset time period, and each time-space diagram comprises nodes corresponding to at least three vehicles; and inputting the time-space diagram sequence into a trained prediction model to be processed, thus obtaining a corresponding predicted driving track of each vehicle, wherein the prediction model is obtained by training a Long Short-term Memory (LSTM) network on the basis of sample time-space diagrams corresponding to sample track data of multiple sample vehicles in the same time period and a sample driving track corresponding to each sample vehicle. The invention also provides a vehicle track prediction device and terminal equipment, vehicle track prediction precision and flexibility of the prediction model are improved, and robustness is also enhanced, thereby being better applied to manless driving.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

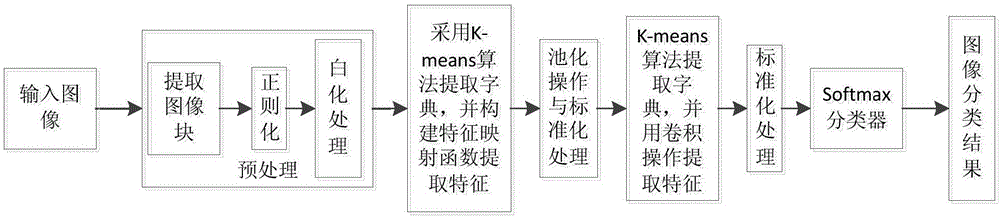

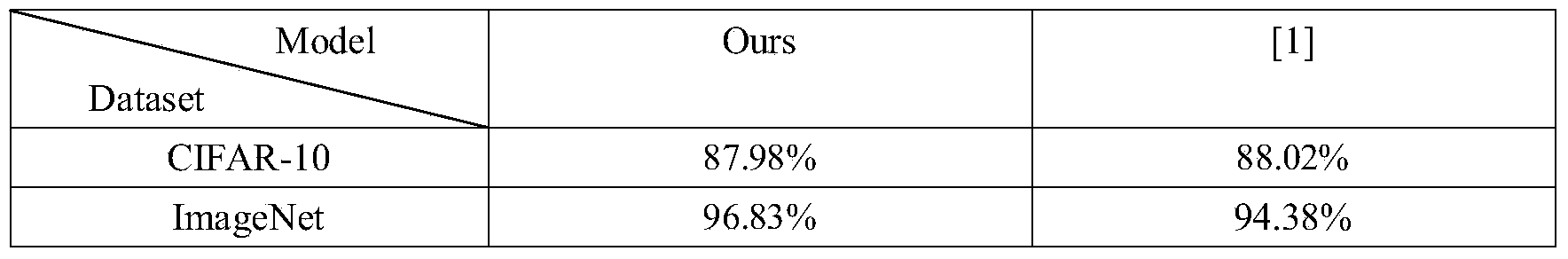

K-means and deep learning-based image classification algorithm

InactiveCN106845528AAvoid trainingSimplify the training processCharacter and pattern recognitionImaging FeatureHigh dimensional

The invention discloses a K-means and deep learning-based image classification algorithm. The algorithm comprises the steps of 1) taking untagged images as input images, and randomly extracting image blocks to form an untagged image set of the untagged images same in size; 2) extracting a primary optimal clustering center by adopting a K-means algorithm; 3) constructing a feature mapping function, and extracting image features of the untagged image set; 4) performing pooling operation and normalization processing; 5) extracting a secondary optimal clustering center by adopting the K-means algorithm, extracting final image features by adopting convolution operation, and performing standardization processing on the final image features; and 6) classifying the final image features subjected to the standardization processing through a sorter. The algorithm has the advantages of simplicity, high efficiency, few training parameters and the like, and has a very good effect for classification of massive high-dimensional images; and the input images are preprocessed, so that the effects of improving the image classification effect and enhancing the classification precision are achieved.

Owner:HUBEI UNIV OF TECH

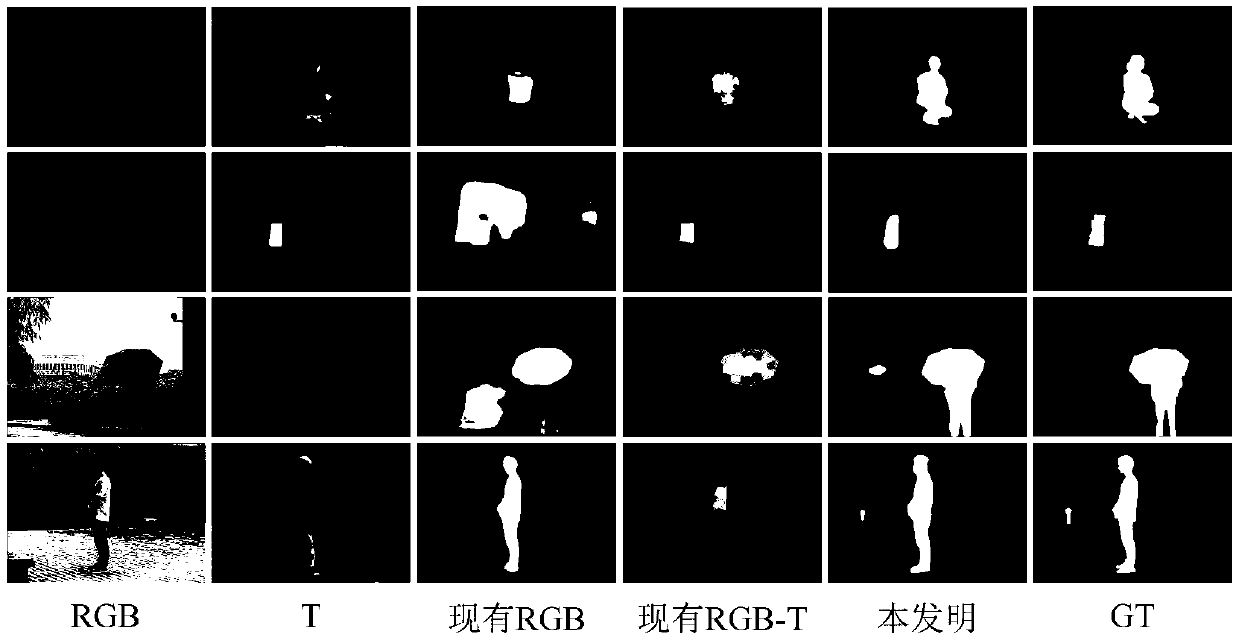

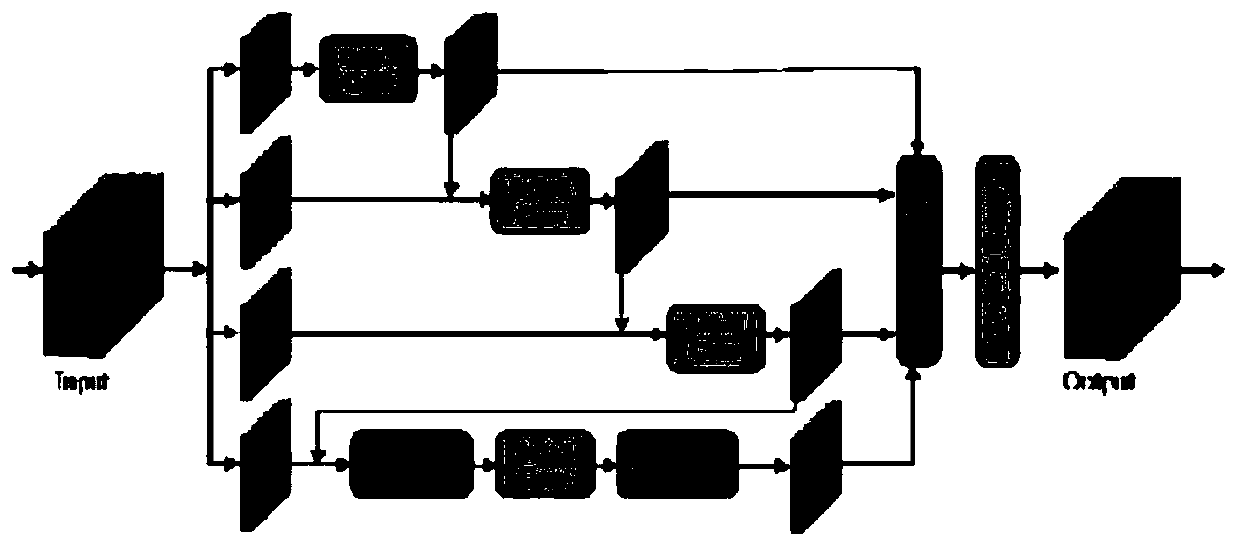

RGB-T image significance target detection method based on multi-level depth feature fusion

ActiveCN110210539AAchieving pixel-level detectionHas a full consistency effectCharacter and pattern recognitionNeural architecturesPattern recognitionImage extraction

The invention discloses an RGB-T image significance target detection method based on multi-level depth feature fusion, which mainly solves the problem that in the prior art, a saliency target cannot be completely and consistently detected in a complex and changeable scene. The implementation scheme comprises the following steps: 1, extracting rough multi-level features from an input image; 2, constructing an adjacent depth feature fusion module, and improving single-mode features; 3, constructing a multi-branch-group fusion module, and fusing the multi-mode characteristics; 4, obtaining a fusion output feature map; 5, training an algorithm network; 6, predicting a pixel-level saliency map of the RGB-T image. Supplementary information from different modal images can be effectively fused, image salient targets can be completely and consistently detected in a complex and changeable scene, and the method can be used for an image preprocessing process in computer vision.

Owner:XIDIAN UNIV

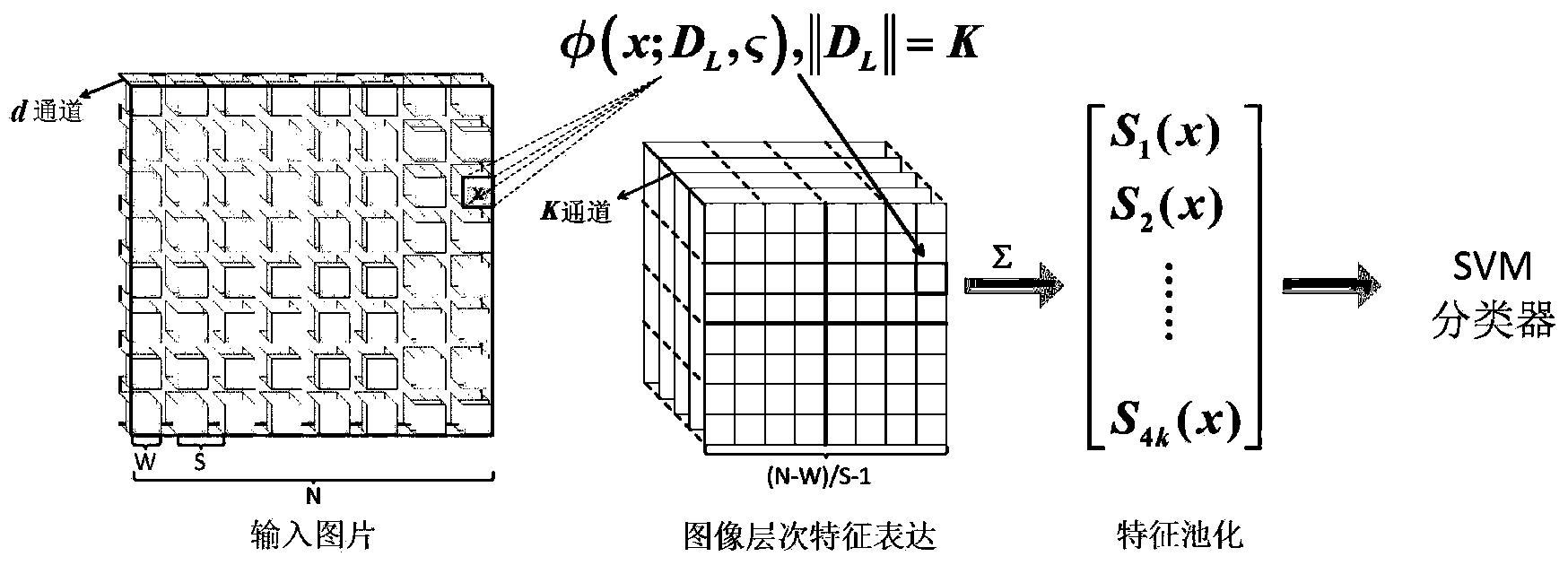

Mass image sorting system based on deep character learning

ActiveCN103955707AReduce training parametersReduce classification complexityCharacter and pattern recognitionReceptive fieldMachine learning

The invention provides a mass image sorting system based on deep character learning. The mass sorting system comprises the following steps that firstly, non-label image data and label image data are input, the non-label image data are pre-processed, interference information is removed, and key information is remained; secondly, K-means character learning is carried out on pre-processed images, and a dictionary of the layer is obtained; thirdly, if the layer is the Nth layer, character mapping is carried out on the dictionary of the layer and the label image data, the fifth step is executed after the deep characters are obtained, or otherwise, character mapping is carried out on the dictionary of the layer and the non-label image data, and the deep characters are obtained; fourthly, the high correlation characters are combined into a receptive field according to the correlation of the deep characters, if the layer is the (N-1)th layer, the fifth step is executed, or otherwise the layer serves as the input information of the next layer and is sent to the second step; fifthly, in the Nth layer, the learned characters are input to an SVM sorter, and sorting is carried out.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

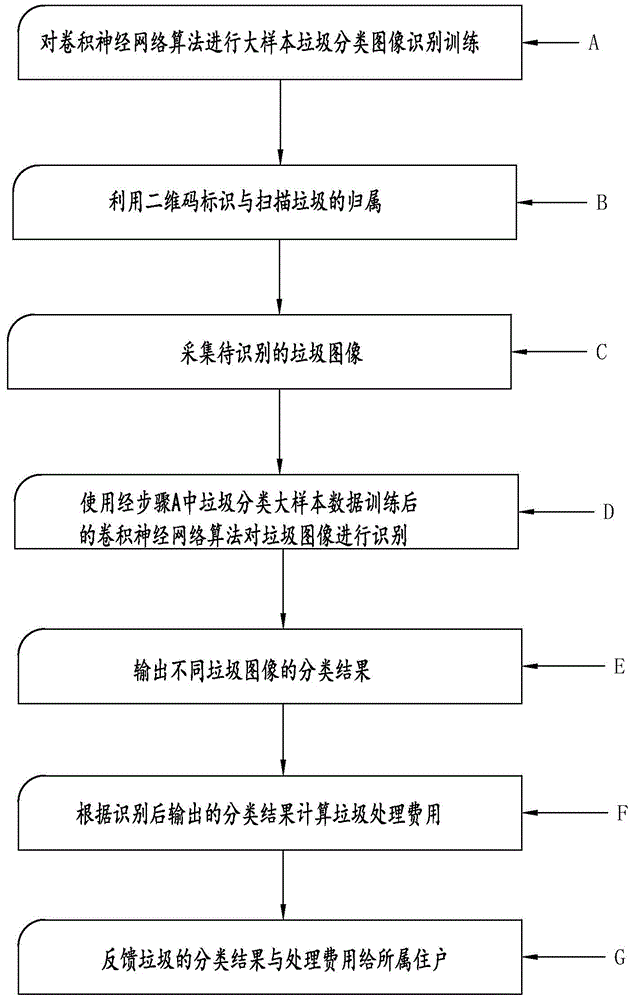

Method for assessing garbage classification based on image identification and two dimensional identification technology

PendingCN105787506AImprove processing efficiencyReduce training parametersData processing applicationsBiological neural network modelsLiving environmentLarge sample

The invention discloses a method for assessing garbage classification based on image identification and two dimensional identification technology. The method includes the following steps: (A) conducting large sample garbage classification image identifying training on a convolutional neural network algorithm; (B) using the two dimensional code to identify and scan ownership of the garbage; (3) acquiring a garbage image to be identified; (D) using the convolutional neural network algorithm that has been trained by the garbage classification big sample data in step A to identify the garbage image: (E) outputting a classification result of different garbage images. According to the invention, the method determines the residents who own the garbage based on the two dimensional code technology, and identifies garbage images by adopting the convolutional neural network algorithm, which substantially increases efficiency of garbage processing and brings clean and comfortable living environment.

Owner:耿春茂

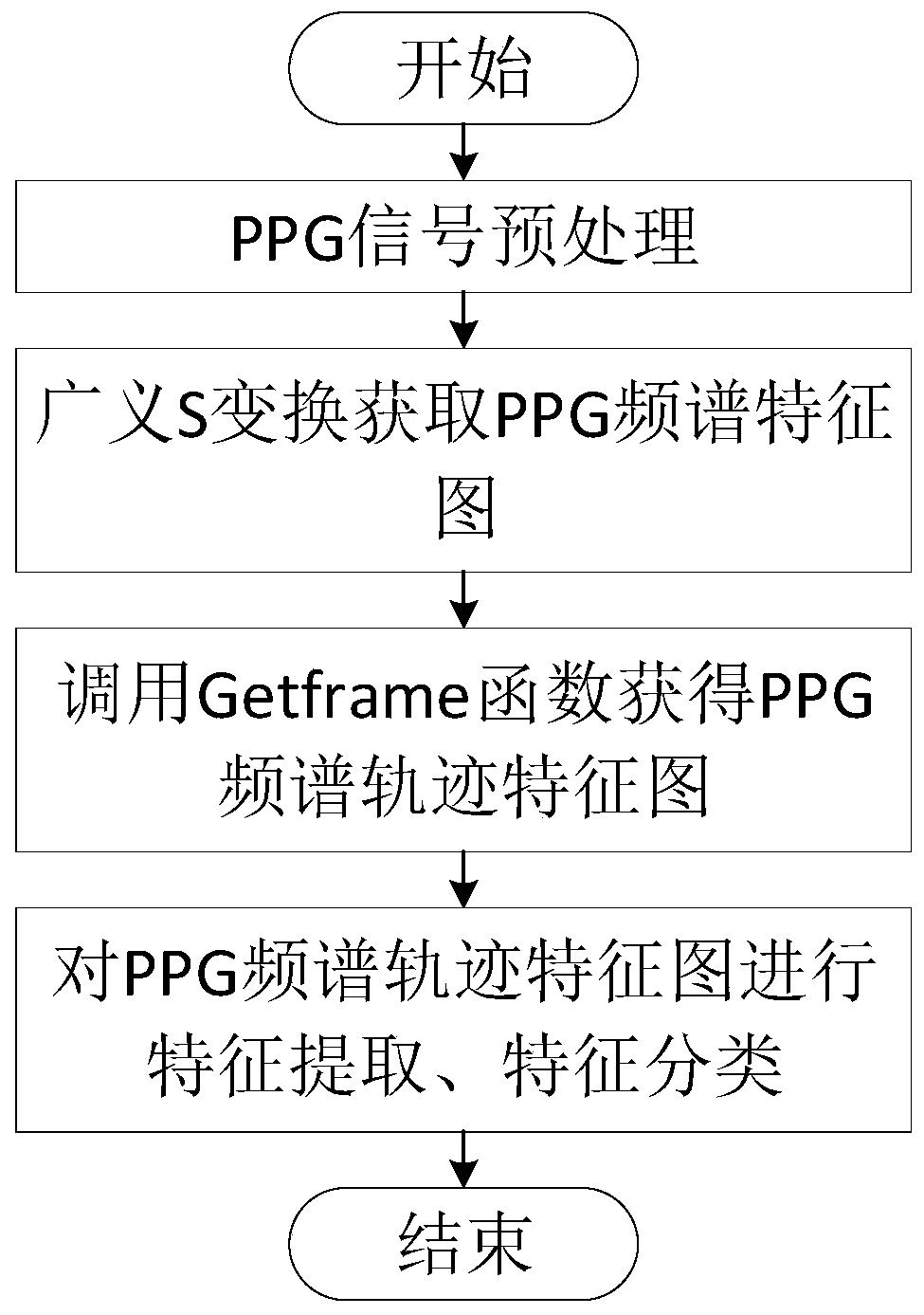

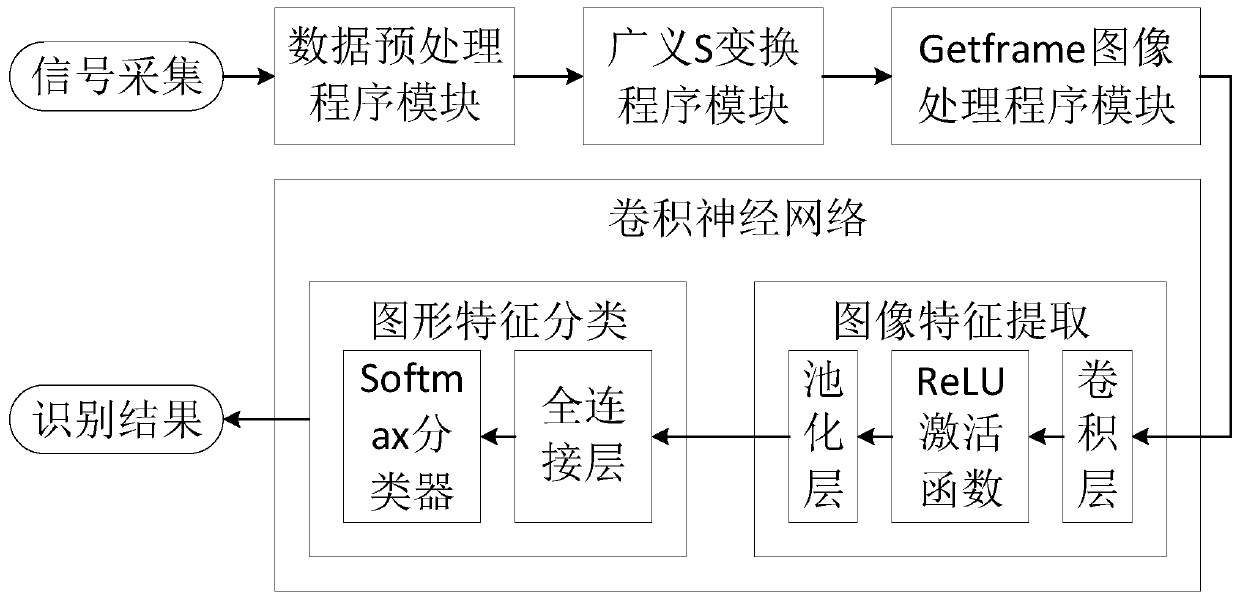

Identity recognition method and system based on photoplethysmography

InactiveCN110458197ARealize identificationReduce complexityCharacter and pattern recognitionDigital data authenticationImaging processingFeature extraction

The invention relates to an identity recognition method based on photoplethysmography. The identity recognition method comprises the following steps that S1, a data preprocessing program module preprocesses collected PPG signals; S2, a generalized S transformation program module performs generalized S transformation on the preprocessed PPG signal to obtain a PPG spectrum feature map; S3, a Getframe image processing module calls a Getframe function to snapshot the PPG spectrum feature map at each time point, and a continuous PPG spectrum trajectory feature map is obtained; and S4, the convolutional neural network performs feature extraction and feature classification on the PPG frequency spectrum track feature map to realize identity recognition. The method has the beneficial effects that the PPG signal is converted into the two-dimensional image from the original one-dimensional signal, so that identity recognition is carried out by adopting the convolutional neural network subsequently, the data features are easy to extract, the data feature extraction process is simple, and the reliability is high.

Owner:广东玖智科技有限公司

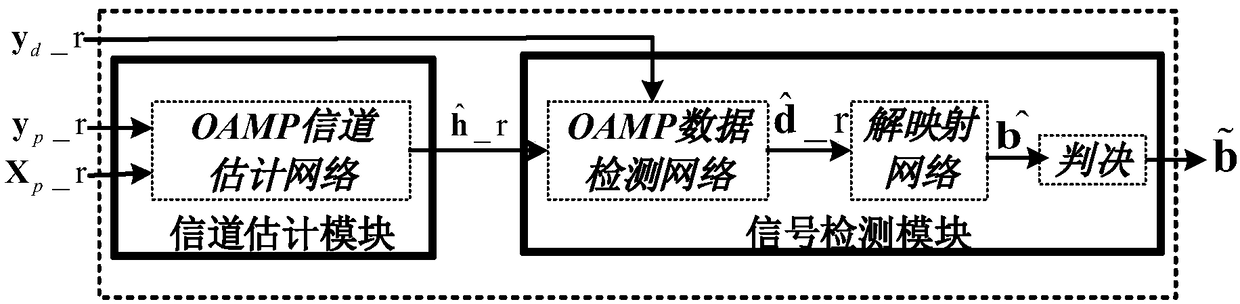

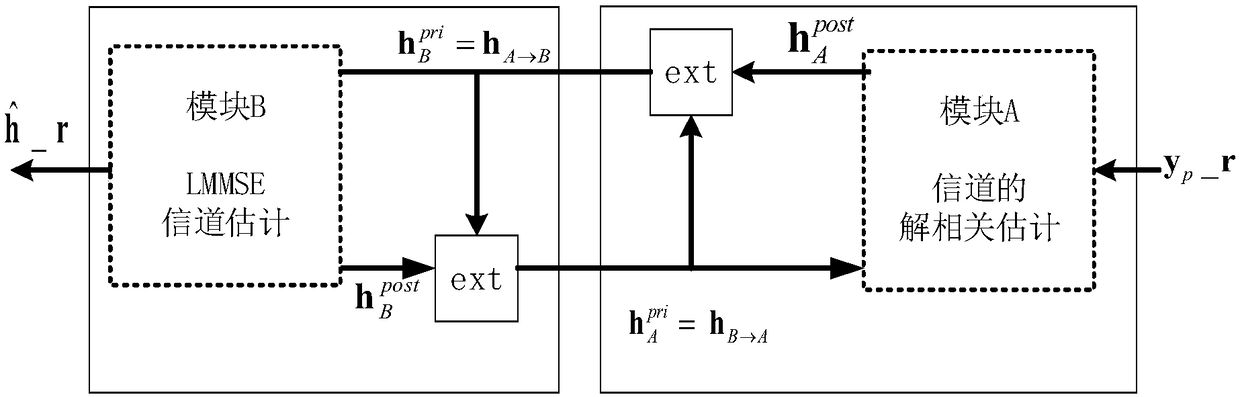

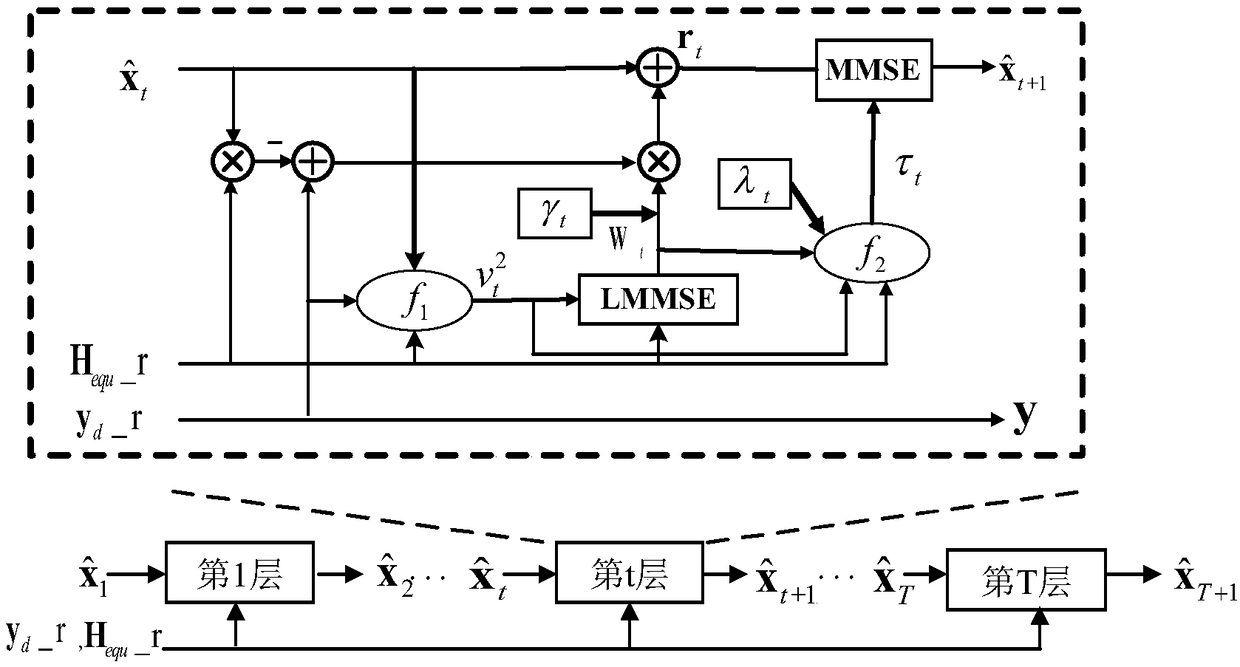

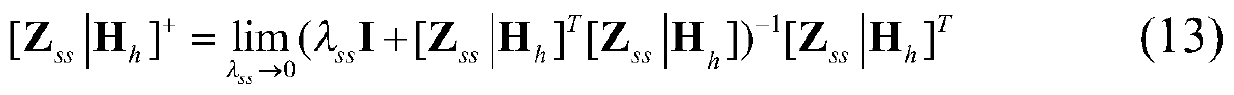

Data model dual-drive GFDM receiver and method

ActiveCN109246038AImprove performanceAdaptableError detection/prevention using signal quality detectorBaseband system detailsChannel state informationTime domain

The invention discloses a data model dual-drive GFDM receiver and method. The method comprises the steps: respectively obtaining a channel estimation and a signal detection neural network; taking thereal-number result of a matrix comprising transmitted pilot frequency information and a received time domain pilot frequency vector as the input of a channel estimation neural network, and outputtingan estimation of the frequency domain channel state information; obtaining an equivalent channel matrix, taking the real-number result of the equivalent channel matrix and the received time domain signal vector as the input of a signal detection neural network, and outputting the result as the estimation of a GFDM symbol; establishing a demapping neural network, taking an estimation of the GFDM symbol outputted by the signal detection neural network as the input, and outputting the estimation as the estimation of the original bit information; determining the output of the demapping network andthe size of a set threshold, and outputting a detection result of the original bit information according to a determination result. The method has the advantages that the training parameters do not change with the data dimension, the training speed is fast, and the adaptability to different channel environments is strong.

Owner:SOUTHEAST UNIV

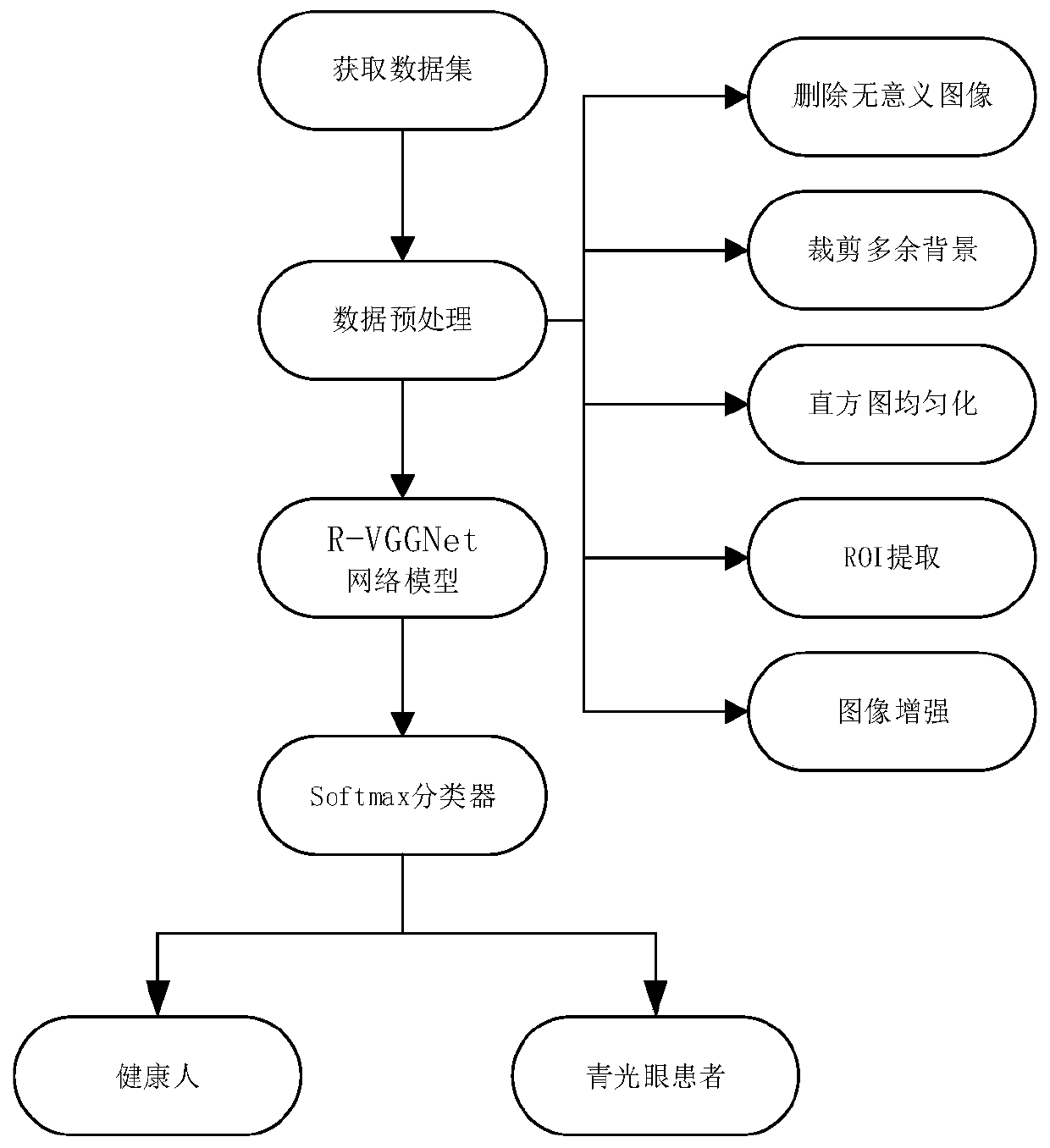

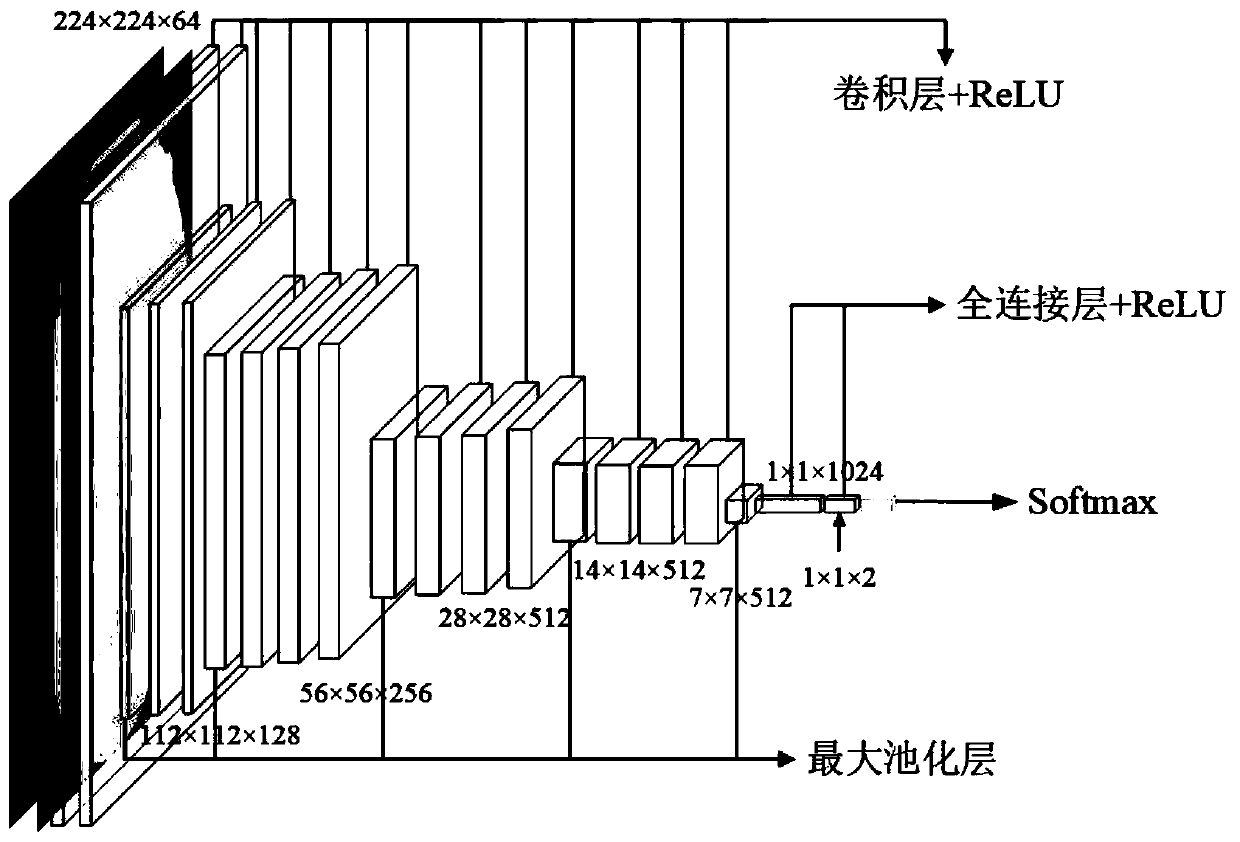

Glaucoma fundus image recognition method based on transfer learning

PendingCN111476283AReduce training parametersImprove recognition rateNeural architecturesNeural learning methodsNetwork onImage identification

The invention discloses a glaucoma fundus image recognition method based on transfer learning, and the method comprises the following steps: 1, obtaining a glaucoma data set, and carrying out the preprocessing of a glaucoma fundus image; 2, constructing a convolutional neural network R-VGGNet; 3, loading the preprocessed training data set into an R-VGGNet convolutional neural network model to perform iterative training and feature extraction of the model; 4, inputting the extracted features into a softmax classifier to complete classification and recognition of glaucoma, and obtaining a finalrecognition model; 5, loading the test data set into the final identification model, and outputting corresponding classification accuracy. According to the method, a transfer learning thought is introduced, weight parameters obtained through training of a VGG16 network on an ImageNet data set are used for freezing the first 13 layers and releasing the weights of the second 3 layers, a glaucoma data set is used for training a full connection layer and a Softmax classifier, and feature extraction and classification are carried out after fine adjustment; the method meets the requirements of deeplearning, and effectively improves the recognition rate of the glaucoma fundus image.

Owner:SHANGHAI MARITIME UNIVERSITY

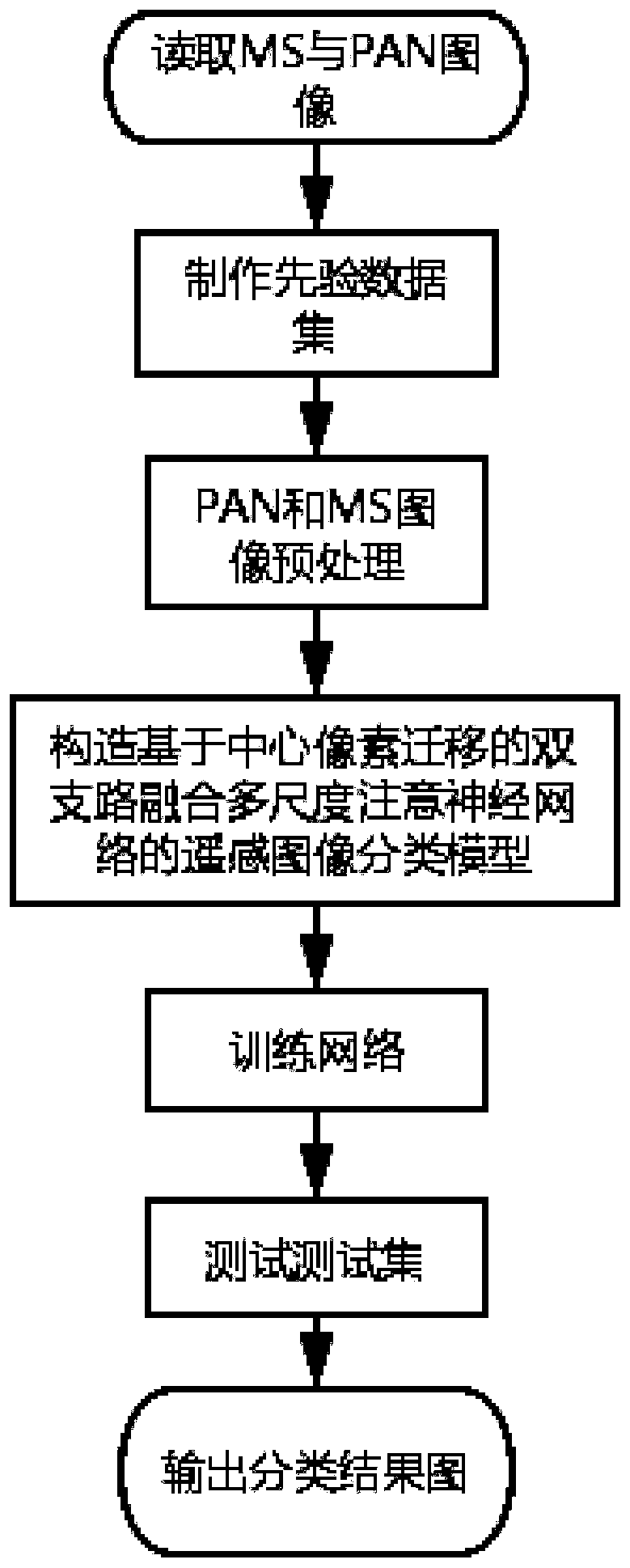

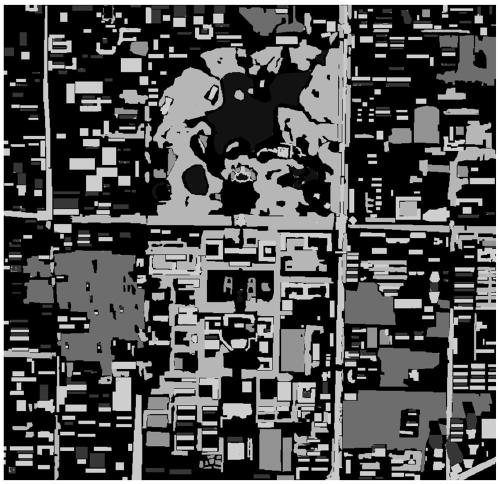

Remote sensing image classification method of double-branch fusion multi-scale attention neural network

ActiveCN111523521AImprove classification accuracyAccurate classificationImage enhancementImage analysisData setClassification methods

The invention discloses a remote sensing image classification method of a double-branch fusion multi-scale attention neural network. The method comprises the following steps: reading a multispectral image from a data set; after an image matrix is obtained, preprocessing image data by using superpixels; performing normalization operation on the data, and taking a block from each pixel in the normalized image matrix to form an image block-based feature matrix; selecting a training set and a test set; constructing a classification model of the convolutional neural network based on two-channel sparse feature fusion; training the classification model by using the training data set; and utilizing the trained classification model to classify the test data set. According to the method, consideringfrom the characteristics of the image, the characteristics can be extracted by self-adapting to the size of the target region object in the image, and a new central pixel offset strategy is adopted for the boundary pixels, so that the classification accuracy of the boundary pixels is improved, and the operation speed of the whole training process is also improved.

Owner:XIDIAN UNIV

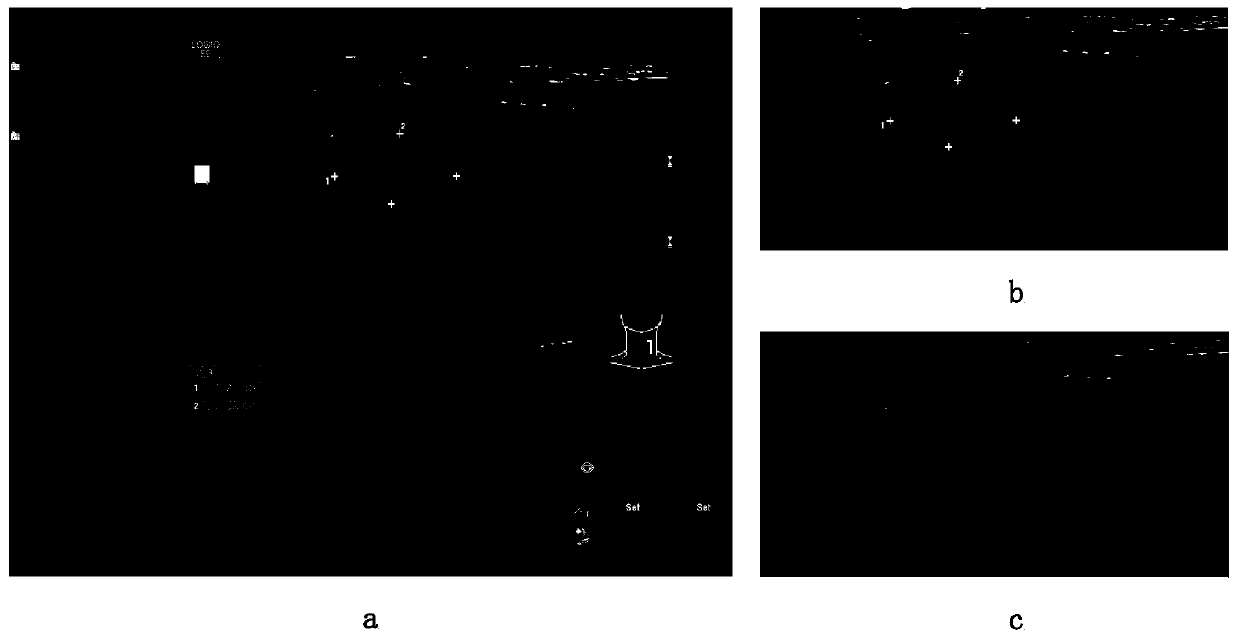

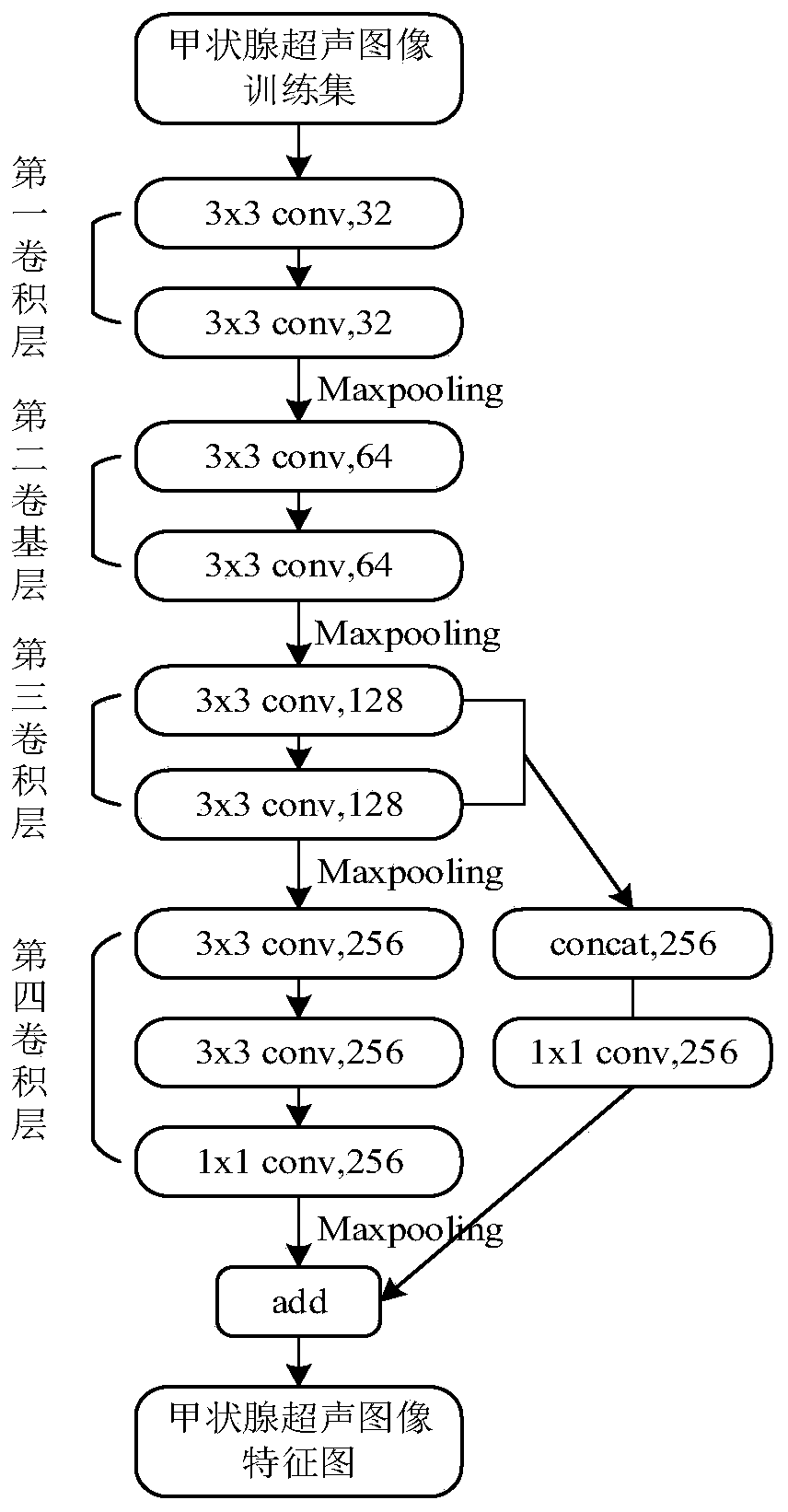

Thyroid ultrasound image nodule automatic positioning and identifying method based on USFaster R-CNN

PendingCN110490892AAvoid missed detection of small nodulesObjectiveImage enhancementImage analysisFeature extractionImage diagnosis

The invention discloses a thyroid ultrasound image nodule automatic positioning and identifying method based on USFaster R-CNN, and belongs to the field of artificial intelligence and deep learning. The method comprises the steps of preprocessing a thyroid ultrasound image, building a deep neural network model, and training and optimizing the network model, wherein the deep neural network model comprises a bottom convolution feature extraction network, a candidate box generation network, a feature map pooling layer and a classification and candidate box regression network. A deep learning method is used to realize thyroid ultrasound image feature automatic extraction, candidate box automatic generation, screening and position correction. An automatic positioning and identification functionof thyroid nodules is realized. The method can effectively assist doctors in thyroid ultrasound image diagnosis, improve the objectivity and accuracy of diagnosis, and effectively reduce the workloadof doctors and the omission ratio of small target nodules.

Owner:SUN YAT SEN UNIV

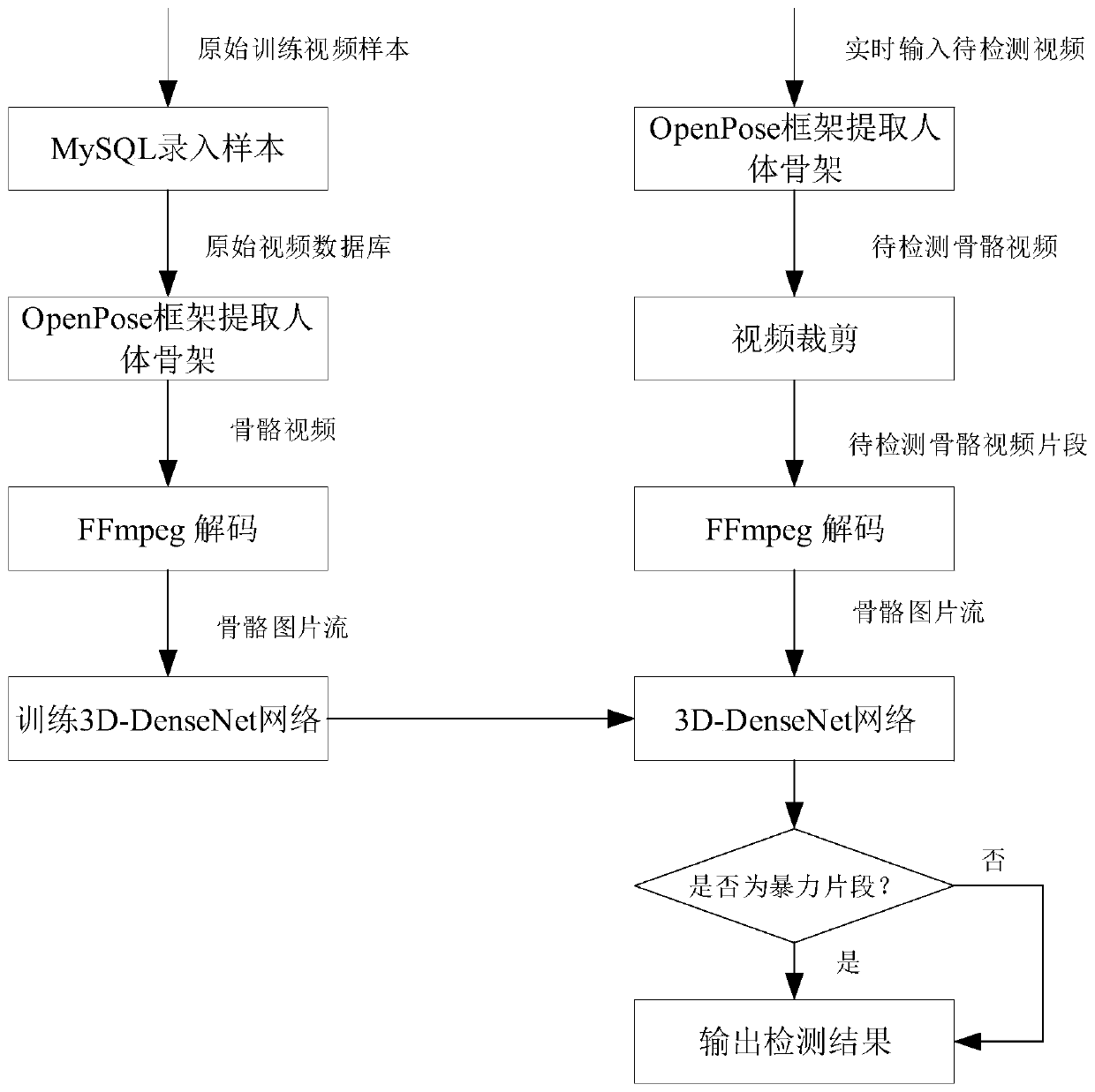

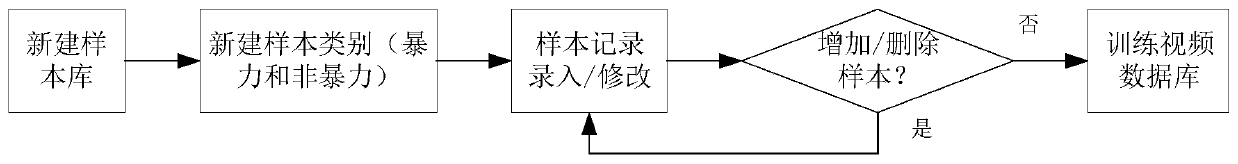

Abnormal behavior detection method and system based on human skeleton, and medium

ActiveCN110363131AEliminate the effects of mutual occlusionAdd depthCharacter and pattern recognitionHuman bodyPattern recognition

The invention provides an abnormal behavior detection method and system based on a human skeleton, and a medium, and the method comprises the steps: S1, building a training video sample database, andbuilding an original video database with a preset frame length according to a public violent behavior database; s2, according to the obtained original video database, extracting human body skeletons in all video samples in the original database by utilizing an open-source attitude estimation algorithm OpenPose to obtain a skeleton video database; s3, deframing all the obtained video samples in theskeleton video database into a skeleton picture stream by using an FFmpeg tool to obtain a skeleton picture stream; S4, sequentially inputting the obtained skeleton picture streams into a 3D-DenseNetnetwork model for training, so as to obtain a trained 3D-DenseNet network model. The human skeleton and the 3D-DenseNet are used for abnormal behavior detection for the first time, the detection accuracy is high, the robustness is high, and the detection result can be output and updated in real time.

Owner:SHANGHAI JIAO TONG UNIV

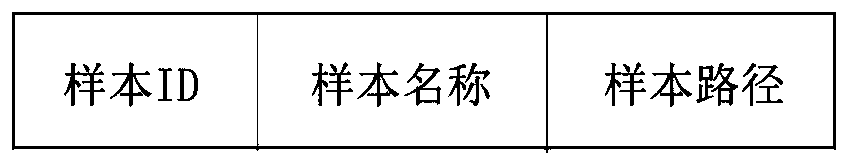

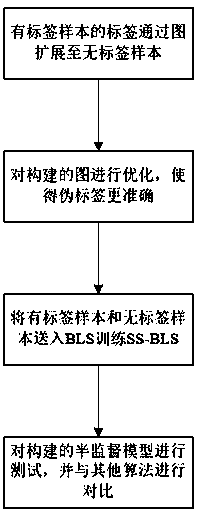

Electroencephalogram signal classification method based on graph semi-supervised width learning

InactiveCN110717390ARealize the classification of EEG signalsIncrease training rateCharacter and pattern recognitionNeural architecturesSignal classificationClassification methods

The invention relates to a classification method based on graph semi-supervised width learning. According to the method, the labels of the labeled samples are extended to the unlabeled samples througha graph extension method, so that the labeled samples and the unlabeled samples are sent to the classifier for training, and a semi-supervised algorithm is realized. According to the method, firstly,a graph based on similarity between sample data is constructed, and meanwhile, an inter-sample difference regular term is added into composition, so that the constructed graph for label expansion ismore accurate; then the labeled and unlabeled samples are sent to a classifier to be trained, a semi-supervised classifier model is obtained, optimization solution is carried out, a weight matrix froman input layer to an output layer is mainly obtained, and thus corresponding labels can be obtained from the input samples through the weight matrix when the test set is tested. The method has a wideapplication prospect in electroencephalogram signal processing and brain-computer interface systems.

Owner:HANGZHOU DIANZI UNIV

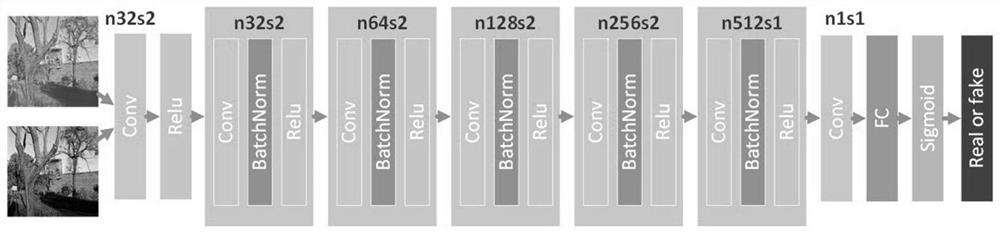

Generative adversarial network image defogging method fusing feature pyramid

InactiveCN111738942AQuality improvementImprove efficiencyImage enhancementImage analysisPattern recognitionImaging processing

The invention discloses a generative adversarial network image defogging method based on a fusion feature pyramid in the technical field of image processing. The technical problems that in the prior art, information of an image processed through an image enhancement defogging method is lost, if parameters of the image processed through an image restoration defogging method are improperly selected,the effect of the restored image can be influenced, and the image defogging speed is influenced through a defogging algorithm based on deep learning are solved. The method comprises the following steps: inputting a foggy image into a pre-trained generative adversarial network, and obtaining a fogless image corresponding to the foggy image, wherein the generator network of the generative adversarial network is fused with a feature pyramid.

Owner:NANJING UNIV OF POSTS & TELECOMM

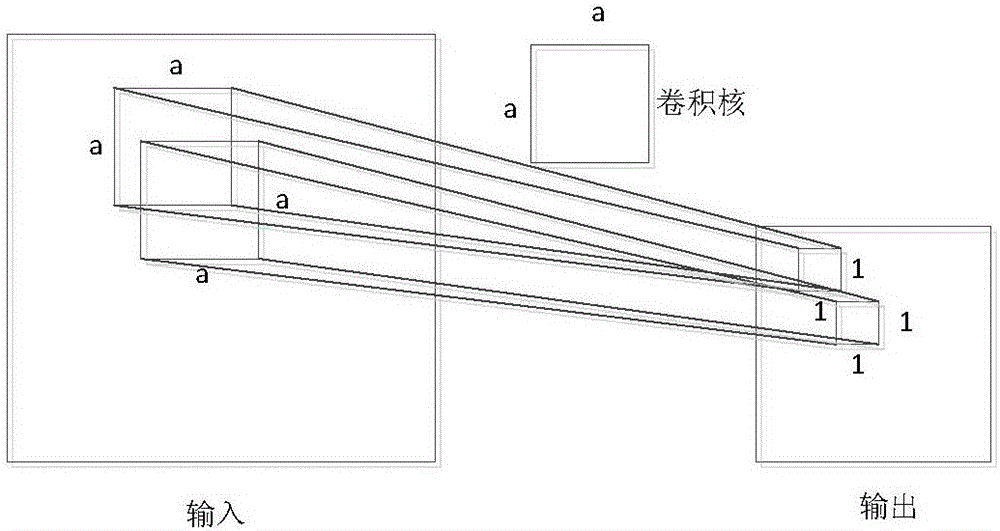

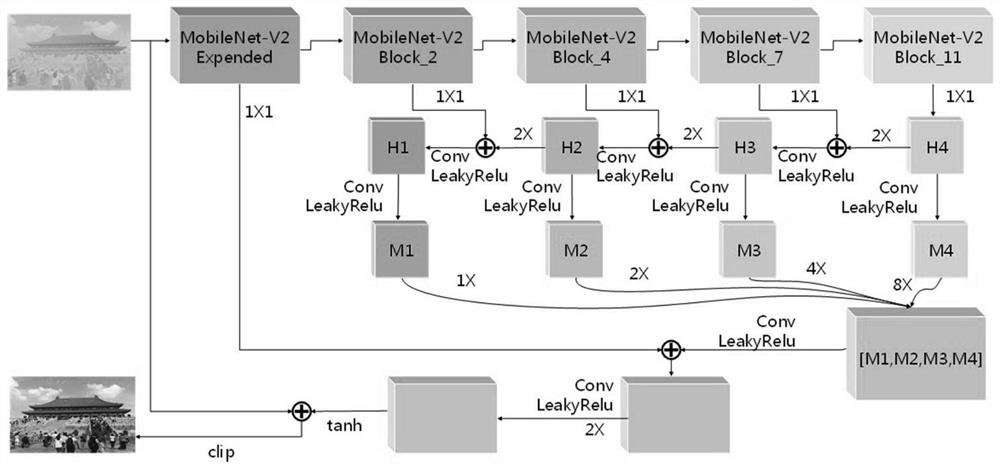

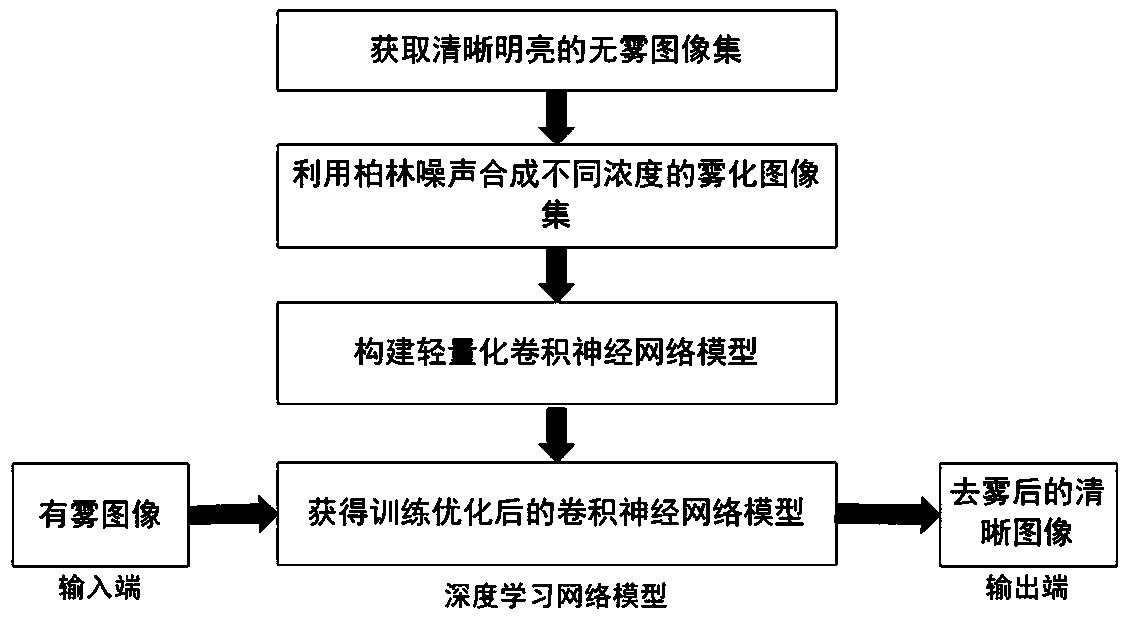

Image defogging method based on lightweight convolutional neural network

ActiveCN110930320AFully extractedReduce training parametersImage enhancementNeural architecturesImaging processingImage restoration

The invention discloses an image defogging method based on a lightweight convolutional neural network in the technical field of image processing. The technical problems that in the prior art, information of an image processed through an image enhancement defogging method is lost, if parameters of the image processed through an image restoration defogging method are improperly selected, the effectof the restored image can be influenced, and the image defogging speed is influenced through a defogging algorithm based on deep learning are solved. The method comprises the following steps: inputting a foggy image into a pre-trained lightweight convolutional neural network to obtain a fogless image, wherein the lightweight convolutional neural network comprises at least two depth separable convolutional layers with different scales, and the depth separable convolutional layers comprise depth convolution and point-by-point convolution which are connected in series with each other.

Owner:NANJING UNIV OF POSTS & TELECOMM

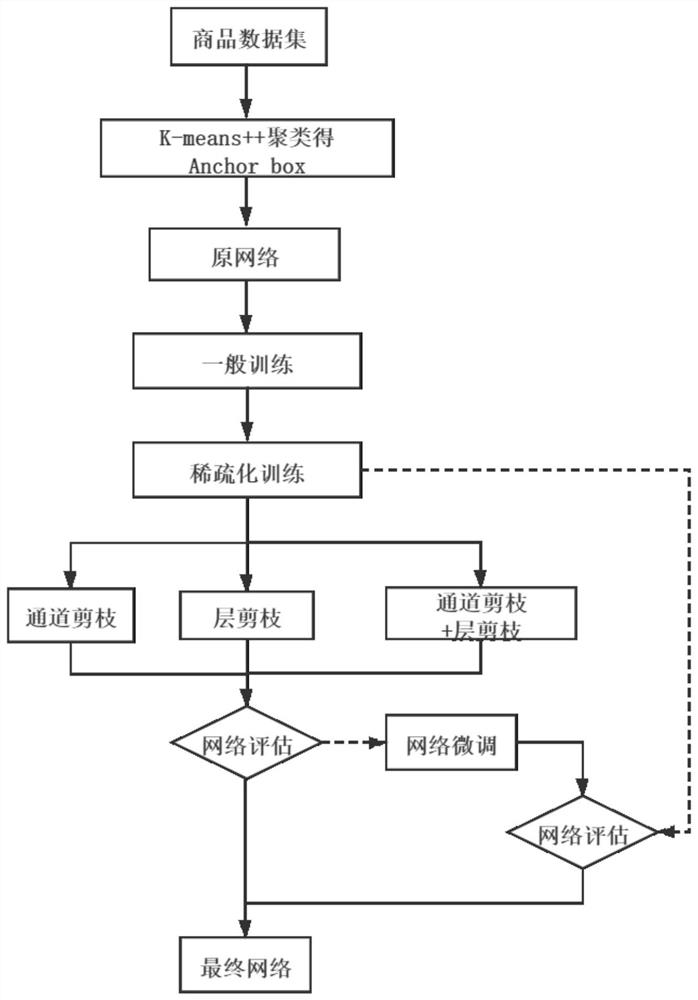

Model optimization algorithm for target detection YOLOv3 based on deep learning

PendingCN112001477ADrop rapidlyReduce mistakesCharacter and pattern recognitionNeural architecturesPattern recognitionCluster algorithm

The invention discloses a model optimization algorithm for target detection YOLOv3 based on deep learning. The model optimization algorithm comprises the following steps: resetting Anchor box of an appropriate commodity data set by adopting a K-means++ clustering algorithm; carrying out general training and sparse training on the model of the target detection YOLOv3; taking a final model after YOLOv3 sparseness as a reference, using channel pruning and layer pruning in an overlapping manner to perform double pruning, and pruning unimportant feature channels and layers; and finely adjusting thepruned model, taking a value with a better effect according to the mAP curve graph, and evaluating the obtained value again. According to the target detection YOLOv3 model optimization algorithm based on deep learning provided by the invention, the clustering effect of the algorithm is improved through K-means++; the double pruning combining the layer pruning and the channel pruning is adopted toperform network pruning so as to improve the performance of the algorithm.

Owner:NANJING UNIV OF SCI & TECH +2

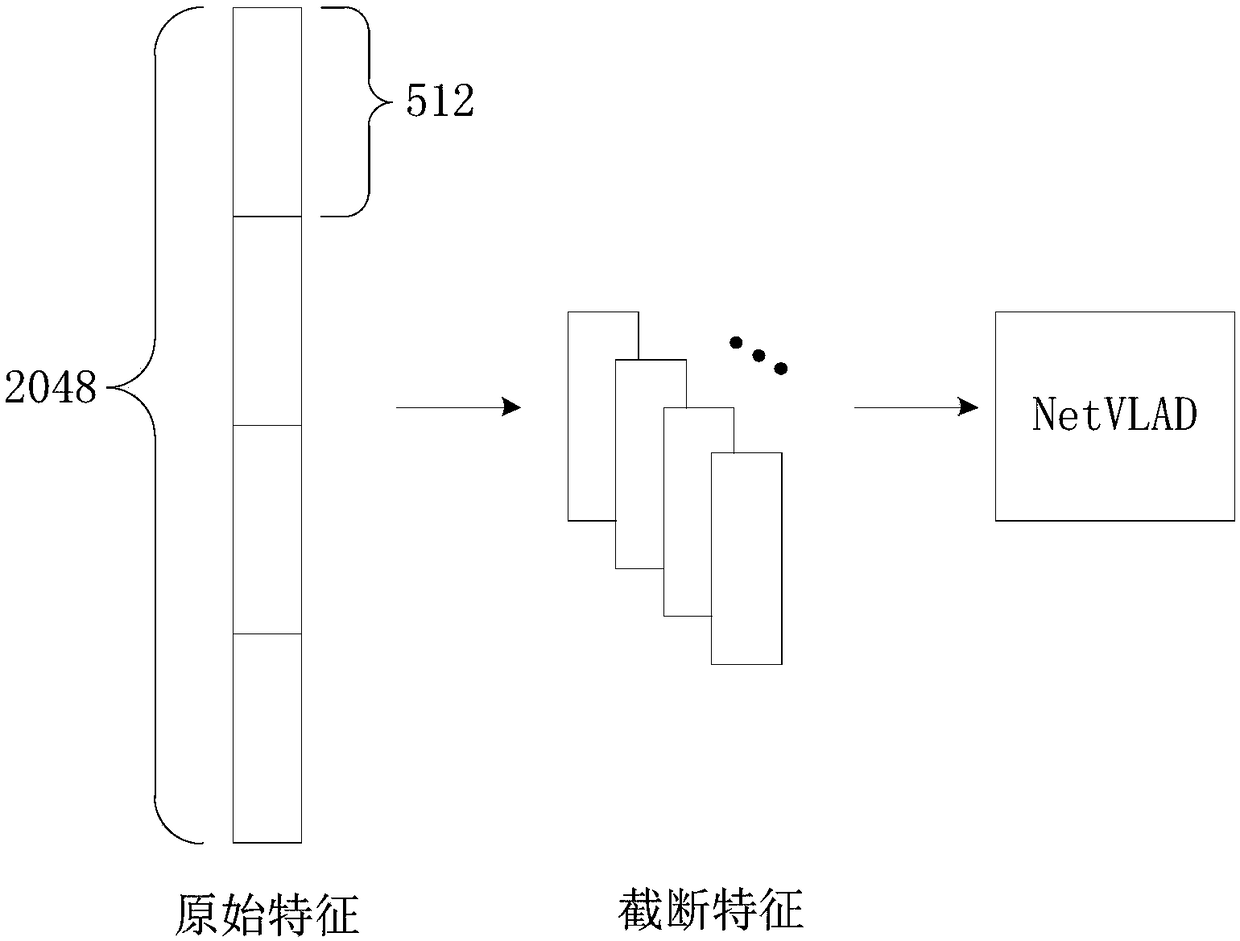

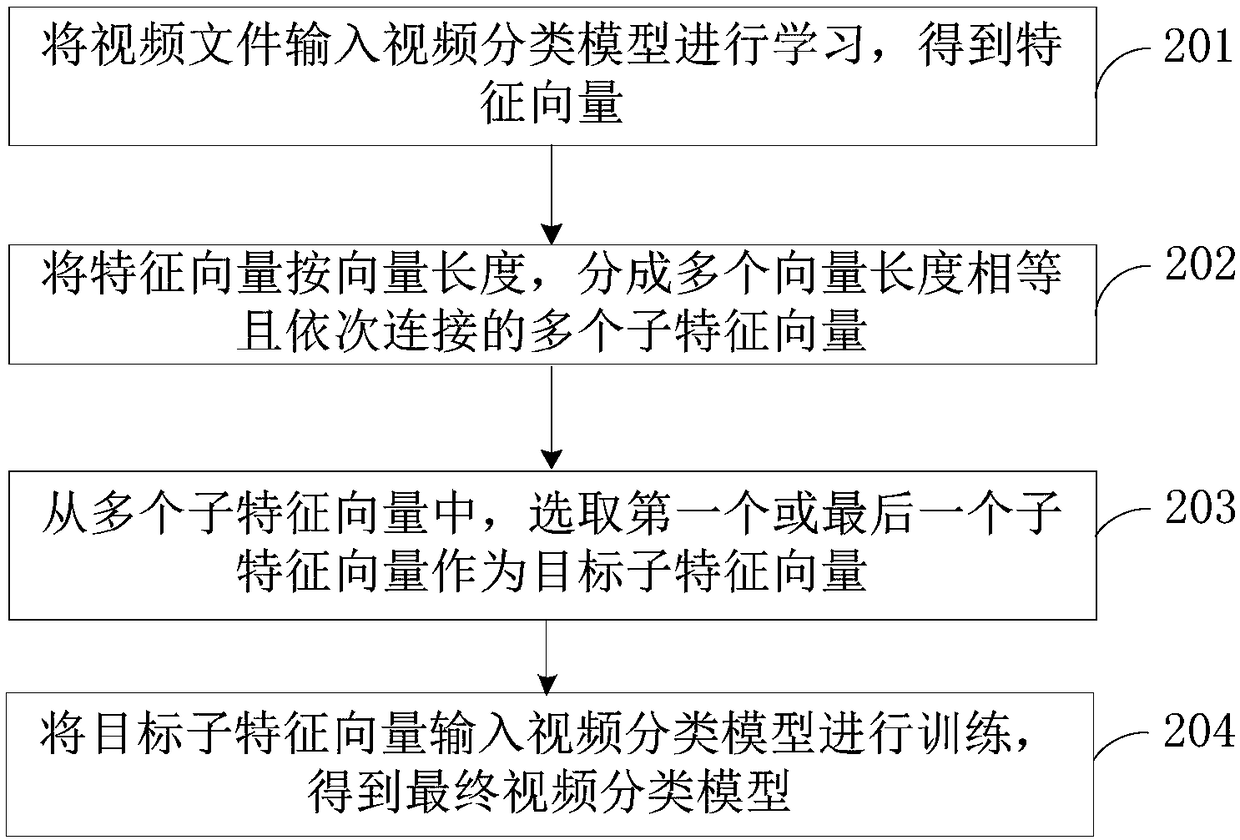

Video classification model training method and device, storage medium and electronic equipment

InactiveCN108154120AReduce sizeReduce training parametersCharacter and pattern recognitionFeature vectorElectric equipment

The invention provides a video classification model training method and device, a storage medium and electronic equipment. The method comprises the steps that video files are input into a video classification model for studying to obtain feature vectors; the feature vectors are divided into multiple sub feature vectors; a sub feature vector is selected from the sub feature vectors as a target subfeature vector; the target sub feature vector is input into the video classification model for training to obtain a final video classification model. According to the method, part of the feature vectors are intercepted as the target feature sub vectors to input into the video classification model for training, the size of input data and the data converted by the input data is reduced, and therefore training parameters are reduced to improve training efficiency.

Owner:SHANGHAI QINIU INFORMATION TECH

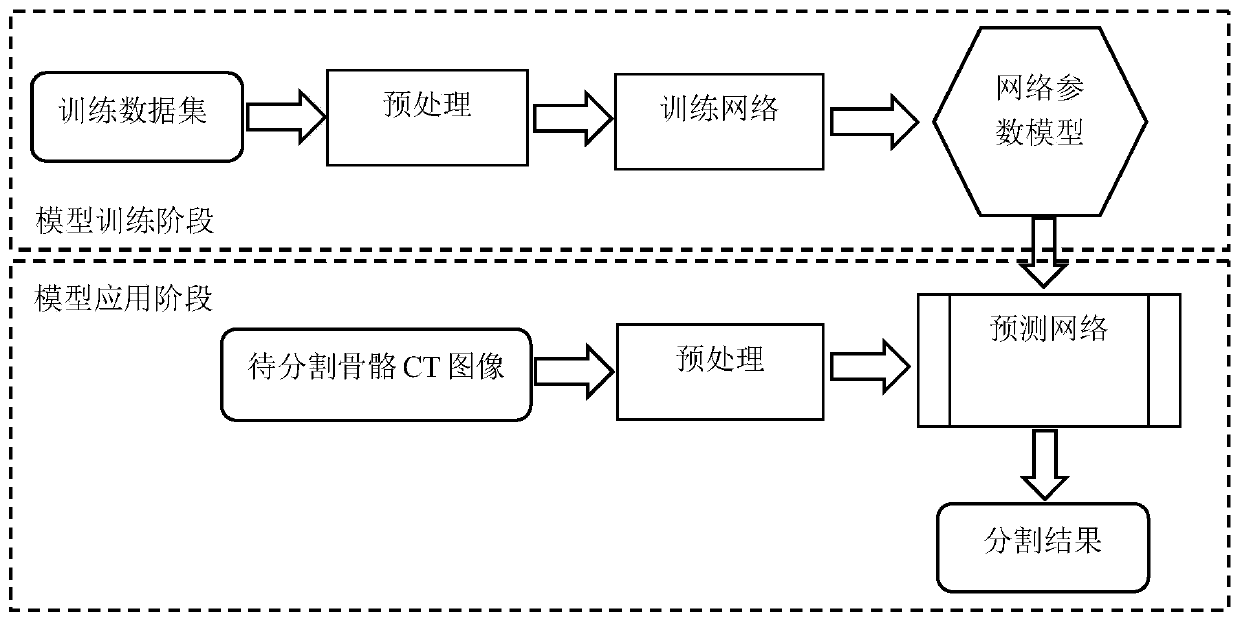

Skeleton CT image three-dimensional segmentation method based on multi-view separation convolutional neural network

PendingCN111145181AAvoid limitationsAccurate 3D SegmentationImage enhancementImage analysis3d imageMultiple view

The invention belongs to the technical field of image processing, provides a three-dimensional CT image segmentation method based on a multi-view separation convolutional neural network, and mainly relates to three-dimensional automatic segmentation of a skeleton in the CT image by using a novel convolutional neural network. The method aims to solve the problems that a neural network using three-dimensional convolution is too large in model, too high in running memory occupation amount and incapable of running on a small-video-memory-capacity display card or embedded device. Meanwhile, in order to improve the capability of the convolutional neural network for utilizing the three-dimensional space context information, a multi-view separation convolution module is introduced, the context information is extracted from the multi-view sub-images of a three-dimensional image by using a plurality of two-dimensional convolution, and the multi-level fusion is carried out, so that the extractionand fusion of the multi-view and the multi-scale context information are realized, and the segmentation precision of the skeleton in the three-dimensional CT image is improved. The average accuracy of the improved network structure is obviously improved, and the number of model parameters is obviously reduced.

Owner:HUAQIAO UNIVERSITY

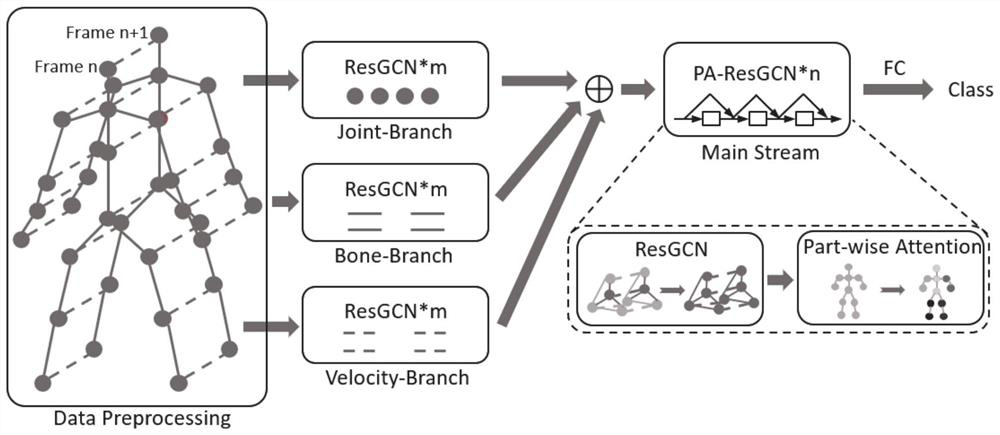

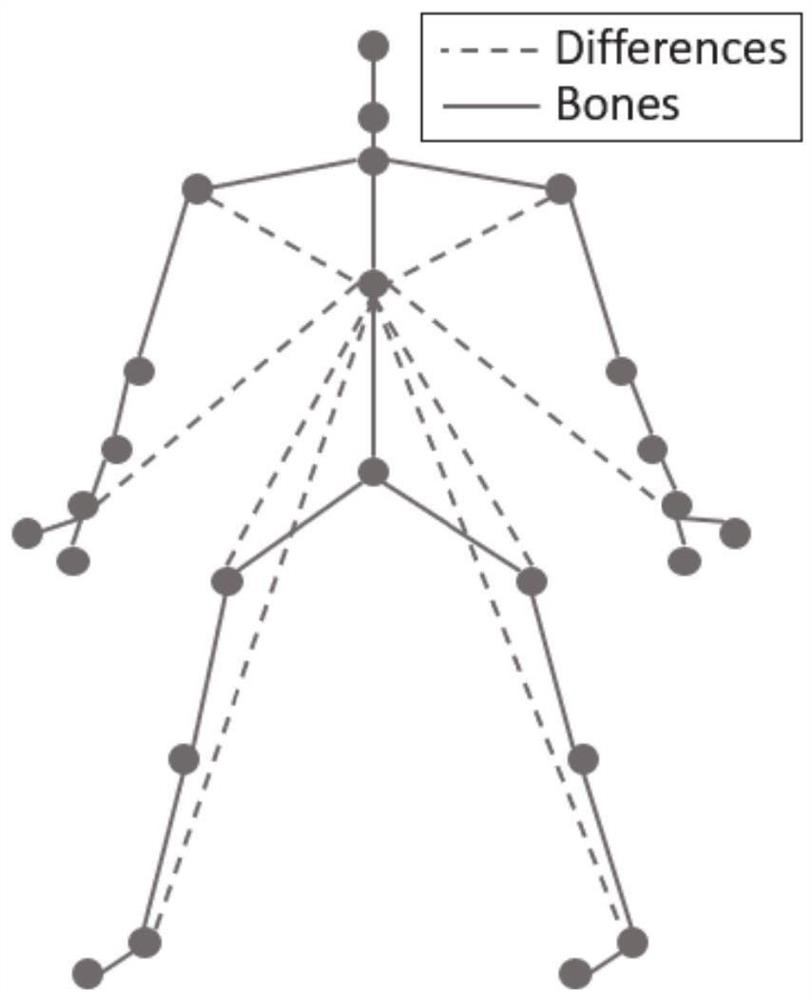

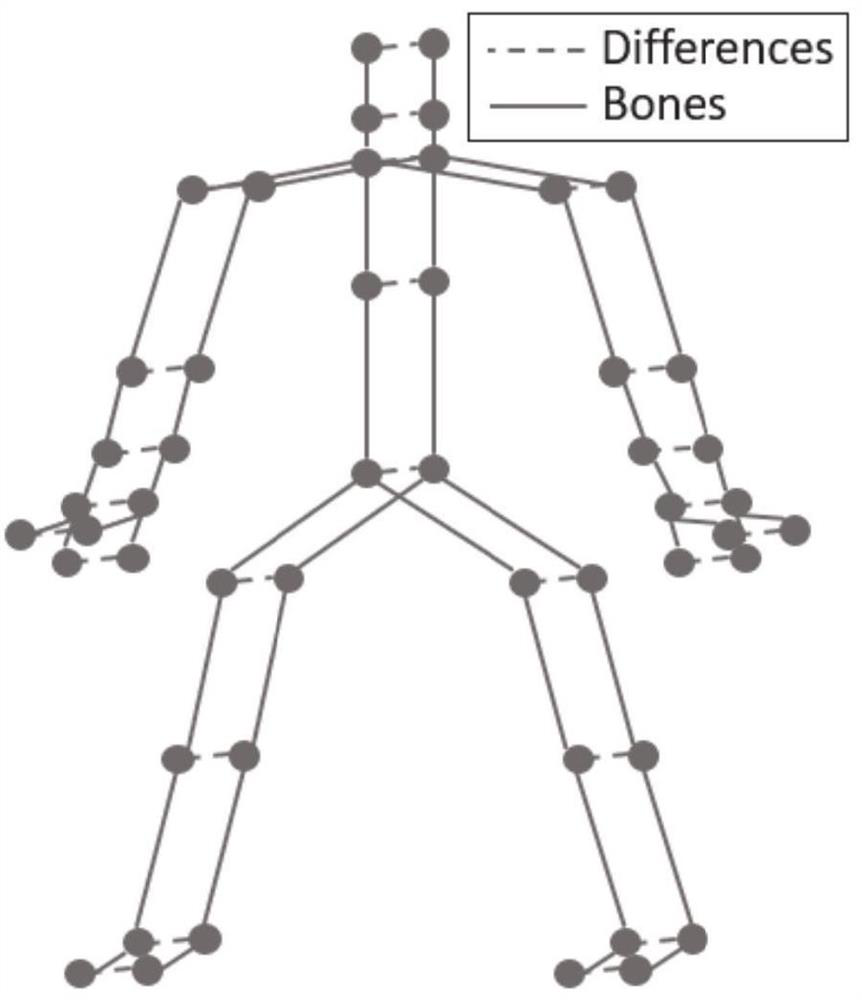

Human body behavior recognition method and system based on human body skeleton

ActiveCN111950485ASolve the distance problemSolve the problem of not being able to make a connectionCharacter and pattern recognitionNeural architecturesHuman bodyHuman behavior

The invention discloses a human body behavior recognition method and system based on a human body skeleton, and the method comprises the steps: obtaining the behavior movement of the human body skeleton and the corresponding skeleton point coordinates, skeleton point inter-frame coordinate differences and skeleton features, and constructing a training set; sequentially training the graph convolution network and the attention mechanism network based on the human body part according to the training set, and constructing a behavior recognition model according to the trained graph convolution network and attention mechanism network; and recognizing the to-be-recognized human skeleton according to the behavior recognition model, and outputting human behavior actions. According to data such as three-dimensional coordinates of human skeleton joint points, coordinate differences between point frames and skeleton features, a graph convolution network is taken as a main body, an attention mechanism network based on human parts is adopted to assist in searching for skeleton points with better distinguishing ability, human behavior actions are classified and recognized, and the recognition precision is improved.

Owner:中科人工智能创新技术研究院(青岛)有限公司

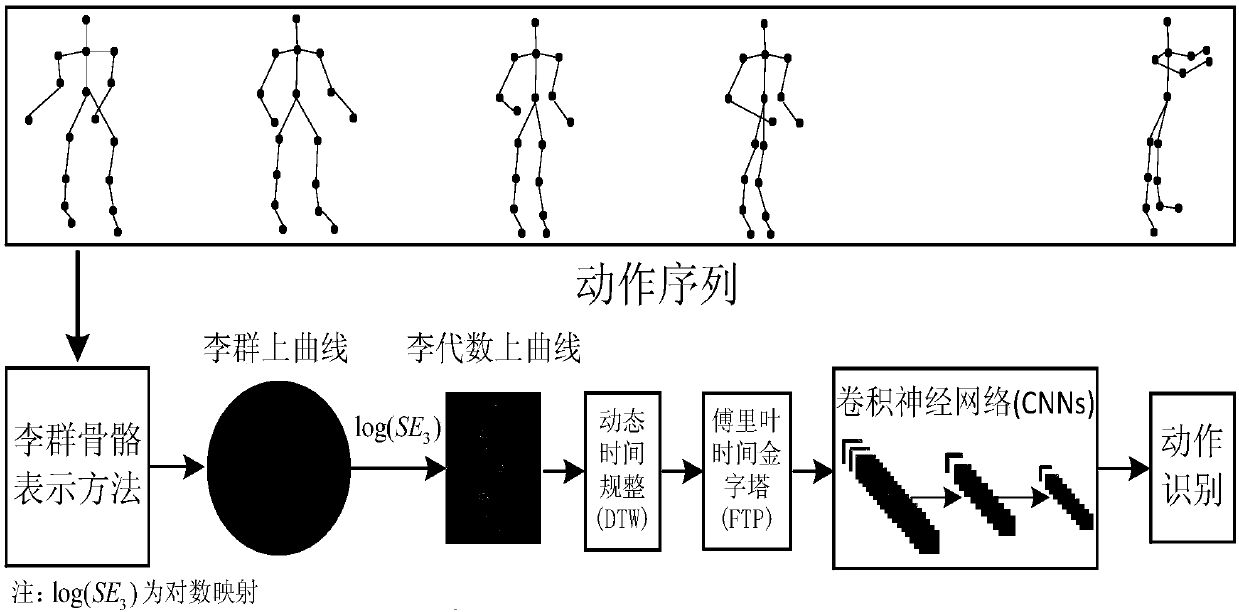

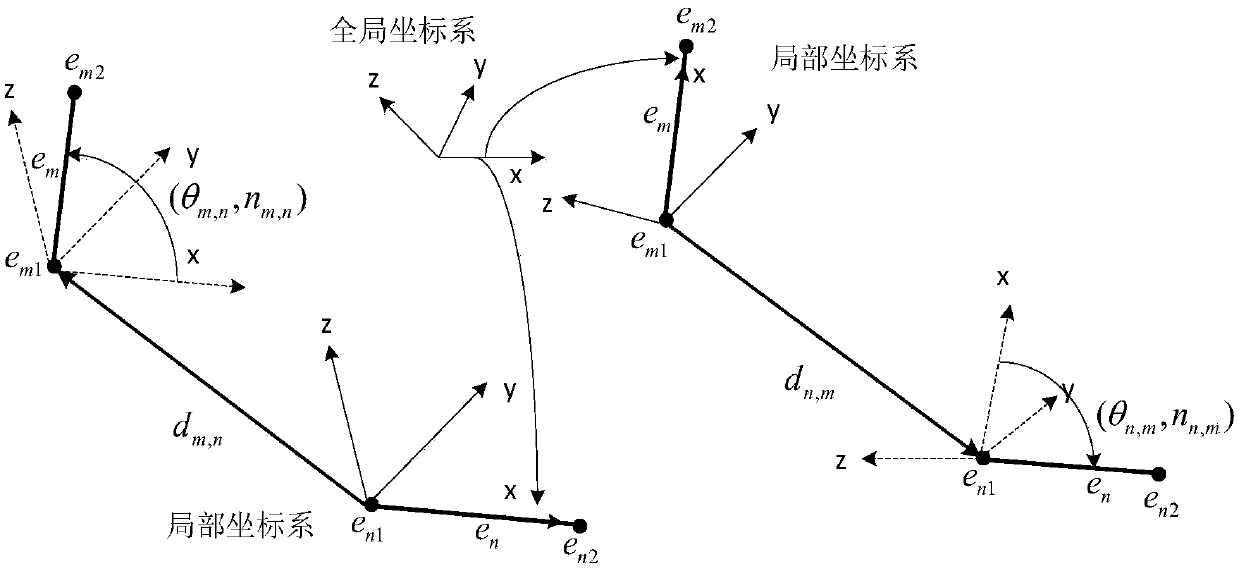

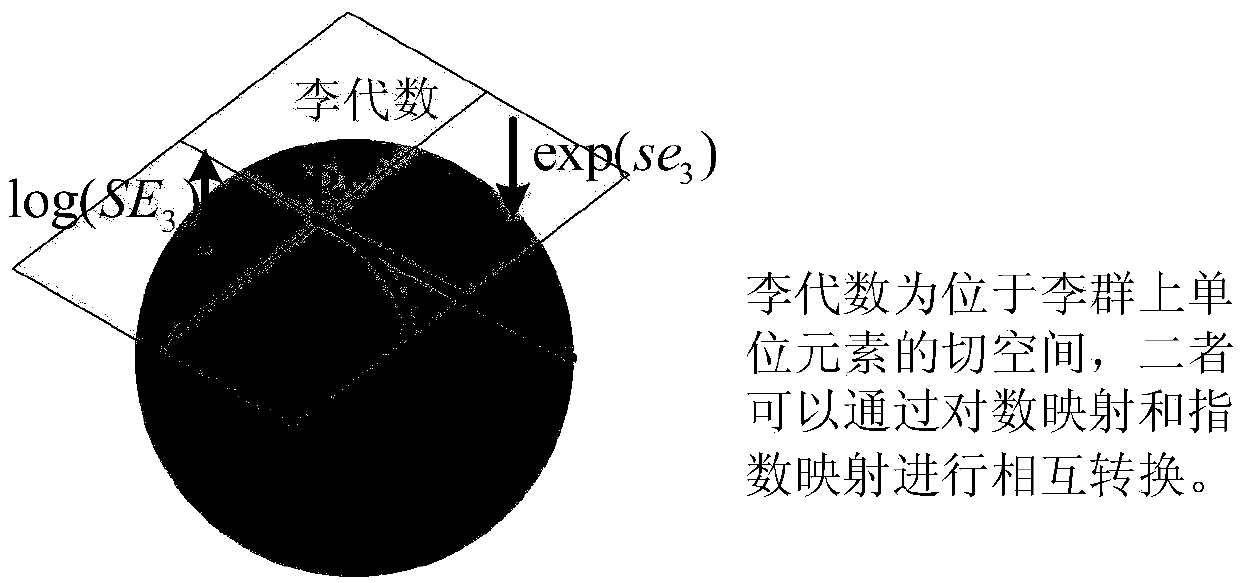

Human motion recognition method based on plum group characteristics and a convolutional neural network

ActiveCN109614899AThe description is accurate and validOvercome the shortcomings of manual feature extractionCharacter and pattern recognitionHuman bodySomatosensory system

The invention relates to a human motion recognition method based on plum group characteristics and a convolutional neural network, and belongs to the field of computer mode recognition. The method comprises the following steps: S1, data acquisition: extracting human skeleton information by utilizing micro soft body sensing equipment Kinect, and acquiring motion information of an experimenter; s2,extracting plum group characteristics, A plum group skeleton representation method for simulating a relative three-dimensional geometrical relationship between limbs of a human body by utilizing rigidlimb transformation is adopted. human body actions are modeled into a series of curves on the plum group, and then the curve based on the plum group space is mapped into a curve based on the plum algebra space through logarithm mapping in combination with the corresponding relation between the plum group and the plum algebra; and S3, feature classification: fusing the plum group features and theconvolutional neural network, training the convolutional neural network by using the plum group features, and enabling the convolutional neural network to learn and classify the plum group features, thereby realizing human body action recognition. According to the invention, a good identification effect can be obtained.

Owner:北京陟锋科技有限公司

Idle traffic light intelligent control method based on reinforcement learning

InactiveCN110930734AReduce training parametersReduce computing requirementsControlling traffic signalsGreedy algorithmSimulation

The invention relates to an idle traffic light control method based on reinforcement learning, and the method comprises the following steps of employing a SlimYOLOv3 model to sense an environment, analyzing a scene, recognizing all vehicle types of targets in the scene, and positioning the positions of the targets through defining a bounding box around each target; adopting a DQN-based reinforcement learning method to train a traffic light control intelligent agent, a) defining an action space, enabling the traffic lights to randomly select actions according to probabilities, and adopting a greedy algorithm to randomly select the actions according to the probabilities; b) defining a state space, wherein the road surface state observed at any moment is the number of vehicles in different intervals in each direction, and an observation state value is a six-dimensional vector; c) defining a reward function, wherein the penalty weights of the three interval road sections are respectively defined as the specification, and the reward value is the sum of the penalty weights of the road sections; and d) learning a strategy enabling the reward value to be the highest by adopting the DQN-based reinforcement learning method to obtain the traffic light control intelligent agent with high performance.

Owner:TIANJIN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com