Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

30results about How to "Reduce cache capacity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

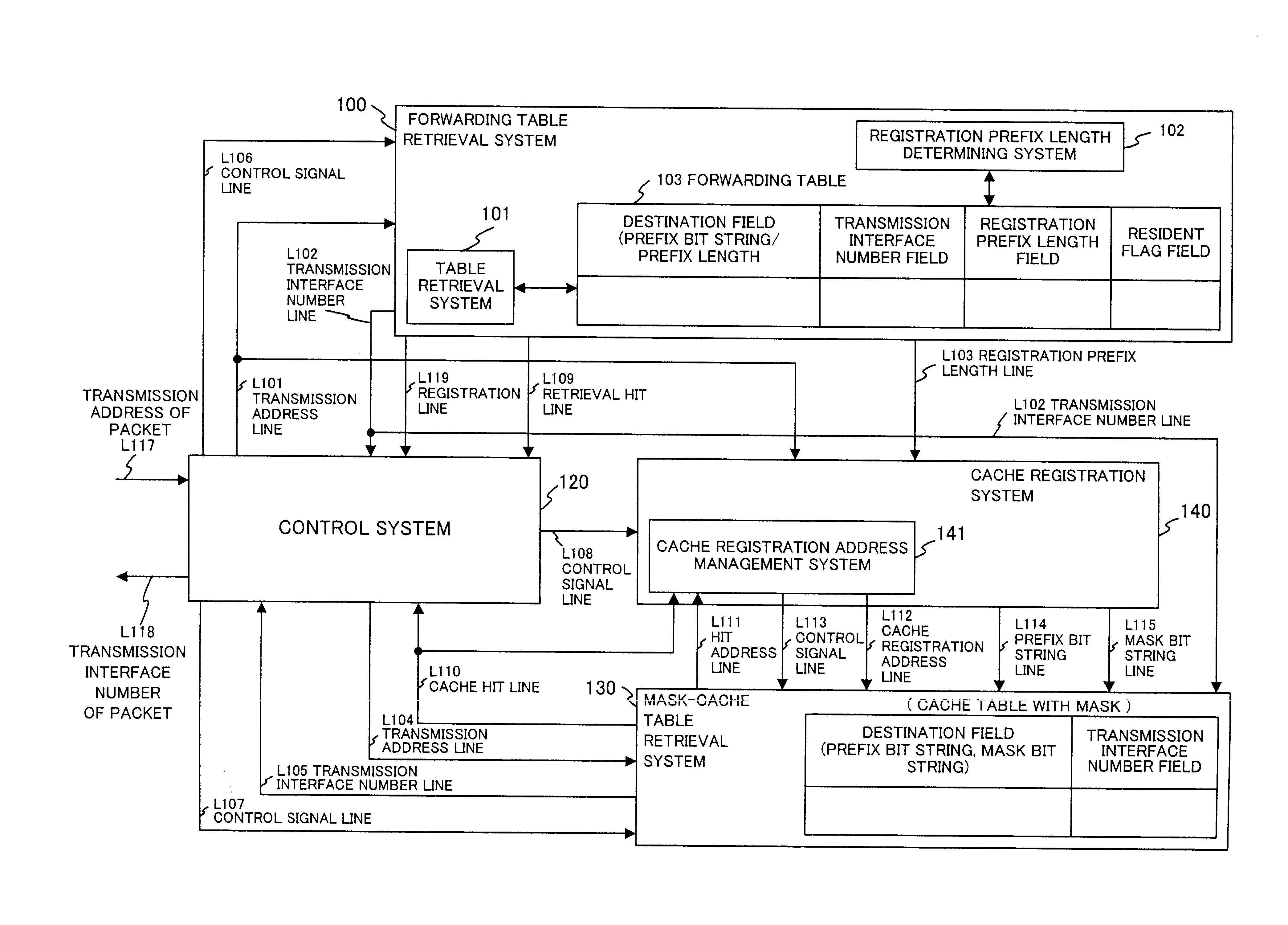

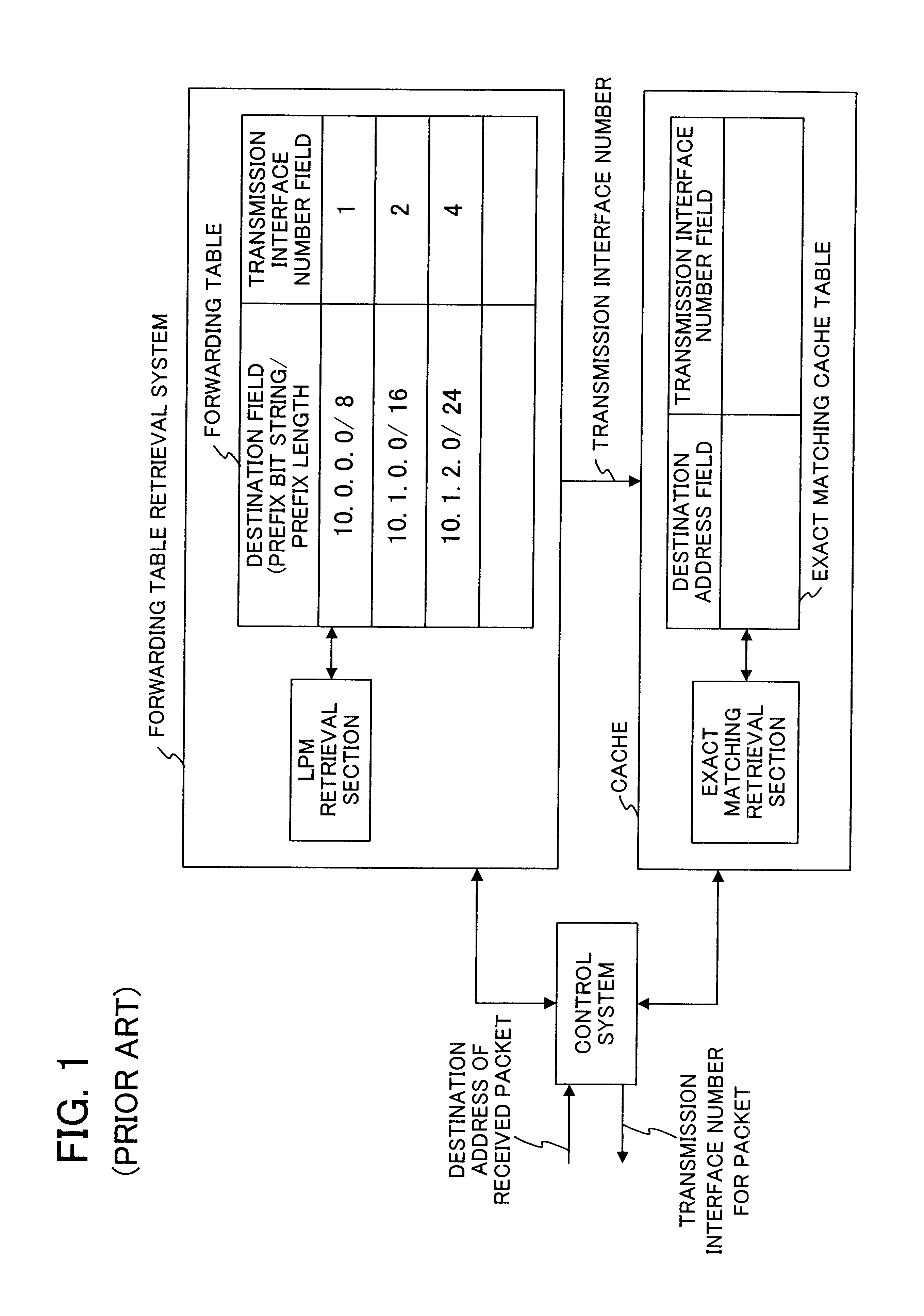

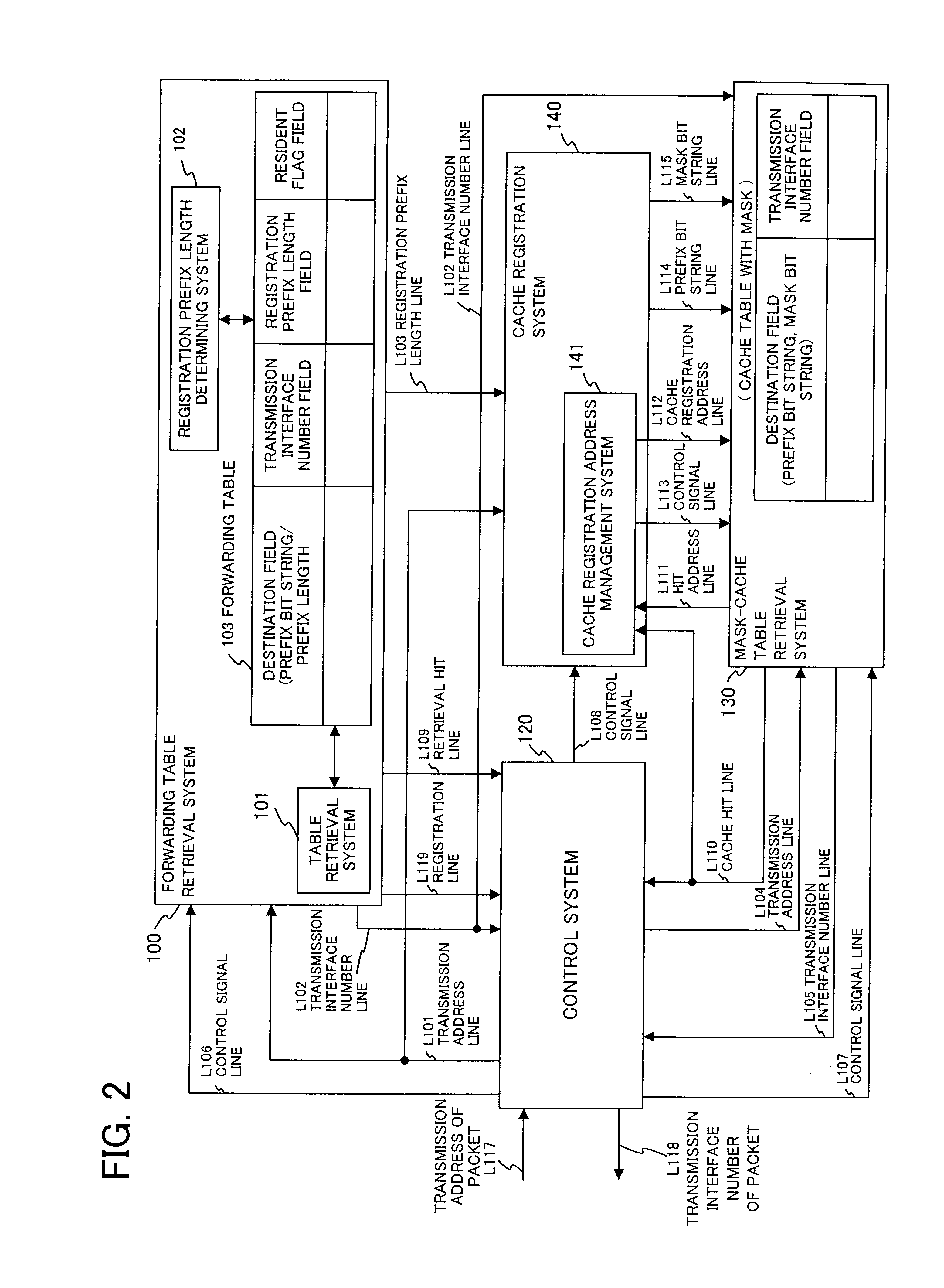

Router with a cache having a high hit probability

InactiveUS6768739B1Reduce cache capacityReduce circuit areaData switching by path configurationMemory systemsLongest prefix matchParallel computing

A router allowing the entry hit probability of the cache to be increased is disclosed. The cache is searched using a different mask for each cache entry. A maximum or optimum cache prefix length is determined as a length of upper bits of the destination address of the received packet which are not masked by a corresponding mask. Alternatively, the cache is searched using longest prefix match (LFM). A cache entry allowing a plurality of destination addresses to be hit can be registered in the cache, resulting in increased cache hit probability.

Owner:NEC CORP

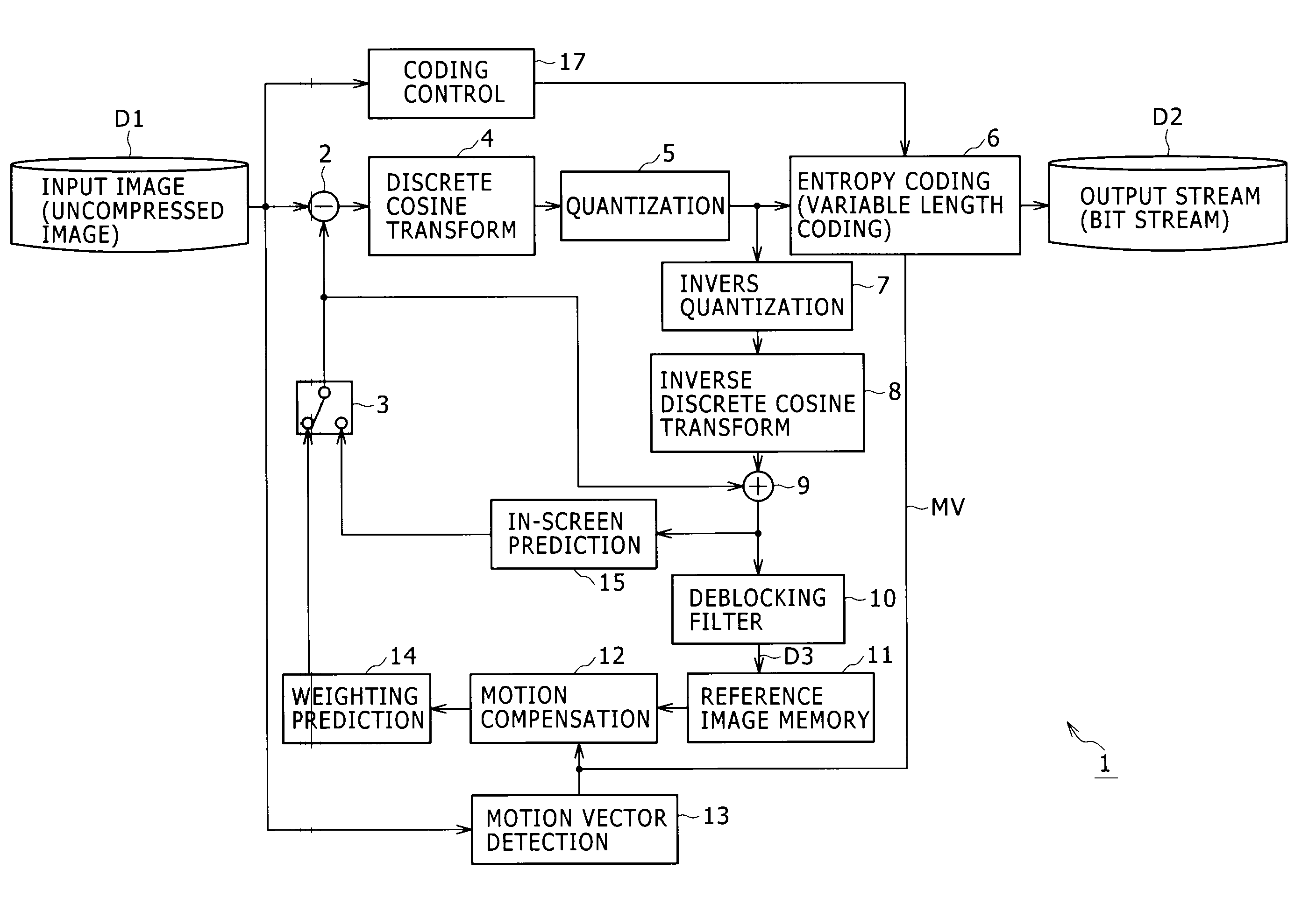

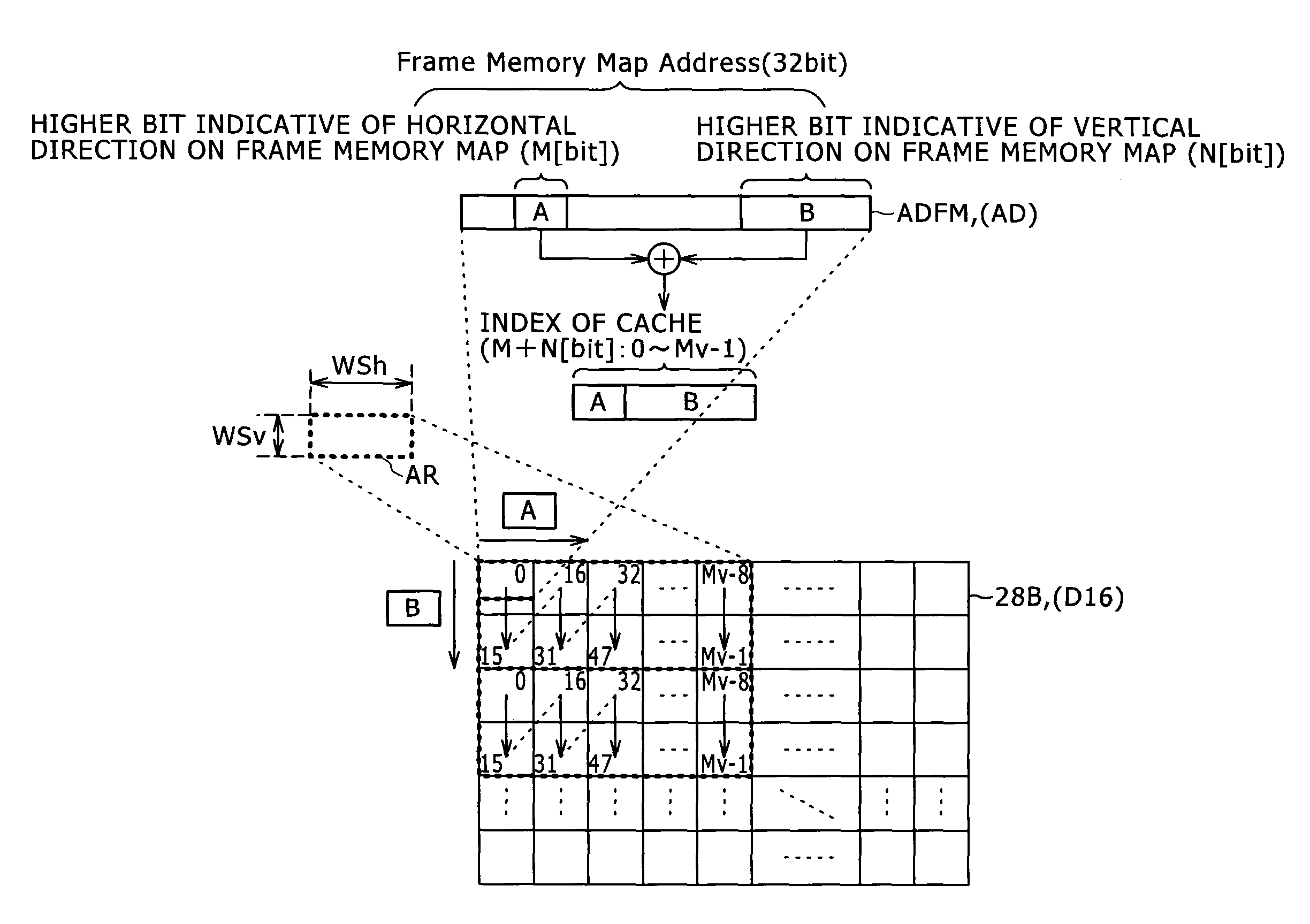

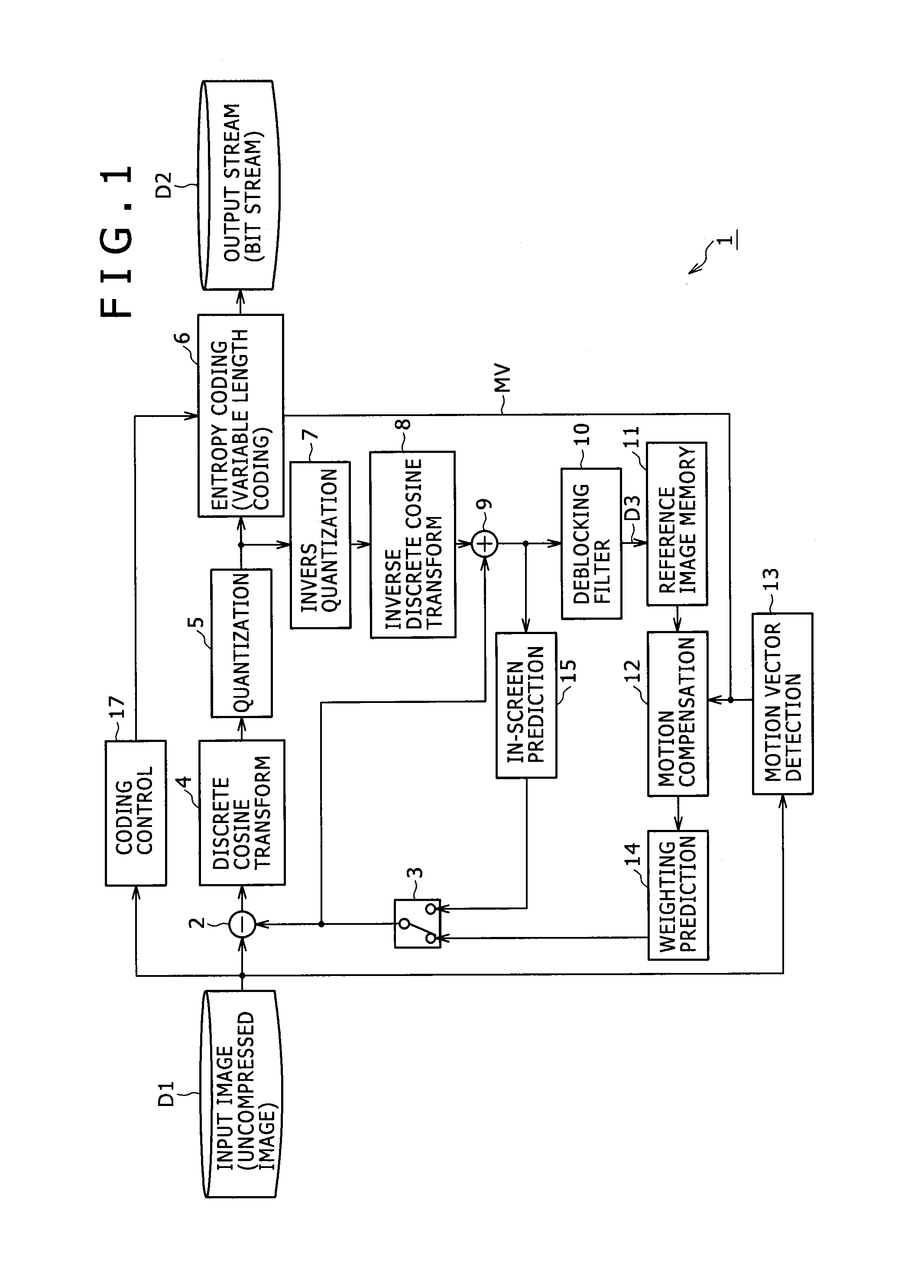

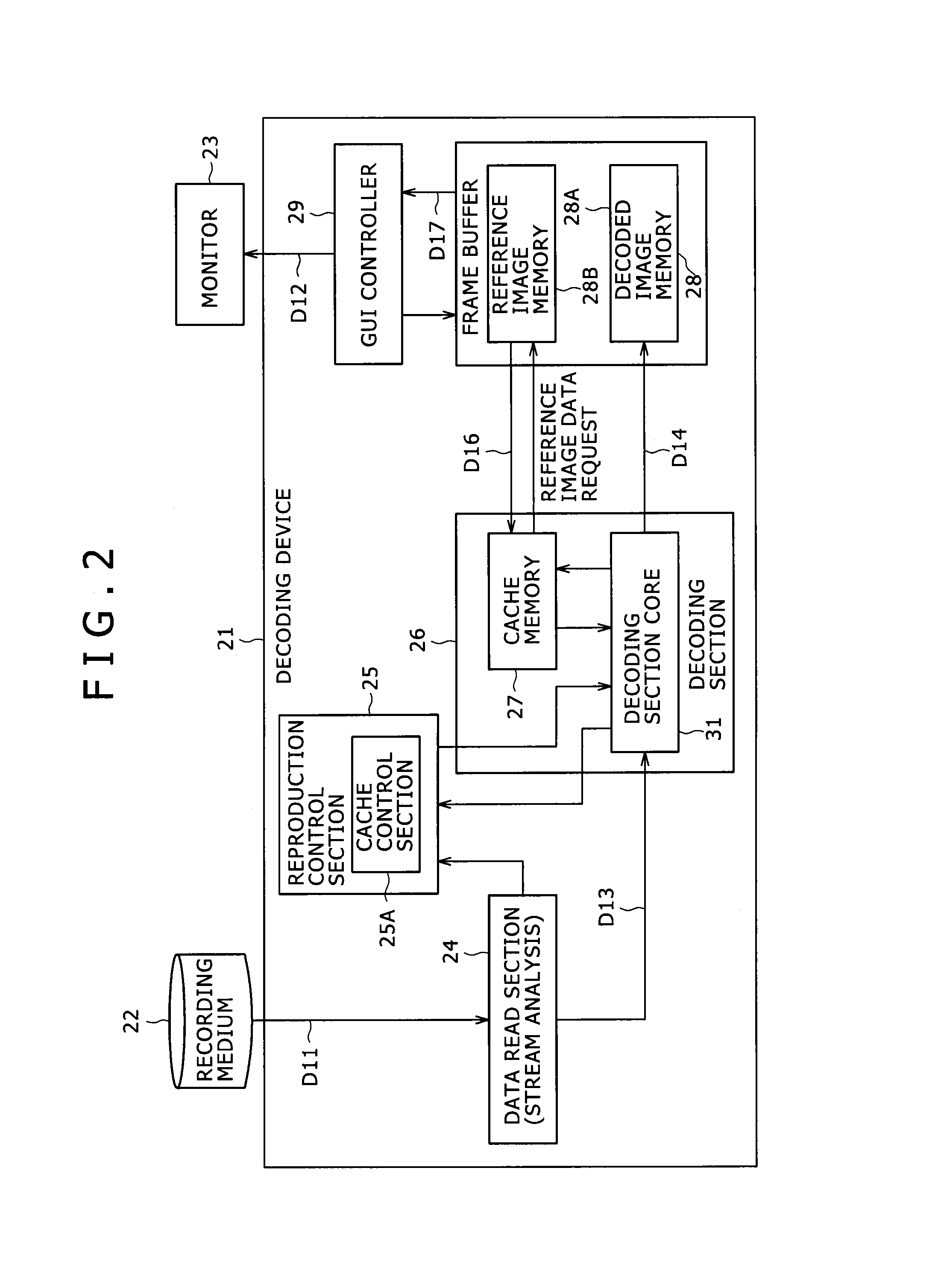

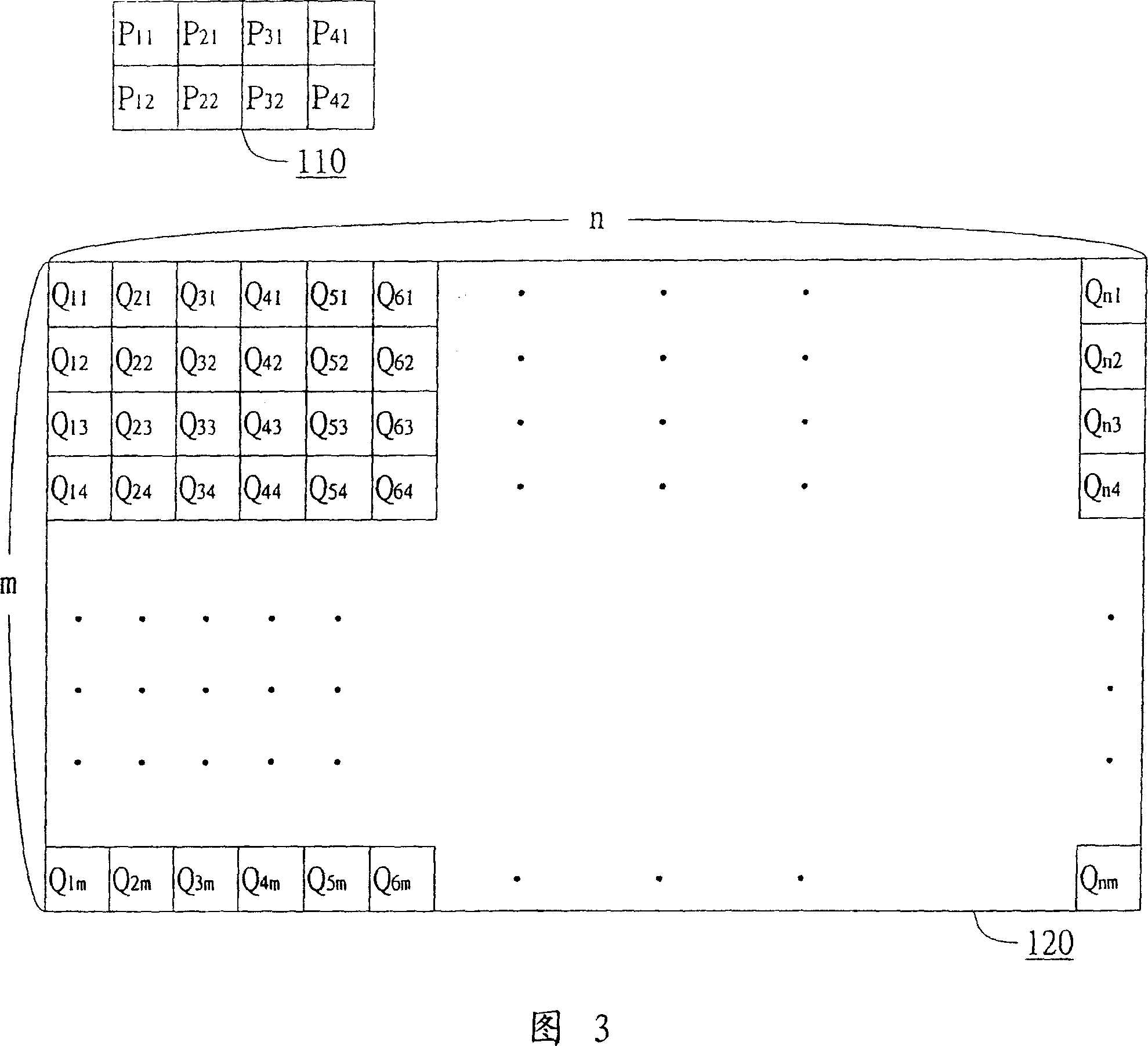

Image data processing method, program for image data processing method, recording medium with recorded program for image data processing method and image data processing device

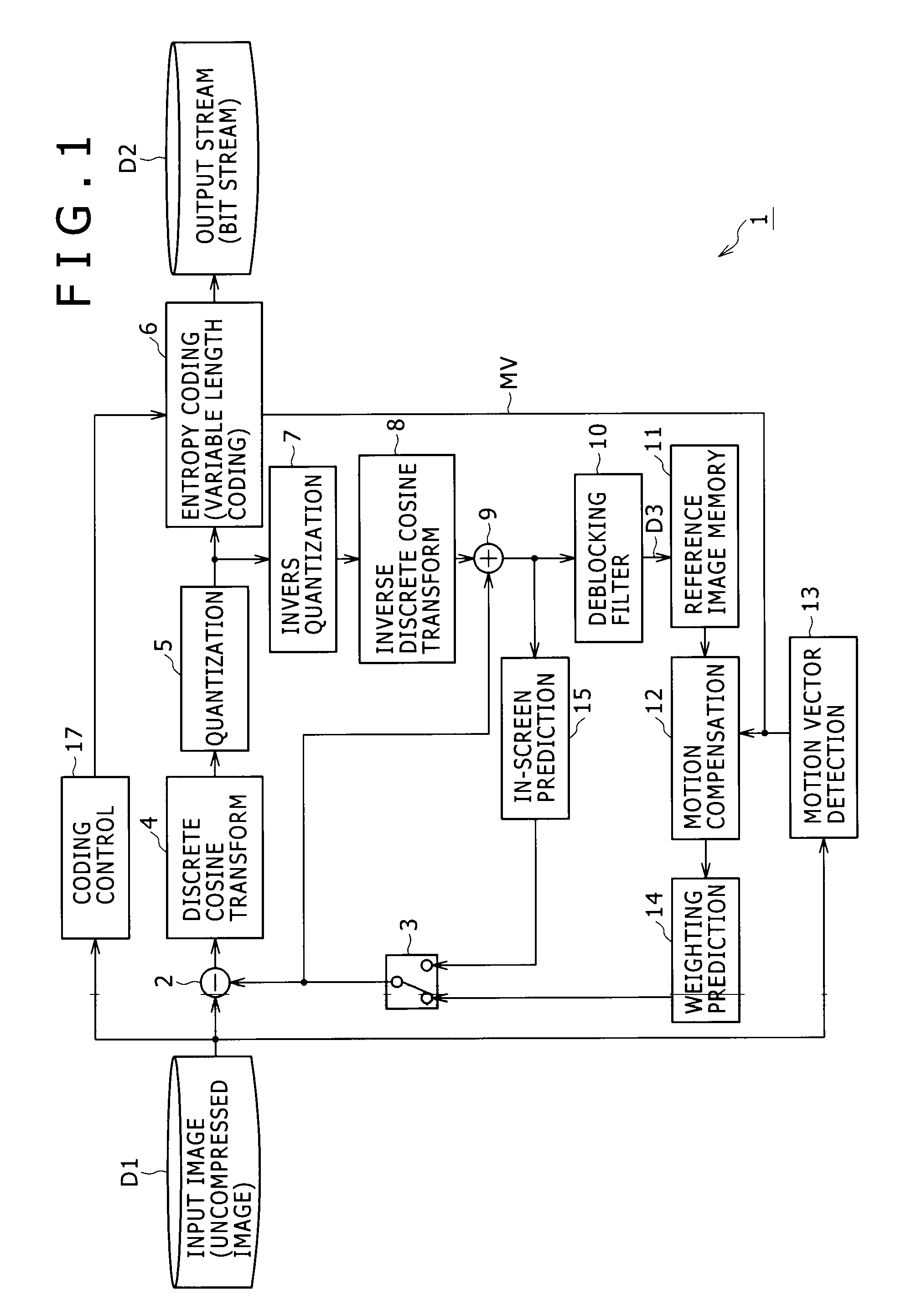

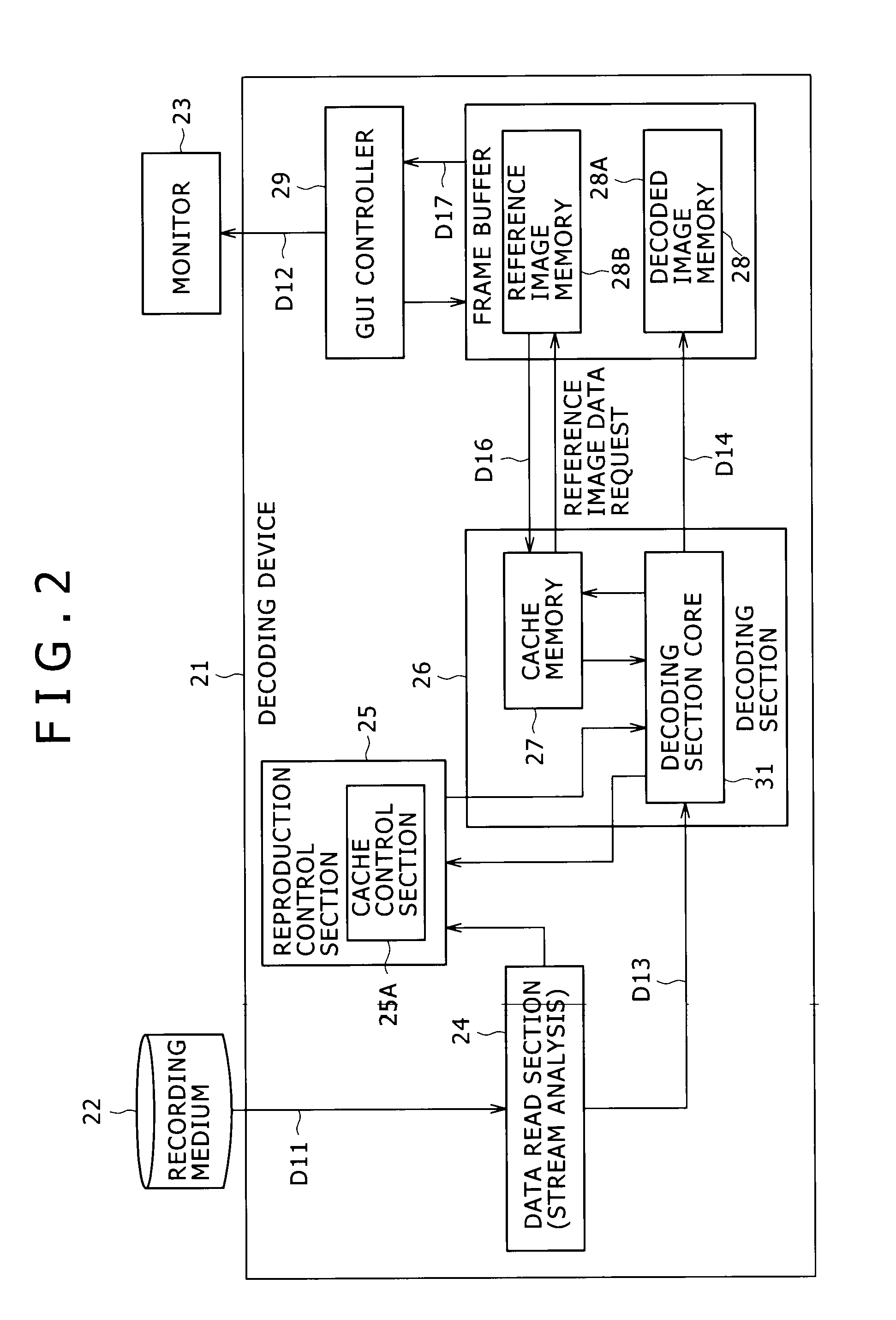

InactiveUS20090322772A1Reduce capacityReduce visit frequencyMemory adressing/allocation/relocationCathode-ray tube indicatorsComputer hardwareReference image

The present invention is applied to a coding device and a decoding device for moving image data in compliance with, for example, an MPEG-4AVC / ITU-T H. 264 system, in which address data is issued to specify an area that is a plurality of read units in a horizontal direction and a vertical direction, respectively, and reference image data is stored in a cache memory.

Owner:SONY CORP

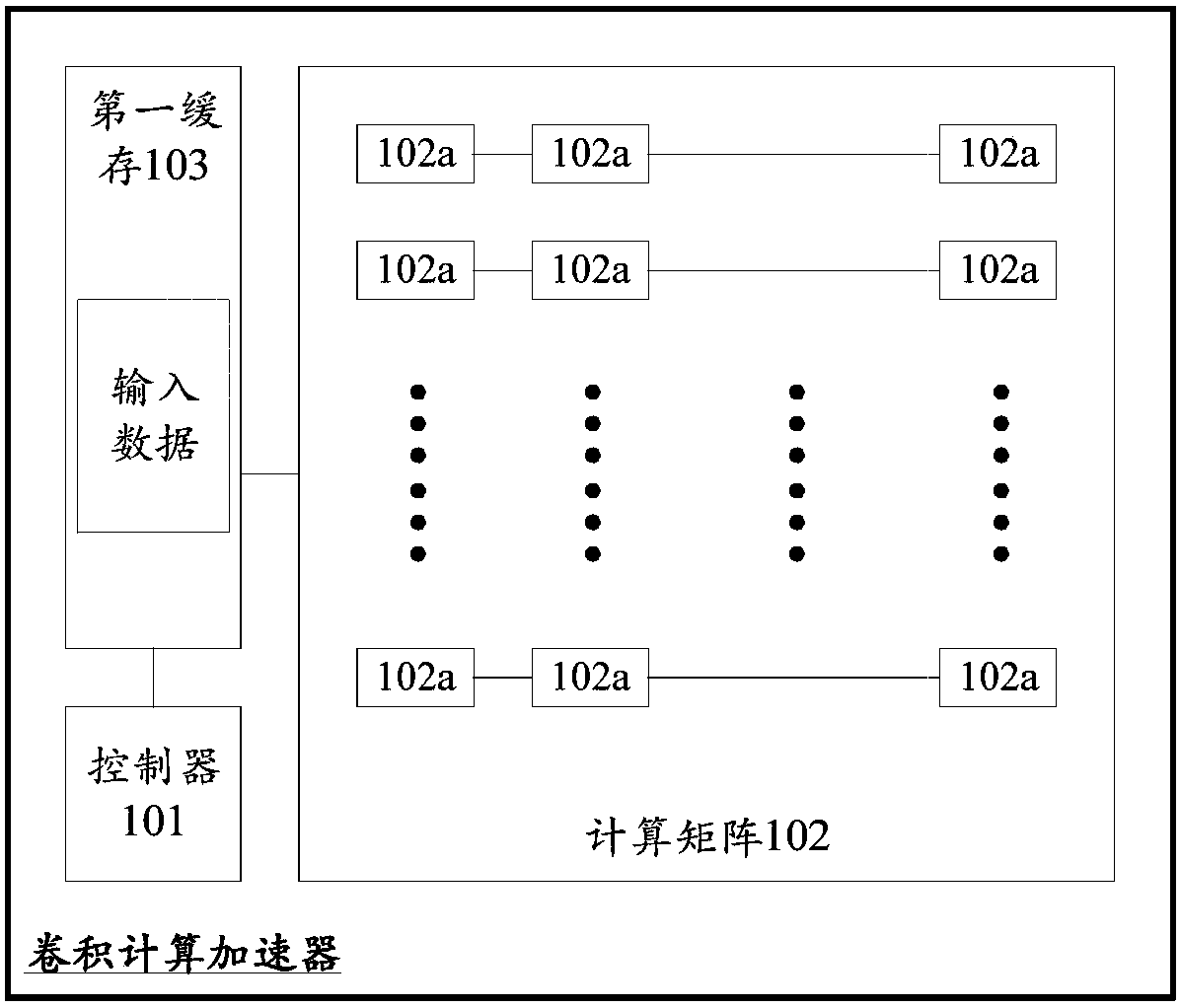

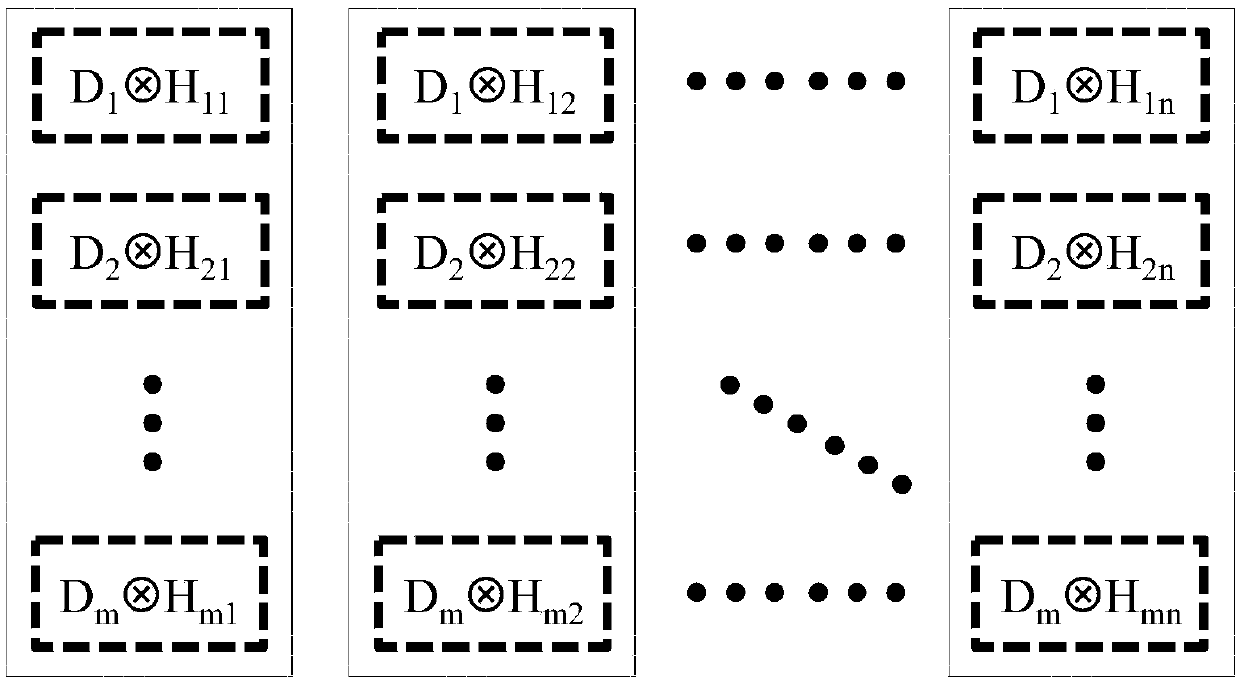

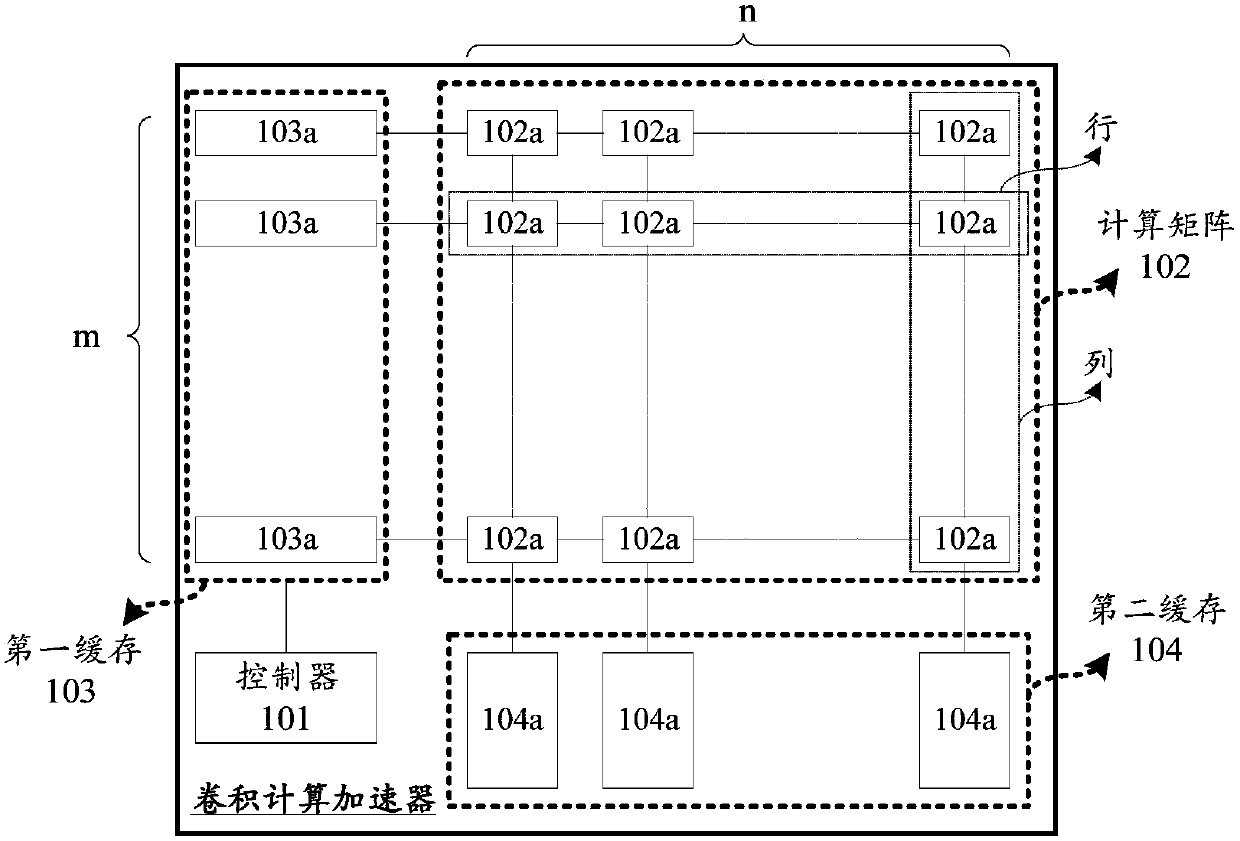

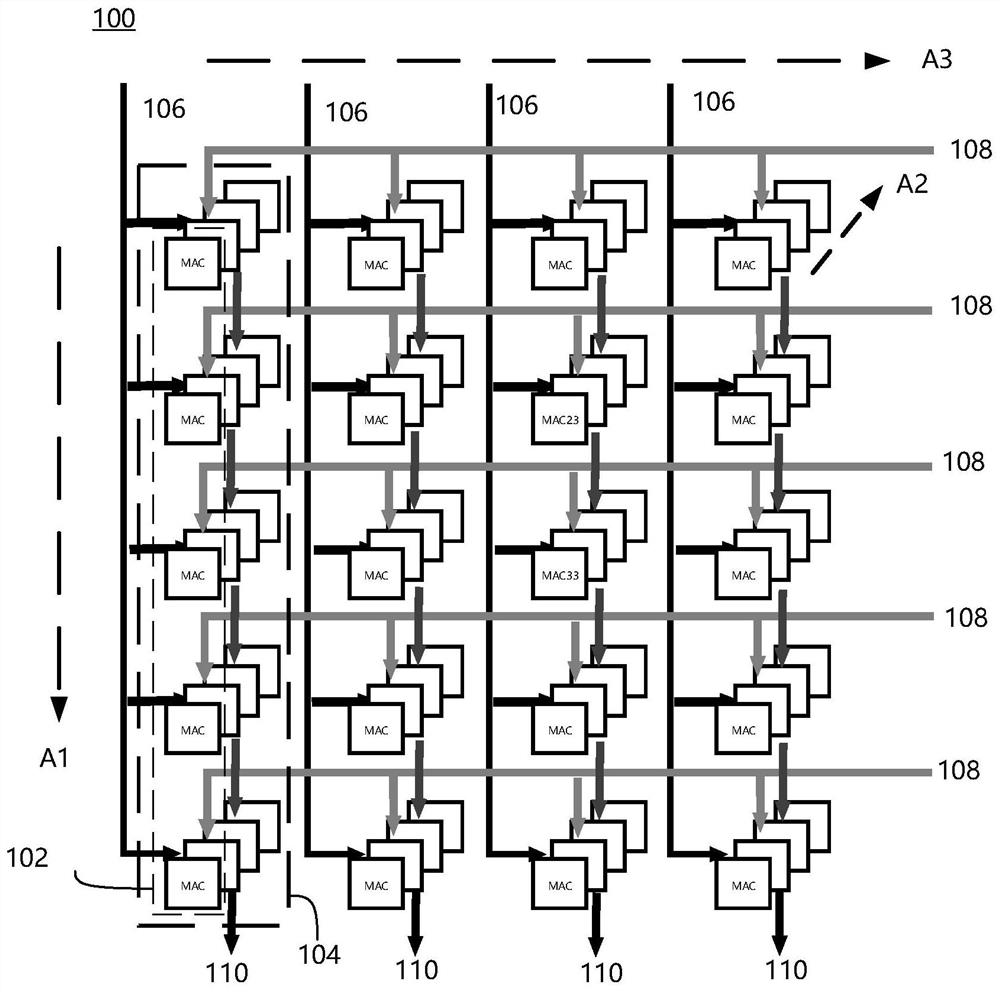

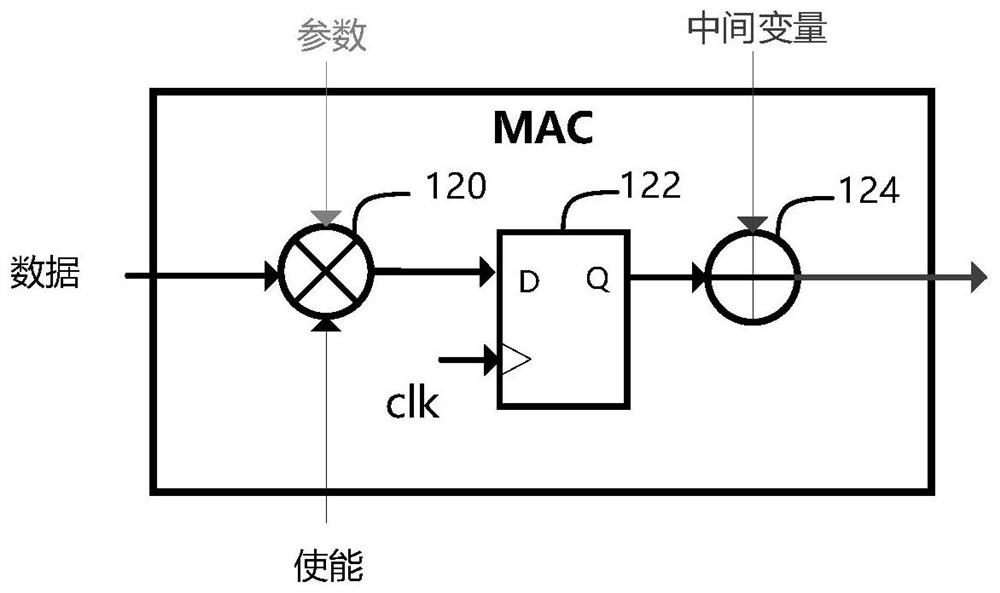

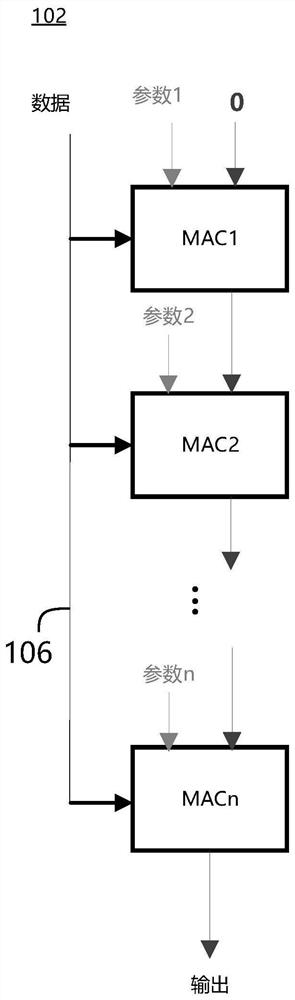

Convolutional calculation accelerator, convolutional calculation method and convolutional calculation equipment

ActiveCN110210610AReduce cache capacityReduce demandProcessor architectures/configurationProgram controlParallel computingBandwidth requirement

The invention relates to a convolution calculation accelerator, a convolution calculation method and convolution calculation equipment, and relates to the technical field of electronic circuits. The convolution calculation accelerator comprises a controller, a calculation matrix and a first cache, the calculation matrix comprises at least one row of calculation units, and each row of calculation units comprises at least two calculation units; the controller is used for controlling input data loaded to the first cache to be input into the computing units of the corresponding rows, and the computing units of the corresponding rows transmit the input data in the computing units of the corresponding rows; each computing unit in the computing units of the corresponding row performs convolutioncomputation on the received input data and a pre-stored convolution kernel; at least two computing units in the same row multiplex the same input data, and only one input channel is needed, so that the cache capacity and input bandwidth requirements of the computing matrix are reduced, and the expandability of the computing matrix is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

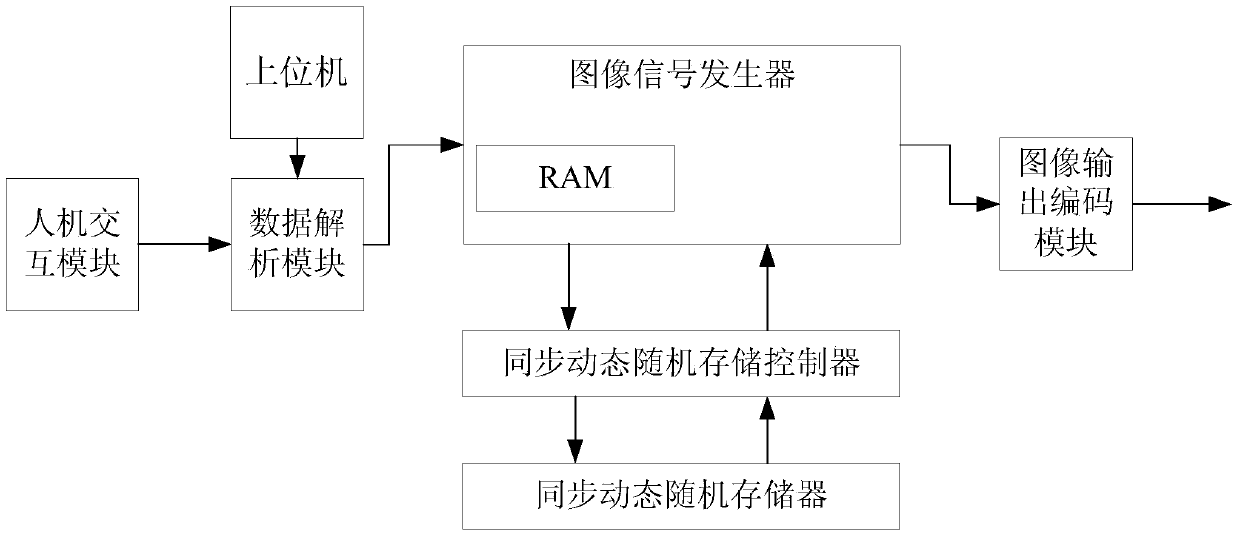

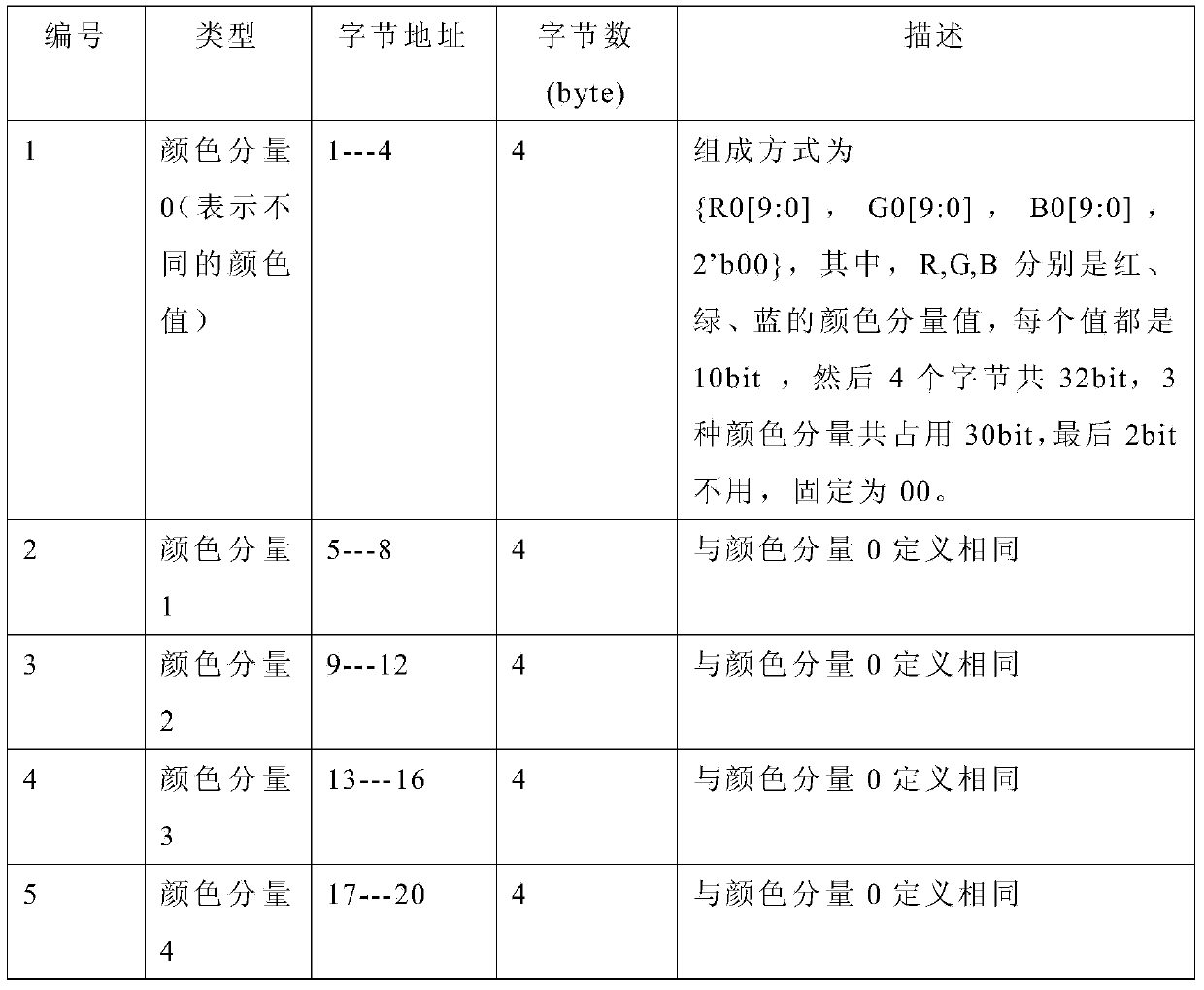

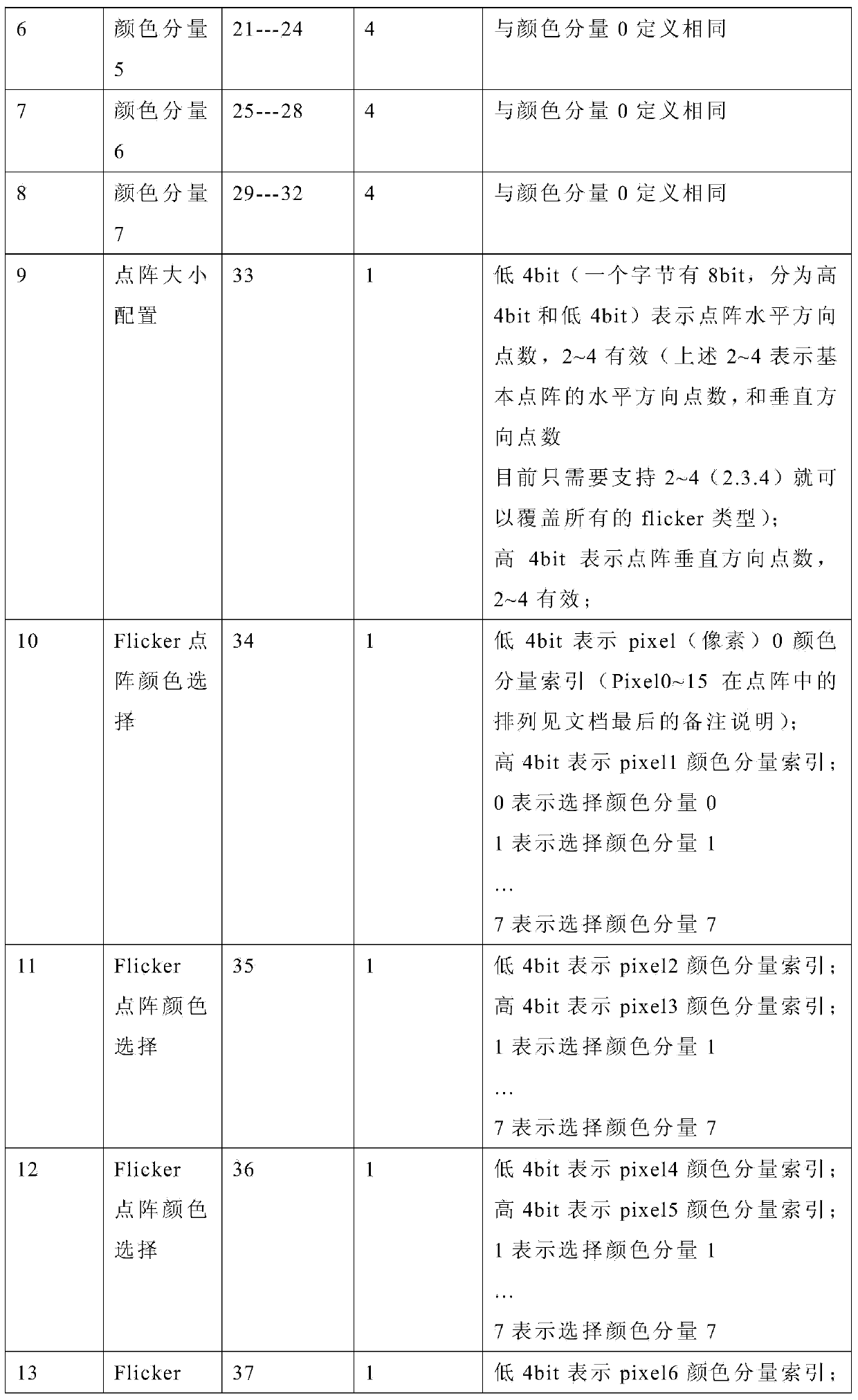

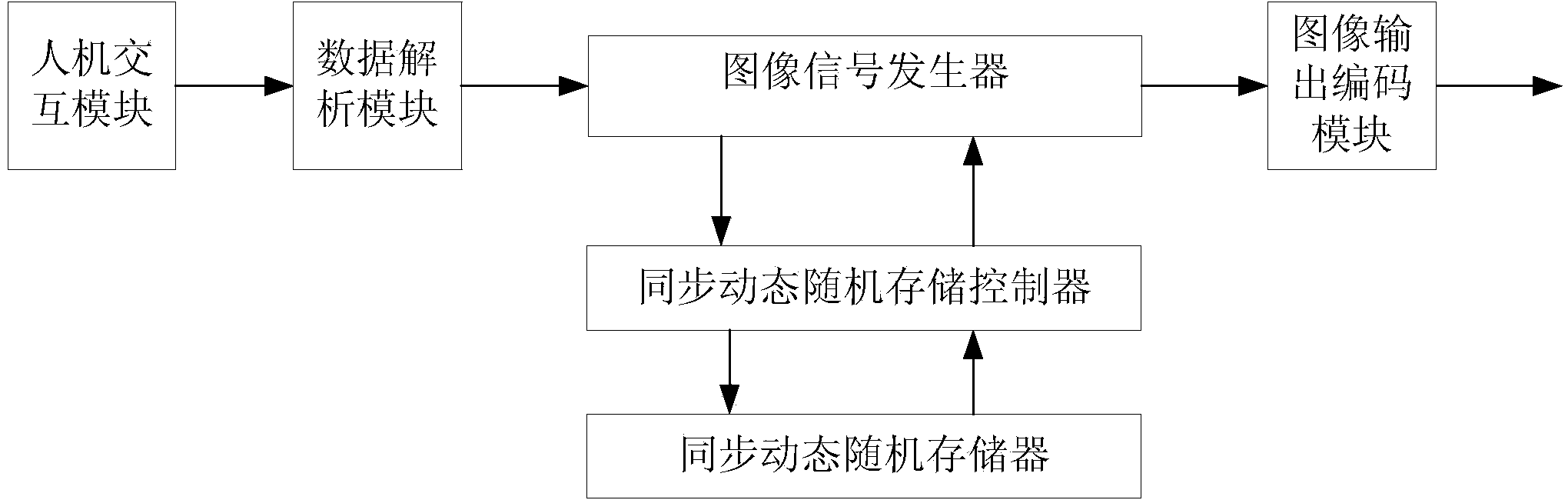

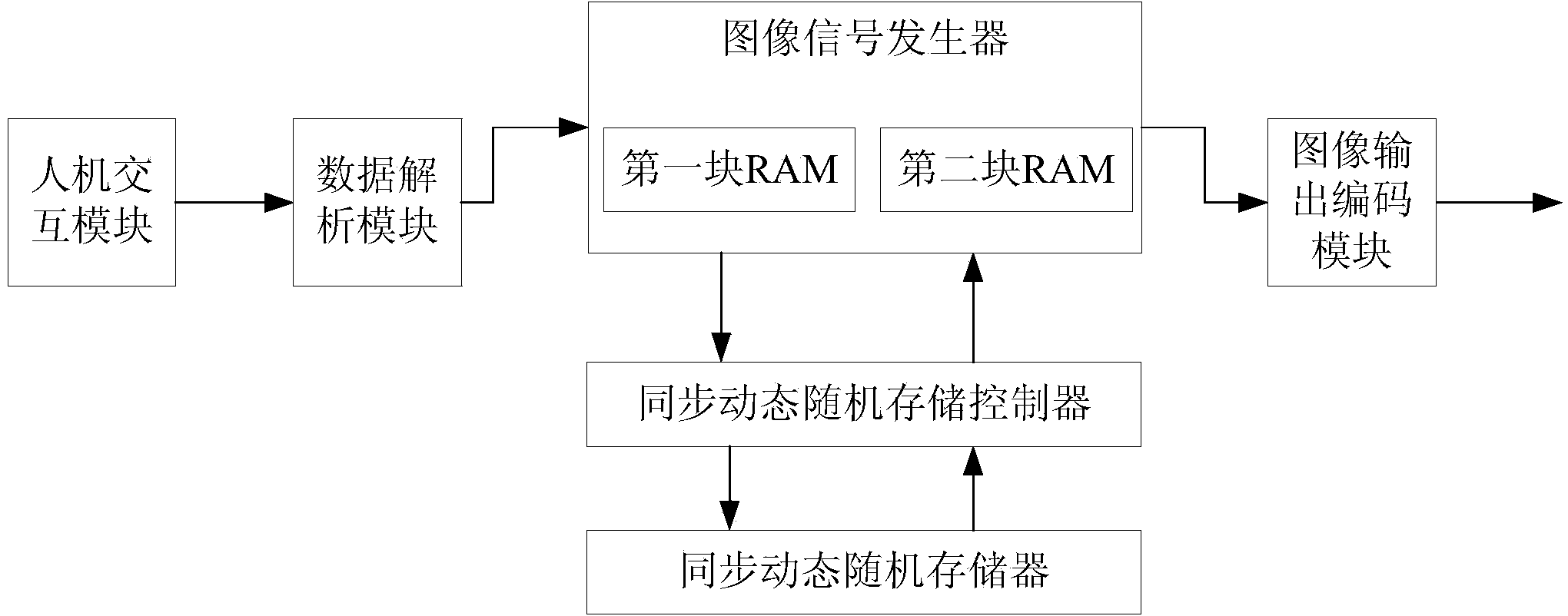

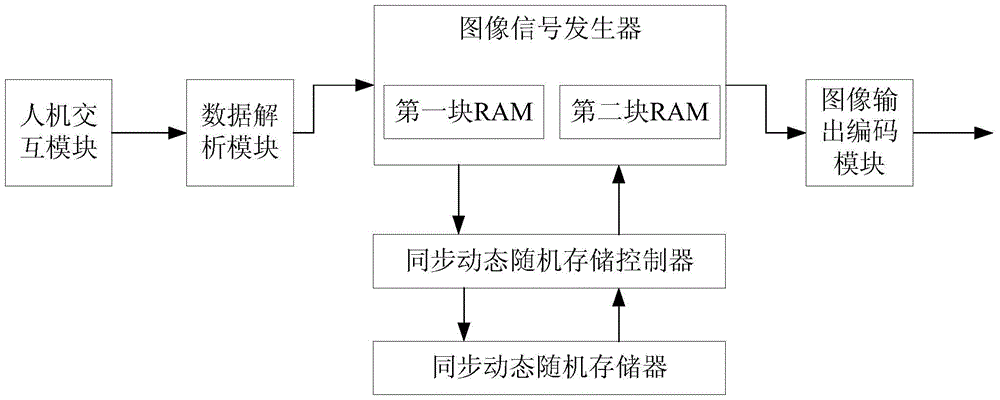

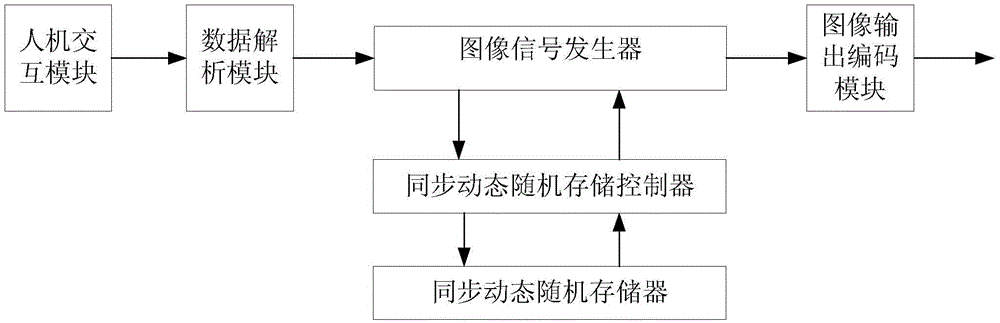

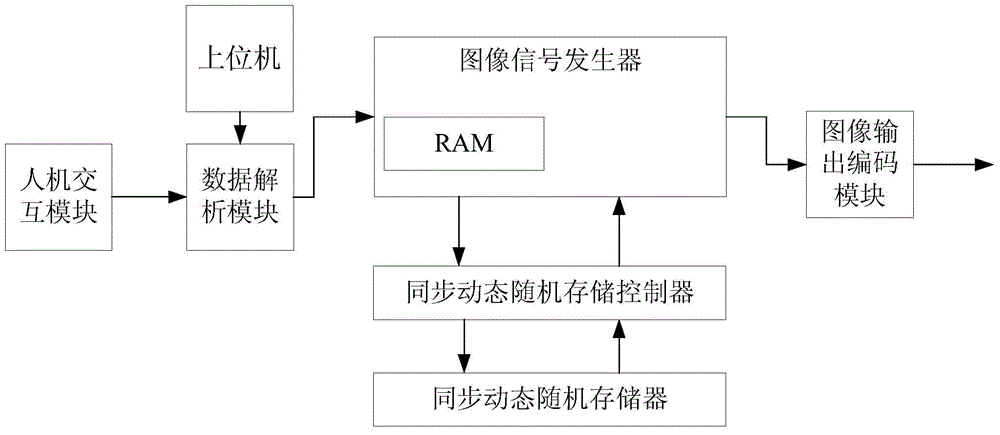

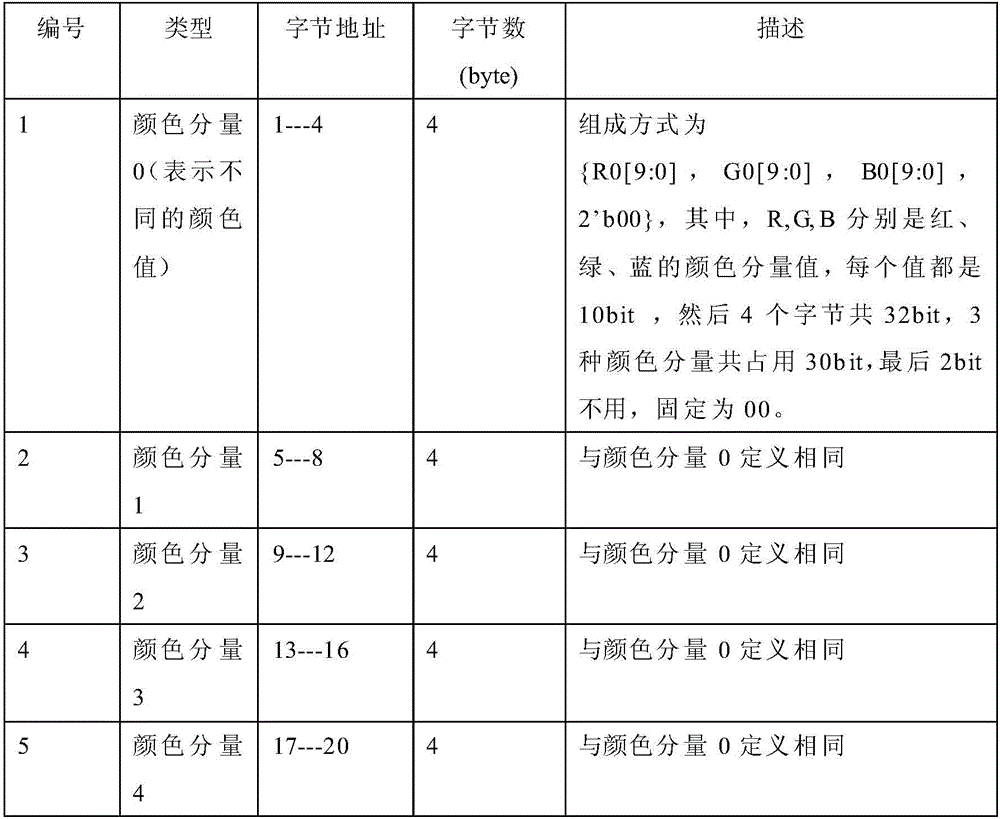

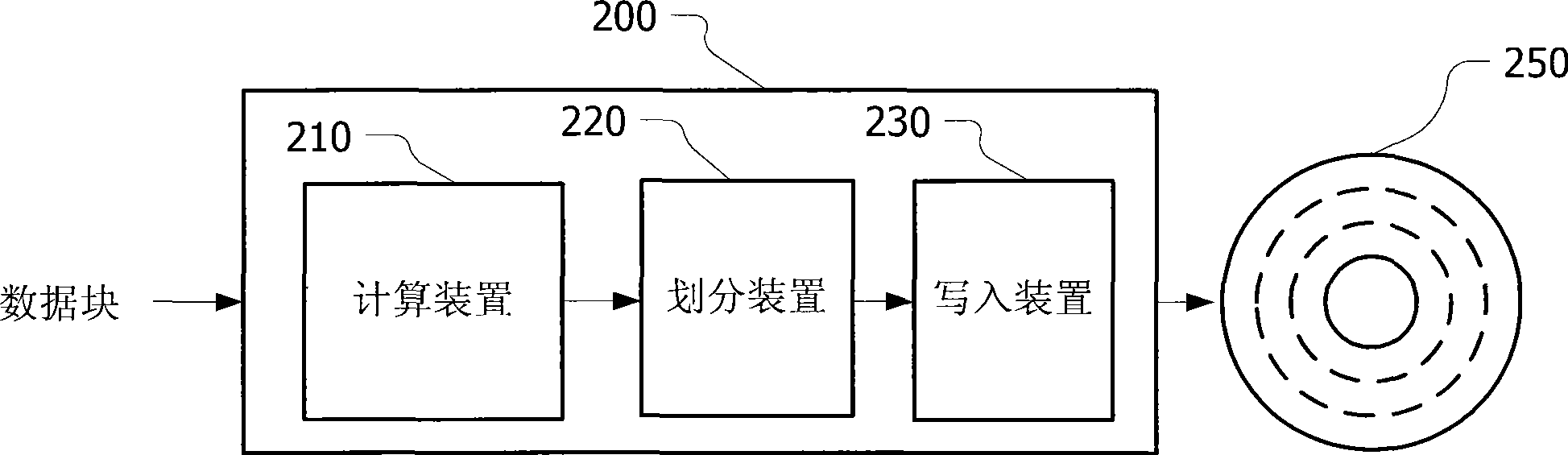

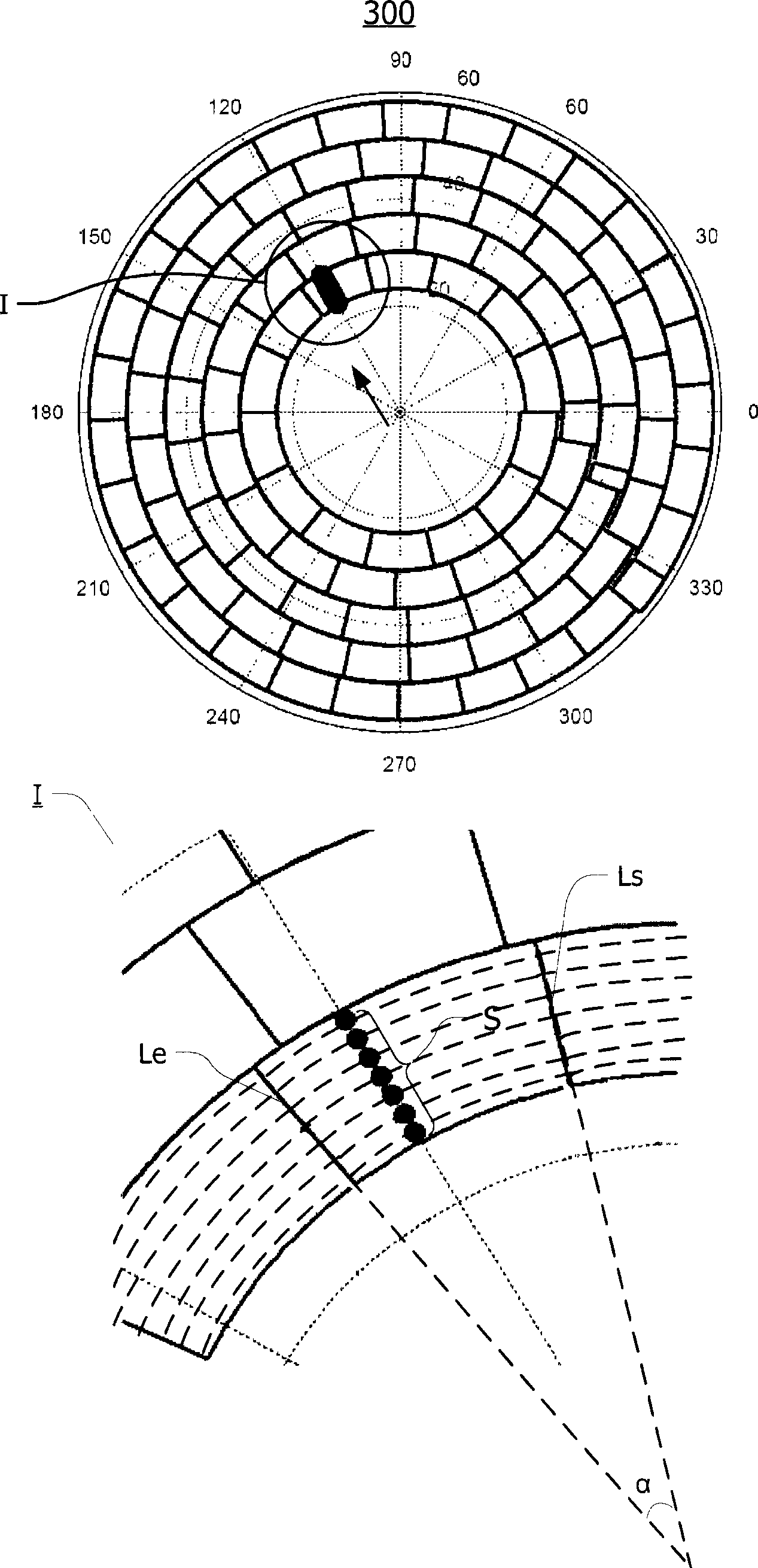

FPGA (Field Programmable Gata Array) based Flicker picture component generation method

ActiveCN104200765AHigh speedImprove fluencyStatic indicating devicesImage resolutionComputer graphics (images)

The invention discloses an FPGA (Field Programmable Gata Array) based Flicker picture component generation method. The FPGA based Flicker picture component generation method comprises 1, confirming horizontal perpendicular points of a Flicker picture lattice in a host computer, confirming picture vertex coordinates and coloring the points; 2, enabling the host computer to transmit the points, the vertex coordinates, color of the points and the module resolution to a data analysis module to be analyzed; 3, enabling the data analysis module to transmit analyzed data to an image signal generator; 4, writing color into a RAM (Random Access Memory) of the image signal generator with the number of pixel points of the picture lattice being served as an address; 5, scanning areas which are corresponding to pictures inside the image signal generator and calculating the every pixel point address being mapped in the picture lattice inside the scanning area; 6, reading color values of the pixel points of the picture lattice and coloring the pixel points with the pixel point addresses mapping in the lattice to be RAM reading addresses. The FPGA based Flicker picture component generation method can generate complex logic pictures such as the Flick picture by utilizing the FPGA.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

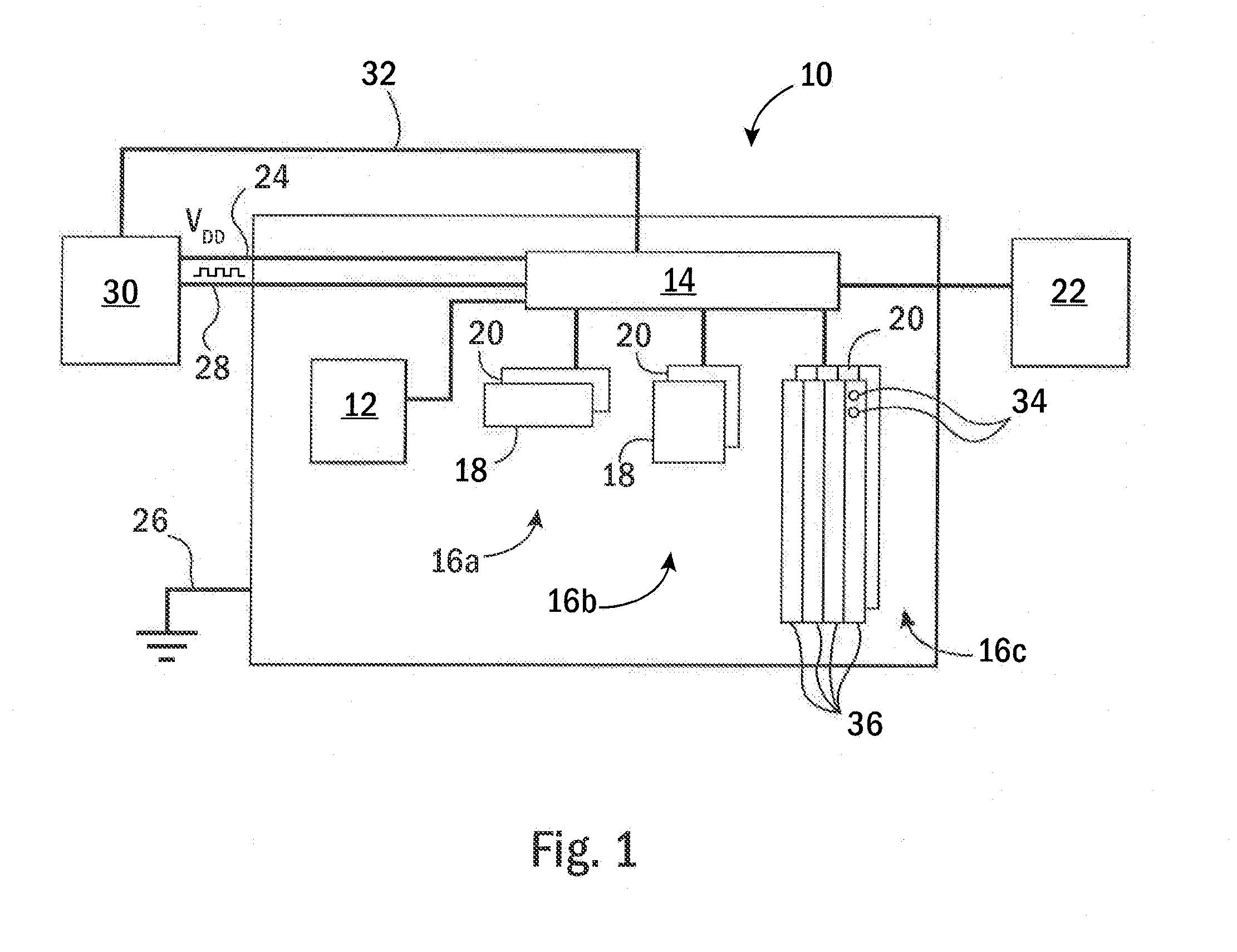

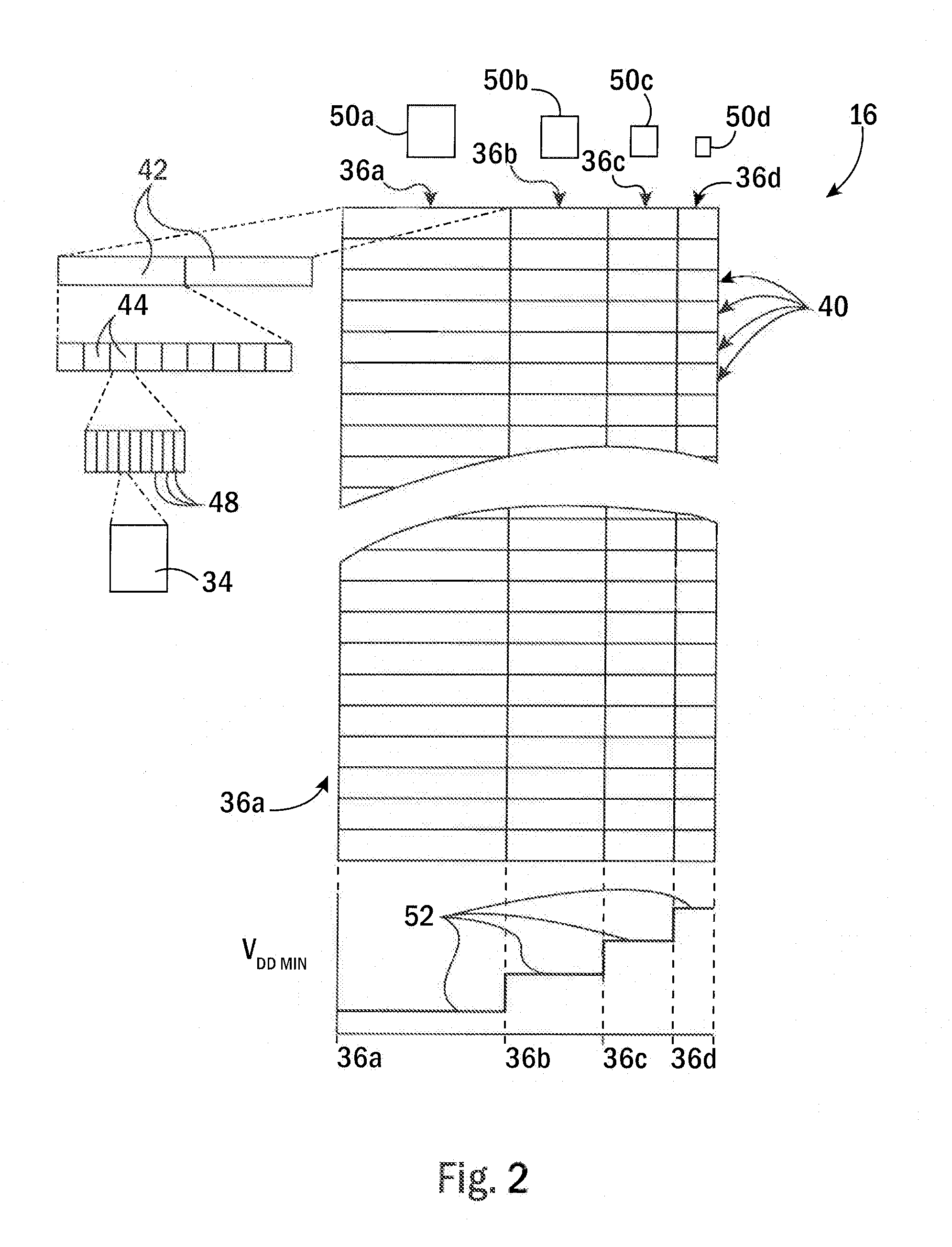

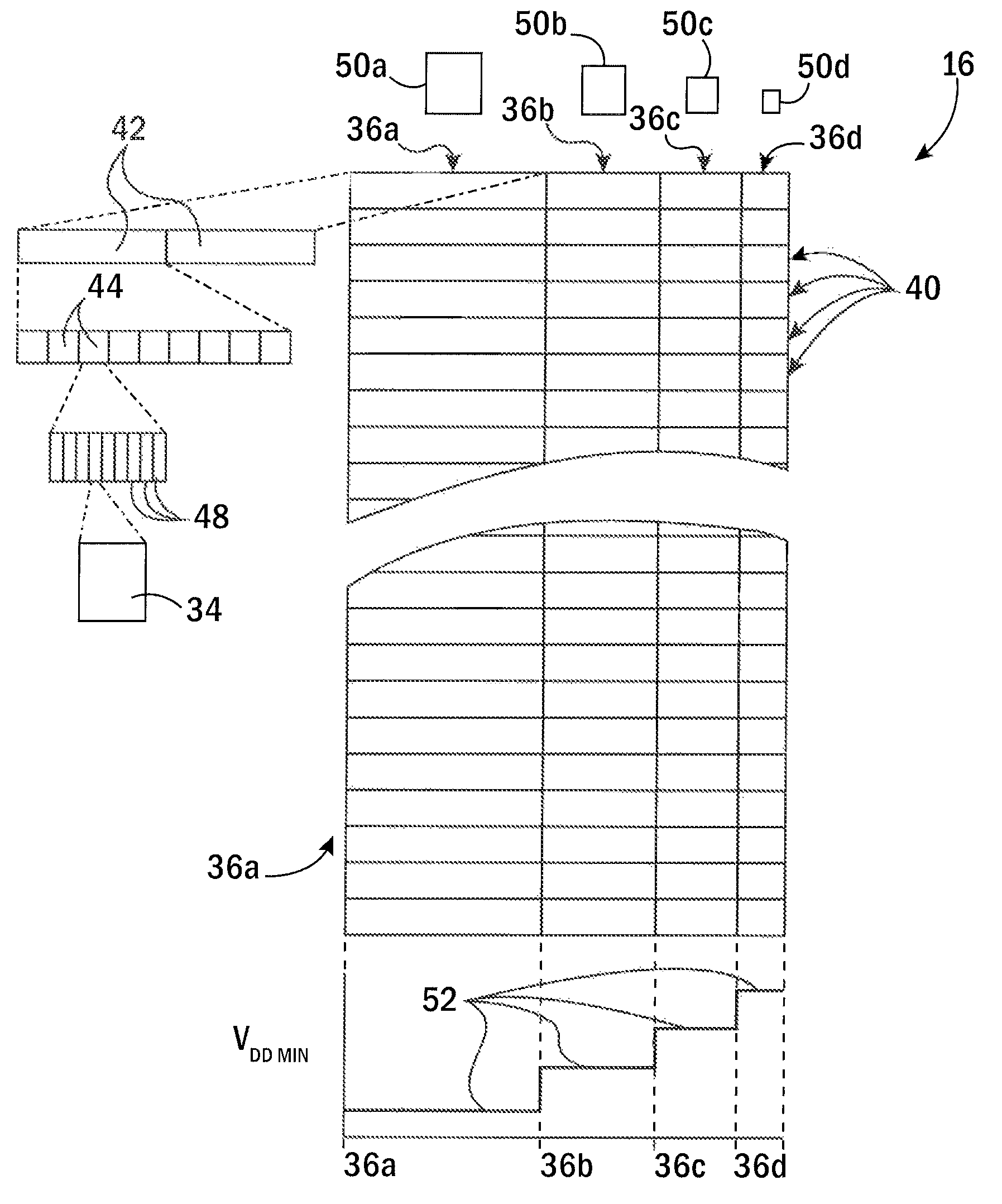

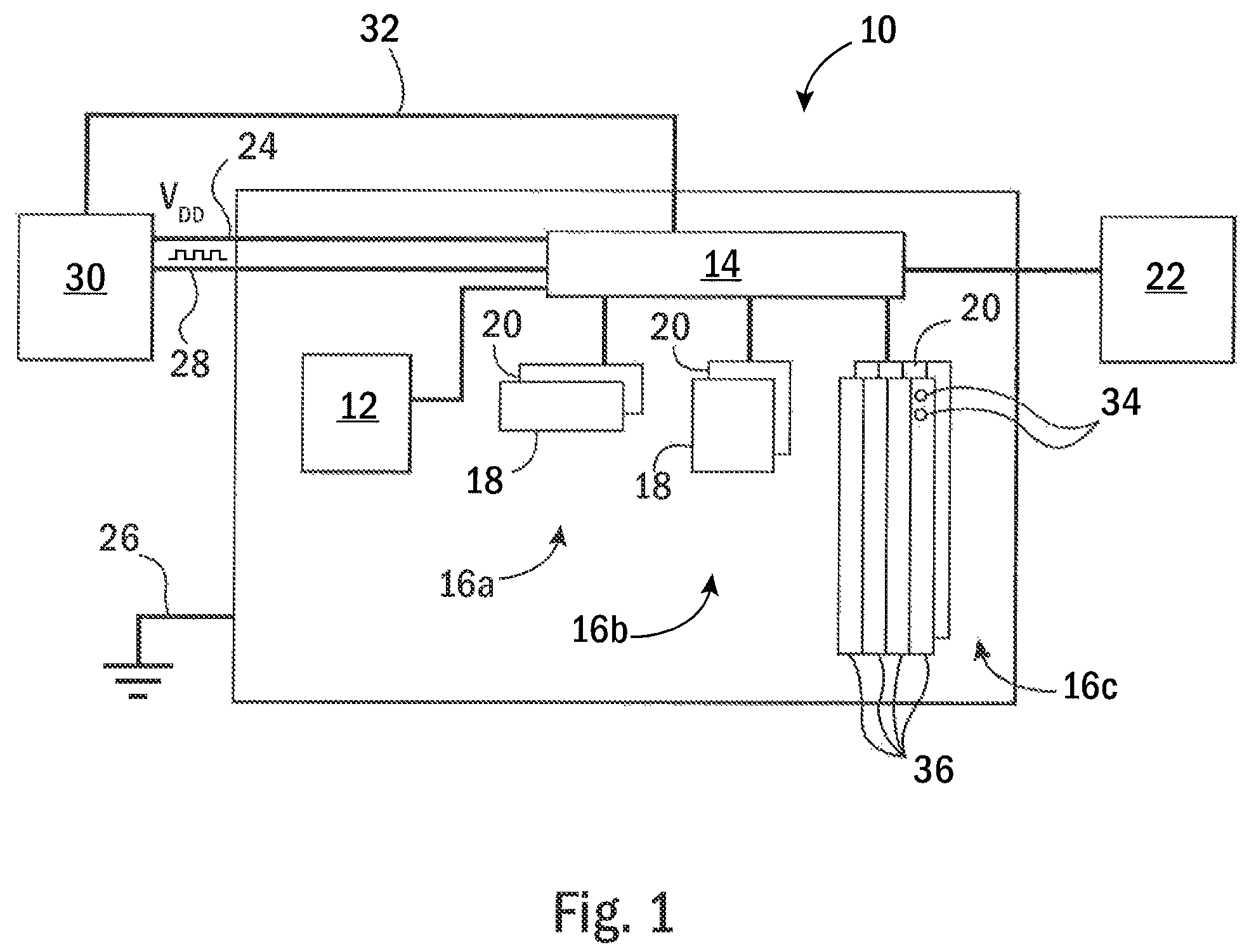

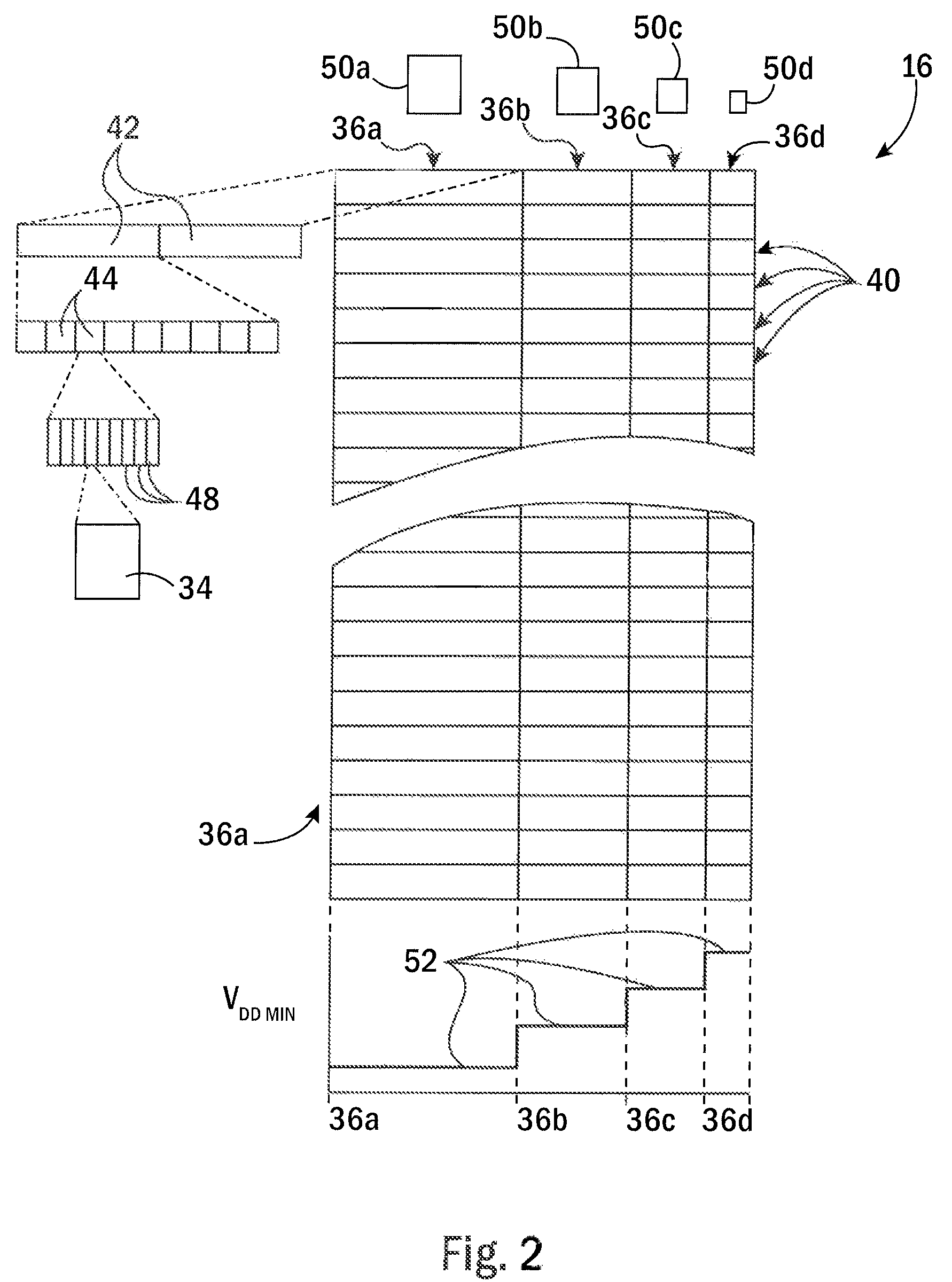

Energy Efficient Processor Having Heterogeneous Cache

ActiveUS20130094318A1Reduce cache capacityReduce voltageMemory architecture accessing/allocationEnergy efficient ICTParallel computingError correcting

A heterogeneous cache structure provides several memory cells into different ways each associated with different minimum voltages below which the memory cells produce substantial state errors. Reduced voltage operation of the cache may be accompanied by deactivating different ways according to the voltage reduction. The differentiation between the memory cells in the ways may be implemented by devoting different amounts of integrated circuit area to each memory cell either by changing the size of the transistors comprising the memory cell or devoting additional transistors to each memory cell in the form of shared error correcting codes or backup memory cells.

Owner:WISCONSIN ALUMNI RES FOUND

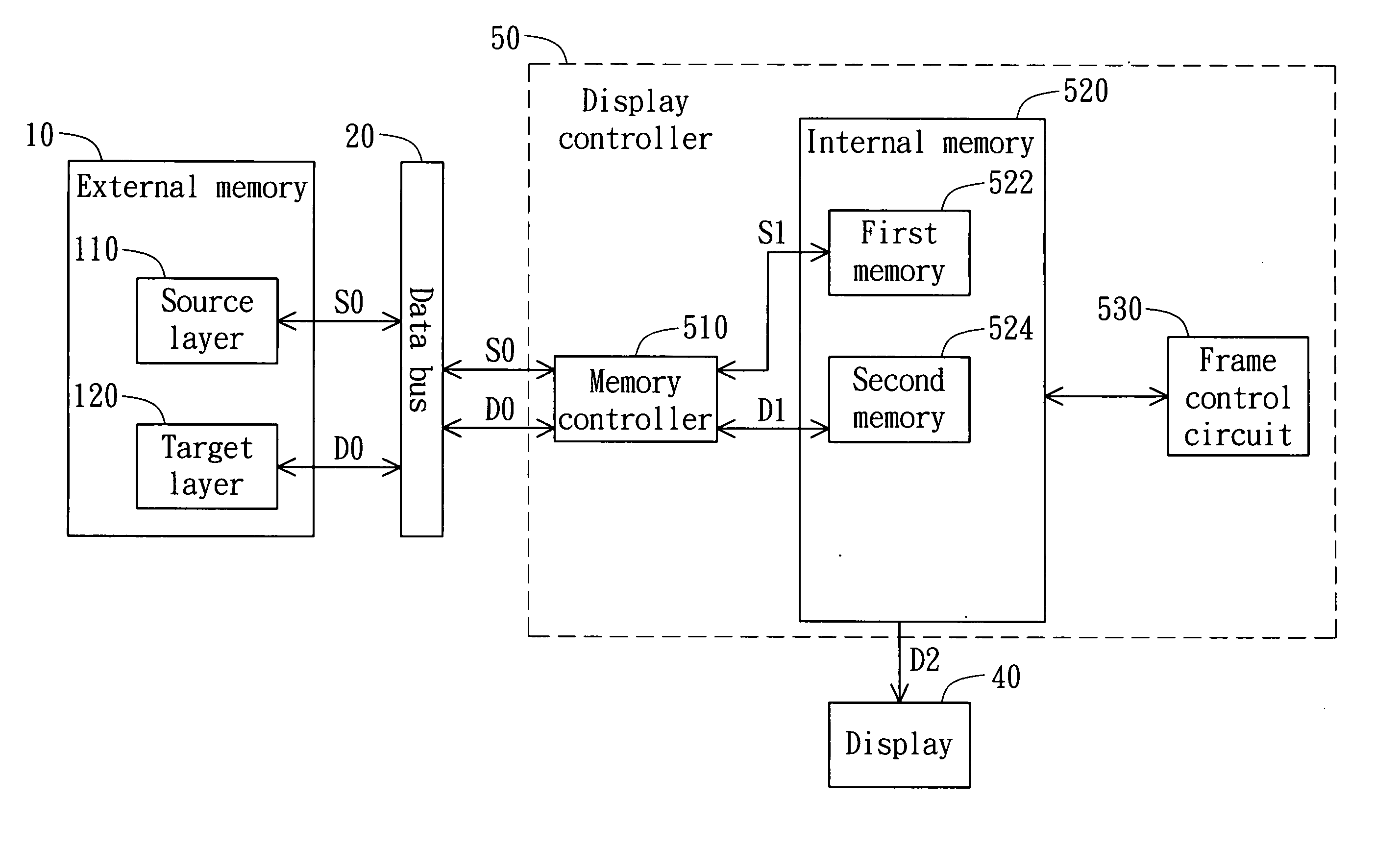

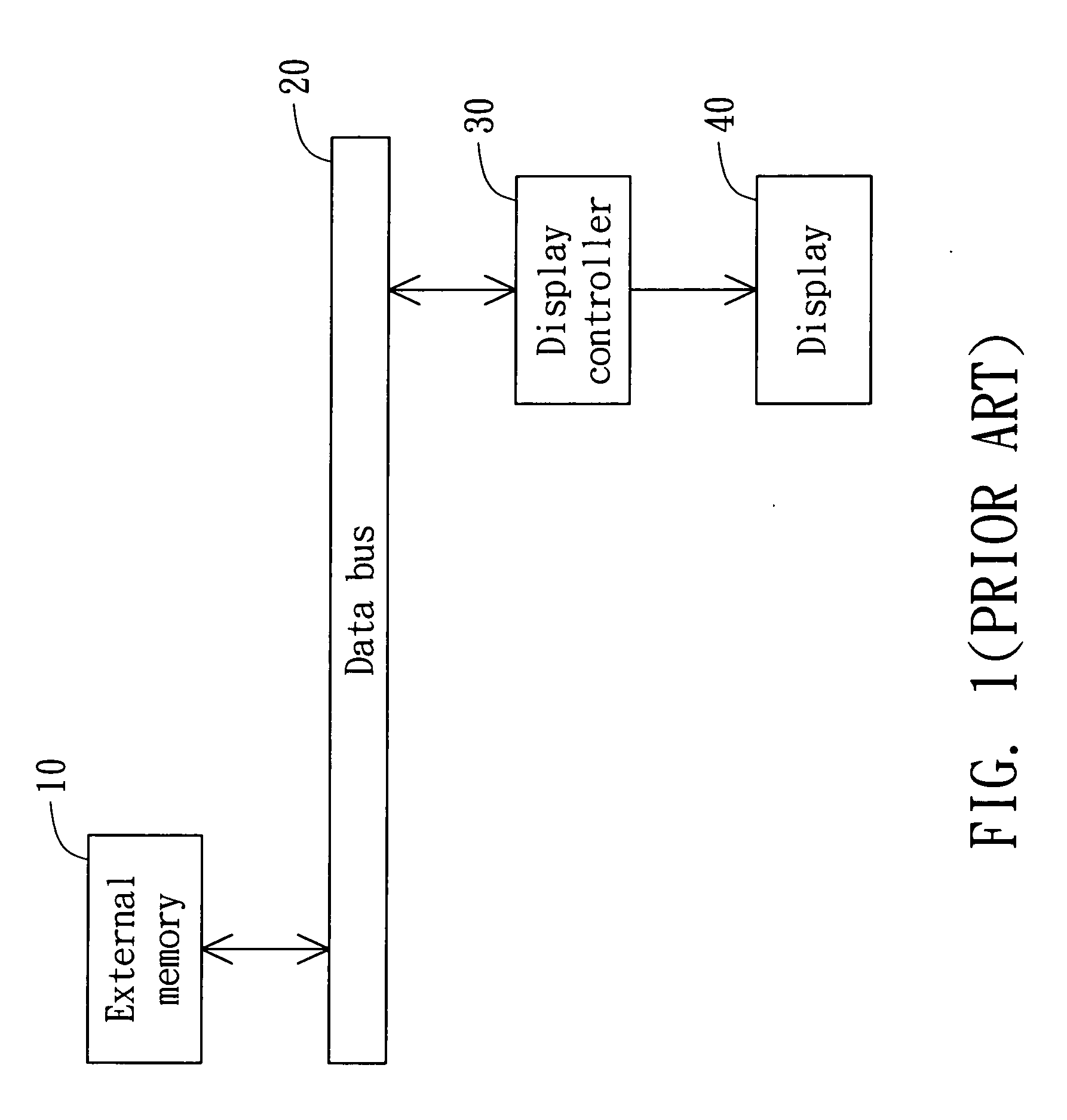

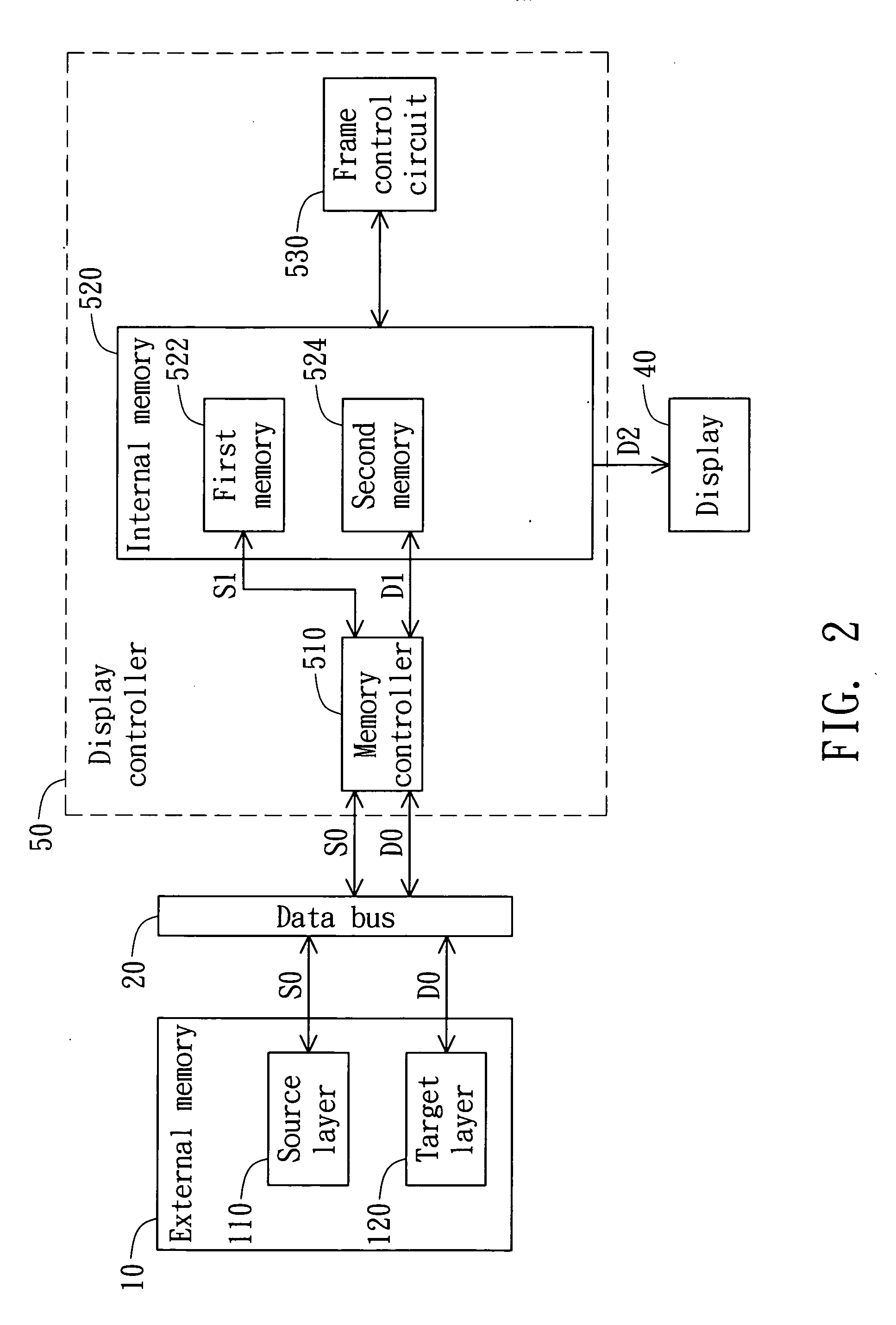

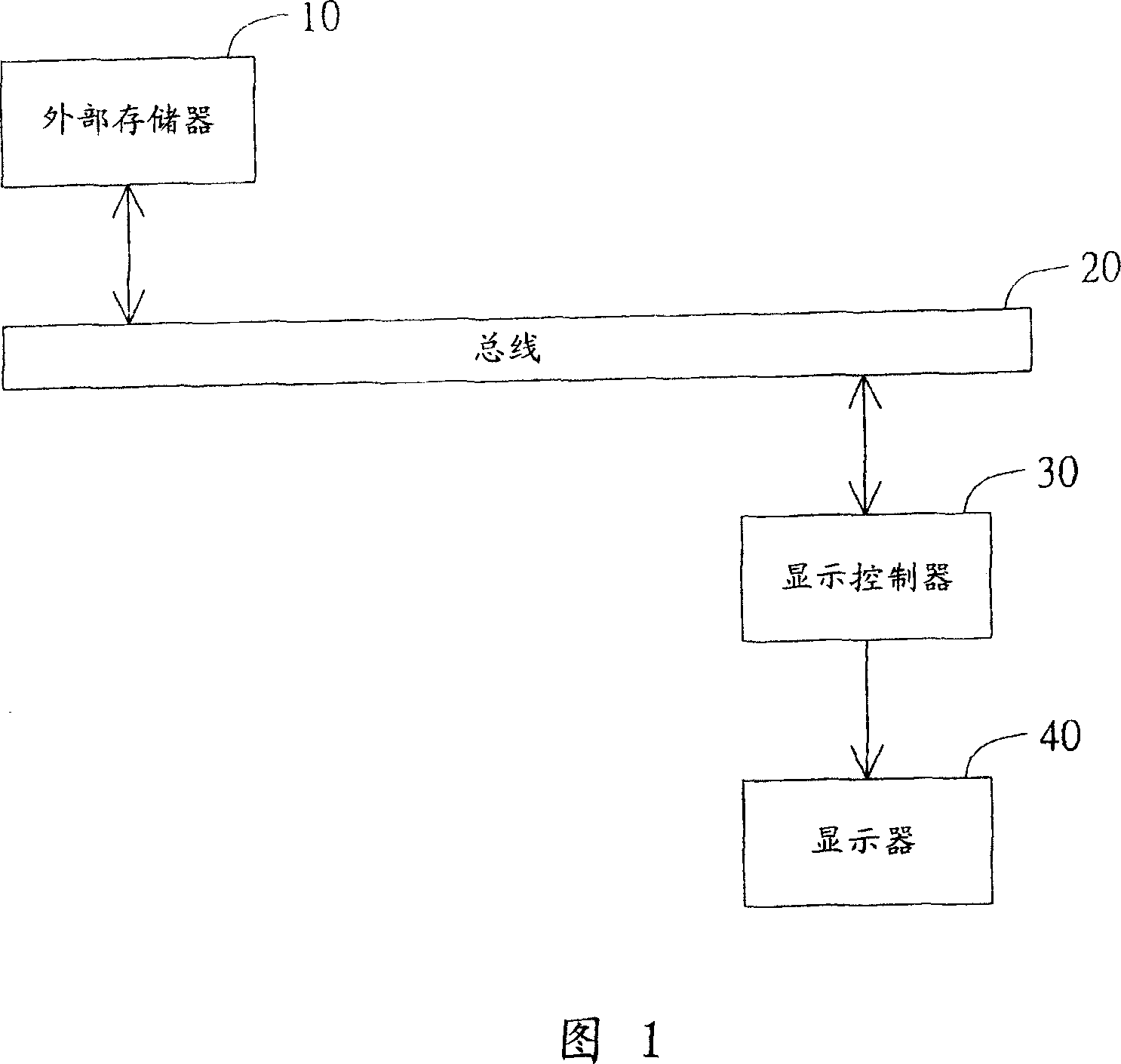

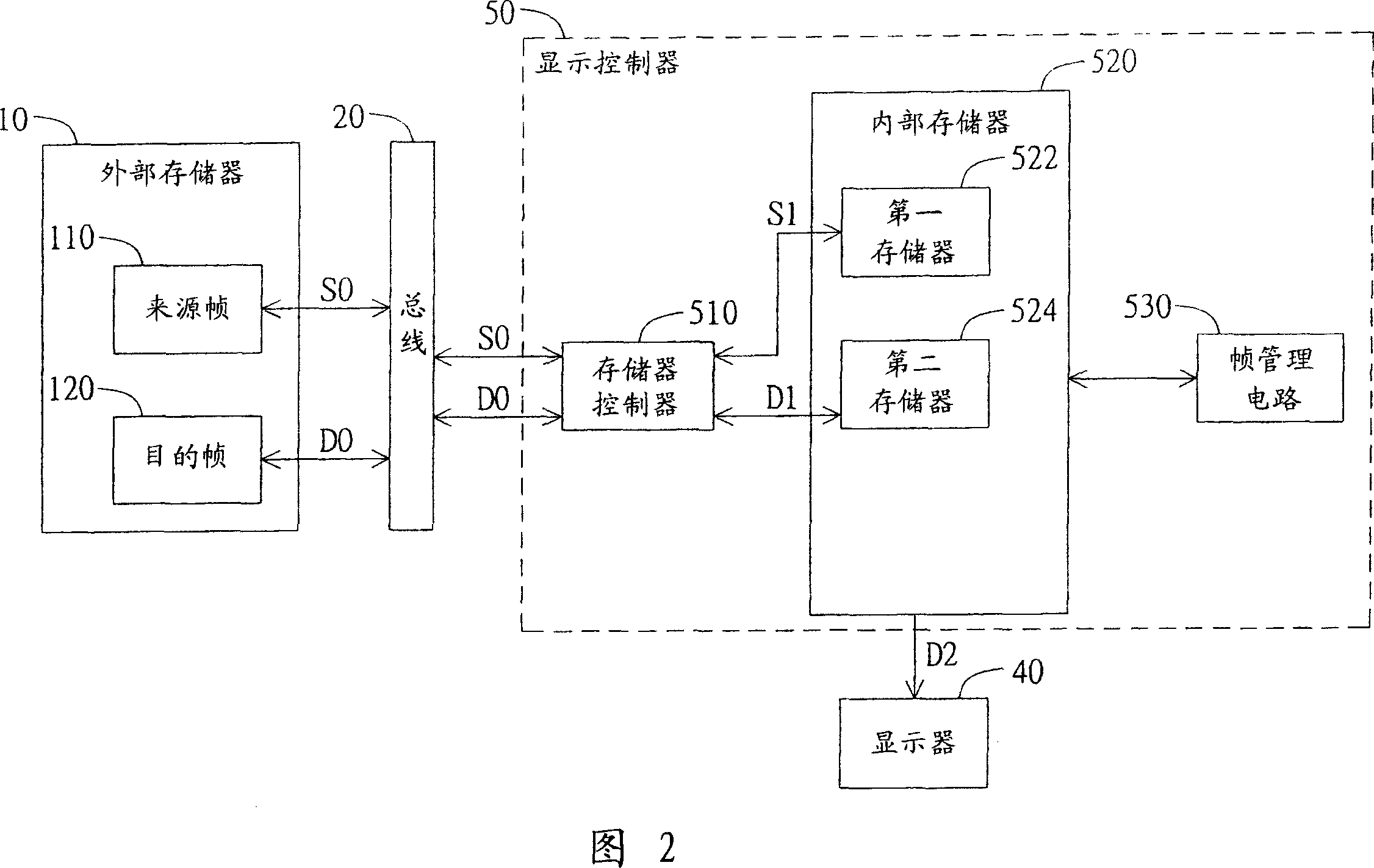

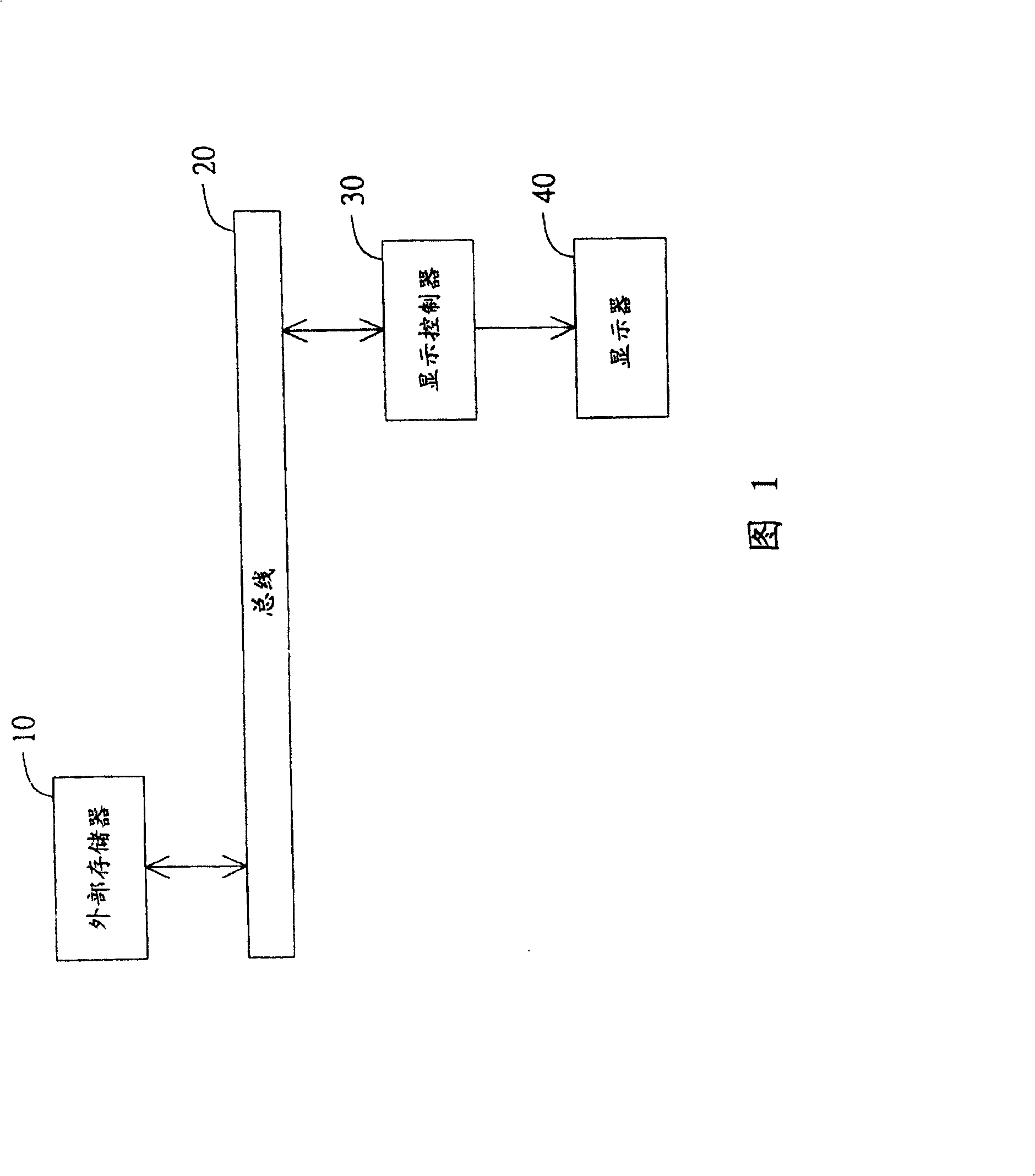

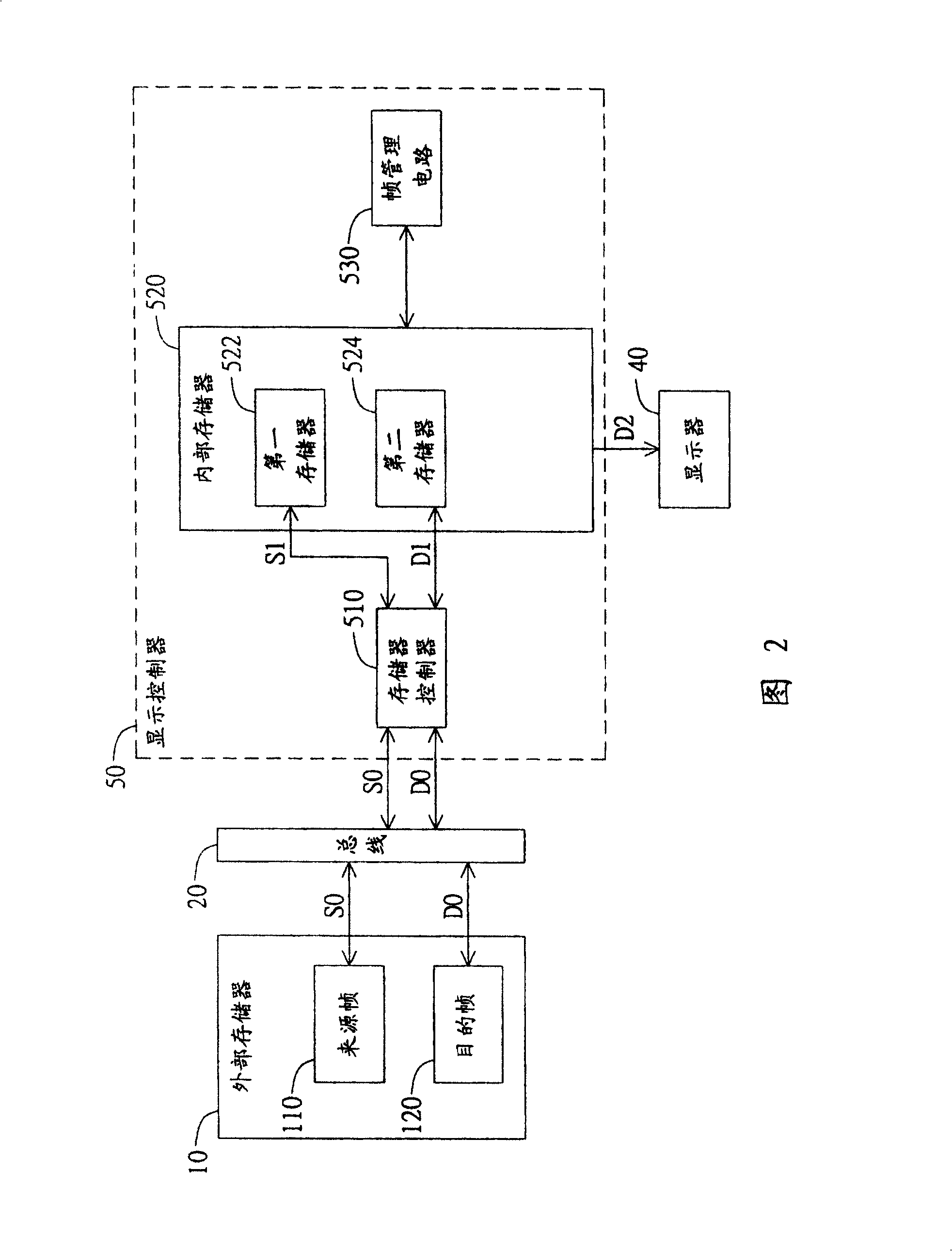

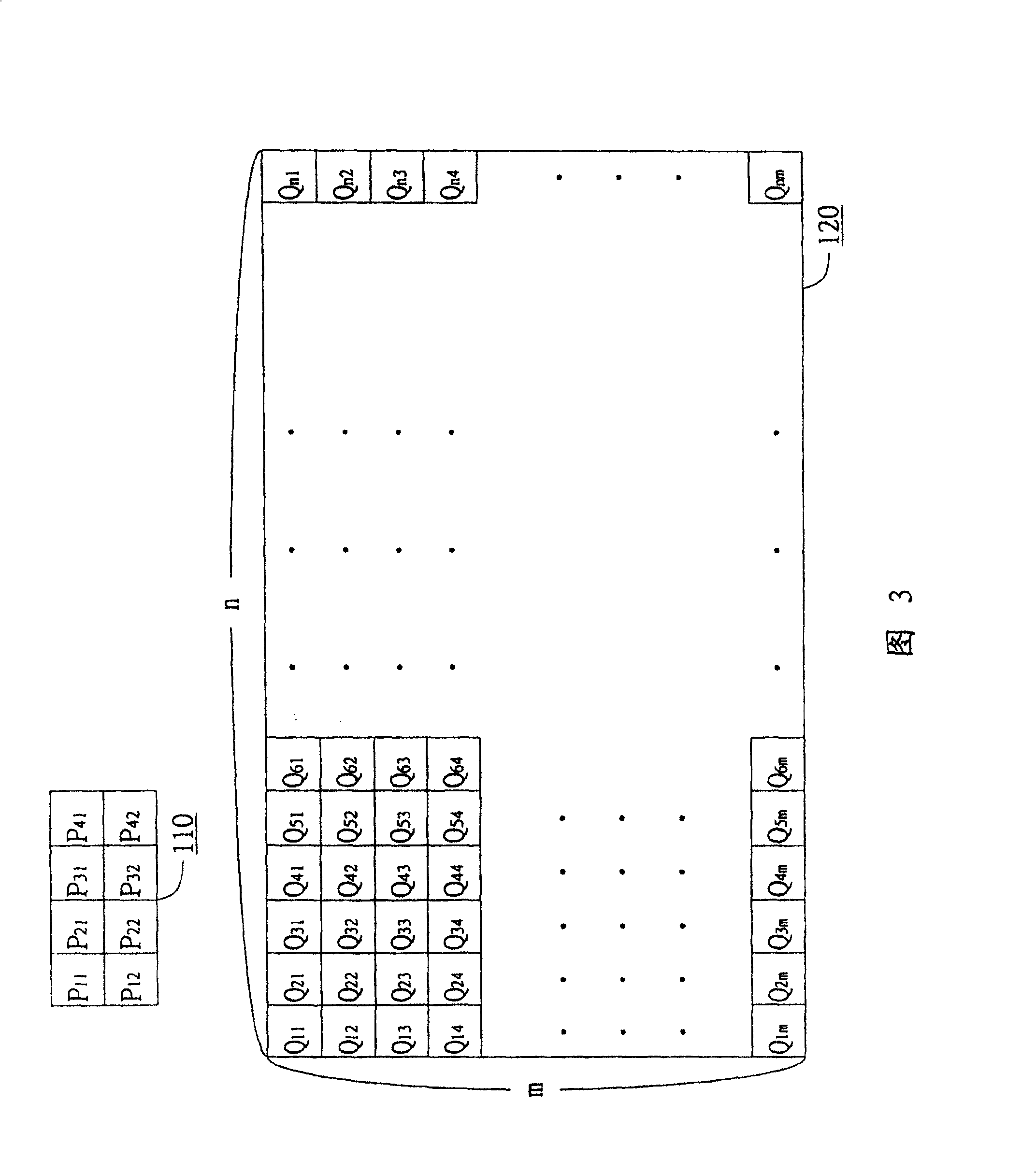

Display controller capable of reducing cache memory and the frame adjusting method thereof

InactiveUS20070076007A1Reducing cache memoryShorten access timeImage memory managementCathode-ray tube indicatorsExternal storageData needs

A display controller capable of reducing cache memory and a frame adjusting method thereof are provided. The display controller comprises a memory controller, a first memory, a second memory and a frame control circuit. The memory controller is for reading part of the image data from a source layer to obtain a first image data, and reading part of the image data from the target layer to obtain a second image data. The first memory is for storing the first image data. The second memory is for storing the second image data. The frame control circuit is for processing the first image data to generate a first processed image data overlaid with the second image data to obtain a second processed image data. If the second processed image data needs further processing, then the display controller loads the second processed image data to an external memory.

Owner:QUANTA COMPUTER INC

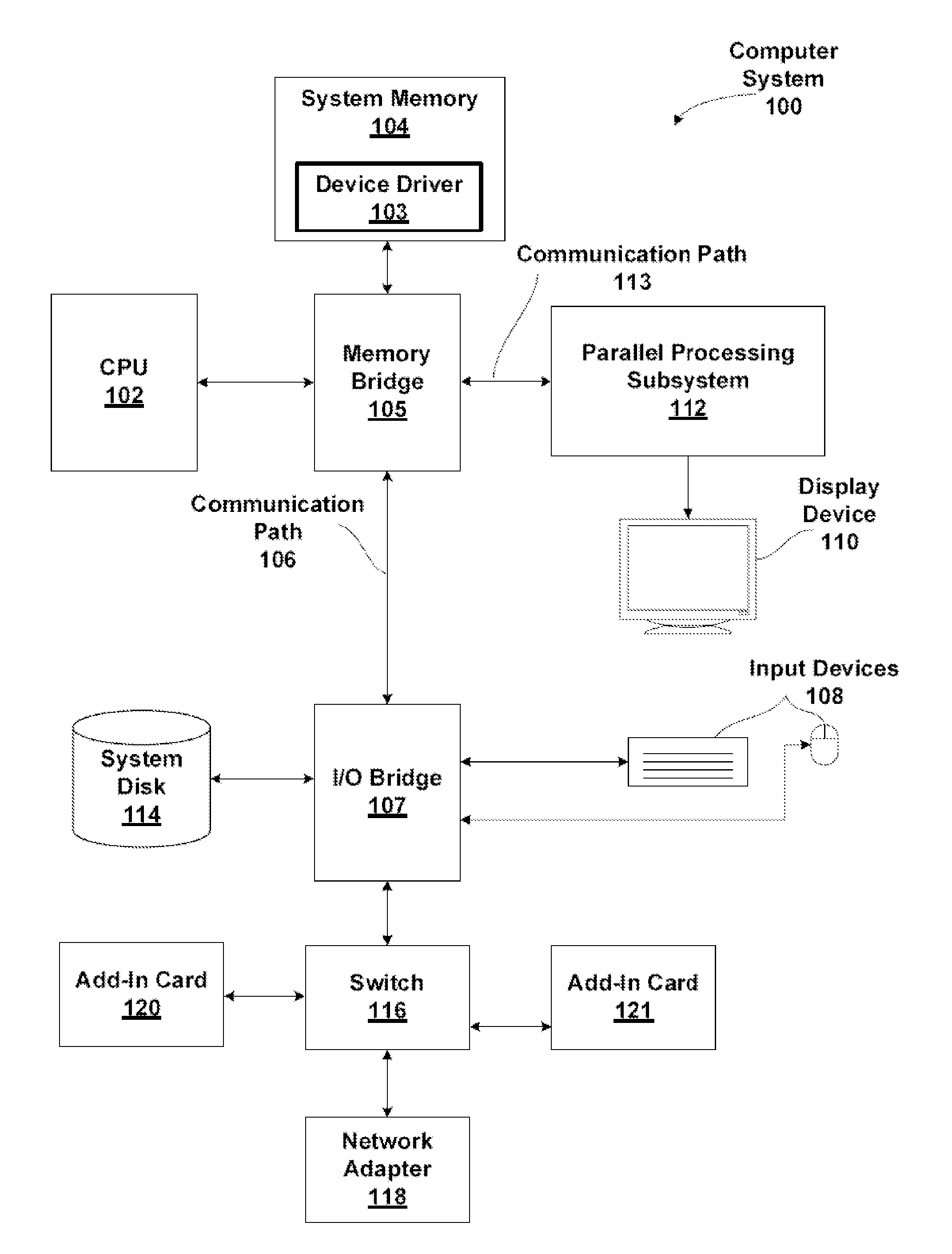

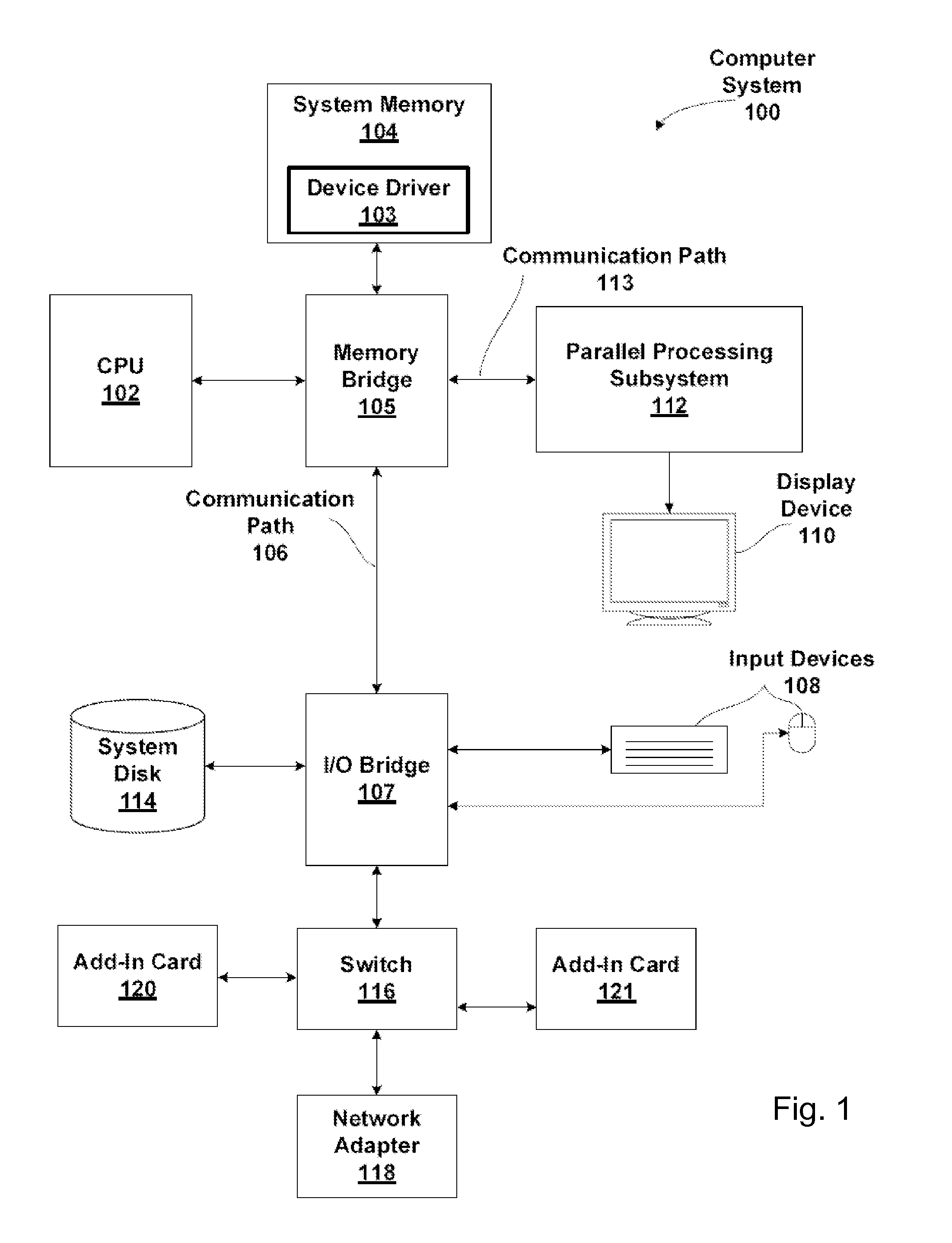

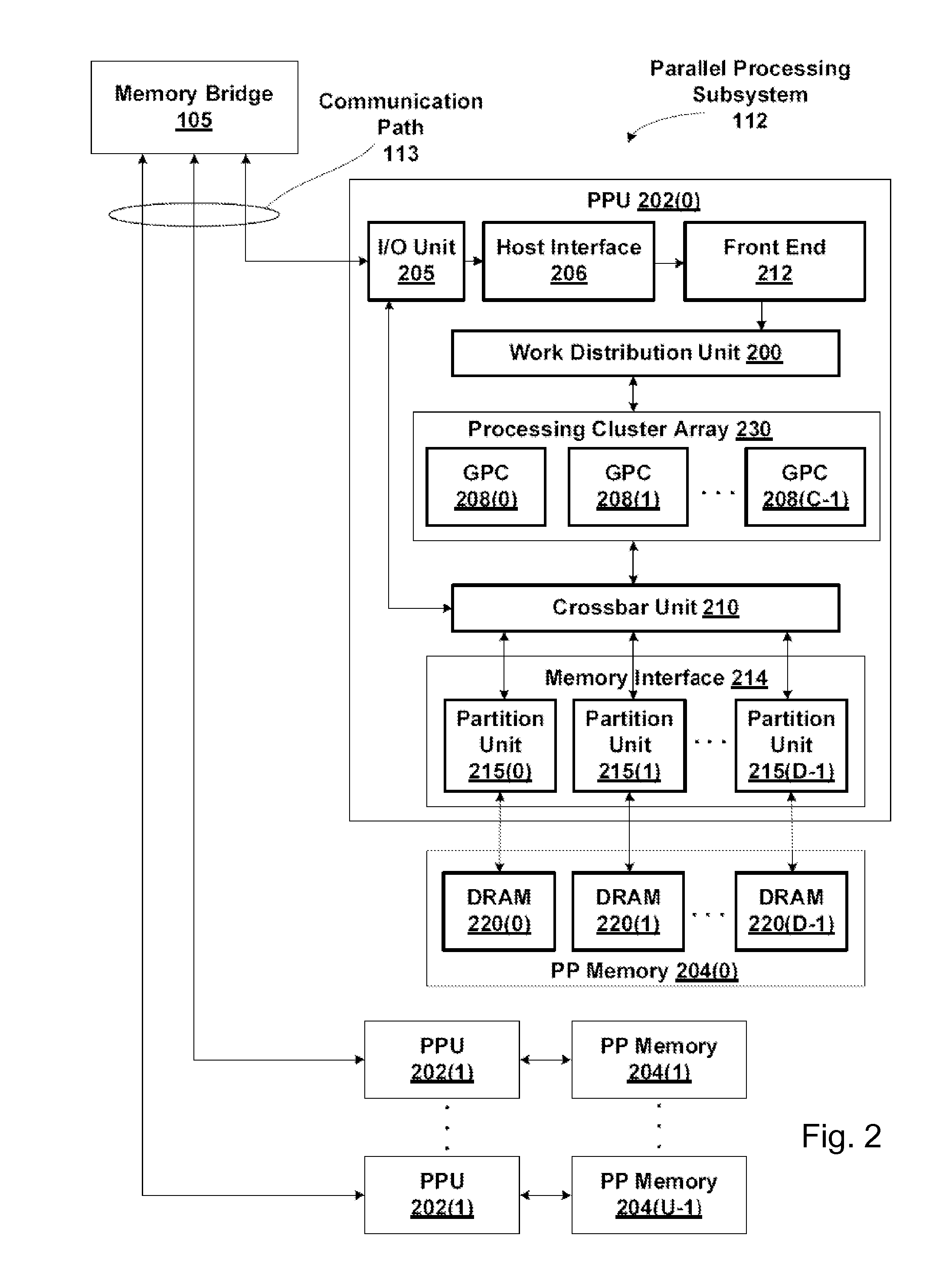

System and method for reduced cache mode

InactiveUS20140136793A1Avoid smallCache utilization is reducedMemory architecture accessing/allocationMemory adressing/allocation/relocationGraphicsComputer memory

A system and method are described for dynamically changing the size of a computer memory such as level 2 cache as used in a graphics processing unit. In an embodiment, a relatively large cache memory can be implemented in a computing system so as to meet the needs of memory intensive applications. But where cache utilization is reduced, the capacity of the cache can be reduced. In this way, power consumption is reduced by powering down a portion of the cache.

Owner:NVIDIA CORP

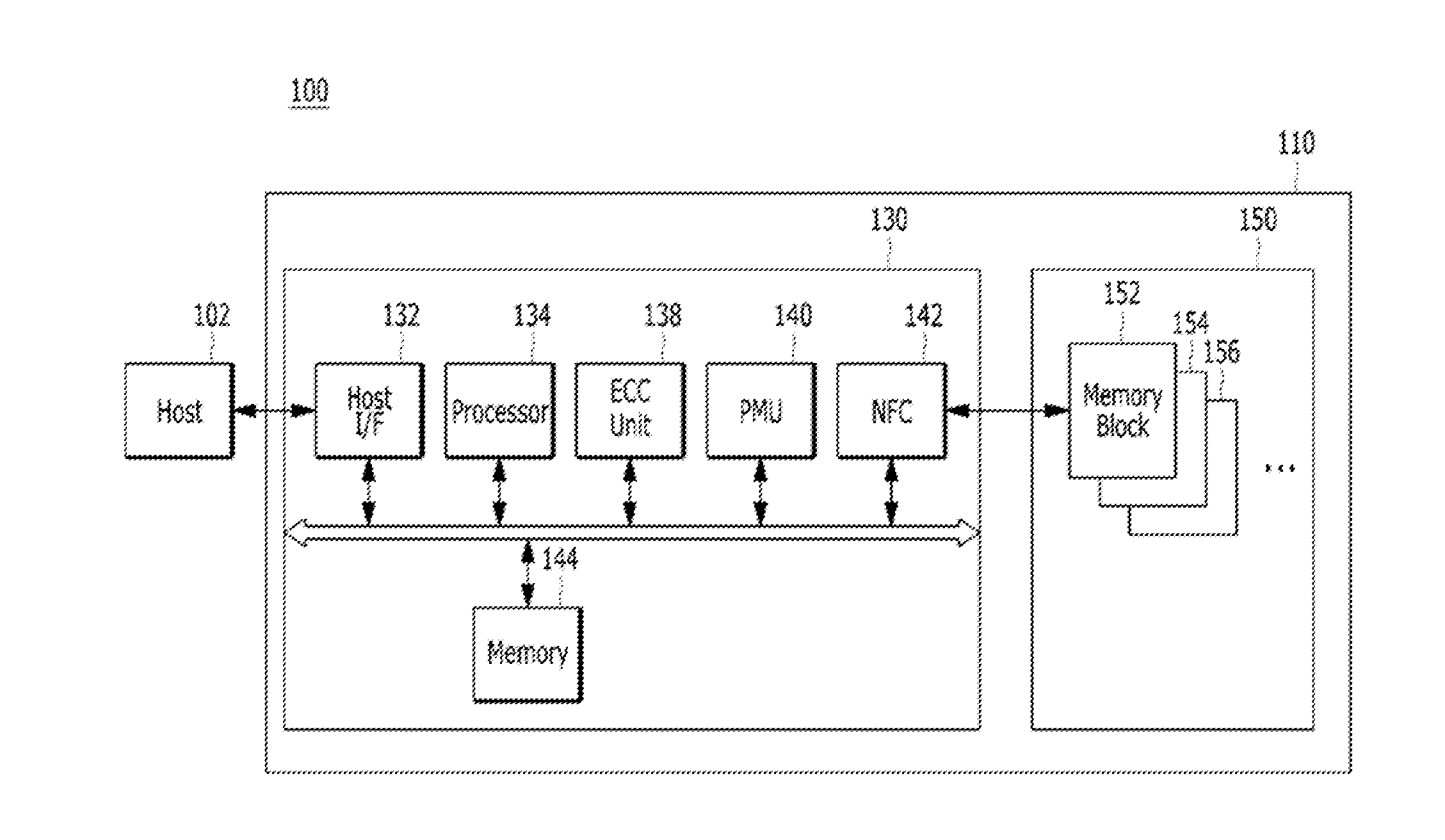

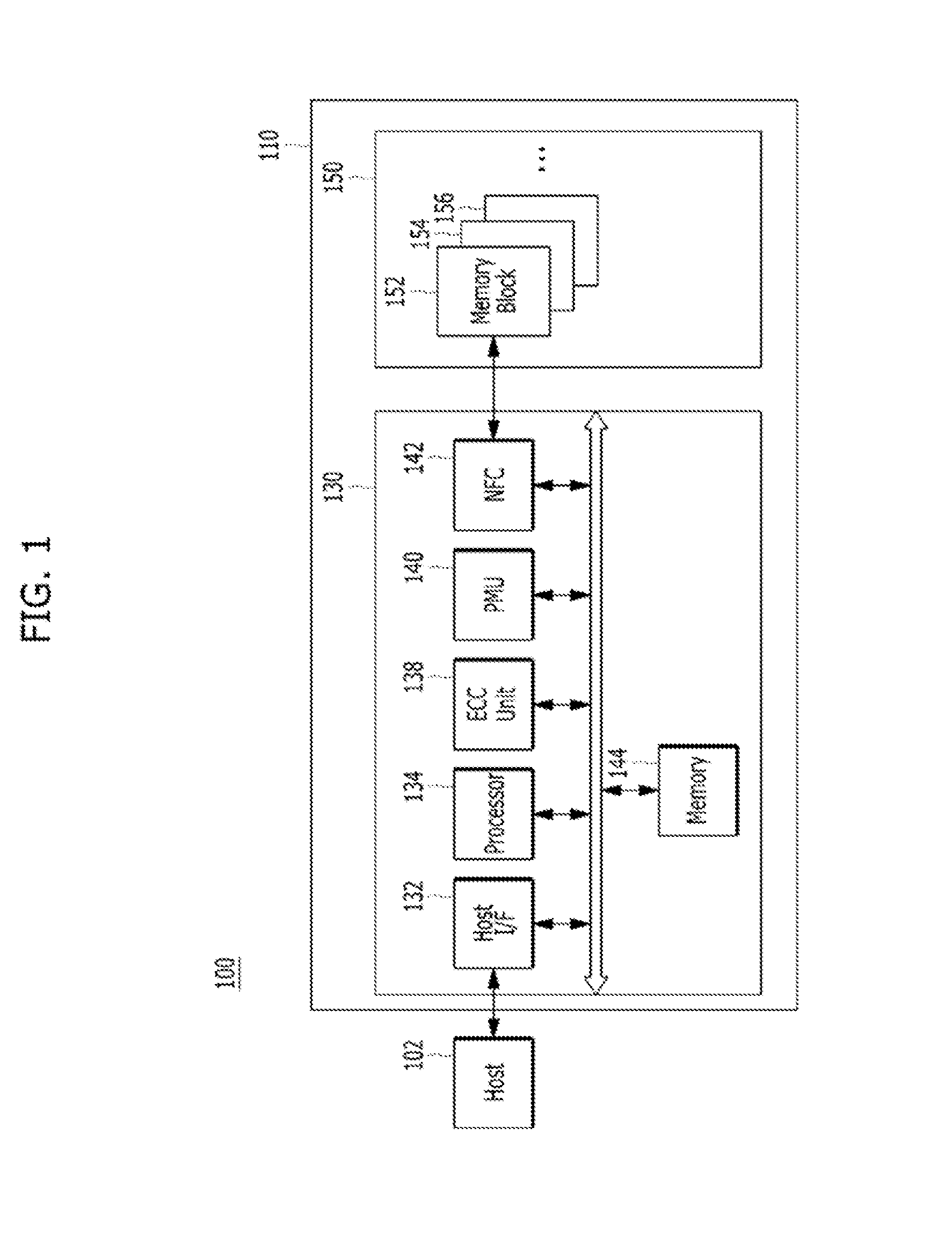

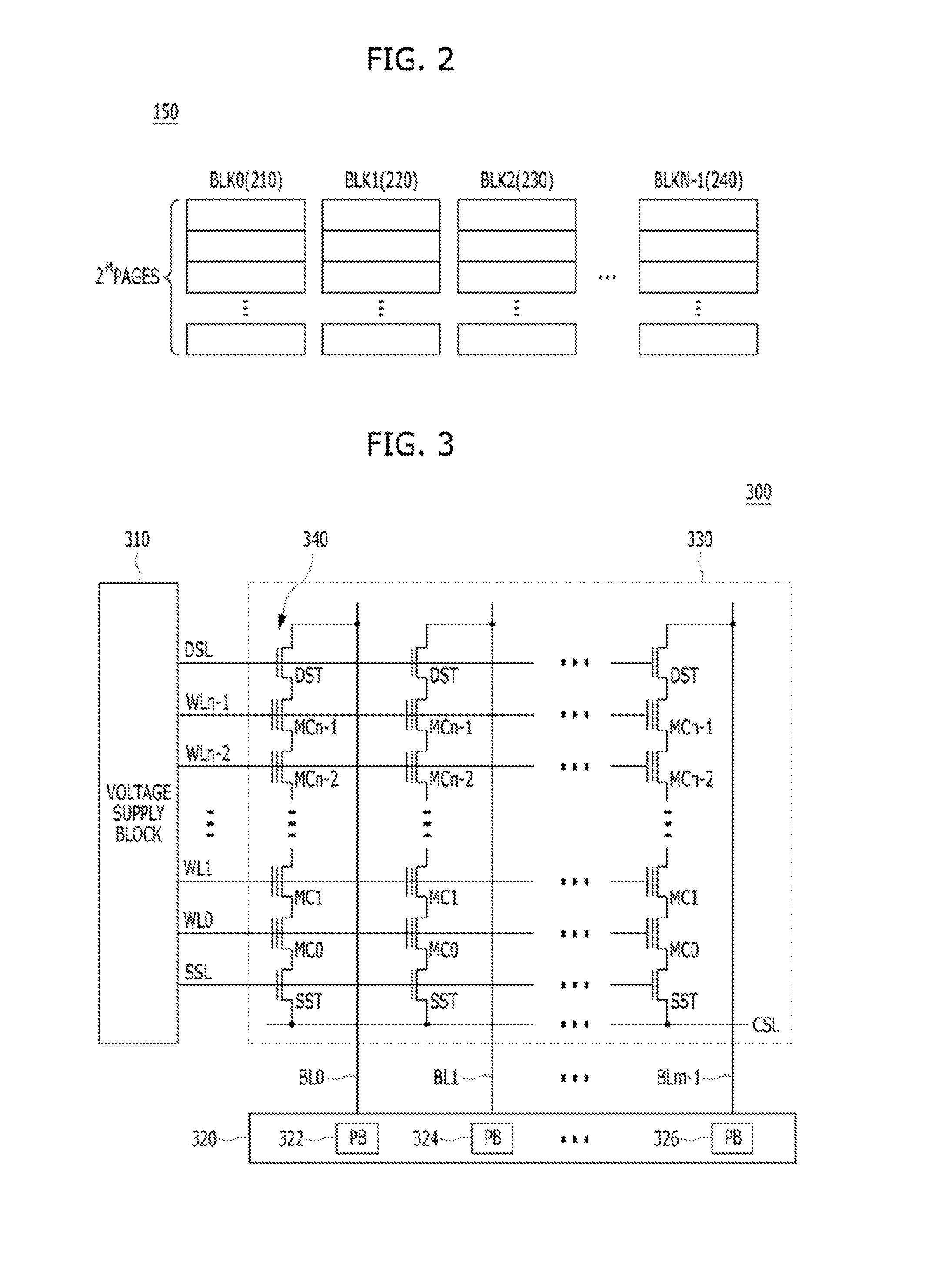

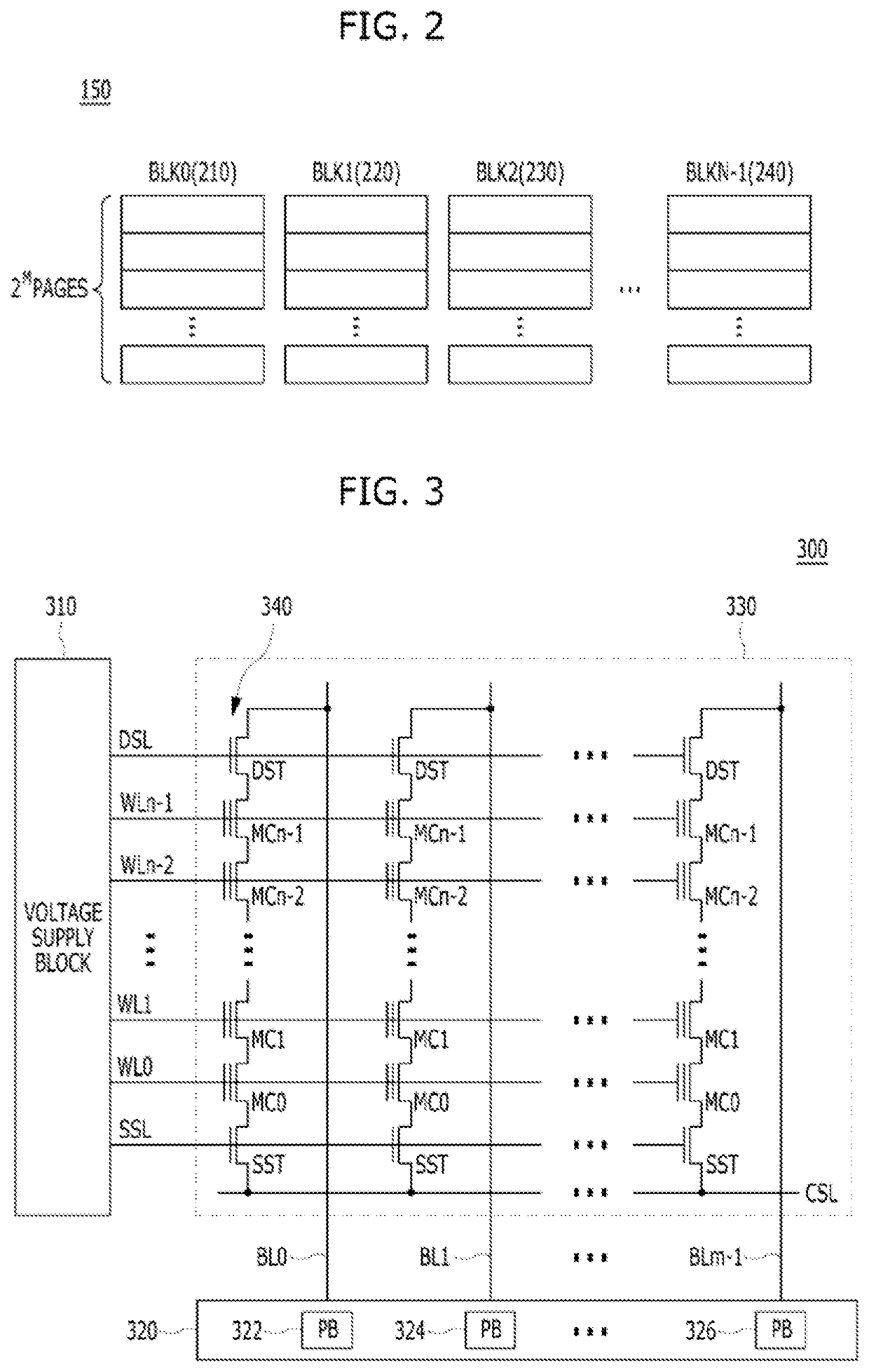

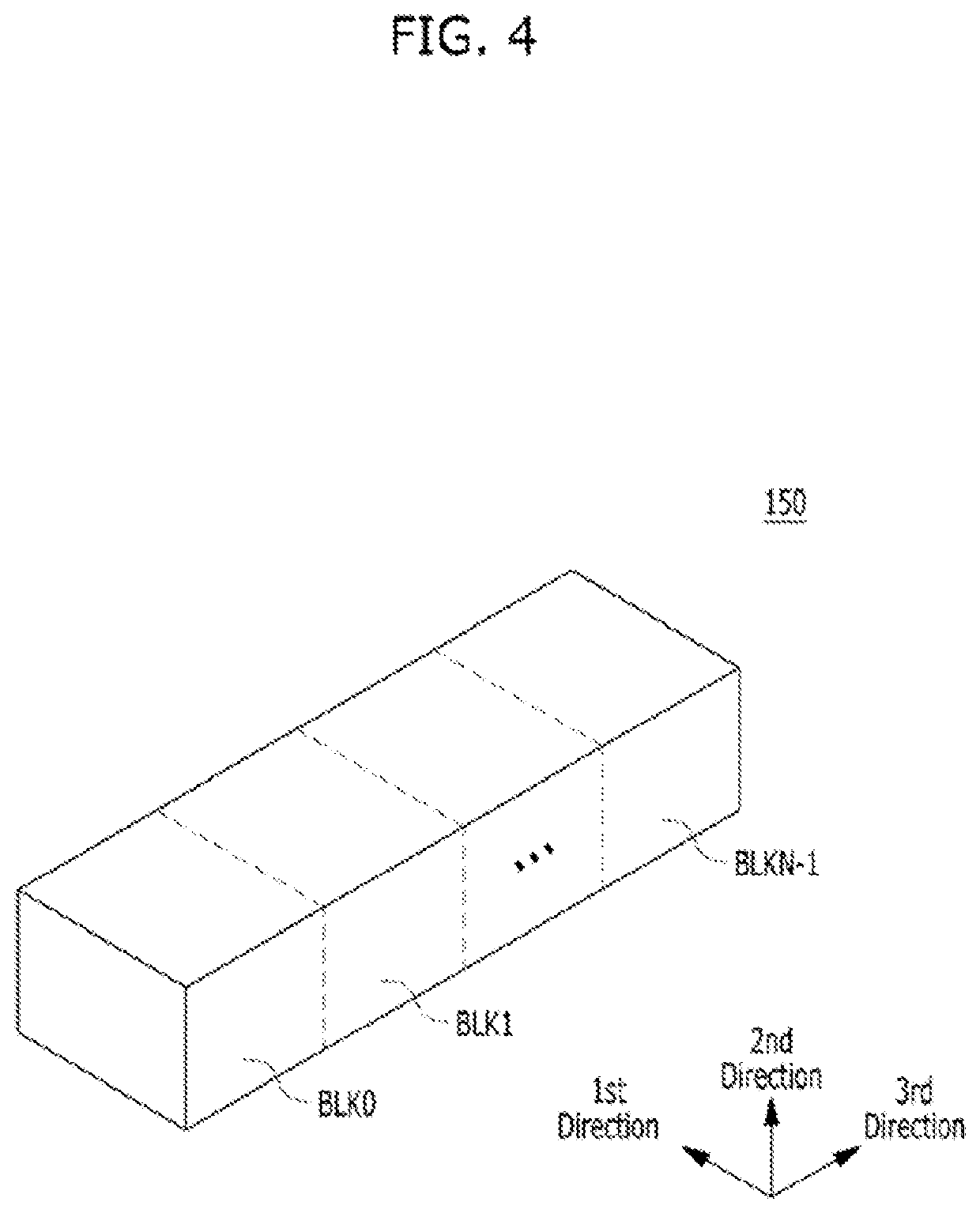

Memory system and operation method thereof

ActiveUS20160224466A1Reduce cache capacityEfficient managementMemory architecture accessing/allocationError detection/correctionParallel computingMemory systems

A memory system may include a first memory device including a first input / output buffer, a second memory device including a second input / output buffer, and a cache memory suitable for selectively and temporarily storing first and second data to be respectively programmed in the first and second memory devices. The first data is programmed to the first memory device in a first program section by being stored in the cache memory only in a first monopoly section of the first program section. The second data is programmed to the second memory device in a second program section by being stored in the cache memory only in a second monopoly section of a second program section. The first monopoly section and the second monopoly section are set not to overlap each other.

Owner:SK HYNIX INC

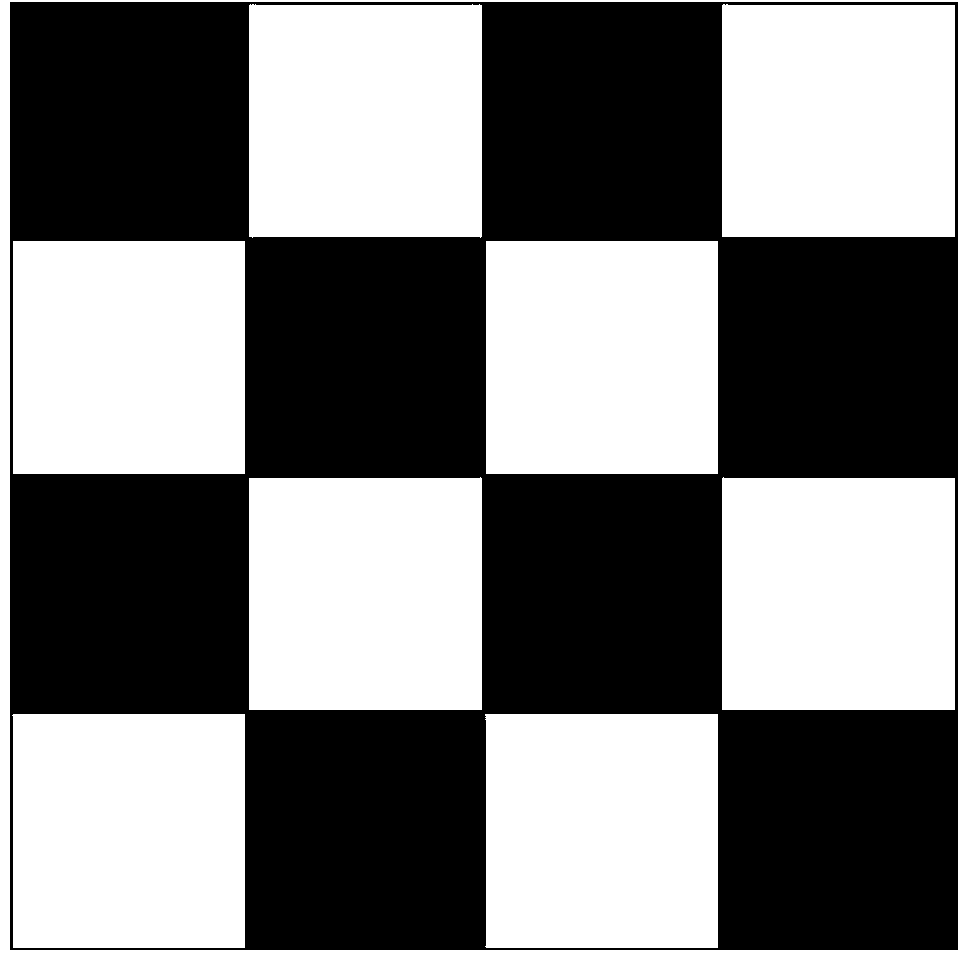

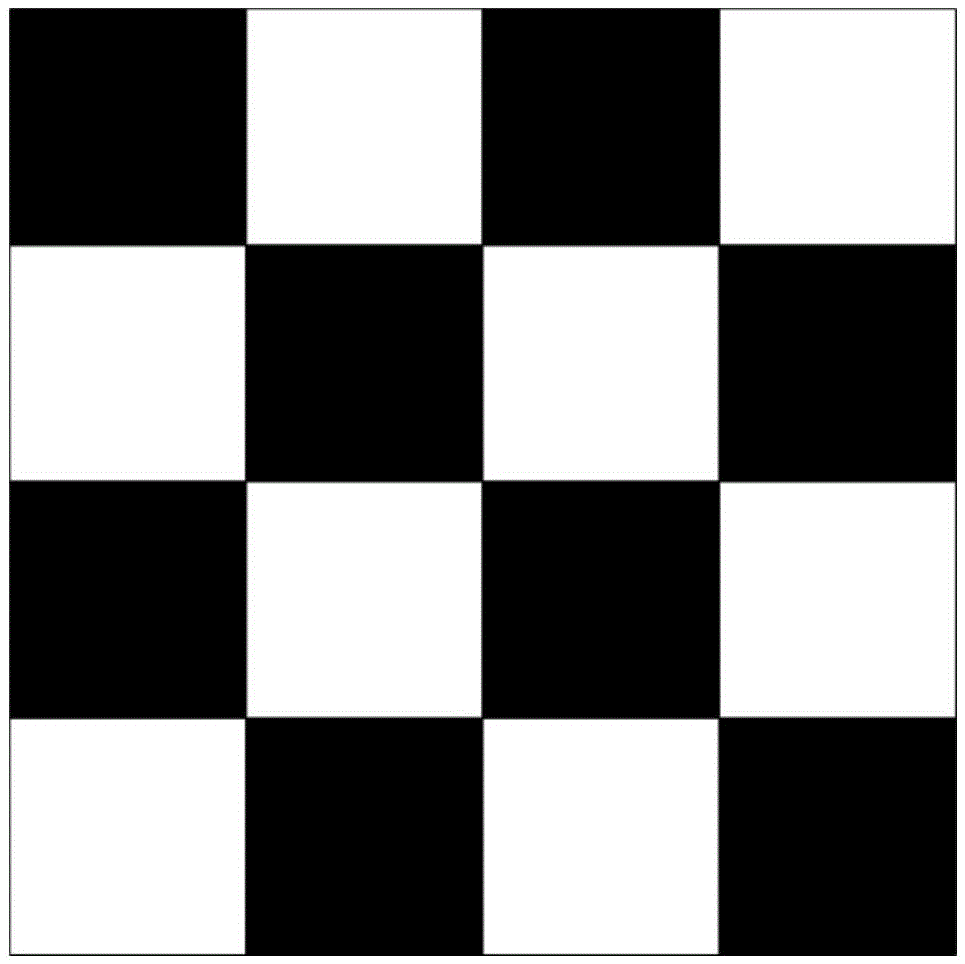

Any-order checker board image assembly generating method based on FPGA

ActiveCN104143303ALow costReduce cache capacityStatic indicating devicesSignal generatorImage signal

The invention discloses an any-order checker board image assembly generating method based on an FPGA. The any-order checker board image assembly generating method based on the FPGA comprises the steps that (1), checker board image peak coordinate information, checker board image color value information and checker board image order information are sent to an image signal generator; (2) horizontal coordinate points within the range of a checker board image are divided into a plurality of continuous horizontal blocks, each horizontal block with the serial number as an odd number is tagged with a first tagged value, each horizontal block with the serial number as an even number is tagged with a second tagged value, the vertical coordinate points within the range of the checker board image are divided into a plurality of continuous vertical blocks, each vertical block with the serial number as an odd number is tagged with a first tagged value, and each vertical block with the serial number as an even number is tagged with a second tagged value; (3), each coordinate point within the range of the checker board image is scanned, if the horizontal tagged value of the scanned coordinate point and the vertical tagged value of the scanned coordinate point are identical, the color value of the scanned point is set as a first color value, and the color value of the scanned point is set as a second color value otherwise. According to the any-order checker board image assembly generating method based on the FPGA, an any-order checker board image assembly, as a complicated logic image, is generated through the FPGA.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

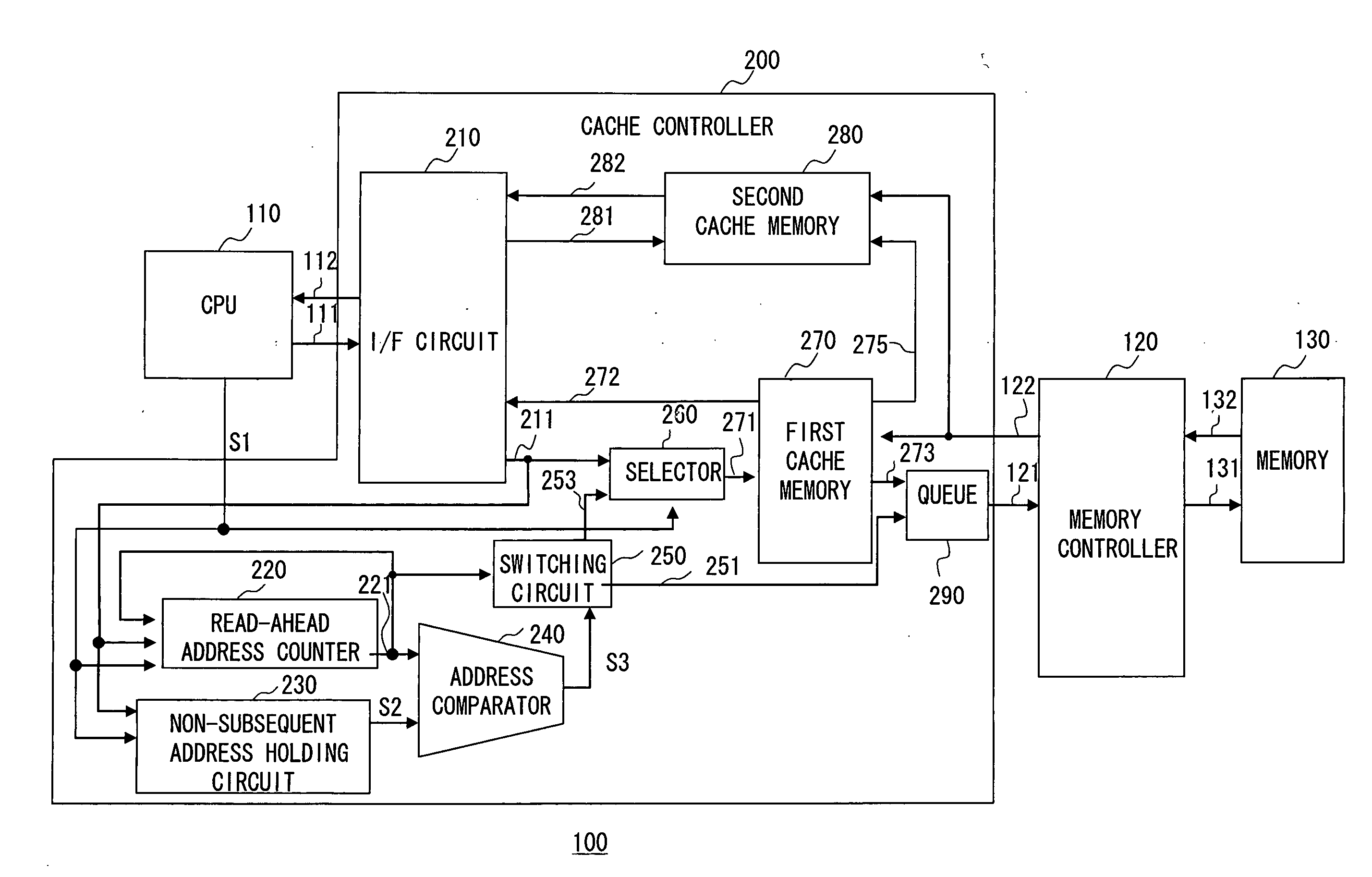

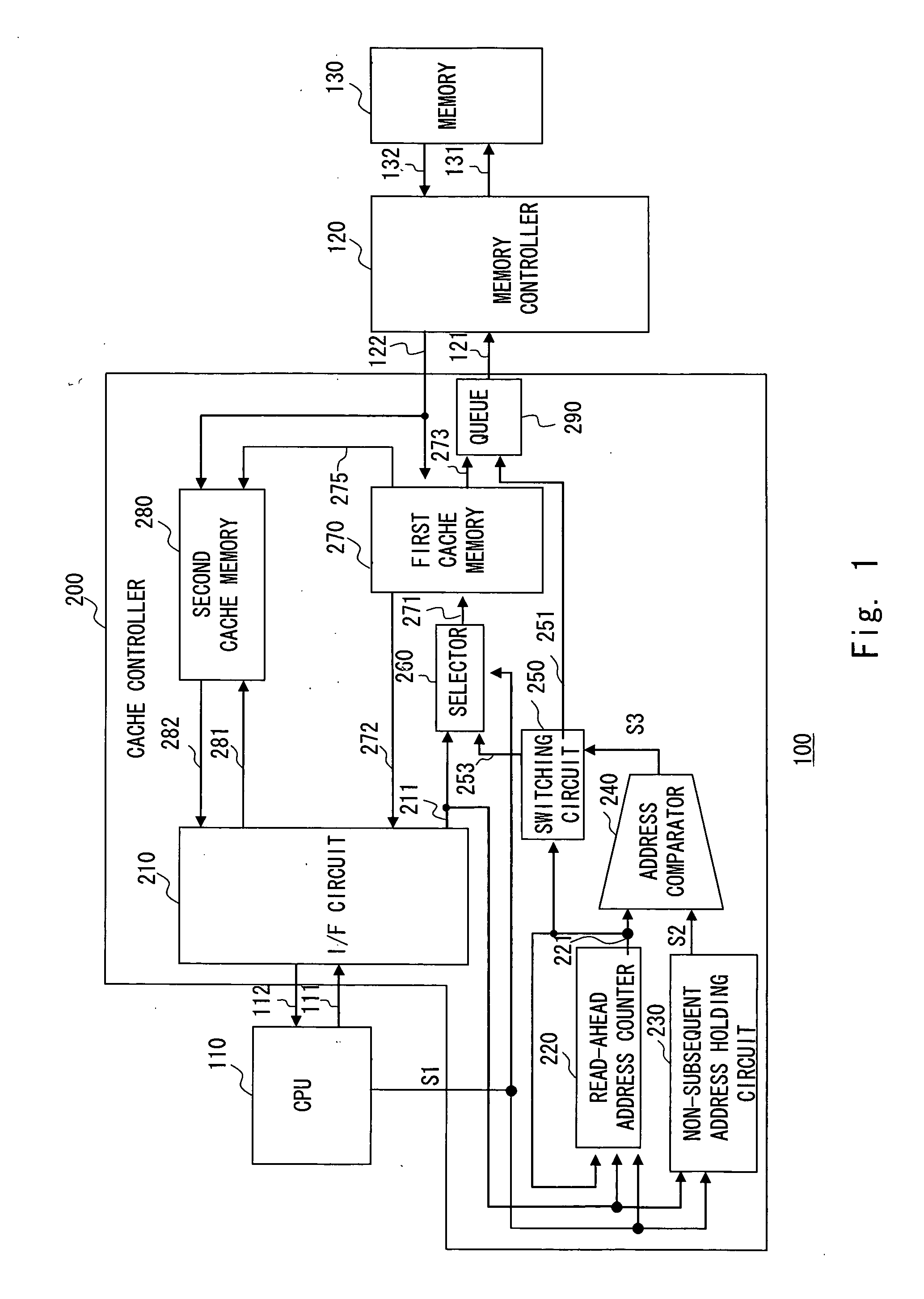

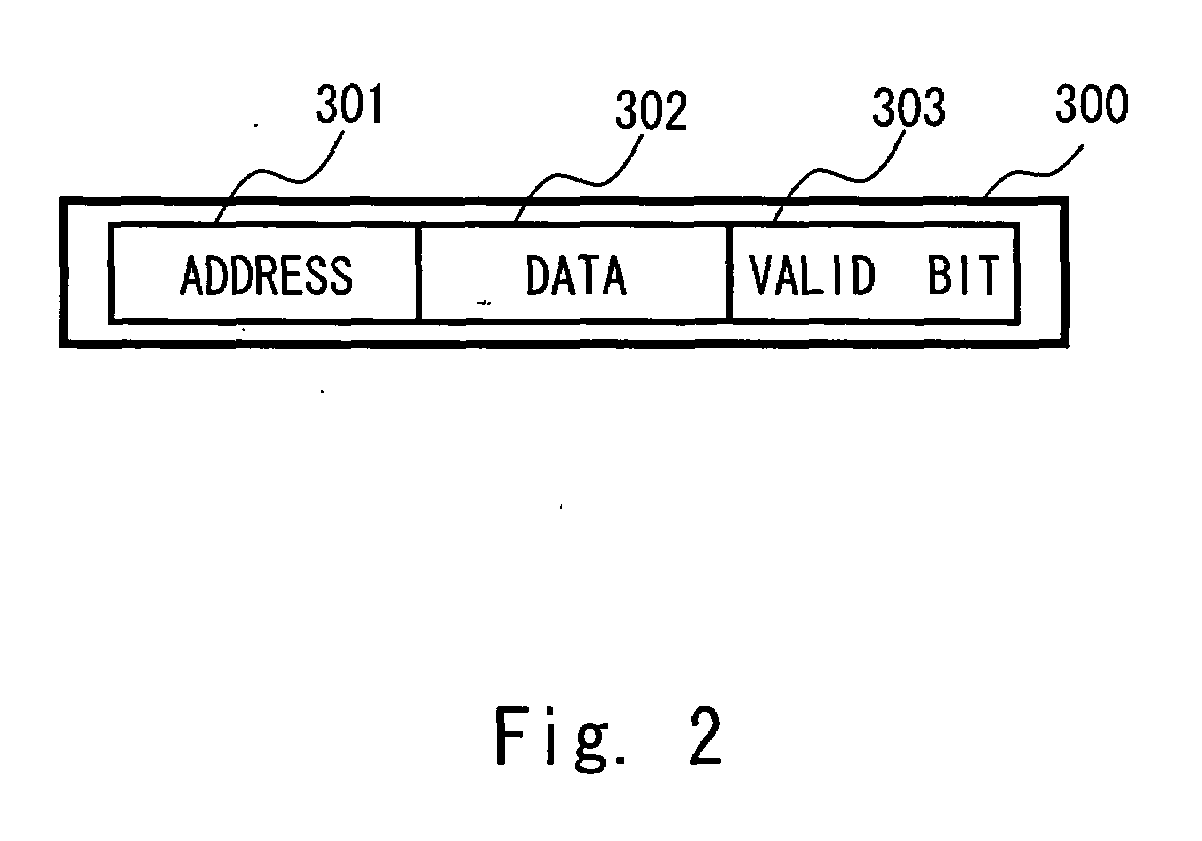

Cache control method, cache device, and microcomputer

InactiveUS20080250211A1Preventing reduction in CPU performanceReduce cache capacityMemory architecture accessing/allocationMemory systemsMicrocomputerParallel computing

Owner:RENESAS ELECTRONICS CORP

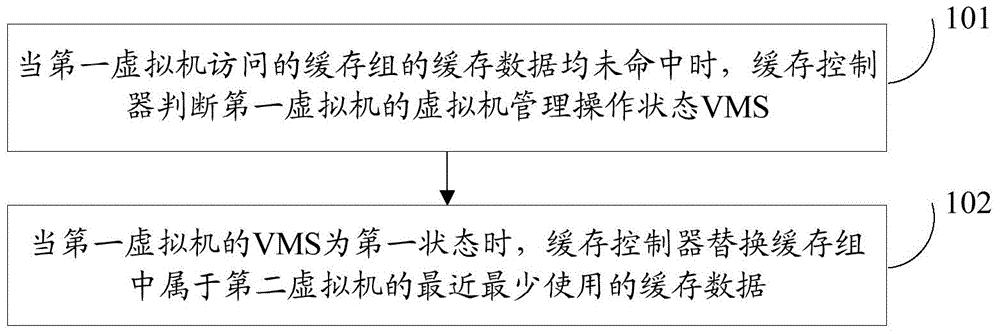

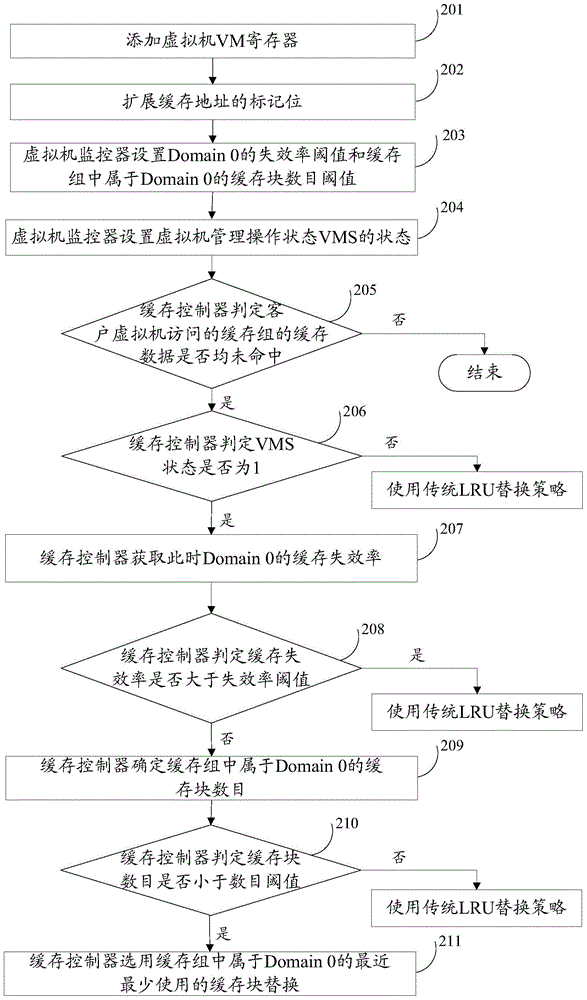

Method and device for dividing caches

ActiveCN104461928AReduce competitionImprove performanceMemory architecture accessing/allocationProgram initiation/switchingVirtualizationLeast recently frequently used

An embodiment of the invention discloses a method and device for dividing caches and relates to the technical field of computers. The method includes: judging the virtual machine management operation state (VMS) of a first virtual machine when the cache data of the cache groups visited by the first virtual machine misses; replacing the cache data, which belongs to a second virtual machine and is used the least recently, in the cache group when the VMS of the first virtual machine is the first state. The method is applicable to virtualized environments and capable of increasing the performance of virtual machines during the starting and copying of the virtual machines.

Owner:HUAWEI TECH CO LTD +1

Method for generating any triangle filling picture assembly based on FPGA

ActiveCN104143304AIncrease generation speedHigh speedStatic indicating devicesComputer graphics (images)Signal generator

The invention discloses a method for generating any triangle filling picture assembly based on an FPGA. The method comprises the steps that 1, coordinates and color values of the three vertexes of a triangle are obtained; 2, a first RAM and a second RAM are generated inside an image signal generator; 3, the two RAMs are initialized; 4, the horizontal coordinate and the vertical coordinate of each pixel in the three edges of a triangle filling picture are generated; 5, the minimum horizontal coordinate, corresponding to each vertical coordinate, in the effective pixel of the triangle filling picture is obtained; 6, the maximum horizontal coordinate, corresponding to each vertical coordinate, in the effective pixel in the triangle filing picture is obtained; 7, the coordinates are scanned within the range of a triangle circumscribed rectangle, whether each pixel is located in the triangle or not is judged, and the color values are given to the pixels in the triangle. The method can generate complex logic pictures like any triangle filling picture assembly through the FPGA.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

Image data processing method, program for image data processing method, recording medium with recorded program for image data processing method and image date processing device

InactiveUS8400460B2Reduce visit frequencyReduce cache capacityMemory adressing/allocation/relocationCathode-ray tube indicatorsComputer hardwareReference image

The present invention is applied to a coding device and a decoding device for moving image data in compliance with, for example, an MPEG-4AVC / ITU-T H. 264 system, in which address data is issued to specify an area that is a plurality of read units in a horizontal direction and a vertical direction, respectively, and reference image data is stored in a cache memory.

Owner:SONY CORP

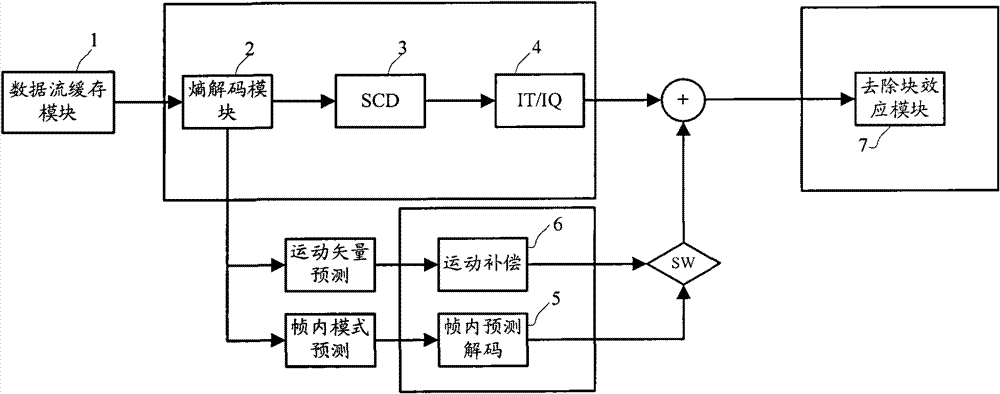

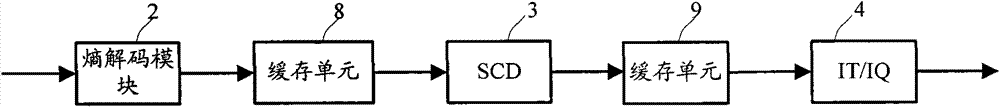

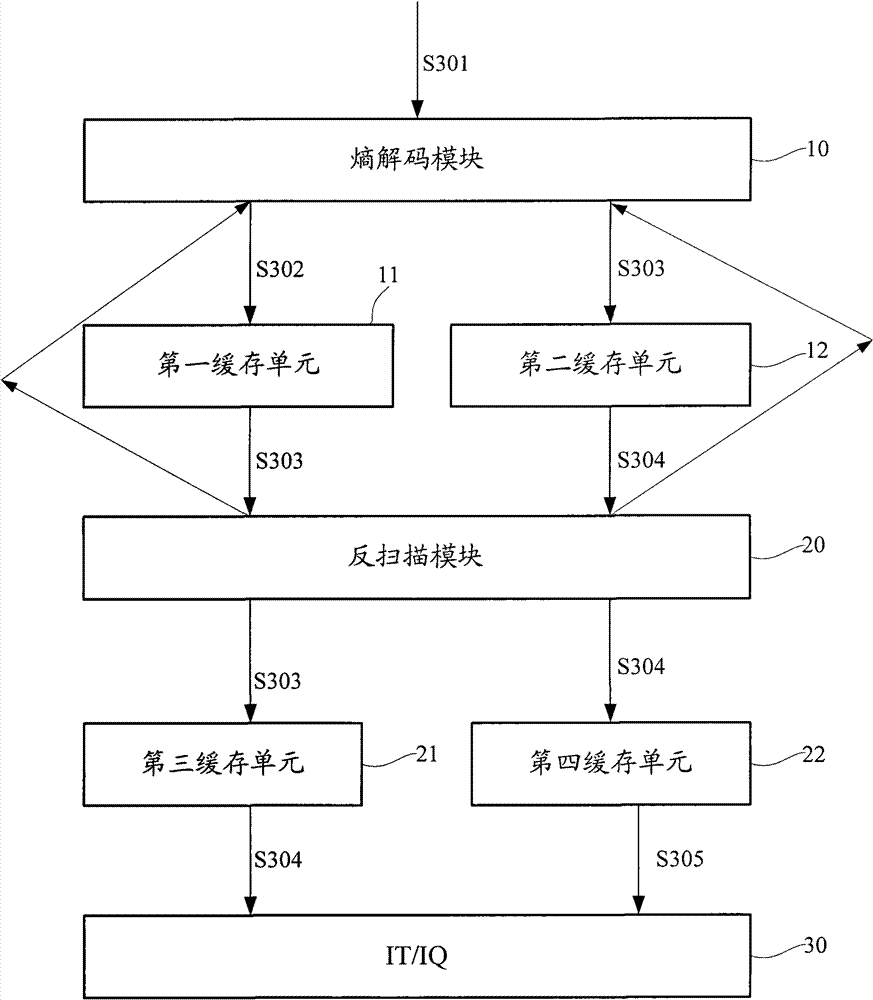

Method and system for video decoding

InactiveCN101710994BShorten the timeImprove decoding processing capabilityTelevision systemsDigital video signal modificationFrequency conversionParallel computing

The invention provides a method and a system for video decoding. The method comprises the following steps: 1, carrying out entropy decoding on first primary data, saving the data after the entropy decoding into a first cache unit and obtaining first entropy decoding data; 2, carrying out entropy decoding on second primary data, saving the data after the entropy decoding into a second cache unit and obtaining second entropy decoding data; while carrying out entropy decoding on the second primary data, extracting the first entropy decoding data, reordering residual coefficient included in the first entropy decoding data, saving the reordered result in a third cache unit and obtaining first anti-scanning data; and 3, extracting the first anti-scanning data, carrying out time-frequency conversion on the first anti-scanning data, and obtaining the first residual value corresponding to the first entropy decoding data. The method and system can reduce waiting time while carrying out data processing in video decoding process in the prior art and achieves the aim of improving the processing capacity of the video decoding system.

Owner:WUXI ZGMICRO ELECTRONICS CO LTD

Energy efficient processor having heterogeneous cache

ActiveUS8687453B2Reduce cache capacityReduce voltageMemory architecture accessing/allocationEnergy efficient ICTParallel computingError correcting

A heterogeneous cache structure provides several memory cells into different ways each associated with different minimum voltages below which the memory cells produce substantial state errors. Reduced voltage operation of the cache may be accompanied by deactivating different ways according to the voltage reduction. The differentiation between the memory cells in the ways may be implemented by devoting different amounts of integrated circuit area to each memory cell either by changing the size of the transistors comprising the memory cell or devoting additional transistors to each memory cell in the form of shared error correcting codes or backup memory cells.

Owner:WISCONSIN ALUMNI RES FOUND

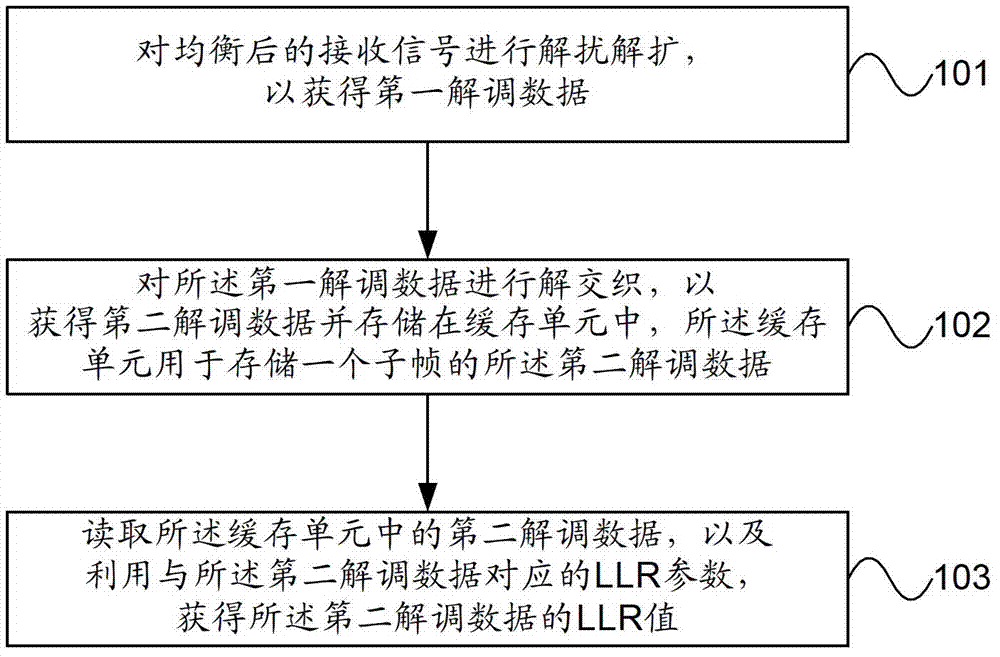

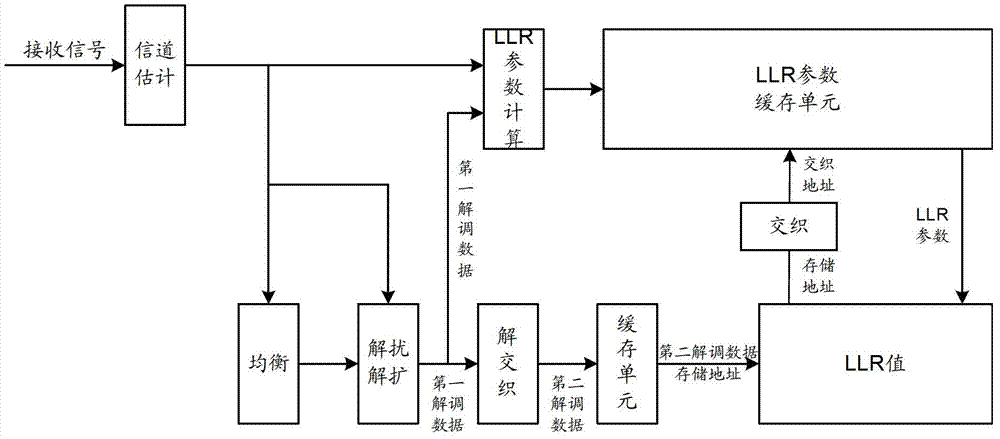

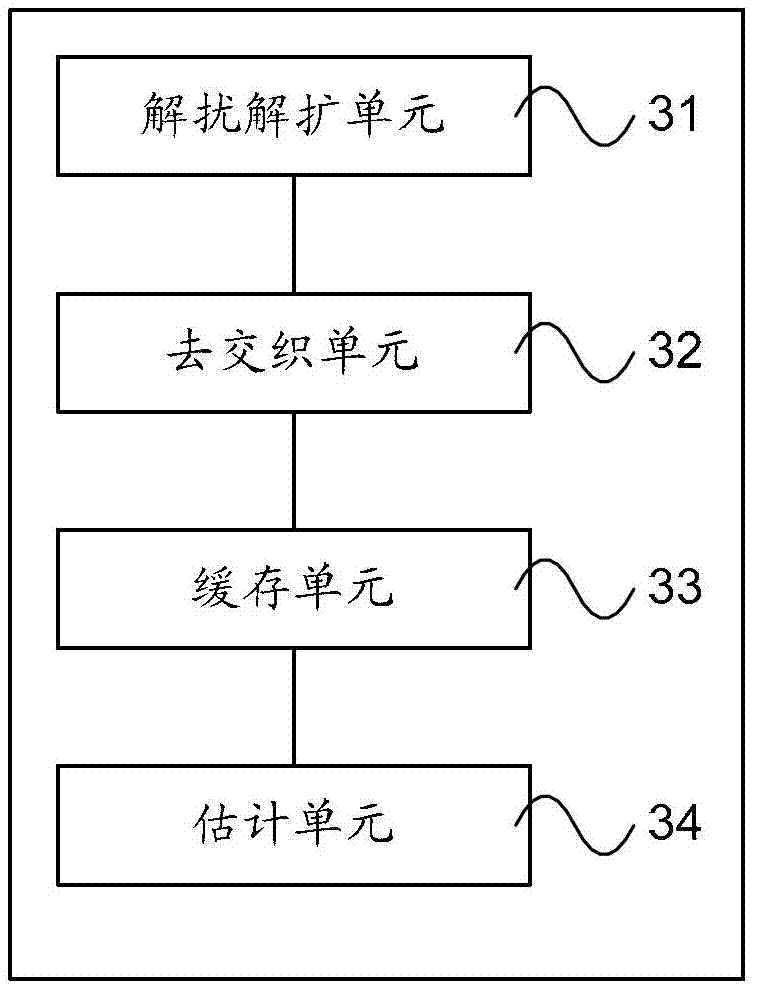

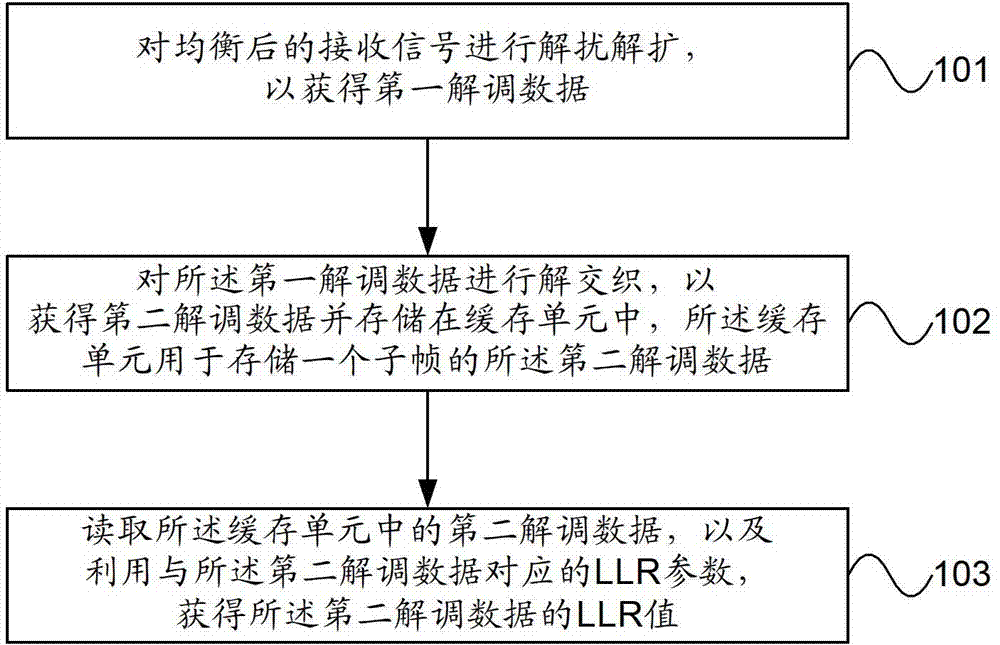

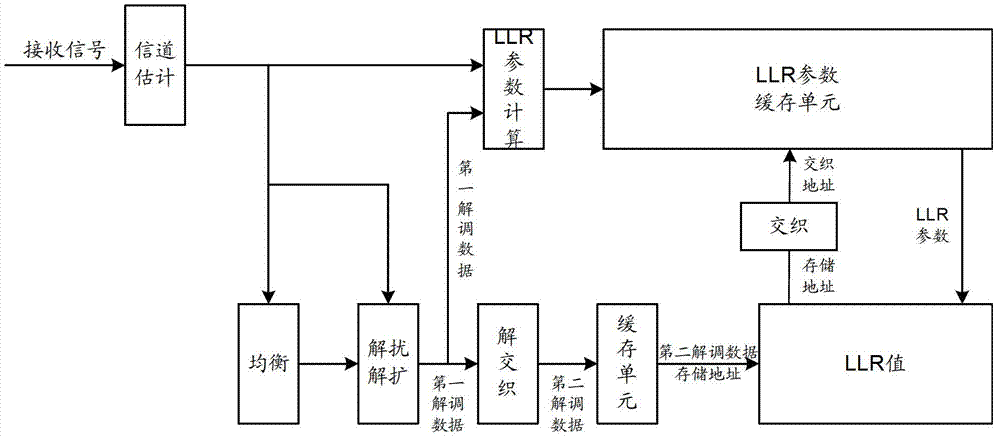

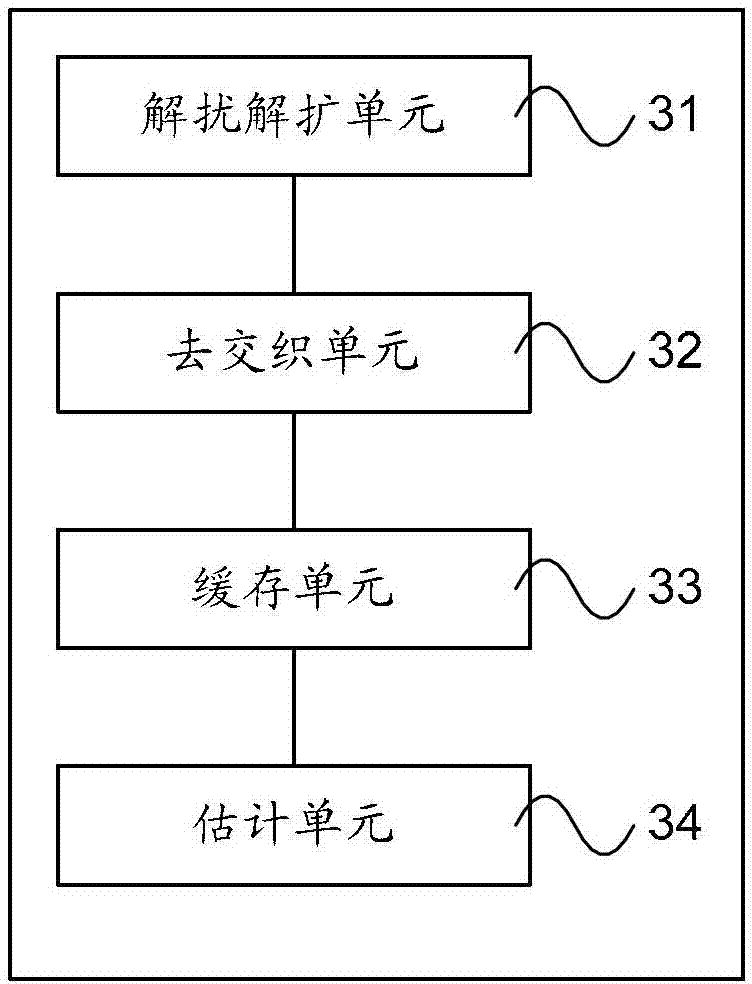

LLR processing method and receiving equipment

The invention provides an LLR processing method and receiving equipment. According to the embodiment of the invention, only the data of one subframe needs buffer memory before LLR processing, thereby saving the buffer memory size of the data of one subframe and reducing the cost of chips of the receiving equipment. In addition, the power consumption and the processing time delay of the receiving equipment can further be reduced.

Owner:HONOR DEVICE CO LTD

Display controller capable of reducing using high speed buffer store and its frame regulating method

InactiveCN1956052AReduction adjustment methodReduce cache sizeCathode-ray tube indicatorsExternal storageComputer science

A display controller used for reducing service of high speed buffer storage is prepared as fetching image data from source frame and destination frame to separately obtain the first and the second image data by storage controller, storing the second image data by the second storage, processing the first image data by frame management circuit and generating processed first image data, superposing processed first image data with the second image data in the second storage to form processed second image data, writing processed second image data back to external storage by display controller if it needs to be reprocessed.

Owner:QUANTA COMPUTER INC

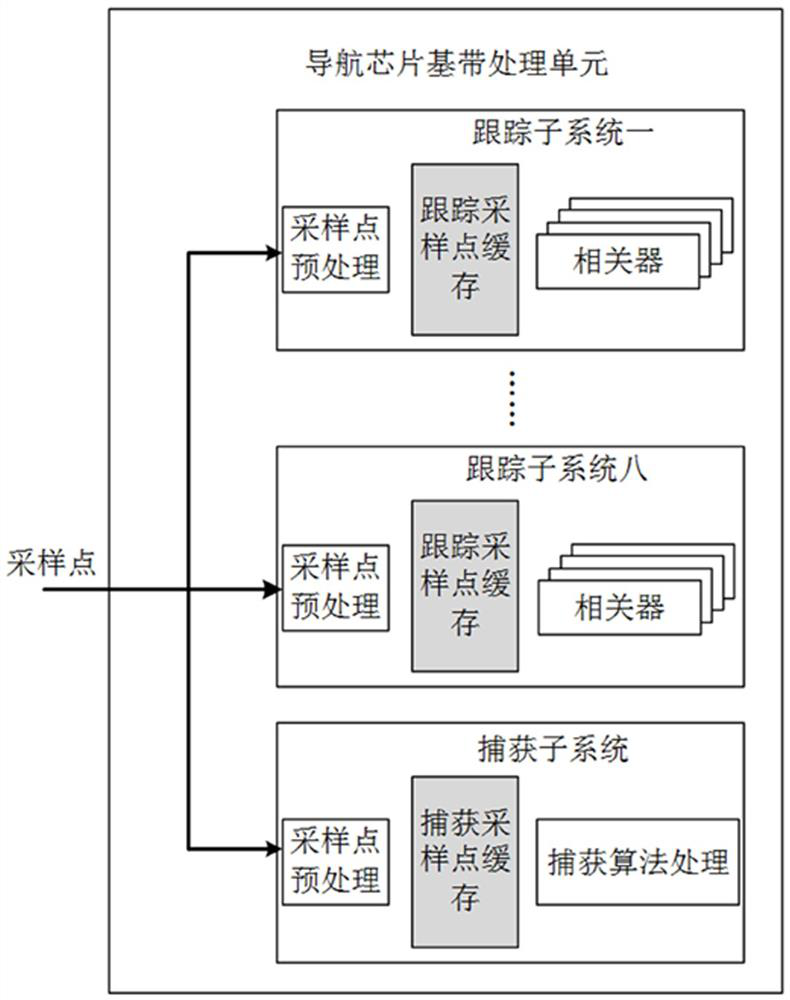

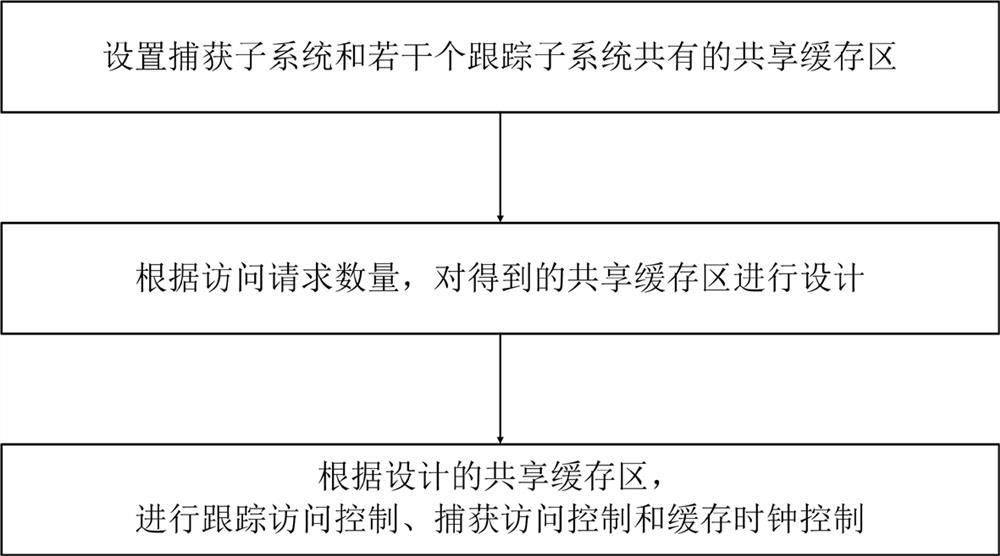

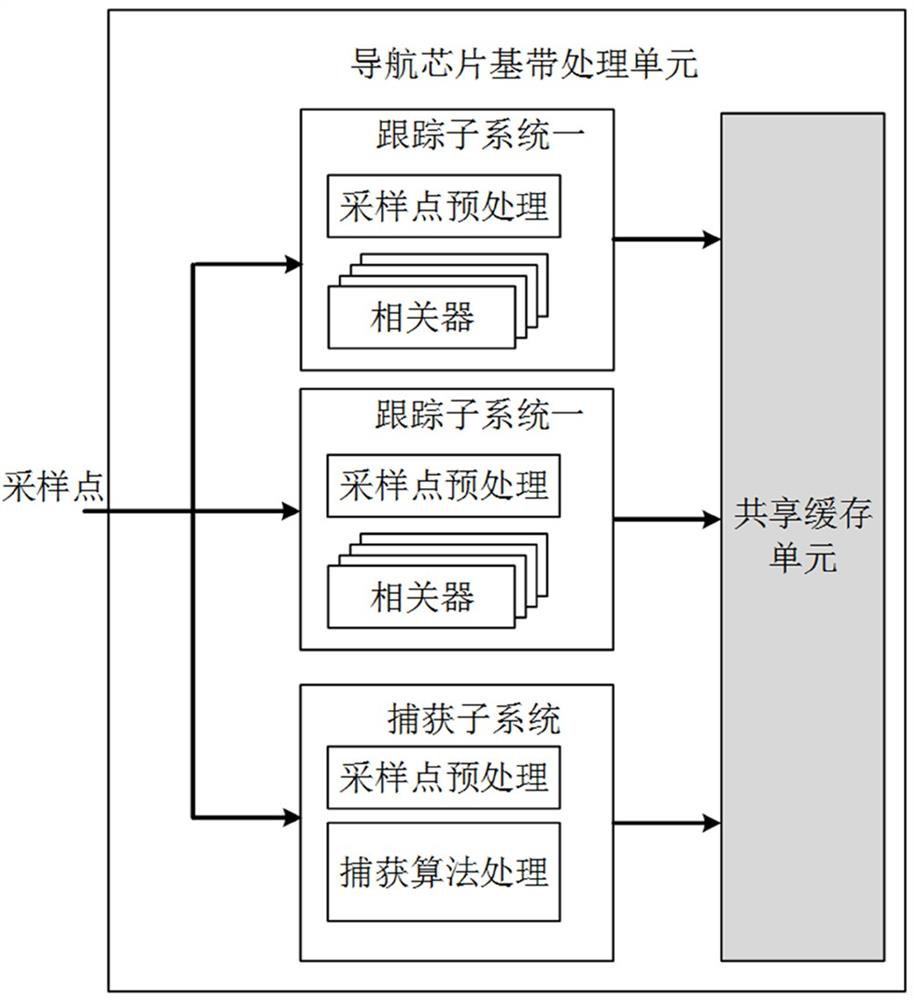

Shared cache method, baseband processing unit and chip thereof

ActiveCN111737191BReduce cache areaConducive to miniaturization designArchitecture with single central processing unitEnergy efficient computingComputer architectureMiniaturization

The invention discloses a shared cache method, which includes setting a shared cache area shared by a capture subsystem and several tracking subsystems; designing the shared cache area according to the number of access requests; performing tracking access control, capture access control and cache clock control . The invention also discloses a baseband processing unit including the shared buffer method, and a chip including the shared buffer method and the baseband processing unit. The present invention effectively improves the utilization rate of the sampling point cache and effectively reduces the cache capacity by sharing the cache unit and controlling the shared cache unit; at the same time, the present invention can effectively reduce the cache area of the chip, which is beneficial to the chip The miniaturization design; at the same time, the invention improves the utilization rate and uniformity of the buffer design, and reduces the power consumption of the buffer, and has high reliability and good practicability.

Owner:长沙金维信息技术有限公司

Method of Generating Arbitrary Triangular Filled Picture Components Based on FPGA

ActiveCN104143304BIncrease generation speedHigh speedStatic indicating devicesMaximum levelComputer graphics (images)

The invention discloses a method for generating an arbitrary triangle filling picture component based on FPGA, which includes 1. obtaining the coordinates and color values of 3 vertices of the triangle; 2. generating a first block of RAM and a second block of RAM inside the image signal generator, 3. Initialize the above 2 blocks of RAM, 4. Generate the horizontal and vertical coordinates of each pixel in the 3 sides of the triangle filling picture, 5. Obtain the minimum level corresponding to each vertical coordinate among the effective pixels of the triangle filling picture Coordinates, 6. Obtain the maximum horizontal coordinate corresponding to each vertical coordinate in the effective pixels of the triangle-filled picture, 7. Scan each coordinate point within the rectangle circumscribed by the triangle to determine whether each pixel is located in the triangle. Pixels within the triangle are assigned color values. The invention can utilize FPGA to generate complex logic pictures such as arbitrary triangle filling picture components.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

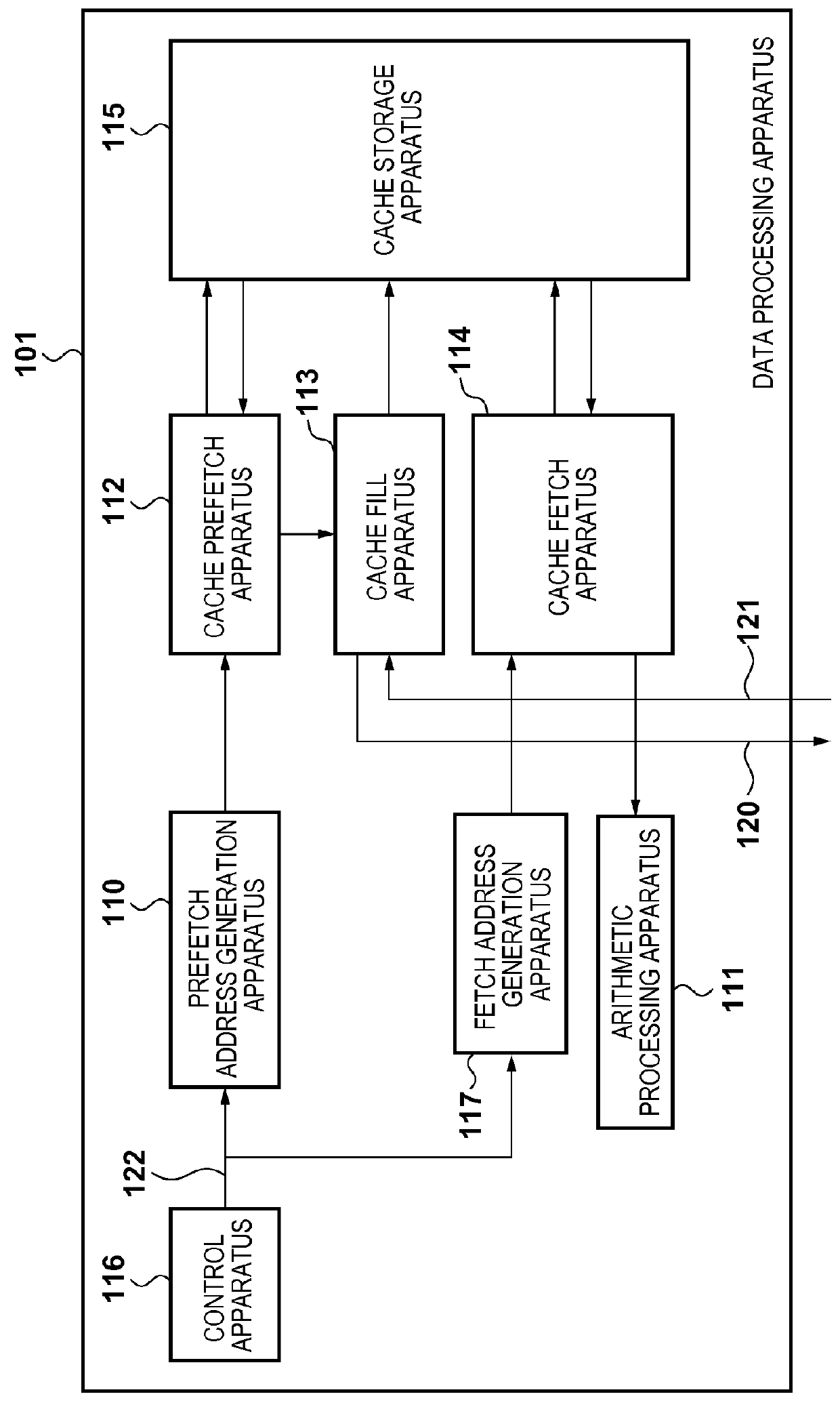

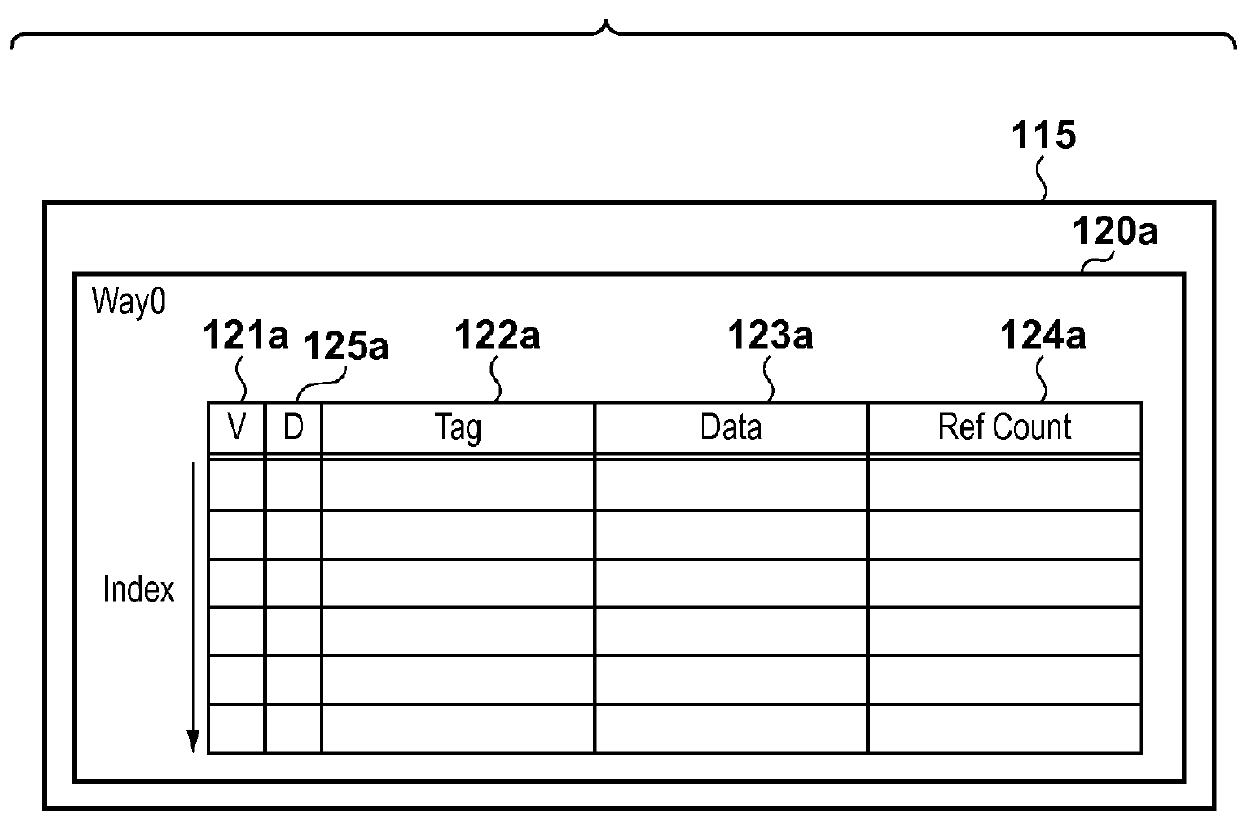

Information processing apparatus, method of controlling the same and computer-readable storage medium

ActiveUS9361235B2Reduce cache capacityEasy to useMemory architecture accessing/allocationMemory adressing/allocation/relocationInformation processingDatabase

Owner:CANON KK

Generation Method of Arbitrary Level Checkerboard Screen Components Based on FPGA

ActiveCN104143303BLow costReduce cache capacityStatic indicating devicesSignal generatorCheckerboard

The invention discloses a method for generating a checkerboard picture component of any order based on FPGA. The horizontal coordinate points are divided into multiple consecutive horizontal blocks, and the odd horizontal blocks are marked as the first mark value, and the even horizontal blocks are marked as the second mark value; the vertical coordinate points within the range of the checkerboard screen are divided into multiple continuous vertical blocks , and mark the odd-numbered vertical blocks as the first mark value, and the even-numbered vertical blocks as the second mark value; 3: Scan each coordinate point within the range of the checkerboard screen, if the horizontal mark value of the scanned coordinate point is the same as If the vertical marker values of the coordinate points are the same, then the color value of the coordinate point is set as the first color value, otherwise it is set as the second color value. The present invention can utilize FPGA to generate complex logical pictures such as checkerboard picture components of any order.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

Display controller capable of reducing using high speed buffer store and its frame regulating method

InactiveCN100447858CReduction adjustment methodReduce cache sizeCathode-ray tube indicatorsExternal storageData source

A display controller capable of reducing cache usage and a frame adjustment method thereof. The display controller includes a memory controller, a first memory, a second memory and a frame management circuit. The memory controller reads the image data of the source frame to obtain the first image data, and reads the image data of the destination frame to obtain the second image data. The first memory is used for storing the first image data. The second memory is used for storing the second image data. The frame management circuit is used for processing the first image data to generate a processed first image data, so that the processed first image data is superimposed on the second image data in the second memory to obtain the processed second image data . If the processed second image data needs to be reprocessed, the display controller writes the processed second image data back to the external memory.

Owner:QUANTA COMPUTER INC

LLR processing method and receiving equipment

The invention provides an LLR processing method and receiving equipment. According to the embodiment of the invention, only the data of one subframe needs buffer memory before LLR processing, thereby saving the buffer memory size of the data of one subframe and reducing the cost of chips of the receiving equipment. In addition, the power consumption and the processing time delay of the receiving equipment can further be reduced.

Owner:HONOR DEVICE CO LTD

Memory system and operation method thereof

ActiveUS11068335B2Efficient managementReduce cache capacityMemory architecture accessing/allocationUnauthorized memory use protectionProgram segmentComputer architecture

A memory system may include a first memory device including a first input / output buffer, a second memory device including a second input / output buffer, and a cache memory suitable for selectively and temporarily storing first and second data to be respectively programmed in the first and second memory devices. The first data is programmed to the first memory device in a first program section by being stored in the cache memory only in a first monopoly section of the first program section. The second data is programmed to the second memory device in a second program section by being stored in the cache memory only in a second monopoly section of a second program section. The first monopoly section and the second monopoly section are set not to overlap each other.

Owner:SK HYNIX INC

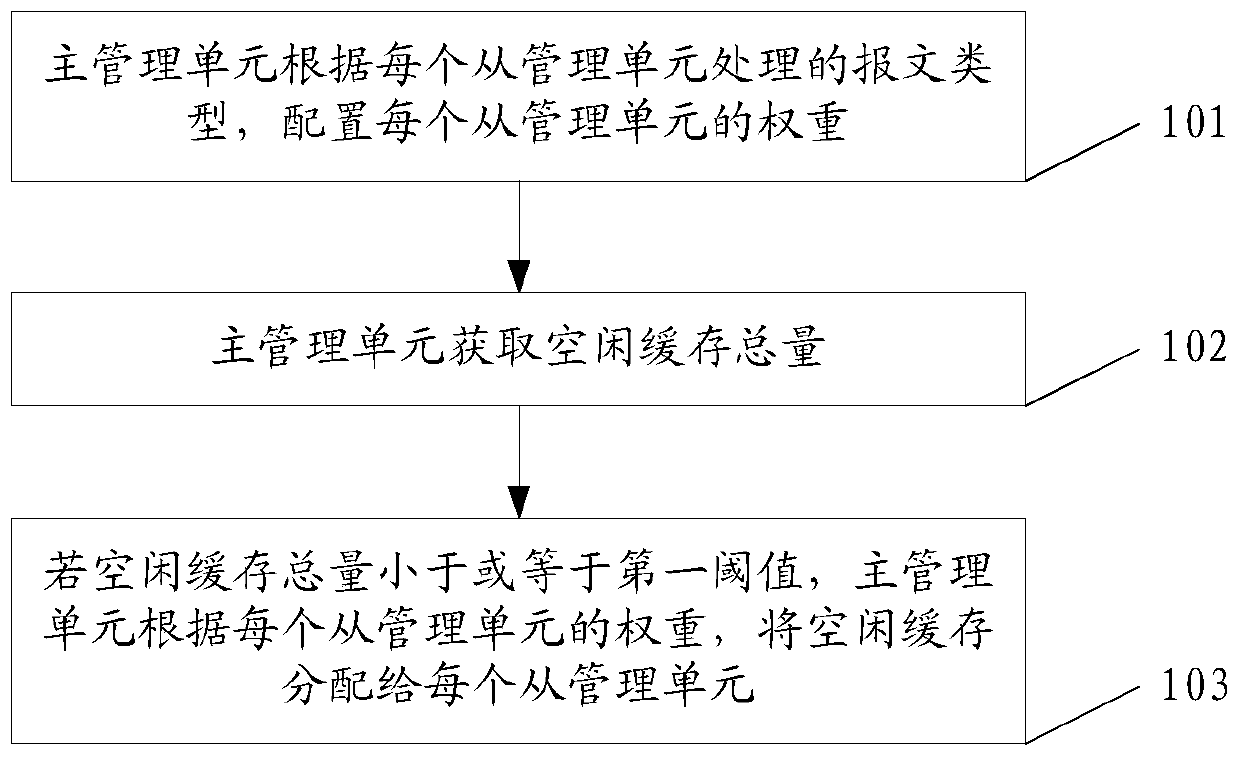

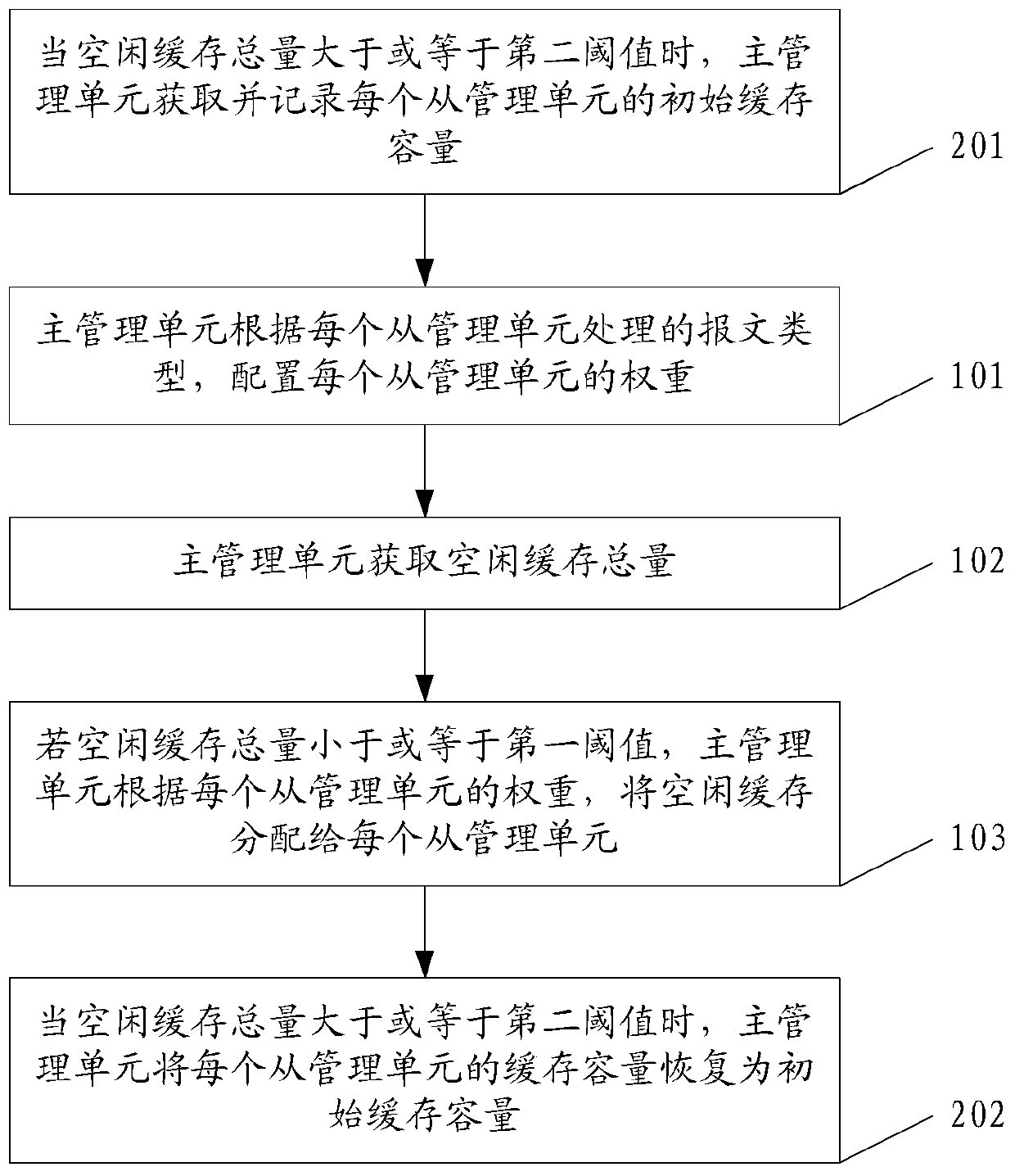

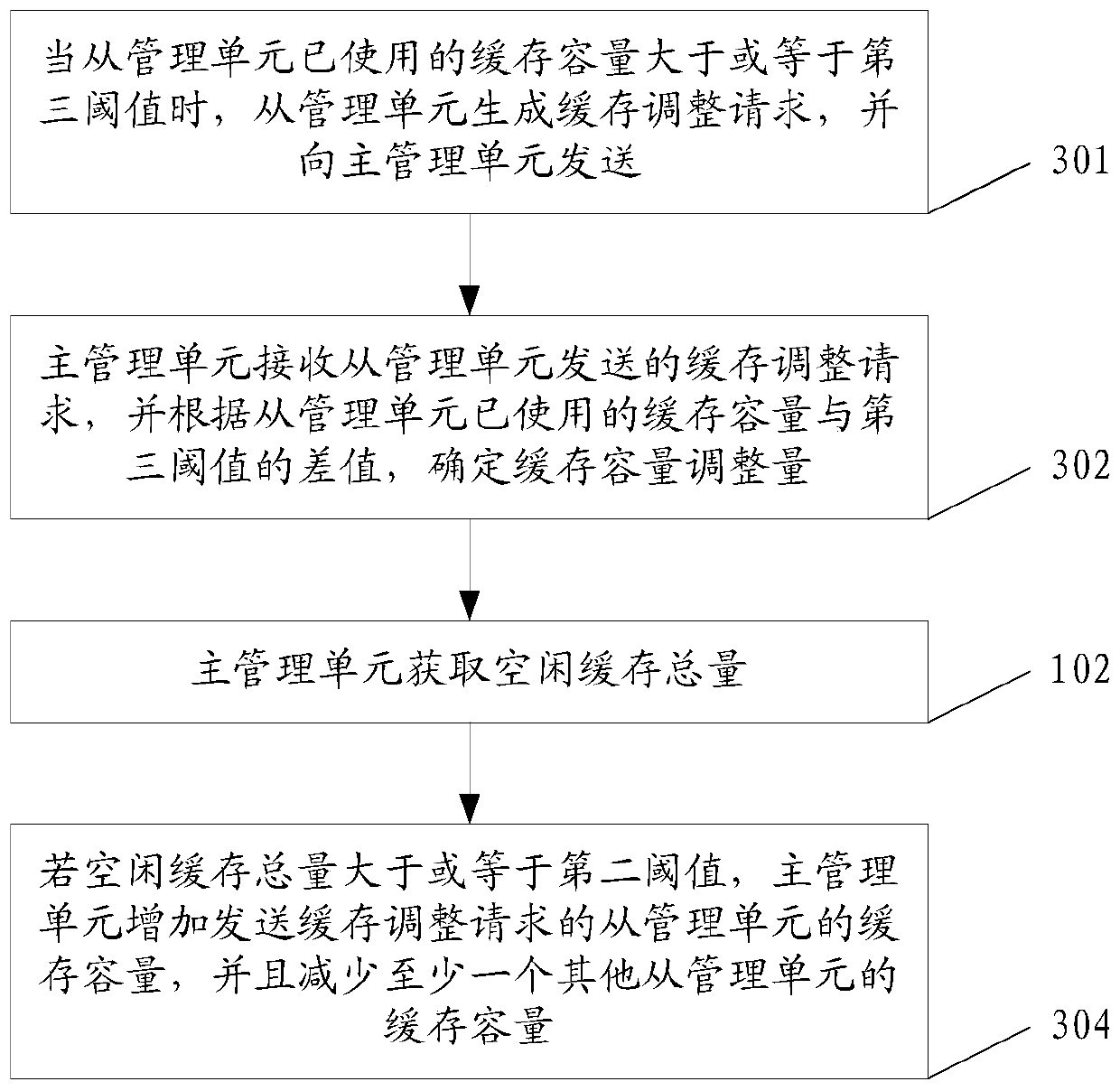

Method and device for managing cache

ActiveCN107302505BLarge cache capacityReduce cache capacityData switching networksManagement unitCache management

The application discloses a cache management method and device, which relate to the technical field of communication networks and can solve the technical problem of poor cache reliability. The device includes a master management unit and at least two slave management units, each slave management unit is used to process a type of message, and the method includes: if the total amount of free cache is less than or equal to a first threshold, the master management unit According to the weight of each slave management unit, assign the idle cache to each slave management unit, and the free cache capacity allocated to each slave management unit is positively related to its weight; or when the cache capacity used by the slave management unit is greater than or When it is equal to the third threshold, if the total amount of free cache is greater than or equal to the second threshold, the master management unit increases the cache capacity of the slave management unit, temporarily increases the ability of the slave management unit to occupy the shared cache, and reduces at least one other slave management unit. The cache capacity of the unit, where increased cache capacity is equal to decreased cache capacity. The solution provided by this application is suitable for managing shared cache.

Owner:MAIPU COMM TECH CO LTD

A Fully Pipelined Multiply-Add Cell Array Circuit for Convolutional Neural Networks

ActiveCN112346704BIncrease the time multiplexing rateAchieve flowDigital data processing detailsNeural architecturesMultiplexingAlgorithm

Owner:HUAZHONG UNIV OF SCI & TECH

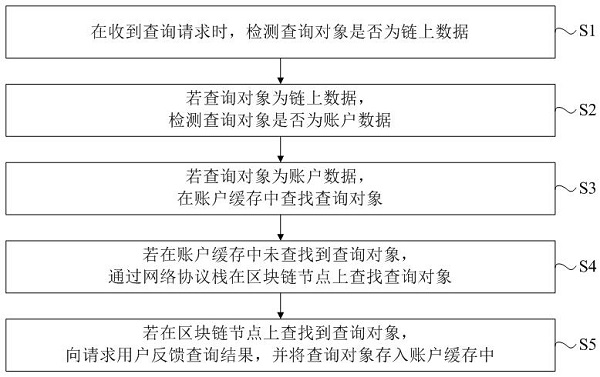

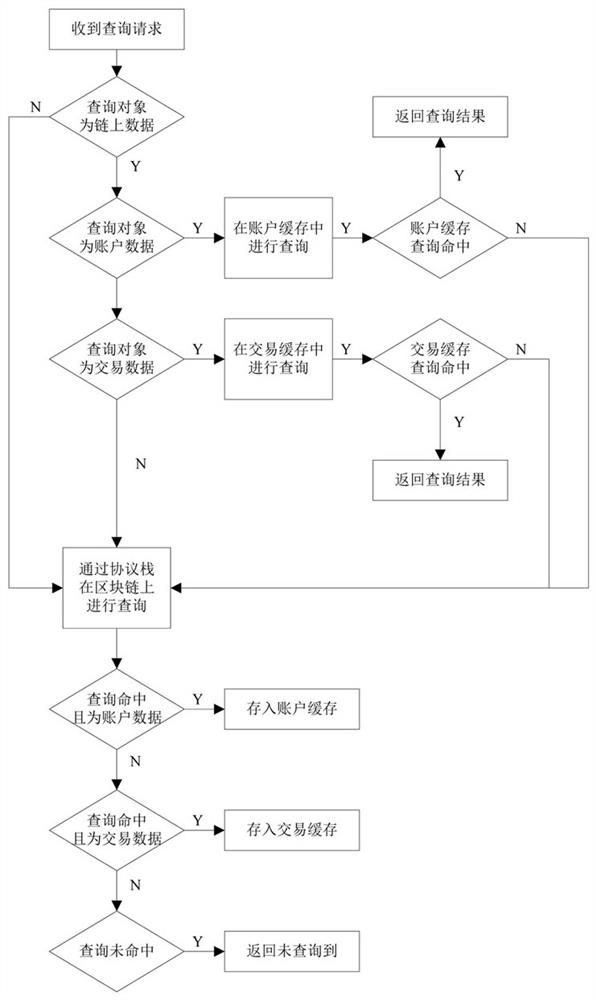

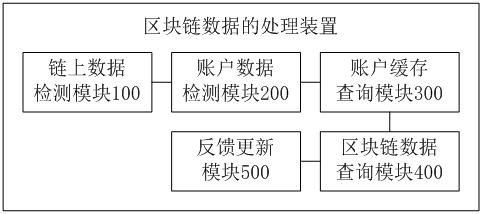

Method and device for processing blockchain data

ActiveCN114490781BExtended service lifeShort timeDatabase updatingSpecial data processing applicationsNetworking protocolProtocol stack

An embodiment of the present disclosure discloses a method and apparatus for processing blockchain data, wherein the processing method includes: when a query request is received, detecting whether the query object is data on the chain, wherein the query request Including the requesting user and the query object; if the query object is on-chain data, detect whether the query object is account data; if the query object is account data, look up the query object in the account cache; If the query object is not found in the account cache, the query object is searched on the blockchain node through the network protocol stack; if the query object is found on the blockchain node, the requesting user is sent to the requesting user. The query result is fed back, and the query object is stored in the account cache. The embodiments of the present disclosure can improve the data processing efficiency of the block node.

Owner:CHINA ACADEMY OF INFORMATION & COMM

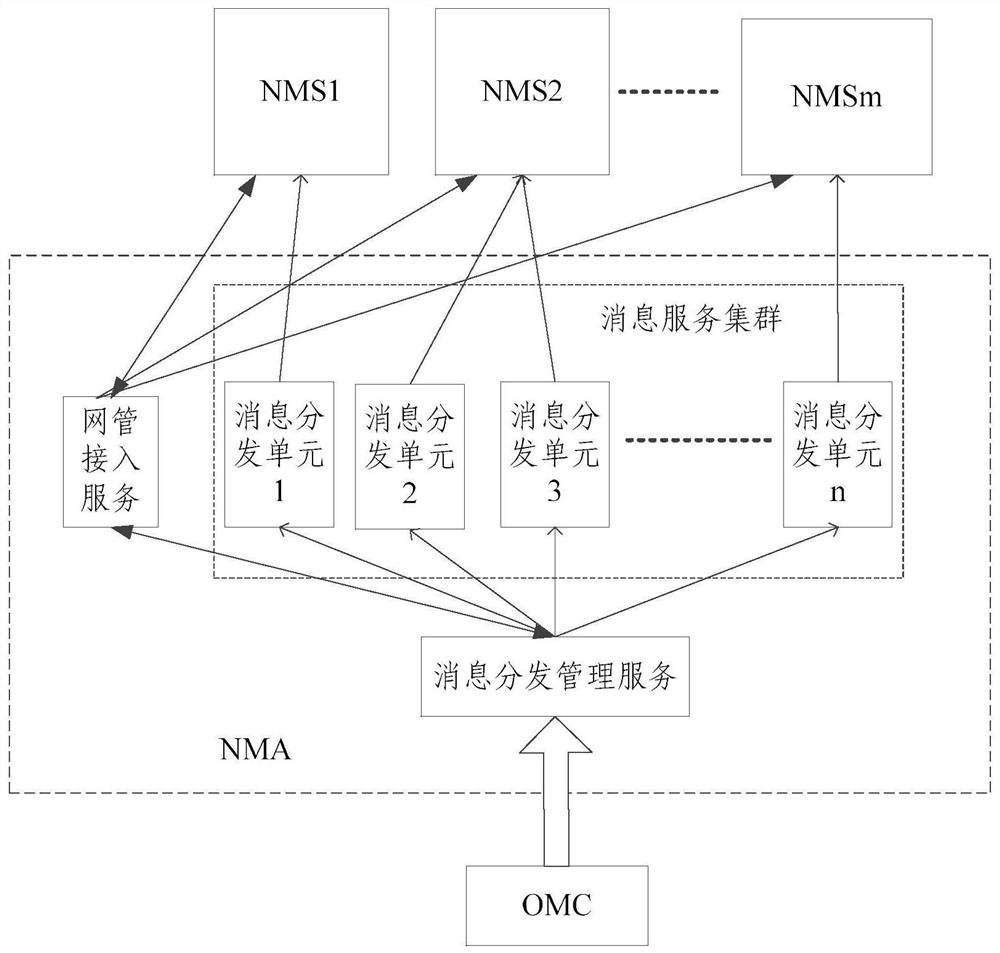

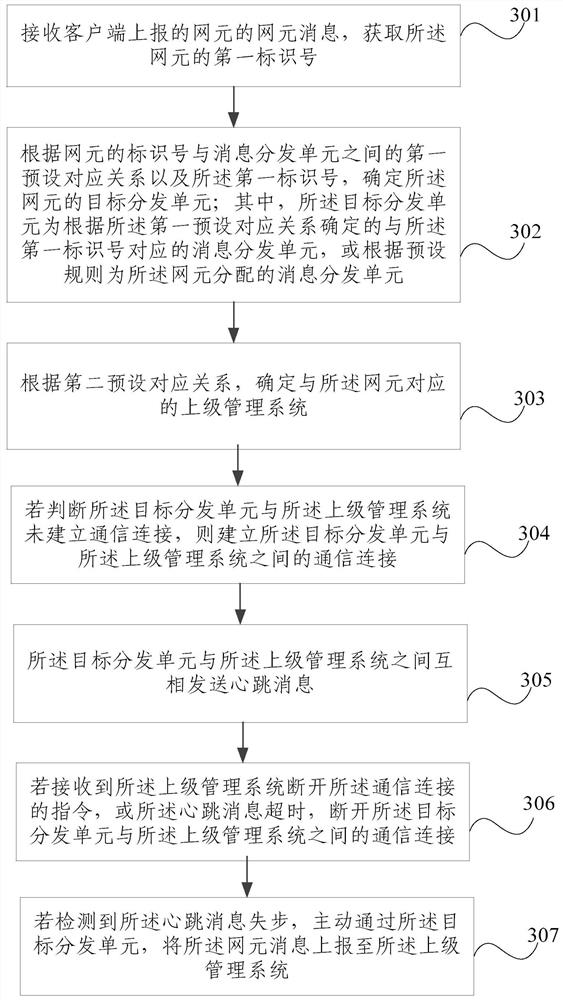

Message interaction method and device

ActiveCN111371573BReduce resource consumptionReduce management pressureData switching networksMessage handlingEngineering

Owner:DATANG MOBILE COMM EQUIP CO LTD

flicker screen component generation method based on fpga

ActiveCN104200765BHigh speedImprove fluencyStatic indicating devicesImage resolutionSignal generator

The invention discloses a method for generating a Flicker picture component, comprising 1. determining the horizontal and vertical points of the Flicker picture lattice in a host computer, determining the coordinates of the vertices of the picture, and filling each point with color; 3. The data analysis module transmits the analyzed data to the image signal generator; 4. In the RAM of the image signal generator, the number of each pixel of the screen dot matrix is Address, write color; 5. Scan the corresponding area of the screen in the image signal generator, and calculate the address mapped in the screen dot matrix for each pixel in the scanning area; 6. Use the dot matrix of each pixel in the scanning area The address mapped in is the RAM read address, read the color value of each pixel of the screen dot matrix, and assign a color to each pixel. The present invention can utilize FPGA to generate complicated logic picture such as Flicker picture.

Owner:WUHAN JINGCE ELECTRONICS GRP CO LTD

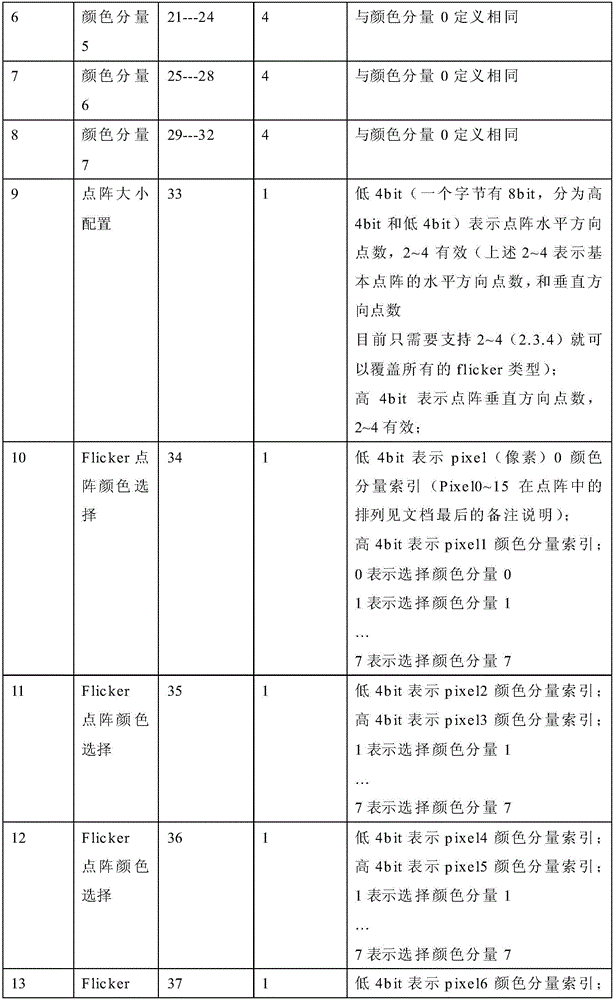

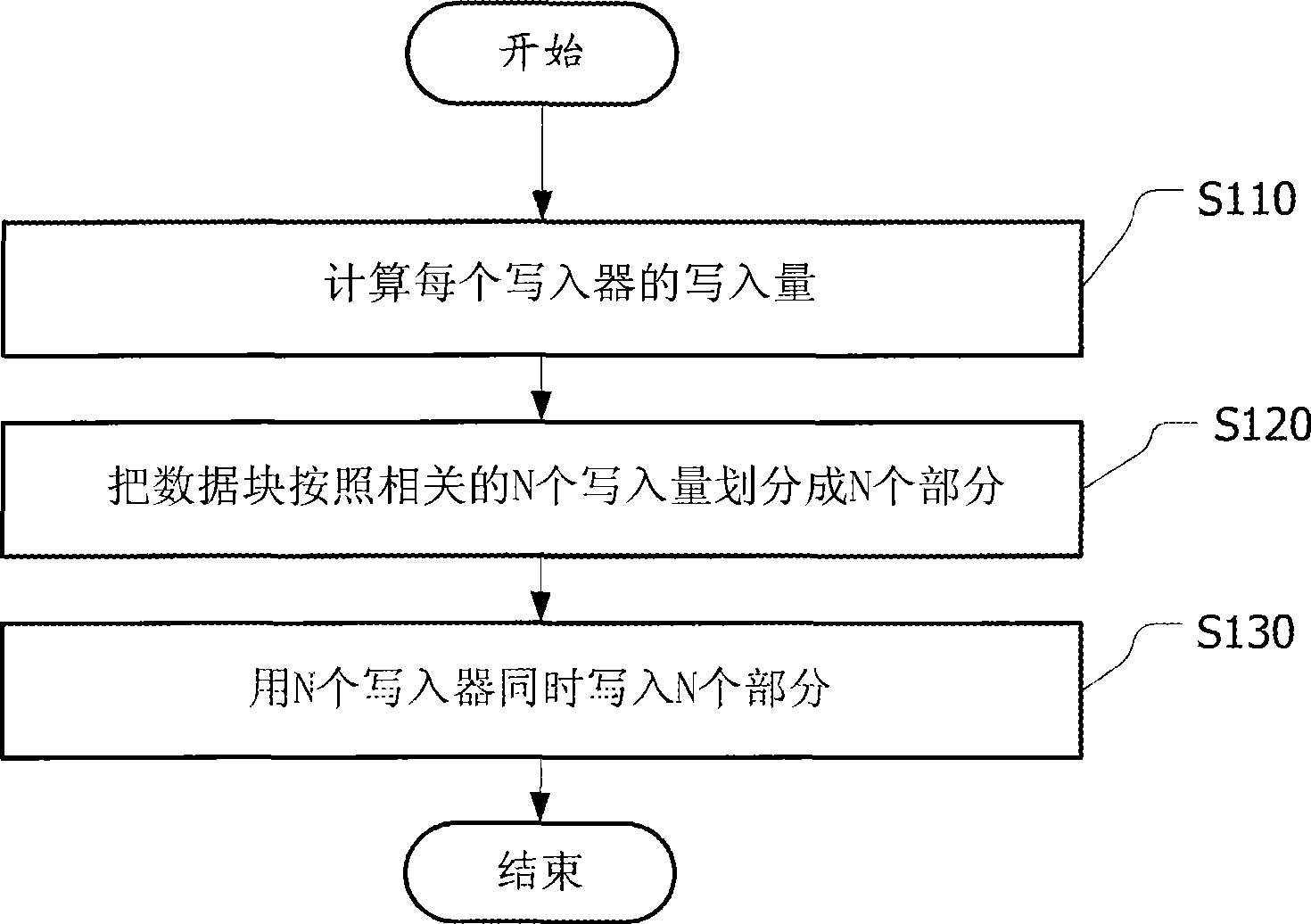

Method and apparatus for writing/reading an information carrier and such an information carrier

InactiveCN101401164AShorten the timeHigh data rateData buffering arrangementsRecord information storageAlgorithmData rate

The present invention proposes a methods and apparatus for writing / reading a data block onto / from an information carrier with M-spots schemes and such an information carrier. The method comprises the steps of dividing the data block into N portions according to predetermined writing capacities for each one of the N writers of the M-spots schemes, N and M being integers not less than 2 and N=M, and writing simultaneously the N portions of data onto the information carrier with the N writers correspondingly. Since all the writers are processing different portions of one data block, the data rate of processing one data block is increased almost N times. Therefore the period that the certain buffer capacity occupied by a data block is decreased substantially, total buffering capacity may be decreased, that means the cost of the schemes is dropped substantially.

Owner:KONINK PHILIPS ELECTRONICS NV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com