Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

234results about How to "Reduce access pressure" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

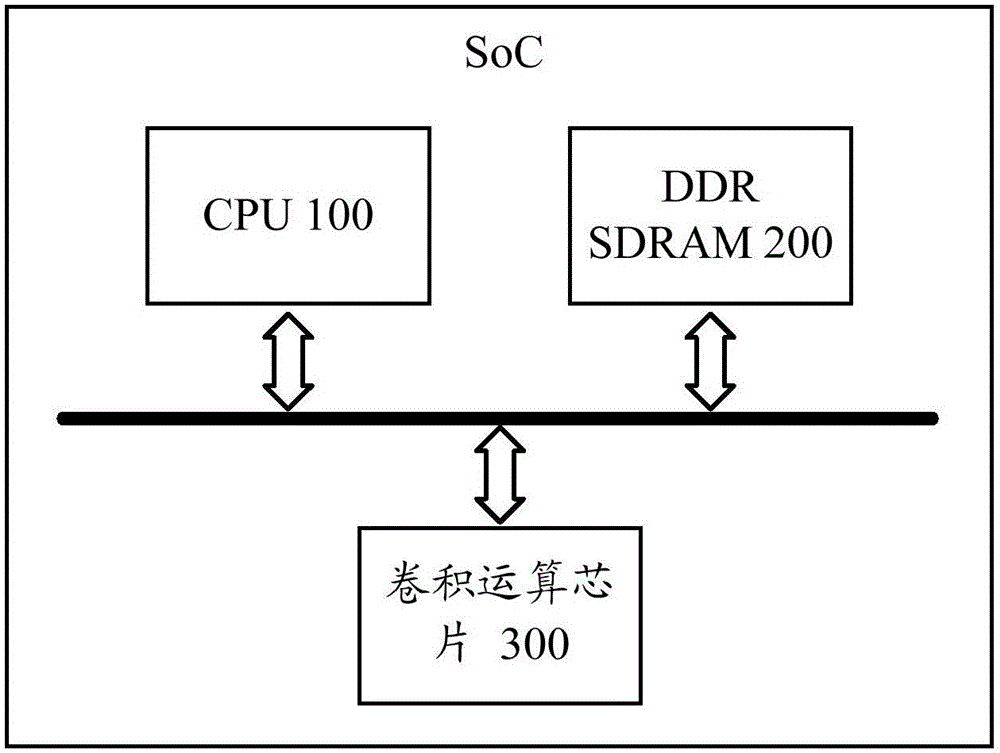

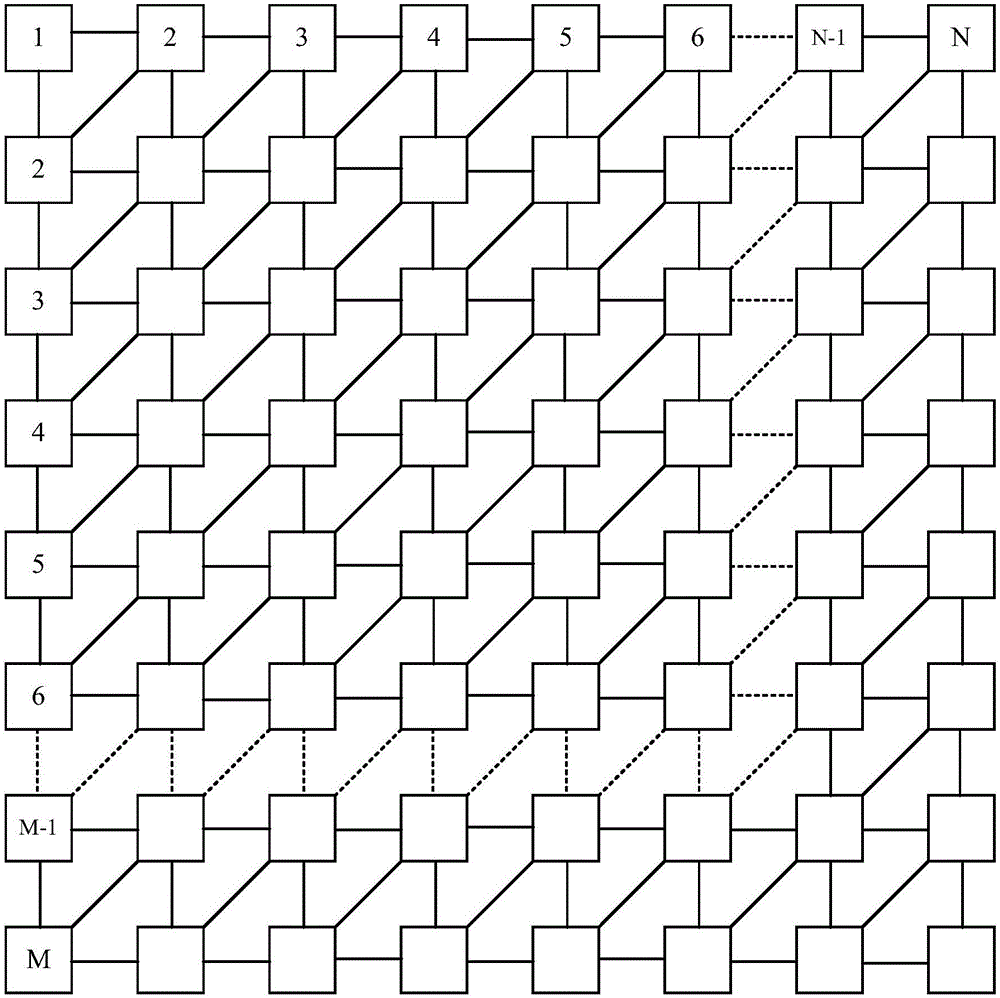

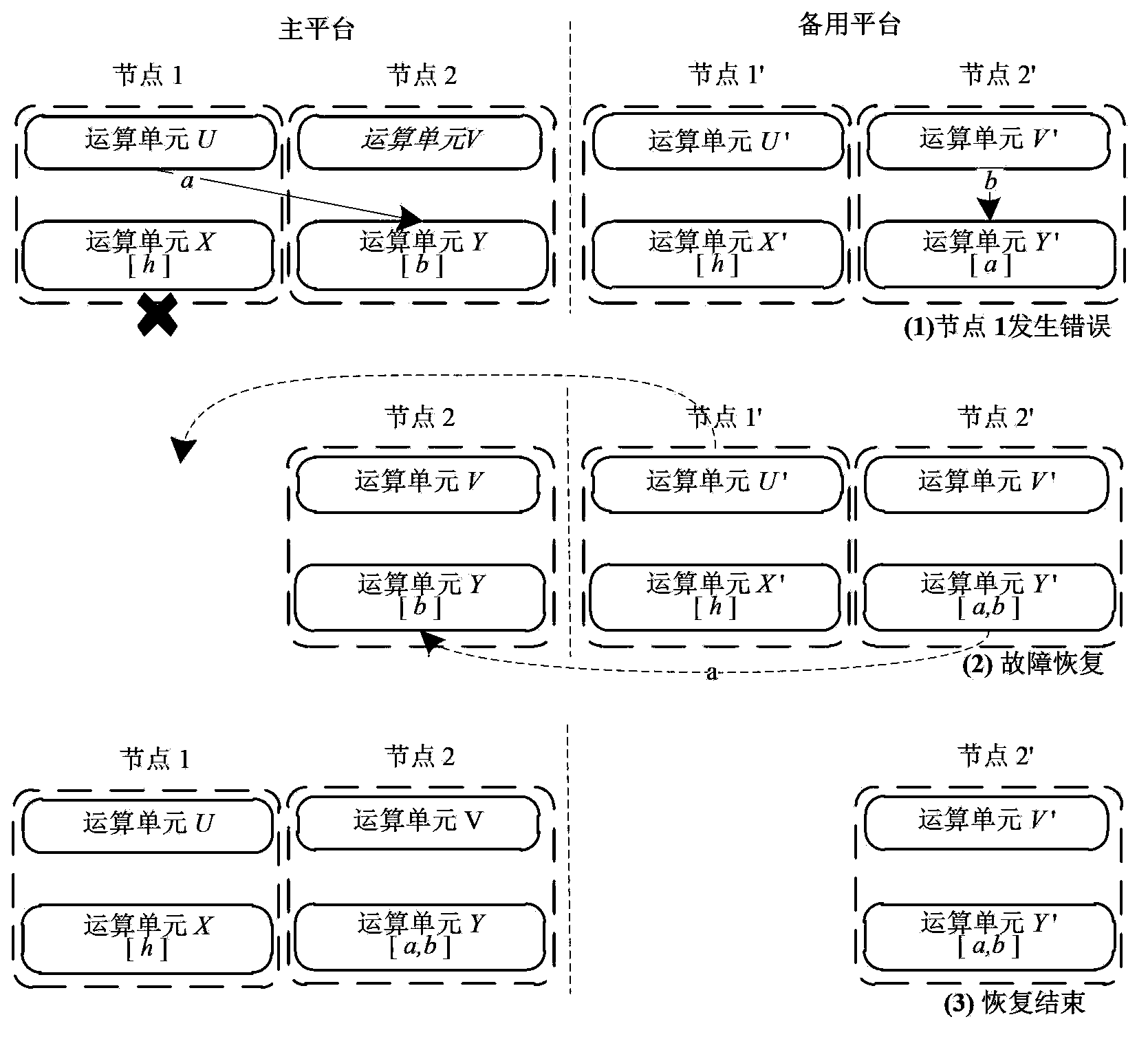

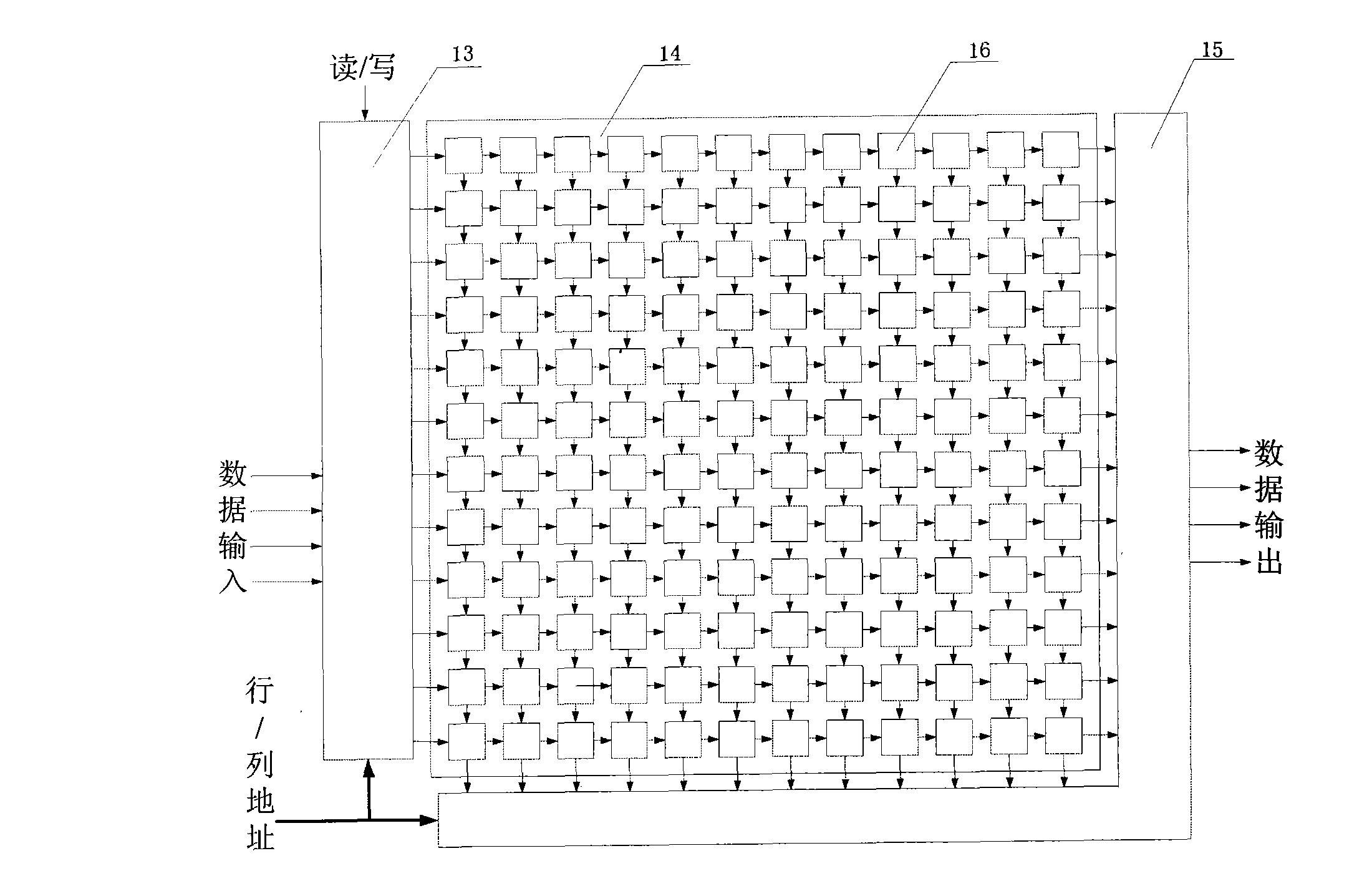

Convolution operation chip and communication equipment

ActiveCN106844294AReduce visitsReduce access pressureDigital data processing detailsBiological modelsResource utilizationComputer module

The invention provides a convolution operation chip and communication equipment. The convolution operation chip comprises an M*N multiplier accumulator array, a data cache module and an output control module; the M*N multiplier accumulator array comprises a first multiplier accumulator window, and a processing unit PE<X, Y> of the first multiplier accumulator window is used for conducting multiplication operation on convolution data of the PE<X, Y> and convolution parameters of the PE<X, Y> and transmitting the convolution parameters of the PE<X, Y> to PE<X, Y+1> and transmitting the convolution data of the PE<X, Y> to PE<X-1, Y+1> to serve as multipliers of multiplication operation between the PE<X, Y+1> and the PE<X-1, Y+1>; the data cache module is used for transmitting the convolution data and the convolution parameters to the first multiplier accumulator window; the output control module is used for outputting a convolution result. According to the convolution operation chip and the communication equipment, the RAM access frequency can be decreased and the RAM access stress can be relieved while the array resource utilization rate is increased.

Owner:HUAWEI MACHINERY

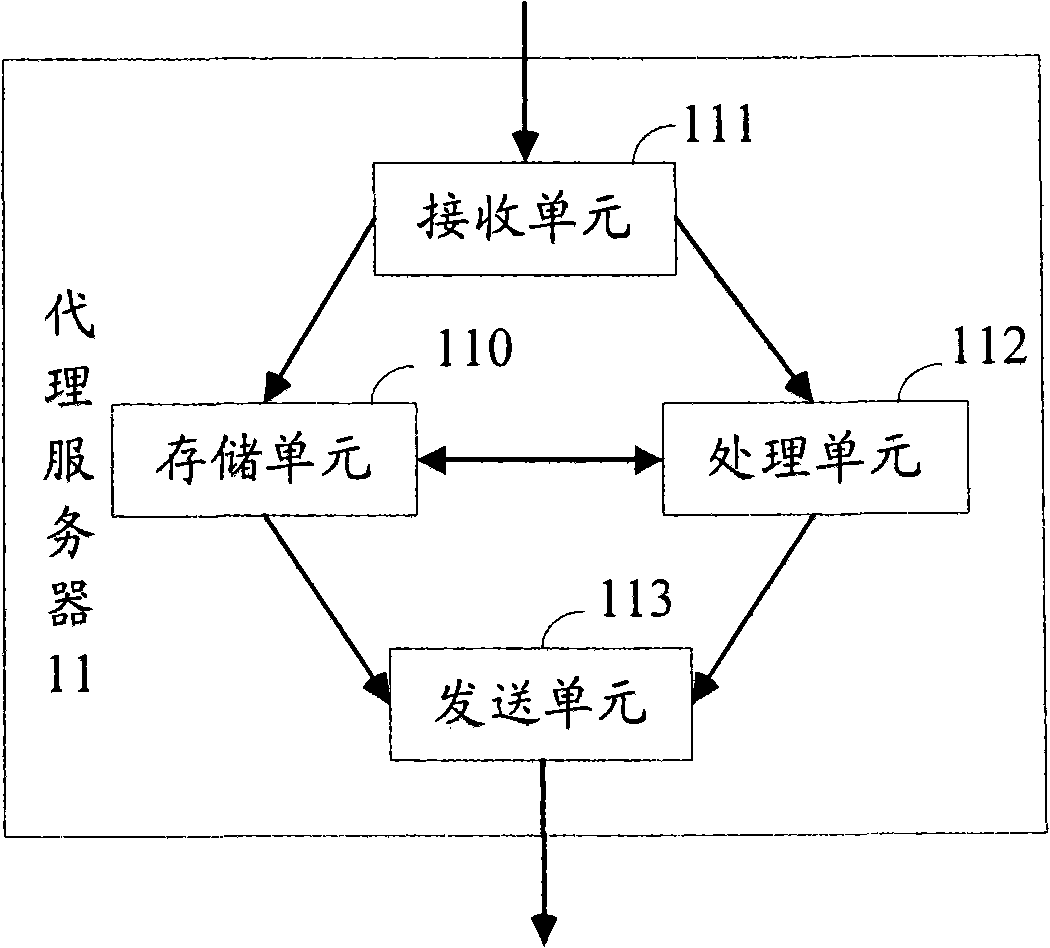

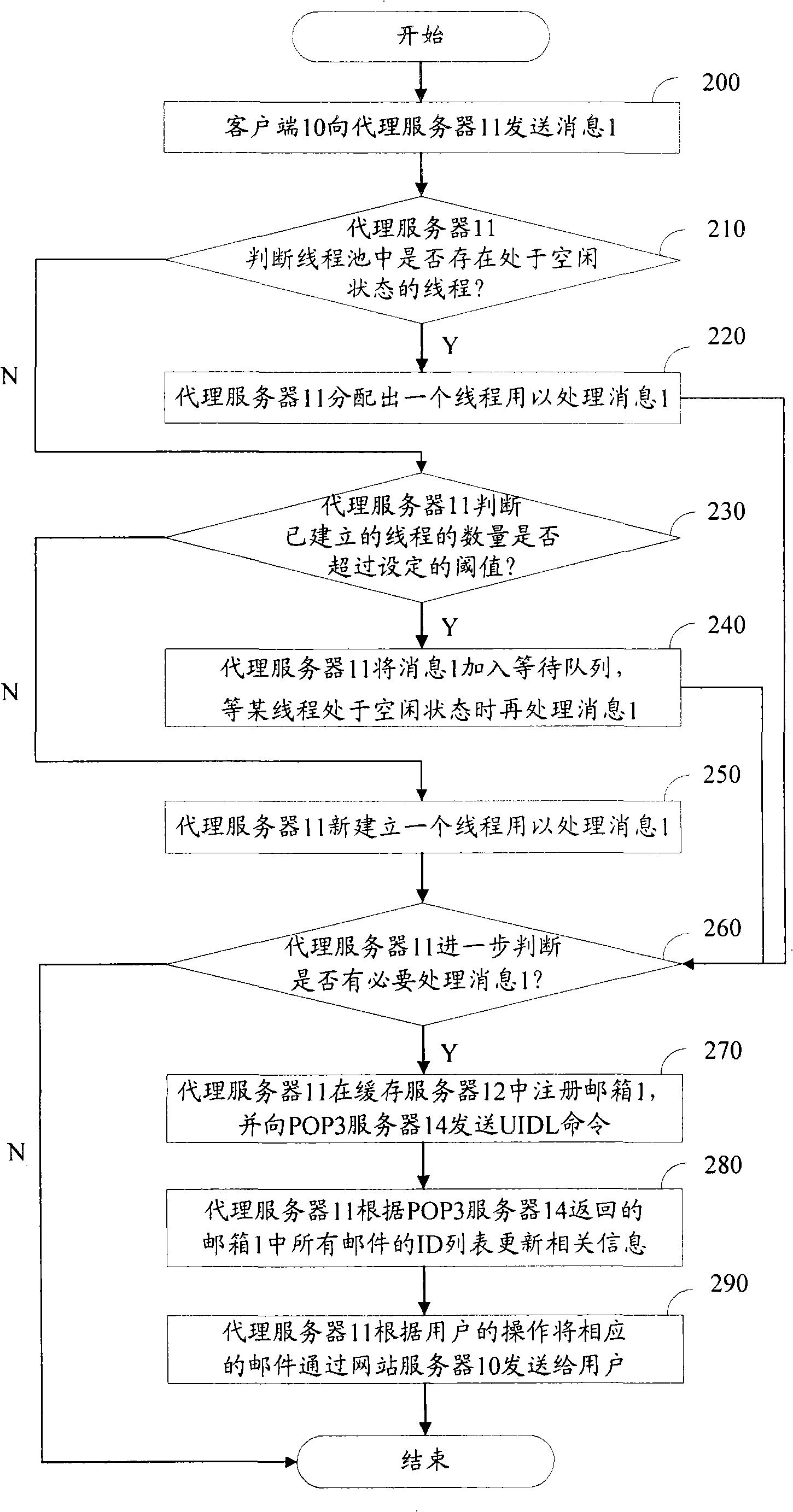

Method, apparatus and system for processing electronic mail

ActiveCN101325561AImprove experienceReduce access pressureMultiprogramming arrangementsData switching networksWeb siteEngineering

The invention discloses a method for processing e-mail, including that: users register a website server, and transmits a request message for receiving mail to an agent server through the website server, wherein, the request message carries mailbox identifications of at least one electric mailbox bond to users; the agent server downloads mails from a corresponding mail server according to the mailbox identification carried in the request message, and than transmits the updated mail information to users through the website server; the agent server transmits the appointed mail according to a user's selection to the user through the website server. So that users can directly receive mails from one or more mail server by the website server, accordingly reducing the operating complexity of users, and improving users' experience in a certain extent. The invention discloses a communication apparatus and a communicating system.

Owner:ALIBABA GRP HLDG LTD

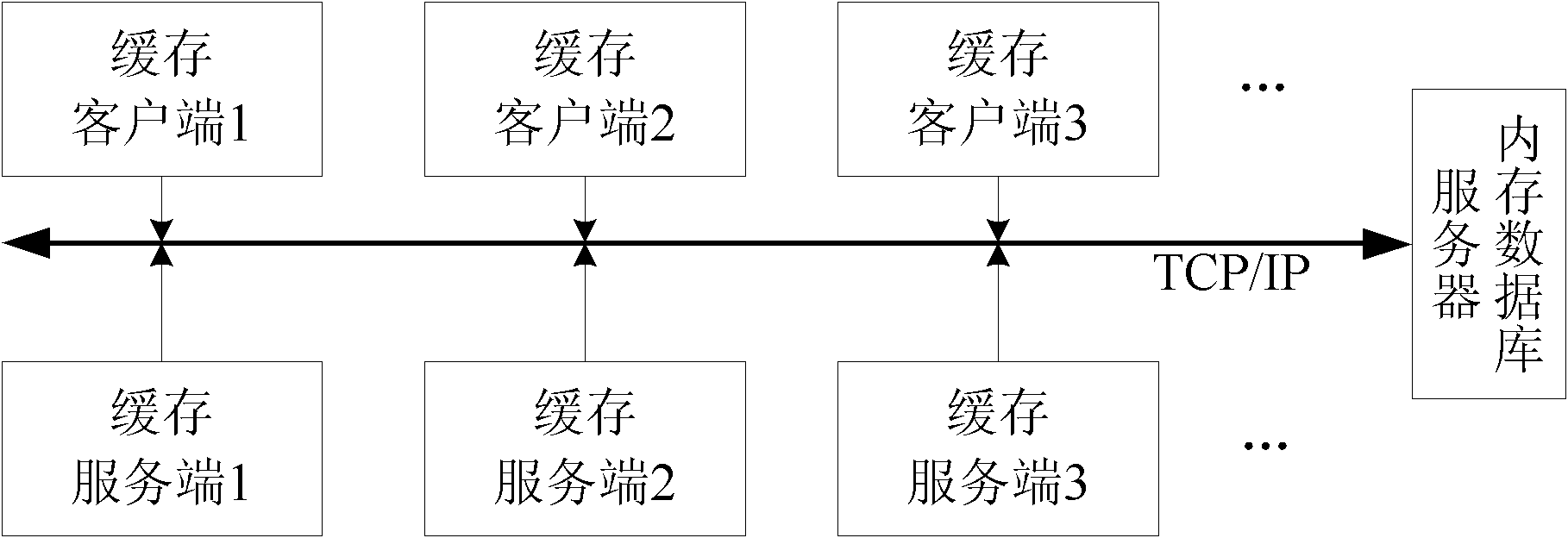

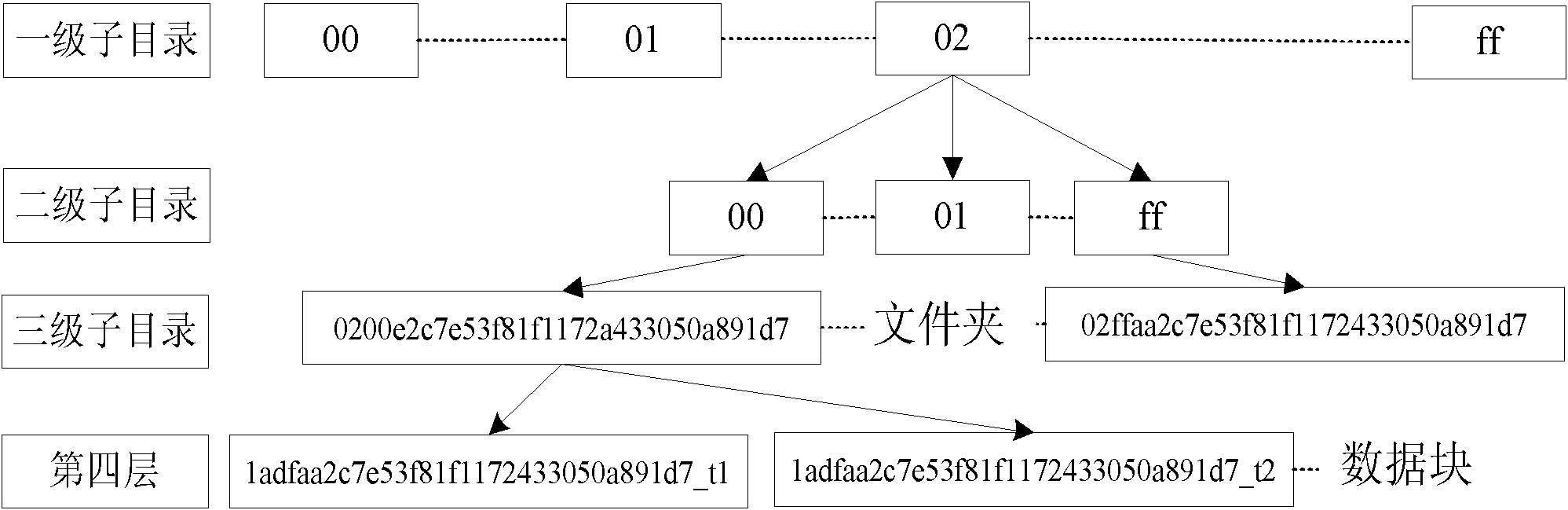

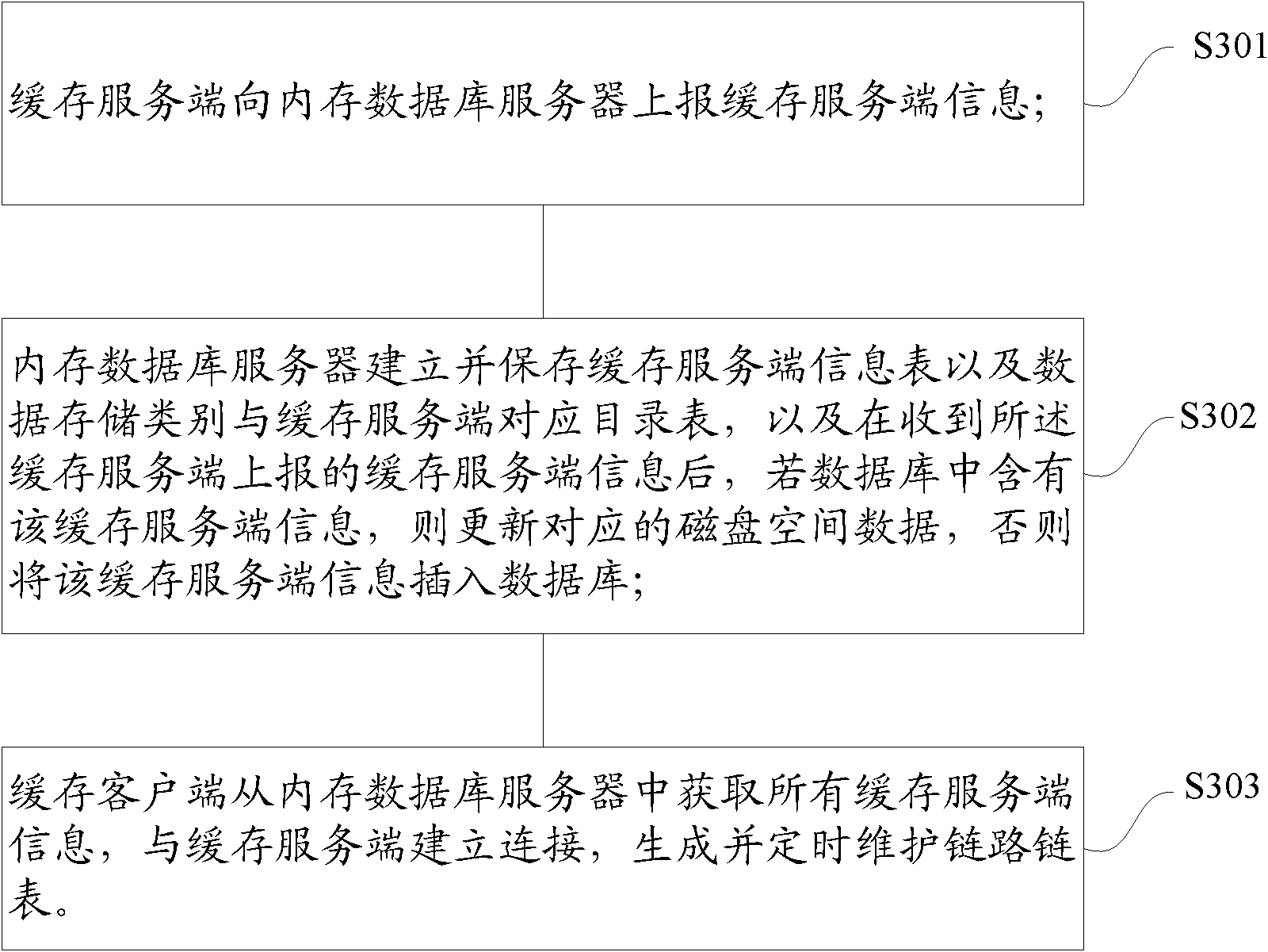

Distributed cache server system and application method thereof, cache clients and cache server terminals

ActiveCN102739720AEasy to set upFast accessInformation formatContent conversionClient-sideDatabase server

Provided in the invention is a distributed cache server system, comprising: cache clients, which are used for obtaining all cache server terminal information from a main memory database server, establishing connection with cache server terminals and generating and regularly maintaining links and link tables; the main memory database server, which is used for establishing and preserving a cache server terminal information table and a catalogue table of correspondence of data storage type and cache server terminals and carrying out processing on the received cache server terminal information reported by the cache server terminals; and cache server terminals, which are used for reporting the cache server terminal information to the main memory database server and completing management of cache data blocks. In addition, the invention also provides an application method of the distributed cache server system, cache clients and cache server terminals. With utilization of the above-mentioned technical scheme, the arrangement and the usage of the cache server system become concise and convenient; the access speed is fast; and the system can be extended and updated automatically.

Owner:ZTE CORP

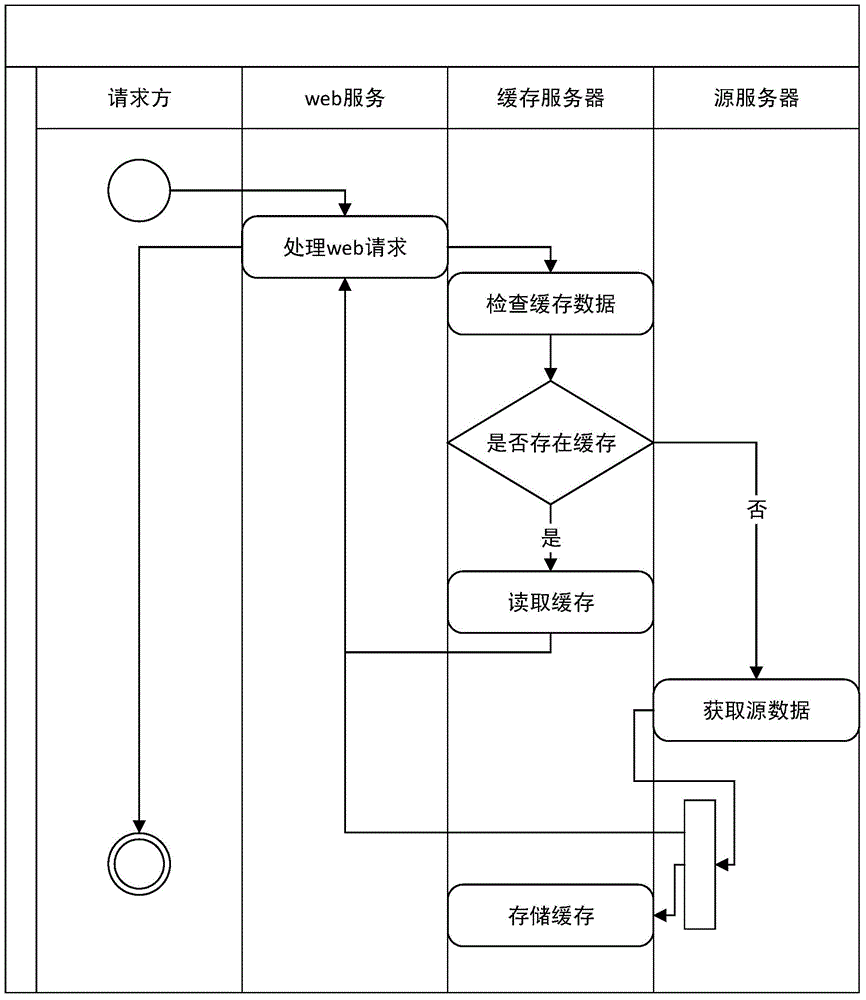

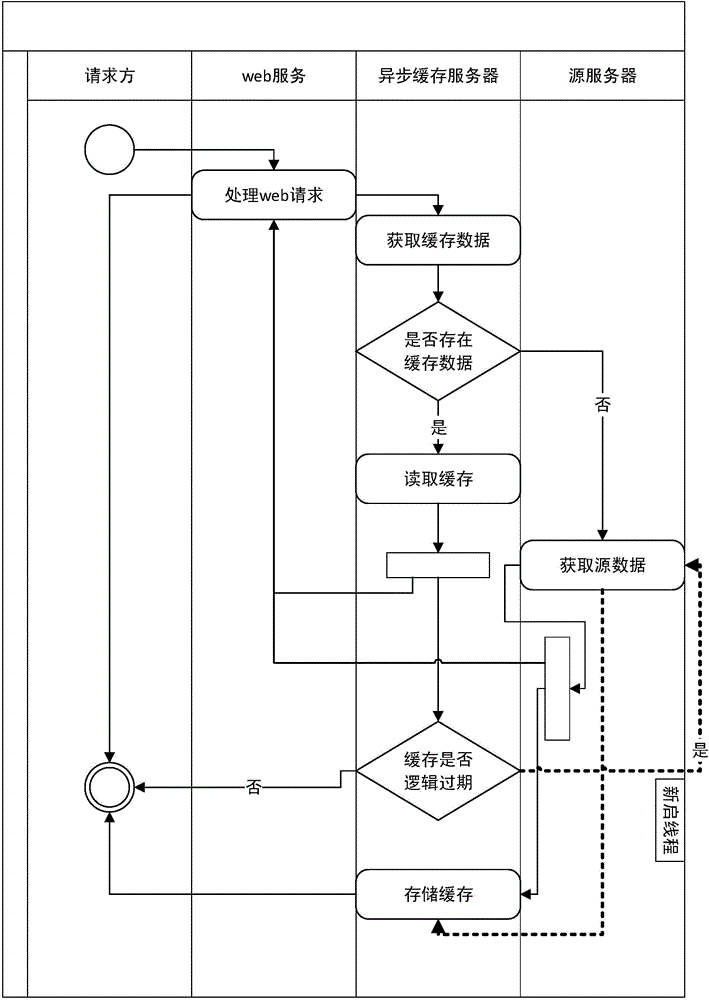

Asynchronous caching method, server and system

InactiveCN105373369AReduce access pressureReduce load pressureSpecific program execution arrangementsExpiration TimeSource data

The invention discloses an asynchronous caching method, server and system, and relates to the technical field of caching. According to the technical scheme provided by the invention, data requested by a user are read from the asynchronous caching server and a source server, and are returned to the user, asynchronous caching data is formed by setting logic expiration time for source data while a response to a user request is completed, and the asynchronous caching data are stored into the asynchronous caching server, so that the update of the asynchronous caching server is realized; and meanwhile, whether the asynchronous caching data expire or not is judged by taking the logic expiration time as a reference, the source data are read after the asynchronous caching data expire, and the update is performed again in the asynchronous caching server. Through the two-time update of the asynchronous caching data, it can be ensured that 80%-90% of user requests only need to access to the asynchronous caching server and do not need to read the source server. With the adoption of the technical scheme provided by embodiments of the invention, the availability and stability of a system can be remarkably improved.

Owner:BEIJING CHESHANGHUI SOFTWARE

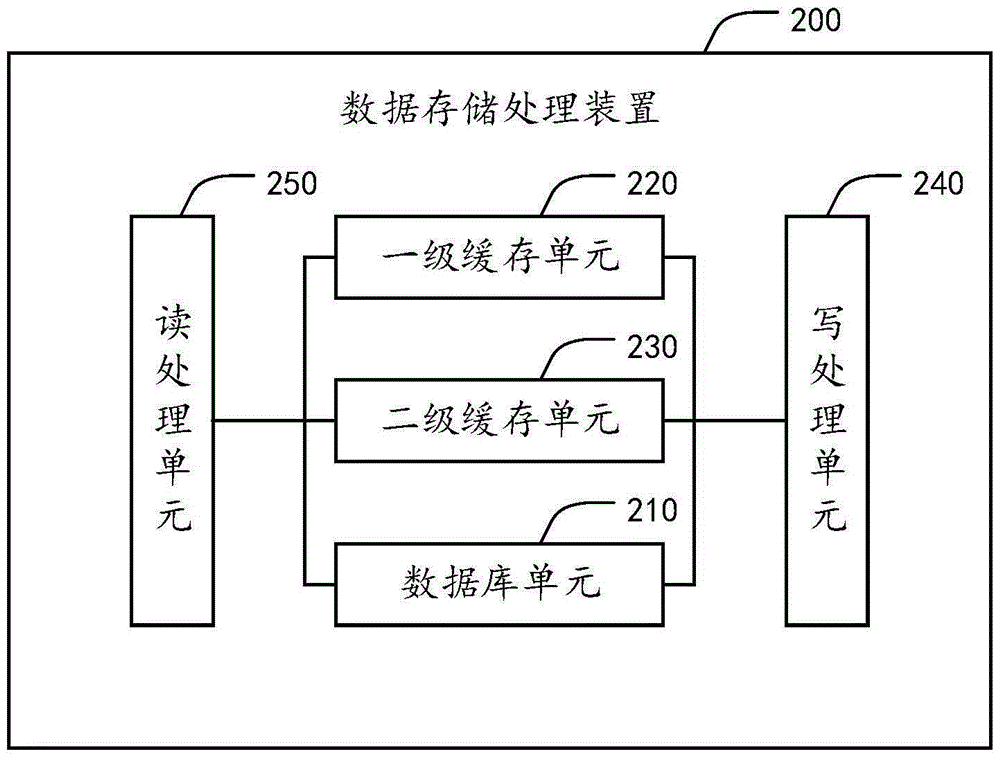

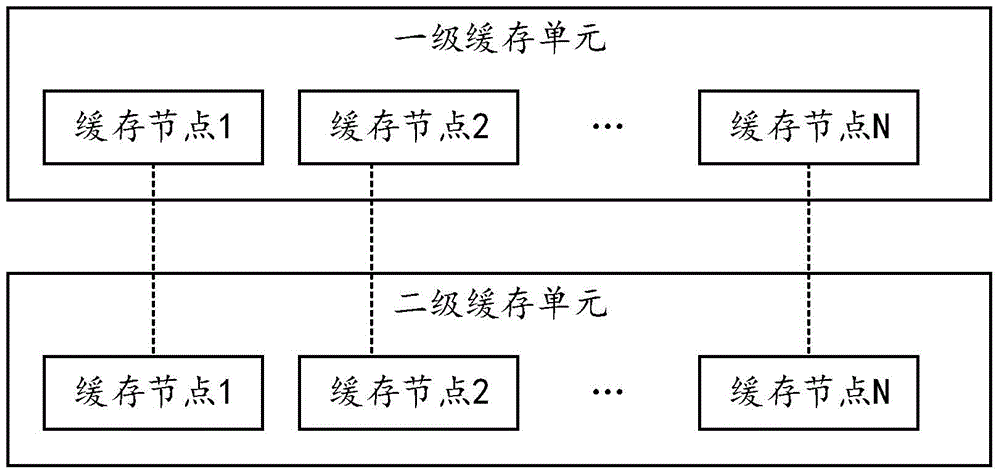

Data storage processing method and device

ActiveCN105183394AReduce access pressureReduce lossInput/output to record carriersSpecial data processing applicationsData queryData library

The invention discloses a data storage processing method and device. The method comprises the steps of storing data into a database, a first-level buffer memory and a second-level buffer memory respectively; when receiving a data query request, conducting query in the first-level buffer memory; if the requested data exist in the first-level buffer memory, returning the obtained data to a requesting party, and if the query result fails to be obtained from the first-level buffer memory, conducting query in the second-level buffer memory; if the requested data exist in the second-level buffer memory, returning the obtained data to the requesting party, and if the query result fails to be obtained from the second-level buffer memory, conducting query in the database; if the requested data exist in the database, returning the obtained data to the requesting party, and if the requested data do not exist in the database, returning the query failure result to the requesting party. By means of the technical scheme of the data storage processing method and device, access pressure of the database is alleviated, and the method and device have the advantages that the equipment losses are reduced and the personnel maintenance cost is lowered.

Owner:BEIJING QIHOO TECH CO LTD

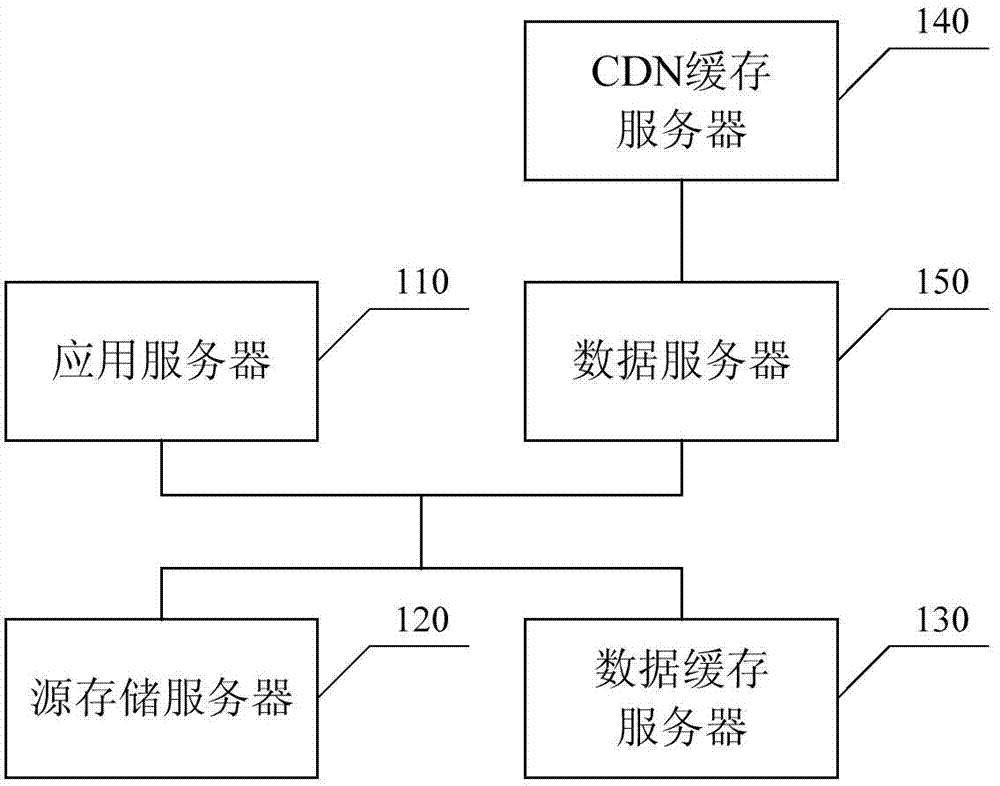

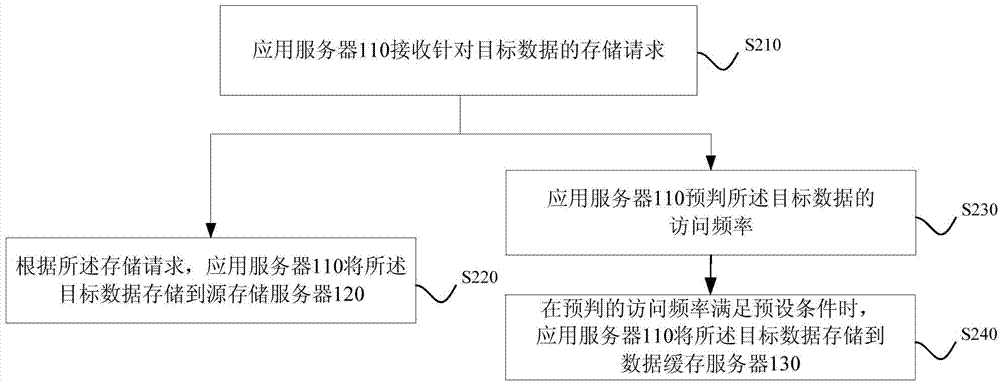

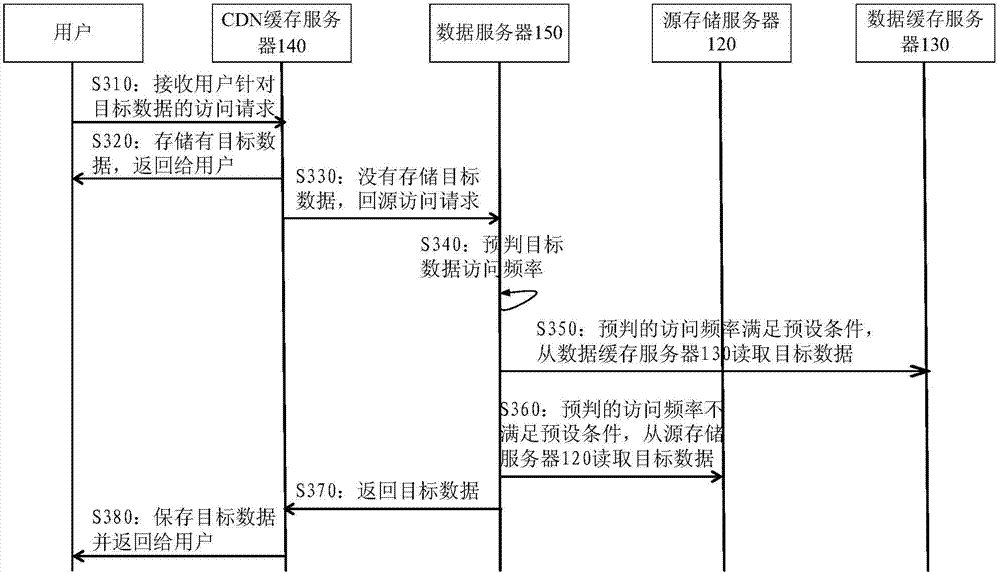

Data storage system as well as data storage method and data access method

The embodiment of the invention discloses a data storage system as well as a data storage method and a data access method. The data storage system comprises an application server, a source storage server, a data caching server, a CDN caching server and a data server, wherein the application server is used for storing target data to the source storage server or the data caching server according to the storage request; the CDN caching server is used for returning the access request to the user target data under the condition that the target data is stored, returning the access request to the data server under the condition that the target data is not stored, receiving and storing the target data returned by the data server, and returning the target data to the user; the data server is used for receiving the access request returned by the CDN caching server, reading the target data from the data caching server or the source storage server, and returning the target data to the CDN caching server. According to the technical scheme provided by the embodiment of the invention, the access pressure of the source server can be reduced.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

Method and system for sharing Web cached resource based on intelligent father node

ActiveCN102843426APlay the role of intelligent guidanceReduce back-to-source bandwidthTransmissionRelationship - FatherWeb cache

The invention discloses a method and a system for sharing Web cached resource based on an intelligent father node, which are used for further reducing the back source bandwidth of a caching system and the access pressure on a source station. The technical scheme of the invention is as follows: the system comprises multiple WEB cache nodes, a source station server, a cached resource list pool and the intelligent father node. The multiple Web cache nodes are used for caching the resource, receiving the user request and returning back the resource corresponding to the user request to the user; the source station server is used for storing the source data; the cached resource list pool is used for receiving and reporting the resource of the multiple Web cache nodes, and forming a resource detailed list of all the Web cache nodes; and the intelligent father node is used for receiving the request of one Web cache node, inquiring the cache position of the resource to be requested in the cached resource list pool, and realizing the resource sharing scheduling among multiple Web cache nodes by redirection.

Owner:CHINANETCENT TECH

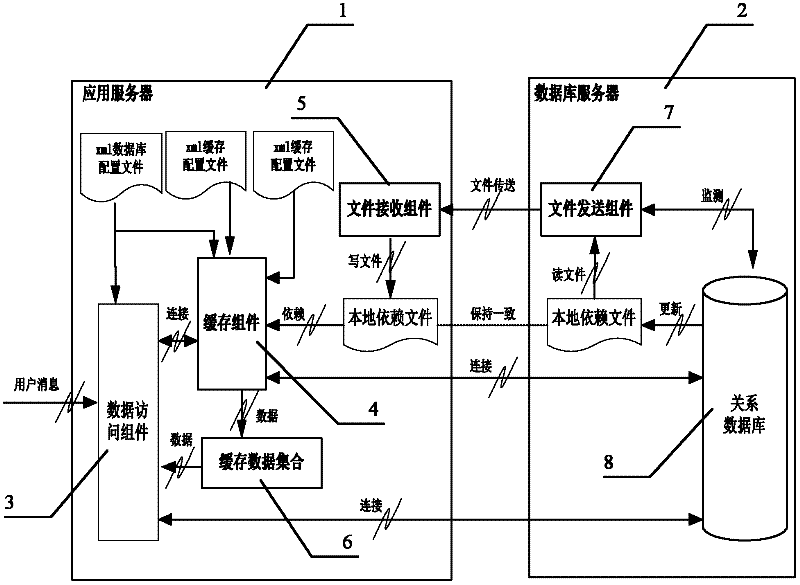

Method and system for realizing data consistency

ActiveCN102411598AReduce access pressureAchieve data consistencySpecial data processing applicationsData setRelational database

The invention discloses a method and a system for realizing data consistency. A data access assembly receives user messages, dynamic structured query language (SQL) is generated according to the user messages, an extensive makeup language (XML) database configuration file is connected with a relationship database and obtains data, and then, the data is transmitted to an application layer; an overall buffer data set is inquired according to the overall property data access assembly object name and the data obtaining object name, the data is returned to the data access assembly when the data exists, and the data is transmitted to the application layer by the data access assembly; and according to the data access assembly object name and the data obtaining object name, an overall buffer memory assembly is created when no data exists, and the obtained data dynamic SQL, the data access assembly object name and the data obtaining object name are transmitted to the buffer memory assembly. The method and the system solve the problem of data consistency between a server buffer memory and the relationship database and have the advantages that the effectiveness of the server buffer memory data is ensured, the connection access to the relationship database is reduced, the access speed is accelerated, and the access efficiency is improved.

Owner:HUNAN CRRC TIMES SIGNAL & COMM CO LTD

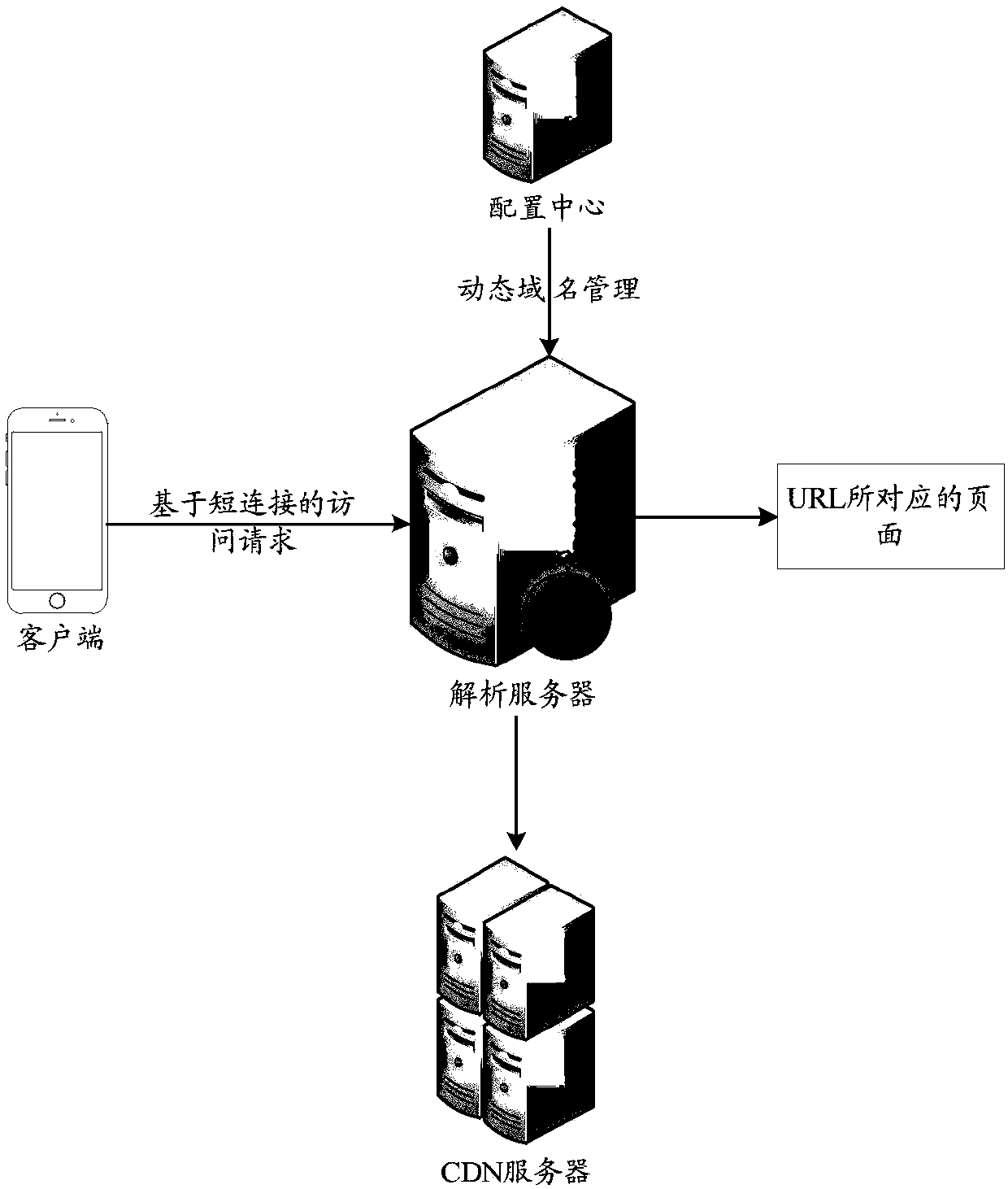

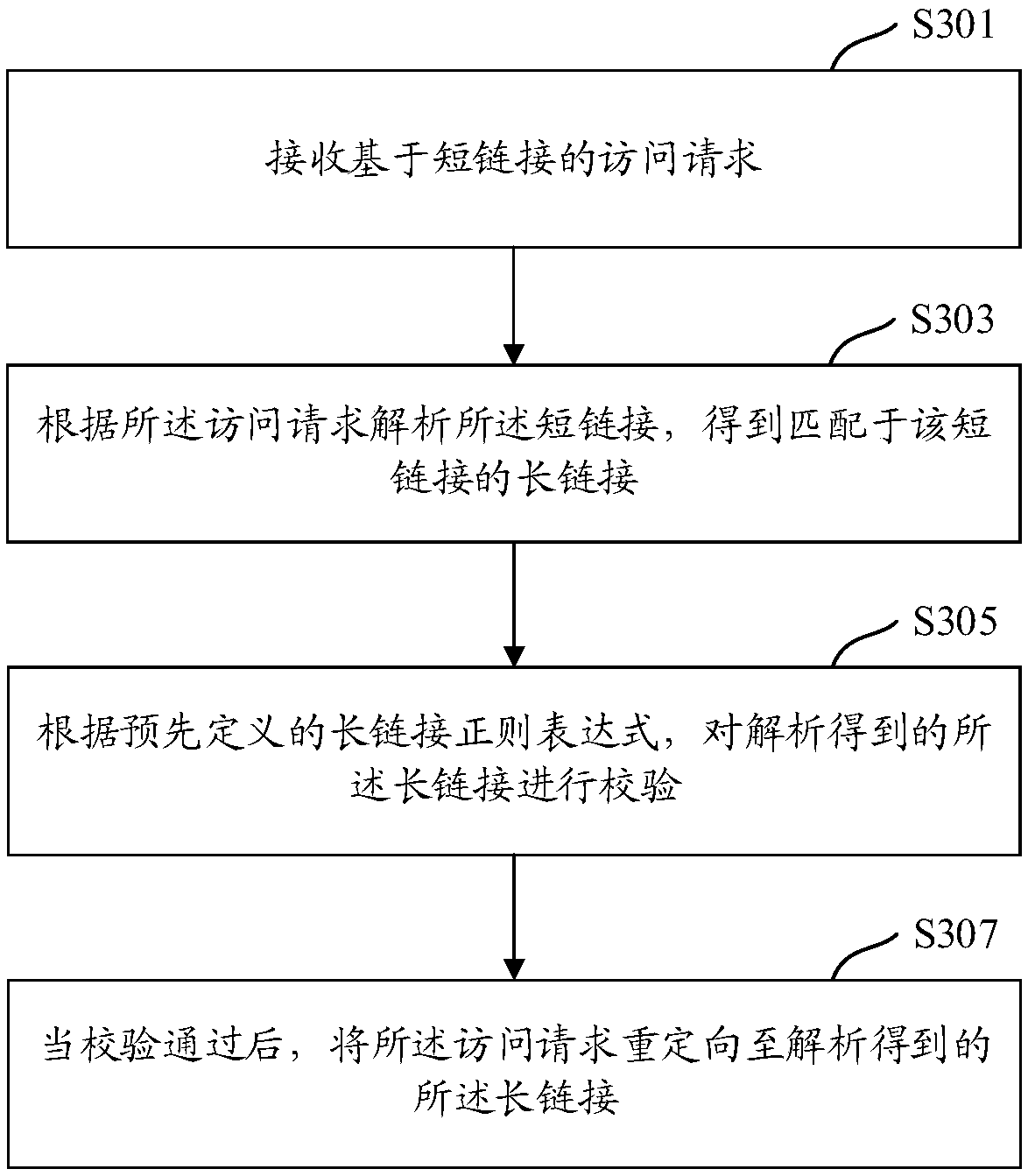

Short link resolution method, short link resolution apparatus and short link resolution device

ActiveCN107733972ARealize dynamic controlReduce access pressureTransmissionSpecial data processing applicationsDomain nameComputer network

The invention discloses a short link resolution method, a short link resolution apparatus and a short link resolution device. A configuration server distributes a defined regular expression to each resolution server, and thus, according to the regular expression, each resolution server checks a long link obtained after short link resolution. By means of using the regular expression, dynamic management and control to secure domain names can be realized, when deleting, adding or modifying corresponding secure domain names is necessary, the configuration server only needs to adjust contents in the regular expression, and can synchronize the updated regular expression to each resolution server in real time.

Owner:ADVANCED NEW TECH CO LTD

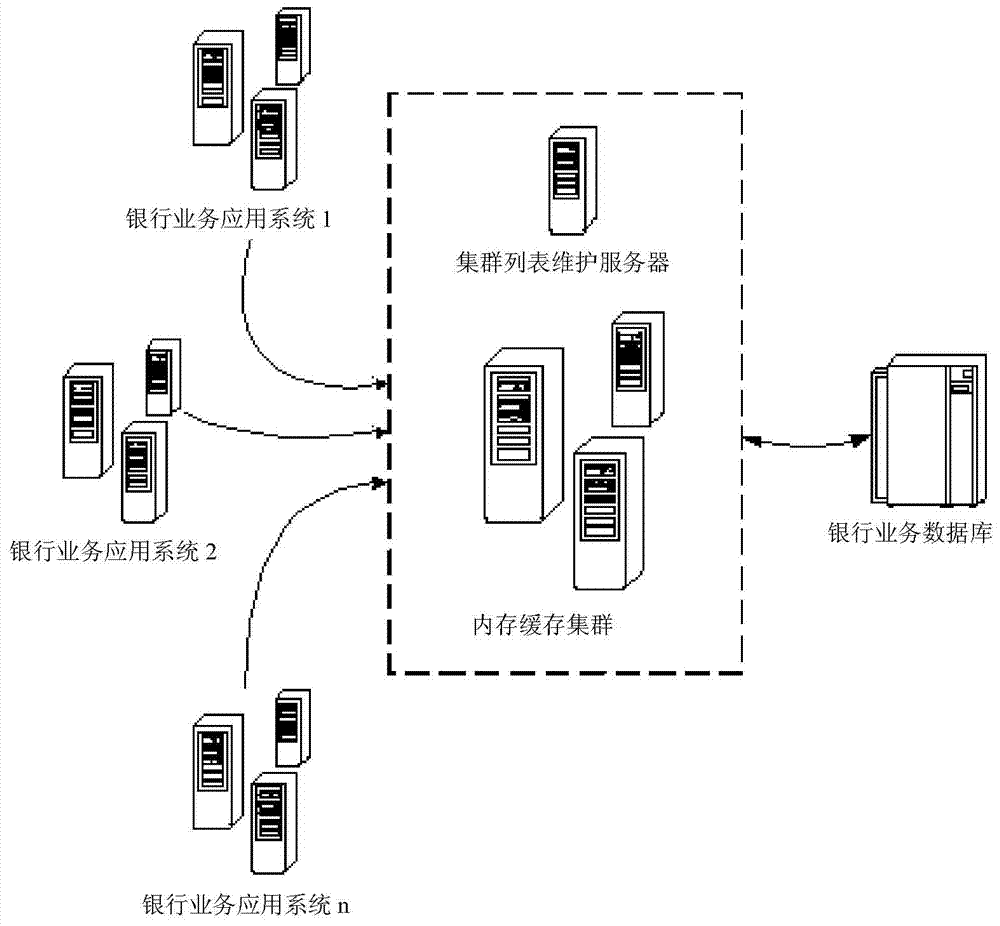

Method and system for operating bank business data memory cache

ActiveCN104850509AProcessing speedSolve the interactive response speedMemory adressing/allocation/relocationSpecial data processing applicationsAccess methodCache server

A method and a system for operating bank business data memory cache are provided. The system comprises a bank business application system, a memory cache cluster and a bank business database. The memory cache cluster comprises a plurality of memory cache servers; the bank business database is used for outputting bank business information data; the bank business application system is connected with the memory cache cluster and is used for receiving a user operation request and transmitting an access request according to the user operation request; the memory cache cluster is respectively connected with the bank business database and the bank business application system and is used for converting the bank business information data as an unstructured memory data structure and then storing in the memory cache servers, and distributing address information corresponding to the memory cache servers to the bank business application system, and inquiring the bank business information data in a local application memory, and outputting a feedback result to the bank business application system. Thereby, the method and the system of the invention provide an integrated, concise and standardized data access method and improve entire interactive response speed of a bank application system under the condition of super-large scale concurrent access.

Owner:BANK OF COMMUNICATIONS

Portal access authentication system and method

ActiveCN104735078ARelieve pressureReduce access pressureData switching networksNetwork access serverComputer network

The invention relates to the WLAN safety access field and discloses a Portal access authentication method and system. By the adoption of the Portal access authentication method and system, the authentication process is simplified, the pressure problem of a Portal server is effectively solved, and the load balance of the Portal server is effective achieved. According to the Portal access authentication method and system, a network access device locally stores user content customized by a content customizing and publishing system, and when a user requests to have access to the network access device to access content, the network access device judges whether the user has the network access authority firstly; if yes, the network access device pushes relevant content to a user terminal; if not, the user terminal sends an http authentication request to the network access device, the network access device verifies the authentication information of the user, and after the user passes authentication, the network access authority is given to the user terminal. The Portal access authentication method and system are suitable for Portal rapid access authentication of user terminals.

Owner:MAIPU COMM TECH CO LTD

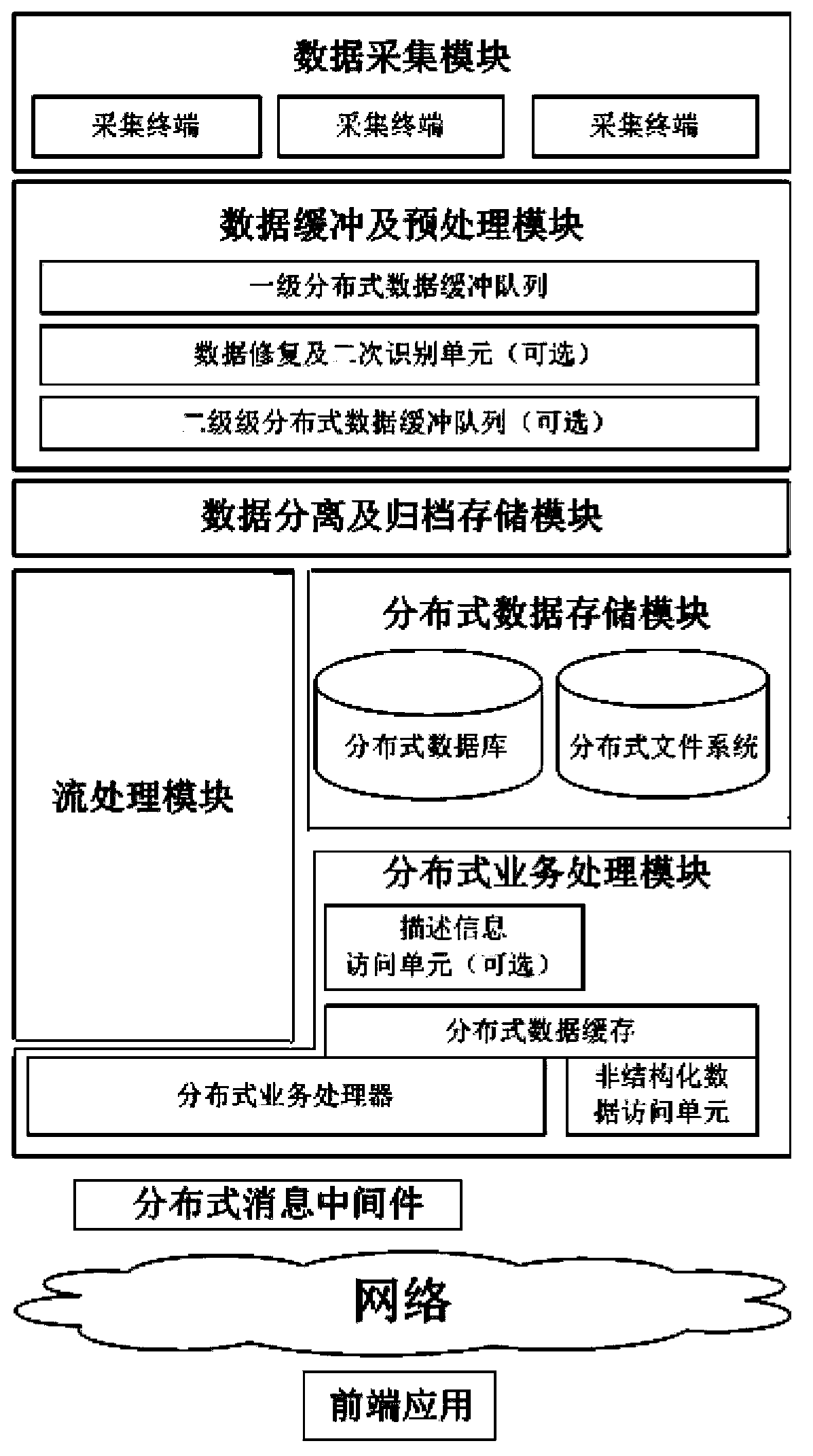

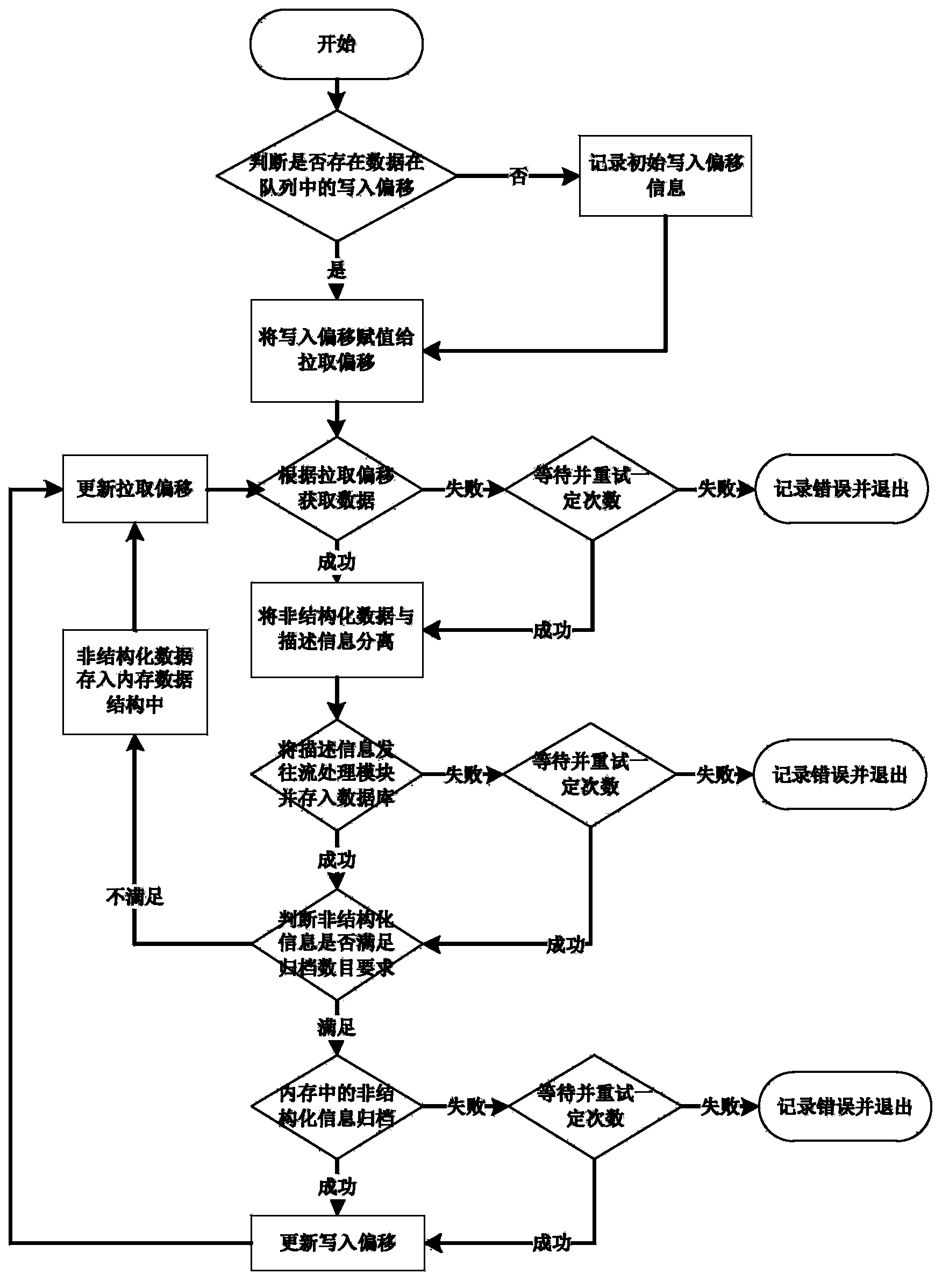

Mass-unstructured data distributed type processing structure for description information

ActiveCN104216899AReduce access pressureSave computing resourcesSpecial data processing applicationsData accessMassage

The invention discloses a mass-unstructured data distributed type processing structure for description information. The mass-unstructured data distributed type processing structure comprises a data collecting module, a data buffering and pre-processing module, a data separating and filing storage module, a stream processing module, a distributed type data storage module, a distributed type service processing module and distributed type massage-oriented middleware. The data collecting module is used for collecting and sending unstructured data to a data buffering queue. The data buffering and pre-processing module is used for temporally storing the data sent by the data collecting module and selectively repairing or carrying out secondary processing on the data. The data separating and filing storage module is used for acquiring data from a prior module distributed type queue, selectively separating the unstructured data from the description information, and the separated data are forwarded or stored in a successor module. The stream processing module is used for monitoring, comparing, calculating and processing new access data. The distributed type data storage module is used for storing the unstructured data and the description information. The distributed type service processing module comprises a service processor, a data access unit and a data buffering assembly. The distributed type massage-oriented middleware is used fore receiving front-end requests for a service processor to carry out selective executing or returning the background processing result to a front end.

Owner:JINAN GRANDLAND DATA TECH

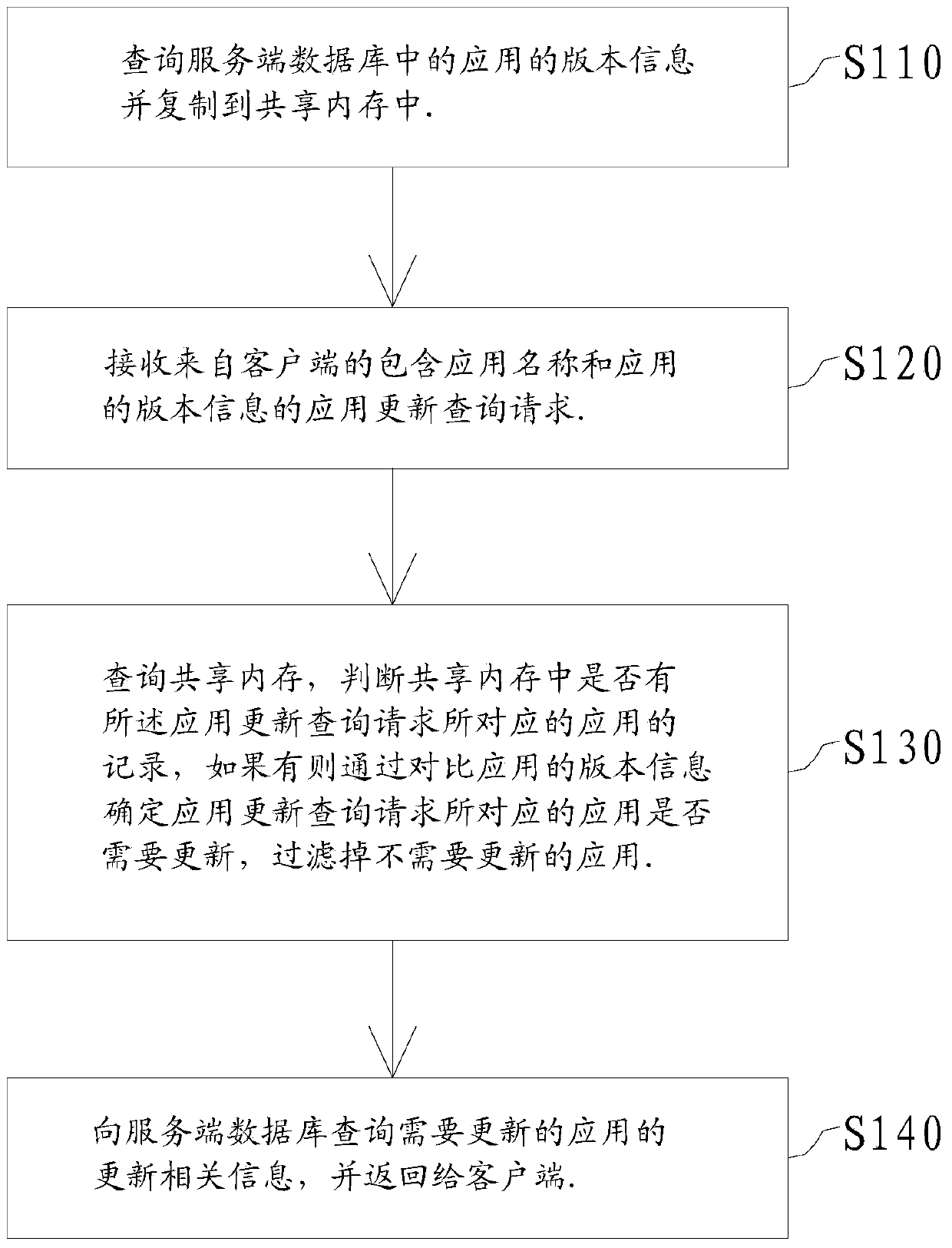

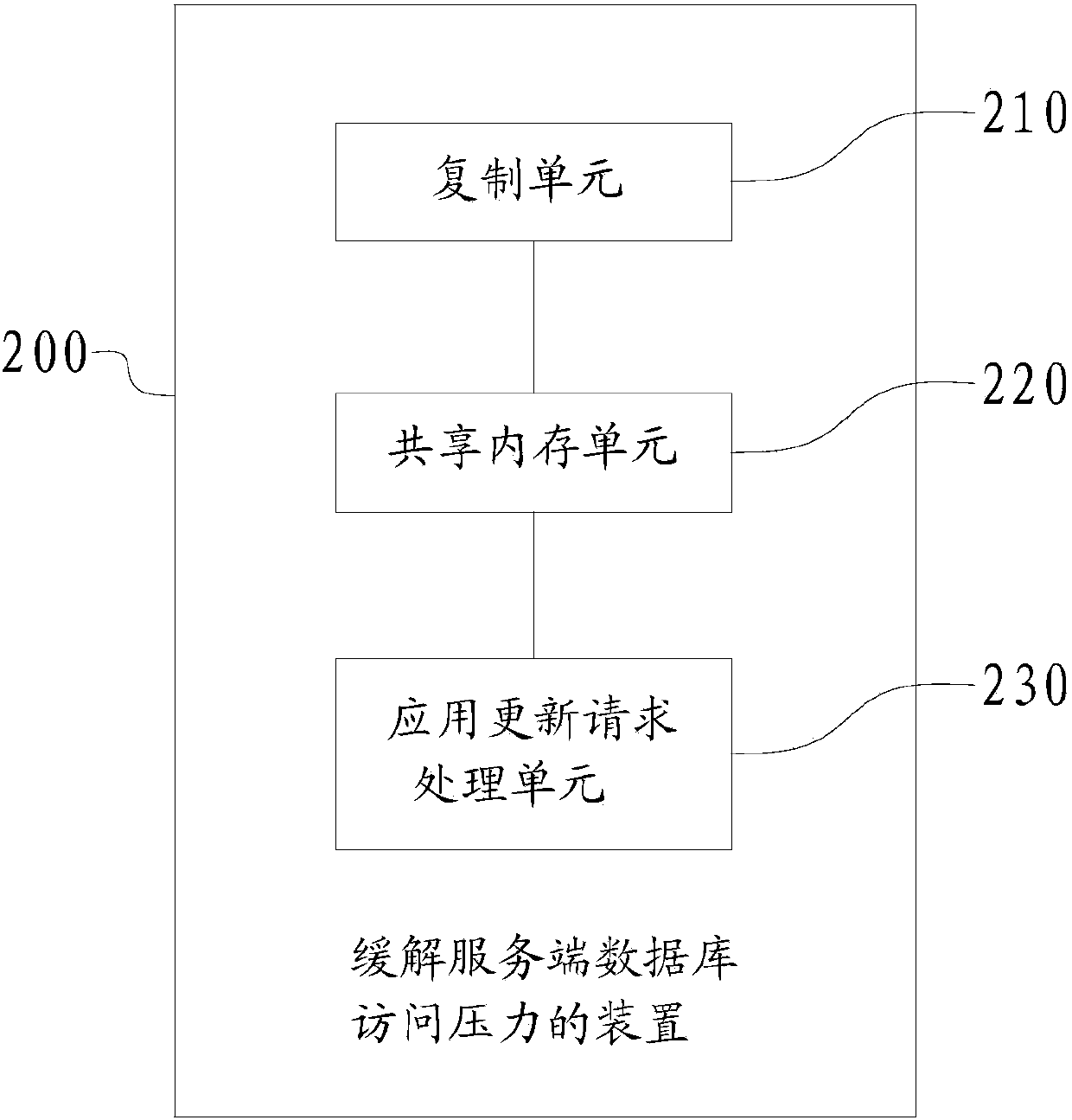

Method and device for releasing access pressure of server-side database

ActiveCN103631869AReduce access pressureSpecial data processing applicationsRelevant informationClient-side

The invention discloses a method and device for releasing the access pressure of a server-side database. The method comprises the steps that version information of an application in the server-side database is inquired and copied into a shared memory; an application update inquiry request, containing the name and the version information of the application, from a client side is received; the shared memory is inquired, whether the record of corresponding applications exists in the shared memory or not is judged, whether the applications corresponding to the application update inquiry request need to be updated is determined by comparing the version information of the applications if the record of the corresponding application exists in the shared memory, and the applications which do not need to be updated are filtered out; update relevant information of the applications which need to be updated is inquired in the server-side database and is retuned to the client side. According to the technical scheme, due to the fact that the shared memory is arranged at the front end of the server-side database, the inquiry requests of the applications which do not need to be updated are filtered out through the function of the shared memory, the request that the number of the requests of the server-side database is valid is particularly inquired, and the access pressure of the server-side database is greatly reduced.

Owner:BEIJING QIHOO TECH CO LTD

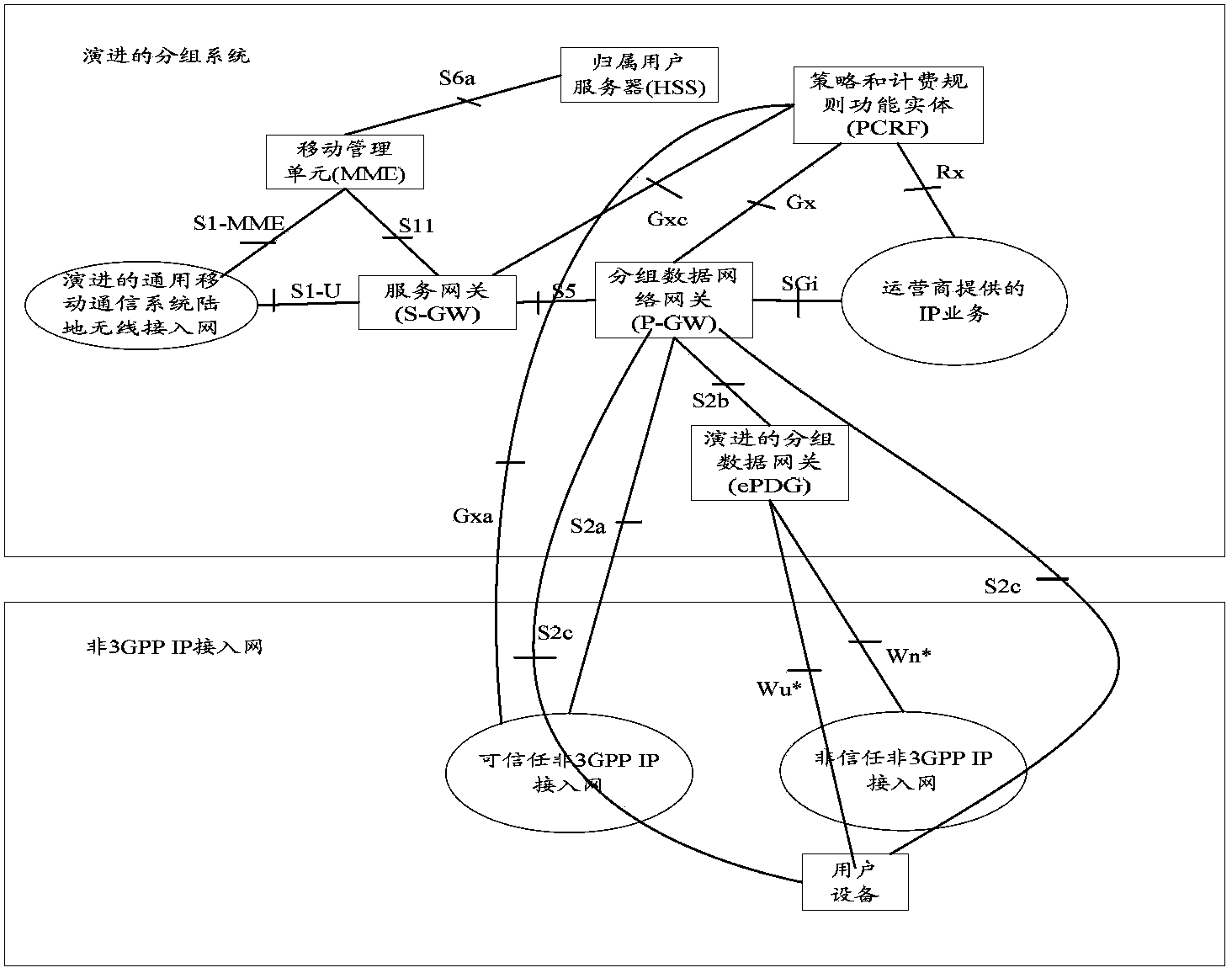

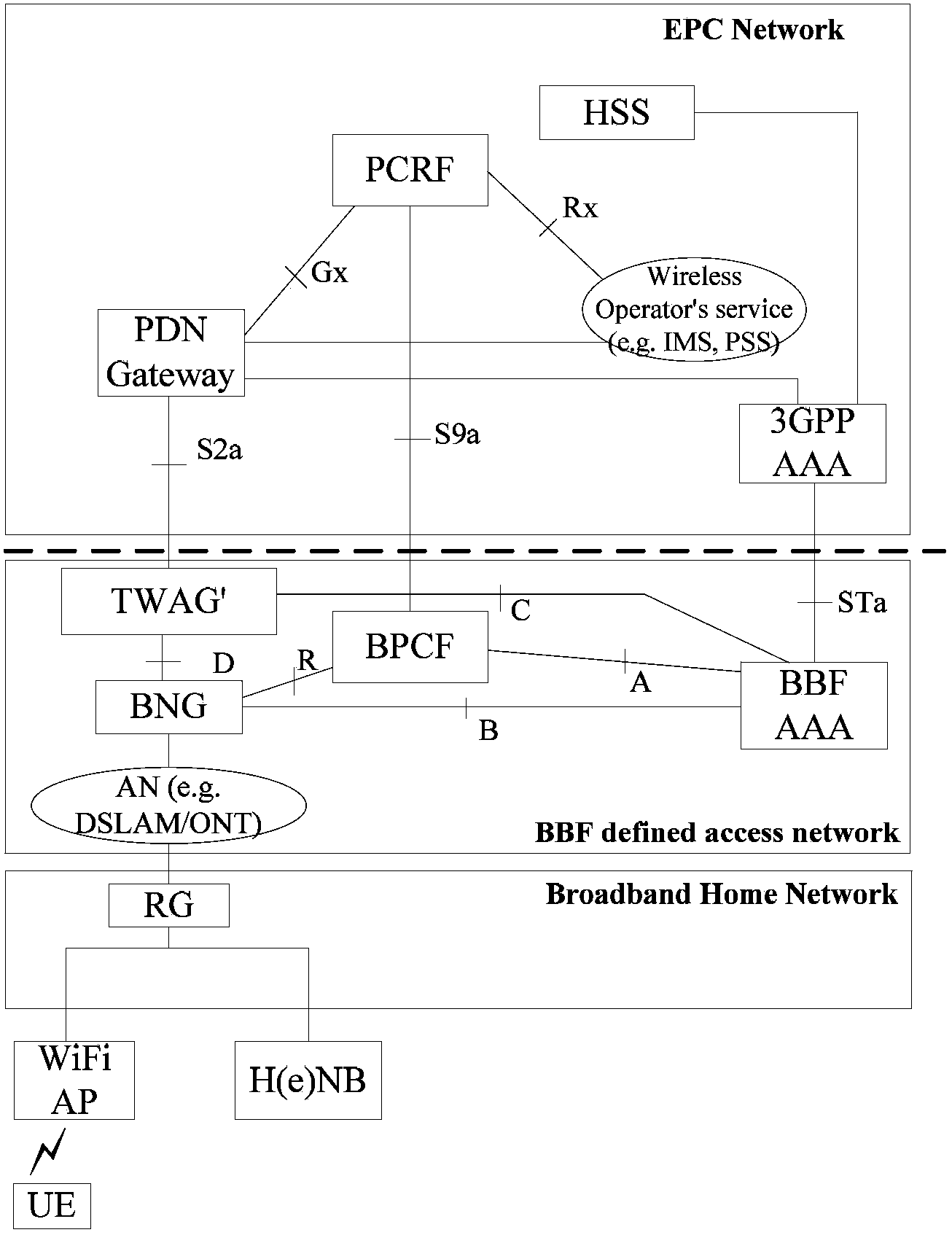

Route selection method and functional network element

Disclosed is a routing selection method, which is applied to a converged network of a WLAN access network and a 3GPP core network. A functional network element is arranged between the WLAN access network and the 3GPP core network. The method comprises: when UE accesses through a WLAN access network, a functional network element forwarding to an AAA an authentication request message sent by the UE, and forwarding to an access network element of the WLAN access network the authentication response message sent by the AAA; and / or the functional network element receiving an IP address request message sent by the access network element of the WLAN access network. Also disclosed at the same time is a functional network element. The present invention can conduct routing selection for 3GPP UE according to the type of the accessing UE and the service type, thereby reducing the access pressure of a 3GPP network.

Owner:ZTE CORP

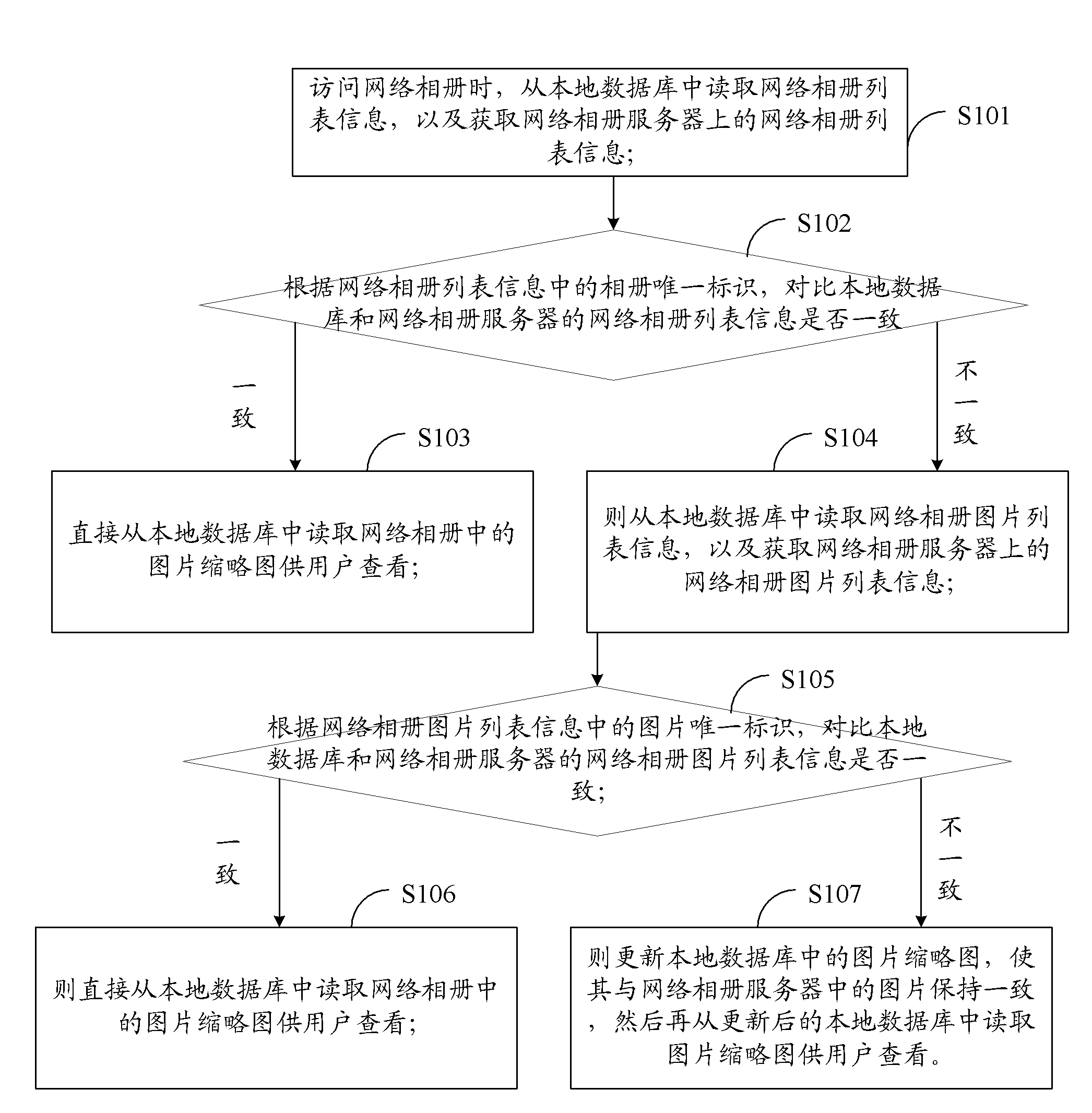

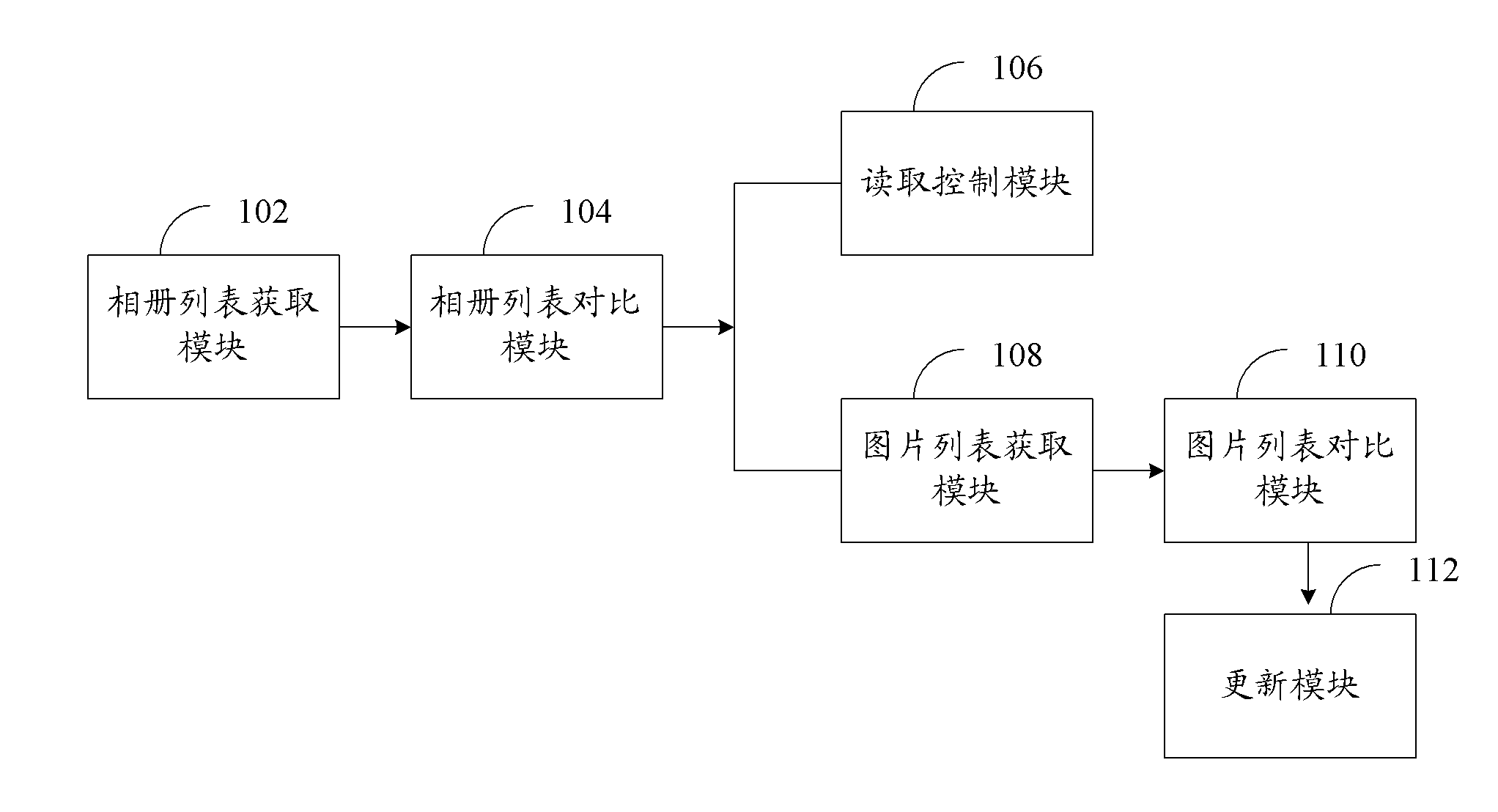

Exhibition method and system of network album

ActiveCN102760131AReduce access pressureHigh speedTransmissionSpecial data processing applicationsThumbnailData mining

The invention is applicable to the technical field of communication, and provides an exhibition method and system of a network album. The method comprises the following steps: comparing whether network album picture list information of a local database and a network album server are consistent according to an album unique identity and a picture unique identity while accessing a network album; if so, directly reading the picture thumbnail in the network album from the local database for user viewing; or if not, updating the picture thumbnail in the local database to be consistent with the pictures in the network album server, and then reading the picture thumbnail from the updated local database for user viewing. According to the invention, the speed from clicking the network album by a user to exhibiting network album in a UI (user interface) is increased; and when the result of the comparison shows that pictures of the local database and the network album server are not changed, the pictures stored in the local database can be directly exhibited for user viewing, so that the access pressure on the network album server is greatly reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

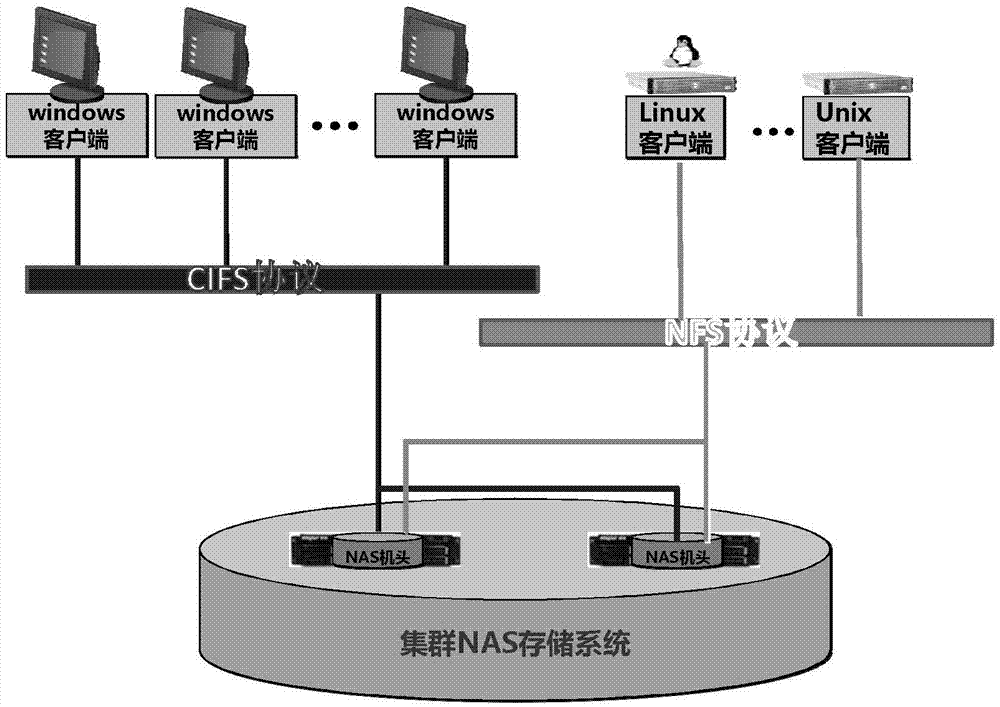

Shared access method of cluster NAS (network attached storage) system

InactiveCN103685579AMake sure to modifyReduce access pressureTransmissionAccess methodNetwork-attached storage

The invention provides a shared access method of a cluster NAS (network attached storage) system. The method has the advantage that different file reading, writing and operation authorities are set for a user using different cluster storage shared access protocols, so one file can only be modified by one user of the cluster storage shared access protocol, and the access conflict caused by different cluster storage shared access protocols accessing the same storage catalogue is avoided.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

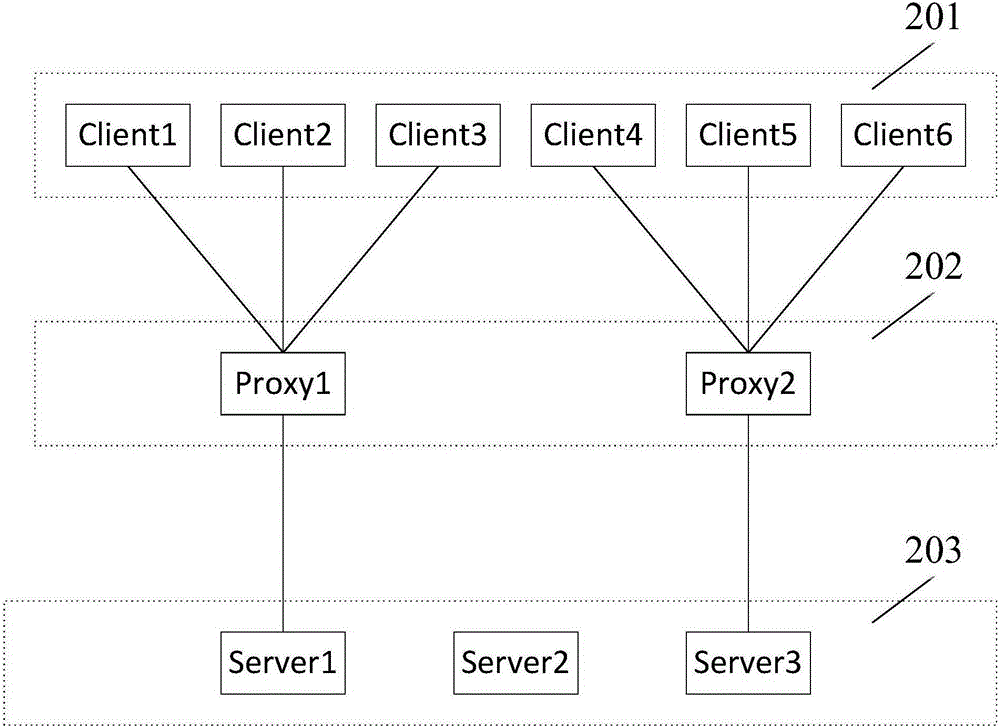

Distributed lock service realization method and device for distributed system

The invention discloses a distributed lock service realization method and device for a distributed system. The method comprises steps that a conversation registration request sent by a client is received through a preset agent node; through connection between the pre-established agent node and a service end, the conversation registration request is sent to the service end; a conversation identifier which corresponds to the conversation registration request and is fed back by the service end through connection is received, and the conversation identifier is sent to a corresponding client; a lock operation request which is constructed on the basis of the conversation identifier and is sent by the client is received through the agent node, and the lock operation request is sent to the service end through connection; the lock operation request is marked by the conversation identifier. The method is advantaged in that connection quantity of the service end is reduced, access burden of the service end is alleviated, and performance of distributed lock service of the distributed system is improved.

Owner:ALIBABA GRP HLDG LTD

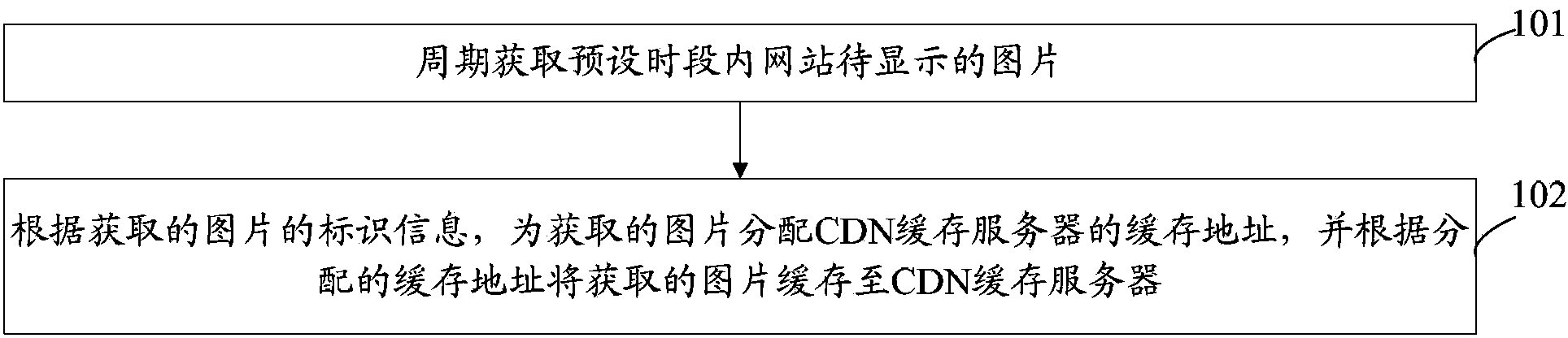

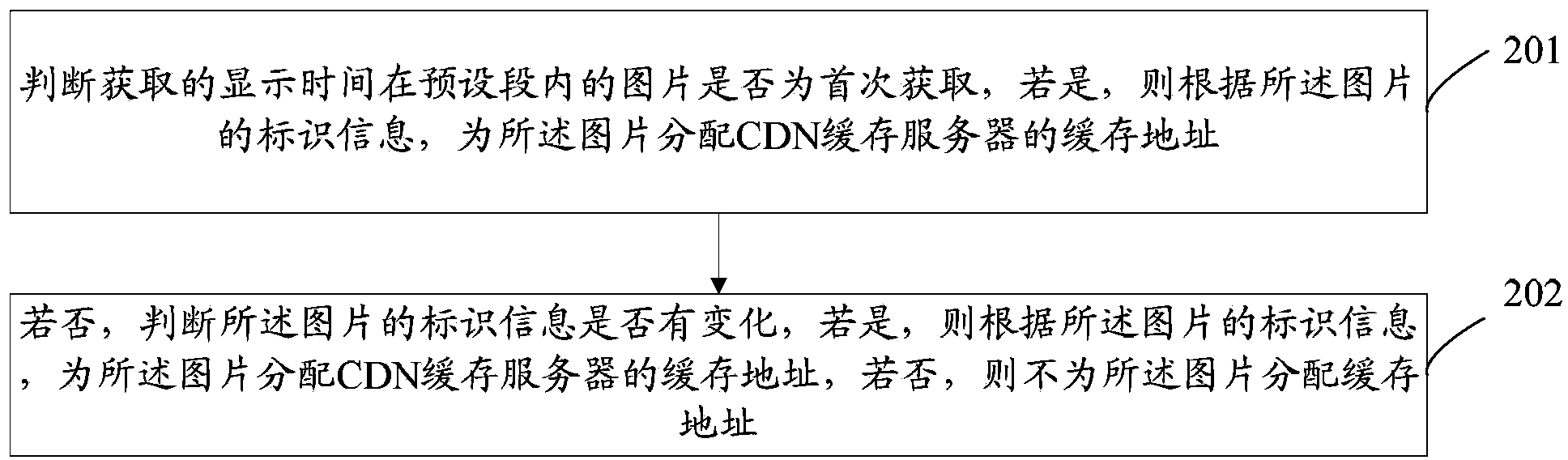

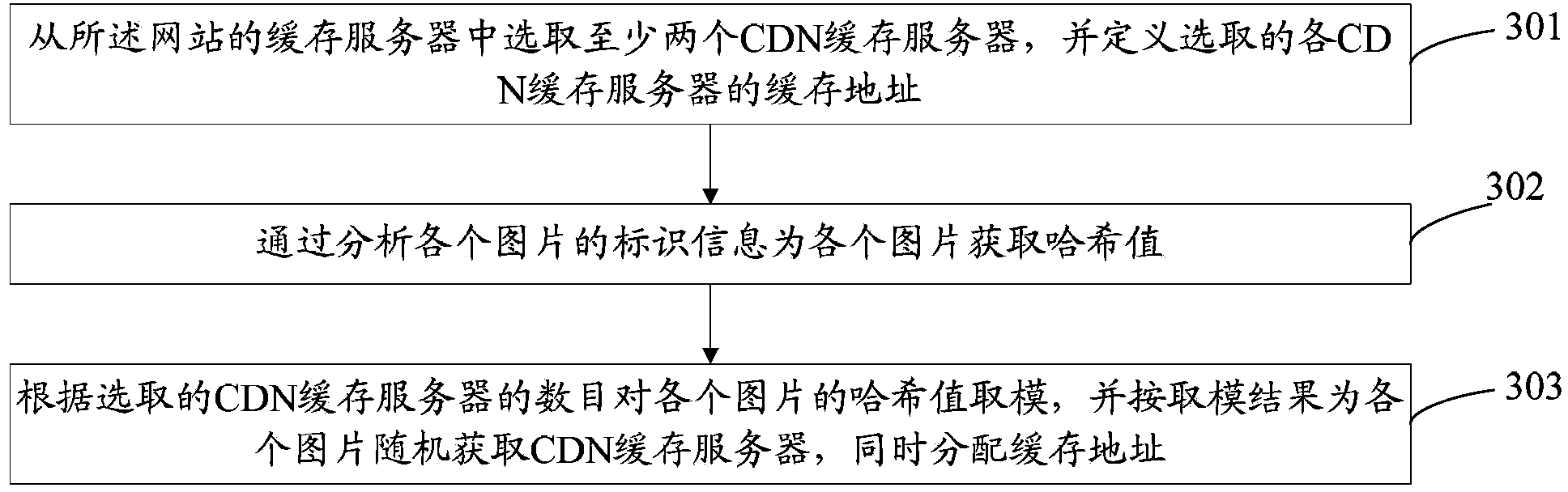

Picture caching method and picture caching system

ActiveCN103412827AReduce access pressureAvoid "back to source" pressureMemory adressing/allocation/relocationSpecial data processing applicationsContent delivery networkCache server

The invention discloses a picture caching method and a picture caching system. The picture caching method includes periodically acquiring to-be-displayed pictures of a website within a preset period, distributing caching addresses of a CDN (content delivery network) caching server to the acquired pictures according to identification information of the acquired pictures, and caching the acquired pictures to the CDN caching server according to the distributed caching addresses. According to the picture caching method and the picture caching system, the to-be-displayed pictures in the website within the preset period is acquired automatically and periodically, and the acquired pictures are cached to the CDN caching server, so that caching control is more flexible; to-be-displayed content of the website can be cached in advance, so that when a large number of users visit the website suddenly, accessing pressure of the website can be effectively reduced, and 'source return' pressure of a picture source website can be effectively avoided.

Owner:GUANGZHOU PINWEI SOFTWARE

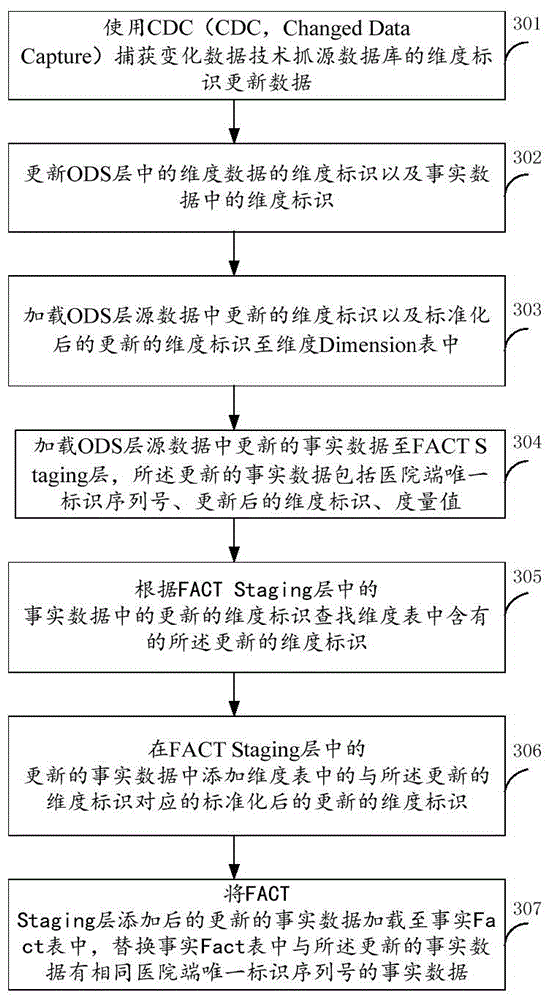

Data warehouse based medical data integration method and system

ActiveCN104462082AReduce access pressureImprove efficiencySpecial data processing applicationsSource Data VerificationOperational data store

The invention is applicable to the technical field of medical data and provides a data warehouse based medical data integration method and system. Source data of a hospital end are acquired, stored to an ODS (operational data store) layer in a data warehouse and subjected to standardized operation, and the standardized data and the source data are combined to be loaded into a data warehouse dimension table and a fact table, so that access pressure of a hospital server is relieved. Meanwhile, parameter values are set to replace abnormal data for data loading, and after dimension data of the source data are changed, the loaded dimension data are updated automatically, so that data verification update can be performed automatically when the data are abnormal, the abnormal data can be processed in a single-node manner, batch reprocessing is not needed, and processing efficiency is improved.

Owner:SHENZHEN UNITED IMAGING HEALTHCARE DATA SERVICE CO LTD

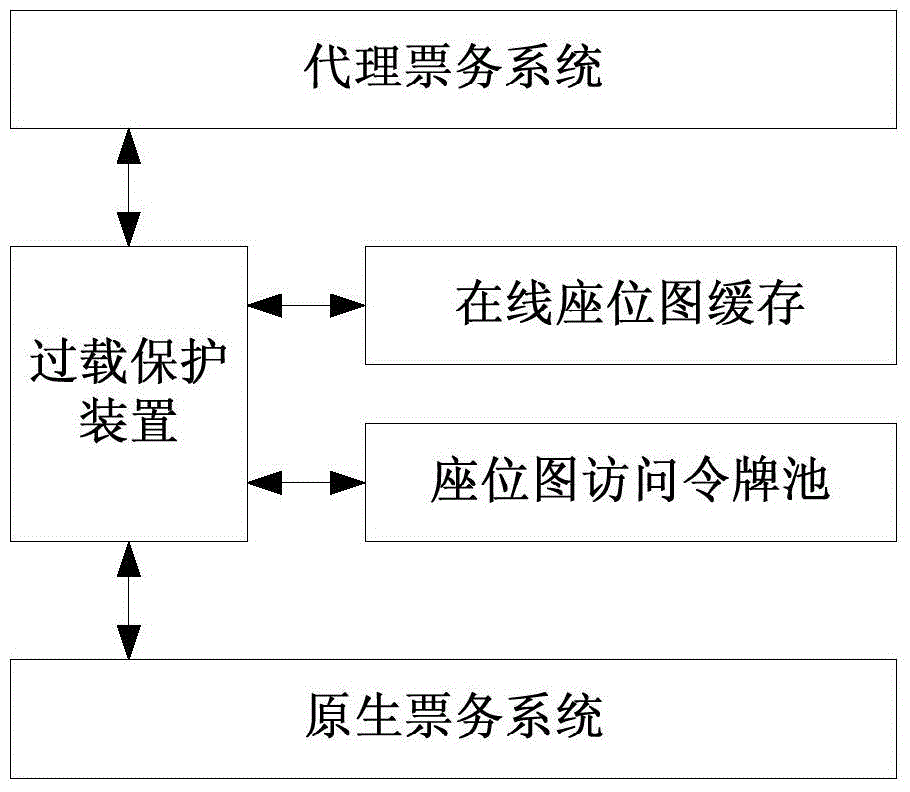

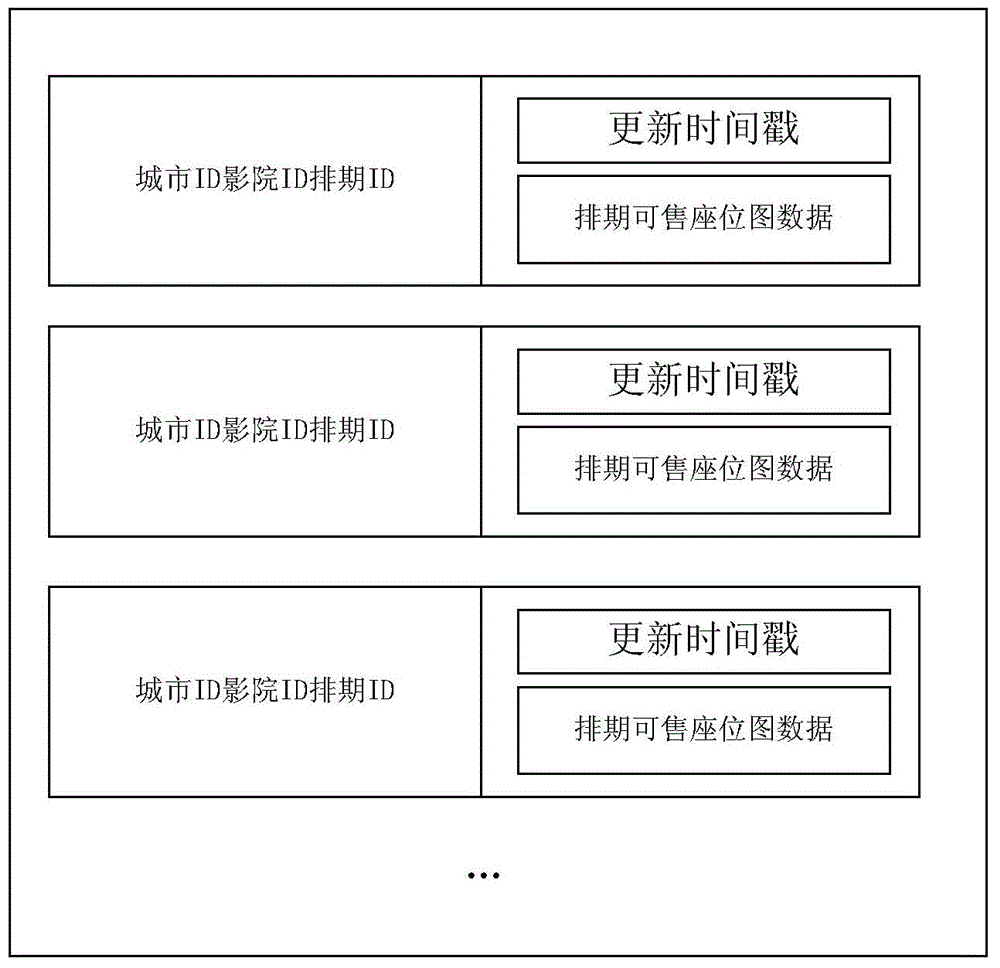

On-line seat-picking method and system, and overload protection device

The invention relates to an on-line seat-picking method and system, and an overload protection device. The method comprises: monitoring connection requests sent to a same original ticketing system by each ticketing system; determining whether the number of times of connection requests within a set time exceeds a preset threshold value; if the number of times of connection requests exceeds a preset threshold value, intercepting the connection requests and starting an overload protection mode, otherwise transmitting the connection requests to an original ticketing system; responding to the interception of the connection requests, and querying whether a required seat map exists in the buffer memory of an on-line seat map; if a required seat map exists in the buffer memory of an on-line seat map, determining whether the required seat map is available according to the survival time of the seat map; feeding back data to a corresponding user if the required seat map is available; and applying for a token to access the original ticketing system if the required seat map is not available or is not stored; and responding to token obtainment, obtaining the required seat map from the original ticketing system, and selecting seats according to the obtained seat map. According to the invention, the access pressure to an external original ticketing system under high concurrent pressure can be reduced.

Owner:CHINA TELECOM CORP LTD

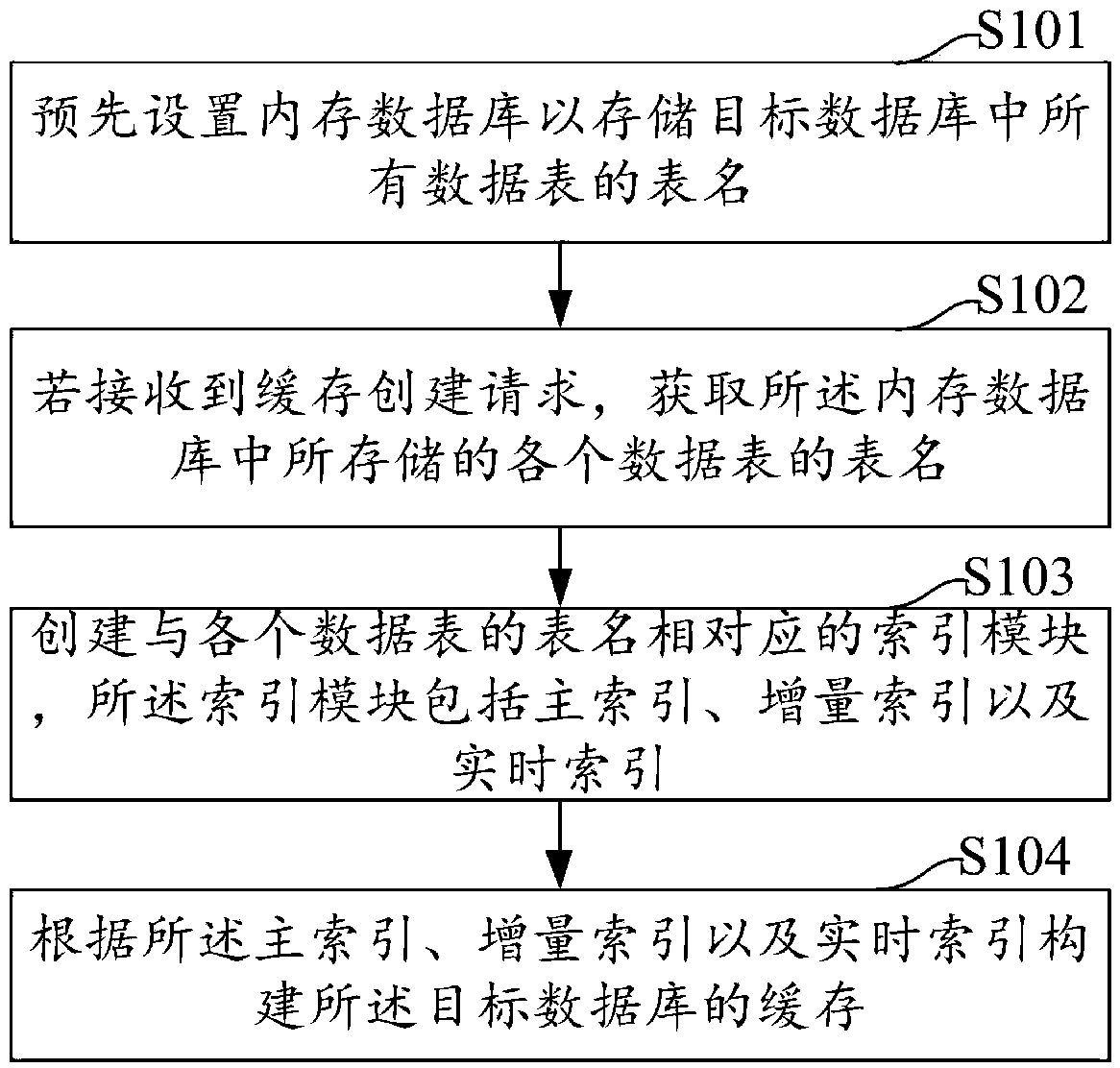

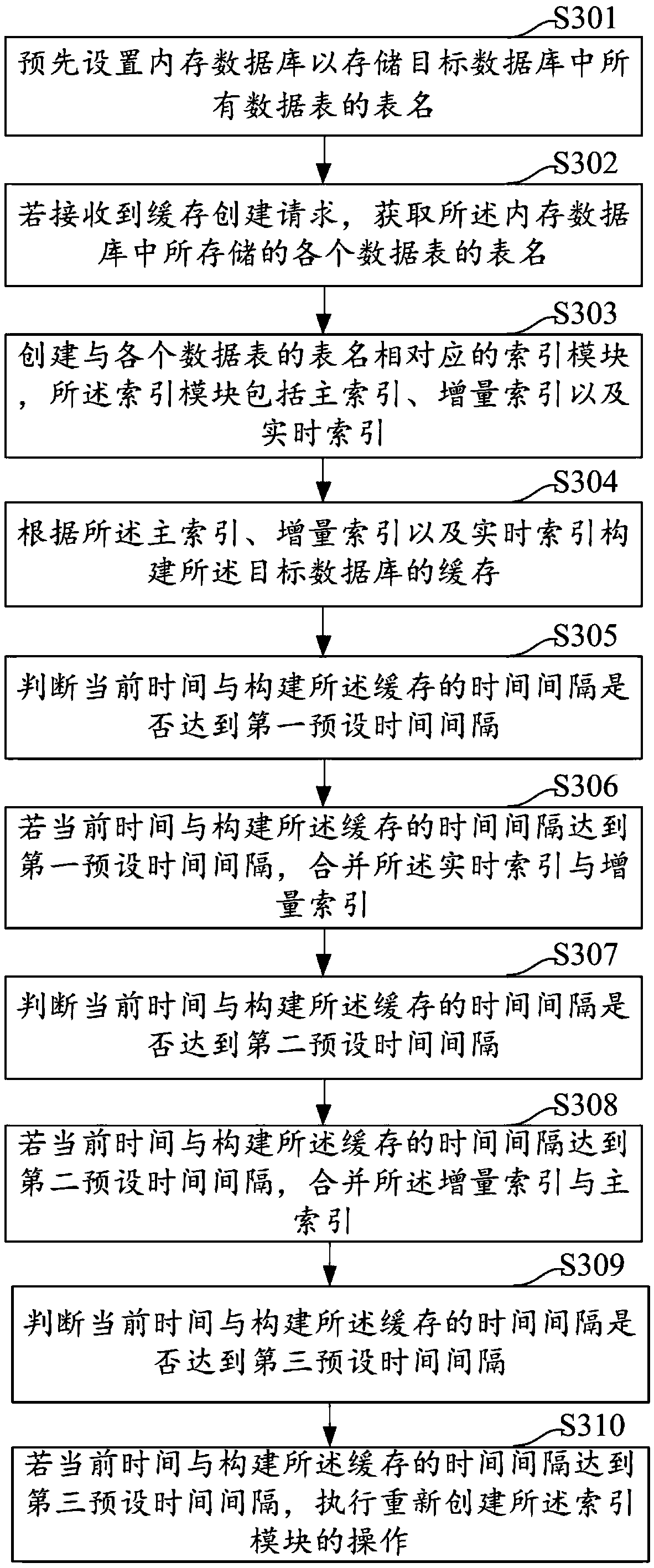

Database cache construction method and apparatus, computer device, and storage medium

ActiveCN109542907AImprove access efficiencyReduce access pressureSpecial data processing applicationsDatabase indexingIn-memory databaseComputer module

The embodiment of the invention discloses a database cache construction method and apparatus, a computer device and a storage medium. The method comprises the following steps: a memory database is preset to store the table names of all data tables in a target database; If a cache creation request is received, acquiring table names of each data table stored in the in-memory database; creating an index module corresponding to a table name of each data table, wherein the index module comprises a primary index, an incremental index and a real-time index; constructing cache of the target database is constructed based on the primary index, the incremental index, and the real-time index. The invention creates an index module to cache the data in the data table for each data table in the target database, which can improve the access efficiency of the data and reduce the access pressure of the database at the same time.

Owner:WONDERSHARE TECH CO LTD

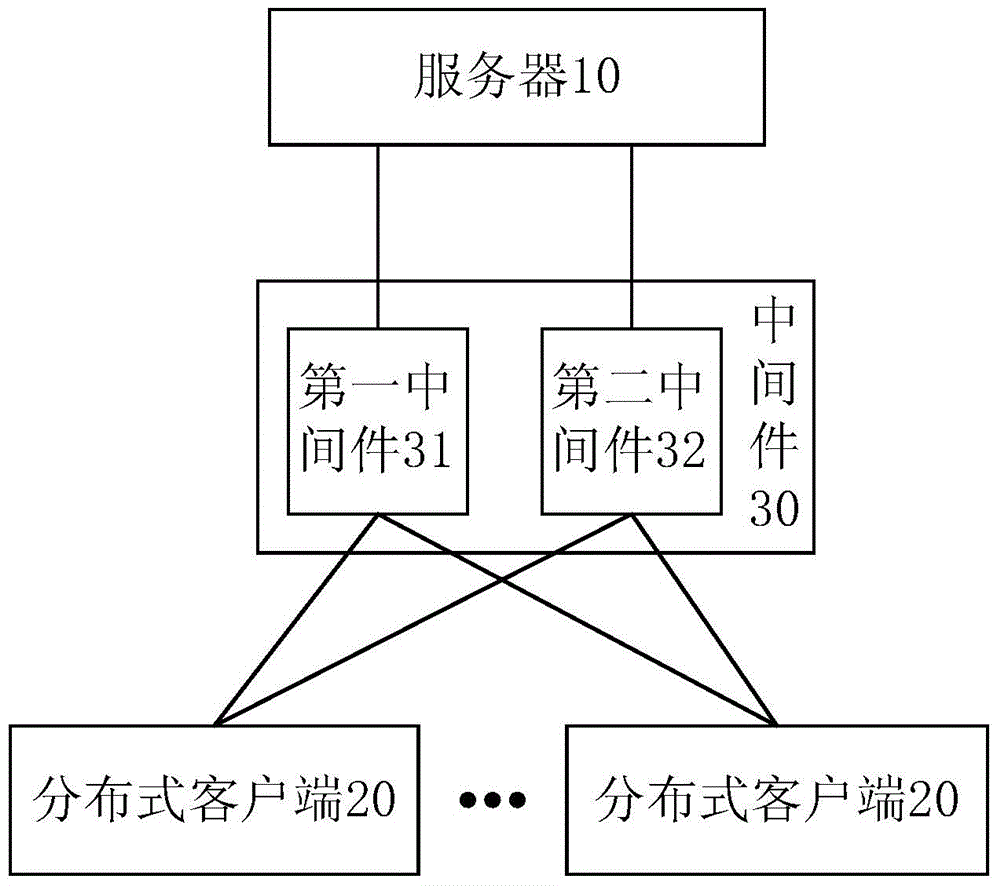

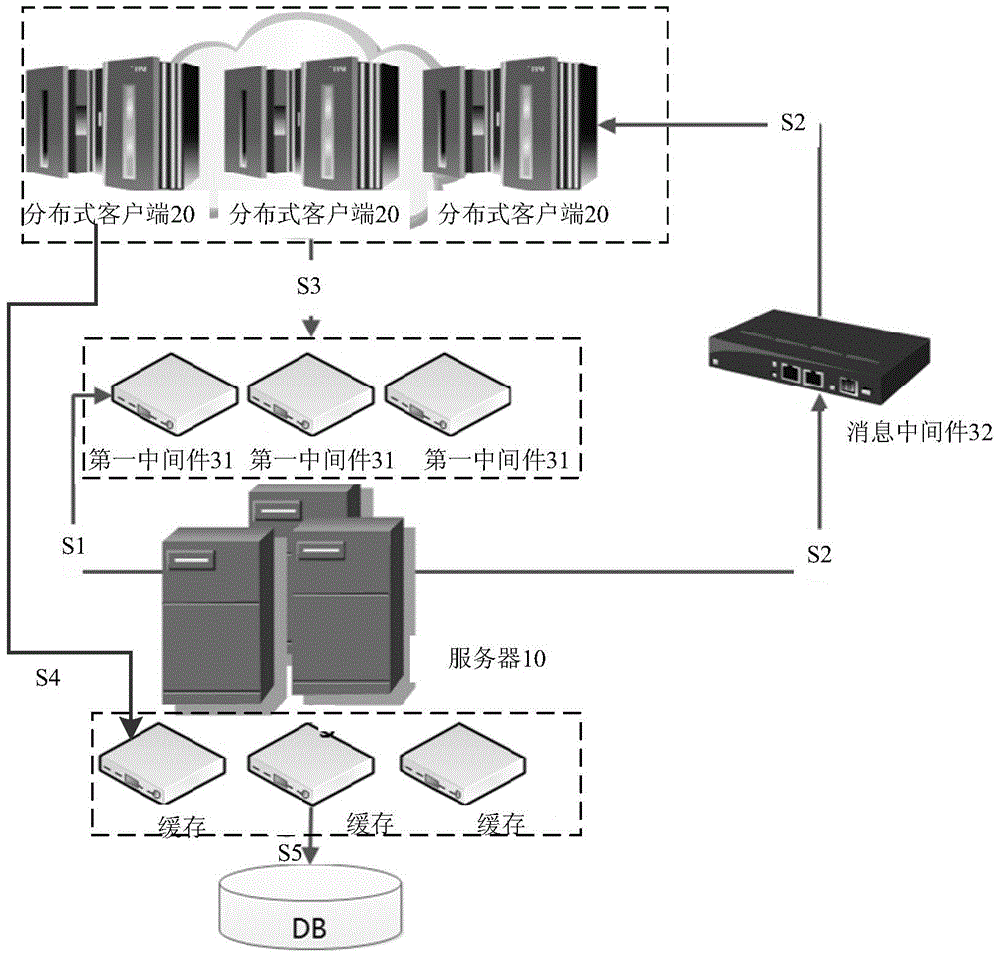

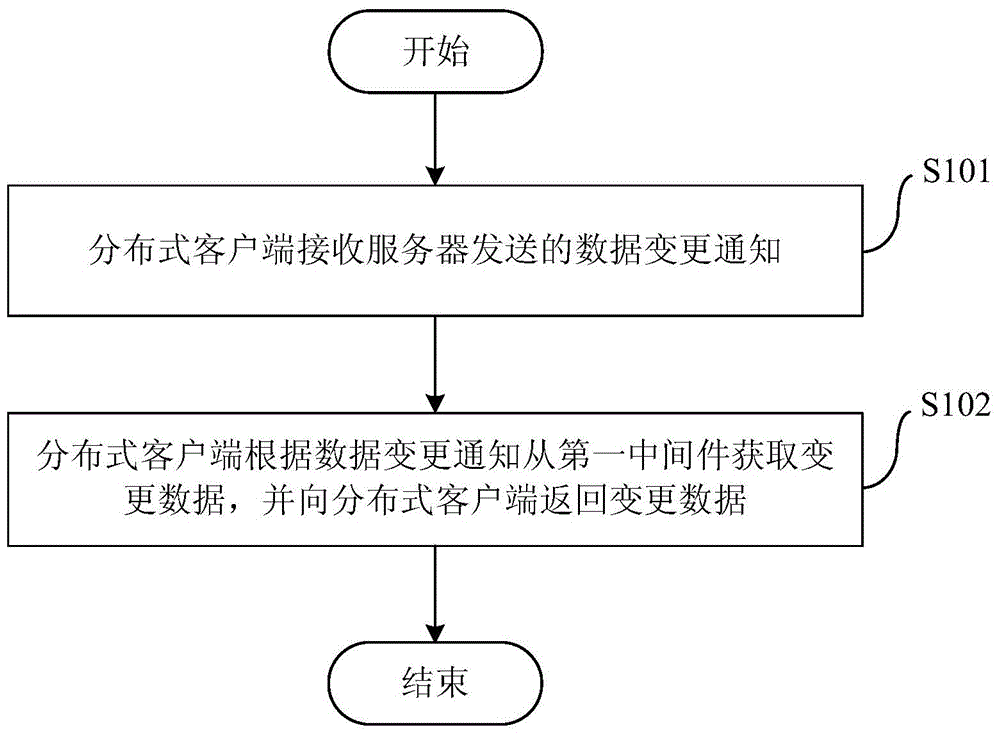

Distributed system and incremental data updating method

InactiveCN105335170AReduce access pressureReduce system resource consumptionProgram loading/initiatingResource consumptionMiddleware

The present application proposes a distributed system and an incremental data updating method. The system comprises: a server, used for sending a data change notification to distributed clients; the distributed clients, used for receiving the data change notification from the server, sending a changed-data acquisition request to first middleware according to the data change notification, and receiving changed data returned by the first middleware; and the first middleware, used for receiving the changed-data acquisition request from the distributed clients, acquiring the changed data according to the changed-data acquisition request and returning the changed data to the distributed clients. The distributed system and the incremental data updating method provided by the embodiments of the present application reduce the access pressure on a database in the distributed system, reduce system resource consumption, and improve the incremental data updating speed of the distributed clients and the stability of the distributed system.

Owner:ALIBABA GRP HLDG LTD

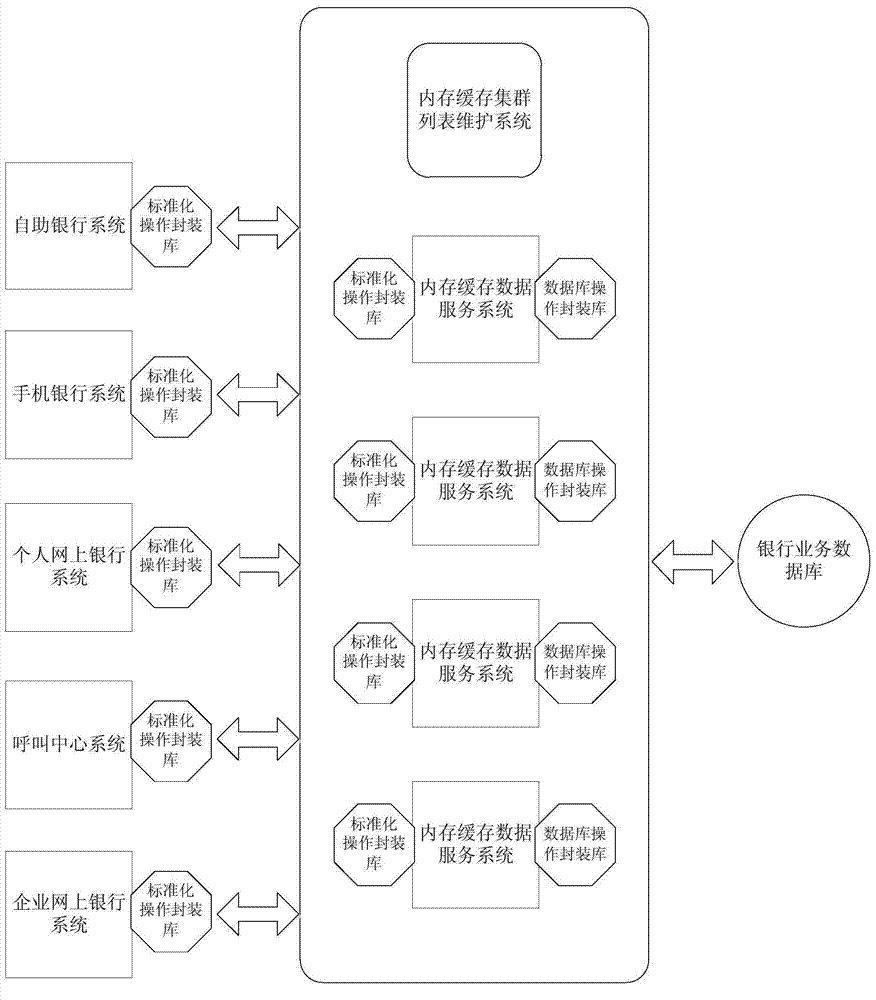

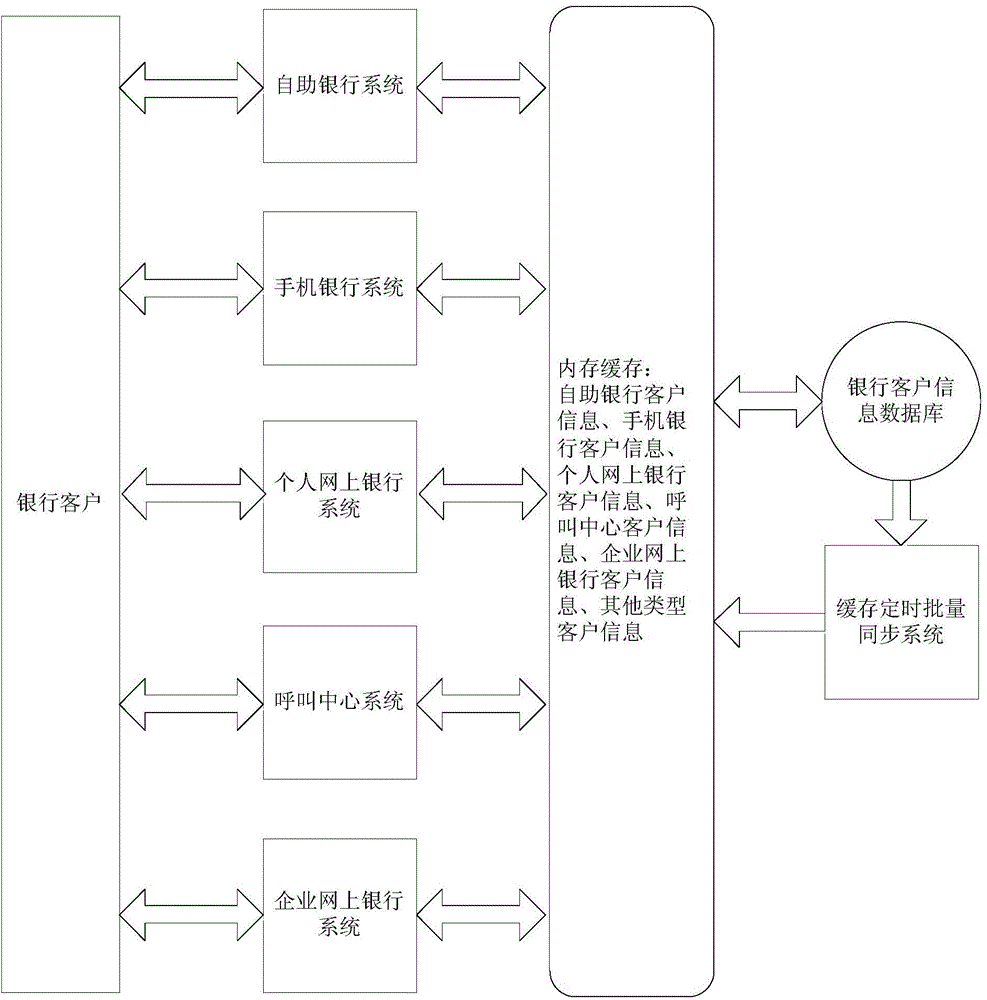

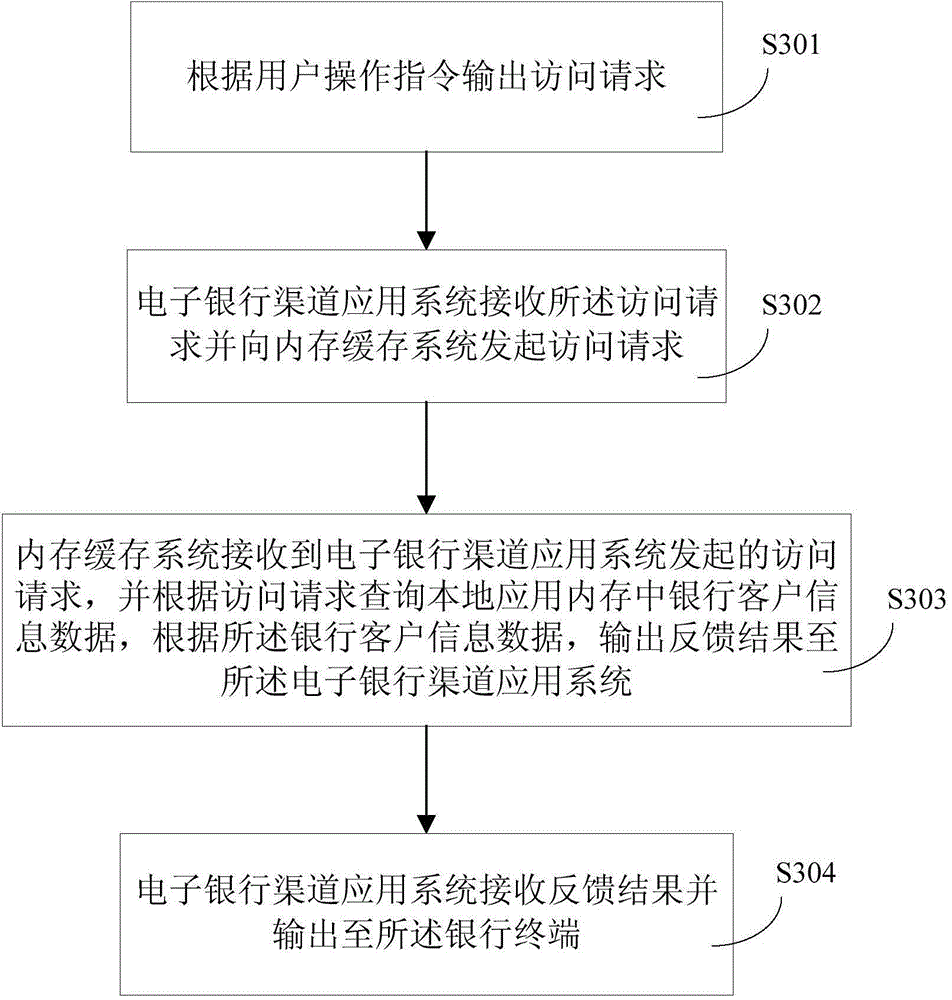

Method and system for quickly accessing information data of clients of banks

InactiveCN104866531AReduce access pressureEffectively spread the pressure of concurrent accessFinanceRelational databasesOutput feedbackOperating system

The invention provides a method and a system for quickly accessing information data of clients of banks. The system includes a bank terminal, a bank digital channel application system and a memory caching system, wherein the bank terminal is in communication connection with the bank digital channel application system, and is used for outputting an access request according to a user operational order; the bank digital channel application system is in communication connection with the memory caching system, and is used for receiving the access request, sending the access request to the memory caching system, and receiving and outputting a feedback result to the bank terminal; the memory caching system includes a plurality of servers, the servers are in communicated connection with a client information data synchronization layer, and are used for receiving the access request sent by the bank digital channel application system, inquiring the bank client information data saved in the memory of the local application, and outputting the feedback result to the bank digital channel application system according to the bank client information data.

Owner:BANK OF COMMUNICATIONS

Mobile phone APP communication system and load balancing method and car renting method

ActiveCN105812478AIncrease load capacityImprove reliabilityApparatus for meter-controlled dispensingTransmissionInternet Authentication ServiceMessage queue

The invention discloses a mobile phone APP communication system and a load balancing method and a car renting method. A communication system comprises a mobile phone APP and a network management platform, the mobile phone APP is connected with a member authentication service, a heartbeat and message push service and the APP service group of the network management platform through a HTTP request; the member authentication service, the heartbeat and message push service and the APP service group are respectively connected with a member logging status database, a message queue is respectively connected with the APP service group, the feed deduction service and the short message service. The application terminal is decoupled from the control terminal, the abnormal probability of a control terminal caused by the abnormality of the application terminal is effectively solved; the client is free from processing any service logic, so that the application terminal is lightweight. The scheme disclosed by the invention is suitable for all electric vehicle renting systems.

Owner:宁波轩悦行电动汽车服务有限公司

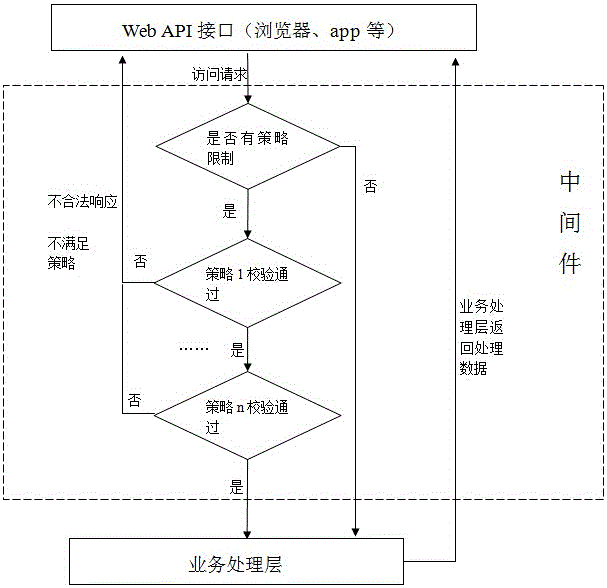

Web API regulating and controlling method based on middleware

ActiveCN105786630AReduce access pressureAvoid a logical judgmentInterprogram communicationTransmissionMiddlewareWorld Wide Web

The invention discloses a Web API regulating and controlling method based on middleware, and belongs to the field of Web architecture optimization.The Web API regulating and controlling method aims at achieving the uniformity, the stability and the security of Web API regulating and controlling.The Web API regulating and controlling method in the technical scheme includes the steps that the middleware is additionally arranged between a Web API and a business processing layer, and sets corresponding strategies according to the access request sent by the Web API, the access request sent by one Web API corresponds to one or more strategies, and the middleware sequentially verifies the access request sent by the Web API according to the corresponding strategies.

Owner:INSPUR COMMON SOFTWARE

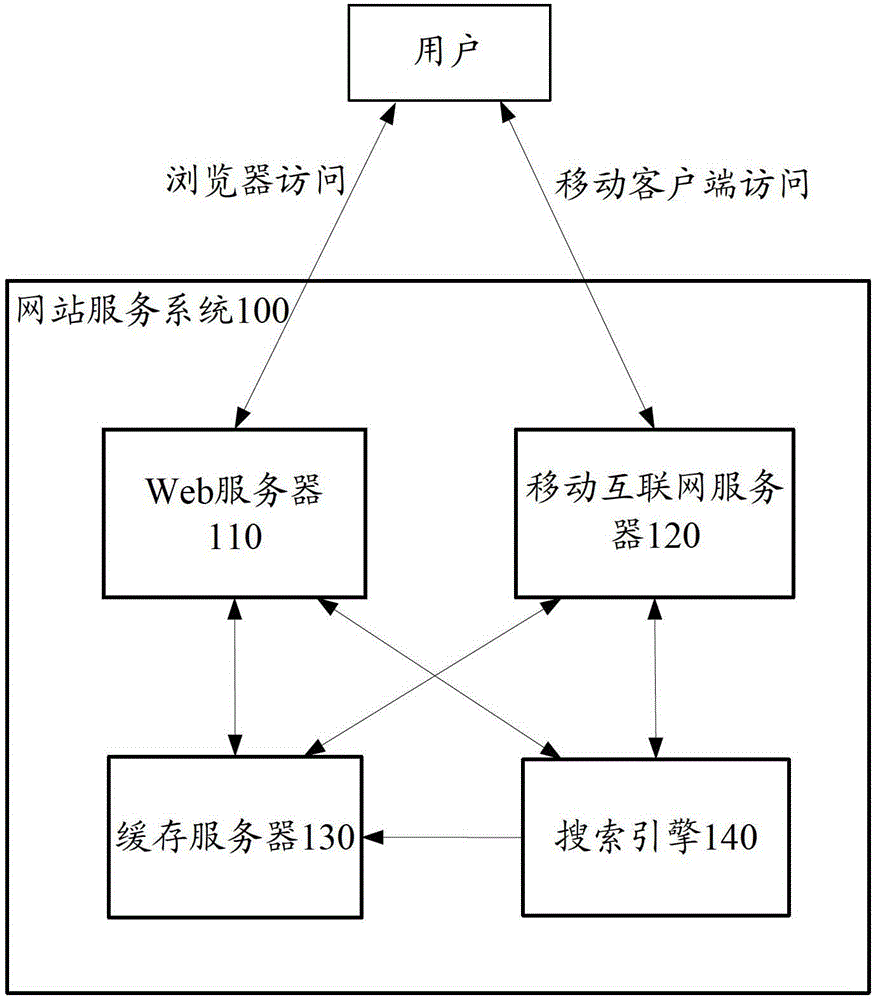

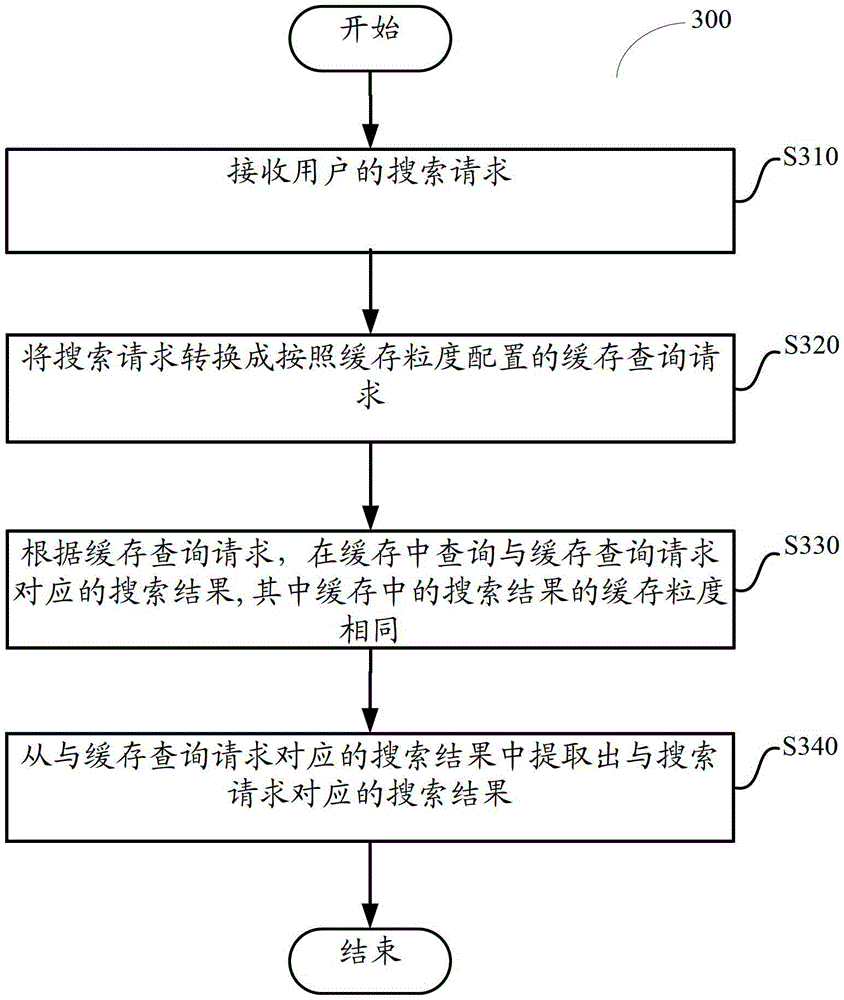

Search method and device

ActiveCN104424199AReduce access pressureImprove experienceWeb data indexingTransmissionGranularityCache hit rate

Generating cache query requests is disclosed, including: receiving a user query from a device; generating a display type specific search request based at least in part on the user query; generating a cache query request based at least in part on the display type specific search request and a cache granularity; identifying search results corresponding to the cache query request stored in a cache; and extracting at least a portion of the search results corresponding to the cache query request based at least in part on a starting position parameter associated with the display type specific search request and a quantity of requested search results parameter associated with the display type specific search request.

Owner:ALIBABA GRP HLDG LTD

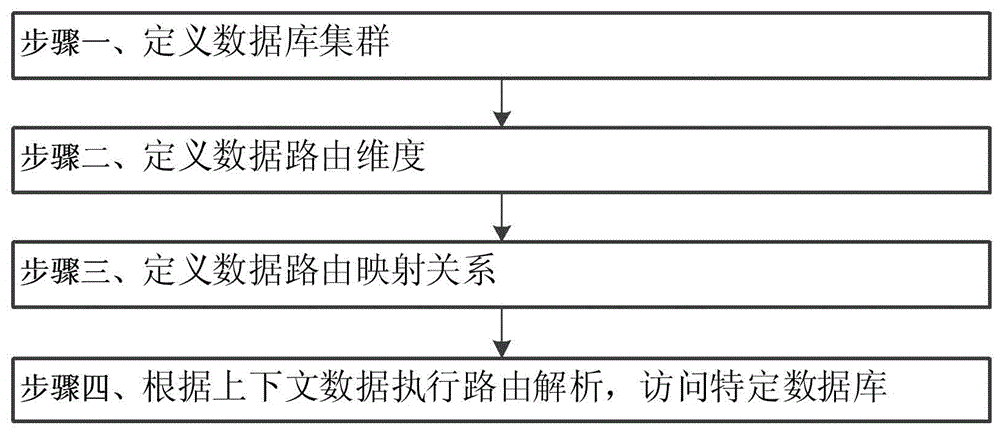

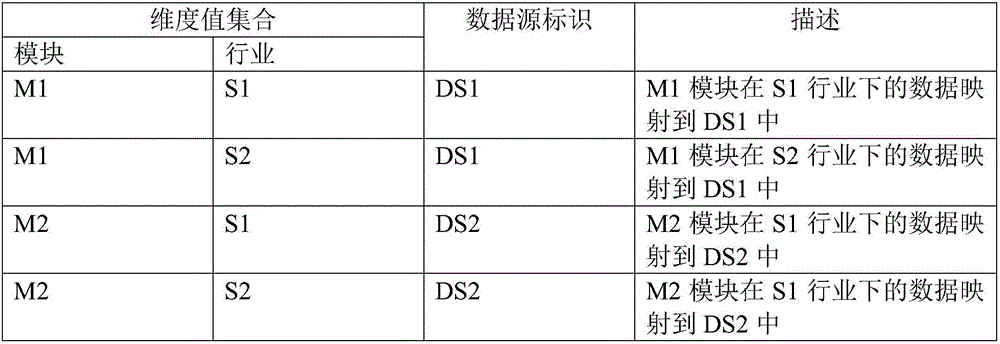

Method for enabling database of large scale application system to be transversely expanded

InactiveCN106202540AAvoid performance bottlenecksImprove performanceSpecial data processing applicationsContext dataLarge applications

The invention discloses a method for enabling a database of a large scale application system to be transversely expanded. The realization process particularly comprises the steps that a database cluster is defined, and database cluster component content is determined; a data routing dimension and a data routing mapping relation are defined; routing resolution is executed according to context data, and a specific database is visited. Compared with the prior art, according to the method for enabling the database of the large scale application system to be transversely expanded, under the situation that a large data volume and high concurrency of the large scale application system exist, access to data can be effectively shunted to different databases through data routing allocation and resolution mechanisms, therefore, access pressure of the large scale application system to the single database is reduced, the performance bottleneck problem of the single database is solved, and the overall operation performance of a large scale application is improved.

Owner:INSPUR COMMON SOFTWARE

Circuit and method based on AVS motion compensation interpolation

InactiveCN101778280AImprove parallelismImprove performanceTelevision systemsDigital video signal modificationMultiplexerMemory interface

The invention relates to a circuit and a method based on AVS motion compensation interpolation, which belong to the technical field of audio and video digital encoding and decoding; the circuit comprises integer-pixel memories I and II, bh and j pixel memories, a memory interface module, one-second and one-fourth pixel interpolation filters, a multiplexer and an adjusting amplitude limiter; the output ends of the circuit comprises integer-pixel memories I and II and the bh and j pixel memories are connected with the input end of the memory interface module; the output end of the memory interface module is respectively connected with the input ends of the one-second and one-fourth pixel interpolation filters; the output ends of the one-second and one-fourth pixel interpolation filters are respectively connected with the input end of the multiplexer; the output end of the one-second pixel interpolation filter is respectively connected with the input ends of the bh and j pixel memories; and the output end of the multiplexer is connected with the input end of the adjusting amplitude limiter, and interpolation results are output by the adjusting amplitude limiter. The invention carries out interpolation operation by the means of improving system parallelism, thereby effectively improving the system performance.

Owner:SHANDONG UNIV

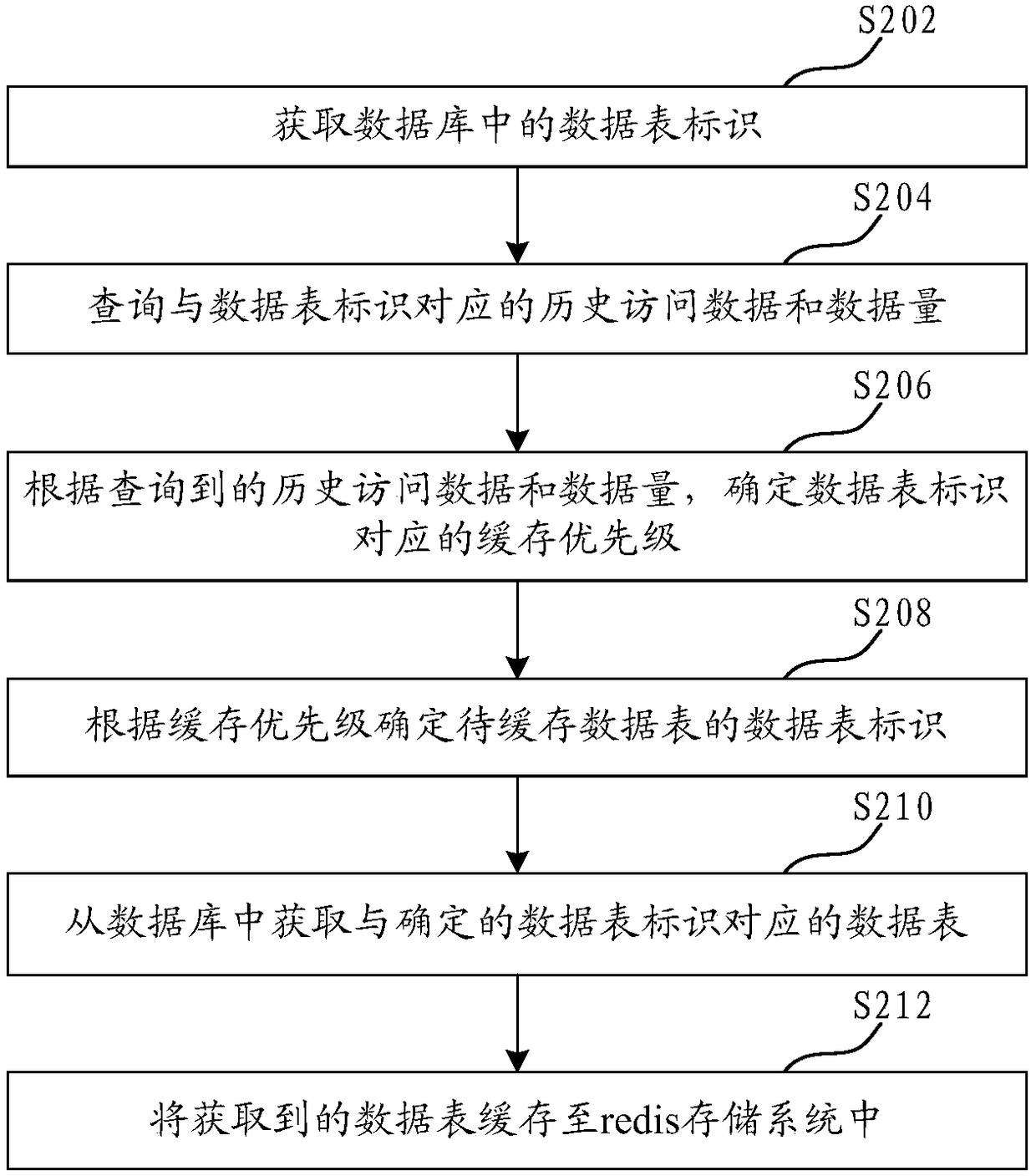

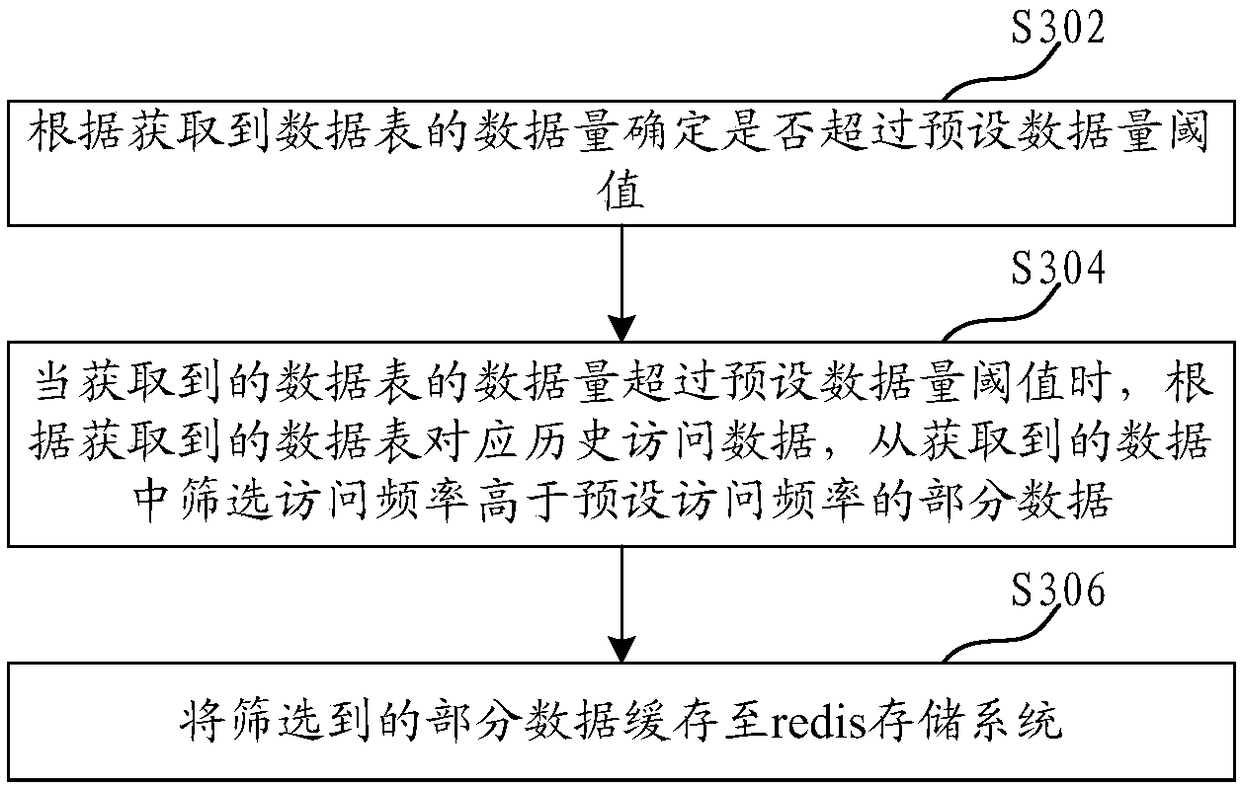

Data caching method and device, server and storage medium

ActiveCN108491450AHigh cache priorityImprove access efficiencySpecial data processing applicationsDatabaseData cache

The invention relates to a data caching method and device, a server and a storage medium. The method comprises the following steps of: obtaining data table identifiers in a database; querying historyaccess data and data sizes corresponding to the data table identifiers; determining caching priorities corresponding to the data table identifiers according to the queried history access data and datasizes; determining a data table identifier of a to-be-cached data table according to the caching priorities; determining a data table corresponding to the determined data table identifier from the database; and caching the obtained data table into a redis storage system. By adoption of the method, the restriction of local caching spaces is not considered, and when data tables in databases are accessed, cached data tables are queried from redis storage systems, so that the data table access efficiency is improved, and the access pressure of the databases is lightened at the same time.

Owner:PINGAN PUHUI ENTERPRISE MANAGEMENT CO LTD

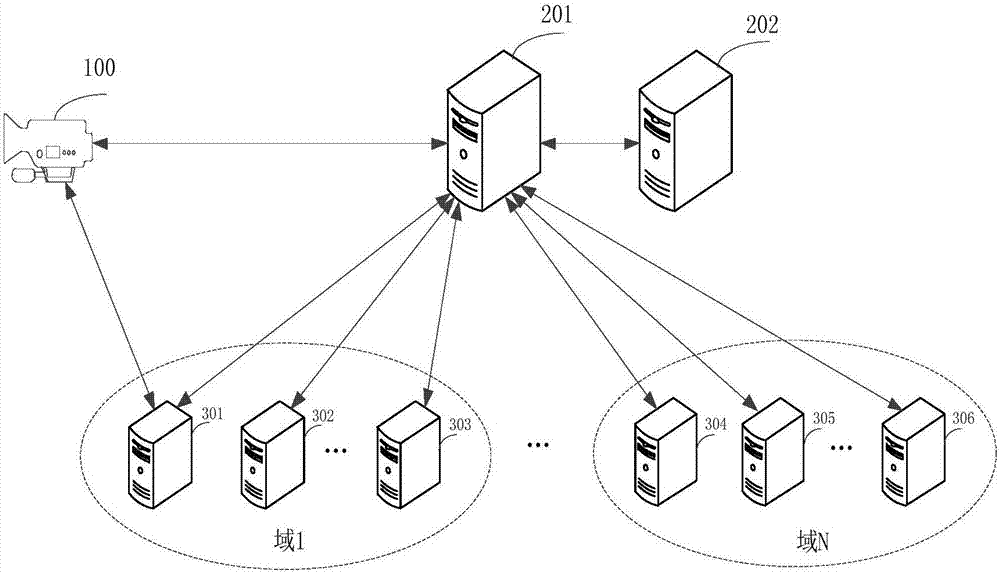

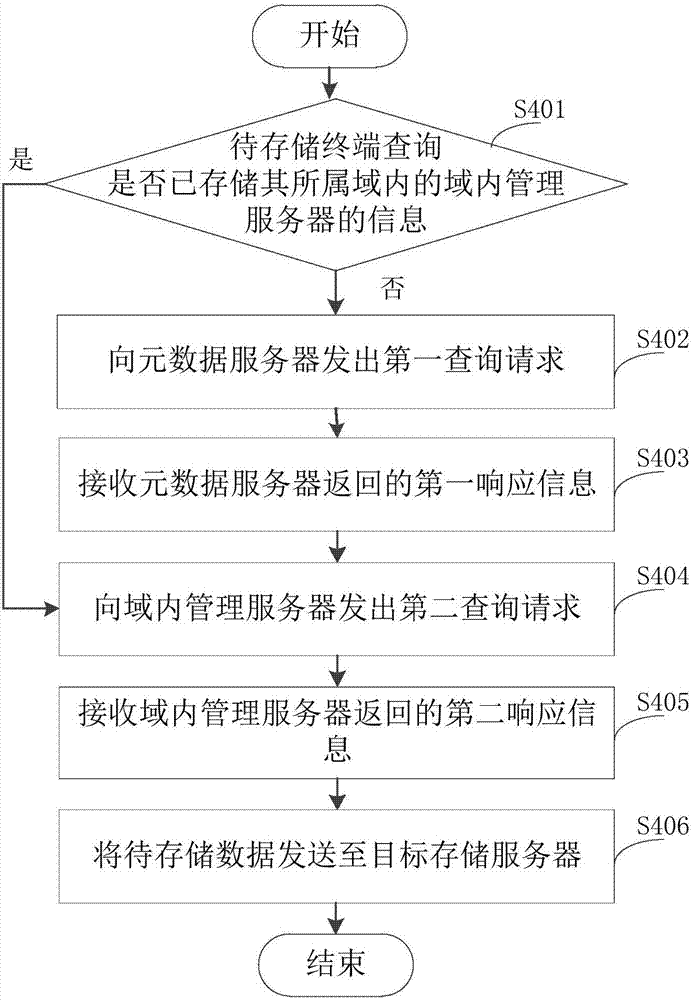

Data storage method and device

ActiveCN107229425AReduce access pressureImprove continuityInput/output to record carriersNO storageComputer terminal

The invention provides a data storage method and device. The method comprises the steps that whether information of an intra-domain management server in a domain which a pending terminal belongs to is stored or not is inquired by the pending storage terminal; if not, a first query request is sent to a metadata server; first response information which is returned by the metadata server is received; a second query request is sent to the intra-domain management server; if yes, the second query request is directly sent to the intra-domain management server; second response information which is returned by the intra-domain management server is received, and the second response information comprises information of a target storage server; data to be stored is sent to the target storage server. According to the data storage method and device, the access capability of a whole memory system is not just dependent on the hardware performance of the metadata server, so that the access pressure of the metadata server is greatly reduced, and accordingly the continuity of storage service of the pending storage terminal is improved.

Owner:XIAN UNIVIEW INFORMATION TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com