Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

34results about How to "Increase cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

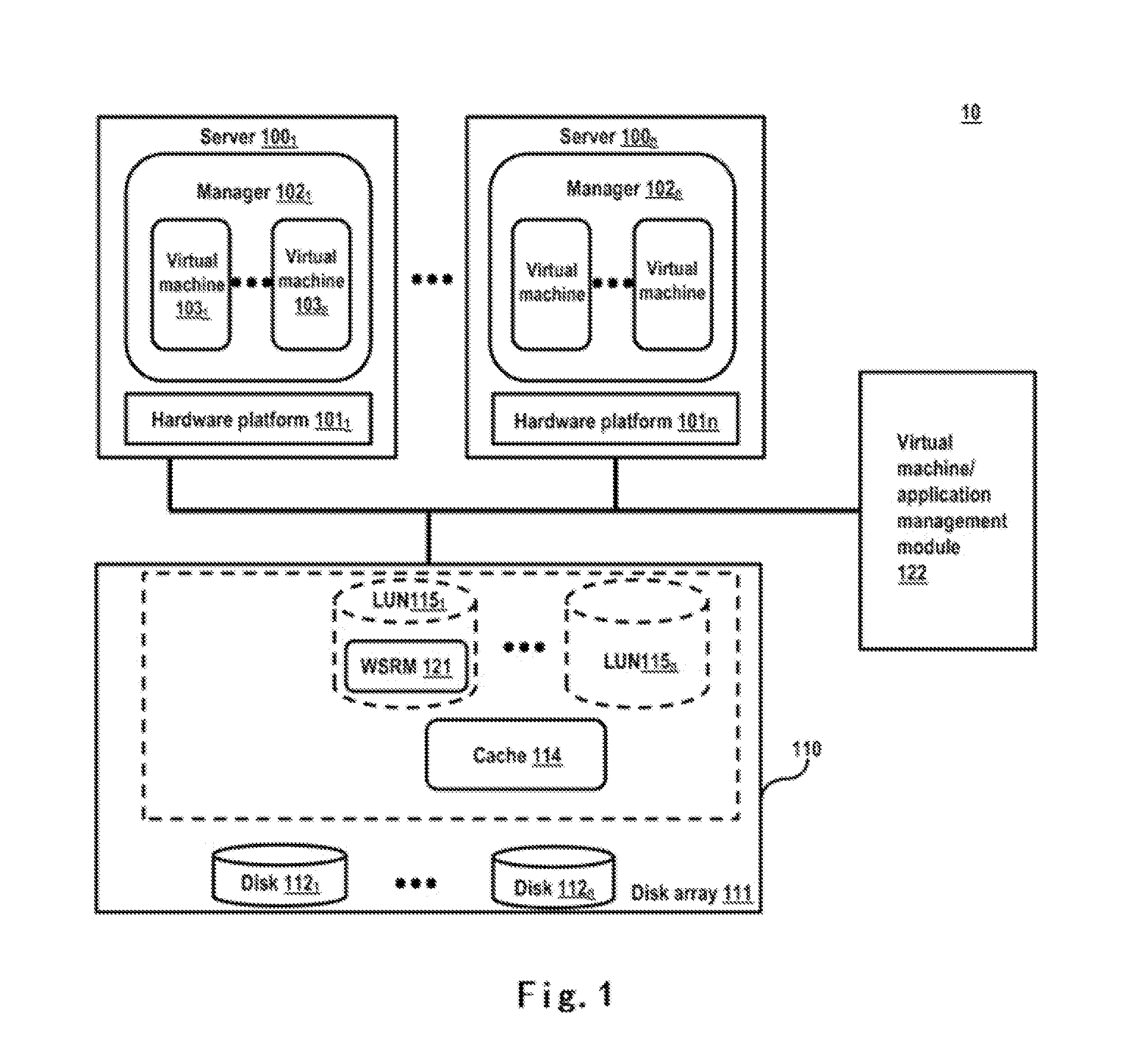

Distributed multicast caching technique

InactiveUS7240105B2Improve cachingIncrease cacheSpecial service provision for substationDigital computer detailsClient-sideRecursion

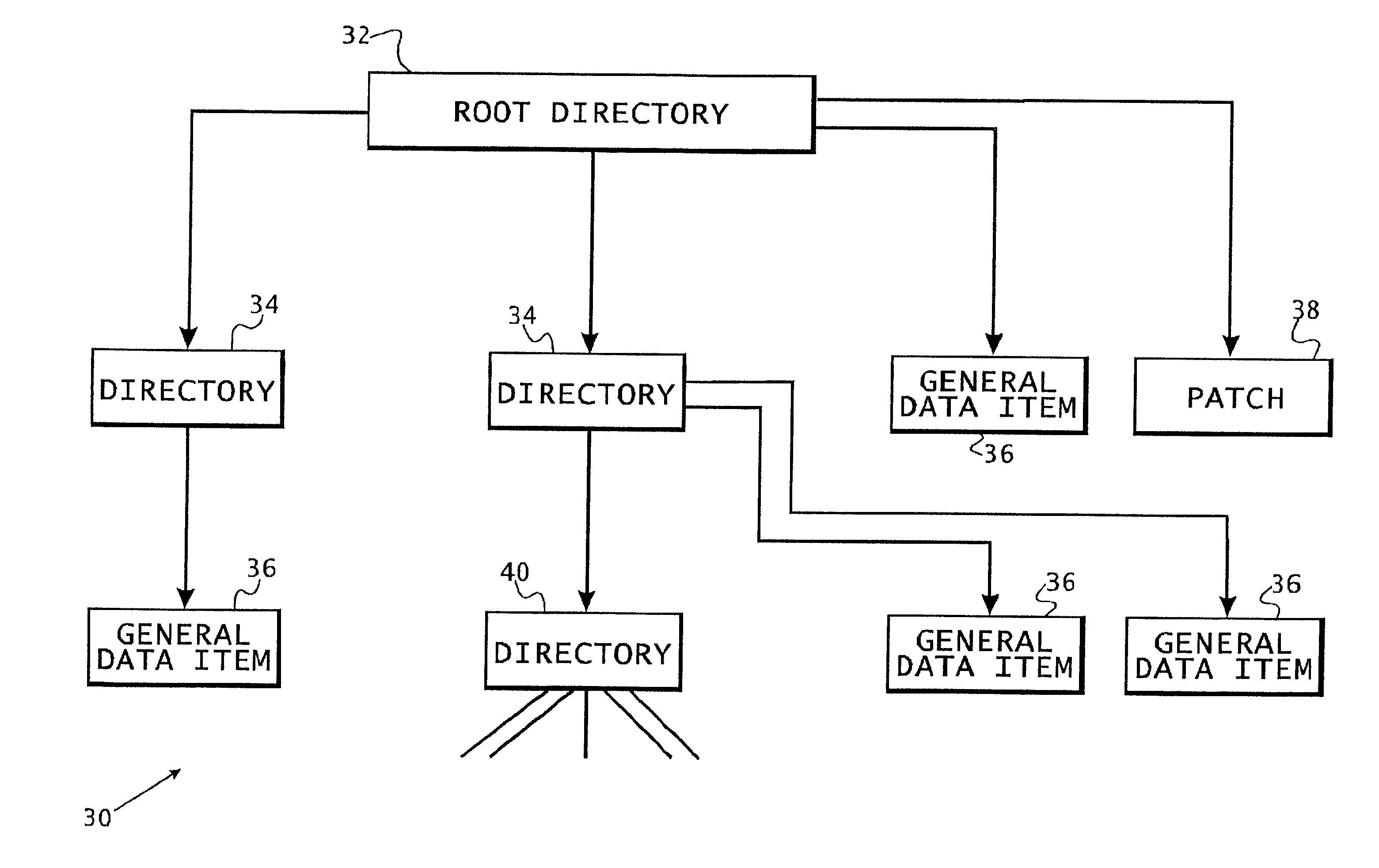

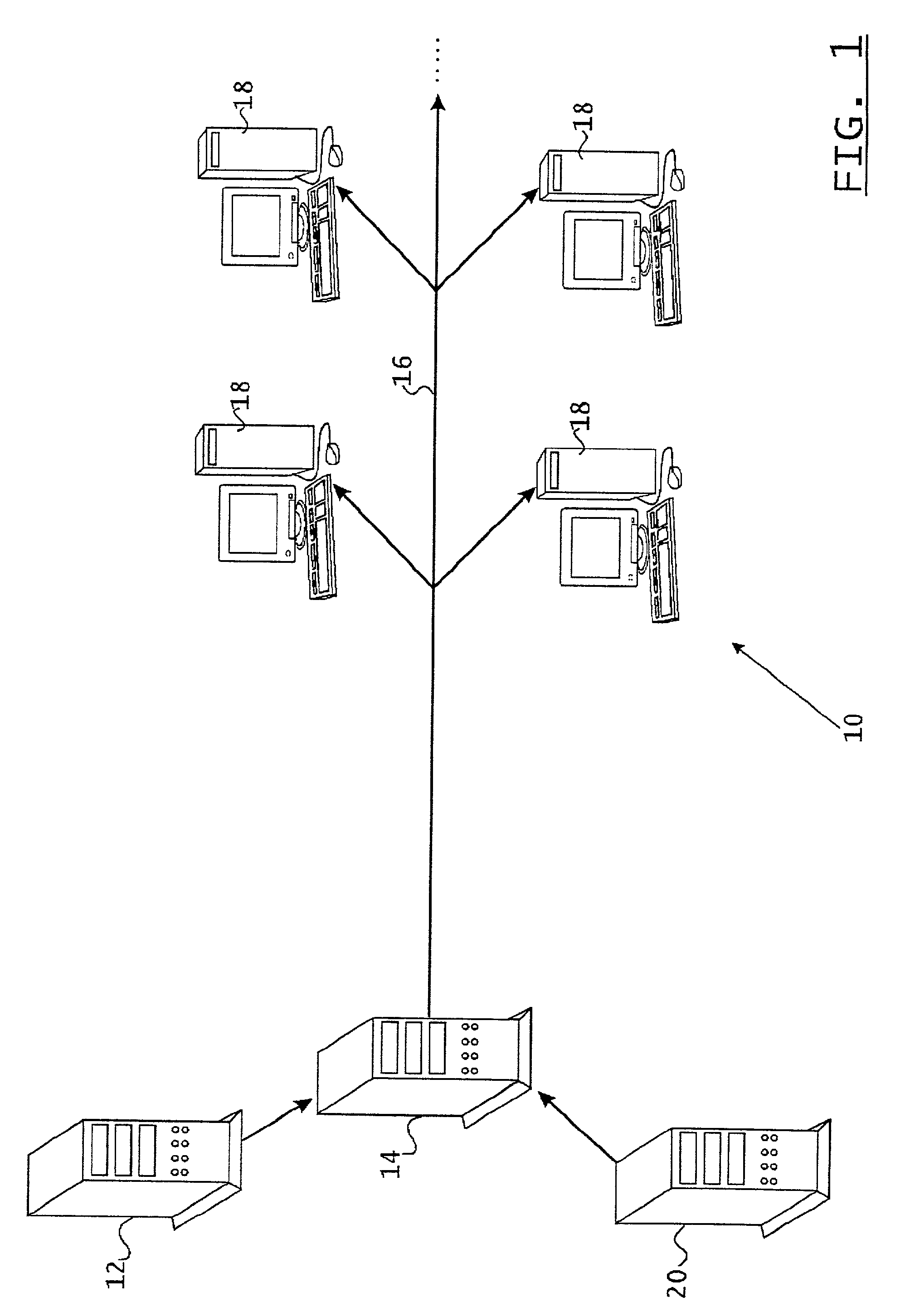

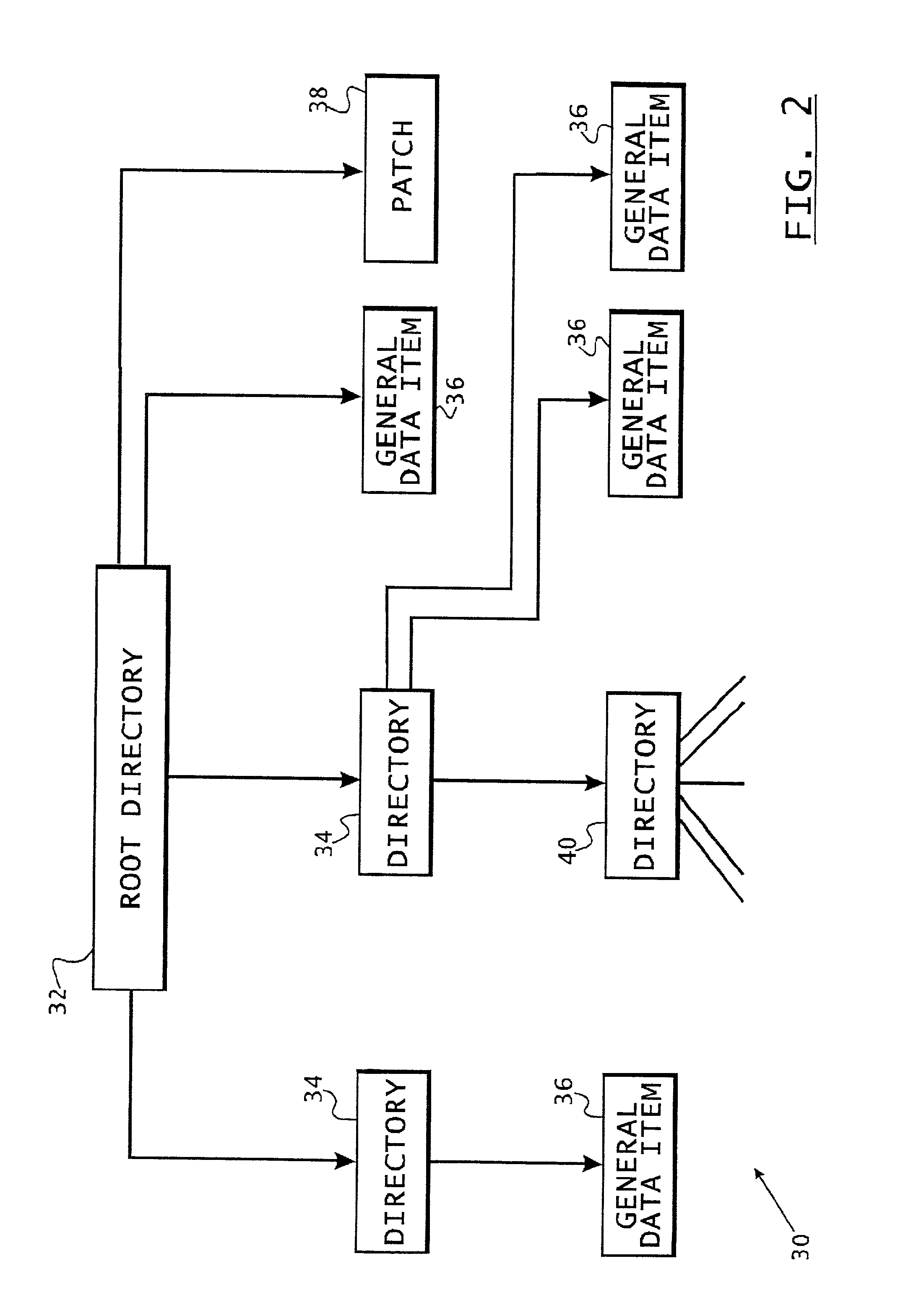

A caching arrangement for the content of multicast transmission across a data network utilizes a first cache which receives content from one or more content providers. Using the REMADE protocol, the first cache constructs a group directory. The first cache forms the root of a multilevel hierarchical tree. In accordance with configuration parameters, the first cache transmits the group directory to a plurality of subsidiary caches. The subsidiary caches may reorganize the group directory, and relay it to a lower level of subsidiary caches. The process is recursive, until a multicast group of end-user clients is reached. Requests for content by the end-user clients are received by the lowest level cache, and forwarded as necessary to higher levels in the hierarchy. The content is then returned to the requesters. Various levels of caches retain the group directory and content according to configuration options, which can be adaptive to changing conditions such as demand, loading, and the like. The behavior of the caches may optionally be modified by the policies of the content providers.

Owner:IBM CORP

Charging-invariant and origin-server-friendly transit caching in mobile networks

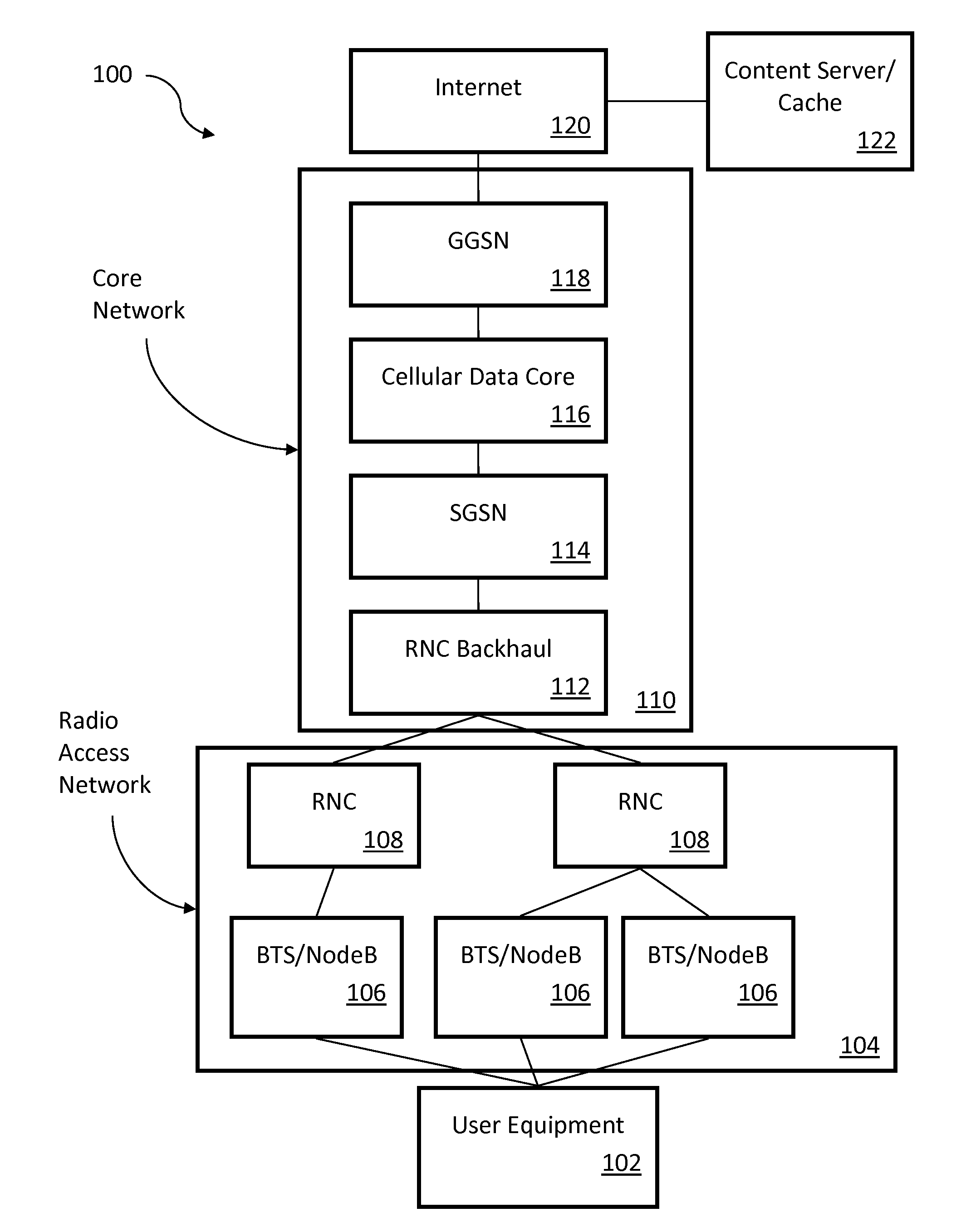

InactiveUS20110202634A1Reduce network latencyFaster downloadsMetering/charging/biilling arrangementsTelephonic communicationRadio access networkMobile device

A method for serving content from a radio-access network cache includes detecting a request from a mobile device for content in the cache. The request is sent to a content-origin server, and a response is received therefrom.

Owner:MOVIK NETWORKS

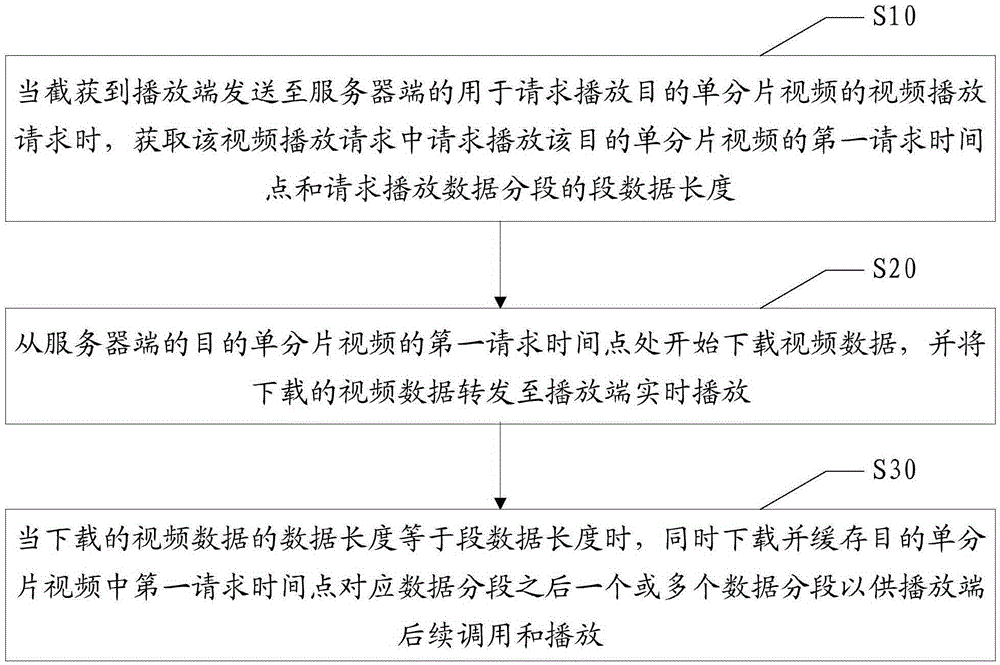

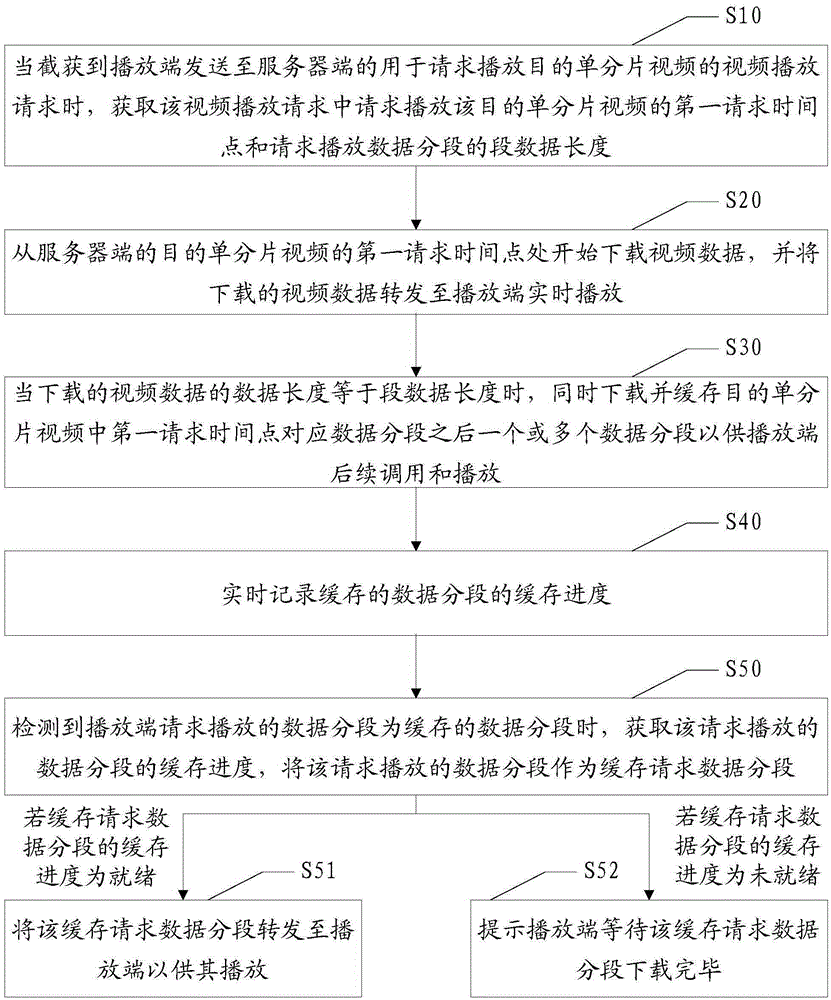

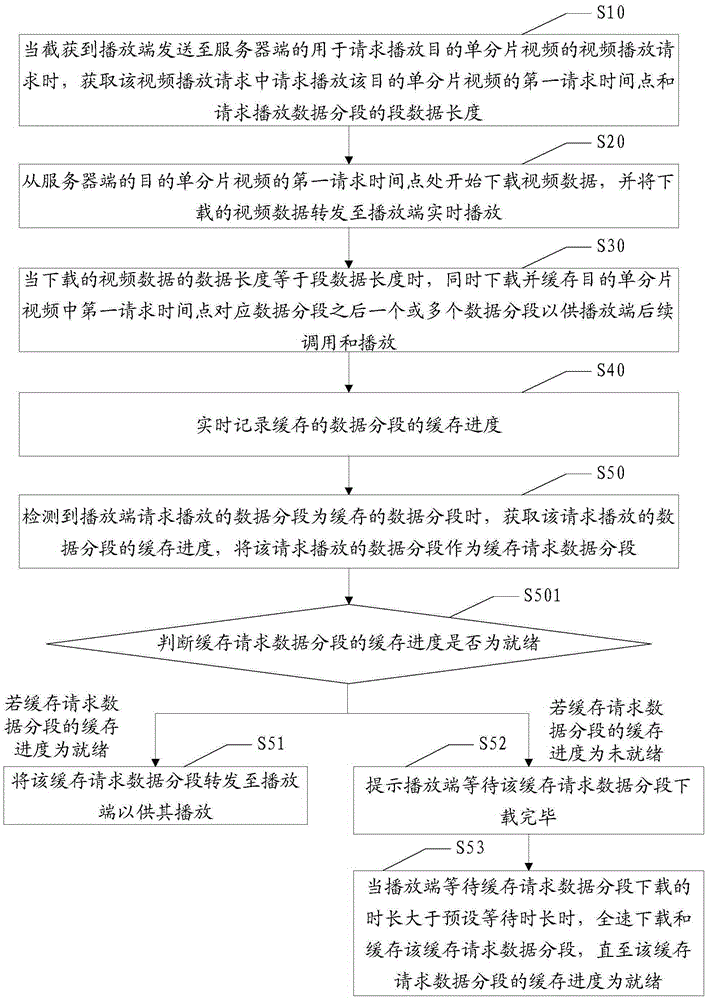

Method and device for accelerating playing of single-fragment video

ActiveCN105430425ASmooth playbackIncrease cacheTransmissionSelective content distributionComputer graphics (images)Data fragment

The invention discloses a method and a device for accelerating playing of a single-fragment video. The method comprises the steps of: when intercepting a video playing request sent by a playing end, obtaining a first request time point and a fragment data length of the single-fragment video in the video playing request; starting to download video data from the first request time point of the target single-fragment video, and forwarding the downloaded video data to a playing end for playing in real time; and when the data length of the downloaded video data is equal to the fragment data length, simultaneously downloading and caching one or more data fragments after the data fragment corresponding to the first request time point in the target single-fragment video. According to the method and device provided by the invention, on the basis of ensuring that the playing end plays the single-fragment video data smoothly, downloading and caching of the single-fragment video data are accelerated, the playing end can call the data fragments to be played is ensured as much as possible, and thereby playing of the single-fragment video is accelerated.

Owner:SHENZHEN TCL NEW-TECH CO LTD

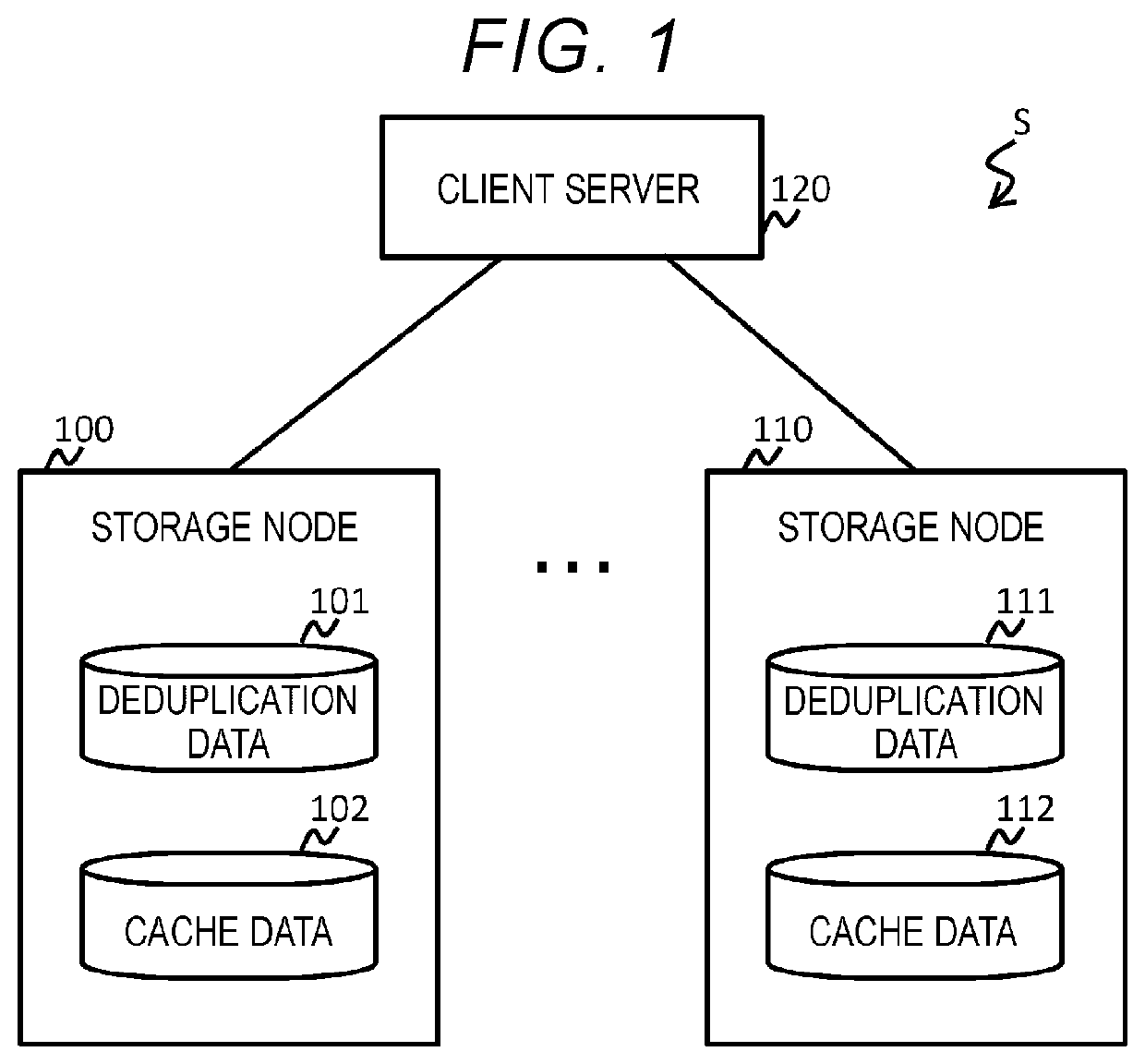

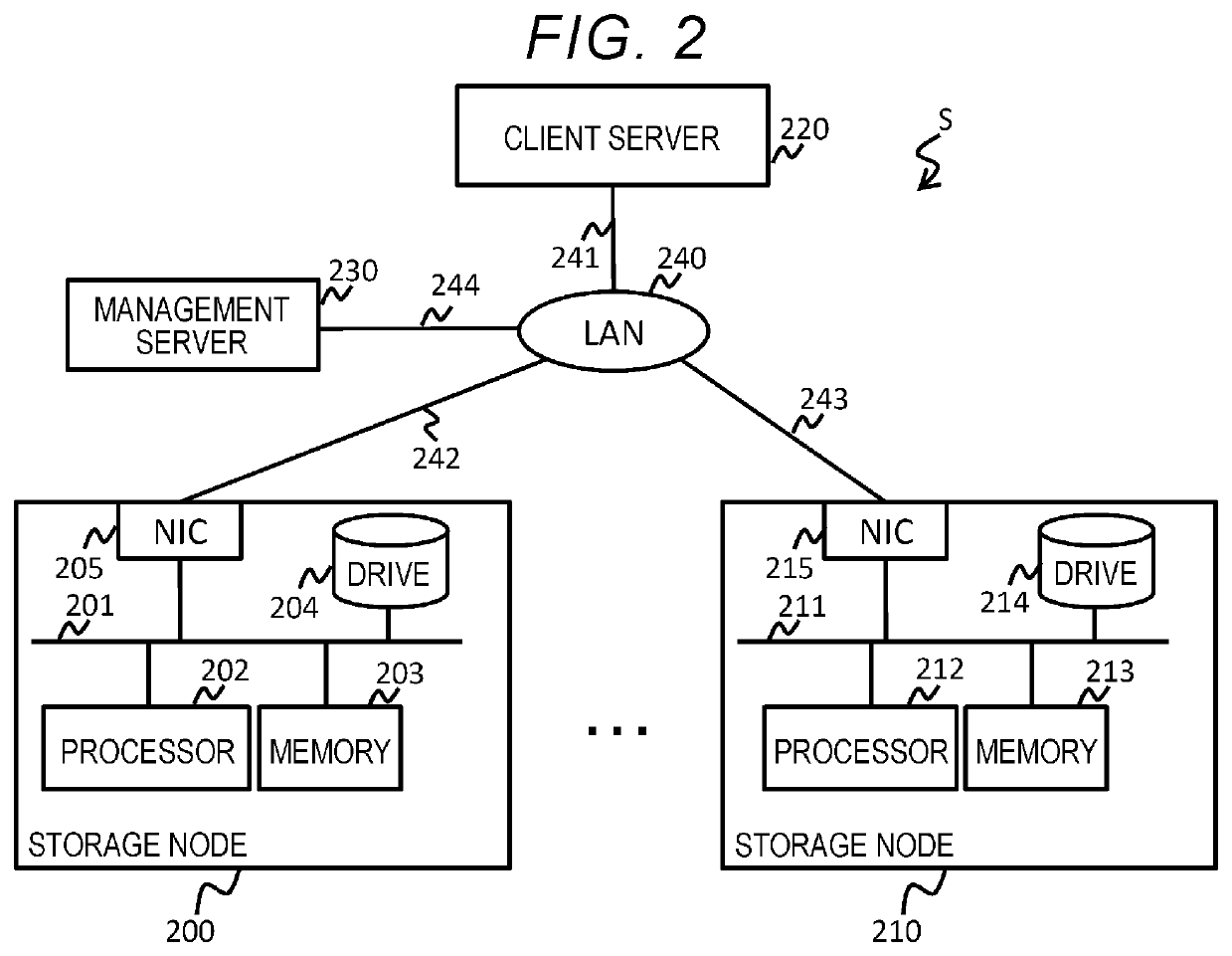

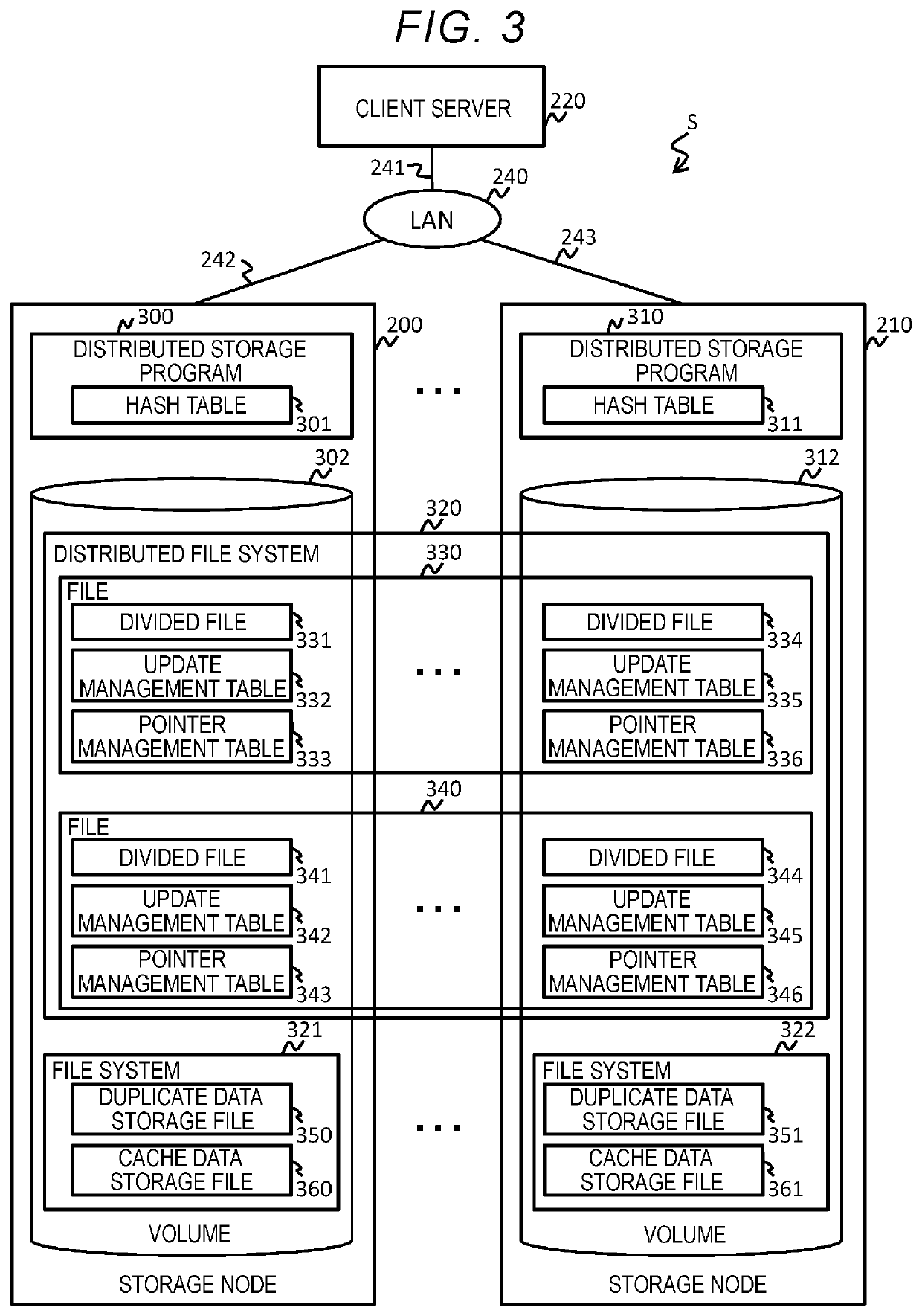

Distributed storage device and data management method in distributed storage device

ActiveUS20210374105A1Improve capacity efficiencyPerformance stability highSpecial data processing applicationsFile system functionsComputer networkData storing

The number of inter-node communications in inter-node deduplication can be reduced and both performance stability and high capacity efficiency can be achieved. A storage drive of storage nodes stores files that are not deduplicated in the plurality of storage nodes, duplicate data storage files in which deduplicated duplicate data is stored, and cache data storage files in which cache data of duplicate data stored in another storage node is stored, in which when a read access request for the cache data is received, the processors of the storage nodes read the cache data if the cache data is stored in the cache data storage file, and request another storage node to read the duplicate data related to the cache data if the cache data is discarded.

Owner:HITACHI LTD

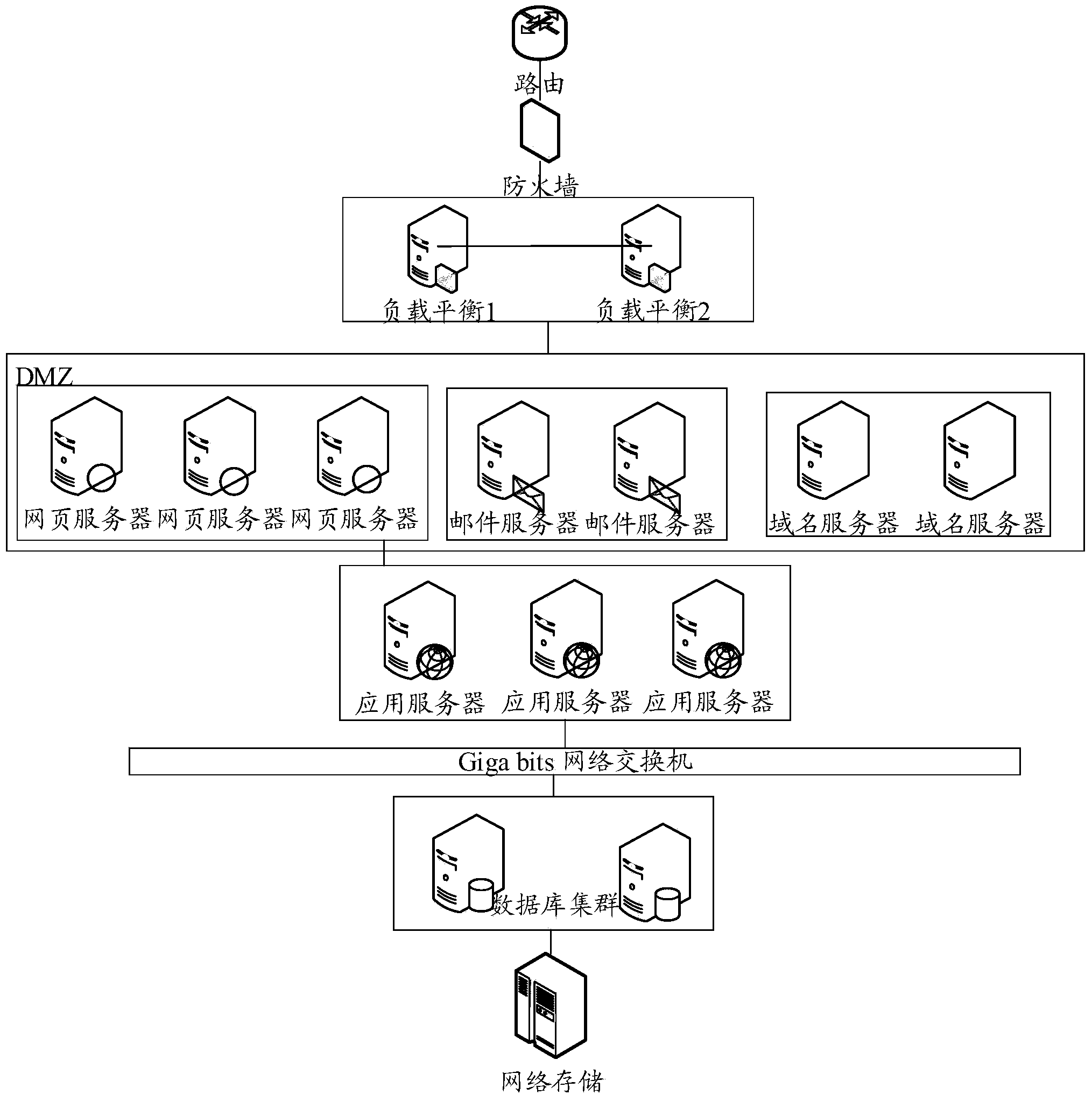

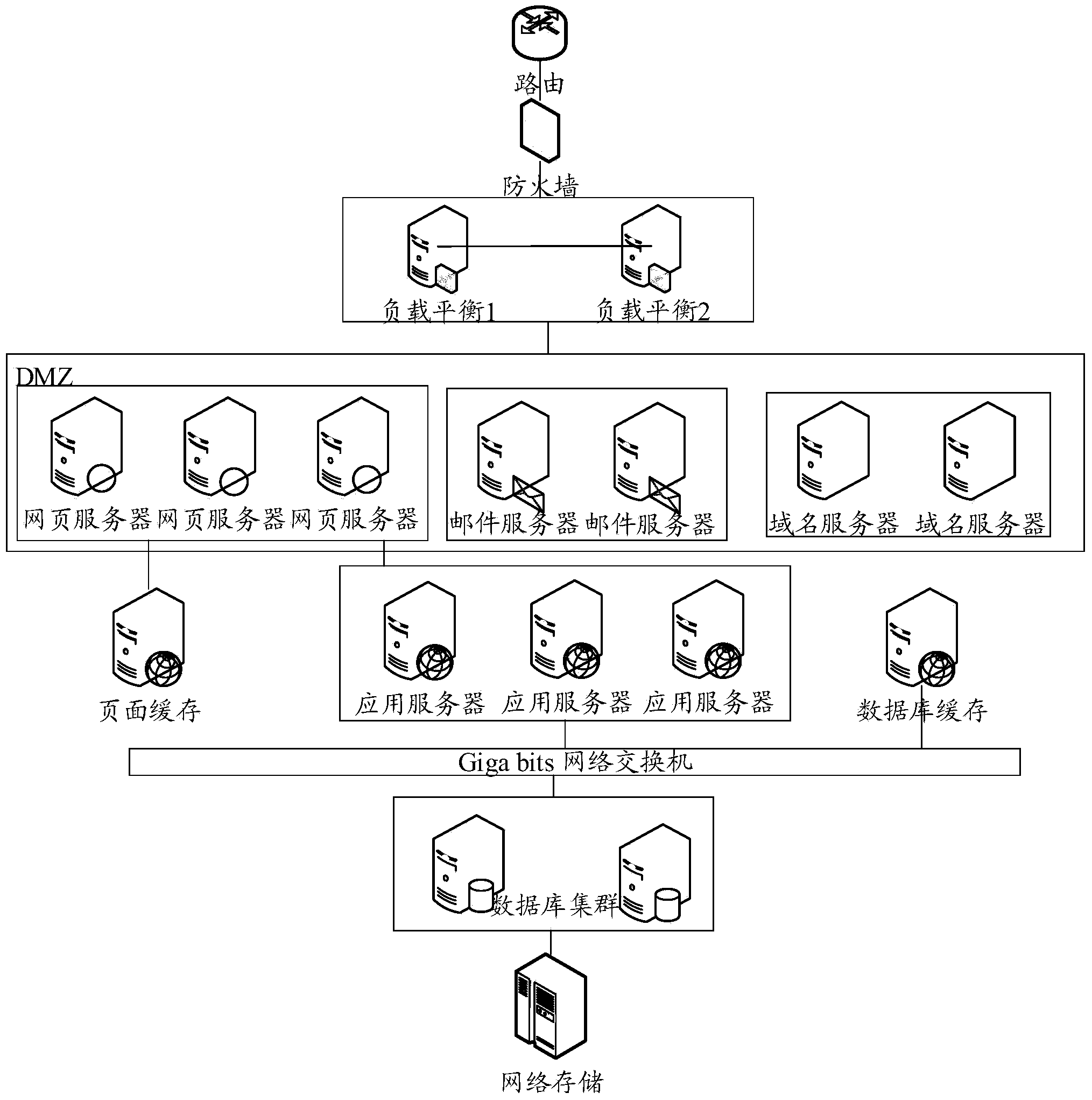

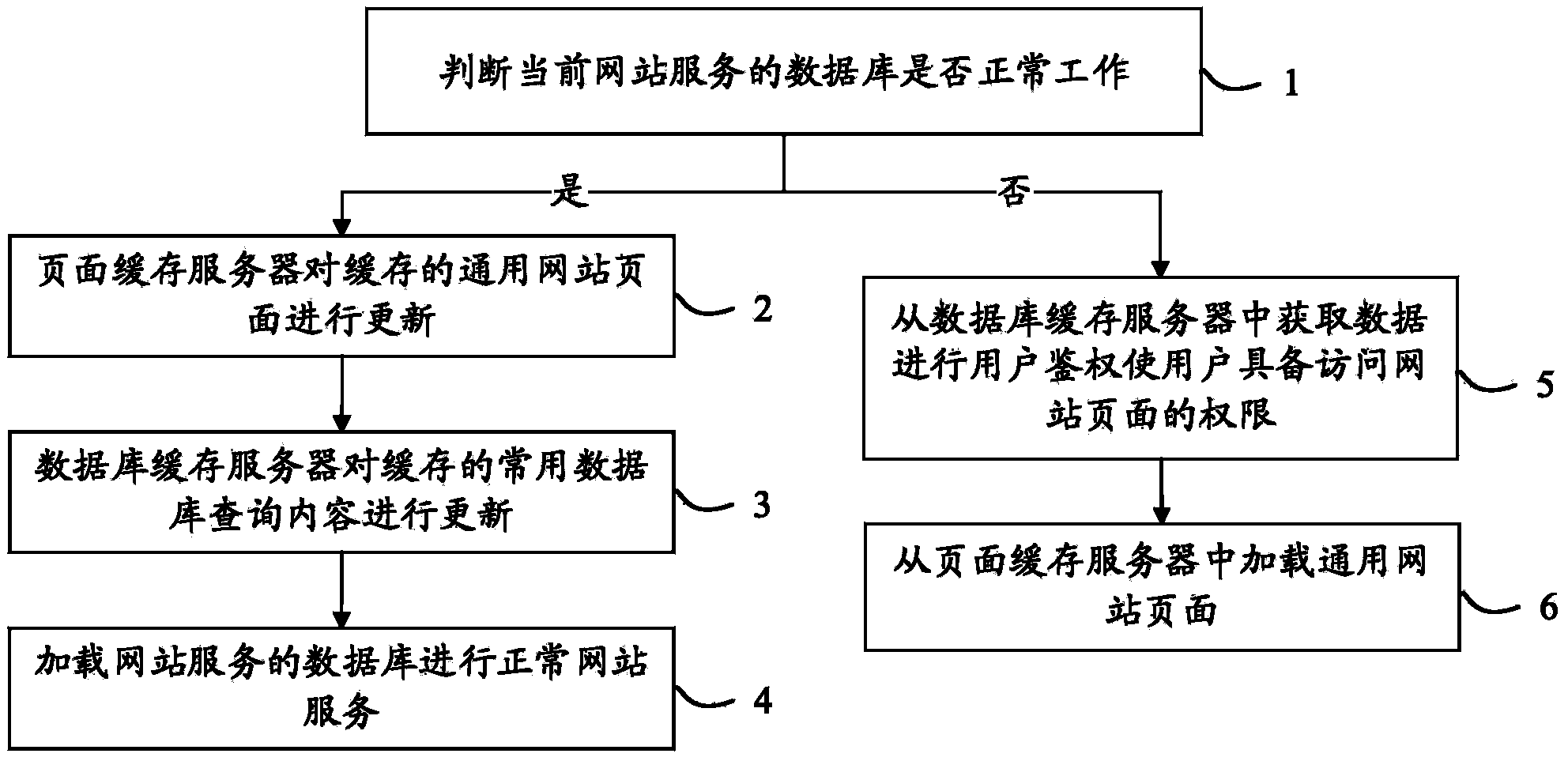

System and method for realizing restoration of web service in case of crash of database

InactiveCN103793538AIncrease cacheFunction increaseDatabase updatingError detection/correctionPersonalizationWeb site

The invention relates to a system and method for realizing the restoration of a web service in case of a crash of a database. The system comprises a page caching server and a database caching server, wherein the page caching server is used for acquiring a general web page from a web server of the web service for caching, and the database caching server is used for caching general database query content of the web service. The structure is adopted for the system and method for realizing the restoration of the web service in case of the crash of the database, page caching and database caching are reasonably set, asynchronous personalized data are acquired, reasonable strategies and services are set, and web page caching, asynchronous personalized data loading, database caching and other technologies are applied comprehensively, as a result, the web service can be quickly restored in case of the crash of the database, a user website can still provide all or part of service under the circumstance that the database has the crash, normal access by users of the web site can be guaranteed, and the application range is wider.

Owner:CERTUS NETWORK TECHNANJING

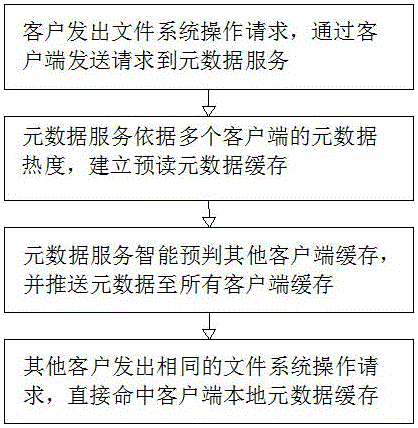

Intelligent pre-reading implementation method for distributed file system

InactiveCN106686113AImprove cache hit ratioRealize intelligent learningTransmissionSpecial data processing applicationsFile systemDistributed File System

The invention discloses an intelligent pre-reading implementation method for a distributed file system, and belongs to the field of distributed file systems. The method specifically comprises the following steps that S1, a customer transmits a file system operating request, and the request is transmitted to a meta-data service through the client; S2, the meta-data service builds a pre-reading meta-data buffer memory according to the heat degree of meta-data of a plurality of clients; S3, the meta-data service intelligent pre-judges other client buffer memories, and pushes the meta-data to all client buffer memories; S4, other customers transmits the same file system operating request, and directly hits the local meta-data buffer memory of the client. According to the invention, the plurality of clients visit a file system at the same time, and the method increases the meta-data buffer memory through the intelligent pushing of meta-data, reduces the number of direct interaction between the clients and the meta-data service, and improves the file operating performance.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

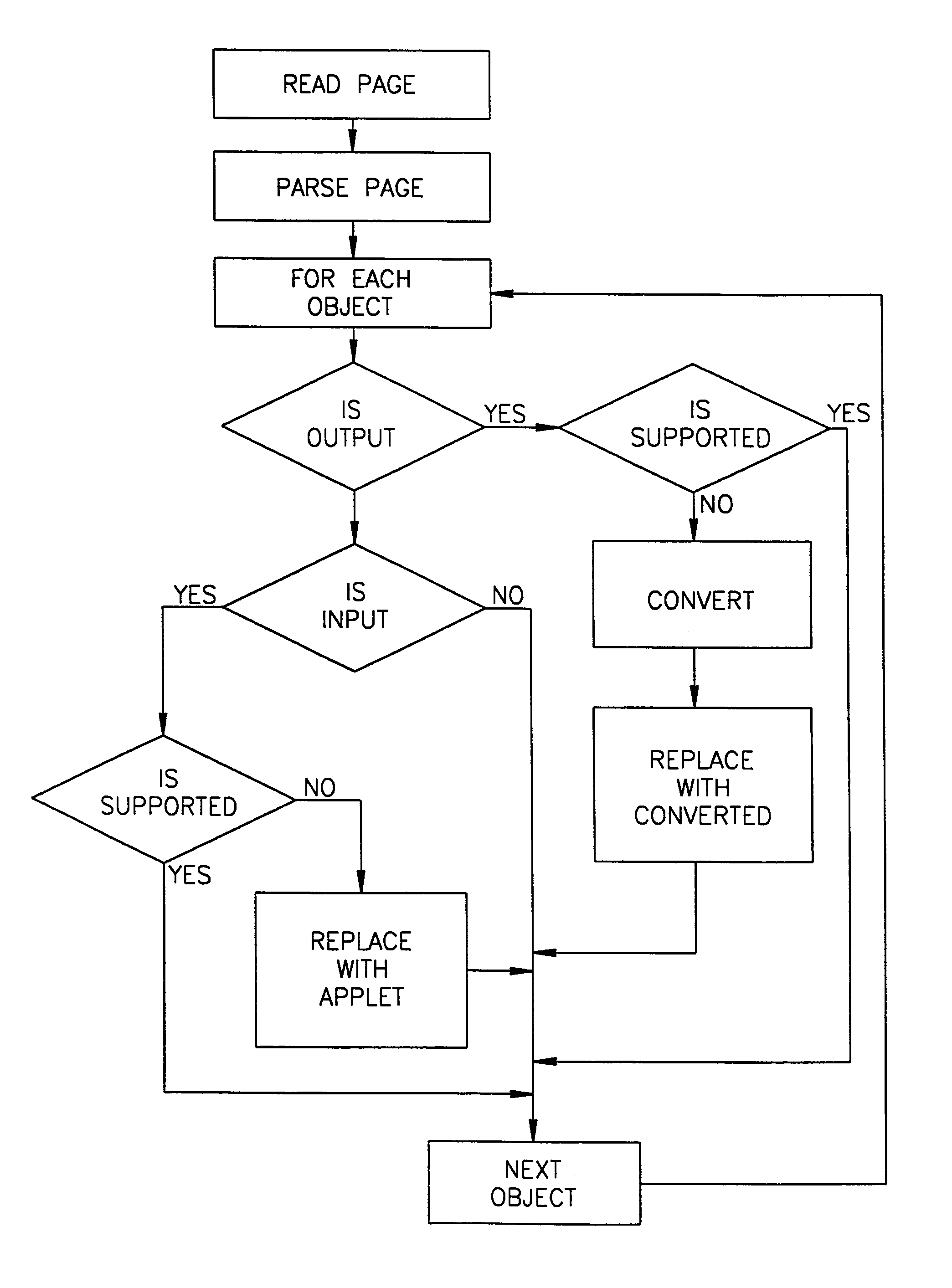

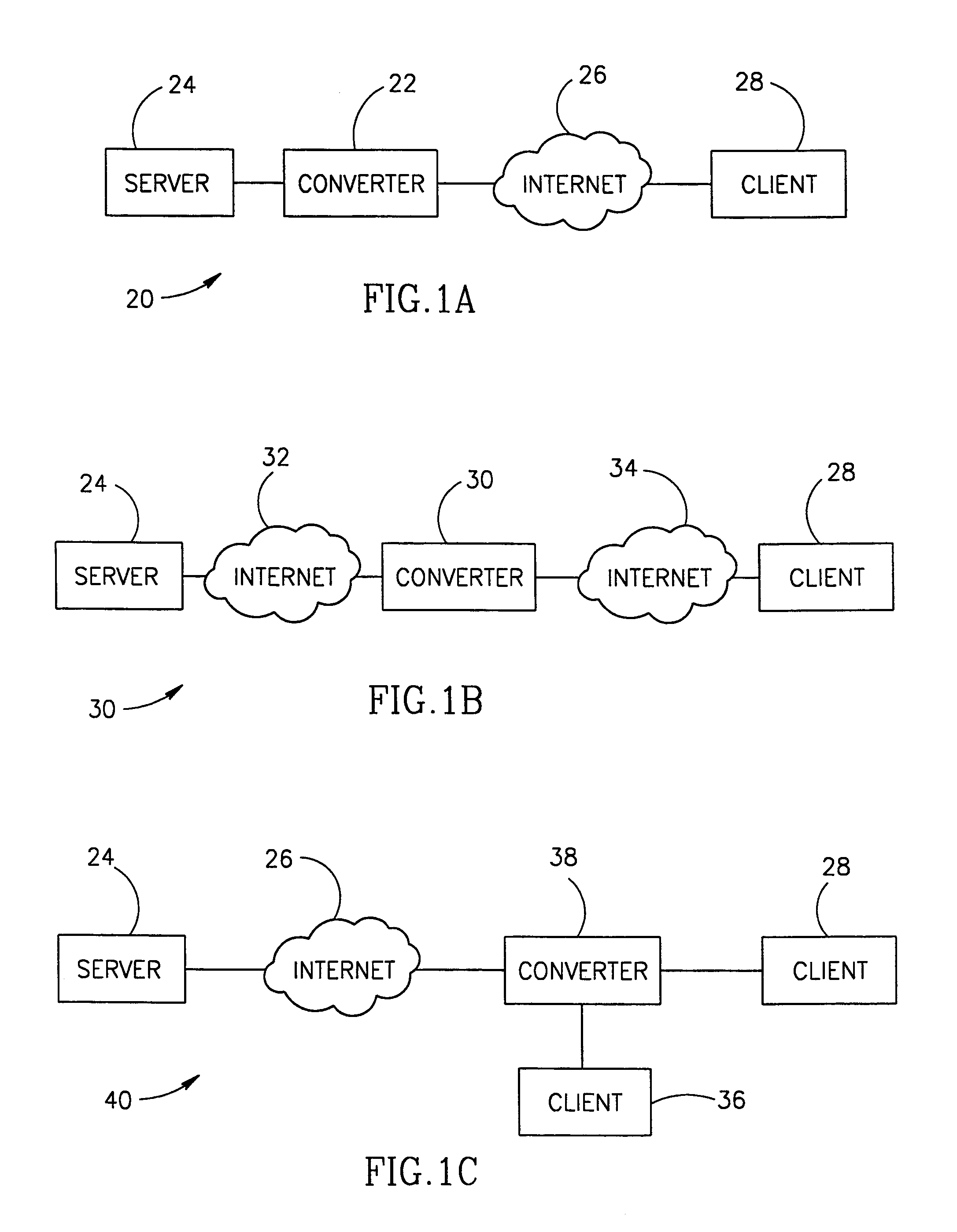

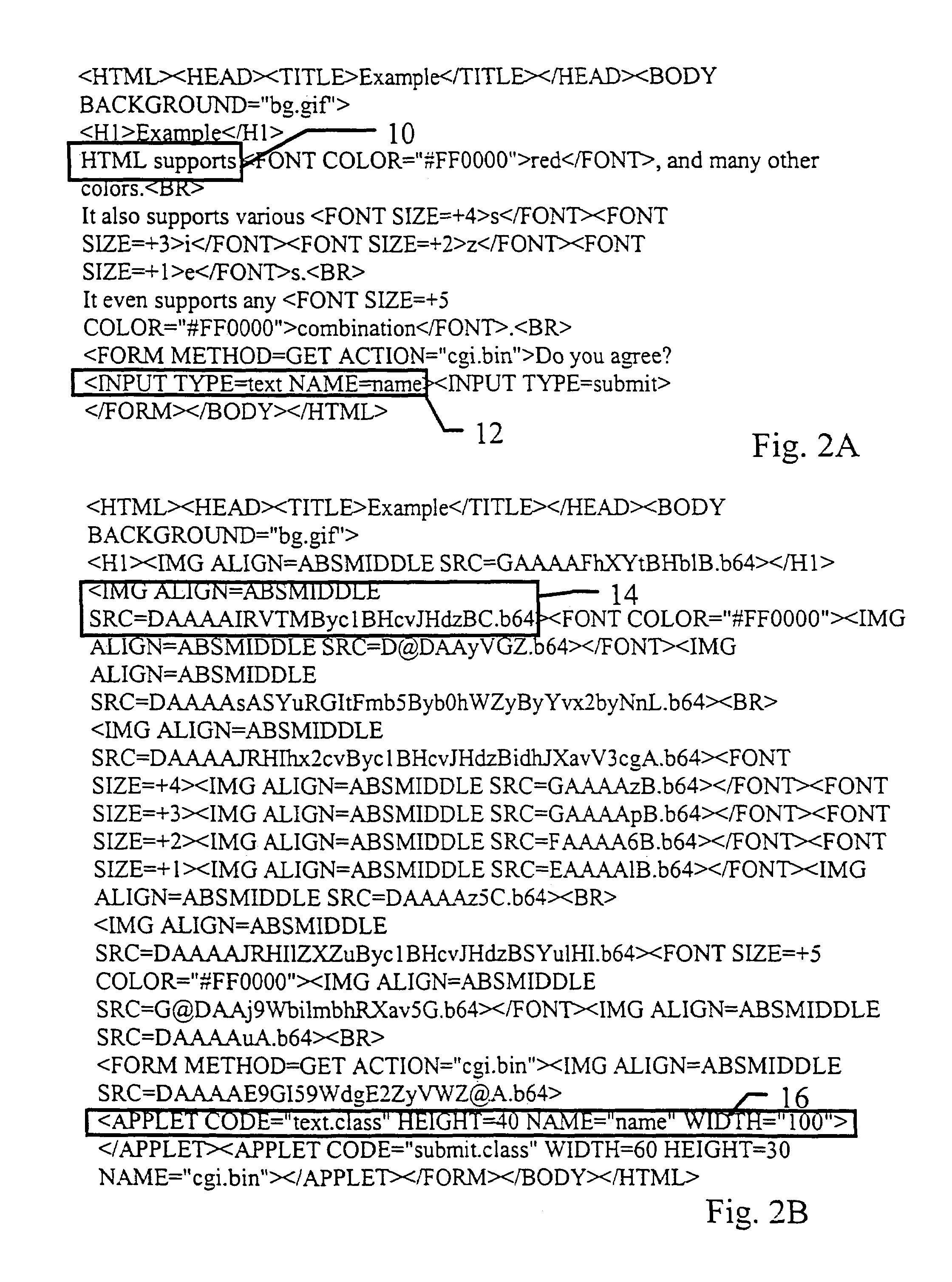

Non-intrusive digital rights enforcement

InactiveUS7167925B2Increase cacheImprove efficiencyComputer security arrangementsMultiple digital computer combinationsThe InternetSyntax

A method for transferring information between a server (24) and a client (28), through a converter (22), comprising:analyzing at least a portion the information by the converter (22), to determine a standard used by the server (24) to encode the information in the portion; andreplacing at least a portion of the analyzed information with other information, which other information uses a second standard,wherein, analyzing comprises parsing the information on a syntactic level and wherein the information comprises at least one Internet hypertext document.

Owner:GOOGLE LLC

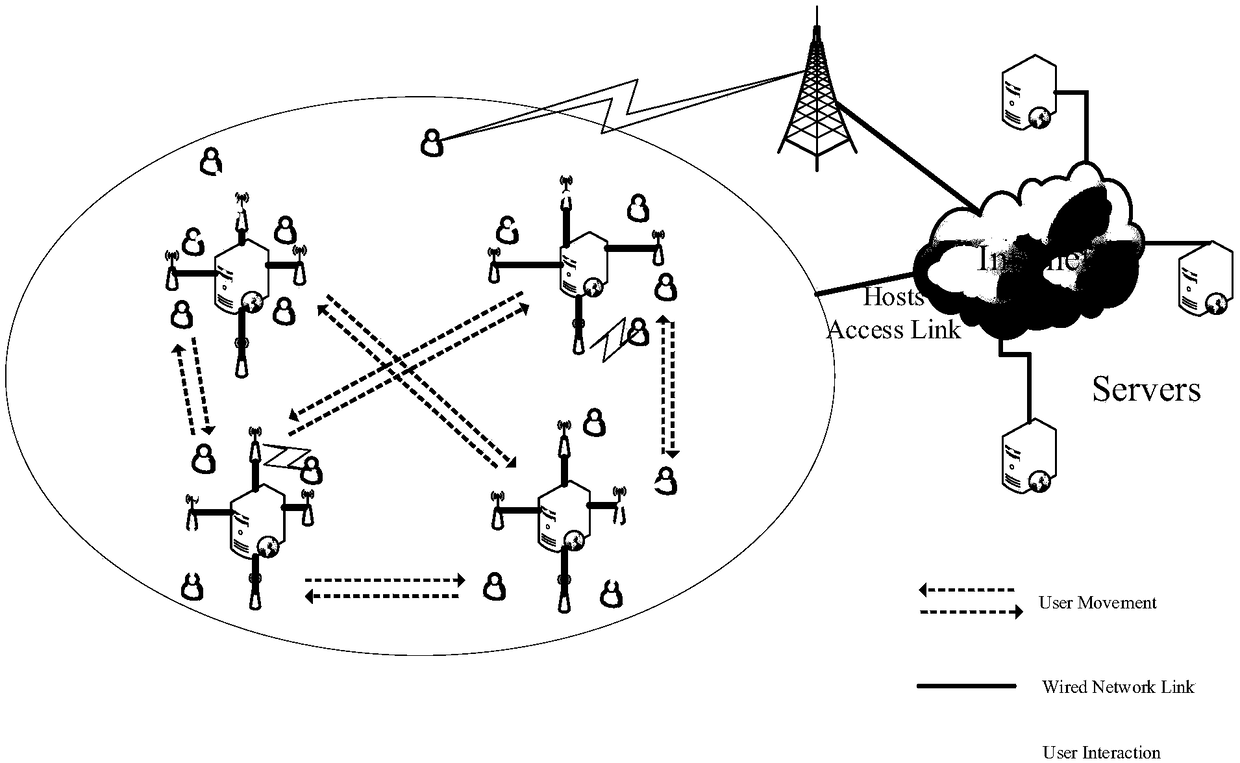

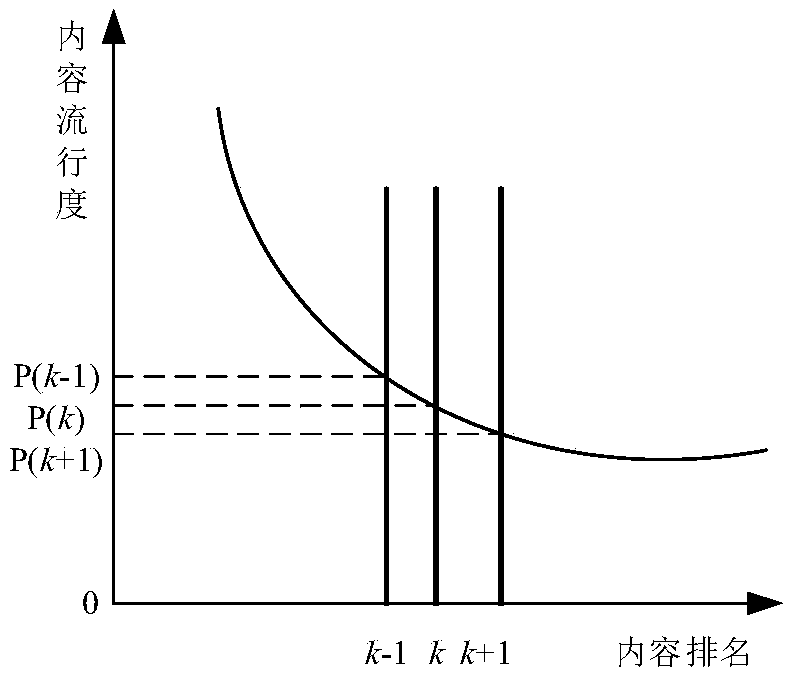

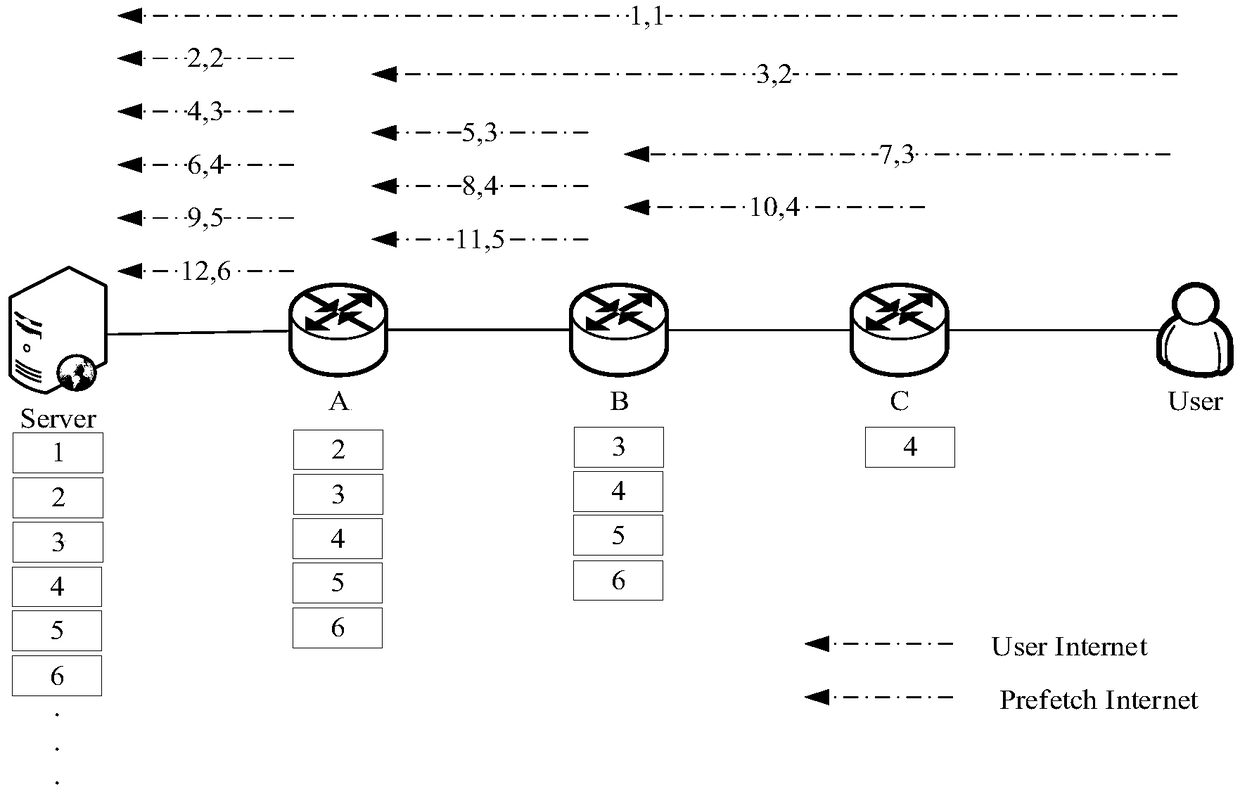

Solution method for reduction of content obtaining delay in mobile content center network

InactiveCN109195180AMuch cacheHigh popularityNetwork traffic/resource managementMobile contentDistributed computing

The present invention provides a solution scheme for reduction of content obtaining delay in a mobile content center network, belonging to the technical field of communication. The scheme mainly comprises the steps of: establishing of a content popularity list, establishing of a prefetching list, congestion control and prefetch concrete operation. The content popularity list and the prefetching list are employed to determine content required being prefetched, and a cache node performs perfetching of the content according to a pre-triggering mode, employs explicit congestion control to performdynamic regulation for a content prefetching window according to a network state and combines the prefetching concrete operation to complete prefetching of the content. The scheme employs features that the content in the content center network can be further segmented into blocks to perform prefetching for the following-up blocks of the given content and gradually push the following-up content blocks to nodes close to the users so as to reduce the delay of the users obtaining content.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

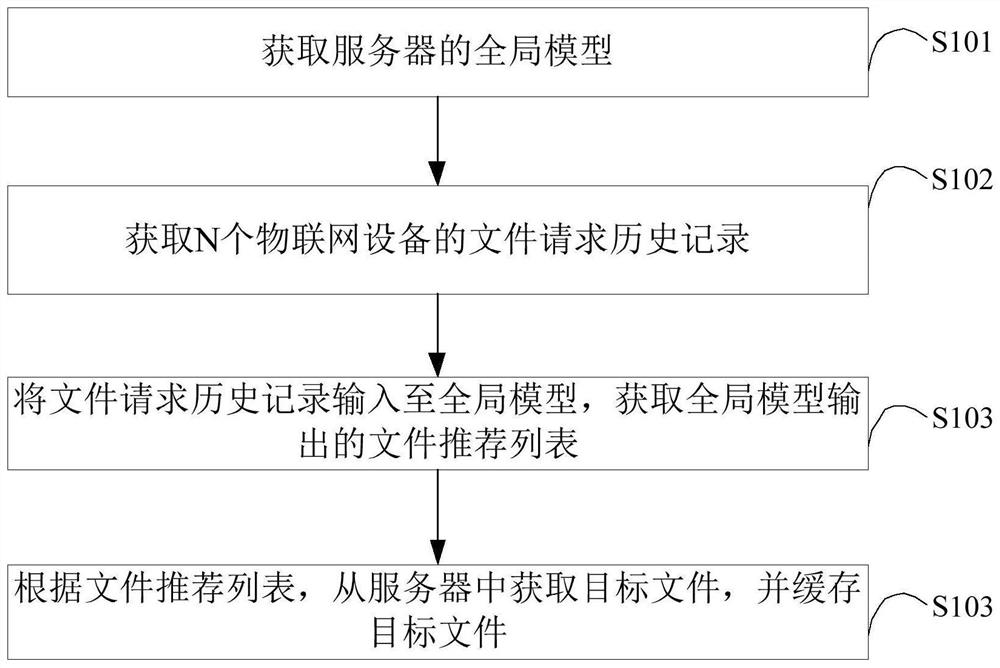

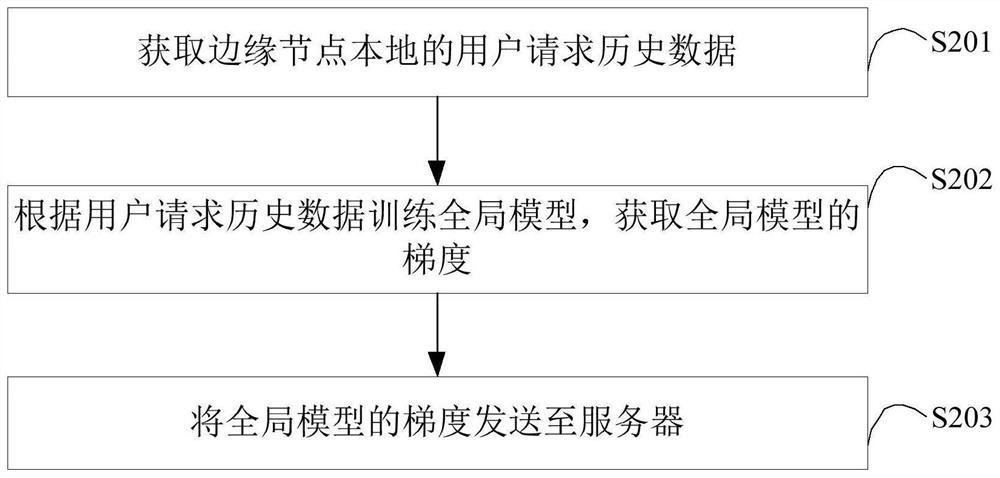

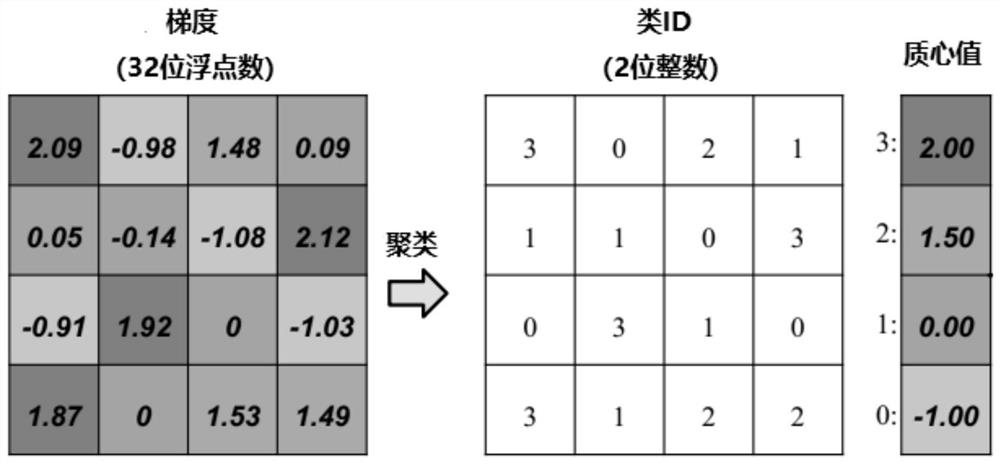

File caching method and device, edge node and computer readable storage medium

PendingCN112118295AIncrease cacheResolve delayDigital data information retrievalTransmissionEdge nodeThe Internet

The invention is applicable to the technical field of Internet of Things, and particularly relates to a file caching method and device, an edge node and a computer readable storage medium. The methodcomprises the steps of connecting a server and N pieces of Internet of Things equipment through an edge node; obtaining a global model from the server; obtaining a file request historical record fromthe Internet of Things equipment; inputting the file request historical record into the global model to obtain a file recommendation list; obtaining and caching a target file from the server accordingto the file recommendation list. Thus, the edge node can pre-cache the files with relatively high relevance with the Internet of Things equipment, so that the probability that the Internet of Thingsequipment hits the file cached in the edge node when requesting the file from the edge node can be improved, and the problem of network delay of data transmission can be solved.

Owner:SHENZHEN UNIV

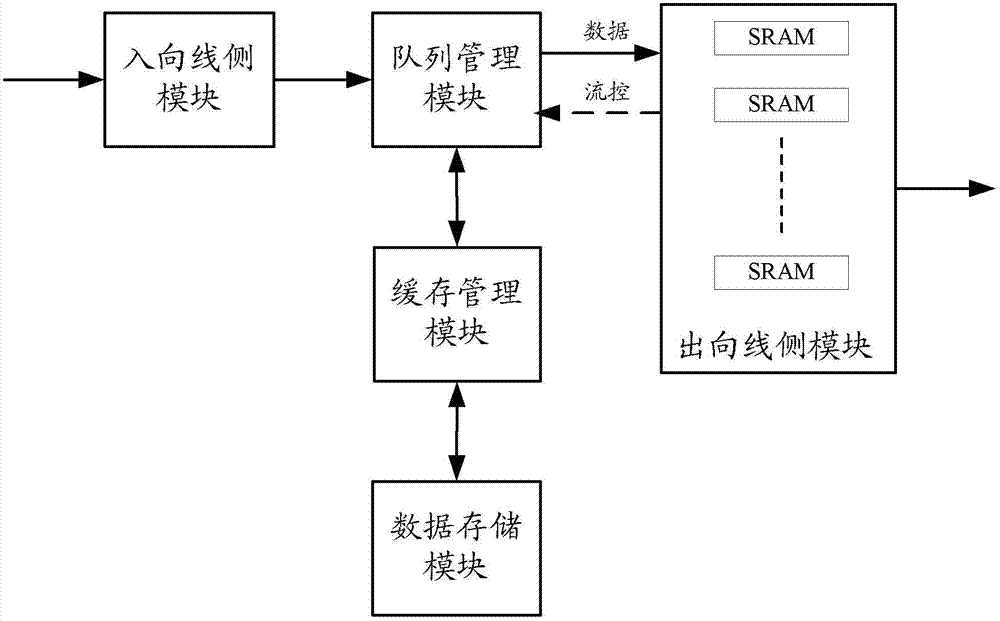

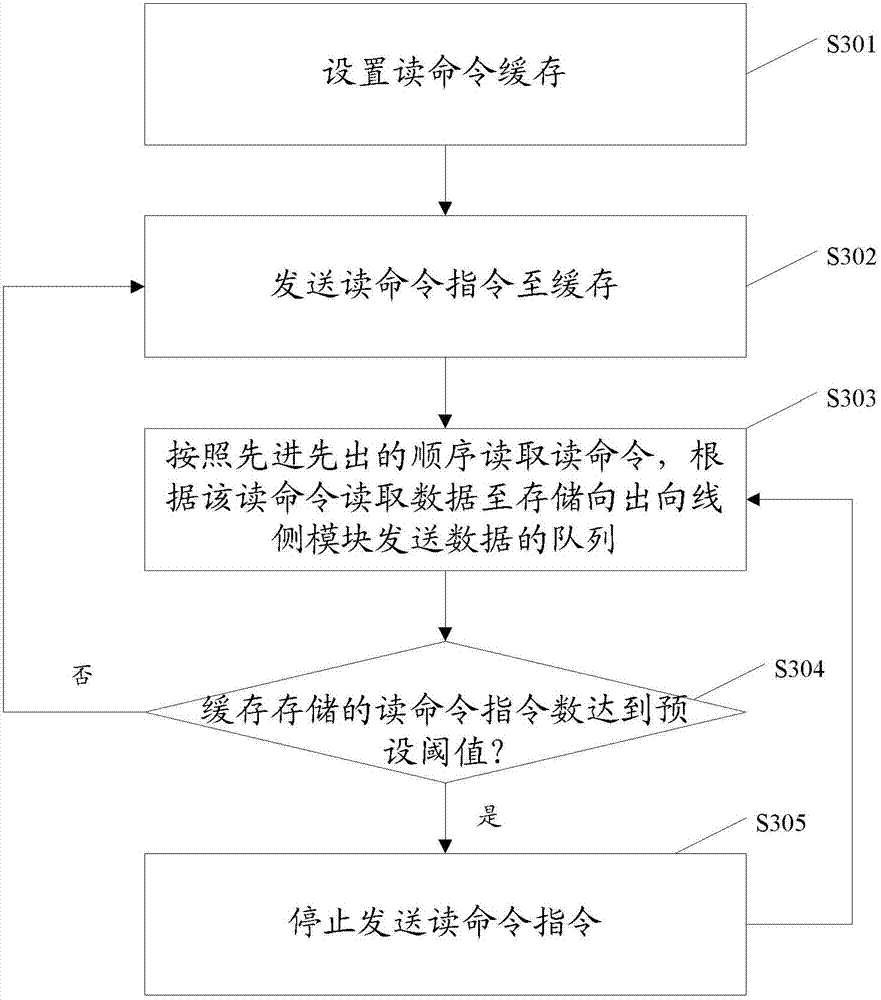

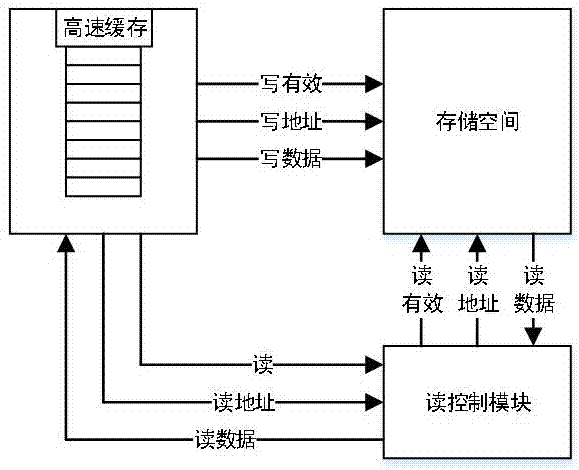

Flow management device and method for saving cache resource

ActiveCN102831077AReduce cache usageReduce cacheMemory adressing/allocation/relocationTraffic capacityFlow management

The invention provides a flow management device and a method for saving a cache resource. The flow management method comprises the following steps of: setting a read command cache; sending read commands to the cache; reading the read commands according to a first-in first-out sequence, reading data according to the read commands to a queue to which a storing outbound line side module sends the data; judging whether the read command stored by the cache reaches a preset threshold value; and stopping sending the read command if the preset threshold value is reached, and continuously reading the read command in the cache according to the first-in first-out sequence. The flow management device and the method reduce cache usage in a flow management system.

Owner:ZTE CORP

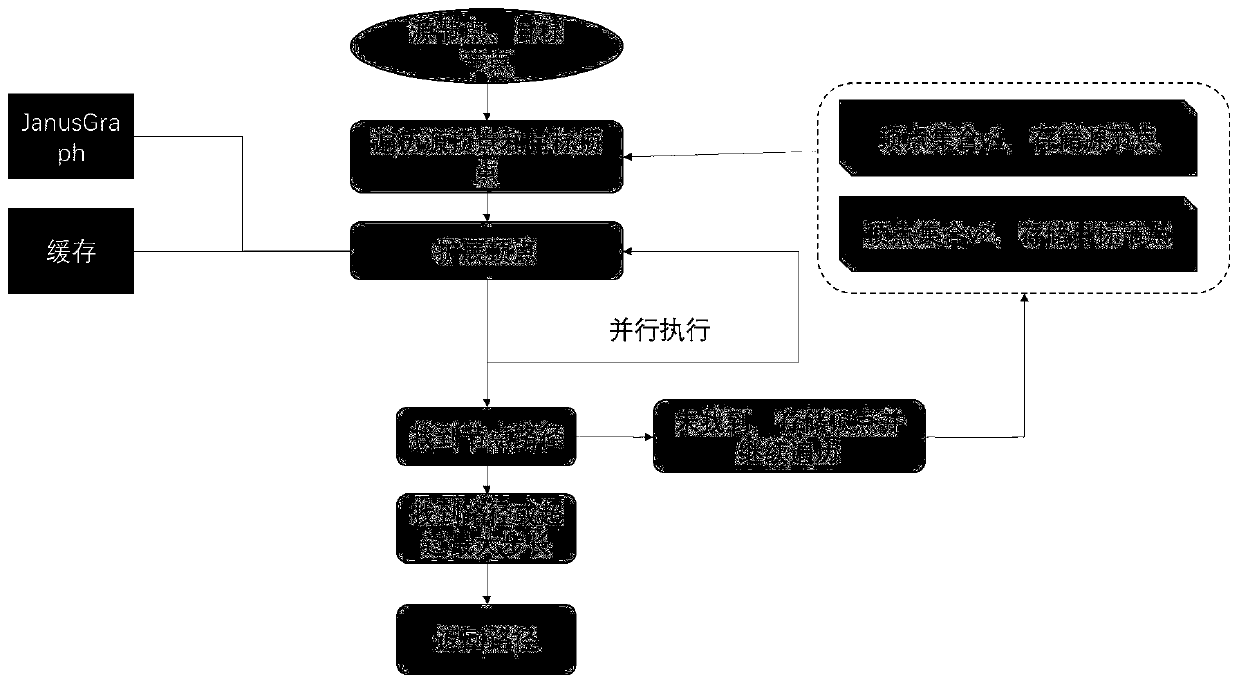

Method for improving exploration performance of Janus Graph path

PendingCN110765319AIncrease the maximum step limitIncrease cacheOther databases indexingOther databases queryingPathPingTheoretical computer science

The invention discloses a method for improving exploration performance of a Janus Graph path, and belongs to the technical field of graph calculation of an application data mining technology. A bidirectional breadth-first traversal algorithm is used, the process of gradual transition from an original node to a target node is changed into the process of simultaneous traversal from two nodes, the total number of vertexes needing to be traversed is reduced, and the number of iterations is reduced. Consumption of storage memory resources can be reduced. M eanwhile, the response time is shortened,the requirements for large data volume, real-time calculation and low response time are met, and the method has good application and popularization value.

Owner:INSPUR SOFTWARE CO LTD

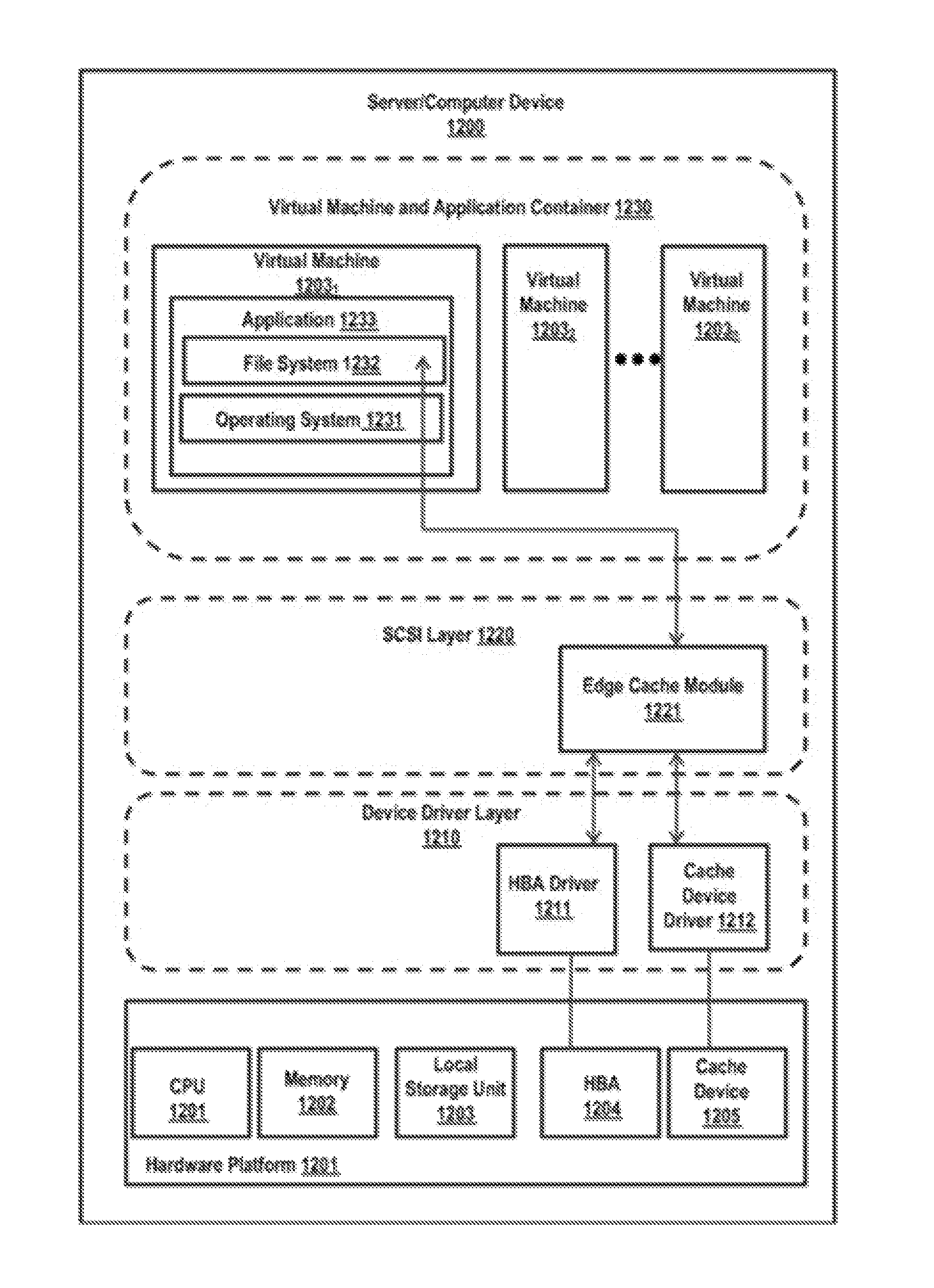

Extending a cache of a storage system

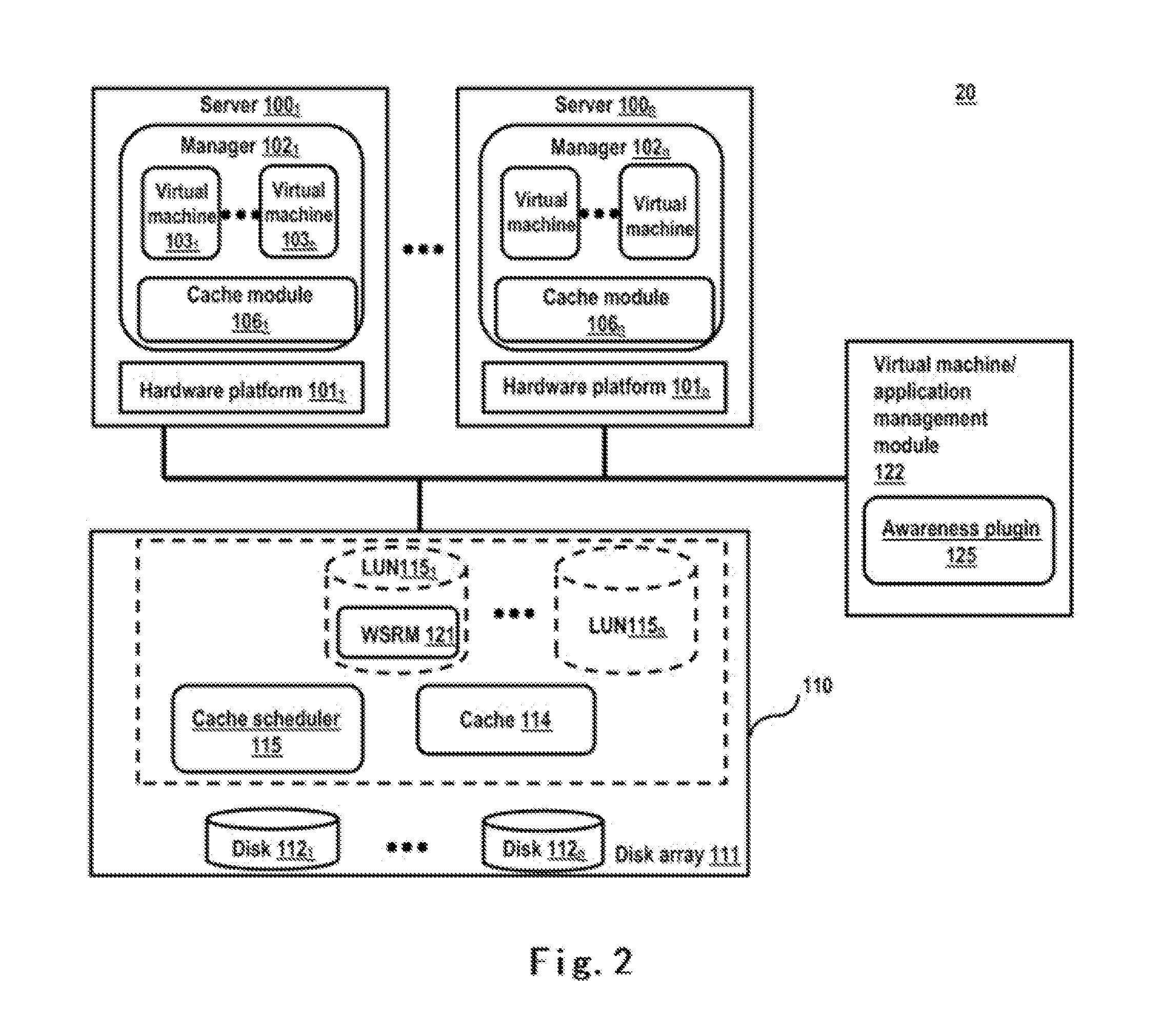

ActiveUS20160335199A1Increase cacheMemory architecture accessing/allocationMemory adressing/allocation/relocationClient-sideComputer science

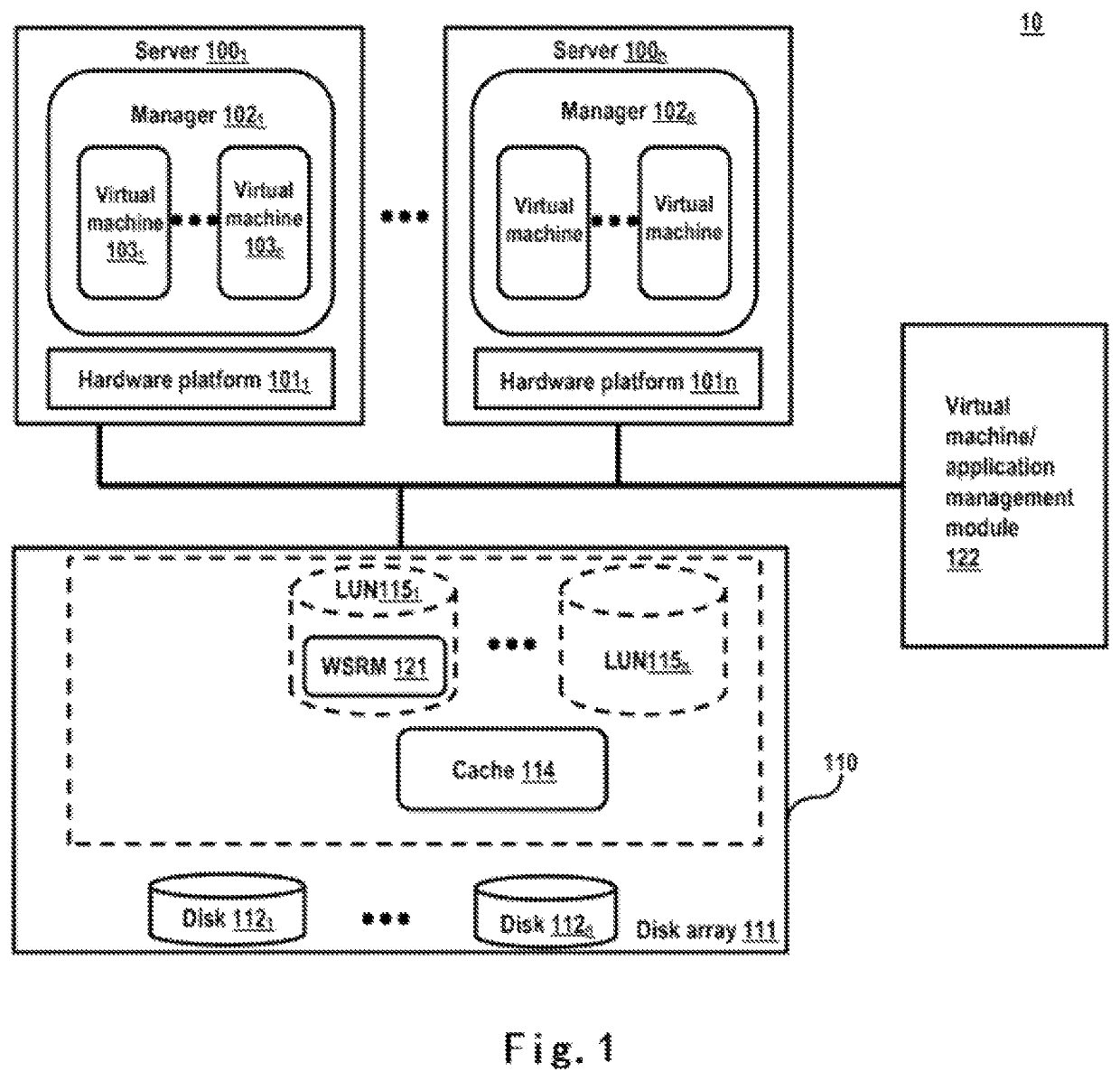

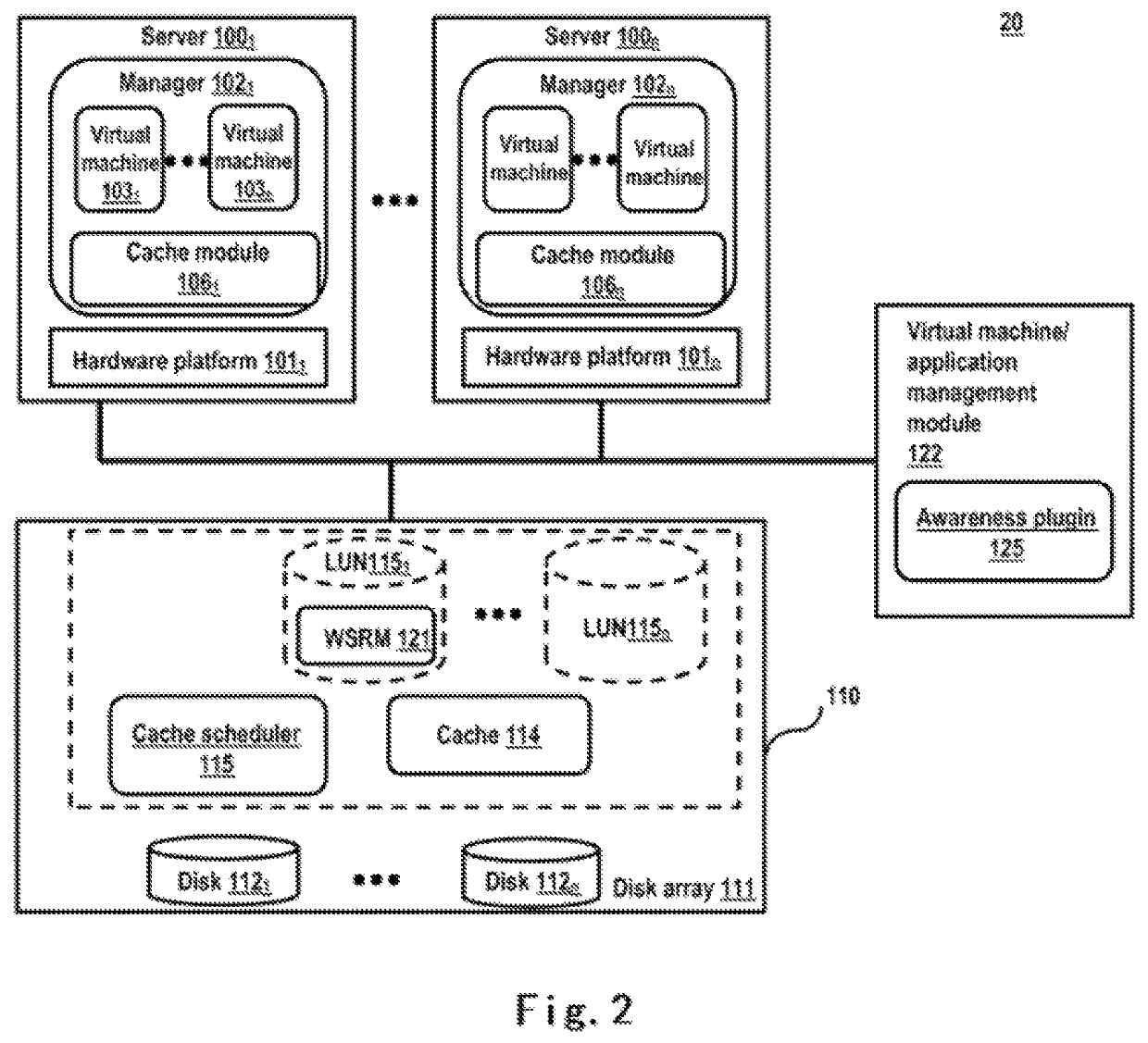

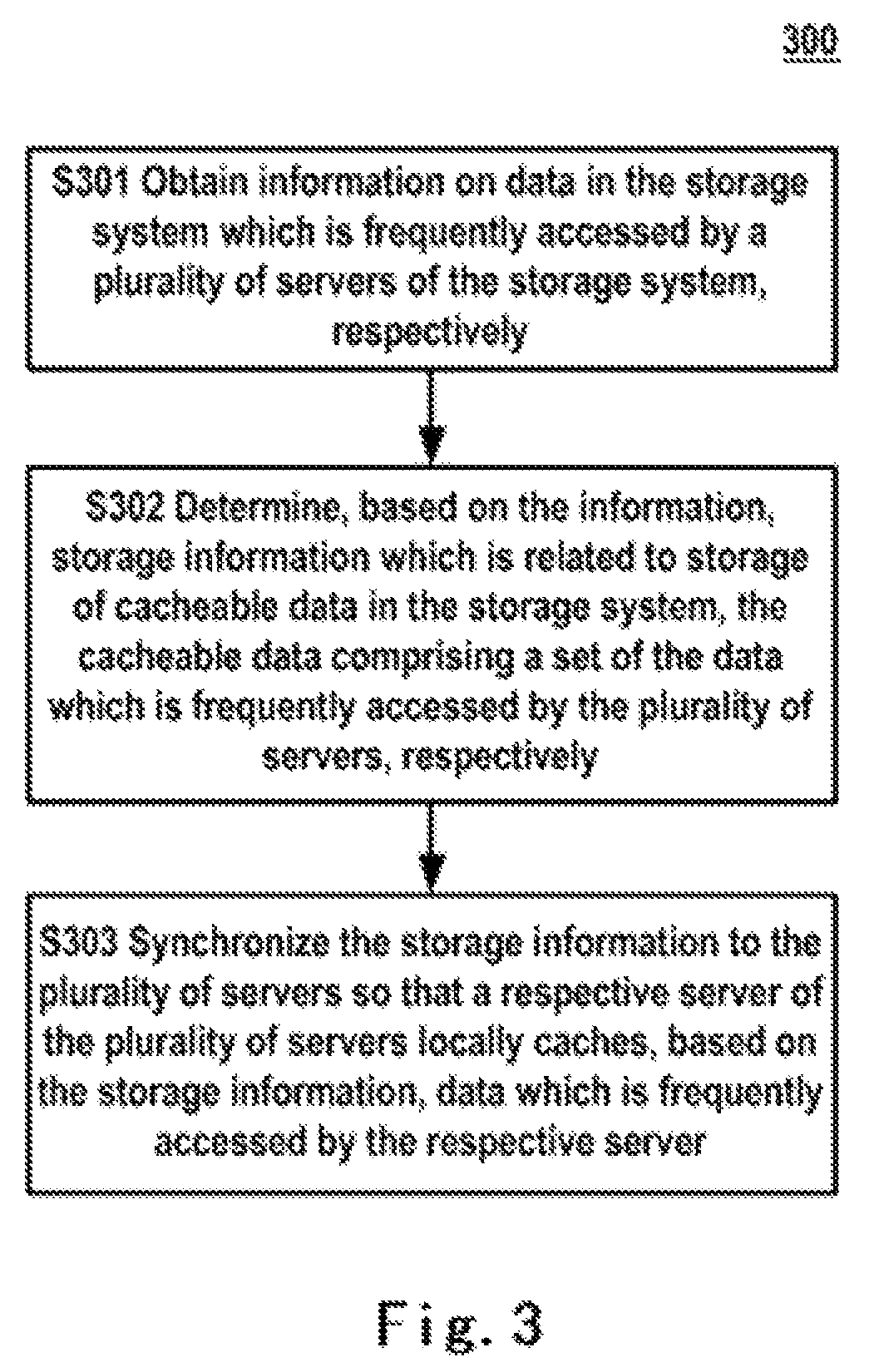

Embodiments of the present disclosure provide a method and system for extending a cache of a storage system, by obtaining information on data in a storage system frequently accessed by a plurality of clients of the storage system; determining, based on the obtained information, storage information related to storage of cacheable data in the storage system, the cacheable data comprising a set of the data frequently accessed by the plurality of clients; and synchronizing the storage information amongst the plurality of clients so that a respective client of the plurality of clients locally caches, based on the storage information, data frequently accessed by the respective client.

Owner:EMC IP HLDG CO LLC

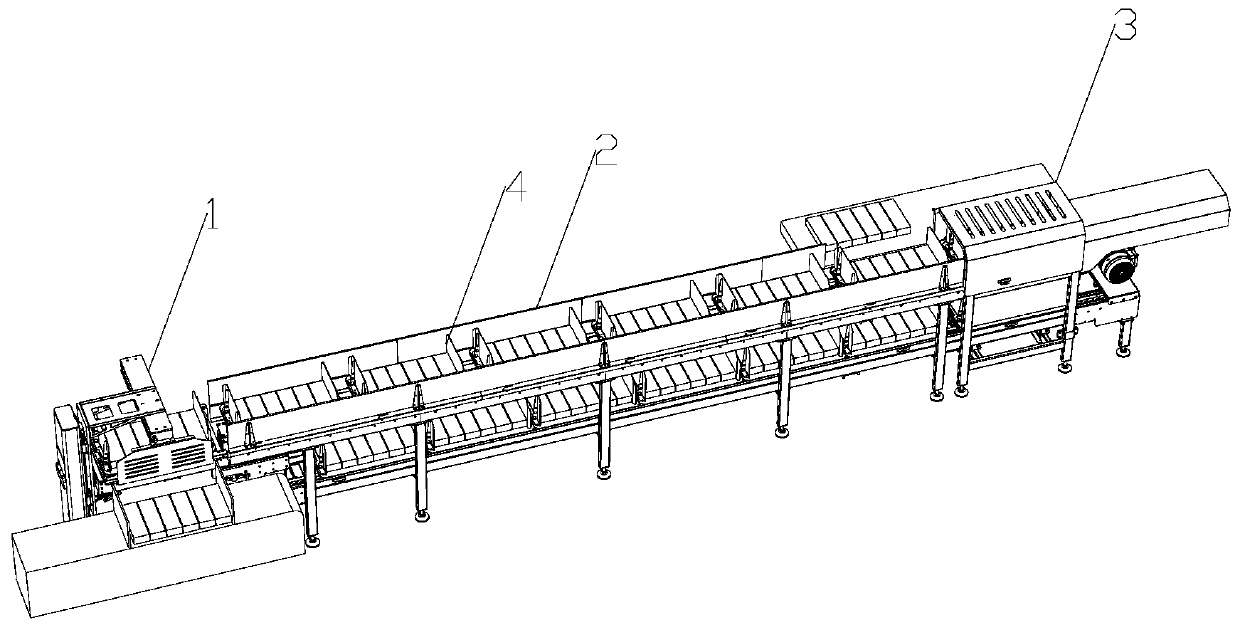

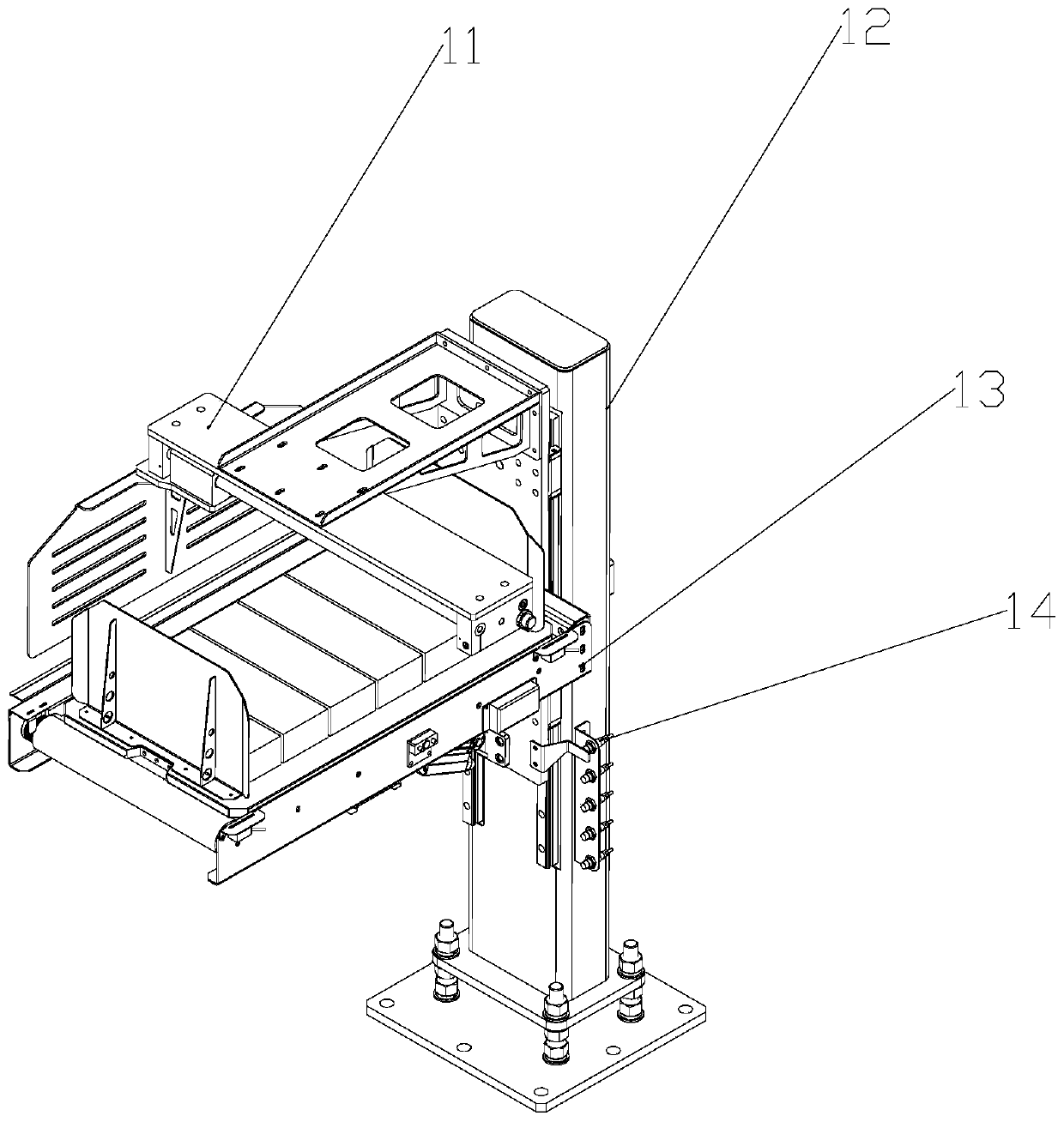

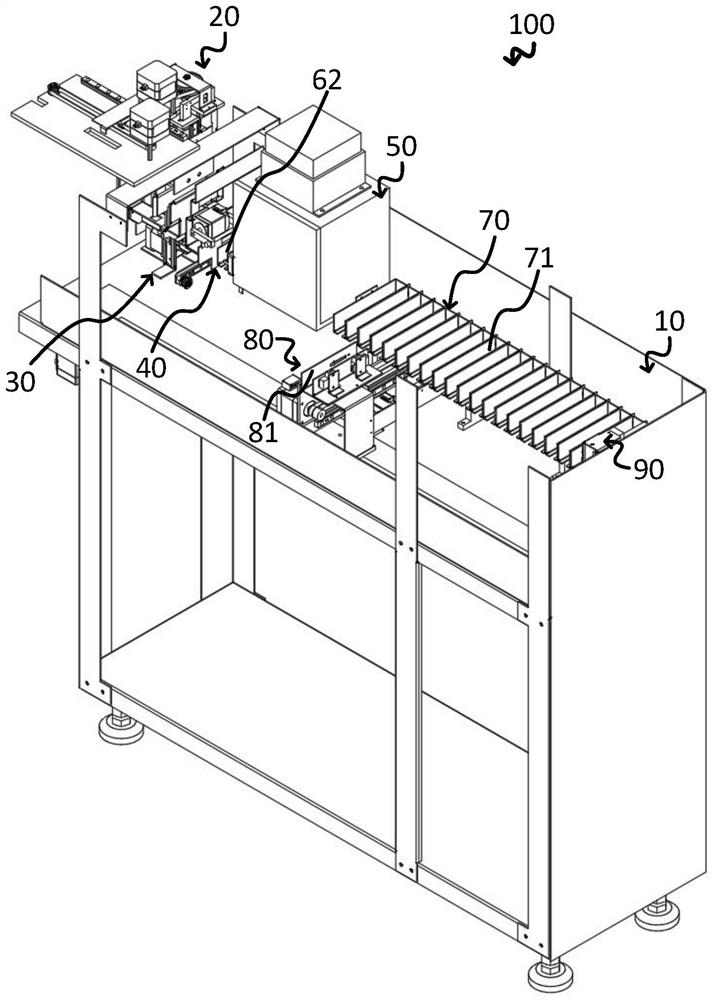

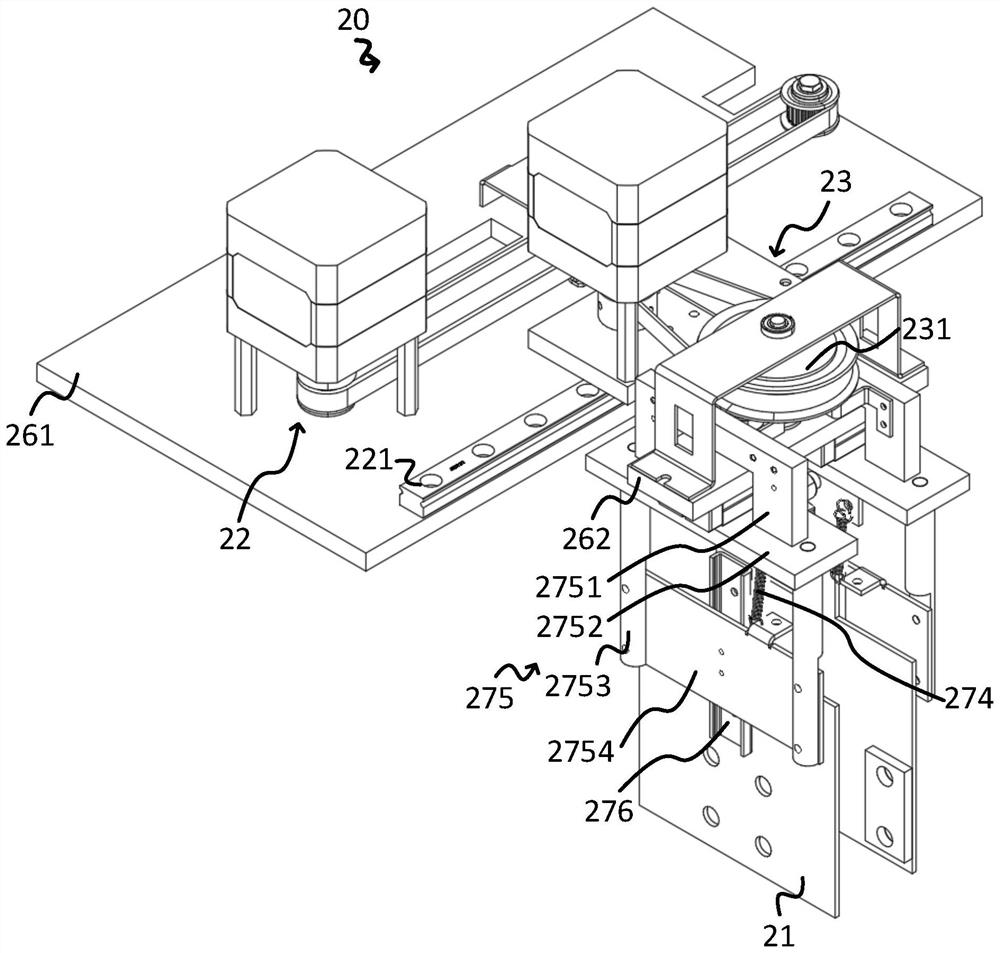

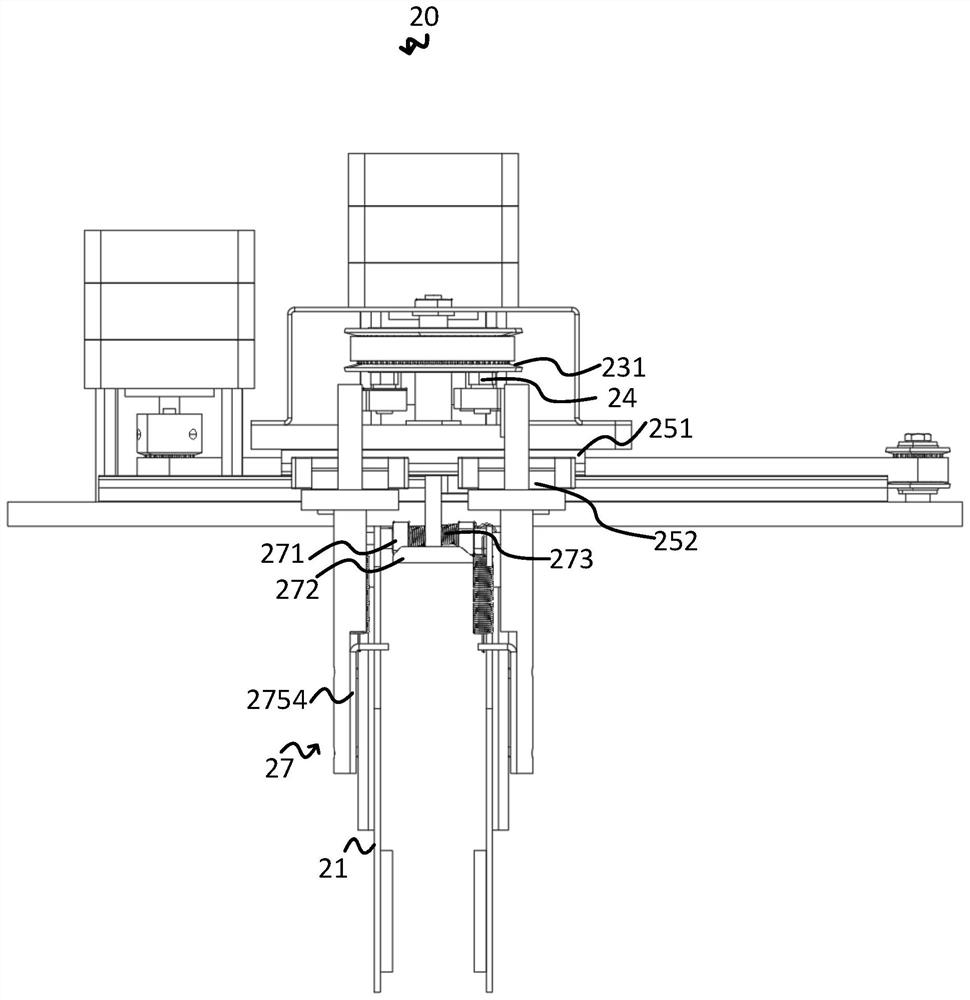

Special-shaped cigarette packaging cache system

PendingCN110015464AIncrease cacheIncrease the amount of cachePackaging automatic controlEngineeringMechanical engineering

The invention discloses a special-shaped cigarette packaging cache system. The special-shaped cigarette packaging cache system comprises a lifting cigarette pushing device, a conveying device and a tray jacking device. The lifting cigarette pushing device is located at one end of the conveying device and used for receiving trays conveyed by the conveying device, combining special-shaped cigaretteson the trays with conventional cigarettes and sending the empty trays after combination onto the conveying device. The tray jacking device is located at the other end of the conveying device and usedfor receiving the trays conveyed by the conveying device and conveying the trays onto the conveying device again. The conveying device is located between the lifting cigarette pushing device and thetray jacking device and used for conveying the trays between the lifting cigarette pushing device and the tray jacking device. The special-shaped cigarette packaging cache system has the beneficial effects that caches of multiple stations are improved, meanwhile each station does not interfere with another, each station is independent and does not affect another, the cache amount and the cache flexibility of the special-shaped cigarettes are greatly improved, and cyclic utilization of the trays can be realized.

Owner:中烟物流技术有限责任公司

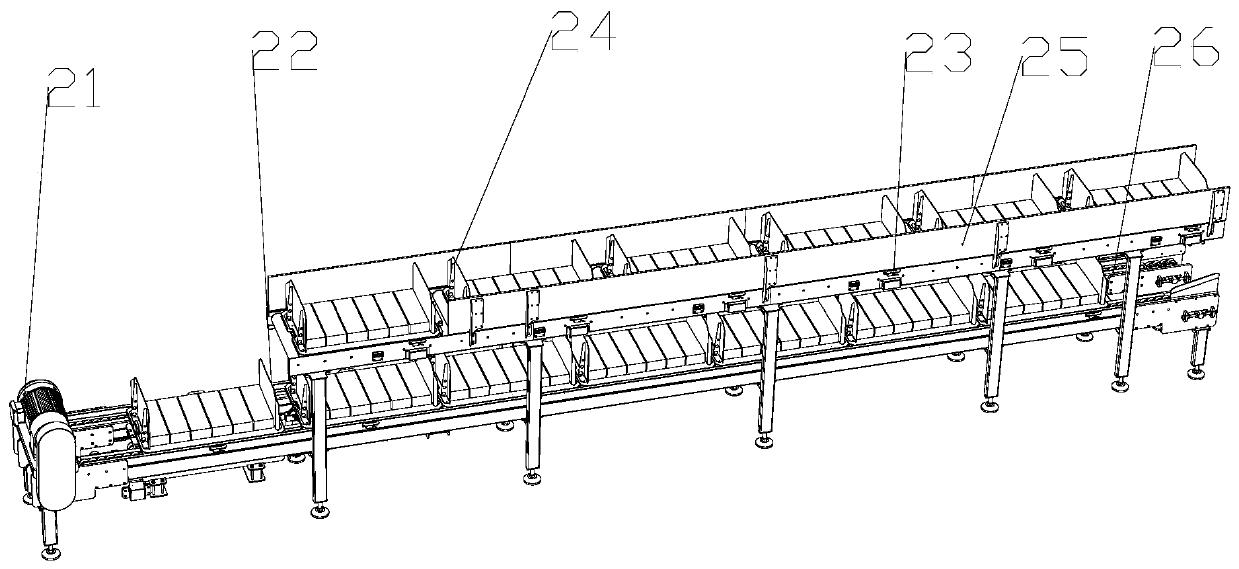

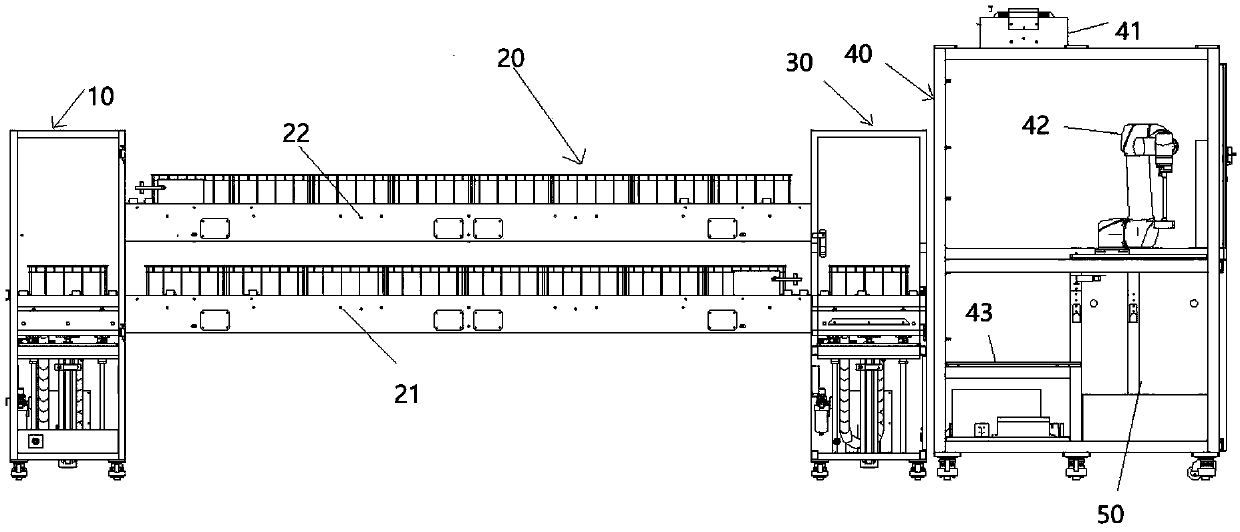

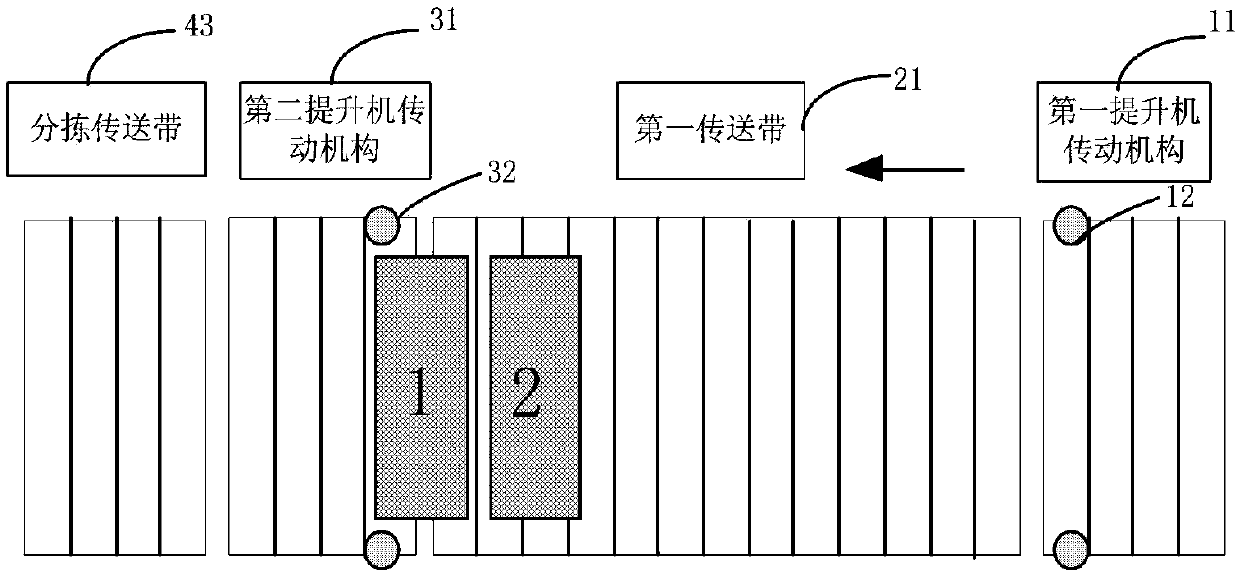

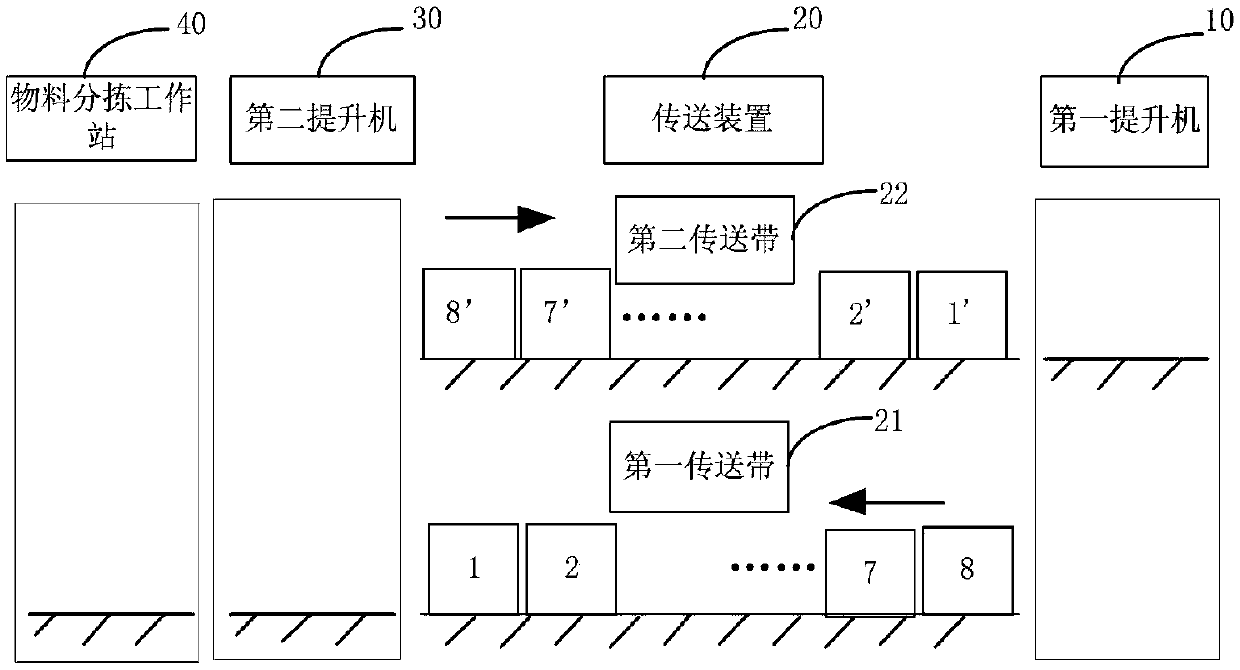

Material sorting and feeding system

PendingCN109533780AIncrease cacheReduce the number of manual feedingConveyorsSortingTime sequenceMechanical engineering

The invention discloses a material sorting and feeding system. The material sorting and feeding system comprises a first lifter, a conveying device, a second lifter, a material sorting work station and a control device, wherein the conveying device at least comprises a first conveying belt and a second conveying belt which are opposite in the conveying direction; the first lifter conveys a workbinto the first conveying belt or receives a workbin from the second conveying belt; the second lifter is used for conveying the workbin to the material sorting work station or the second conveying beltor receiving the workbin from the first conveying belt or the sorting work station; the material sorting work station recognizes and sorts materials inside the workbin; and the control device controls the above devices to work according to the time sequence. The material sorting and feeding system can greatly increase the temporary storage of the workbin, can greatly reduce the manual material supplementing frequency on the condition of lifting the utilization rate of the system, and has the characteristics of being simple in structure, highly intelligent, accurate in feeding and the like.

Owner:武汉库柏特科技有限公司

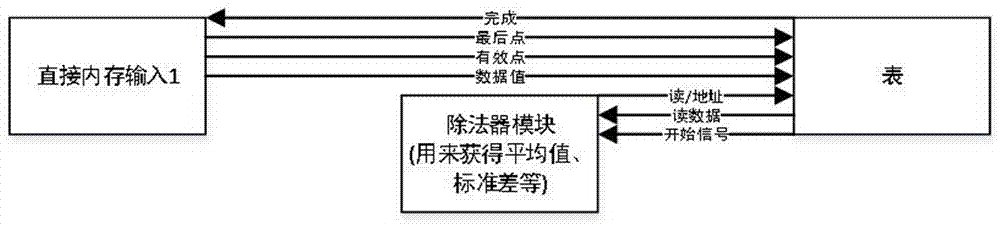

Background processing and table establishing method for gray-scale image

ActiveCN106940875AOptimizing the table creation schemeShorten the timeImage memory managementDetails involving image processing hardwareMethod selectionGrayscale

The invention discloses a background processing and table establishing method for a gray-scale image. A table establishing module is additionally arranged in a detection module. In the table establishing manner, the statistics on the to-be-acquired image information is conducted. After the completion of the first traversal process, the table establishing is completed. The statistics and the calculation are conducted based on data in an established table, so that parameters, which cannot be obtained through the one-time traversal process, can be obtained. Compared with the existing counting manner, the table establishing manner is selected. When an image is fed for the first time, the number of occurrence times of each pixel value in the image is counted. Meanwhile, the table-building scheme is optimized, and the table-building time is greatly reduced. During the solving process of a difference sum, an average deviation and other parameters, only the number of different pixel values is traversed. Therefore, the working time is greatly reduced for million pixel points in the image.

Owner:杭州朔天科技有限公司 +1

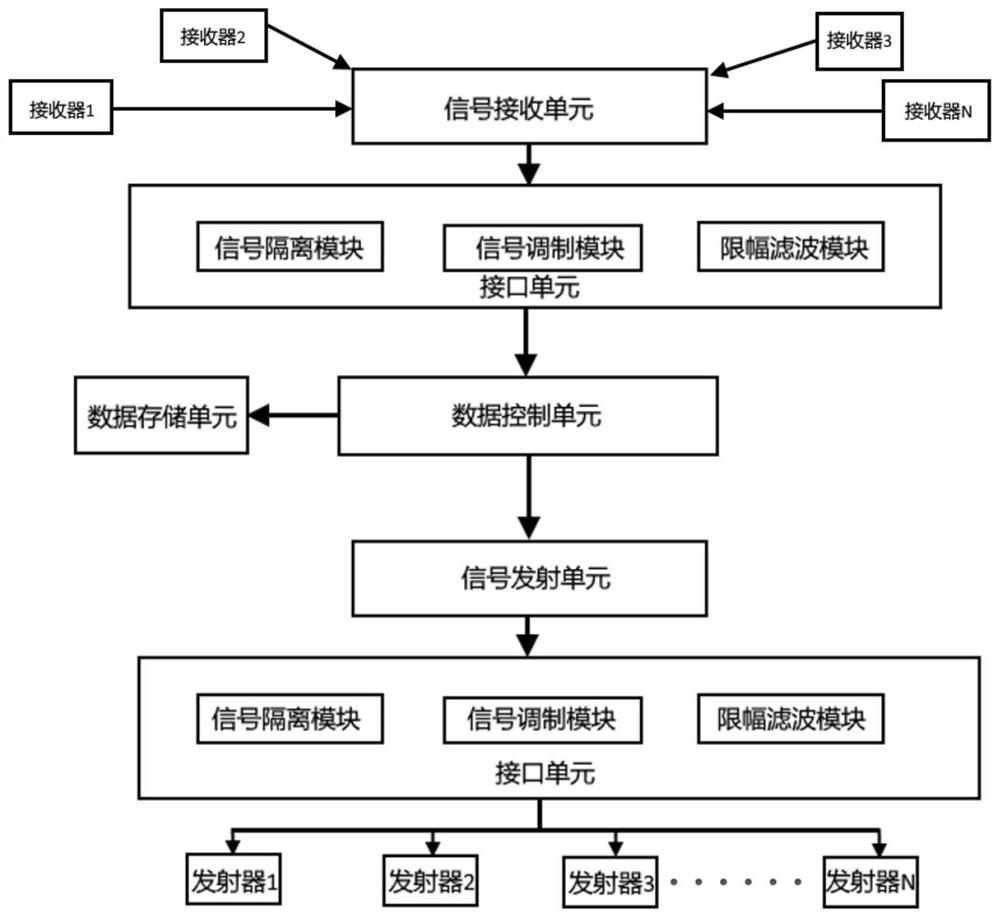

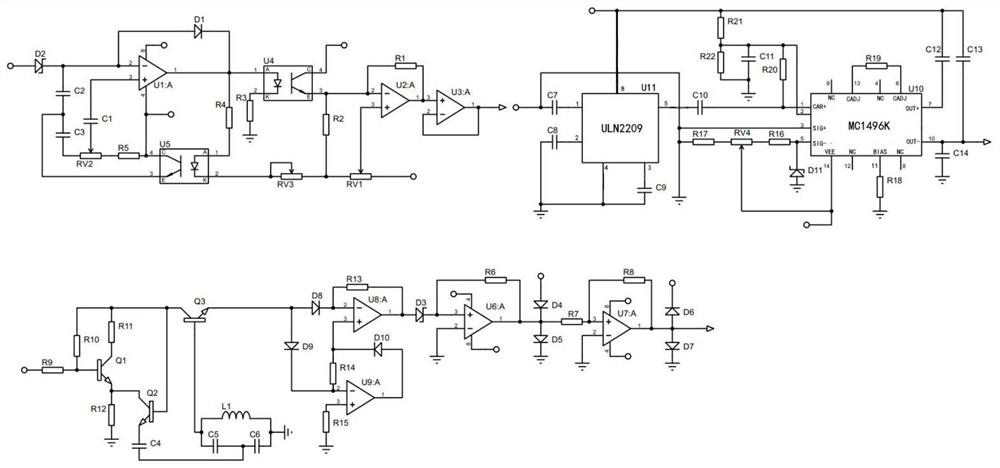

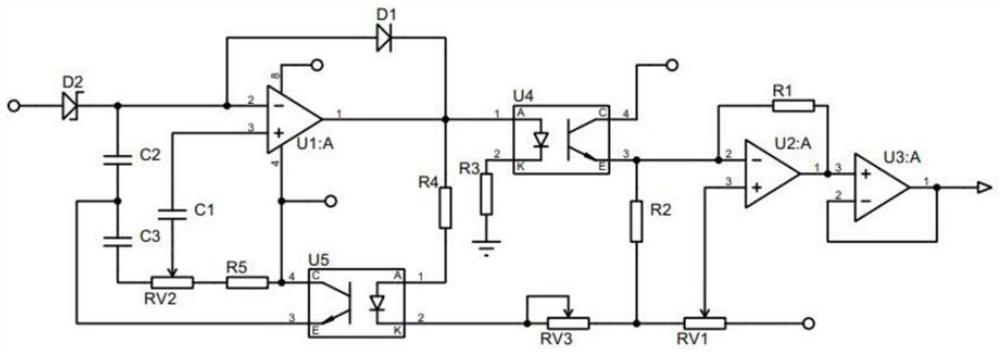

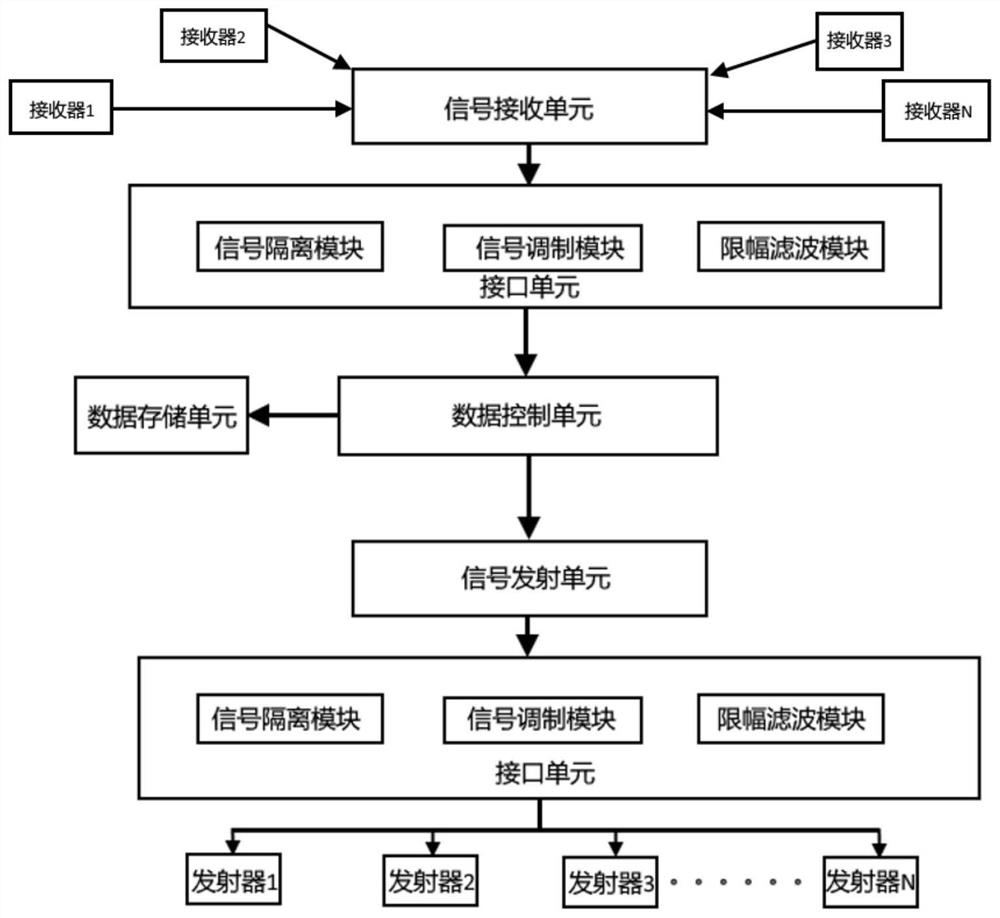

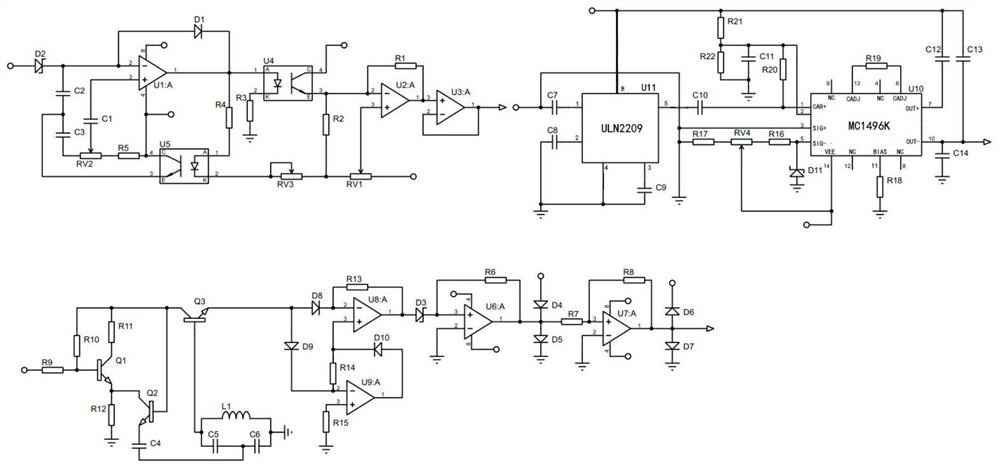

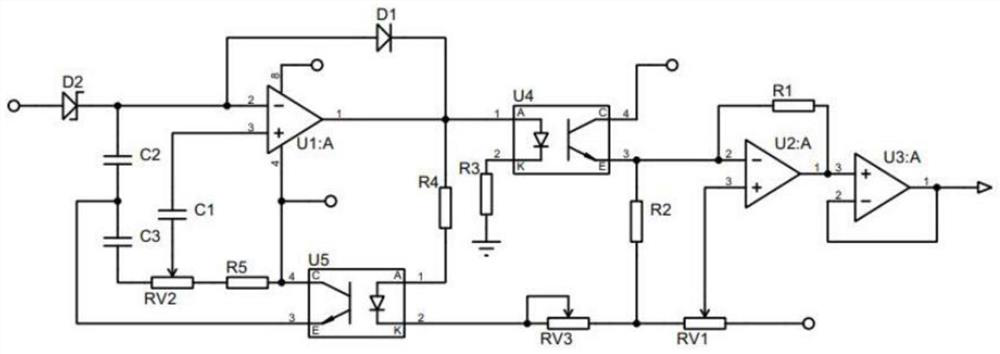

Surge protection circuit and surge protection method for Internet of Things mobile base station

ActiveCN112234589AIncrease conversion timeIncrease cacheEmergency protective arrangements for limiting excess voltage/currentData controlSignal modulation

The invention discloses a surge protection circuit and a surge protection method for an Internet of Things mobile base station, and belongs to the field of Internet of Things mobile base stations. Thesurge protection circuit comprises a signal transmitting unit, a signal receiving unit, a data control unit, a data storage unit and an interface unit, wherein the interface unit comprises a signal isolation module, a signal modulation module and an amplitude limiting filtering module. When the receiver receives a user signal, the user signal is transferred to the data control unit through the interface, and the signal is output to the emitter through the interface unit during signal output and emission, so that the conversion time and cache of the signal can be prolonged, the signal can be better stabilized, and stable transmission can be realized in an environment with strong thunderstorm signal interference; and during signal emission, each emission set can increase a time delay, and sub-signals in each emission set can be subjected to signal isolation, so that signals do not interfere with each other.

Owner:NANJING YUNTIAN ZHIXIN INFORMATION TECH CO LTD +2

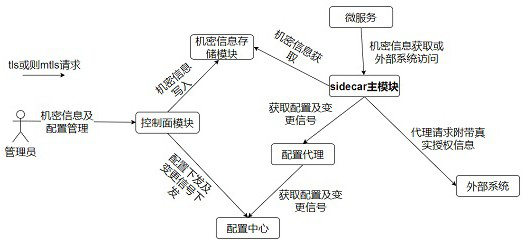

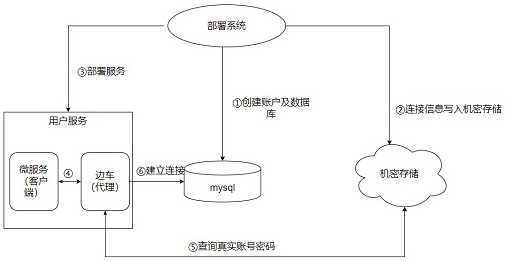

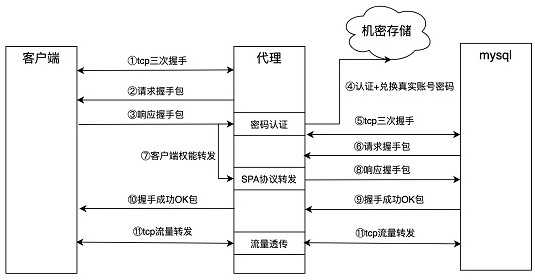

Data confidential information protection system based on zero-trust network

ActiveCN114465827AImprove information securityImprove securityDigital data information retrievalDigital data protectionComputer securityMicro services

The invention discloses a data confidential information protection system based on a zero-trust network, and belongs to the technical field of communication. The system comprises a control plane module, a confidential information storage module, a configuration center, a configuration agent, a sidecar main module and an external system, the control plane module is used for adding, deleting, modifying and checking confidential information, verifying authority information of operators, storing configuration information into a configuration center, and sending a configuration updating signal to the configuration center; the confidential information storage module is used for storing confidential information; receiving and storing the configuration updating signal by using the configuration center; calling an update signal and actual configuration from a configuration center by using a configuration agent, applying the configuration, and communicating with a sidecar main module; management and verification of confidential information are realized by using the sidecar main module; an external system is used for receiving micro-service calling, and a checking request is initiated for confidential information content.

Owner:南京智人云信息技术有限公司

Extending a cache of a storage system

ActiveUS10635604B2Increase cacheMemory architecture accessing/allocationTransmissionData packEngineering

Owner:EMC IP HLDG CO LLC

Sample caching device

The invention provides a sample caching device, comprising: a frame for forming a support structure; the clamping mechanism is used for clamping a sample frame loaded with at least one sample tube; the stopping mechanism is used for stopping the sample rack on the transmission module in the to-be-clamped area; the transfer mechanism is provided with a first X-direction moving assembly, a first motor and a push rod; the refrigerator is used for refrigerating the sample rack loaded with the sample tubes to be subjected to quality control; the first containing groove and the second containing groove are formed in the two sides of the transferring mechanism correspondingly. The second containing groove is located between the transfer mechanism and the refrigerator. The caching mechanism is provided with a plurality of caching grooves which are sequentially arranged in parallel; the carrying mechanism is provided with a third accommodating groove and is used for transferring the sample rack; the clamping mechanism clamps the sample frame and then places the sample frame in the first containing groove. The structure is simple and compact, operation is easy and convenient, sample frame transfer is stable, the transmission module and the analysis module are matched, the full-automatic detection function of analysis equipment is achieved, and meanwhile cache and detection efficiency is improved.

Owner:南京国科精准医学科技有限公司

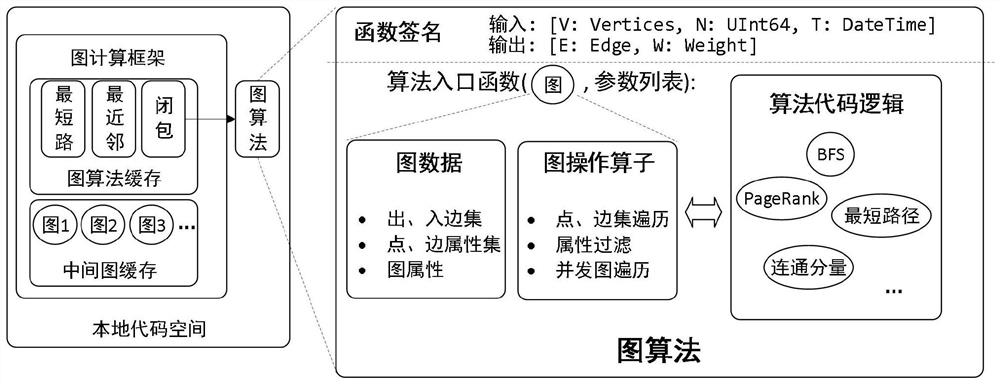

A graph computing method and system based on dynamic code generation

ActiveCN110287378BEliminate overheadAvoid preprocessing overheadOther databases indexingSpecial data processing applicationsGraph operationsCode generation

The present invention proposes a graph calculation method and system based on dynamic code generation, including: constructing an intermediate graph structure containing graph operation primitives according to a graph construction request, and storing the intermediate graph structure in an intermediate graph buffer after associating it with a graph name ;According to the graph algorithm request, generate a graph algorithm structure composed of external code bytecodes, and send it to the graph algorithm buffer; retrieve the intermediate graph buffer and graph algorithm buffer with the execution request, and obtain the intermediate graph structure to be executed, the graph to be executed A triplet composed of the algorithm structure and the parameter list, and retrieve the triplet in the local code cache to obtain the execution object in the local code cache, and execute to obtain the result. The invention injects the generated code in the local code space, eliminating the overhead of data exchange; constructs an intermediate graph structure that can be recompiled, so that the access code of graph data can be compiled and optimized; meanwhile, it increases the cache of the intermediate graph structure and the graph algorithm Caching avoids the preprocessing overhead of graph calculations.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

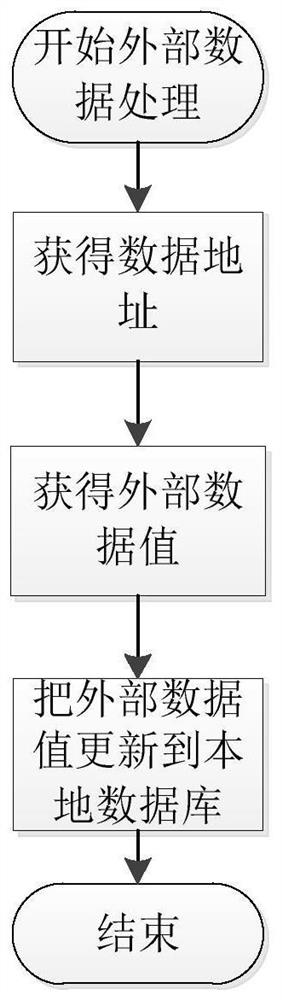

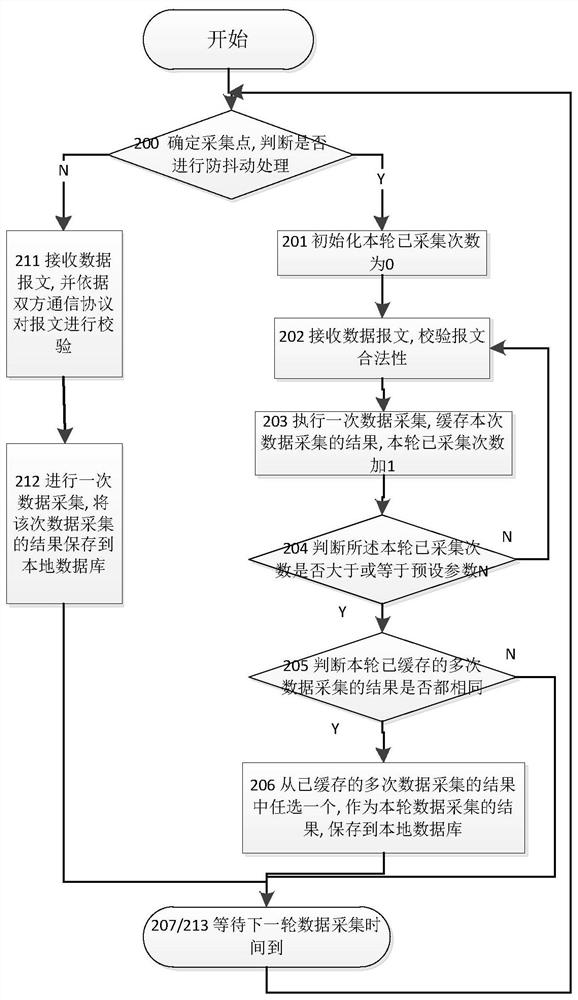

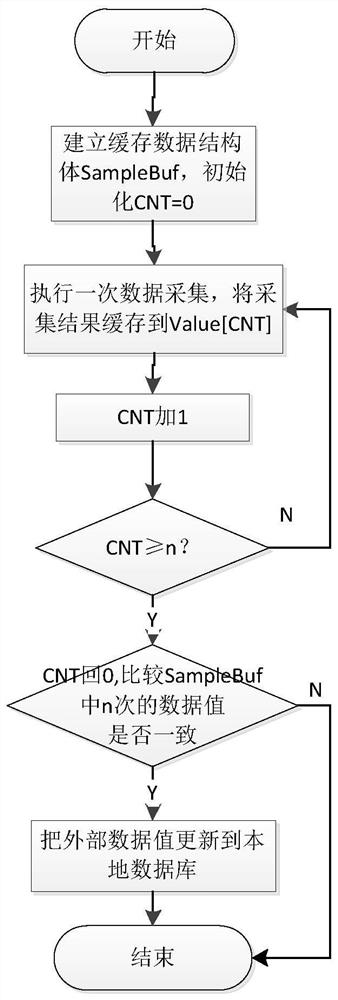

A data acquisition method and device

Owner:北京和利时系统集成有限公司

Method for tapping on independent research board card block sheet for satisfying heat dissipation

InactiveCN104102315AImprove performanceIncrease cacheDigital data processing detailsEngineeringIndependent research

The invention discloses a method for tapping on an independent research board card block sheet for satisfying heat dissipation, and belongs to the computer communication field. An independent research board card block sheet comprises a block sheet body and a block sheet hook, wherein the block sheet body is provided with alveolate heat dissipation holes; the edge of the block sheet body is provided with a heat dissipation groove; the side length of each alveolate heat dissipation hole is 0.2-0.5cm; the cover area of the alveolate heat dissipation holes does not exceed 4 / 5 of the area of the block sheet body; and the area of a vertical split cross section of the heat dissipation groove is trapezoidal. The method for the independent research board card block sheet comprises the following specific steps: 1) according to the specification of a board card, selecting the specification of the independent research board card block sheet; 2) installing the independent research board card block sheet onto a corresponding hook hole of the board card; and 3) stretching the independent research board card block sheet, and confirming that the independent research board card block sheet is firmly installed. The invention has the benefits that heat dissipation effect is enhanced, and damages caused by refitting the board cards are avoided.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

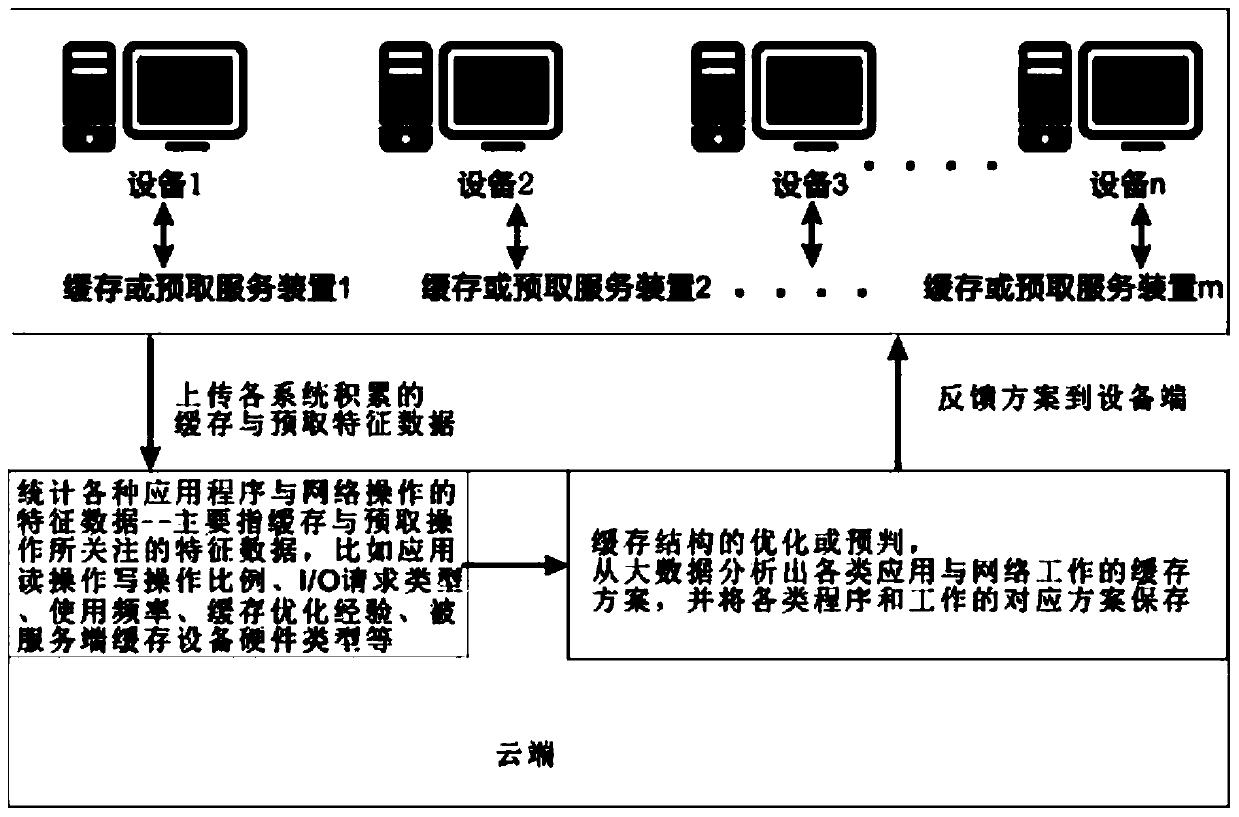

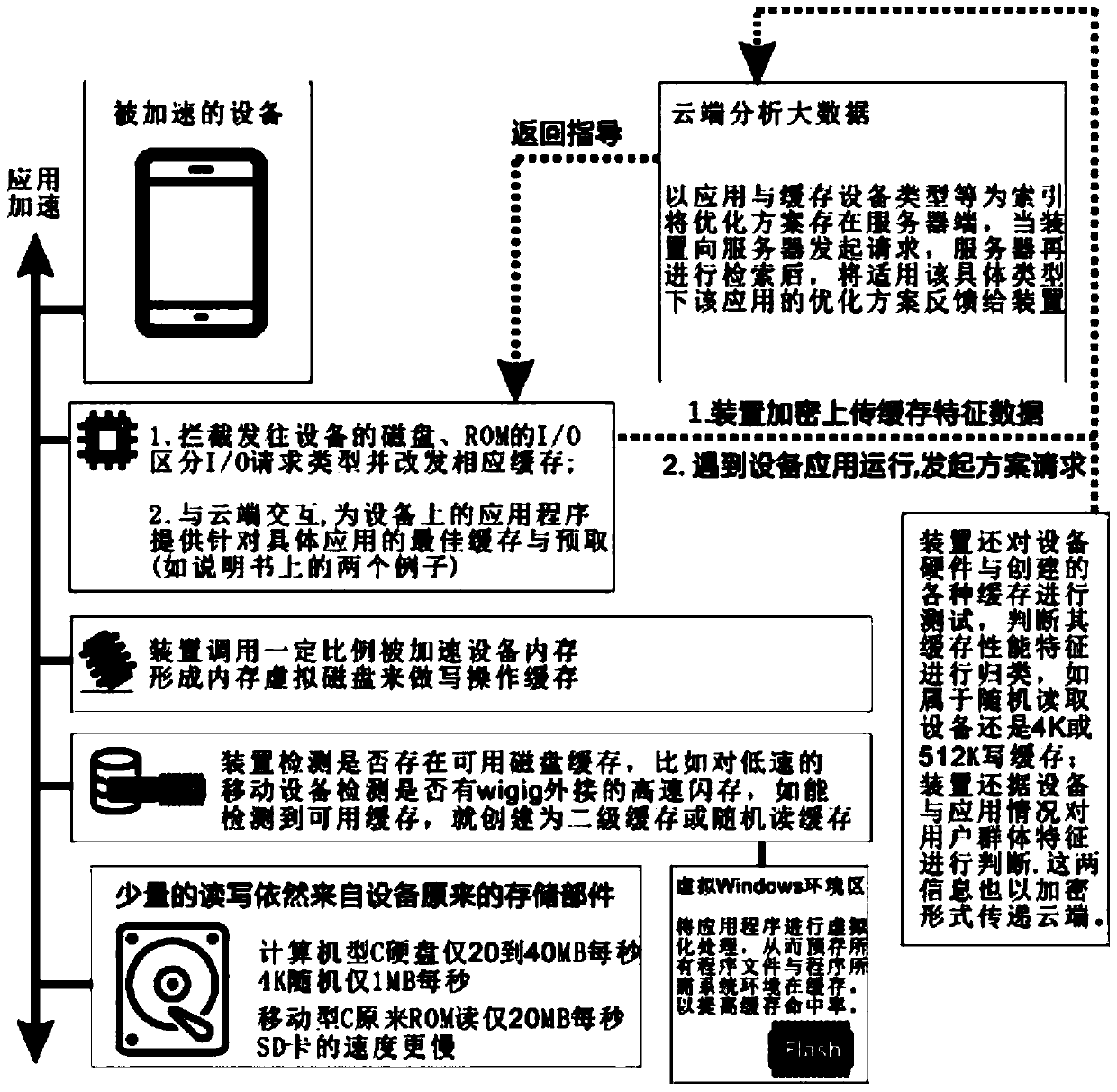

A caching and prefetching acceleration method and device for computing equipment based on big data

ActiveCN104320448BImprove the effectImprove caching accelerationTransmissionActive feedbackWeb operations

A caching and prefetching acceleration method and device for computing equipment based on big data, which is different from the traditional caching mode in which equipment is optimized, in that the method submits data to the cloud by a large number of caching or prefetching service devices, including these Part of the characteristic data of various applications or network operations on the service device served by the service device. The so-called characteristic data mainly refers to the characteristic data concerned by the cache and prefetch operations, such as the proportion of application read and write operations, I / O Request type, file size, frequency of use, cache optimization experience, hardware type of the cached device on the server side, user group characteristics, etc., the cloud will perform statistics and analysis after receiving the data, dig out optimized cache or prefetch solutions for different applications, and then By means of active feedback or passive response, the optimized caching scheme and prediction scheme are returned to the caching service device for processing, so that the work of the nature of prediction and targeted optimization can be directly performed without re-accumulating cache data for a long time.

Owner:张维加

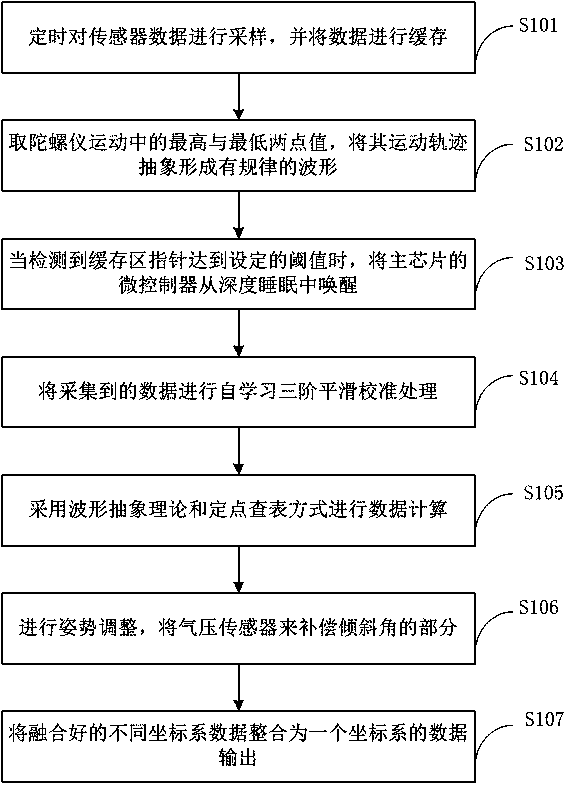

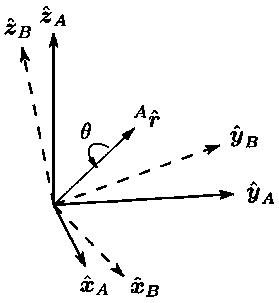

Fast and low-power sensor fusion system and method

ActiveCN106370186BIncrease cacheReduce power consumptionNavigational calculation instrumentsMicrocontrollerGyroscope

The invention discloses a rapid low-power-consumption fusion system and method for a sensor. The method is characterized by including the steps: sampling data of the sensor at regular time and caching the data; taking the maximum value and the minimum value in gyroscope movement and abstracting movement tracks to form regular waveforms; awakening a microcontroller of a main chip from deep sleep when detecting that a cache region pointer reaches a set threshold value; performing self-learning three-order smooth calibration for the acquired data; calculating the data by the aid of the waveform abstract theory and a fixed-point table look-up mode; adjusting posture and compensating an inclination angle by an air pressure sensor. The calculating process is reduced in a simple table look-up and waveform abstracting mode, fusion algorithm can be realized by a simple eight-bit chip, and power consumption and calculating time can be greatly reduced.

Owner:时瑞科技(深圳)有限公司

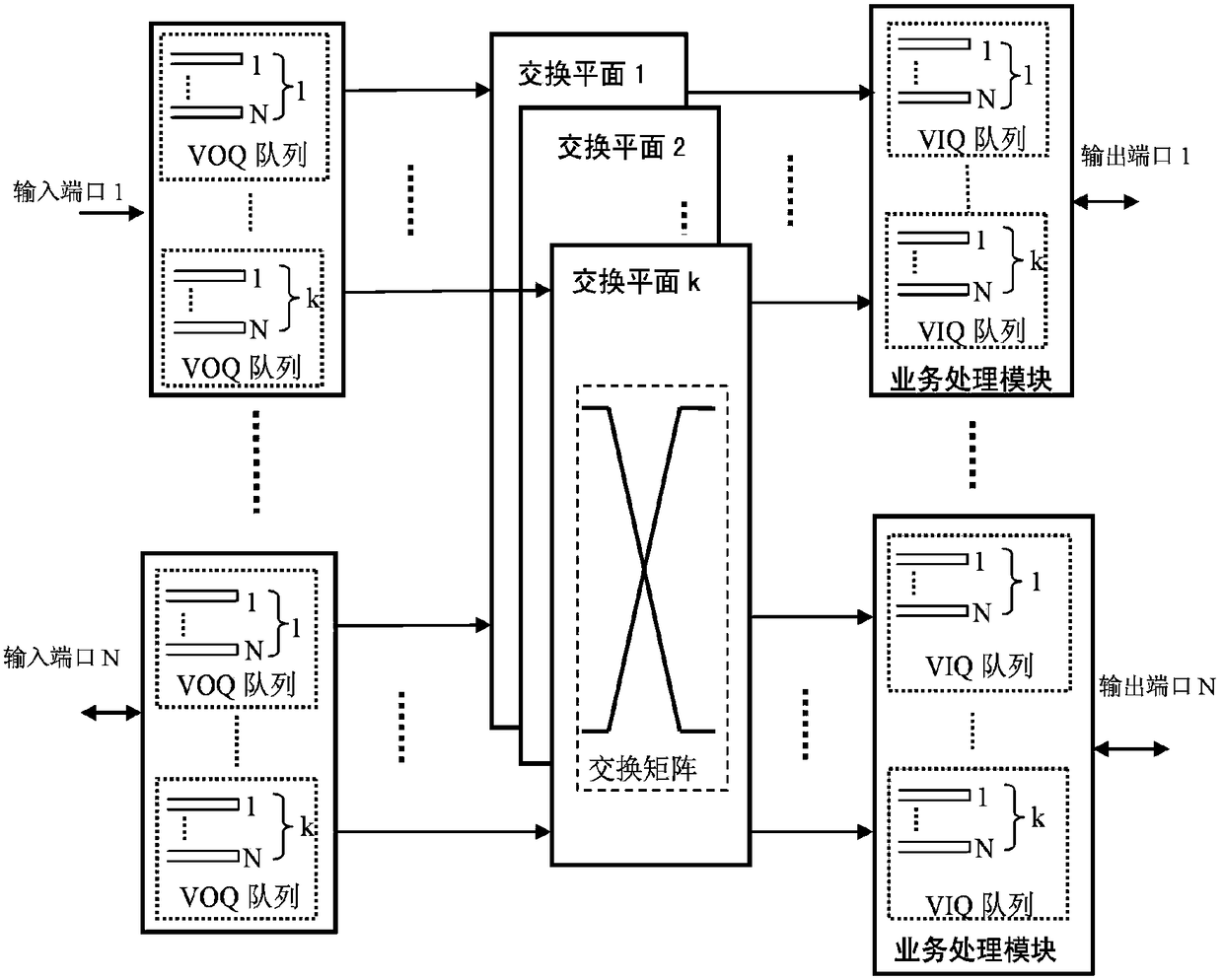

A Data Exchange Method Based on Multiple Switching Planes

ActiveCN105812290BImprove reliabilityEasy to implementData switching networksNetwork packetDependability

The invention discloses a data switching method based on a multi-switching plane, and relates to a technology of reliably switching access services through multiple switching planes in the field of communication switching. The method adopts multiple switching planes to transmit data packages, and fault of any switching plane does not influence the other switching planes and whole switching system. Integrity verification is performed on the data packages, so that the integrity of the data packages is ensured, and the unicity of the data is ensured through only time tag and ID in the system. The method has advantages of simple realization, high reliability, strong expandability and the like, and is suitable for the environments with special requirements for high reliability such as poor equipment operation environment, difficult component changing environment and the like.

Owner:NO 54 INST OF CHINA ELECTRONICS SCI & TECH GRP

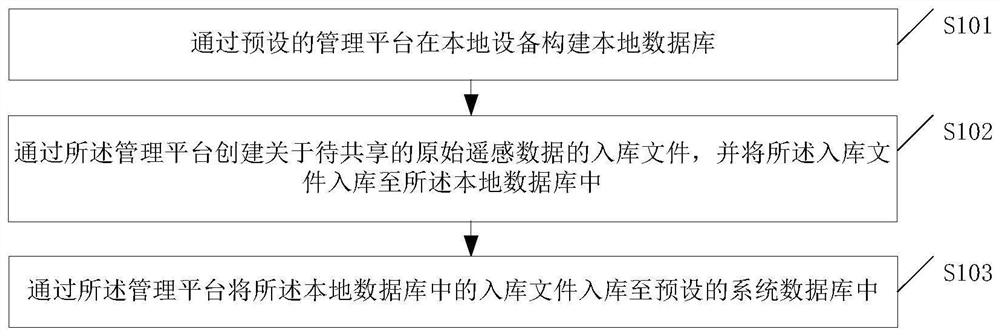

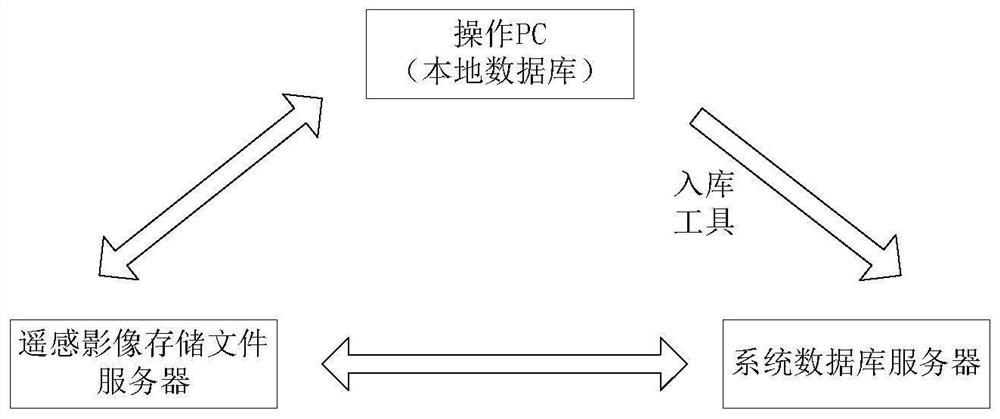

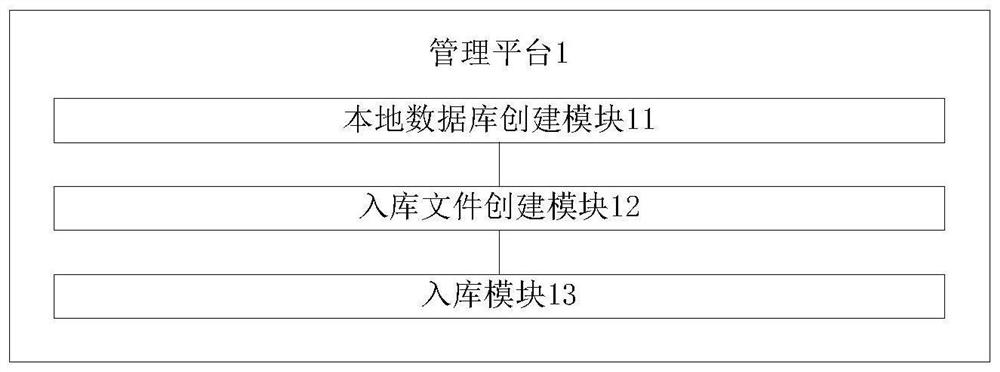

Remote sensing data distribution method, device and management platform based on mosaic dataset

ActiveCN111966670BReduce frequent operationsReduce accessDatabase management systemsSpecial data processing applicationsSensing dataData set

The embodiment of the present application discloses a remote sensing data distribution method, device and management platform based on a mosaic dataset. The method includes: constructing a local database on a local device through a preset management platform; storage files of the original remote sensing images, and store the storage files into the local database; store the storage files in the local database into the preset system database through the management platform. Through the solution of this embodiment, the local database is added to cache and correct the data to be shared, so as to process the wrong data in advance, and effectively avoid data errors published to the system database.

Owner:CHENGDU STAR ERA AEROSPACE TECH CO LTD

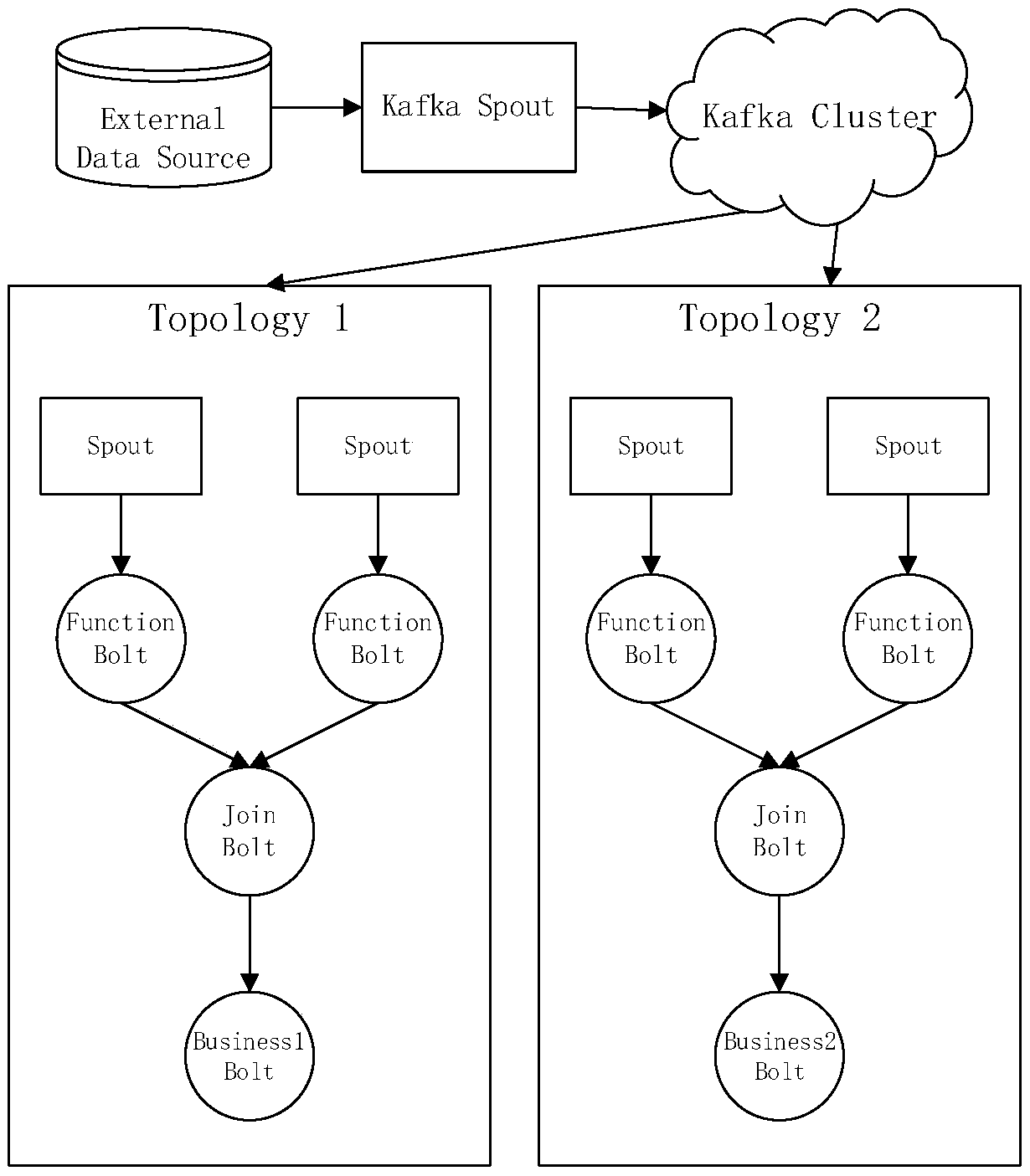

A Storm-based industrial signaling data stream type computing framework

InactiveCN109902107ASolve streaming complex problemsImprove transmission efficiencyDigital data information retrievalSpecial data processing applicationsStreaming dataData stream

The invention provides a Storm-based industrial signaling data stream type computing framework. Due to the difficulties of large scale, miscellaneous types and low quality of current industrial big data and a complex mode during stream data mining, a framework which reasonably utilizes a limited memory when a data mining algorithm is executed so as to obtain more data at one time is designed. In order to mine valuable information from industrial signaling data existing in a stream form, a Storm is utilized to mine a real-time data stream. For different real-time data mining tasks, the same type of data sources may be used, Spout sharing of the data sources of different Topology is achieved through Kafka message middleware, and reliable transmission of messages, message returning and the like are guaranteed. According to the Storm-based industrial signaling data stream type computing framework, the effectiveness and the high efficiency of information extraction in the industrial signaling data mining process can be effectively improved, the current situation that industrial big data are miscellaneous in type and low in quality is changed, and the requirements of intelligent industrial production and development are met.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

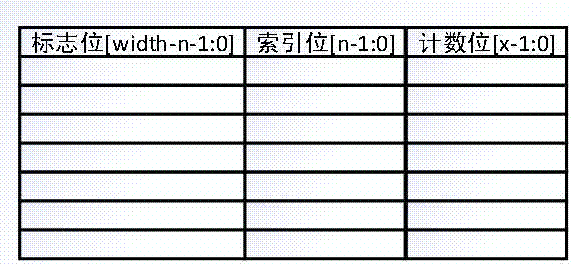

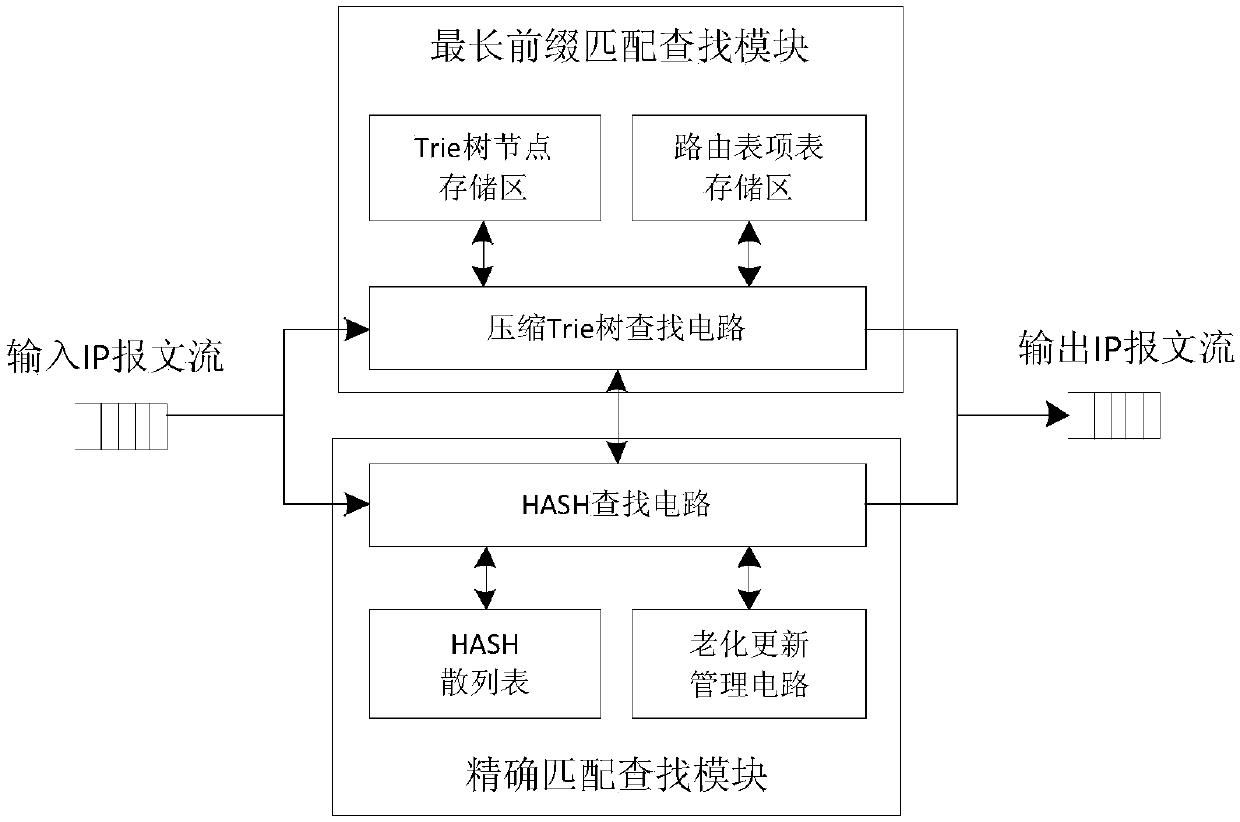

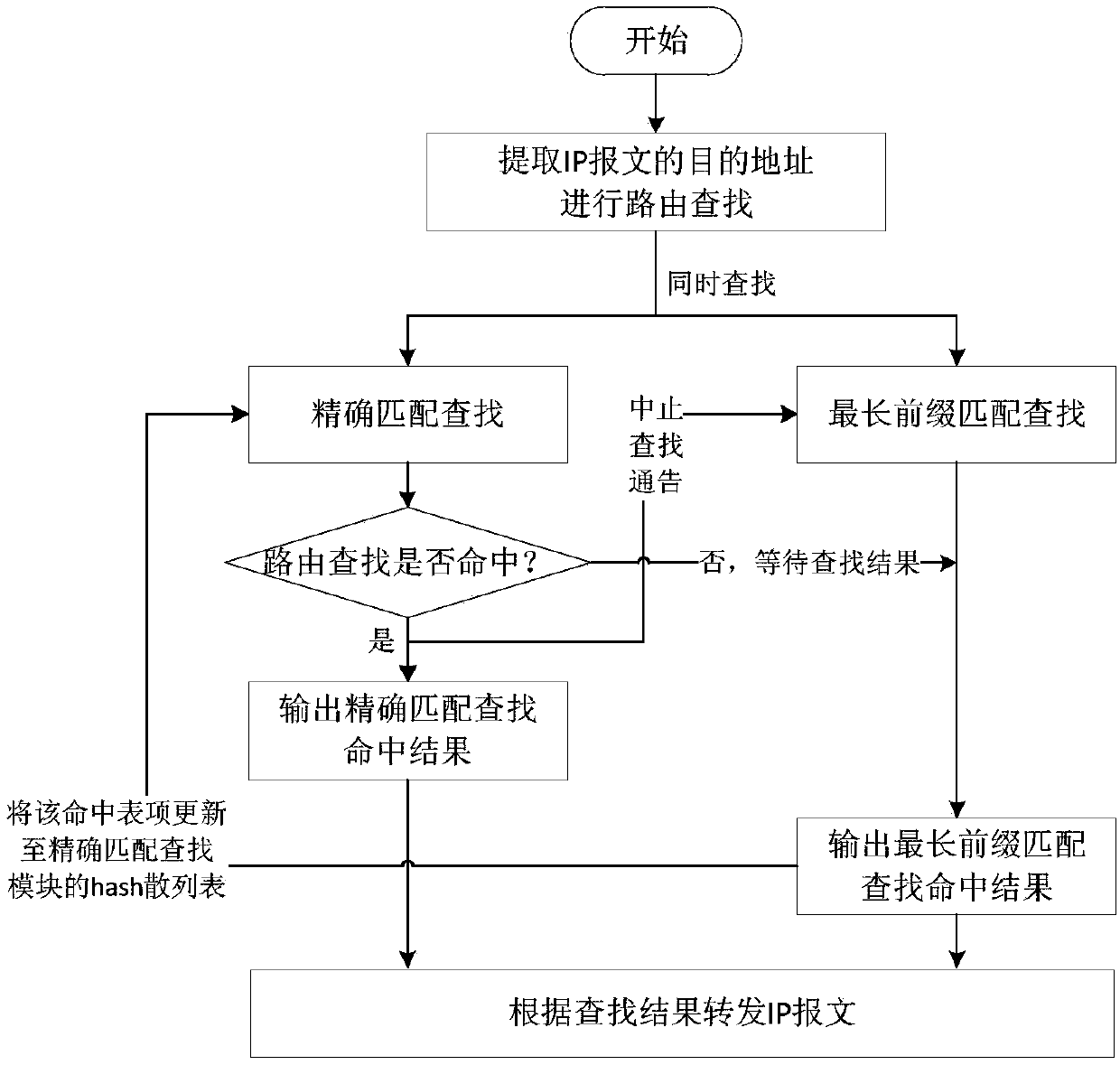

On-board fast route lookup system combined with longest prefix match and exact match

ActiveCN106549872BImprove route lookup speedIncrease cacheData switching networksLongest prefix matchTheoretical computer science

The on-board fast route lookup system combined with the longest prefix match and exact match, including the exact match lookup module and the longest prefix match lookup module, the exact match lookup module includes a hash lookup circuit, a hash hash table and an aging update management circuit; The long prefix matching search module includes a compressed Trie tree search circuit, a Trie tree node storage area and a routing table item storage area. Compared with the traditional longest prefix matching search structure, the method of the present invention adds an exact match search as a high-speed cache of active routing table entries by establishing a route search structure combining the longest prefix match and exact match, greatly improving the IP packet rate. The routing lookup speed of the text stream effectively reduces the average lookup time. In addition, by building an aging update management circuit that accurately matches and looks up, the automatic learning and adding operations of active routing table items are realized, and the aging check and processing of routing table items are completed. Delete operation to ensure efficient management and lookup performance of active routing table entries.

Owner:XIAN INSTITUE OF SPACE RADIO TECH

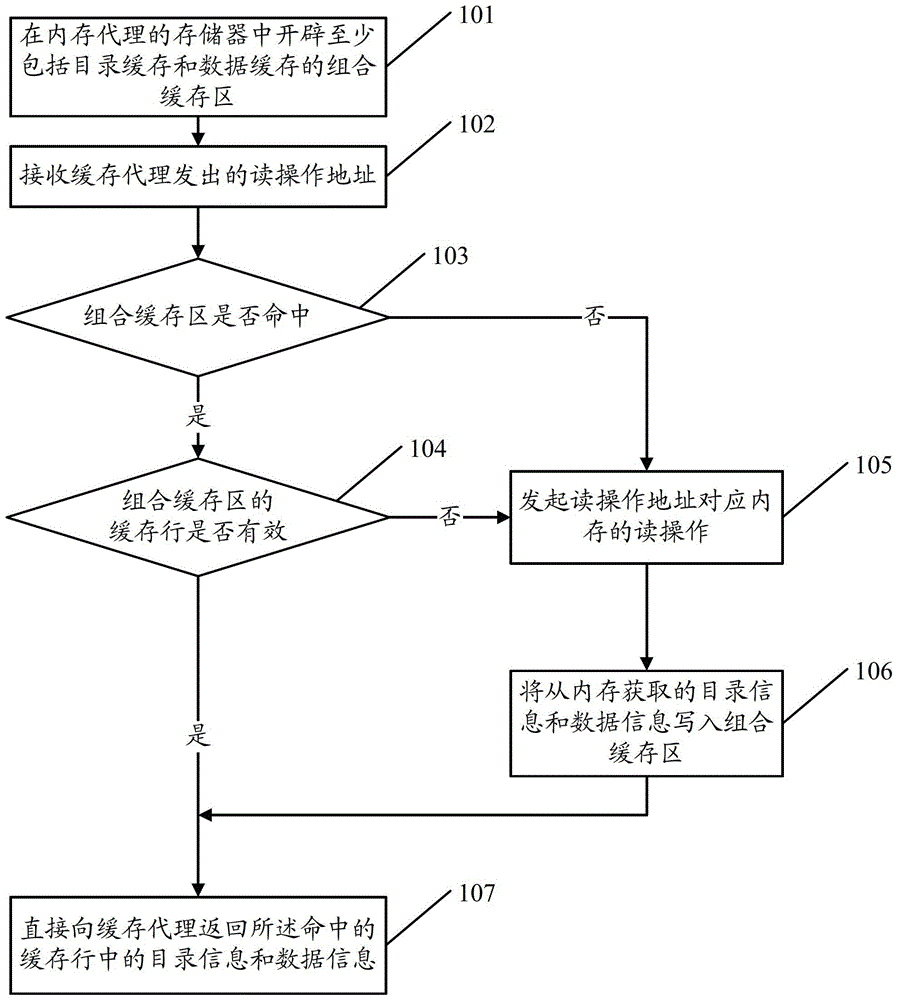

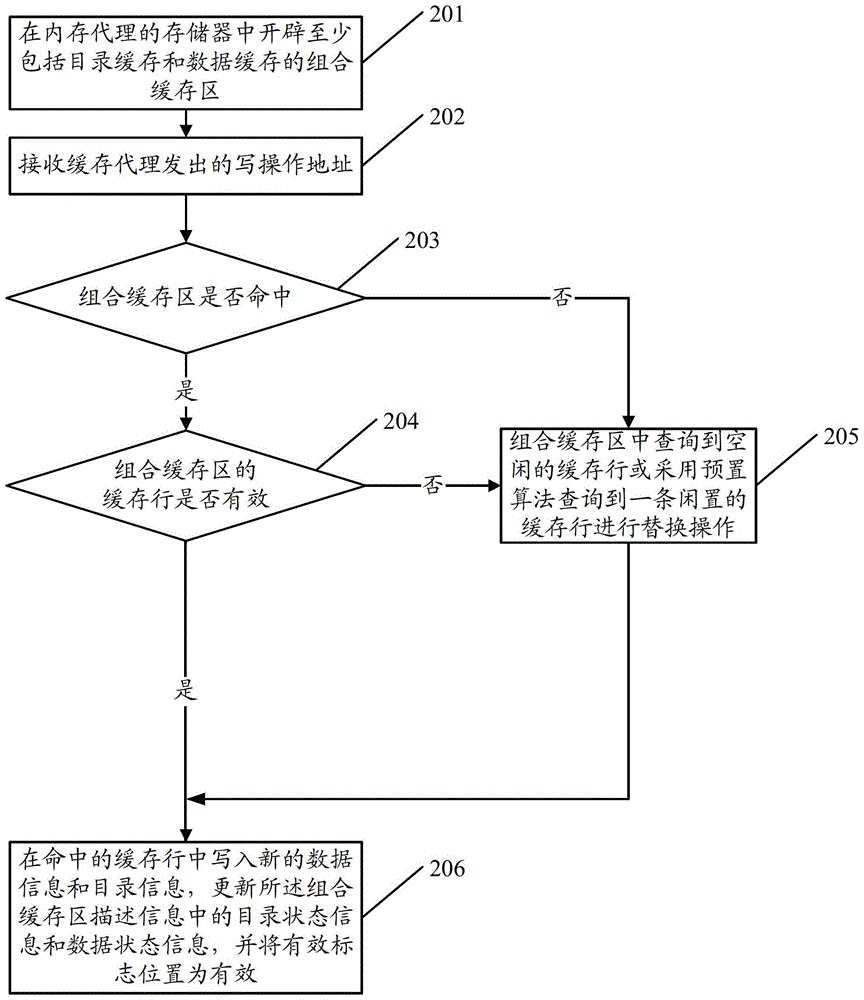

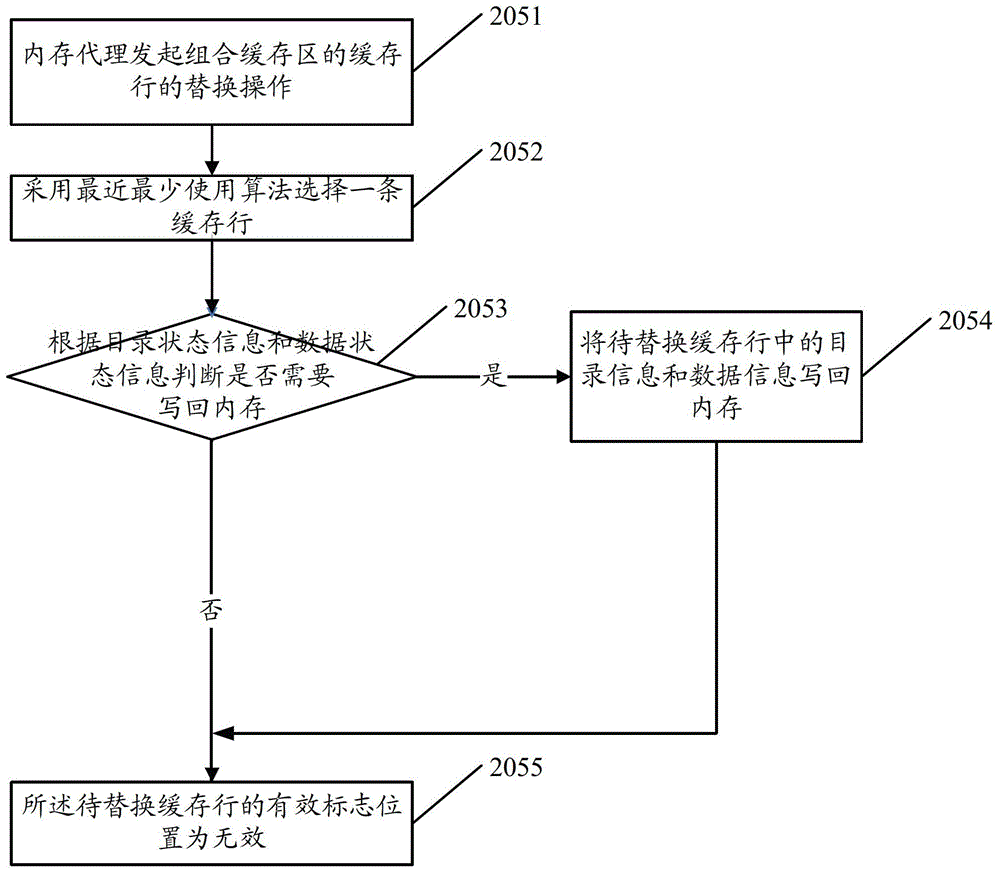

A memory data buffering method and device

ActiveCN103076992BIncrease cacheLower latencyInput/output to record carriersParallel computingData acquisition

The embodiment of the invention discloses a memory data buffering method. The method comprises the following steps of: forming a combined cache region which at least comprises a directory cache and a data cache in a memory of a home agent, wherein cache lines in the directory cache and the data cache are in one-to-one correspondence; receiving an operation address which is sent by a cache agent, and judging whether the combined cache region is formed and effective according to the operation address; and if so, directly performing corresponding operation on the combined cache region. By the invention, the frequency of memory access is reduced, and data acquisition delay is reduced.

Owner:XFUSION DIGITAL TECH CO LTD

A kind of surge protection circuit and protection method for Internet of things mobile base station

ActiveCN112234589BIncrease conversion timeIncrease cacheEmergency protective arrangements for limiting excess voltage/currentData controlSignal modulation

The invention discloses a surge protection circuit and a protection method for an internet of things mobile base station, belonging to the field of internet of things mobile base stations; a surge protection circuit and a protection method for an internet of things mobile base station, comprising: a signal transmitting unit , a signal receiving unit, a data control unit, a data storage unit, and an interface unit; the interface unit includes: a signal isolation module, a signal modulation module, and an amplitude limiting filter module; when the present invention receives user signals through the receiver, the data is transferred through the interface. The control unit, at the same time, when the signal output is transmitted, it is output to the transmitter through the interface unit, which can increase the conversion time and buffering of the signal, so as to better stabilize the signal, so as to be stable in the environment with strong thunderstorm signal interference. During signal transmission, a delay will be added to each transmission set, and the sub-signals in each transmission set will be signal-isolated, so that the signals do not interfere with each other.

Owner:NANJING YUNTIAN ZHIXIN INFORMATION TECH CO LTD +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com