Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

95 results about "PCI-X" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

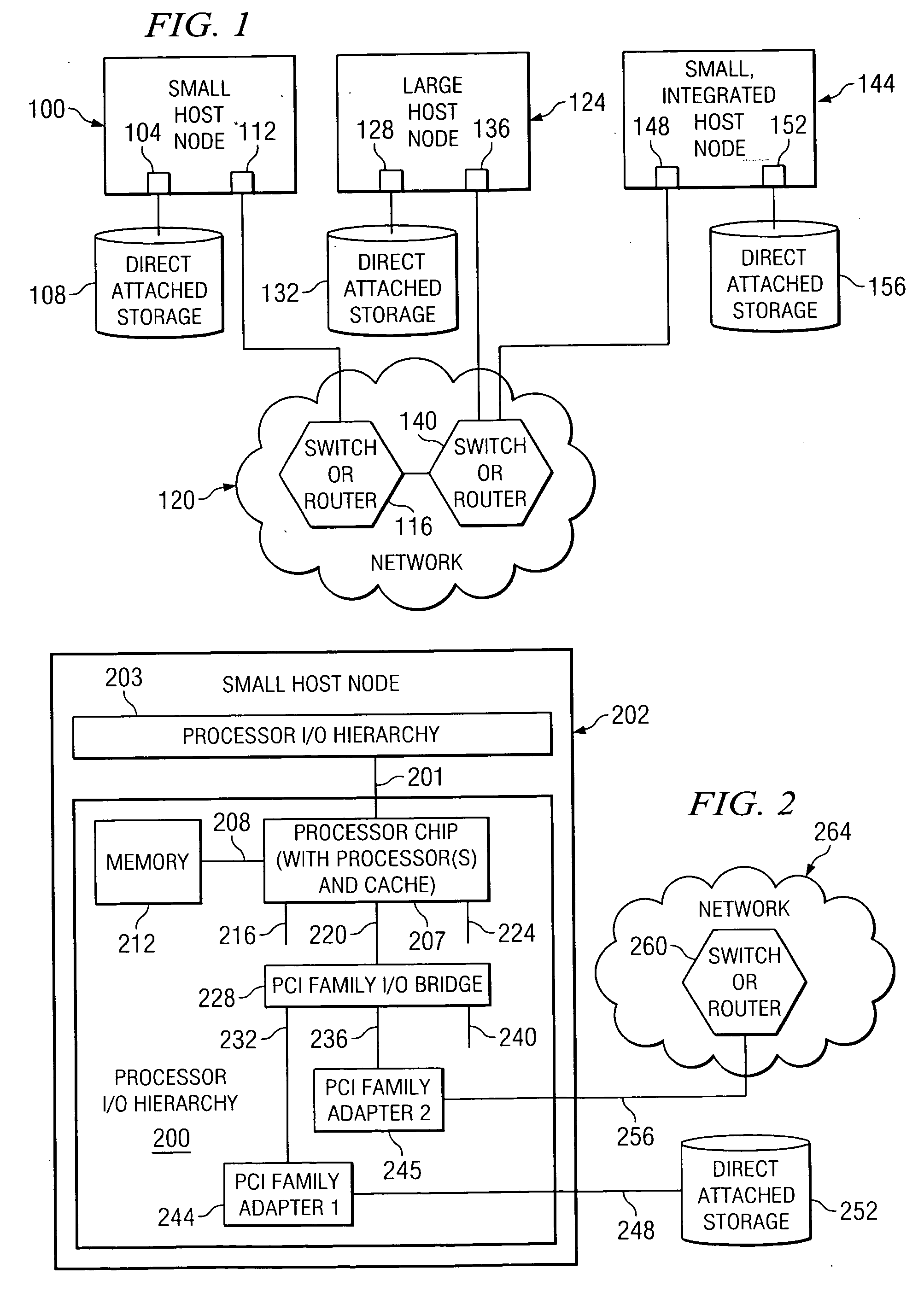

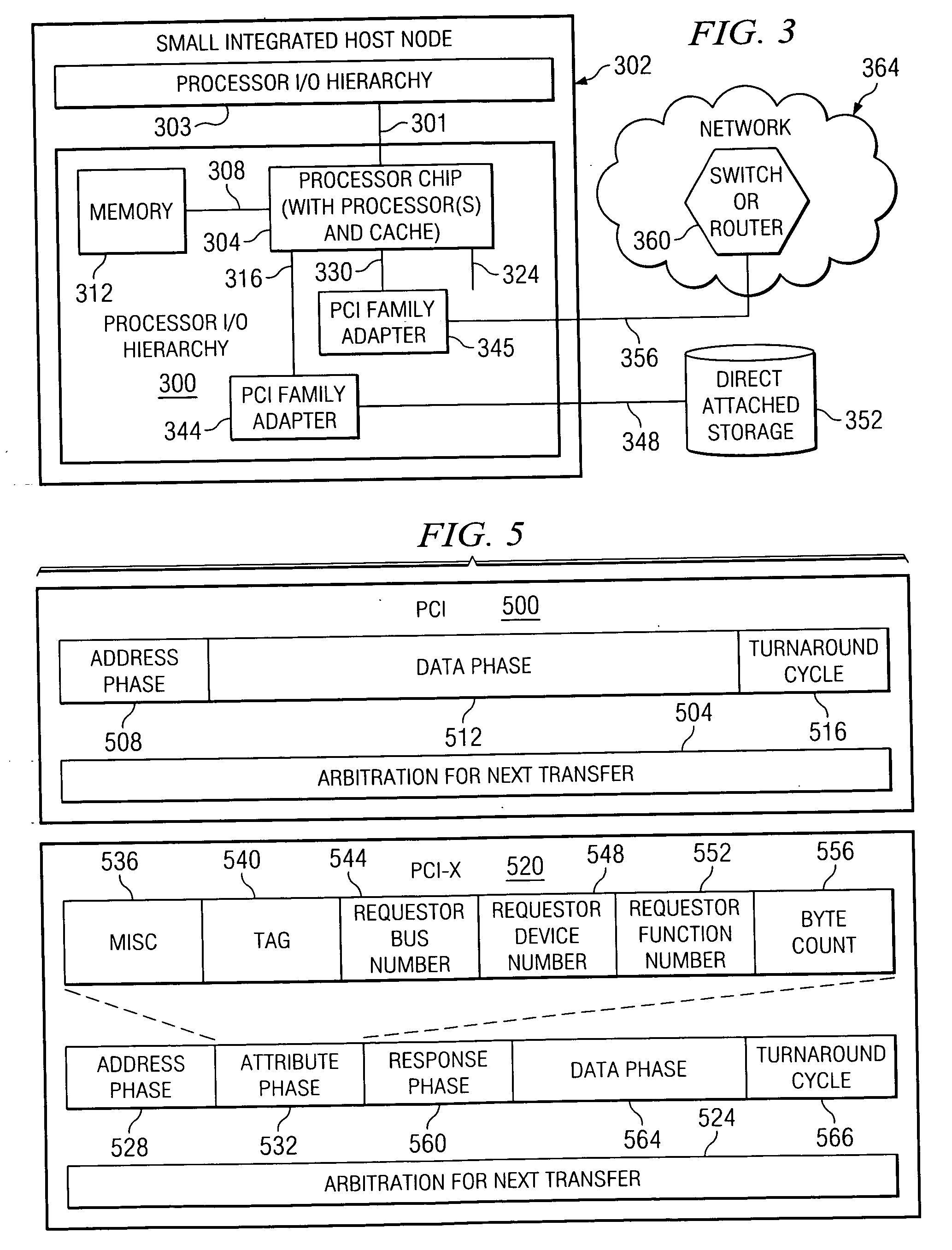

PCI-X, short for Peripheral Component Interconnect eXtended, is a computer bus and expansion card standard that enhances the 32-bit PCI local bus for higher bandwidth demanded mostly by servers and workstations. It uses a modified protocol to support higher clock speeds (up to 133 MHz), but is otherwise similar in electrical implementation. PCI-X 2.0 added speeds up to 533 MHz, with a reduction in electrical signal levels.

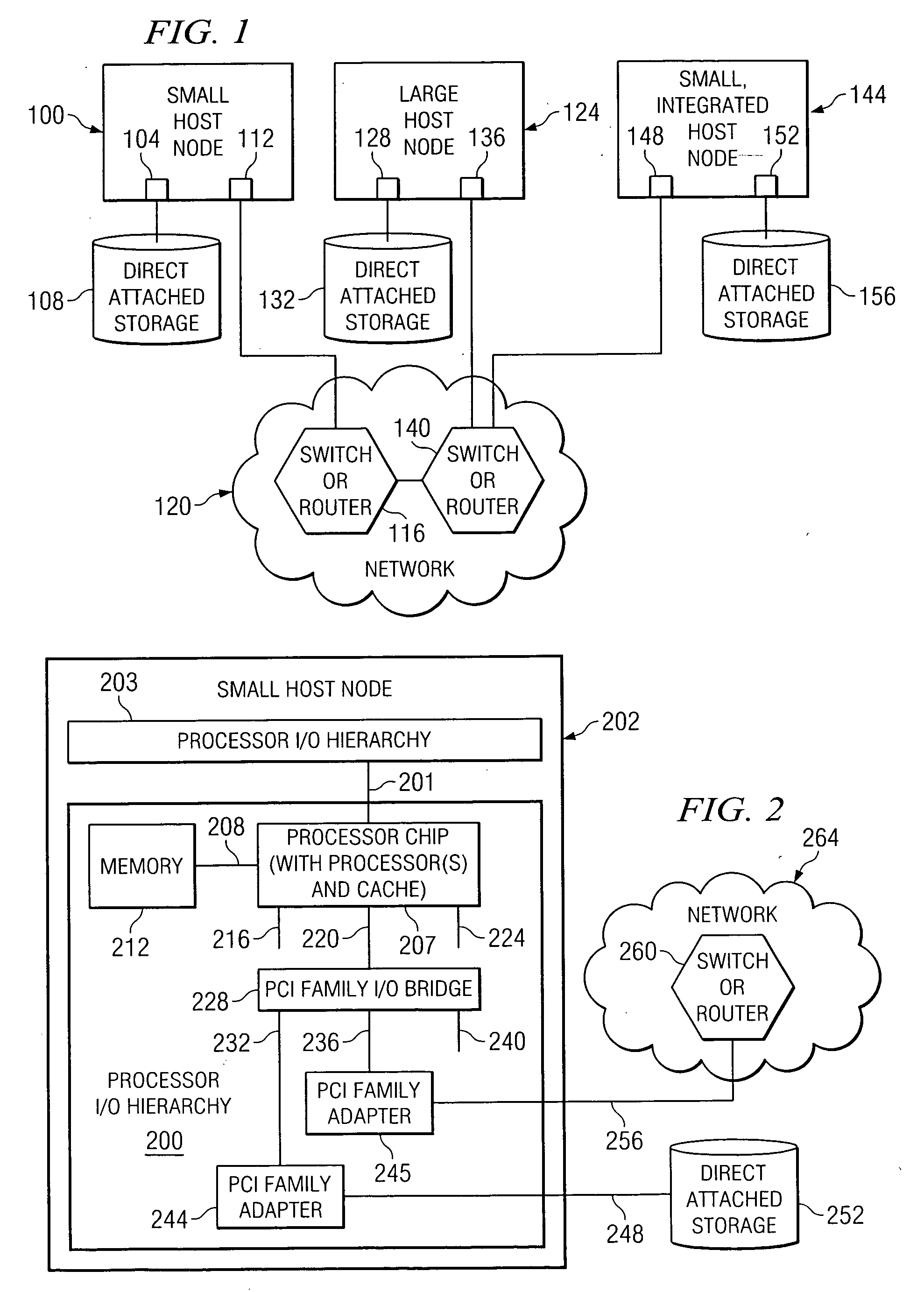

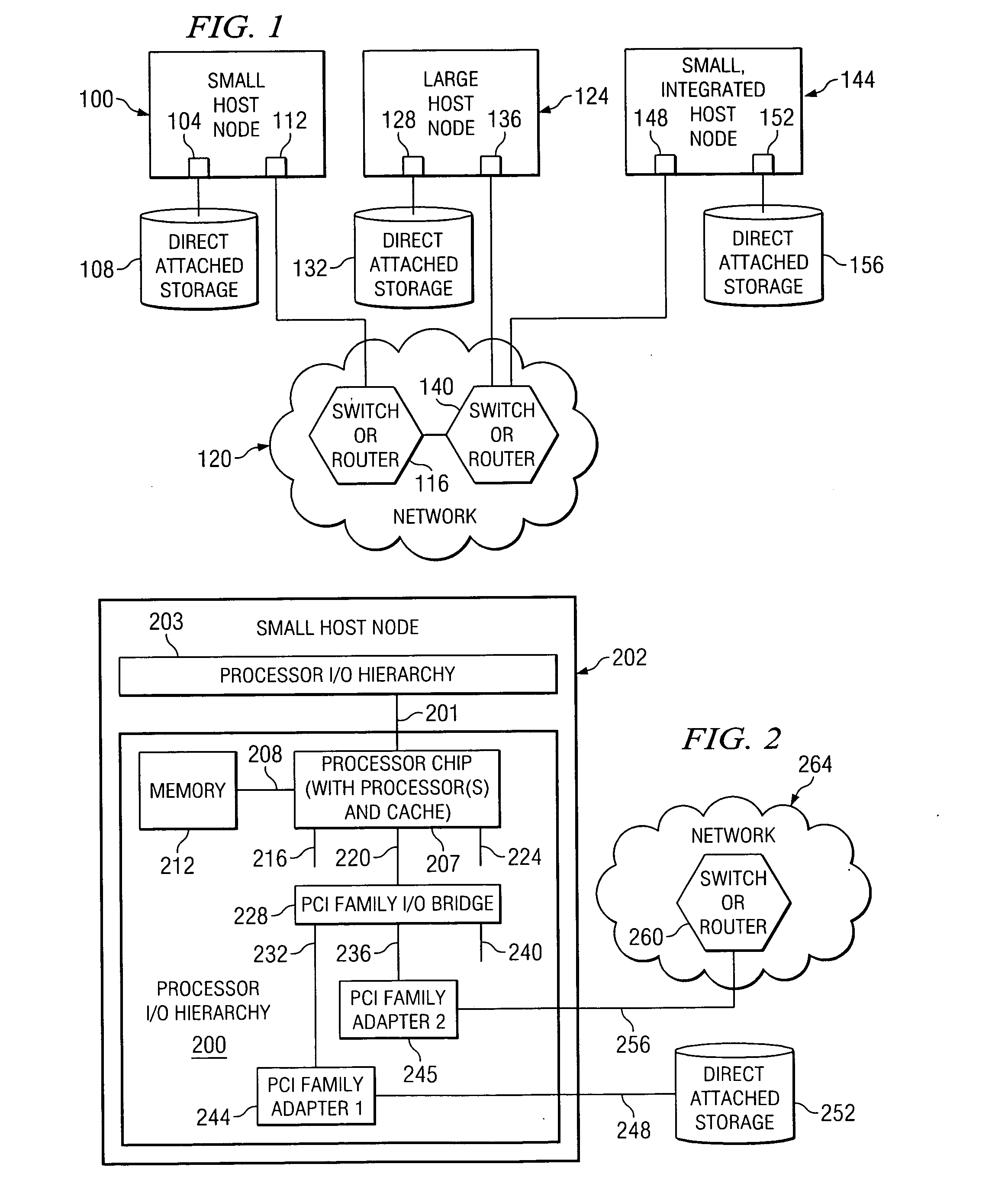

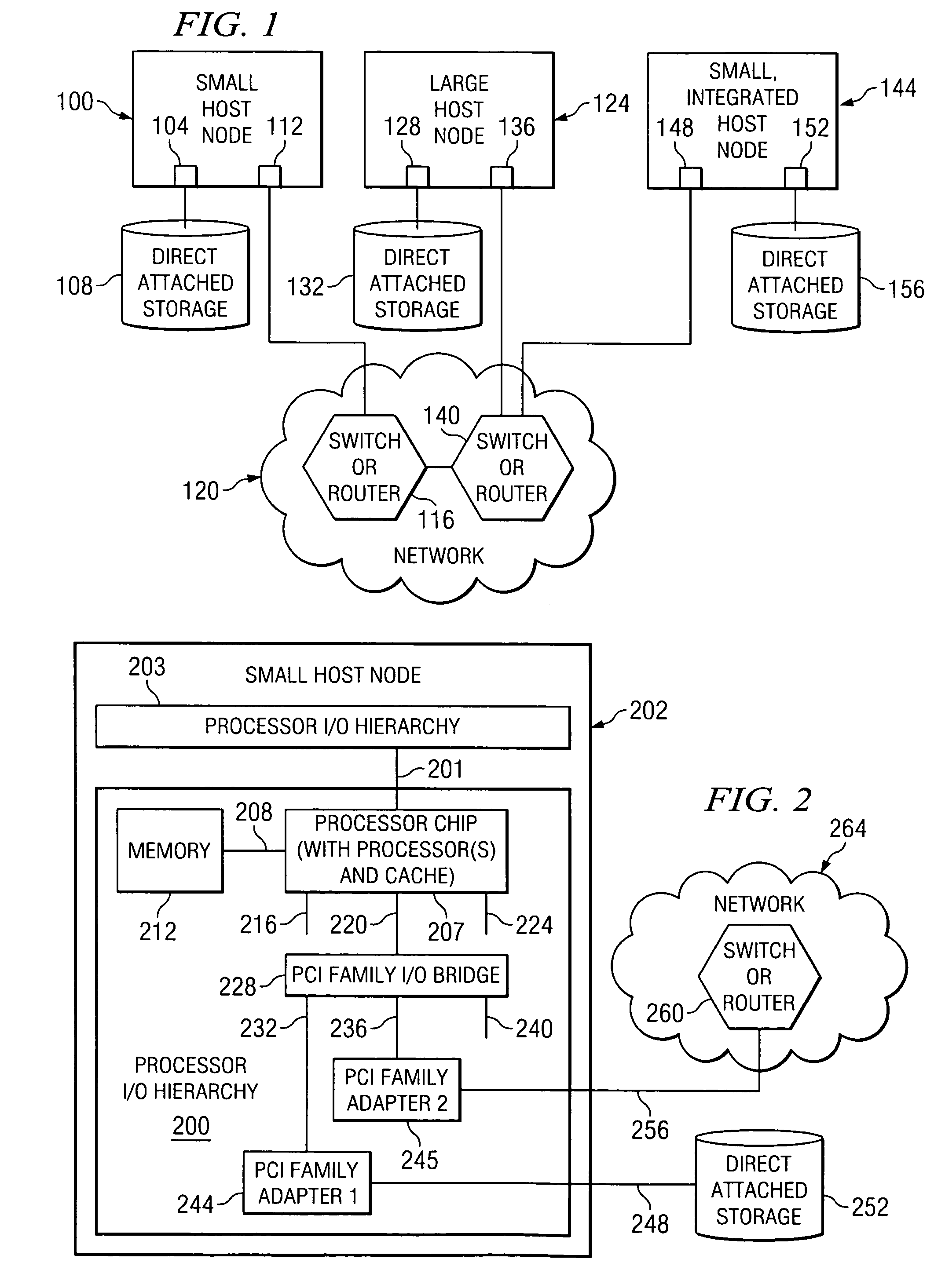

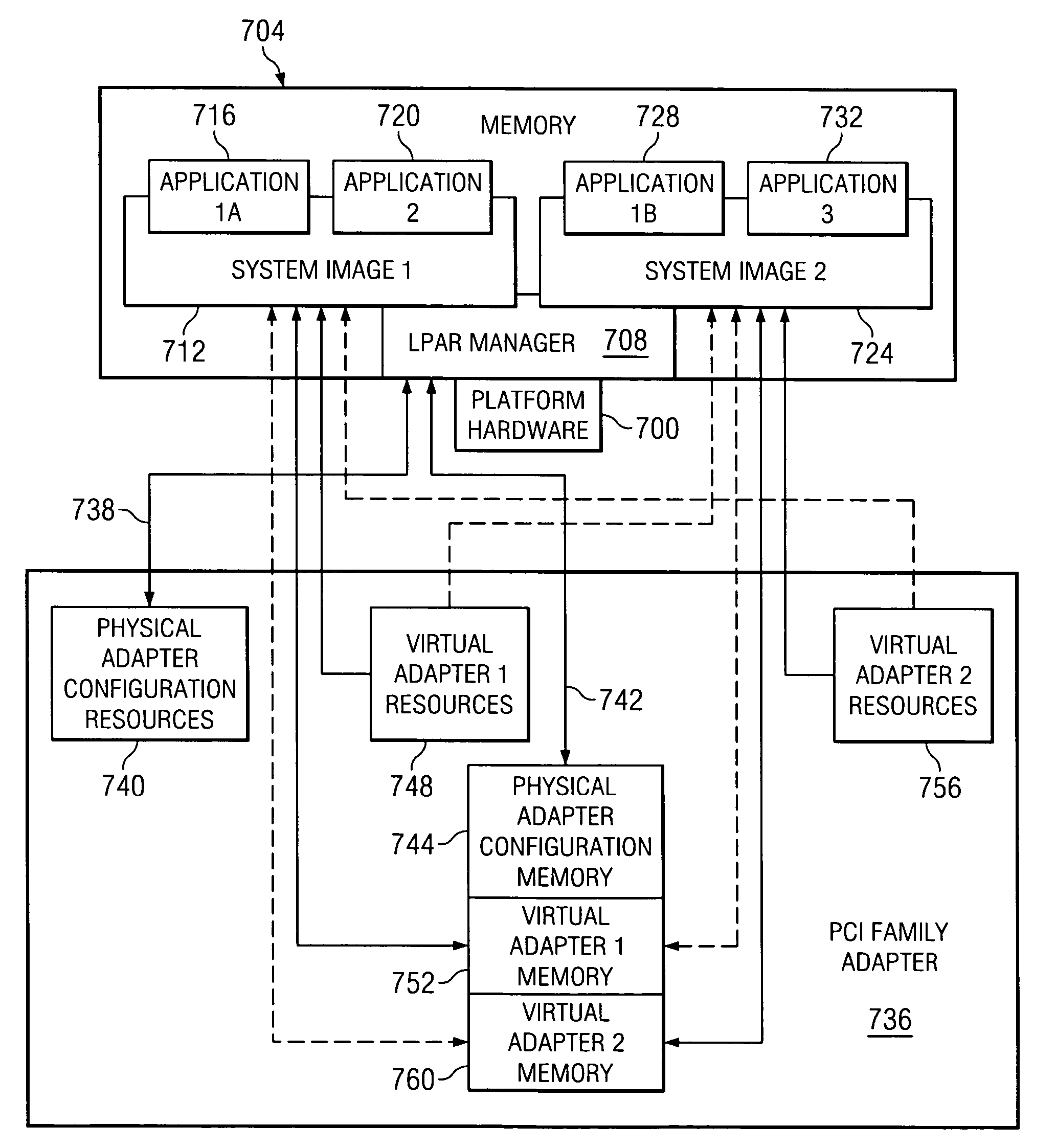

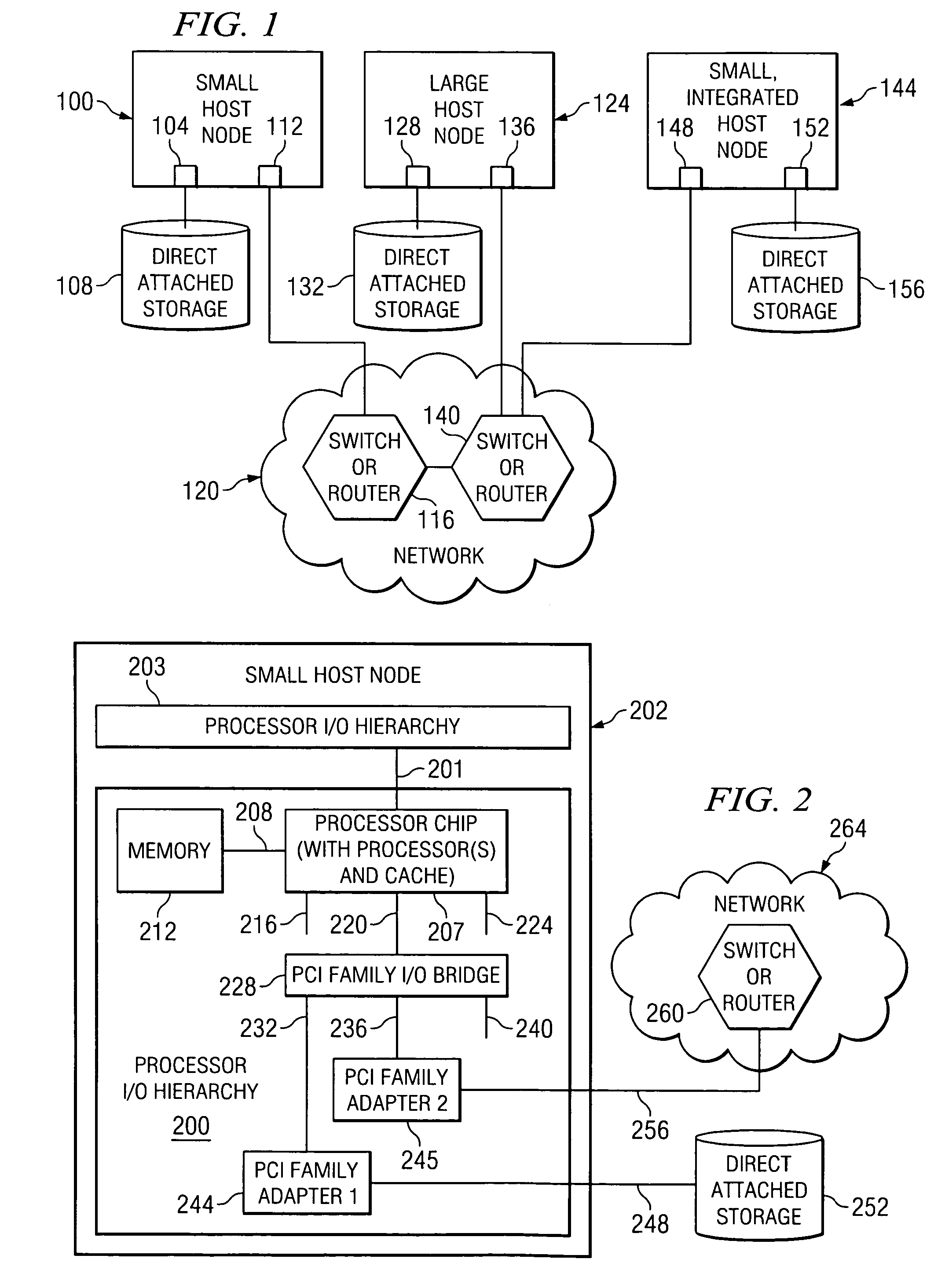

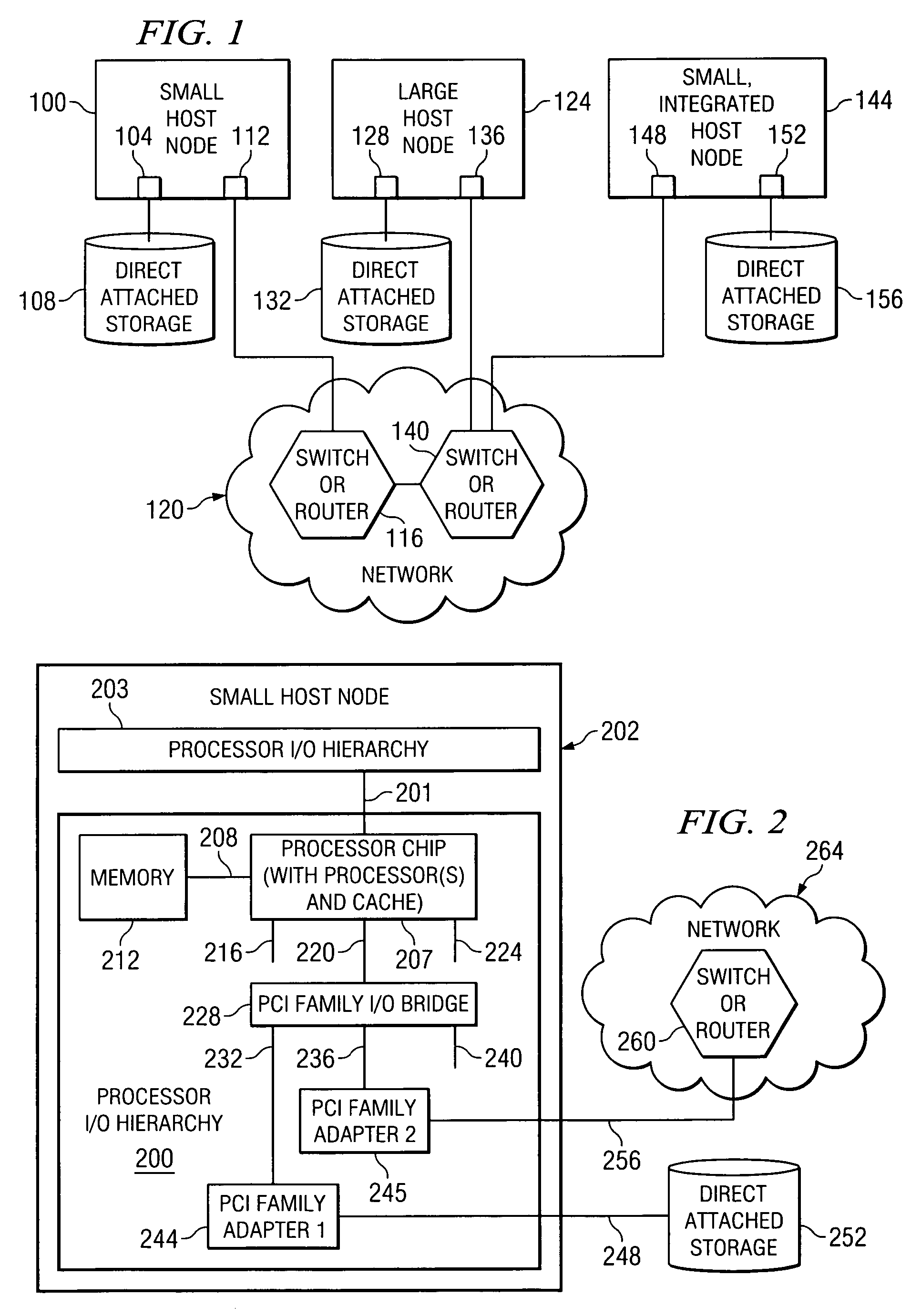

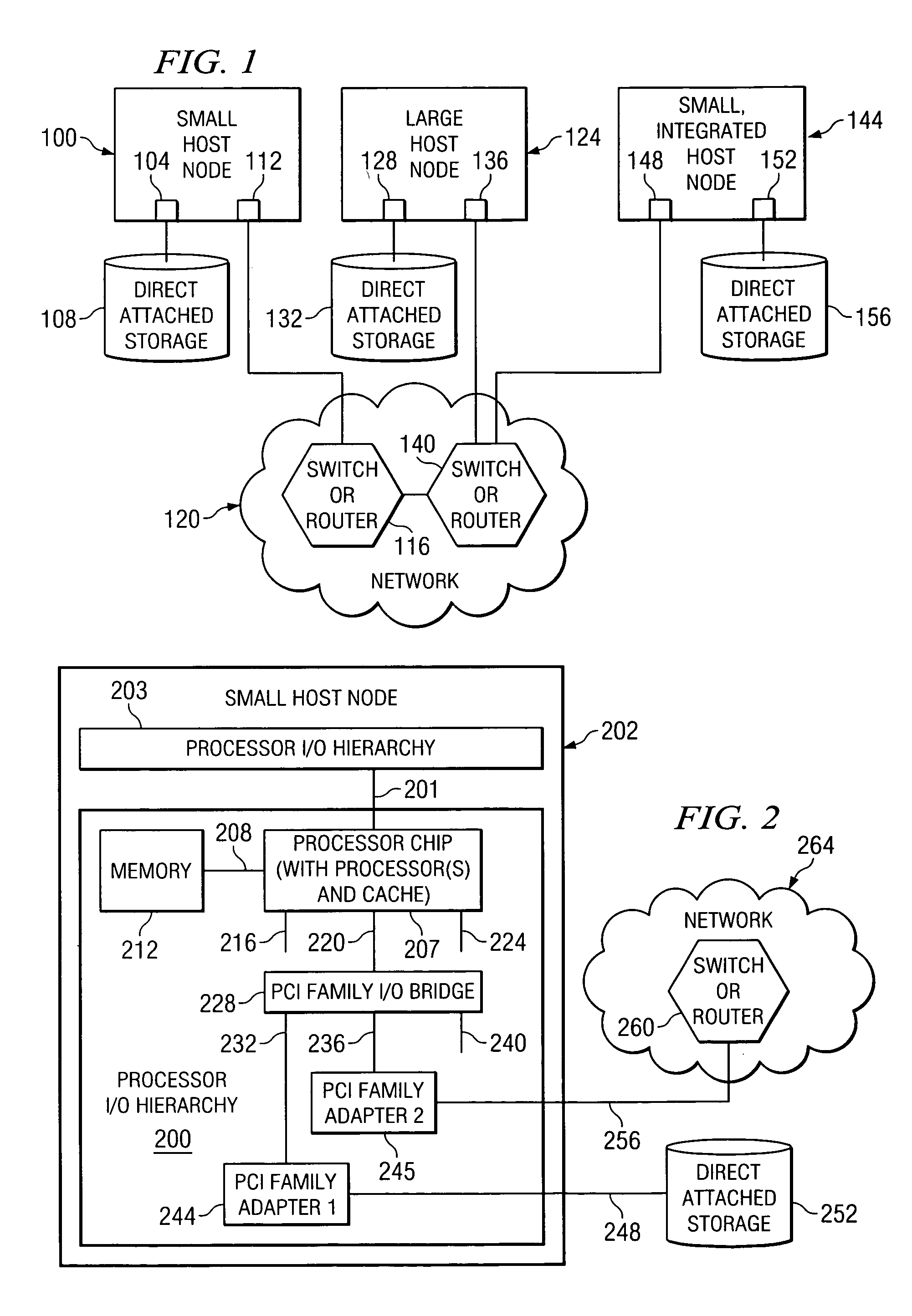

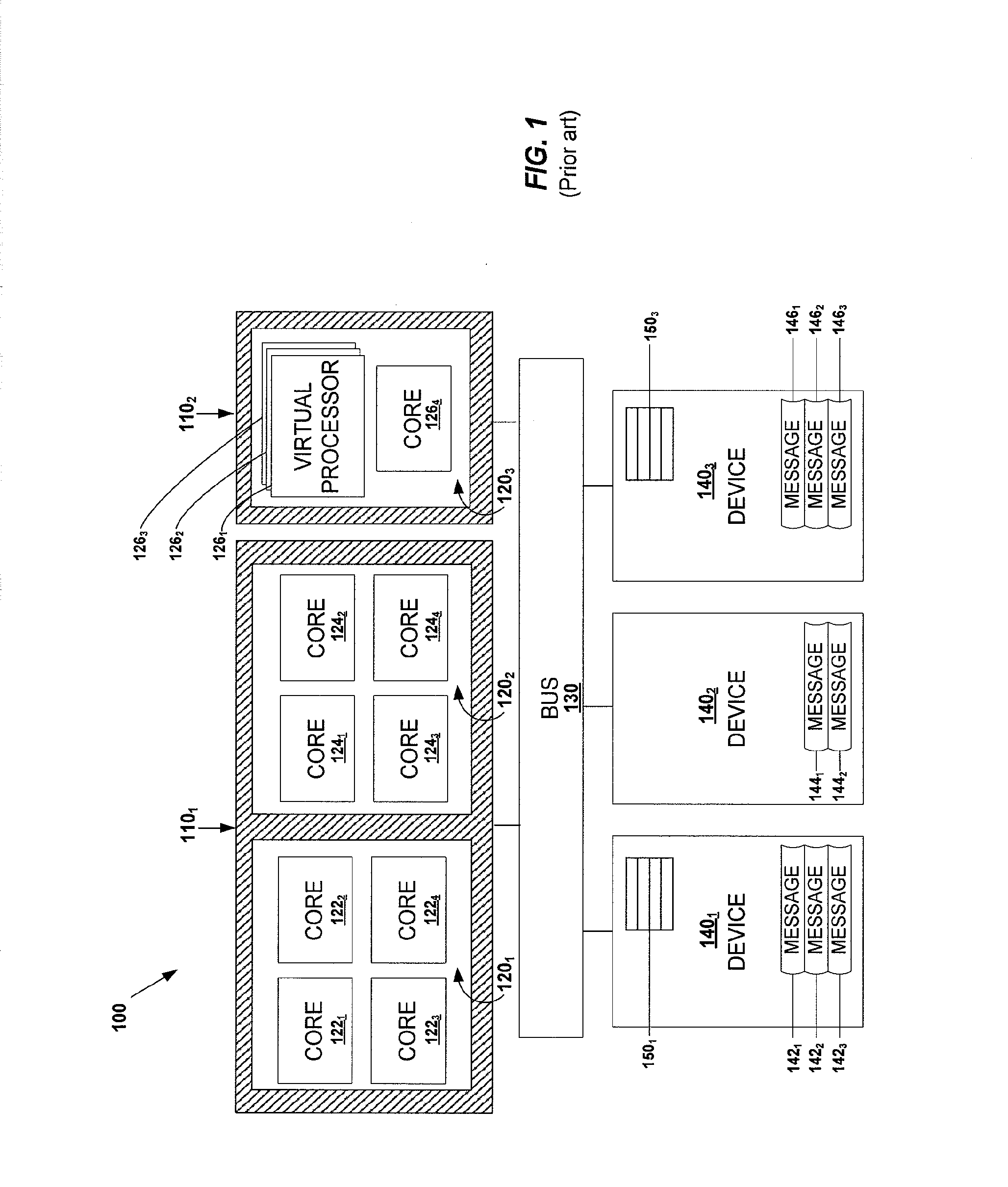

Method and system for native virtualization on a partially trusted adapter using adapter bus, device and function number for identification

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to use a PCI adapter identifier to associate its resources to a system image and isolate them from other system images thereby providing I / O virtualization is provided. Specifically, the present invention is directed to a mechanism for sharing among multiple system images a conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A mechanism is provided that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to use a PCI adapter identifier to associate its resources to a system image and isolate them from other system images, thereby providing I / O virtualization.

Owner:IBM CORP

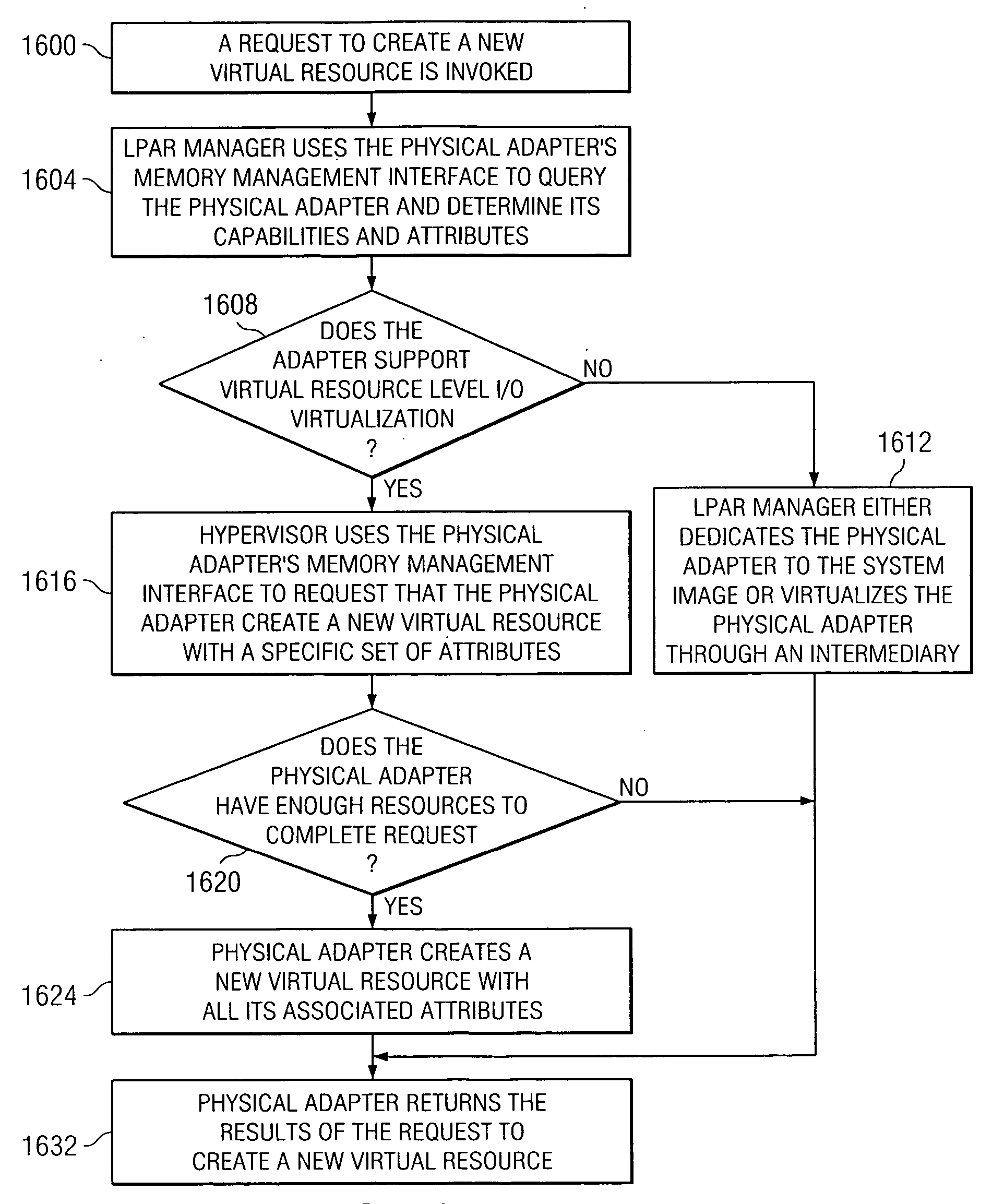

System and method of virtual resource modification on a physical adapter that supports virtual resources

A method, computer program product, and distributed data processing system for modifying one or more virtual resources that reside within a physical adapter, such as a peripheral component interconnect (PCI), PCI-X, or PCI-E adapter, and that are associated with a virtual host is provided. Specifically, the present invention is directed to a mechanism for sharing conventional PCI I / O adapters, PCI-X I / O Adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for host to adapter communications. A mechanism is provided for directly modifying one or more virtual resources that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter, and that are associated with a virtual host.

Owner:IBM CORP

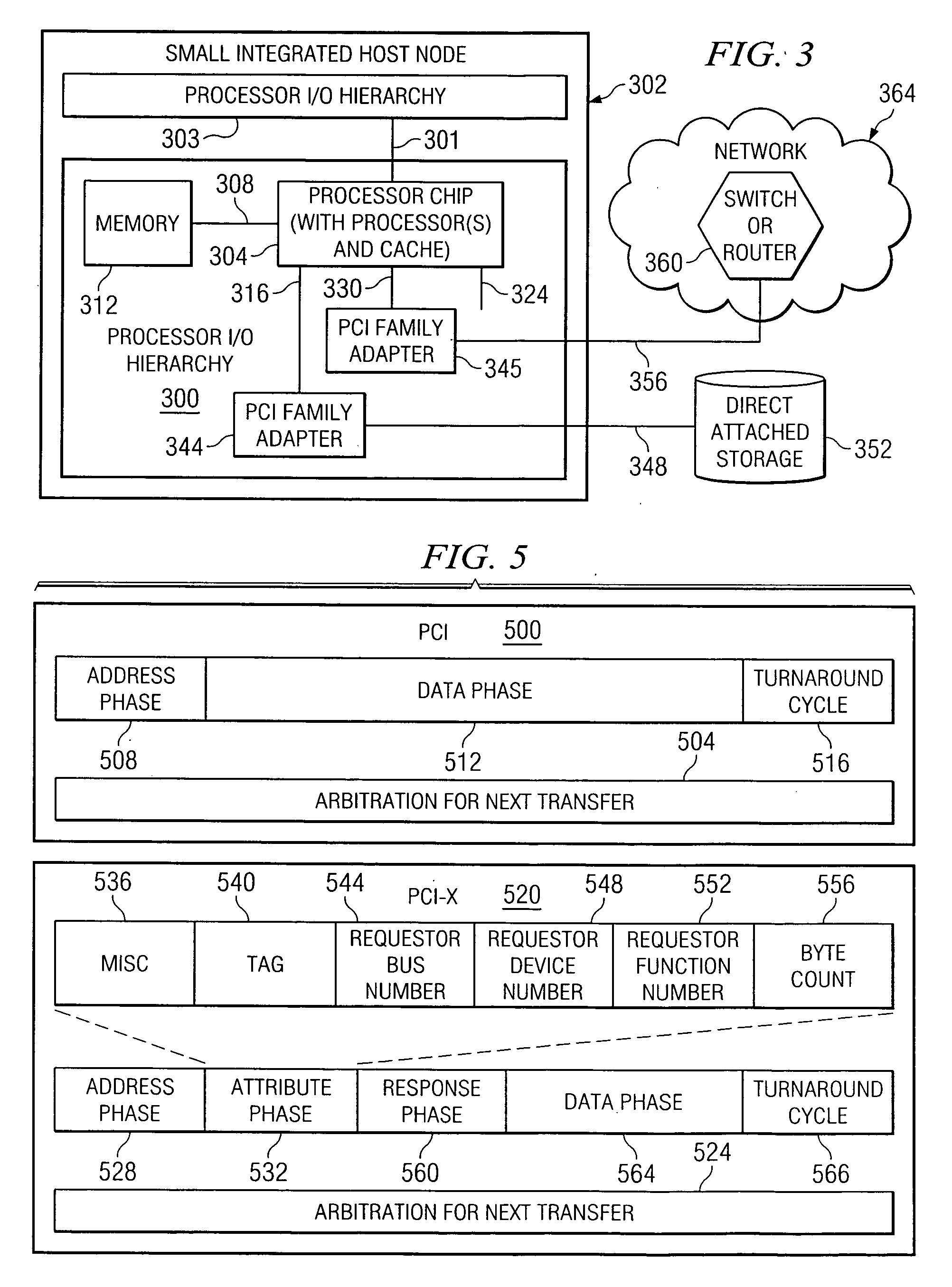

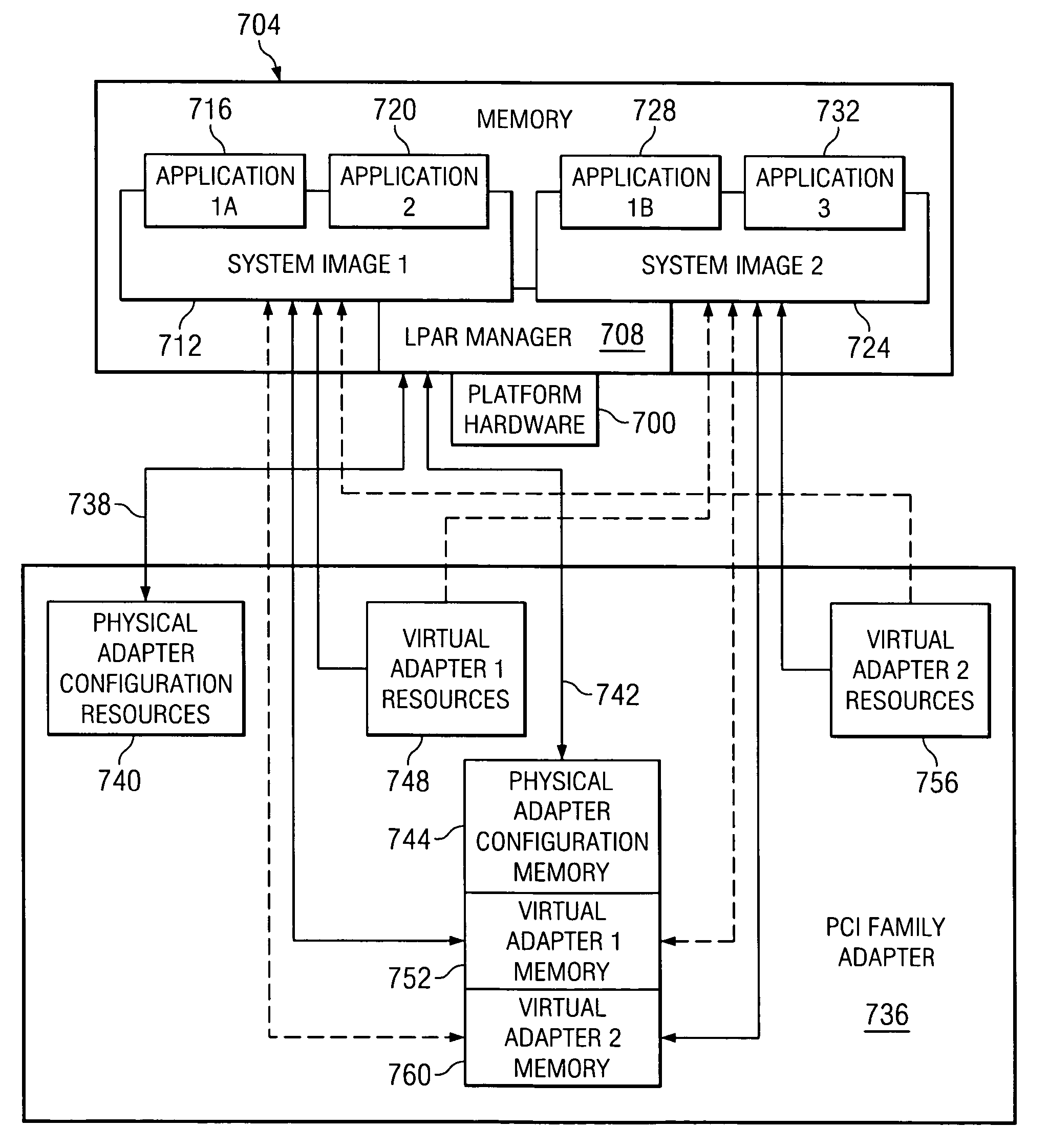

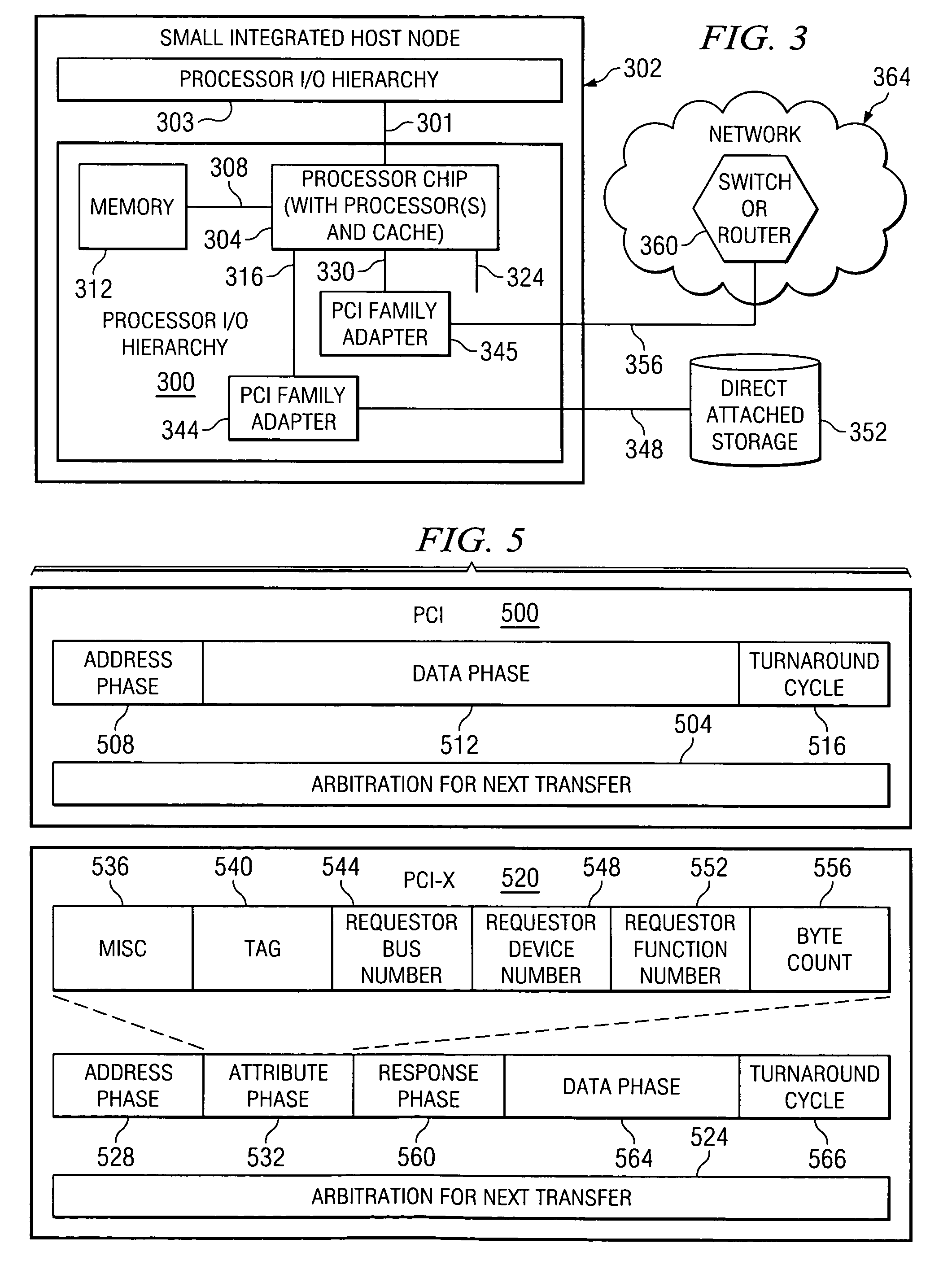

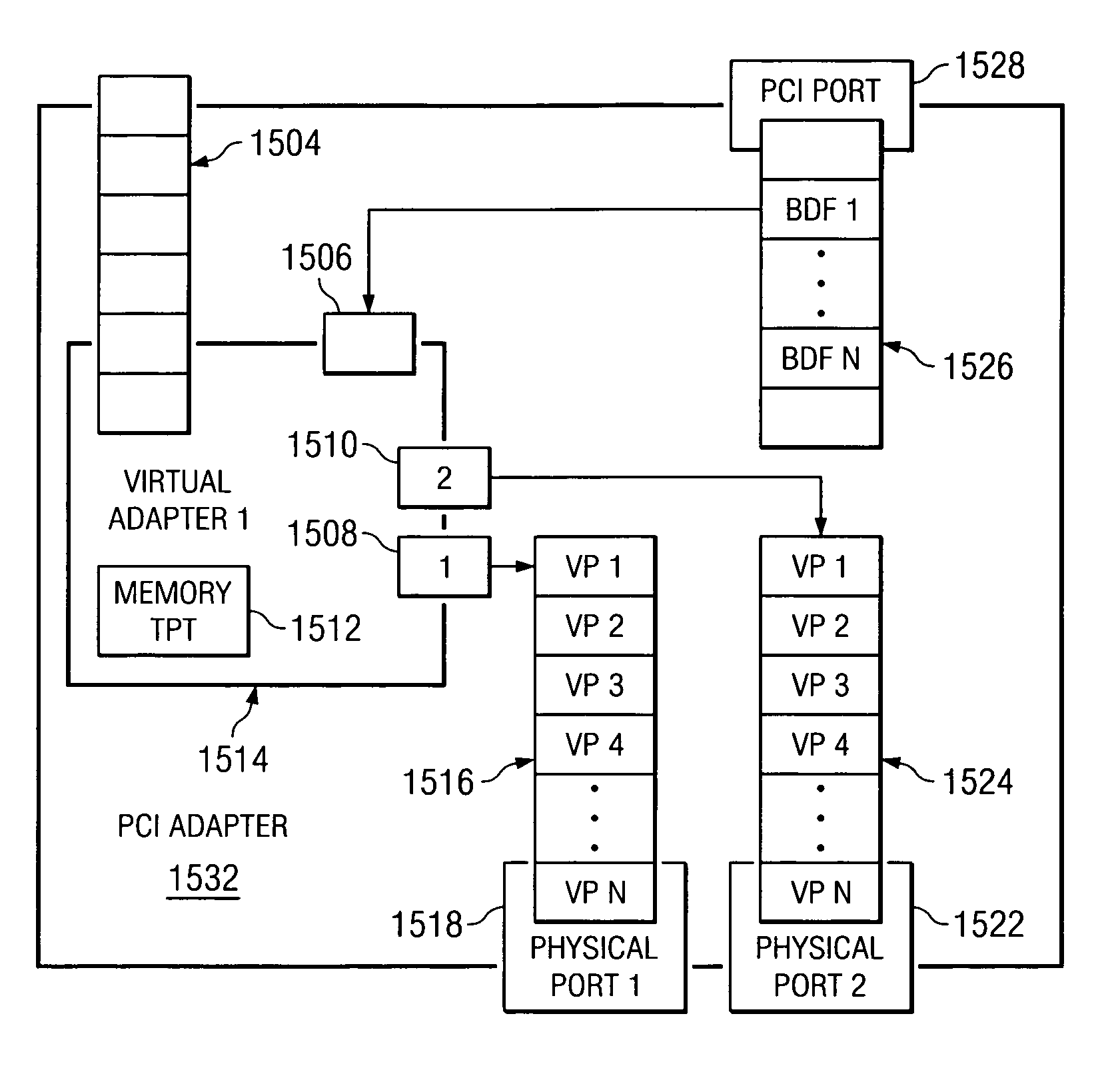

Data processing system, method, and computer program product for creation and initialization of a virtual adapter on a physical adapter that supports virtual adapter level virtualization

InactiveUS20060195618A1Program controlInput/output processes for data processingMemory addressData processing system

A method, computer program product, and distributed data processing system for directly sharing an I / O adapter that directly supports adapter virtualization and does not require an LPAR manager or other intermediary to be invoked on every I / O transaction is provided. The present invention also provides a method, computer program product, and distributed data processing system for directly creating and initializing a virtual adapter and associated resources on a physical adapter, such as a PCI, PCI-X, or PCI-E adapter. Specifically, the present invention is directed to a mechanism for sharing conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A mechanism is provided for directly creating and initializing a virtual adapter and associated resources within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter. Additionally, each virtual adapter has an associated set of host side resources, such as memory addresses and interrupt levels, and adapter side resources, such as adapter memory addresses and processing queues, and each virtual adapter is isolated from accessing the host side resources and adapter resources that belong to another virtual or physical adapter.

Owner:IBM CORP

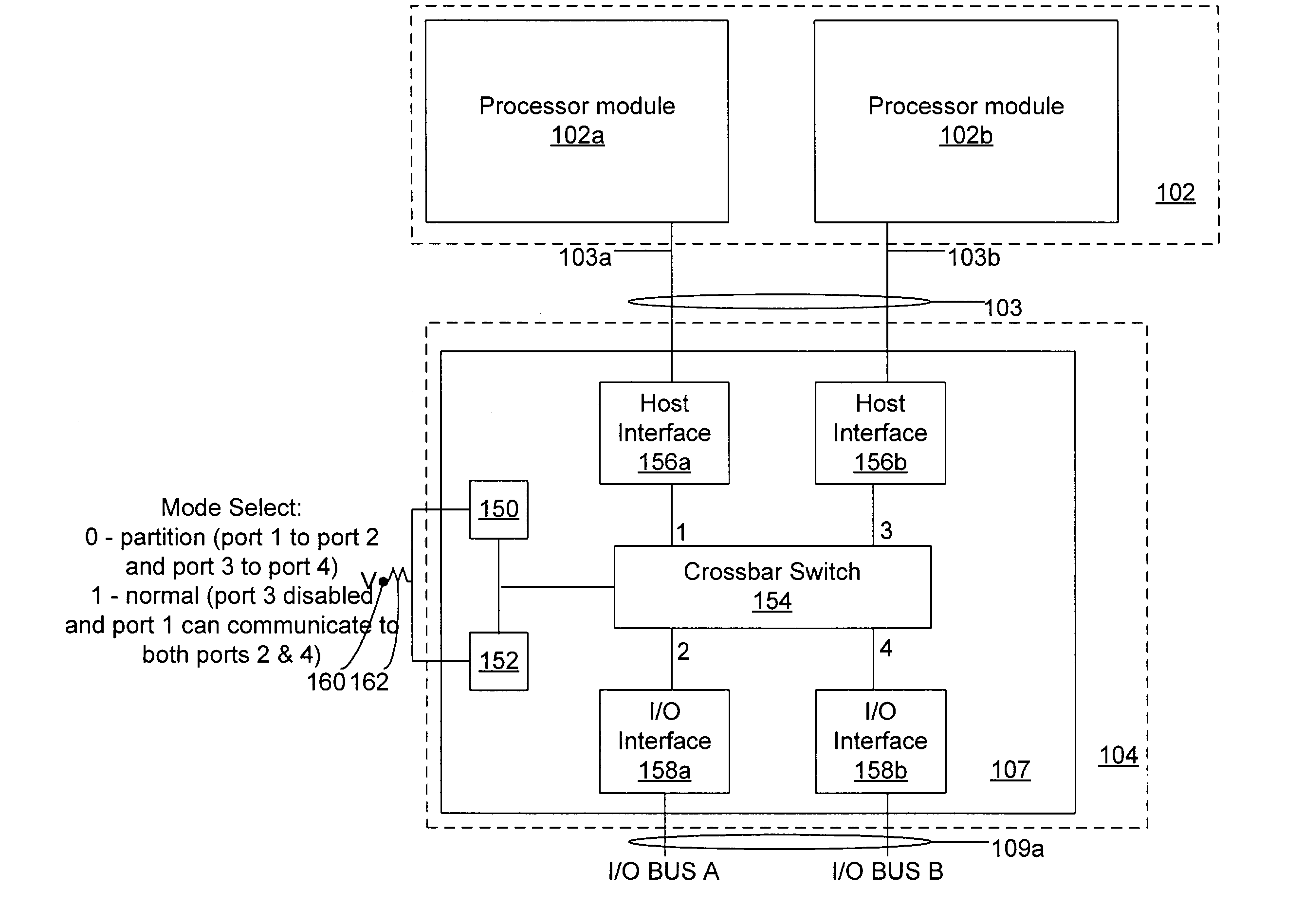

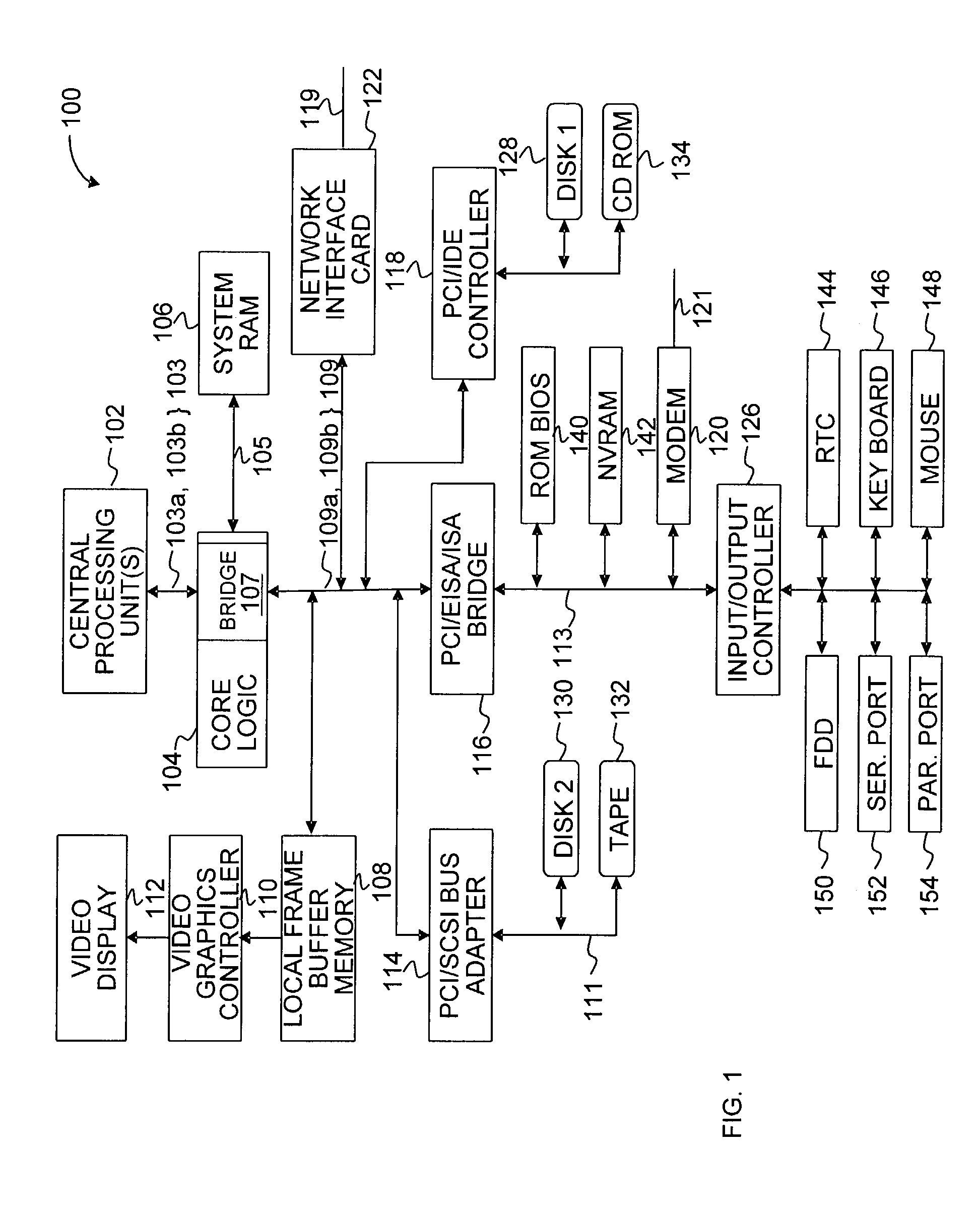

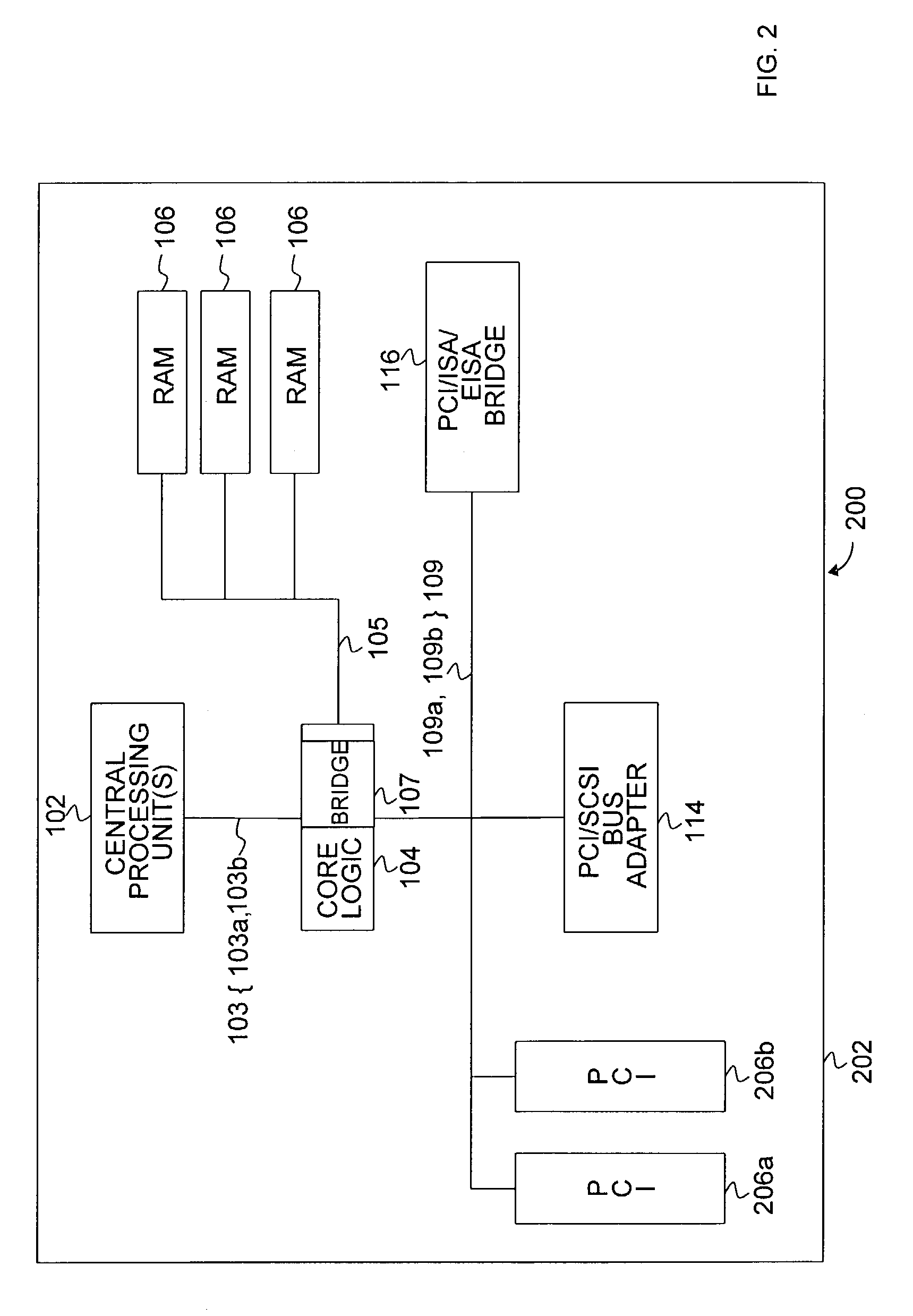

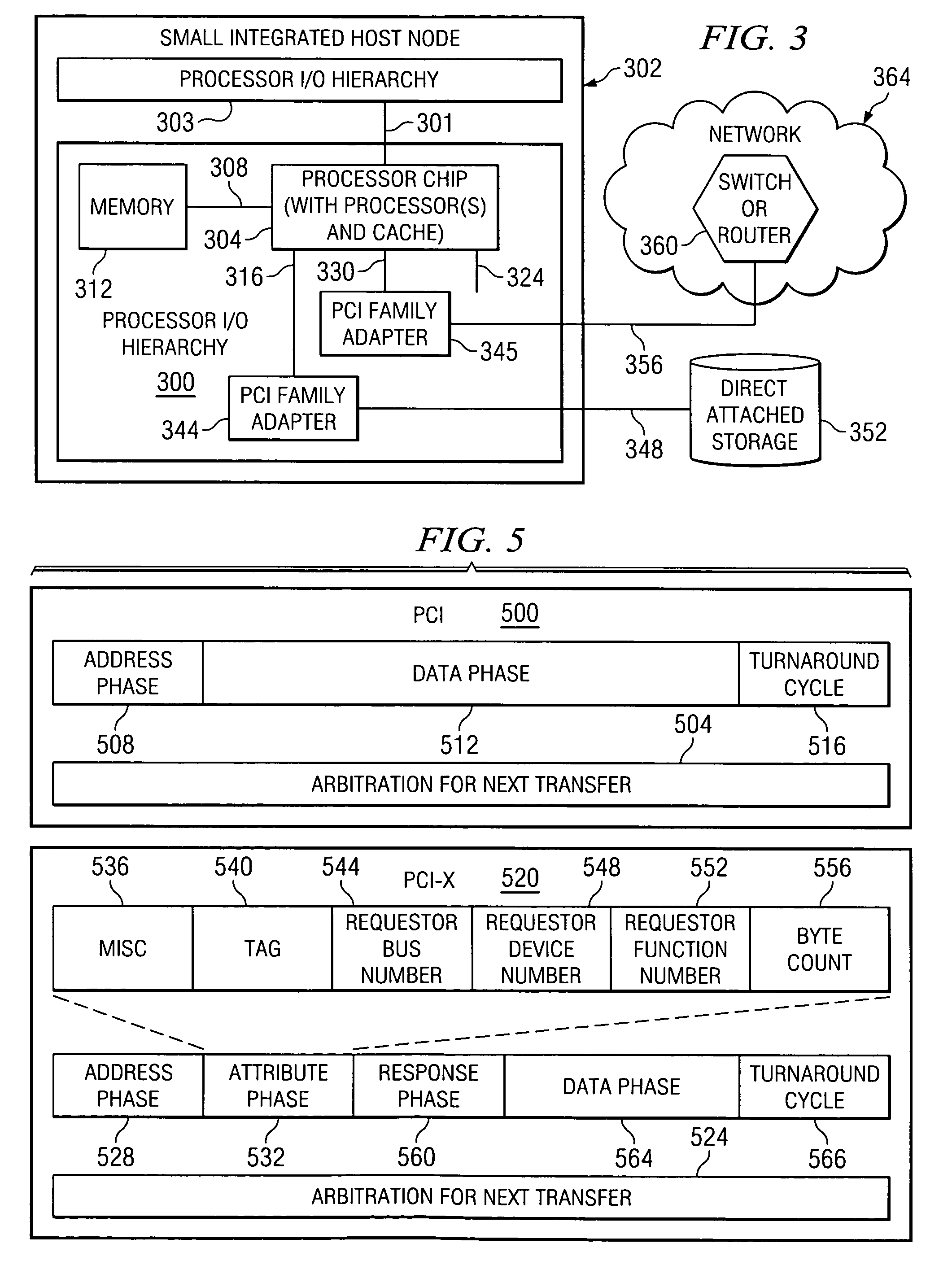

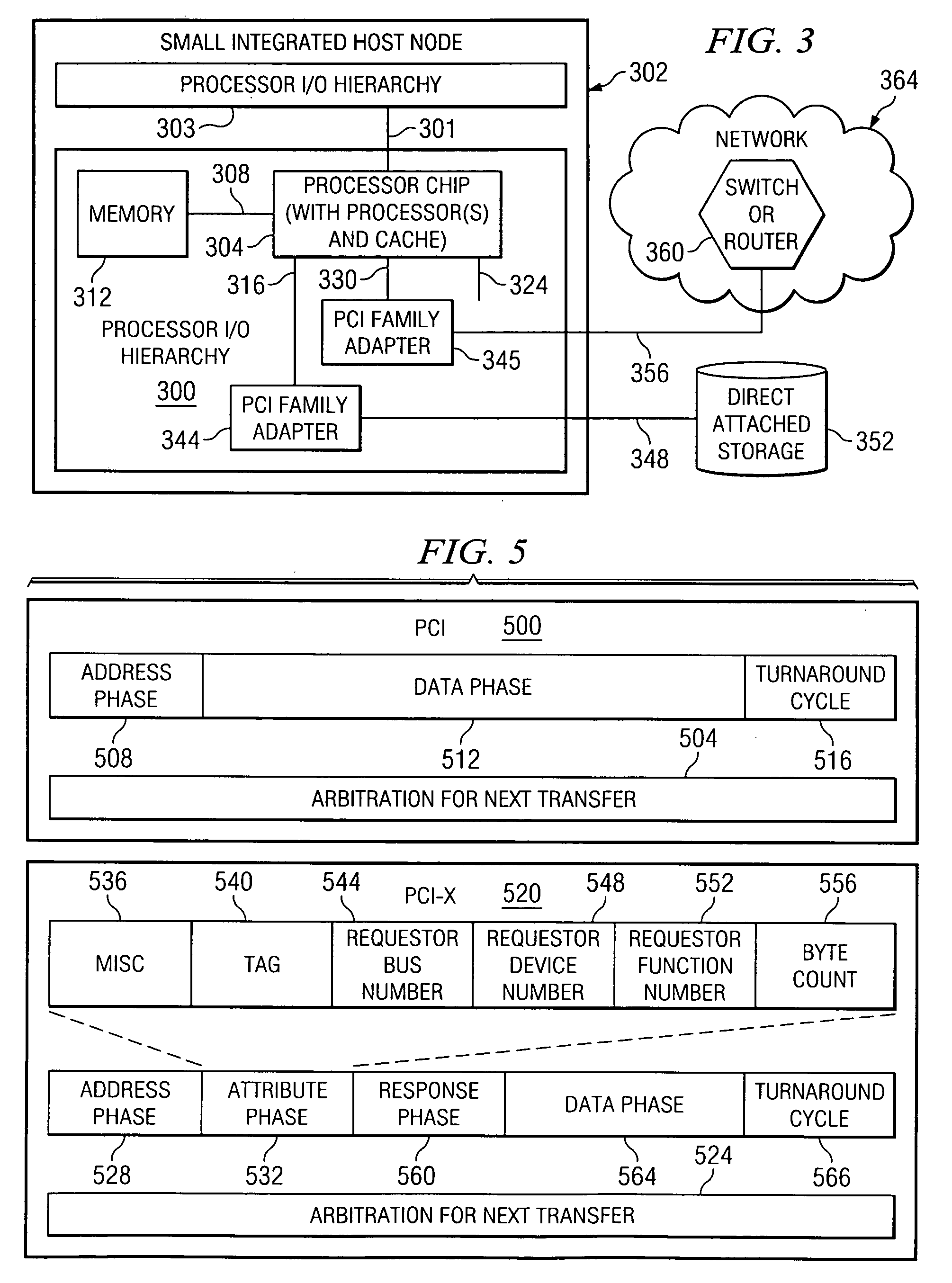

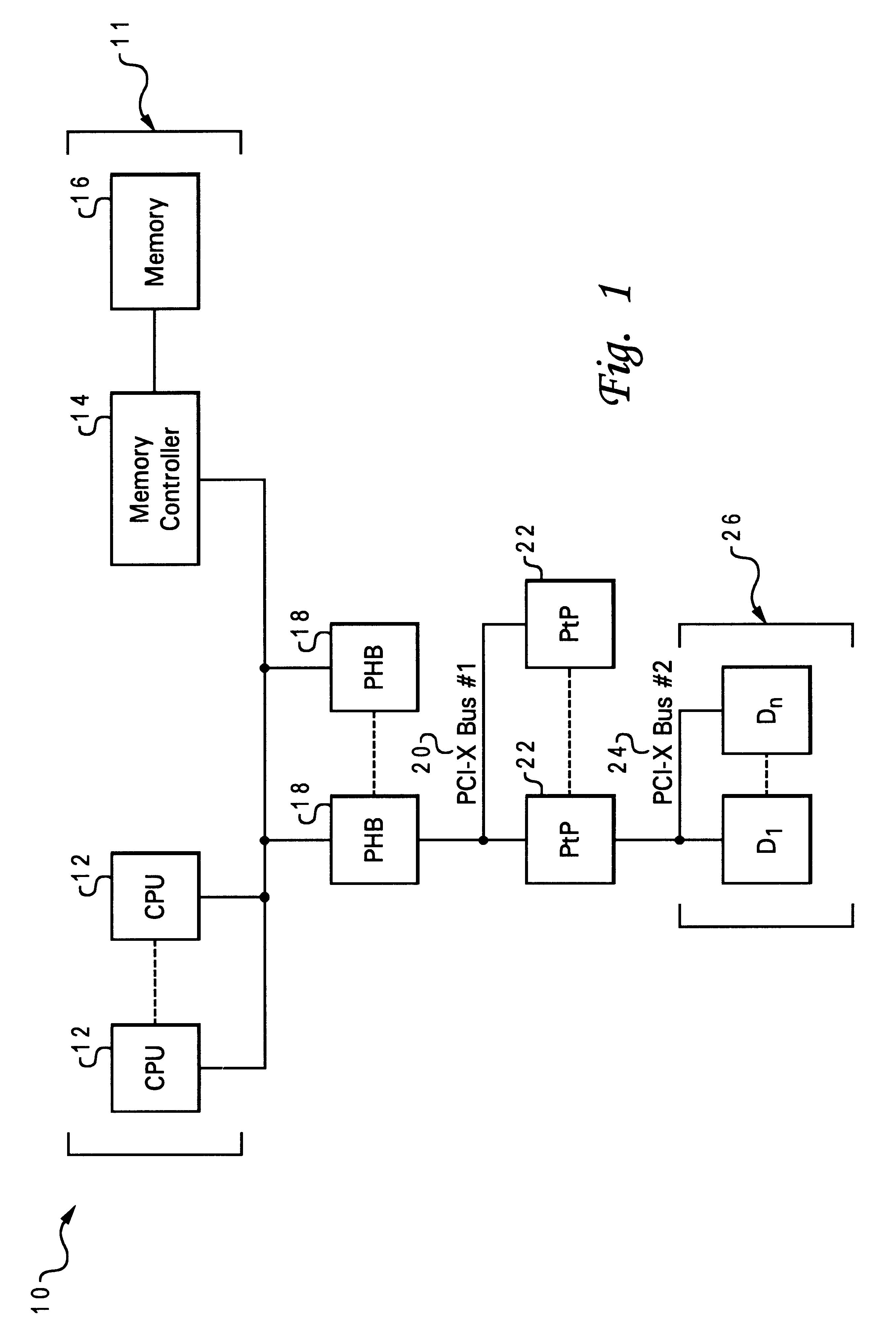

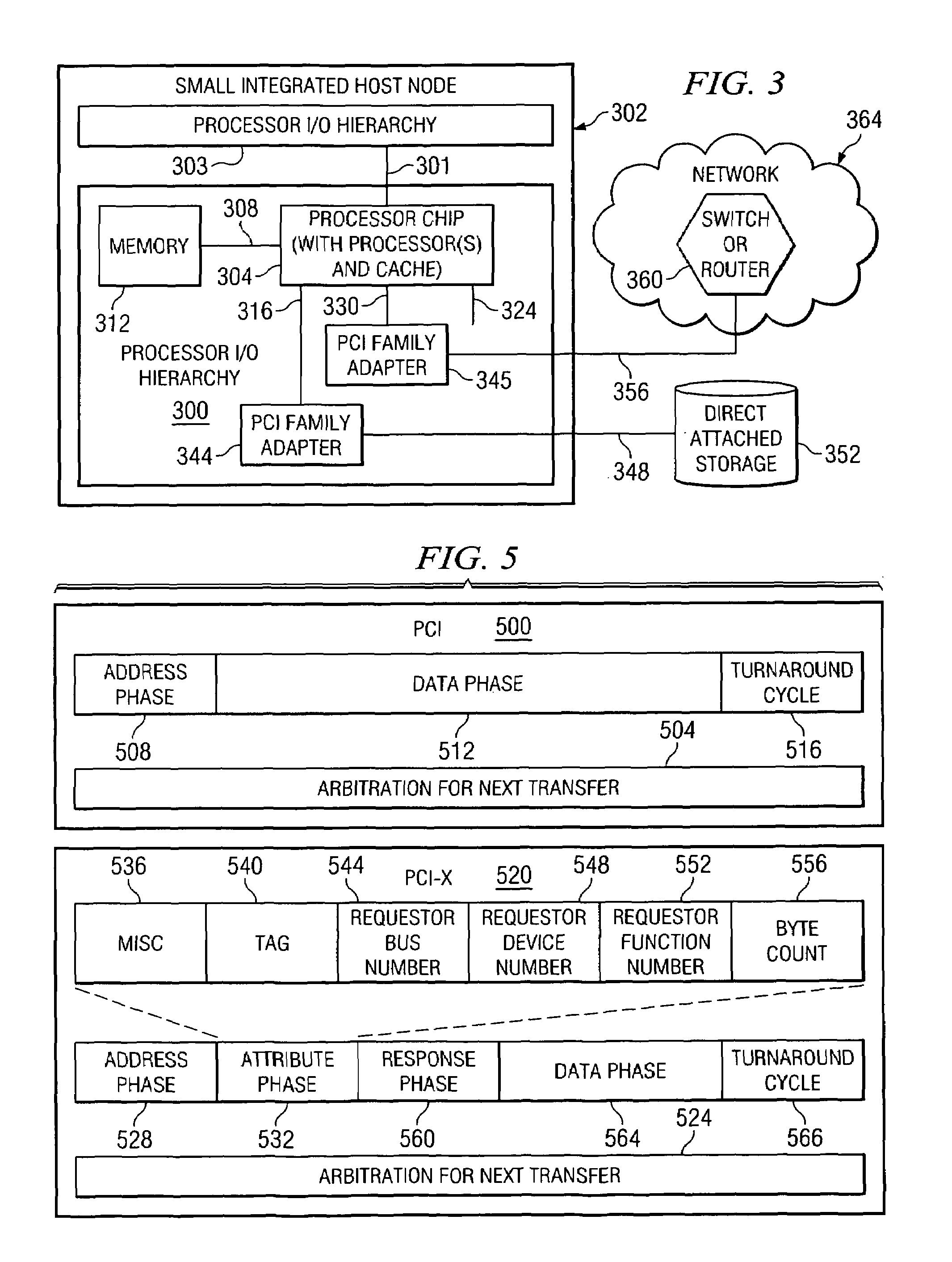

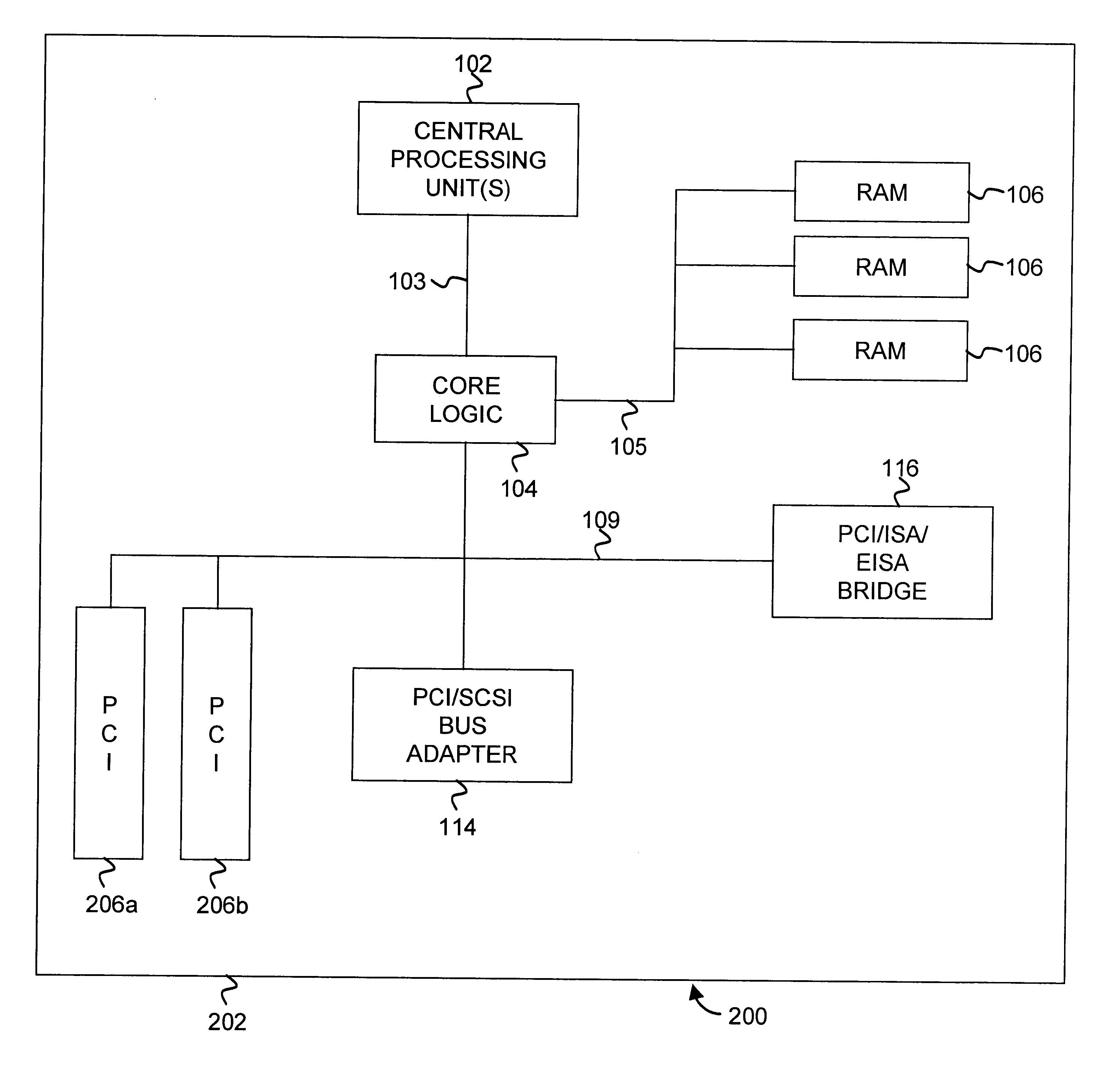

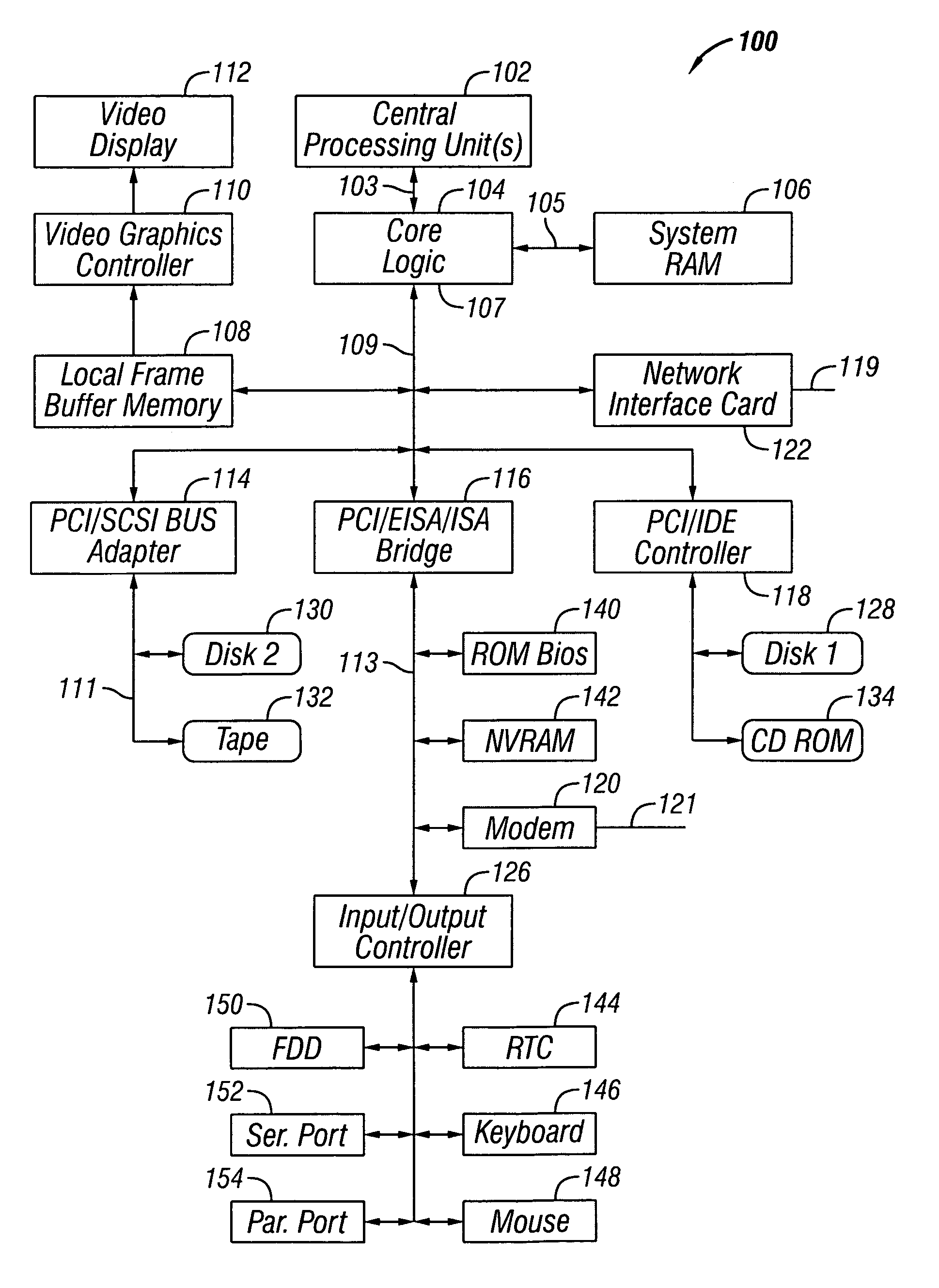

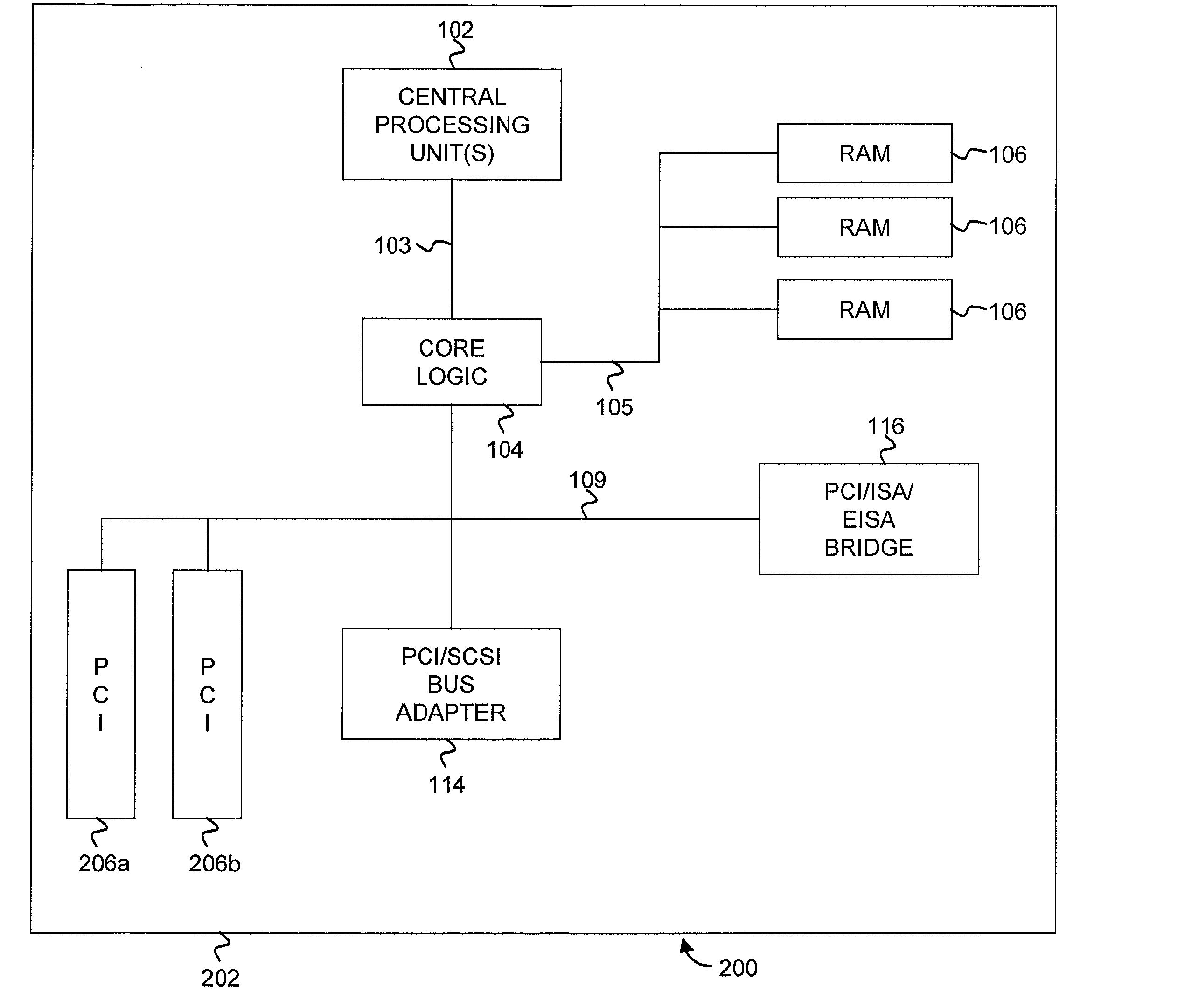

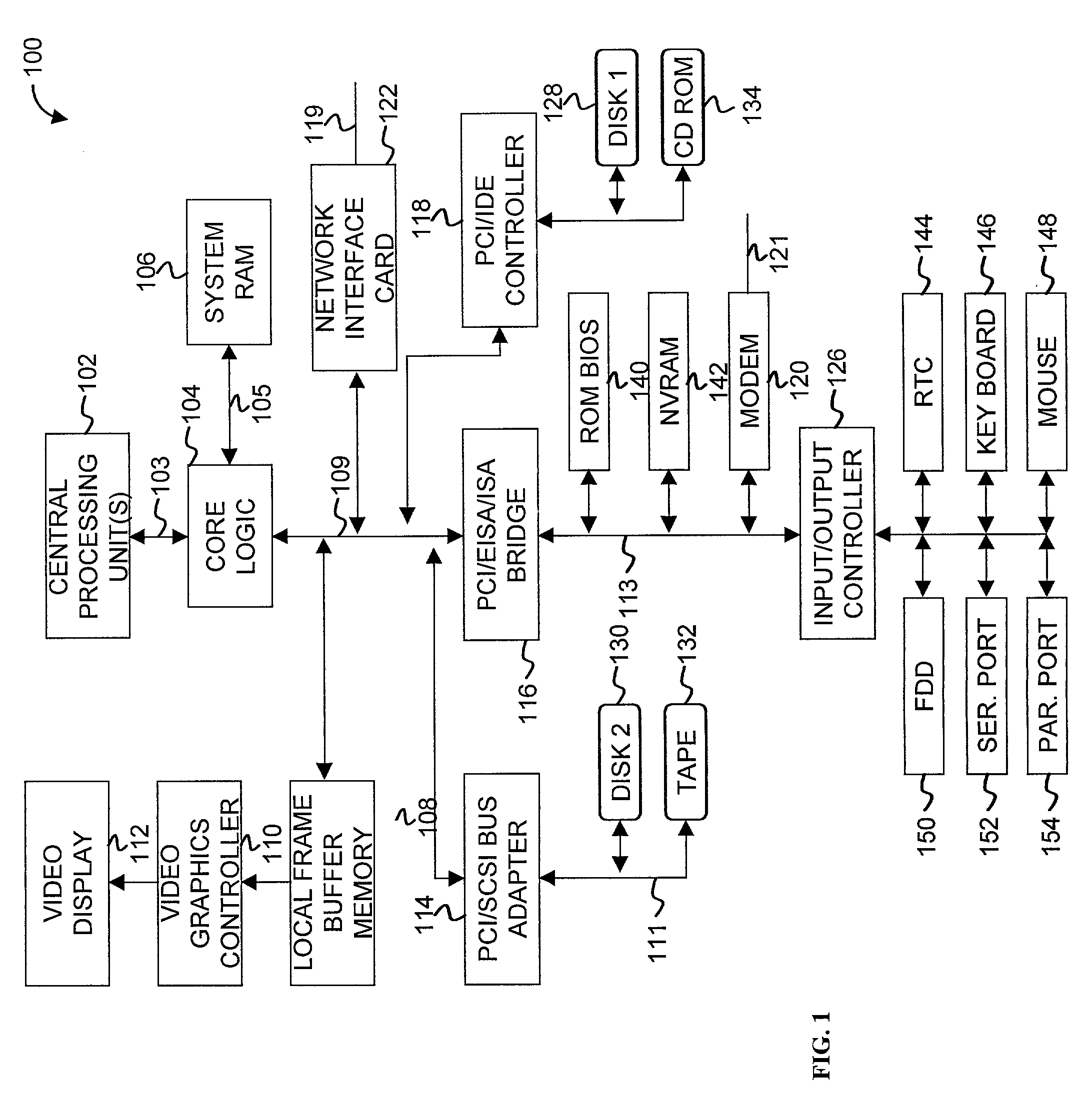

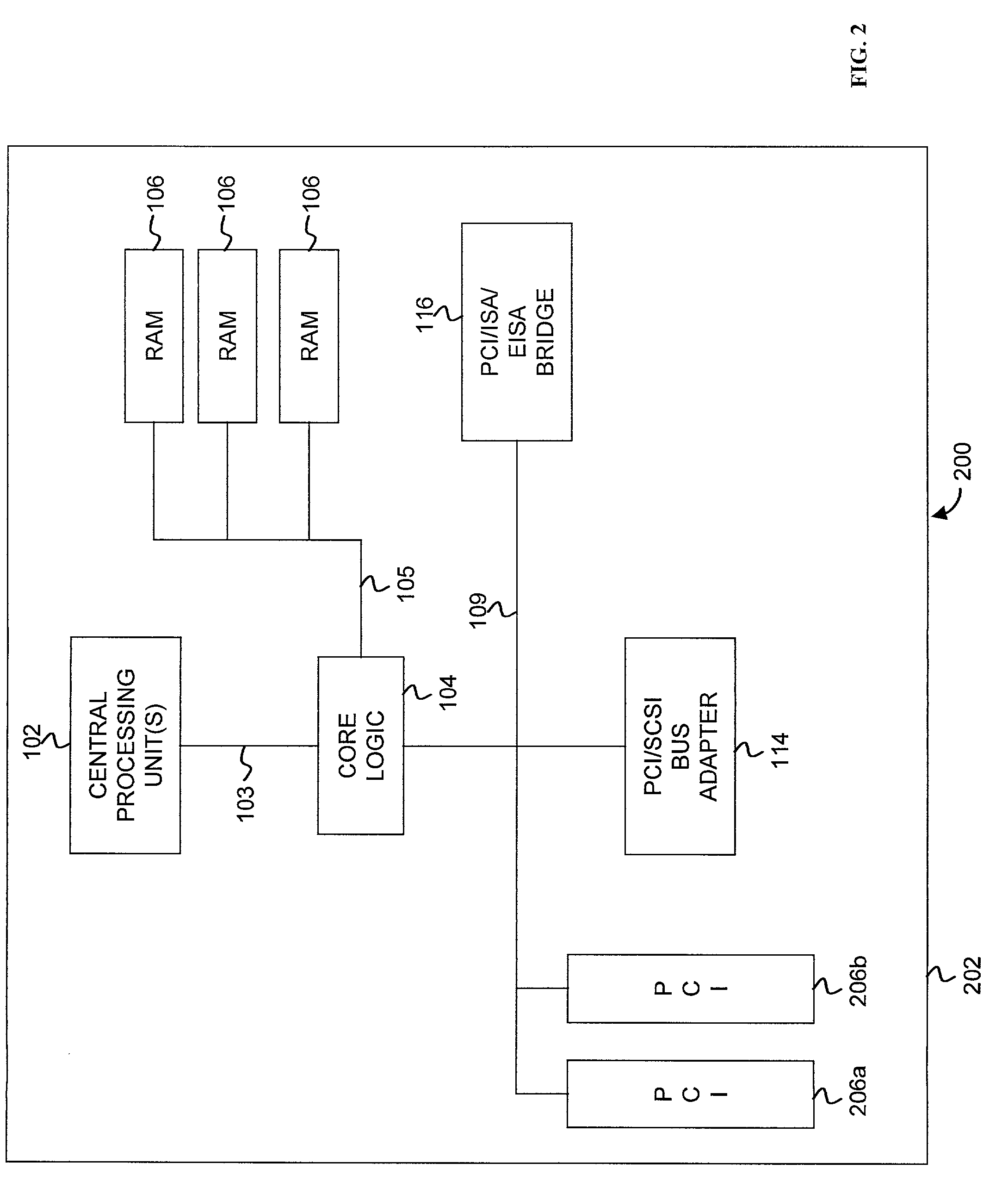

Supporting a host-to-input/output (I/O) bridge

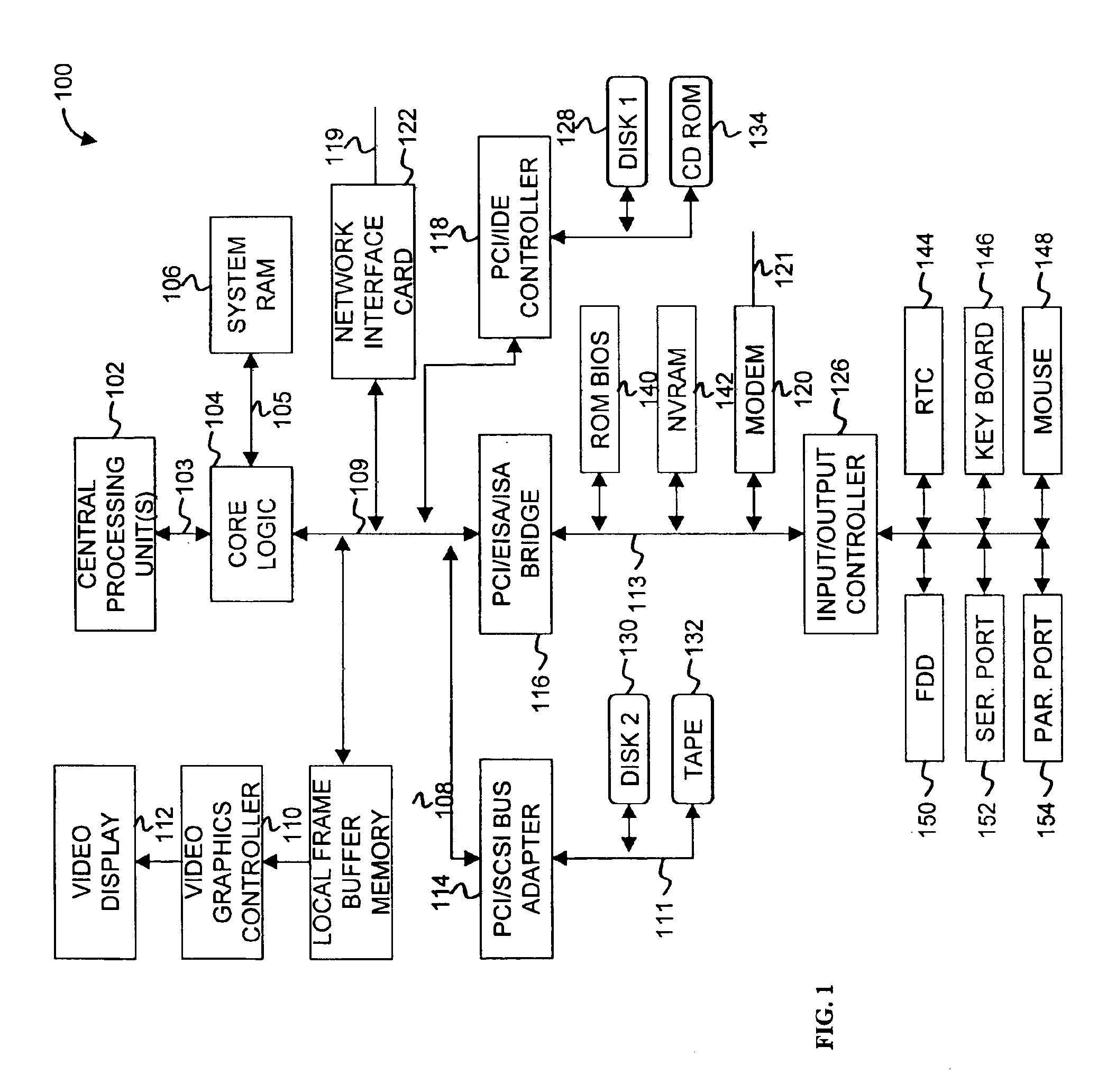

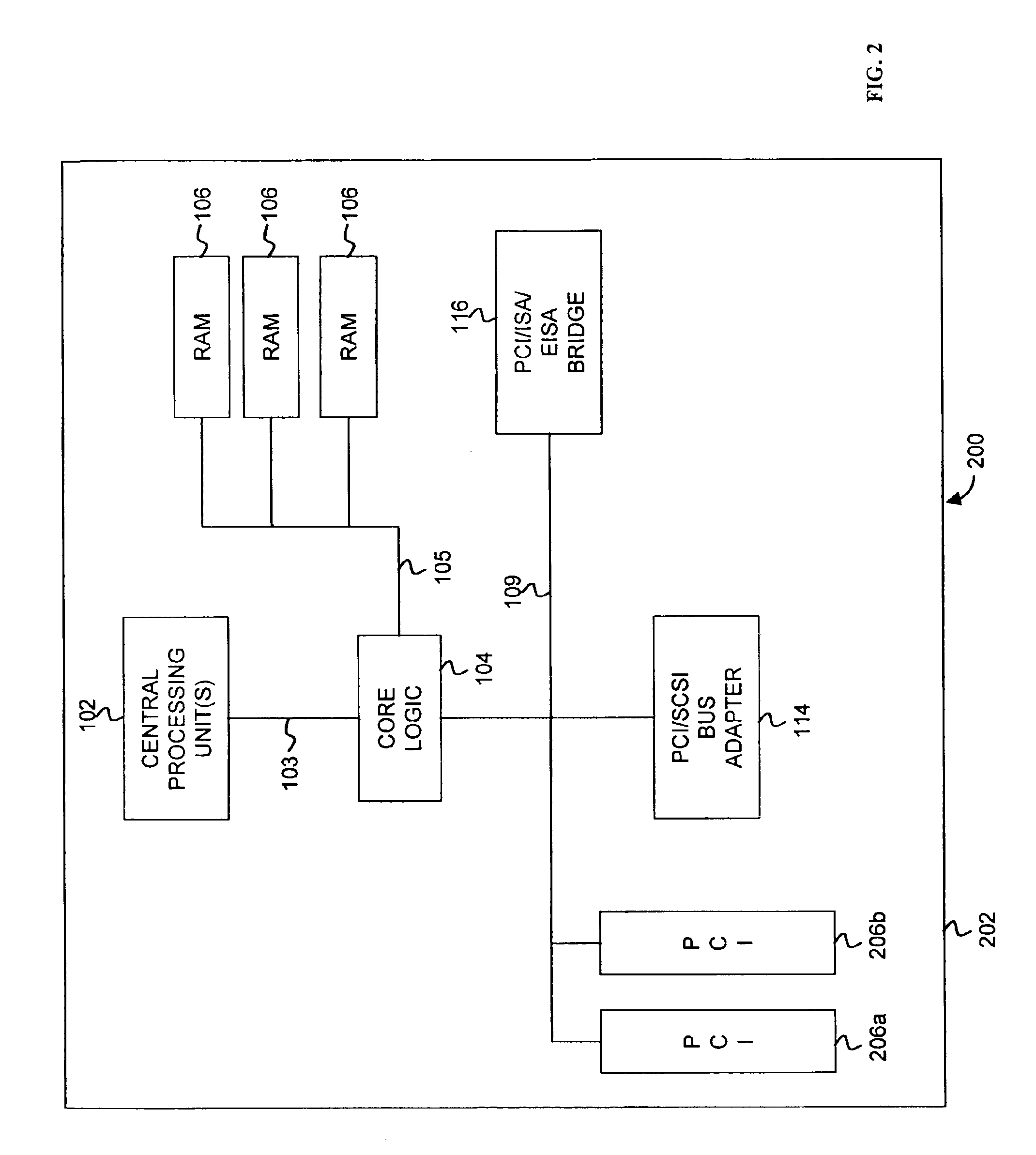

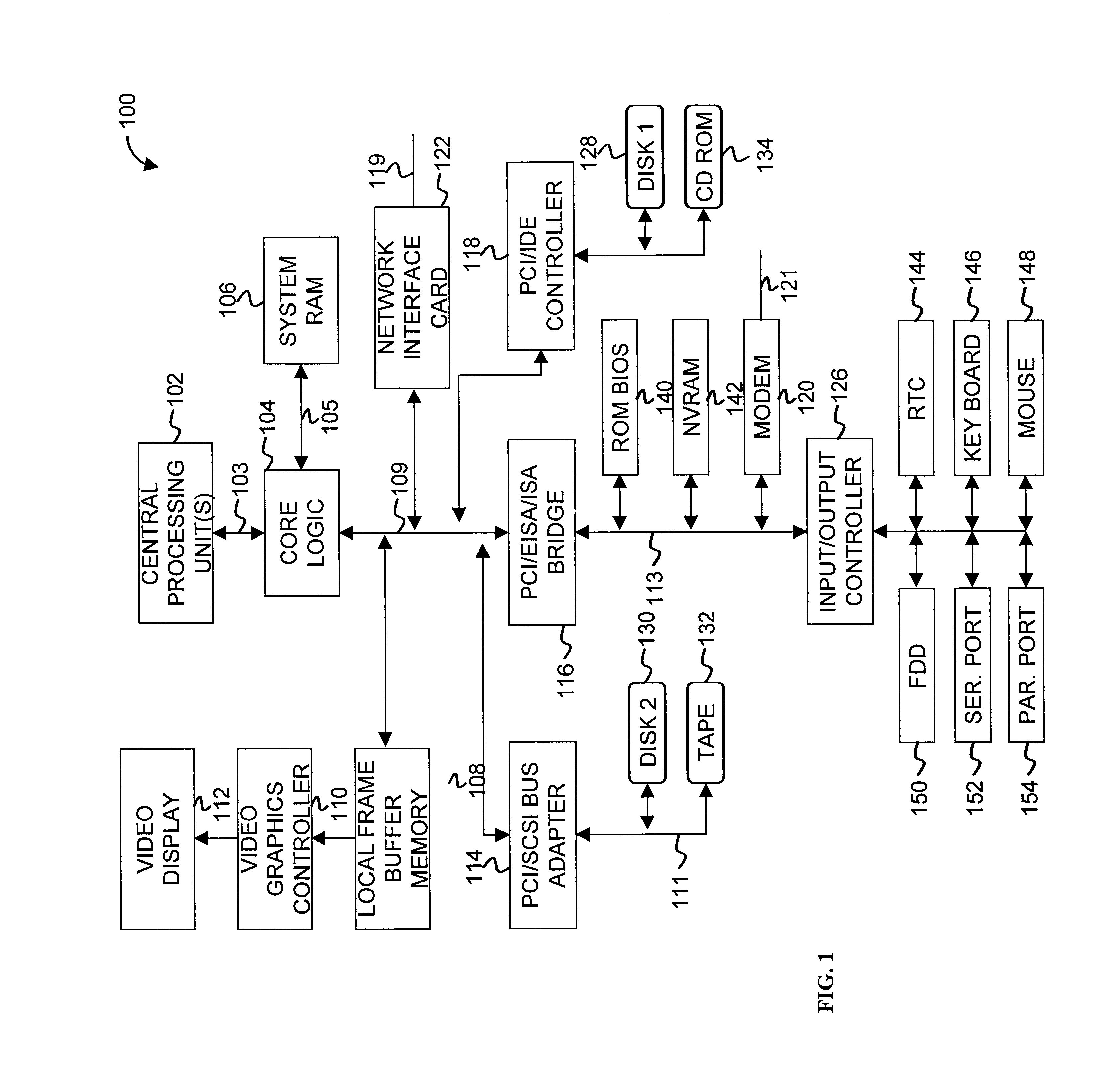

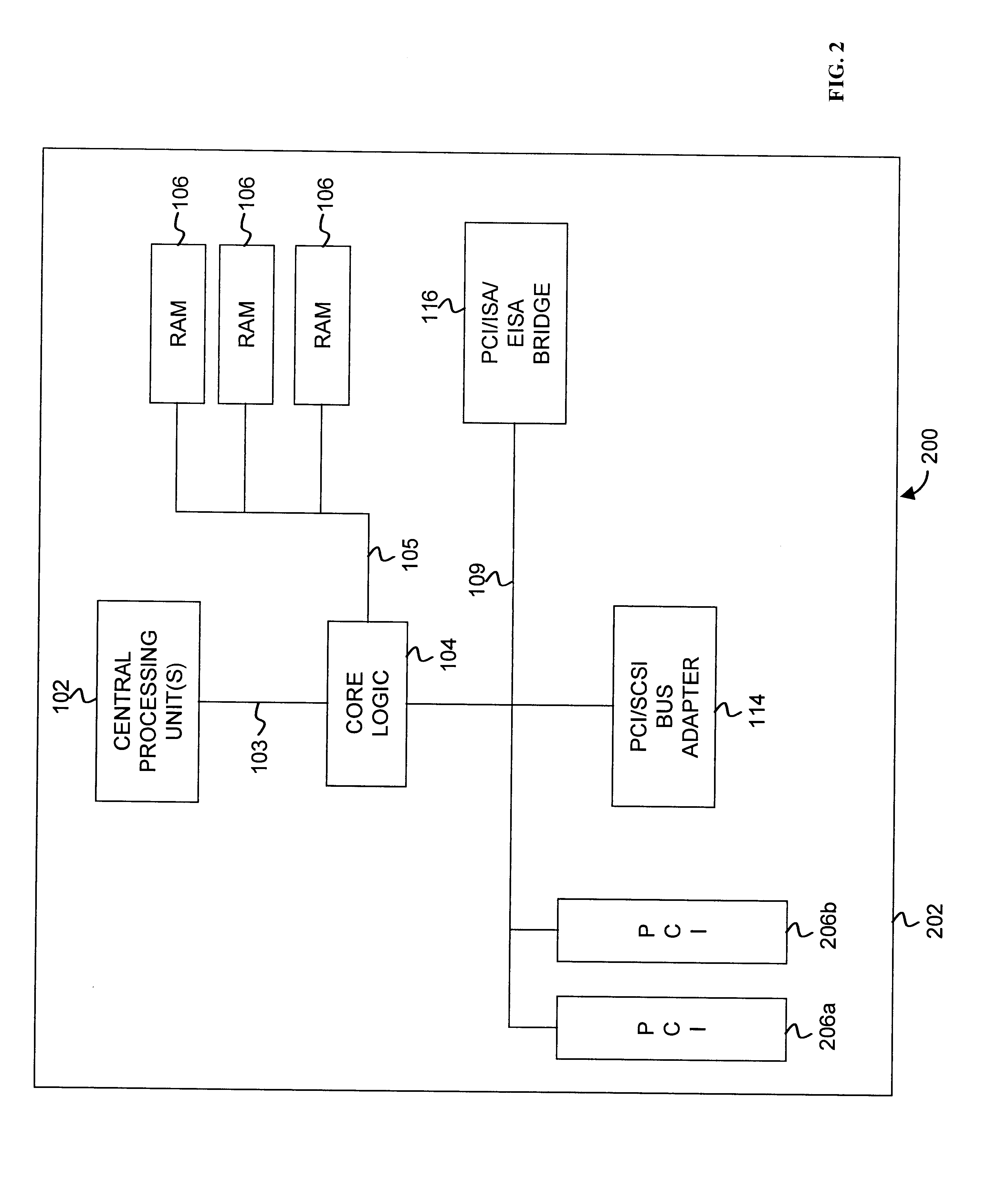

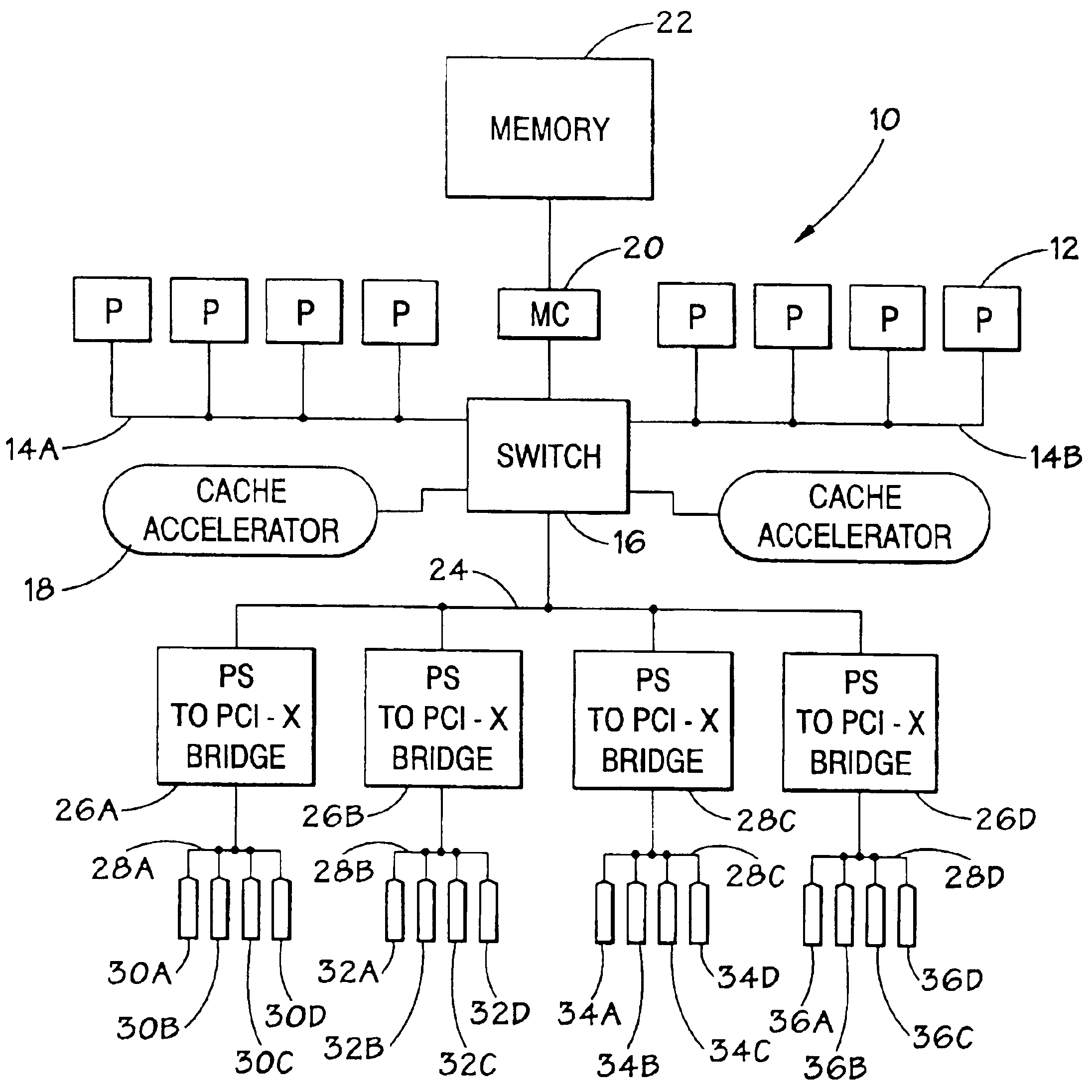

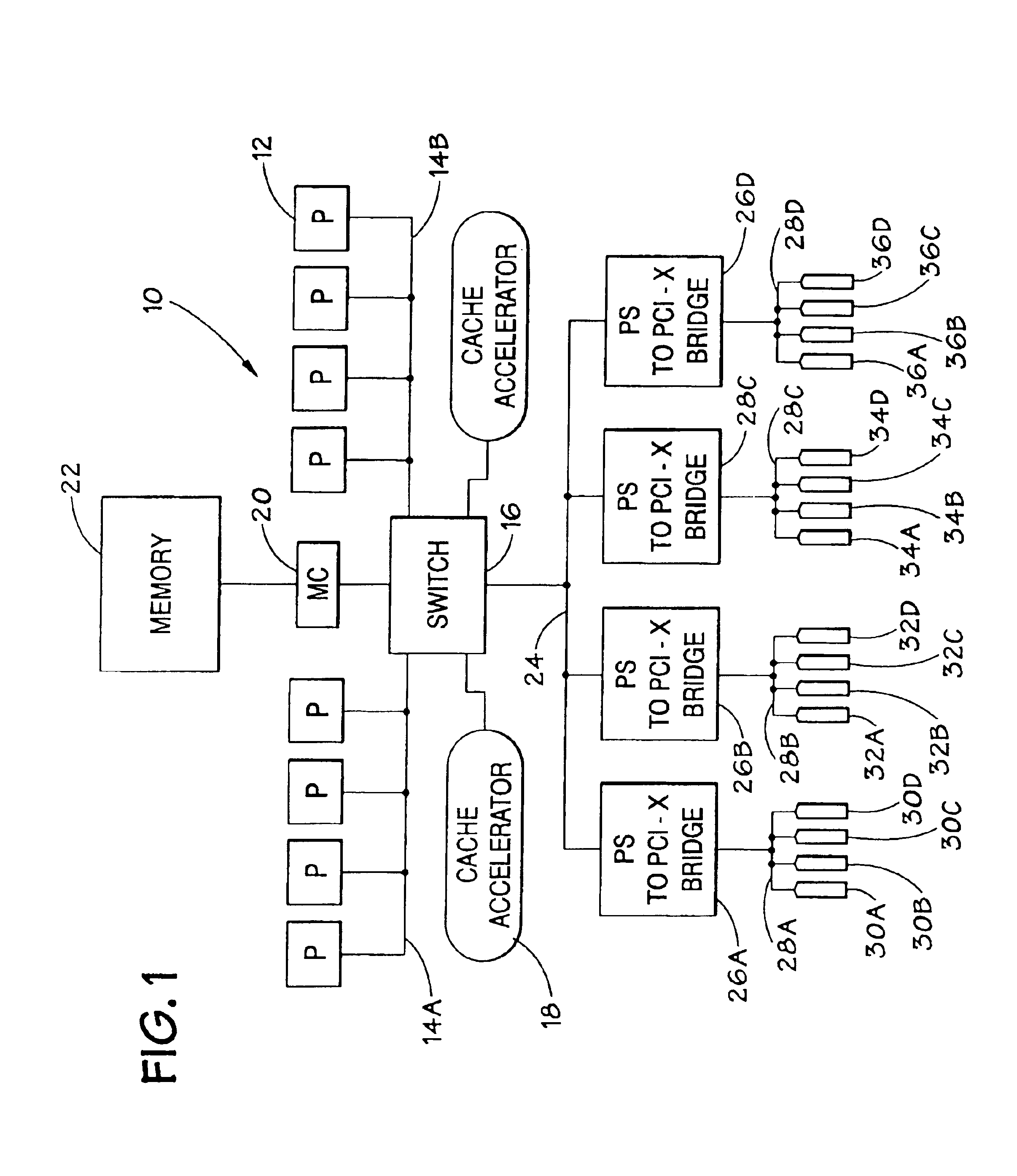

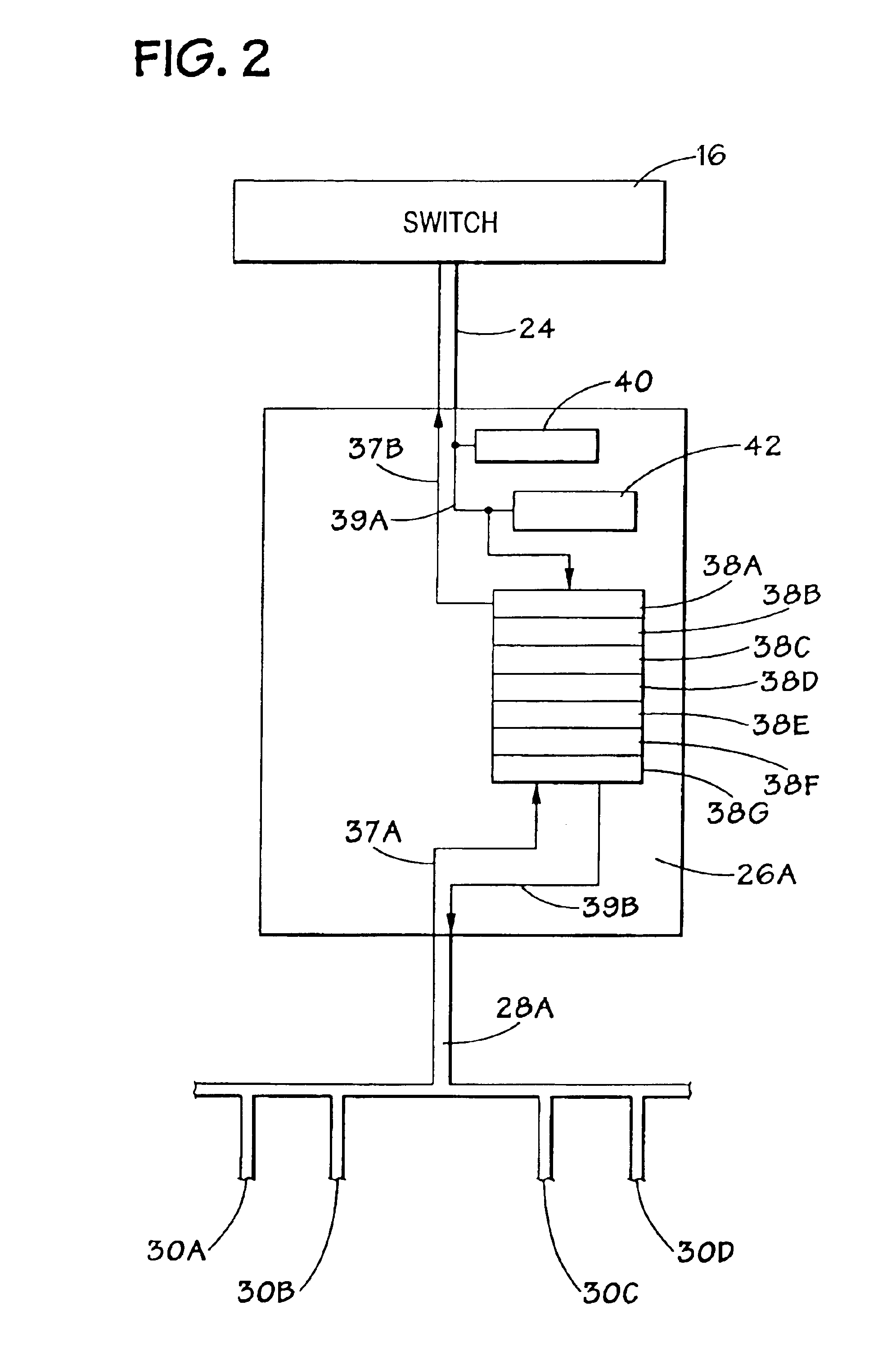

In a computer system, a host-to-I / O bridge (e.g., a host-to-PCI-X bridge) includes core logic that interfaces with at least two host buses for coupling a central processing unit(s) and the bridge, and interfaces with at least two PCI-X buses for coupling at least two PCI-X devices and the bridge. The core logic includes at least two PCI-X configuration registers adapted to have bits set to partition the core logic for resource allocation. Embodiments of the present invention allow the system to program the logical bus zero to behave as a single logical bus zero or partition into two or more separate “bus zero” PCI-X buses with their own configuration resources. Partitioning allows performance and functional isolation and transparent I / O sharing.

Owner:HEWLETT PACKARD DEV CO LP

Association of host translations that are associated to an access control level on a PCI bridge that supports virtualization

InactiveUS20060195675A1Memory adressing/allocation/relocationInput/output processes for data processingTrusted componentsPCI Express

A method, computer program product, and distributed data processing system that allows a system image within a multiple system image virtual server to directly expose a portion, or all, of its associated system memory to a shared PCI adapter without having to go through a trusted component, such as a Hypervisor. Specifically, the present invention is directed to a mechanism for sharing conventional PCI I / O adapters, PCI-X I / O Adapters, PCI-Express I / O Adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications.

Owner:IBM CORP

Association of host translations that are associated to an access control level on a PCI bridge that supports virtualization

InactiveUS7398337B2Memory adressing/allocation/relocationInput/output processes for data processingTrusted componentsPCI Express

A method, computer program product, and distributed data processing system that allows a system image within a multiple system image virtual server to directly expose a portion, or all, of its associated system memory to a shared PCI adapter without having to go through a trusted component, such as a Hypervisor. Specifically, the present invention is directed to a mechanism for sharing conventional PCI I / O adapters, PCI-X I / O Adapters, PCI-Express I / O Adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications.

Owner:INT BUSINESS MASCH CORP

System and method for virtual resource initialization on a physical adapter that supports virtual resources

ActiveUS20060195620A1Memory adressing/allocation/relocationComputer security arrangementsResource virtualizationTerm memory

A method, computer program product, and distributed data processing system for directly sharing a network stack offload I / O adapter that directly supports resource virtualization and does not require a LPAR manager or other intermediary to be invoked on every I / O transaction is provided. The present invention also provides a method, computer program product, and distributed data processing system for directly creating and initializing one or more virtual resources that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter, and that are associated with a virtual host. Specifically, the present invention is directed to a mechanism for sharing conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for host to adapter communications. A mechanism is provided for directly creating and initializing one or more virtual resources that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter, and that are associated with a virtual host.

Owner:IBM CORP

Method, system, and computer program product for virtual adapter destruction on a physical adapter that supports virtual adapters

InactiveUS20060224790A1Memory adressing/allocation/relocationMultiprogramming arrangementsData processing systemPCI-X

A method, computer program product, and distributed data processing system for directly destroying the resources associated with one or more virtual adapters that reside within a physical adapter is provided. A mechanism is provided for directly destroying the resources associated with one or more virtual adapters that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter.

Owner:IBM CORP

System and method for destroying virtual resources in a logically partitioned data processing system

InactiveUS20060195619A1Memory adressing/allocation/relocationMultiprogramming arrangementsTerm memoryPCI Express

A method, computer program product, and distributed data processing system for directly destroying one or more virtual resources that reside within a physical adapter and that are associated with a virtual host. Specifically, the present invention is directed to a mechanism for sharing conventional Peripheral Component Interconnect (PCI) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for host to adapter communications.

Owner:IBM CORP

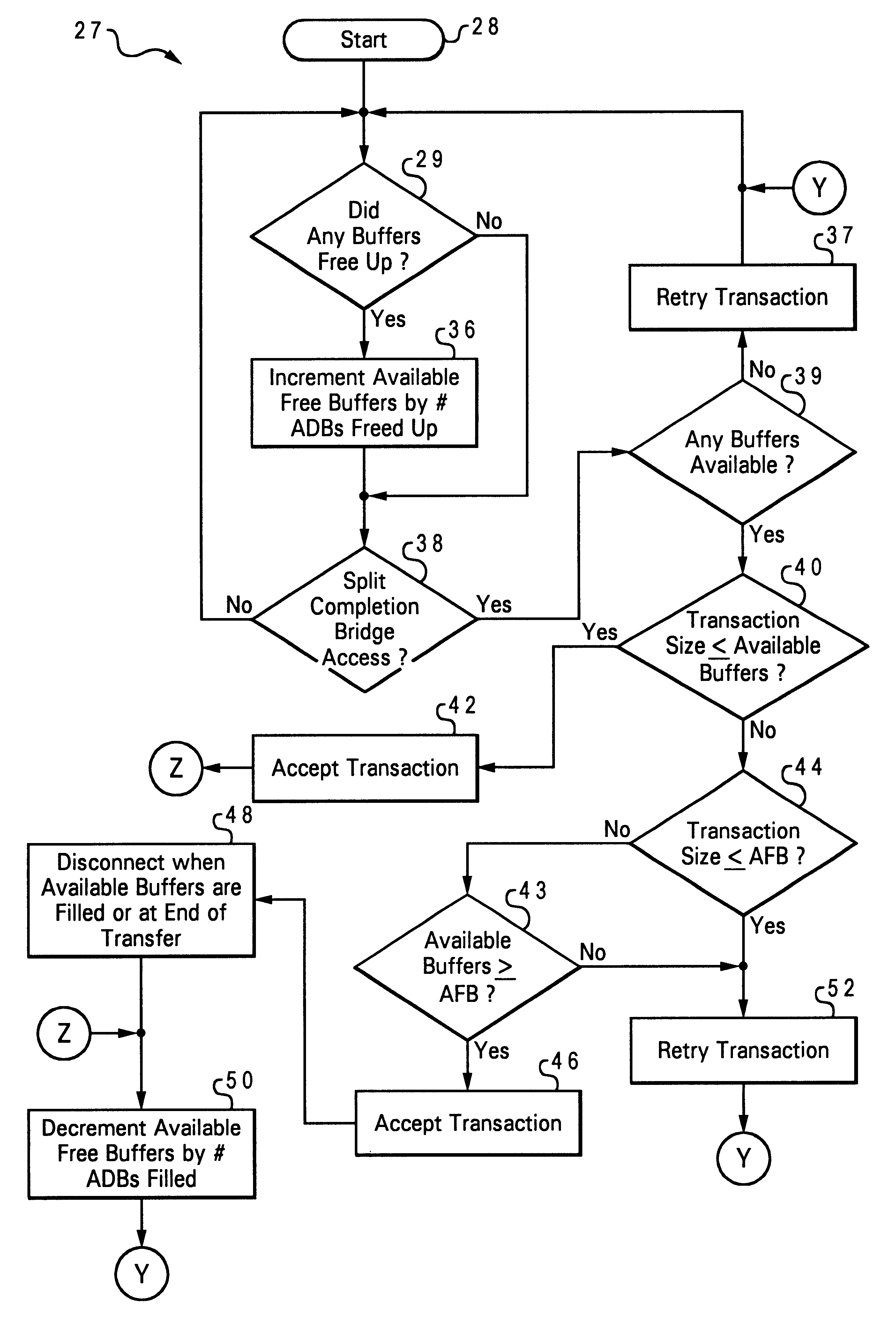

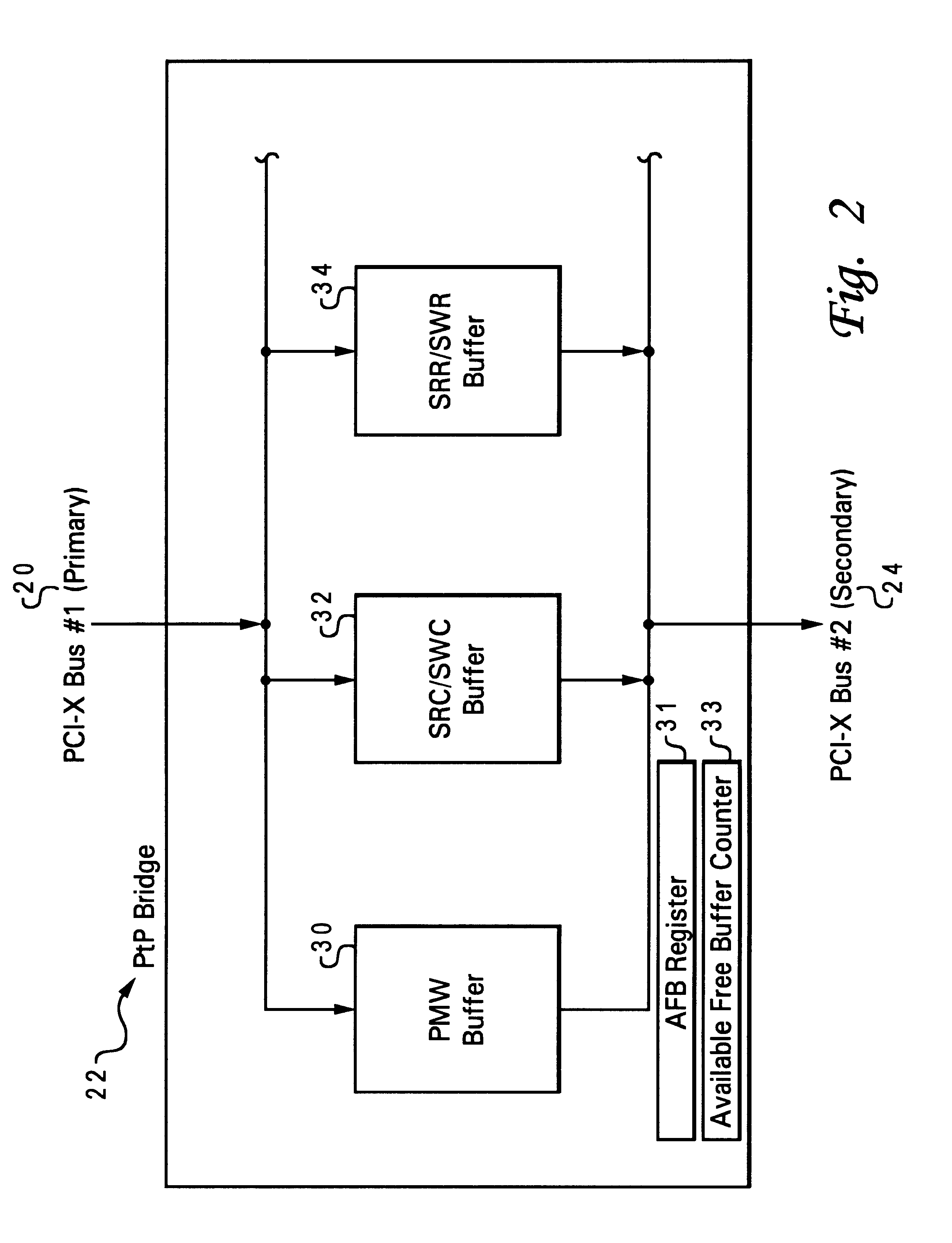

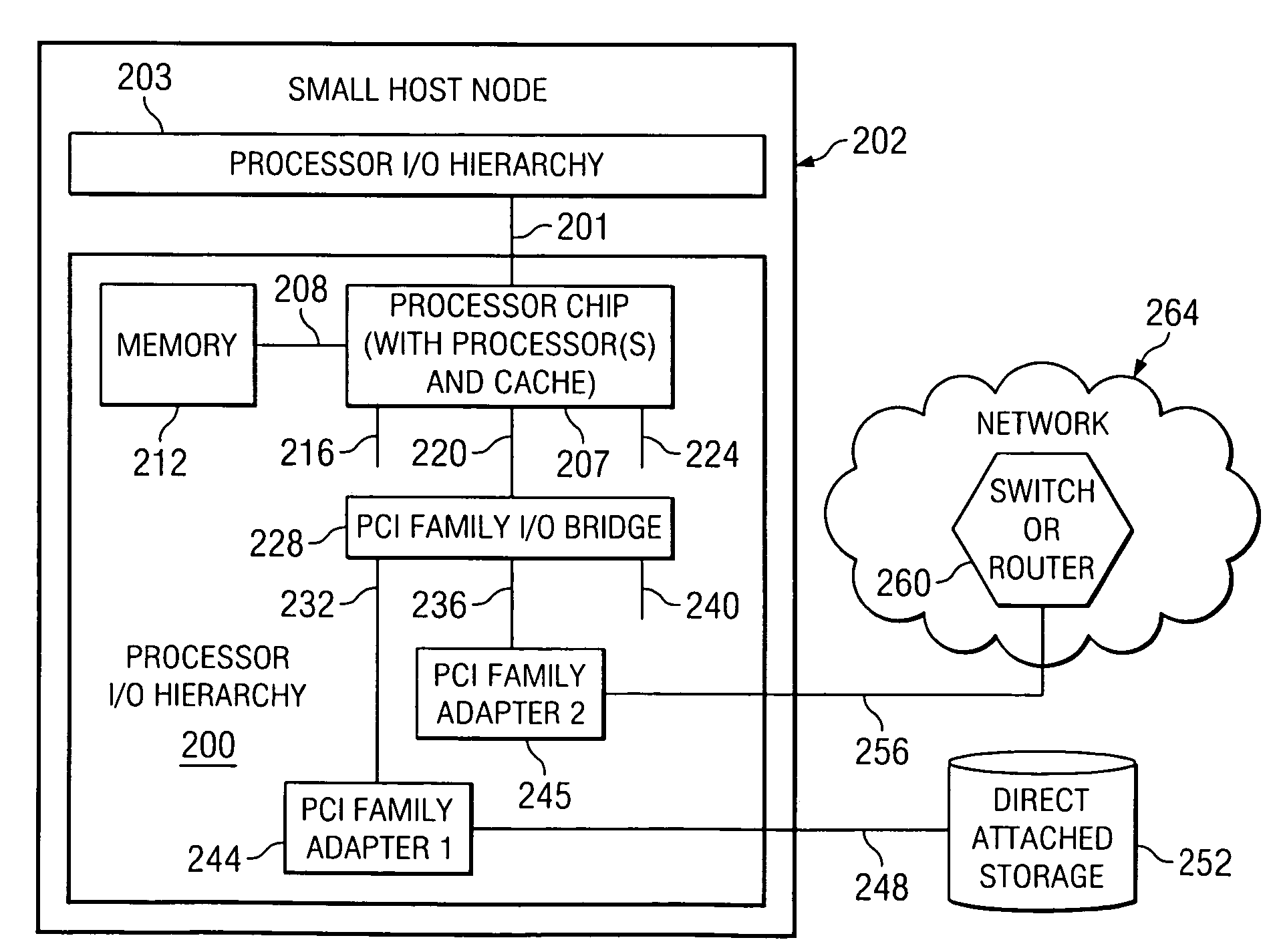

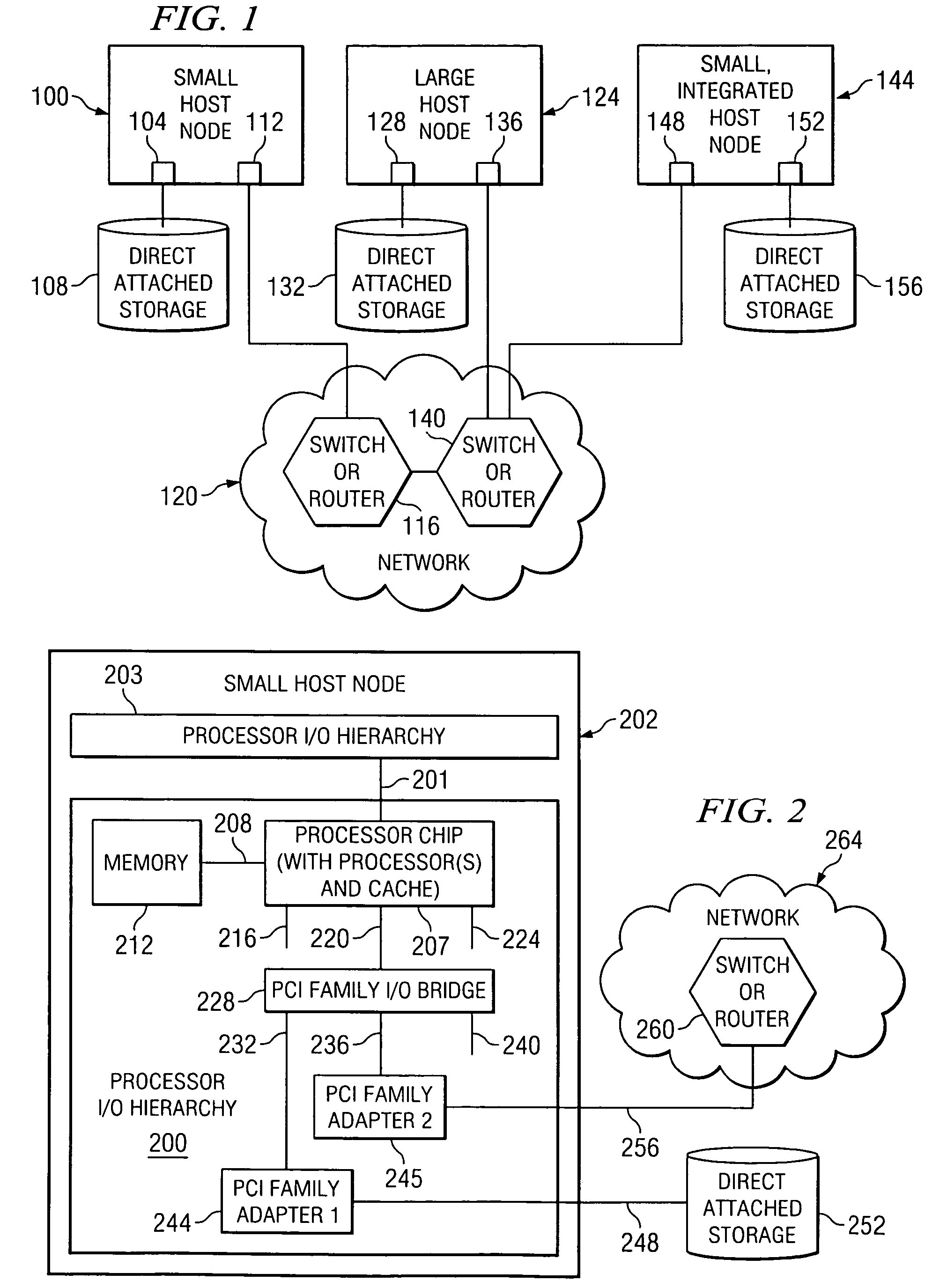

Buffer management for improved PCI-X or PCI bridge performance

InactiveUS6425024B1Memory systemsInput/output processes for data processingLarge sizeComputer science

Buffer management for improved PCI-X or PCI bridge performance. A system and method for managing transactions across a PCI-X or PCI bridge, and a system and method of waiting for, increasing, and / or optimizing the available buffers for transaction size or sizes across a PCI-X or PCI bridge. Transactions are processed across the bridge, and the bridge has buffers with actual available buffer space used for receiving and processing the transactions. Transaction size of the transaction is determined. The system and method sets an available free block which is a set amount of available buffer space that is to be freed up before certain larger size transactions are processed. The system and method waits for the actual available buffer space to free up to and reach the available free block. The certain larger size transactions are then processed when the actual available buffer space has reached the available free block. The processing of the transaction involves accepting the transaction if the transaction size is not greater than the actual available buffer space, retrying the transaction for processing by the bridge when the transaction size is less than the available free block but greater than the actual available buffer space, retrying the transaction by the bridge when the transaction size is greater than the available free block and greater than the available buffer space until the available buffer space is greater than or equal to the available free block, and accepting the transaction and then disconnecting once the actual available buffers are filled or at an end of the transaction.

Owner:IBM CORP

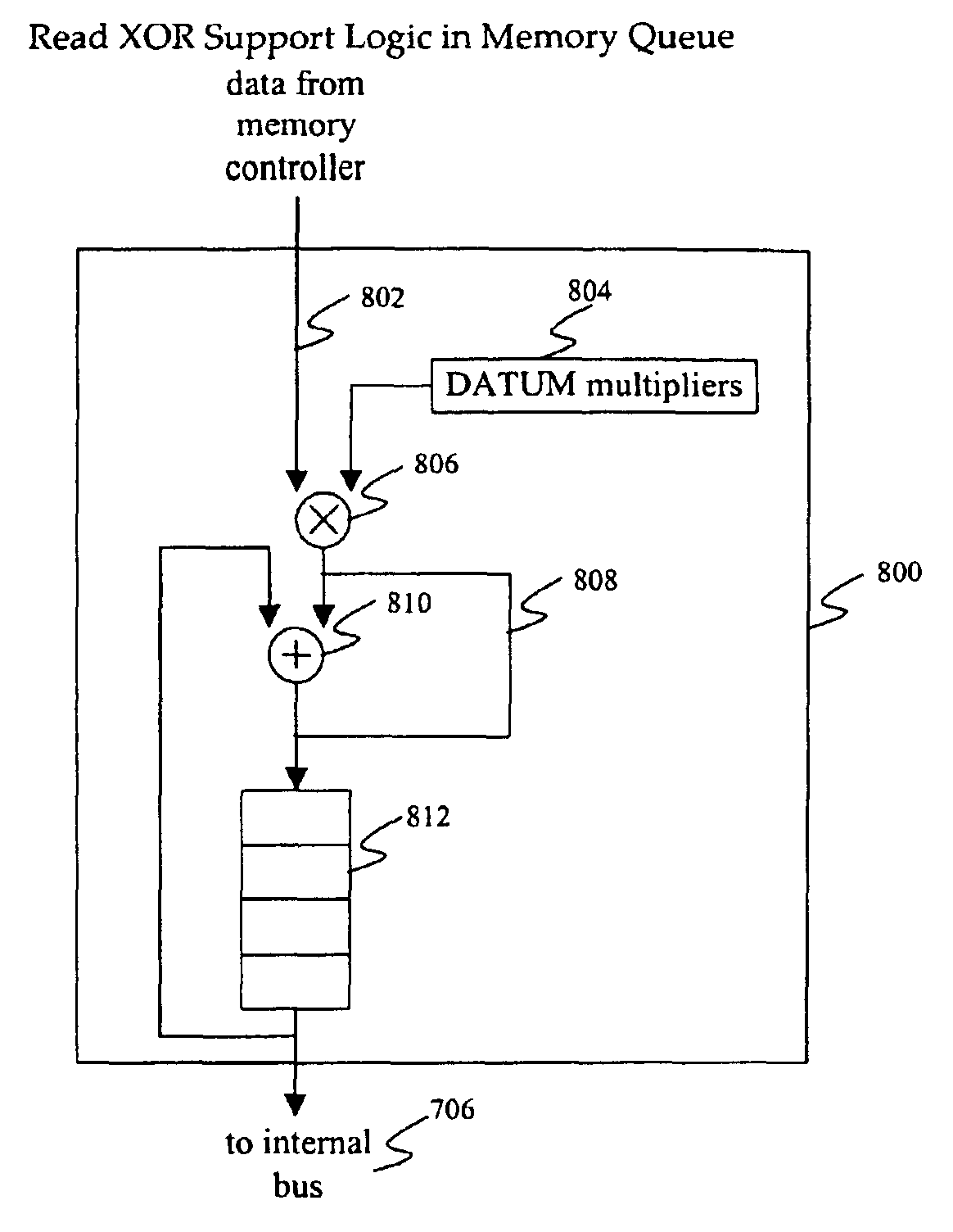

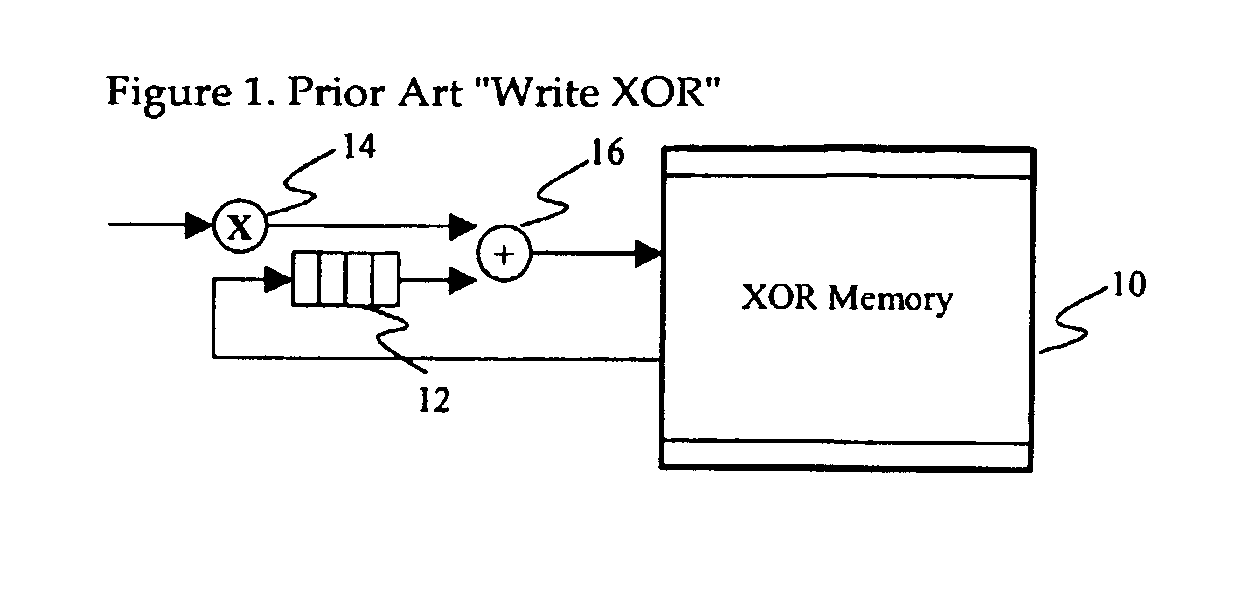

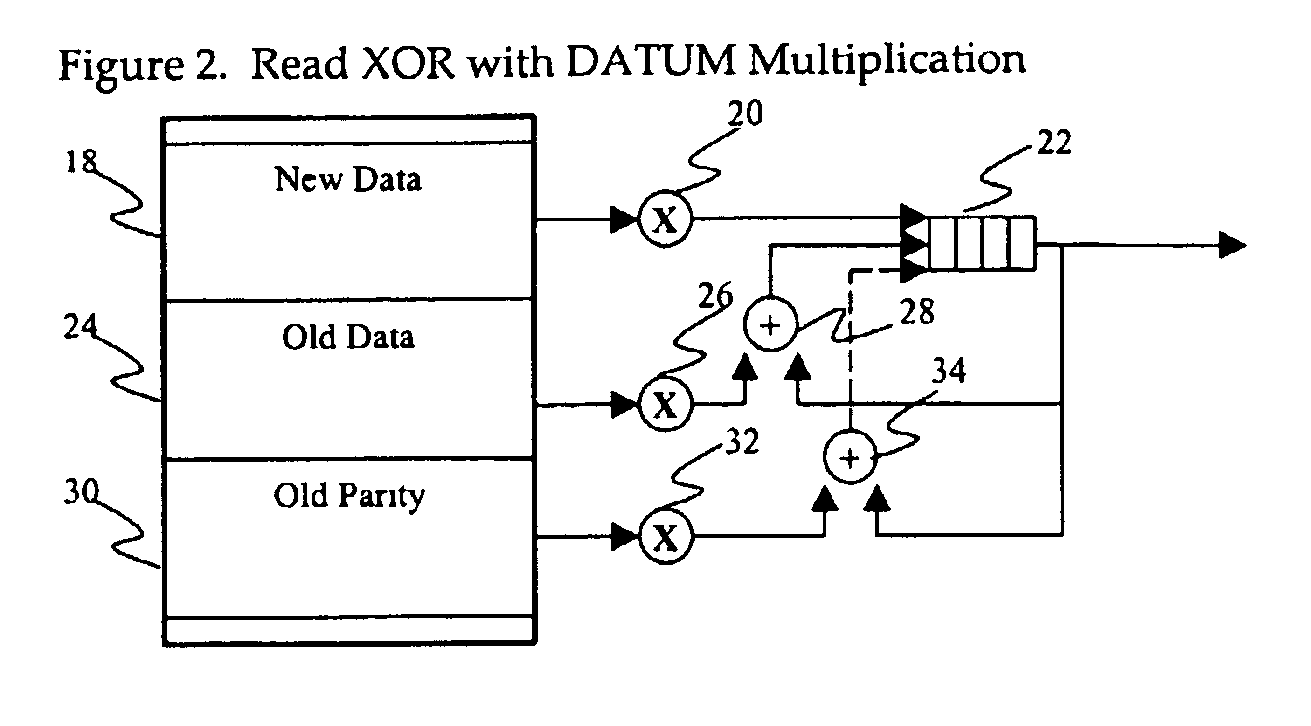

Memory controller interface with XOR operations on memory read to accelerate RAID operations

ActiveUS6918007B2Improve performanceReduce in quantityRedundant data error correctionMemory systemsRAIDMemory controller

A single read request to a memory controller generates multiple read actions along with XOR / DATUM manipulation of that read data. Fewer memory transfers are required to accomplish a RAID5 / DATUM parity update. This allows for higher system performance when memory bandwidth is the limiting system component. In implementation, a read buffer with XOR capability is tightly coupled to a memory controller. New parity does not need to be stored in the controller's memory. Instead, a memory read initiates multiple reads from memory based on an address decode. The data from the reads are multiplied and XOR'd before being returned to the requestor. In the case of a PCI-X requestor, this occurs as a split-completion.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

System and method for managing metrics table per virtual port in a logically partitioned data processing system

InactiveUS7308551B2Fine granularityDigital computer detailsMultiprogramming arrangementsData processing systemGranularity

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to track performance and reliability statistics per virtual upstream and downstream port, thereby allowing a system and network management to be performed at finer granularity than what is possible using conventional physical port statistics, is provided. Particularly, a mechanism of managing per-virtual port performance metrics in a logically partitioned data processing system including allocating a subset of resources of a physical adapter to a virtual adapter of a plurality of virtual adapters is provided. The subset of resources includes a virtual port having an identifier assigned thereto. The identifier of the virtual port is associated with an address of a physical port. A metric table is associated with the virtual port, wherein the metric table includes metrics of operations that target the virtual port.

Owner:IBM CORP

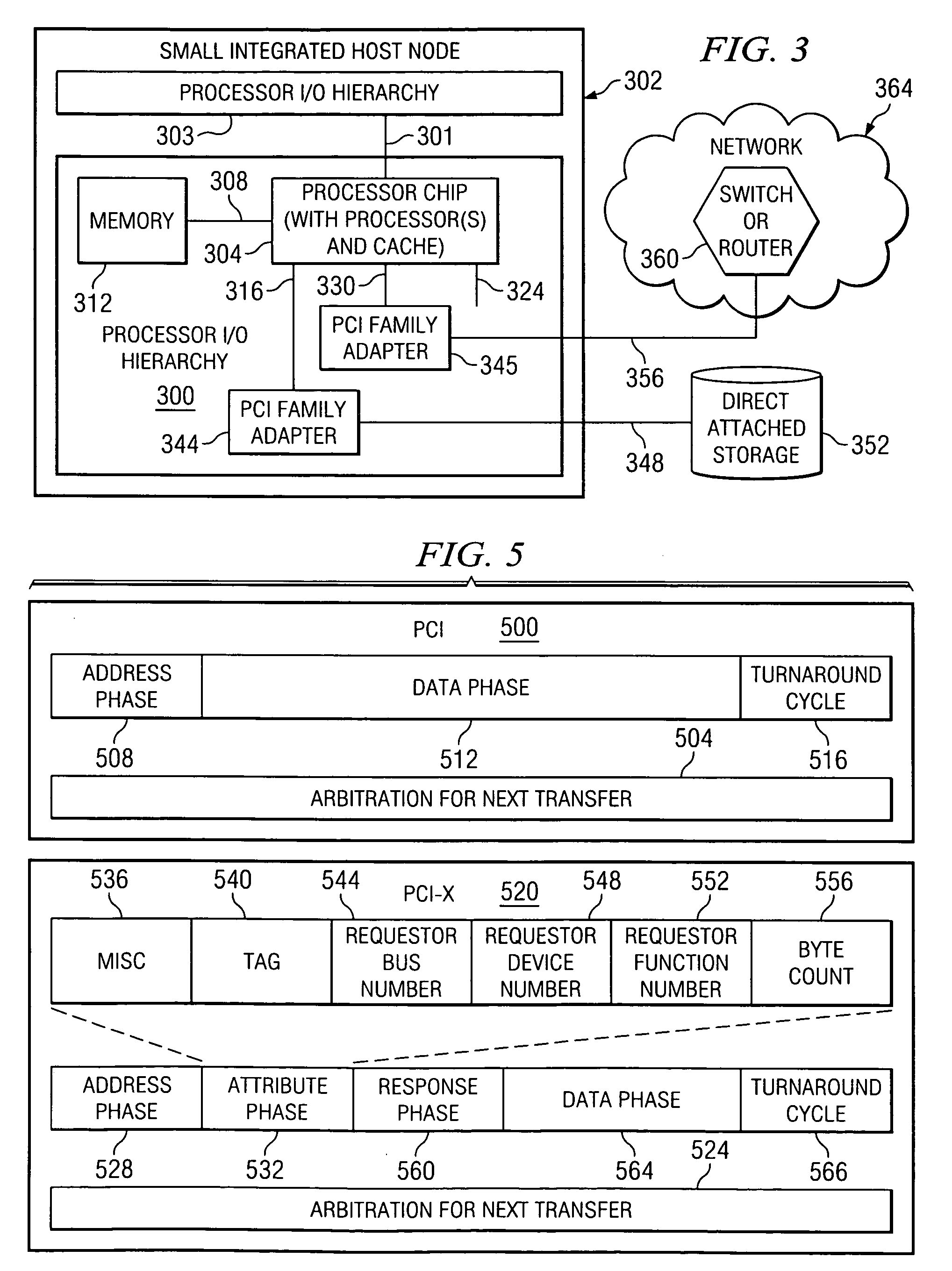

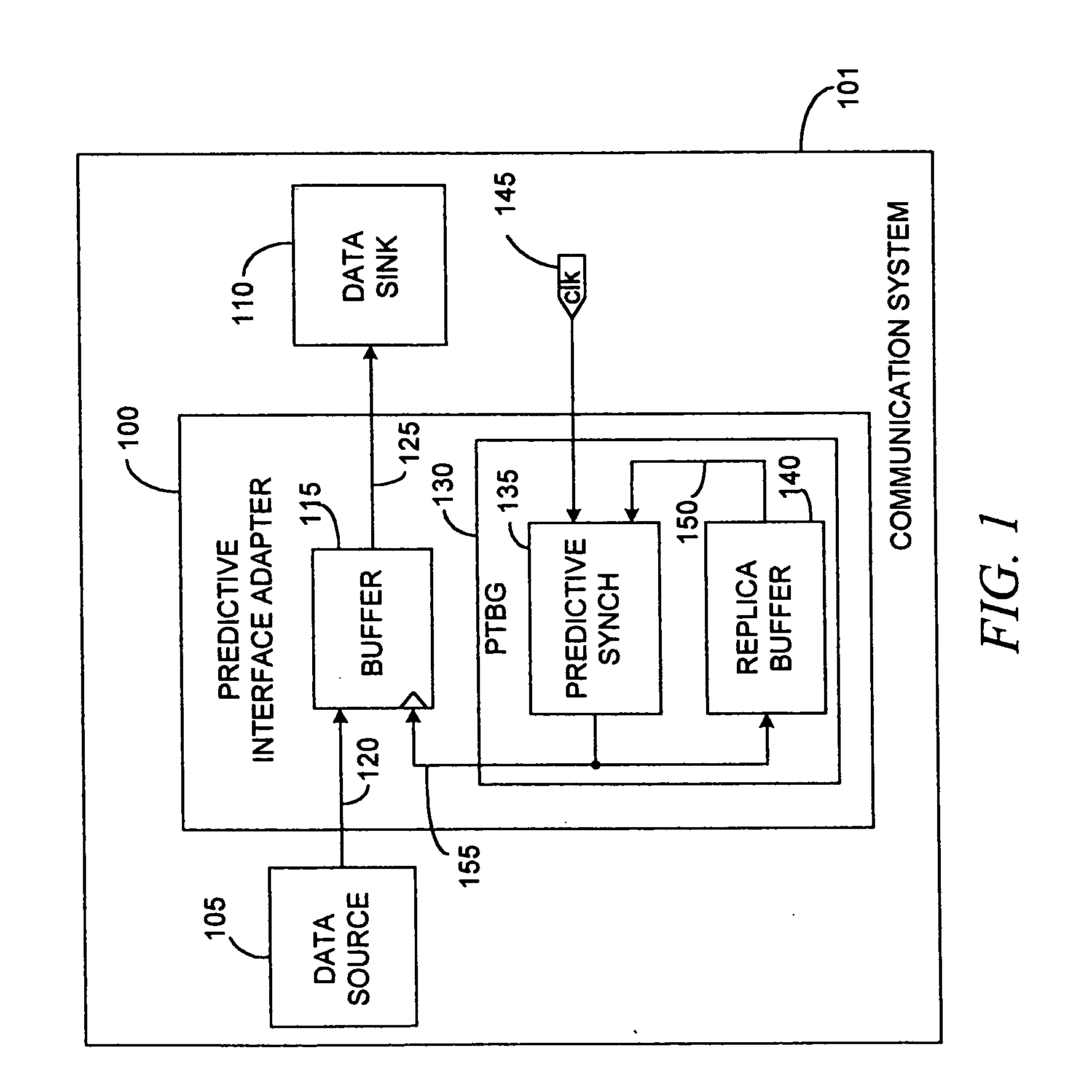

Integrated gigabit ethernet PCI-X controller

InactiveUS20060143344A1Reduce data latencyEnergy efficient ICTVolume/mass flow measurementTransceiverBus interface

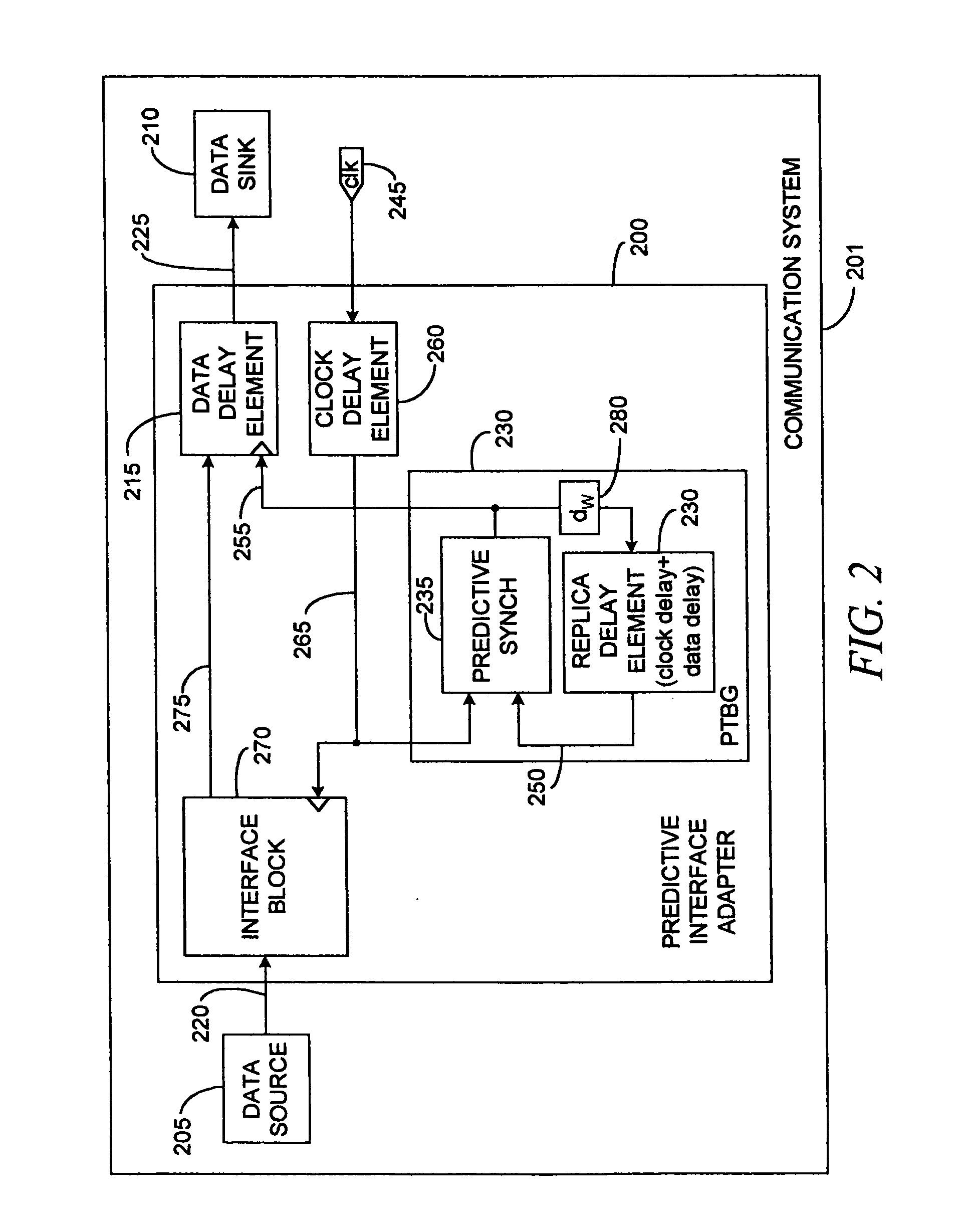

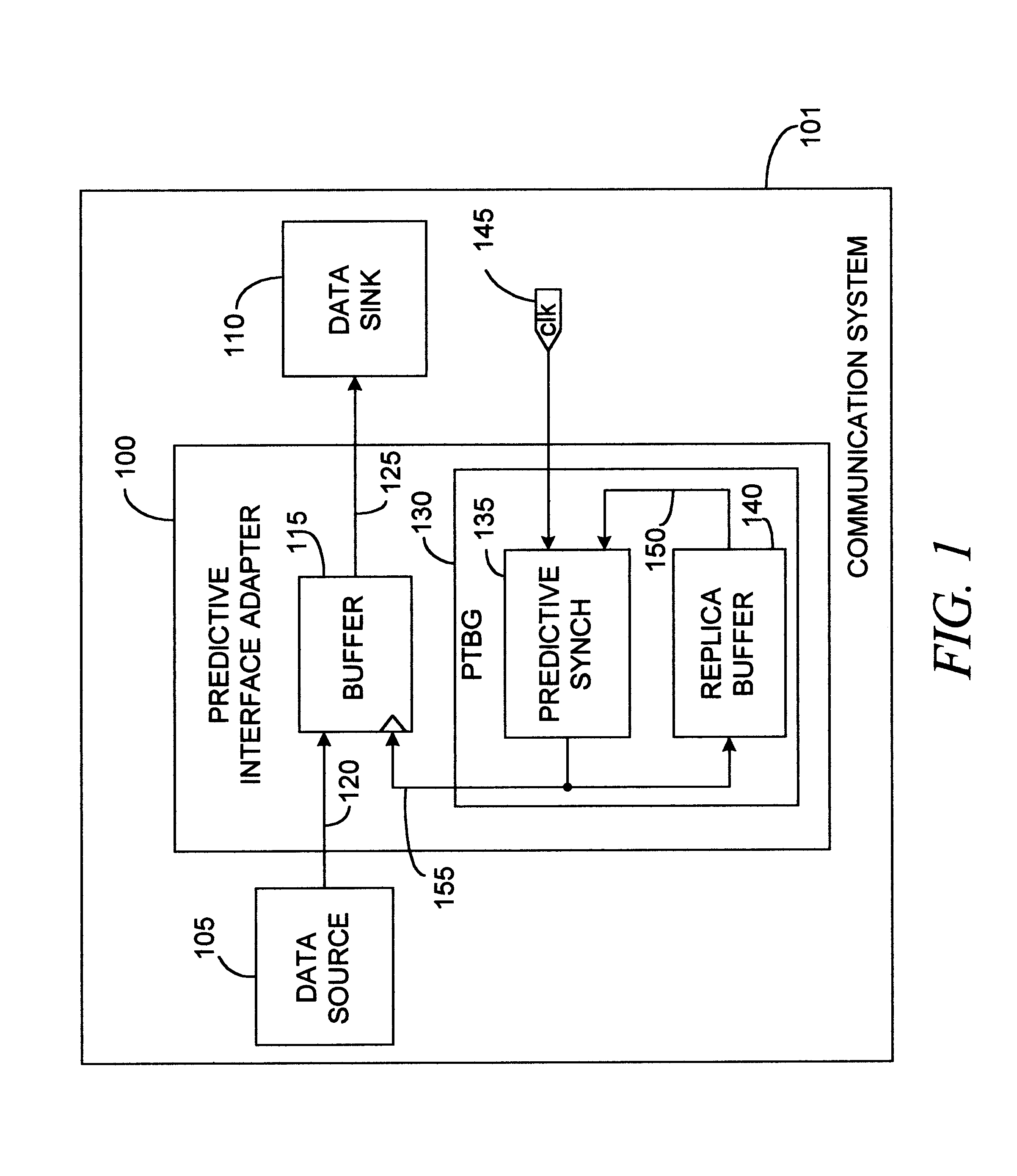

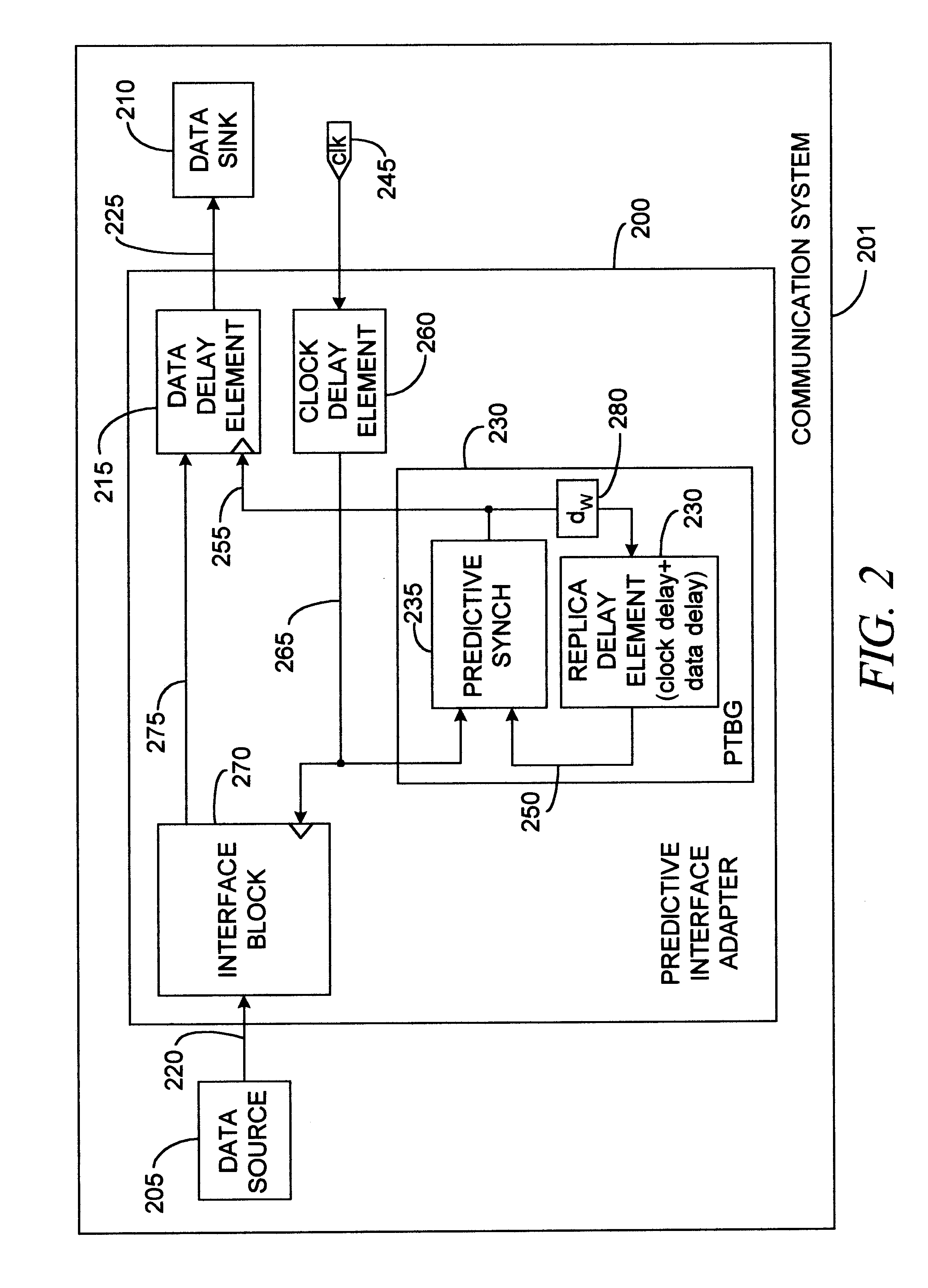

A network controller having a multiprotocol bus interface adapter coupled between a communication network and a computer bus, the adapter including a predictive time base generator; and a management bus controller adapted to monitor and manage preselected components coupled with one of the communication network and the computer bus. The management bus controller is adapted to employ an Alert Standard Format (ASF) specification protocol, a System Management Bus (SMBus) specification protocol, an Intelligent Platform Management Interface (IPMI) specification protocol, a Simple Network Management Protocol (SNMP), or a combination thereof. The network controller also includes a 10 / 100 / 1000BASE-T IEEE Std. 802.3-compliant transceiver and media access controller coupled with the communication network; a buffer memory coupled with the MAC, wherein the buffer memory includes one of a packet buffer memory, a frame buffer memory, a queue memory, and a combination thereof; and a transmit CPU and a receive CPU coupled with the multiprotocol bus interface adapter and the management bus controller. The network controller can be a single-chip VLSI device in an 0.18 micron CMOS VLSI implementation.

Owner:AVAGO TECH INT SALES PTE LTD

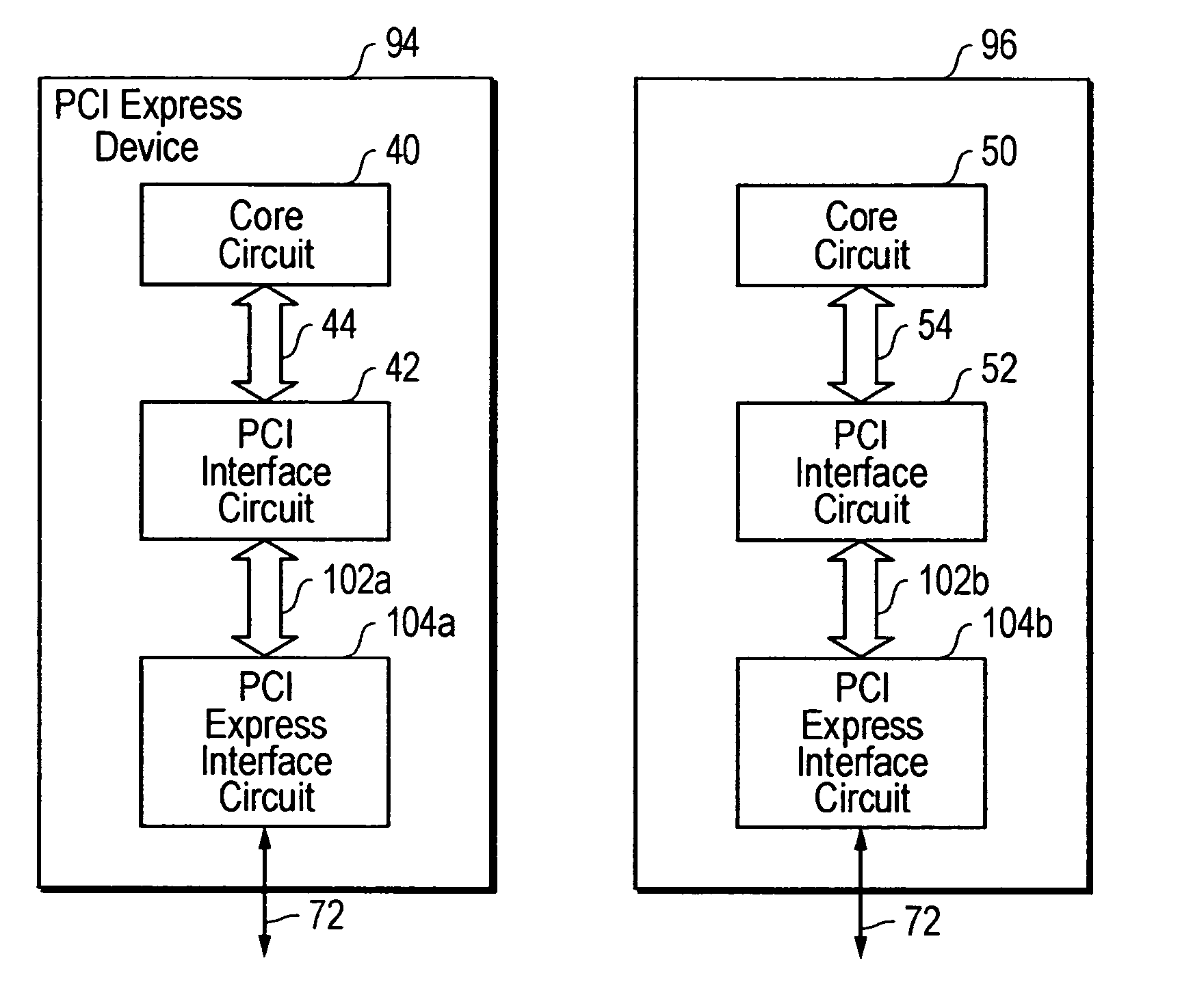

PCI-express to PCI/PCI X translator

An apparatus for converting a PCI / PCI X device into a PCI-Express device. The apparatus may include a first circuit configured to receive first data, wherein the first circuit is configured to translate the first data into PCI formatted data. The apparatus may also include a PCI data bus and a second circuit coupled to the first circuit via the PCI data bus. The second circuit is configured to receive the PCI formatted data from the first circuit via the PCI data bus. The second circuit is configured to translate the PCI formatted data received from the first circuit into PCI-Express formatted data. However, the PCI data bus transmits data between only the first and second circuits.

Owner:RENESAS ELECTRONICS AMERICA

Native virtualization on a partially trusted adapter using PCI host memory mapped input/output memory address for identification

InactiveUS20060195623A1Internal/peripheral component protectionProgram controlMemory addressTerm memory

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to associate its resources to a system image and isolate them from other system images, thereby providing I / O virtualization is provided. Specifically, the present invention is directed to a mechanism for sharing conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A mechanism is provided that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to associate its resources to a system image and isolate them from other system images thereby providing I / O virtualization.

Owner:IBM CORP

System and method for virtual adapter resource allocation matrix that defines the amount of resources of a physical I/O adapter

InactiveUS7376770B2Memory adressing/allocation/relocationProgram controlData processing systemResource allocation

A method, computer program product, and distributed data processing system that enables host software or firmware to allocate virtual resources to one or more system images from a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, is provided. Adapter resource groups are assigned to respective system images. An adapter resource group is exclusively available to the system image to which the adapter resource group assignment was made. Assignment of adapter resource groups may be made per a relative resource assignment or an absolute resource assignment. In another embodiment, adapter resource groups are assigned to system images on a first come, first served basis.

Owner:IBM CORP

Method for virtual resource initialization on a physical adapter that supports virtual resources

ActiveUS7546386B2Memory adressing/allocation/relocationComputer security arrangementsData processing systemResource virtualization

A method for directly sharing a network stack offload I / O adapter that directly supports resource virtualization and does not require a LPAR manager or other intermediary to be invoked on every I / O transaction is provided. The present invention also provides a method, computer program product, and distributed data processing system for directly creating and initializing one or more virtual resources that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter, and that are associated with a virtual host. Specifically, the present invention is directed to a mechanism for sharing conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for host to adapter communications. A mechanism is provided for directly creating and initializing one or more virtual resources that reside within a physical adapter, such as a PCI, PCI-X, or PCI-E adapter, and that are associated with a virtual host.

Owner:IBM CORP

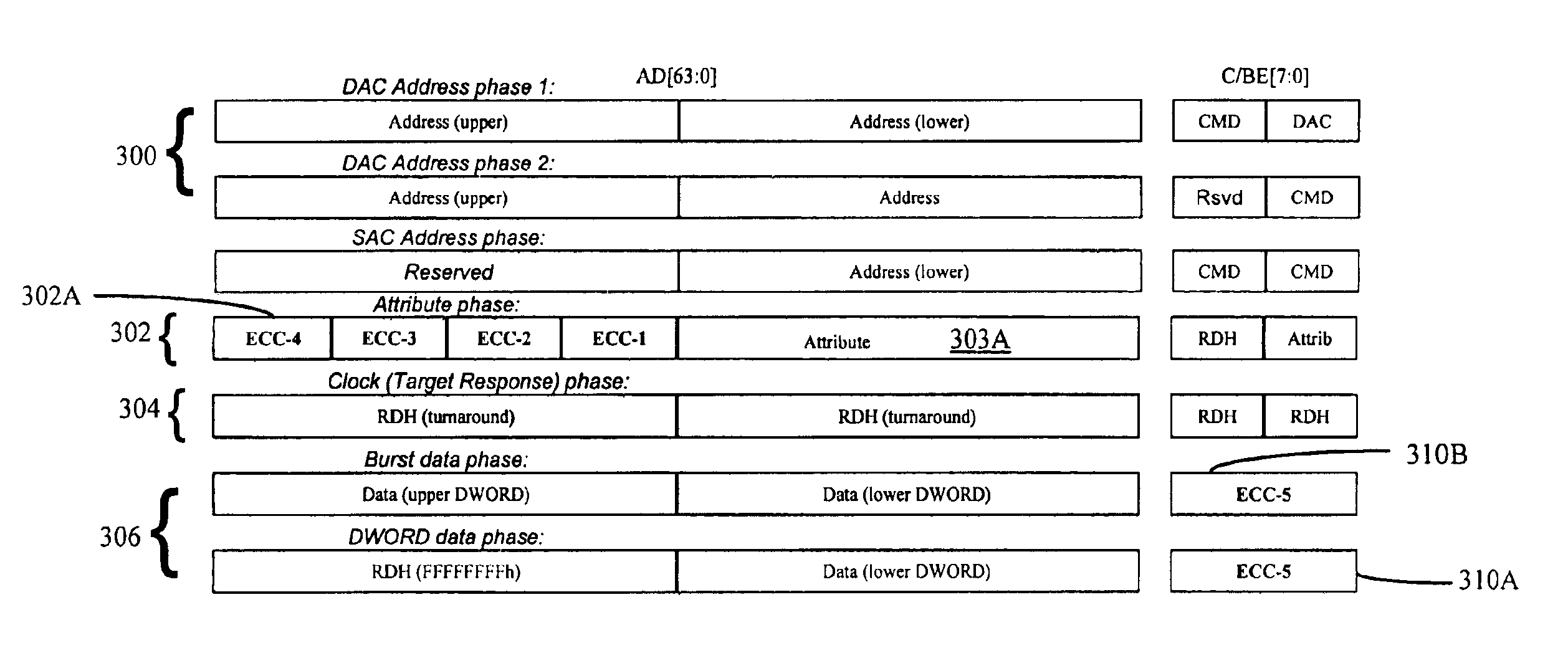

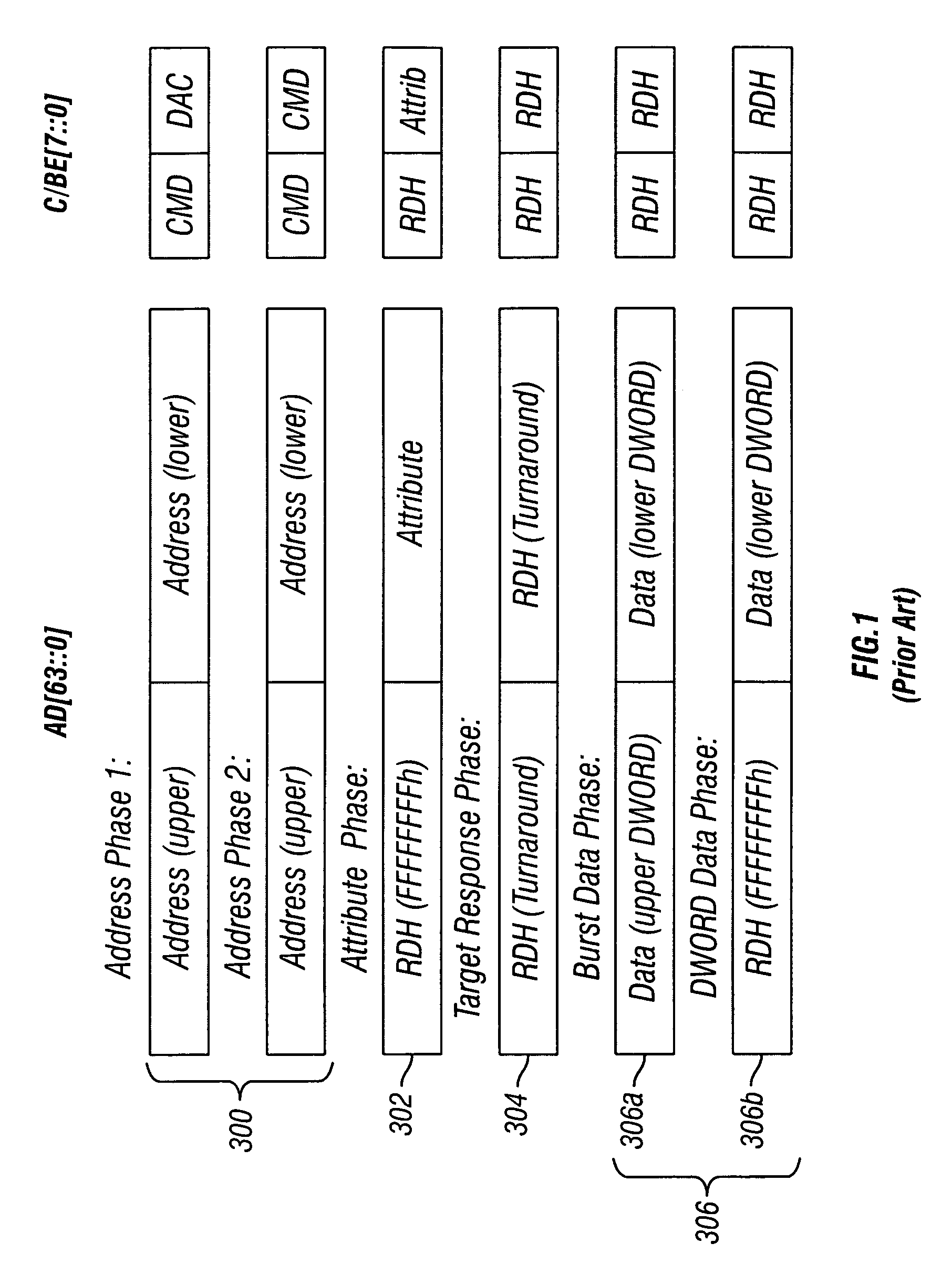

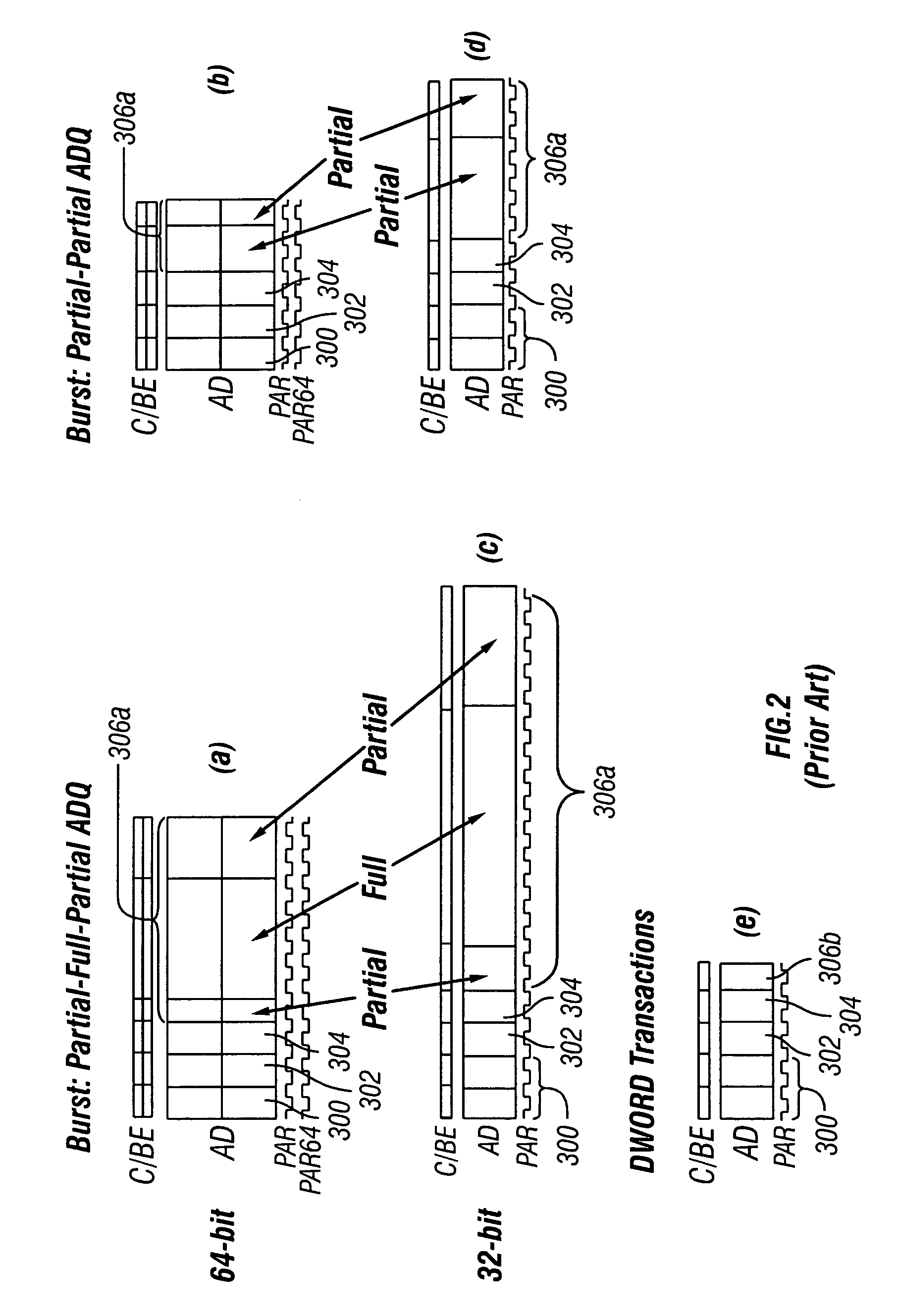

Supporting error correction and improving error detection dynamically on the PCI-X bus

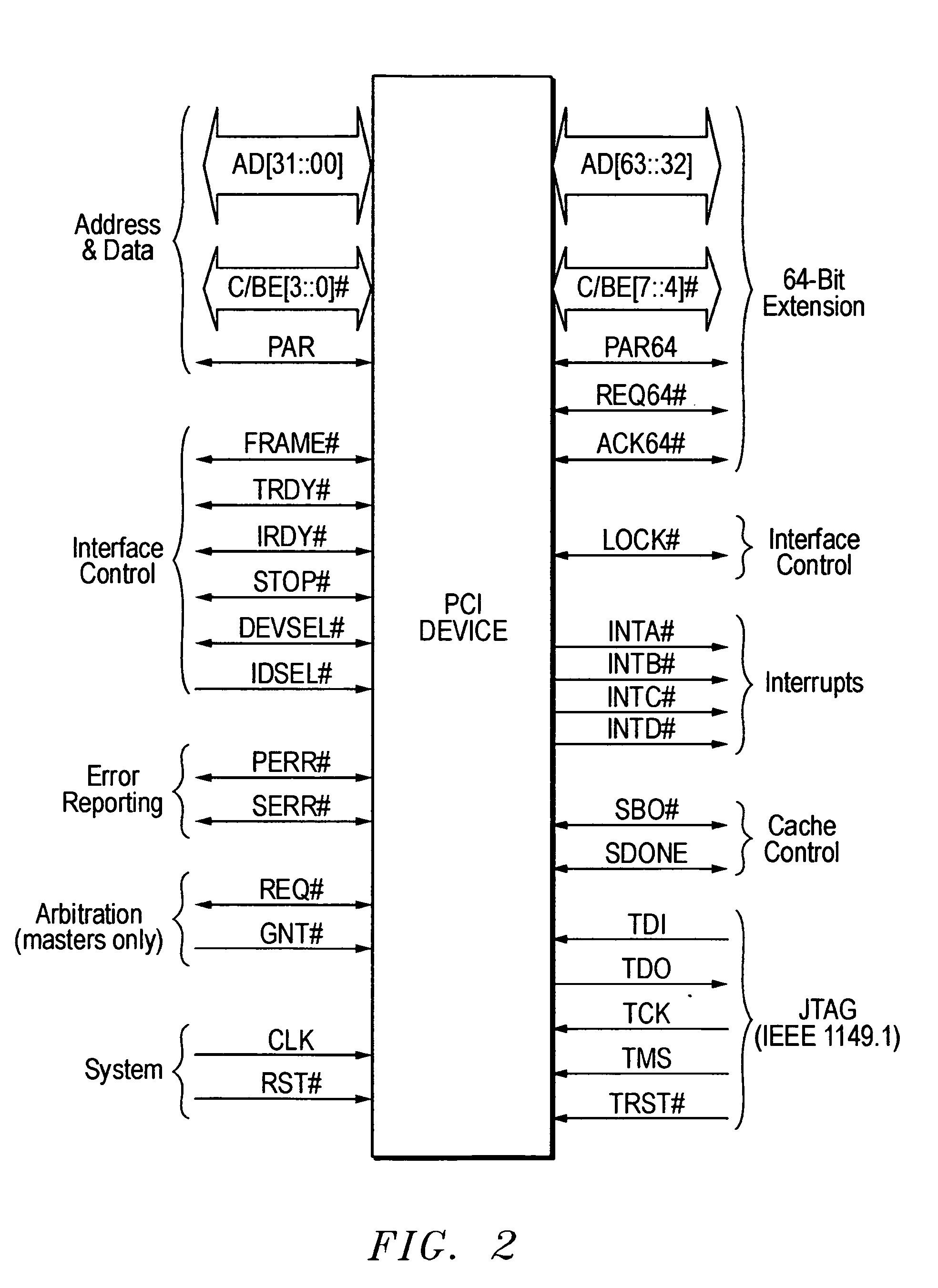

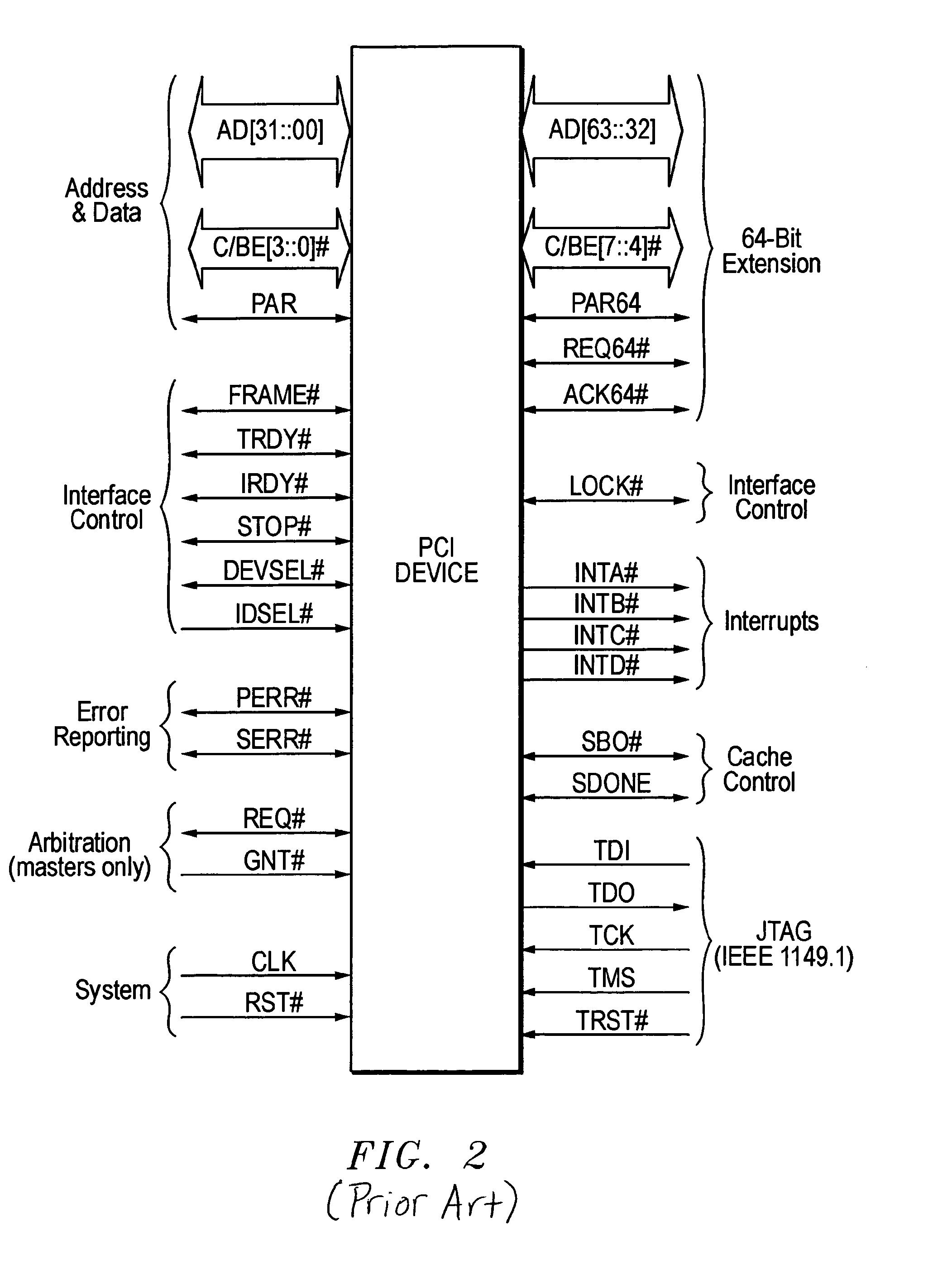

An error correction code mechanism for the extensions to the peripheral component interconnect bus system (PCI-X) used in computer systems is fully backward compatible with the full PCI protocol. The error correction code check-bits can be inserted to provide error correction capability for the header address and attribute phases, as well as for burst and DWORD transaction data phases. The error correction code check-bits are inserted into unused attribute, clock phase, reserved, or reserved drive high portions of the AD and / or C / BE# lanes of the PCI-X phases.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

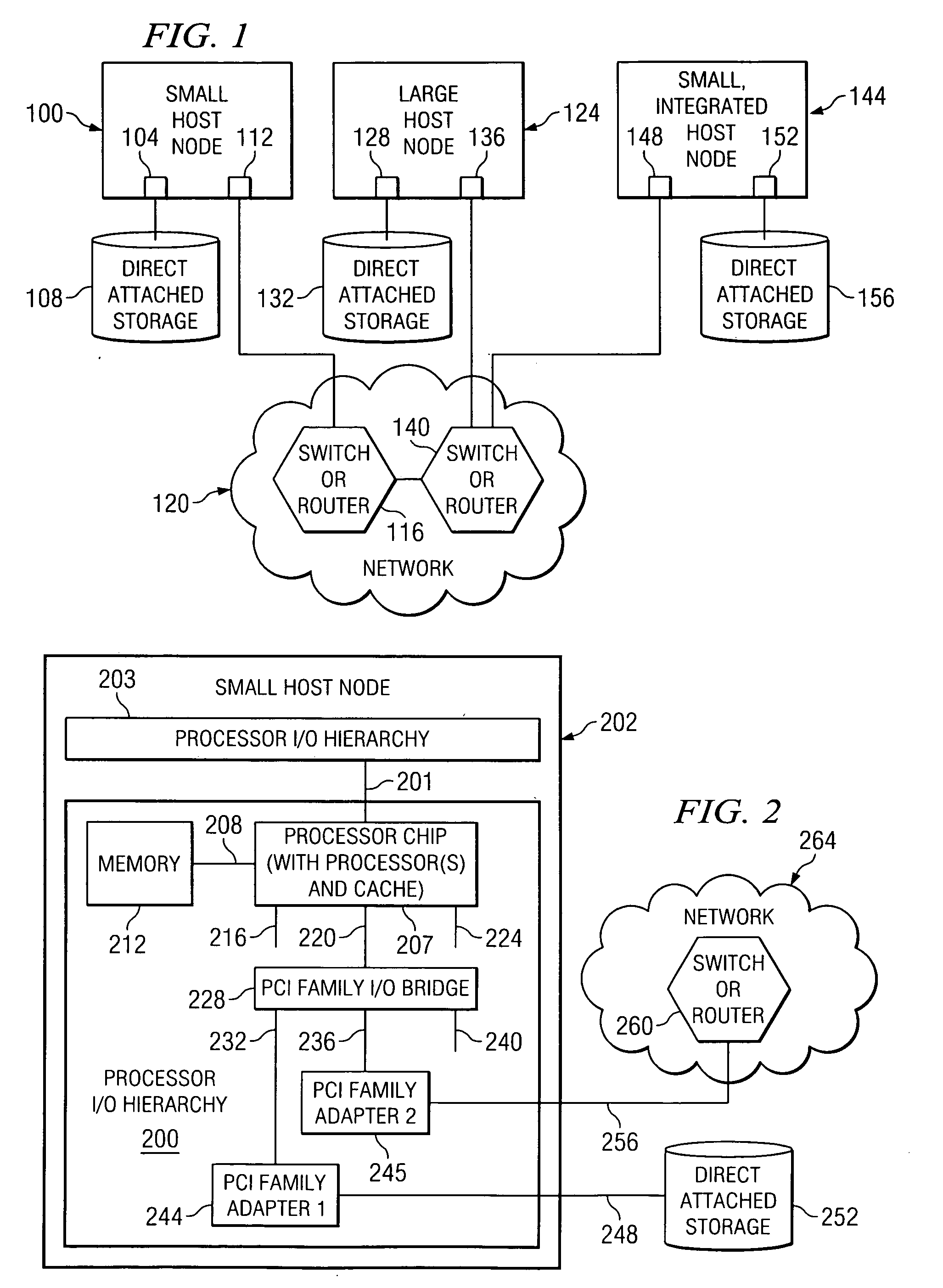

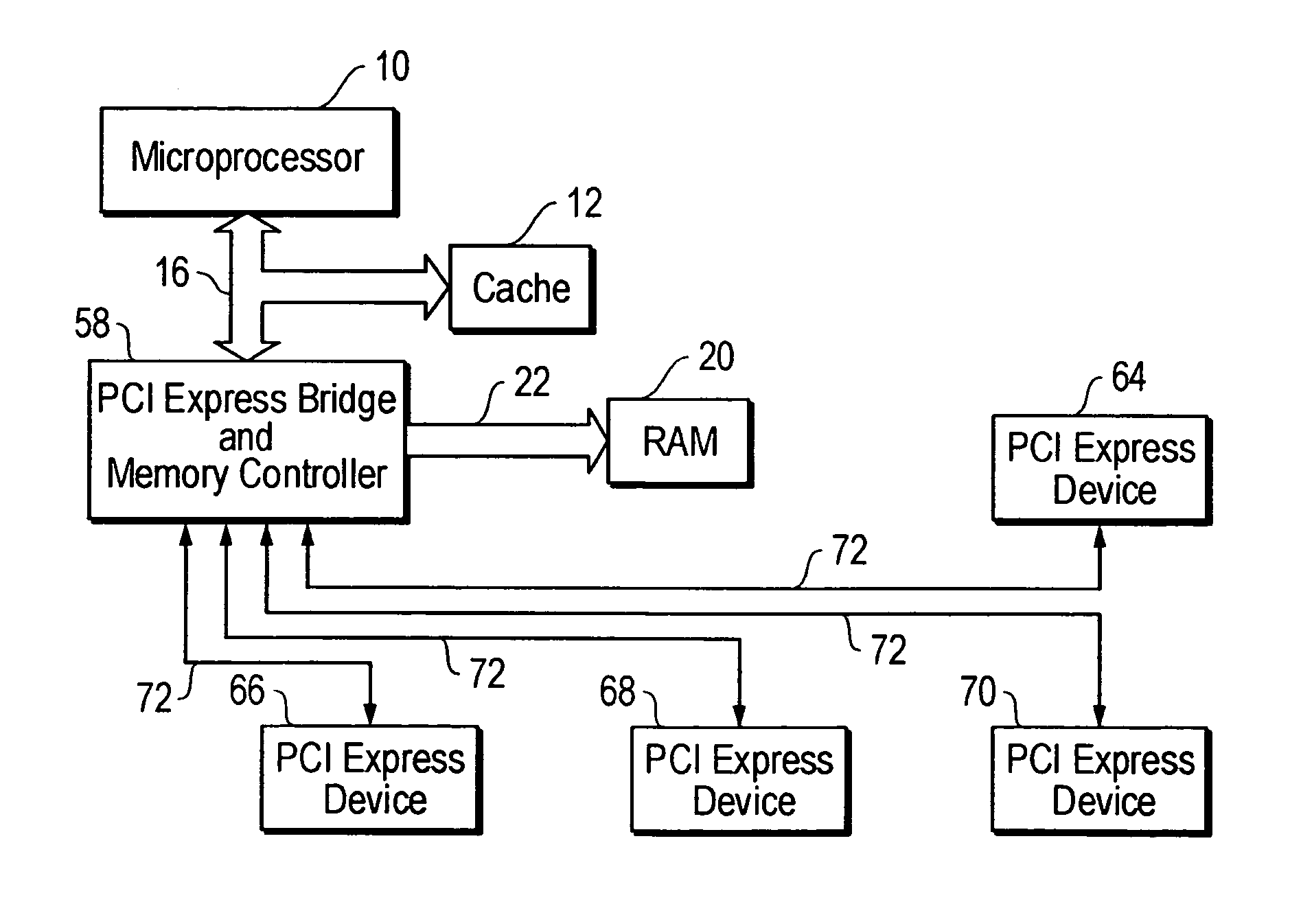

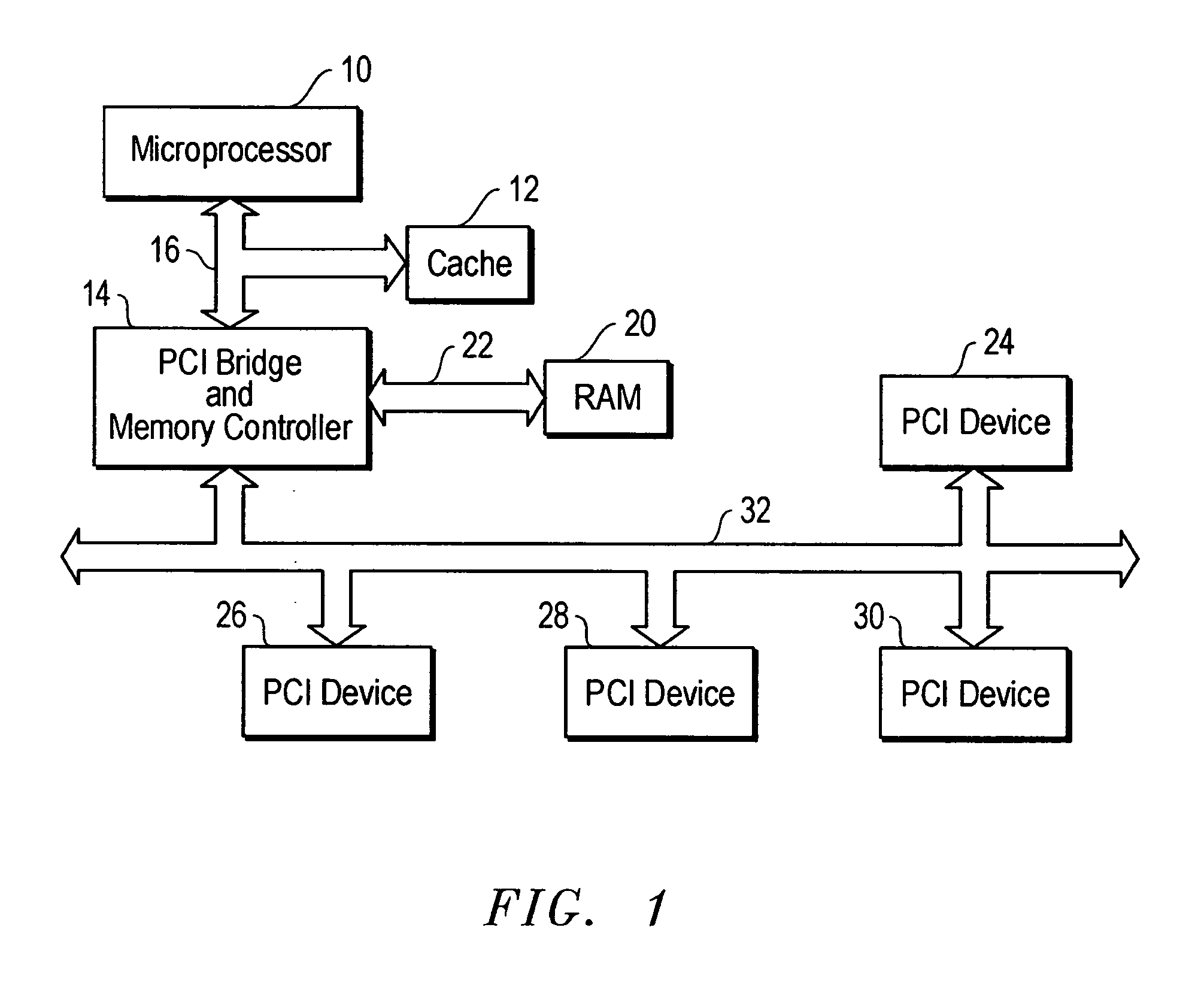

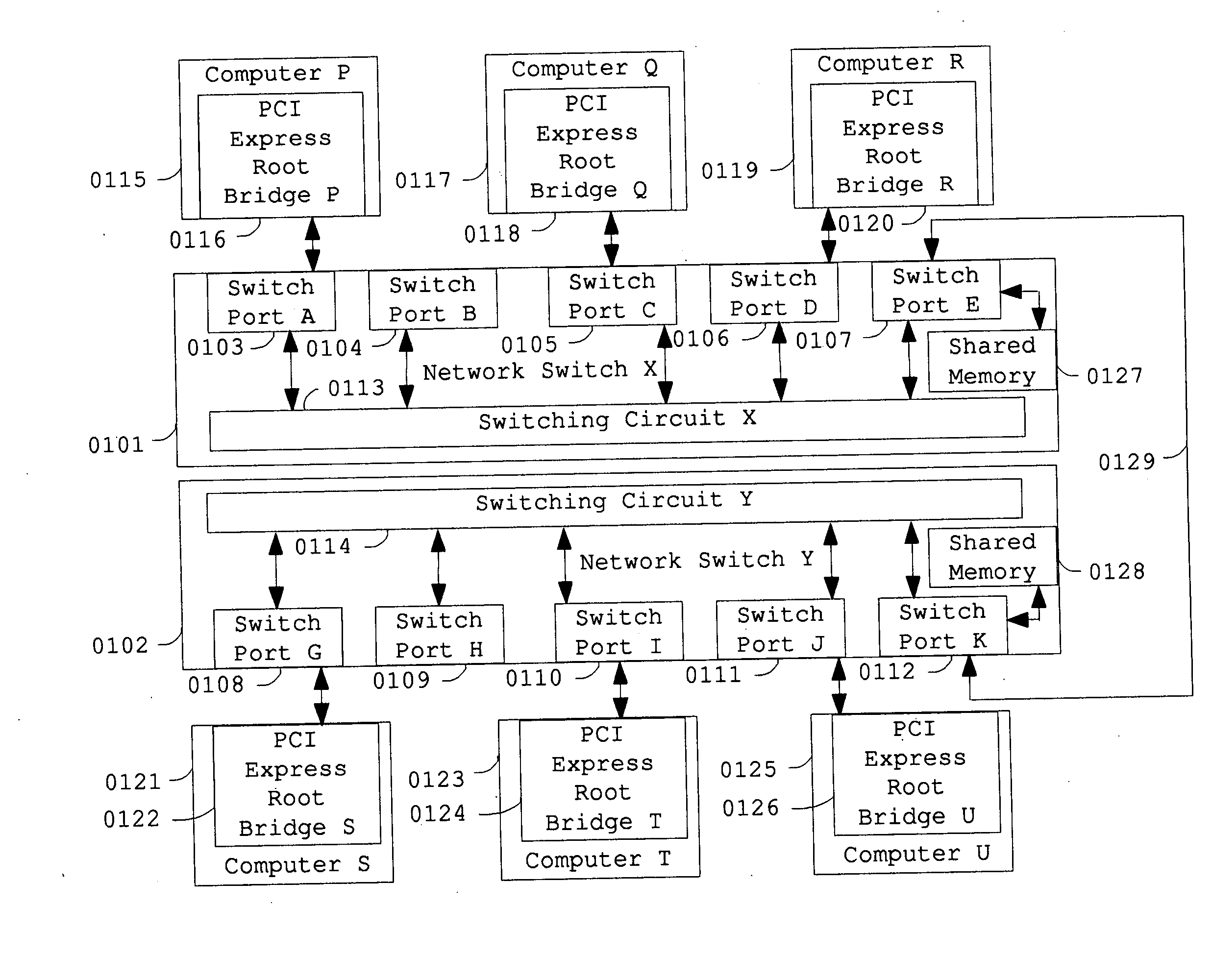

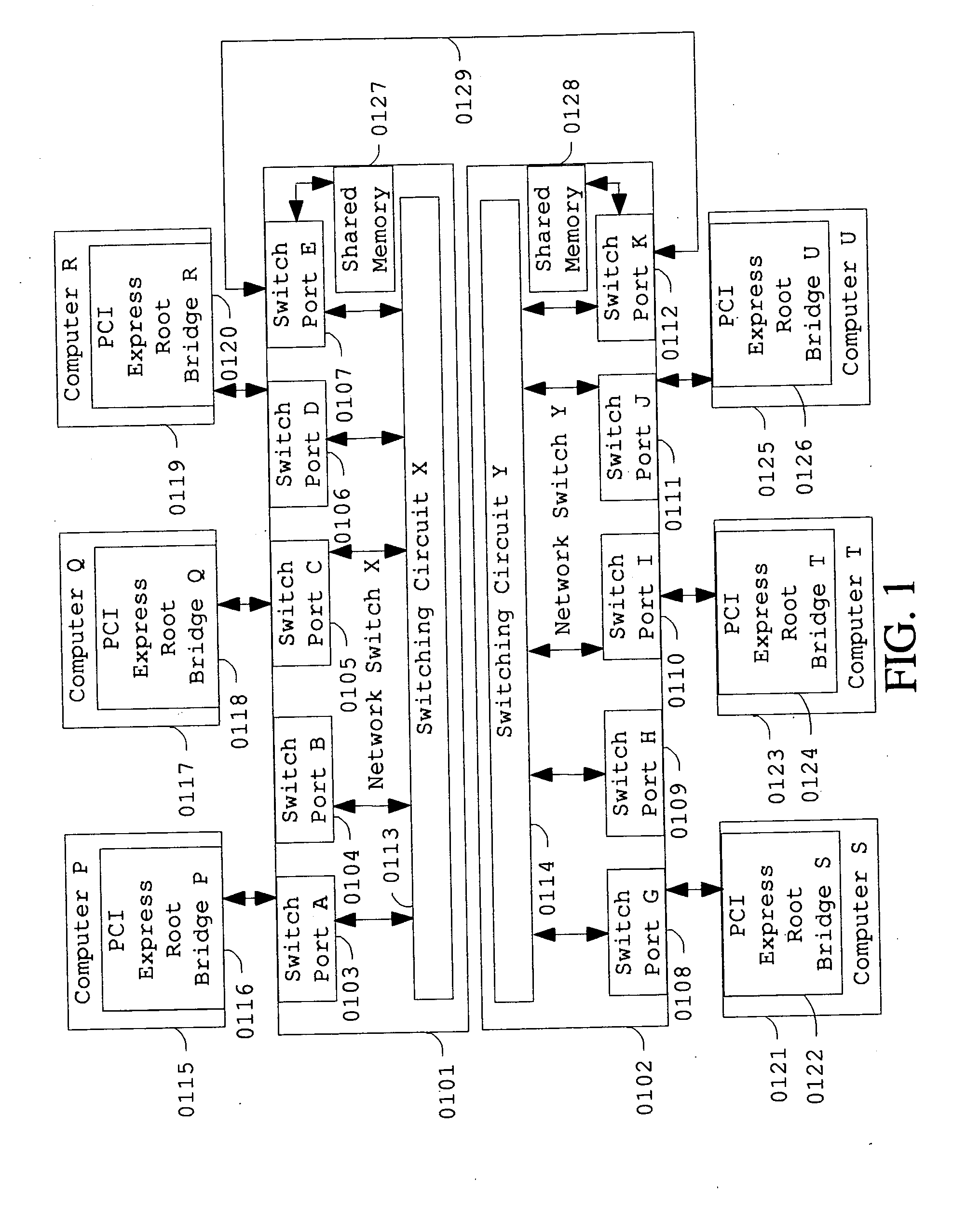

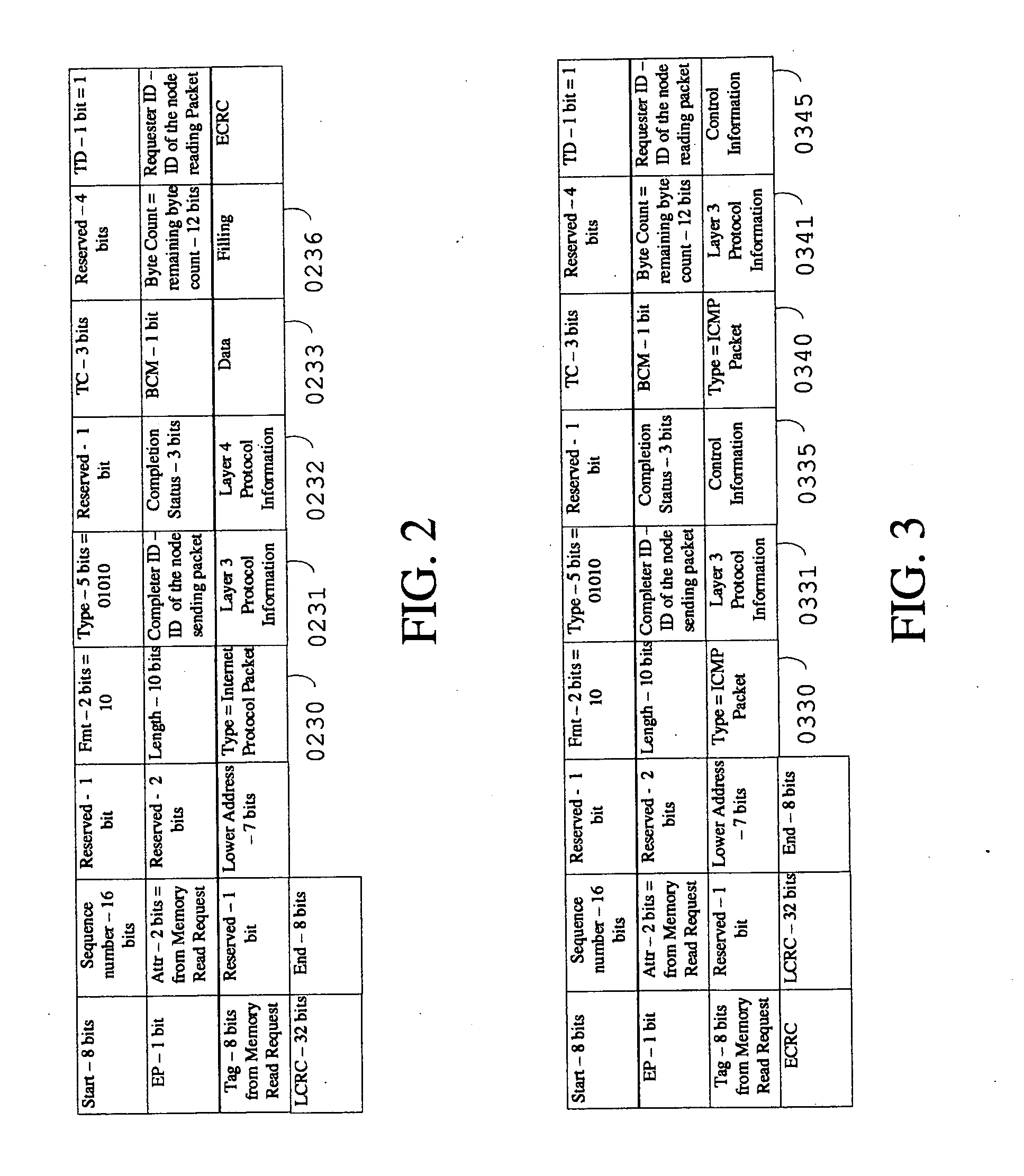

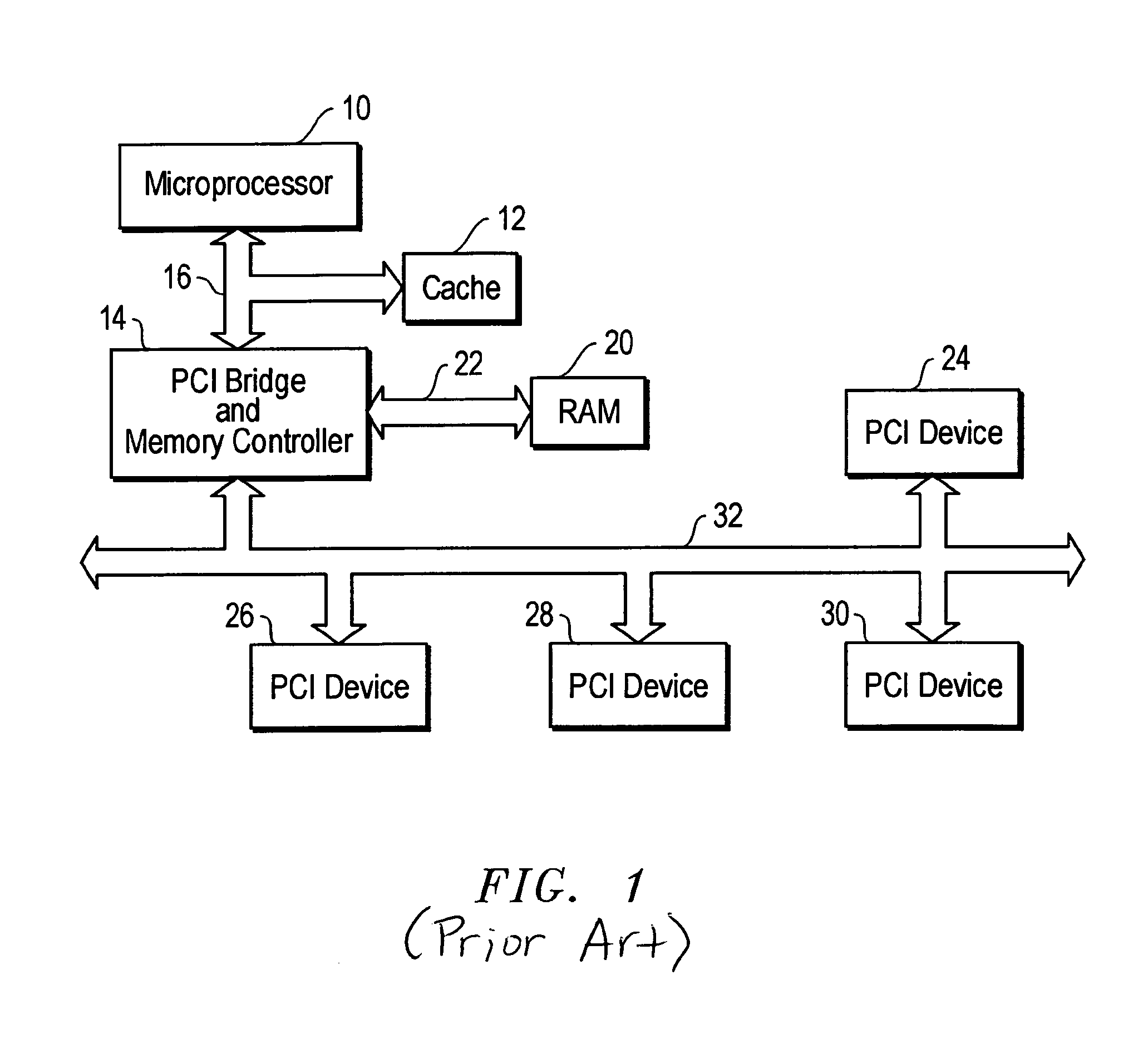

PCI express network

InactiveUS20100002714A1Low costReduces networkData switching by path configurationMass storageStorage area network

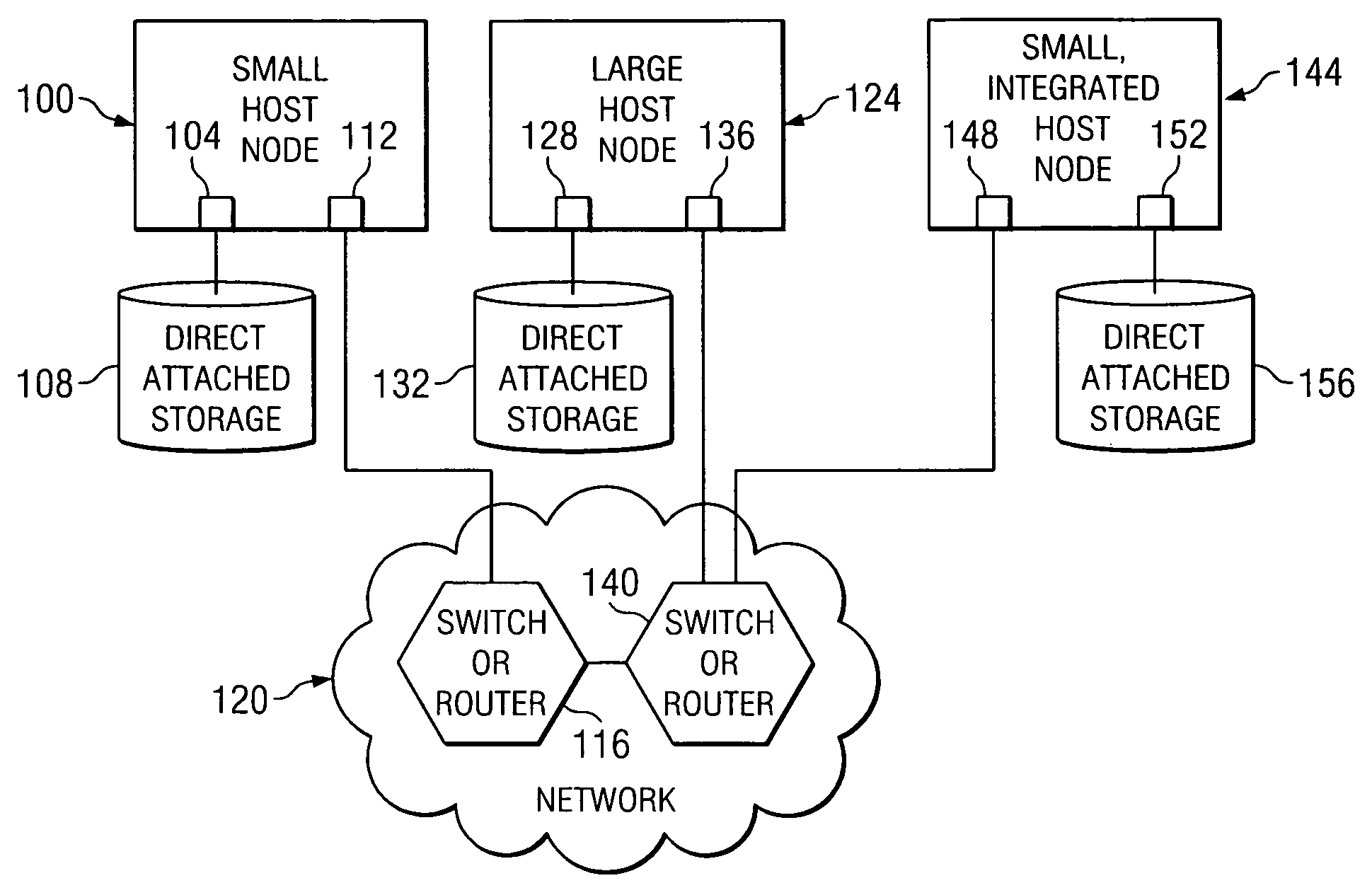

Low communication latency, low cost and high scalability can be achieved by allowing PCI or PCI-X or PCI Express for connectivity between computers or embedded systems and network switches and for connectivity between network switches. These technologies can also be used for interconnecting storage area network switches, computers and mass-memory controllers. PCI Express root bridges in computers or embedded systems can be connected directly to network switch ports.

Owner:GEORGE GEORGE MADATHILPARAMBIL +1

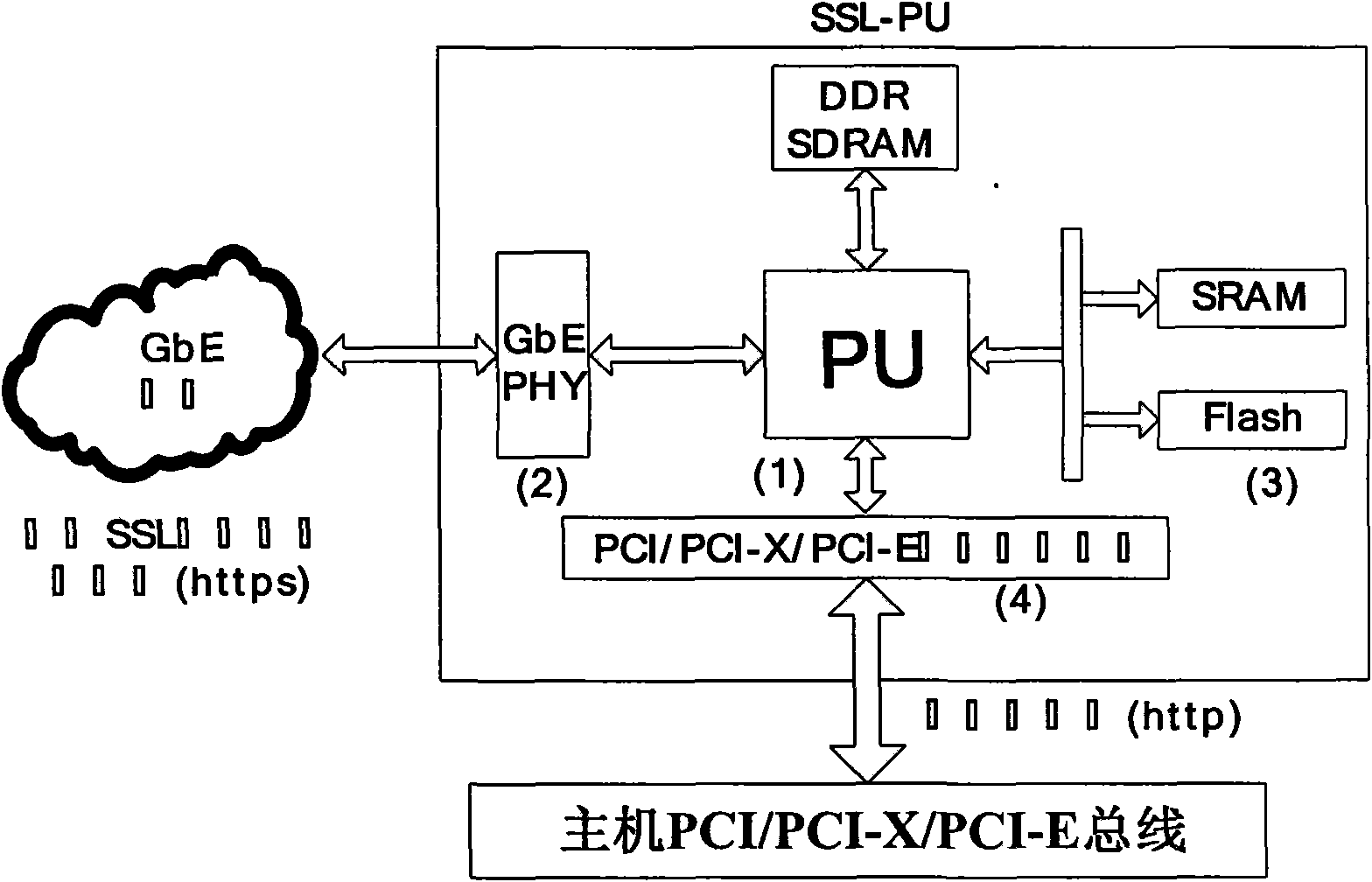

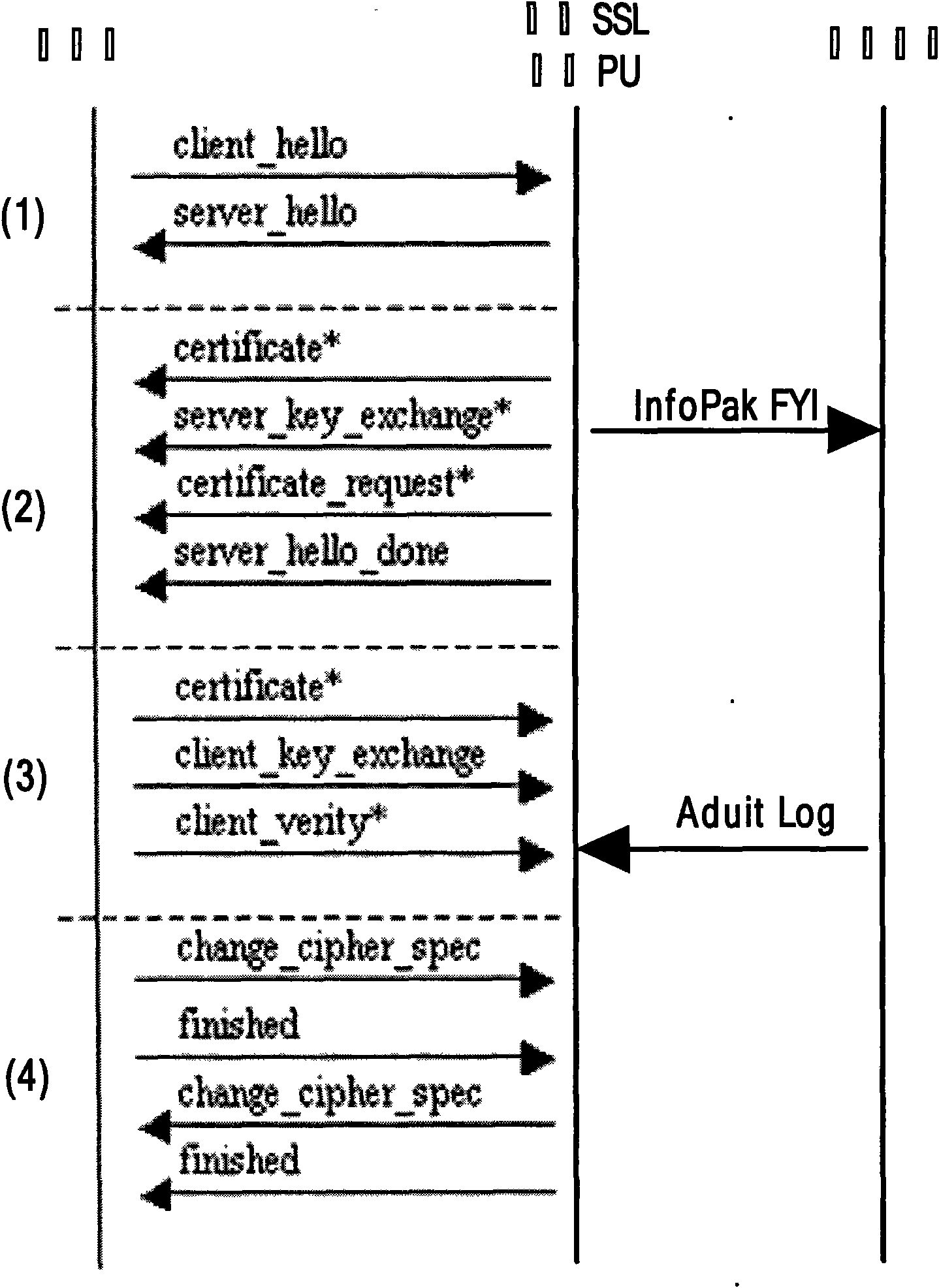

Design architecture and method for secure load balancing by utilizing SSL communication protocol

The invention discloses design architecture and a method for secure load balancing by utilizing SSL communication protocol, in particular to provide secure and reliable data communication for client and load balancing equipment by introducing SSL (Security Socket Layer) communication protocol. The invention mainly designs a processor SSL-PU based on SSL encryption, wherein, the processor SSL-PU is loaded in a load balancer and comprises a processing unit (PU), a memory cell (Flash, SRAM, DDR SDRAM, etc.), an Ethernet network controller (PCI, PCI-X, PCI-E) and GbE PHY (RJ45 interface). SSL-PU well solves the security problem caused by traditional load-balancing equipment and client clear text and the problem that traditional SSL encryption technology based on software excessively occupies system CPU and memory resources, thereby saving server bandwidth, increasing throughput and improving flexibility and availability of network; the processor well realizes the security data interaction with the client, and delivers data in the form of clear text to a load-balancing module; the load balancing module locates a request to a corresponding server according to load balancing algorithm; the server transmits data to the load balancing equipment; and the load balancer with an SSL-PU module transmits encrypted security data to the client, thereby completing secure data interactive access. The invention is particularly suitable for secure load-balancing scheduling of load balancing equipment of which the back end is a cluster system.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Multiprotocol computer bus interface adapter and method

Owner:AVAGO TECH INT SALES PTE LTD

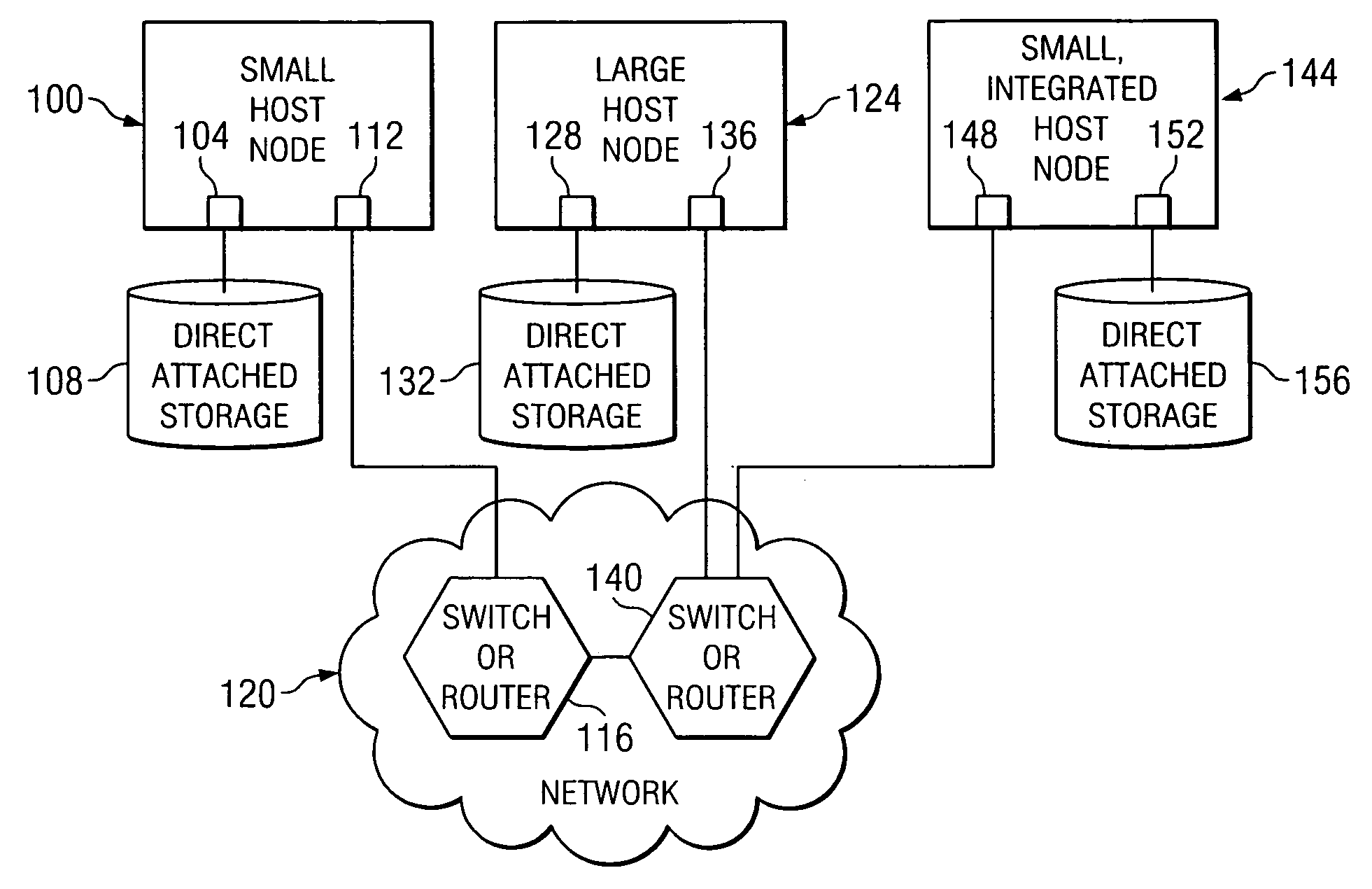

Priority transaction support on the PCI-X bus

InactiveUS6801970B2Multiprogramming arrangementsData switching by path configurationMultiplexingProcessing Instruction

Support for indicating and controlling transaction priority on a PCI-X bus. Embodiments of the invention provide indicia that can be set to communicate to PCI-X-to-PCI-X bridges and Completer that a transaction should be handled specially and scheduled ahead of any other transaction not having their corresponding indicia set. A special handling instruction allows the priority transaction to be scheduled first or early. The indicia are implemented by setting a bit(s) in an unused portion of a PCI-X attribute field, or multiplexed with a used portion, to schedule the associated transaction as the priority transaction over other transactions that do not have their corresponding bit set. The present invention can be used for interrupt messaging, audio streams, video streams, isochronous transactions, or for high performance, low bandwidth control structures used for communication in a multiprocessor architecture across PCI-X.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

PCI-express to PCI/PCI X translator

An apparatus for converting a PCI / PCI X device into a PCI-Express device. The apparatus may include a first circuit configured to receive first data, wherein the first circuit is configured to translate the first data into PCI formatted data. The apparatus may also include a PCI data bus and a second circuit coupled to the first circuit via the PCI data bus. The second circuit is configured to receive the PCI formatted data from the first circuit via the PCI data bus. The second circuit is configured to translate the PCI formatted data received from the first circuit into PCI-Express formatted data. However, the PCI data bus transmits data between only the first and second circuits.

Owner:RENESAS ELECTRONICS AMERICA

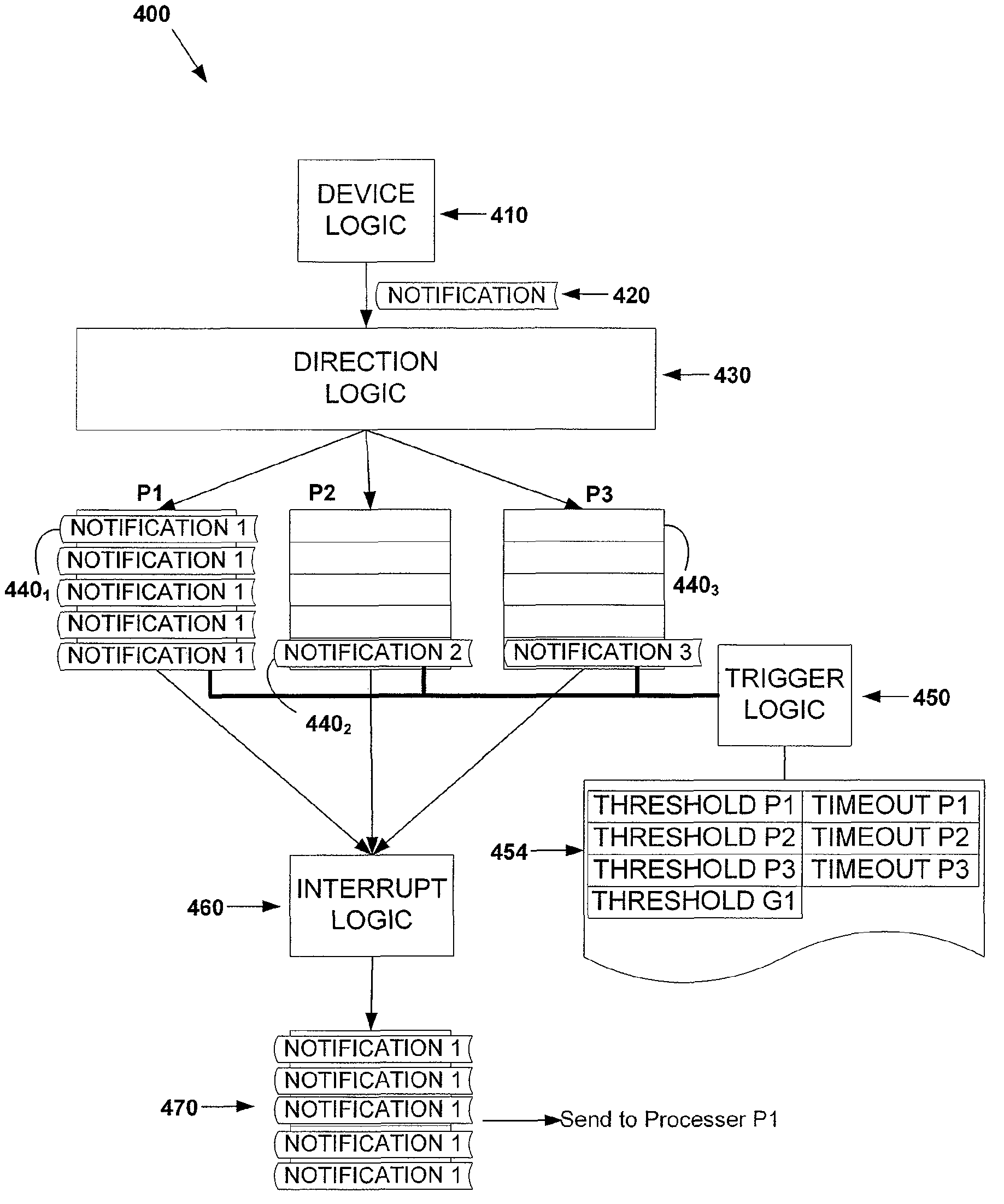

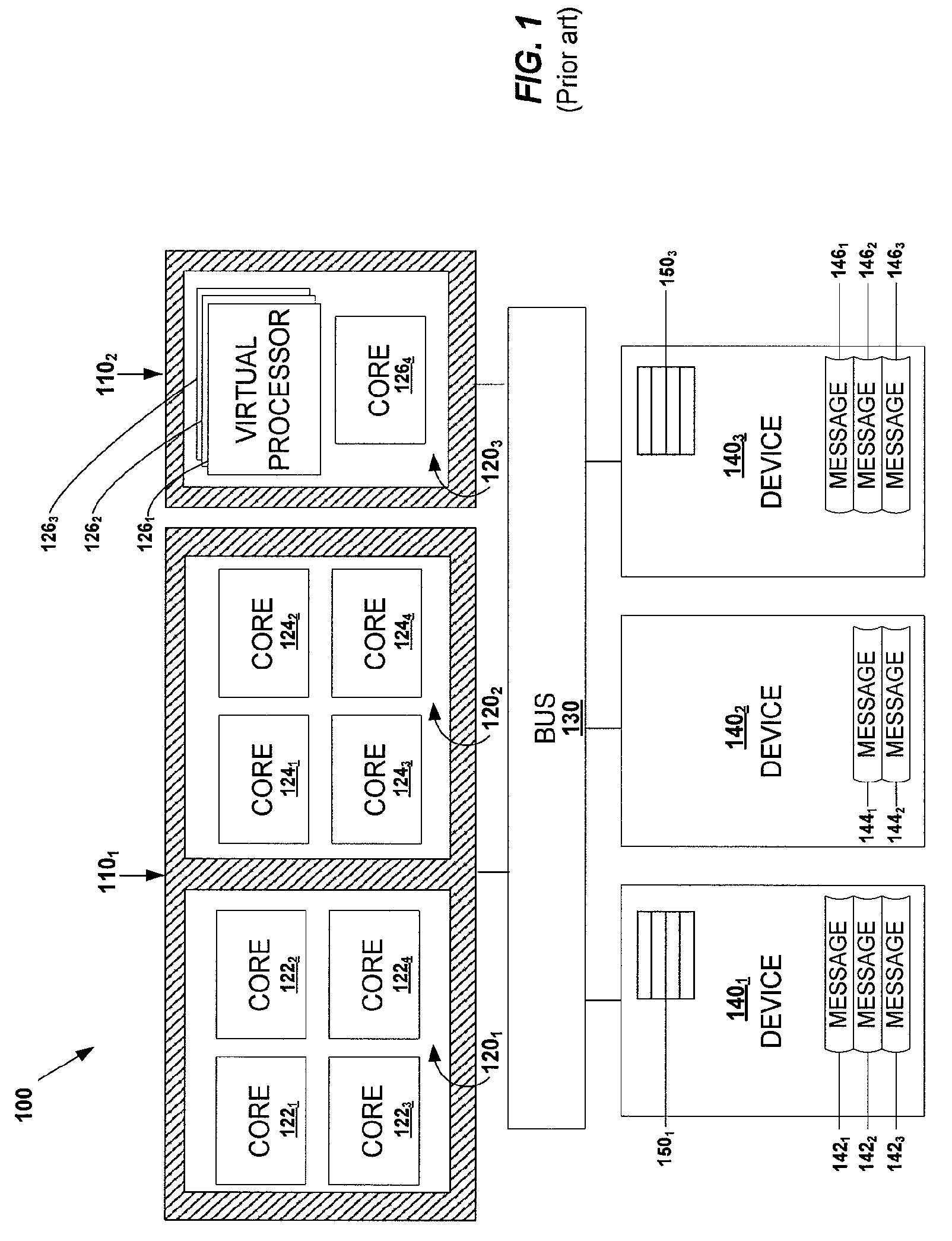

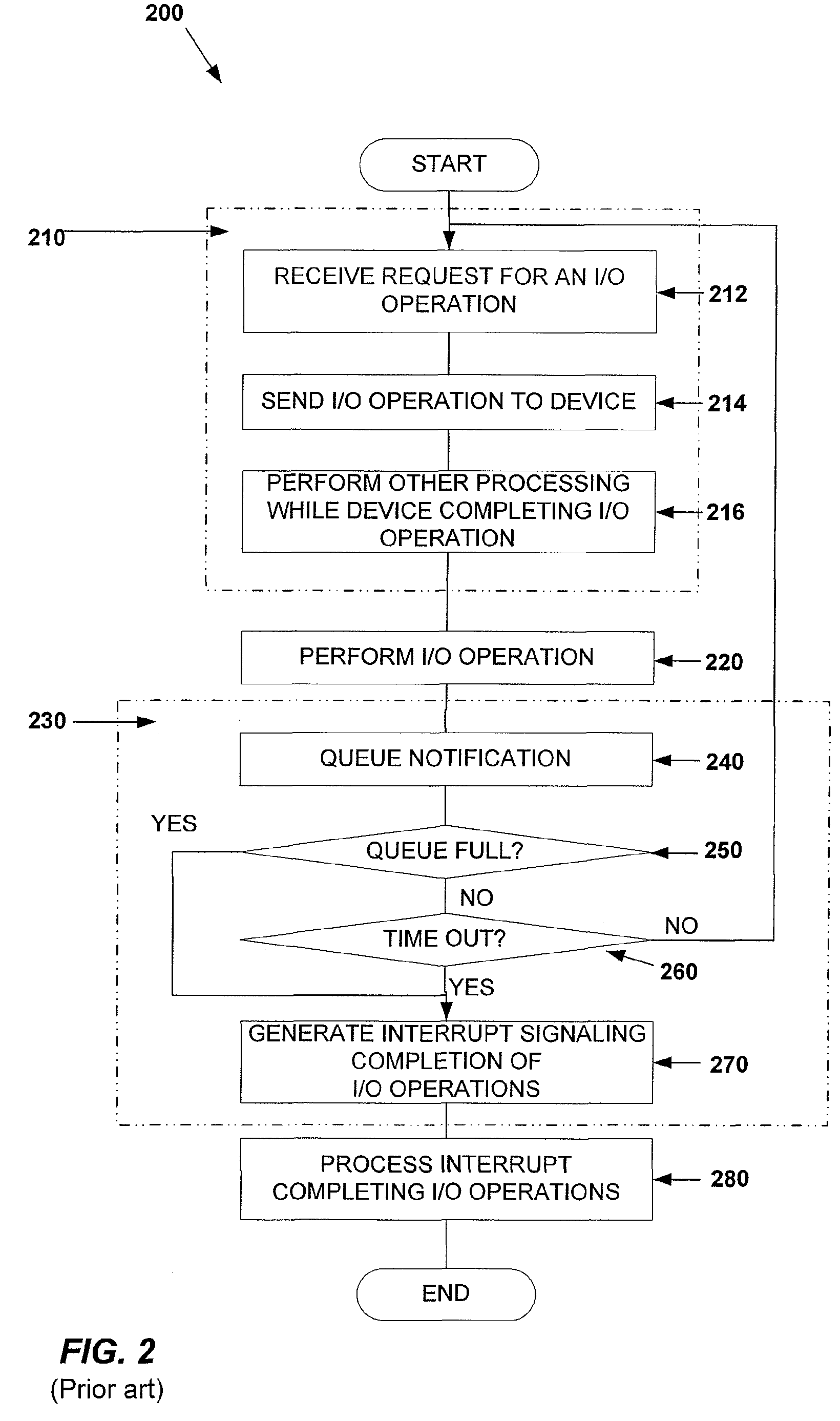

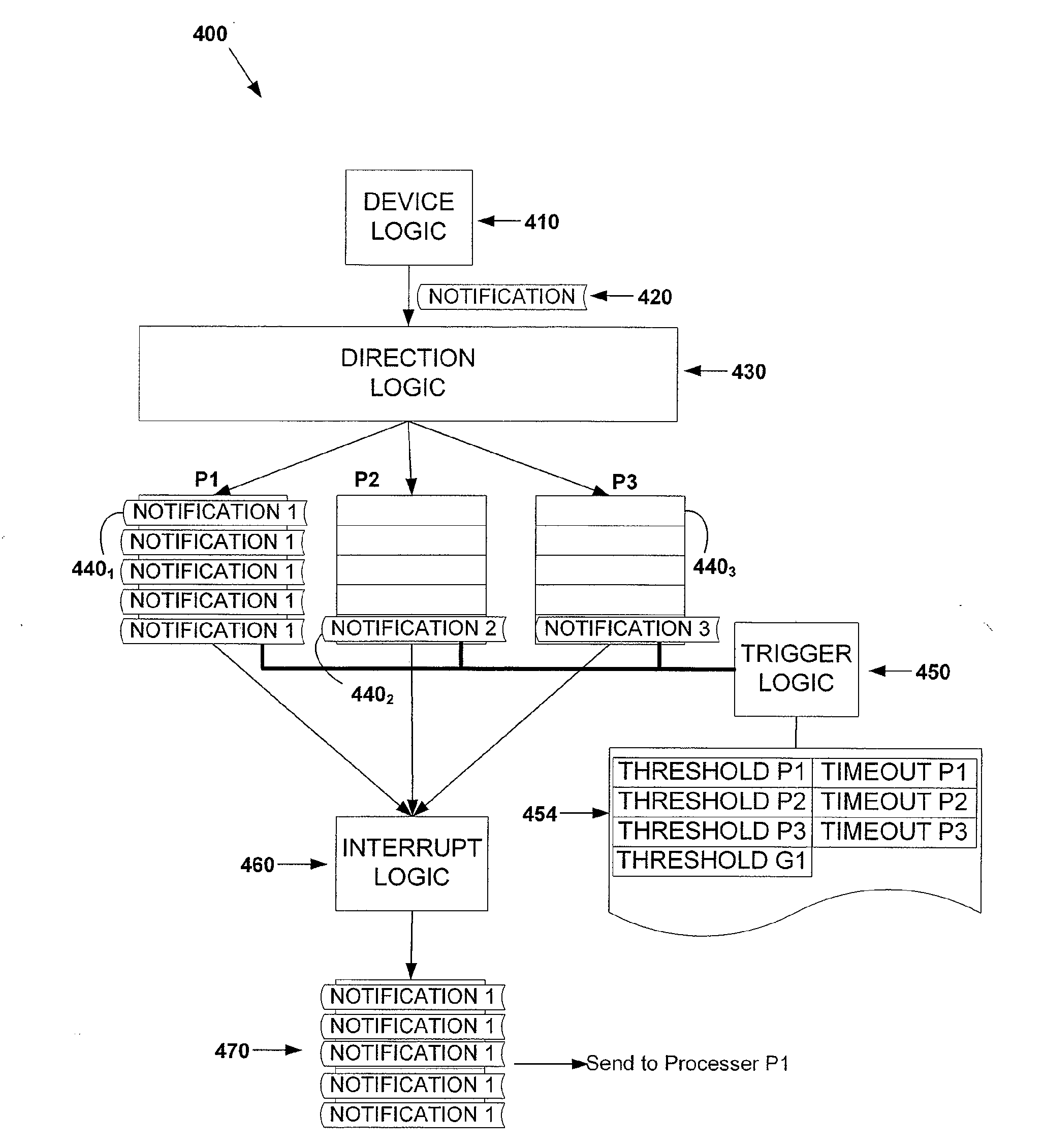

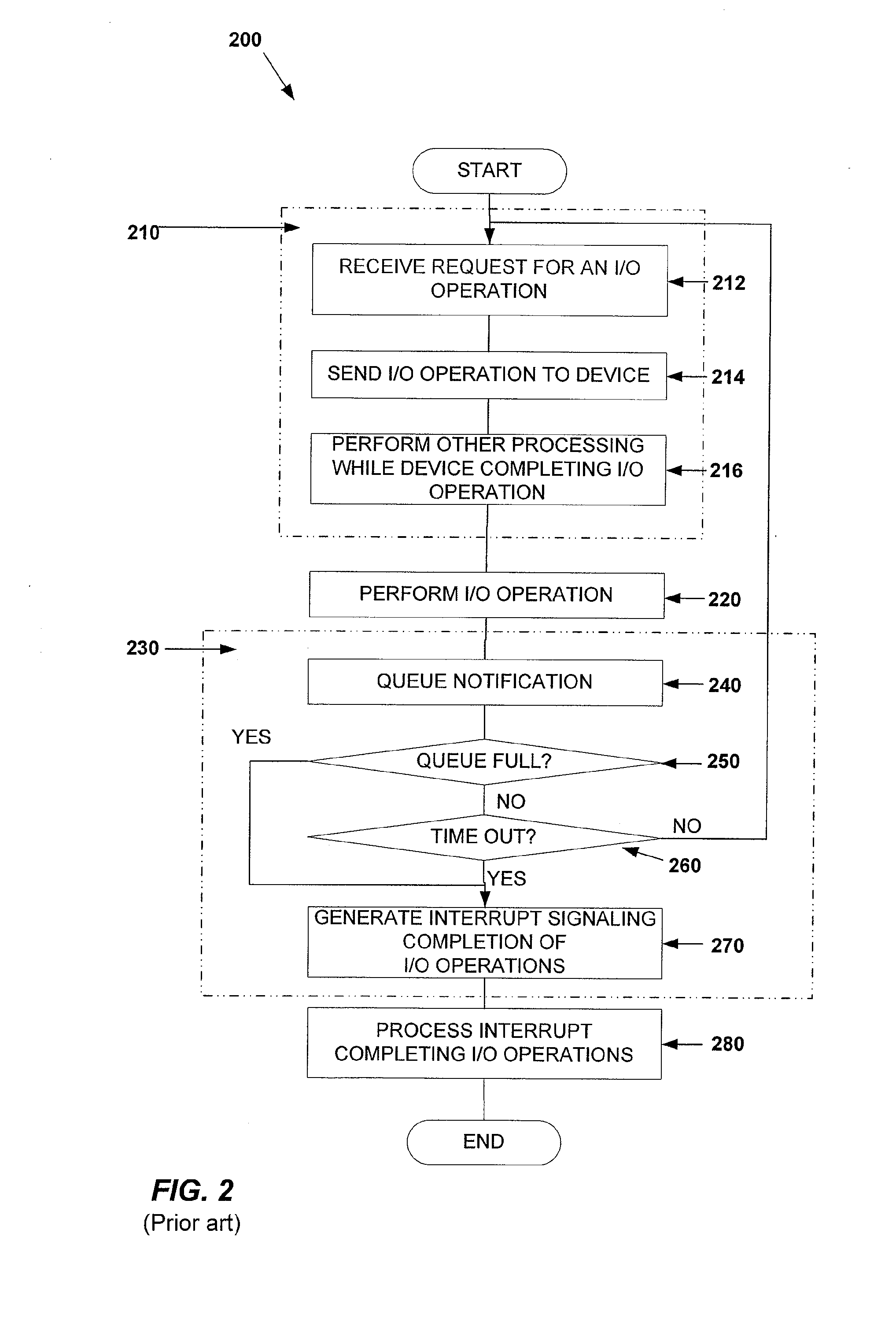

Interrupt redirection with coalescing

InactiveUS7788435B2Improve efficiencyDistributed loadProgram controlMulti processorComputerized system

An interrupt redirection and coalescing system for a multi-processor computer. Devices interrupt a processor or group of processors using pre-defined message address and data payloads communicated with a memory write transaction over a PCI, PCI-X, or PCI Express bus. The efficiency of processing may be improved by combining multiple interrupt notifications into a single interrupt message to a processor. For some interrupts on a multi-processor computer, such as those signaling completion of an input / output (I / O) operation assigned to a device, the efficiency of processing the interrupt may vary from processor to processor. Processing efficiency and overall computer system operation may be improved by appropriately coalescing interrupt messages within and / or across a plurality of queues, where interrupts are queued on the basis of which processor they target.

Owner:MICROSOFT TECH LICENSING LLC

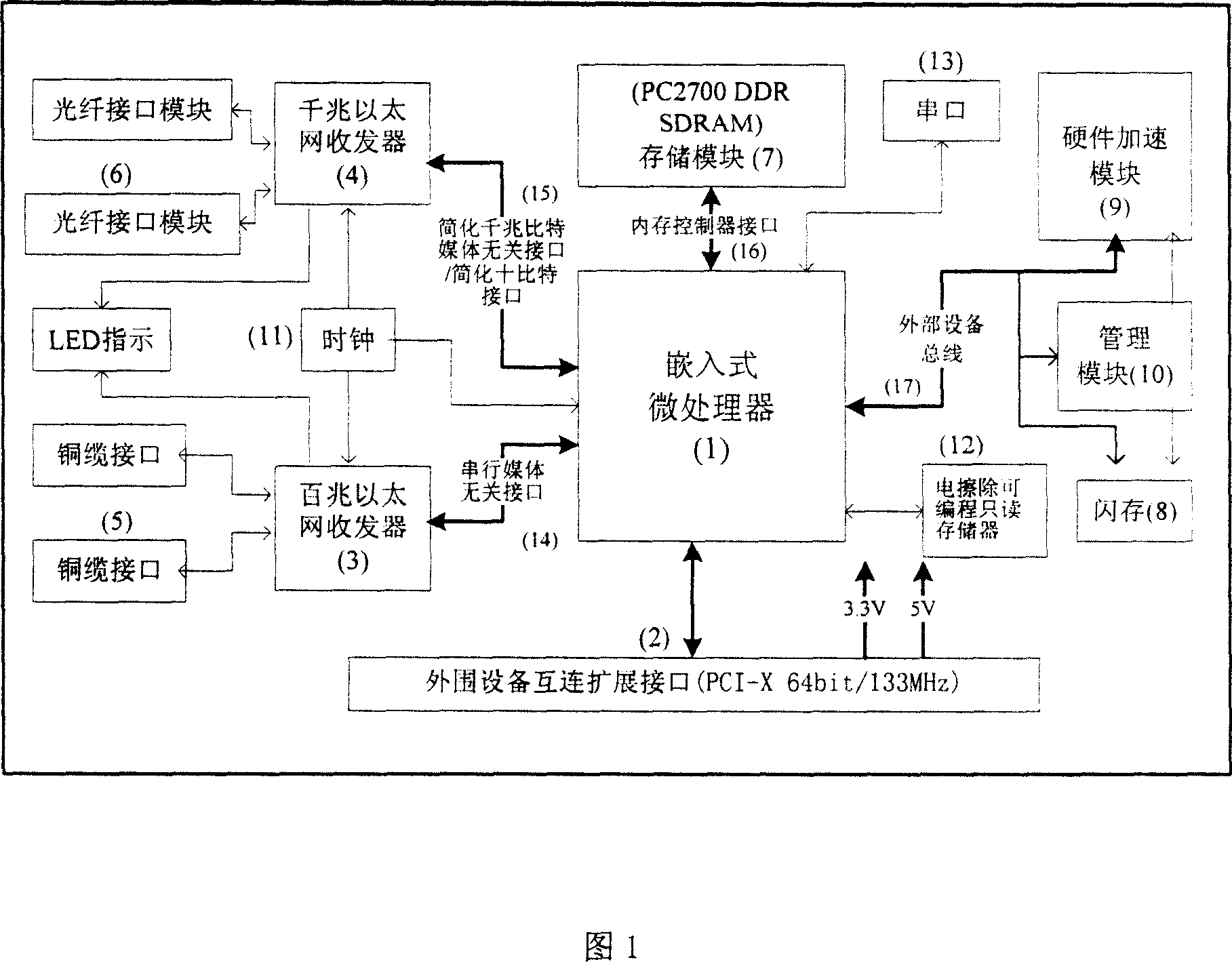

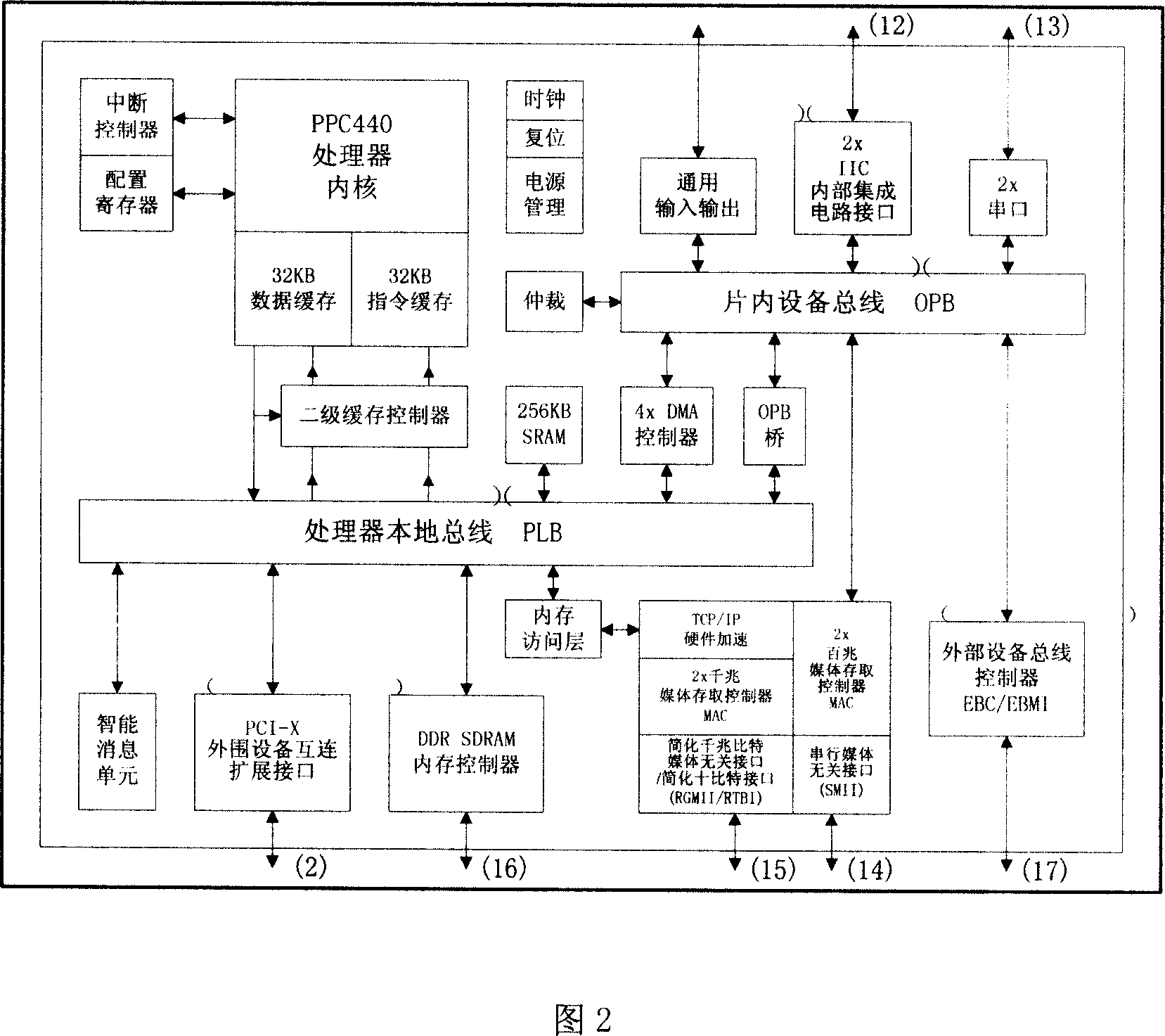

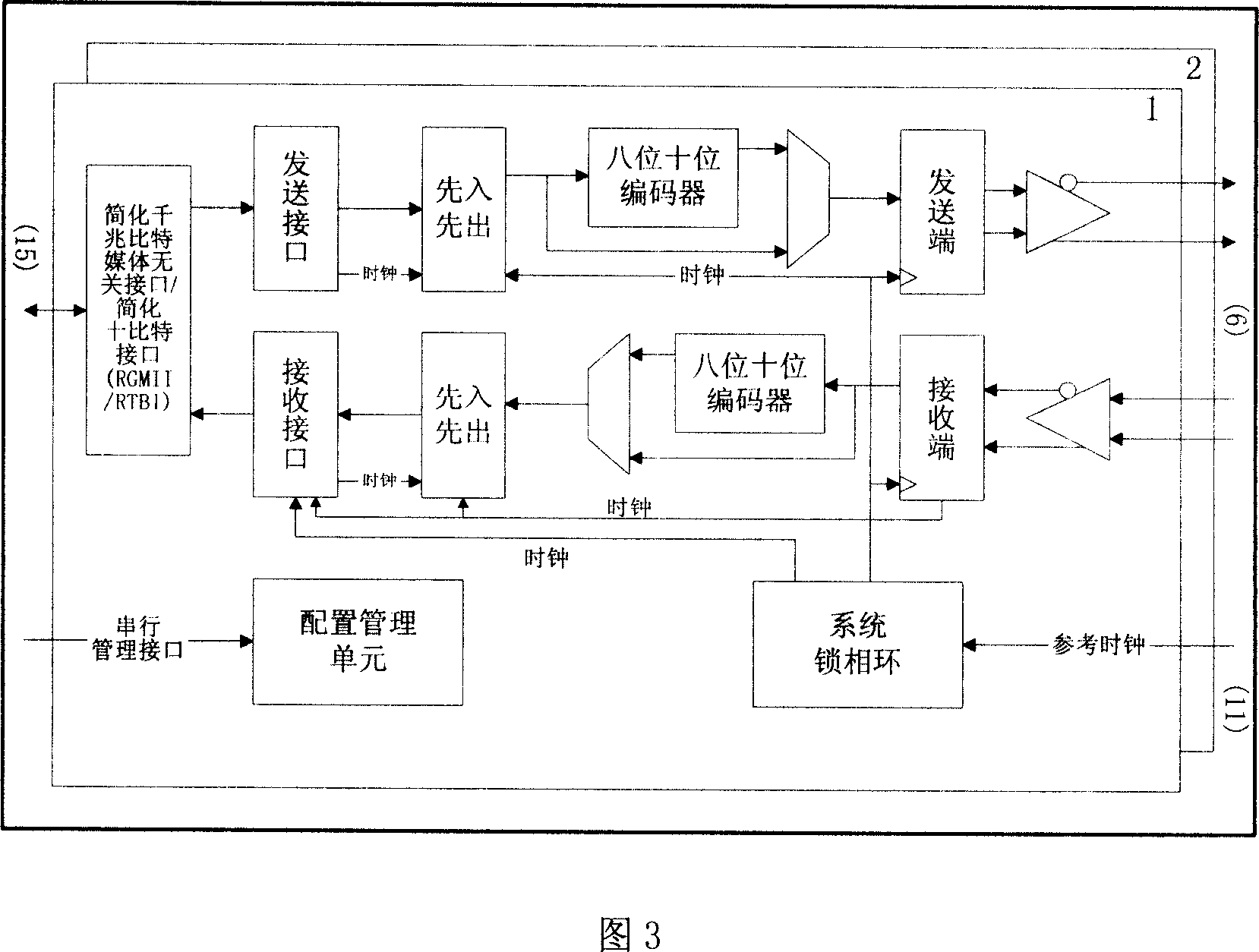

Intelligent Ethernet card with function of hardware acceleration

InactiveCN1992610ARelieve pressureEasy to handleData switching detailsComputer scienceNetwork interface controller

This invention discloses an intelligent Ethernet card with hardware acceleration function. The network card has the embedded high-performance processor, and designs two fiber Gigabit Ethernet interfaces and two copper Fast Ethernet interfaces, the network bandwidth being as high as 2.2Gbps, attached with the large capacity memory and the hardware acceleration module configured by user program. The network card uses 64-Bit / 133MHz PCI-X interface, with PCI-X V1.0A and PCI V2.3 bus specification. The network card can be used in the servers with higher requirement to the network processing capacity, and using the powerful network processing capacity of the network card, it can unload many needs in the server to the network card, processed by network card processor and hardware acceleration module, thus reducing the pressure on the server, increasing the overall system processing power.

Owner:HUAWEI TECH CO LTD

Interrupt redirection with coalescing

InactiveUS20090177829A1Improve efficiencyEfficiently perform processingSpecific program execution arrangementsMulti processorPCI Express

An interrupt redirection and coalescing system for a multi-processor computer. Devices interrupt a processor or group of processors using pre-defined message address and data payloads communicated with a memory write transaction over a PCI, PCI-X, or PCI Express bus. The efficiency of processing may be improved by combining multiple interrupt notifications into a single interrupt message to a processor. For some interrupts on a multi-processor computer, such as those signaling completion of an input / output (I / O) operation assigned to a device, the efficiency of processing the interrupt may vary from processor to processor. Processing efficiency and overall computer system operation may be improved by appropriately coalescing interrupt messages within and / or across a plurality of queues, where interrupts are queued on the basis of which processor they target.

Owner:MICROSOFT TECH LICENSING LLC

Supporting cyclic redundancy checking for PCI-X

ActiveUS7447975B2Improves error detectionCode conversionError detection onlyTarget ResponseTransaction data

A cyclic redundancy check (CRC) mechanism for the extensions (PCI-X) to the Peripheral Component Interconnect (PCI) bus system used in computer systems is fully backward compatible with the full PCI-X protocol. CRC check-bits are inserted to provide error detection capability for the header address and attribute phases, and for burst and DWORD transaction data phases. The CRC check-bits are inserted into unused attribute or clock (or target response) phases, or into reserved or reserved drive high portions (bits) of the address / data (AD), command / byte enable (C / BE#), or into the parity lanes of the PCI-X phases.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Method and system for fully trusted adapter validation of addresses referenced in a virtual host transfer request

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to validate that a direct memory access address referenced by an incoming I / O transaction that was initiated through a memory mapped I / O operation are associated with a virtual adapter or virtual resource that is referenced by the incoming memory mapped I / O operation is provided. Specifically, the present invention is directed to a mechanism for sharing conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O Adapters, PCI-Express I / O Adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A single physical I / O adapter validates that one or more direct memory access addresses referenced by an incoming I / O transaction initiated through a memory mapped I / O operation are associated with a virtual adapter or virtual resource that is referenced by the incoming memory mapped I / O operation.

Owner:IBM CORP

Priority transaction support on the PCI-X bus

InactiveUS20030065842A1Multiprogramming arrangementsData switching by path configurationMultiplexingProcessing Instruction

Support for indicating and controlling transaction priority on a PCI-X bus. Embodiments of the invention provide indicia that can be set to communicate to PCI-X-to-PCI-X bridges and Completer that a transaction should be handled specially and scheduled ahead of any other transaction not having their corresponding indicia set. A special handling instruction allows the priority transaction to be scheduled first or early. The indicia are implemented by setting a bit(s) in an unused portion of a PCI-X attribute field, or multiplexed with a used portion, to schedule the associated transaction as the priority transaction over other transactions that do not have their corresponding bit set. The present invention can be used for interrupt messaging, audio streams, video streams, isochronous transactions, or for high performance, low bandwidth control structures used for communication in a multiprocessor architecture across PCI-X.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Enhancing a PCI-X split completion transaction by aligning cachelines with an allowable disconnect boundary's ending address

InactiveUS6901467B2Unauthorized memory use protectionInput/output processes for data processingComputer scienceOperating system

A method for processing a PCI-X transaction in a bridge is disclosed, wherein data is retrieved from a memory device and is stored in a bridge then delivered to a requesting device. The method may comprise the acts of allocating a buffer in the bridge for the PCI-X transaction, retrieving data from a memory device, wherein the data comprises a plurality of cachelines, storing the plurality of cachelines in the buffer, wherein the plurality of cachelines are tracked and marked for delivery as the plurality of cachelines are received in the buffer, and delivering the plurality of cachelines to the requesting device in address order, the plurality of cachelines transmitted to the requesting device when one of the plurality of cachelines in the buffer aligns to an ending address of an allowable disconnect boundary (ADB) and the remaining cachelines are in address order.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com