Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

34 results about "General purpose graphic processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

GPGPU (general purpose graphics processing unit) Share this item with your network: A general-purpose GPU (GPGPU) is a graphics processing unit (GPU) that performs non-specialized calculations that would typically be conducted by the CPU (central processing unit). Ordinarily, the GPU is dedicated to graphics rendering.

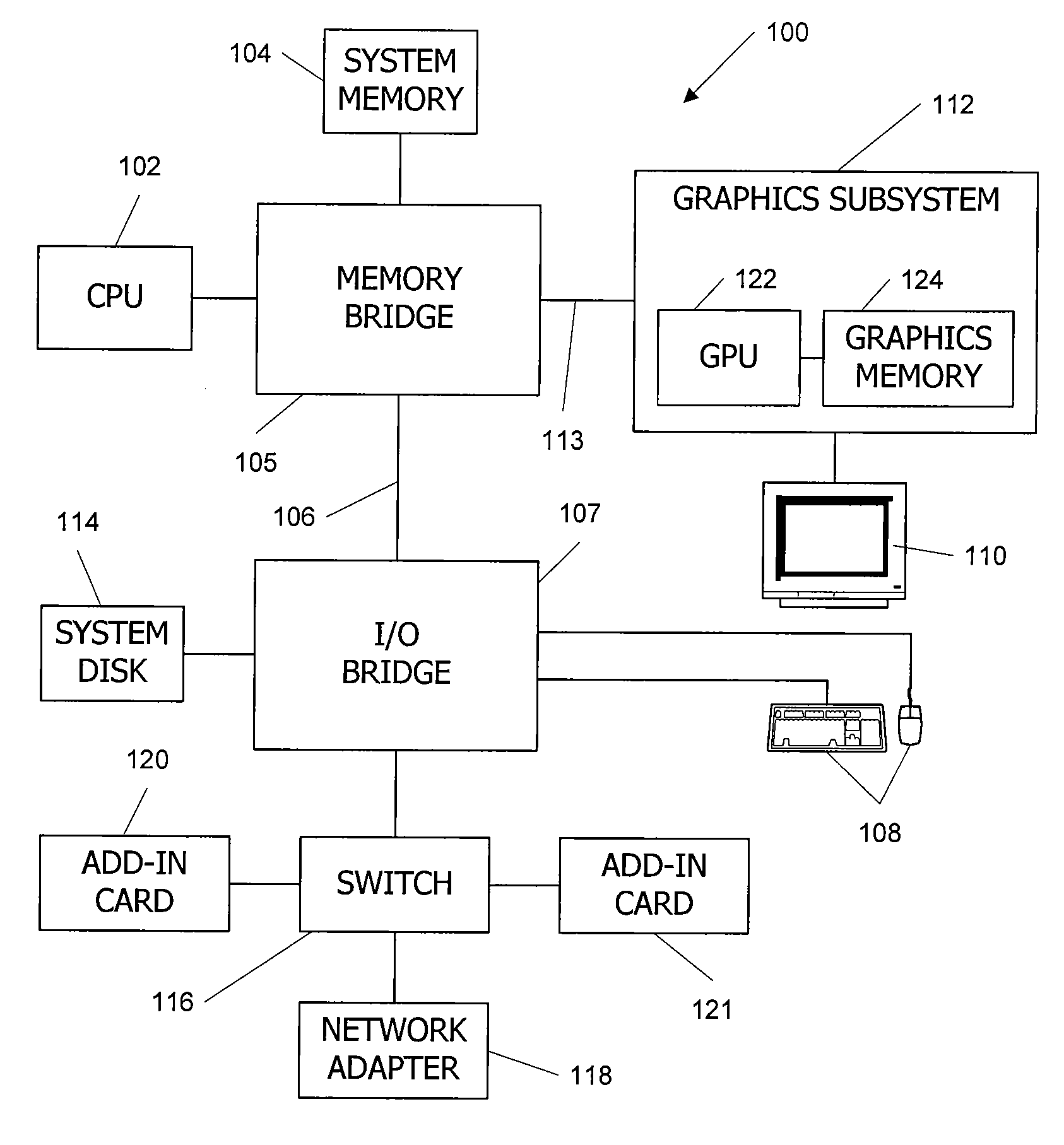

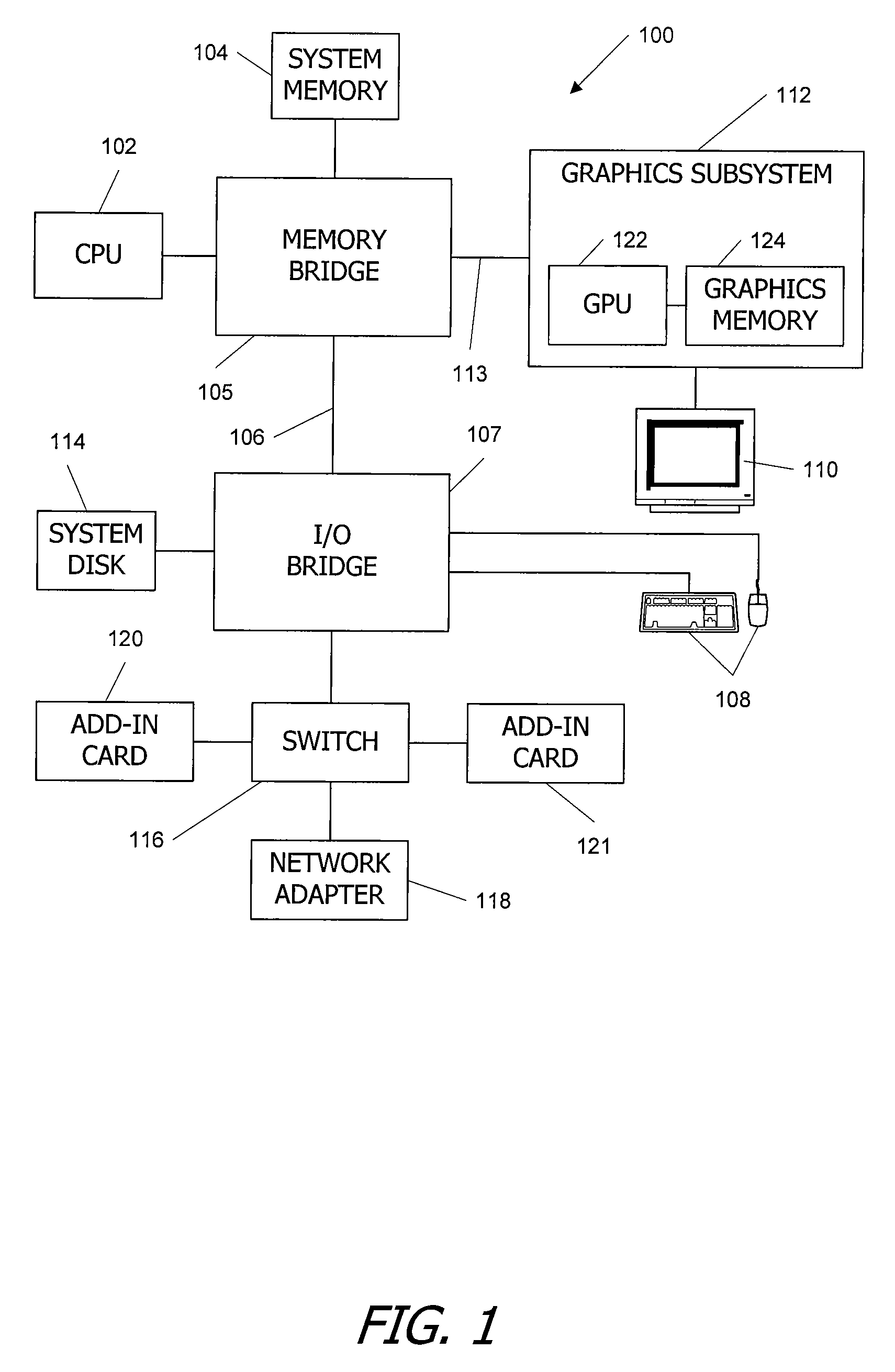

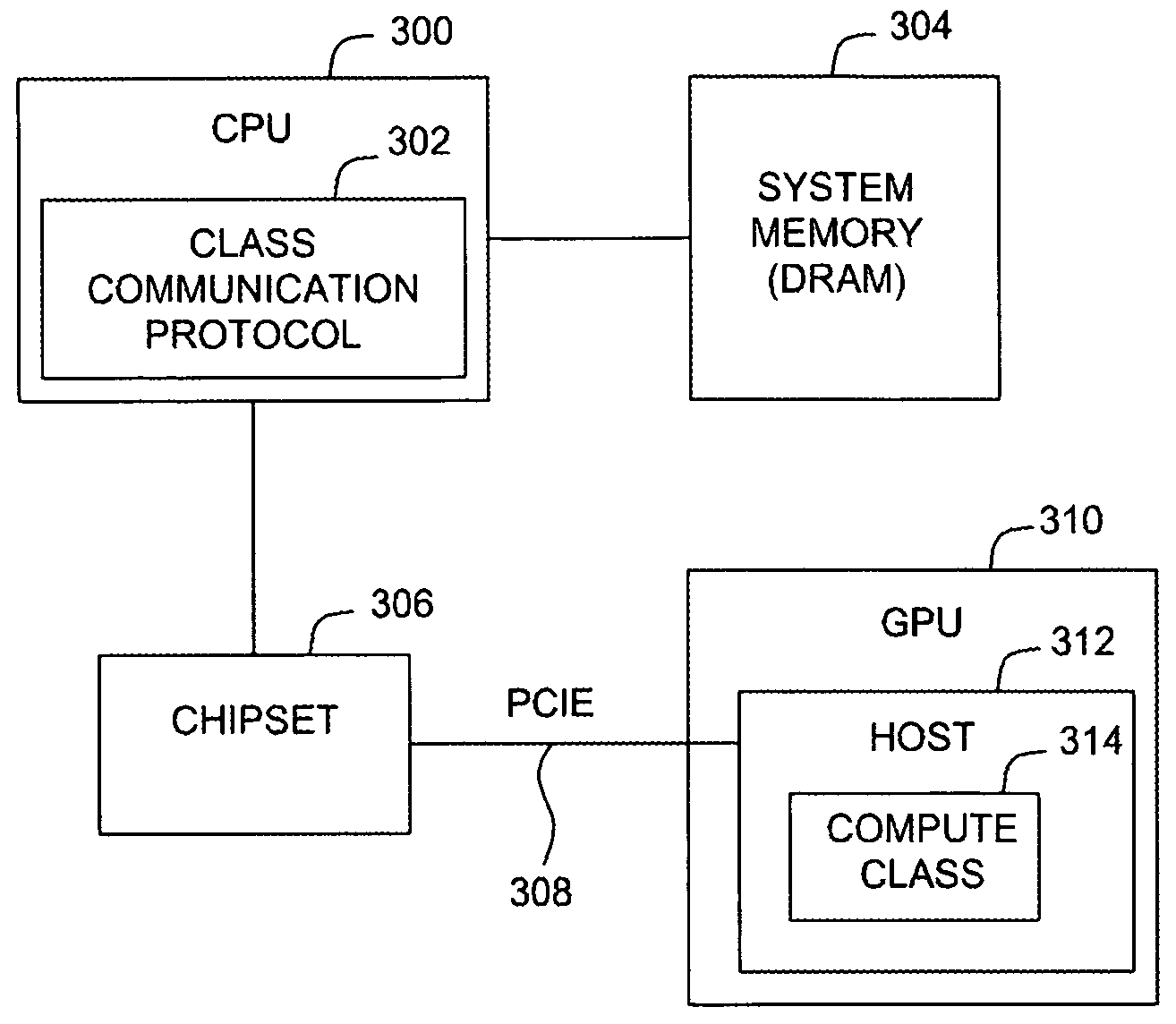

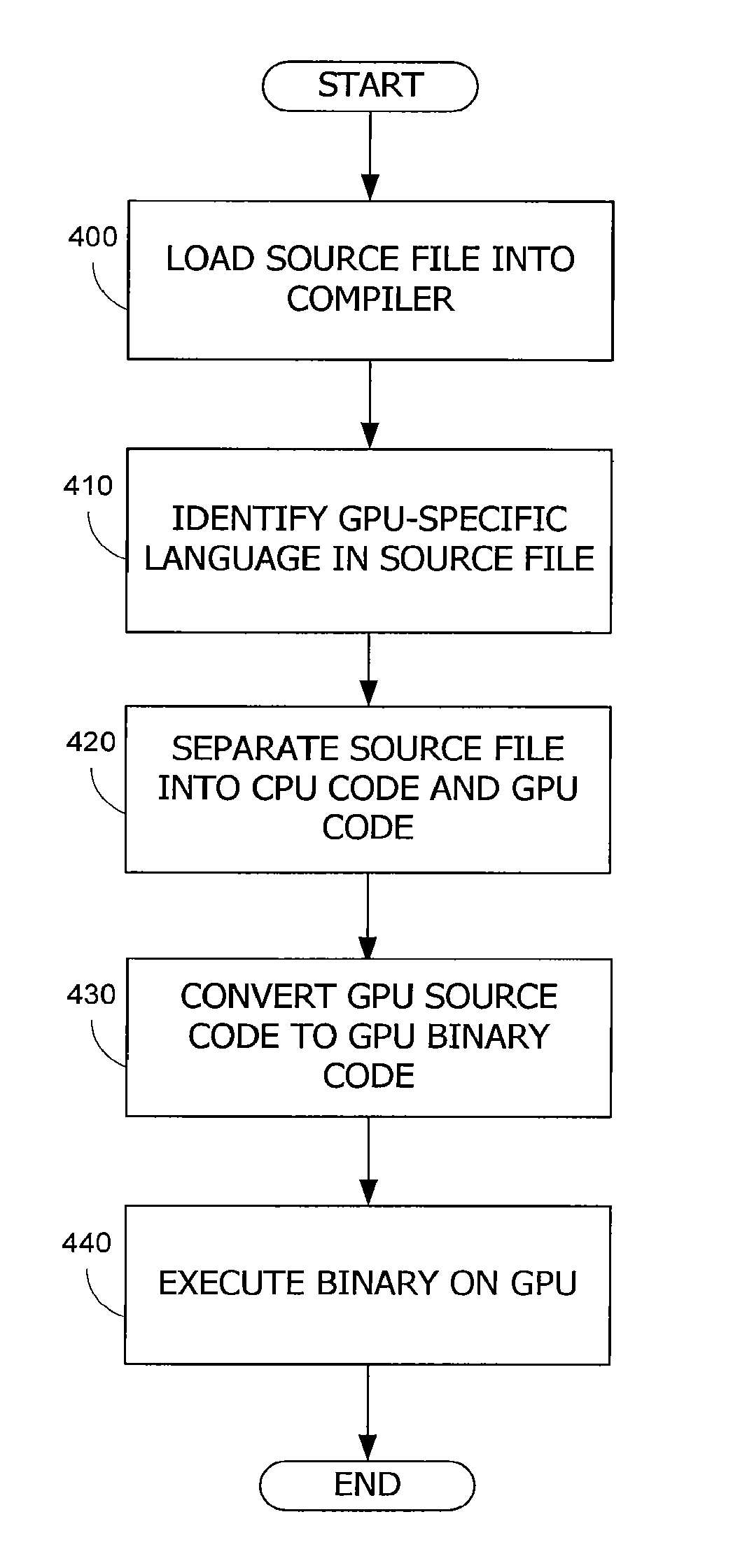

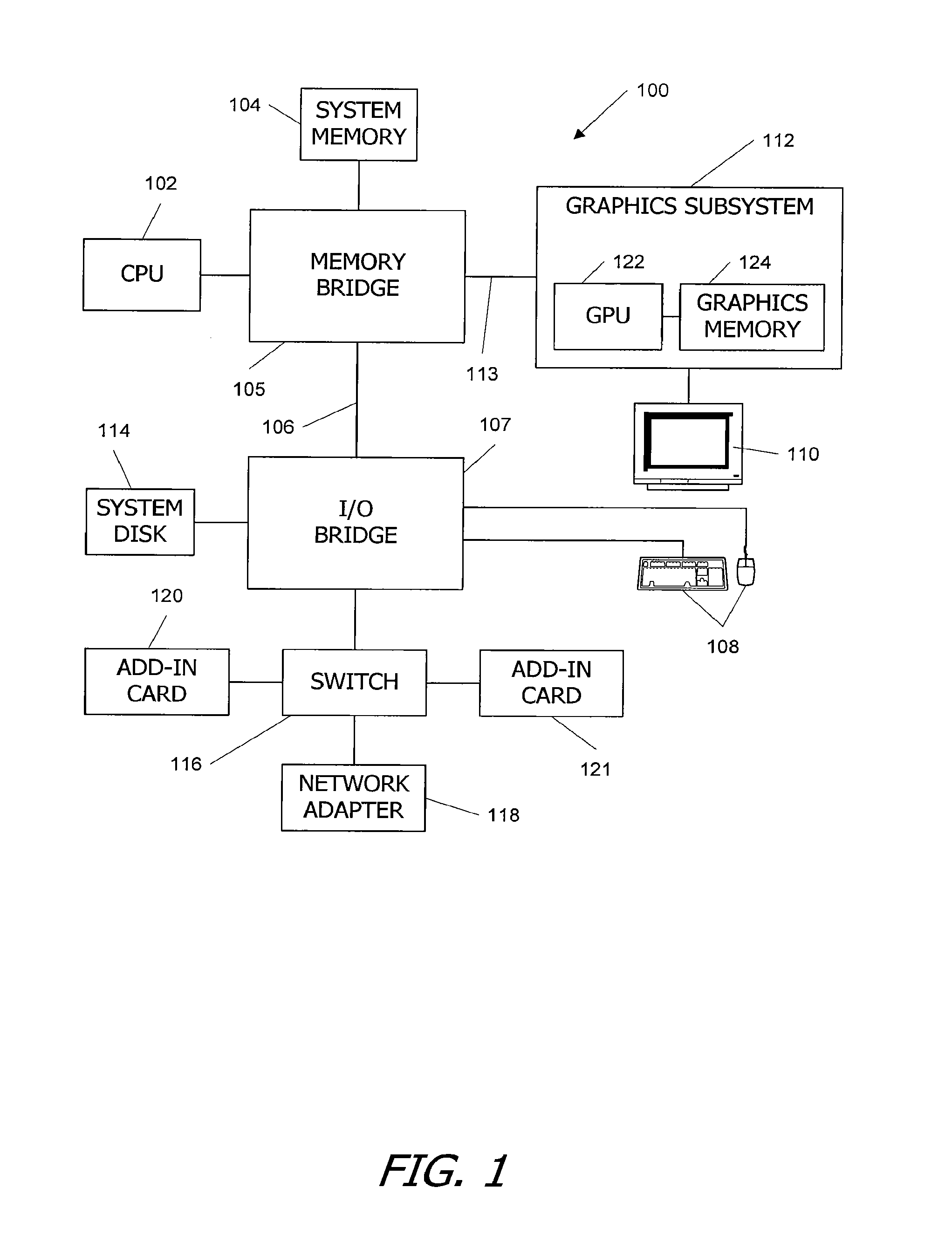

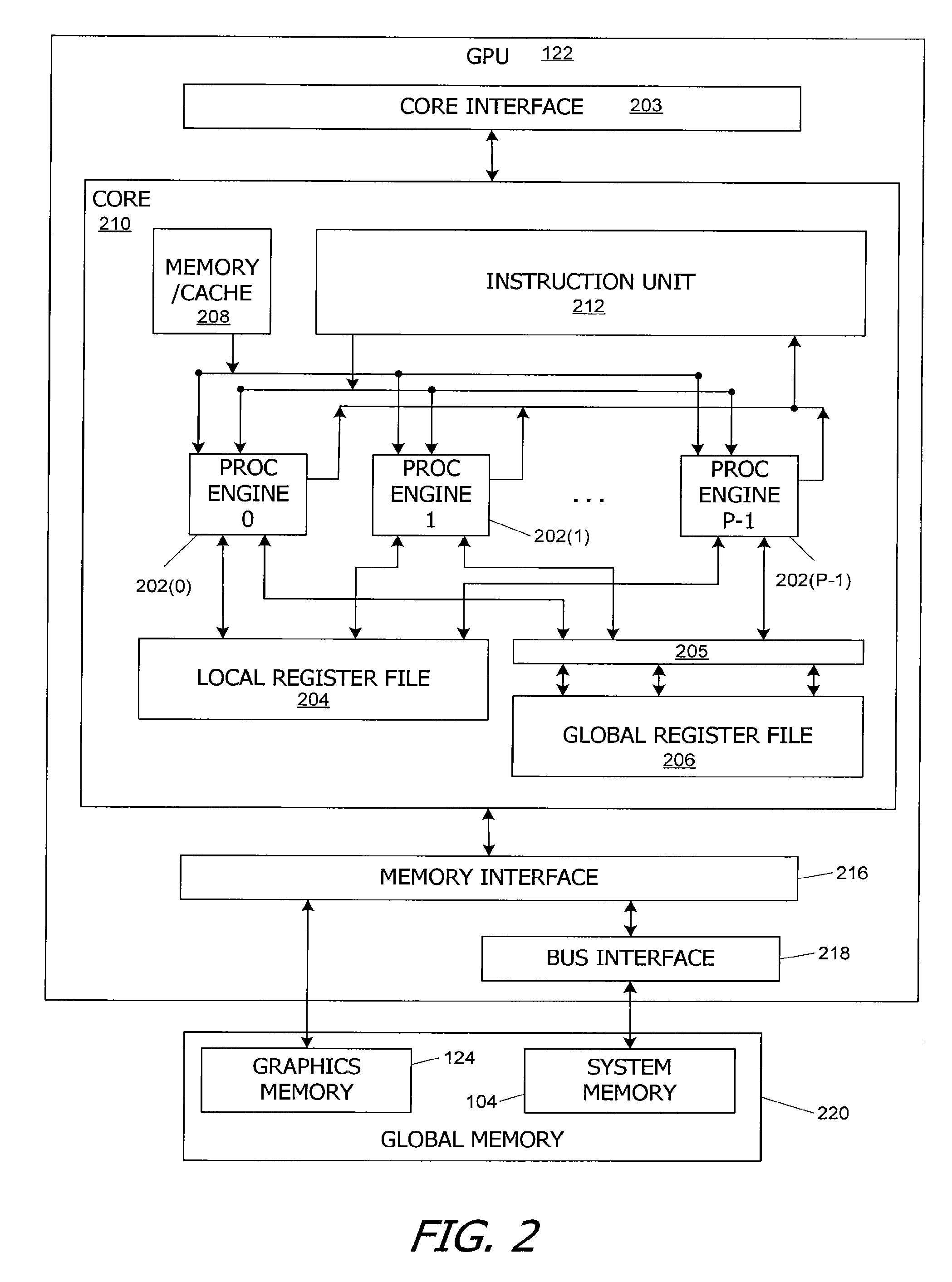

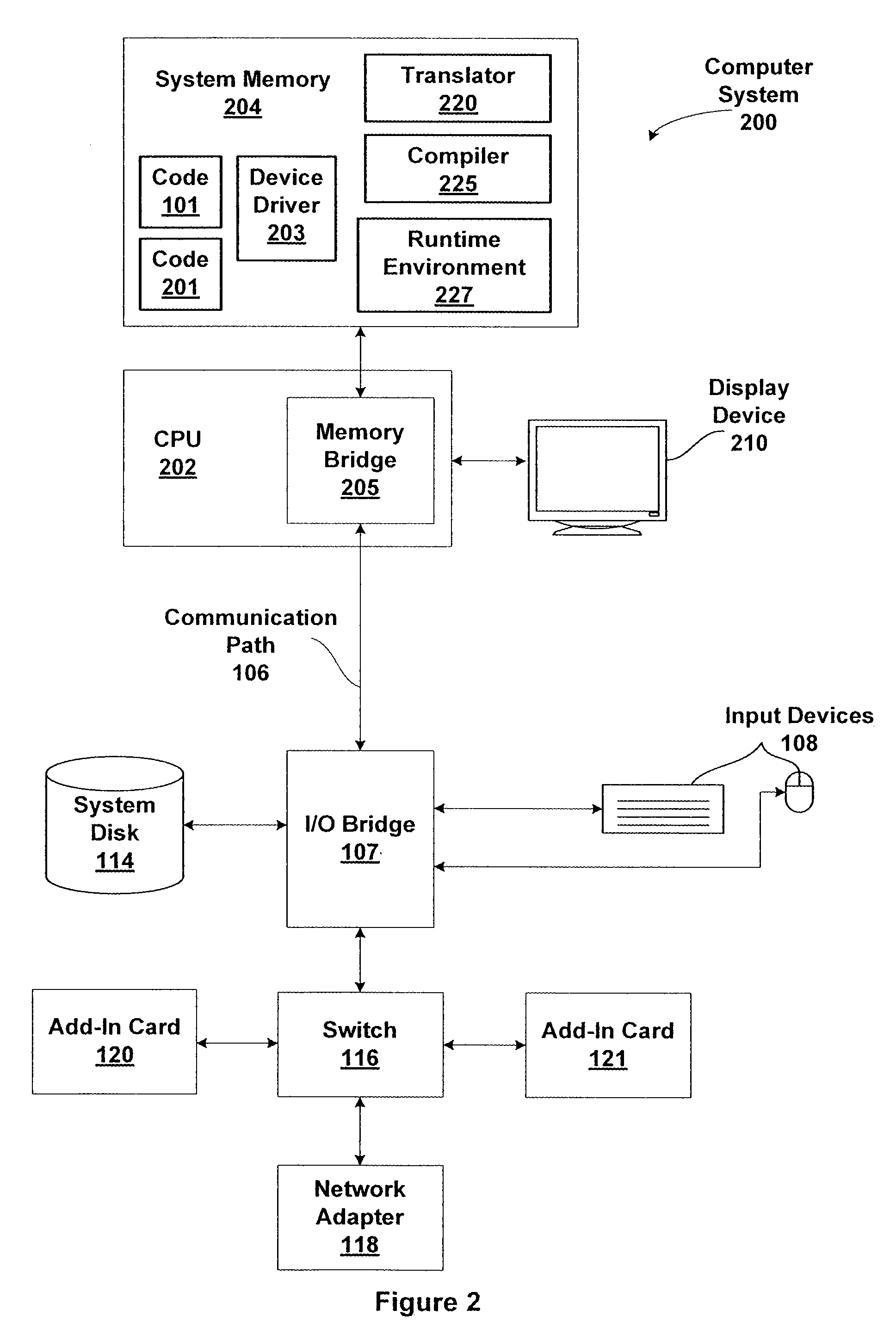

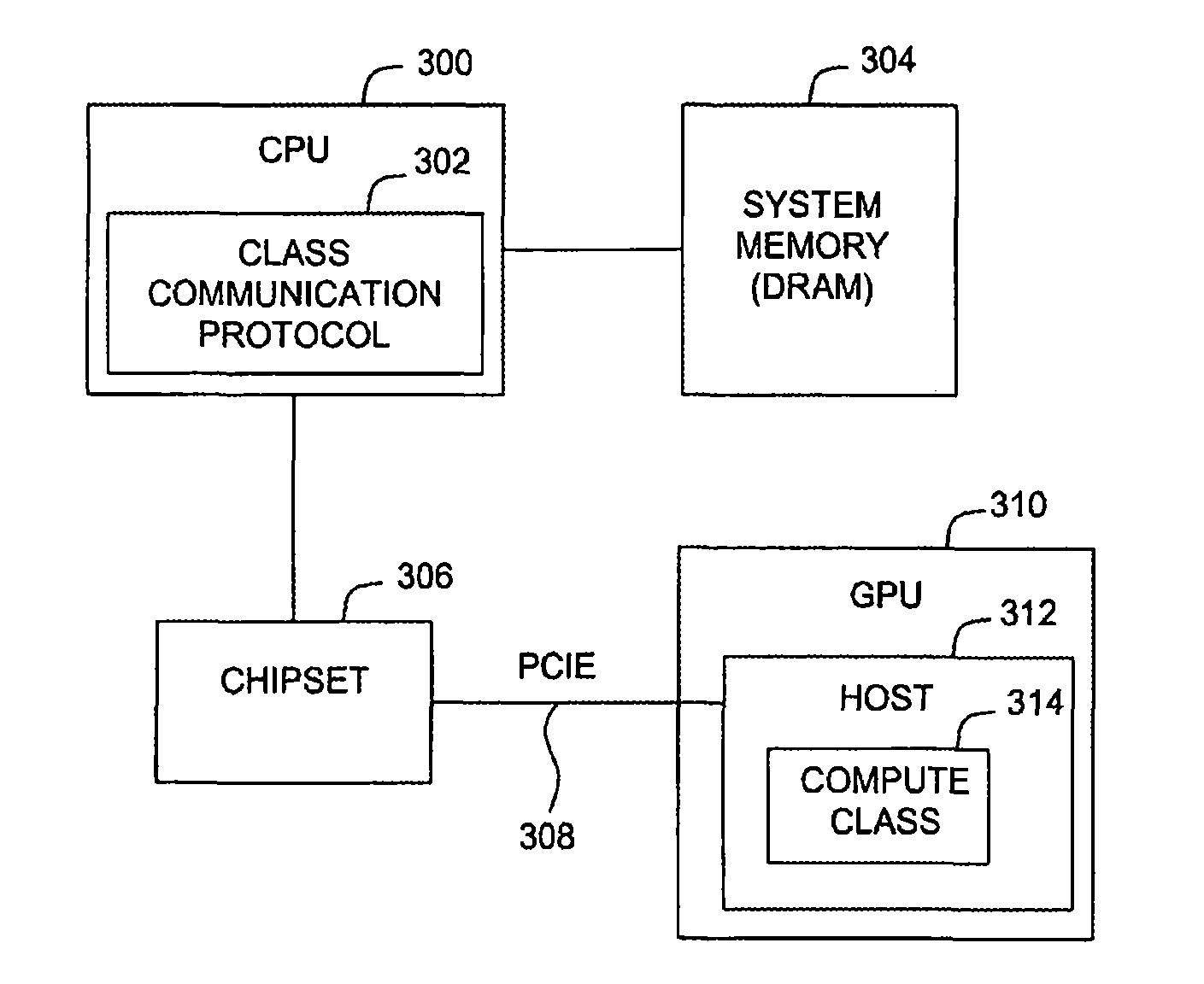

C/c++ language extensions for general-purpose graphics processing unit

A general-purpose programming environment allows users to program a GPU as a general-purpose computation engine using familiar C / C++ programming constructs. Users may use declaration specifiers to identify which portions of a program are to be compiled for a CPU or a GPU. Specifically, functions, objects and variables may be specified for GPU binary compilation using declaration specifiers. A compiler separates the GPU binary code and the CPU binary code in a source file using the declaration specifiers. The location of objects and variables in different memory locations in the system may be identified using the declaration specifiers. CTA threading information is also provided for the GPU to support parallel processing.

Owner:NVIDIA CORP

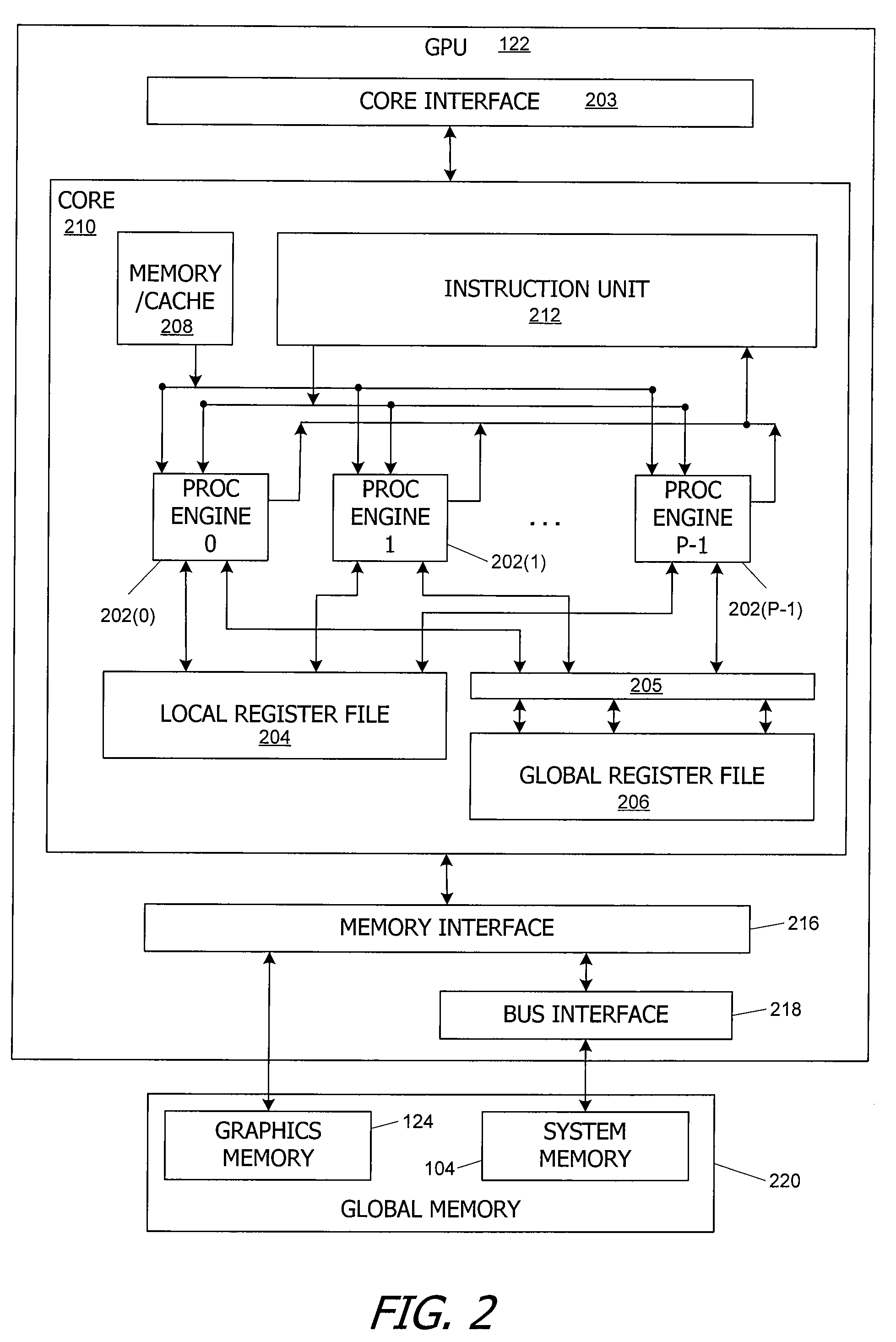

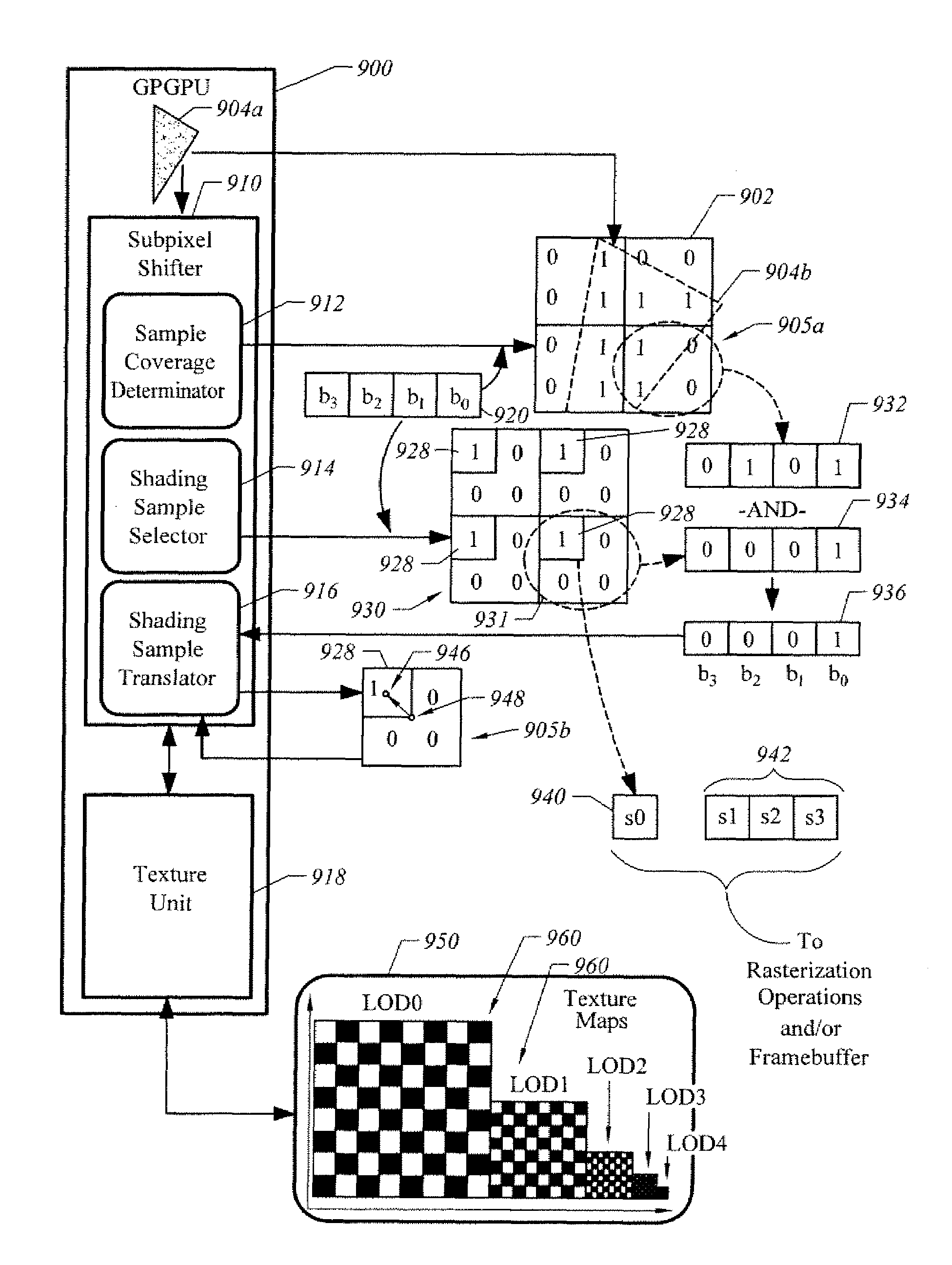

Graphical processing system, graphical pipeline and method for implementing subpixel shifting to anti-alias texture

ActiveUS7456846B1Reduce aliasingReduce textureDetails involving antialiasingCathode-ray tube indicatorsGeneral purposeGraphics

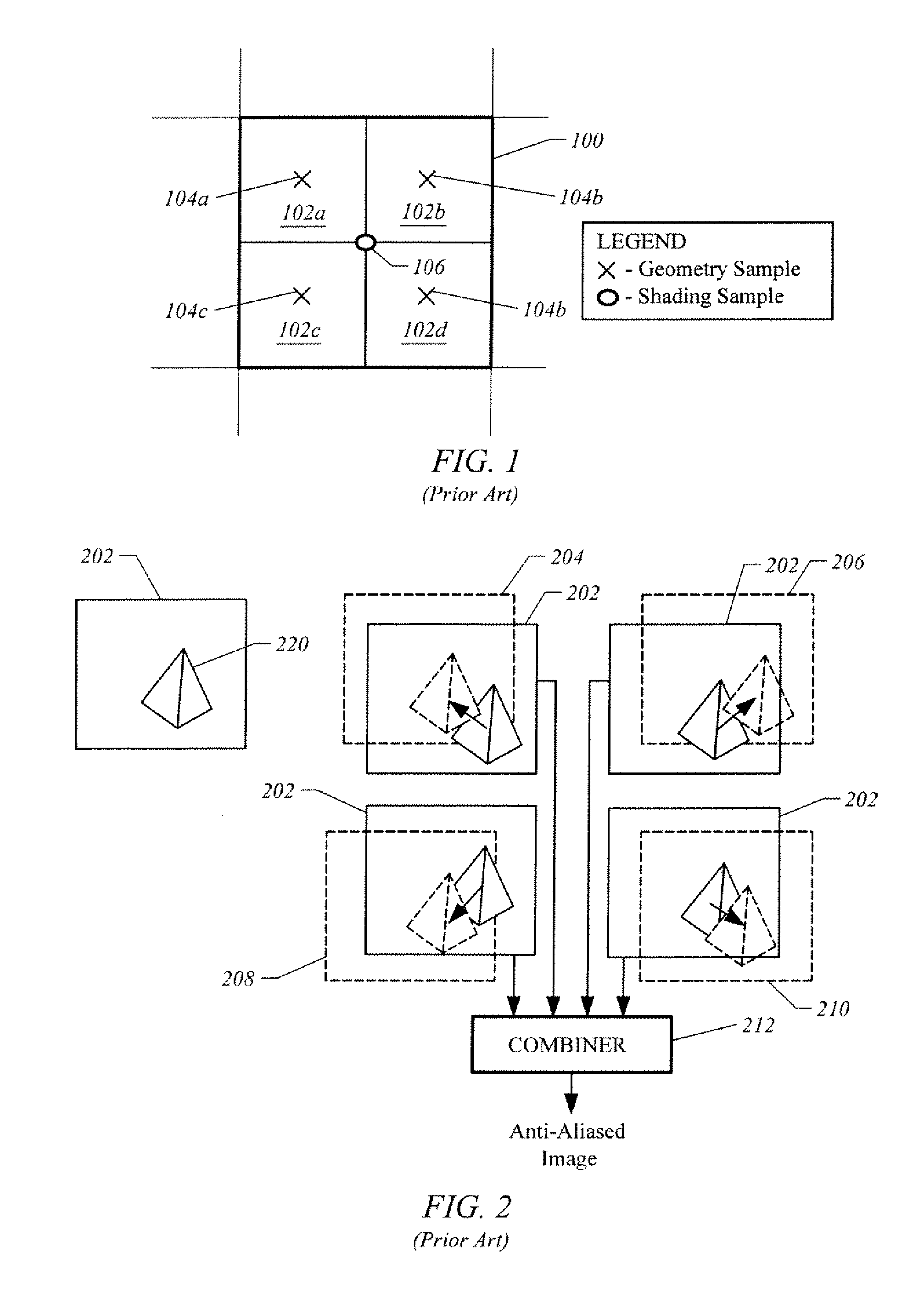

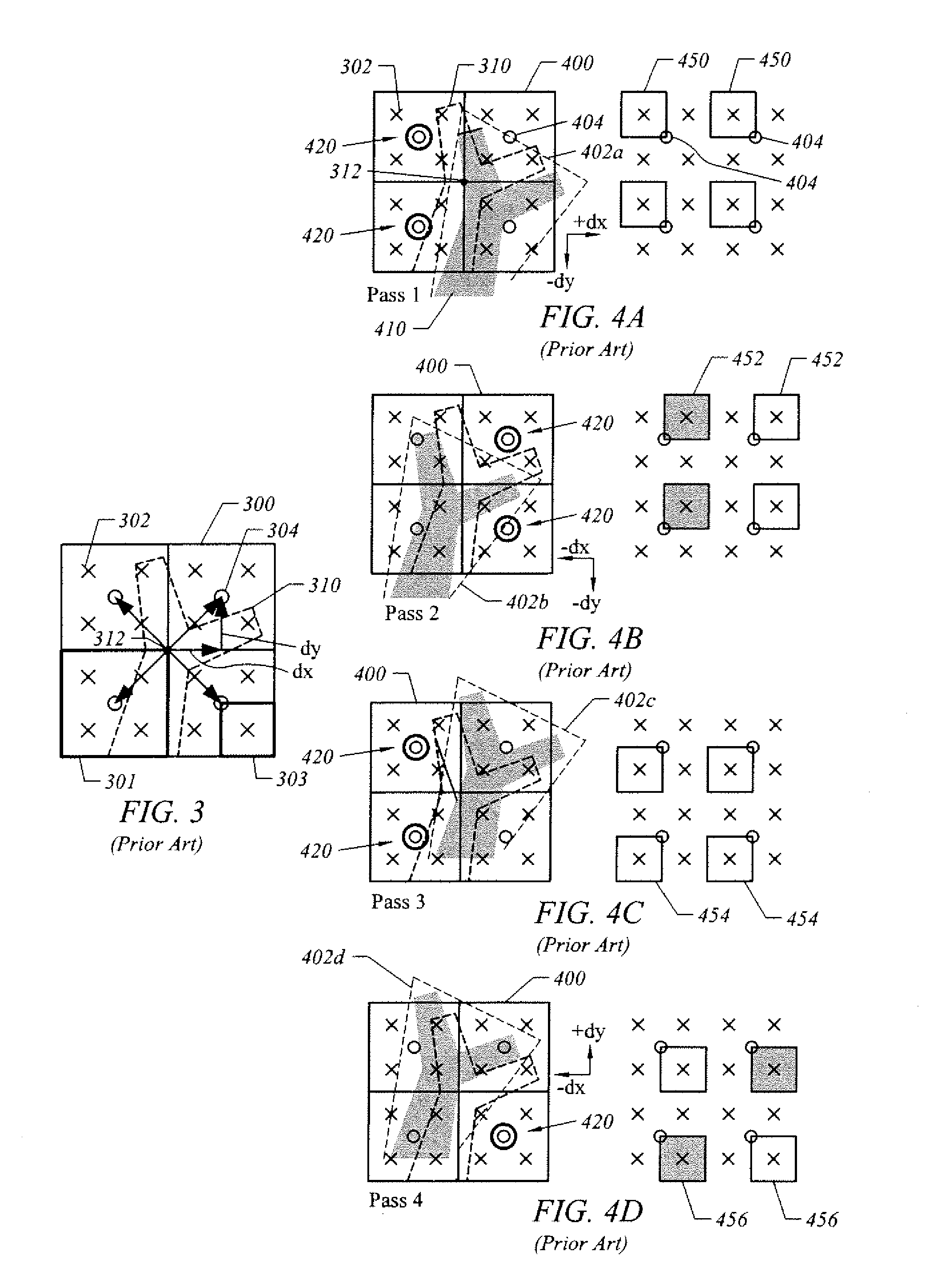

A system, apparatus, and method are disclosed for modifying positions of sample positions for selectably oversampling pixels to anti-alias non-geometric portions of computer-generated images, such as texture, at least in part, by shifting shading sample positions relative to a frame of reference. There is generally no relative motion between the geometries and the coverage sample positions. In one embodiment, an apparatus, such as a graphics pipeline and / or a general purpose graphics processing unit, anti-aliases geometries of a computer-generated object. The apparatus includes at least a texture unit and a pipeline front end unit to determine geometry coverage and a subpixel shifter to shift shading sample positions relative to the frame of reference. The apparatus can receive subpixel shifting masks to select subsets of shading sample positions. Each of the shading sample positions is shifted to a coverage sample position to reduce level of detail (“LOD”) artifacts.

Owner:NVIDIA CORP

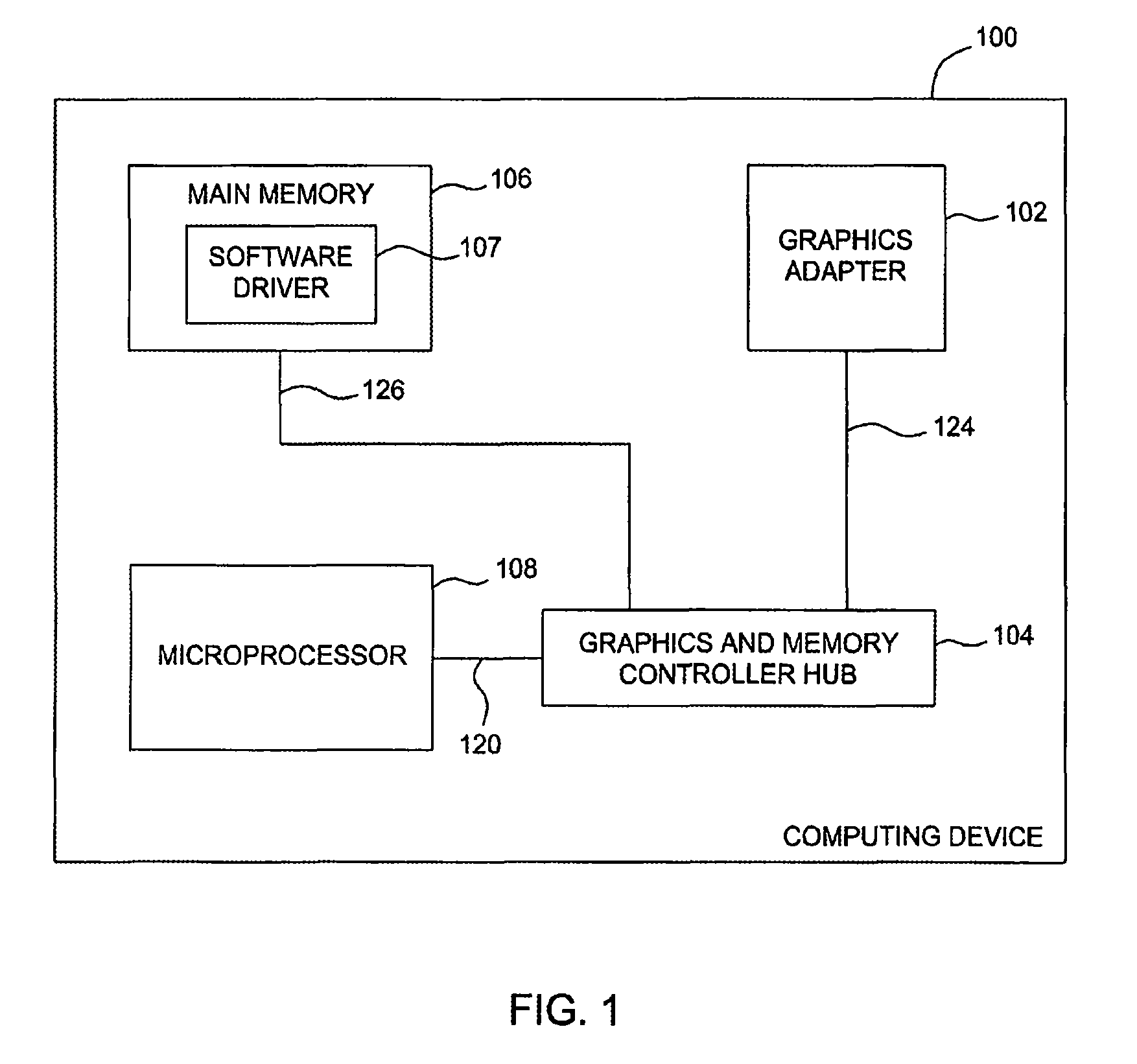

Cryptographic computations on general purpose graphics processing units

ActiveUS7746350B1Release resourcesFaster and efficient performanceUnauthorized memory use protectionHardware monitoringGeneral purposeGeneral purpose graphic processing unit

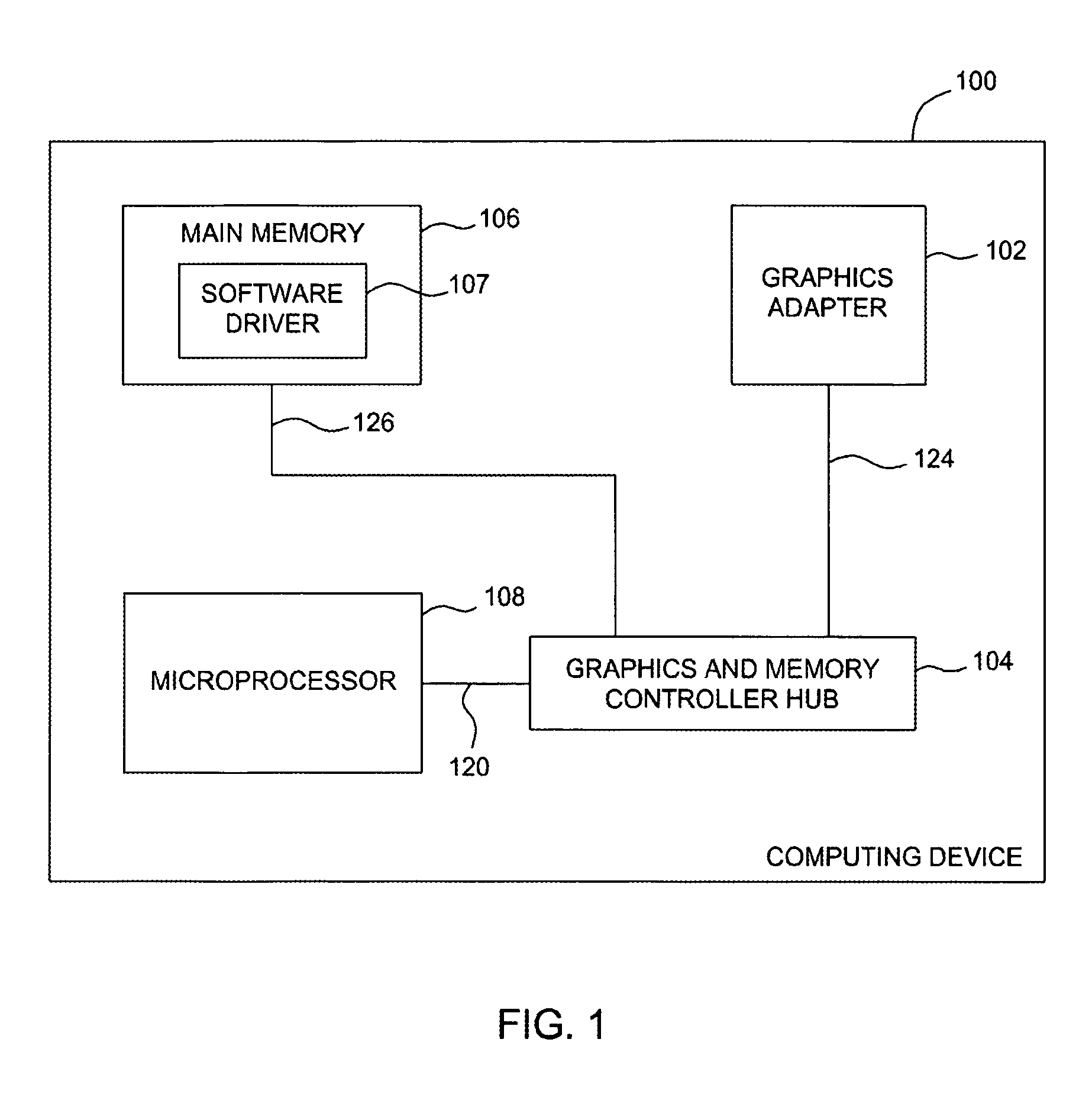

One embodiment of the invention sets forth a computing system for performing cryptographic computations. The computing system comprises a central processing unit, a graphics processing unit, and a driver. The central processing requests a cryptographic computation. In response, the driver downloads microcode to perform the cryptographic computation to the graphics processing unit and the graphics processing unit executes microcode. This offloads cryptographic computations from the CPU. As a result, cryptographic computations are performed faster and more efficiently on the GPU, freeing resources on the CPU for other tasks.

Owner:NVIDIA CORP

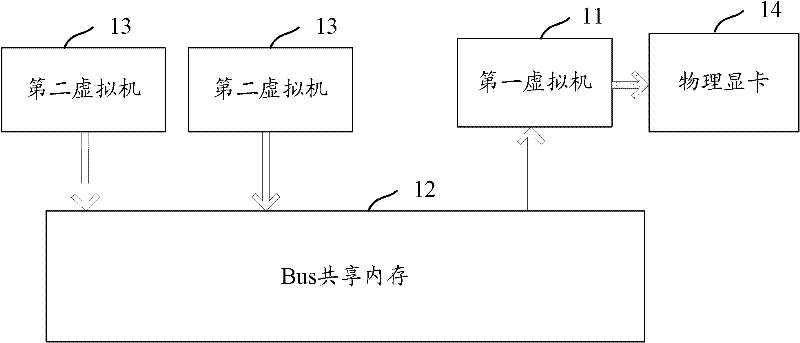

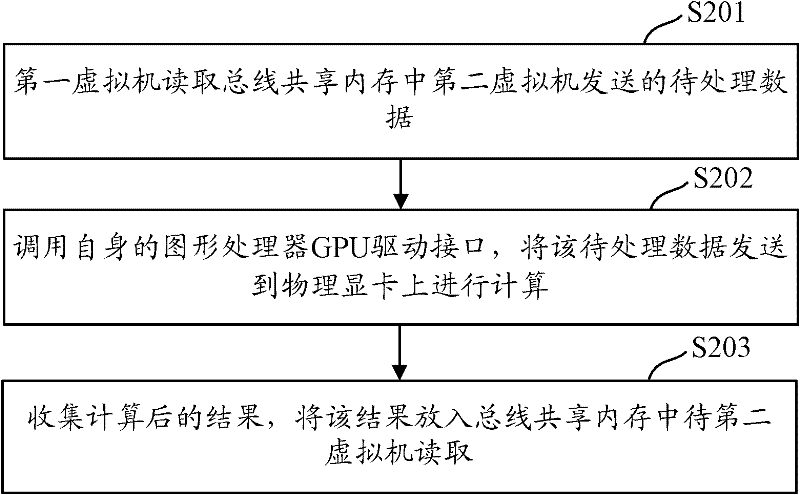

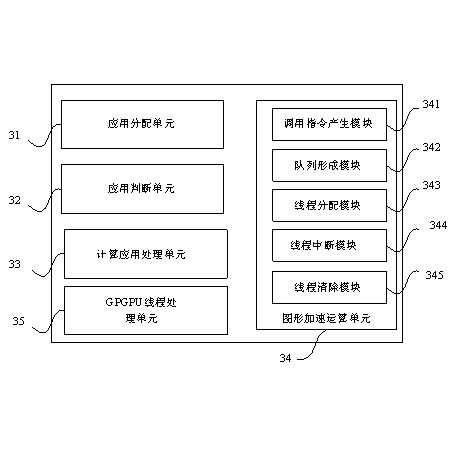

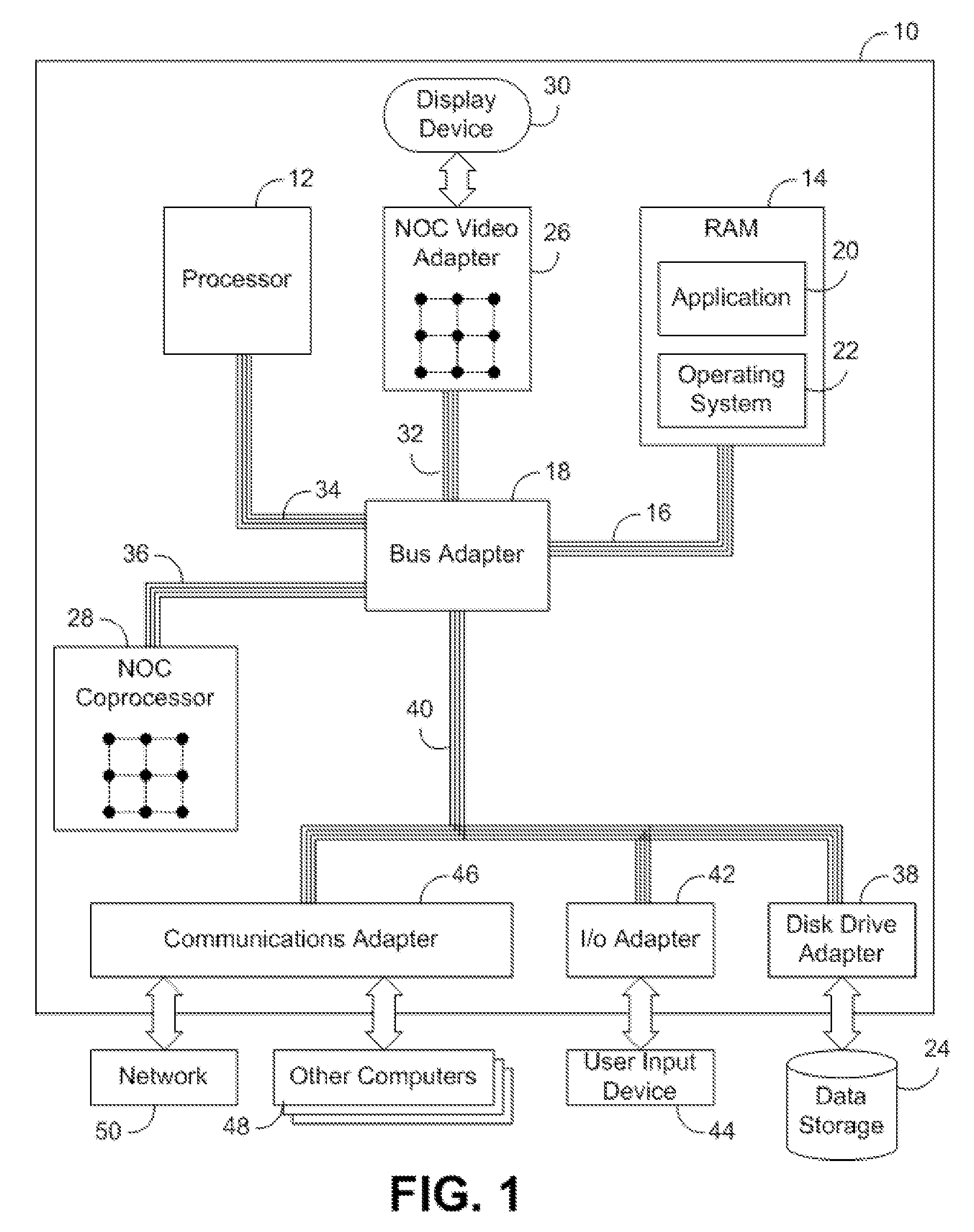

Implementation method, system and device for virtualization of universal graphic processor

ActiveCN102541618AImplement virtualizationProcessor architectures/configurationSoftware simulation/interpretation/emulationGeneral purposeGraphics

The invention discloses an implementation method, system and device for the virtualization of a GPGPU (General Purpose Graphics Processing Unit) to solve the problem that the virtualization of the GPGPU cannot be solved in the prior art. The implementation method comprises the following steps: a first virtual machine reads data to be processed in a shared memory of a bus, wherein the data to be processed is written by a second virtual machine; and the first virtual machine invokes a CPU (Central Processing Unit) driver interface, sends the data to be processed to a physical display card for calculation, collects calculated results, and inputs the results in the shared memory of the bus to be read by the second virtual machine. As the first virtual machine can visit the physical display card, and can realize information interaction with the second virtual machine, the virtualization of the GPGPU is realized.

Owner:CHINA MOBILE COMM GRP CO LTD

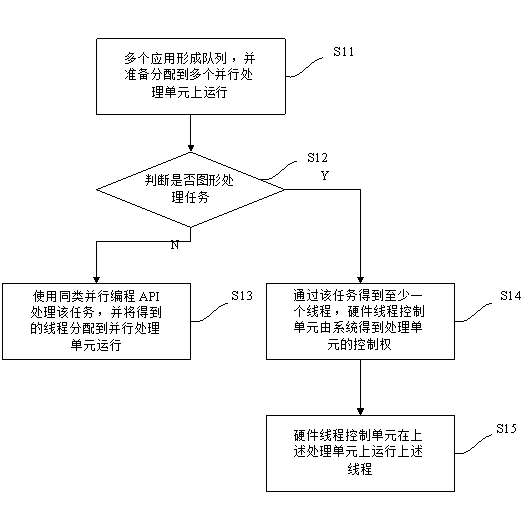

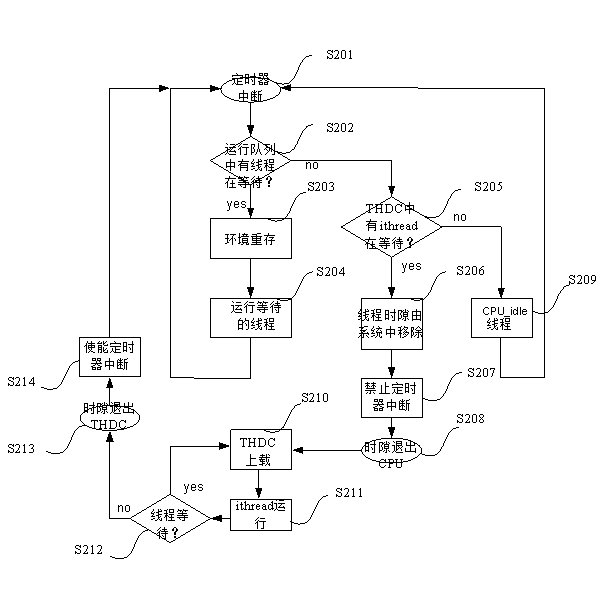

Method and device for achieving multi-application parallel processing on single processors

ActiveCN103064657AResource allocationConcurrent instruction executionHardware threadComputer architecture

The invention relates to a method for achieving multi-application parallel processing on single processors such as a graphics processing unit (GPU) and a general purpose graphics processing units (GPGPU). The method comprises the steps of respectively enabling a plurality of applications to form queues and be prepared to be distributed to a plurality of parallel processing units to perform parallel running; respectively judging whether the plurality of applications are image rendering applications, and if the plurality of applications are the image rendering applications, generating at least one coloring thread to a hardware thread control unit through the applications, and starting rendering on the processing units controlled by a hardware manager by GPU driving; or else, using a homogeneous parallel programming application programming interface (API) to process at least one thread generated by the applications, and configuring the processed thread to the processing units serving as symmetrical multi processing (SMP) cores so as to run. The invention further relates to a device for achieving the method. The method and device for achieving multi-application parallel processing on the single processors have the advantage that two or more different kinds of applications can be processed simultaneously on one processor.

Owner:SHENZHEN ZHONGWEIDIAN TECH

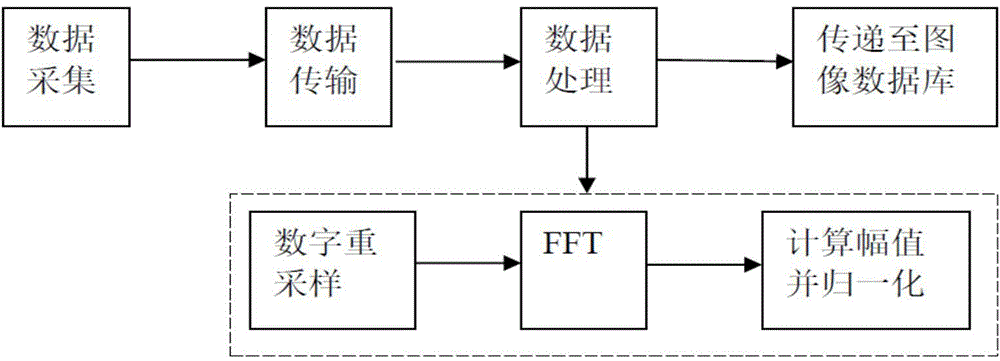

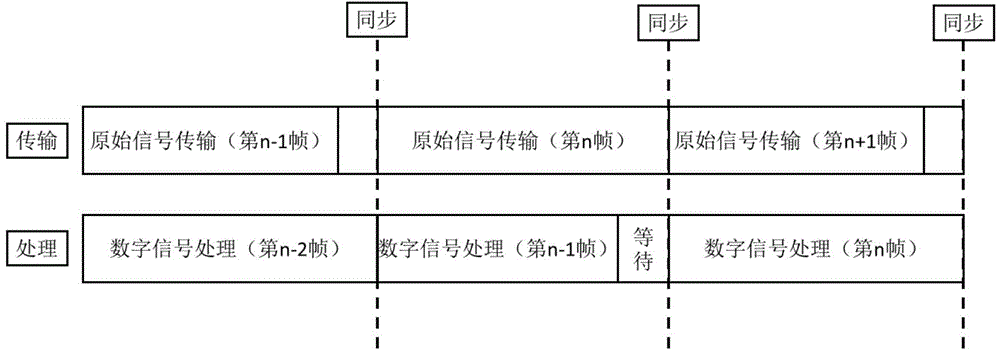

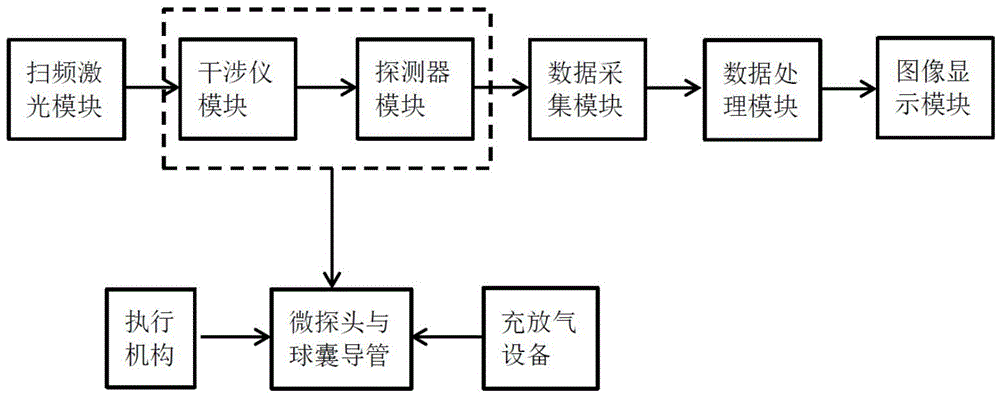

Method and system for processing OCT (Optical Coherence Tomography) signal by using general purpose graphic processing unit

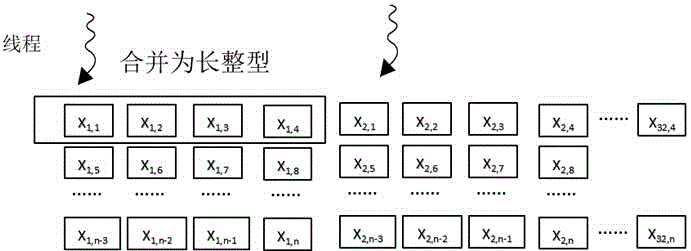

InactiveCN104794740AEvenly distributedIncreased maximum imaging depthBalloon catheter2D-image generationDigital signal processingOriginal data

The invention provides a method or processing an OCT (Optical Coherence Tomography) signal in an OCT system by using a general purpose graphic processing unit (GPGPU). The method comprises the following four steps: (1) acquiring data; (2) transmitting the data; (3) processing the data; and (4) transmitting the data to a graphic display library. When the data are transmitted, OCT original data which are transmitted to an equipment memory at the last time by the general purpose graphic processing unit are subjected to parallel processing; and the processed data are stored into the memory of the graphic display library. By virtue of the method, FD-OCT digital signal processing efficiency can be improved, and real-time processing and displaying of a graphic can be realized; the requirements and cost of digital signal processing equipment are reduced, the transportability of software is improved and the software development cost is reduced; and meanwhile, the method is in seamless combination with a current modern graphic display library so that the flexibility of software display is improved.

Owner:MICRO TECH (NANJING) CO LTD

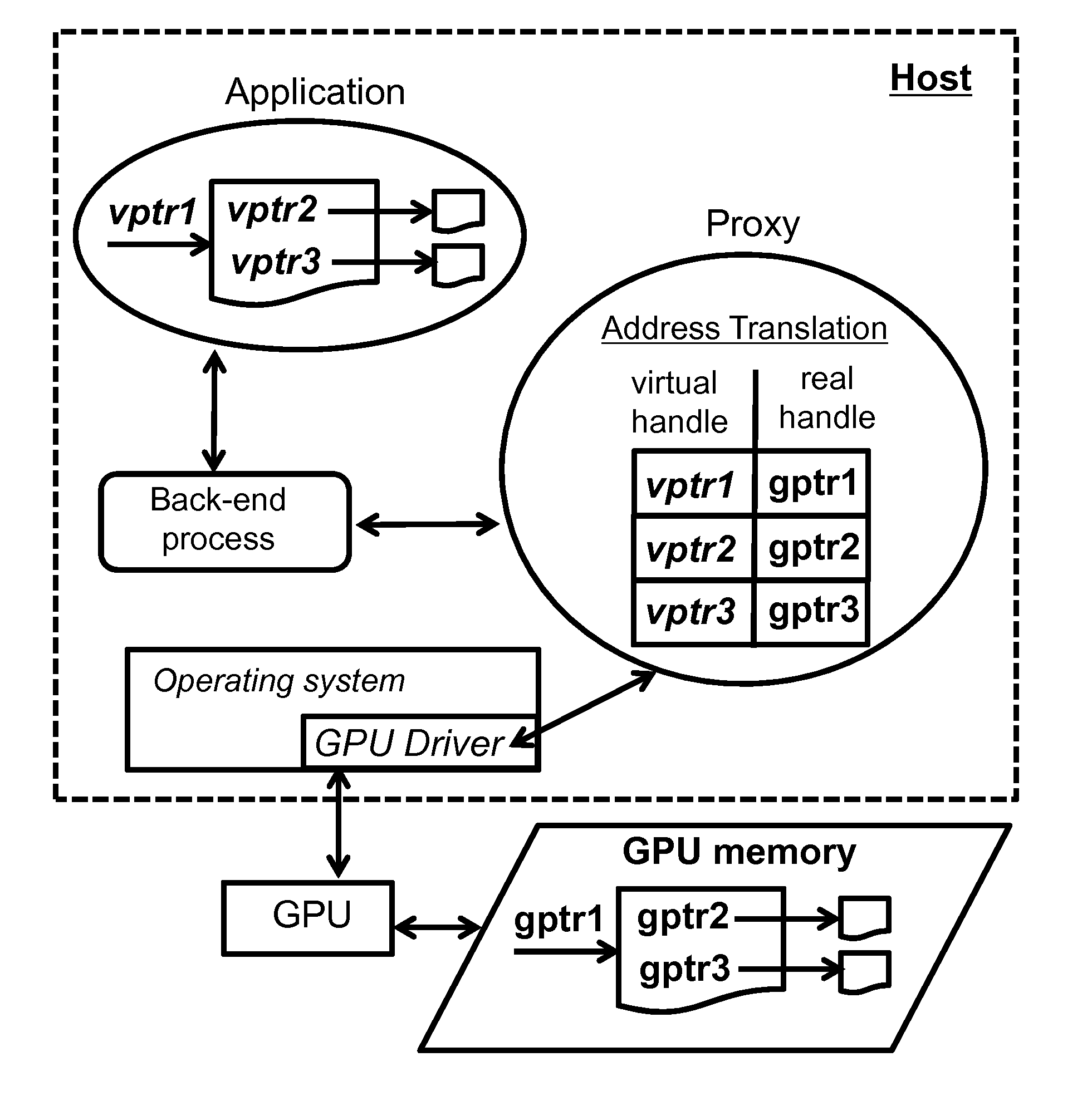

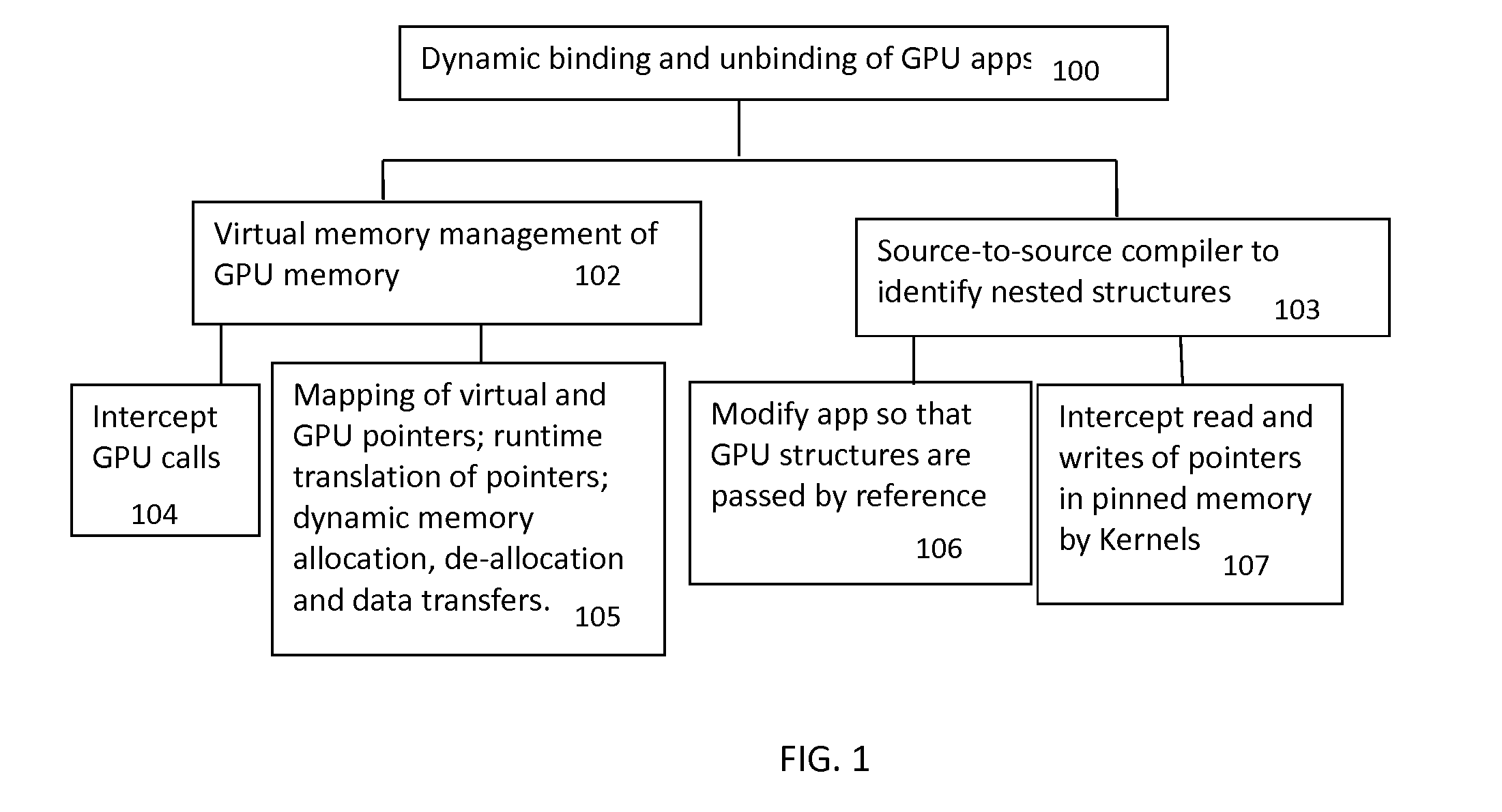

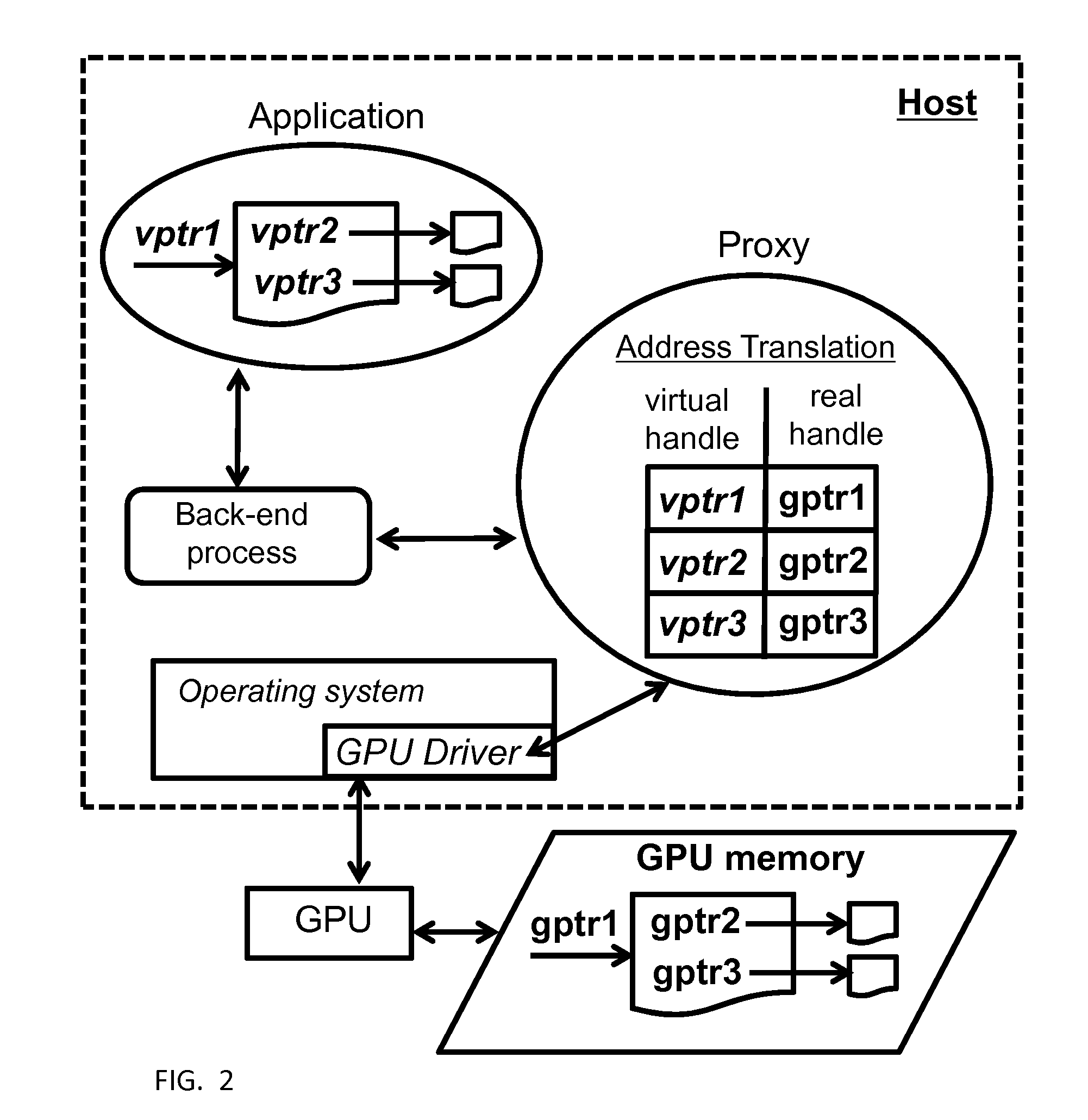

Method and system to dynamically bind and unbind applications on a general purpose graphics processing unit

InactiveUS20120188263A1Memory adressing/allocation/relocationImage memory managementComputational scienceGeneral purpose

A system for dynamically binding and unbinding of graphics processing unit GPU applications, the system includes a memory management for tracking memory of a GPU used by an application, and a source-to-source compiler for identifying nested structures allocated on the GPU so that the virtual memory management can track these nested structures, and identifying all instances where nested structures on the GPU are modified inside kernels.

Owner:NEC CORP

C/c++ language extensions for general-purpose graphics processing unit

A general-purpose programming environment allows users to program a GPU as a general-purpose computation engine using familiar C / C++ programming constructs. Users may use declaration specifiers to identify which portions of a program are to be compiled for a CPU or a GPU. Specifically, functions, objects and variables may be specified for GPU binary compilation using declaration specifiers. A compiler separates the GPU binary code and the CPU binary code in a source file using the declaration specifiers. The location of objects and variables in different memory locations in the system may be identified using the declaration specifiers. CTA threading information is also provided for the GPU to support parallel processing.

Owner:NVIDIA CORP

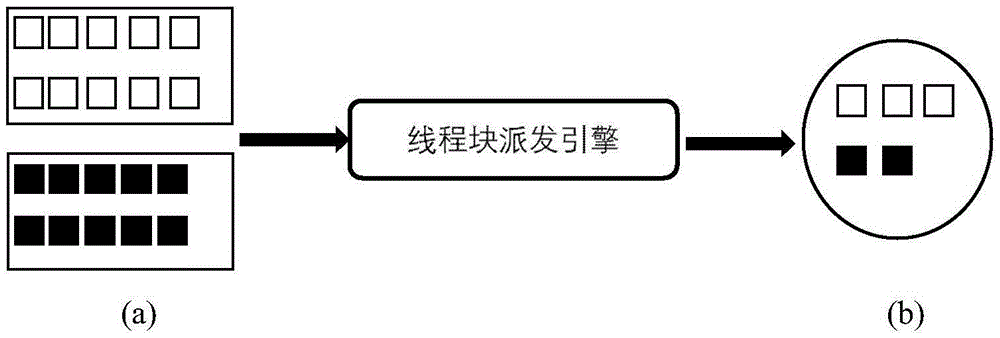

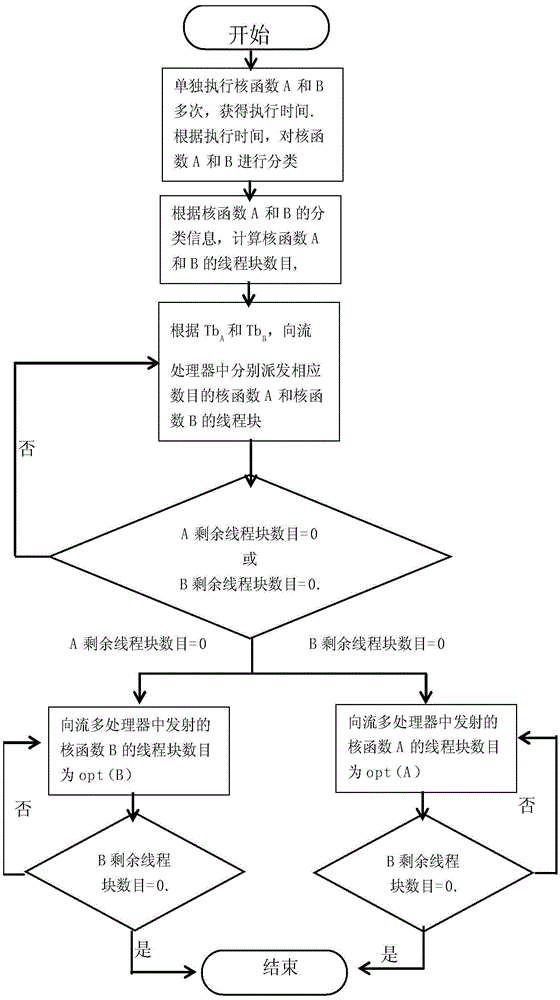

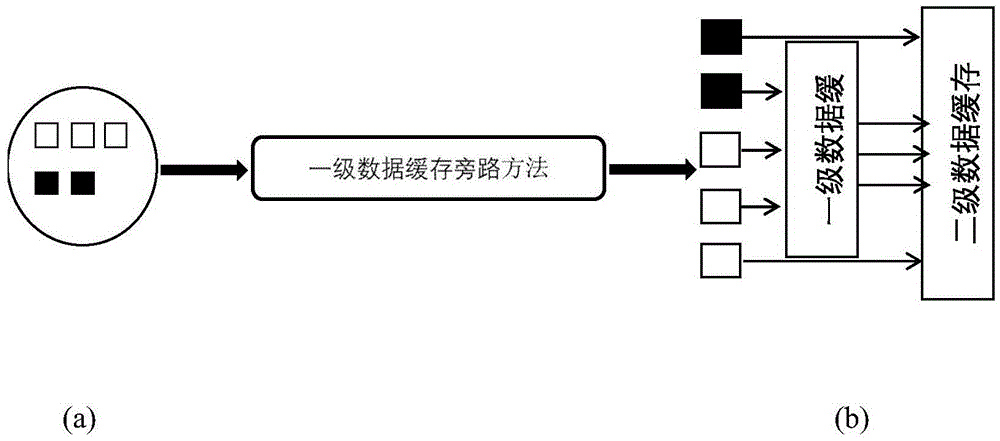

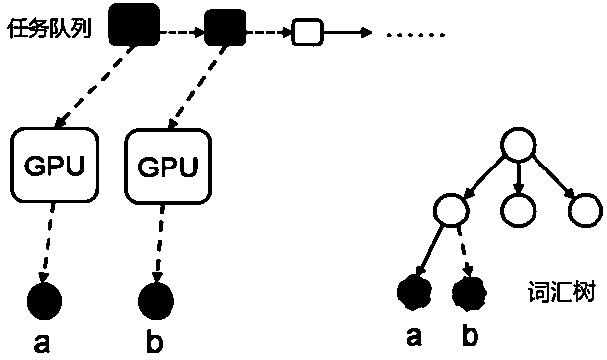

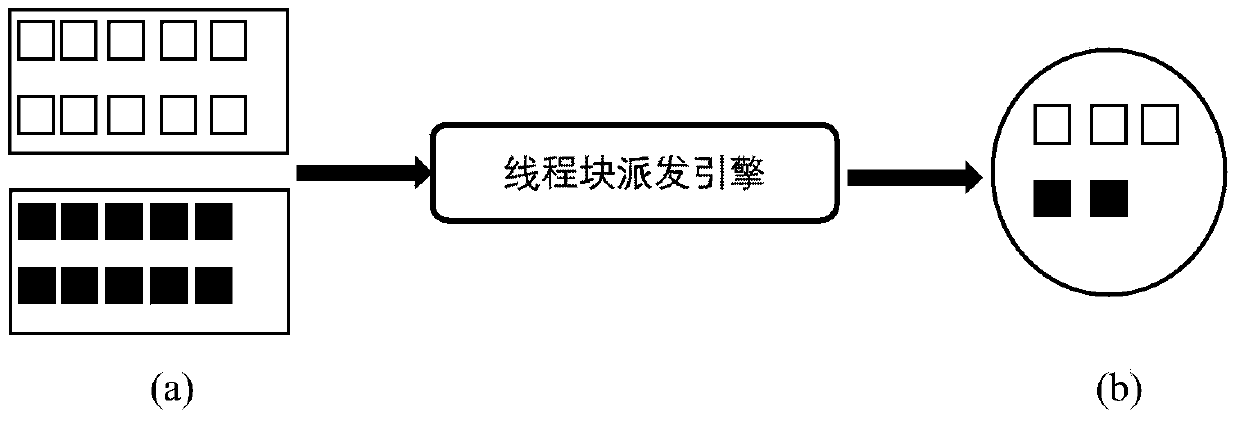

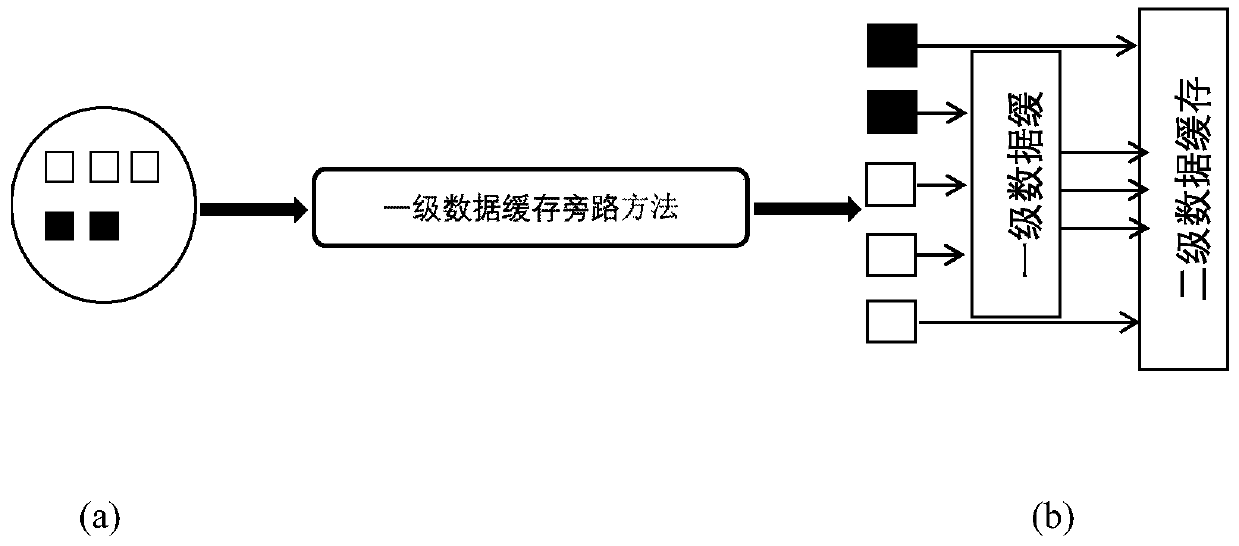

Method for distributing tasks by general purpose graphic processing unit in multi-task concurrent execution manner

ActiveCN105653243ARelieve pressureOvercome low resource utilizationConcurrent instruction executionImage data processing detailsDynamic methodBusiness efficiency

The invention discloses a method for distributing tasks by a general purpose graphic processing unit in a multi-task concurrent execution manner. The method comprises the following steps: firstly classifying kernel functions through a thread block distribution engine method; carrying out classified counting on the kernel functions to obtain the number of thread blocks of the kernel functions which are respectively distributed to a streaming processor; and distributing the thread blocks with different kernel function corresponding numbers into a same streaming multiprocessor so as to achieve the aims of improving the resource utilization rate of each streaming multiprocessor in the general purpose graphic processing unit and enhancing the system performance and the energy efficiency ratio. A level-1 data cache bypass method can be further utilized; and according to the method, a dynamic method is used for determining the thread block of which kernel function is bypassed, and then bypassing is carried out according to the number of the bypassed thread blocks of the kernel functions, so as to achieve the aims of lightening the pressure of the level-1 data cache and further improving the performance.

Owner:PEKING UNIV

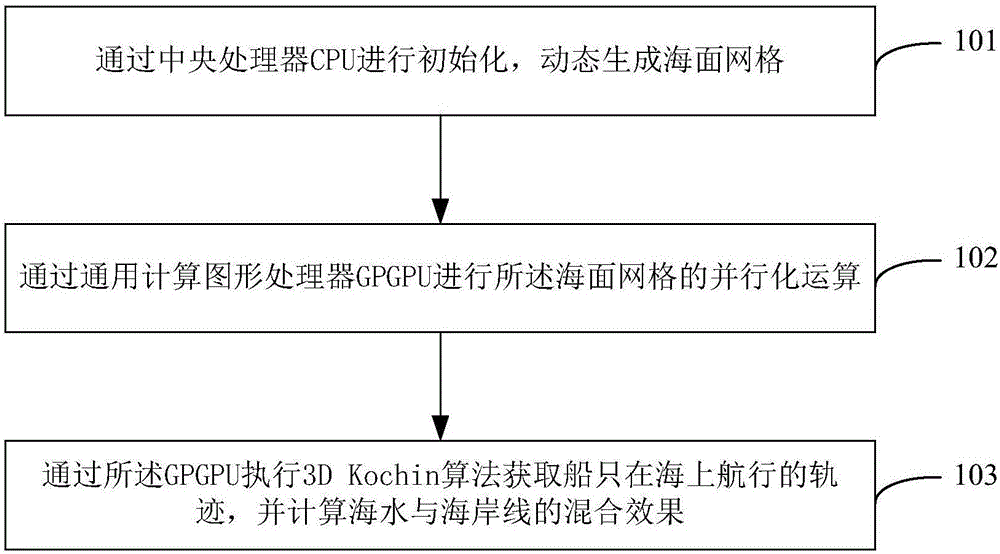

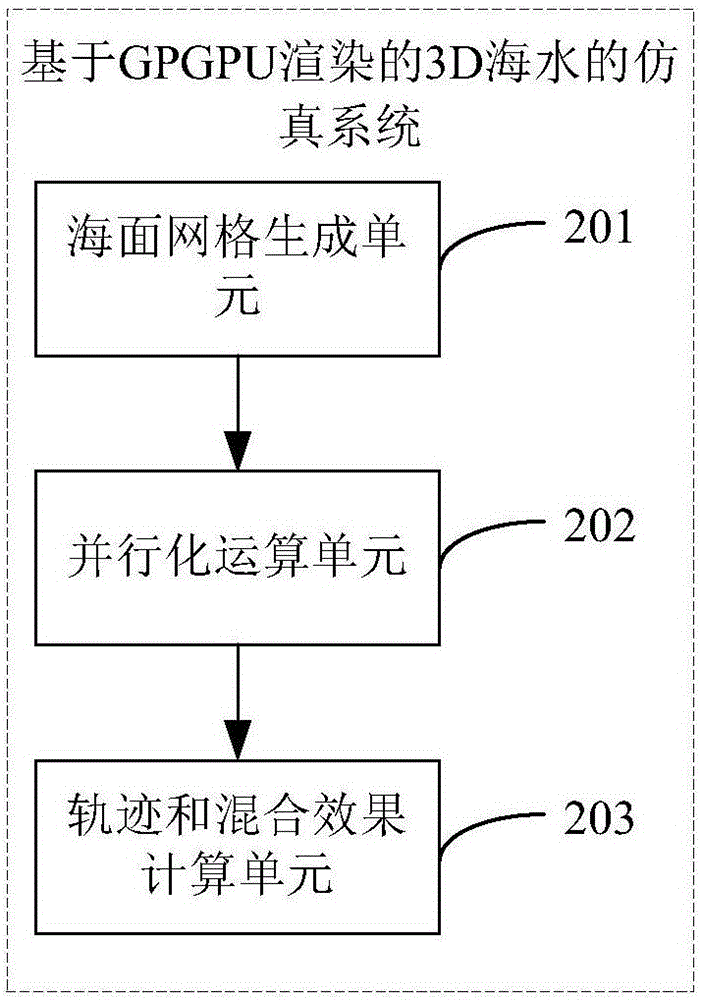

GPGPU-rendering-based simulation method and simulation system for 3D sea water

The application provides a general-purpose-graphics-processing-unit (GPGPU)-rendering-based simulation method and simulation system for 3D sea water. The simulation method comprises: a CPU is used for initialization and an ocean-surface grid is generated dynamically; parallel computing is carried out on the ocean-surface grid by using the GPGPU; and the GPGPU executes a 3D Kochin algorithm to obtain a marine navigation track of a ship and calculates a mixing effect of sea water and a coastline. According to the method provided by the application, all computing and rendering are arranged in the GPGPU and sea water module computing and rendering form an integrated flow in the GPGPU, thereby reducing communication between an internal memory and a video memory and thus realizing the GPGPU performances fully and improving the simulation efficiency. On the basis of the method, rendering, reflecting, and refraction of sea water and waves as well as good coordination of the visual effect reality sense of the ship track and simulation real-time performance are realized.

Owner:姜雪伟

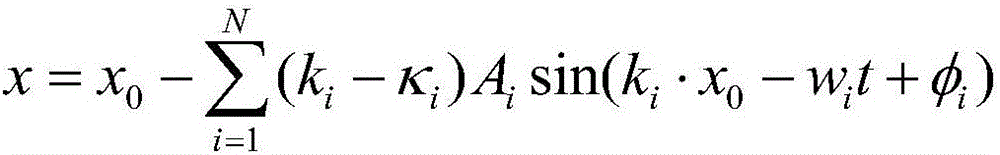

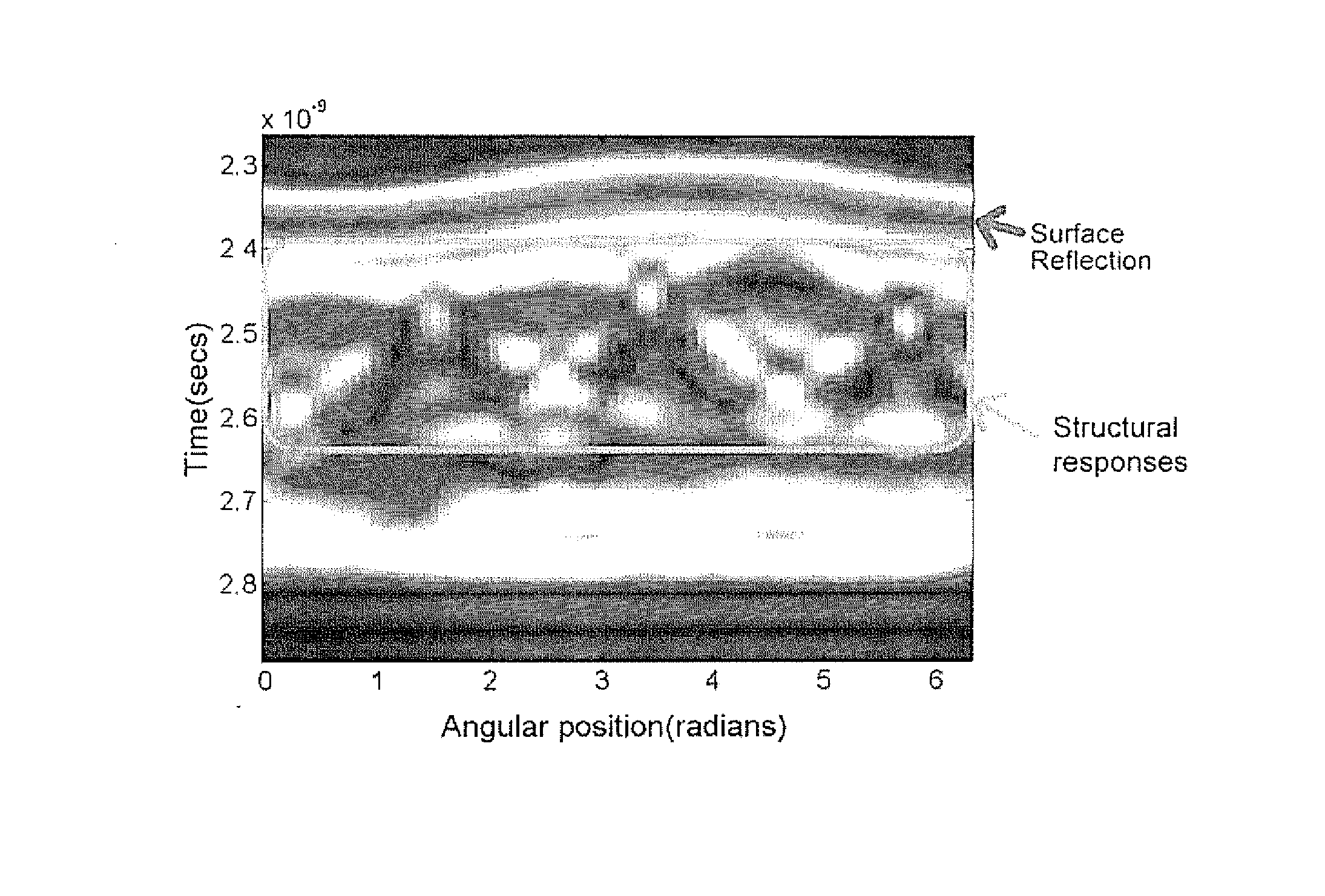

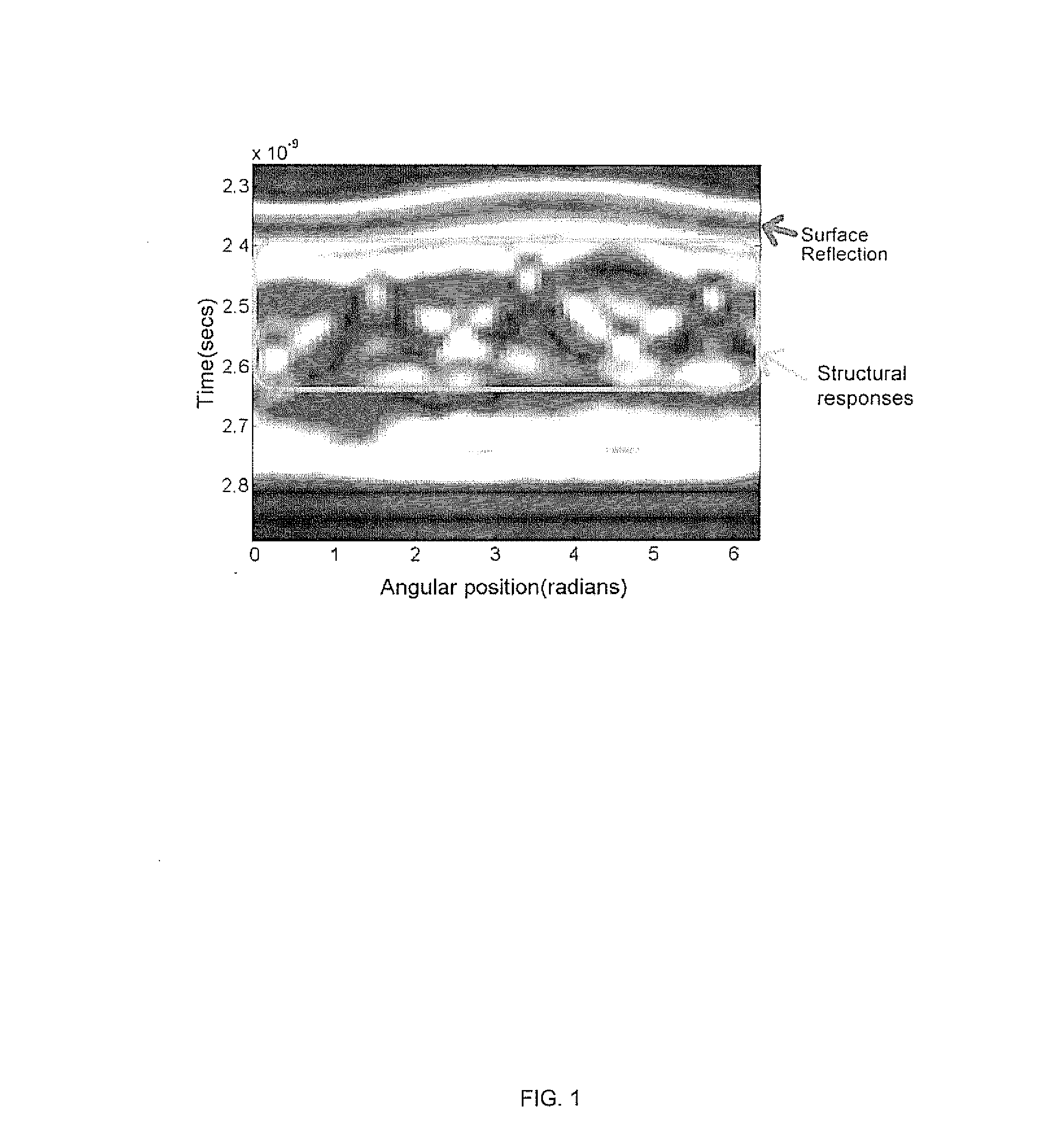

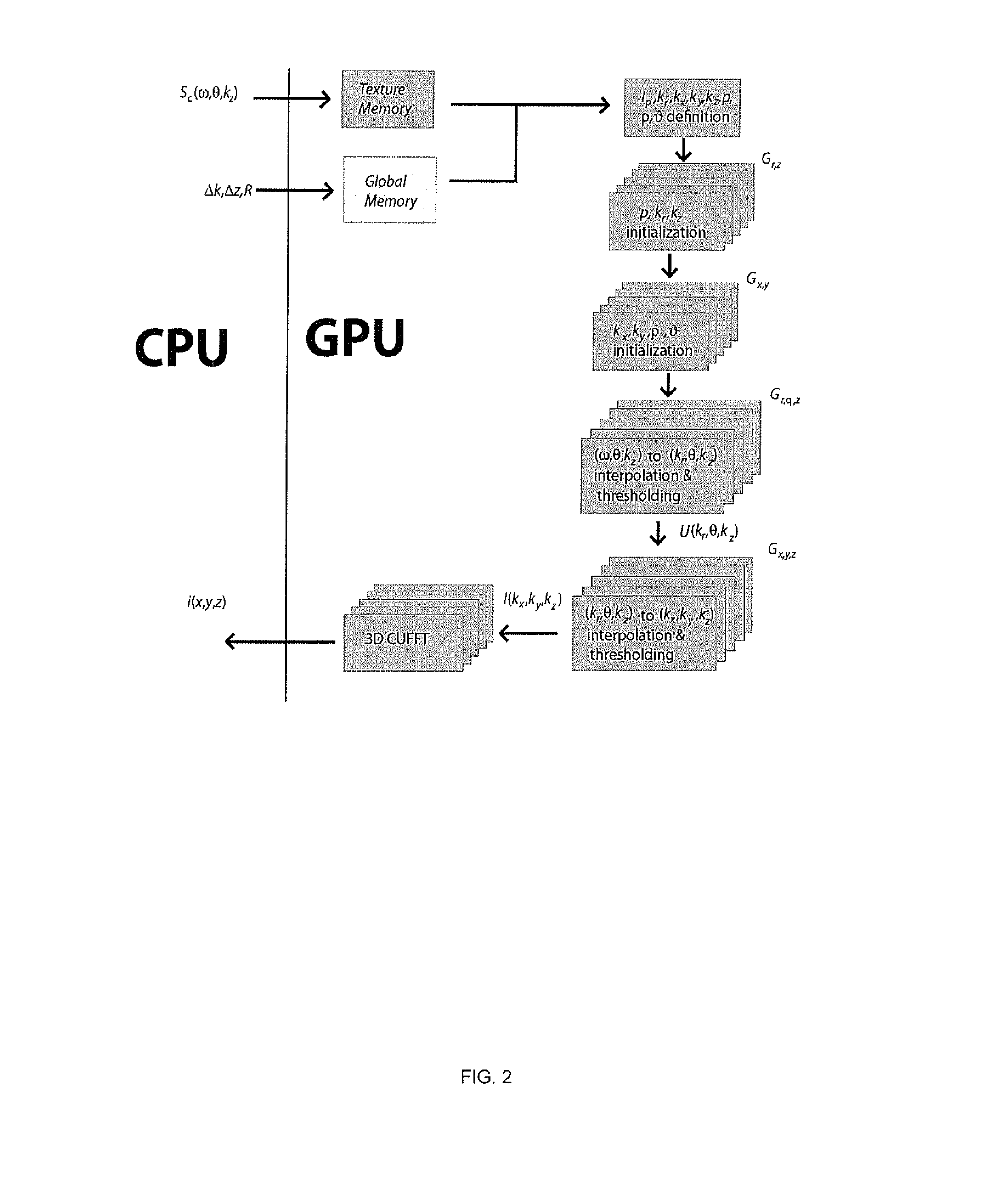

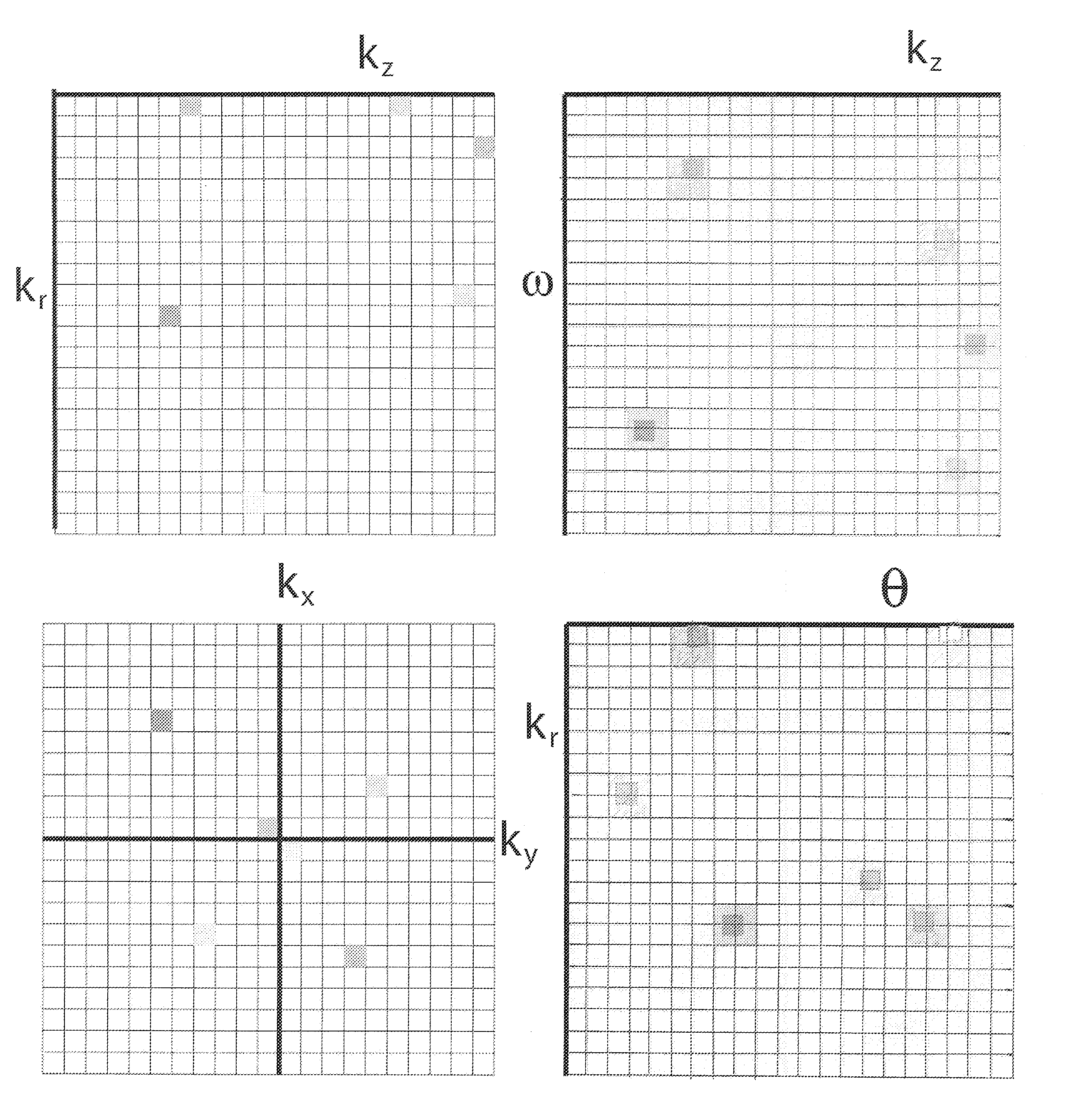

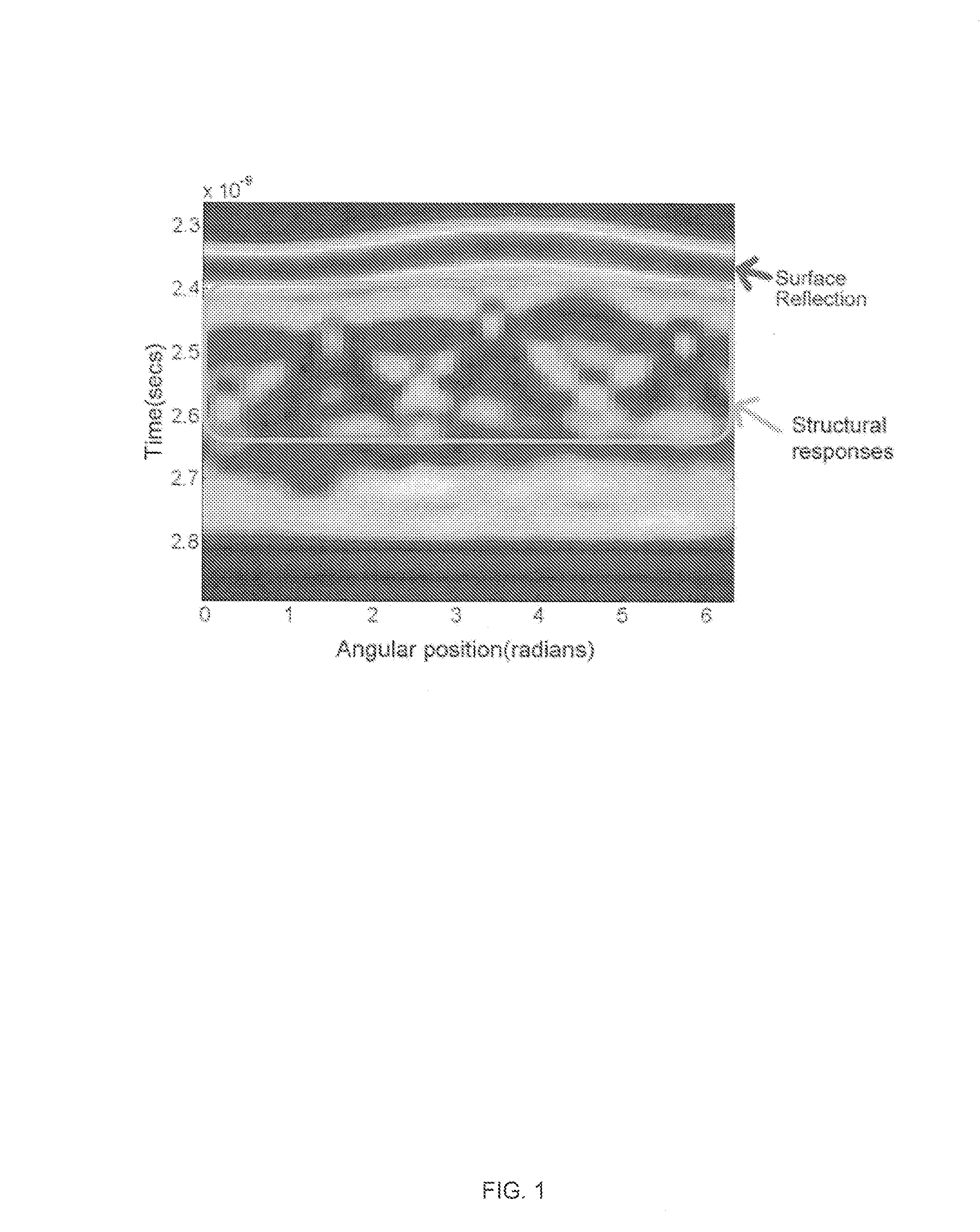

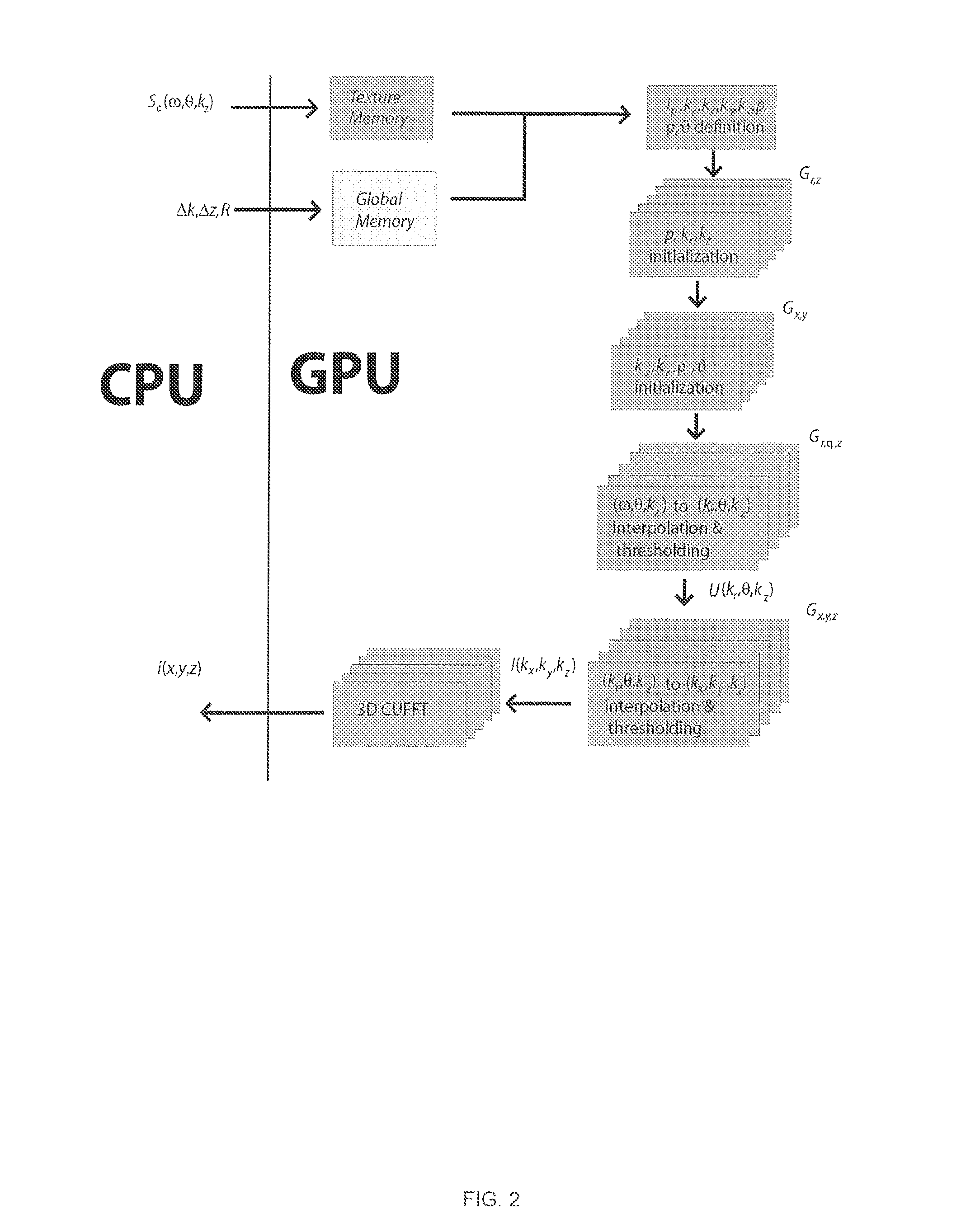

Real Time Reconstruction of 3D Cylindrical Near Field Radar Images Using a Single Instruction Multiple Data Interpolation Approach

InactiveUS20130044022A1Low costHigh bandwidthRadio wave reradiation/reflectionPerformance computingProblem space

The present invention uses a Single Instruction Multiple Data (SIMD) architecture to form real time 3D radar images recorded in cylindrical near field scenarios using a wavefront reconstruction approach. A novel interpolation approach is executed in parallel, significantly reducing the reconstruction time without compromising the spatial accuracy and signal to noise ratios of the resulting images. Since each point in the problem space can be processed independently, the proposed technique was implemented using an approach on a General Purpose Graphics Processing Unit (GPGPU) to take advantage of the high performance computing capabilities of this platform.

Owner:TAPIA DANIEL FLORES +1

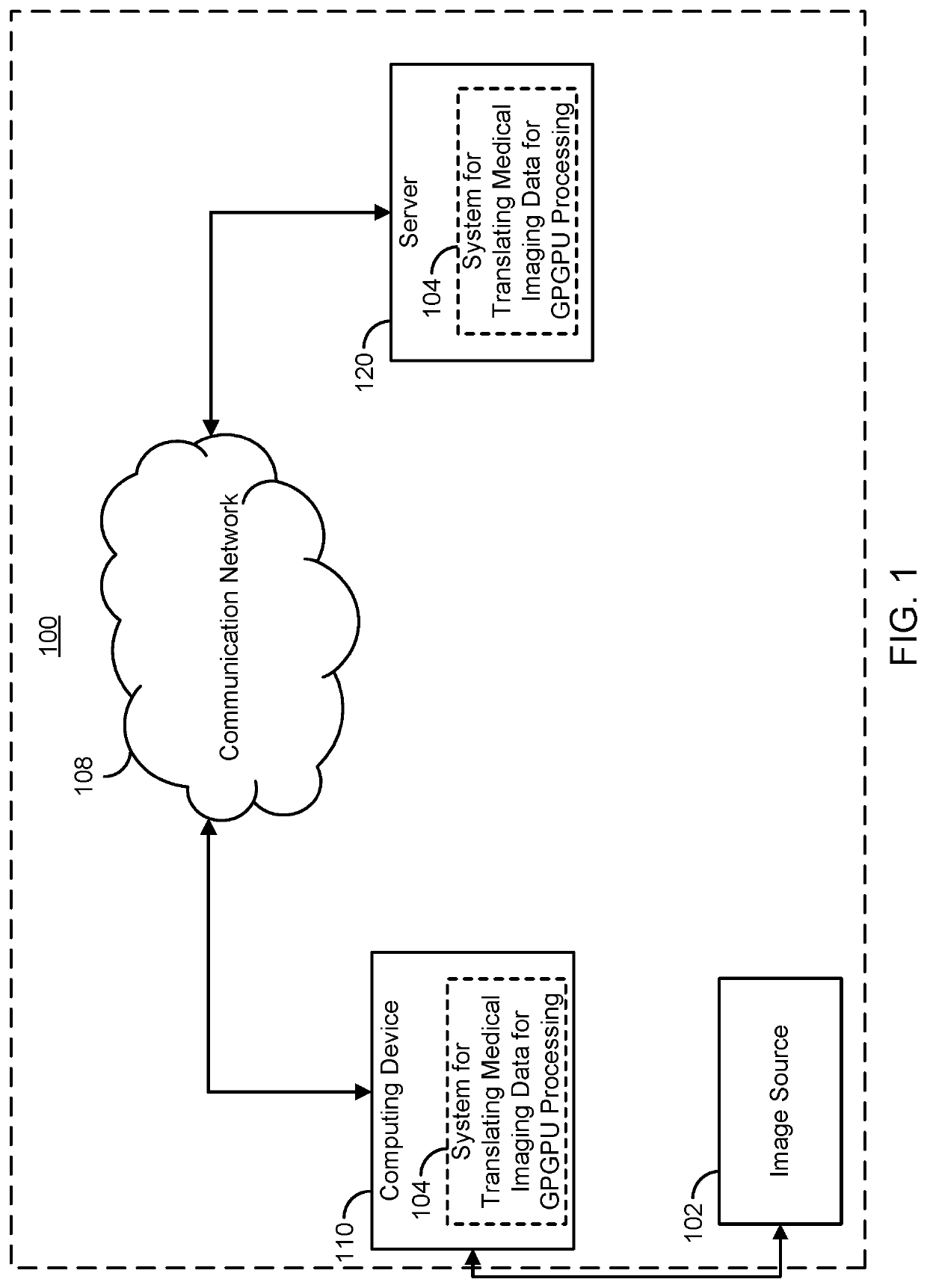

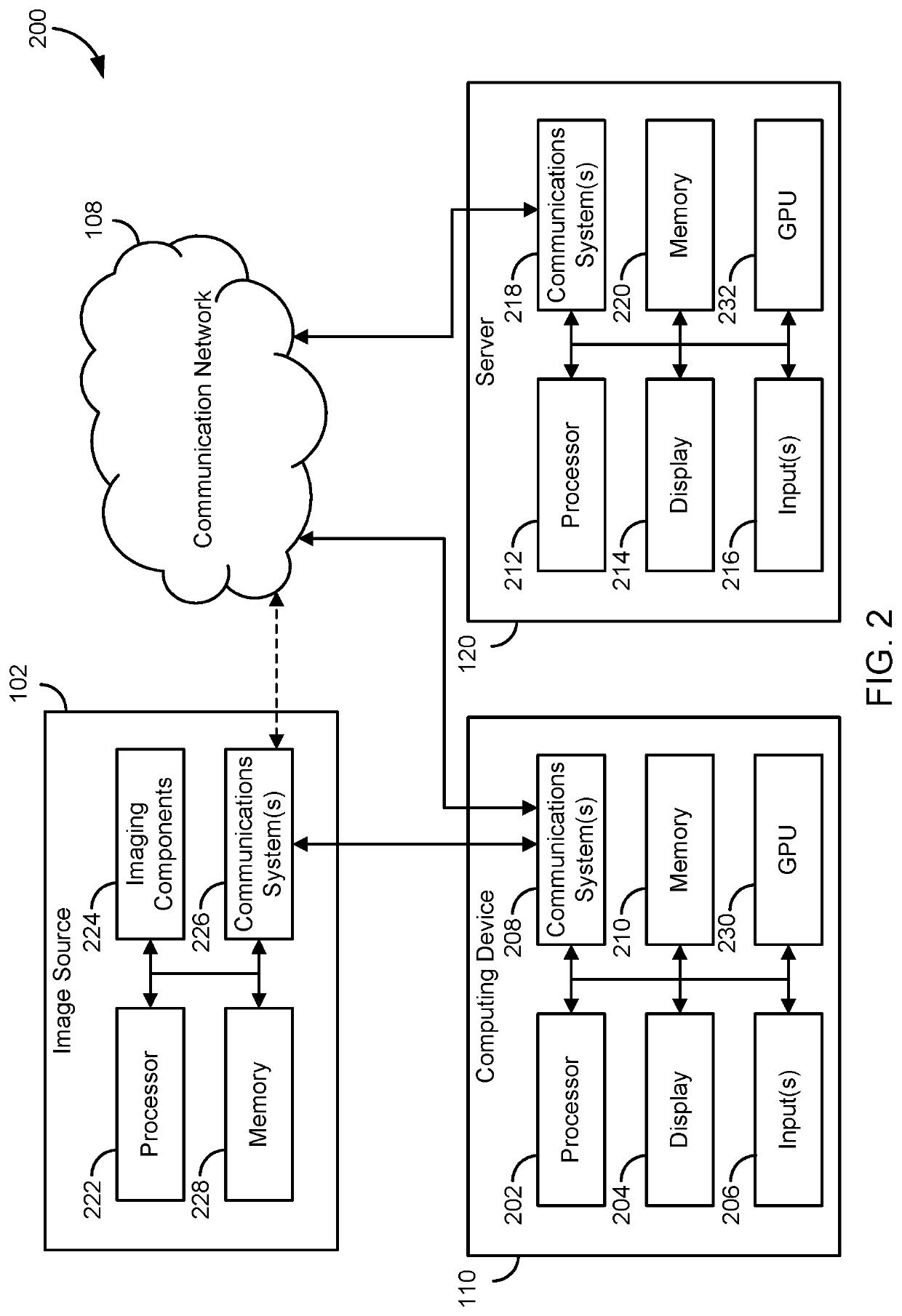

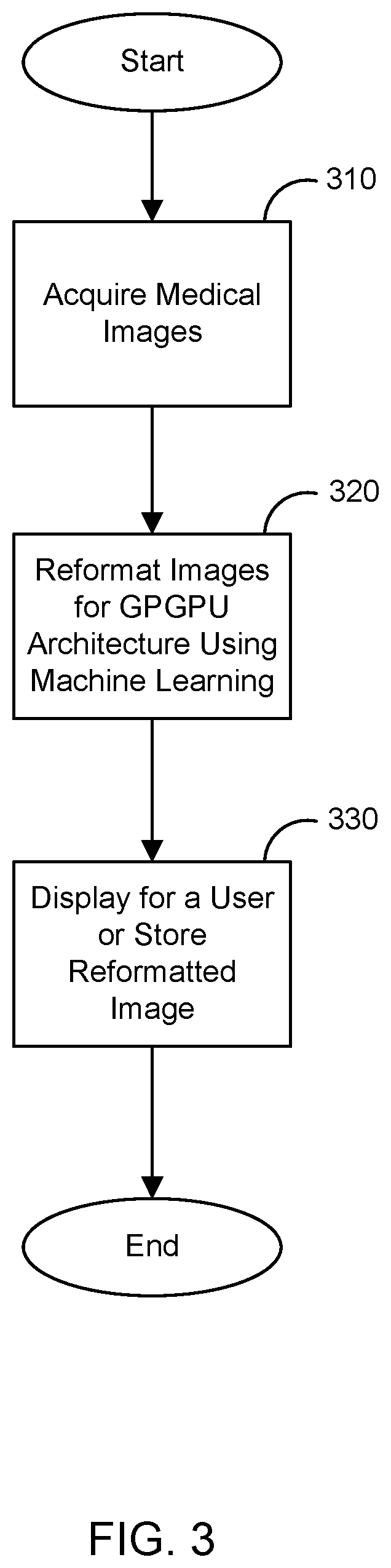

System and method for utilizing general-purpose graphics processing units (GPGPU) architecture for medical image processing

InactiveUS20200265578A1Enhance the imageEasy to resolveUltrasonic/sonic/infrasonic diagnosticsImage enhancementMedical imaging dataData set

Systems and methods for translating medical imaging data for processing using a general processing graphic processing unit (GPGPU) architecture are provided. Medical imaging data acquired from a patient and having data characteristics incompatible with processing on the GPGPU architecture, including at least one of bit-resolution, memory capacity requirements for processing, or bandwidth requirements for processing is translated for processing by the GPGPU architecture. The translation process is performed by determining a plurality of window level settings using a machine learning network to increase conspicuity of an object in an image generated from the medical imaging data or generate at least two channel image datasets from the medical imaging data. Translated medical image data is crated using at least one of the window level settings or at least two channel image datasets and then processed using the GPGPU architecture to generate medical images of the patient.

Owner:THE GENERAL HOSPITAL CORP

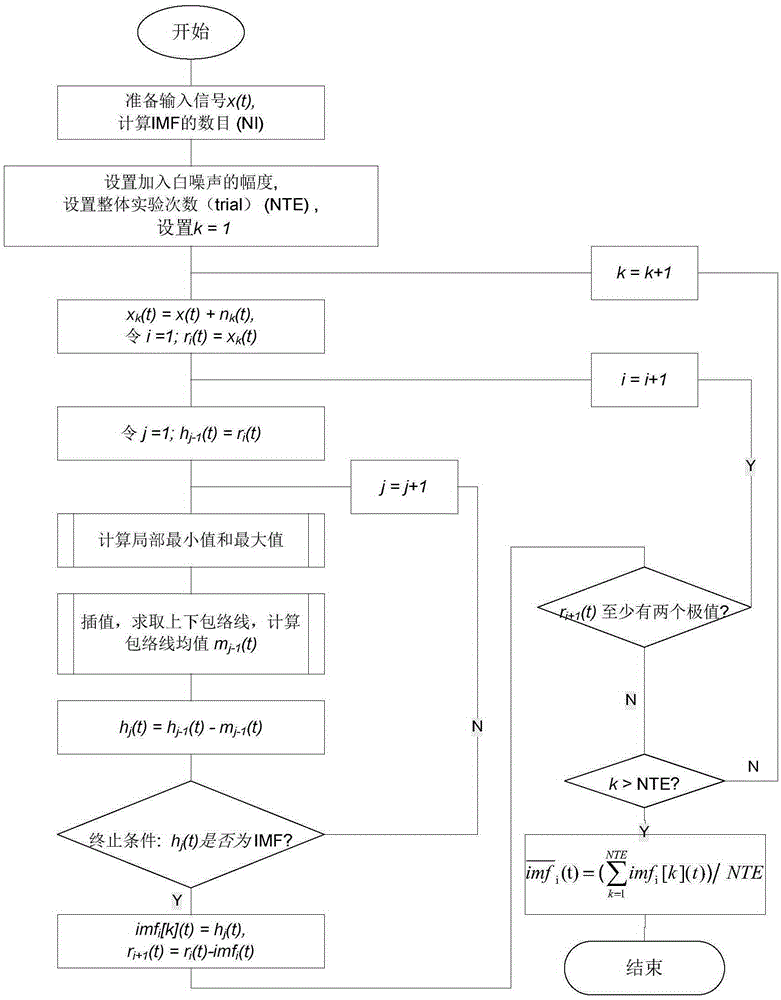

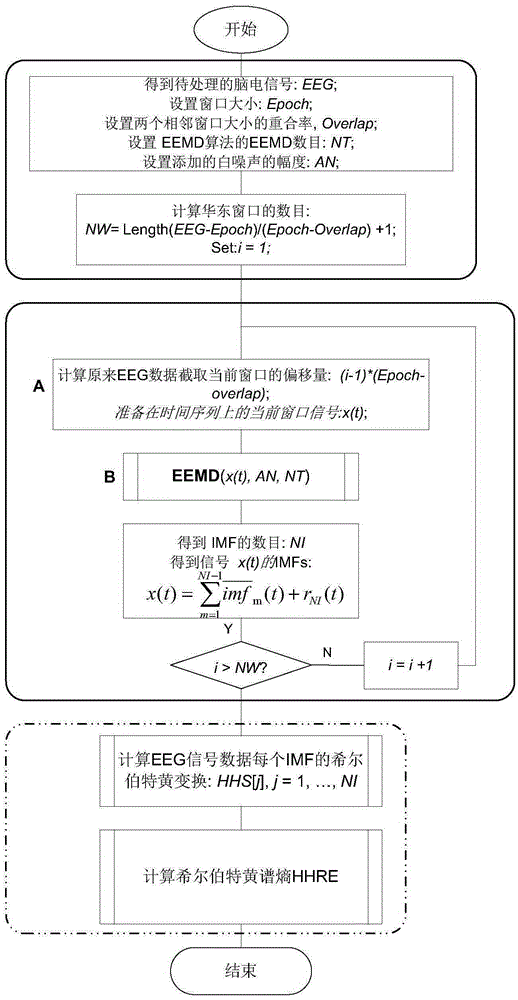

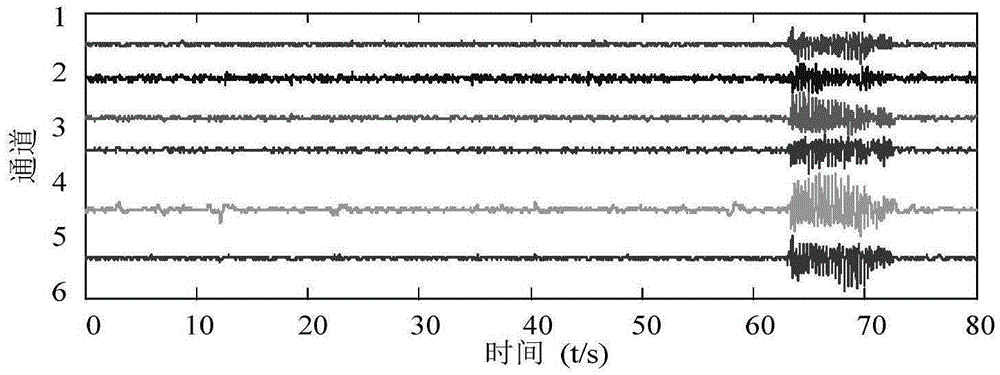

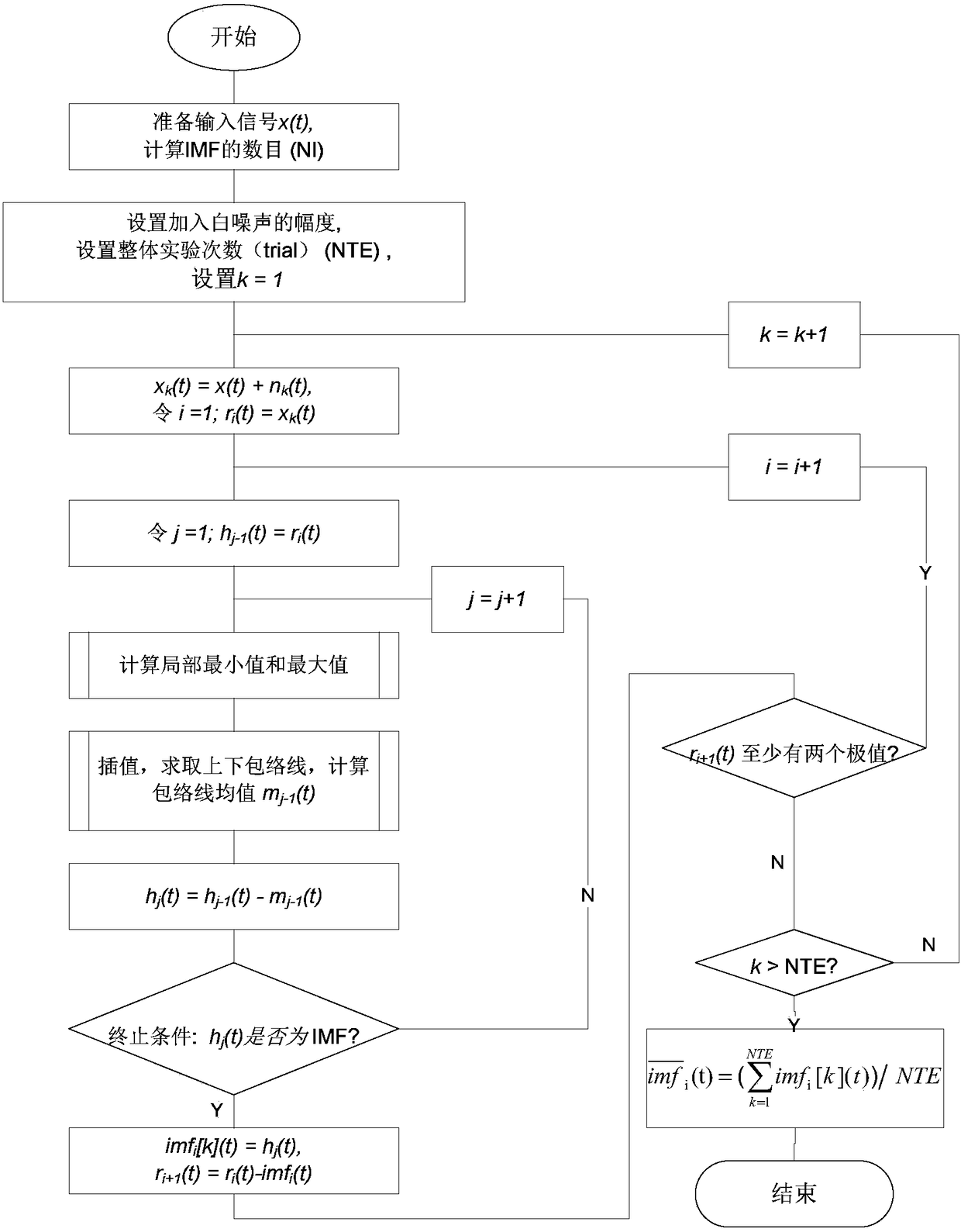

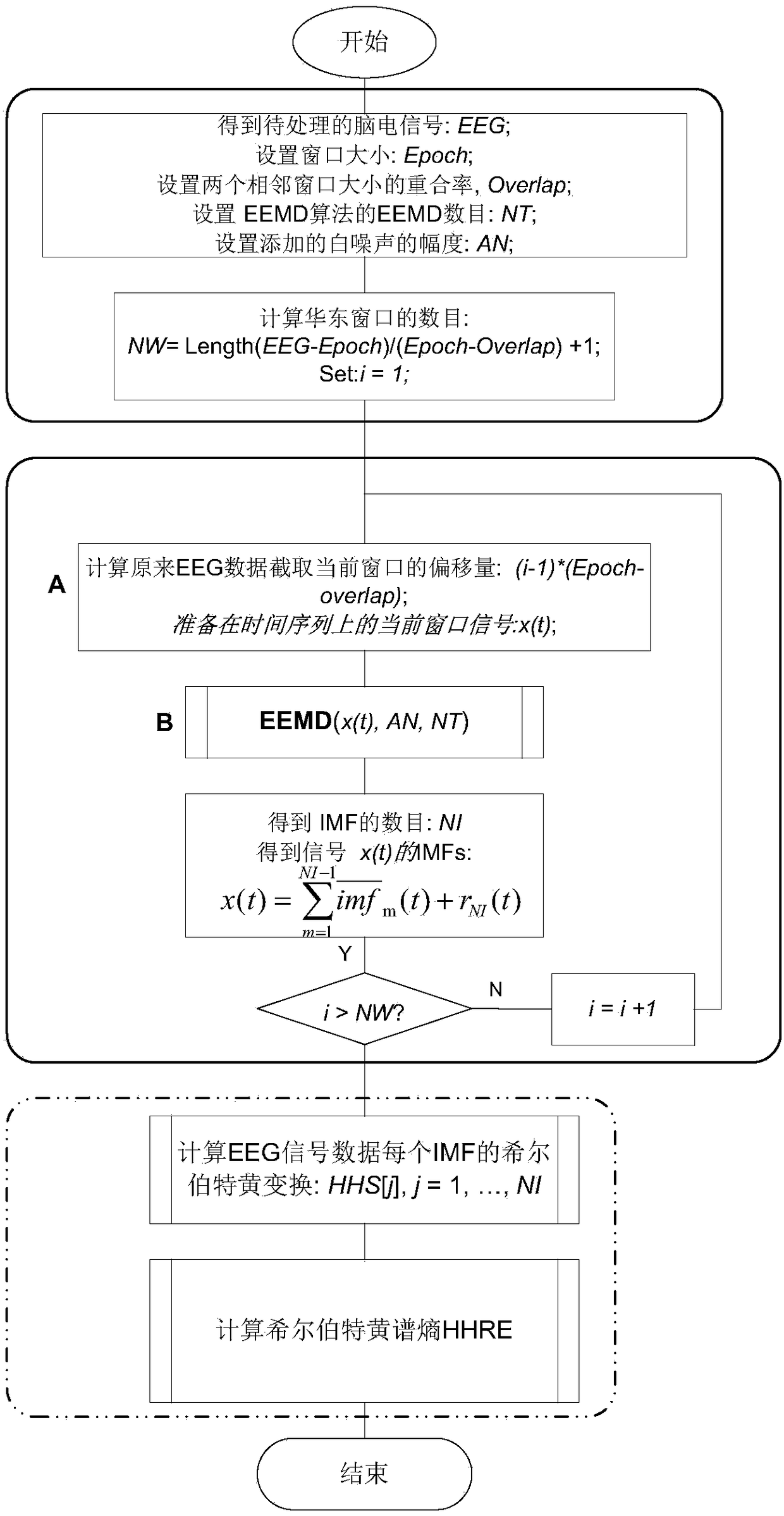

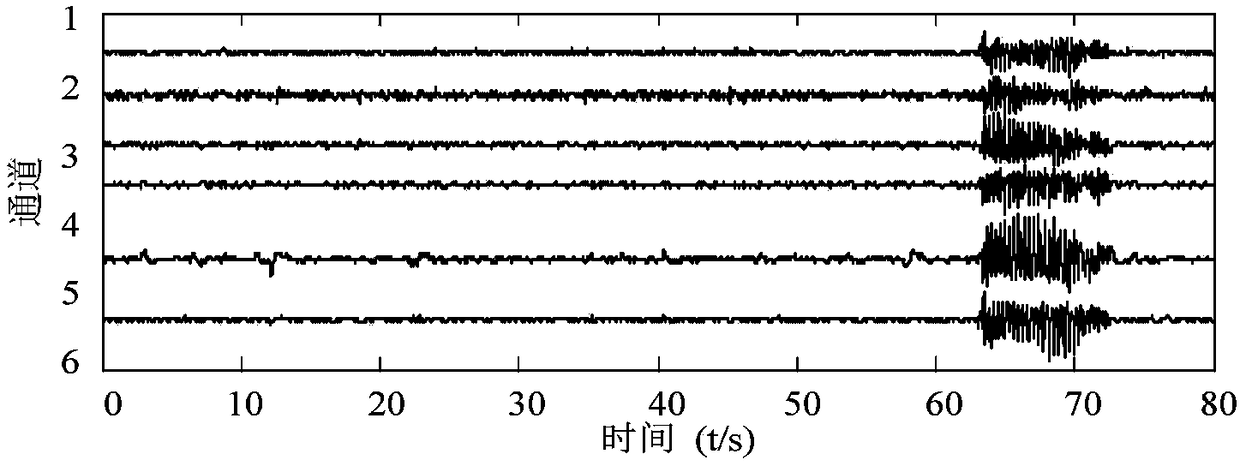

Nonlinear unsteady-state complex signal self-adapting decomposition method based on GPGPU

ActiveCN105279376AEasy to handleTo achieve the purpose of parallelizationSpecial data processing applicationsGeneral purposeGraphics

The invention discloses a nonlinear unsteady-state complex signal self-adapting decomposition method based on GPGPU (General Purpose Graphics Processing Units), and belongs to the field of signal analysis. During large-scale signal data processing by a conventional EEMD (Ensemble Empirieal Mode Decomposition) algorithm, the processing is limited by the denseness computation of the algorithm per se, so that the conventional algorithm cannot meet the real-time performance demand in practical application. A plurality of high parallel computation steps included by the EEMD algorithm are analyzed; a GPGPU method based on a CUDA (Compute Unified Device Architecture) is used for performing parallel design on the EEMD algorithm, so that the algorithm reaches an optimal state in the aspects of data precision and time consumption; Hilbert-huang transform is combined; Hilbert transform and Shannon entropy concepts are used for obtaining Hilbert-huang spectral entropy to further studying decomposition signals. Experiments prove that the method has high efficiency and availability in the practical signal decomposition analysis.

Owner:WUHAN UNIV

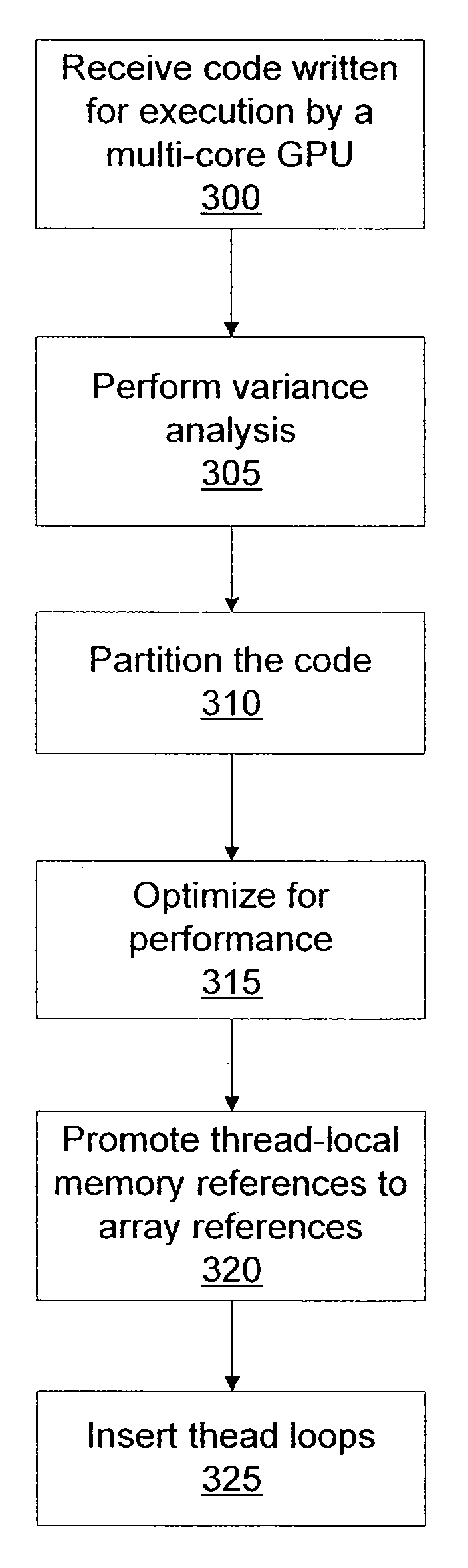

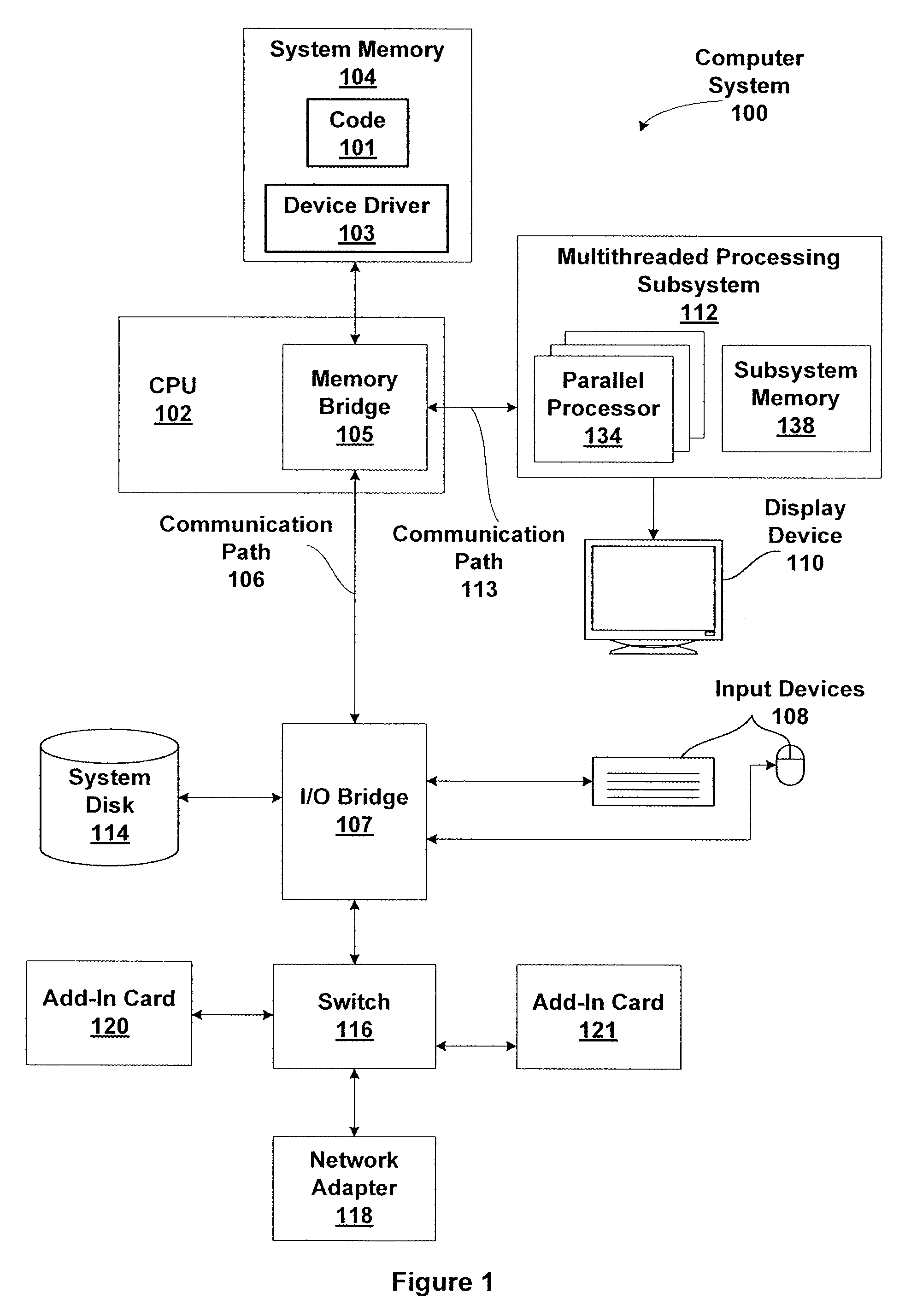

Thread-local memory reference promotion for translating CUDA code for execution by a general purpose processor

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

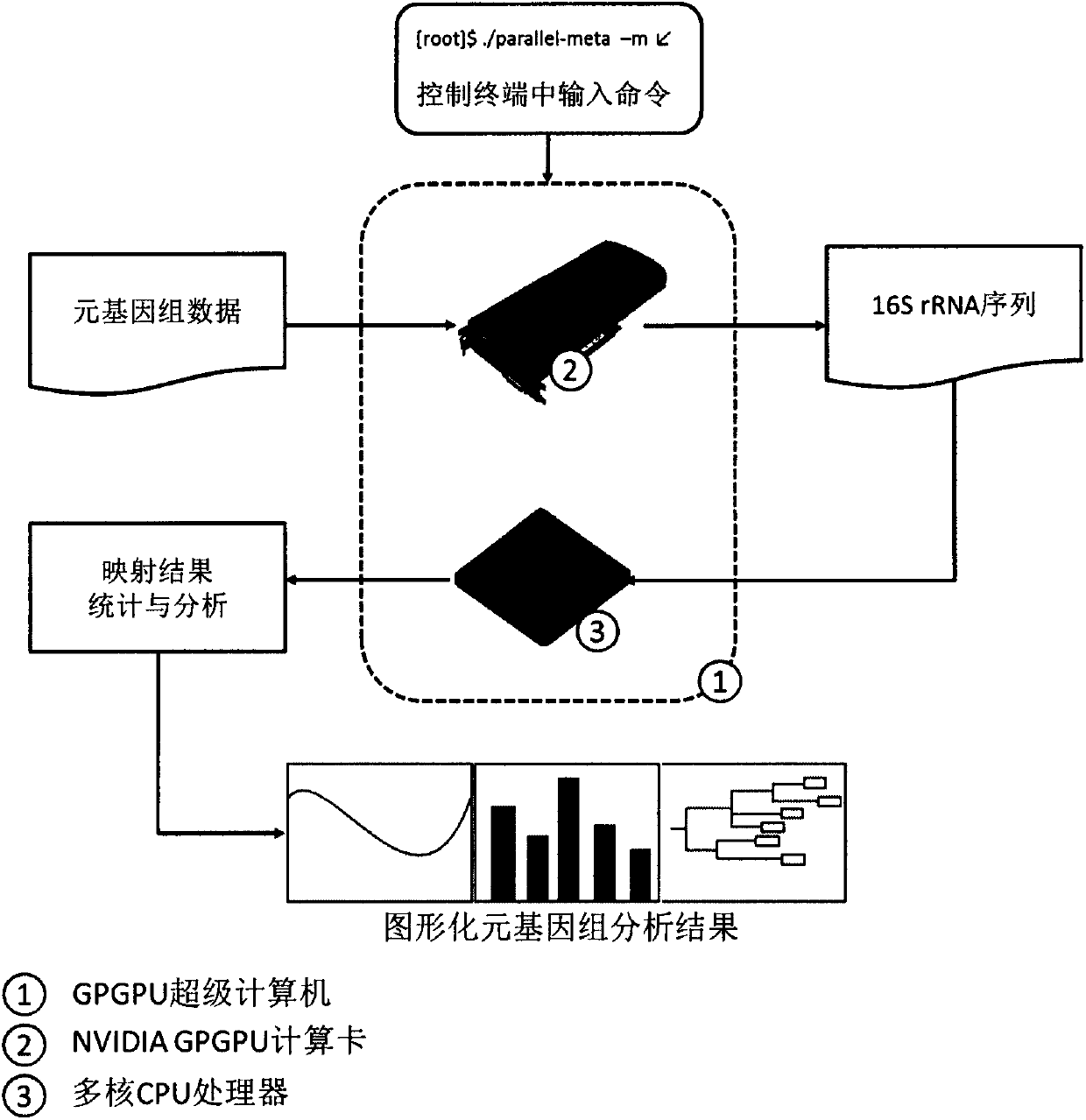

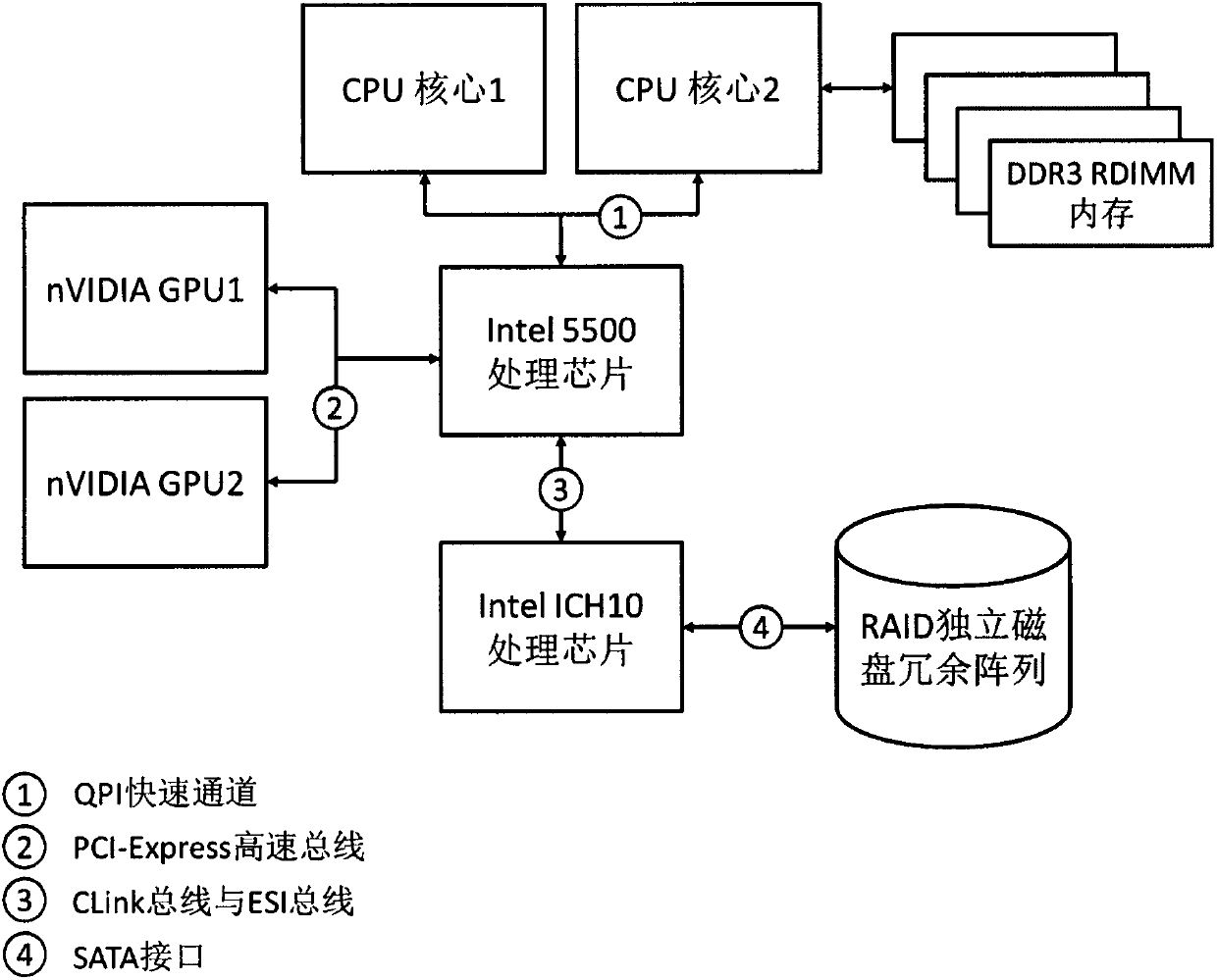

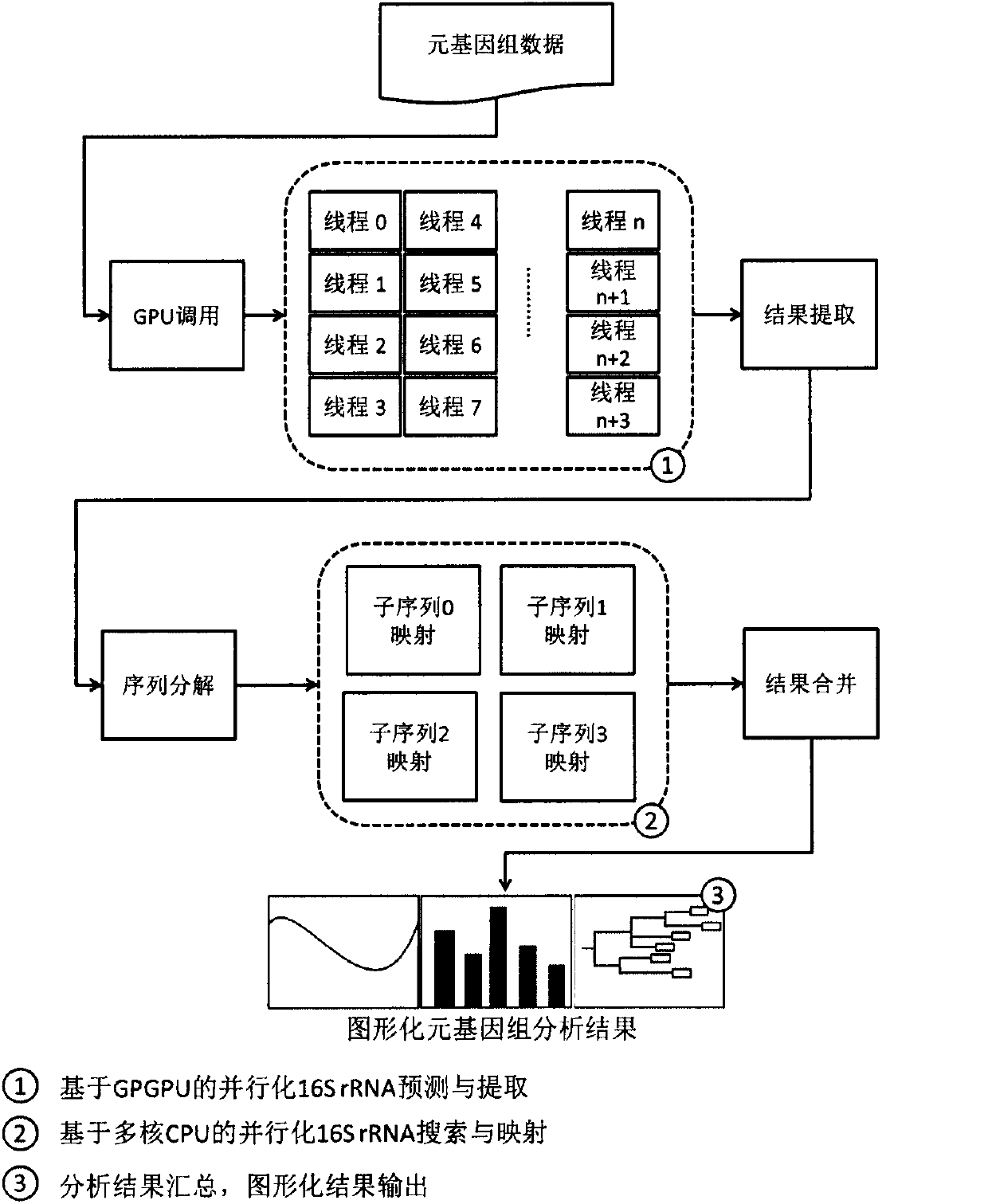

High-performance metagenomic data analysis system on basis of GPGPU (General Purpose Graphics Processing Units) and multi-core CPU (Central Processing Unit) hardware

InactiveCN103310125ASolve Computational BottlenecksSpeed upSpecial data processing applicationsData analysis systemGenomic data

The invention relates to a high-performance metagenomic data analysis system on the basis of GPGPU (General Purpose Graphics Processing Units) and multi-core CPU (Central Processing Unit) hardware. Aiming at the condition that a conventional computer cannot meet the requirement on analysis of mass metagenomic data and according to the characteristic that the mass data in metagenomic data processing can be processed in parallel, the invention discloses a calculation analysis system which is on the basis of the GPGPU and the multi-core CPU hardware and combines software and hardware methods. A main module of the metagenomic calculation and analysis system on the basis of a GPGPU super computer comprises a GPGPU and multi-core CPU computer and a uniform software platform. The high-performance metagenomic data analysis system on the basis of the GPGPU and multi-core CPU hardware has the characteristics of (1) a high-performance parallel calculation and storage hardware system and (2) the high-performance uniform configurable software platform. Metagenomic sequence processing on the basis of the GPGPU hardware can obviously improve analysis efficiency of the metagenomic data.

Owner:QINGDAO INST OF BIOENERGY & BIOPROCESS TECH CHINESE ACADEMY OF SCI

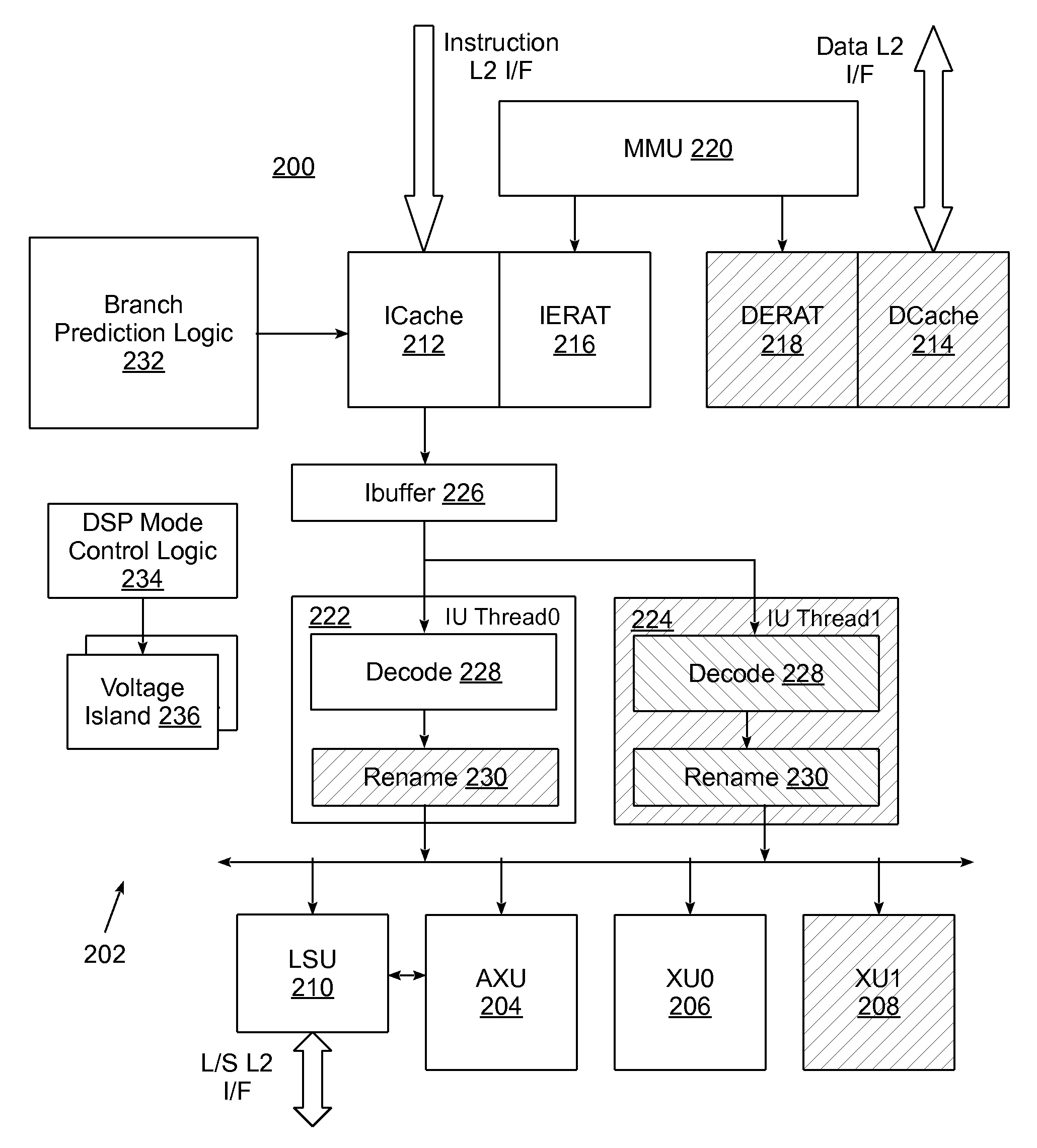

General purpose processing unit with low power digital signal processing (DSP) mode

InactiveUS9274591B2Reduce power consumptionEnergy efficient ICTPower supply for data processingDigital signal processingGeneral purpose

A method and circuit arrangement utilize a general purpose processing unit having a low power DSP mode for reconfiguring the general purpose processing unit to efficiently execute DSP workloads with reduced power consumption. When in a DSP mode, one or more of a data cache, an execution unit, and simultaneous multithreading may be disabled to reduce power consumption and improve performance for DSP workloads. Furthermore, partitioning of a register file to support multithreading, and register renaming functionality, may be disabled to provide an expanded set of registers for use with DSP workloads. As a result, a general purpose processing unit may be provided with enhanced performance for DSP workloads with reduced power consumption, while also not sacrificing performance for other non-DSP / general purpose workloads.

Owner:GLOBALFOUNDRIES INC

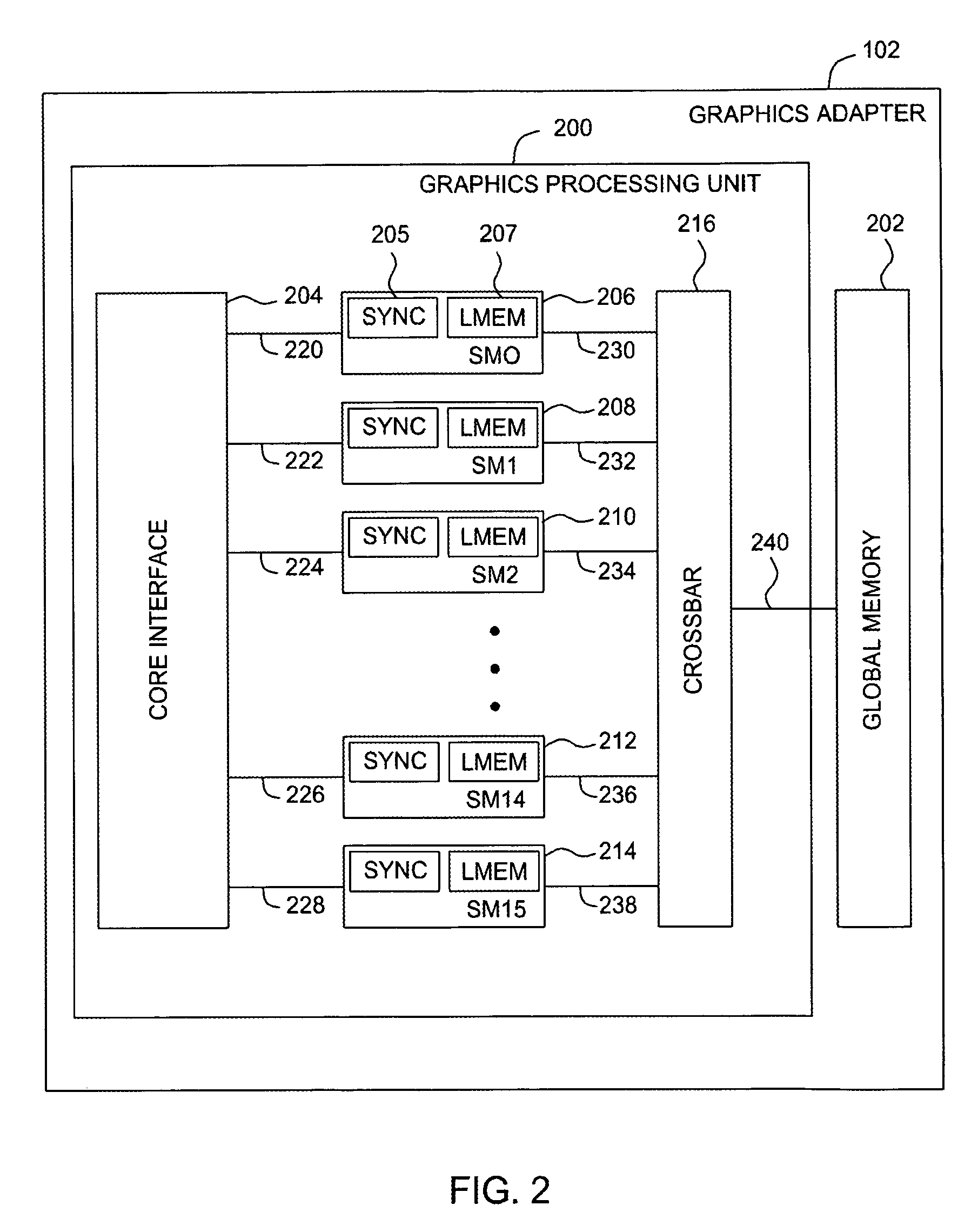

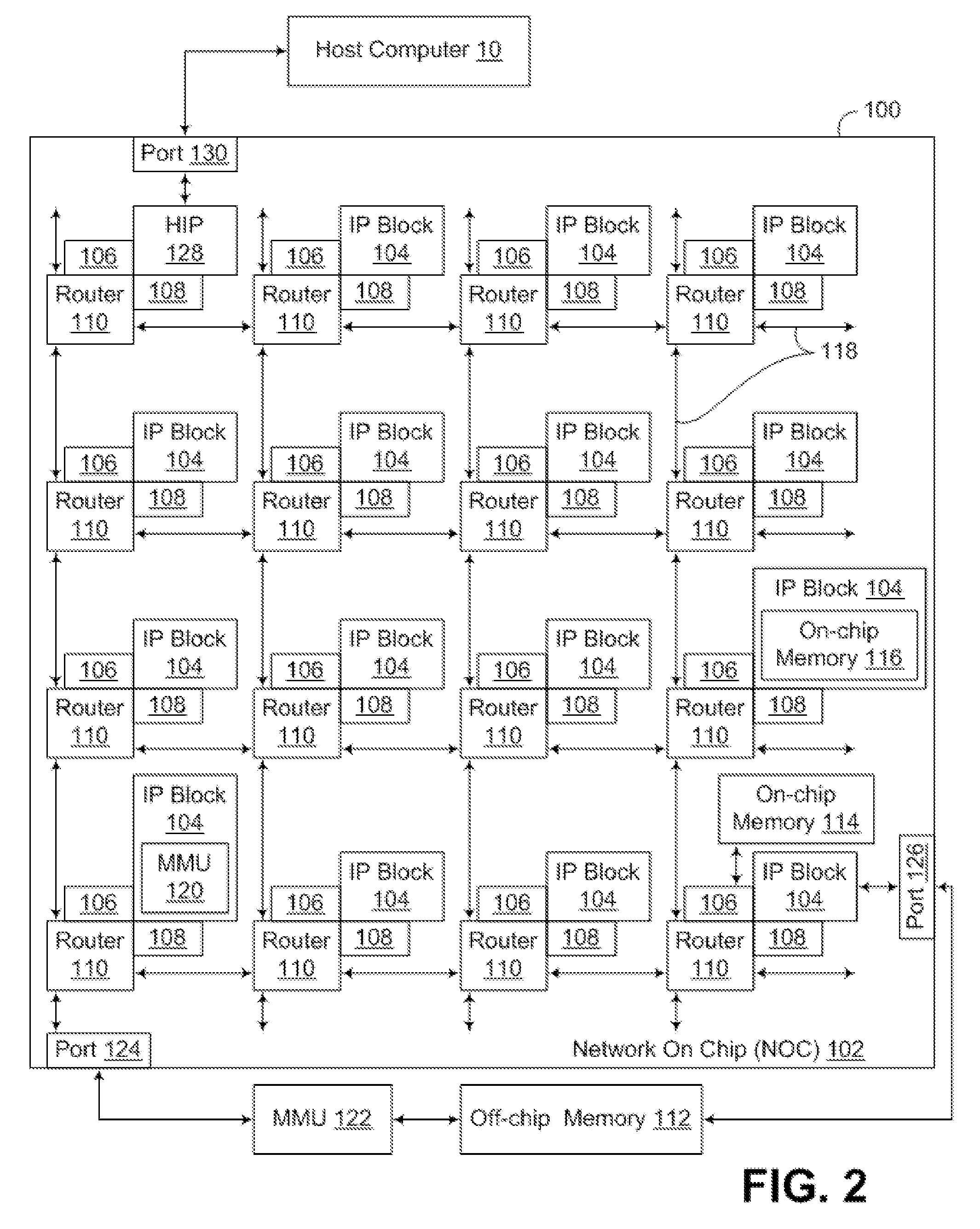

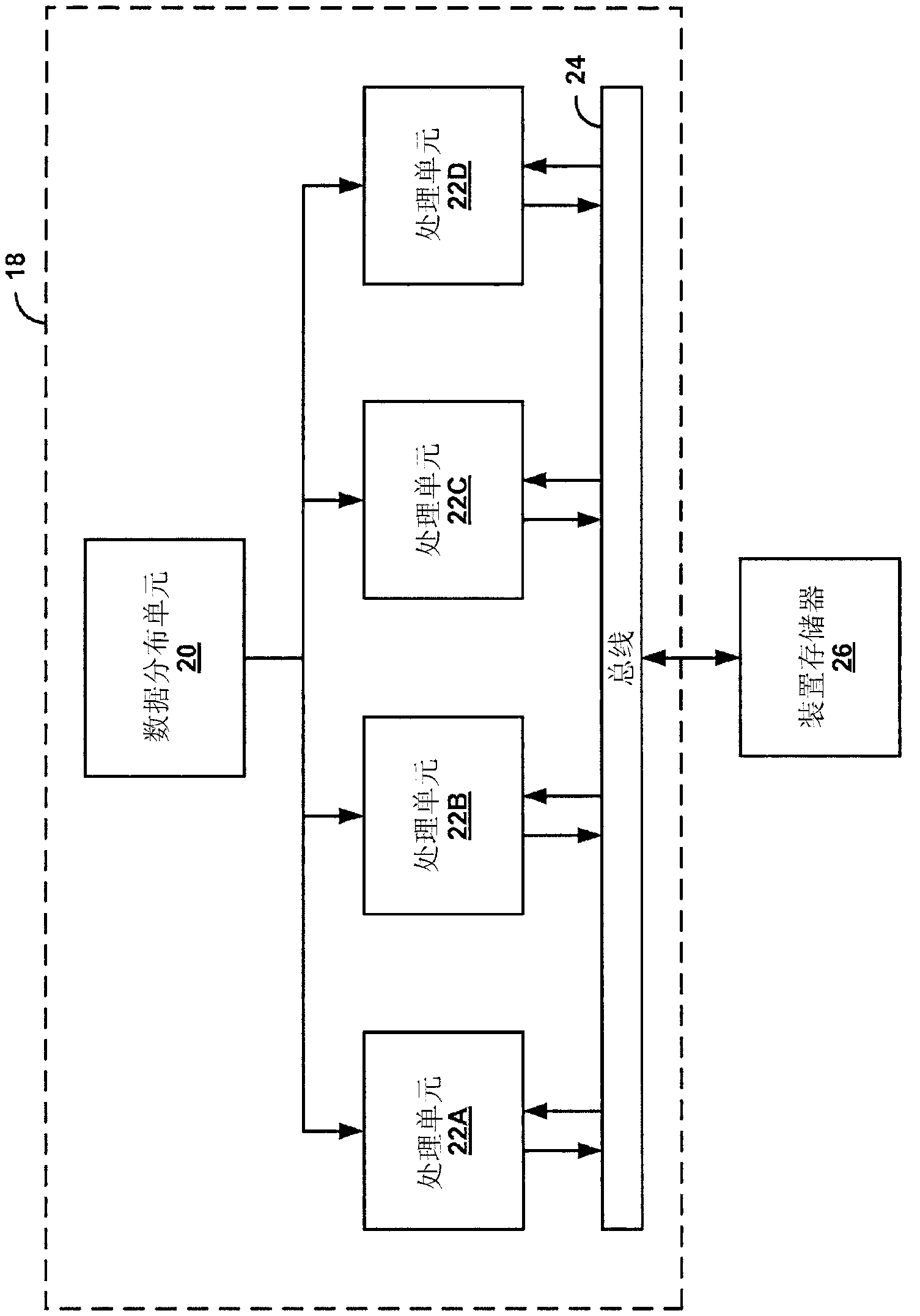

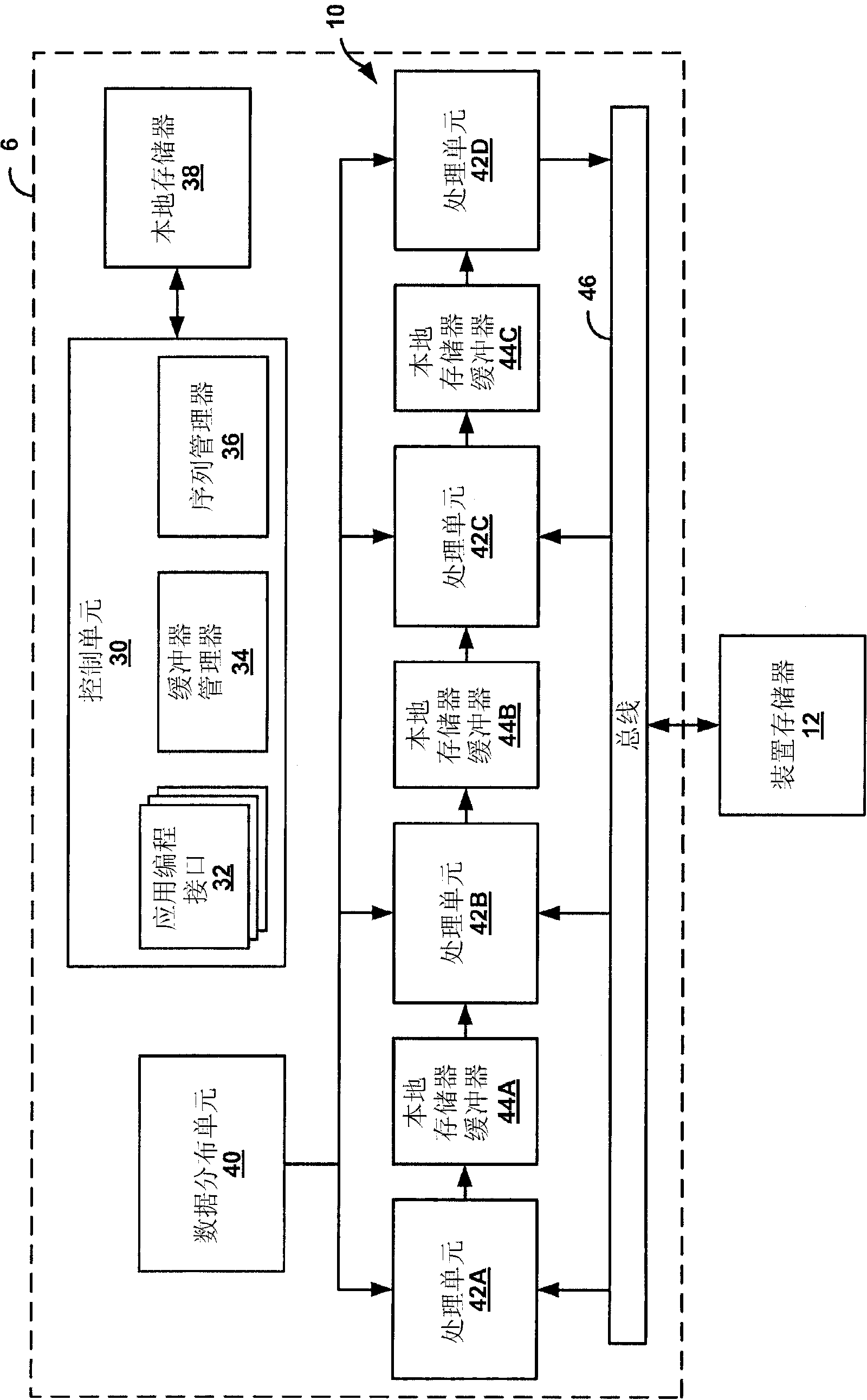

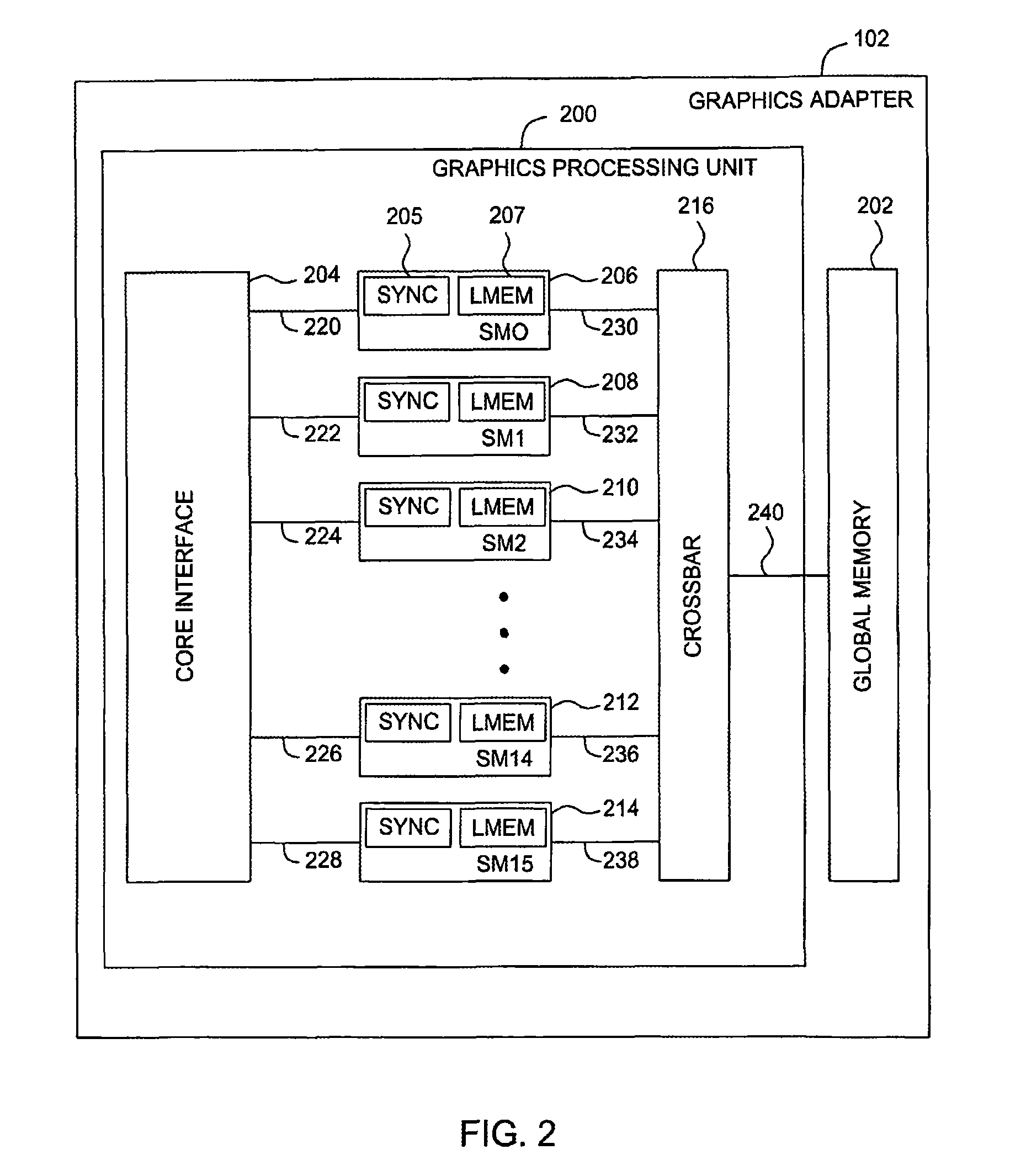

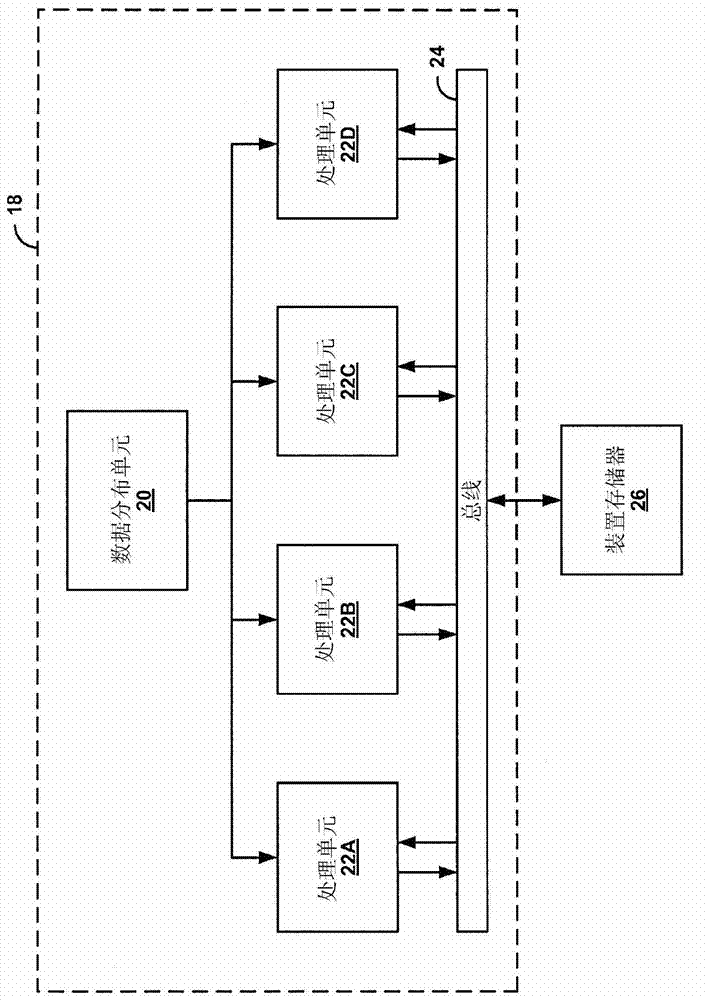

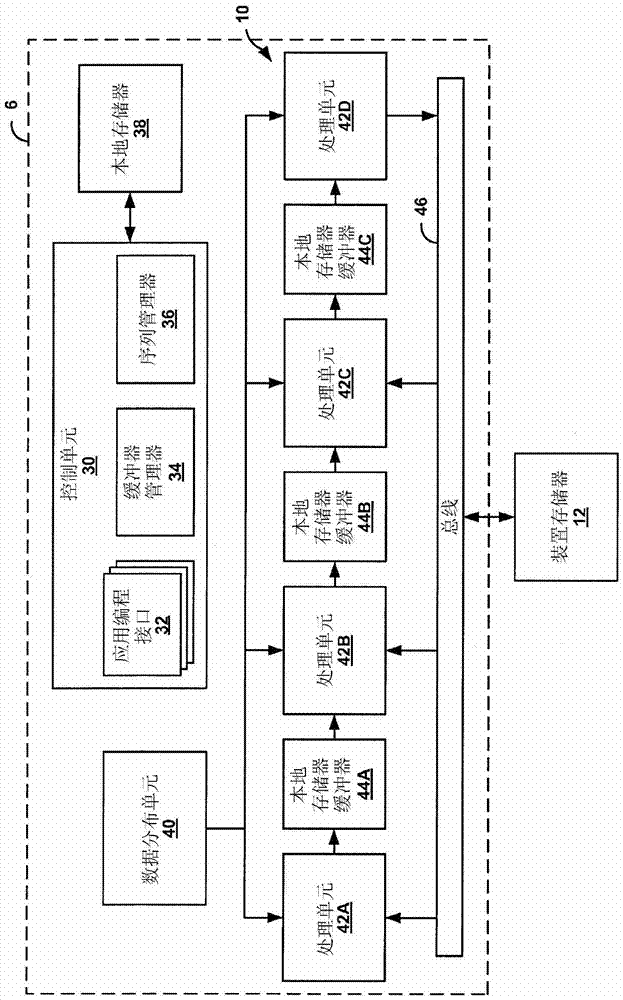

Computational resource pipelining in general purpose graphics processing unit

This disclosure describes techniques for extending the architecture of a general purpose graphics processing unit (GPGPU) with parallel processing units to allow efficient processing of pipeline-based applications. The techniques include configuring local memory buffers connected to parallel processing units operating as stages of a processing pipeline to hold data for transfer between the parallel processing units. The local memory buffers allow on-chip, low-power, direct data transfer between the parallel processing units. The local memory buffers may include hardware-based data flow control mechanisms to enable transfer of data between the parallel processing units. In this way, data may be passed directly from one parallel processing unit to the next parallel processing unit in the processing pipeline via the local memory buffers, in effect transforming the parallel processing units into a series of pipeline stages.

Owner:QUALCOMM INC

Cryptographic computations on general purpose graphics processing units

ActiveUS8106916B1Release resourcesCryptographic computations are performed faster and more efficientlyUnauthorized memory use protectionHardware monitoringGeneral purposeGraphics processing unit

One embodiment of the invention sets forth a computing system for performing cryptographic computations. The computing system comprises a central processing unit, a graphics processing unit, and a driver. The central processing requests a cryptographic computation. In response, the driver downloads microcode to perform the cryptographic computation to the graphics processing unit and the graphics processing unit executes microcode. This offloads cryptographic computations from the CPU. As a result, cryptographic computations are performed faster and more efficiently on the GPU, freeing resources on the CPU for other tasks.

Owner:NVIDIA CORP

Real time reconstruction of 3D cylindrical near field radar images using a single instruction multiple data interpolation approach

InactiveUS8872697B2Low costHigh bandwidthDetection using electromagnetic wavesRadio wave reradiation/reflectionPerformance computingSignal-to-quantization-noise ratio

The present invention uses a Single Instruction Multiple Data (SIMD) architecture to form real time 3D radar images recorded in cylindrical near field scenarios using a wavefront reconstruction approach. A novel interpolation approach is executed in parallel, significantly reducing the reconstruction time without compromising the spatial accuracy and signal to noise ratios of the resulting images. Since each point in the problem space can be processed independently, the proposed technique was implemented using an approach on a General Purpose Graphics Processing Unit (GPGPU) to take advantage of the high performance computing capabilities of this platform.

Owner:TAPIA DANIEL FLORES +1

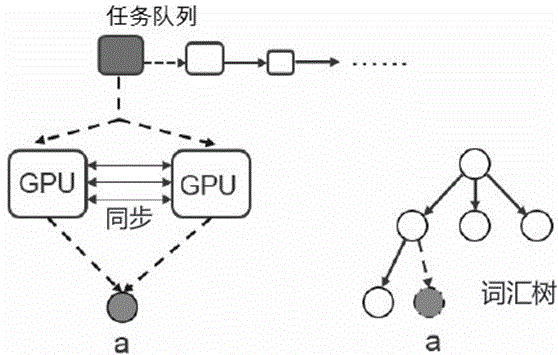

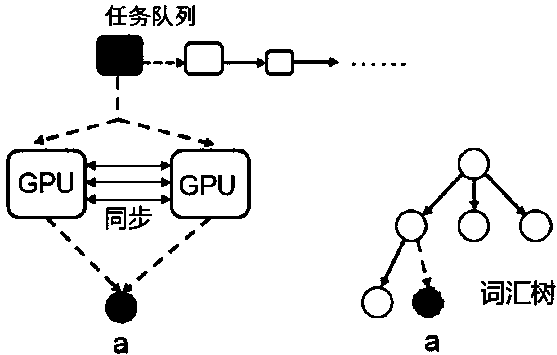

High-dimensional-data-oriented vocabulary tree building method based on heterogeneous platform

The invention belongs to the technical field of a parallel processing unit, and particularly relates to a high-dimensional-data-oriented vocabulary tree building method based on a heterogeneous platform. According to the method, the strong parallel calculation capability and the programmability of a graphics processing unit arranged on a heterogeneous processing platform (a mixed architecture of a general purpose processor and a GPGPU (General Purpose Graphics Processing Unit)) are used for accelerating the speed of the building process of a high-dimensional vocabulary tree. The characteristic of high concurrency of the graphics processing unit is used for accelerating the speed of the core process of a high-dimensional vocabulary tree algorithm; the characteristics of the high-dimensional vocabulary tree algorithm and the memory access mode of the graphics processing unit are used for optimizing the memory accessing process of the algorithm; and a coordination strategy of a host and the graphics processing unit in the operation process of the high-dimensional vocabulary tree algorithm is designed. The method has the advantage that the building speed of the high-dimensional-data-oriented vocabulary tree can be effectively accelerated.

Owner:FUDAN UNIV

Pipelining Computational Resources in General-Purpose Graphics Processing Units

ActiveCN103348320BMultiprogramming arrangementsConcurrent instruction executionData streamGeneral purpose graphic processing unit

This disclosure describes techniques for extending the architecture of a general-purpose graphics processing unit, GPGPU, with parallel processing units to allow efficient processing of pipeline-based applications. The technique includes configuring local memory buffers connected to parallel processing units operating as stages of a processing pipeline to hold data for transfer between the parallel processing units. The local memory buffers allow on-chip, low power, direct data transfer between the parallel processing units. The local memory buffer may include a hardware-based data flow control mechanism to enable transfer of data between the parallel processing units. In this way, data can be passed directly from one parallel processing unit to the next parallel processing unit in the processing pipeline via the local memory buffer, effectively transforming the parallel processing unit into a series of pipeline stages.

Owner:QUALCOMM INC

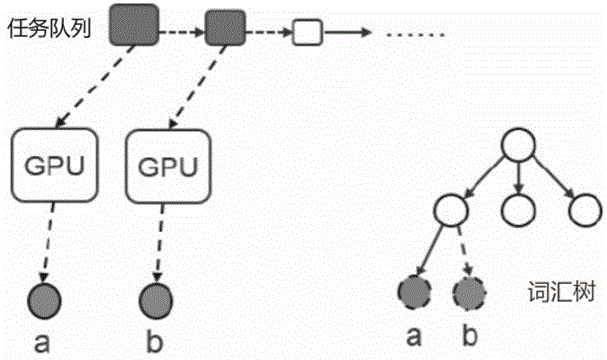

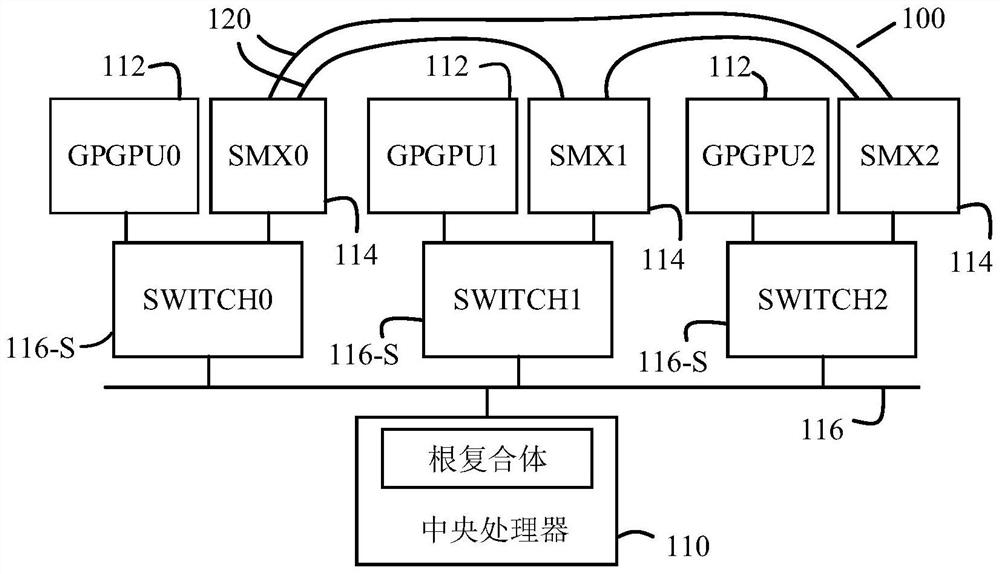

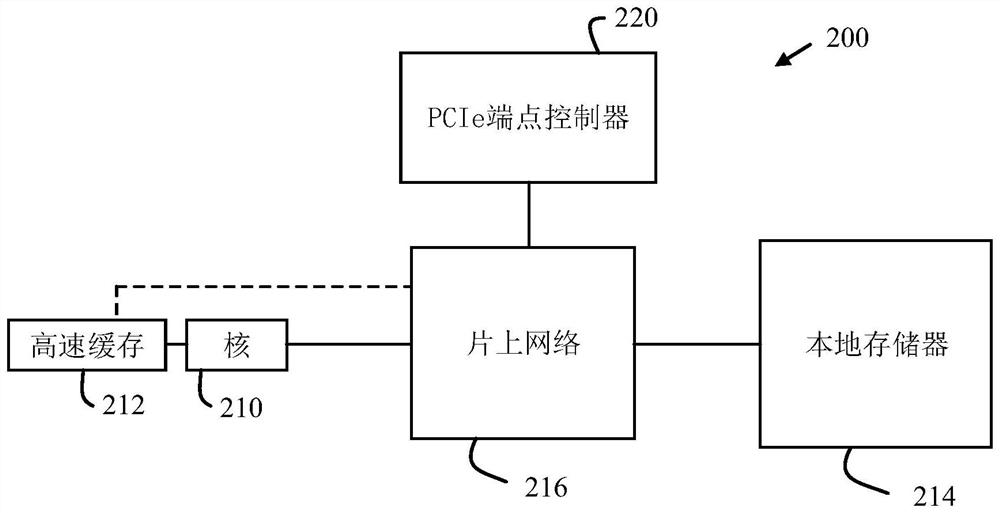

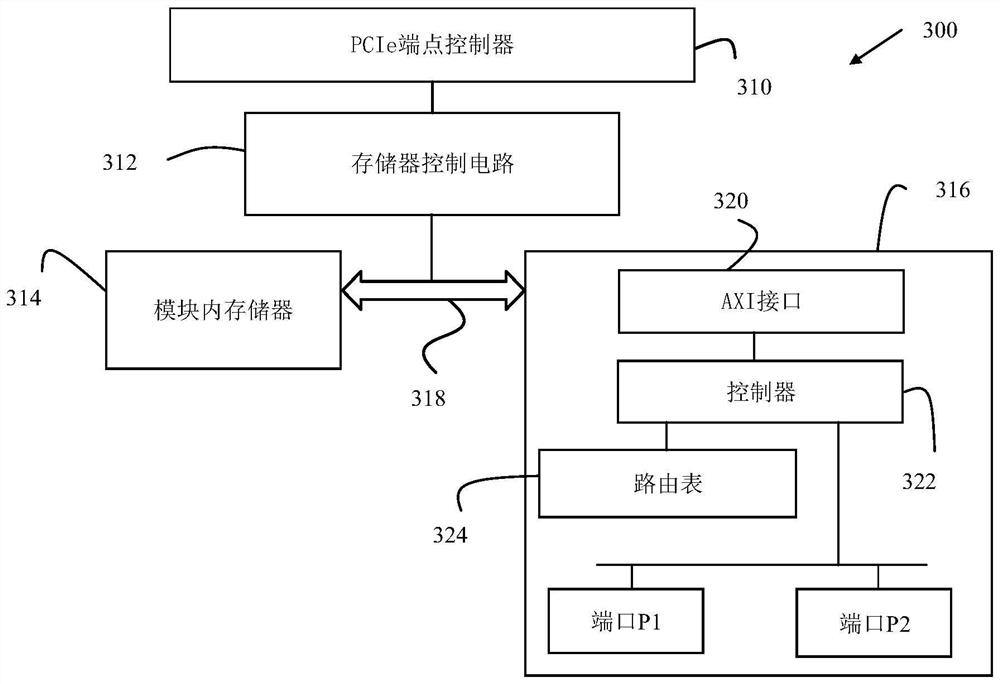

Processing system and method of operating processing system

PendingCN114238156ABig spaceEasy to understandMemory adressing/allocation/relocationProcessor architectures/configurationGeneral purposeGraphics

Owner:ALIBABA DAMO (HANGZHOU) TECH CO LTD

A gpgpu-based adaptive decomposition method for nonlinear unsteady complex signals

ActiveCN105279376BEasy to handleTo achieve the purpose of parallelizationCharacter and pattern recognitionComplex mathematical operationsGraphicsGeneral purpose

The invention discloses a nonlinear unsteady-state complex signal self-adapting decomposition method based on GPGPU (General Purpose Graphics Processing Units), and belongs to the field of signal analysis. During large-scale signal data processing by a conventional EEMD (Ensemble Empirieal Mode Decomposition) algorithm, the processing is limited by the denseness computation of the algorithm per se, so that the conventional algorithm cannot meet the real-time performance demand in practical application. A plurality of high parallel computation steps included by the EEMD algorithm are analyzed; a GPGPU method based on a CUDA (Compute Unified Device Architecture) is used for performing parallel design on the EEMD algorithm, so that the algorithm reaches an optimal state in the aspects of data precision and time consumption; Hilbert-huang transform is combined; Hilbert transform and Shannon entropy concepts are used for obtaining Hilbert-huang spectral entropy to further studying decomposition signals. Experiments prove that the method has high efficiency and availability in the practical signal decomposition analysis.

Owner:WUHAN UNIV

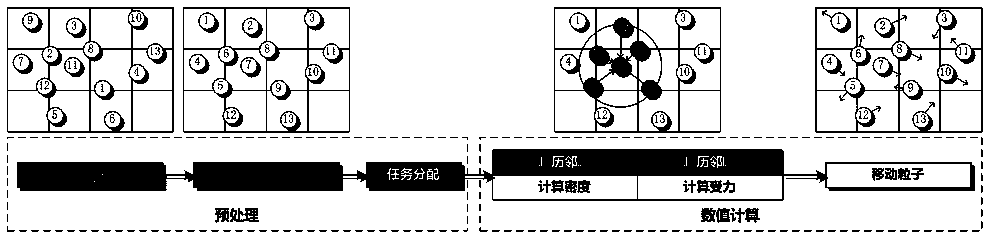

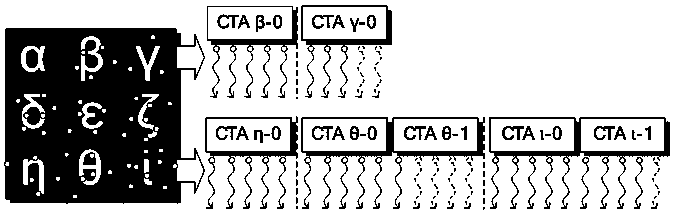

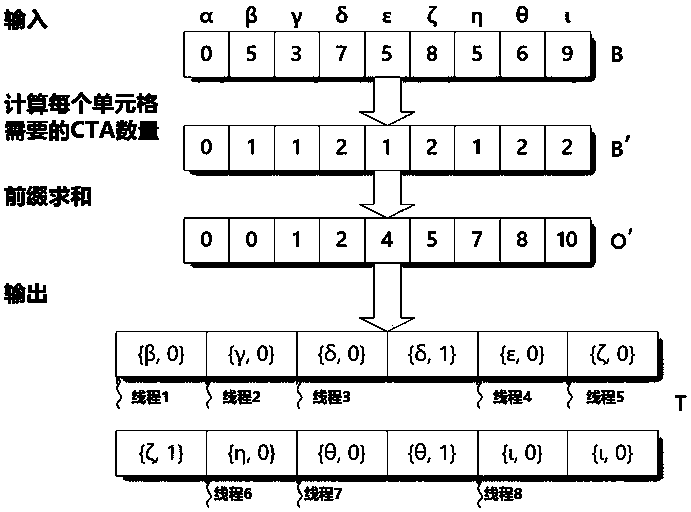

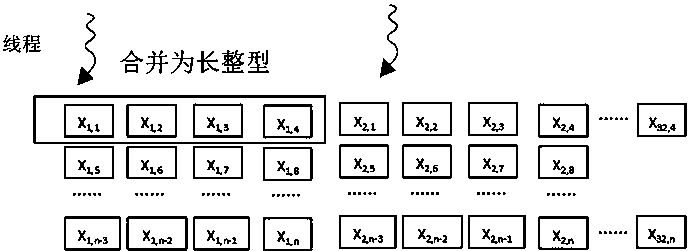

A gpgpu parallel computing method for sph fluid simulation

ActiveCN106484532BImprove performanceResource allocationInterprogram communicationSmoothed-particle hydrodynamicsLevel structure

The invention discloses a GPGPU (General Purpose Graphics Processing Unit) parallel computing method oriented to SPH (Smoothed Particle Hydrodynamics) fluid simulation. The method comprises the step of: grouping particle computing tasks in an SPH method to accord with a tread level structure in a GPGPU, wherein a thread in a cooperative thread array (CTA) can process logically similar work. The grouping method is developed for a CTA scheduling rule in the GPGPU and is realized on the GPGPU, thus the cache hit rate of a system can be improved while allocating tasks rapidly. An on-chip shared memory can be used for caching read data from a global memory on the basis of the allocating method; the memory bandwidth can be fully utilized according to the storage access characteristics of the global memory; and meanwhile through utilization of SIMD (Single Instruction Multiple Data) characteristics of the GPGPU, the thread synchronization overhead brought by use of the shared memory can be effectively avoided.

Owner:EAST CHINA NORMAL UNIV

A Construction Method of High Dimensional Vocabulary Tree Based on Heterogeneous Platform

The invention belongs to the technical field of a parallel processing unit, and particularly relates to a high-dimensional-data-oriented vocabulary tree building method based on a heterogeneous platform. According to the method, the strong parallel calculation capability and the programmability of a graphics processing unit arranged on a heterogeneous processing platform (a mixed architecture of a general purpose processor and a GPGPU (General Purpose Graphics Processing Unit)) are used for accelerating the speed of the building process of a high-dimensional vocabulary tree. The characteristic of high concurrency of the graphics processing unit is used for accelerating the speed of the core process of a high-dimensional vocabulary tree algorithm; the characteristics of the high-dimensional vocabulary tree algorithm and the memory access mode of the graphics processing unit are used for optimizing the memory accessing process of the algorithm; and a coordination strategy of a host and the graphics processing unit in the operation process of the high-dimensional vocabulary tree algorithm is designed. The method has the advantage that the building speed of the high-dimensional-data-oriented vocabulary tree can be effectively accelerated.

Owner:FUDAN UNIV

A task dispatching method for multi-task concurrent execution of a general-purpose graphics processor

ActiveCN105653243BRelieve pressureOvercome low resource utilizationConcurrent instruction executionImage data processing detailsGraphicsComputer architecture

The invention discloses a method for distributing tasks by a general purpose graphic processing unit in a multi-task concurrent execution manner. The method comprises the following steps: firstly classifying kernel functions through a thread block distribution engine method; carrying out classified counting on the kernel functions to obtain the number of thread blocks of the kernel functions which are respectively distributed to a streaming processor; and distributing the thread blocks with different kernel function corresponding numbers into a same streaming multiprocessor so as to achieve the aims of improving the resource utilization rate of each streaming multiprocessor in the general purpose graphic processing unit and enhancing the system performance and the energy efficiency ratio. A level-1 data cache bypass method can be further utilized; and according to the method, a dynamic method is used for determining the thread block of which kernel function is bypassed, and then bypassing is carried out according to the number of the bypassed thread blocks of the kernel functions, so as to achieve the aims of lightening the pressure of the level-1 data cache and further improving the performance.

Owner:PEKING UNIV

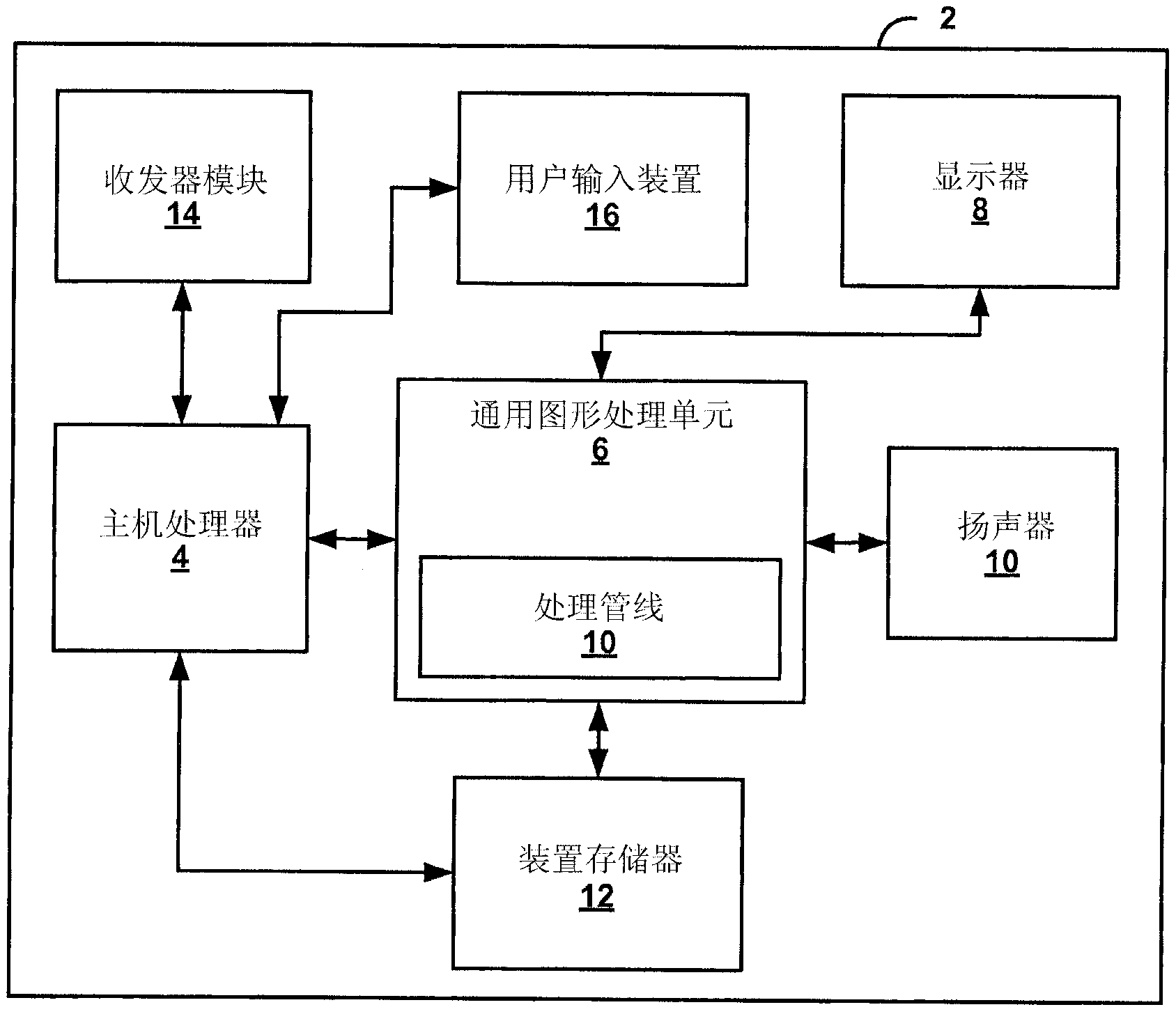

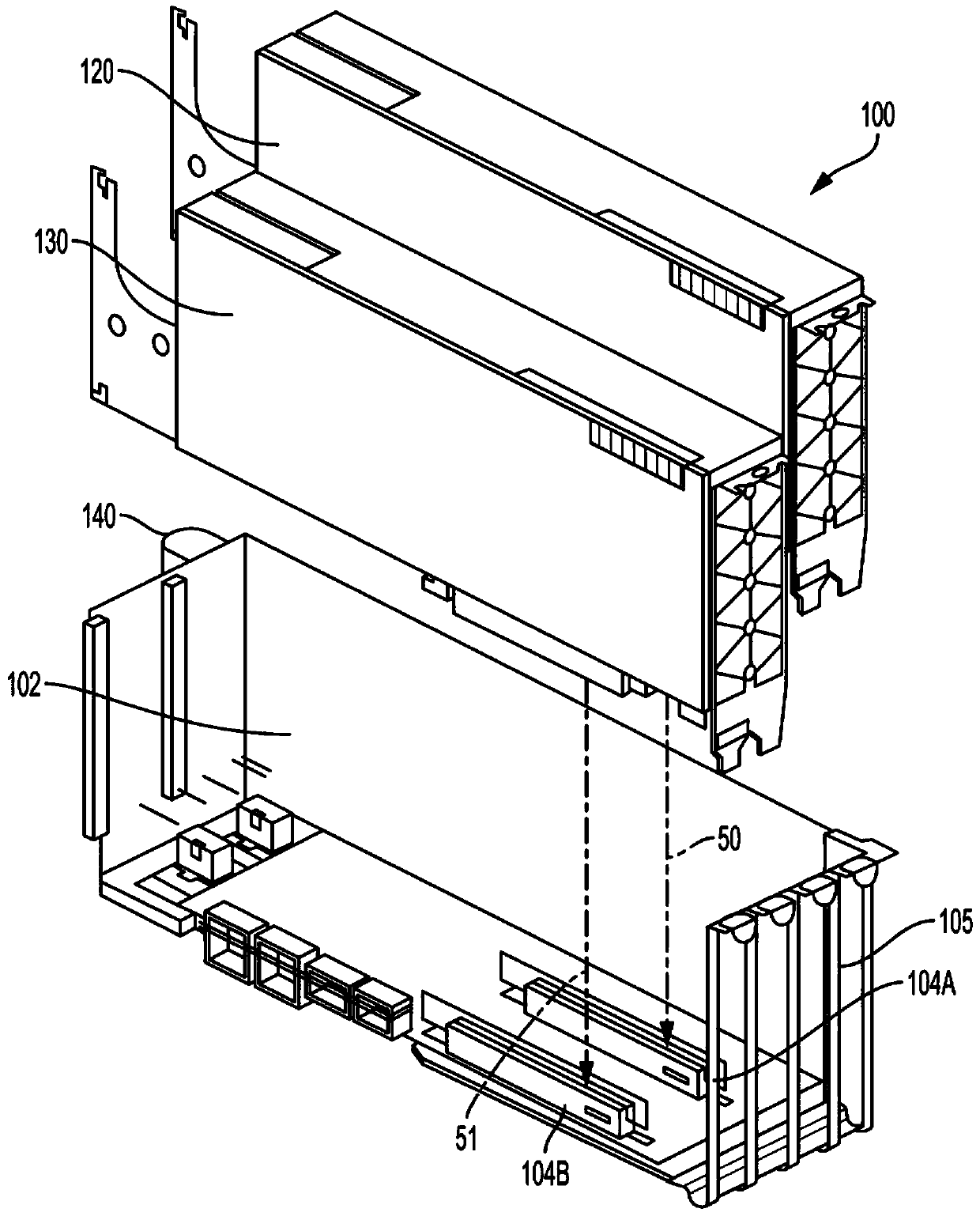

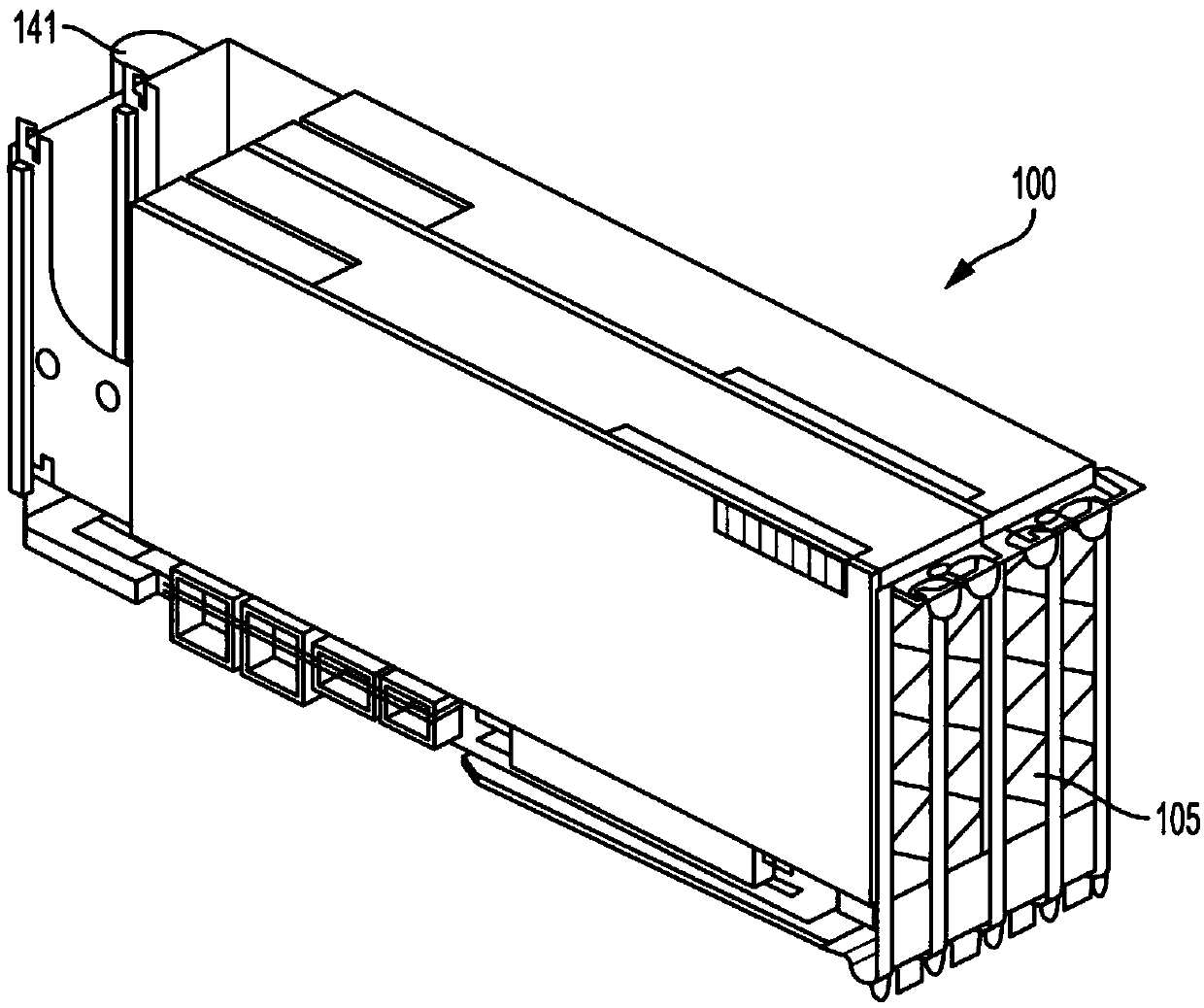

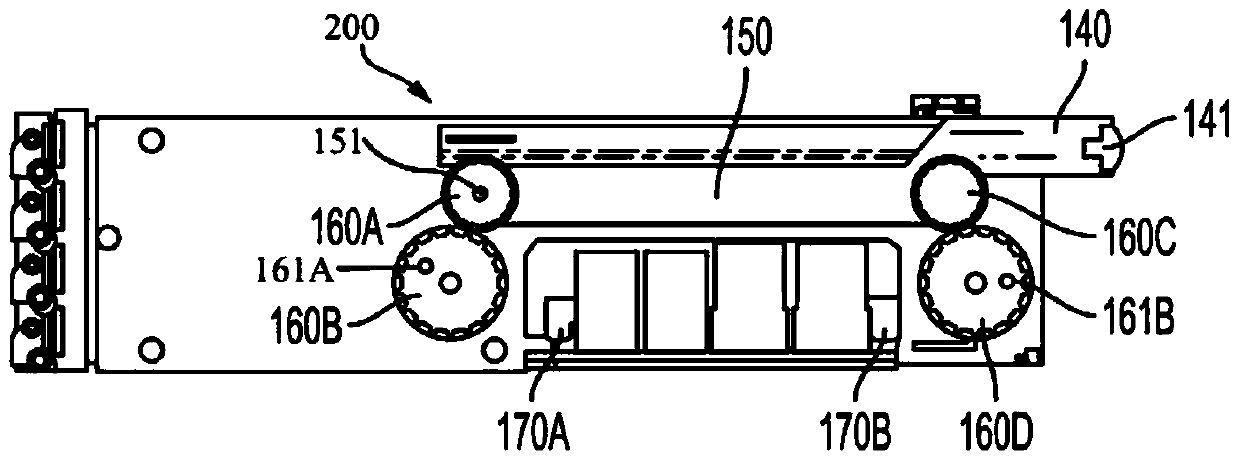

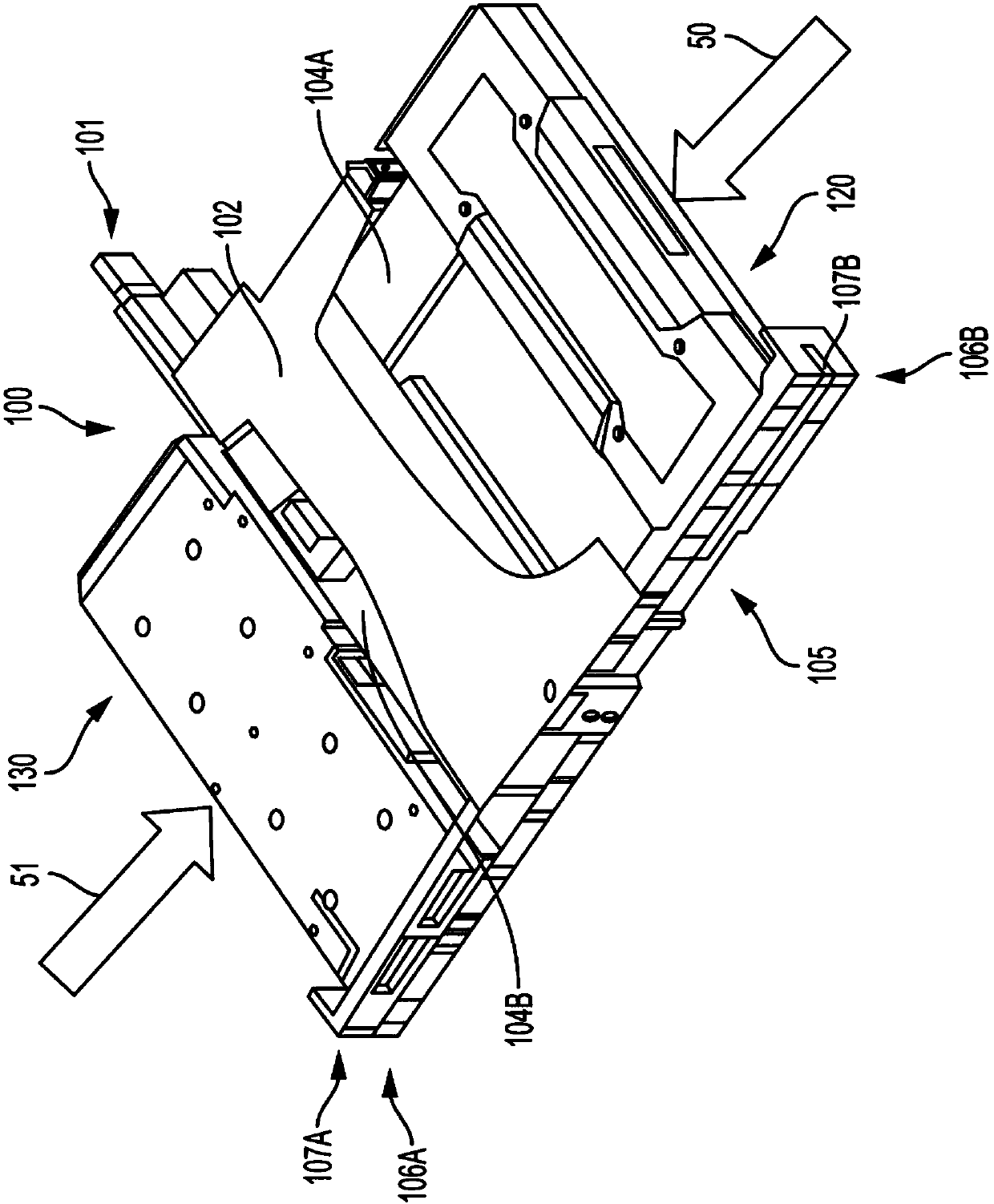

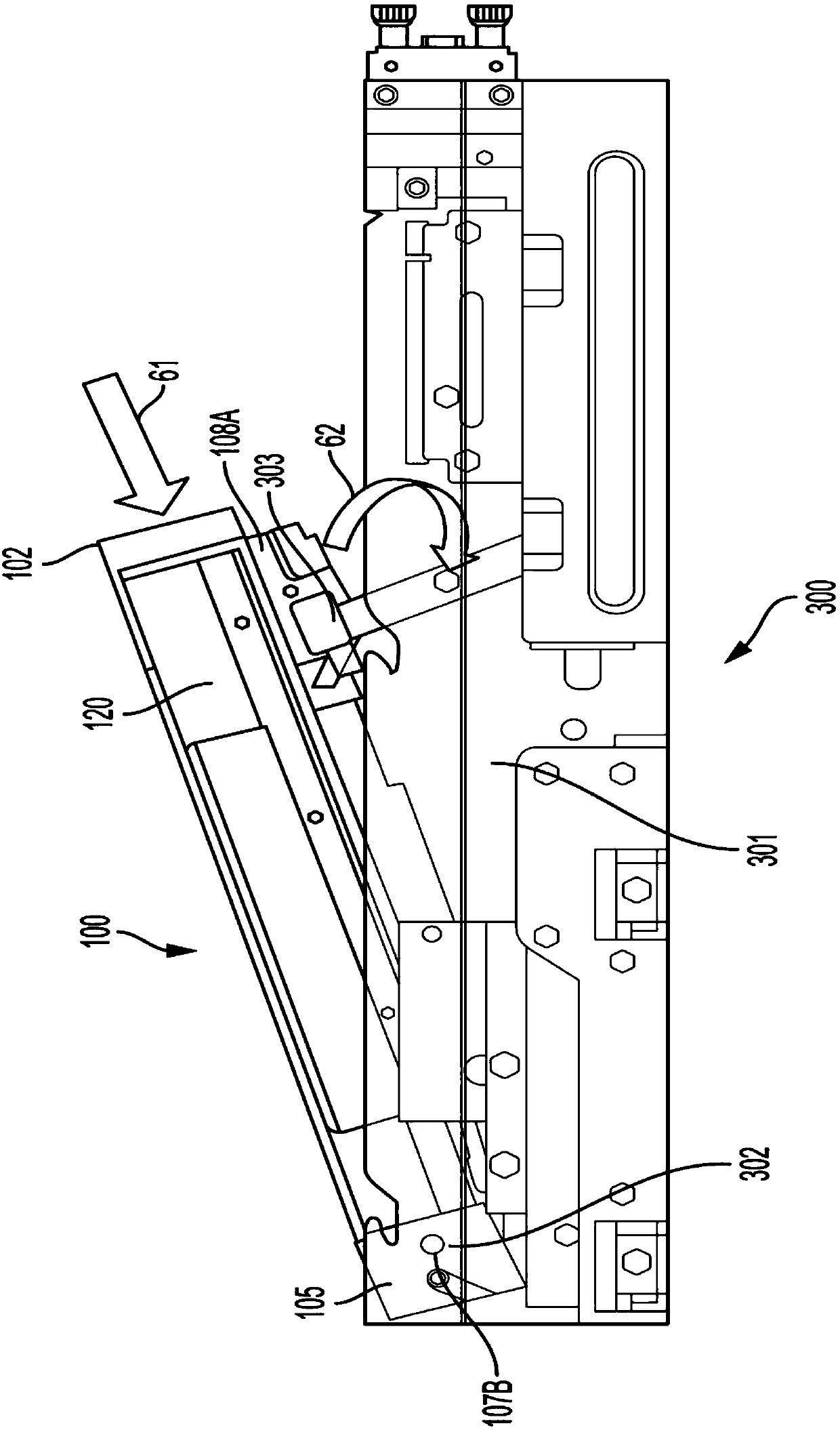

Computing device and general purpose graphic processing units (GPGPU) carrier

The invention provides a computing device and a general purpose graphic processing units (gpgpu) carrier. The computing device is provided with a slot that includes at least one locking element configured to receive a removable general purpose graphic processing unit (GPGPU) carrier. The GPGPU carrier includes a bracket with a first receiving space for securing a first GPGPU, and a second receiving space for securing a second GPGPU. The GPGPU carrier also includes a locking mechanism connected to the bracket and configured to secure the at least one locking element of the computing device to at least one securing mechanism corresponding to the at least one locking element. The locking mechanism includes a lever connected to gear drives configured to actuate the at least one securing mechanism upon actuating the lever.

Owner:QUANTA COMPUTER INC

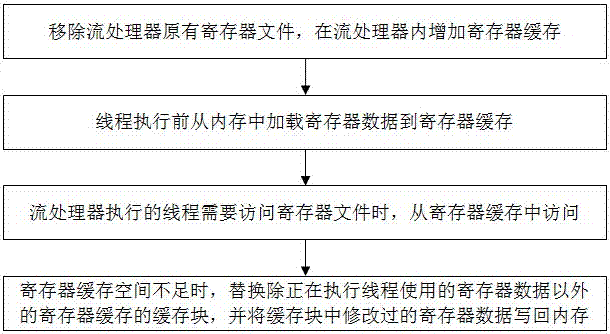

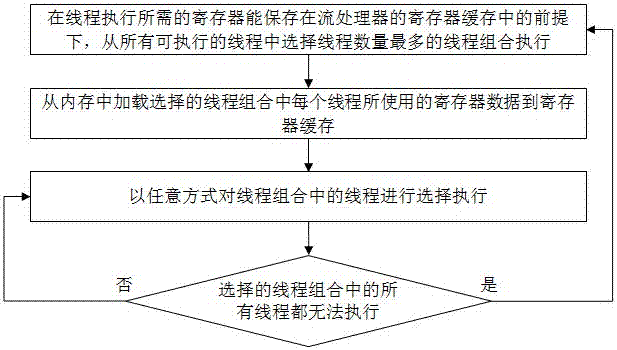

A method for implementing gpgpu register cache

ActiveCN104182281BReduce energy consumptionReduce chip areaResource allocationEnergy efficient computingGeneral purposeGraphics

The invention discloses a method for implementing register caches of GPGPU (general purpose graphics processing units). The method includes removing original register files in various stream processors, adding the caches of registers required for storing threads being executed, and loading register data in memories to the register caches before the threads are executed; selecting the thread combinations with the maximum thread quantities from the executable threads on the premise that the registers required by execution of the threads can be stored in the register caches of the stream processors, executing the selected thread combinations, loading register data in the memories to the register caches, selectively executing the threads in the thread combinations in optional modes and reselecting another group of threads when all the threads in the selected thread combinations cannot be executed. The register data are used by each thread in the selected thread combinations. The method has the advantages that storage spaces required by the register files of the stream processors can be reduced, energy consumption and areas can be decreased, the problem of constraints due to insufficient quantities of registers of existing stream processors can be solved, and the system efficiency can be improved.

Owner:ZHEJIANG UNIV CITY COLLEGE

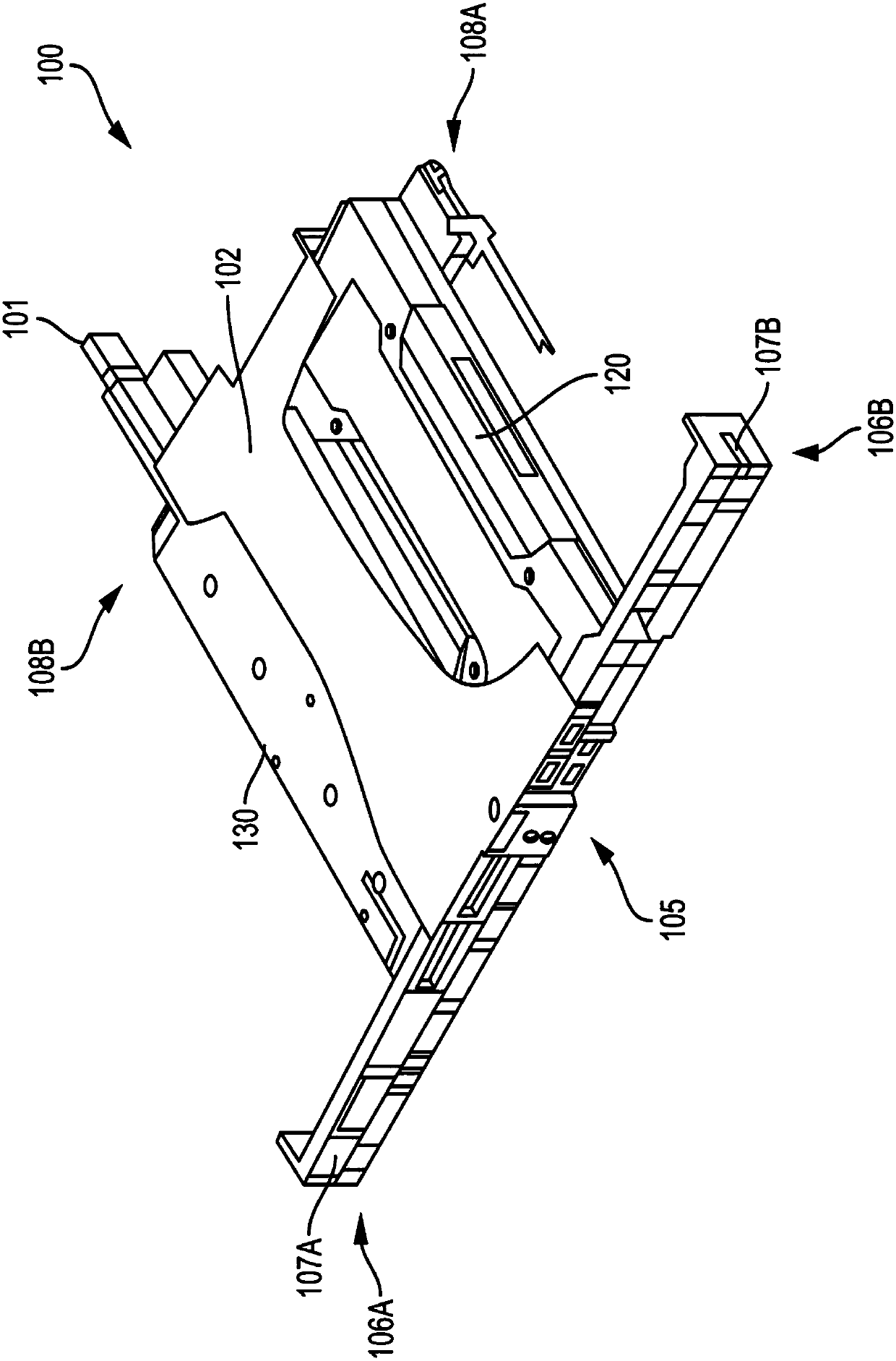

Computing device and general purpose graphic processing unit carrier

The invention discloses a computing device and a general purpose graphic processing unit carrier. The computing device is provided with a slot that includes a first locking element and a second locking element configured to receive a removable general purpose graphic processing unit (GPGPU) carrier. The GPGPU carrier includes a bracket for securing a first GPGPU in a first receiving space, and second GPGPU in a second receiving space. The bracket also includes a first latching mechanism configured to secure the first locking element of the slot, and a second latching mechanism configured to secure the second locking element of the slot. The GPGPU carrier also includes a frame secured to the bracket. The frame includes a first end with a first guide slot, and a second end with a second guide slot, configured to secure the GPGPU carrier within the slot. The first and second guide slots are configured to enable the GPGPU carrier to adjust from a first position to a second position while secured within the slot.

Owner:QUANTA COMPUTER INC

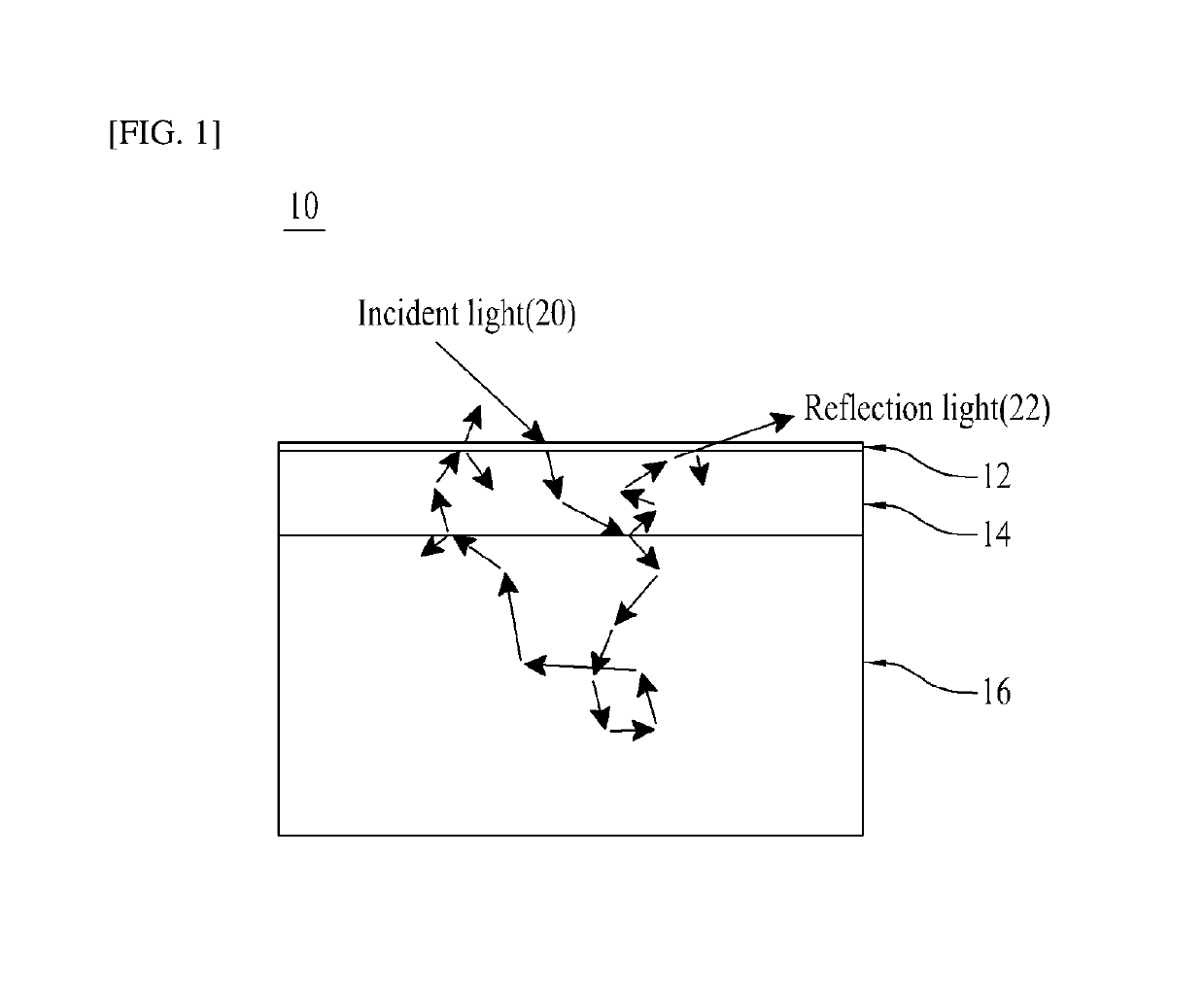

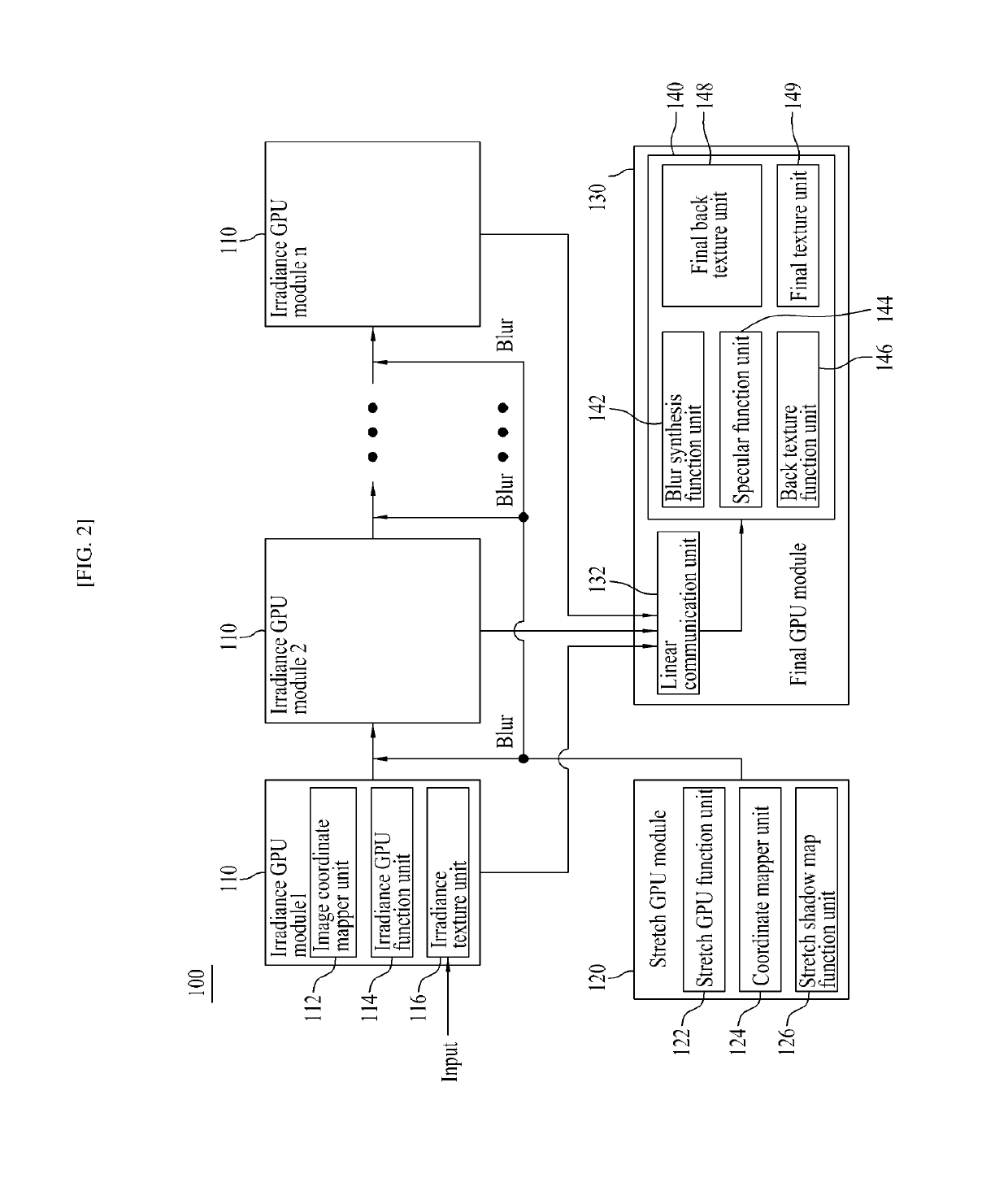

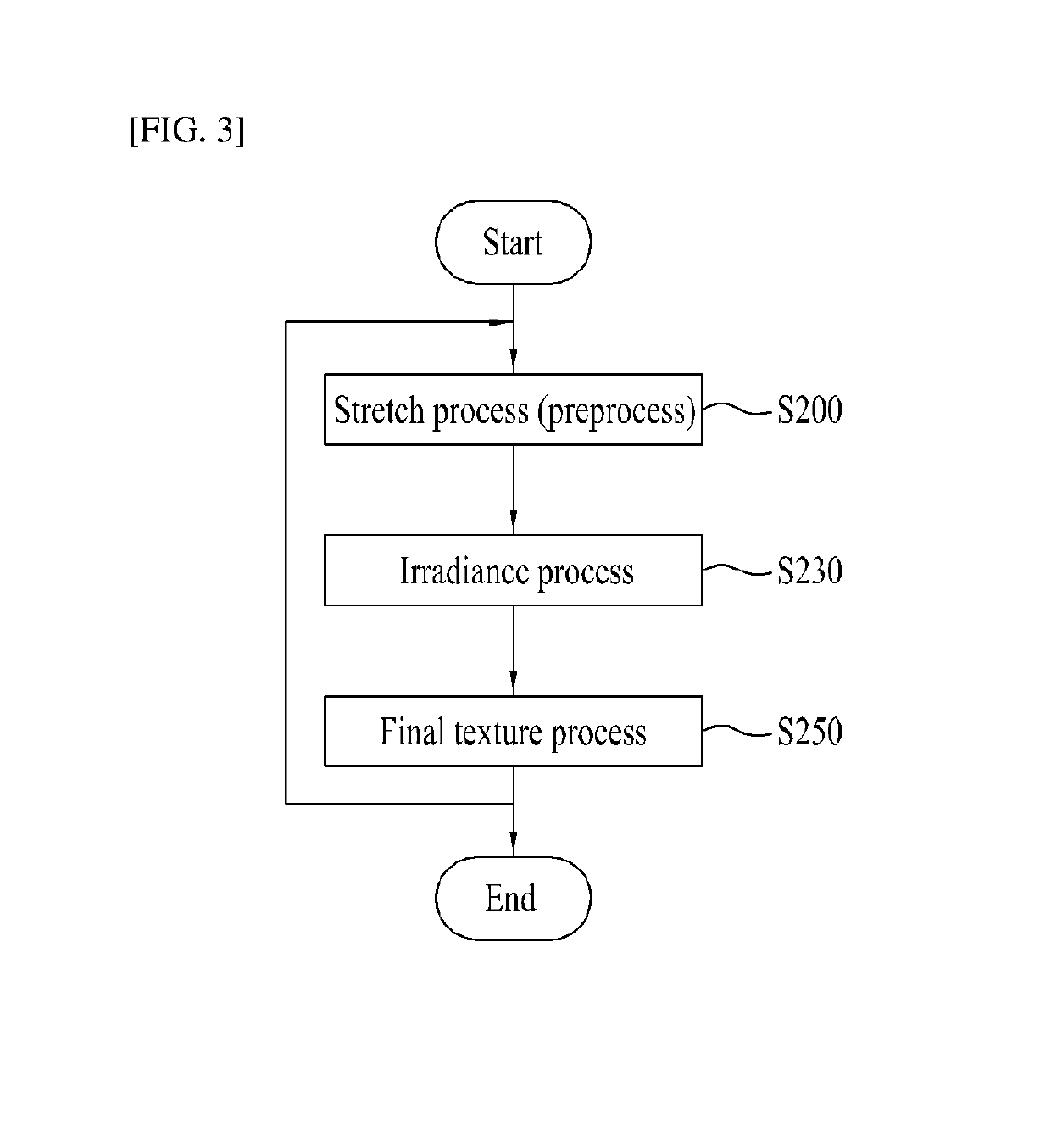

Three-dimensional character rendering system using general purpose graphic processing unit, and processing method thereof

ActiveUS10403023B2Reduce system loadFast real-timeAnimationImage renderingGeneral purpose graphic processing unitIrradiance

The present invention relates to a system for rendering a three dimensional character and a method for processing thereof. The system for rendering a three dimensional character renders a three dimensional character model, for example, a skin having a multilayered structure such as a face of the person to enable realistic skin expressions according to reflection and scattering of light using a GPGPU. To this end, the system for rendering a three dimensional character includes a plurality of GPGPU modules corresponding to a render pass. According to the present invention, an irradiance texture of an image for each layer of the skin is created and processed using the GPGPU without passing through a render pass of a rendering library, thereby reducing a load of the system for rendering and enabling realistic skin expressions in real time.

Owner:HEO YOON JU

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com