Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

37 results about "Deep cnn" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Ml-based methods for pseudo-ct and hr mr image estimation

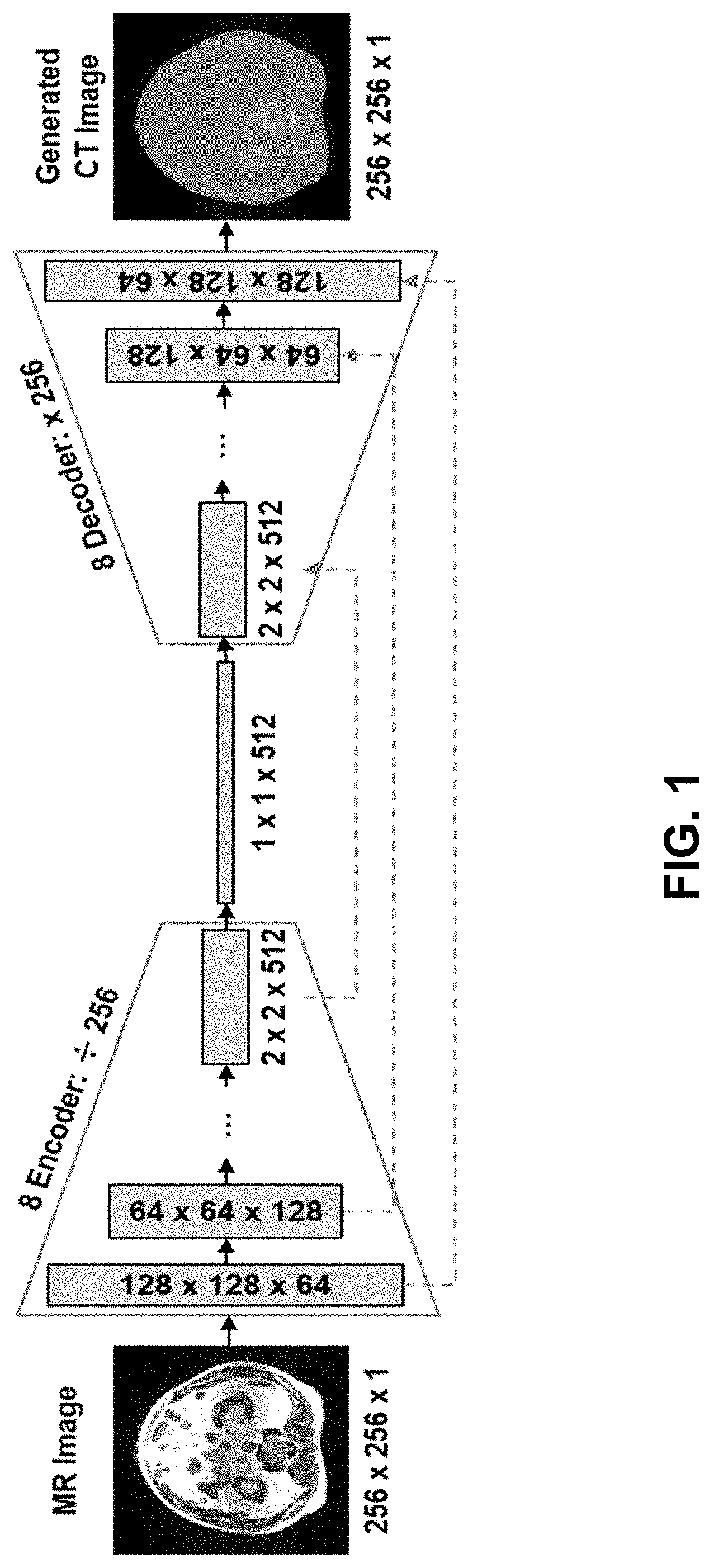

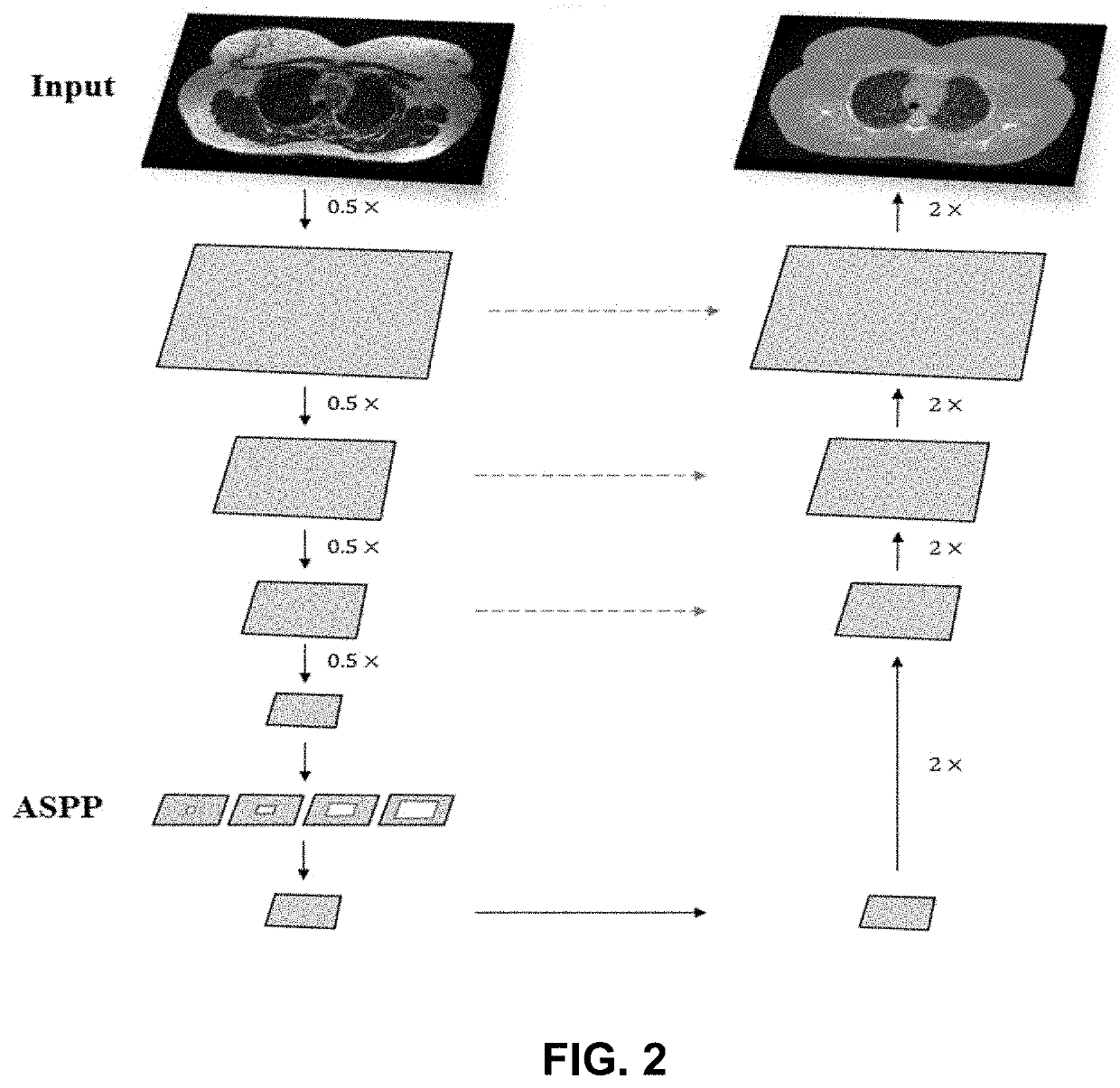

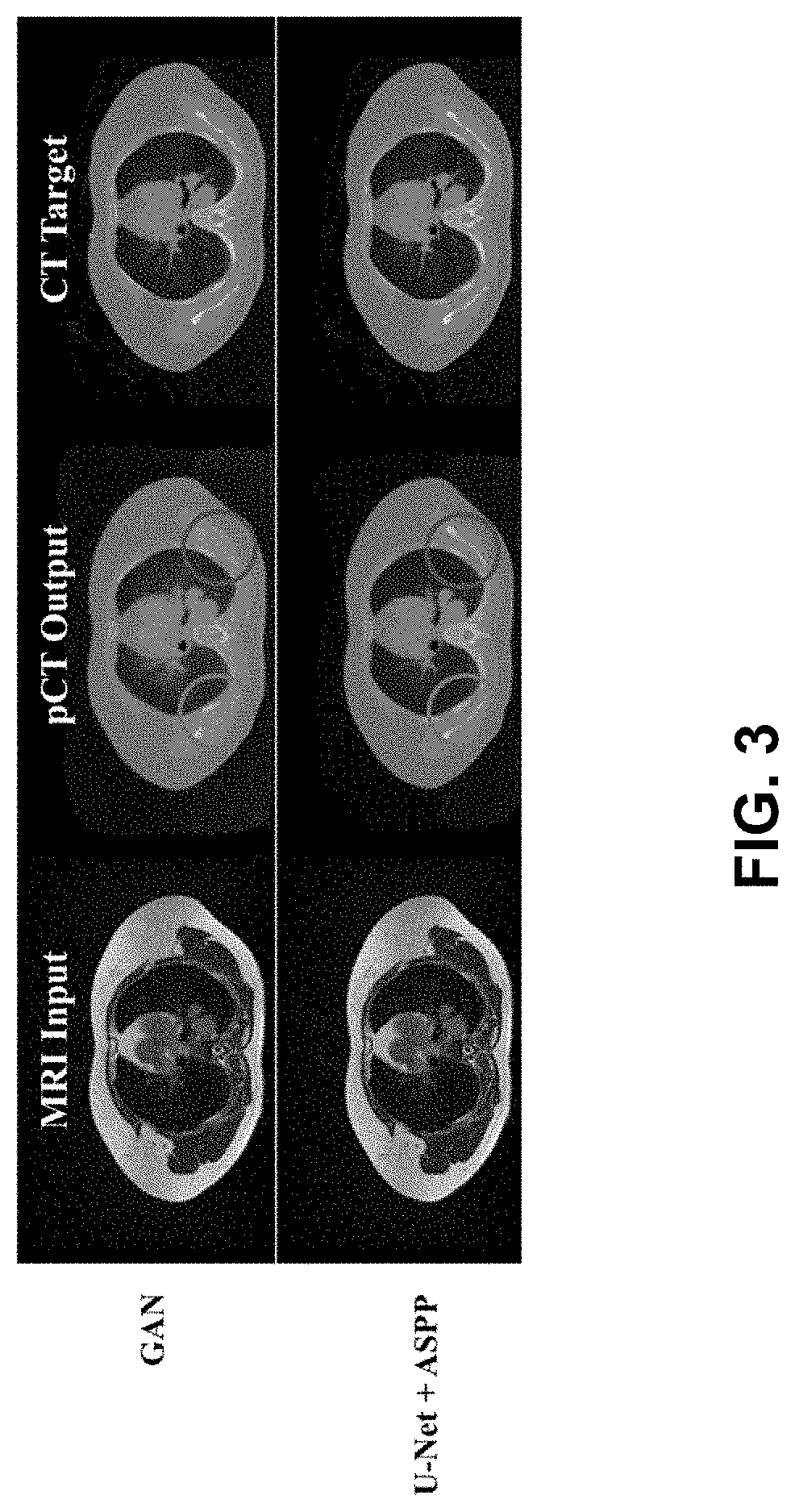

The present disclosure describes a computer-implemented method of transforming a low-resolution MR image to a high-resolution MR image using a deep CNN-based MRI SR network and a computer-implemented method of transforming an MR image to a pseudo-CT (sCT) image using a deep CNN-based sCT network. The present disclosure further describes a MR image-guided radiation treatment system that includes a computing device to implement the MRI SR and CT networks and to produce a radiation plan based in the resulting high resolution MR images and sCT images.

Owner:WASHINGTON UNIV IN SAINT LOUIS

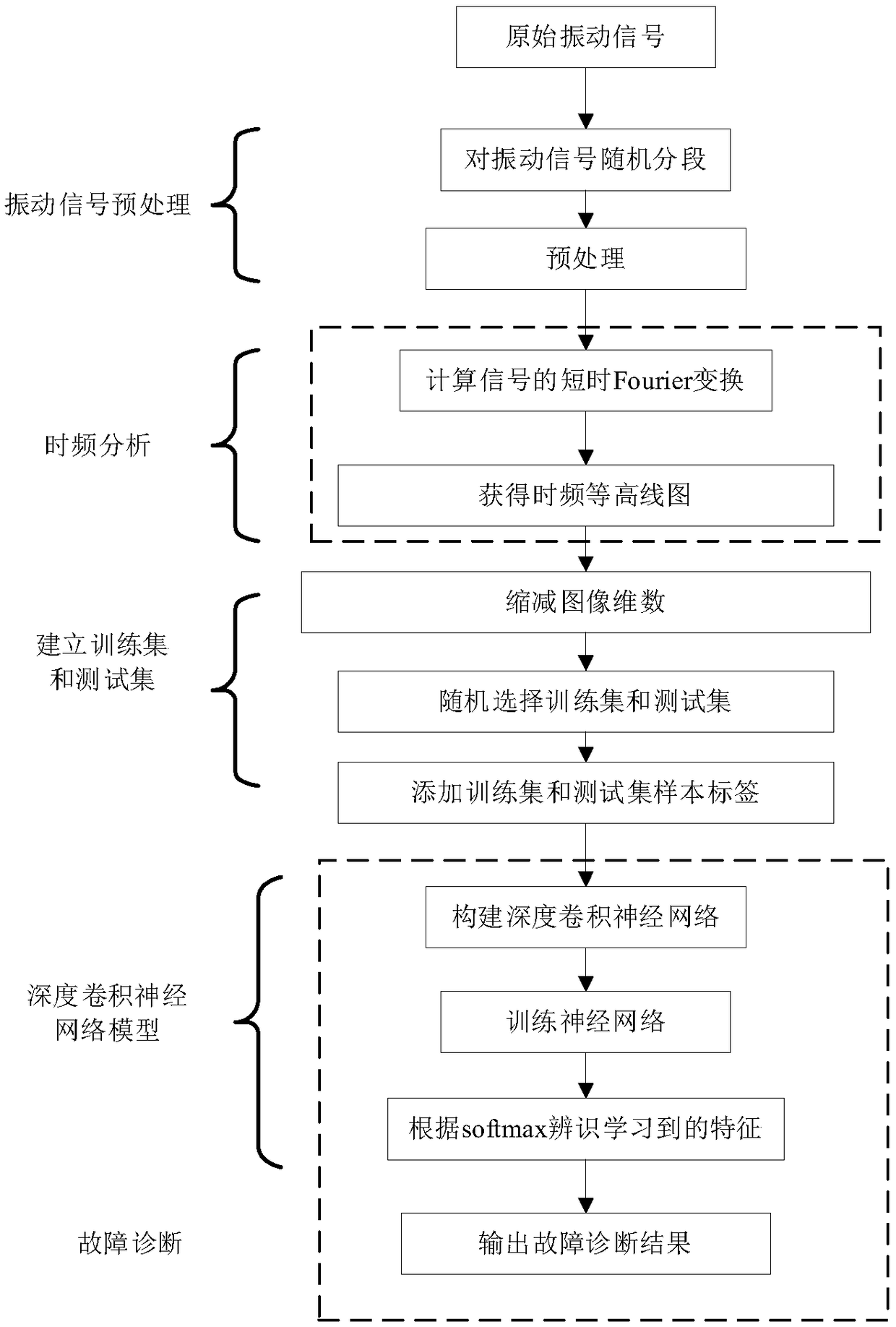

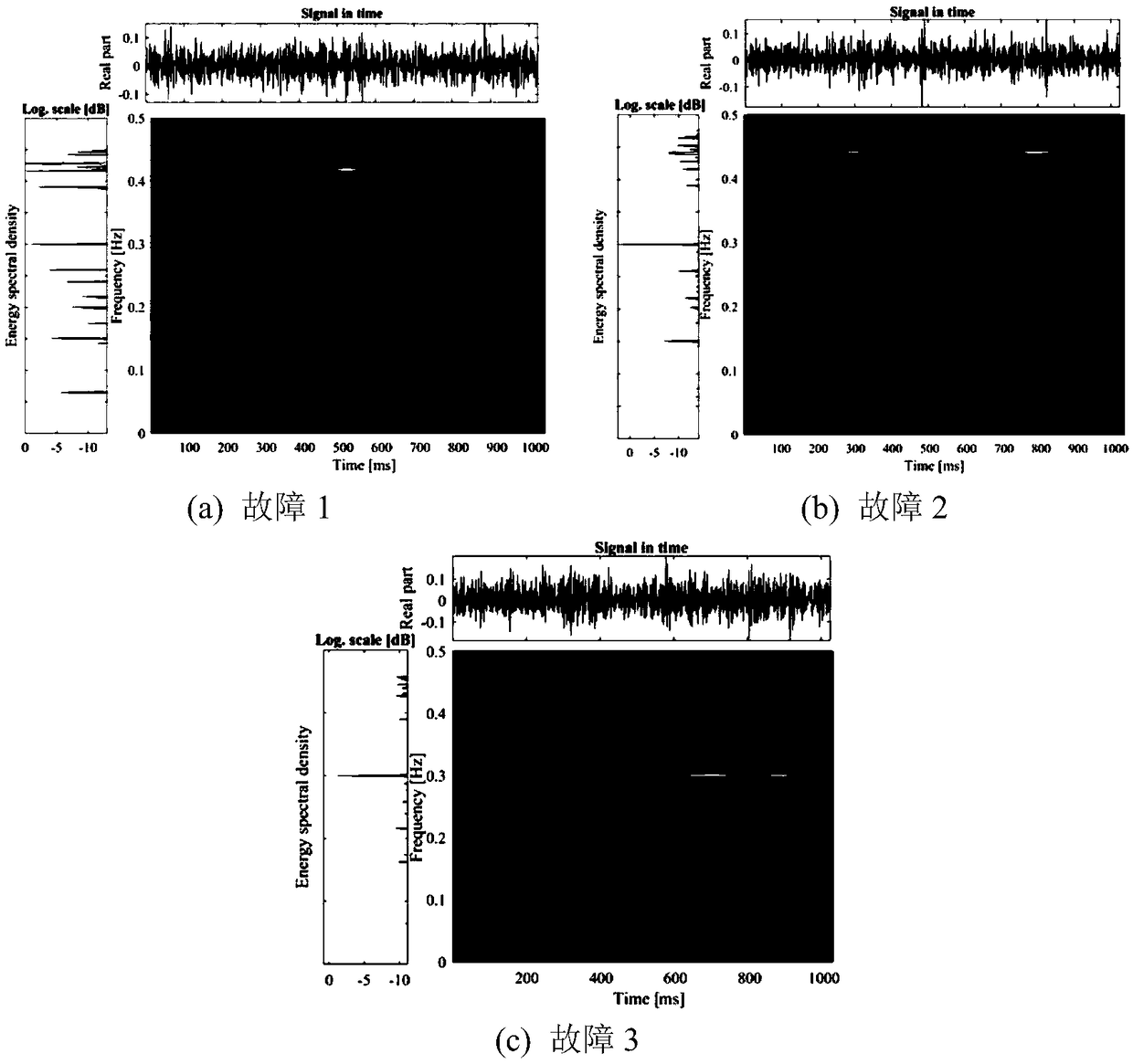

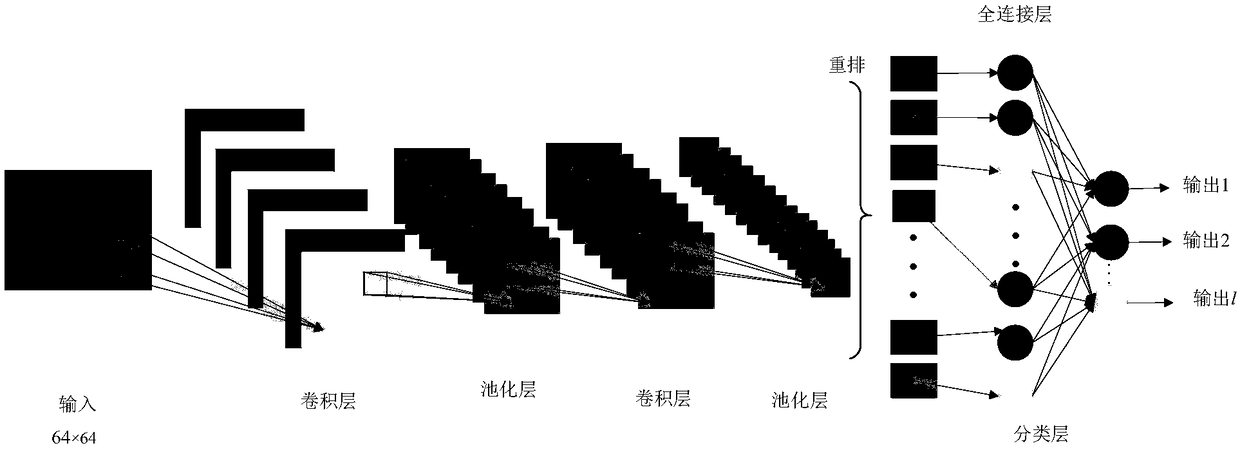

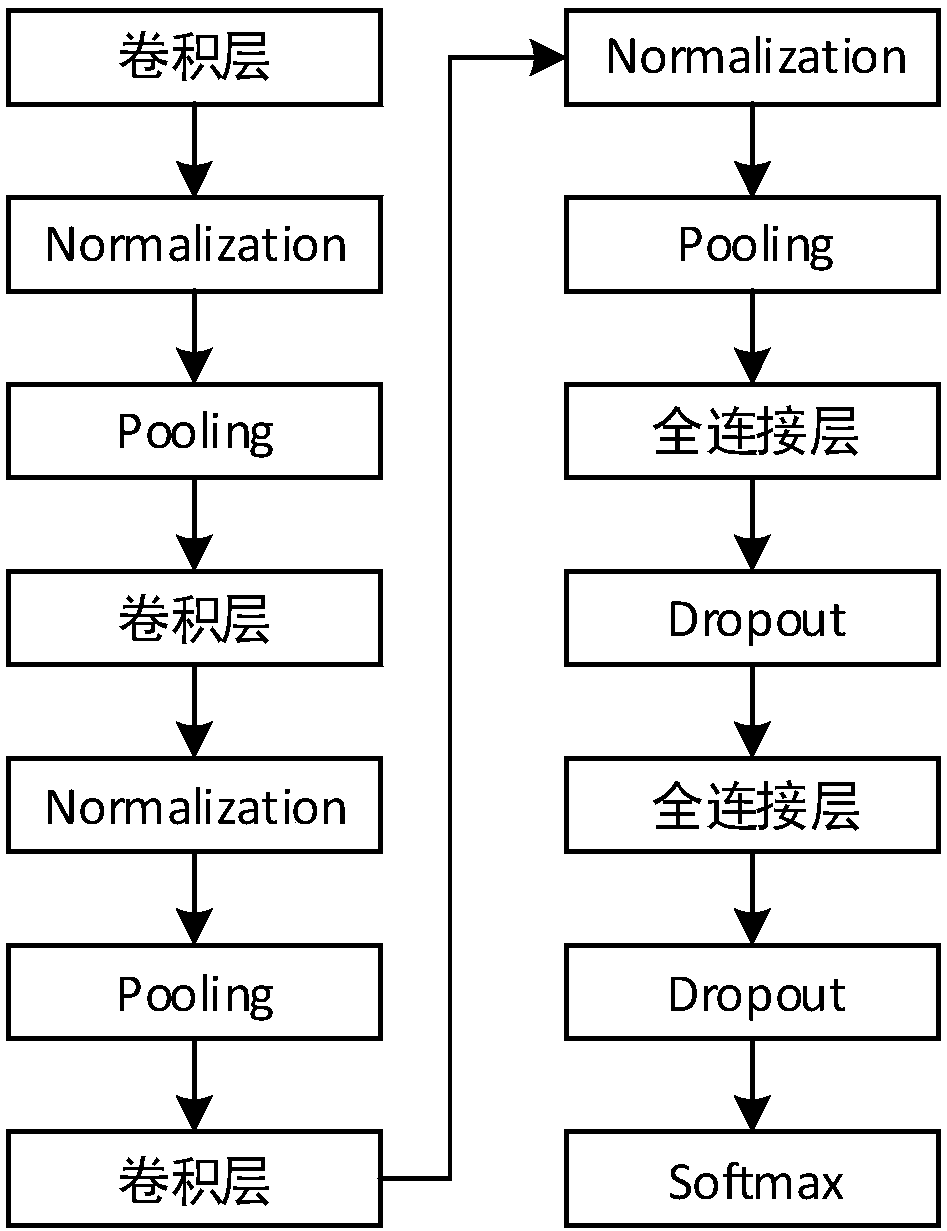

Method for intelligently diagnosing rotating machine fault feature based on deep CNN model

ActiveCN108830127AAccurately reflect the characteristicsAvoid featuresCharacter and pattern recognitionNeural architecturesAlgorithmNetwork model

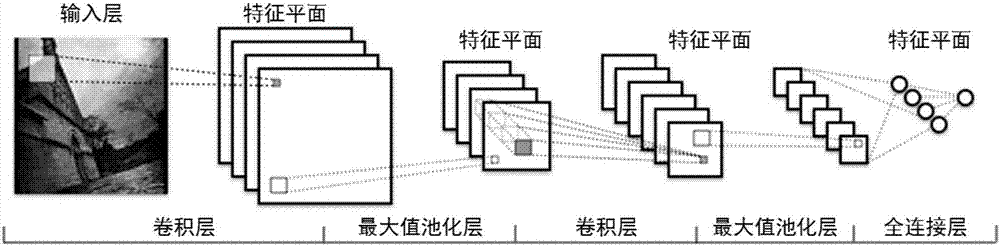

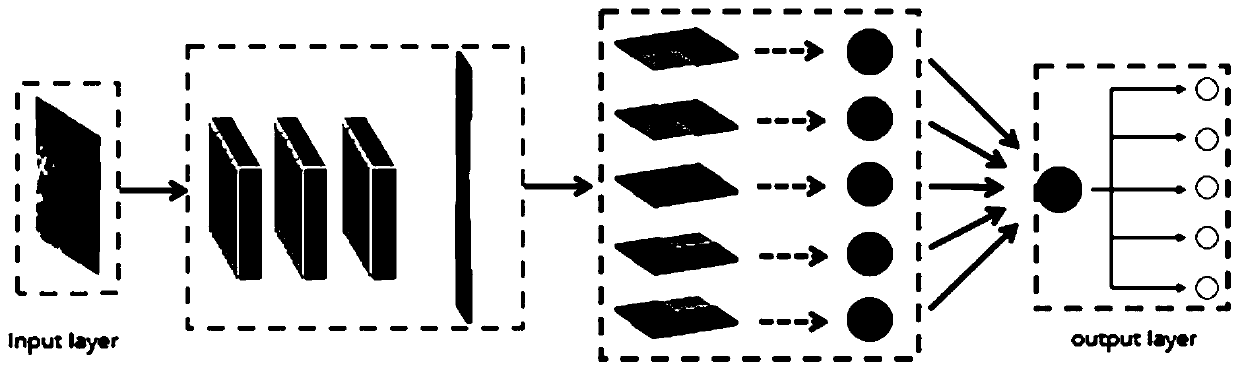

The invention discloses a method for intelligently diagnosing a rotating machine fault feature based on a deep CNN model. The method comprises: (1) acquiring rotating machine fault vibration signal data, segmenting the data and performing de-trend item preprocessing; (2) performing short-time Fourier time-frequency transform analysis on the signal data to obtain the time-frequency representation of each vibration signal, and displaying the time-frequency representation with a pseudo-color map; (3) reducing the image resolution by an interpolation method and superimposing respective images to form a training sample and a test sample as inputs of the CNN; (4) constructing the deep CNN model including an input layer, two convolution layers, two pooling layers, a fully connected layer, and a softmax classification layer and an output layer; and (5) introducing the training sample into the model for training, obtaining a convolution feature, a pooling feature and a neural network structuralparameter, and diagnosing unknown fault signals according to the constructed deep CNN. The method has better accuracy and stability than an existing time-domain or frequency-domain method.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

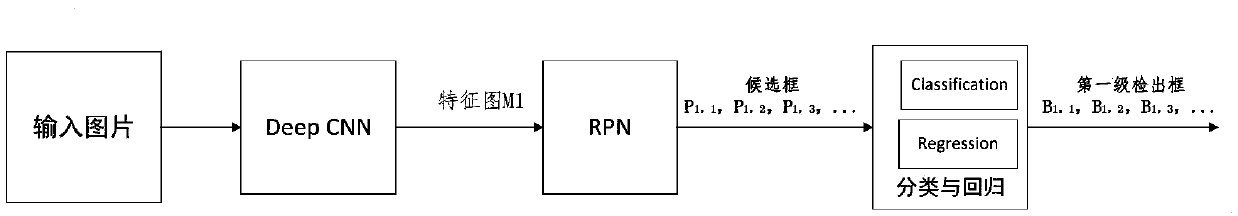

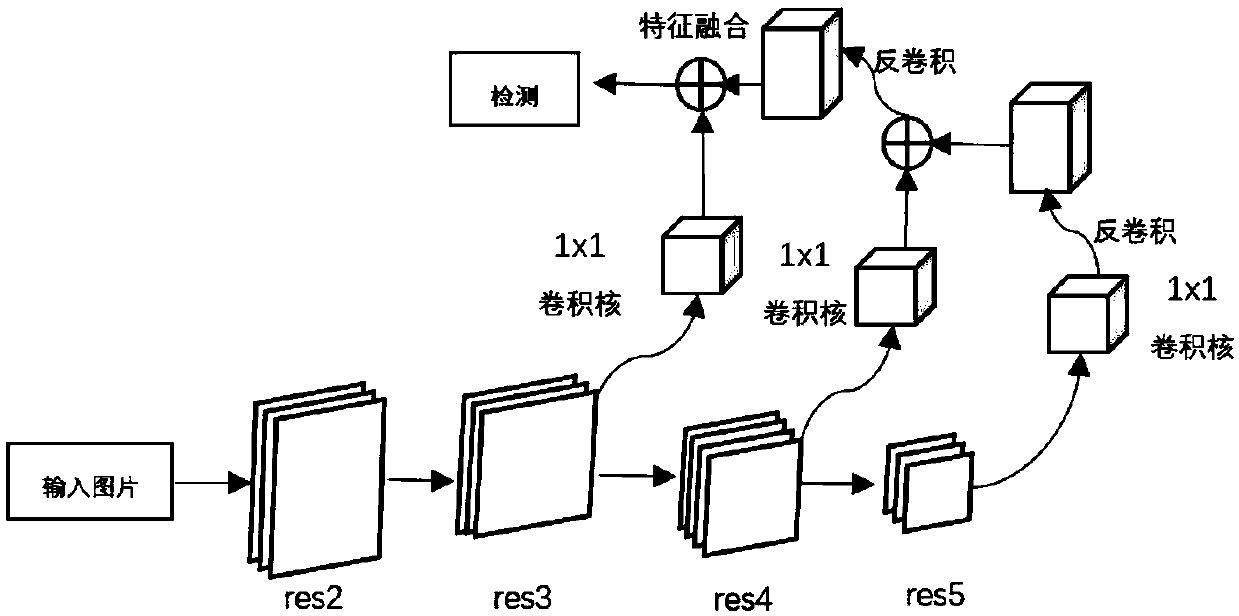

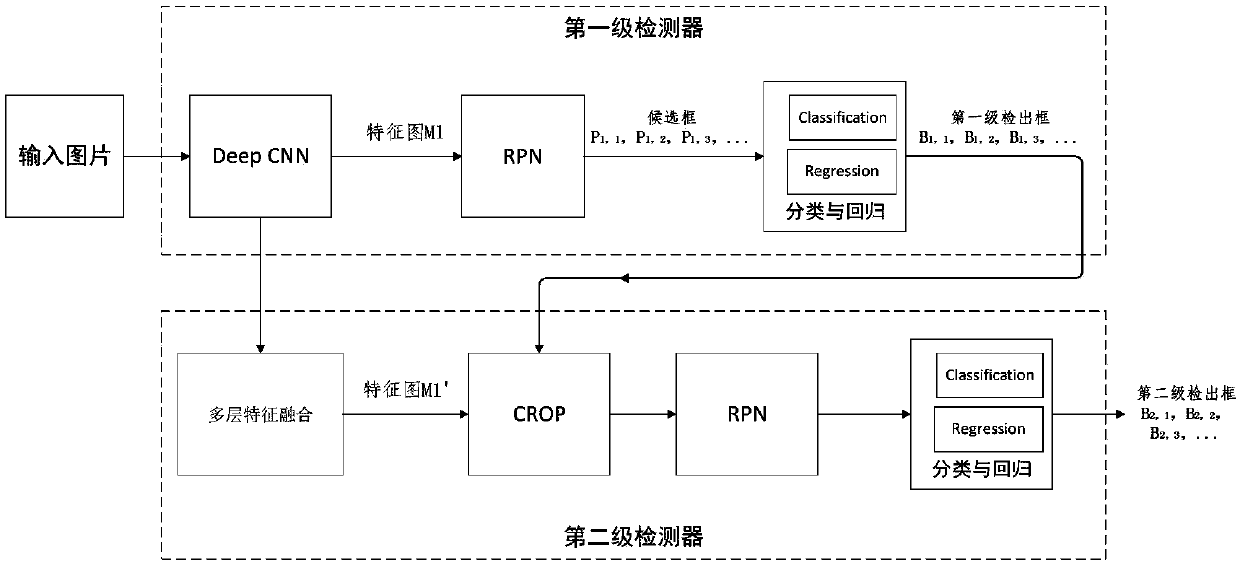

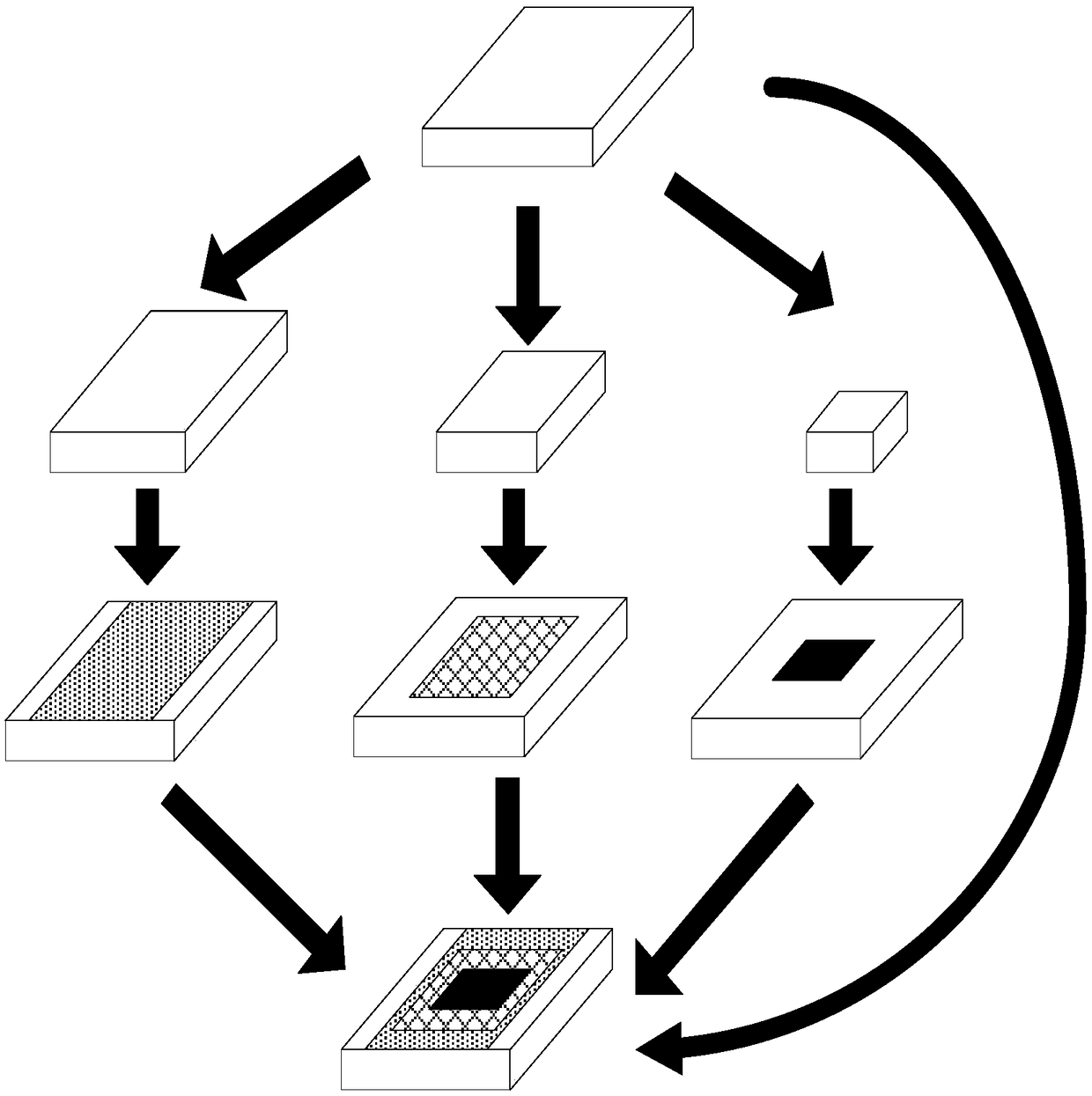

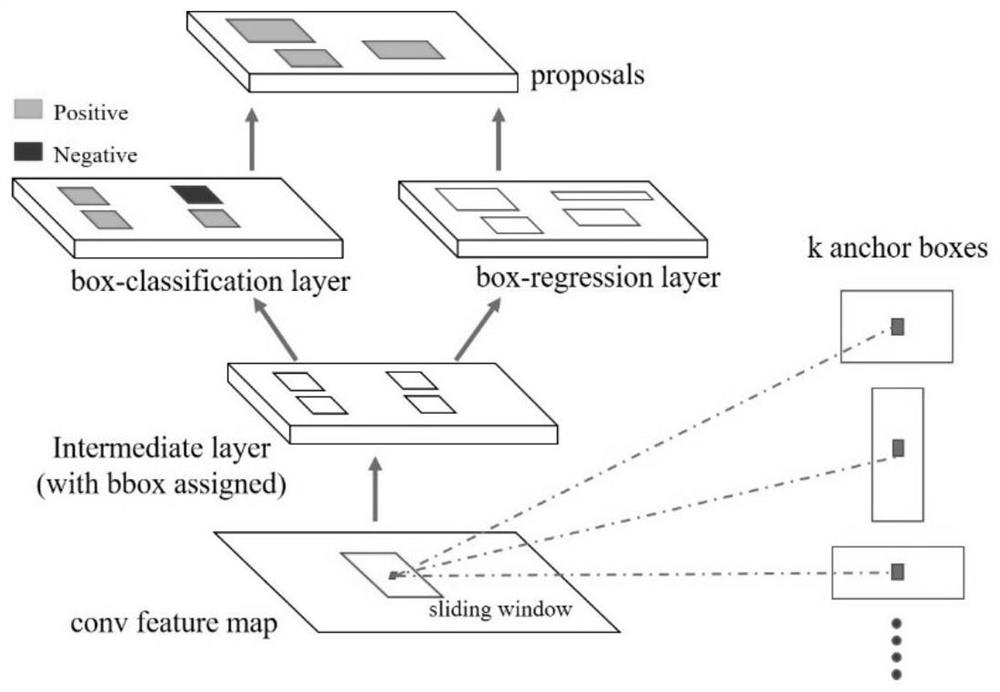

An image small target detection method based on combination of two-stage detection

PendingCN109598290AFully excavatedReduce the problem of false detection and missed detection of small targetsCharacter and pattern recognitionPattern recognitionNetwork model

The invention discloses a small target detection method based on combination of two-stage detection. The method includes: Sending the original image into a first detector to detect a first-stage target B1; Fusing the output features of the shallow CNN and the output features of the deep CNN to obtain M1 ', and selecting a corresponding feature map M2 from the M1' by using B1; taking the M2 as an input feature map and sending the M2 to an RPN module and a classification and regression module of a second-stage detector for detection and positioning of a second-stage target; And adding d loss obtained from two-stage detection as the total Loss of the whole network to obtain an end-to-end detection network model. According to the invention, a two-stage detection network is constructed; A largetarget is accurately detected firstly, then a small target is detected in a large target area, and a detection frame of the small target is limited in a local area which is most possible and most easily detected, namely the area where the large target is located, so that complex background interference is effectively removed, the false detection probability is reduced, and the detection precisionof the small target and the small target in the image is improved.

Owner:SHANGHAI JIAO TONG UNIV

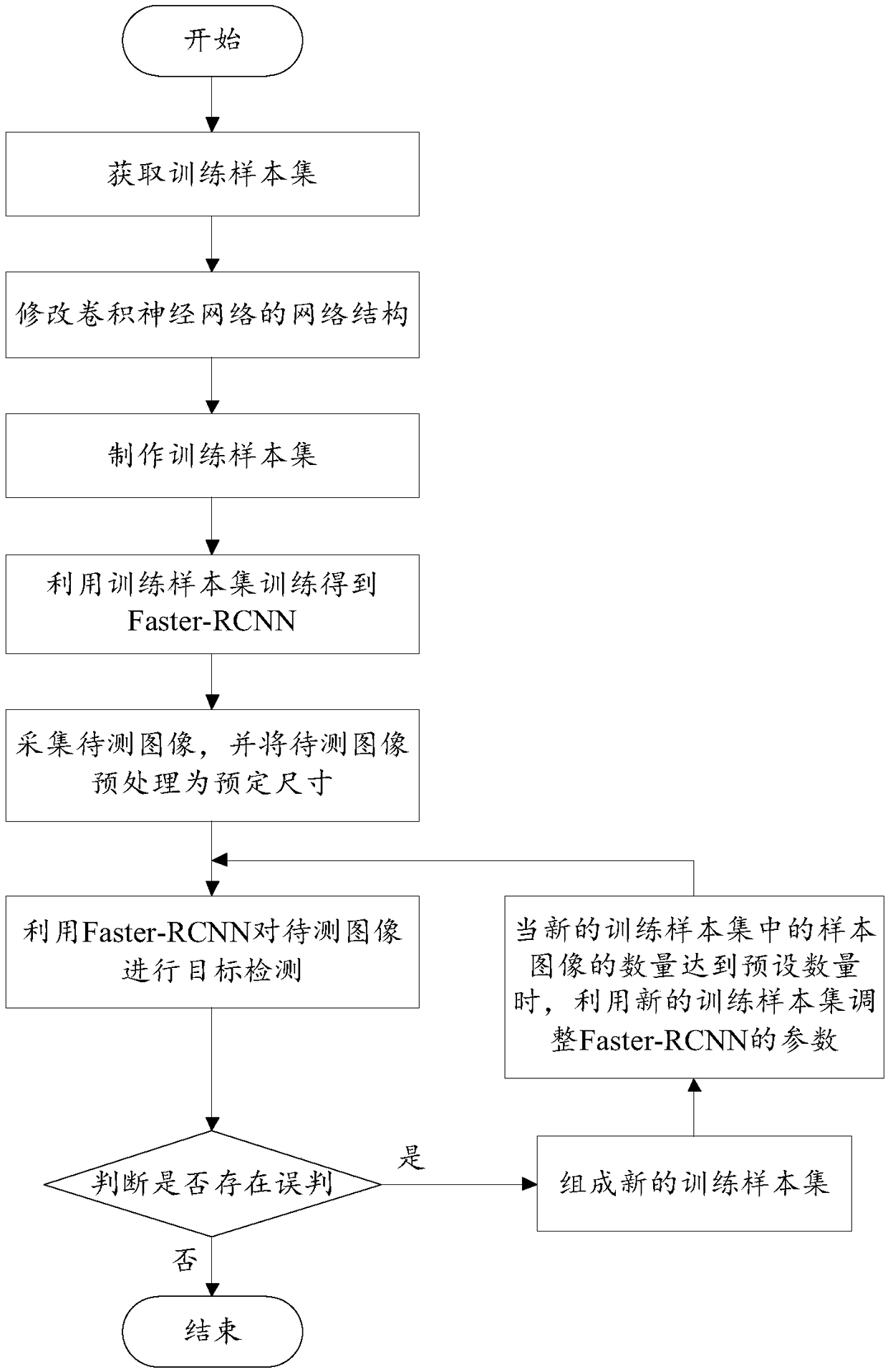

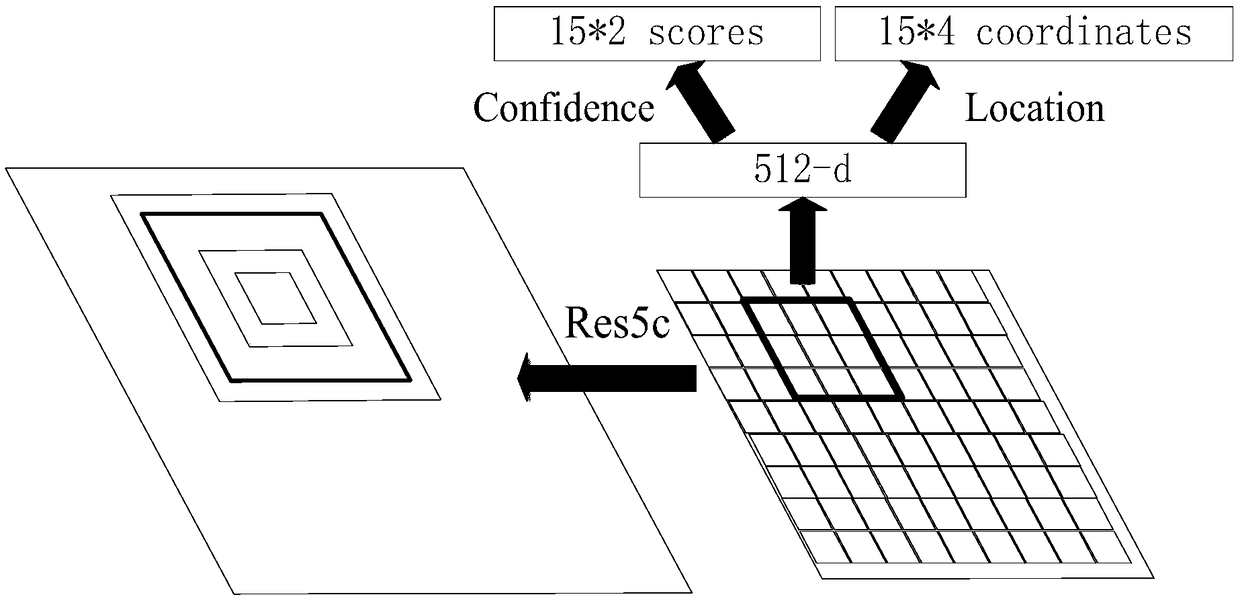

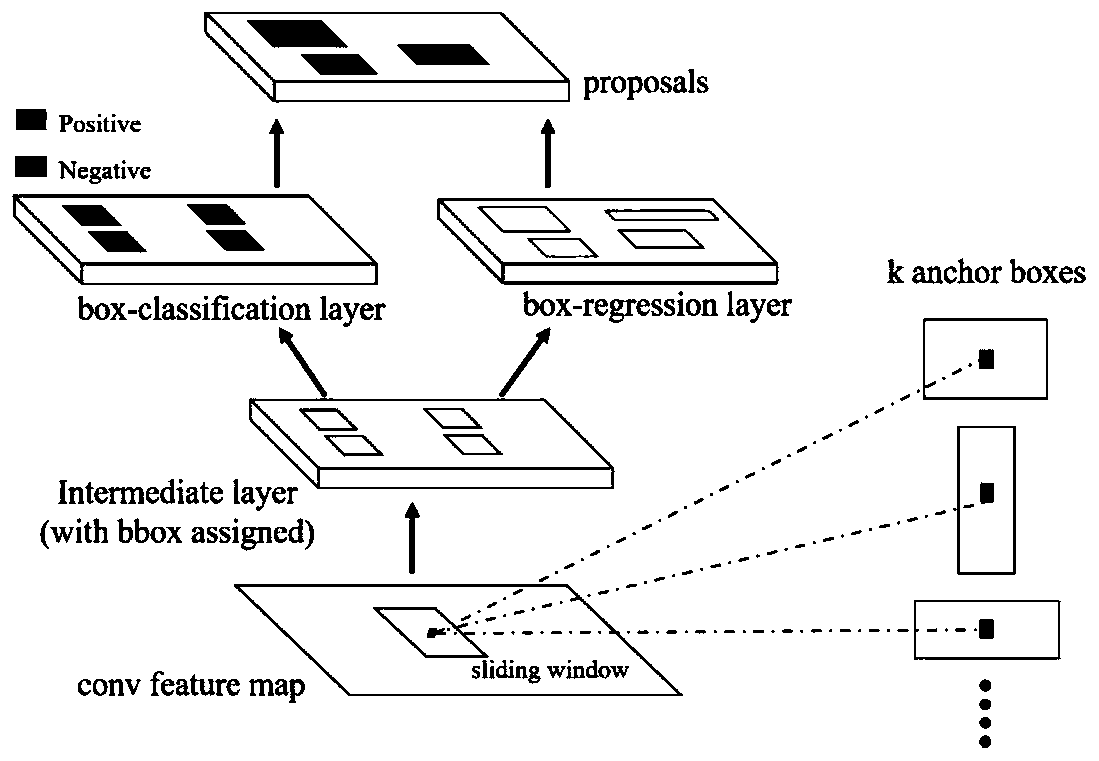

Target detection method based on Faster-RCNN reinforcement learning

ActiveCN108830285AReduce false positivesImprove performanceCharacter and pattern recognitionNeural architecturesFeature extractionImaging processing

The invention discloses a target detection method based on Faster-RCNN reinforcement learning and relates to the field of image processing. The method comprises acquiring an image to be tested; introducing the image to be tested into a Faster-RCNN; modifying the network structure of the CNN in the Faster-RCNN to replace a convolution module in the network structure of the last scale with a hourglass module; extracting the feature of the image to be tested by the CNN to generate feature mapping maps; importing the last feature mapping map into an RPN; vectorizing a feature mapping map corresponding to a candidate region selected by the RPN and then classifying the feature mapping map by a classifier to obtain a detection result. The method modifies the network structure of the CNN, replacesthe ordinary convolution module in the deep network with the hourglass module, performs reinforcement learning on the semantic information carried by the deep features extracted by the deep CNN, hierarchically highlights the semantic information of an object, and reduces the false negatives and false positives to a certain extent.

Owner:TOP LEARNING BEIJING EDUCATION TECH CO LTD

Convolution Neural Network (CNN) structure improving method

PendingCN106960243AImprove performanceAvoid vanishing gradientsNeural architecturesNeural learning methodsNetwork structureMax pooling

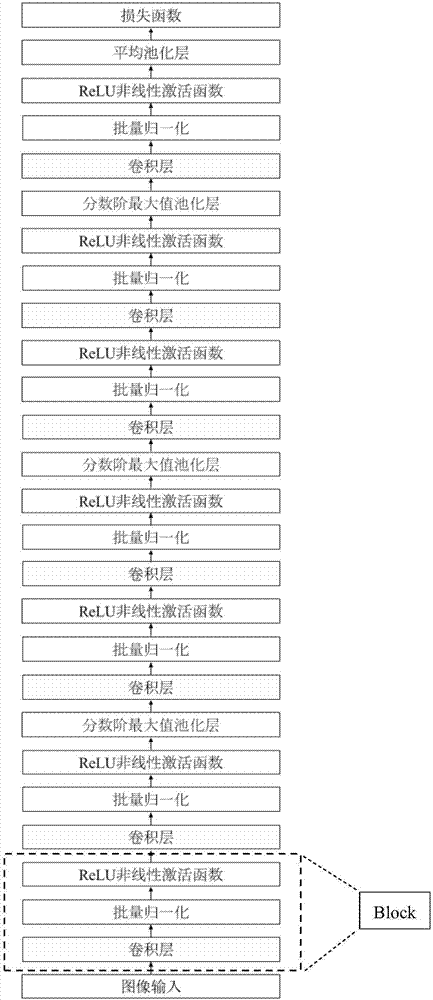

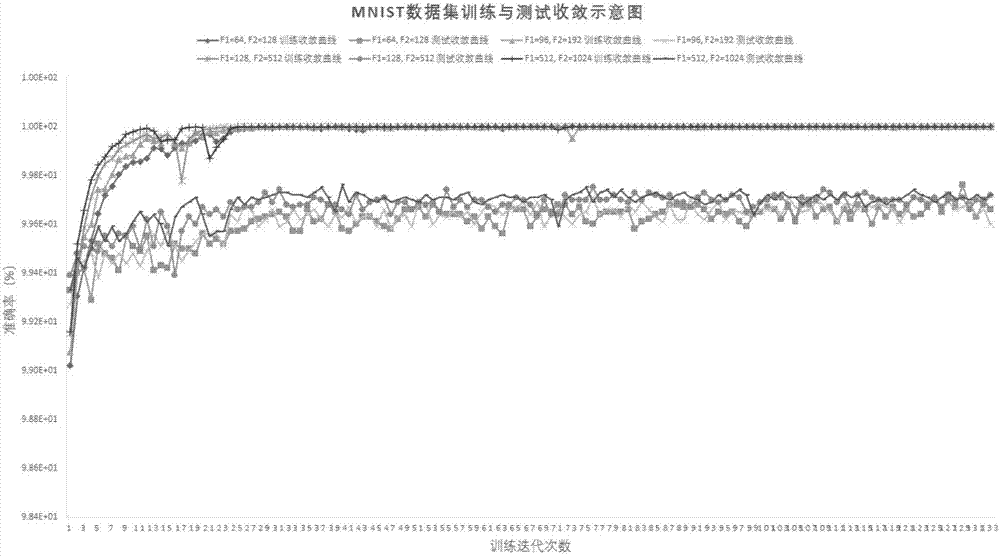

The invention relates to the in-depth learning field, and especially relates to a Convolution Neural Network (CNN) structure improving method; the method comprises the following steps: a, using a fractional max pooling (FMP) principle to change a maximum value pooling layer in a conventional CNN structure into fractional orders, thus realizing sampling dimension reduction under a random dimension; b, ensuring a shallow network structure, continuously widening the network structure, combining the fractional order maximum value pooling layer, and thus improving the network performance. The method uses the fractional order maximum value pooling principle to ensure the layer at a shallow level, can continuously widens the network structure, thus preventing a deep network to have gradient vanish and weight failure phenomenon in a training process, and causing the CNN structure hard to train. The method can realize equal or better performance with the deep CNN structure, and uses less network parameters, thus providing obvious performance advantages.

Owner:CENT SOUTH UNIV

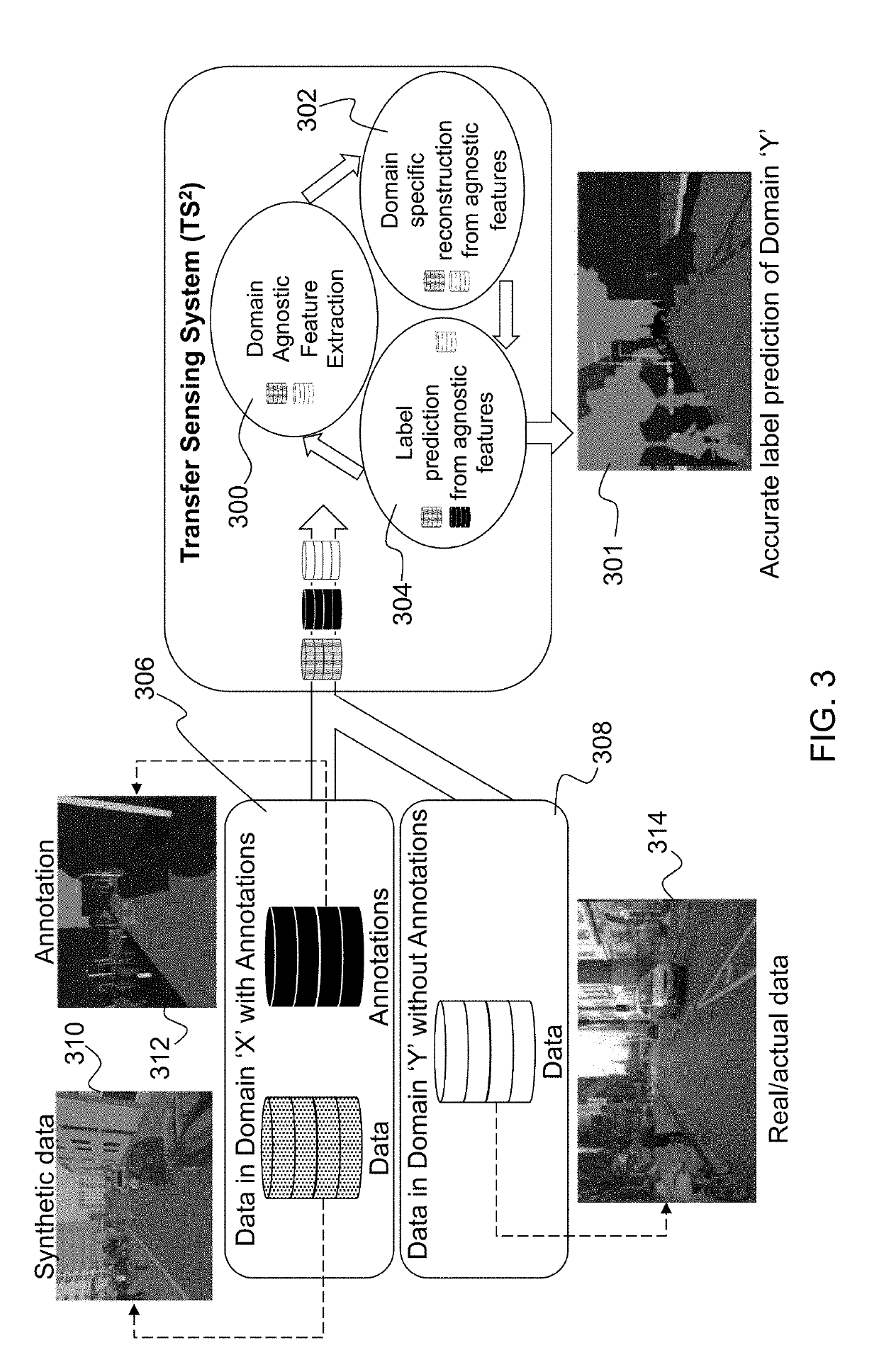

Domain adaption learning system

Described is a system for adapting a deep convolutional neural network (CNN). A deep CNN is first trained on an annotated source image domain. The deep CNN is adapted to a new target image domain without requiring new annotations by determining domain agnostic features that map from the annotated source image domain and a target image domain to a joint latent space, and using the domain agnostic features to map the joint latent space to annotations for the target image domain.

Owner:HRL LAB

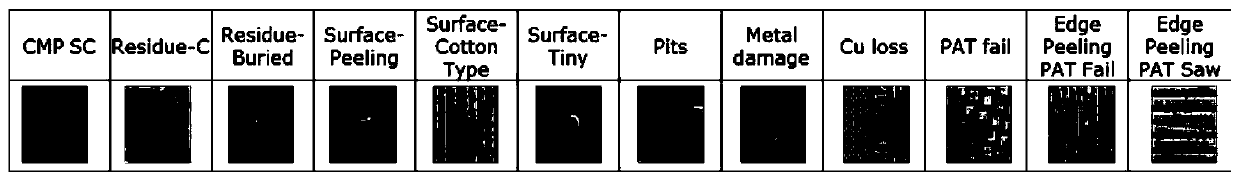

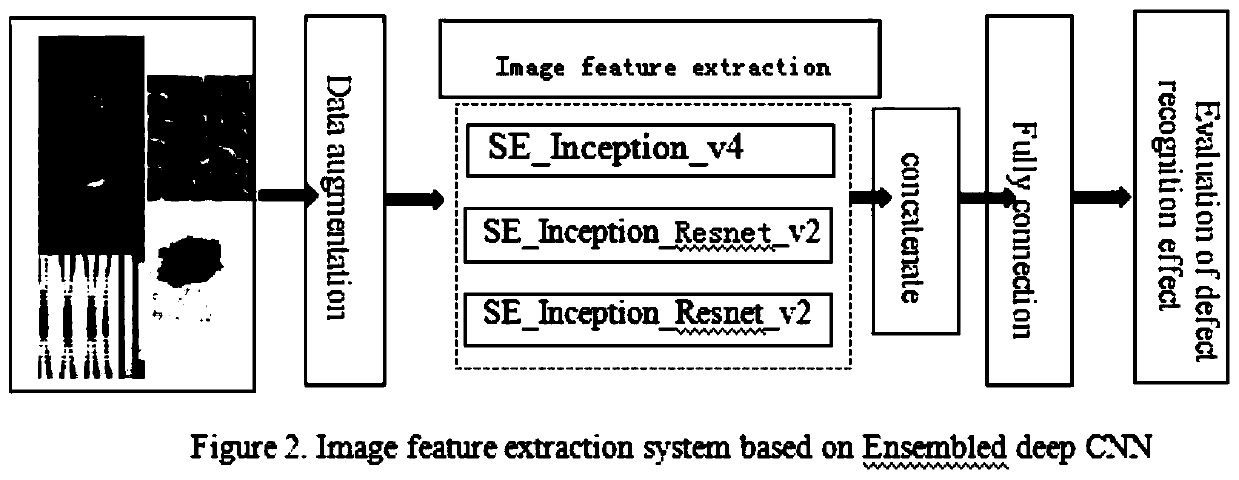

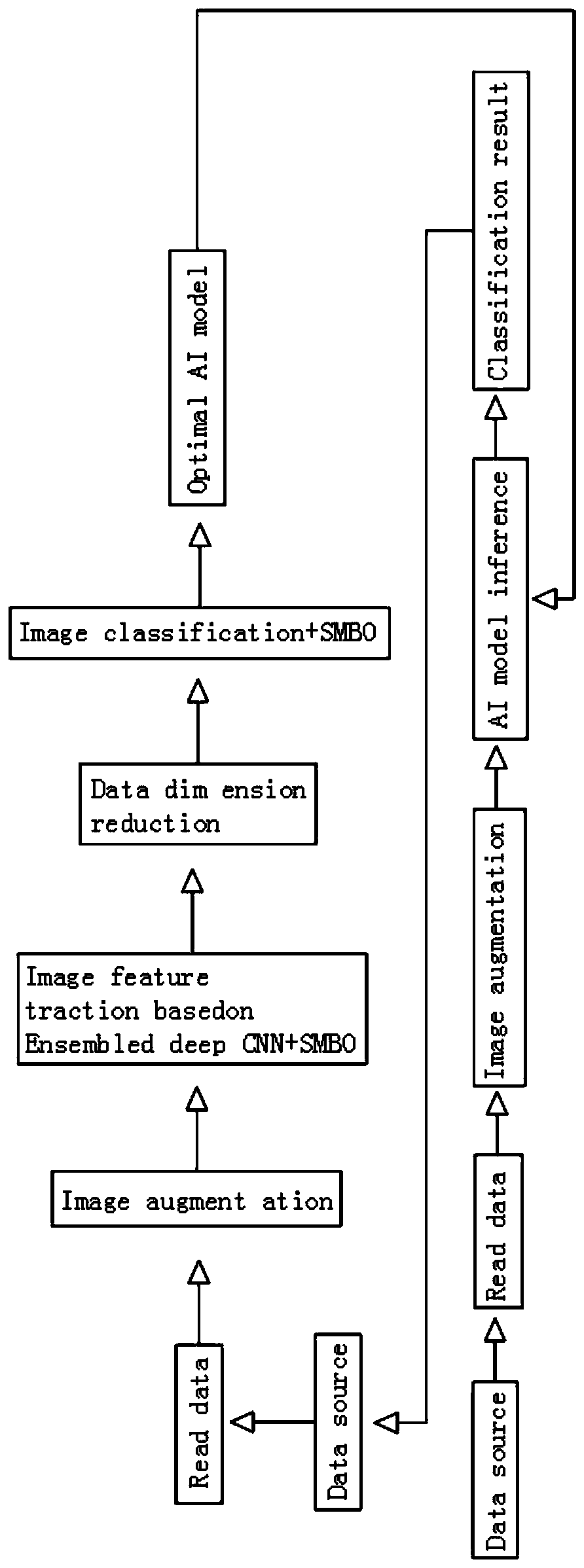

Integrated circuit defect image recognition and classification system based on fusion deep learning model

InactiveCN110766660AIncrease the level of automationIncrease workloadImage enhancementImage analysisFeature extractionWafer

The invention discloses an integrated circuit defect image recognition and classification system based on a fusion deep learning model, and provides a mode of using a fusion model based on a deep convolutional neural network (CNN) to carry out on-line automatic recognition and classification on defect images of a wafer so as to timely detect the change of the number of various defects of the wafer. The core mechanism of the method is a defect image feature extraction method constructed by two deep learning models integrated into a learning mechanism. According to the deep CNN fusion model, a Combined3 defect image classification model is constructed on the basis of two frameworks of SE _ Inception _ V4 and SE _ Inception _ ResNet _ V2; and a sequence model optimization (SMBO) algorithm isutilized to perform hyper-parameter optimization on the fusion depth CNN recognition model, so that the model recognition precision is improved. Increasing automation levels. And the identification cost is reduced because an engineer is replaced by the AI model, and the working efficiency is greatly improved. Based on a real-time identification and classification result, engineers can count defectdata and search reasons in time, so that process parameters are adjusted, and the yield is improved.

Owner:上海众壹云计算科技有限公司

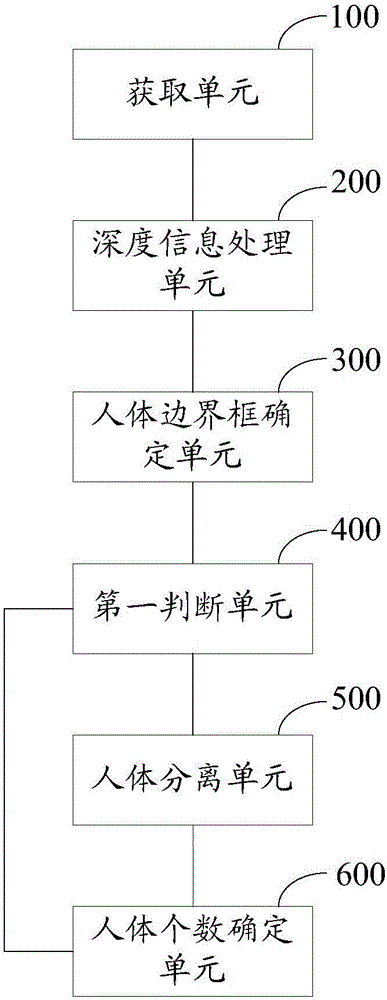

Human body identification method and device

ActiveCN106778614AImprove accuracyAvoid being misidentified as a human bodyBiometric pattern recognitionColor imageHuman body

The invention provides a human body identification method and a human body identification device. The method comprises the steps of acquiring image information; carrying out deep processing on the image information to obtain a depth image and a color image; carrying out human body detection of a deep CNN (Convolutional Neural Network) on the color image and determining a human body boundary frame in the color image; judging whether a unique human body exists in a human body boundary frame area, corresponding to the human body boundary frame, in the depth image; if two or more human bodies exist in the human body boundary frame area, separating the two or more human bodies; and determining the number of the human bodies in the image information according to the number of the human bodies in the human body boundary frame area in the depth image. According to the human body identification method and the human body identification device, secondary identification for the human bodies identified in the color image is realized based on the depth image, the overlapped human bodies are prevented from being misjudged as one human body, the human body identification is assisted by use of the image information of the depth image, and thus the accuracy degree of the human body identification is improved.

Owner:INT INTELLIGENT MACHINES CO LTD

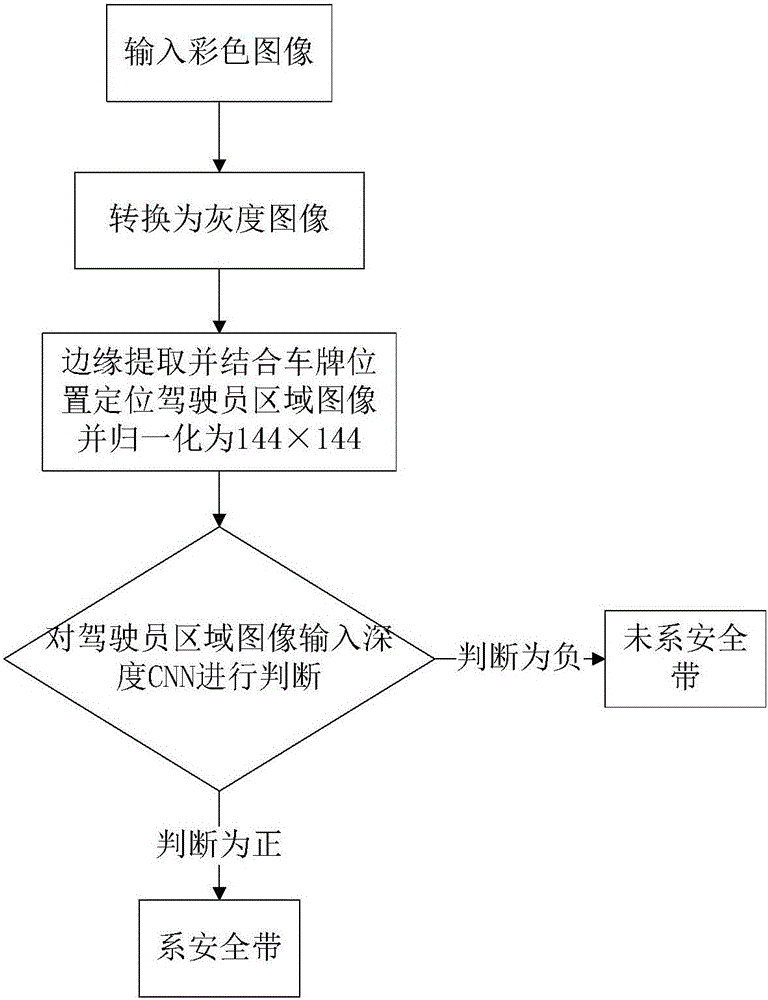

Method for detecting safety belt based on deep CNN

InactiveCN106203499AImprove judgment accuracyThe principle of the judgment method is simpleCharacter and pattern recognitionNeural architecturesGrayscaleApplication Context

The invention belongs to the field of intelligent transportation and relates to a method for detecting a safety belt based on the adoption of deep CNN of image at traffic monitoring points. The method establishes a sample database and conducts deep CNN training on the sample database, and adopts training parameters to determine whether a driver in a newly input image wears a safety belt. The method comprises the following steps of extracting a gray scale image of a driver area, establishing a sample database, designing a deep CNN network, training the deep CNN network and determining the result of individual image. The method extracts a window area by using upper and lower edge information of a front window of a vehicle, conducts auxiliary positioning of the driver area by using a plate area, does not need to use characteristics of manual design classification, and has high precision in determining whether the driver wears the safety belt. The method has simple principle, is easy to operate, has small errors, is safe and reliable, has strong application values, and is environmental friendly.

Owner:QINGDAO UNIV

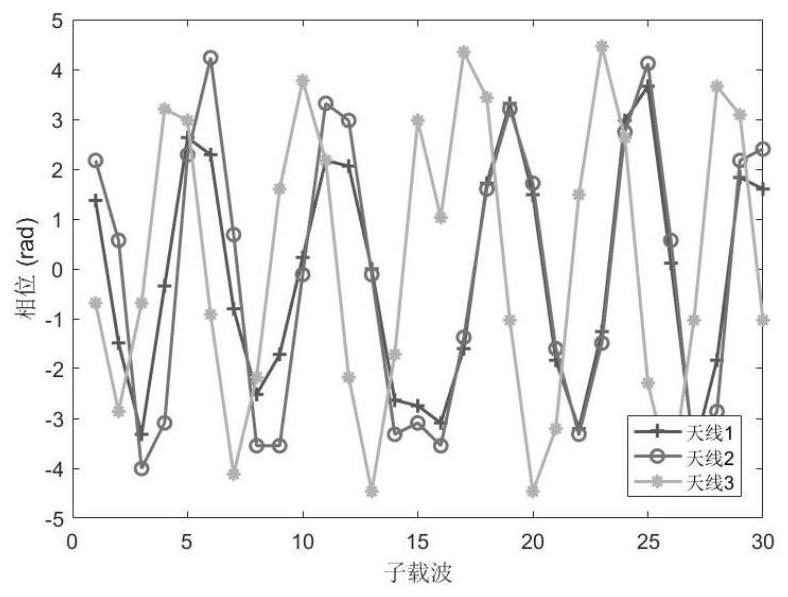

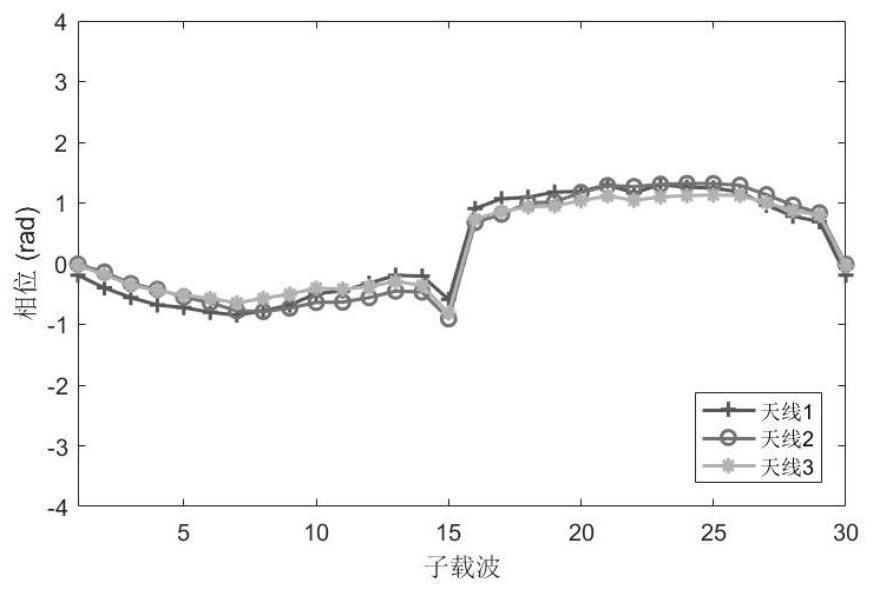

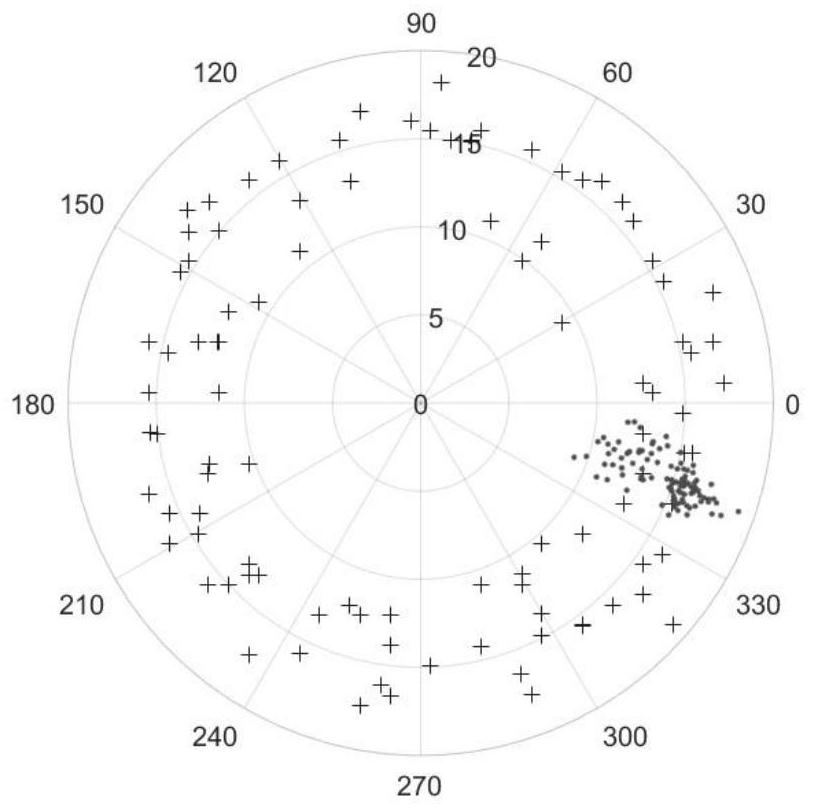

Wi-Move behavior perception method based on CNN (Convolutional Neural Network)

ActiveCN112036433AImprove recognition accuracyCharacter and pattern recognitionTransmission monitoringFeature extractionNetwork model

The invention relates to a Wi-Move behavior perception method based on a CNN. The Wi-Move behavior perception method comprises the following steps: (1) preprocessing Wi-Move data; (2) performing humanbody behavior perception based on CNN; (3) constructing a Wi-Move input feature map; (4) designing a Wi-Move network; and (5) optimizing the Wi-Move network model. Aiming at the problem that a feature extraction and classification sensing method is not comprehensive in feature extraction and is only suitable for sensing fewer behavior types, the invention provides a CNN-based WiMove behavior sensing method, and compared with the feature extraction and classification sensing method, the CNN-based WiMove behavior sensing method has higher recognition accuracy in occasions of sensing multiple types of behaviors. Amplitude and phase information of all CSI subcarriers is hierarchically extracted by a deep CNN network, and feature information is more comprehensive.

Owner:TIANJIN CHENGJIAN UNIV

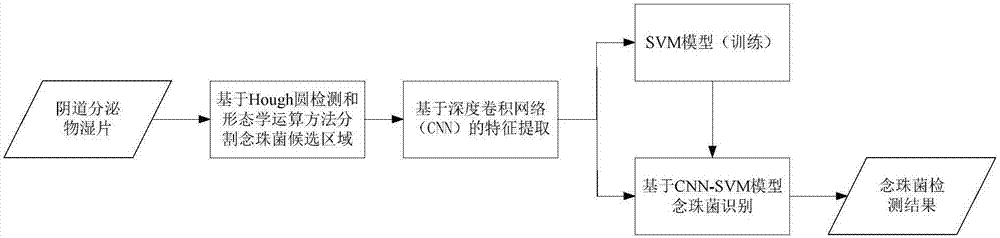

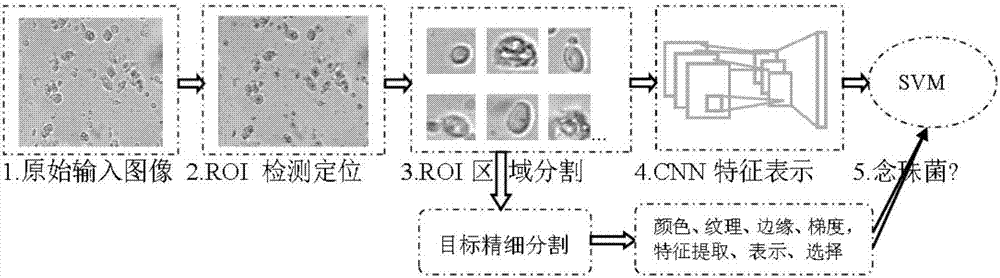

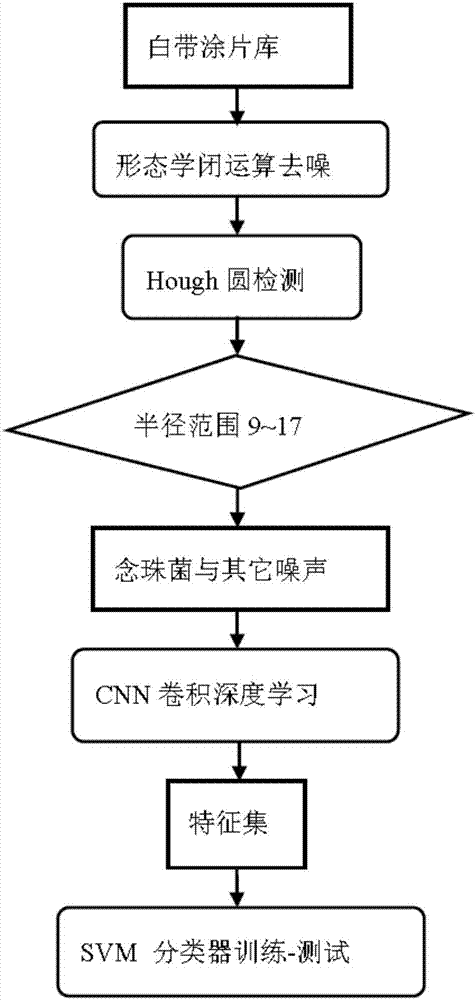

Vaginal secretion wet sheet candida detection method based on Hough round detection and deep CNN (convolutional neural network)

InactiveCN107099577ARealize identificationRealize detectionMicrobiological testing/measurementBiological material analysisSupport vector machineImaging processing

The invention discloses a vaginal secretion wet sheet candida detection method based on a Hough round detection and deep CNN (convolutional neural network). The method comprises the following steps of (1) by aiming at the round features (oval or 8 shape) of the candida, dividing a candidate region of the vaginal secretion wet sheet candida on the basis of a Hough round detection and morphological operation method; (2) performing feature extraction based on the deep CNN; (3) building a CNN-SVM (support vector machine)-based classification model, and realizing the candida identification. The image processing and recognition method based on the Hough round detection and deep CNN is used; the vaginal secretion wet sheet candida detection can be conveniently, economically and efficiently realized; the requirements of intelligence, high speed and high precision of the medical application can be met.

Owner:SOUTH CHINA UNIV OF TECH

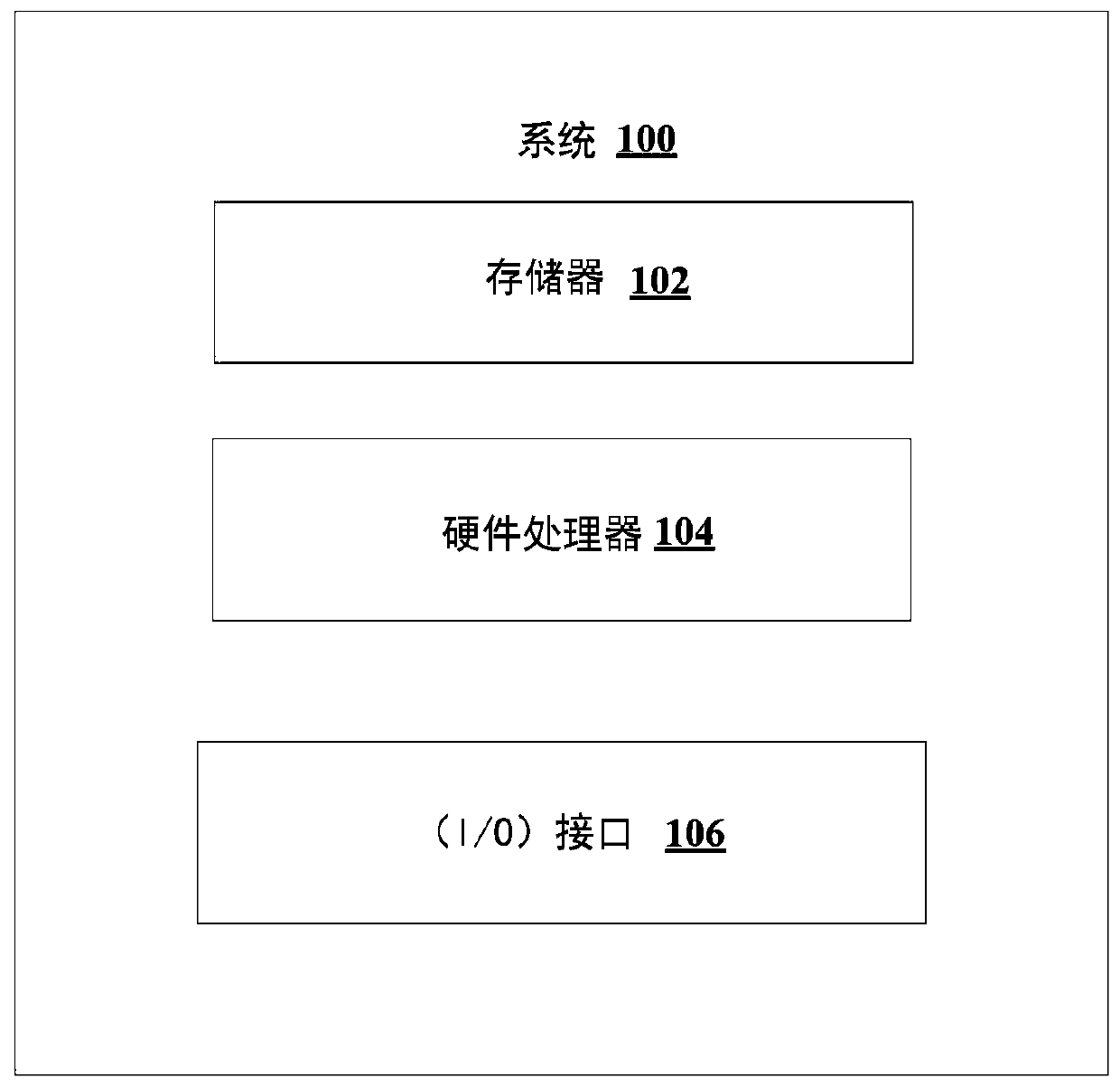

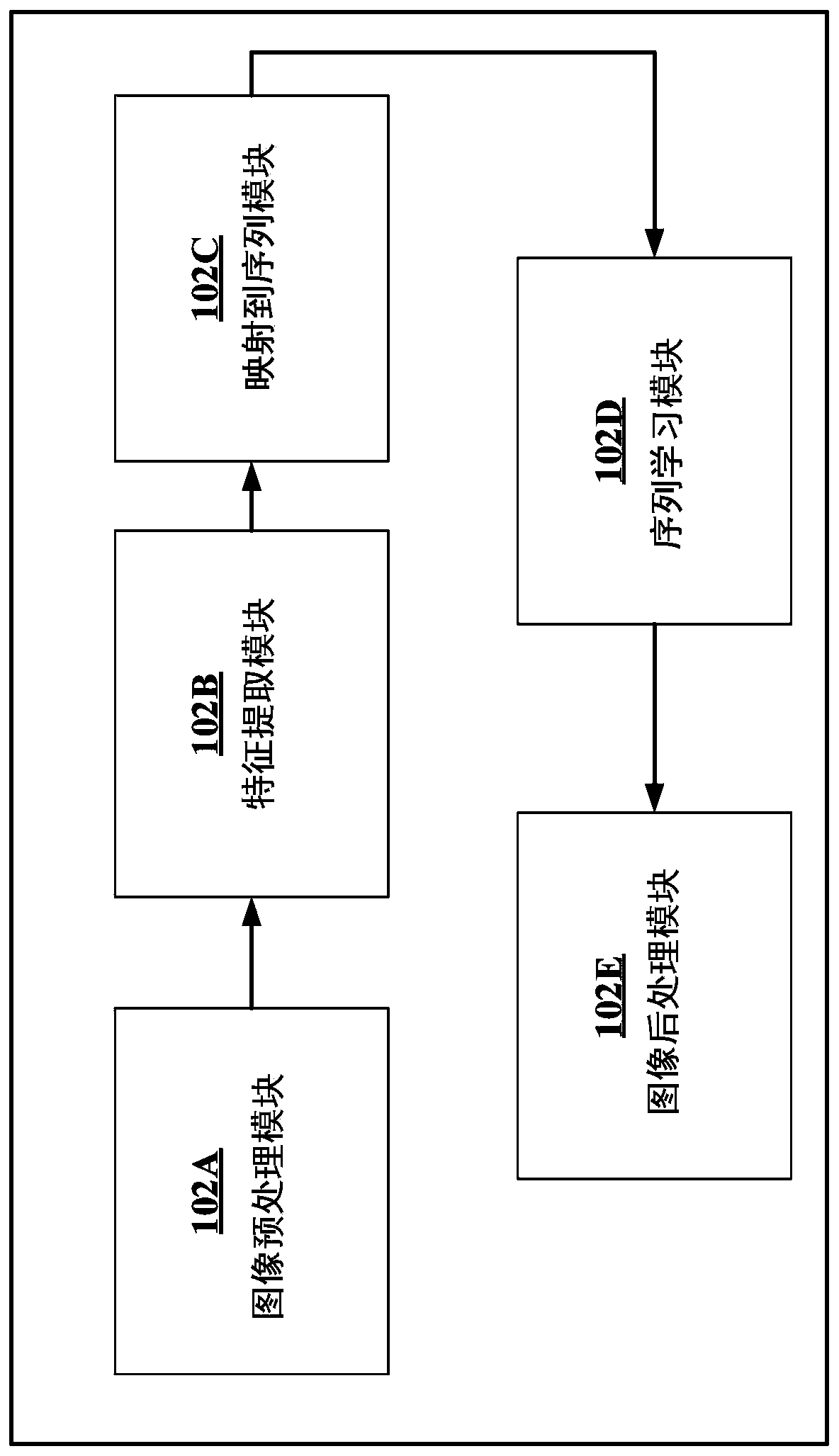

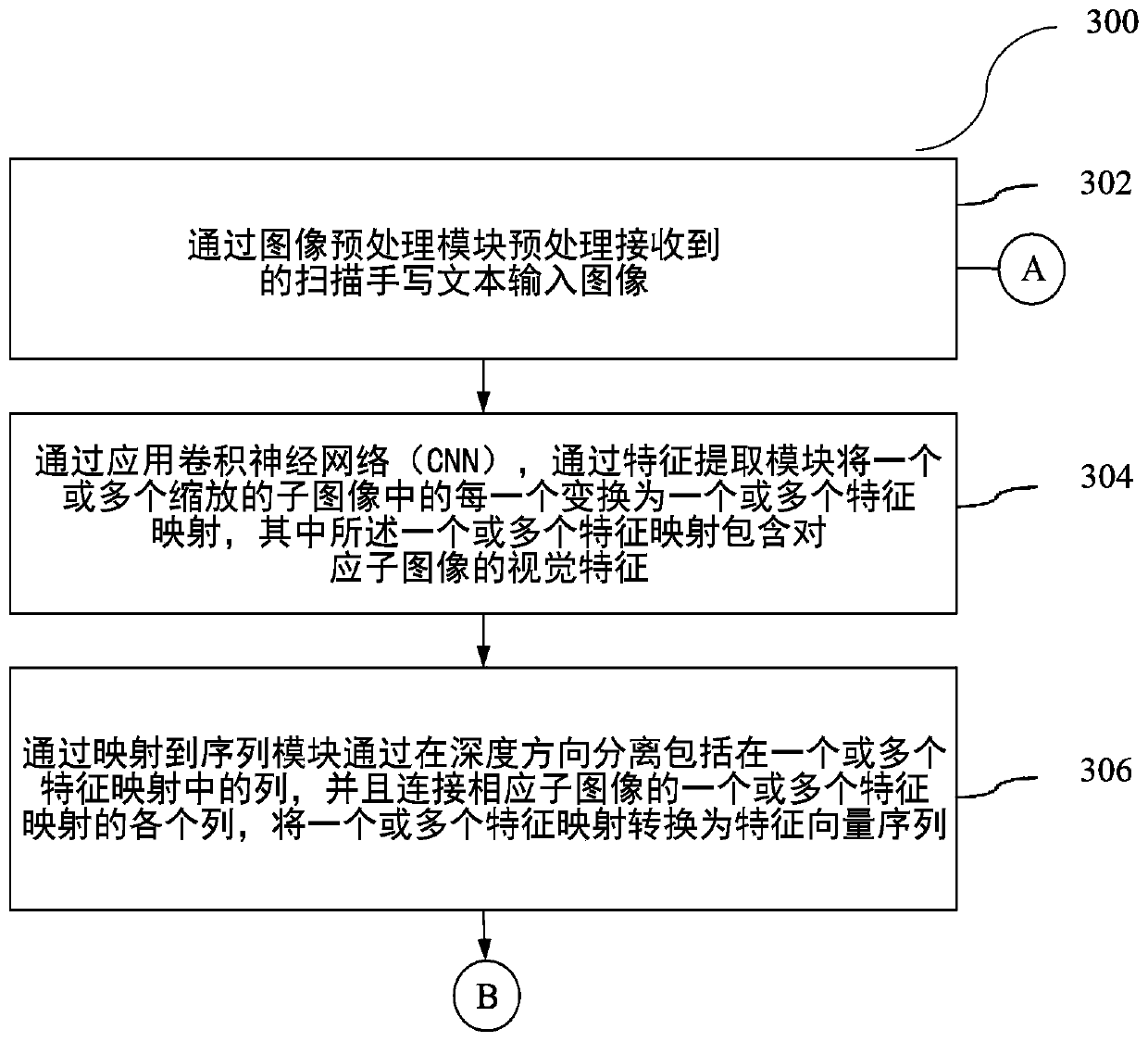

Systems and methods for end-to-end handwritten text recognition using neural networks

PendingCN110738090AGeometric image transformationNeural architecturesText recognitionTransformation of text

The present disclosure provides systems and methods for end-to-end handwritten text recognition using neural networks. Most existing hybrid architectures involve high memory consumption and large number of computations to convert an offline handwritten text into a machine readable text with respective variations in conversion accuracy. The method combine a deep convolutional neural network (CNN) with a RNN (Recurrent Neural Network) based encoder unit and decoder unit to map a handwritten text image to a sequence of characters corresponding to text present in the scanned handwritten text inputimage. The deep CNN is used to extract features from handwritten text image whereas the RNN based encoder unit and decoder unit is used to generate converted text as a set of characters. The disclosed method requires less memory consumption and less number of computations with better conversion accuracy over the existing hybrid architectures.

Owner:TATA CONSULTANCY SERVICES LTD

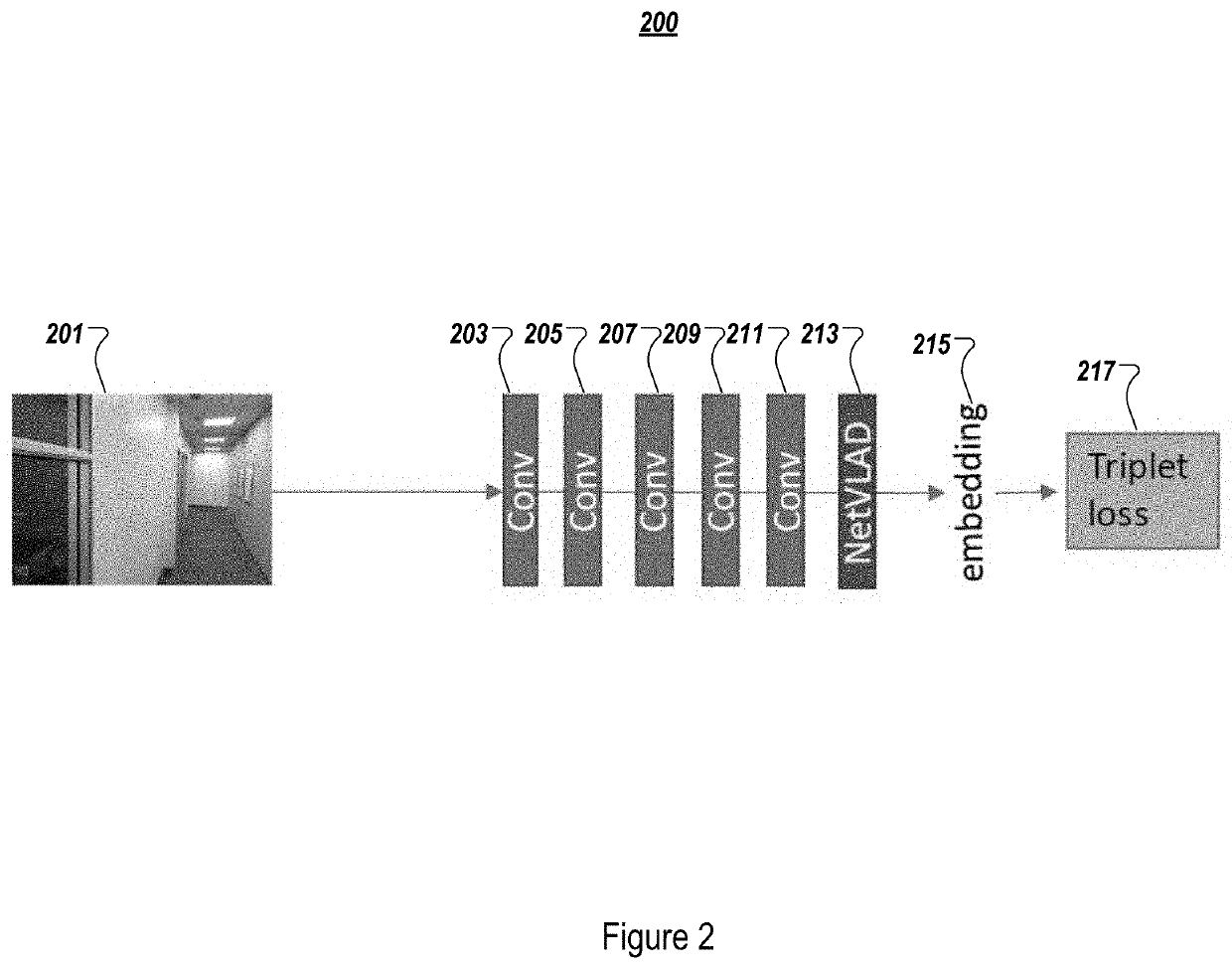

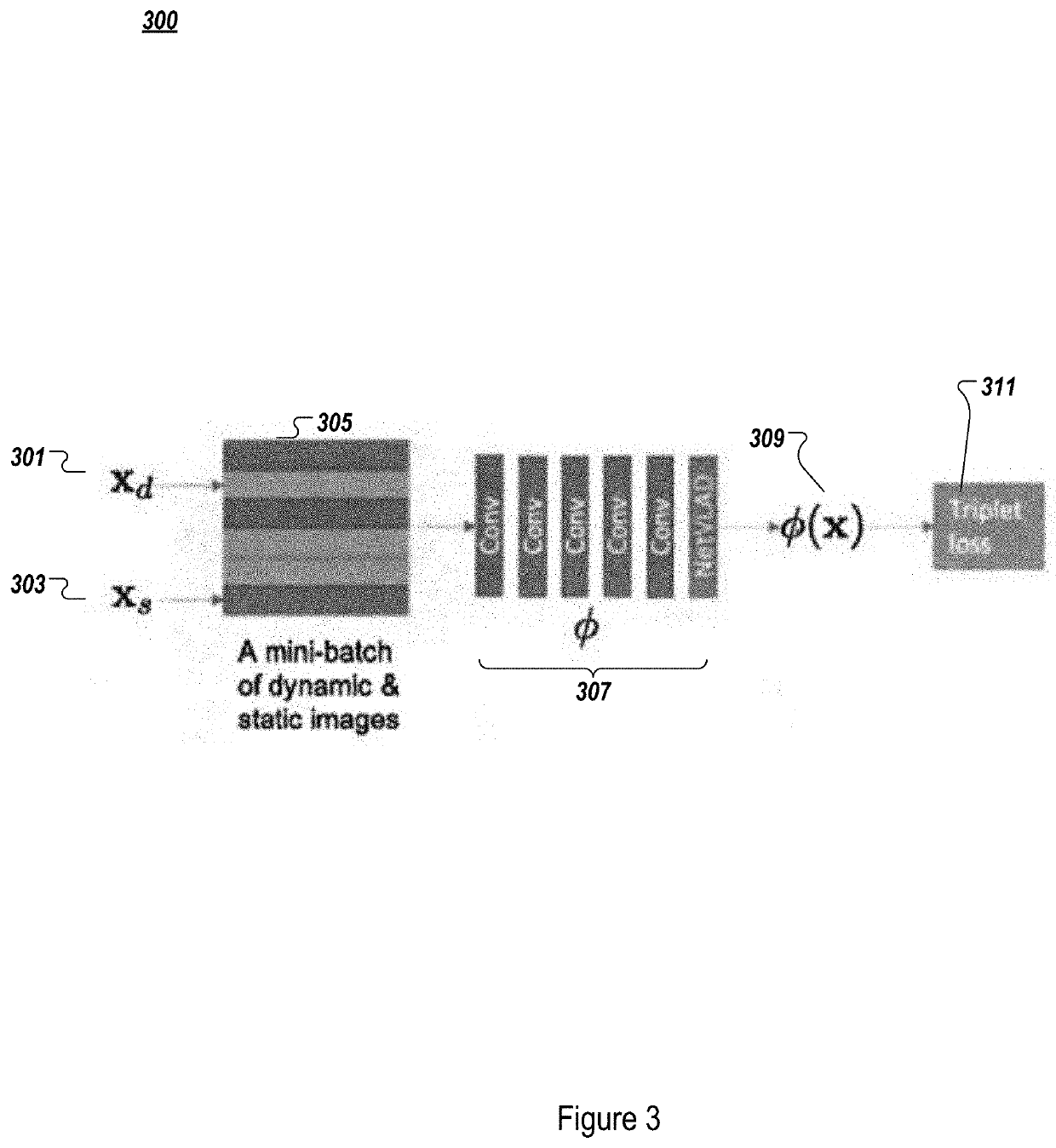

Indoor localization using real-time context fusion of visual information from static and dynamic cameras

ActiveUS20200311468A1Television system detailsDigital data information retrievalDynamic queryVisual perception

A computer-implemented method of localization for an indoor environment is provided, including receiving, in real-time, a dynamic query from a first source, and static inputs from a second source; extracting features of the static inputs by applying a metric learning convolutional neural network (CNN), and aggregating the extracted features of the static inputs to generate a feature transformation; and iteratively extracting features of the dynamic query on a deep CNN as an embedding network and fusing the feature transformation into the deep CNN, and applying a triplet loss function to optimize the embedding network and provide a localization result.

Owner:FUJIFILM BUSINESS INNOVATION CORP

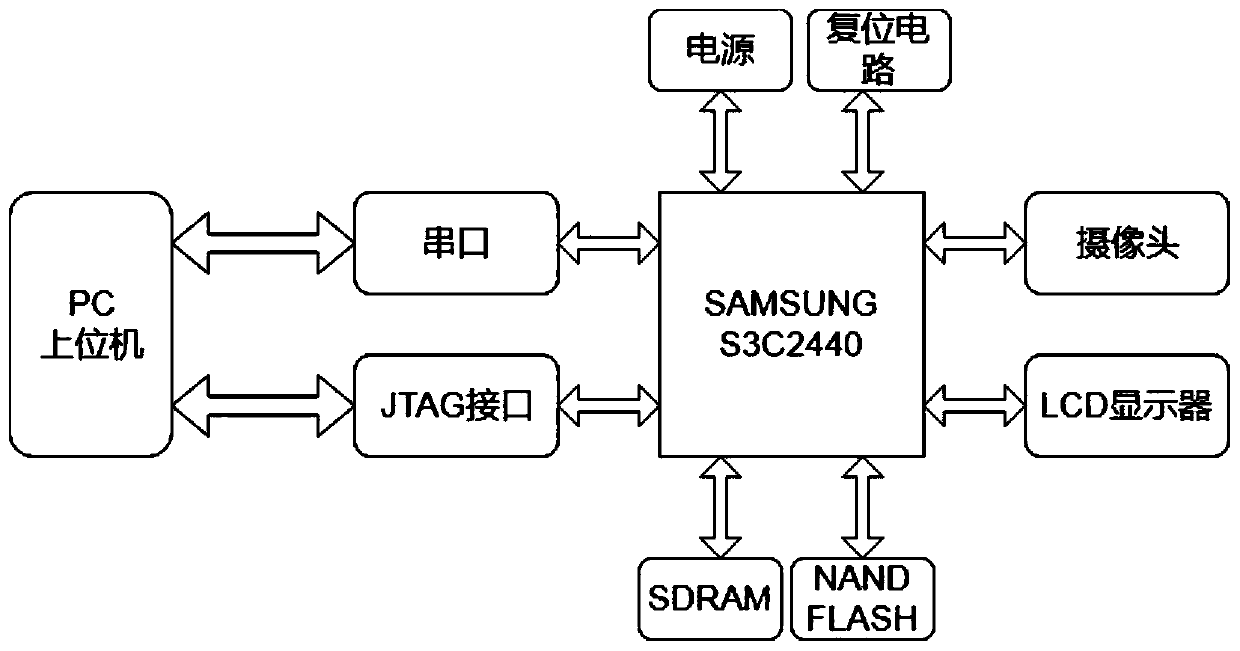

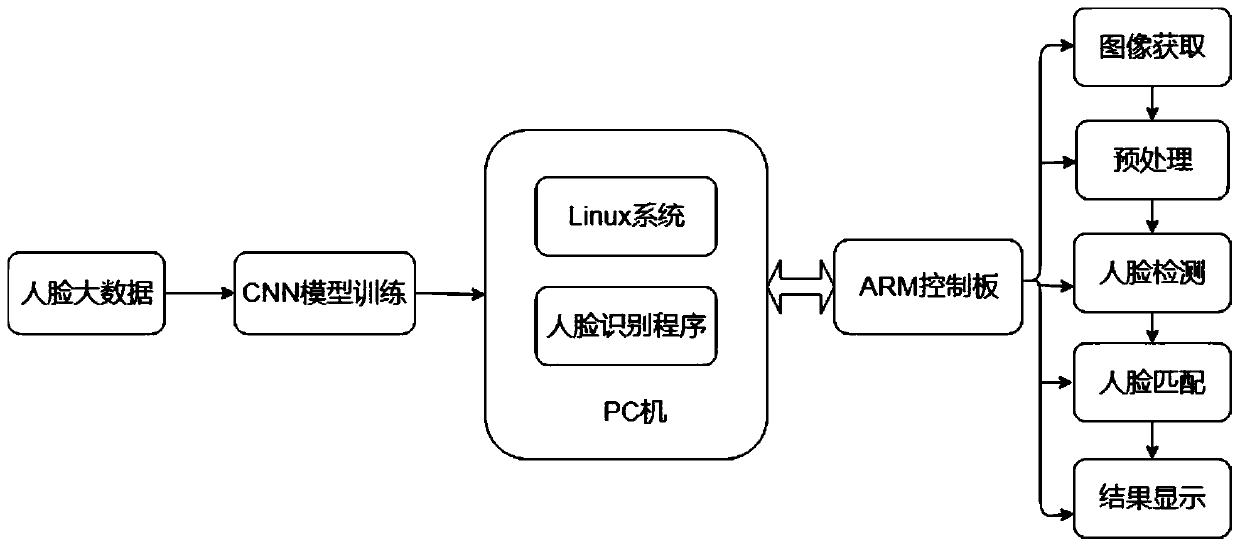

An embedded face recognition system based on an ARM microprocessor and deep learning

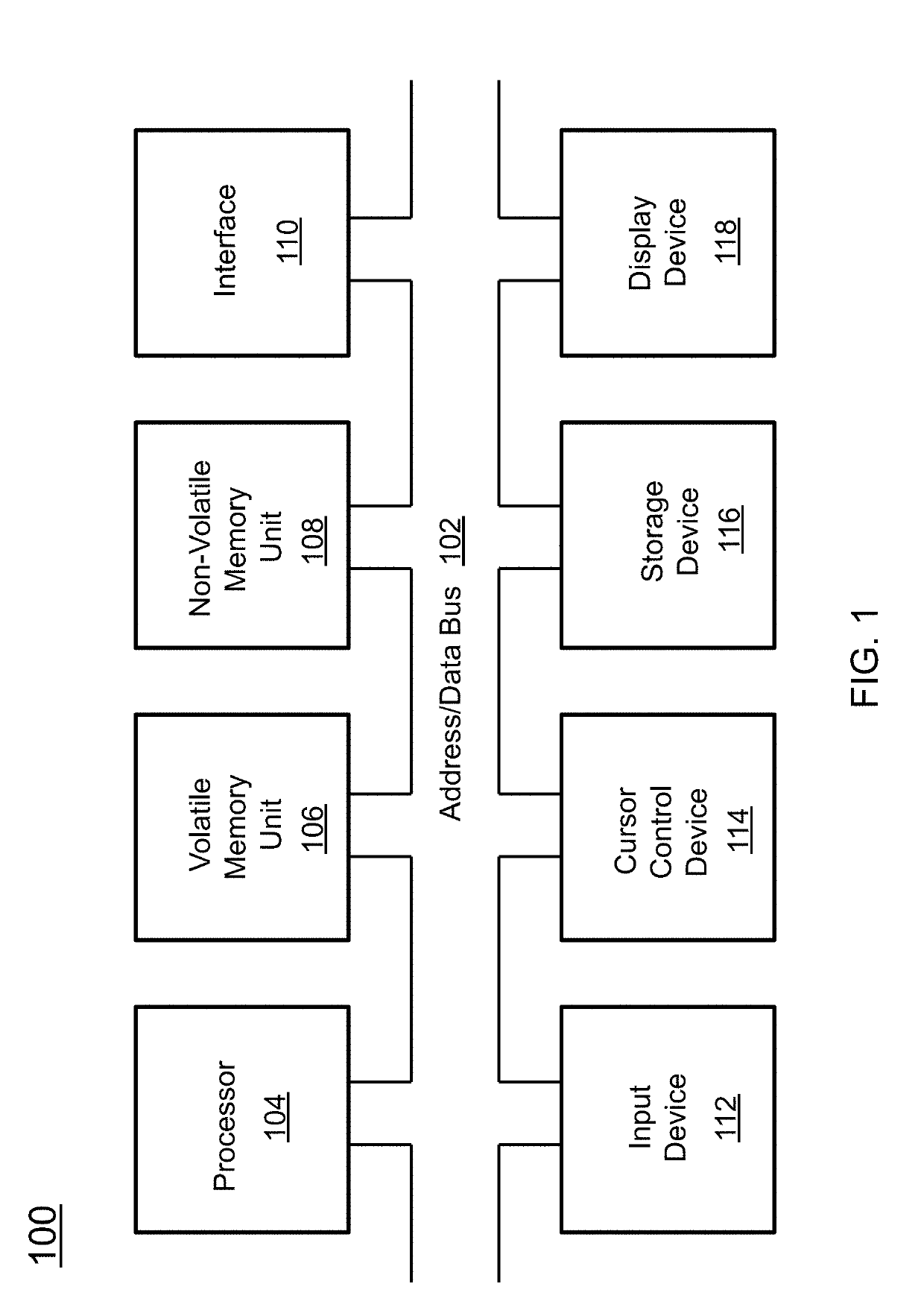

PendingCN109948568AImprove practicalityOvercome the phenomenon of low or even unrecognizable recognition accuracyCharacter and pattern recognitionFace detectionDisplay device

The invention relates to an embedded face recognition system based on an ARM microprocessor and deep learning, and the system comprises an upper computer which is used for transplanting a driving program and a pre-trained face recognition program to a control panel; the control panel used for running a face recognition program and displaying a recognition result on the display. The face recognition program comprises the following steps: pre-training a network model for establishing a face recognition neural network of a Facenet and training the face recognition neural network; acquiring face image: starting an image acquisition device through the control panel to acquire a face photo; preprocessing the face photo, and performing scale change on the shot face photo to form a picture pyramid; detecting human face: sending the preprocessed human face picture into a pre-trained deep CNN human face detection neural network to obtain a picture of a human face part; and matching face: sendingthe obtained picture of the face part into a pre-trained Facenet face recognition neural network to obtain a matching result.

Owner:DONGHUA UNIV

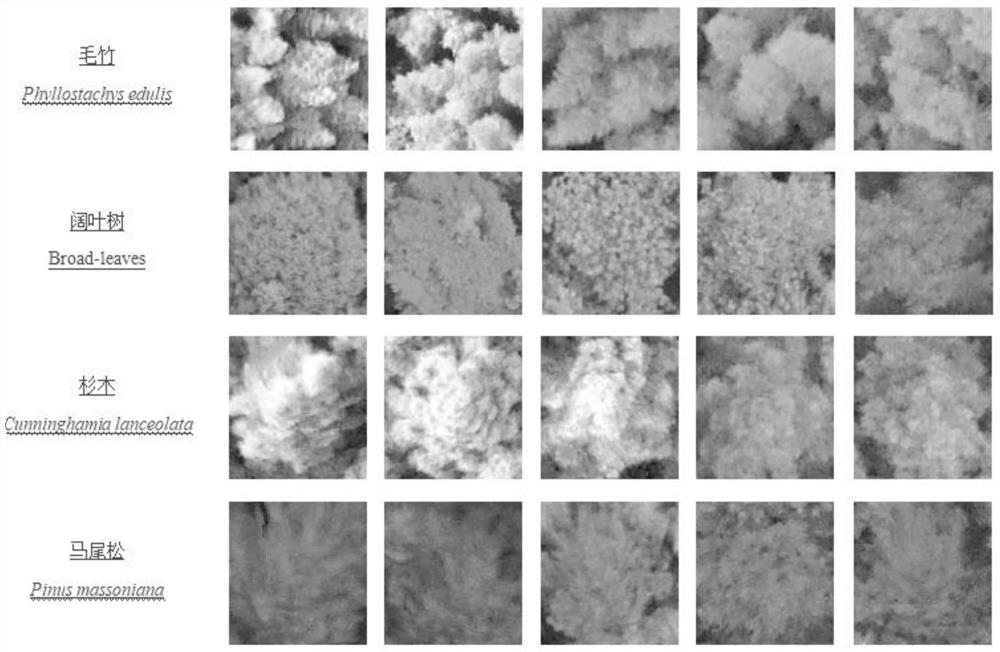

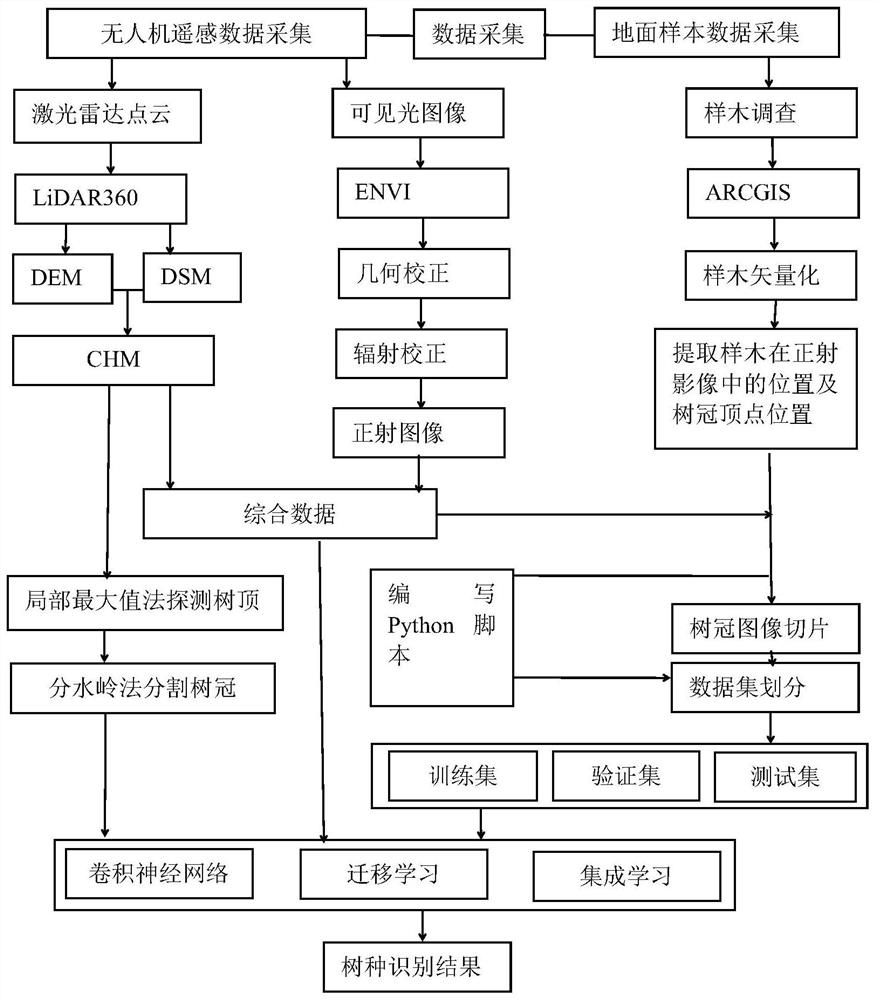

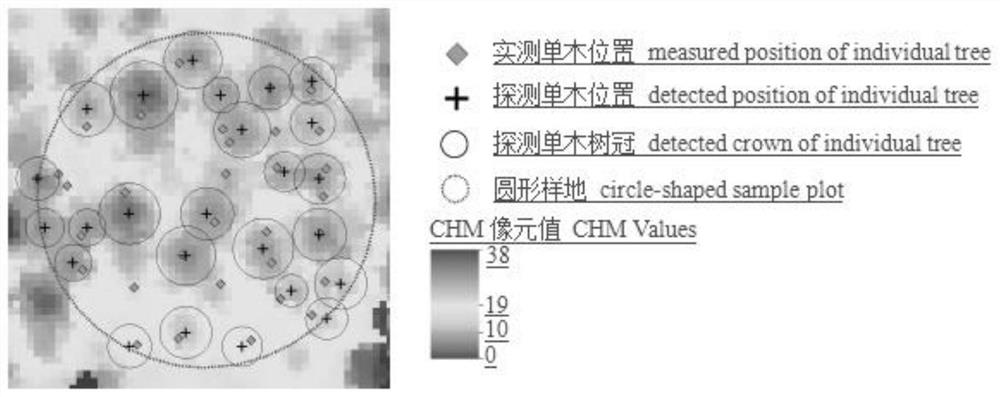

Tree species identification method based on multi-source remote sensing of unmanned aerial vehicle

ActiveCN113591766AImprove accuracyCharacter and pattern recognitionNeural architecturesData setPoint cloud

The invention discloses a tree species identification method based on multi-source remote sensing of an unmanned aerial vehicle, and the method comprises the steps: obtaining a visible light image and a laser radar point cloud, and carrying out the preprocessing of the laser radar point cloud and the visible light image; detecting the crown of the canopy height model of the laser radar point cloud through a local maximum value method, and segmenting the crown through a watershed method to obtain a segmented crown boundary; obtaining a crown data set and a sample data set by taking the segmented crown boundary as an outer boundary and taking a visible light orthoimage brightness value and a laser radar canopy height model (CHM) as features; and carrying out transfer learning and ensemble learning on the crown data set and the sample data set through a convolutional neural network, and then outputting a tree species identification result. The unmanned aerial vehicle visible light remote sensing image and the laser radar point cloud are comprehensively applied, the deep CNN model is adopted for transfer learning, deep convolutional neural network transfer learning and integrated learning are input for tree species identification, and the accuracy of unmanned aerial vehicle remote sensing tree species identification is improved.

Owner:RES INST OF FOREST RESOURCE INFORMATION TECHN CHINESE ACADEMY OF FORESTRY

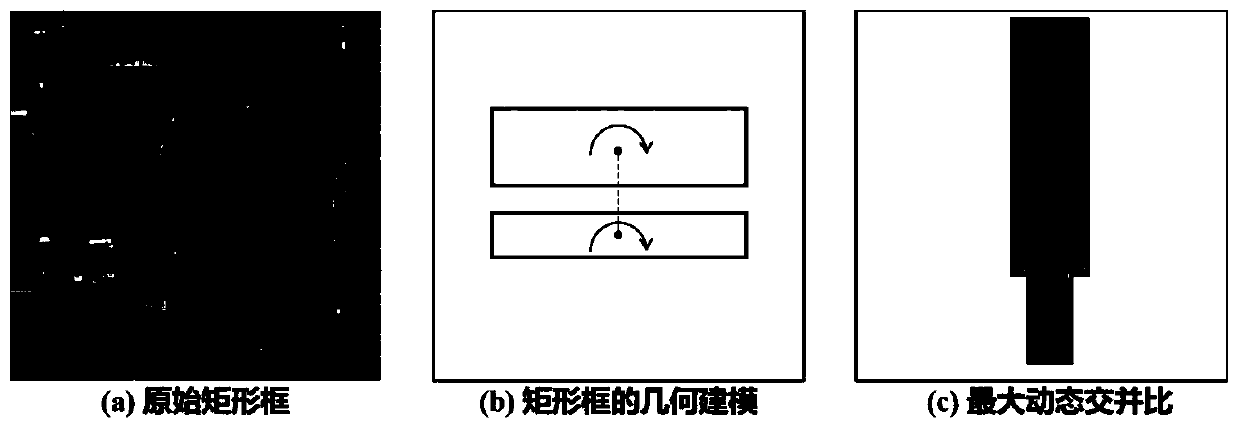

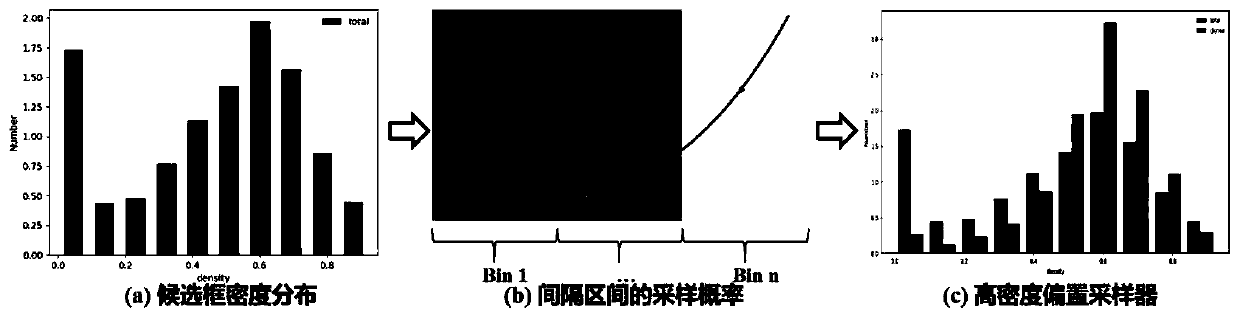

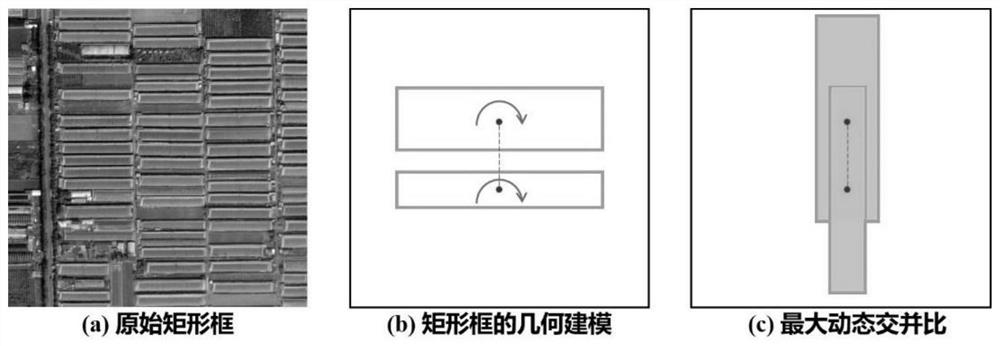

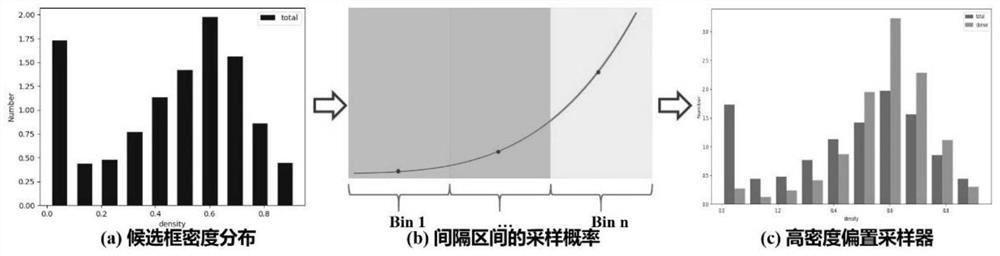

Remote sensing image dense target deep learning detection method

ActiveCN111126287AAchieve positioningEffective Convolutional FeaturesClimate change adaptationScene recognitionHigh densityRemote sensing

The invention discloses a remote sensing image dense target deep learning detection method. The method is used for extracting a remote sensing image dense target. According to the method, an image isinputted into a deep CNN basic network, so that a feature map can be obtained; deep convolution features are inputted into a dense target extraction framework, so as to be subjected to region of interest (RPN branch) extraction, object classification and rectangular frame regression; for RPN branches, a high density bias sampler is adopted to excavate more samples (difficult samples) with high density to improve detection performance; Soft-NMS is employed to reserve more positive objects after the dense target extraction framework; and finally, a refined rectangular frame is outputted, so thatthe quantity of dense objects can be counted.

Owner:WUHAN UNIV

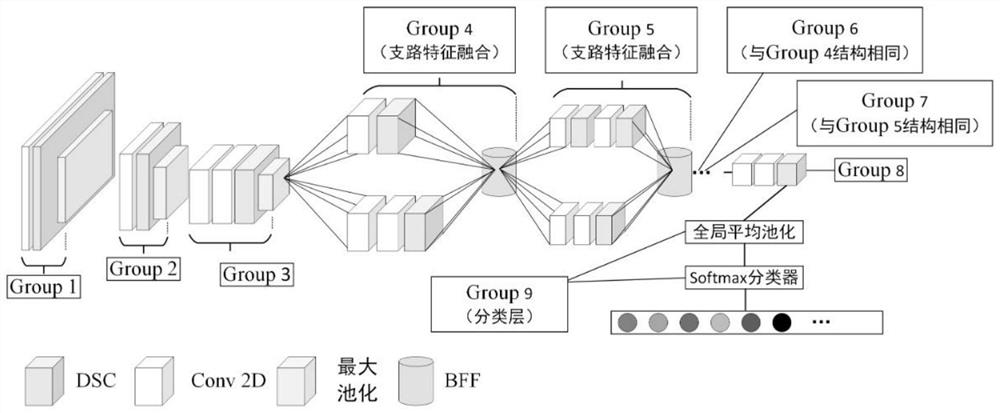

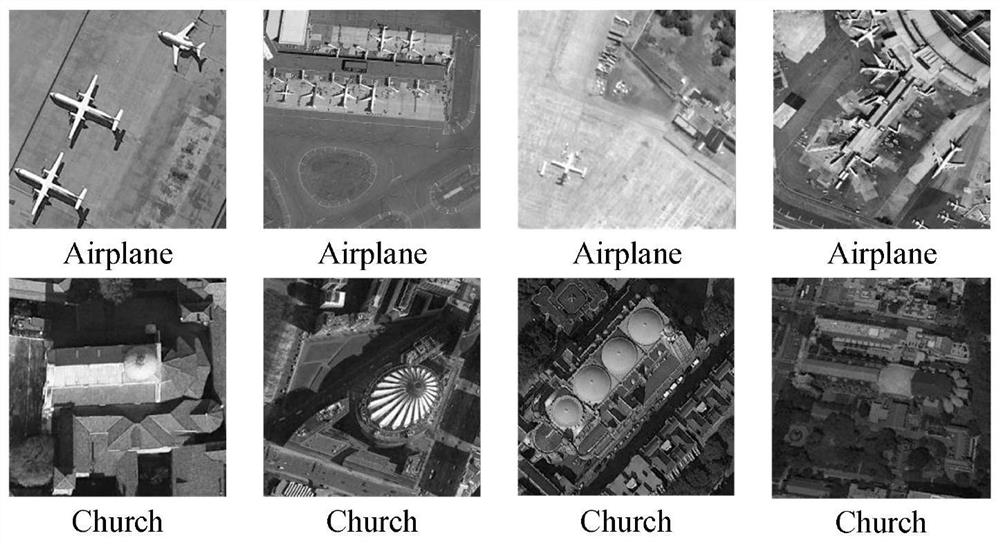

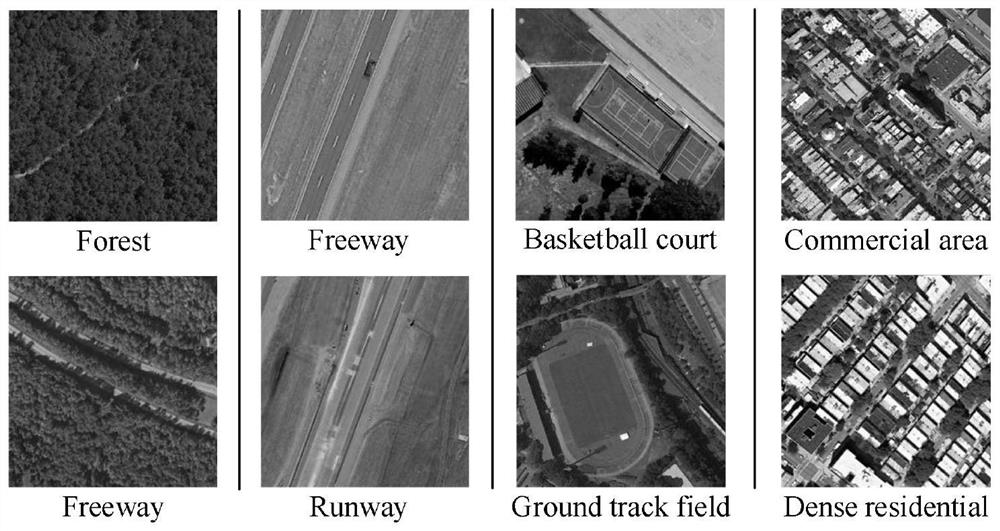

Remote sensing scene classification method based on branch feature fusion convolutional network

ActiveCN111723685AReduce complexityImprove classification accuracyScene recognitionNeural architecturesData setClassification methods

The invention discloses a remote sensing scene classification method based on a branch feature fusion convolutional network, and relates to a remote sensing scene classification method. The objectiveof the invention is to solve the problem that an existing remote sensing scene image has a complex space structure. According to the method, the problems that most existing deep CNN models with good classification performance have high complexity, shallow CNN models have low complexity, but the classification accuracy cannot meet the requirements of practical application in the remote sensing field are solved. The method comprises the following steps: 1, establishing an LCNN-BFF network model; 2, training a network model by adopting the data set; 3, verifying the accuracy of the pre-trained model by adopting the test set, obtaining the trained model if the accuracy meets the requirement, otherwise, continuing to train the model until the accuracy meets the requirement; and 4, adopting thetrained model to classify the remote sensing scenes to be identified. The method is applied to the field of remote sensing scene classification.

Owner:QIQIHAR UNIVERSITY

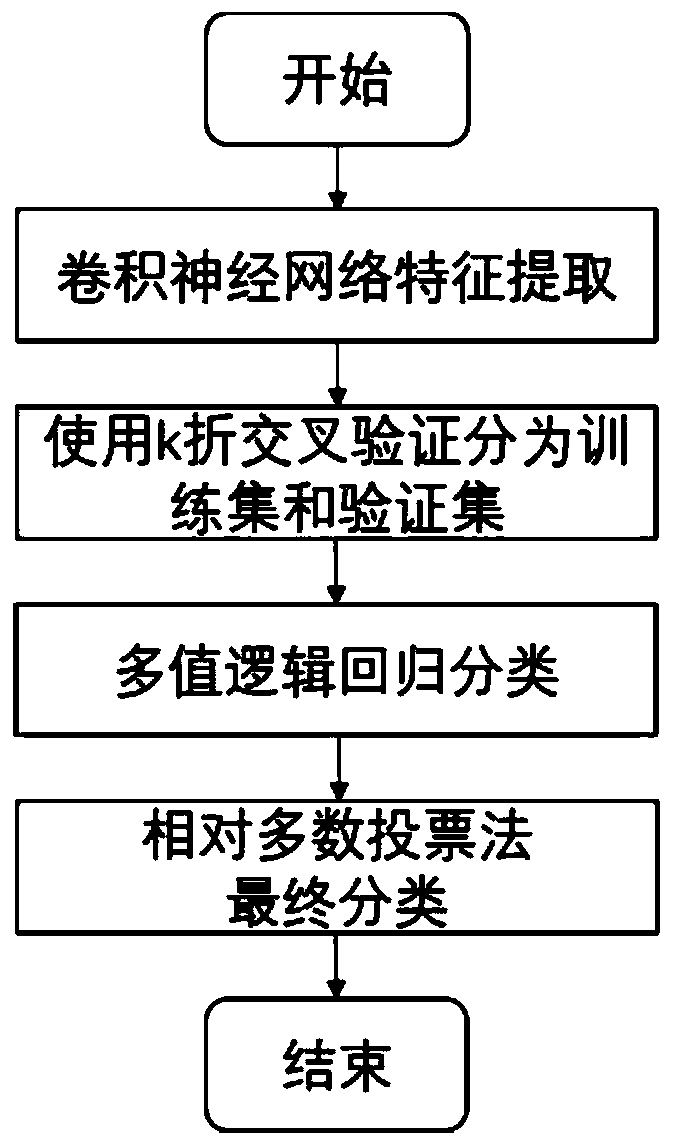

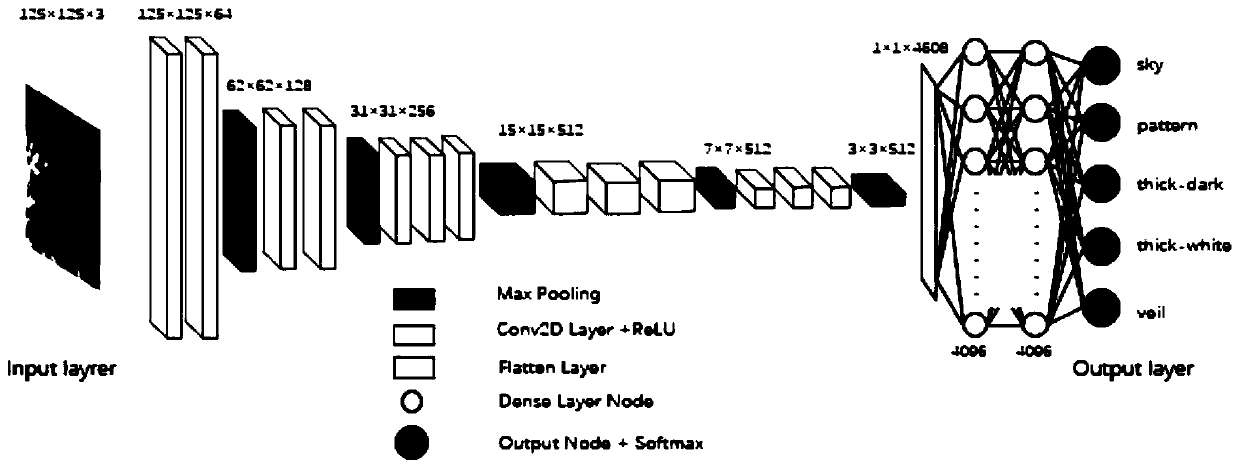

Foundation meteorological cloud picture classification method based on cross validation deep CNN feature integration

InactiveCN111310820AAvoid complex preprocessingReduce computational complexityCharacter and pattern recognitionAlgorithmClassification methods

The invention belongs to the technical field of ground-based meteorological cloud picture classification, and particularly relates to a ground-based meteorological cloud picture classification methodbased on cross validation deep CNN feature integration. According to the method, firstly, a convolutional neural network model is utilized to extract deep CNN features of a foundation meteorological cloud image, then multiple times of resampling of the CNN features is performed based on cross validation, and finally, identification of the cloud shape of the foundation cloud image is performed based on a voting strategy of multiple times of cross validation resampling results. According to the method, the ground-based meteorological cloud images are automatically classified, and an adaptive end-to-end automatic cloud recognition algorithm directly based on the original cloud images without any image preprocessing is realized. The proposed algorithm relates to the fields of computer vision,machine learning, image recognition and the like. The proposed algorithm fully overcomes the non-robustness of a single CNN feature cloud classification result and the high calculation overhead of multi-time deep convolutional neural network integration, and at the same time, ensures that the proposed algorithm has high classification accuracy and noise stability.

Owner:SHANXI UNIV

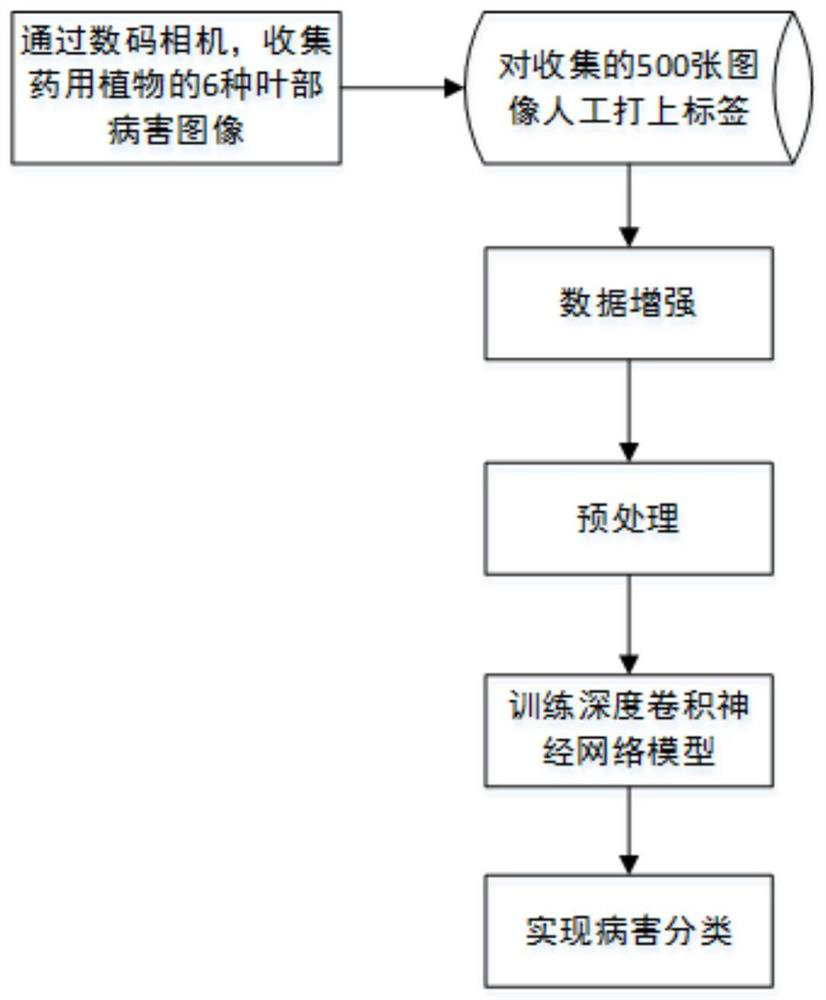

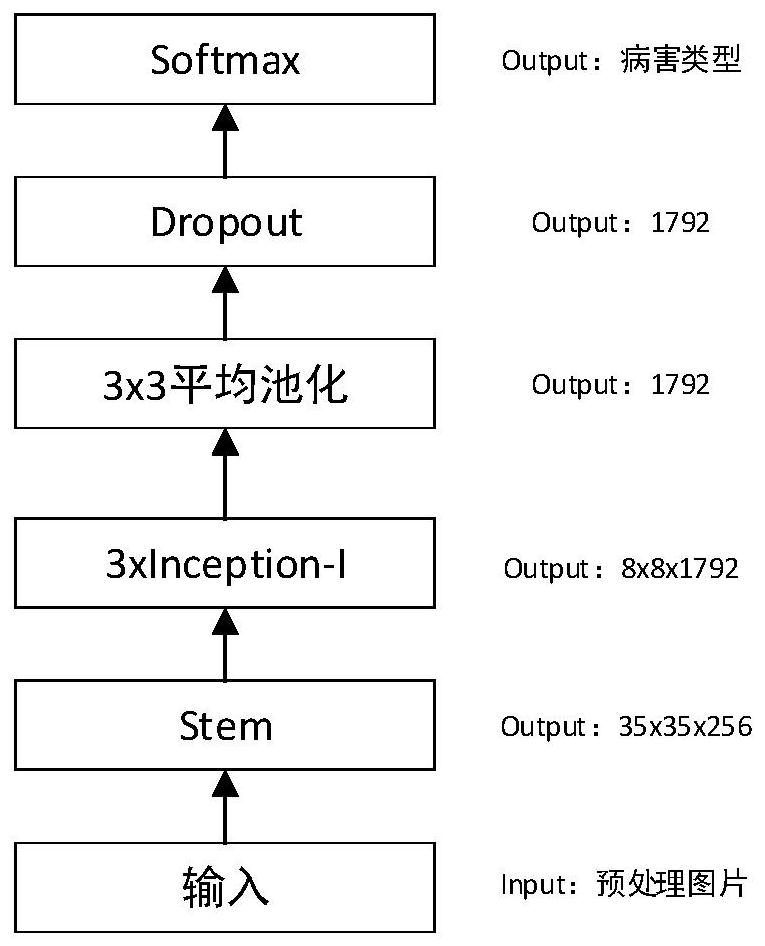

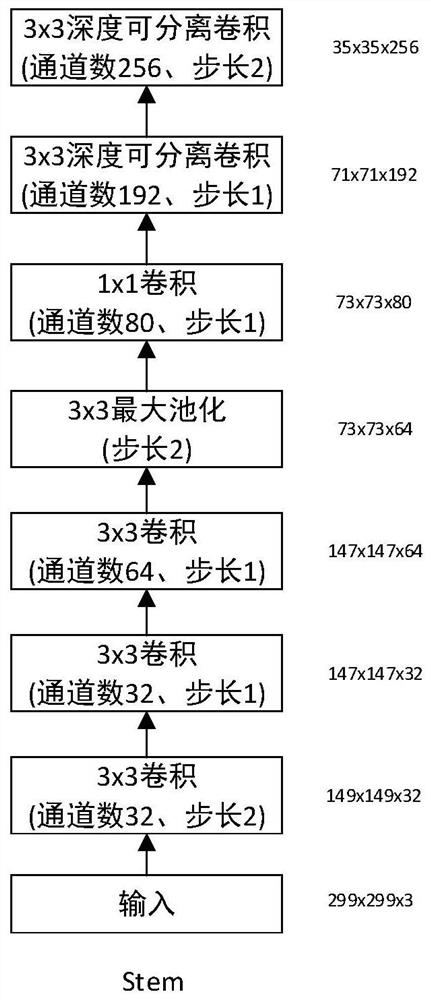

Medicinal plant leaf disease image recognition method based on deep learning

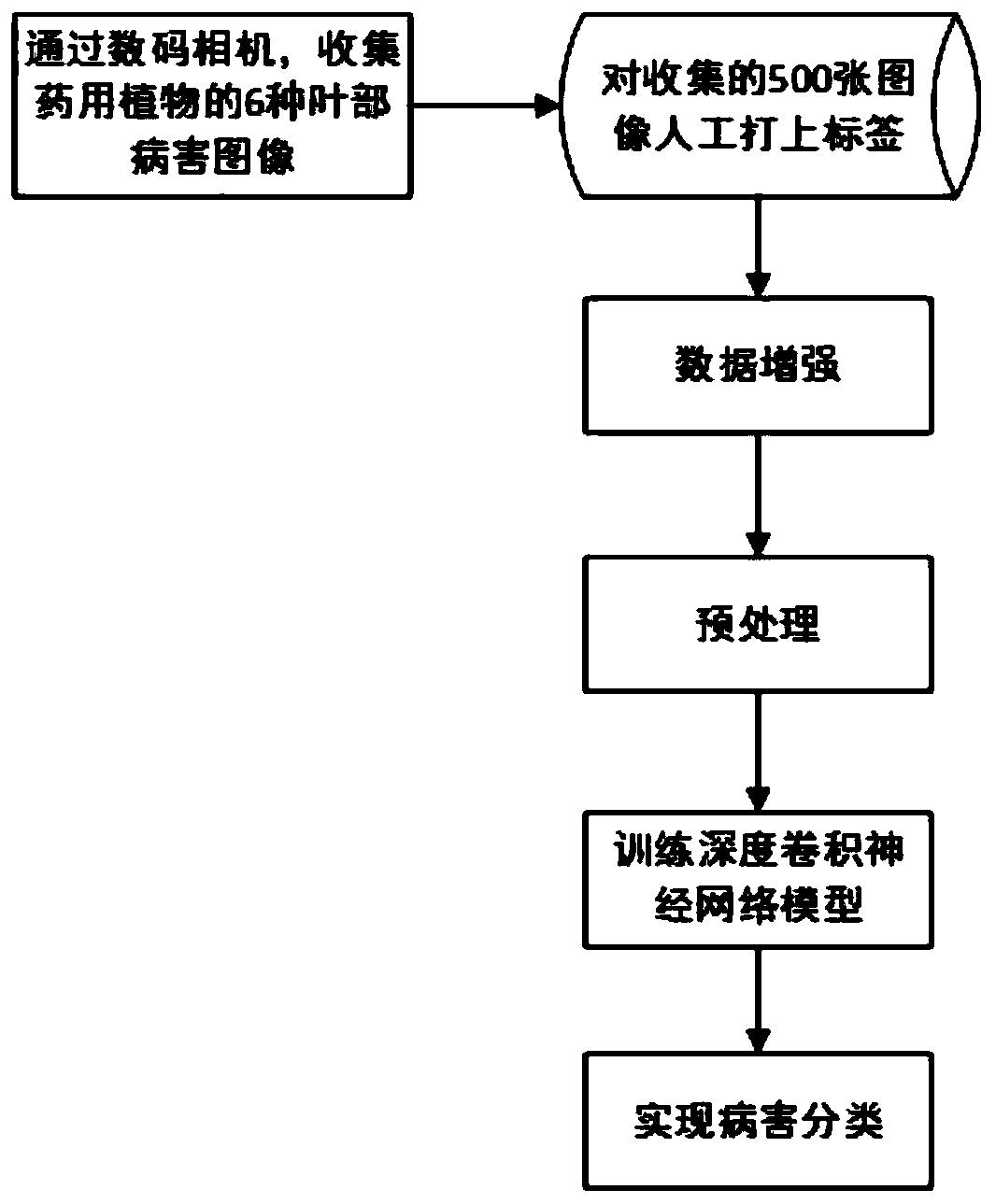

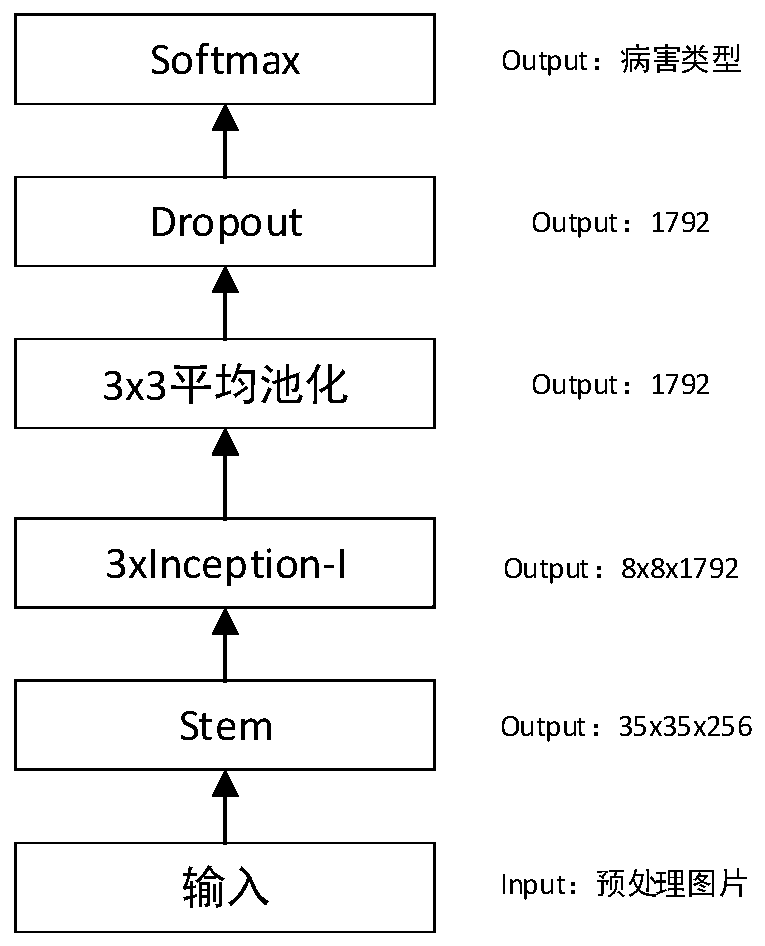

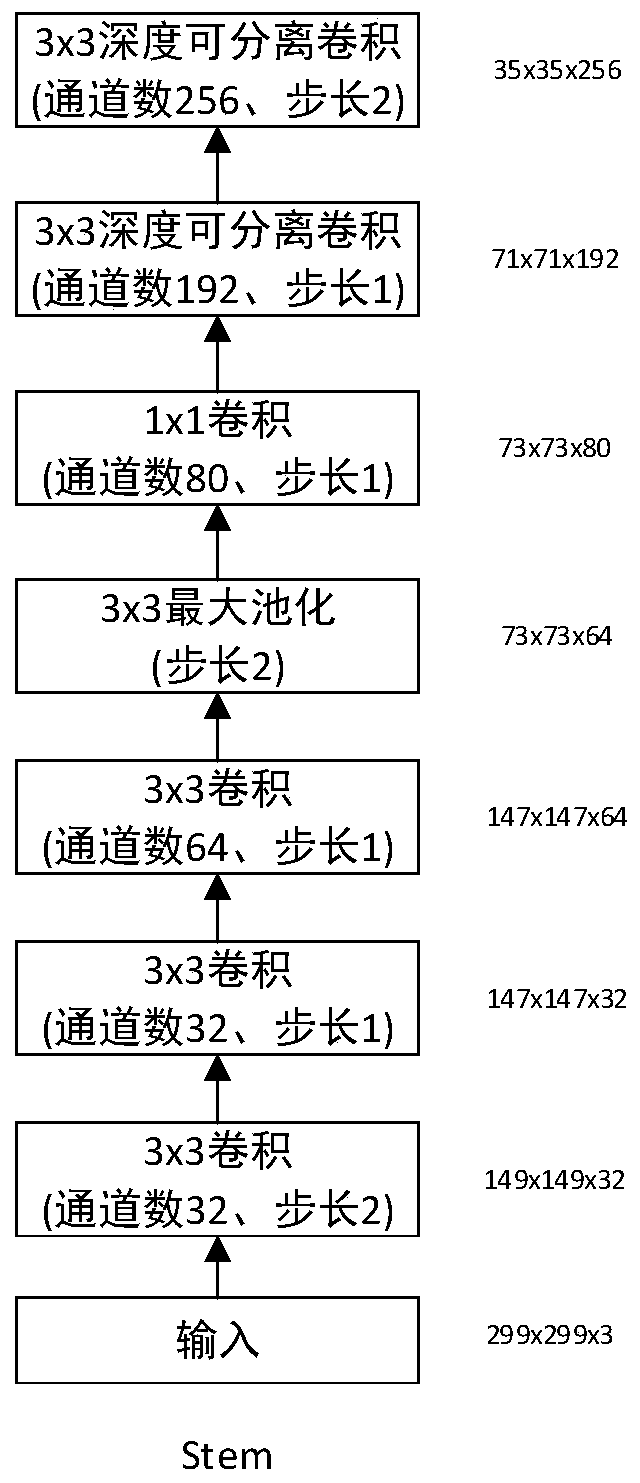

ActiveCN110717451AIncrease diversityImprove computing efficiencyImage enhancementGeometric image transformationPattern recognitionBiology

The invention discloses a medicinal plant leaf disease image recognition method based on deep learning, and relates to the technical field of medicinal plant leaf disease prevention, and the method comprises the steps: collecting a plurality of medicinal plant leaf disease images; carrying out enhancement processing on the leaf disease image of the medicinal plant; uniformly adjusting the size ofeach enhanced medical plant leaf disease image to be 299 * 299; training a deep CNN model, wherein the deep CNN model comprises a convolution pooling network, an Inception-I network, an average pooling network, a Dropout layer and a Softmax layer which are connected in series, the last two convolution layers of the convolution pooling network connected in series are depth separable convolution layers, and the Inception-I network comprises a random pooling layer; and identifying the size-adjusted leaf disease images of the medicinal plants through a deep CNN model, the recognition result beingthe type of the disease of the leaf of each medicinal plant, and classifying the disease of the leaf of each medicinal plant based on the recognition result. The recognition method can effectively assist planters to diagnose diseases and improve the diagnosis efficiency.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

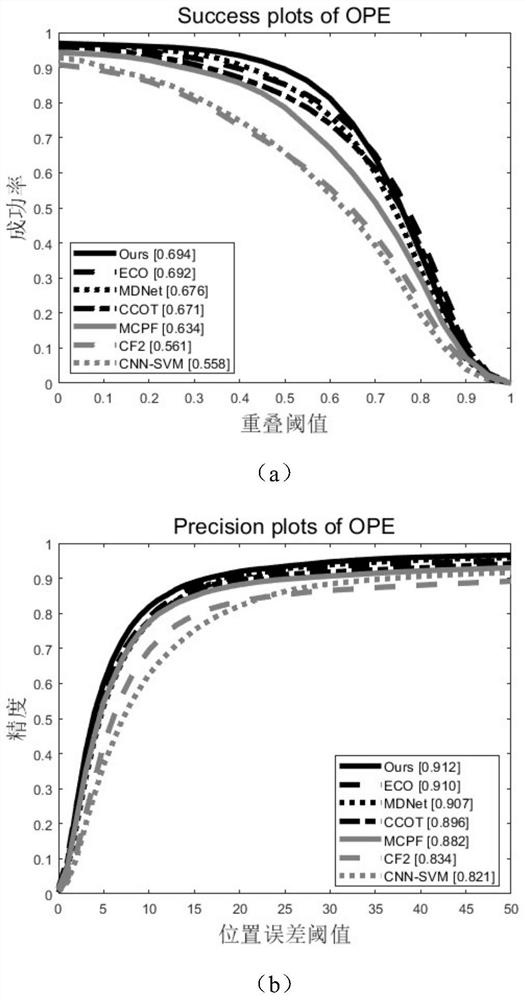

Tracking method based on dual-model adaptive kernel correlation filtering

ActiveCN109858454AImprove accuracyGuaranteed real-timeInternal combustion piston enginesCharacter and pattern recognitionDimensionality reductionAdaptive kernel

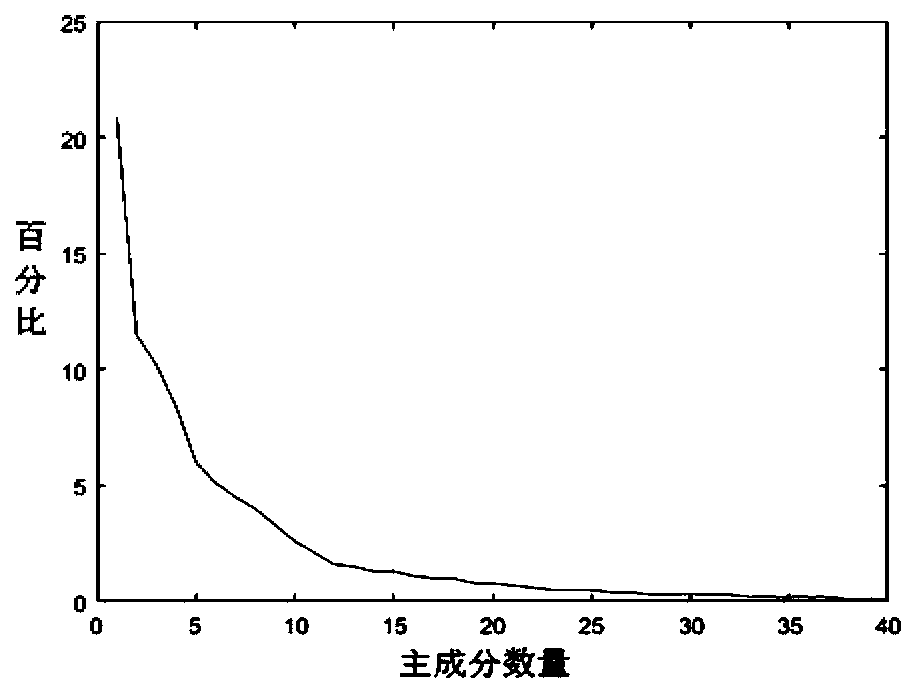

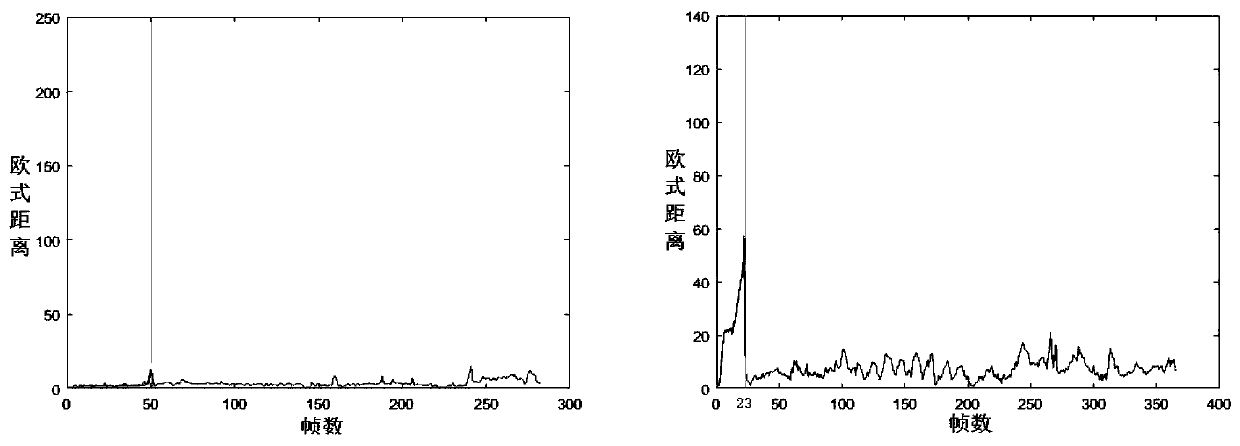

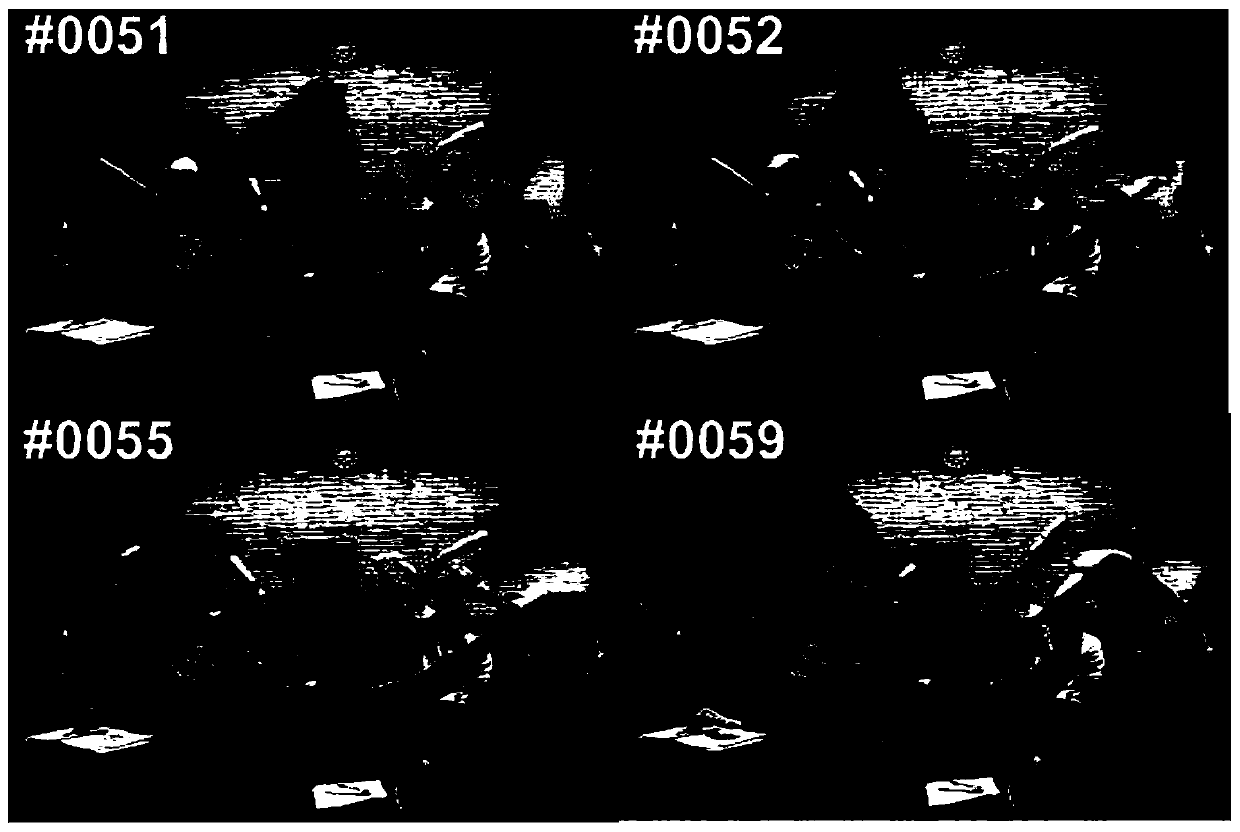

The invention provides a tracking method based on dual-model adaptive kernel correlation filtering, which comprises the following steps: initializing the position of a pre-estimated target, calculating a Gaussian tag, and establishing a main feature model and an auxiliary feature model; extracting HOG features to serve as features of a main feature model, extracting deep convolution features to serve as features of an auxiliary feature model, and setting initialization parameters; calculating a response layer of the pre-estimated target by utilizing the main characteristic model, and obtainingan optimal position and an optimal scale of the pre-estimated target by the response layer through a Newton iteration method; if the maximum confidence response value max of the response layer corresponding to the optimal scale is greater than an empirical threshold u, determining a pre-estimated target position, and updating the main feature model; if max is smaller than or equal to an empiricalthreshold u, stopping updating the main feature model, expanding a search area, extracting CNN features of a target pre-selected area, performing dimensionality reduction on deep CNN features by using a PCA technology, estimating a new target position by using the dimensionality-reduced CNN features, and updating an auxiliary feature model until thevideo sequence ends.

Owner:NORTHEASTERN UNIV

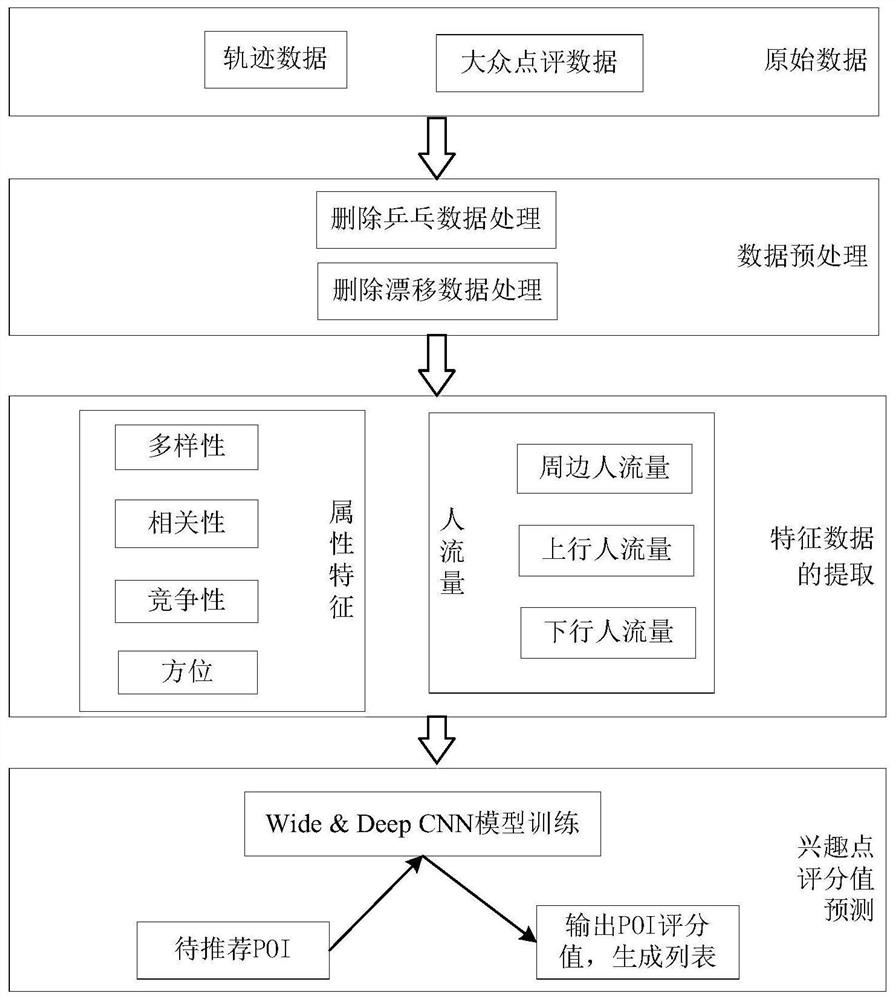

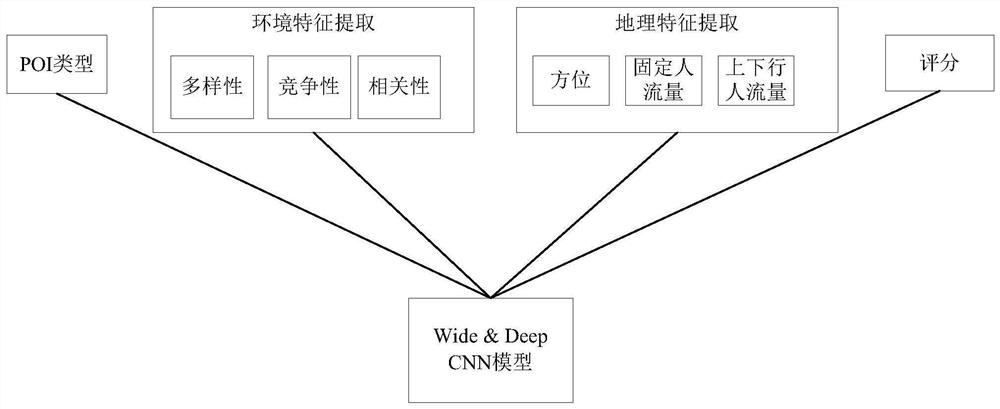

User trajectory-based interest point recommendation method

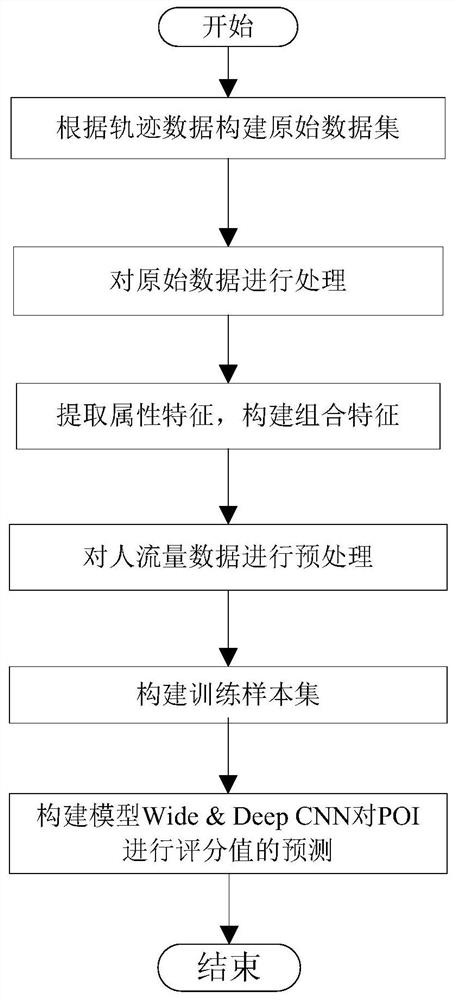

ActiveCN112579922AReduce the impact of accuracyImprove accuracyDigital data information retrievalNeural architecturesOriginal dataEngineering

The invention provides a user trajectory-based interest point recommendation method which comprises the following steps of: firstly, collecting user trajectory data, deleting ping-pong data and driftdata in an original data set to reduce the influence of noise data on the accuracy of the recommendation method, and then extracting attribute characteristics in different regions; performing statistics on surrounding pedestrian flow data and uplink and downlink pedestrian flow data of a position where a POI is located, constructing a training sample set, designing a model WideDeep CNN by combining a Wide model and a Deep CNN model, and predicting a score value of the POI to be recommended in a region to be monitored by using the model WideDeep CNN. According to the method, the training sampleset is constructed by fully using user trajectory data; scoring fingers of different types of POIs are generated based on a neural network model, and then a POI list is generated according to the scoring values. According to the method, time-space information contained in the mobile big data is deeply mined, the requirements of the public are analyzed, and the interest point recommendation problem is better solved.

Owner:NORTHEASTERN UNIV

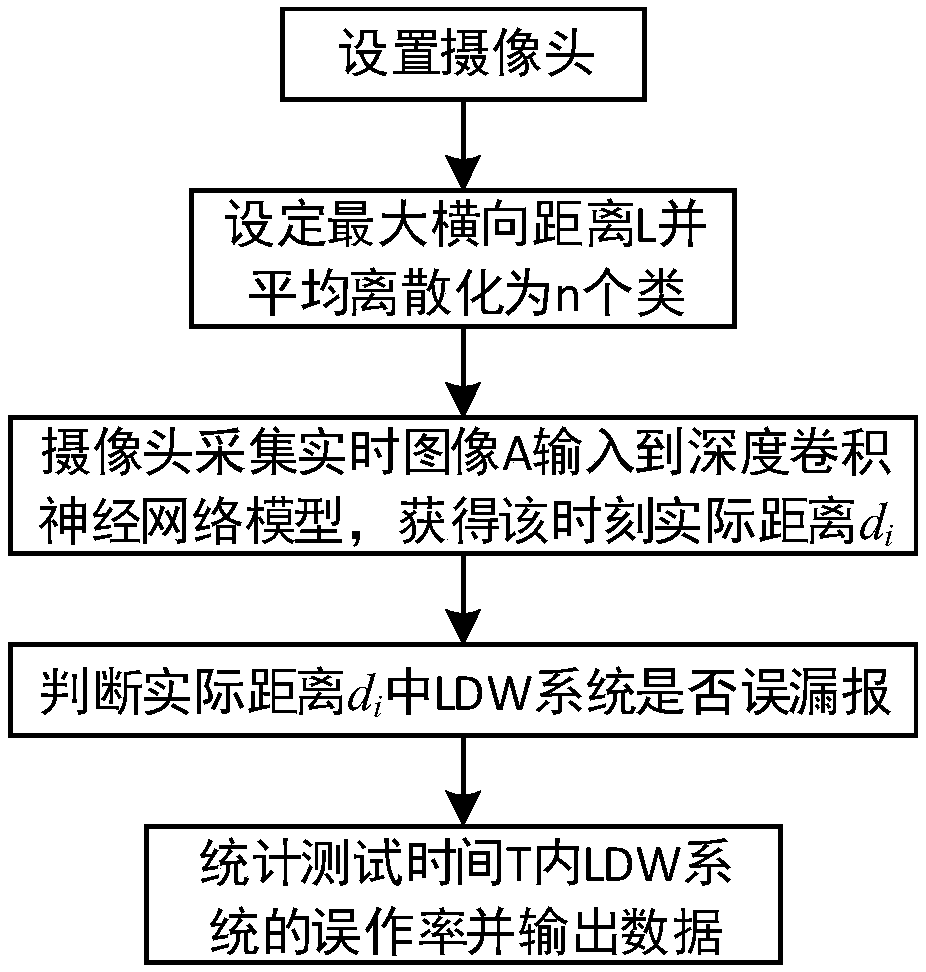

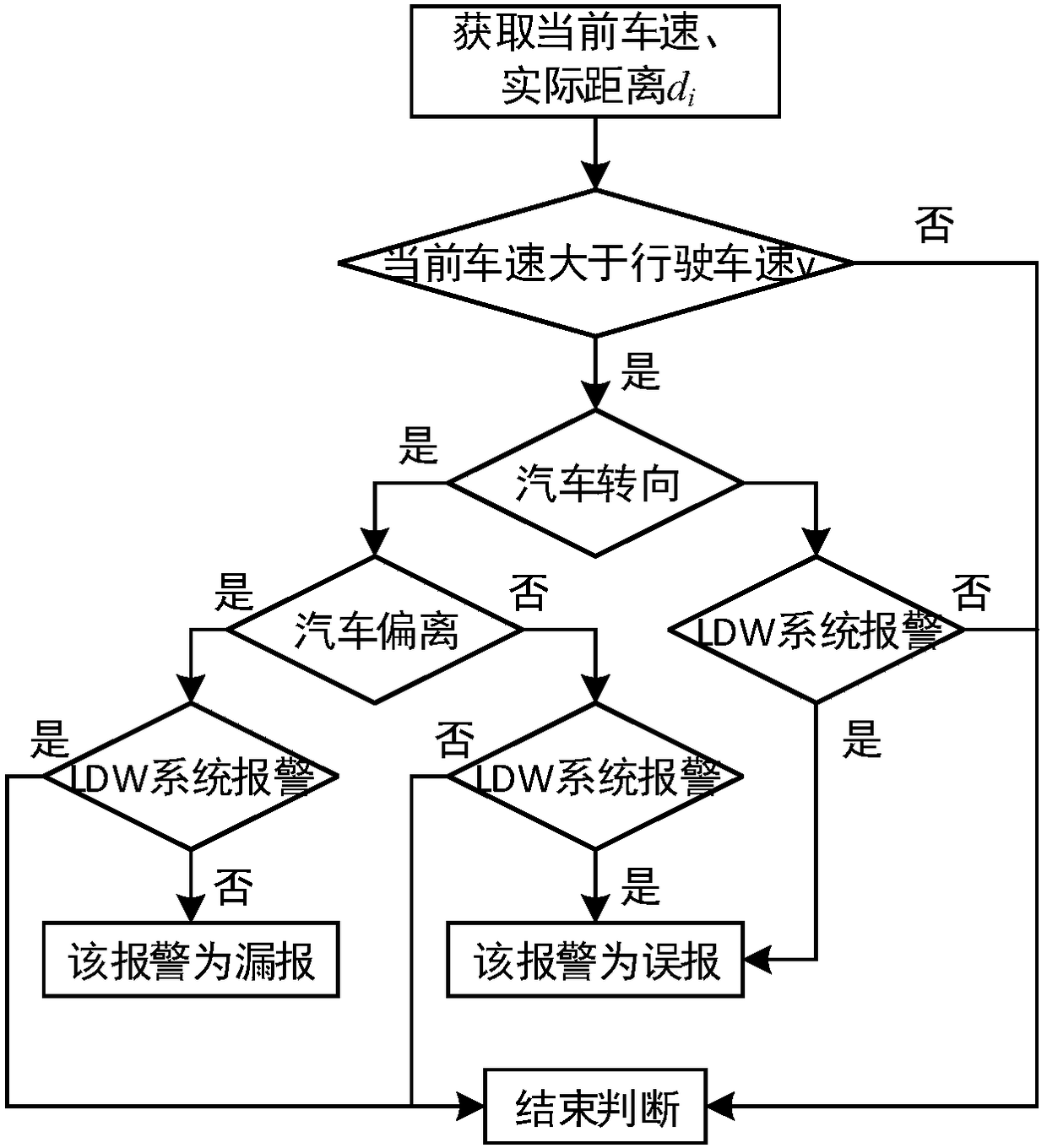

LDW false and omitted alarm test method and system based on convolutional neural network

ActiveCN108445866AFast recognitionHigh speedProgramme controlElectric testing/monitoringData acquisitionComputer science

The invention discloses a LDW false and omitted alarm test method based on a convolutional neural network (CNN). The method comprises steps of S1, disposing a camera; S2, setting a maximum lateral distance L, and averagely discretizing the same into n categories; S3, acquiring a real-time image A, inputting the same to a deep CNN model, calculating the actual distance di of a lane line; S4, determining whether a LDW system has false or omitted alarms; and S5, obtaining the misoperation rate of the LDW system. A test system comprises an image acquisition device, an onboard data acquisition mechanism, an analyzer, and an operation processor. The image acquisition device is connected to the analyzer, and the operation processor is connected to the analyzer and the onboard data acquisition mechanism. The method is easy to operate, high in recognition speed and high in recognition precision, applicable to the lanes in various road conditions. The test system can be just provided with the image acquisition device, the onboard data acquisition mechanism, the analyzer and the operation processor in the simplest manner, can fully automatically identify deviations without an extra lane linemark ruler.

Owner:CHONGQING UNIV

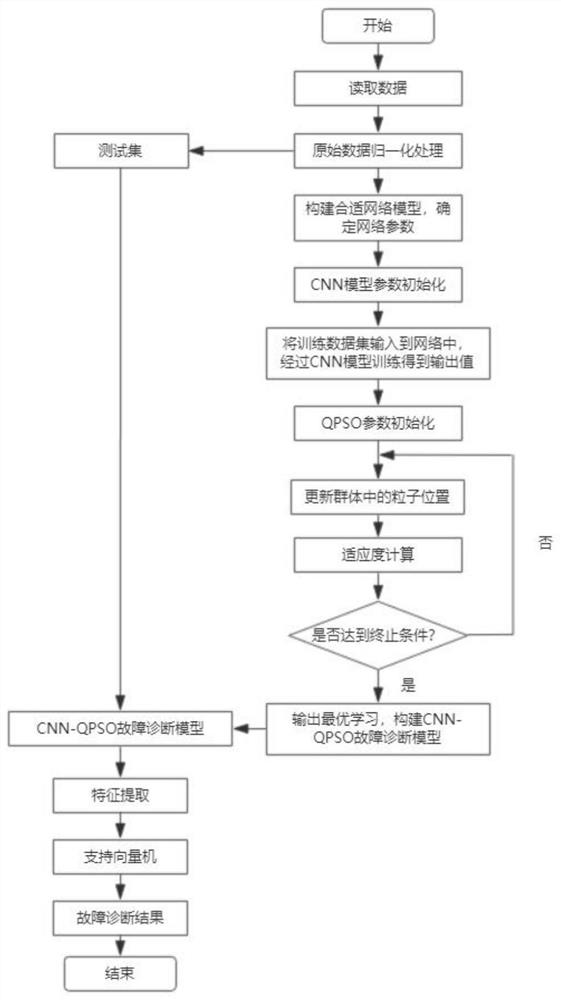

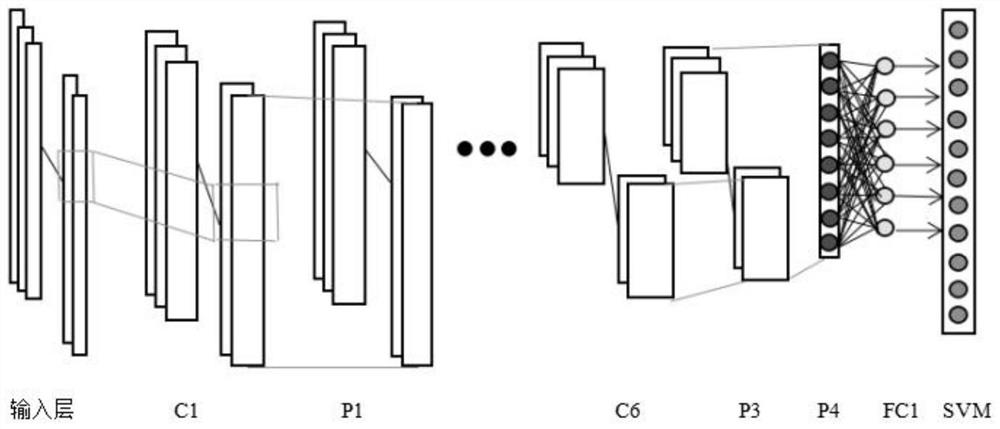

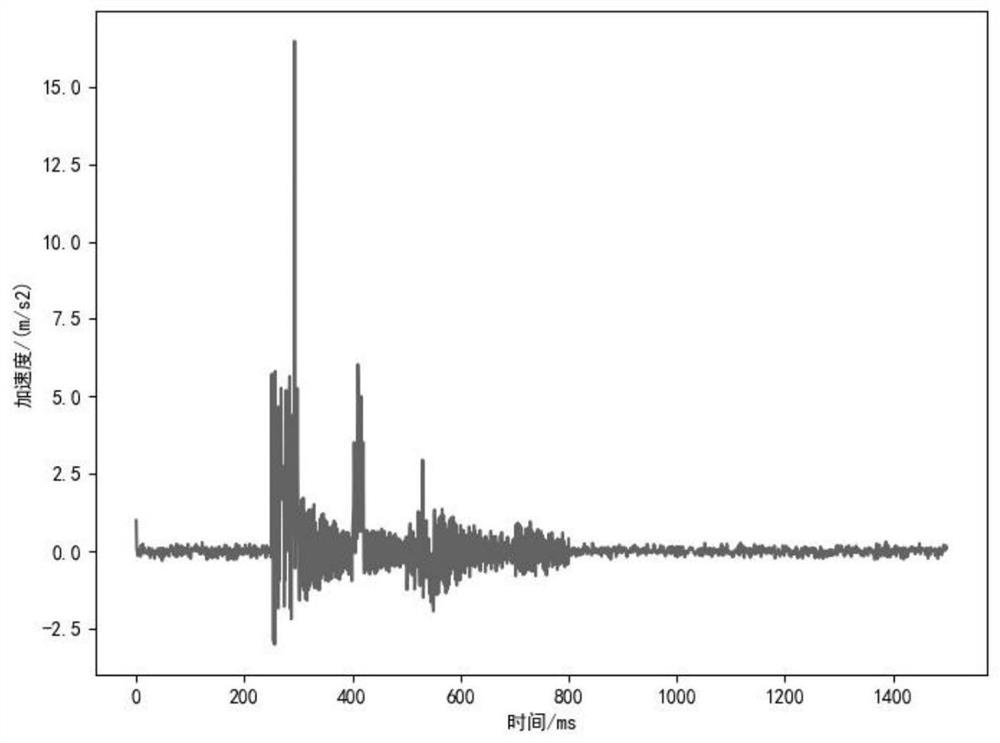

Medium-voltage circuit breaker fault diagnosis method based on deep learning and intelligent optimization

PendingCN113988136AEfficient extractionWell representedKernel methodsCharacter and pattern recognitionAlgorithmOriginal data

The invention discloses a medium-voltage circuit breaker fault diagnosis method based on deep learning and intelligent optimization. The method comprises the following steps: 1) collecting medium-voltage circuit breaker vibration signals in a normal state, tripping closing electromagnet blockage, main shaft blockage and half shaft blockage as an original data set; 2) performing normalization processing on training set data and test set data; 3) constructing a deep CNN model; 4) performing optimization training on an SVM classifier by combining the trained deep CNN model with quantum particle swarm optimization; and 5) inputting test sample data into a trained fault diagnosis model to carry out circuit breaker fault diagnosis. According to the invention, data features are effectively extracted by using the advantage of strong feature extraction capability of a convolutional neural network; and furthermore, the accuracy of data classification is improved by utilizing the advantage that the quantum particle swarm optimization can effectively eliminate a local optimum phenomenon.

Owner:ZHEJIANG UNIV OF TECH

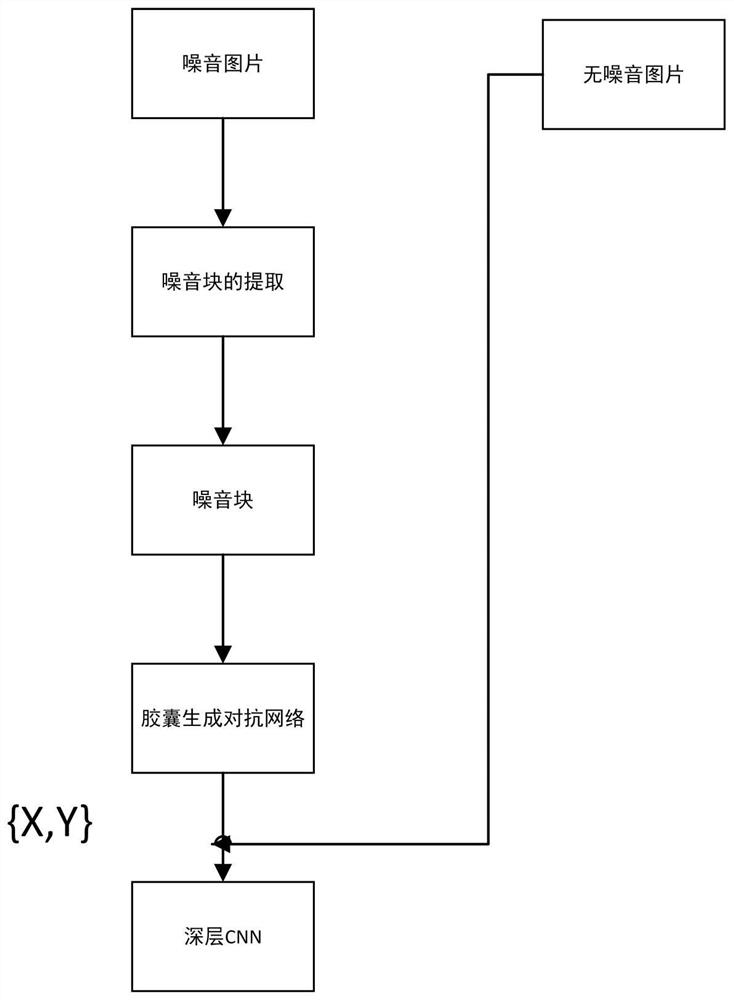

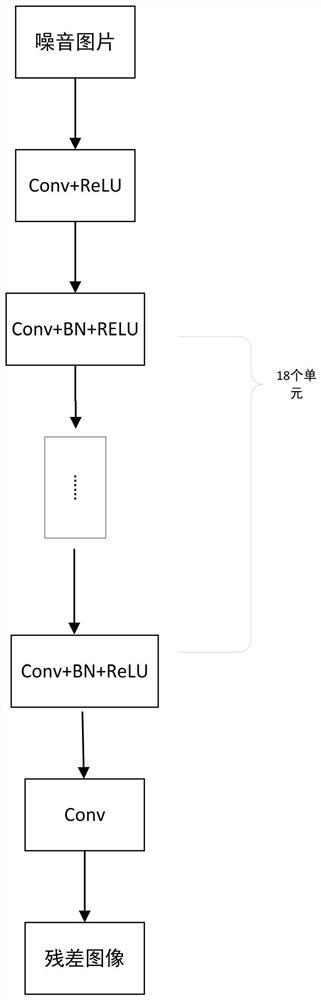

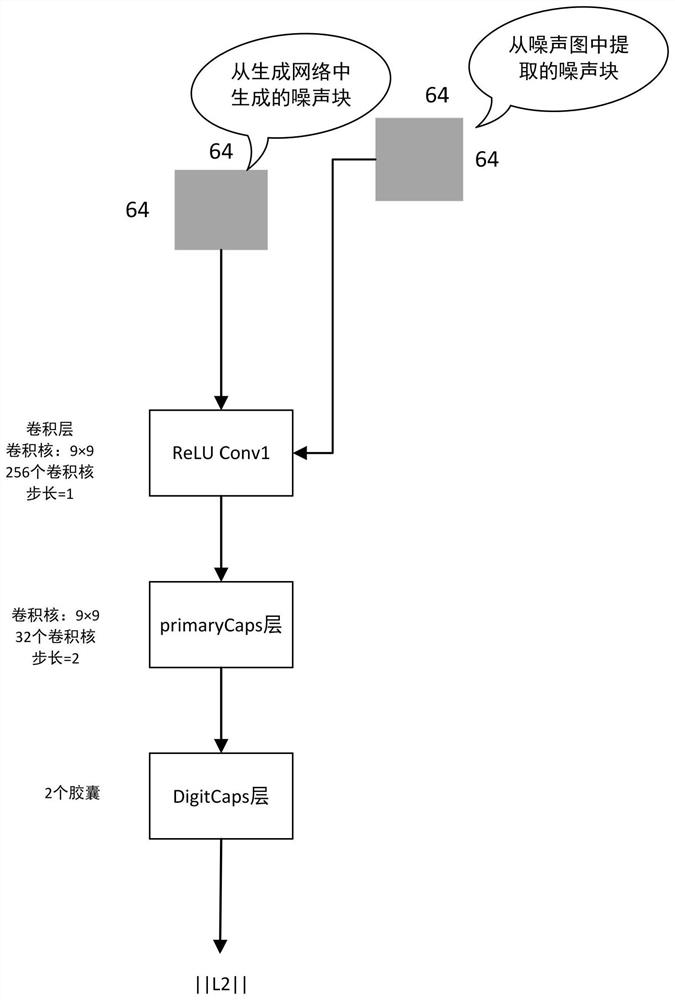

Image blind denoising method for noise modeling based on capsule generative adversarial network

InactiveCN112200748AImprove the characteristics of not fully utilizing the human brainImprove the disadvantage of wasting some informationImage enhancementImage analysisPattern recognitionGenerative adversarial network

The invention discloses an image blind denoising method for noise modeling based on a capsule generative adversarial network, and the method comprises the steps: 1, extracting a smooth noise block from a given noise image; 2, carrying out the noise modeling based on the capsule generative adversarial network; and 3, carrying out the training of a deep CNN, and obtaining a noise reduction model, soas to achieve the blind denoising of an image. According to the method, the defect that the noise reduction effect is poor under the condition that noise information is unknown or a sensor is uncertain in the conventional method can be overcome, so that the noise reduction effect is improved.

Owner:HEFEI UNIV OF TECH

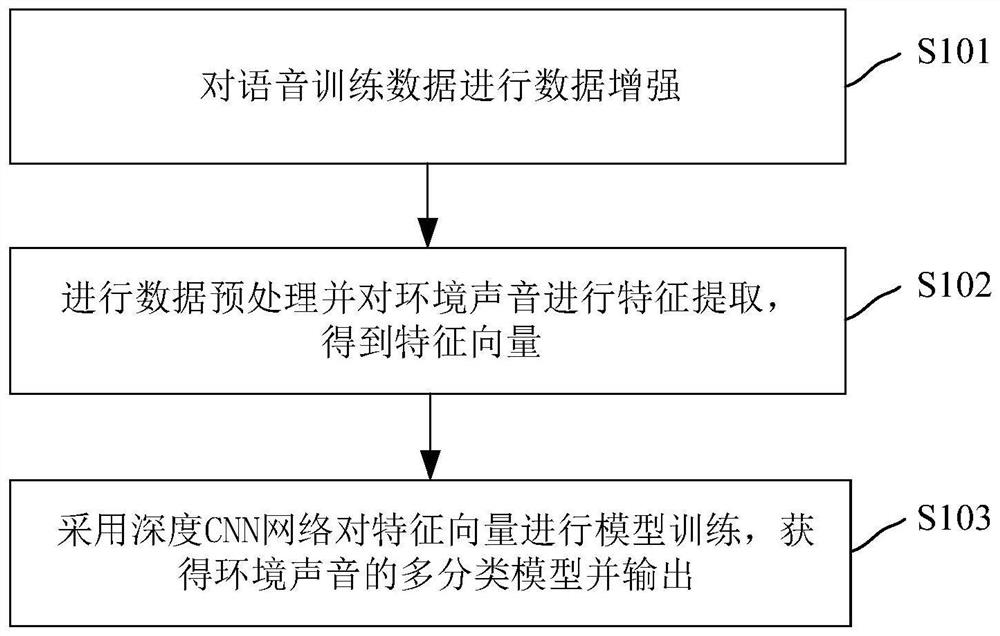

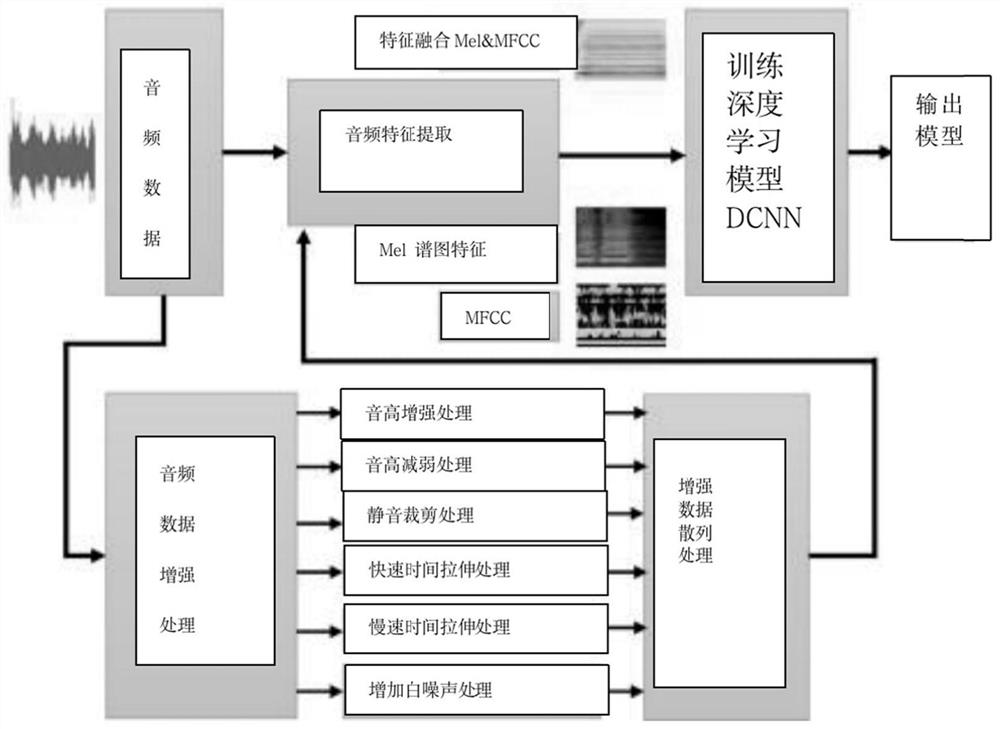

Environmental sound classification analysis method and device and medium

PendingCN114882909AImprove training effectHigh practical valueSpeech analysisFeature vectorEnvironmental sound classification

The invention discloses an environmental sound classification analysis method and device and a medium. The method comprises the steps of performing data enhancement on voice training data; carrying out data preprocessing and carrying out feature extraction on the environment sound to obtain a feature vector; and carrying out model training on the feature vector by adopting a deep CNN network to obtain a multi-classification model of the environmental sound and outputting the multi-classification model. The data enhancement method is adopted, experiments show that the training effect is greatly improved compared with that without data enhancement, and the practical value of the system is improved.

Owner:ZHUHAI GAOLING INFORMATION TECH COLTD

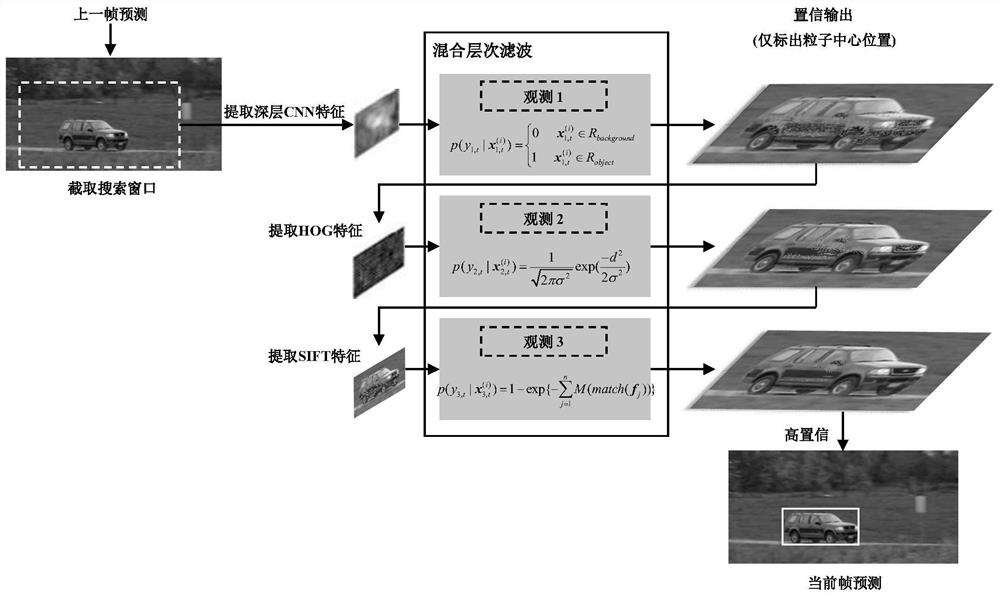

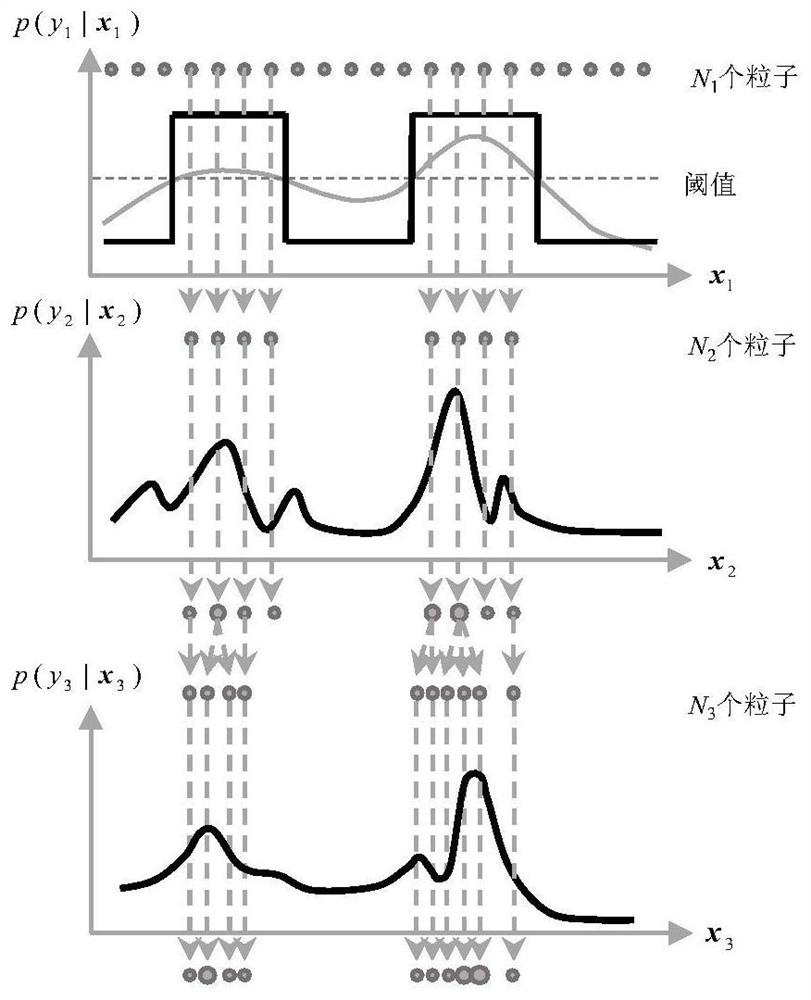

Visual tracking method based on hybrid hierarchical filtering and complementarity characteristics

ActiveCN112232359ASolve the small target tracking problemImprove tracking accuracyCharacter and pattern recognitionArtificial lifePattern recognitionComputer vision

The invention discloses a visual tracking method based on hybrid hierarchical filtering and complementarity characteristics. The method comprises the following steps: establishing a three-stage hybridhierarchical filtering target tracking framework, and estimating a tracking result through a coarse-to-fine search strategy by using confidence output of each stage. The method specifically comprisesthe following steps: establishing a first-stage observation model as observation 1 by using deep CNN features so as to separate a target from a background and roughly position the target; establishing a second-stage observation model by using HOG features to serve as observation 2, and adjusting the target position; 3, establishing a third-stage observation model as observation by using SIFT features, and finally positioning the target. According to the method, the tracking precision and robustness are improved, and the tracking effect is excellent in the environments of rapid target movement, background mixing and the like.

Owner:中国人民解放军陆军炮兵防空兵学院

A Deep Learning Detection Method for Dense Targets in Remote Sensing Images

ActiveCN111126287BAchieve positioningEffective Convolutional FeaturesClimate change adaptationScene recognitionHigh densityRemote sensing

The invention discloses a deep learning detection method for dense targets in remote sensing images, which is used for extracting dense targets in remote sensing images. First, the image is input into the deep CNN base network to obtain the feature map; second, the deep convolutional features are input into the dense object extraction framework for region of interest extraction (RPN branch), object classification and rectangular frame regression. For the RPN branch, a high-density bias sampler is proposed to mine more samples with high density (hard samples) to improve detection performance. Soft‑NMS is adopted after the dense object extraction framework to retain more positive objects. Finally, the refined rectangular frame is output to realize the number statistics of dense objects.

Owner:WUHAN UNIV

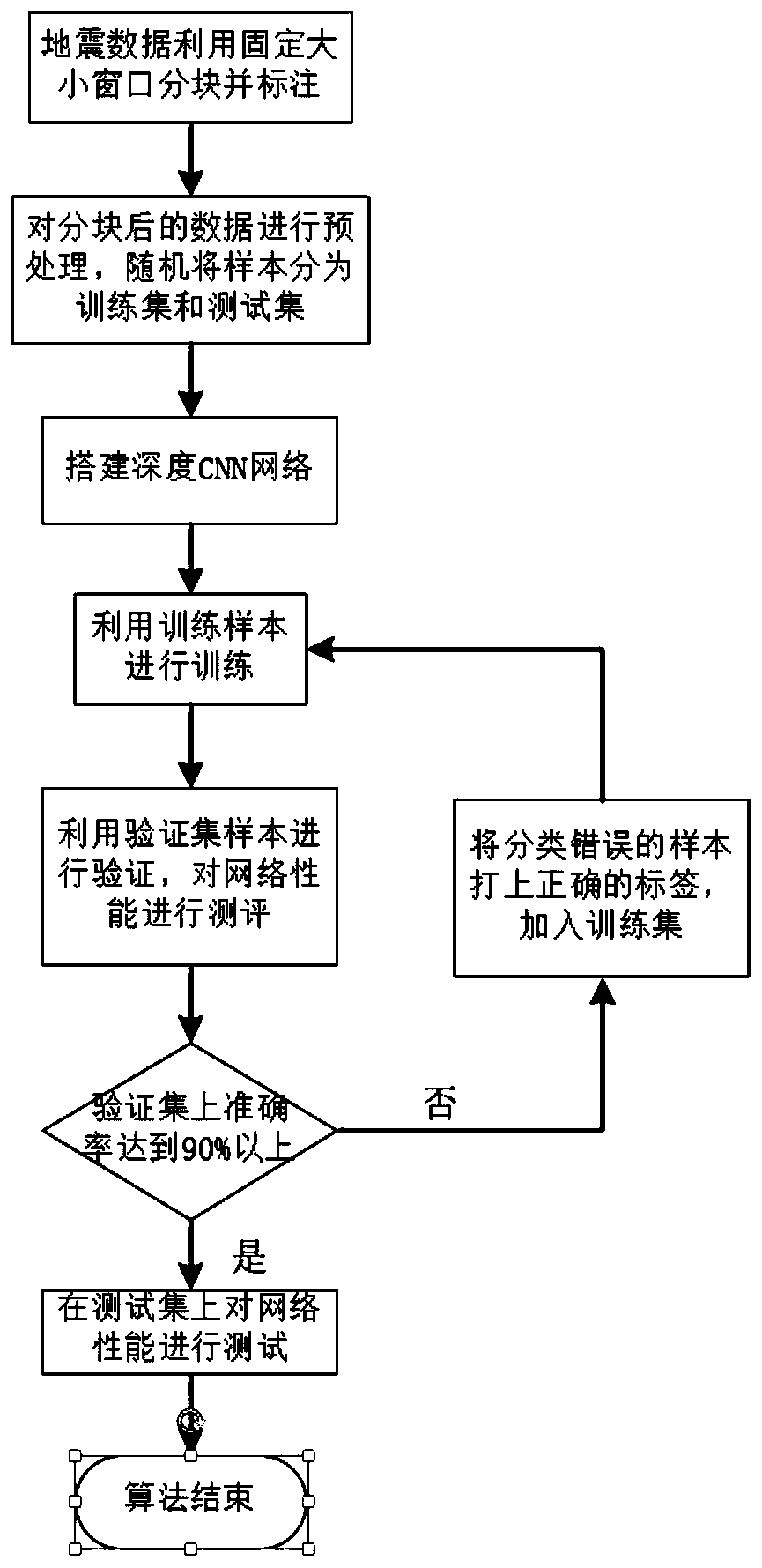

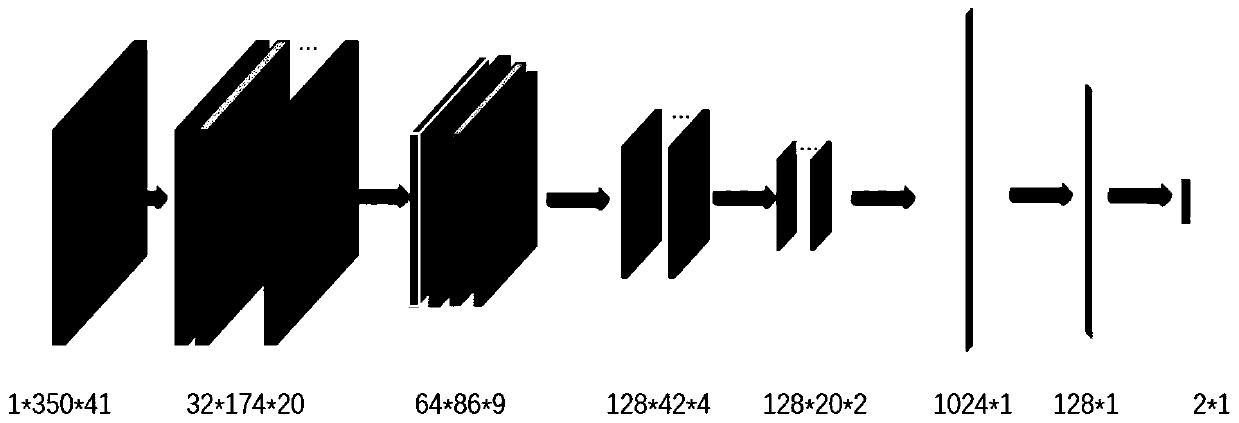

Pumping unit noise positioning method based on deep learning

PendingCN110619383AImprove intelligenceSave time and costNeural architecturesTest sampleSlide window

The invention discloses a pumping unit noise positioning method based on deep learning. The method comprises the following steps: S1, partitioning seismic data according to columns by using single-step sliding of a sliding window to obtain a plurality of local seismic trace sets with fixed sizes, if the number of seismic traces containing pumping unit noise reaches a threshold Th1, marking the number as 1, and if the number of seismic traces containing pumping unit noise is lower than a threshold Th2, marking the number as 0; S2, preprocessing the local seismic trace gather, calculating an energy spectrum of the local seismic trace gather, carrying out mean filtering, then carrying out downsampling, randomly selecting part of data as a training set, and taking the rest part as a test set;S3, establishing a deep CNN network, training the CNN network by using the training set obtained in the step S2, and supplementing the training set with misclassified data for repeated training in thetraining process; S4, testing the CNN trained in the step S3 by using a test sample, and quantitatively evaluating the positioning function of the CNN; and S5, performing width estimation on the positioned noise of the oil pumping unit according to the positioning result in the step S4.

Owner:CHINA PETROLEUM & CHEM CORP +1

A deep learning-based image recognition method for leaf diseases of medicinal plants

ActiveCN110717451BIncrease diversityImprove computing efficiencyImage enhancementGeometric image transformationPattern recognitionBiology

The invention discloses a method for identifying images of leaf diseases of medicinal plants based on deep learning, and relates to the technical field of protection of leaf diseases of medicinal plants. The method includes collecting several images of leaf diseases of medicinal plants; The image is enhanced; the size of each enhanced image of leaf diseases of medicinal plants is uniformly adjusted to 299x299; the deep CNN model is trained, and the deep CNN model includes convolution pooling network in series, Inception‑I network, average pooling network, Dropout layer and Softmax layer, the last two convolutional layers of the convolutional pooling network in series are depthwise separable convolutional layers, and the Inception‑I network includes a random pooling layer; The leaf disease images of each medicinal plant are identified, and the identification result is the type of disease on the leaf of each medicinal plant, and the leaf disease of each medicinal plant is classified based on the identification result. The identification method can effectively assist planters in diagnosing diseases and improve diagnosis efficiency.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

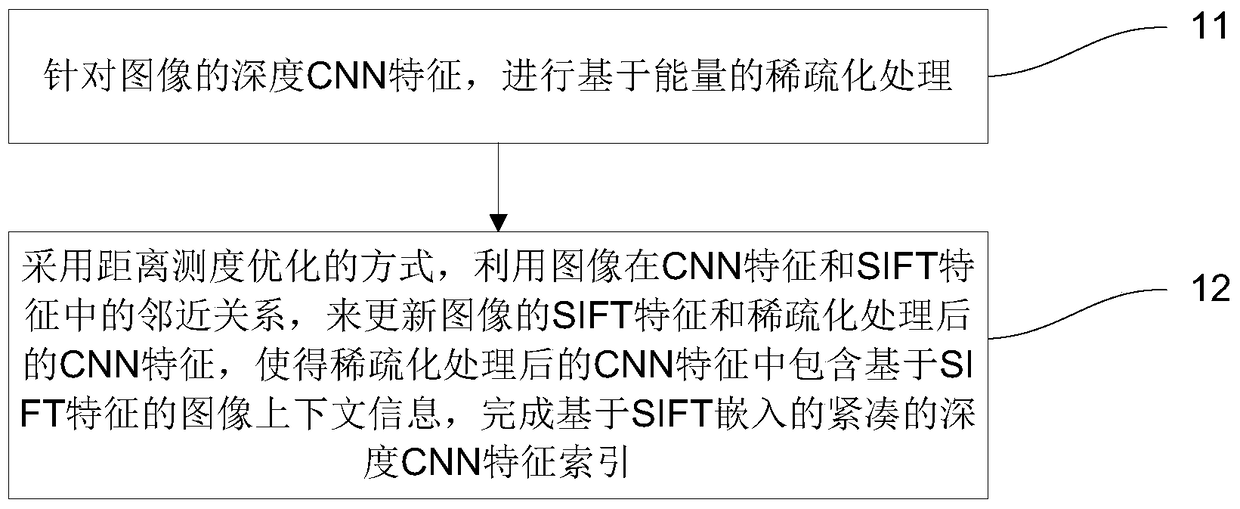

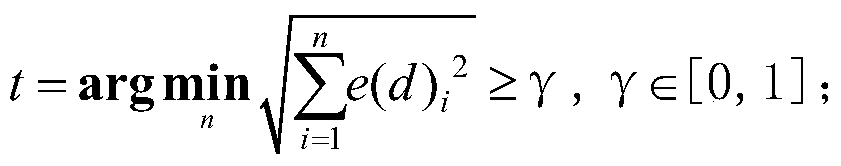

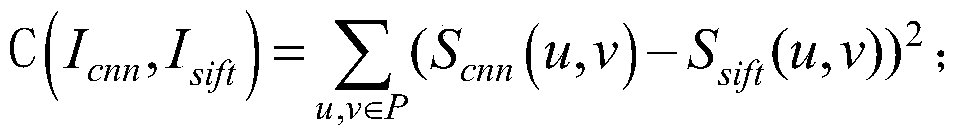

A compact deep CNN feature indexing method based on SIFT embeddings

ActiveCN105022836BImprove sparsityEasy maintenanceCharacter and pattern recognitionStill image data indexingPattern recognitionEnergy based

The invention discloses a compact deep CNN feature indexing method based on SIFT embedding, which includes: performing energy-based sparse processing on the deep CNN features of images; adopting a distance measurement optimization method, using images in CNN features and SIFT features To update the SIFT feature of the image and the CNN feature after sparse processing, so that the CNN feature after sparse processing contains the image context information based on SIFT feature, and complete the compact deep CNN feature index based on SIFT embedding. The method disclosed by the invention is used for efficiently storing the features of pictures in a huge number of databases, and effectively reducing the time required for online retrieval.

Owner:UNIV OF SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com