Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

260 results about "Voice Training" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A variety of techniques used to help individuals utilize their voice for various purposes and with minimal use of muscle energy.

Speech training method with alternative proper pronunciation database

InactiveUS6963841B2Improving speech patternSpeech recognitionElectrical appliancesSpeech trainingUser input

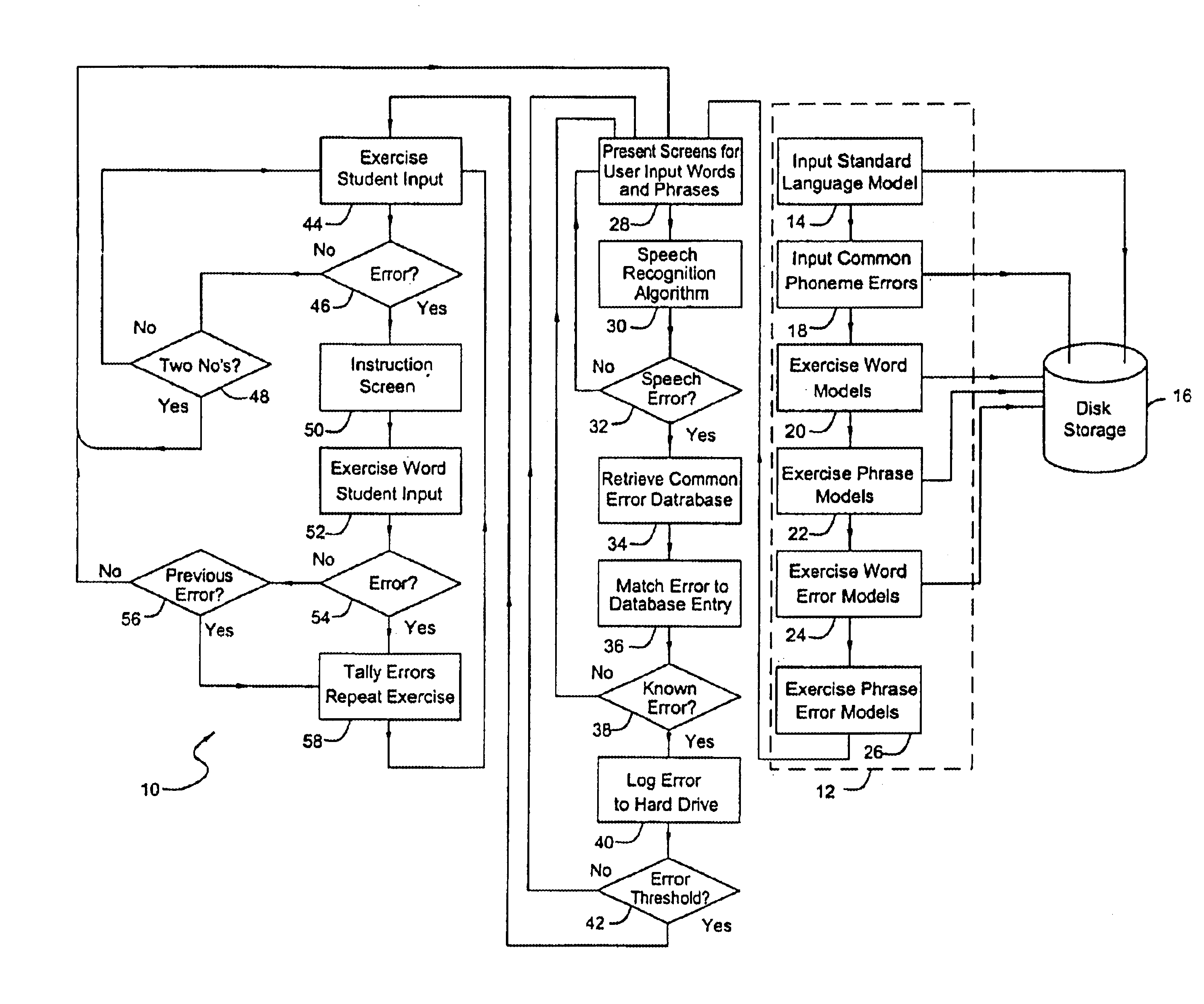

In accordance with a present invention speech training system is disclosed. It uses a microphone to receive audible sounds input by a user into a first computing device having a program with a database consisting of (i) digital representations of known audible sounds and associated alphanumeric representations of the known audible sounds, and (ii) digital representations of known audible sounds corresponding to mispronunciations resulting from known classes of mispronounced words and phrases. The method is performed by receiving the audible sounds in the form of the electrical output of the microphone. A particular audible sound to be recognized is converted into a digital representation of the audible sound. The digital representation of the particular audible sound is then compared to the digital representations of the known audible sounds to determine which of those known audible sounds is most likely to be the particular audible sound being compared to the sounds in the database. In response to a determination of error corresponding to a known type or instance of mispronunciation, the system presents an interactive training program from the computer to the user to enable the user to correct such mispronunciation.

Owner:LESSAC TECH INC

Method and apparatus for providing voice recognition service to a wireless communication device

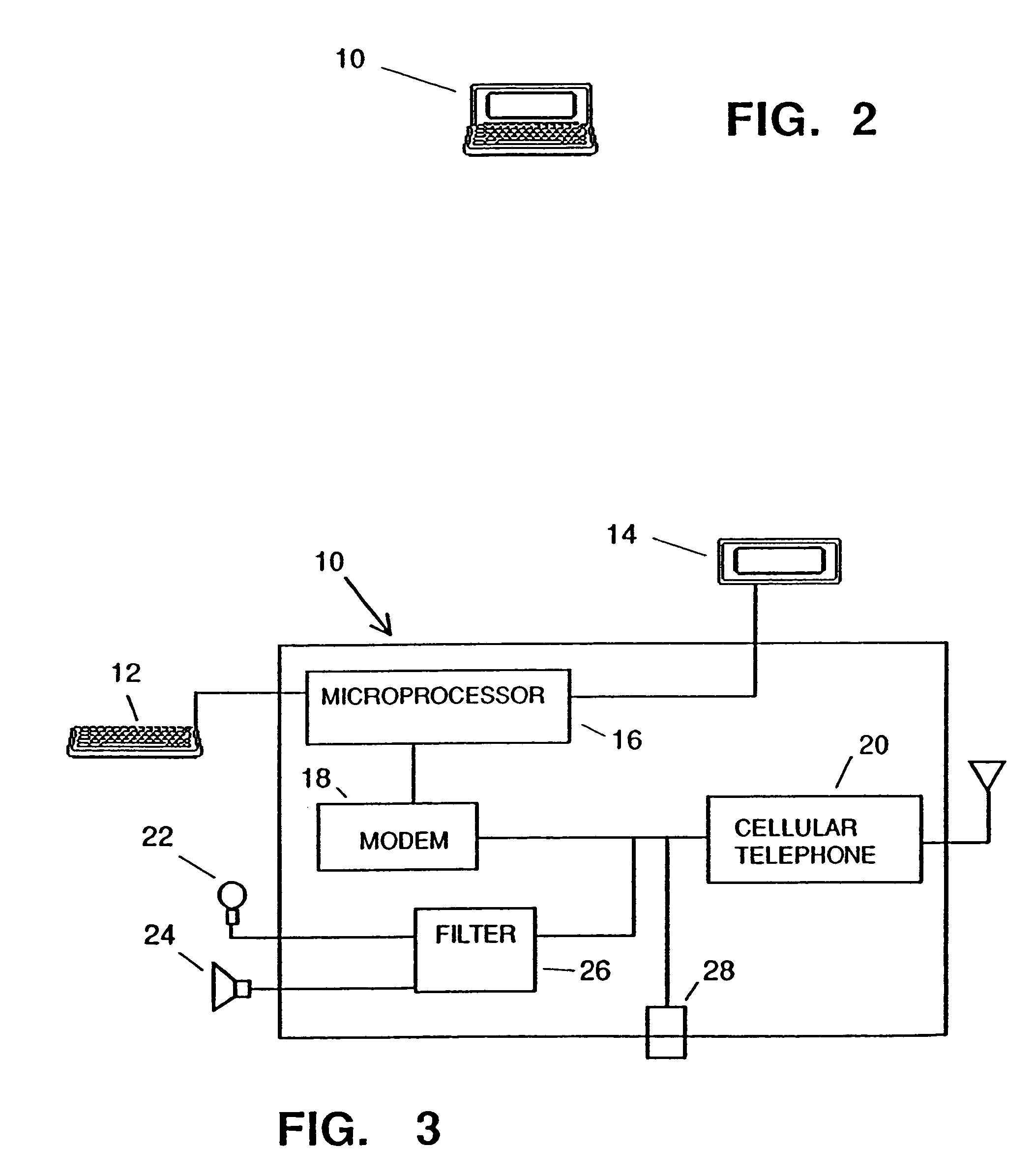

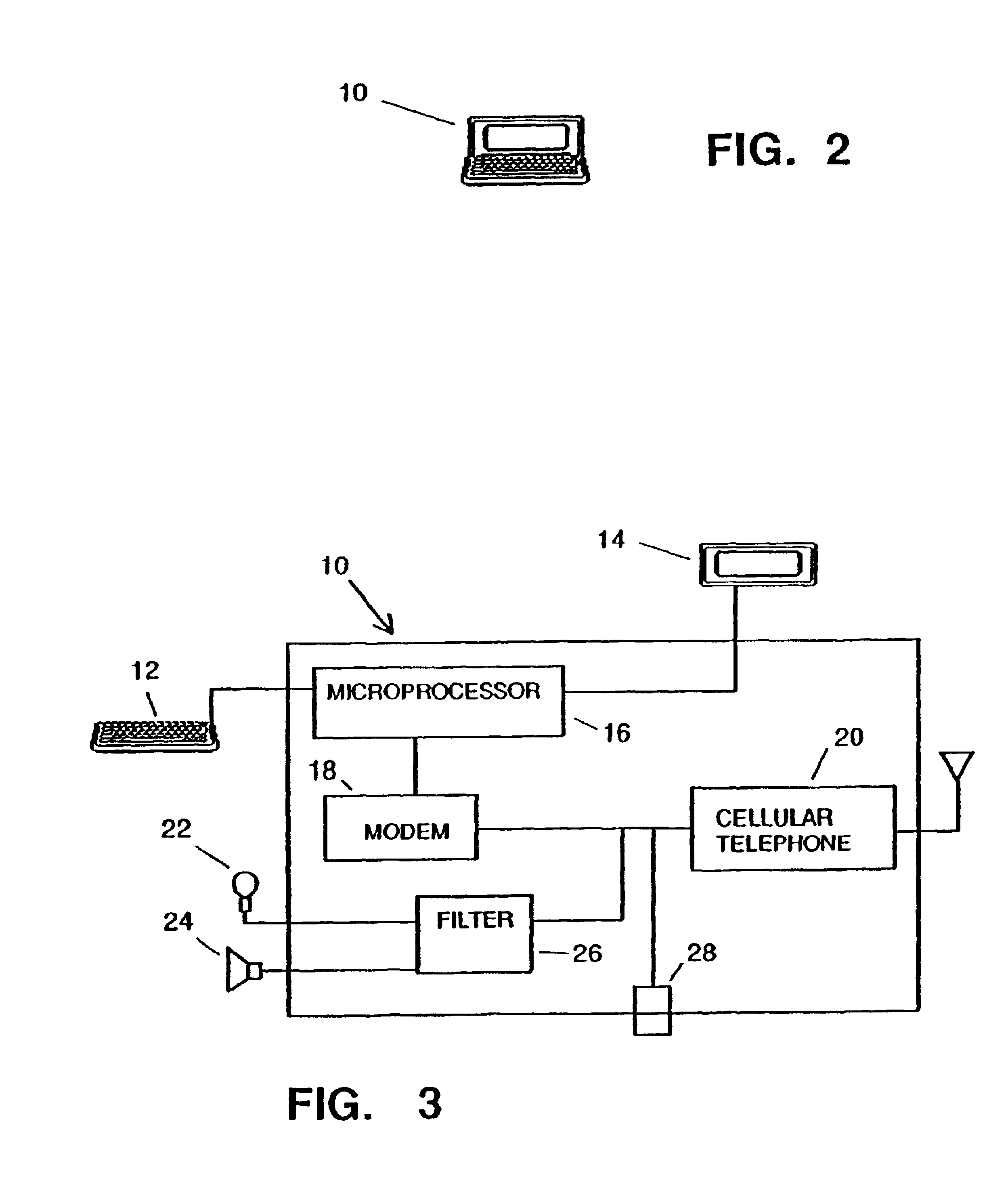

InactiveUS20020178003A1Devices with voice recognitionSubstation equipmentCommunications systemContext model

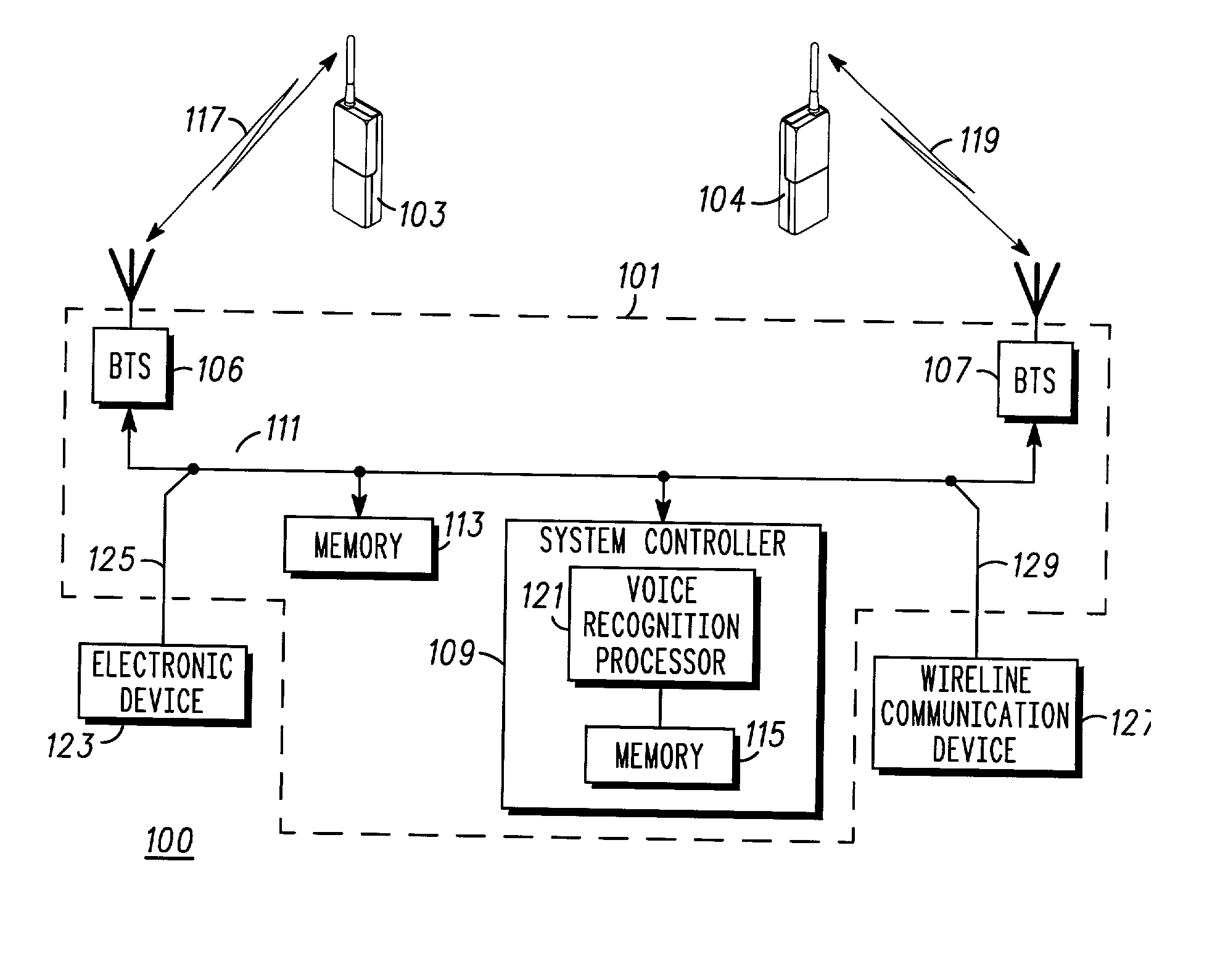

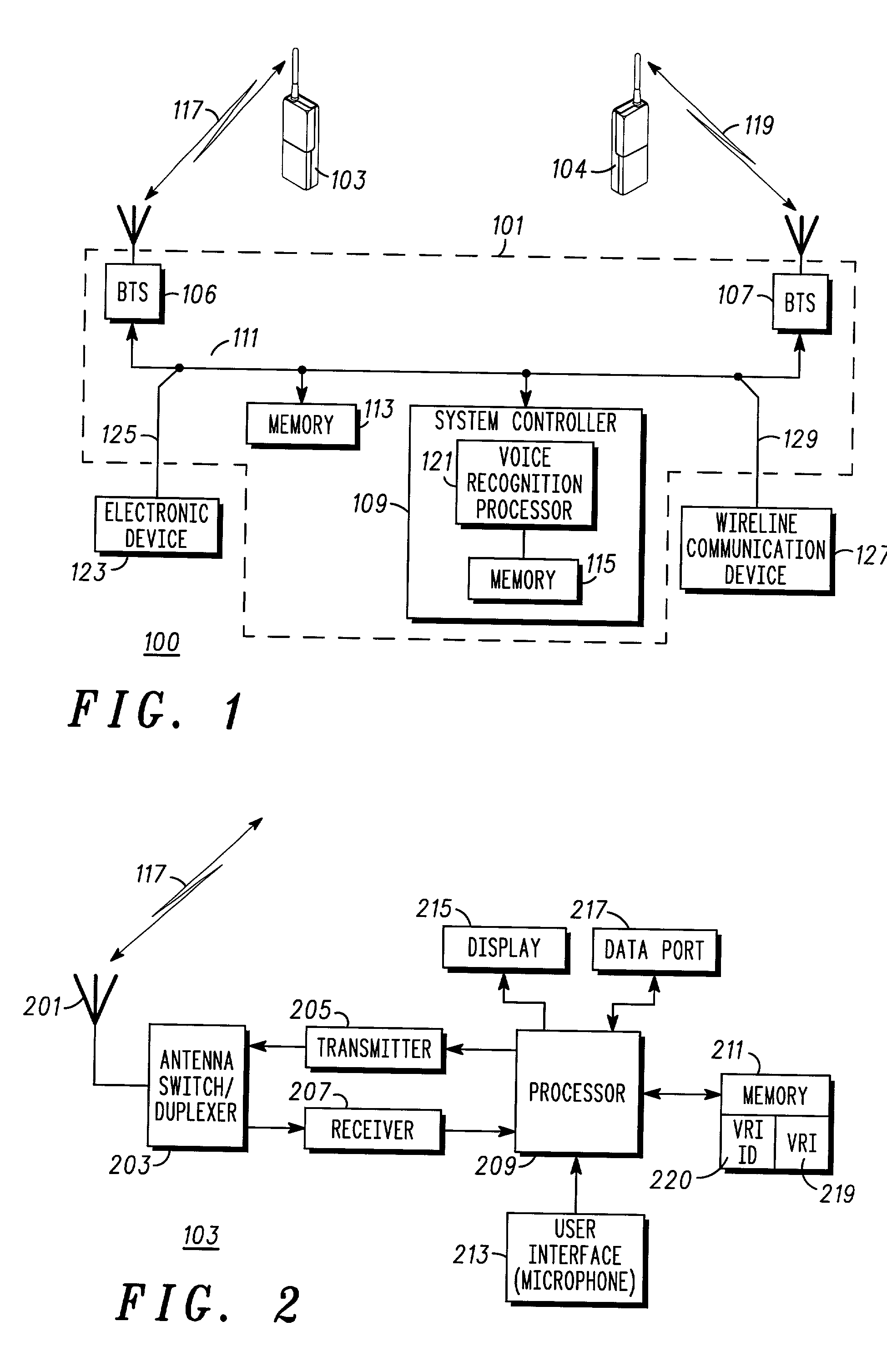

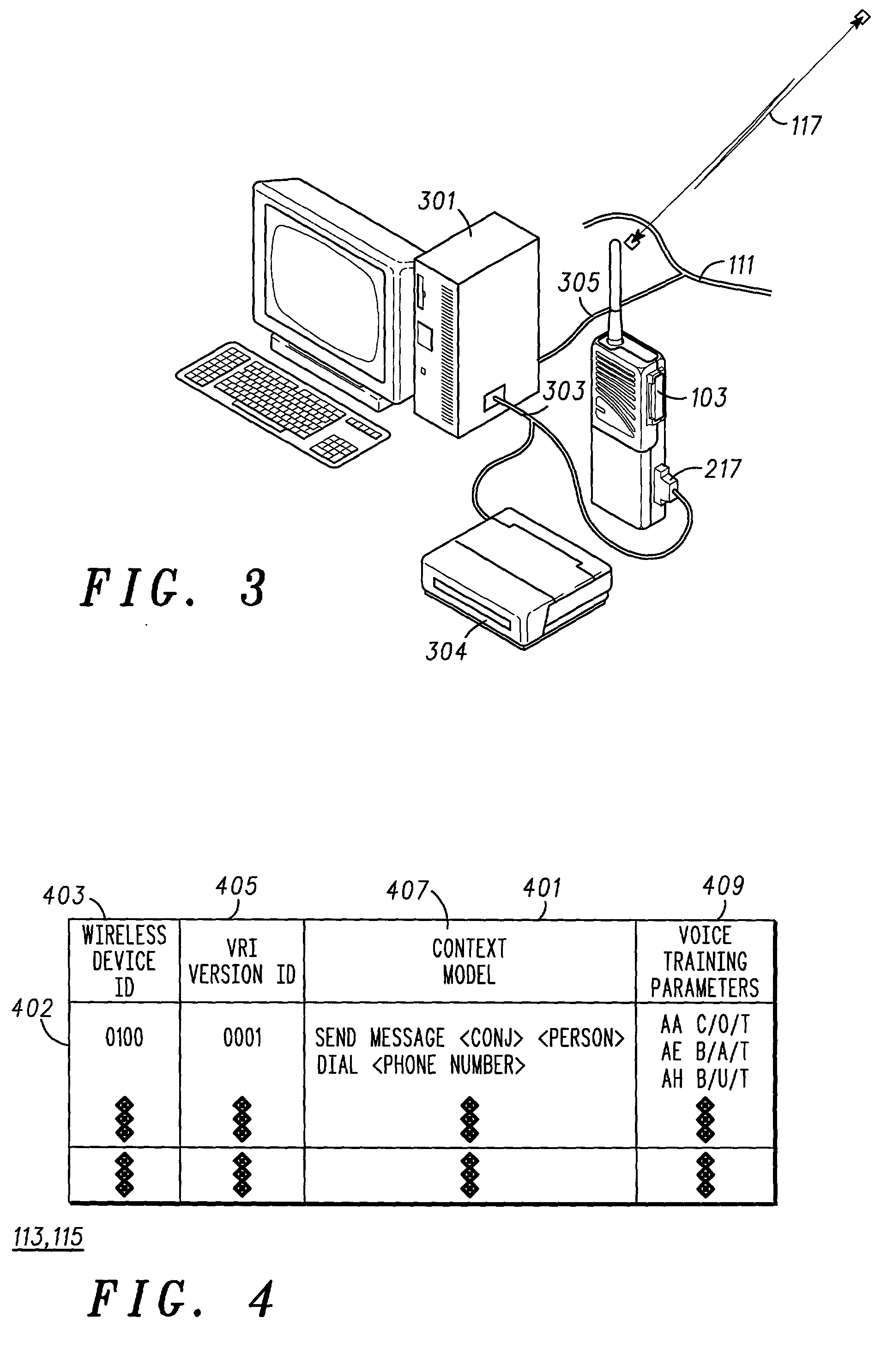

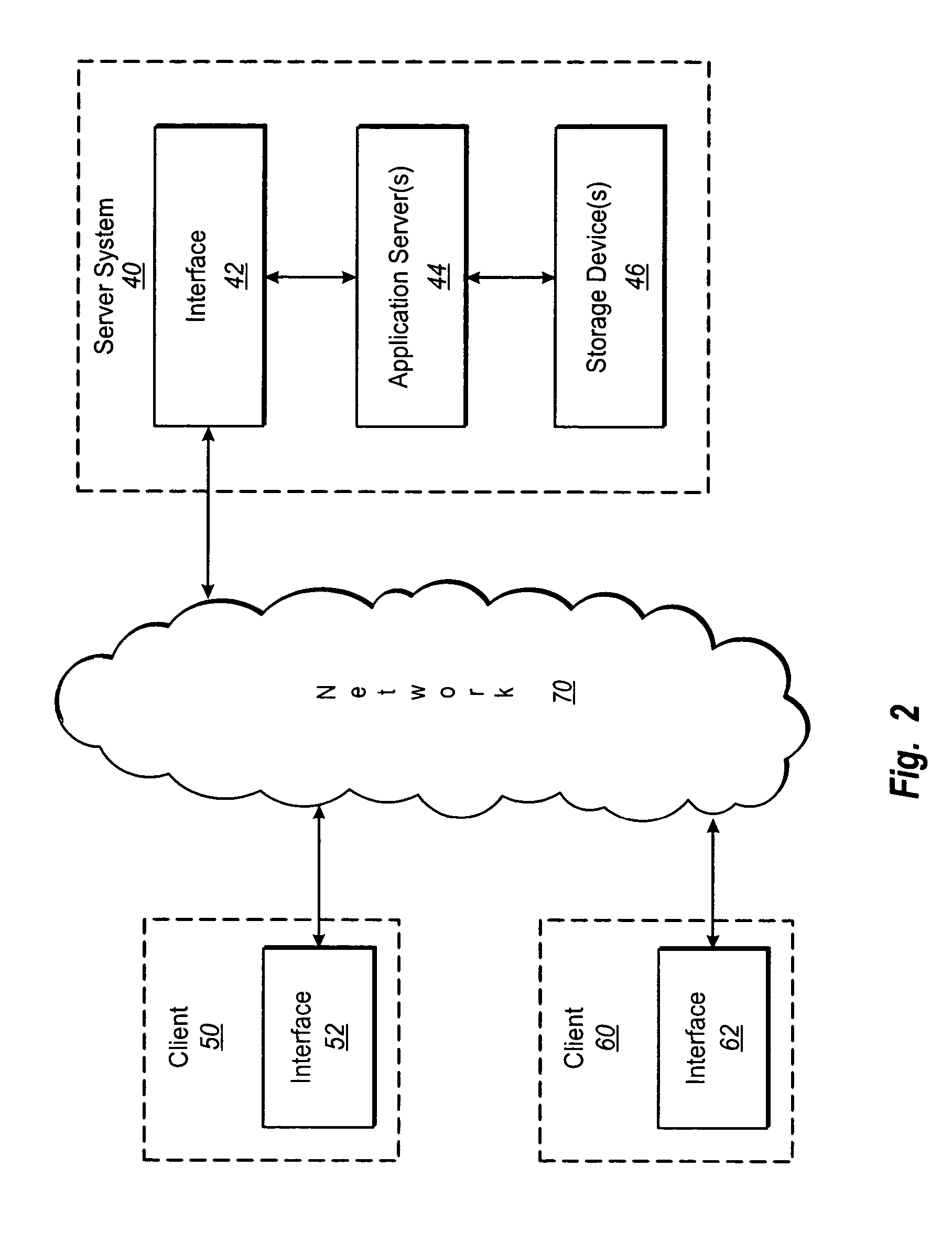

A wireless communication system employs a method and apparatus for providing voice recognition service to a wireless communication device. Voice recognition information (e.g., a context model and voice training parameters) is generated by a wireless device user and stored in a memory (e.g., a SIM card) of the wireless device to form one portion of a voice recognition processing engine. Another portion of the voice recognition processing engine (e.g., a voice recognition processor and operating software therefor) is implemented in a wireless system infrastructure of the wireless communication system. The wireless device transmits the voice recognition information to the system infrastructure preferably upon request for such information by the system infrastructure. The system infrastructure then uses both portions of the voice recognition processing engine to provide voice recognition service to the wireless device and its user during operation of the wireless device.

Owner:MOTOROLA INC

Relay for personal interpreter

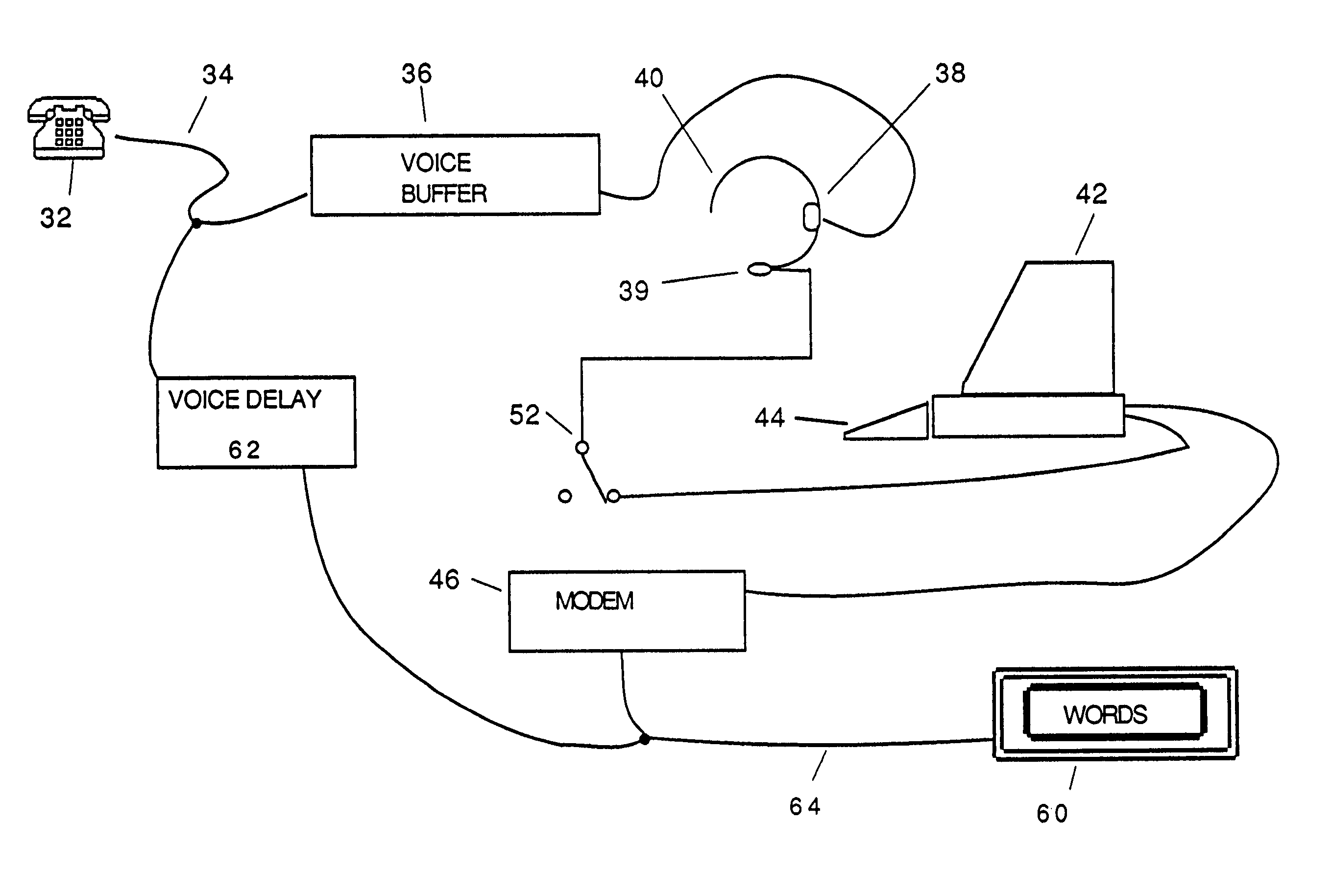

InactiveUS7006604B2Fast communication speedValve arrangementsAutomatic call-answering/message-recording/conversation-recordingVoice communicationTyping

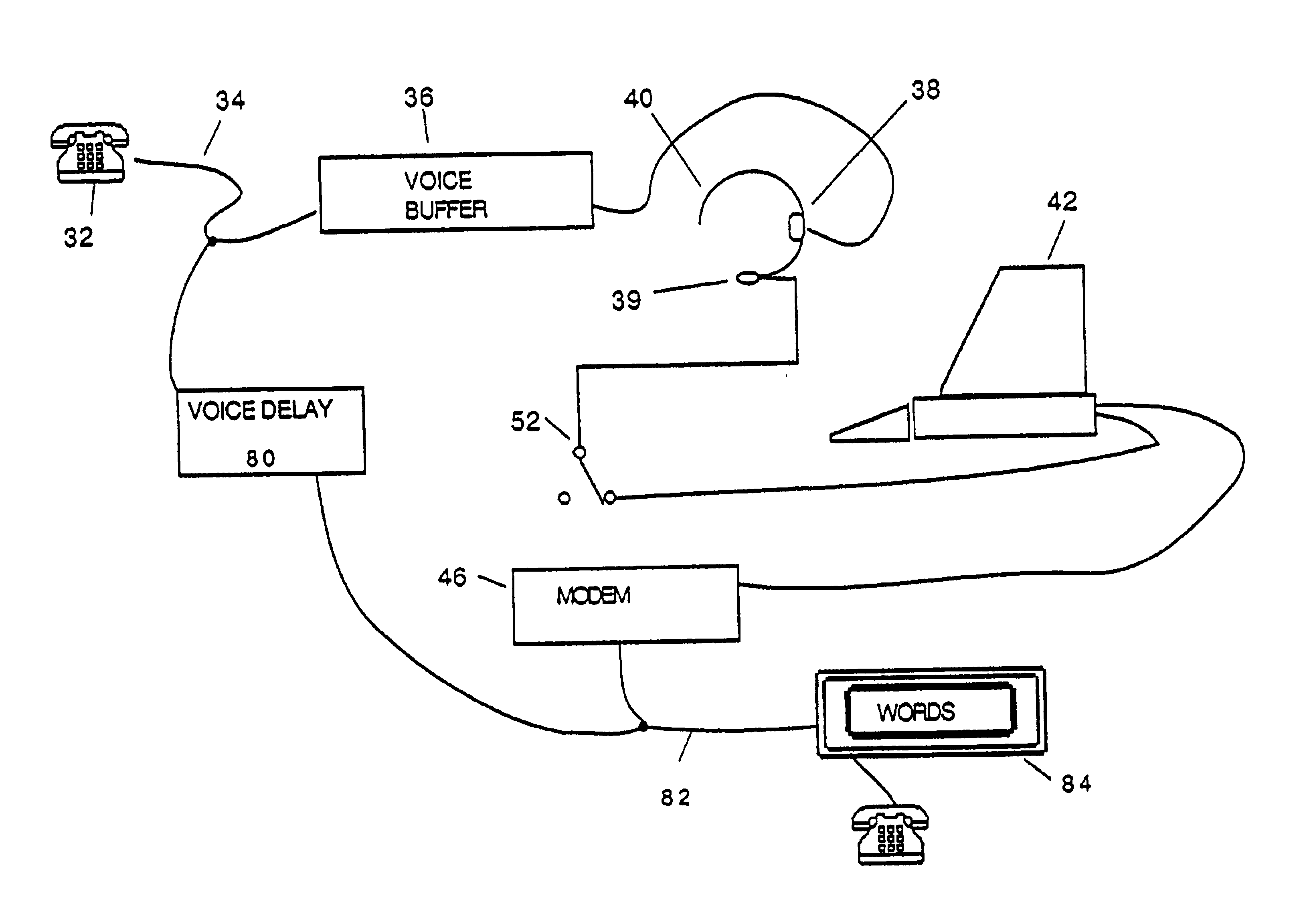

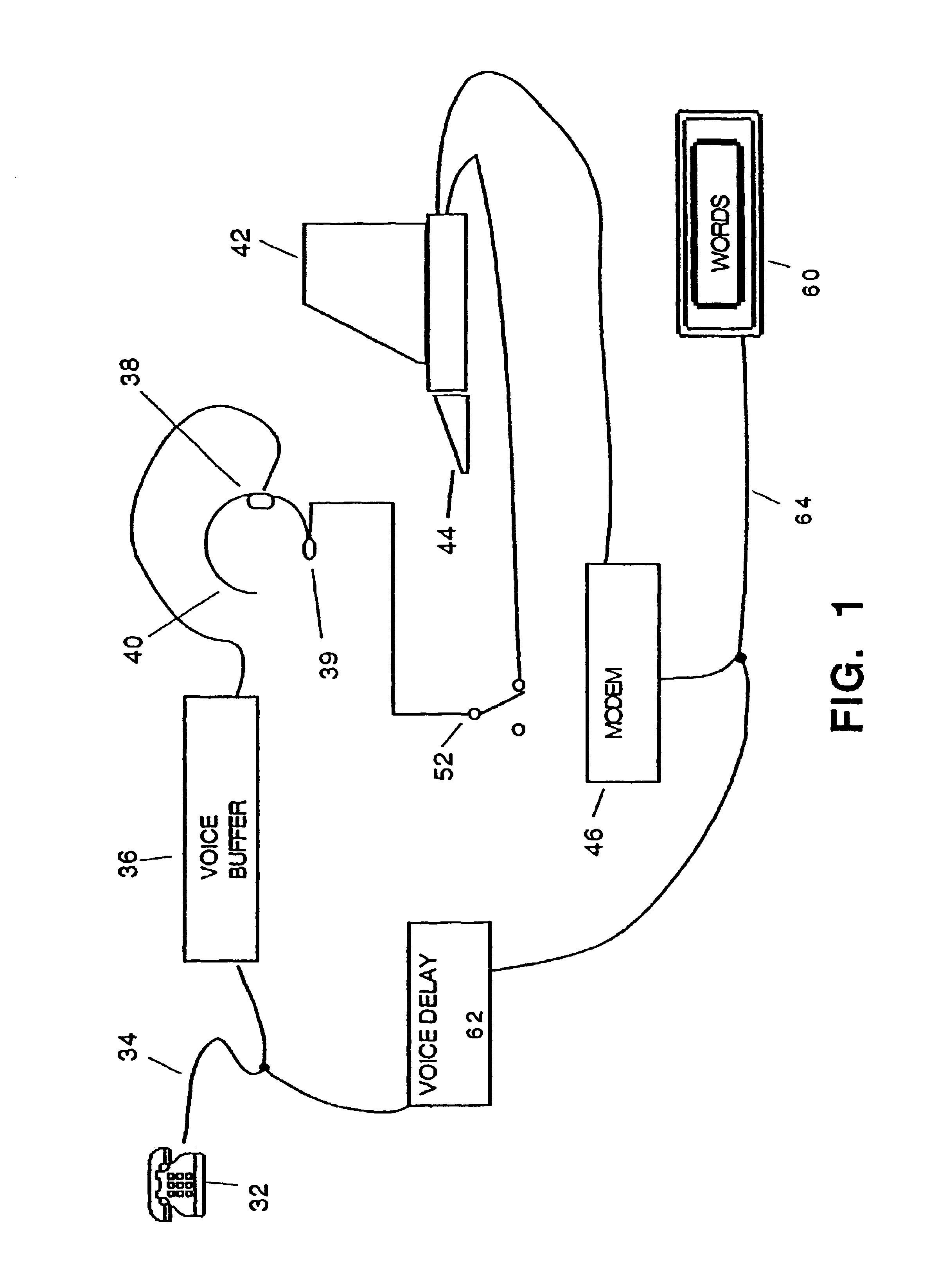

A relay is described to facilitate communication through the telephone system between hearing users and users who need or desire assistance in understanding voice communications. To overcome the speed limitations inherent in typing, the call assistant at the relay does not type most words but, instead, re-voices the words spoken by the hearing user into a computer operating a voice recognition software package trained to the voice of that call assistant. The text stream created by the computer and the voice of the hearing user are both sent to the assisted user so that the assisted user can be supplied with a visual text stream to supplement the voice communications. A time delay in the transmission of the voice of the hearing user through the relay is of assistance to the assisted user in comprehending the communications session.

Owner:ULTRATEC INC

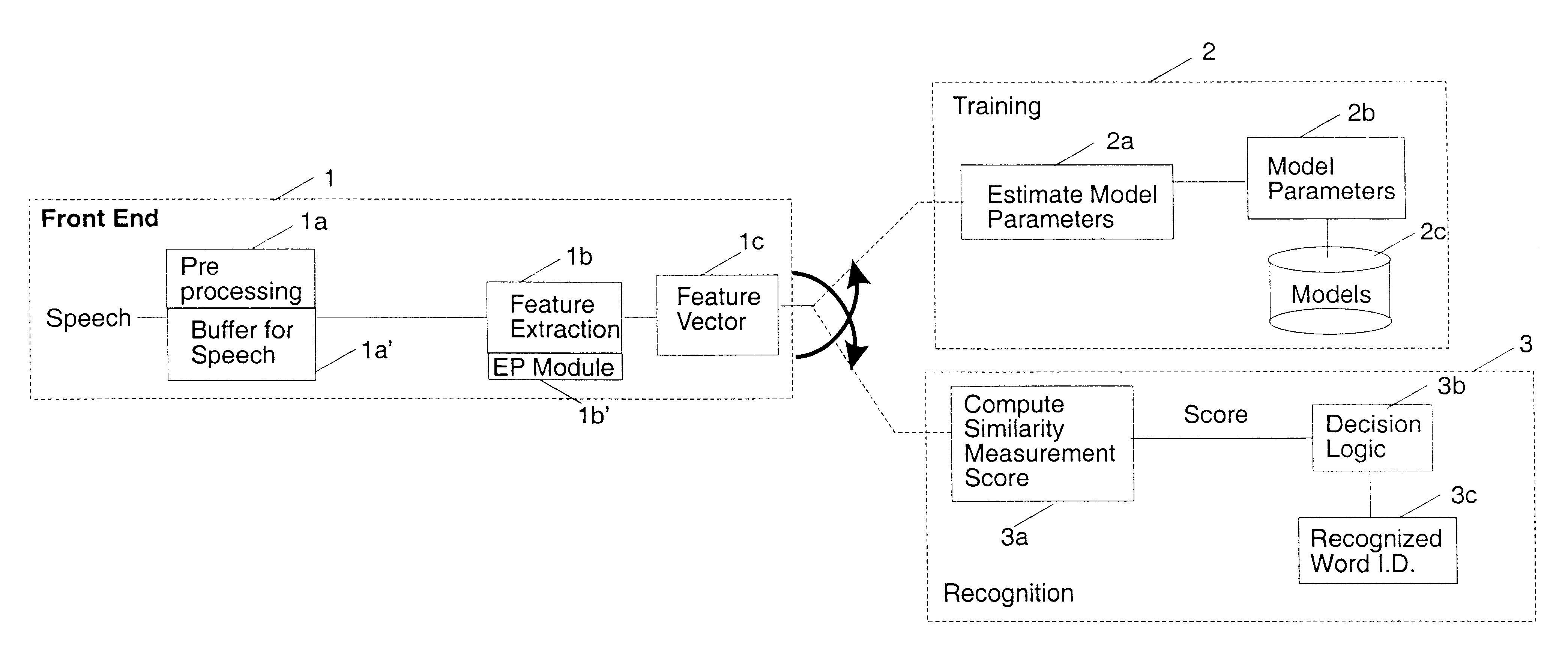

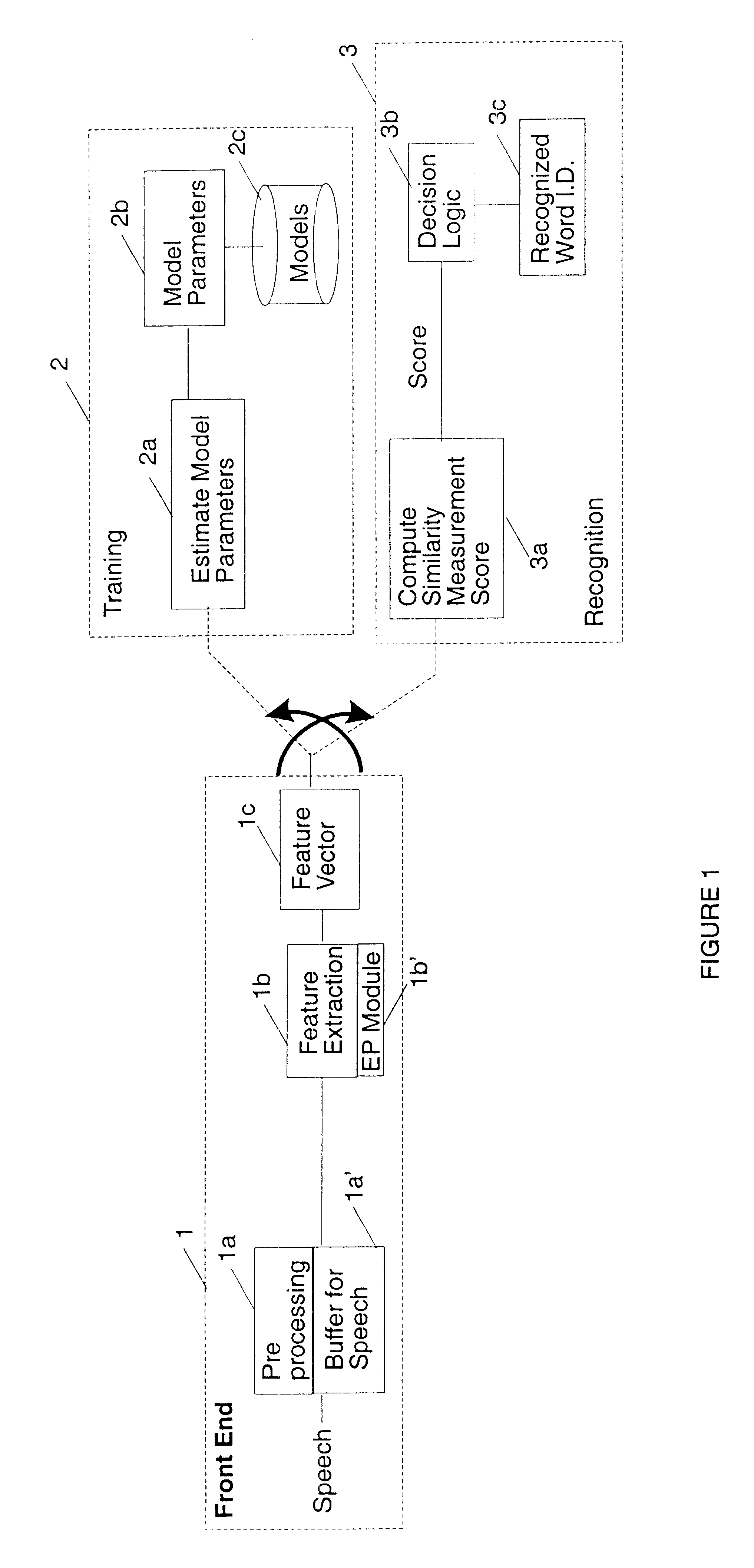

Smart training and smart scoring in SD speech recognition system with user defined vocabulary

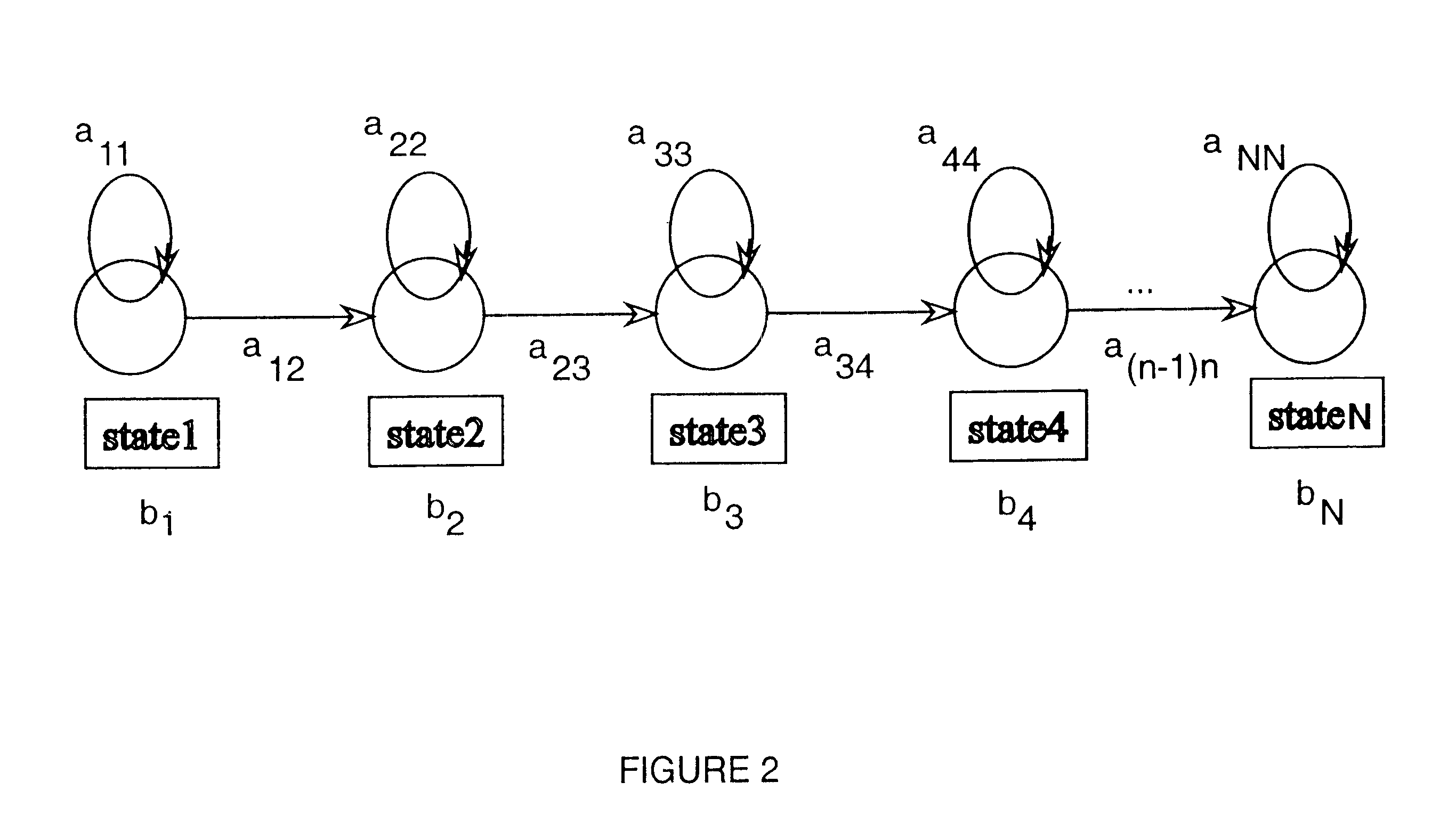

In a speech training and recognition system, the current invention detects and warns the user about the similar sounding entries to vocabulary and permits entry of such confusingly similar terms which are marked along with the stored similar terms to identify the similar words. In addition, the states in similar words are weighted to apply more emphasis to the differences between similar words than the similarities of such words. Another aspect of the current invention is to use modified scoring algorithm to improve the recognition performance in the case where confusing entries were made to the vocabulary despite the warning. Yet another aspect of the current invention is to detect and warn the user about potential problems with new entries such as short words and two or more word entries with long silence periods in between words. Finally, the current invention also includes alerting the user about the dissimilarity of the multiple tokens of the same vocabulary item in the case of multiple-token training.

Owner:WIAV SOLUTIONS LLC

Relay for personal interpreter

InactiveUS6934366B2Fast communication speedIncrease speedCordless telephonesAutomatic call-answering/message-recording/conversation-recordingVoice communicationTyping

A relay is described to facilitate communication through the telephone system between hearing users and users who need or desire assistance in understanding voice communications. To overcome the speed limitations inherent in typing, the call assistant at the relay does not type most words but, instead, re-voices the words spoken by the hearing user into a computer operating a voice recognition software package trained to the voice of that call assistant. The text stream created by the computer and the voice of the hearing user are both sent to the assisted user so that the assisted user can be supplied with a visual text stream to supplement the voice communications. A time delay in the transmission of the voice of the hearing user through the relay is of assistance in the assisted user comprehending the communications session.

Owner:ULTRATEC INC

Speech recognition and voice training data storage and access methods and apparatus

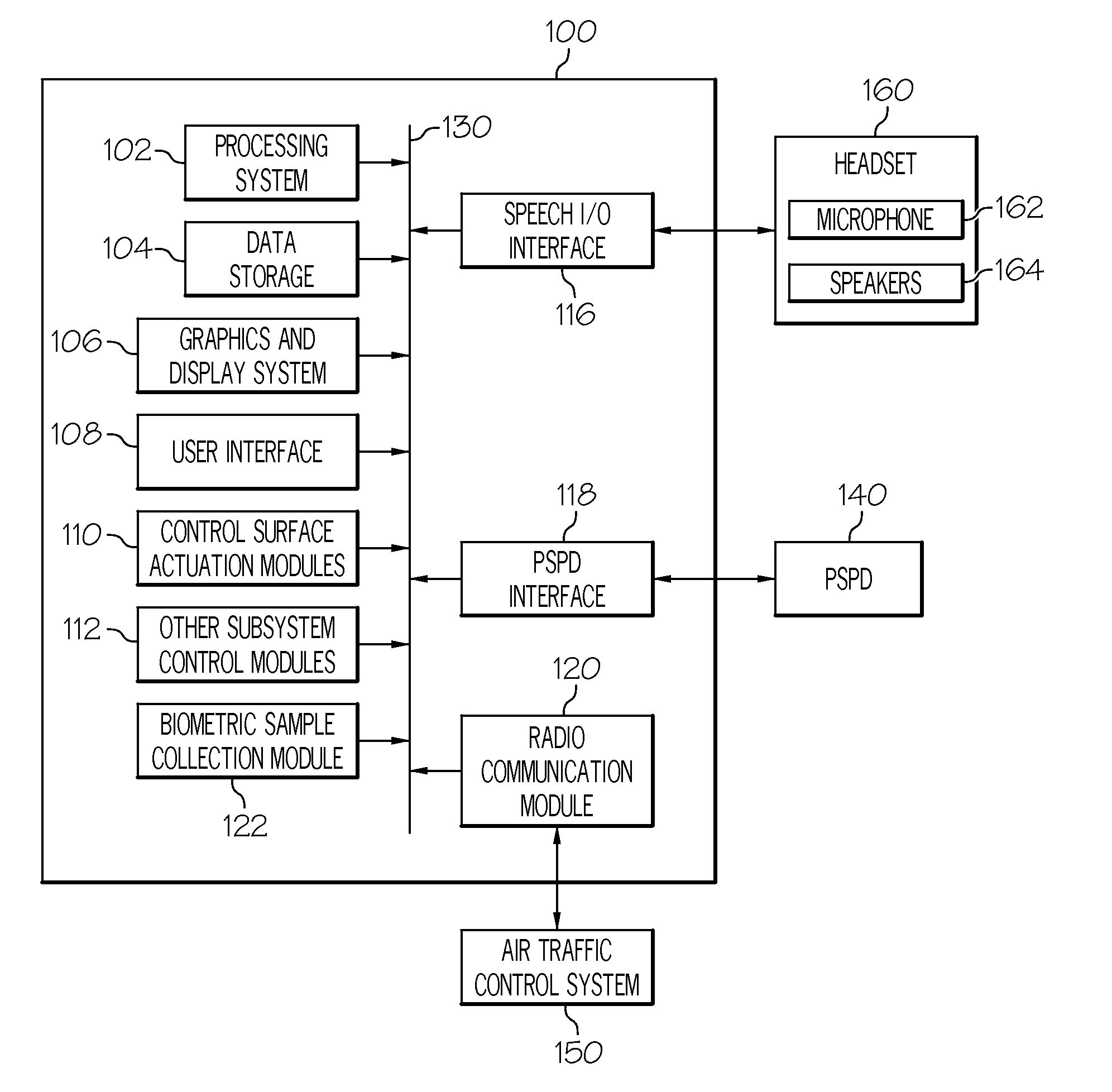

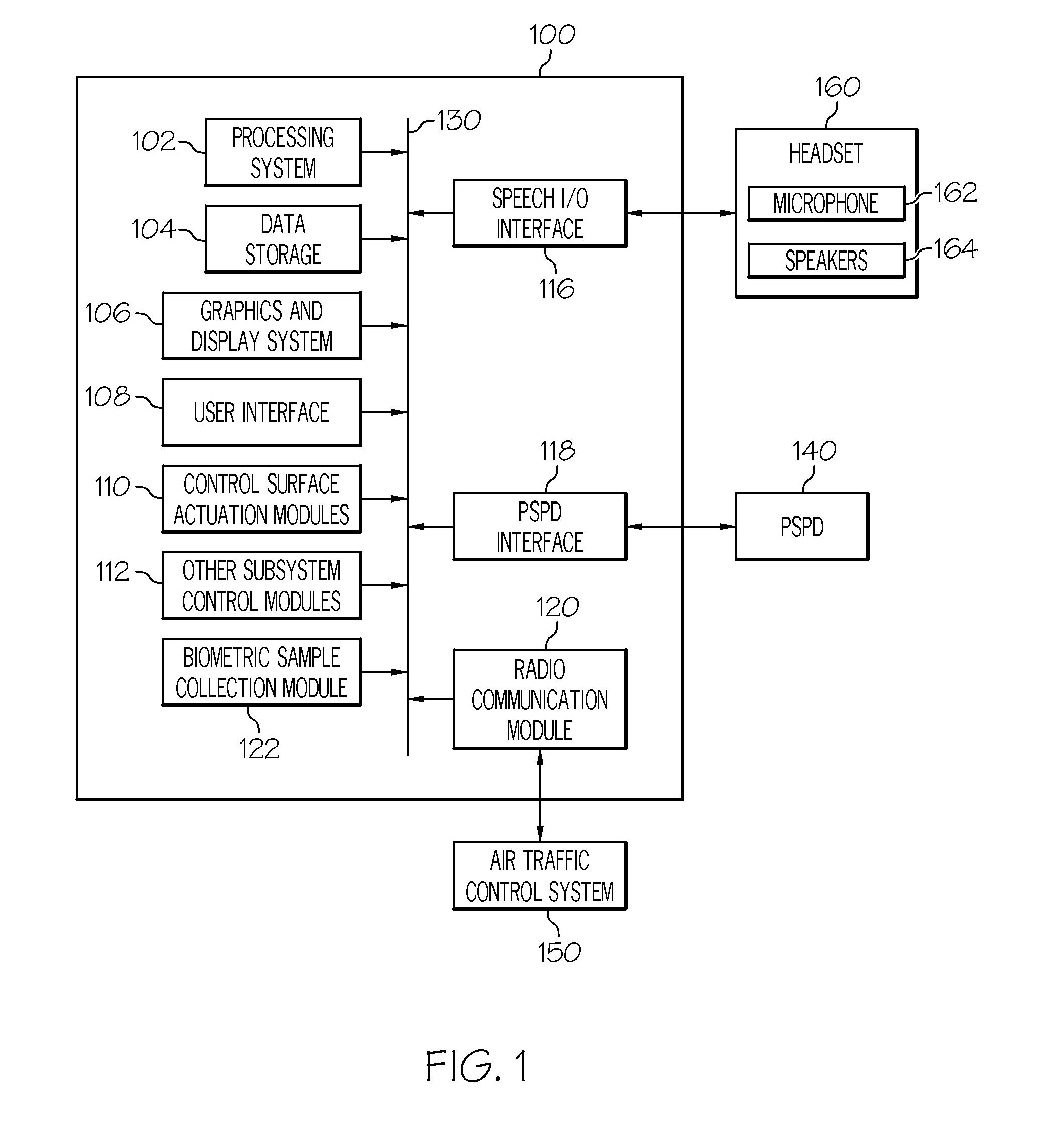

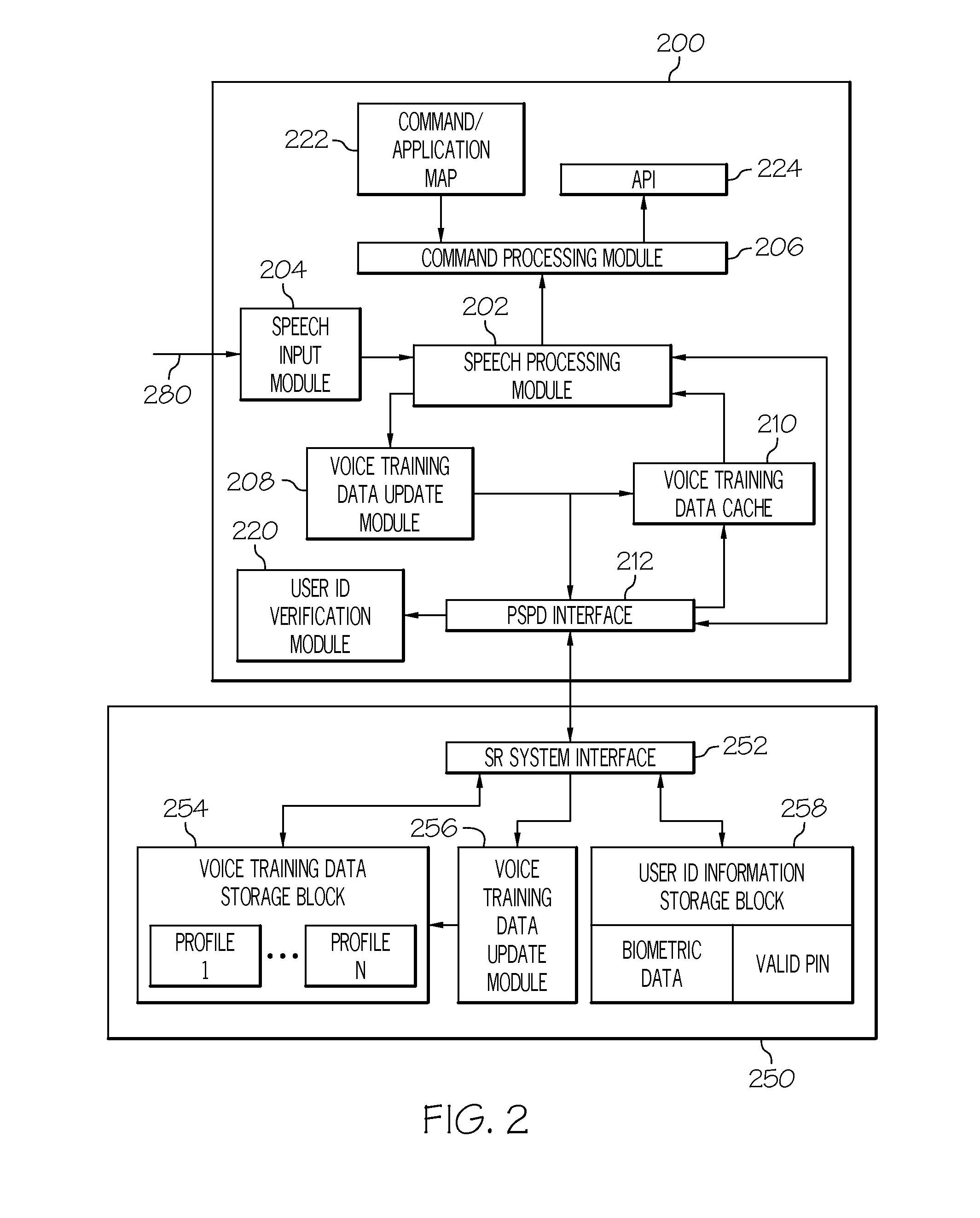

Embodiments include a speech recognition system and a personal speech profile data (PSPD) storage device that is physically distinct from the speech recognition system. In the speech recognition system, a PSPD interface receives voice training data, which is associated with an individual, from the PSPD storage device. A speech input module produces a digital speech signal derived from an utterance made by a system user. A speech processing module accesses voice training data stored on the PSPD storage device through the PSPD interface, and executes a speech processing algorithm that analyzes the digital speech signal using the voice training data, in order to identify one or more recognized terms from the digital speech signal. A command processing module initiates execution of various applications based on the recognized terms. Embodiments may be implemented in various types of host systems, including an aircraft cockpit-based system.

Owner:HONEYWELL INT INC

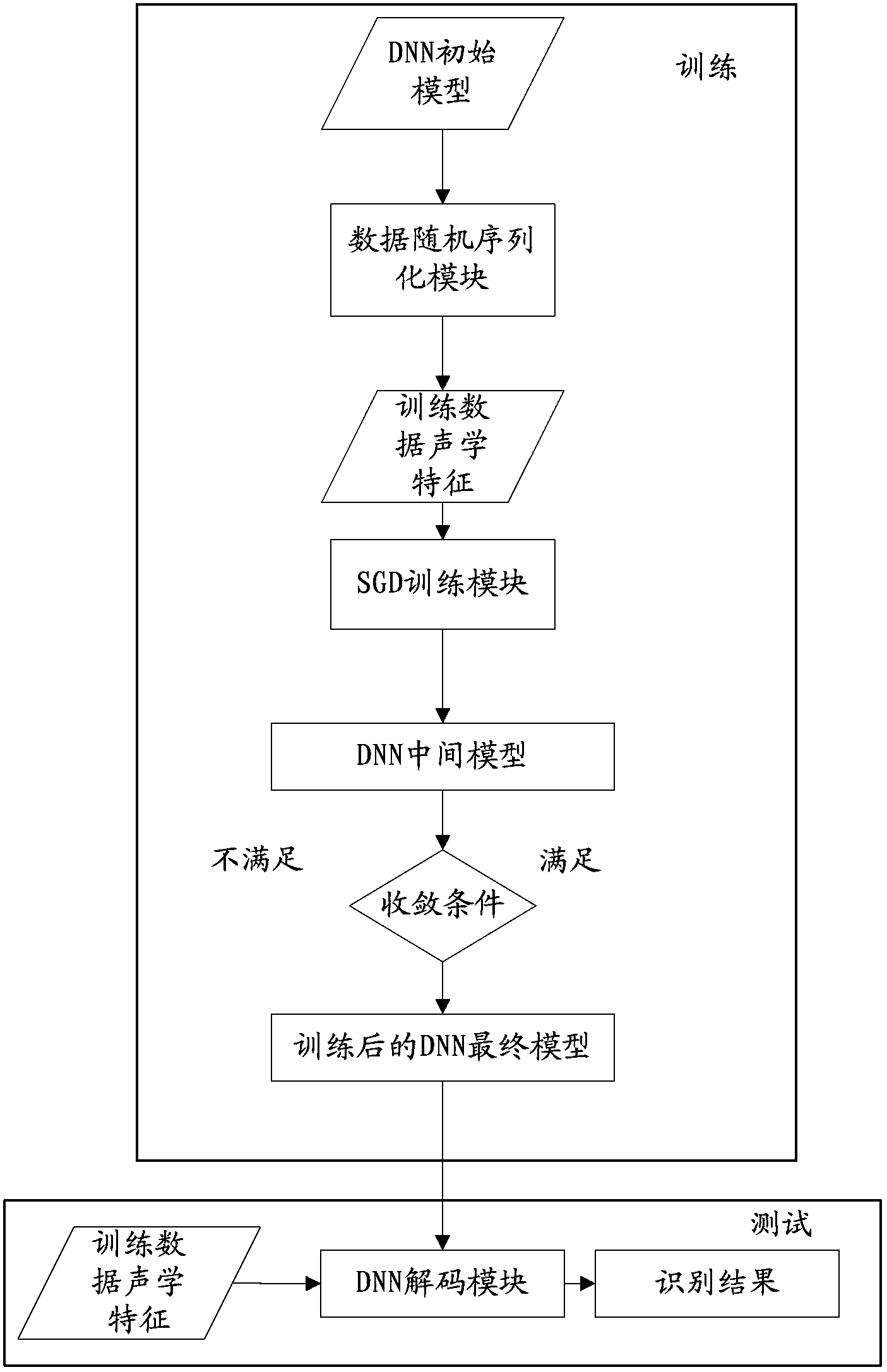

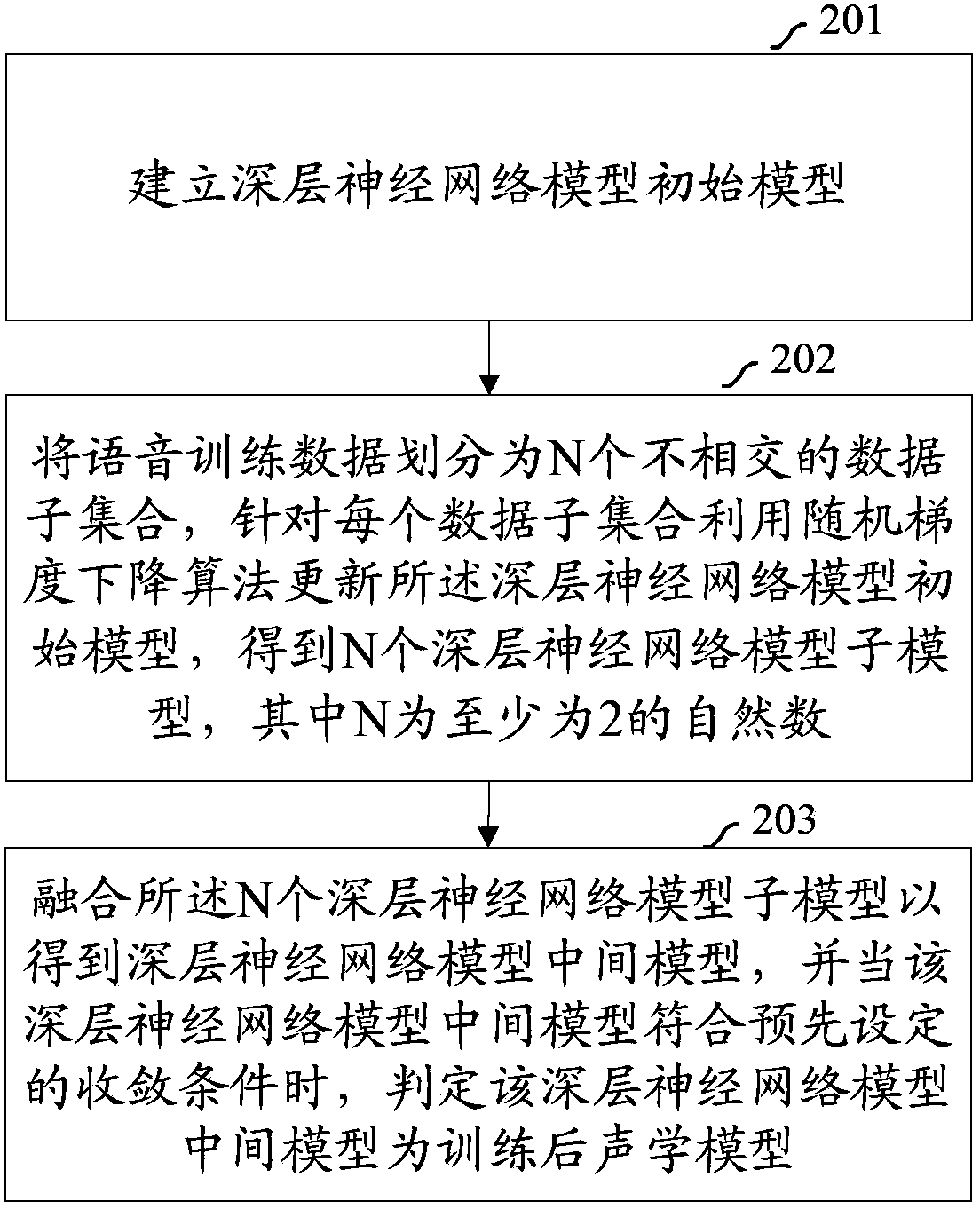

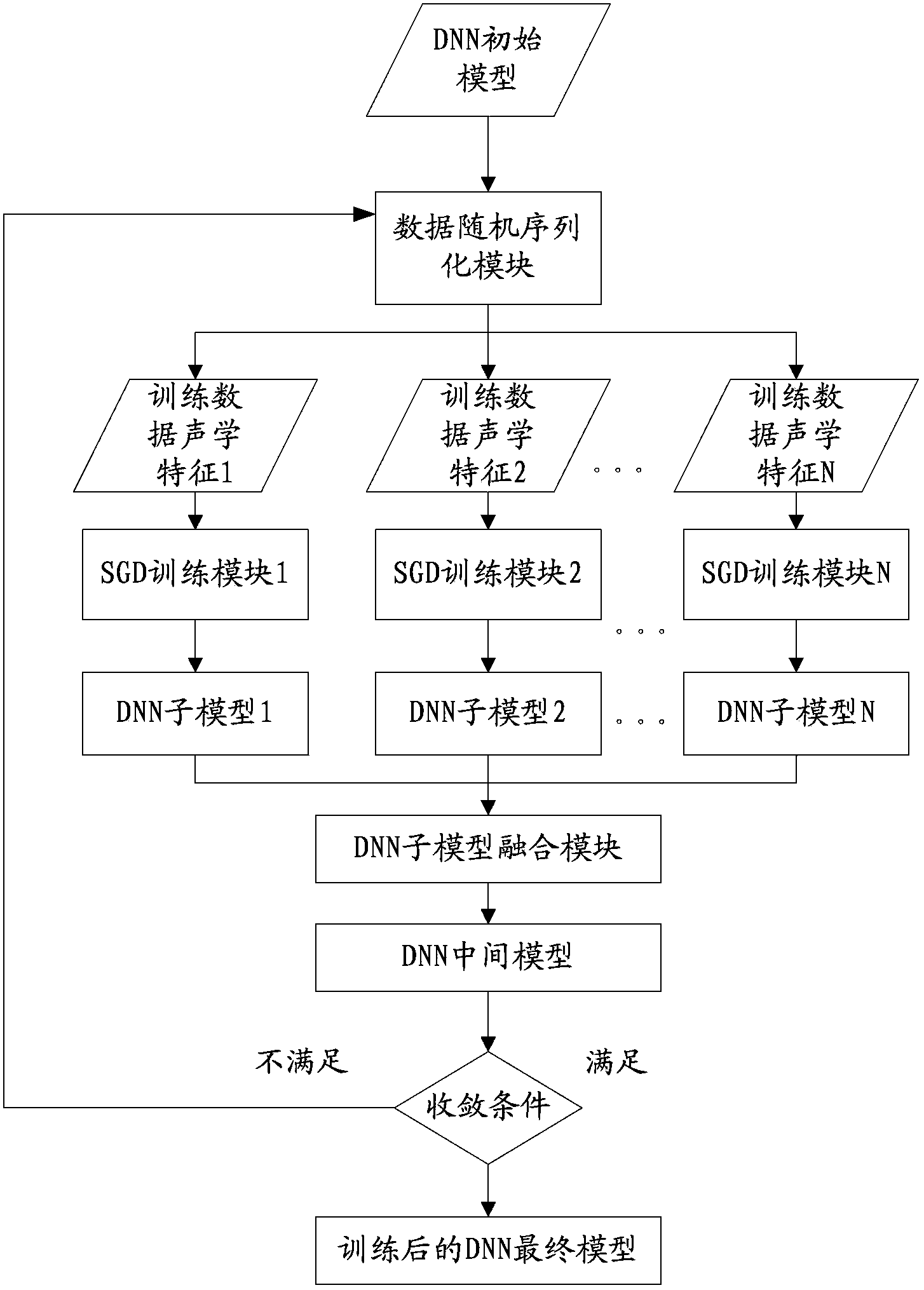

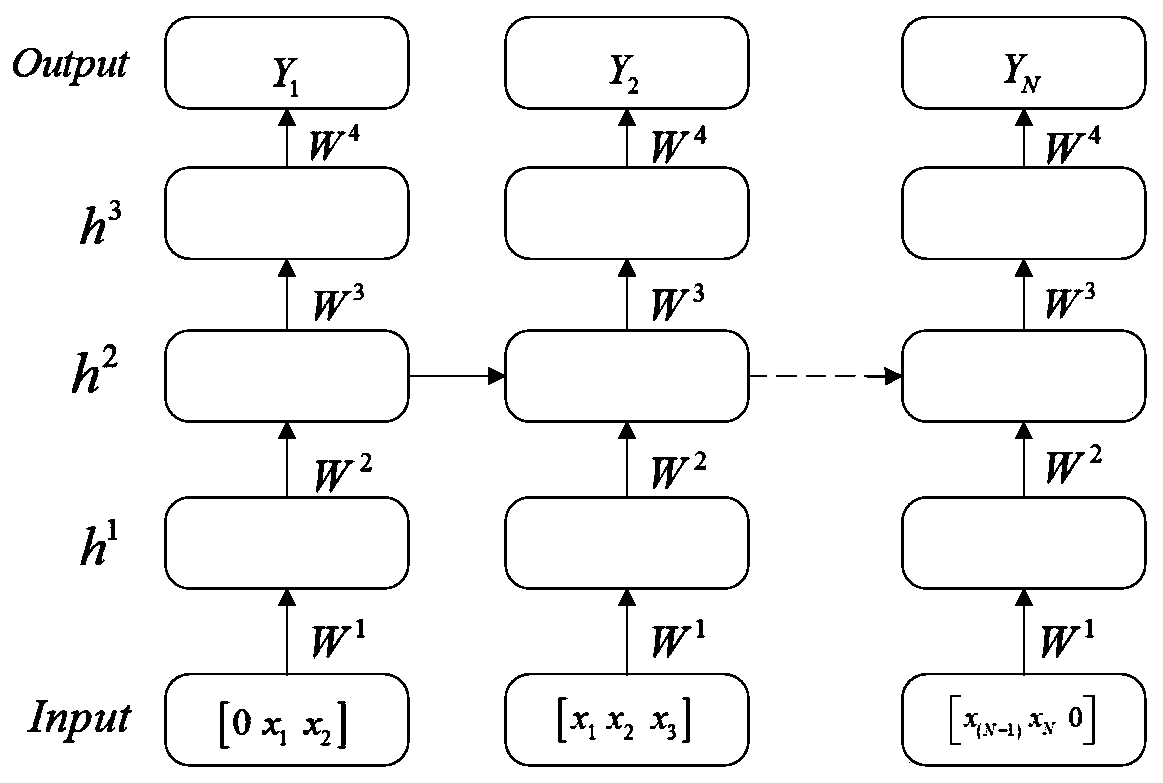

Acoustic model training method and device

ActiveCN104143327AReduce biasImprove speech recognition performanceSpeech recognitionStochastic gradient descentAlgorithm

The embodiment of the invention provides an acoustic model training method and device. The method includes the steps of establishing a deep neural network model initial model; dividing voice training data into N non-intersecting data subsets, renewing the deep neural network model initial model for each data subset by means of a stochastic gradient descend algorithm to obtain N deep neural network model sub models, wherein N is an integer larger than or equal to 2; integrating the N deep neural network model sub models to obtain a deep neural network model intermediate model, and judging that the deep neural network model intermediate model is a trained acoustic model when the deep neural network model intermediate model conforms to preset convergence conditions. By means of the acoustic model training method and device, training efficiency of the acoustic model is improved, and performance of voice recognition is not reduced.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

System and method for speech therapy

The present disclosure relates to speech therapy and voice training methods. A system is provided which utilizes tonal and rhythm visualization components to allow a person to “see” their words as they attempt to improve or regain their ability to speak clearly. The system is also applicable to vocal music instruction and allows students improve their singing abilities by responding to visual feedback which incorporates both color and shape. The system may comprise a step-by-step instruction method, live performance abilities, and recording and playback features. Certain embodiments incorporate statistical analysis of student progress, remote access for teacher consultation, and video games for enhancing student interest.

Owner:MASTER KEY LLC

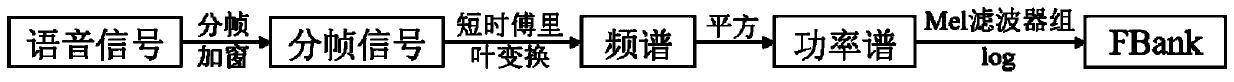

Method for recognizing speaker based on conversion of neutral and affection sound-groove model

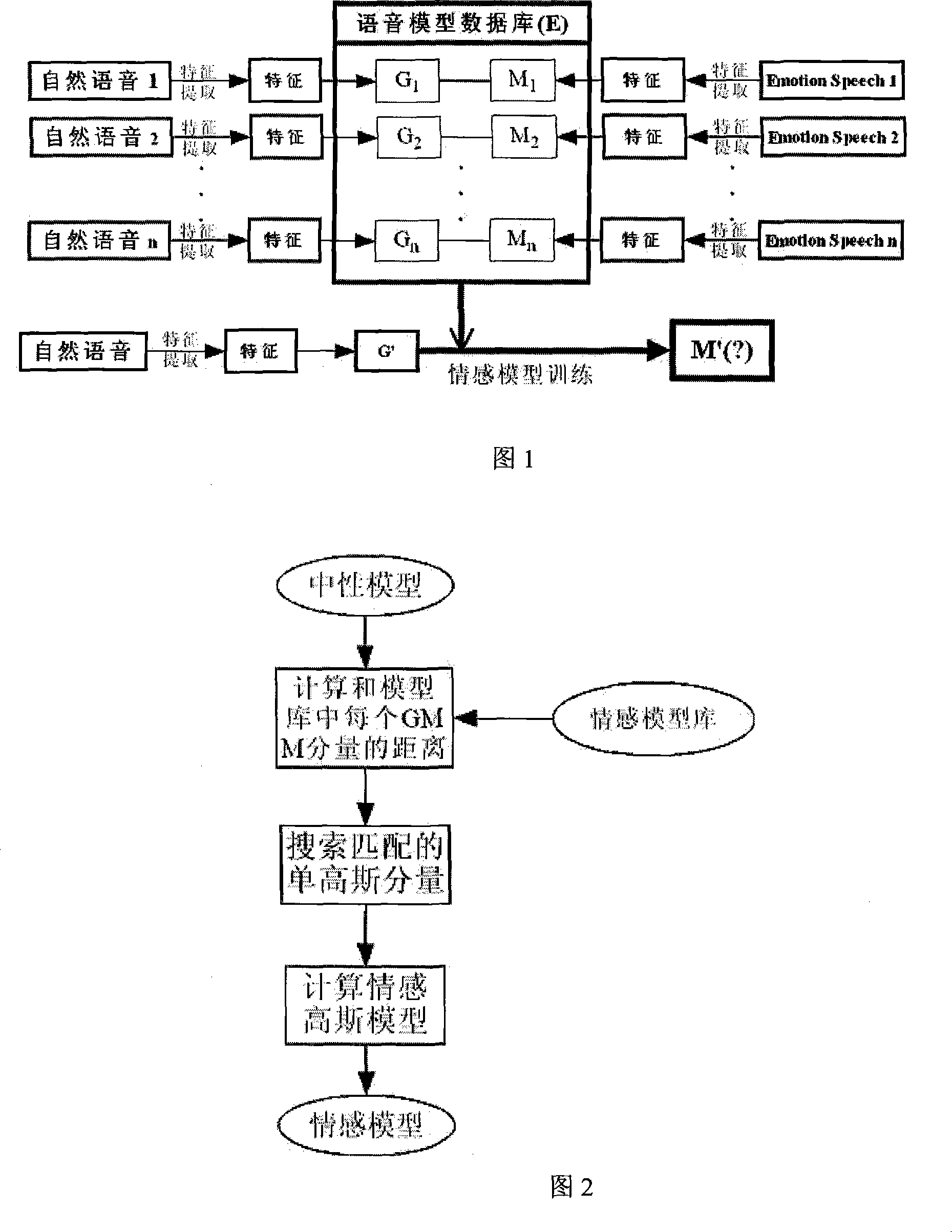

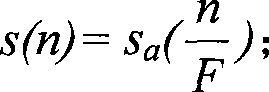

The invention relates to a speaker identification method based on neutralization and sound-groove model conversion, the steps comprises (1) extracting voice feature, firstly conducting voice frequency pre-treating which is divided into three parts of sample-taking quantification, zero drift elimination, then extracting reverse spectrum signature MFCC, (2) building emotion model library, conducting Gaussian compound model training, training neutral model according to the neutral voice training of the users, conducting neutralization-emotion model conversion and obtaining emotion voice model by algorithm approach of neutralization-emotion voice conversion and (3) scoring for the voice test to identify the speakers. The invention has the advantages that the technique uses the algorithm approach of neutralization-emotion model conversion to increase the identification rate of the emotive speaker identifying. The technique trains out emotion voice model of the users according to the neutralization voice model of the users and increases the identification rate of the system.

Owner:ZHEJIANG UNIV

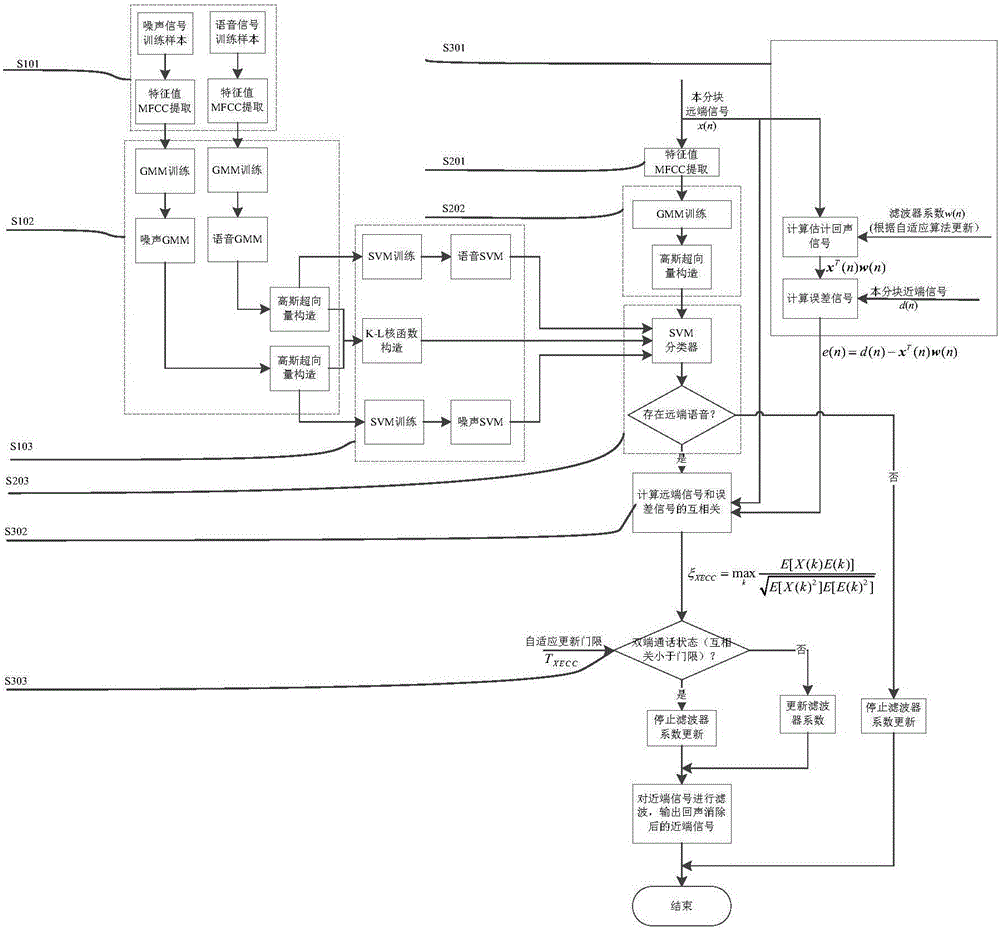

Voice state detection method suitable for echo cancellation system

ActiveCN105957520AImprove accuracyOvercoming the problem of inaccurate detectionSpeech recognitionProximal pointSvm classifier

The invention relates to a voice state detection method suitable for an echo cancellation system. The voice state detection method relates to the field of voice interaction technologies based on an IP network. The voice state detection method comprises the steps of: constructing a support vector machine (SVM) classifier by utilizing noise training samples and voice training samples, wherein signals to be detected are far-end and near-end signals after blocking, carrying out VAD judgment on the block far-end signal by adopting the constructed SVM classifier based on a Gaussian mixture model, stopping updating and filtering of a filter and outputting a near-end voice signal directly if the judgment result is that no voice exists, and carrying out double-end conversation judgment when judging that voice exists at a far end; stopping updating coefficients of the filter when in double-end conversation, and filtering the near-end signal; otherwise, conducting coefficient updating and filtering of the filter according to the far-end signal. The voice state detection method improves accuracy of voice activity detection, prevents a double-end mute state from being misjudged to be a double-end conversation state, and prevents error updating and filtering of the filter without a reference signal.

Owner:BEIJING UNIV OF POSTS & TELECOMM

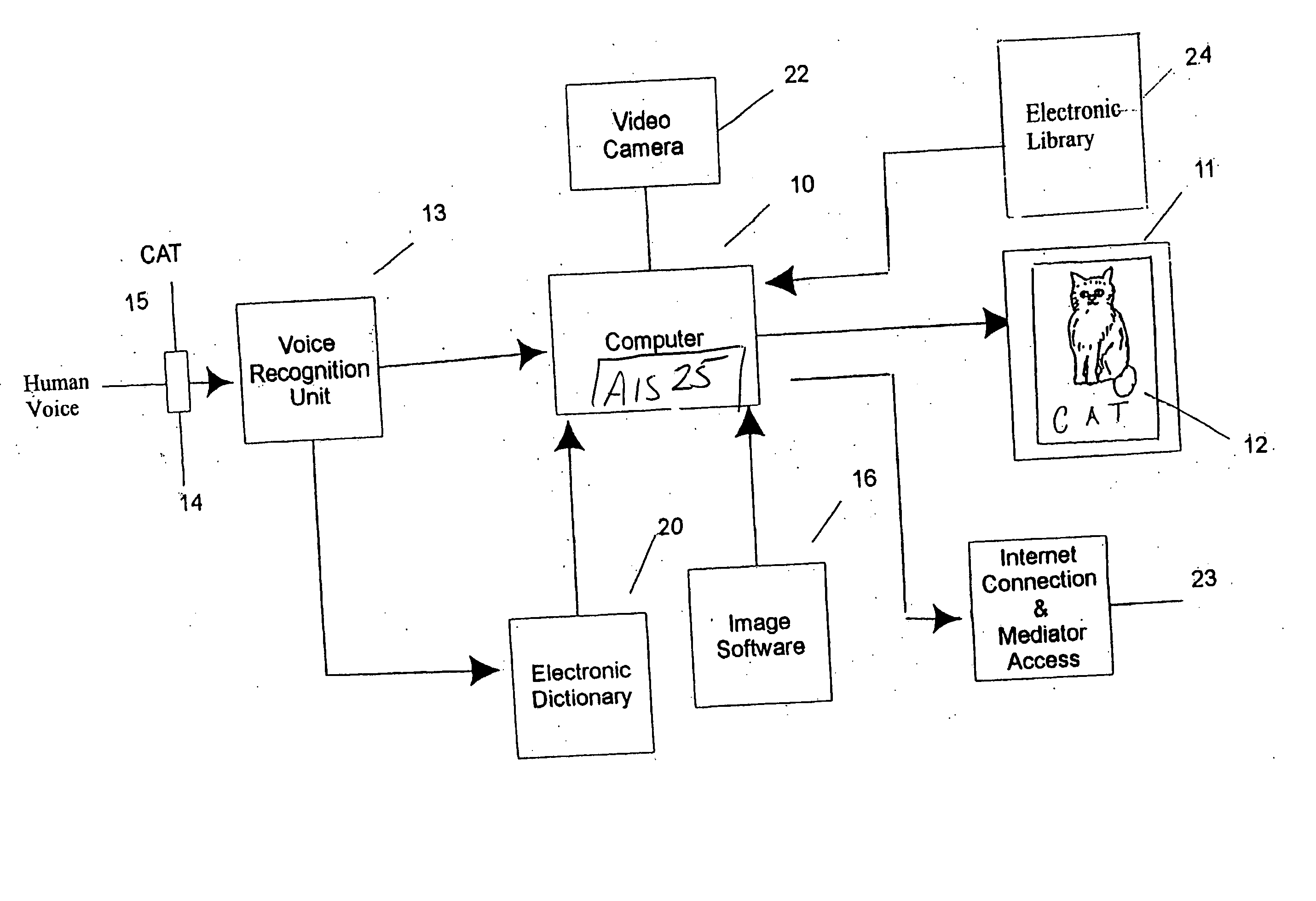

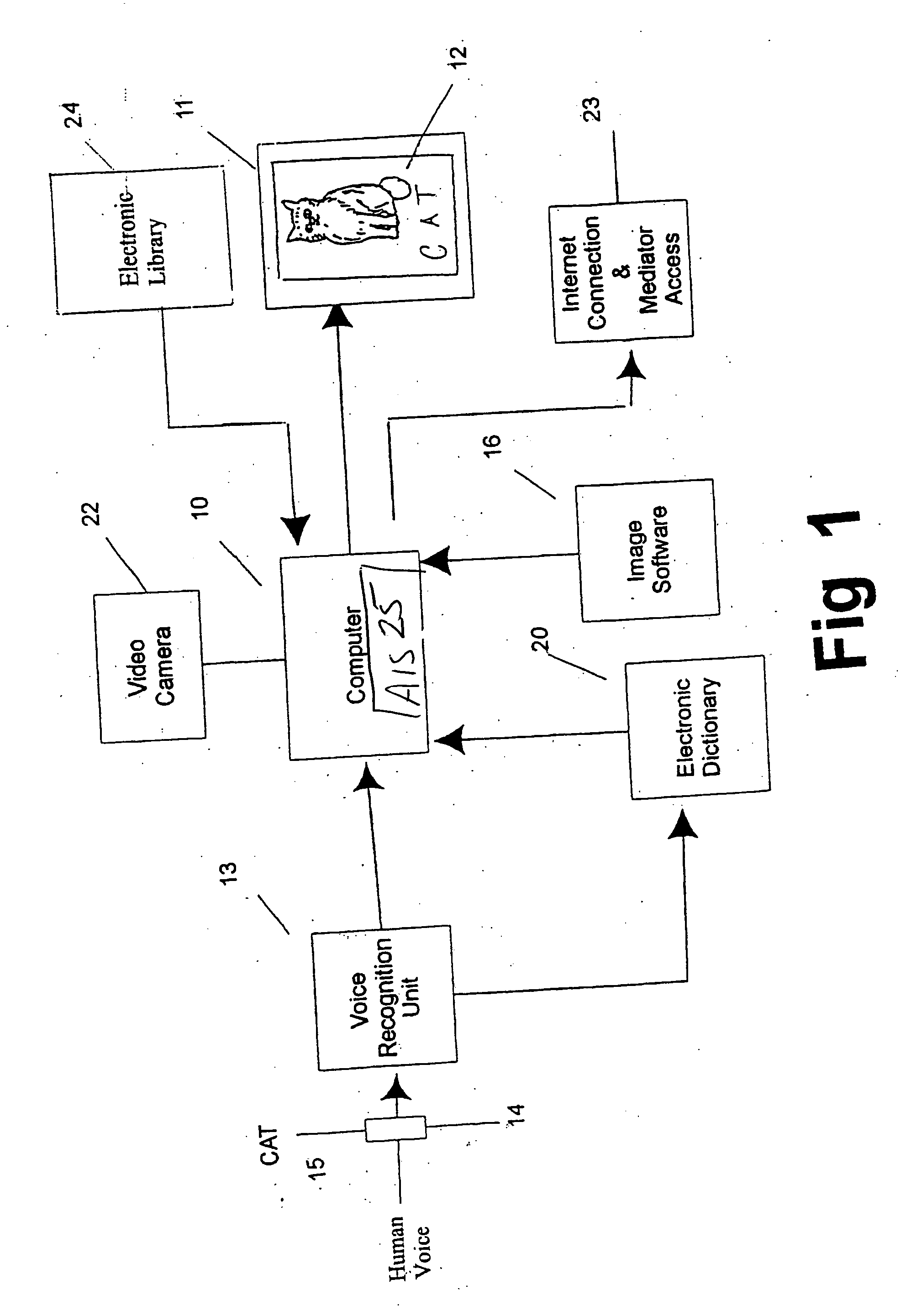

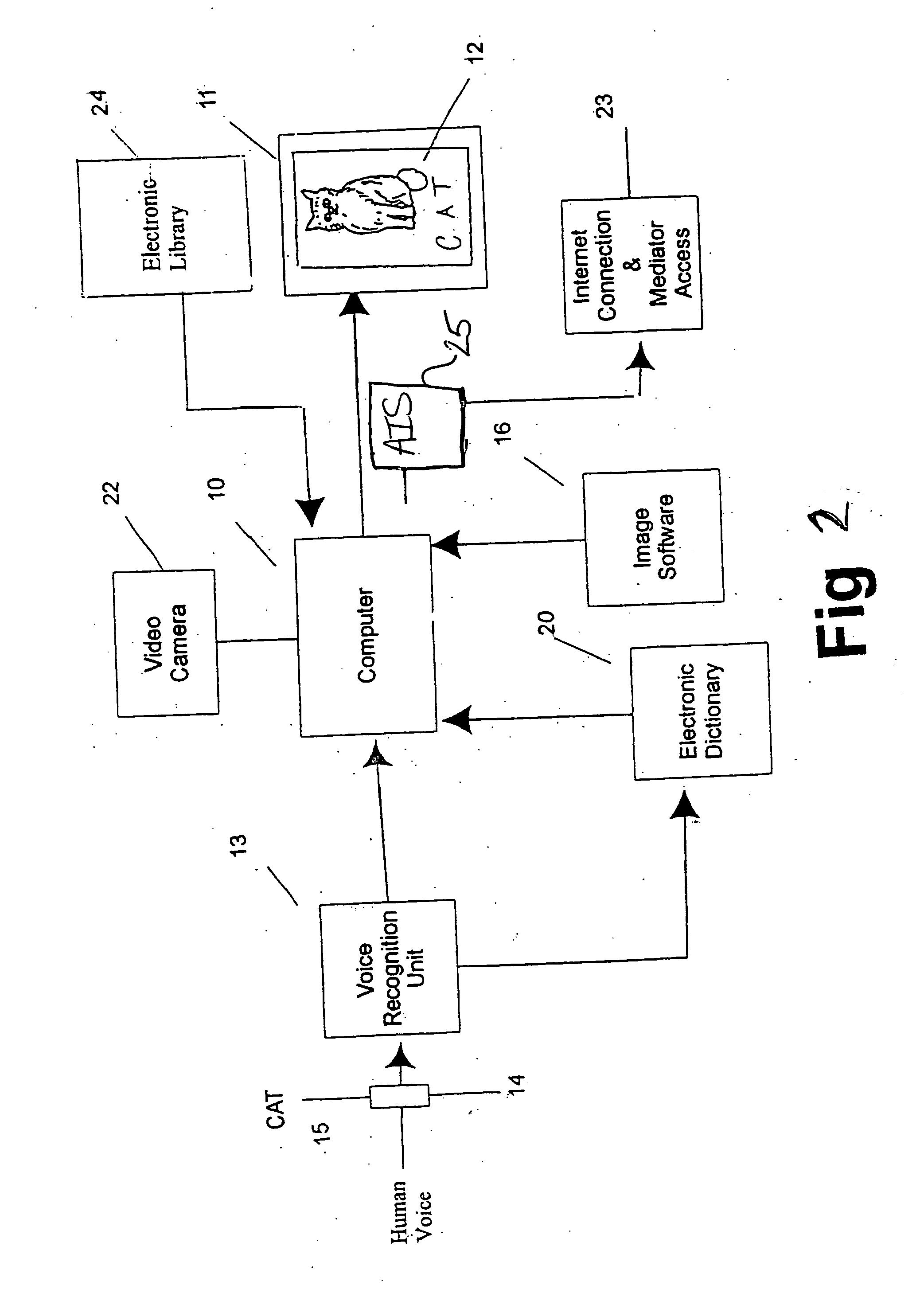

System and method for training users with audible answers to spoken questions

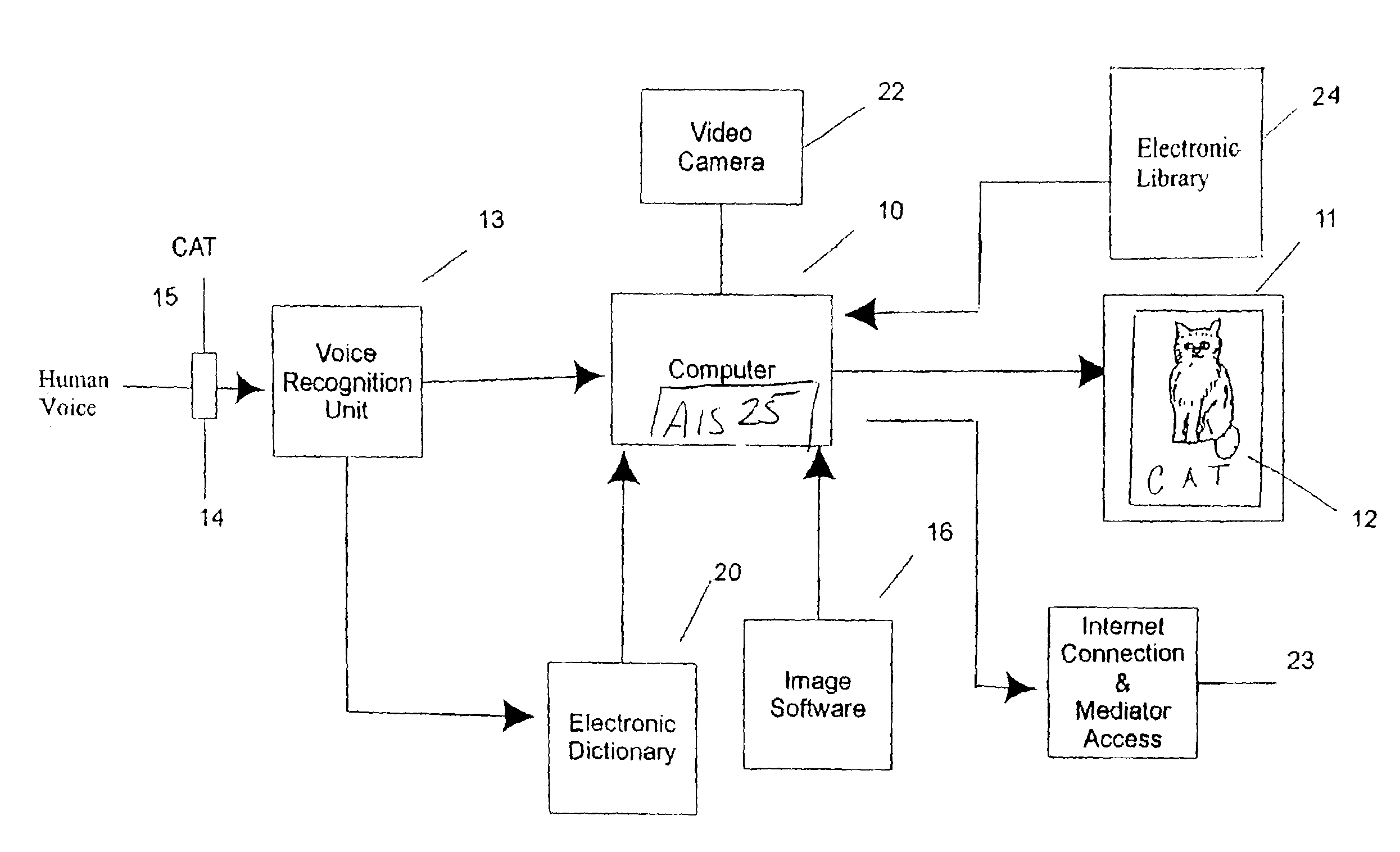

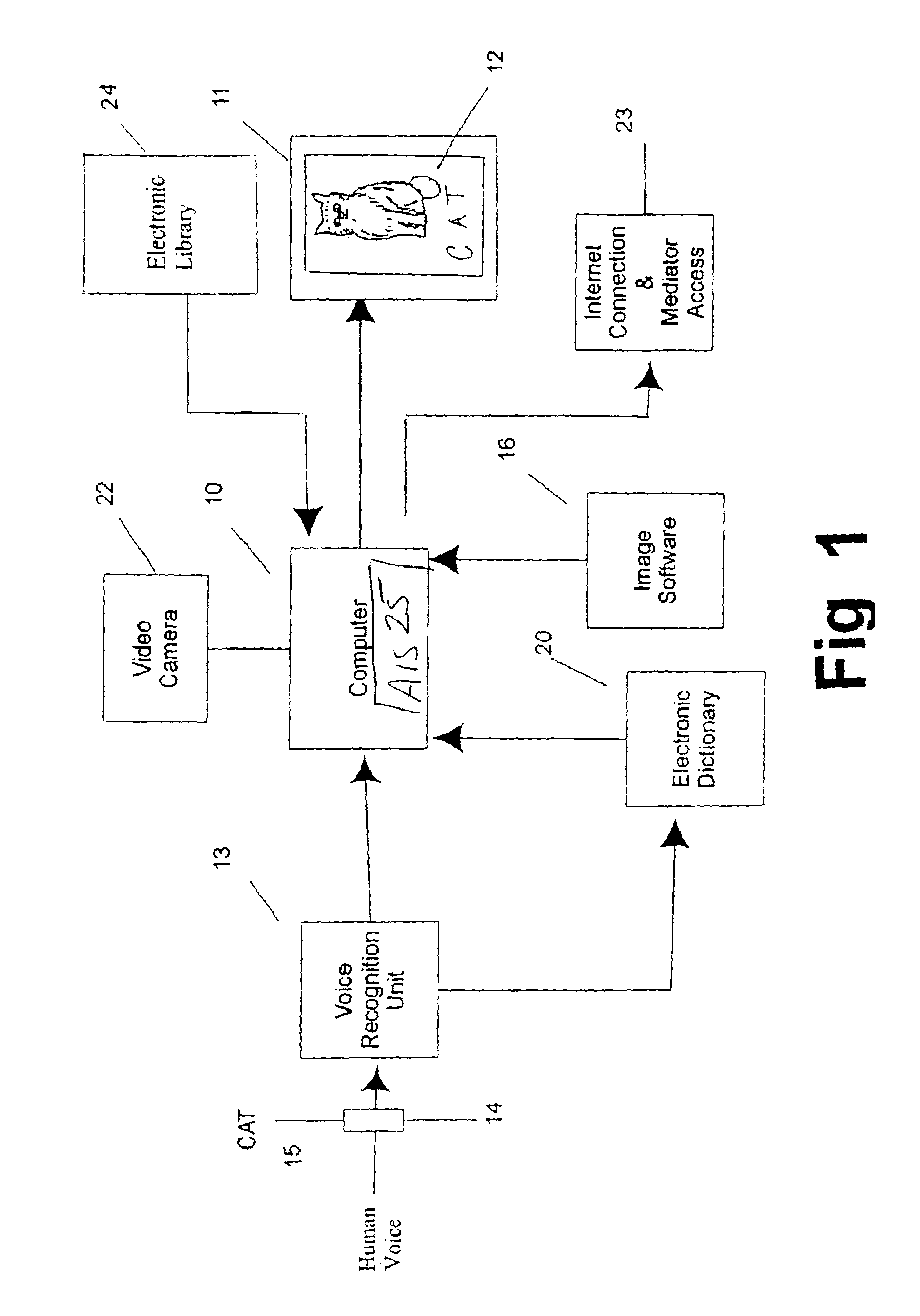

A phonics training system provides immediate, audible and virtual answers to questions regarding various images such as objects, animals and people, posed by a user when the user views such images on a video display terminal of the system. The system can provide virtual answers to questions without the need for an instruction or teacher and includes a computer having a video output terminal and an electronic library containing common answers to basic questions. This system can also include an artificial intelligence system.

Owner:HANGER SOLUTIONS LLC

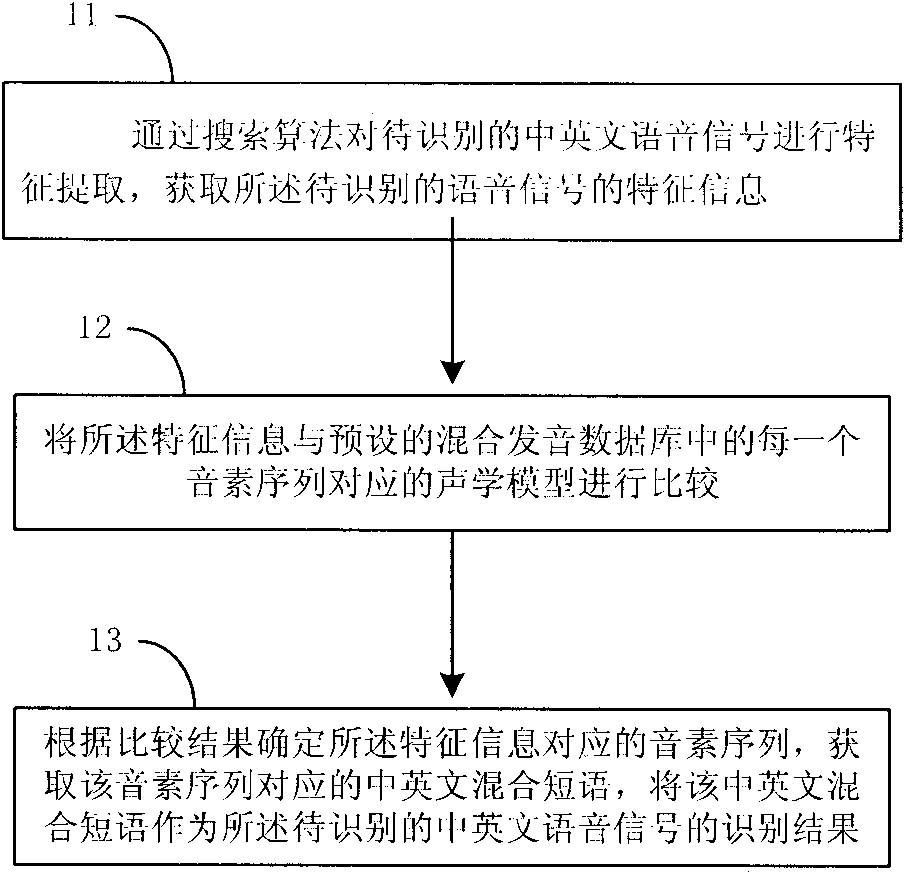

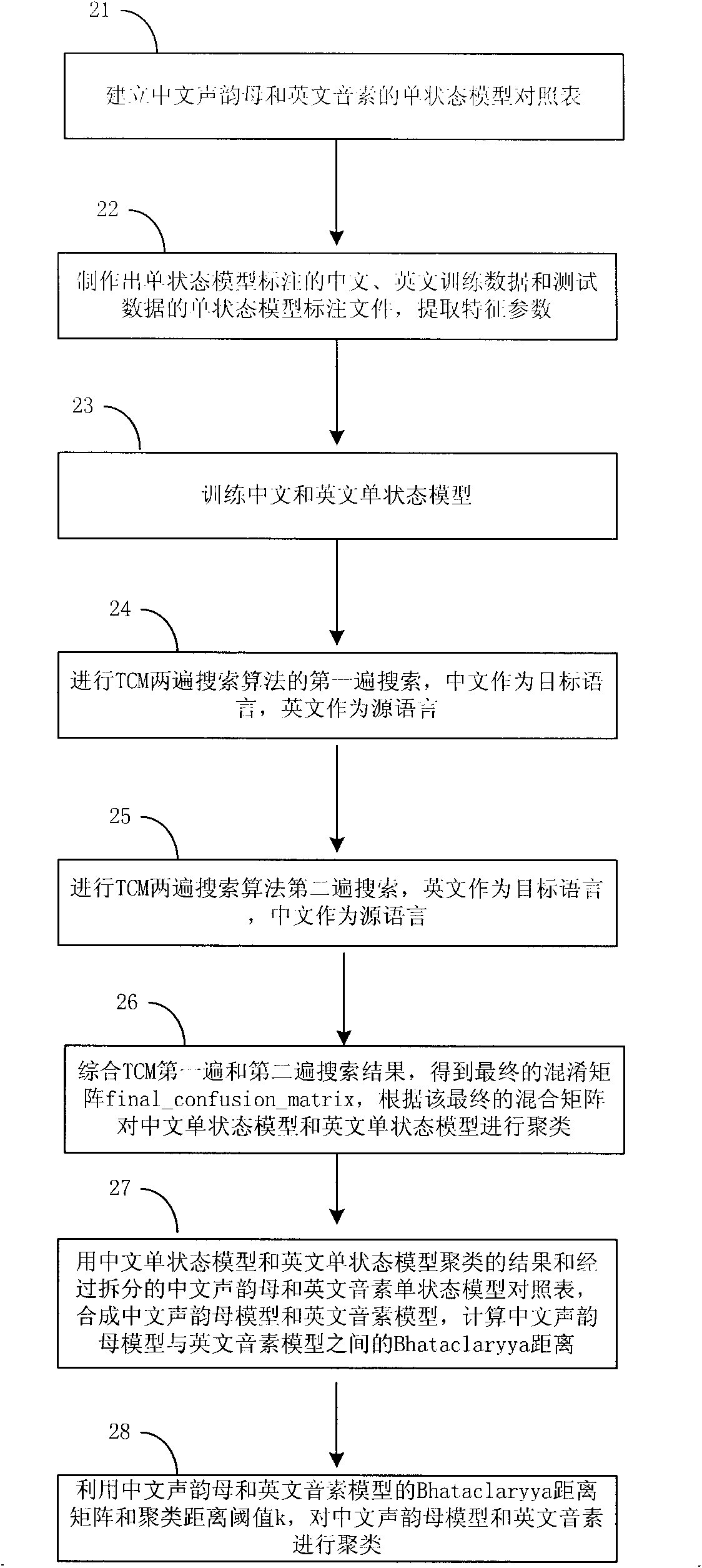

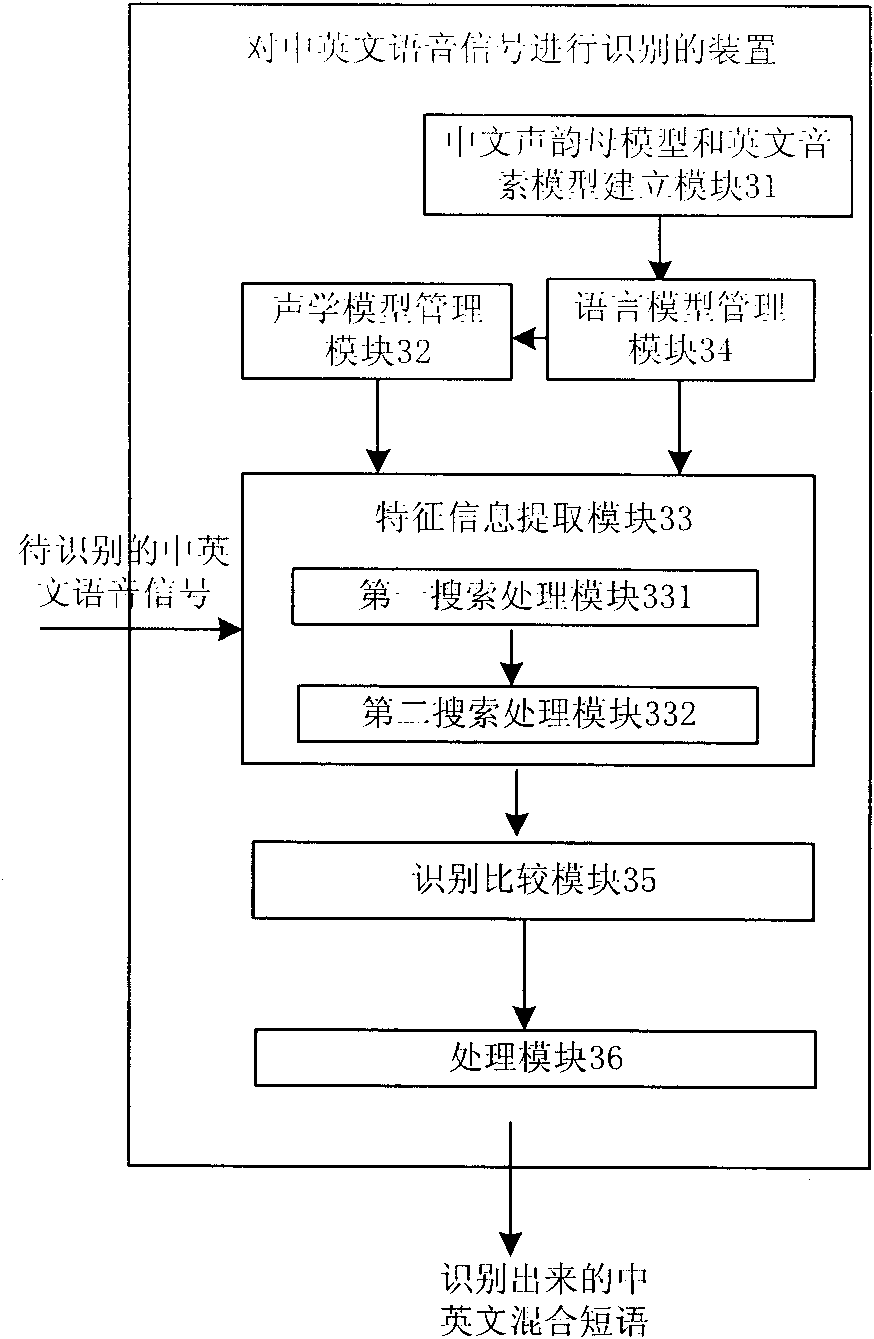

Method and device for identifying Chinese and English speech signal

The invention provides a method and a device for identifying a Chinese and English speech signal. The method mainly comprises the following steps of: carrying out feature extraction on a Chinese and English speech signal to be identified by a searching algorithm to acquire the feature information of the speech signal to be identified; and comparing the feature information with an acoustic model corresponding to each phoneme sequence in a mixed speech database, determining a phoneme sequence corresponding to the feature information based on the comparative result, and acquiring a Chinese and English mixed phrase corresponding to the phoneme sequence, wherein the Chinese and English mixed phrase is taken as an identification result of the Chinese and English speech signal to be identified. The invention can establish the acoustic model with less confusion, and does not need a large amount of labeled speech training data, thereby saving system resources. The invention can effectively raise the identification rate of the Chinese and English speech signal.

Owner:GLOBAL INNOVATION AGGREGATORS LLC

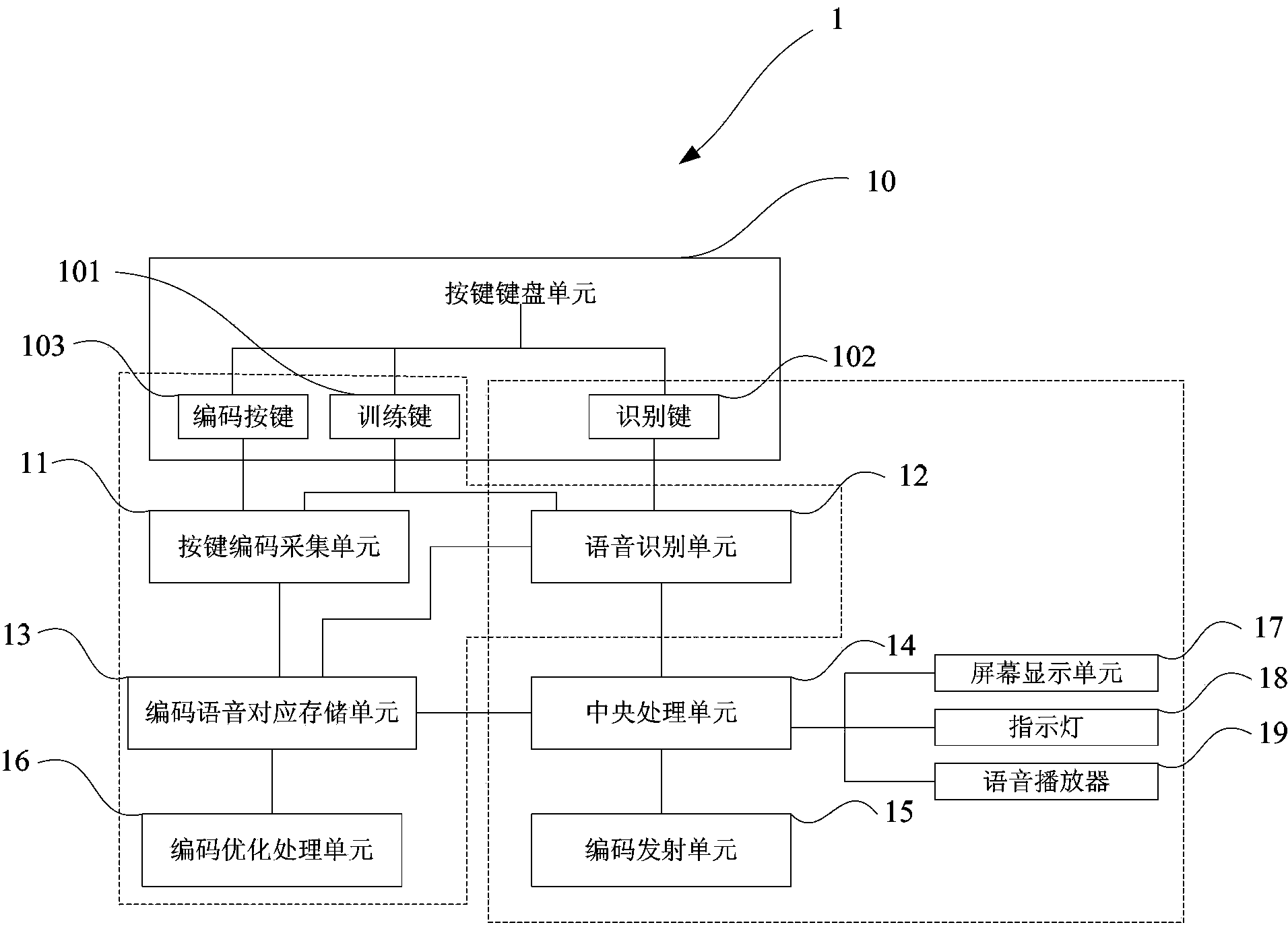

A method for a voice control remote controller and a voice remote controller

InactiveCN103632669AImprove applicabilityPracticalSpeech recognitionSelective content distributionSpeech soundSubvocal recognition

The invention provides a method for a voice controlled remote controller and a voice remote controller. The method comprises the following steps: 1), the voice controlled remote controller is made to enter a voice training mode; codes of buttons are set; and character voice instructions are set for functions, corresponding to the button codes, of a to-be-remotely-controlled device; 2), the button codes are collected and the character voice instructions are identified; 3), the collected button codes and the identified voice instructions are set to be correspondingly related to each other, and the button codes and the voice instructions which are set to be correspondingly related to each other are stored; 4), the voice remote controller is made to enter a voice recognition mode, and input voice control instructions are identified; 5), matching is carried out between the identified voice control instructions and the stored character voice instructions, and after the matching succeeds, the button codes corresponding to the matched character voice instructions are obtained; and 6), the button codes are emitted to the to-be-remotely-controlled device. According to the invention, problems of existing voice remote controllers that selection of one channel can not be carried out via a plurality of voices are solved.

Owner:上海闻通信息科技有限公司

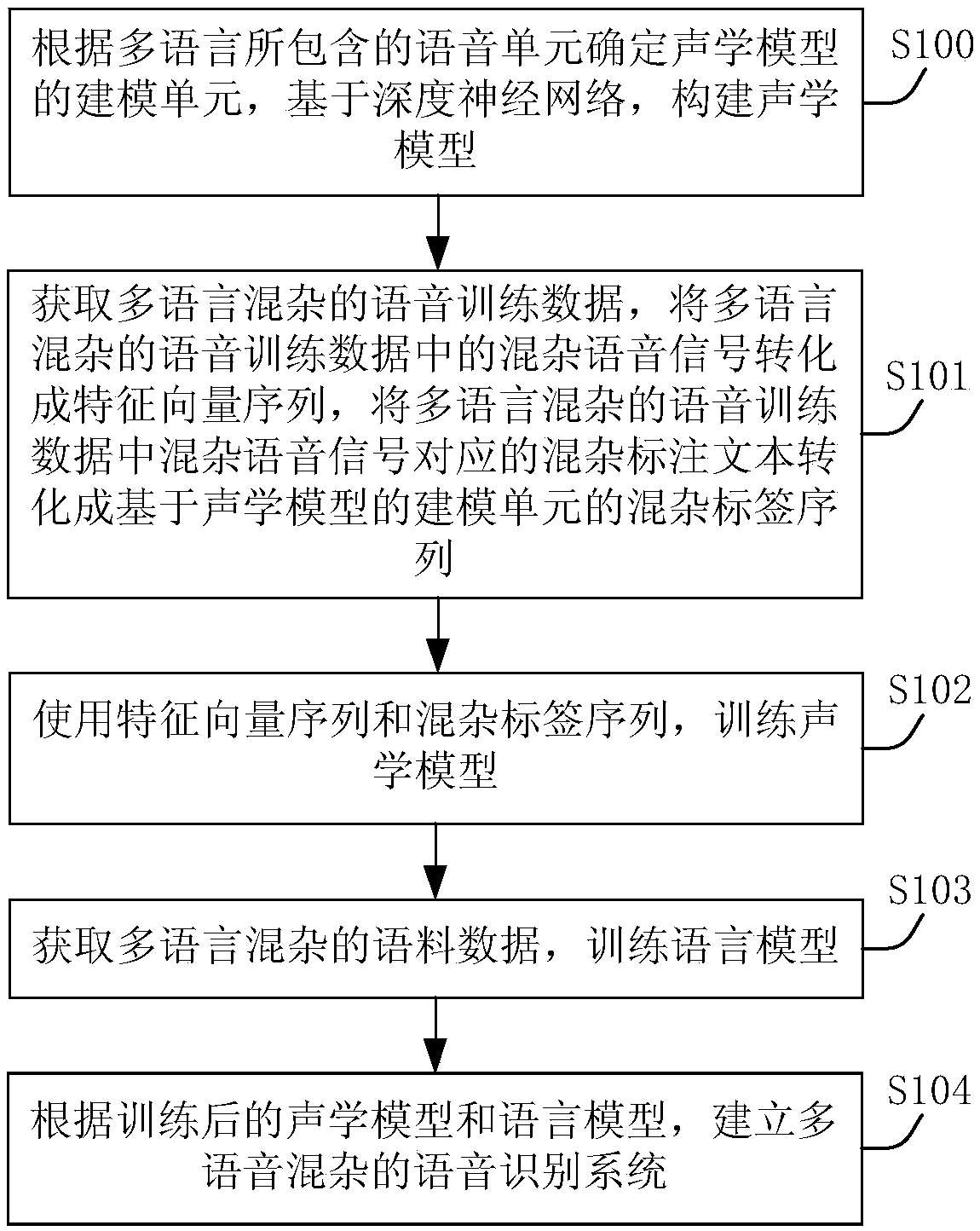

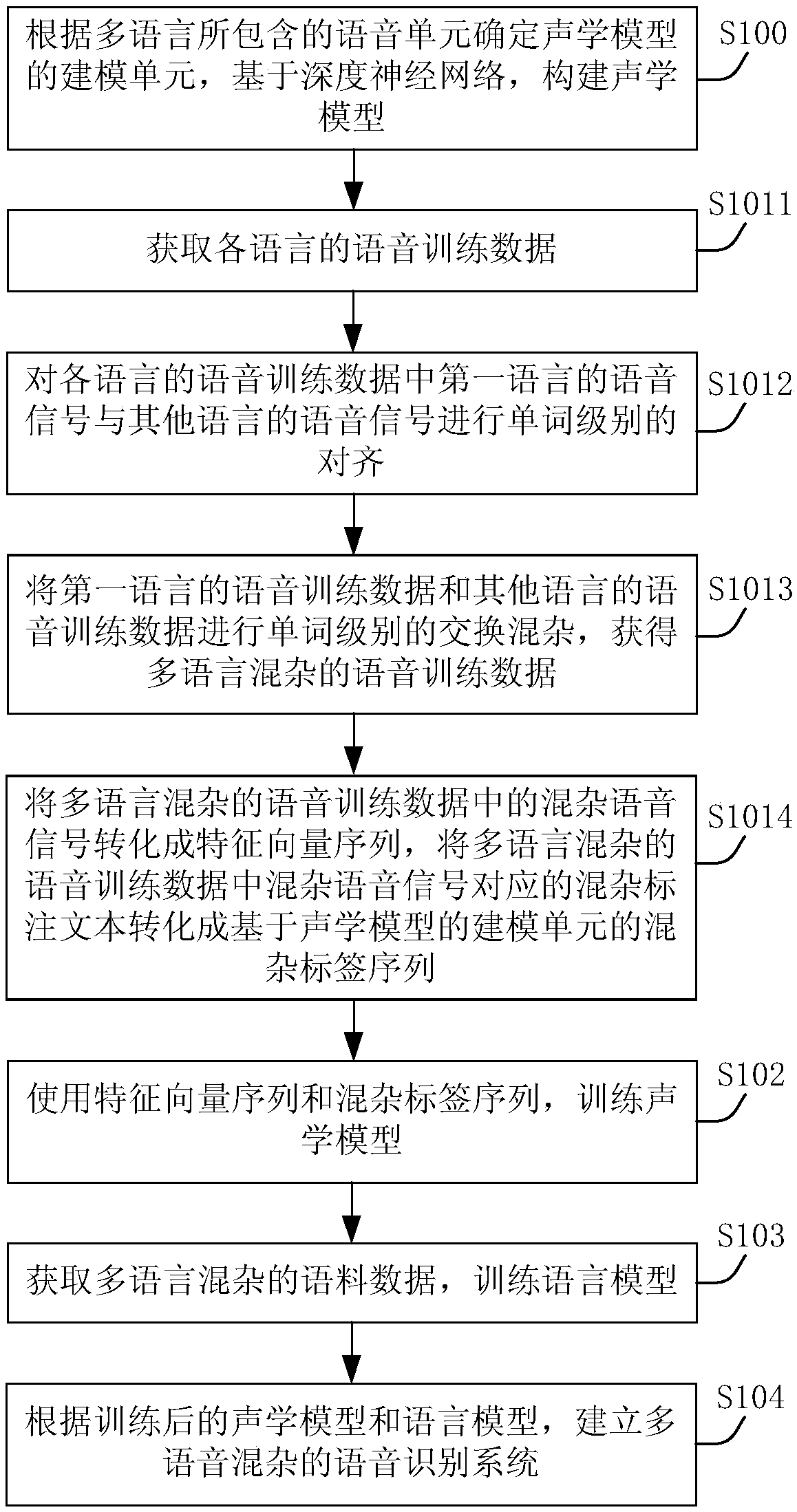

Method and device for multilingual hybrid model establishment and data acquisition, and electronic equipment

ActiveCN108711420ASolve classification problemsImprove recognition accuracySpeech recognitionSpeech synthesisData acquisitionLabelling

Embodiments of the invention provide a method and device for multilingual hybrid model establishment and data acquisition, and electronic equipment. The method includes determining a modeling unit ofan acoustic model according to a speech unit contained by multilanguage, and establishing the acoustic model based on a deep neural network, wherein the modeling unit is the context free speech unit;obtaining multilingual hybrid speech training data, converting a hybrid speech signal in the multilingual hybrid speech training data into an eigenvector sequence, and converting a hybrid labelling text corresponding to the hybrid speech signal into a hybrid label sequence of the modeling unit based on the acoustic model; training the acoustic model by using the eigenvector sequence and the hybridlabel sequence; obtaining multilingual hybrid corpus data to train a language model; and establishing a multilingual hybrid speech recognition system according to the acoustic model and the languagemodel. According to the embodiments, recognition accuracy of speech data mixing multiple languages can be enhanced.

Owner:BEIJING ORION STAR TECH CO LTD

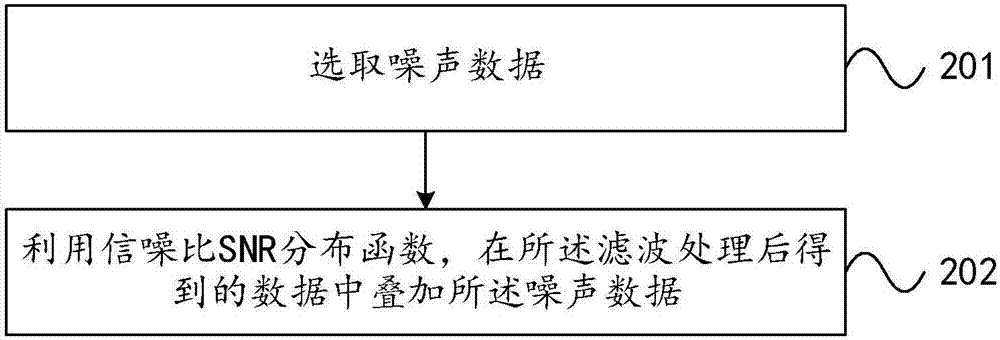

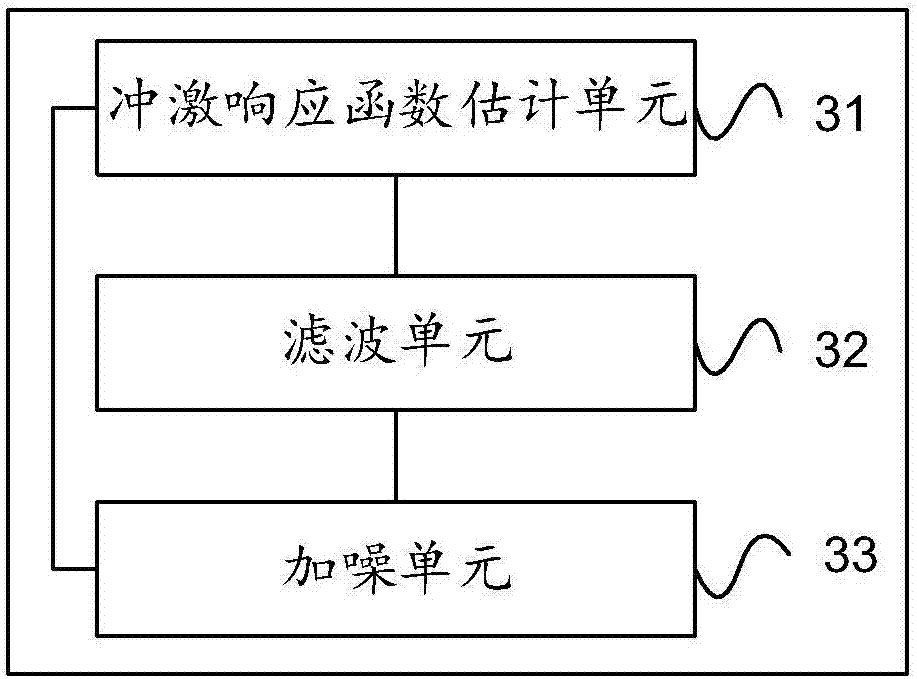

Voice data enhancing method and system

The invention provides a voice data enhancing method and a system, wherein the method comprises: estimating an impulse response function in a far-field environment; filtering the near-field voice training data through the use of the impulse response function; and performing noise-adding process on the obtained data after the filtering to obtain the far-field voice training data. According to the invention, it is possible to avoid the problem that a large amount of time and economic cost are required to record the far-field voice training data in the prior art; and the time and the economic cost of obtaining far-field voice training data are reduced.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

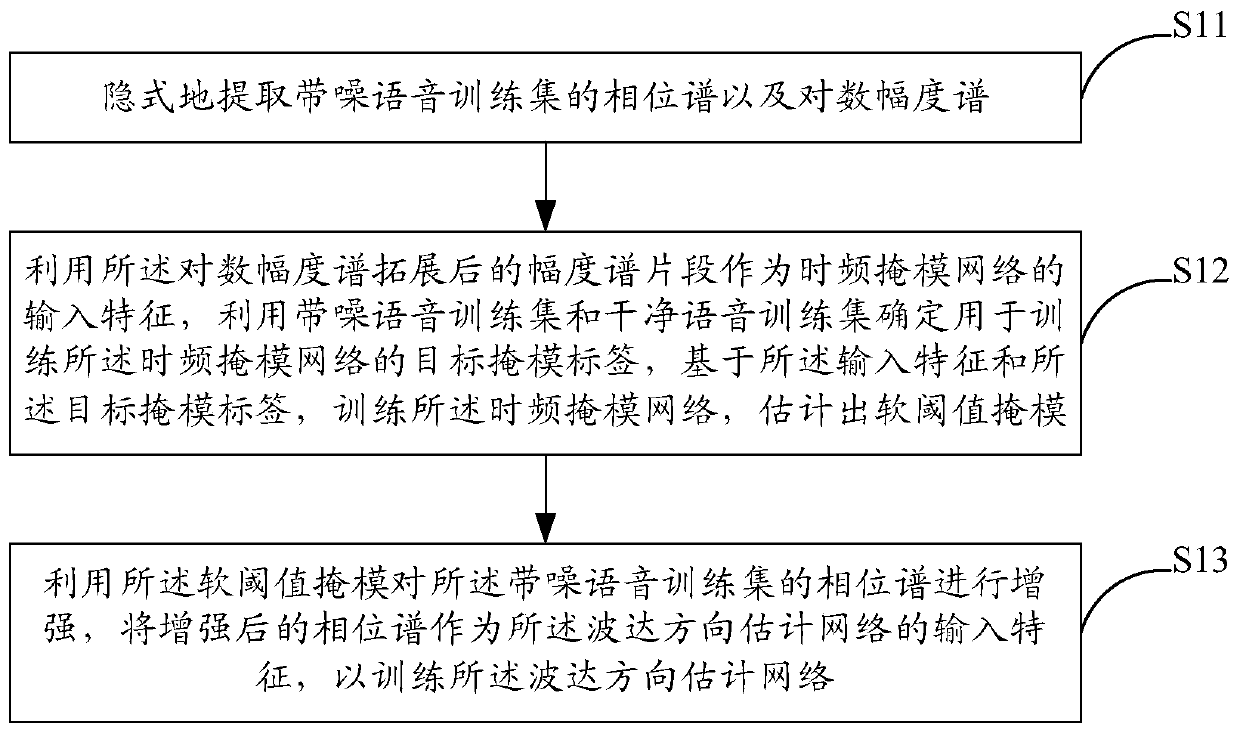

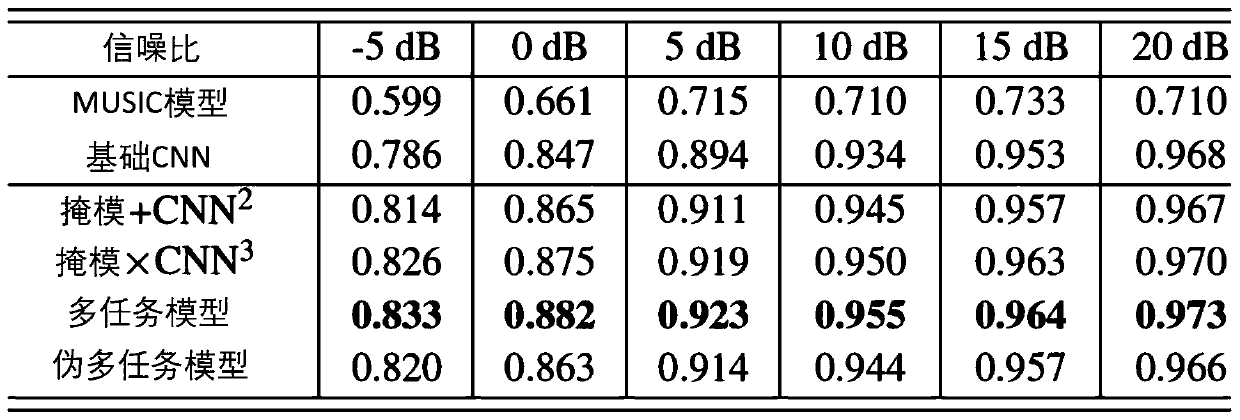

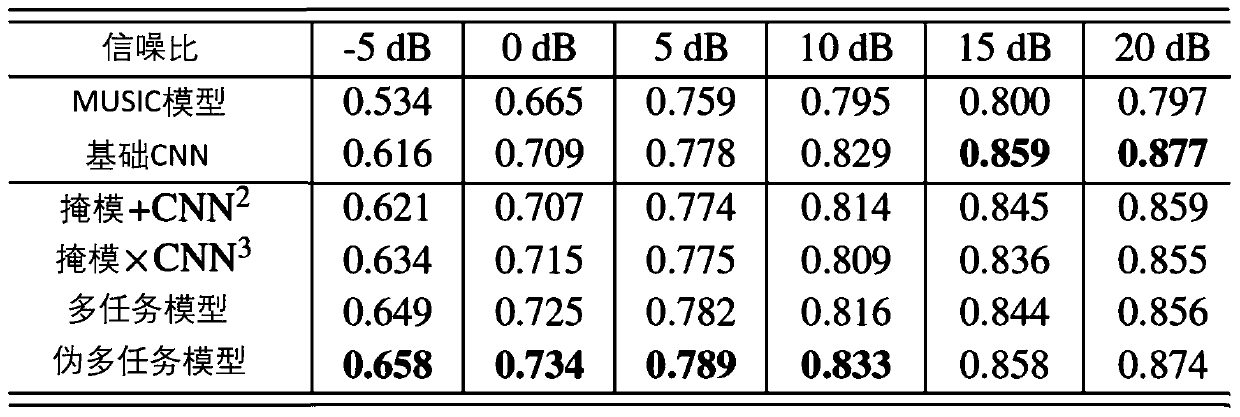

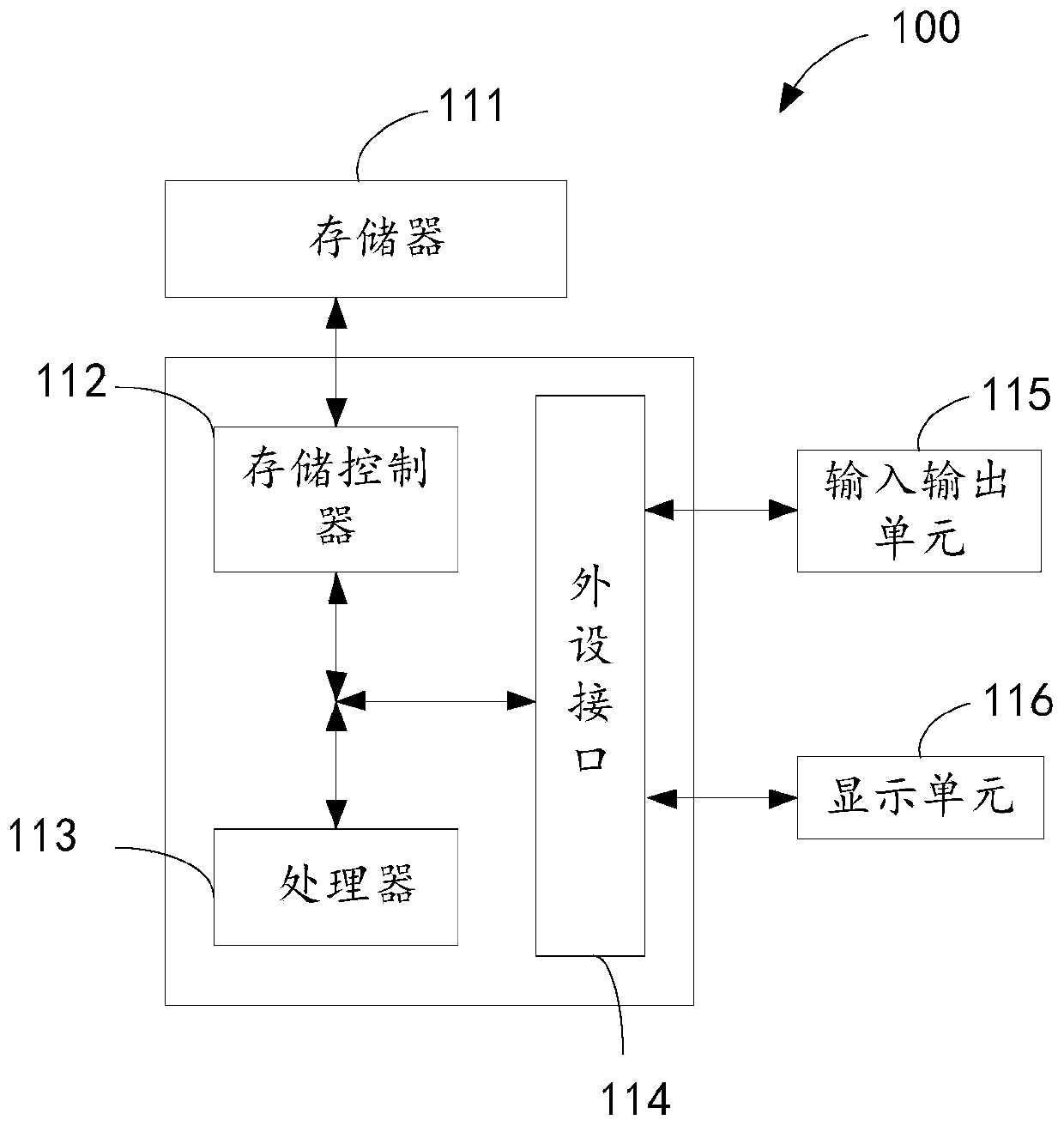

Combined model training method and system

ActiveCN109712611AAccurately estimate the effectImprove accuracySpeech recognitionDirection/deviation determination systemsPhase spectrumTime frequency masking

The embodiment of the invention provides a combined model training method. The method comprises the following steps: extracting the phase spectrum and the logarithm magnitude spectrum of a noisy voicetraining set in an implicit manner; by utilizing the magnitude spectrum fragments of the logarithm magnitude spectrum after expansion as the input features of a time frequency masking network, and byutilizing the noisy voice training set and a clear voice training set, determining a target masking label used for training the time frequency masking network, based on the input features and the target masking label, training the time frequency masking network, and estimating a soft threshold mask; and enhancing the phase spectrum of the noisy voice training set by utilizing the soft threshold mask, wherein the enhanced phase spectrum is adopted as the input features of a DOA (direction of arrival) estimation network, and training the DOA estimation network. The embodiment of the invention further provides a combined model training system. According to the embodiment of the invention, by setting the target masking label, the input features are extracted in an implicit manner, and the time frequency masking network and DOA estimation network combined training is more suitable for the DOA estimation task.

Owner:AISPEECH CO LTD

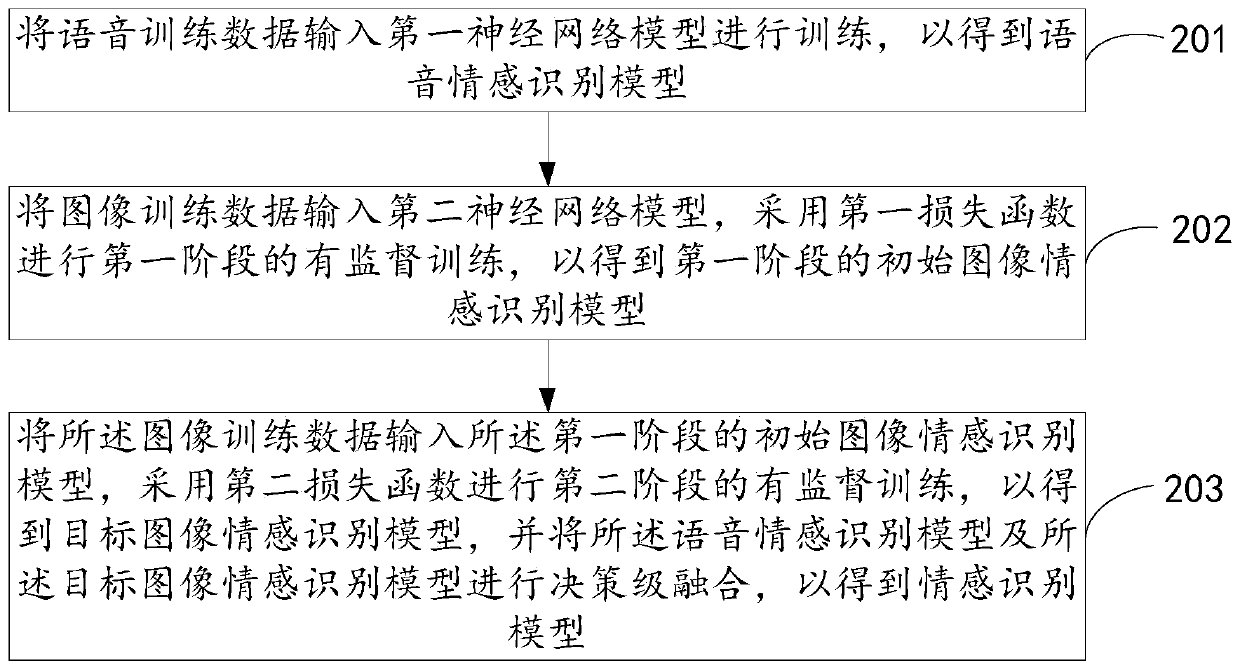

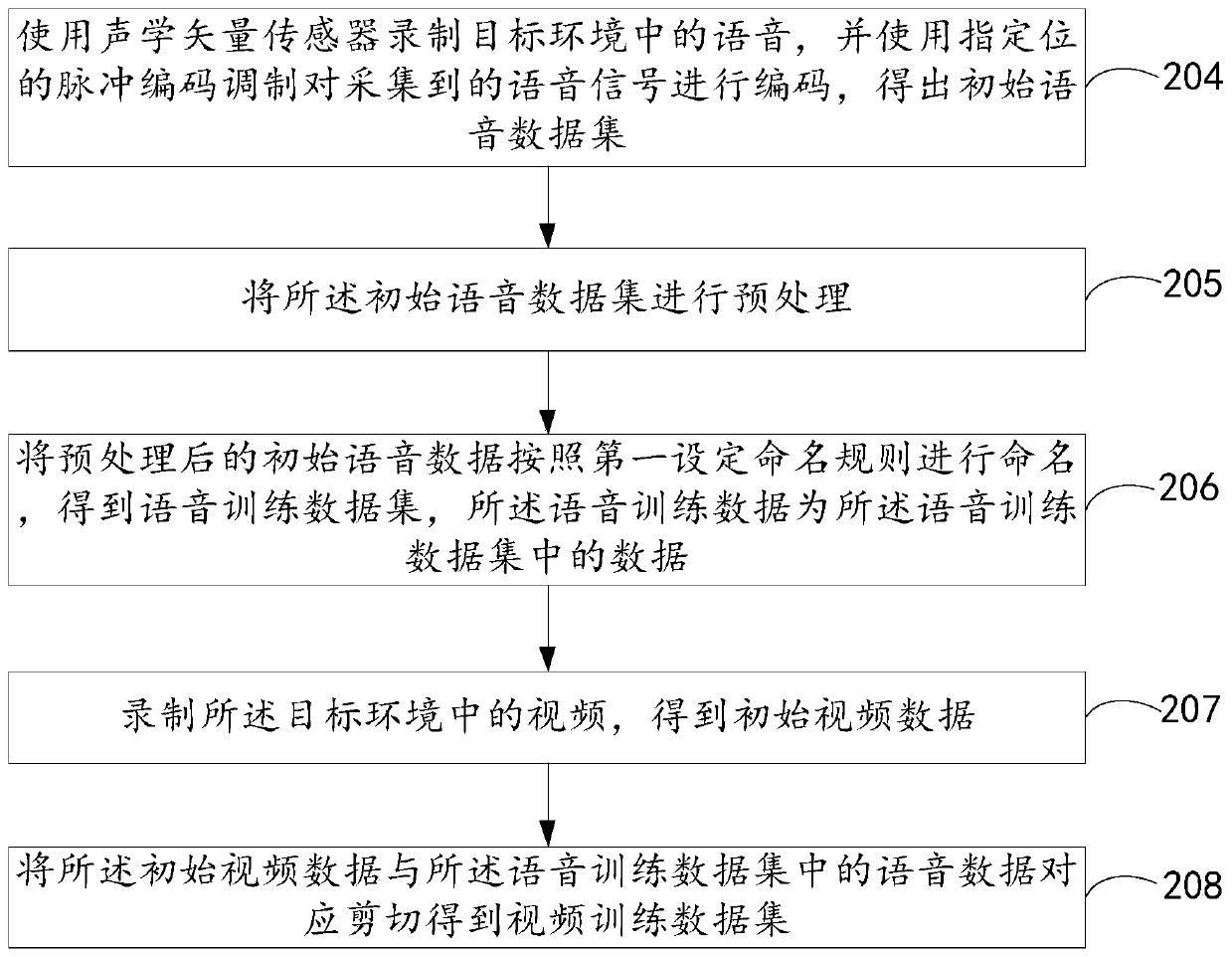

Training method for bimodal emotion recognition model and bimodal emotion recognition method

ActiveCN110556129ARealize identificationThe importance of effective matchingSpeech analysisCharacter and pattern recognitionPattern recognitionNetwork model

The invention provides a training method for a bimodal emotion recognition model and a bimodal emotion recognition method. The training method for the bimodal emotion recognition model comprises the following steps: inputting voice training data into a first neural network model for training, so that a voice emotion recognition model is obtained; inputting image training data into a second neuralnetwork model, and carrying out supervised training of the first stage by adopting a first loss function, so that an initial image emotion recognition model of the first stage is obtained; and inputting the image training data into the initial image emotion recognition model of the first stage, carrying out supervised training of the second stage by adopting a second loss function, so that a target image emotion recognition model is obtained, and carrying out decision fusion on the voice emotion recognition model and the target image emotion recognition model, so that the bimodal emotion recognition model is obtained.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

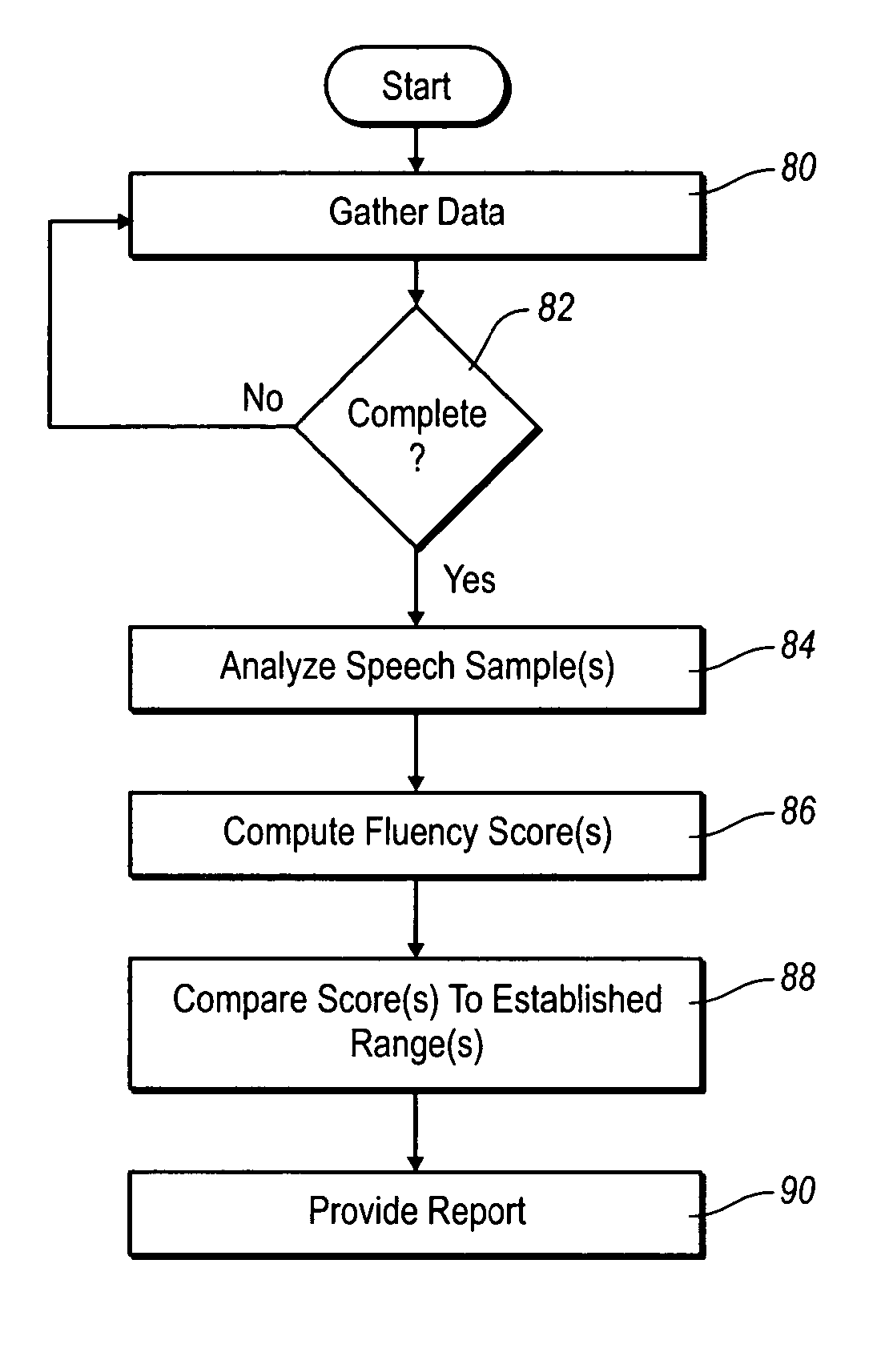

Systems and methods for dynamically analyzing temporality in speech

Systems and methods for dynamically analyzing temporality in an individual's speech in order to selectively categorize the speech fluency of the individual and / or to selectively provide speech training based on the results of the dynamic analysis. Temporal variables in one or more speech samples are dynamically quantified. The temporal variables in combination with a dynamic process, which is derived from analyses of temporality in the speech of native speakers and language learners, are used to provide a fluency score that identifies a proficiency of the individual. In some implementations, temporal variables are measured instantaneously.

Owner:BRIGHAM YOUNG UNIV

Digital media adaptor with voice control function and its voice control method

InactiveCN101025860AReliable performanceImprove scalabilityNon-electrical signal transmission systemsSpeech recognitionInformation repositoryRemote control

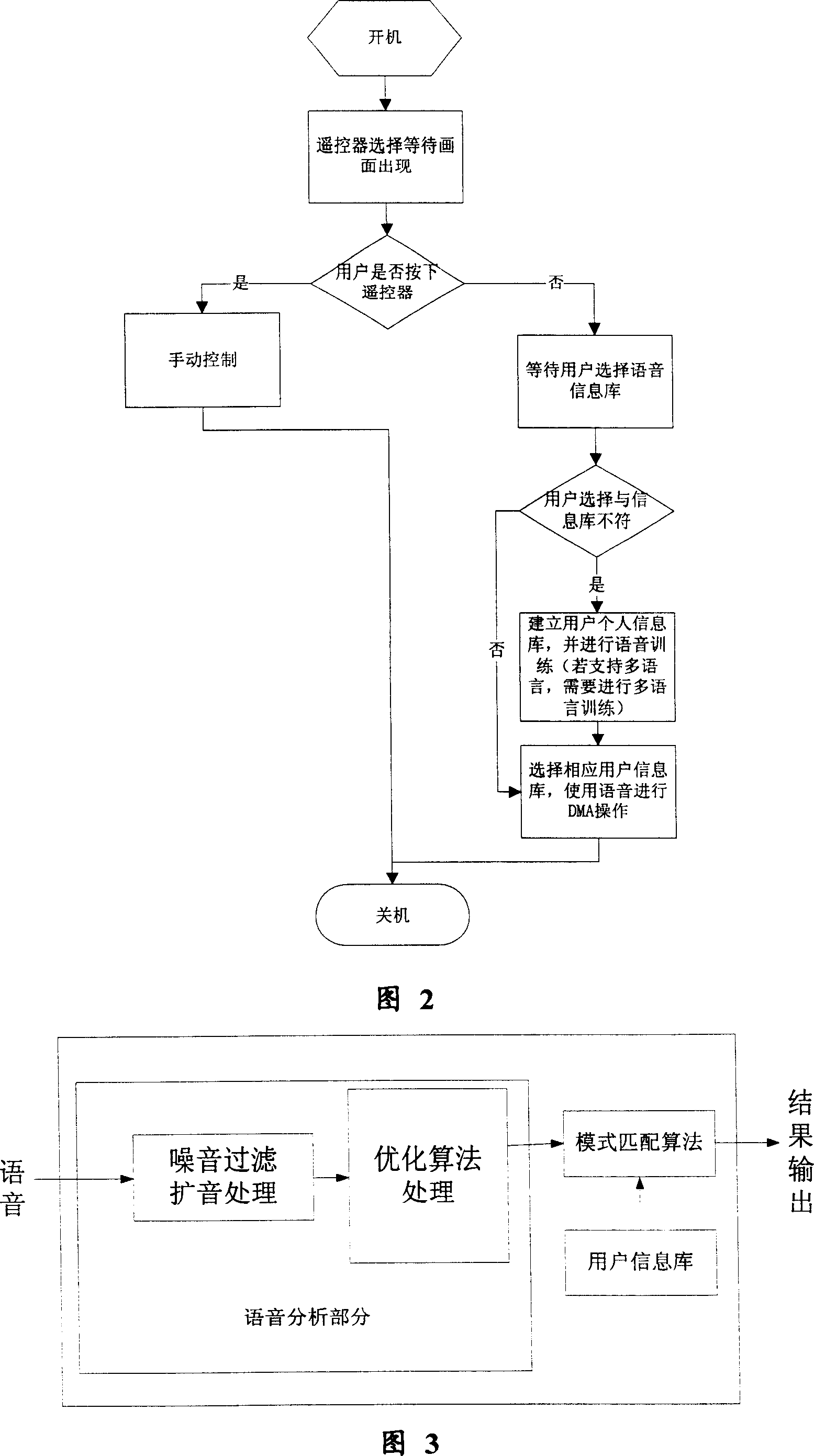

This invention relates to a digital media adapter and a method with voice control function and the adapter includes voice input module, ADC module, speech recognition module, voice prompt and playback function module, user information storage library, infrared remote control signal input module and system control module. The method includes judging in a certain time whether there is infrared remote control signal, establishing user information library and voice training, receiving and selecting voice information from user library, conducting ADC on voice information, judging whether the corresponding voice message matches the selected personal information library, conducting the corresponding users' voice command recognition and handling.

Owner:HUANDA COMPUTER (SHANGHAI) CO LTD

System and method for training users with audible answers to spoken questions

A phonics training system provides immediate, audible and virtual answers to questions regarding various images such as objects, animals and people, posed by a user when the user views such images on a video display terminal of the system. The system can provide virtual answers to questions without the need for an instruction or teacher and includes a computer having a video output terminal and an electronic library containing common answers to basic questions. This system can also include an artificial intelligence system.

Owner:HANGER SOLUTIONS LLC

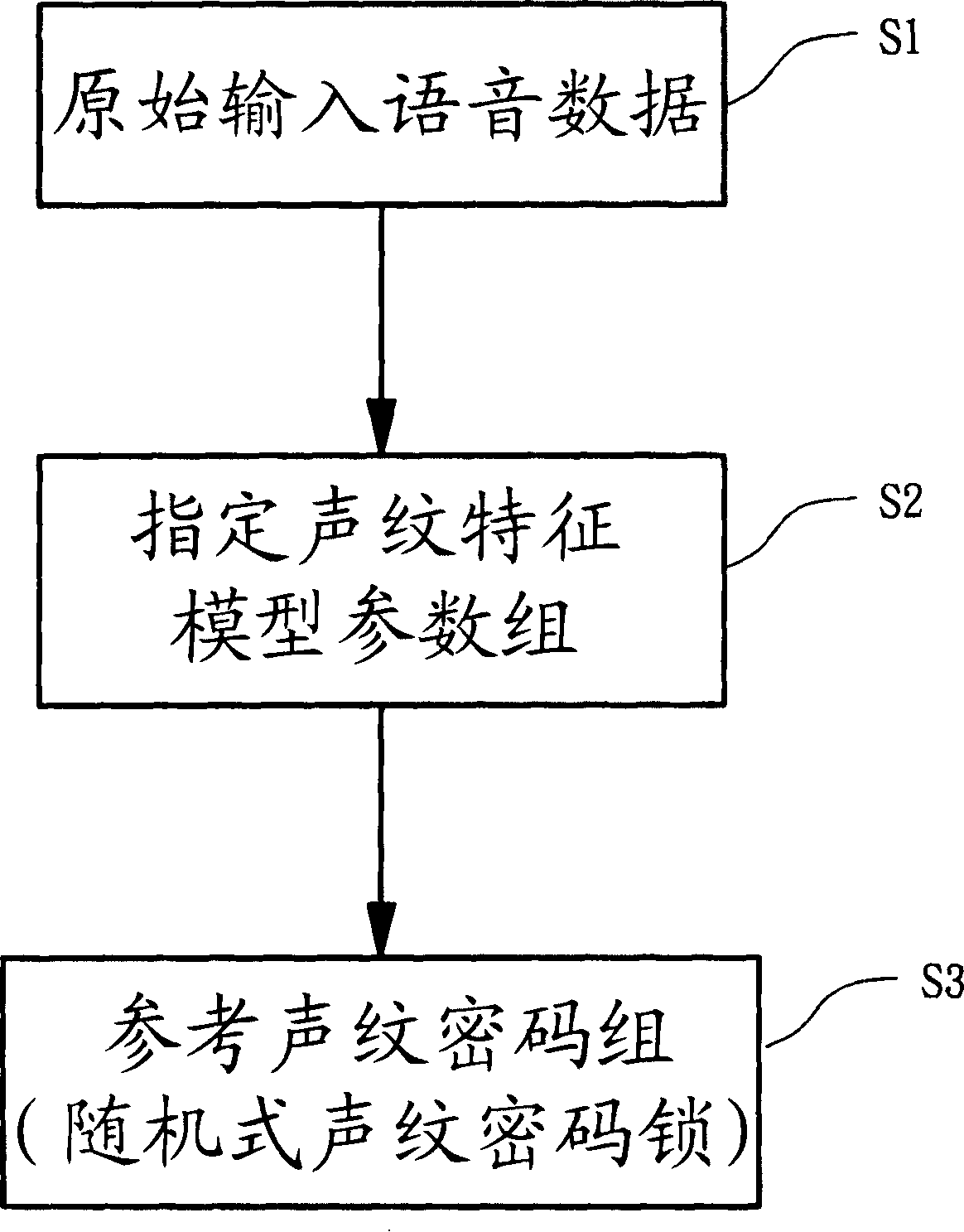

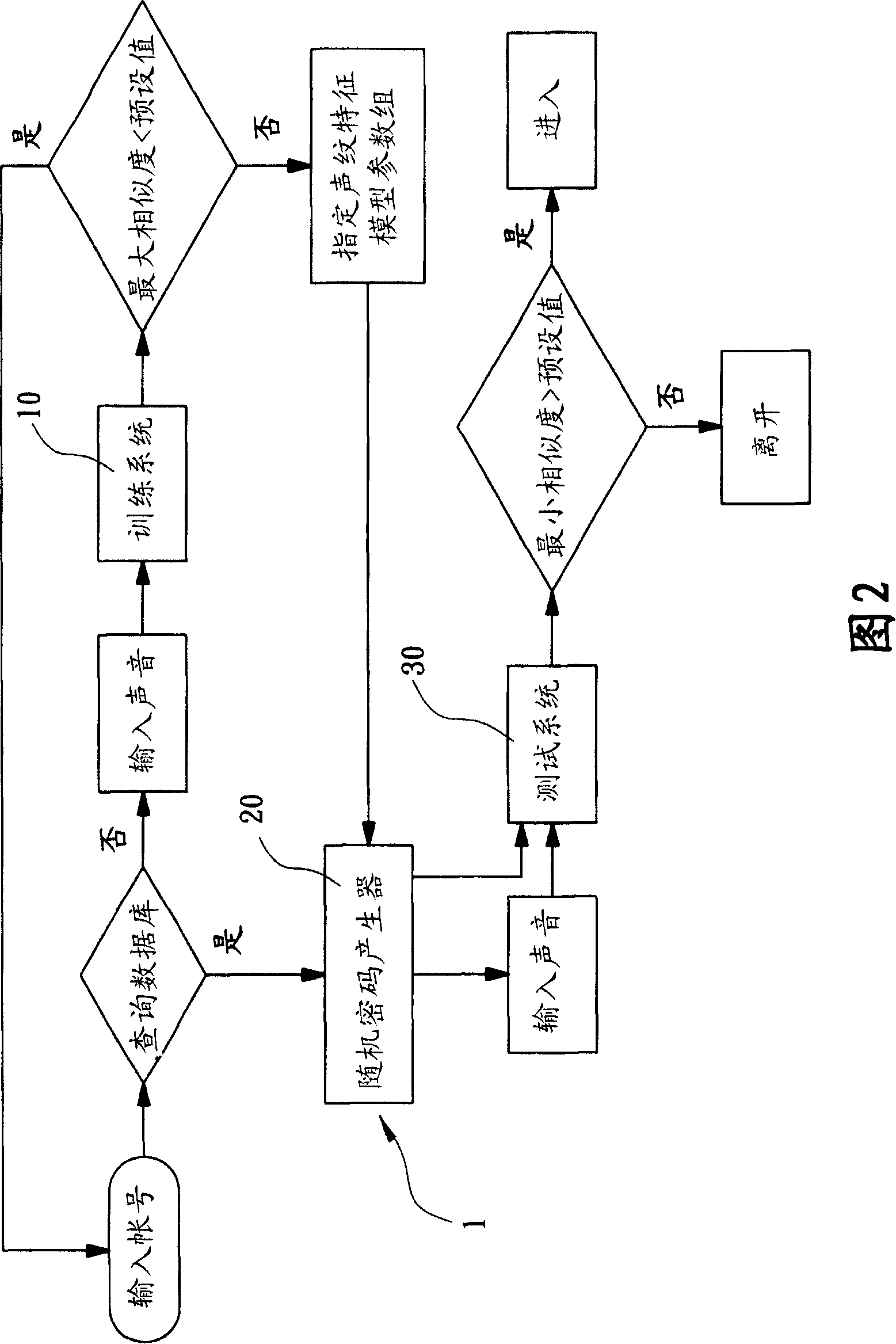

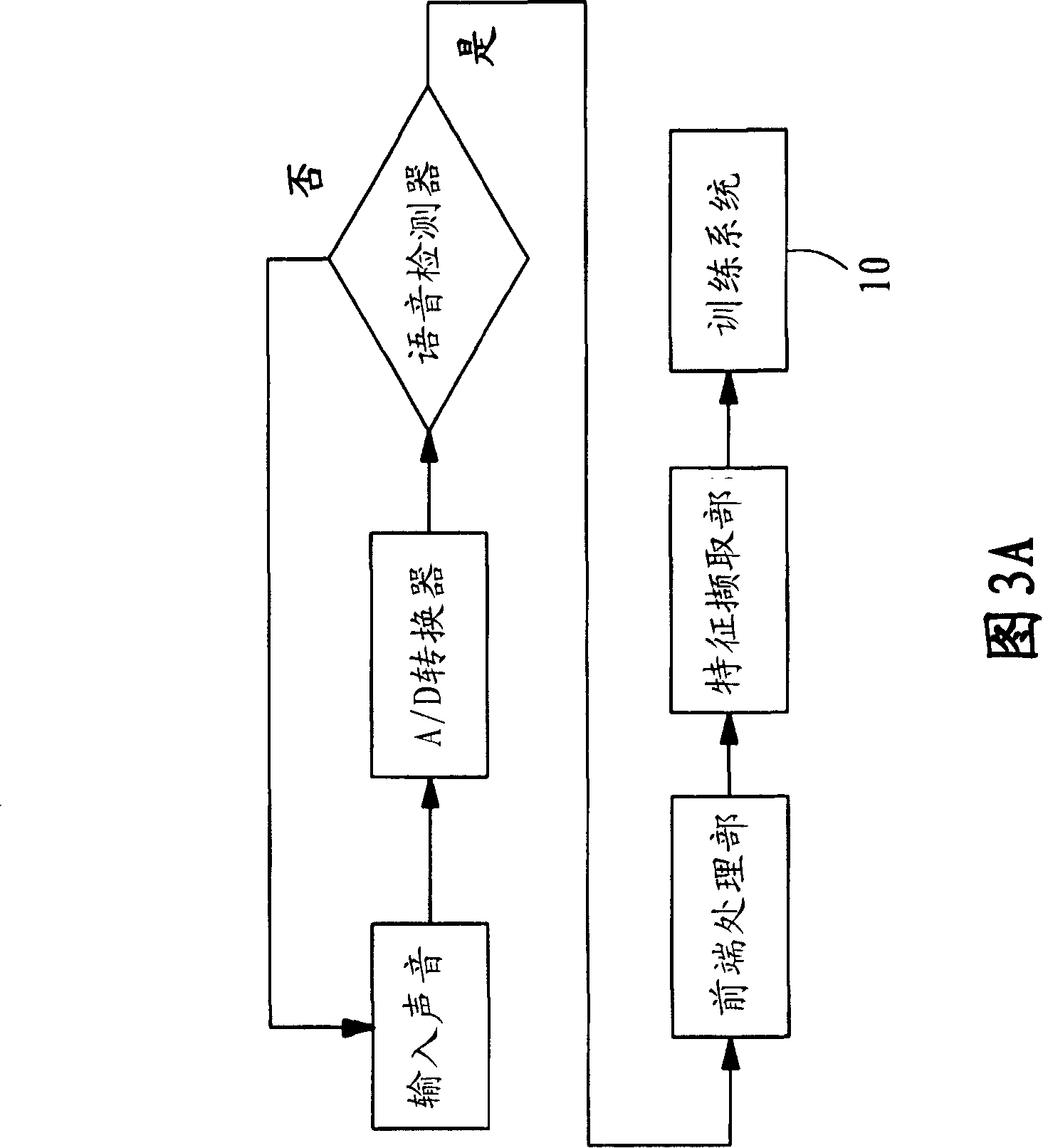

Accidental vocal print password validation system, accidental vocal print cipher lock and its generation method

InactiveCN101197131ANot easy to crack illegallyNot vulnerable to illegal crackingUser identity/authority verificationDigital data authenticationPasswordModel parameters

The invention provides a random sound trace verifying system, which comprises a training system, a random password generator and a testing system, so that the original input voice data is performed training and testing operation. In the voice training, the training system acquires a designated sound trace feature model parameter set from the original input voice data and a plurality of sound trace feature units from the designated sound trace feature model parameter set and incorporates into at least one reference sound trace password set to provide voice testing operation for the testing system. In executing the voice testing, the random password generator generates randomly at least one reference sound trace password set from the sound trace feature unit of the designated sound trace feature model parameter set to form a random sound trace password lock. The invention achieves the effect of avoiding illegal repack by randomly generating one or more reference sound trace password set, that is, the random sound trace verifying system completes setting to form the random sound trace password lock.

Owner:TOP DIGITAL

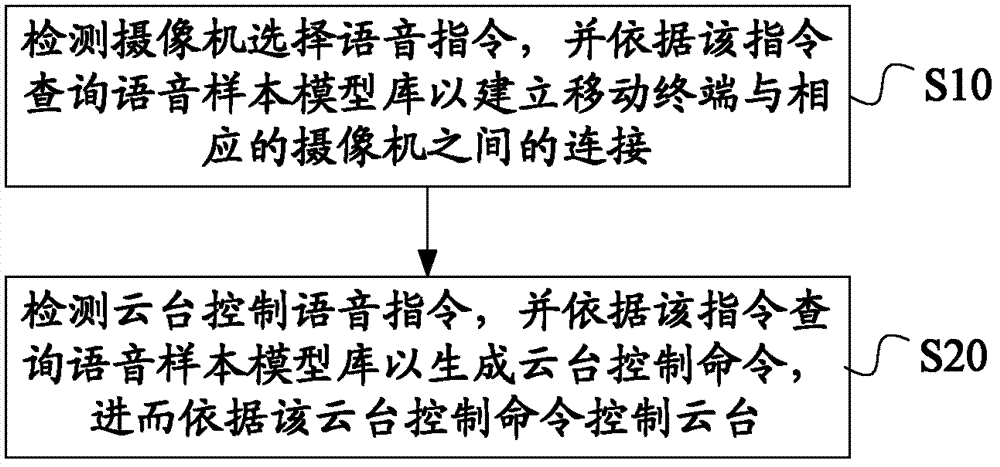

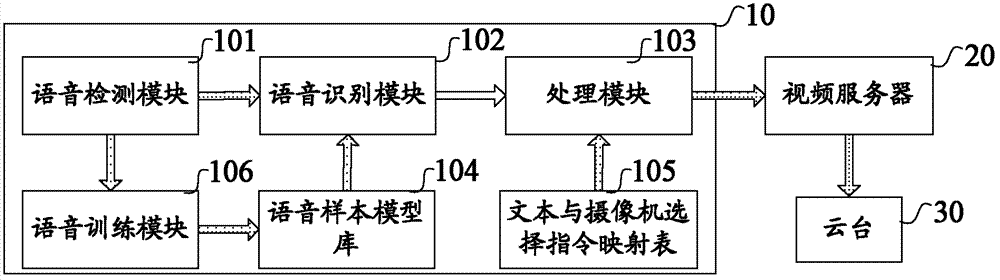

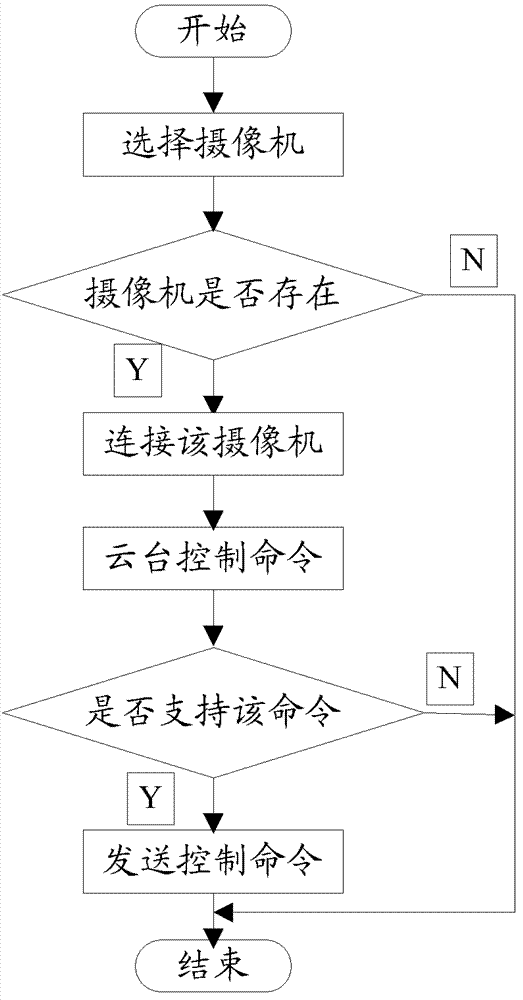

PTZ control method and system

ActiveCN103248633AAvoid the problem of not being able to recognize voices with different accentsImprove practicalityTelevision system detailsColor television detailsControl systemSpeech sound

The invention discloses a PTZ control method and system. The method comprises the following steps: detecting a camera voice selection command, and inquiring a voice sample model library according to the command so as to build a connection between a mobile terminal and a corresponding camera; and detecting a PTZ control voice command, and inquiring the voice sample model library according to the command so as to generate a PTZ control command based on which the PTZ is controlled. According to the invention, when the PTZ is controlled, first, a camera is selected through the voice; and second, the PTZ bearing the camera is controlled through the voice command. Besides, the invention also discloses a PTZ control system which comprises a voice training module used for training the voice sample model library to reinforce voice recognition ability, and accordingly, the technical problem that a correct control command can not be generated as different voices and dialects can not be recognized during the voice recognition process is solved.

Owner:SHENZHEN ZTE NETVIEW TECH

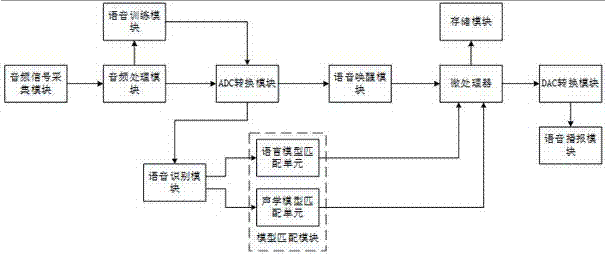

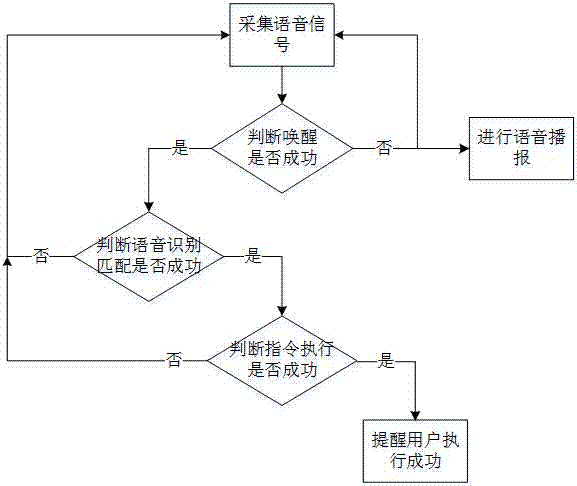

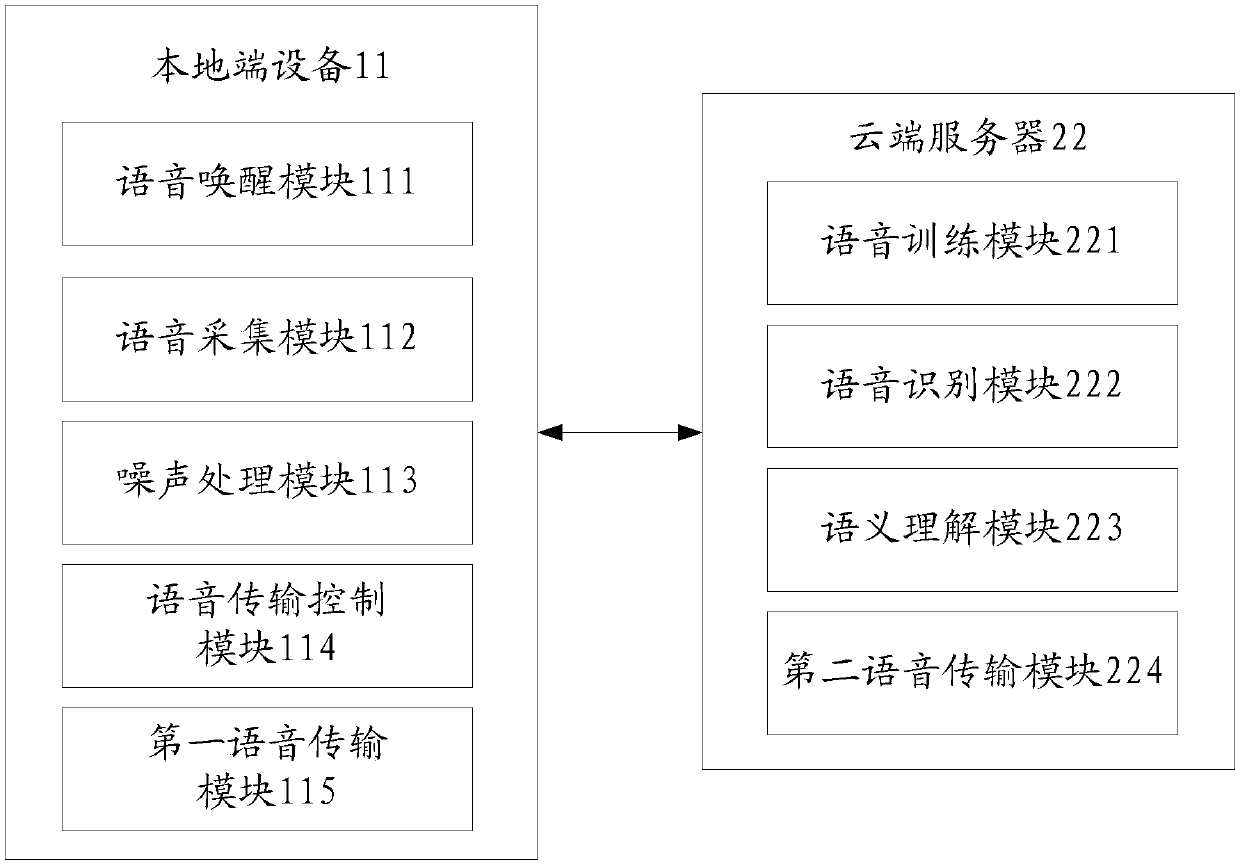

A voice interaction system and method of a smart home

PendingCN107016993AProblems preventing incorrect voice inputAvoid misuseData switching by path configurationSpeech recognitionInteraction systemsPhonological memory

The invention discloses a voice interaction system for smart home, which includes an audio signal acquisition module, an audio processing module, a voice training module, a voice recognition module, a voice wake-up module, a model matching module, an ADC conversion module, a microprocessor, and a storage module , a DAC conversion module and a voice playback module; the steps of the method are as follows: S1, collecting voice and audio signals; S2, judging whether the voice and audio signals wake up the smart home device; S3, judging whether the voice recognition matching is successful; S4, completing according to the voice command Task. Forming voice memory through voice training for users can effectively prevent the problem of wrong voice input to smart home devices by other non-user voices; by setting voice wake-up, it can effectively prevent misoperation problems caused by users who do not want to command smart home devices.

Owner:成都铅笔科技有限公司

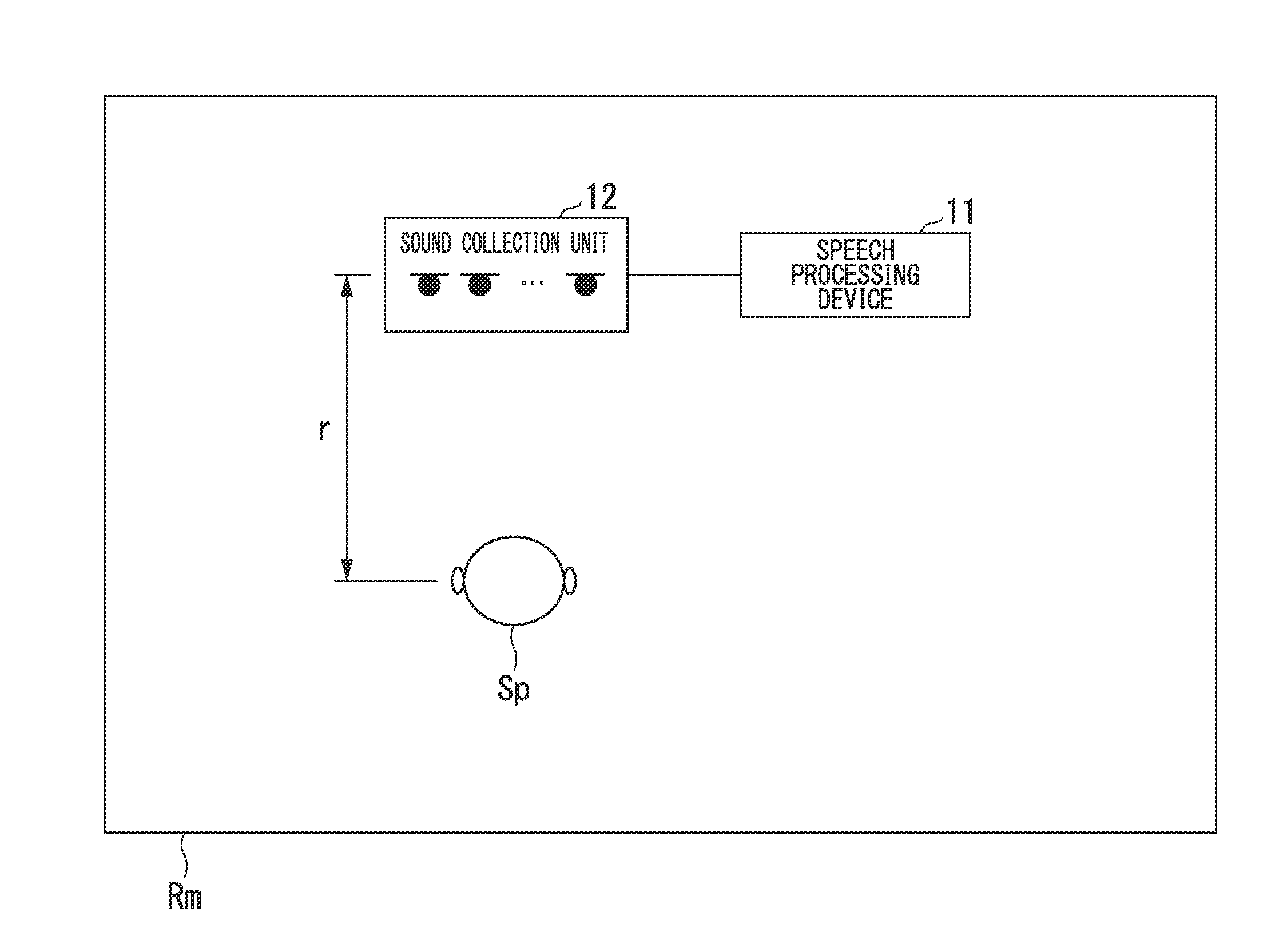

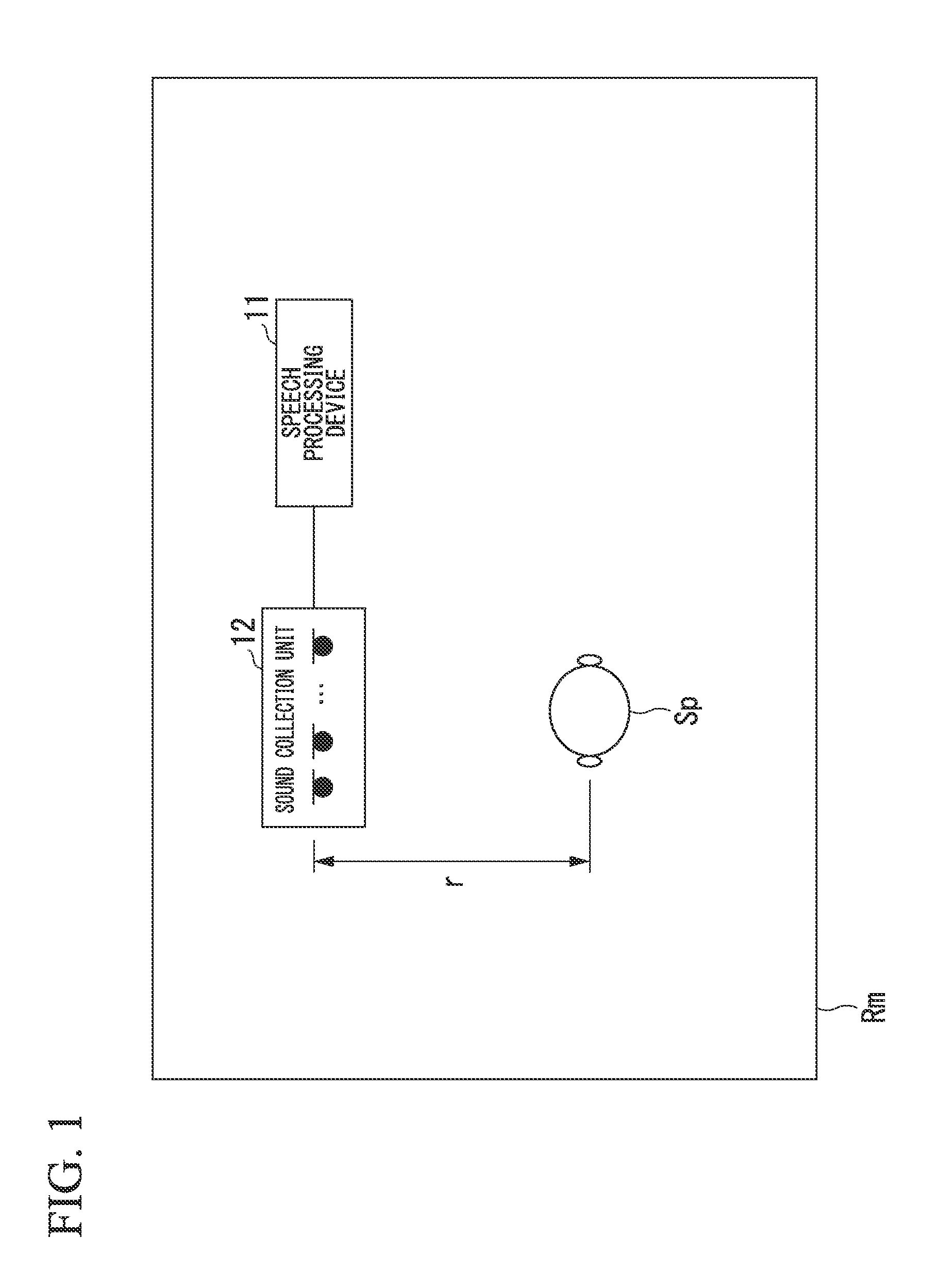

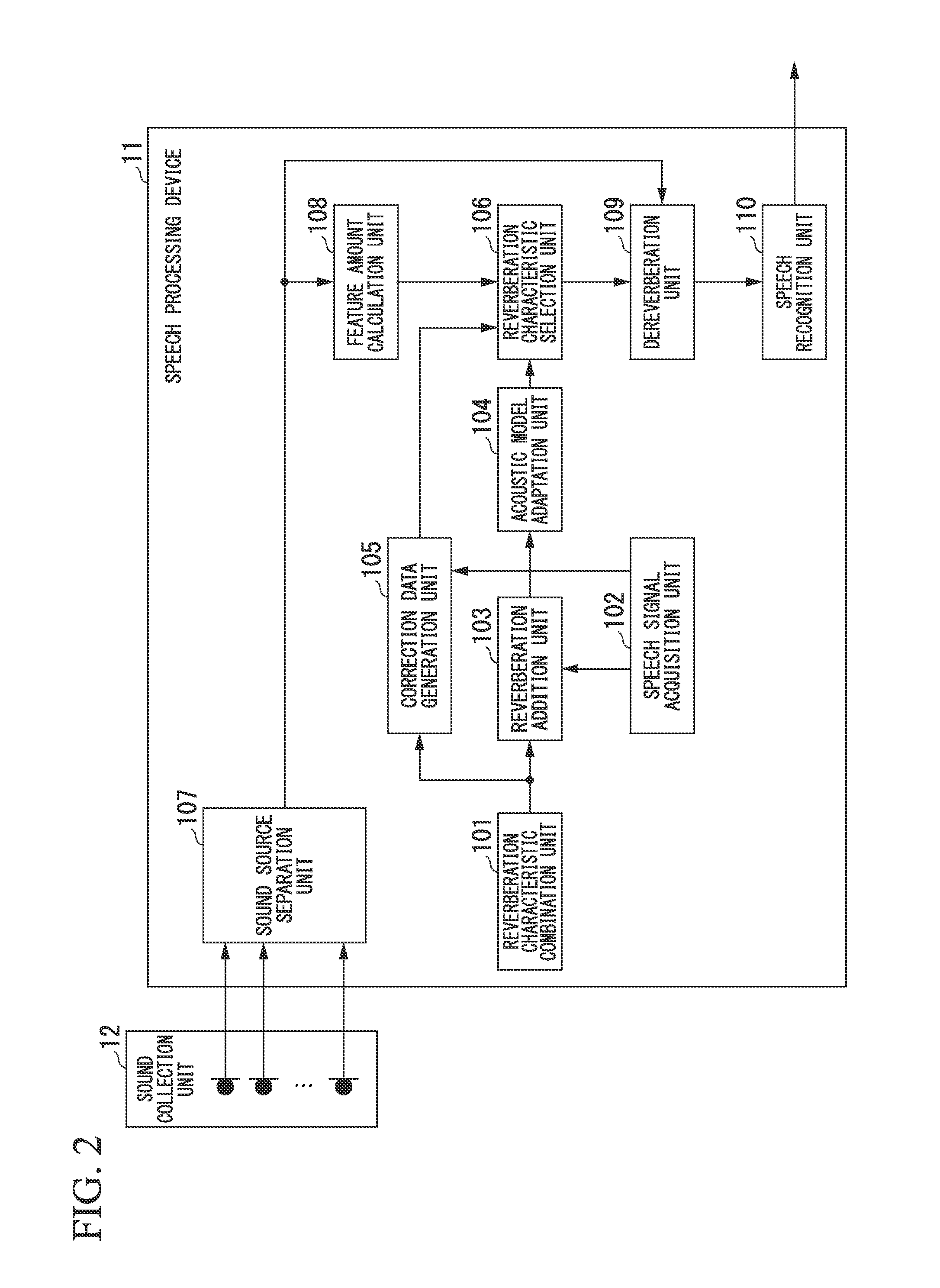

Speech processing device, speech processing method, and speech processing program

ActiveUS20150012268A1Improve speech recognition accuracySpeech recognitionAcoustic modelSelf adaptive

A speech processing device includes a reverberation characteristic selection unit configured to correlate correction data indicating a contribution of a reverberation component based on a corresponding reverberation characteristic with an adaptive acoustic model which is trained using reverbed speech to which a reverberation based on the corresponding reverberation characteristic is added for each of reverberation characteristics, to calculate likelihoods based on the adaptive acoustic models for a recorded speech, and to select correction data corresponding to the adaptive acoustic model having the calculated highest likelihood, and a dereverberation unit configured to remove the reverberation component from the speech based on the correction data.

Owner:HONDA MOTOR CO LTD

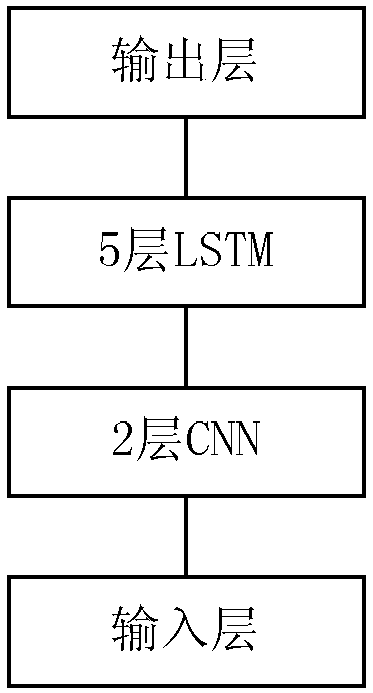

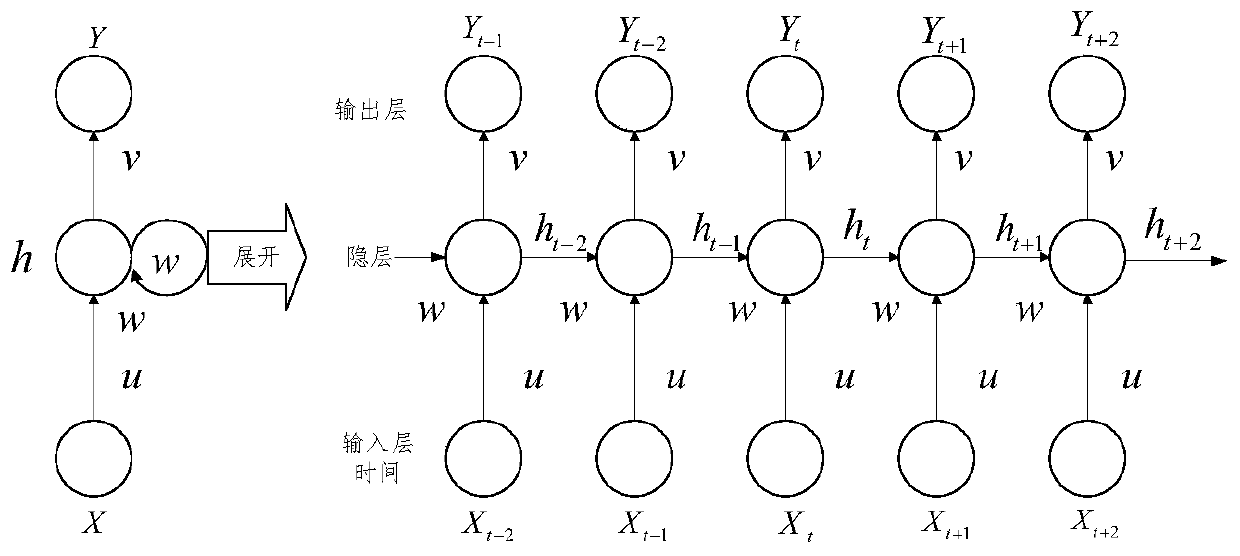

Voice noise lowering method based on RNN and voice recognizing method

InactiveCN109712628AIncrease the number of layersEffective utilizationSpeech analysisHidden layerSpeech identification

The invention discloses a voice noise lowering method based on RNN and a voice recognizing method, and belongs to the field of voice recognizing. In a noise environment, voice signal feature parameters with better performance can be extracted. The voice noise lowering method includes the steps that a DRNN noise lowering model is established; based on RNN, two hidden layers are added, there are noconnecting layers on the two hidden layers, an original hidden layer of RNN is located between the two added hidden layers, and the three hidden layers are located between an input layer and an outputlayer; a voice training signal X with noise is subjected to zero fill so that dimensions are kept uniform, after zero fill, the signal is divided into N groups, each group includes three data, the grouped data is input into the DRNN noise lowering model to be trained, and parameters of the DRNN noise lowering model are determined; the DRNN noise lowering model with the determined parameters is used for conducting noise lowering on the voice signal or the feature parameters. The voice recognizing method adopts the DRNN noise lowering model for conducting noise lowering on the feature parameters before recognizing and training on the basis.

Owner:HARBIN UNIV OF SCI & TECH

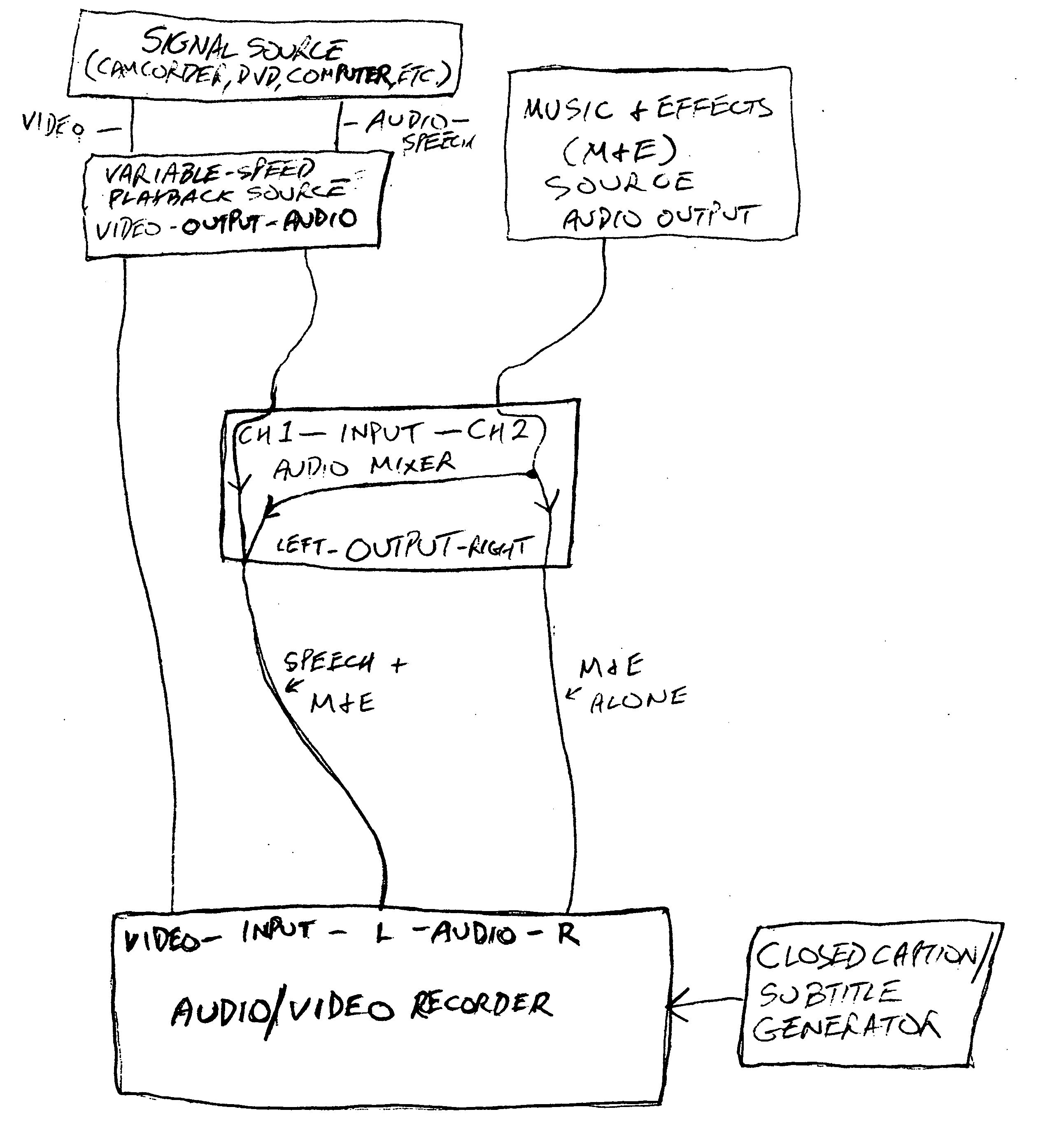

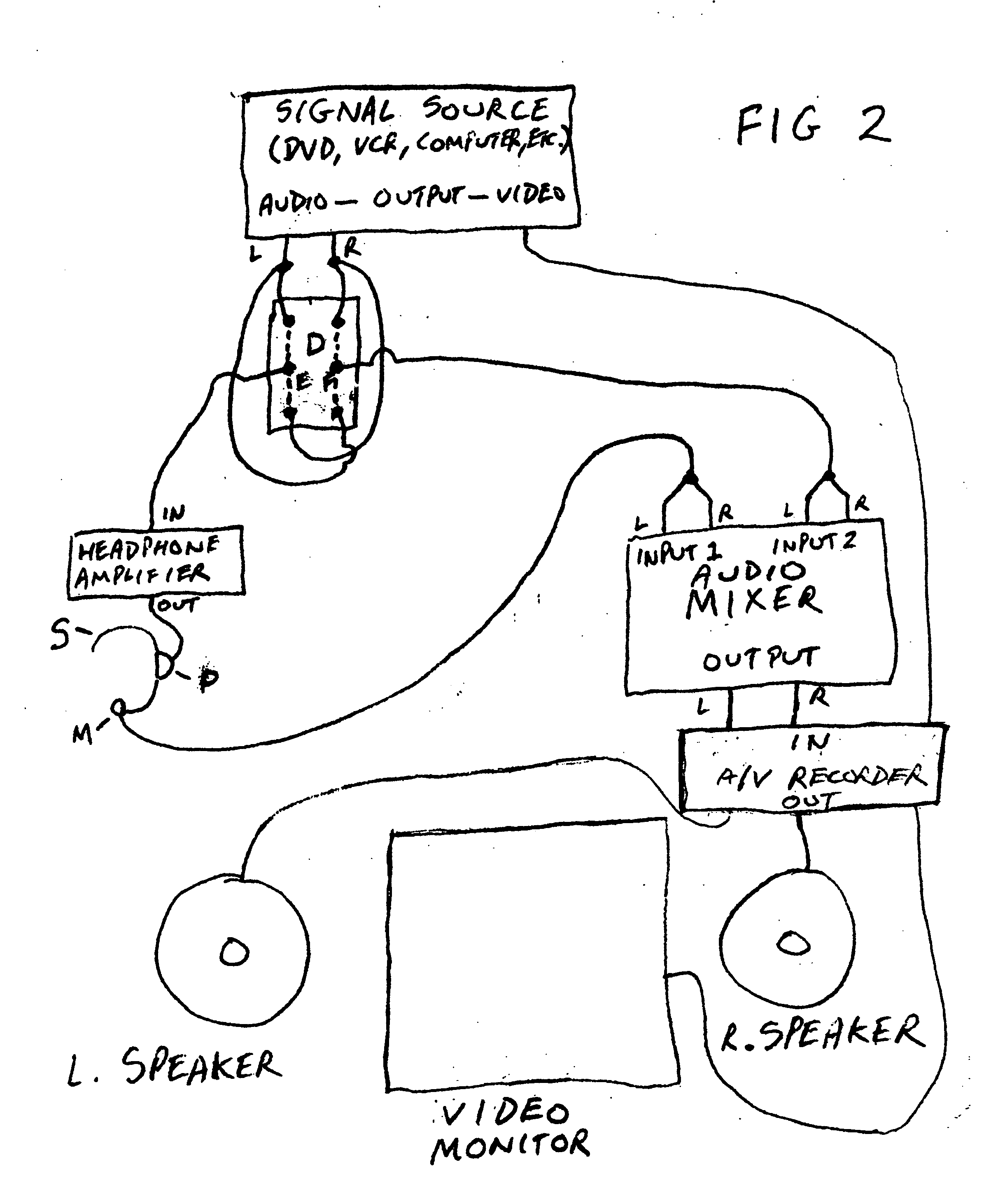

Encoding and decoding system for making and using interactive language training and entertainment materials

InactiveUS20060141437A1Reduce embarrassmentAdd funElectrical appliancesTeaching apparatusThe InternetHeadphones

This invention is a system for interactive learning for language and other studies, providing an immersion experience with other students. Program material, which can be easily and inexpensively recorded by teachers, students and other users, is encoded to make it interactive when it is decoded upon playback, in such a way that the part that the student is to speak, sing or play is played back through the student's headphones, prompting the student to perform the part properly in response to on-screen action, while the rest of the program material's audio, such as other characters' dialogue, is played back through a loudspeaker. The student-performed part is then recorded, so that upon playback the student can see how his or her efforts sound, compare with the original and / or mesh with the rest of the program. This permits the user to write dialogues, skits, words, expressions, lyrics, etc., to record them, and use the recordings for effective interactive voice training. These encoded pieces can be exchanged with other users, even via the internet across the world, to promote linguistic and cultural exchange and understanding.

Owner:WAKAMOTO CARL ISAMU

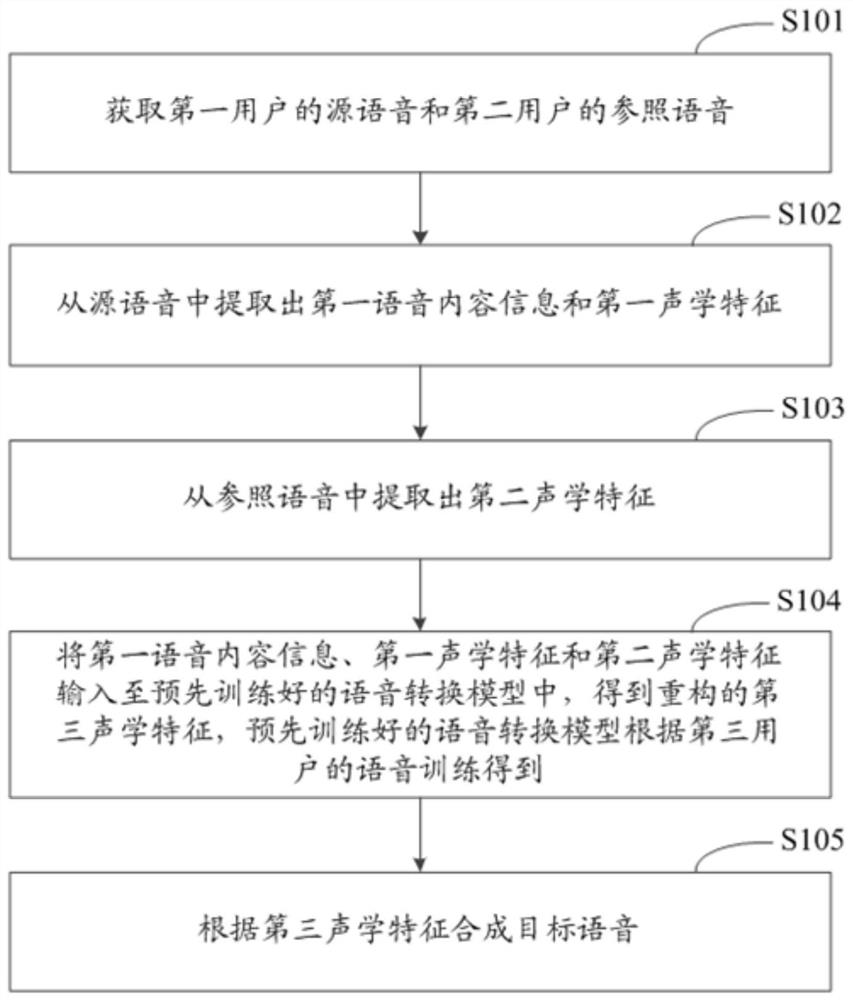

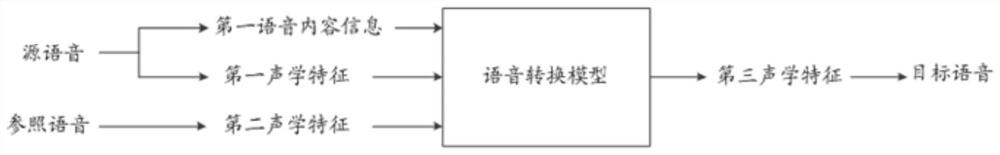

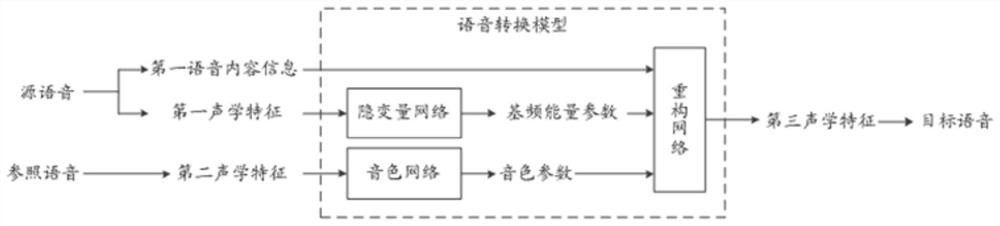

Voice conversion method and device and electronic equipment

PendingCN112259072AEasy to understandSpeech recognitionNeural learning methodsAcousticsVoice transformation

The invention discloses a voice conversion method and a device and electronic equipment, and relates to the technical field of voice conversion, voice interaction, natural language processing and deeplearning. According to the specific implementation scheme, the method comprises the followings steps: acquiring source voice of a first user and reference voice of a second user; extracting first voice content information and a first acoustic feature from the source voice; extracting a second acoustic feature from the reference voice; inputting the first voice content information, the first acoustic feature and the second acoustic feature into a pre-trained voice conversion model to obtain a reconstructed third acoustic feature, and obtaining the pre-trained voice conversion model according to voice training of a third user; and synthesizing the target speech according to the third acoustic feature. According to the method, the first voice content information and the first acoustic feature of the source voice and the second acoustic feature of the reference voice are input into the pre-trained voice conversion model, and the target voice is obtained and synthesized according to the reconstructed third acoustic feature, so that the waiting time of voice conversion can be shortened.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

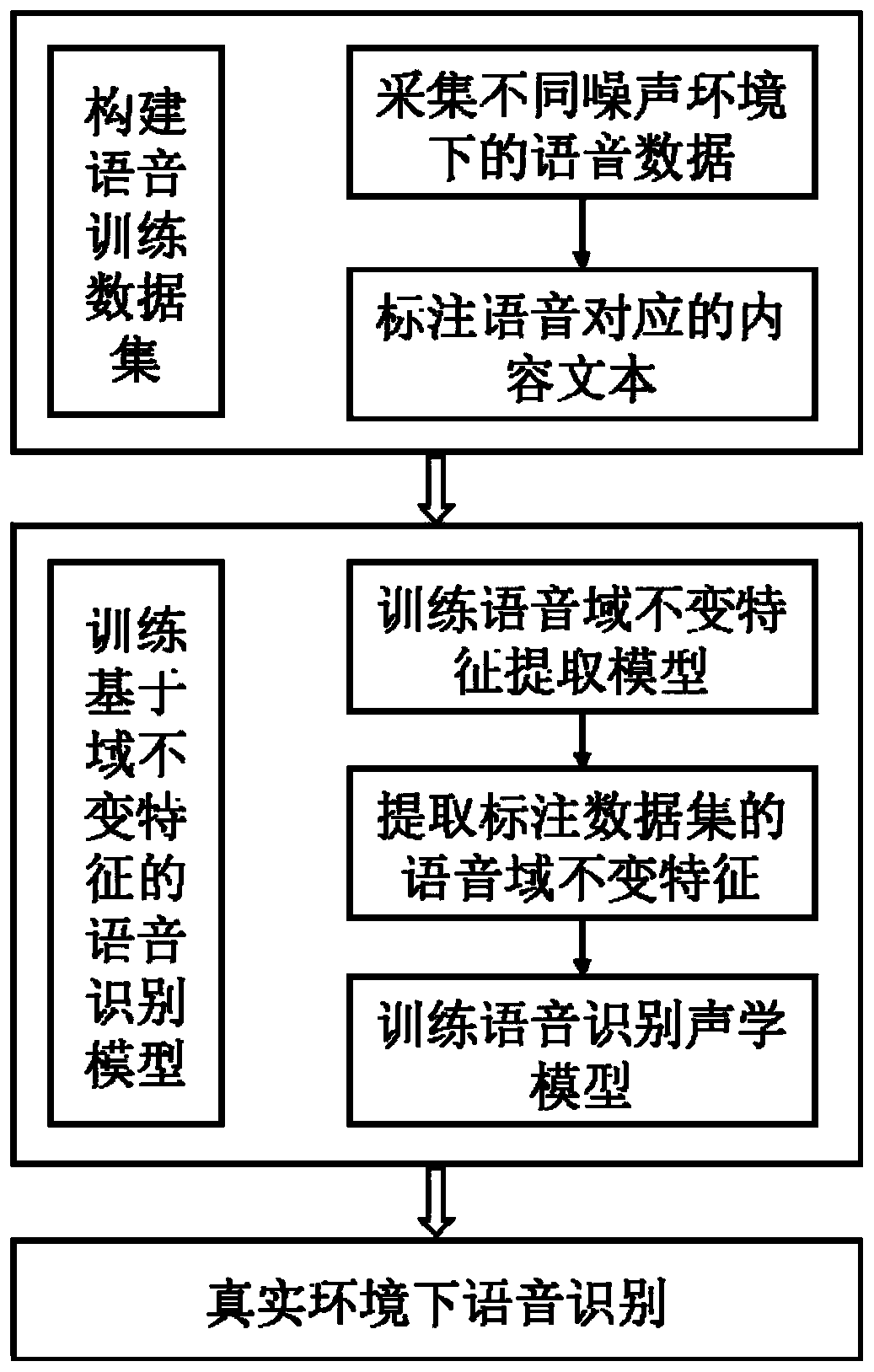

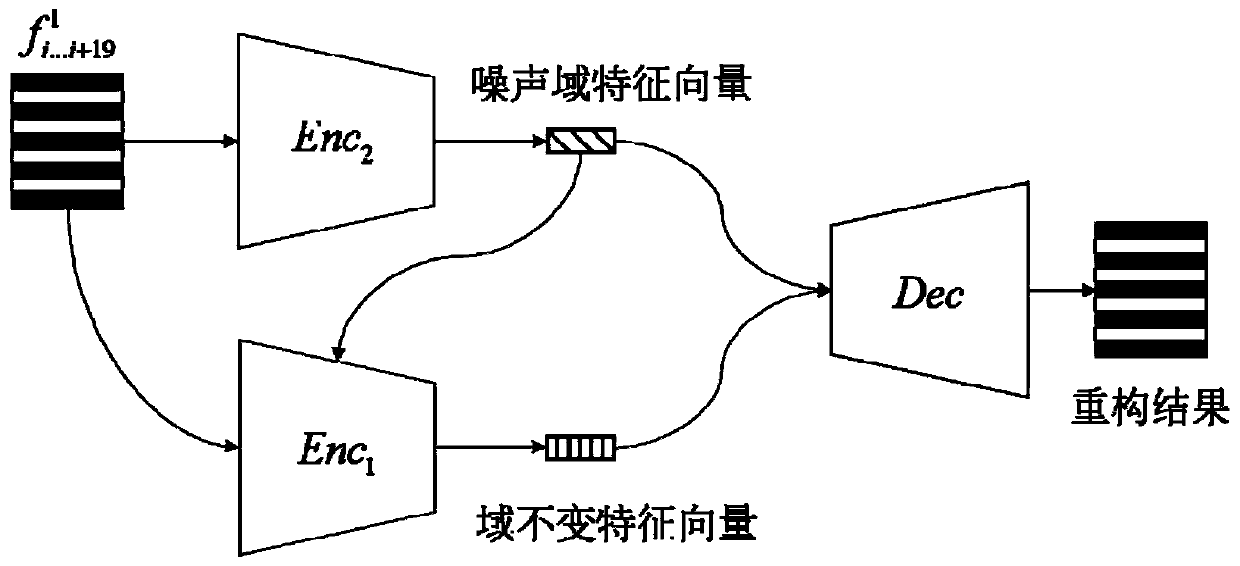

Speech recognition method based on domain-invariant feature

ActiveCN110570845AGood noise robustnessImprove recognition accuracySpeech recognitionText annotationSpeech identification

The invention discloses a speech recognition method based on a domain-invariant feature, and the method applies a speech domain-invariant feature extraction model to an end-to-end speech recognition model. The feature extraction model used in the speech recognition method aims at the robustness problem, and by adding more types of speech data to train the speech feature extraction model, better parameters can be obtained and a better domain-invariant feature extraction model can be obtained. The speech recognition method based on the domain-invariant feature uses unlabeled pure speech data totrain the feature extraction model, uses a small number of speeches with text annotation to train an end-to-end acoustic model, and provides important technical support for improving the robustness ofthe end-to-end acoustic model. Compared with the prior art, the speech recognition method based on the domain-invariant feature has higher recognition accuracy in different noise environments, smaller task quantity of speech annotation tasks, and faster training and testing speed of the models.

Owner:WUHAN UNIV OF TECH

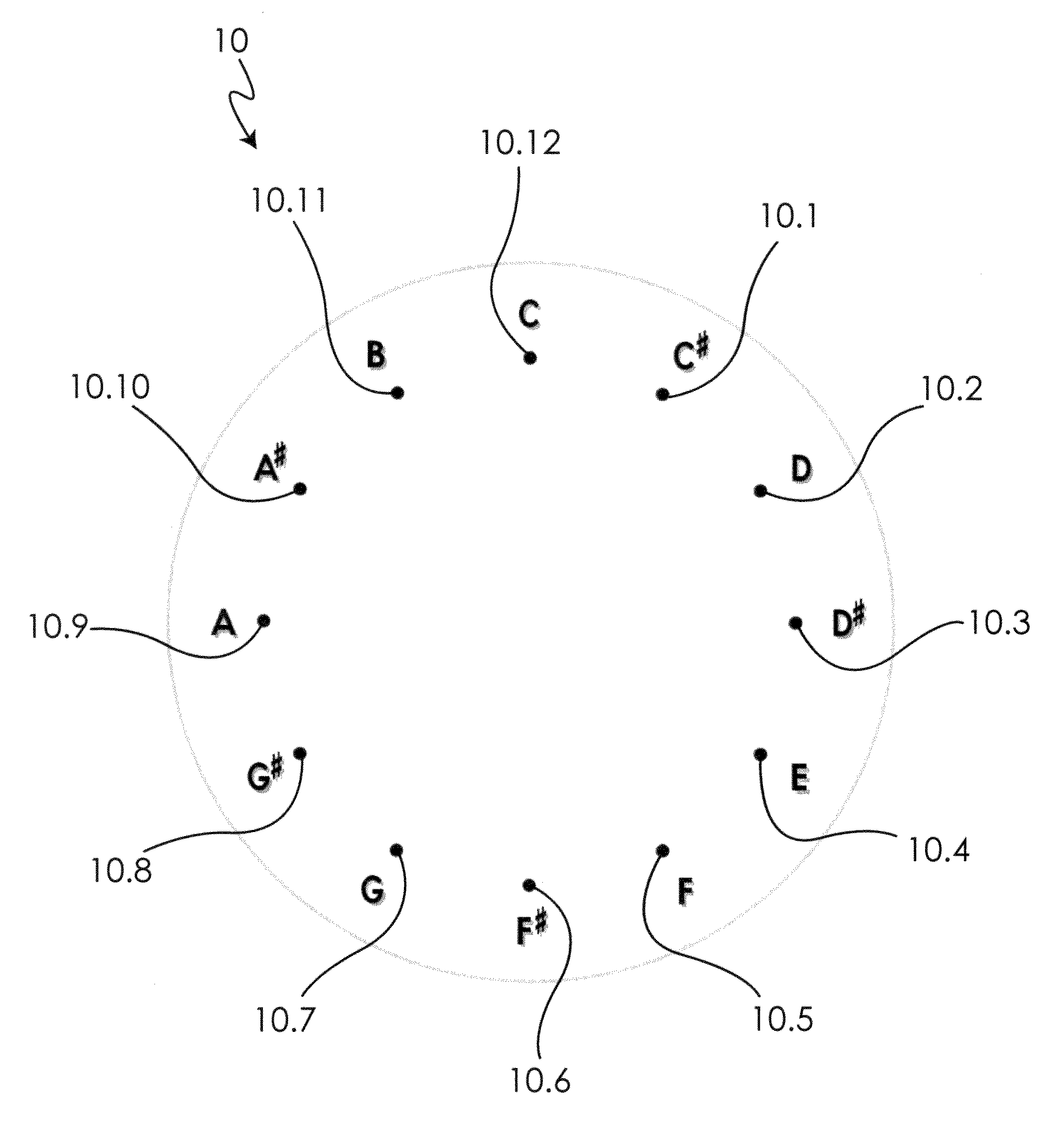

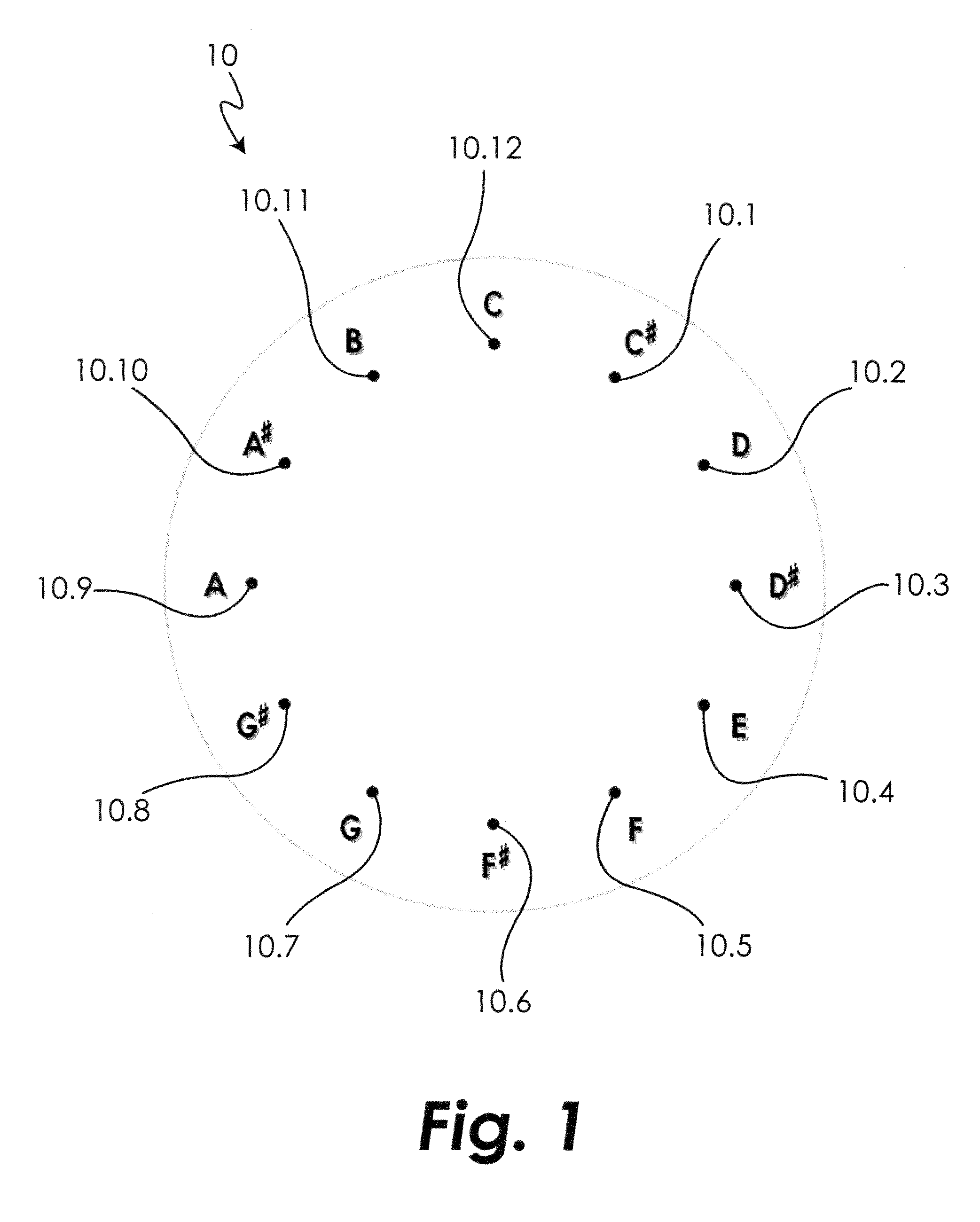

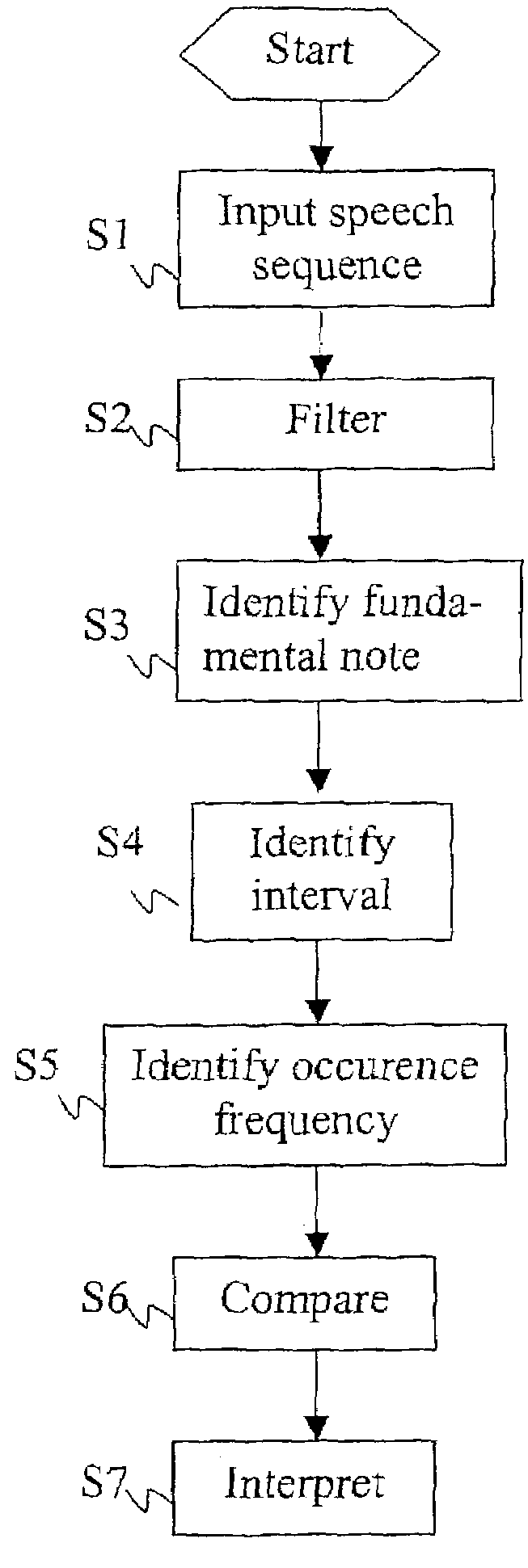

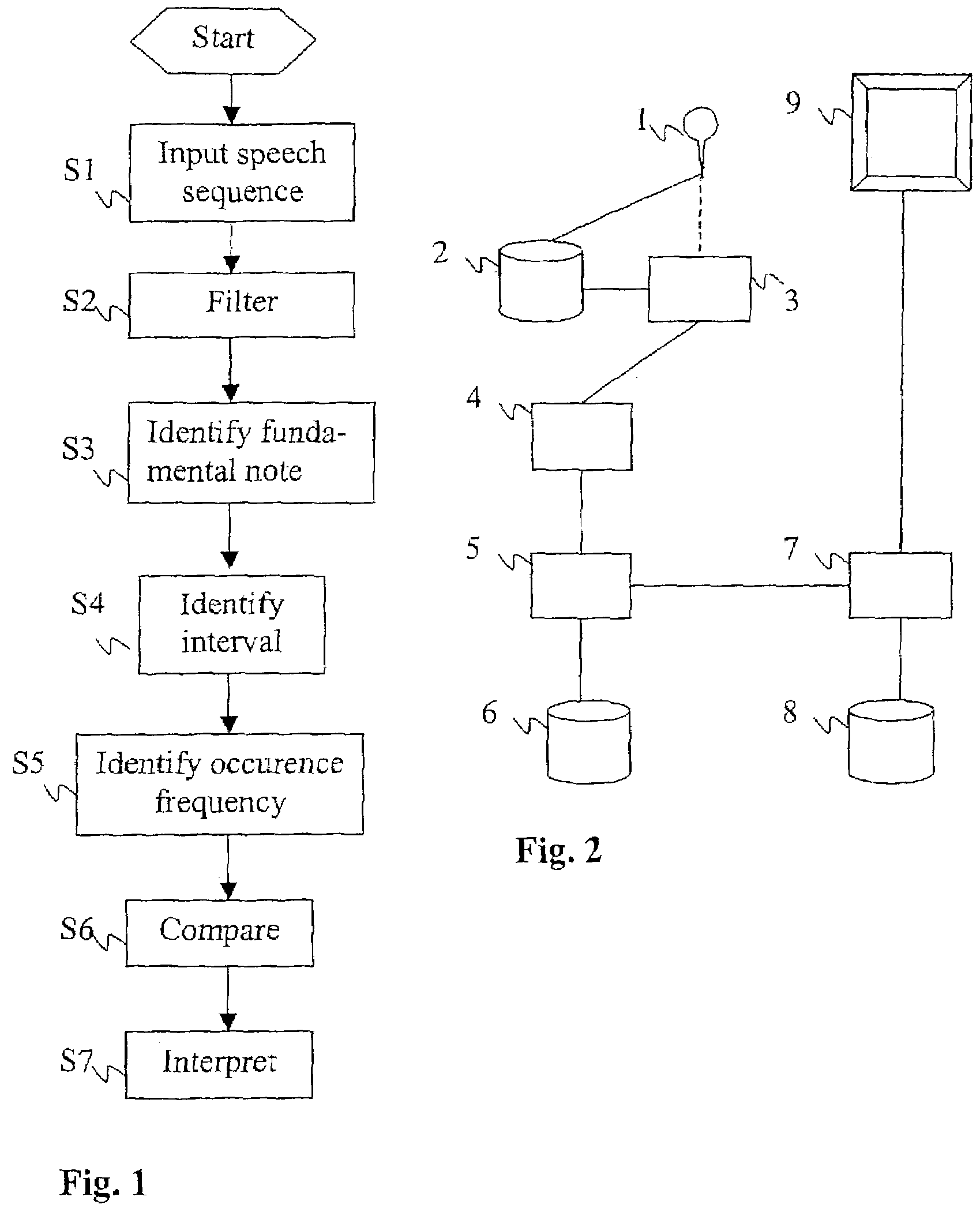

Method and device for speech analysis

A device and a method for speech analysis are provided, comprising measuring fundamental notes of a speech sequence to be analysed and identifying frequency intervals between at least some of said fundamental notes. An assessment is then made as to the frequency at which at least some of these thus identified intervals occur in the speech sequence to be analysed. Among other applications are speech training and diagnosis of pathological conditions.

Owner:TRANSPACIFIC INTELLIGENCE

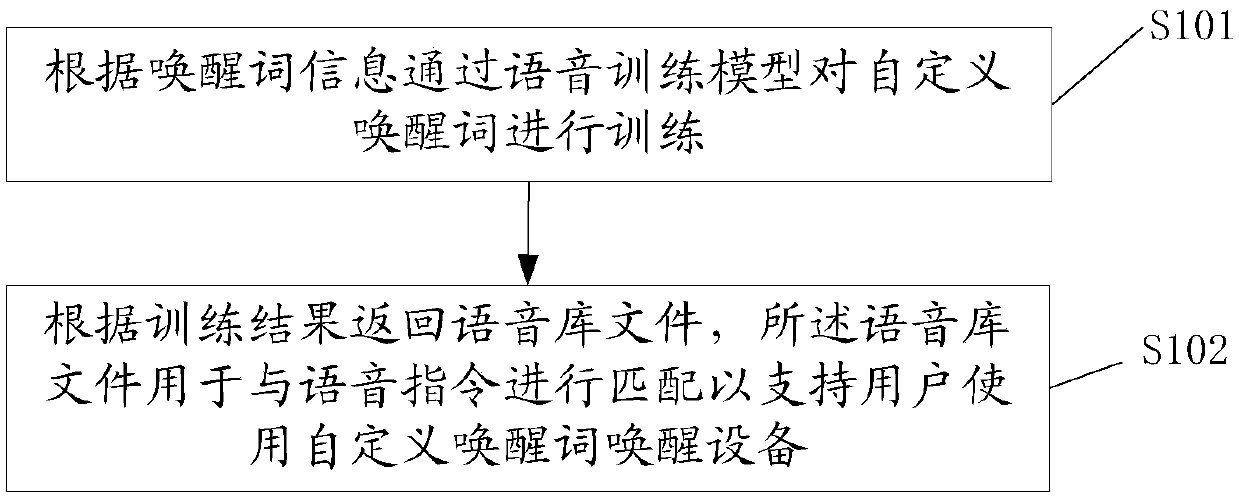

Voice wake-up word defining method and system

ActiveCN109584860AImplement customizationReal-time recognitionDigital data authenticationSpeech recognitionMatch algorithmsSpeech sound

The invention provides a voice wake-up word defining method and system, and relates to the technical field of voice interaction. The method comprises that a customized wake-up word is trained by a voice training model according to wake-up word information; a sound band file, which is used for matching a voice instruction so that a user can wake up a device via the customized wake-up word, is returned according to a training result; and the sound bank file comprises an audio file corresponding to information of the wake-up word, or a voice matching algorithm and a voice matching parameter corresponding to information of the wake-up word. The customized wake-up word is trained by the voice training model so as to form the sound bank file, and thus, the wake-up word of a voice system can be customized by the user.

Owner:JOYOUNG CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com