Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

324results about How to "Improve speech recognition performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

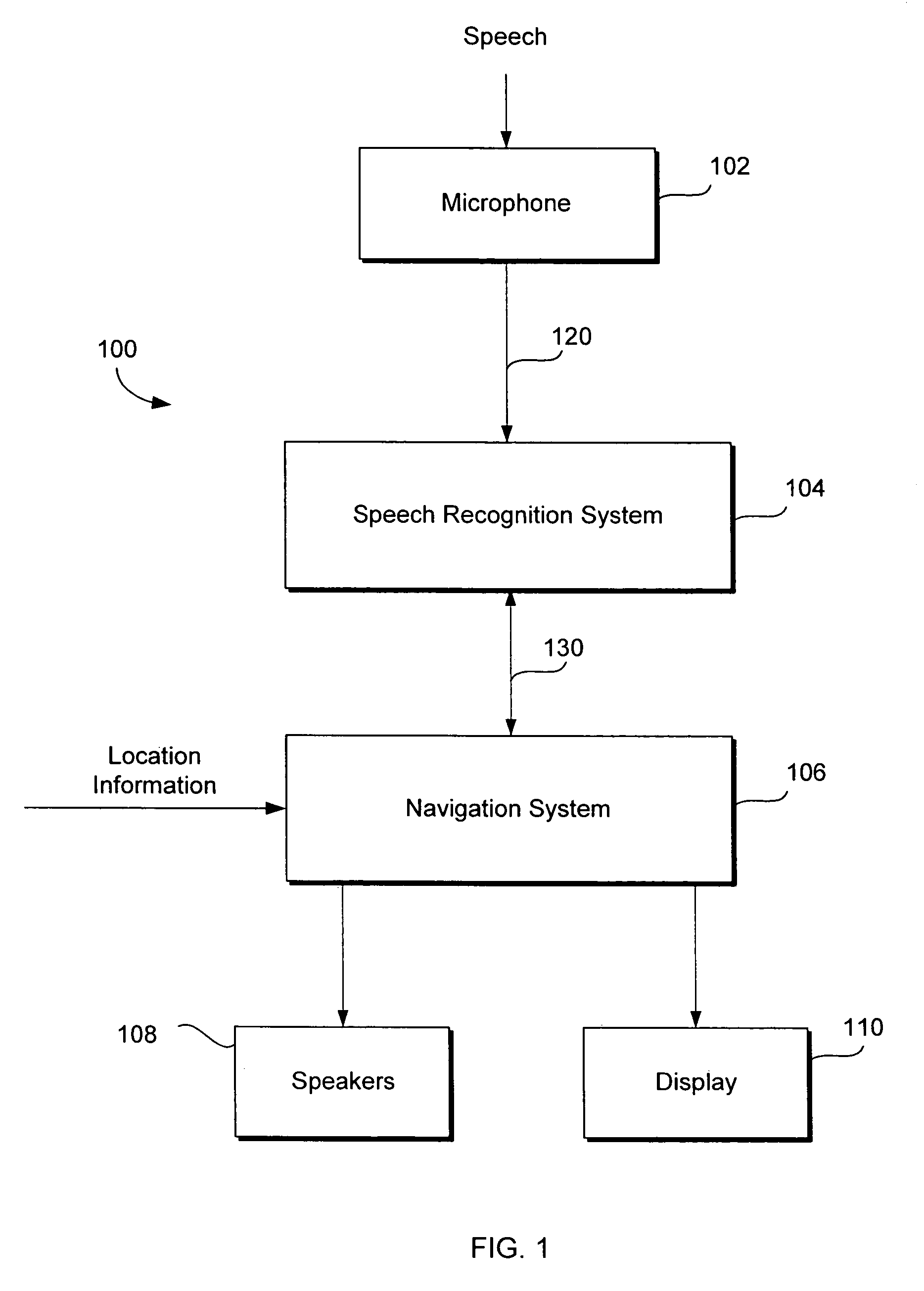

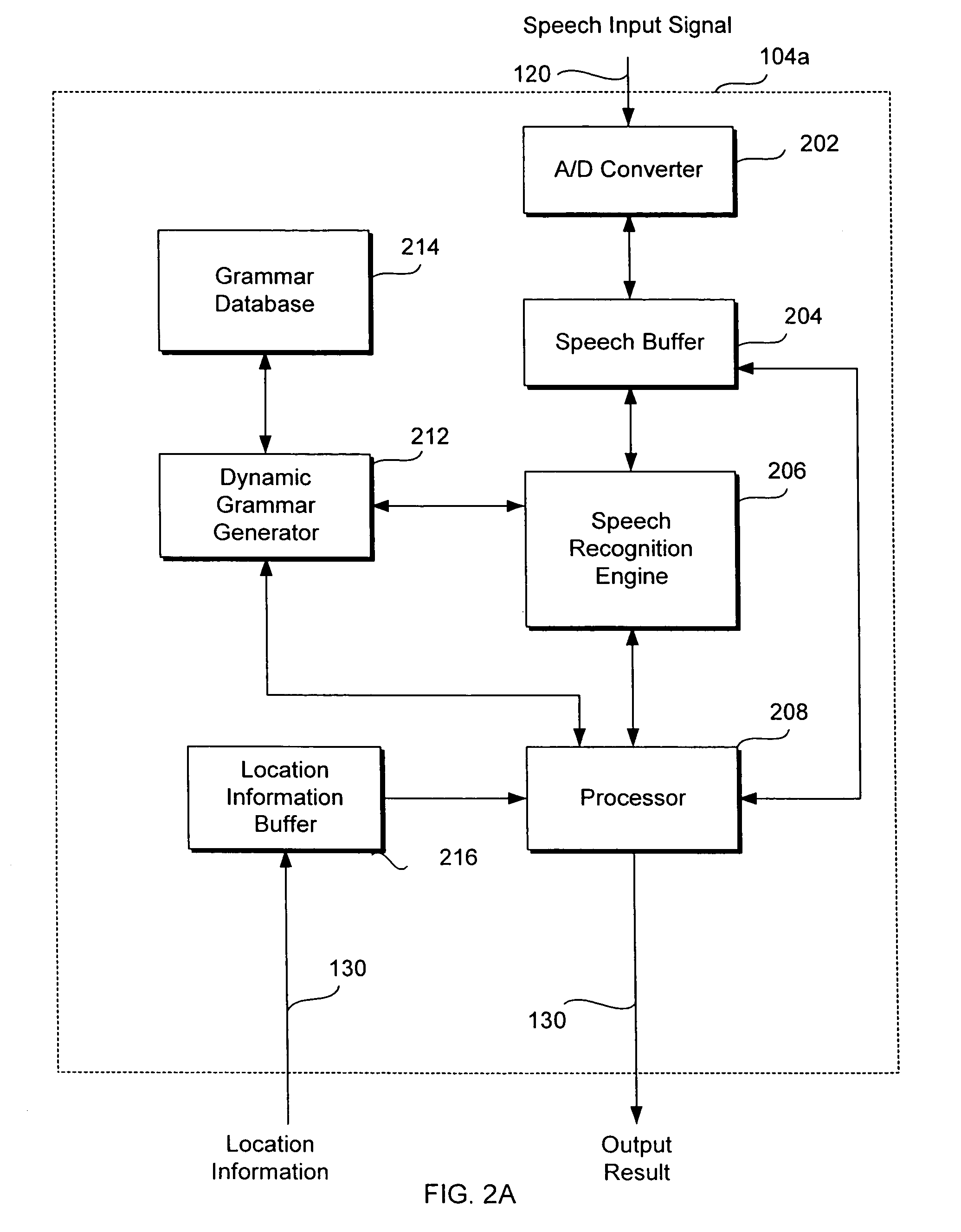

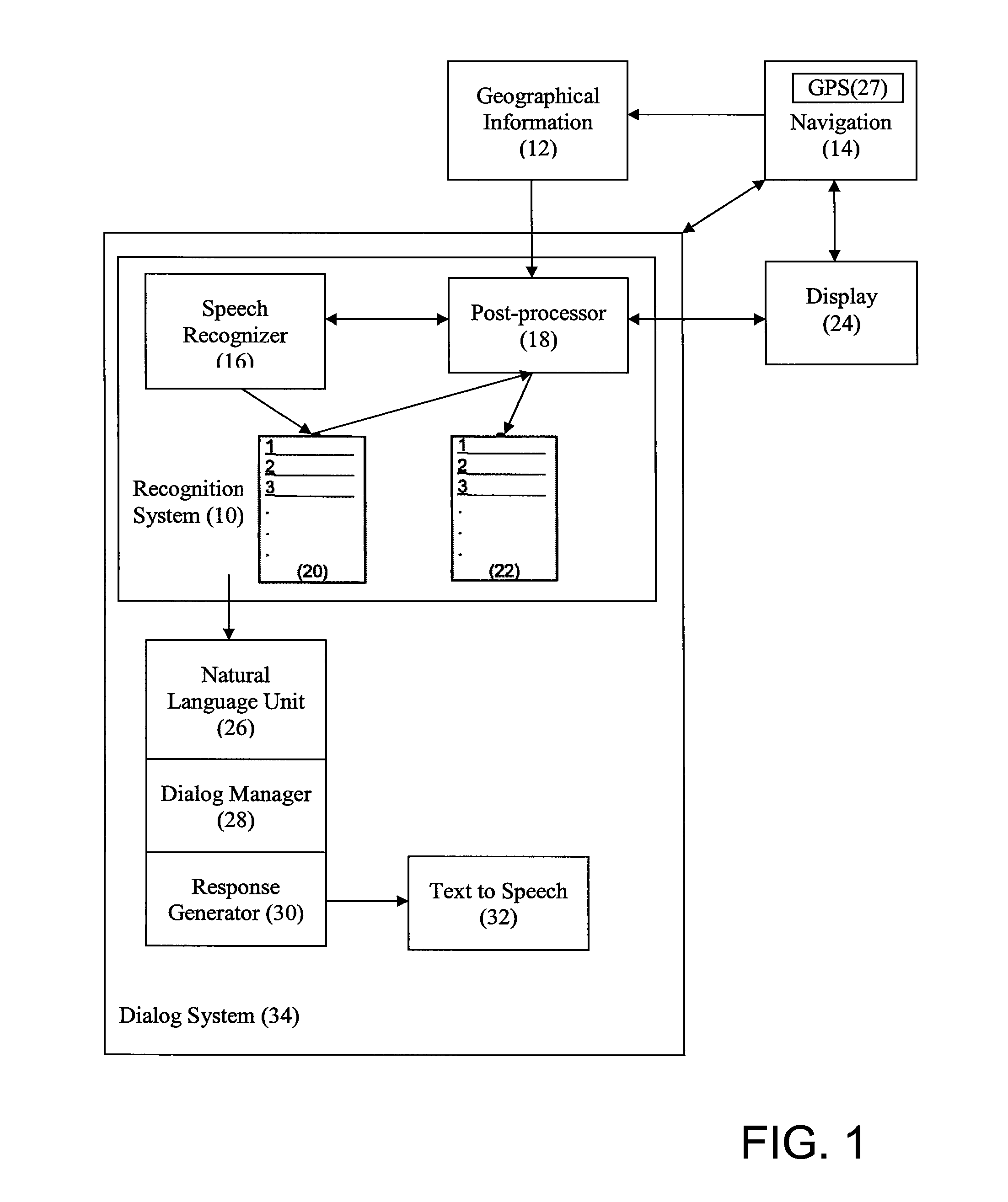

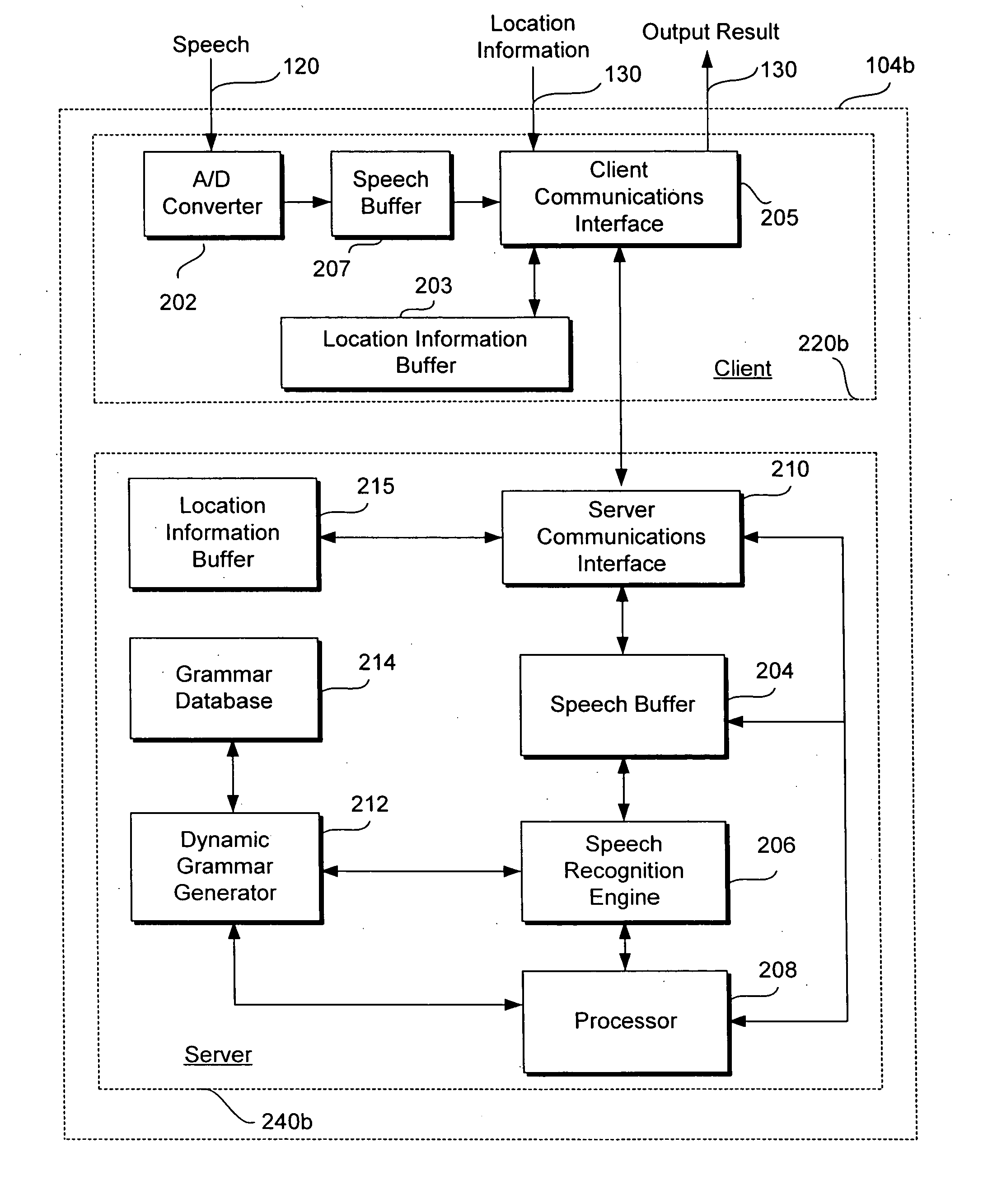

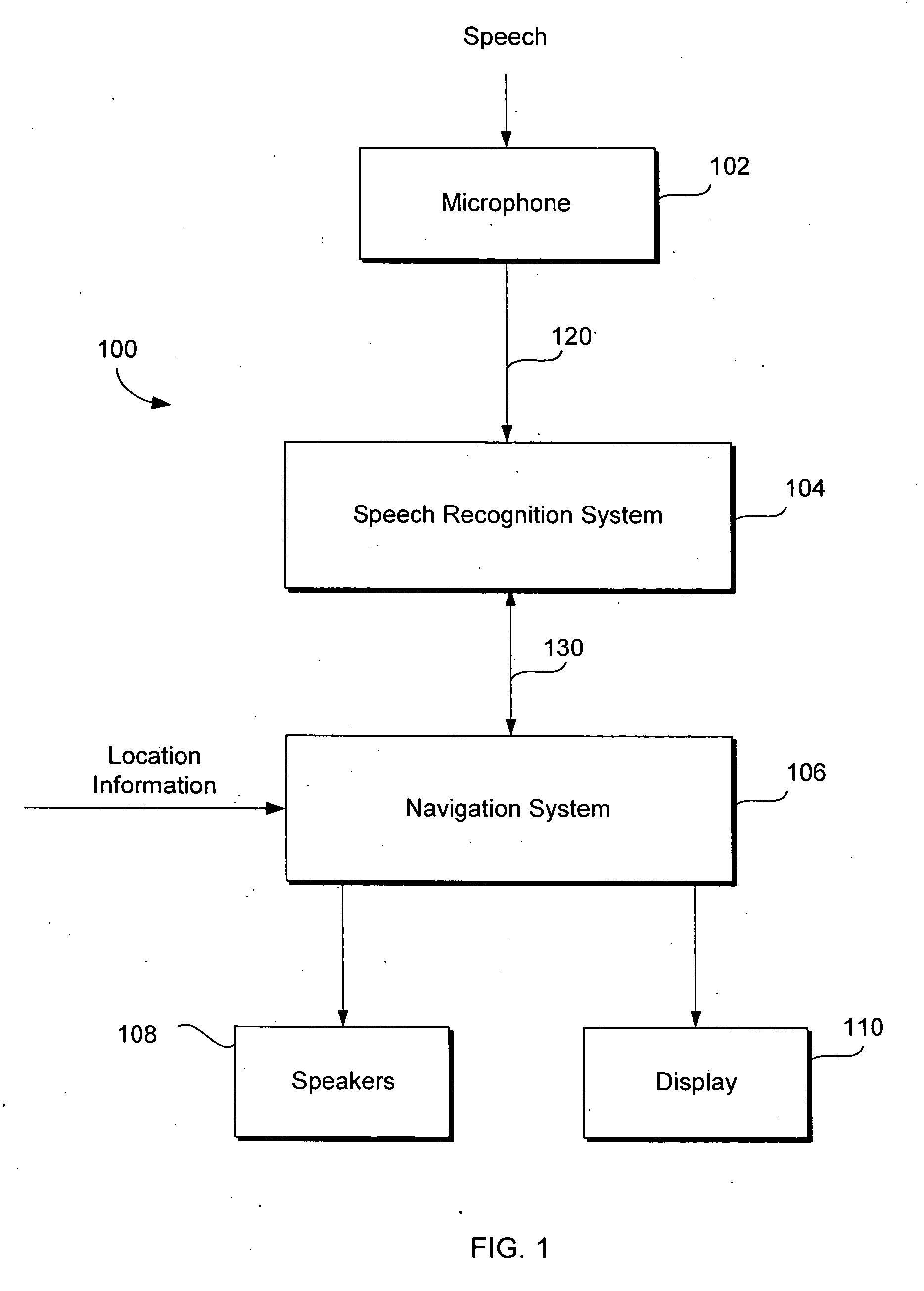

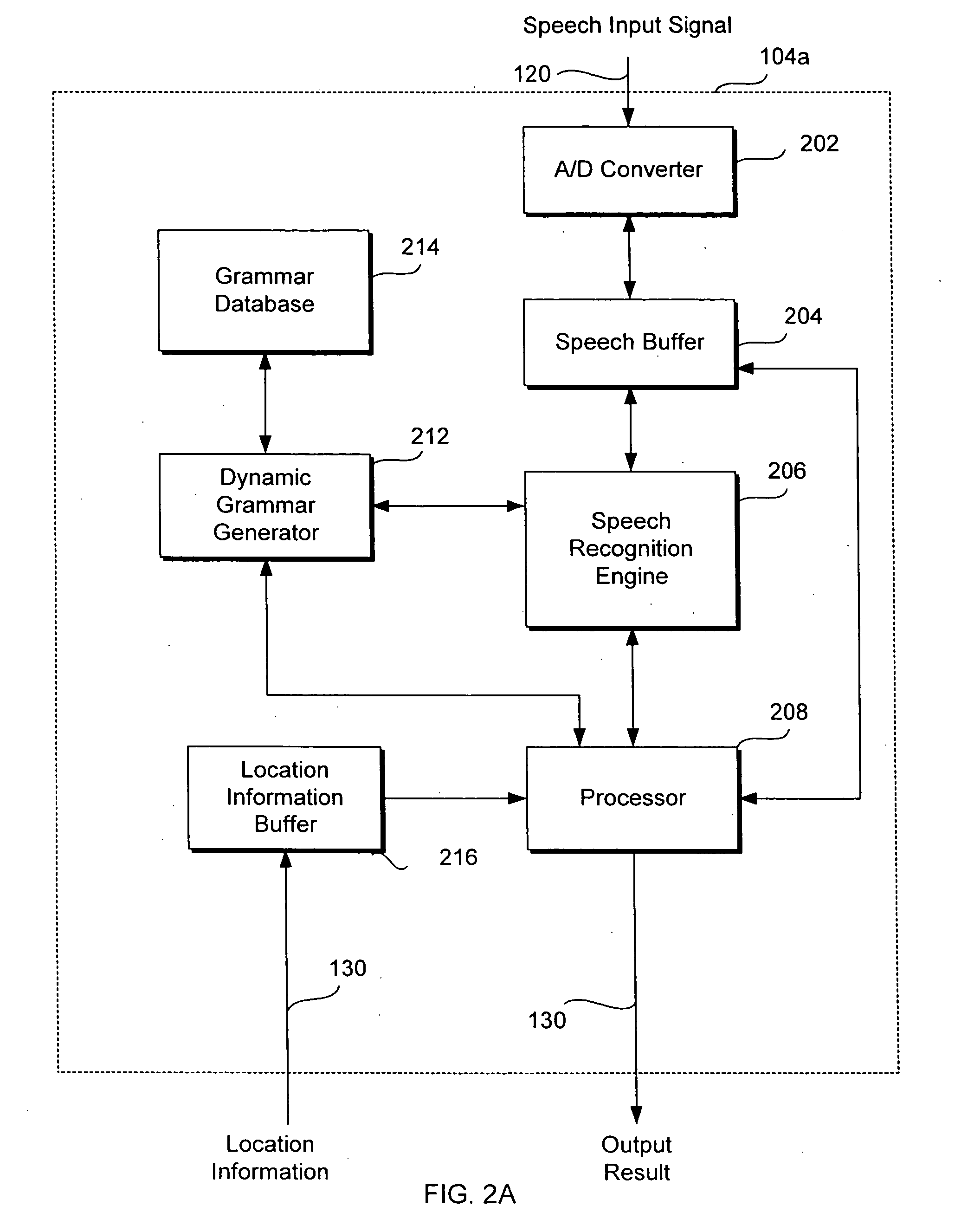

Method and system for speech recognition using grammar weighted based upon location information

InactiveUS7328155B2Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionSpeech identificationNavigation system

A speech recognition method and system for use in a vehicle navigation system utilize grammar weighted based upon geographical information regarding the locations corresponding to the tokens in the grammars and / or the location of the vehicle for which the vehicle navigation system is used, in order to enhance the performance of speech recognition. The geographical information includes the distances between the vehicle location and the locations corresponding to the tokens, as well as the size, population, and popularity of the locations corresponding to the tokens.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

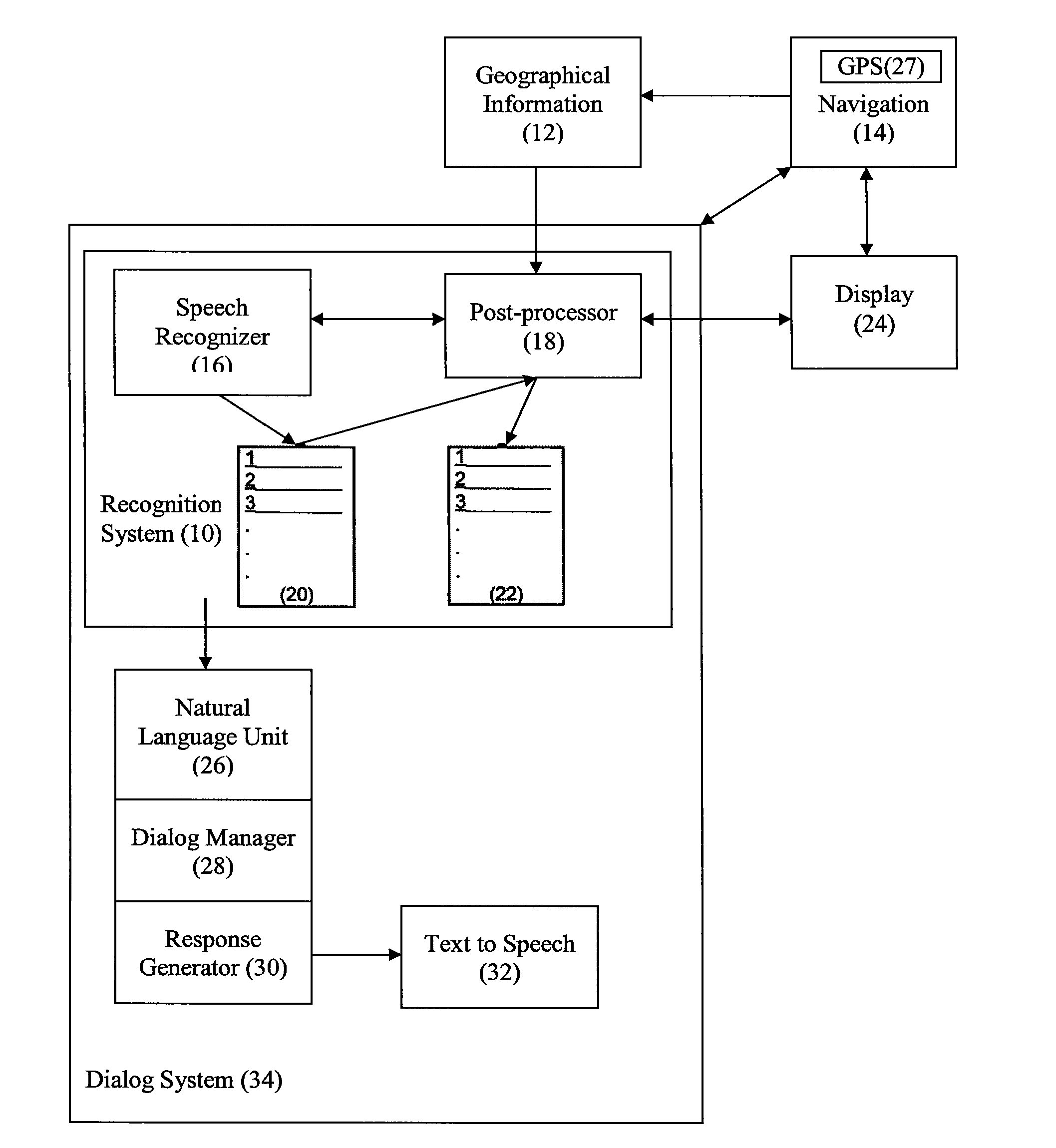

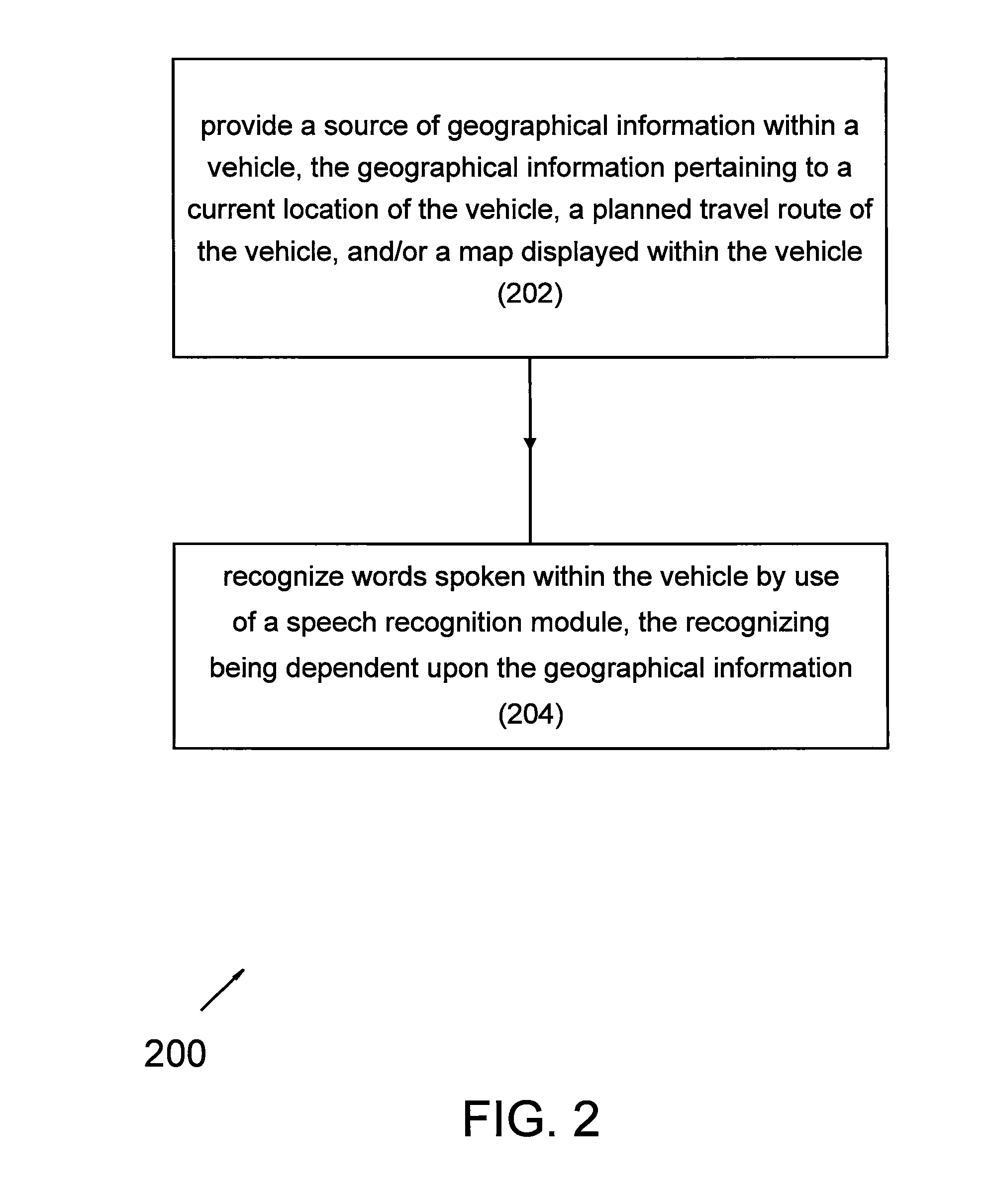

Method and system for improving speech recognition accuracy by use of geographic information

ActiveUS20110022292A1Improve speech recognition performanceReduce misidentificationInstruments for road network navigationRoad vehicles traffic controlProgram planningSpeech identification

A method for speech recognition includes providing a source of geographical information within a vehicle. The geographical information pertains to a current location of the vehicle, a planned travel route of the vehicle, a map displayed within the vehicle, and / or a gesture marked by a user on a map. Words spoken within the vehicle are recognized by use of a speech recognition module. The recognizing is dependent upon the geographical information.

Owner:ROBERT BOSCH GMBH

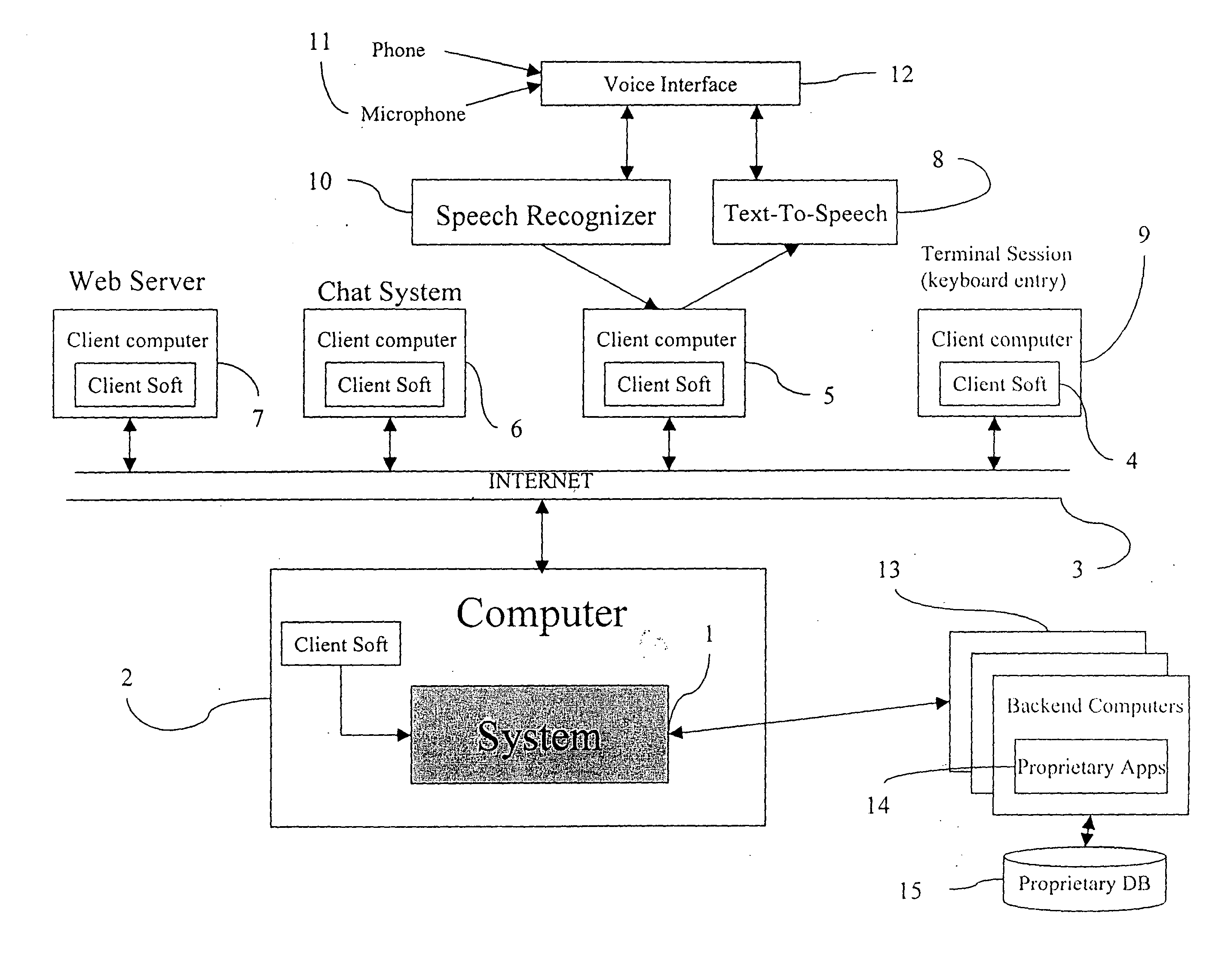

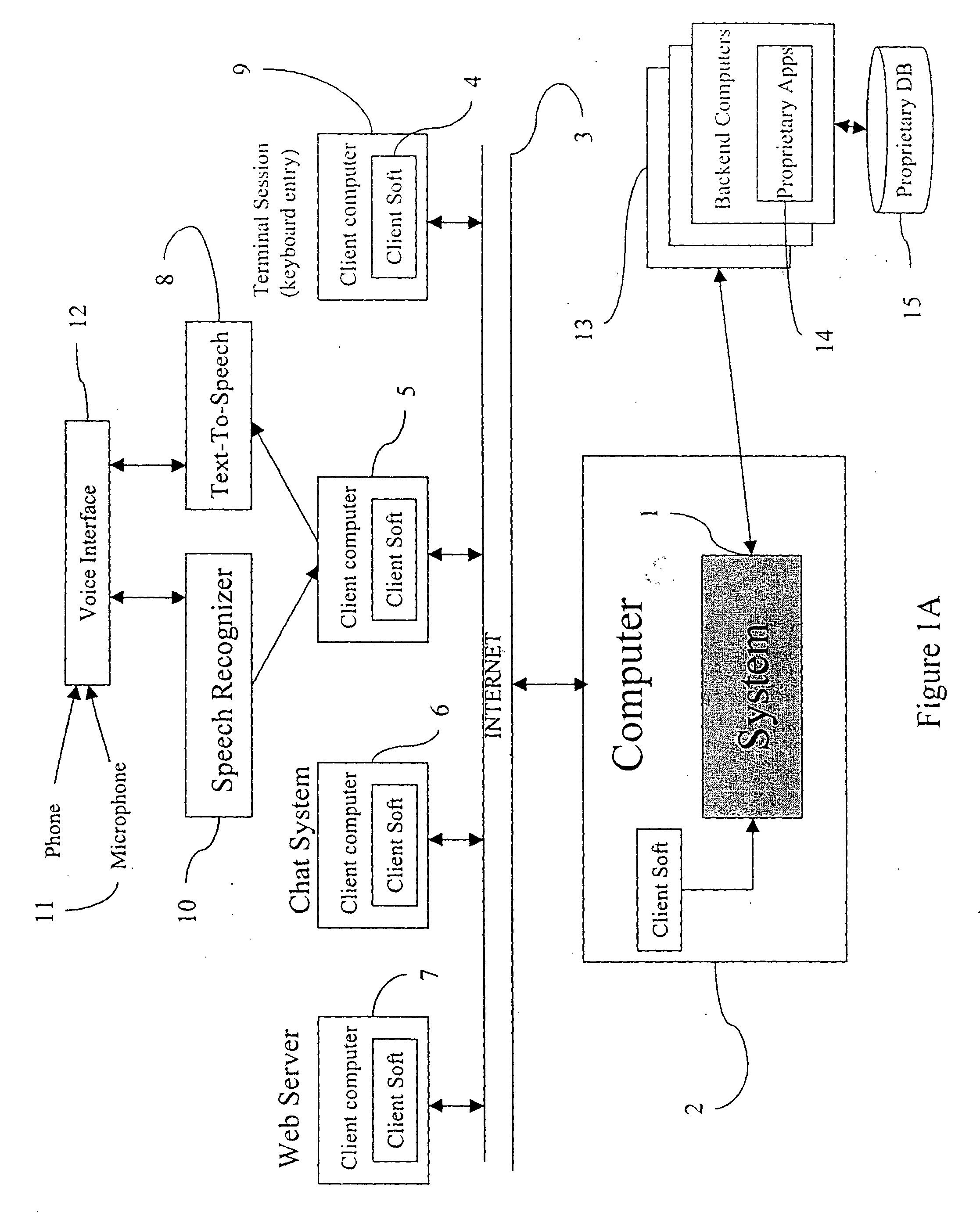

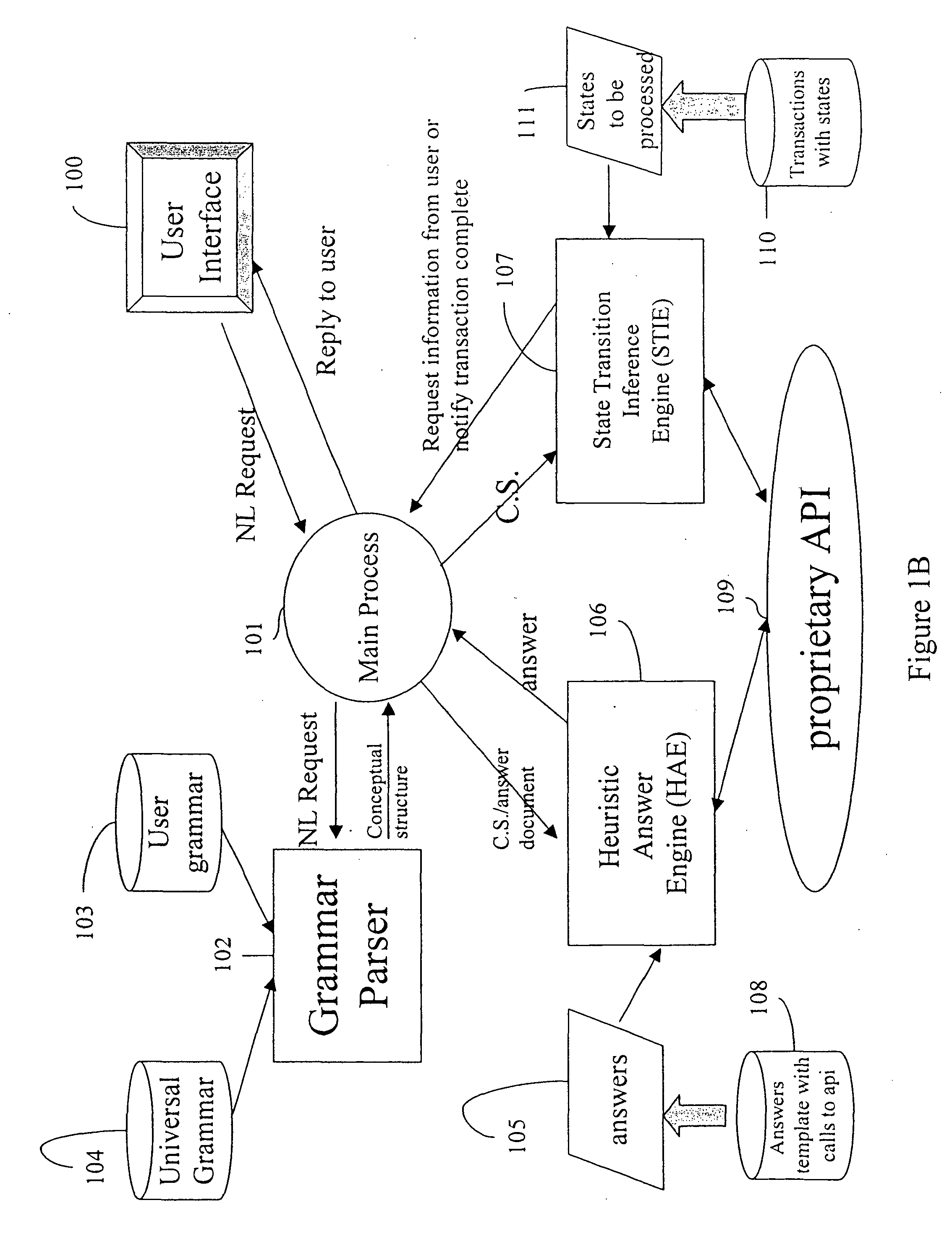

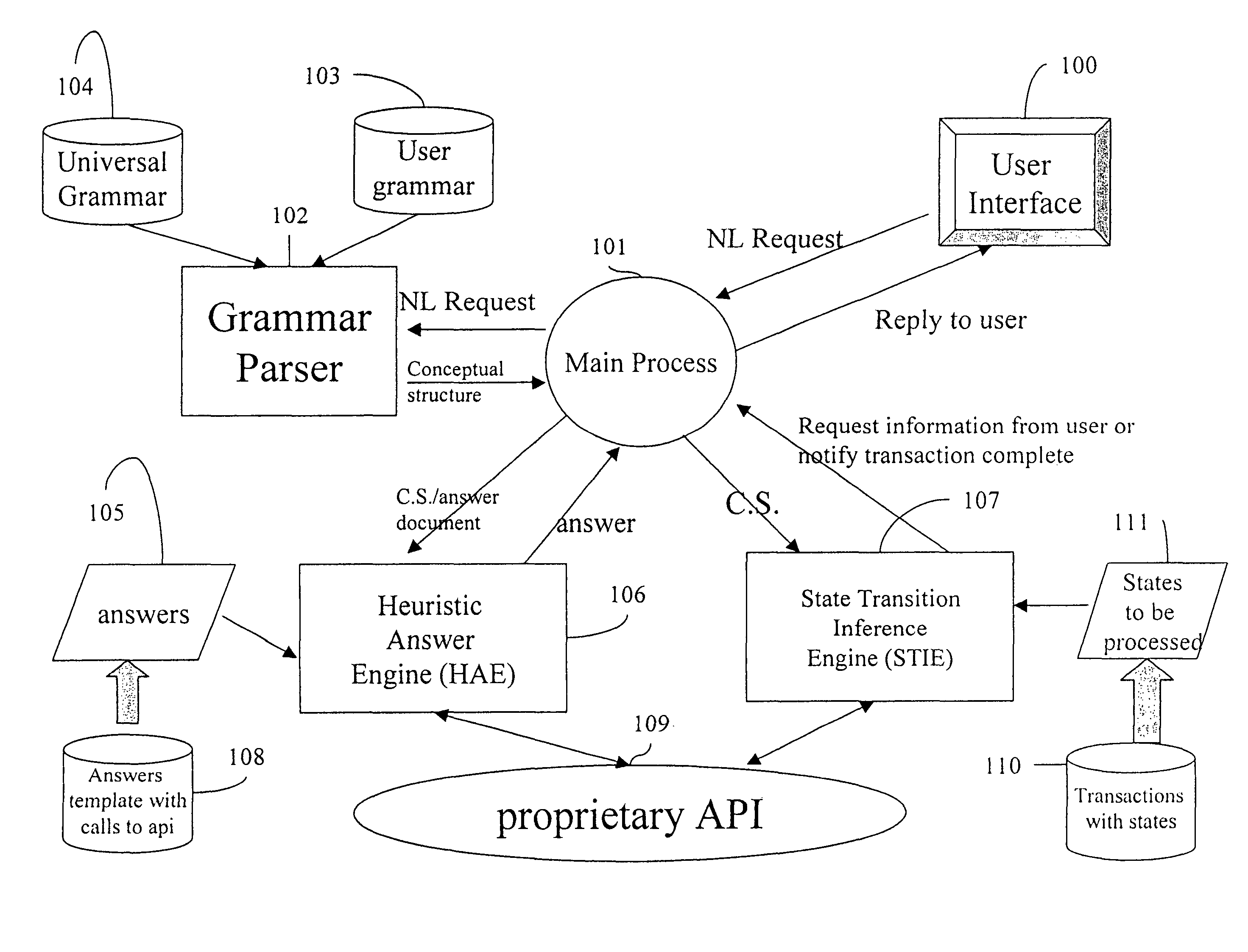

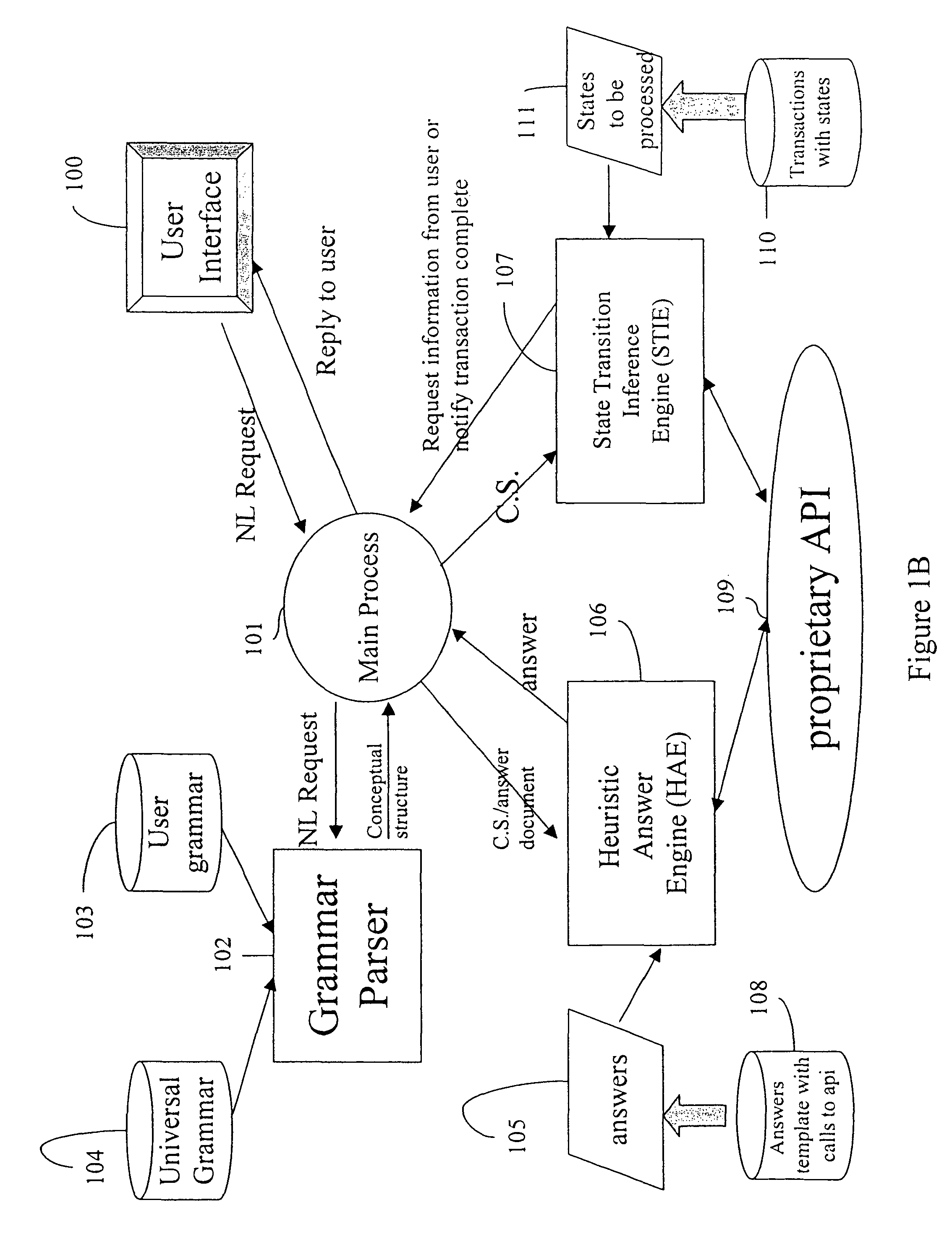

Apparatus and methods for developing conversational applications

InactiveUS20040083092A1Improve speech recognition performanceQuick fixSemantic analysisSpeech recognitionState switchingApplication software

Owner:GYRUS LOGIC INC

Method and system for speech recognition using grammar weighted based upon location information

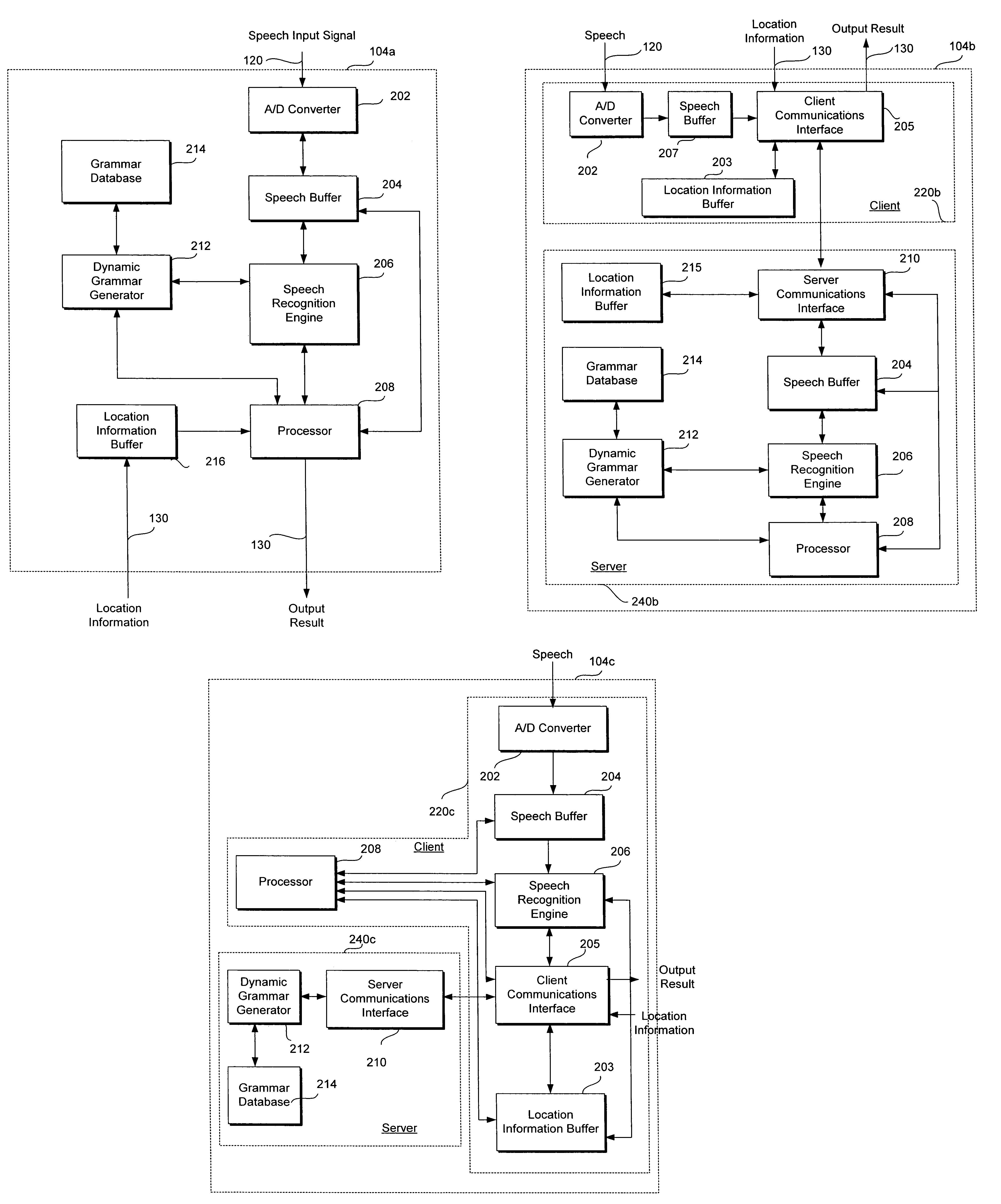

InactiveUS20050080632A1Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionNavigation systemSpeech sound

A speech recognition method and system for use in a vehicle navigation system utilize grammar weighted based upon geographical information regarding the locations corresponding to the tokens in the grammars and / or the location of the vehicle for which the vehicle navigation system is used, in order to enhance the performance of speech recognition. The geographical information includes the distances between the vehicle location and the locations corresponding to the tokens, as well as the size, population, and popularity of the locations corresponding to the tokens.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

Digital Signatures for Communications Using Text-Independent Speaker Verification

ActiveUS20120296649A1Improve speech recognition performanceSpeech recognitionTransmissionText independentDigital signature

A speaker-verification digital signature system is disclosed that provides greater confidence in communications having digital signatures because a signing party may be prompted to speak a text-phrase that may be different for each digital signature, thus making it difficult for anyone other than the legitimate signing party to provide a valid signature.

Owner:NUANCE COMM INC

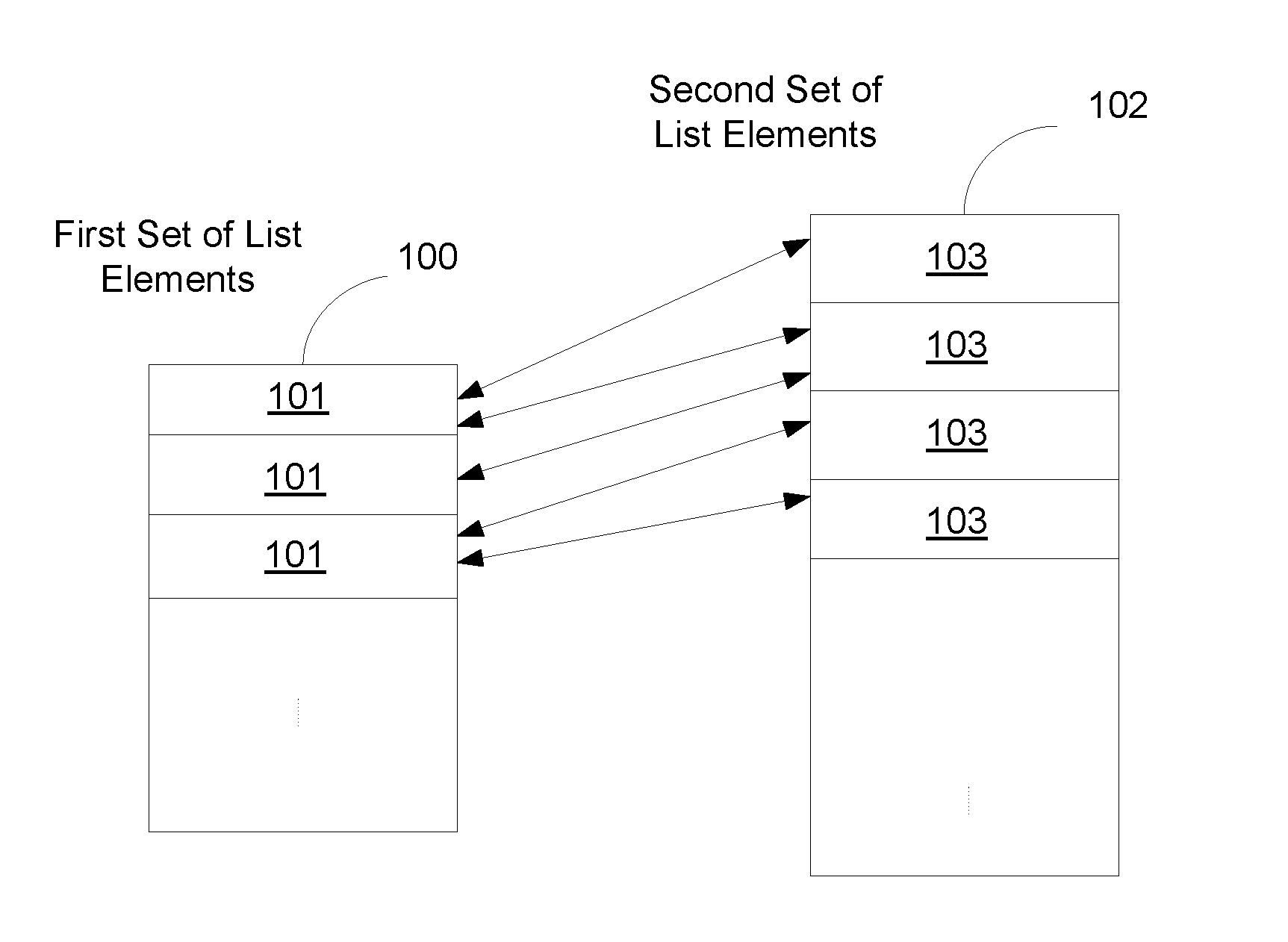

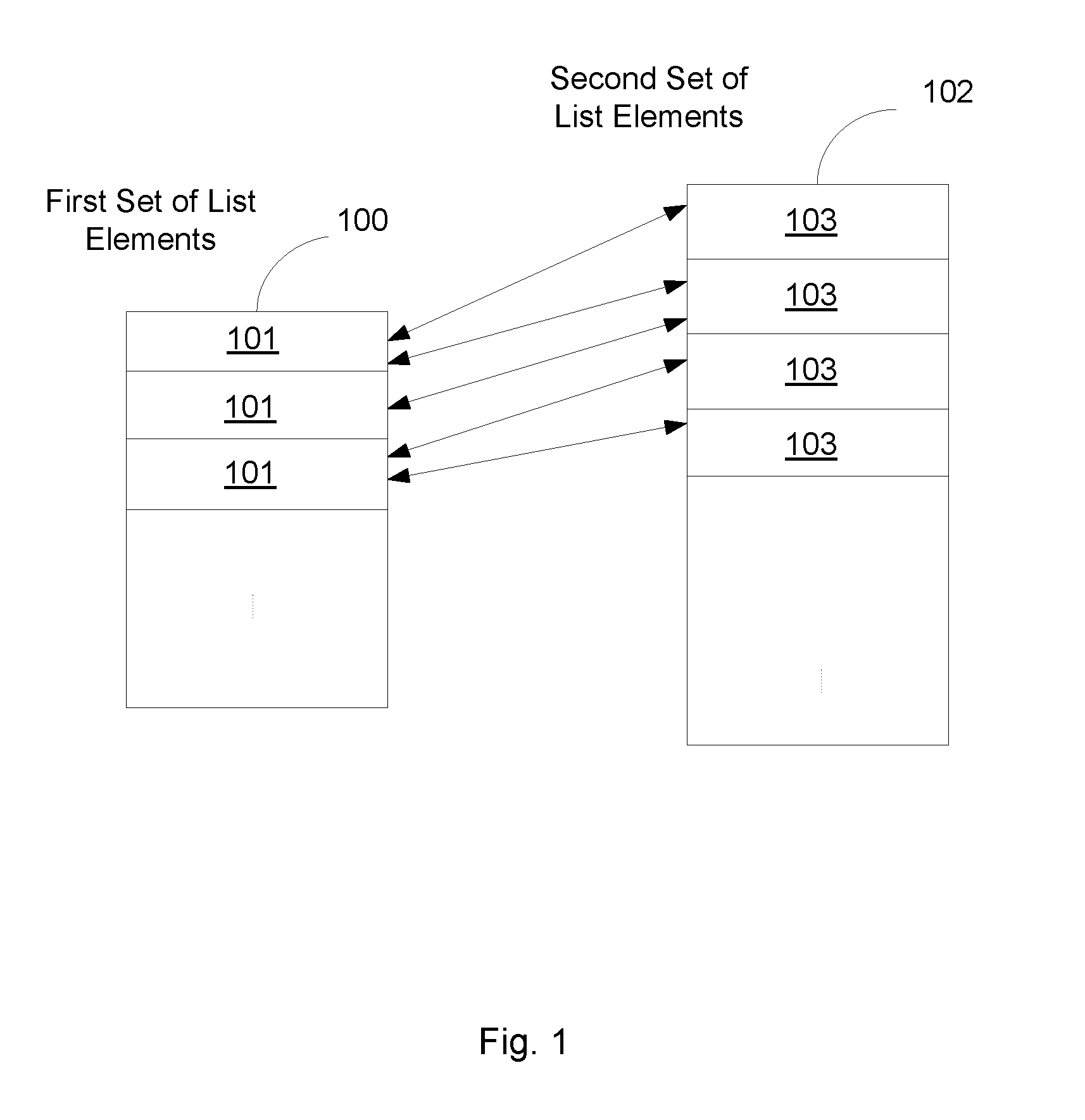

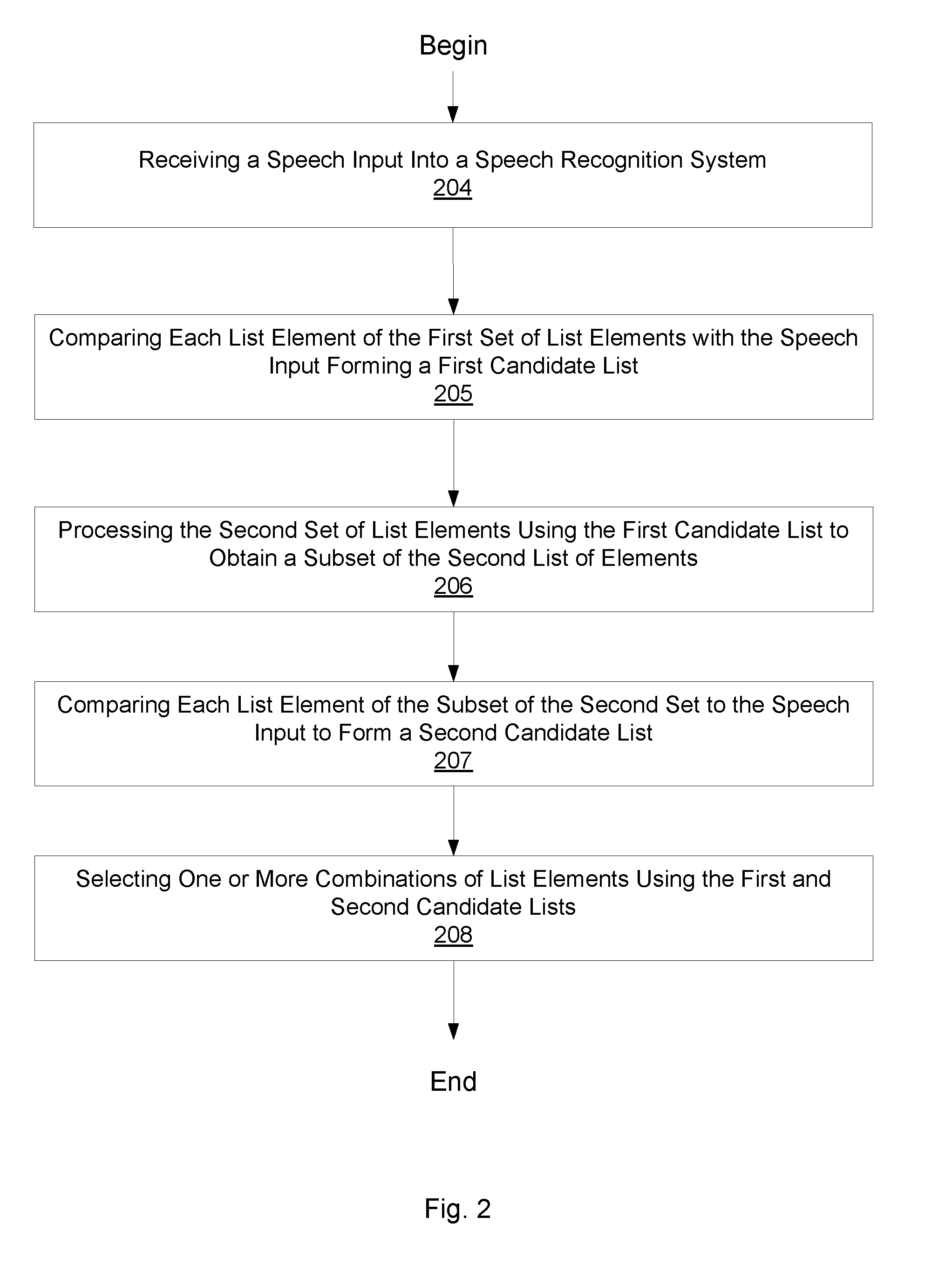

Speech Recognition Method for Selecting a Combination of List Elements via a Speech Input

The invention provides a speech recognition method for selecting a combination of list elements via a speech input, wherein a first list element of the combination is part of a first set of list elements and a second list element of the combination is part of a second set of list elements, the method comprising the steps of receiving the speech input, comparing each list element of the first set with the speech input to obtain a first candidate list of best matching list elements, processing the second set using the first candidate list to obtain a subset of the second set, comparing each list element of the subset of the second set with the speech input to obtain a second candidate list of best matching list elements, and selecting a combination of list elements using the first and the second candidate list.

Owner:CERENCE OPERATING CO

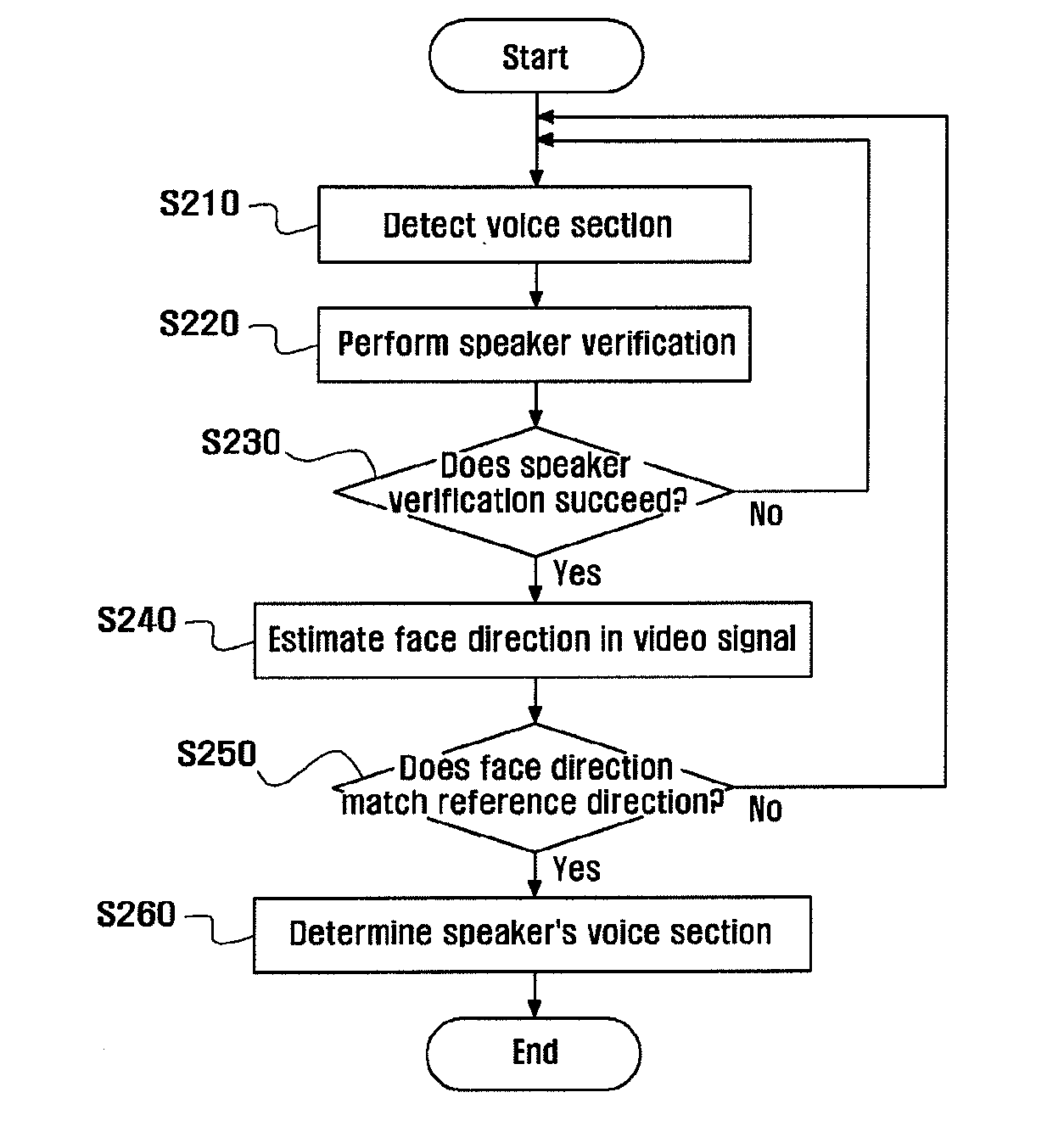

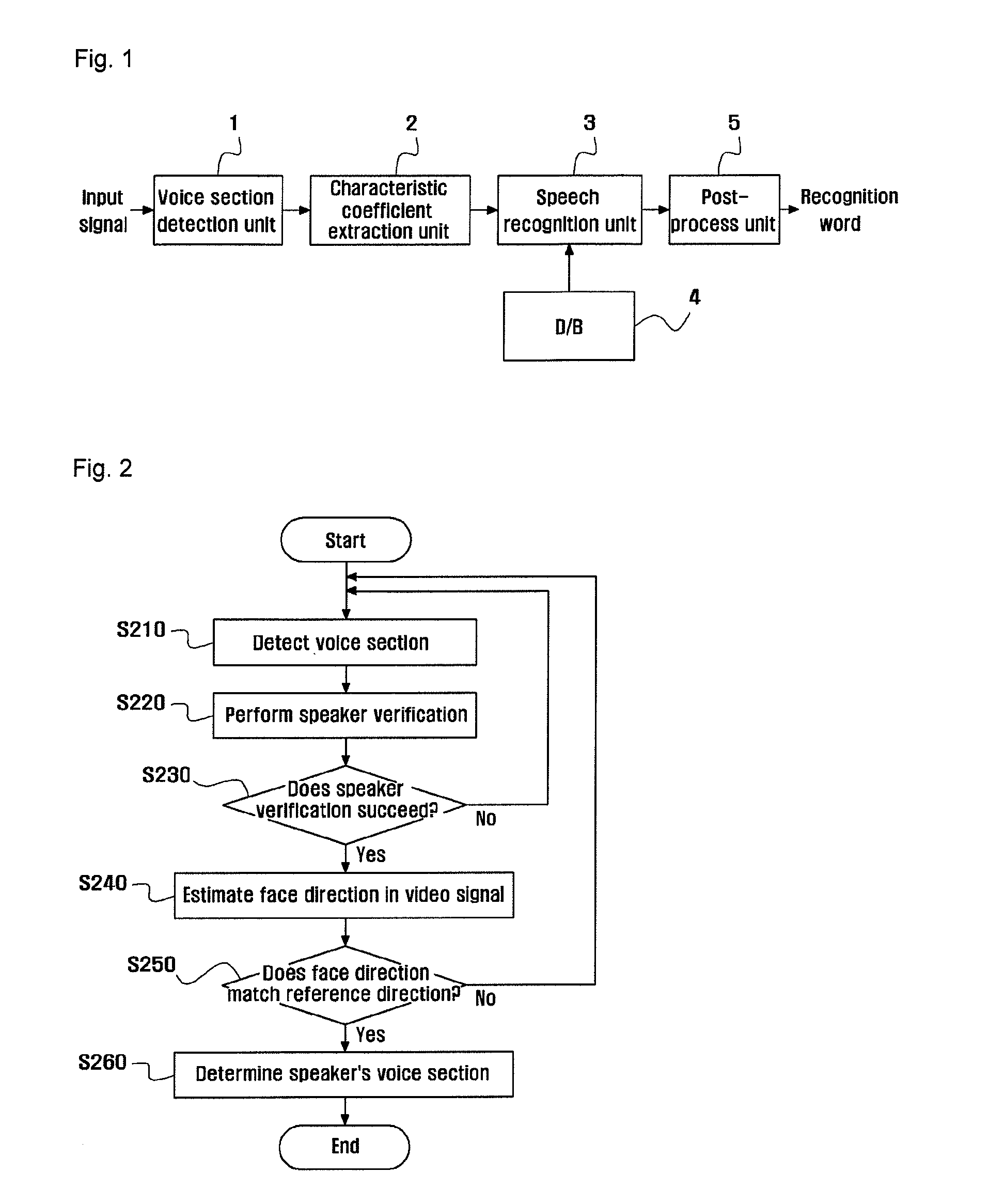

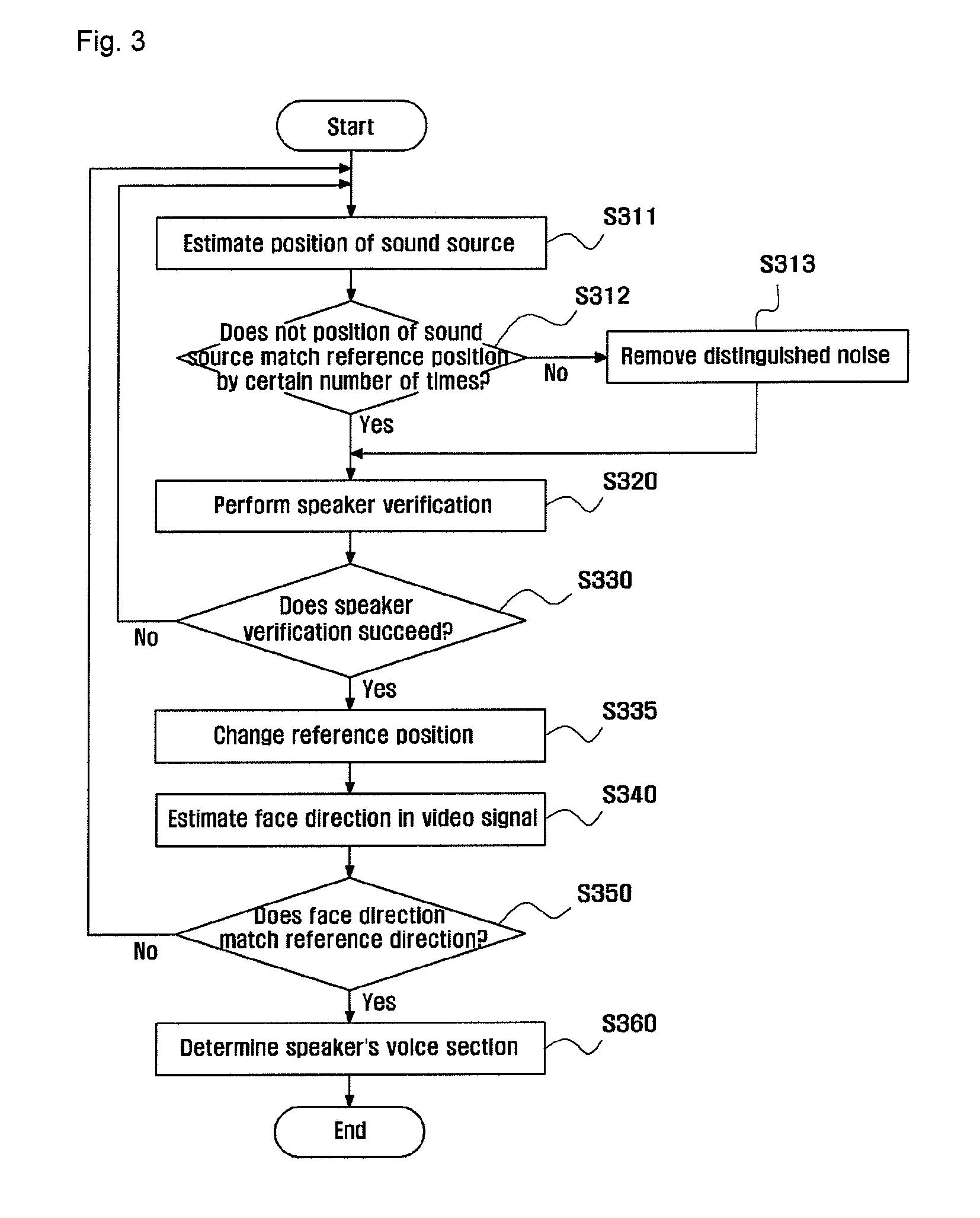

Method for detecting voice section from time-space by using audio and video information and apparatus thereof

ActiveUS20120078624A1Improve performanceImprove speech recognition performanceSpeech recognitionLoudspeakerAudio signal

The present invention relates to a method for detecting a voice section in time-space by using audio and video information. According to an embodiment of the present invention, a method for detecting a voice section from time-space by using audio and video information comprises the steps of: detecting a voice section in an audio signal which is inputted into a microphone array; verifying a speaker from the detected voice section; sensing the face of the speaker by using a video signal which is inputted into a camera if the speaker is successfully verified, and then estimating the direction of the face of the speaker; and determining the detected voice section as the voice section of the speaker if the estimated face direction corresponds to a reference direction which is previously stored.

Owner:KOREA UNIV IND & ACADEMIC CALLABORATION FOUND

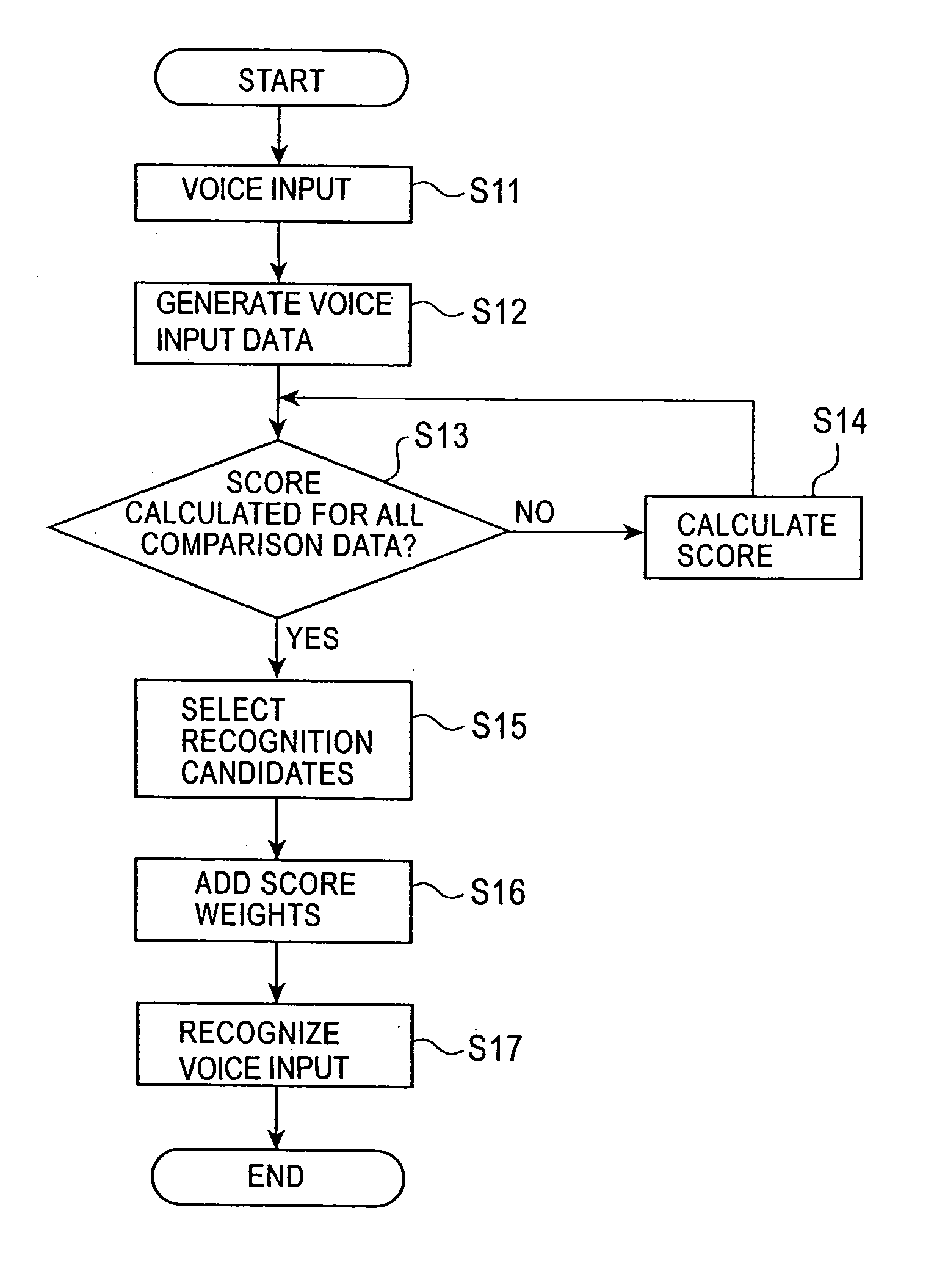

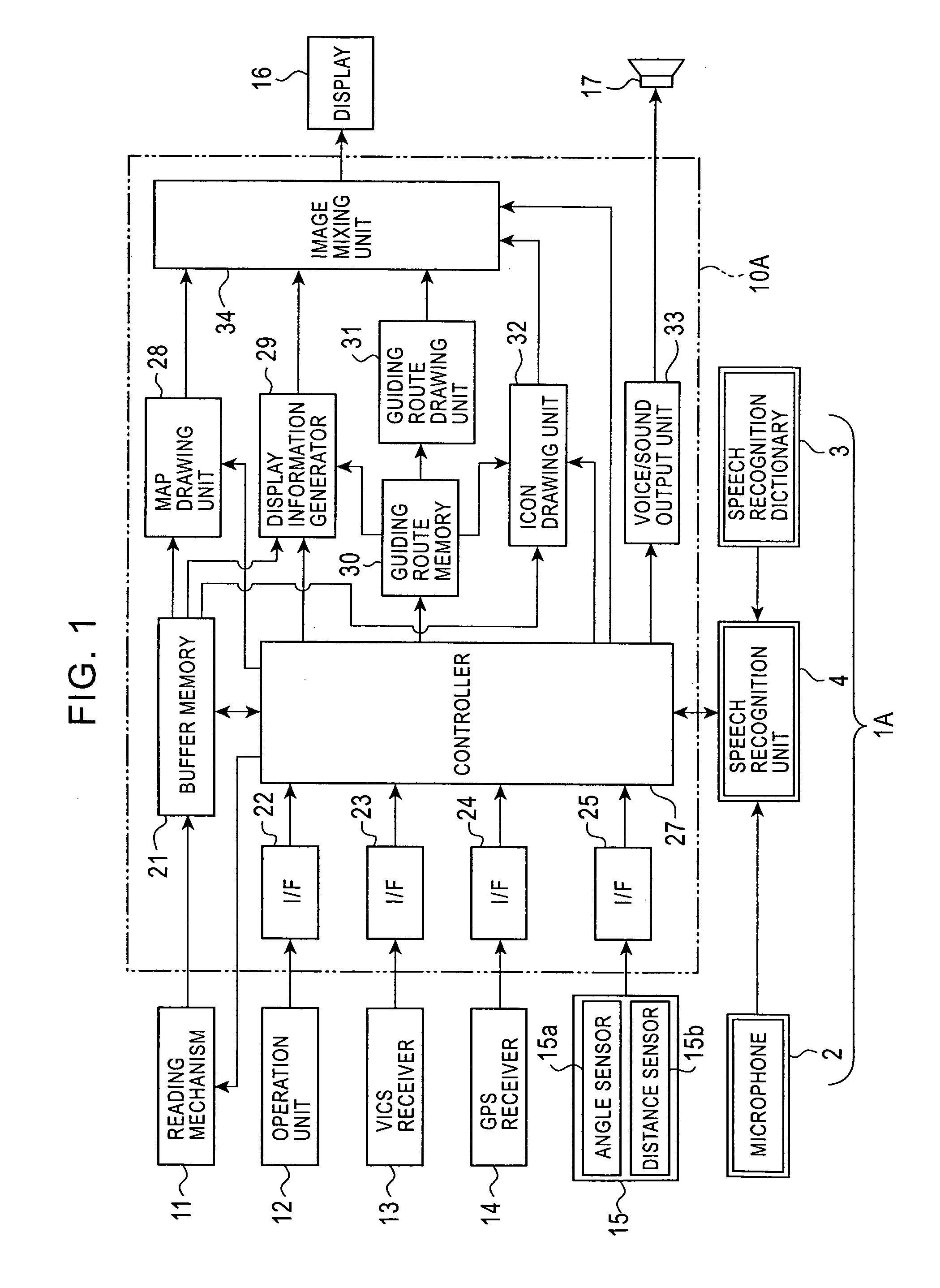

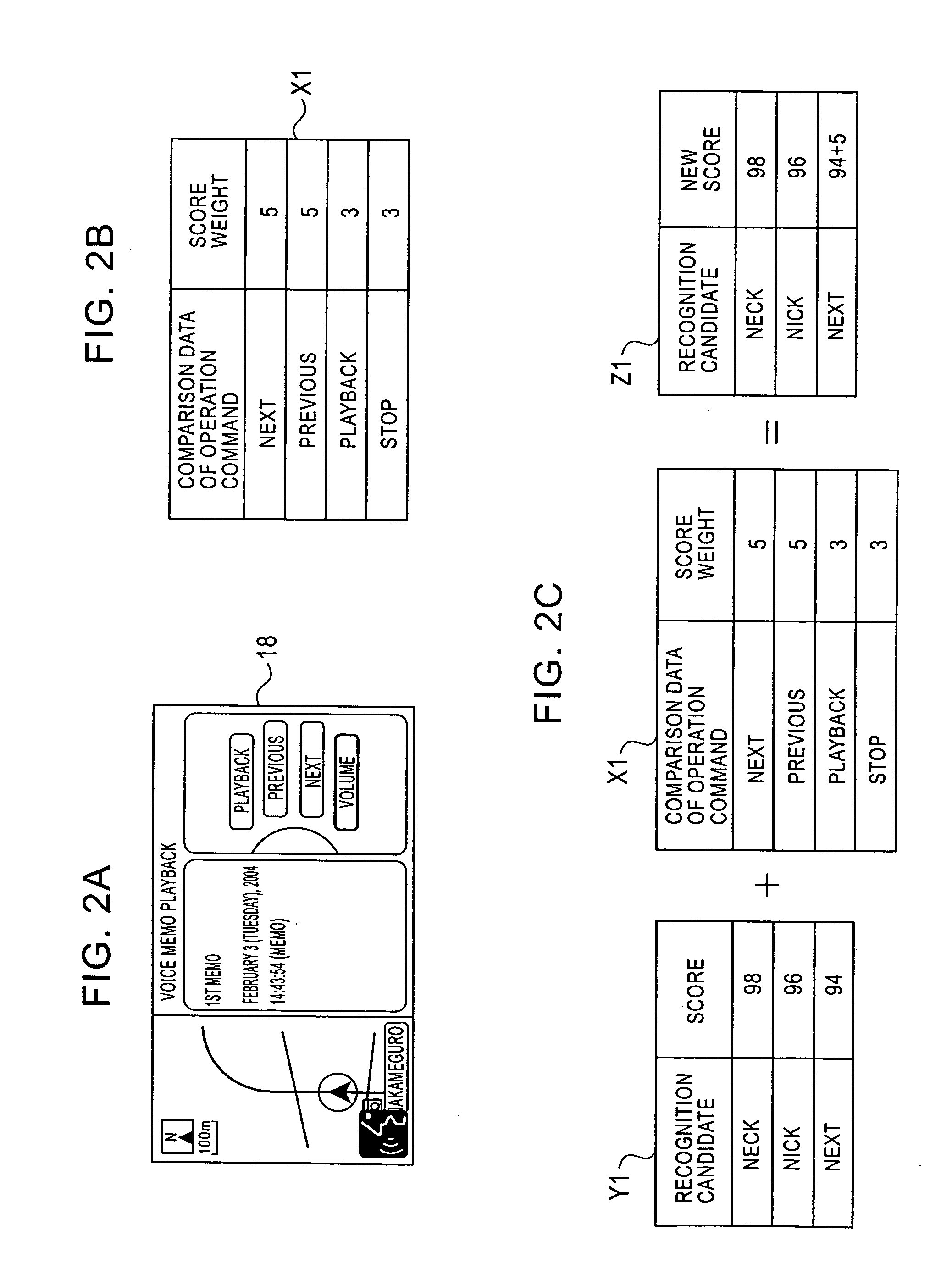

Speech recognition apparatus, navigation apparatus including a speech recognition apparatus, and speech recognition method

InactiveUS20070033043A1Improve accuracyImprove speech recognition performanceNavigation instrumentsSpeech recognitionIdentification deviceSpeech input

A speech recognition apparatus includes a speech recognition dictionary and a speech recognition unit. The speech recognition dictionary includes comparison data used to recognize a voice input. The speech recognition unit is adapted to calculate the score for each comparison data by comparing voice input data generated based on the voice input with each comparison data, recognize the voice input based on the score, and produce the recognition result of the voice input. The speech recognition apparatus further includes data indicating score weights associated with particular comparison data, used to weight the scores calculated for the particular comparison data. After the score is calculated for each comparison data, the score weights are added to the scores of the particular comparison data, and the voice input is recognized based on total scores including the added score weights.

Owner:ALPINE ELECTRONICS INC

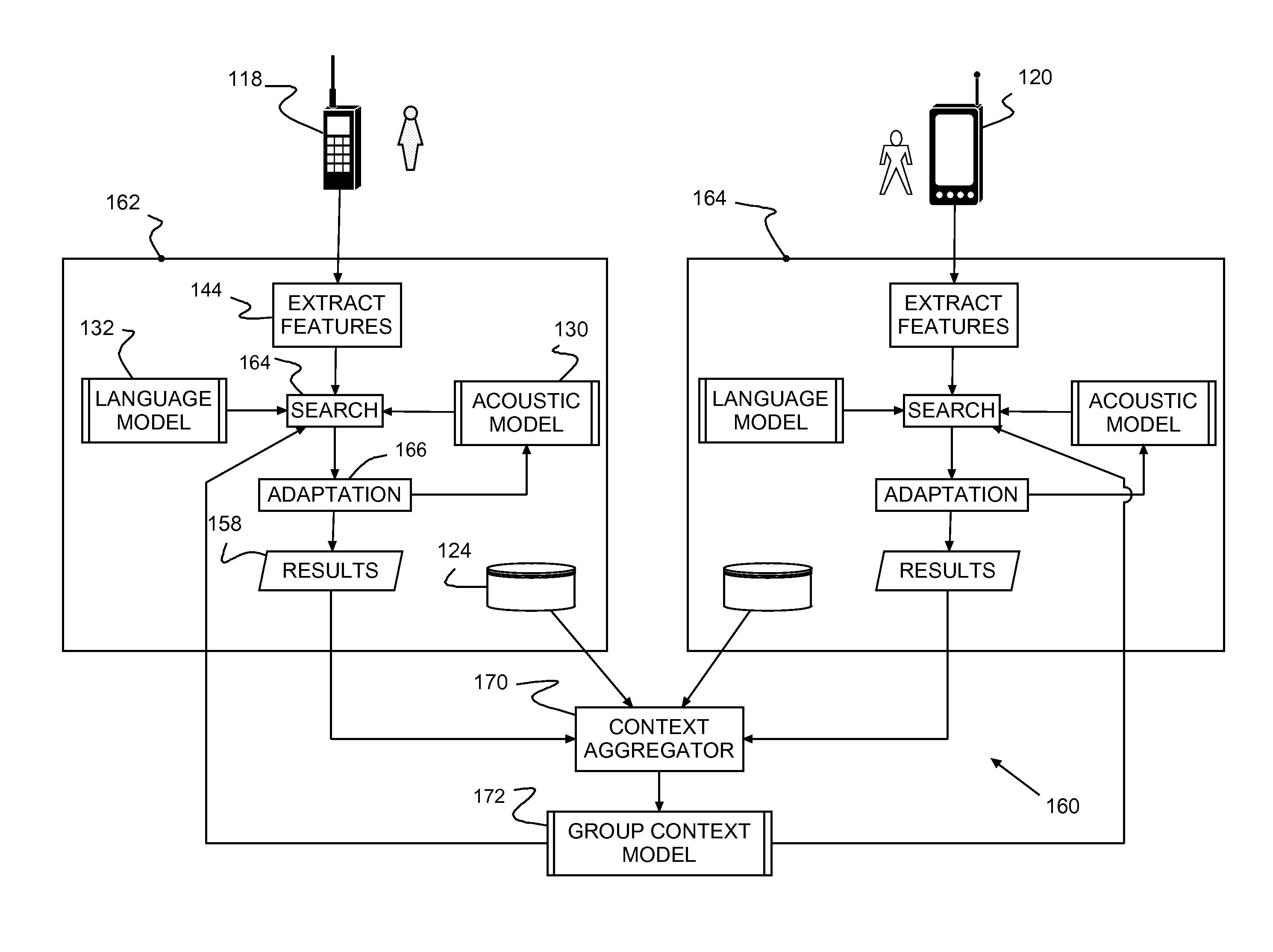

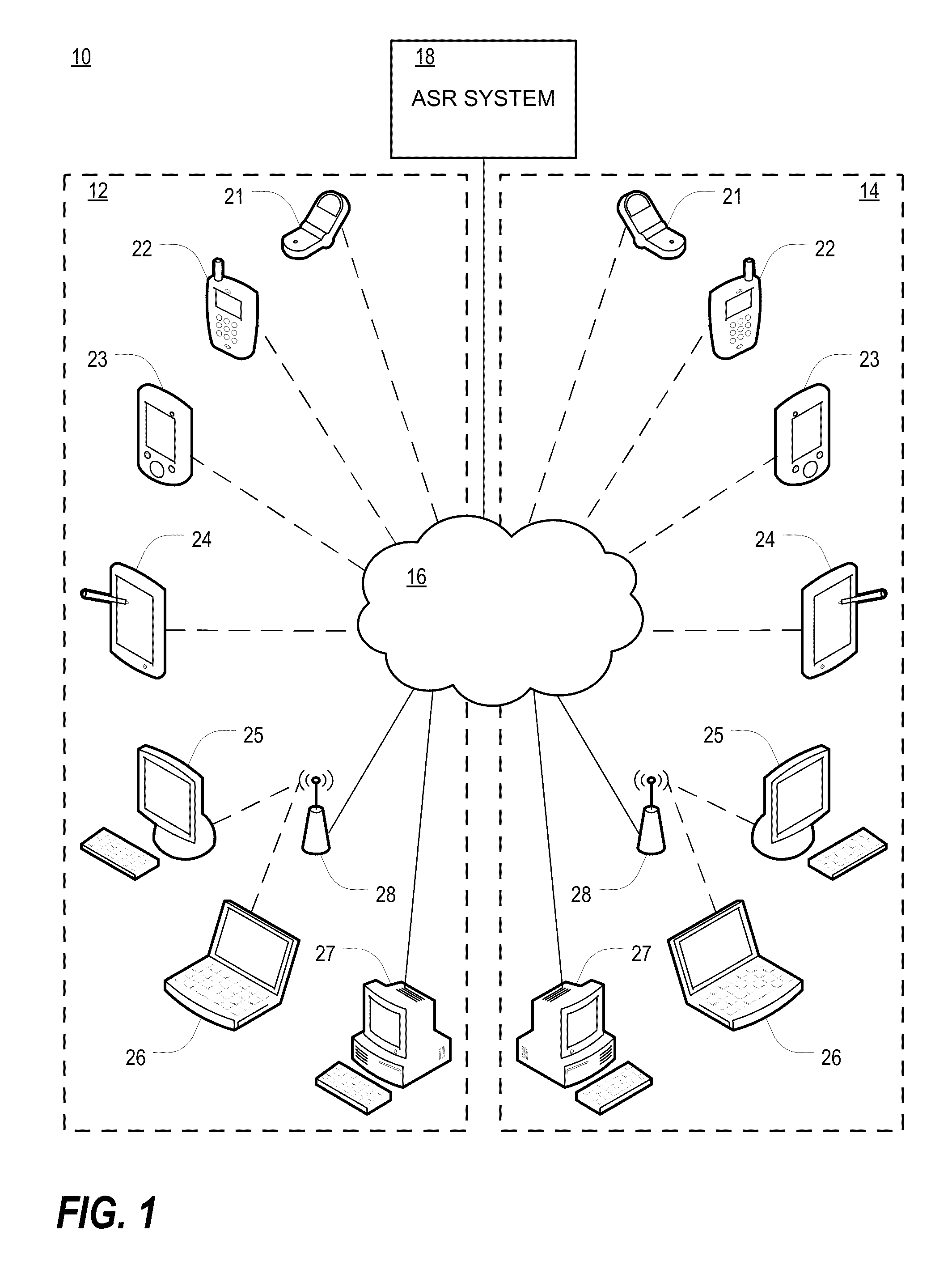

System, method and program product for providing automatic speech recognition (ASR) in a shared resource environment

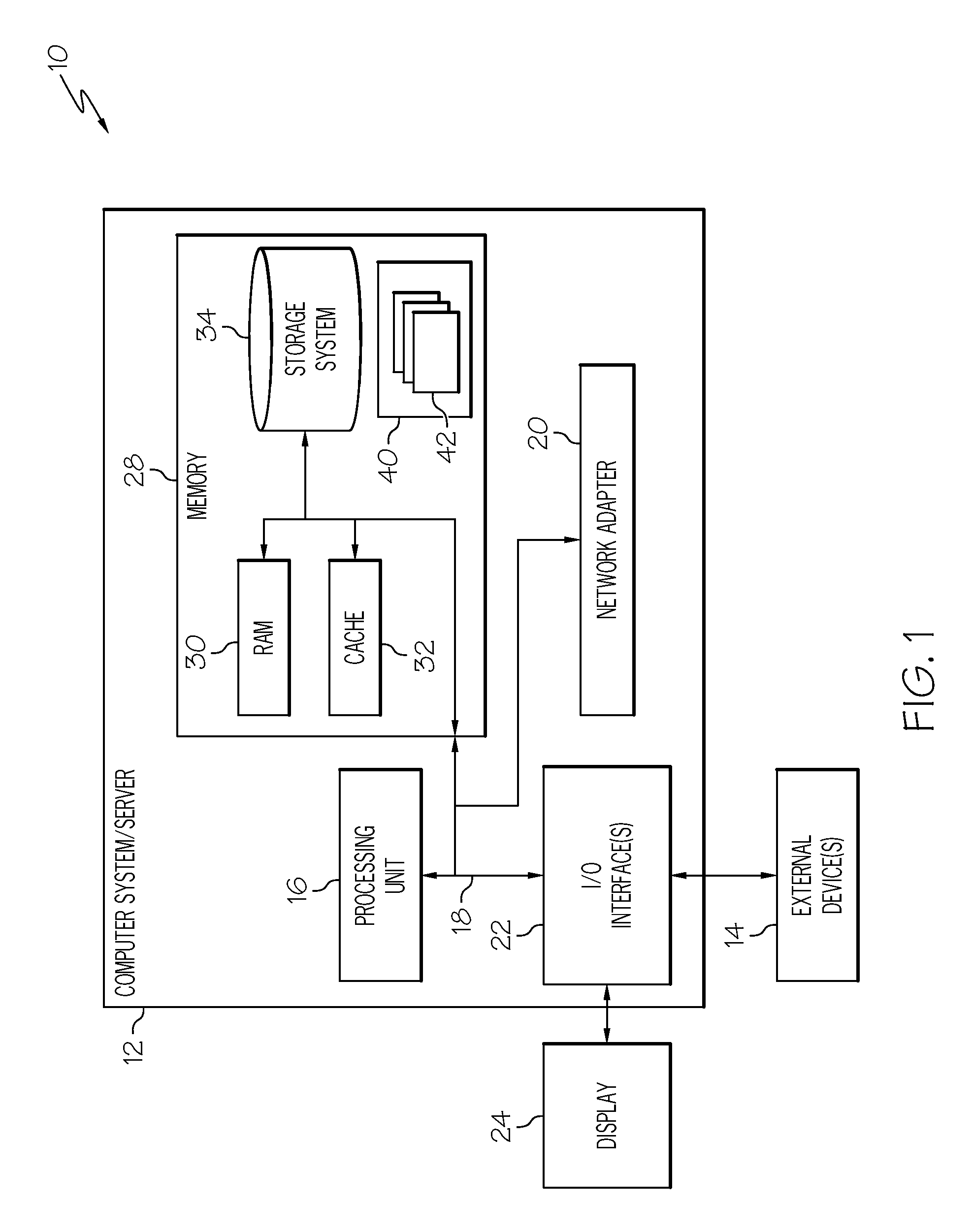

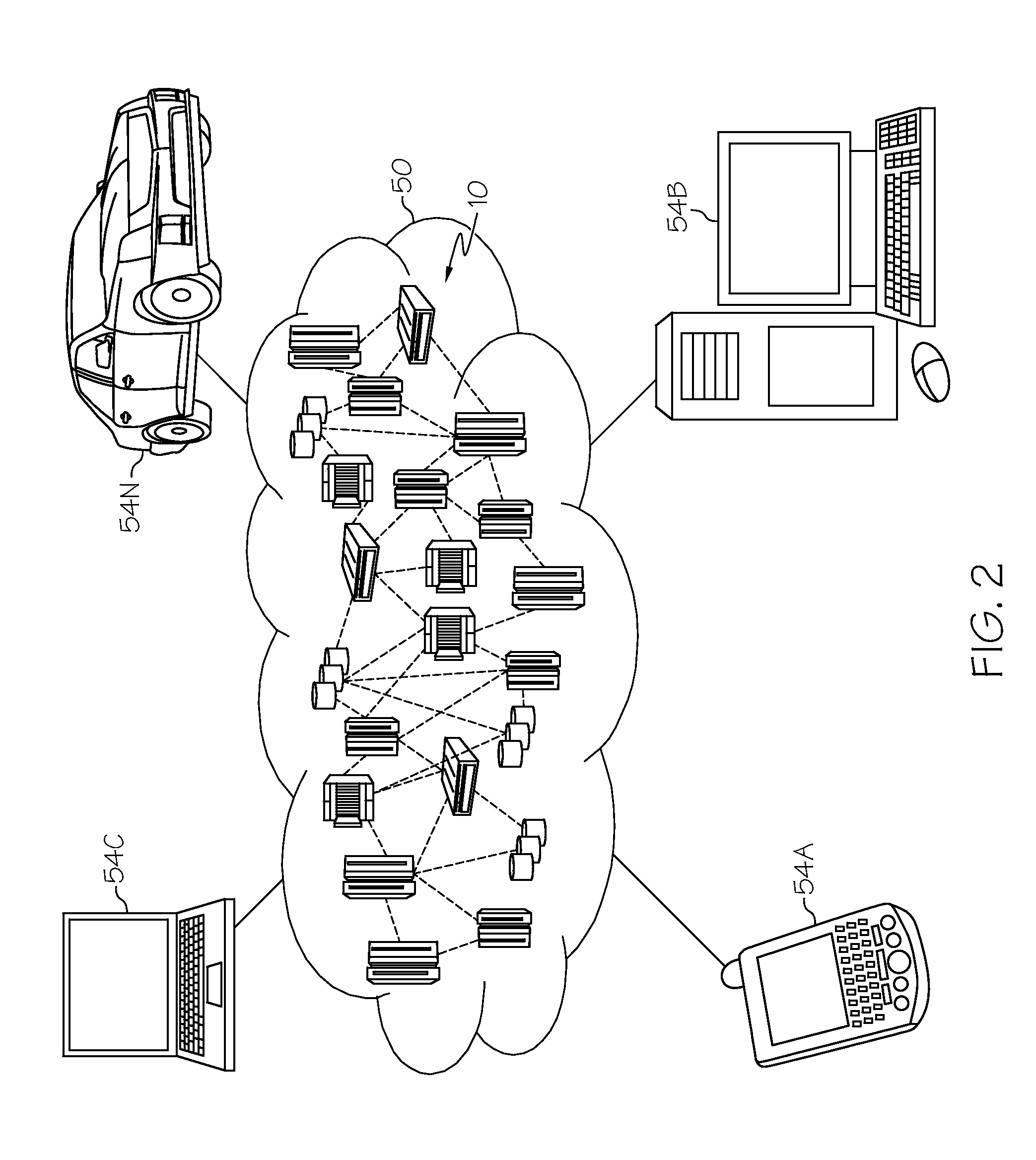

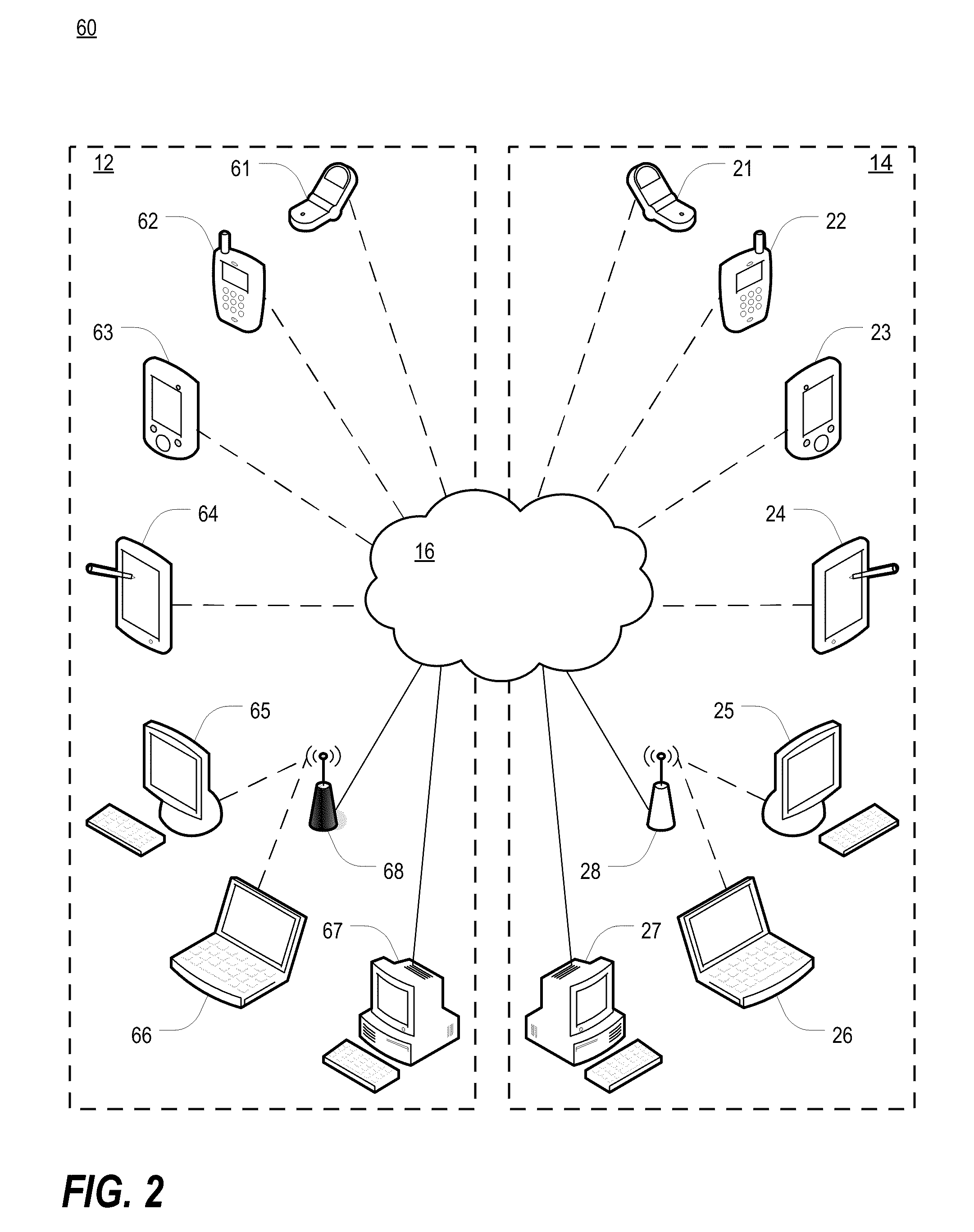

InactiveUS9043208B2Improve speech recognition performanceLeveling precisionSpeech recognitionContext modelAmbiguity

A speech recognition system, method of recognizing speech and a computer program product therefor. A client device identified with a context for an associated user selectively streams audio to a provider computer, e.g., a cloud computer. Speech recognition receives streaming audio, maps utterances to specific textual candidates and determines a likelihood of a correct match for each mapped textual candidate. A context model selectively winnows candidate to resolve recognition ambiguity according to context whenever multiple textual candidates are recognized as potential matches for the same mapped utterance. Matches are used to update the context model, which may be used for multiple users in the same context.

Owner:INT BUSINESS MASCH CORP

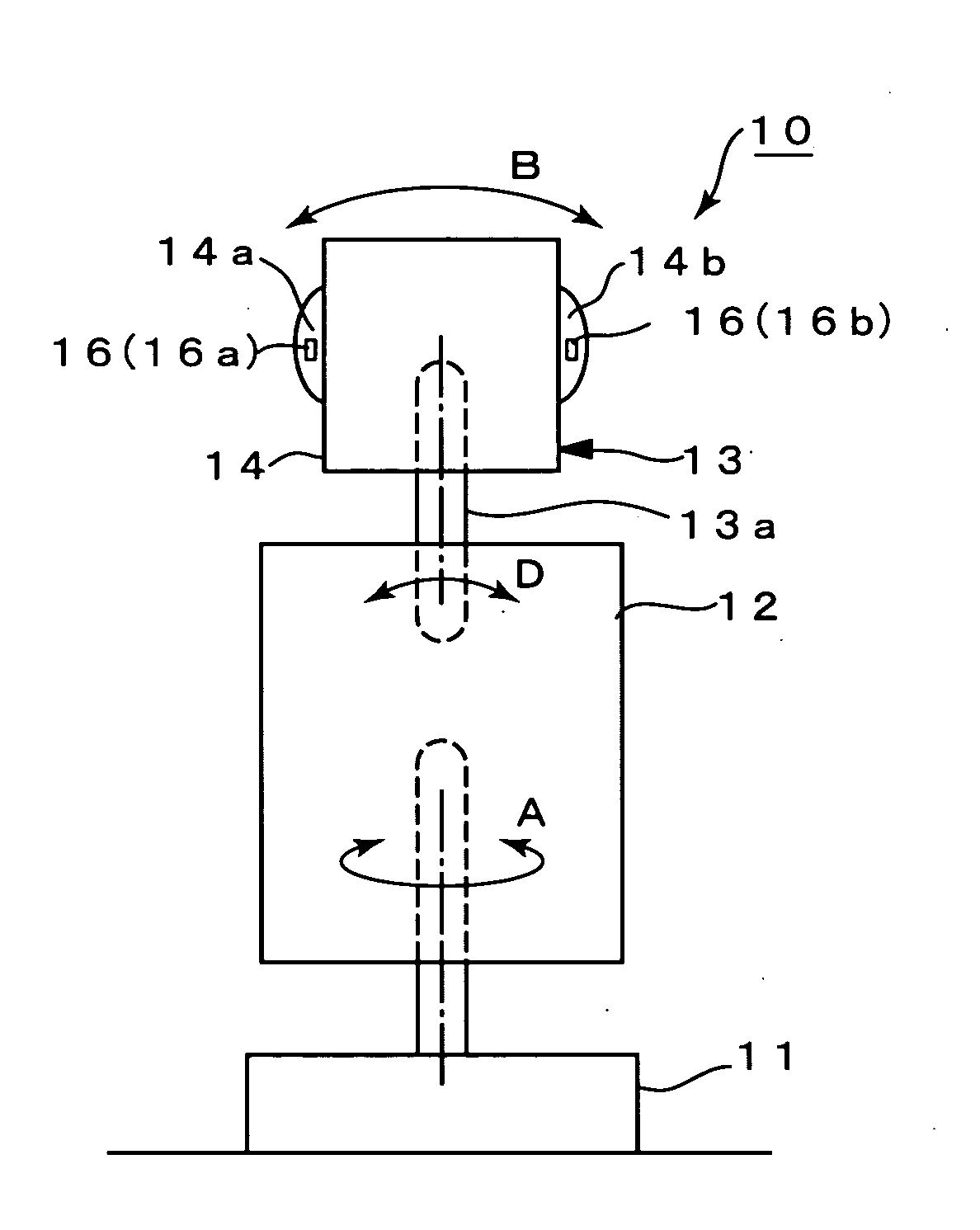

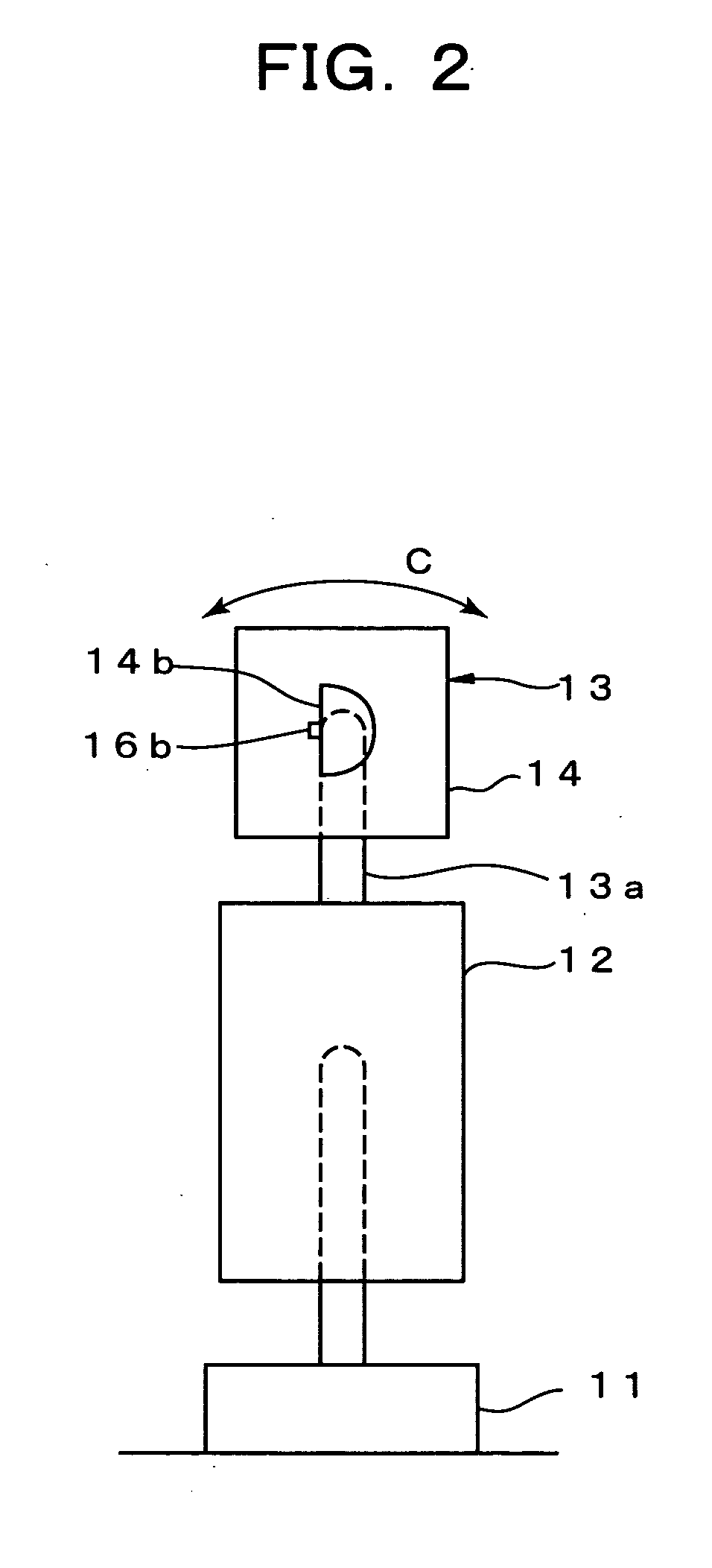

Robotics visual and auditory system

InactiveUS20090030552A1Accurate collectionAccurately localizeProgramme controlComputer controlSound source separationPhase difference

It is a robotics visual and auditory system provided with an auditory module (20), a face module (30), a stereo module (37), a motor control module (40), and an association module (50) to control these respective modules. The auditory module (20) collects sub-bands having interaural phase difference (IPD) or interaural intensity difference (IID) within a predetermined range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring a wave shape of a sound source, conducts speech recognition of separated sound signals from respective sound sources using a plurality of acoustic models (27d), integrates speech recognition results from each acoustic model by a selector, and judges the most reliable speech recognition result among the speech recognition results.

Owner:JAPAN SCI & TECH CORP

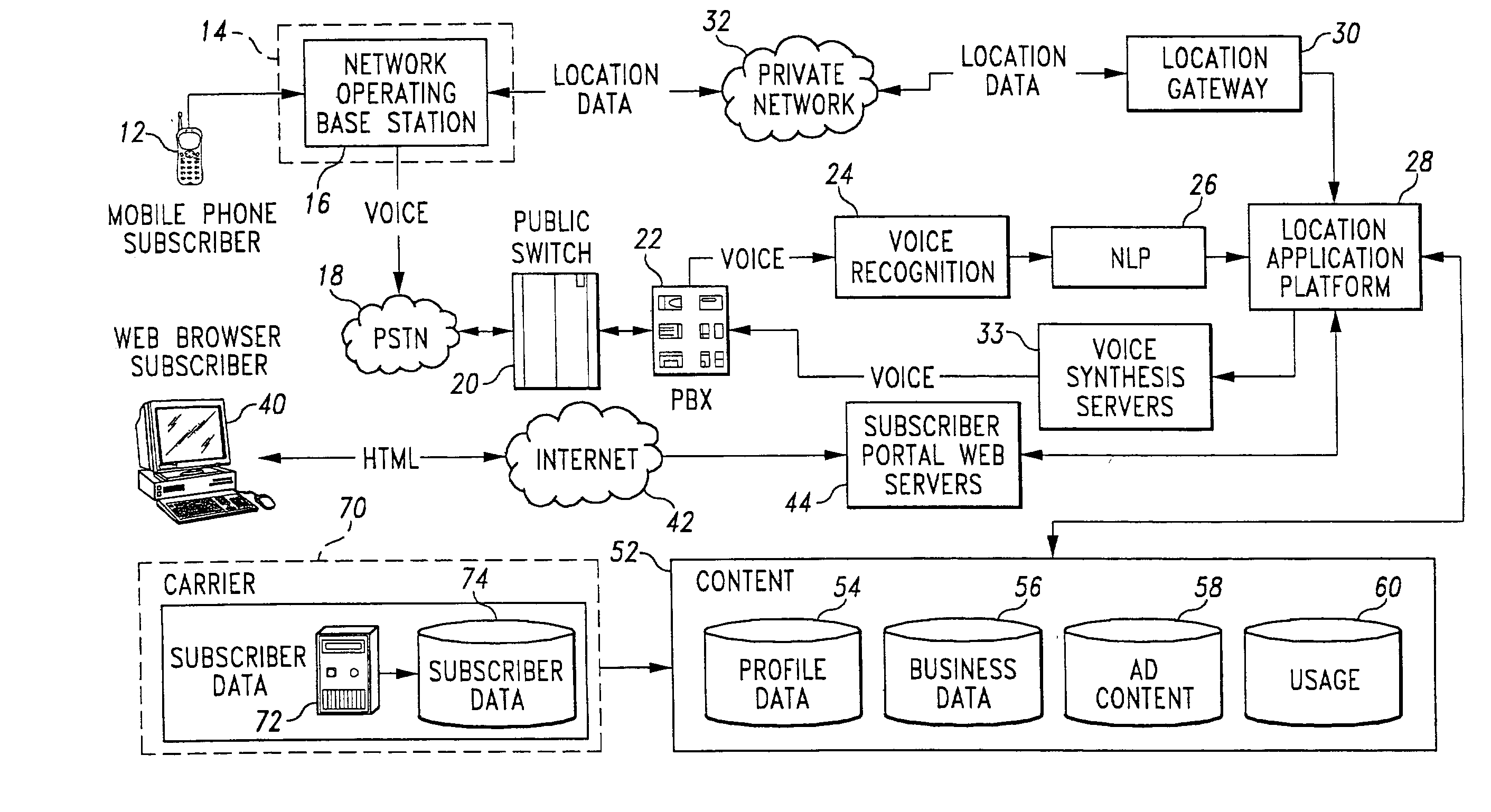

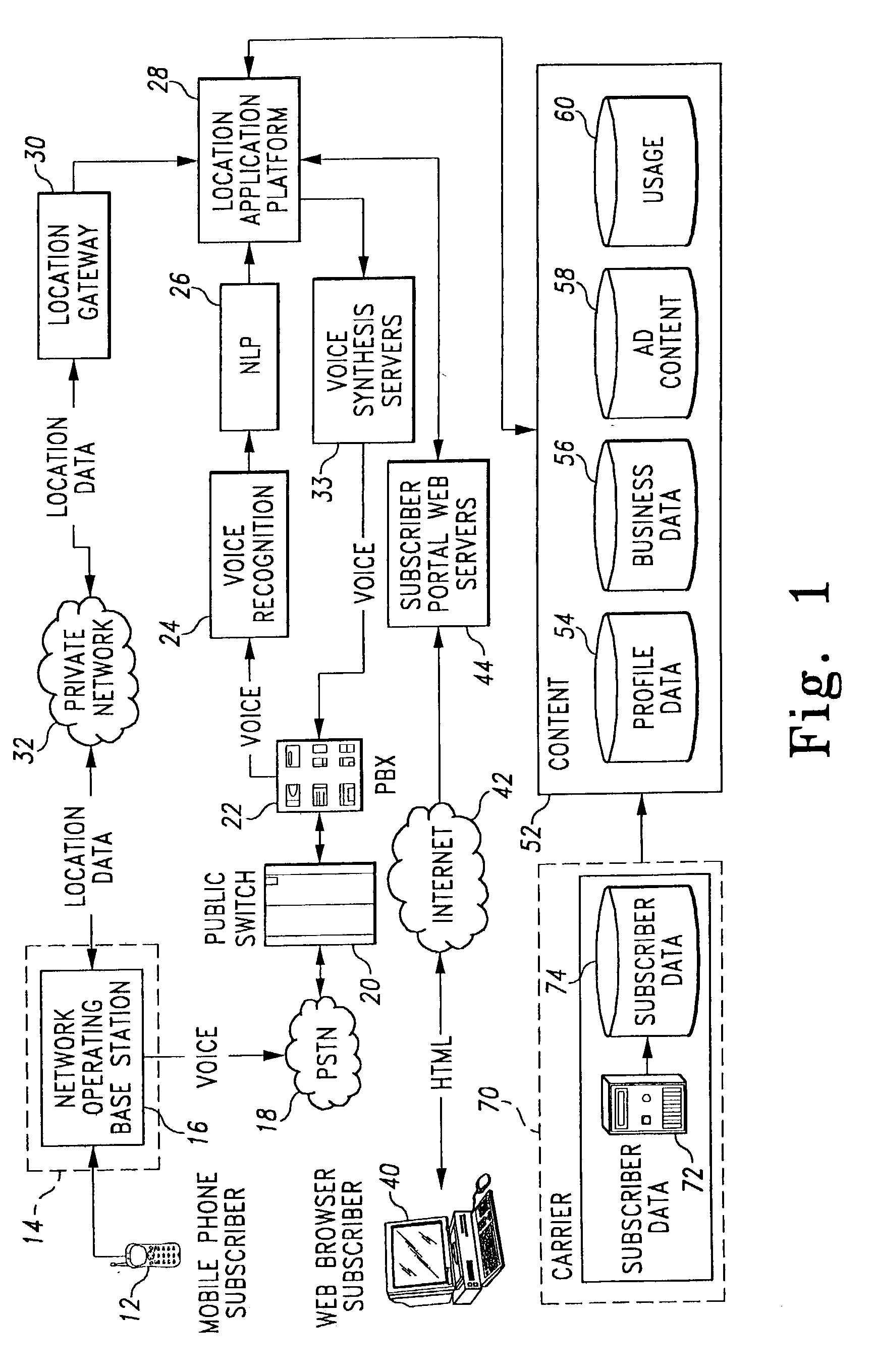

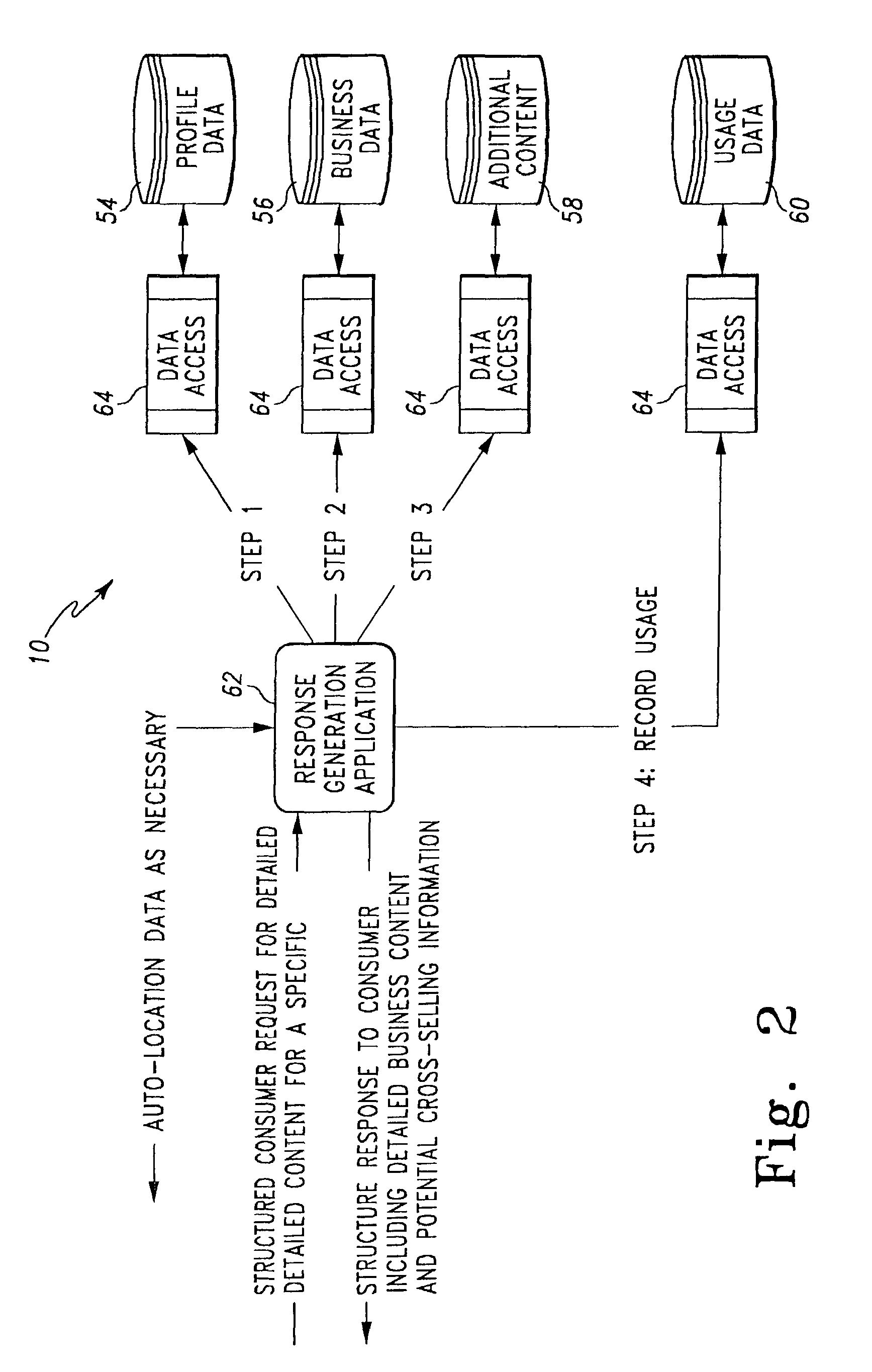

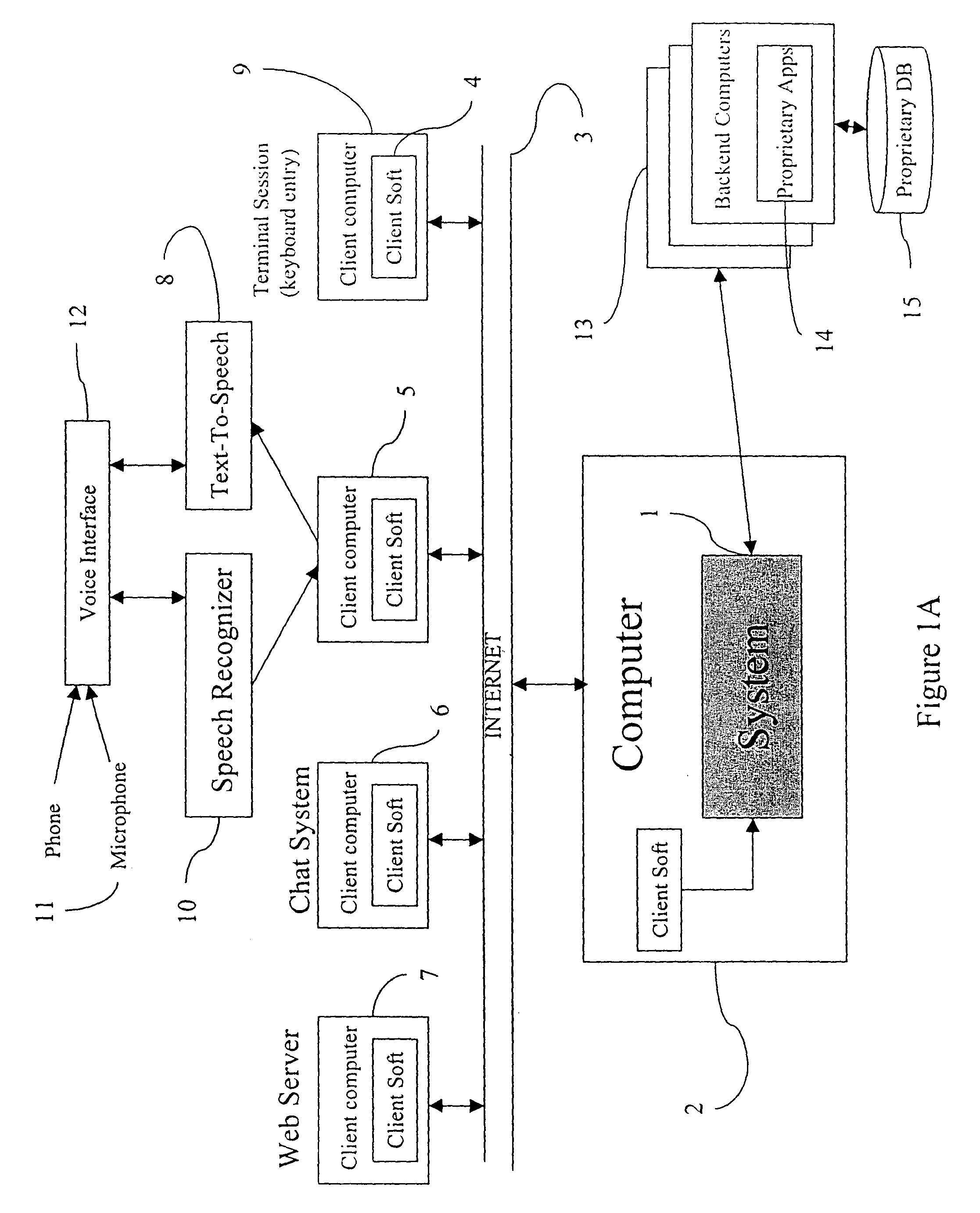

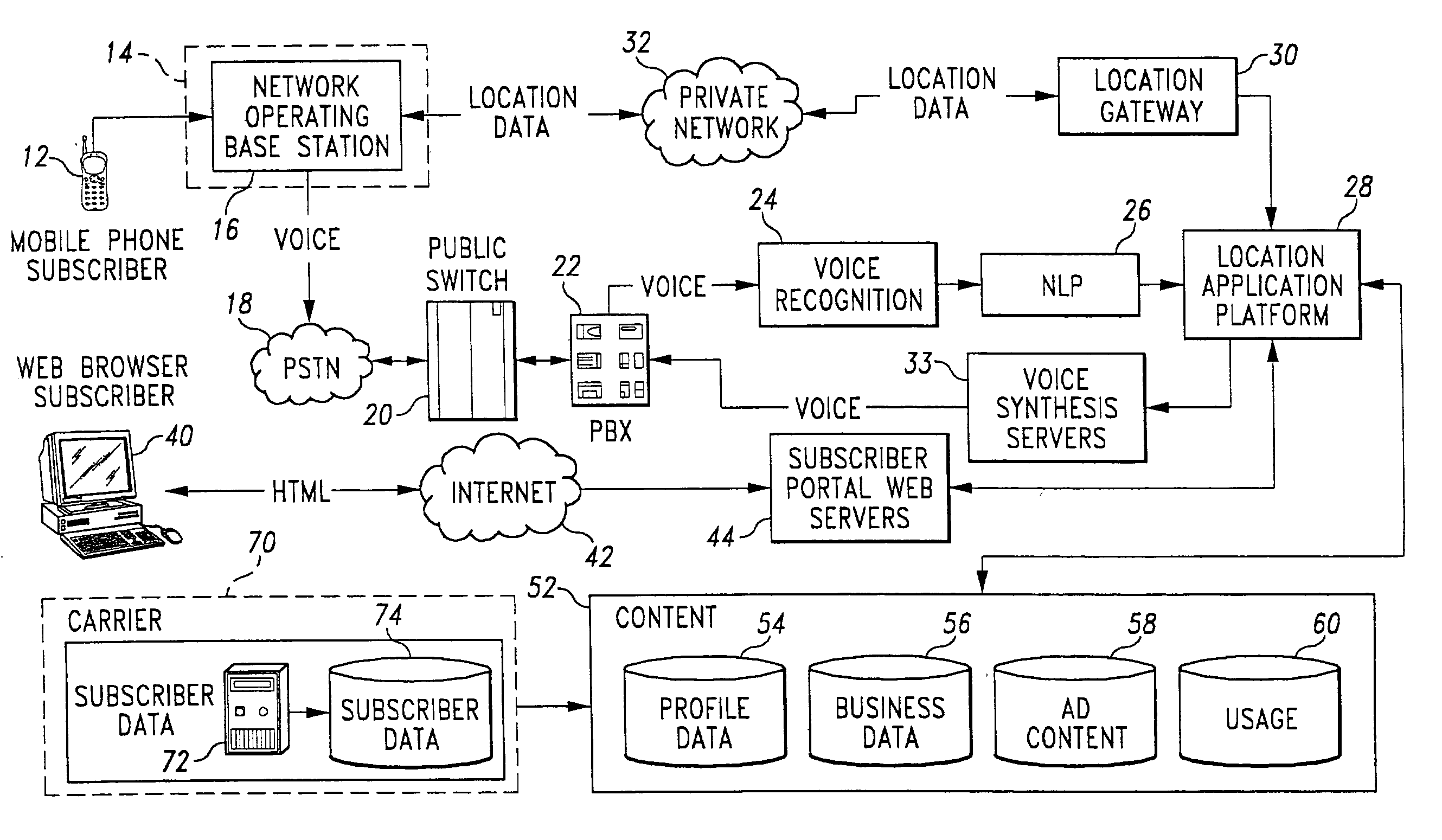

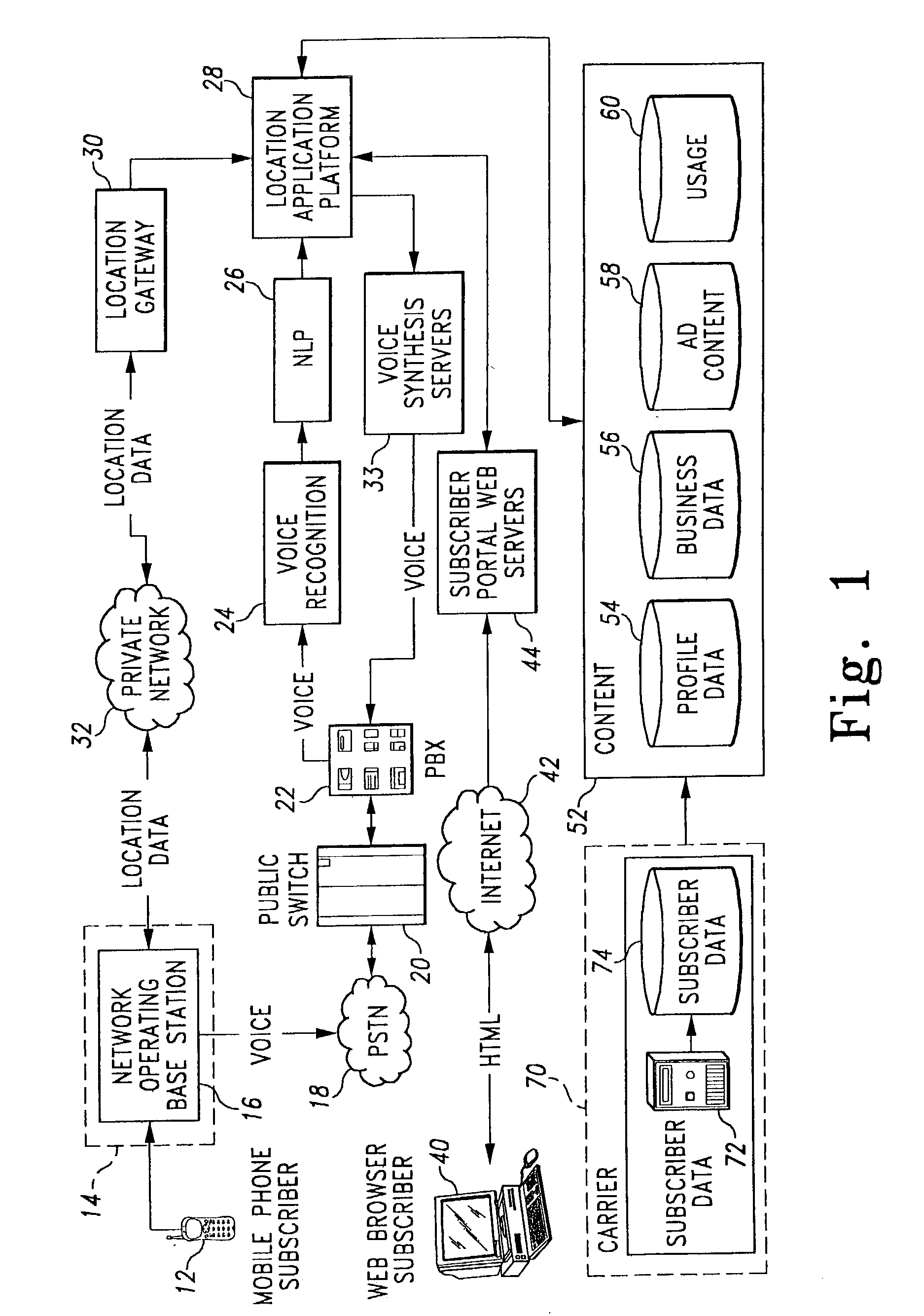

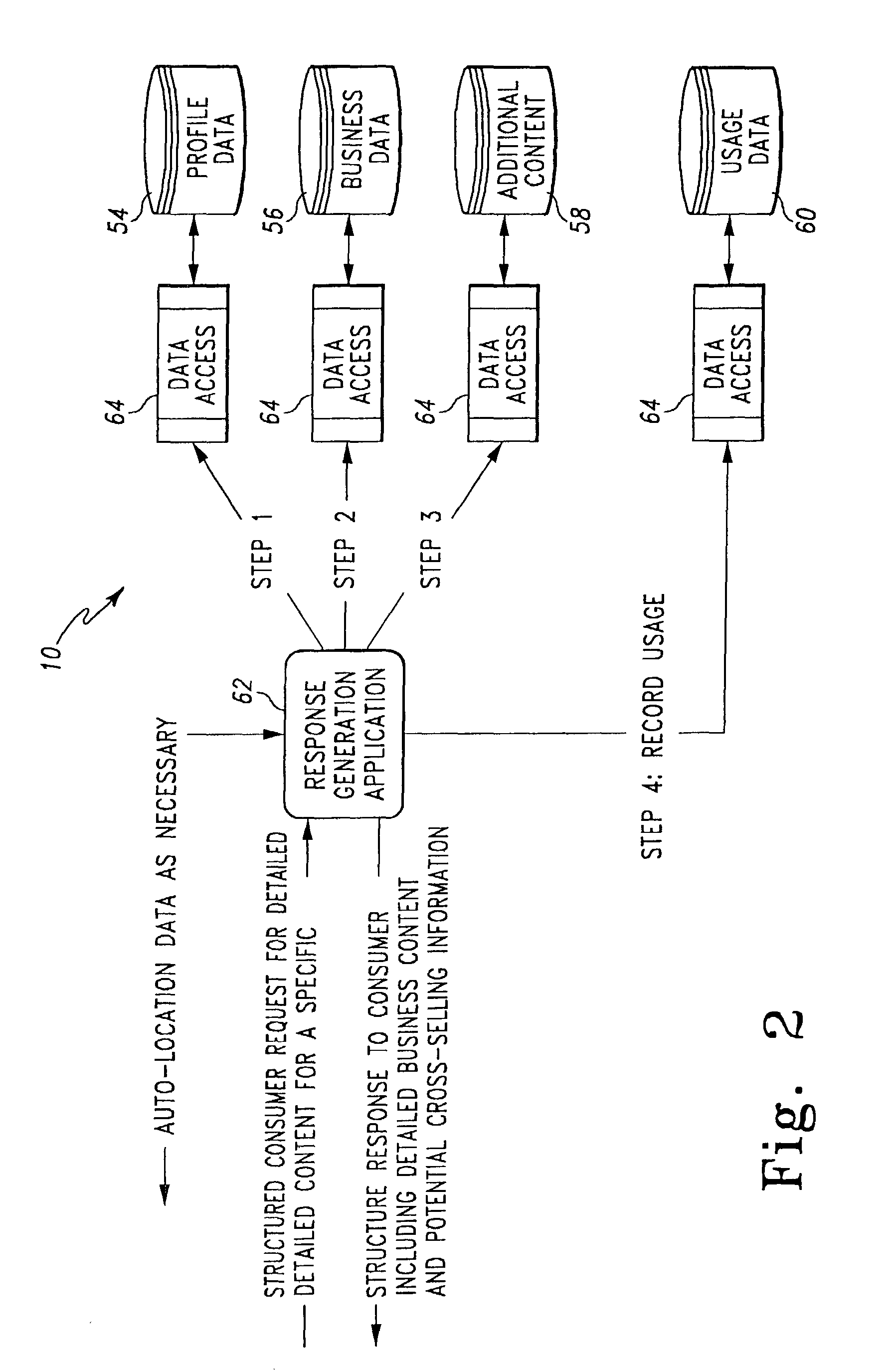

Natural language processing for a location-based services system

ActiveUS20020161587A1Improve user experienceImprove speech recognition performanceDigital data information retrievalSpecial service for subscribersNatural languageApplication software

A method and system for providing natural language processing in a communication system is disclosed. A voice request is generated with a remote terminal that is transmitted to a base station. A voice recognition application is then used to identify a plurality of words that are contained in the voice request. After the words are identified, a grammar associated with each word is also identified. Once the grammars have been identified, each word is categorized into a respective grammar category. A structured response is then generated to the voice request with a response generation application.

Owner:ACCENTURE GLOBAL SERVICES LTD

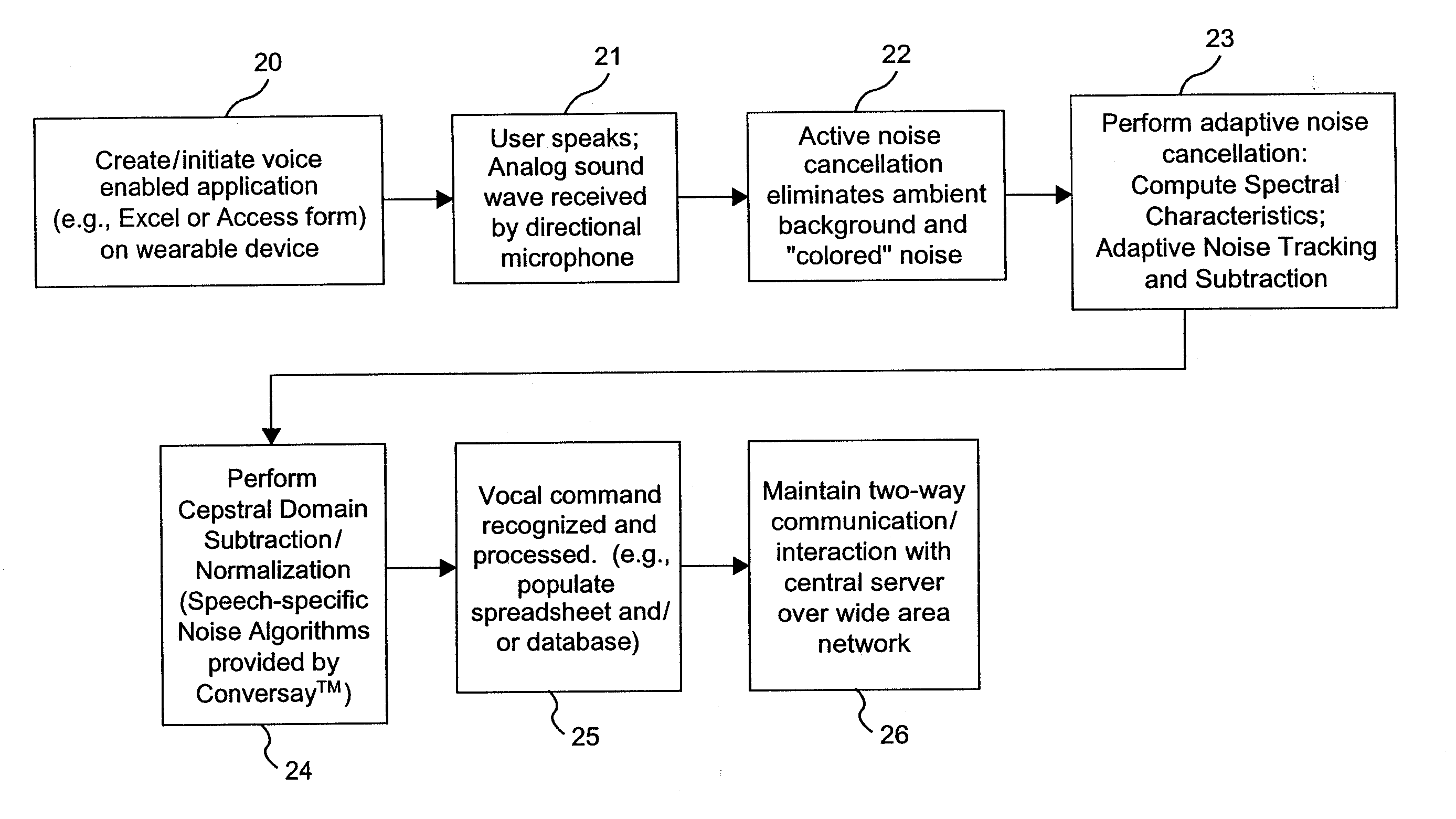

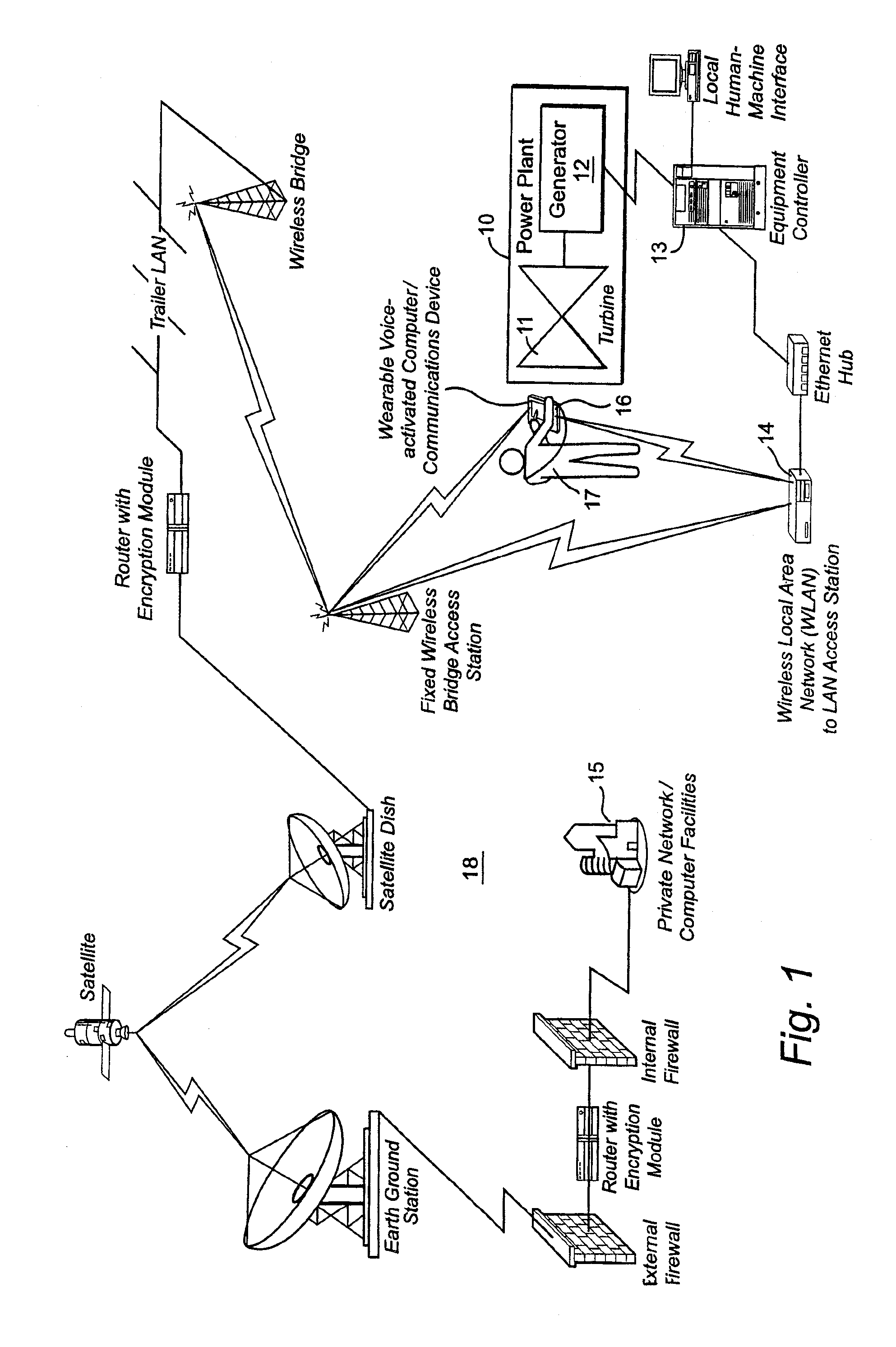

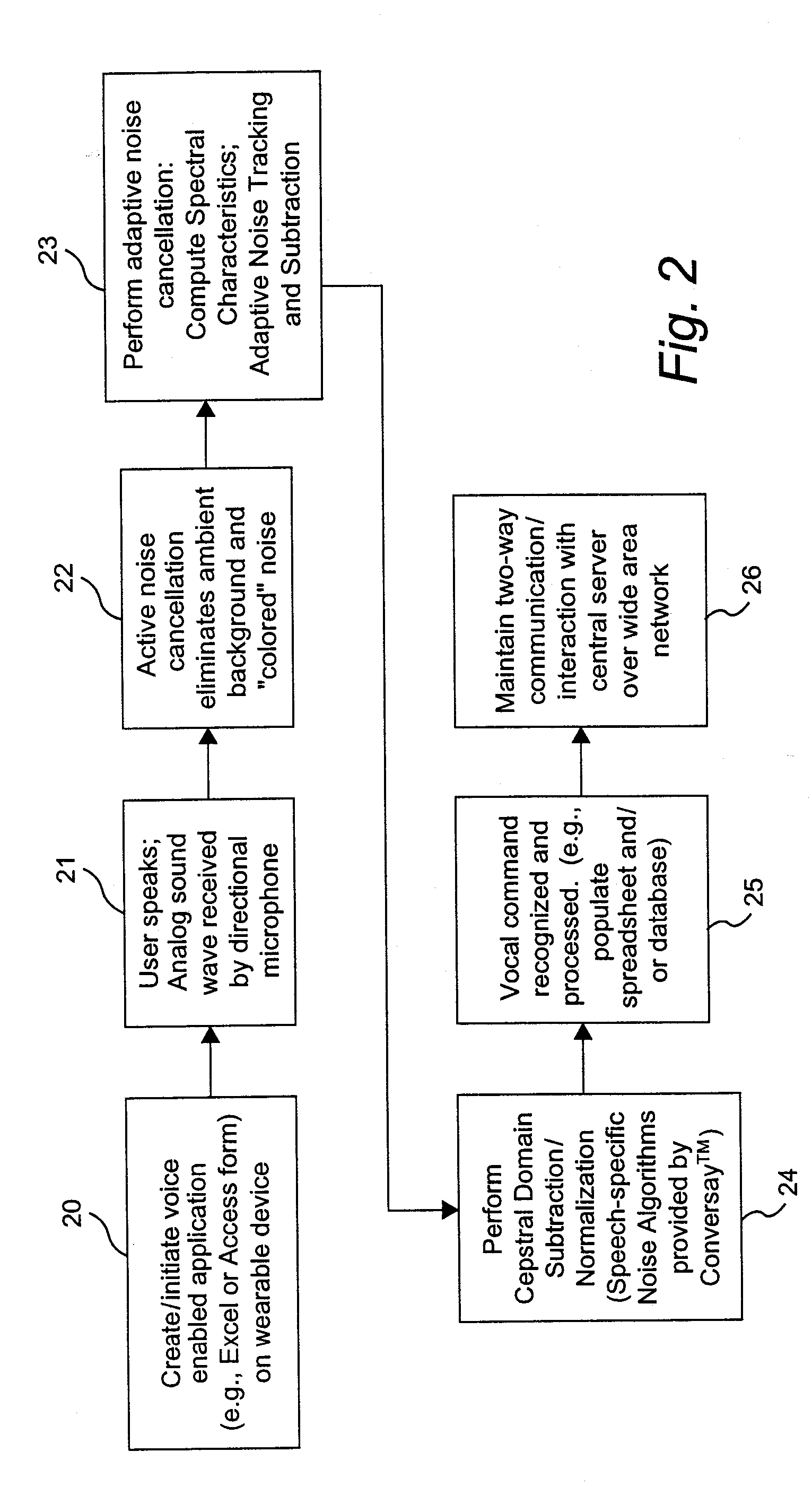

Digitization of work processes using wearable wireless devices capable of vocal command recognition in noisy environments

InactiveUS20050080620A1High bandwidth connectivityImprove productivitySpeech analysisInformation processingFrequency spectrum

In an information processing system having an equipment controller for enabling the digitization of complex work processes conducted during testing and / or operation of machinery and equipment, a local wireless communications network is implemented using at least one fixed point wireless communications access station and one or more voice-responsive wireless mobile computing / communications devices that are capable of accurate vocal command recognition in noisy industrial environments. The computing / communications device determines spectral characteristics of ambient non-speech background noises and provides active noise cancellation through the use of a directional microphone and an adaptive noise tracking and subtraction process. The device is carried by a user and is responsive to one or more vocal utterances of the user for communicating data to the information processing system and / or generating operational control commands to provide to the equipment controller for controlling the machinery or equipment.

Owner:GENERAL ELECTRIC CO

Apparatus and methods for developing conversational applications

InactiveUS7302383B2Improve speech recognition performanceQuick fixSemantic analysisSpeech recognitionExternal applicationApplication software

Owner:GYRUS LOGIC INC

Natural language processing for a location-based services system

InactiveUS20040243417A9Improve speech recognition performanceImprove user experienceDigital data information retrievalSpecial service for subscribersResponse generationCommunications system

A method and system for providing natural language processing in a communication system is disclosed. A voice request is generated with a remote terminal that is transmitted to a base station. A voice recognition application is then used to identify a plurality of words that are contained in the voice request. After the words are identified, a grammar associated with each word is also identified. Once the grammars have been identified, each word is categorized into a respective grammar category. A structured response is then generated to the voice request with a response generation application.

Owner:ACCENTURE GLOBAL SERVICES LTD

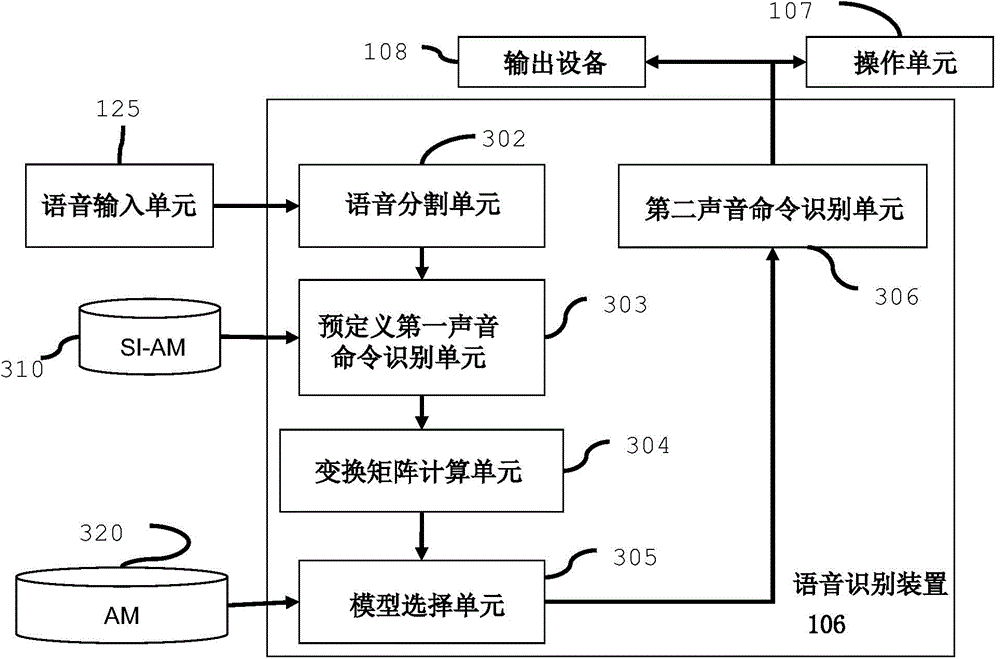

Speech recognition device and speech recognition method

InactiveCN105869641AImprove speech recognition performanceSpeech recognitionSpeech identificationAcoustic model

The invention discloses a speech recognition device and a speech recognition method. The speech recognition device comprises a unit which is configured to obtain speech inputted by a current user, a unit which is configured to split obtained speech and output at least two voice command segments, a unit which is configured to recognize a first predefined voice command from the voice command segments through using an acoustic model unrelated to a speaker, a unit which is configured to calculate a transformation matrix for the current user based on a voice command segment which is recognized as the first predefined voice command, a unit which is configured to select an acoustic model for the current user from acoustic models registered in the speech recognition device based the calculated transformation matrix, and a unit which is configured to recognize a second voice command from the voice command segments through using the selected acoustic model. According to the speech recognition device and the speech recognition method of the invention adopted, speech recognition performance can be improved through using the selected acoustic model (AM).

Owner:CANON KK

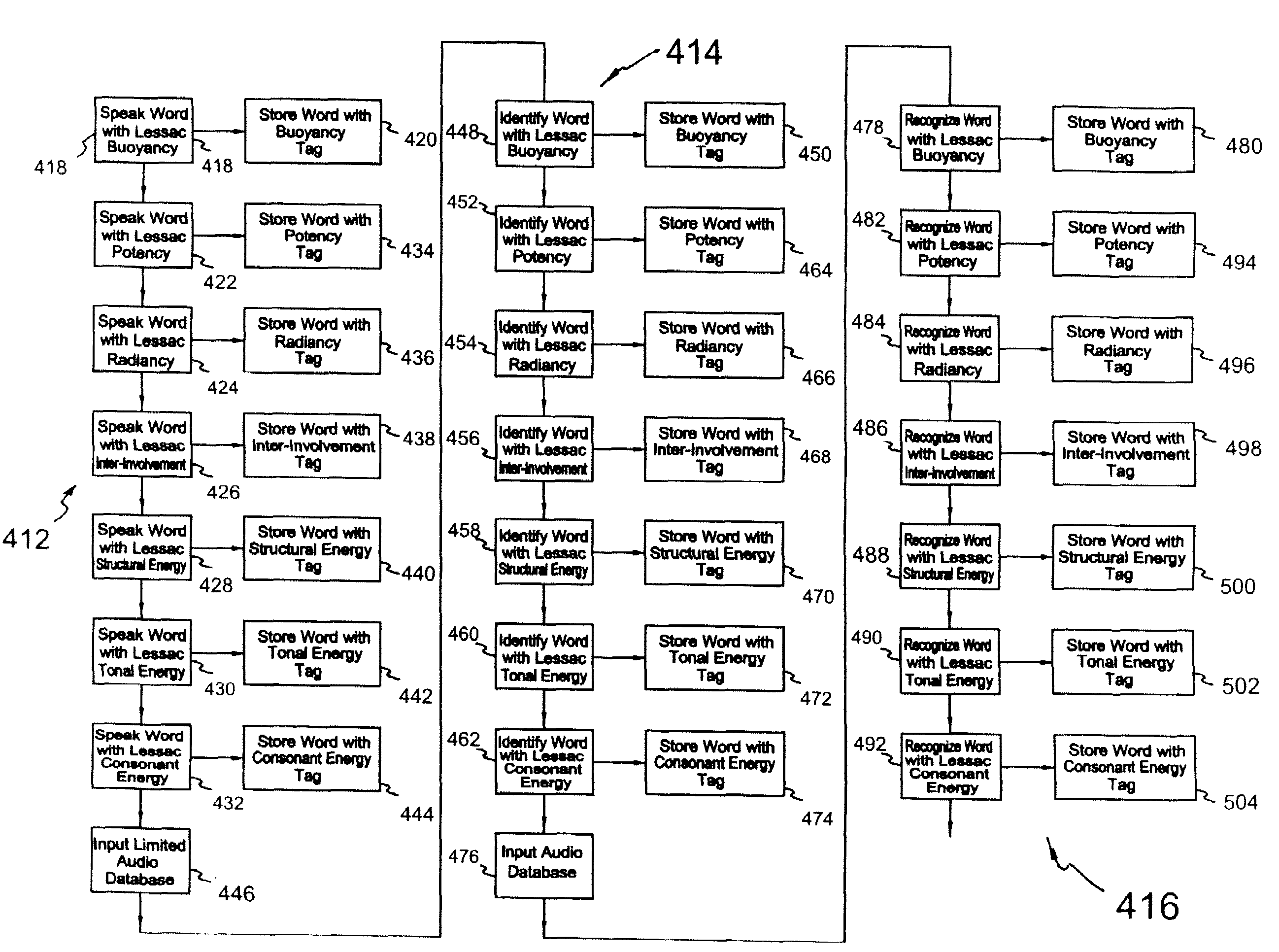

Method of recognizing spoken language with recognition of language color

InactiveUS7280964B2Reduce in quantityImprovement speech patternSpeech recognitionElectrical appliancesAlphanumericSpeech identification

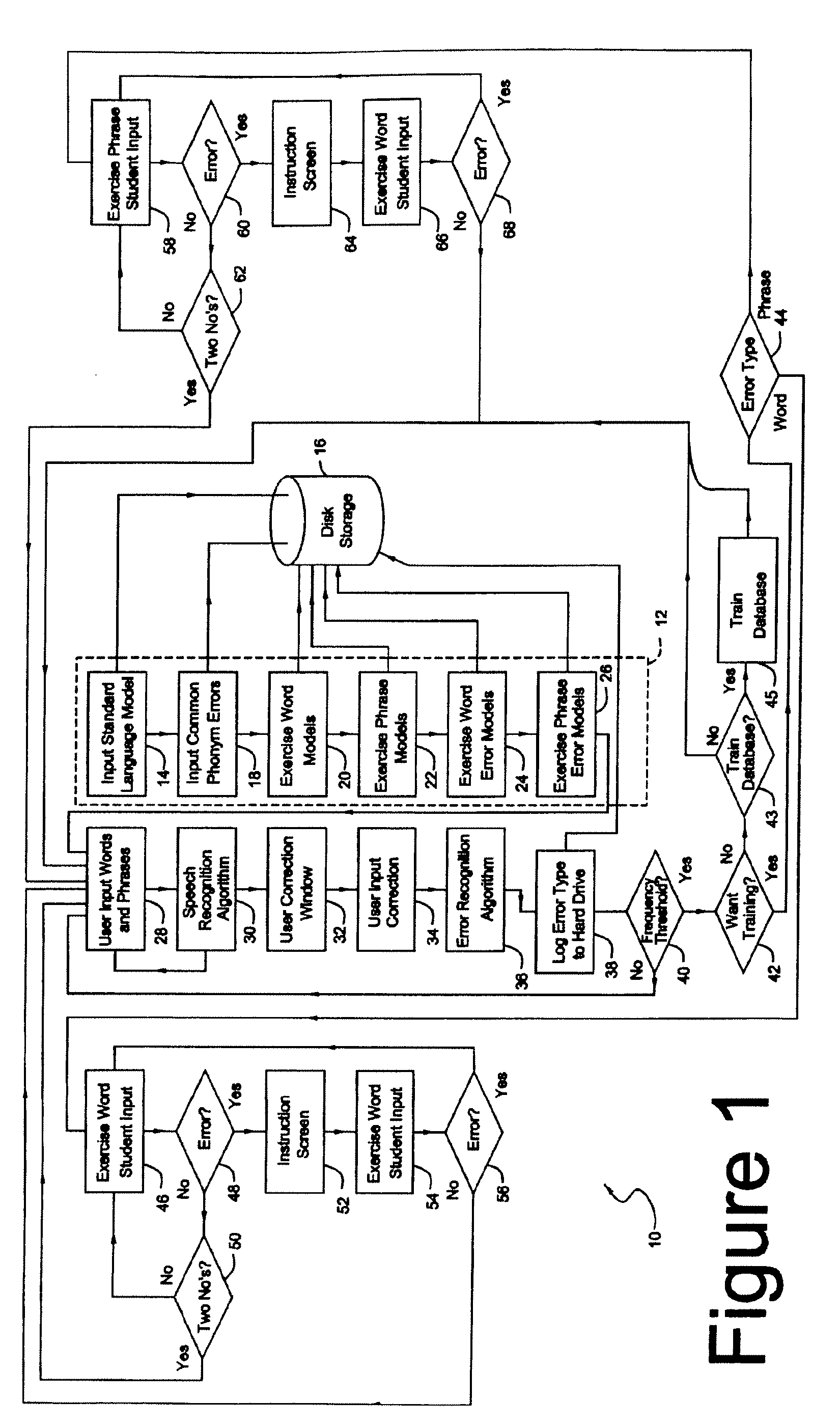

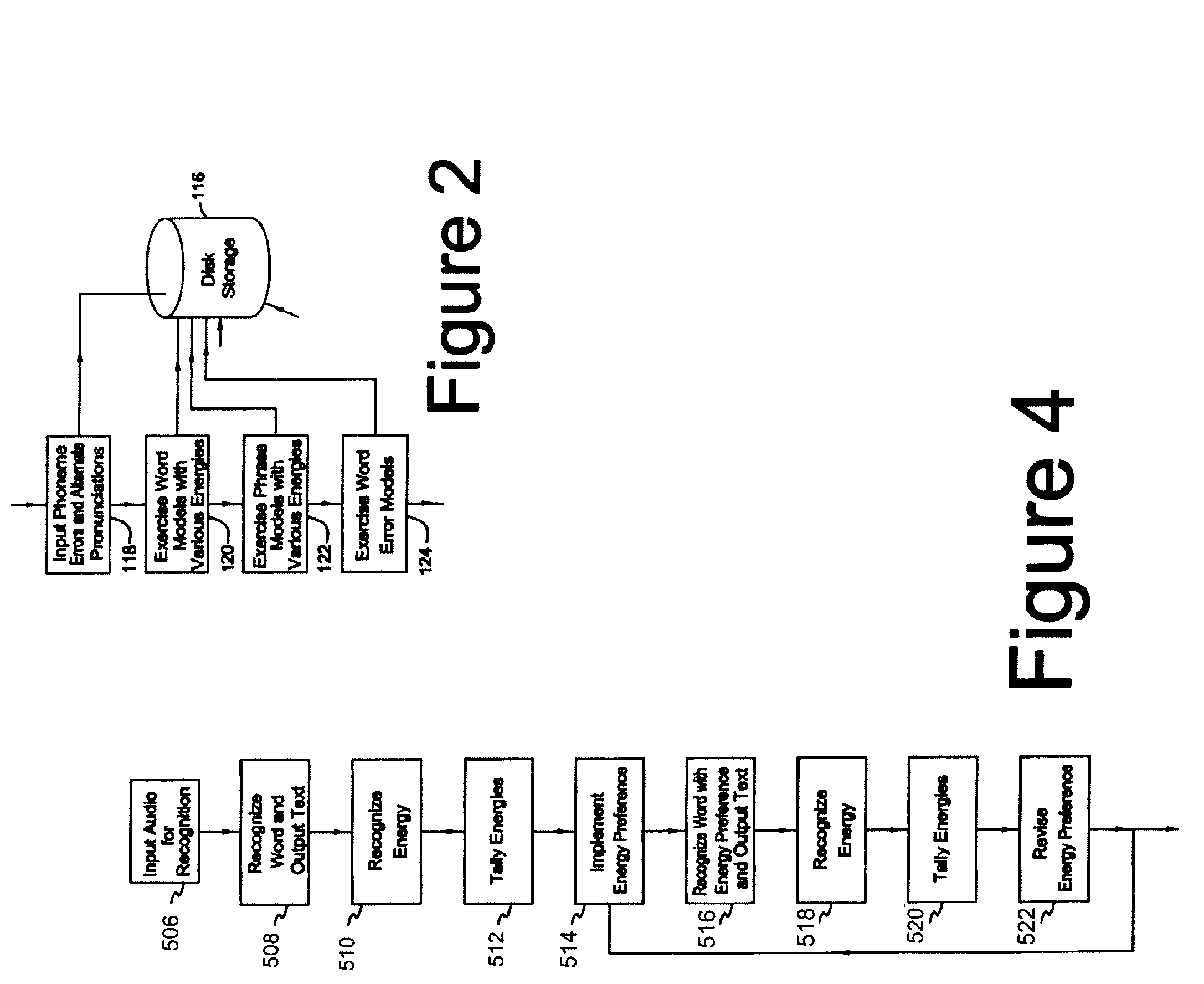

In accordance with a present invention speech recognition is disclosed. It uses a microphone to receive audible sounds input by a user into a first computing device having a program with a database consisting of (i) digital representations of known audible sounds and associated alphanumeric representations of the known audible sounds and (ii) digital representations of known audible sounds corresponding to mispronunciations resulting from known classes of mispronounced words and phrases. The method is performed by receiving the audible sounds in the form of the electrical output of the microphone. A particular audible sound to be recognized is converted into a digital representation of the audible sound. The digital representation of the particular audible sound is then compared to the digital representations of the known audible sounds to determine which of those known audible sounds is most likely to be the particular audible sound being compared to the sounds in the database. A speech recognition output consisting of the alphanumeric representation associated with the audible sound most likely to be the particular audible sound is then produced. An error indication is then received from the user indicating that there is an error in recognition. The user also indicates the proper alphanumeric representation of the particular audible sound. This allows assistant to determine whether the error is a result of a known type or instance of mispronunciation. In response to a determination of error corresponding to a known type or instance of mispronunciation, the system presents an interactive training program from the computer to the user to enable the user to correct such mispronunciation.

Owner:LESSAC TECH INC

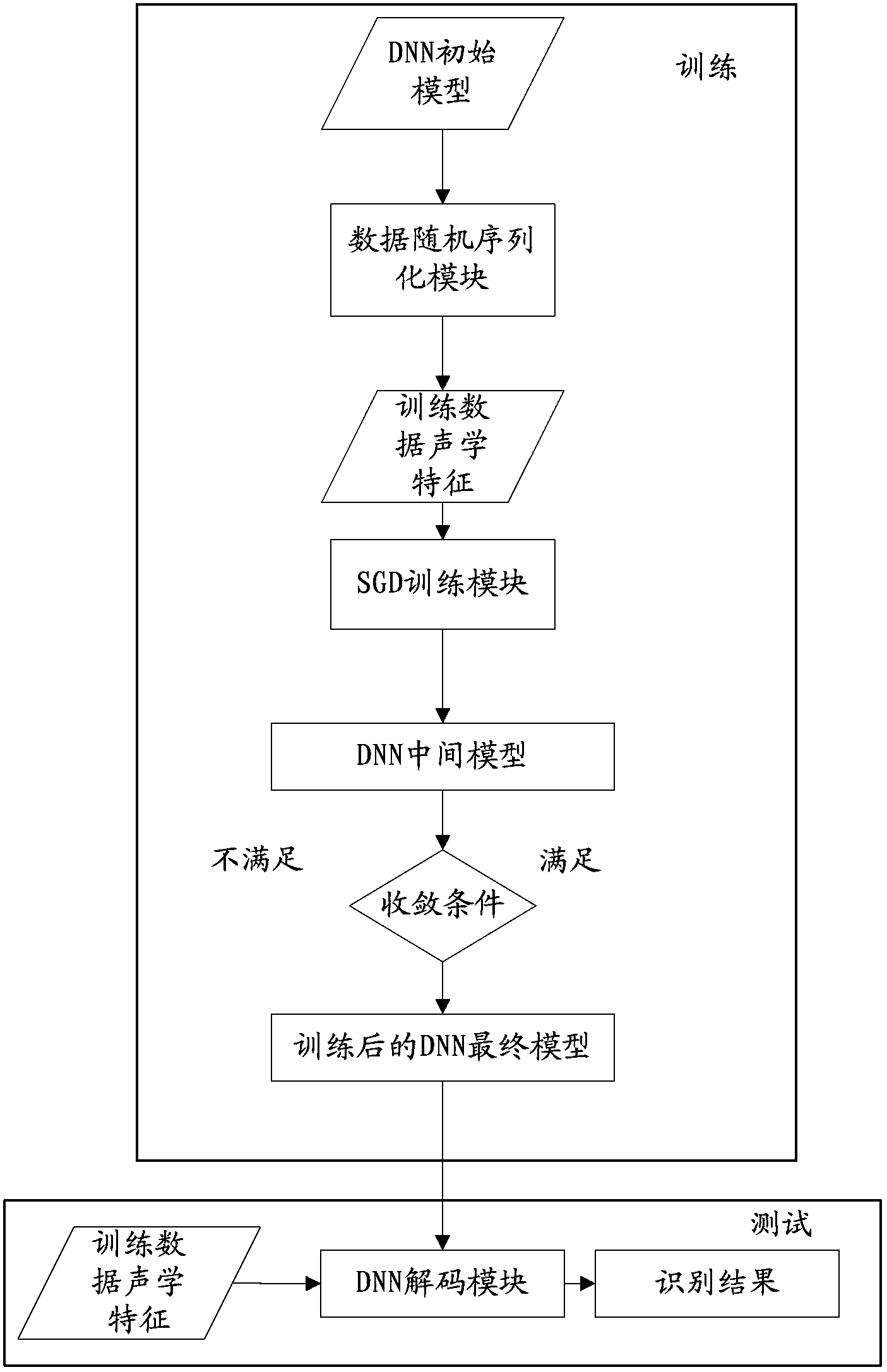

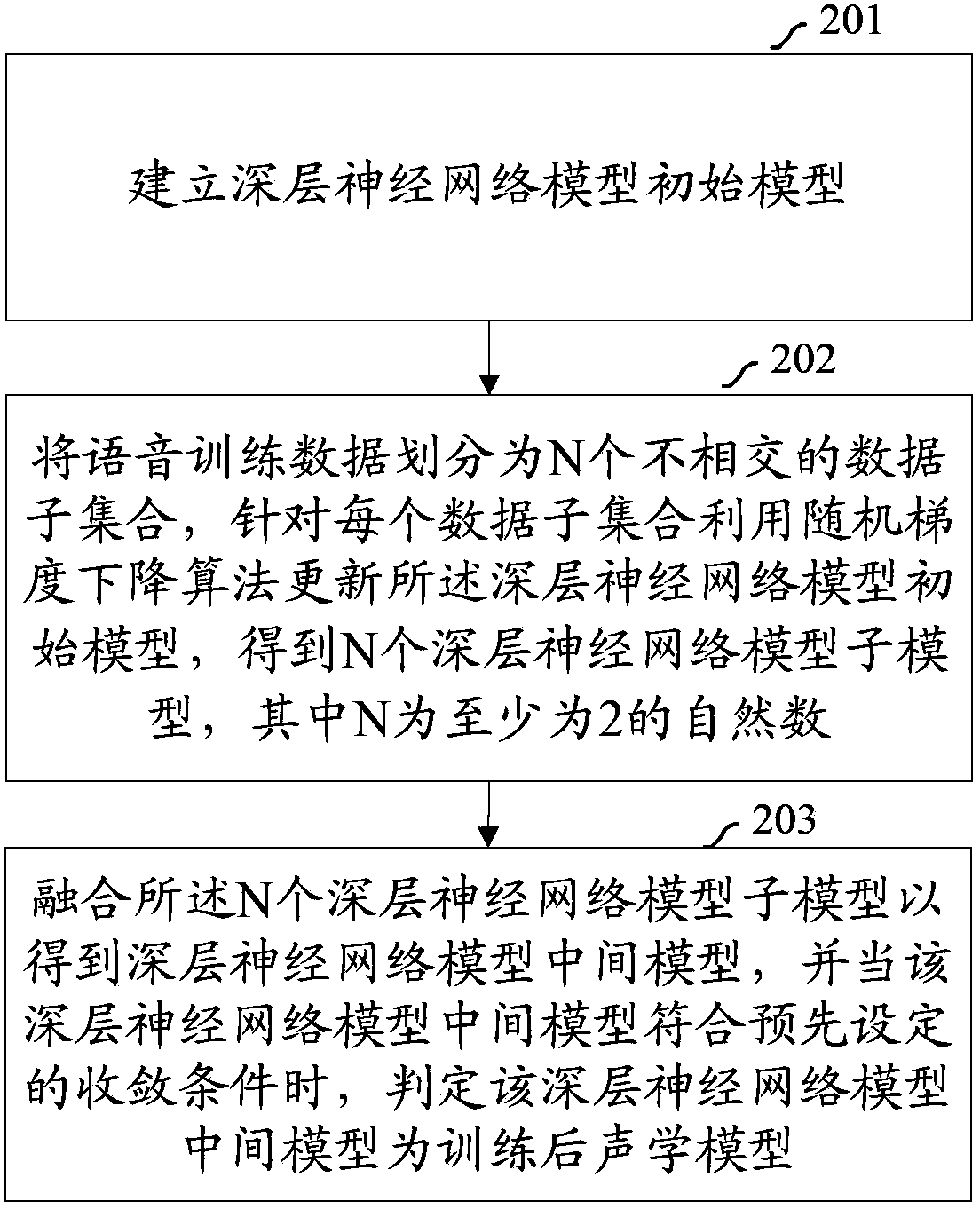

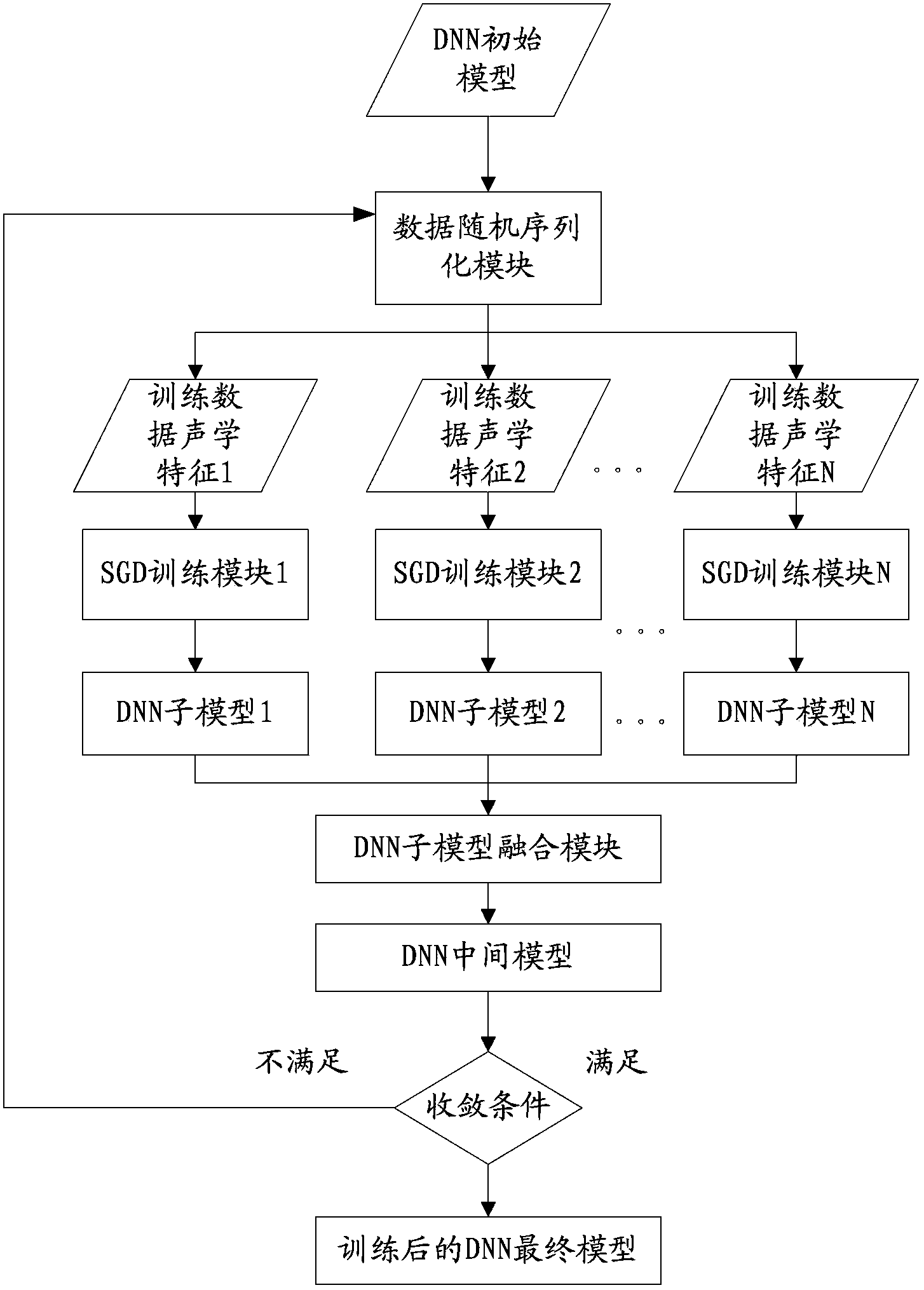

Acoustic model training method and device

ActiveCN104143327AReduce biasImprove speech recognition performanceSpeech recognitionStochastic gradient descentAlgorithm

The embodiment of the invention provides an acoustic model training method and device. The method includes the steps of establishing a deep neural network model initial model; dividing voice training data into N non-intersecting data subsets, renewing the deep neural network model initial model for each data subset by means of a stochastic gradient descend algorithm to obtain N deep neural network model sub models, wherein N is an integer larger than or equal to 2; integrating the N deep neural network model sub models to obtain a deep neural network model intermediate model, and judging that the deep neural network model intermediate model is a trained acoustic model when the deep neural network model intermediate model conforms to preset convergence conditions. By means of the acoustic model training method and device, training efficiency of the acoustic model is improved, and performance of voice recognition is not reduced.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

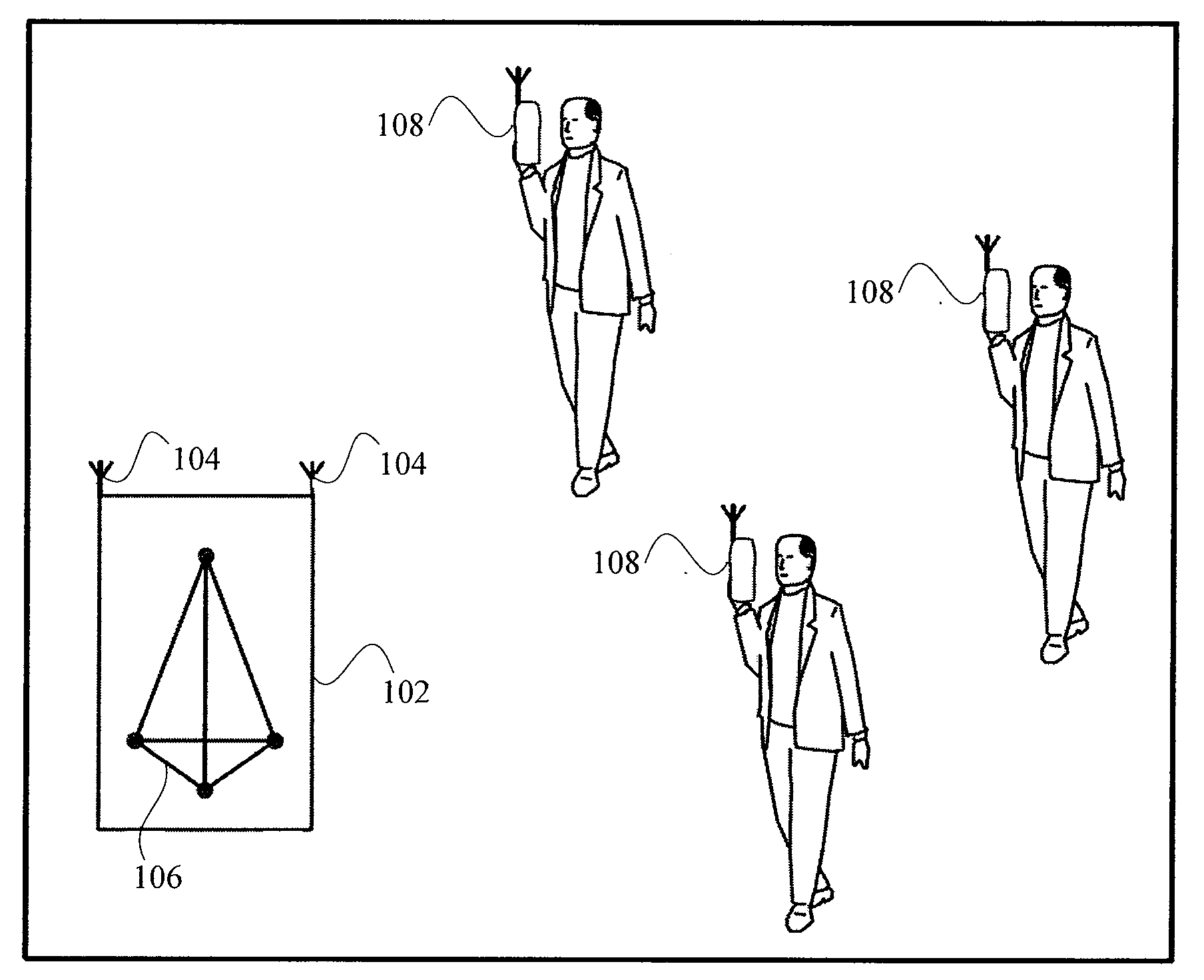

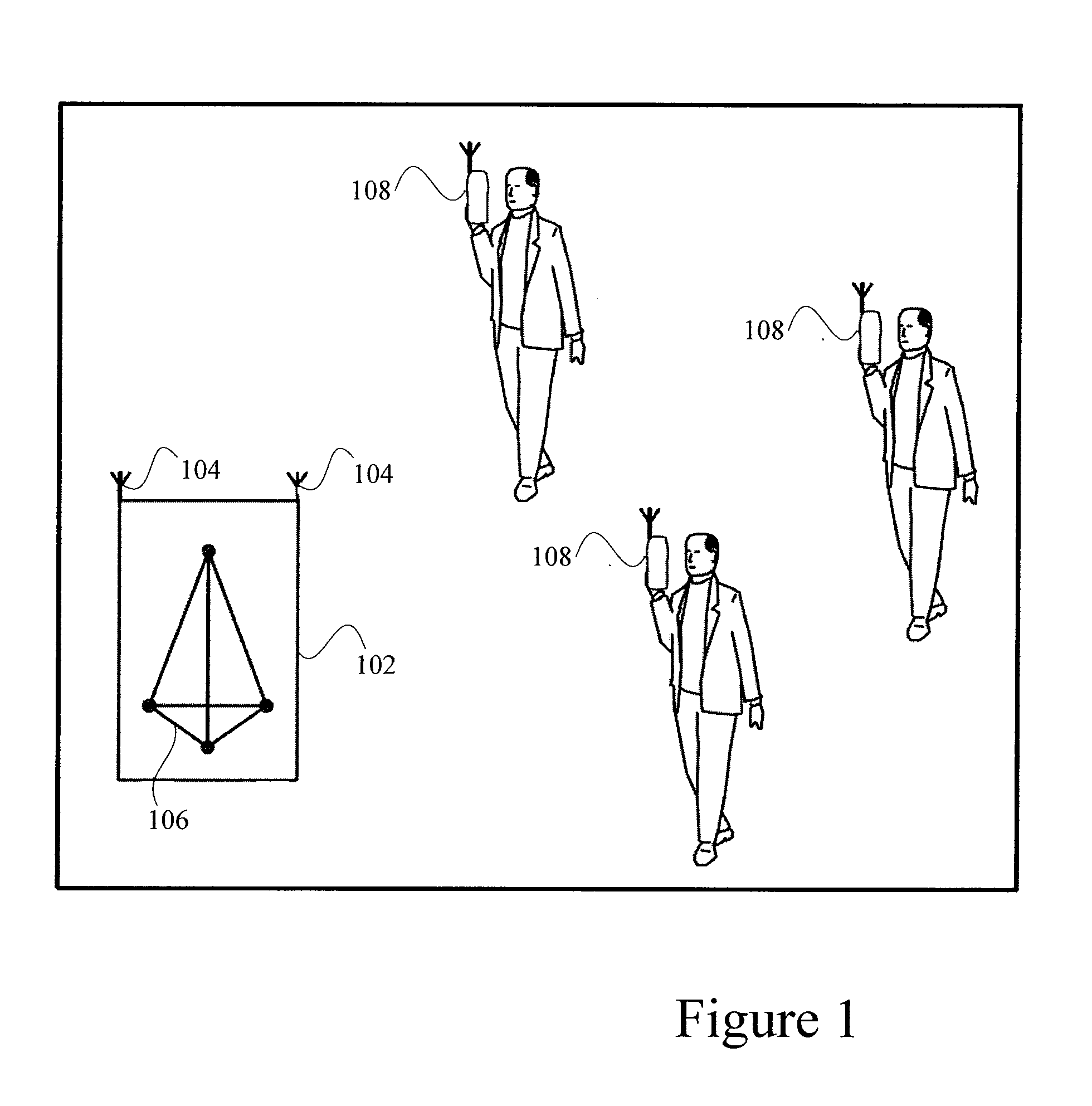

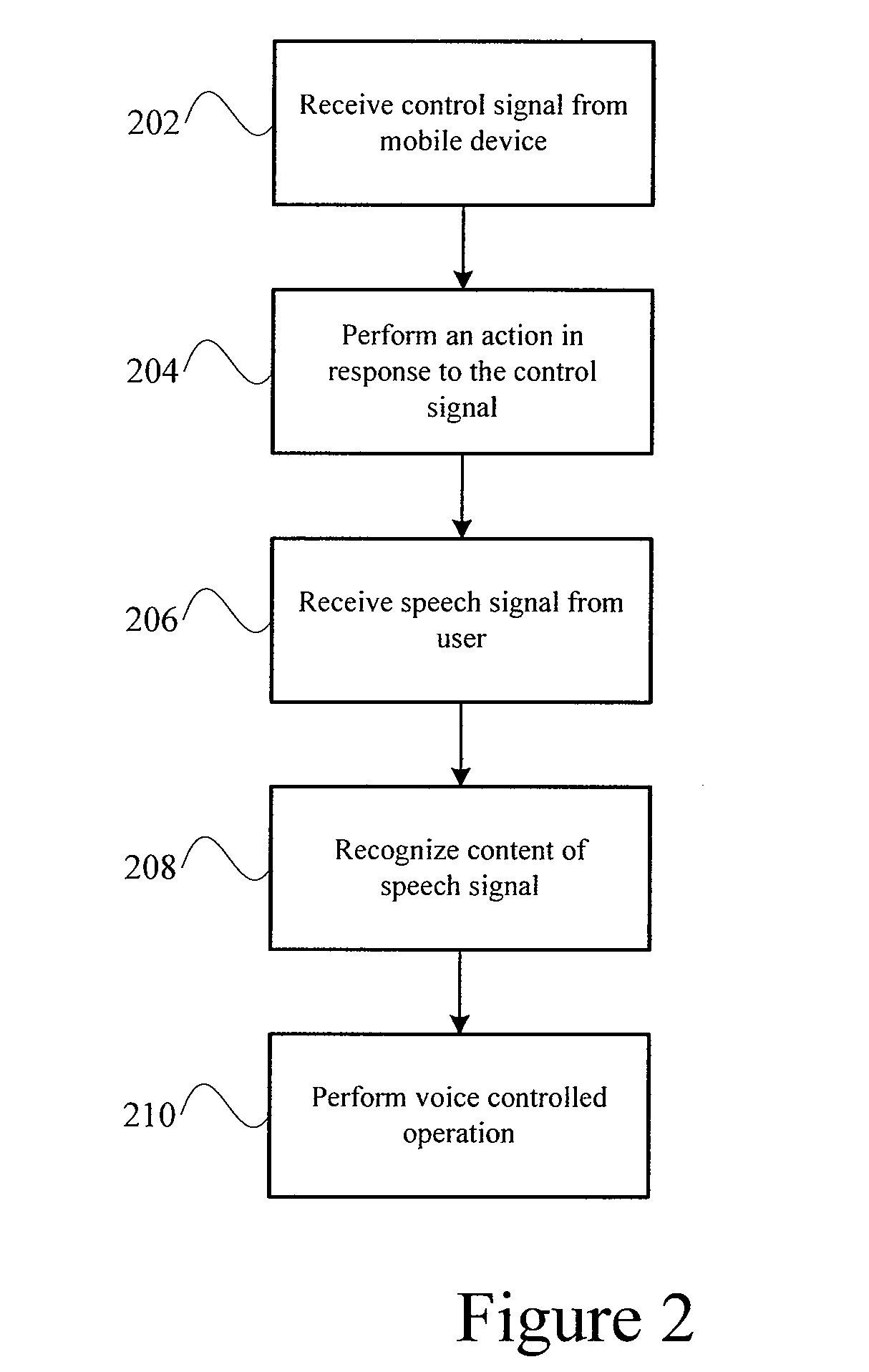

Voice control system

InactiveUS20080262849A1Improve speech recognition performanceSpeech recognitionControl signalControl system

A voice control system allows a user to control a device through voice commands. The voice control system includes a speech recognition unit that receives a control signal from a mobile device and a speech signal from a user. The speech recognition unit configures speech recognition settings in response to the control signal to improve speech recognition.

Owner:NUANCE COMM INC

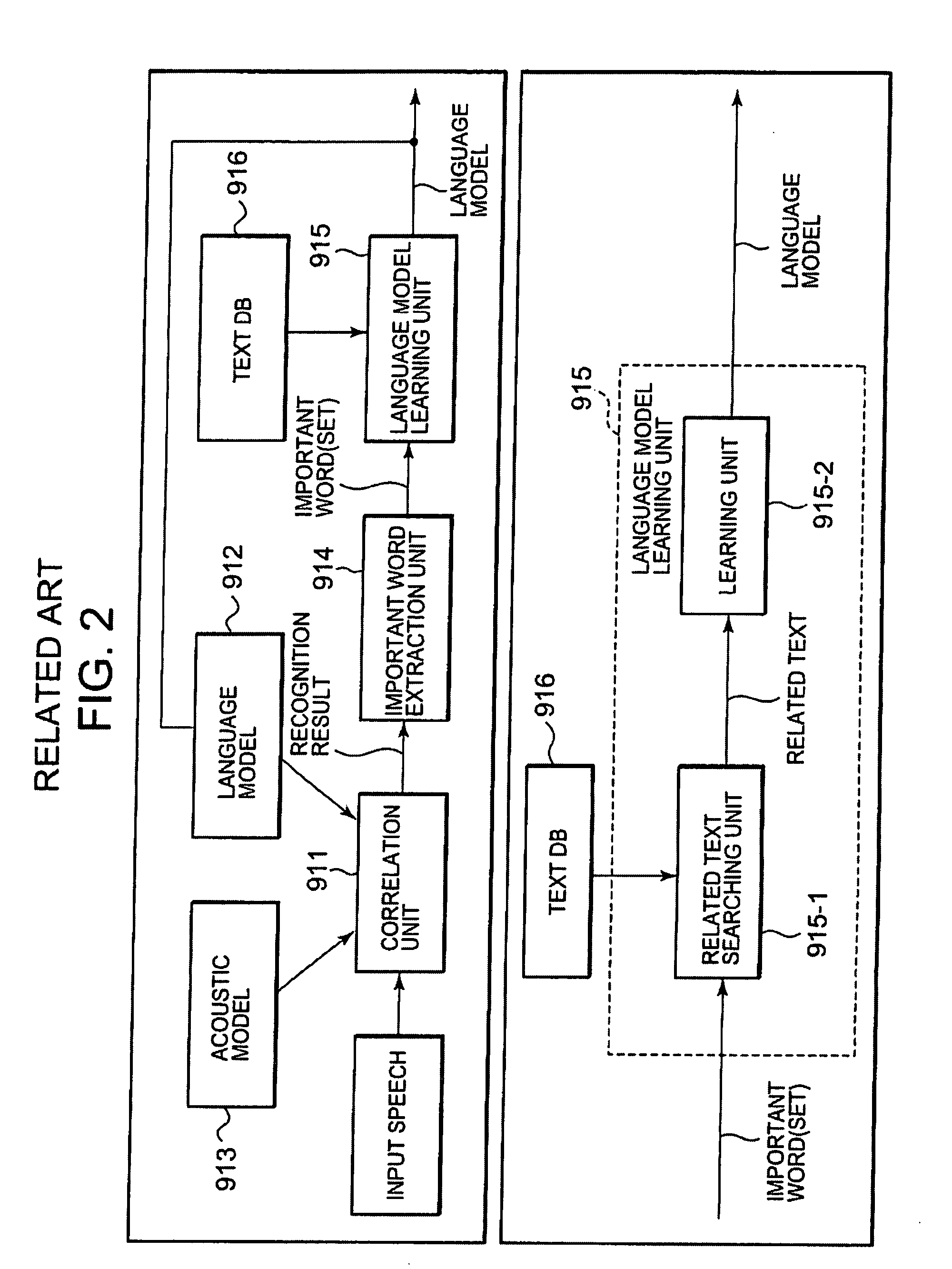

Speech recognition method, speech recognition system, and server thereof

InactiveUS20080228480A1Improve speech recognition rateImprove speech recognition performanceSpeech recognitionModel selectionSpeech identification

A speech recognition method comprises model selection step which selects a recognition model based on characteristic information of input speech and speech recognition step which translates input speech into text data based on the selected recognition model.

Owner:NEC CORP

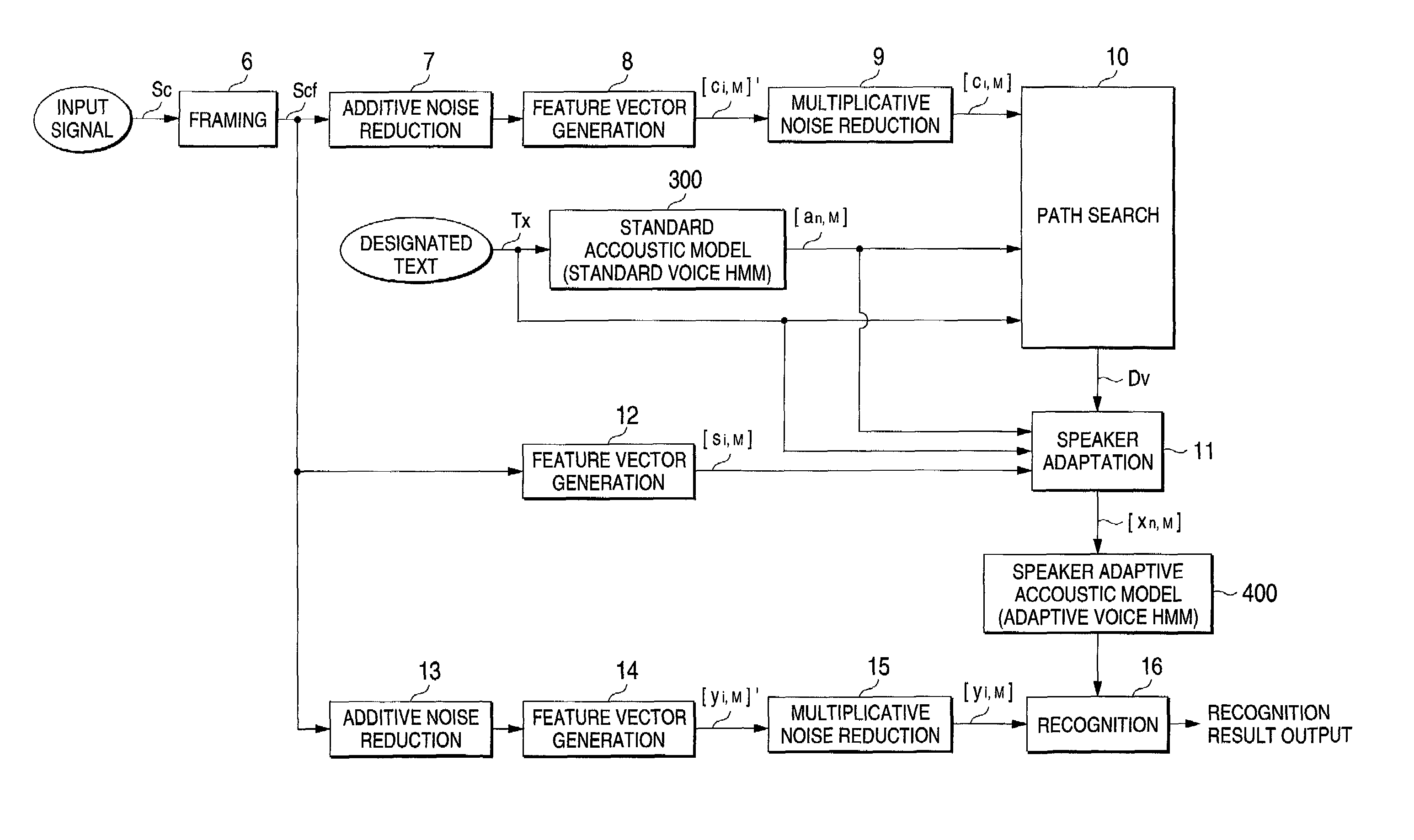

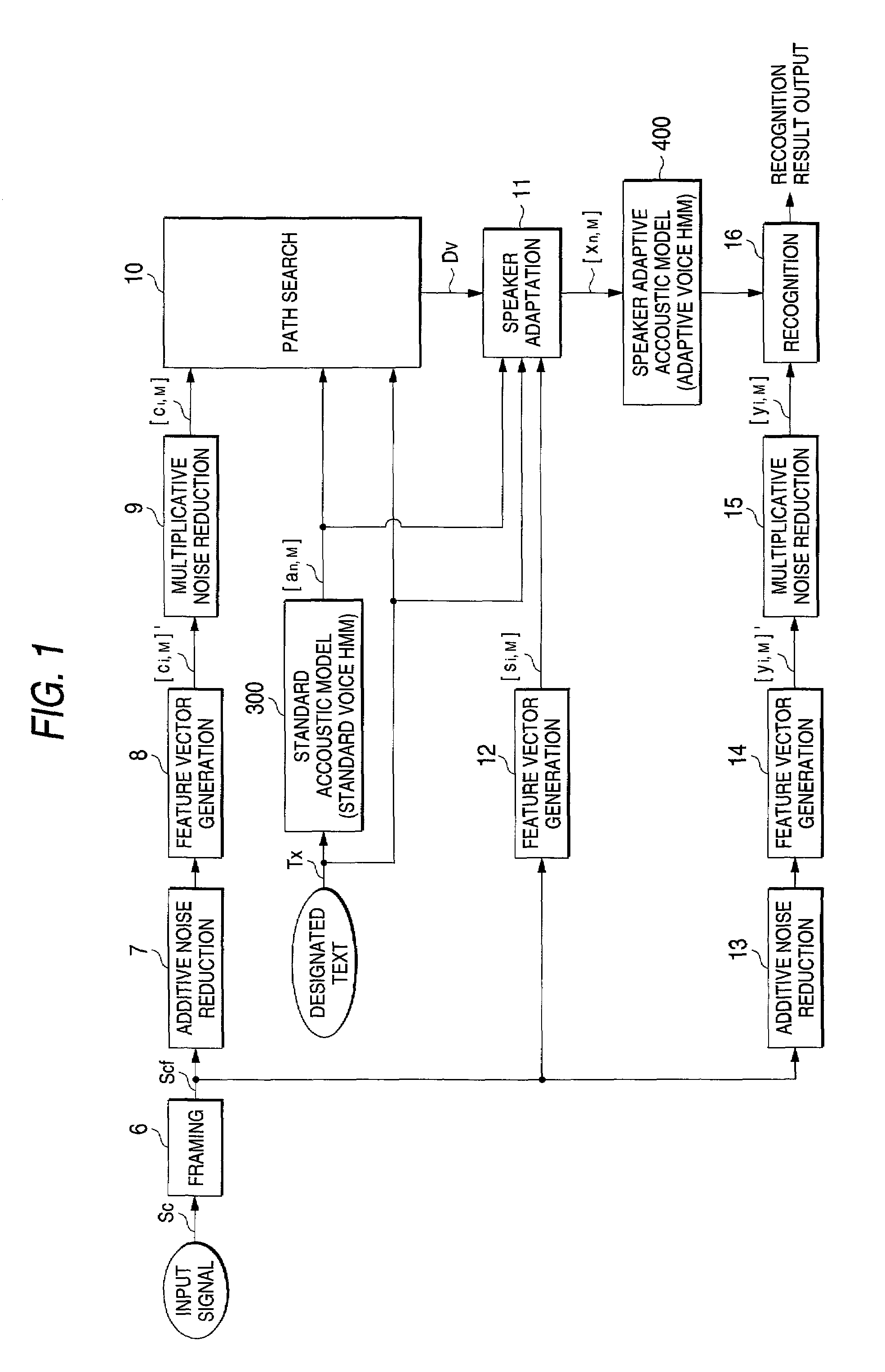

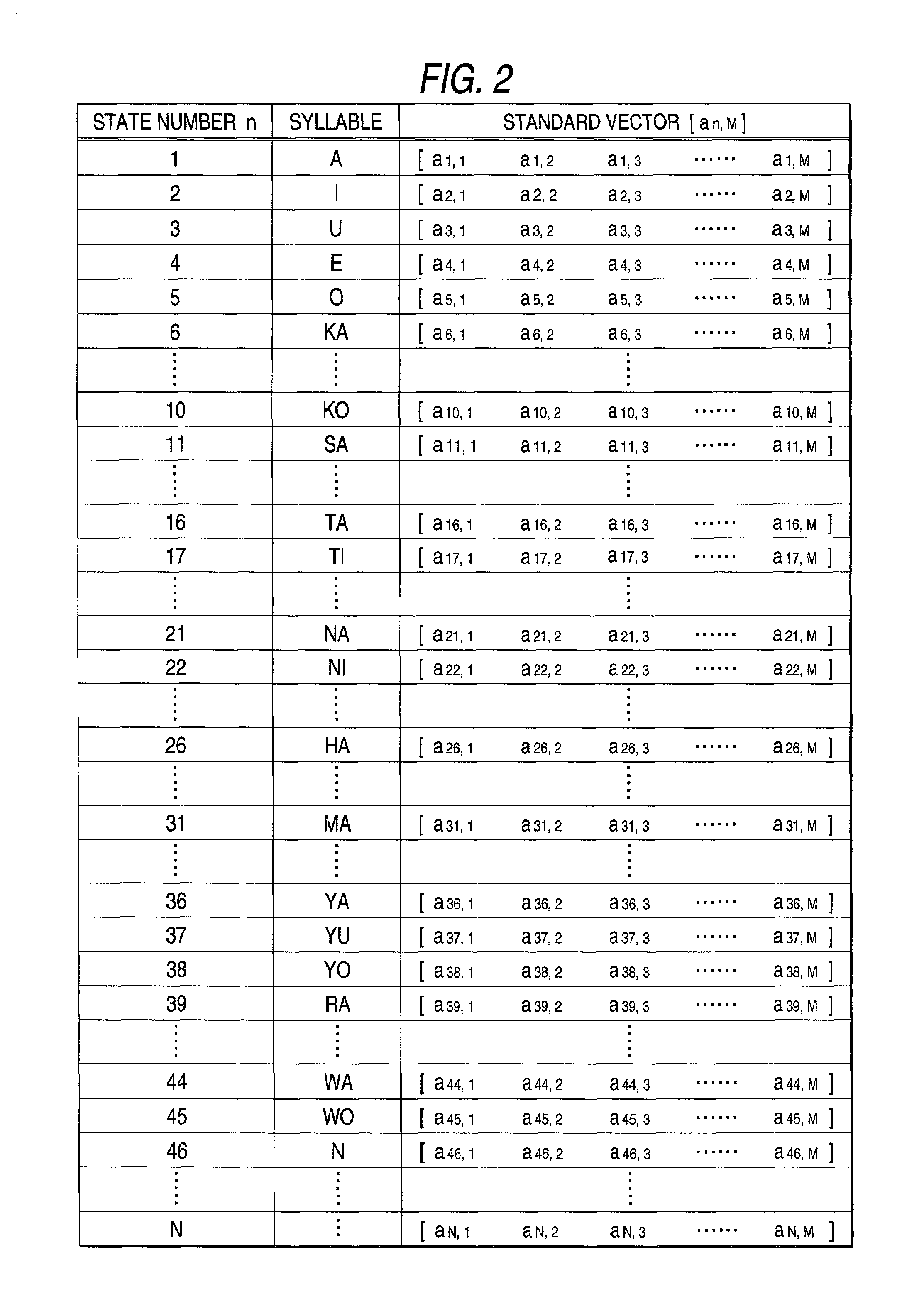

Speech recognition system with an adaptive acoustic model

InactiveUS7065488B2Improve speech recognition performanceReduce noiseSpeech recognitionFeature vectorAcoustic model

At the time of the speaker adaptation, first feature vector generation sections (7, 8, 9) generate a feature vector series [Ci, M] from which the additive noise and multiplicative noise are removed. A second feature vector generation section (12) generates a feature vector series [Si, M] including the features of the additive noise and multiplicative noise. A path search section (10) conducts a path search by comparing the feature vector series [Ci, m] to the standard vector [an, m] of the standard voice HMM (300). When the speaker adaptation section (11) conducts correlation operation on an average feature vector [S^n, m] of the standard vector [an, m] corresponding to the path search result Dv and the feature vector series [Si, m], the adaptive vector [xn, m] is generated. The adaptive vector [xn, m] updates the feature vector of the speaker adaptive acoustic model (400) used for the speech recognition.

Owner:PIONEER CORP

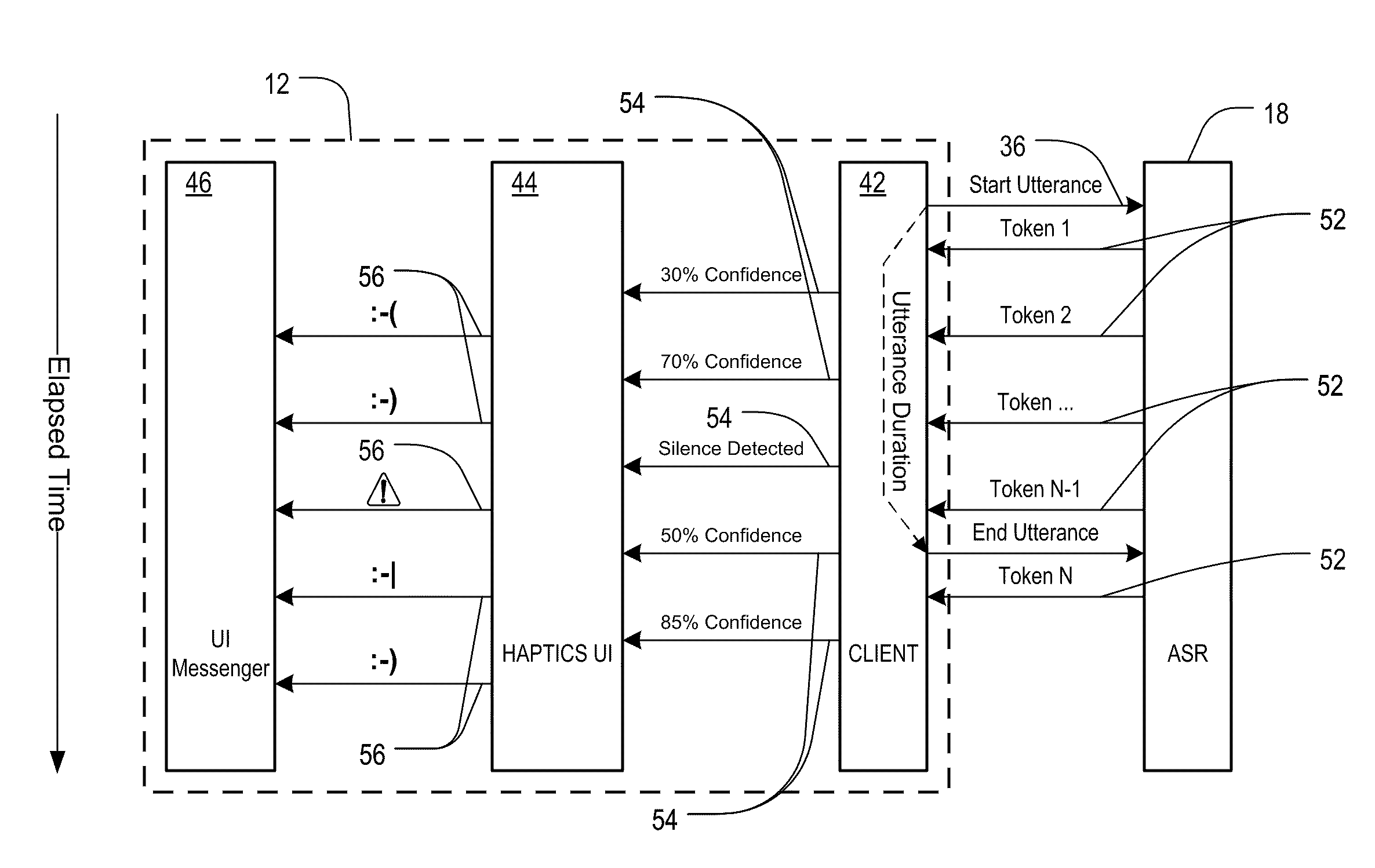

Methods, apparatuses, and systems for providing timely user cues pertaining to speech recognition

ActiveUS20100049525A1Improve understandingEasy to identifySpeech recognitionSpeech synthesisElectronic communicationWorld Wide Web

A method is provided of providing cues from am electronic communication device to a user while capturing an utterance. A plurality of cues associated with the user utterance are provided by the device to the user in at least near real-time. For each of a plurality of portions of the utterance, data representative of the respective portion of the user utterance is communicated from the electronic communication device to a remote electronic device. In response to this communication, data, representative of at least one parameter associated with the respective portion of the user utterance, is received at the electronic communication device. The electronic communication device provides one or more cues to the user based on the at least parameter. At least one of the cues is provided by the electronic communication device to the user prior to completion of the step of capturing the user utterance.

Owner:YAP +1

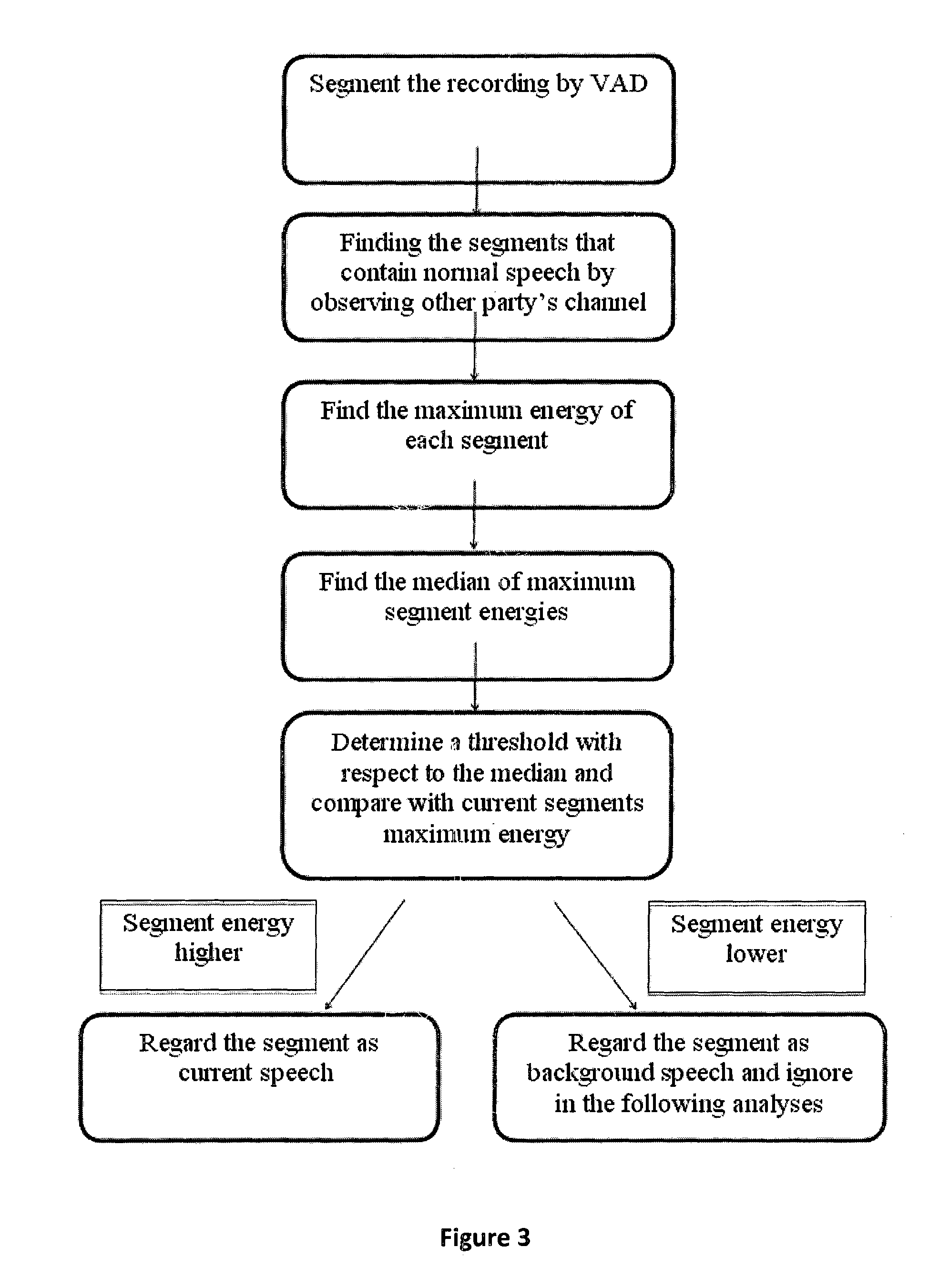

Speech analytics system and methodology with accurate statistics

ActiveUS20150350438A1Eliminate disadvantageLow costAutomatic call-answering/message-recording/conversation-recordingSpecial service for subscribersSpeech analyticsSpeech sound

The present invention relates to implementing new ways of automatically and robustly evaluating agent performance, customer satisfaction, campaign and competitor analysis in a call-center and it is comprising; analysis consumer server, call pre-processing module, speech-to-text module, emotion recognition module, gender identification module and fraud detection module.

Owner:SESTEK SES & ILETISIM BILGISAYAR TEKNOLOJILERI SANAYII & TICARET ANONIM SIRKETI

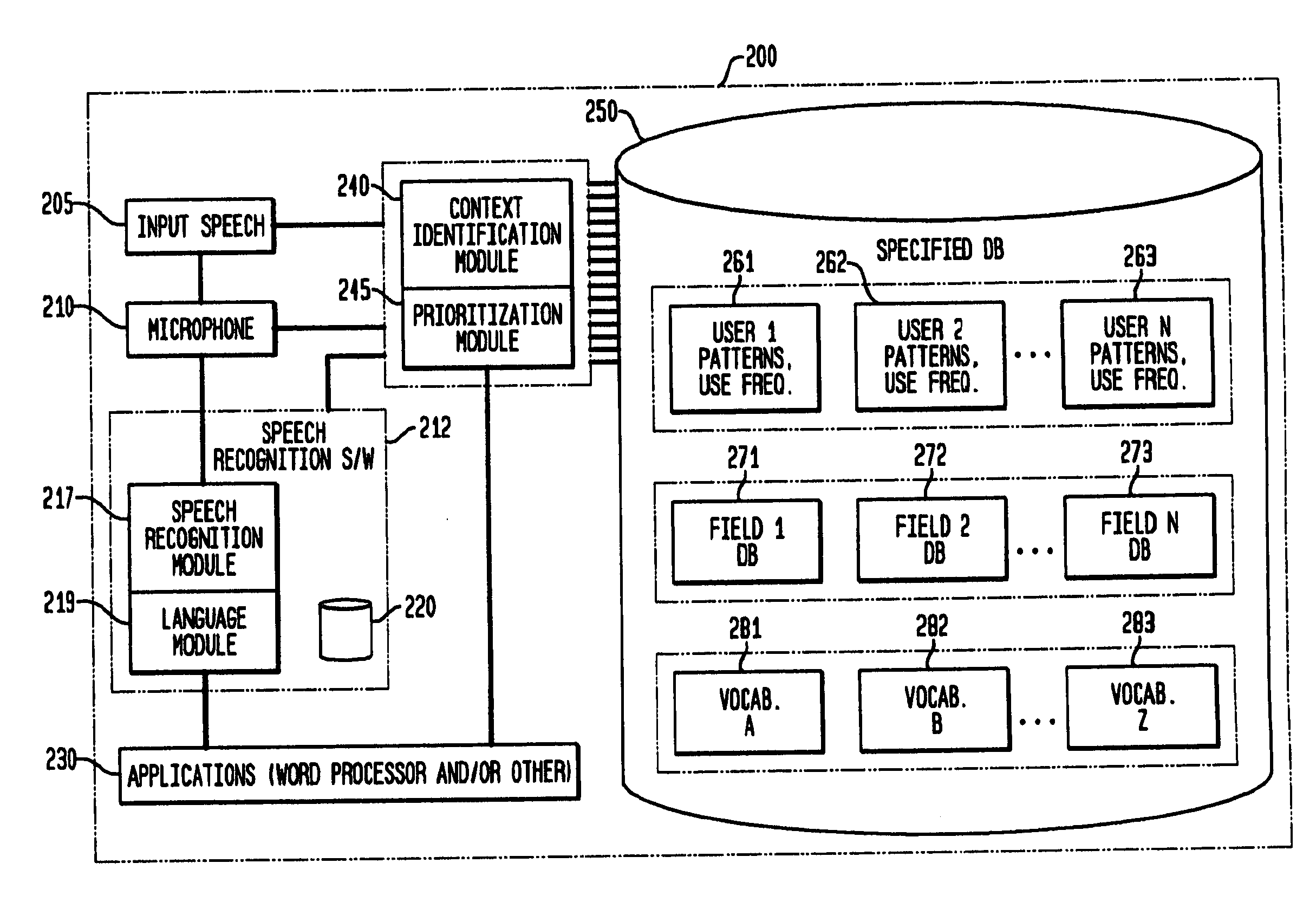

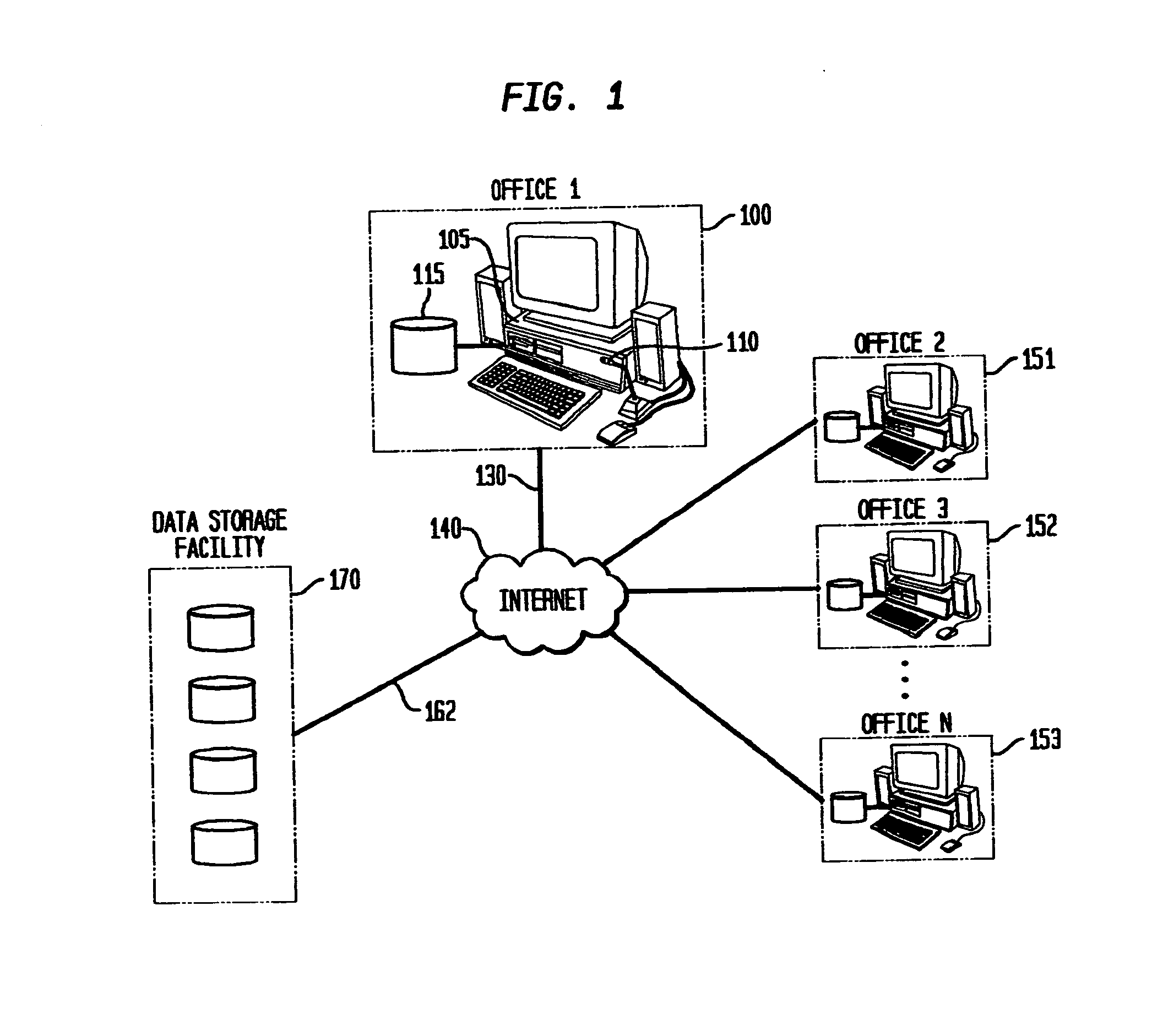

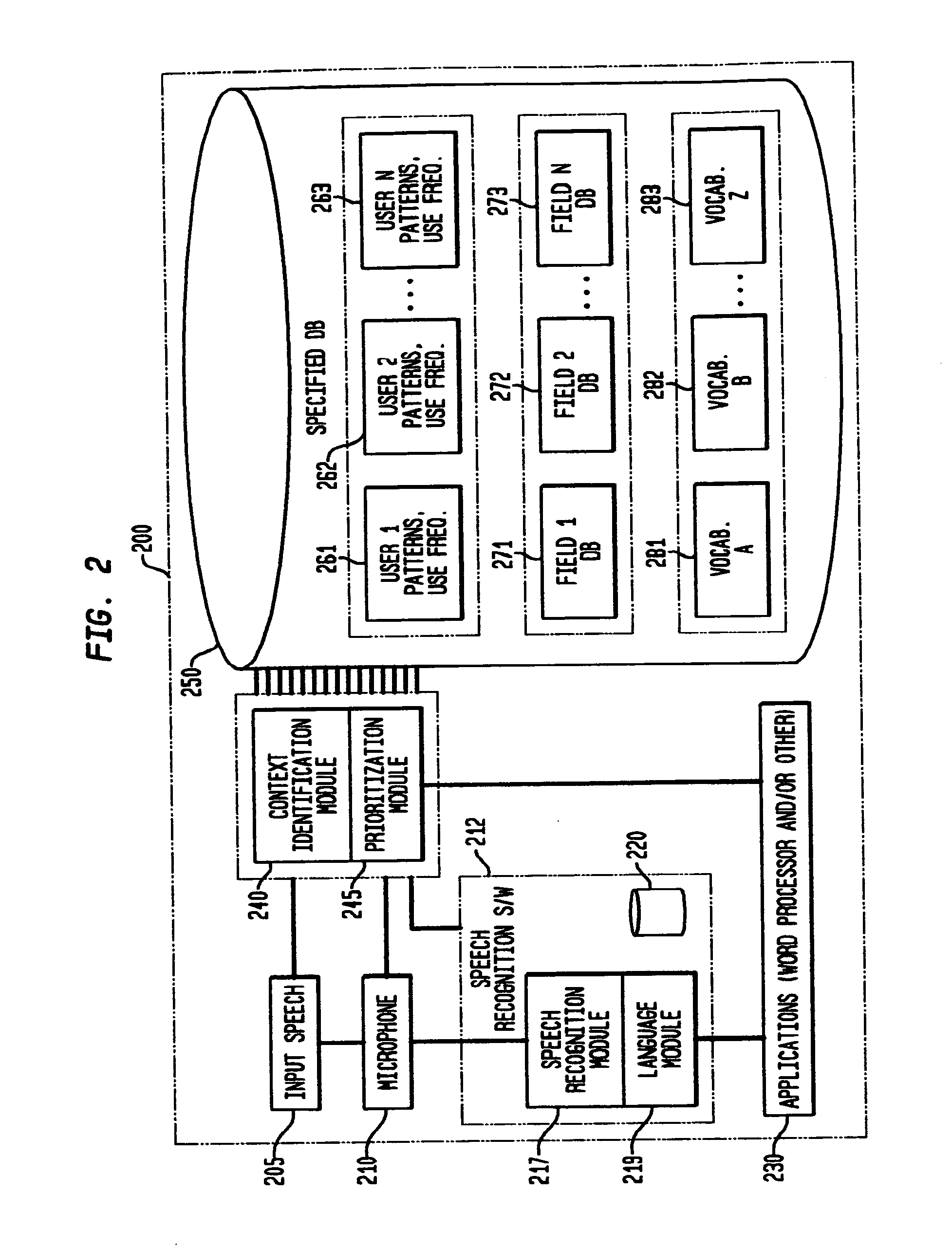

Method and apparatus for improving the transcription accuracy of speech recognition software

ActiveUS20110153620A1Improve accuracyImprove speech recognition performanceDigital data processing detailsSpeech recognitionSpeech identificationNumeric data

A virtual vocabulary database is provided for use with a with a particular user database as part of a speech recognition system. Vocabulary elements within the virtual database are imported from the user database and are tagged to include numerical data corresponding to the historical use of the vocabulary element within the user database. For each speech input, potential vocabulary element matches from the speech recognition system are provided to the virtual database software which creates virtual sub-vocabularies from the criteria according to predefined criteria templates. The software then applies vocabulary element weighting adjustments according to the virtual sub-vocabulary weightings and applies the adjustment to the default weighting provided by the speech recognition system. The modified weightings are returned with the associated vocabulary elements to the speech engine for selection of an appropriate match to the input speech.

Owner:COIFMAN ROBERT E

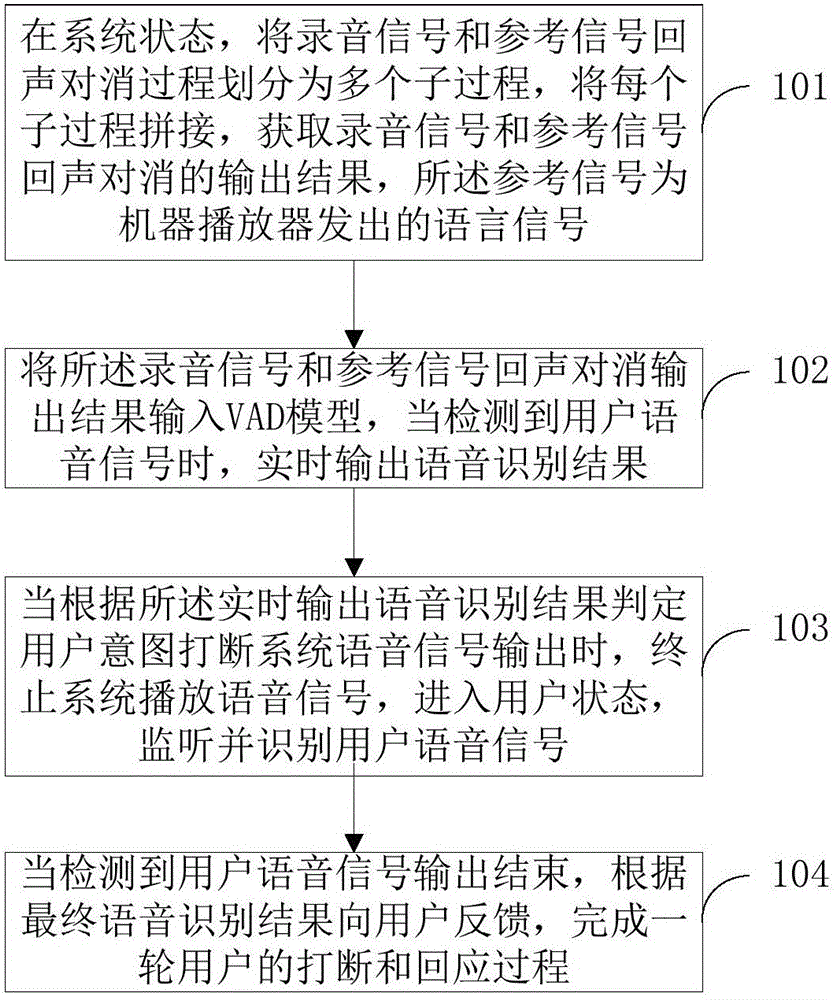

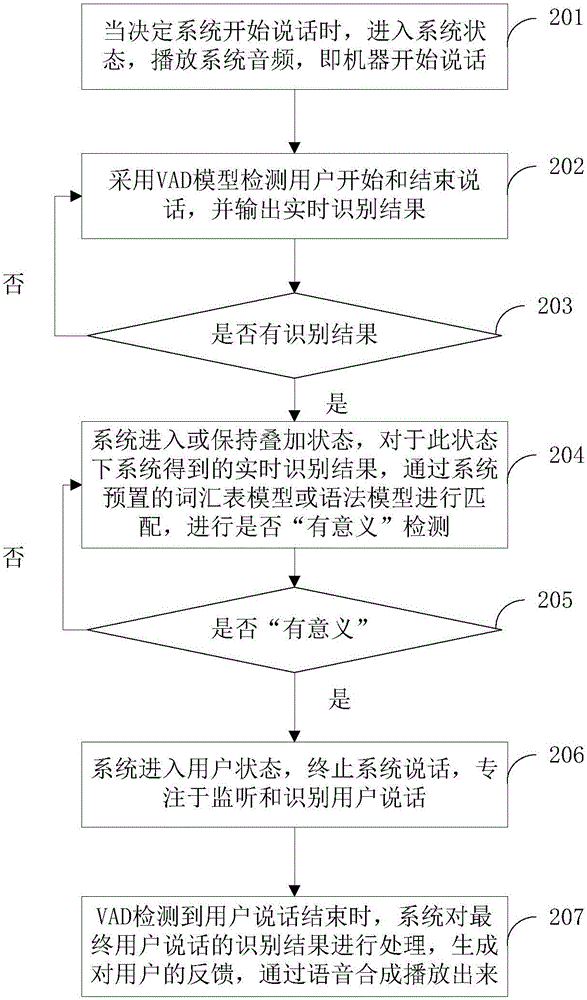

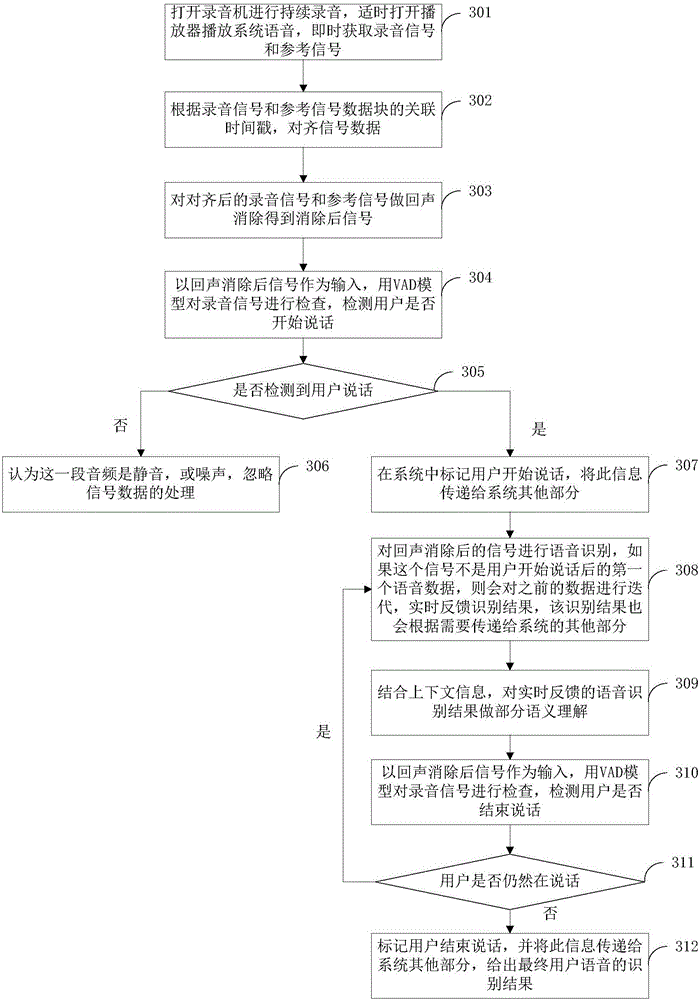

Man-machine voice interaction method and system

InactiveCN105070290ADialogue naturallyFast interactive responseSpeech recognitionMan machineSpeech identification

The invention discloses a man-machine voice interaction method. The method includes the following steps: dividing a recording signal and reference signal echo cancellation process into multiple sub-processes in a system state, splicing the sub-processes, and obtaining an output result of recording signal and reference signal echo cancellation, the reference signal being a voice signal emitted by a machine player; inputting the output result of recording signal and reference signal echo cancellation to a VAD model, and outputting a voice recognition result in real time when a user voice signal is detected; stopping a system from playing the voice signal when determining, based on the real-time output voice recognition result, the intention of a user and interrupting output of the system voice signal, entering a user state, and monitoring and recognizing the user voice signal; and sending feedback to the user according to the final voice recognition result when detecting that the output of the user voice signal is finished, and completing the interruption and responding process of one round of users. Interruption behaviors of users during an interaction process can be effectively detected, talking opportunities and contents of a machine can be decided, and thus the machine becomes smarter.

Owner:AISPEECH CO LTD

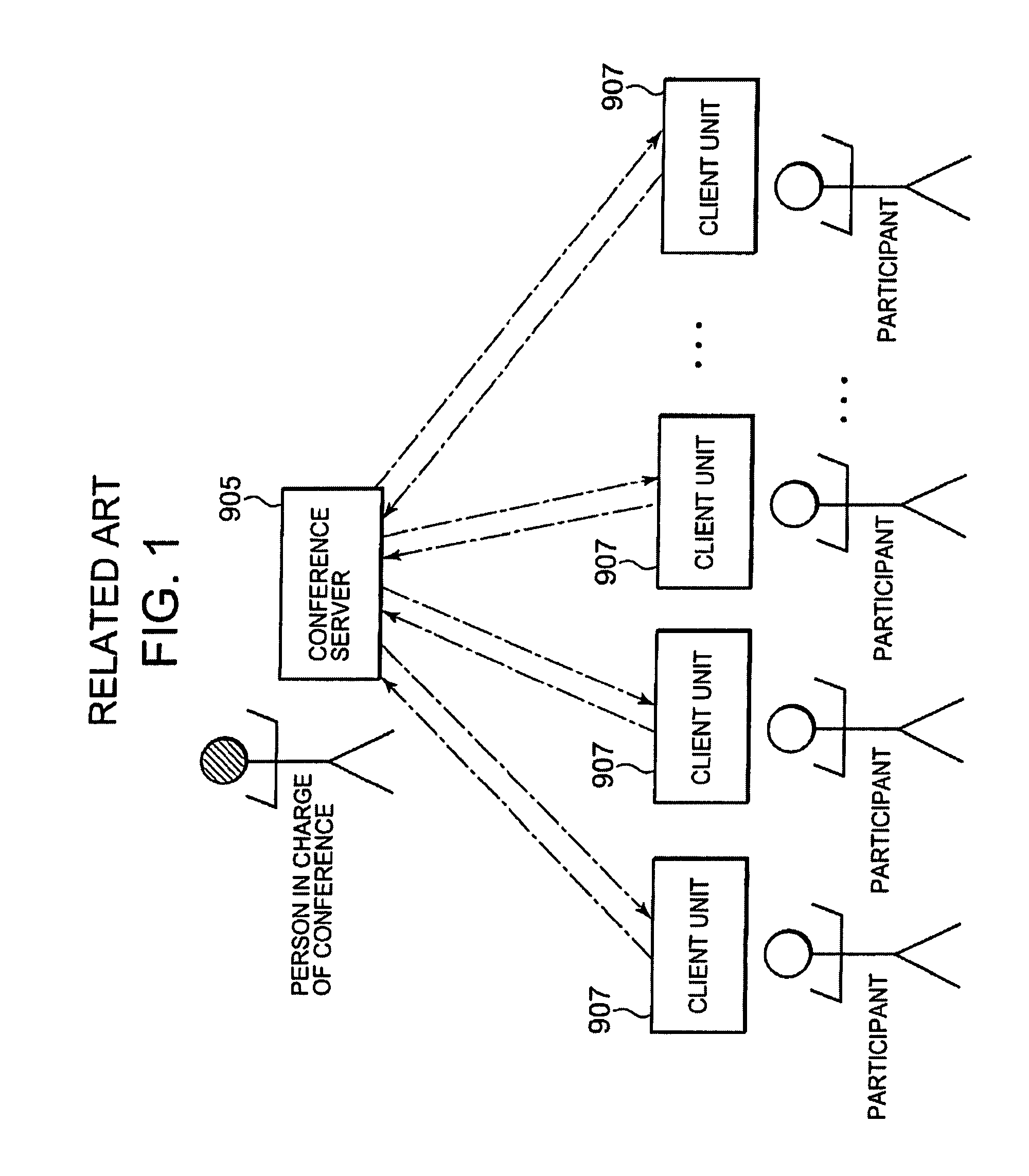

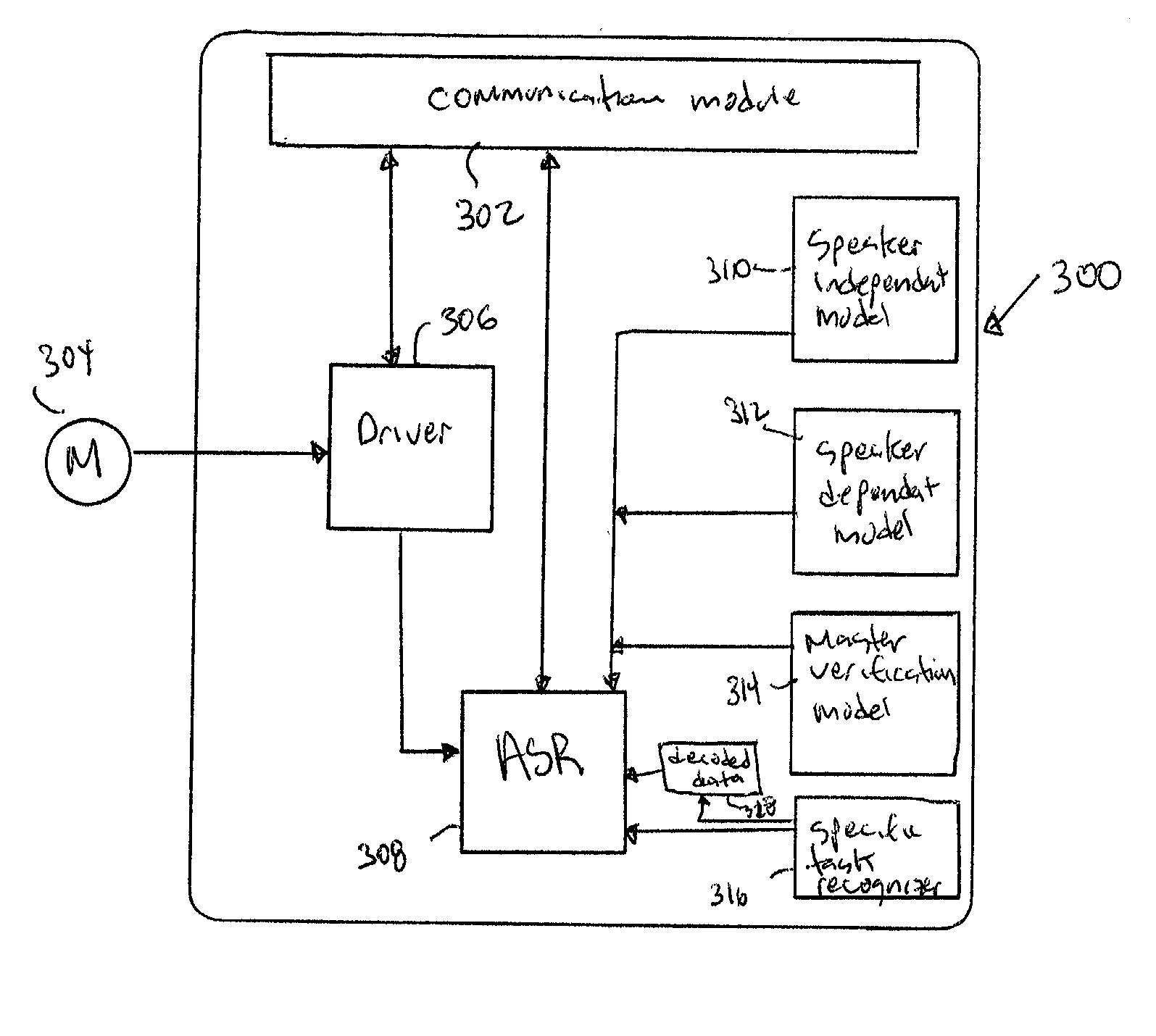

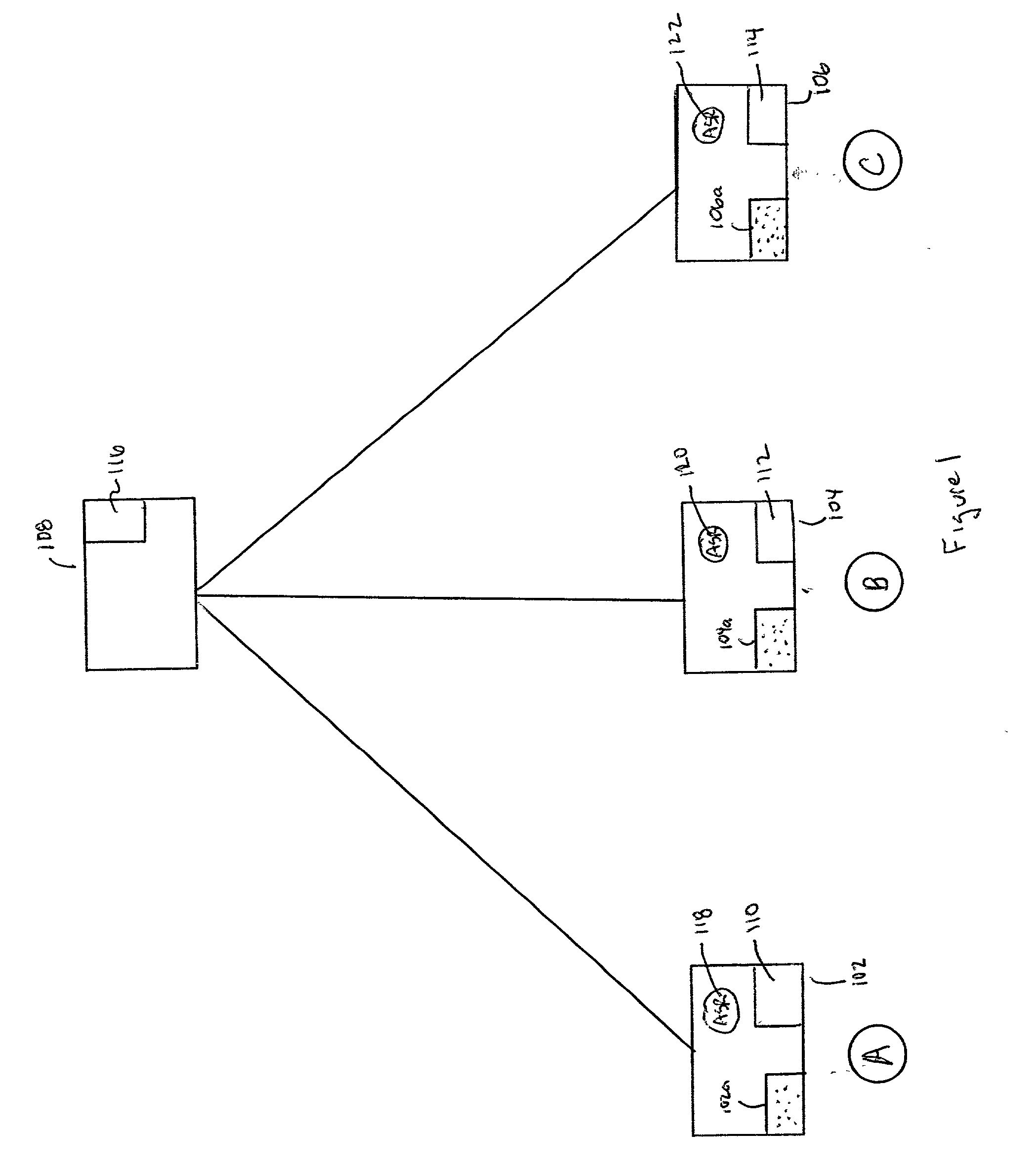

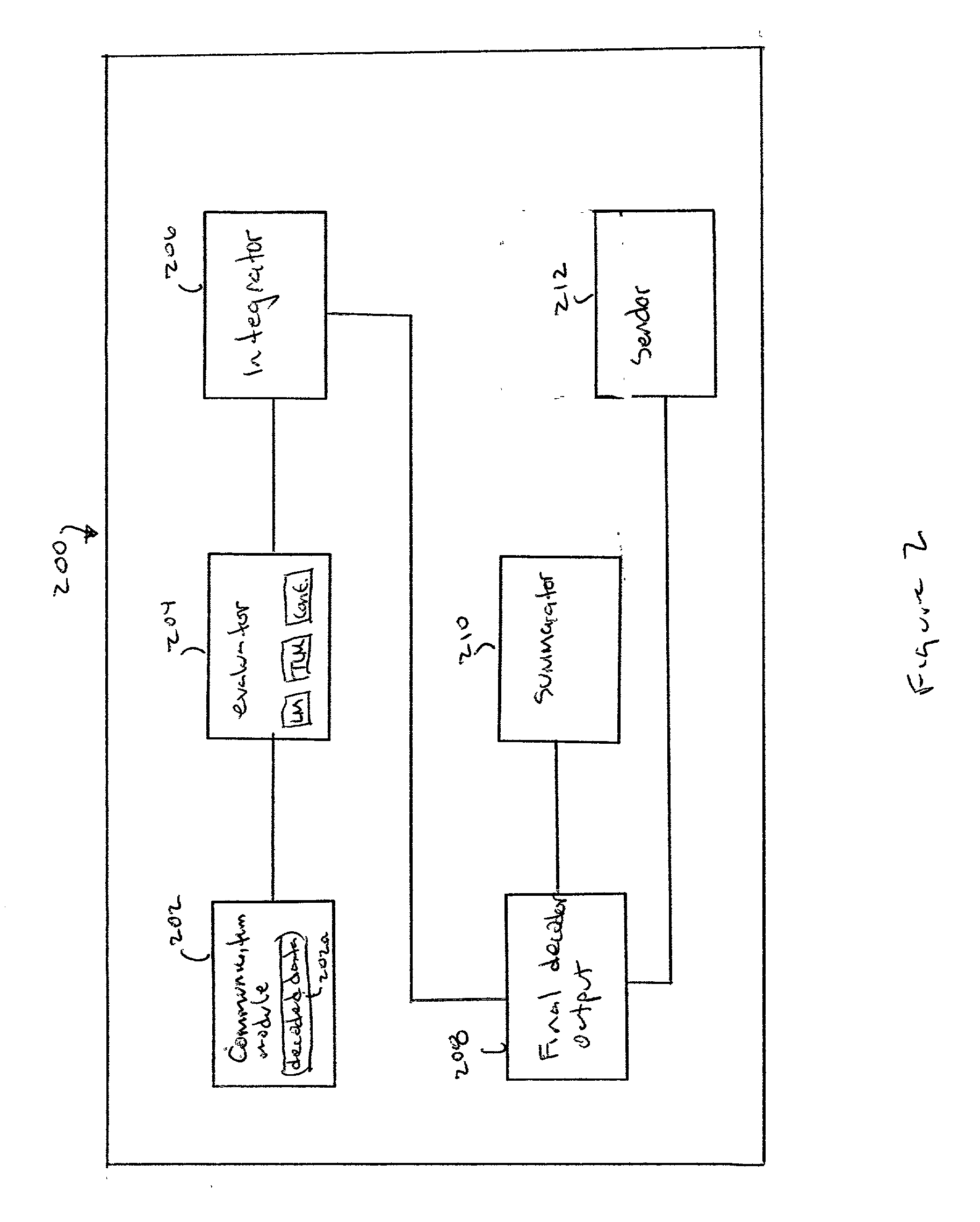

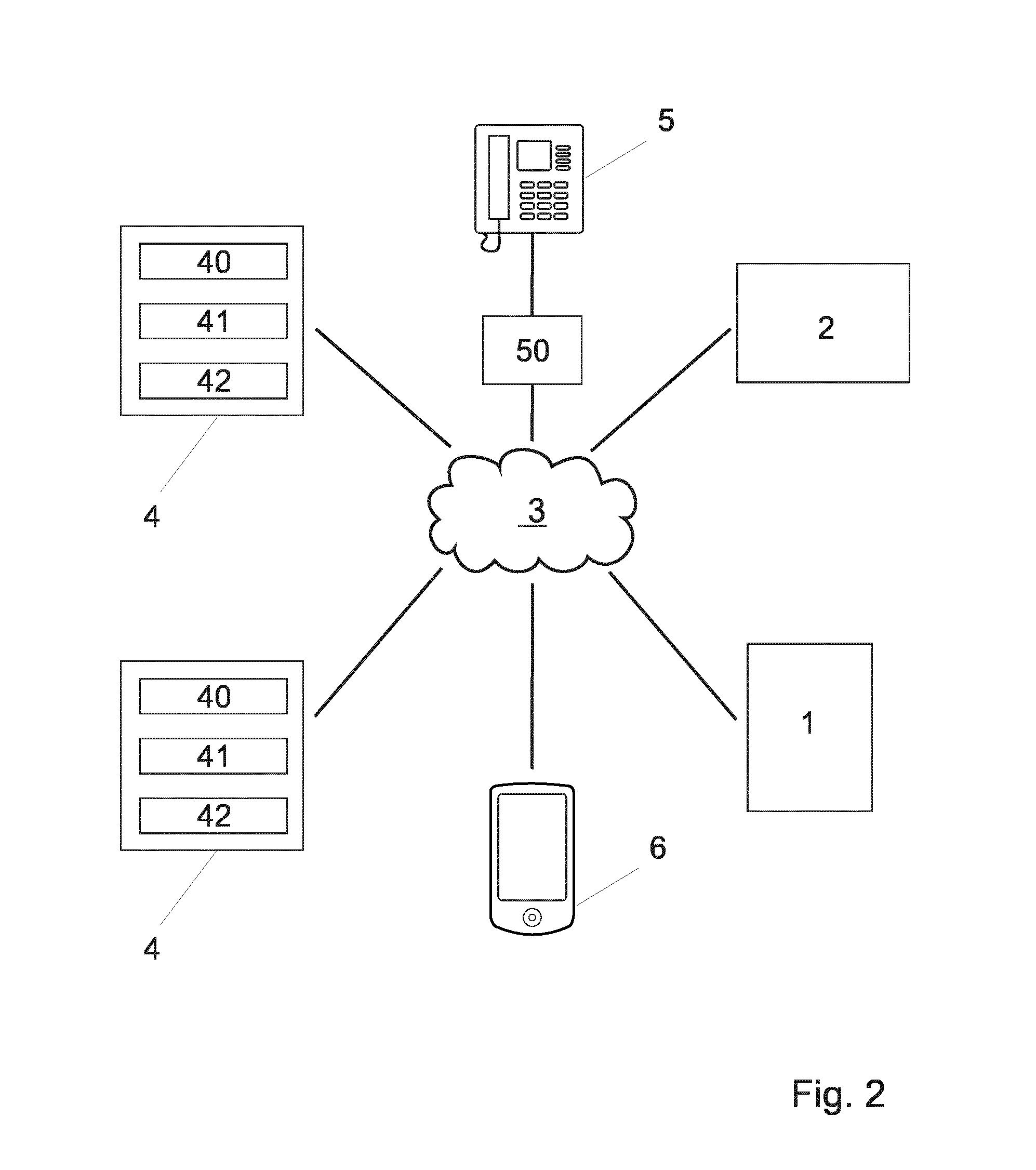

Collaboration of multiple automatic speech recognition (ASR) systems

InactiveUS20030144837A1OptimizationImprove speech recognition performanceSpeech recognitionSpeech identificationAutomatic speech

A system and method for collaborating multiple ASR (automatic speech recognition) systems. The system and method analyzes voice data on various computers having speech recognition residing thereon. The speech recognition residing on the various computers may be different systems. The speech recognition systems detect voice data and recognize their respective masters. The master computer as well as those computers which did not recognize their master may analyze the voice data (evaluate) and then integrate this analyzed voice data into a single decoded output. In this manner, many different speakers, utilizing the system and method for collaborating multiple ASR systems, may have their voice data analyzed and integrated into a single decoded output, regardless of ASR systems.

Owner:IBM CORP

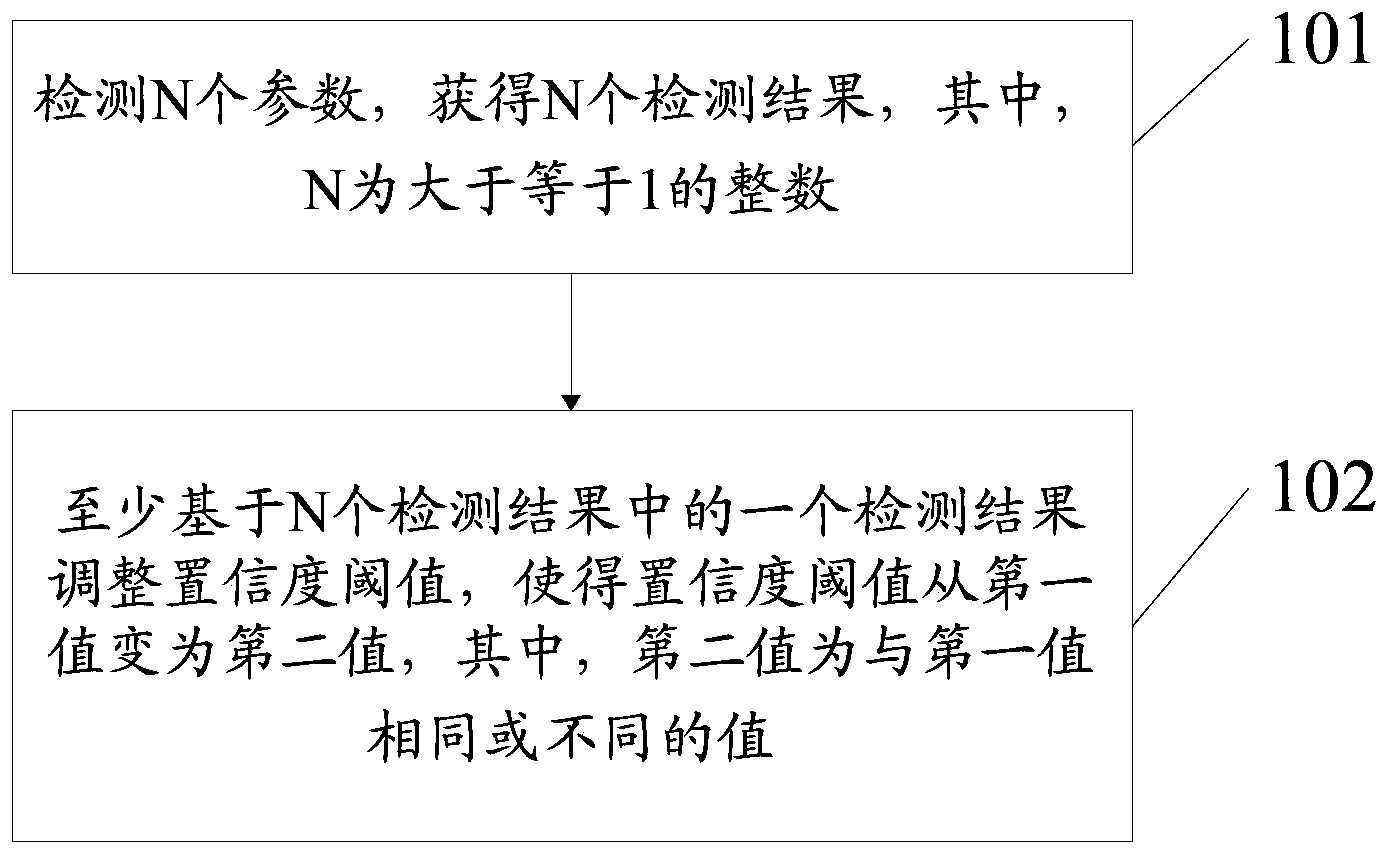

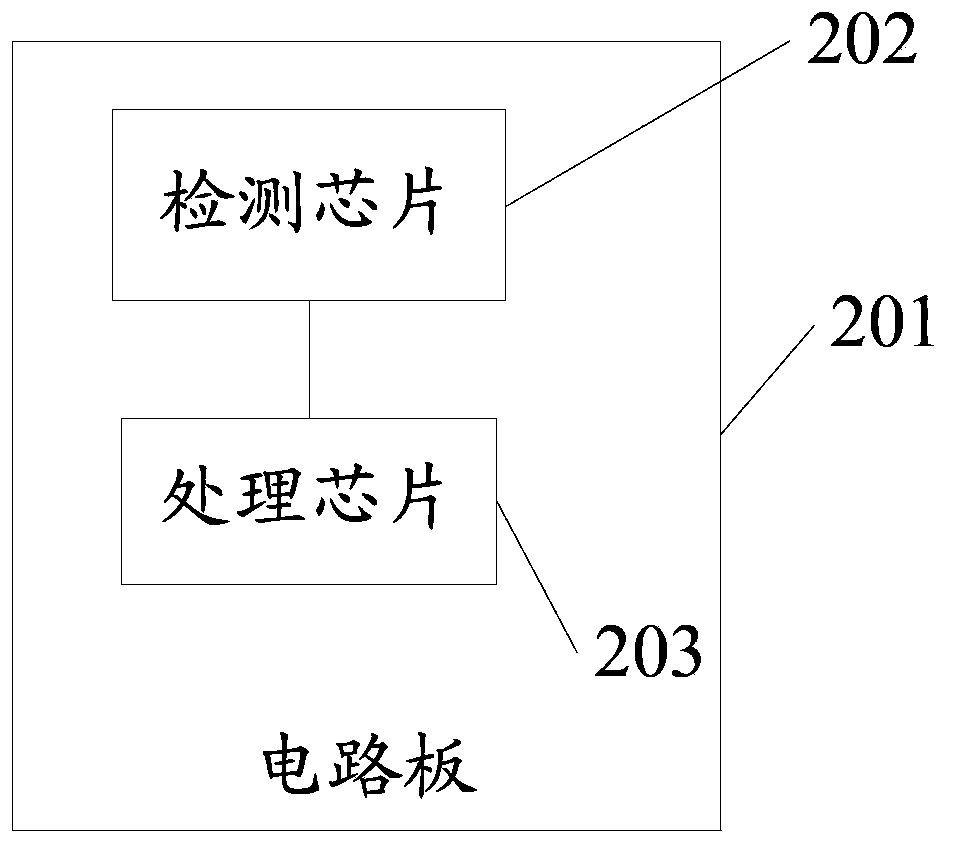

Method for adjusting confidence coefficient threshold of voice recognition and electronic device

ActiveCN103578468AReasonable judgmentAccurate judgmentSpeech recognitionPattern recognitionSubvocal recognition

Owner:LENOVO (BEIJING) CO LTD

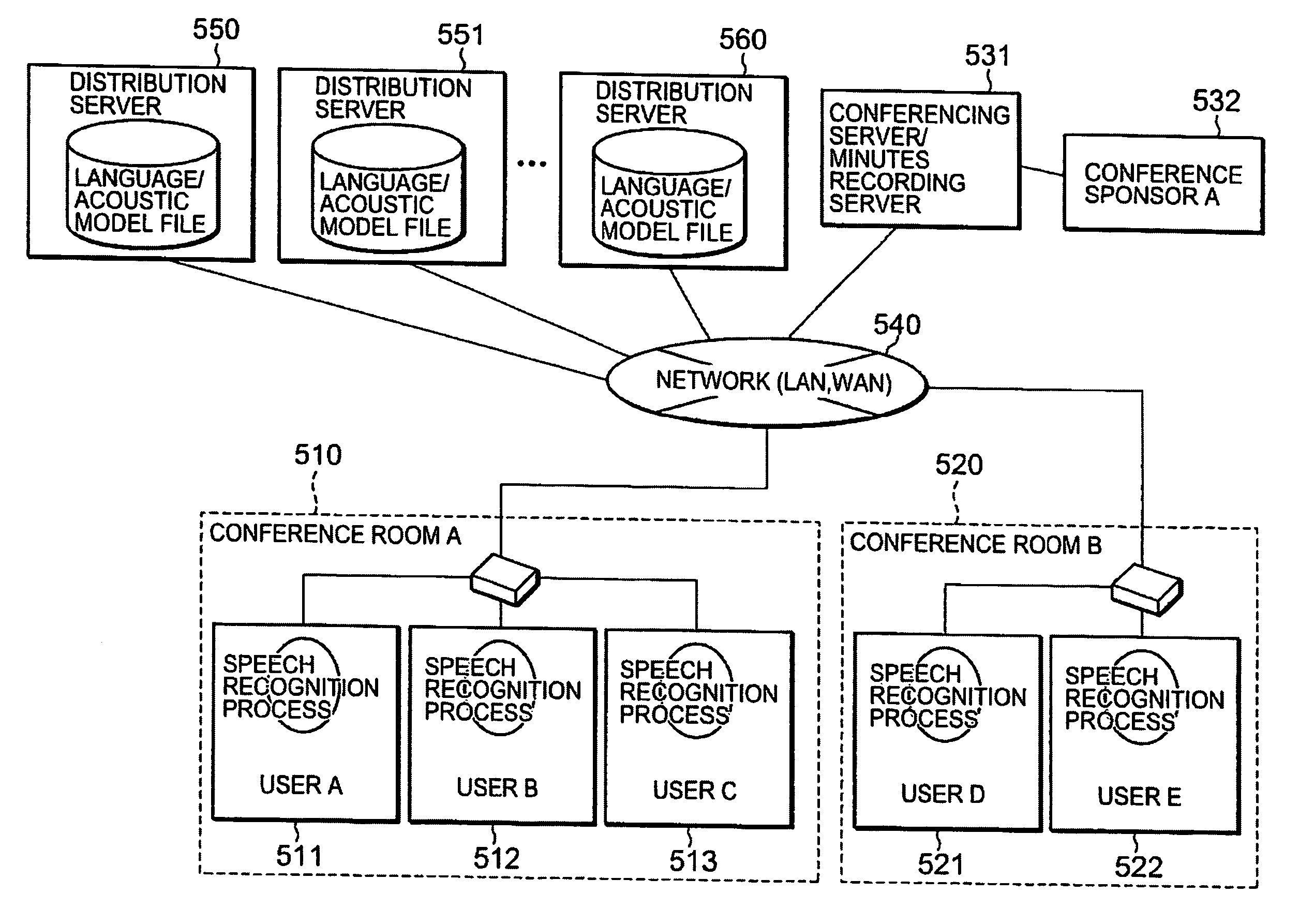

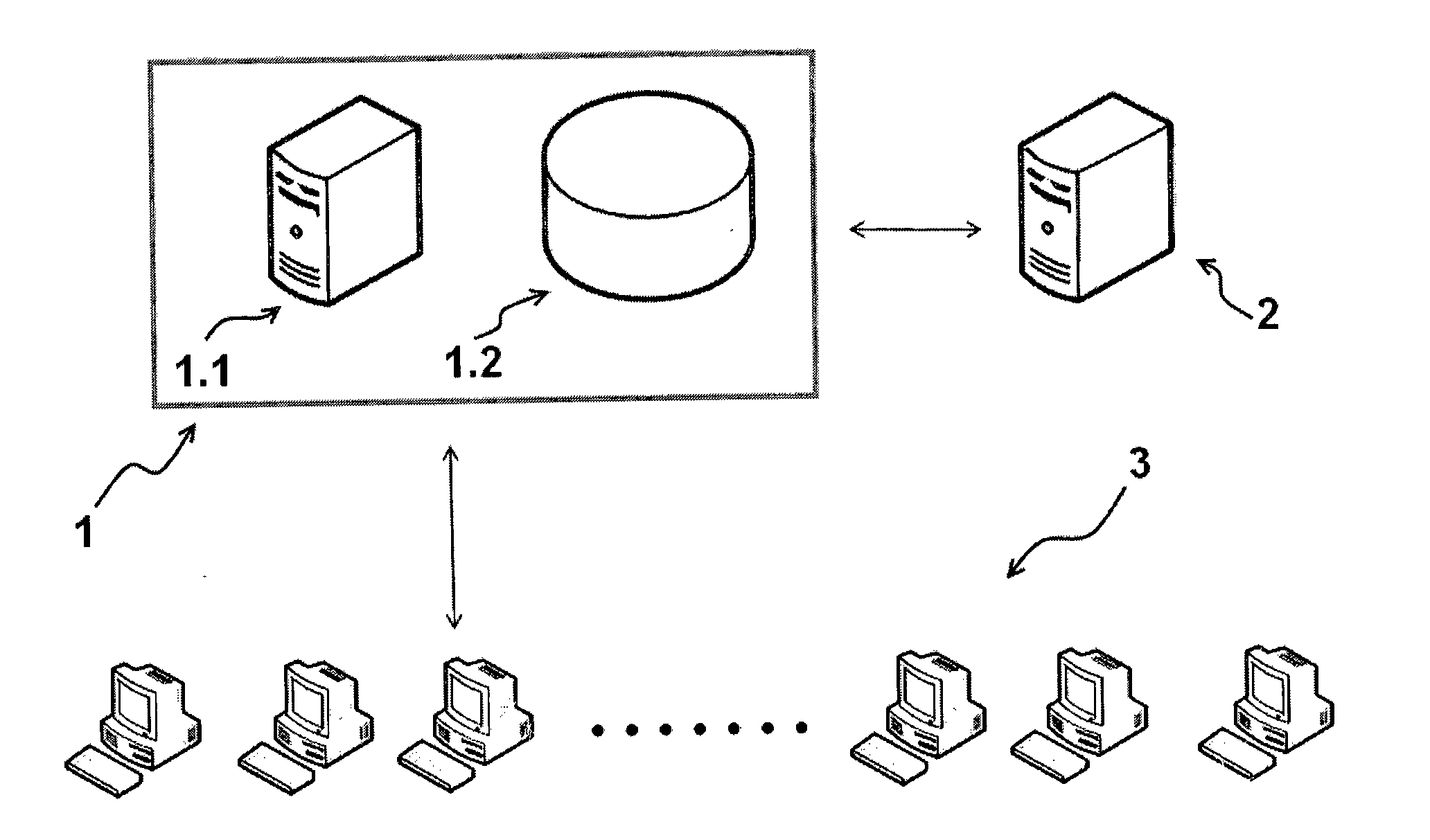

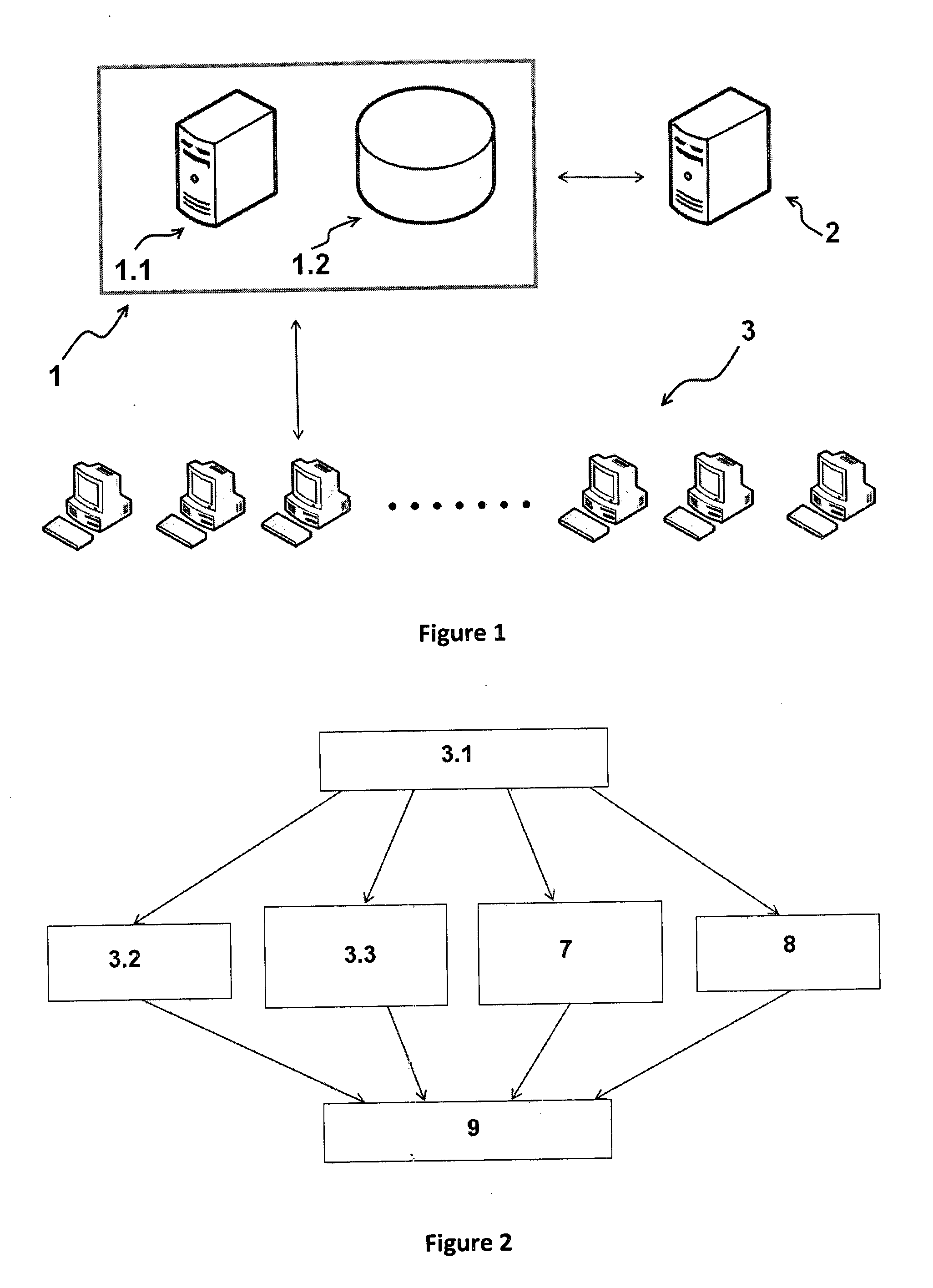

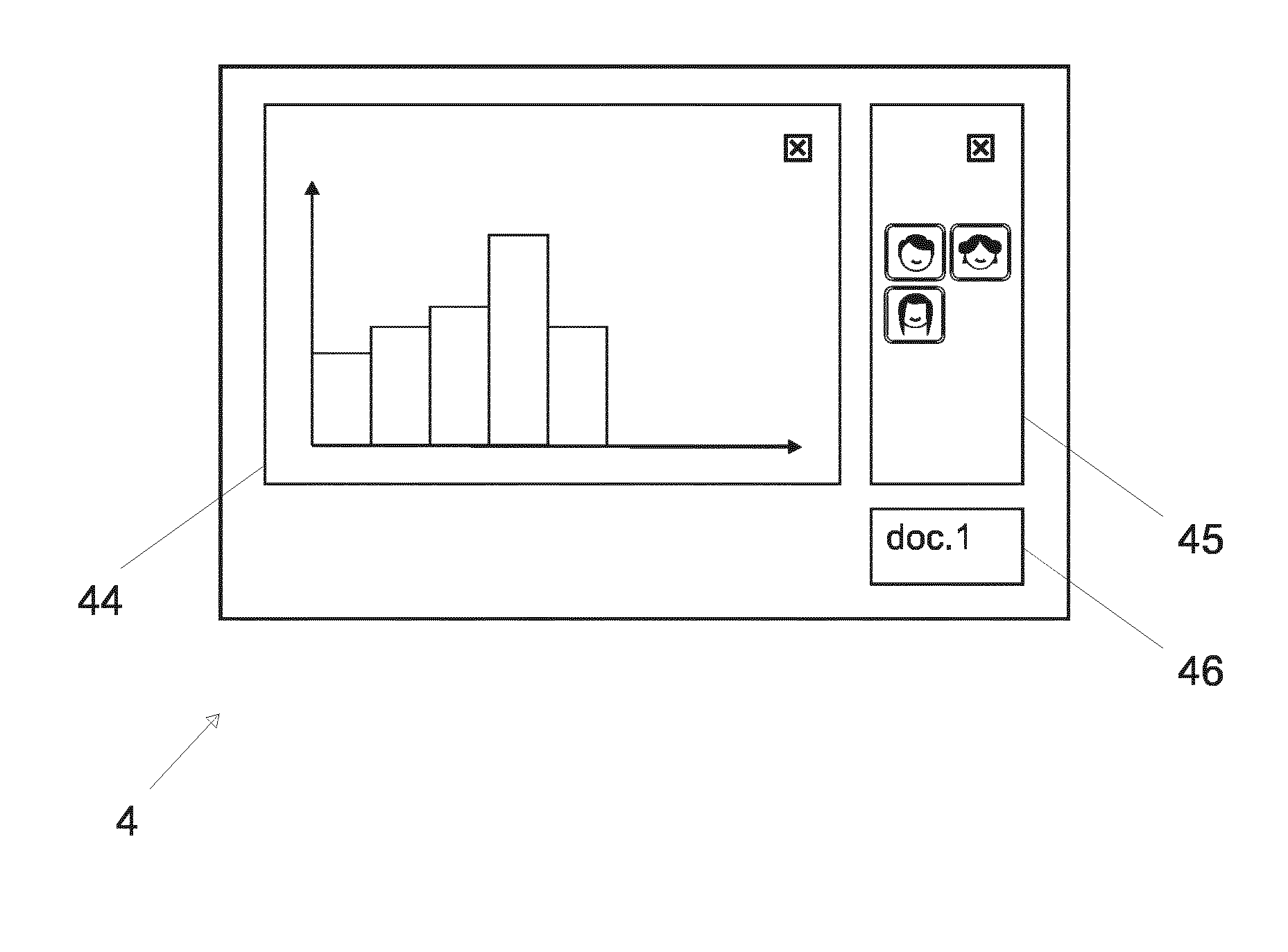

Method for preparing a transcript of a conversion

InactiveUS20140244252A1Quality improvementImprove speech recognition performanceSpeech recognitionData switching networksApplication programming interfaceAutomatic speech

A method for providing participants to a multiparty meeting with a transcript of the meeting, comprising the steps of: establishing an meeting among two or more participants; exchanging during said meeting voice data as well as documents; uploading at least a part of said voice data and at least a part of said documents to a remote speech recognition server (1), using an application programming interface of said remote speech recognition server; converting at least a part of said voice data to text with an automatic speech recognition system (13) in said remote speech recognition server, wherein said automatic speech recognition system uses said documents to improve the quality of speech recognition; building in said remote speech recognition server a computer object (120) embedding at least a part of said voice data, at least a part of said documents, and said text; making said computer object (120) available to at least one of said participant.

Owner:KOEMEI

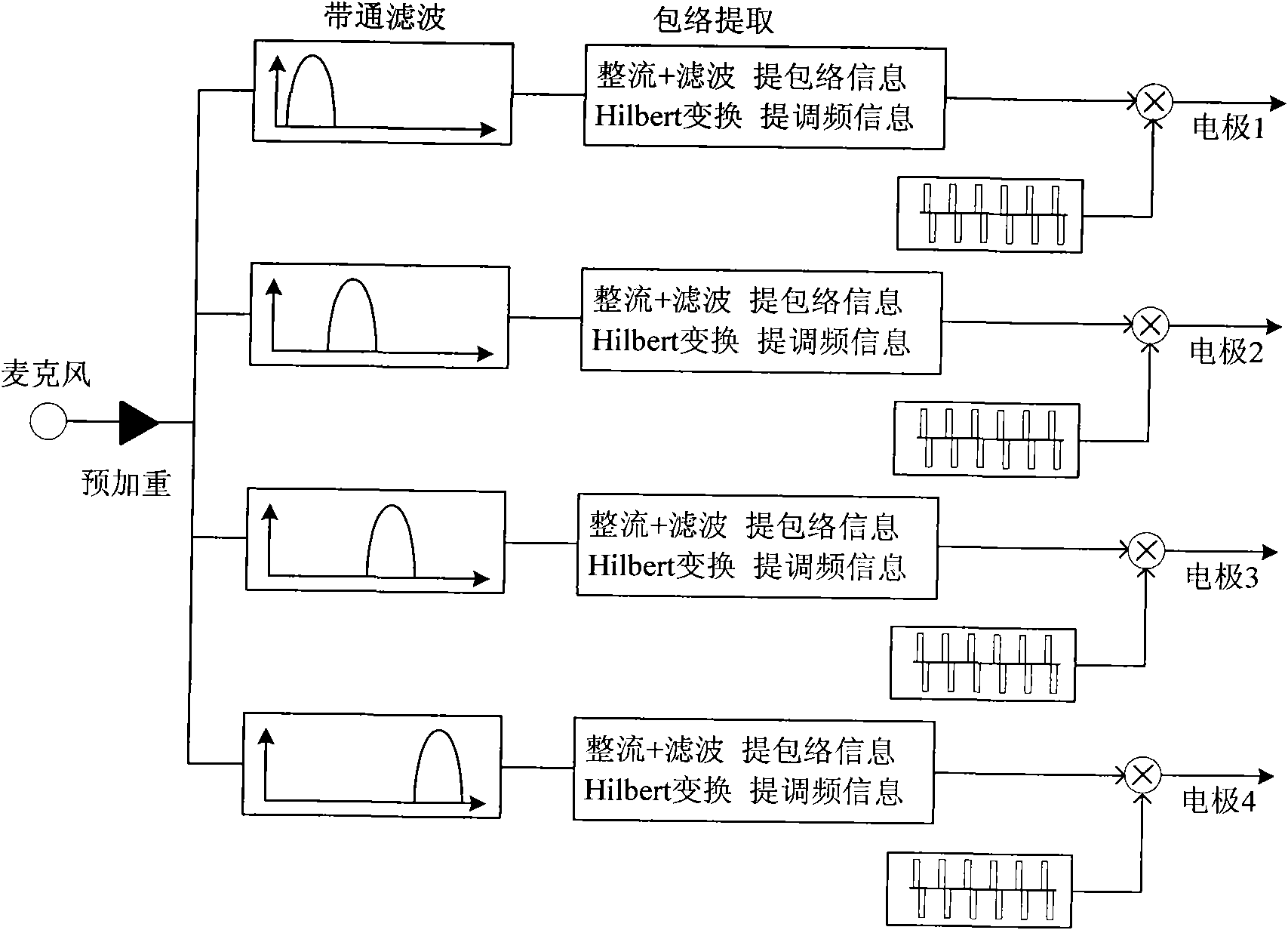

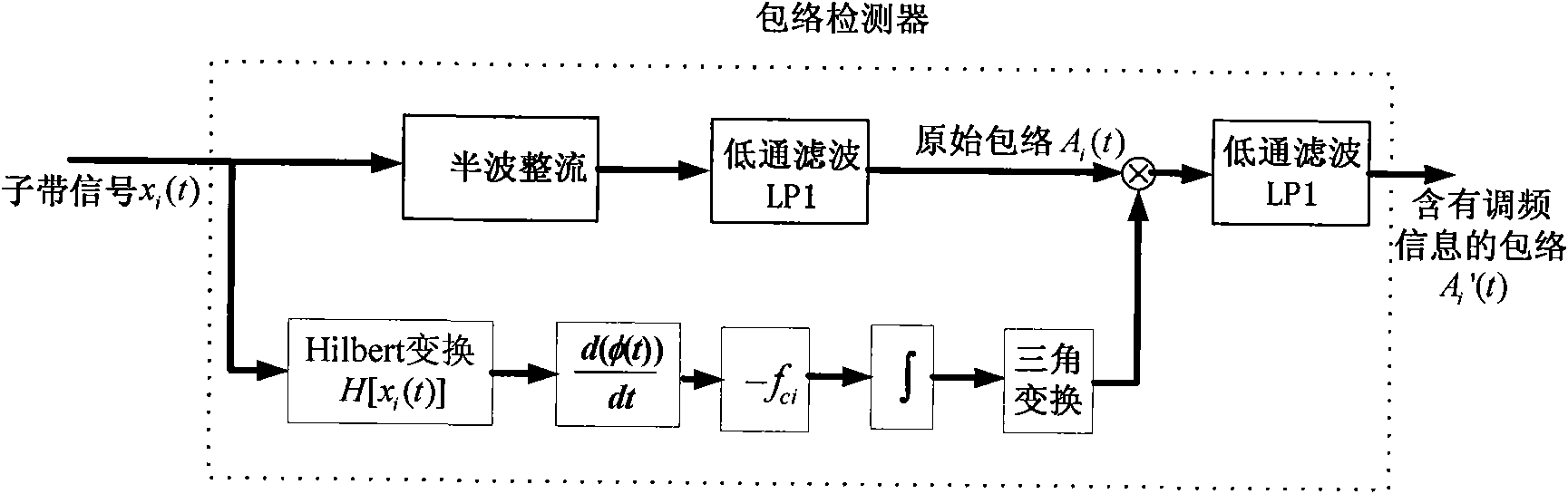

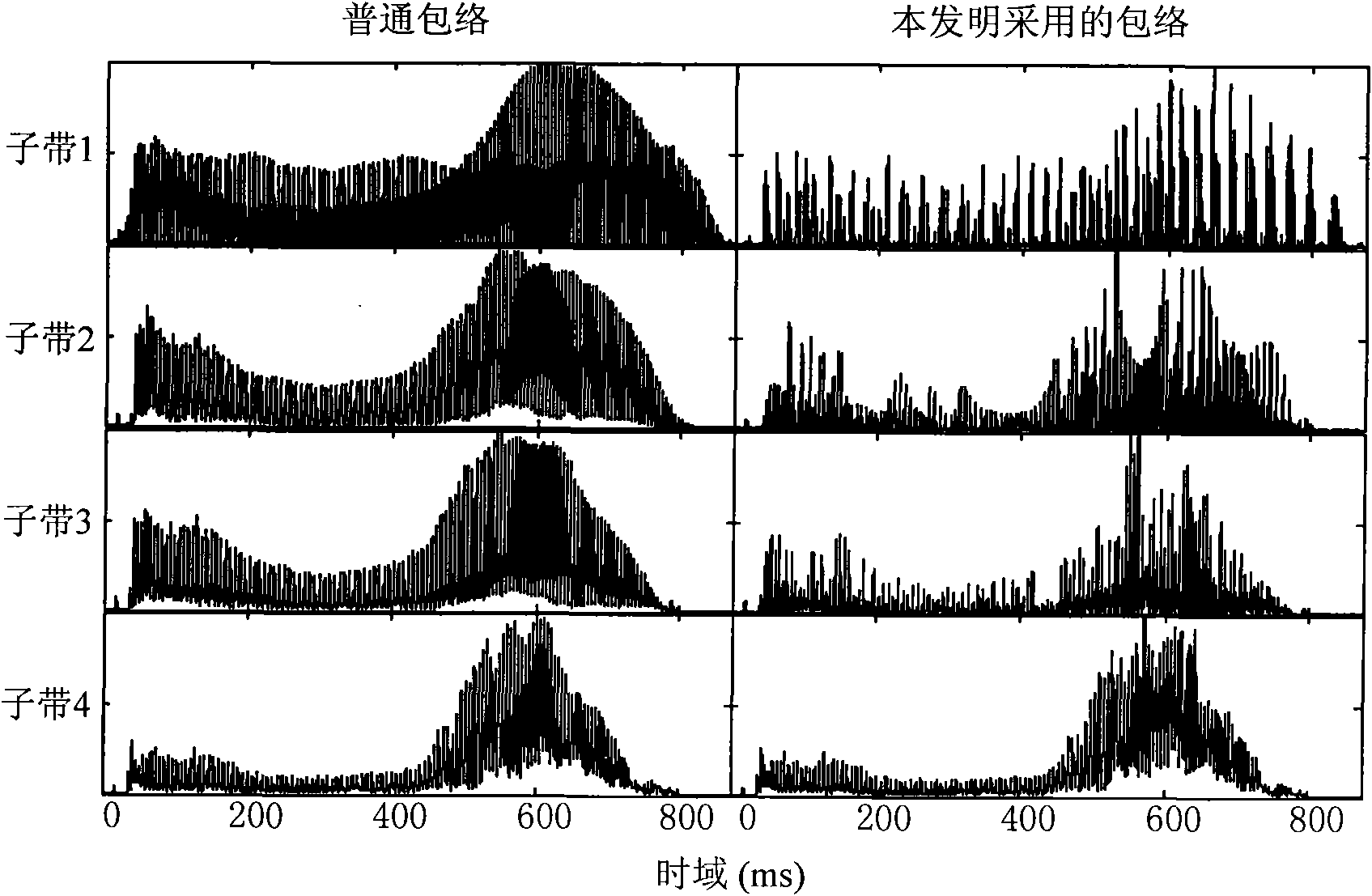

Artificial cochlea speech processing method based on frequency modulation information and artificial cochlea speech processor

ActiveCN101642399AImprove speech recognition performanceGood effectEar treatmentSpeech analysisSpeech ProcessorElectrical impulse

The invention provides an artificial cochlea speech processing method based on frequency modulation information and an artificial cochlea speech processor. The artificial cochlea speech processing method comprises the following steps: pre-emphasizing a speech signal; decomposing the speech signal by an analysis filter into a plurality of sub frequency bands; then, extracting the time-domain envelope information of each sub frequency band signal; adopting a Hilbert transform method to extract the frequency modulation information of a low-frequency part to multiple by time-domain envelopes so asto acquire a synthetic time-domain envelope containing the frequency modulation information; utilizing various acquired time-domain envelopes of the sub frequency bands to modulate a pulse sequence by a pulse generator; adding modulated pulses of various sub frequency bands to acquire a finally synthesized stimulus signal; and sending the stimulus signal to an electrode to generate an electric pulse to stimulate the auditory nerve. The artificial cochlea speech processor is suitable for deafness patients speaking Chinese as a native language to recognize speeches in a noise environment and has noise robustness, thereby enabling the deafness patients to feel more fine speech structure information, enhancing the speech recognition abilities of the deafness patients in the noise environmentand benefiting the tone recognition.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

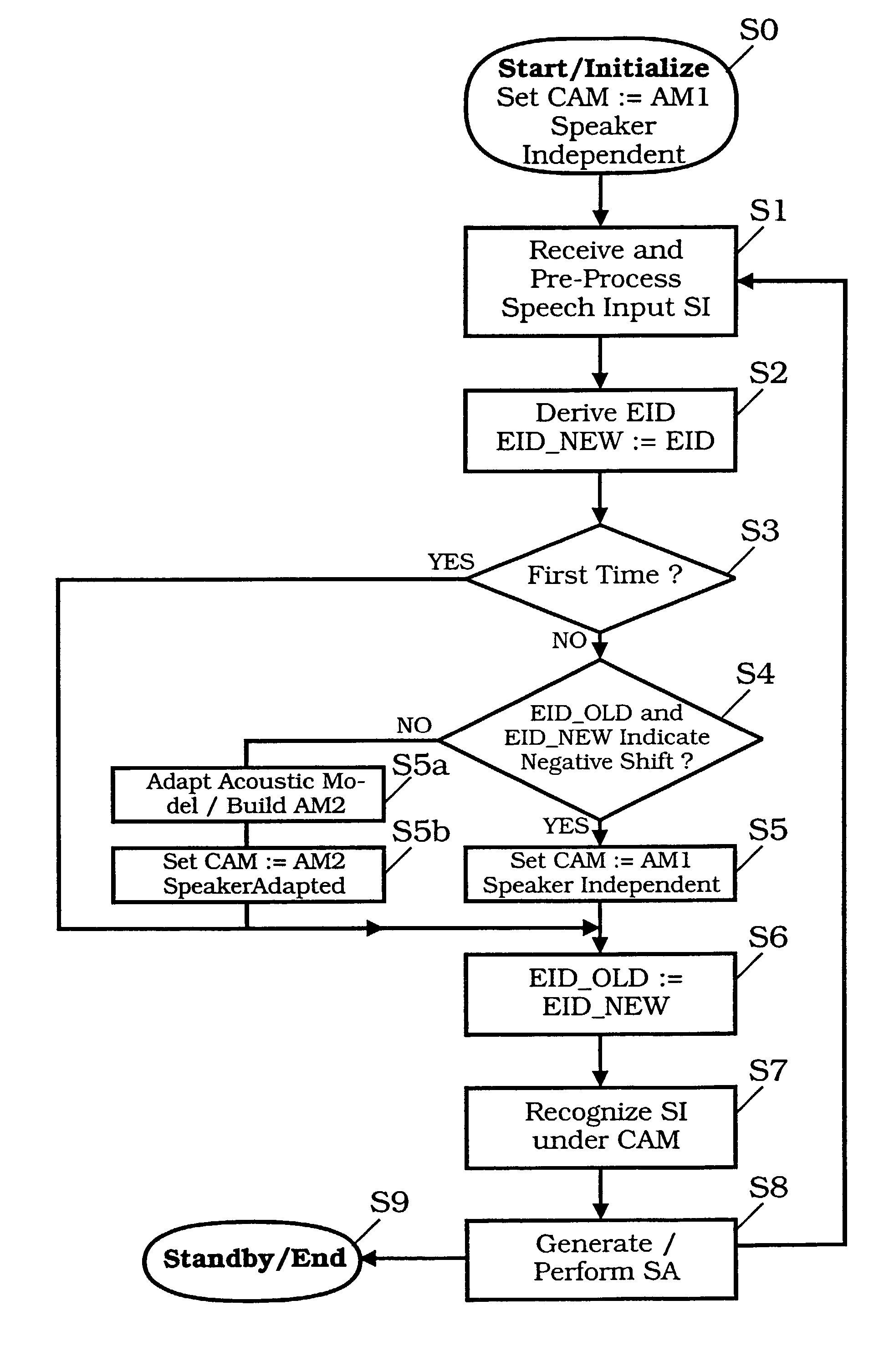

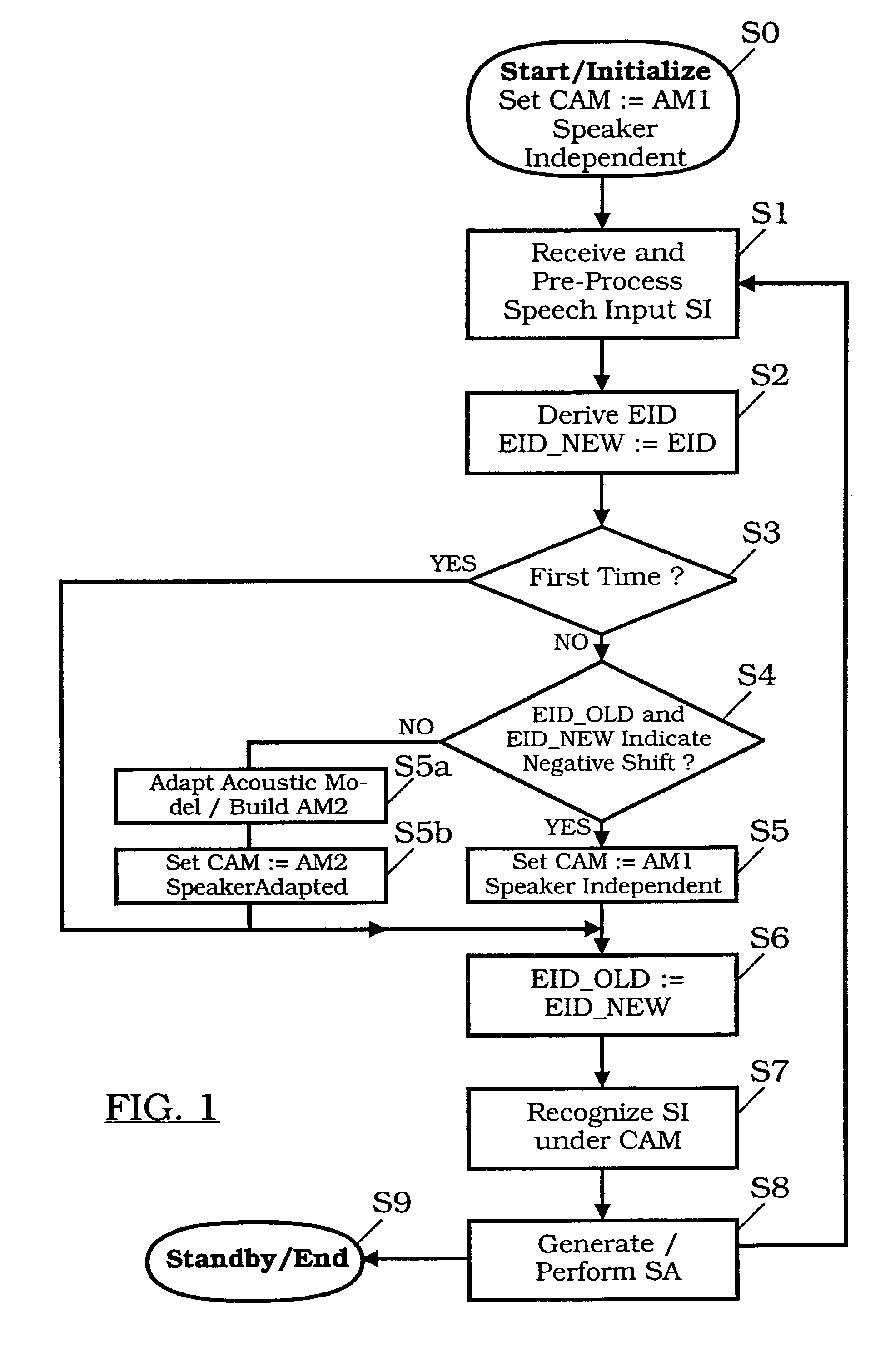

Method for recognizing speech/speaker using emotional change to govern unsupervised adaptation

InactiveUS7684984B2Improve user satisfactionImprove recognition rateSpeech recognitionIdentification rateSpeech input

To improve the performance and the recognition rate of a method for recognizing speech in a dialogue system, or the like, it is suggested to derive emotion information data (EID) from speech input (SI) being descriptive for an emotional state of a speaker or a change thereof based upon which a process of recognition is chosen and / or designed.

Owner:SONY DEUT GMBH

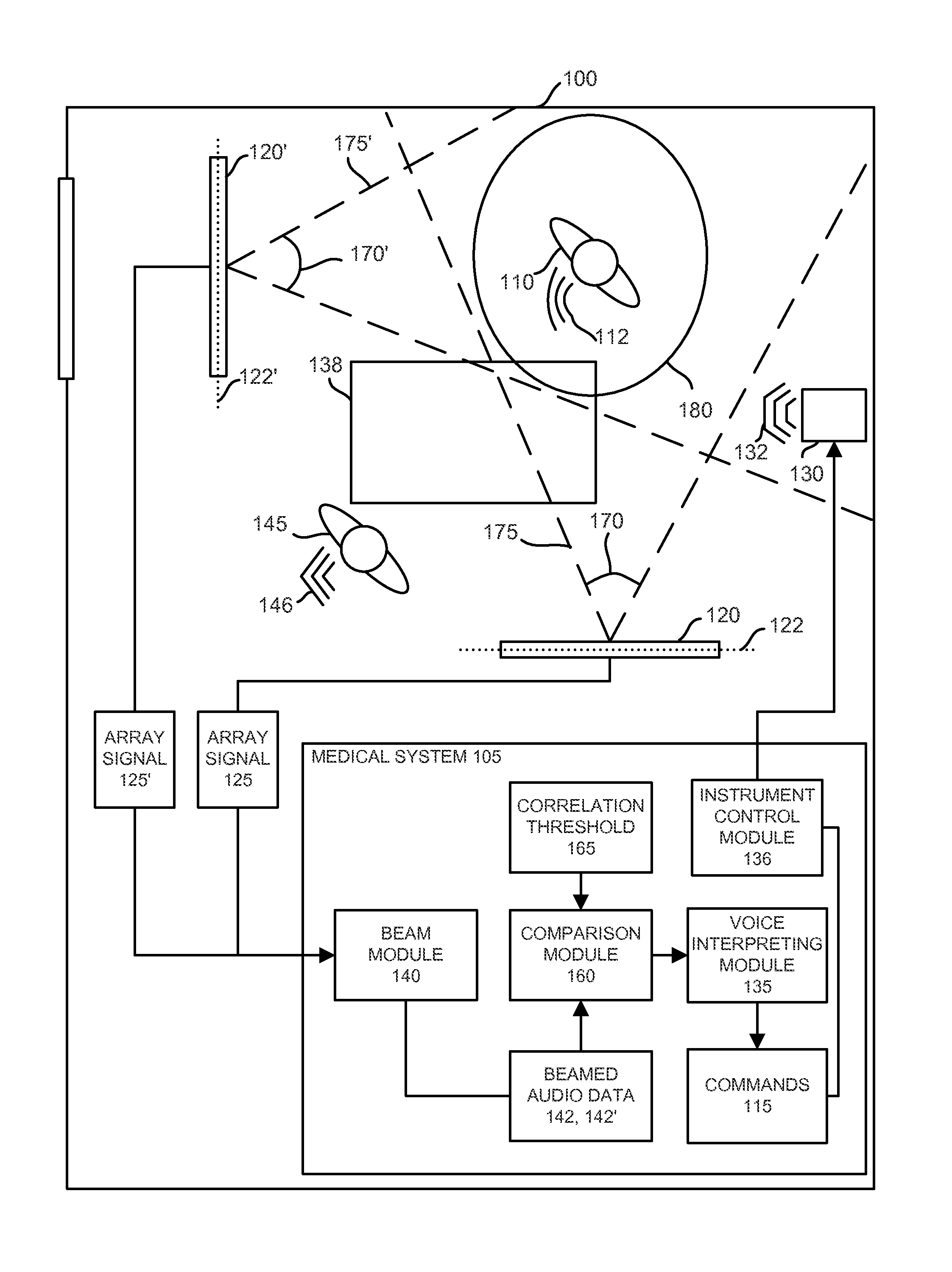

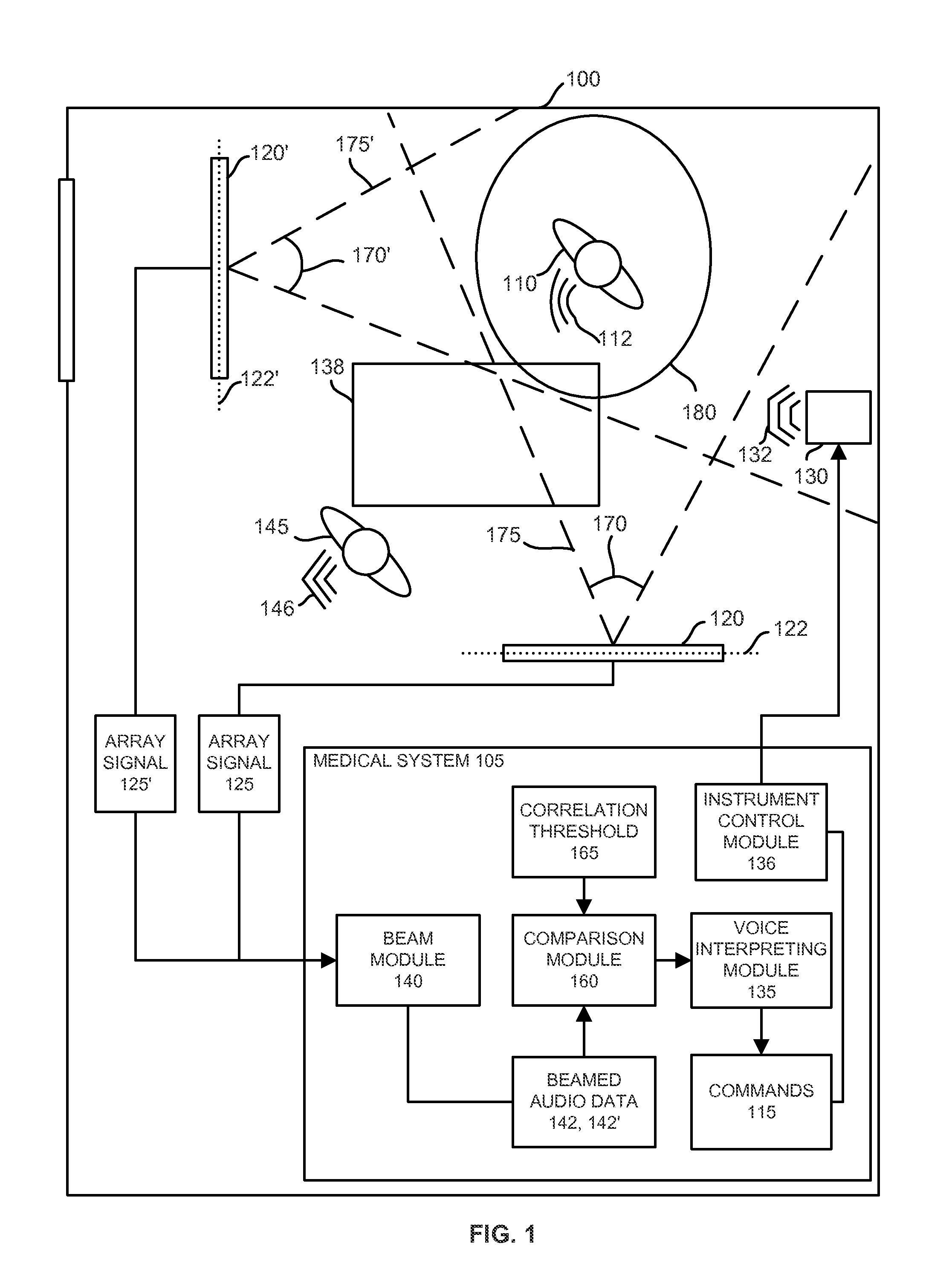

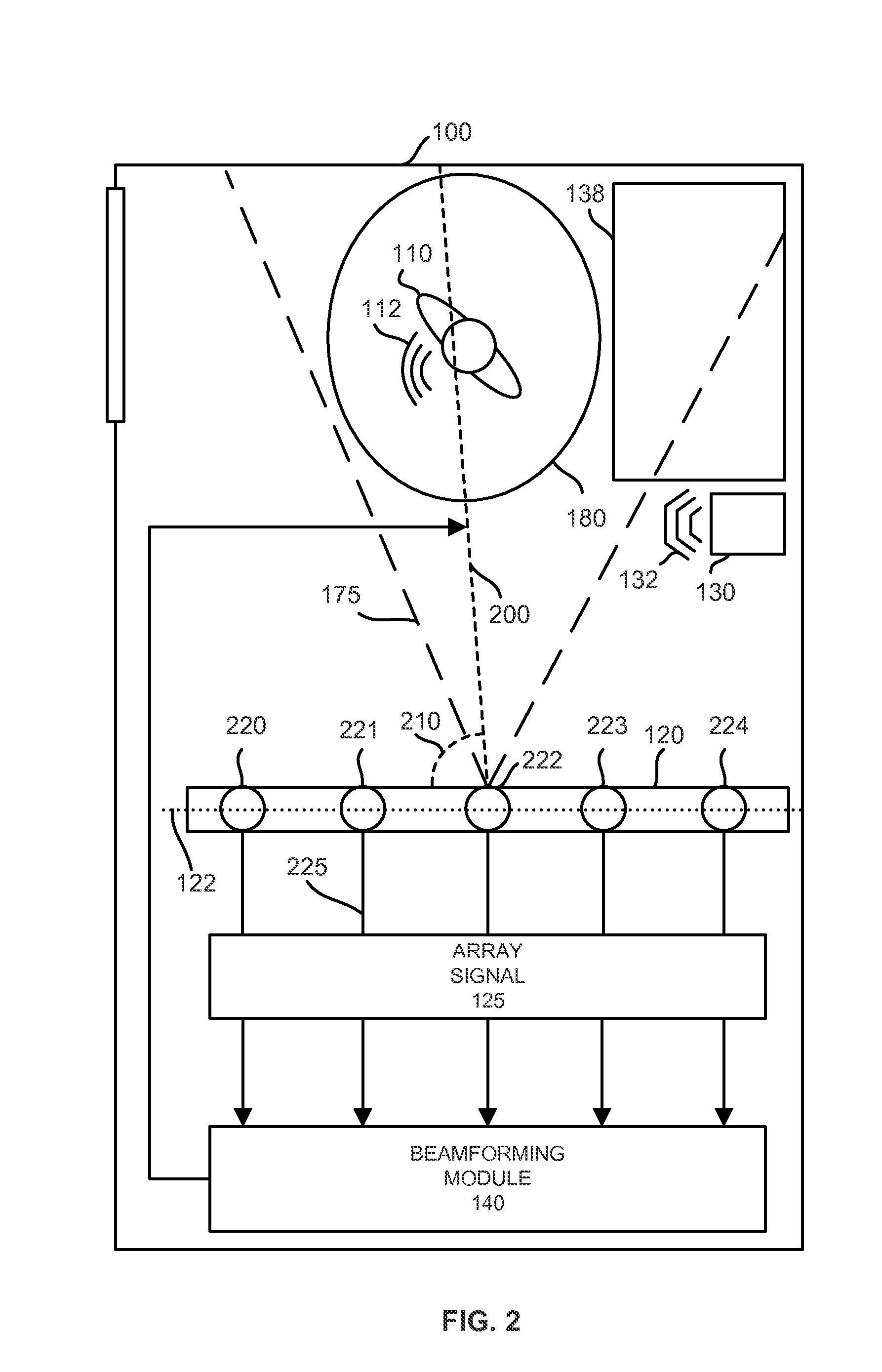

Voice Control System with Multiple Microphone Arrays

ActiveUS20160125882A1Improve speech recognition performanceImprove signal-to-noise ratioMicrophones signal combinationMouthpiece/microphone attachmentsControl systemComputer module

A voice controlled medical system with improved speech recognition includes a first microphone array, a second microphone array, a controller in communication with the first and second microphone arrays, and a medical device operable by the controller. The controller includes a beam module that generates a first beamed signal using signals from the first microphone array and a second beamed signal using signals from the second microphone array. The controller also includes a comparison module that compares the first and second beamed signals and determines a correlation between the first and second beamed signals. The controller also includes a voice interpreting module that identifies commands within the first and second beamed signals if the correlation is above a correlation threshold. The controller also includes an instrument control module that executes the commands to operate said medical device.

Owner:STORZ ENDOSKOP PRODN

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com