Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

60 results about "Time frequency masking" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

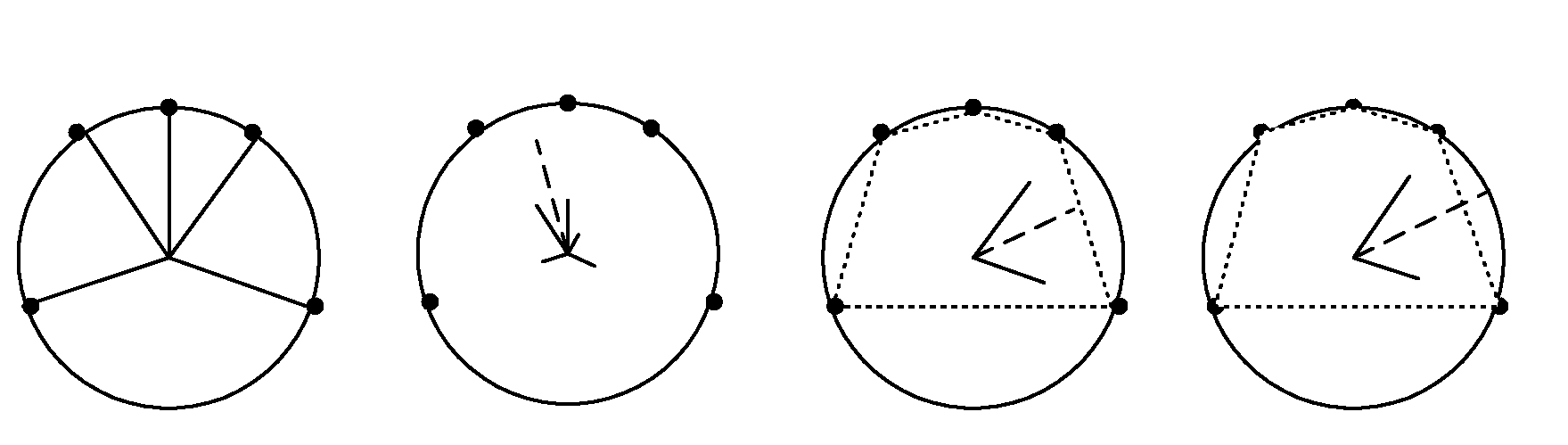

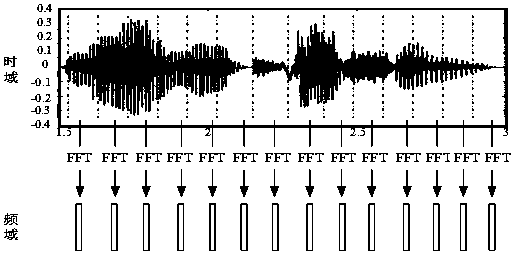

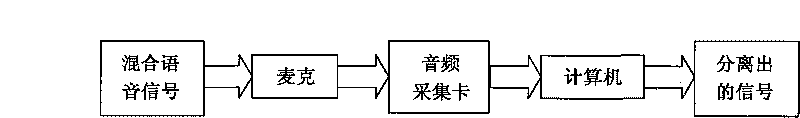

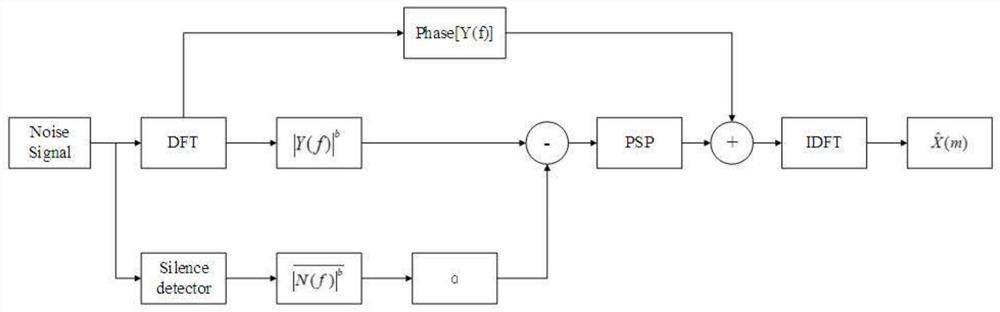

Time-frequency masking (TFM) method [1] separates sound sources by masking unwanted sounds in the time-frequency domain. The method primarily relies on clustering of the mixed signals with respect to their amplitudes and time delays.

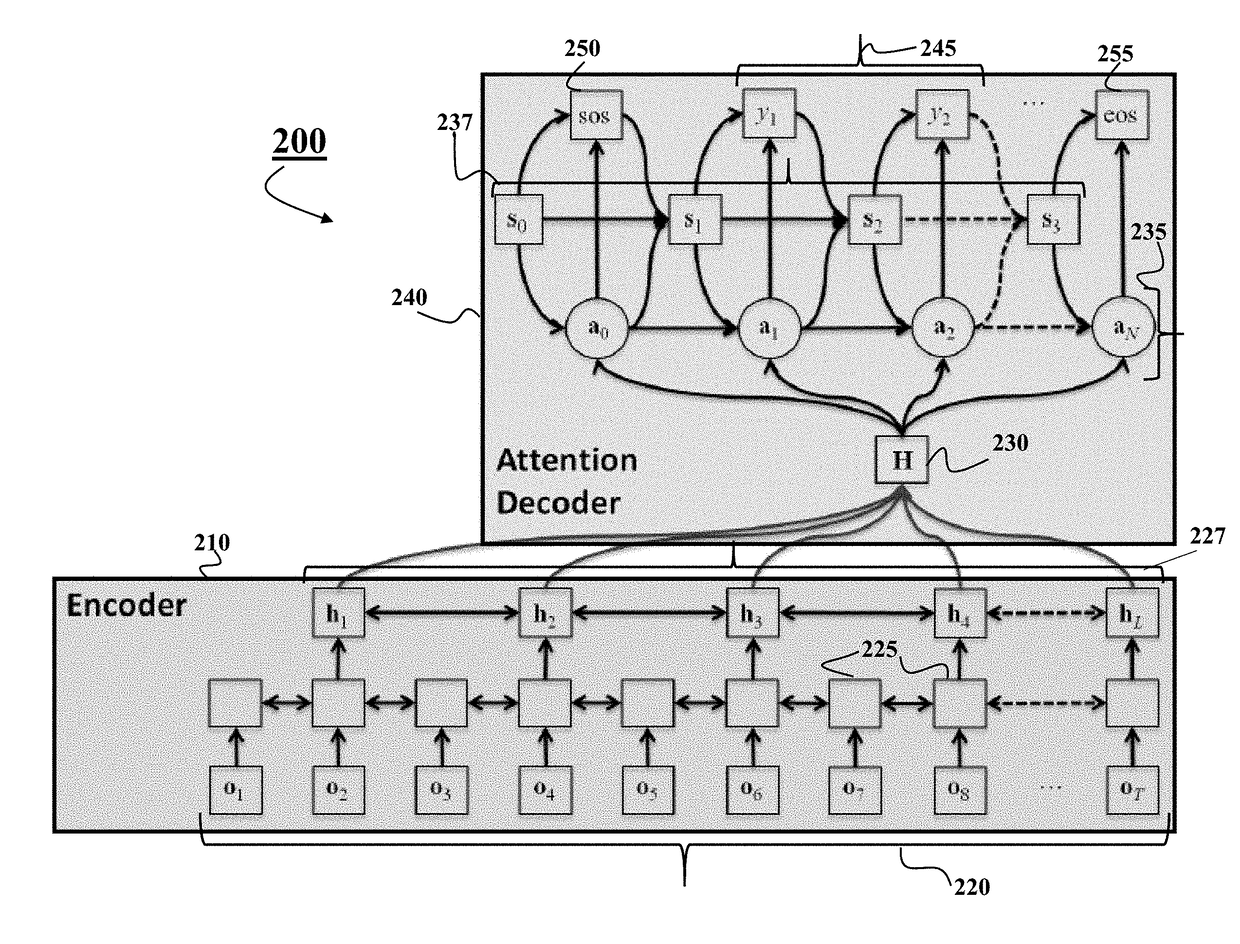

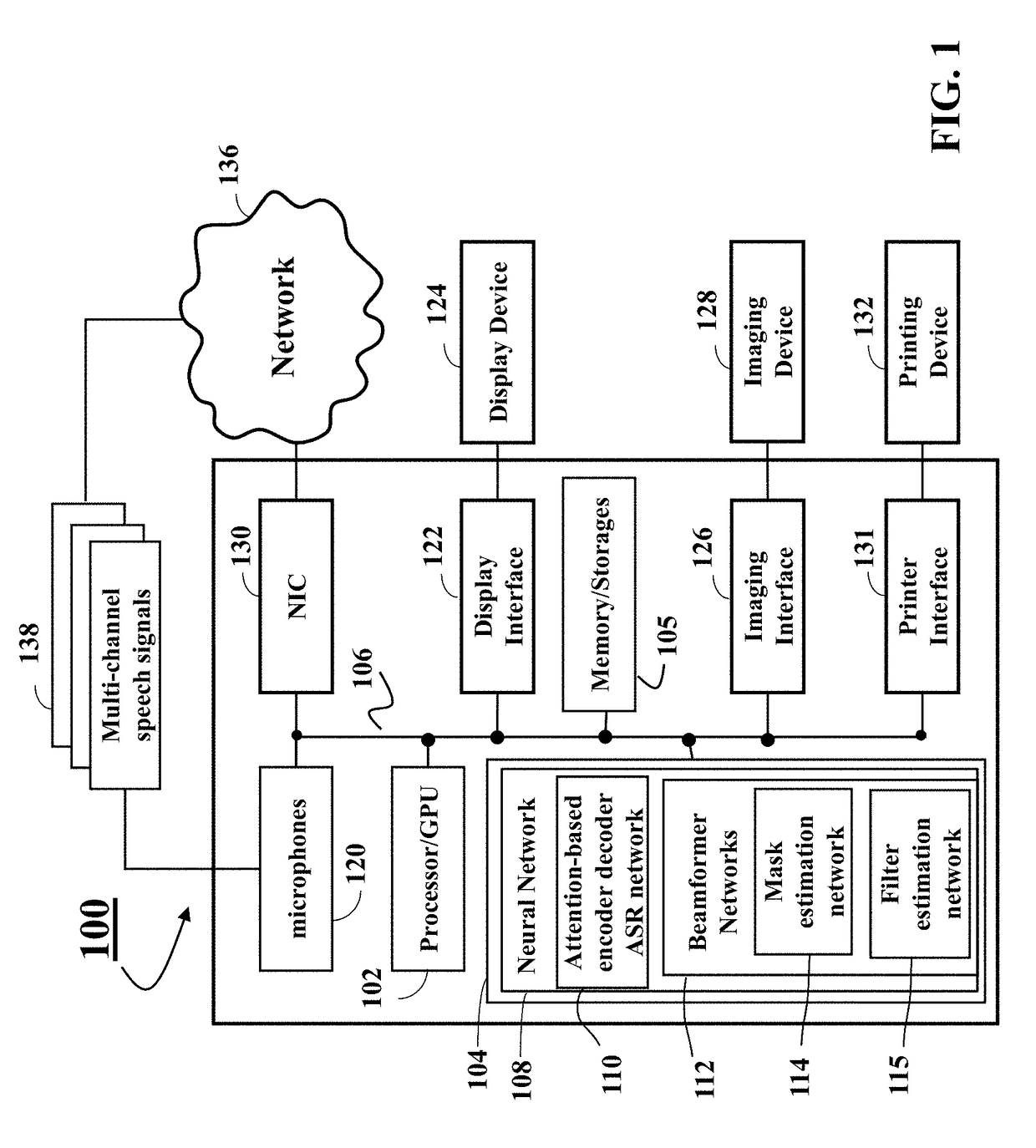

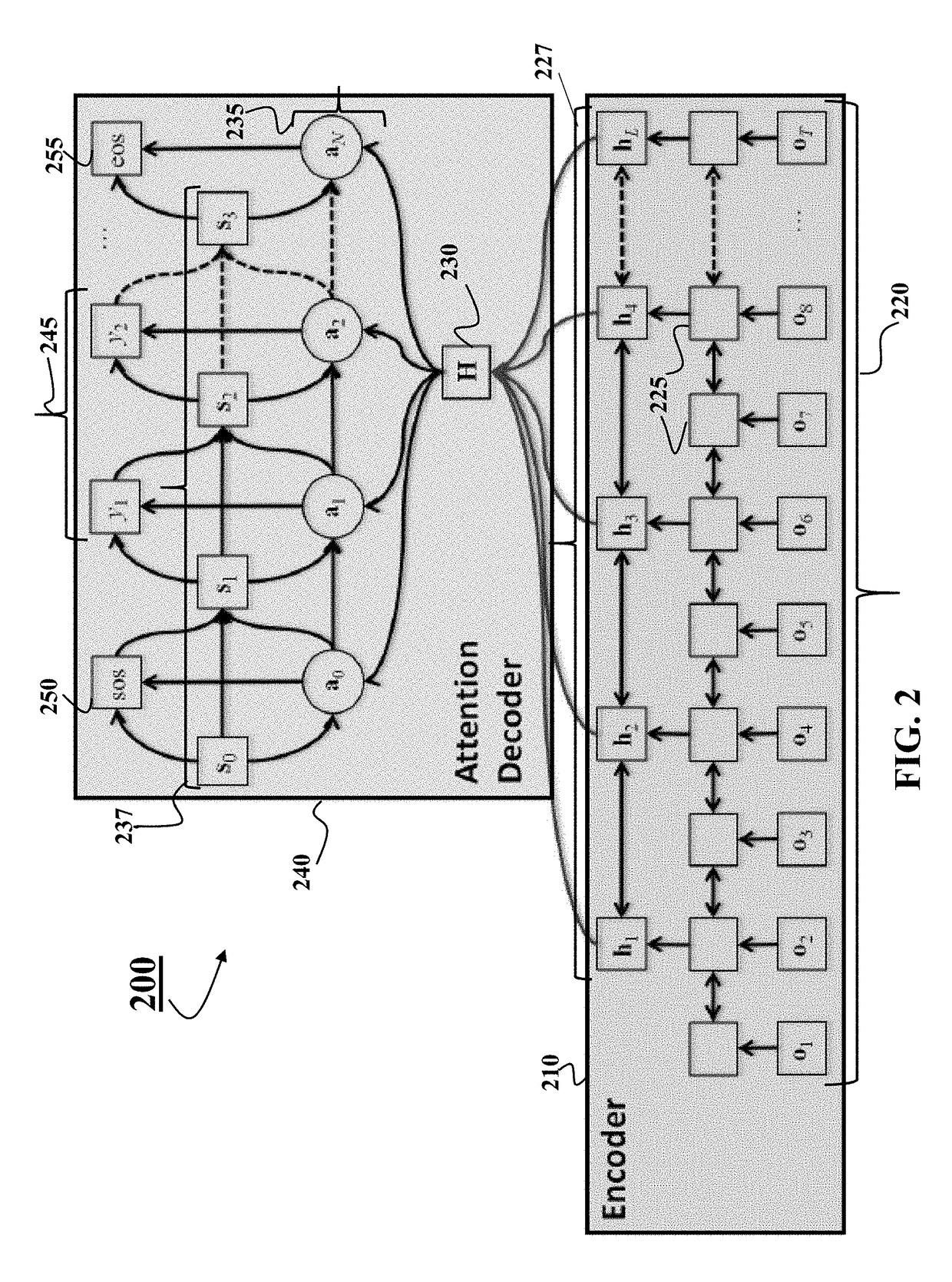

System and Method for Multichannel End-to-End Speech Recognition

ActiveUS20180261225A1Improve performanceAttenuation bandwidthSpeech recognitionEncoder decoderData set

A speech recognition system includes a plurality of microphones to receive acoustic signals including speech signals, an input interface to generate multichannel inputs from the acoustic signals, one or more storages to store a multichannel speech recognition network, wherein the multichannel speech recognition network comprises mask estimation networks to generate time-frequency masks from the multichannel inputs, a beamformer network trained to select a reference channel input from the multichannel inputs using the time-frequency masks and generate an enhanced speech dataset based on the reference channel input and an encoder-decoder network trained to transform the enhanced speech dataset into a text. The system further includes one or more processors, using the multichannel speech recognition network in association with the one or more storages, to generate the text from the multichannel inputs, and an output interface to render the text.

Owner:MITSUBISHI ELECTRIC RES LAB INC

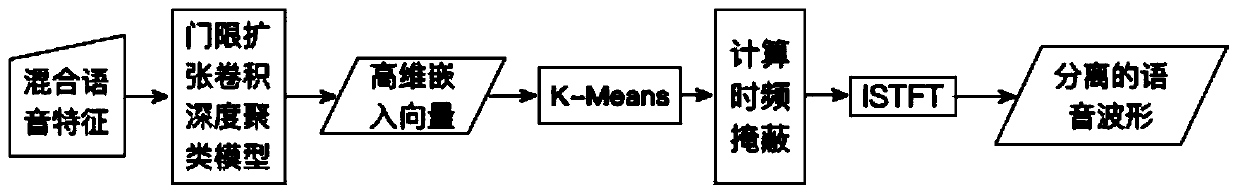

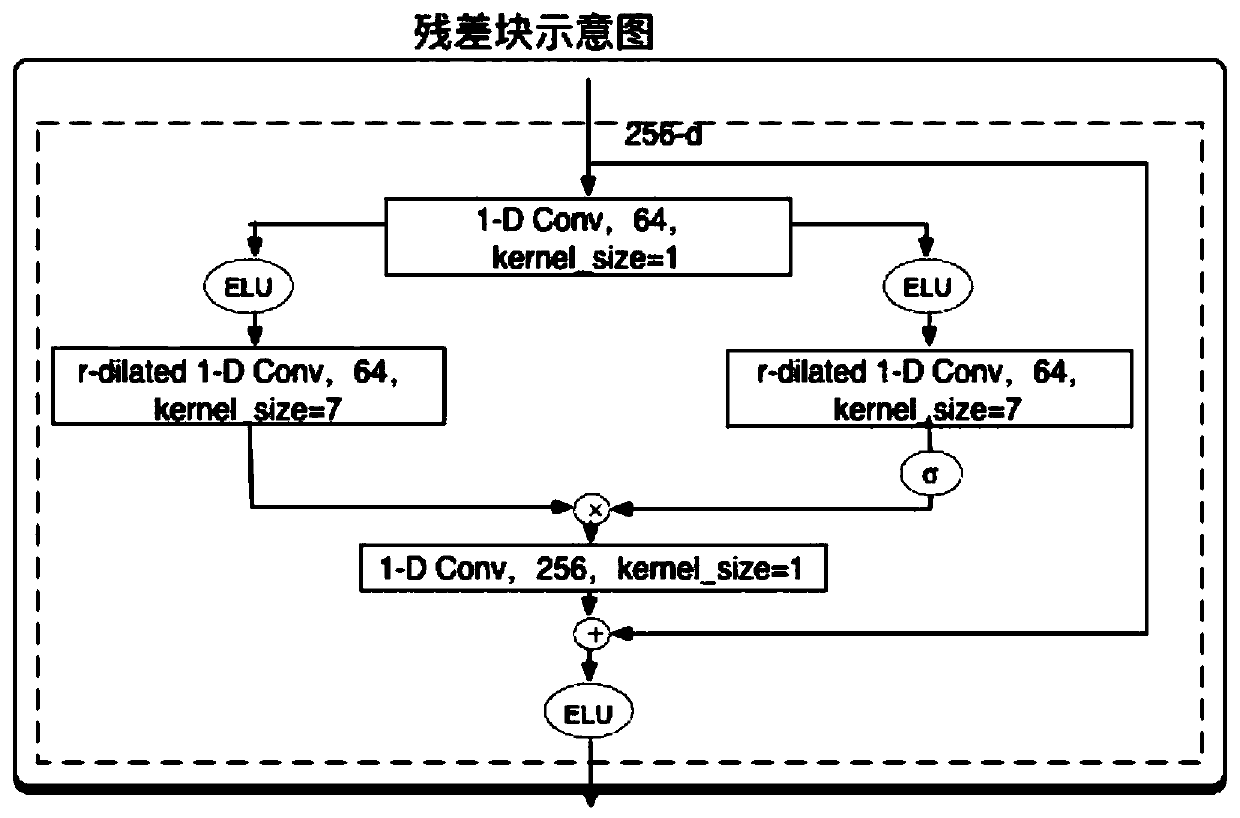

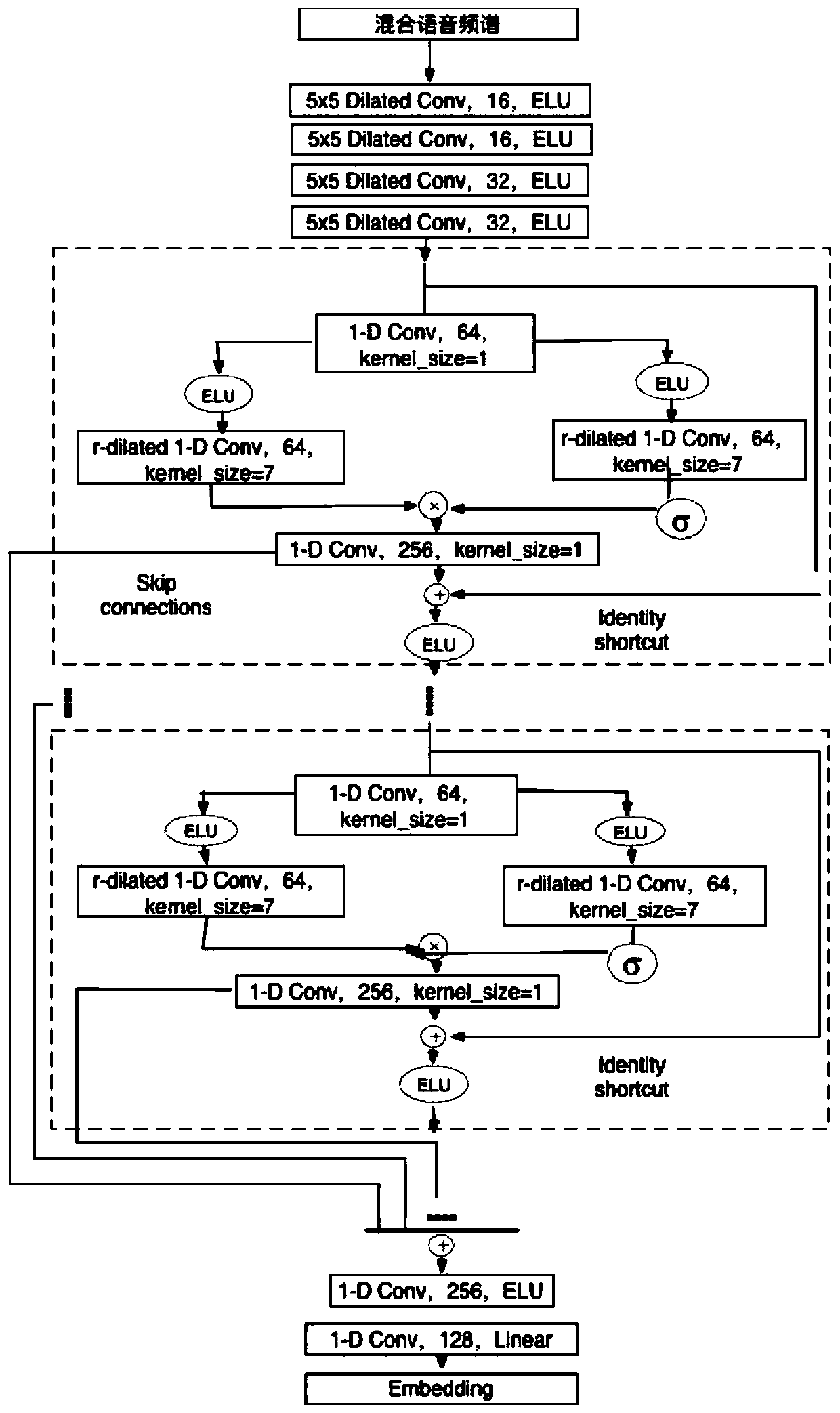

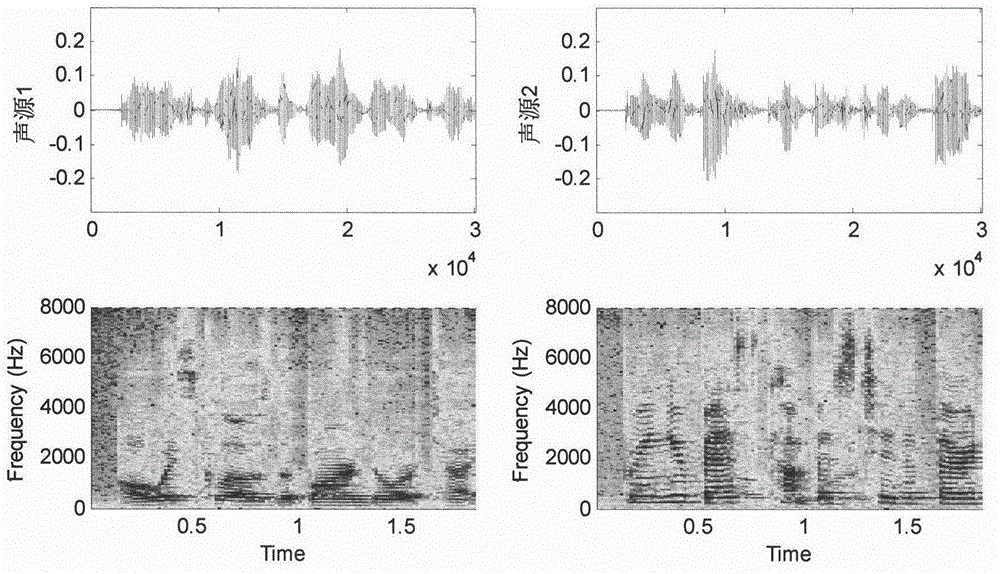

Multi-speaker voice separation method based on convolutional neural network and depth clustering

InactiveCN110459240AExpand the receptive fieldFew parametersSpeech analysisCharacter and pattern recognitionFrequency spectrumSound sources

The invention discloses a multi-speaker voice separation method based on a convolutional neural network and depth clustering. The method comprises the following steps: 1, a training stage: respectively performing framing, windowing and short-time Fourier transform on single-channel multi-speaker mixed voice and corresponding single-speaker voice; and training mixed voice amplitude frequency spectrum and single-speaker voice amplitude frequency spectrum as an input of a neural network model; 2, a testing stage: taking the mixed voice amplitude frequency spectrum as an input of a threshold expansion convolutional depth clustering model to obtain a high-dimensional embedded vector of each time-frequency unit in the mixed frequency spectrum; using a K-means clustering algorithm to classify thevectors according to a preset number of speakers, obtaining a time-frequency masking matrix of each sound source by means of the time-frequency unit corresponding to each vector, and multiplying thematrixes with the mixed voice amplitude frequency spectrum respectively to obtain a speaker frequency spectrum; and combining a mixed voice phase frequency spectrum according to the speaker frequencyspectrum, and obtaining a plurality of separate voice time domain waveform signals by adopting short-time Fourier inverse transform.

Owner:XINJIANG UNIVERSITY

Time frequency mask-based single acoustic vector sensor (AVS) target voice enhancement method

ActiveCN104103277AReduce complexityTarget Direction Speech EnhancementSpeech analysisPoint correlationAcoustic vector sensor

The invention relates to a time frequency mask-based single acoustic vector sensor (AVS) target voice enhancement method. According to the method, the arrival angle of the target voice is known, a method of combining a fixed beam former and a post-positioned Wiener filter is adopted for realizing target voice enhancement, and calculation of the weight value of the post-positioned Wiener filter involves self-power spectrum estimation of the target voice. Time frequency sparse characteristics of a voice signal are used, the time frequency point correlation arrival angle for receiving audio signals is estimated through calculating the ISDR (Inter-sensor data ratio) of component signals outputted by two gradient sensors in the AVS, time frequency mask is designed through calculating errors between the time frequency point correlation arrival angle and a target arrival angle, and thus self-power spectrum estimation of the target voice is acquired. According to the method of the invention, any noise prior knowledge does not needed, the target voice can be effectively enhanced in a complicated environment where multiple speakers exist, and interference voice can background noise can be suppressed. In addition, the operation complexity is low, the adopted microphone array size is small (about 1cm<3>), and application on a portable device is excessively facilitated.

Owner:SHENZHEN HIAN SPEECH SCI & TECH CO LTD

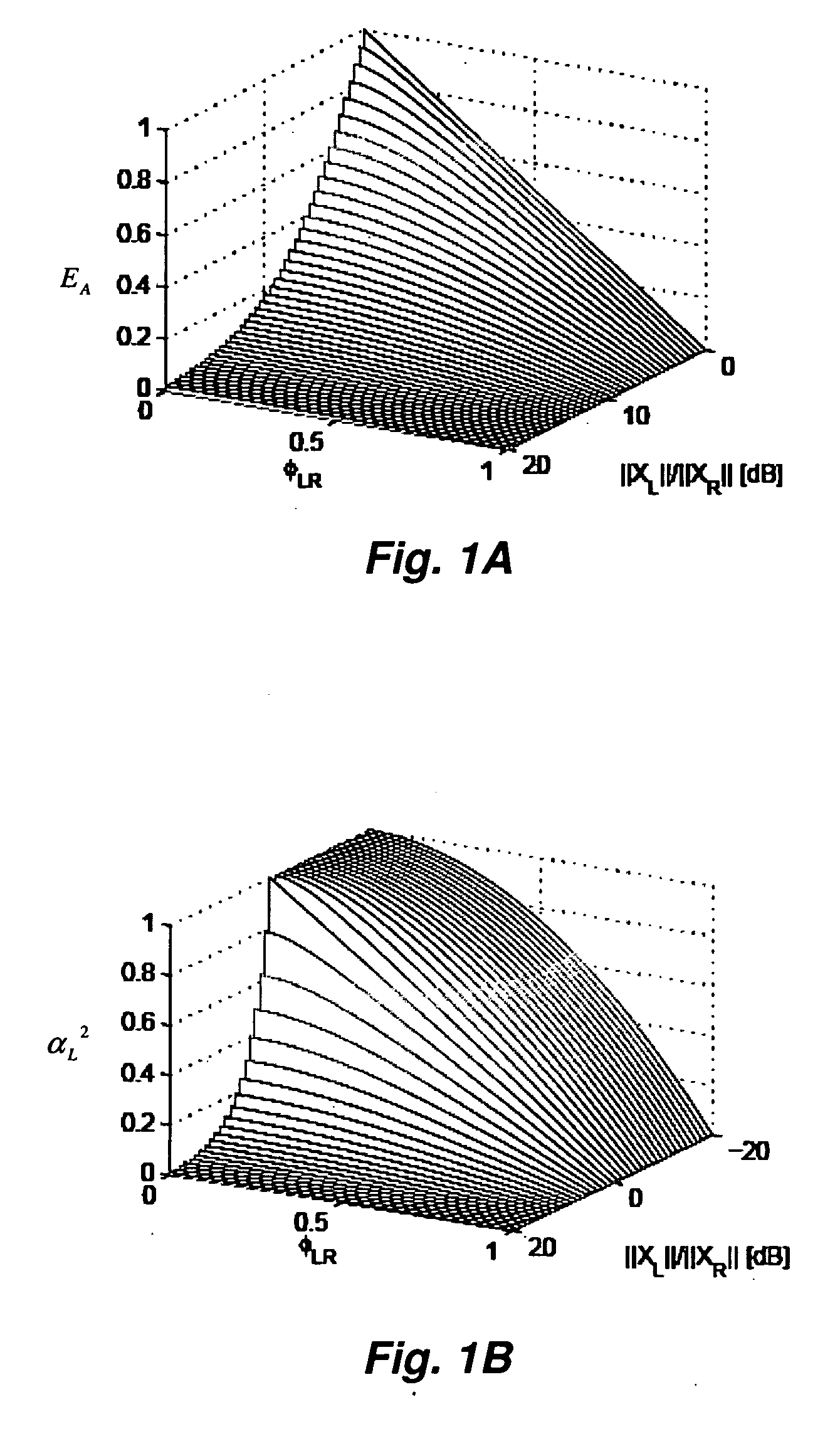

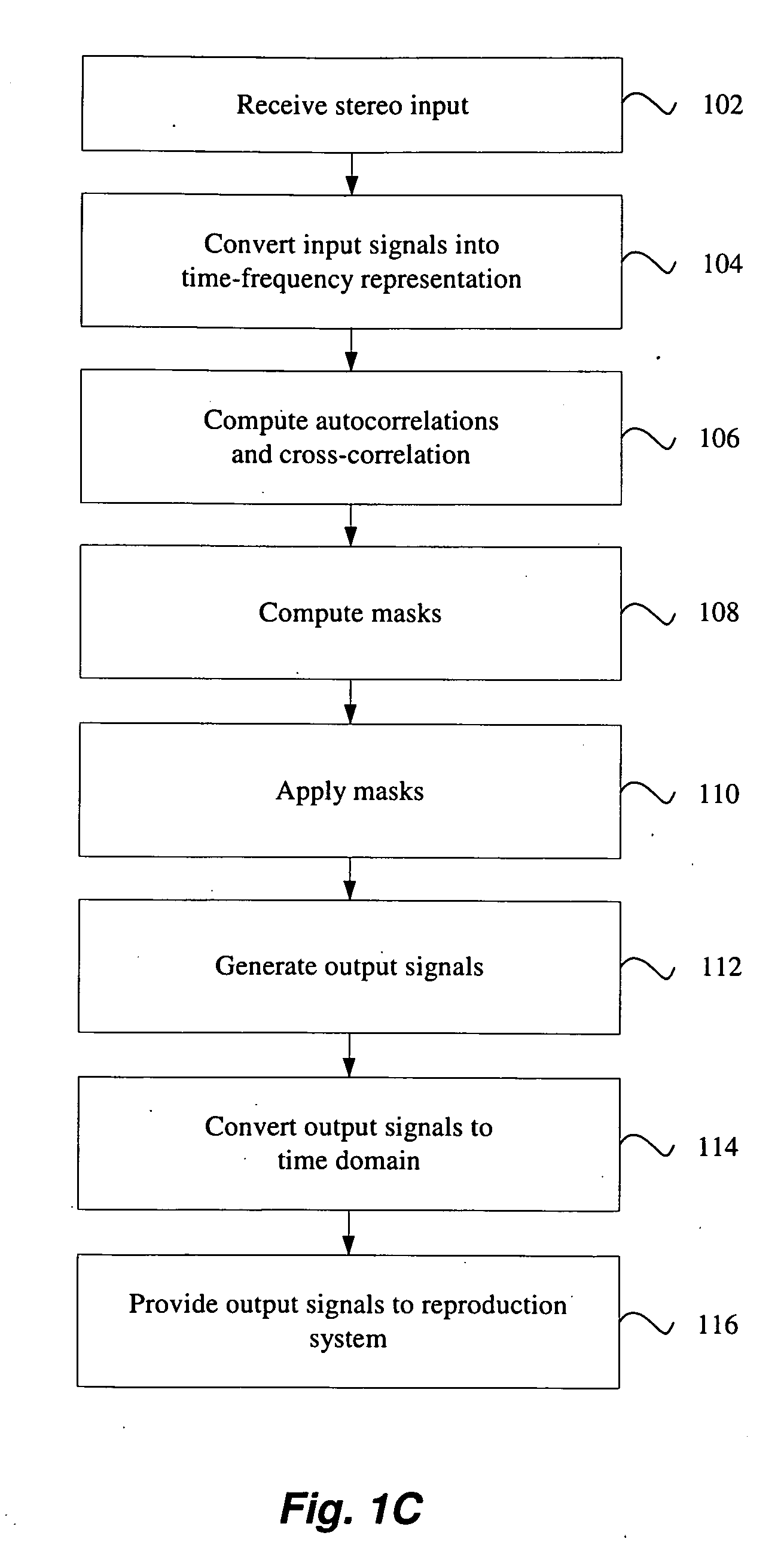

Correlation-based method for ambience extraction from two-channel audio signals

Owner:CREATIVE TECH CORP

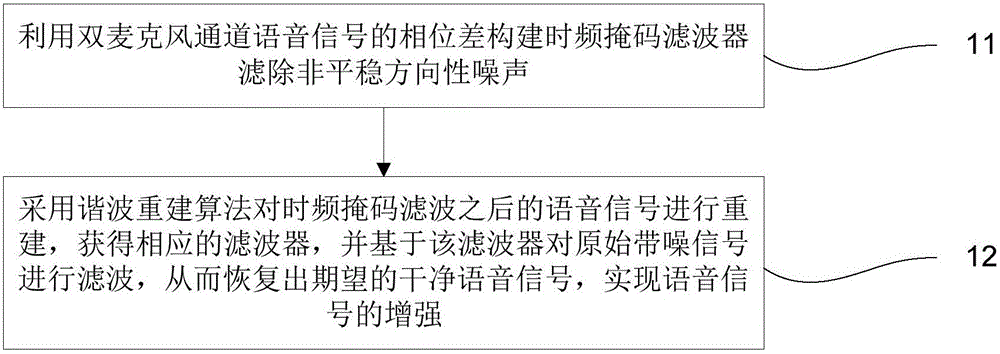

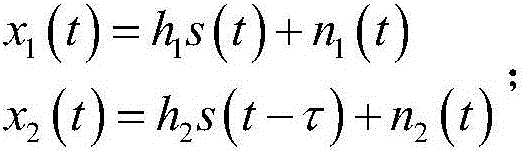

Speech enhancement method applied to dual-microphone array

The invention discloses a speech enhancement method applied to a dual-microphone array.The speech enhancement method includes the steps that a time-frequency mask filter is constructed with phase difference of dual-microphone channel speech signals to filter out unsteady directional noise; a harmonic reconstruction algorithm is adopted to reconstruct the speech signals obtained after time-frequency mask filtering to obtain a corresponding filter, original signals with noise are filtered through the filter, expected clean speech signals are recovered, and the speech signals are enhanced.

Owner:UNIV OF SCI & TECH OF CHINA

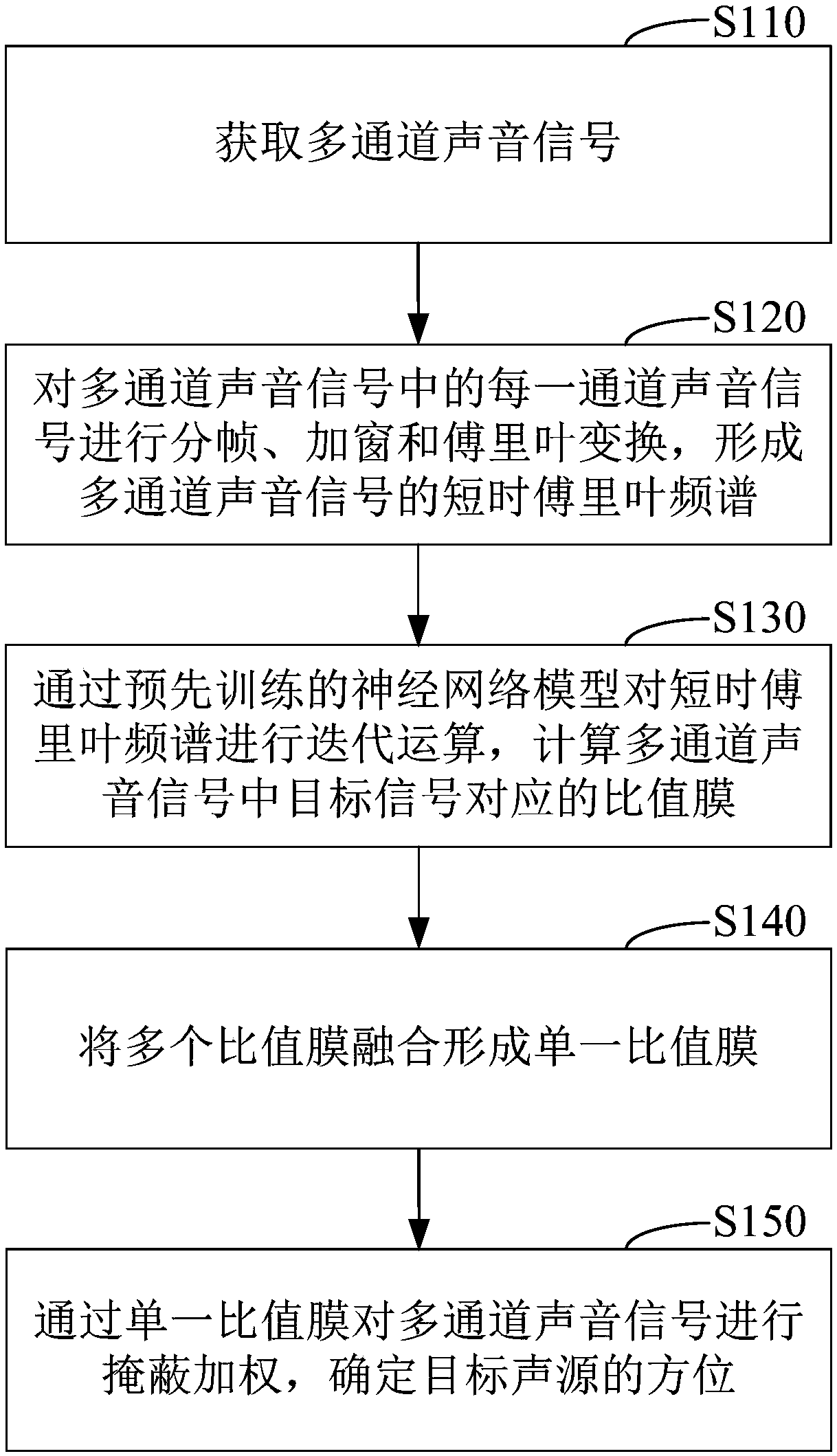

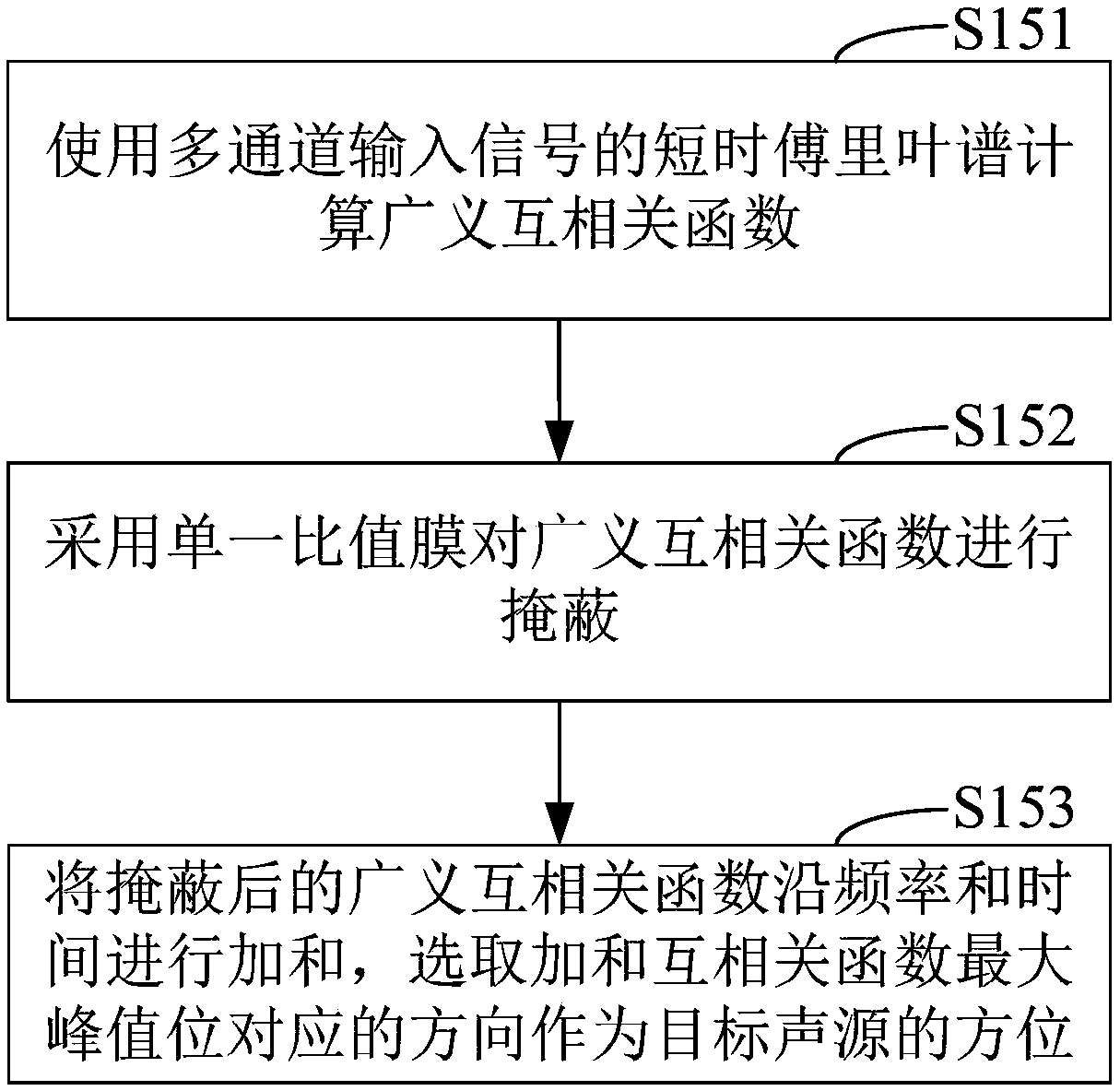

Method for sound source direction estimation based on time frequency masking and deep neural network

ActiveCN109839612AImprove accuracyImprove stabilityDirection/deviation determination systemsSound sourcesSignal-to-noise ratio (imaging)

The invention discloses a method and device for sound source direction estimation based on time frequency masking and a deep neural network, electronic equipment and a storage medium, and belongs to the field of computer technologies. The method comprises the steps of acquiring a multichannel sound signal; carrying out framing, windowing and Fourier transform on each channel sound signal in the multichannel sound signal so as to form a short-time Fourier spectrum of the multichannel sound signal; carrying out an iterative operation on the short-time Fourier spectrum through a pre-trained neural network model, calculating ratio membranes corresponding to target signals in the multichannel sound signal, and fusing the multiple ratio membranes to form a single ratio membrane; and marking andweighting the multichannel sound signal according to the single ratio membrane to determine the orientation of the target sound source. The method and device for sound source direction estimation based on the time frequency masking and the deep neural network can have strong robustness in the environment with a low signal-to-noise ratio and strong reverberation, and improve the accuracy and stability of direction estimation for the target sound source.

Owner:ELEVOC TECH CO LTD

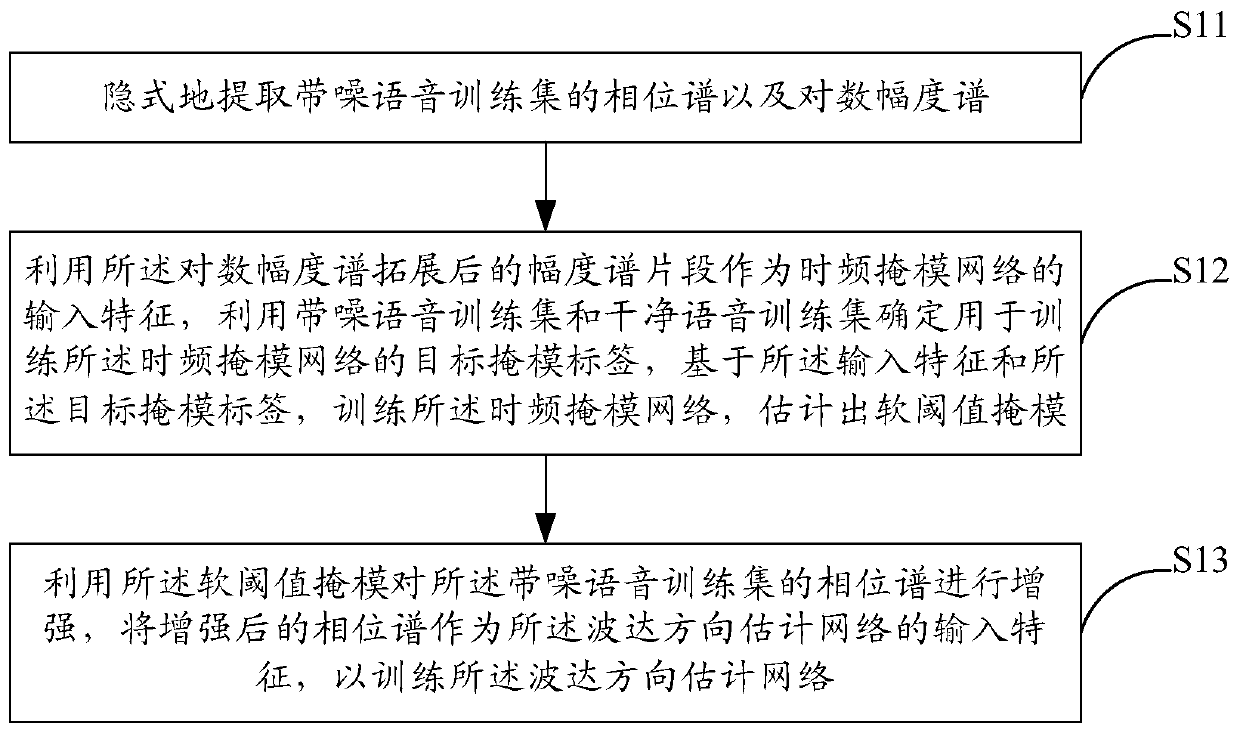

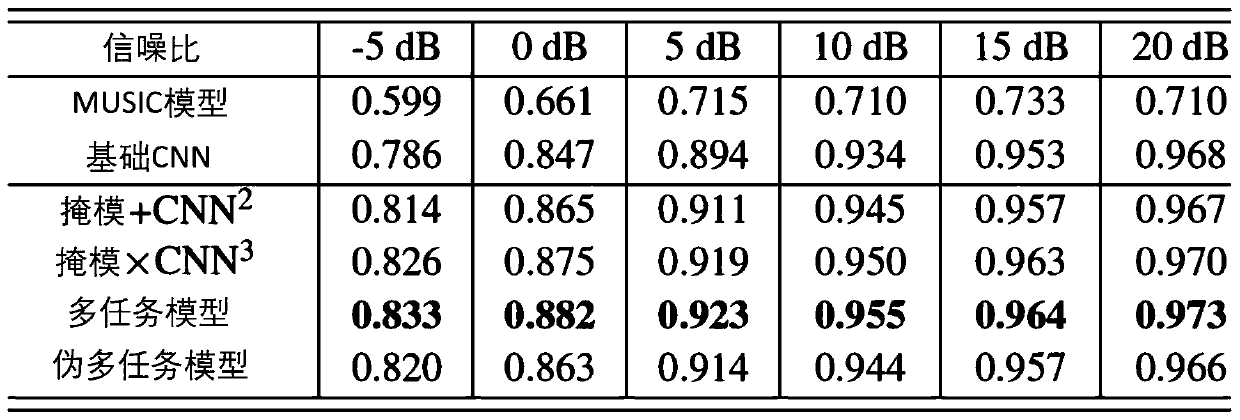

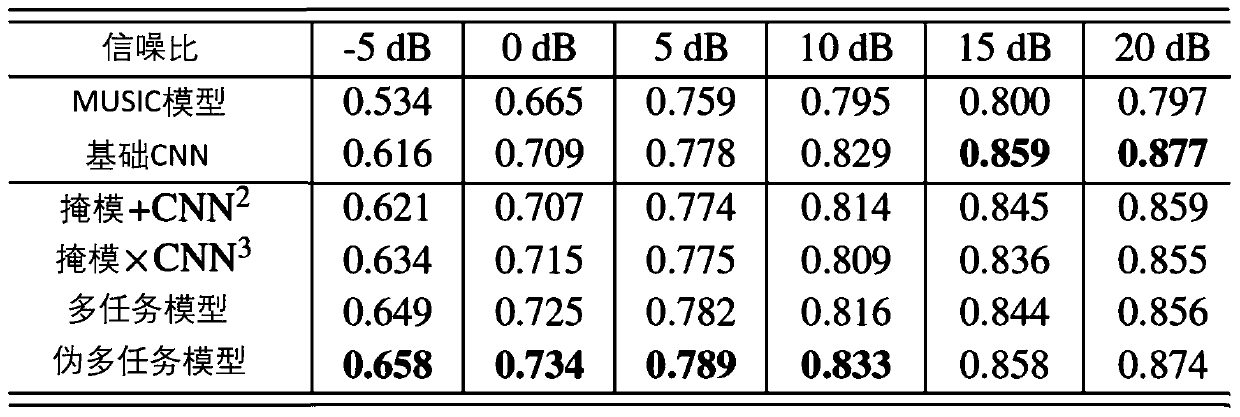

Combined model training method and system

ActiveCN109712611AAccurately estimate the effectImprove accuracySpeech recognitionDirection/deviation determination systemsPhase spectrumTime frequency masking

The embodiment of the invention provides a combined model training method. The method comprises the following steps: extracting the phase spectrum and the logarithm magnitude spectrum of a noisy voicetraining set in an implicit manner; by utilizing the magnitude spectrum fragments of the logarithm magnitude spectrum after expansion as the input features of a time frequency masking network, and byutilizing the noisy voice training set and a clear voice training set, determining a target masking label used for training the time frequency masking network, based on the input features and the target masking label, training the time frequency masking network, and estimating a soft threshold mask; and enhancing the phase spectrum of the noisy voice training set by utilizing the soft threshold mask, wherein the enhanced phase spectrum is adopted as the input features of a DOA (direction of arrival) estimation network, and training the DOA estimation network. The embodiment of the invention further provides a combined model training system. According to the embodiment of the invention, by setting the target masking label, the input features are extracted in an implicit manner, and the time frequency masking network and DOA estimation network combined training is more suitable for the DOA estimation task.

Owner:AISPEECH CO LTD

Voice processing method, voice processing device and device for processing voice

InactiveCN110808063APhase accurateReduce voice distortionSpeech analysisFrequency spectrumTime frequency masking

The embodiment of the invention discloses a voice processing method, a voice processing device and a device for processing voice. The embodiment of the method comprises the following steps of performing time-frequency analysis on noisy voice to obtain the frequency spectrum of the noisy voice in complex domains; inputting the frequency spectrum of the noisy voice in the complex fields into a pretrained time-frequency shielding prediction model; obtaining a prediction value of time-frequency masking of the noisy voice in the complex domains; multiplying the prediction value by the frequency spectrum of the noisy voice in the complex domains; generating the frequency spectrum of target voice in the noisy voice in the complex domains; and synthesizing the target voice on the basis of the frequency spectrum of the target voice in the complex domains. The embodiment has the advantages that the voice distortion degree is reduced, and the voice noise reduction effect is improved.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

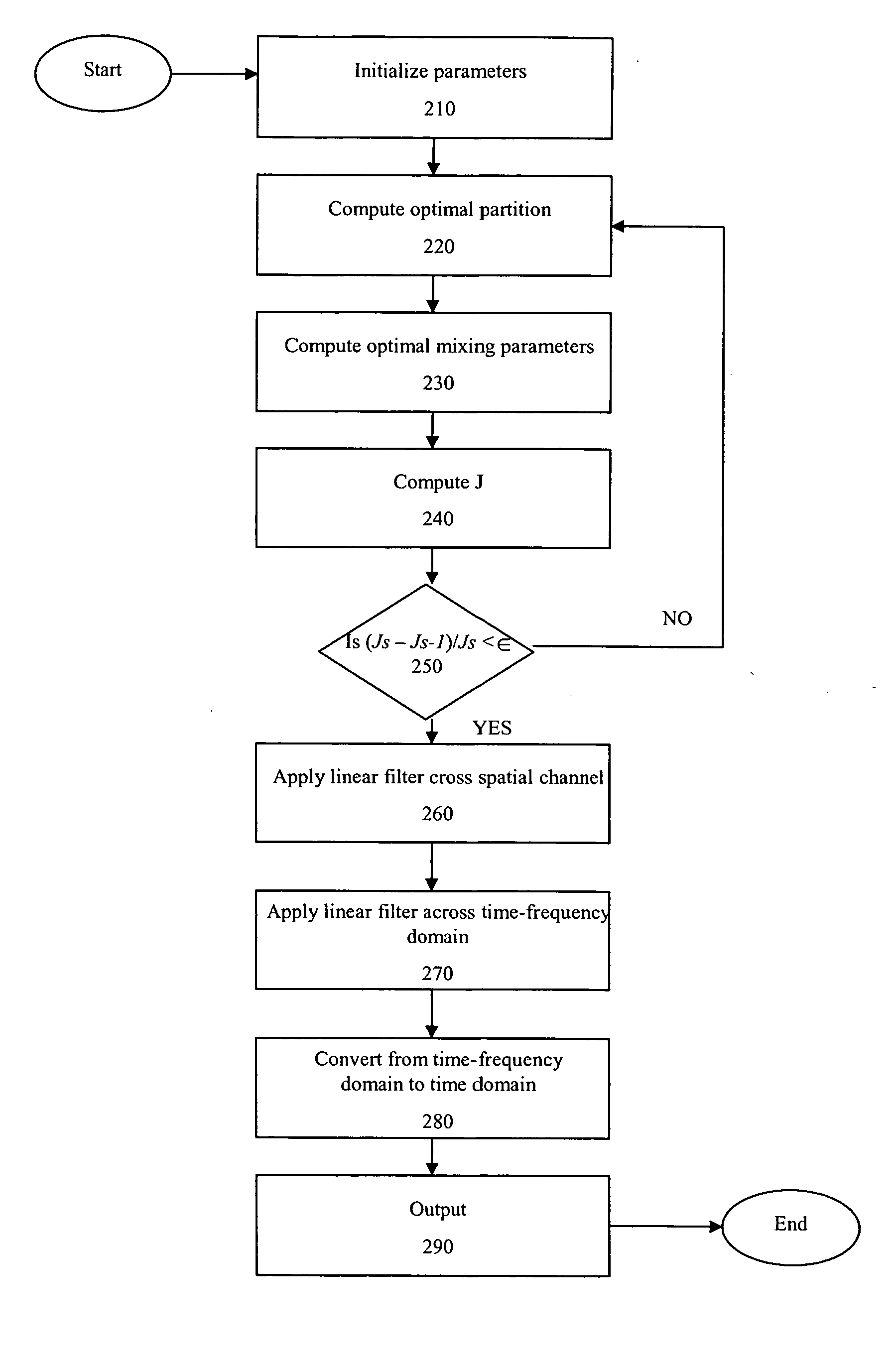

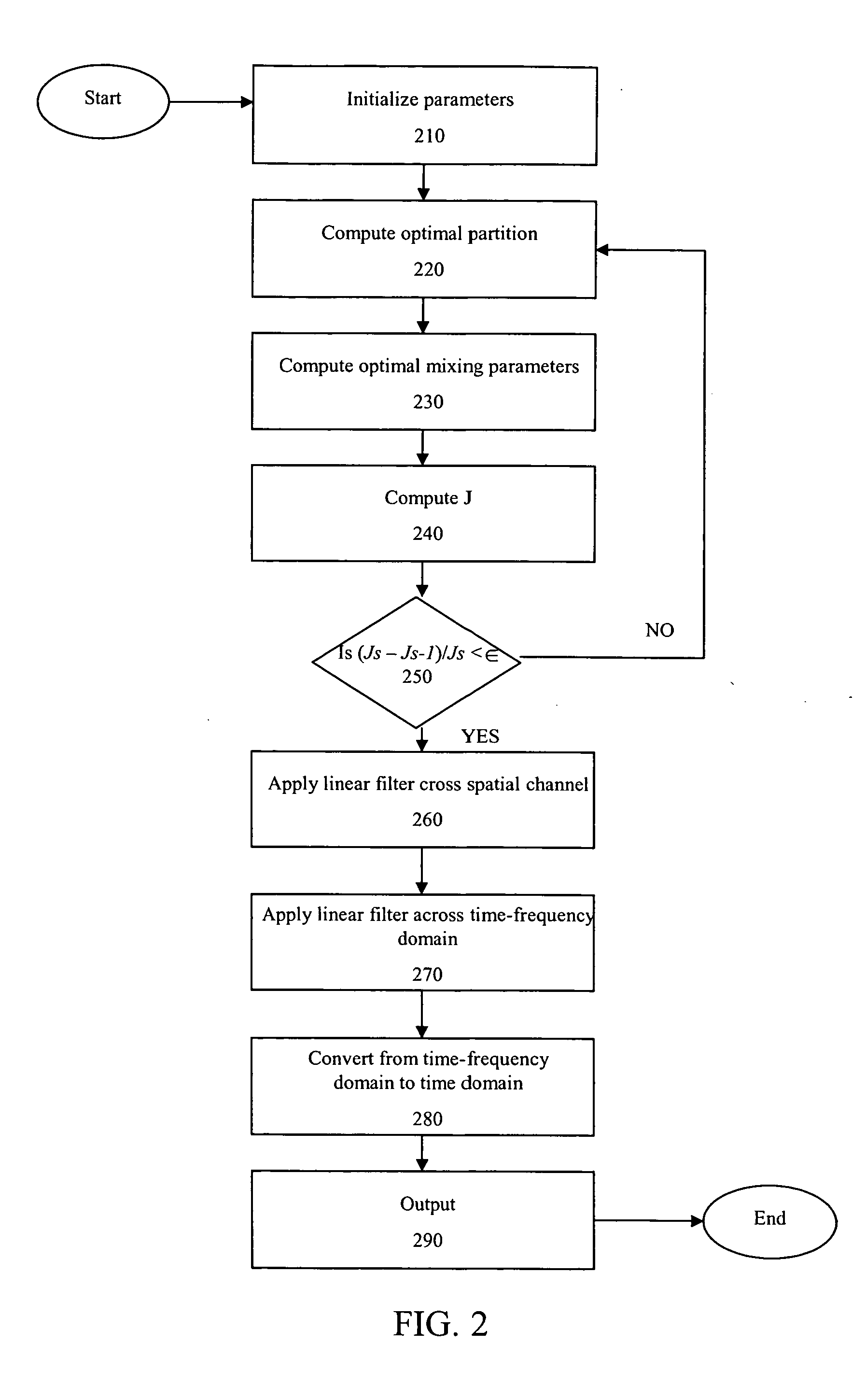

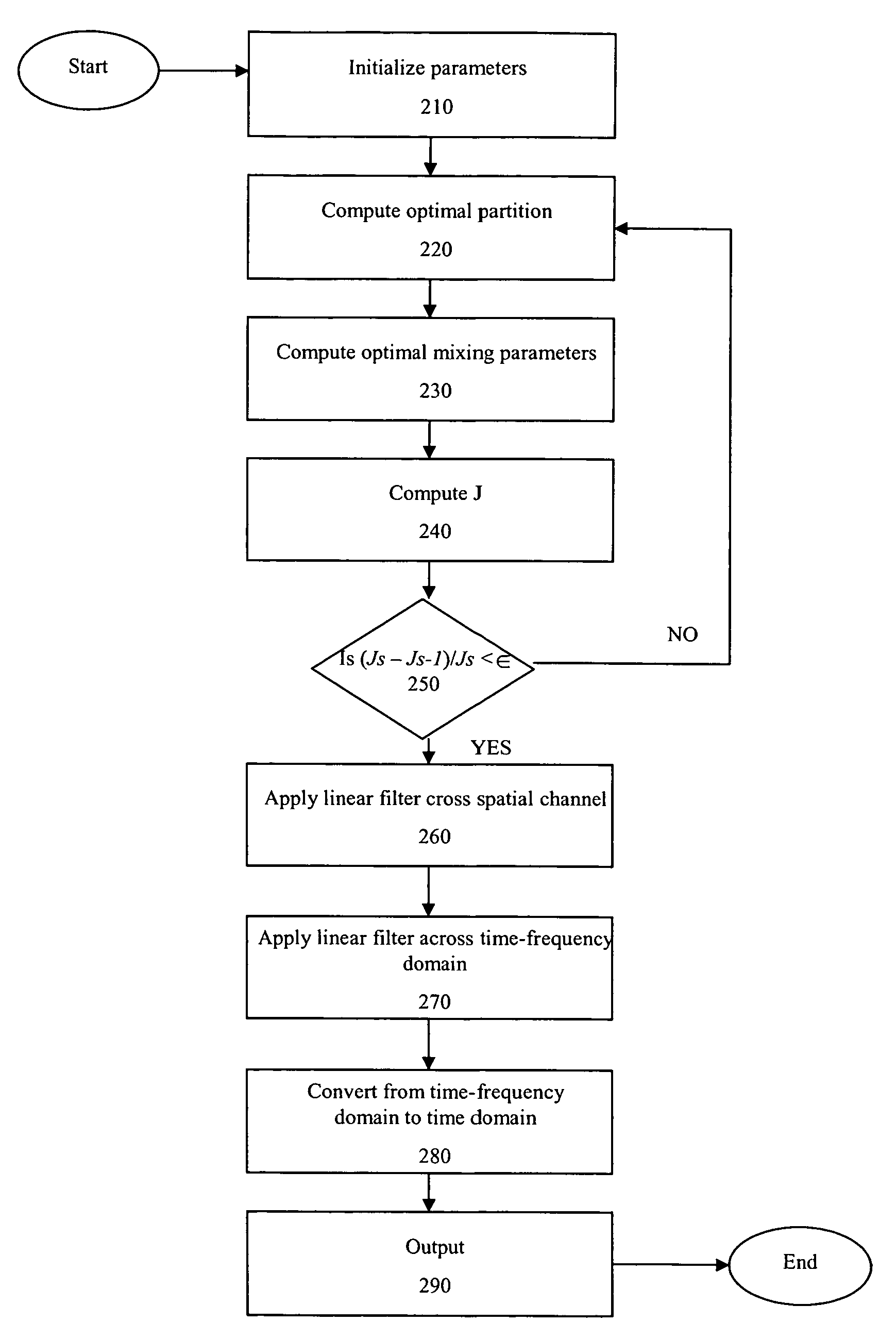

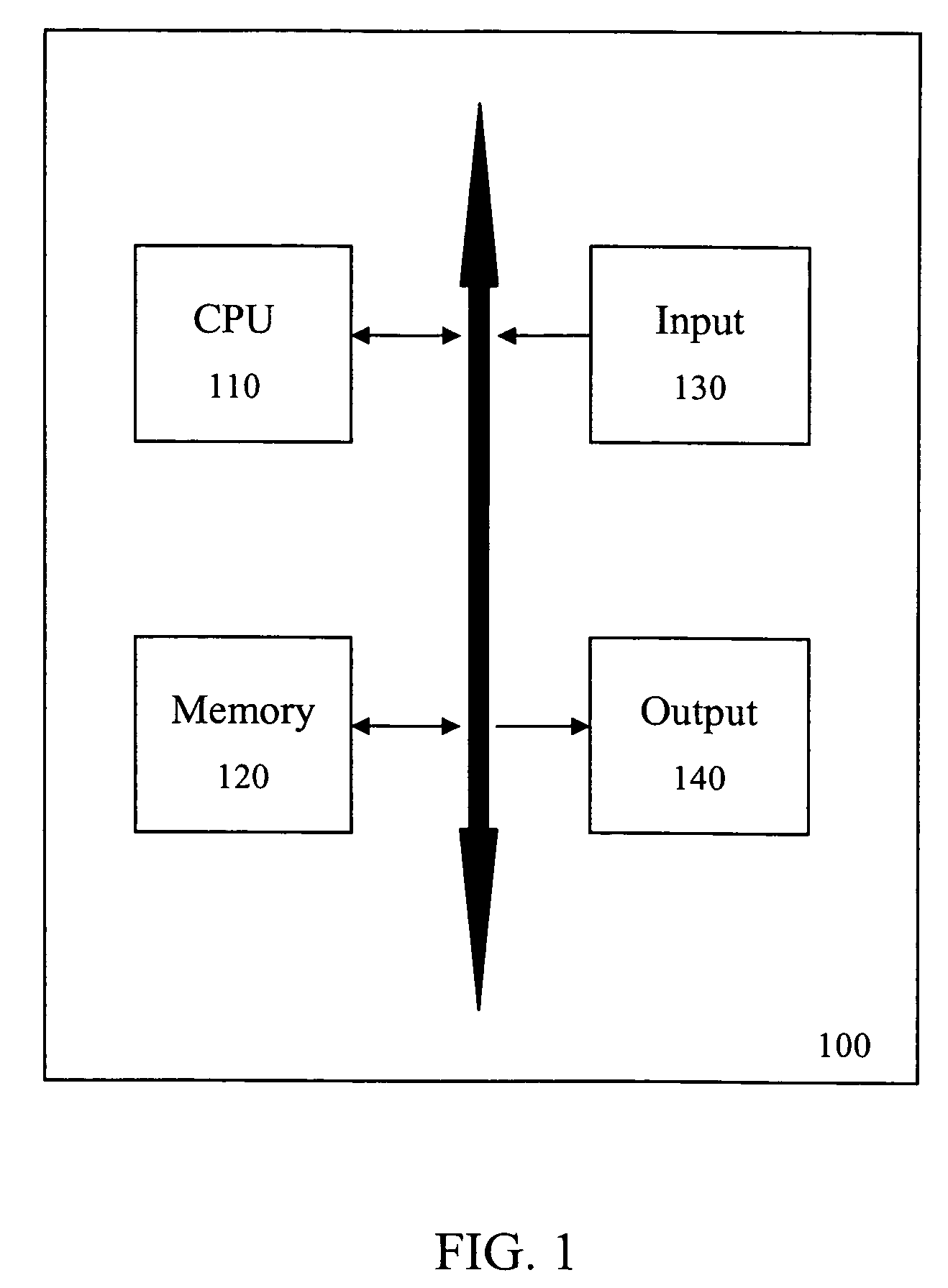

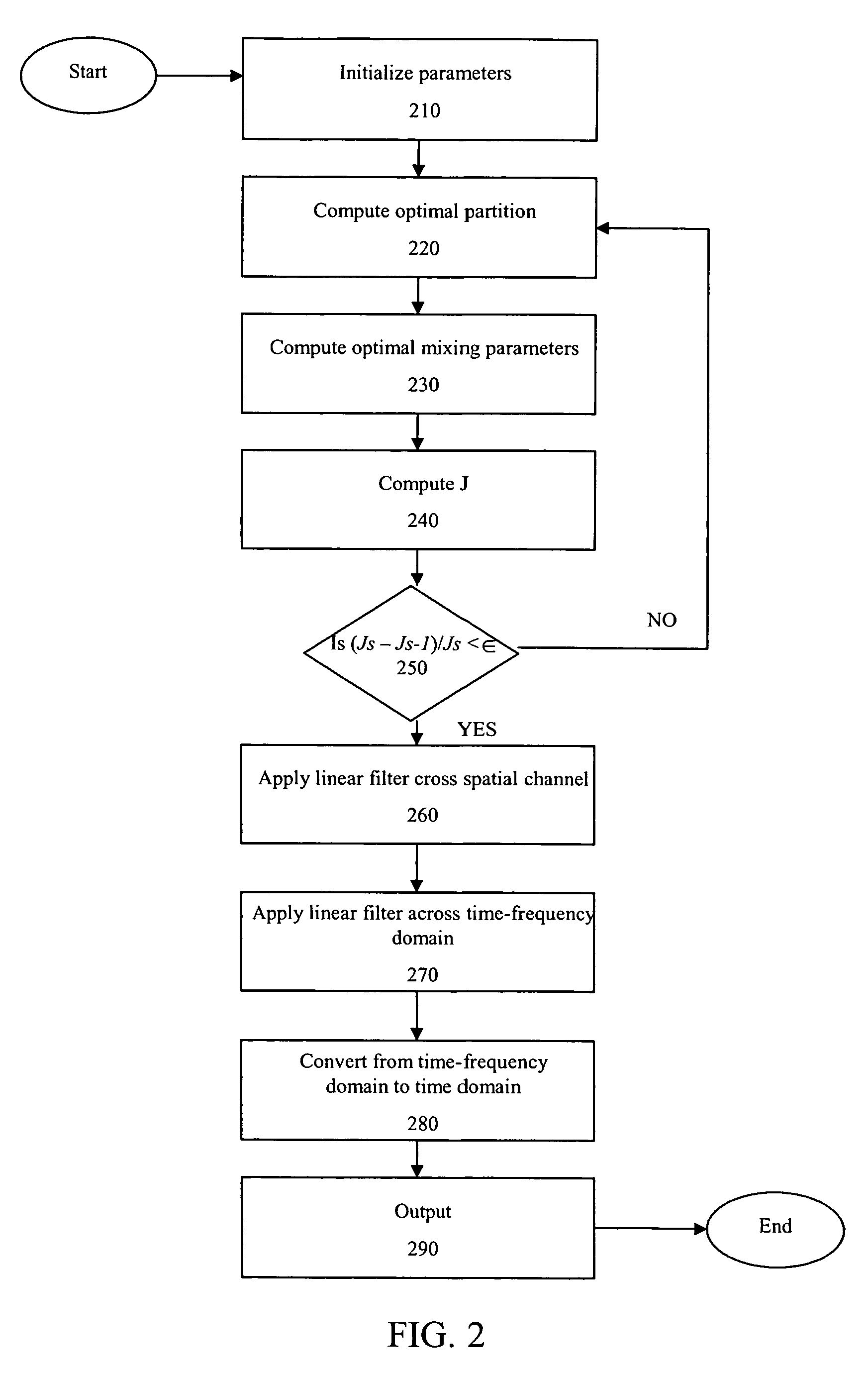

System and method for non-square blind source separation under coherent noise by beamforming and time-frequency masking

ActiveUS20040158821A1Amplifier modifications to reduce noise influenceDigital computer detailsLinear filterEngineering

A system and method for non-square blind source separation (BSS) under coherent noise. The system and method for non-square BSS estimates the mixing parameters of a mixed source signal and filters the estimated mixing parameters so that output noise is reduced and the mixed source signal is separated from the noise. The filtering is accomplished by a linear filter that performs a beamforming for reducing the noise and another linear filter that solves a source separation problem by selecting time-frequency points where, according to a W-disjoint orthogonality assumption, only one source is active.

Owner:SIEMENS CORP

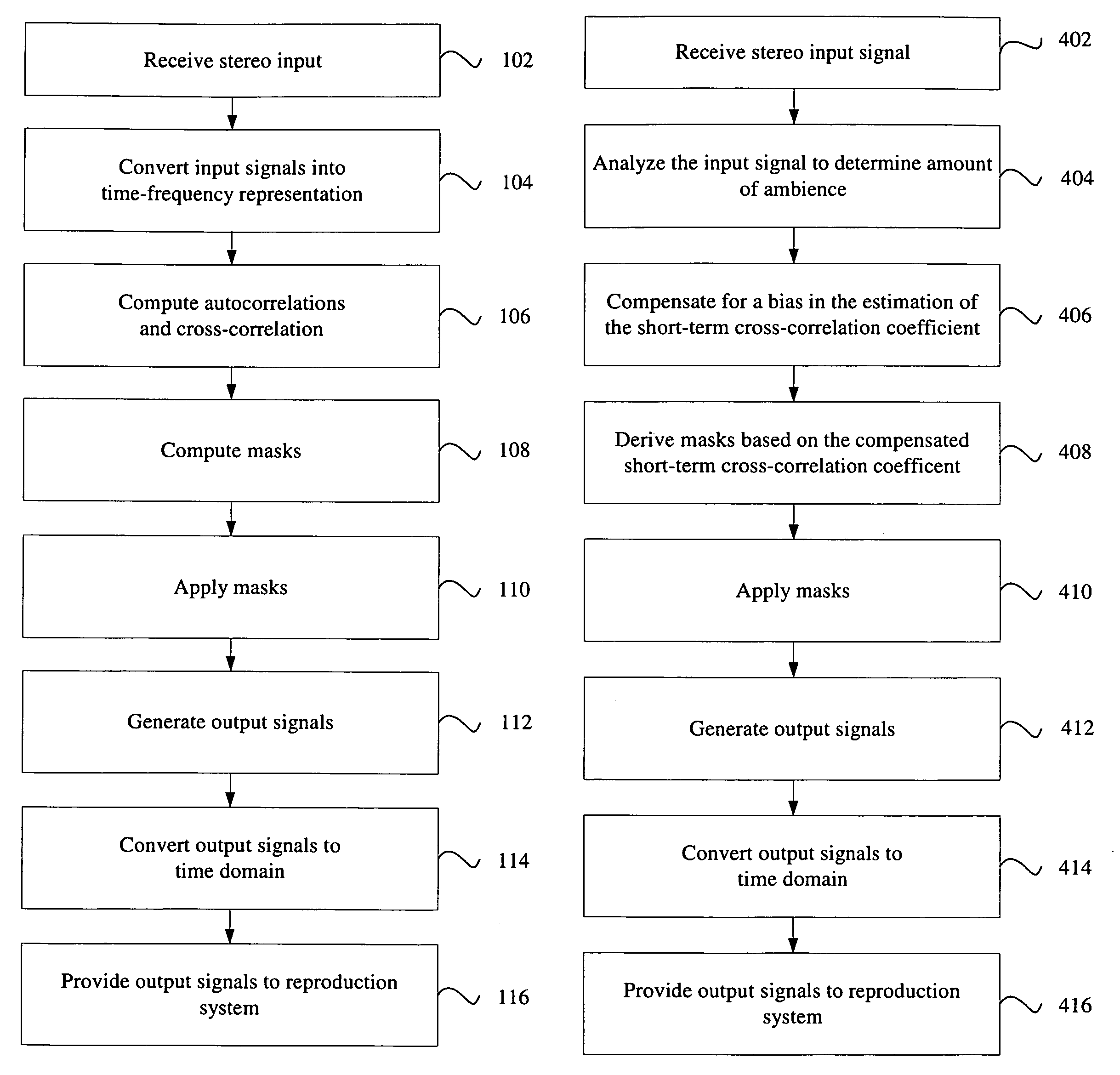

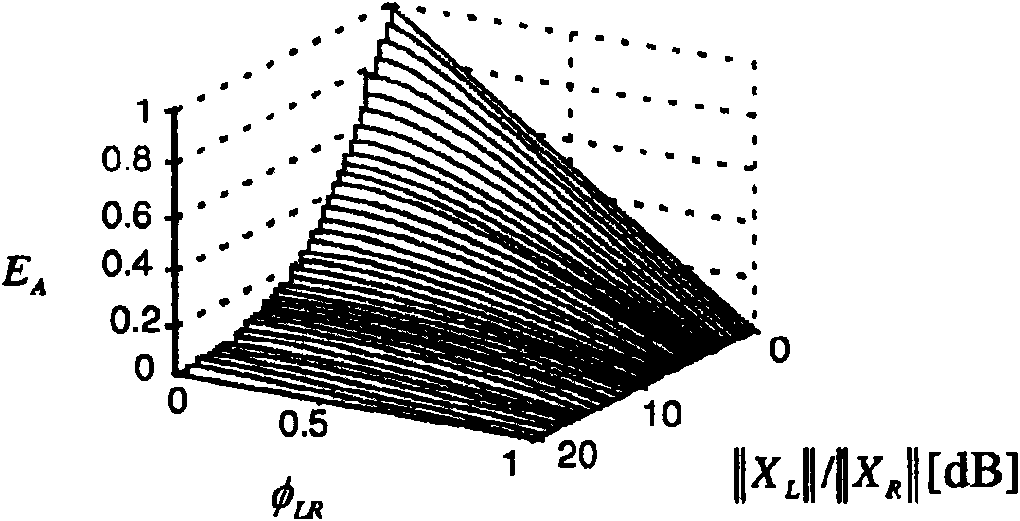

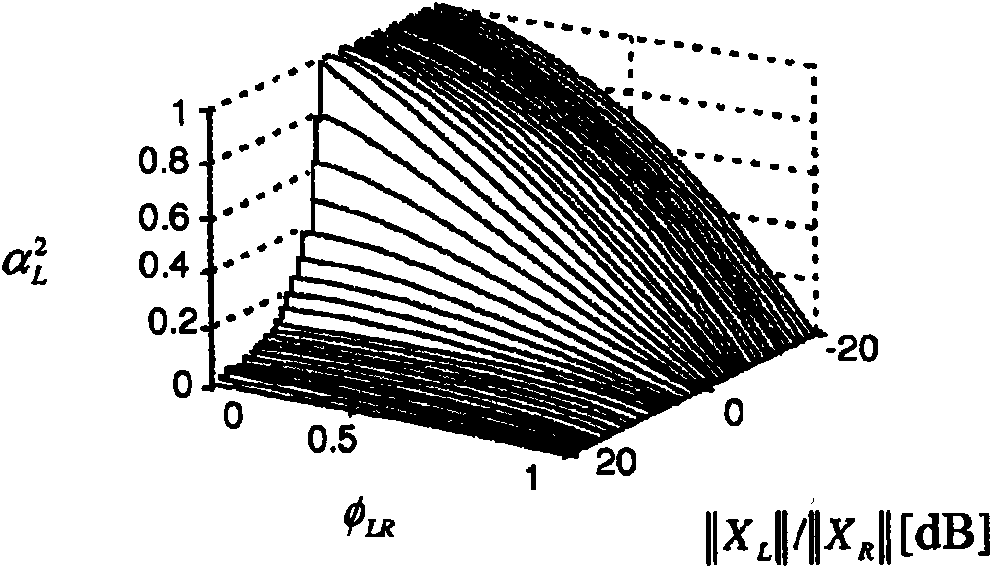

Correlation-based method for ambience extraction from two-channel audio signals

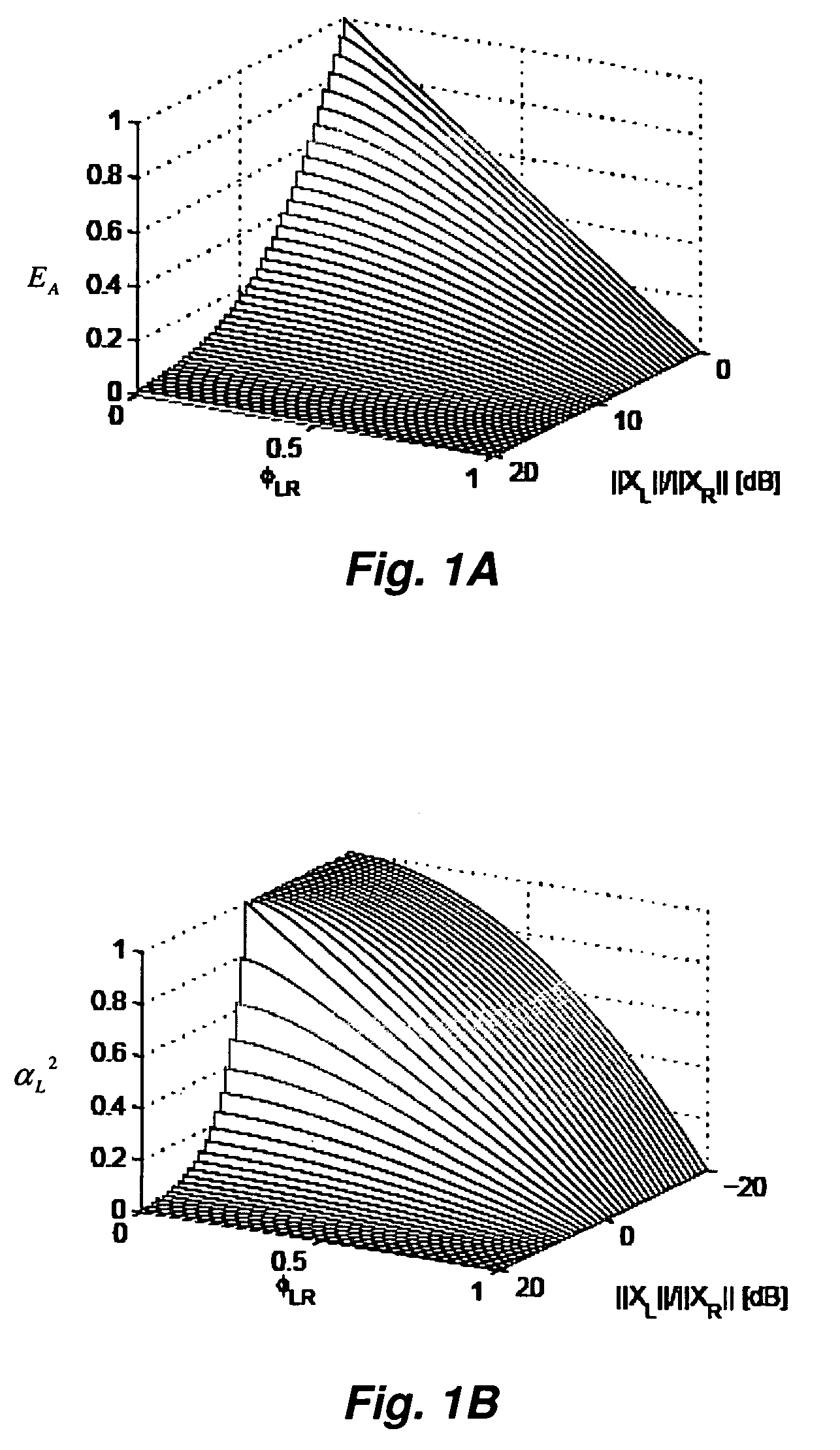

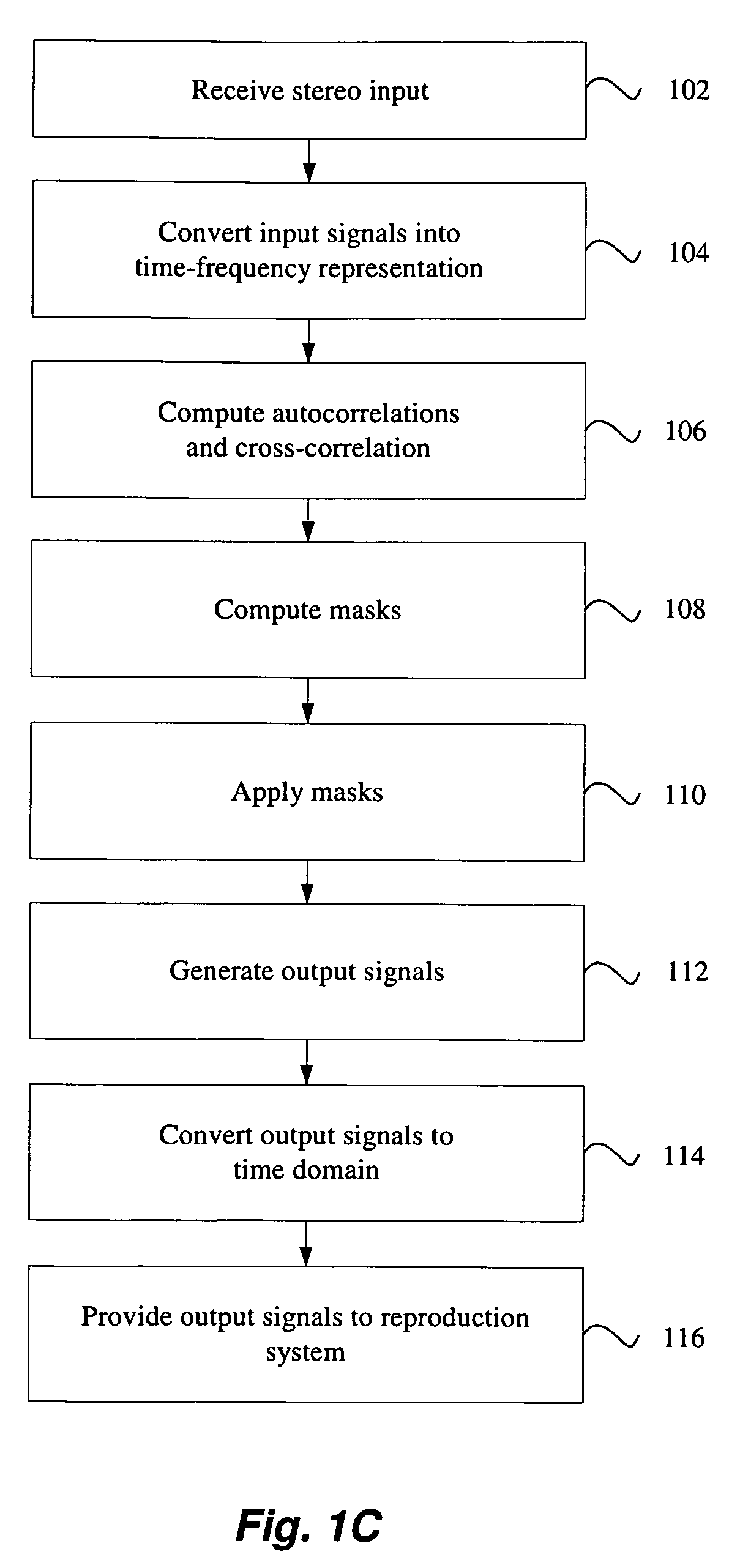

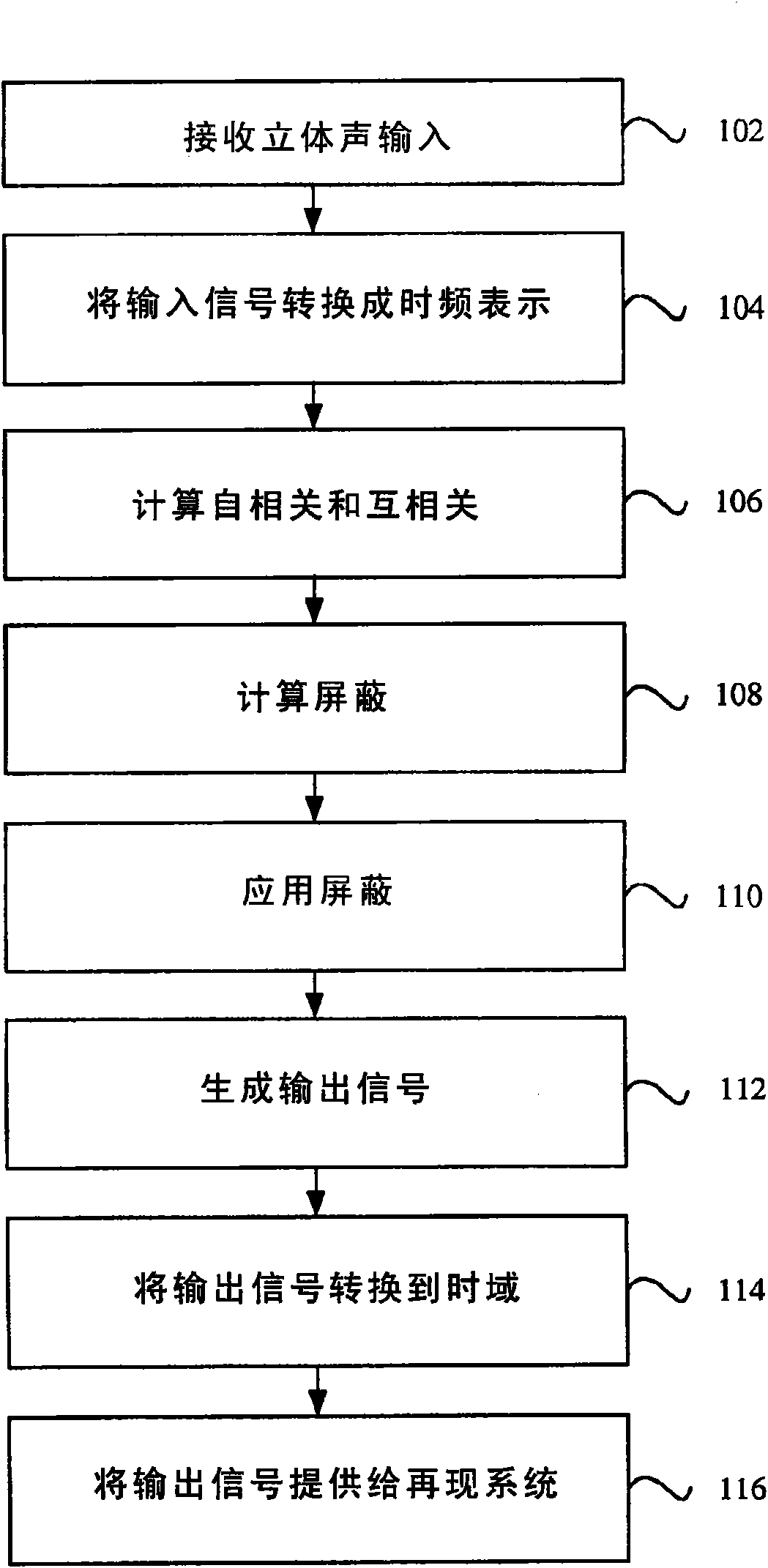

A method of ambience extraction includes analyzing an input signal to determine the time-dependent and frequency-dependent amount of ambience in the input signal, wherein the amount of ambience is determined based on a signal model and correlation quantities computed from the input signals and wherein the ambience is extracted using a multiplicative time-frequency mask. Another method of ambience extraction includes compensating a bias in the estimation of a short-term cross-correlation coefficient. In addition, systems having various modules for implementing the above methods are disclosed.

Owner:CREATIVE TECH CORP

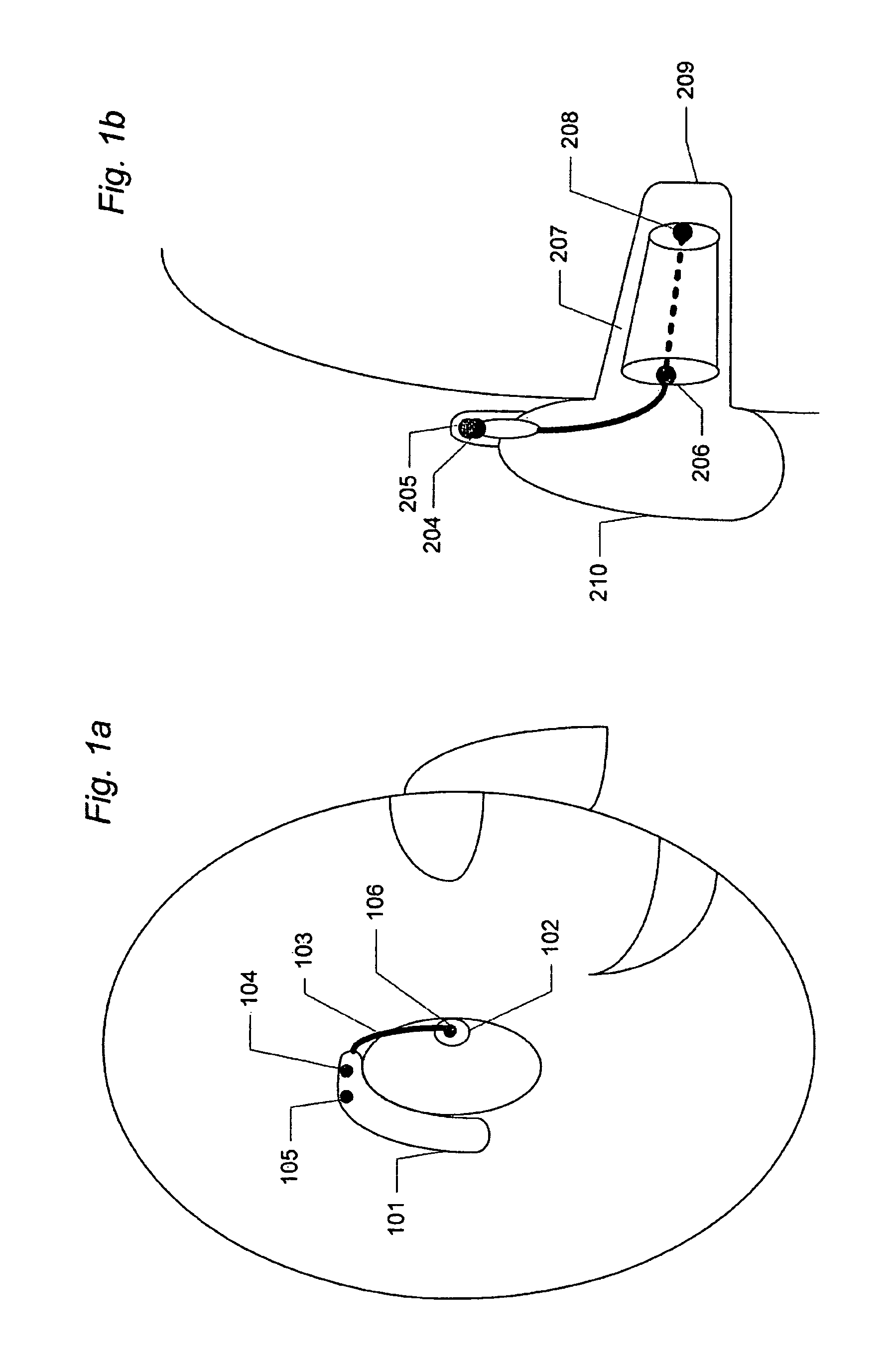

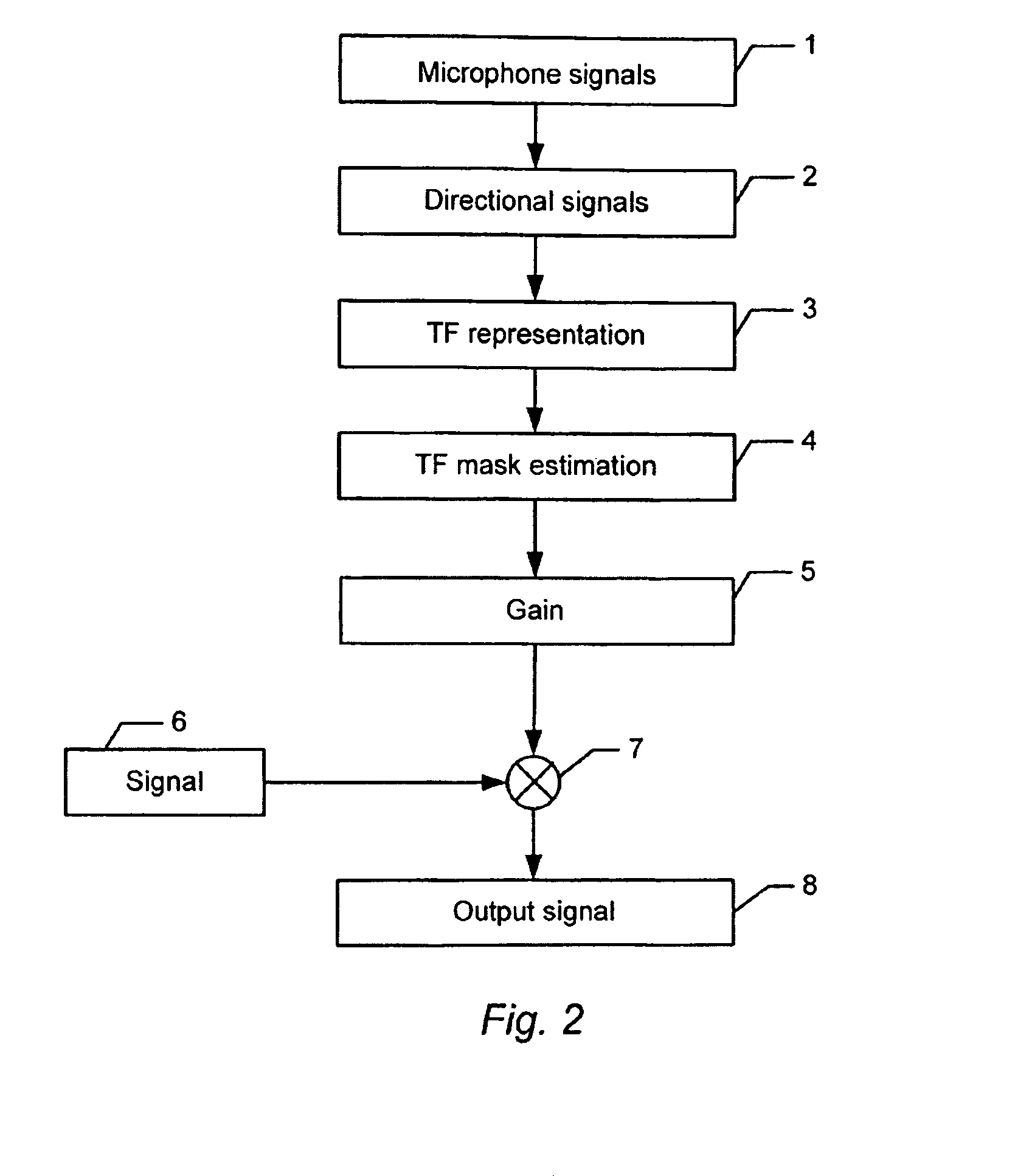

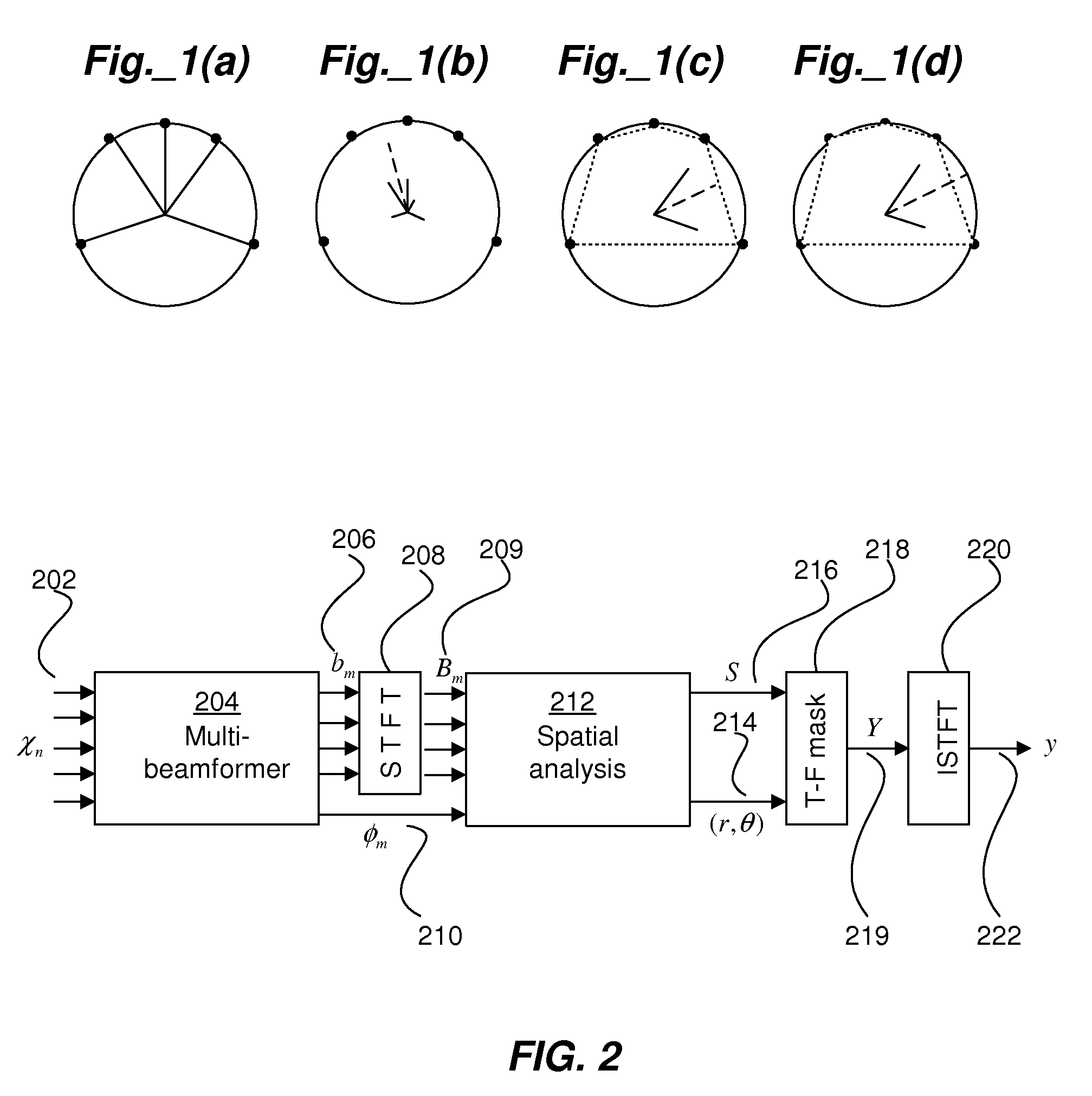

Microphone Array Processor Based on Spatial Analysis

ActiveUS20090103749A1Increase spatial selectivityIncrease heightSignal processingMicrophones signal combinationLight beamSpatial analysis

An array processing system improves the spatial selectivity by forming multiple steered beams and carrying out a spatial analysis of the acoustic scene. The analysis derives a time-frequency mask that, when applied to a reference look-direction beam (or other reference signal), enhances target sources and substantially improves rejection of interferers that are outside of the specified region.

Owner:CREATIVE TECH CORP

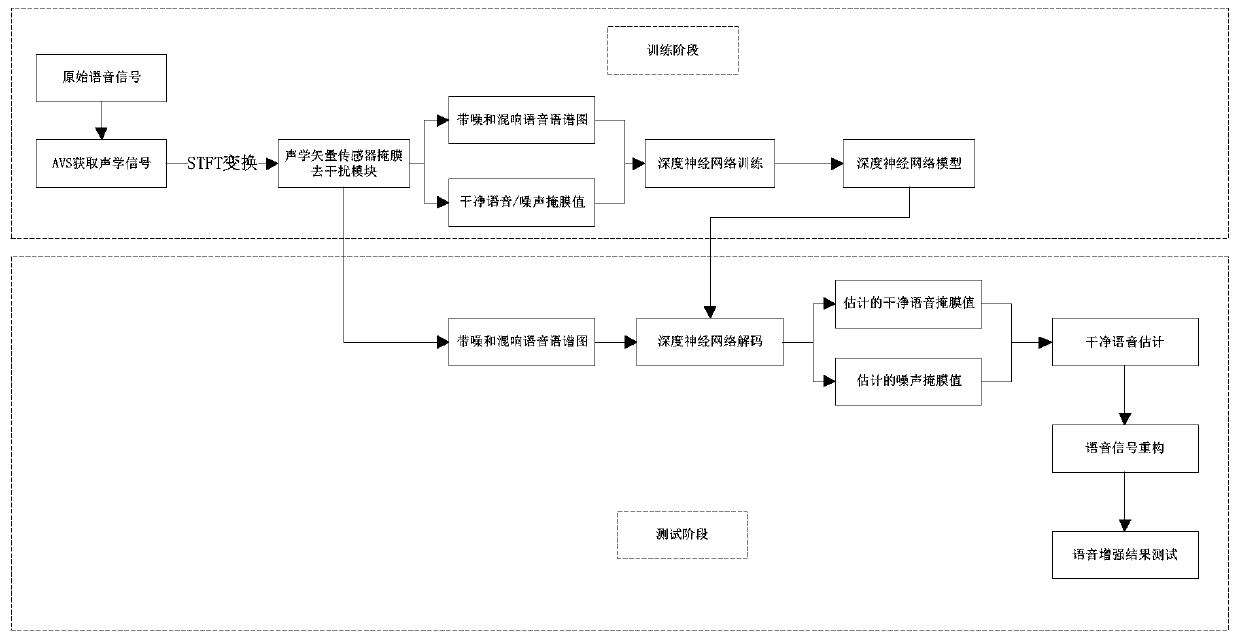

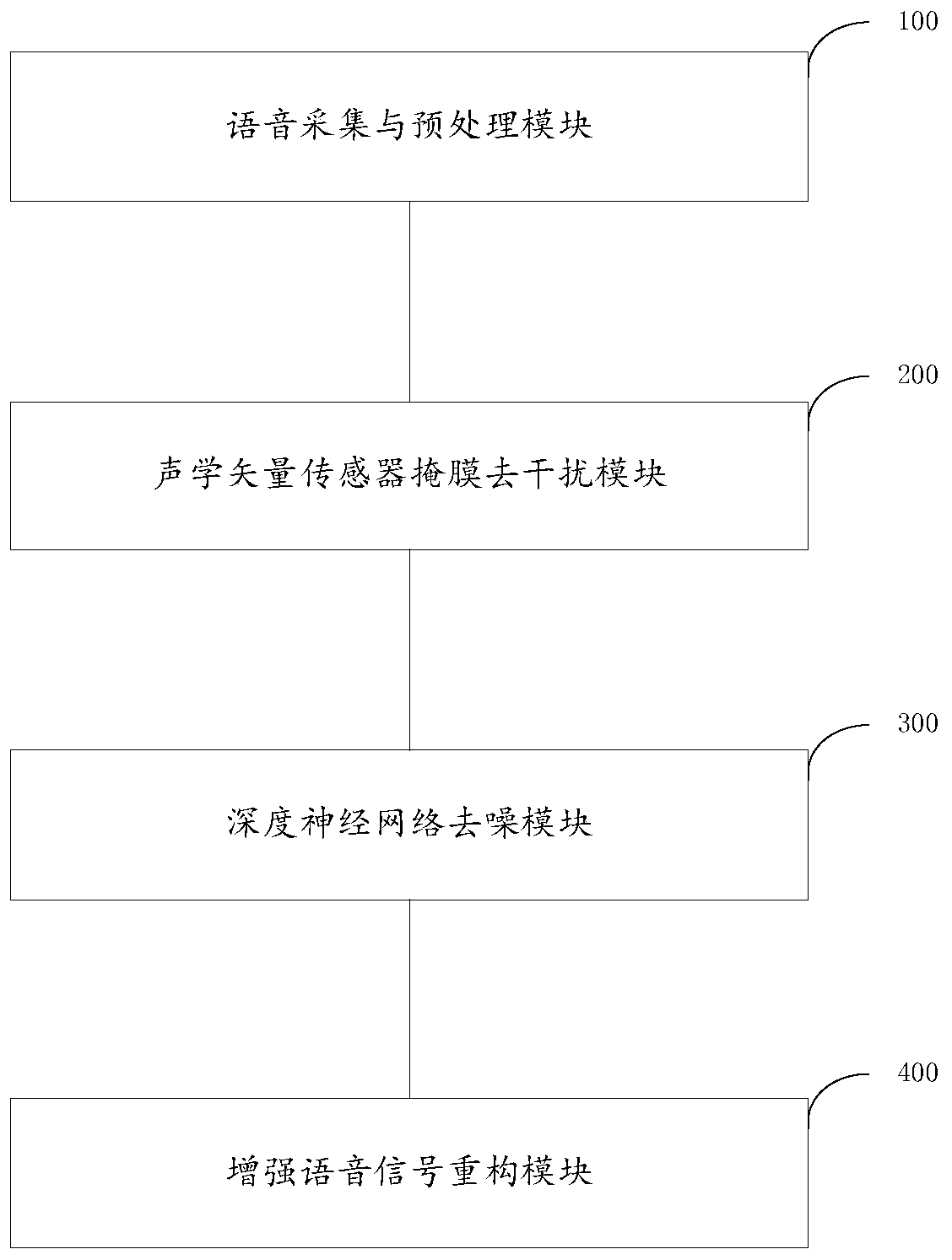

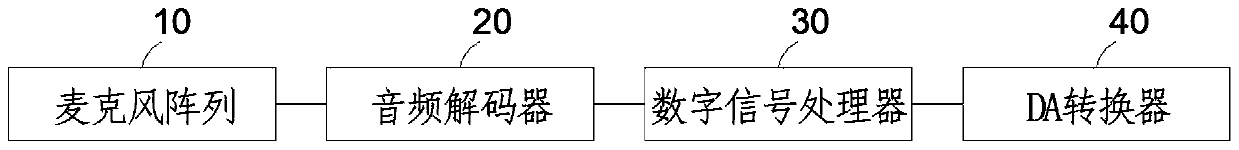

Speech enhancement method and system, computer equipment and storage medium

ActiveCN110503972AVoice enhancementReduce hardware costsSpeech analysisFrequency spectrumCollection system

The invention provides a speech enhancement method and system, computer equipment and a storage medium, and relates to the technical field of the human-machine speech interaction. The method comprisesthe following steps: collecting multi-channel acoustic signals through an acoustic vector sensor, preprocessing the multi-channel acoustic signals and acquiring a time-frequency spectrum, filtering the time-frequency spectrum and outputting a signal atlas; performing masking processing on the signal atlas through a nonlinear mask, and outputting an enhanced single-channel speech spectrogram; inputting the single-channel spectrogram into a deep neural network mask estimation model and outputting a mask spectrogram; performing time-frequency masking enhancement on the signal atlas through the mask spectrogram to acquire enhanced amplitude speech spectrogram; reconstructing through the enhanced amplitude speech spectrogram so as to output an enhanced target speech signal. The technical problem that the multi-channel speech enhancement is high in hardware cost, large in collection system volume, and high in operation complexity is solved, and the excellent speech enhancement effect can beacquired under difference interference noise types, strengths and room reverberation conditions.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

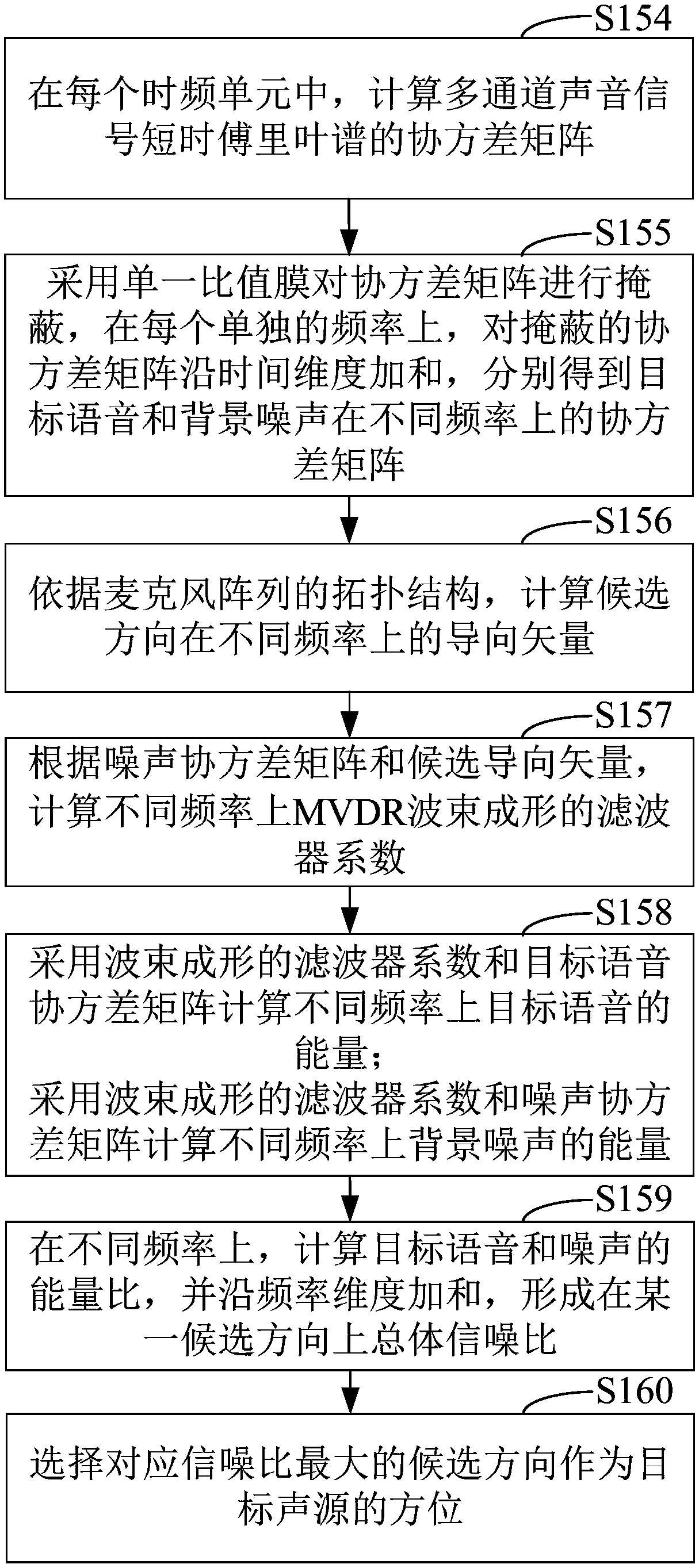

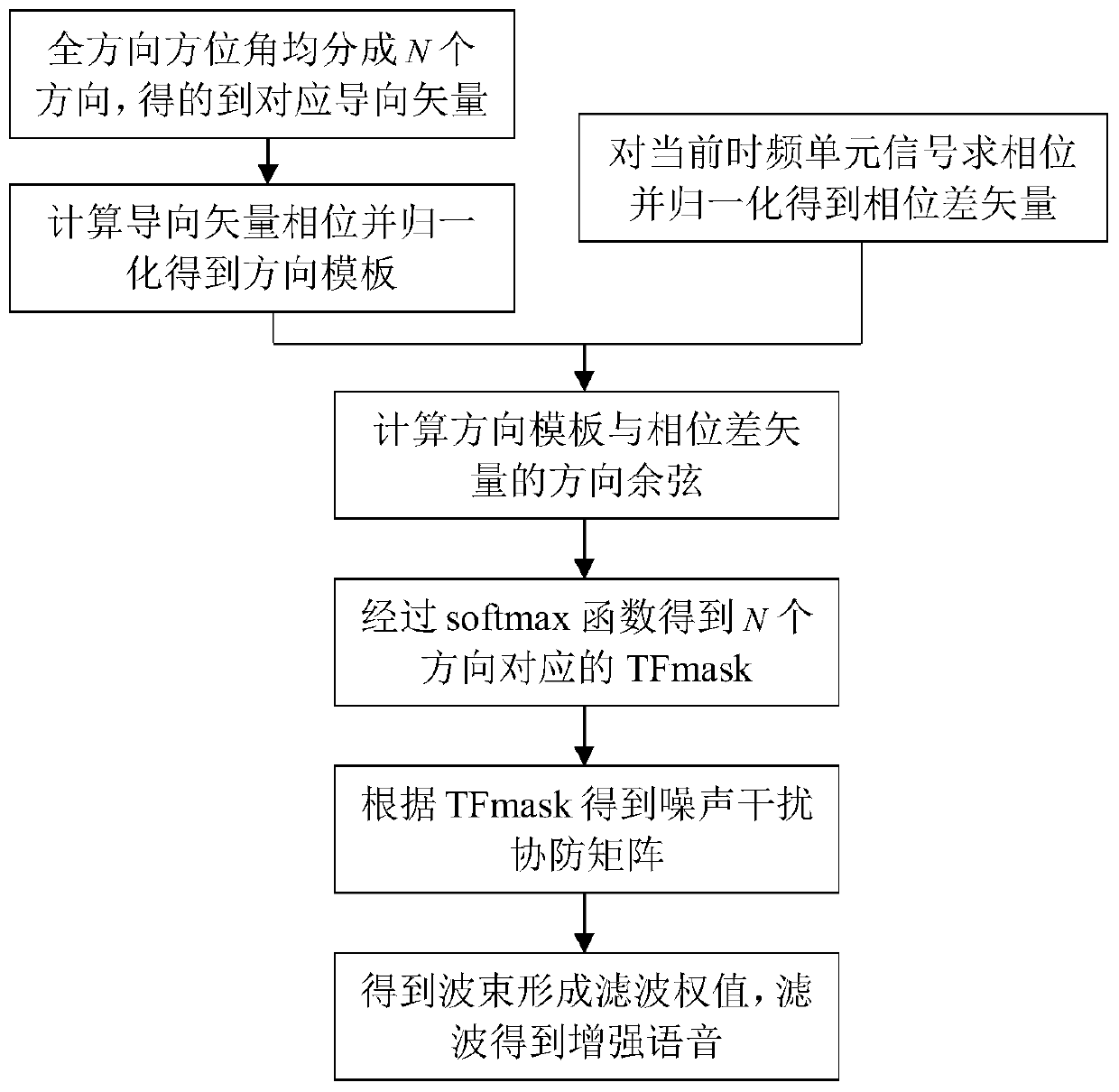

Microphone array beam forming method

ActiveCN110931036AVoice Interference DistinguishmentAchieve maximum compressionSpeech analysisFrequency UnitNoise

The invention discloses a microphone array beam forming method. The invention provides a microphone array beam forming method for solving the problem that a microphone array beam forming algorithm cannot well estimate a noise and interference covariance matrix and a source voice signal steering vector in a noisy and multi-voice interference environment. According to the invention, on the basis oftraditional microphone array beam forming; a direction template is constructed, a time-frequency masking value TFmask is obtained by using a phase difference vector of a time-frequency unit signal andan included angle cosine of different direction templates, and voice interference of a source signal and voice interference of other directions are distinguished as much as possible in a short-time Fourier transform domain with a relatively low calculated amount.

Owner:HANGZHOU NATCHIP SCI & TECH

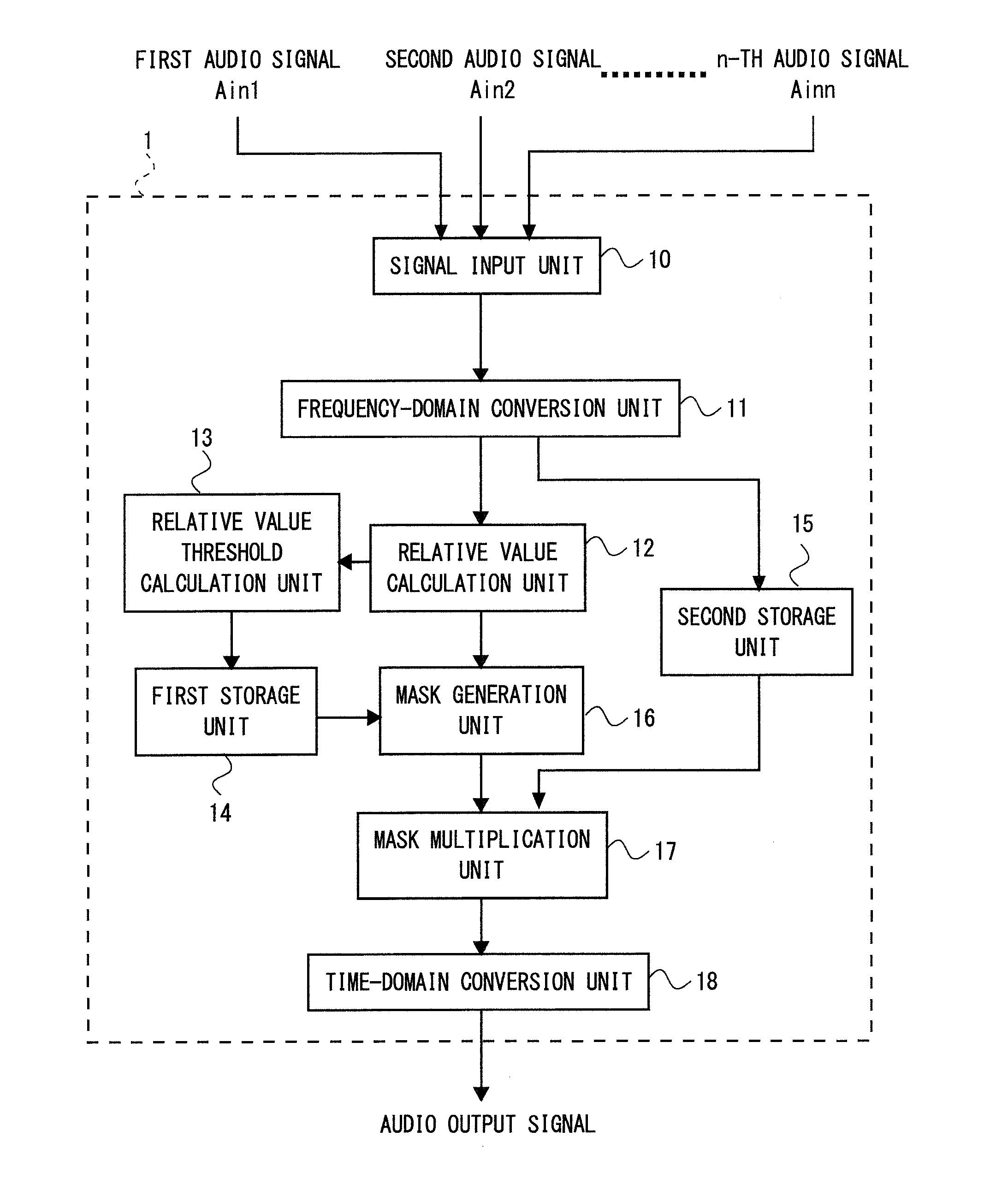

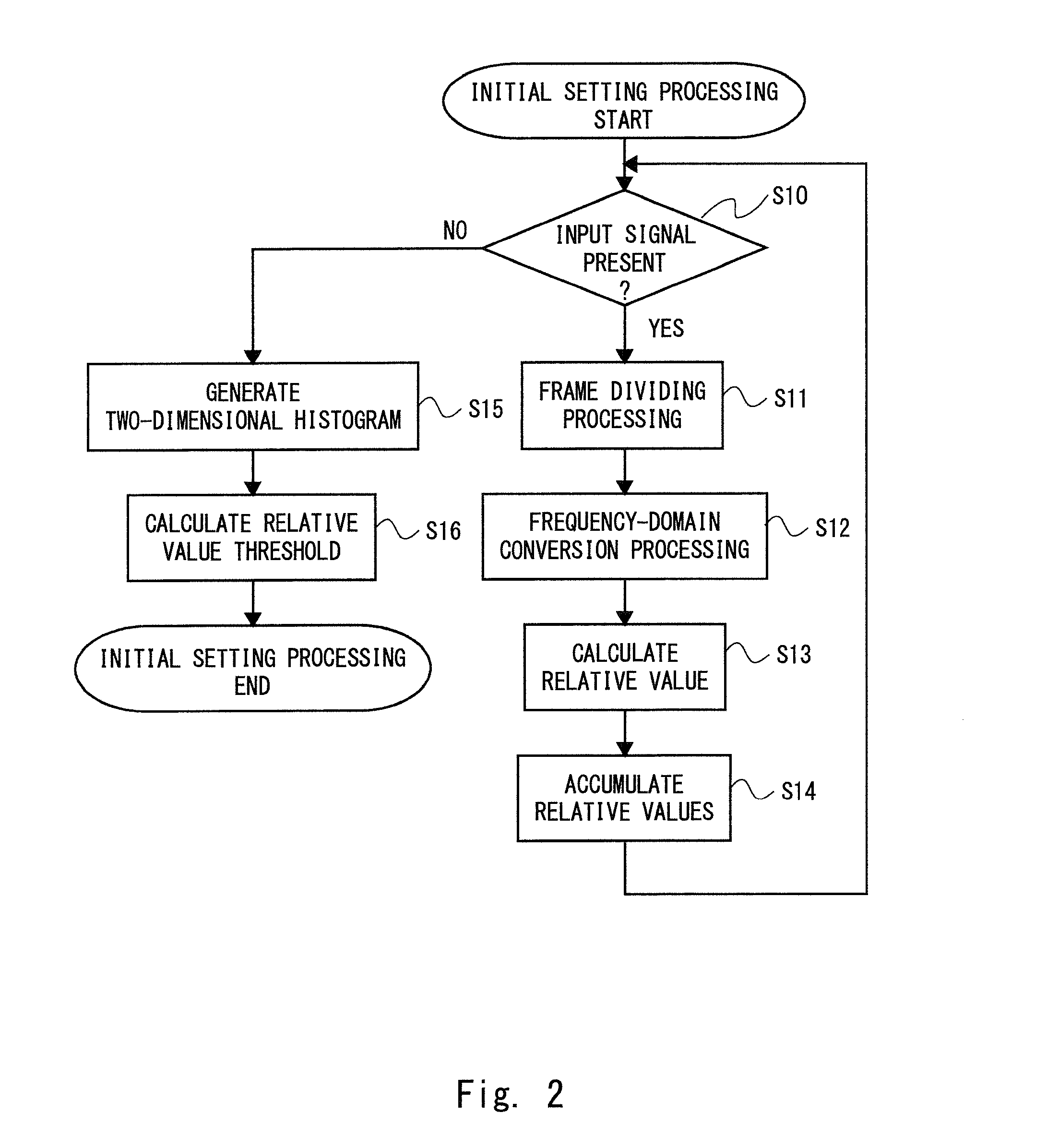

Audio signal processing device

ActiveUS20150245137A1Improve accuracyEasy to produceSpeech analysisTransducer acoustic reaction preventionTime domainTime frequency masking

An audio signal processing device includes: a frequency-domain conversion unit that generates a plurality of pieces of frequency-domain information from a plurality of audio input signals acquired at different positions; a relative value calculation unit that calculates, for each piece of frequency-domain information, a relative value between a time-frequency component included in one frequency-domain information and a time-frequency component included in another frequency-domain information; a mask generation unit that compares the relative value with an emphasized range set based on a relative value threshold stored in advance to generate a time-frequency mask that decreases a value of the frequency-domain information corresponding to the relative value which is outside the emphasized range; a mask multiplication unit that multiplies the time-frequency mask by the frequency-domain information to generate emphasized frequency-domain information; and a time-domain conversion unit that converts the emphasized frequency-domain information into an audio output signal indicated as being time-domain information.

Owner:JVC KENWOOD CORP A CORP OF JAPAN

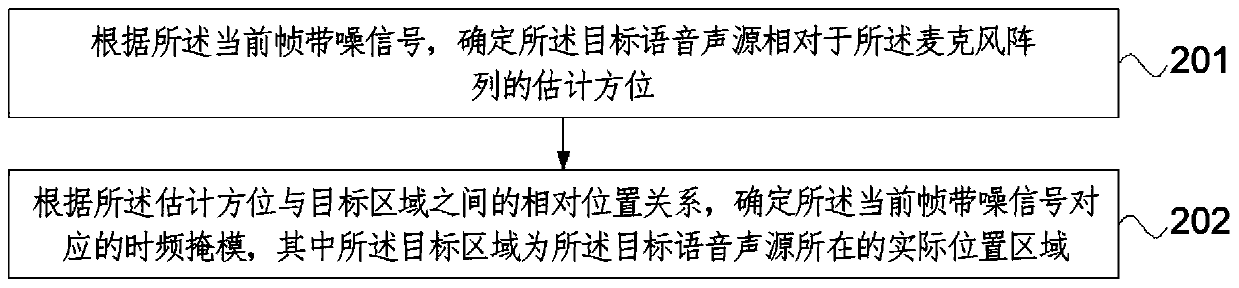

Voice enhancing method, voice enhancing device, voice enhancing equipment and storage medium

InactiveCN110085246AImprove speech enhancement performanceSmall amount of calculationSpeech analysisSound sourcesTime frequency masking

The invention relates to the technical field of voice signal processing, and provides a voice enhancing method, a voice enhancing device, voice enhancing equipment and a storage medium, and aims at solving the problems that the calculation quantity of an existing voice enhancing method is great, and the real-time requirement cannot be met. The voice enhancing method comprises the following steps of obtaining a current frame of noisy signal collected by a microphone array, wherein the current frame of noisy signal at least comprises sound signals respectively sent out by a target voice sound source and other sound sources; by using the current frame of noisy signal, determining a time frequency mask corresponding to the current frame of noisy signal; determining wave filter coefficient corresponding to the current frame of noisy signal by using the time frequency mask; by using the wave filter coefficient, performing noise enhancing processing on the noisy signal. According to the invention, during the time frequency mask calculation, only one frame of noisy signal is needed to be processed, so that the calculation quantity is small; the real-time requirements can be met.

Owner:BEIJING SINOVOICE TECH CO LTD

System and method for non-square blind source separation under coherent noise by beamforming and time-frequency masking

ActiveUS7474756B2Amplifier modifications to reduce noise influenceDigital computer detailsLinear filterEngineering

A system and method for non-square blind source separation (BSS) under coherent noise. The system and method for non-square BSS estimates the mixing parameters of a mixed source signal and filters the estimated mixing parameters so that output noise is reduced and the mixed source signal is separated from the noise. The filtering is accomplished by a linear filter that performs a beamforming for reducing the noise and another linear filter that solves a source separation problem by selecting time-frequency points where, according to a W-disjoint orthogonality assumption, only one source is active.

Owner:SIEMENS CORP

Reverberation elimination method and device, computer equipment and storage medium

InactiveCN111312273AReverb removalReduce speech impairmentSpeech analysisAcousticsTime frequency masking

The invention relates to a reverberation elimination method and device, computer equipment and a storage medium. The method comprises the following steps: acquiring a voice signal with reverberation;processing the voice signal with reverberation to obtain a first amplitude spectrum, and obtaining a voice feature with reverberation of the voice signal with reverberation based on the first amplitude spectrum; determining a corresponding time-frequency masking quantity according to the voice characteristics with reverberation, and performing reverberation elimination on the first amplitude spectrum based on the time-frequency masking quantity to obtain a second amplitude spectrum; and determining the voice signal after reverberation elimination according to the second amplitude spectrum. Byadopting the method, the reverberation elimination effect and the voice quality after reverberation elimination can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

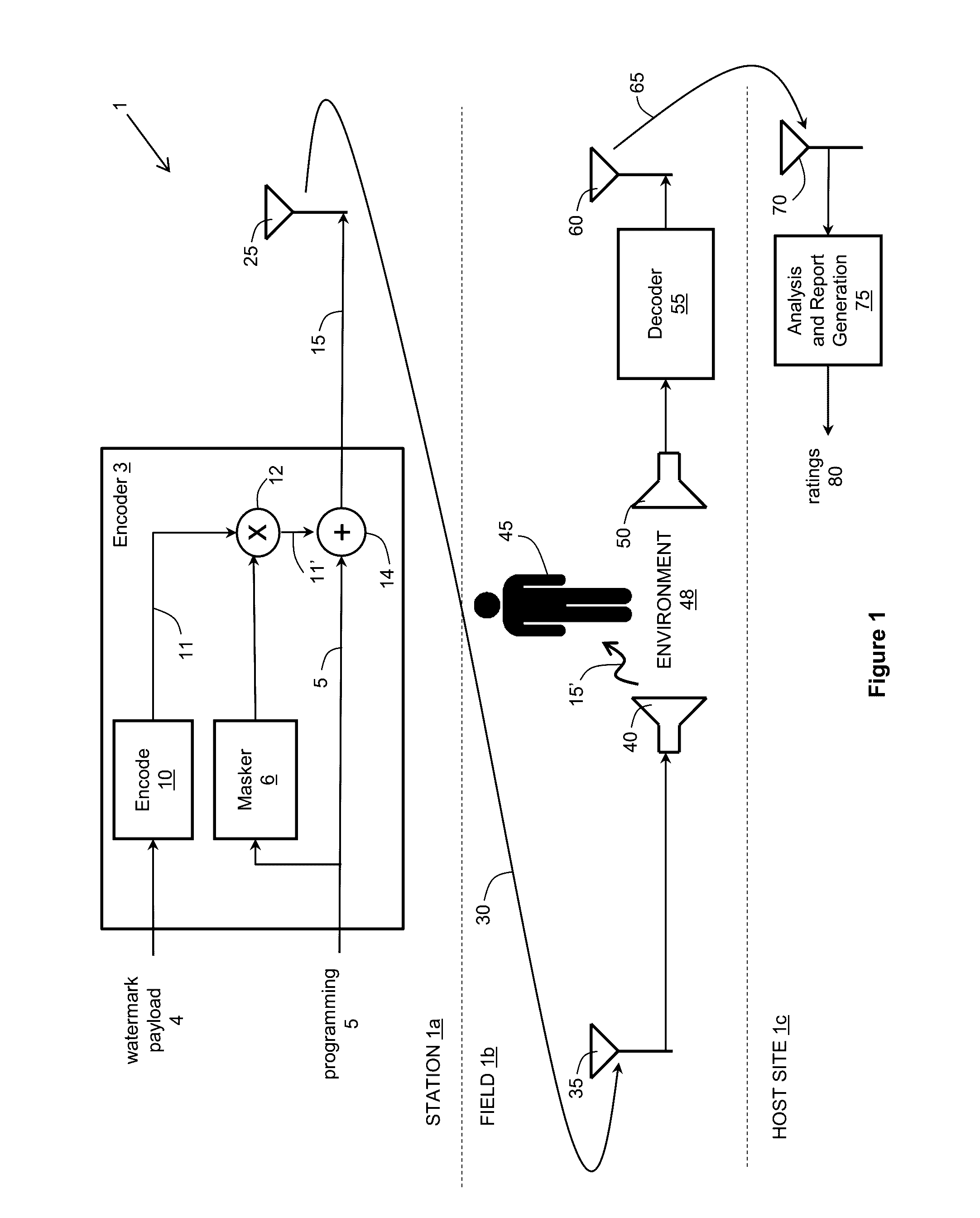

Inserting watermarks into audio signals that have speech-like properties

A method for a machine or group of machines to watermark an audio signal includes receiving an audio signal and a watermark signal including multiple symbols, and inserting at least some of the multiple symbols in multiple spectral channels of the audio signal, each spectral channel corresponding to a different frequency range. Optimization of the design incorporates minimizing the human auditory system perceiving the watermark channels by taking into account perceptual time-frequency masking, pattern detection of watermarking messages, the statistics of worst case program content such as speech, and speech-like programs.

Owner:TLS CORP

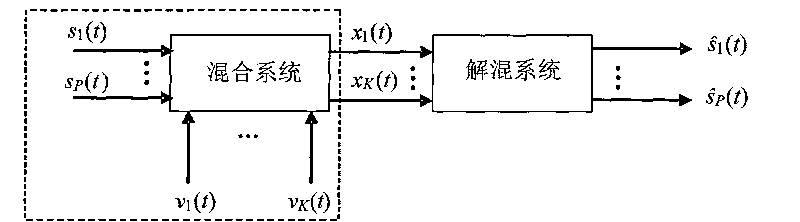

Blind source separation method based on mixed signal local peak value variance detection

InactiveCN101727908AEfficient and accurate estimateImprove estimation performanceSpeech analysisUltrasound attenuationTime domain

The invention discloses a blind source separation method based on mixed signal local peak value variance detection, relating to the improvement on a DUET method and solving the problem that the peak value can not be effectively detected in the prior DUET blind source separation method, in particularly comprising the following steps: finding out all N*N grid subregions on a signal source attenuation-delay column diagram; selecting out the subregion of which the value of the central point is maximum in all subregions as the peak value subregion; respectively calculating the average value of the three-dimensional coordinates of all data points in the selected peak value subregion, sequentially solving the distances from each data point to the average value point and calculating variances; sequencing all variances, and extracting the first P larger variances; and transferring the horizontal coordinates and vertical coordinates corresponding to the P peak values to an attenuation-delay array, extracting the peak value by binary time-frequency masks, separating signal sources on the time-frequency domain, and transforming to time domain to obtain the final separation source signals. The method is applicable to general peak value detection, in particular applicable to the peak value detection of the DUET blind source separation method.

Owner:HARBIN INST OF TECH

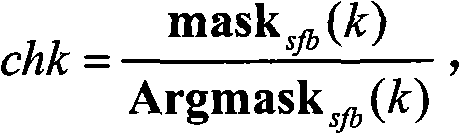

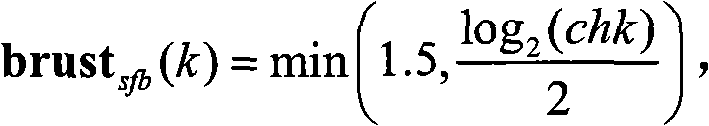

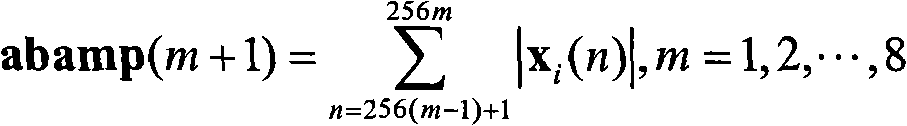

Psychoacoustics model processing method based on advanced audio decoder

ActiveCN101308659AImprove coding efficiencyImprove encoding qualitySpeech analysisRate distortionDiffusion matrix

The invention discloses a psychoacoustic model processing method based on an advanced audio encoder. The psychoacoustic model processing method includes the following steps: A, the perceptual entropy threshold value and the masking limen of a coding sub-band are obtained by the spectrum energy of the psychoacoustic of the sub-band of the bit stream to be encoded through masked diffusion matrix algorithm; B, anticipated bit consumption of the sub-band is calculated by employing time-frequency correction and anticipated echo correction through the perceptual entropy threshold value and the masking limen of the coding sub-band; C, the psychoacoustic model outputs the anticipated bit consumption of the sub-band, which then serves as a parameter for code rate distortion so as to carry out the encoding process. The psychoacoustic model processing method can obtain the bit consumption of the sub-band through the perceptual entropy more accurately and the anticipated value is taken by the encoder as the parameter for code rate distortion control, thus greatly improving quantizing encoding efficiency and quality of the encoder.

Owner:ZTE CORP

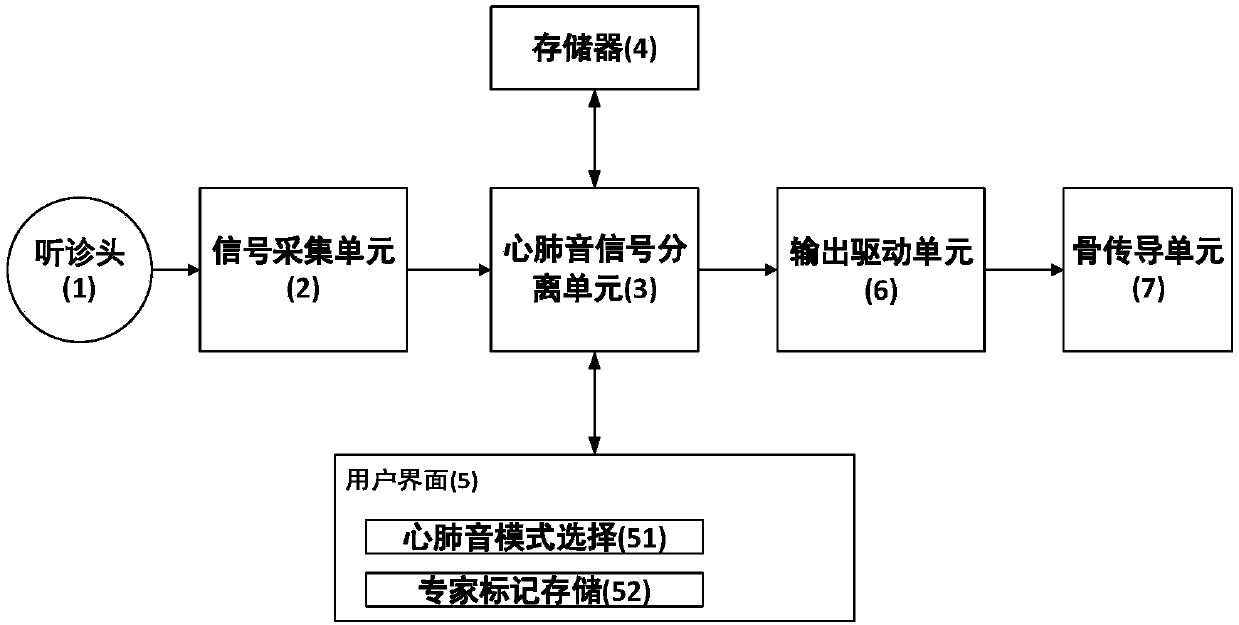

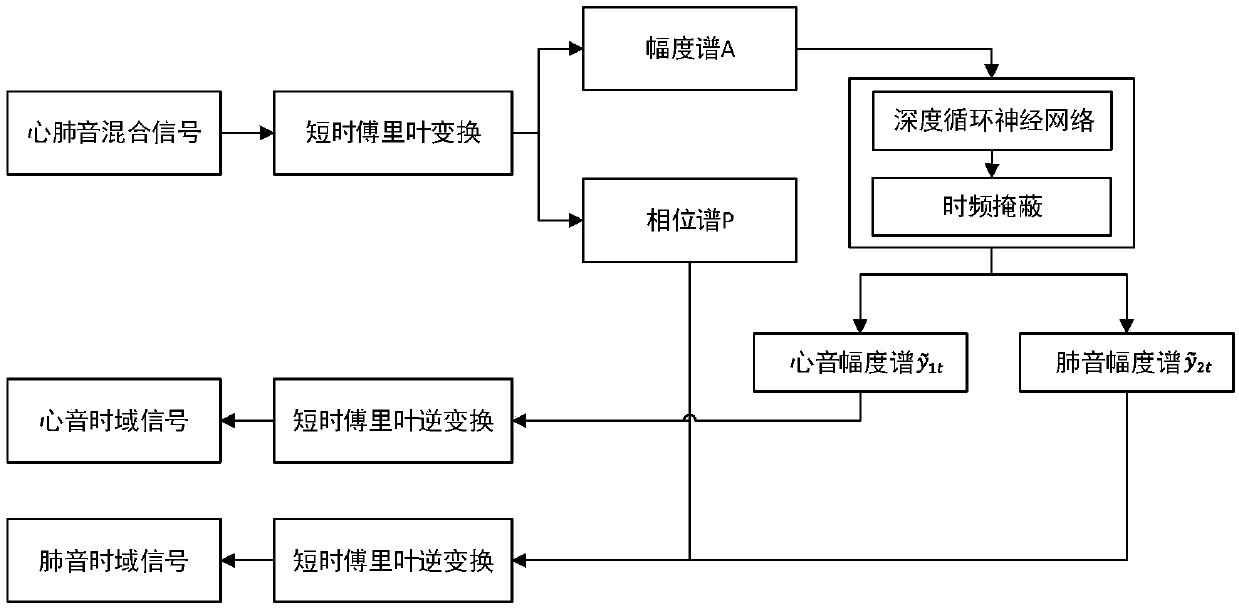

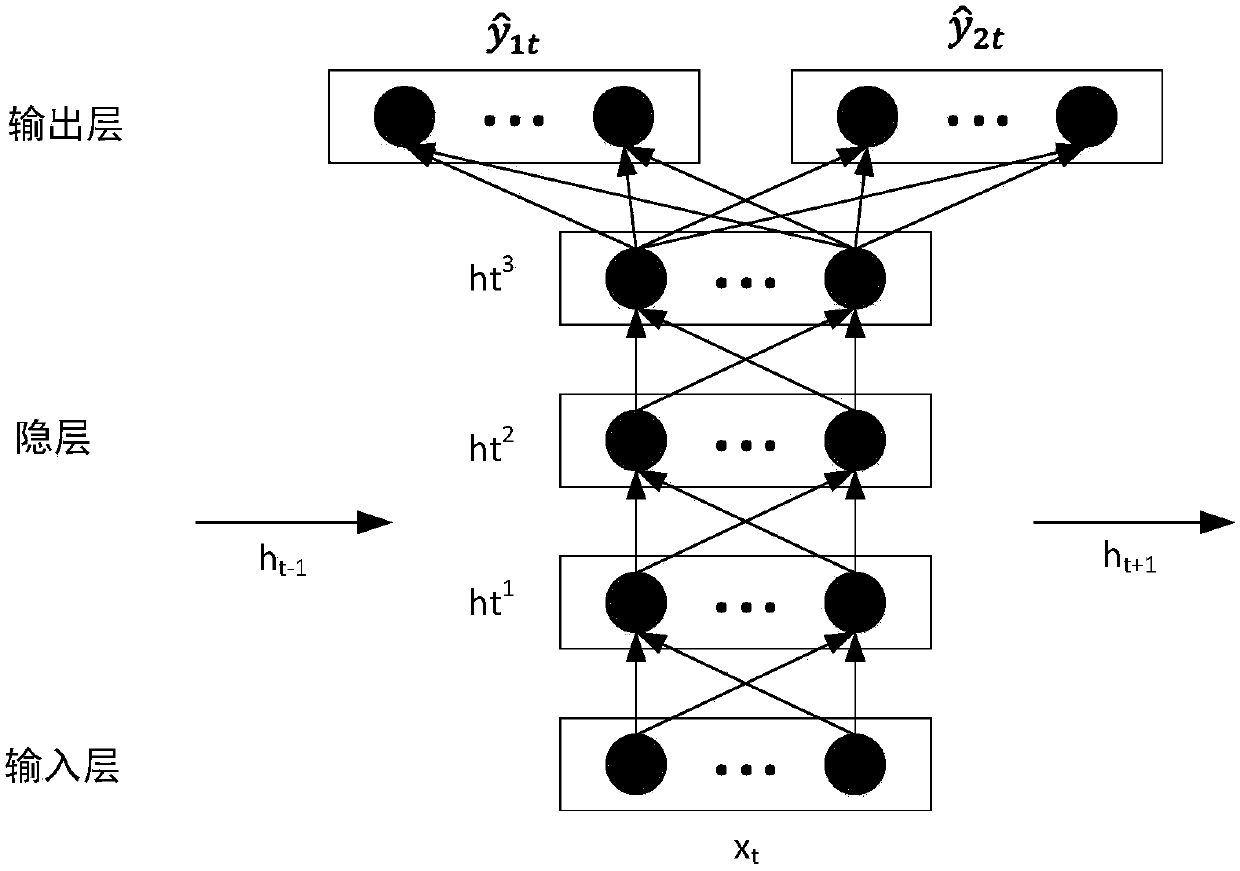

Bone-conduction digital auscultation system for automatic separation of heart and lung sounds

InactiveCN110251152AEffective nonlinear decouplingEasy to manageStethoscopeNeural learning methodsTime domainHeart sounds

The invention discloses an auscultation system for heart and lung sound separation based on deep learning; bone conduction is used for auscultation. The auscultation device includes an auscultation head, a signal acquisition module, a heart and lung sound signal separation module, a memory, a user interface, an output driving unit and a bone conduction unit. The signal acquisition module acquires heart and lung sound signals and digitizes the heart and lung sound signals, and the heart and lung sound signal separation module adopts a time-frequency masking deep circulatory neural network for heart and lung sound signal separation; the user interface can select heart-lung sound modes and store expert tags. The output driving unit receives heart or lung sound time-domain signals separated from previous stage for power amplification, and drives the bone conduction unit to generate vibration. The auscultator can automatically separate clean heart and lung sounds from the overlapped heart and lung sounds, facilitate data acquisition and post-analysis, and enhance the auscultation experience.

Owner:PEKING UNIV

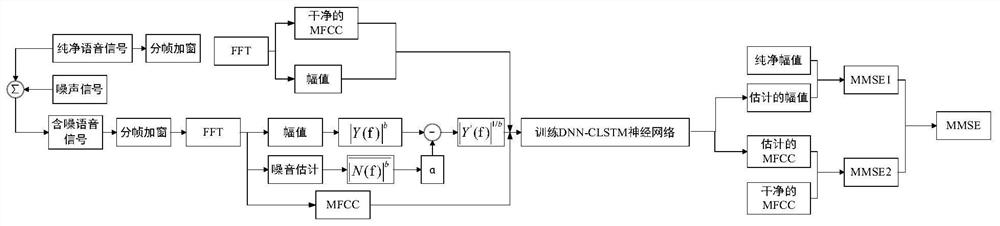

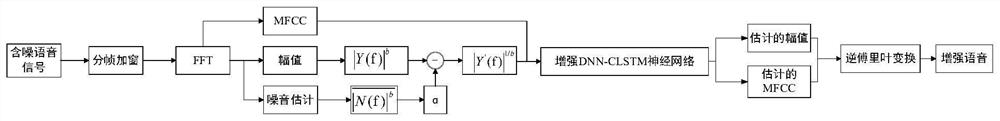

Voice enhancement method based on DNN-CLSTM network

Owner:XIAN UNIV OF POSTS & TELECOMM

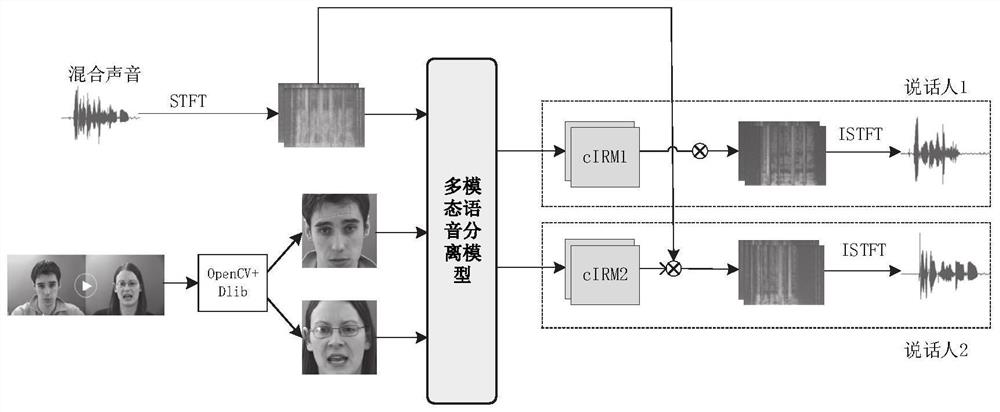

Multi-mode voice separation method and system

ActiveCN113035227AFlexibleStrong voice separation abilitySpeech analysisCharacter and pattern recognitionFace detectionTime domain

The invention provides a multi-mode voice separation method and system. The method comprises the following steps: receiving a mixed voice of a to-be-recognized object and facial visual information of the to-be-recognized object; performing face detection by using a Dlib library to obtain the number of speakers; and processing information to obtain a multilingual spectrogram and face images of the speakers, transmitting the multilingual spectrogram and the face images of the speakers to a multi-modal voice separation model, and dynamically adjusting the structure of the model according to the number of the speakers, wherein in the training process of the multi-modal voice separation model, complex field ideal ratio masking is used as a training target; defining a ratio between a clean sound spectrogram and a mixed sound spectrogram in a complex field, consisting of a real part and an imaginary part, and containing amplitude and phase information of a sound; enabling the multi-modal voice separation model to output time-frequency masks corresponding to the number of faces; and carrying out complex number multiplication on the output masking and the spectrogram of the mixed sound to obtain a spectrogram of the clean sound, and carrying out short-time inverse Fourier transform calculation on the spectrogram of the clean sound to obtain a time domain signal of the clean sound, thereby completing voice separation. The model is more suitable for most application scenes.

Owner:SHANDONG UNIV

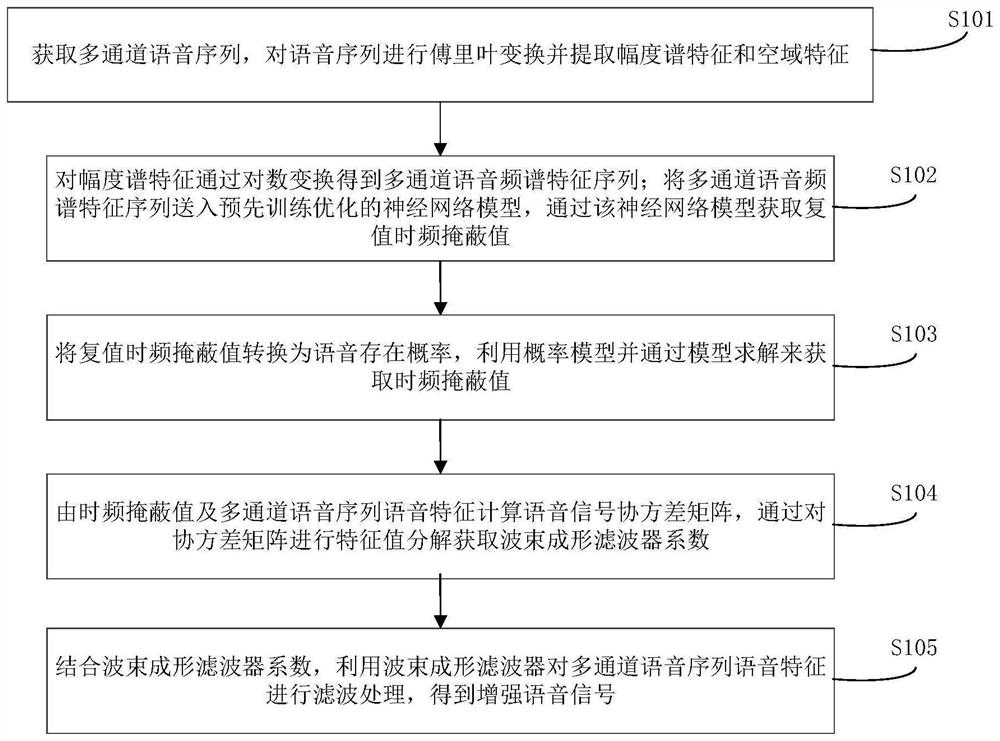

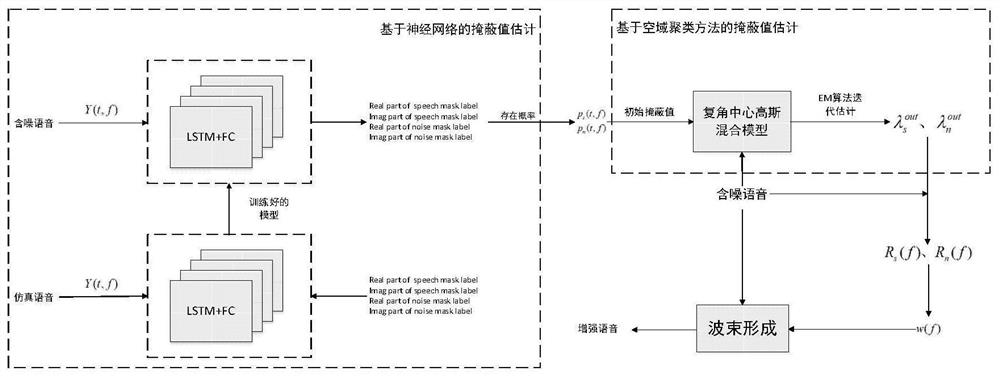

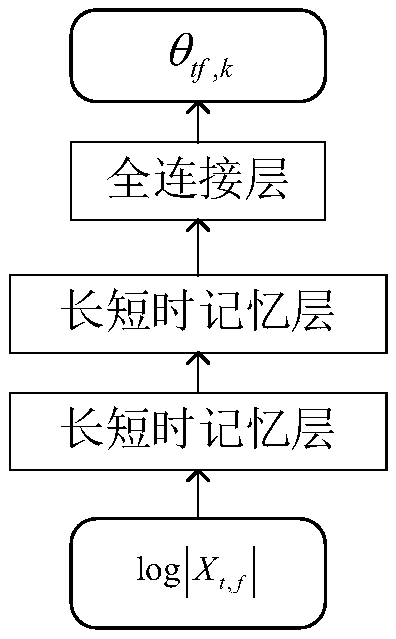

Beam forming method and system based on time-frequency masking value estimation

The invention belongs to the technical field of speech enhancement, and particularly relates to a beam forming method and system based on time-frequency masking value estimation, and the method comprises the steps: obtaining a multi-channel speech sequence, and extracting amplitude spectrum features and spatial domain features through Fourier transform; carrying out logarithm transformation on the amplitude spectrum characteristics to obtain a multi-channel voice frequency spectrum characteristic sequence, and sending the multi-channel voice frequency spectrum characteristic sequence to a pre-trained and optimized neural network model to obtain a complex value time-frequency masking value; converting the complex value time-frequency masking value into a voice existence probability, and obtaining a time-frequency masking value by utilizing a probability model; calculating a voice signal covariance matrix according to the time-frequency masking value and the multi-channel voice feature sequence, and performing eigenvalue decomposition on the covariance matrix to obtain a beamforming filter coefficient; and in combination with the beamforming filter coefficient, performing filtering processing on the multi-channel voice sequence voice features by using a beamforming filter to obtain an enhanced voice signal. According to the invention, the neural network and spatial clustering are integrated to estimate the time-frequency masking value, and the performance of beam forming and speech recognition is improved.

Owner:PLA STRATEGIC SUPPORT FORCE INFORMATION ENG UNIV PLA SSF IEU +1

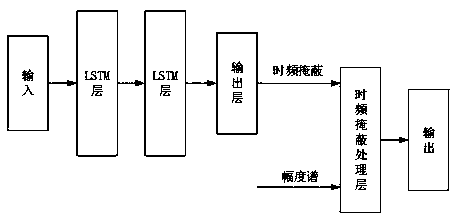

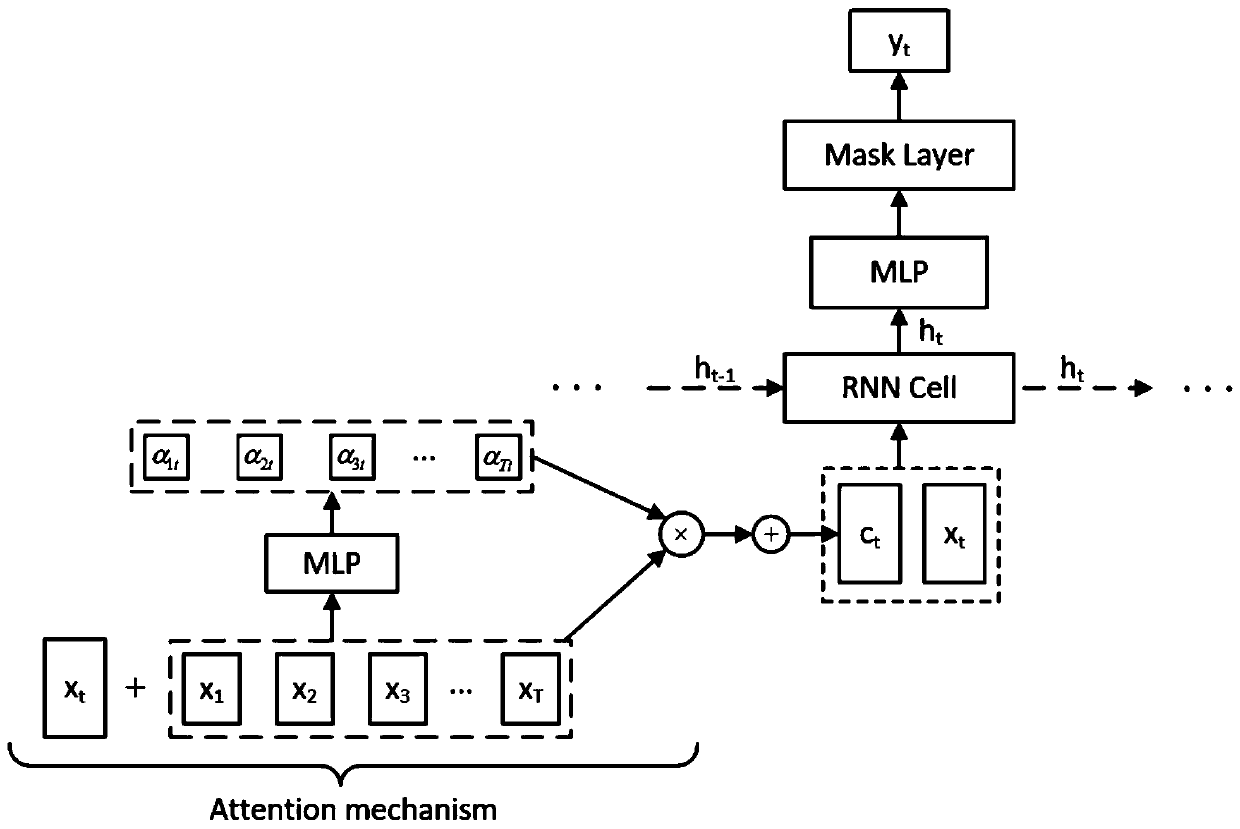

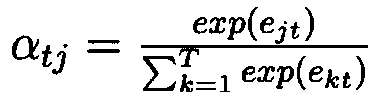

Speech enhancement algorithm based on attention mechanism

InactiveCN110299149ATroubleshoot voice noise reduction qualityGood handling mechanismSpeech analysisBiological neural network modelsFeature vectorAlgorithm

The invention discloses a speech enhancement algorithm based on an attention mechanism. A neural network speech enhancement model based on the attention mechanism is constructed, and comprises three components of a neural network based on the attention mechanism, a standard deep loop neural network and a time-frequency masking layer. At each time step, the model performs attention mechanism calculation on an incoming frame at current time and speech frames of an entire segmentto obtain feature vector expression corresponding to the current time step. The model input is obtained by splicing thecurrent time step feature vector with the current speech frame, and the current input is encoded by the standard deep loop neural network to obtain a predicted value of time-frequency masking. The predicted value of the time-frequency masking is multiplied by the mixed speech step-by-step to obtain an enhanced speech segment. The algorithm models a speech enhancement problem from the perspectiveof improving the generalization performance of the model, and can effectively solve the speech enhancement problem in a noise scene which does not appear in the training.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

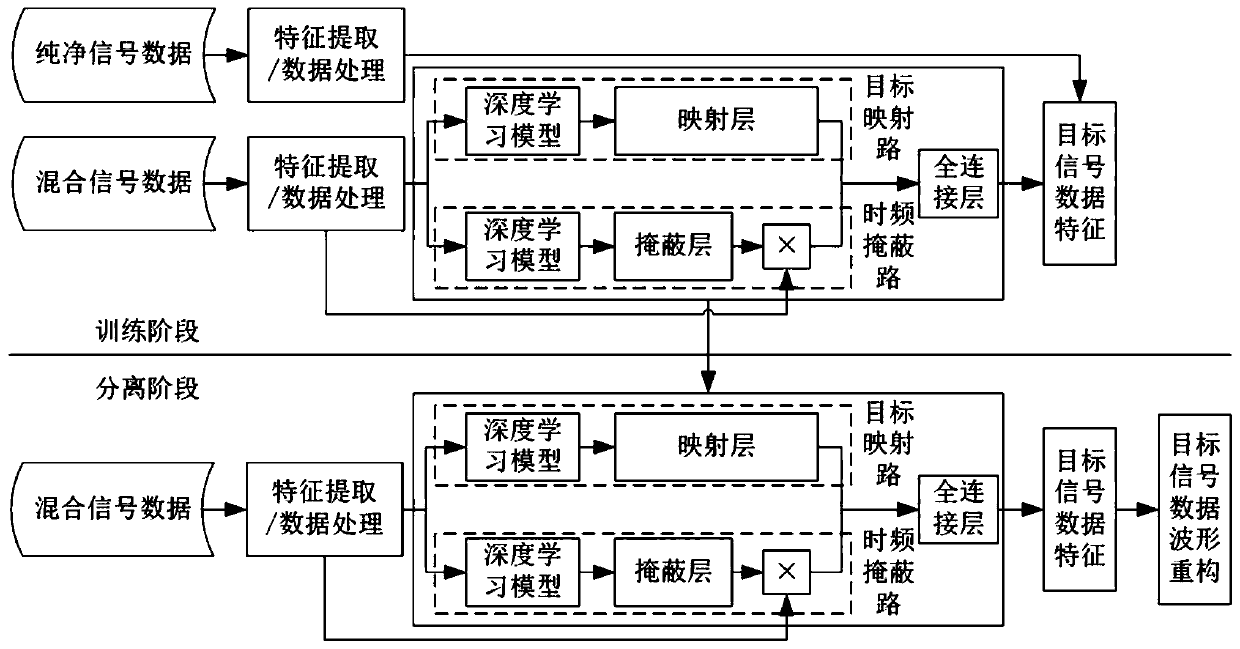

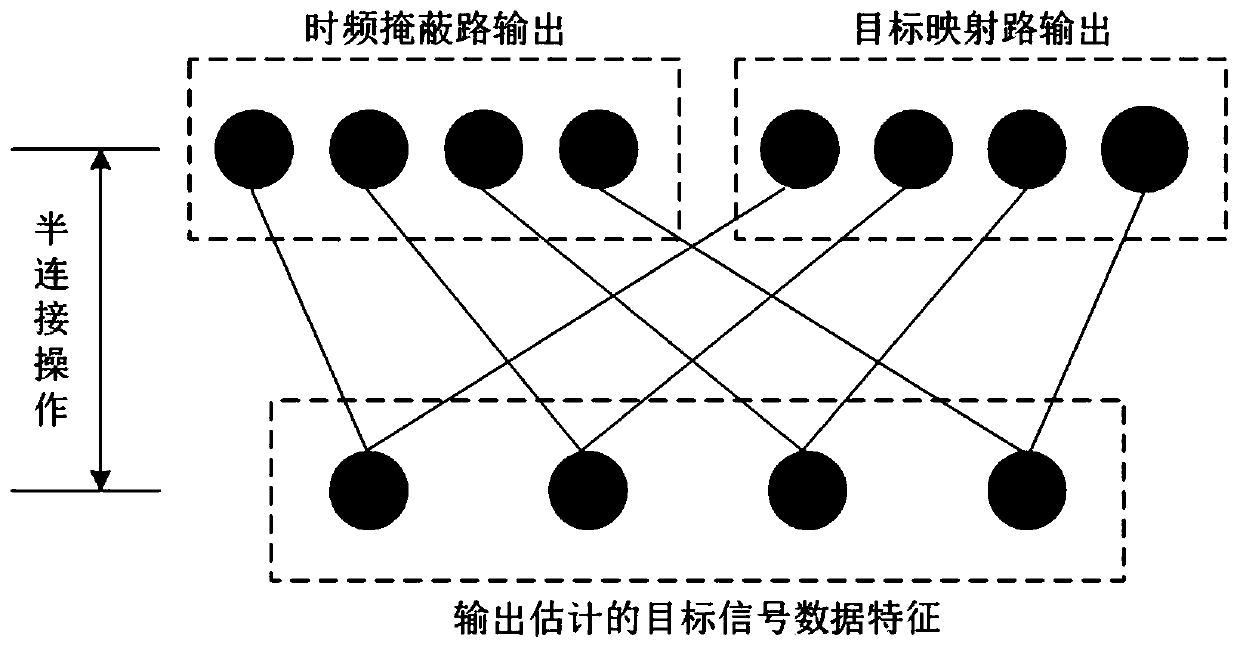

Single-channel signal double-channel separation method and device, storage medium and processor

InactiveCN110321810AImprove generalizationImprove performanceSpeech analysisCharacter and pattern recognitionNeural network learningTarget signal

The invention discloses a single-channel signal double-channel separation method and device, a storage medium and a processor. The method comprises the steps that a multi-path neural network learningmodel is established, the model comprises a target mapping path, a time-frequency masking path and a full connection layer, the target mapping path adopts a target mapping method to separate and concurrently, and the time-frequency masking path adopts a time-frequency masking method to separate single-channel signal data; and the data output after the target mapping path and the time-frequency masking path are separated are converged through a full connection layer and arranged into the specification of the target data, and then estimated target signal data characteristics are output. According to the method, the advantages of a time-frequency masking method and a target mapping method are compatible, the defects of the time-frequency masking method and the target mapping method are overcome to a certain extent, and the generalization performance of the model is good under the condition that the signal data phase is not considered.

Owner:SOUTH CHINA NORMAL UNIVERSITY

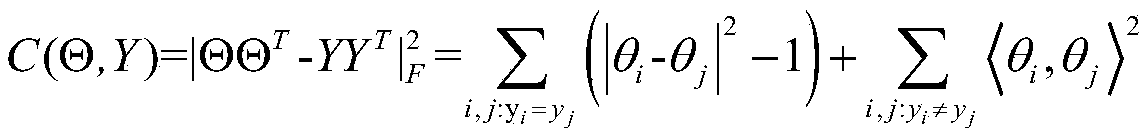

The invention discloses a fFeature extraction method for blind source separation

InactiveCN109614943ANo outliersEasy to separateCharacter and pattern recognitionTime domainFrequency spectrum

The invention belongs to the technical field of communication, and particularly relates to a feature extraction method for blind source separation. The method provided by the invention is mainly characterized by comprising the following steps of: preparing materials; p; preprocessing the mixed blind source signal; O; obtaining a time-frequency map, inputting the data as training data into a neuralnetwork; D; deep learning method, fitting a neural network objective function with the following characteristics; i; in the process of minimizing the objective function; T; target function convergence, t, the Euclidean distance sum of the time frequency points of the same source signal reaches the minimum; w; when the Euclidean distance sum of different time-frequency points reaches the maximum,inputting the mixed blind source signals into the trained neural network, clustering the signals of different sources according to the output of the neural network, constructing a time-frequency masking matrix by utilizing the feature set, calculating the frequency spectrum, and obtaining separated time-domain signals. The beneficial effects of the invention are that the method can achieve the separation of mixed signals of a plurality of unknown source signals.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Correlation-based method for ambience extraction from two-channel audio signals

A method of ambience extraction includes analyzing an input signal to determine the time-dependent and frequency-dependent amount of ambience in the input signal, wherein the amount of ambience is determined based on a signal model and correlation quantities computed from the input signals and wherein the ambience is extracted using a multiplicative time-frequency mask. Another method of ambience extraction includes compensating a bias in the estimation of a short-term cross-correlation coefficient. In addition, systems having various modules for implementing the above methods are disclosed.

Owner:CREATIVE TECH LTD

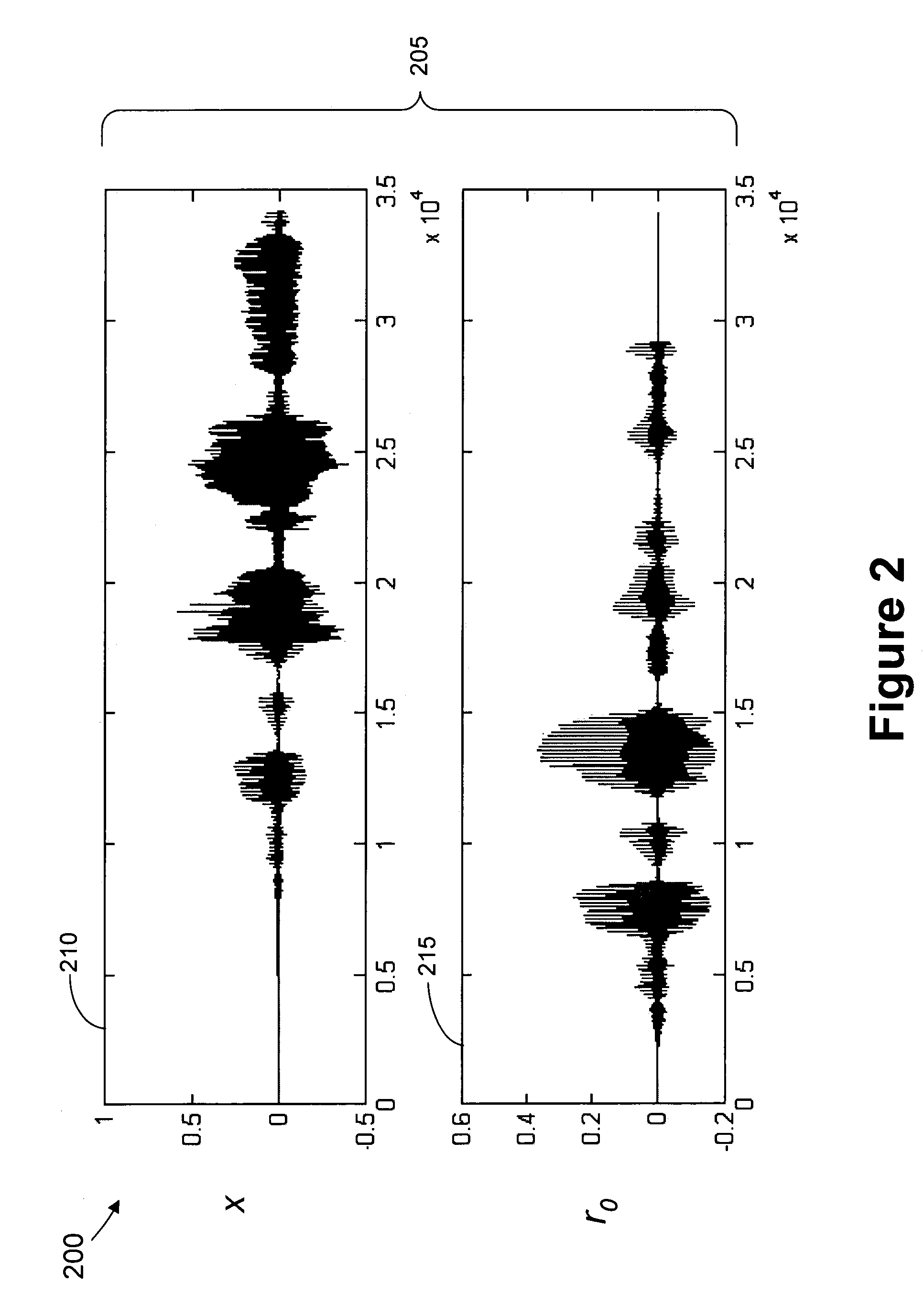

Method for eliminating an unwanted signal from a mixture via time-frequency masking

ActiveUS7302066B2Reduce signalingTransducer acoustic reaction preventionSpeech recognitionEngineeringTime frequency masking

A method is presented for eliminating an unwanted signal (e.g., background music, interference, etc.) from a mixture of a desired signal and the unwanted signal via time-frequency masking. Given a mixture of the desired signal and the unwanted signal, the goal of the present invention is to eliminate or at least reduce the effects of the unwanted signal to obtain an estimate of the desired signal.

Owner:SIEMENS CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com