Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

126results about How to "Save cache space" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache management method and device, equipment and storage medium

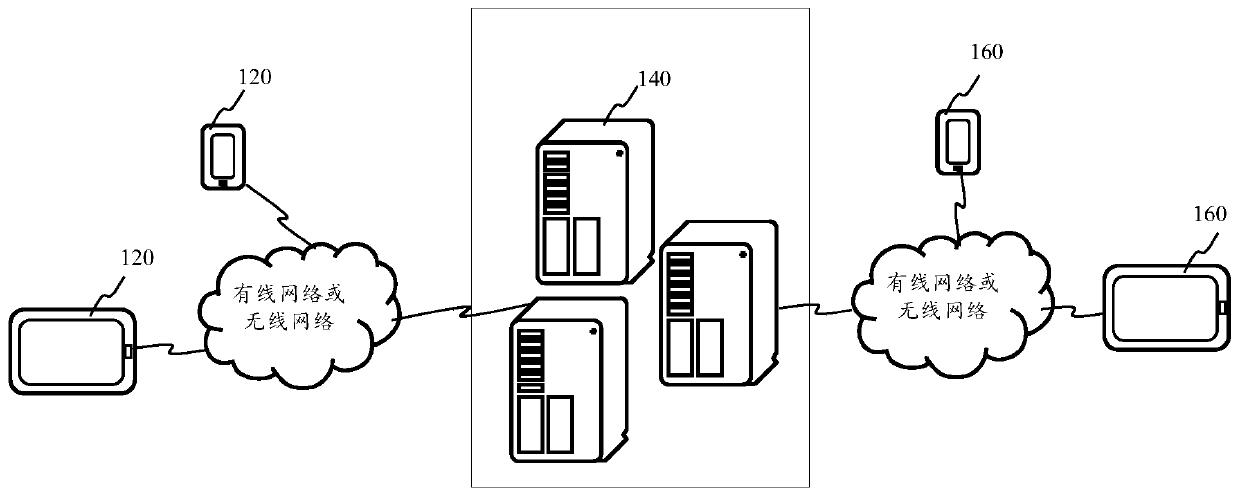

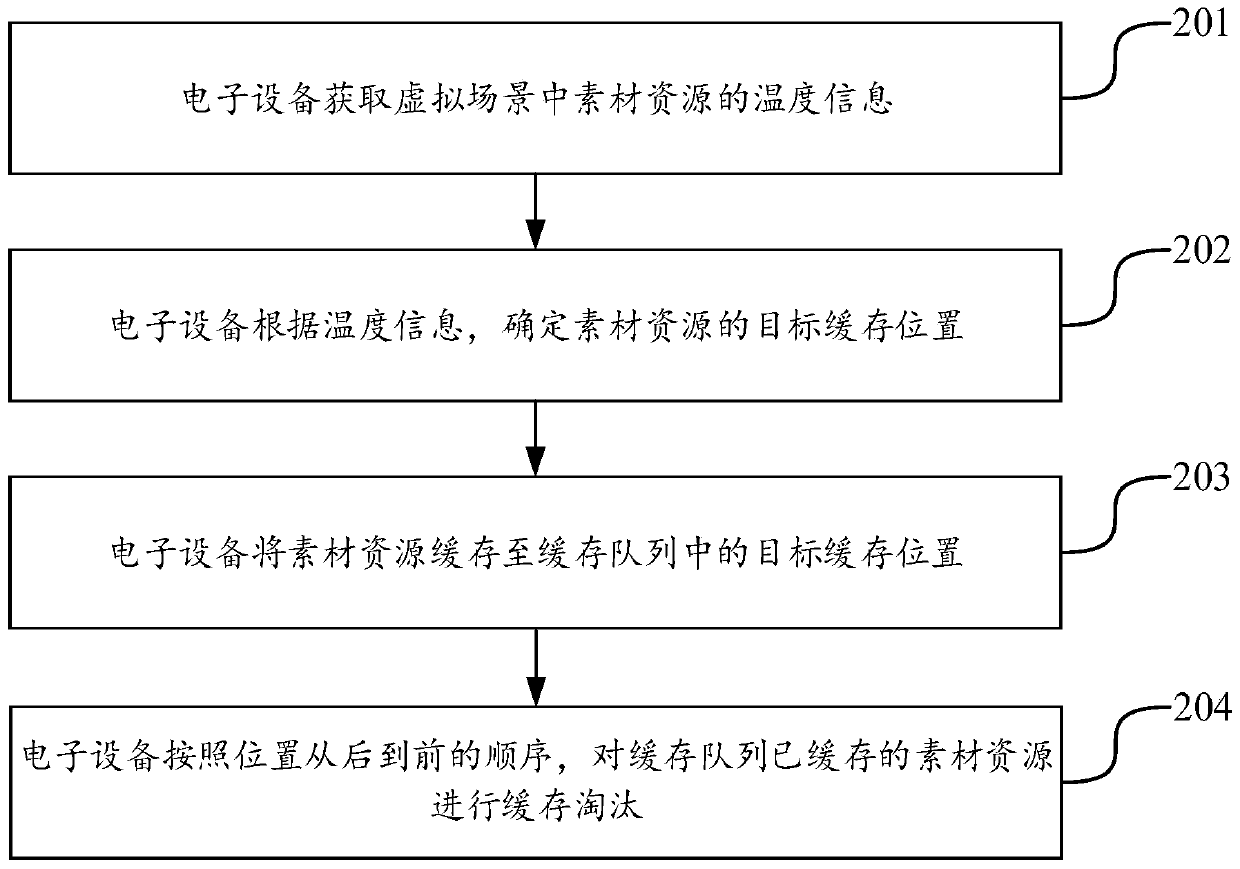

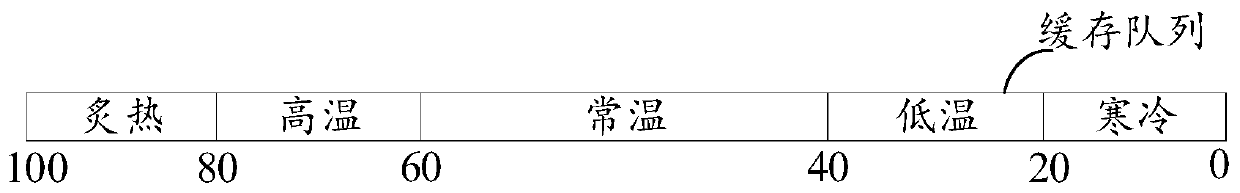

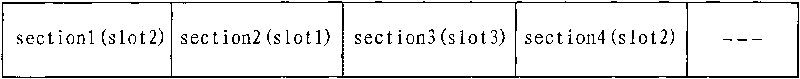

ActiveCN110908612ANot easy to eliminateExtended dwell timeInput/output to record carriersParallel computingMaterial resources

The invention discloses a cache management method and device, equipment and a storage medium, and belongs to the technical field of storage. The embodiment of the invention provides a method for caching and eliminating material resources of a virtual scene based on temperature information, adopts the temperature information to represent the probability that the material resources are accessed in the virtual scene, uses the positions, corresponding to the temperature information, in the cache queue for caching the material resources, and conducts cache elimination on the cache queue according to the sequence of the positions from back to front. In this way, the cold resources of the virtual scene are eliminated first, and then the hot resources of the virtual scene are eliminated. On one hand, by prolonging the residence time of the hotspot resources in the cache, the probability of hitting the cache when the hotspot resources are accessed is improved, so that the cache hit rate is improved, and the problem of cache pollution is solved. And on the other hand, the cold resources in the cache are removed as soon as possible, so that the cache space is saved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Method for dynamically caching DVB data

InactiveCN101720037AEasy to readIncrease profitPulse modulation television signal transmissionMemory adressing/allocation/relocationDistribution controlData segment

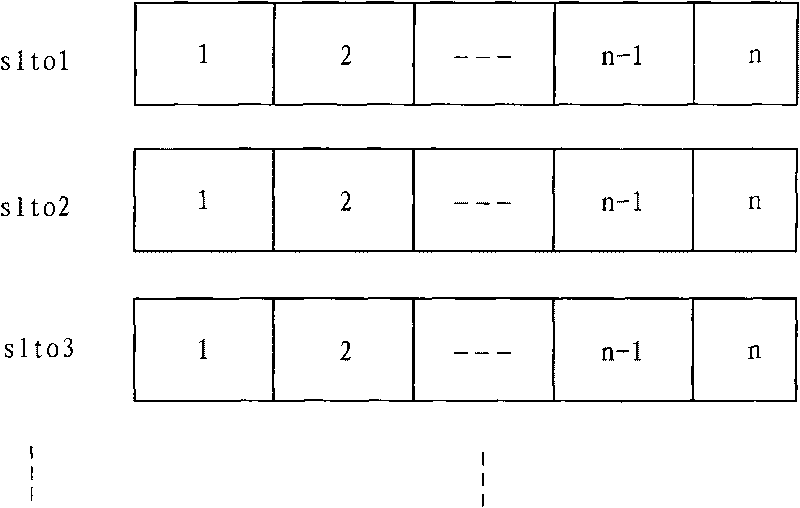

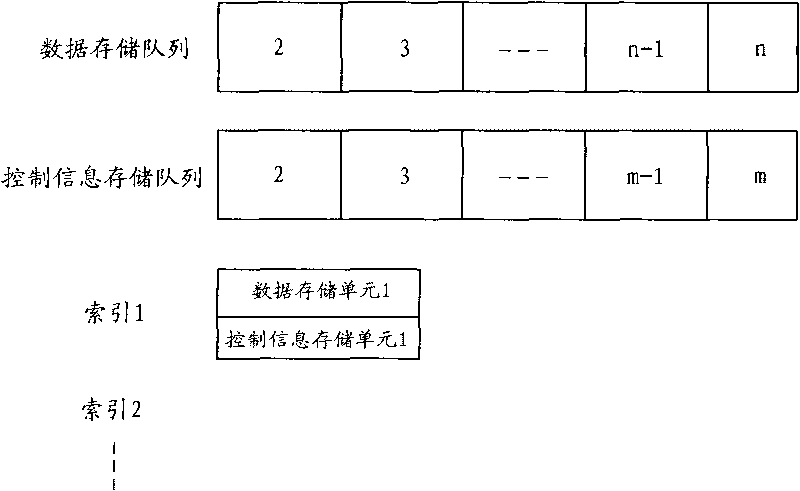

The invention discloses a method for dynamically caching DVB data, which comprises the following steps: dividing a data storage array and a control information storage array in a memory to serve as public arrays, which are used for storing data information and controlling information respectively; taking a hardware channel unit slot and a filtering unit filter as basic units, and setting a plurality of indexes; and when one of the indexes receives a data segment, allocating a data storage unit in the data storage array for the index to store data information of the data segment, and allocating a control information storage unit in the control information storage array for the index to store control information of the data segment. By dynamically allocating cache space for each slot in the method of the invention, the aim of the data load balance of each slot is achieved; the memory space required by the data processing is saved; and the utilization rate of the memory space is improved.

Owner:HISENSE BROADBAND MULTIMEDIA TECH

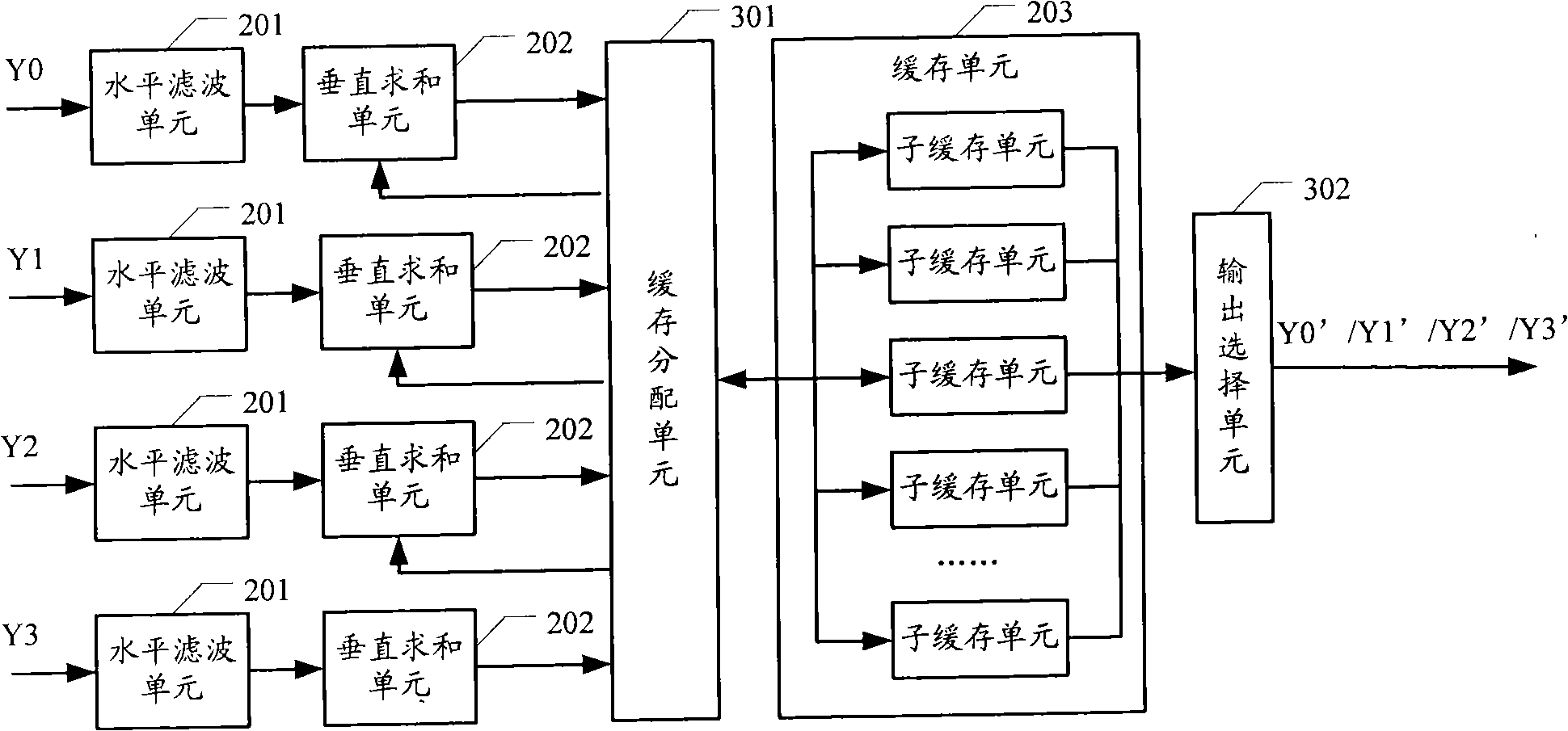

Diminished image digital filtering method and device

InactiveCN101286226ASave cache spaceDigital technique networkTelevision conference systemsOriginal dataDigital filter

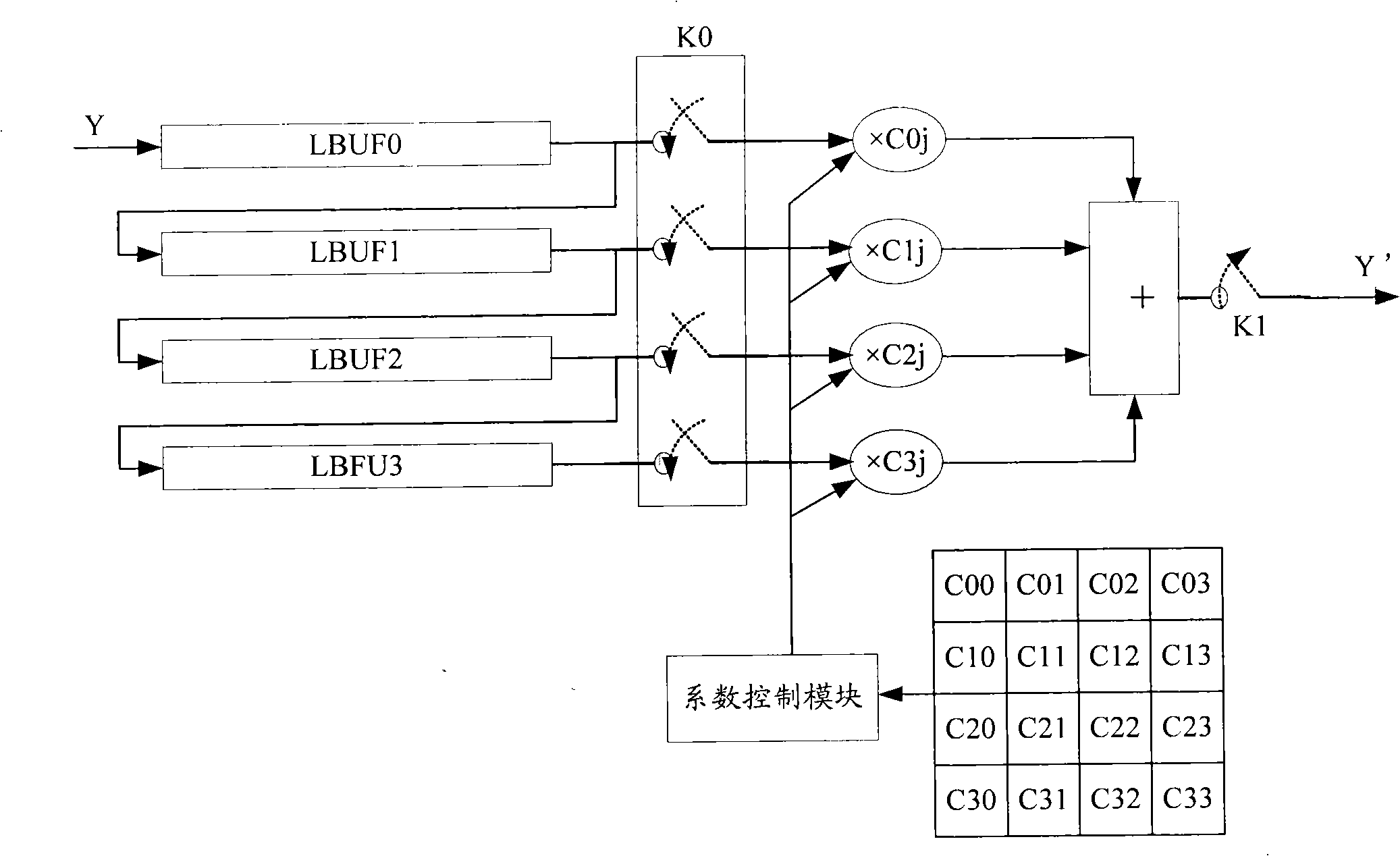

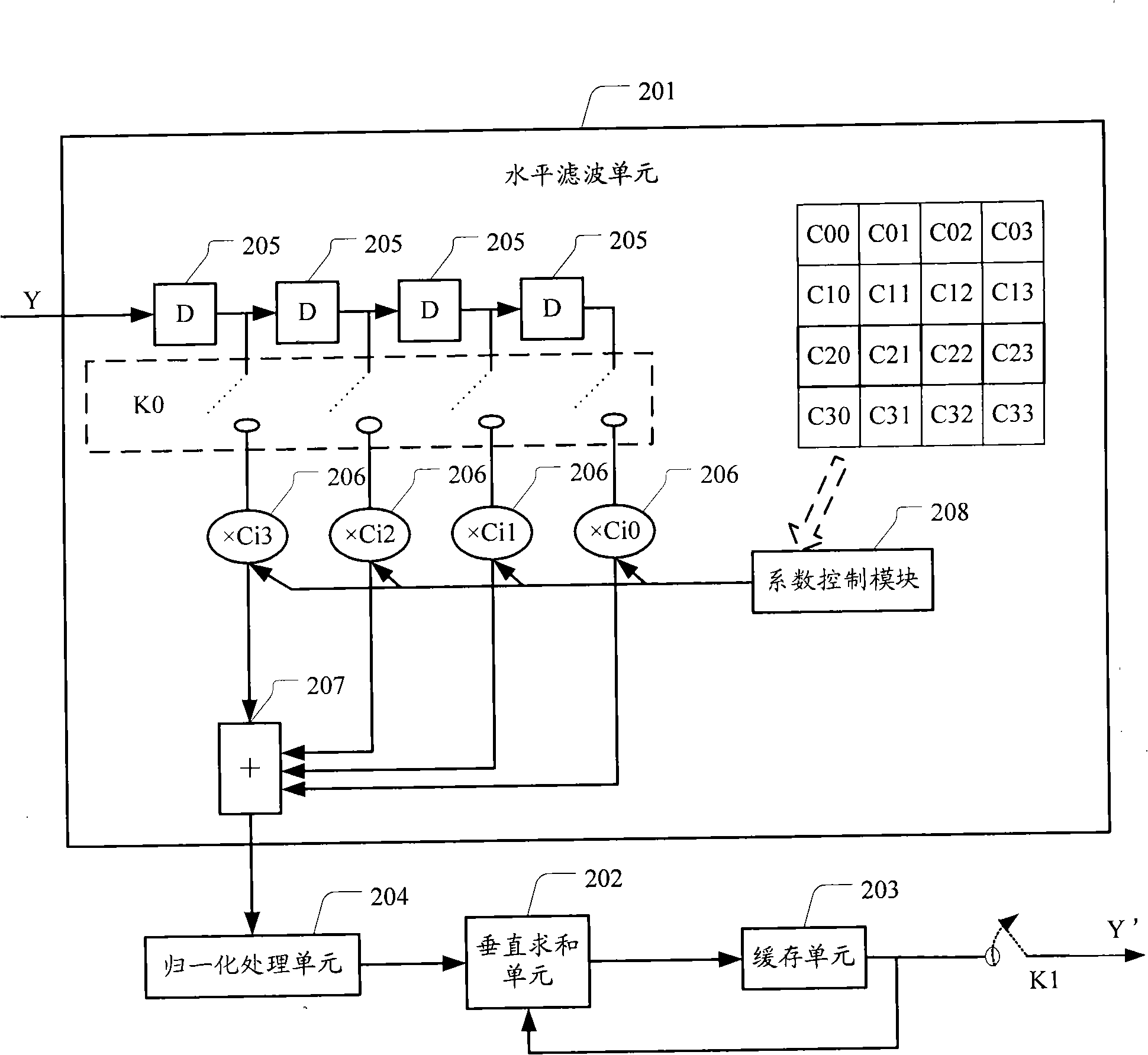

The invention discloses a digital filtering method for image zooming-out, comprising that: a first line of data in the current source image data is carried out the horizontal filtering treatment according to the horizontal widths of the source image and a target image, the result data is saved in a cache unit; the next line of the data in the current source image data is carried out the horizontal filtering treatment according to the horizontal widths of the source image and the target image, the result data is correspondingly added with the data in the cache unit one by one, the result data after the adding is covered on the original data in the cache unit; the process is carried out like this till the last line of the data in the current source image; and the data in the cache unit is output as the data of the target image of the data of the current source image. The technical proposal of the invention does not need to consume a lot of storage media.

Owner:NEW H3C TECH CO LTD

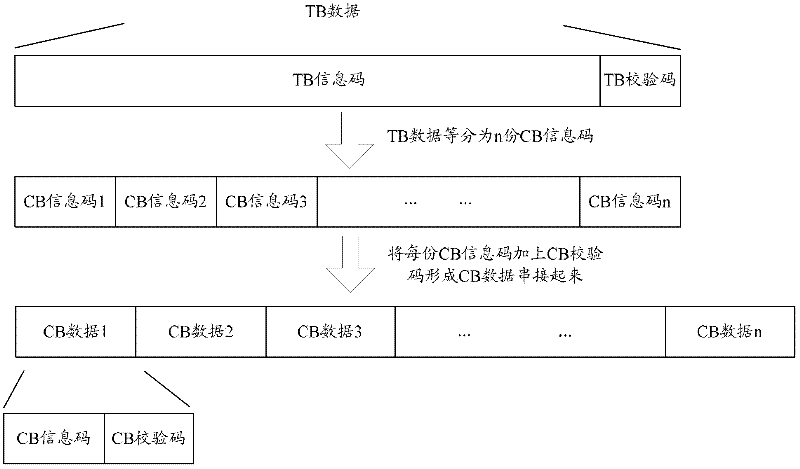

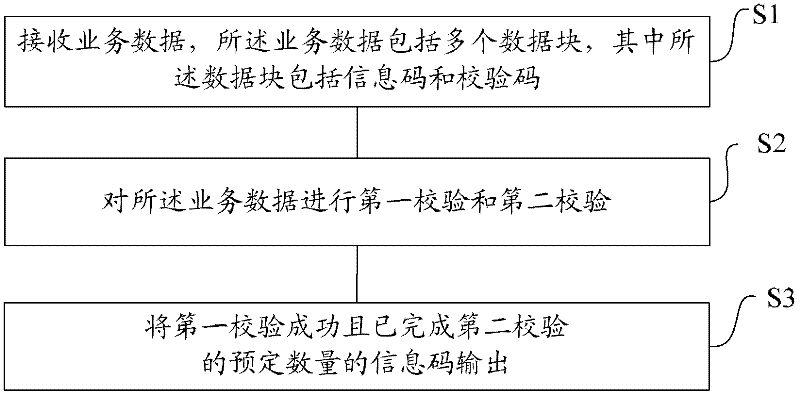

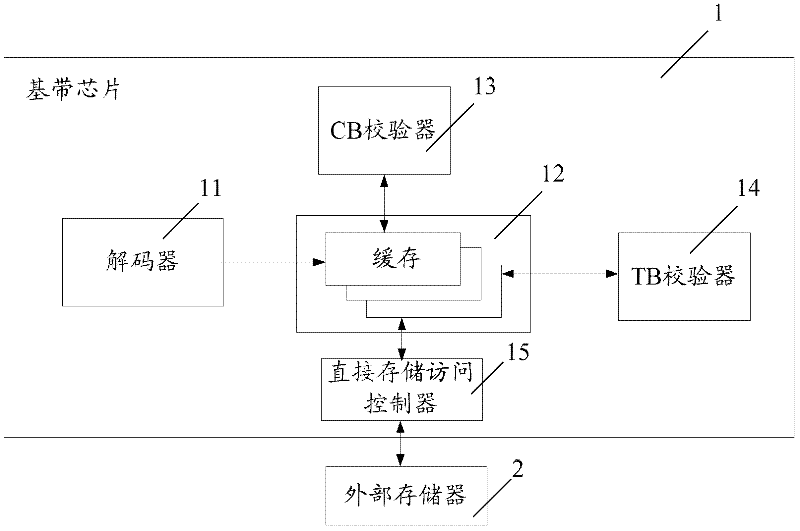

Method and device for receiving service data in communication system, and baseband chip

ActiveCN102571272ASave cache spaceSave memoryMulti-frequency code systemsError prevention/detection by diversity receptionCommunications systemComputer science

The invention discloses a method and a device for receiving service data in a communication system, and a baseband chip. The receiving method comprises the following steps of: receiving the service data, wherein the service data comprises a plurality of data blocks of which each comprises an information code and a check code; performing primary check and secondary check on the service data; and outputting a preset number of information codes which pass the primary check and are subjected to the secondary check. By the technical scheme, a memory of the baseband chip is saved.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

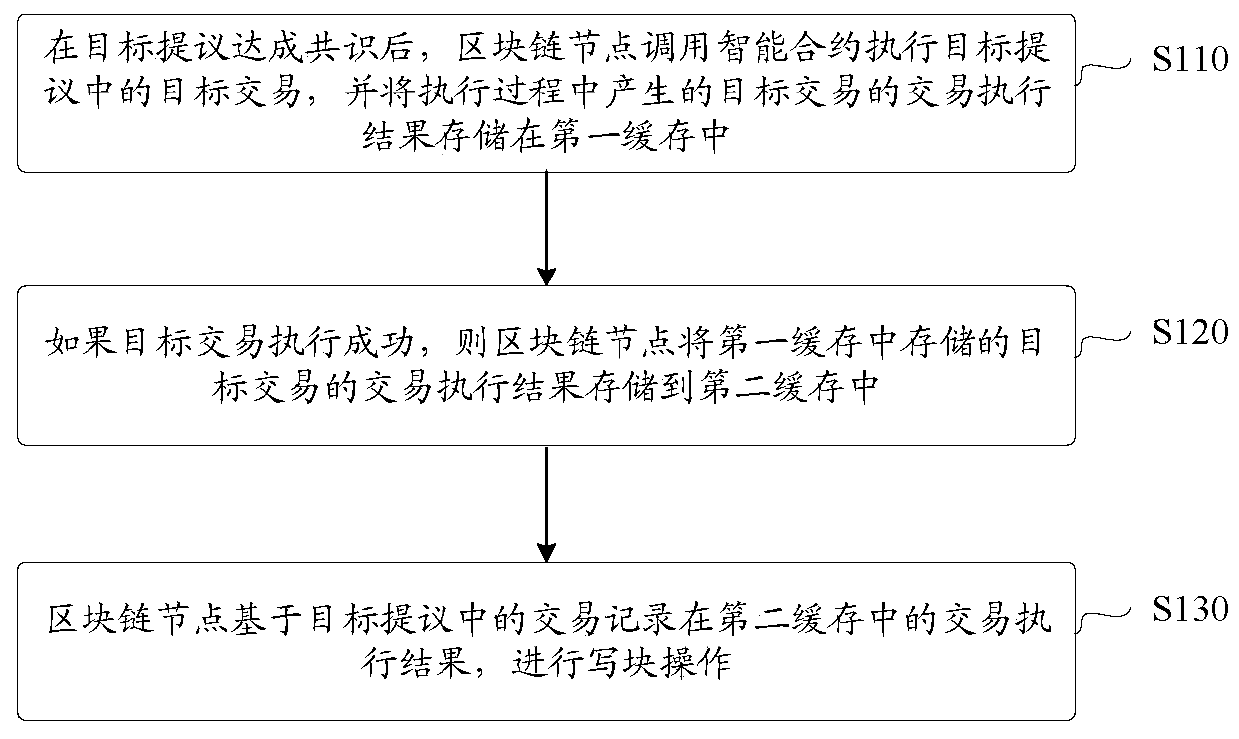

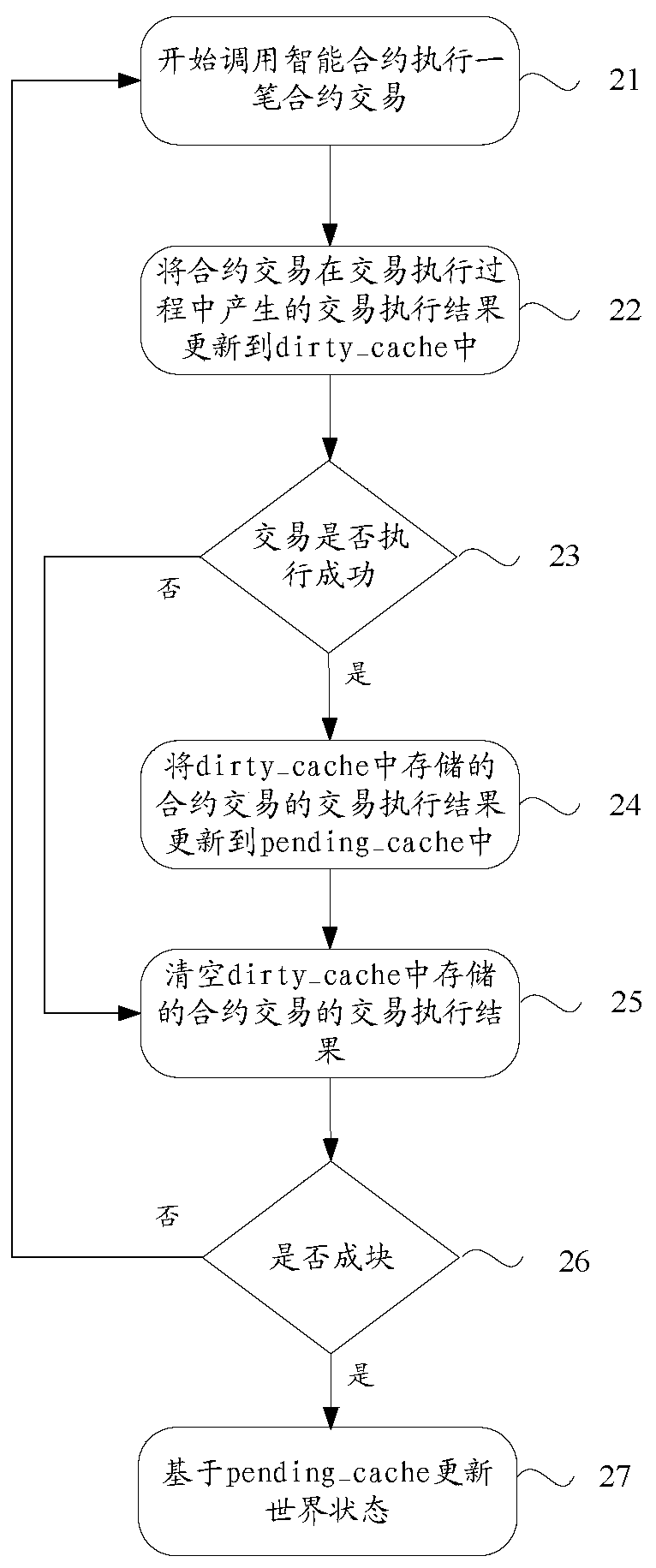

Intelligent contract execution method and system in blockchain, and electronic equipment

ActiveCN111383031ASave cache spaceImprove the efficiency of rolling back modification history operationsDatabase updatingDatabase distribution/replicationFinancial transactionSmart contract

The invention discloses an intelligent contract execution method and system in a blockchain, and electronic equipment. The method comprises the following steps: after a target proposal reaches a consensus, a blockchain node calls a smart contract to execute a target transaction in the target proposal, and stores a transaction execution result of the target transaction generated in an execution process in a first cache; if the target transaction is successfully executed, the blockchain node stores a transaction execution result of the target transaction stored in the first cache into a second cache; and the blockchain node performs a block writing operation based on the transaction execution result in the second cache.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

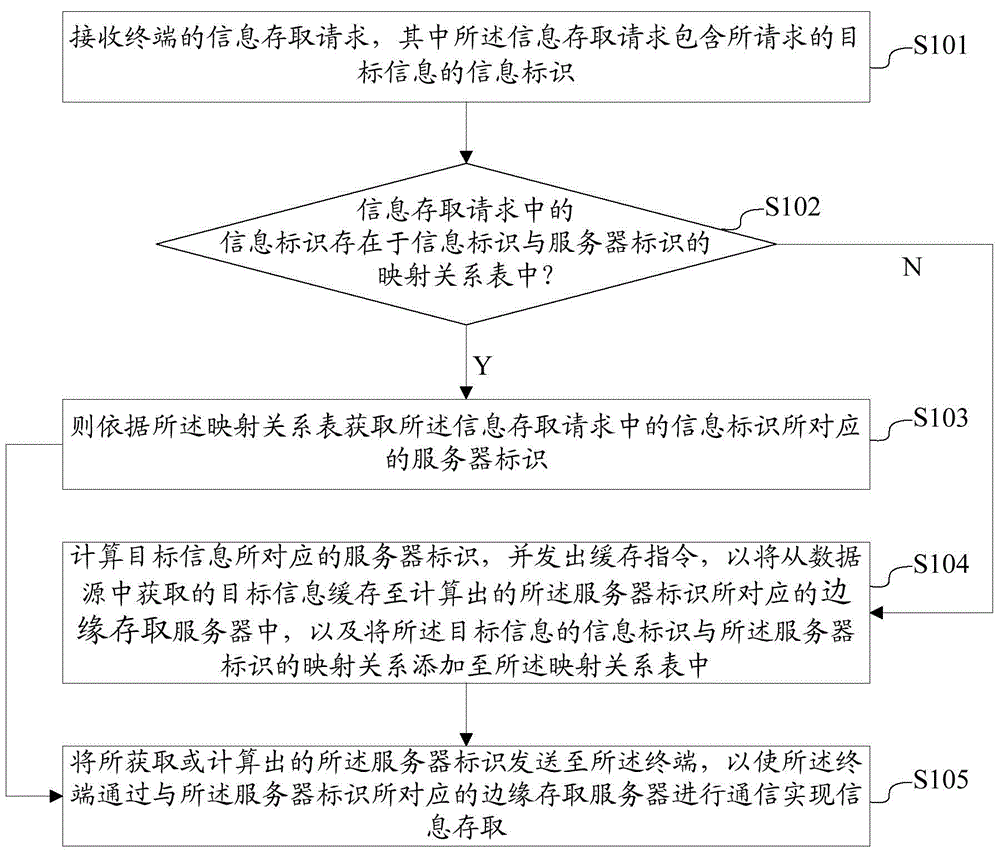

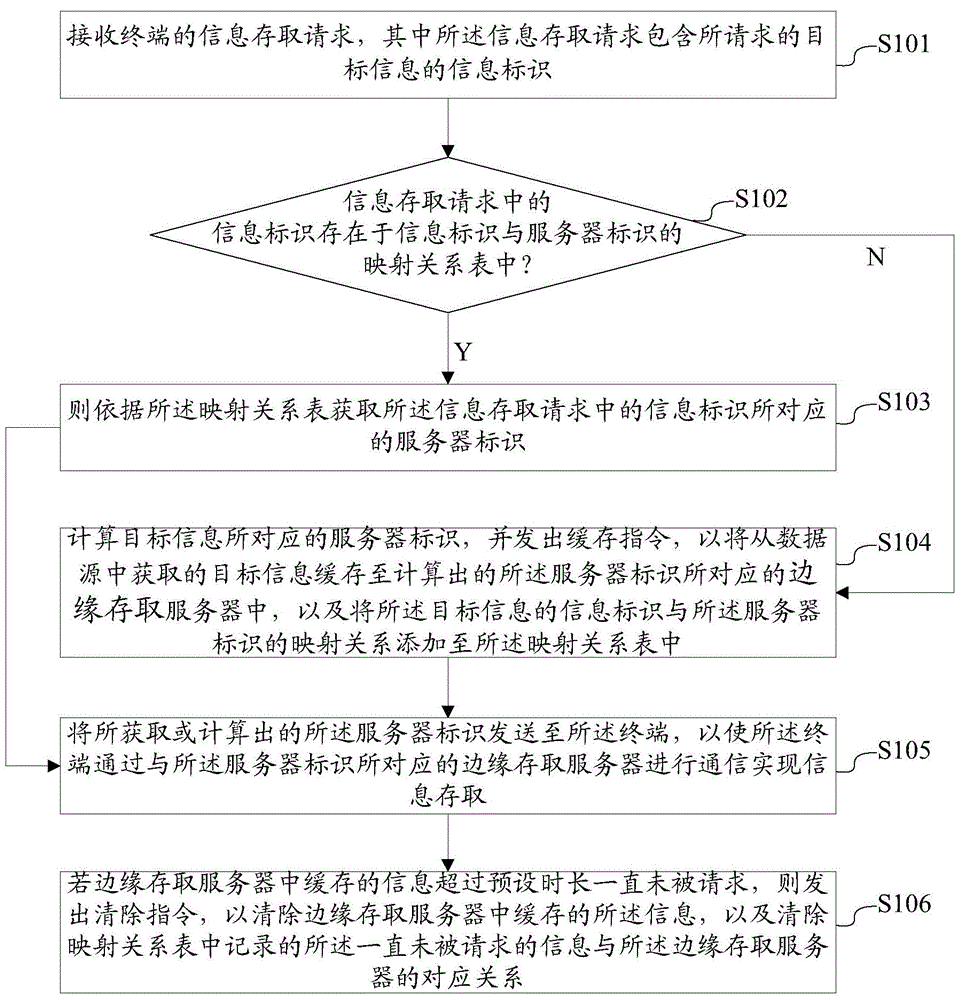

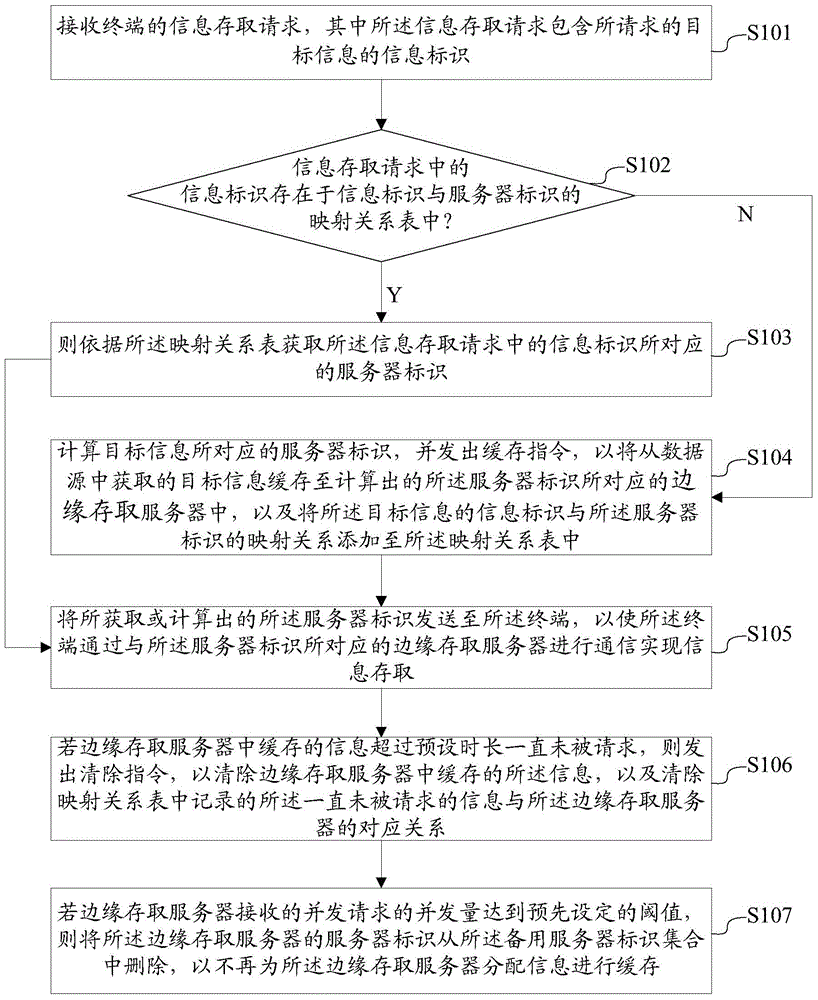

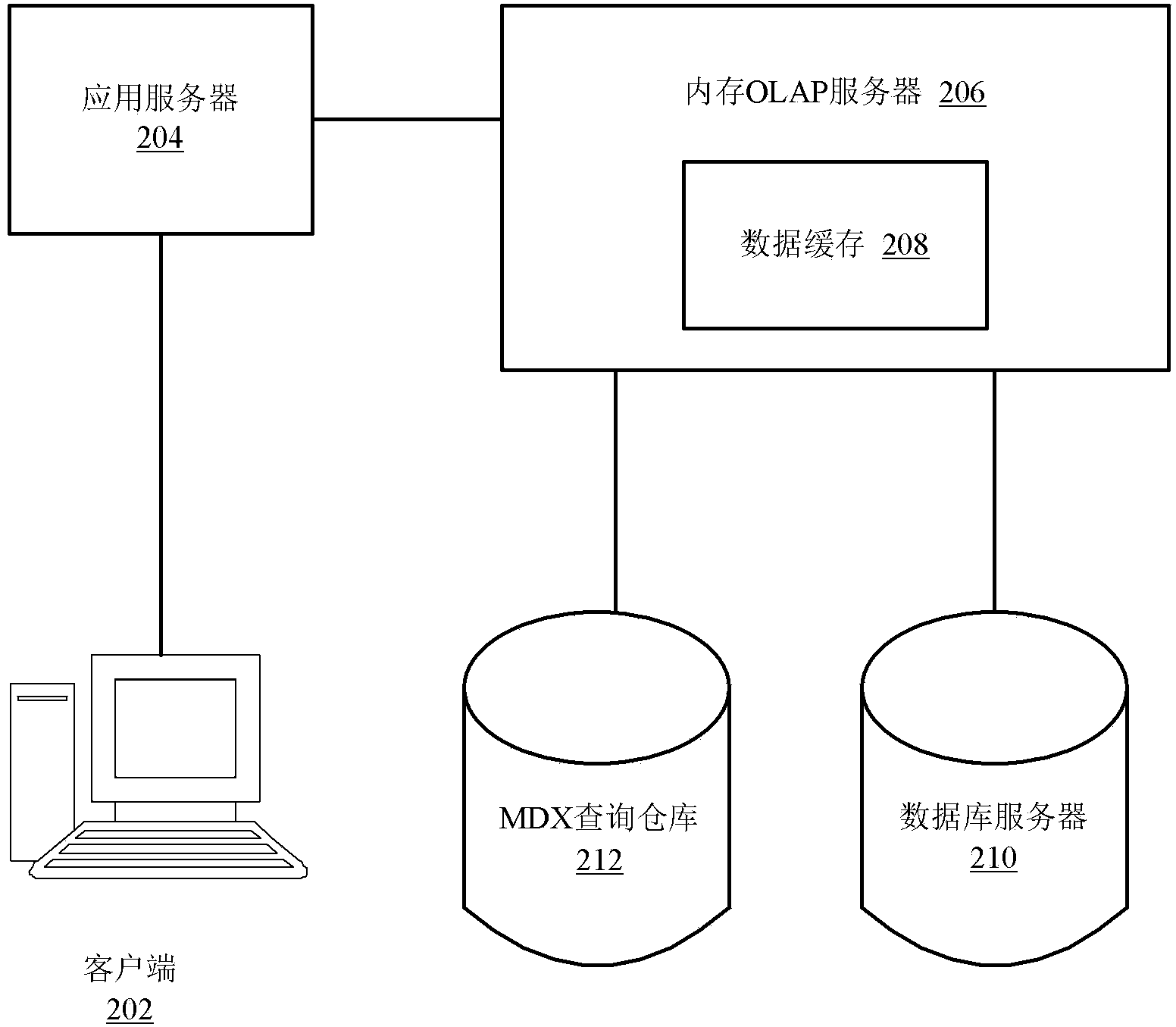

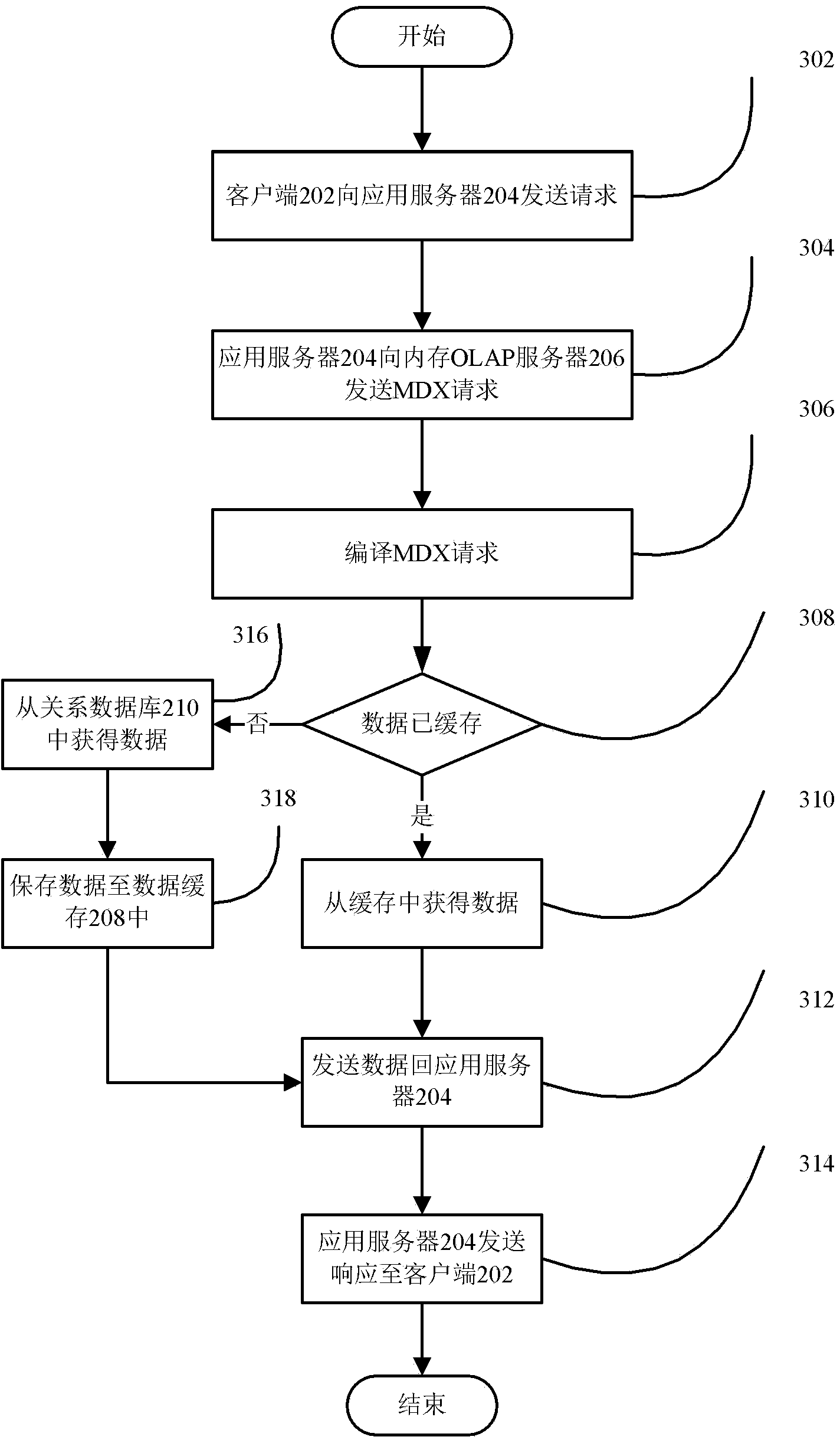

Method and system for accessing information

InactiveCN104092776AMultiple copies avoidSave cache spaceTransmissionObjective informationData source

The invention discloses a method and system for accessing information. The method includes the steps of caching the information in a data source according to the one-to-one mapping relation, caching same contents into certain edge accessing servers, and storing one-to-one mapping relations between the information contents and the edge accessing servers to form a mapping relation table; based on the mode, when accessing requests of terminals are received, if requested information contents are contained in the table, representing that requested target information is cached in the servers, and directly obtaining the servers where the target information is cached from the table; if the requested information contents are not contained in the table, calculating the edge accessing servers corresponding to the target information, caching the target information in the data source into the servers, and meanwhile adding mapping relations between the information and the servers into the mapping relation table; then responding to the terminals, and informing the terminals of the servers where the target information is cached to enable the terminals and the servers to be in communication to achieve information accessing. By means of the method and system, multiple copies of the same contents are avoided, and cache space is saved.

Owner:BEIJING CYCLE CENTURY DIGITAL TECH

Method and device for managing memory space

ActiveCN103049393AImprove stability and reliabilityImprove hit rateInput/output to record carriersMemory adressing/allocation/relocationData migrationTraffic volume

The invention discloses a method and device for managing a memory space. The method includes that if stale data in a first memory space reaches a certain threshold, whether valid data exists in the first memory space is judged, and on yes judgment, the valid data arranged at an initial position is taken out from the first memory space until all valid data in the first memory space is taken out; on no judgment, the first memory space is released; Hash operation is conducted on data taken out from the first memory space, a memory address of the data in a second memory space is obtained, and the data is written on the memory address of the second memory space. The method and device can meet a requirement for expanding / reducing caching capacity and can also guarantee that users can access caching data normally during a data migration period. Simultaneously, pressure of a data base or a disc is reduced during a data traffic peak period, and the stability of overall service is improved.

Owner:BEIJING QIHOO TECH CO LTD

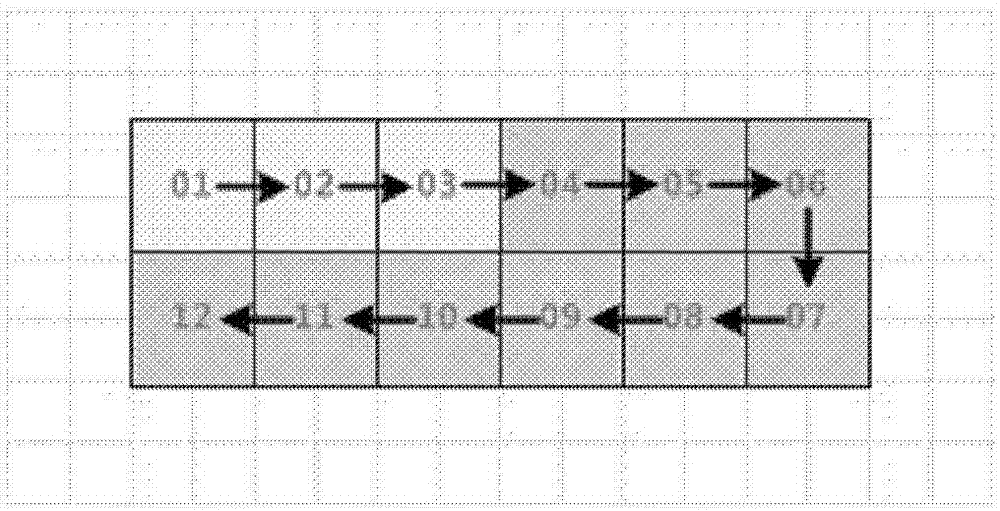

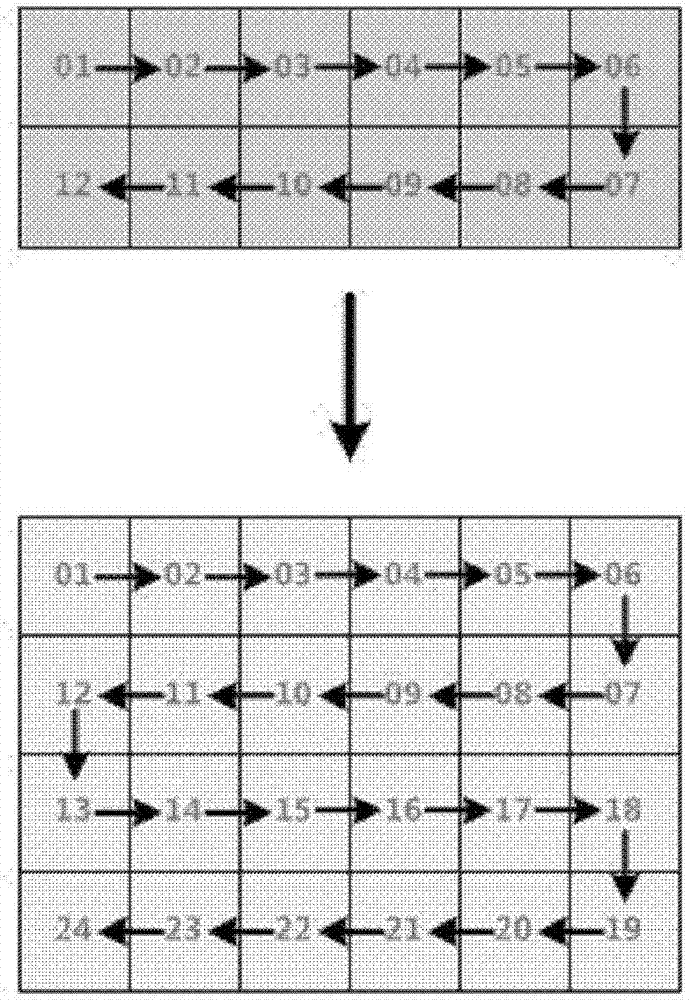

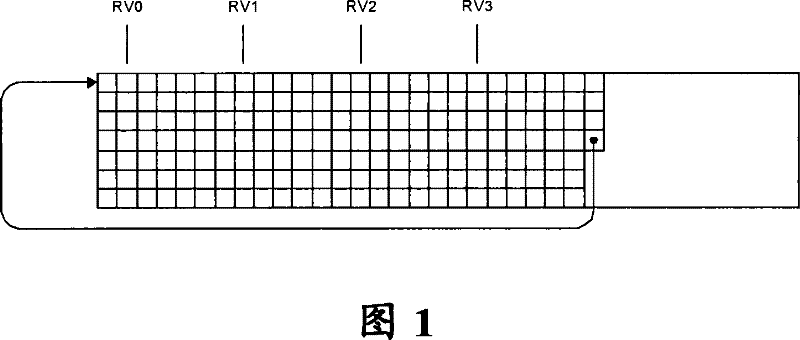

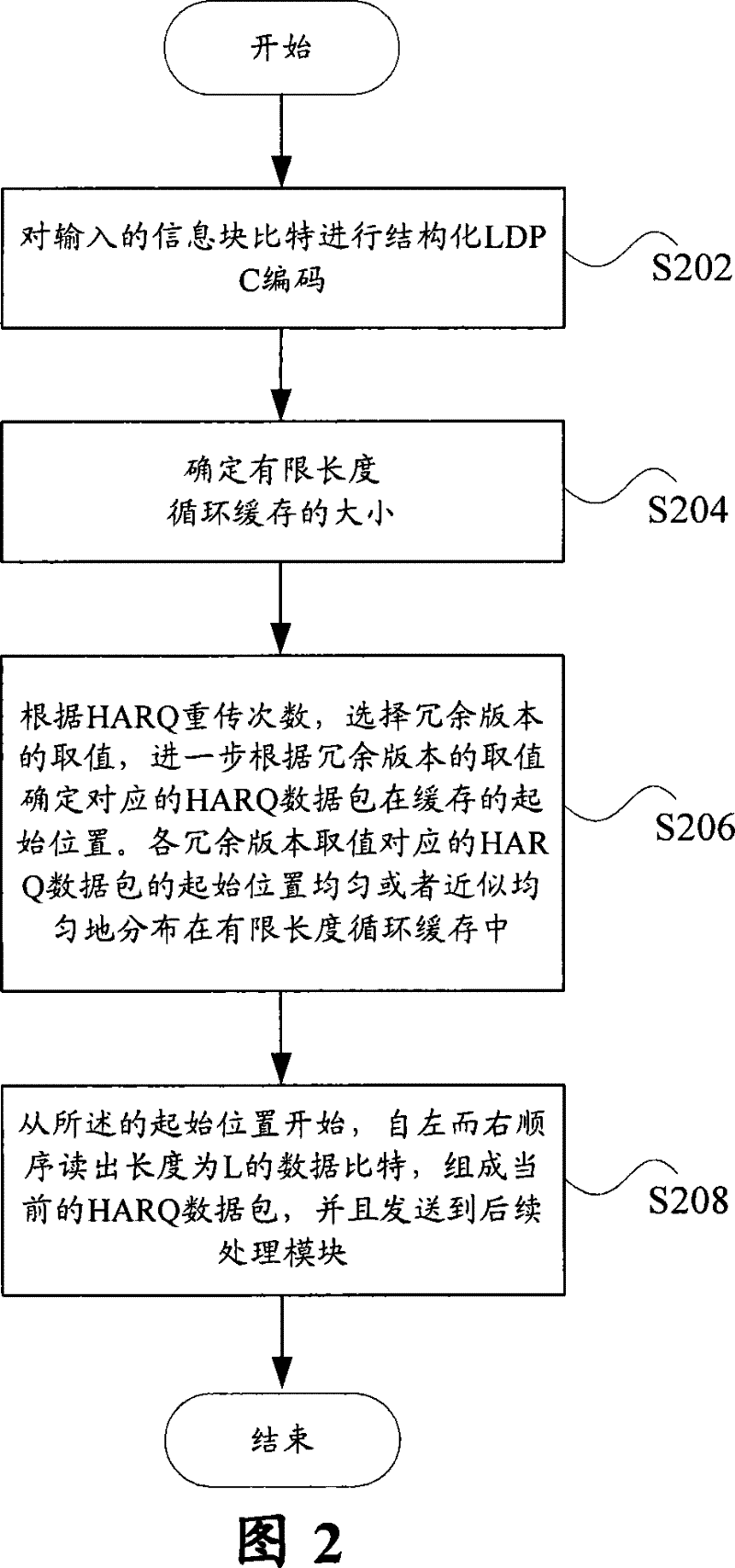

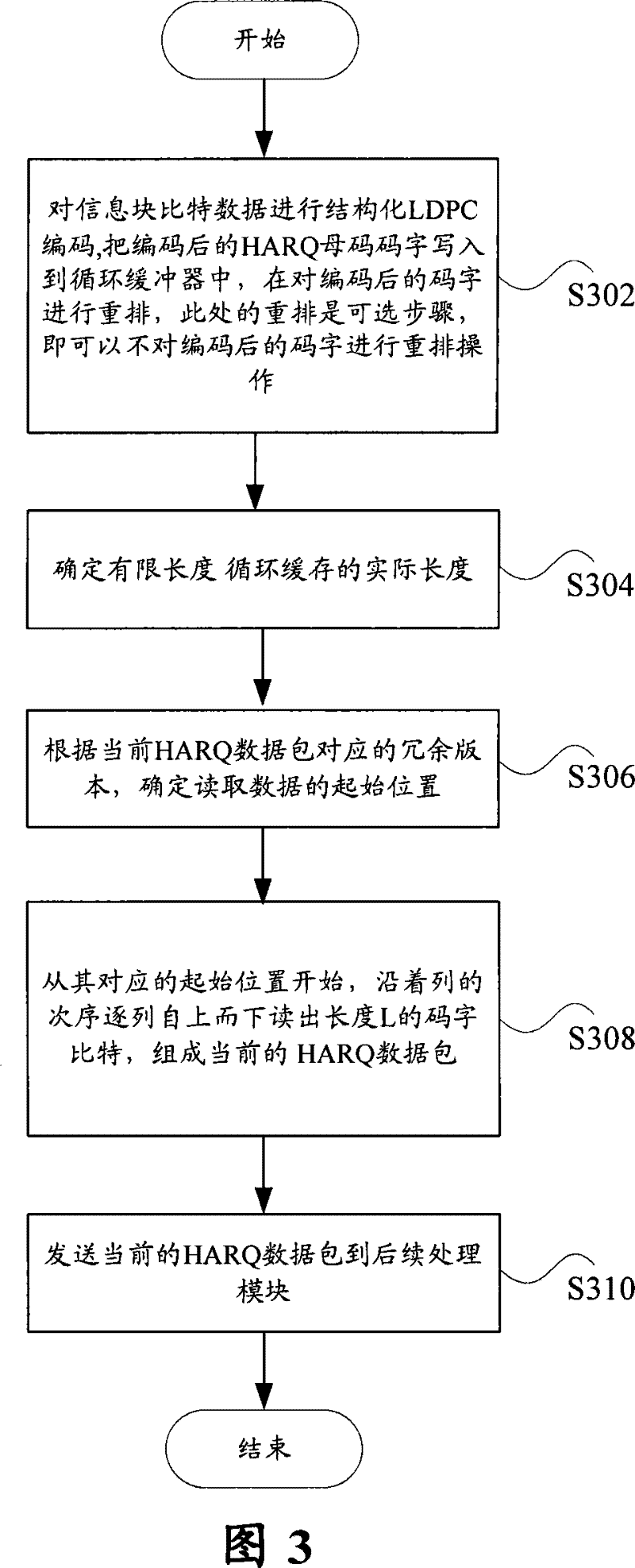

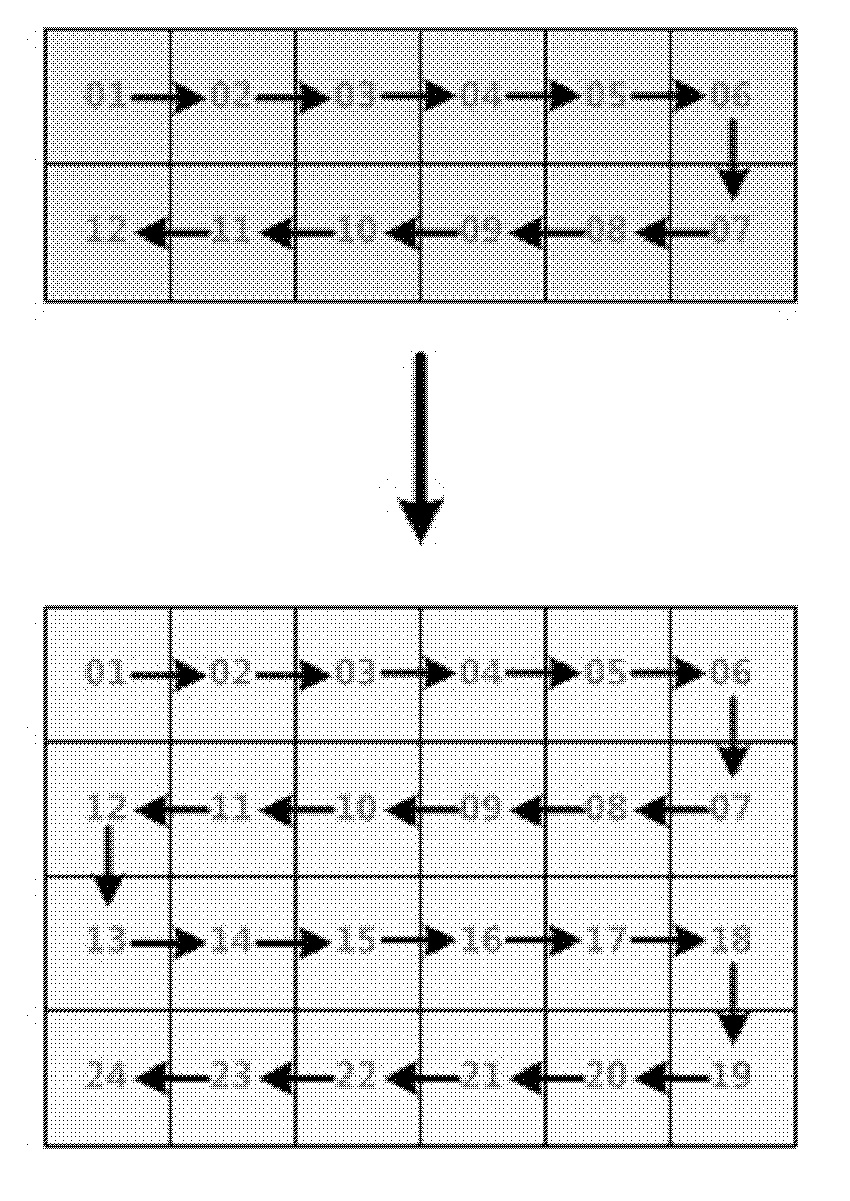

Speed matching method of limited length circular buffer of LDPC code

ActiveCN101188428BImprove retransmission performanceSave cache spaceError correction/detection using multiple parity bitsData packAlgorithm

The invention discloses a speed matching method of the limited-length circular cache of a low-density parity-check code. The method includes the following steps of conducting a structured low-density parity-check code encoding on the data bit of an inputted information block, and determining the size of the one-dimensional limited-length circular cache according to the encoding result; selecting a redundant version value from a plurality of preset redundant version values according to the retransmission times of the mixed retransmission request, and determining the initial position of the data bit of the formed mixed retransmission request data packet read from the one-dimensional limited-length circular cache according to the selected redundant version value; and reading the mixed automatic retransmission request data packet composed of specific-length data from the initial position in order, and sending the mixed automatic retransmission request data packet out. The respective corresponding initial positions of the multiple preset redundant version values are evenly or almost evenly distributed on the one-dimensional limited-length circular cache.

Owner:ZTE CORP

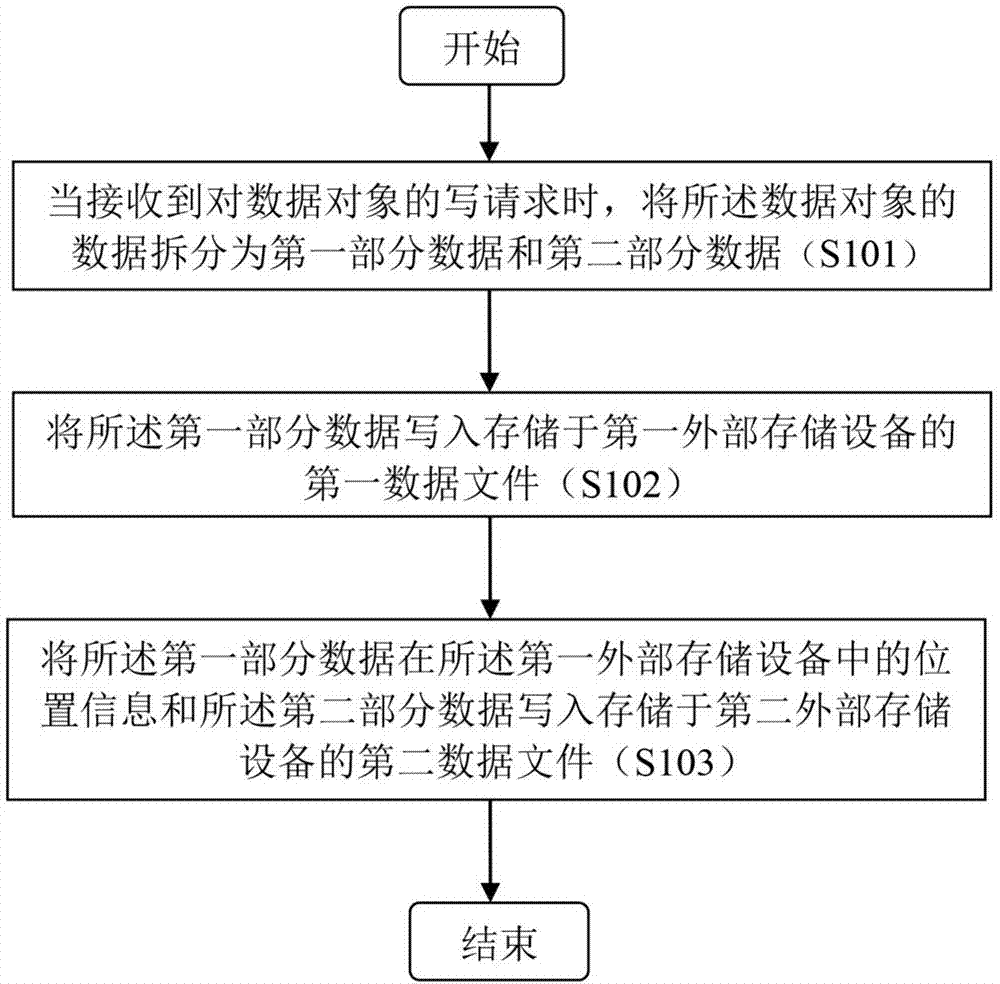

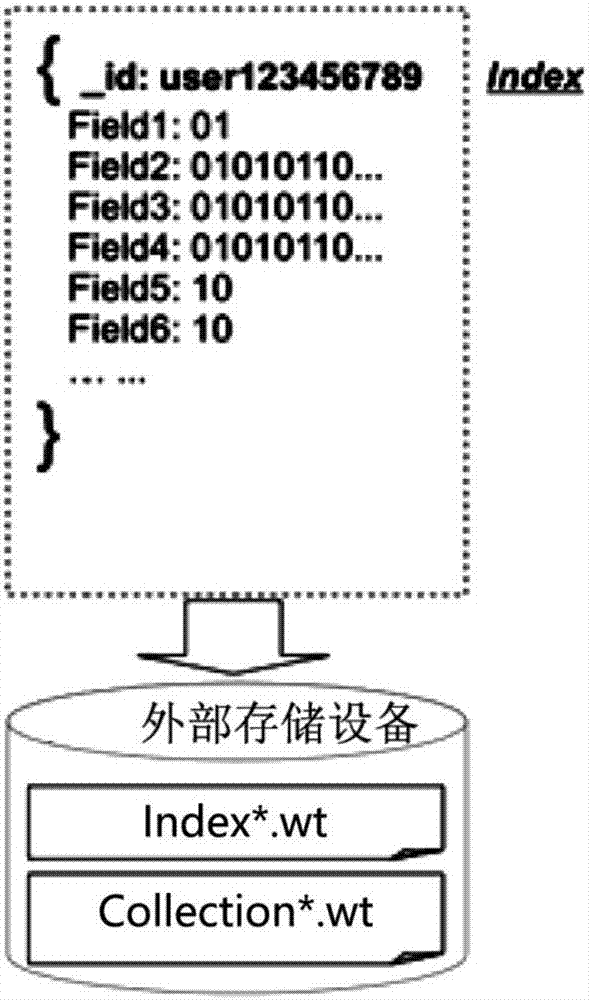

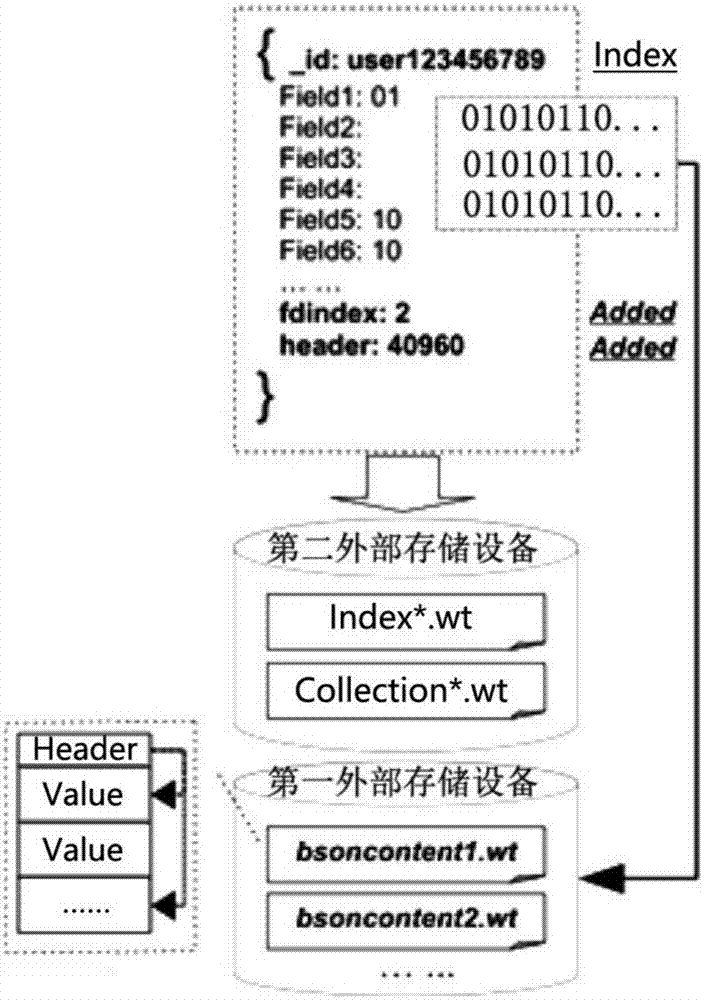

Method and device for storing data objects

ActiveCN107491523ASave cache spaceReduce swap-in and swap-out operationsFile access structuresSpecial data processing applicationsExternal storageData retrieval

The invention provides a method and a device for storing data objects. The method includes the following steps that when the data object writing request is received, data of the data objects is divided into first partial data and second partial data, wherein the first partial data includes data fields of the data object, and the second partial data includes metadata of the data objects; the first partial data is wrote and stored in a first data file of a first external storage device; the location information, in the first external storage device, of the first partial data and the second partial data are wrote and stored in a second data file of a second external storage device. According to the method and the device, data required by retrieval of the data objects and high in access frequency is loaded into a cache region, the cache space occupied by each data object can thus be effectively reduced, and the data retrieval speed is ensured.

Owner:SAMSUNG (CHINA) SEMICONDUCTOR CO LTD +1

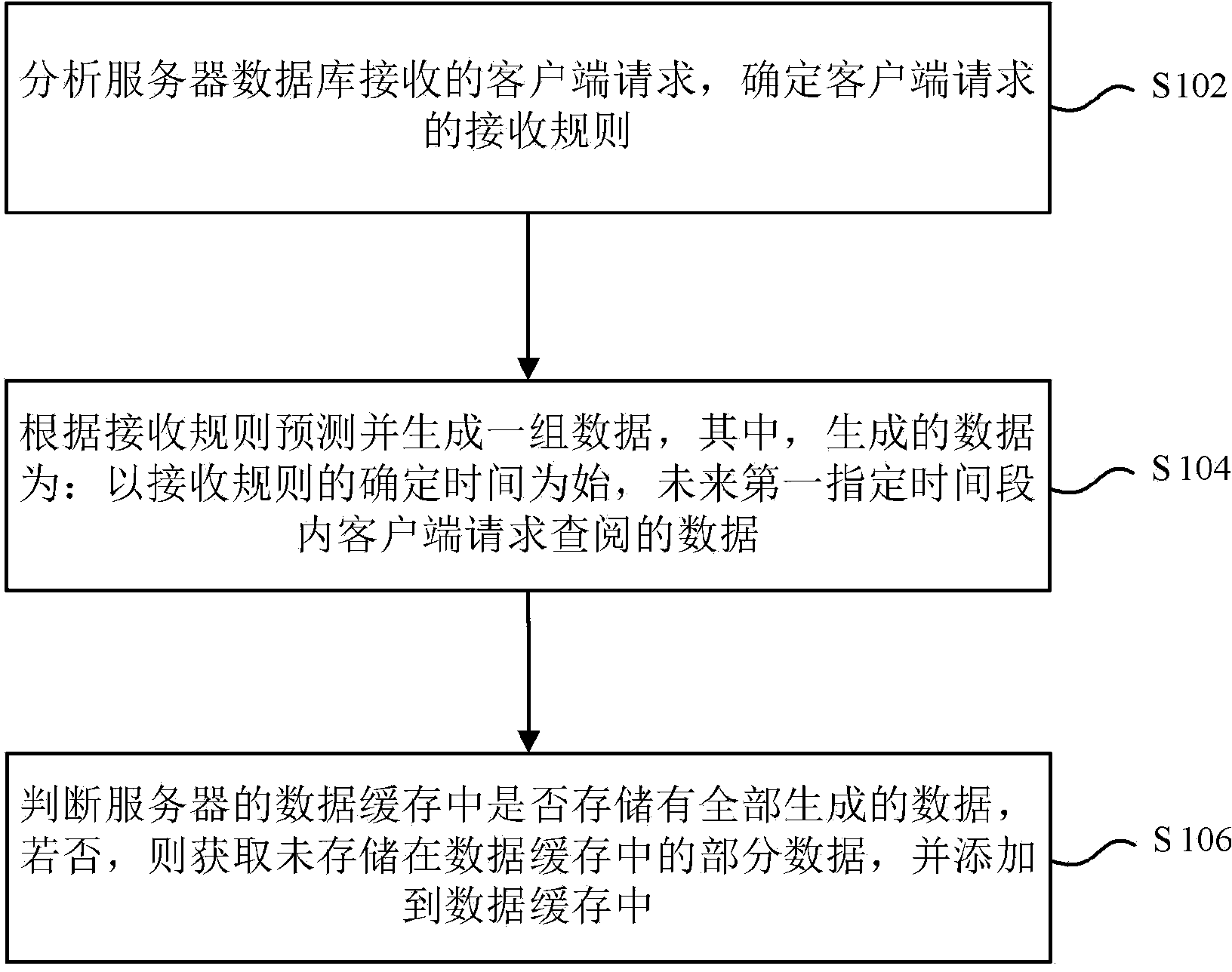

Data caching method and device

ActiveCN103886038ACaching is reasonably efficientClear in timeSpecial data processing applicationsClient-sideDatabase

The invention provides a data caching method and device. The data caching method includes the steps of analyzing a client request received by a server database and determining a receiving rule of the client request; predicting and generating a group of data according to the receiving rule, wherein the generated data are data requested to be checked by a client within a first appointed time period in the future with time when the receiving rule is determined as the beginning; judging whether all generated data are stored in a data cache of a server, obtaining a part of data not stored in the data cache if the answer is negative and adding the data to the data cache. By the adoption of the data caching method, the data requested to be checked by the client can be recognized according to the receiving rule, data not needed by the client are eliminated in time, caching space is saved, the data can be cached more reasonably and effectively, and the problem that in the prior art, data cannot be wholly stored in an internal storage is solved.

Owner:CHINA STANDARD SOFTWARE

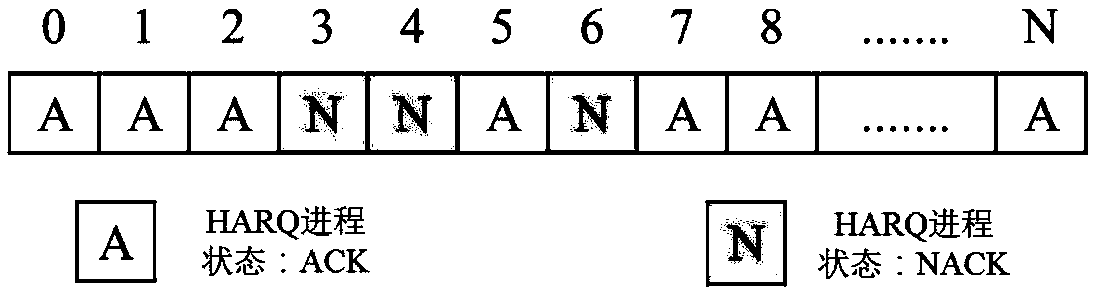

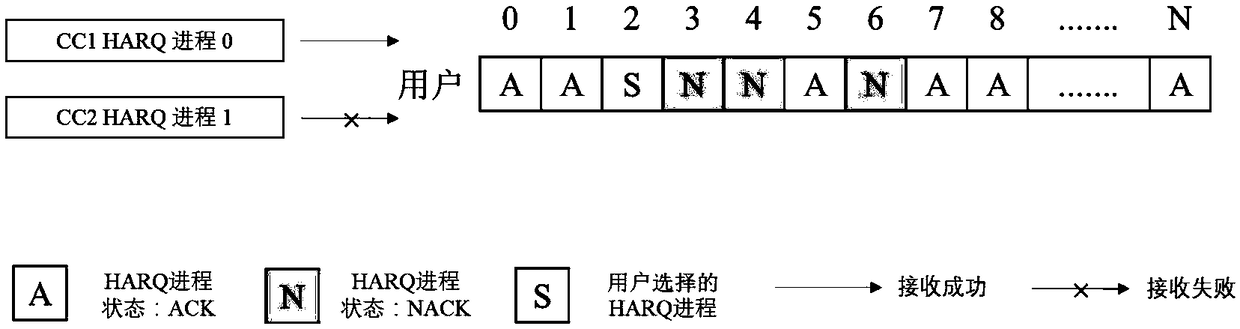

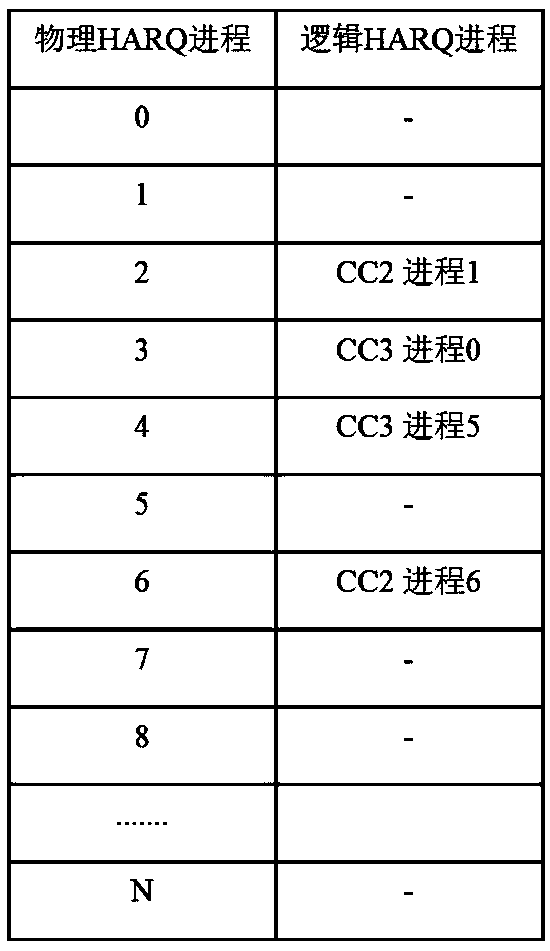

Method for multiplexing HARQ (Hybrid Automatic Repeat reQuest) processes

ActiveCN108768597ASave number of HARQ processesSave cache spaceError prevention/detection by using return channelNetwork traffic/resource managementMultiplexingComputer science

The invention provides a method for multiplexing hybrid automatic repeat request (HARQ) processes and belongs to the technical field of mobile communication. According to the method, at a user side, the HARQ processes are divided into physical HARQ processes and logic HARQ processes, and a physical process storage table and a logic process storage table are established. A state of each physical HARQ process, a mapping relationship between the physical HARQ processes and the logic HARQ process, and space addresses for caching soft bits are stored in the physical process storage table. NDIs (NewData Indicators) expected by the user side are stored in the logic process storage table. When downlink transmission receiving of a user is unsuccessful, the available physical processes are selectedfor caching the soft bits, the mapping relationship between the logic processes and the physical processes is recorded, and the expected NDIs of the logic processes are kept unchanged. When the downlink transmission receiving is successful, only the expected NDIs corresponding to the logic HARQ processes are overturned, and the soft bits are not cached. According to the method, the number of theHARQ processes and cache space required by the user side are reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM

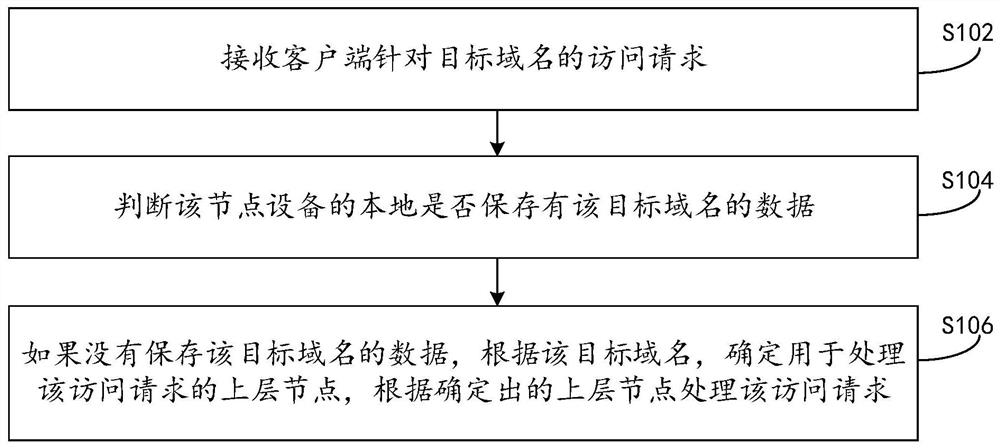

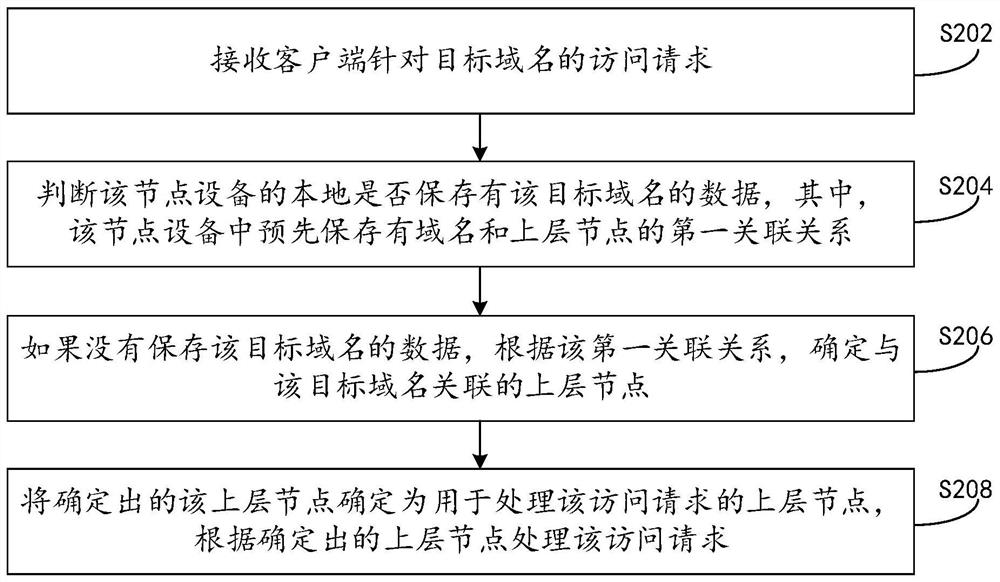

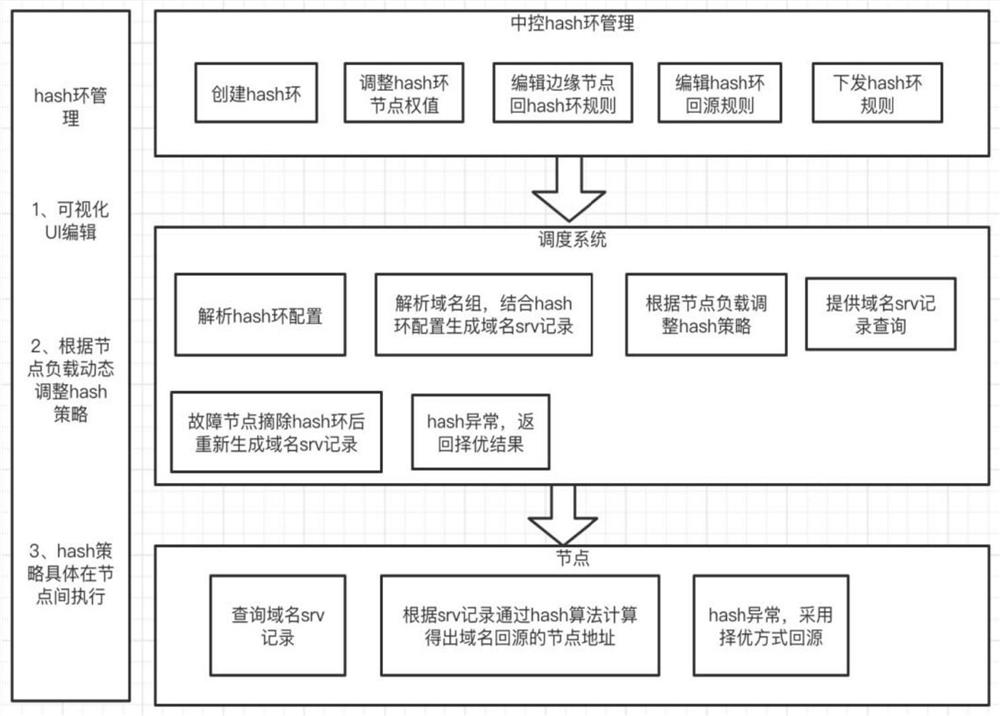

Access request processing method and device and electronic equipment

PendingCN112153160ASave cache spaceImprove caching capacityData switching networksEngineeringComputer network

The invention provides an access request processing method and device and electronic equipment, and relates to the technical field of the Internet. The method comprises: receiving an access request ofa client for a target domain name; judging whether data of the target domain name is locally stored in node equipment or not; and if the data of the target domain name is not stored, determining an upper-layer node for processing the access request according to the target domain name, and processing the access request according to the determined upper-layer node. According to the embodiment of the invention, an association relationship between the domain name and a back-to-source node is preset, when the content data of the target domain name requested to be accessed is not stored in the nodeequipment, and the corresponding associated back-to-source node can be determined according to the target domain name for back-to-source, therefore, only resources of the target domain name need to be cached to the associated upper-layer node, the redundant storage problem caused by caching the same resources in multiple upper-layer nodes in a preferred back-to-source mode is effectively relieved, and the overall caching capacity of a CDN is enhanced.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD

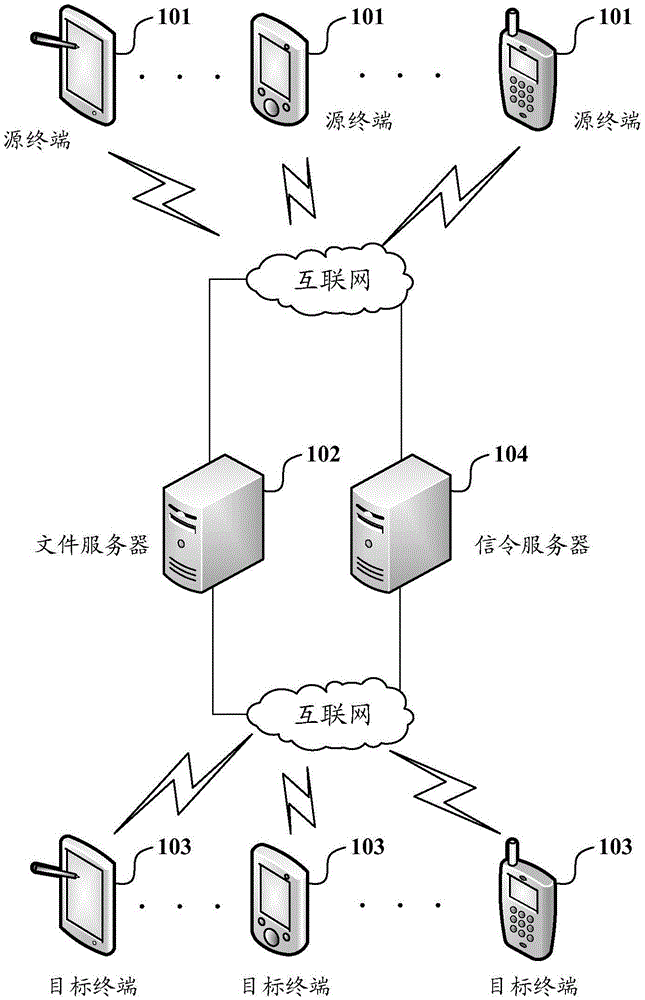

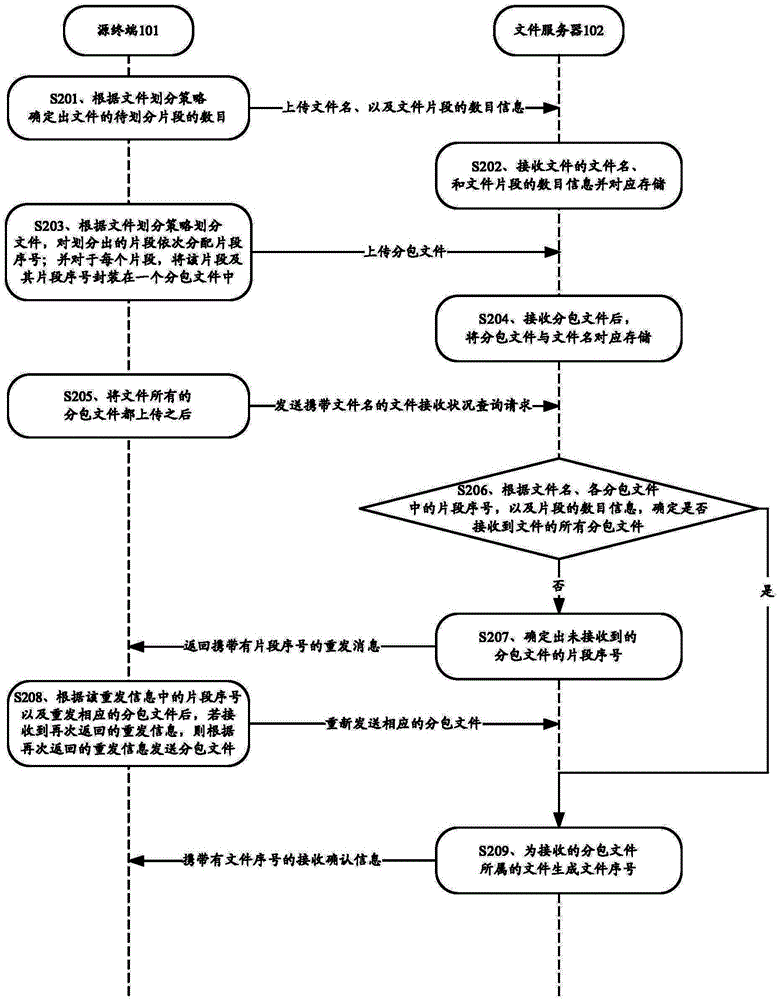

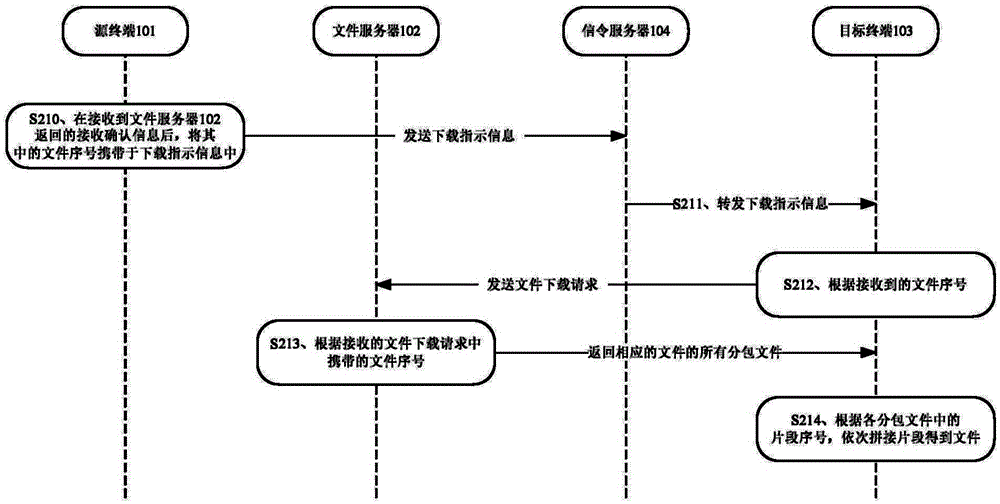

File server, terminal and file subpackage transmission method

InactiveCN105491132ASave bandwidthSave cache spaceError prevention/detection by using return channelFile transmissionComputer terminal

The embodiment of the invention provides a file server, a terminal and a file subpackage transmission method. The method comprises the following steps: a source terminal determines the number of file fragments to be divided according to a file division strategy, and uploads the file name and number information onto the file server; the source terminal divides the file according to the file division strategy, and assigns fragment sequence numbers to the divided fragments; each fragment and the sequence number thereof are encapsulated in a subpackage file to be uploaded to the file server; then, a file receiving condition inquiry request is transmitted to the file server; the source terminal re-transmits a corresponding subpackage file after receiving retransmission information returned from the file server; and the retransmission information is determined by the file server according to the file name, the fragment sequence number carried in each subpackage file and the number information. Through adoption of the method, time for transmitting the file can be saved, the file transmission efficiency is improved, and bandwidth and system resources are saved.

Owner:BEIJING YUANXIN SCI & TECH

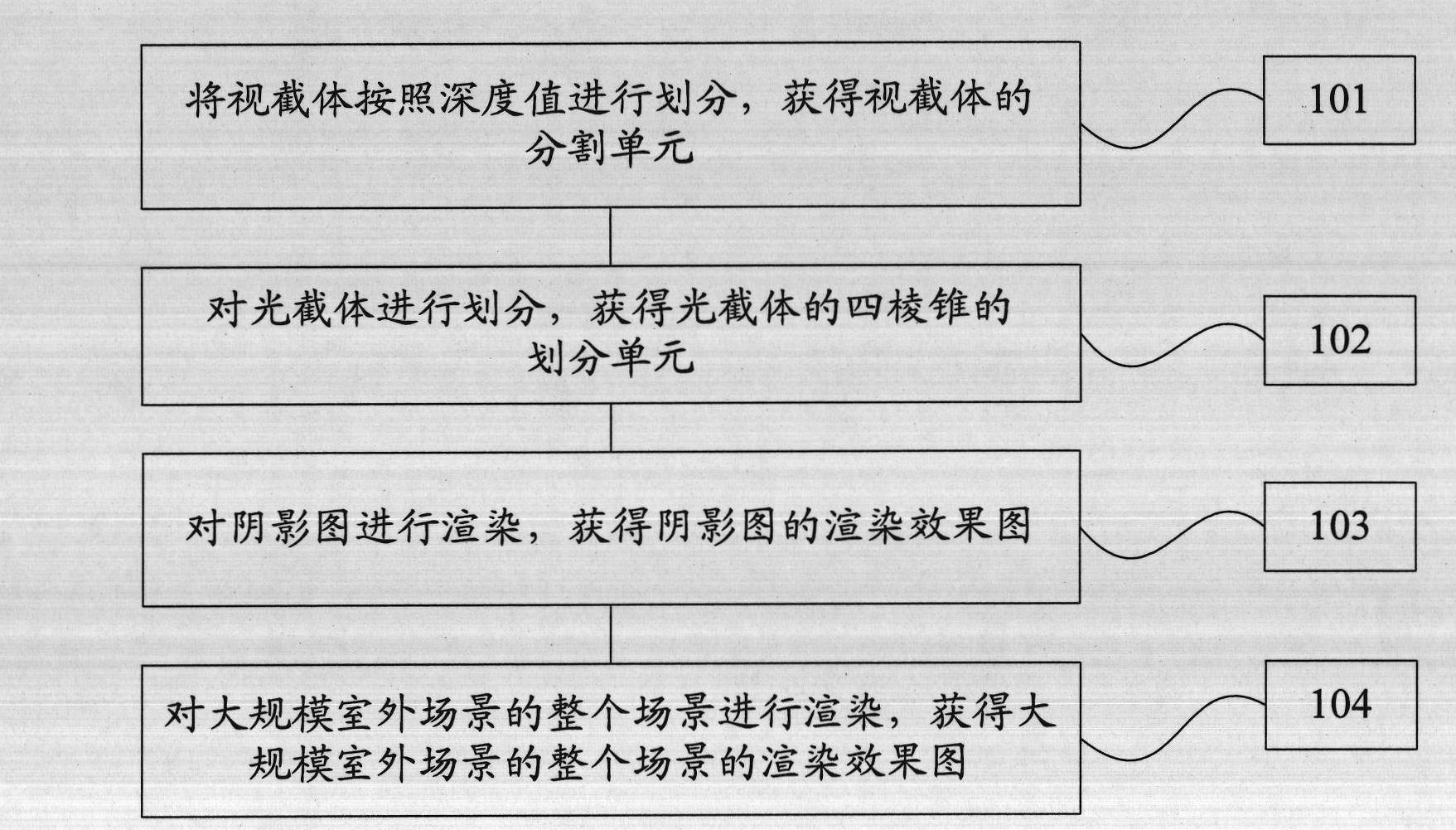

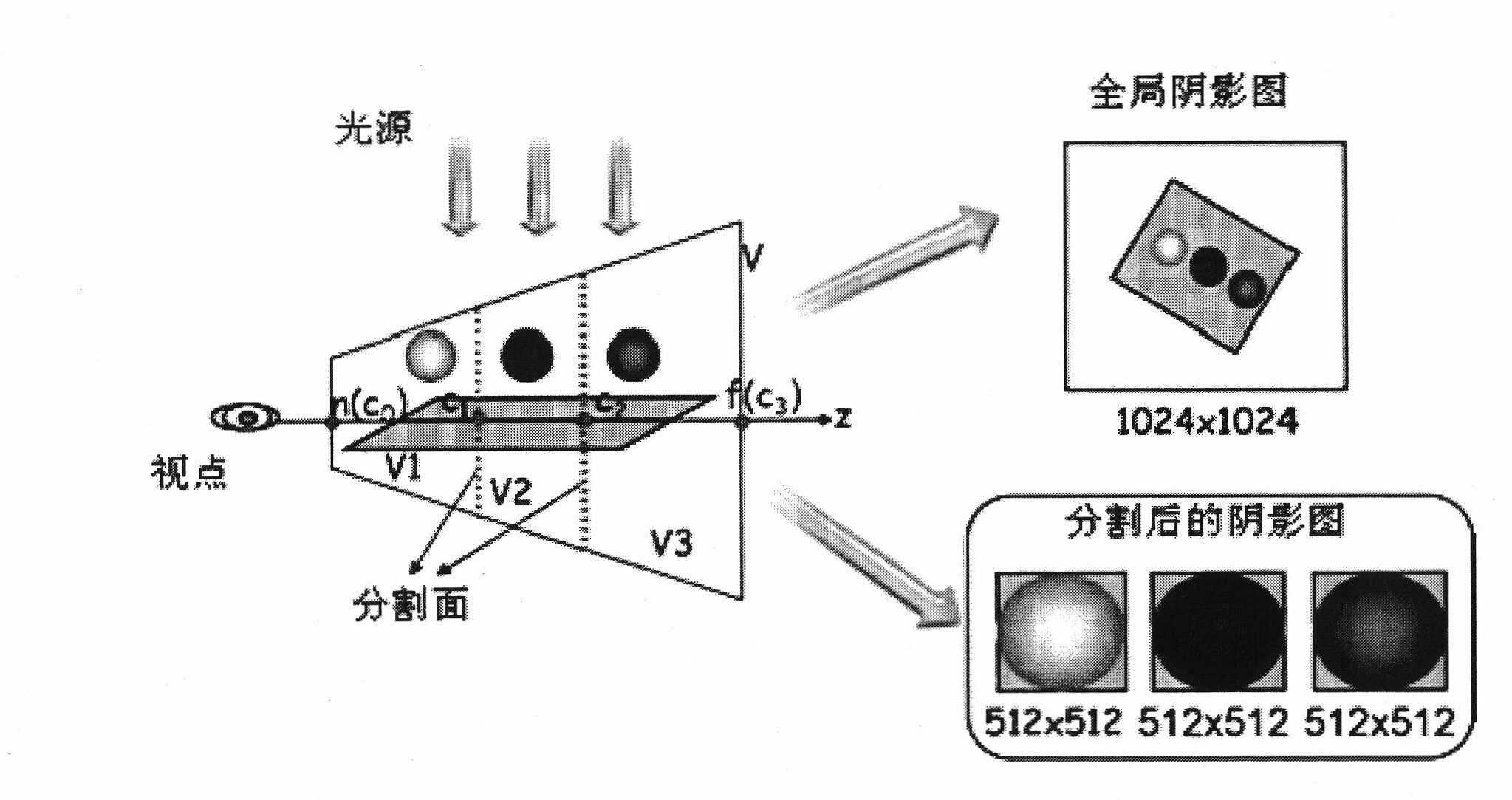

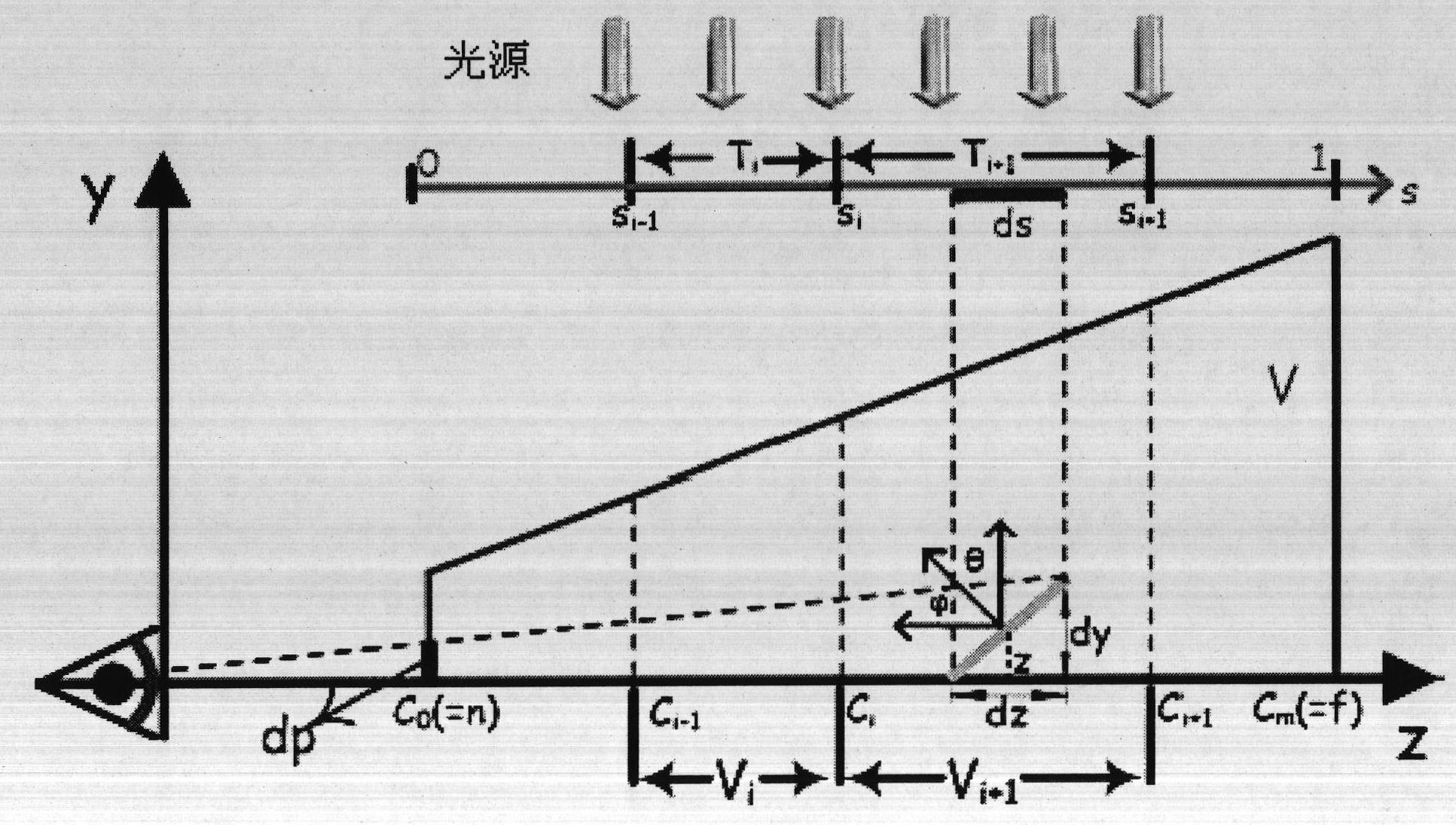

Shadow drafting method of large-scale outdoor scene

InactiveCN102436673AImprove realismGuaranteed drawing speed3D-image renderingComputer graphics (images)Viewing frustum

The invention provides a shadow drafting method of a large-scale outdoor scene. The method comprises the following steps that: view frustum division is carried out according to a depth value, so that segmentation units of the view frustum are obtained; optic frustum division is carried out to obtain rectangular pyramid-shaped division units of the optic frustum; rendering is carried out on a shadow graph to obtain a rendering effect graph of the shadow graph; and rendering is carried out on a whole scene of a large-scale outdoor scene so as to obtain a rendering effect graph of the whole scene of the large-scale outdoor scene. According to the invention, a shadow drafting method of a large-scale outdoor scene is provided, wherein the method can be rapidly realized and has a small occupied memory space.

Owner:KARAMAY HONGYOU SOFTWARE

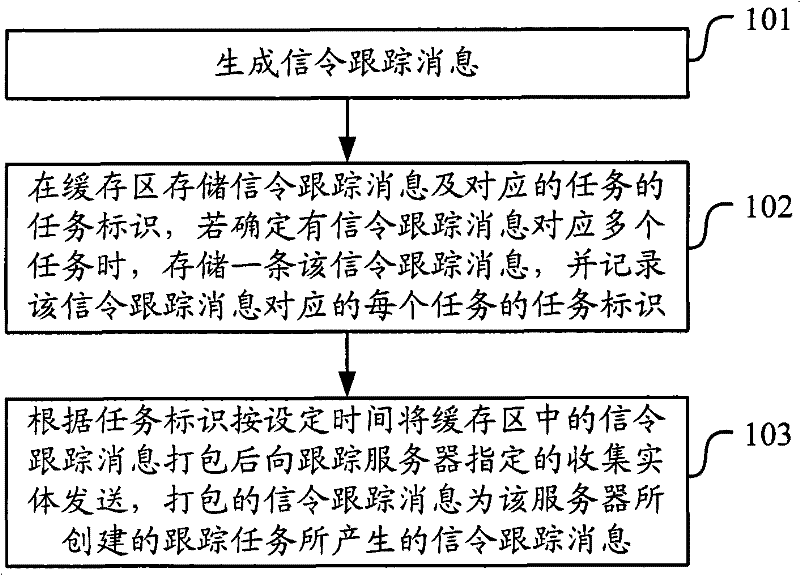

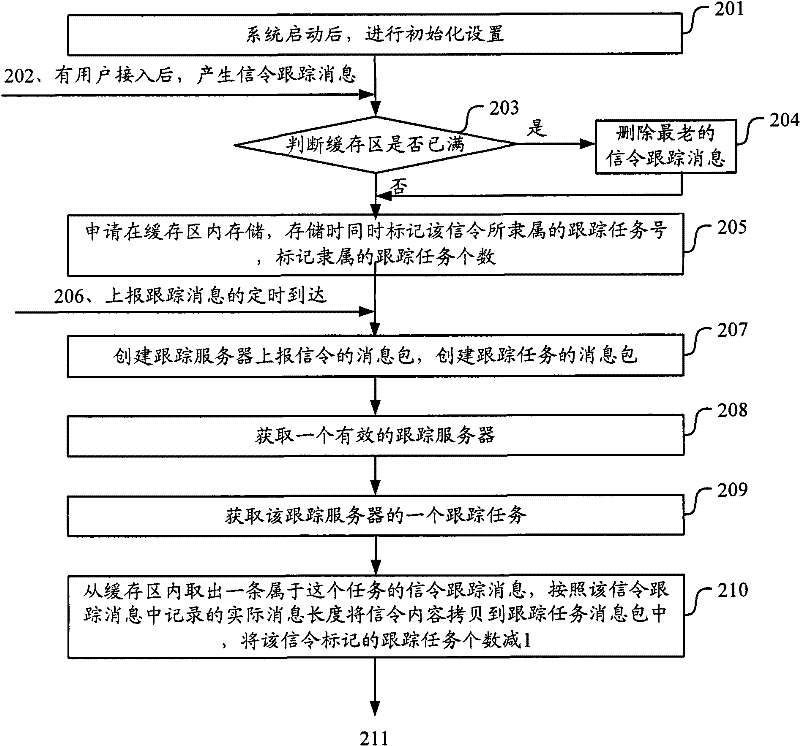

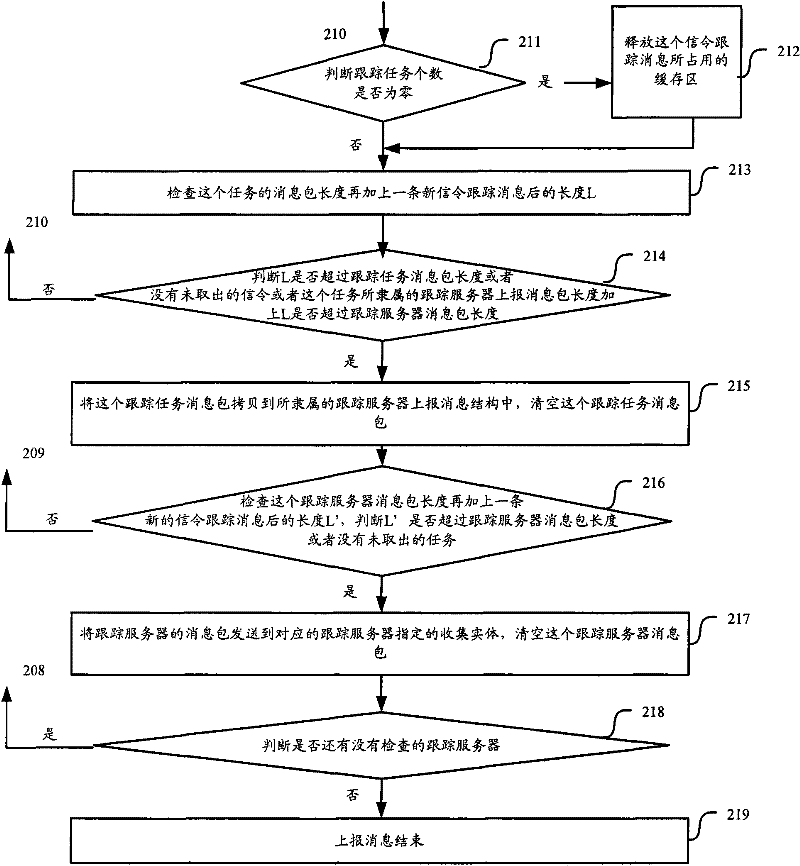

Method and equipment for sending signaling tracing message

ActiveCN102196392AReduce duplicate savesSave cache spaceNetwork traffic/resource managementServices signallingParallel computingNetwork element

The invention discloses a method and equipment for sending signaling tracing message. The method comprises the following steps of: generating the signaling tracing message; storing the signaling tracing message and task identification of corresponding tasks in a cache region; if the signaling tracing message corresponding to multiple tasks is determined, storing a piece of signaling tracing message, and recording to the task identification of each task corresponding to the signaling tracing message; and packing the signaling tracing message in the cache region in set time according to the task identification, and sending the packed signaling tracing message to an acquisition entity specified by a tracing server, wherein the packed signaling tracing message is the signaling tracing message generated by the traced task created by the server. Congestion of networks in a certain time period is not caused. Meanwhile, timely discovery of network element faults is not affected. The signaling tracing message is not repeatedly stored in the cache region, and the traced message is more comprehensive due to the storage of maximum capacity.

Owner:DATANG MOBILE COMM EQUIP CO LTD

Decoder buffer control method under frame field adaptive decoding schema

ActiveCN101389033AIncrease profitImprove execution efficiencyTelevision systemsDigital video signal modificationParallel computingReference image

This invention is related to the image decoding technology, especially relating to a decoder cache control method under the frame field self-adapting decoding mode. This invention discloses a decoder cache control method under the frame field self-adapting decoding mode aiming to the low utilization ratio of memory, requirement of larger storage area and high cost of decoder of the current technology under the frame field self-adapting decoding mode so conquer the defects. The technical proposal of this invention is the decoder cache control method under the frame field self-adapting decoding mode, comprising following steps: a, dividing an image cache area in the storage area of the decoder; b, pre-determining a span for accessing the current image and a reference image data based on the coding mode of the current image; c, sharing the image cache area by the areas of the frame and the field in the process of image decoding. This invention for the decoder cache control is capable of effectively improving the utilization ratio of the storage area, saving cache area and improving the execution efficiency of the decoder.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

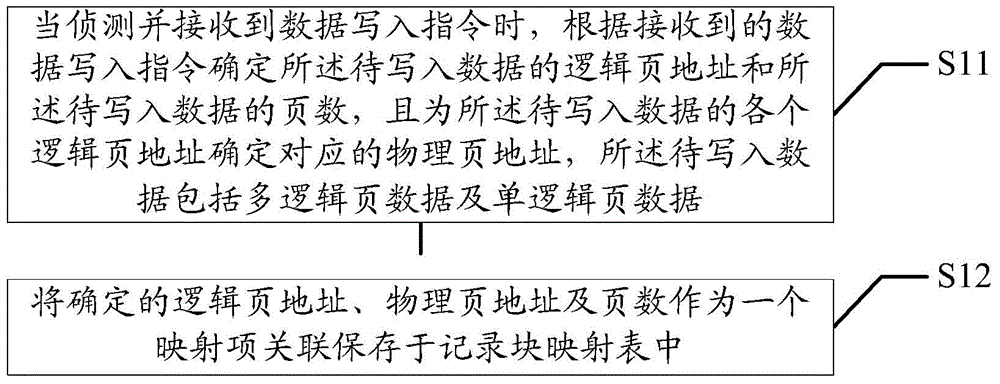

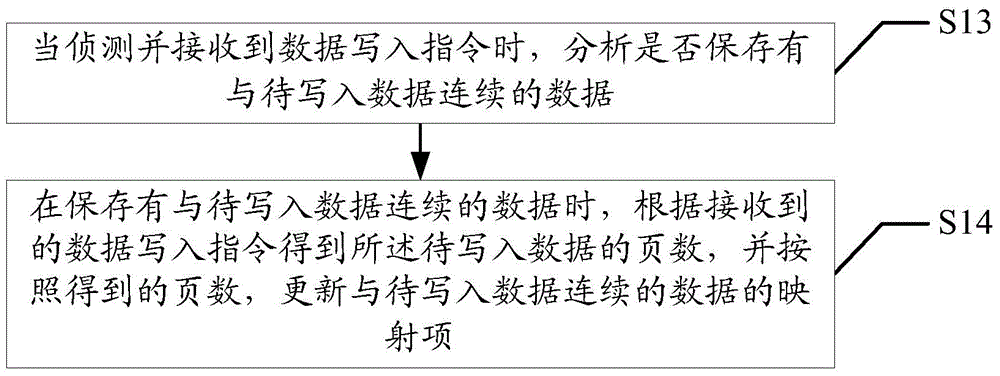

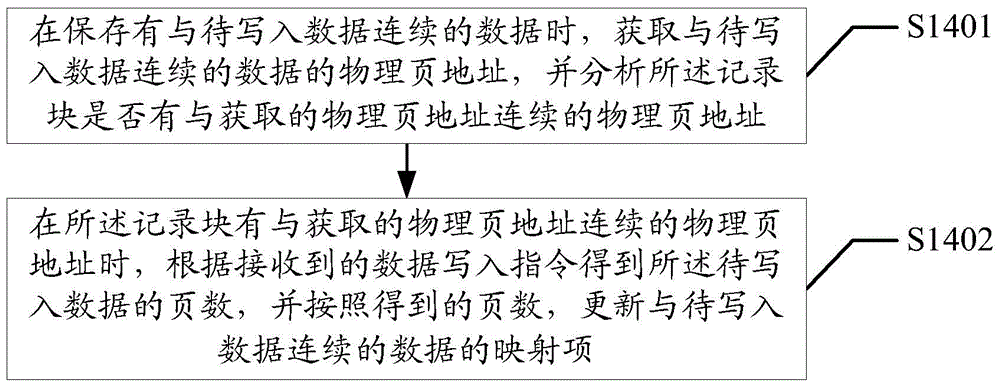

Data storage method and device

ActiveCN103955432AAvoid creatingReduce manufacturing costInput/output to record carriersMemory adressing/allocation/relocationPage countDatabase

The invention discloses a data storage method. The data storage method comprises the steps that when a data writing instruction is detected and received, logic page addresses of data to be written and a number of pages of the data to be written are determined according to the received data writing instruction, a physical page address corresponding to each logic page address of the data to be written is determined, and the data to be written comprise multi-logic page data and signal-logic page data; the determined logic page addresses, the physical page addresses and the number of pages are taken as a mapping entry and stored a record block mapping table in a relevance way. The invention also discloses a data storage device. A mapping entry is effectively prevented from being created for each page of storage data, a buffer memory space is saved, and the manufacturing cost of a Nand Flash storage device is thus saved.

Owner:合肥致存微电子有限责任公司

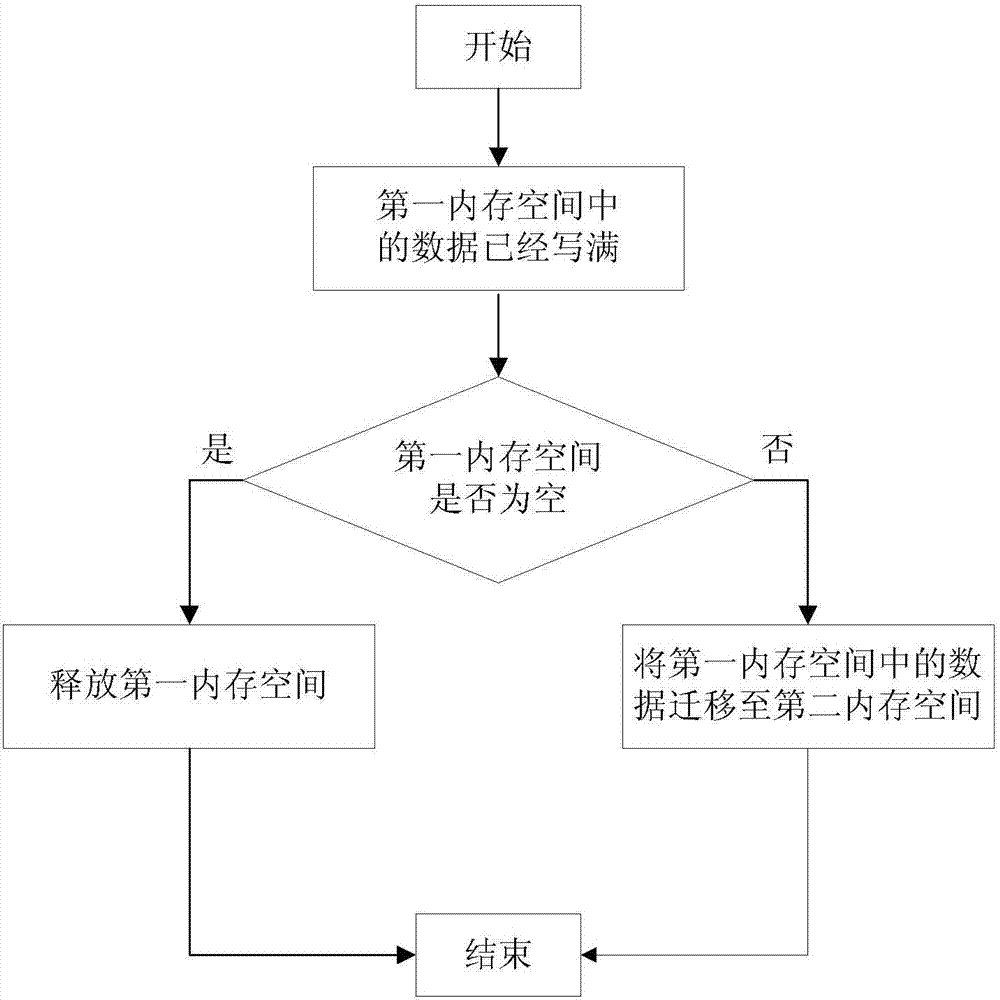

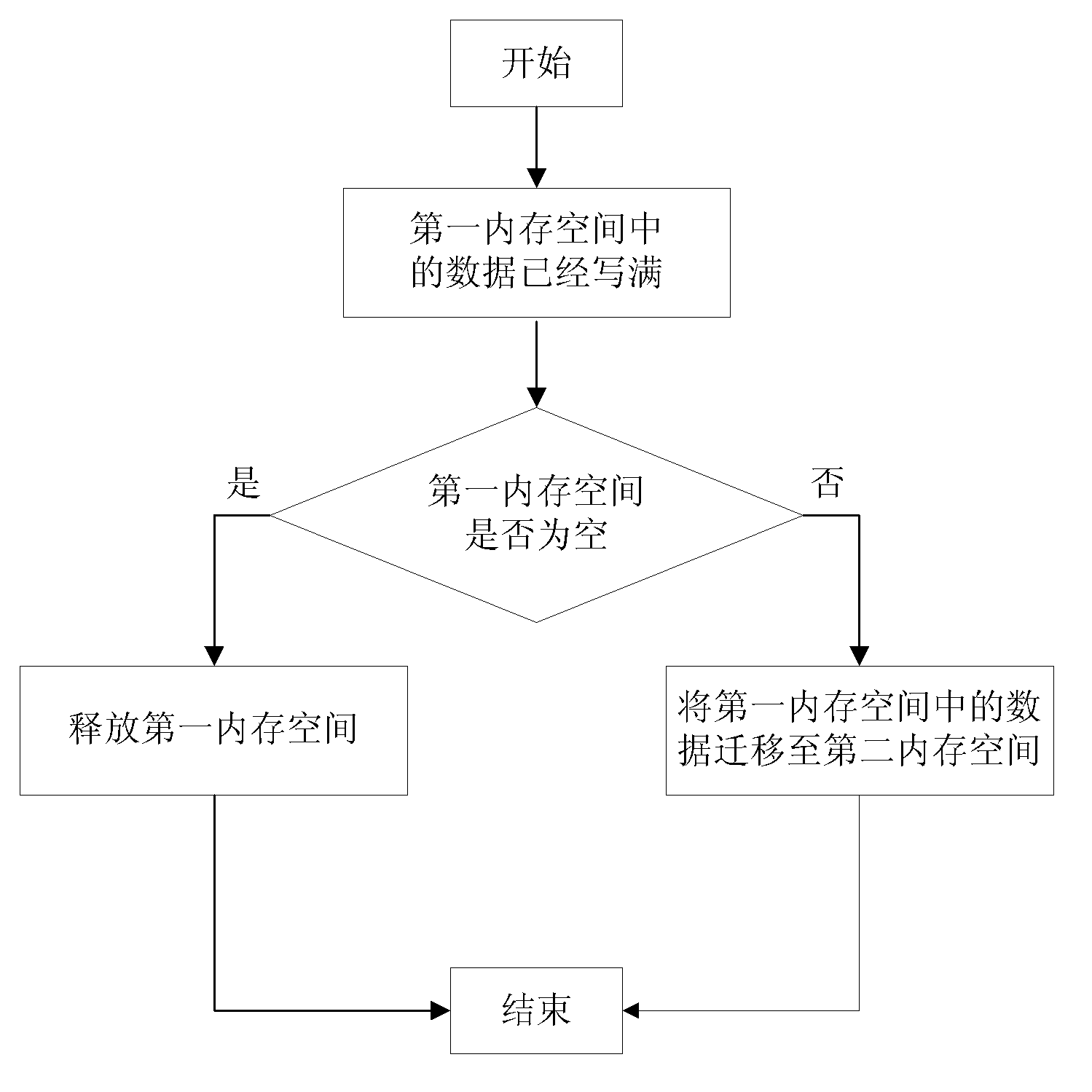

Method and device for operating cache data

ActiveCN103019956AImprove hit rateSmooth expansionMemory adressing/allocation/relocationSpecial data processing applicationsTraffic capacityInternal memory

The invention discloses a method and device for operating cache data. The method comprises the following steps of judging whether a first internal memory space is empty or not if the first internal memory space is fully filled with data, taking the data, located at a starting position, out of the first internal memory space to be written into a second internal memory space with larger volume if the first internal memory space is not empty, until taking out all data in the first internal memory space; and releasing the first internal memory space if the first internal memory space is empty. The invention also provides a method for transferring the cache data in the first internal memory space into the second internal memory space with small volume as well as a device corresponding to the method. Due to the adoption of the method and the device, not only can the enlarging / reduction requirement of the cache volume be met, but also a user can access the cache data normally during the transferring period of the data; and meanwhile, during the peak flow of the data, the pressure of a database or a magnetic disk can be alleviated, and the integral service stability also can be improved.

Owner:BEIJING QIHOO TECH CO LTD

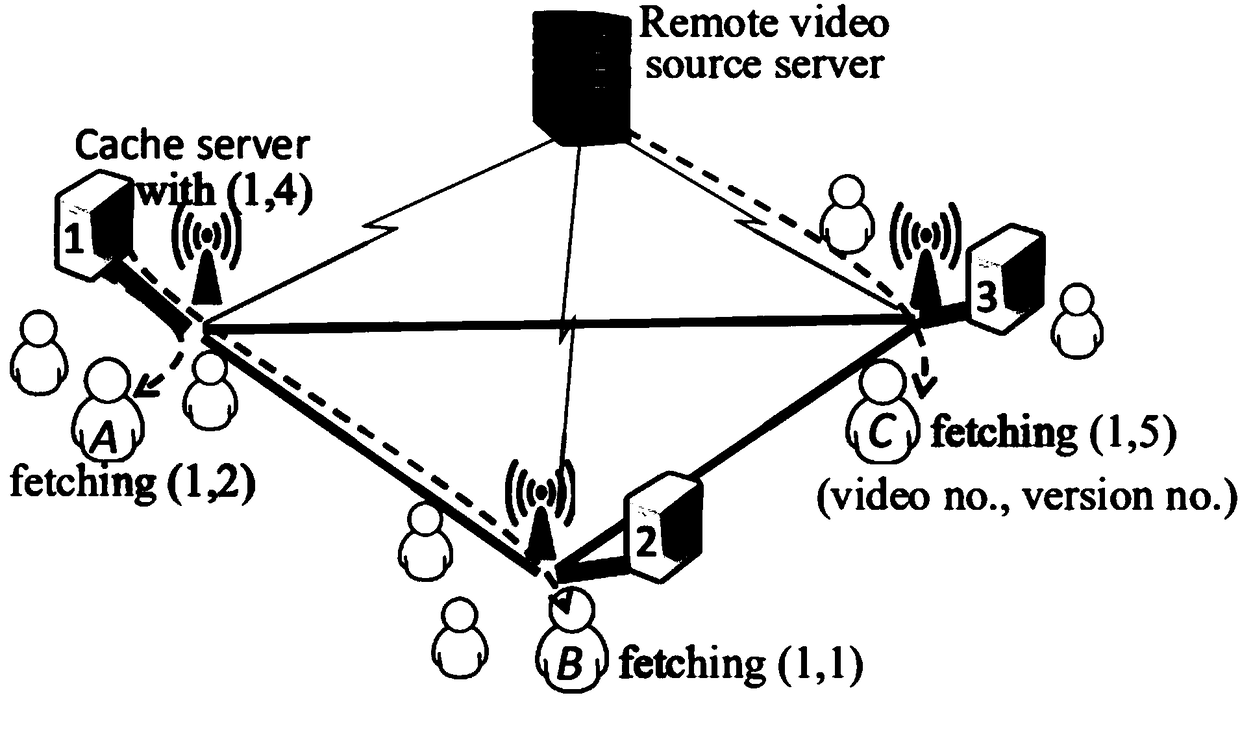

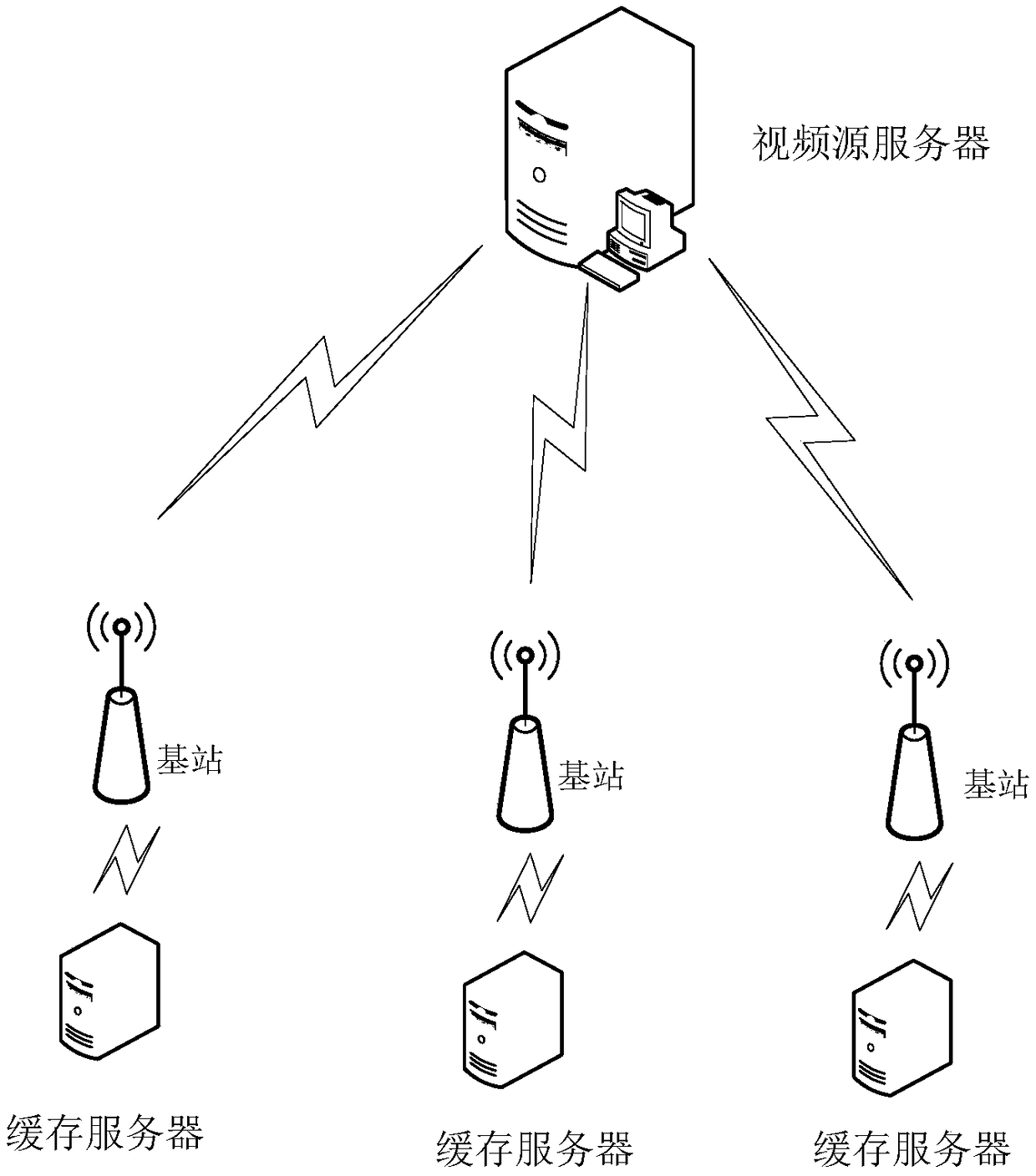

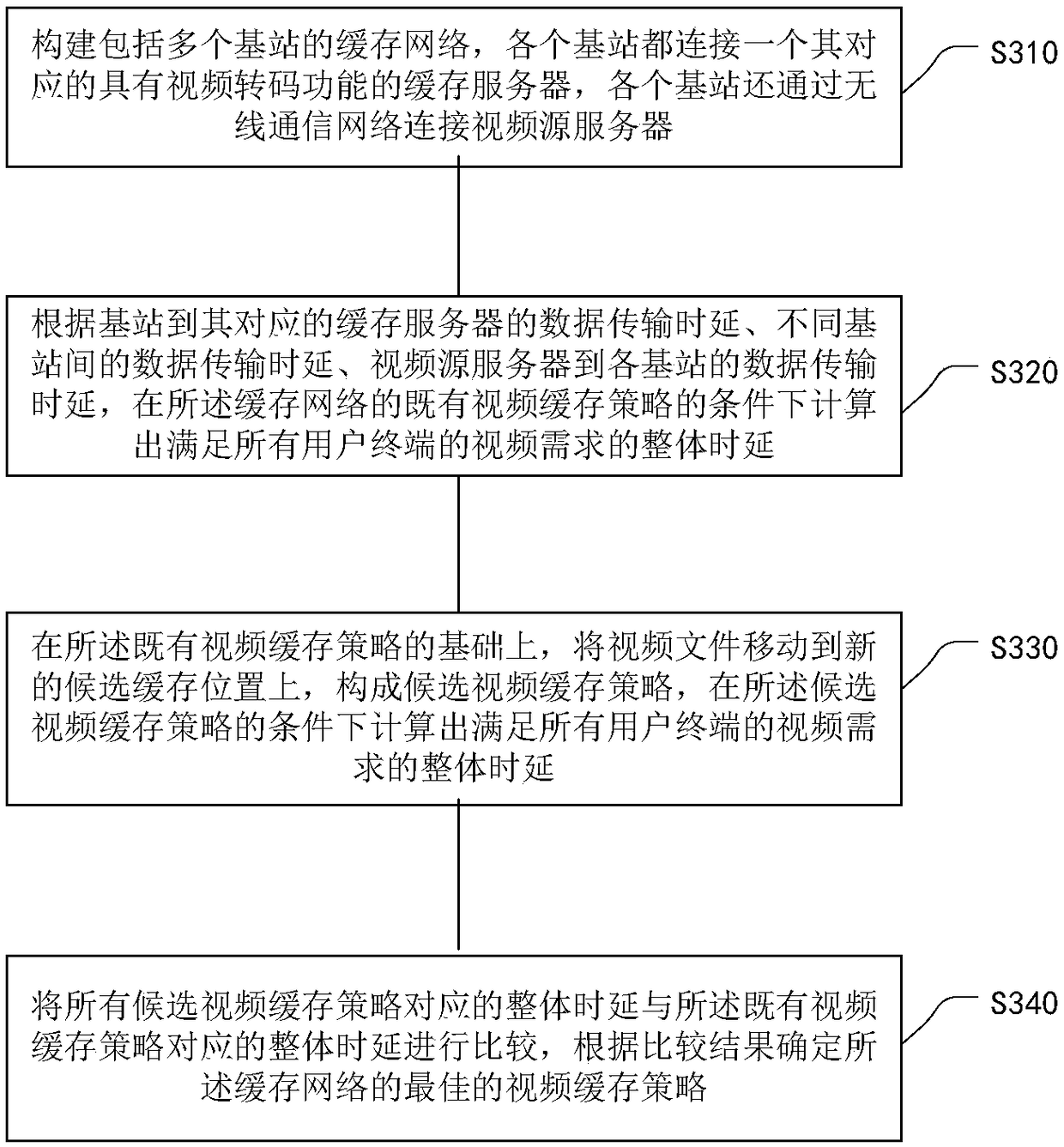

A method and system for video caching based on cooperation among multi-cache servers

InactiveCN109040771ASave cache spaceIncrease profitSelective content distributionData switching networksData transmissionReal-time computing

The invention provides a method and a system for video caching based on cooperation among multi-cache servers. The method comprises the following steps: according to the data transmission delay from abase station to a corresponding buffer server, the data transmission delay between different base stations, and the data transmission delay from a video source server to each base station, the overall time delay satisfying the video requirements of all user terminals is calculated under the condition of the existing video buffer strategy of the buffer network; the video file is moved to a new candidate buffer location to constitute a candidate video buffer strategy. The overall delay corresponding to all candidate video buffer strategies is compared with the overall delay corresponding to theexisting video buffer strategies, and the optimal video buffer strategy of the buffer network is determined according to the comparison results. The method of the invention comprehensively utilizes the characteristics of video transcoding to reduce the buffer space, and further extends the buffer space to a mechanism in which multiple buffer servers can cooperate with each other, thereby effectively improving the utilization rate of video resources.

Owner:BEIJING JIAOTONG UNIV

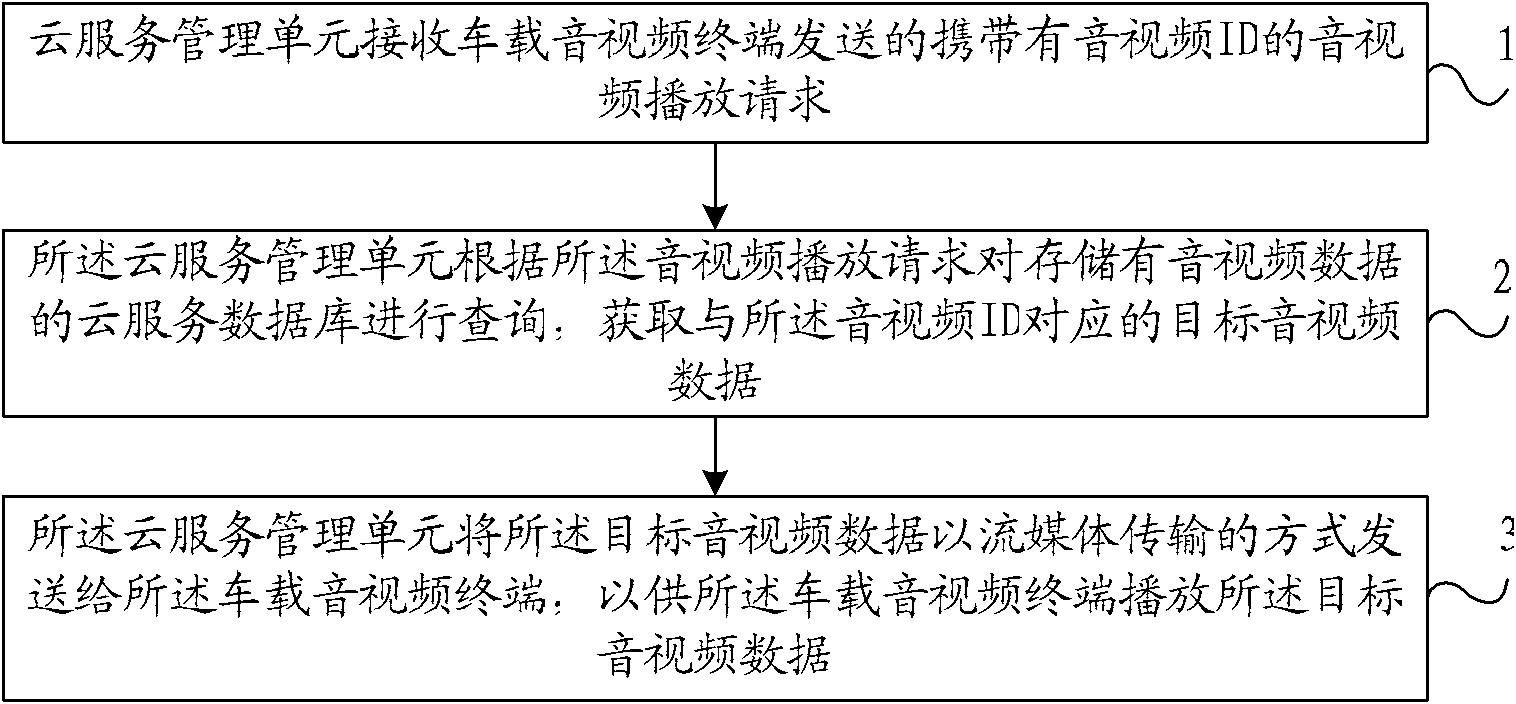

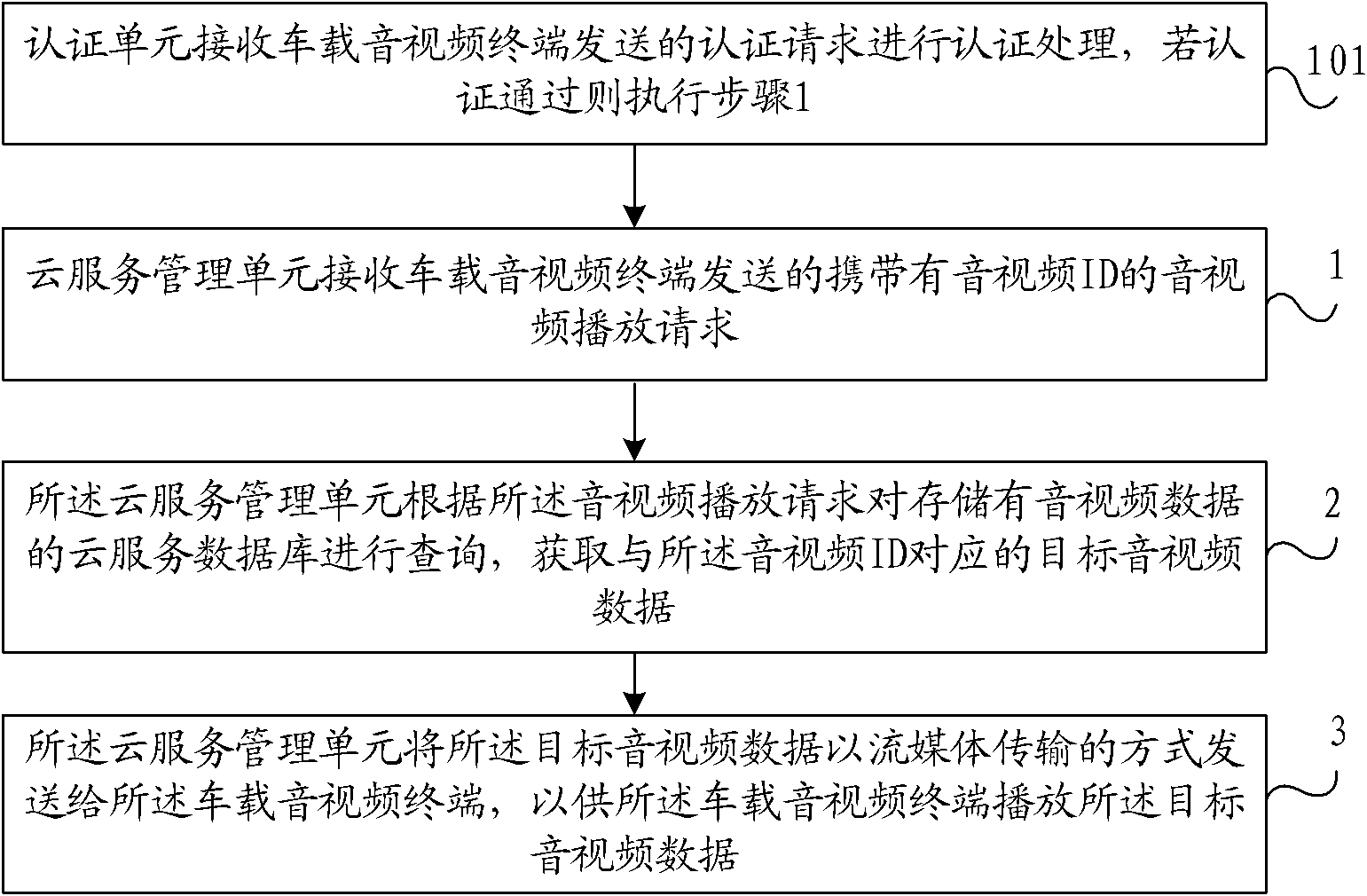

Vehicle-mounted audio and video playing method based on cloud service and cloud computing center

ActiveCN103108013APlay in real timeReduce storage requirementsTransmissionComputer terminalData storing

The invention provides a vehicle-mounted audio and video playing method based on a cloud service and a cloud computing center, wherein the method comprises that a cloud service management unit receives an audio and video playing request with an audio and video ID, the audio and video playing request is sent by a vehicle-mounted audio and video terminal, according to the audio and video playing request, an inquiry is carried out on a cloud service database with audio and video data stored so that objective audio and video data corresponding to the audio and video ID is obtained, and the objective audio and video data are sent to the vehicle-mounted audio and video terminal and used for being played by the vehicle-mounted audio and video terminal. According to the scheme, when the audio and video data are stored in the cloud service database and the vehicle-mounted audio and video terminal requests the audio and video data, the audio and video data are sent to the vehicle-mounted audio and video terminal in a streaming media mode, and therefore high real-time performance is provided, and the storage requirements for a vehicle-mounted audio device are low.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

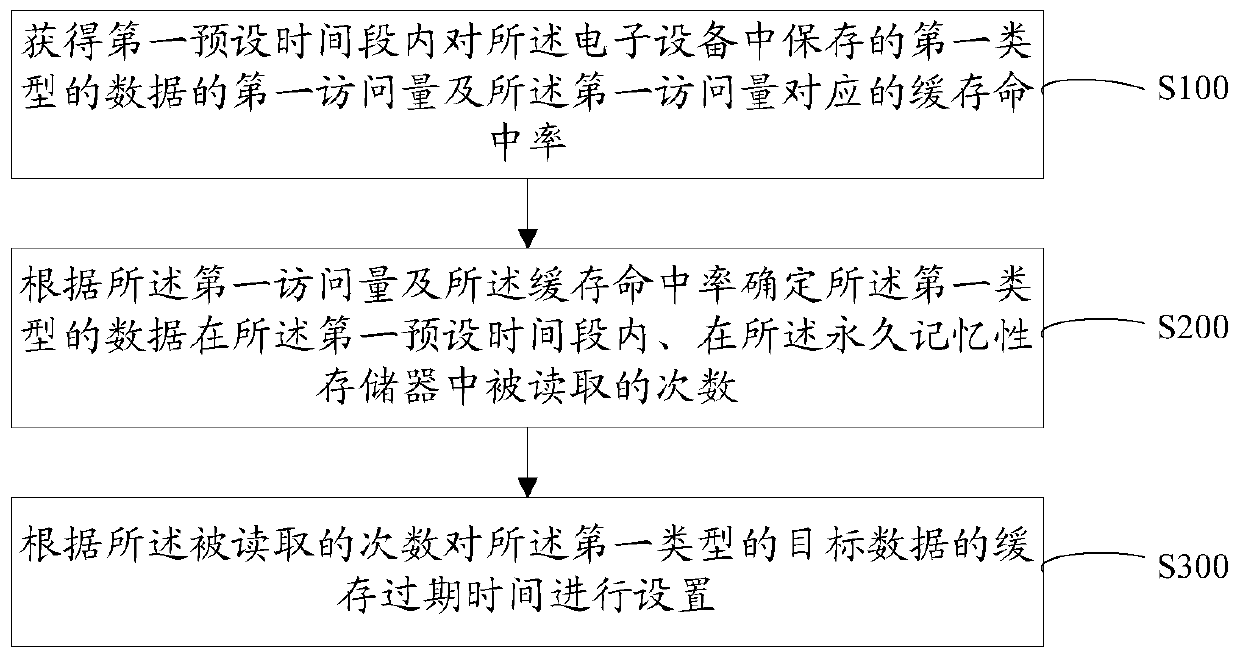

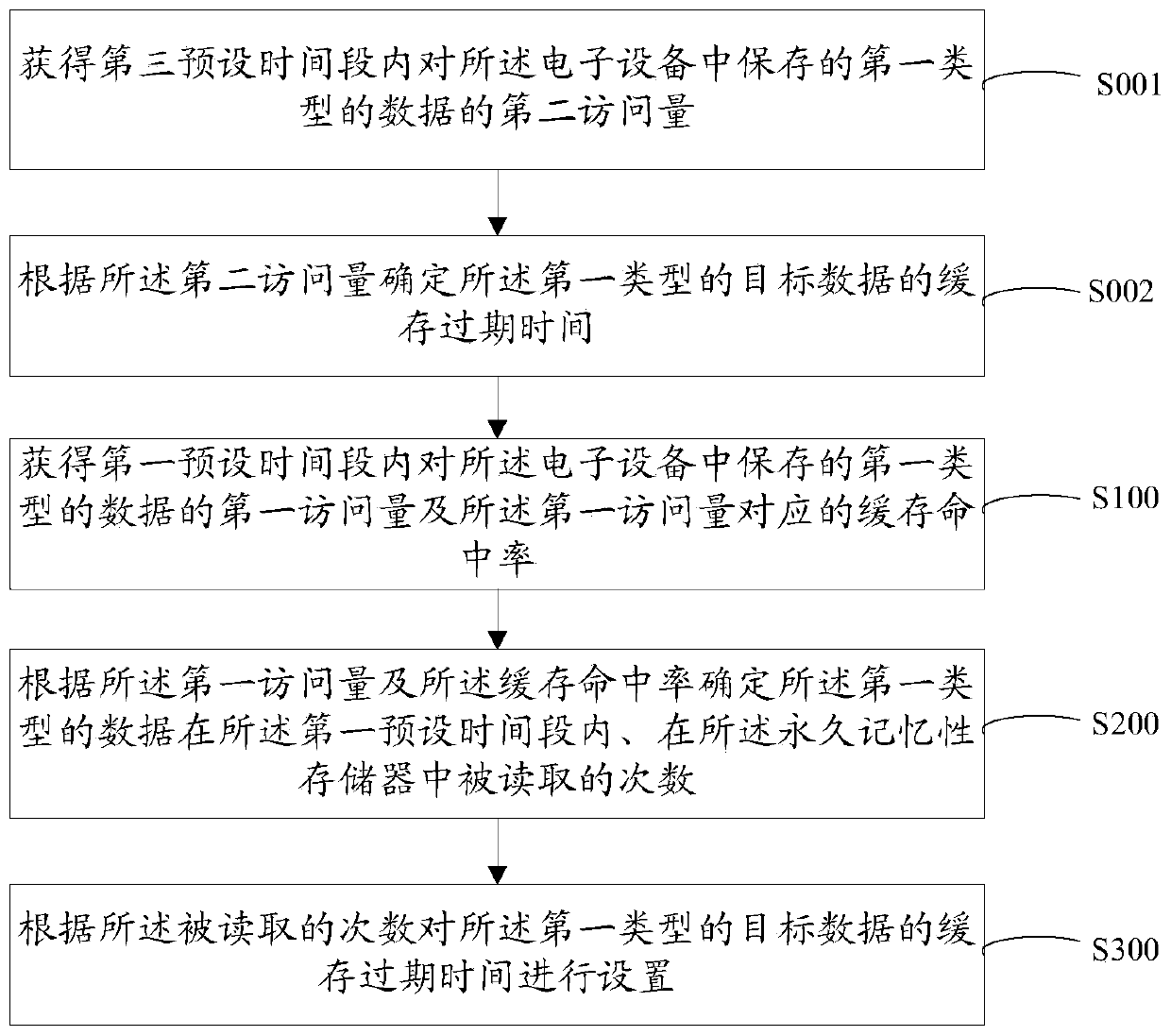

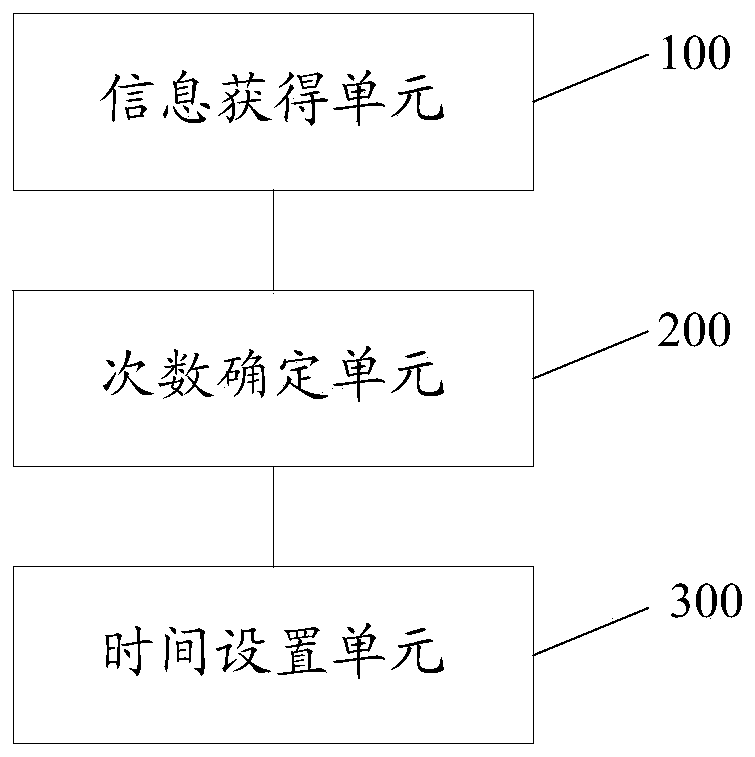

Cache expiration time setting method and device, electronic equipment and storage medium

PendingCN110489063AReduce read pressureRealize fine managementInput/output to record carriersEnergy efficient computingExpiration TimeCache hit rate

Embodiments of the invention provide a cache expiration time setting method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining a first page view of a first type of data stored in the electronic equipment in a first preset time period and a cache hit rate corresponding to the first page view; according to the first page view and the cache hit rate, determining the number of times that the first type of data is read in the permanent memory memory in the first preset time period; and setting the cache expiration time of the target data of the firsttype according to the read times. According to the invention, the cache expiration time can be set according to the number of times that the data is read in the permanent memory; the cache expirationtime is matched with the number of times that the data is read in the permanent memory, so that fine management of the cache data is realized, the reading pressure of the permanent memory is reduced,and the cache space is saved.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

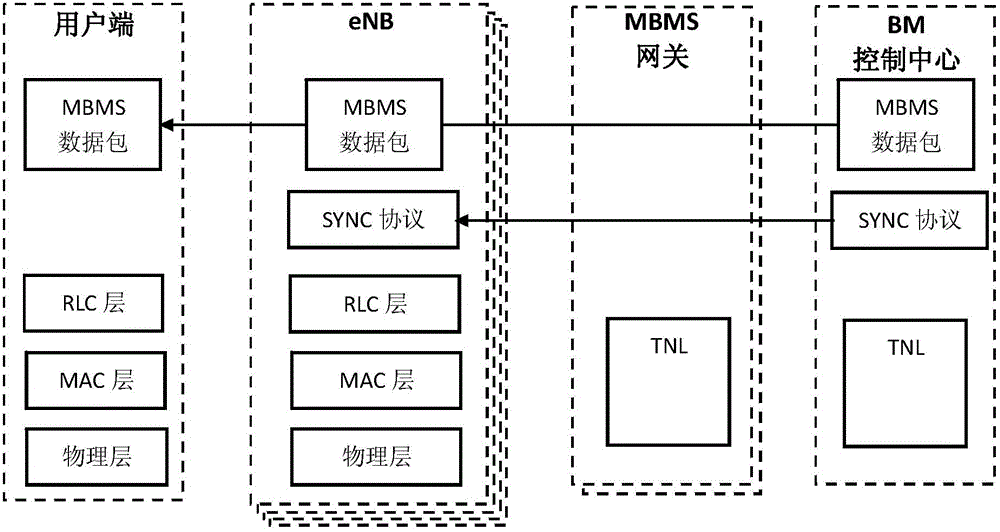

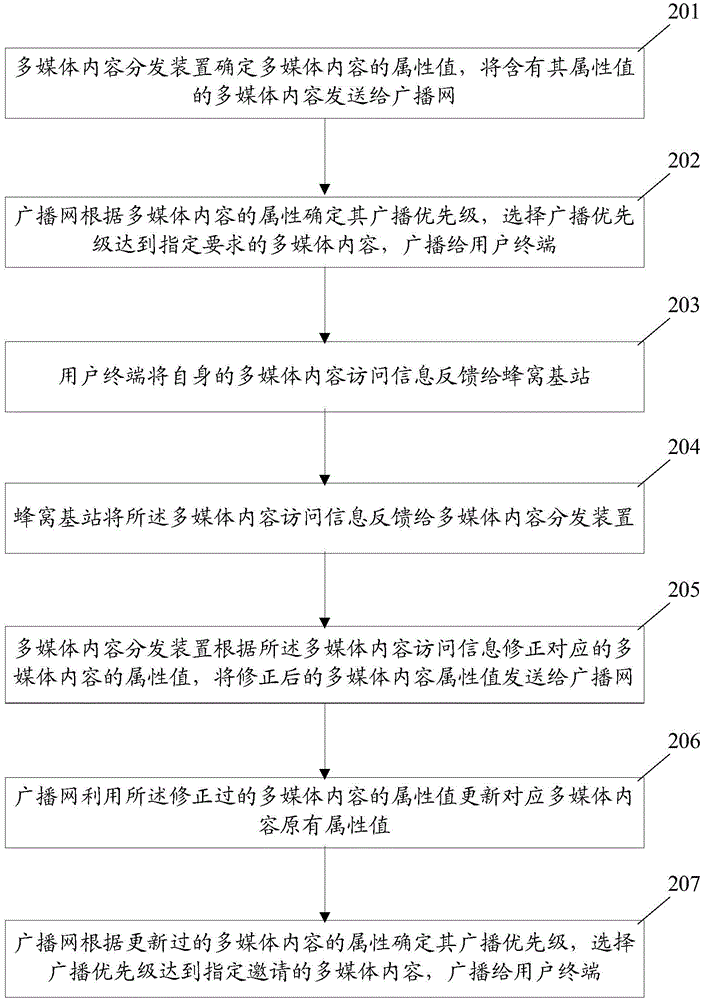

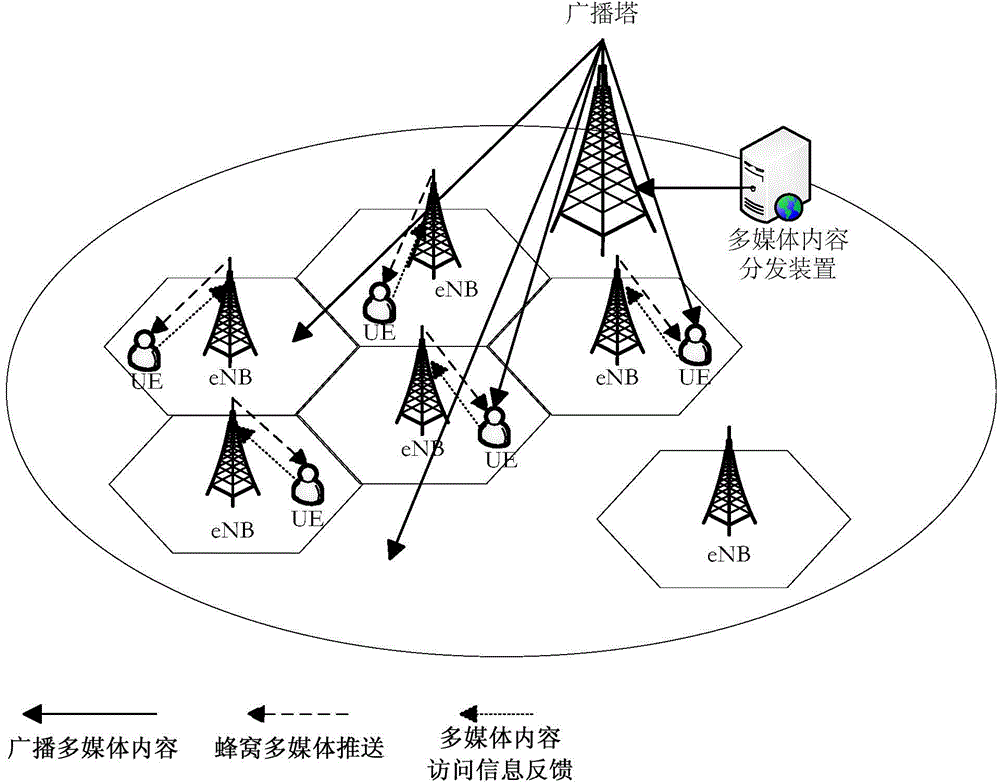

Transmission method and system for multimedia content, and corresponding method, subscriber terminal and device

InactiveCN105228027AImprove utilization efficiencyHigh degree of complianceSelective content distributionContent distributionSystem capacity

The invention discloses a transmission method and system for a multimedia content, and a corresponding method, subscriber terminal and device. The transmission method includes that: a multimedia content distribution device determines an attribute value of the multimedia content, and sends the multimedia content containing the attribute value to a broadcast network; according to an attribute of the multimedia content, the broadcast network determines a broadcast priority of the multimedia content, and broadcasts the multimedia content which meets a specified requirement of the broadcast priority to the subscriber terminal; the subscriber terminal feeds access information of the own multimedia content back to a cellular eNB; the cellular eNB feeds the access information of the multimedia content back to the multimedia content distribution device; the multimedia content distribution device amends the corresponding attribute value of the multimedia content according to the access information of the multimedia content, and sends the amended attribute value of the multimedia content to the broadcast network, and then the broadcast network updates the broadcast and rebroadcasts. According to the invention, the load of a communication transmission pipeline caused by the increase of the service volume can be relieved, and the utilization efficiency of the system capacity is improved.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

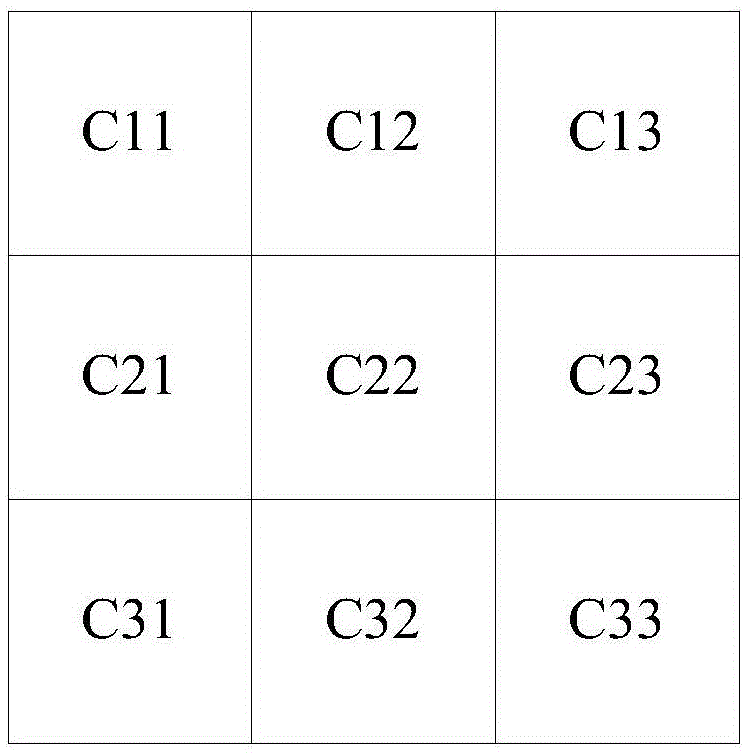

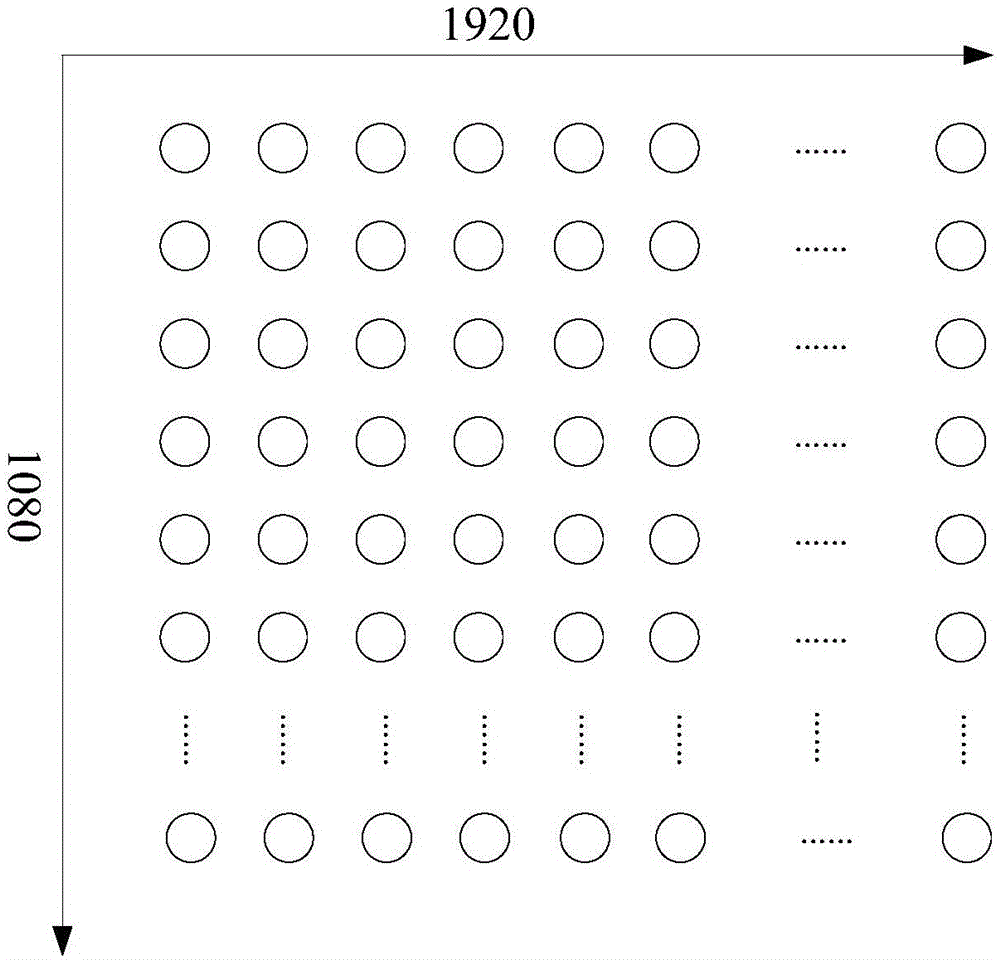

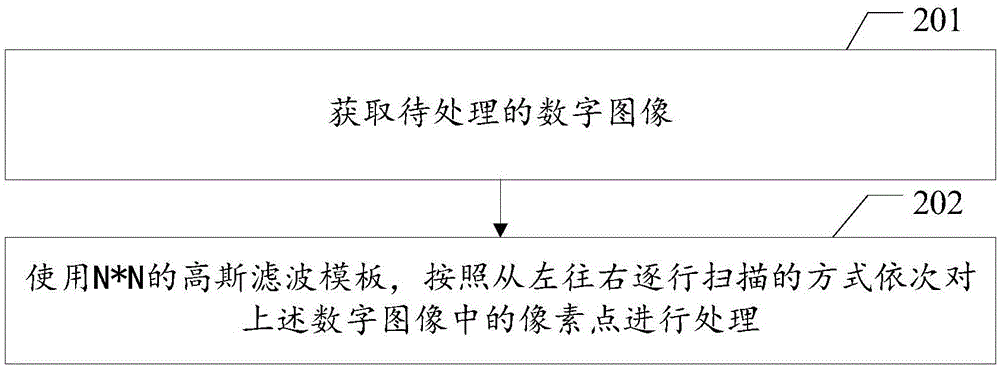

Digital image processing method digital image processing device

The invention discloses a digital image processing method digital image processing device, wherein the digital image processing method comprises the steps of: using an N*N gauss filtering template to successively process pixel points in a digital image by scanning from left to right line by line; in the processing process of the pixel points in the digital image, introducing a row vector [n1,n2...n3] to store weight values from a second column to an Nth column which are generated in the processing process of the pixel points; and in the processing process of the pixel points in the digital image except those in the (N+1) / 2th column, utilizing two columns of weighted values which are previously stored in the gauss filtering template to calculate new gray values of the pixel points. According to the scheme, the calculating amount for processing the pixel points is effectively reduced, and the cache space needed in the pixel points processing process is saved.

Owner:TCL CORPORATION

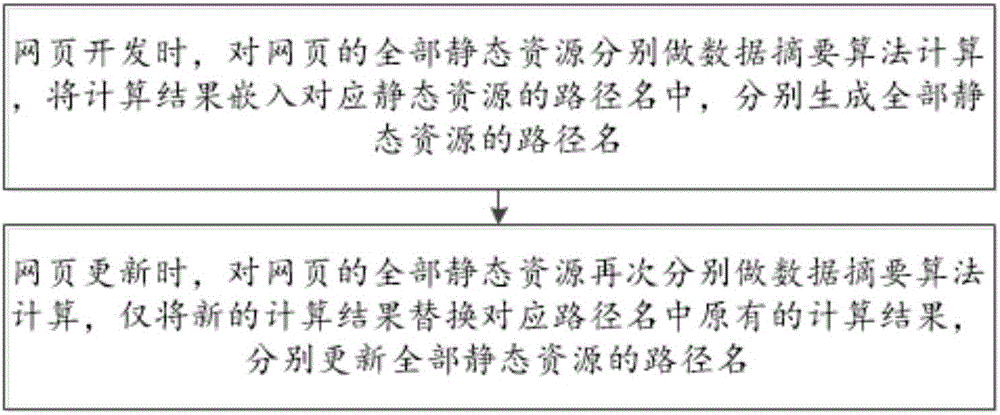

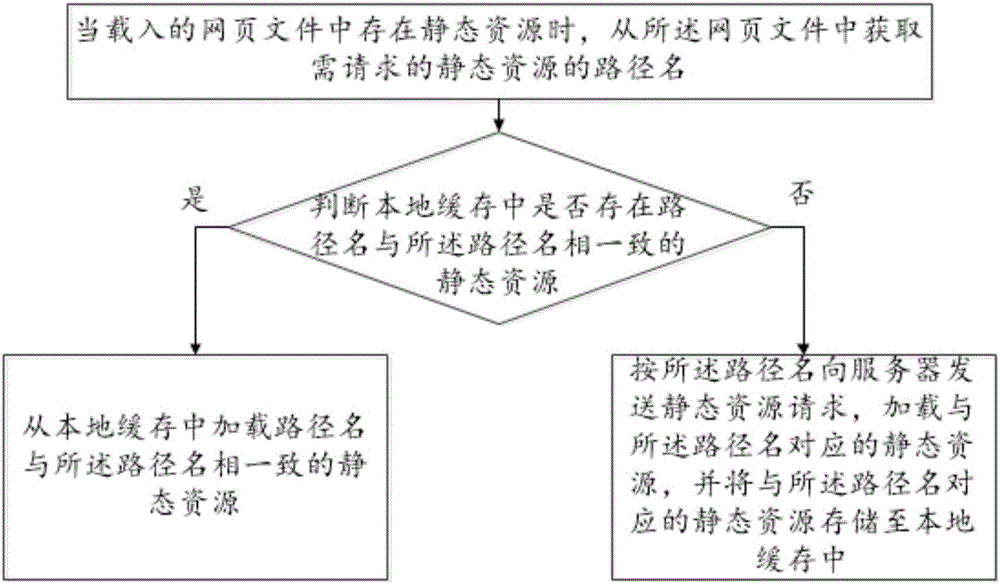

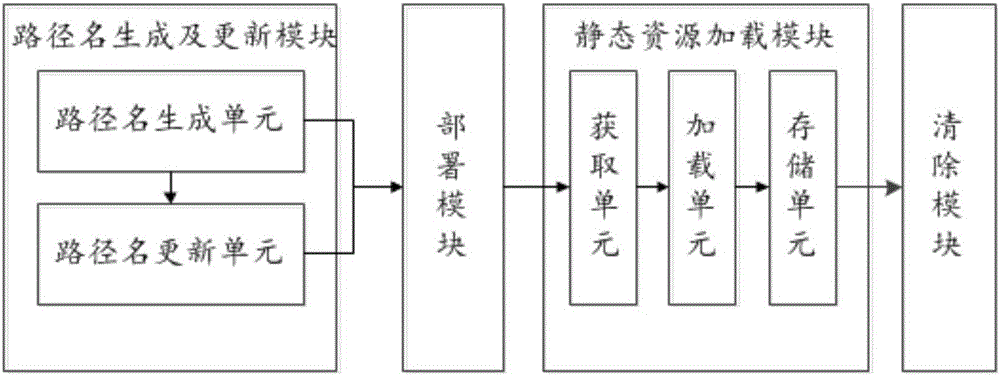

Static resource real-time effecting method and system

InactiveCN106021300AImplement non-covered publishingReal-time quick effectWebsite content managementSpecial data processing applicationsCoverage TypeTime effect

The invention relates to a static resource real-time effecting method and system. The static resource real-time effecting method includes: calculating a static resource through the data digest algorithm and embedding the calculation result in a path name. The calculation result obtaining from the data digest algorithm, corresponding to the static resource will change only when the static resource changes, and then the path name of the static resource changes; the static source is uploaded from a server only when the path name changes; the path name of an unchanged static resource does not change, and then the static resource is uploaded from a local cache; and the static resource real-time effecting method and system can achieve non-coverage type issue and real-time rapid effecting of the static resources.

Owner:北京思特奇信息技术股份有限公司

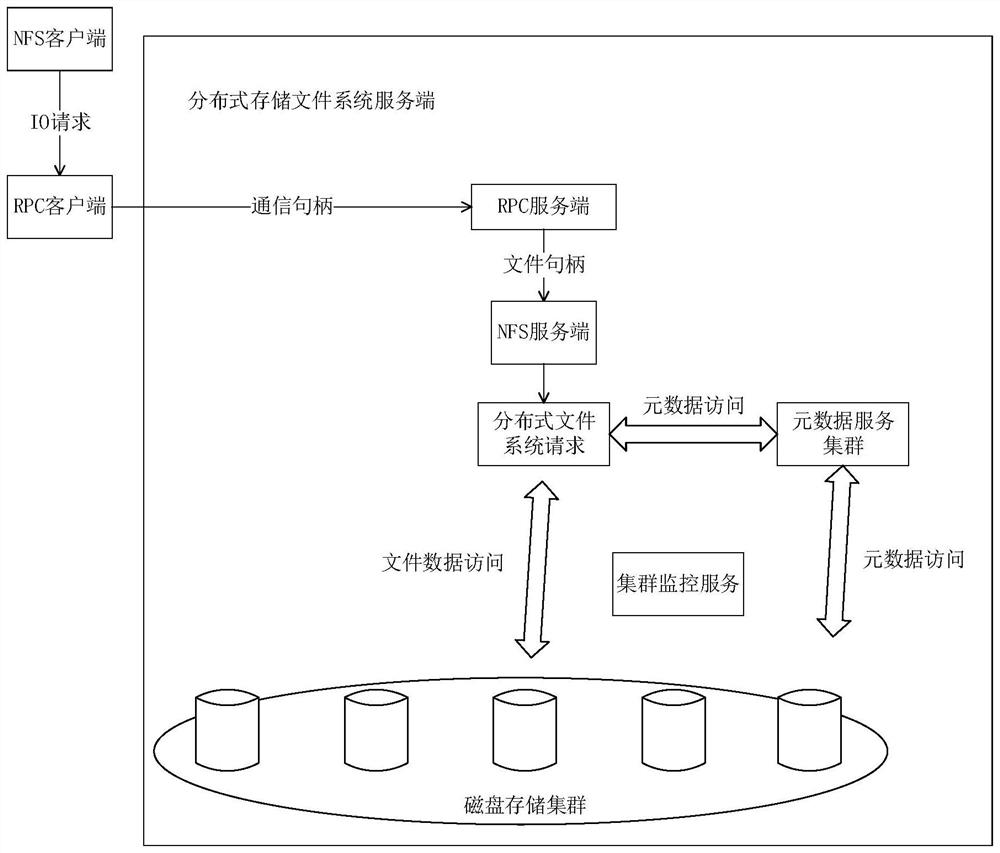

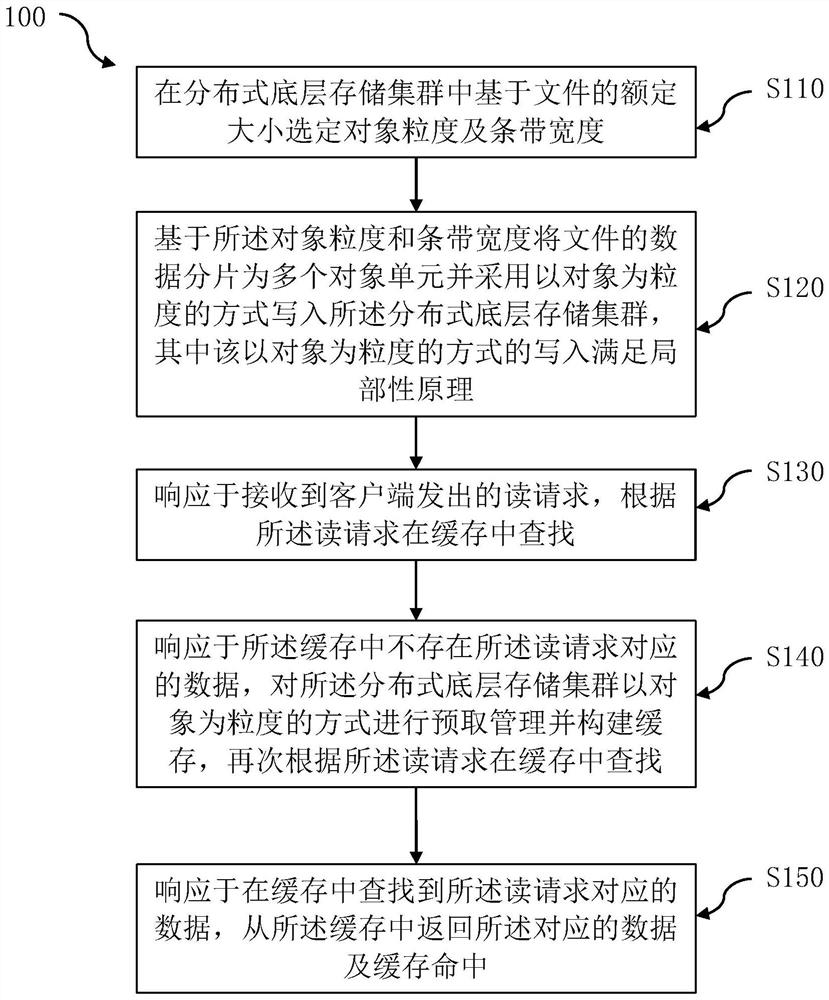

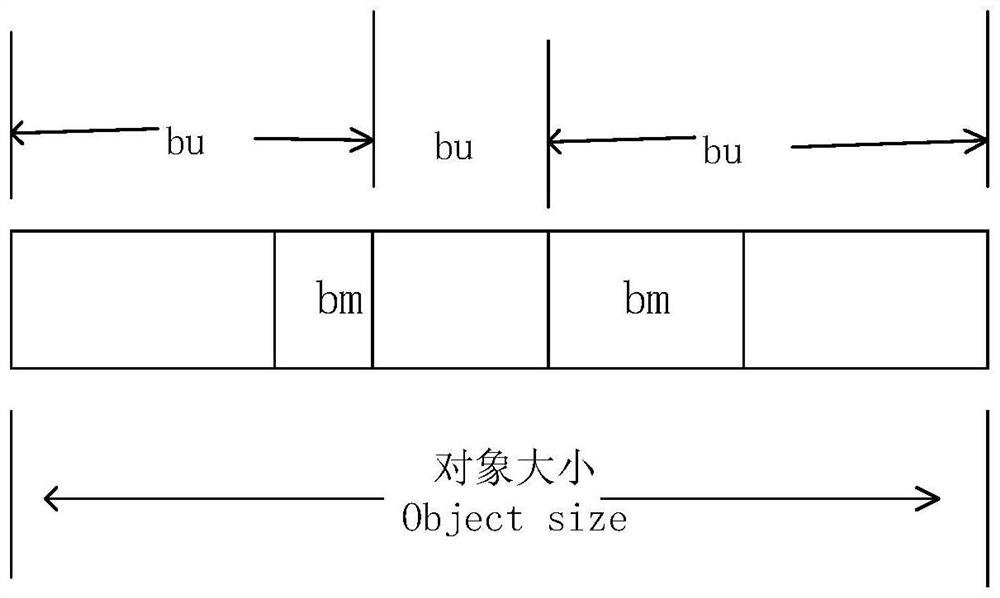

Distributed storage cache management method and system, storage medium and equipment

PendingCN114817195AFair useEasy to useFile access structuresSpecial data processing applicationsParallel computingCache management

The invention provides a distributed storage cache management method and system, a storage medium and equipment. The method comprises the following steps: selecting object granularity and stripe width in a distributed underlying storage cluster based on the rated size of a file; fragmenting data of the file into a plurality of object units based on the object granularity and the stripe width, and writing the object units into a distributed underlying storage cluster in a manner of taking the object as the granularity, wherein the writing in the manner of taking the object as the granularity meets a locality principle; in response to a received read request sent by a client, searching in the cache according to the read request; in response to the fact that the data corresponding to the read request does not exist in the cache, performing prefetching management on the distributed underlying storage cluster by taking the object as the granularity, constructing the cache, and searching in the cache again according to the read request; and in response to the data corresponding to the read request found in the cache, returning the corresponding data and cache hit from the cache. According to the method, the cache space is saved, the prefetching hit rate is increased, and the overall IO performance of storage is improved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

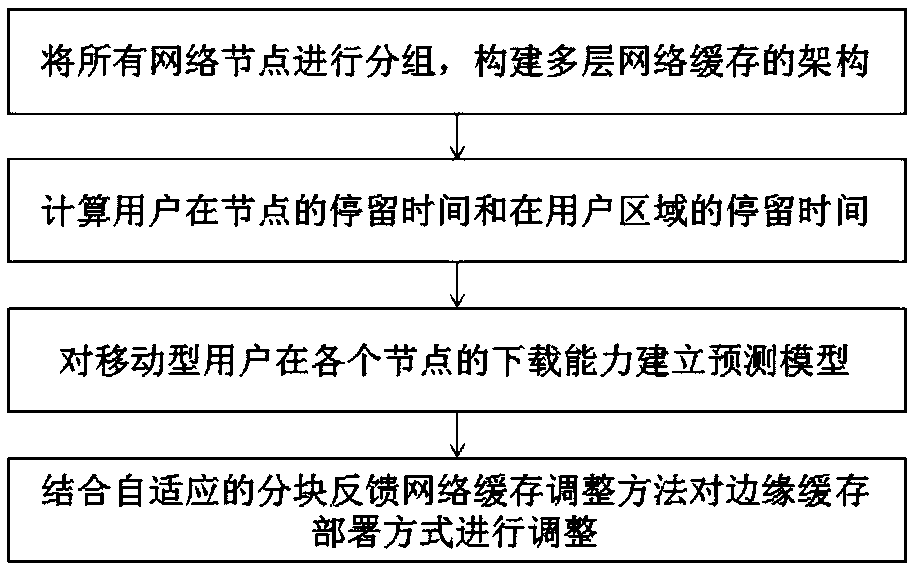

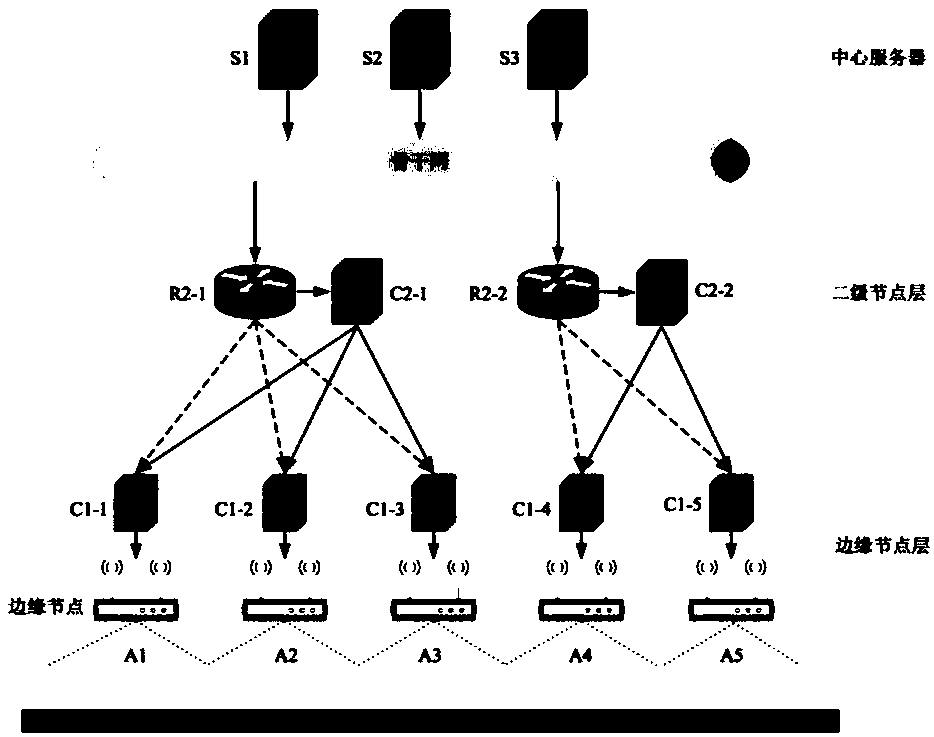

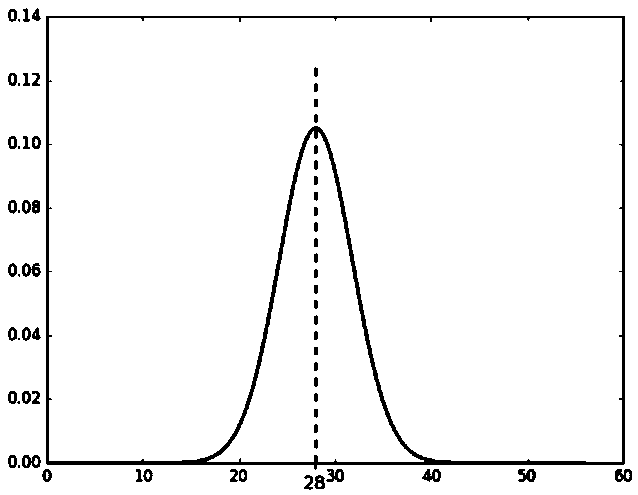

Network edge cache adjusting method oriented to user mobility

The invention discloses a network edge cache adjusting method oriented to user mobility. The method comprises the following steps: S1, grouping all network nodes to constructing architecture of multilayer network cache; S2, orderly computing the retention time at each node and the retention time at a user region by the user; S3, establishing a prediction model for the downloading capacity of the mobile user at each node according to the mobile condition of the user and the network status; and S4, adjusting an edge cache deployment way by combining the downloading capacity prediction model witha self-adaptive blocking feedback network cache adjusting method. Through the technical scheme provided by the invention, the space of a skeleton network load is further reduced by redesigning a deployment location of the cache space, a downloading condition feedback mechanism is added, the problem of the prediction limitation of the downloading capacity prediction model is corrected, the appropriate amount of and adequate amount of cache service can be provided according to a user demand, thereby satisfying the content access demand of the user with strong mobility, and the cache space canbe greatly saved.

Owner:SOUTHWEST JIAOTONG UNIV

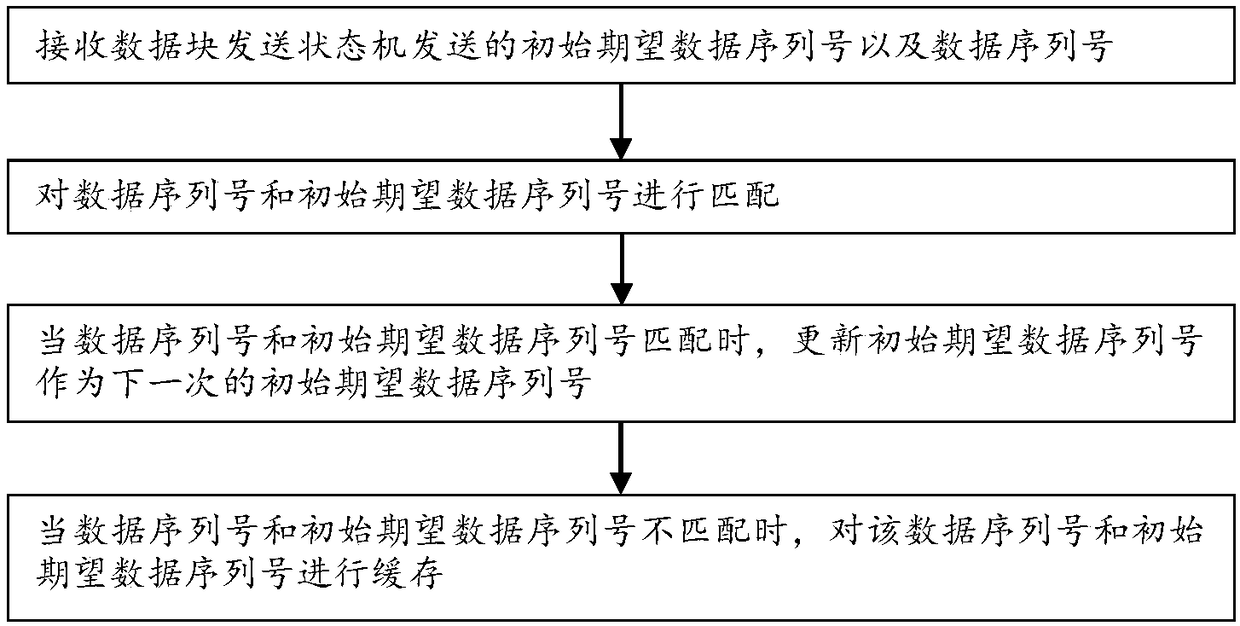

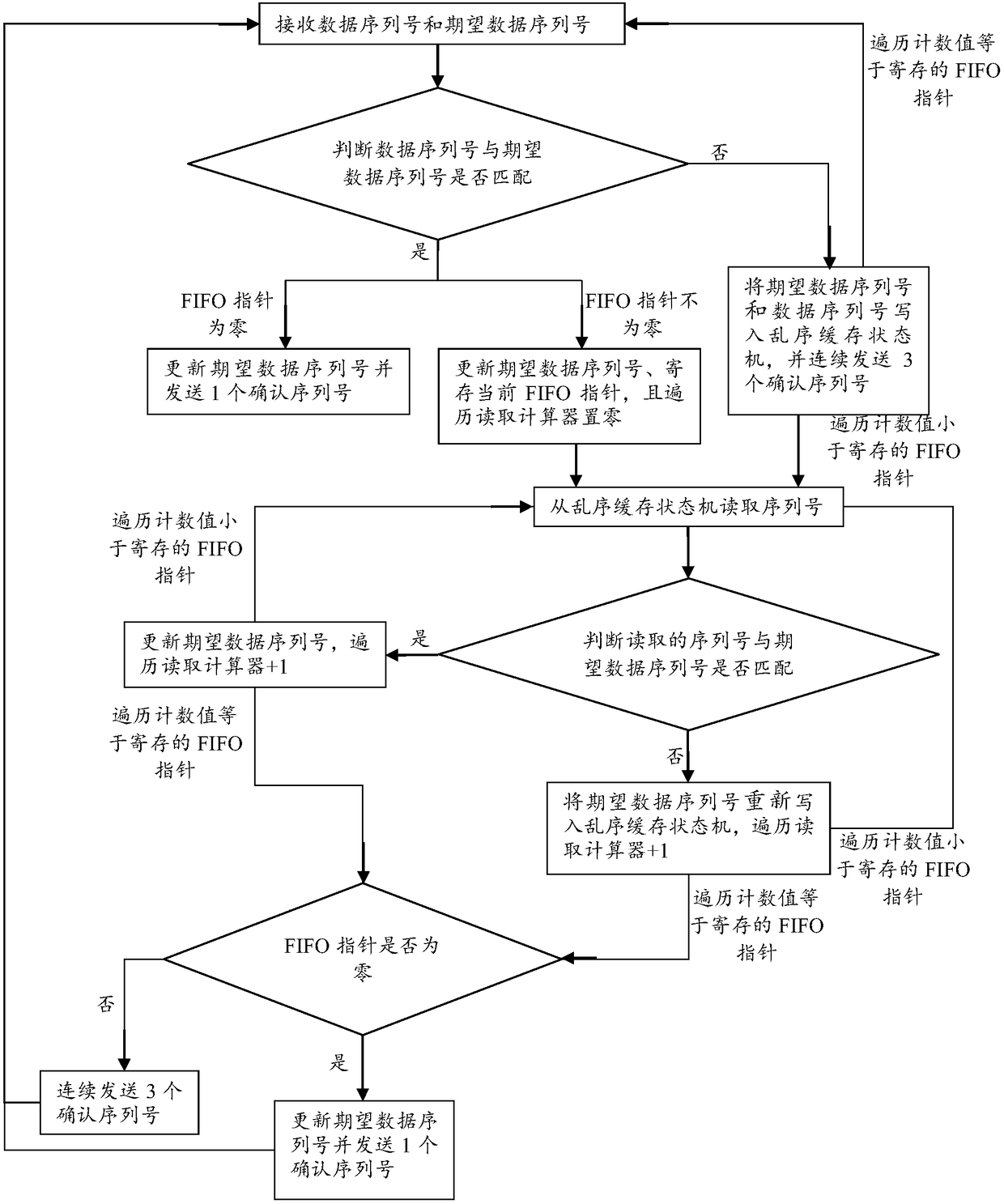

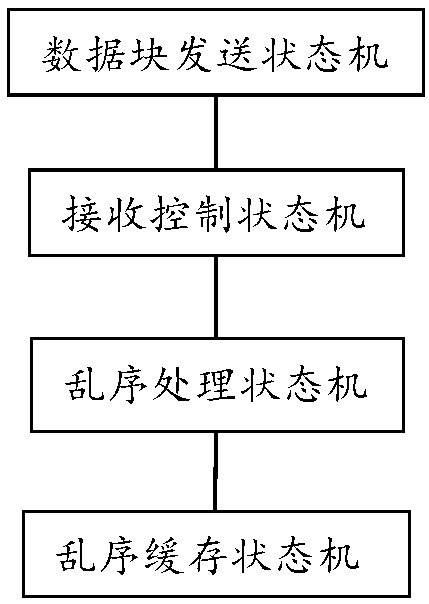

RFC6349-based data block out-of-order test method and apparatus

PendingCN108600041ASave cache spaceImprove data transfer efficiencyData switching networksData sequencesSerial code

The invention provides an RFC6349-based data block out-of-order test method and apparatus. The method comprises the following steps: receiving an initial expected data sequence number and a data sequence number sent by a data block sending state machine; matching the data sequence number with the initial expected data sequence number; when the data sequence number is matched with the initial expected data sequence number, updating the initial expected data sequence number to serve as the initial expected data sequence number of the next time; and when the data sequence number is not matched with the initial expected data sequence number, caching the data sequence number and the initial expected data sequence number. By adoption of the method and apparatus provided by the invention, the data do not need to be cached, so that the cache space is saved.

Owner:OPWILL TECH BEIJING

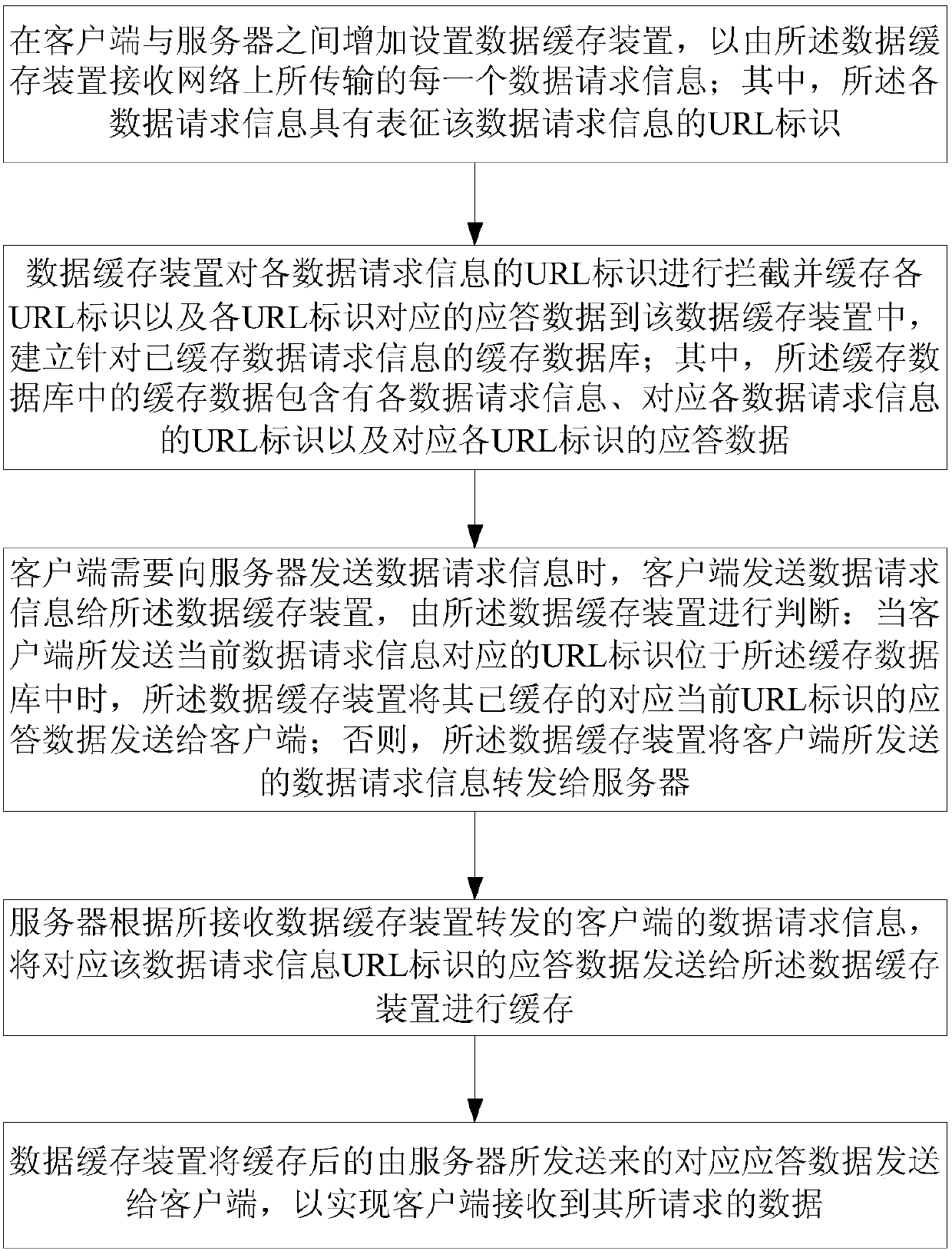

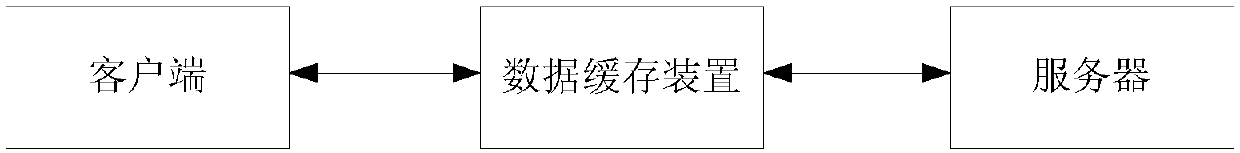

Data caching method and system for network request

The invention relates to a data caching method and system for a network request, and the method comprises the steps: adding a data caching device between a client and a server, and receiving data request information which is transmitted on a network and has a URL identifier; the caching device intercepts and caches the URL identifier of each piece of data request information and establishes a caching database; when the client sends a data request to the server, the client sends data request information to the data caching device for judgment: when the URL identifier corresponding to the current data request information sent by the client is located in the caching database, the data caching device sends cached response data corresponding to the current URL identifier to the client; otherwise, the data caching device forwards the data request information sent by the client to the server; the server sends response data corresponding to the data request information URL identifier to the data caching device for caching; and the data caching device sends the cached corresponding response data to the client, so that the client can receive the requested data.

Owner:NINGBO FOTILE KITCHEN WARE CO LTD

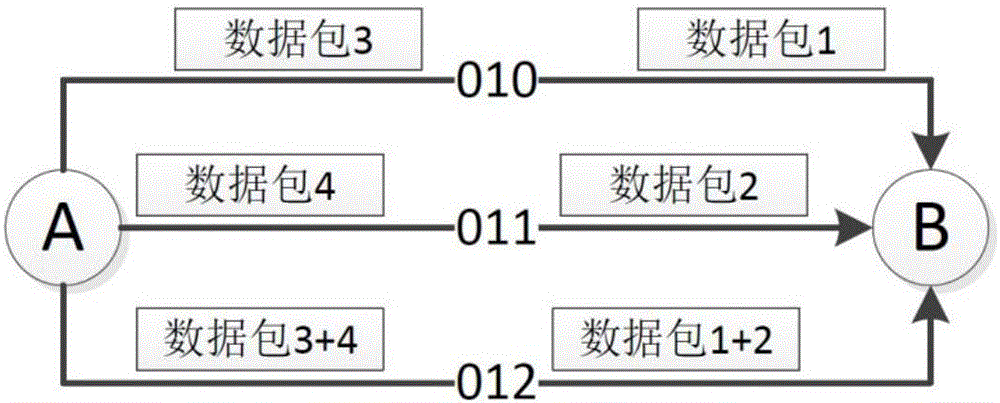

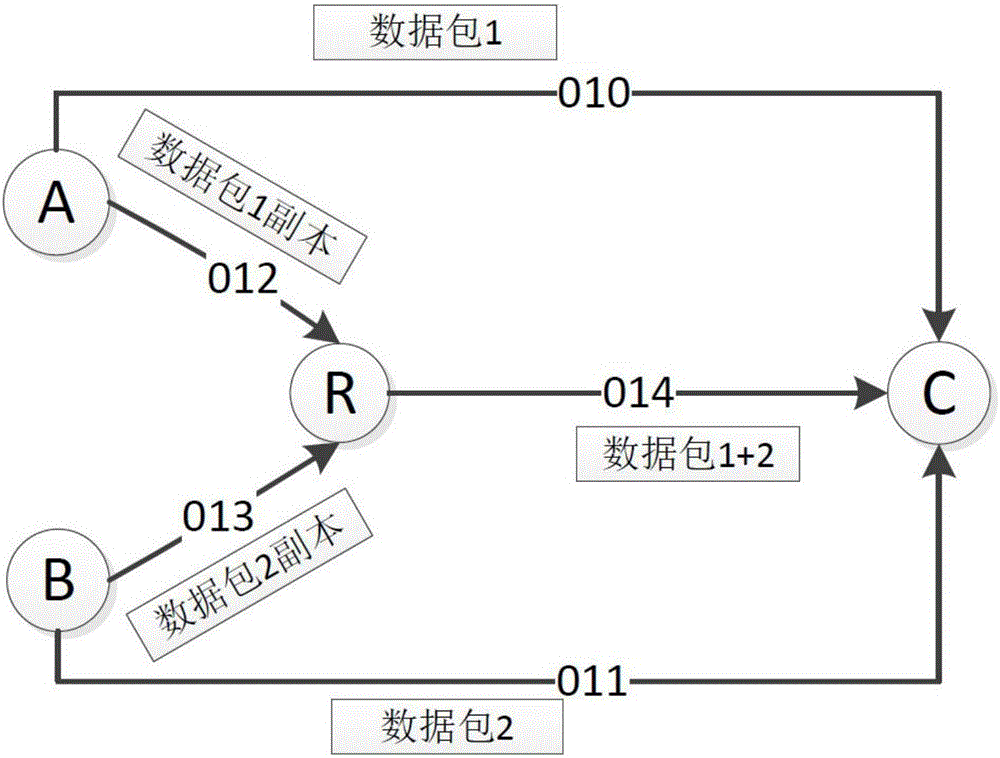

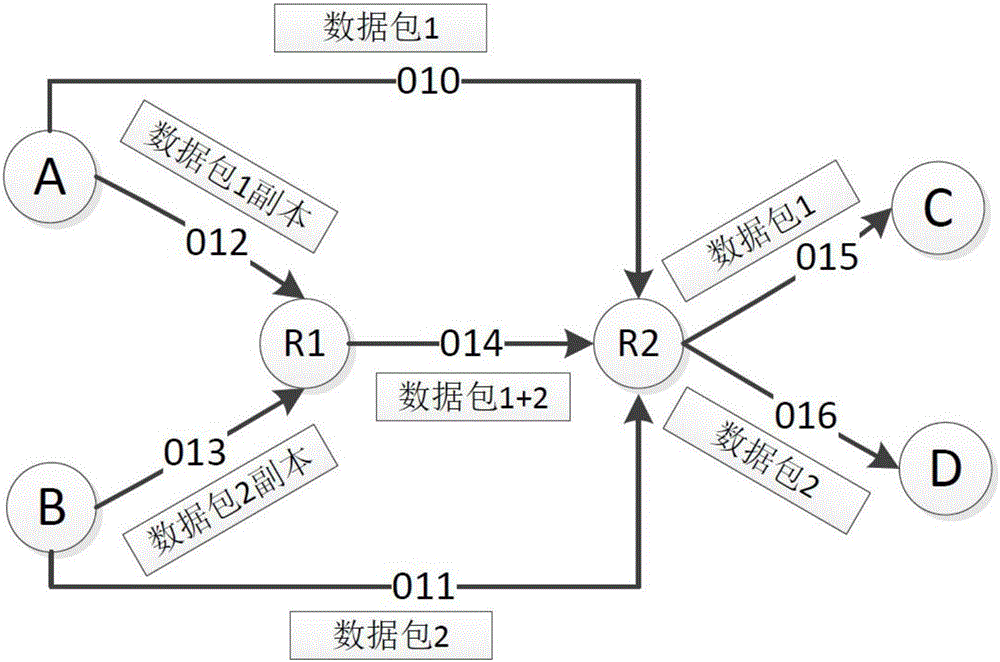

Network coding and path protection-based optical network single-link fault protection method

InactiveCN106656416AGuaranteed normal transmissionNo overflowElectromagnetic transmissionForward error control useNetwork linkProtection mechanism

The present invention relates to a network coding and path protection-based optical network single-link fault protection method. According to the method, a 1+1 path protection and network coding-combined method is adopted, relay nodes are responsible for network coding; when overflow occurs on the buffer of a certain pair of nodes, a data packet which has been buffered for the longest time is transmitted along a protection path, and a 1+1 path protection method is used; and the packet header of the data packet is provided with an identification bit which is used for indicating whether the data packet has been subjected to network coding, if the identification bit is 1, it is indicated that the data packet has been subjected to network coding, and the identification bit is 0, it is indicated that the data packet has not been subjected to network coding, and a destination node receives the data packet transmitted along the protection path, reads packet header information, judges whether the data packet has been subjected to network coding and performs corresponding processing. With the network coding and path protection-based optical network single-link fault protection method of the invention adopted, the normal transmission of data in a network can be ensured when a single-link fault occurs on network links, and redundant data packets generated in the network due to a protection mechanism can be decreased.

Owner:TIANJIN UNIV

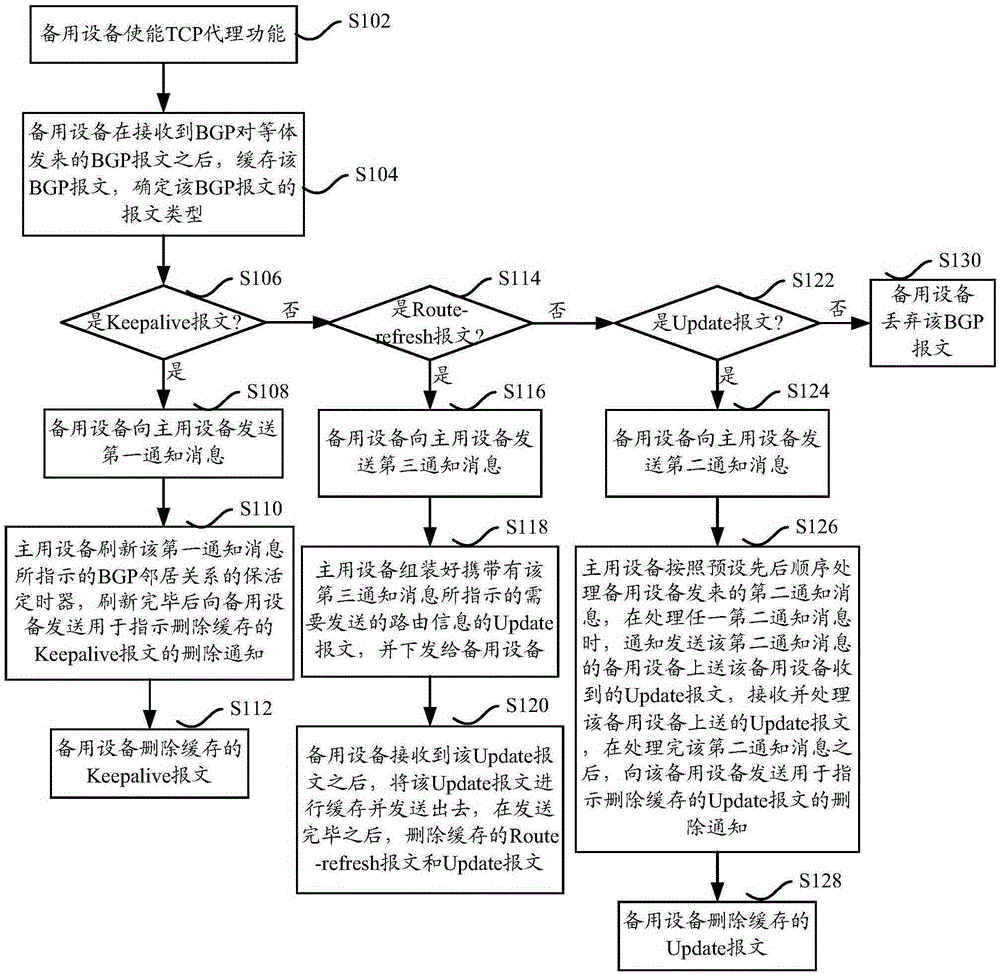

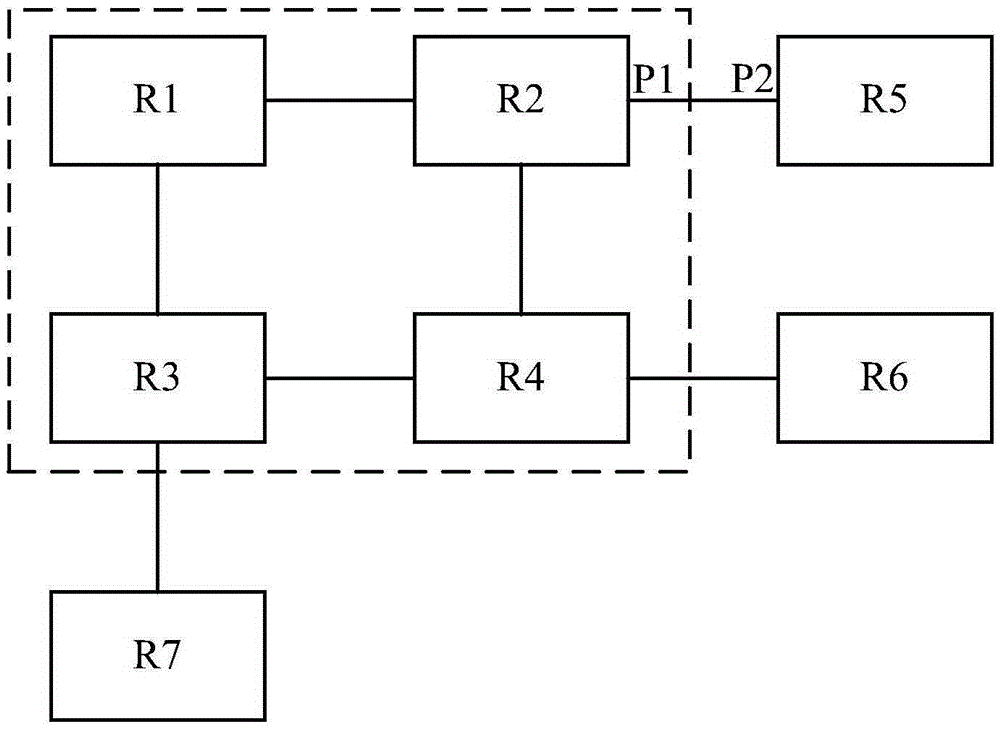

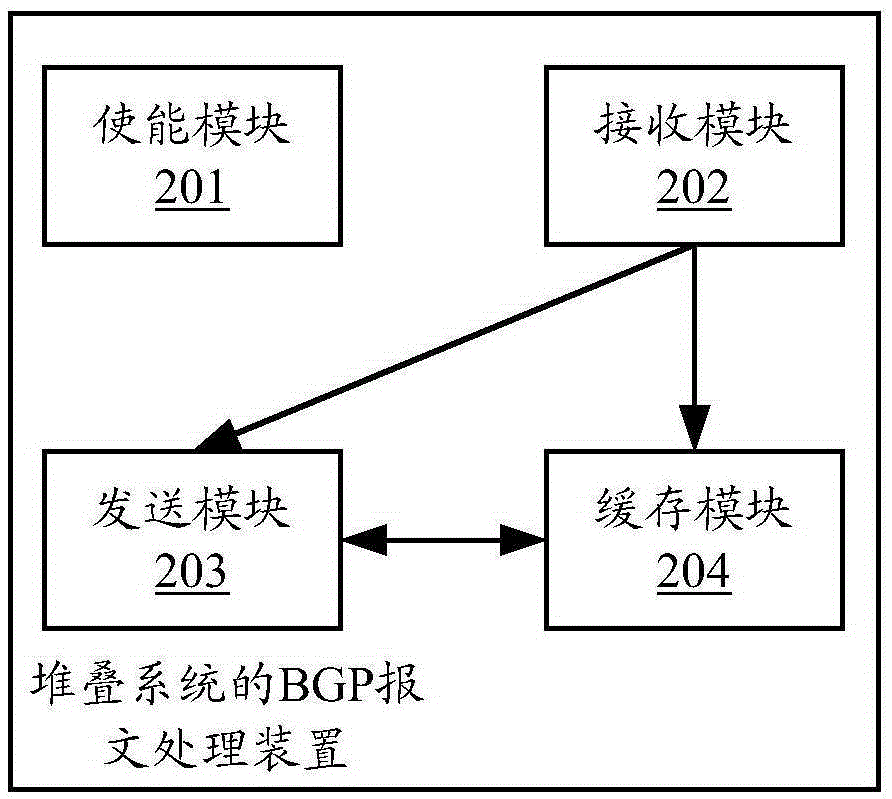

BGP message processing method and device of stack system

ActiveCN105591951AReduce occupancySave cache spaceData switching networksHigh level techniquesBorder Gateway ProtocolMessage processing

The present invention discloses a BGP (Border Gateway Protocol) message processing method and device of a stack system. The stack system operates a BGP protocol and comprises a master and a slave. The method comprises: a TCP (Transmission Control Protocol) agency function is available when the slave is used; buffering the received BGP message and sending a notification message to the master after receiving BGP message emitted by a BGP peer pair; and deleting buffered BGP message after the master processes the notification message. The buffer space of a master is saved, and the CPU resource occupy of a master is reduced.

Owner:NEW H3C TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com