Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

37results about How to "Close to life" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Meal replacement protein powder solid beverage for managing body weight

InactiveCN104287049AGuaranteed intakeIntake controlMilk preparationSugar food ingredientsFiberAdditive ingredient

The invention discloses a meal replacement protein powder solid beverage for managing the body weight and belongs to the field of nutrient foods. The solid beverage comprises the following components of soybean isolated protein, dried skim milk, whey protein concentrate, resistant dextrin, inulin, L-carnitine, a white kidney bean extract, a guarana extract, soy dietary fiber powder, pea fiber, oat fiber, erythritol, stevioside, phospholipid, Arabic gum, xanthan gum, pectin, alkalized cocoa powder, xylooligosaccharide and edible essence. By comprehensively considering the difference of nutrient components required by a human body at daytime and nighttime, the solid beverage disclosed by the invention is reasonable in formula matching and balanced in nutrition, and is the meal replacement solid beverage for supplementing the nutrients of the human body and controlling the body weight; the production process is simple and the drying is avoided; the damage to plant extracts and other components is prevented, so that the effects of nutrient components are guaranteed; besides, no sugar is added in the formula of the meal replacement protein powder solid beverage, so that the meal replacement protein powder solid beverage is suitable for being eaten by people with diabetes.

Owner:JIANGSU ALAND NOURISHMENT

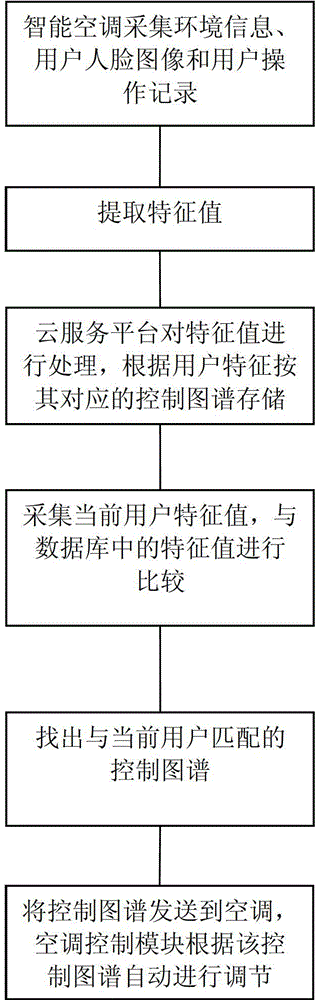

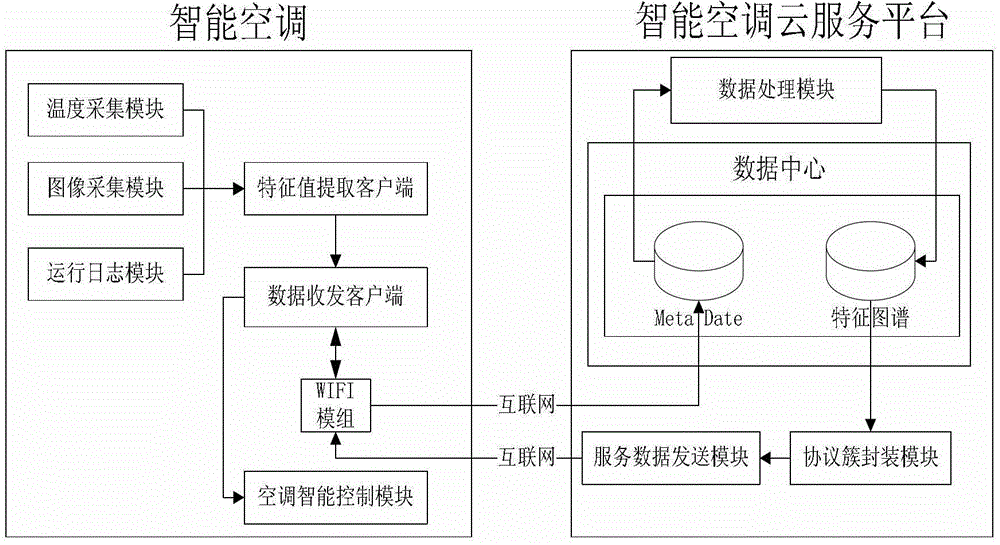

Intelligent air-conditioner control method and system based on self-adaptive temperature control technique

ActiveCN102927660ARun smartSimple and fast operationSpace heating and ventilation safety systemsLighting and heating apparatusTemperature controlPersonalization

The invention relates to an intelligent control technique, and in particular relates to an intelligent air-conditioner control method and system based on a self-adaptive temperature control technique. The method provided by the invention mainly comprises the following steps that: an intelligent air-conditioner acquires environment information, facial images of users and operation records of the users, and extracts feature values; a cloud service platform processes the feature values, stores the feature values into corresponding control maps according to the user features, acquires the feature values of a current user, compares the feature values of the current user with feature values in a data bank, finds out the control map matched with the current user, and transmits the control map to the air-conditioner; and an air-conditioner control module automatically adjust the air-conditioner according to the control map. The intelligent air-conditioner control method has the beneficial effect of enabling the intelligent air-conditioner to provide individual temperature adjustment services according to the different users and different situations, so that the air-conditioner can be operated more simply and work more intelligently, and is closer to the life of people. The intelligent air-conditioner control method is particularly applicable to the intelligent air-conditioner.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

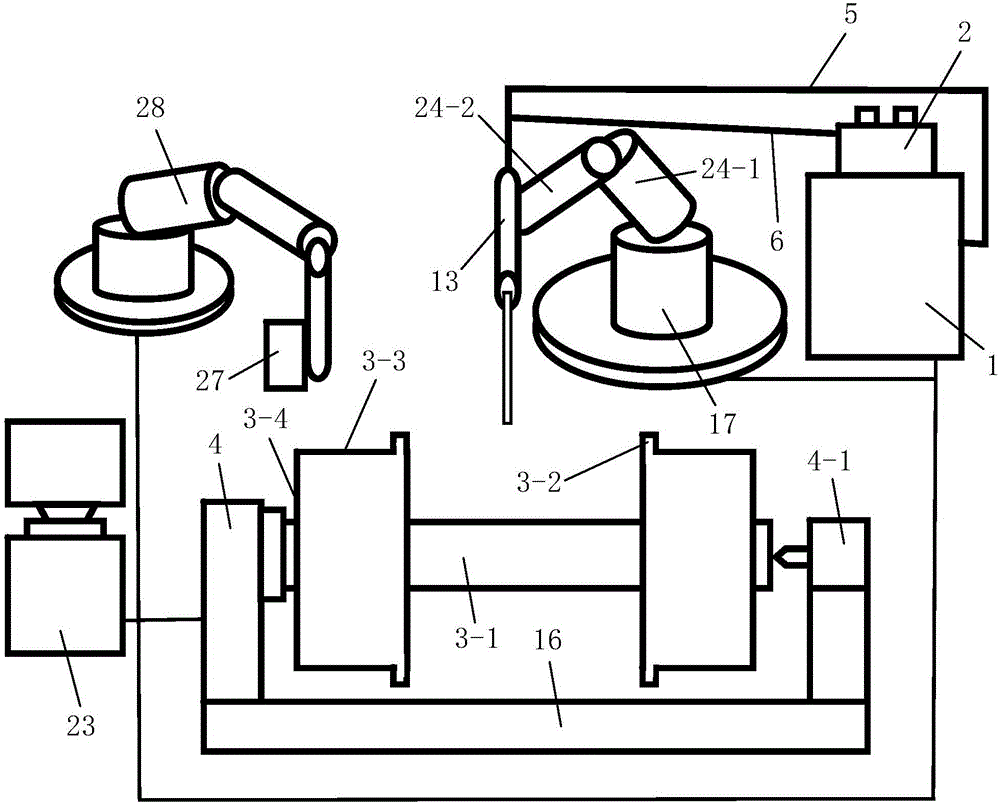

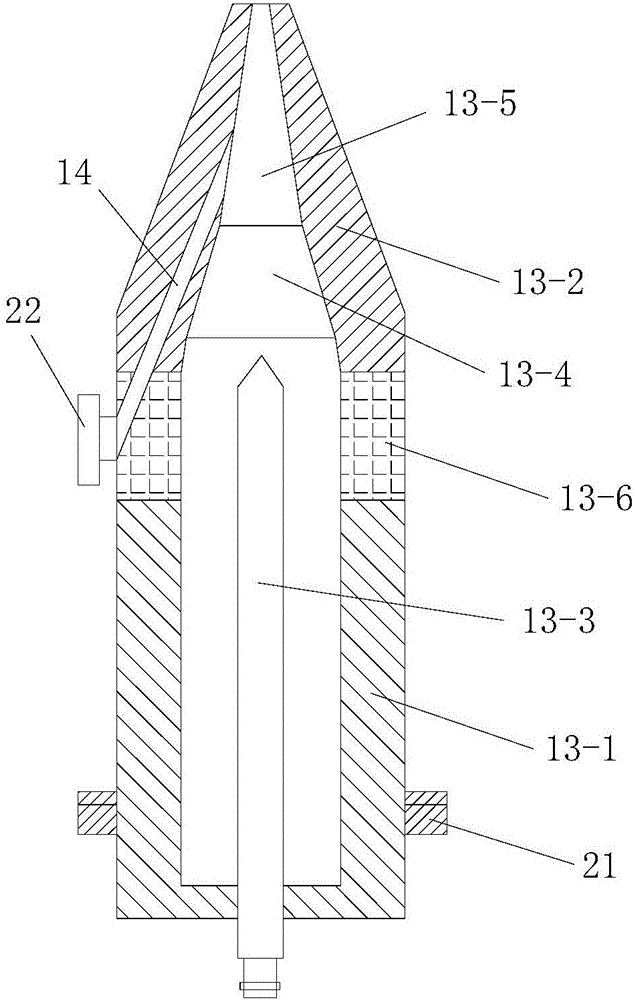

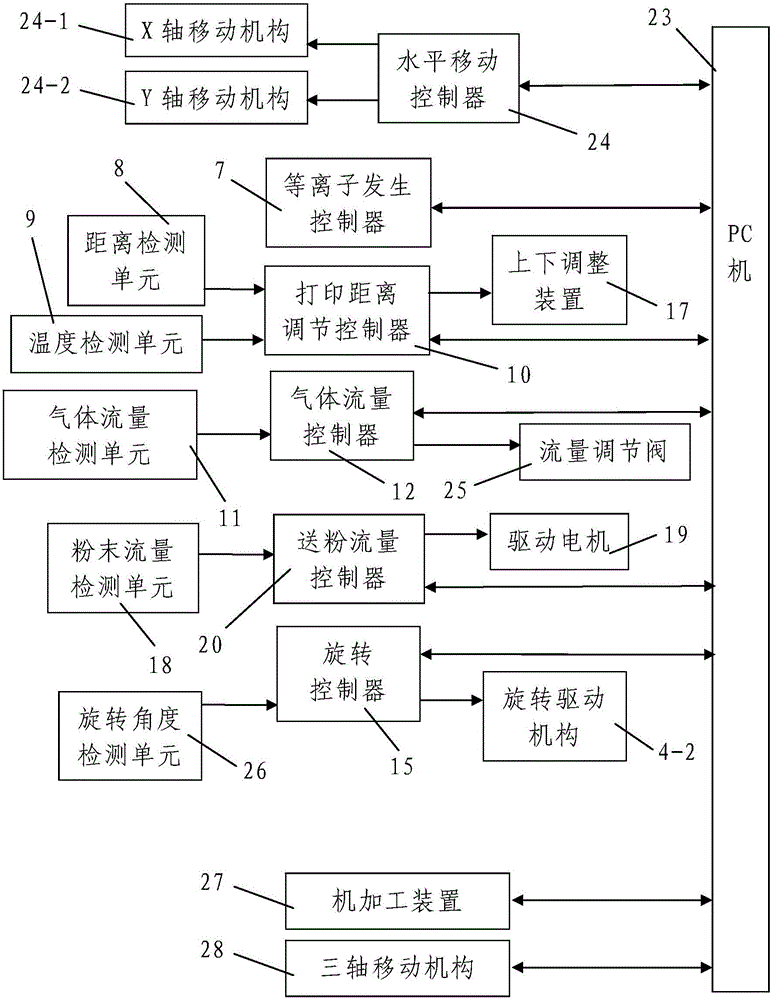

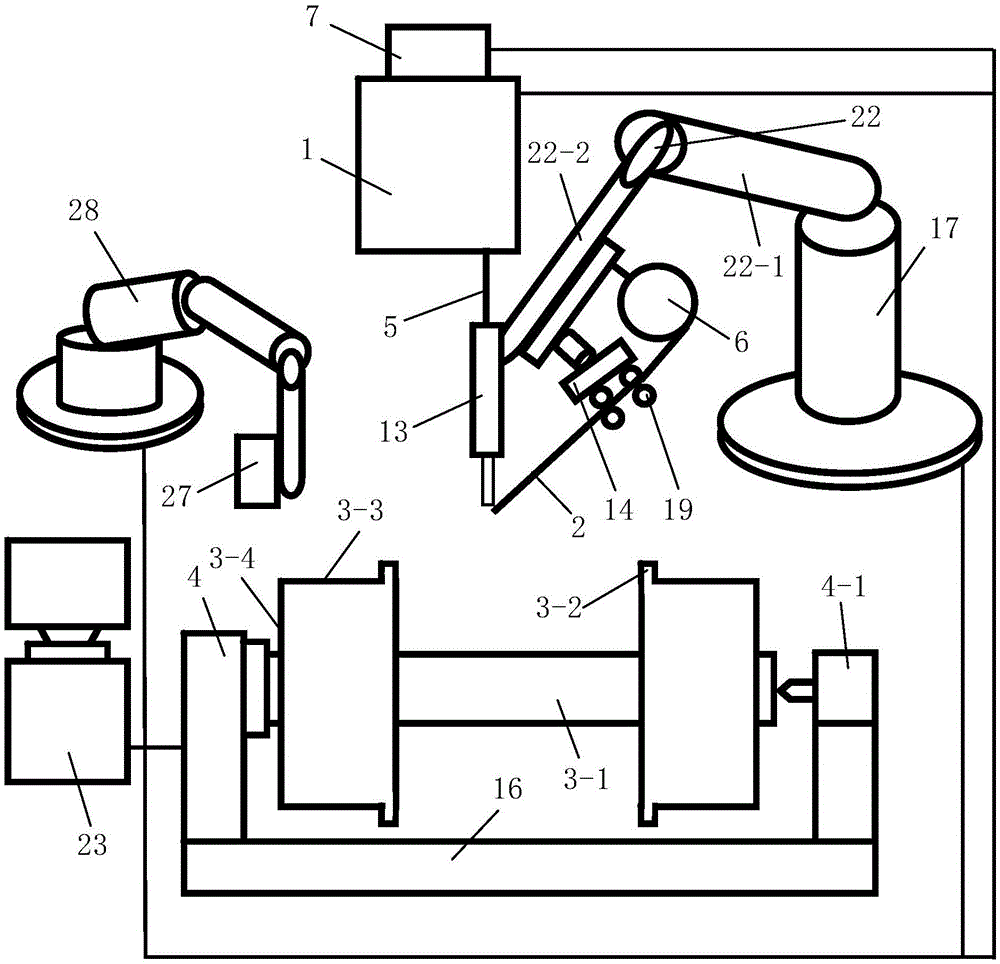

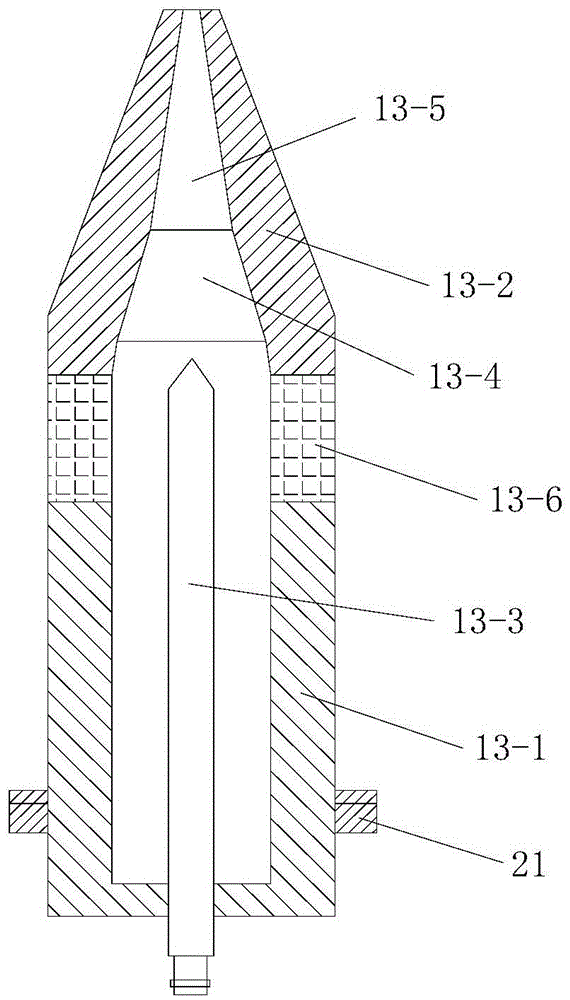

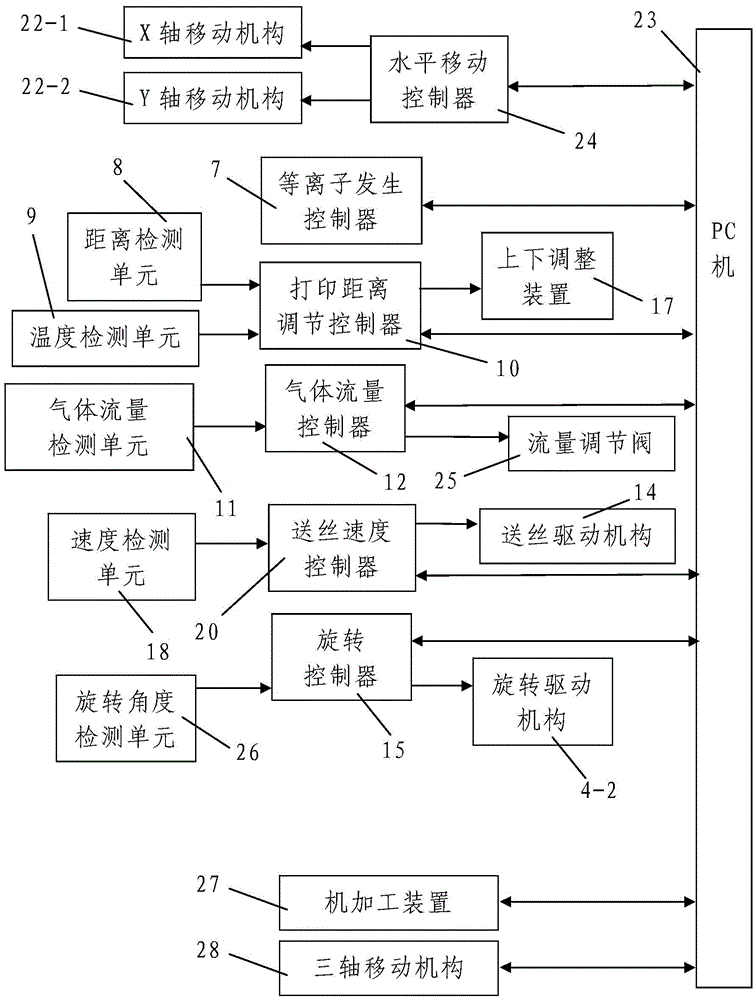

Plasma 3D printing remanufacturing equipment and method for train wheel

InactiveCN105710371ASimple structureReasonable designAdditive manufacturing apparatusIncreasing energy efficiencyDistance detectionPlasma beam

The invention discloses plasma 3D printing remanufacturing equipment and method for a train wheel. The plasma 3D printing remanufacturing equipment consists of a monitoring system, a plasma beam processing system, a machining device for machining a train wheel to be repaired, a triaxial moving mechanism used for driving the machining device to move and a horizontal printing platform used for placing the train wheel to be repaired, wherein the plasma beam processing system consists of a plasma generator, a printing position adjusting device, an air supply device and a powder feeder; and the monitoring system comprises a horizontal movement controller, a temperature detection unit, a distance detection unit, a printing distance adjustment controller and a rotation controller, and the temperature detection unit and the printing distance adjustment controller constitute a temperature adjustment and control device. The plasma 3D printing remanufacturing method comprises the following steps: firstly, detecting defects of the train wheel; and secondly, repairing the train wheel. The plasma 3D printing remanufacturing equipment is reasonable in design, simple and convenient to operate, high in efficiency and good in using effect, the repair process is directly carried out in the atmospheric environment without a closed molding room, the repaired train wheel is good in quality, and the repair efficiency is high.

Owner:SINOADDITIVE MFG EQUIP CO LTD

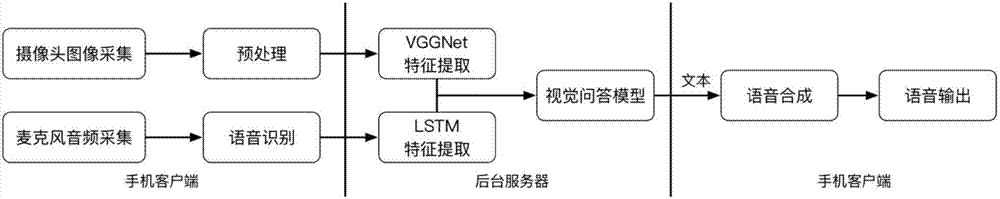

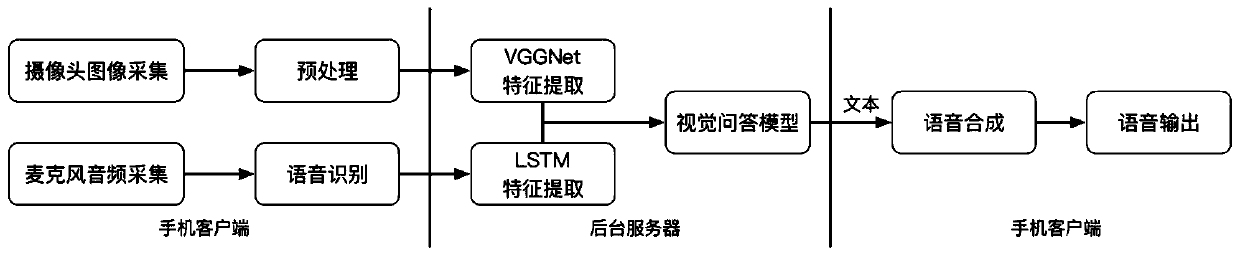

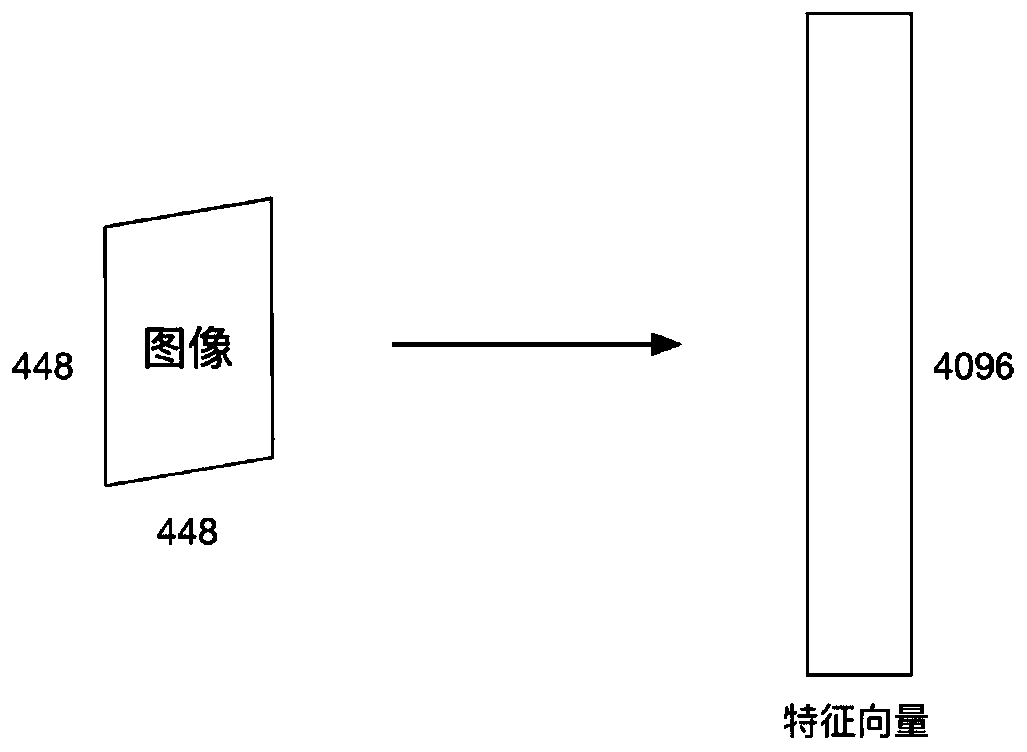

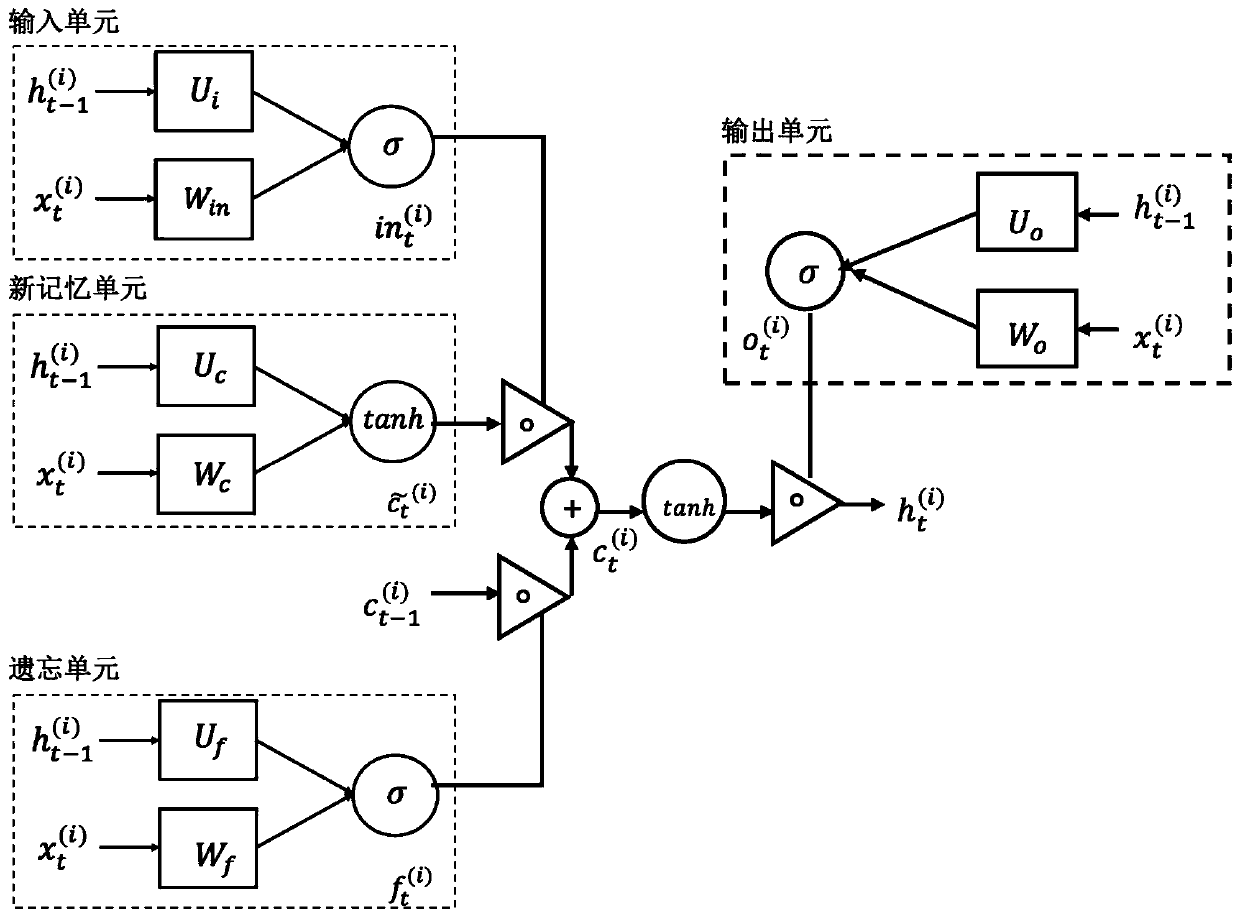

Method for constructing deep visual Q&A system for visually impaired persons

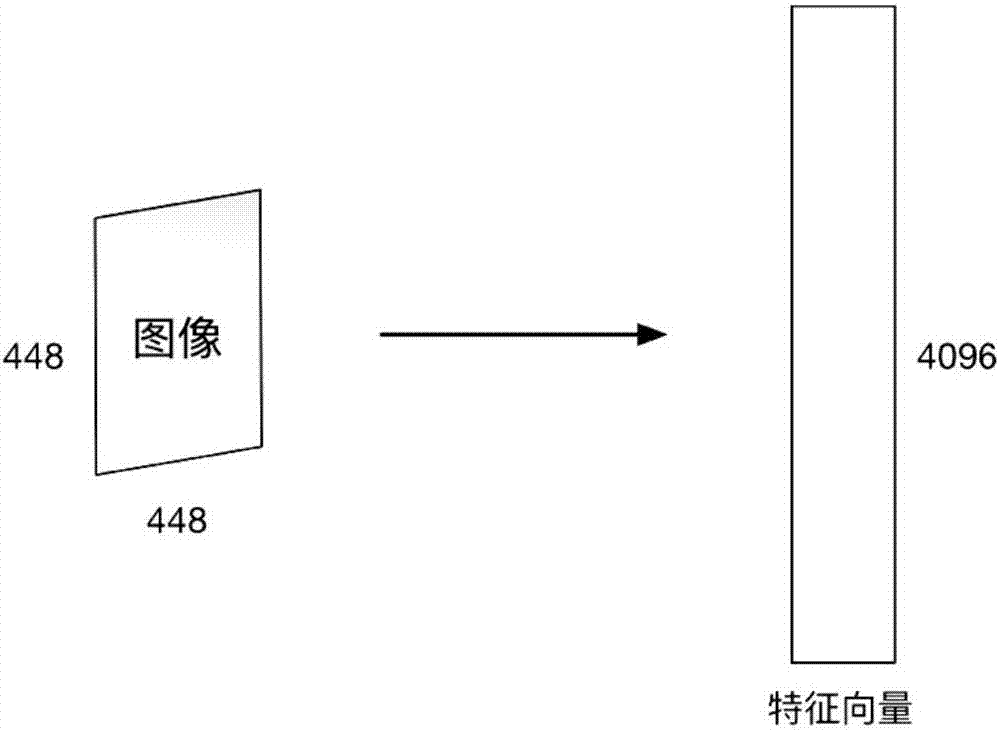

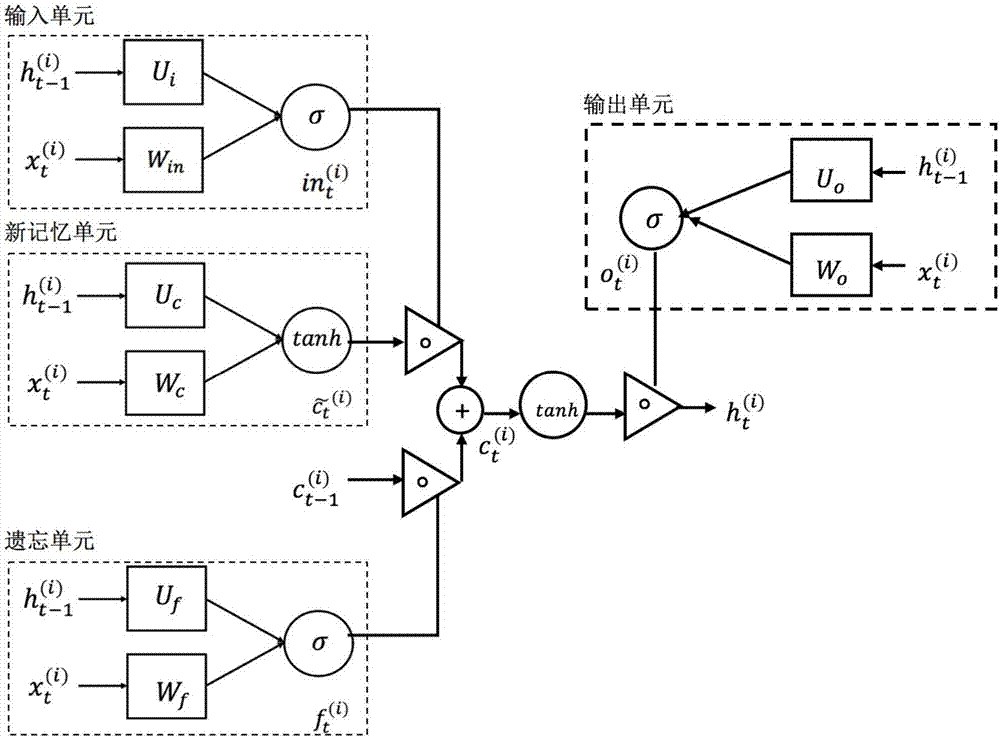

ActiveCN106951473ALimited recognitionShorten the timeCharacter and pattern recognitionNeural architecturesTraining phaseBack propagation algorithm

The present invention discloses a method for constructing a deep visual Q&A system for visually impaired persons. In the training phase, the method comprises: taking collected pictures and a corresponding Q&A text to constitute a training set; extracting picture features for the pictures by using the convolutional neural network; for a question text, converting questions into a word vector list by using the word vector technique, and taking the word vector list as input of the LSTM so as to extract question features; and finally, after carrying out element dot product on the pictures and the question features, carrying out classification on the pictures and the question features so as to obtain an answer prediction value, comparing the answer prediction value with an answer tag in the training set, calculating the loss, and using the back propagation algorithm to optimize the model. In the running phase, the method comprises that: a client obtains photos taken by the user and the question text, and uploads the photos and the question text to a server; the server inputs the uploaded photos and question text into a trained model, extracts question features by using the same manner, outputs a corresponding answer prediction value by using a classifier, and returns the answer prediction value to the client; and the client returns the answer prediction value to the user in a form of voice input.

Owner:ZHEJIANG UNIV

Plasma 3D fast forming and remanufacturing method and equipment of train wheels

InactiveCN105522155ASimple structureReasonable designAdditive manufacturing apparatusIncreasing energy efficiencyMonitoring systemPlasma generator

The invention discloses a plasma 3D fast forming and remanufacturing method and equipment of train wheels. The equipment comprises a monitoring system, a plasma beam machining system, a machining device for machining to-be-repaired train wheels, a three-axis moving mechanism for driving the machining device to move and a horizontal printing platform for placing the to-be-repaired train wheels. The plasma beam machining system comprises a plasma generator, a gas supply device, a feeding device and a printing positioning adjusting device. The monitoring system comprises a horizontal movement controller, a temperature detecting unit, a distance detecting unit, a printing distance adjusting controller and a rotation controller, wherein the temperature detecting unit and the printing distance adjusting controller form a temperature adjusting and control device. The method includes the steps of firstly, detecting train wheel defects; secondly, repairing the train wheels. The plasma 3D fast forming and remanufacturing method and equipment has the advantages that the equipment is reasonable in design, simple to operate, high in efficiency, good in use effect and high in repairing efficiency, a closed forming room is not needed, the repairing process is directly performed under an atmospheric environment, and the repaired train wheels are good in quality.

Owner:SINOADDITIVE MFG EQUIP CO LTD

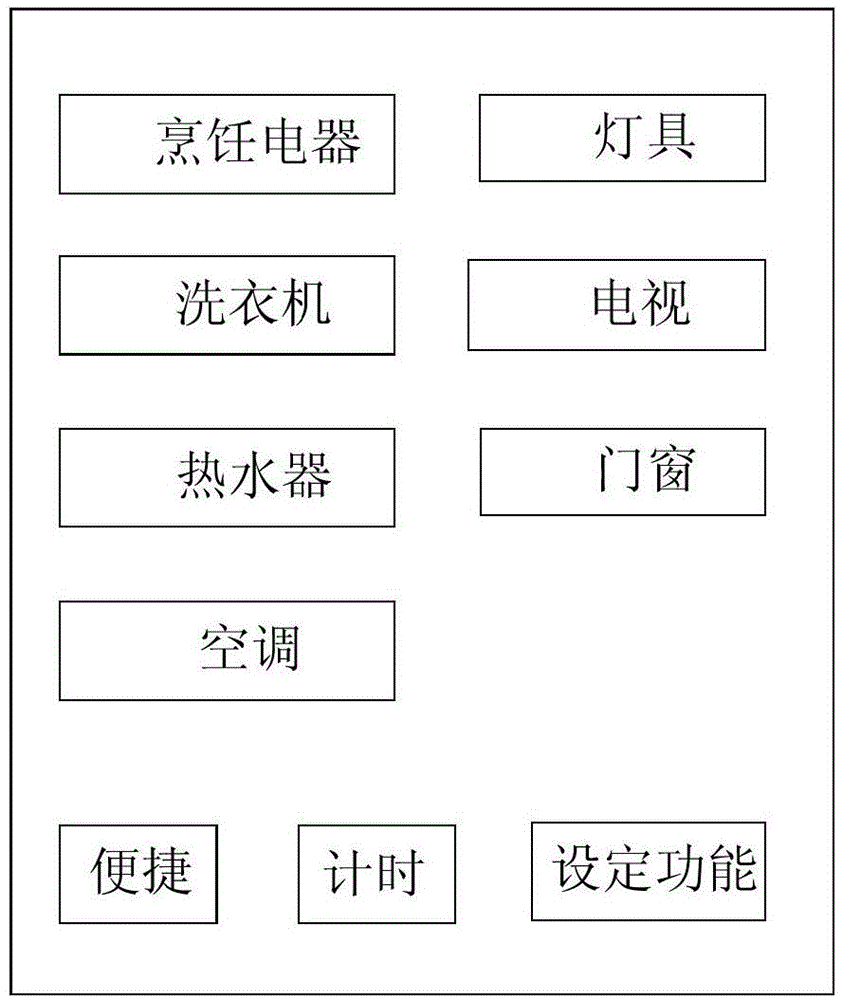

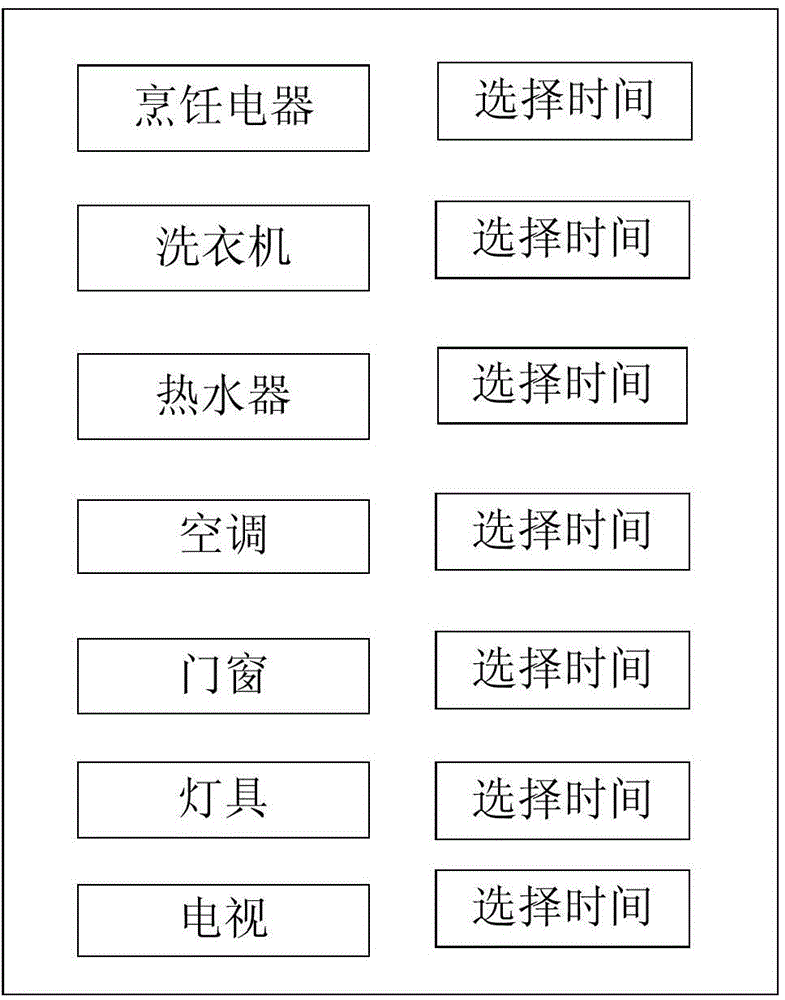

Smart household control system

InactiveCN105785774AImprove convenienceImprove intelligenceComputer controlProgramme total factory controlStart timeControl system

The invention discloses a smart household control system which at least includes a smart object module, an easy module, and a timing module. The smart object module includes available smart household appliance devices. The timing module includes a countdown function which, based on working hours corresponding to the selected one or more than one smart household appliance devices and the time when a user arrives at a smart home, conducts countdown on the starting time of the smart household appliance device or devices. The smart object module and / or the smart household devices, the easy module, and the timing module that are available for selection therein sets and are selection-operatably displayed in an internet-connectable smart device terminal. The internet-connectable smart device terminal is connected to the smart household, and controls the smart household. According to the invention, the smart household control system can determine the starting time of the smart household in a more precise manner in accordance with the distance between a user and the smart household, which increases intelligence of the smart household and is much closer to user's life.

Owner:BEIJING QIHOO TECH CO LTD +1

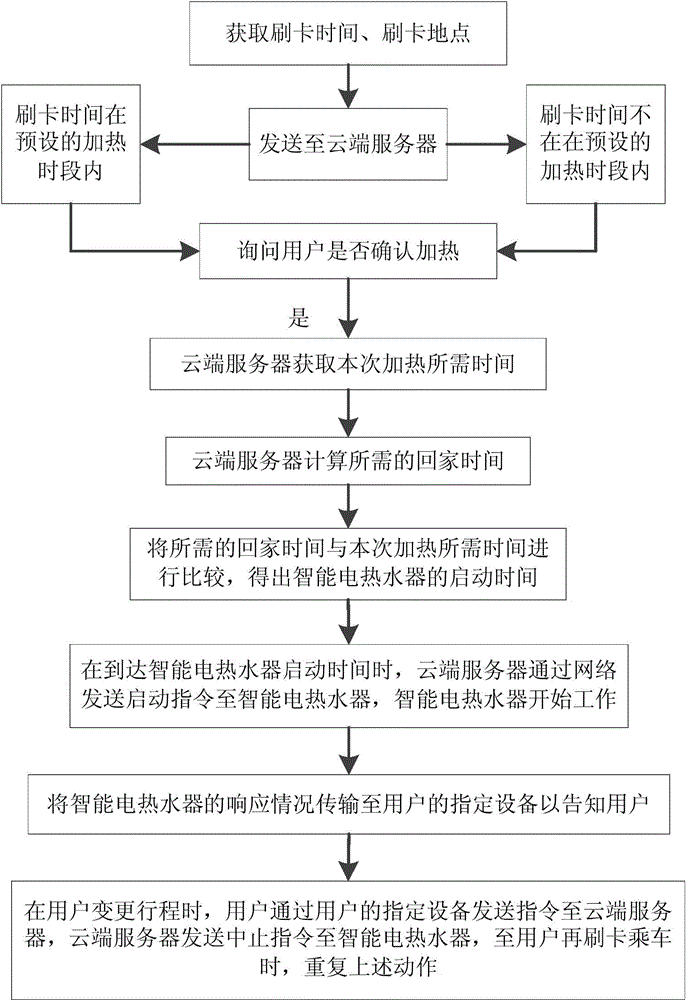

Intelligent control system and method for controlling intelligent home equipment by using bus card

ActiveCN104615107AImprove convenienceImprove intelligenceComputer controlLocation information based serviceStart timeIntelligent control system

The invention provides an intelligent control system and a method for controlling intelligent home equipment by using a bus card; the method comprises the steps: a user sends card-swiping time and card-swiping location information to a Cloud server when swiping the bus card; the Cloud server asks the user whether the task processing is confirmed; if so, the Cloud server acquires the needed time for this task processing; the Cloud server calculates required journey time; the Cloud server compares the required journey time with the needed time for this task processing and obtains the starting time of the intelligent home equipment; at the arrival of the starting time of the intelligent home equipment, the Cloud server sends a starting command to the intelligent home equipment through the network and enables the intelligent home equipment to work; the intelligent home equipment feeds back information to user's appointed equipment by means of the Cloud server. The operation convenience and intelligence of the intelligent home equipment are improved and the control method and the control system conform more closely to users' life.

Owner:BEIJING QIHOO TECH CO LTD

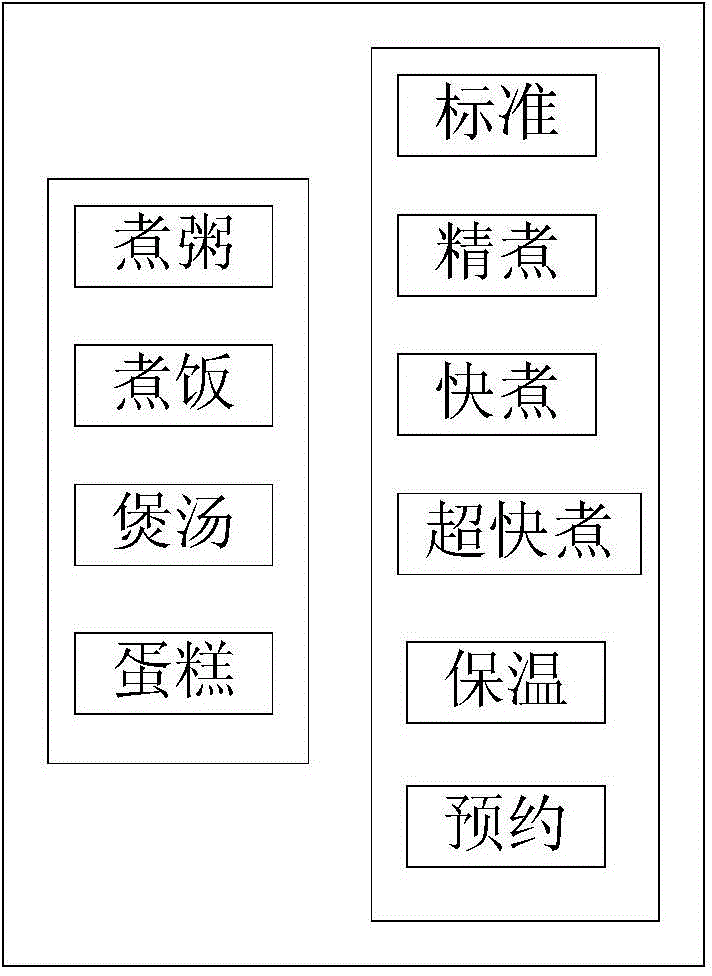

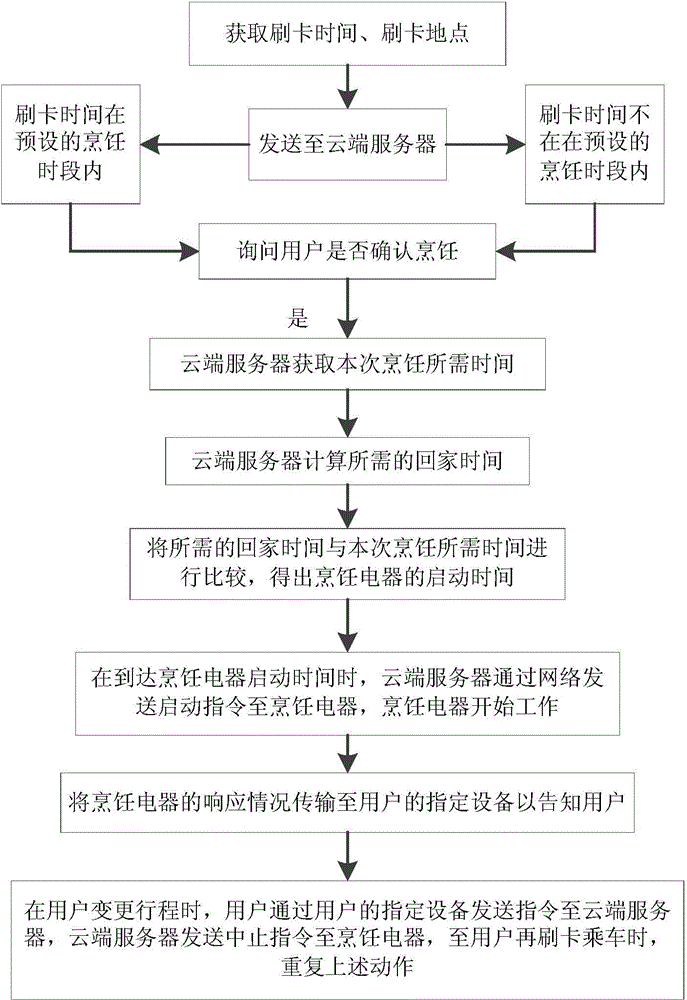

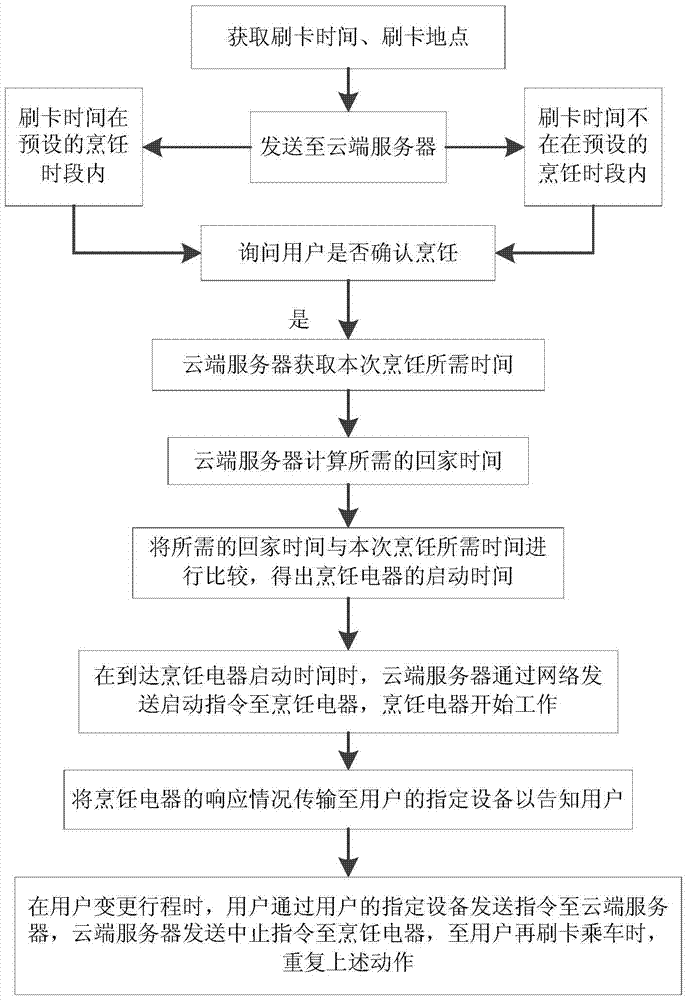

Intelligent control system and method for controlling cooking appliance by using bus card

ActiveCN104615021AImprove certaintyAvoid misuseProgramme control in sequence/logic controllersStart timeIntelligent control system

The invention provides a method and a system for controlling a cooking appliance by using a bus card. The method comprises the steps: a user sends card-swiping time and card-swiping location information to a Cloud server when swiping the bus card; the Cloud server asks the user whether the cooking is confirmed; if so, the Cloud server acquires the needed time for this cooking; the Cloud server calculates required journey time; the Cloud server compares the required journey time with the needed time for this cooking and obtains the starting time of the cooking appliance; at the arrival of the starting time of the cooking appliance, the Cloud server sends a starting command to the cooking appliance through the network and enables the cooking appliance to work; the cooking appliance feeds back information to user's appointed equipment by means of the Cloud server; when the user changes his journey, the user sends a command to the Cloud server and the Cloud server sends a halt command to the cooking appliance; till the user gets on a bus and swipes his bus card, the above steps are repeated. The operation convenience and intelligence of intelligent home are improved and the control method and the control system conform more closely to users' life.

Owner:BEIJING QIHOO TECH CO LTD

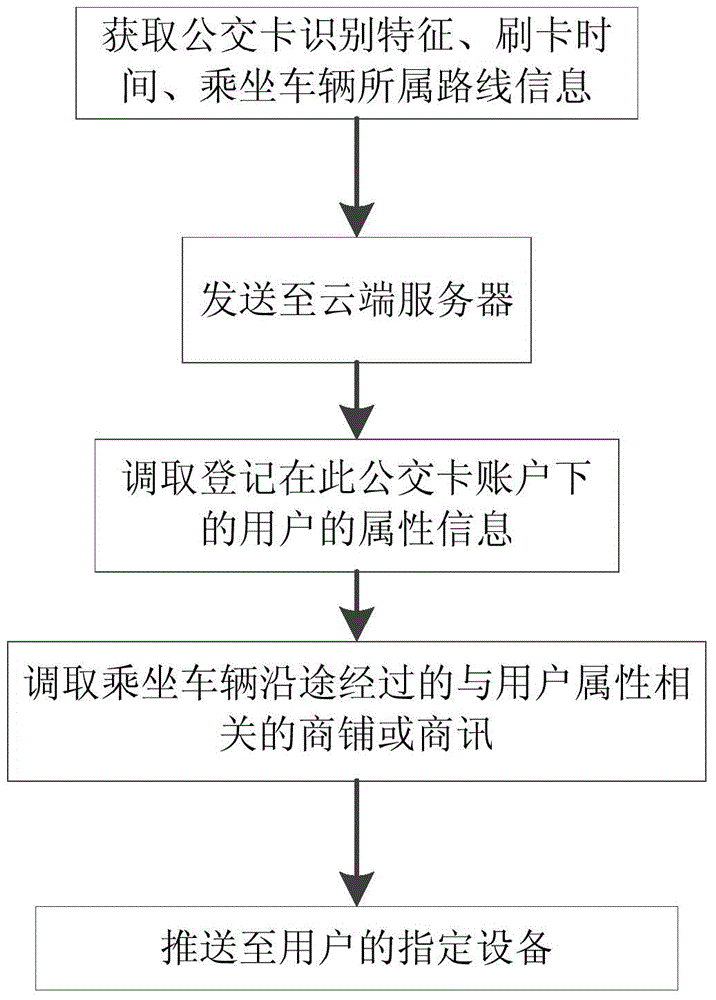

Intelligent control method for message forwarding by means of bus cards

ActiveCN104618858AImprove intelligence and convenienceImprove convenienceDatabase distribution/replicationMessaging/mailboxes/announcementsReal-time computingIntelligent control

The invention provides an intelligent control method for message forwarding by means of bus cards; the method comprises the steps: S1, when a user gets on a bus and swipes his bus card, the recognition feature of the user's bus card and the route information of the bus are sent to a Cloud server through the network; and S2, after receiving the information, the Cloud server forwards the relevant messages to the user according to user's attribute. When the user gets on a bus and swipes his bus card, the Cloud server is triggered by the swiping of the bus card to obtain the user's attribute registered in the bus card account, obtain the situations of user's various hobbies and obtain the route information of the bus to confirm the travel route, so that the Cloud server forwards the messages of shops or business information near the location of the user to the user when riding a bus. Mobile phone users do not need to search the messages actively; although many kinds of messages are forwarded, the operation is not tedious; users can consider more concerned messages and business information of shops, so that the message acquisition convenience and intelligence for users are improved and the method conforms more closely to users' life.

Owner:BEIJING QIHOO TECH CO LTD +1

Konjac soybean juice noodles and preparation method thereof

InactiveCN107691965AFragrant tasteImprove immune functionFood preservationFood ingredient functionsEconomic benefitsFood processing

The invention discloses konjac soybean juice noodles and a preparation method thereof, and belongs to the technical field of food processing. According to the konjac soybean juice noodles, konjac powder and soybean juice are combined, and the noodles have rich nutrients. Compared with common konjac noodles, the konjac soybean juice noodles have a mouth feel with faint scent and can absorb certainnutrient substances needed for human bodies. The method has the advantages of simple preparation processes, low costs and high economic benefits.

Owner:四川森态源生物科技有限公司

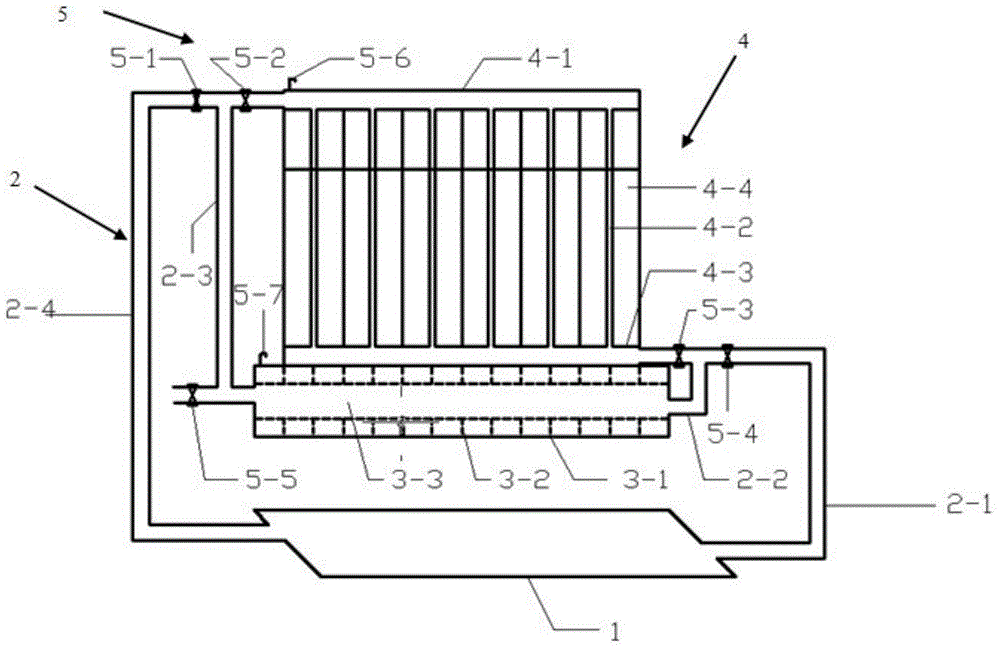

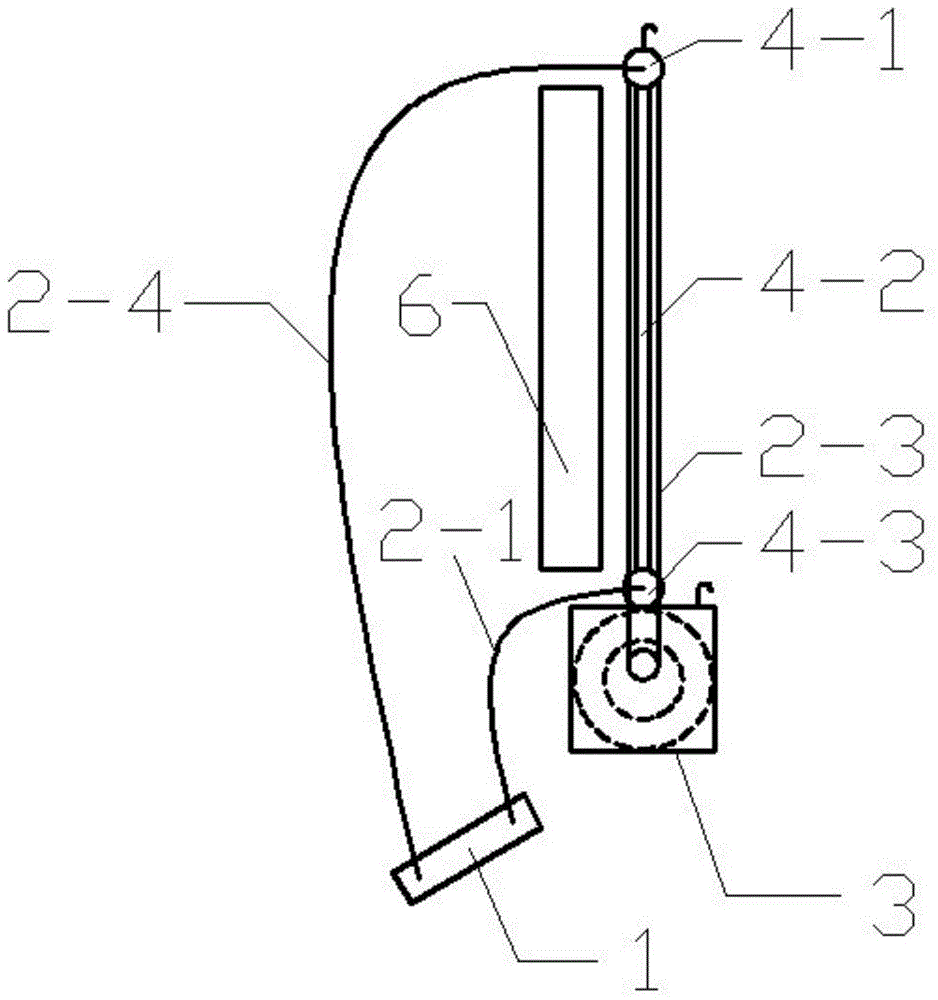

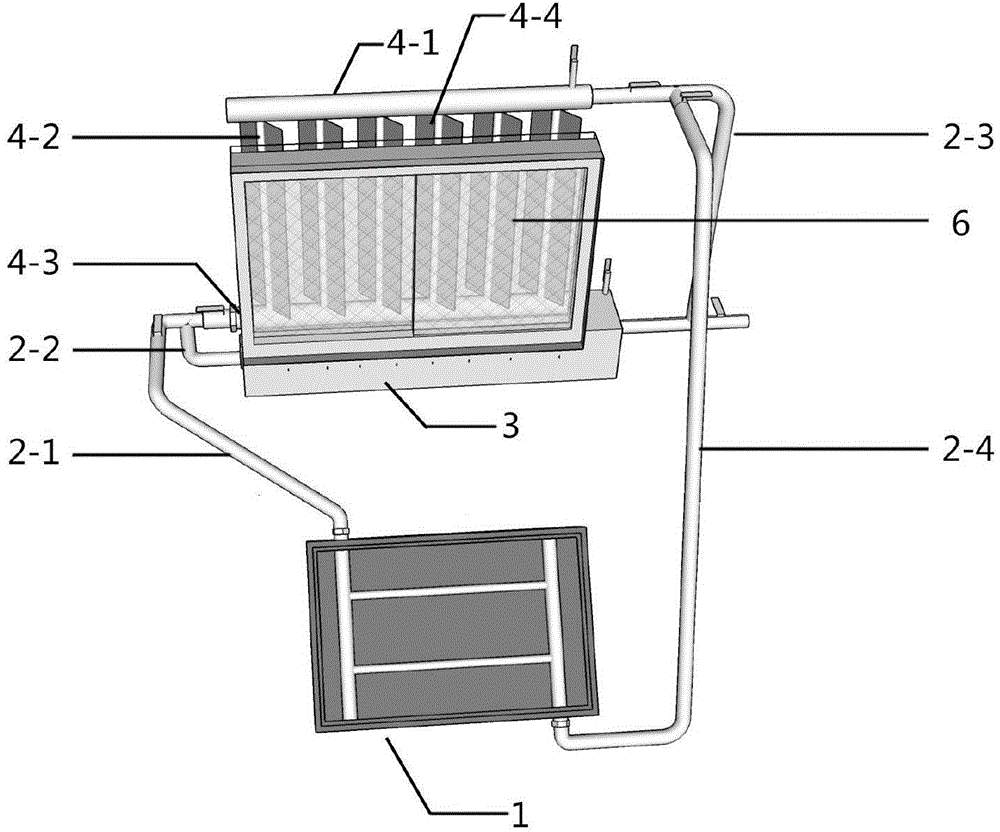

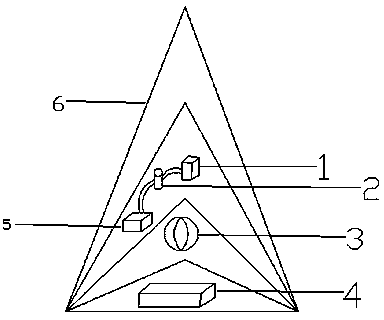

Heat-storing and energy-saving window device coupled with solar heat source

ActiveCN104963621AIncrease useClose to lifeSolar heat devicesLight protection screensEngineeringPhase-change material

The invention relates to a heat-storing and energy-saving window device coupled with a solar heat source. The heat-storing and energy-saving window device coupled with the solar heat source comprises a heat dissipation shutter. The heat-storing and energy-saving window device coupled with the solar heat source is characterized by further comprising a plate-type solar heat collector, a heating medium water pipeline and a phase-change material heat storage tank; the solar heat collector is of a plate-type structure and is installed on the upper portion of an outdoor lower window, the included angle between the heat collecting surface of the solar heat collector and the horizontal direction ranges from 10 degrees to 50 degrees, the length of the solar heat collector is the same as that of a window on which the solar heat collector is installed, and the width of the solar heat collector is one third to one second of the height of the window; the heat dissipation shutter comprises a water separator, heat dissipation water pipes, a water collector and heat conduction slats; the water separator and the water collector are horizontally arranged over and under the window on which the water separator and the water collector are installed respectively; the heat dissipation water pipes are evenly distributed between the water separator and the water collector, and each heat dissipation pipe is fixed with a heat conduction slat which can rotate by 360 degrees by taking the corresponding heat dissipation pipe as the axis.

Owner:HEBEI UNIV OF TECH

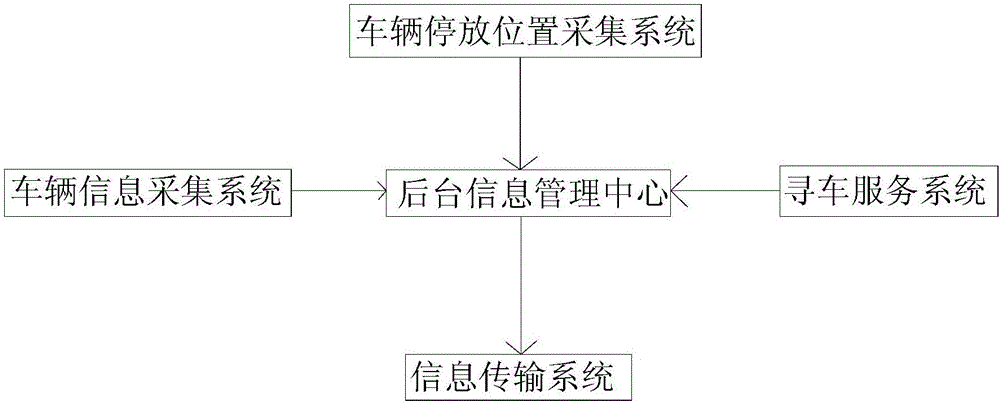

Parking lot vehicle parking-searching system based on smartphone

PendingCN106384530AAccurate recordGuaranteed accuracyRoad vehicles traffic controlParking areaInformation transmission

The invention relates to the information system technology field and particularly relates to a parking lot vehicle parking-searching system based on a smartphone. The parking lot vehicle parking-searching system comprises a vehicle information collection system, a vehicle parking position collection system, an information transmission system, a background information management center, a vehicle searching service system and a smartphone with a downloaded parking lot vehicle parking-searching APP; the vehicle information collection system, the vehicle parking position collection system, the information transmission system and the vehicle searching service system are connected to the background information management center; and the information transmission system is connected to the smartphone. The parking lot vehicle parking-searching system has functions of vehicle automatic identification and laser label tracking positioning, can accurately guide the vehicle of the user to an available parking space in the parking lot, provides accurate and fast vehicle parking and searching to the user, saves vehicle parking and searching time for the user, and is strong in practicability and applicable to popularization in the field.

Owner:HECHI UNIV

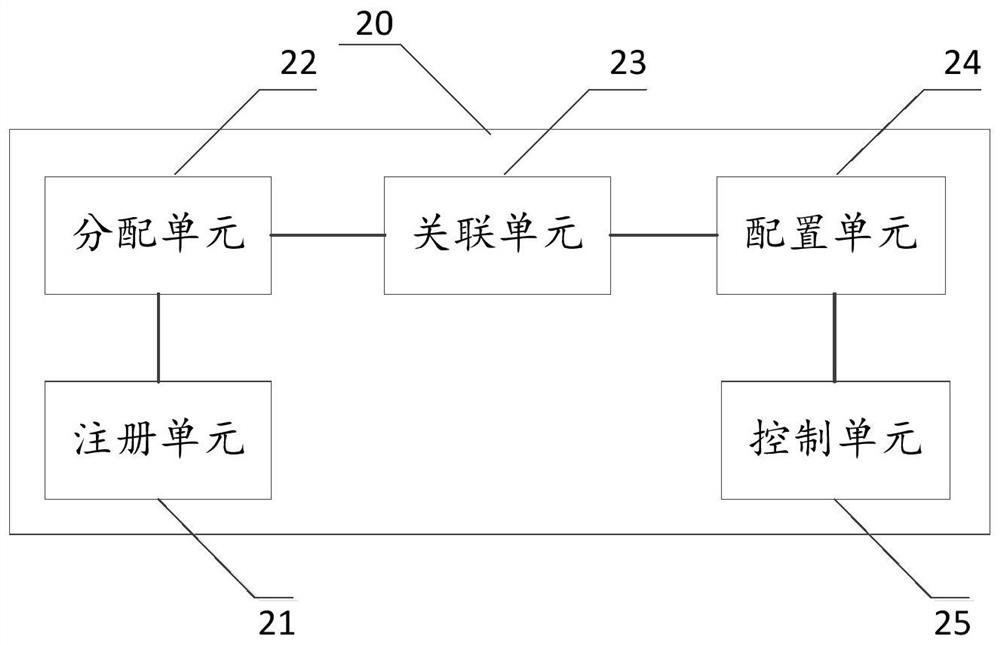

Integrated management method, device and equipment based on smart home and smart community

PendingCN112904739AQuality improvementRealize interconnectionComputer controlTotal factory controlComputer networkCommunity setting

The invention discloses an integrated management method based on a smart home and a smart community. The method comprises the steps: responding to a registration request of a user entering a cloud service management system, and the cloud service management system is used for managing a smart home system and a smart community system; based on the registration request, distributing a first identification number and a second identification number corresponding to the smart home system and the smart community system to a user; associating the smart home system with the smart community system by establishing a corresponding relationship between the first identification number and the second identification number; pushing the smart home system page for a user to perform a home mode and configure a control rule of smart home equipment corresponding to the home mode, wherein the cloud service management system controls the corresponding smart home equipment to run / stop running according to the home mode, and matches the start of the smart community equipment corresponding to the associated smart community system.

Owner:厦门立林科技有限公司

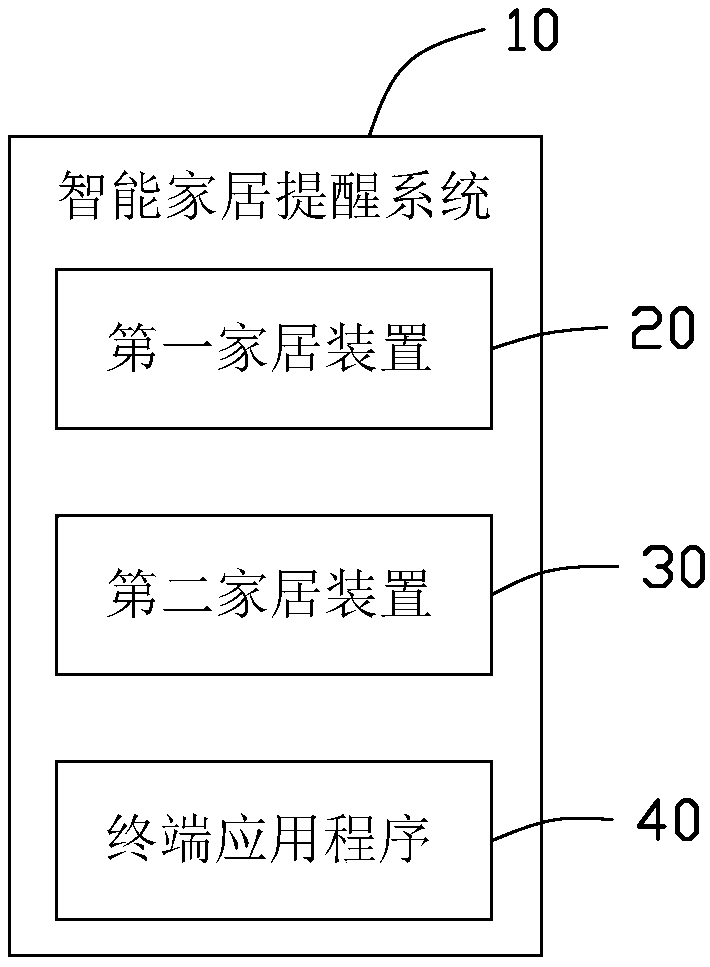

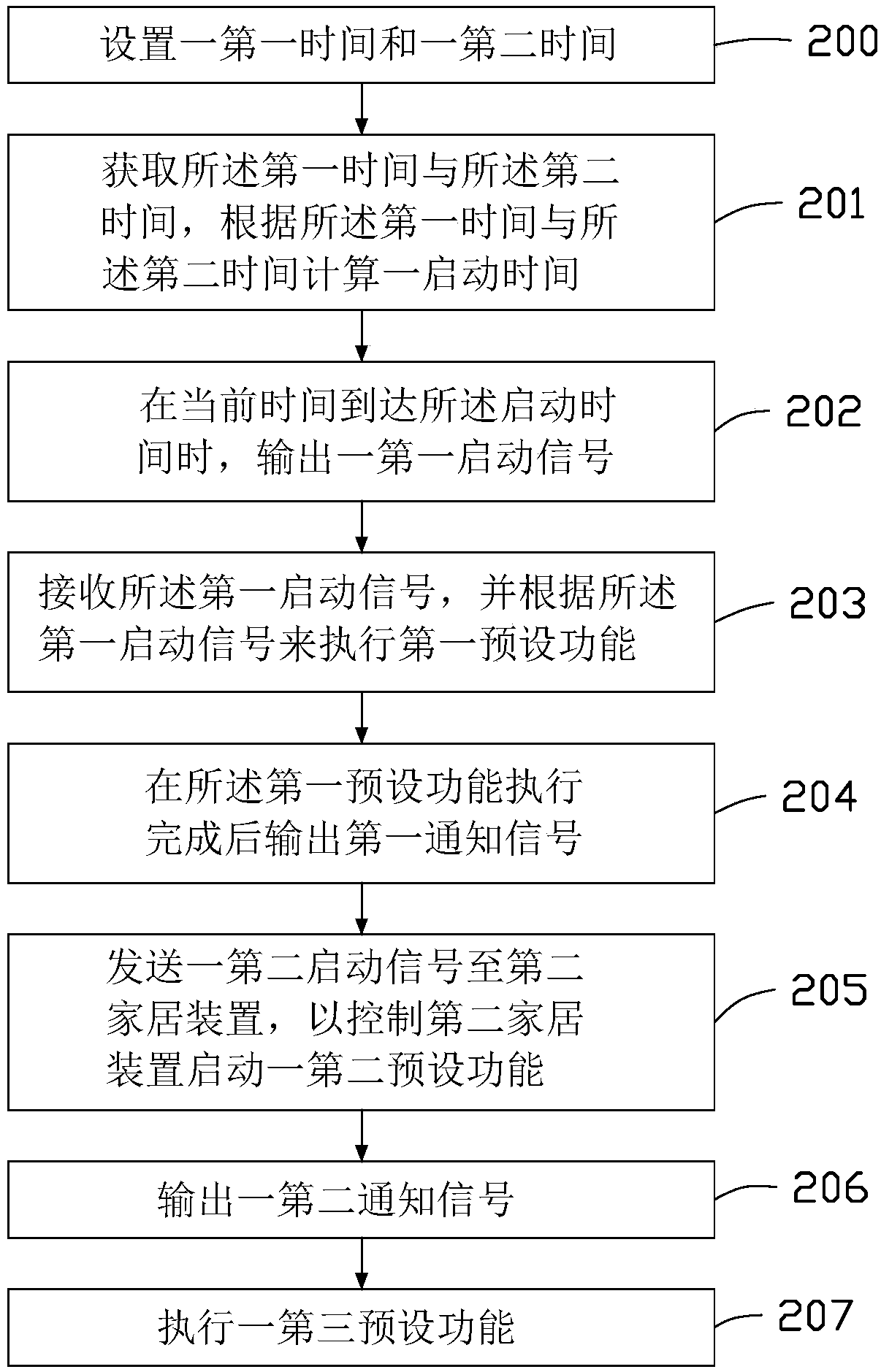

Intelligent home reminding system

InactiveCN110058529AImprove convenienceImprove intelligenceComputer controlTotal factory controlRelevant informationStart time

The invention relates to an intelligent home reminding system which comprises a terminal application program and a first home device, wherein the terminal application program is used for acquiring first time and second time, calculating starting time according to the first time and the second time, and outputting a first starting signal when the current time reaches the starting time; and the first home device is used for executing a first preset function to respond to the received first starting signal. The intelligent home reminding system obtains relevant information of a user in advance, controls the starting of the intelligent home according to the obtained relevant information, improves control convenience and intellectualization of the intelligent home, and is closer to life of theuser.

Owner:SHENZHEN FUTAIHONG PRECISION IND CO LTD +1

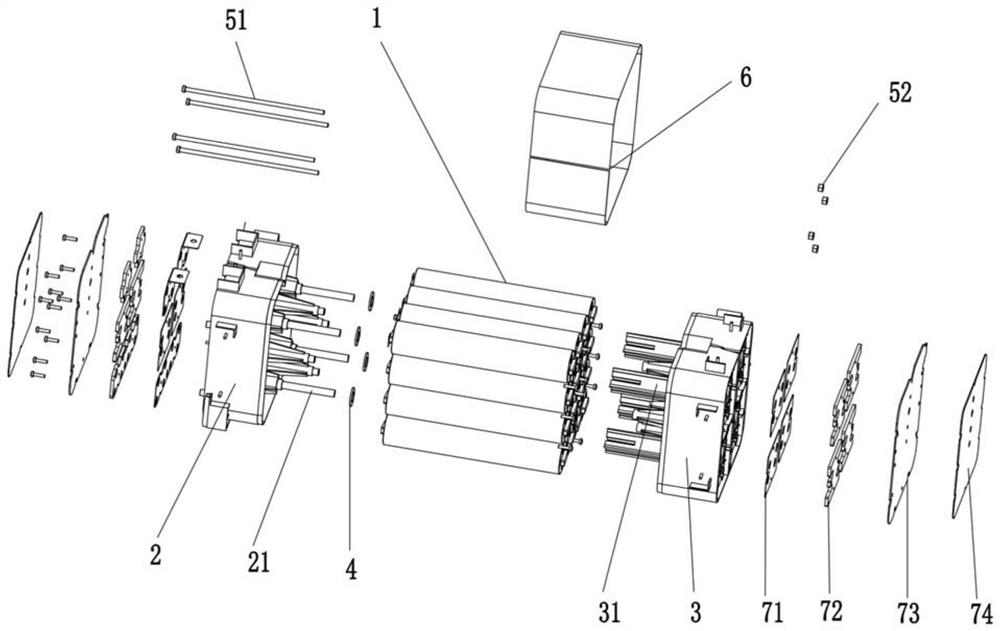

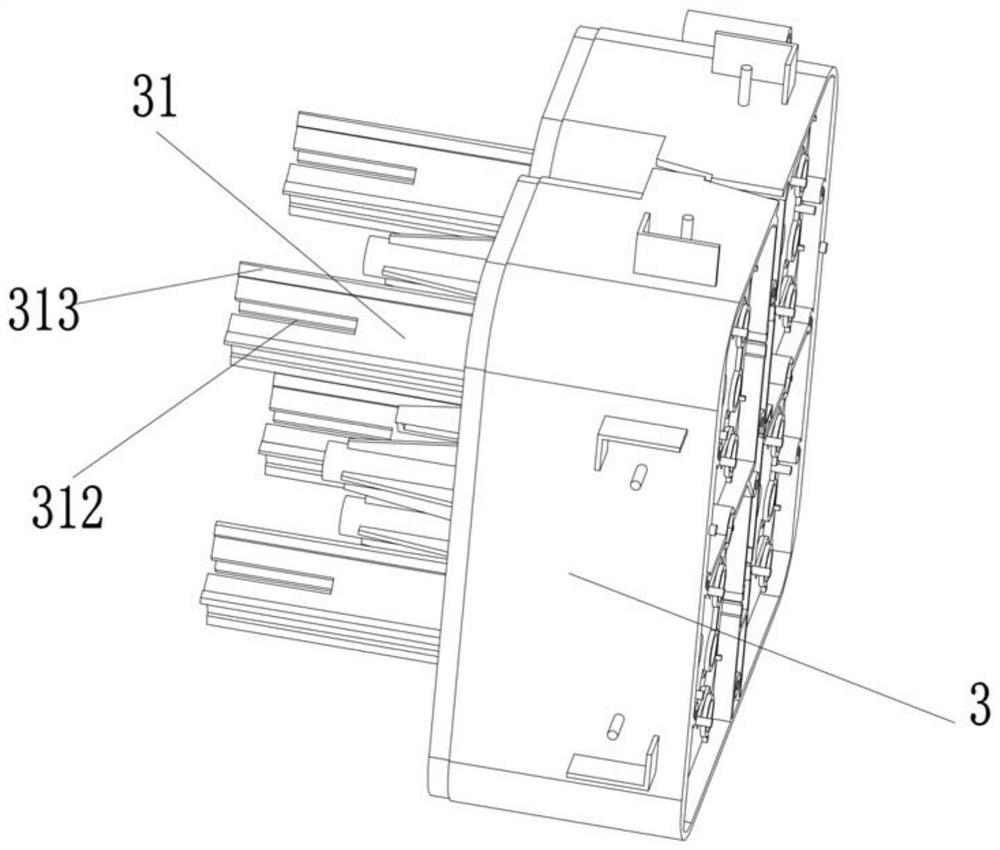

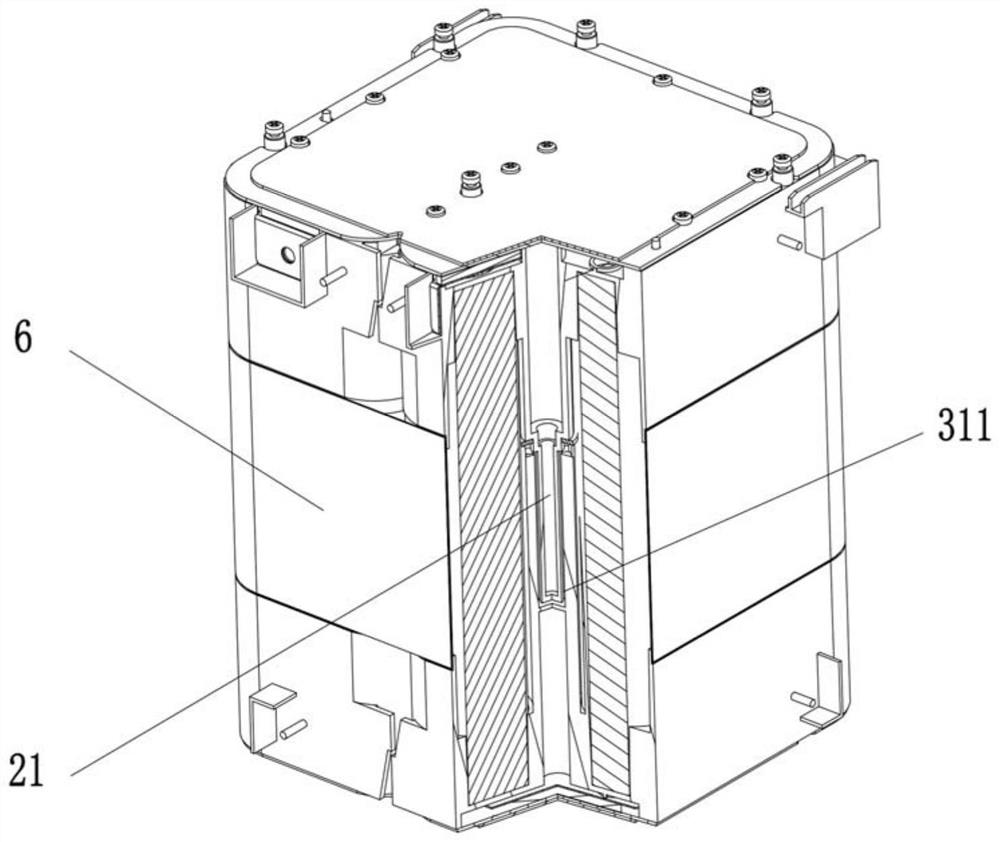

Novel grouping structure of large cylindrical battery cells

PendingCN114094269AImprove stabilityEnsure a reasonable distanceVent arrangementsSecondary cellsBusbarEngineering

The invention discloses a novel grouping structure of large cylindrical battery cells. The novel grouping structure comprises a plurality of battery cells, and a battery cell group consisting of an upper bracket and a lower bracket at two ends of the battery cells; the middle parts of the upper bracket and the lower bracket are respectively provided with a plurality of filling columns and glue pouring columns which are matched with each other; the filling columns are provided with gaskets for interfering the movement of the circumferential battery cells; glue filling holes matched with the filling columns are formed in the centers of the glue filling columns; glue is arranged in the glue filling holes; busbars are arranged at the two ends of the battery cell group; and a heat conduction pad, a heating film and a heat preservation pad are sequentially arranged on the outer sides of the busbars. The invention provides a novel grouping structure of large cylindrical battery cells, which can improve the welding tear resistance of an aluminum tab of the large cylindrical battery cell, balance the heating area of a single battery cell and ensure the temperature rise consistency.

Owner:福建飞毛腿动力科技有限公司

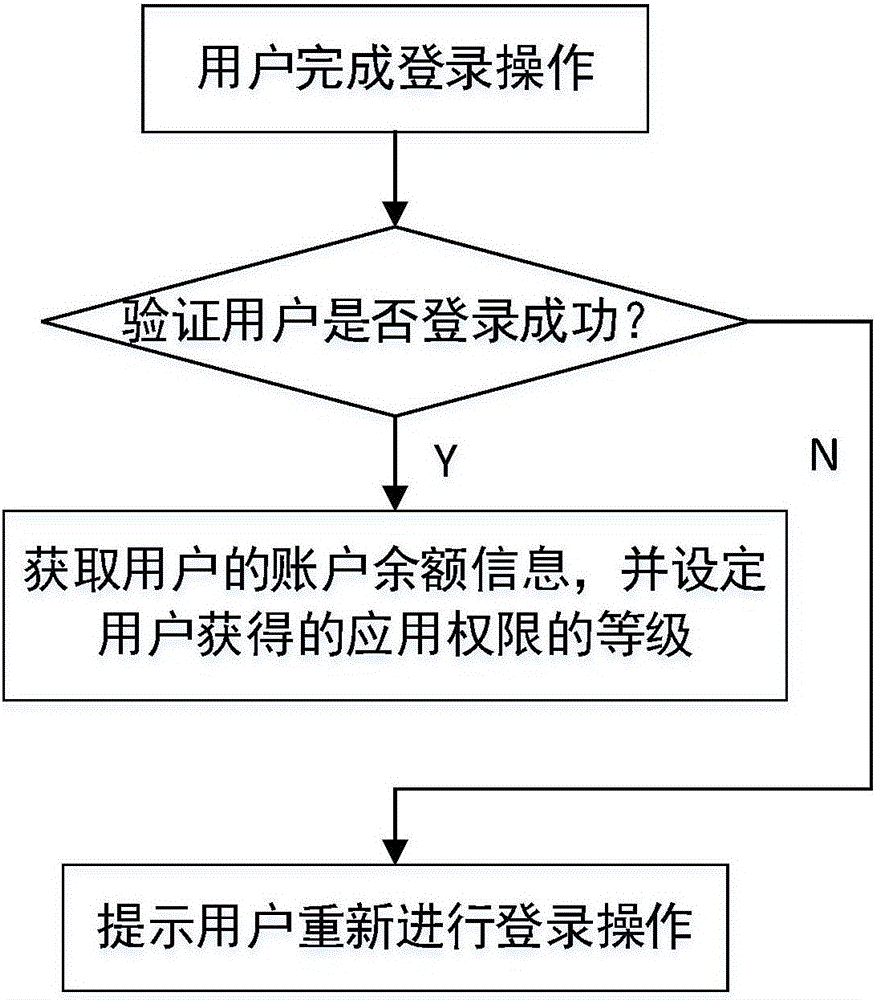

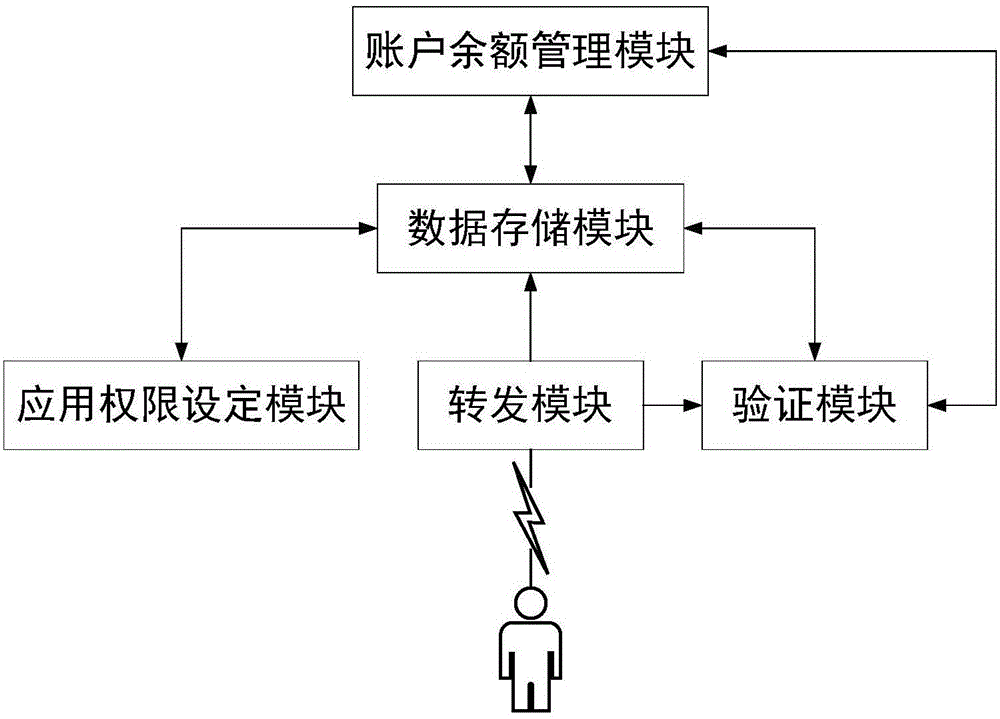

Social method based on user account balance and server

InactiveCN106850624AClose to lifeEnhanced interactionTransmissionProtocol authorisationInternet privacyIncome level

The invention discloses a social method based on a user account balance and a server. The social method comprises the steps of acquiring account balance information of a logged user, and setting a social level of the user so that the user has a permission of browsing social data of other users whose social permission levels are not greater than the social permission level of the user. And thus, a social manner on the basis of an income level of the user is provided, the social manner is enabled to be closer to a life of the user, and the interaction among the users is greatly increased.

Owner:SICHUAN YANBAO TECH CO LTD

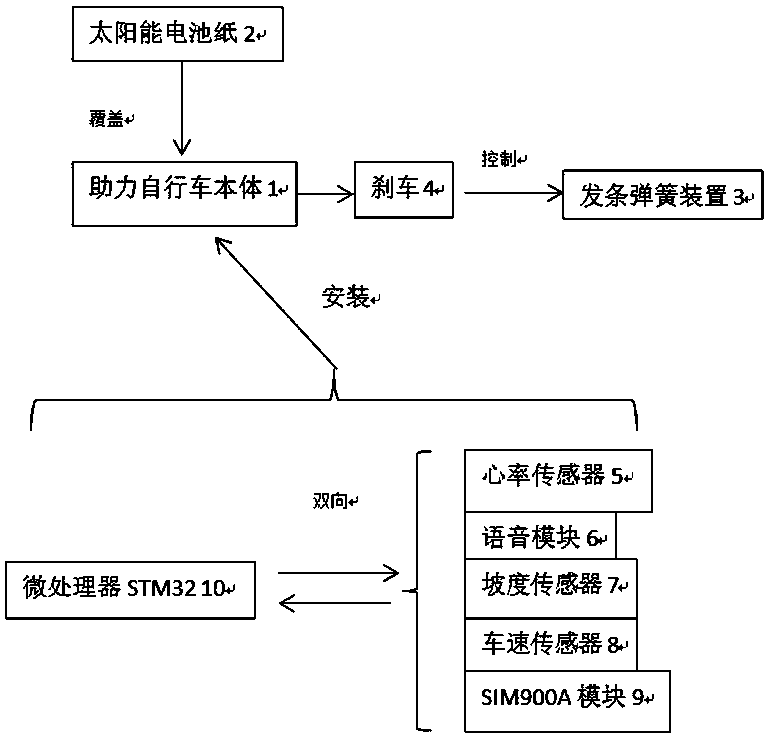

Intelligent power assisted bicycle

The invention discloses an intelligent power assisted bicycle comprising a power assisted bicycle body. The bicycle body is covered with solar cell paper; a chain of the bicycle body is connected witha coiled spring device; the coiled spring device is connected with a brake; a heart rate sensor and a voice module are installed on two handlebars of the bicycle body; a gradient sensor and a bicyclespeed sensor are installed at bearings at the centers of wheels; an SIM900A module is installed at the bicycle tail; the heart rate sensor, the voice module and the gradient sensor are controlled bya microprocessor STM32; and the STM32 is installed inside a bicycle frame. The brake triggers the coiled spring device similar to a mechanical clock, and thus mechanical energy is converted into elastic potential energy of a spring to be stored; and then, the steep degree of a front slope is detected by the gradient sensor, and thus corresponding kinetic energy is released intelligently.

Owner:林义艳

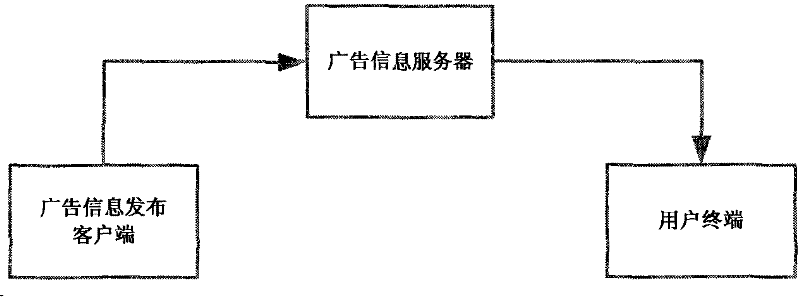

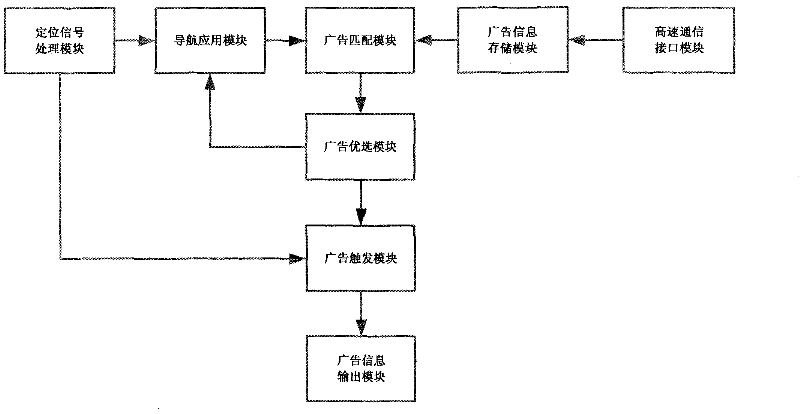

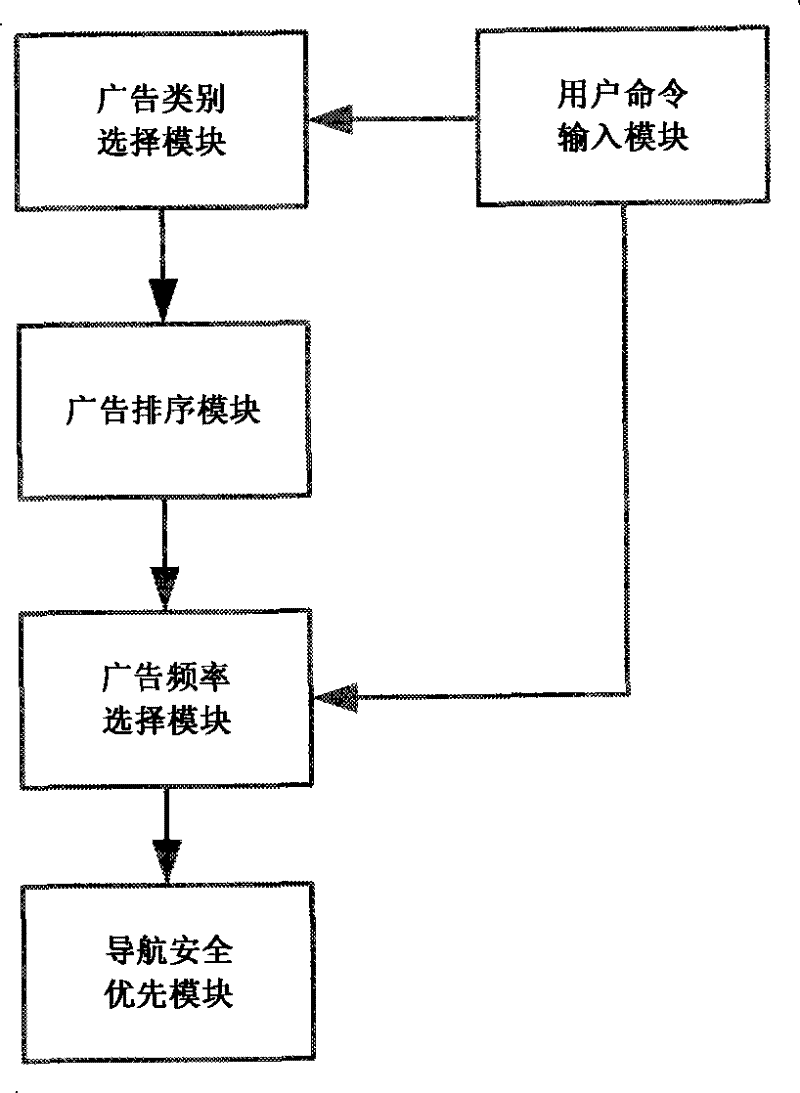

Advertising information issuing system combined with positioning navigation

InactiveCN101534315BTargetedImprove timelinessInstruments for road network navigationTransmissionInformation dispersalGeolocation

Owner:深圳市莱科文化科技有限公司

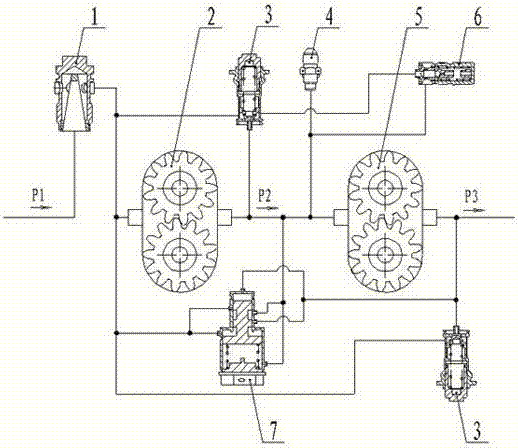

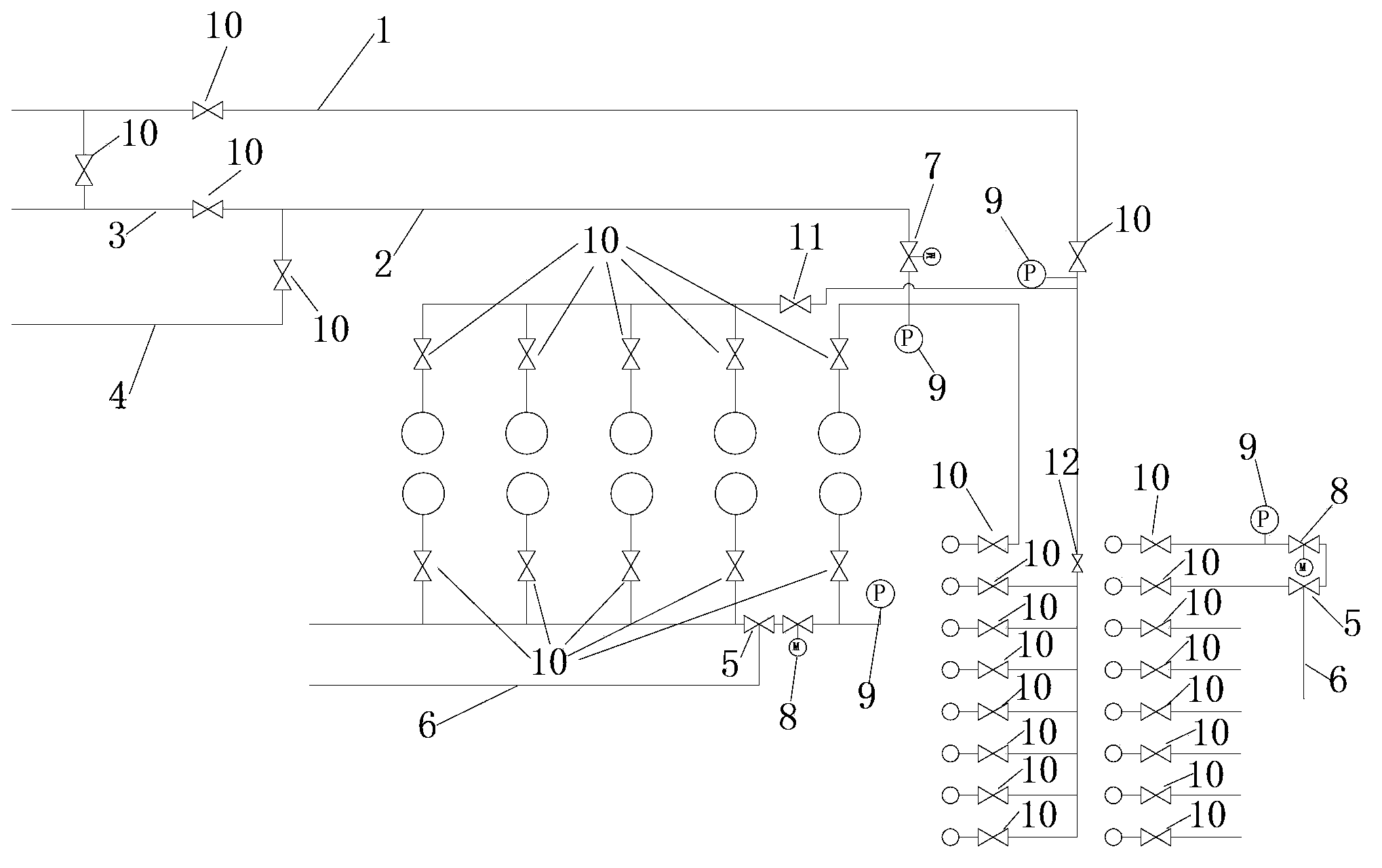

Automatic partial pressure double gear pump

ActiveCN105370567BLoad averageLoad sharingRotary piston pumpsRotary/oscillating piston combinationsGear pumpDistribution control

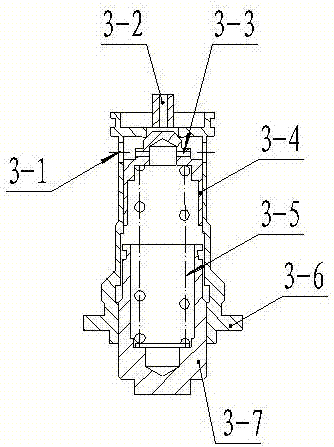

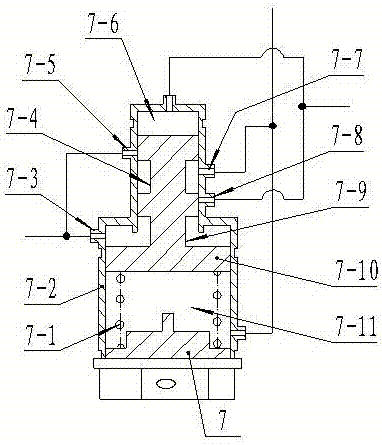

The invention discloses a pressure distribution control dual gear pump, which belongs to a dual gear pump and aims to provide a dual gear pump which can balance loads at different stages. The pressure distribution control dual gear pump comprises two-stage gear pumps and a differential pressure adjusting device (7), wherein the two-stage gear pumps are connected in series; the differential pressure adjusting device (7) is formed by a piston (7-10) and a spring (7-1) which are positioned in a shell (7-2); a first oil port (7-3), a second oil port (7-5), a third oil port (7-7) and a fourth oil port (7-8) are formed in the shell (7-2), and two ring grooves are formed in the piston (7-10); the first oil port (7-3) and the second oil port (7-5) are respectively connected to the inlet end of the first-stage gear pump (2), a high-pressure cavity (7-6) and a fourth oil port (7-8) are respectively connected to the outlet end of the second-stage gear pump (5), and a low-pressure cavity (7-11) and the third oil port (7-7) are respectively connected to an oil way between the first-stage gear pump (2) and the second-stage gear pump (5). The dual gear pump has the advantages of stability in operation, long service life, high speed, high pressure and large flow.

Owner:GUIZHOU HONGLIN MACHINERY

Method and intelligent control system for controlling cooking appliances by bus card

ActiveCN104615021BImprove certaintyAvoid misuseProgramme control in sequence/logic controllersStart timeIntelligent control system

The invention provides a method and a system for controlling a cooking appliance by using a bus card. The method comprises the steps: a user sends card-swiping time and card-swiping location information to a Cloud server when swiping the bus card; the Cloud server asks the user whether the cooking is confirmed; if so, the Cloud server acquires the needed time for this cooking; the Cloud server calculates required journey time; the Cloud server compares the required journey time with the needed time for this cooking and obtains the starting time of the cooking appliance; at the arrival of the starting time of the cooking appliance, the Cloud server sends a starting command to the cooking appliance through the network and enables the cooking appliance to work; the cooking appliance feeds back information to user's appointed equipment by means of the Cloud server; when the user changes his journey, the user sends a command to the Cloud server and the Cloud server sends a halt command to the cooking appliance; till the user gets on a bus and swipes his bus card, the above steps are repeated. The operation convenience and intelligence of intelligent home are improved and the control method and the control system conform more closely to users' life.

Owner:BEIJING QIHOO TECH CO LTD

Desert preservation box device

InactiveCN104071458ANo pollution in the processNo noiseDomestic cooling apparatusLighting and heating apparatusThermodynamicsProcess engineering

The invention relates to a desert preservation box device, wherein the device comprises a circulation refrigerating device having a zeolite box, a radiator and a refrigerating water tank connected together through pipelines, the whole refrigerating device is sealed totally and pumped into the vacuum state, and a heat preservation box device having a heat preservation shell, a degassing and water holding system and a heat preservation box; the circulation refrigerating system can cycle the water and refrigerate during the water circulation process, and the heat preservation box device can control the temperature in the preservation box.

Owner:蔡传阳

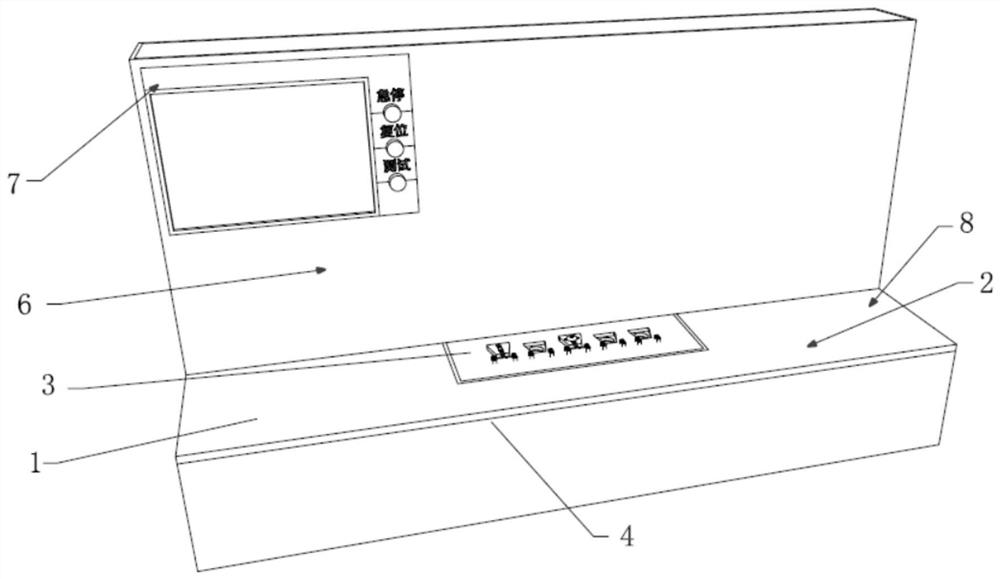

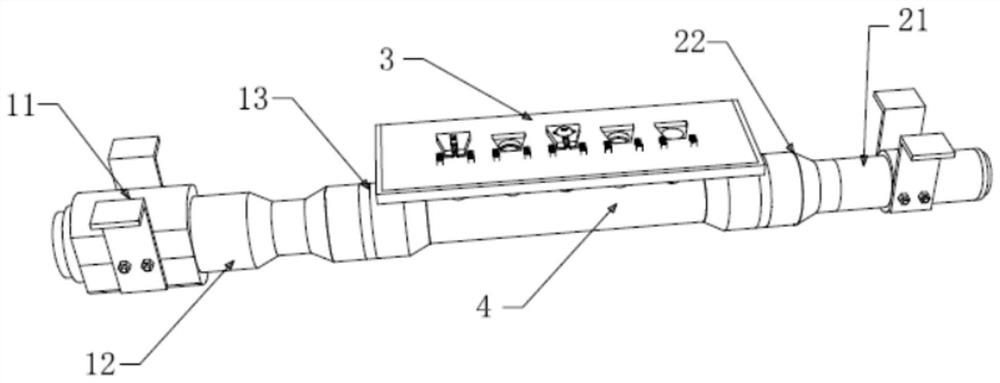

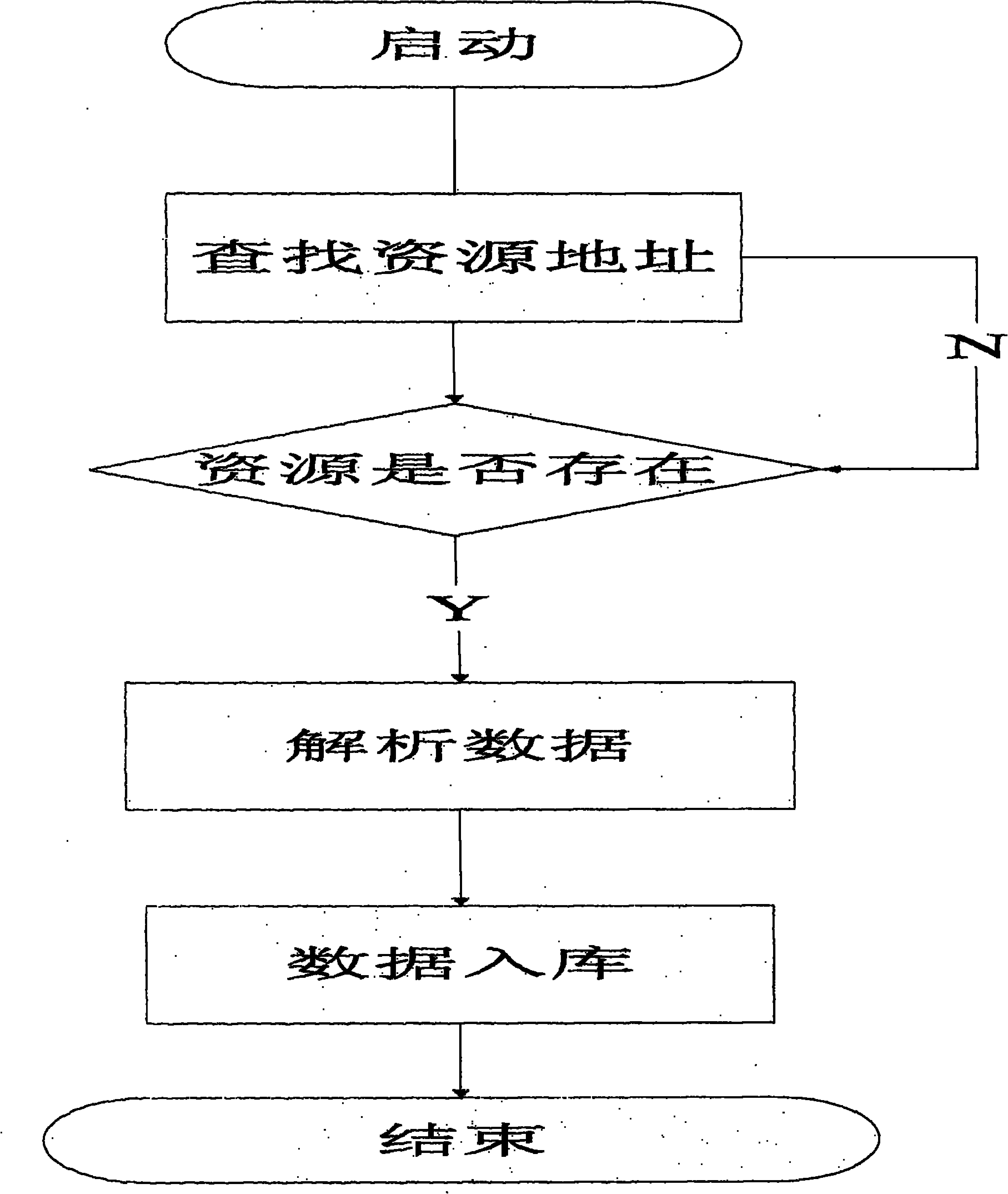

Temperature limiter service life testing device

PendingCN112269366AReduce the impactImprove stabilityProgramme controlElectric testing/monitoringThermodynamicsEngineering

The invention discloses a temperature limiter service life testing device, relates to the technical field of air conditioner part detection, and solves the problem that in the prior art, an electric hair drier is used for testing the service life of a temperature limiter, so that a testing result is unstable. The device comprises a heating assembly, a heat dissipation assembly, a test carrier anda first air duct, a to-be-tested temperature limiter is installed on the test carrier located above an air outlet of the first air duct, the heating assembly and the heat dissipation assembly are located at the two ends of the first air duct, and hot air generated by heating of the heating assembly flows out through the air outlet of the first air duct and heats the to-be-tested temperature limiter. The heat dissipation assembly introduces outside normal-temperature air into the first air channel and the air flows out of the air outlet to cool the to-be-tested temperature limiter, so that therequired temperature is provided for the opening and closing of the to-be-tested temperature limiter contact through the heating assembly and the heat dissipation assembly, and the service life of theto-be-tested temperature limiter is measured by recording the opening and / or closing times of the to-be-tested temperature limiter contact. The service life result of the temperature limiter measuredby the device is good in stability.

Owner:GREE ELECTRIC APPLIANCES WUHAN +1

TV program notice system based on 3G wireless network

InactiveCN101924783AConvenient queryQuick searchSubstation equipmentTwo-way working systemsWireless internet accessThird generation

The invention provides a TV program notice system based on a 3G wireless network, wherein, a mobile terminal of a mobile phone is connected with the 3G wireless network, and a server is connected with the 3G wireless network and the internet; the mobile terminal of the mobile phone sends a wireless internet access request signal; a 3G wireless network receiver identifies the wireless signal and establishes wireless network connection for a request; and then the mobile terminal of the mobile phone can access the internet, check a TV program notice at a server side and understand various services and resources in the system. Compared with the prior art, the TV program notice system has the advantages of promoting application and popularization of the mobile phone, being capable of more conveniently, more quickly, more practically and more efficiently inquiring the program notice, being closer to life of people, and facilitating people to rationally arrange time according to corresponding program play.

Owner:重庆寰坤科技发展有限公司

Charger with music player

InactiveCN105552975AClose to lifeBatteries circuit arrangementsElectric powerMusic playerMobile phone

Provided in the invention is a charger with a music player. A music player is arranged at a side of a charger body. When the charger is used for charging a battery, the music player can be turned on to play music. Therefore, the charger can be closer to life and meet the taste of the old. The user can carry the charger conveniently when going out. And the charger not only can be used for charging a mobile phone but also can be used for playing music.

Owner:XIANGYANG NO 25 HIGH SCHOOL

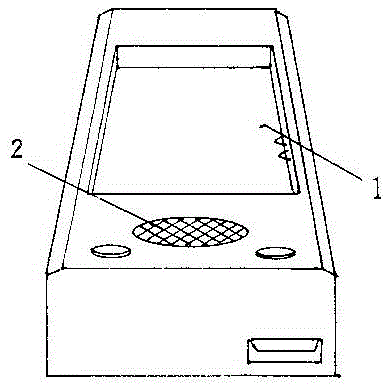

Ageing test device of liquid treatment device

InactiveCN104251785AClose to lifeClose to performance testingStructural/machines measurementWater sourceEngineering

The invention discloses an ageing test device of a liquid treatment device. The ageing test device comprises a life test water pipe and a performance test water pipe. One end of the life test water pipe is connected to a water inlet of a life test device; the other end of the life test water pipe is connected to a circulating water pool. A drain outlet of the life test device is connected to the circulating water pool. One end of the performance test water pipe is connected to a water inlet of a performance test device; the other end of the performance test water pipe includes two branch water pipes; one branch water pipe is connected to the circulating water pool; the other branch water pipe is connected to tap water; a drain outlet of the performance test device is connected to the circulating water pool and a direct drain pipe through a manual three-way valve. Both the life test device and the performance test device are internally provided with resin tanks. Difference of incoming water sources are tested via performance testing, and resin softening tanks are added to simulate actual usage. During supply of tap water, hardness of water before and after softening can be tested. A pressure gauge and an electromagnetic flowmeter are used to monitor the testing process, water circuit opening and closing, pressure increasing and decreasing and performance monitoring are preformed via a control cabinet, and observing is facilitated.

Owner:NANJING FOBRITE ENVIRONMENTAL TECH

Intelligent air-conditioner control method and system based on self-adaptive temperature control technique

ActiveCN102927660BRun smartSimple and fast operationSpace heating and ventilation safety systemsLighting and heating apparatusTemperature controlPersonalization

The invention relates to an intelligent control technique, and in particular relates to an intelligent air-conditioner control method and system based on a self-adaptive temperature control technique. The method provided by the invention mainly comprises the following steps that: an intelligent air-conditioner acquires environment information, facial images of users and operation records of the users, and extracts feature values; a cloud service platform processes the feature values, stores the feature values into corresponding control maps according to the user features, acquires the feature values of a current user, compares the feature values of the current user with feature values in a data bank, finds out the control map matched with the current user, and transmits the control map to the air-conditioner; and an air-conditioner control module automatically adjust the air-conditioner according to the control map. The intelligent air-conditioner control method has the beneficial effect of enabling the intelligent air-conditioner to provide individual temperature adjustment services according to the different users and different situations, so that the air-conditioner can be operated more simply and work more intelligently, and is closer to the life of people. The intelligent air-conditioner control method is particularly applicable to the intelligent air-conditioner.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

Shoe box with transparent window

InactiveCN104369963AEasy to useSolve the problem that you must open the box to understand the shoesGarmentsContainer/bottle contructionEngineering

Owner:ZAOYANG NO 1 EXPERIMENTAL ELEMENTARY SCHOOL

Novel refreshing box device for desert

InactiveCN104071459ANo pollution in the processNo noiseDomestic cooling apparatusLighting and heating apparatusEngineeringHeat conservation

The invention relates to a novel refreshing box device for the desert. The novel refreshing box device is characterized by comprising a circular refrigerating device and a heat preserving box device; the circular refrigerating device comprises a zeolite box, a radiator and a refrigerating water tank which are connected through pipelines; the whole refrigerating device is completely sealed and vacuumized; the heat preserving device comprises a heat preserving housing, an air removing and moisture maintaining system and a heat preserving box; the circular refrigerating system enables water circulating and is able to refrigerate during water circulation; the heat preserving box device is able to control the inner temperature of a refreshing box; a thermometer is arranged on the housing. According to the novel refreshing box device for the desert, the inner temperature can be displayed all the times, and the convenience is improved.

Owner:蔡传阳

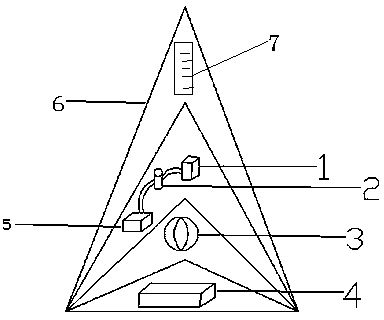

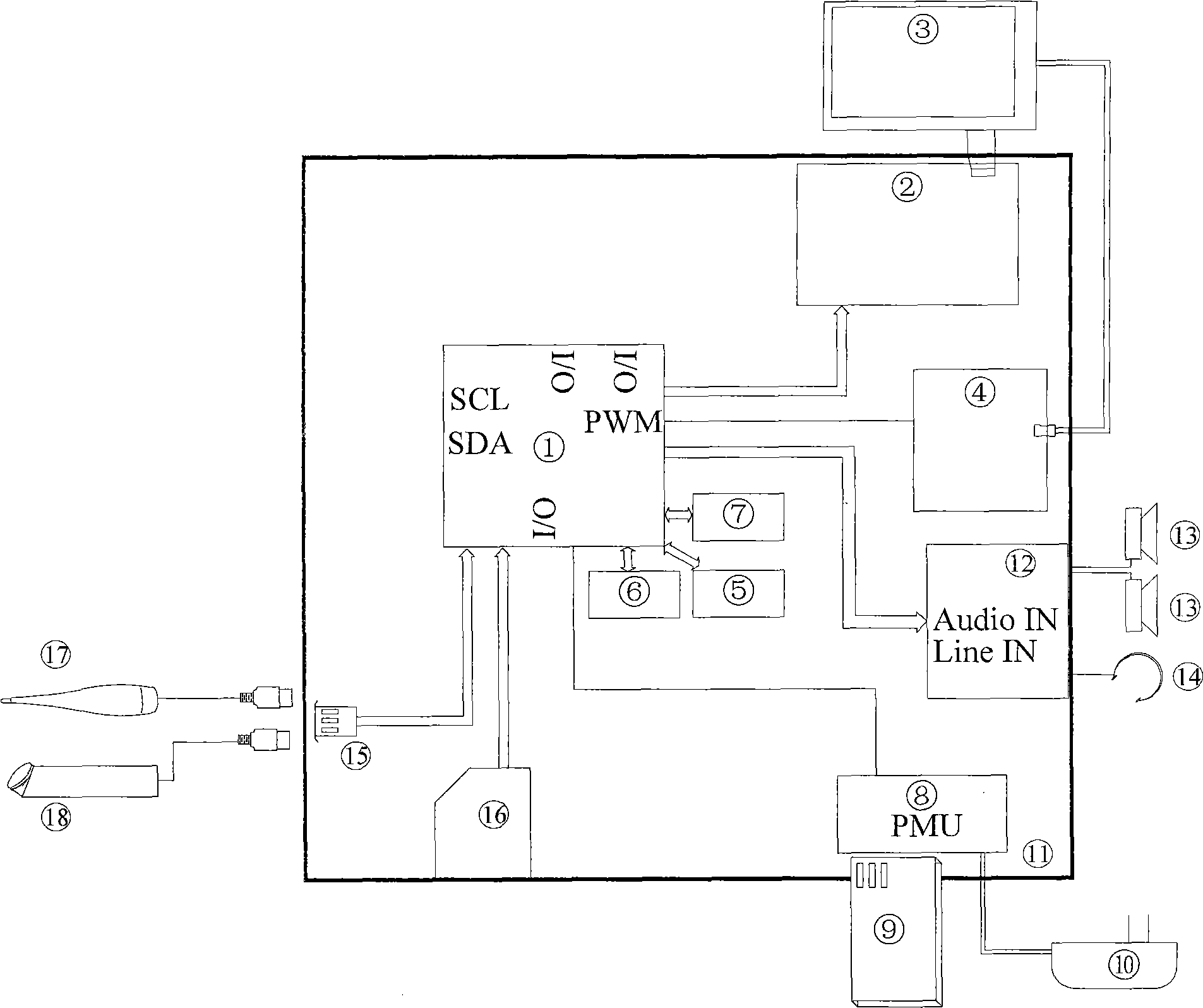

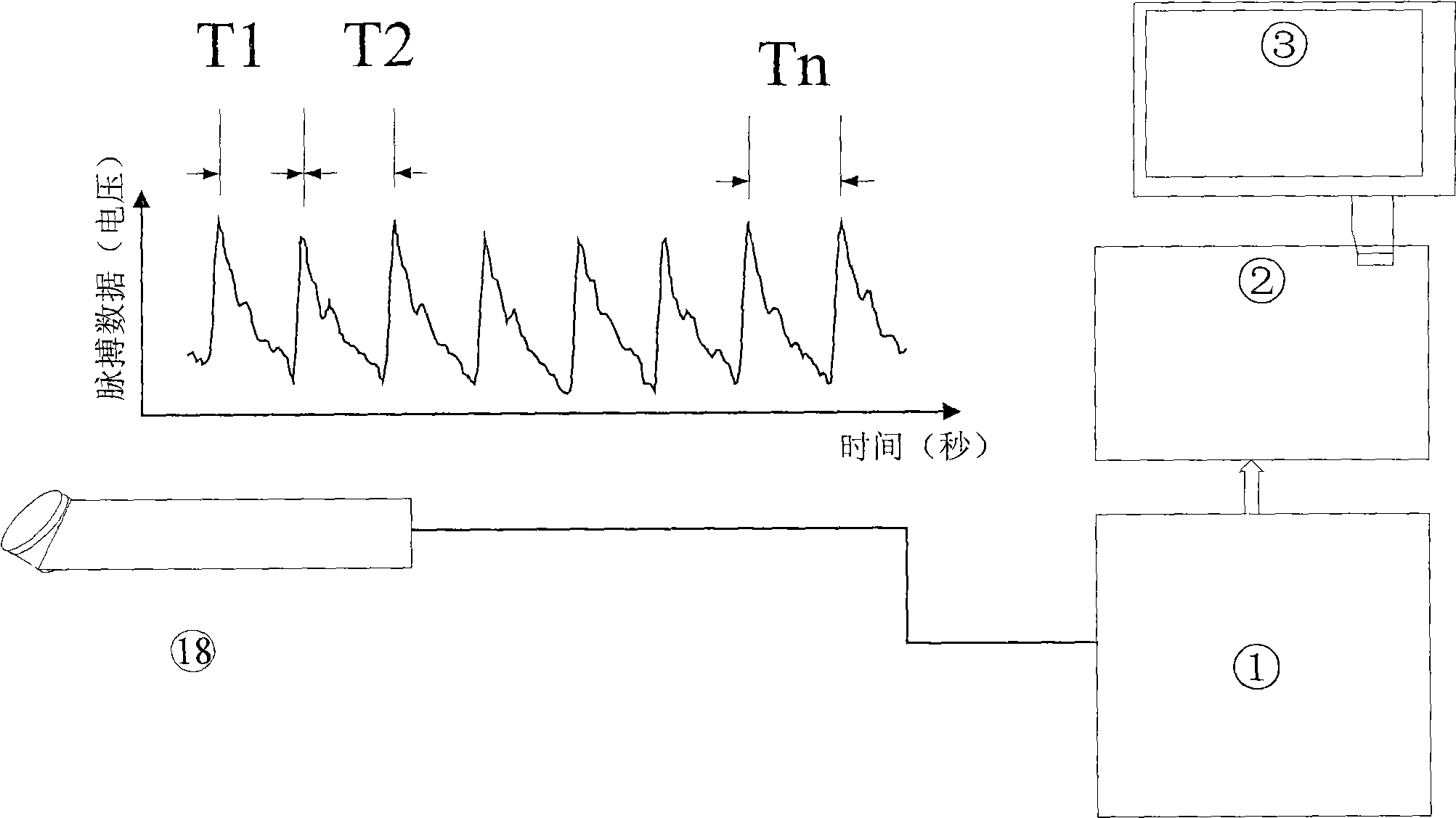

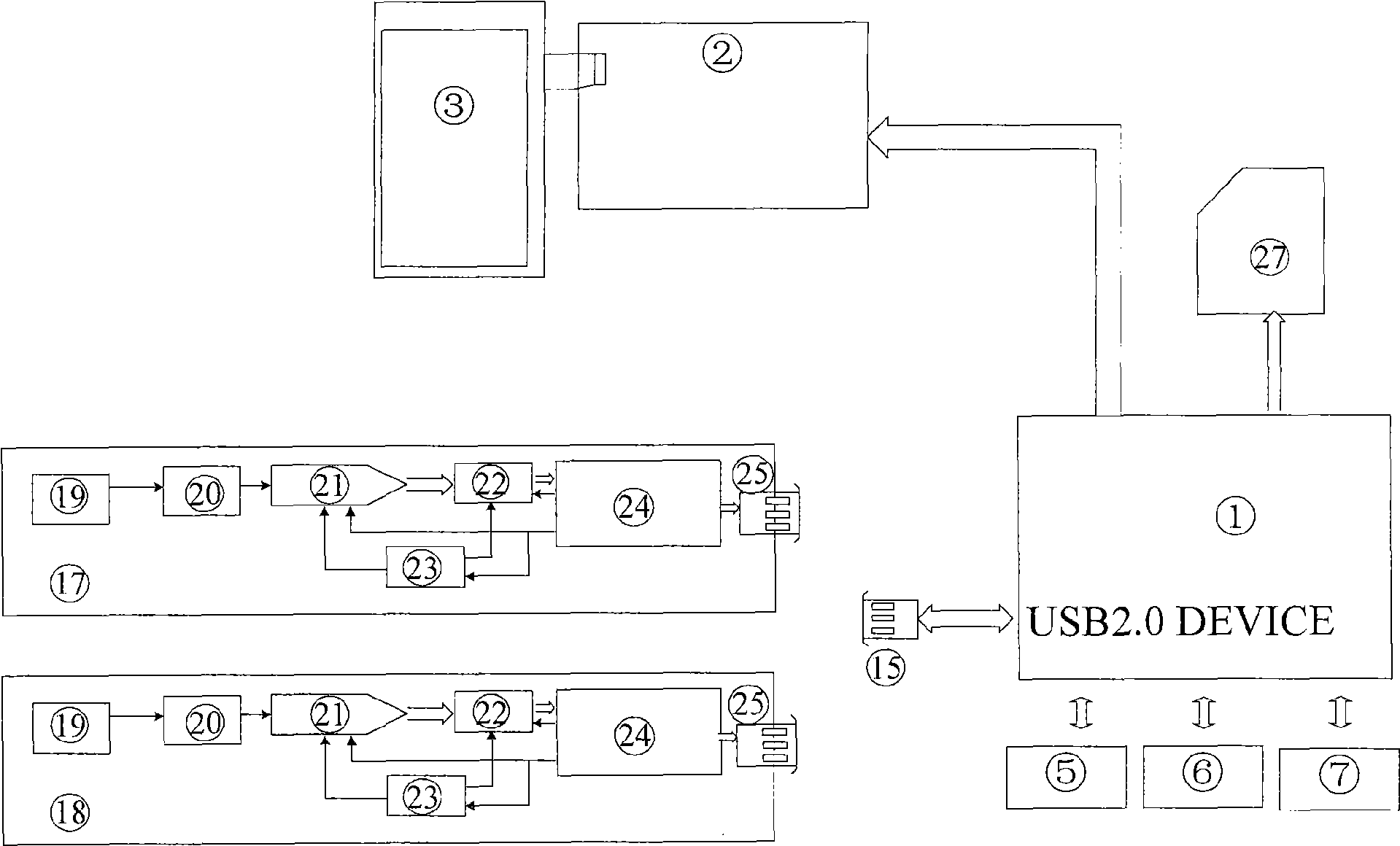

Multifunctional digital photo frame

InactiveCN101504831AClose to lifeMeet needsCathode-ray tube indicatorsDiagnostic recording/measuringMicrocontrollerHeart rate measurement

The invention discloses a multifunctional digital photo frame, which comprises a circuit main board, a display screen and a power supply unit. The circuit main board is provided with a microcontroller and a display screen drive circuit electrically connected between the microcontroller and the display screen. The digital photo frame also comprises an electronic thermometer module and a pulse test pressure sensor module which are controlled by the microcontroller and displayed by the display screen. The digital photo frame provides more convenient and practical health condition auxiliary detection services for a user, such as body temperature measurement, pulse and heart rate measurement and the like, is closer to the life of the user, and meets the requirement of the user.

Owner:SUZHOU SHUANGLIN PLASTICS & RUBBER ELECTRONICS

Construction method of deep visual question answering system for the visually impaired

ActiveCN106951473BLimited recognitionShorten the timeCharacter and pattern recognitionNeural architecturesVisually impairedBack propagation algorithm

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com