Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

48 results about "Visual behavior" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

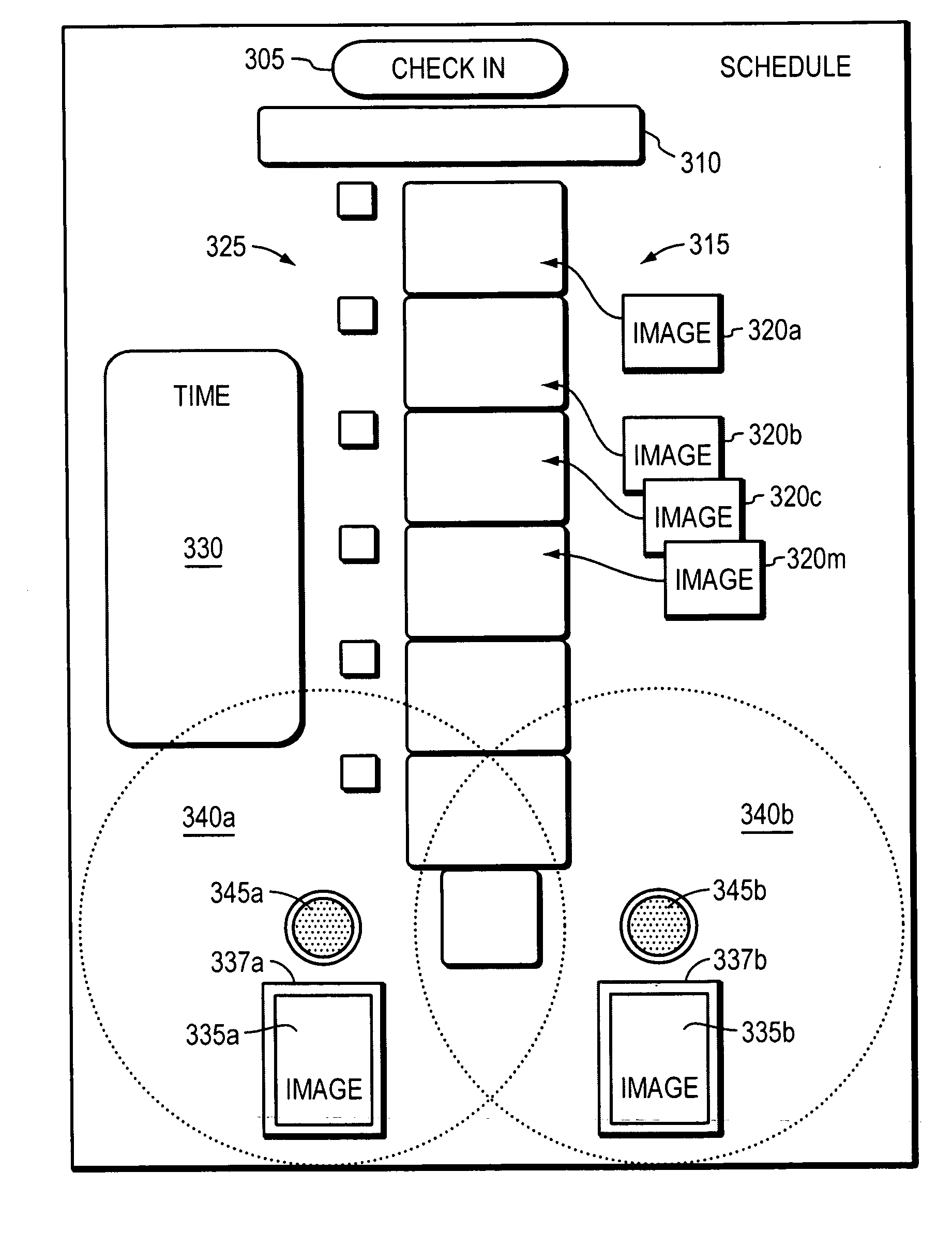

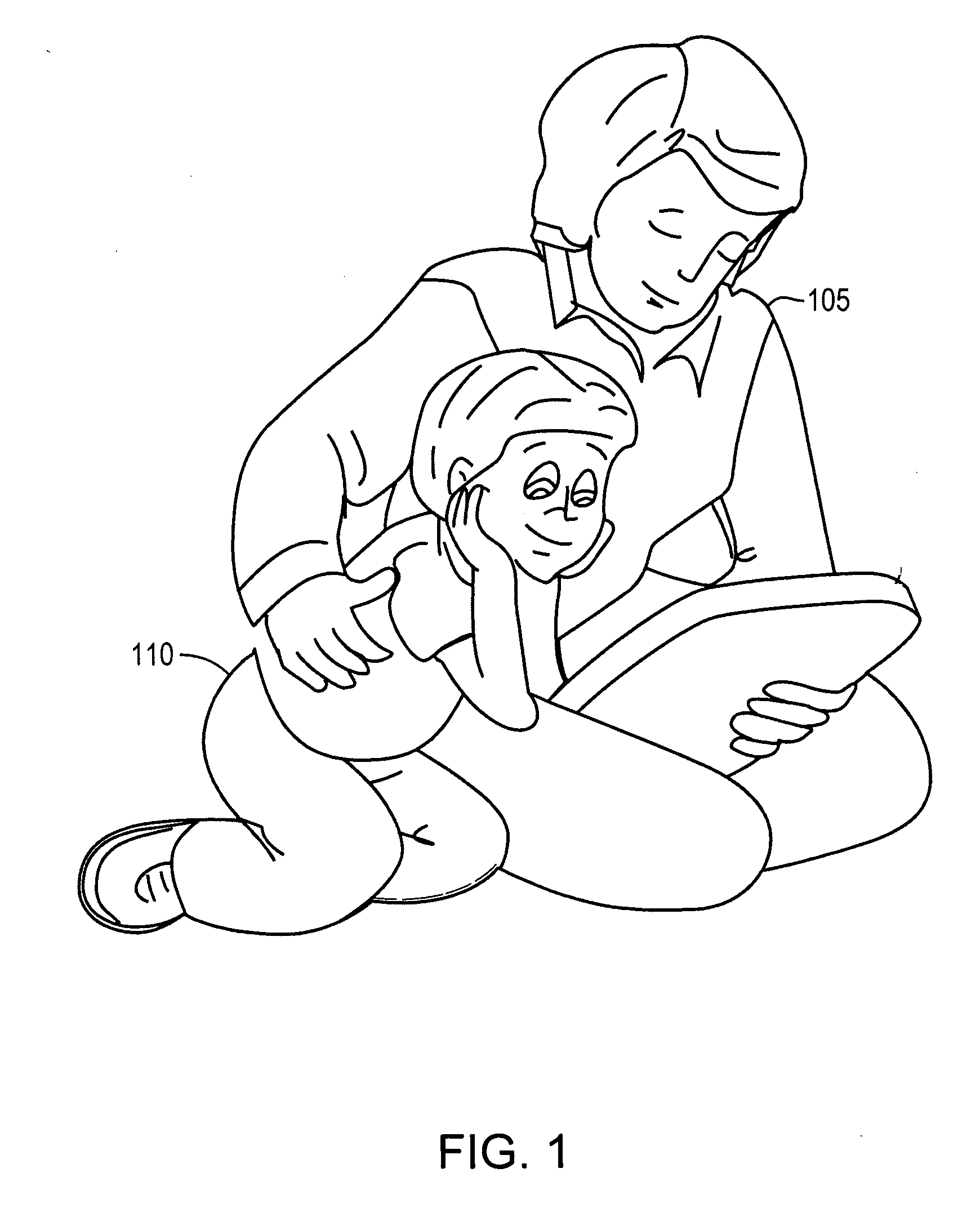

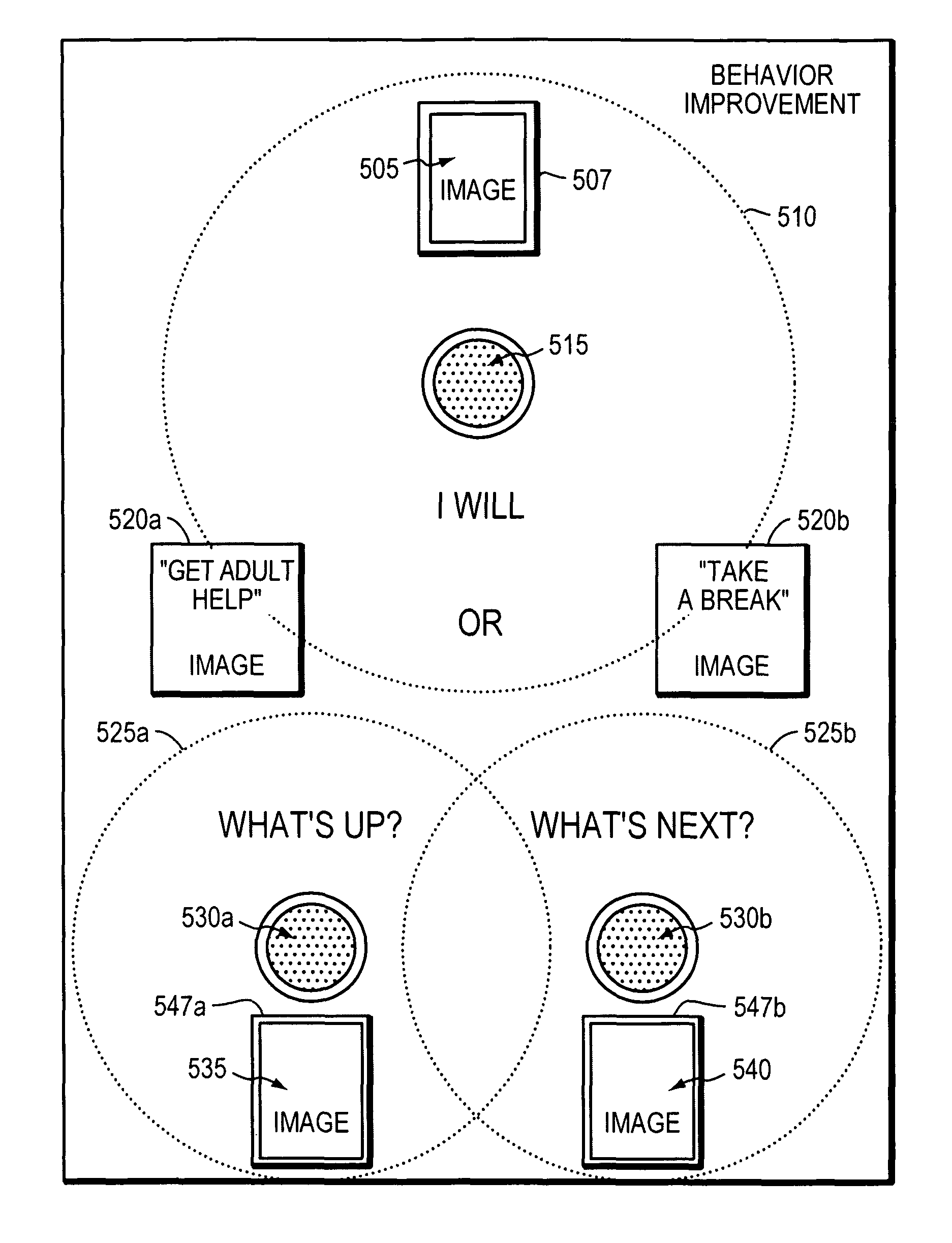

Method and apparatus for developing a person's behavior

ActiveUS20070117073A1Assist in developing the behavior in the personElectrical appliancesTeaching apparatusHuman behaviorAdaptive response

An embodiment of an apparatus, or corresponding method, for developing a person's behavior according to the principles of the present invention comprises at least one visual behavior indicator that represents a behavior desired of a person viewing the at least one visual behavior indicator. The apparatus, or corresponding method, further includes at least two visual choice indicators viewable with the at least one visual behavior indicator that represent choices available to the person, the choices assisting in developing the behavior in the person by assisting the person in choosing an appropriately adaptive response supporting the desired behavior or as an alternative to behavior contrary to the desired behavior.

Owner:BEE VISUAL

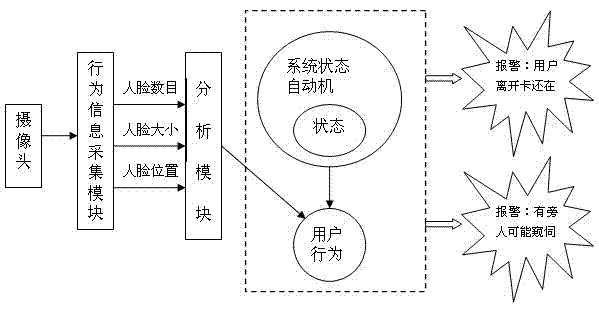

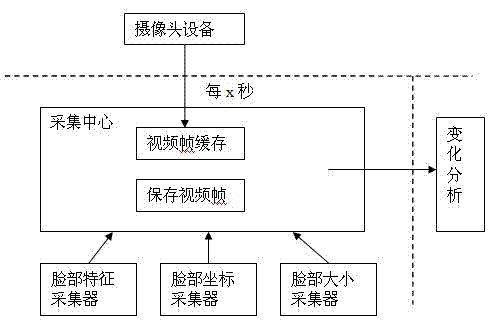

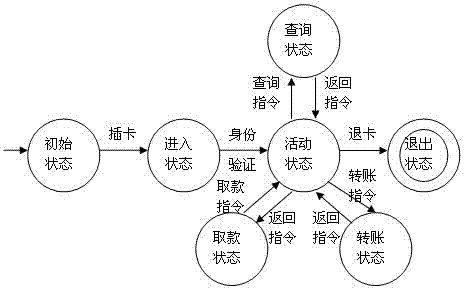

Visual behavior early warning prompting method and system for automatic teller machine (ATM) bank card

InactiveCN102176266AAvoid safety hazardsAvoid dangerComplete banking machinesAcquisition timePhases of clinical research

The invention discloses a visual behavior early warning prompting method and a visual behavior early warning prompting system for an automatic teller machine (ATM) bank card. The method comprises the following steps of: 1, periodically acquiring face information about a user using an ATM and people around the user; 2, establishing an ATM user behavior state automaton, and judging a current behavior state of the user; 3, comparing the face information acquired by the step 1 according to acquisition time; and 4, performing comprehensive analysis by combining information returned by the steps 2 and 3, making a judgment on normal behaviors and abnormal behaviors, and if the abnormal behaviors are judged, performing early warning prompting. In the method and the system, the influence of user behaviors on the safety of the ATM is focused, potential hazards are adaptively identified from the stages and changes of the behaviors by analyzing the behaviors of the ATM user, and the user is warned, so the hazards are prevented, the potential safety hazards of the ATM caused by the user behaviors are avoided, and the method for the safety of the ATM is more active, low in cost and efficient.

Owner:WUHAN UNIV

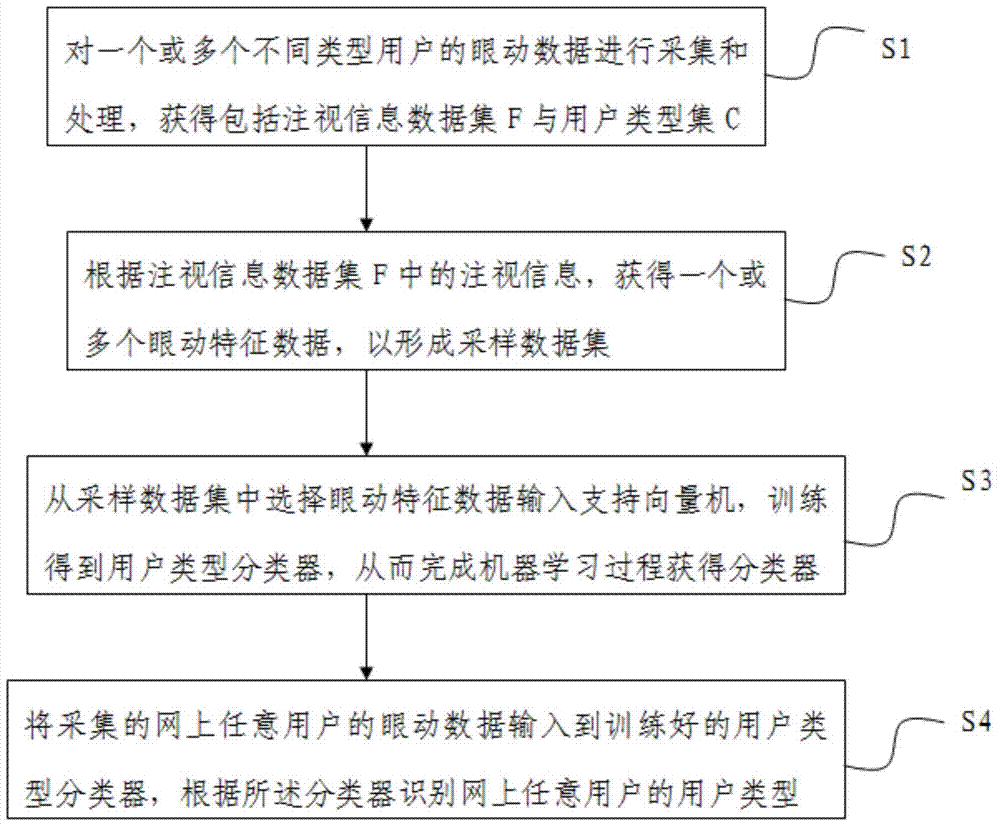

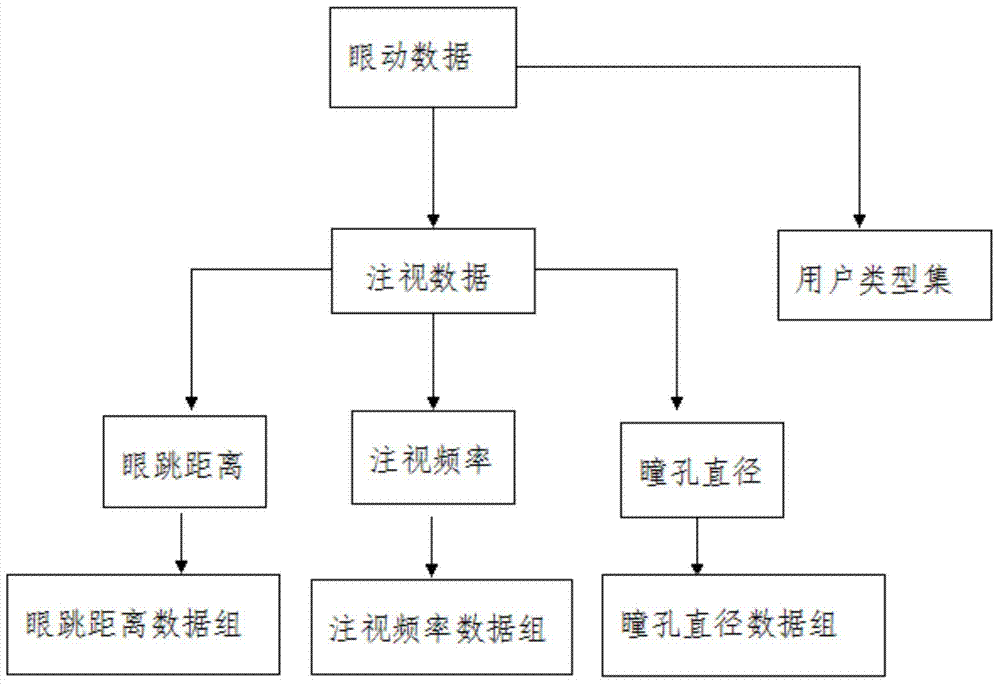

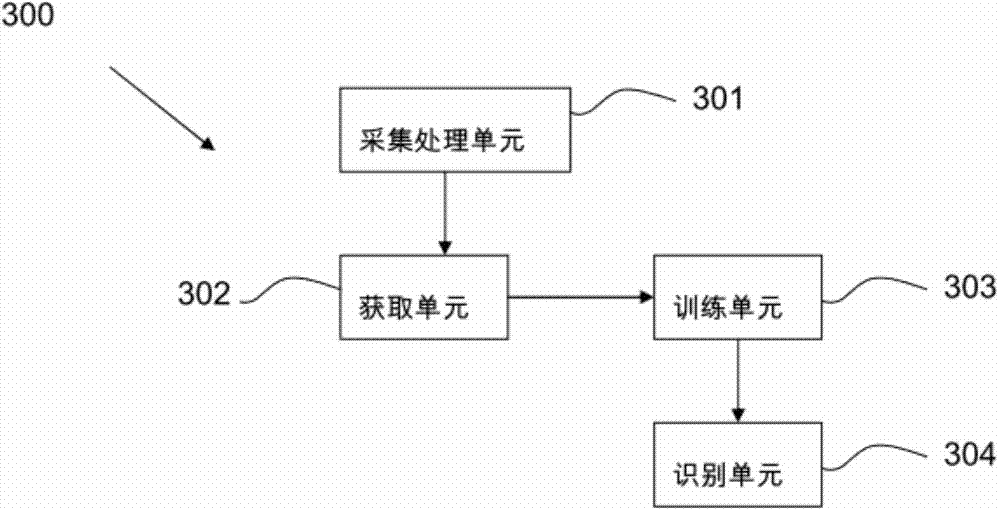

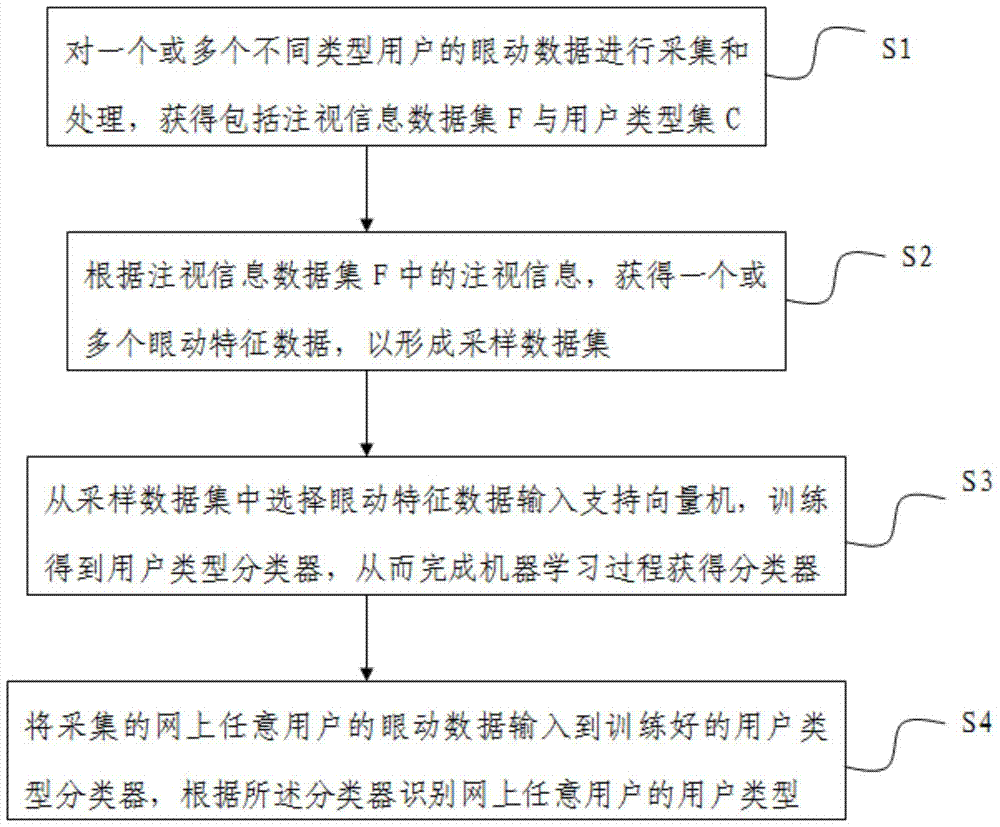

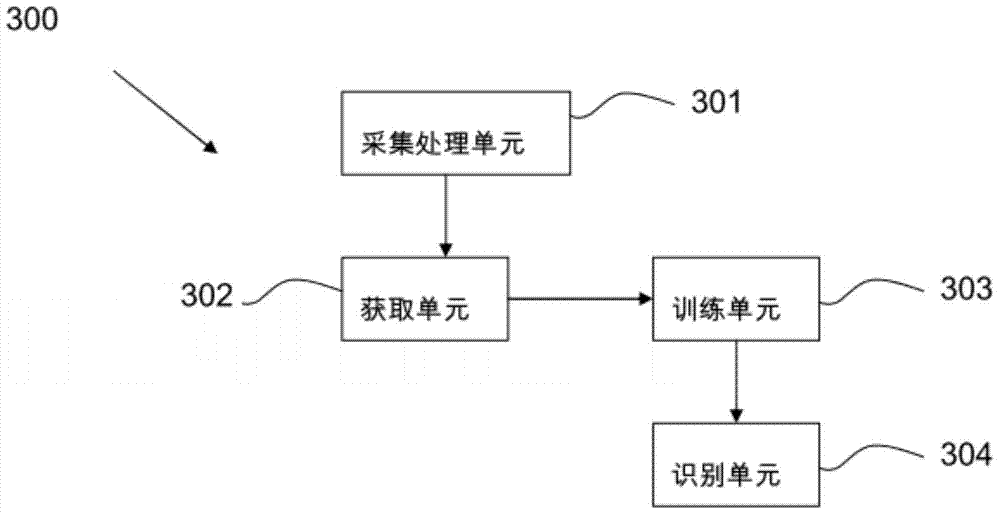

Online user type identification method and system based on visual behavior

ActiveCN104504404AEasy and reliable data extractionImprove accuracyCharacter and pattern recognitionSupport vector machineData set

The invention discloses an online user type identification method and an online user type identification system based on visual behavior. The online user type identification method based on the visual behavior includes: collecting and processing eye movement data of one type or various types of users, obtaining a watching information data set and a user type set, obtaining a piece or multiple pieces of eye movement feature data according to watching information concentrated in the watching information data set so as to generate a sampling data set, selecting a part of the eye movement feature data from the sampling data set, inputting the selected eye movement feature data into a support vector machine, obtaining a user type classifier through training, completing a machine learning process so as to obtain a classifier, inputting collected eye movement data of each arbitrary online user into the user type classifier after being trained, and identifying a user type of each arbitrary online user according to the obtained classifier. The online user type identification method based on the visual behavior proactively uses an eye movement tracking technology to obtain and calculate three types of the eye movement feature data of each user when each user browses a webpage, and judges the types of the online users according to differences of the eye movement feature data. The online user type identification method and the online user type identification system based on the visual behavior perform user identification based on the visual behavior, can proactively record the eye movement data of the online users, simply and conveniently extract the data, and are high in accuracy and reliability.

Owner:BEIJING UNIV OF TECH

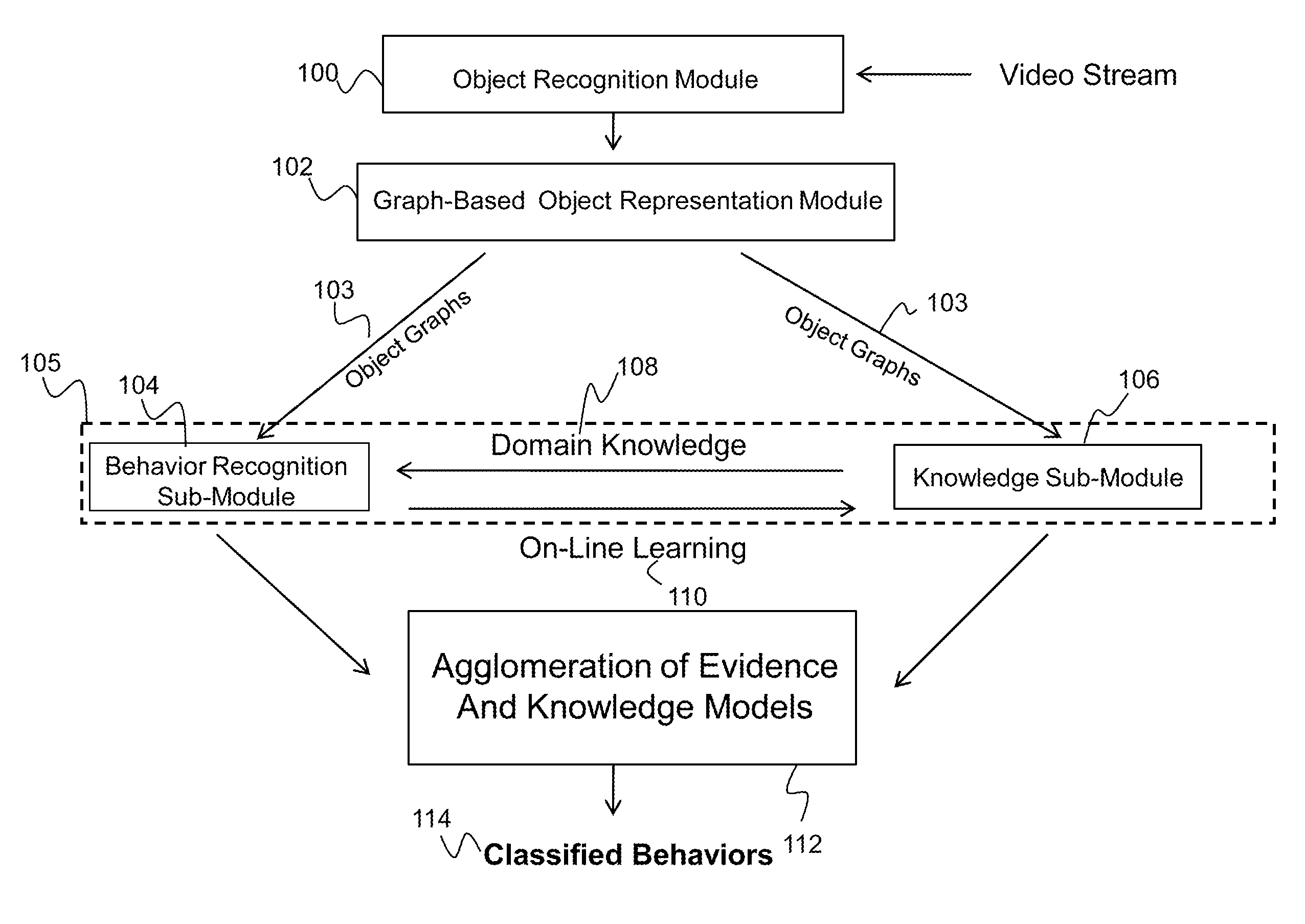

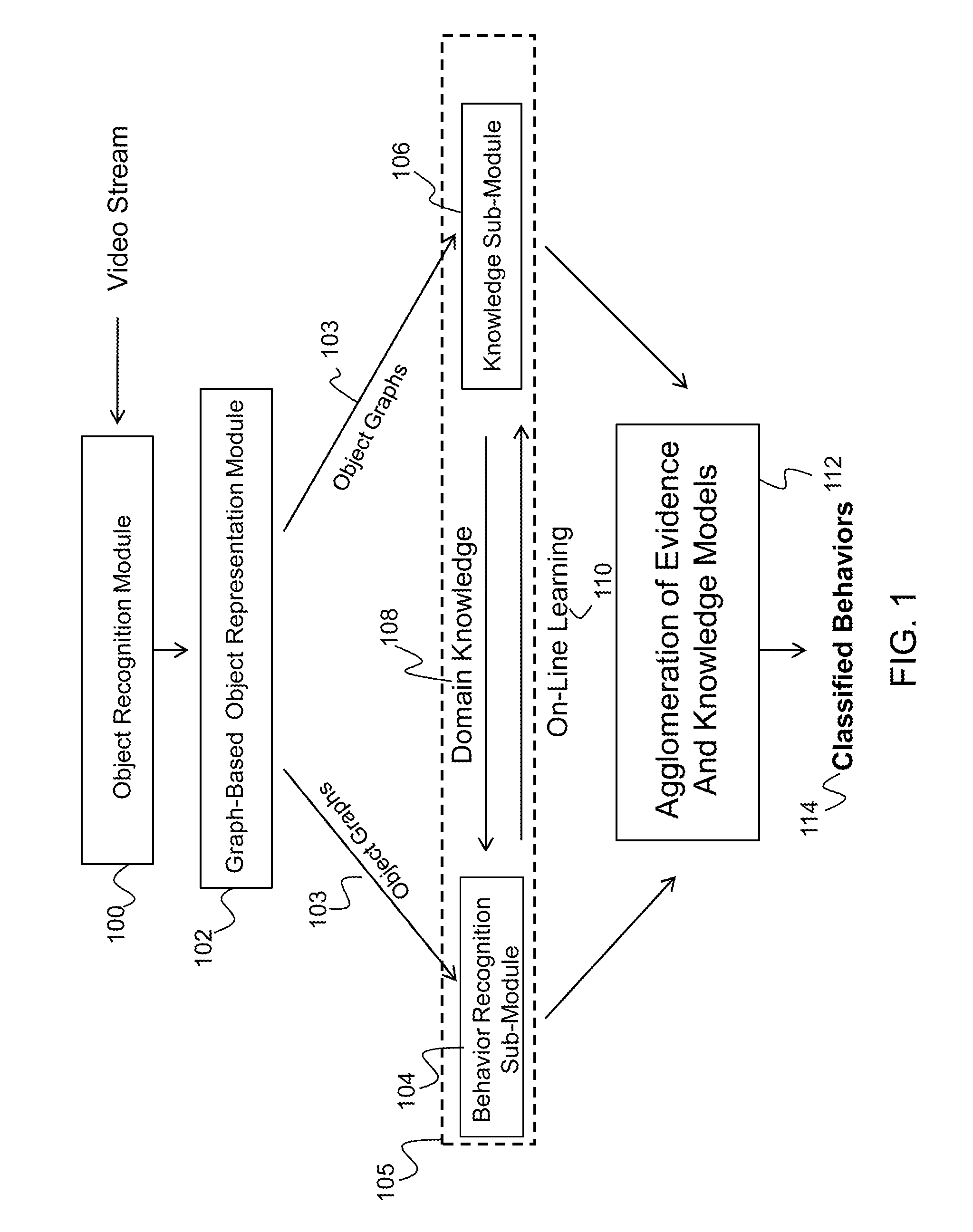

Method for online learning and recognition of visual behaviors

Described is a system for object and behavior recognition which utilizes a collection of modules which, when integrated, can automatically recognize, learn, and adapt to simple and complex visual behaviors. An object recognition module utilizes a cooperative swarm algorithm to classify an object in a domain. A graph-based object representation module is configured to use a graphical model to represent a spatial organization of the object within the domain. Additionally, a reasoning and recognition engine module consists of two sub-modules: a knowledge sub-module and a behavior recognition sub-module. The knowledge sub-module utilizes a Bayesian network, while the behavior recognition sub-module consists of layers of adaptive resonance theory clustering networks and a layer of a sustained temporal order recurrent temporal order network. The described invention has applications in video forensics, data mining, and intelligent video archiving.

Owner:HRL LAB

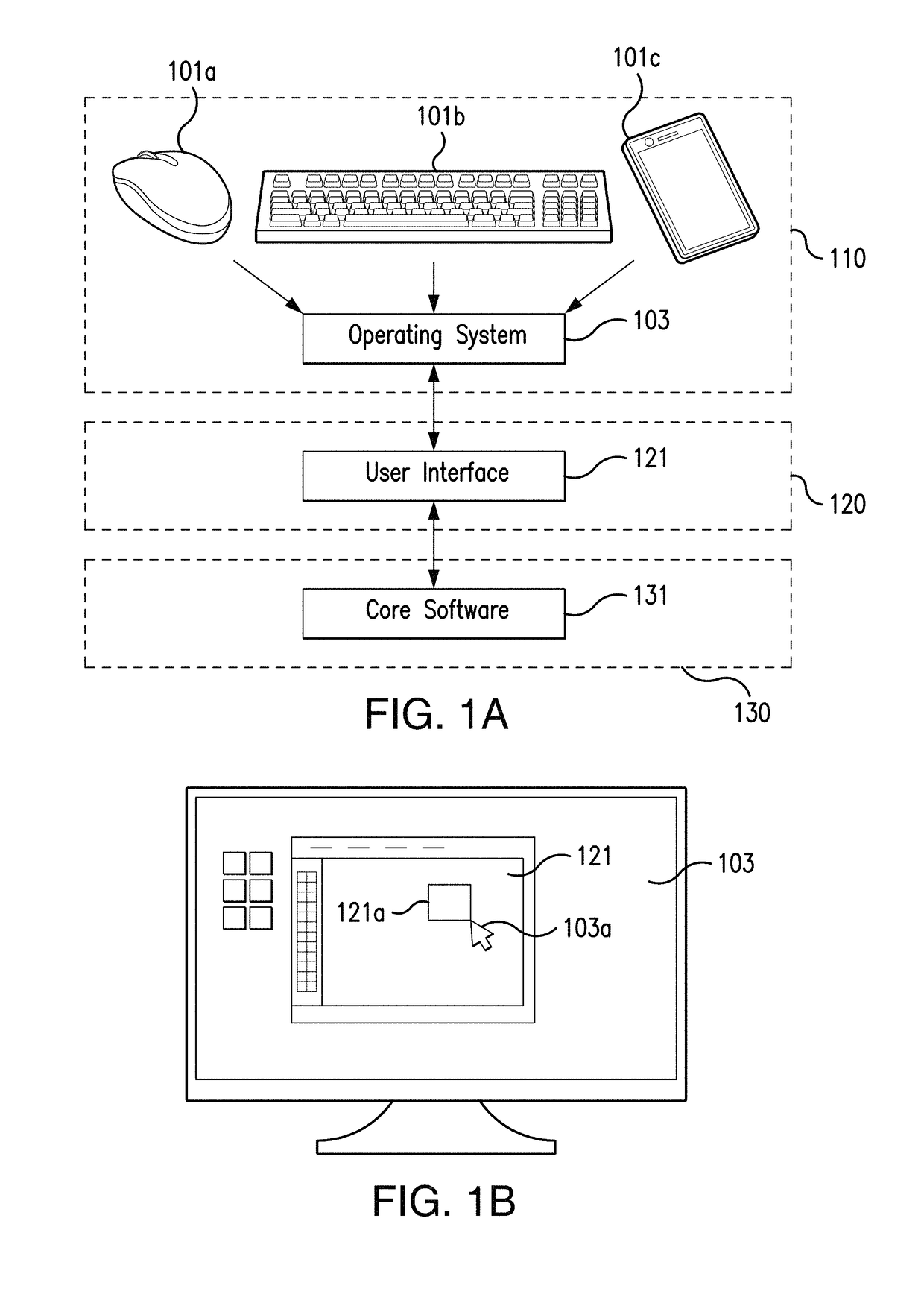

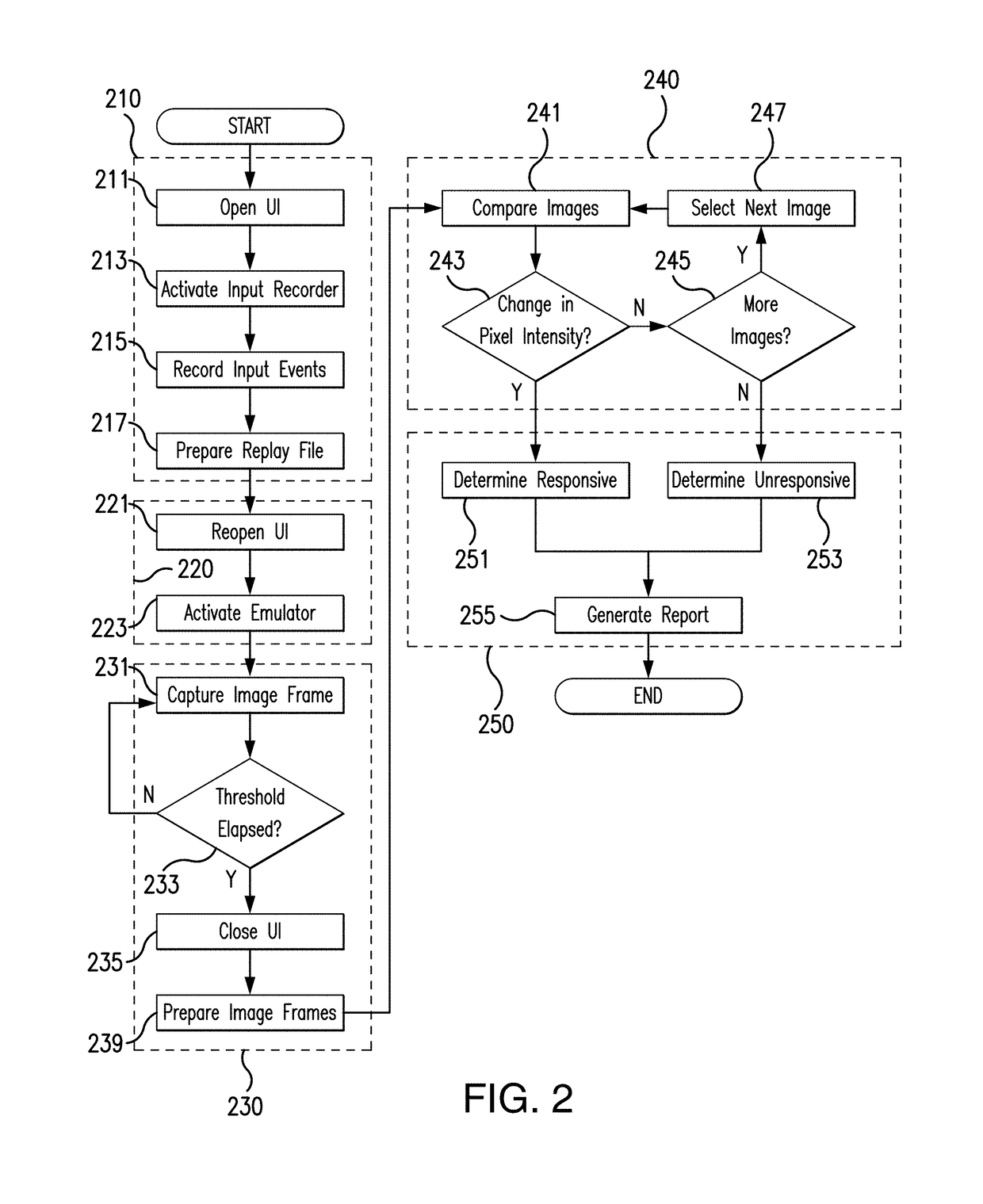

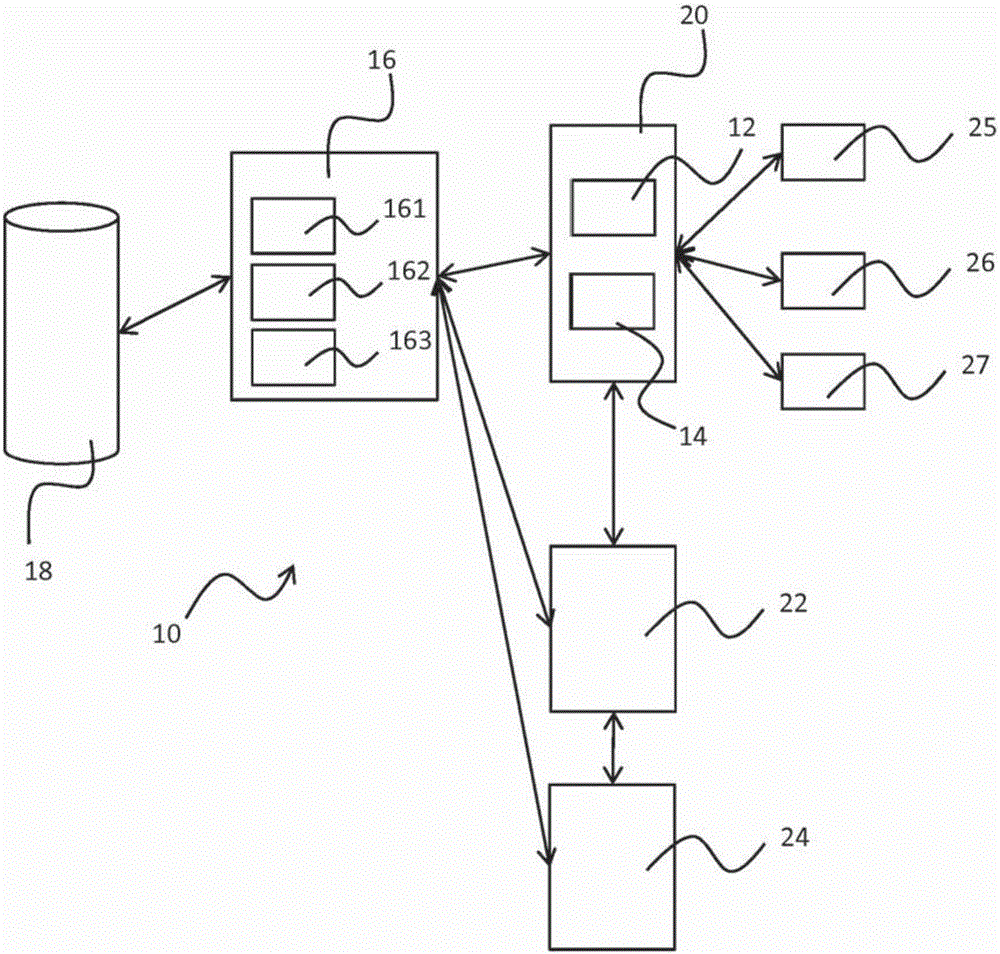

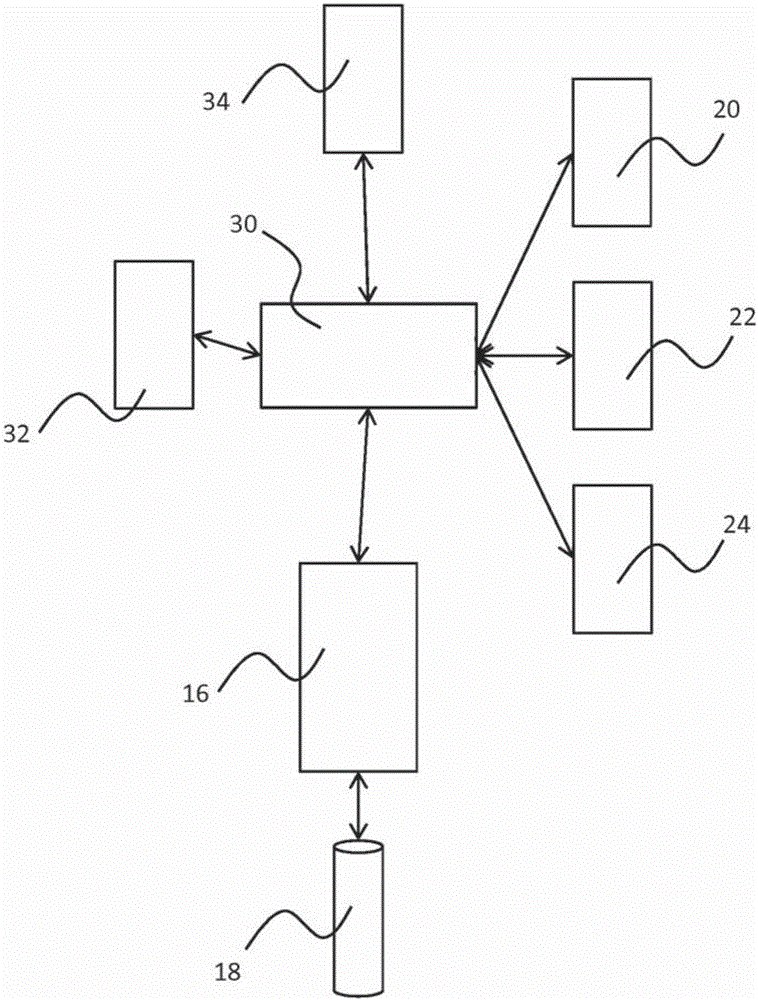

System and method for automated testing of user interface software for visual responsiveness

A benchmark test system captures and records root, or input, behavior from a user input device as one or more time-displaced samples of input. The system also separately captures and records the canvas, or visual, behavior of a user interface in response to the captured input as a series of time-displaced image frames. The image frames are analyzed for visual prompts occurring responsive to the input, and parameters of the image frames are determined. A parametric difference between corresponding ones of the root events and canvas responses is thereby computed, in order to determine a degree of visual responsiveness for the user interface software respective to the root input.

Owner:CADENCE DESIGN SYST INC

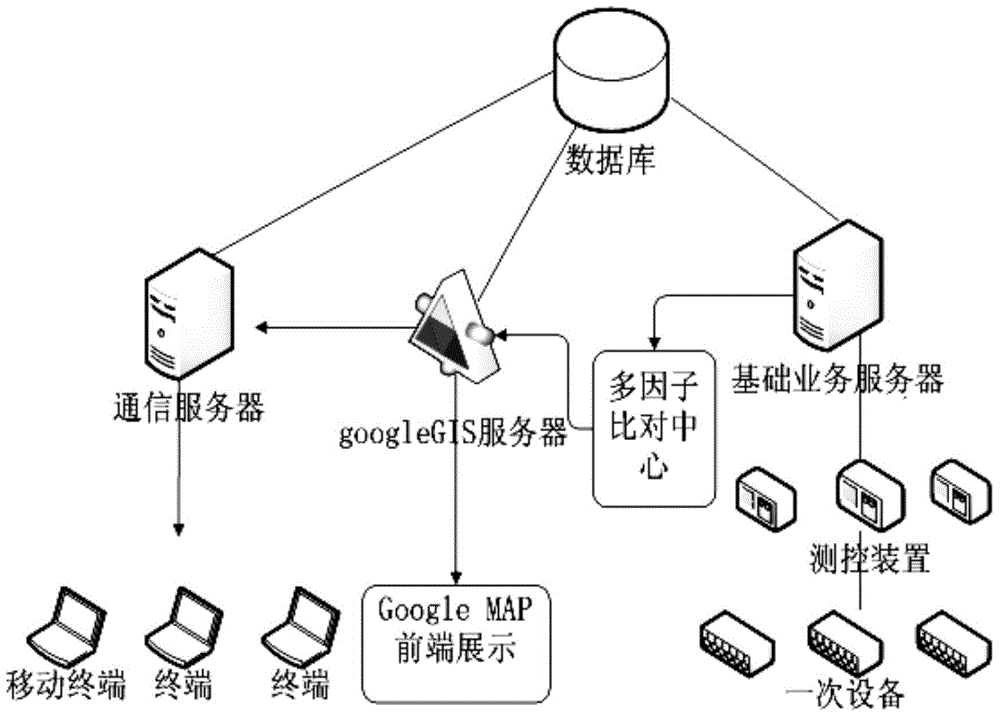

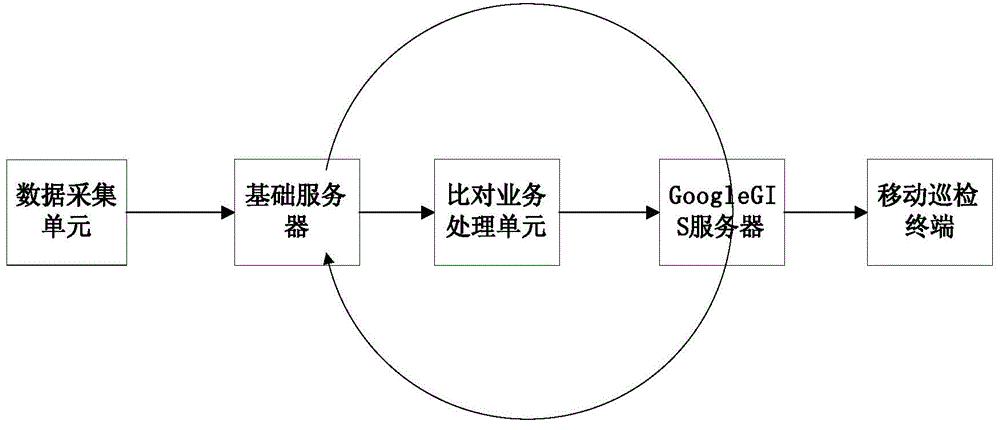

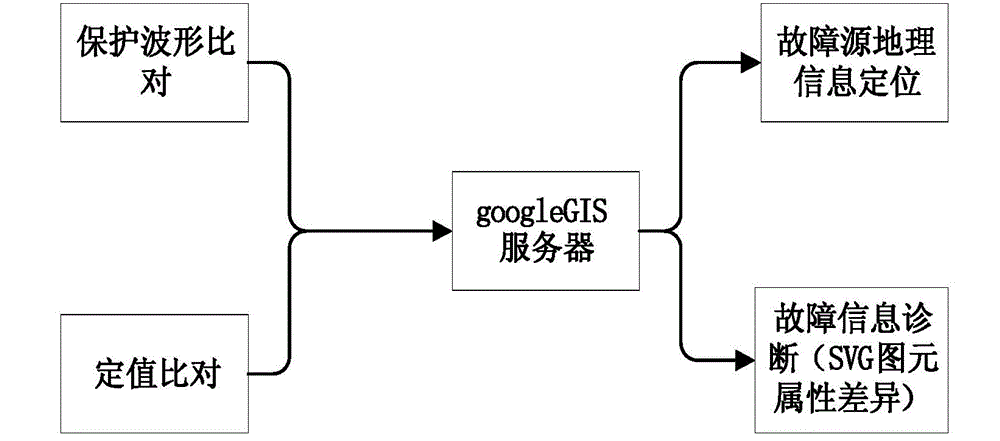

Intelligent substation fault diagnosing and positioning method based on multi-factor comparison visualization

ActiveCN104539047ARealize dynamic visual behavior operationCircuit arrangementsSpecial data processing applicationsVisual behaviorSmart substation

The invention discloses an intelligent substation fault diagnosing and positioning method based on multi-factor comparison visualization. The method includes the steps of generating SVG graphic primitives of a relay protection device, indicating GoogleGIS geographic information, converting COMTRADE files into SVG files, comparing protection waveforms with definite value information, and positioning fault information. The dynamic visual behavior operation of the relay protection device is truly achieved; the healthy and stable running of a whole electric system can be globally and remotely monitored; through multi-factor comparison, the cause of faults can be more accurately and efficiently found; the fault location is indicated in time through a GoogleGIS; a main station dispatching center can completely visually monitor the running of the whole electric system in a responsibility area in real time; once a circuit and an electric device fail, the fault source location can be instantly positioned, and inspection and maintenance personnel near a fault source can be dispatched in time, and the real intelligent visual running maintenance of the whole electric system is achieved.

Owner:STATE GRID CORP OF CHINA +3

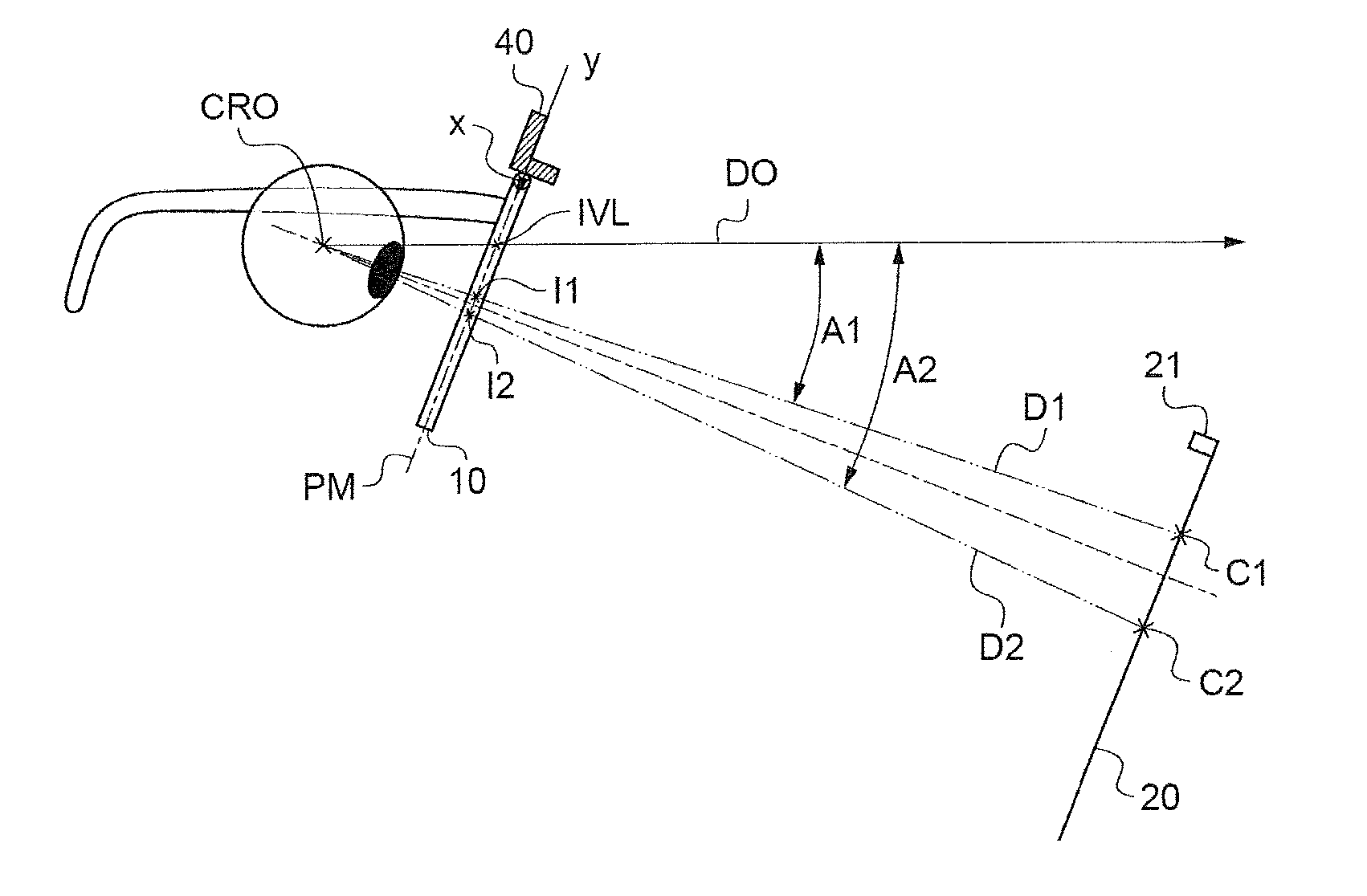

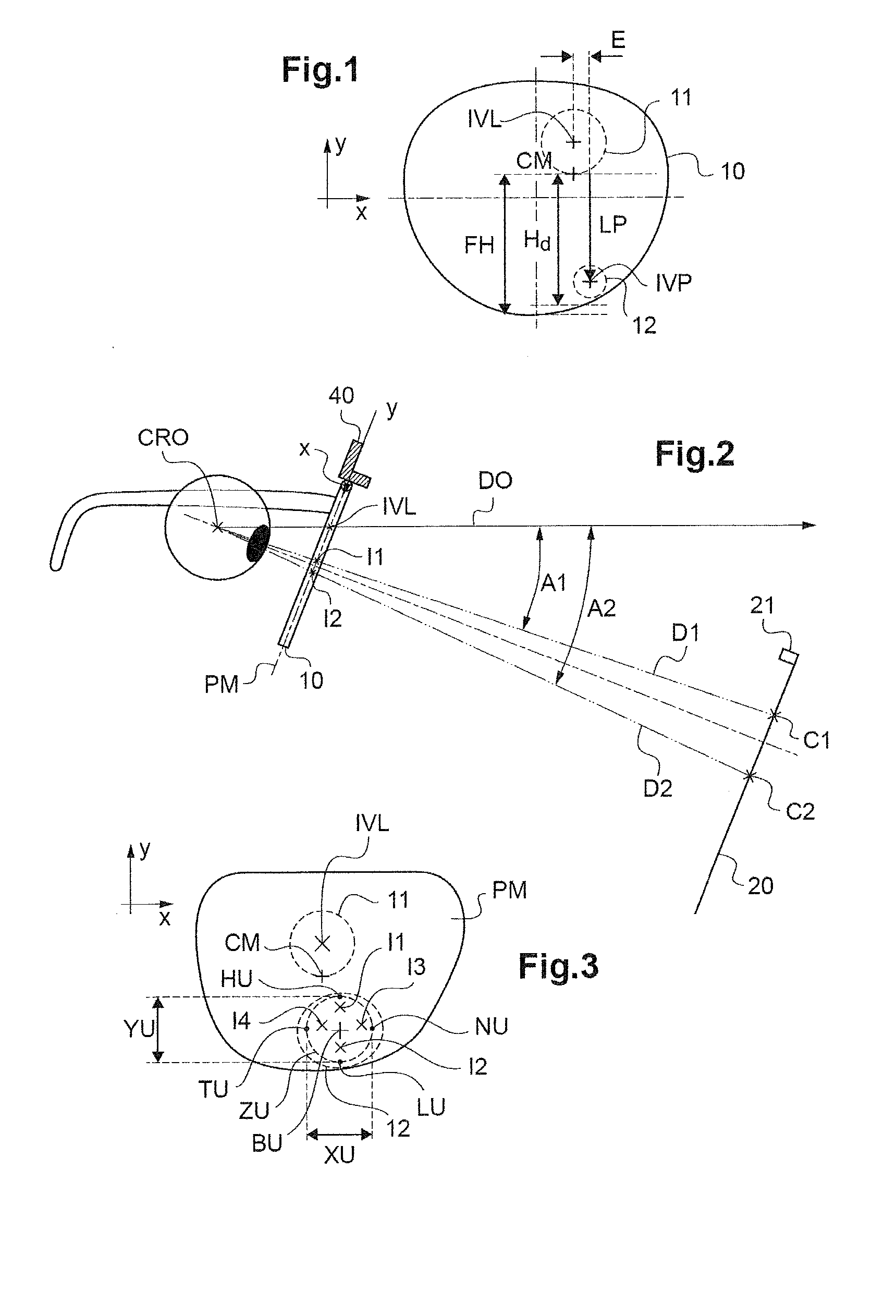

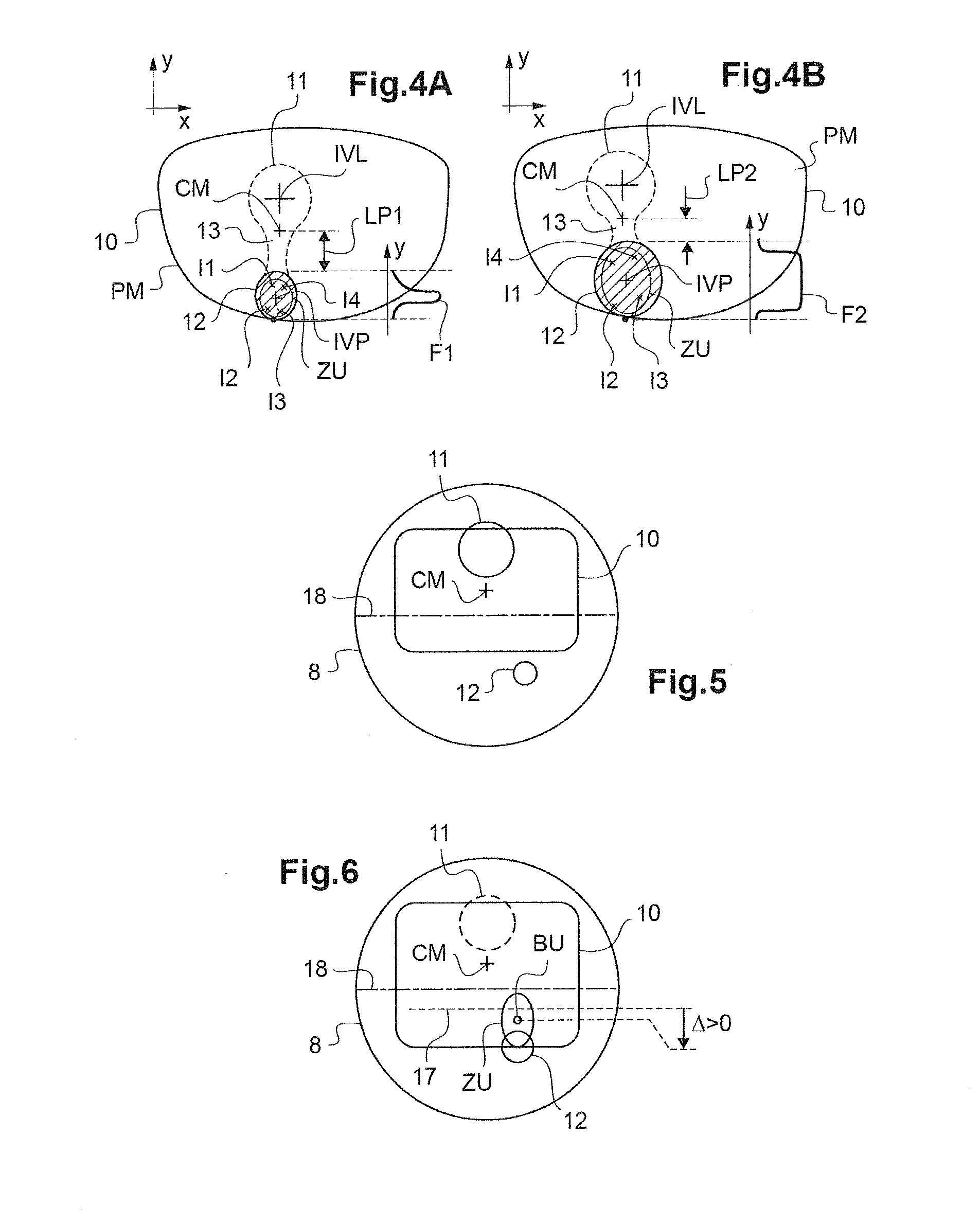

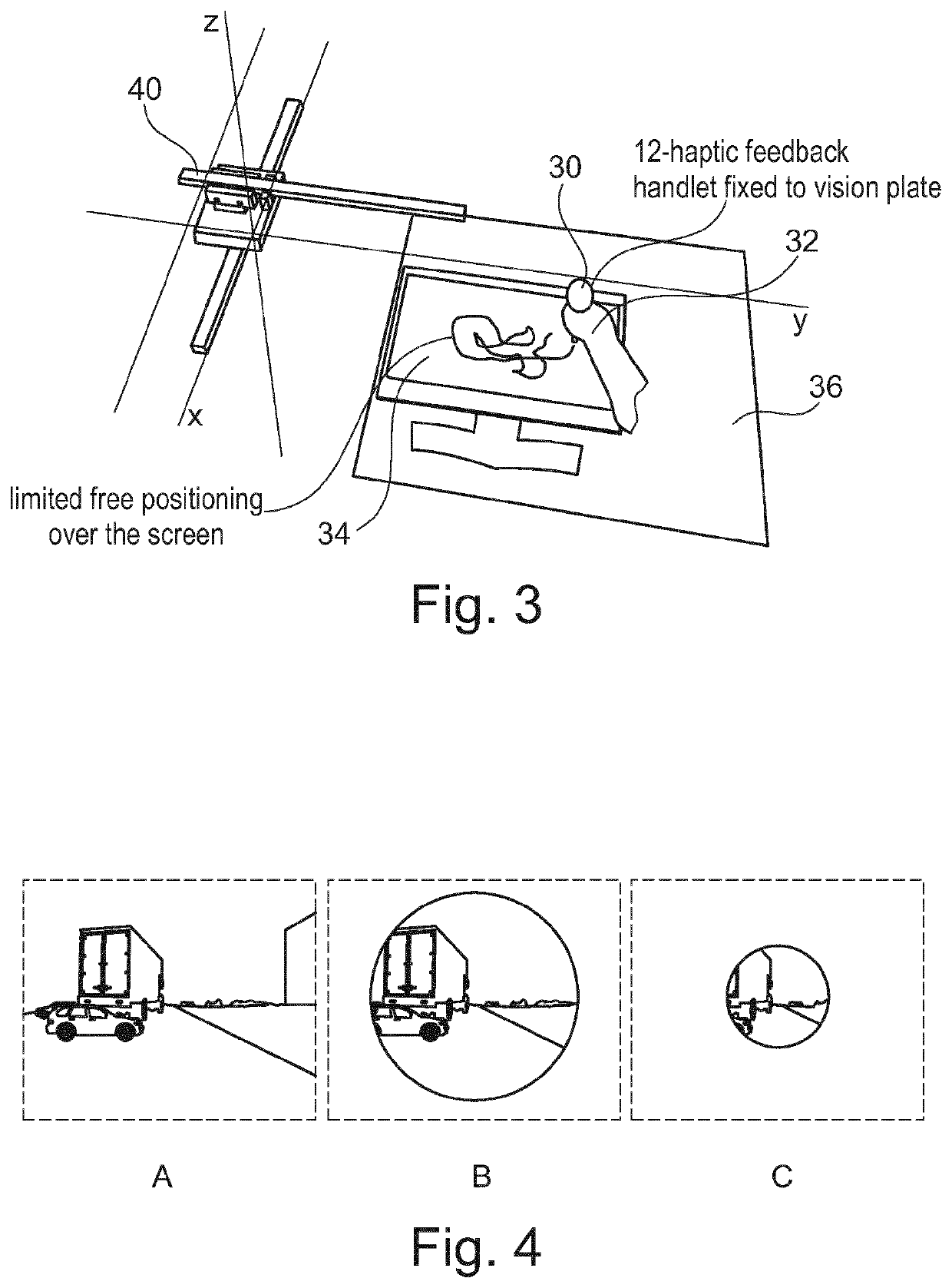

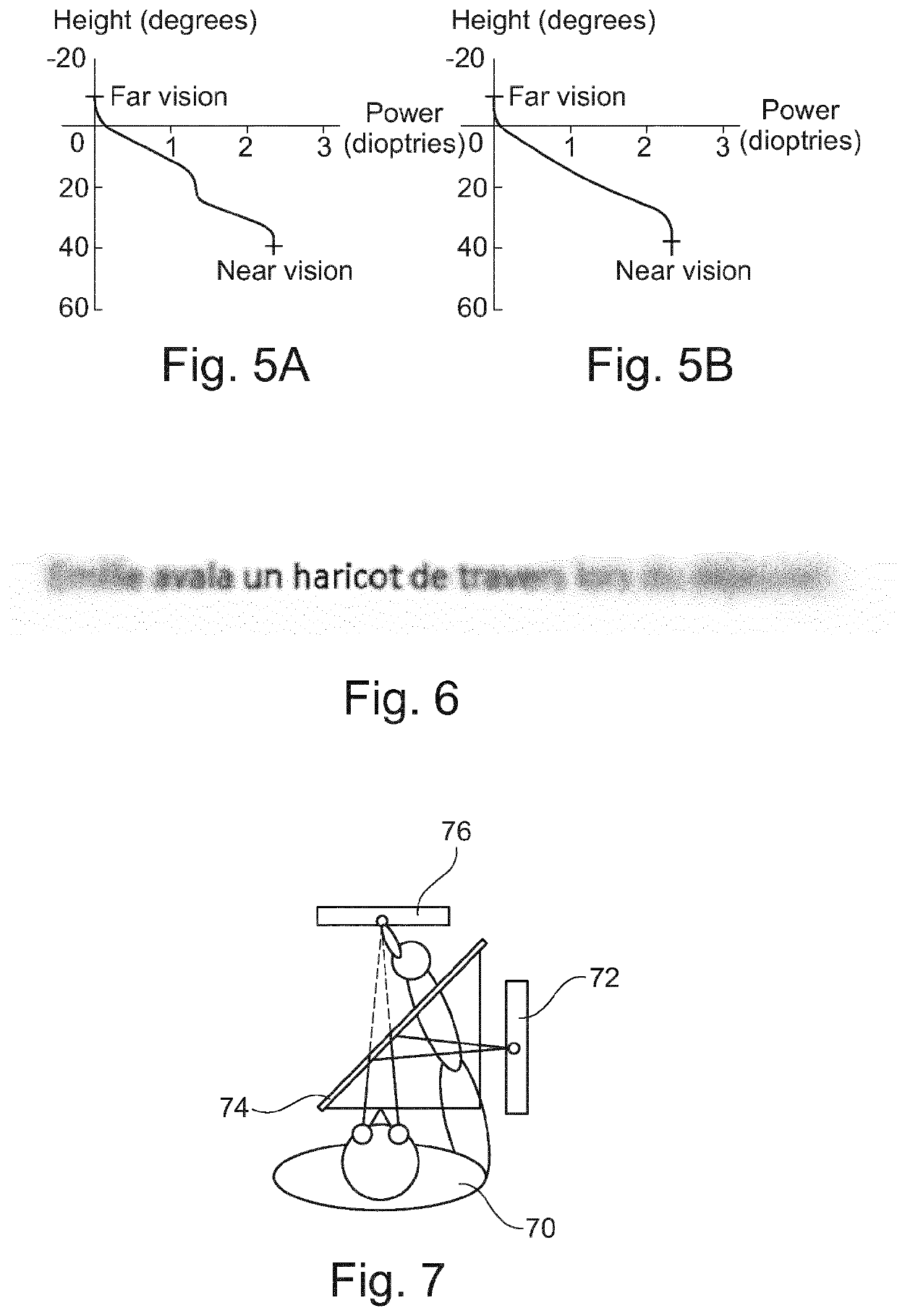

Method For Determining At Least One Optical Design Parameter For A Progressive Ophthalmic Lens

ActiveUS20160274383A1Improve visual comfortSpectales/gogglesEye diagnosticsGaze directionsComputer science

A method for determining at least one optical conception parameter for a progressive ophthalmic lens intended to equip a frame of a wearer, depending on the visual behavior of the latter. The method comprises the following steps: a) collecting a plurality of behavioral measurements relating to a plurality of gaze directions and / or positions of the wearer during a visual task; b) statistically processing said plurality of behavioral measurements in order to determine a zone of use of the area of an eyeglass fitted in said frame, said zone of use being representative of a statistical spatial distribution of said plurality of behavioral measurements; and c) determining at least one optical conception parameter for said progressive ophthalmic lens depending on a spatial extent and / or position of the zone of use.

Owner:ESSILOR INT CIE GEN DOPTIQUE

Method and apparatus for developing a person's behavior

ActiveUS8740623B2Assist in developing the behavior in the personElectrical appliancesTeaching apparatusHuman behaviorAdaptive response

An embodiment of an apparatus, or corresponding method, for developing a person's behavior according to the principles of the present invention comprises at least one visual behavior indicator that represents a behavior desired of a person viewing the at least one visual behavior indicator. The apparatus, or corresponding method, further includes at least two visual choice indicators viewable with the at least one visual behavior indicator that represent choices available to the person, the choices assisting in developing the behavior in the person by assisting the person in choosing an appropriately adaptive response supporting the desired behavior or as an alternative to behavior contrary to the desired behavior.

Owner:BEE VISUAL

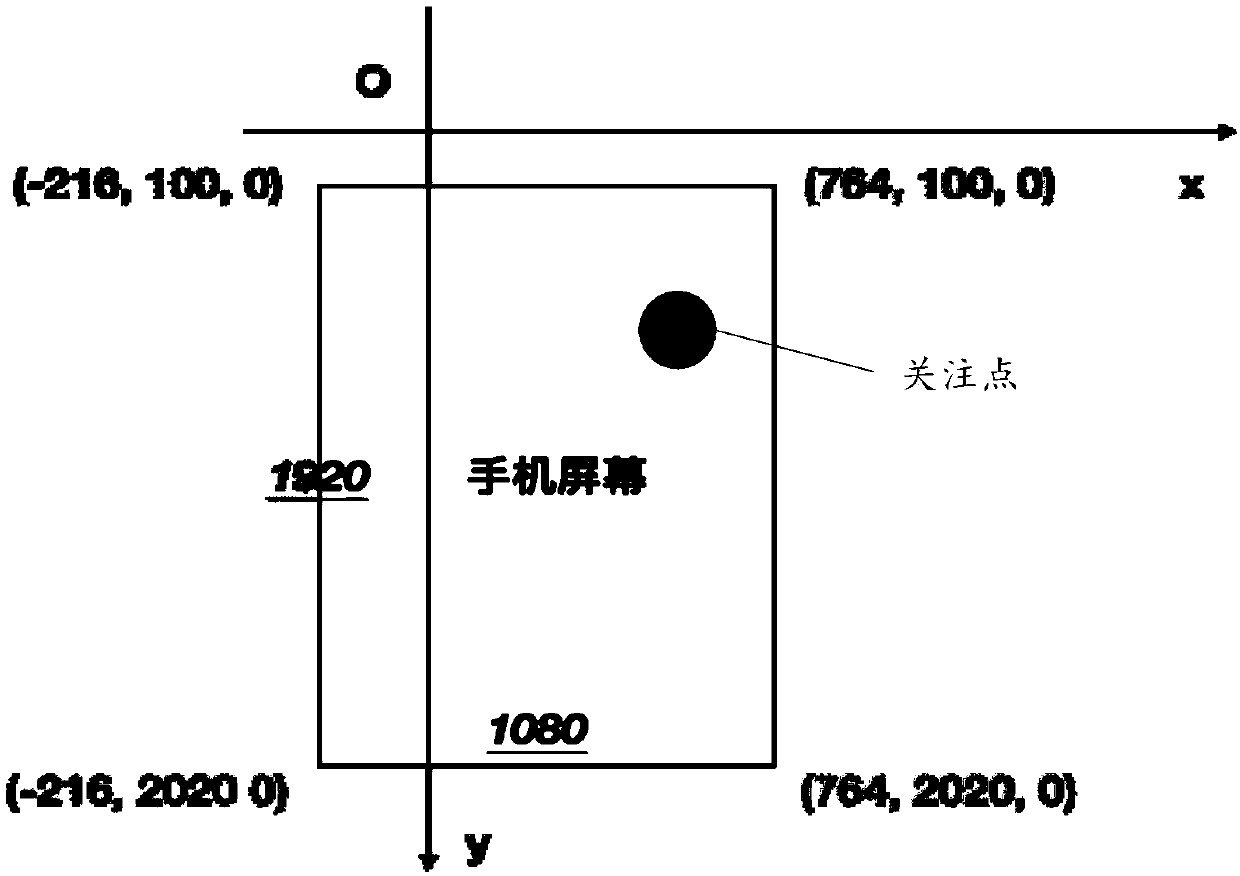

Method and device for obtaining user behavior data

PendingCN110658907AEasy to displayInput/output for user-computer interactionGraph readingEngineeringHuman–computer interaction

The embodiment of the invention discloses a method and device for obtaining user behavior data, and the method and device are applied to client equipment with a depth sensing device, and the method comprises the steps: obtaining a focus point of a sight line of a user on a user interface through the depth sensing device on the client equipment; tracking the attention point, and obtaining the stayduration and the attention range of the sight line of the user on the user interface; and determining visual activity data of the user on the user interface based on the stay duration and the attention range. By means of the technical scheme, the visual behavior data of the user can be collected under the condition that the user does not perceive any more, and the accuracy of the obtained user behavior data is improved.

Owner:阿里宋汉信息技术有限公司

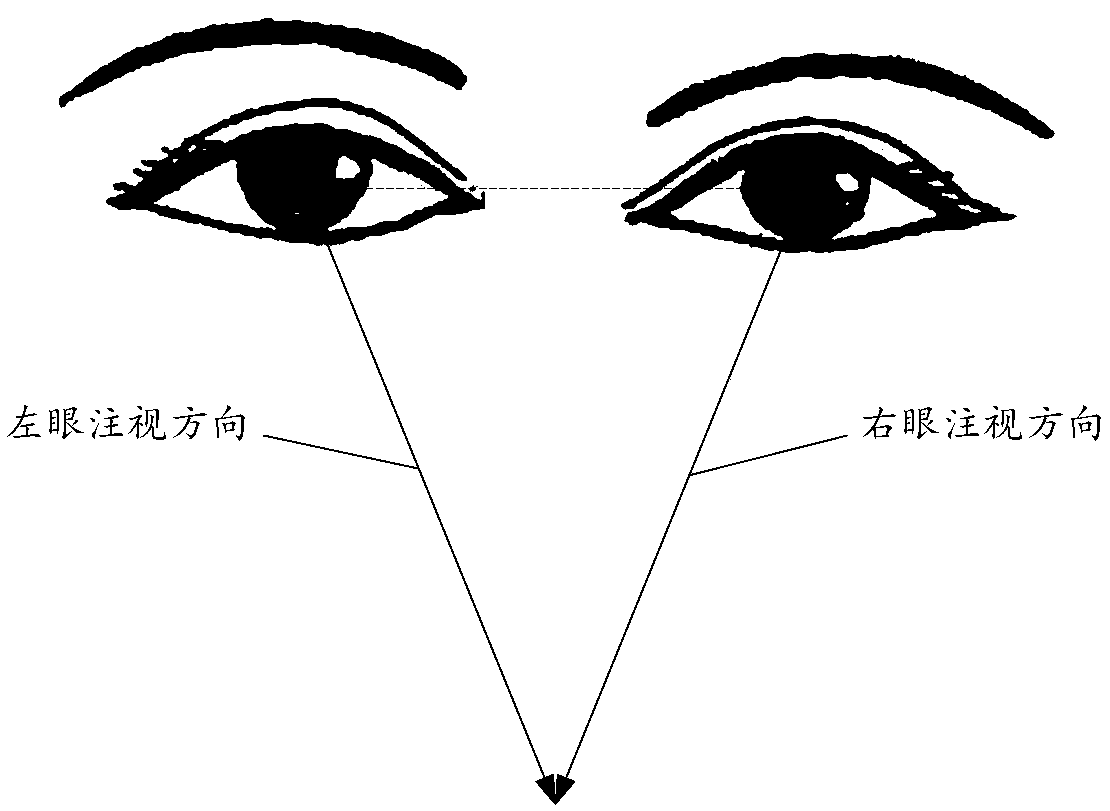

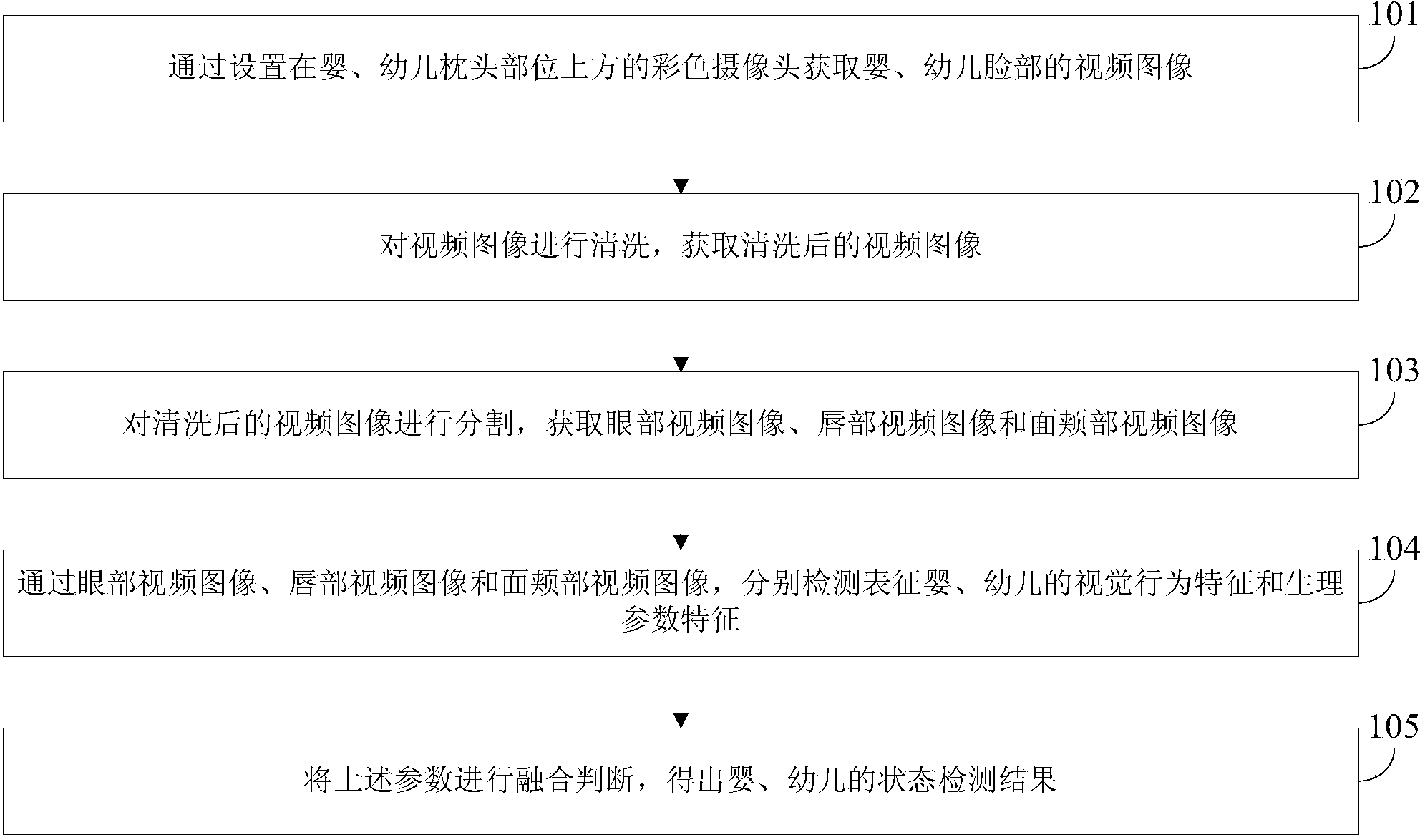

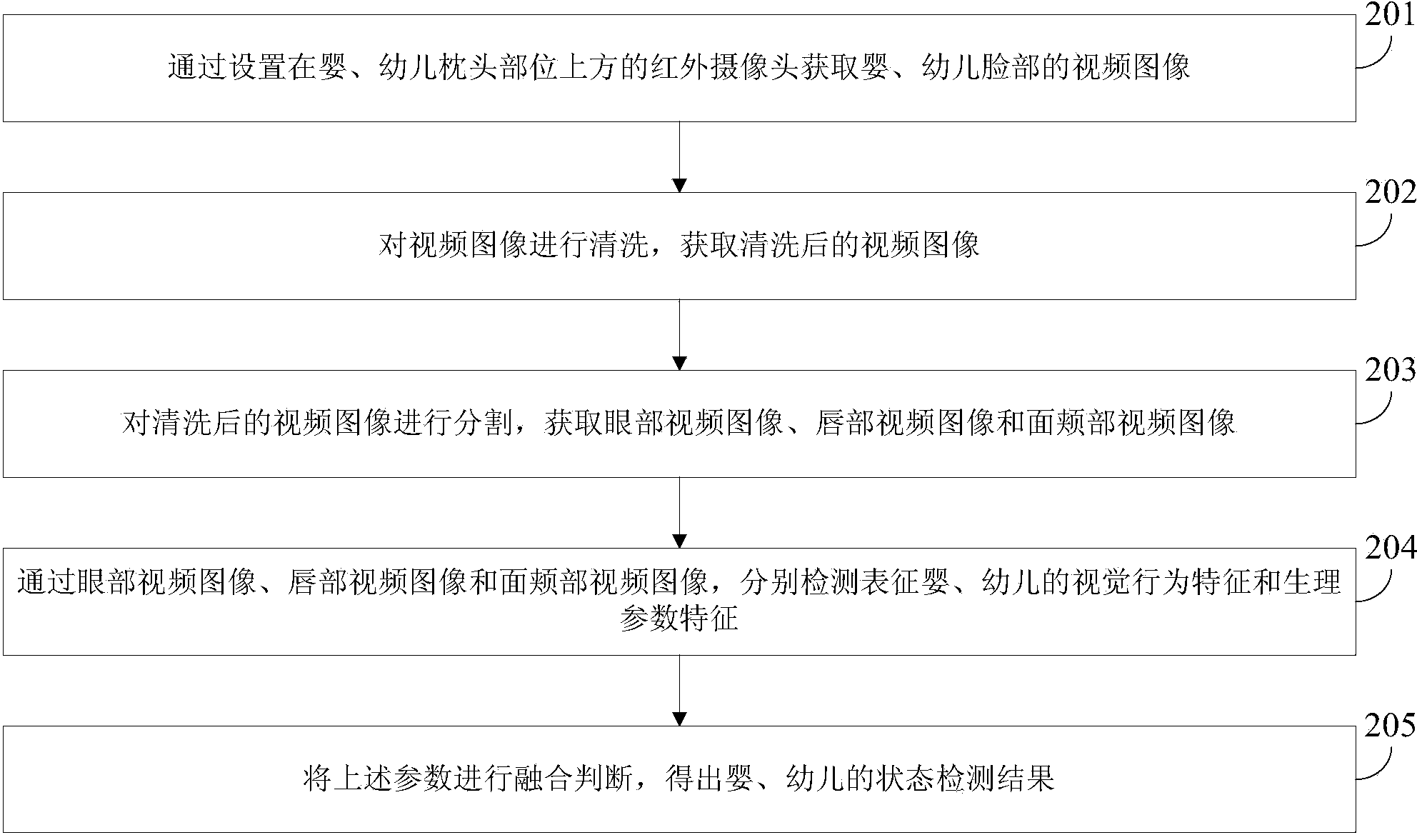

Infant monitoring method and device based on robot vision

InactiveCN104077881ADoes not affect behaviorIncreased wearing burdenDiagnosticsSurgeryVideo imageVisual perception

The invention discloses an infant monitoring method and device based on robot vision. The method includes the steps that visual behavior characteristics and physiological parameter characteristics of an infant are detected and represented according to eye video images, lip video images and cheek video images; the parameters are fused and judged to obtain a sleep state detection result of the infant. The device comprises an embedded system used for extracting the visual behavior characteristics and the physiological parameter characteristics of a testee, fusing and processing the characteristic information and sounding an alarm, wherein the visual behavior characteristics include mouth openness frequency, the head position, duration, posture changing frequency of the infant, and the physiological parameter characteristics include pulses and the like. When the sleep state of the infant is abnormal, an alarm signal is output to an alarm, and an abnormal signal is output to a parent or related guardianship personnel at the same time. Only one camera is used for obtaining the information, more information including physiological parameters of the infant is obtained, and health condition monitoring reliability of the infant is greatly improved.

Owner:TIANJIN UNIV

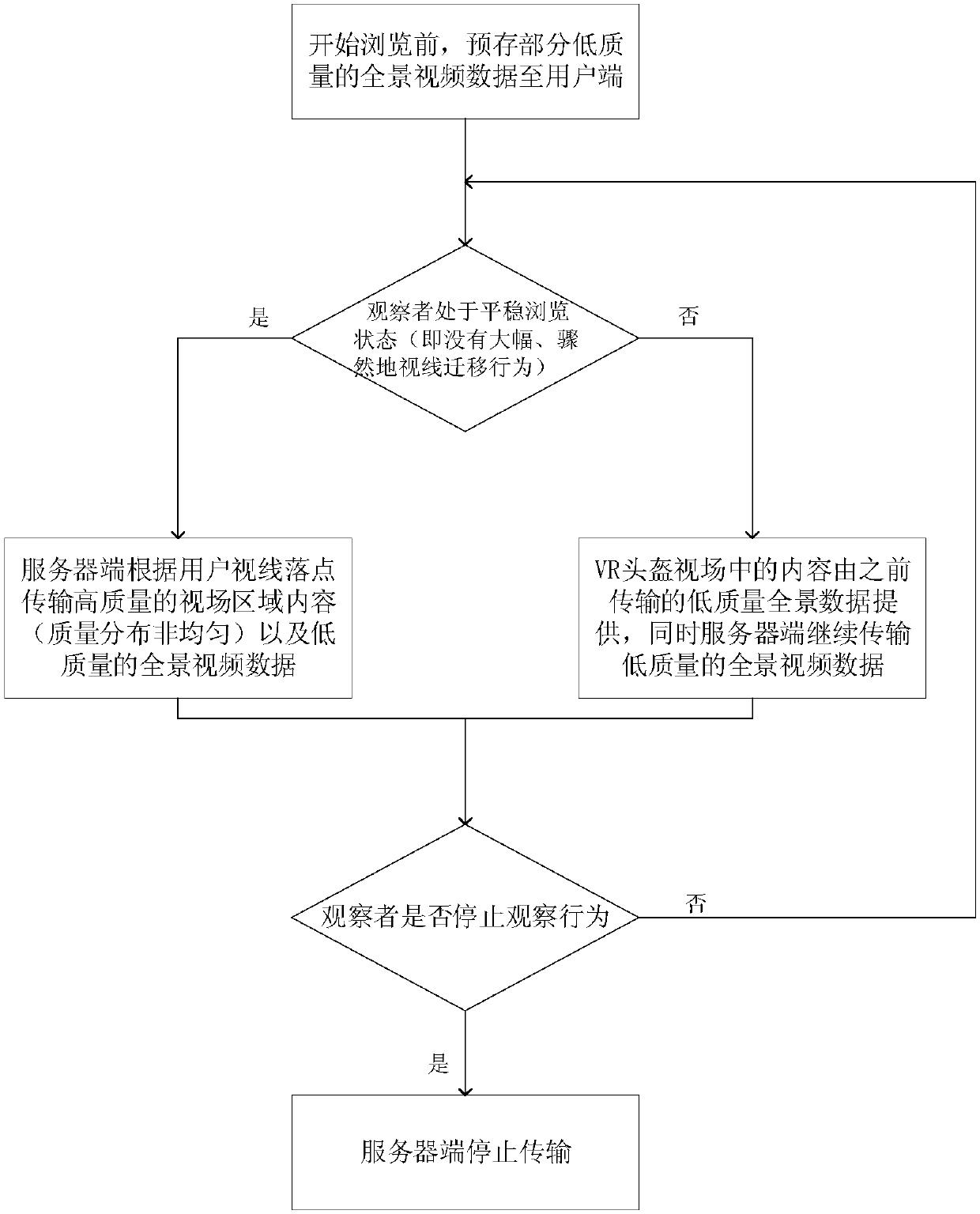

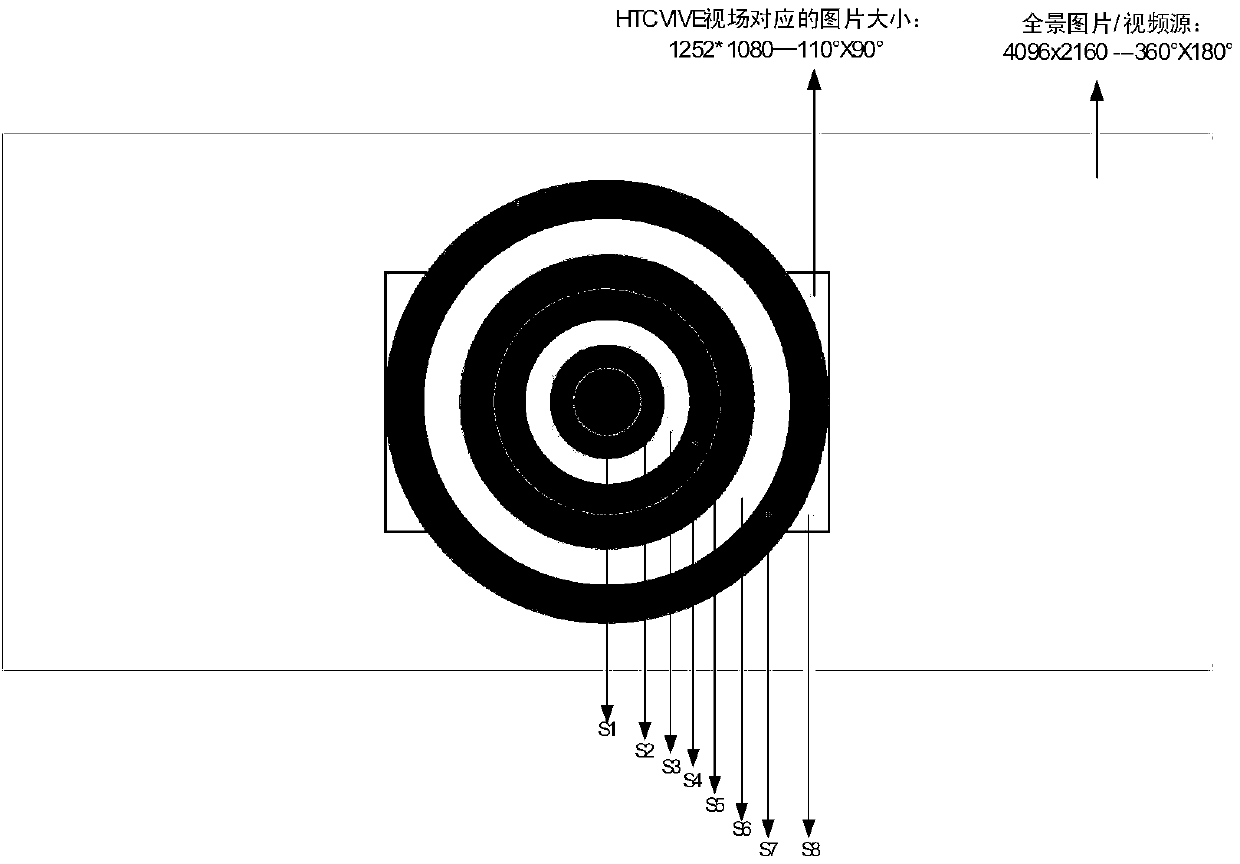

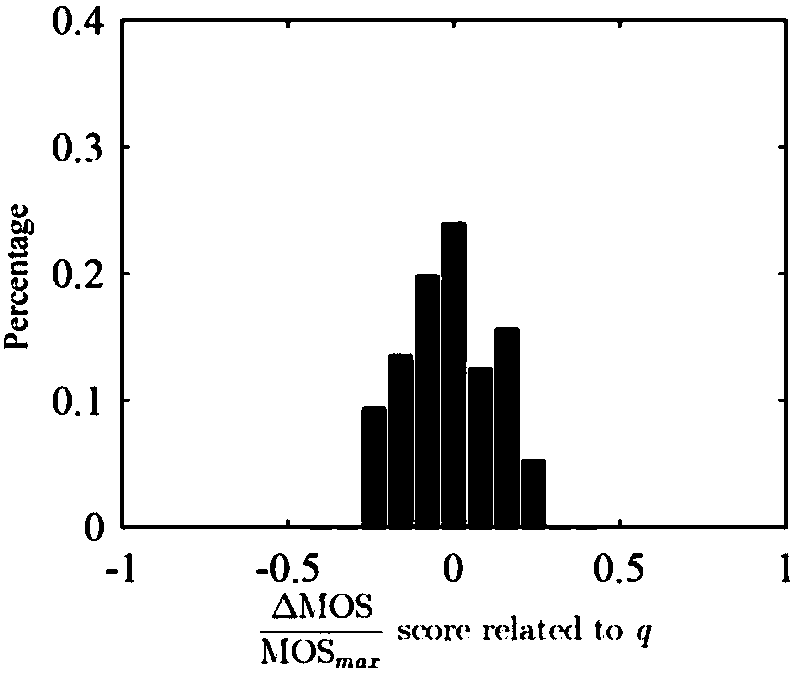

Immersive media data transmission method adapting to human eye perception condition

InactiveCN110072121ASubjective perceived quality unchangedReduce sizeInput/output for user-computer interactionTransmissionData streamImaging quality

The invention discloses an immersive media data transmission method adapting to a human eye perception condition. The method comprises the following steps of S1, before a user starts to browse, pre-storing part of low-quality panoramic video data to a user side; s2, when the user starts to browse stably, enabling the server side to transmit the high-quality view field area content according to thecurrent visual behavior of the user, and distributing the non-uniformly distributed image quality under the current view field based on the current sight drop point of the user; s3, during the process that the visual focus of the user is migrated to the next region from the current region, providing the data in the view field of the VR helmet by the pre-stored low-quality panoramic video data, and continuously pre-storing the low-quality panoramic video data to the user side; and S4, when the user recovers stable browsing and the sight line is migrated again, repeating the steps S2 and S3 until the observation is stopped. According to the method, the size of the VR data flow can be effectively reduced, and meanwhile it can be guaranteed that the subjective perception quality of the videocontent in the whole view field area is not changed.

Owner:NANJING UNIV

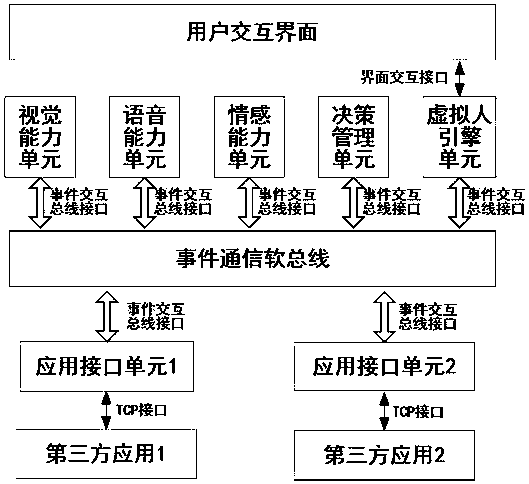

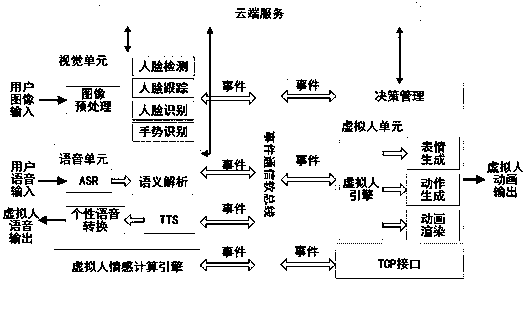

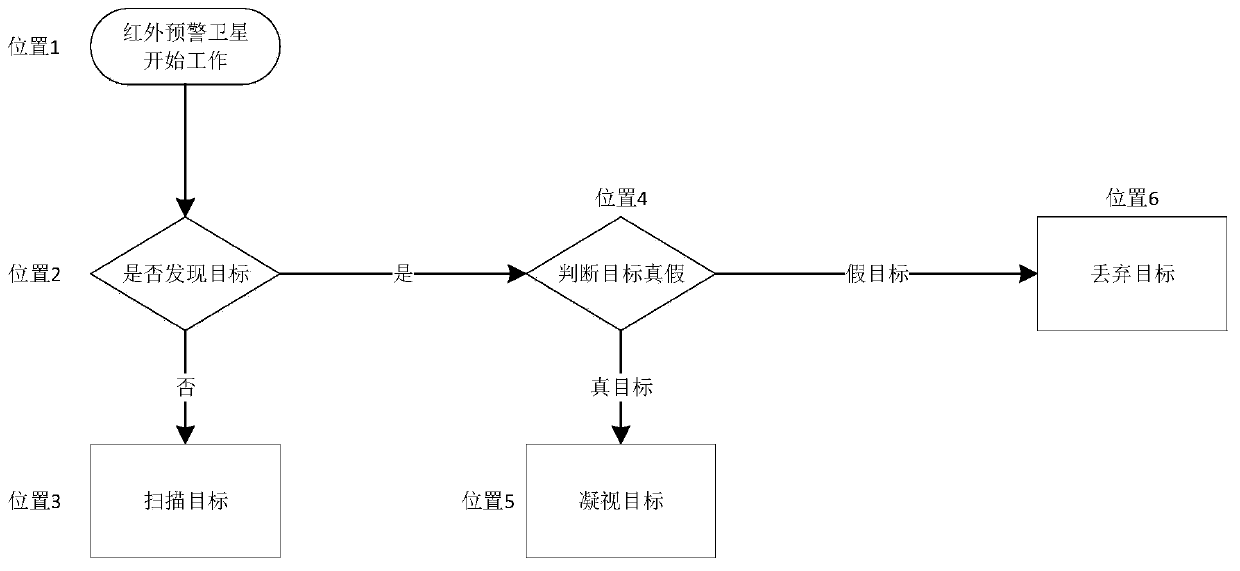

Virtual human interaction software bus system and an implementation method thereof

InactiveCN109917917AImprove interactivityQuick interactionInput/output for user-computer interactionSoftware designSoftware busHuman interaction

The invention discloses a virtual human interaction software bus system and an implementation method thereof, and the system comprises an event communication soft bus which is used for realizing softbus communication between modules based on a publish-subscribe mechanism; The visual ability unit and the voice ability unit are used for virtual human vision and voice interaction; The emotional ability unit is used for calculating the emotional state of the virtual human; The decision management unit is used for generating a behavior control instruction of the virtual human; The virtual human engine unit is used for driving visual behavior display of the virtual human; The application interface unit is used for interacting with a third-party application; According to the invention, each unitis mounted on an event communication soft bus through a bus interface; According to the system, the third-party application is supported to access the system according to the interface protocol, so that integrated control of virtual human images, actions, expressions, sounds, clothes transformation and other operations is realized, the problem of compatibility of different application systems tovirtual human control is solved, the virtual human is easier to integrate into an intelligent man-machine interaction process, and the user experience is effectively improved.

Owner:南京七奇智能科技有限公司

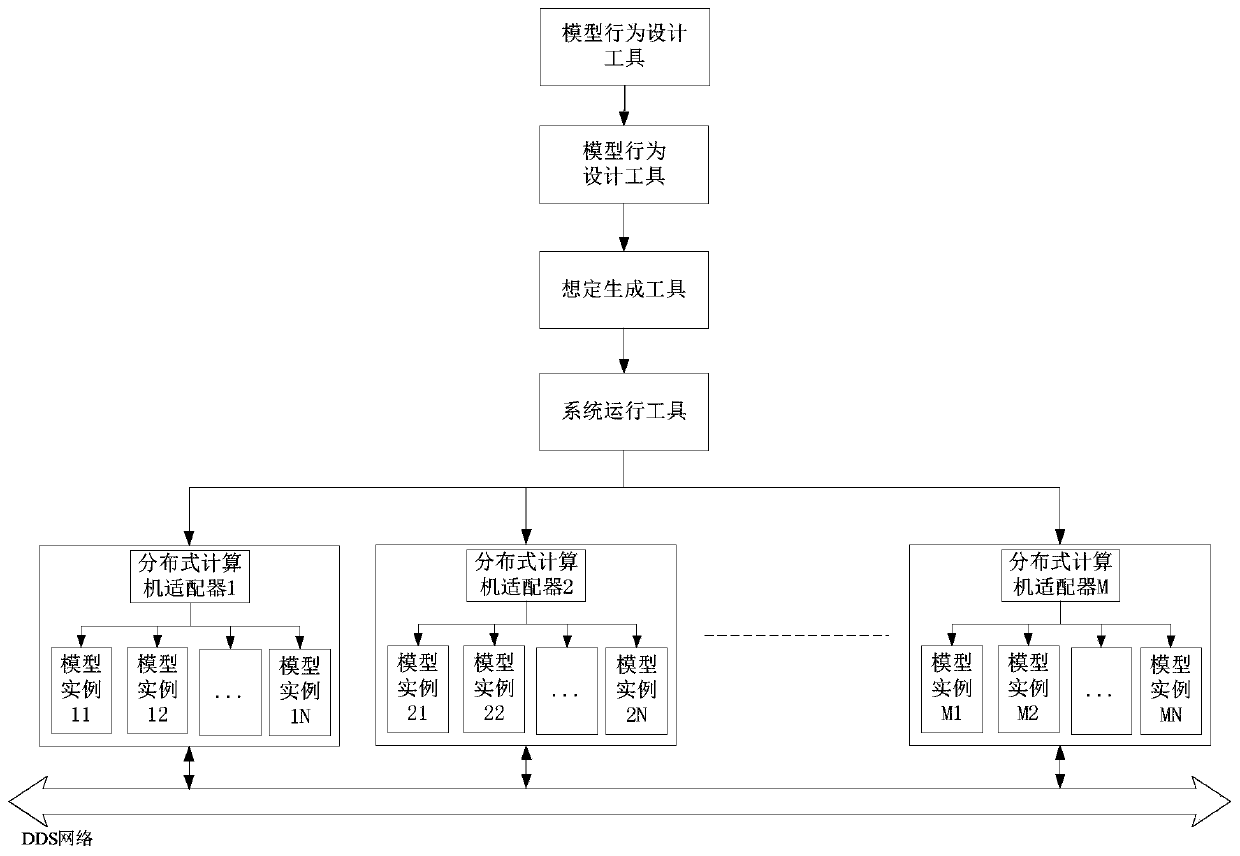

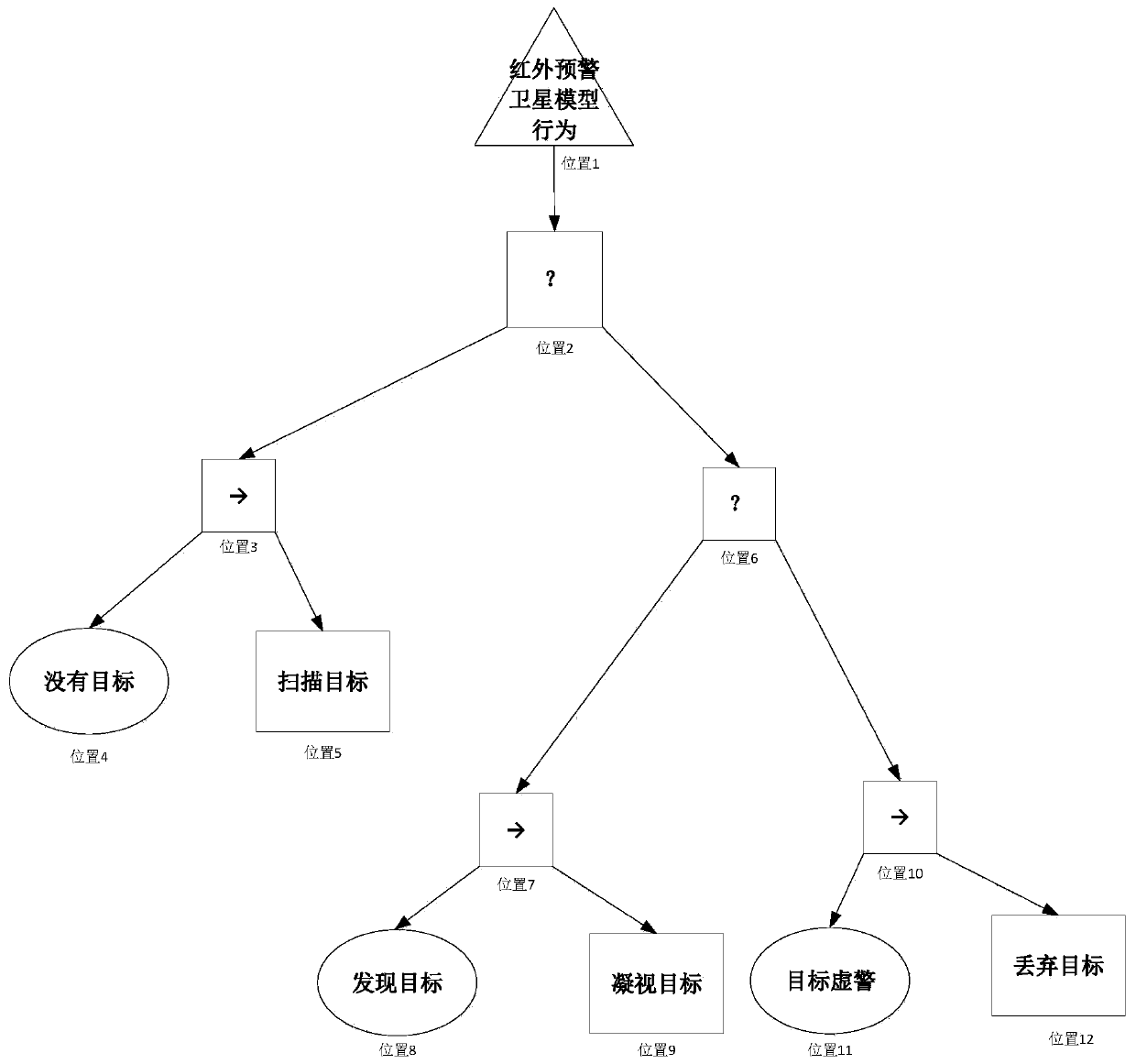

Distributed simulation platform based on behavior tree

ActiveCN111079244AImprove efficiencyEffective protectionDesign optimisation/simulationGeographical information databasesLogisimXML

The invention discloses a distributed simulation platform based on a behavior tree. Model behaviors are designed through a behavior tree method, the design is direct and convenient, the model behaviors can be dynamically adjusted in a visual behavior tree mode before a simulation system operates, a simulation platform calls the model behaviors according to an adjusted logic sequence, and the modelbehaviors can be changed quickly and dynamically; the simulation platform comprises a model behavior design tool, a model behavior development tool, a scenario generation tool, a system operation control tool and a distributed computer adapter, the five tools form a complete whole, a user can control full-cycle use of the whole simulation system through the five tools, and the use efficiency of the system is greatly improved; a model used by the simulation platform is in a dynamic link library form, so that a model source code can be effectively protected; and the used scenario file format, model behavior description file format and model initialization file format are XML file formats, so that the method has very strong universality.

Owner:中国航天系统科学与工程研究院

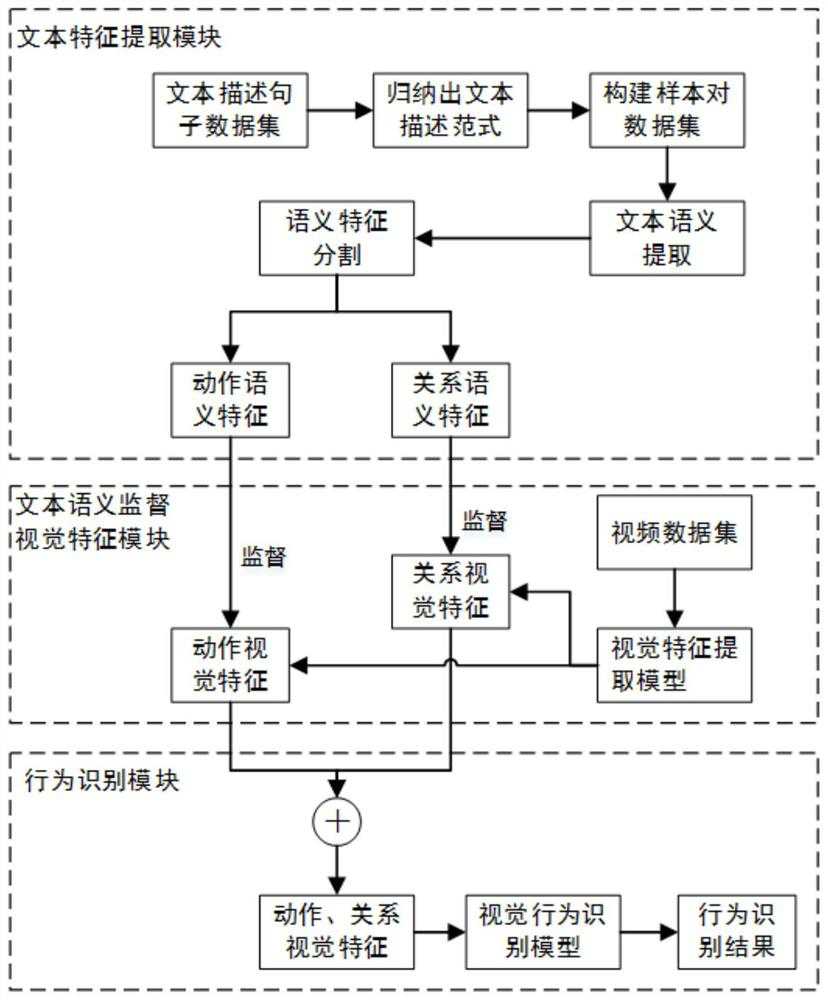

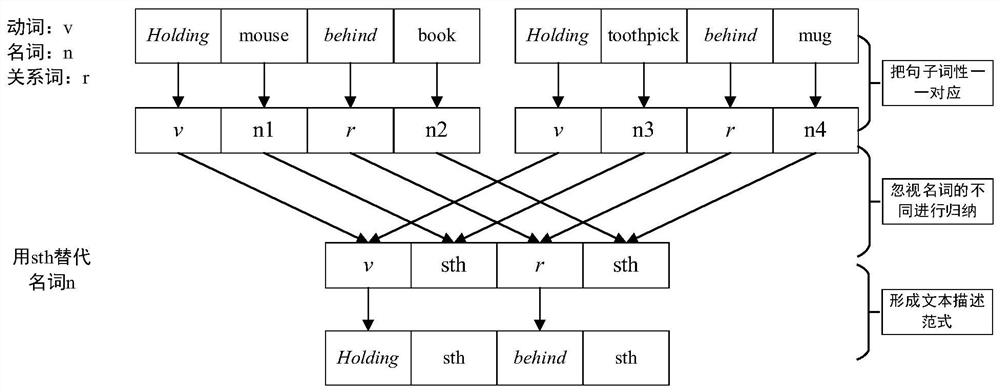

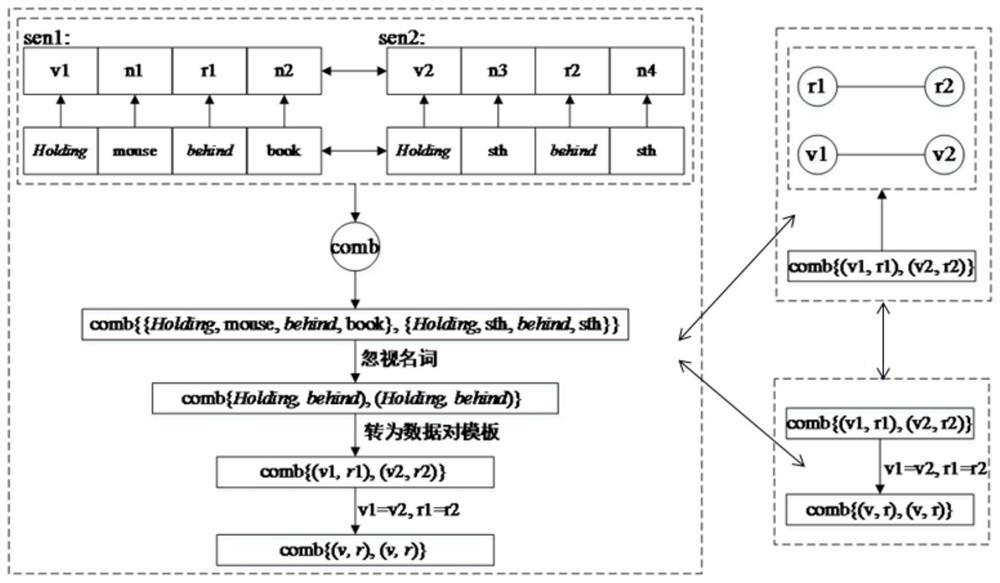

Visual behavior recognition method and system based on text semantic supervision and computer readable medium

PendingCN112580362ALarge supervisory roleImprove efficiencySemantic analysisNeural architecturesFeature vectorFeature extraction

The invention discloses a visual behavior recognition method and system based on text semantic supervision and a computer readable medium. The method comprises the steps of text semantic feature extraction, visual feature extraction based on text semantic supervision and visual behavior recognition construction. According to the method, text description normal forms of various behaviors are concluded on the basis of text description sentences of a video sample set of the same type of behaviors, a sample pair data set is constructed, and action semantic feature vectors and relation semantic feature vectors of the text description sentences are extracted from a text semantic extraction model; the extracted action visual feature vector and the relation visual feature vector are supervised byusing the action semantic feature vector and the relation semantic feature vector, and behavior recognition is performed by using the extracted action visual feature vector and the relation visual feature vector; therefore, the problems that in the current visual behavior recognition field, the accuracy of visual behavior recognition is not high, the efficiency of text semantic supervision is nothigh, and actions and relations between behaviors cannot be accurately recognized are solved.

Owner:XIDIAN UNIV

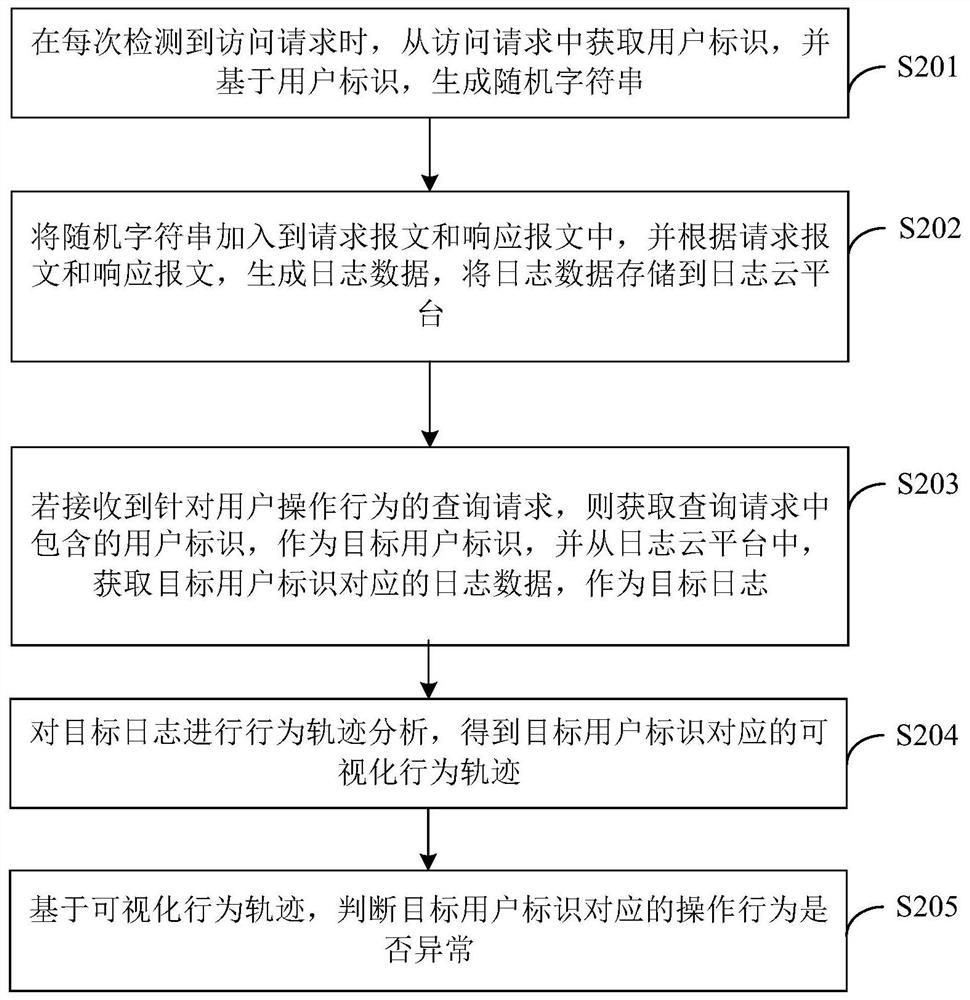

Behavior data monitoring method and device, computer equipment and medium

PendingCN112491602AImprove monitoring efficiencyRealize analysis and judgmentHardware monitoringFile metadata searchingEngineeringBehavioral data

The invention relates to the field of artificial intelligence, and discloses a behavior data monitoring method and device, computer equipment and a storage medium, and the method comprises the steps:obtaining a user identification from an access request when the access request is detected each time, generating a random character string based on the user identification, and adding the random character string into a request message and a response message, generating log data according to the request message and the response message, storing the log data to a log cloud platform, obtaining a useridentifier contained in a query request as a target user identifier when the query request for the user operation behavior is received, obtaining the log data corresponding to the target user identifier from the log cloud platform. According to the behavior data monitoring method and device, behavior track analysis is carried out on the target log to obtain the visual behavior track correspondingto the target user identifier, and whether the operation behavior corresponding to the target user identifier is abnormal or not is judged based on the visual behavior track, so that the behavior data monitoring efficiency is improved.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

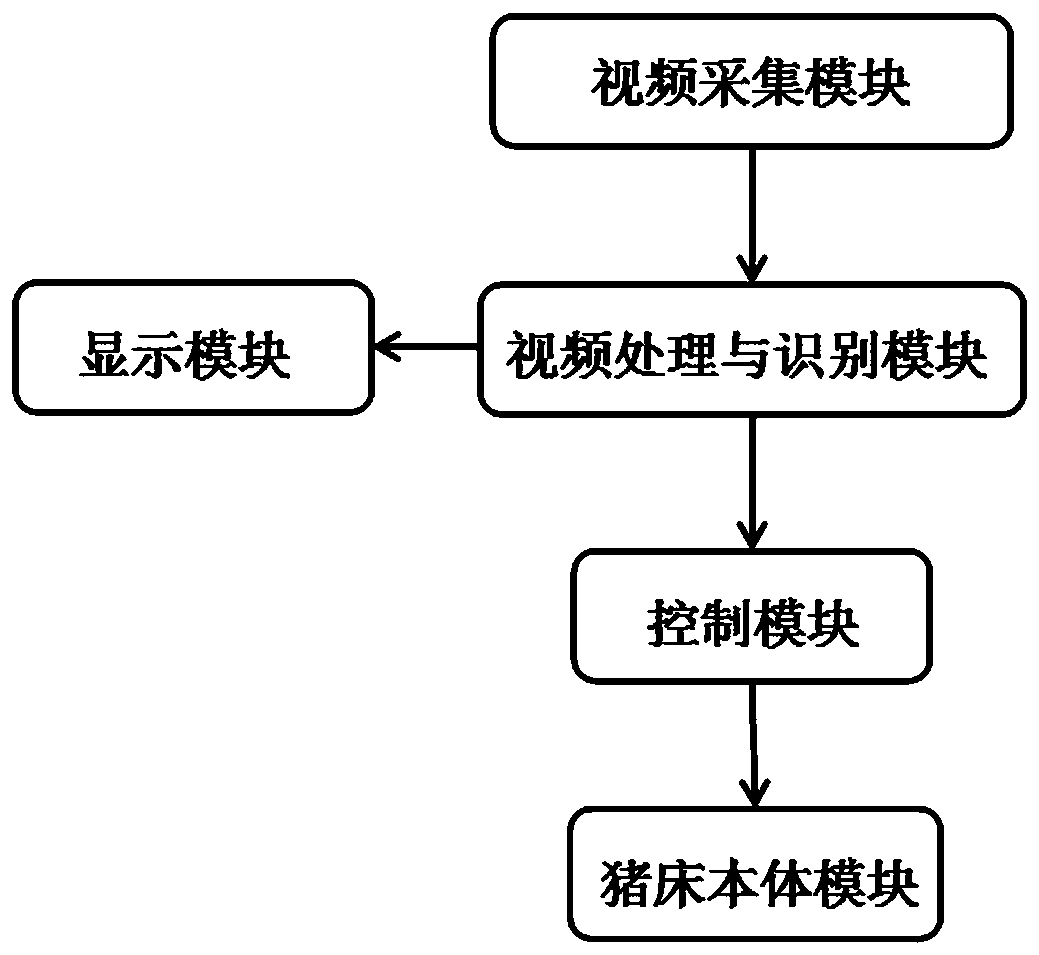

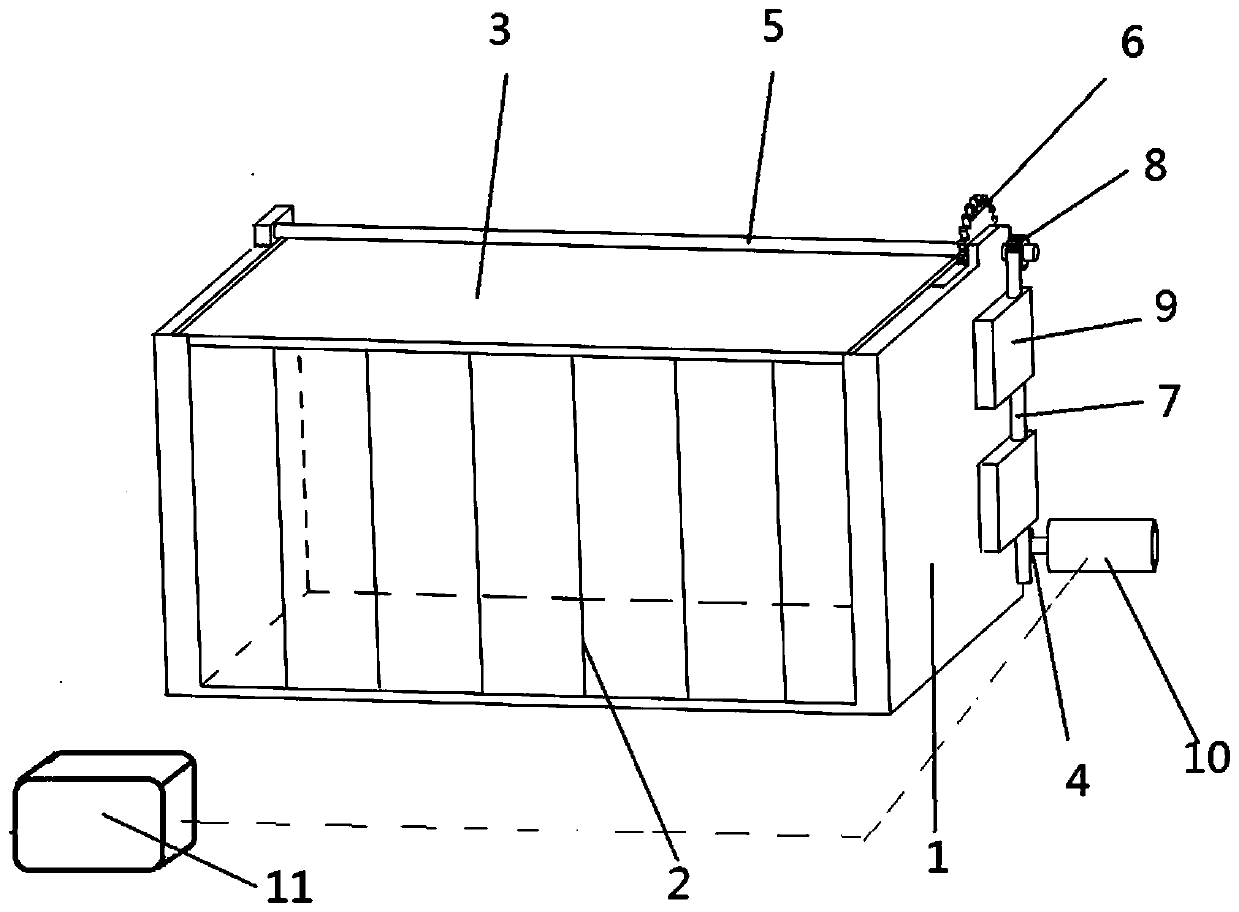

Pighouse temperature control method and system based on visual behavior feedback

ActiveCN110338067ATemperature controlCharacter and pattern recognitionAnimal housingTemperature controlPattern recognition

The invention particularly relates to a pighouse temperature control method and system based on visual behavior feedback. The system adopts a pig bed body module, a video collection module, a video image processing and recognition module, a control module and a display module, wherein the pig bed body module is a place used for allowing pigs to lie at rest and observing the pigs; the video collection module is used for collecting video data information; the video image processing and recognition module is used for collecting and saving video images and identifying and classifying behaviors ofthe pigs in the images; the control module receives identifying and classifying results and outputs control quantity to a servo motor in the pig bed body module, and opening or closing control over atop plate is achieved by controlling the servo motor, thereby regulating the temperature in a pig bed body; the display module plays received collected videos and displays the results recognized by the video image processing and recognition module. According to the pighouse temperature control method and system based on the visual behavior feedback, behavior information of the pigs in a pig bed ismonitored by using a camera, the behaviors of the pigs are recognized in time through the video image processing and recognition module, and the temperature in the pig bed is regulated according to the behaviors of the pigs.

Owner:HENAN UNIV OF ANIMAL HUSBANDRY & ECONOMY

A monitoring system for monitoring head mounted device wearer

ActiveCN106462895AMonitor visual behaviorHealth-index calculationMedical automated diagnosisCommunication unitMonitoring system

A monitoring system for monitoring the visual behavior of a wearer of a head-mounted device, the monitoring system comprises: - a least one wearer's visual behavior sensor configured to sense at least one wearer's visual behavior data relating to the visual behavior of the wearer of the head-mounted device, - a communication unit associated with the at least one wearer's visual behavior sensor and configured to communicate said visual behavior data to a wearer information data generating unit, - a wearer information data generating unit configured to : - receive said wearer's visual behavior data, - store said wearer's visual behavior data, and - generate an wearer information data indicative of at least one of : wearer's vision or general health condition of the wearer or wearer's activity or wearer's authentication based, at least, on the evolution over time of said wearer's visual behavior data.

Owner:ESSILOR INT CIE GEN DOPTIQUE

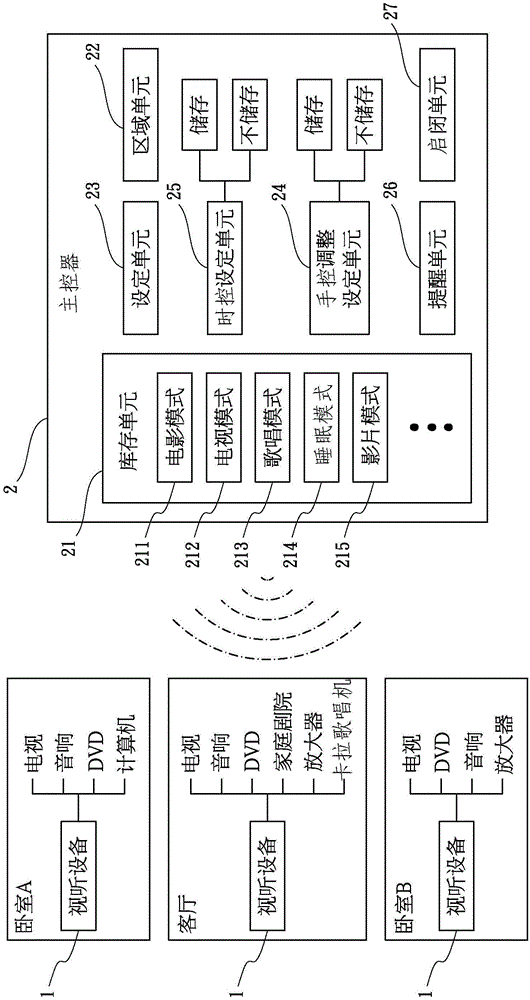

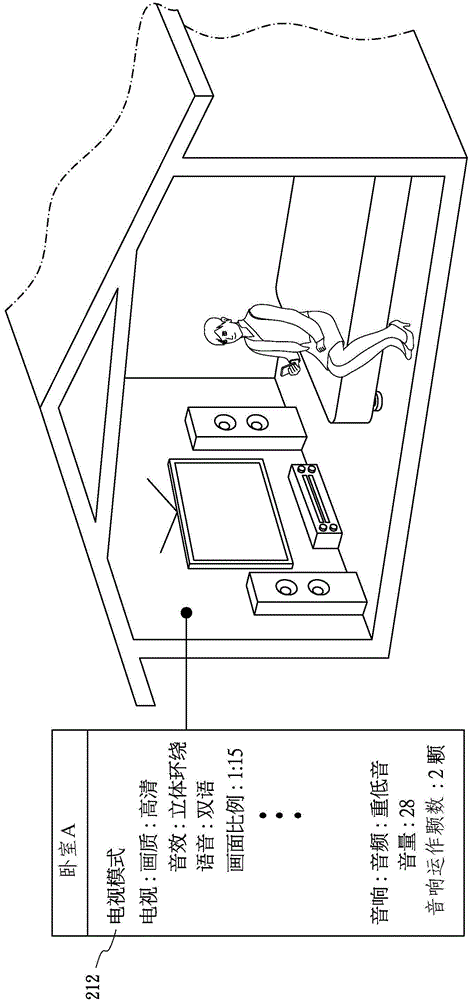

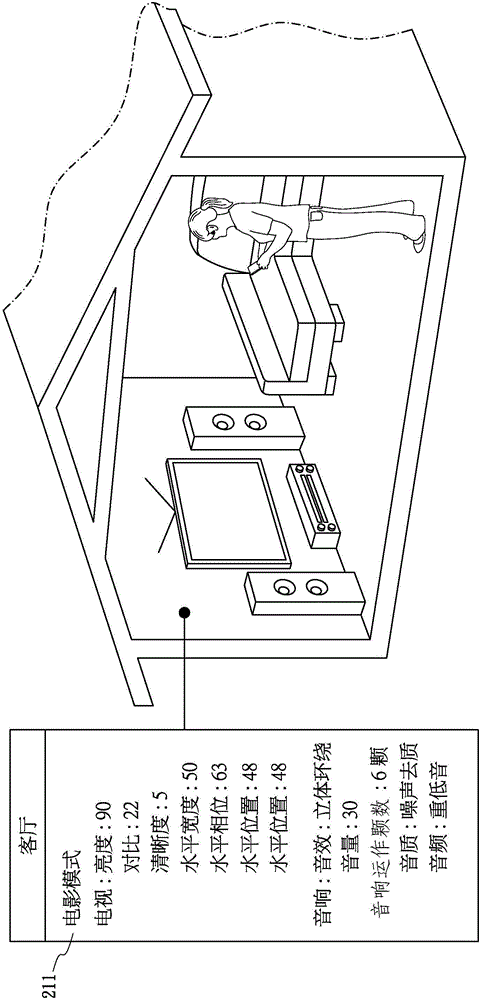

Intelligent audio-visual integration device

InactiveCN104950825AAvoid wasting timeTransmission systemsProgramme total factory controlVisual integrationEngineering

The invention discloses an intelligent audio-visual integration device, which comprises an audio-visual device and a main controller. The main controller is wirelessly connected with the audio-visual device. The main controller controls various adjustment of the audio-visual device and is internally provided with a stock keeping unit, a region unit and a setting unit, wherein the stock keeping unit is provided with a plurality of menus according to audio-visual behaviors; the region unit is provided with a plurality of region menus according to places in the home; and the setting unit carries out detail setting on the audio-visual device according to the audio-visual behaviors. A region menu is selected according to the region unit, a to-be-executed audio-visual behavior is further selected, the audio-visual device is controlled by the main controller to be automatically adjusted to detail setting of the corresponding mode, and the time for adjustment by a user himself or herself is reduced.

Owner:李文嵩

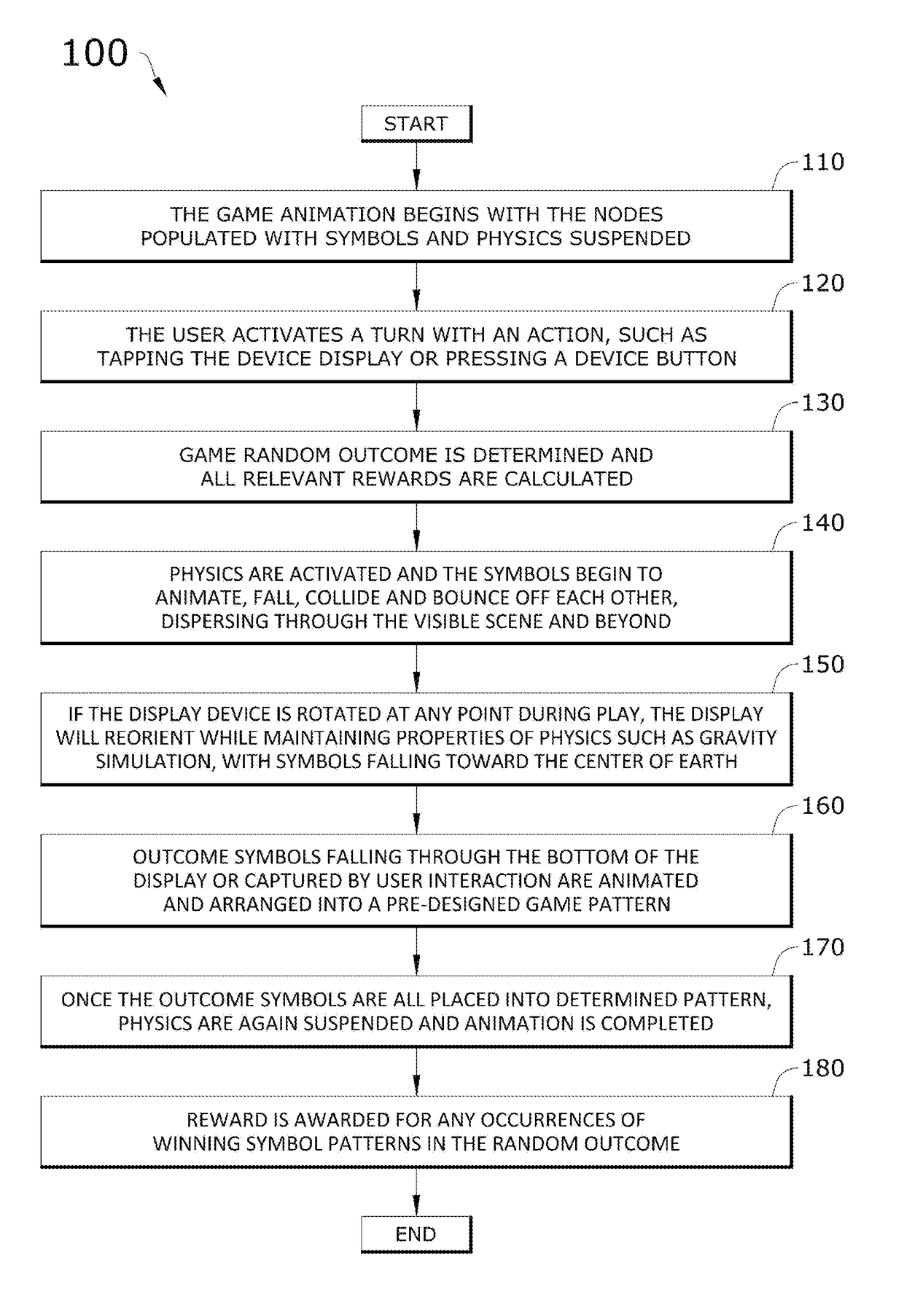

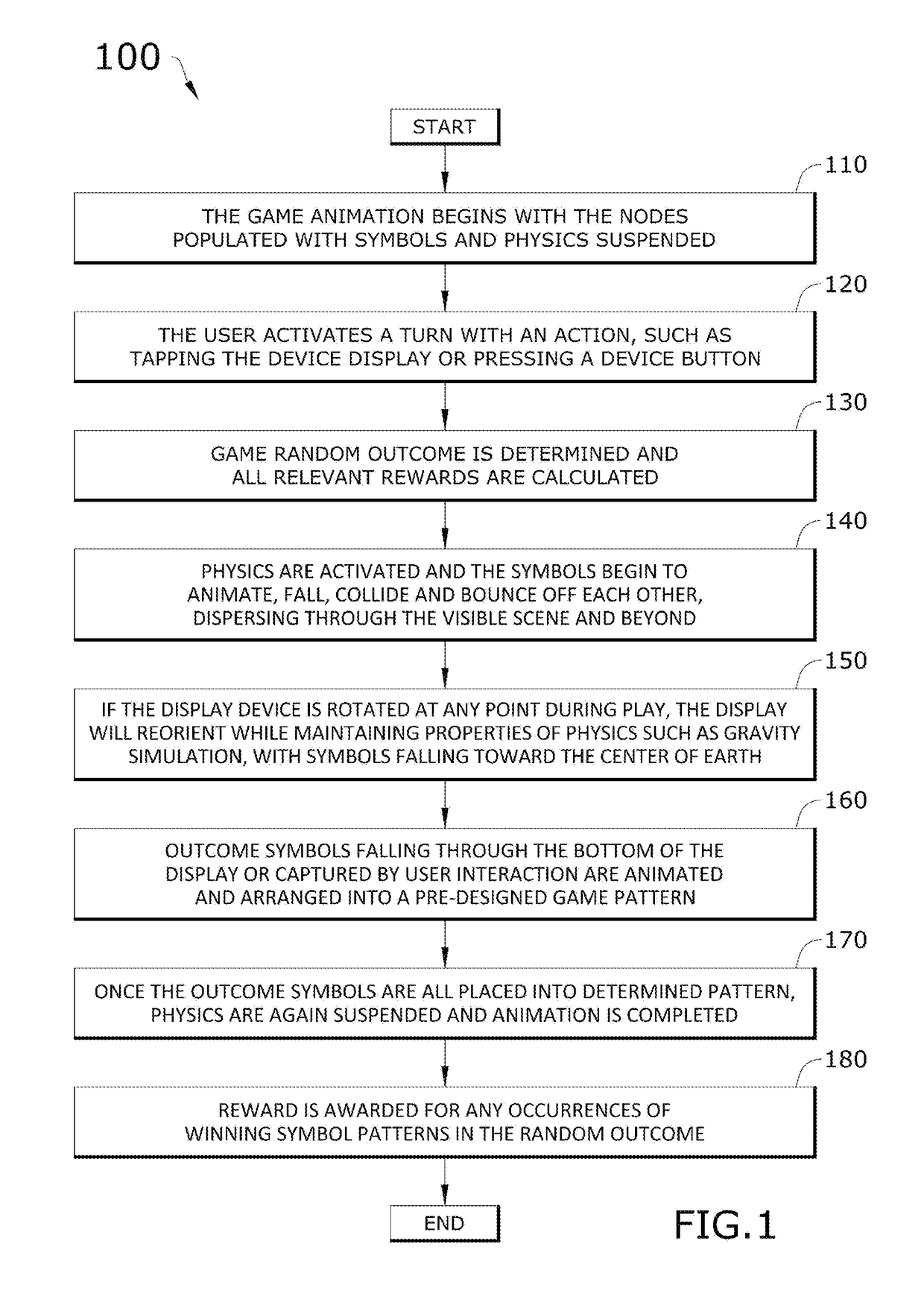

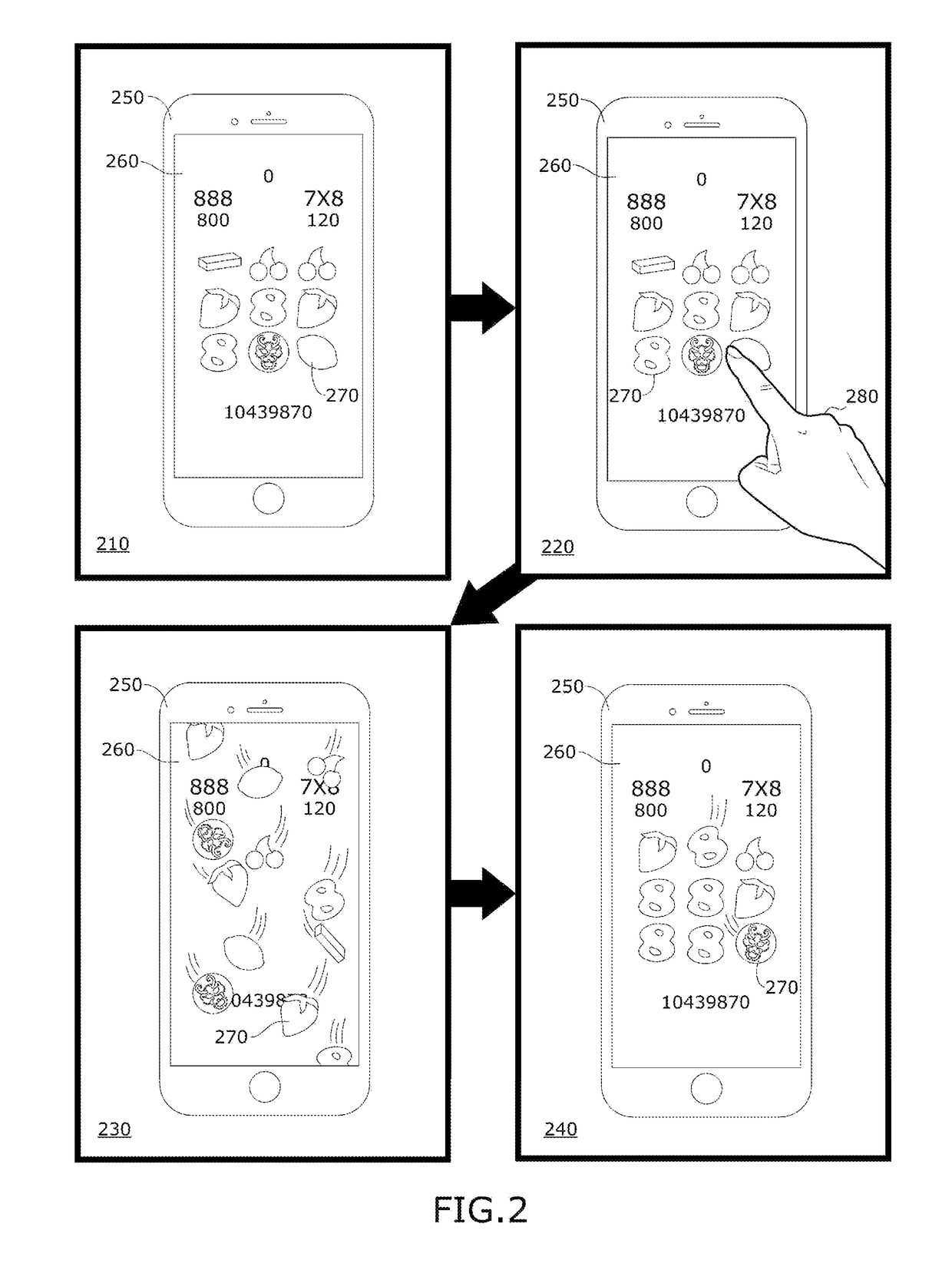

Method for using simulated physics to animate slot symbol objects of a slot video game

InactiveUS20180311581A1Apparatus for meter-controlled dispensingVideo gamesAnimationLaws of thermodynamics

A method for using simulated physics to animate slot symbol objects of a slot video game is disclosed. The method goes beyond the visual concept of reels spinning up or down by applying physics calculations to video slot symbol objects, resulting in new animated behavior of the video slot symbol objects outside of the constraints of a reel boundary or a pattern. Symbols exist as independent virtual objects susceptible to the laws of physics, within a virtual scene. The resulting visual behavior of flying, falling and otherwise interacting objects within the game scene produces a unique visual random experience every time the game is played. The applied method results extend the player game enjoyment with a new pseudo-random visual behavior of animating slot symbols.

Owner:PATTA JULIUS

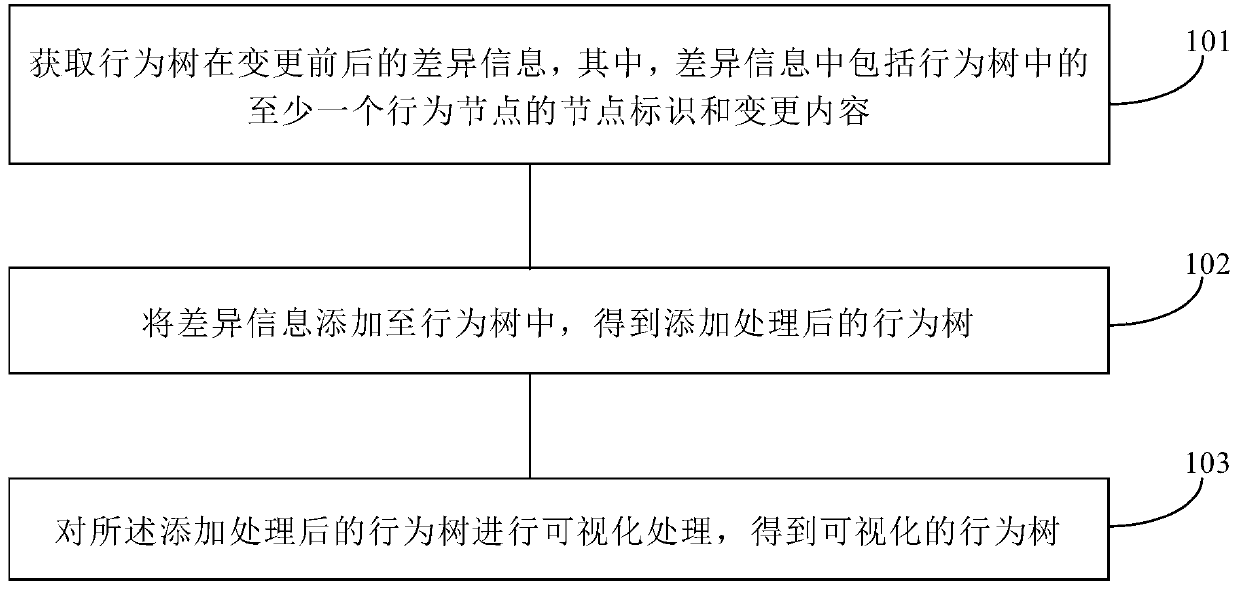

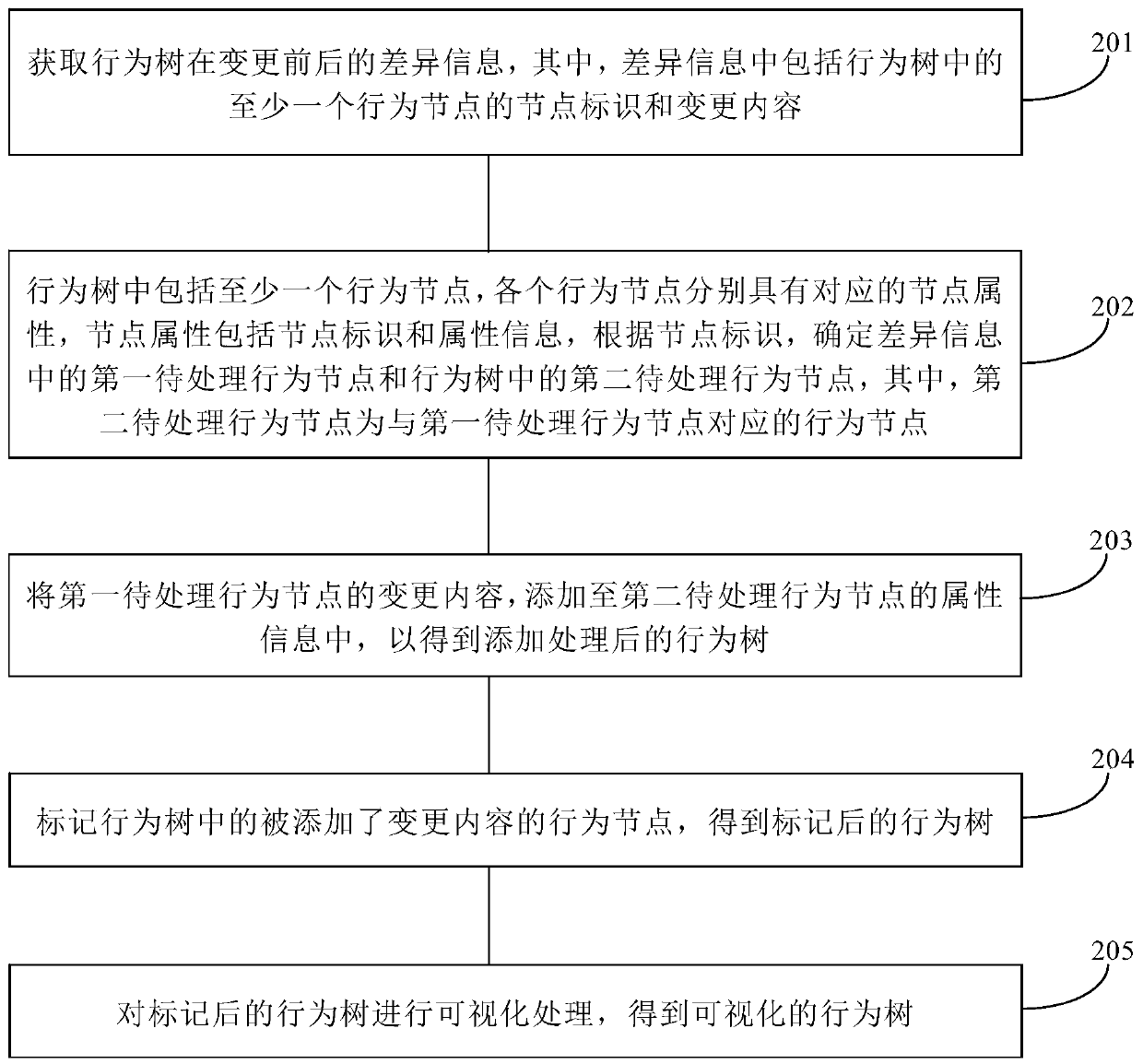

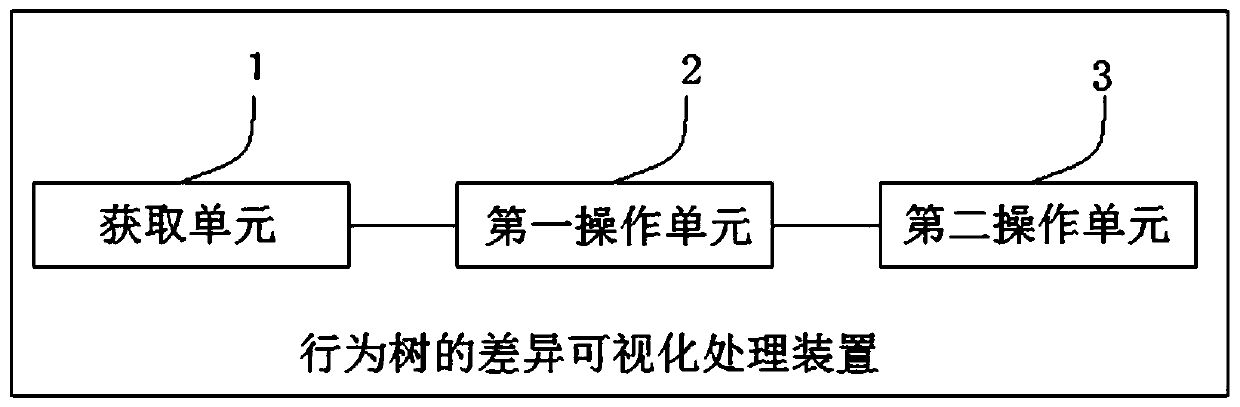

Difference visualization processing method and device for behavior tree

PendingCN110008304ARealize visualizationRealize visual displaySemi-structured data mapping/conversionText database indexingNODALTheoretical computer science

The invention provides a difference visualization processing method and device for a behavior tree, equipment and a storage medium, and the method comprises the steps: obtaining the difference information of a behavior tree before and after change, the difference information comprising the node identifier and change content of at least one behavior node in the behavior tree; adding the differenceinformation into a behavior tree to obtain a behavior tree subjected to addition processing; and performing visualization processing on the behavior tree subjected to the addition processing to obtaina visual behavior tree. Difference information of the obtained behavior tree before and after change is obtained; addition to a behavioral tree, performing visualization processing on the behavior tree subjected to the addition processing; the difference information of the behavior tree before and after change can be visualized, the difference information of the behavior tree can be visually displayed, a tester can accurately and rapidly identify and determine the difference information of the behavior tree, and furthermore, the quality and the efficiency of game management and game test workcan be ensured.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Intelligent analysis system and method for nuclear power construction site

PendingCN114333273ARealize security management and controlRealize smart workTelevision system detailsCharacter and pattern recognitionVideo monitoringData stream

The invention provides an intelligent analysis system and method for a nuclear power construction site. The intelligent video analysis system for the nuclear power construction site comprises a user database, an AI intelligent visual behavior analysis module, a video monitoring alarm system, a gun dome camera module and a label recognizer. The label recognizer is used for uniquely recognizing identity label information of a monitoring target in a nuclear power construction site and transmitting information data obtained through recognition to the AI intelligent visual behavior analysis module. The gun dome camera module is used for collecting video picture information in a nuclear power construction site and transmitting a data stream to the video monitoring alarm system; the video monitoring alarm system is used for uploading collected video stream data to the AI intelligent visual behavior analysis module; and the AI intelligent visual behavior analysis module is used for processing and analyzing the received video stream data, judging whether a monitoring target meets the standard requirement of on-site safety construction, and sending out an alarm signal through the video monitoring alarm system.

Owner:JIANGSU NUCLEAR POWER CORP

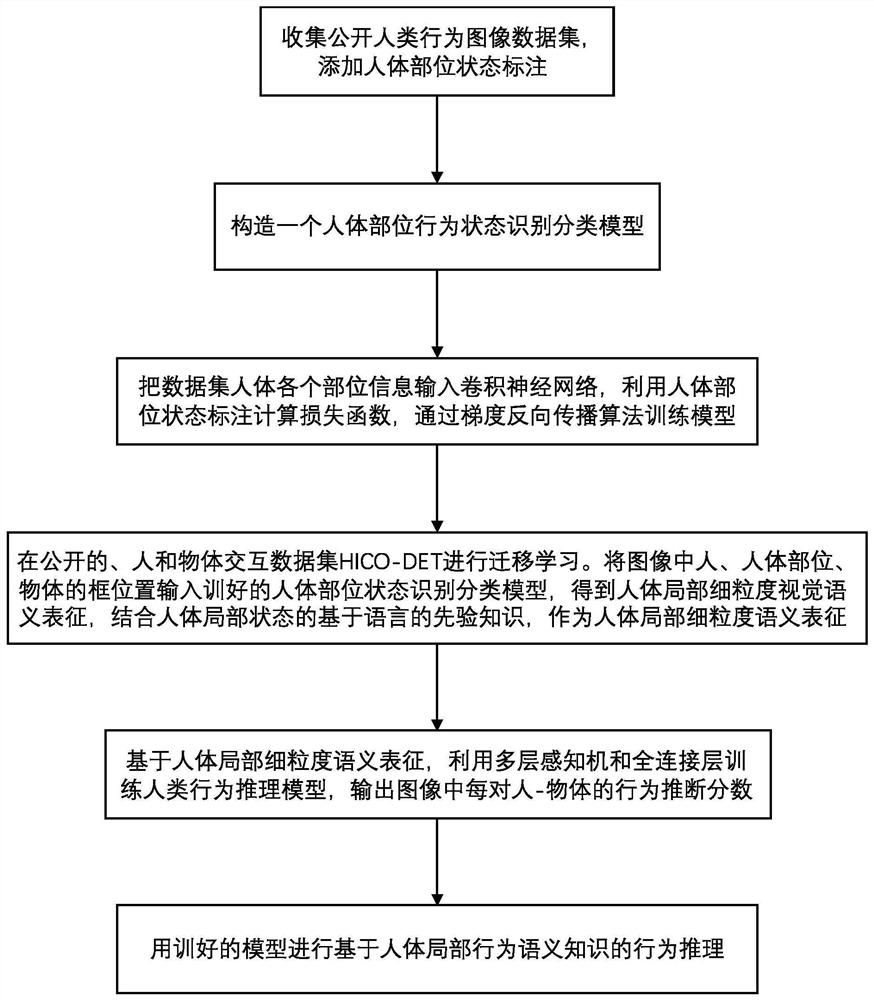

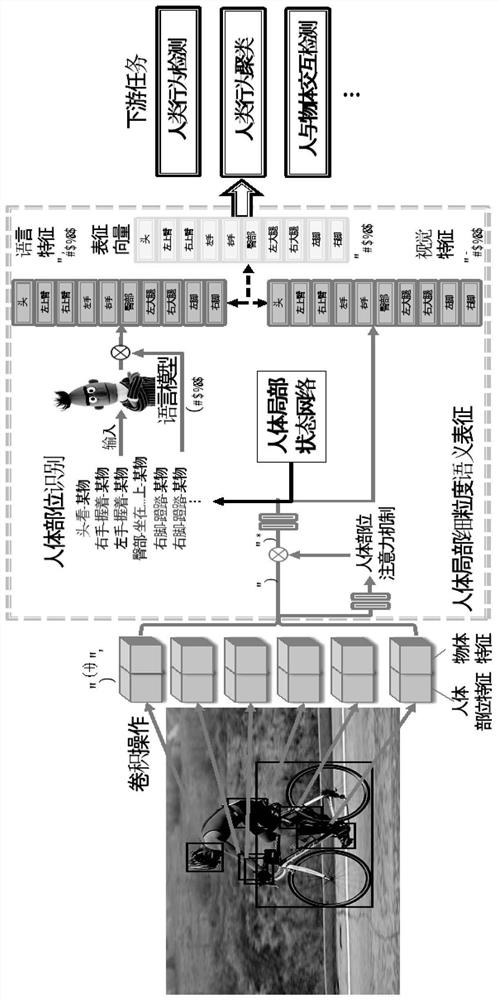

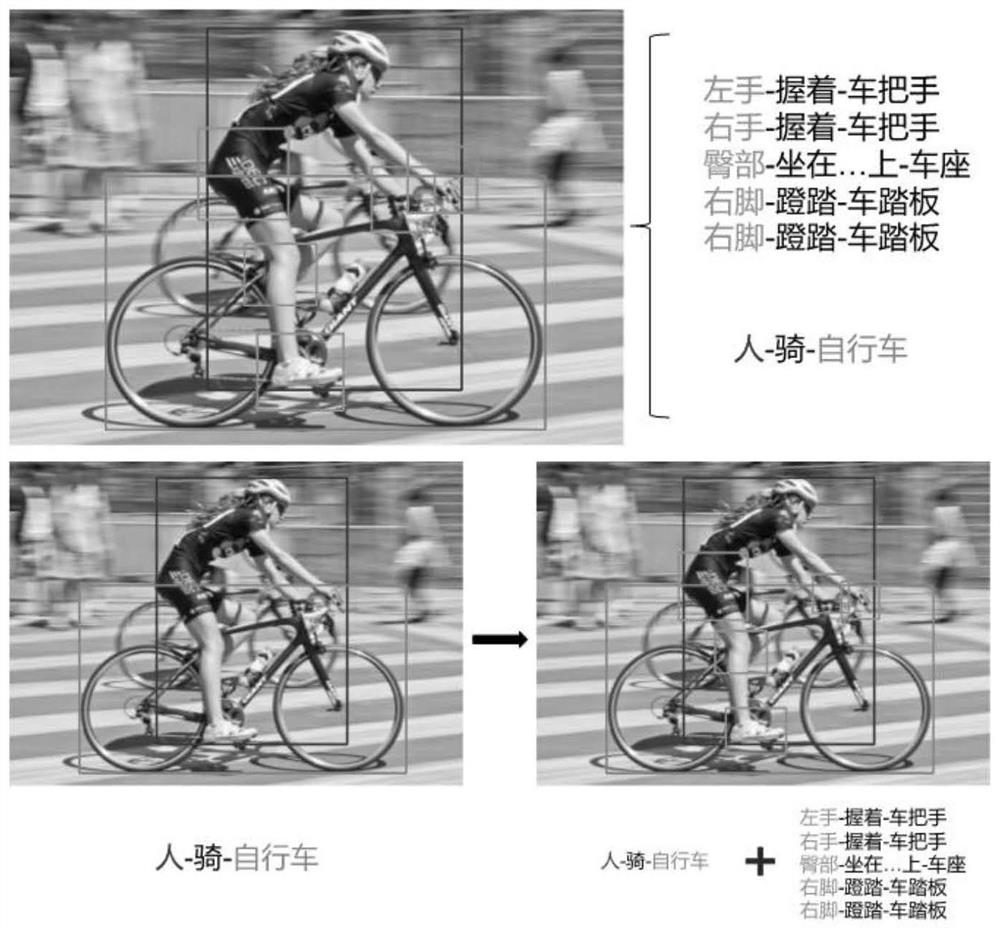

Behavior image classification method based on human body local semantic knowledge

ActiveCN113449564AAchieve knowledge transferSolve the problems that are not conducive to transfer learningCharacter and pattern recognitionNeural learning methodsLanguage understandingHuman body

The invention discloses an image classification method based on human body local behavior semantic knowledge. The method comprises the following steps: establishing a human body part behavior state recognition model for obtaining human body local fine-grained semantic representation, and performing model training; then converting visual information in an image to be detected into language-based priori knowledge by utilizing natural language understanding, fusing the priori knowledge and the visual information to generate a fine-grained behavior representation vector, and migrating the fine-grained behavior representation vector to a computer visual behavior and recognition task; and finally, reasoning the overall behavior by combining the local fine-grained features of the human body to complete a behavior understanding process to obtain a classification result. According to the method, very ideal recognition performance improvement is achieved in various complex behavior understanding tasks; and meanwhile, the method has the advantages of one-time pre-training and multiple-time diverse migration, and has generalization and flexibility.

Owner:SHANGHAI JIAO TONG UNIV

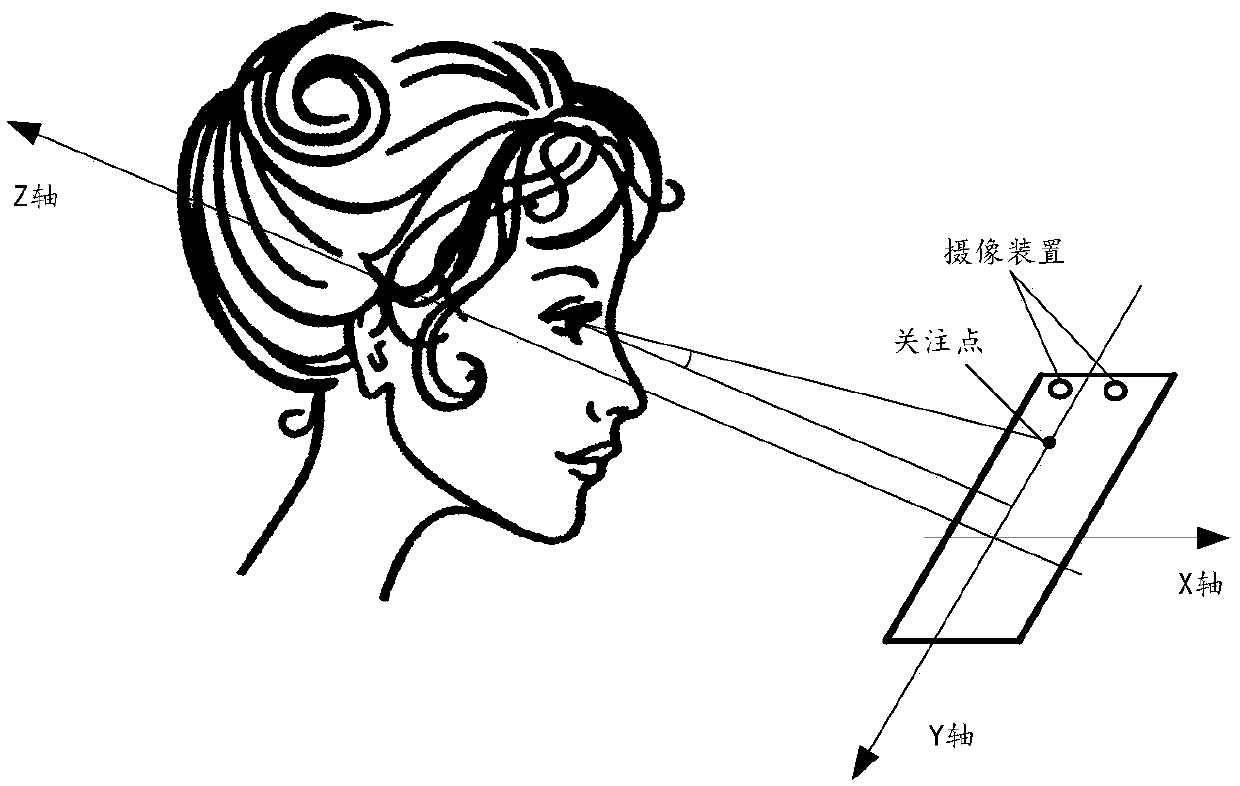

Eye tracking device

InactiveUS20190149703A1Improve acquisitionCost effectiveTelevision system detailsCharacter and pattern recognitionDriver/operatorHuman eye

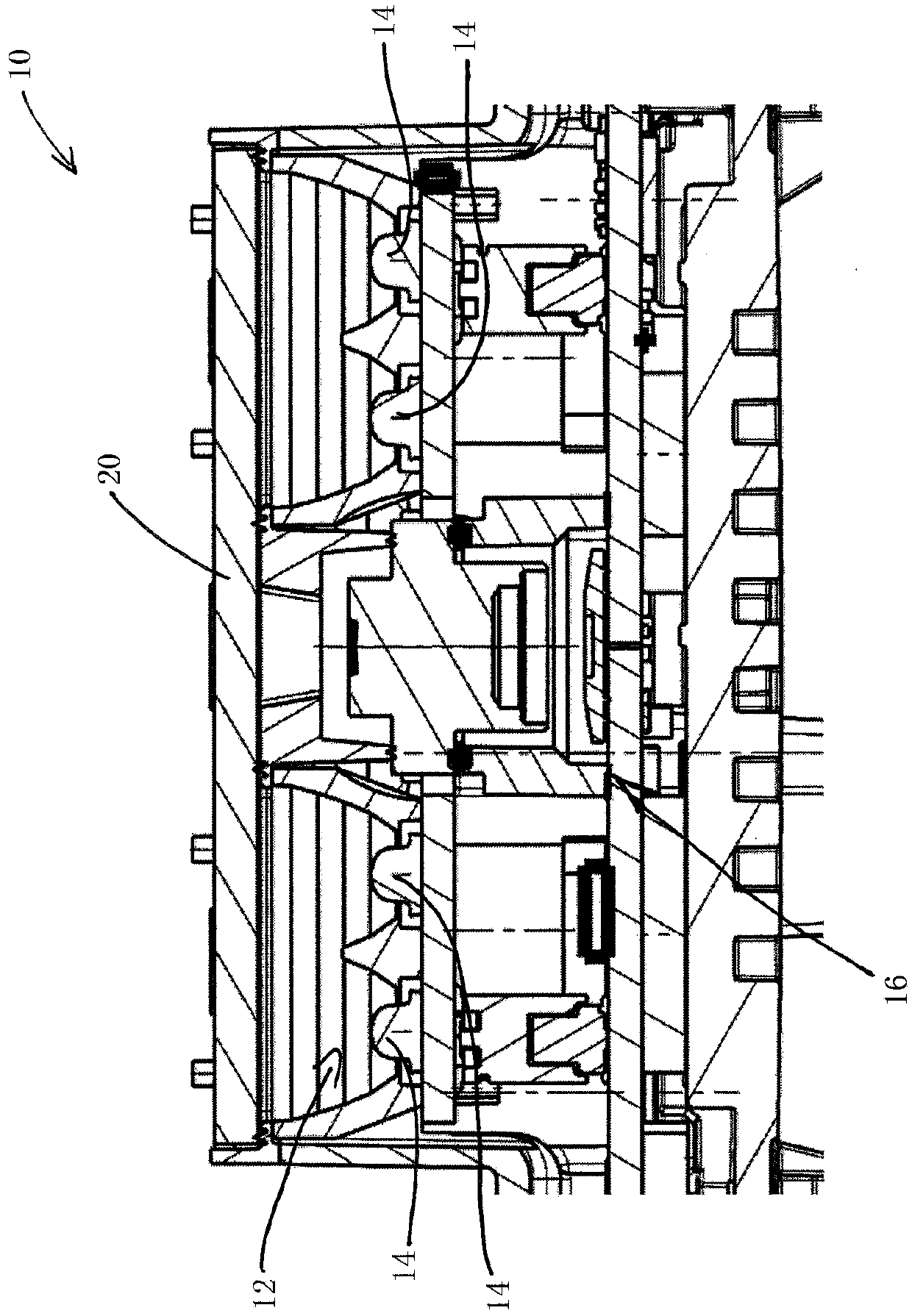

Eye tracking device, in particular for the detection of a driver's visual behavior, comprising an illuminator for illuminating a person's eye, an imaging device for detecting light reflected by the person's eye, and a cover for covering the illuminator and the imaging device, the cover comprising a light leak prevention structure for preventing light leaks between the illuminator and the imaging device through the cover.

Owner:APTIV TECH LTD

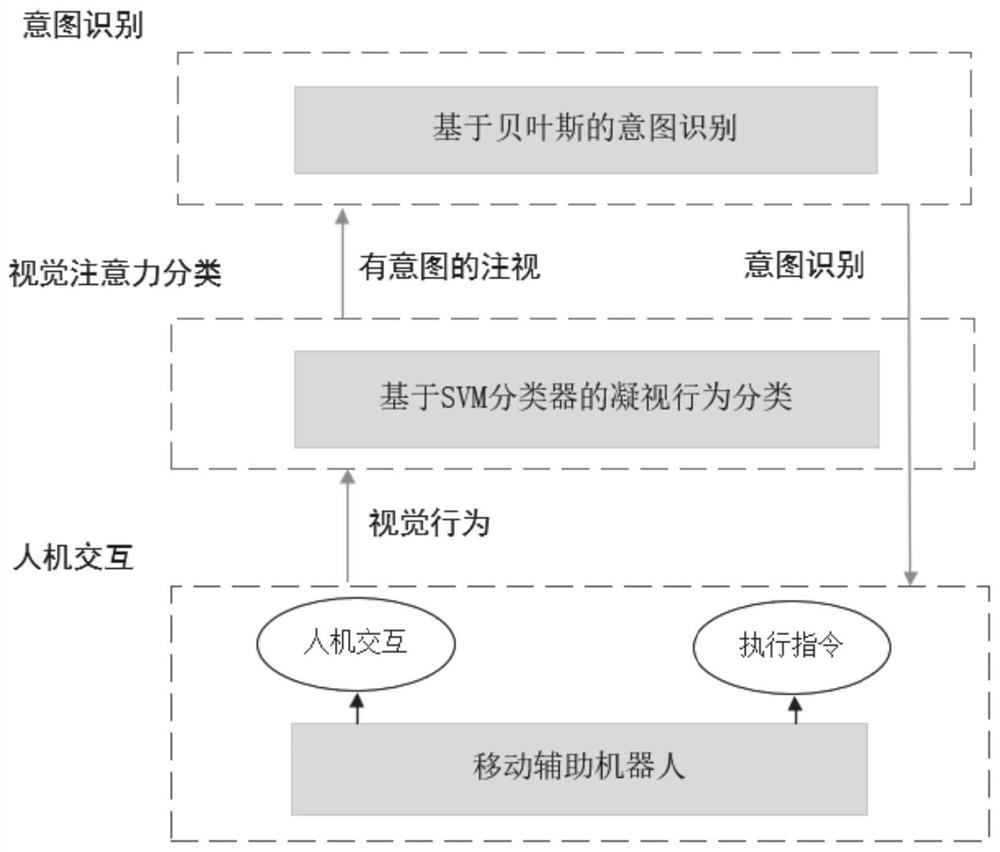

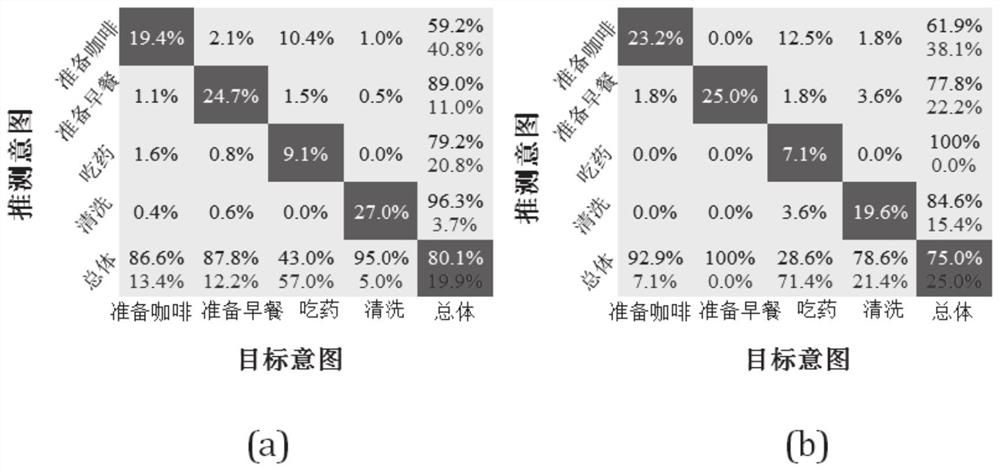

Gaze intention recognition method and system based on historical visual behaviors

ActiveCN112861828AImprove classification accuracyEliminate distractionsCharacter and pattern recognitionPattern recognitionSvm classifier

The invention provides a gaze intention recognition method and system based on historical visual behaviors. The method comprises the following steps: firstly, extracting eye movement characteristics of a user for each object based on historical visual behaviors, including fixation duration, fixation frequency, fixation interval and fixation speed; then inputting eye movement features of the user to each object to an SVM classifier, judging whether the user intentionally watches the object, and if so, adding the object into an intentionally historical watched object sequence; and finally, inputting a historical staring object sequence with intentions to a naive Bayes classifier, and determining the intentions of the user. Compared with a method based on a single object, the gaze intention recognition method based on the historical visual behaviors has the advantage that the intention recognition accuracy is remarkably improved.

Owner:SHANDONG UNIV

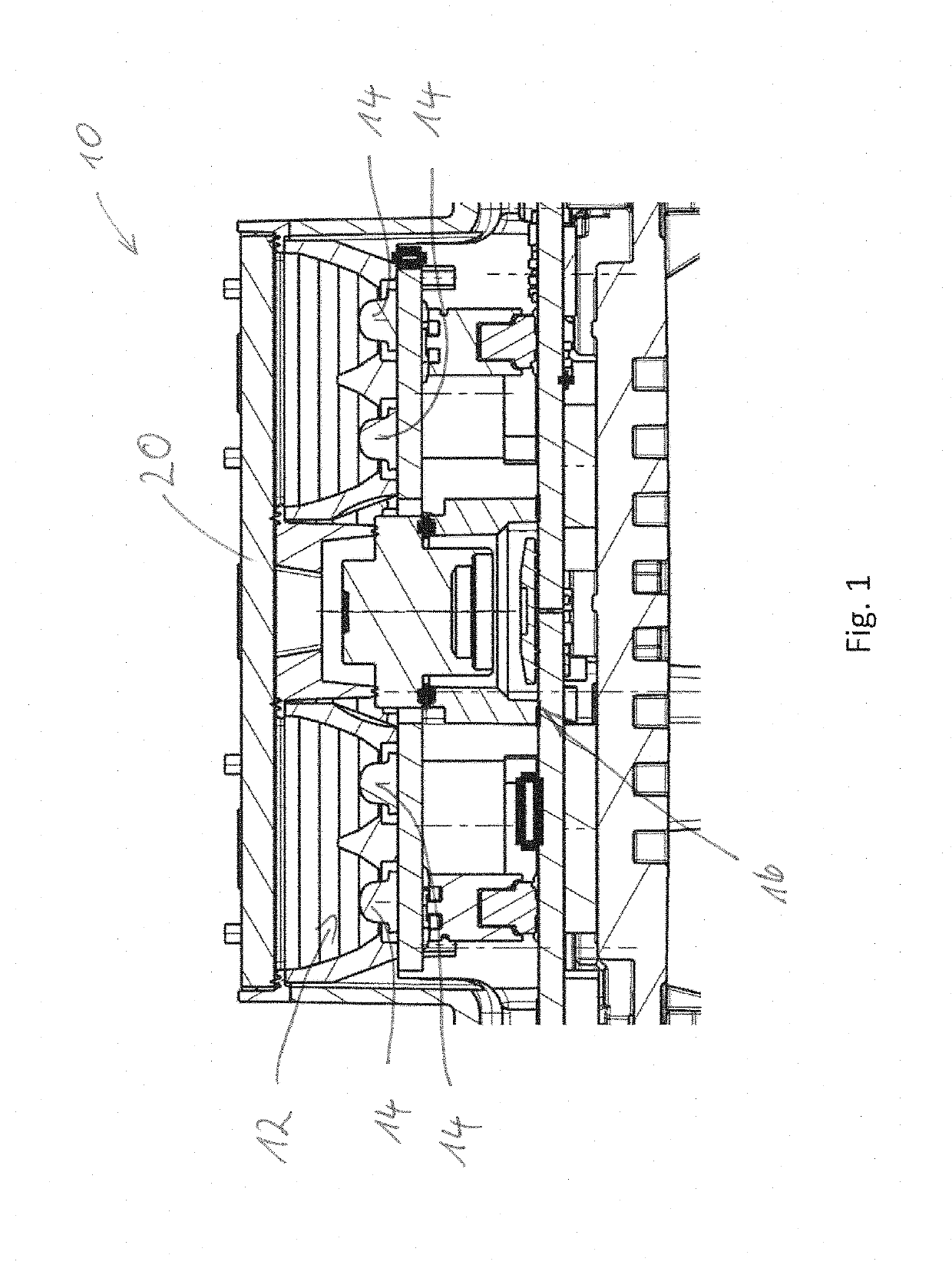

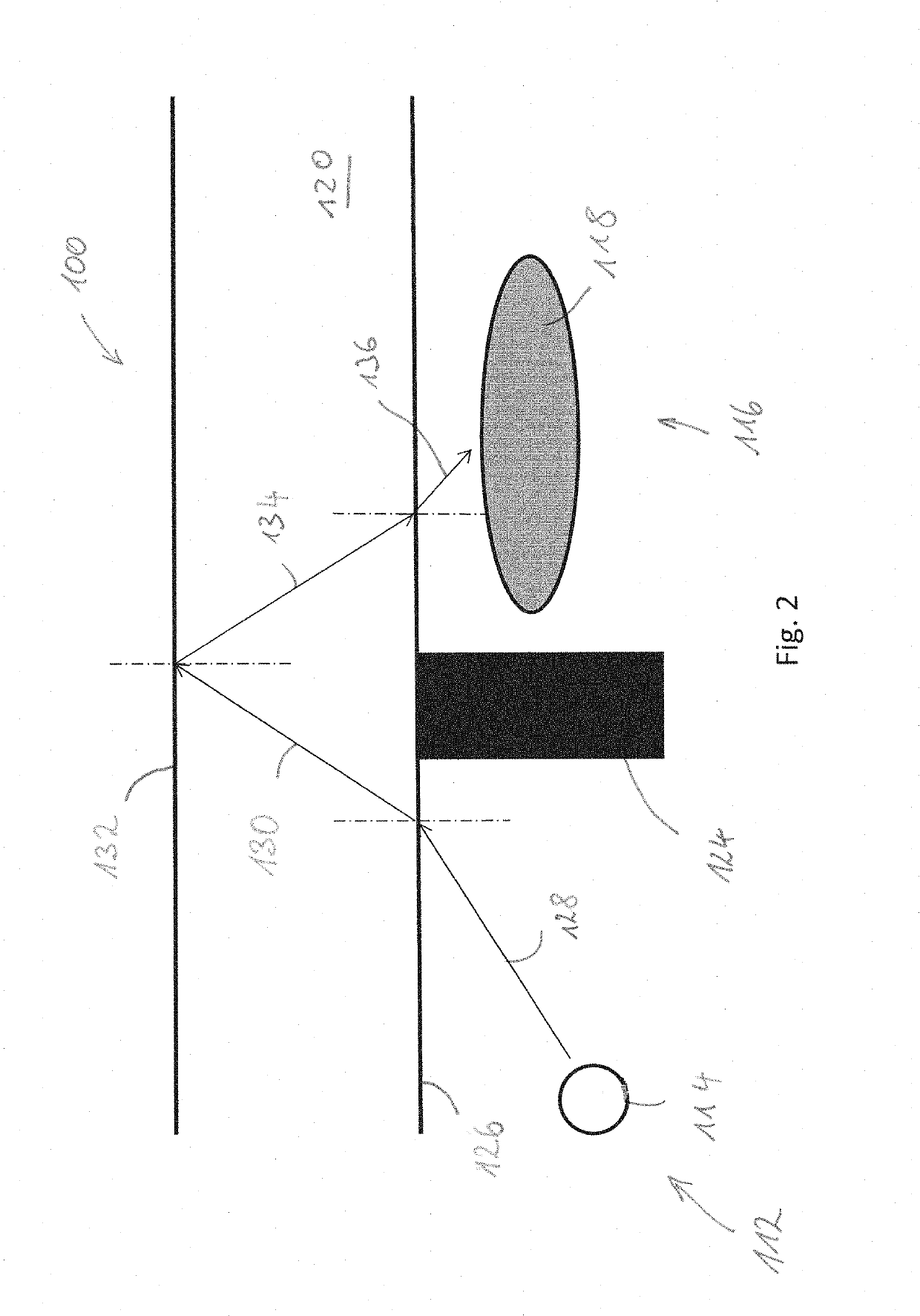

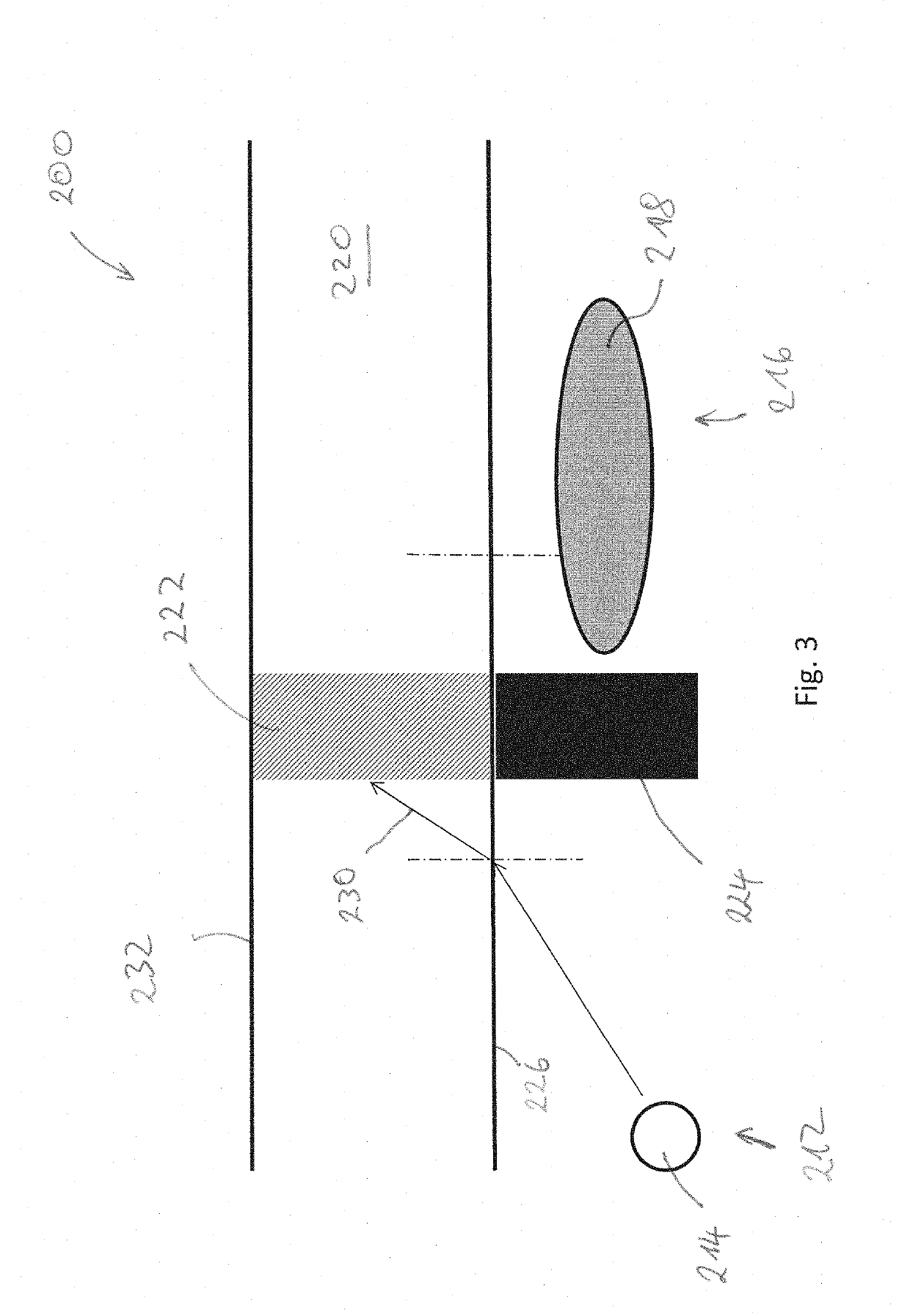

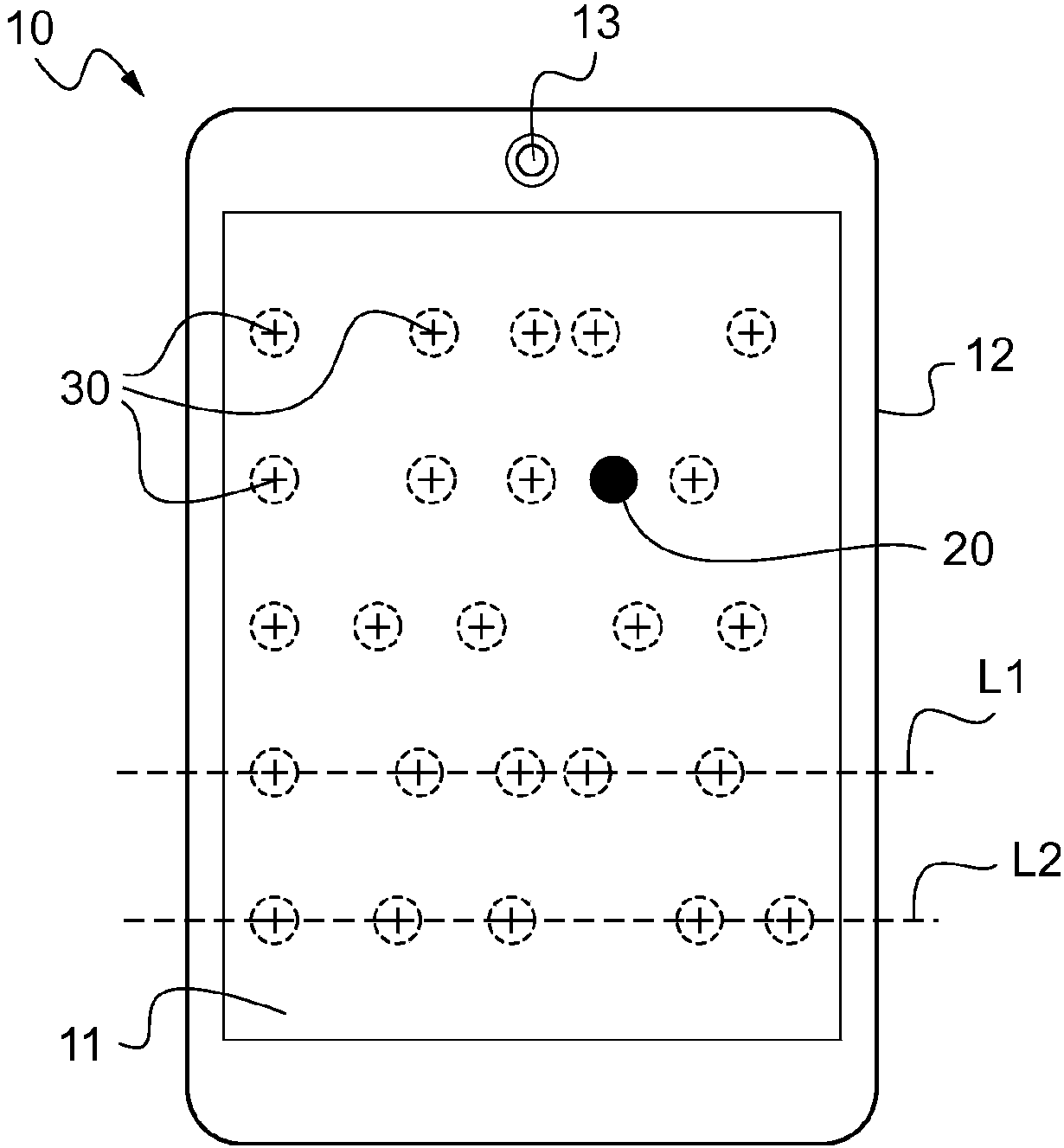

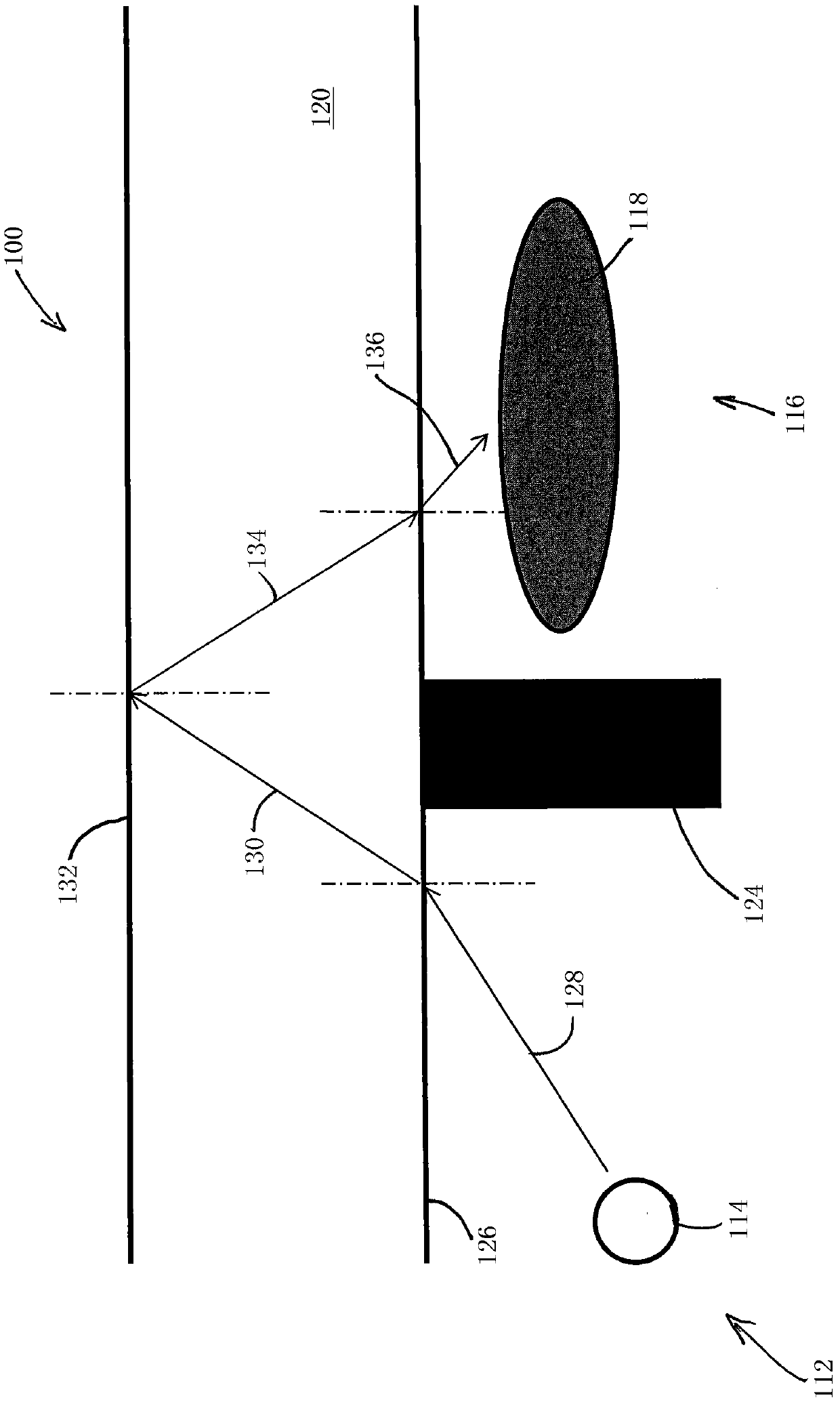

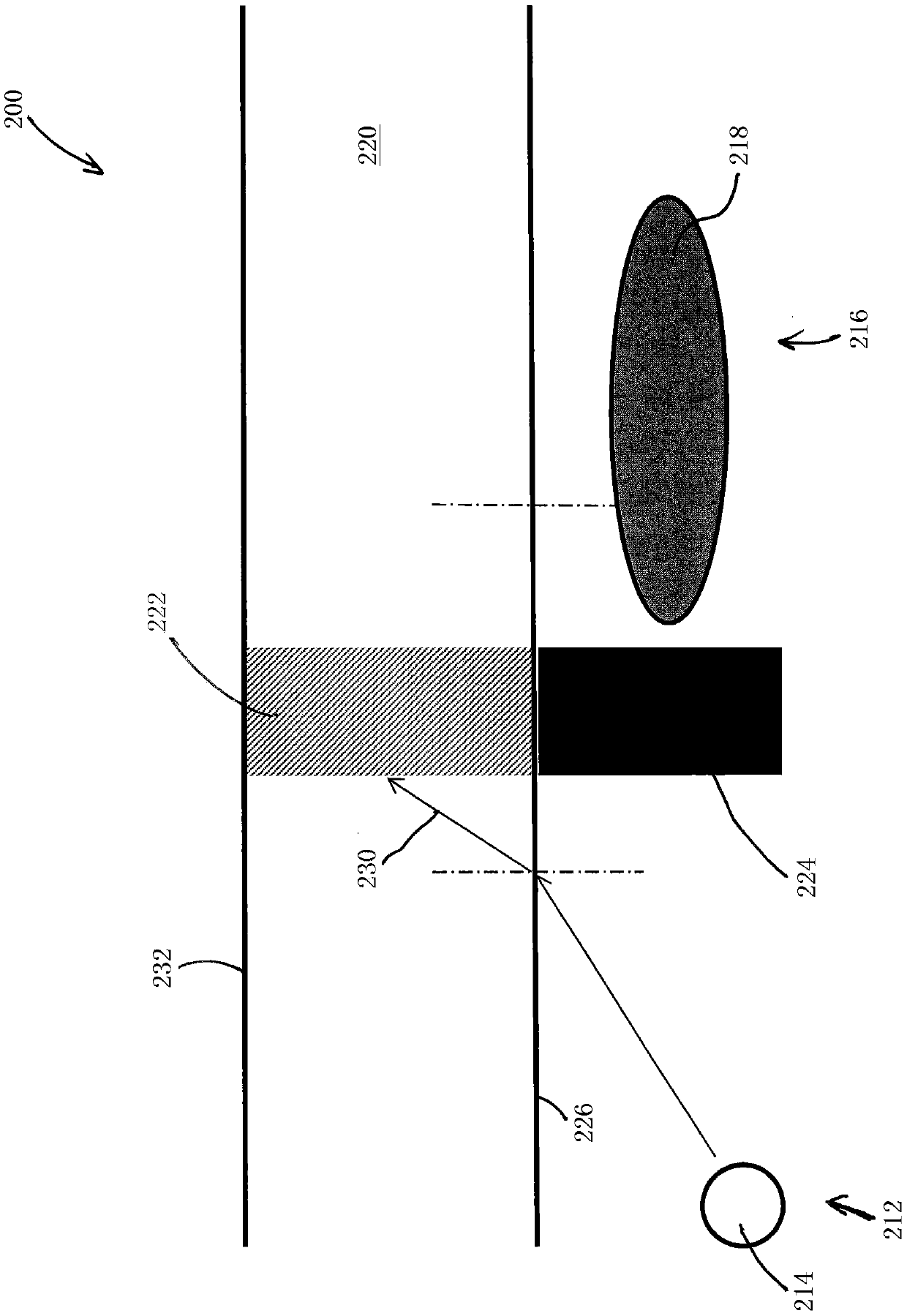

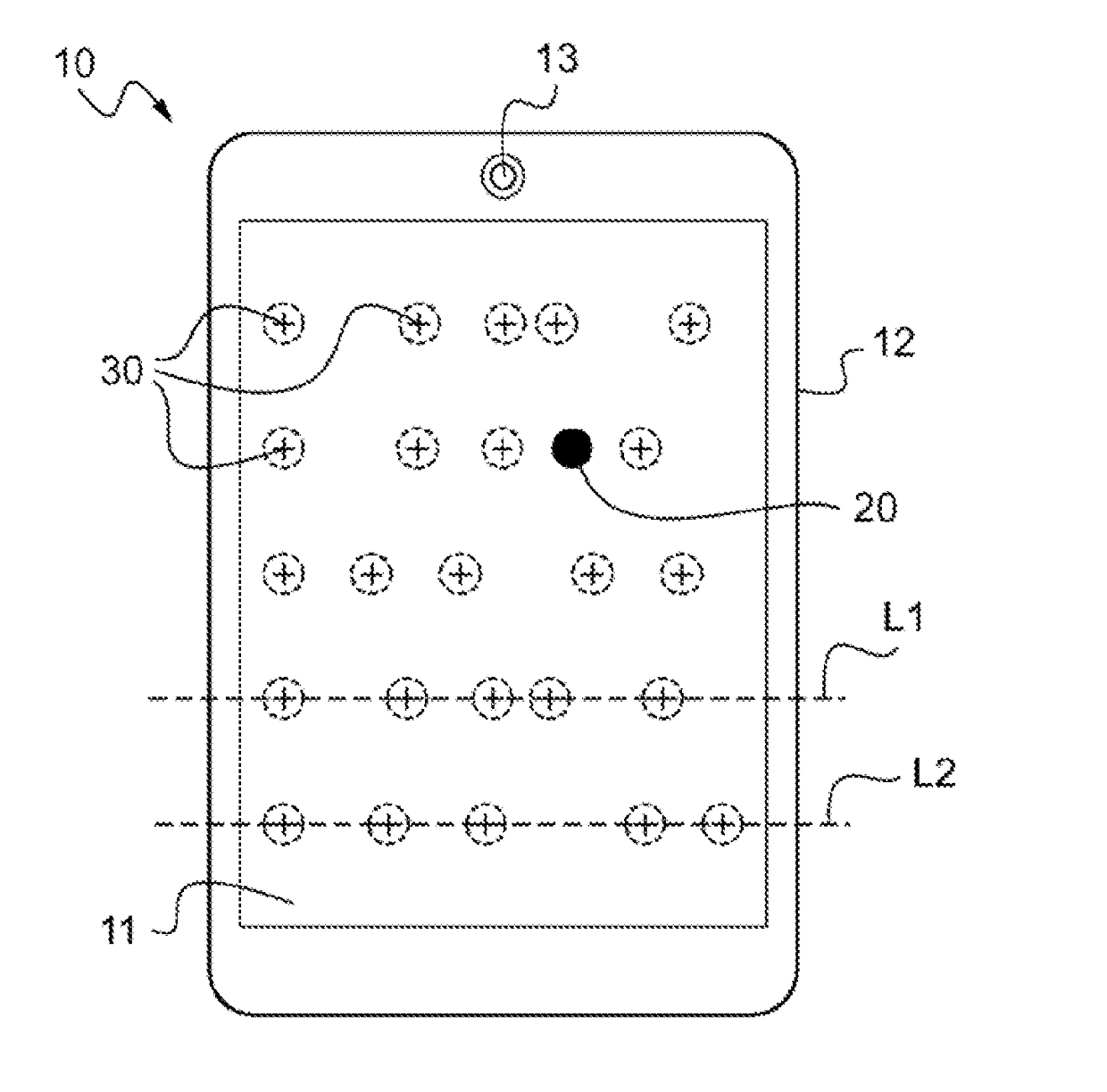

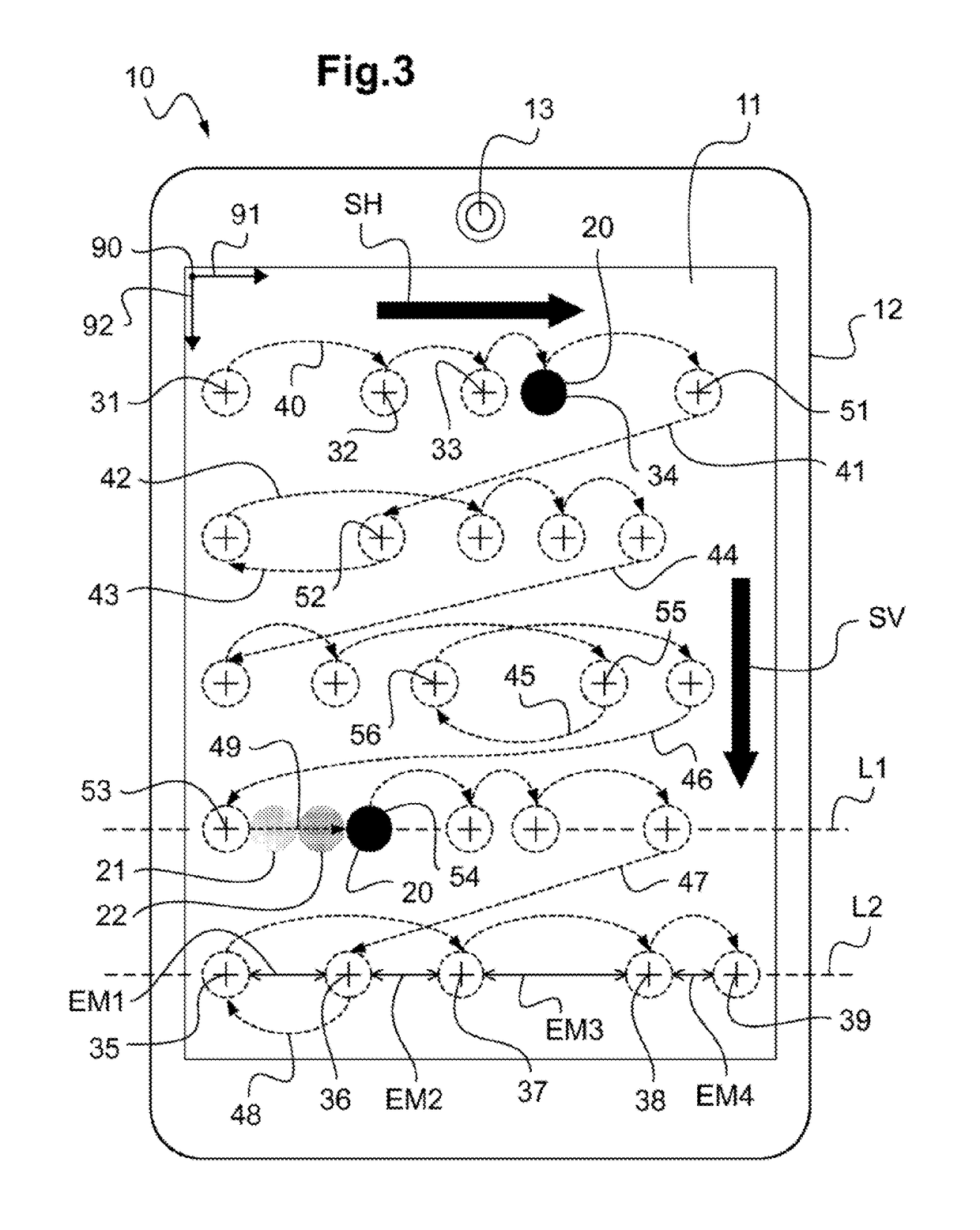

Device for testing the visual behavior of a person, and method for determining at least one optical design parameter of an ophthalmic lens using such a device

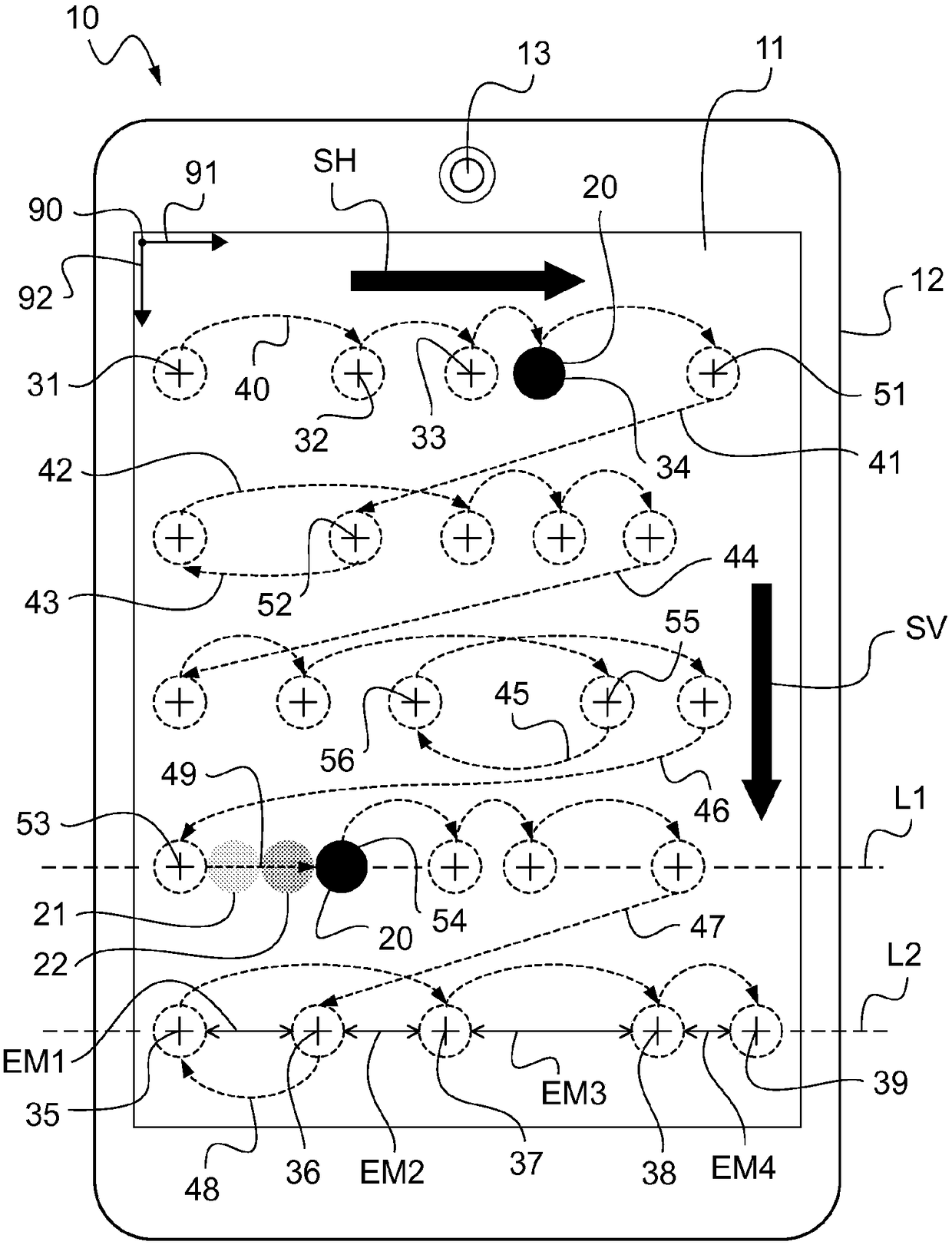

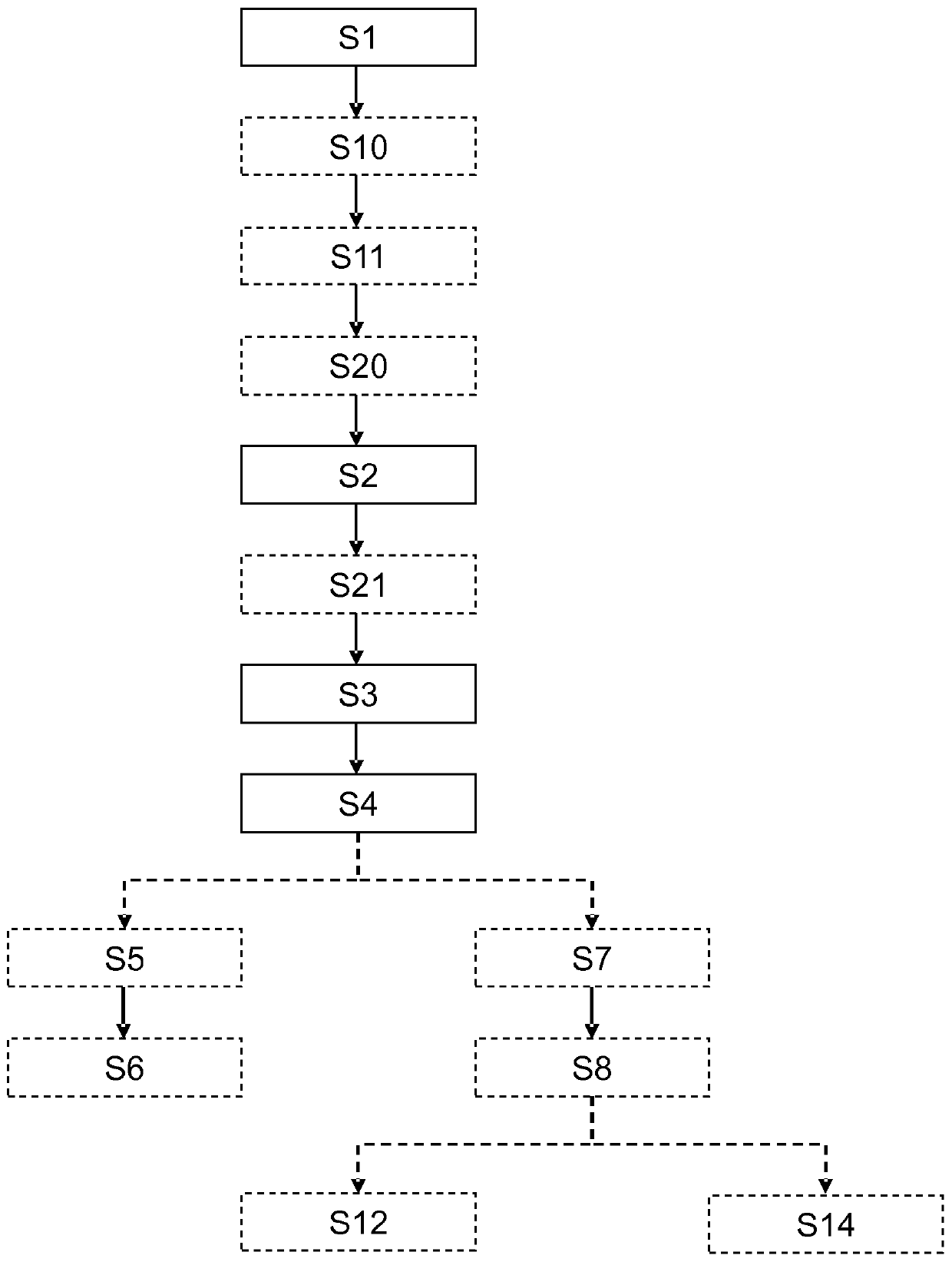

The invention relates to a device (10) for testing the visual behavior of a person. Said device comprises: an active display (11) capable of displaying at least one visually dominant target (20) in aplurality of positions (30) that are variable over time and that are aligned along at least one line (L1, L2) or column, and a unit for controlling the display. Said unit is programmed so that the consecutive display positions of the target follow, over time, a visual tracking protocol.

Owner:ESSILOR INT CIE GEN DOPTIQUE

A method for determining a postural and visual behavior of a person

ActiveCN110892311ASuitable for optical functionsImage enhancementMedical imagingContext dataComputer vision

A method for determining a postural and visual behavior of a person, the method comprising: - a person image receiving step during which a plurality of images of the person are received, - a context determining step during which the plurality of images of the person are analyzed so as to determine context data representative of the context in which the person is on each image of the plurality of images, - an analyzing step during which the plurality of images of the person are analyzed so as to determine at least one oculomotor parameter of the person, - a postural and visual behavior determining step during which a postural and visual behavior of the person is determined based at least on the at least one oculomotor parameter and the context data.

Owner:ESSILOR INT CIE GEN DOPTIQUE

Eye tracking device

PendingCN109799610ACharacter and pattern recognitionDiagnostic recording/measuringDriver/operatorOphthalmology

Owner:APTIV TECH LTD

Method and system for determining an optical system intended to equip a person on the basis of the adaptability of the person to a visual and/or proprioceptive modification of his/her environment

A method for determining an optical system intended to equip a person on the basis of the adaptability of the person to a visual and / or proprioceptive modification of his environment, the method. including a person visual behaviour parameter providing, during which a person visual behaviour parameter indicative of the visual behaviour of the person relative to a given state of the environment is provided; a reference value providing, during which a first value of the person visual behaviour pa ameter corresponding to a reference state of the environment is provided; a visual and / or proprioceptive modification providing, during which a visual and / or proprioceptive modification of the reference state of the environment is provided so as to define a modified state of the environment; and determining, during which an optical parameter of the optical system is determined based on the first value of the person visual behaviour parameter and on a second value of the person visual behaviour parameter associated with the modified state of the environment.

Owner:ESSILOR INT CIE GEN DOPTIQUE +1

Device for testing the visual behavior of a person, and method for determining at least one optical design parameter of an ophthalmic lens using such a device

A device for testing visual behavior of a person, including: an active display configured to display at least one visually predominant target in a plurality of positions that are variable over time and that are aligned along at least one line or column, and a unit for controlling the display. The unit is programmed so that consecutive display positions of the target follow, over time, a visual tracking protocol.

Owner:ESSILOR INT CIE GEN DOPTIQUE

A method and system for identifying online user types based on visual behavior

ActiveCN104504404BEasy and reliable data extractionImprove accuracyCharacter and pattern recognitionSupport vector machineData set

The invention discloses a visual behavior-based online user type identification method and system, which collects and processes the eye movement data of one or more different types of users, obtains a gaze information data set and a user type set, and according to the gaze information data set Gaze information, obtain one or more eye movement feature data to form a sampling data set, select eye movement feature data from it and input it into a support vector machine, train to obtain a user type classifier, complete the machine learning process to obtain a classifier, and collect any online The user's eye movement data is input to the trained user type classifier, and the user type of any user on the Internet is identified according to the classifier. Mainly use eye-tracking technology to obtain and calculate three types of eye-movement characteristic data when users browse the web, and judge the type of online users according to the difference in eye-movement characteristic data. User identification based on visual behavior can actively record the eye movement data of online users, and the extraction of data is simple and reliable, with high accuracy and reliability.

Owner:BEIJING UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com