Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

38 results about "Processor mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

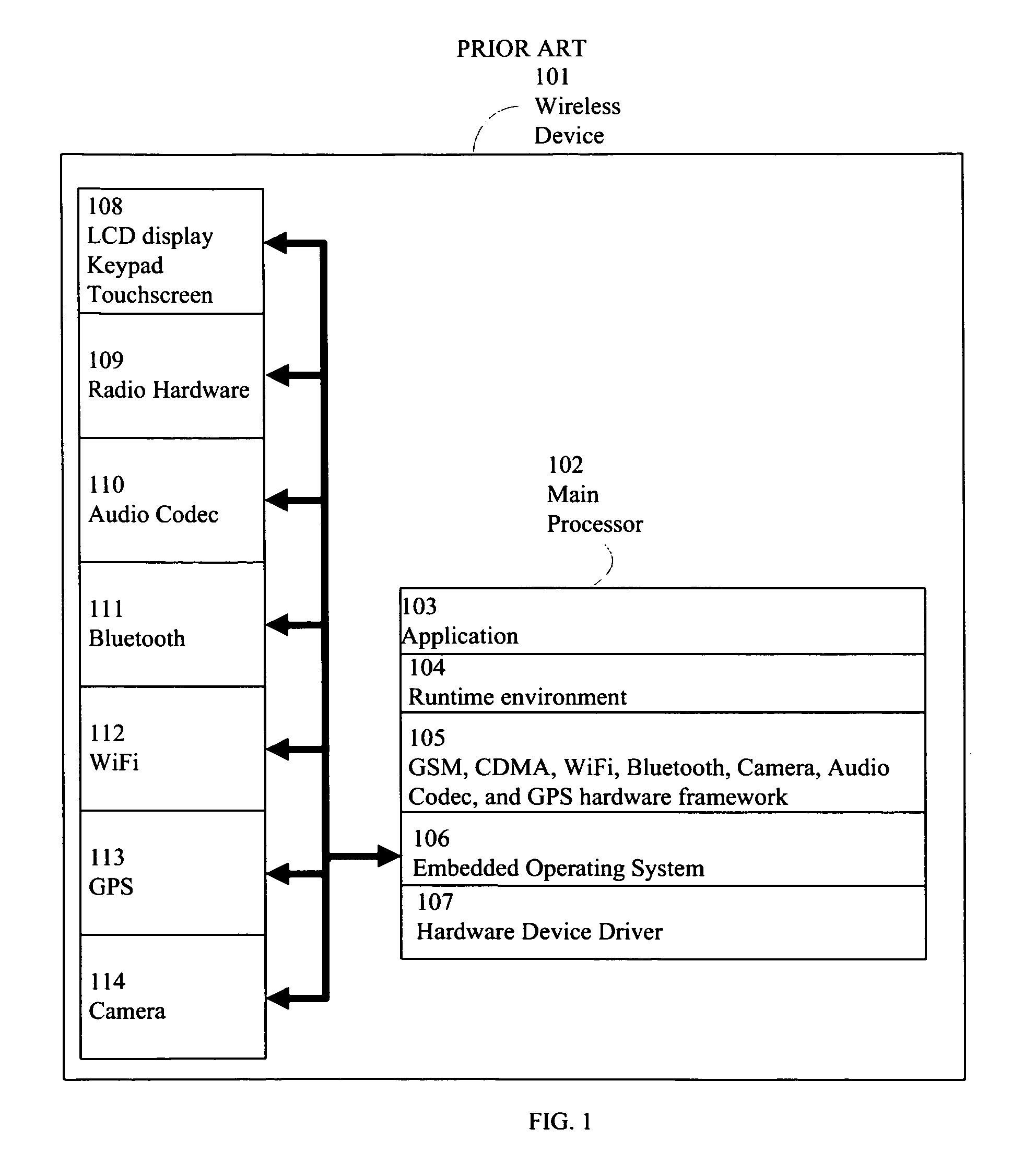

System and method for implementing a remote input device using virtualization techniques for wireless device

InactiveUS20100199008A1Low costShort development timeCathode-ray tube indicatorsProgram controlVirtualizationRemovable media

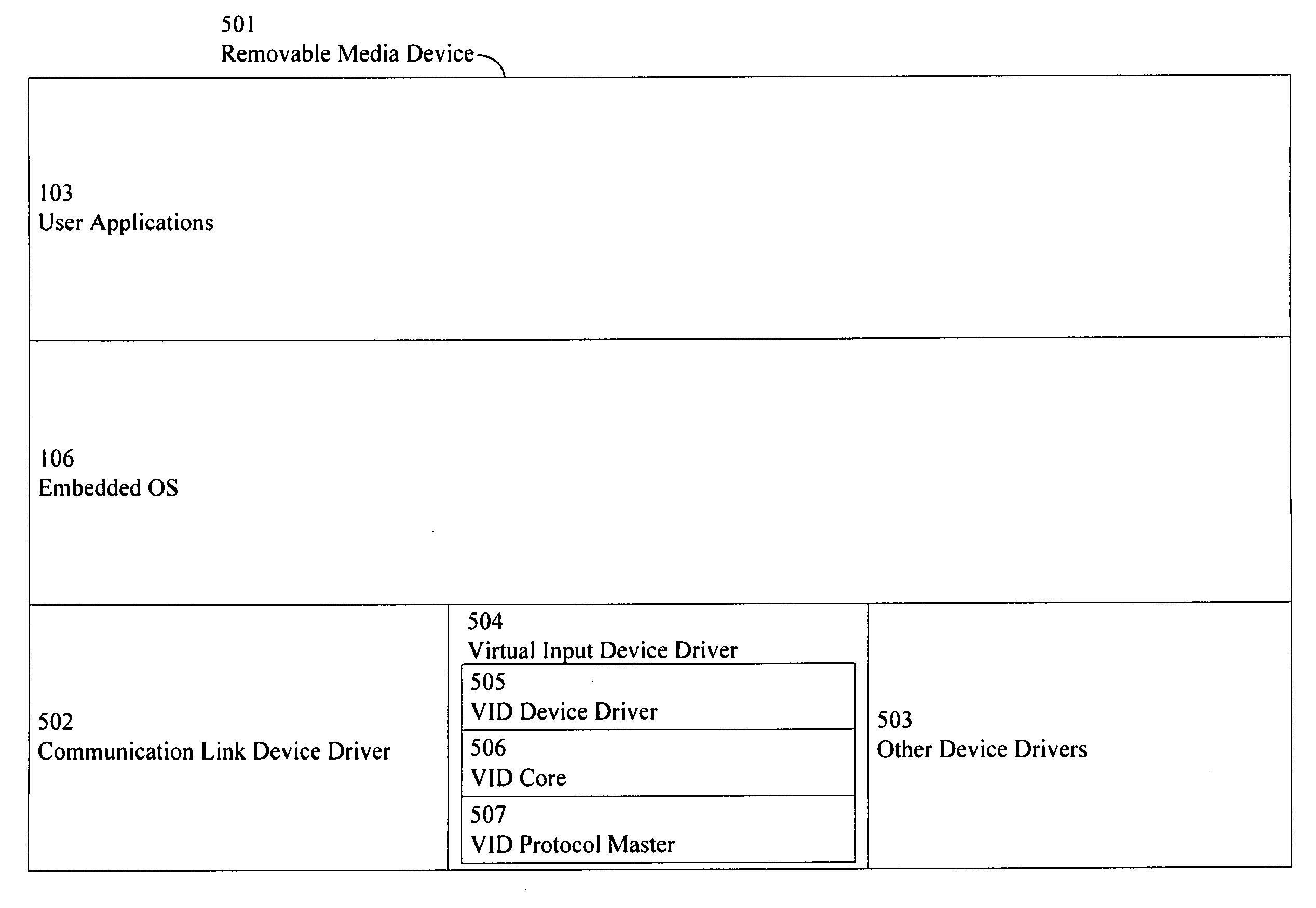

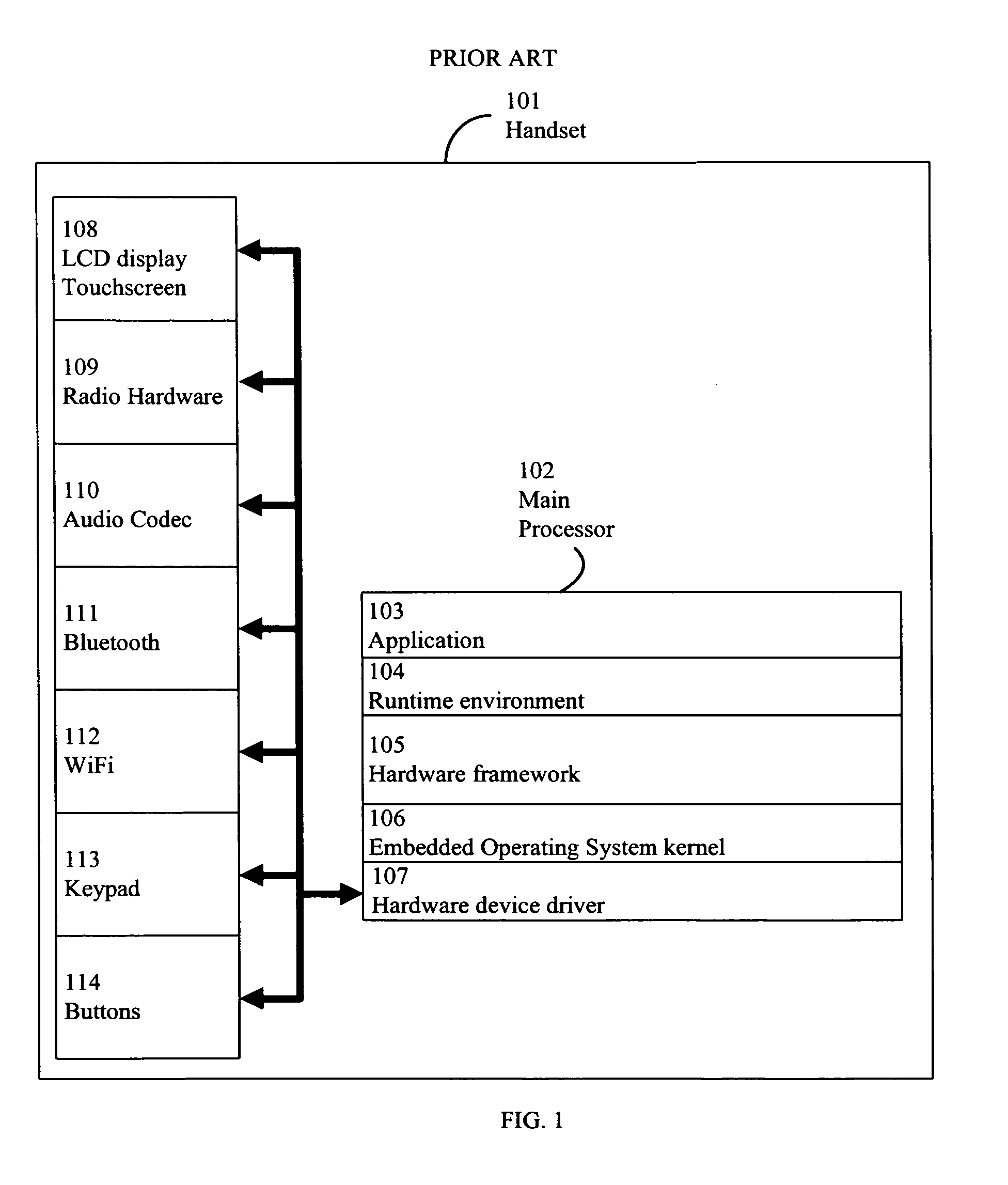

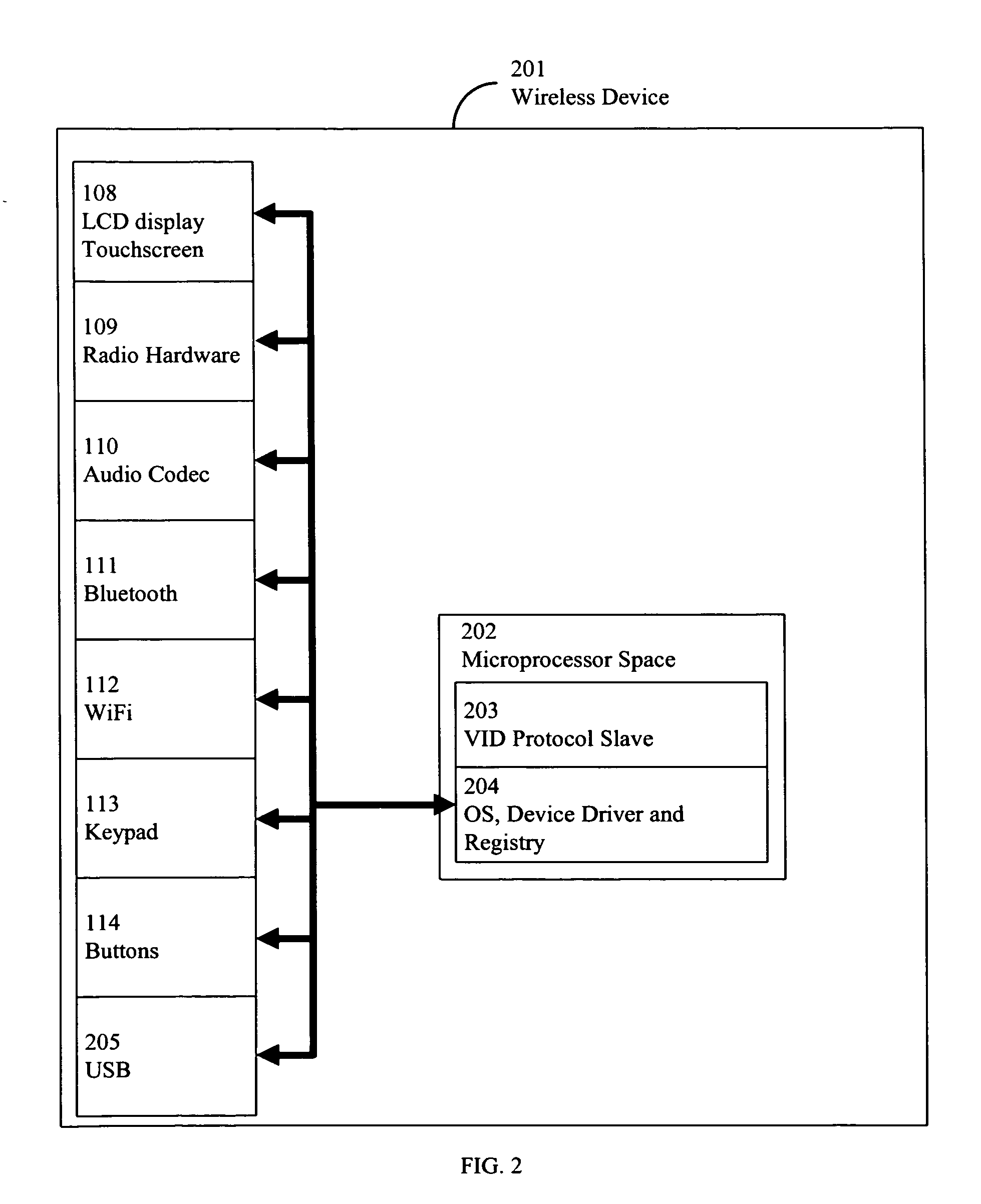

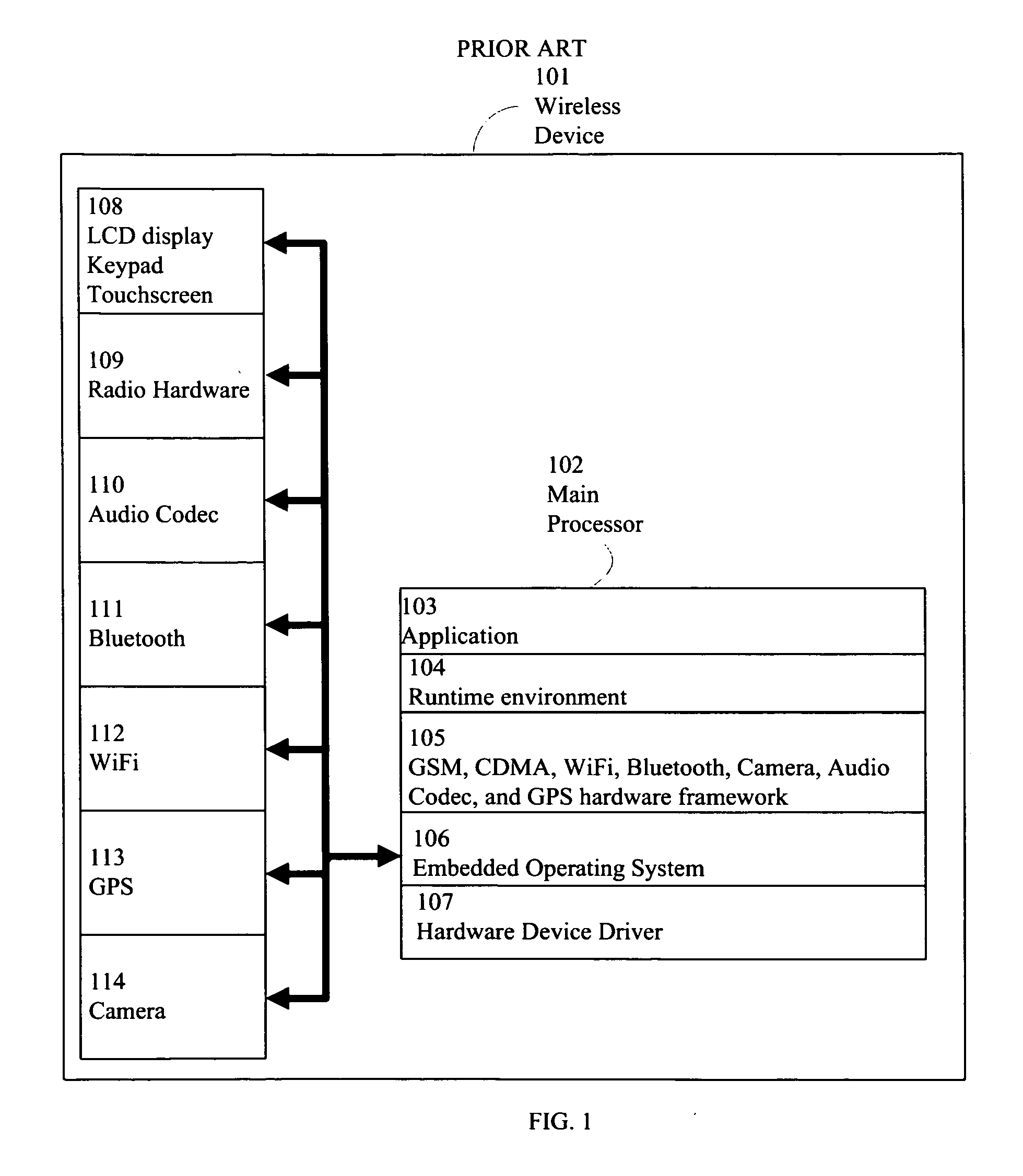

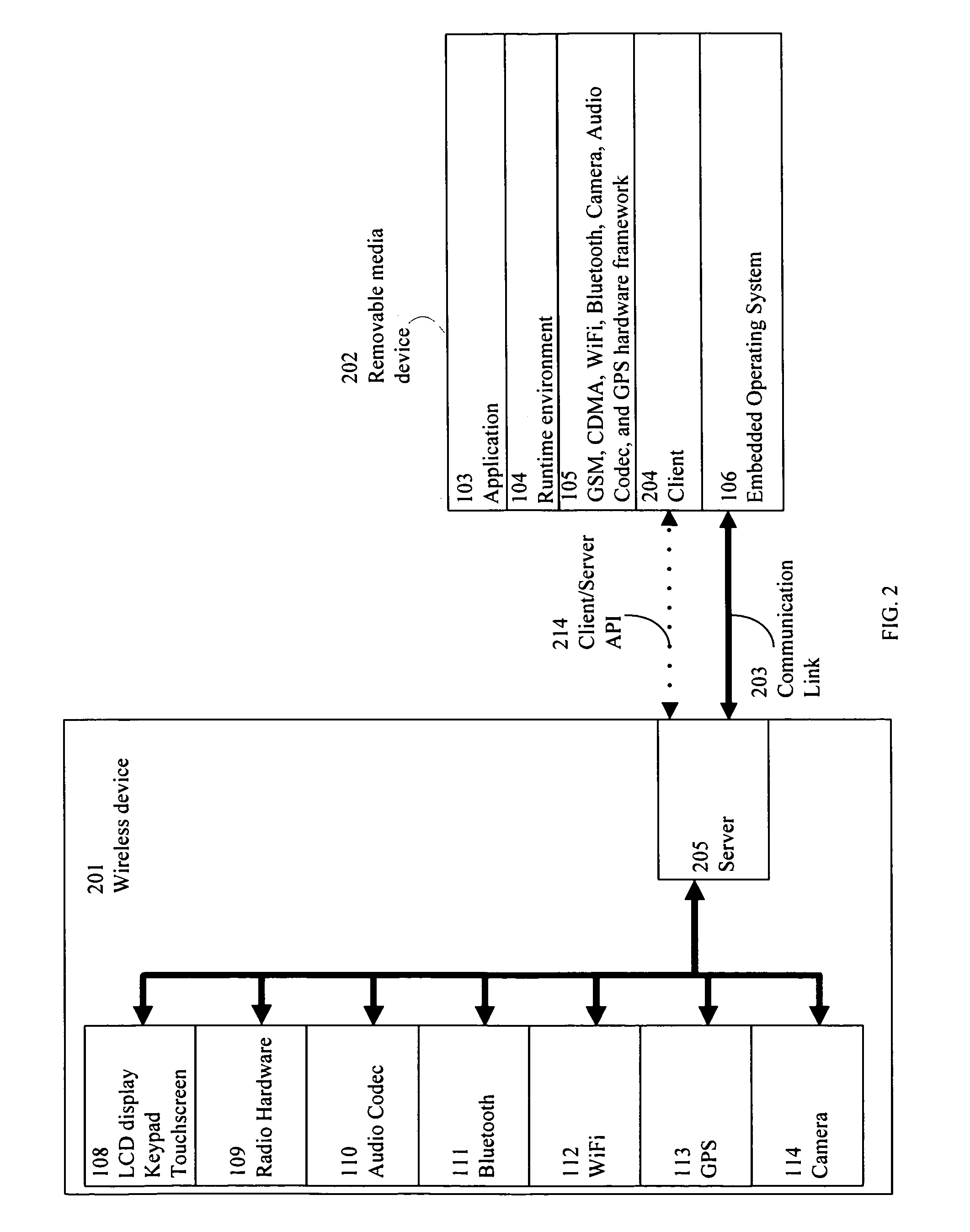

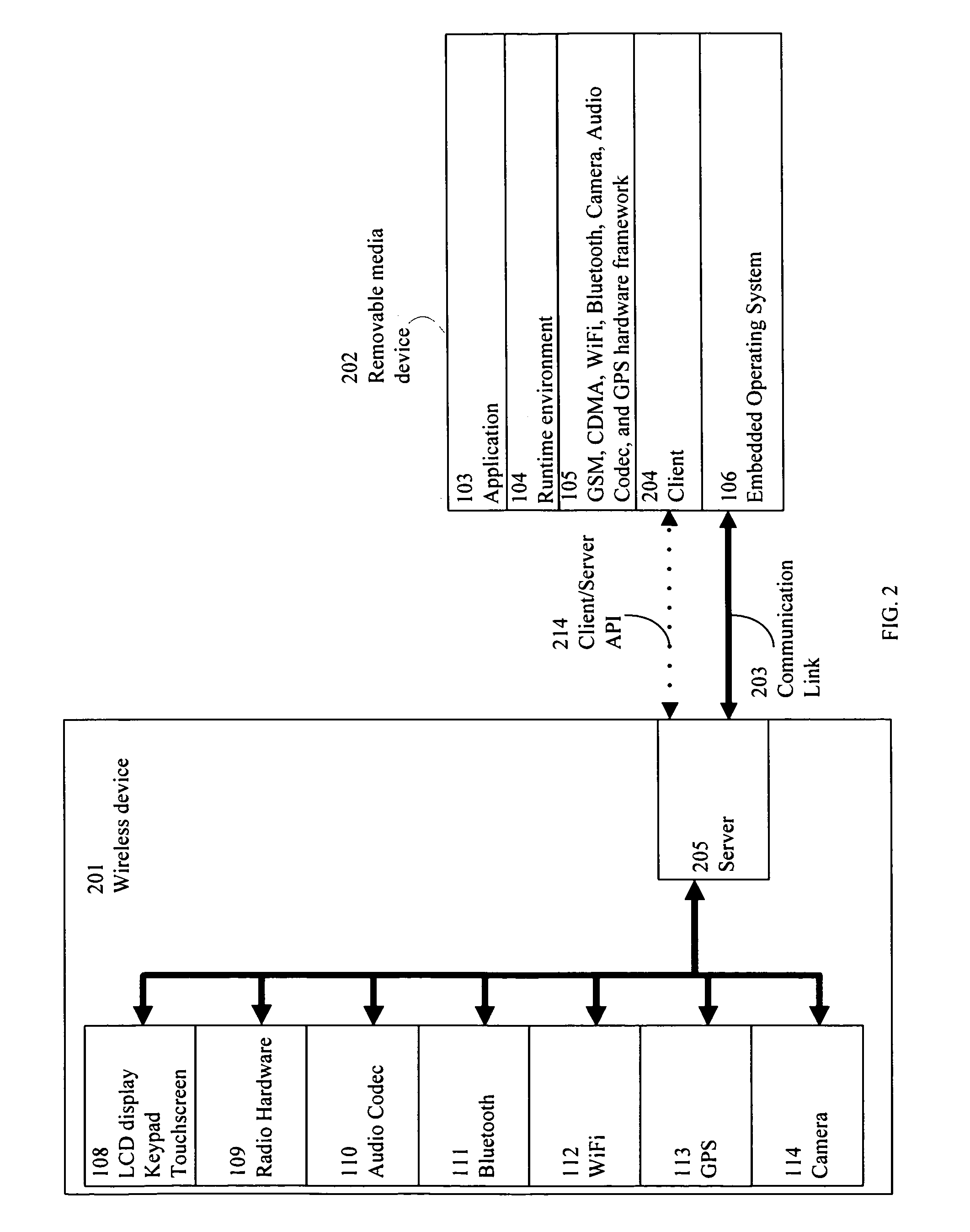

Systems and methods for implementing a remote input device using virtualization techniques for wireless devices are described. In one aspect, the system may comprise a wireless device that includes a processor, a memory, input hardware, and a protocol slave adapted to communicate with the input hardware; and a removable media device that includes a memory, a processor, and a protocol master adapted to communicate with the protocol slave of the wireless device. In another embodiment, the method may comprise emulating a hardware interface on a removable media device; mapping input hardware of a wireless device to the interface; mapping a processor of the media device to the input hardware; wrapping and sending input hardware commands from a protocol master of the media device to a protocol slave of the wireless device; and executing the commands on the input device.

Owner:CASSIS INT PTE

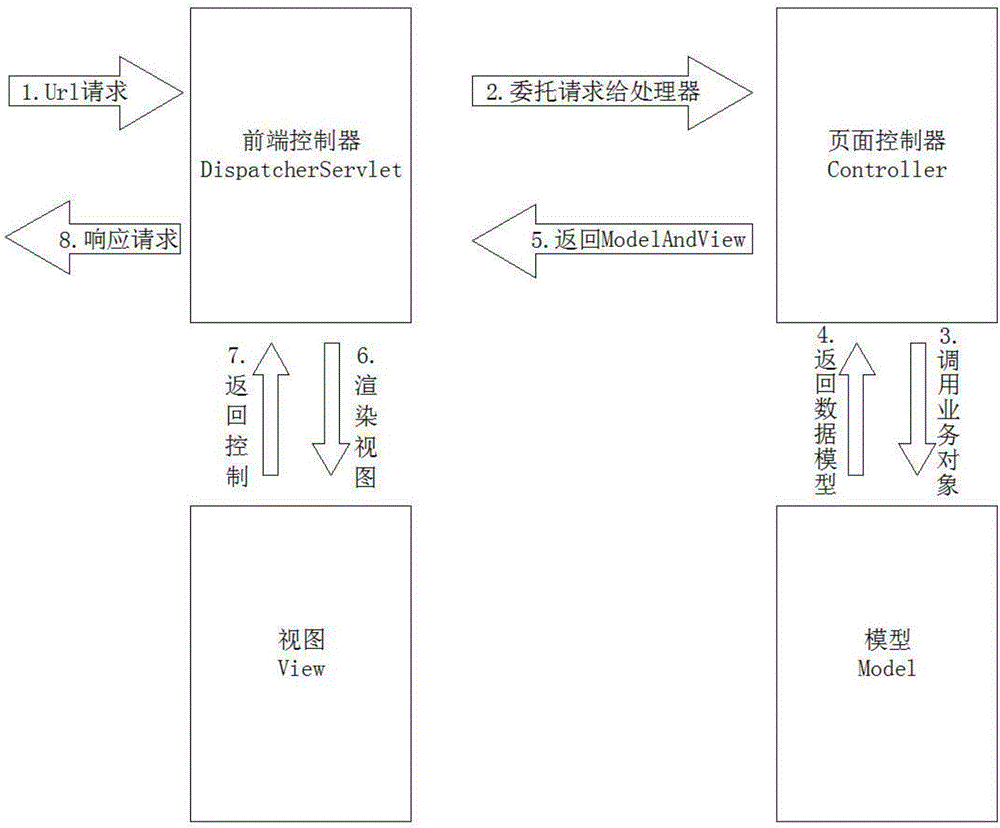

MVC framework based on Spring and MyBatis

InactiveCN105843609AEliminate shelf-level maintenance workloadConform to access styleSoftware designSoftware reuseComputer graphics (images)Computer software

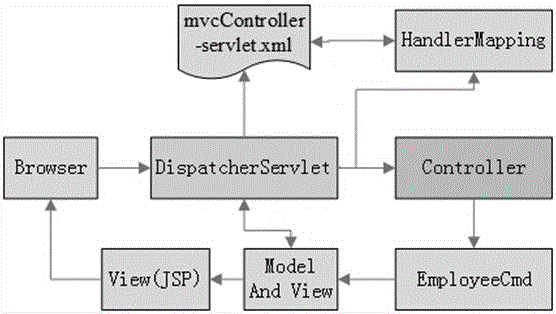

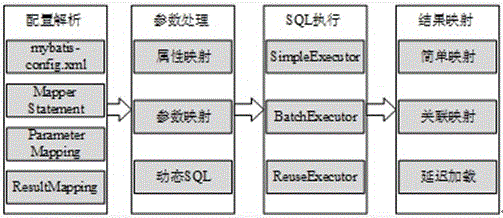

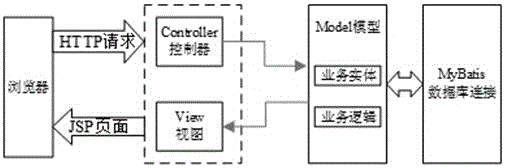

The invention discloses an MVC framework based on Spring and MyBatis, which belongs to the technical field of computer software. The front-end controller is DispatcherServlet; The mapper (Handler Mapping) performs processor management, and the view resolver (View Resolver) performs view management; the page controller / action / processor is the implementation of the Controller interface. The invention establishes a development platform based on mature ideas such as MVC, and develops a more concise Web layer.

Owner:INSPUR QILU SOFTWARE IND

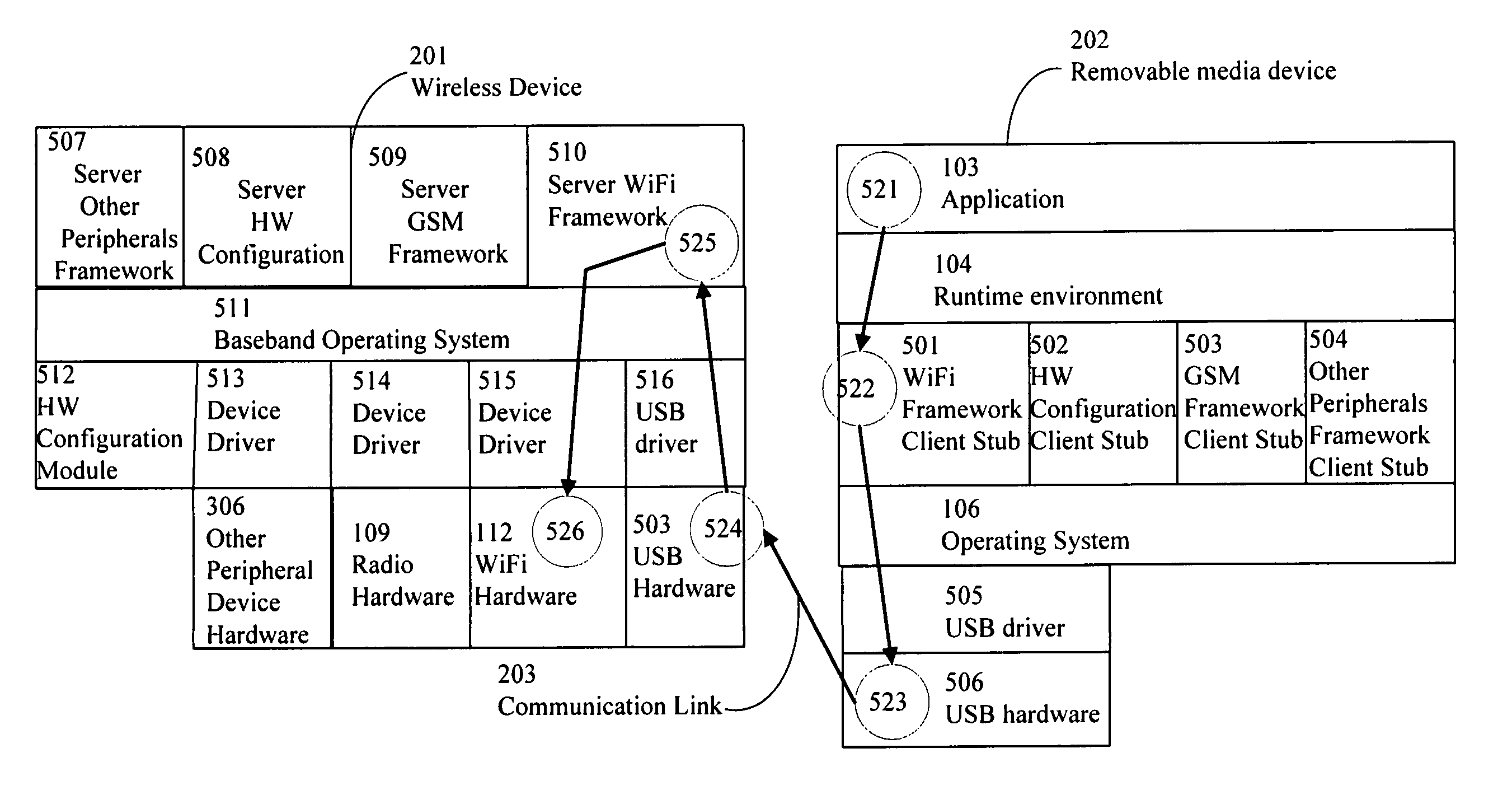

System and method for remotely operating a wireless device using a server and client architecture

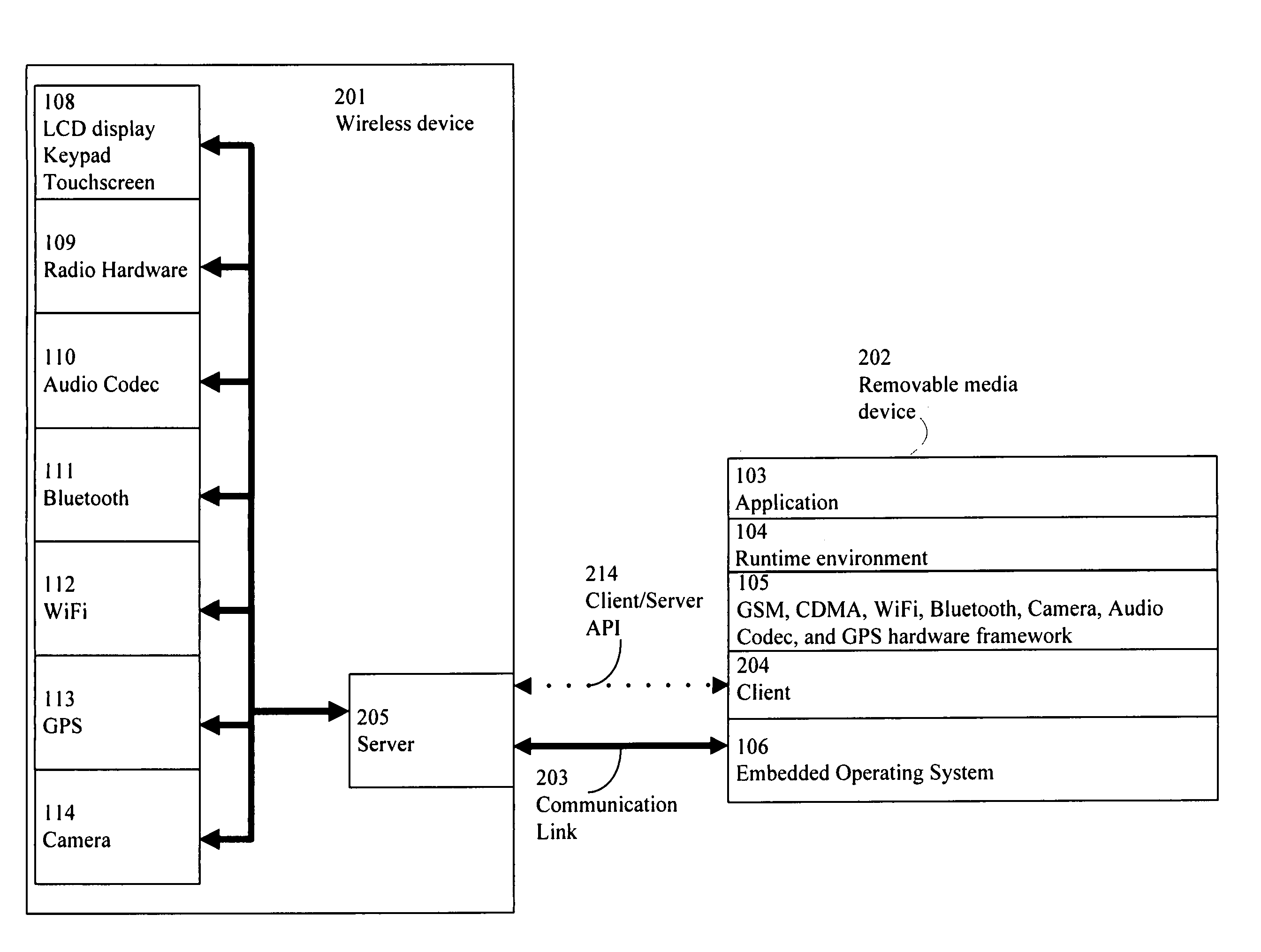

The present disclosure relates to a system and method for remotely operating one or more peripheral devices of a wireless device using a server and client architecture. In one aspect, the system may comprise a wireless device that includes a processor, a memory, a peripheral device, and a server adapted to communicate with the peripheral device; and a removable media device that includes a memory, a processor, and a client adapted to communicate with the server of the wireless device. In another aspect, the method may comprise the steps of emulating a hardware interface on a removable media device; mapping a peripheral device of a wireless device to the interface; mapping a processor of the media device to the peripheral device; wrapping and sending hardware commands from a client of the media device to a server of the wireless device; and executing the commands on the peripheral device.

Owner:CASSIS INT PTE

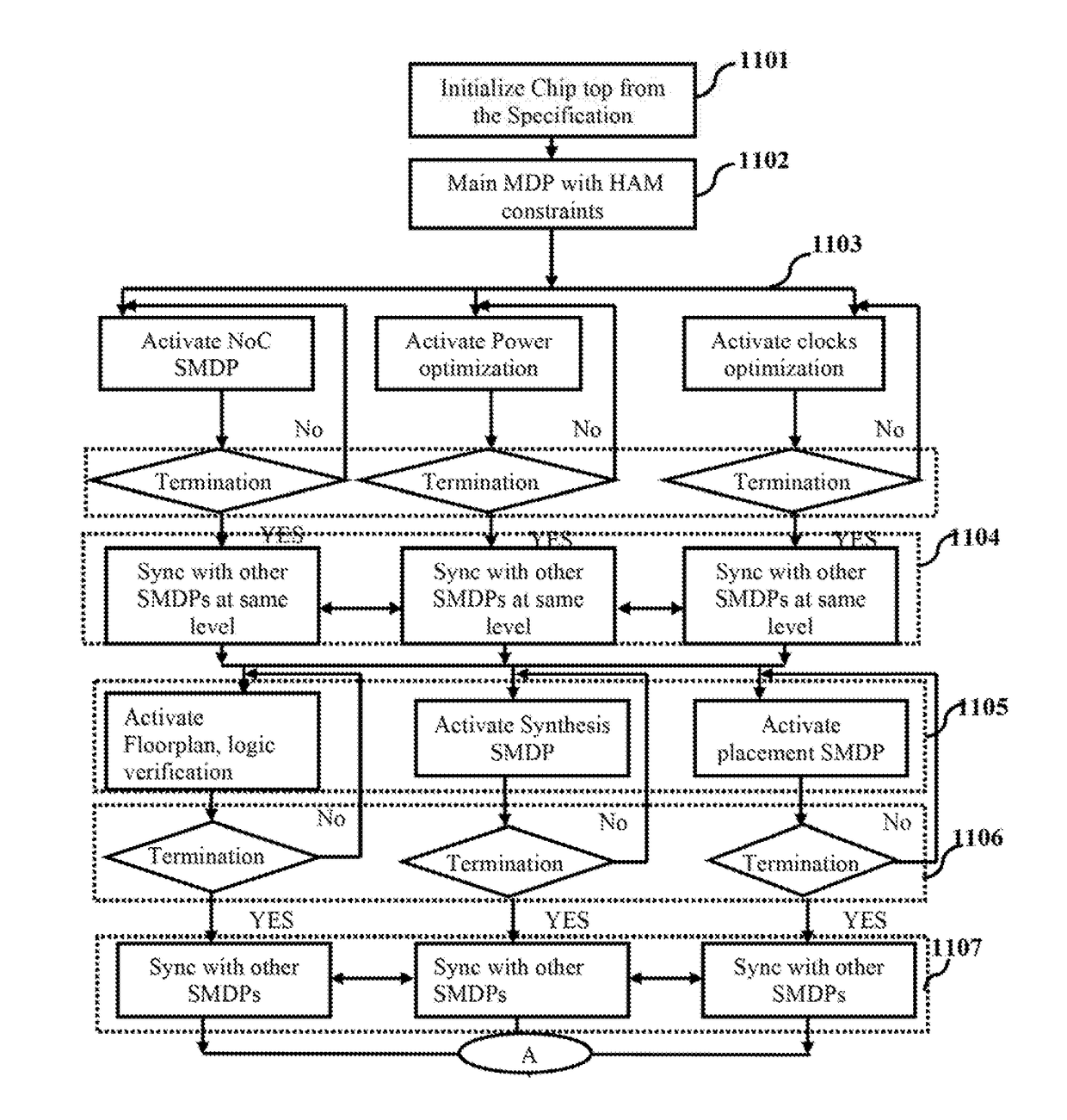

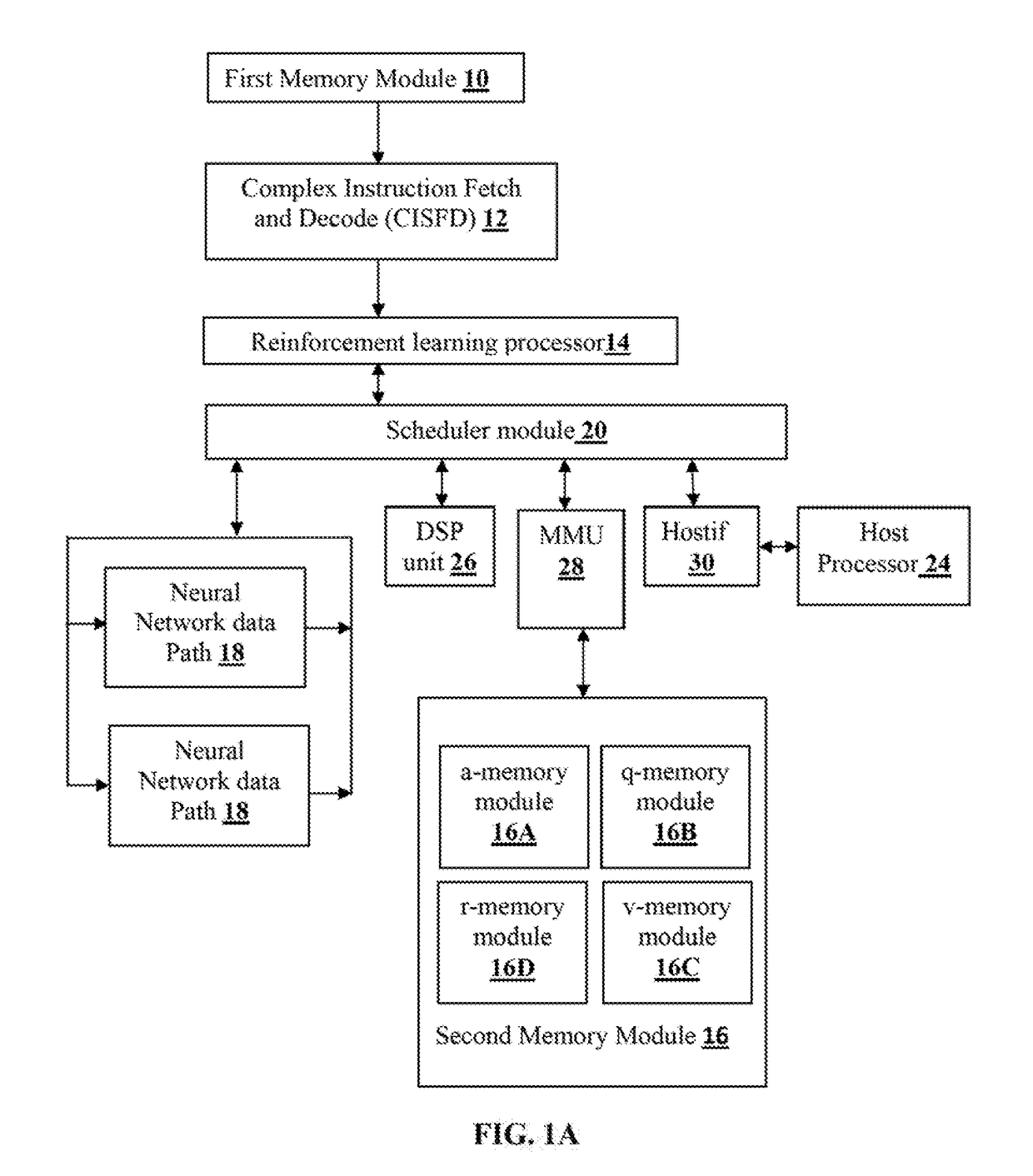

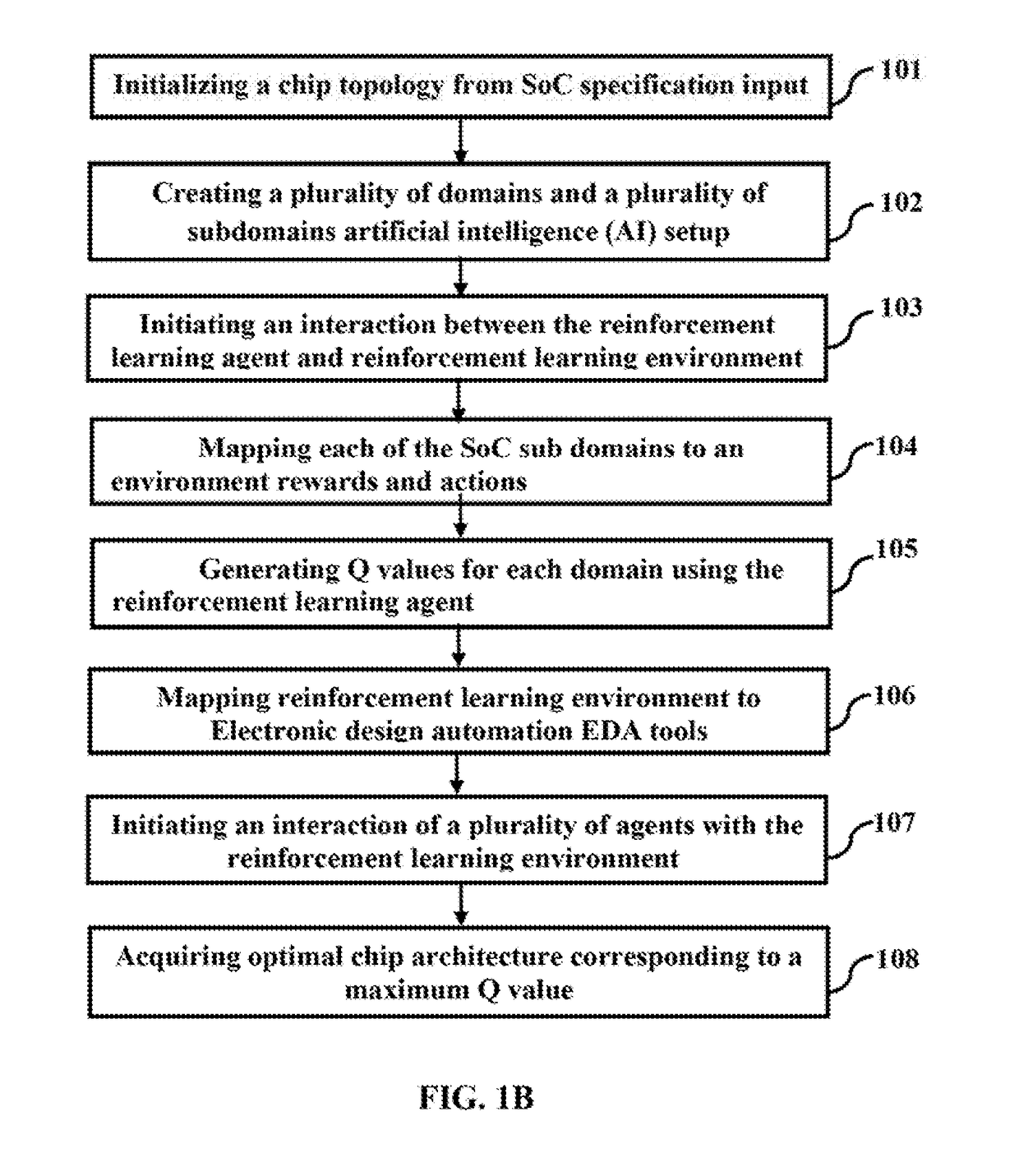

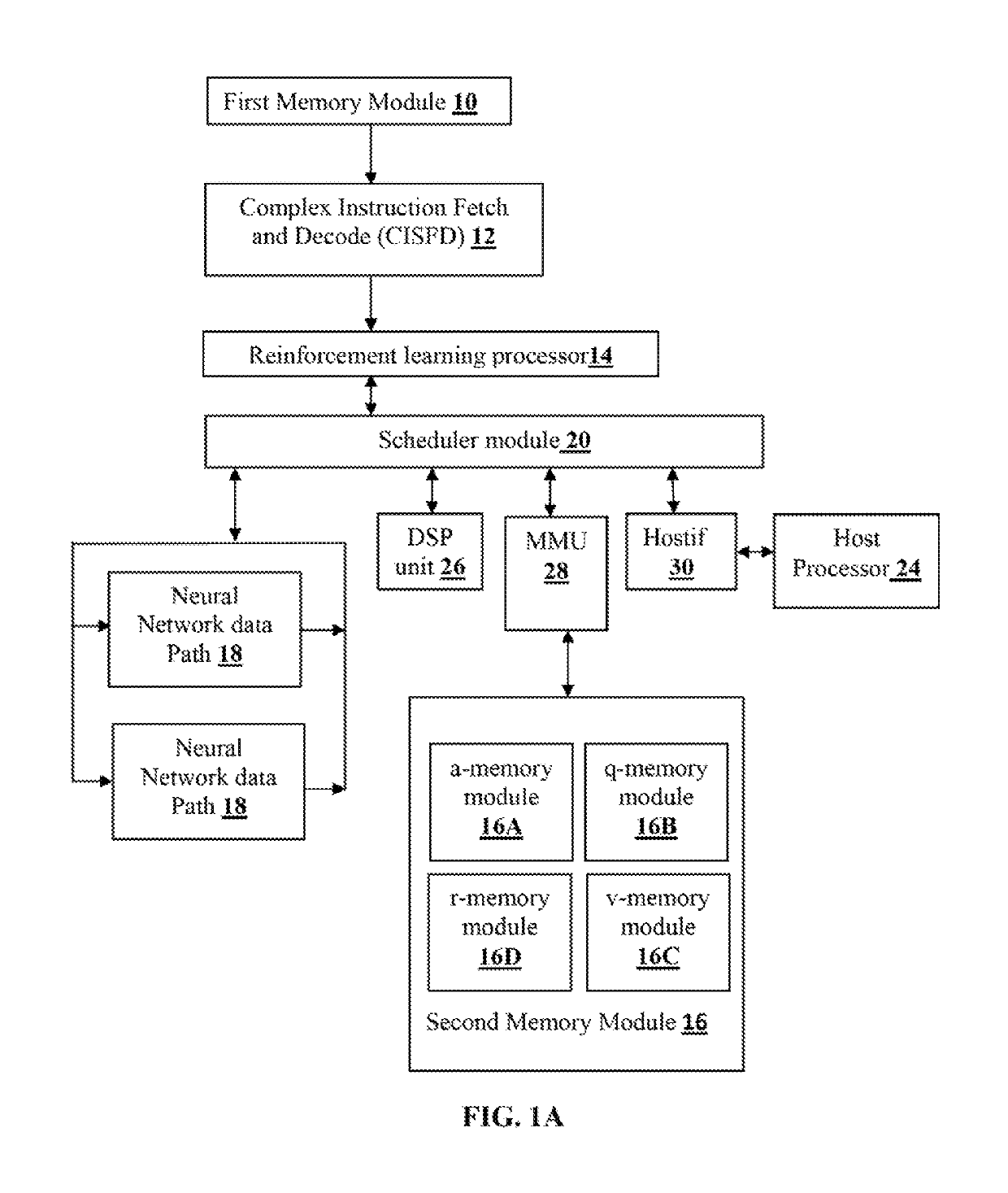

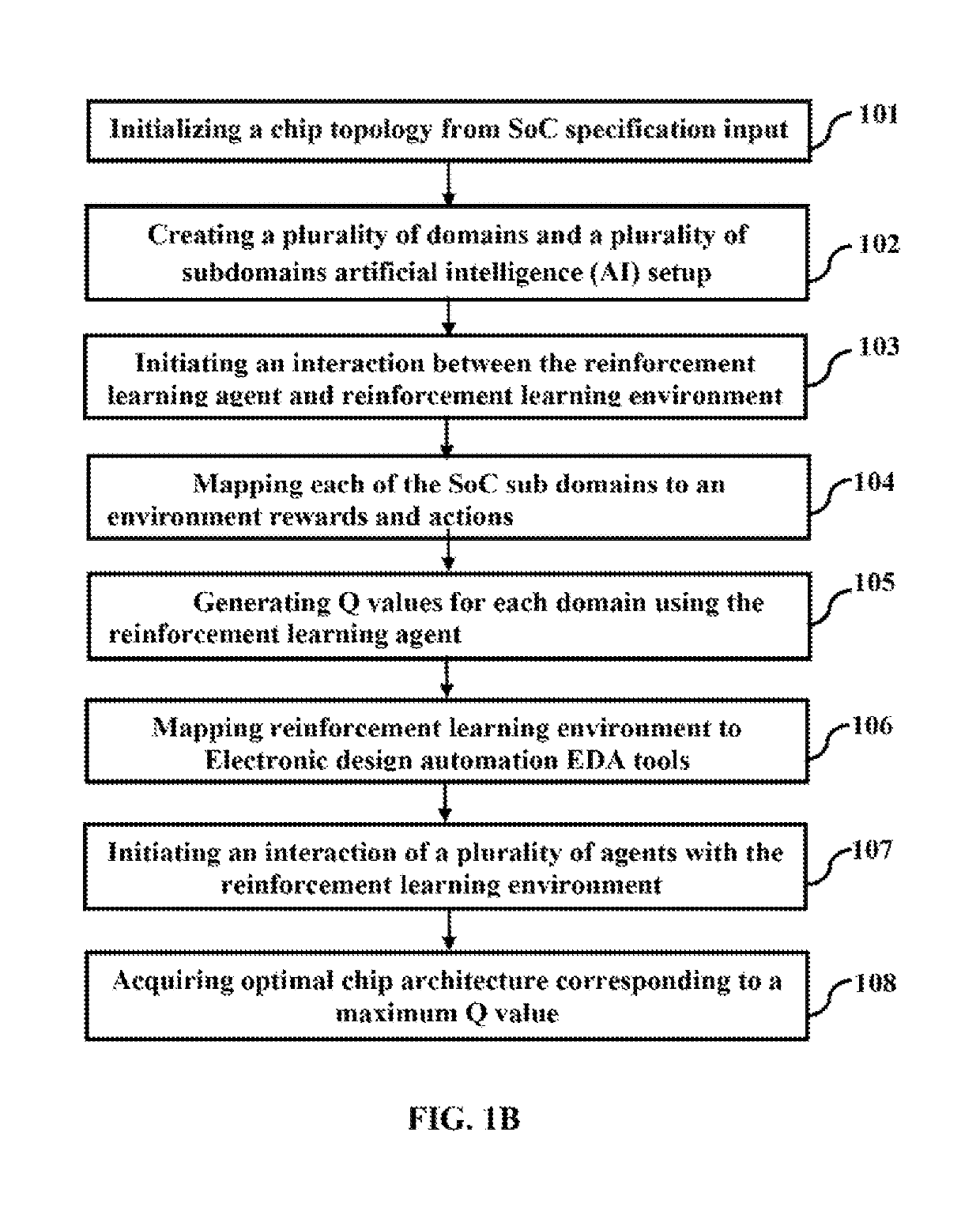

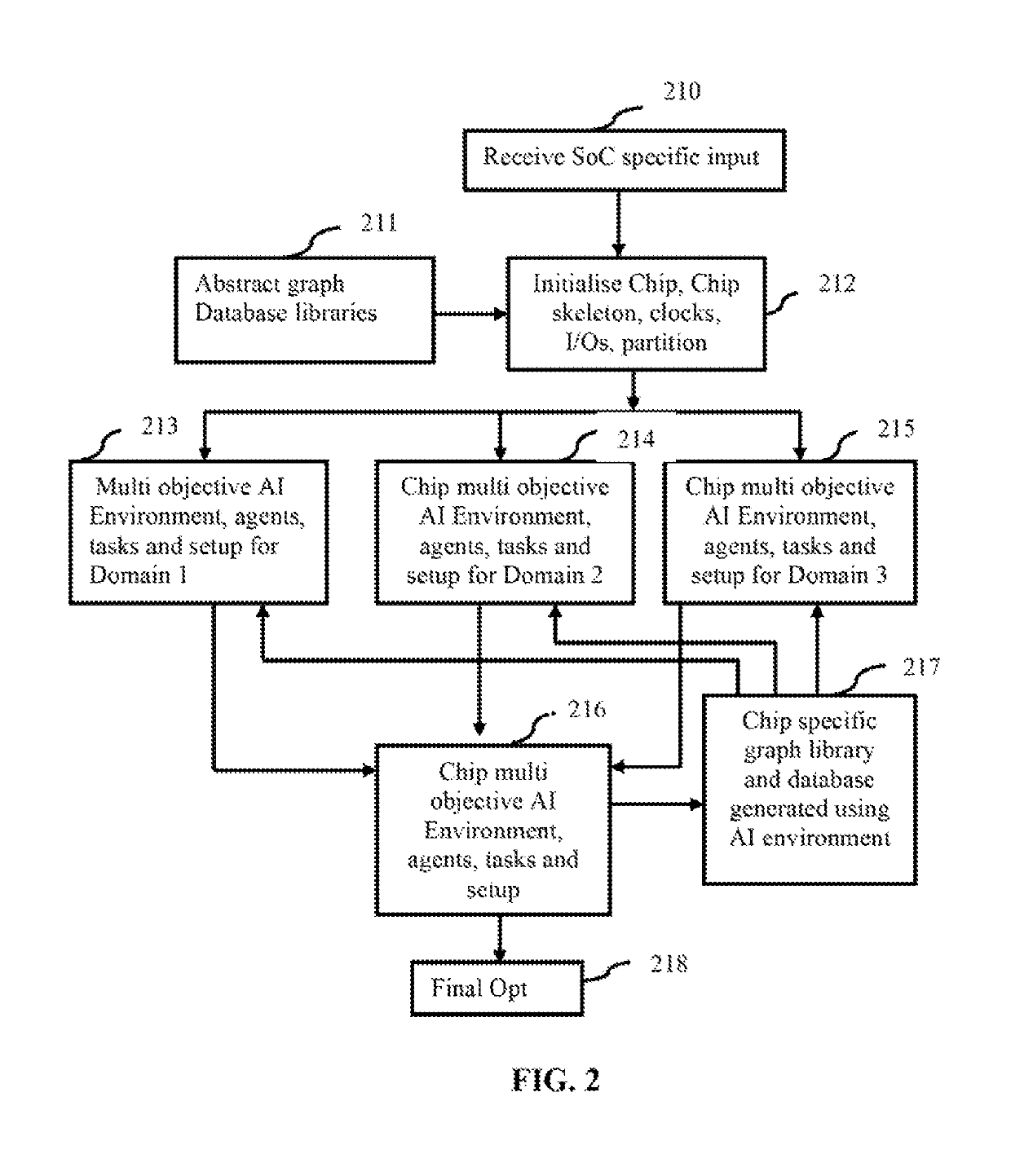

SYSTEM AND METHOD FOR DESIGNING SYSTEM ON CHIP (SoC) CIRCUITS USING SINGLE INSTRUCTION MULTIPLE AGENT (SIMA) INSTRUCTIONS

ActiveUS20180260498A1Computer aided designSpecial data processing applicationsComputer architectureChip architecture

The embodiments herein discloses a system and method for designing SoC by using a reinforcement learning processor. An SoC specification input is received and a plurality of domains and a plurality of subdomains is created using application specific instruction set to generate chip specific graph library. An interaction is initiated between the reinforcement learning agent and the reinforcement learning environ lent using the application specific instructions. Each of the SoC sub domains from the plurality of SoC sub domains is mapped to a combination of environment, rewards and actions by a second processor. Further, interaction of a plurality of agents is initiated with the reinforcement learning environment for a predefined number of times and further Q value, V value, R value, and A value is updated in the second memory module. Thereby, an optimal chip architecture for designing SoC is acquired using application-domain specific instruction set (ASI).

Owner:ALPHAICS CORP

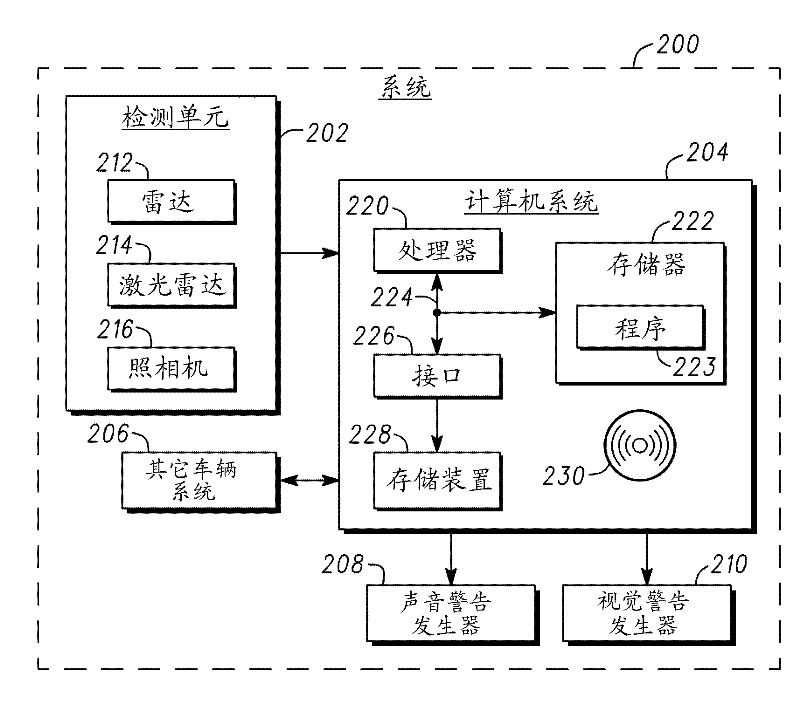

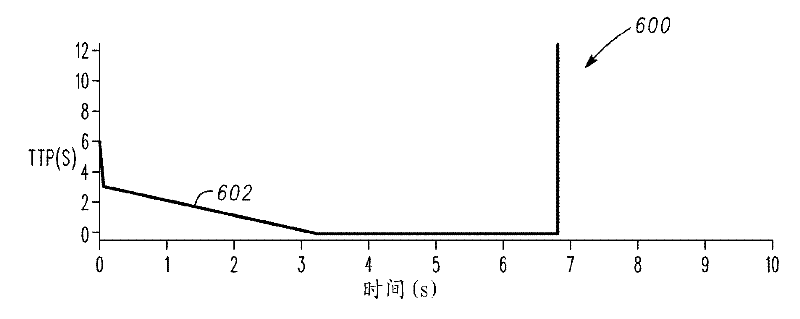

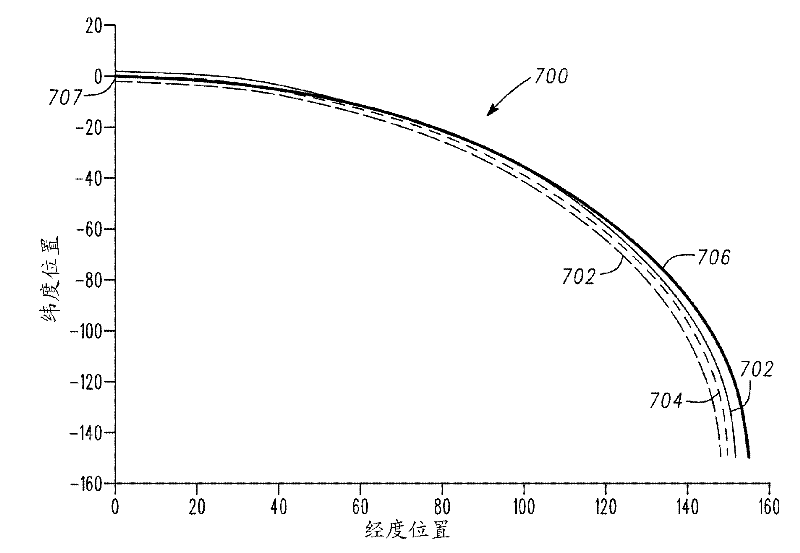

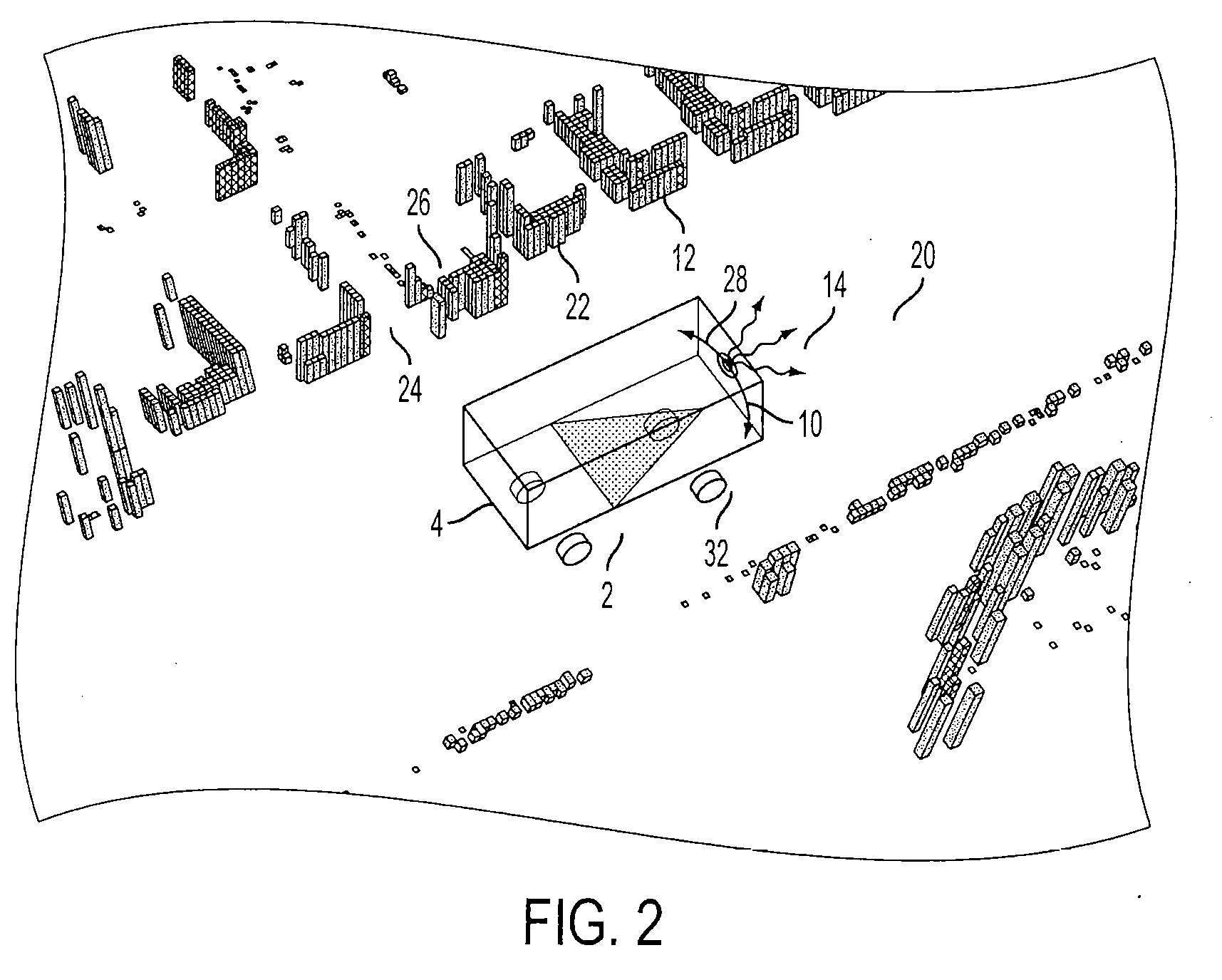

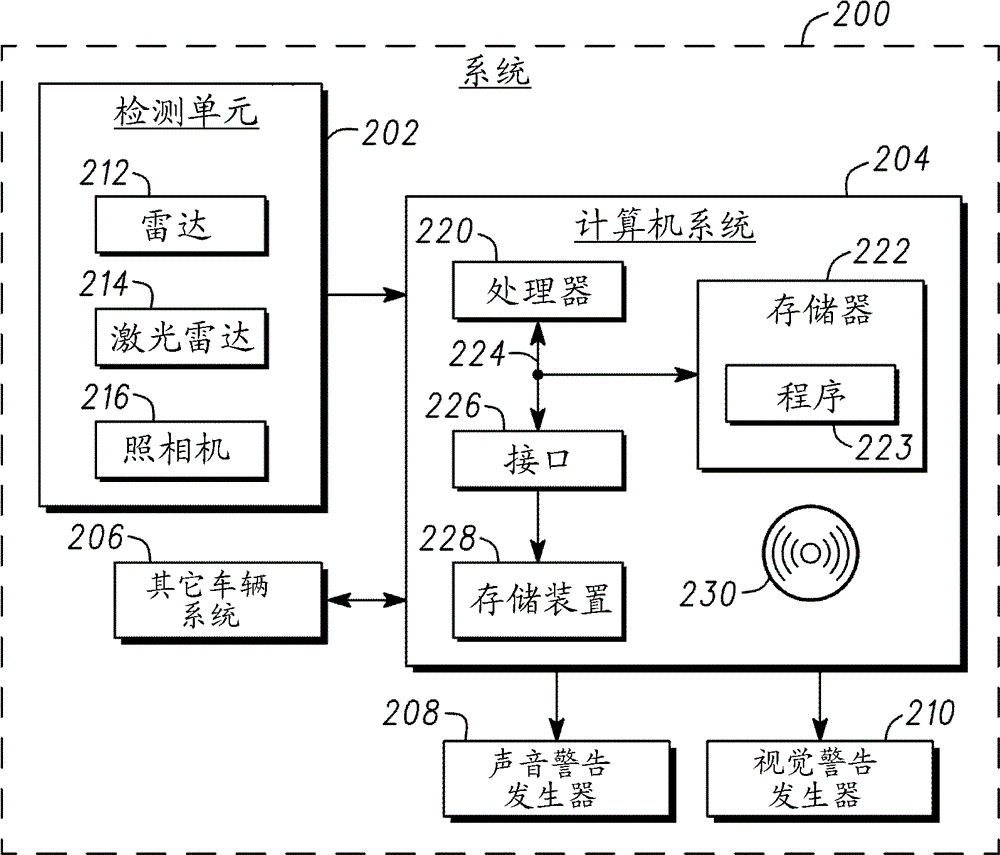

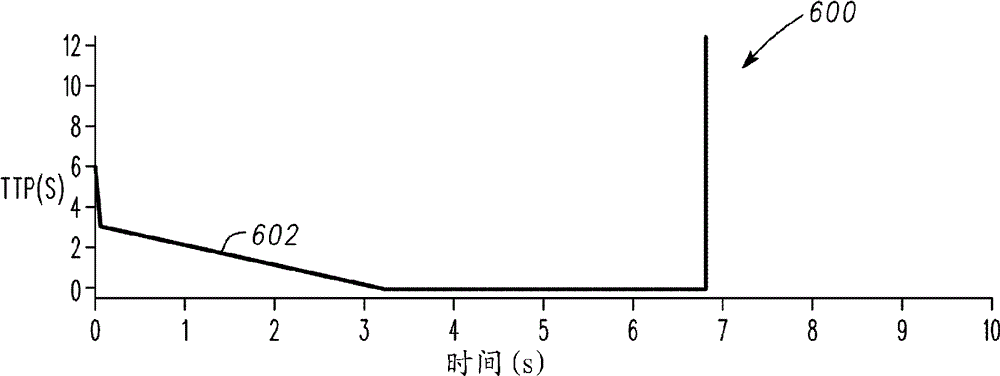

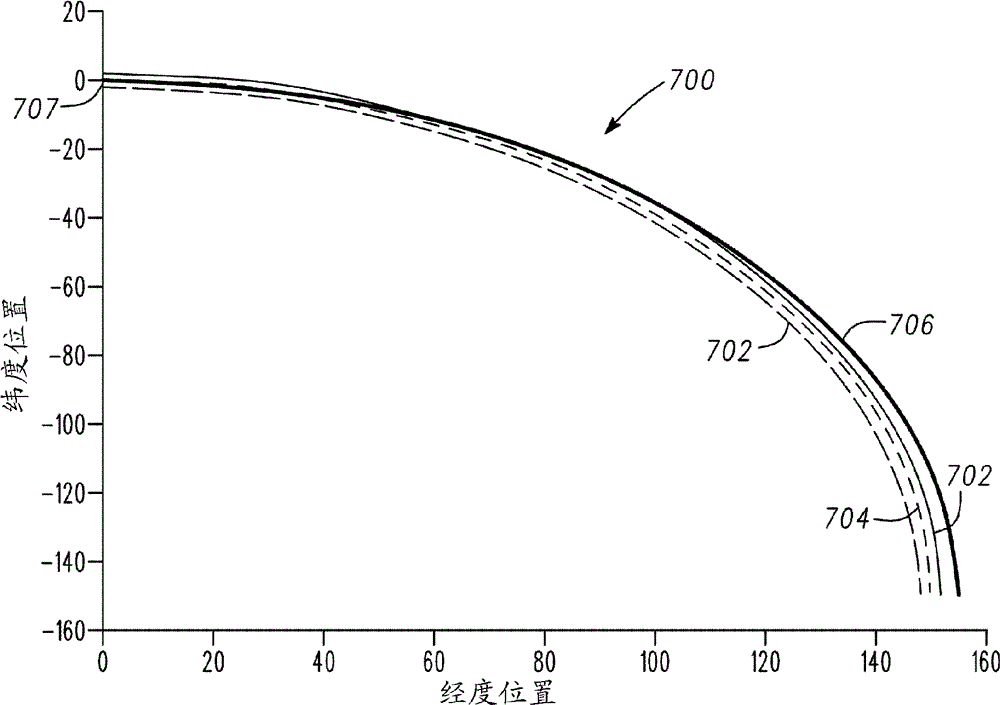

Method and system for collision assessment for vehicles

ActiveCN102328656ARoad vehicles traffic controlPedestrian/occupant safety arrangementProcessor mappingEnvironmental geology

Methods and systems are provided for assessing a target proximate a vehicle. A location and a velocity of the target are obtained. The location and the velocity of the target are mapped onto a polar coordinate system via a processor. A likelihood that the vehicle and the target will collide is determined using the mapping.

Owner:GM GLOBAL TECH OPERATIONS LLC

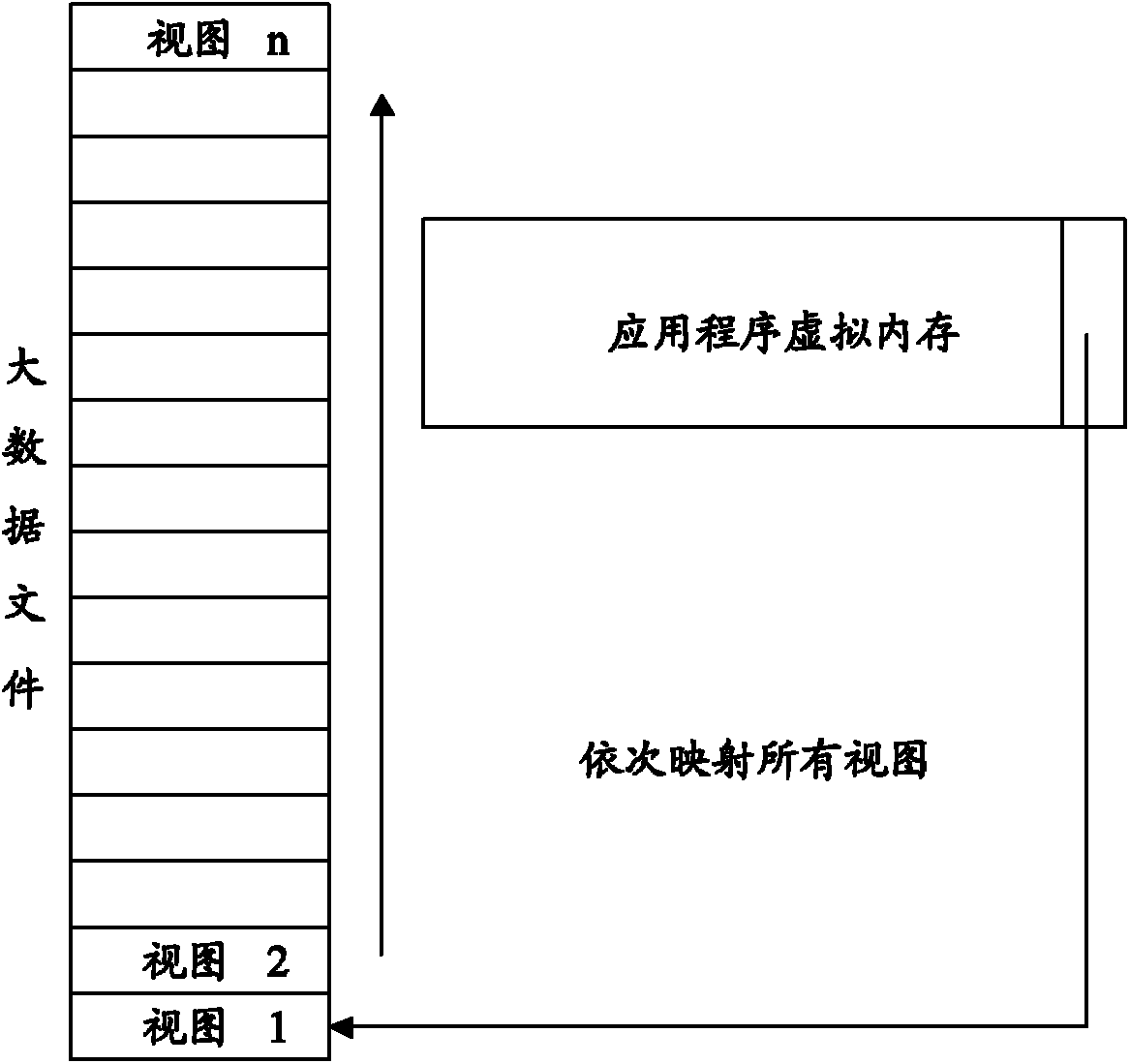

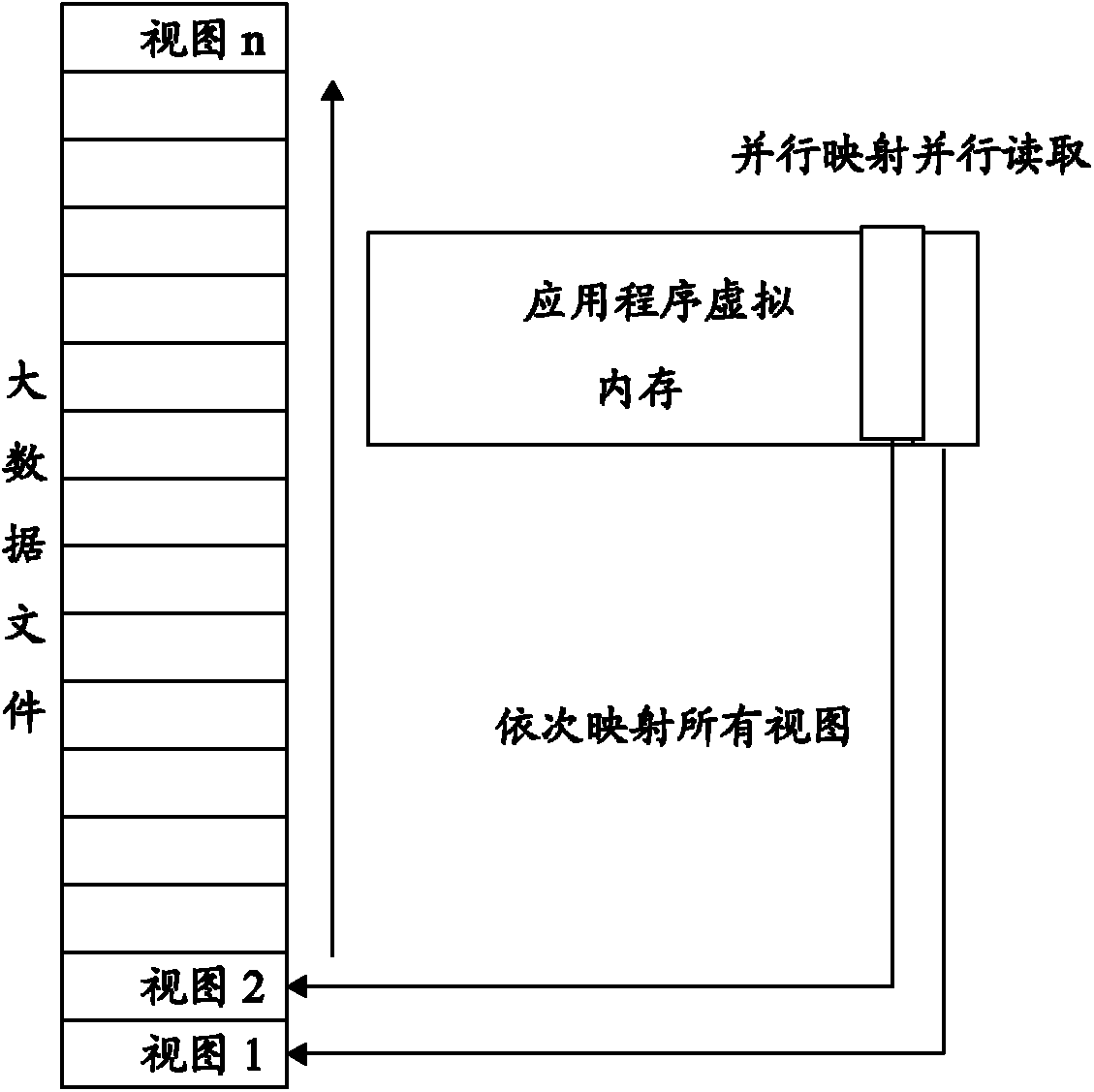

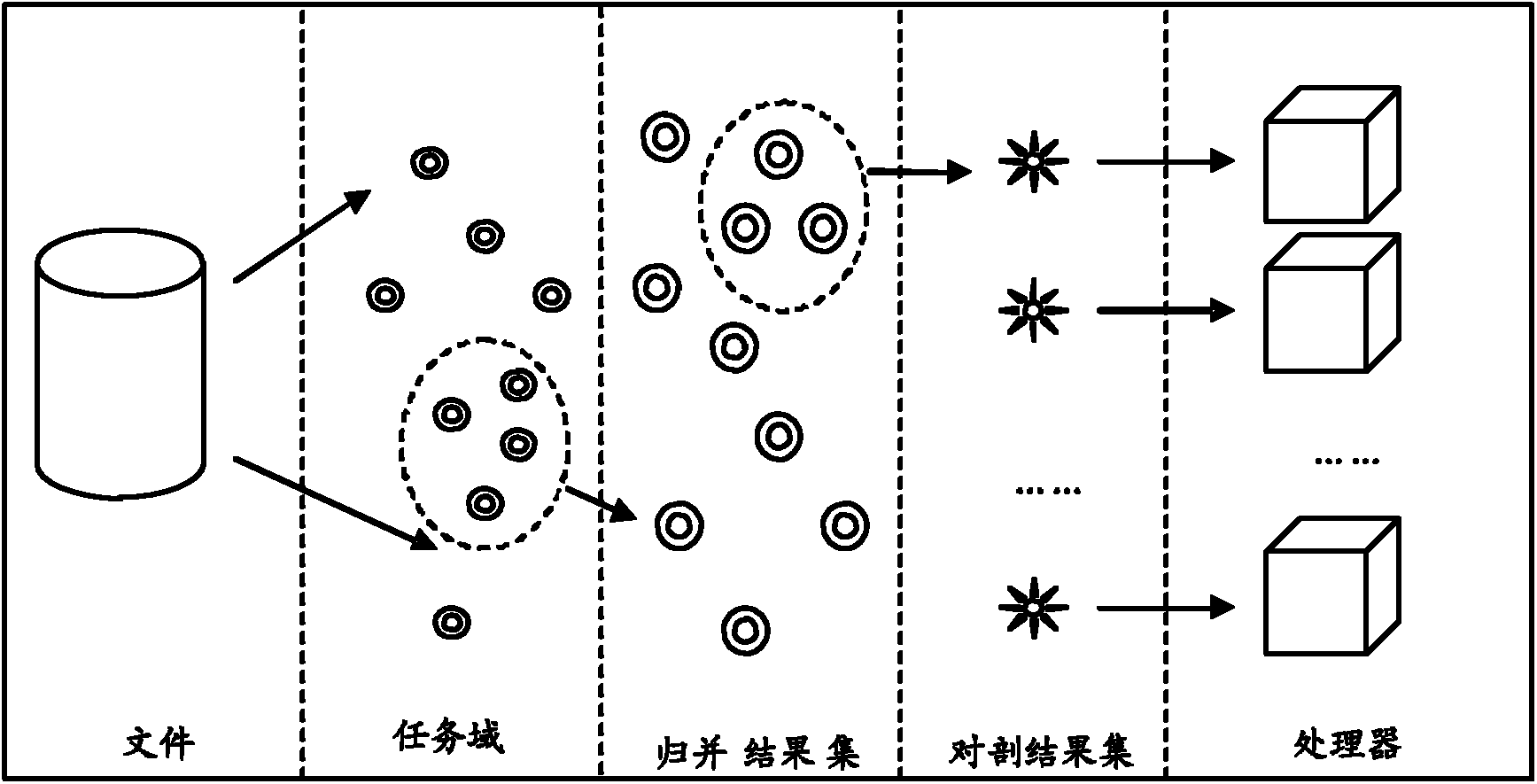

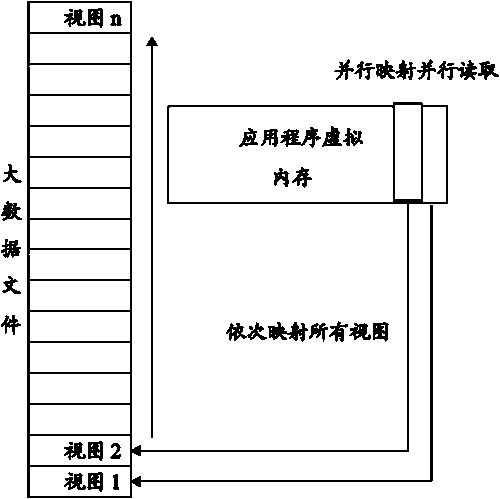

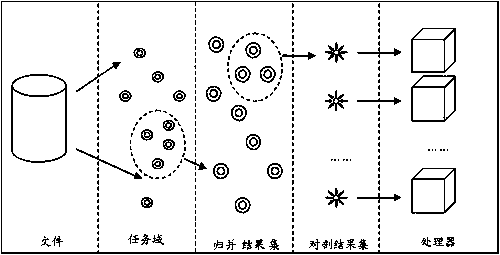

Method for rapidly extracting massive data files in parallel based on memory mapping

InactiveCN102231121AImprove efficiencyBreak through the bottleneck of processing speedMultiprogramming arrangementsSpecial data processing applicationsInternal memoryTask mapping

The invention discloses a method for rapidly extracting massive data files in parallel based on memory mapping. The method comprises the following steps of: generating a task domain: forming the task domain by task blocks, wherein the task blocks are elements in the task domain; generating a task pool: performing sub-task domain merger of the elements in the task domain according to a rule of lowcommunication cost, taking a set of the elements in the task domain as the task pool for scheduling tasks, and extracting tasks to be executed by a processor according to the scheduling selection; scheduling the tasks: according to the remaining quantity of the tasks, determining the scheduling particle size of the tasks, extracting the tasks according with requirements from the task pool, and preparing for mapping; and mapping a processor: mapping the extracted tasks to be executed by a currently idle processor. According to the method disclosed by the invention, the multi-nuclear advantagescan be played; the efficiency for an internal memory to map files is increased; the method can be applicable for reading a single file from a massive file, the capacity of which is below 4GB; the reading speed of this kind of files can be effectively increased; and the I / O (Input / Output) throughput rate of a disk file can be increased.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

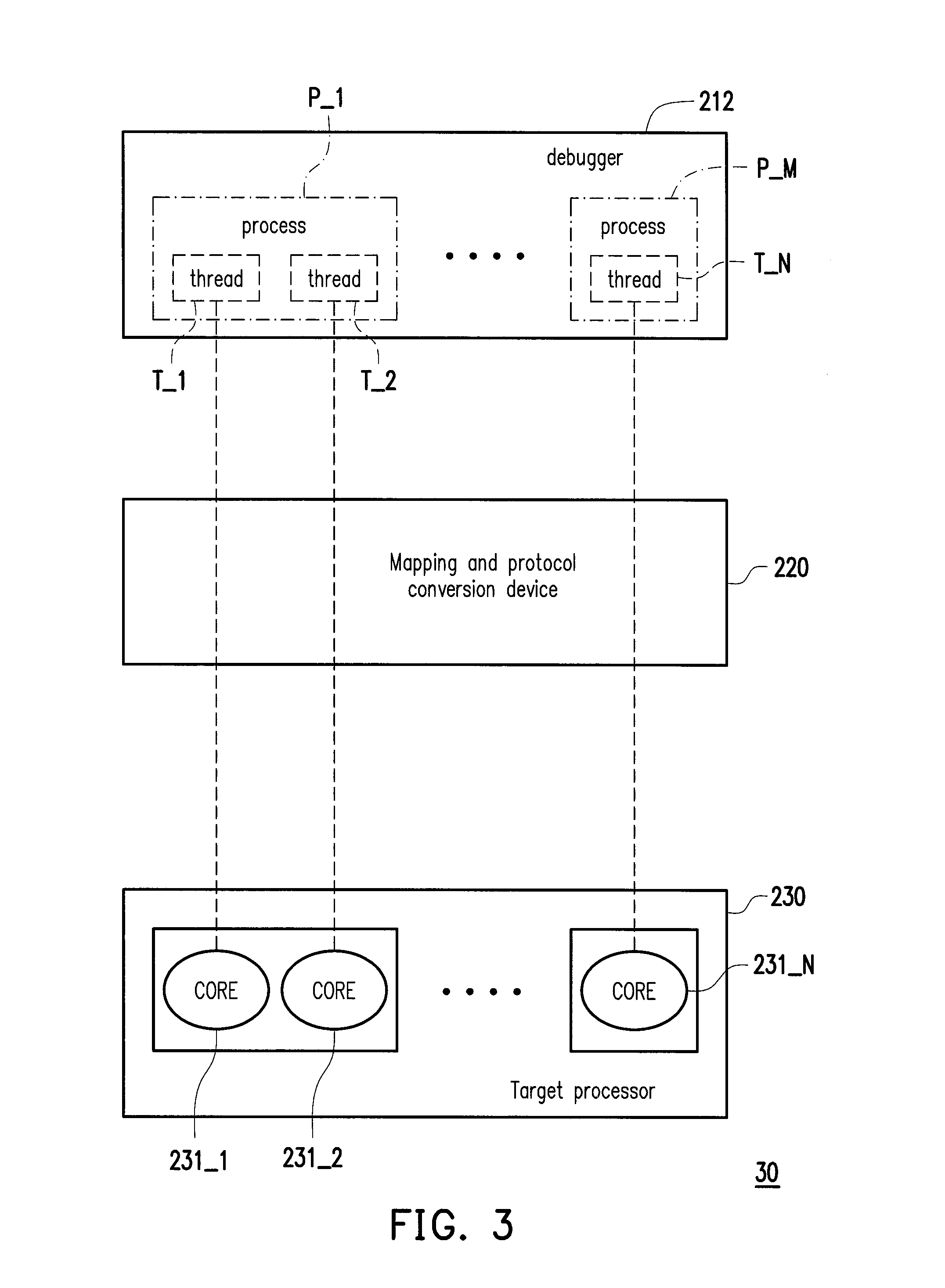

Debugging system and debugging method of multi-core processor

ActiveUS20160092327A1PowerfulLow costFunctional testingSoftware testing/debuggingComputer architectureEngineering

The invention relates to a debugging system and a debugging method of a multi-core processor. The debugging system includes a debugging host, a target processor, and a mapping and protocol conversion device. The debugging host includes a debugger, and the target processor includes a plurality of cores. The mapping and protocol conversion device is connected between the debugging host and the target processor, identifies a core architecture to which each of the cores belongs, and maps each of the cores respectively to at least one thread of at least one process according to the core architecture to which each of the cores belongs. Afterwards, the debugger executes a debugging procedure on the target processor according to the process and the thread corresponded to each of the cores.

Owner:ALI (CHENGDU) CORP

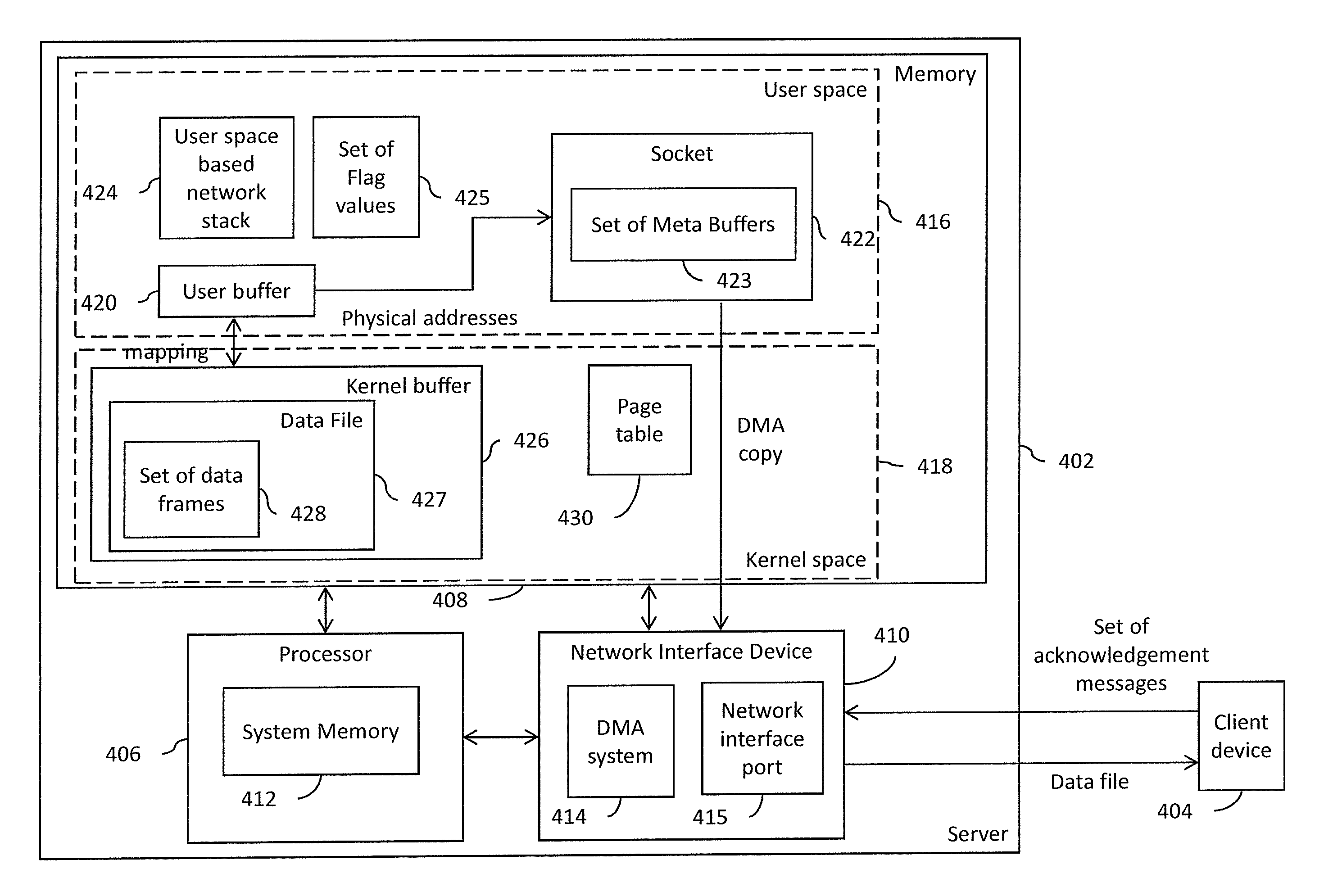

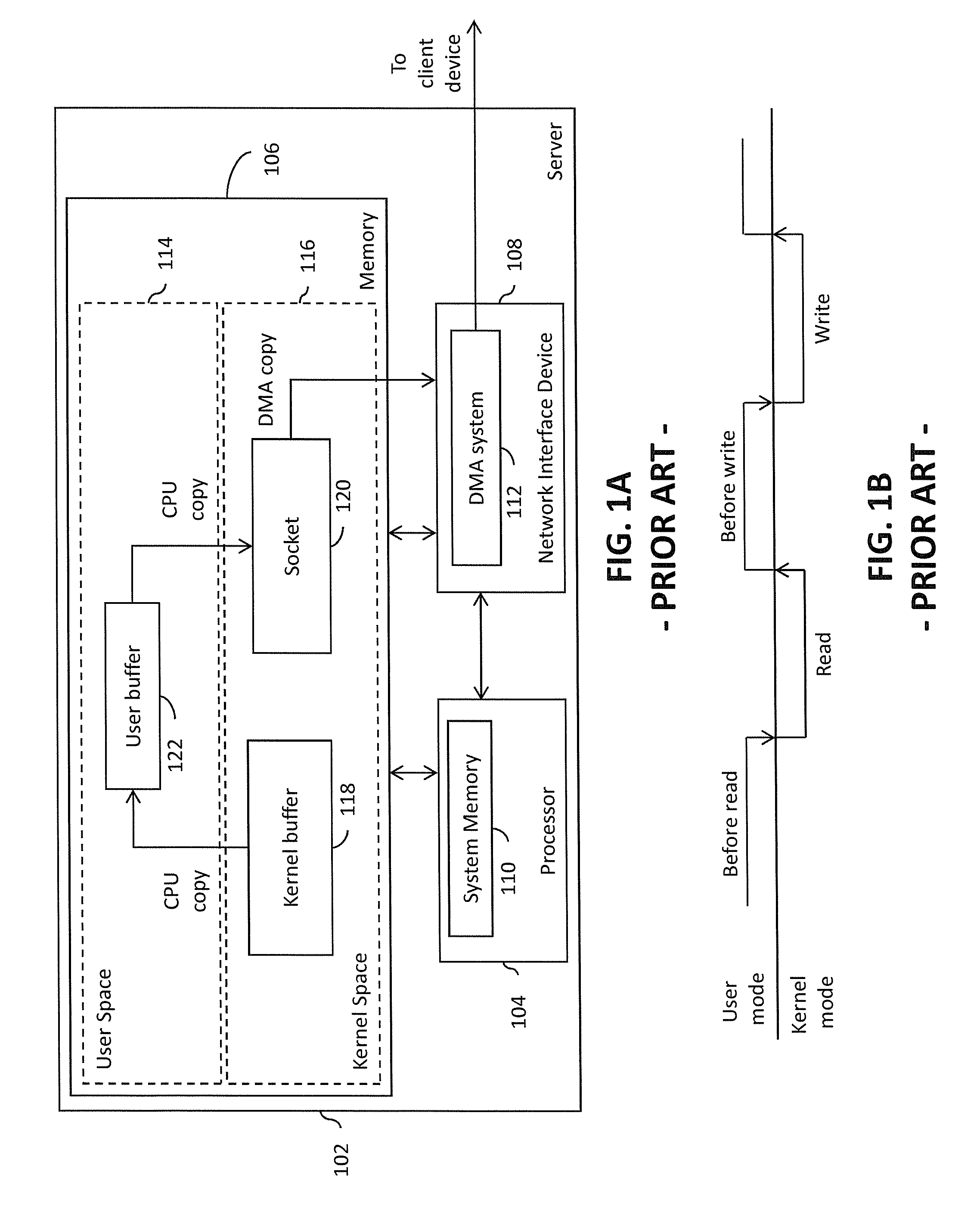

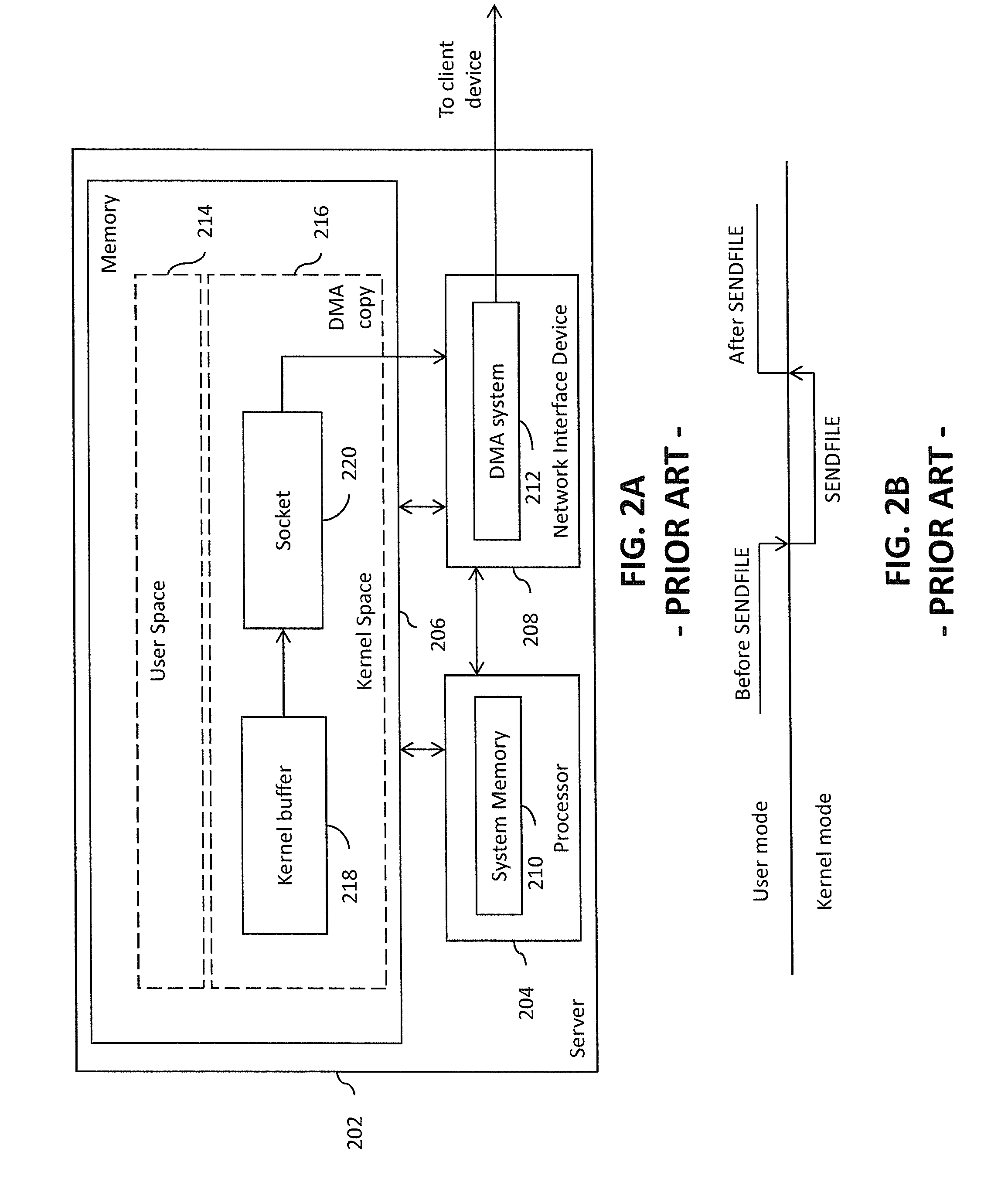

Zero-copy data transmission system

InactiveUS20160275042A1Interprogram communicationDigital computer detailsZero-copyNetwork interface device

A data transmission system for transmitting a data file from a server to a client device includes a processor, a memory and a network interface device. The memory includes a user space and a kernel space. The data file is stored in the kernel space. The processor receives a transmission request from the client device for transmitting the data file. The processor maps a set of virtual addresses corresponding to the data file to the user space as a mapped data file, and stores a set of physical addresses corresponding to the set of virtual addresses in a set of meta-buffers of a socket created in the user space. The network interface device retrieves the data file from the kernel space based on the set of physical addresses from the set of meta-buffers, and transmits the data file to the client device.

Owner:NXP USA INC

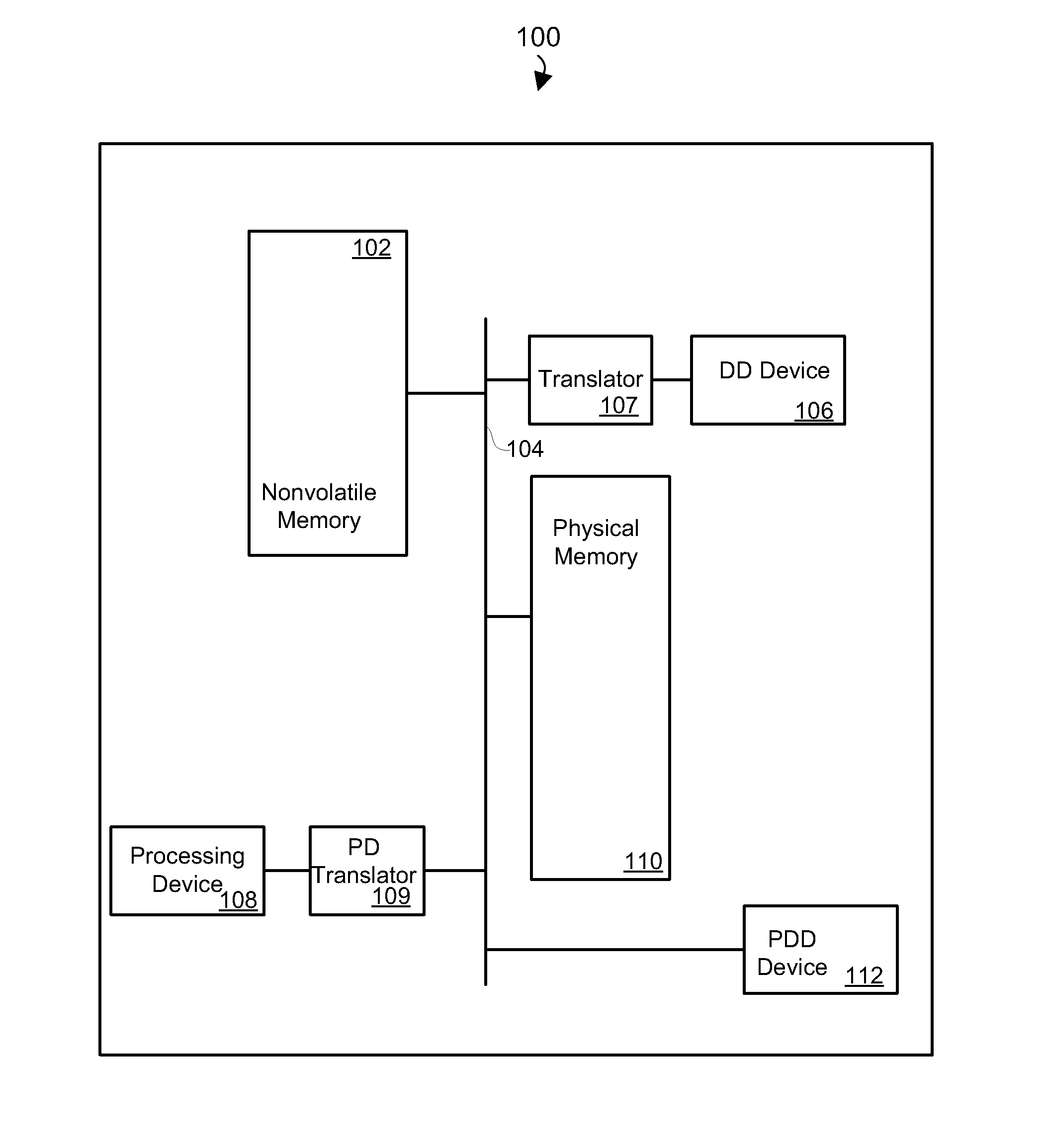

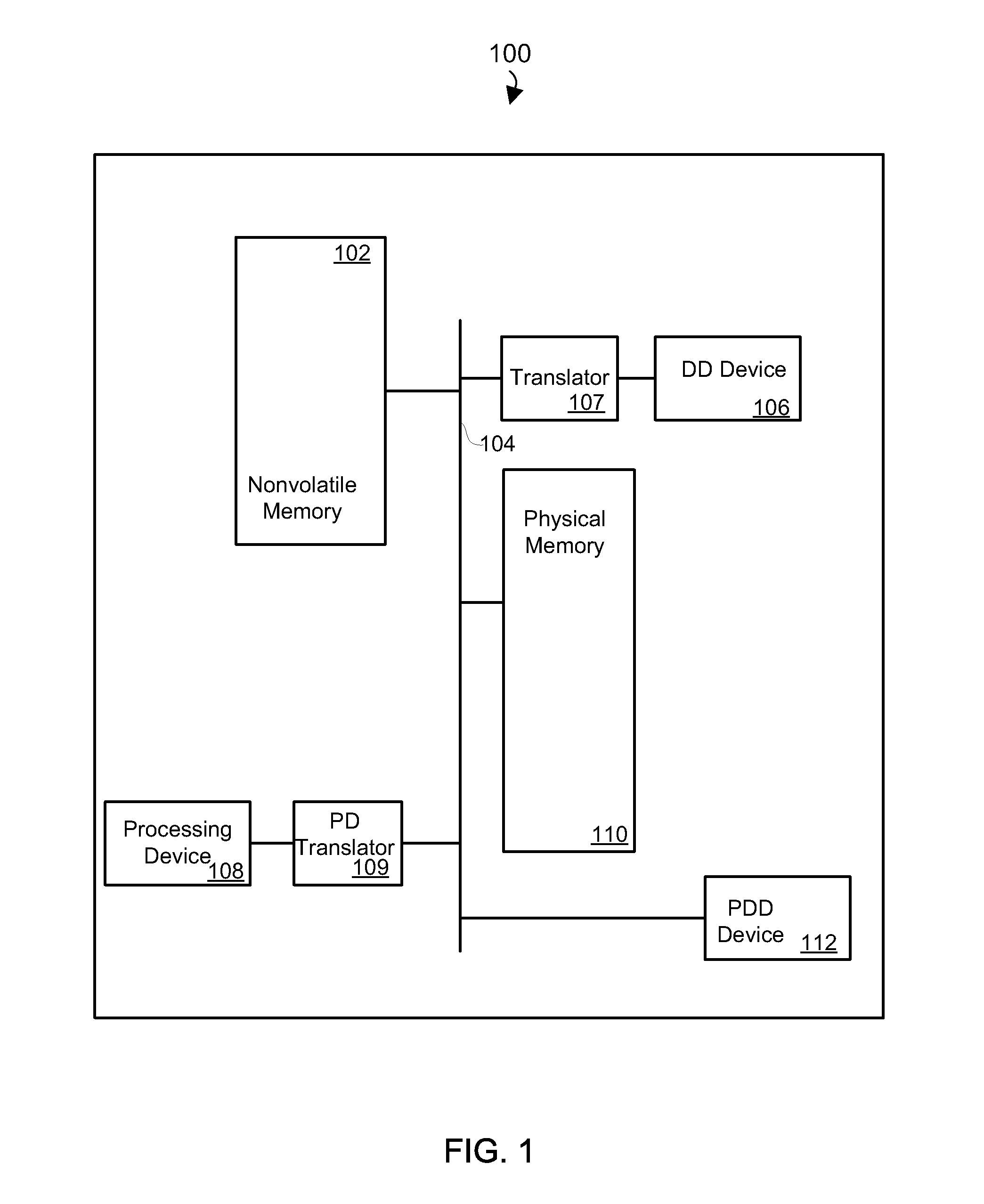

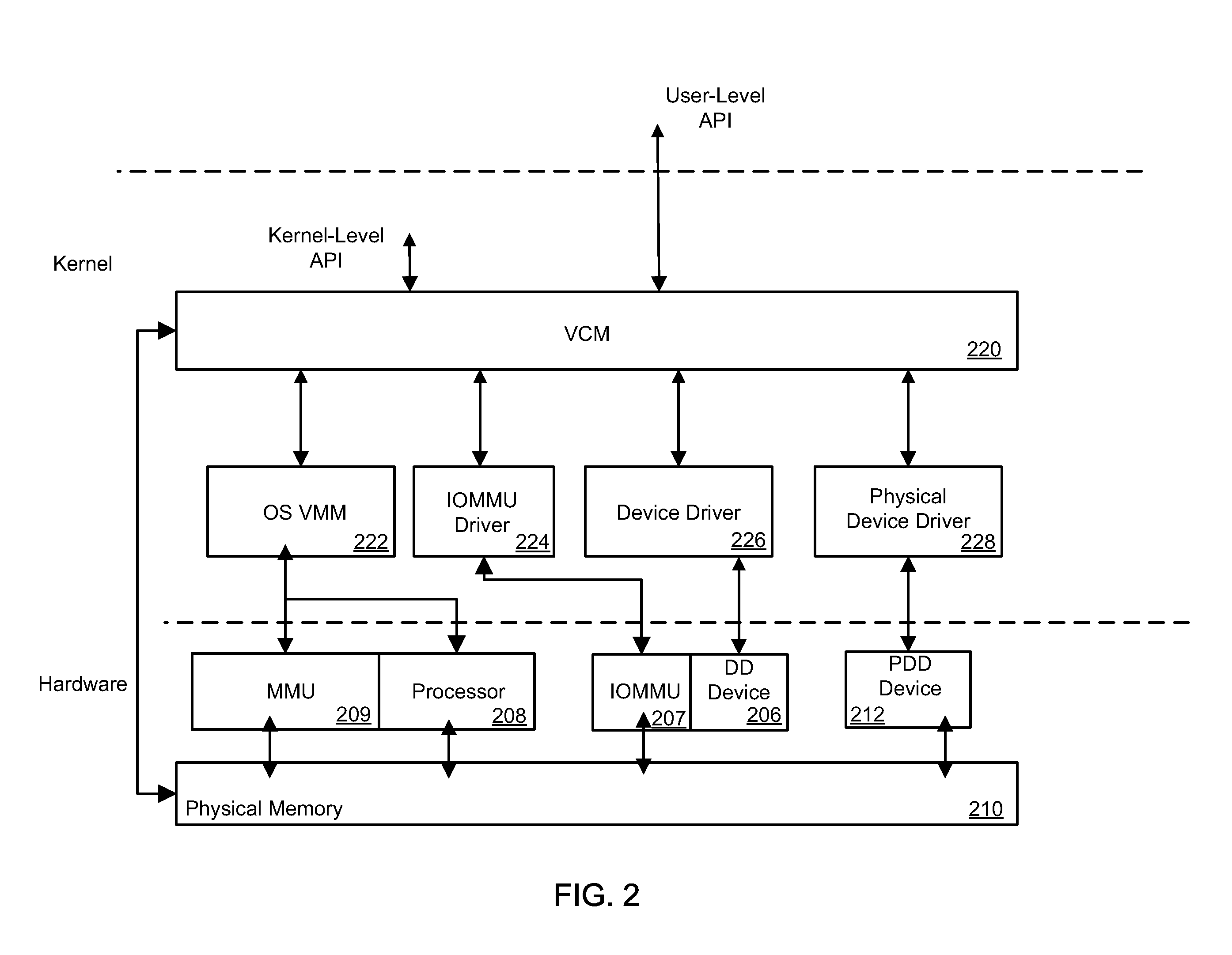

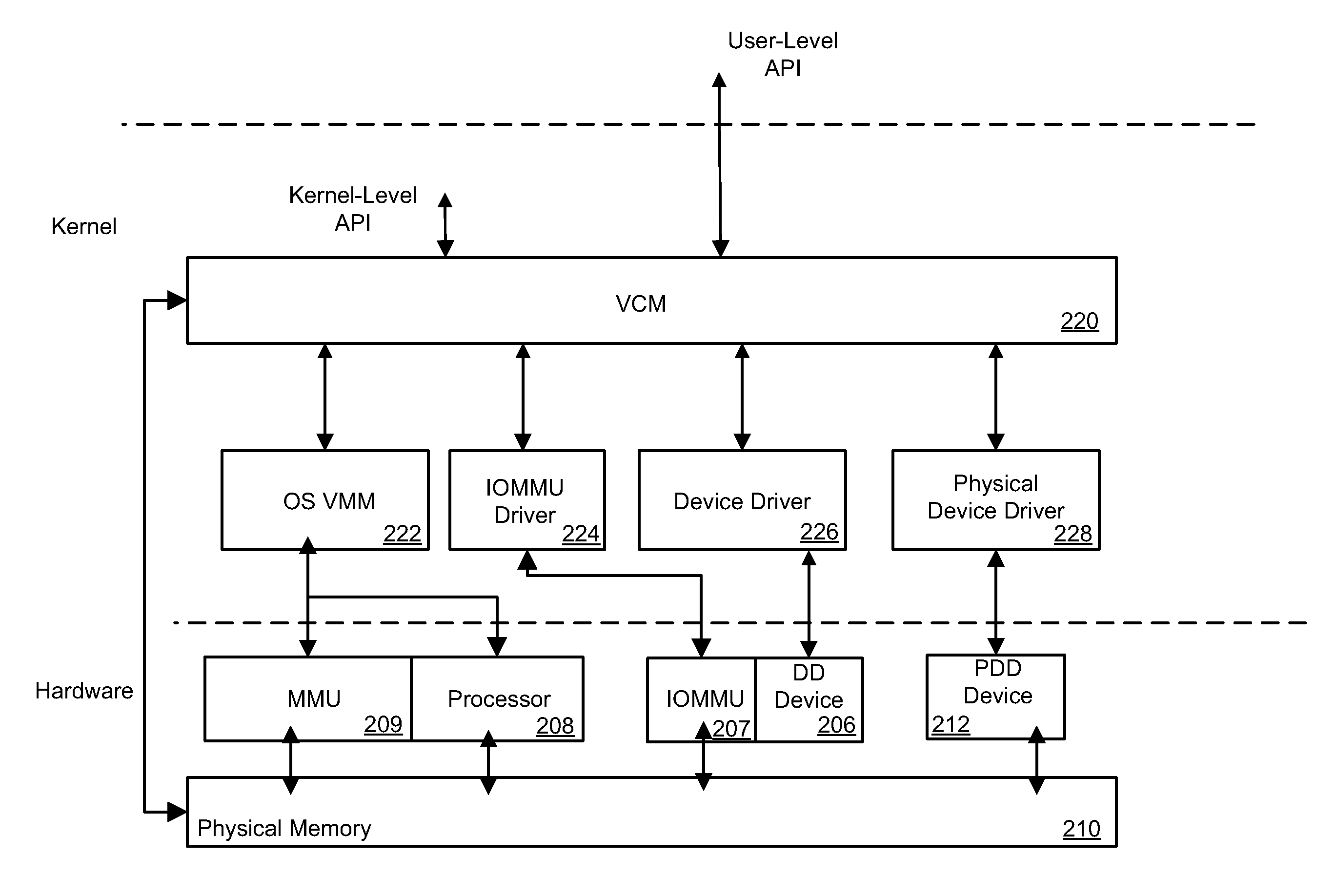

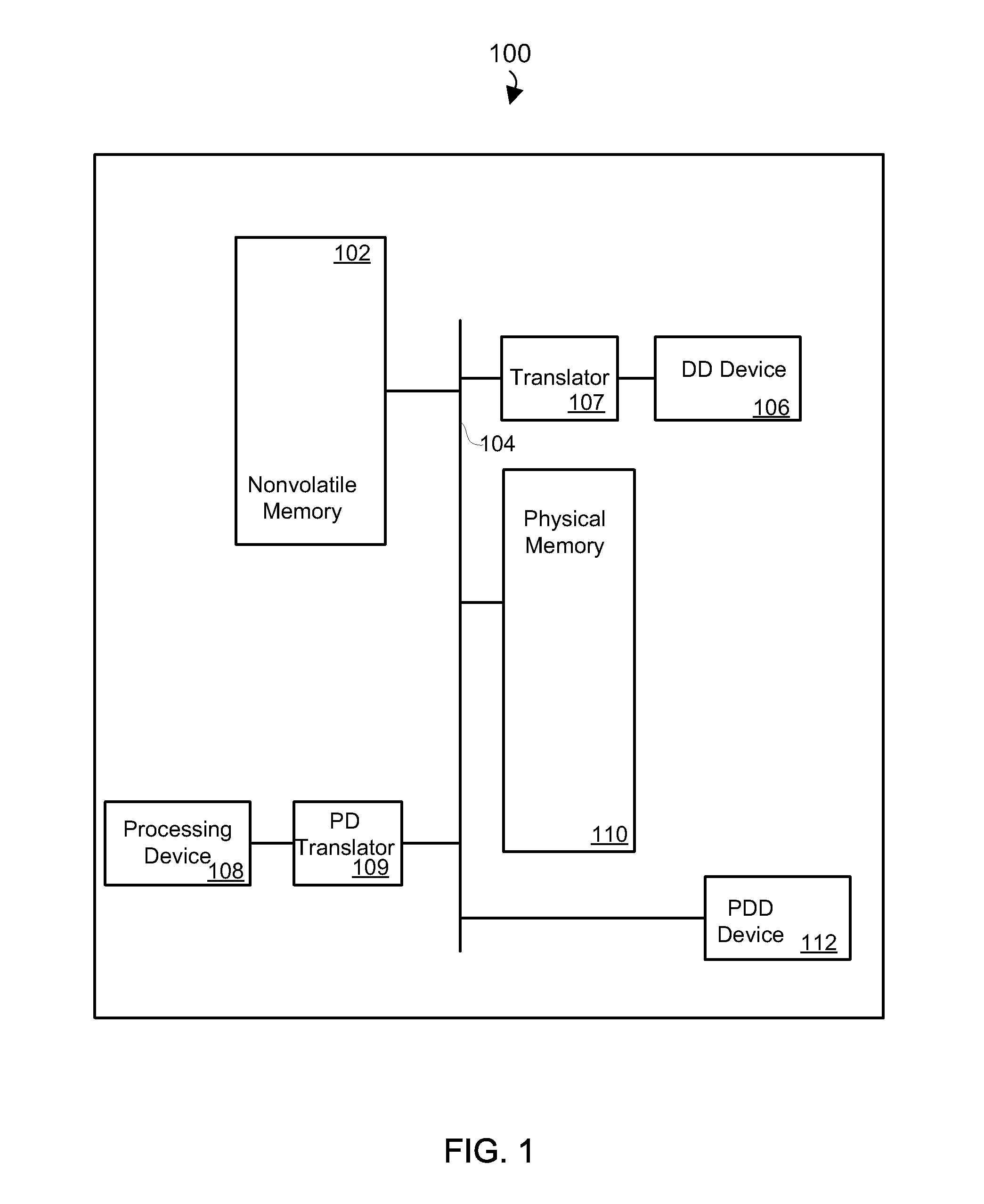

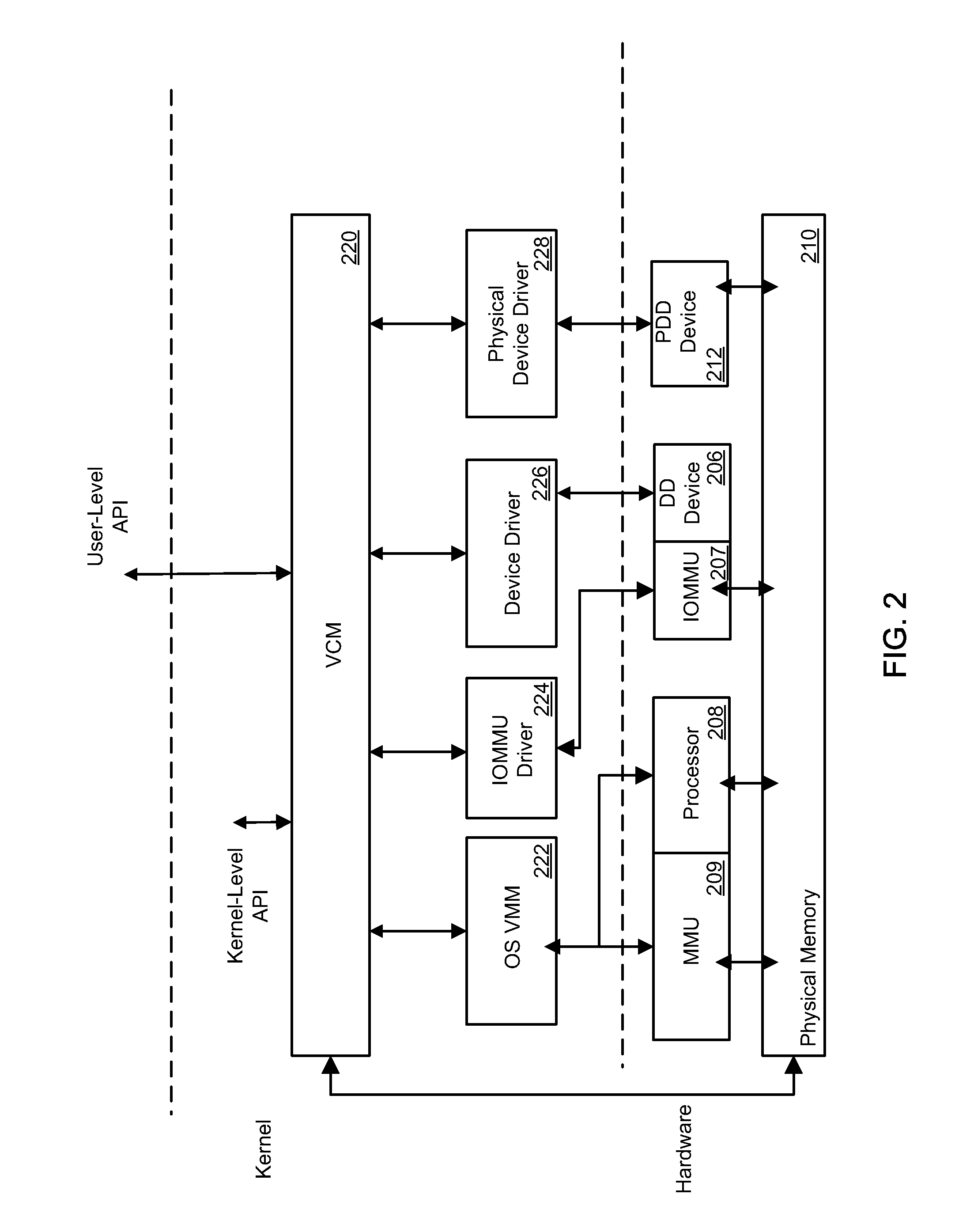

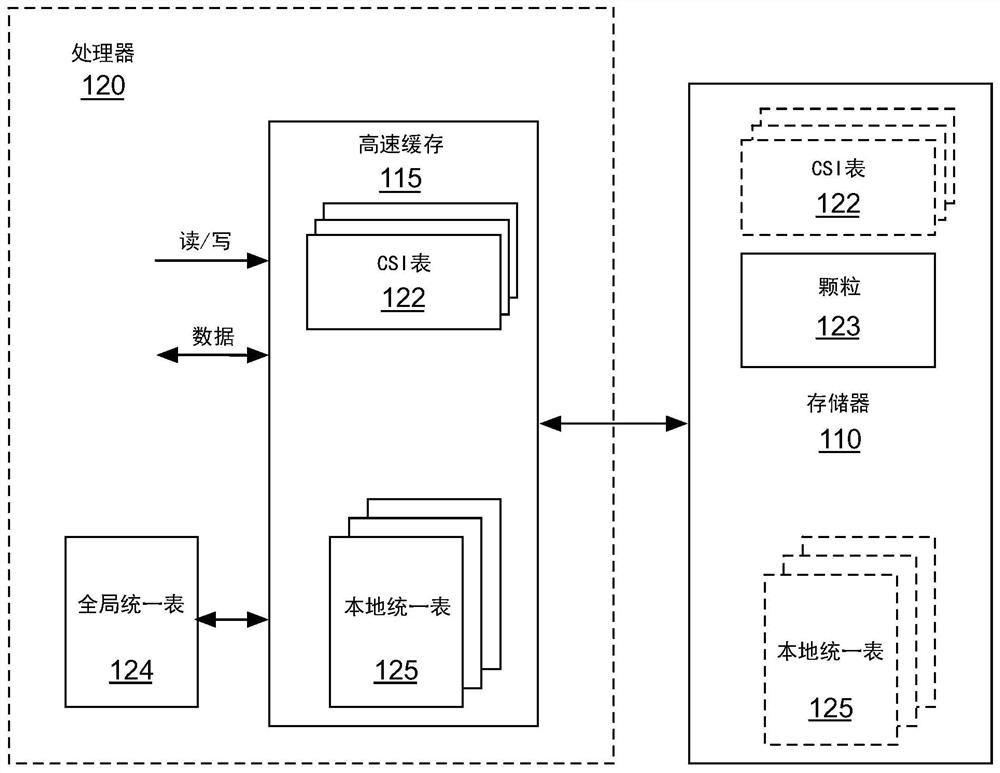

Unified Virtual Contiguous Memory Manager

InactiveUS20110225387A1Memory adressing/allocation/relocationComputer security arrangementsManagement unitData mining

Memory management methods and computing apparatus with memory management capabilities are disclosed. One exemplary method includes mapping an address from an address space of a physically-mapped device to a first address of a common address space so as to create a first common mapping instance, and encapsulating an existing processor mapping that maps an address from an address space of a processor to a second address of the common address space to create a second common mapping instance. In addition, a third common mapping instance between an address from an address space of a memory-management-unit (MMU) device and a third address of the common address space is created, wherein the first, second, and third addresses of the common address space may be the same address or different addresses, and the first, second, and third common mapping instances may be manipulated using the same function calls.

Owner:QUALCOMM INNOVATION CENT

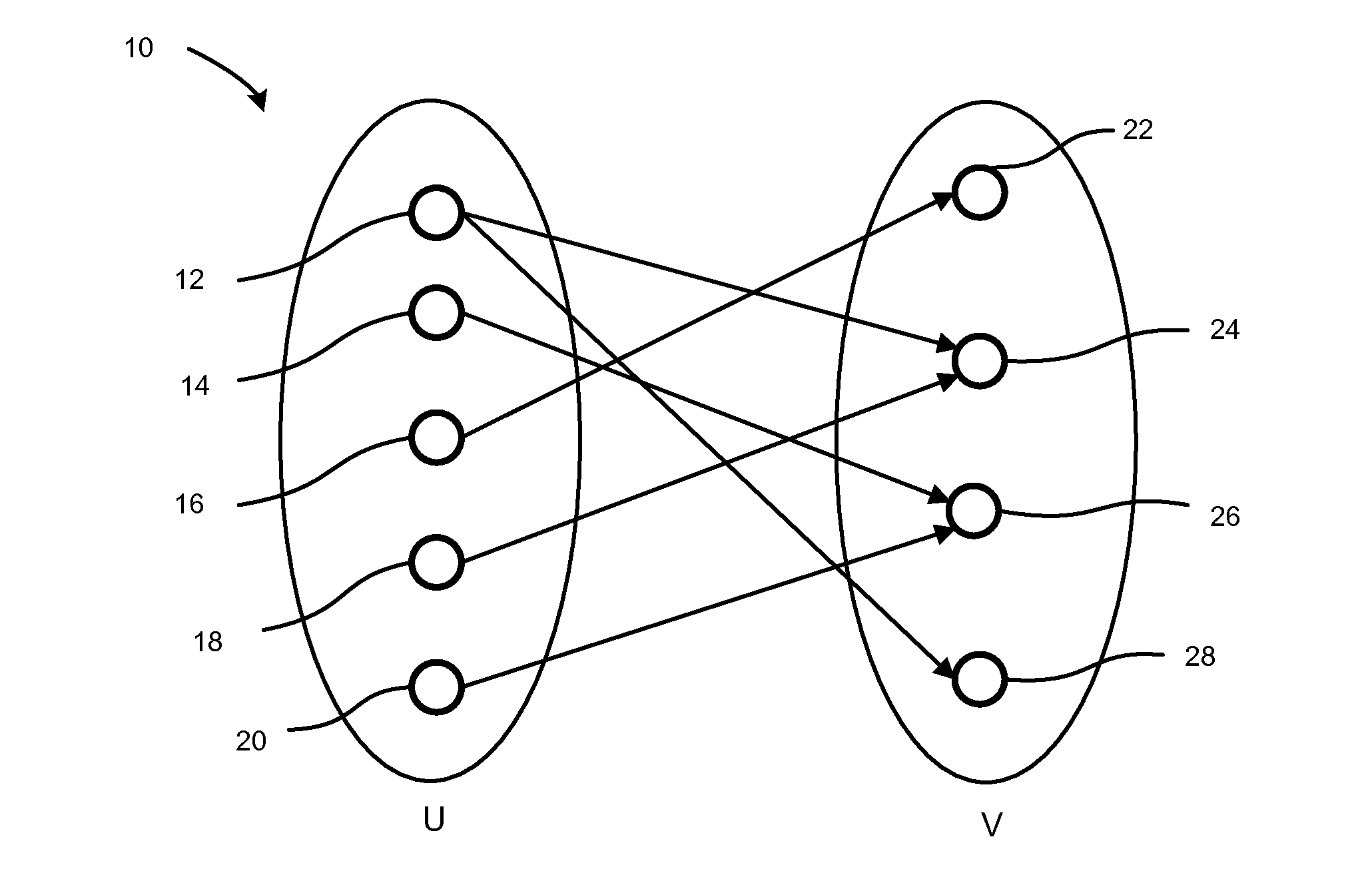

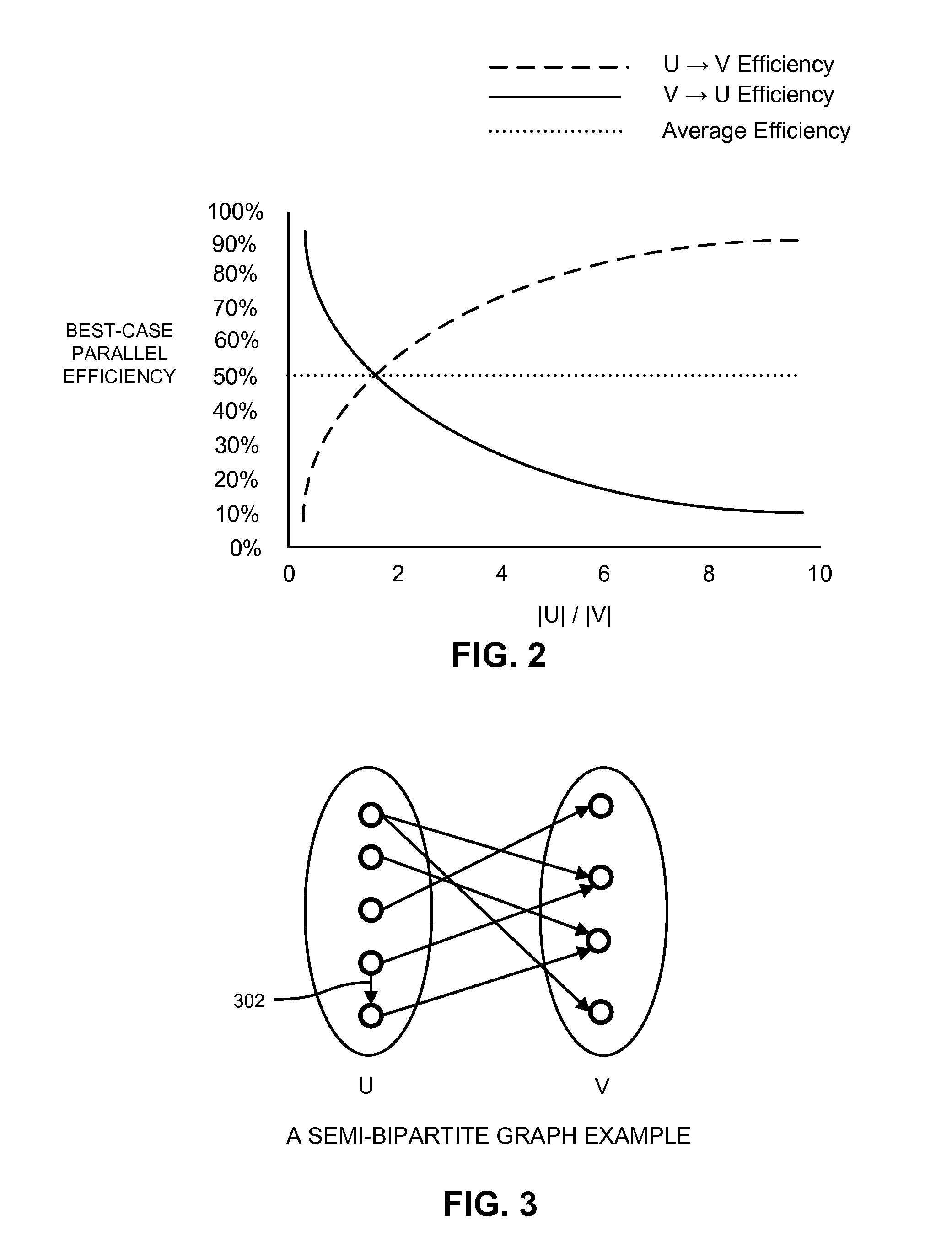

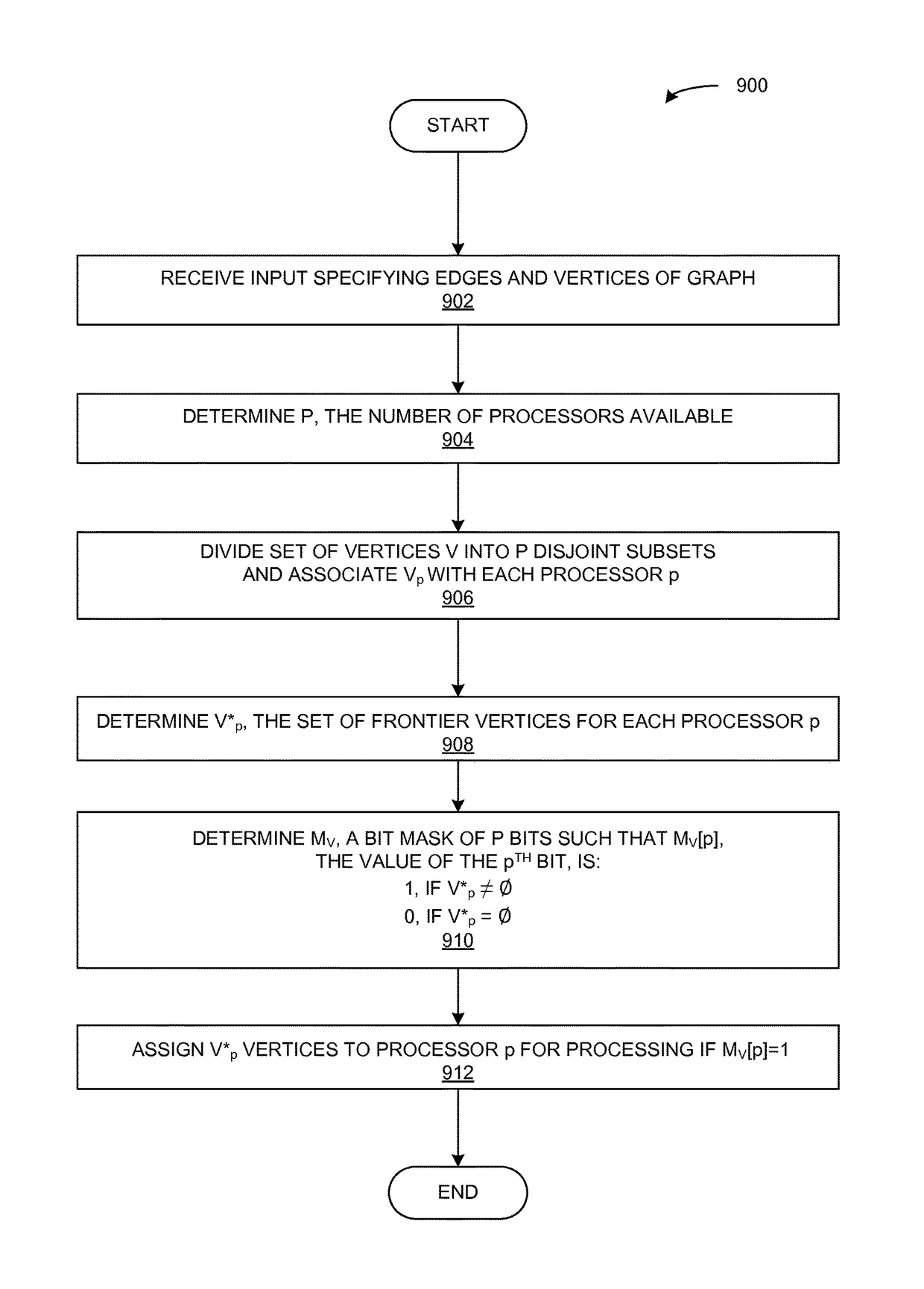

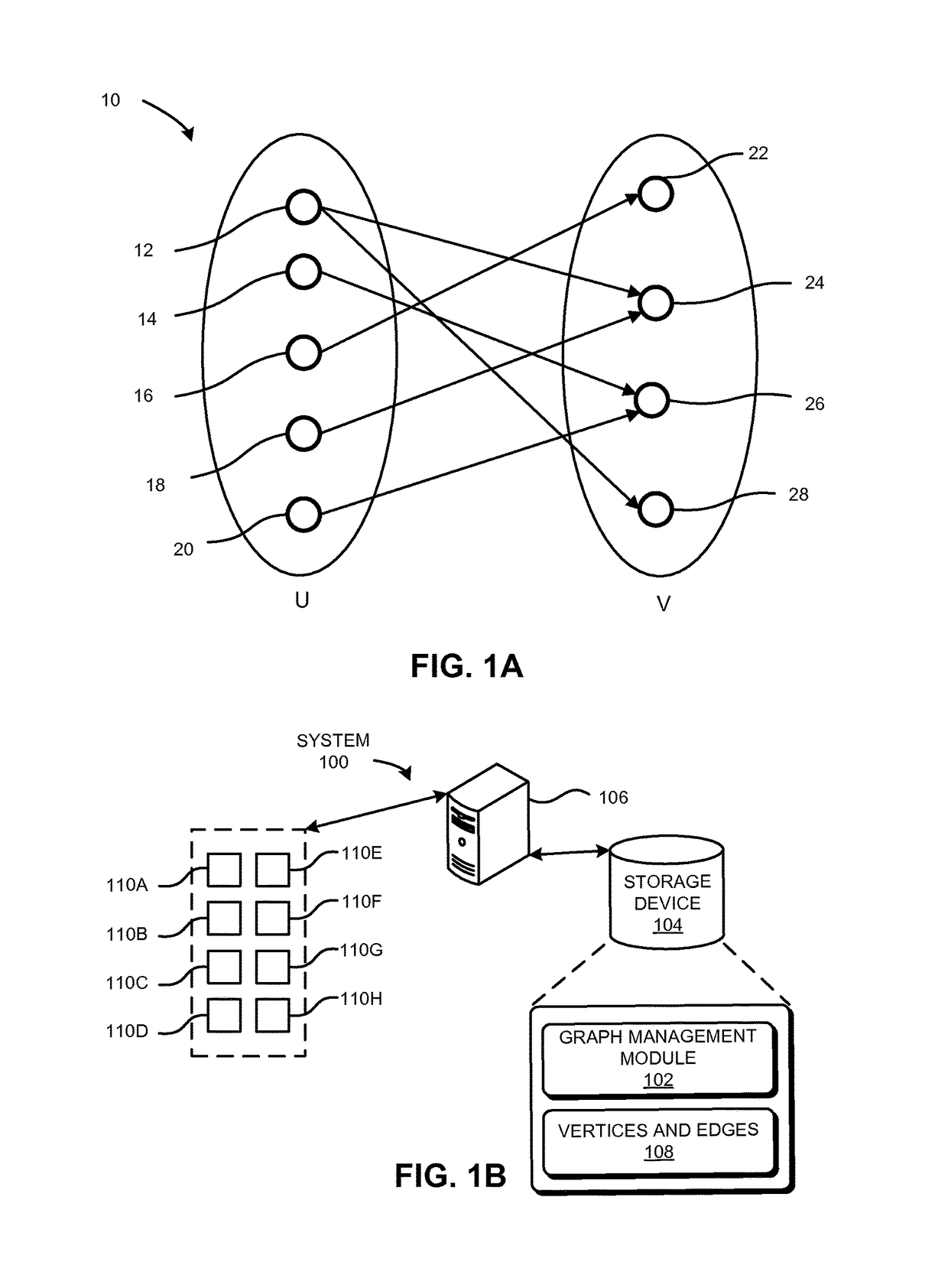

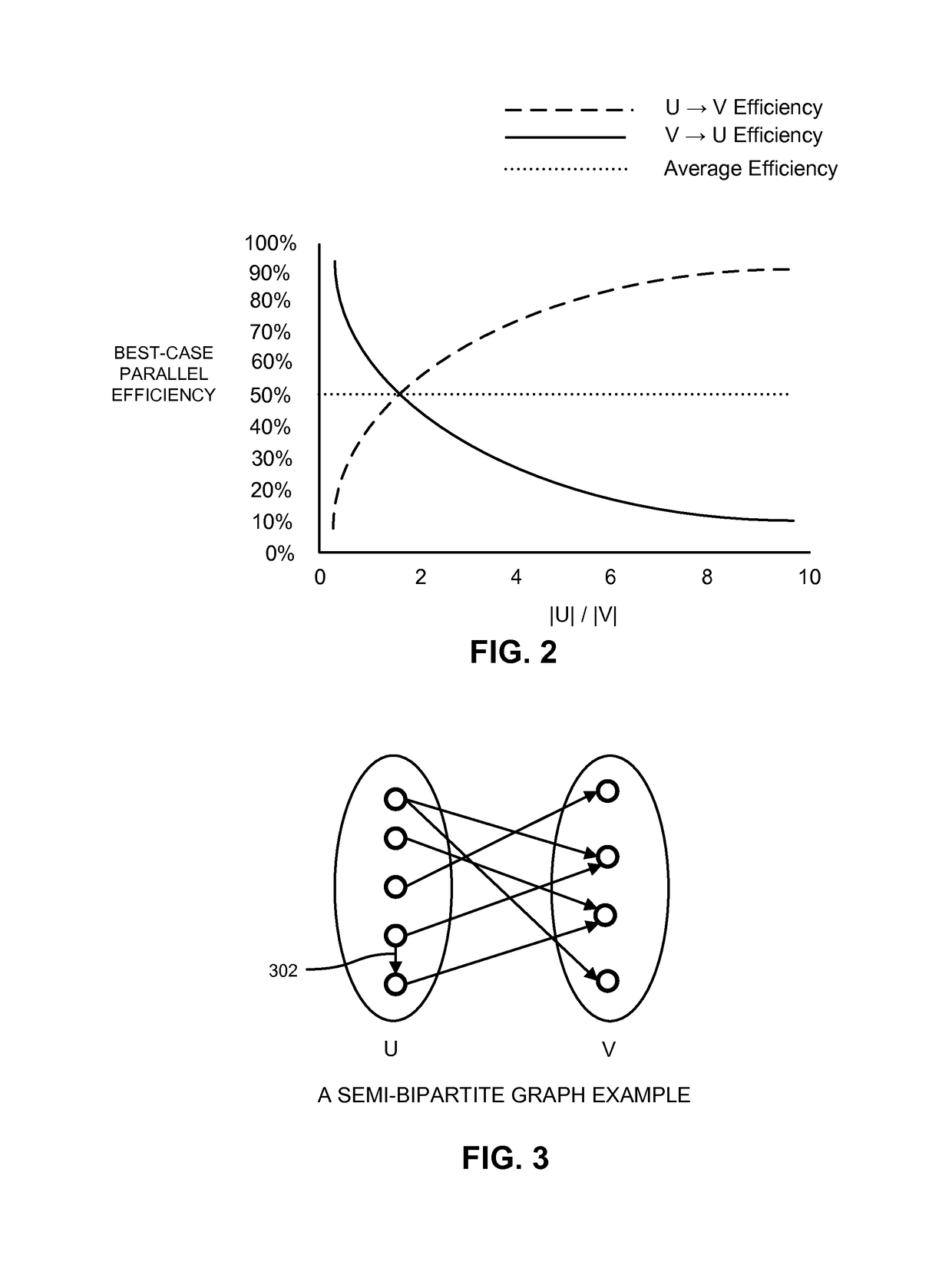

System and method for improved parallel search on bipartite graphs using dynamic vertex-to-processor mapping

One embodiment of the present invention provides a system for dynamically assigning vertices to processors to generate a recommendation for a customer. During operation, the system receives graph data with customer and product vertices and purchase edges. The system traverses the graph from a customer vertex to a set of product vertices. The system divides the set of product vertices among a set of processors. Subsequently, the system determines a set of product frontier vertices for each processor. The system traverses the graph from the set of product frontier vertices to a set of customer vertices. The system divides the set of customer vertices among a set of processors. Then, the system determines a set of customer frontier vertices for each processor. The system traverses the graph from the set of customer frontier vertices to a set of recommendable product vertices. The system generates one or more product recommendations for the customer.

Owner:XEROX CORP

Interactive virtual display

ActiveUS20190220149A1Input/output for user-computer interactionStatic indicating devicesMap LocationLight beam

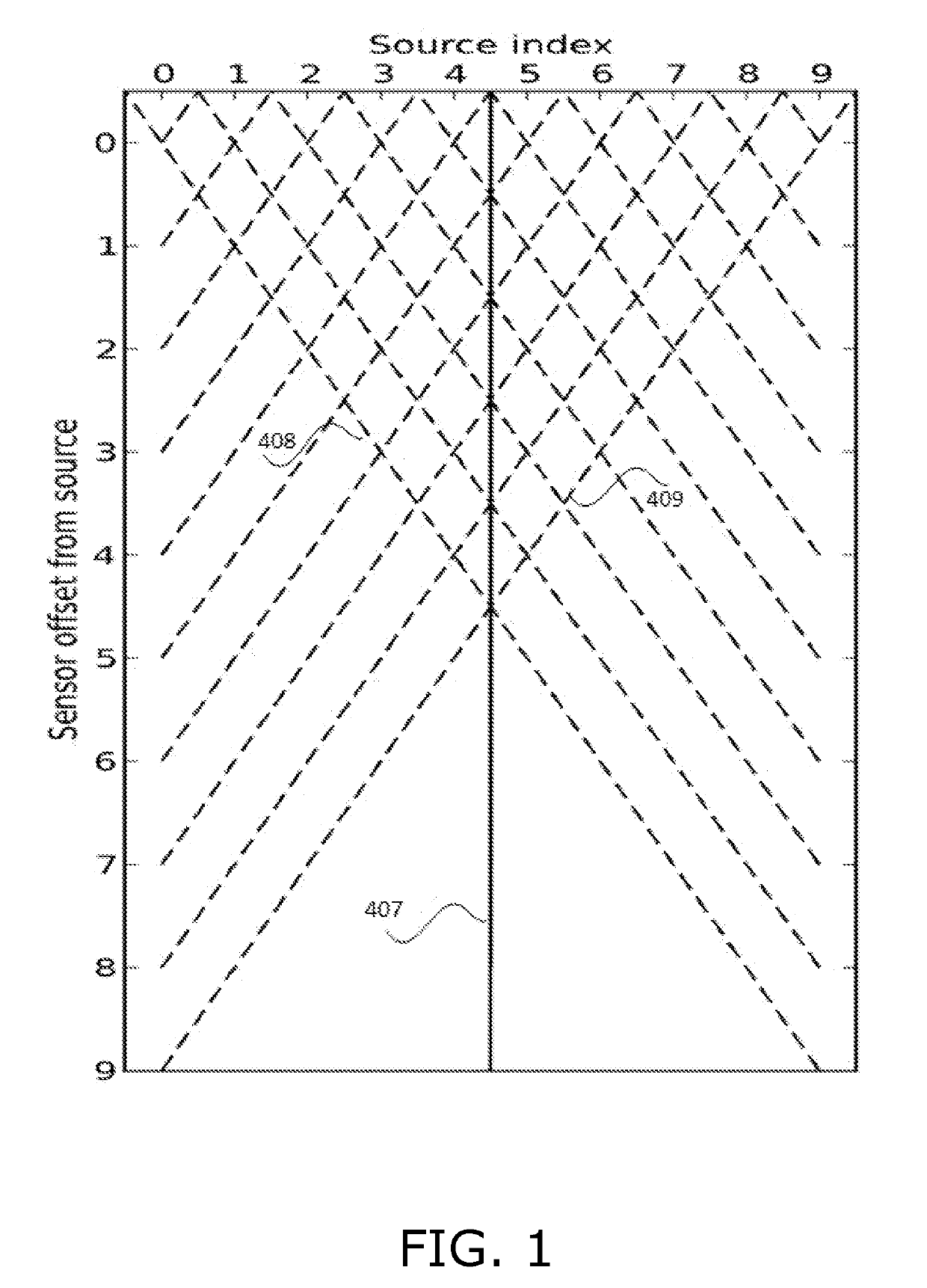

A sensor including optics configured in accordance with a display that presents a GUI, the optics projecting the GUI above the display such that the GUI is visible in-air, a reflectance sensor including light emitters projecting light beams towards the projected GUI, light detectors detecting reflections of the beams by objects interacting with the projected GUI, and a lens maximizing detection of light at each detector for light entering the lens at a respective location along the lens at a specific angle θ, whereby for each emitter-detector pair, maximum detection of light projected by the emitter of the pair, reflected by an object and detected by the detector of the pair, corresponds to the object being at a specific 2D location in the projected GUI, and a processor mapping detections of light for emitter-detector pairs to their corresponding 2D locations, and translating the mapped locations to display coordinates.

Owner:NEONODE

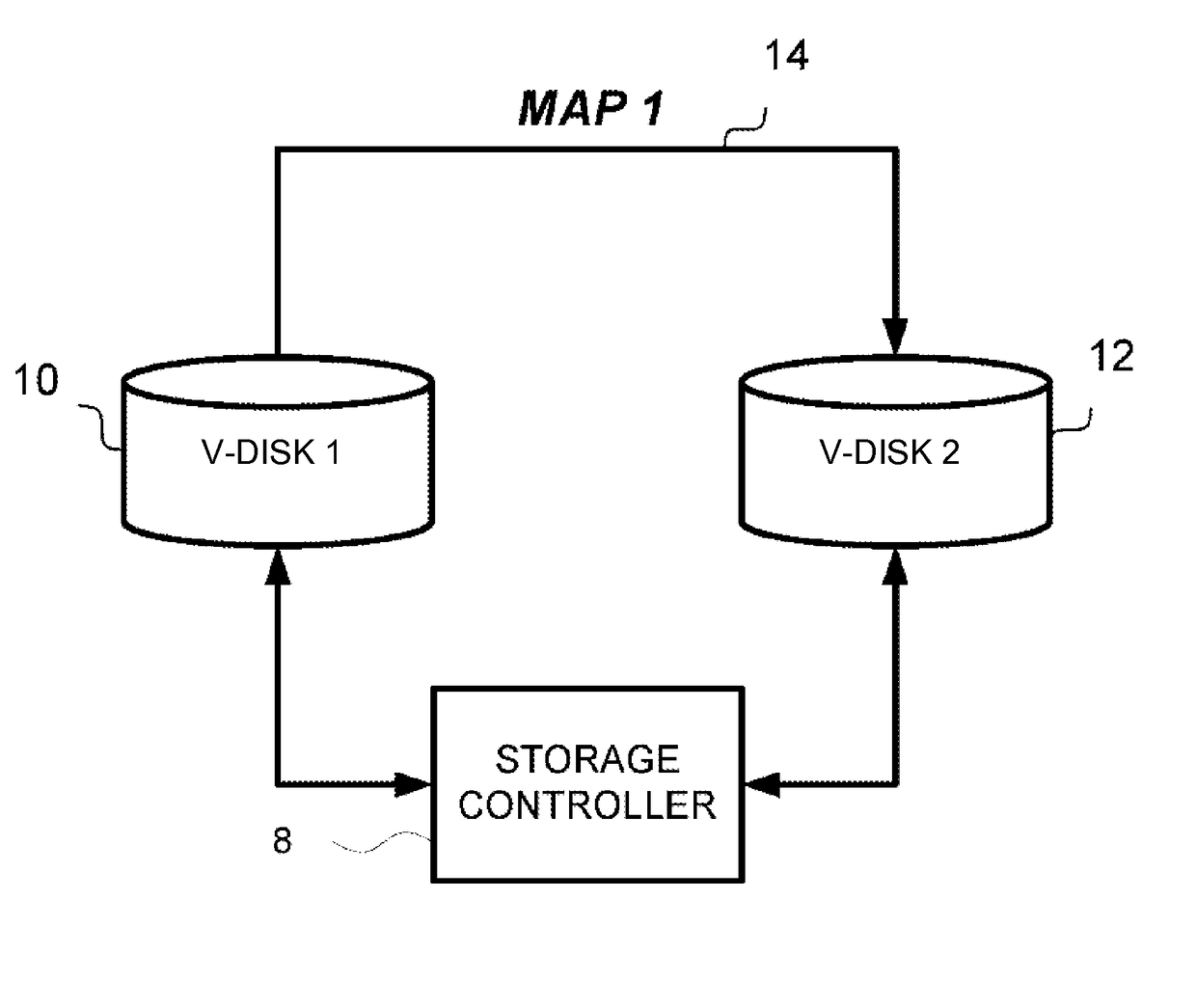

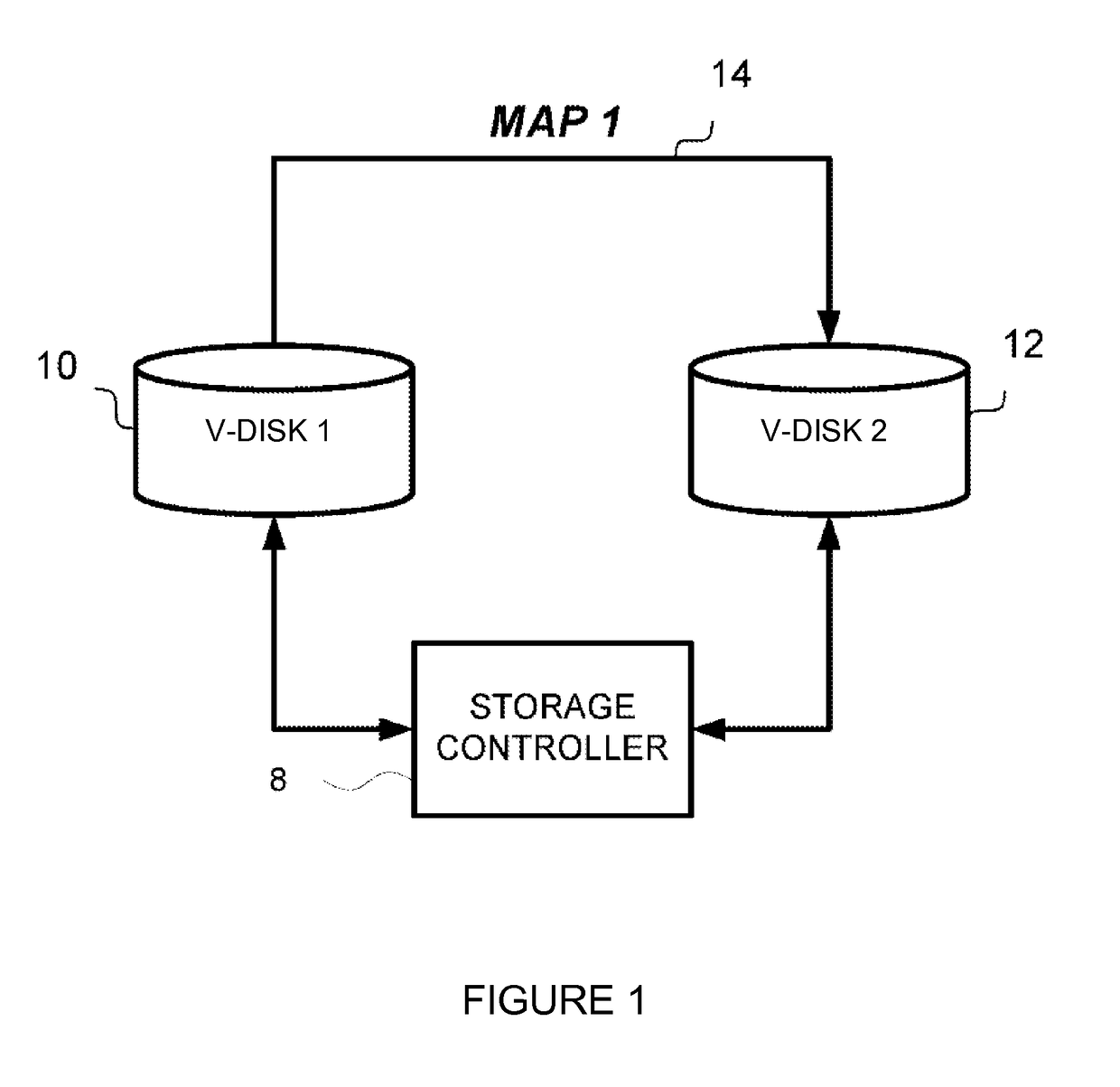

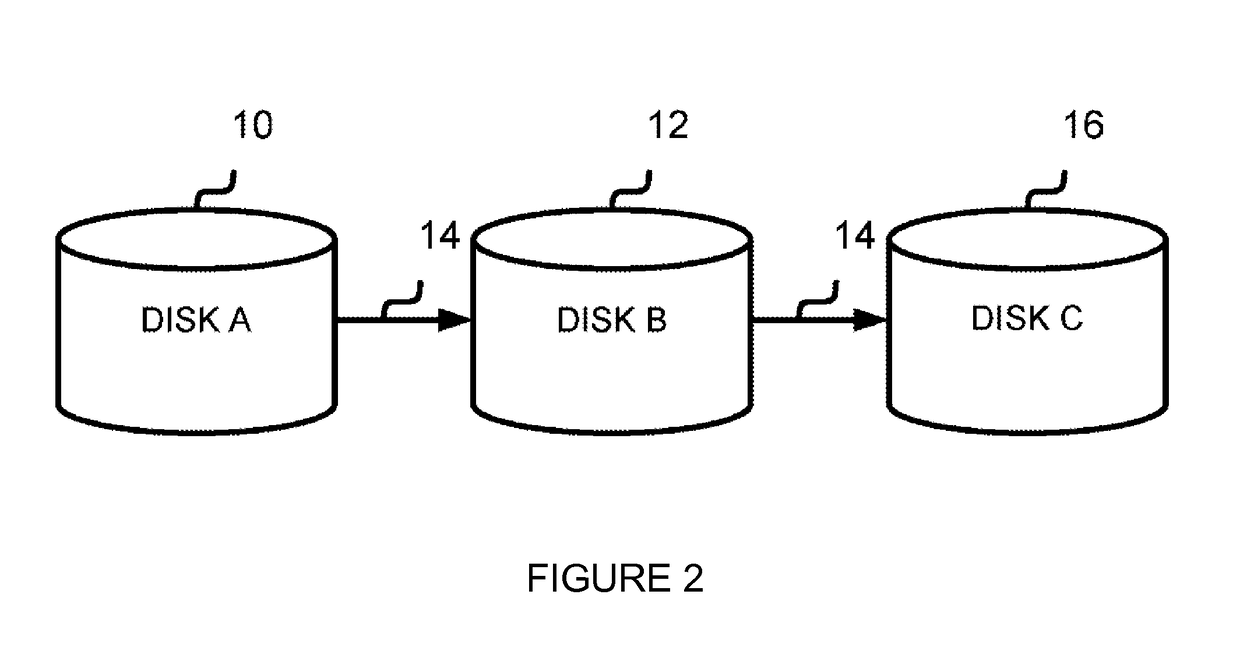

Point-in-time copy on write for golden image

ActiveUS9886349B2Input/output to record carriersRedundant operation error correctionComputer hardwareProcessor mapping

A system, method, and computer program product for managing storage volumes. A processor creates a first point-in-time copy cascade, where the first point-in-time copy cascade comprises a source volume, a first snapshot point-in-time copy volume, and a second snapshot point-in-time copy volume; the first volume is a snapshot copy of the source volume and the second volume is a snapshot copy of the first volume; and the source volume, the first volume, and the second volume include a host portion. A processor creates a third snapshot point-in-time copy volume from the first volume. A processor maps the third volume to create a second cascade, wherein the second cascade comprises the source volume, the first volume, and the third volume but not the second volume. A processor directs an I / O operation for the first copy volume to the third volume.

Owner:INT BUSINESS MASCH CORP

System and method for remotely operating a wireless device using a server and client architecture

The present disclosure relates to a system and method for remotely operating one or more peripheral devices of a wireless device using a server and client architecture. In one aspect, the system may comprise a wireless device that includes a processor, a memory, a peripheral device, and a server adapted to communicate with the peripheral device; and a removable media device that includes a memory, a processor, and a client adapted to communicate with the server of the wireless device. In another aspect, the method may comprise the steps of emulating a hardware interface on a removable media device; mapping a peripheral device of a wireless device to the interface; mapping a processor of the media device to the peripheral device; wrapping and sending hardware commands from a client of the media device to a server of the wireless device; and executing the commands on the peripheral device.

Owner:CASSIS INT PTE

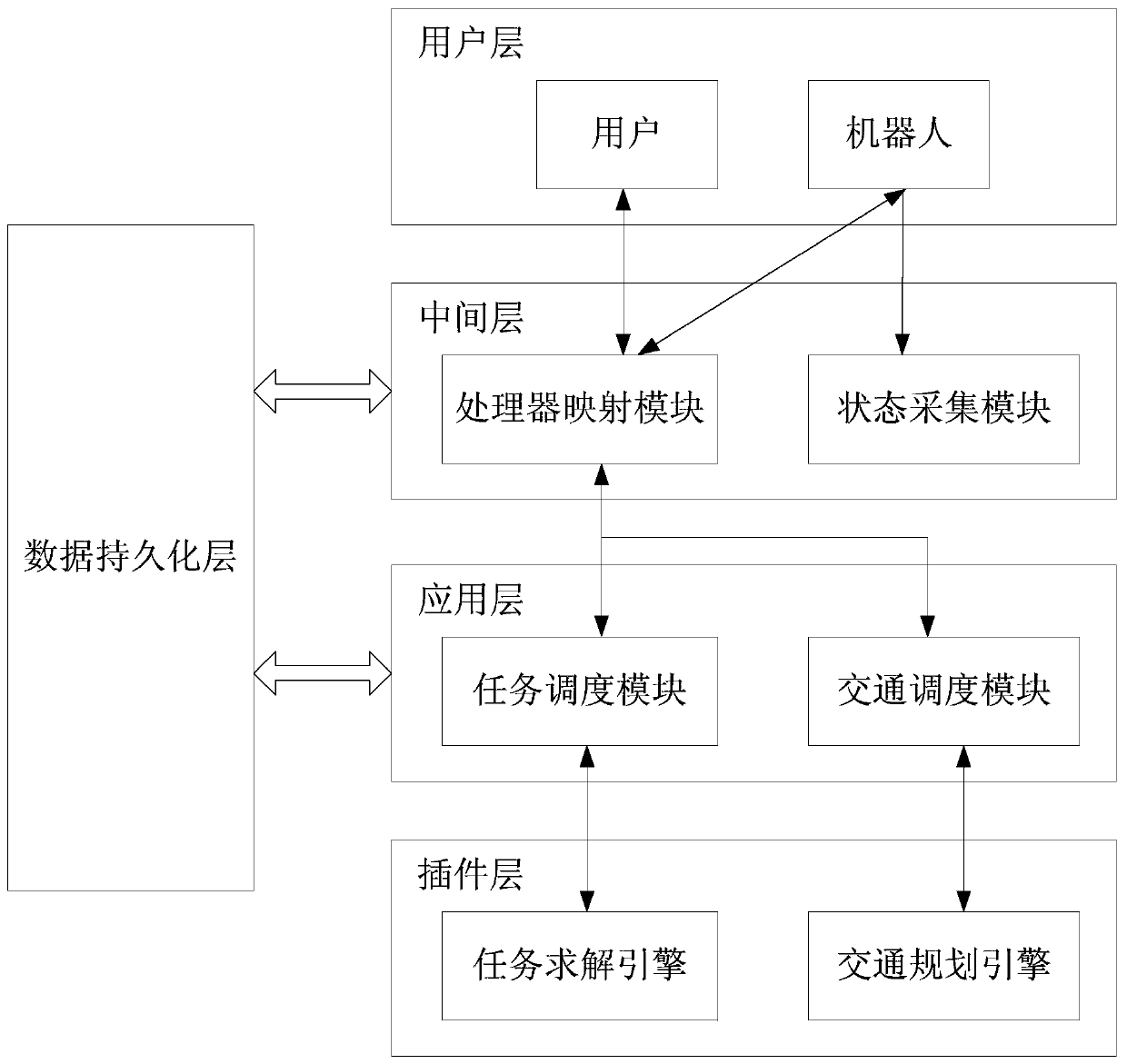

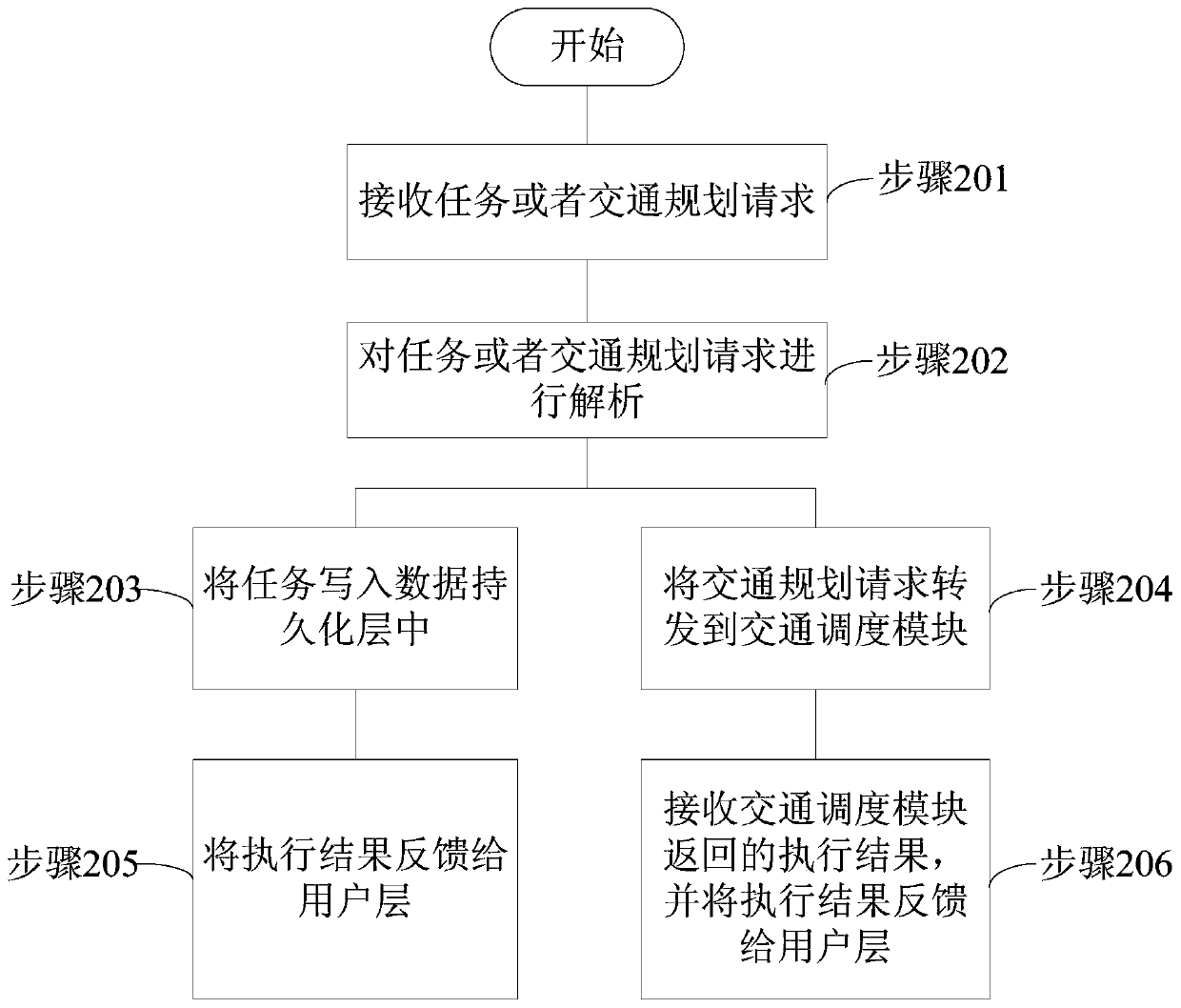

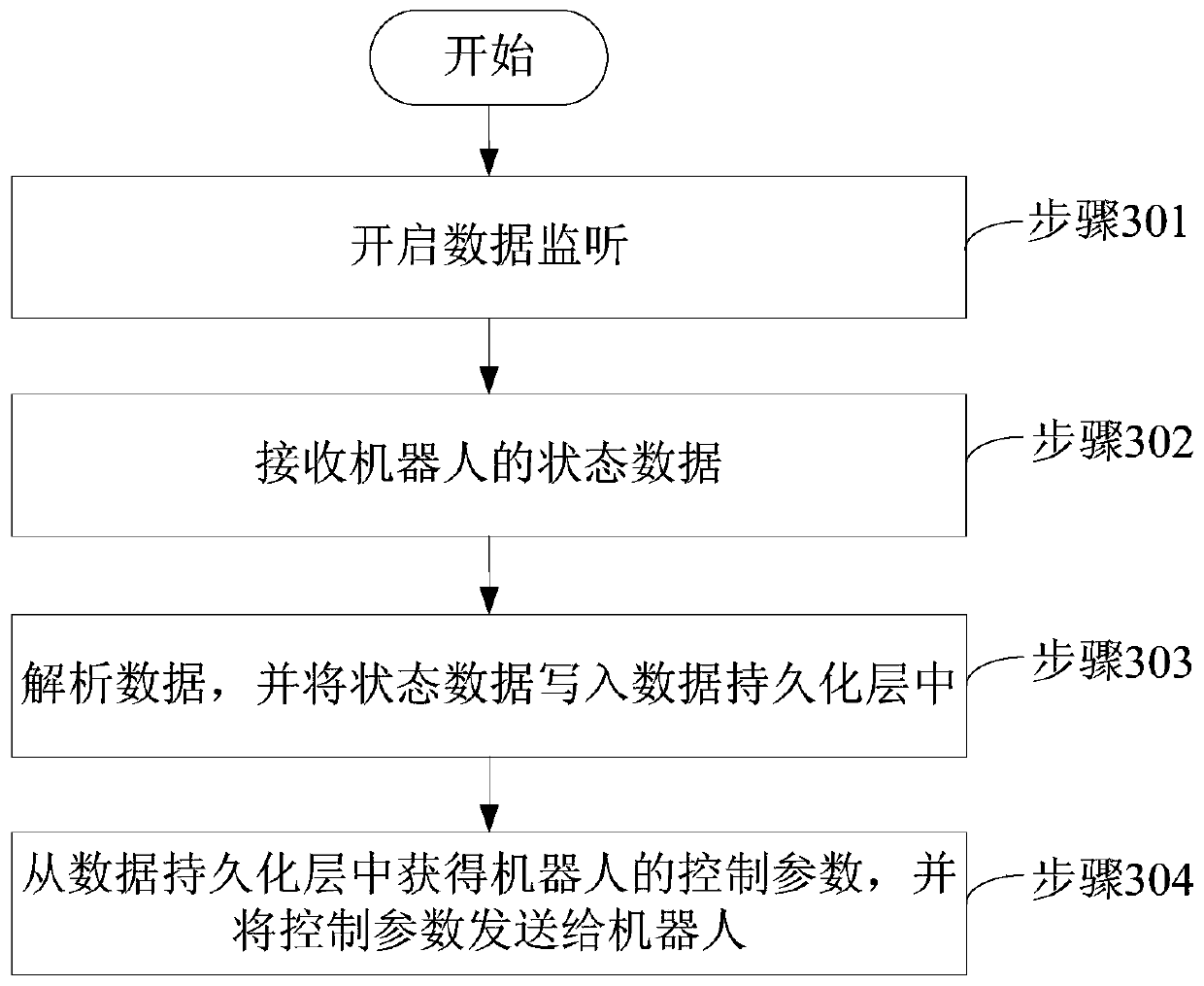

Robot cluster scheduling system

ActiveCN109800937AIntelligent planning of traffic routesAvoid traffic jamsProgramme-controlled manipulatorRelational databasesSimulationTraffic congestion

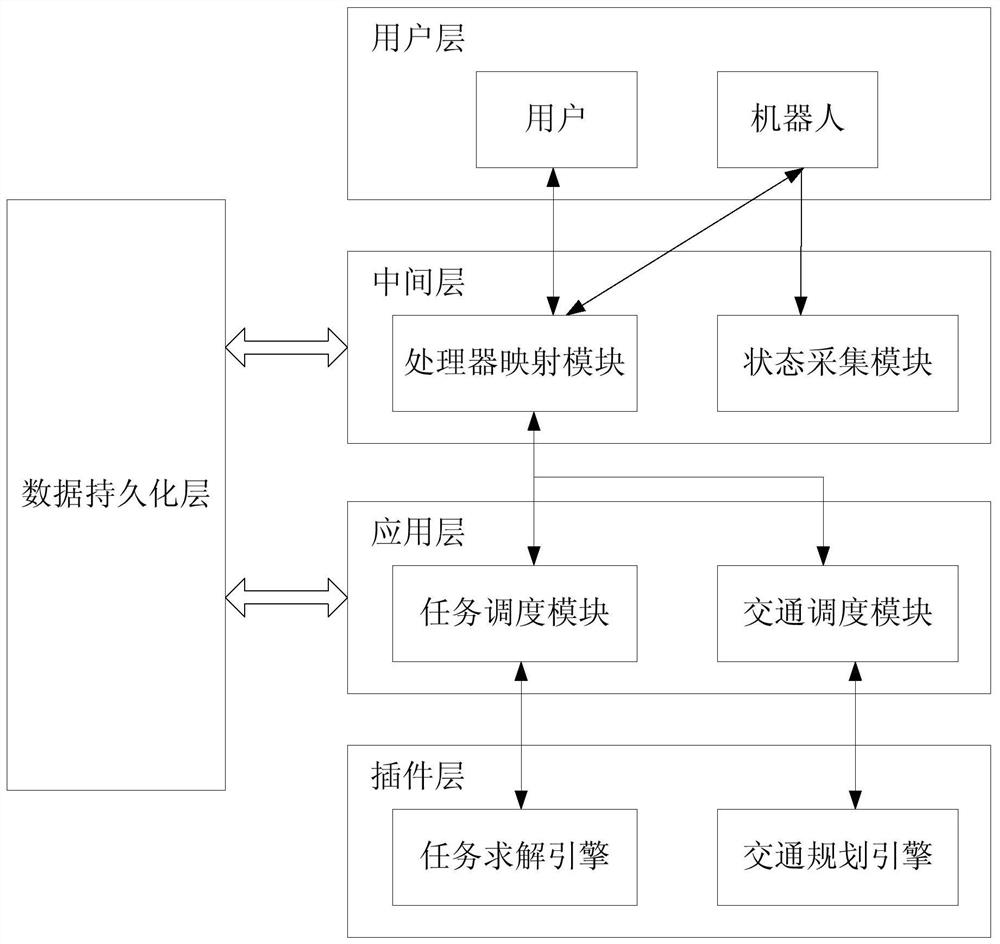

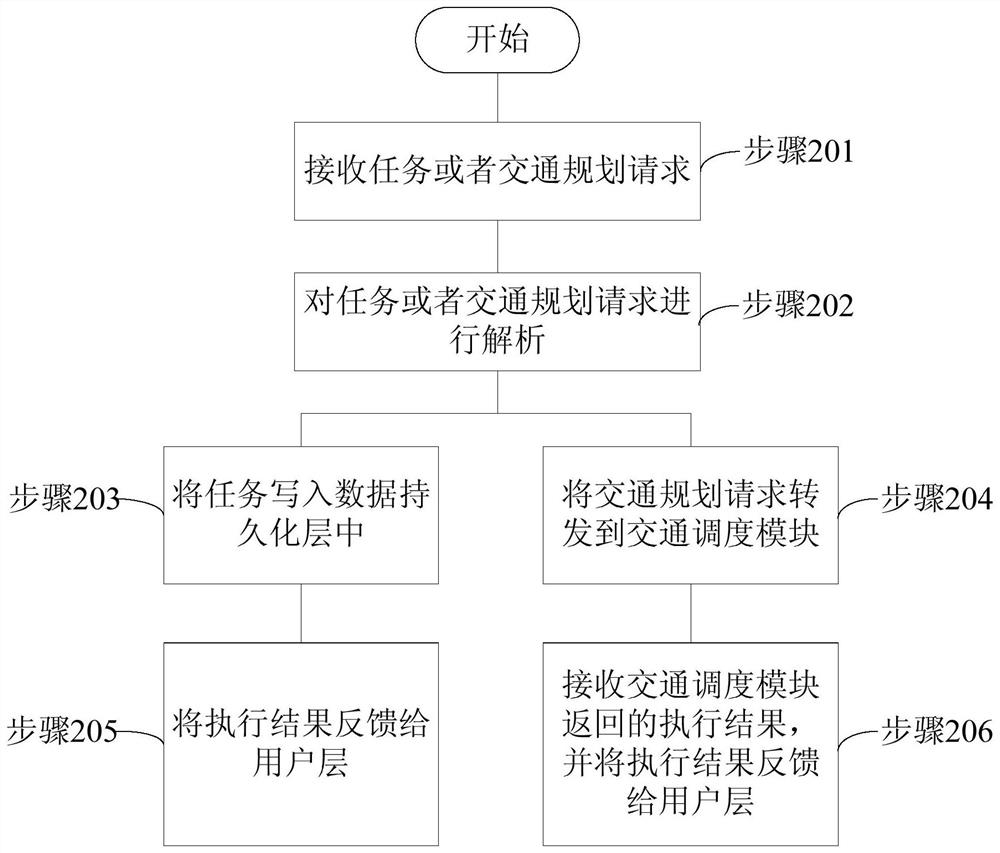

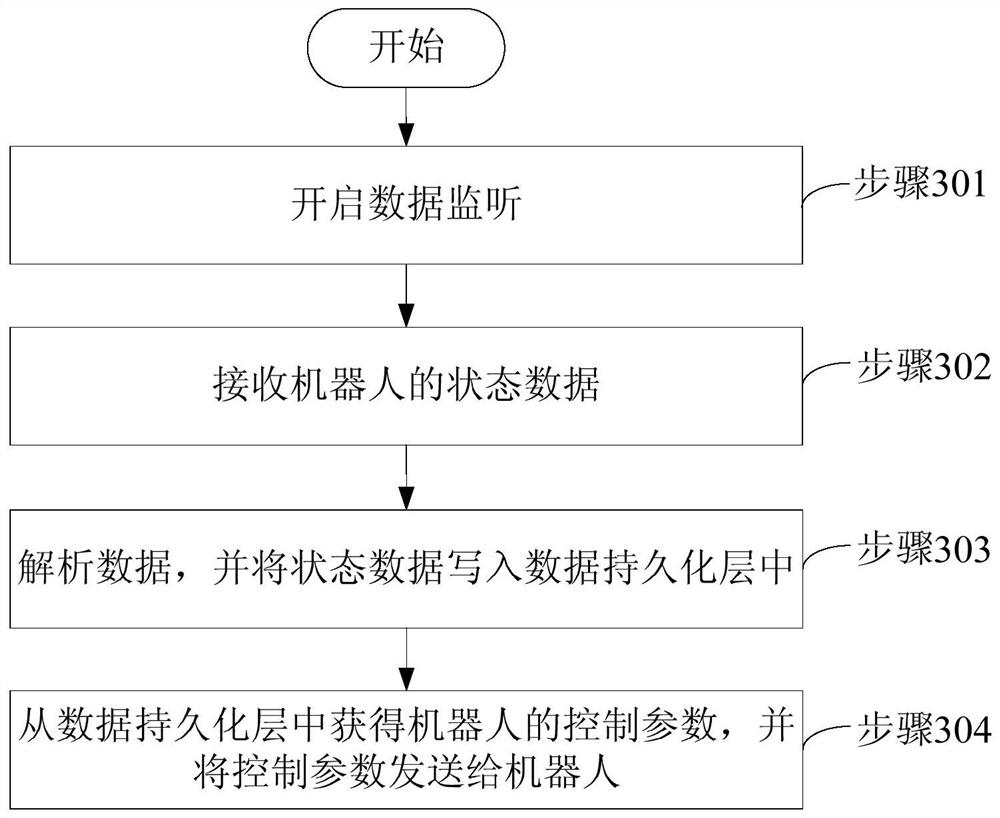

The invention discloses a robot cluster scheduling system including a user layer, a middle layer and an application layer, a plug-in layer and a data persistence layer. The middle layer comprises a processor mapping module and a state acquisition module. The application layer comprises a task scheduling module and a traffic scheduling module. The plug-in layer comprises a task solving engine and atraffic planning engine. The task solving engine is used for determining a target robot according to the parameters and the state data of the task. The traffic planning engine is used for determininga target route. The task solving engine and the traffic planning engine both provide APIs. On one hand, the robot cluster scheduling system supporting secondary development is realized. The system issimple in structure and low in system coupling, on the other hand. The target robot is determined according to the task, optimal distribution of the robot task is achieved. The execution efficiency of the scheduled task is improved, and on the other hand. The traffic route of the robot can be intelligently planned, and traffic jam of the robot is prevented.

Owner:BOZHON PRECISION IND TECH CO LTD +1

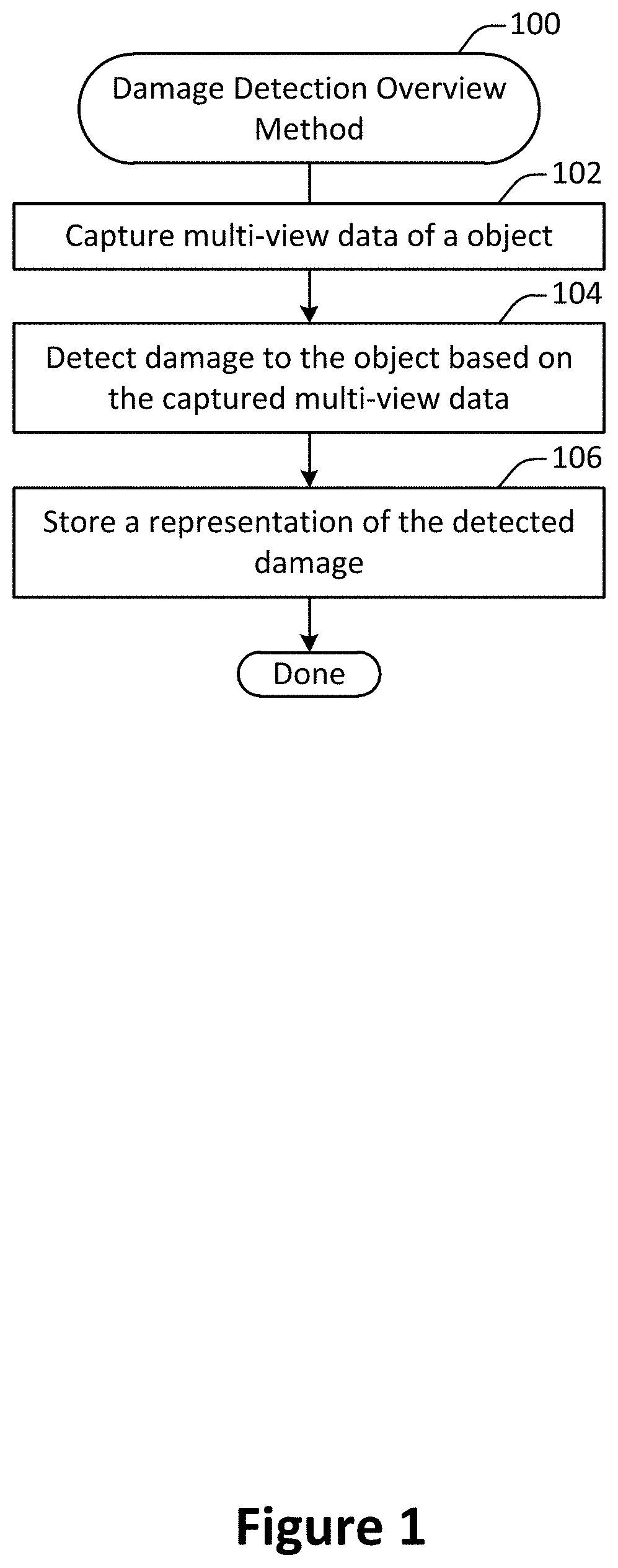

Damage detection from multi-view visual data

Reference images of an object may be mapped to an object model to create a reference object model representation. Evaluation images of the object may also be mapped to the object model via the processor to create an evaluation object model representation. Object condition information may be determined by comparing the reference object model representation with the evaluation object model representation. The object condition information may indicate one or more differences between the reference object model representation and the evaluation object model representation. A graphical representation of the object model that includes the object condition information may be displayed on a display screen.

Owner:FUSION

System and method for designing system on chip (SoC) circuits using single instruction multiple agent (SIMA) instructions

ActiveUS10372859B2Computer aided designSoftware simulation/interpretation/emulationComputer architectureChip architecture

Owner:ALPHAICS CORP

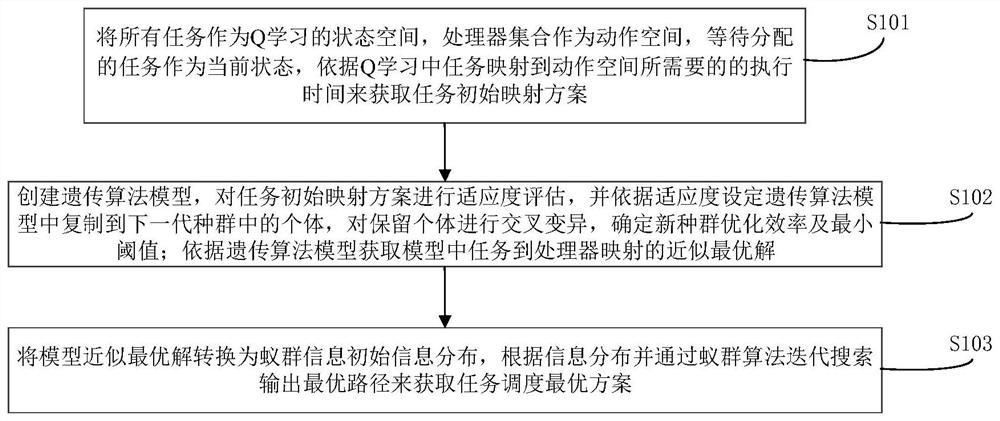

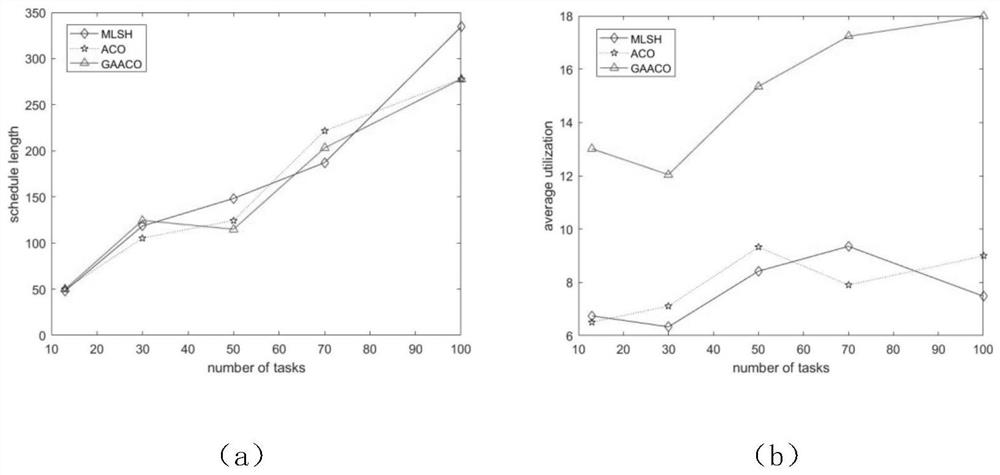

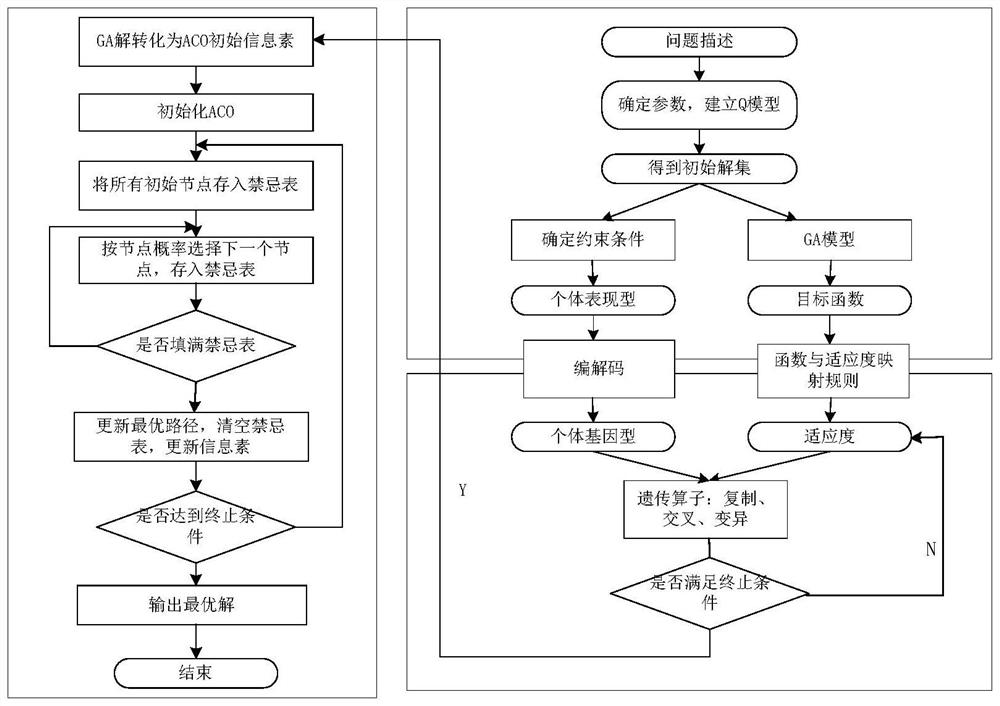

Heterogeneous platform task scheduling method and system based on Q learning

ActiveCN112256422AImprove local search capabilitiesDiversity guaranteedProgram initiation/switchingResource allocationMulti processorTheoretical computer science

The invention belongs to the technical field of heterogeneous multi-processor computing, and particularly relates to a heterogeneous platform task scheduling method and system based on Q learning, which take all tasks as a Q learning state space, take a processor set as an action space, and wait for an allocated task as a current state. The method comprises the following steps: obtaining a task initial mapping scheme according to execution time required for mapping a task to an action space in Q learning; creating a genetic algorithm model, carrying out fitness evaluation on the task initial mapping scheme, setting individuals copied to the next generation of population in the genetic algorithm model according to fitness, carrying out crossover variation on reserved individuals, and determining new population optimization efficiency and a minimum threshold value; obtaining an approximately optimal solution mapped from a task to a processor in the model according to the genetic algorithm model; converting the model approximate optimal solution into ant colony information initial information distribution, and iteratively searching and outputting an optimal path through an ant colonyalgorithm according to the information distribution to obtain a task scheduling optimal scheme, so as to better improve the performance of the heterogeneous platform.

Owner:PLA STRATEGIC SUPPORT FORCE INFORMATION ENG UNIV PLA SSF IEU

Unified virtual contiguous memory manager

InactiveUS8458434B2Memory adressing/allocation/relocationComputer security arrangementsManagement unitMemory management unit

Memory management methods and computing apparatus with memory management capabilities are disclosed. One exemplary method includes mapping an address from an address space of a physically-mapped device to a first address of a common address space so as to create a first common mapping instance, and encapsulating an existing processor mapping that maps an address from an address space of a processor to a second address of the common address space to create a second common mapping instance. In addition, a third common mapping instance between an address from an address space of a memory-management-unit (MMU) device and a third address of the common address space is created, wherein the first, second, and third addresses of the common address space may be the same address or different addresses, and the first, second, and third common mapping instances may be manipulated using the same function calls.

Owner:QUALCOMM INNOVATION CENT

System and method for improved parallel search on bipartite graphs using dynamic vertex-to-processor mapping

One embodiment of the present invention provides a system for dynamically assigning vertices to processors to generate a recommendation for a customer. During operation, the system receives graph data with customer and product vertices and purchase edges. The system traverses the graph from a customer vertex to a set of product vertices. The system divides the set of product vertices among a set of processors. Subsequently, the system determines a set of product frontier vertices for each processor. The system traverses the graph from the set of product frontier vertices to a set of customer vertices. The system divides the set of customer vertices among a set of processors. Then, the system determines a set of customer frontier vertices for each processor. The system traverses the graph from the set of customer frontier vertices to a set of recommendable product vertices. The system generates one or more product recommendations for the customer.

Owner:XEROX CORP

Double-publicized production data front-end display system and method based on AngularJS and Bootstrap

InactiveCN106446023AImplement two-way bindingAchieve mutual influenceSpecial data processing applicationsCouplingComputer module

The present invention discloses a double-publicized production data front-end display system based on AngularJS and Bootstrap. The system comprises a server and a client. The server adopts a lightweight Web framework of a JavaSpringMVC request-driven type, and a front-end controller is DispatcherServlet. An application controller comprises a processor mapper and a view parser, wherein the processor mapper performs processor management, and the view parser performs view management. A page controller / action / processor is implemented by a Controller. The client constructs a page by using Bootstrap, and accesses data by using AngularJS. According to the system provided by the present invention, AngularJS is used to access data, so that not only can the learning curve of the front-end developers of the display system be reduced, which makes program development and maintenance more simple, but also two-way binding of the data can be realized, so as to achieve synchronization of data in the View layer and the model layer and achieve mutual impact of the data, and the coupling degree between functional modules of the system can be decreased.

Owner:江苏数加数据科技有限责任公司

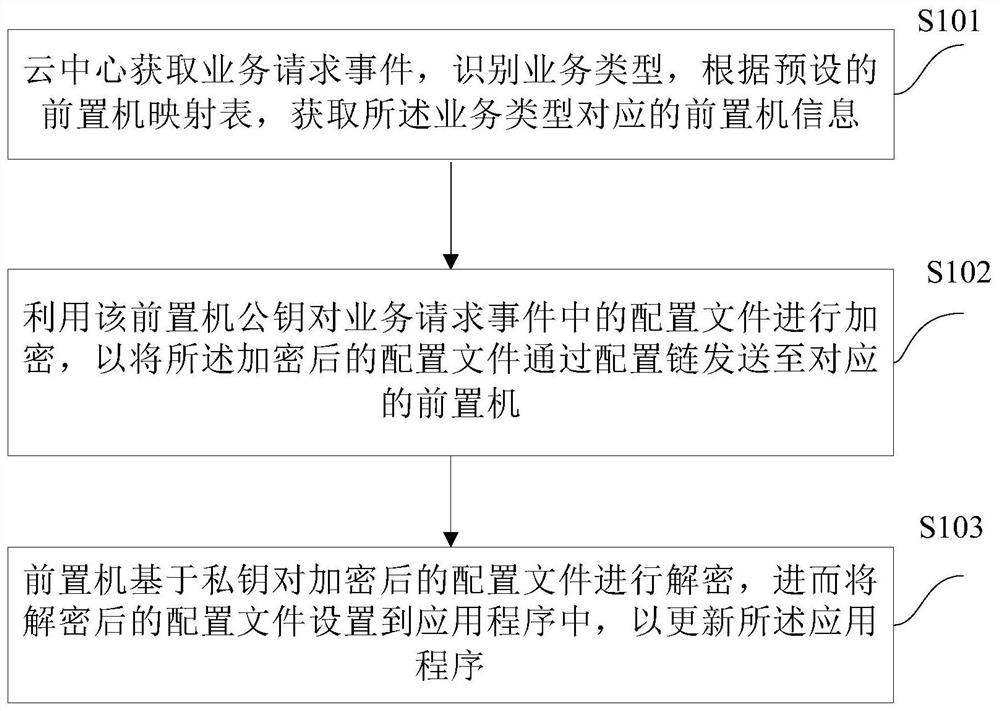

Application program updating method and system

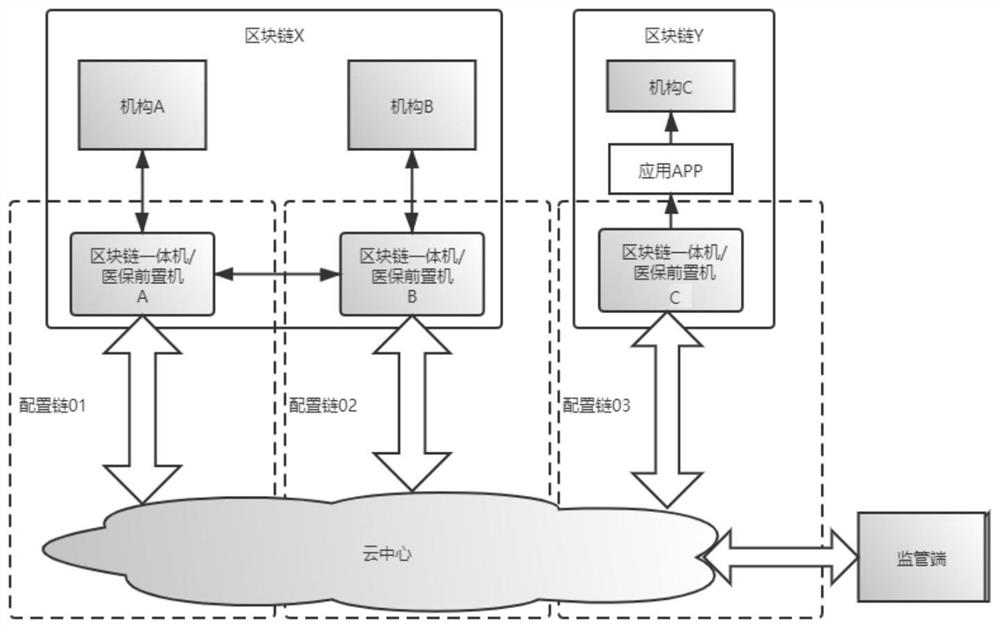

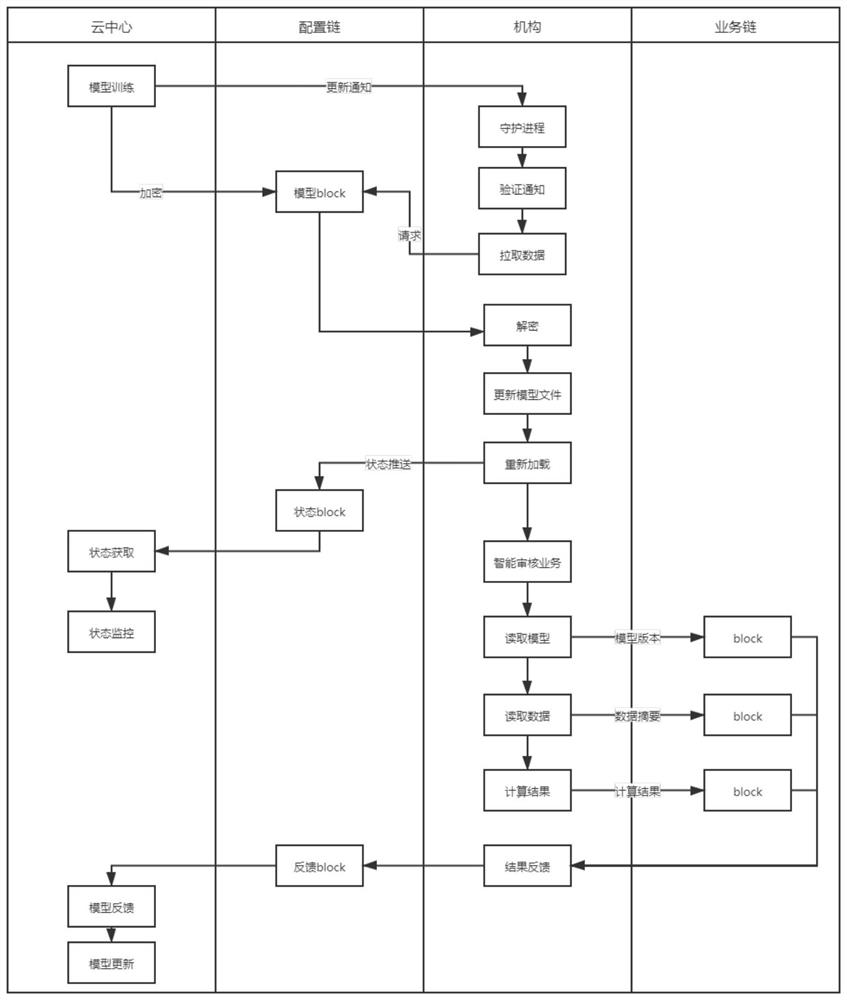

PendingCN112416396AImprove satisfactionSolve monitoring problemsDatabase distribution/replicationDigital data protectionEngineeringOperating system

The invention discloses an application program updating method and system, and one specific embodiment of the method comprises the steps that a cloud center obtains a service request event, identifiesa service type, obtains front-end processor information corresponding to the service type according to a preset front-end processor mapping table, and updates the service type of the front-end processor information; the the front-end processor public key is used for encrypting a configuration file in a service request event, so as to send the encrypted configuration file to the corresponding front-end processor through a configuration chain; wherein the cloud center is associated with each front-end processor in the blockchain node through a corresponding configuration chain; and the front-end processor decrypts the encrypted configuration file based on the private key, and then sets the decrypted configuration file into the application to update the application. Therefore, the problems of high cost and low efficiency of existing project localization deployment can be solved.

Owner:TAIKANG LIFE INSURANCE CO LTD +1

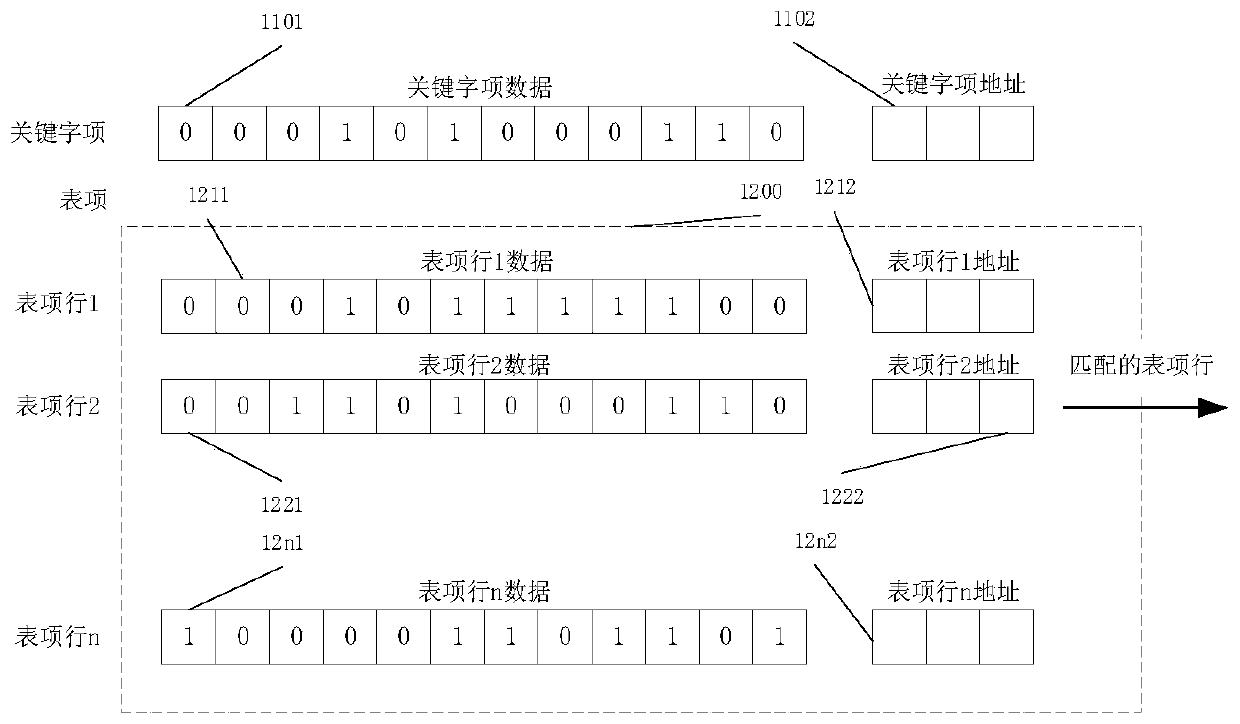

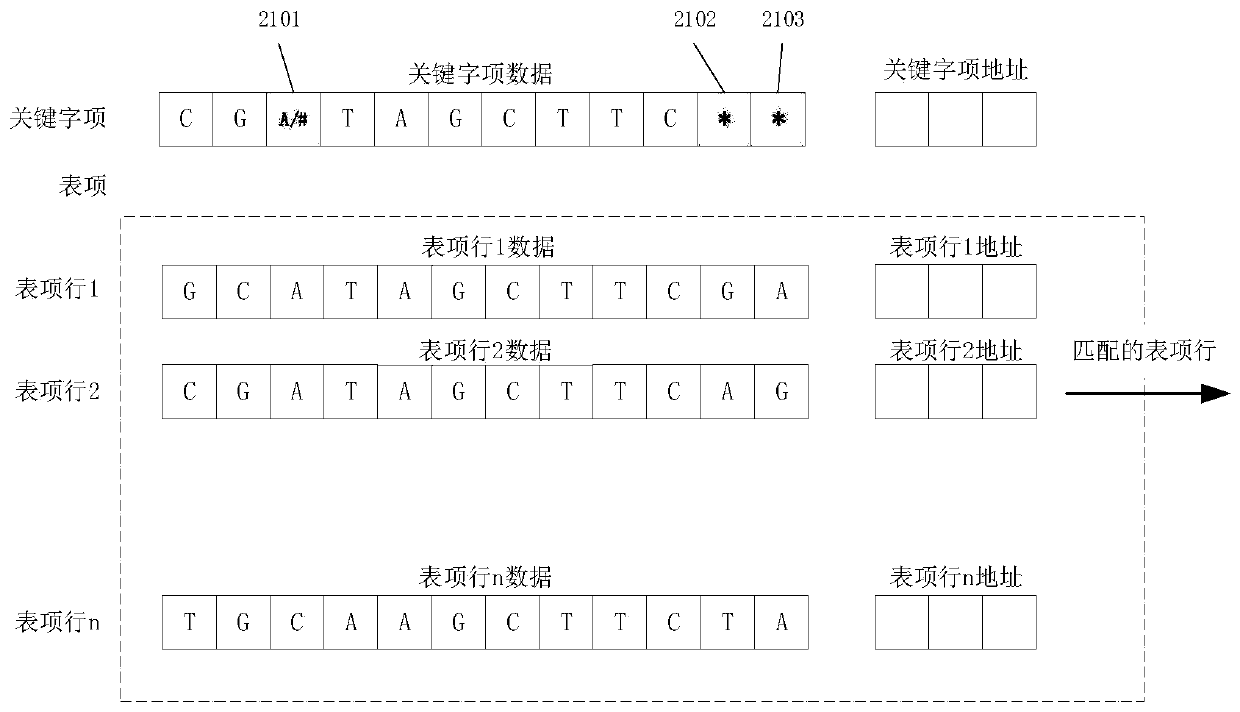

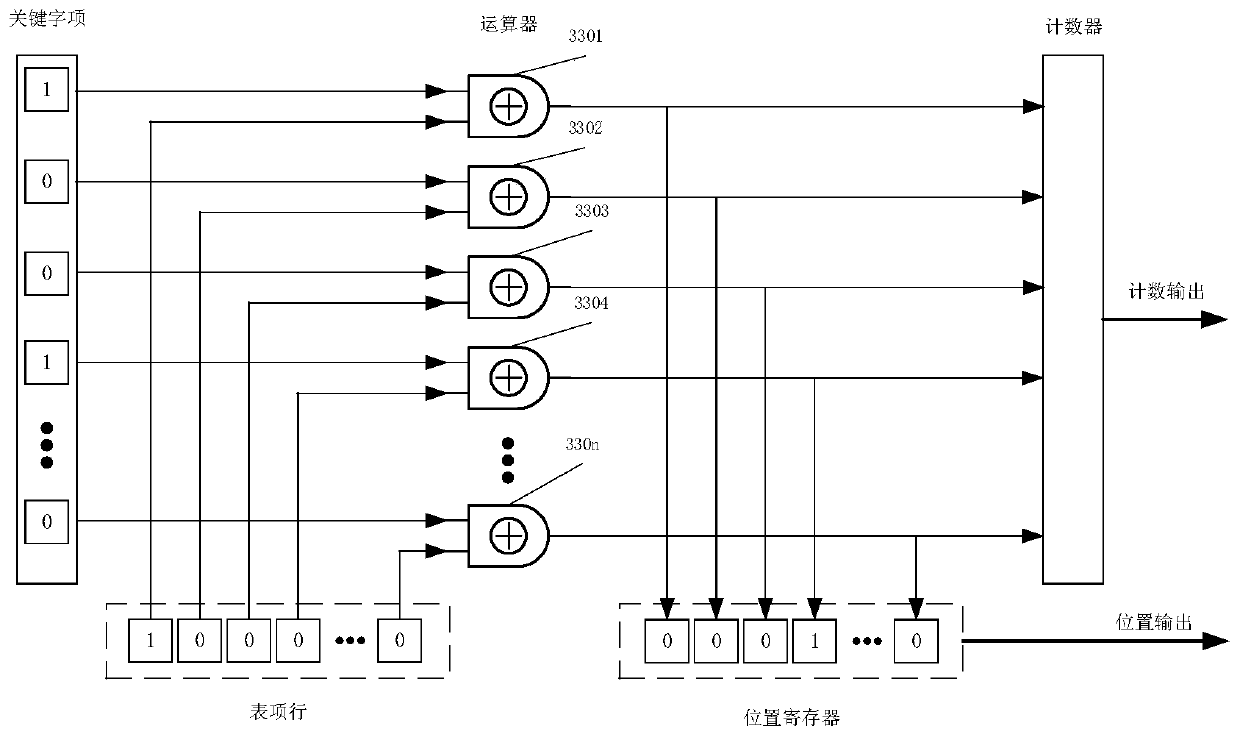

Super-parallel comparison method and system

ActiveCN110647665ARealize the intention of the inventionImprove the speed of comparisonOther databases queryingSpecial data processing applicationsCommunication interfaceMulti dimensional data

A single-period super-parallel comparison method is designed by adopting an FPGA (Field Programmable Gate Array), a programmable logic or TCAM (Ternary Content Addressable Memory) chip, so that simultaneous bit-by-bit comparison of a keyword item and a plurality of table item rows is realized in a single logic period, and a matched table item row address, similarities and differences point statistical data and position information are output. The algorithm supports table item reconfiguration, dissimilar site processing, filter filtering, table item mapping, one-dimensional array, two-dimensional data and multi-dimensional data comparison; the system comprises a comparator array, reconfigurable logic, a dissimilar site processor, a mapping memory, a filter and a communication interface. Anindependent comparison server and an independent PCIE acceleration card can be formed. According to the method, when comparison is carried out on 10M table item rows, the speed is increased by more than 109 orders of magnitudes compared with the Von's computer comparison algorithm of the fastest CPU at present.

Owner:丁贤根

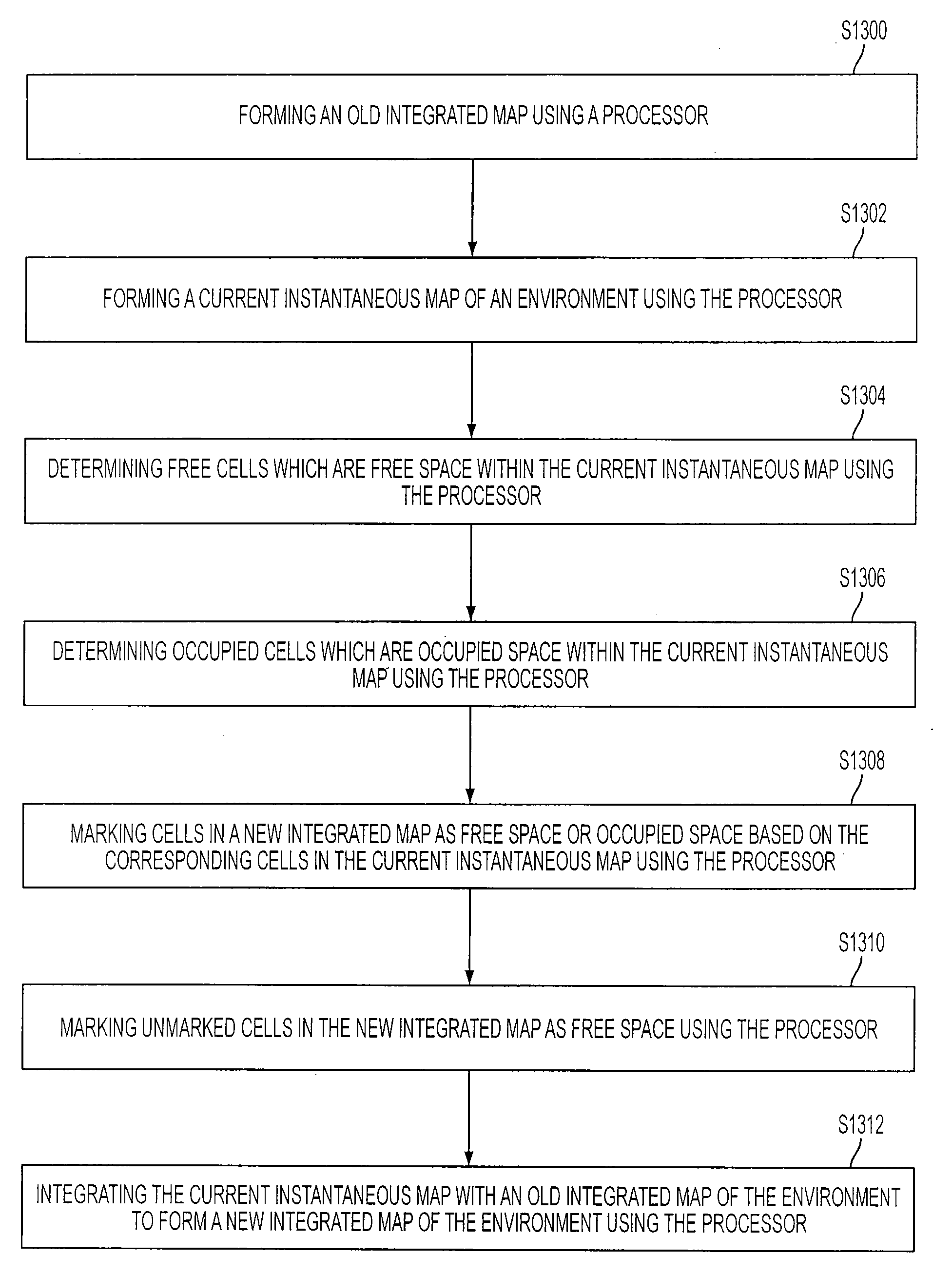

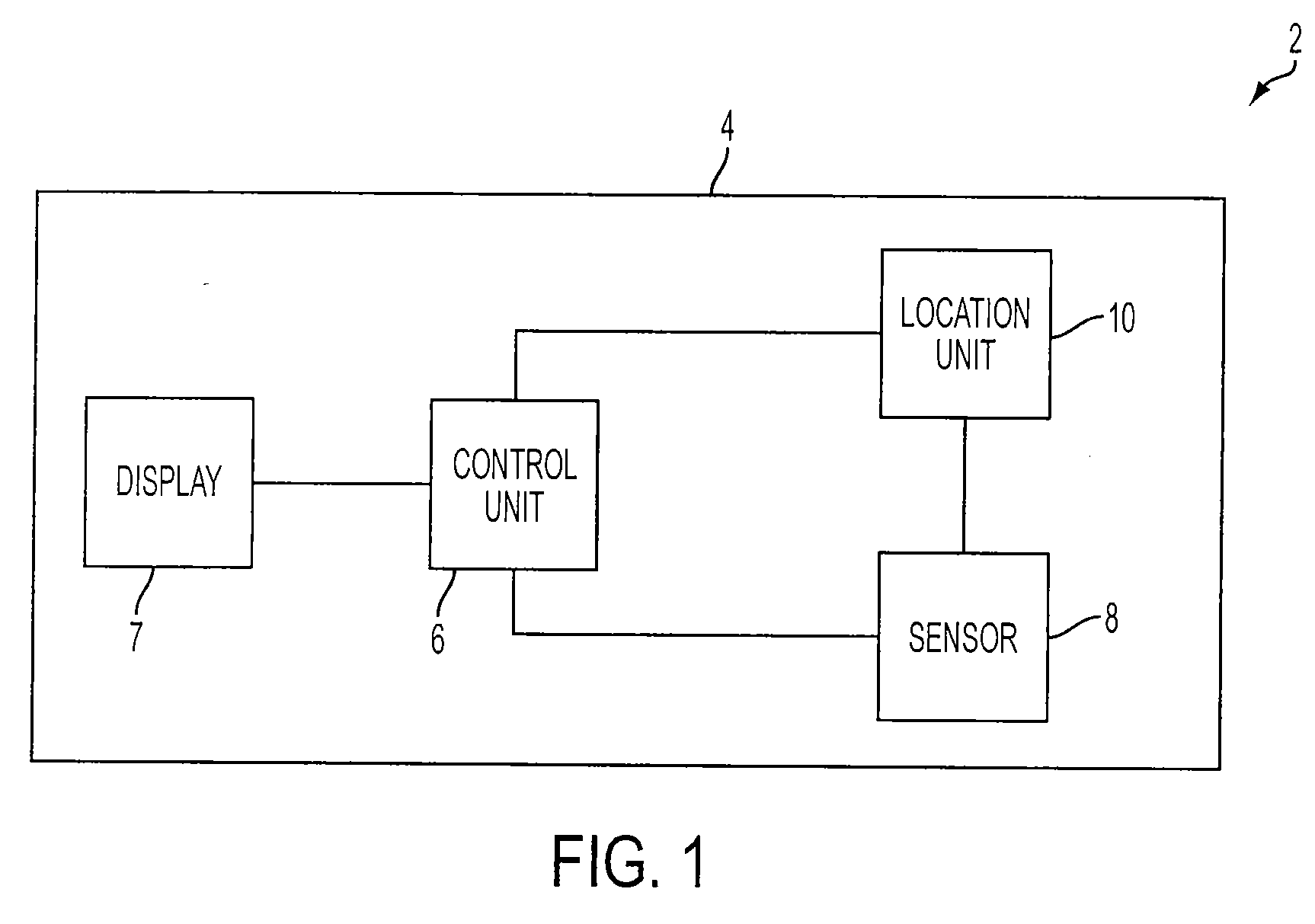

Method and system for mapping environments containing dynamic obstacles

ActiveUS8108148B2Road vehicles traffic controlAutomatic initiationsComputer scienceProcessor mapping

Owner:TOYOTA MOTOR CO LTD

Robot Swarm Scheduling System

ActiveCN109800937BIntelligent planning of traffic routesAvoid traffic jamsProgramme-controlled manipulatorRelational databasesSimulationProcessor mapping

The invention discloses a robot cluster scheduling system, comprising: a user layer, an intermediate layer, an application layer, a plug-in layer and a data persistence layer, the intermediate layer includes a processor mapping module and a state acquisition module, and the application layer includes a task scheduling module and a traffic Scheduling module, the plug-in layer includes task solving engine and traffic planning engine. The task solving engine is used to determine the target robot according to the parameters and state data of the task. The traffic planning engine is used to determine the target route. Both the task solving engine and the traffic planning engine provide APIs. On the one hand, it implements a robot cluster scheduling system that supports secondary development, and the system coupling is low. On the other hand, it realizes the determination of the target robot according to the task, realizes the optimal allocation of robot tasks, and improves the execution of tasks after scheduling. Efficiency, on the other hand, can intelligently plan the traffic route of the robot to prevent traffic jams of the robot.

Owner:BOZHON PRECISION IND TECH CO LTD +1

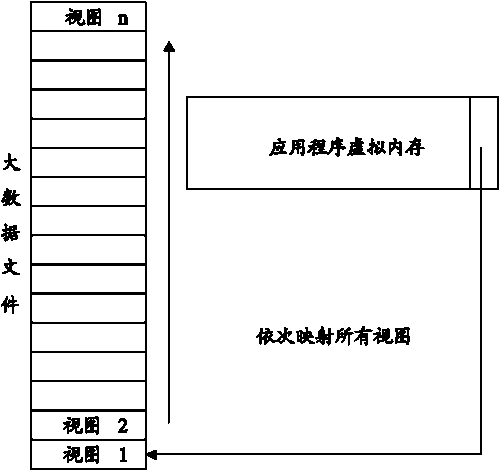

A big data file rapid parallel extraction method based on memory mapping

InactiveCN109815249AImprove efficiencyBreak through the bottleneck of processing speedDatabase updatingTransmissionGranularityData file

The invention discloses a big data file rapid parallel extraction method based on memory mapping, which comprises the following steps of generating a task domain, and forming the task domain by task blocks, wherein the task blocks are elements in the task domain; generating a task pool, carrying out sub-task domain merging on elements in the task domain according to the principle of low communication cost, taking a set of elements in the task domain as a task pool for task scheduling, and extracting tasks for execution by a processor according to scheduling selection; scheduling task, decidingthe scheduling granularity of the tasks according to the residual quantity of the tasks, extracting the tasks meeting the requirements out of the task pool, and preparing for mapping; and mapping theprocessor, mapping the extracted task to the current idle processor for execution. According to the method, the multi-core advantage can be exerted, the file mapping efficiency of the memory is improved, the method can be applied to the large file reading of a single file with the capacity below 4GB, the reading speed of the files can be effectively increased, and the I / O throughput rate of the magnetic disk files is increased.

Owner:苏州华必讯信息科技有限公司

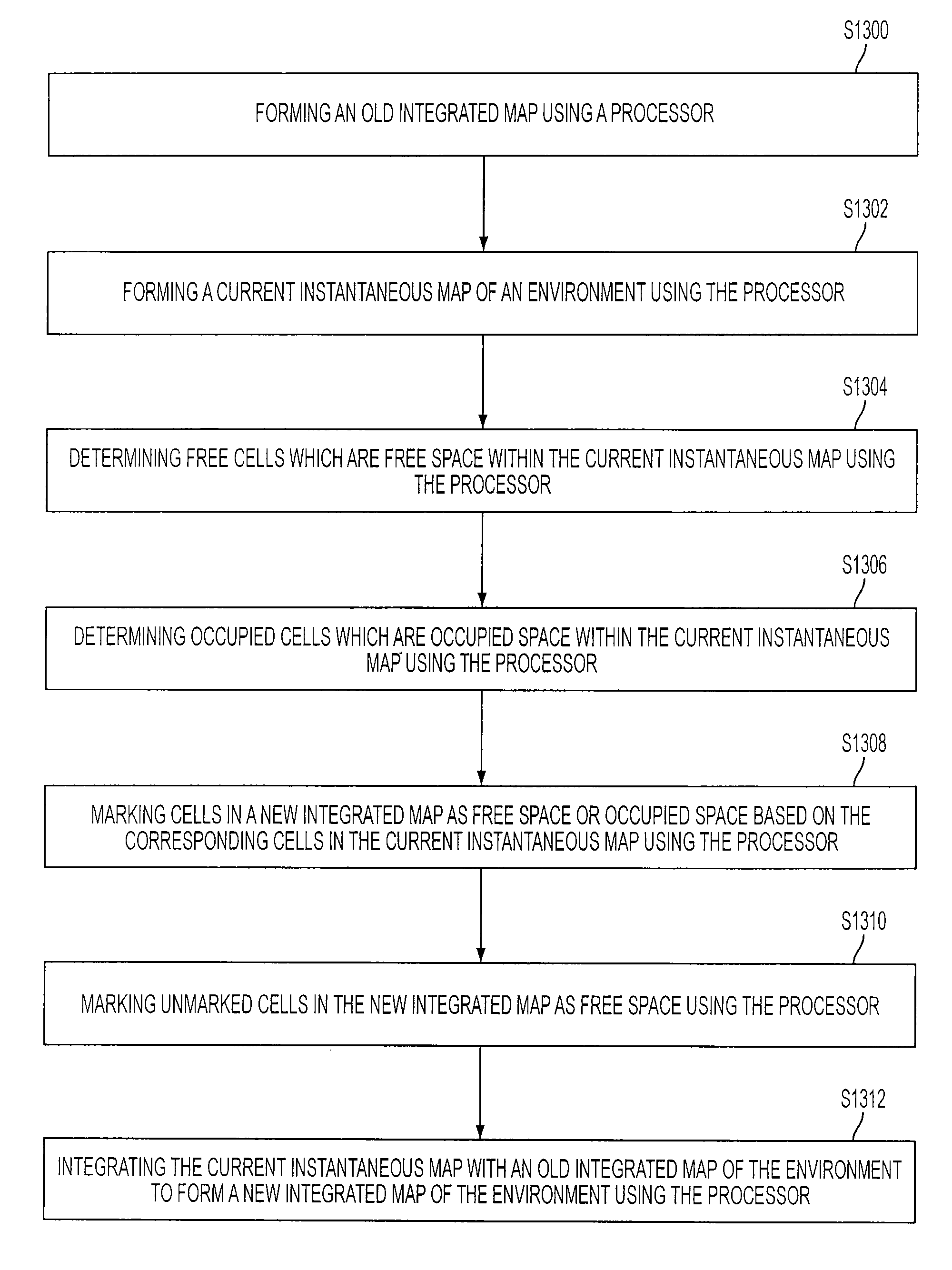

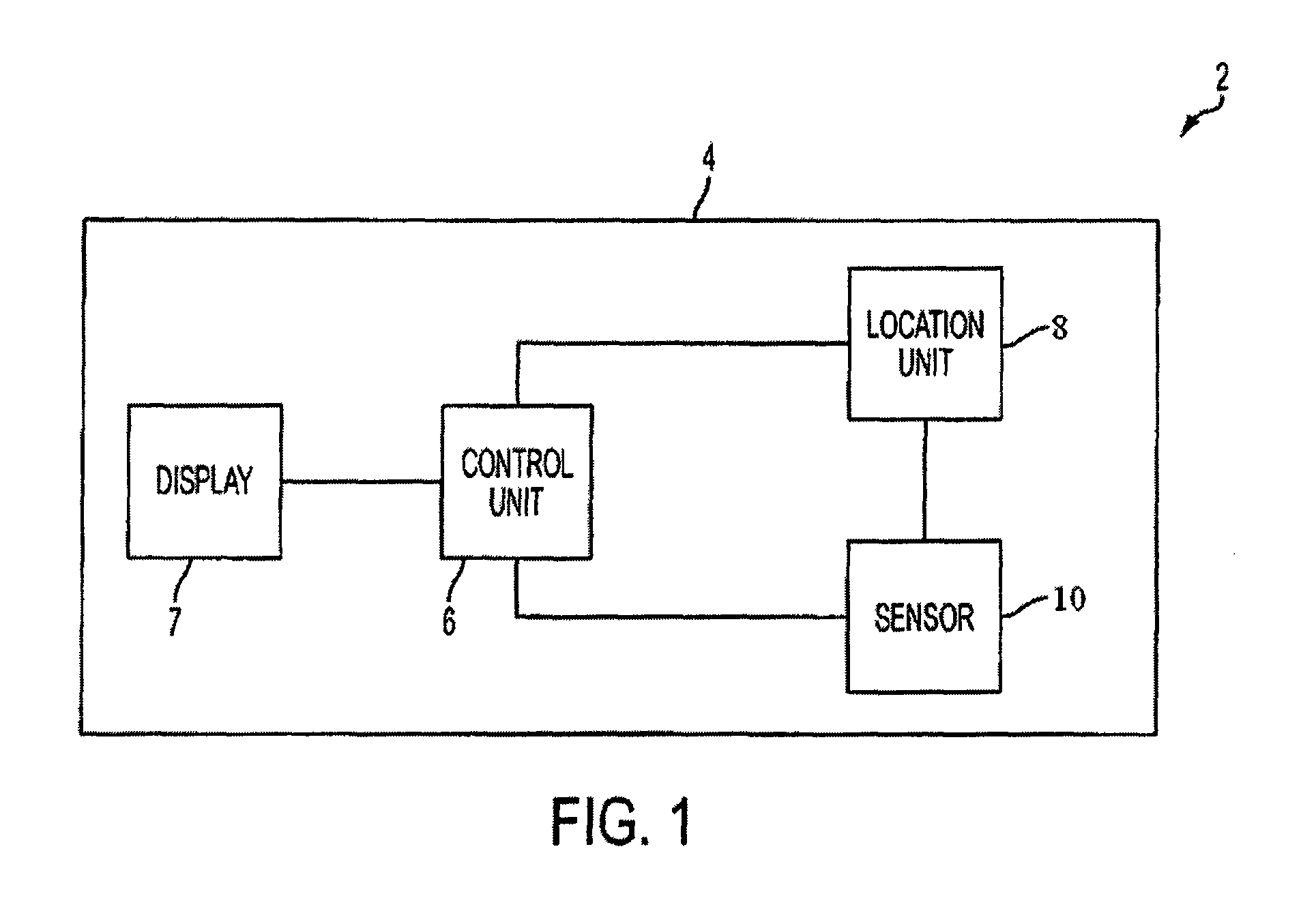

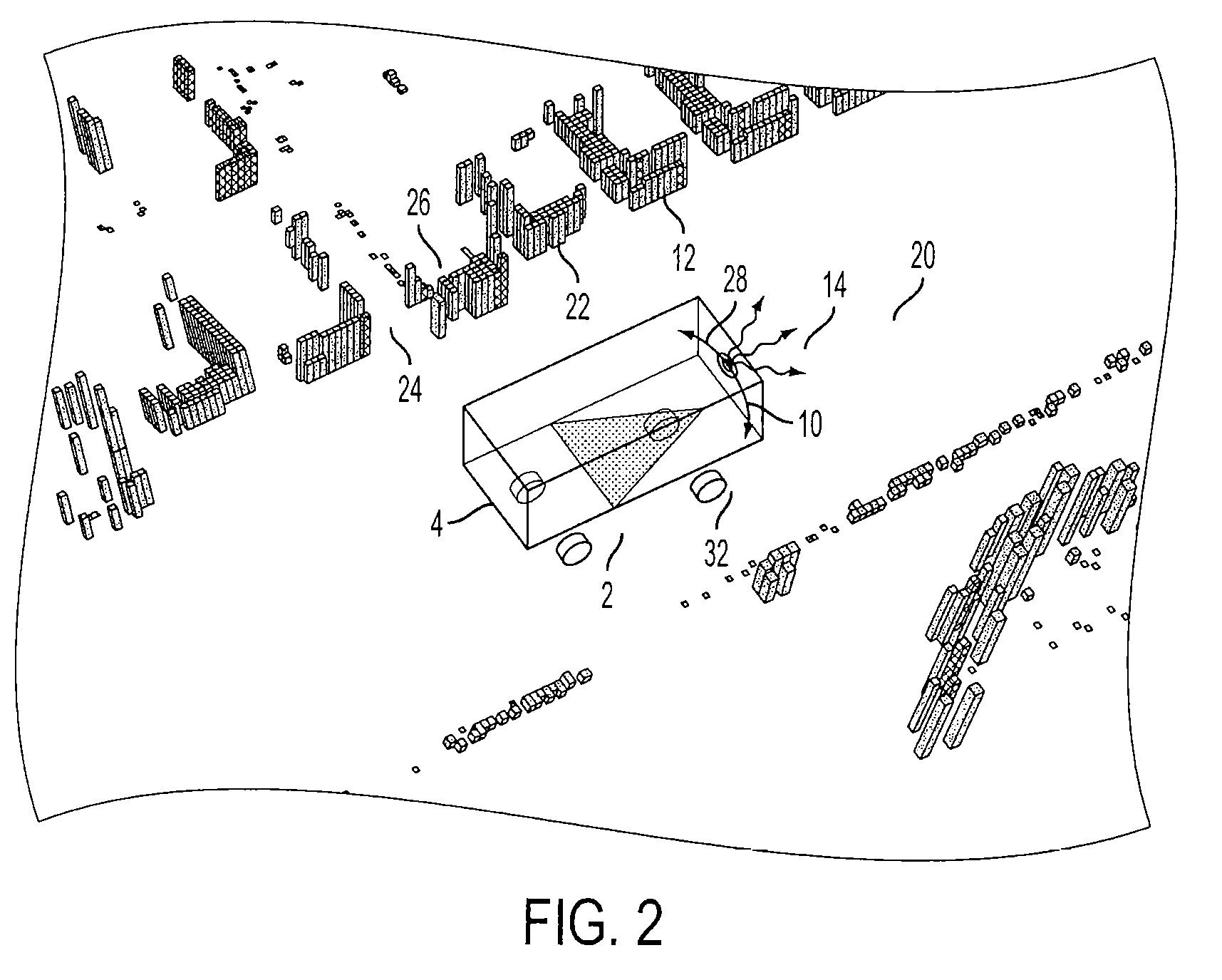

Method and system for mapping environments containing dynamic obstacles

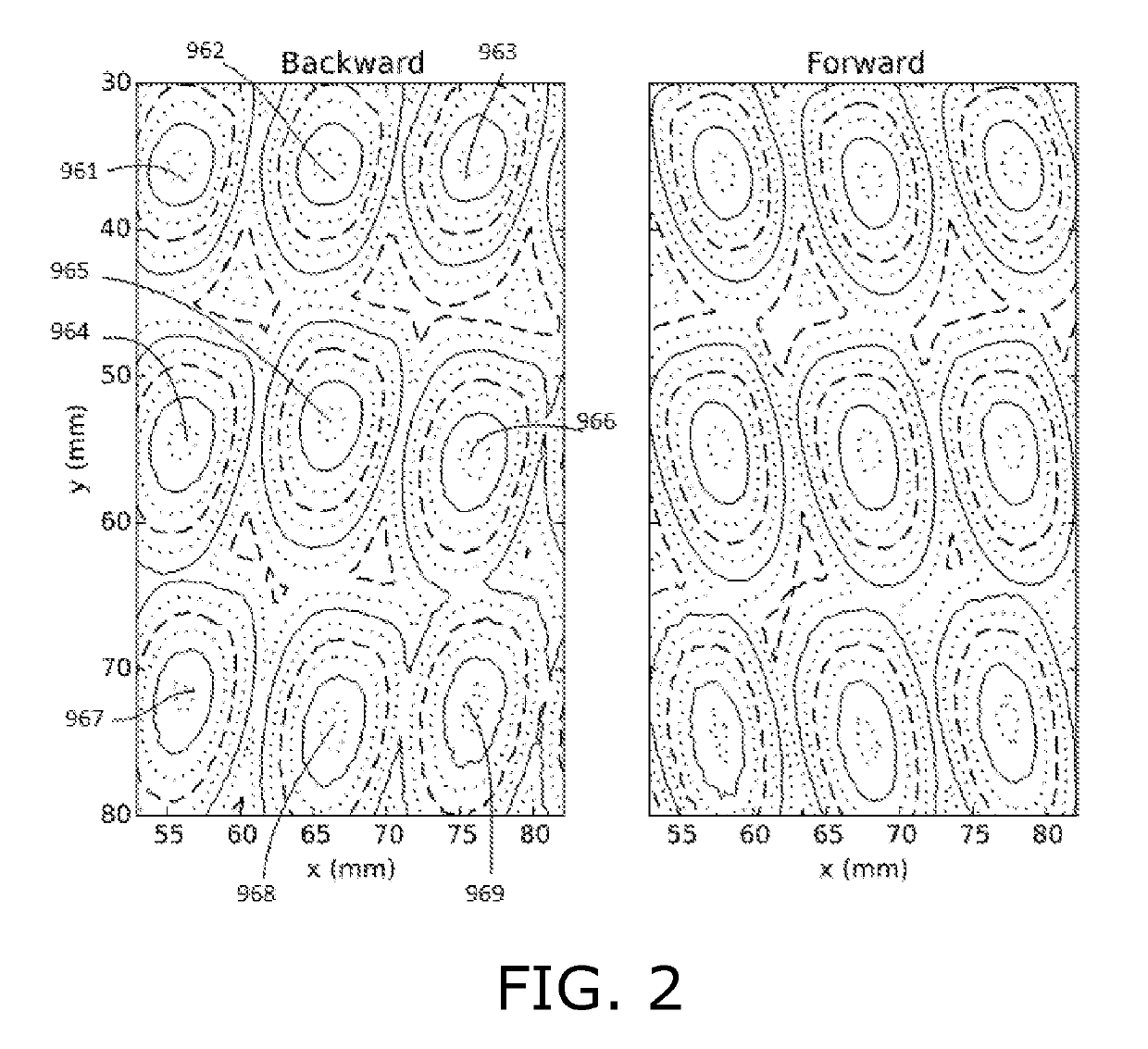

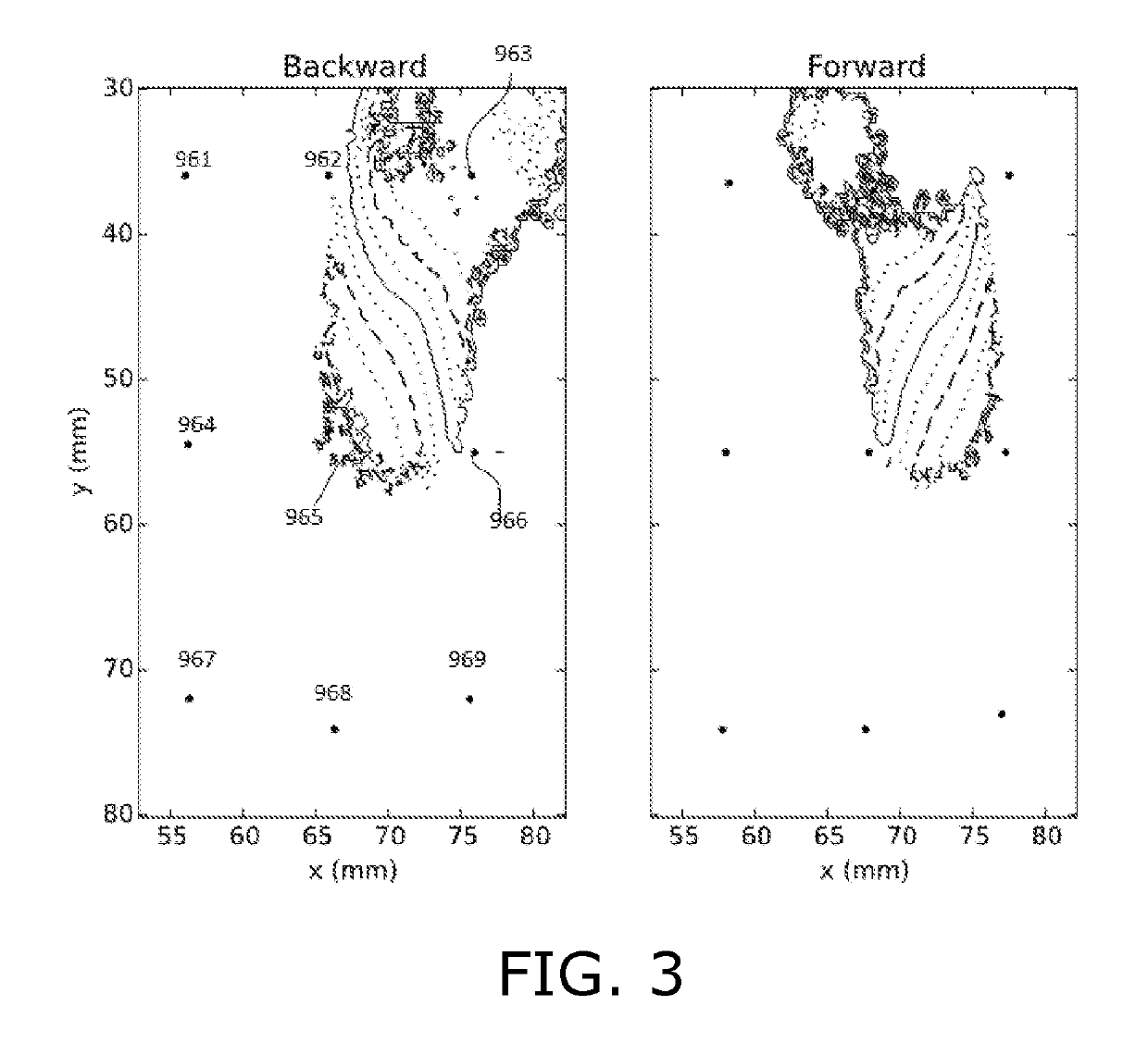

ActiveUS20100223007A1Road vehicles traffic controlAutomatic initiationsComputer scienceProcessor mapping

The present invention relates to a method and system for mapping environments containing dynamic obstacles. In one embodiment, the present invention is a method for mapping an environment containing dynamic obstacles using a processor including the steps of forming a current instantaneous map of the environment, determining cells which are free space within the current instantaneous map, determining cells which are occupied space within the current instantaneous map, and integrating the current instantaneous map with an old integrated map of the environment to form a new integrated map of the environment.

Owner:TOYOTA MOTOR CO LTD

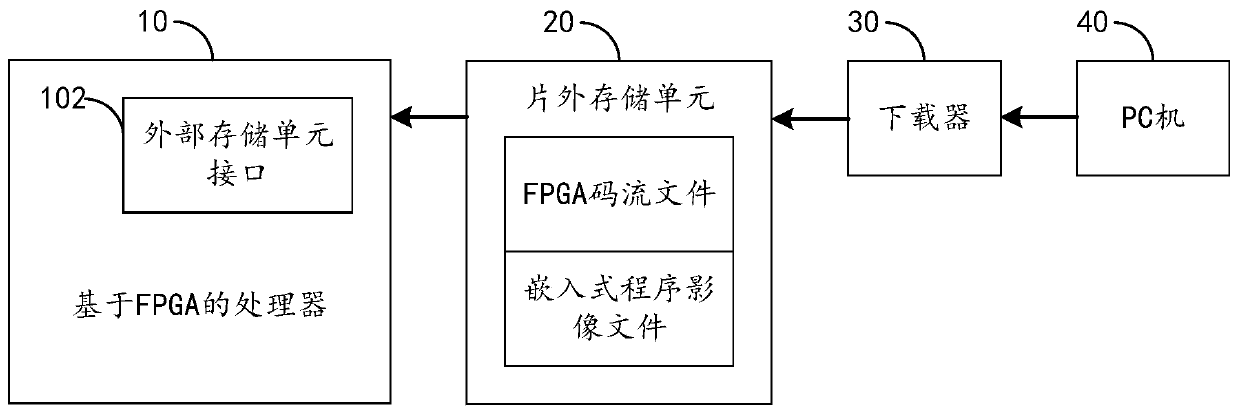

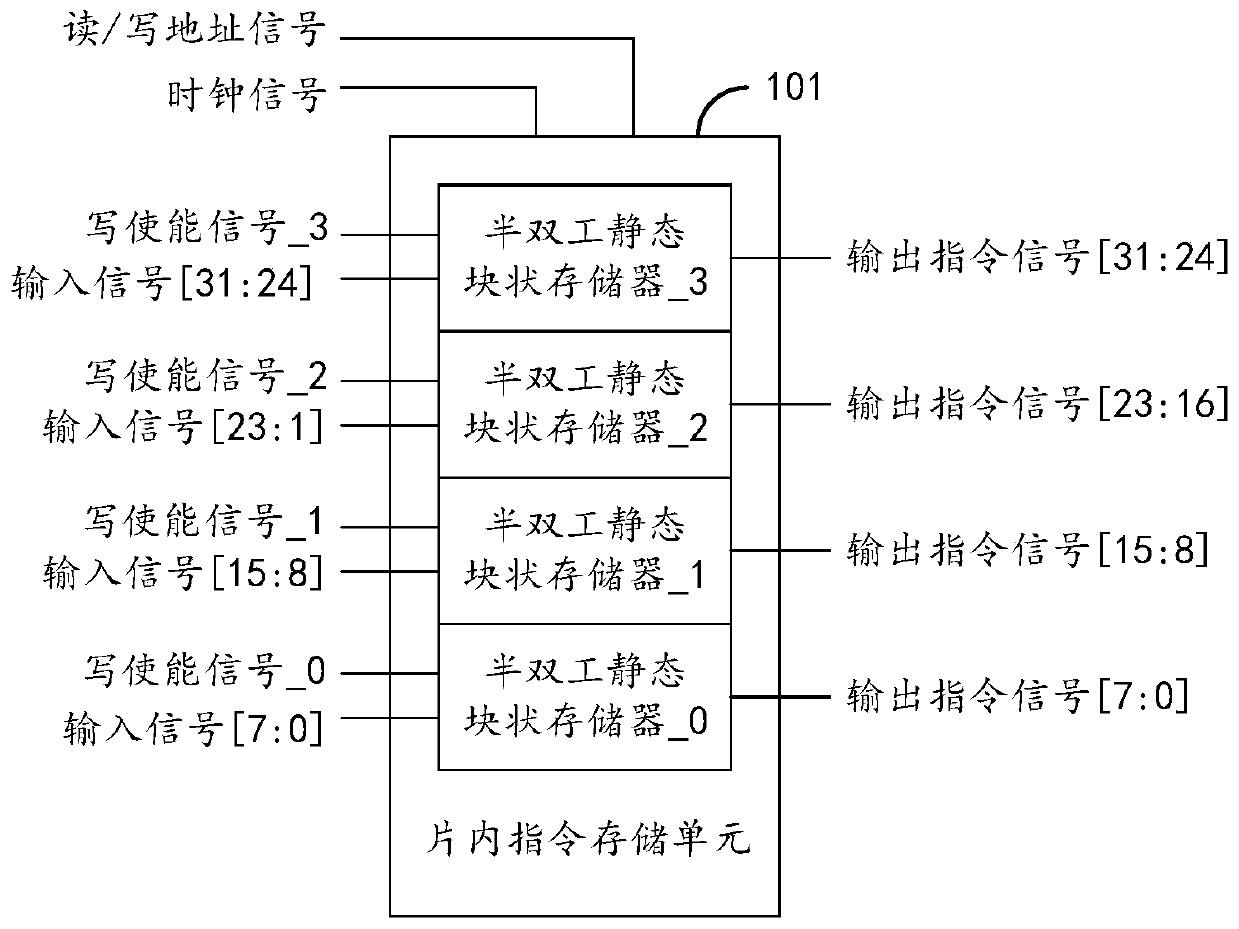

Processor starting method based on FPGA and processor

InactiveCN111198718AAvoid designReduce development complexityBootstrappingComputer architectureEngineering

The invention discloses a processor starting method based on an FPGA and a processor. According to the technical scheme, the method comprises the following steps: running a solidification starting bootstrap program, and obtaining an off-chip storage unit mapped by a processor from a processor core address space and used for downloading an embedded program image file; loading the embedded program image file in the off-chip storage unit corresponding to the address field to an on-chip instruction storage unit; and running the embedded program image file in the on-chip instruction storage unit. When the embedded program needs to be updated, the technical scheme can avoid reloading the processor kernel hardware design, reduce the development complexity and improve the development efficiency.

Owner:GOWIN SEMICON CORP LTD

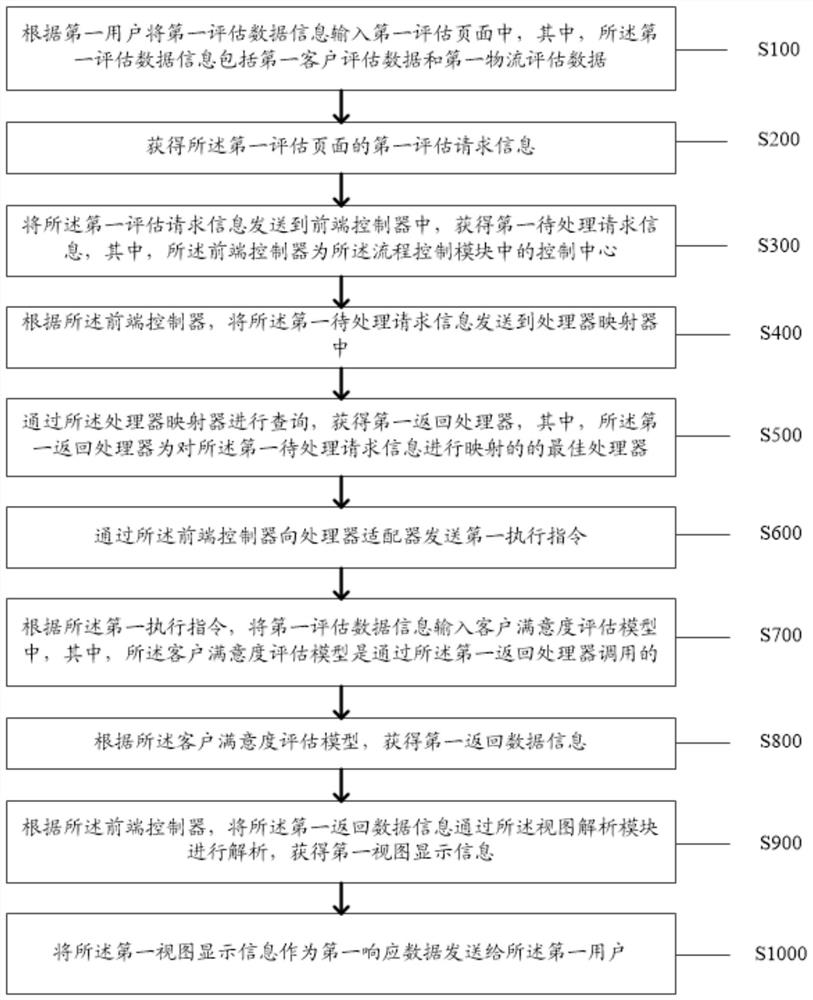

Customer evaluation method and system based on big data

PendingCN112766769AImprove evaluation efficiencyCustomer relationshipResourcesLogistics managementCustomer delight

The invention discloses a customer evaluation method and system based on big data. The method comprises the steps of obtaining first evaluation request information of a first evaluation page; sending the first evaluation request information to a front-end controller to obtain first to-be-processed request information; obtaining a first return processor from the processor mapper sent by the front-end controller; sending a first execution instruction to a processor adapter through the front-end controller, and inputting first evaluation data information into a customer satisfaction evaluation model; obtaining first return data information according to the customer satisfaction evaluation model; according to the front-end controller, analyzing the first return data information through the view analysis module, and obtaining first view display information; and sending the first view display information as first response data to the first user. Thus, the technical problem of low evaluation efficiency caused by too much customer evaluation data of logistics service in the prior art is solved.

Owner:南京利特嘉软件科技有限公司

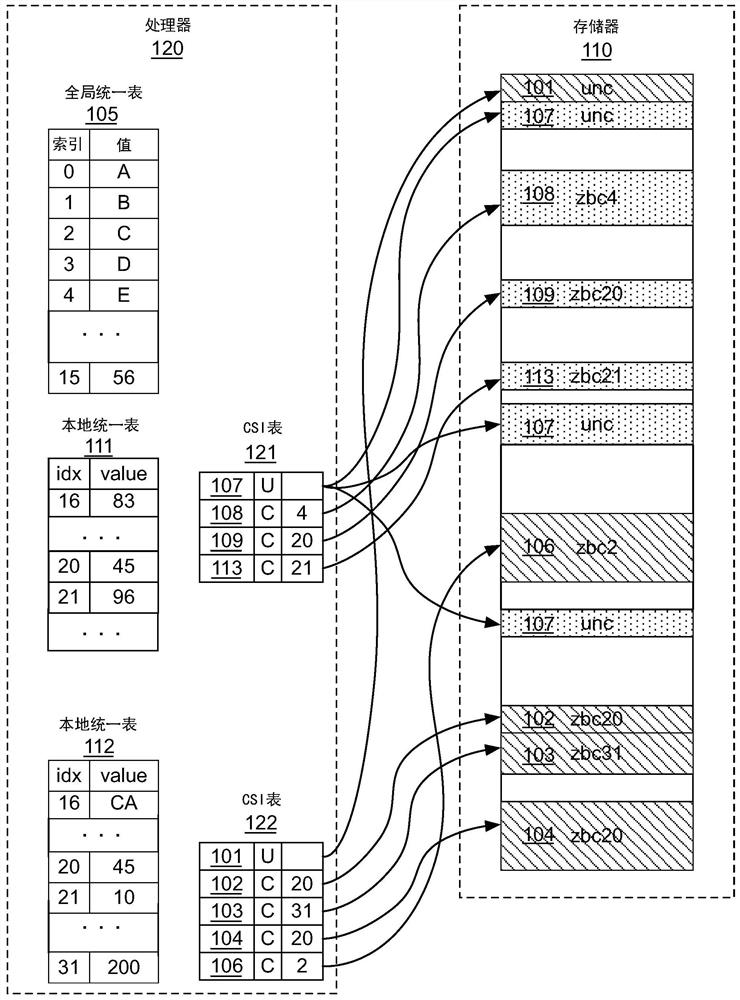

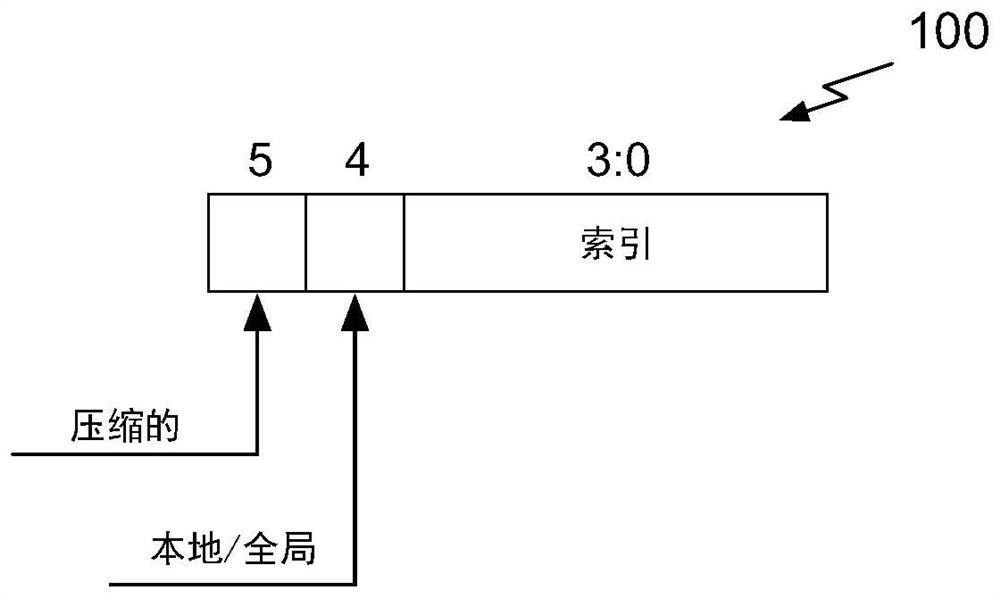

Techniques for dynamically compressing memory regions having a uniform value

ActiveCN113609029AMemory adressing/allocation/relocationCode conversionComputer architectureExternal storage

The invention discloses techniques for dynamically compressing memory regions having a uniform value. Accesses between a processor and its external memory is reduced when the processor internally maintains a compressed version of values stored in the external memory. The processor can then refer to the compressed version rather than access the external memory. One compression technique involves maintaining a dictionary on the processor mapping portions of a memory to values. When all of the values of a portion of memory are uniform (e.g., the same), the value is stored in the dictionary for that portion of memory. Thereafter, when the processor needs to access that portion of memory, the value is retrieved from the dictionary rather than from external memory. Techniques are disclosed herein to extend, for example, the capabilities of such dictionary-based compression so that the amount of accesses between the processor and its external memory are further reduced.

Owner:NVIDIA CORP

Method and system for collision assessment for vehicles

ActiveCN102328656BAnti-collision systemsComplex mathematical operationsProcessor mappingEnvironmental geology

Methods and systems are provided for assessing a target proximate a vehicle. A location and a velocity of the target are obtained. The location and the velocity of the target are mapped onto a polar coordinate system via a processor. A likelihood that the vehicle and the target will collide is determined using the mapping.

Owner:GM GLOBAL TECH OPERATIONS LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com