A big data file rapid parallel extraction method based on memory mapping

A technology of memory mapping and extraction method, which is applied in the field of fast parallel extraction of large data files based on memory mapping, can solve the problem that the content of the file cannot be determined, and achieve the effects of good scalability, performance improvement and efficiency improvement.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

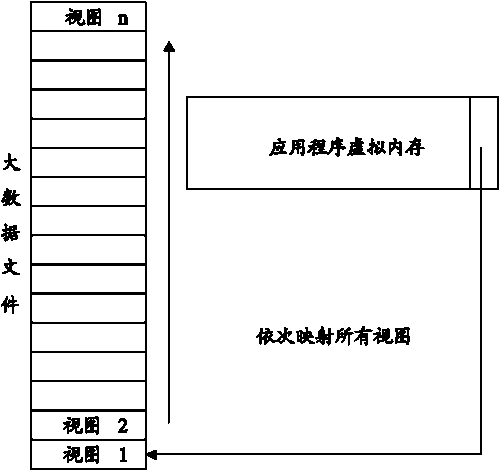

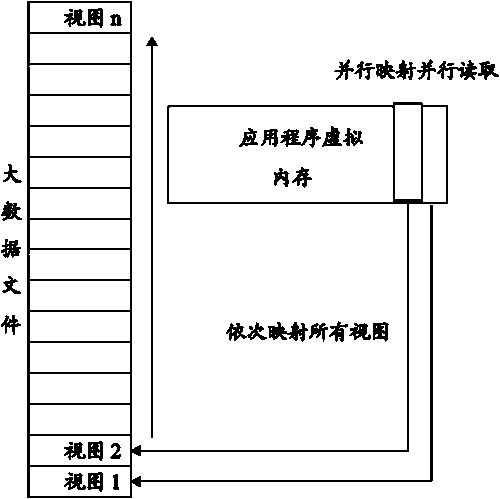

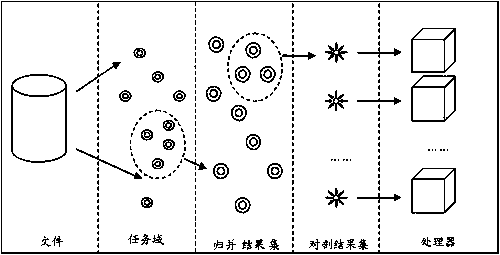

[0093] The present invention strives to propose a universal and efficient solution to the problem of reading large data files through the combination of multi-core technology and memory mapping file technology without increasing the hardware cost. The core problem to be solved is to improve the efficiency of the application program in reading and processing large files with a data volume of several GB, and to break through the efficiency bottleneck of the original memory-mapped file method through the reasonable application of the multi-core environment. At the same time, the solution proposed by the present invention will also solve the generality problem of reading large data files.

[0094] The present invention makes the following adjustments based on the traditional loop mapping method: the loop mapping technique tends to allocate equal tasks to each processor one by one, that is, the task units allocated each time are basically equal. The present invention divides tasks ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com