Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

107 results about "Motion computation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Realtime object tracking system

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

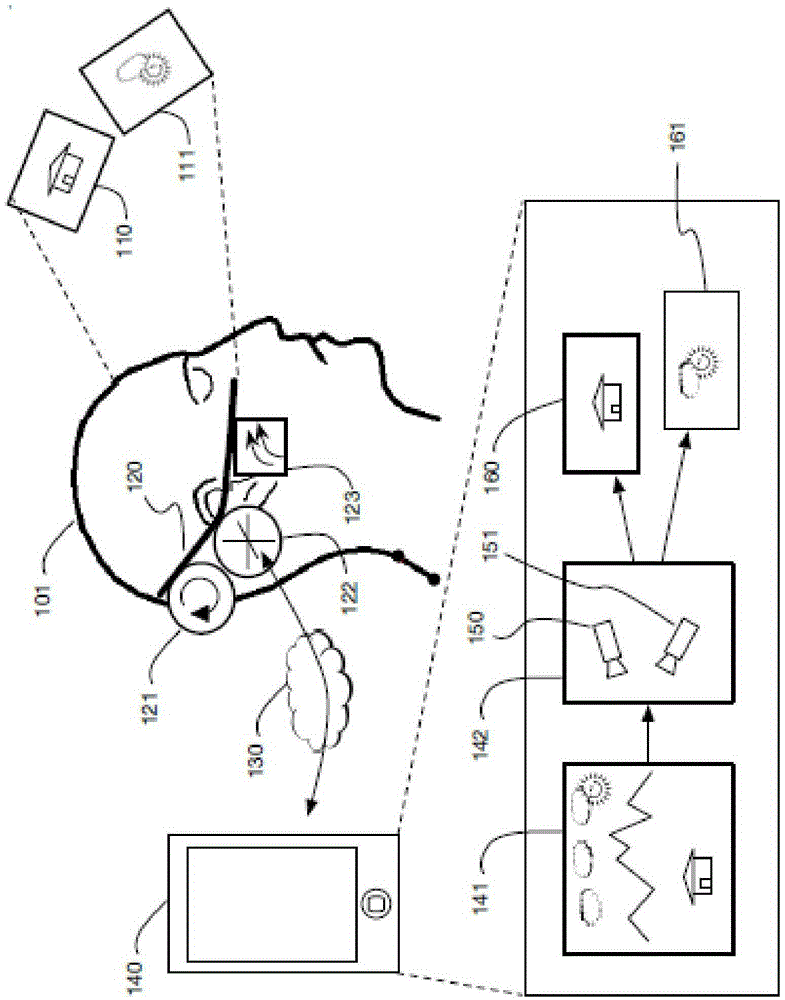

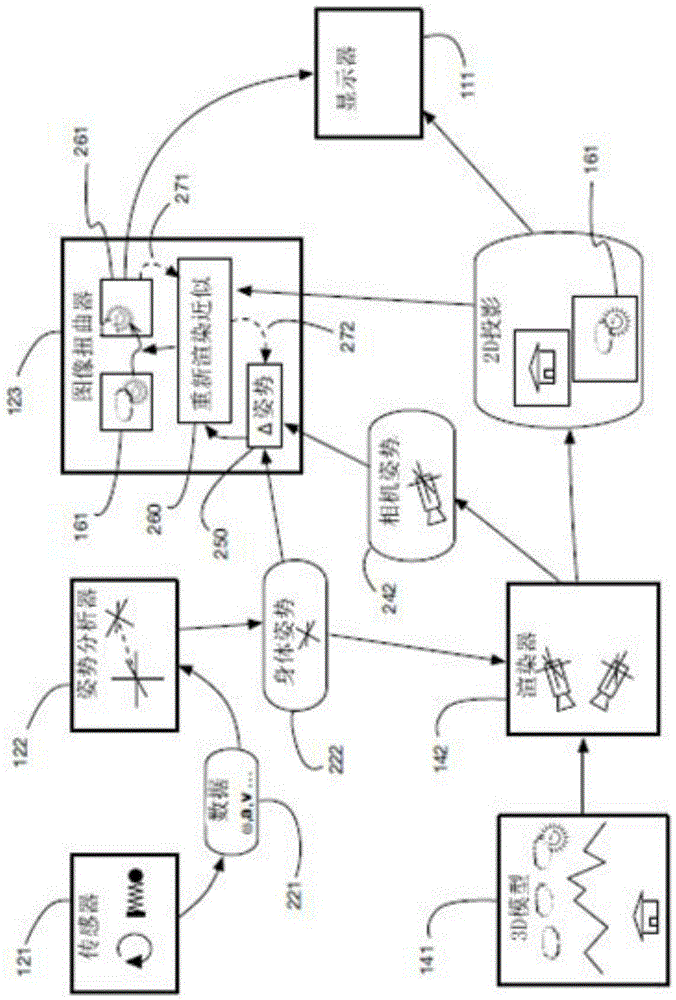

Vision Based Human Activity Recognition and Monitoring System for Guided Virtual Rehabilitation

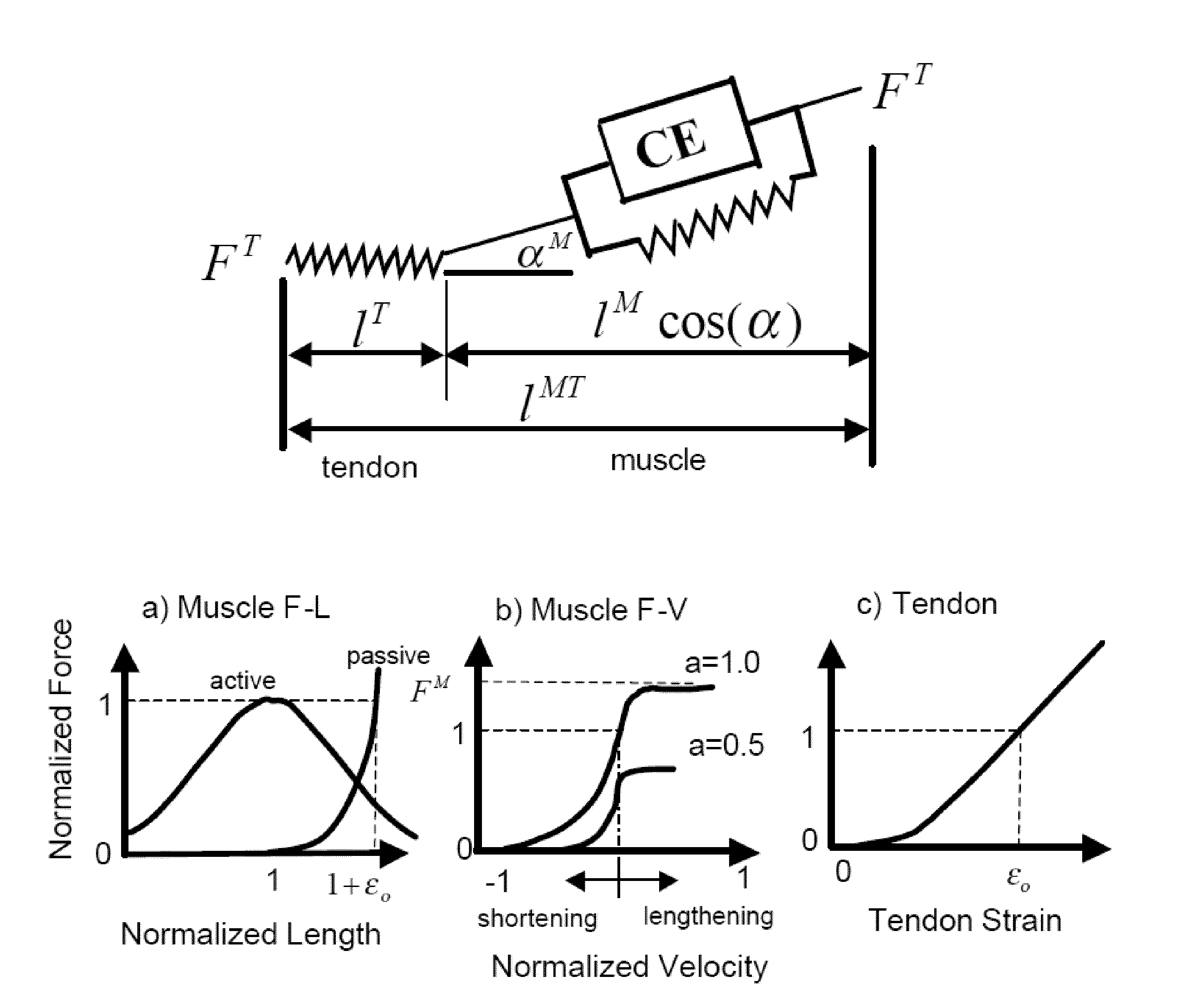

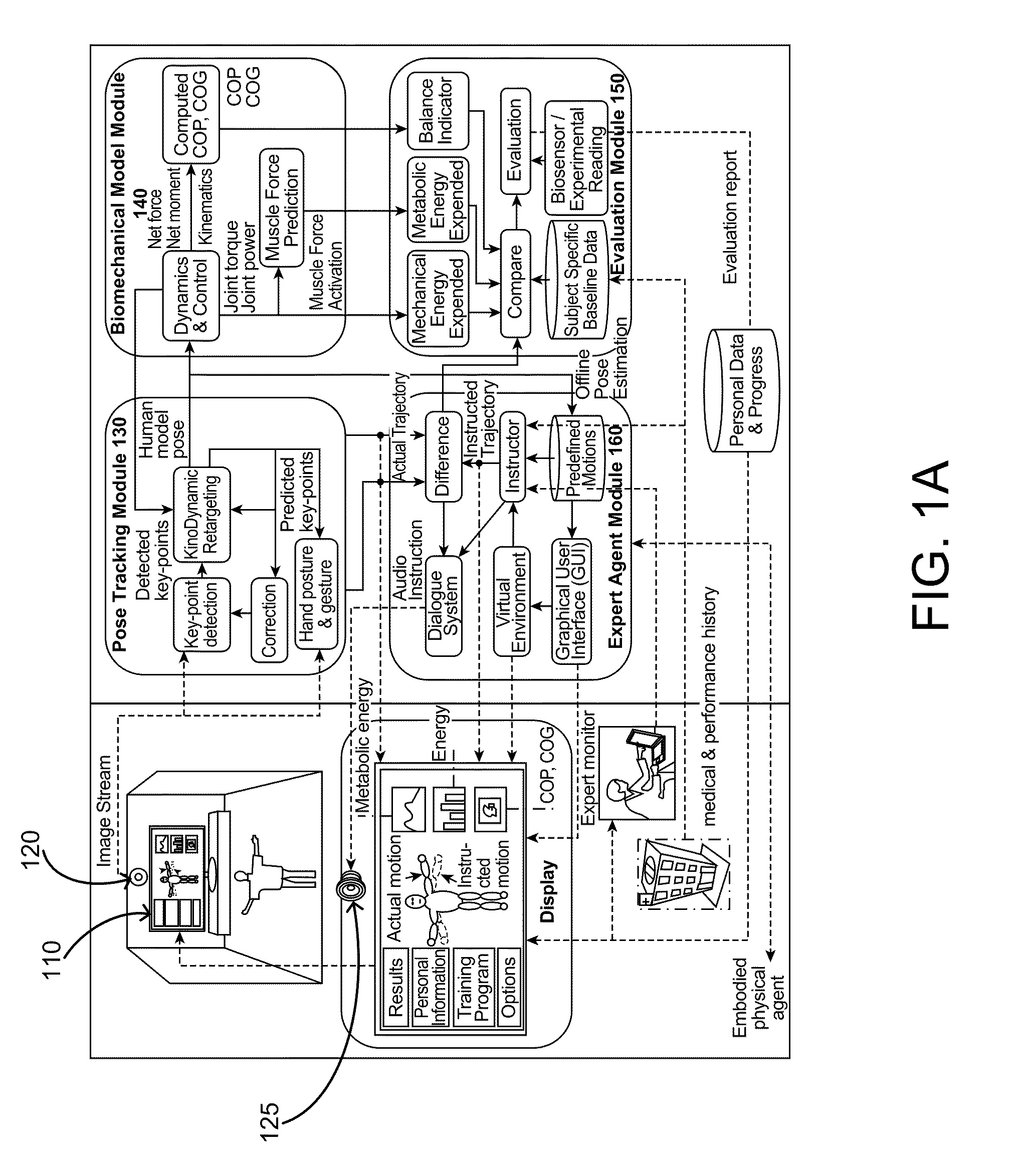

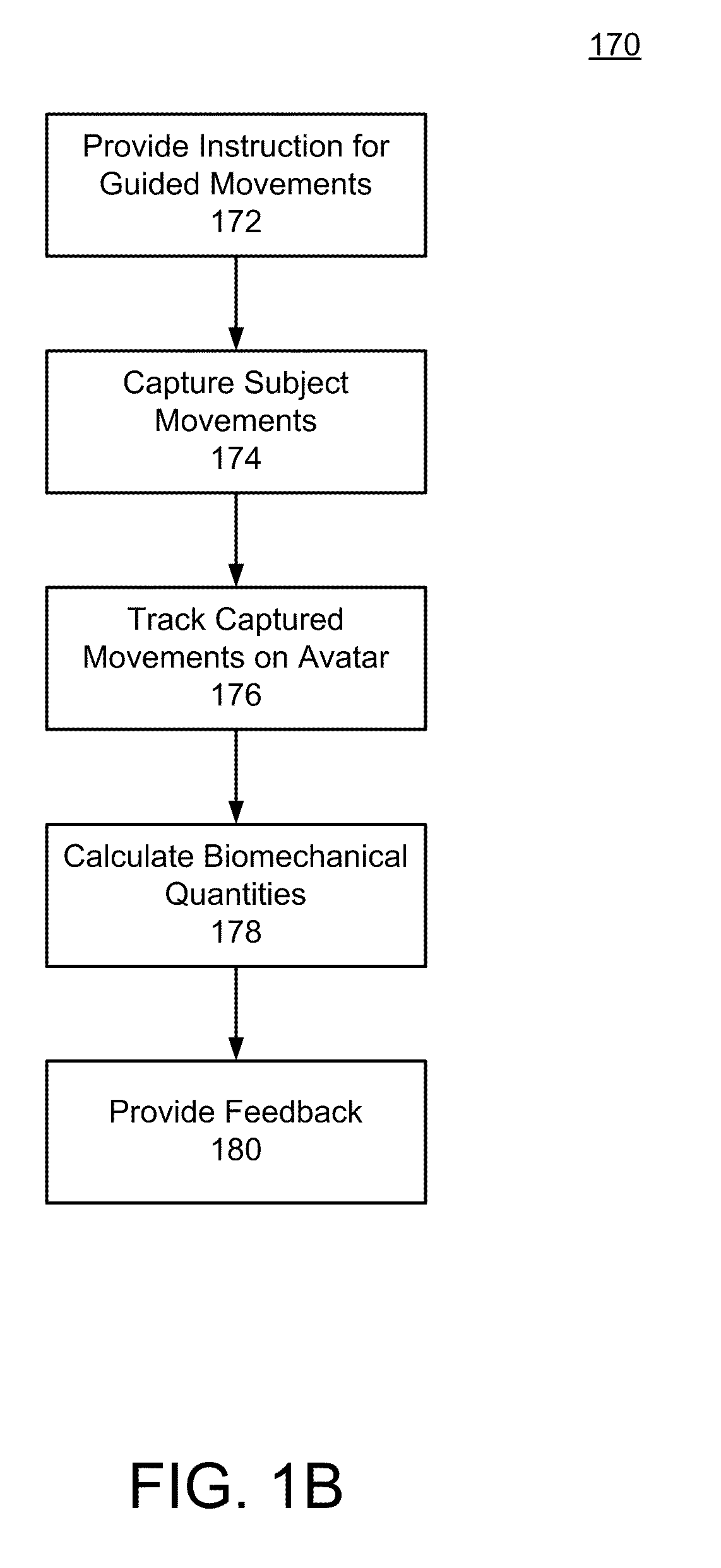

A system, method, and computer program product for providing a user with a virtual environment in which the user can perform guided activities and receive feedback are described. The user is provided with guidance to perform certain movements. The user's movements are captured in an image stream. The image stream is analyzed to estimate the user's movements, which is tracked by a user-specific human model. Biomechanical quantities such as center of pressure and muscle forces are calculated based on the tracked movements. Feedback such as the biomechanical quantities and differences between the guided movements and the captured actual movements are provided to the user.

Owner:HONDA MOTOR CO LTD

Moving object tracking method, and image processing apparatus

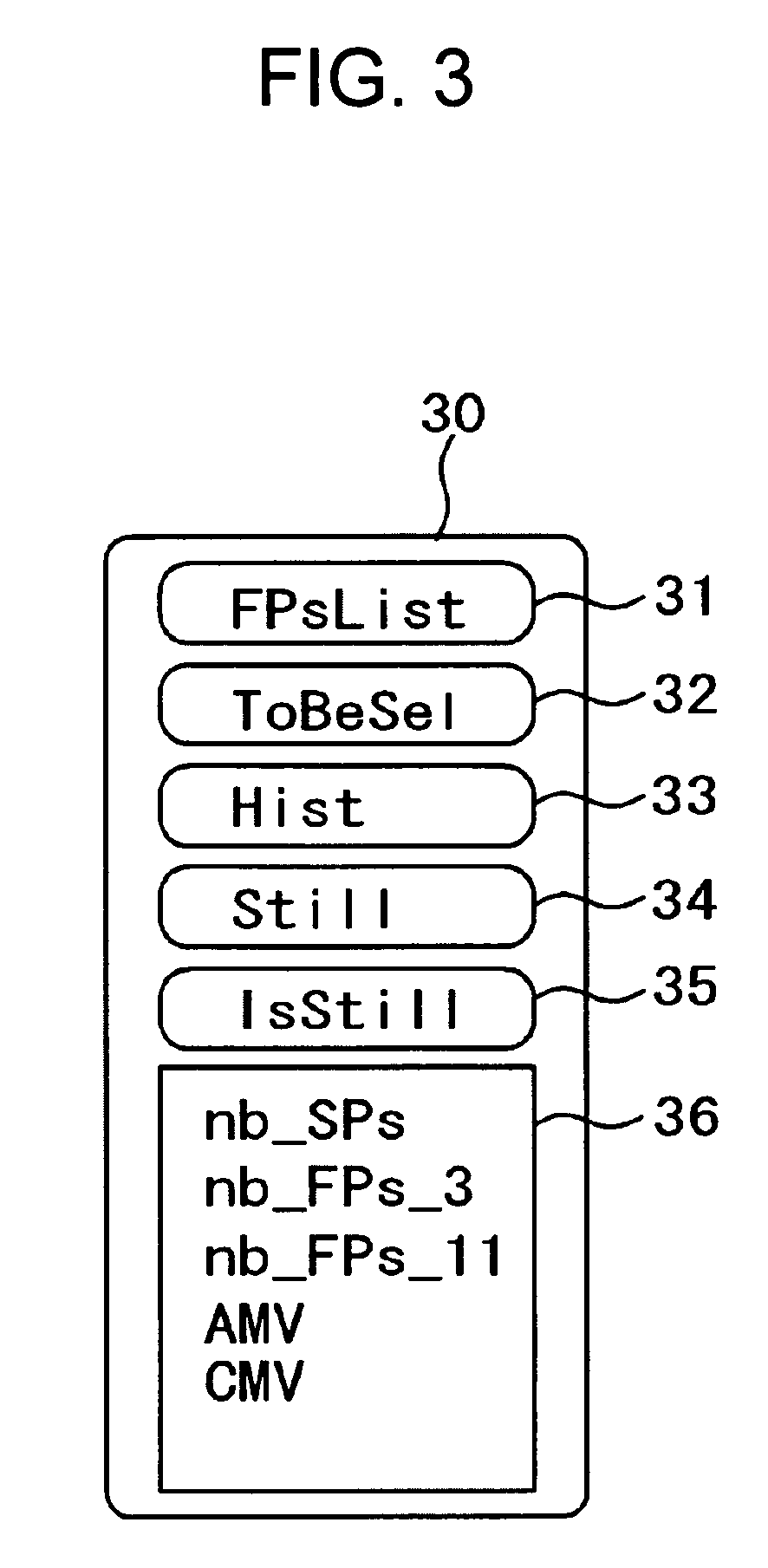

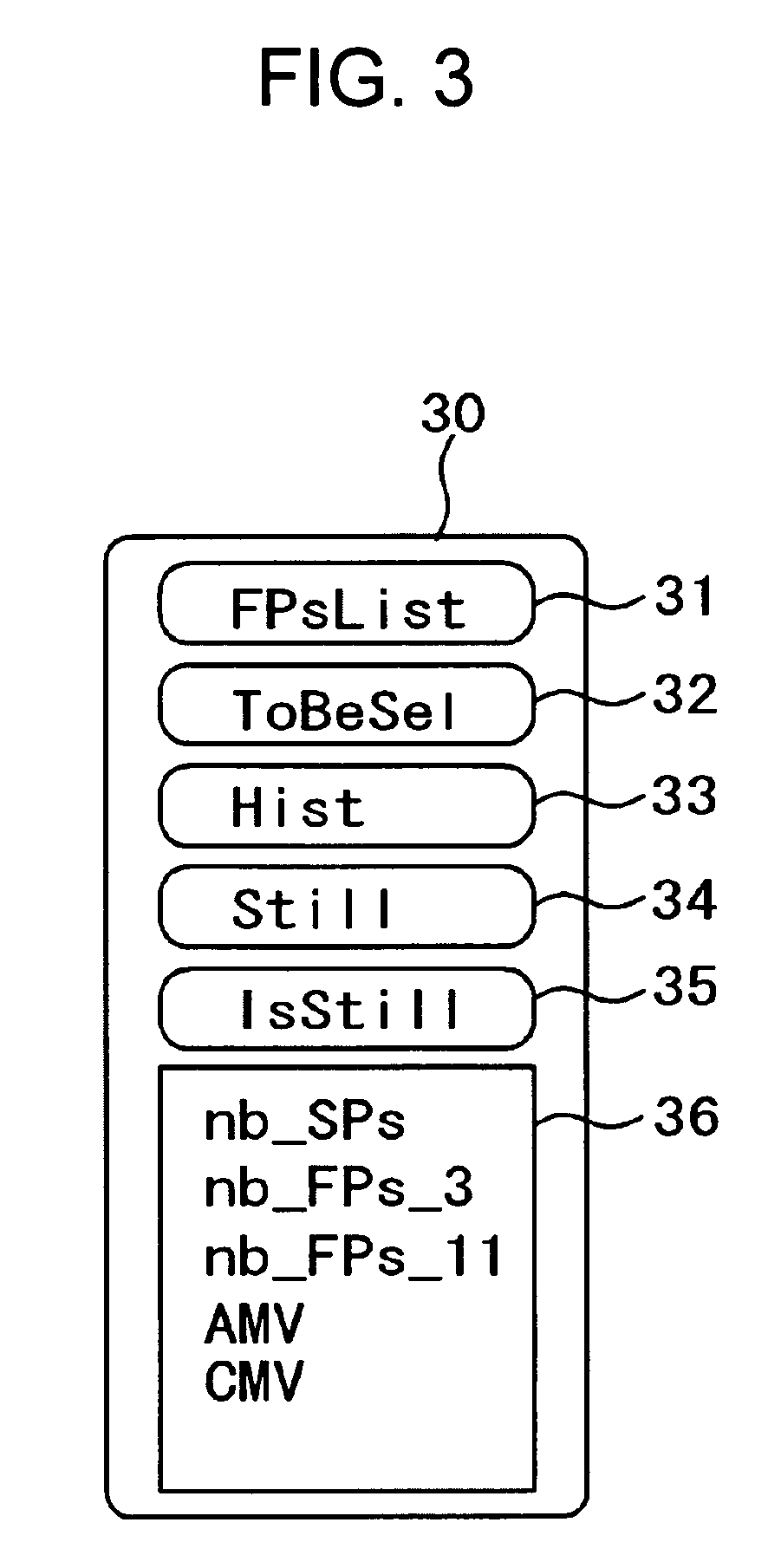

An image processing apparatus for performing a tracking of a moving object by setting a tracking area corresponding to a moving object appearing in a video image, by setting a plurality of feature points in the tracking area, and by tracking the plurality of feature points, comprises a tracking processing unit for tracking each of the plurality of feature points from the preceding frame to the present frame, and a motion calculation processing unit for specifying a position of the tracking area in the present frame by estimating the motion of the tracking area based the tracking result of each of the feature points, wherein the tracking processing unit calculates reliability indicating the height of the possibility that a feature point exists in the moving object to each of the feature points, and the motion calculation processing unit calculates the motion of the tracking area by using the reliability.

Owner:SONY CORP

Low-latency virtual reality display system

InactiveCN105404393ALower latencyImprove synchronicityInput/output for user-computer interactionGraph readingComputer graphics (images)Display device

Owner:ARIADNES THREAD USA INC DBA IMMEREX

Picture rate conversion system for high definition video

ActiveUS8184200B1Television system detailsPicture reproducers using cathode ray tubesHigh definition tvMotion vector

Systems and methods for converting a picture frame rate between a source video at a first rate and a target video at a second rate. A system may include a phase plane correlation calculator configured to determine a first motion vector estimate. The system may further include a global motion calculator configured to determine a second motion vector estimate based on the previous frame data, the current frame data, and the first motion vector estimate. The system may also include a motion compensated interpolator for assigning a final motion vector through a quality calculation and an intermediate frame generator for generating the intermediate frame using the final motion vector.

Owner:SYNAPTICS INC +1

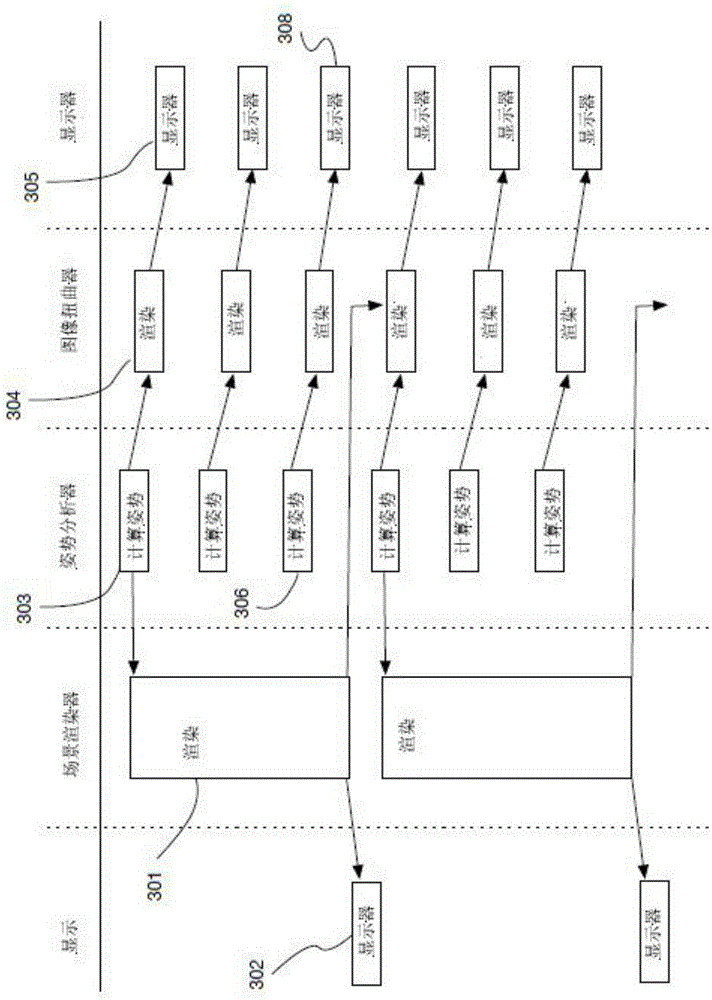

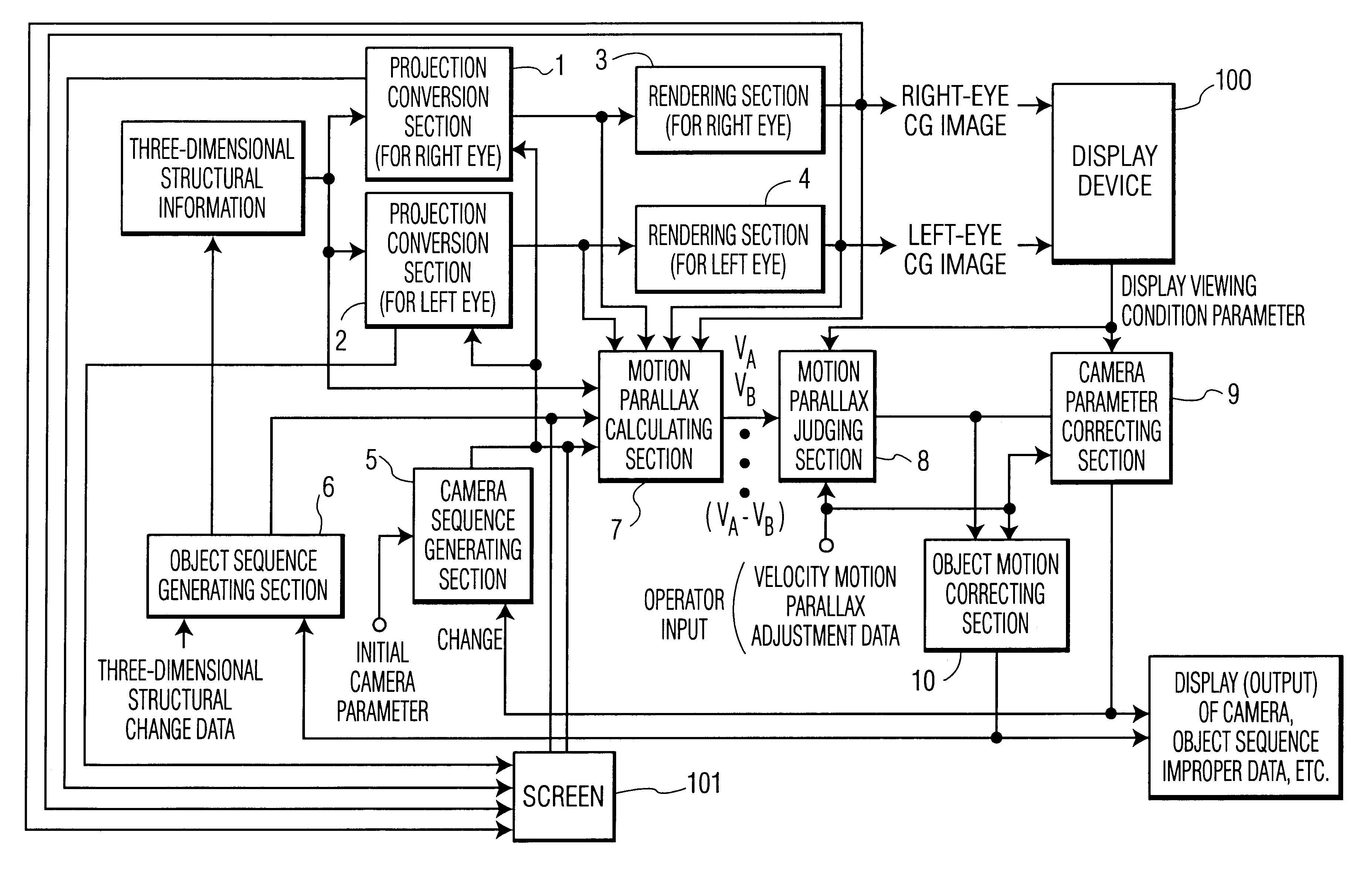

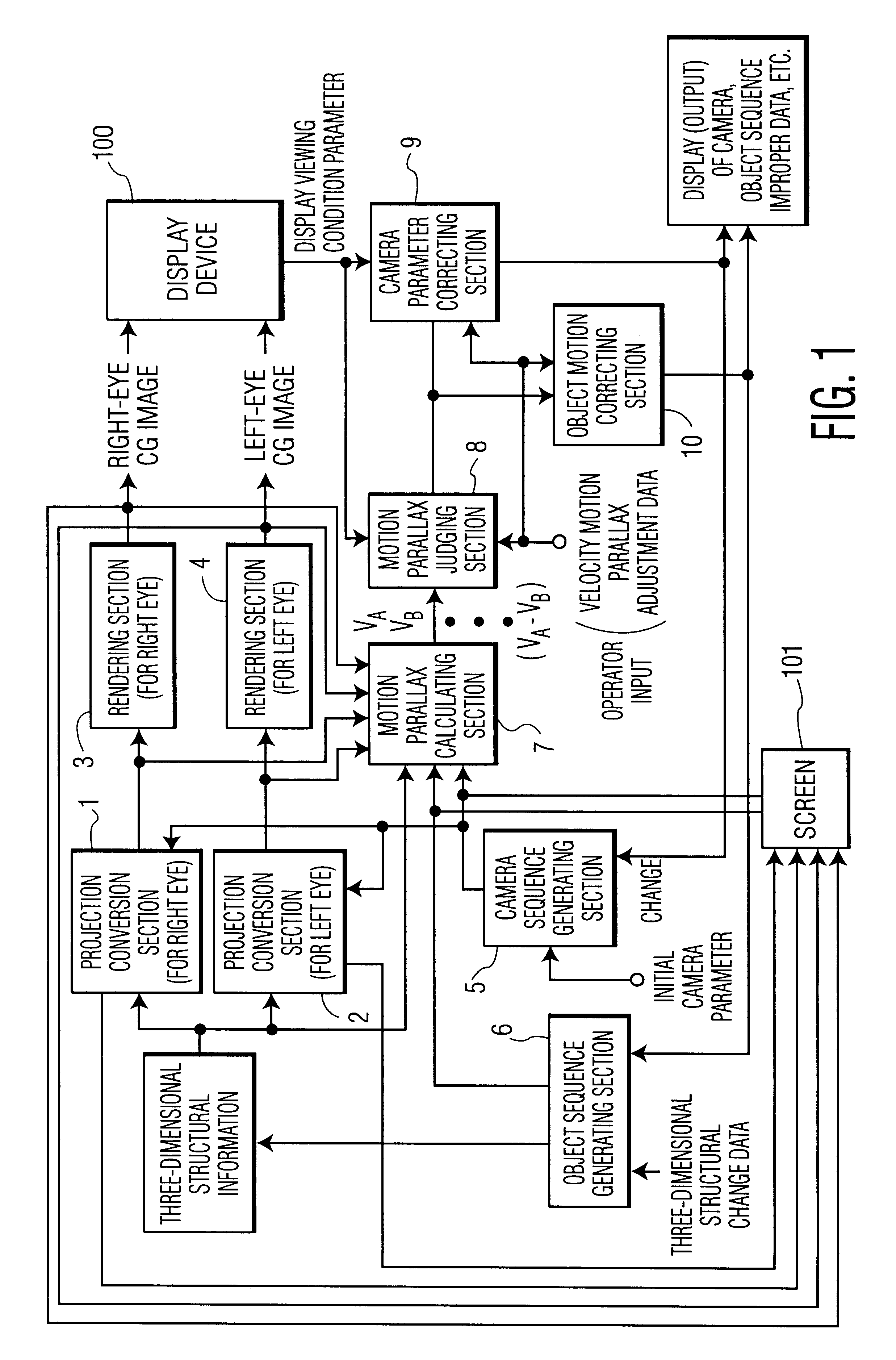

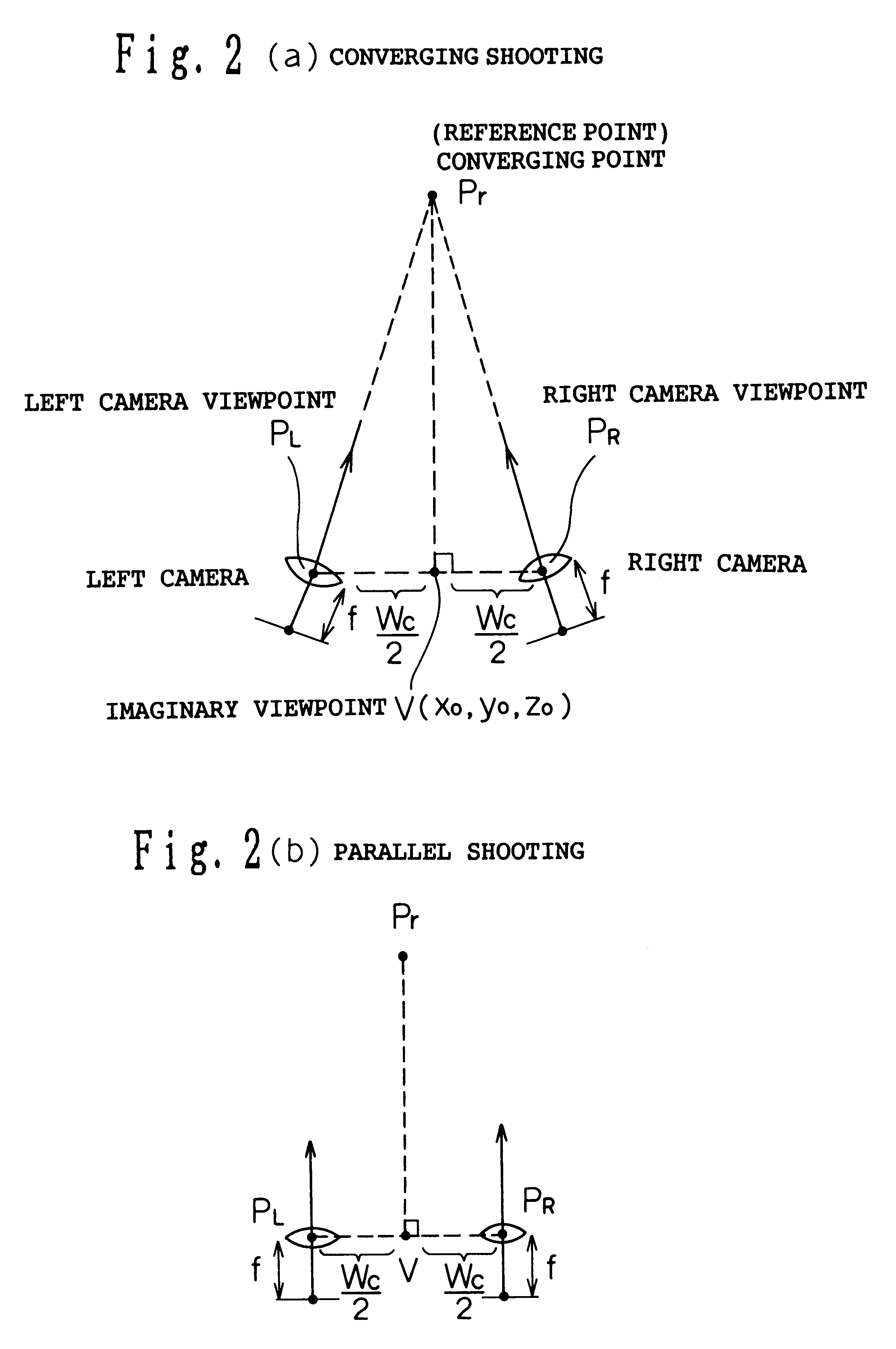

Stereoscopic computer graphics moving image generating apparatus

A stereoscopic computer graphics (CG) moving image generating apparatus includes a projection transformation section for generating a two-dimensional projection image as viewed from a camera using three-dimensional structural information of a subject; rendering sections for calculating a viewable image from the output of the projection transformation section; a camera sequence generating section for generating camera parameters defining projection transformation in the projection transformation section and capable of generating a moving image by varying the same; a motion calculating section for calculating the motion and / or motion parallax of a displayed subject using at least one of the three-dimensional structural information, the output of the projection transformation section, the output of the rendering sections, and the output of the camera sequence generating section; and a camera parameter correcting section for automatically or manually correcting the camera parameter so that the motion and / or motion parallax of the displayed subject do not exceed a tolerance range of a viewer, based at least on the output of the motion calculating section, the size of a screen for image display, and the viewing distance of the viewer.

Owner:PANASONIC CORP

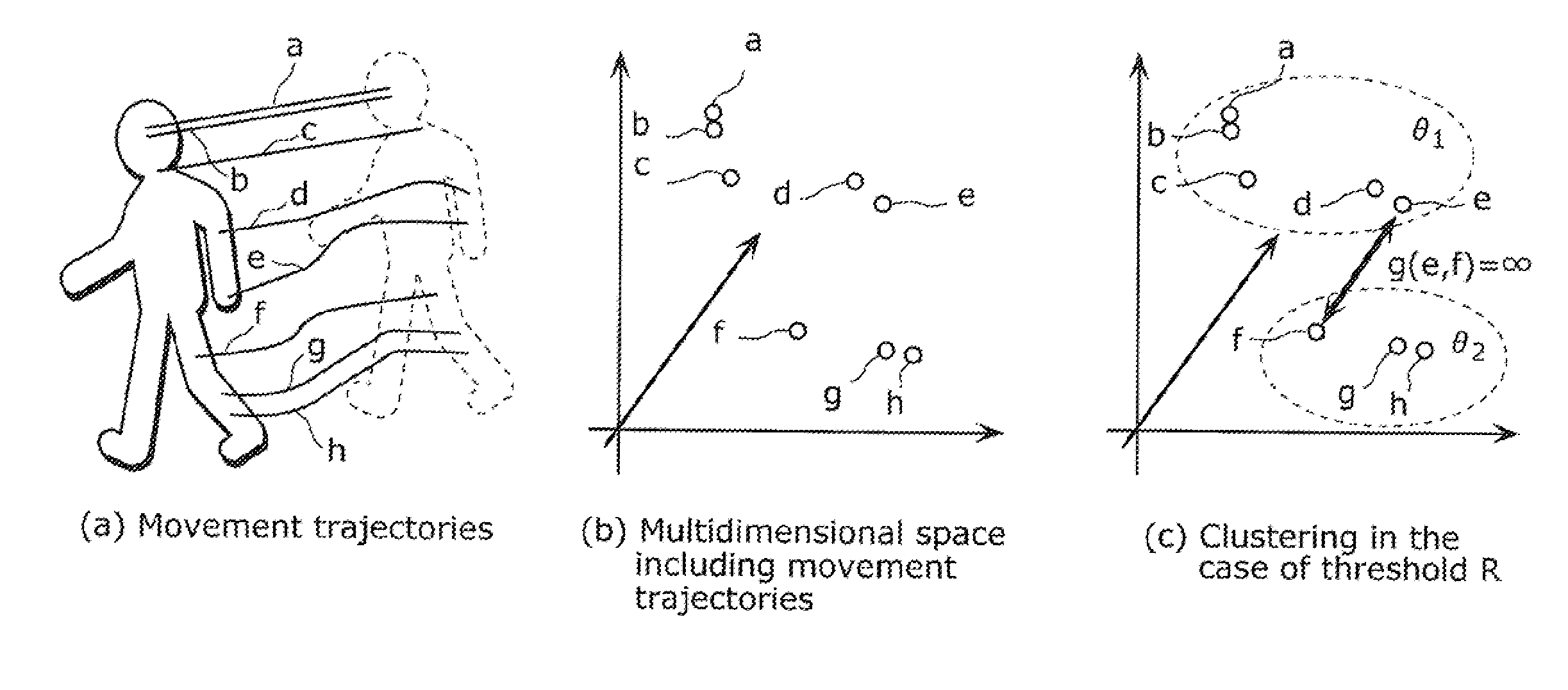

Moving object detection apparatus and moving object detection method

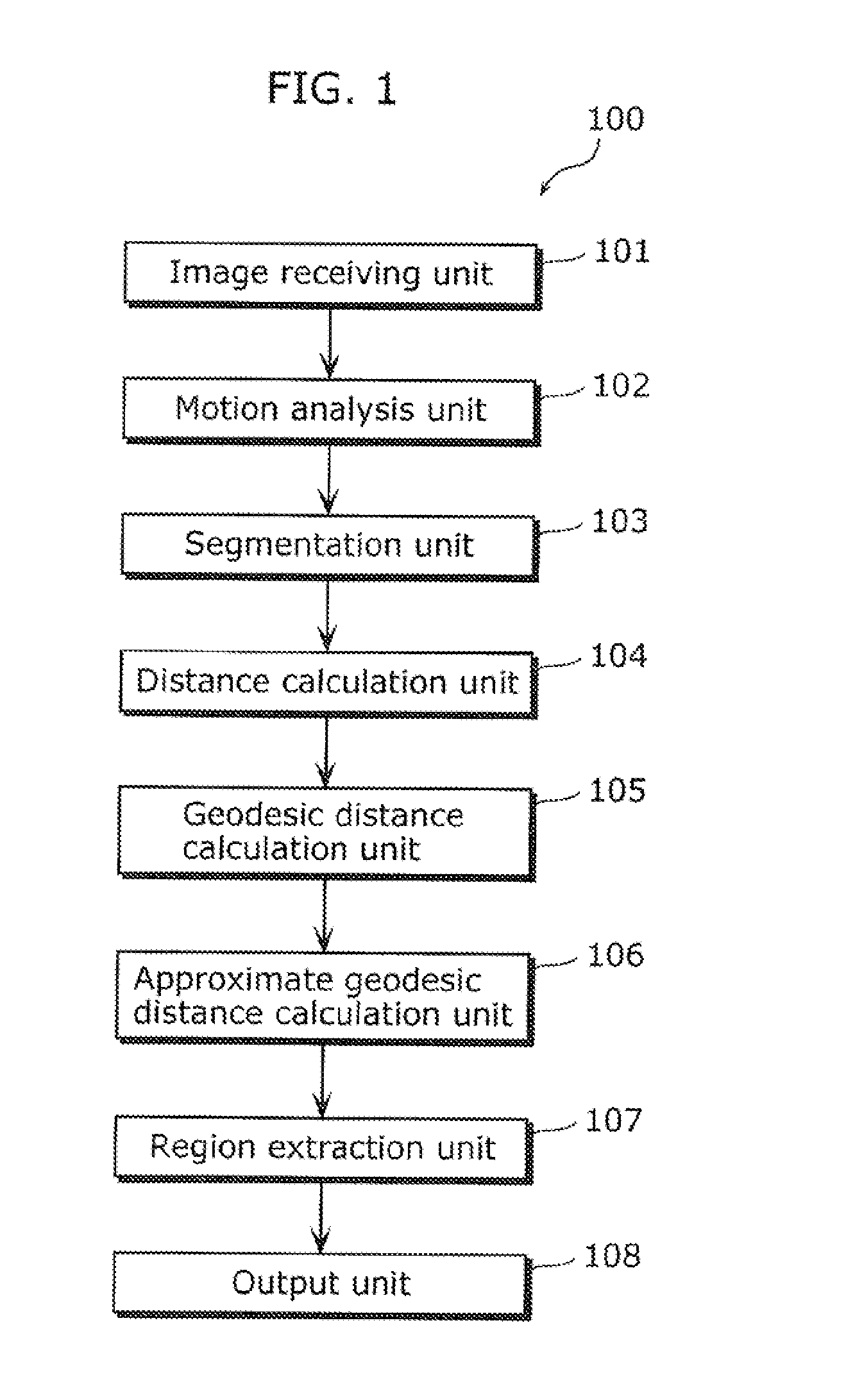

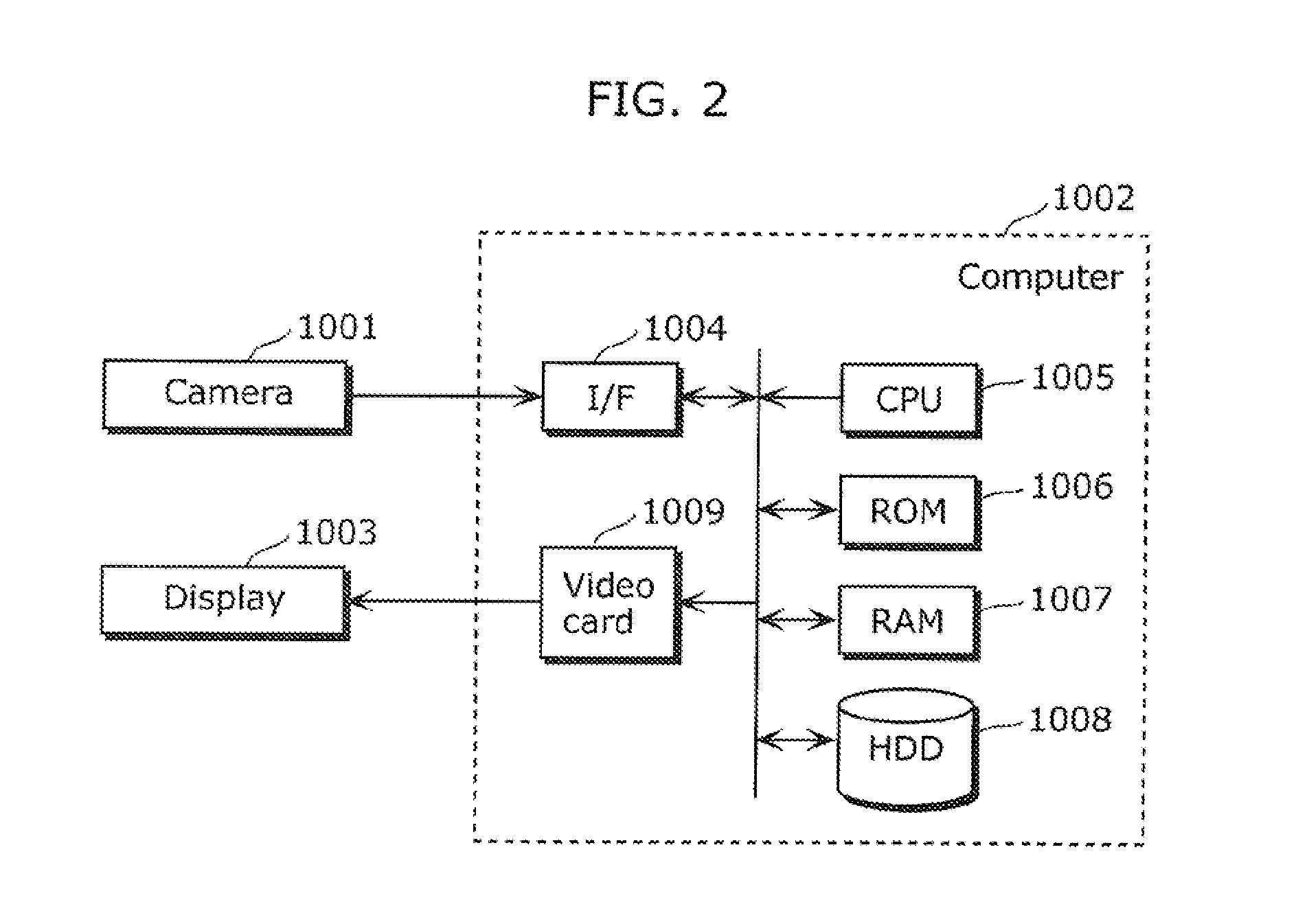

InactiveUS20110091073A1Accurately performs region extraction at high speedImage enhancementImage analysisVideo sequenceObject detection

To provide a moving object detection apparatus which accurately performs region extraction, regardless of the pose or size of a moving object. The moving object detection apparatus includes: an image receiving unit receiving the video sequence; a motion analysis unit calculating movement trajectories based on motions of the image; a segmentation unit performing segmentation so as to divide the movement trajectories into subsets, and setting a part of the movement trajectories as common points shared by the subsets; a distance calculation unit calculating a distance representing a similarity between a pair of movement trajectories, for each of the subsets; a geodesic distance calculation unit transforming the calculated distance into a geodesic distance; an approximate geodesic distance calculation unit calculating an approximate geodesic distance bridging over the subsets, by integrating geodesic distances including the common points; and a region extraction unit performing clustering on the calculated approximate geodesic distance.

Owner:SOVEREIGN PEAK VENTURES LLC

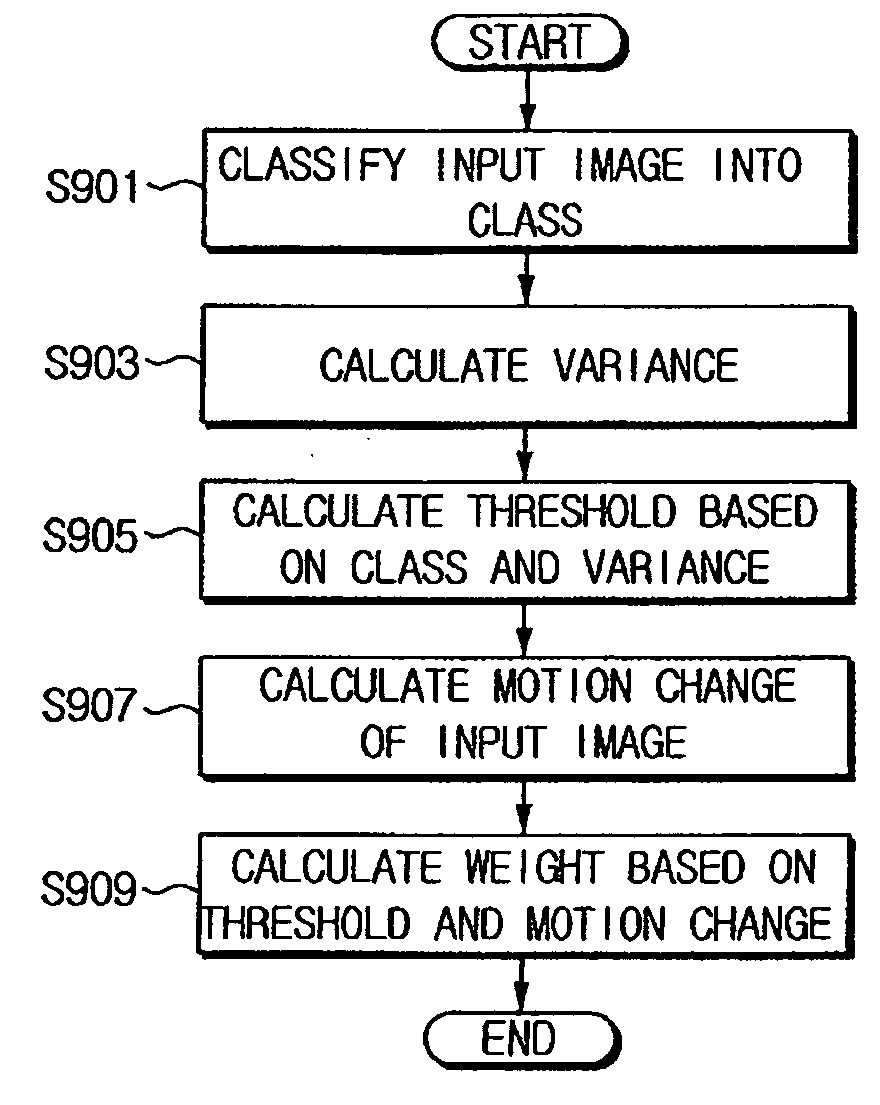

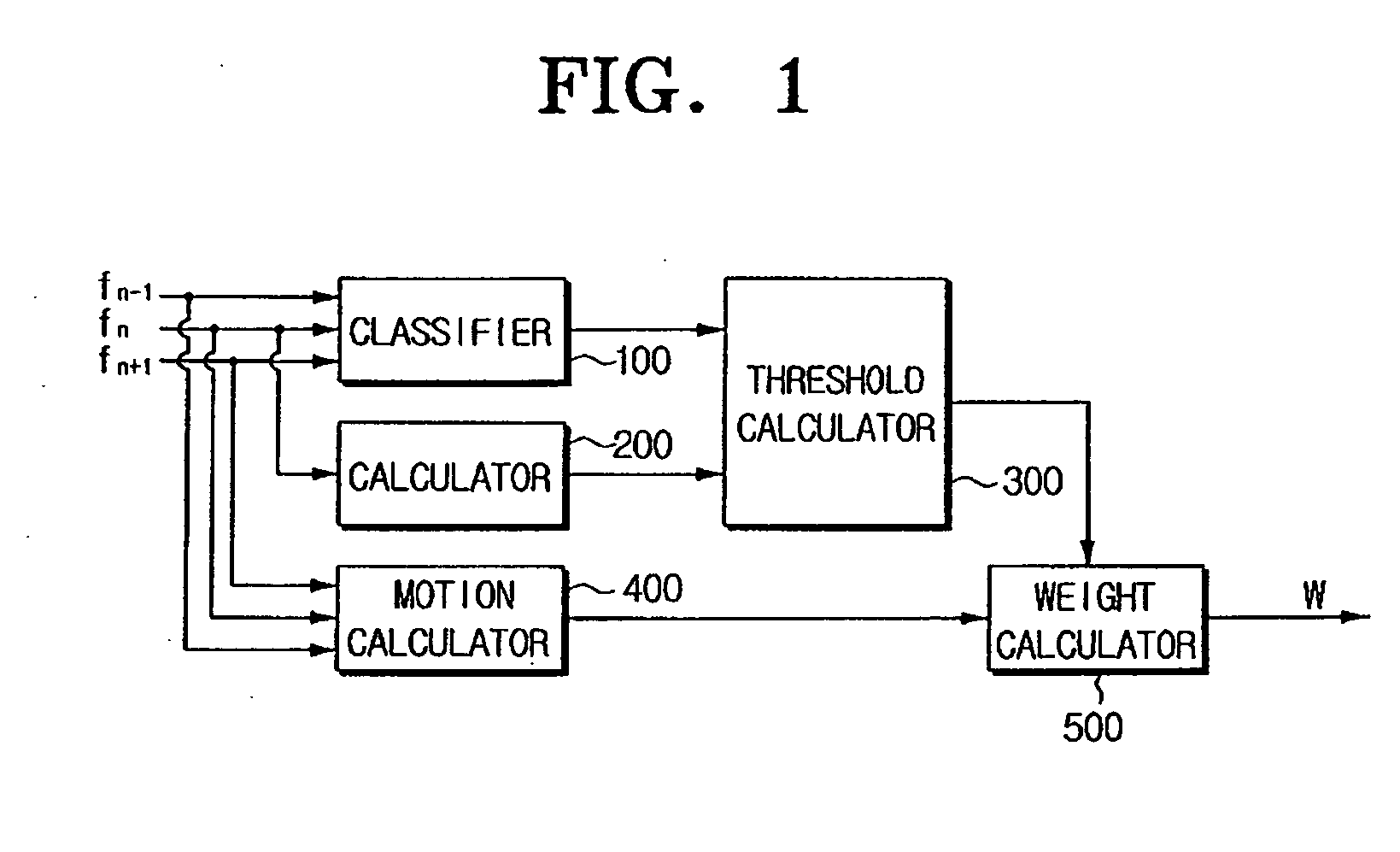

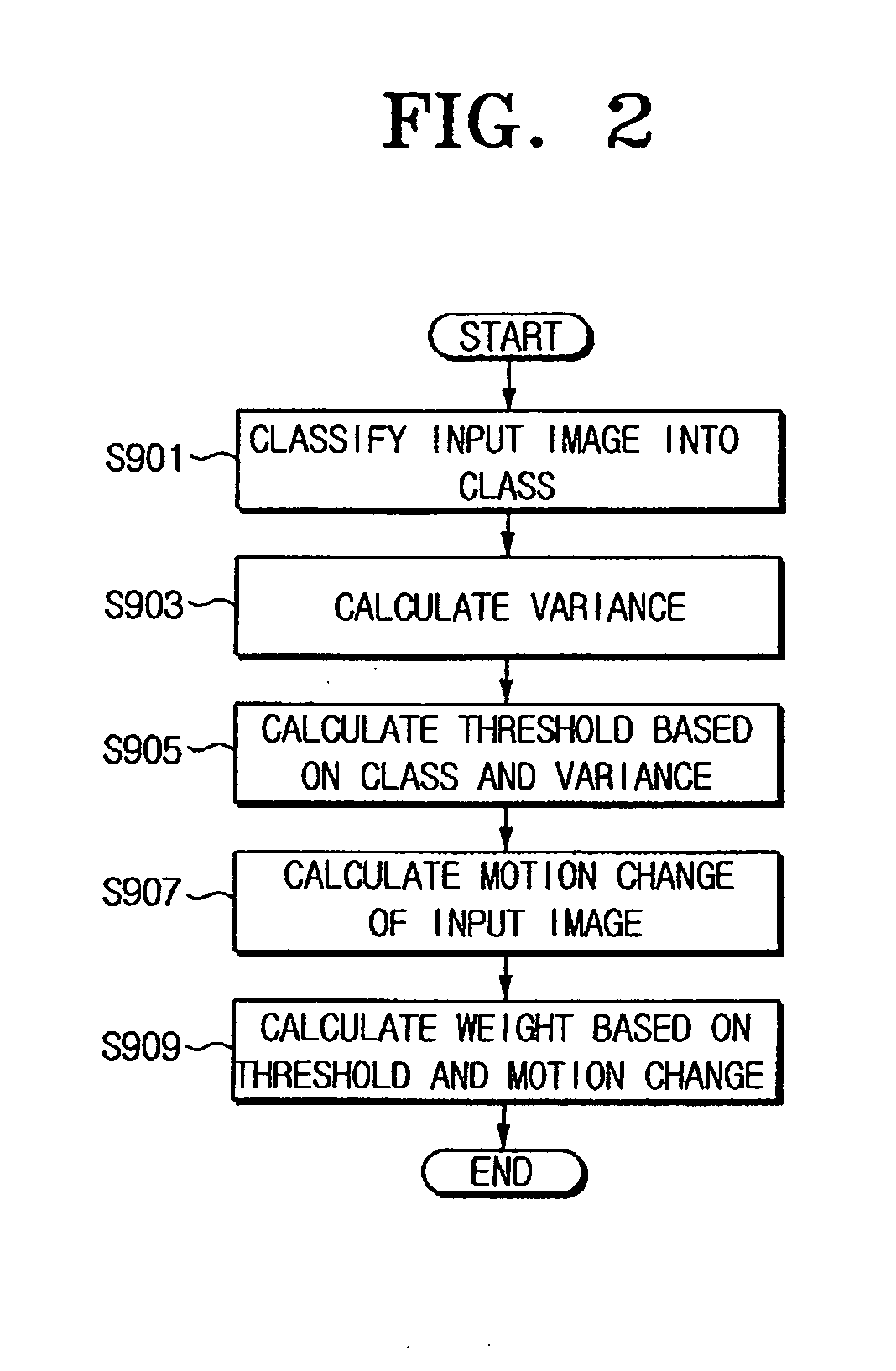

Motion adaptive image processing apparatus and method thereof

InactiveUS20060147090A1Accurate imagingTelevision system detailsImage analysisPattern recognitionImaging processing

A motion adaptive image processing apparatus includes a classifier to classify a current field of a sequence of in put fields into one or more class regions, a calculator to calculate a variance based on pixel values of pixels located in a predetermined region around a certain pixel of the current field, a threshold calculator to calculate a maximum variance and a minimum variance which are pre-set according to the one or more class regions, the threshold calculator calculating a threshold based on the calculated maximum variance and the minimum variance, a motion calculator to calculate a motion change of an image using a previous field and a next field of the current field in the sequence of the input fields, and a weight calculator to calculate the weight to be applied to the certain pixel based on the calculated threshold and the calculated motion change of the image.

Owner:SAMSUNG ELECTRONICS CO LTD

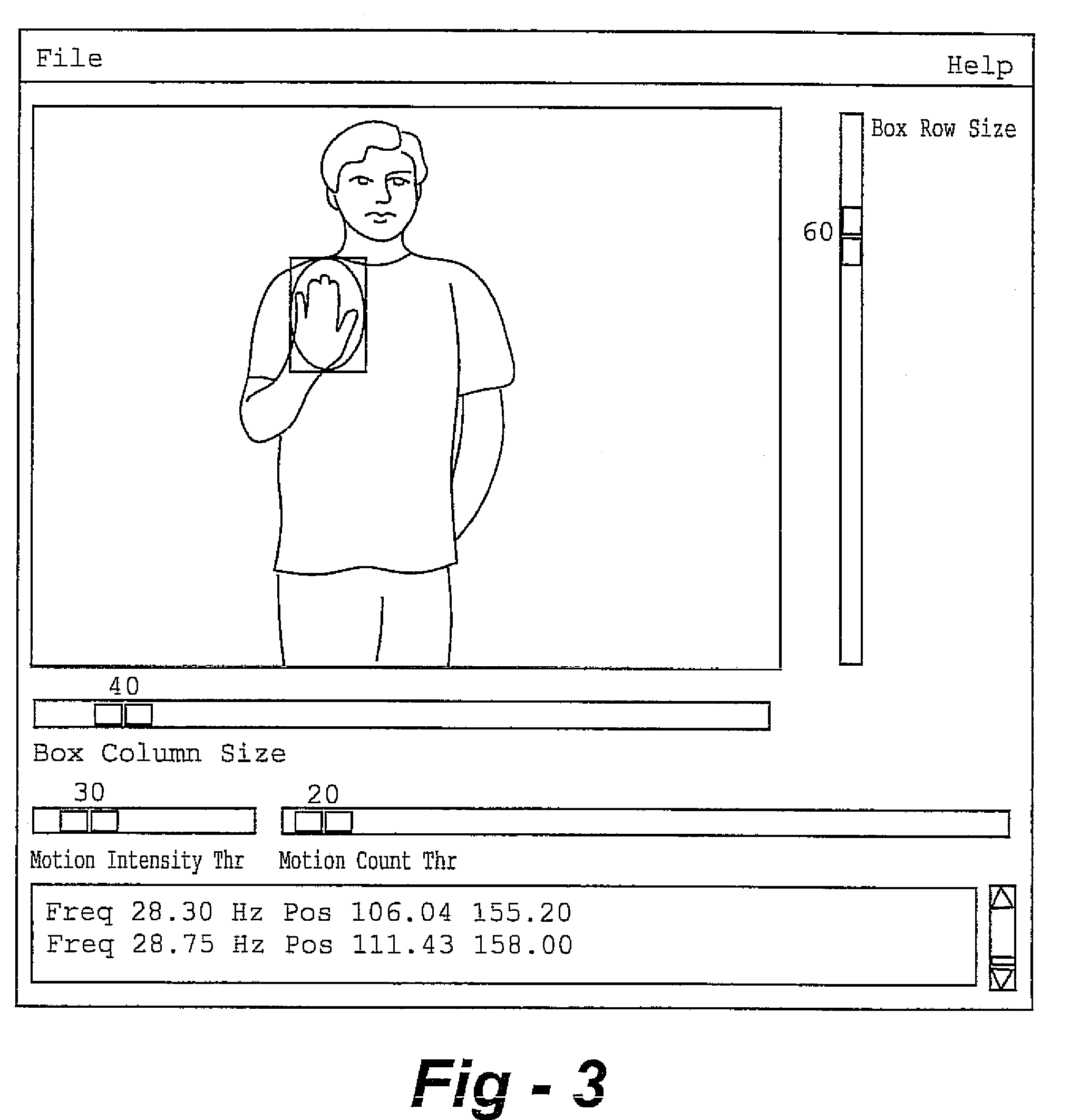

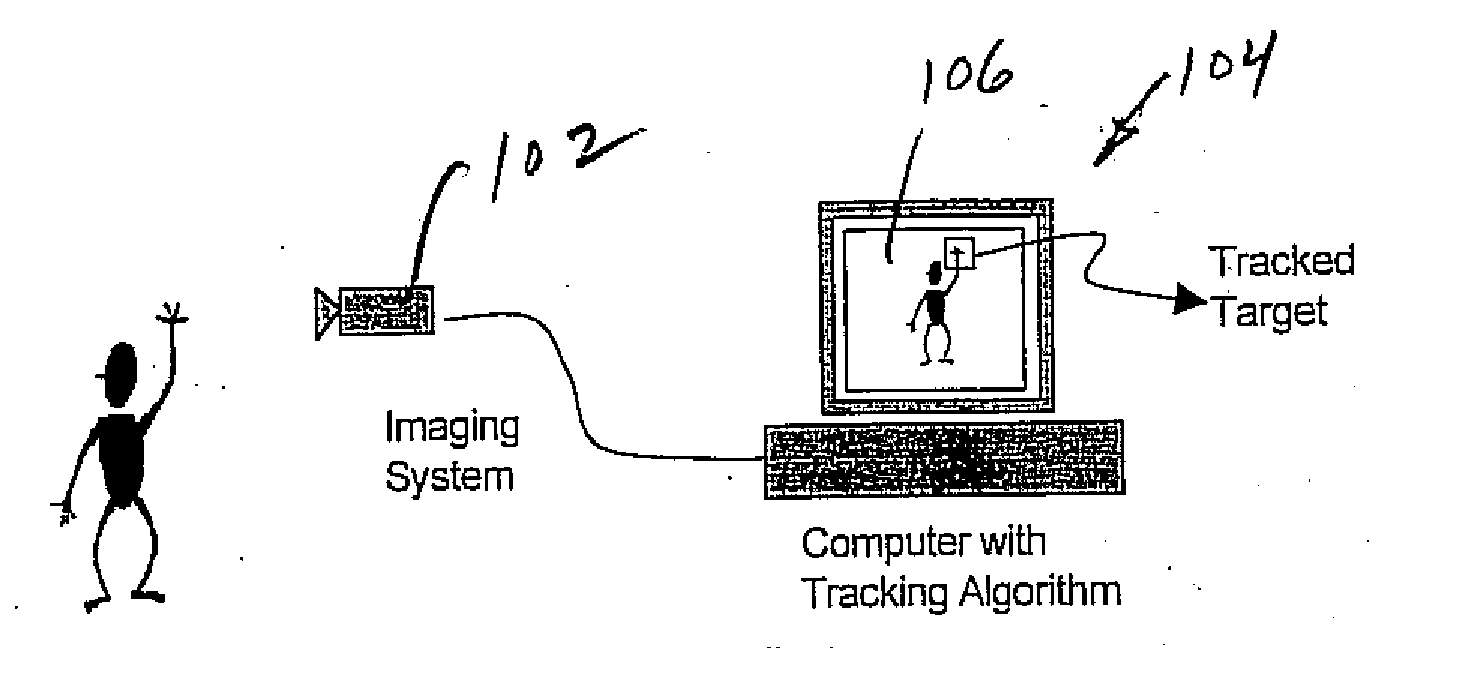

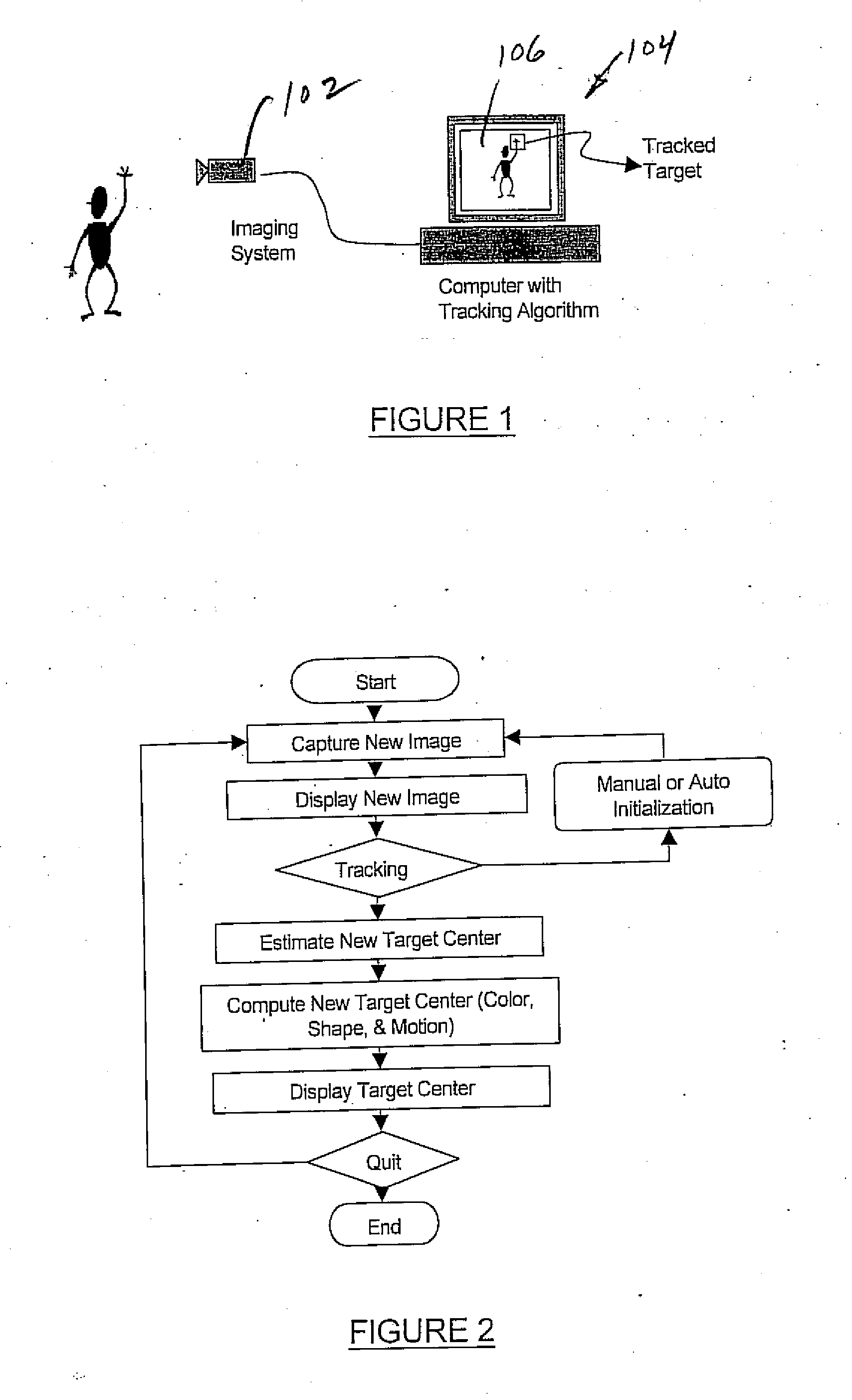

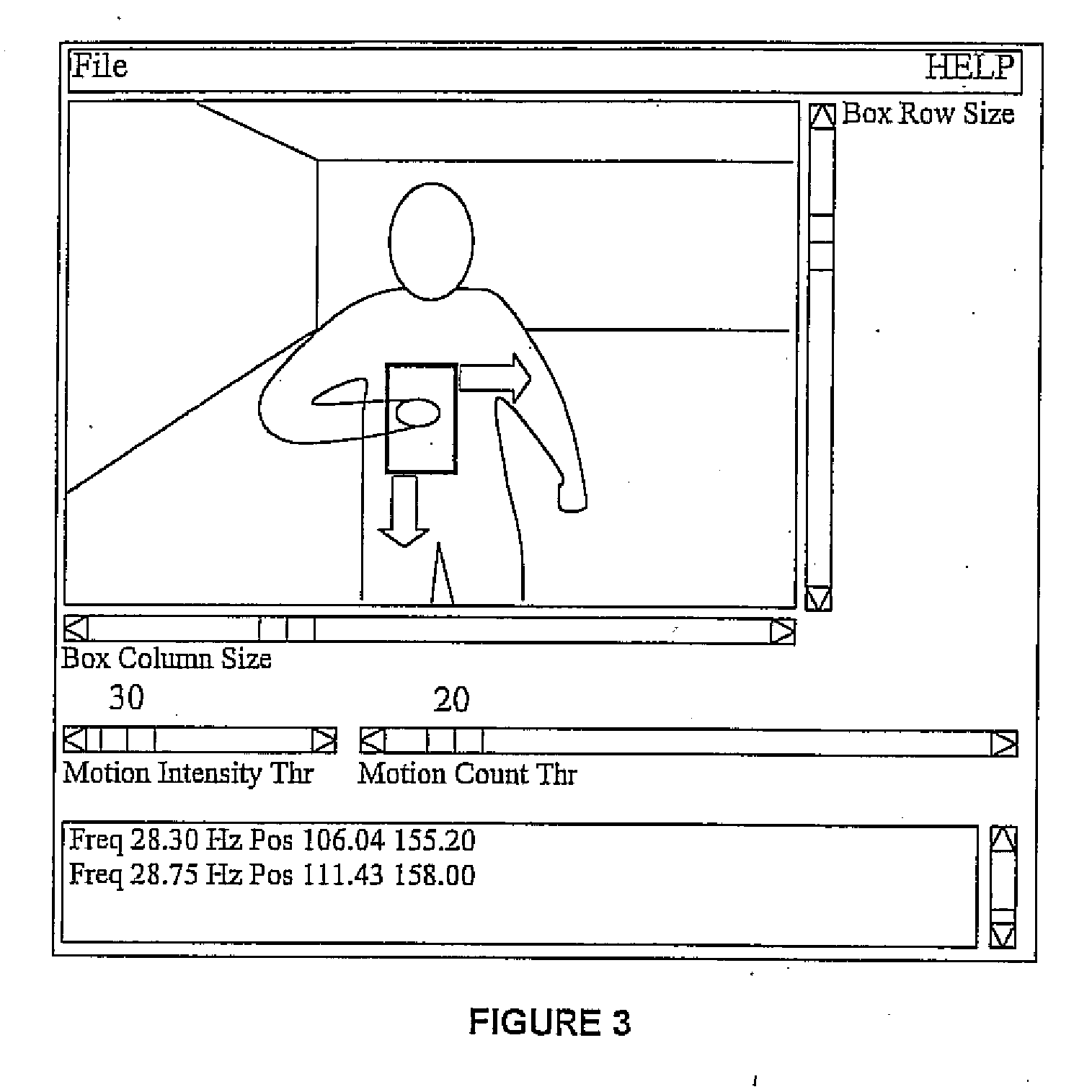

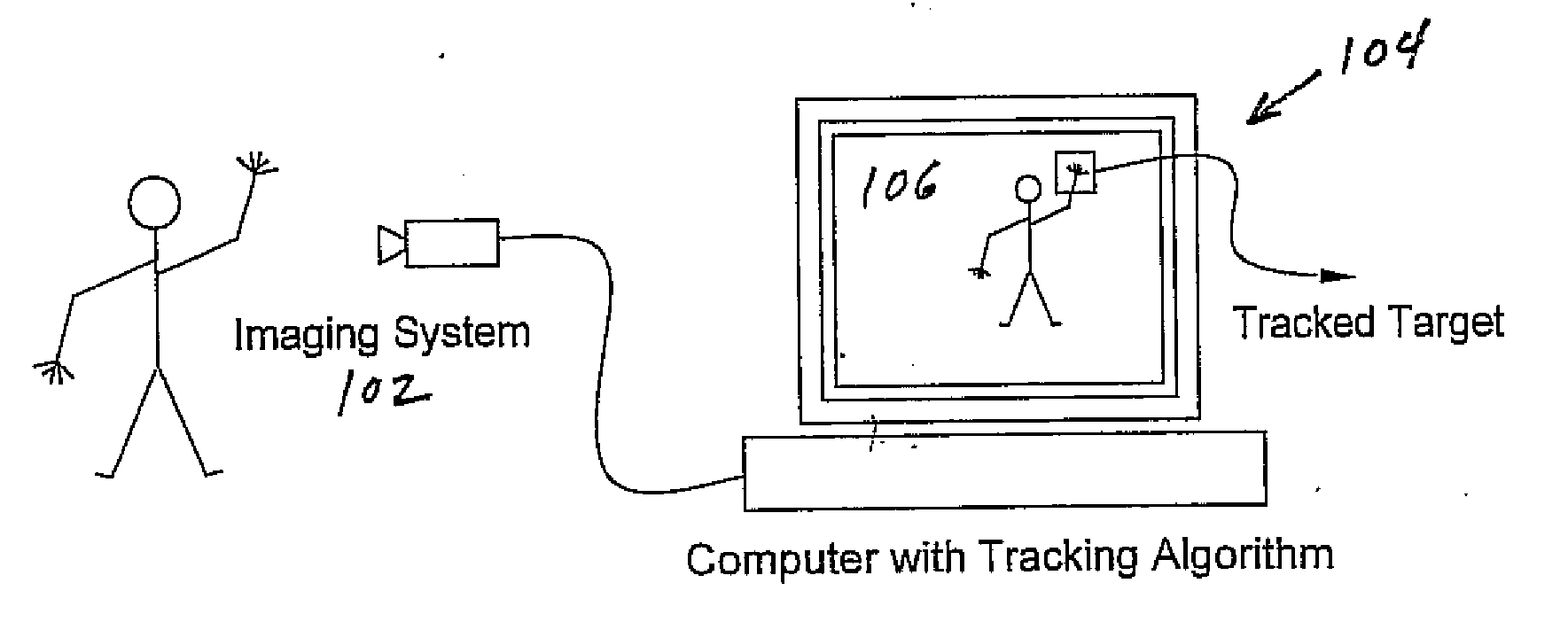

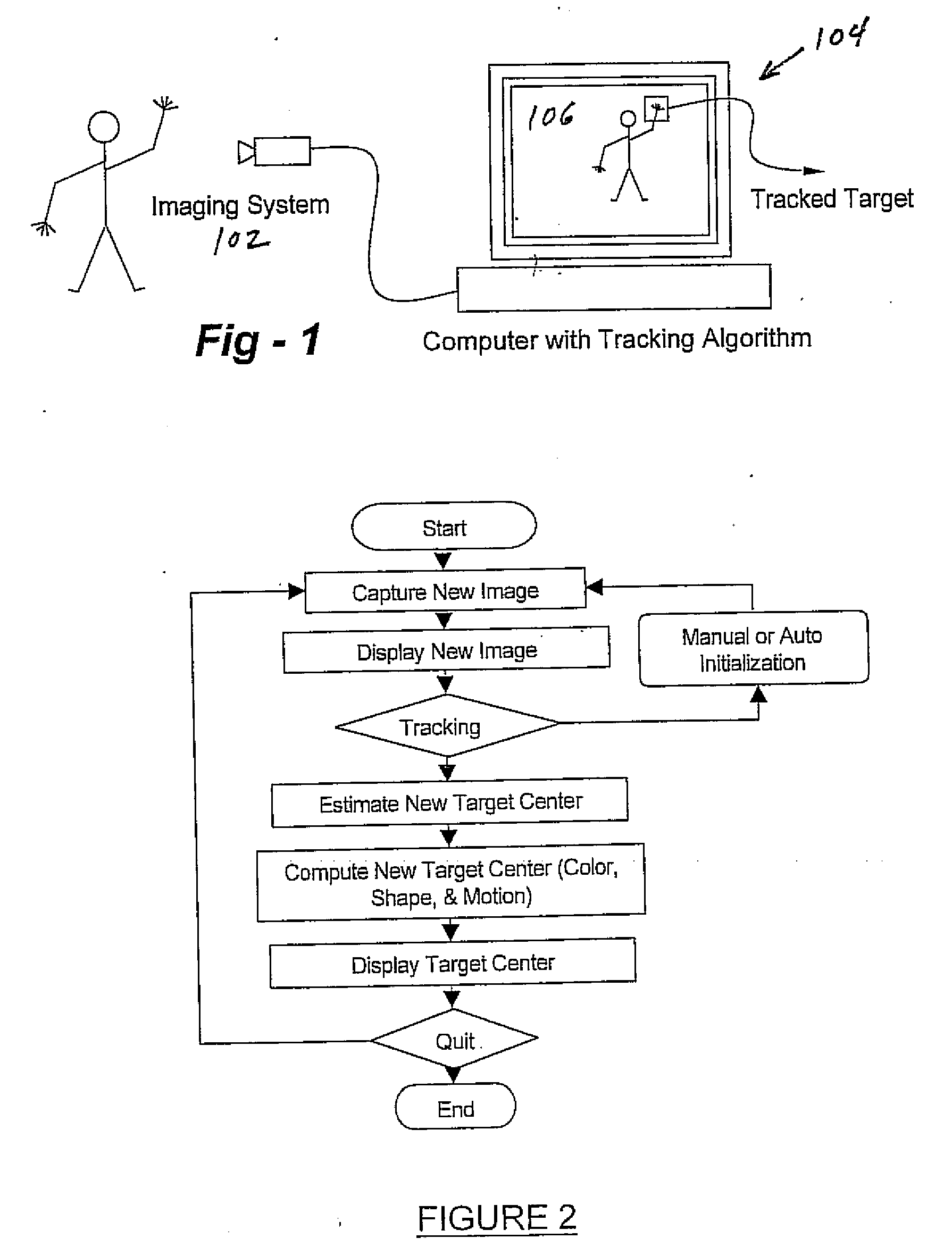

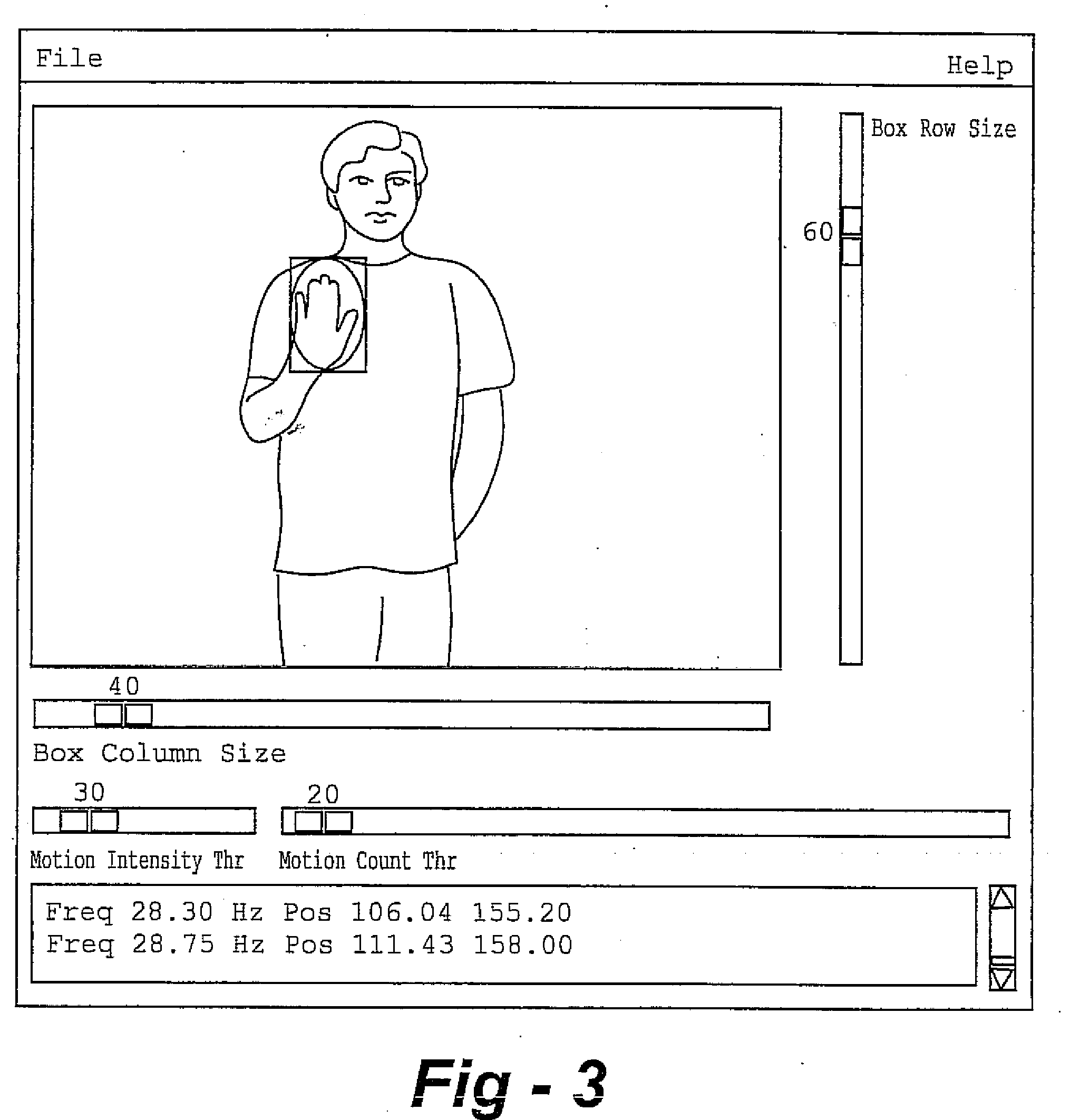

Real-time head tracking system for computer games and other applications

InactiveUS20070066393A1Computationally efficientImage enhancementDrawing from basic elementsComputer usersObject based

A real-time computer vision system tracks the head of a computer user to implement real-time control of games or other applications. The imaging hardware includes a color camera, frame grabber, and processor. The software consists of the low-level image grabbing software and a tracking algorithm. The system tracks objects based on the color, motion and / or shape of the object in the image. A color matching function is used to compute three measures of the target's probable location based on the target color, shape and motion. The method then computes the most probable location of the target using a weighting technique. Once the system is running, a graphical user interface displays the live image from the color camera on the computer screen. The operator can then use the mouse to select a target for tracking. The system will then keep track of the moving target in the scene in real-time.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

Tweening-based codec for scaleable encoders and decoders with varying motion computation capability

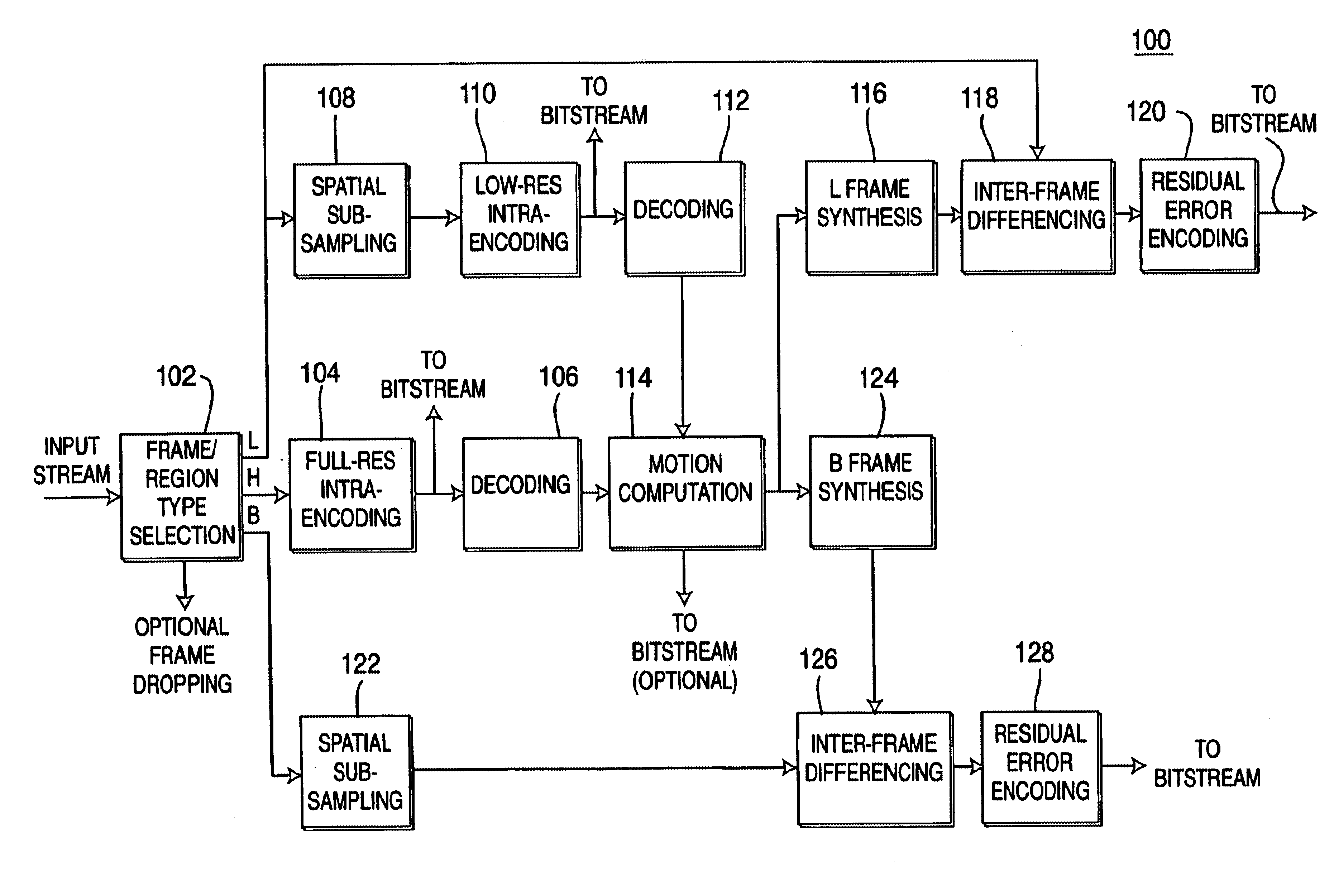

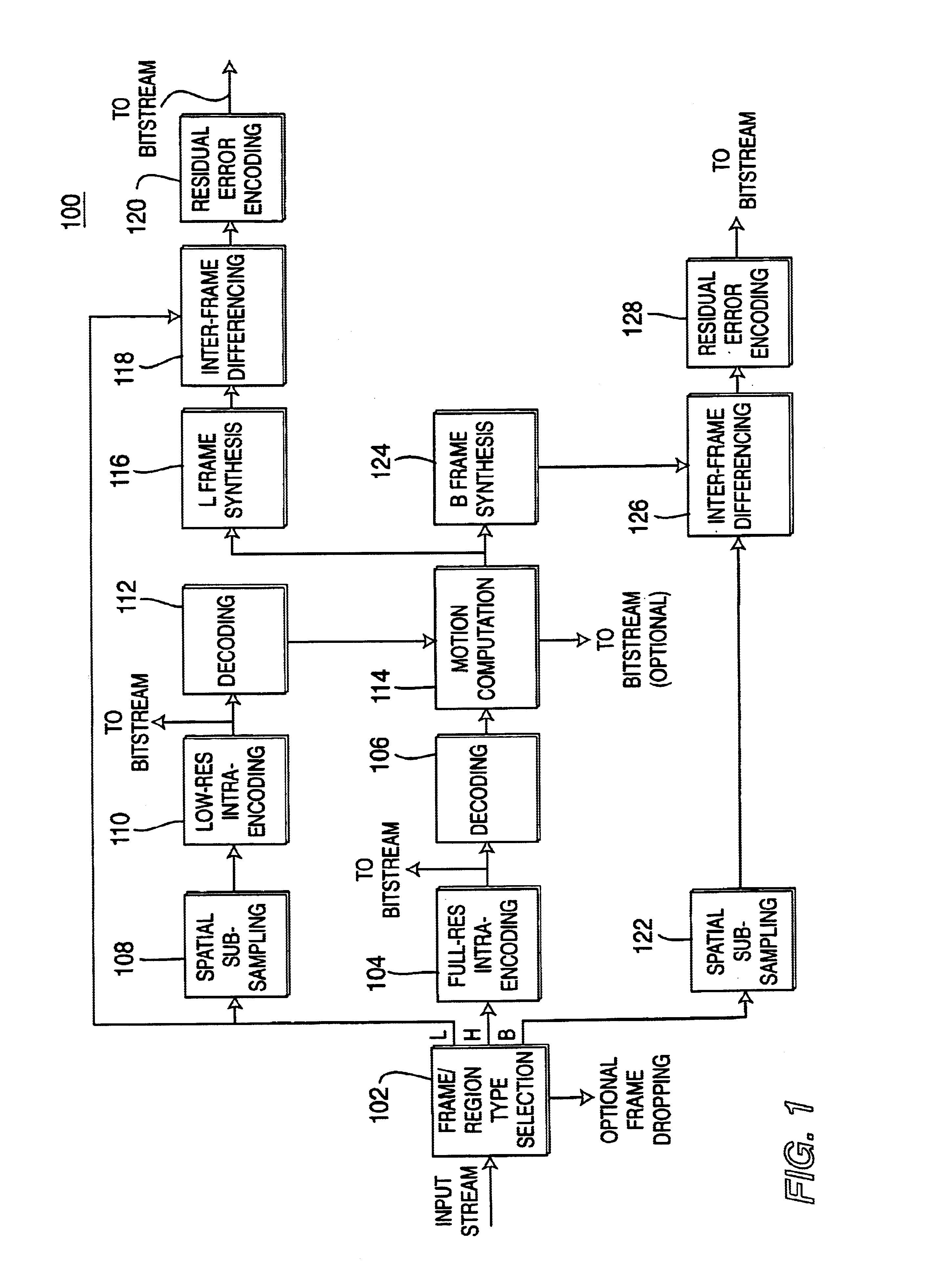

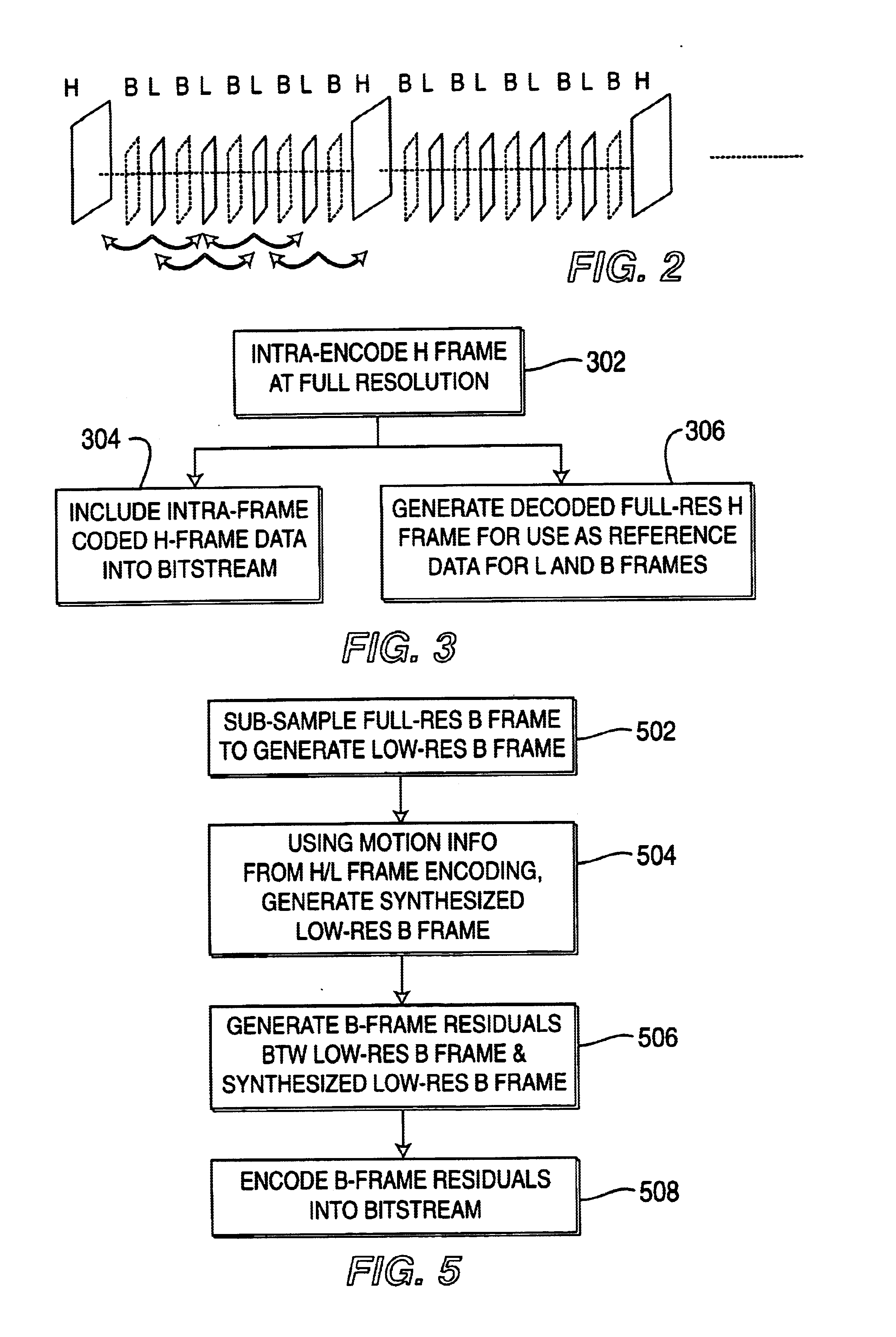

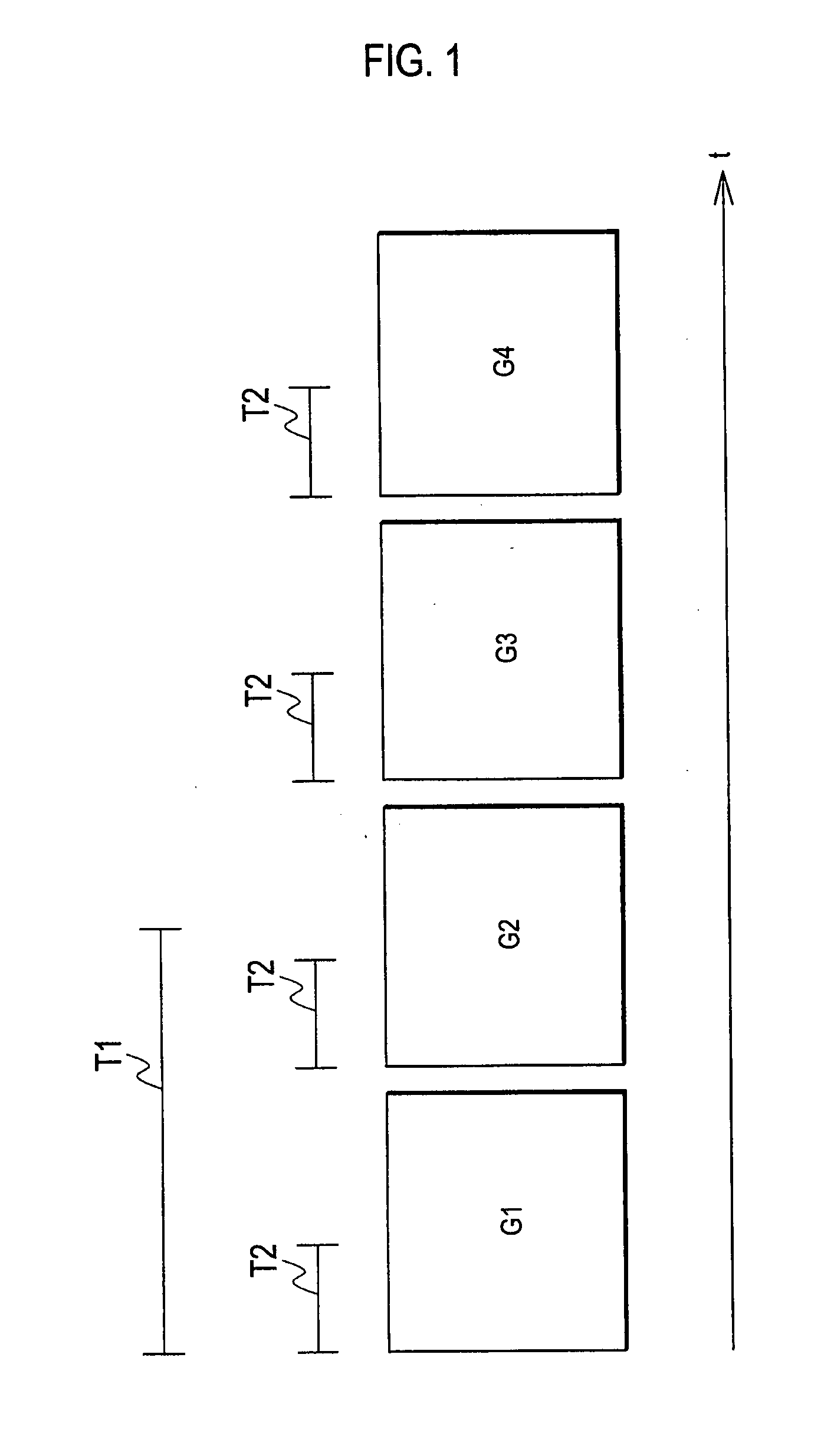

InactiveUS6907073B2Color television with pulse code modulationColor television with bandwidth reductionVideo bitstreamImage resolution

A scaleable video encoder has one or more encoding modes in which at least some, and possibly all, of the motion information used during motion-based predictive encoding of a video stream is excluded from the resulting encoded video bitstream, where a corresponding video decoder is capable of performing its own motion computation to generate its own version of the motion information used to perform motion-based predictive decoding in order to decode the bitstream to generate a decoded video stream. All motion computation, whether at the encoder or the decoder, is preferably performed on decoded data. For example, frames may be encoded as either H, L, or B frames, where H frames are intra-coded at full resolution and L frames are intra-coded at low resolution. The motion information is generated by applying motion computation to decoded L and H frames and used to generate synthesized L frames. L-frame residual errors are generated by performing inter-frame differencing between the synthesized and original L frames and are encoded into the bitstream. In addition, synthesized B frames are generated by tweening between the decoded H and L frames and B-frame residual errors are generated by performing inter-frame differencing between the synthesized B frames and, depending on the implementation, either the original B frames or sub-sampled B frames. These B-frame residual errors are also encoded into the bitstream. The ability of the decoder to perform motion computation enables motion-based predictive encoding to be used to generate an encoded bitstream without having to expend bits for explicitly encoding any motion information.

Owner:SRI INTERNATIONAL

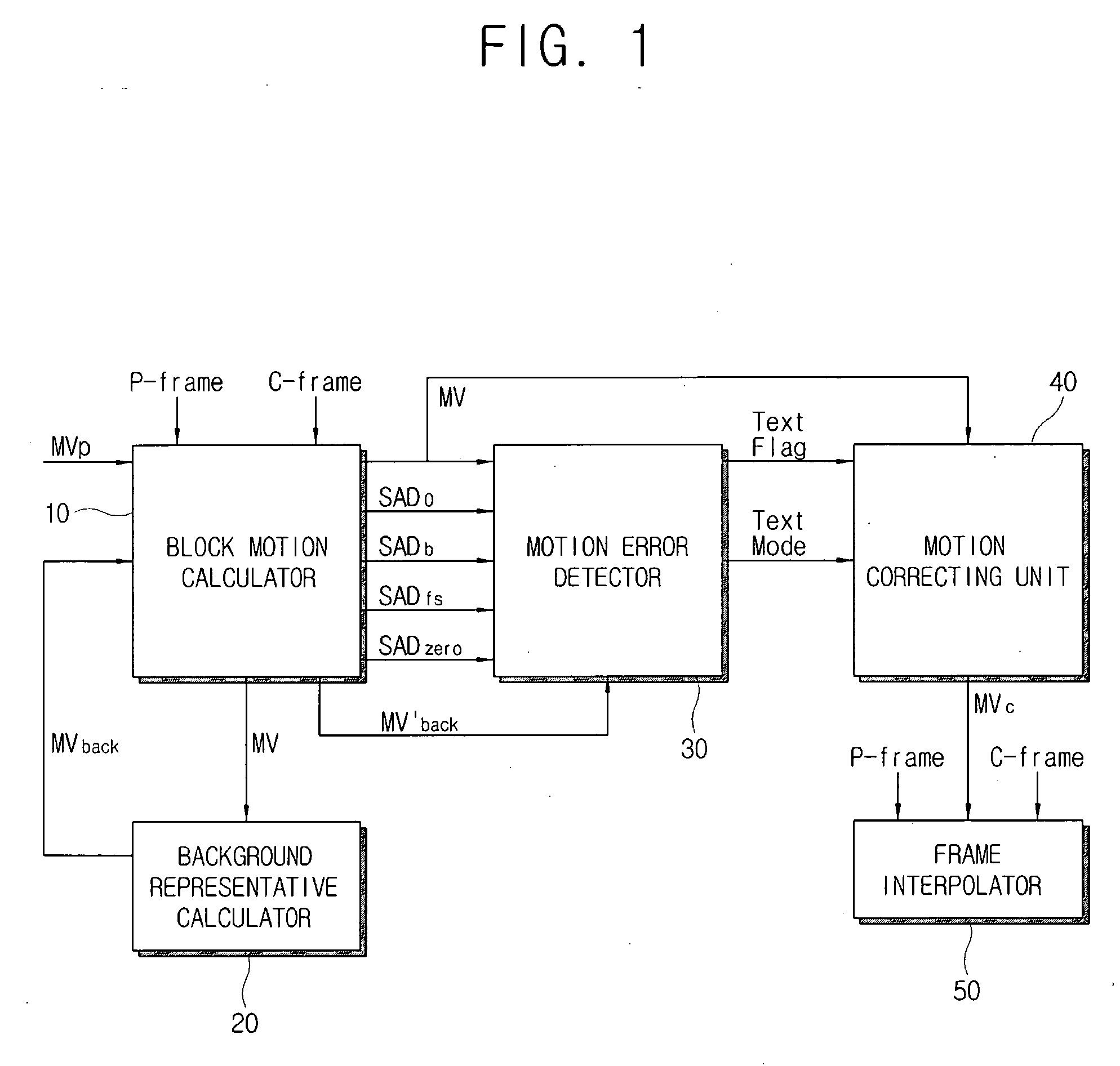

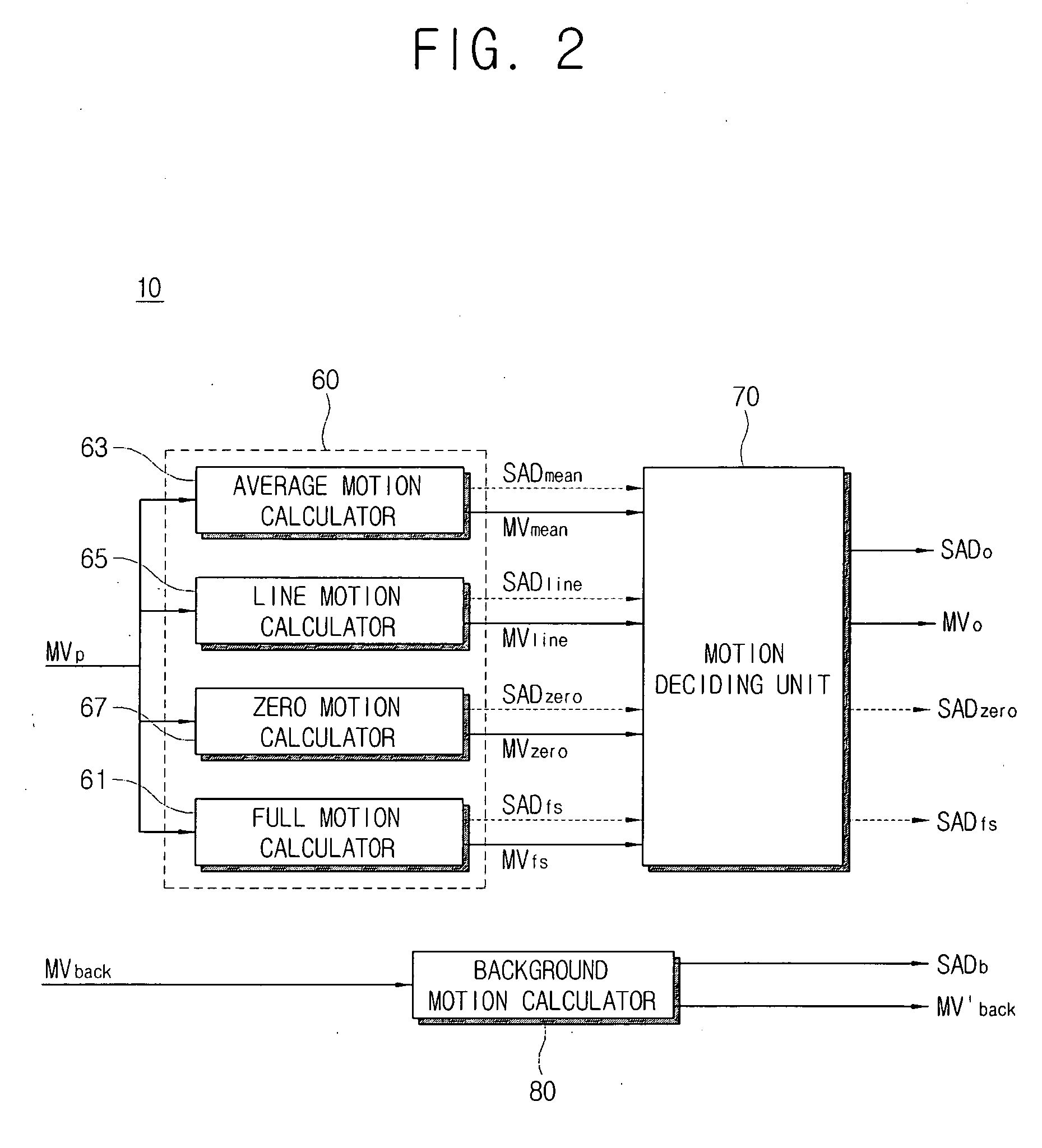

Motion estimating apparatus and motion estimating method

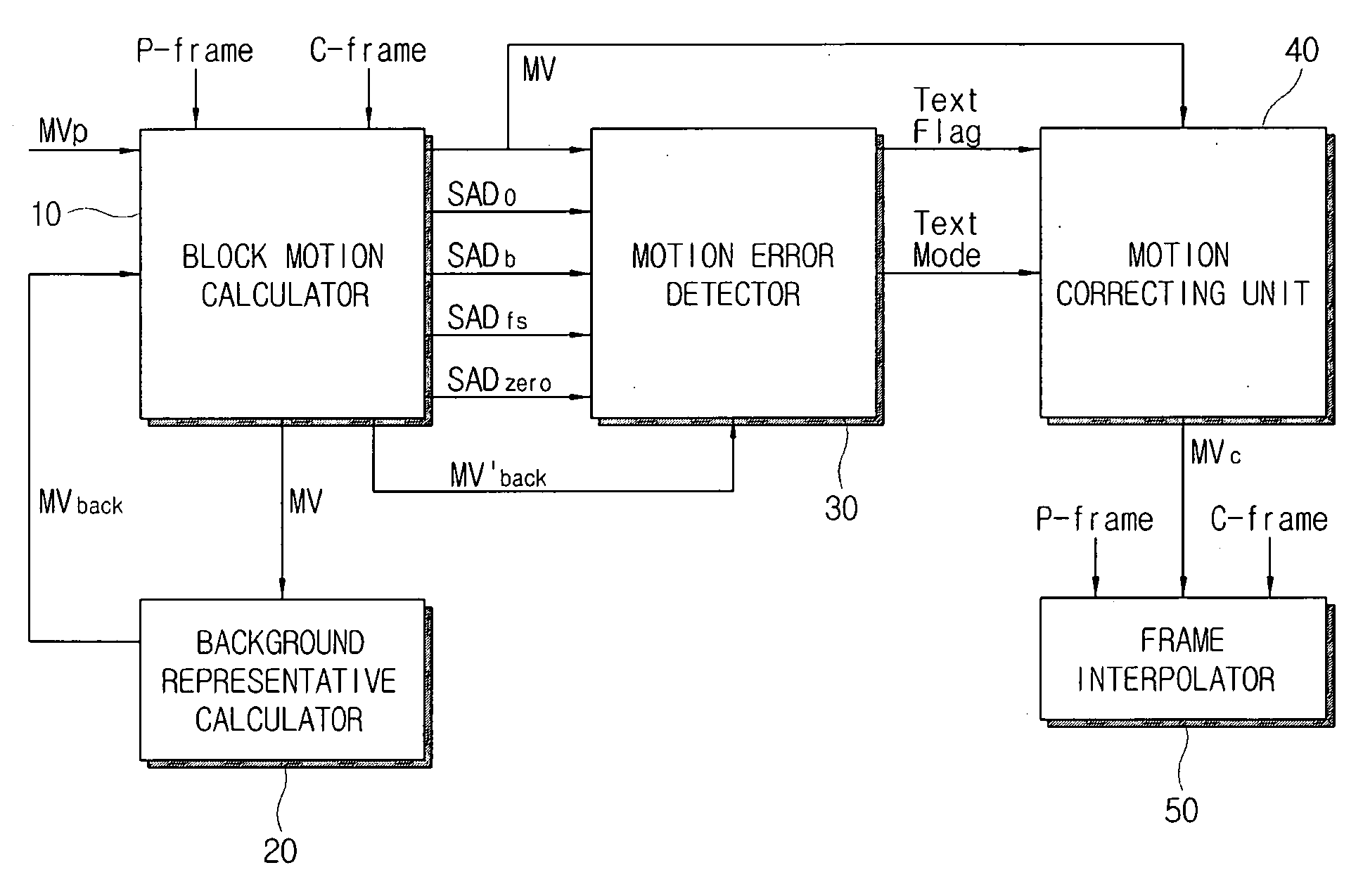

InactiveUS20070133685A1Reduce image distortionColor television with pulse code modulationColor television with bandwidth reductionMotion vectorMotion error

An apparatus and method for estimating motion are provided. An exemplary motion estimating apparatus comprises a background representative calculator for calculating a background representative vector representing background motion of a frame to be interpolated on the basis of motion vectors of the frame to be interpolated, a block motion calculator for calculating motion vectors for respective blocks of the frame to be interpolated on the basis of a current frame and a previous frame, for providing the motion vectors to the background representative calculator, and for calculating background motion vectors for the respective blocks through local search on the basis of the background representative vector output from the background representative calculator, a motion error detector for determining whether each block is in a text area on the basis of the motion vectors and the background motion vectors output from the block motion calculator and a motion correcting unit for determining whether each block in the text area is in a boundary area on the basis of motion vectors of peripheral blocks of each block when each block is in the text area, and for correcting a motion vector of each block in the boundary area when each block in the text area is in the boundary area.

Owner:SAMSUNG ELECTRONICS CO LTD

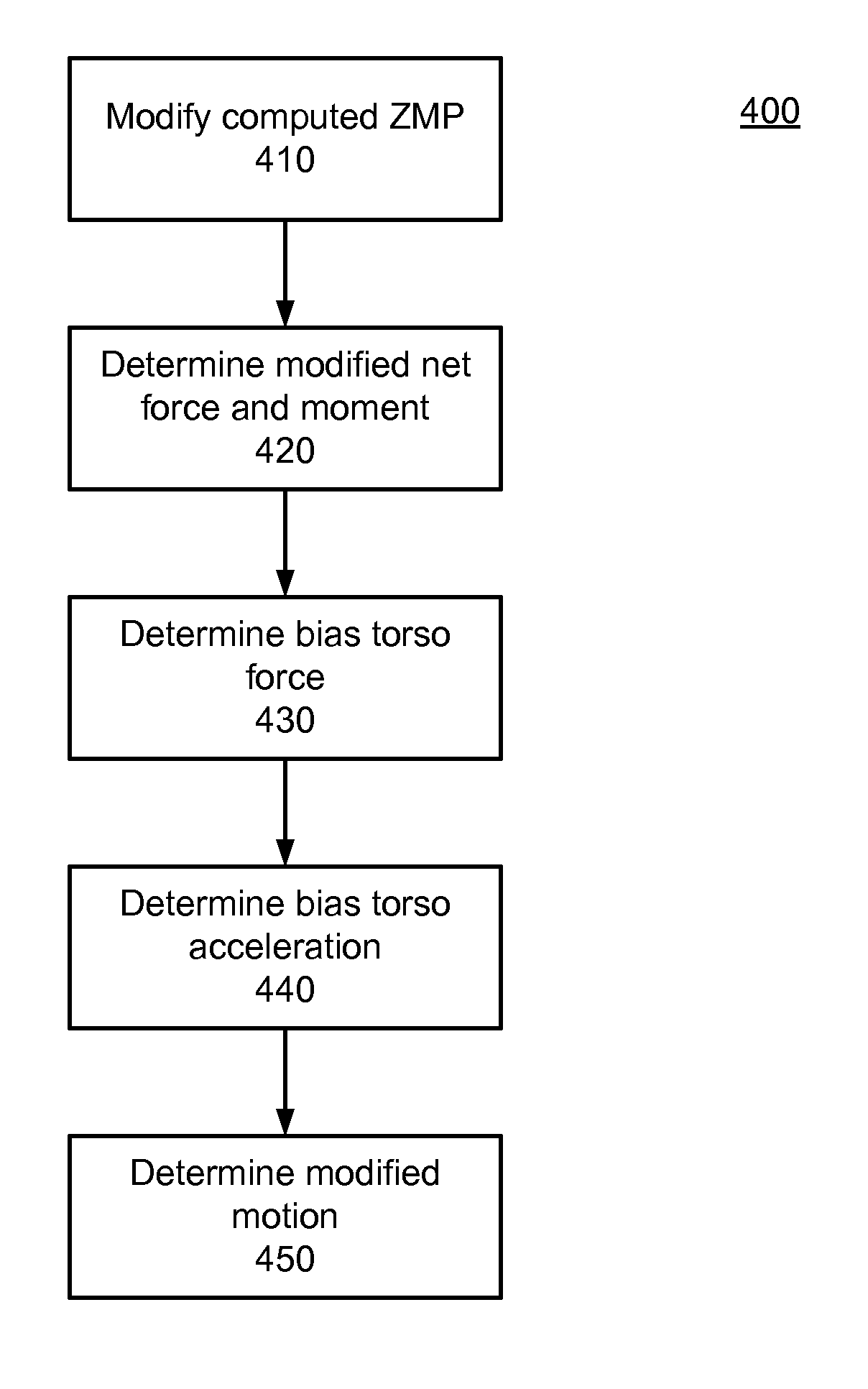

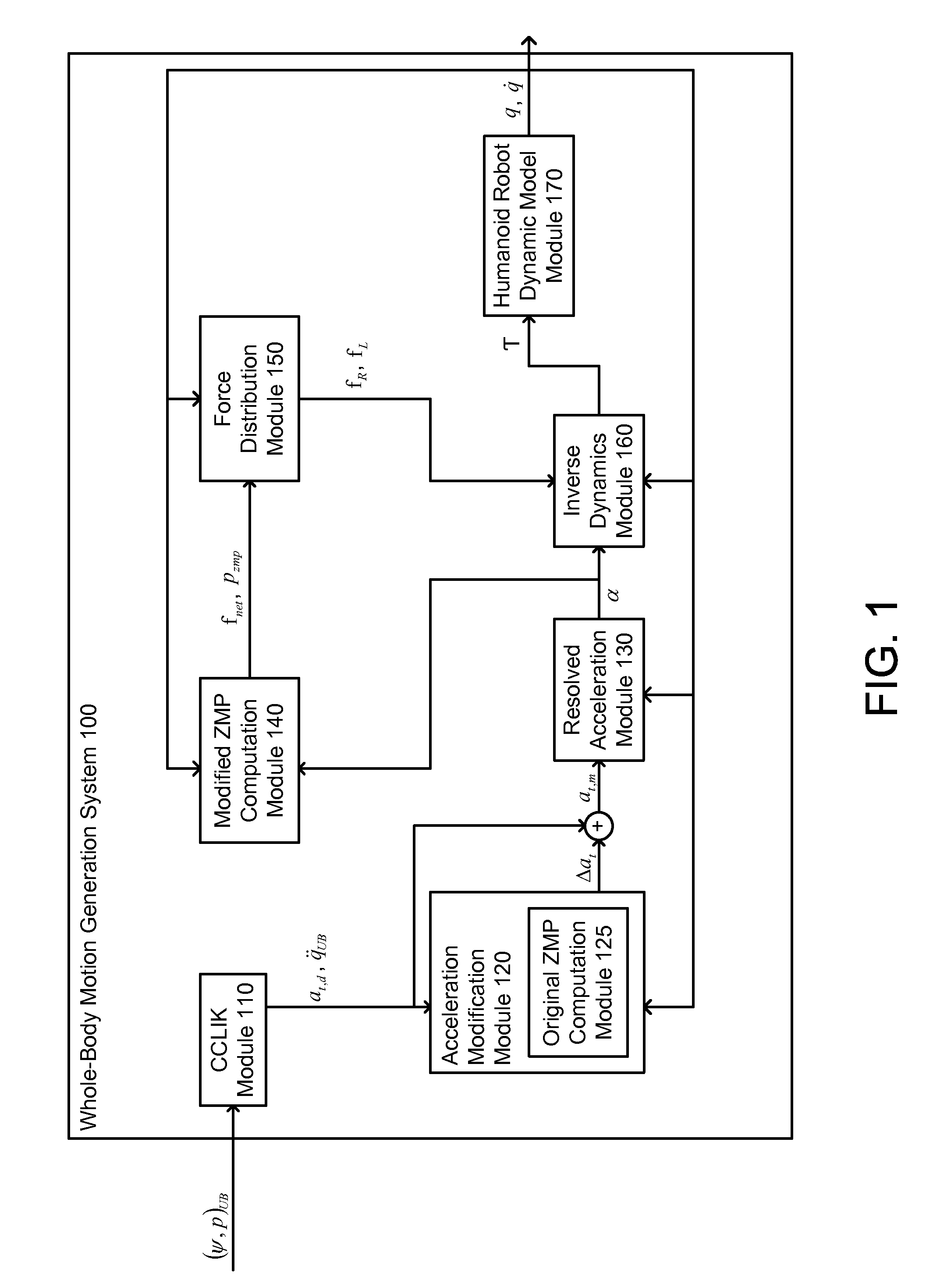

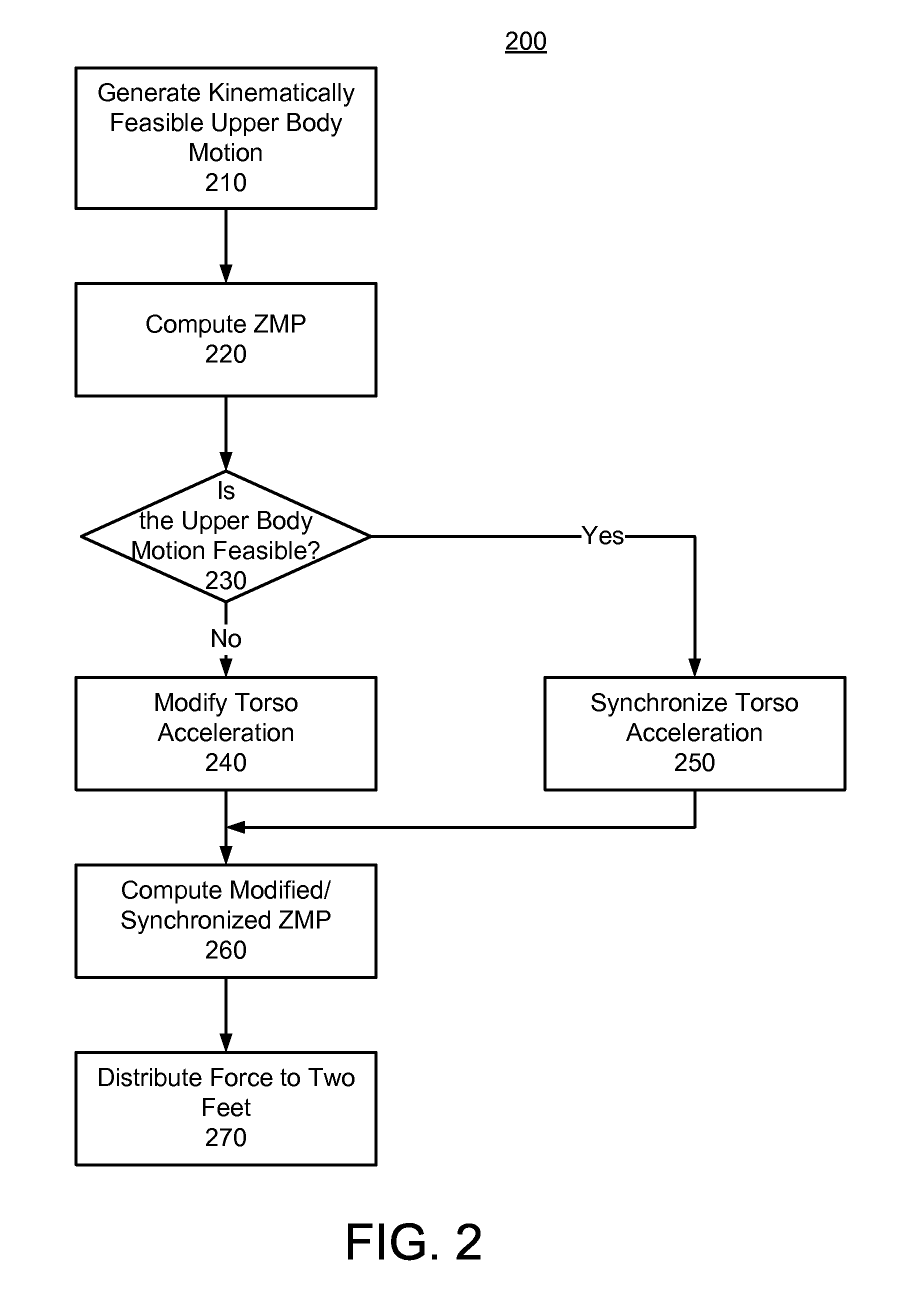

Whole-body humanoid control from upper-body task specifications

A system, method, and computer program product for generating dynamically feasible whole-body motion of a humanoid robot while realizing specified upper-body task motion are described. A kinematically feasible upper-body motion is generated based on the specified upper-body motion. A series of zero-moment points (ZMP) are computed for the generated motion and used to determine whether such motion is dynamically feasible. If the motion is not dynamically feasible, then the torso acceleration is modified to make the motion dynamically feasible, and otherwise synchronized as needed. A series of modified ZMP is determined based on the modified torso acceleration and used to distribute the resultant net ground reaction force and moment to the two feet.

Owner:HONDA MOTOR CO LTD

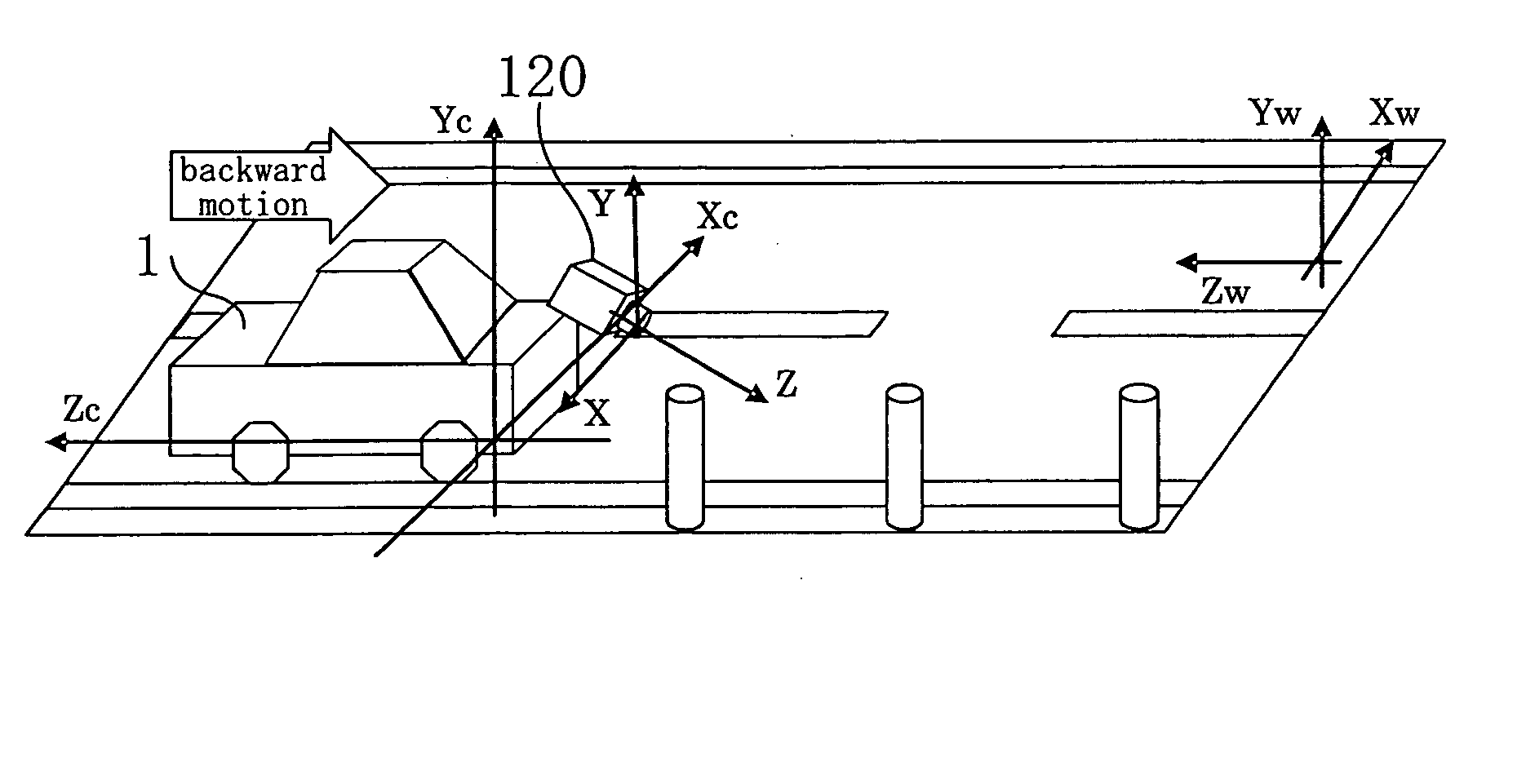

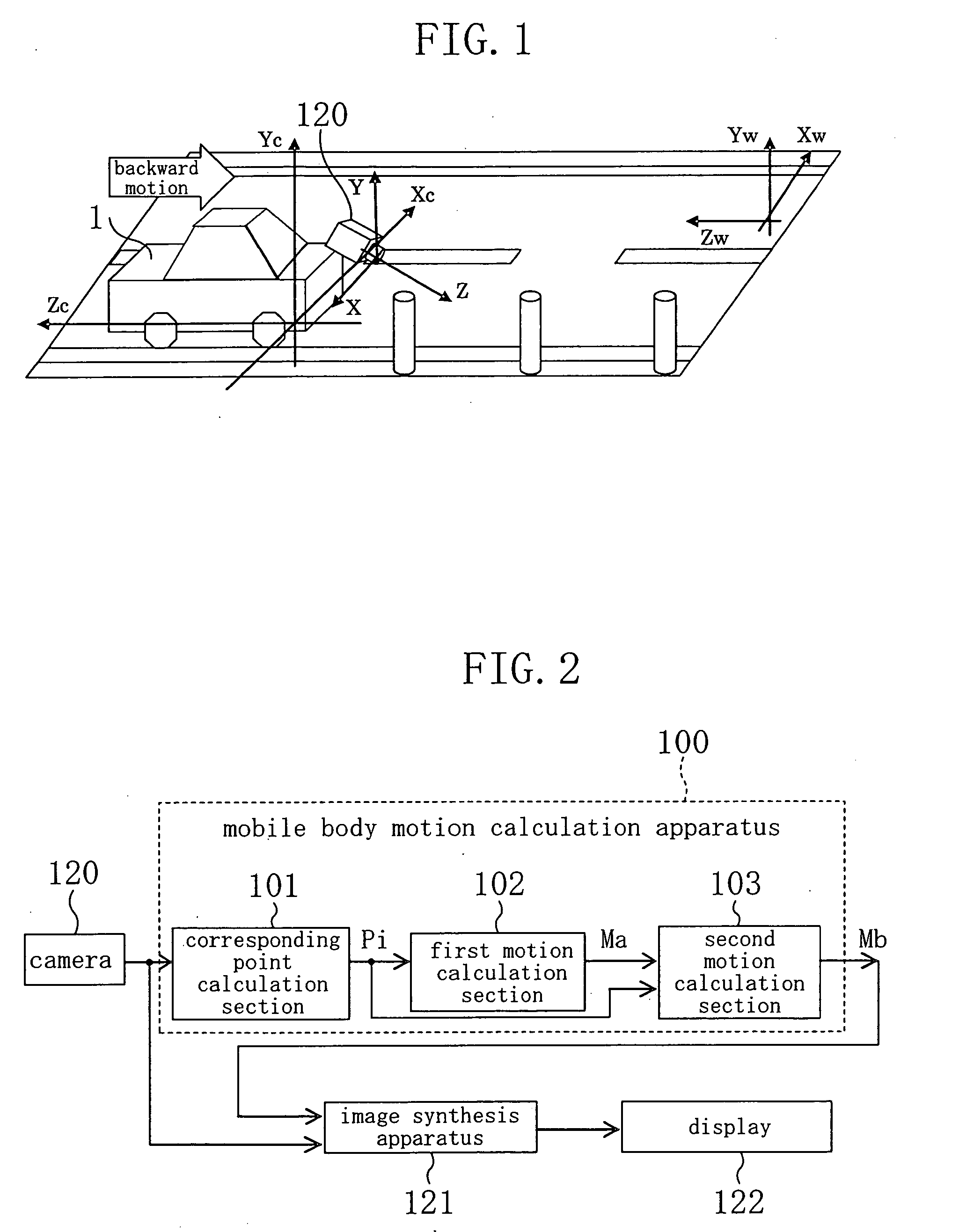

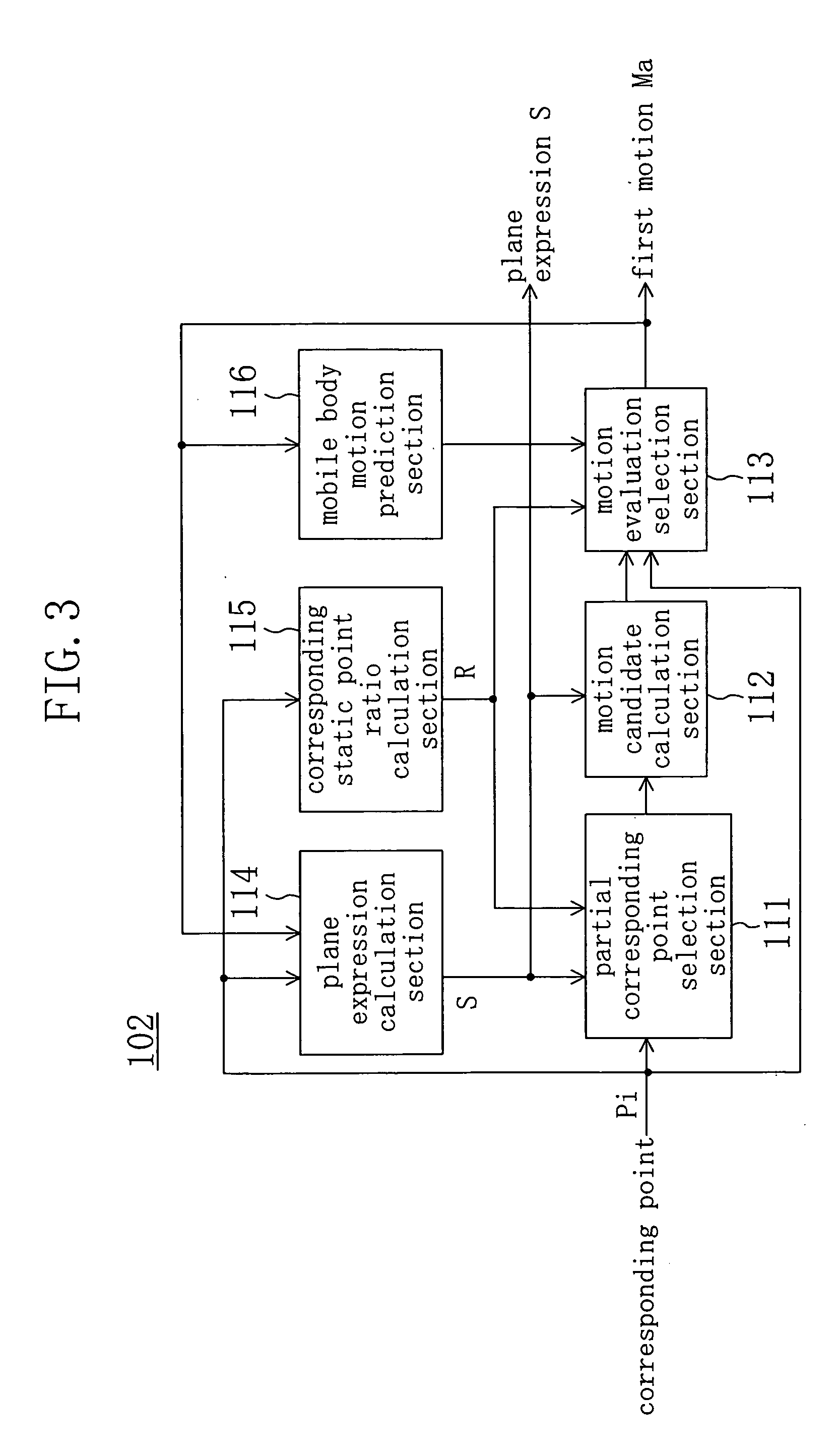

Mobile unit motion calculating method, apparatus and navigation system

ActiveUS20060055776A1Improve accuracyImage analysisCharacter and pattern recognitionNavigation systemComputer science

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

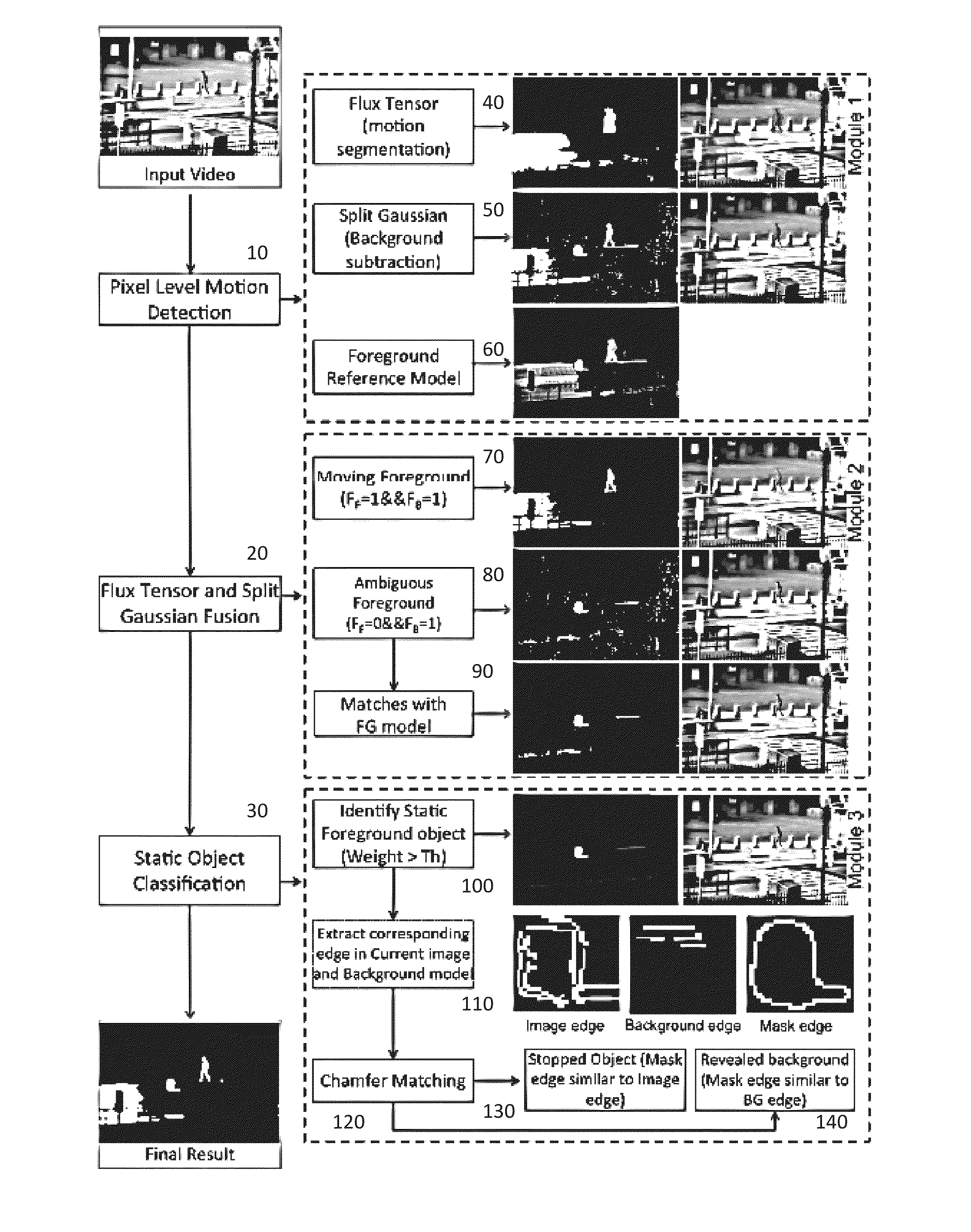

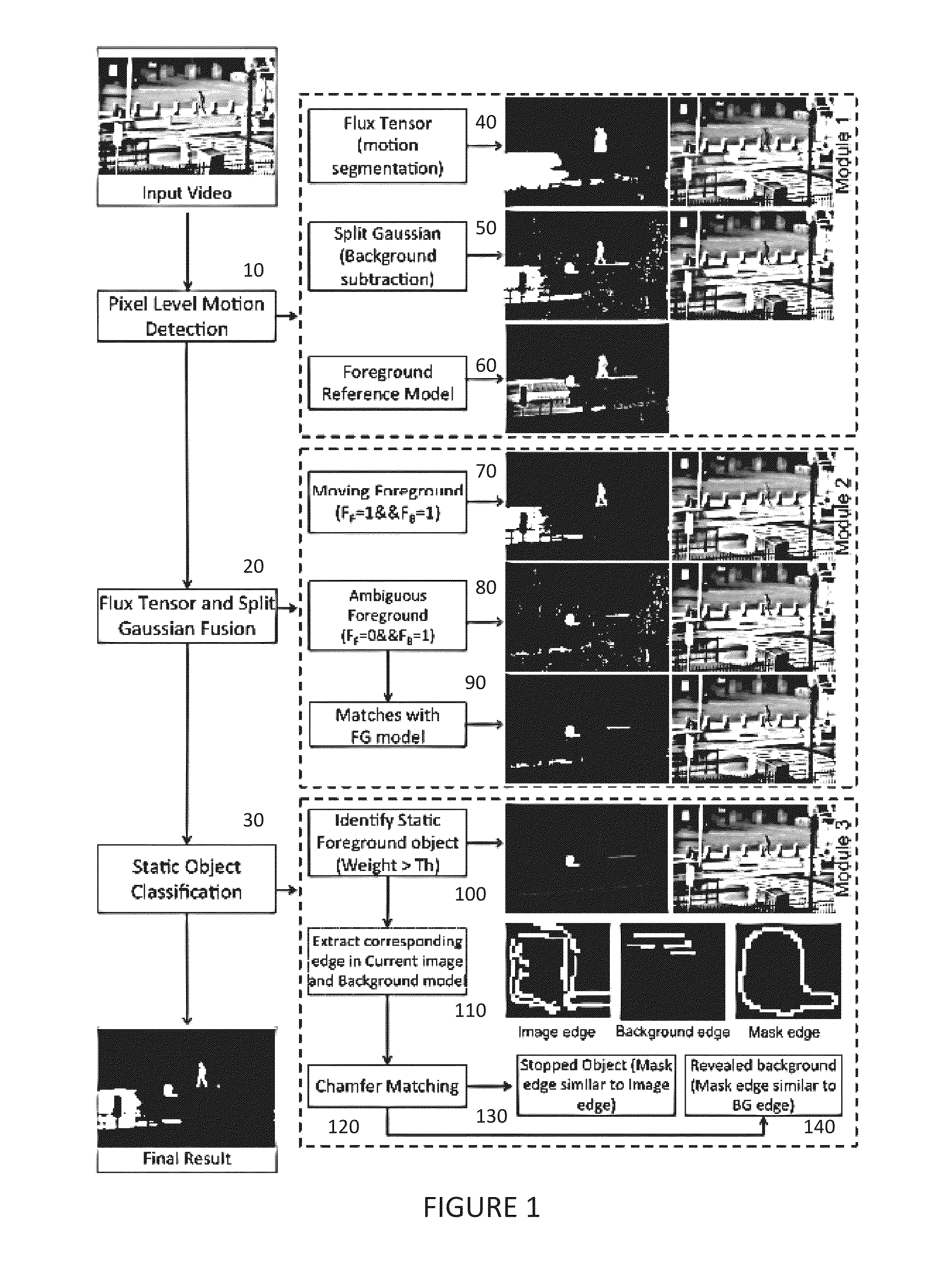

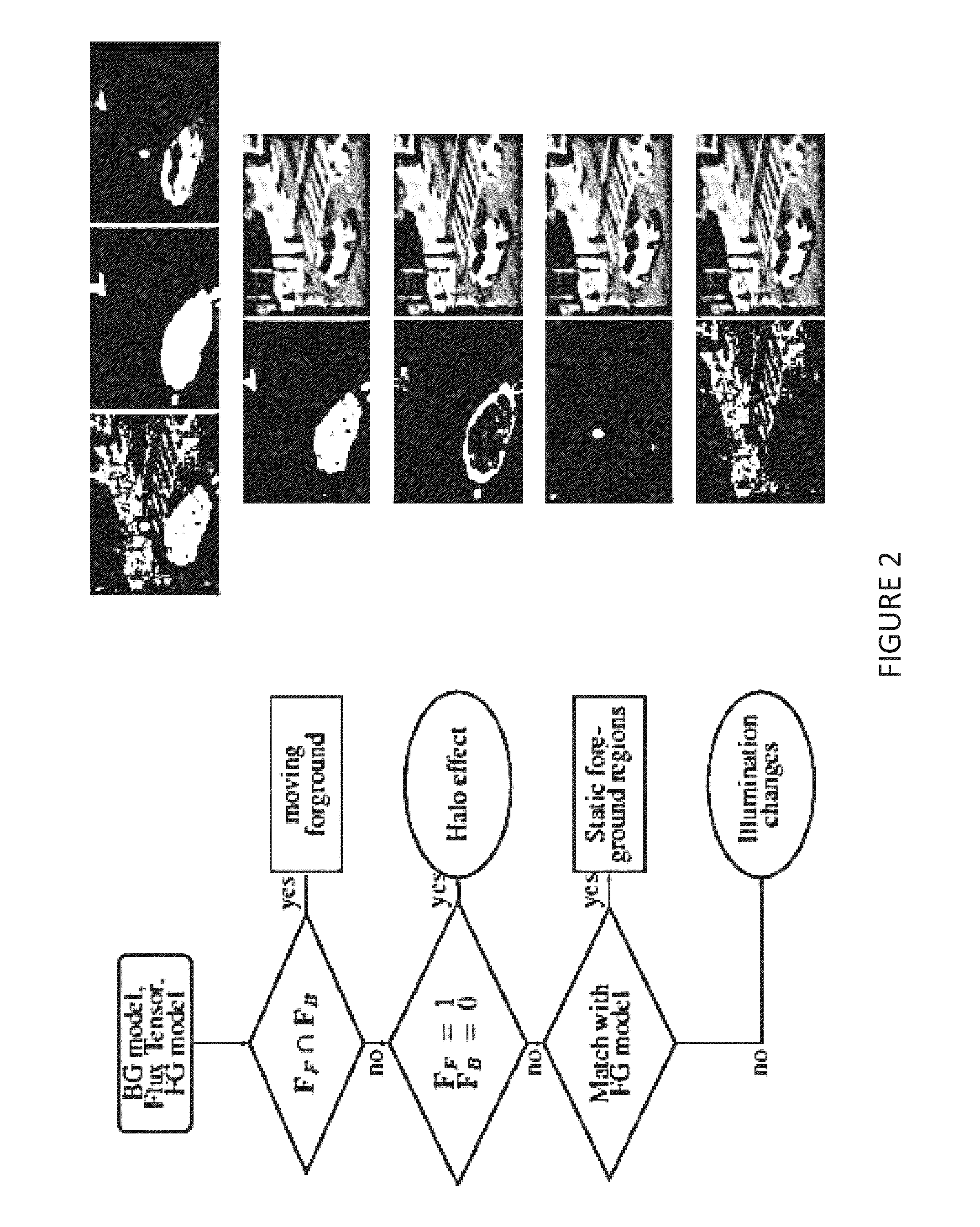

System and method for static and moving object detection

ActiveUS9454819B1Improve accuracyImprove performanceImage enhancementImage analysisMotion computationObject detection

A method for static and moving object detection employing a motion computation method based on spatio-temporal tensor formulation, a foreground and background modeling method, and a multi-cue appearance comparison method. The invention operates in the presence of shadows, illumination changes, dynamic background, and both stopped and removed objects.

Owner:THE DEPT OF THE AIR FORCE +1

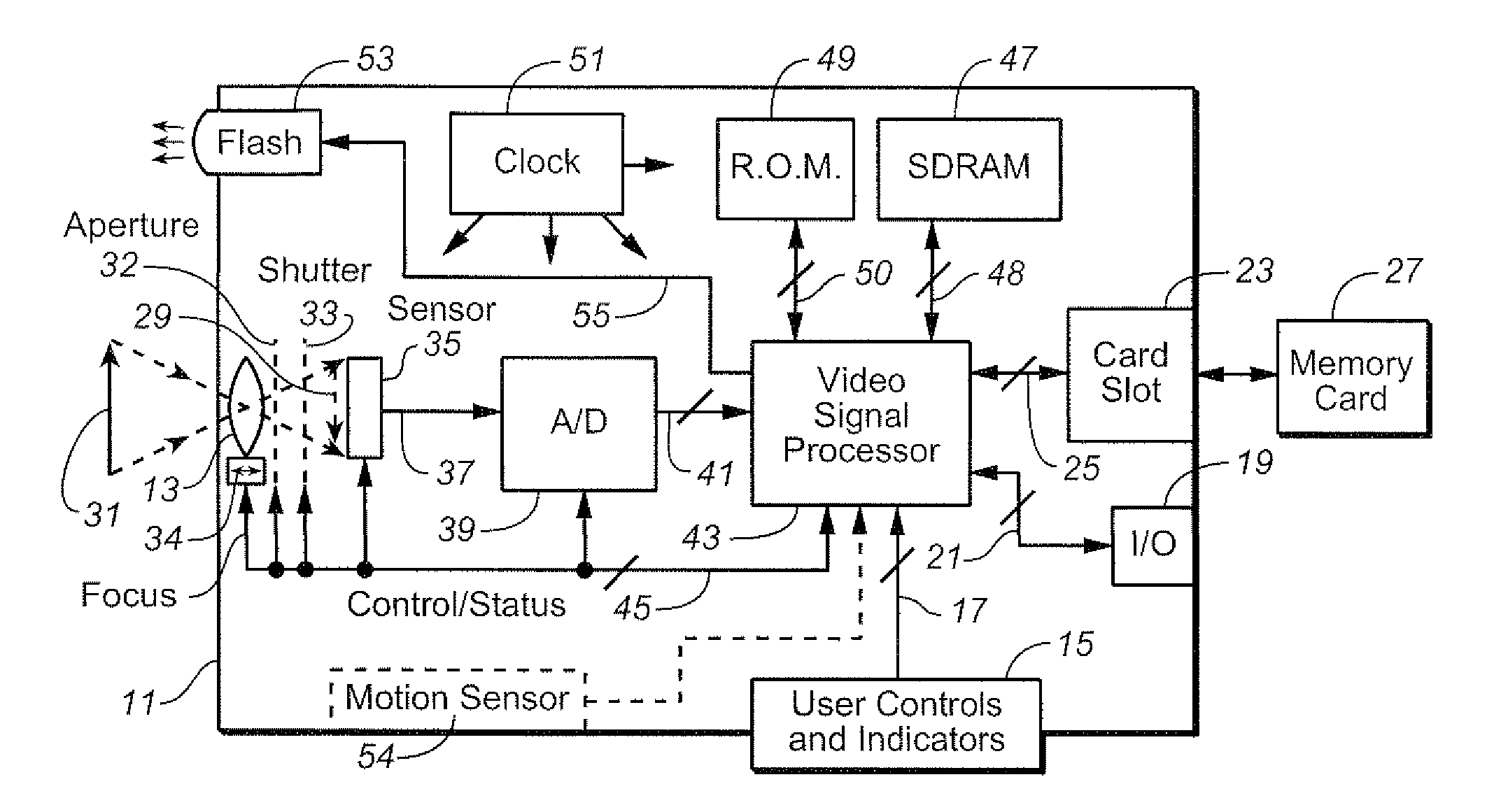

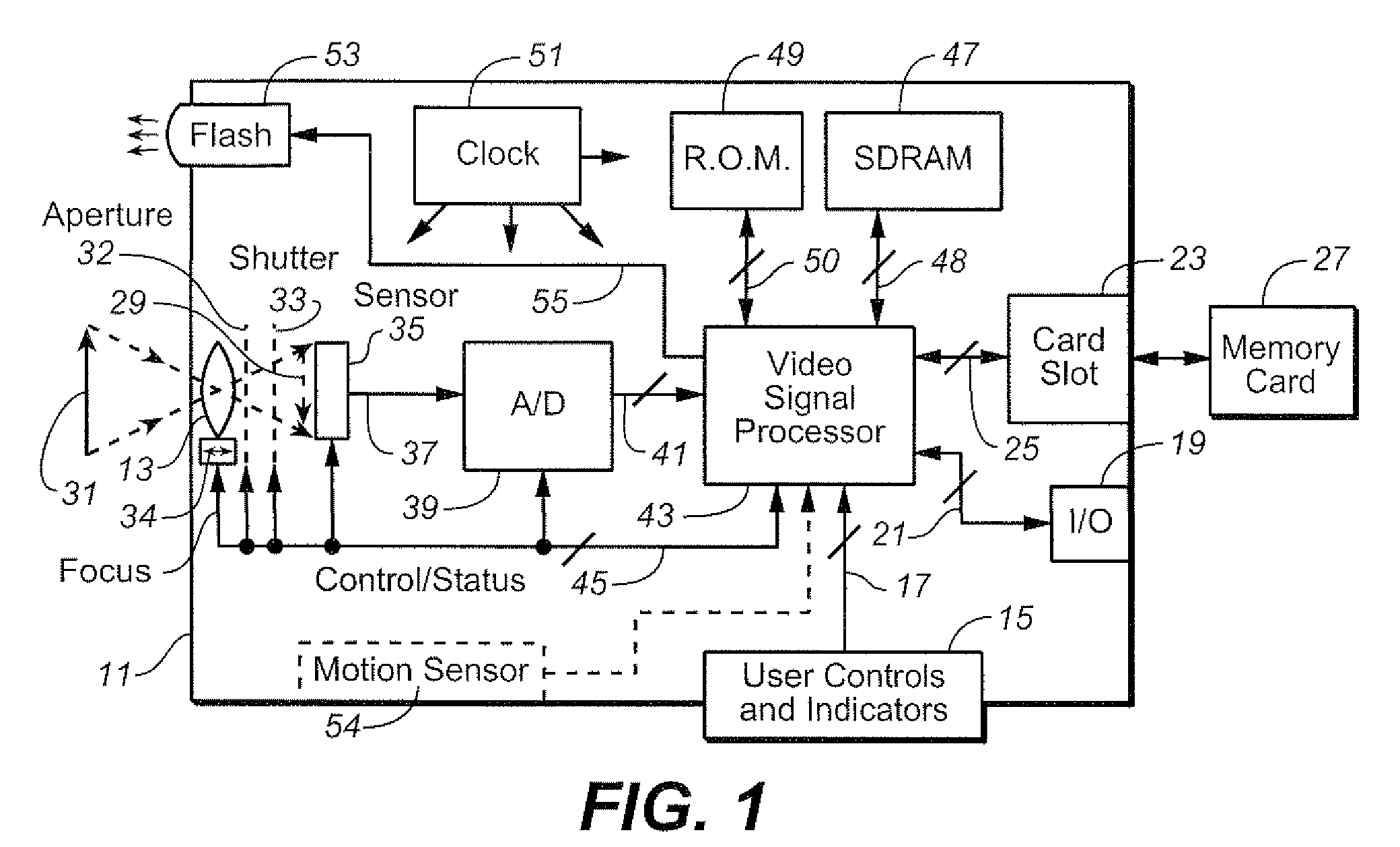

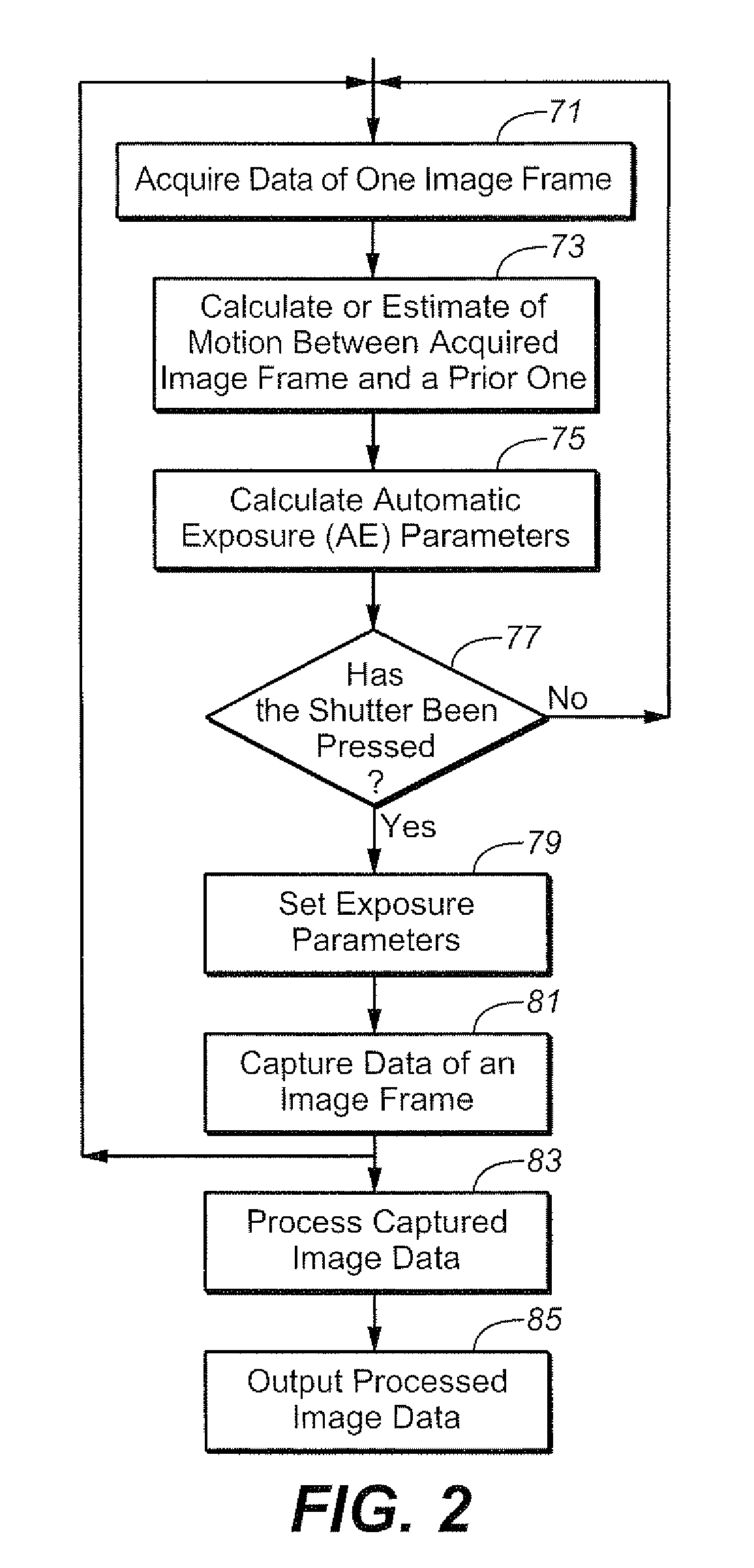

Technique of Motion Estimation When Acquiring An Image of A Scene That May Be Illuminated With A Time Varying Luminance

ActiveUS20080291288A1Reduce the impactReduce impactTelevision system detailsTelevision system scanning detailsMotion vectorPixel brightness

In a digital camera or other image acquisition device, motion vectors between successive image frames of an object scene are calculated from normalized values of pixel luminance in order to reduce or eliminate any effects on the motion calculation that might occur when the object scene is illuminated from a time varying source such as a fluorescent lamp. Calculated motion vectors are checked for accuracy by a robustness matrix.

Owner:QUALCOMM INC

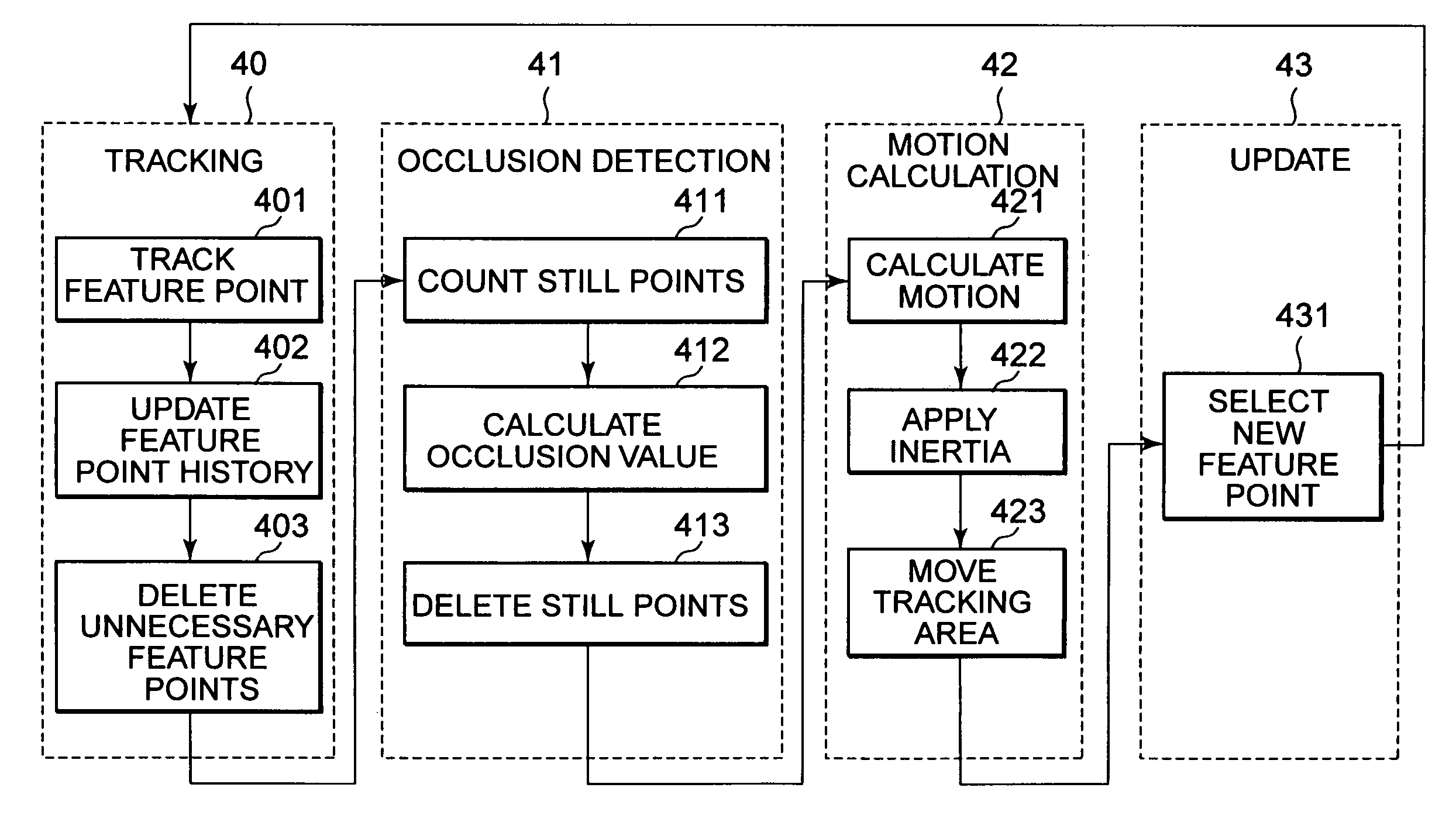

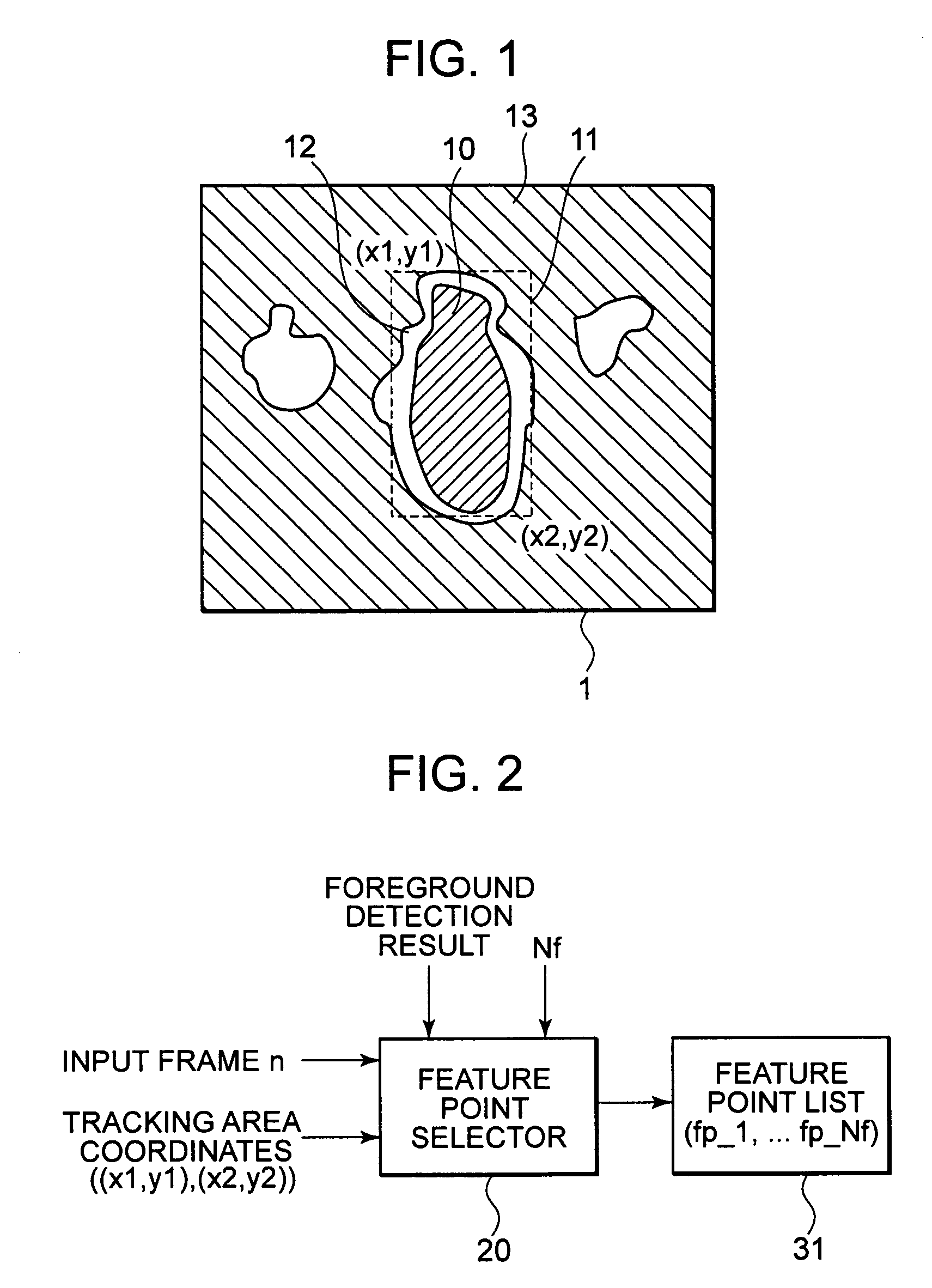

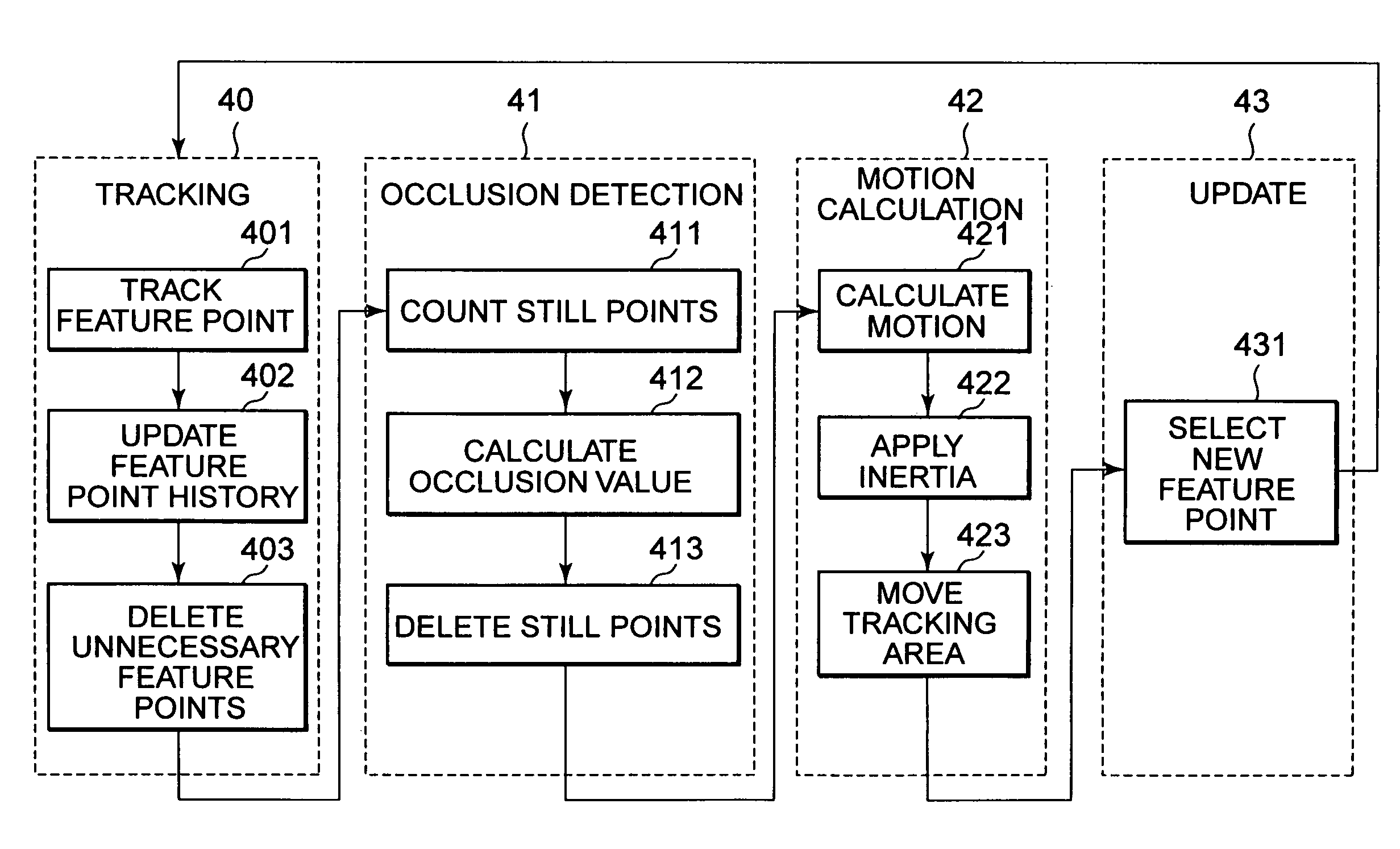

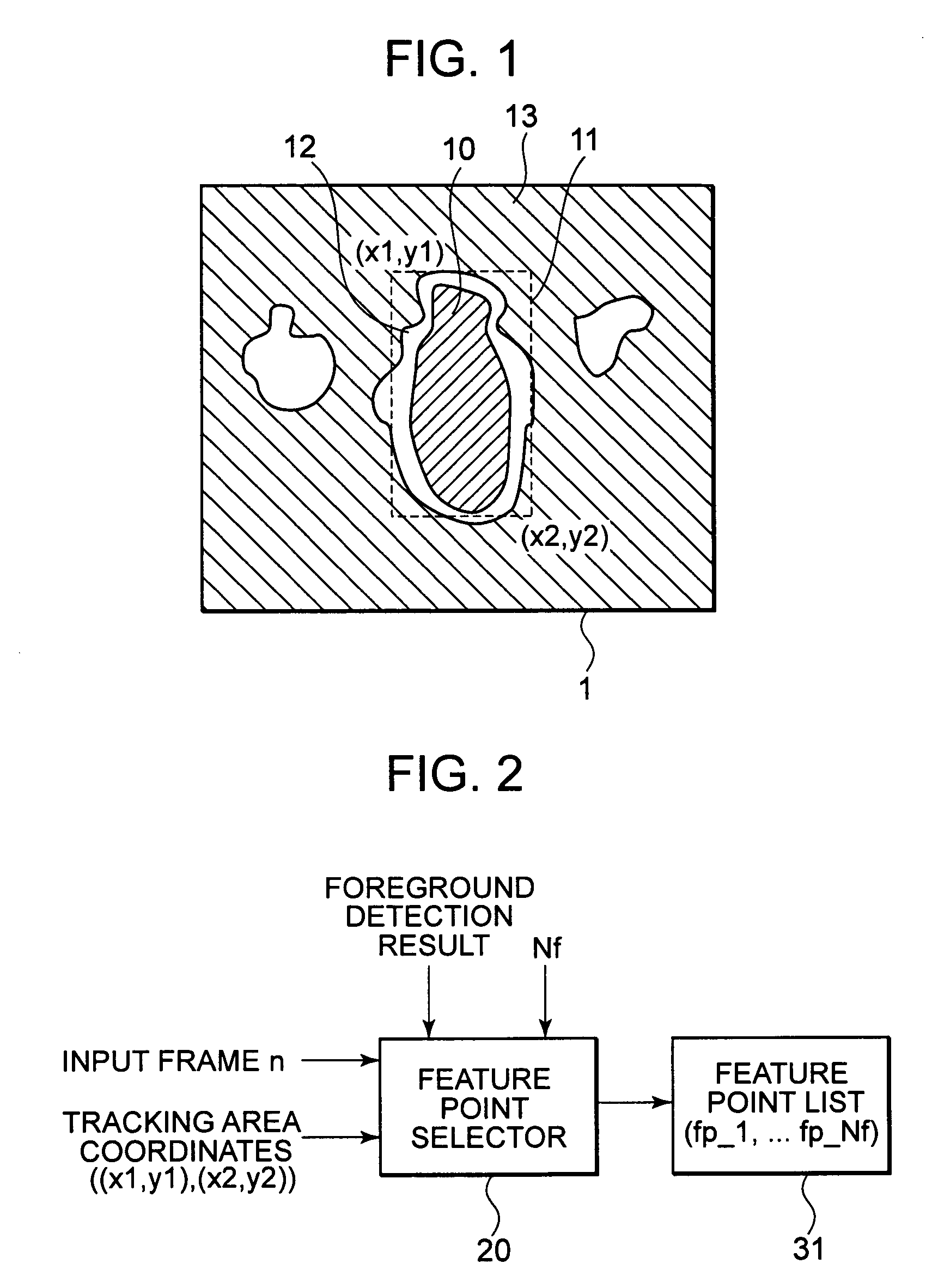

Moving object tracking method using occlusion detection of the tracked object, and image processing apparatus

An image processing apparatus for performing a tracking of a moving object by setting a tracking area corresponding to a moving object appearing in a video image, by setting a plurality of feature points in the tracking area, and by tracking the plurality of feature points, comprises a tracking processing unit for tracking each of the plurality of feature points from the preceding frame to the present frame, and a motion calculation processing unit for specifying a position of the tracking area in the present frame by estimating the motion of the tracking area based the tracking result of each of the feature points, wherein the tracking processing unit calculates reliability indicating the height of the possibility that a feature point exists in the moving object to each of the feature points, and the motion calculation processing unit calculates the motion of the tracking area by using the reliability.

Owner:SONY CORP

Realtime object tracking system

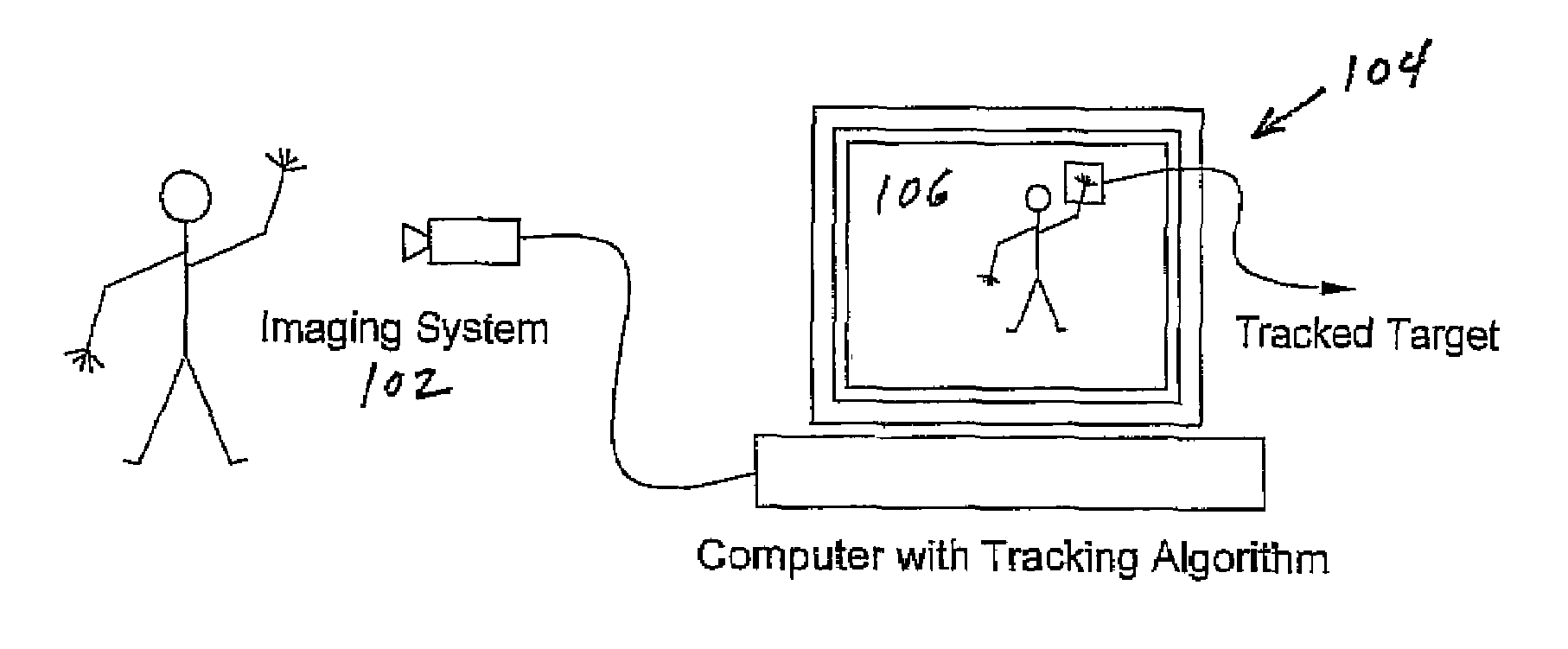

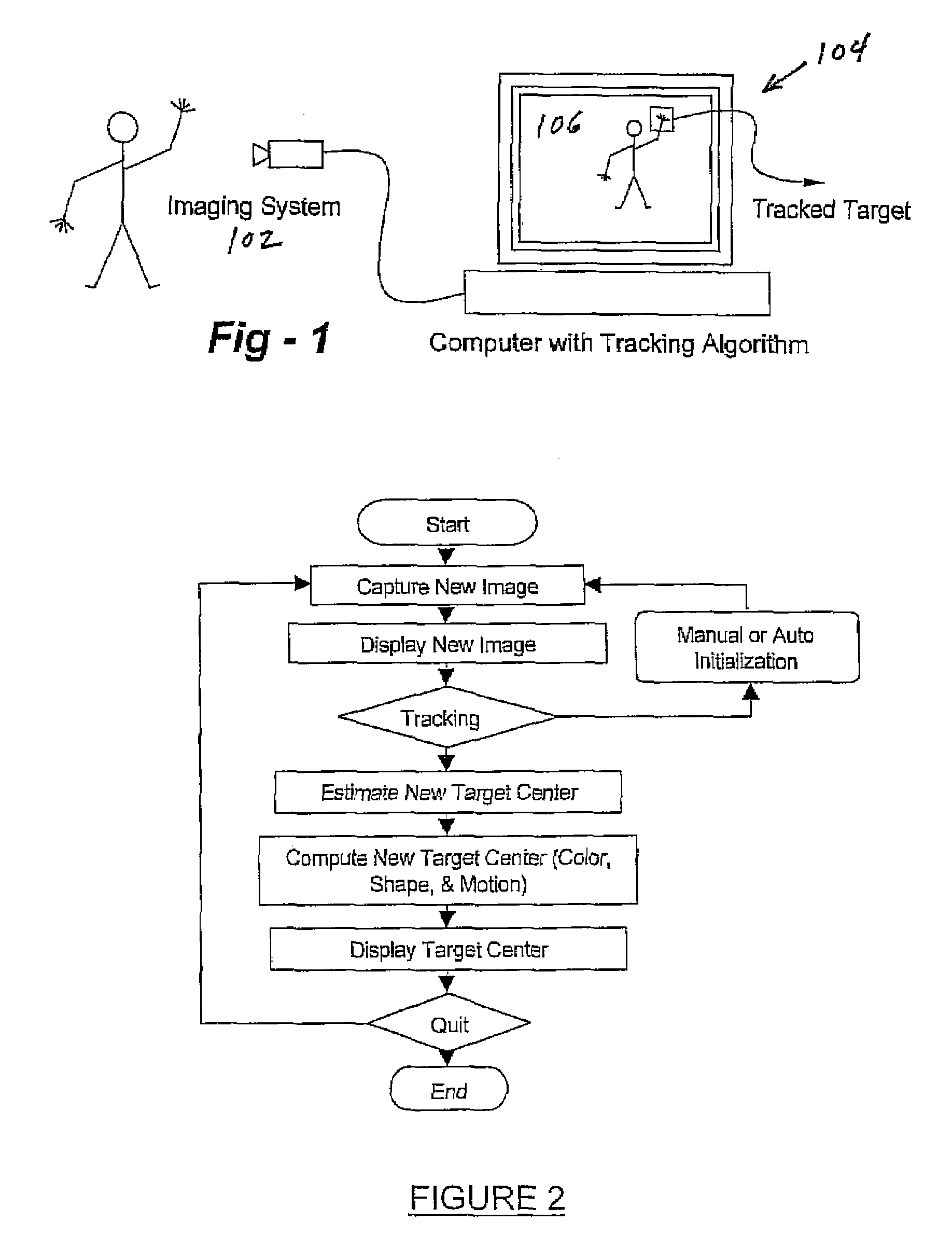

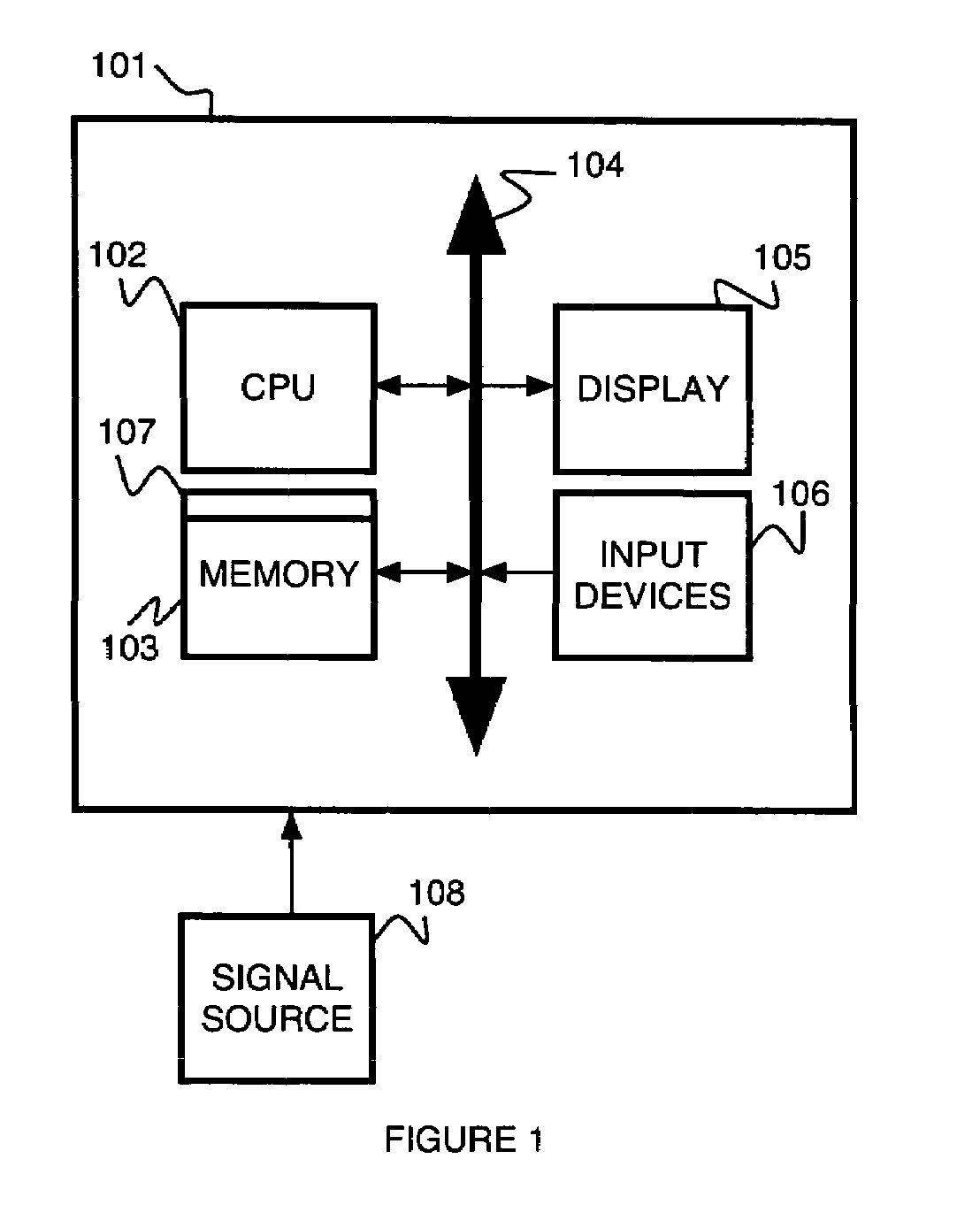

InactiveUS20090116692A1Computationally efficientMinimal computationImage analysisIndoor gamesObject basedTrack algorithm

A real-time computer vision system tracks one or more objects moving in a scene using a target location technique which does not involve searching. The imaging hardware includes a color camera, frame grabber and processor. The software consists of the low-level image grabbing software and a tracking algorithm. The system tracks objects based on the color, motion and / or shape of the object in the image. A color matching function is used to compute three measures of the target's probable location based on the target color, shape and motion. The method then computes the most probable location of the target using a weighting technique. Once the system is running, a graphical user interface displays the live image from the color camera on the computer screen. The operator can then use the mouse to select a target for tracking. The system will then keep track of the moving target in the scene in real-time.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

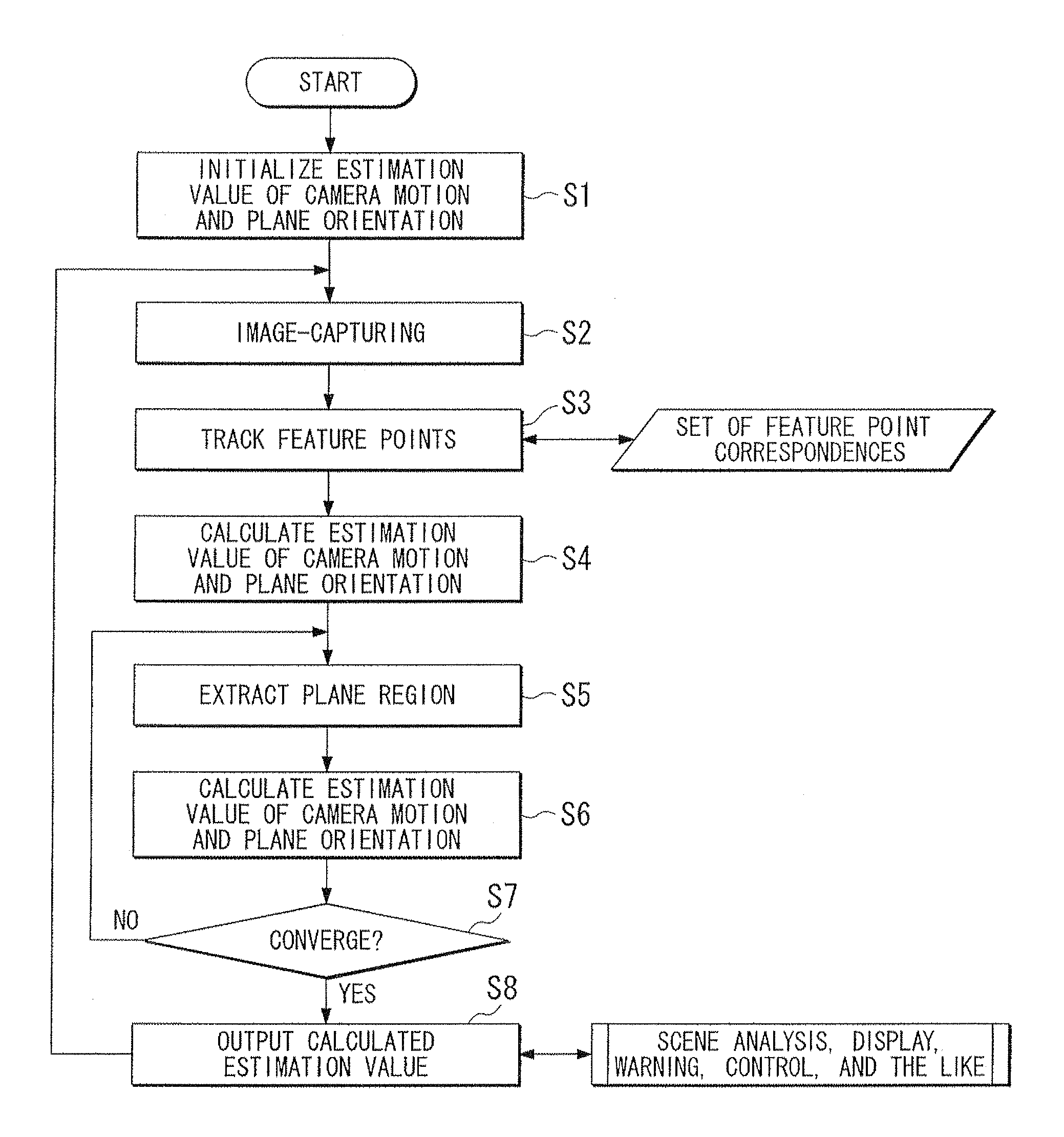

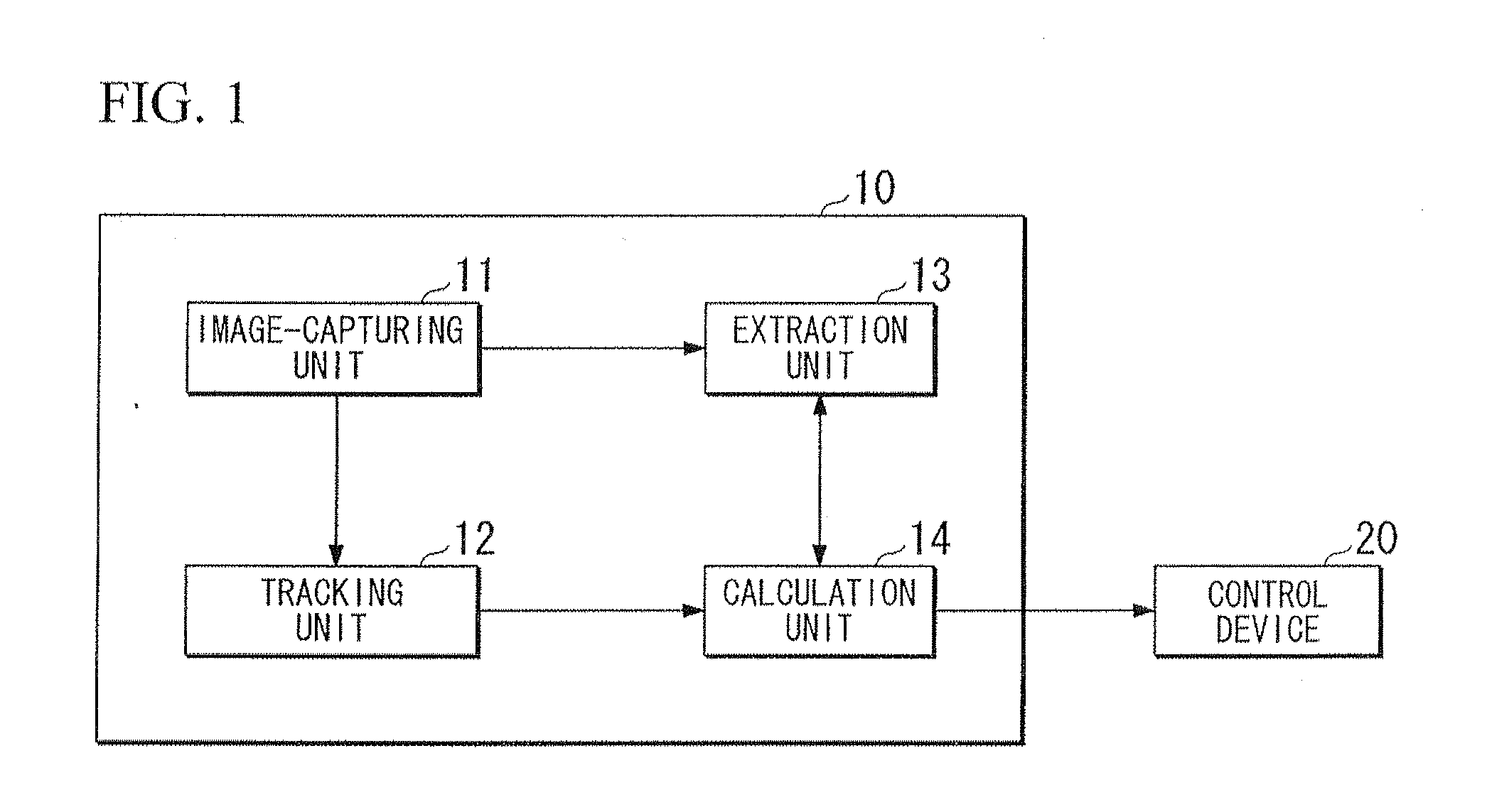

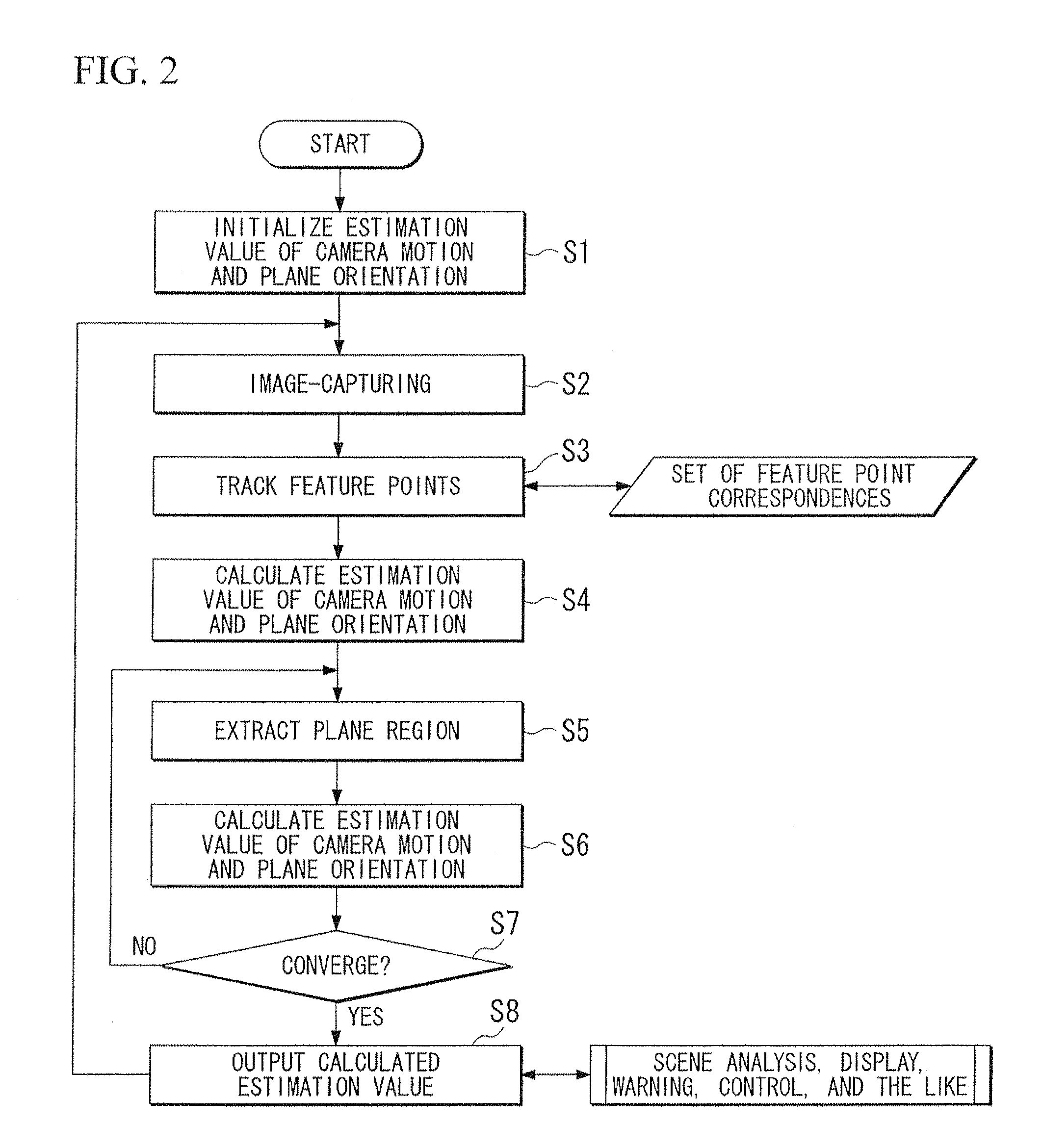

Motion calculation device and motion calculation method

InactiveUS20110175998A1Minimize cost functionCalculation stableImage enhancementImage analysisImage extractionMotion vector

A motion calculation device includes an image-capturing unit configured to capture an image of a range including a plane and outputs the captured image, an extraction unit configured to extract a region of the plane from the image, a detection unit configured to detect feature points and motion vectors of the feature points from a plurality of images captured by the image-capturing unit at a predetermined time interval; and a calculation unit configured to calculate the motion of the host device based on both of an epipolar constraint relating to the feature points and a homography relating to the region.

Owner:HONDA ELESYS CO LTD

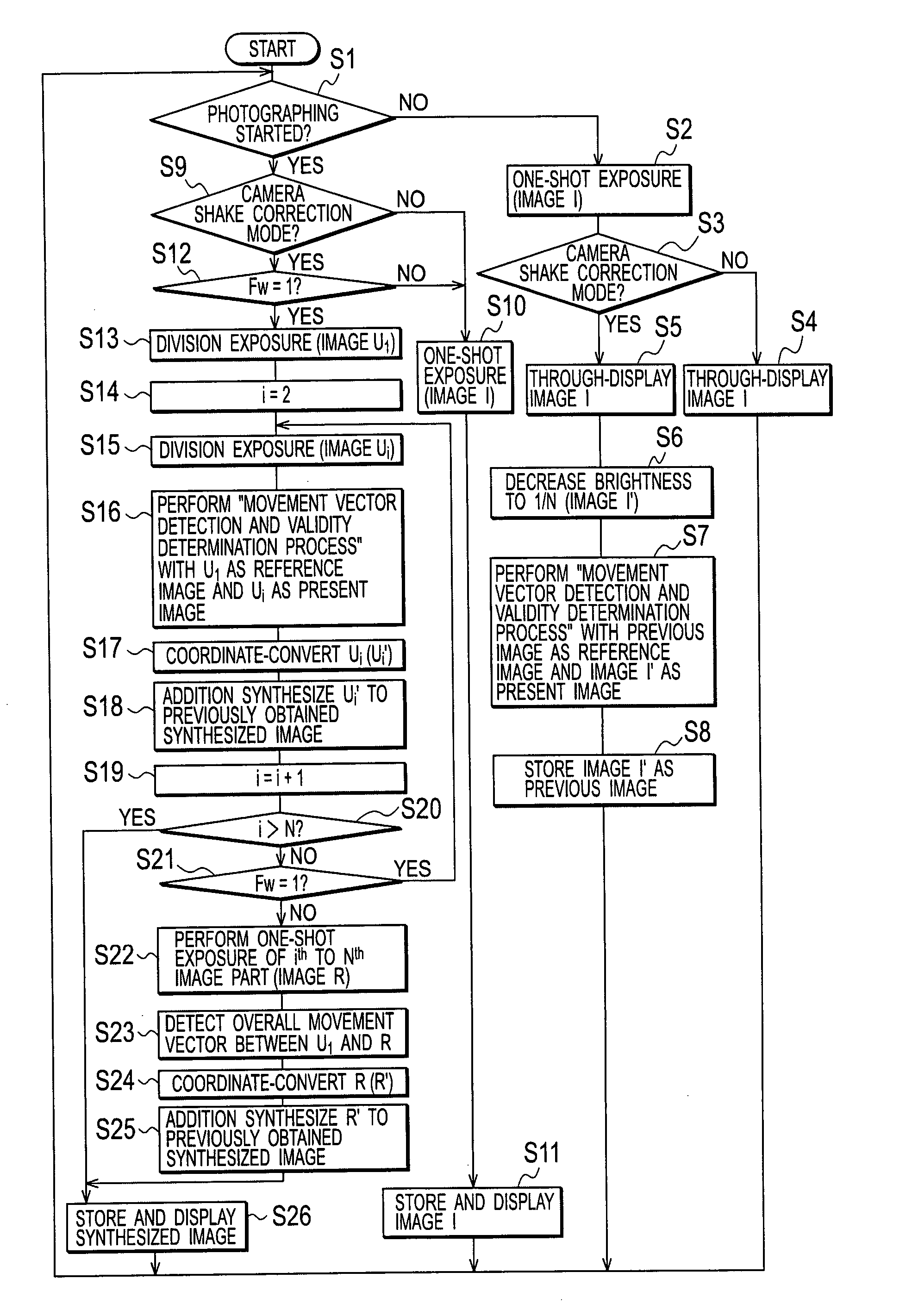

Imaging device

An imaging device is provided that predicts before still image photographing, whether alignment of a plurality of images obtained by the still image photographing mode should be performed based on the divided exposures and automatically switches photographing modes depending on the predicted result. The imaging device has a first movement computation unit that calculates image movement from input images when still image photograph is not performed, and a photographing mode switching unit that switches between a first still image photographing mode and a second still image photographing mode when starting photographing, based on image movement calculated by the first movement computation unit right before the photographing begins.

Owner:XACTI CORP

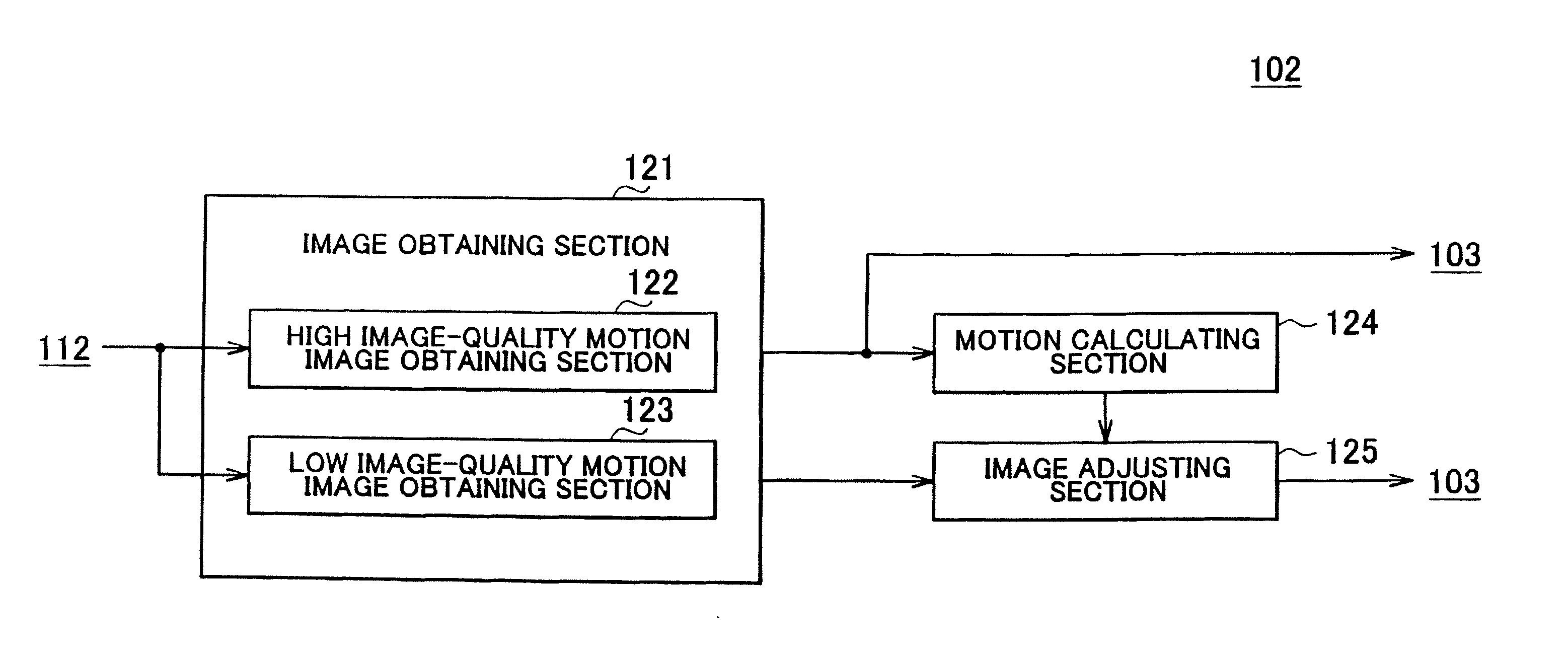

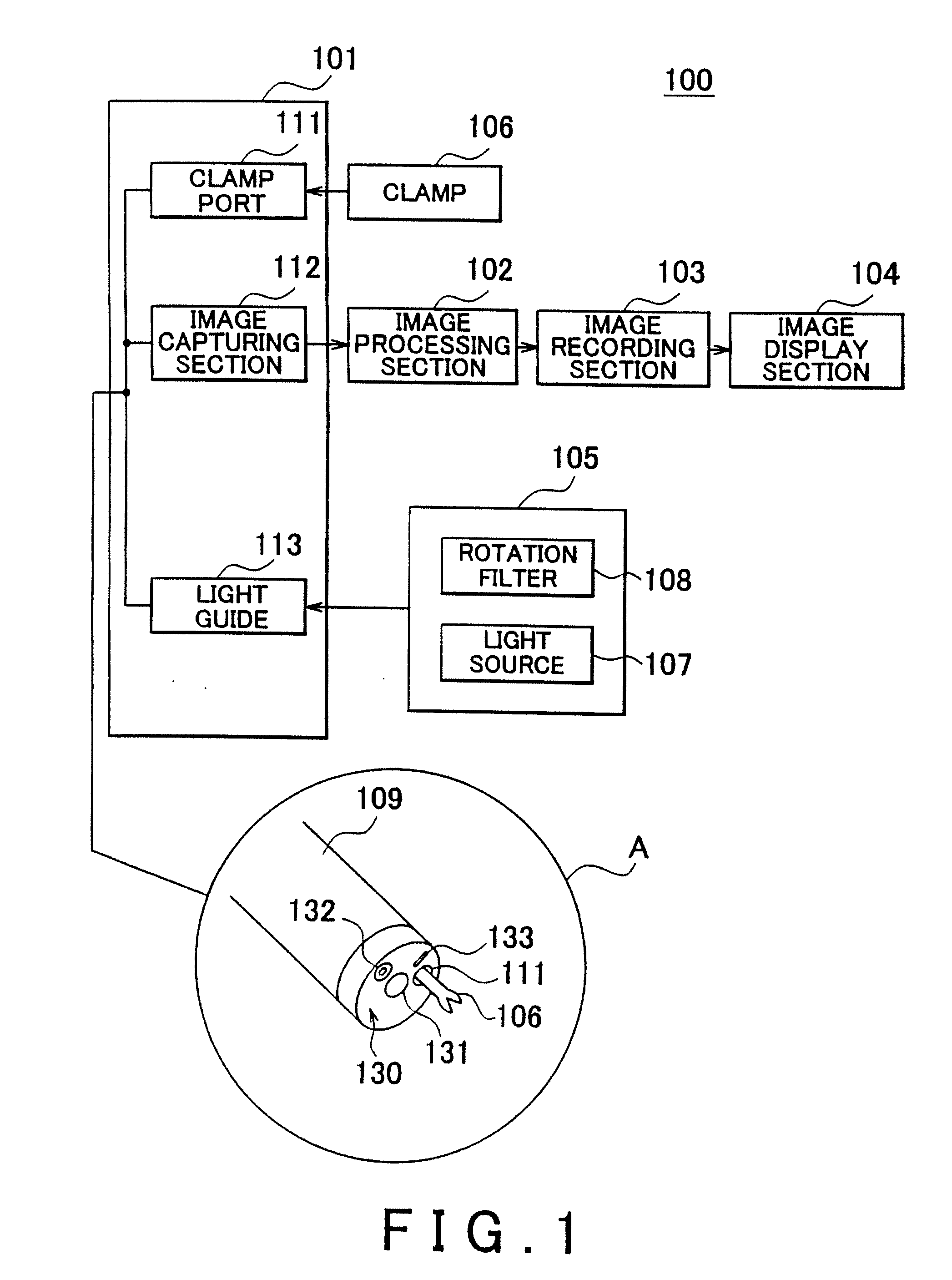

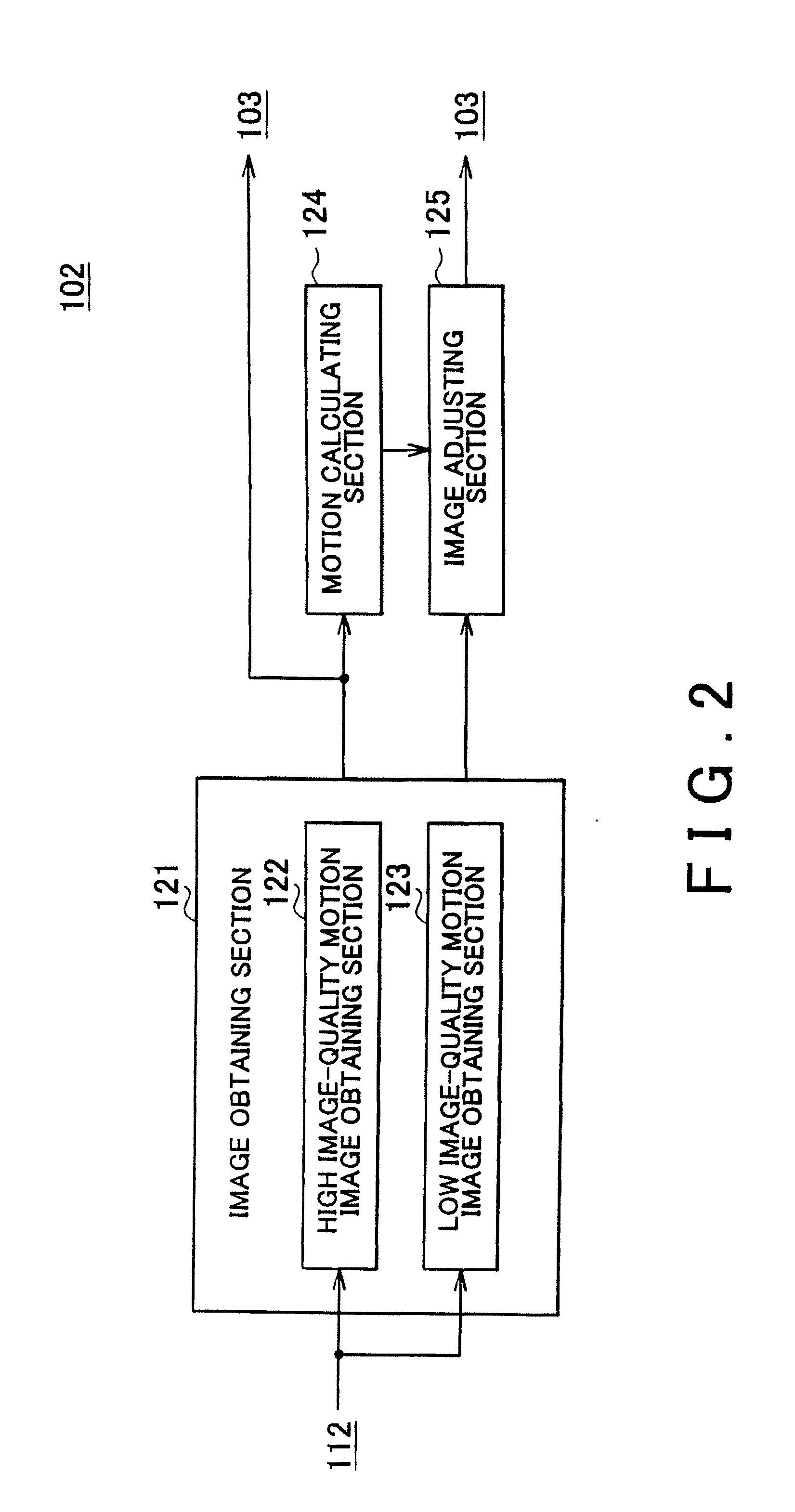

Image processing system, image processing method, and computer readable medium

InactiveUS20100177180A1High imagingReduce image qualityImage enhancementTelevision system detailsImaging processingImaging quality

Provided are an image processing system, an image processing method, and a program for adjusting an image quality of a fluorescent light motion image. An image obtaining section that obtains a high image-quality motion image and a low image-quality motion image in which a same subject is captured simultaneously; a motion calculating section that calculates a motion of the subject in the high image-quality motion image, from the high image-quality motion image; and an image adjusting section that generates a motion image resulting from adjusting an image quality of the low image-quality motion image, based on the motion calculated by the motion calculating section are included, to adjust an image quality of a low image-quality motion image. Accordingly, the image quality of a low image-quality image can be improved.

Owner:FUJIFILM CORP

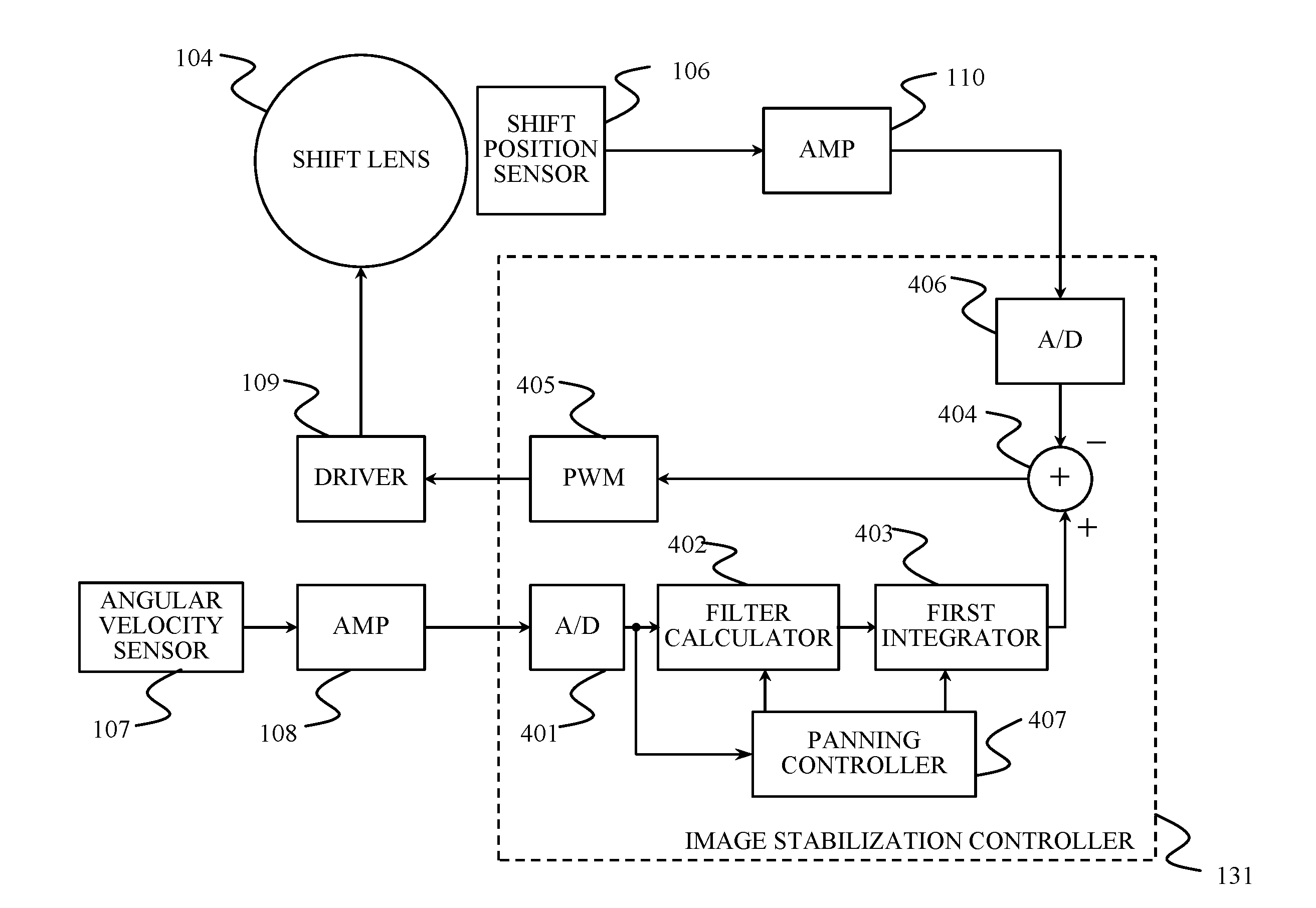

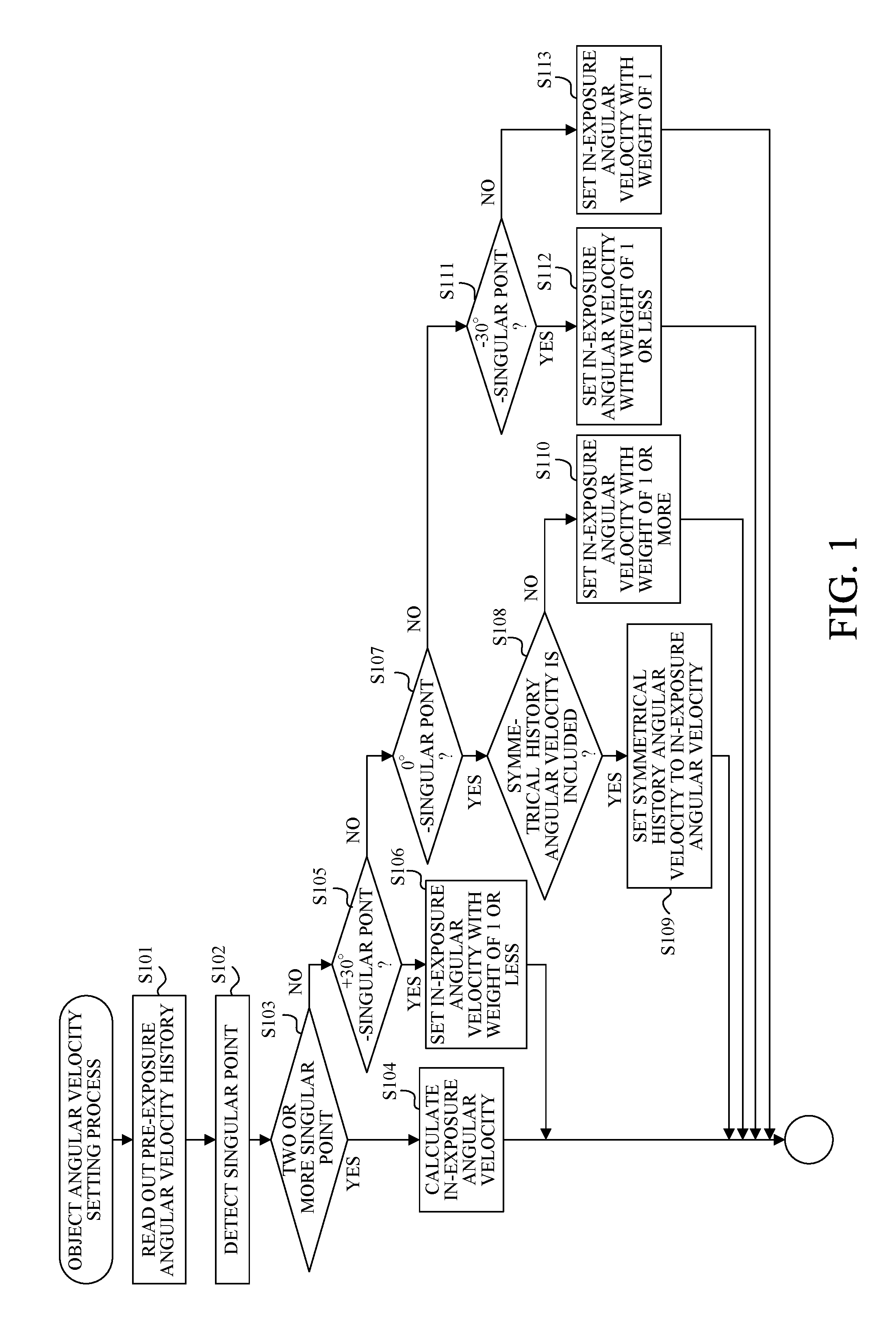

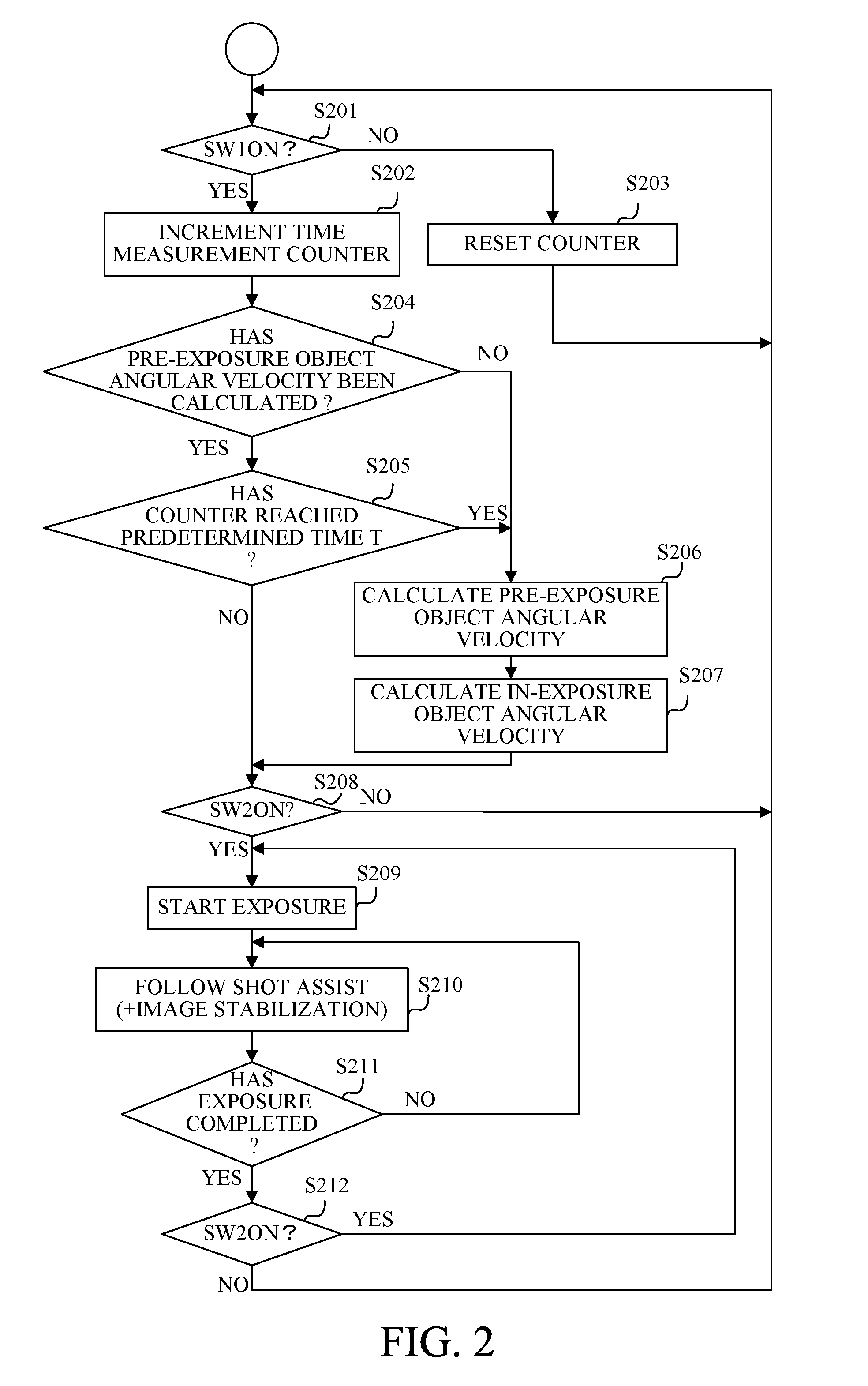

Image capturing apparatus, control method thereof and storage medium storing control program therefor

ActiveUS20160261784A1Good follow shotReduced object image blurTelevision system detailsColor television detailsComputer graphics (images)Radiology

In the image capturing apparatus, the controller controls an optical element, when a motion of the image capturing apparatus follows a motion of the object, by using first motion information obtained from a first detector to detect the motion of the image capturing apparatus and second motion information obtained from a second detector to detect the motion of the object. The calculator calculates prediction information on the motion of the object during an exposure time, by using the second information detected at multiple times before the exposure time. The controller uses the prediction information to control the optical element during the exposure time.

Owner:CANON KK

Real time monitoring and prediction of motion in MRI

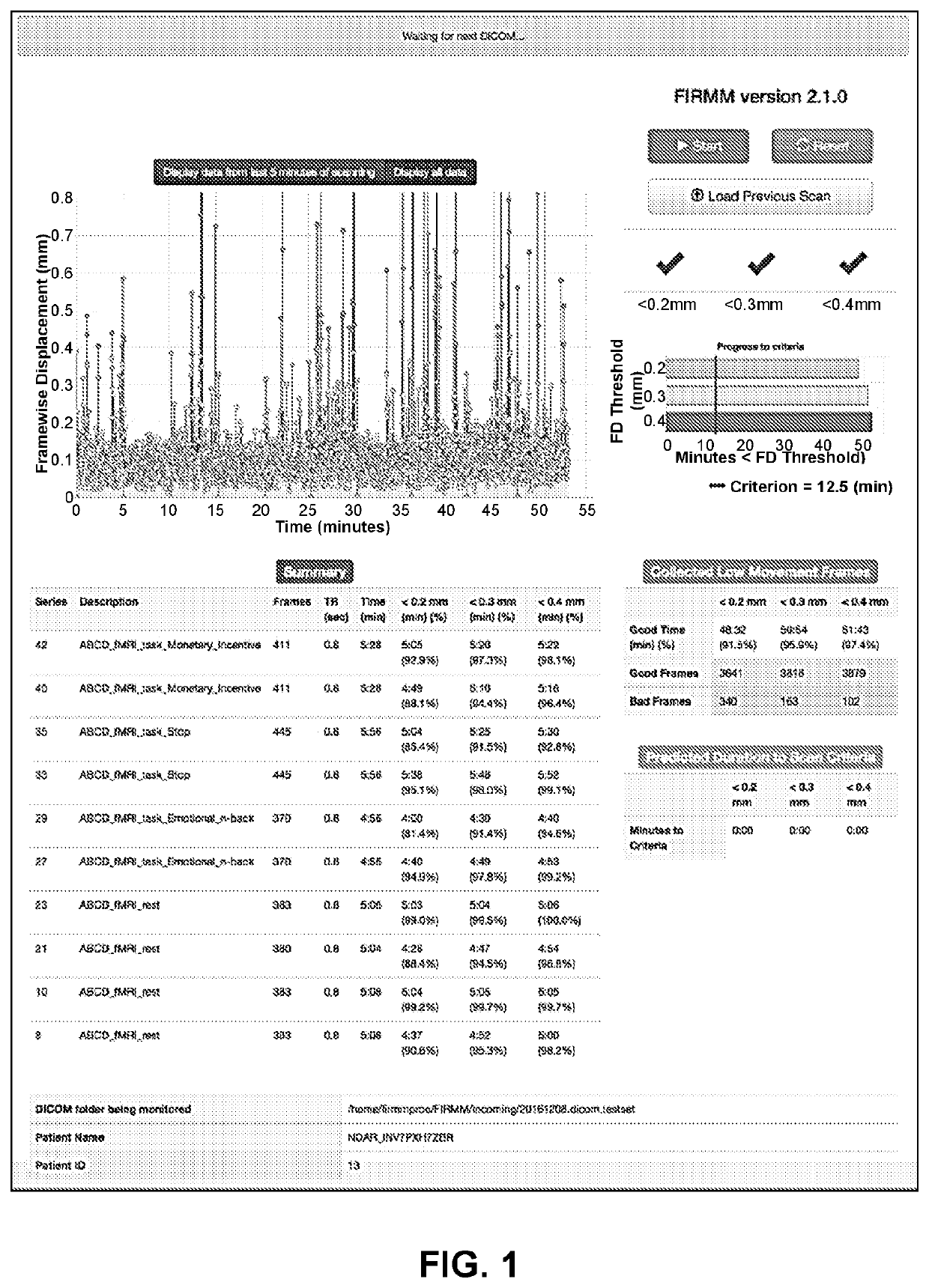

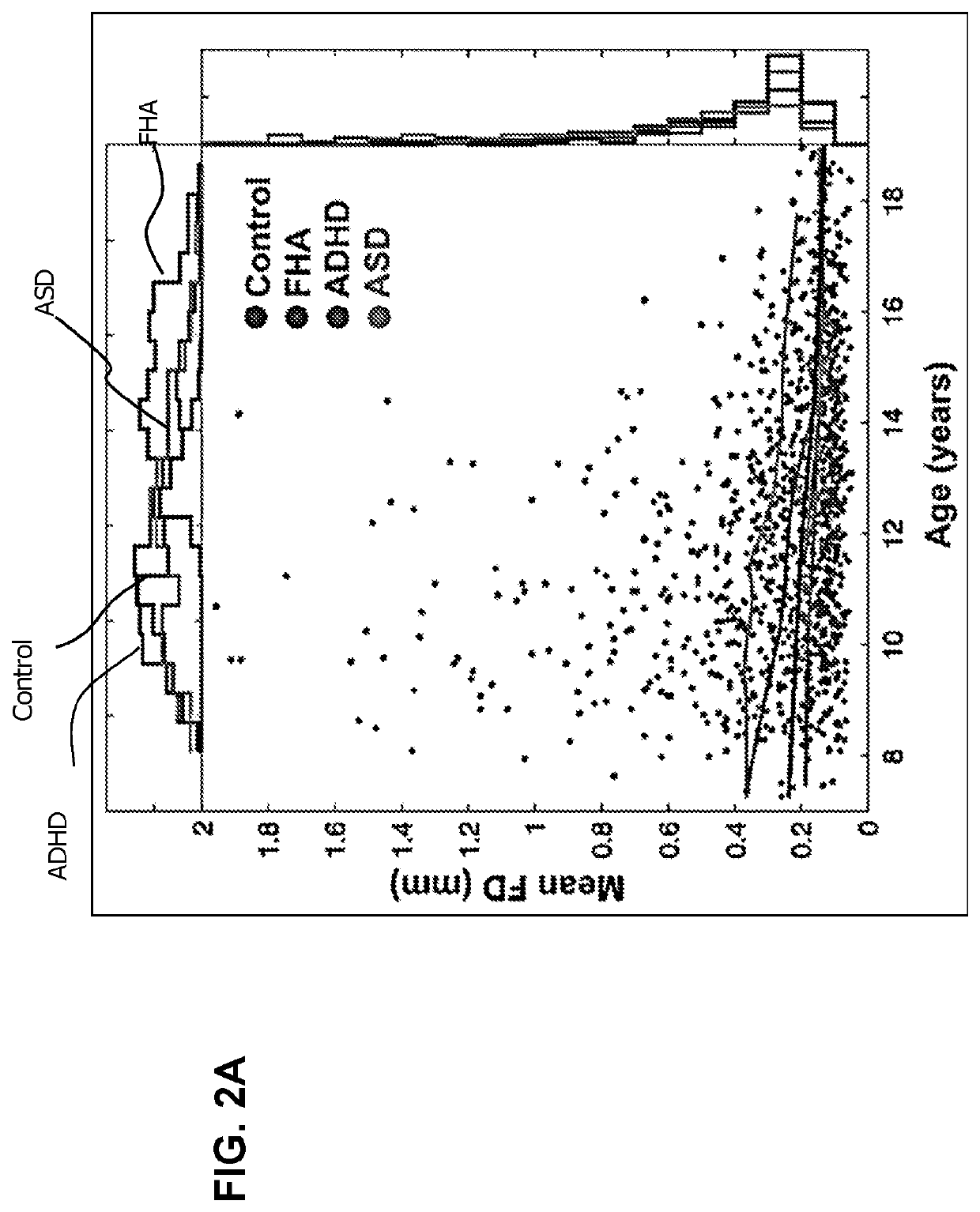

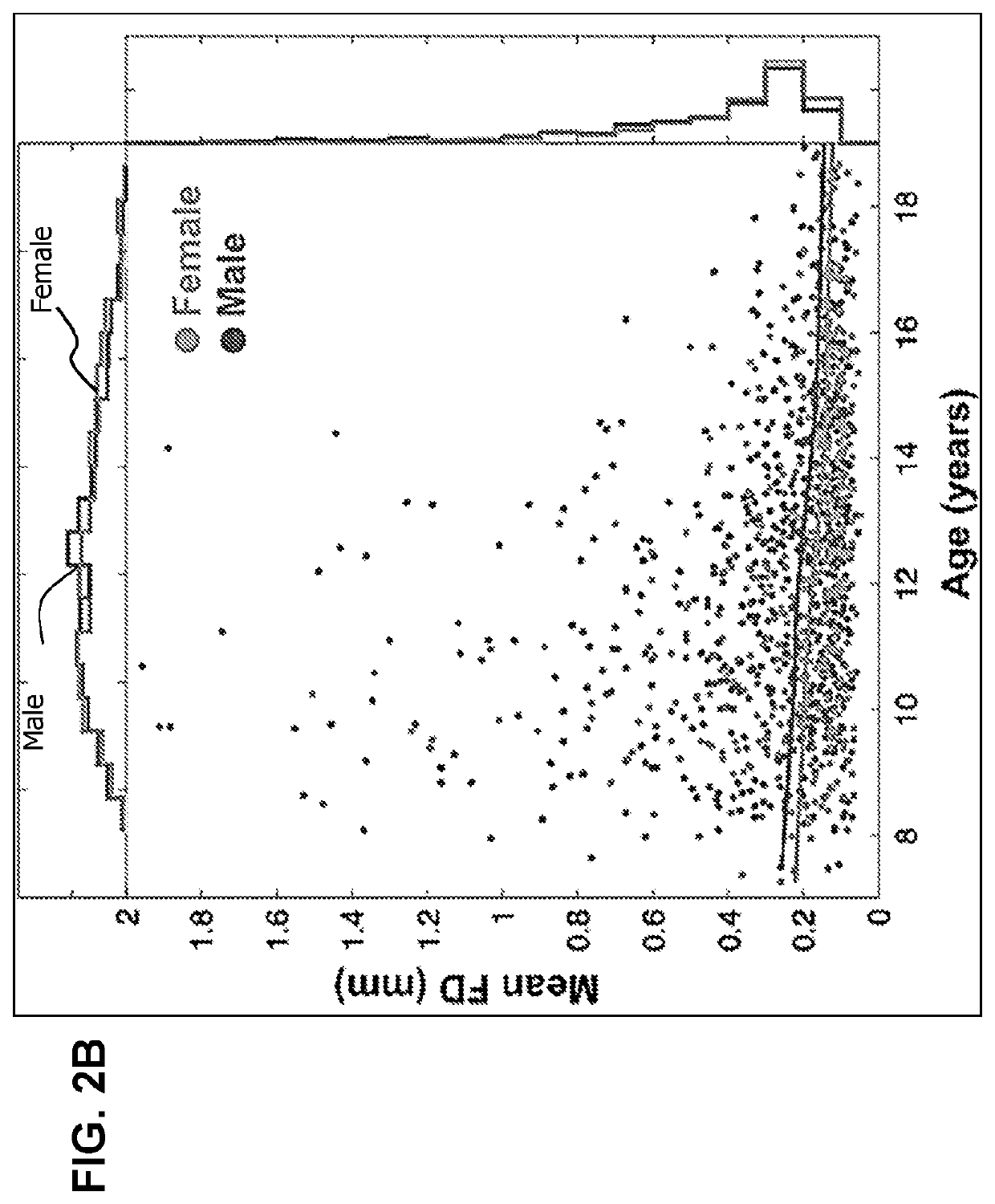

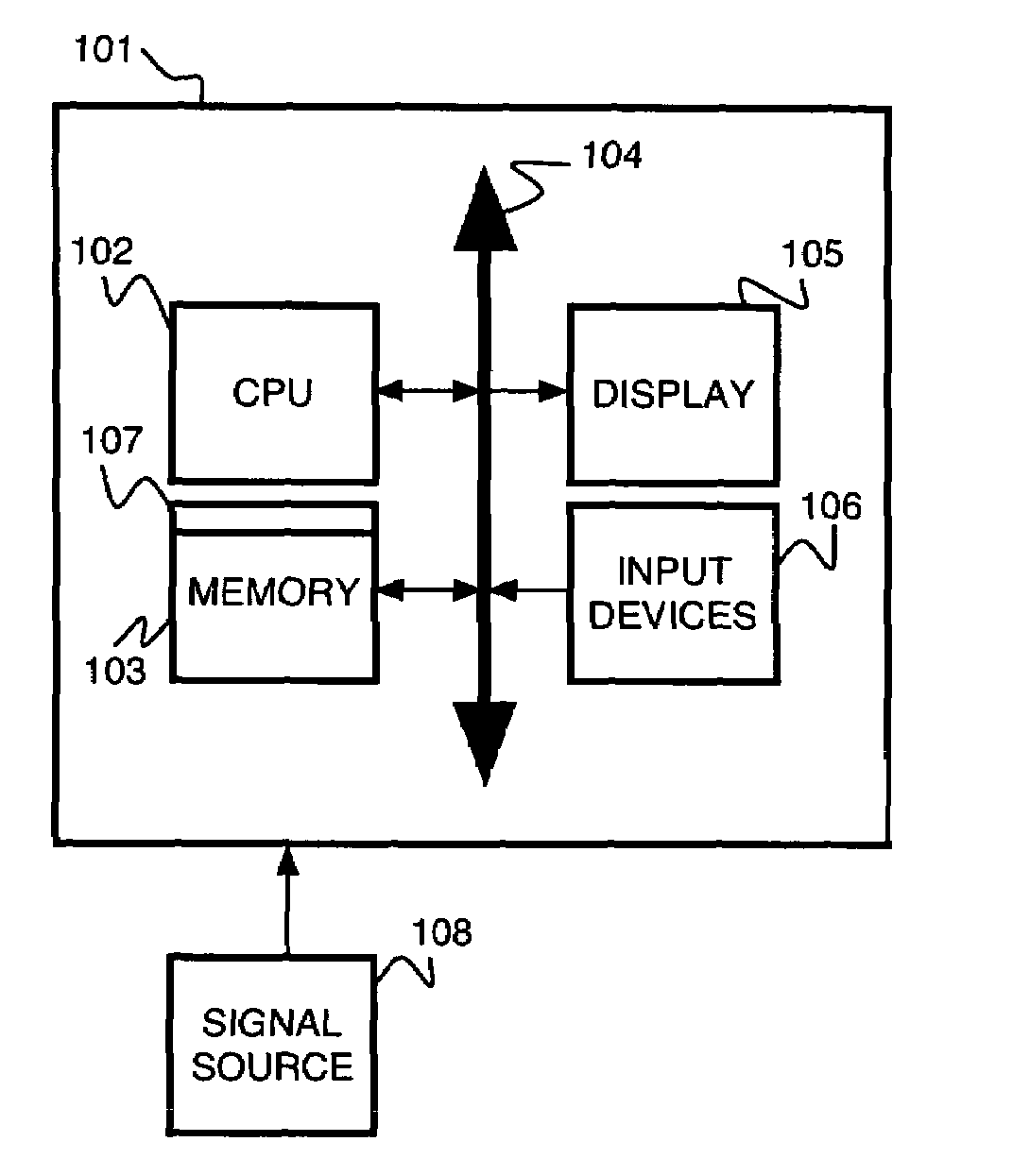

ActiveUS20200225308A1Medical data miningDiagnostic recording/measuringMedicineMagnetic Resonance Imaging Scan

Methods, computer-readable storage devices, and systems are described for reducing movement of a patient undergoing a magnetic resonance imaging (MRI) scan by aligning MRI data, the method implemented on a Framewise Integrated Real-time MRI Monitoring (“FIRMM”) computing device including at least one processor in communication with at least one memory device. Aspects of the method comprise receiving a data frame from the MRI system, aligning the received data frame to a preceding data frame, calculating motion of a body part between the received data frame and the preceding data frame, calculating total frame displacement, and excluding data frames with a cutoff above a pre-identified threshold of the total frame displacement.

Owner:OREGON HEALTH & SCI UNIV +1

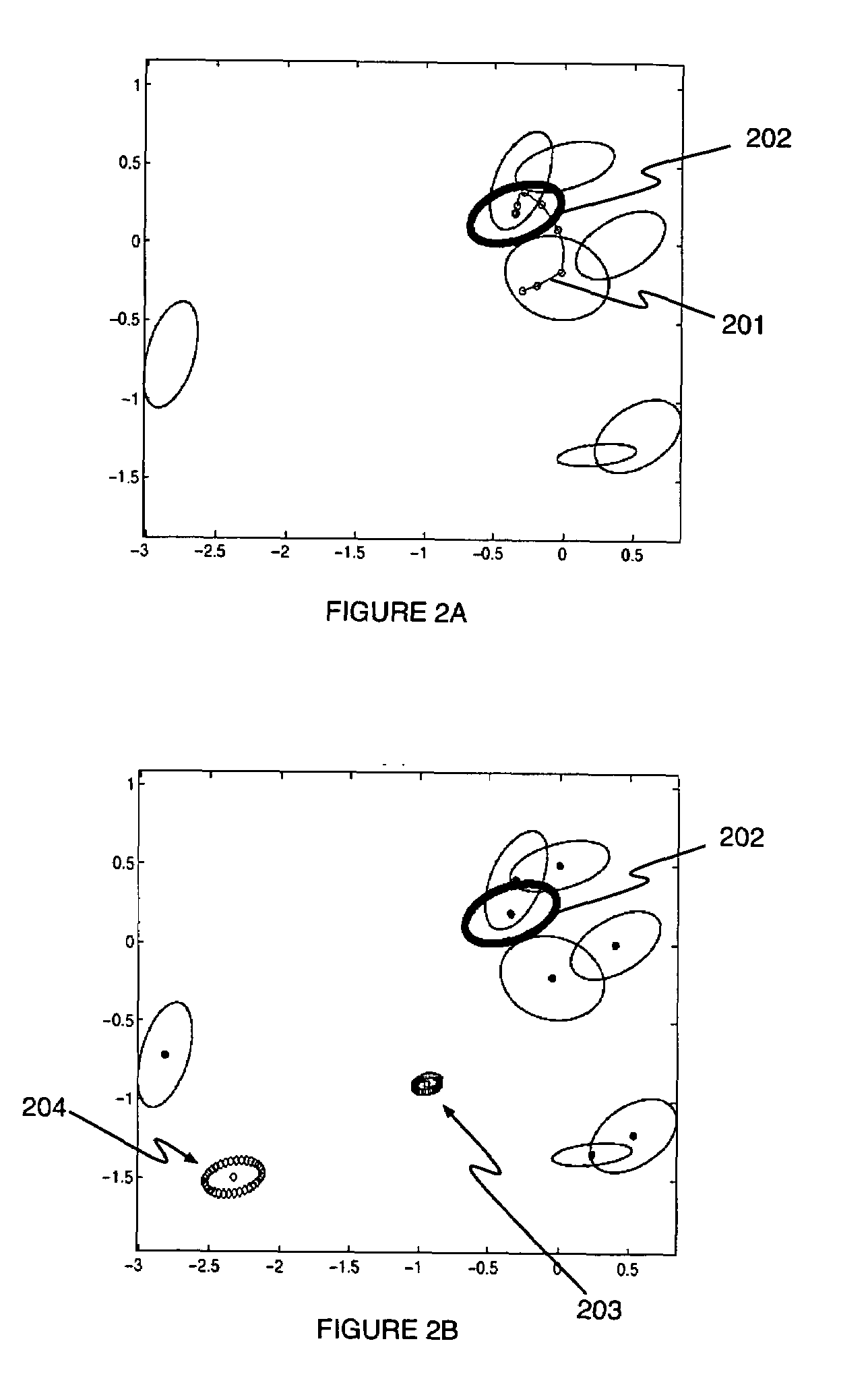

Density estimation-based information fusion for multiple motion computation

InactiveUS7298868B2Image analysisCharacter and pattern recognitionSpectral density estimationPattern detection

A fusion estimator is determined as the location of the most significant mode of a density function, which takes into account the uncertainty of the estimates to be fused. A mode detection method relies on mode tracking across scales. The fusion estimator is consistent and conservative, while handling naturally outliers in the data and multiple source models. The new estimator is applied for multiple motion estimation. Numerous experiments validate the theory and provide very competitive results. Other applications include generic distributed fusion, robot localization, motion tracking, registration of medical data, fusion for automotive tasks.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

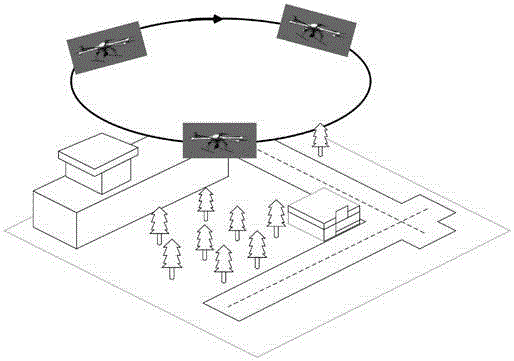

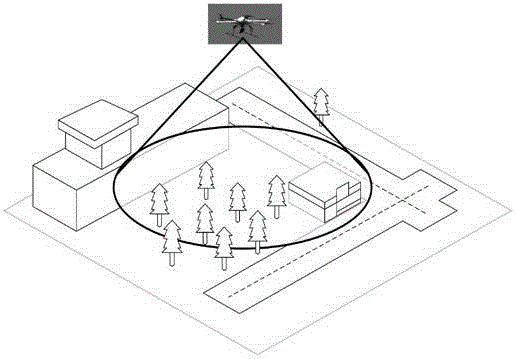

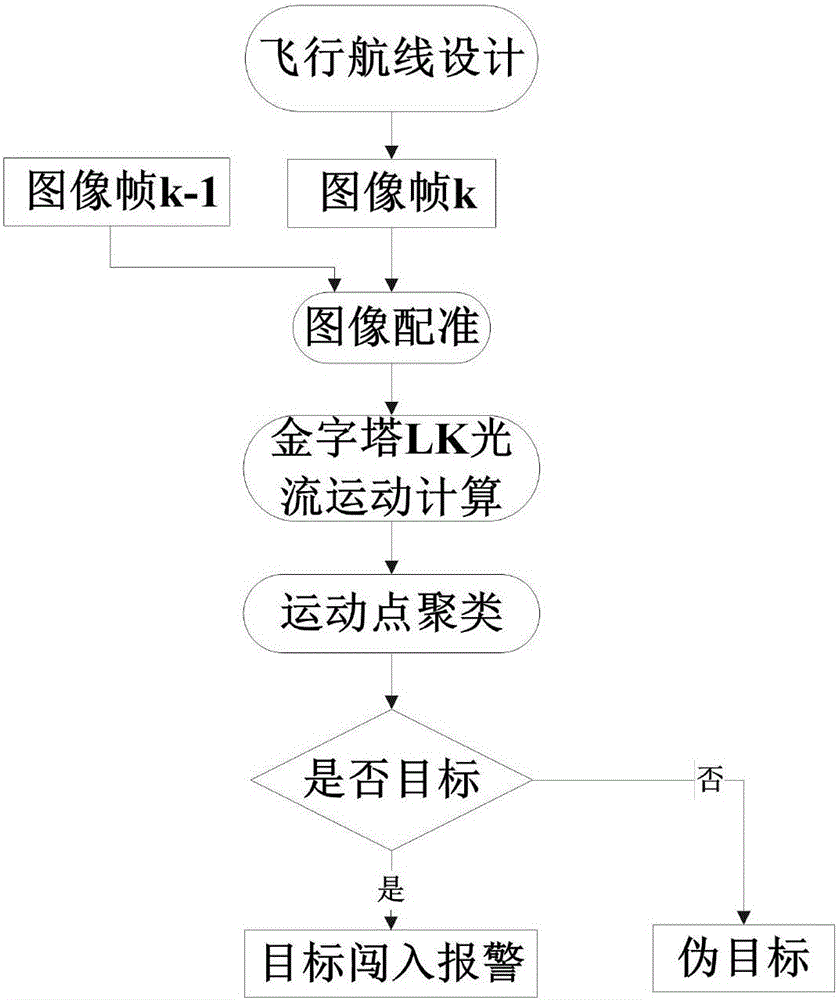

Quick and automatic target detection method

ActiveCN106845364AReduce computational complexityControl calculation timeImage enhancementImage analysisComputation complexityUncrewed vehicle

The invention mainly belongs to the technical field of target invasion detection and particularly relates to an unmanned aerial vehicle image-based region invasion target detection method. The method is used for target detection of an unmanned aerial vehicle. The method comprises the steps of performing Gaussian pyramid layering on an original image in an original video obtained by a vehicle-mounted camera of the unmanned aerial vehicle to lower calculation complexity of feature point extraction; and then extracting SIFT feature points of the image to perform image registration, capturing motion information in the image by adopting LK sparse optical flow of a pyramid to realize target point motion calculation, performing motion point clustering and false target removal, and finally performing target judgment to realize target detection. According to the method, the search range of inter-frame feature points of the unmanned aerial vehicle video can be reduced, the motion large-displacement problem in the unmanned aerial vehicle image is solved, and the detection capability is improved; and therefore, the manual detection intensity of region invasion is reduced and the automatic perception capability of the unmanned aerial vehicle is improved.

Owner:中国航天电子技术研究院

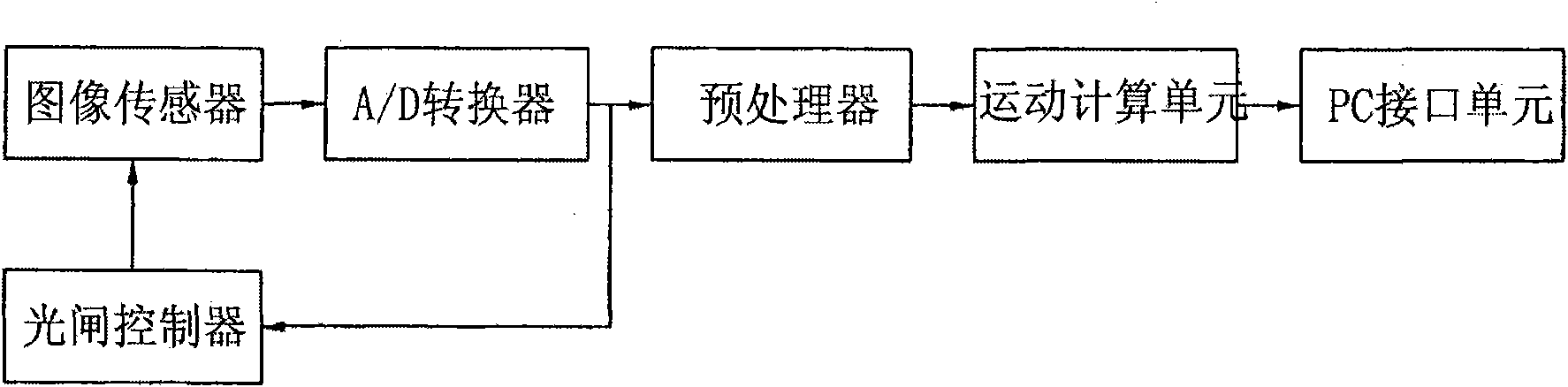

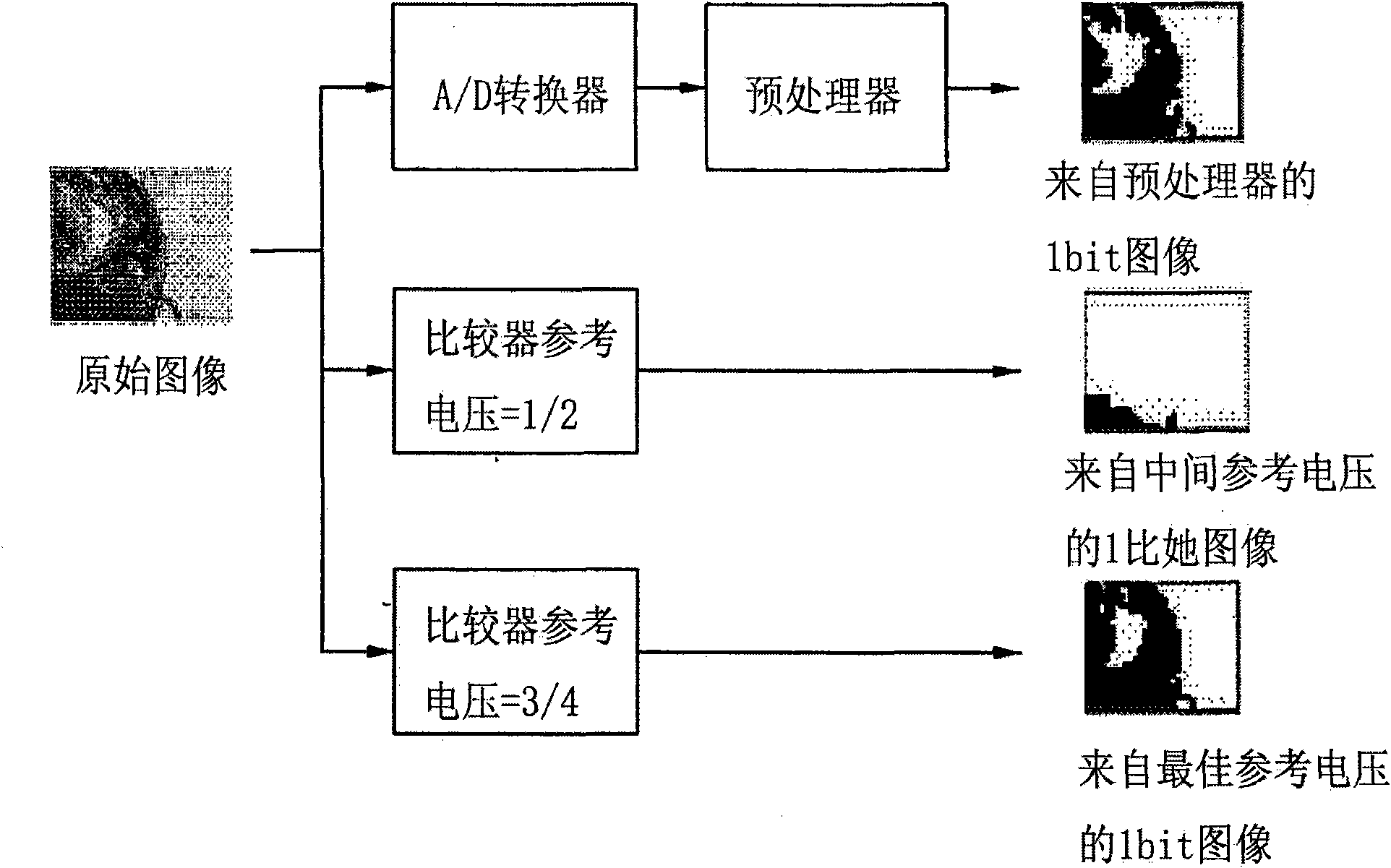

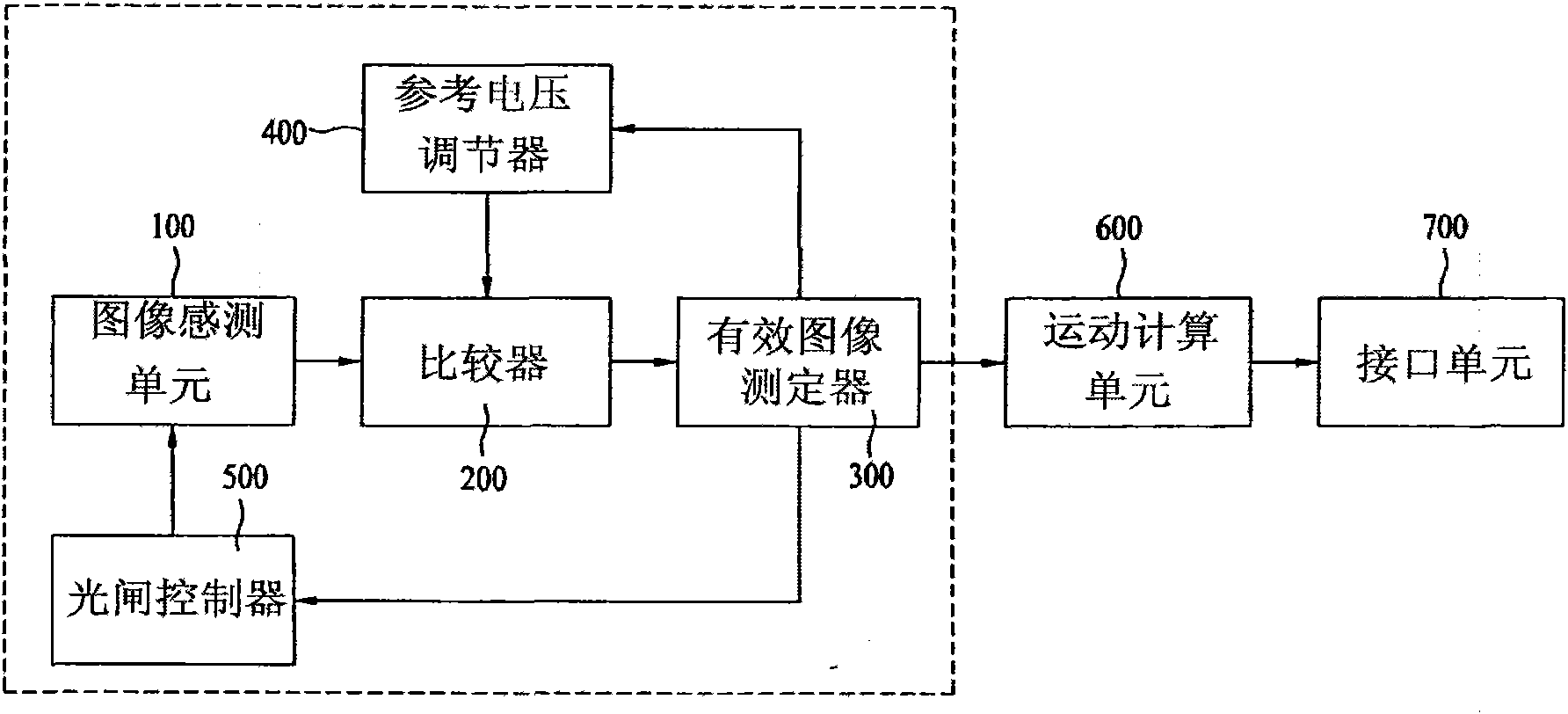

Low power image sensor adjusting reference voltage automatically and optical pointing device comprising the same

InactiveCN101554038ALow costReduce power consumptionTelevision system detailsTelevision system scanning detailsImage sensorVoltage reference

A low power image sensor sensing light from an object and outputting an image signal includes: an image sensing unit which senses light from an object, converts the light into an electric signal, and outputs the electric signal; a comparing unit which receives an electric signal from the image sensor, compares a voltage level of the electric signal with a reference voltage, and outputs an image signal as an 1bit signal per pixel; and an effective image adjuster which compares bit value distribution of an image signal output from the comparator with a preset effective range, and adjusts the effective image to output an effective image. Further, an optical pointing device includes: an image sensor which senses light from an object and outputs an image signal; and a motion computing unit which receives the image signal and compares before and after images to calculate a motion vector, wherein the motion computing unit includes: a temporary storage unit to temporarily store image data output from the image sensor; a comparator to compare the image data output from the image sensor with the image data stored in the temporary storage unit; a direction selector; and a controller.

Owner:SILICON COMM TECH

Apparatus and method for controlling motion-based user interface

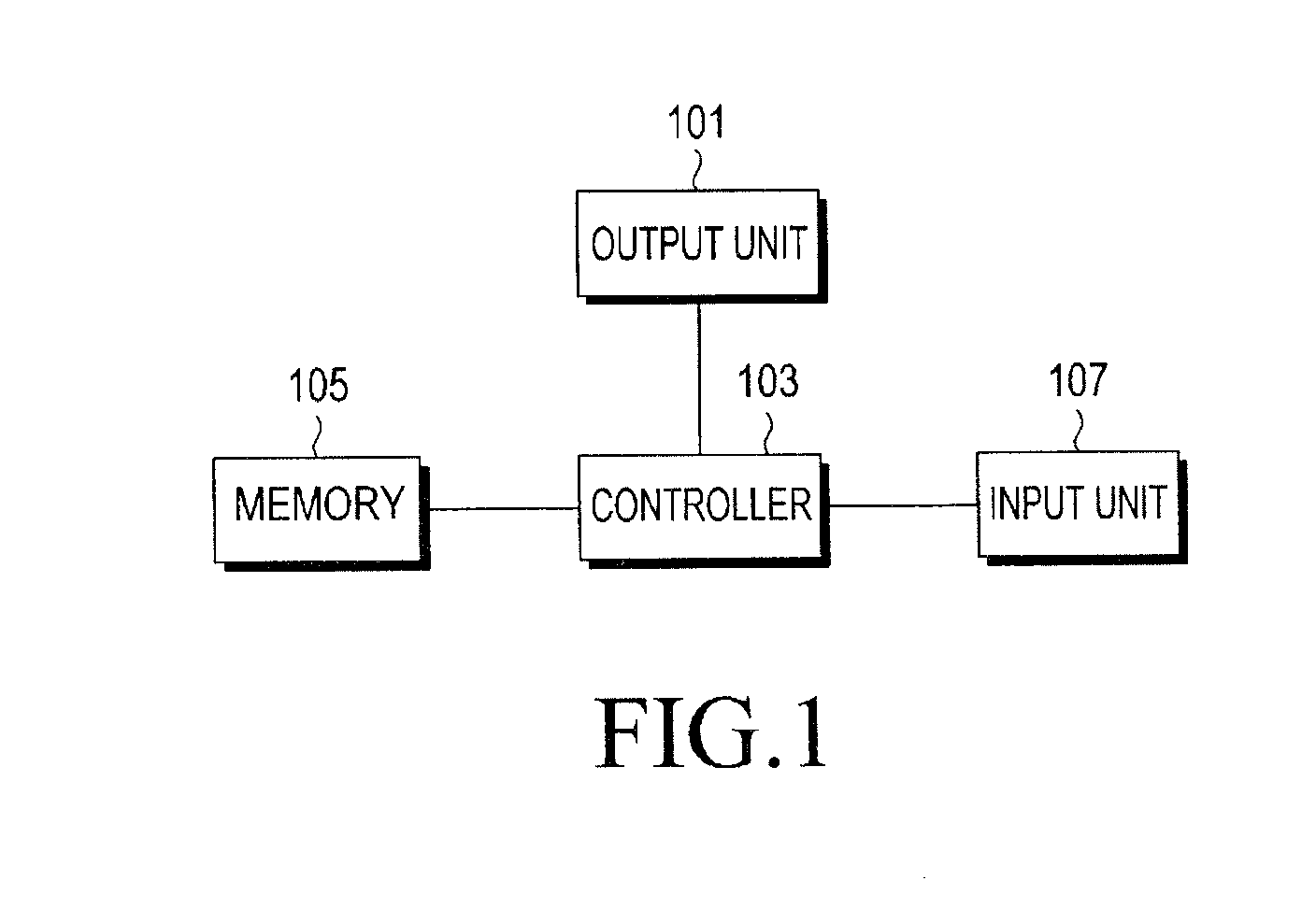

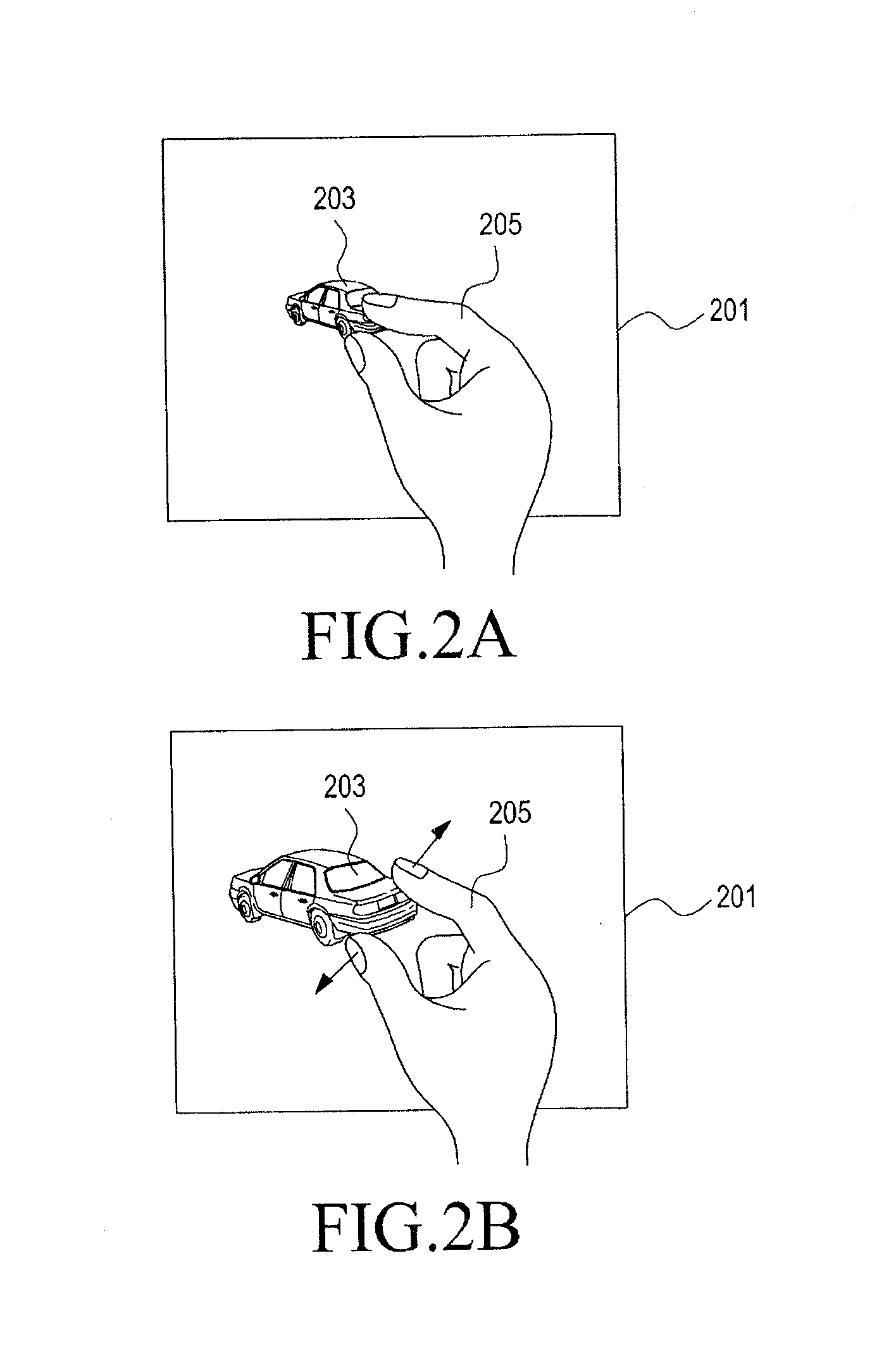

ActiveUS20130194222A1Easily enlargeShrink wellDigital data processing detailsGeometric image transformationTouchscreenComputer science

A method and apparatus for controlling a motion-based user interface are provided. The apparatus includes a touch screen for displaying an image and for receiving input of a user touch on at least one spot of the touch screen, a sensor unit for sensing a motion of the apparatus, a motion calculator for calculating a degree of the motion of the apparatus, when sensing the motion of the apparatus at the sensor unit, and a controller for, when the at least one spot is touched on the touch screen, determining the number of touched spots, for receiving information about the degree of the motion of the apparatus from the motion calculator, and for determining whether to change the size of the image or the position of the image according to the number of touched spots.

Owner:SAMSUNG ELECTRONICS CO LTD

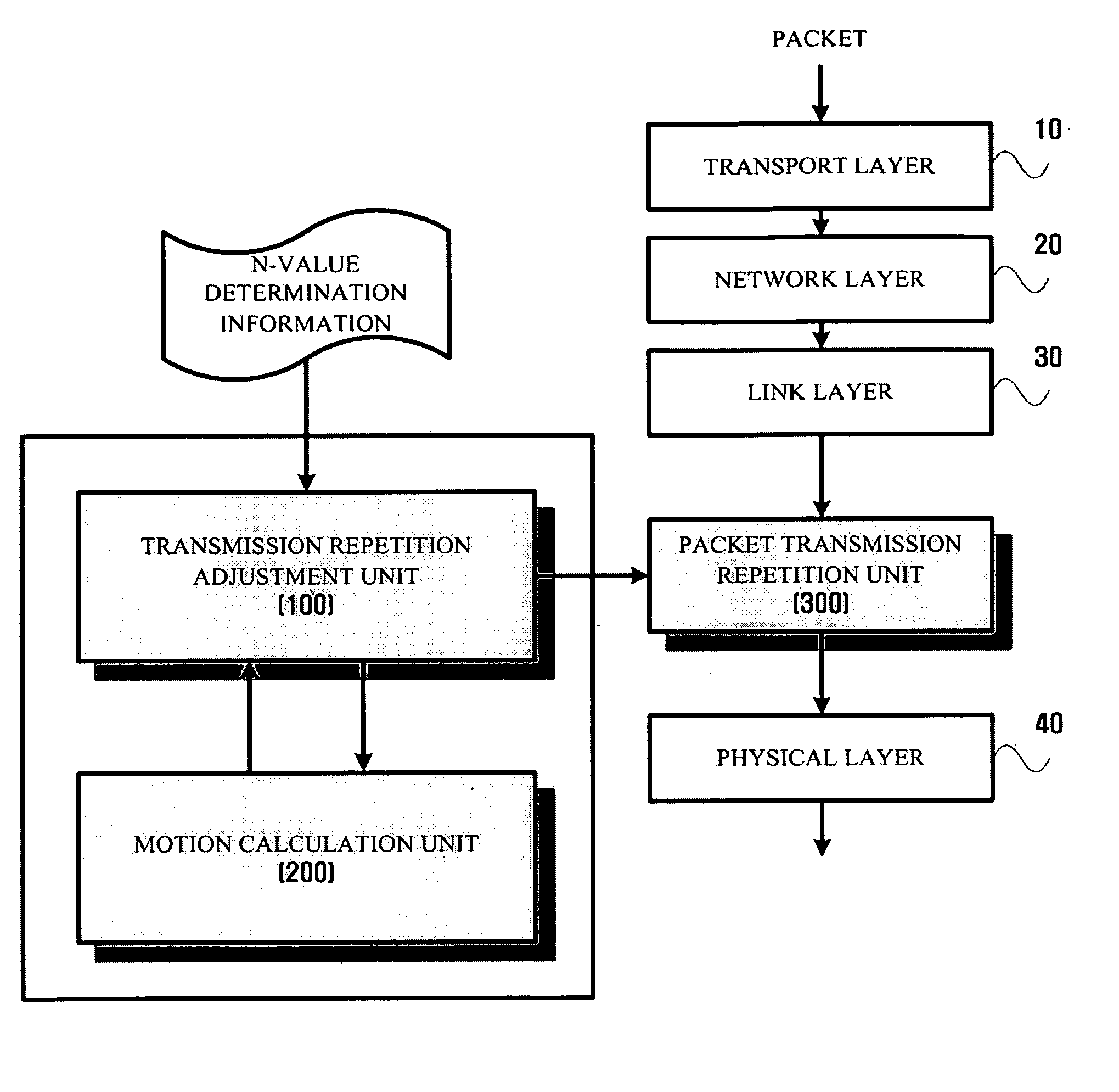

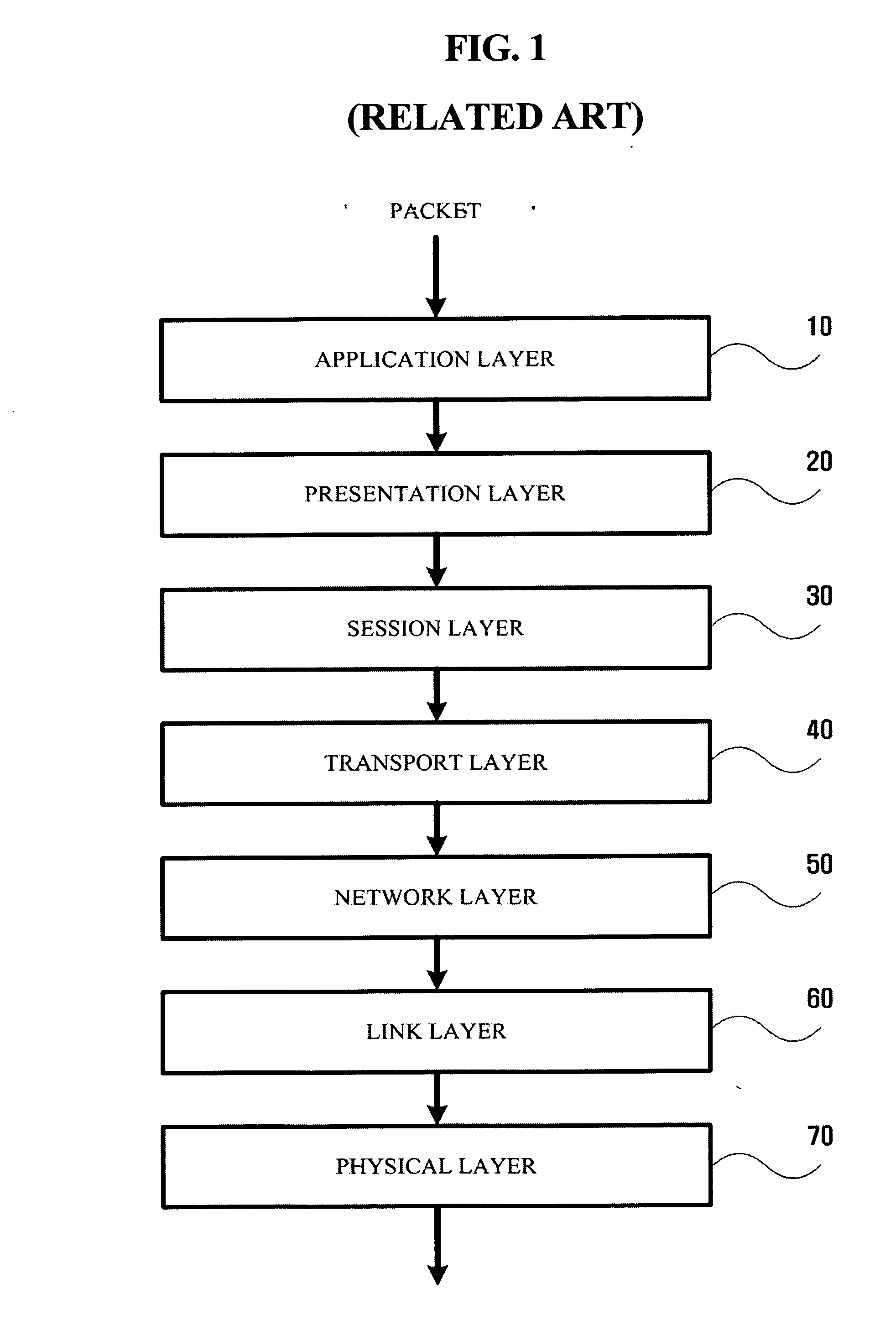

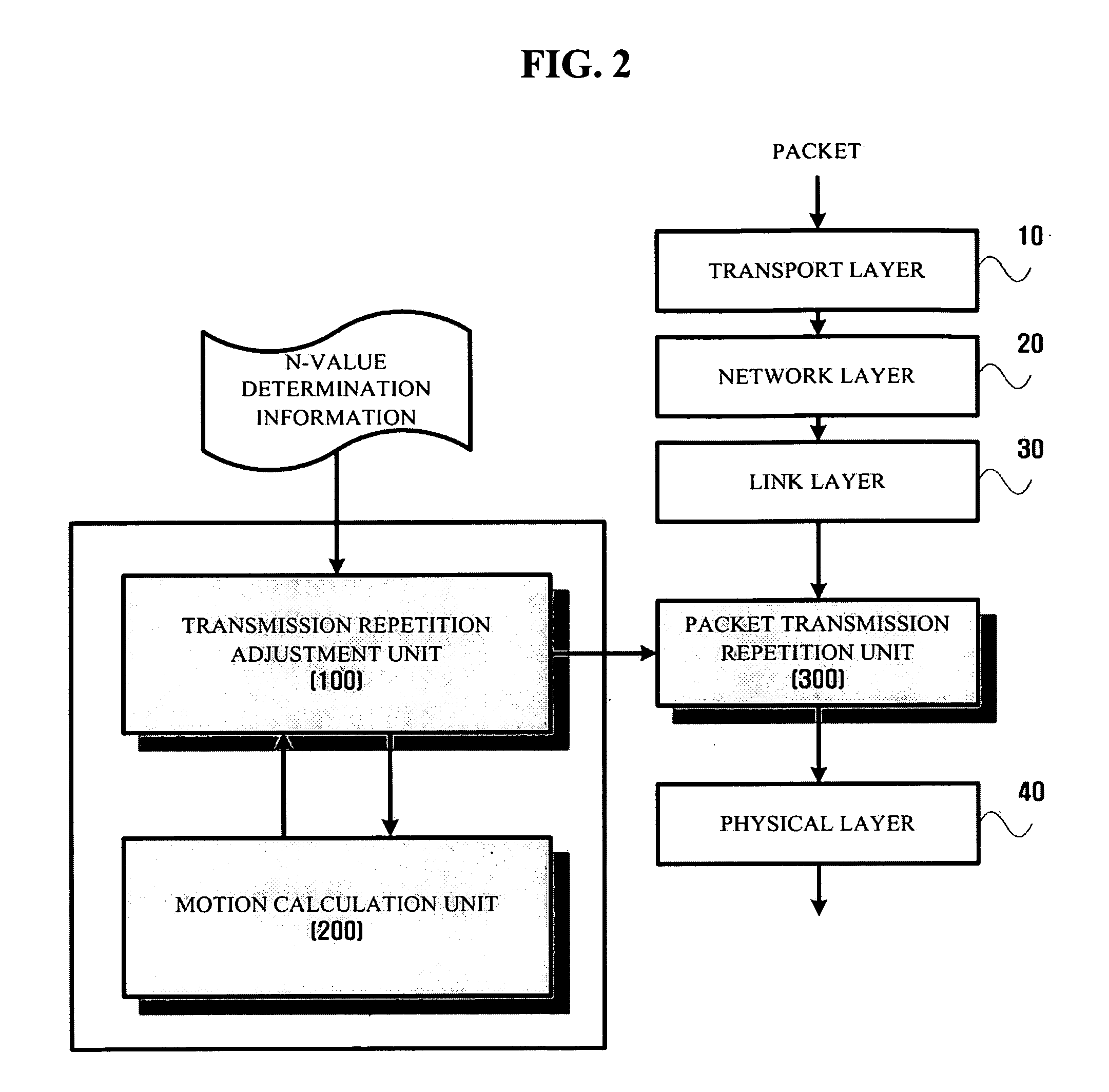

Apparatus and method for transmitting packets in wireless network

ActiveUS20070109996A1Reducing packet loss packetReducing packet packet transmission delay timeError prevention/detection by using return channelTransmission systemsData link layerRepetition Number

Provided is an apparatus and method for transmitting packets in a wireless network. The apparatus includes a motion calculation unit, a transmission repetition adjustment unit, and a packet transmission repetition unit, The, motion calculation unit calculates the motion value of a mobile terminal. The transmission repetition adjustment unit receives N-value determination information and determines the number of repetitions (N value) of transmission of a packet transmitted and received by the mobile terminal based on the received N-value determination information, and the motion value calculated by the motion calculation unit. The packet transmission repetition unit repeatedly transmits the packet, which is received from a data link layer, to a physical layer according to the N value determined by the transmission repetition adjustment unit.

Owner:SAMSUNG ELECTRONICS CO LTD

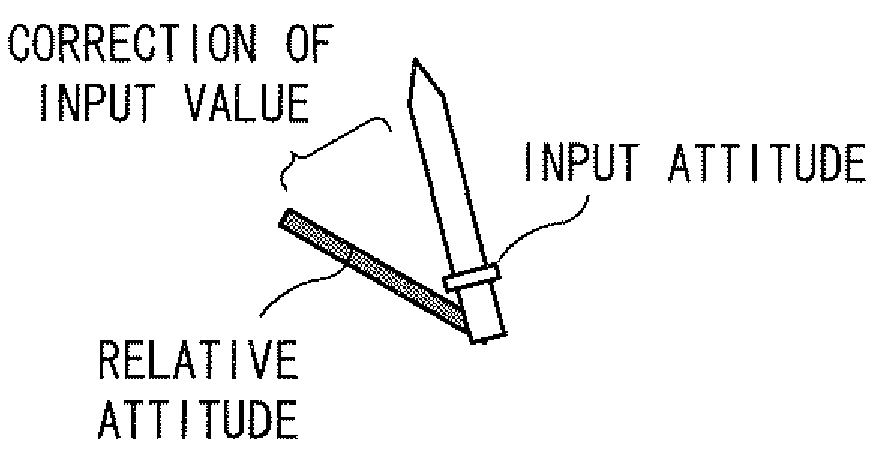

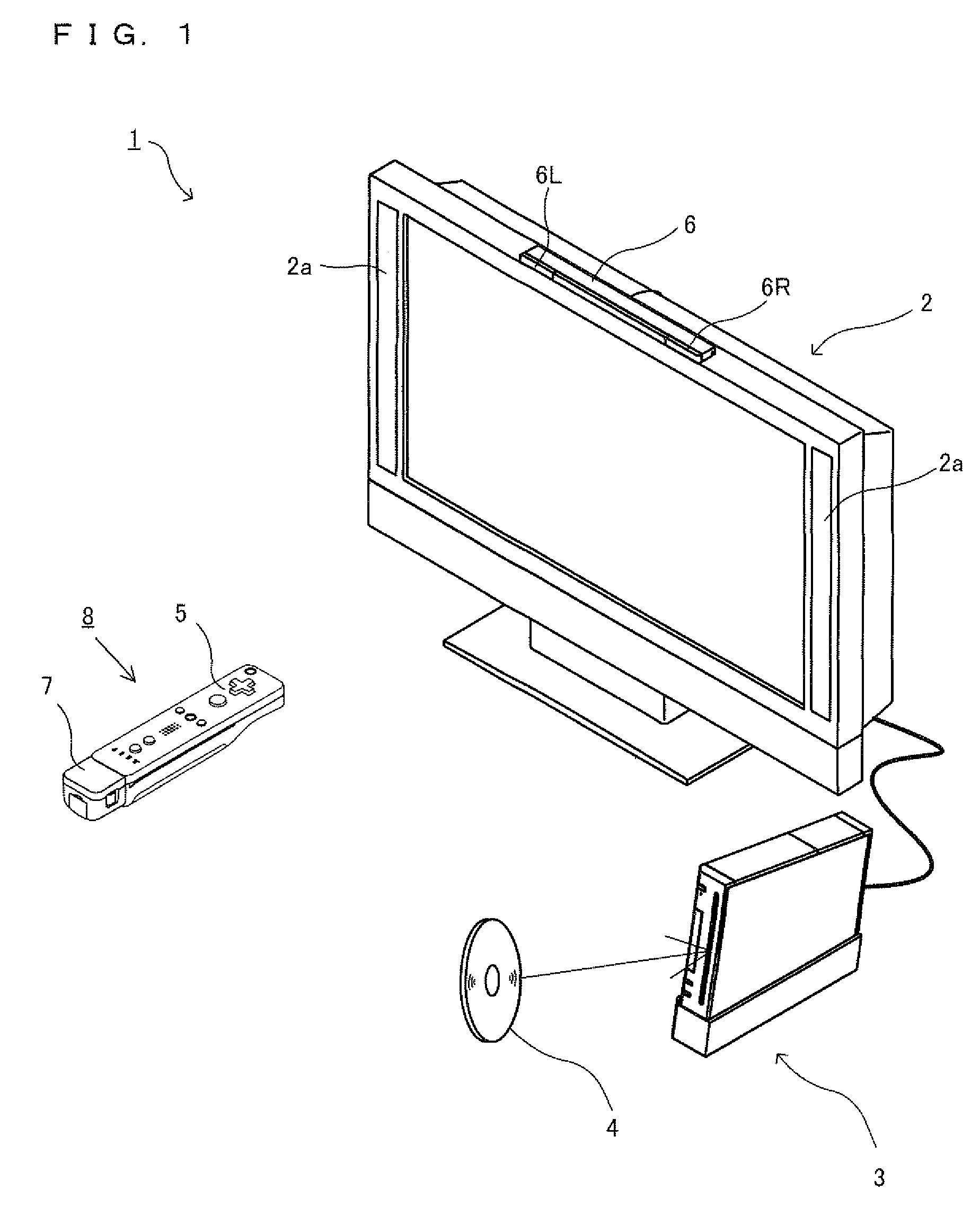

Input device attitude prediction

ActiveUS8000924B2Accurate calculationAdvantageous in terms of cost effectivenessDigital computer detailsSpeed measurement using gyroscopic effectsSimulationControl theory

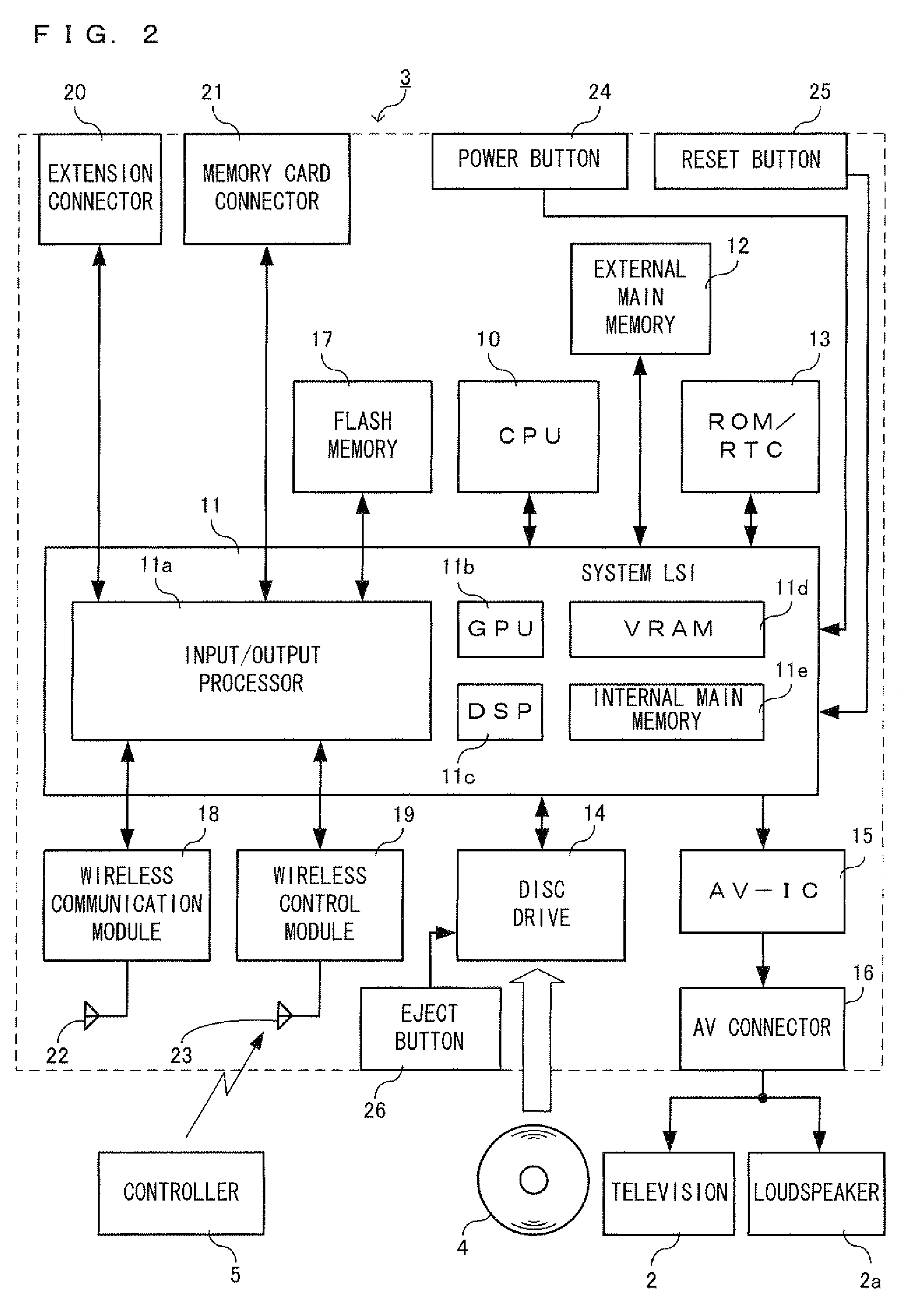

A first attitude calculation means S2 calculates a first attitude indicating an attitude of an input device itself based on a motion detection signal output from an input device equipped with a motion detection sensor. A motion calculation means S21 calculates a motion of the first attitude. An approaching operation determination means S22 to S24 determines whether or not the motion of the first attitude calculated by the motion calculation means is a motion of approaching a predetermined attitude. An input attitude setting means S27, S28, S4 sets an attitude obtained by correcting the first attitude as an input attitude if the motion of the first attitude is the motion of approaching the predetermined attitude, and sets the first attitude as an input attitude if the motion of the first attitude is not the motion of approaching the predetermined attitude. A process execution means S5 performs a predetermined information process based on the input attitude.

Owner:NINTENDO CO LTD

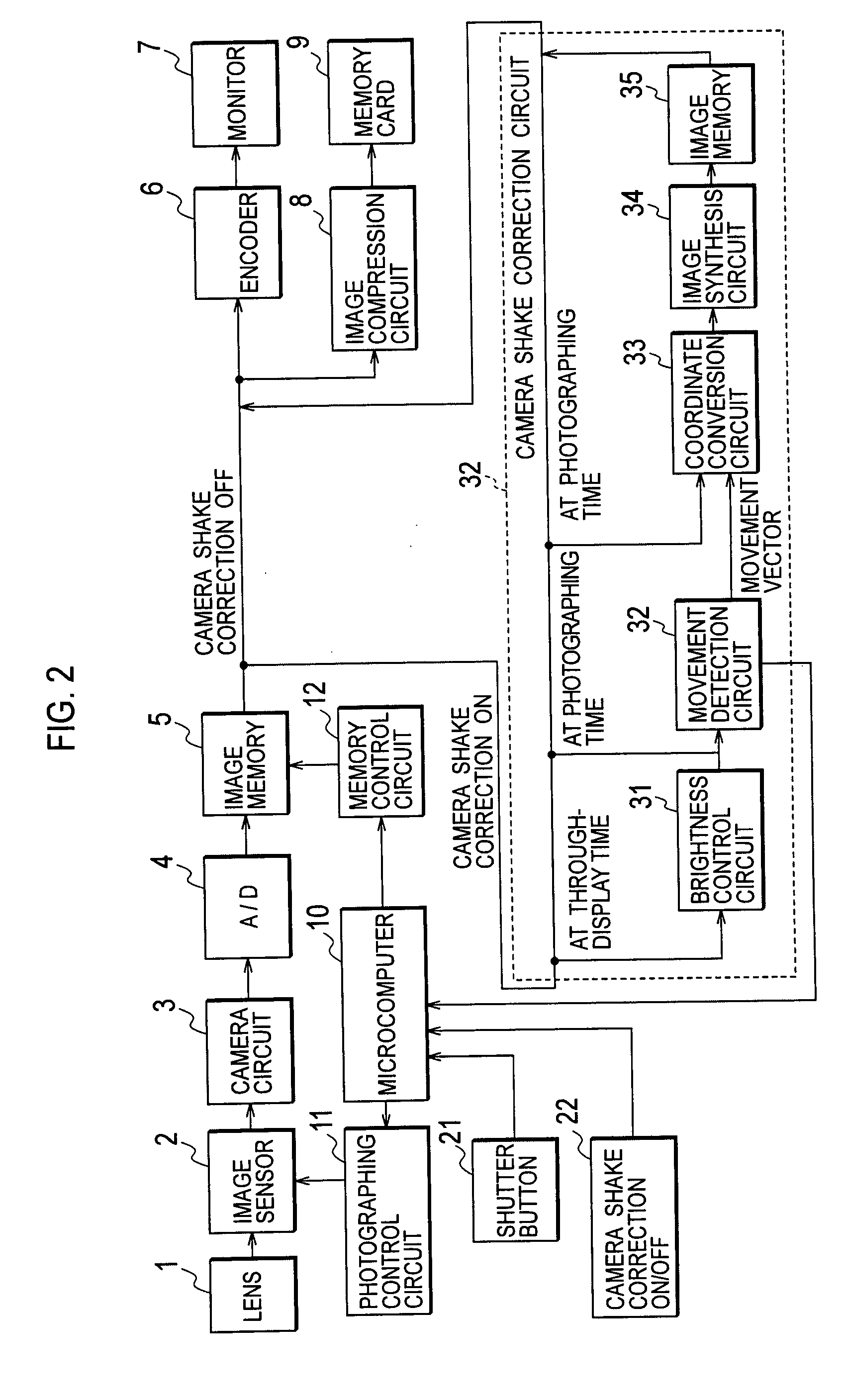

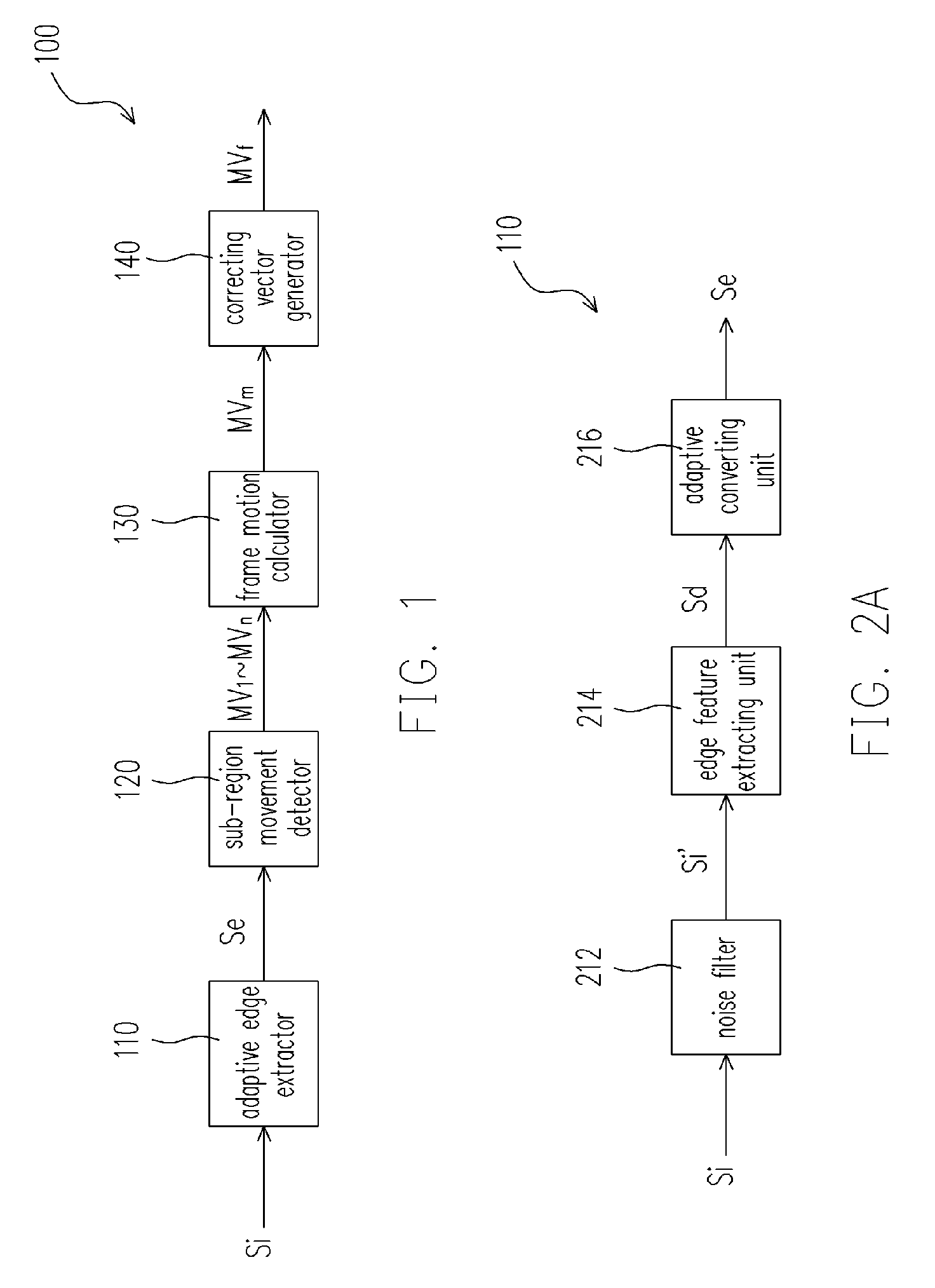

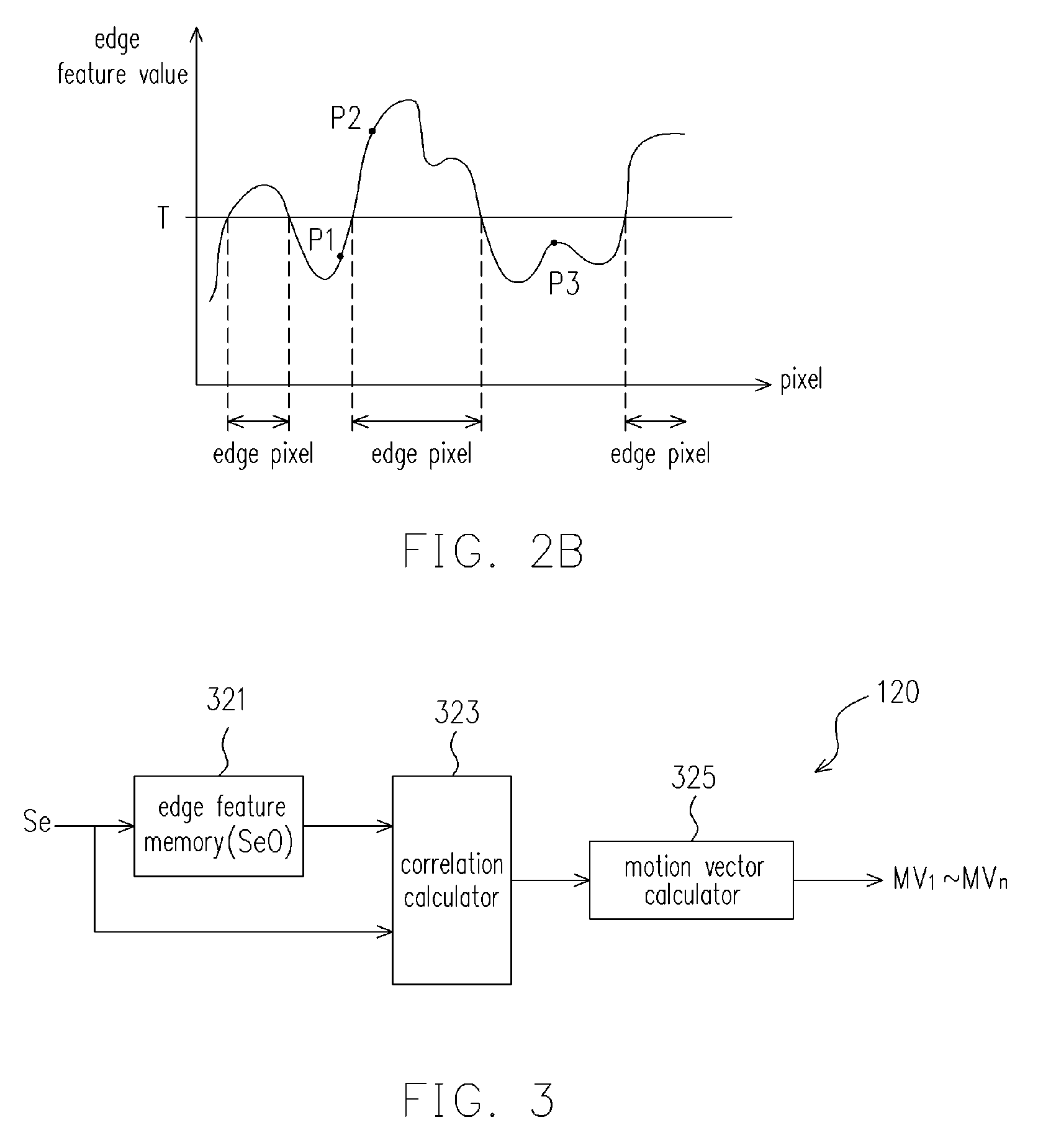

Image vibration-compensating apparatus and method thereof

InactiveUS7855731B2Reasonable priceSimple dataTelevision system detailsPicture signal generatorsDigital videoMotion vector

An image vibration-compensating apparatus and a method thereof are provided. The apparatus and the method are applied in an image-capturing apparatus, such as a digital still camera (DSC) or digital video recorder (DVR), for compensating image vibration when capturing an image. The image vibration-compensating apparatus comprises an adaptive edge extractor, a sub-region movement detector, a frame motion calculator, and a correcting vector generator. The image vibration-compensating apparatus analyses the difference between the current image and the previous image, and outputs the correcting motion vector to achieve the function of image vibration-compensation. In the image vibration-compensating method, an accumulate compensation mode is used to prevent fast motion of an image frame which will cause discomfort of the user's eyes when a relatively large image vibration occurs in a short period.

Owner:NOVATEK MICROELECTRONICS CORP

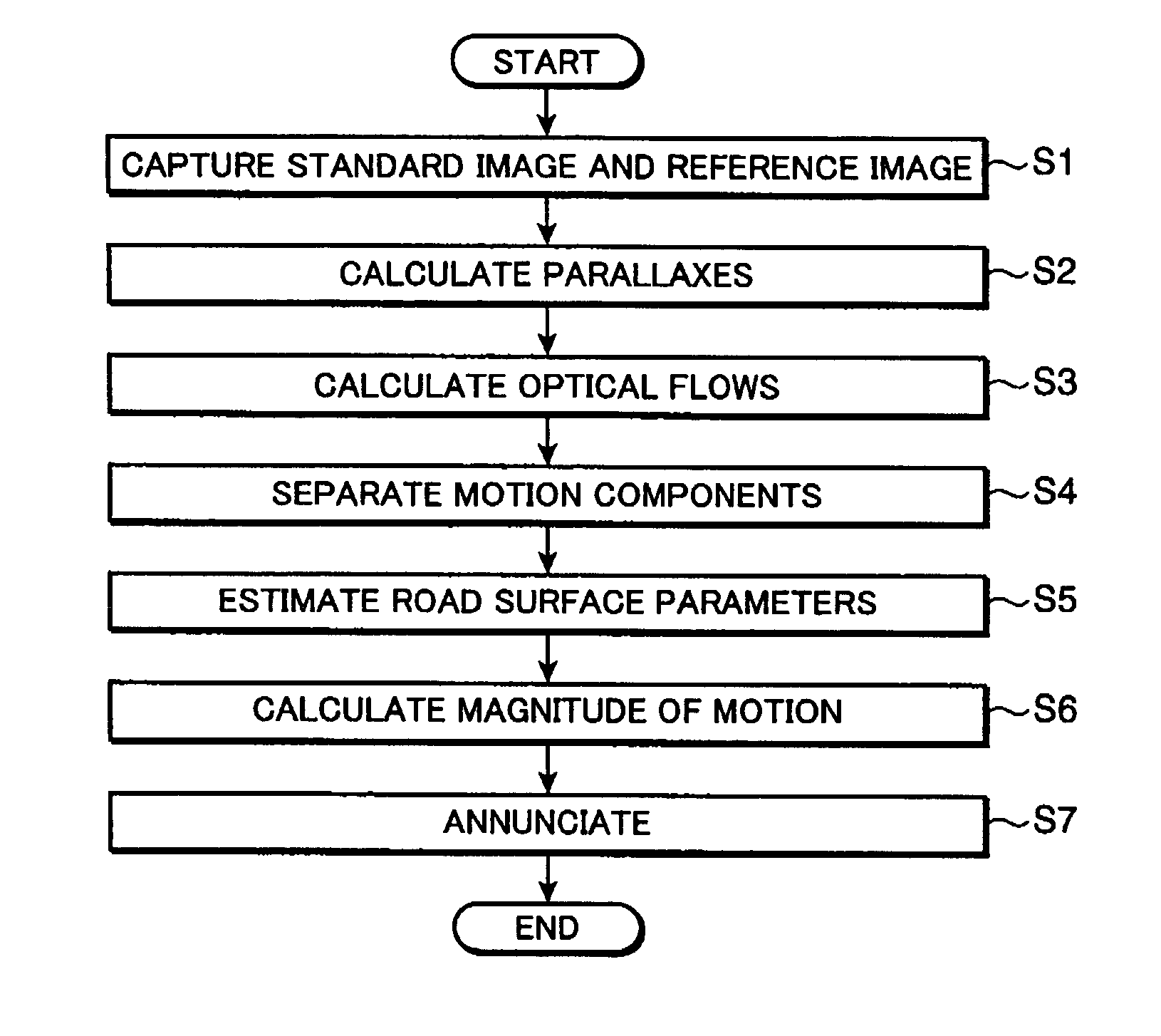

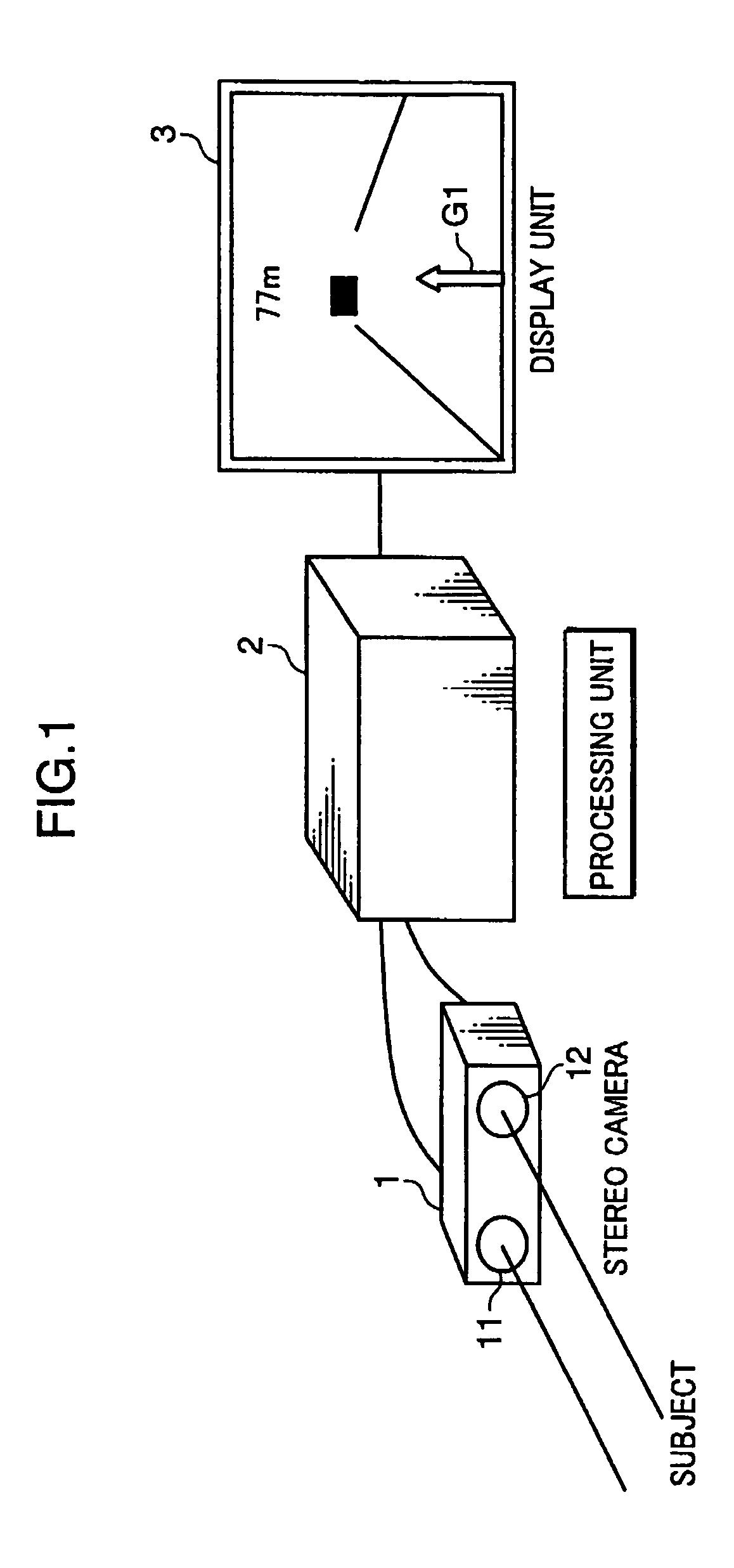

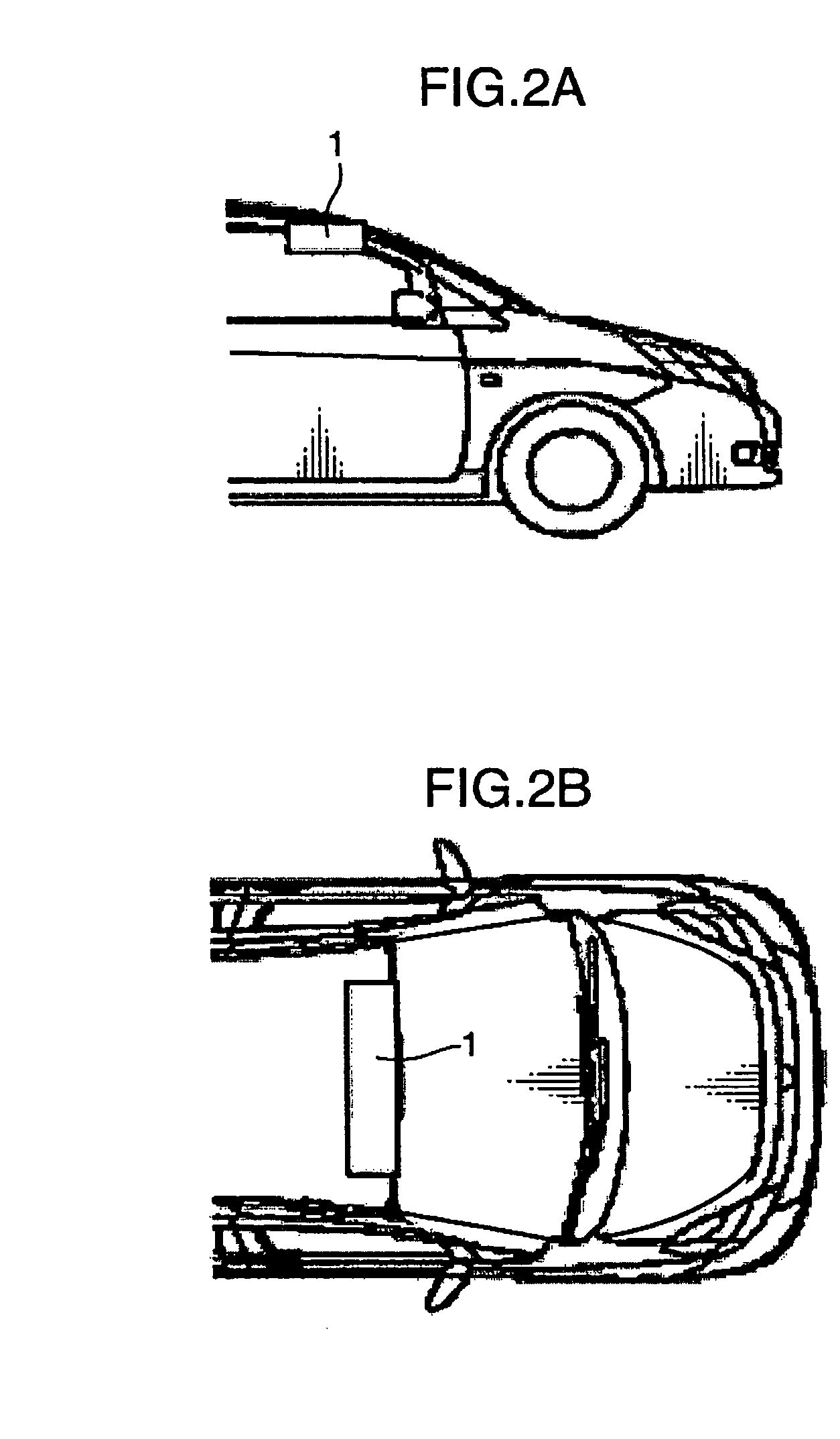

Analyzer

ActiveUS8229173B2Improve accuracyHigh precision analysisImage enhancementImage analysisParallaxAngular velocity

A parallax calculator sets a plurality of target points in each standard image and calculates parallaxes of the individual target points. An optical flow calculator calculates two-dimensional optical flows of the individual target points by searching for points corresponding to the target points set in the standard image captured in a frame immediately preceding a current frame. A movement calculation block calculates a forward moving speed of the moving body and angular velocities thereof in both a pitch direction and a pan direction.

Owner:KONICA MINOLTA INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com