Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

147 results about "Data contention" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In database management systems, block contention (or data contention) refers to multiple processes or instances competing for access to the same index or data block at the same time.

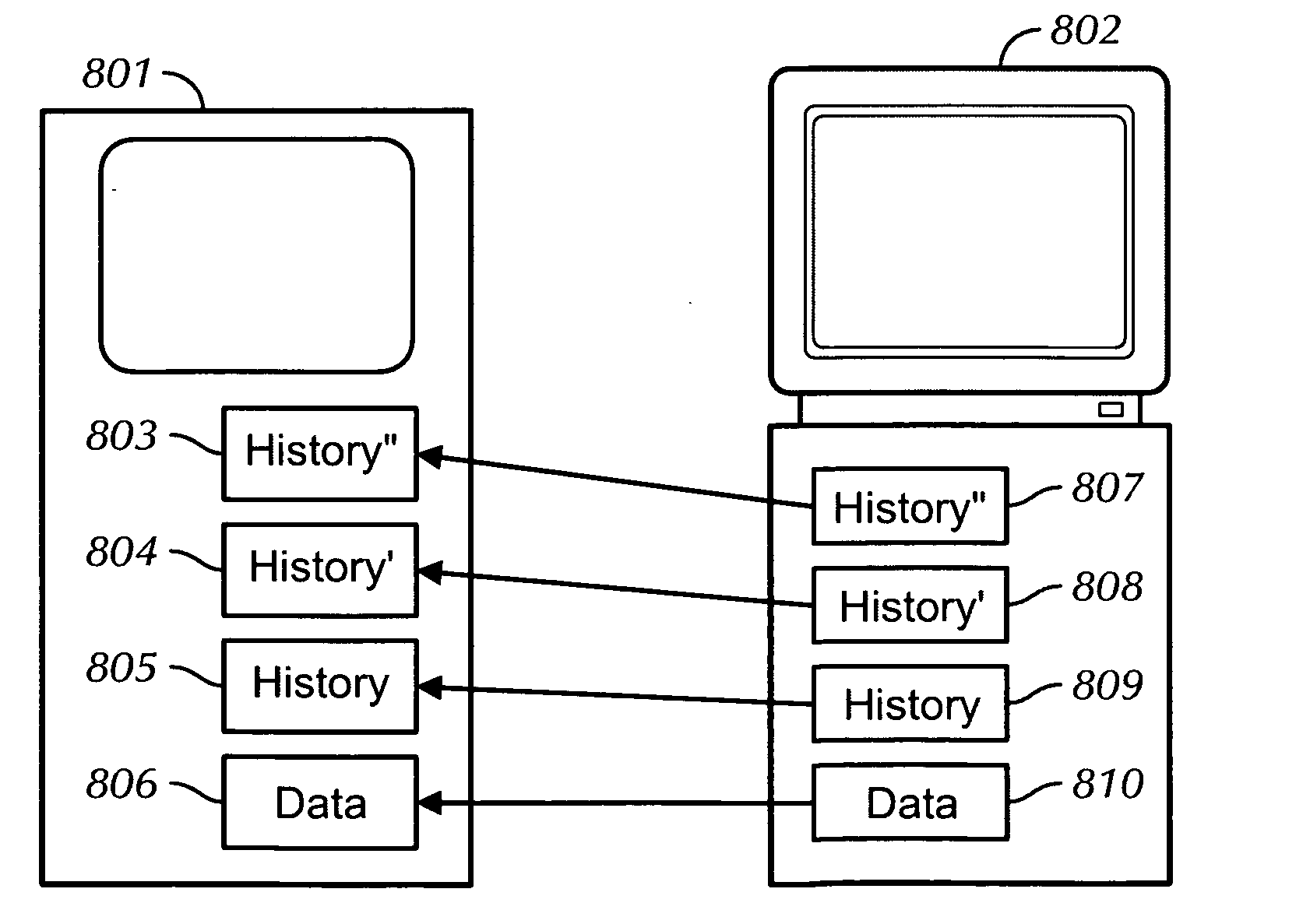

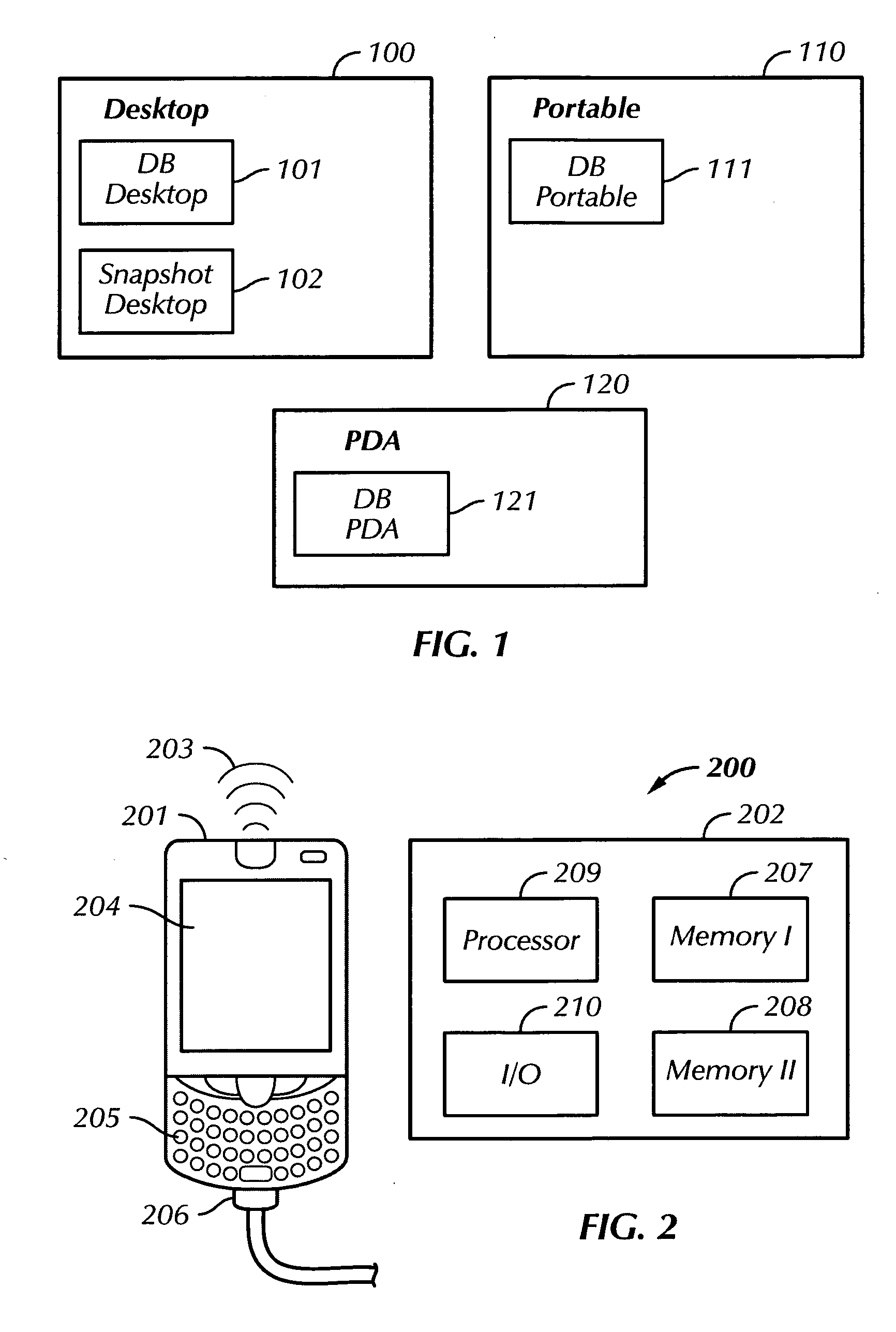

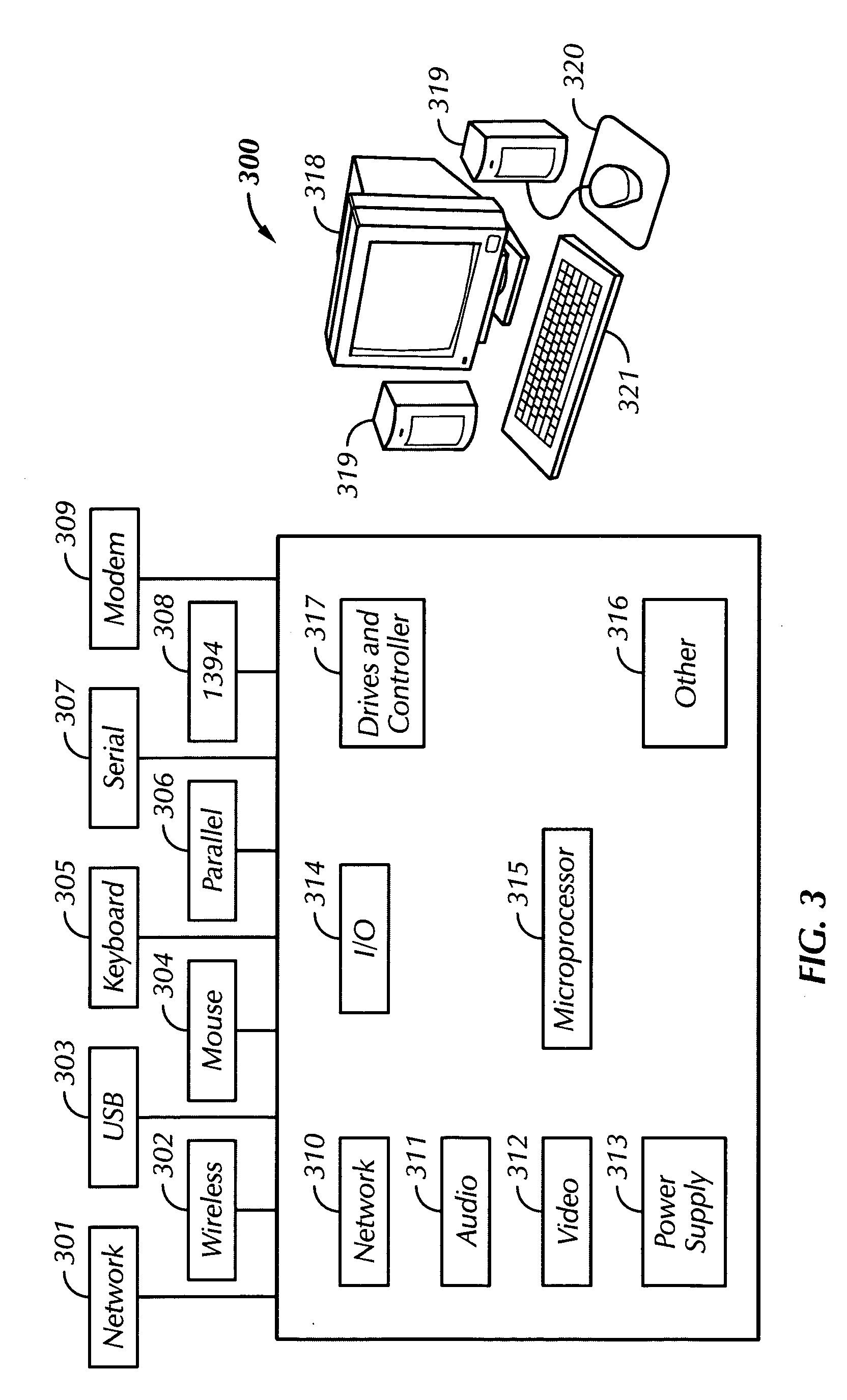

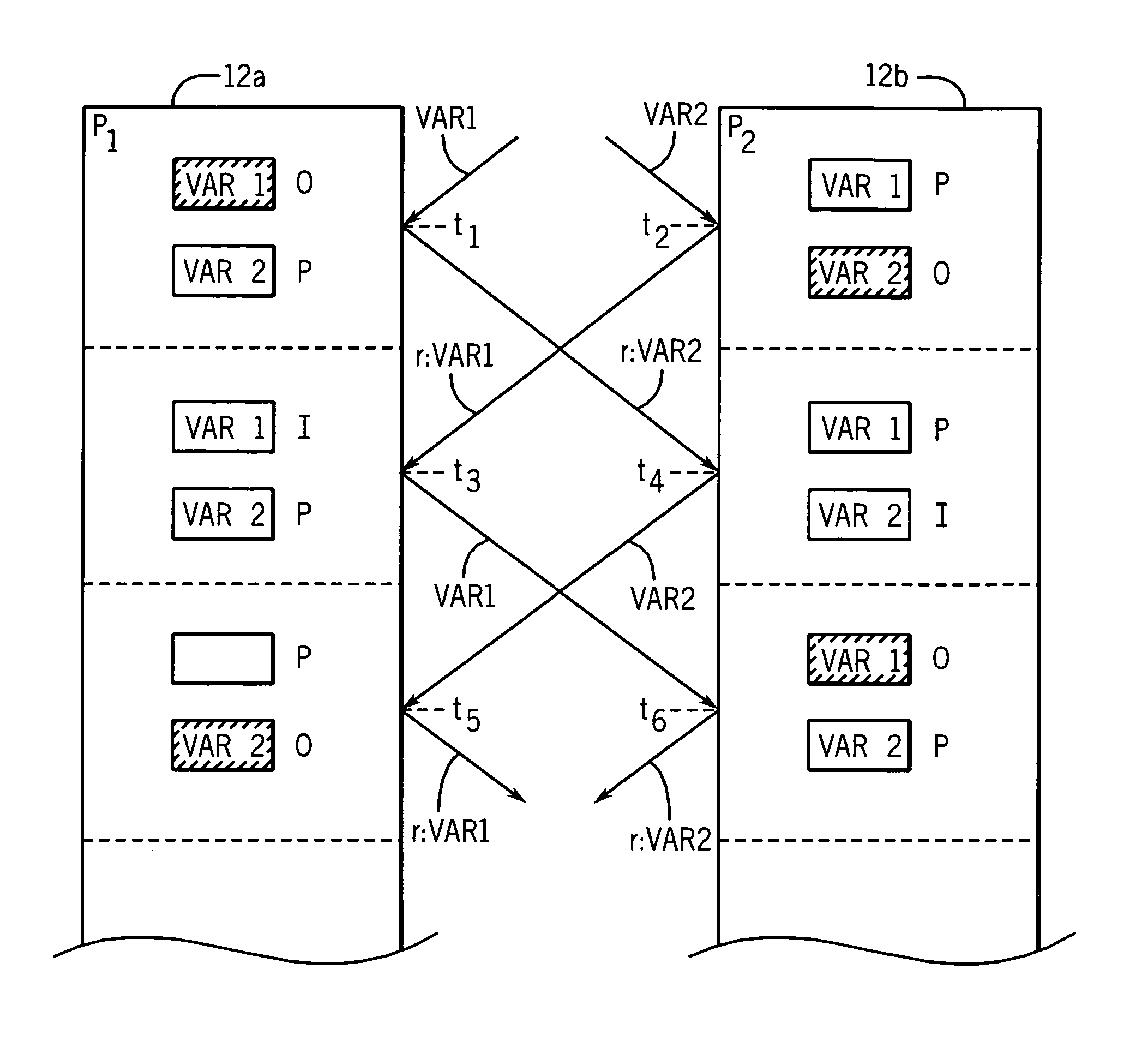

State based synchronization

ActiveUS20060069809A1Understanding over priorityDigital data processing detailsDatabase distribution/replicationMetadata repositoryState dependent

A system for synchronization whereby metadata repository maintains information regarding the history and status of data items in a data repository. Data items are associated with states and such states changes (e.g. increment) in response to changes to the data items. History statements associated with the same states describe the changes in a generic enough fashion that multiple data items may be associated with a single state (e.g. if multiple data items share a common history such as that they were all edited by a user on the same device). The history repository is synchronized with other history repositories so as to reflect the states of data items on multiple devices. The synchronized history stores are used during synchronization to identify and resolve data conflicts through ancestry of data item history.

Owner:APPLE INC

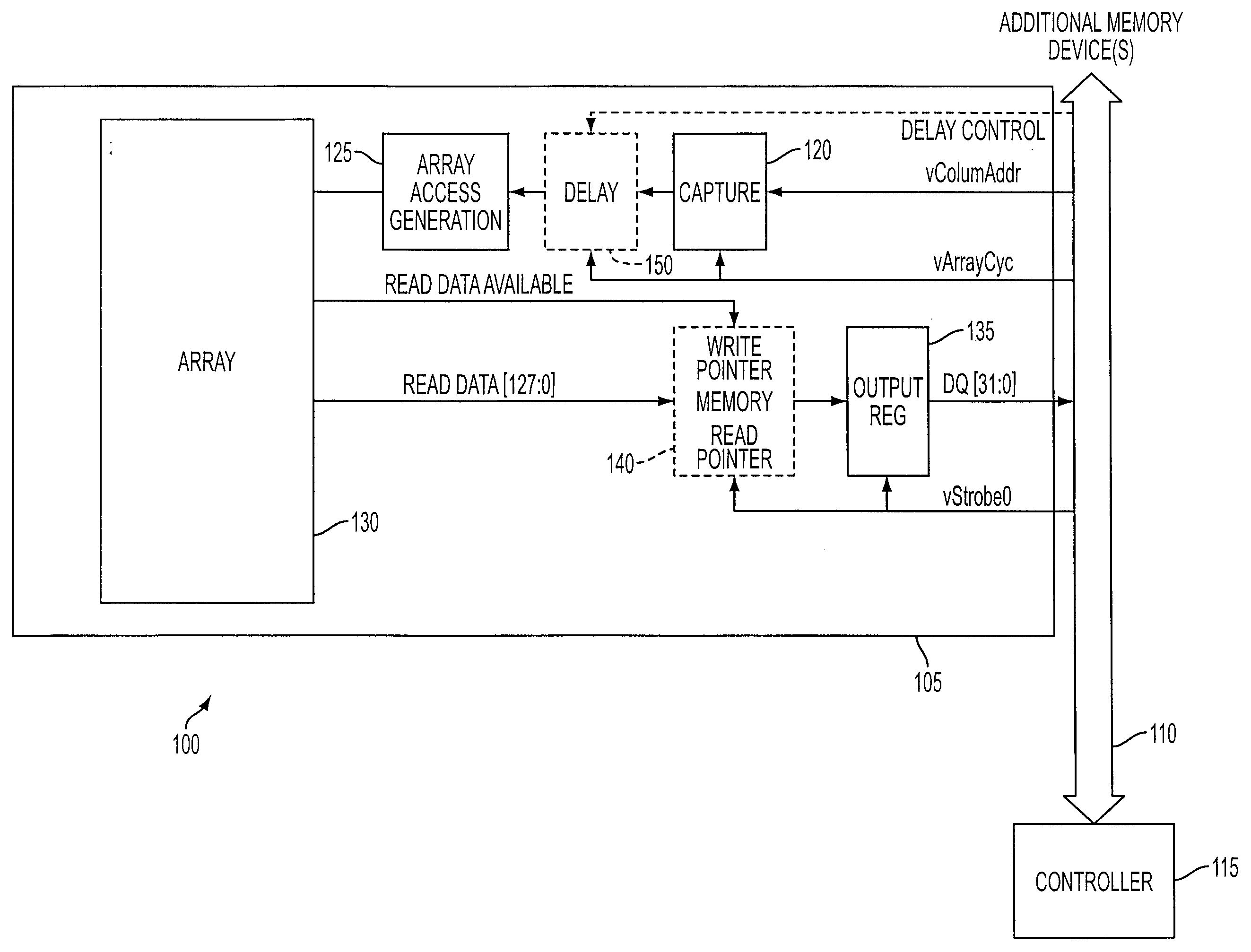

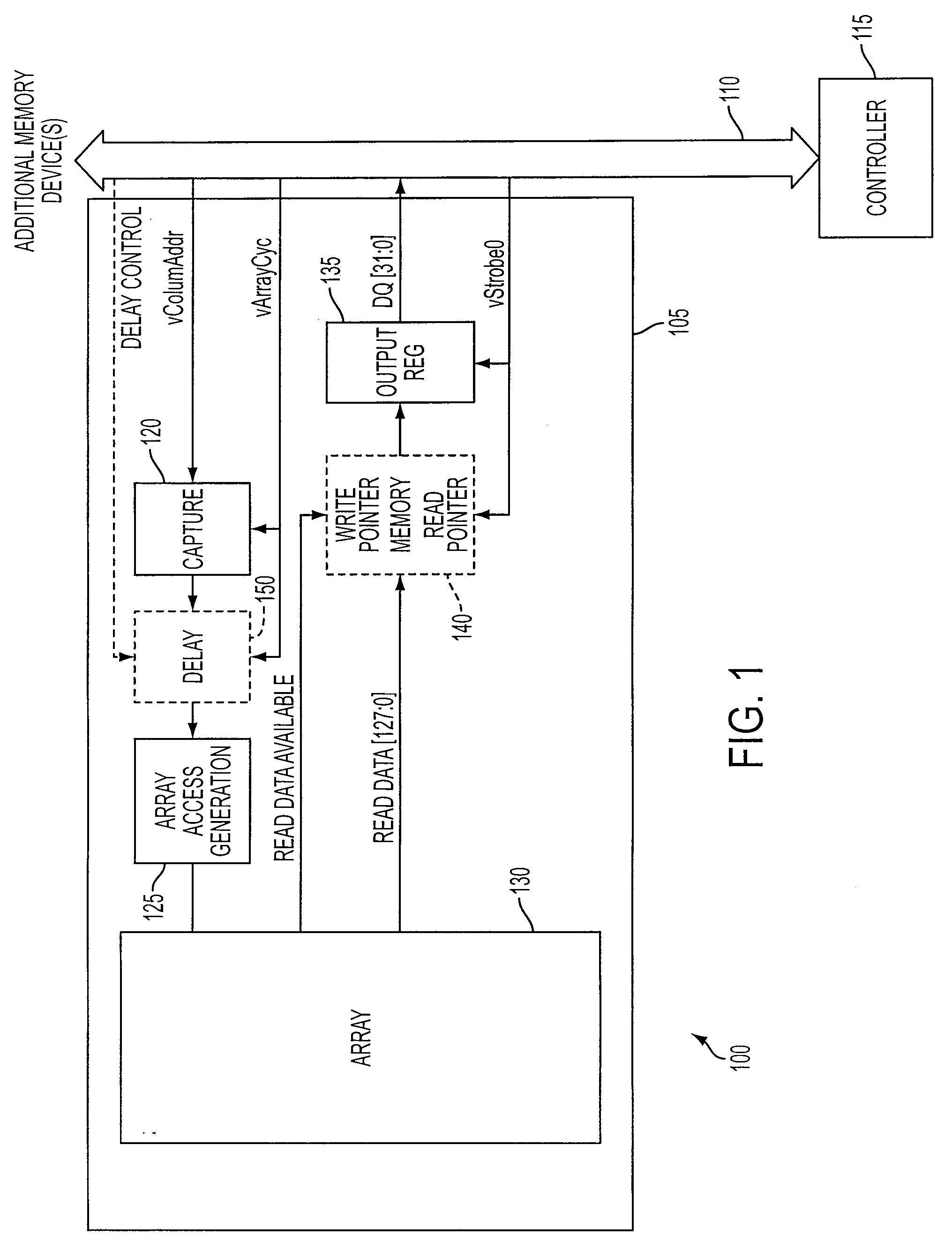

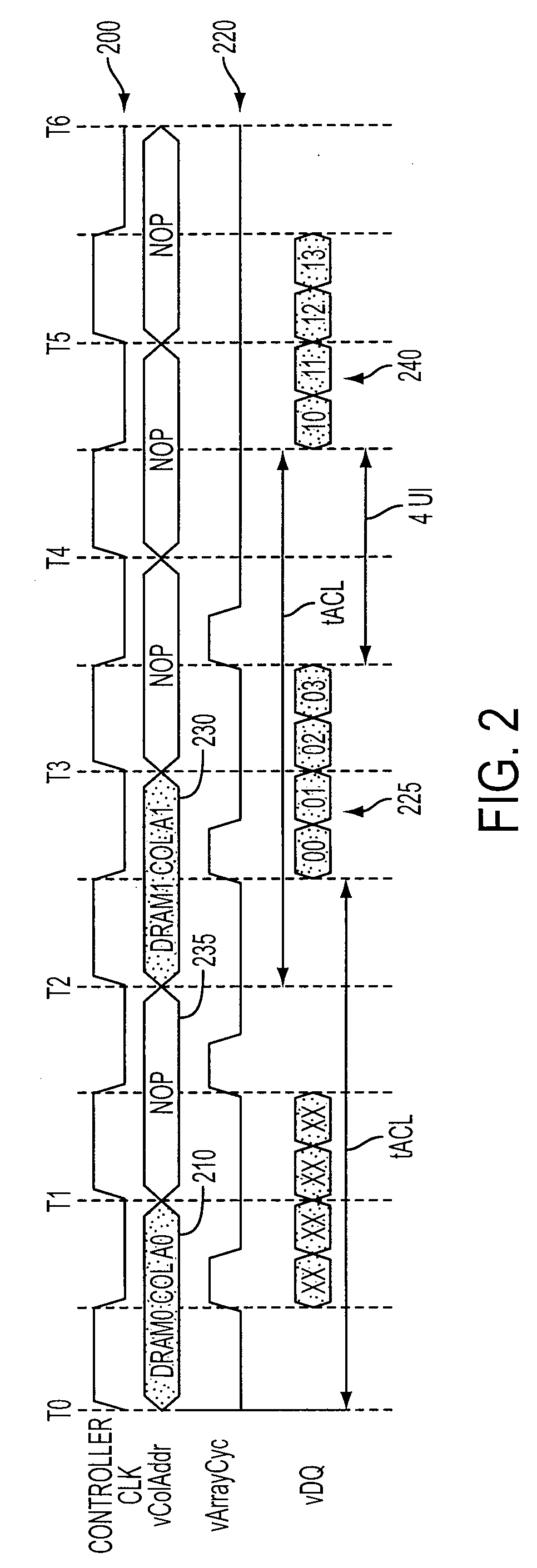

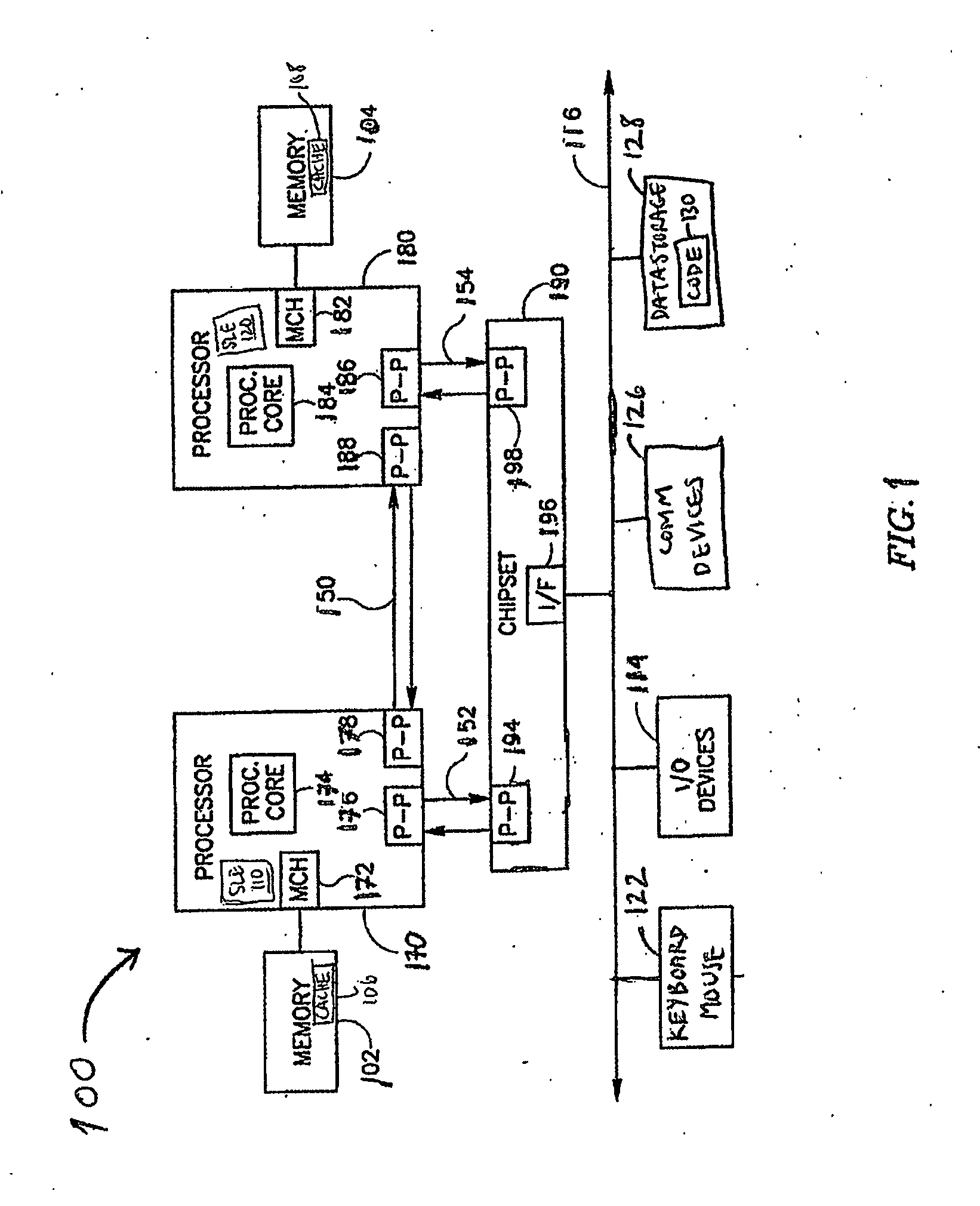

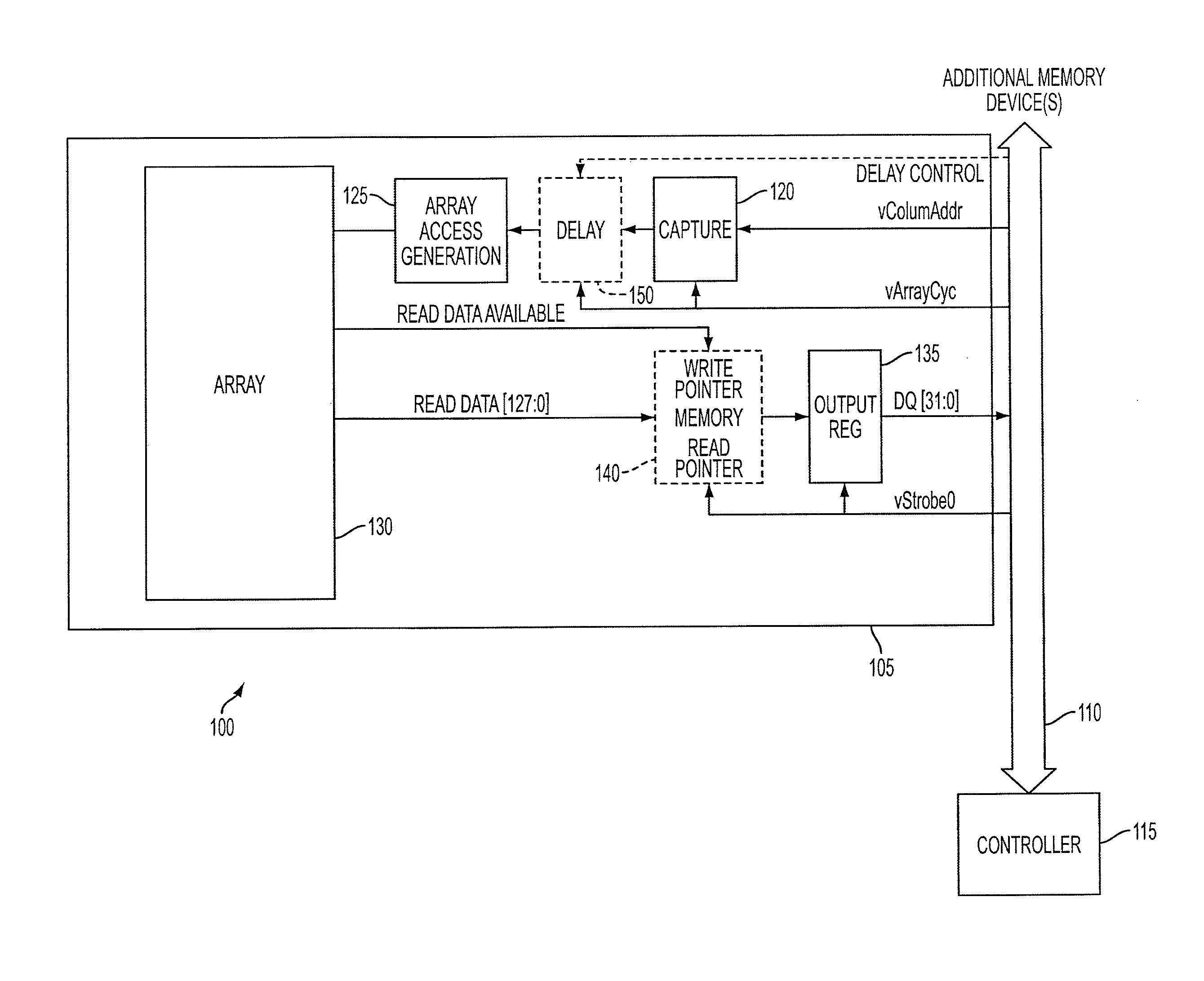

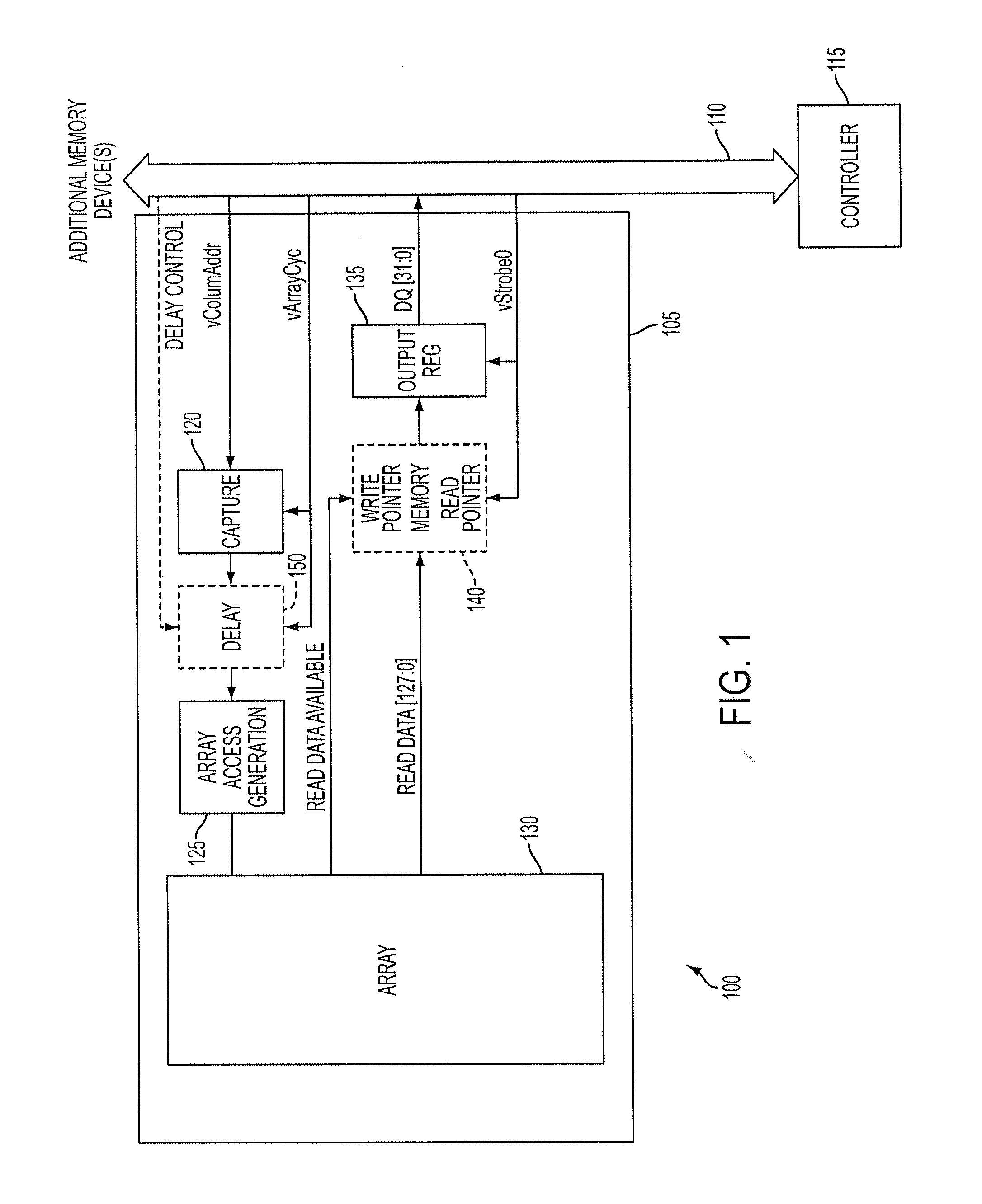

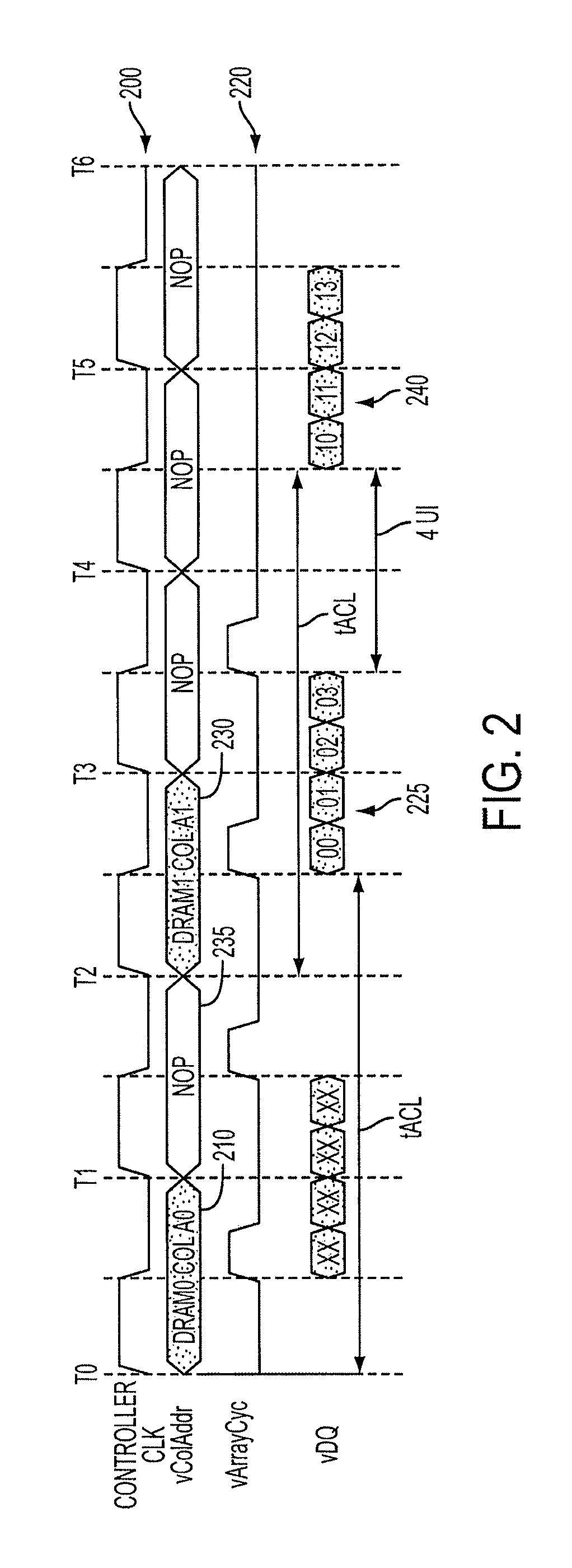

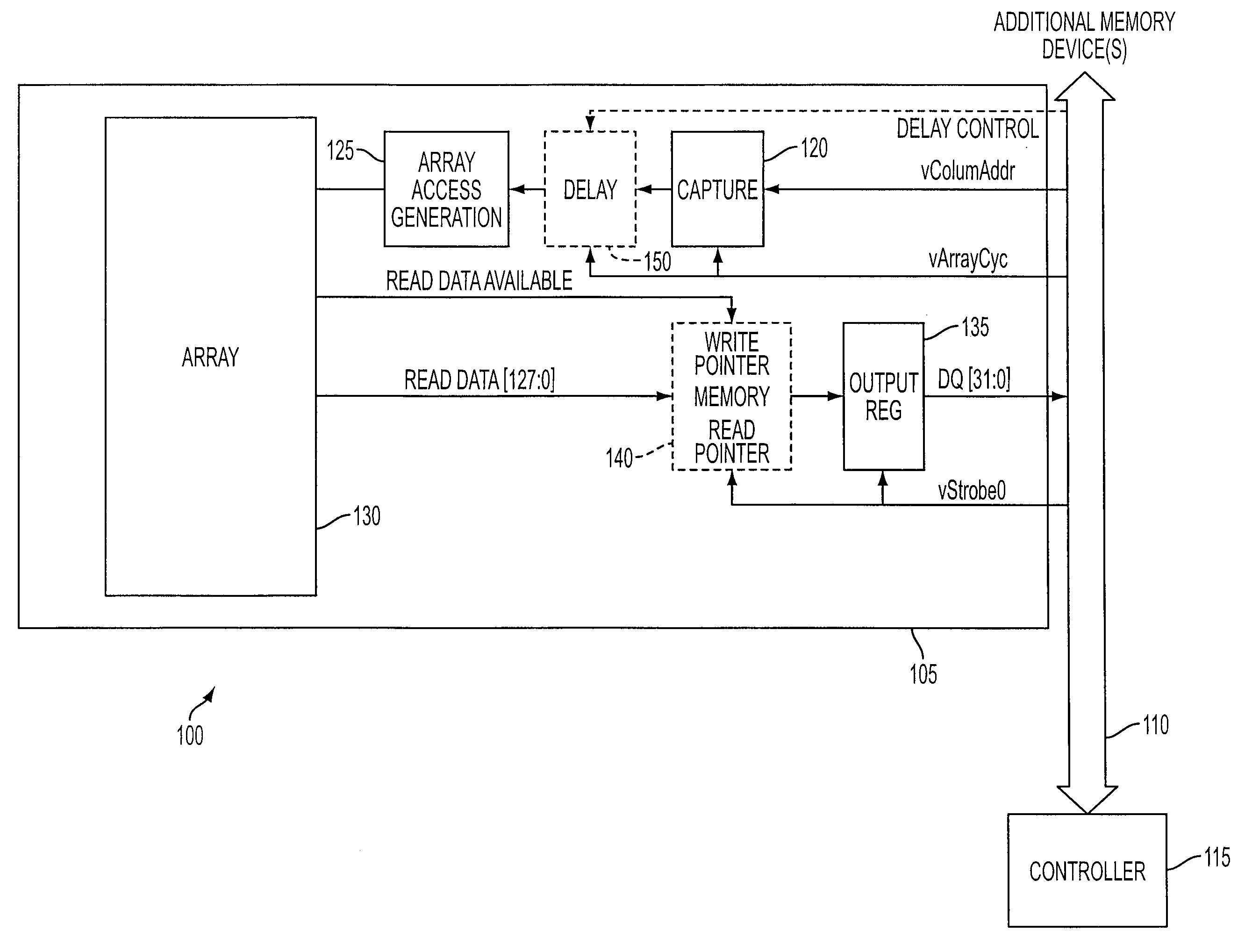

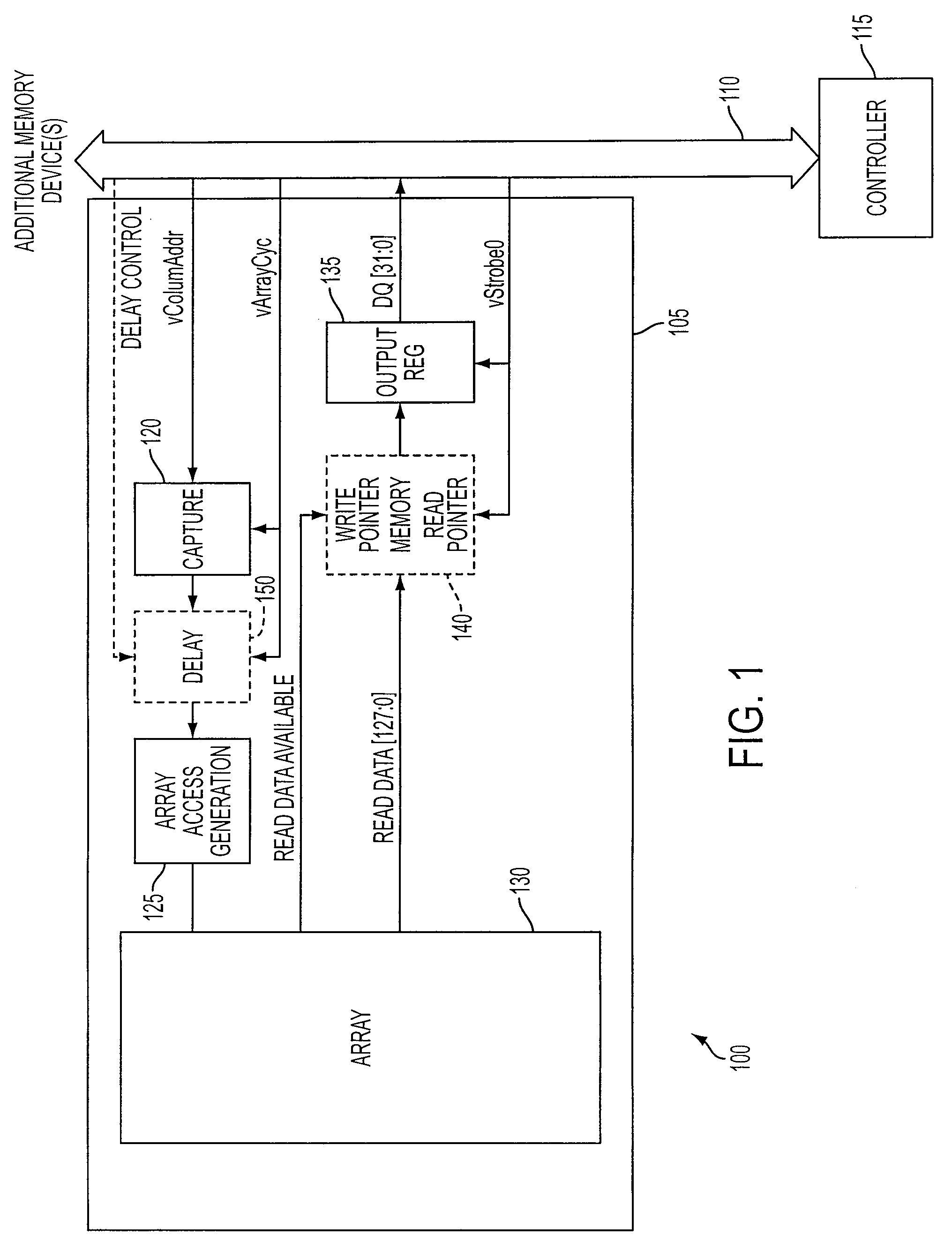

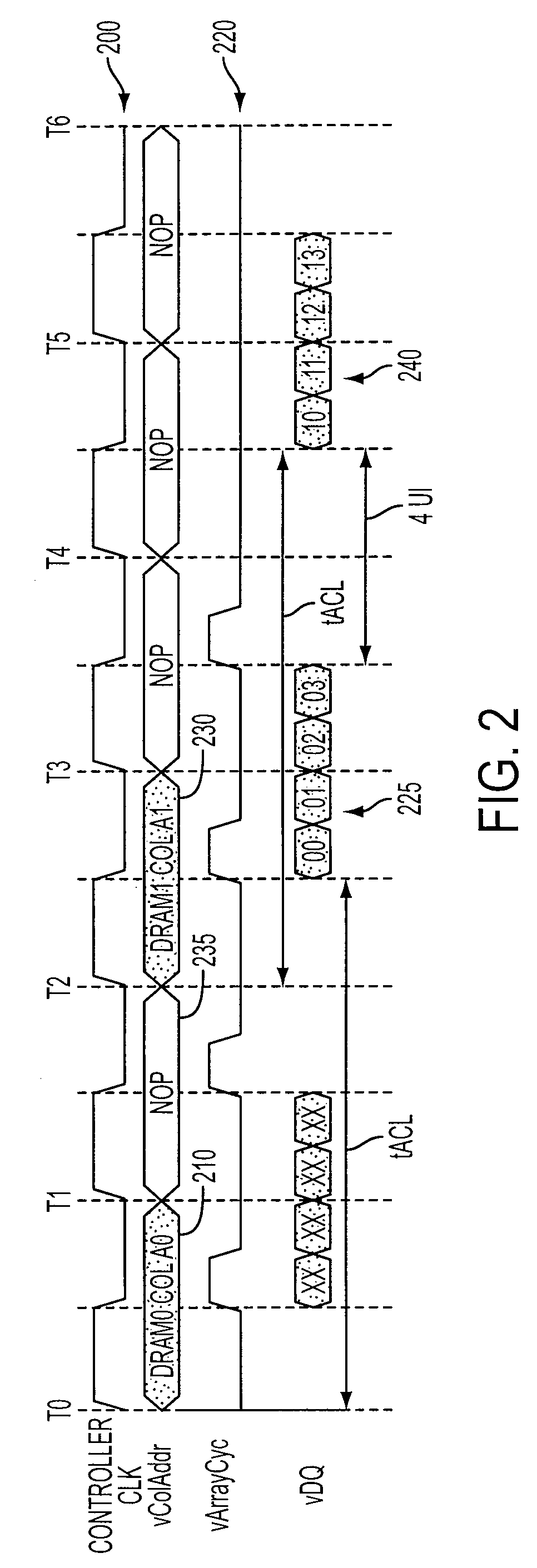

Memory systems and methods for controlling the timing of receiving read data

Embodiments of the present invention provide memory systems having a plurality of memory devices sharing an interface for the transmission of read data. A controller can identify consecutive read requests sent to different memory devices. To avoid data contention on the interface, for example, the controller can be configured to delay the time until read data corresponding to the second read request is placed on the interface.

Owner:MICRON TECH INC

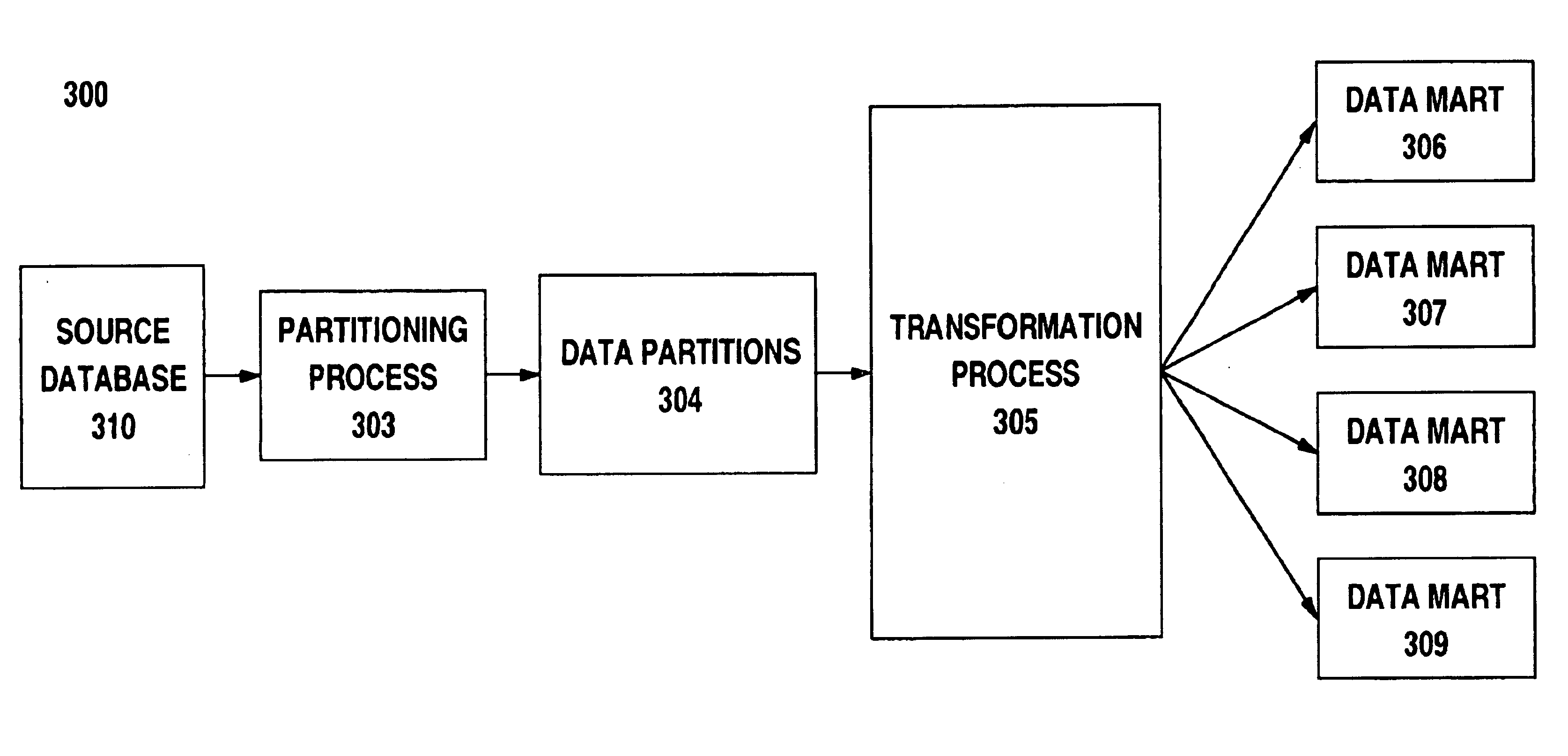

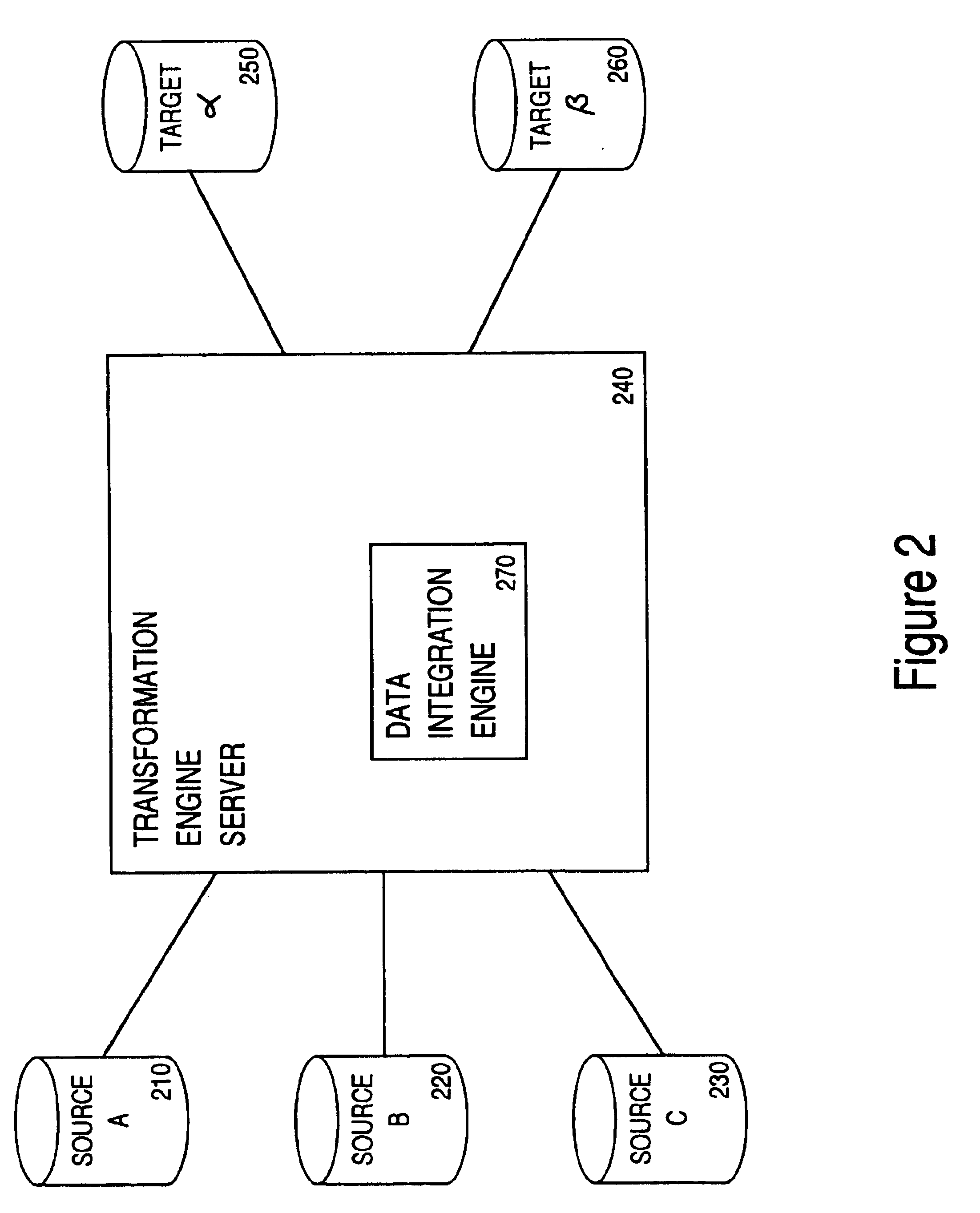

Method and apparatus with data partitioning and parallel processing for transporting data for data warehousing applications

InactiveUS6850947B1Improve throughputMinimize data sharingDigital data information retrievalDigital data processing detailsApplication softwareOperational data store

A method and apparatus for transporting data for a data warehouse application is described. The data from an operational data store (the source database) is organized in non-overlapping data partitions. Separate execution threads read the data from the operational data store concurrently. This is followed by concurrent transformation of the data in multiple execution threads. Finally, the data is loaded into the target data warehouse concurrently using multiple execution threads. By using multiple execution threads, the data contention is reduced. Thereby the apparatus and method of the present invention achieves increased throughput.

Owner:INFORMATICA CORP

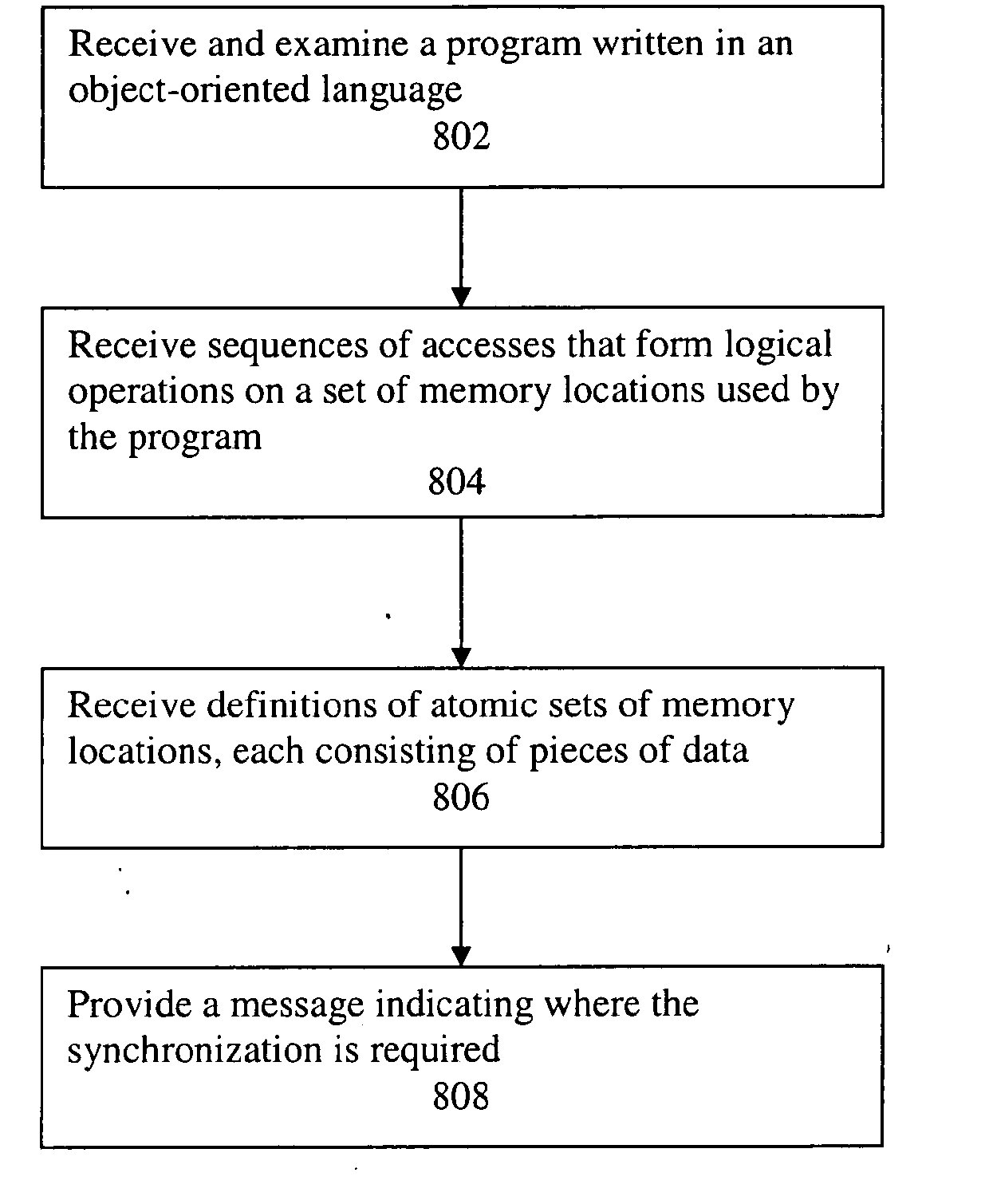

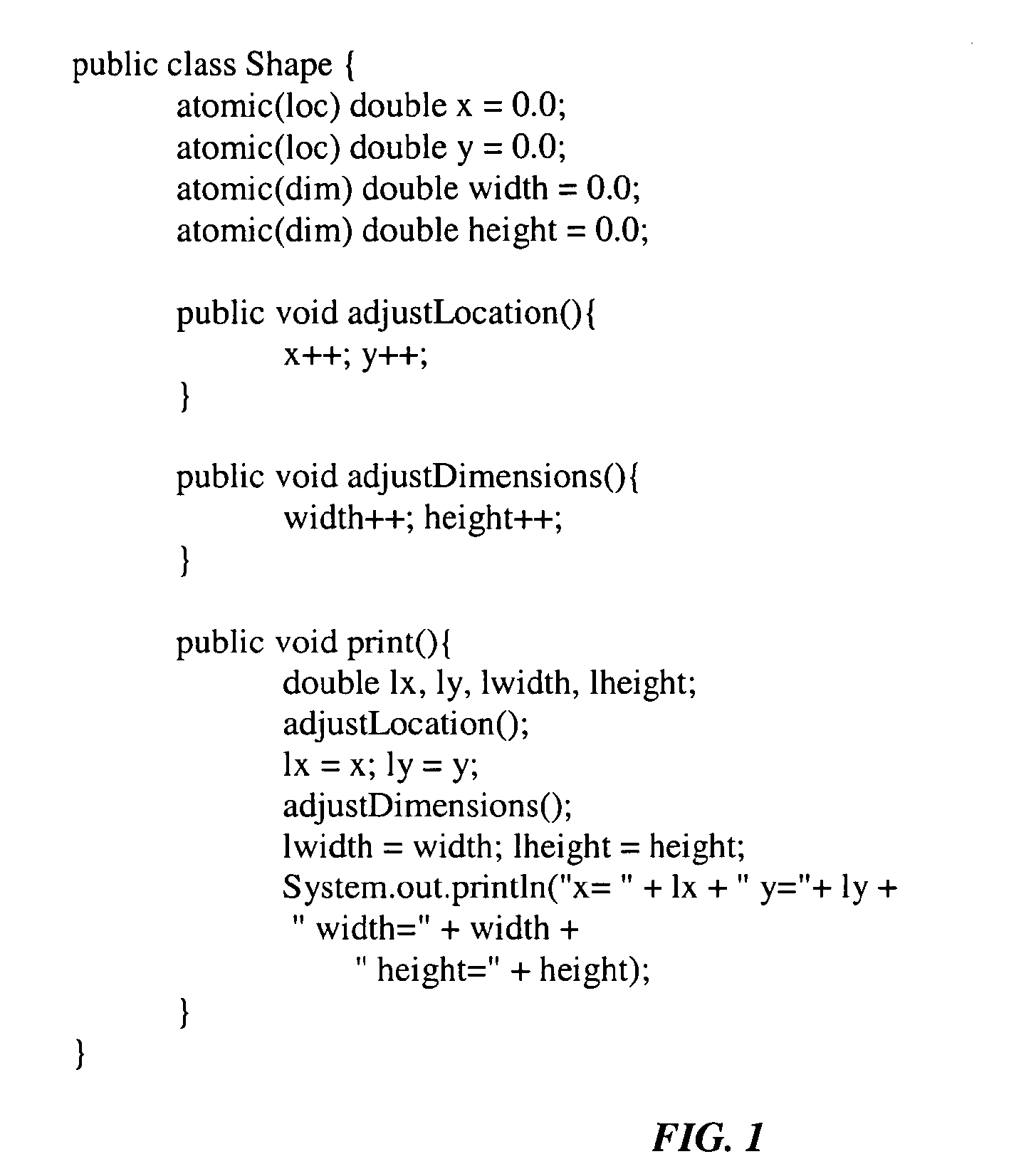

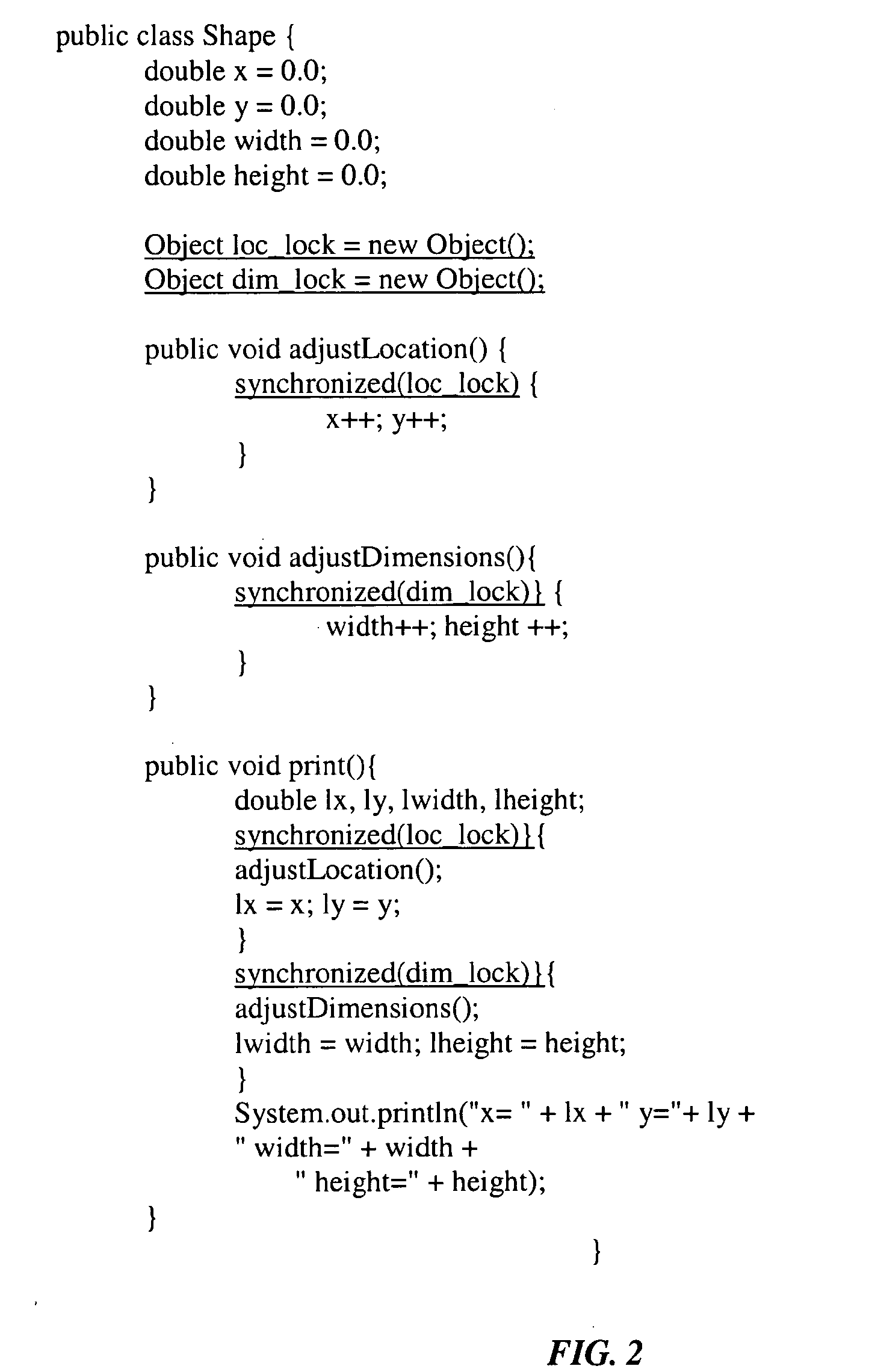

Using atomic sets of memory locations

InactiveUS20100192131A1Software engineeringSpecific program execution arrangementsCoding blockData set

A system and method for ensuring consistency of data and preventing data races, including steps of: receiving and examining a computer program written in an object-oriented language; receiving sequences of accesses that form logical operations on a set of memory locations used by the program; receiving definitions of atomic sets of data from the memory locations, wherein said atomic sets are sets of data that indicate an existence of a consistency property without requiring the consistency property itself; inferring which code blocks of the computer program must be synchronized in order to prevent one or more data races in the computer program, wherein synchronization is inferred by determining by analysis for each unit of work, what atomic sets are read and written by the unit of work; and providing a message indicating where synchronization is required.

Owner:INT BUSINESS MASCH CORP

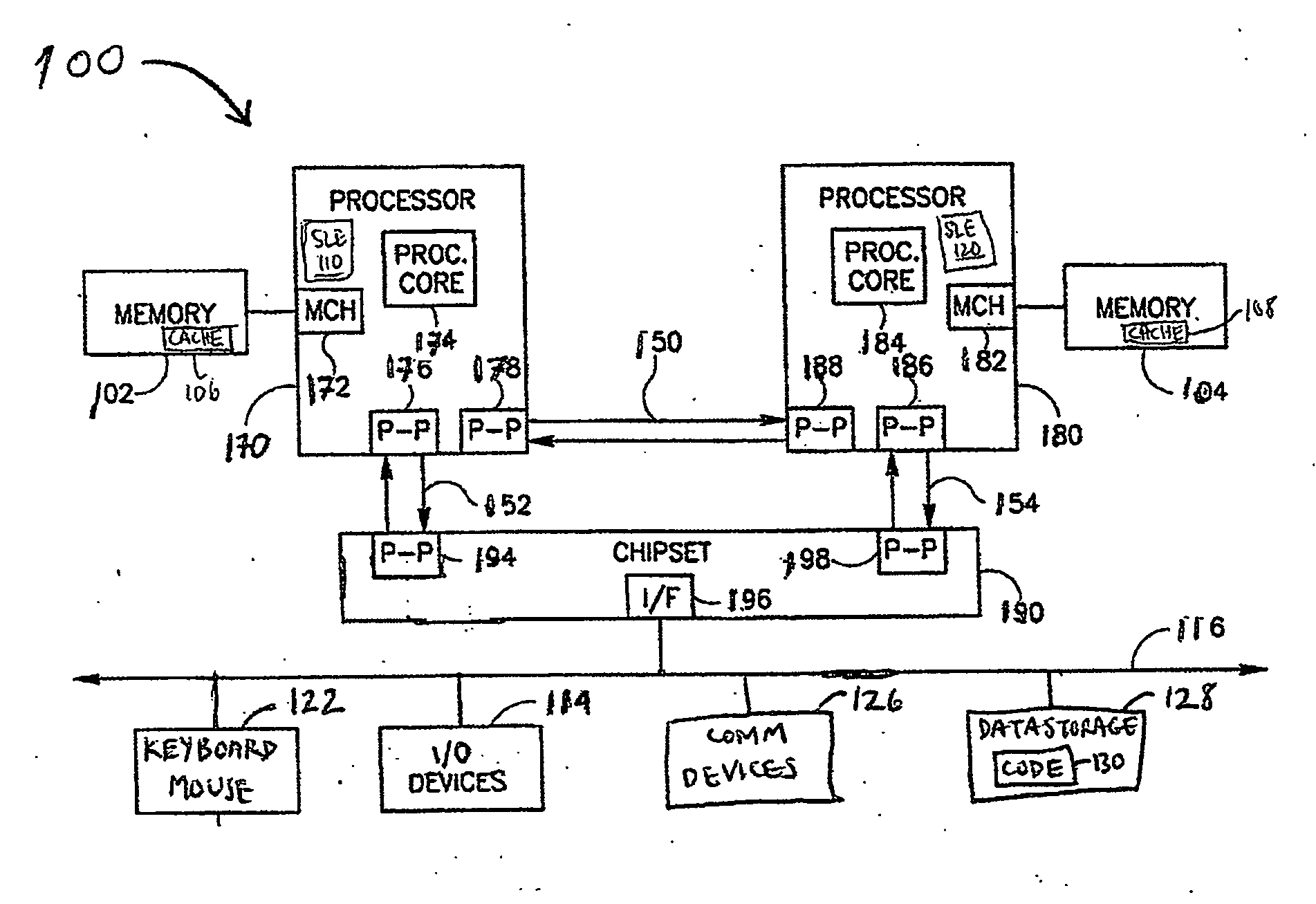

Computer architecture providing transactional, lock-free execution of lock-based programs

ActiveUS20050177831A1Efficient subsequent executionEliminate “ live-lock ” situationMultiprogramming arrangementsConcurrent instruction executionCritical sectionShared memory

Hardware resolution of data conflicts in critical sections of programs executed in shared memory computer architectures are resolved using a hardware-based ordering system and without acquisition of the lock variable.

Owner:WISCONSIN ALUMNI RES FOUND

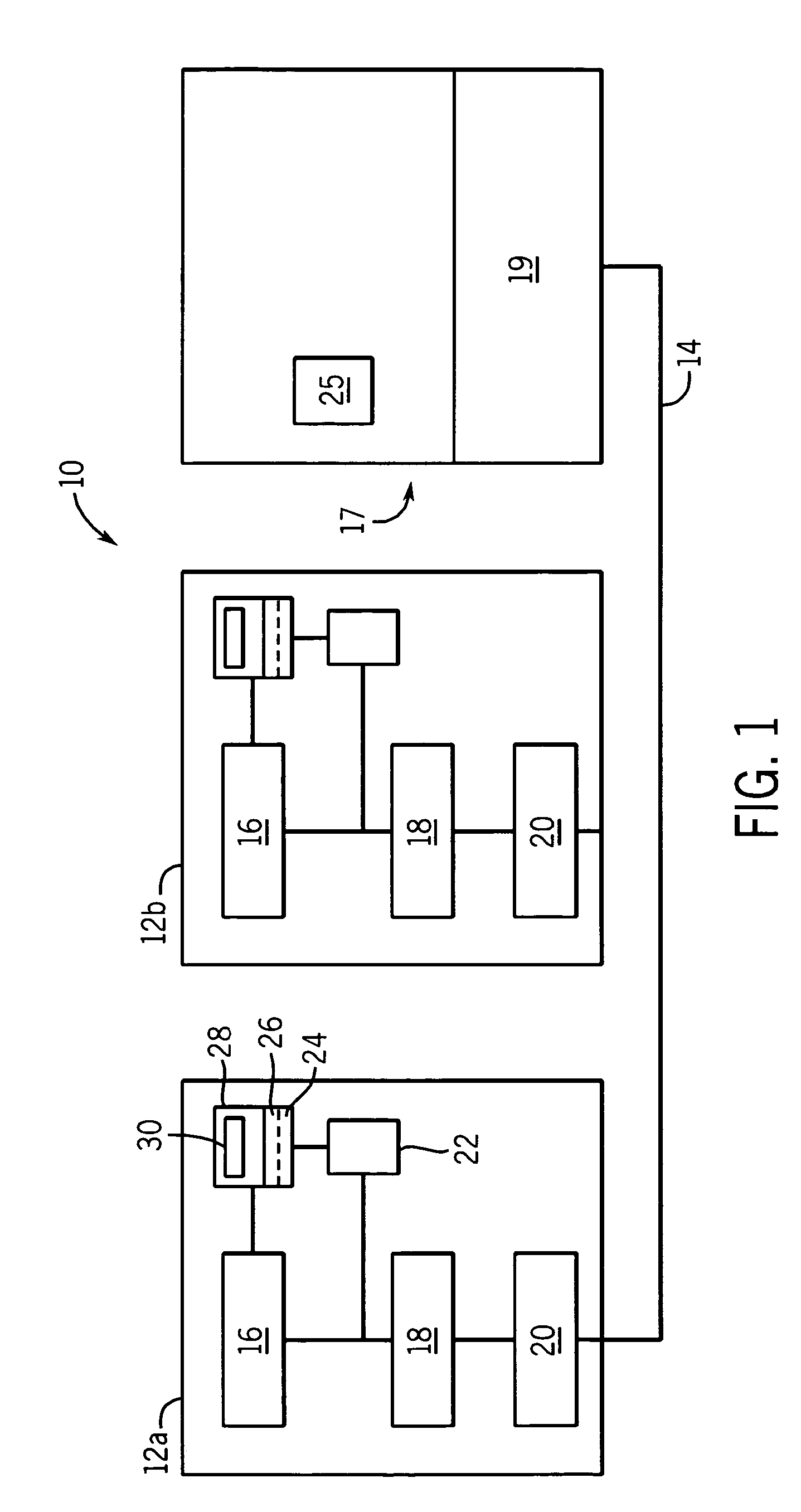

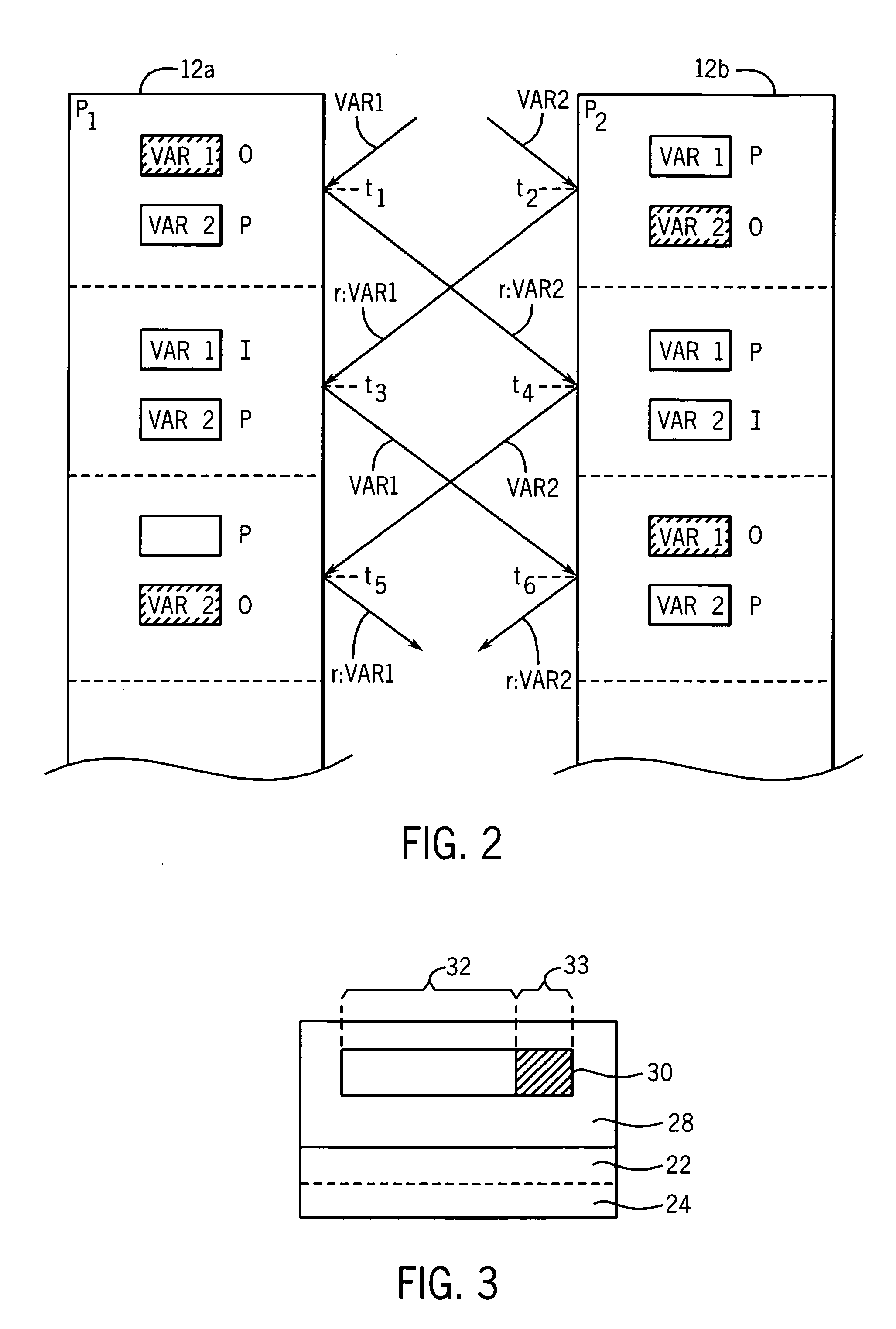

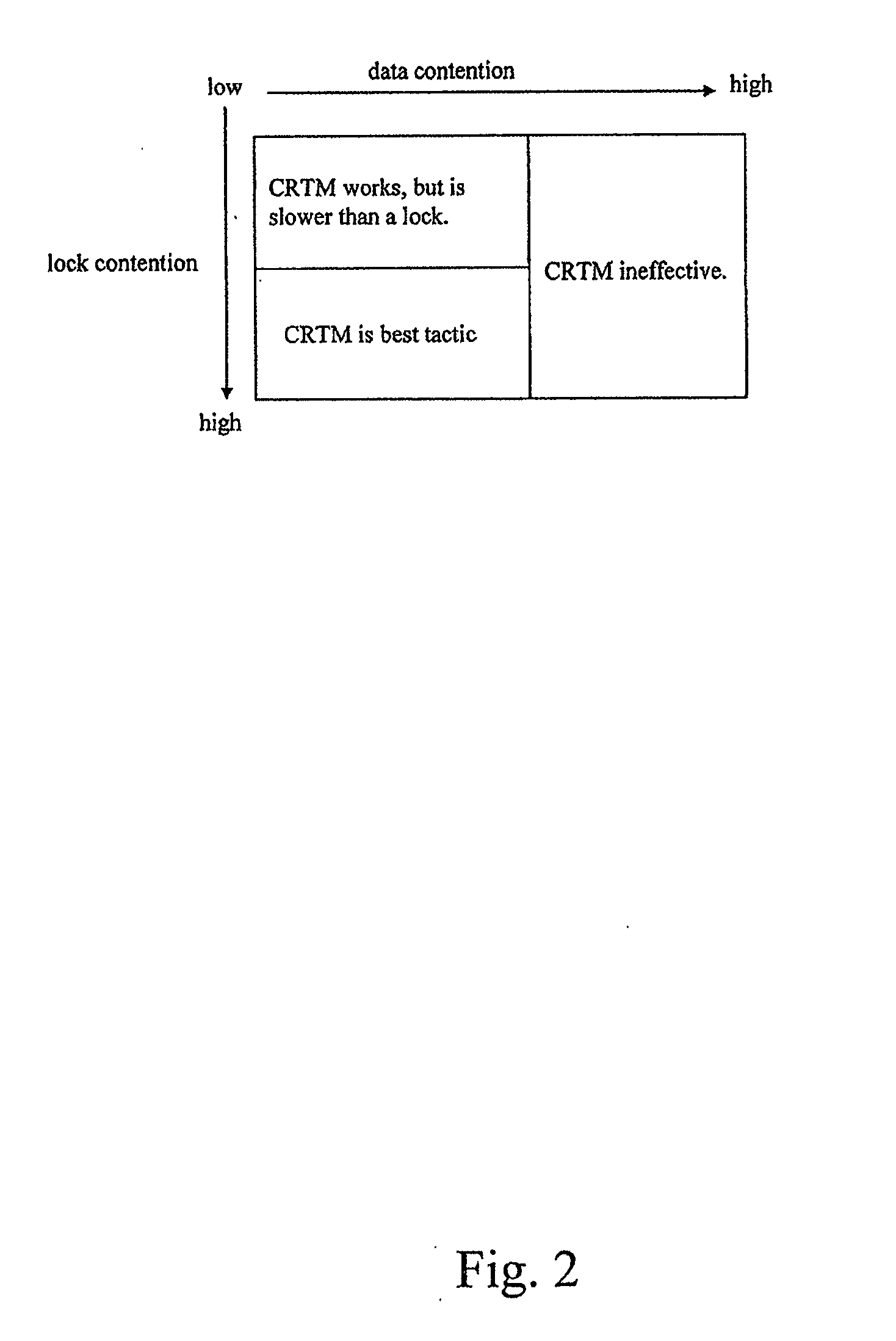

Device, system, and method for regulating software lock elision mechanisms

InactiveUS20090125519A1Digital data processing detailsTransaction processingSoftware lockoutData contention

A method, apparatus and system for, in a computing apparatus, comparing a measure of data contention for a group of operations protected by a lock to a predetermined threshold for data contention, and comparing a measure of lock contention for the group of operations to a predetermined threshold for lock contention, eliding the lock for concurrently executing two or more of the operations of the group using two or more threads when the measure of data contention is approximately less than or equal to the predetermined threshold for data contention and the measure of lock contention is approximately greater than or equal to a predetermined threshold for lock contention, and acquiring the lock for executing two or more of the of operations of the group in a serialized manner when the measure of data contention is approximately greater than or equal to the predetermined threshold for data contention and the measure of lock contention is approximately less than or equal to a predetermined threshold for lock contention. Other embodiments are described and claimed.

Owner:INTEL CORP

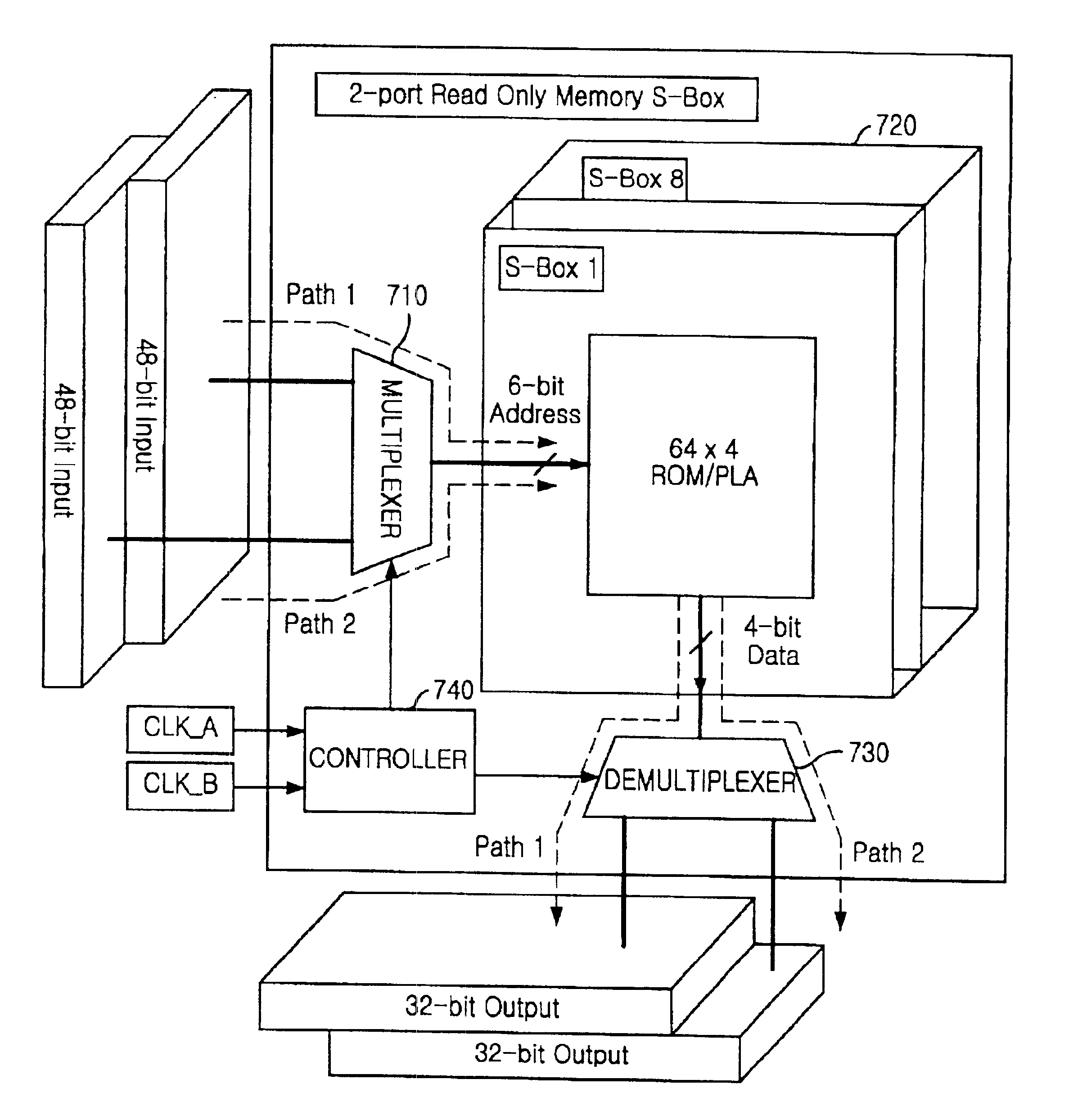

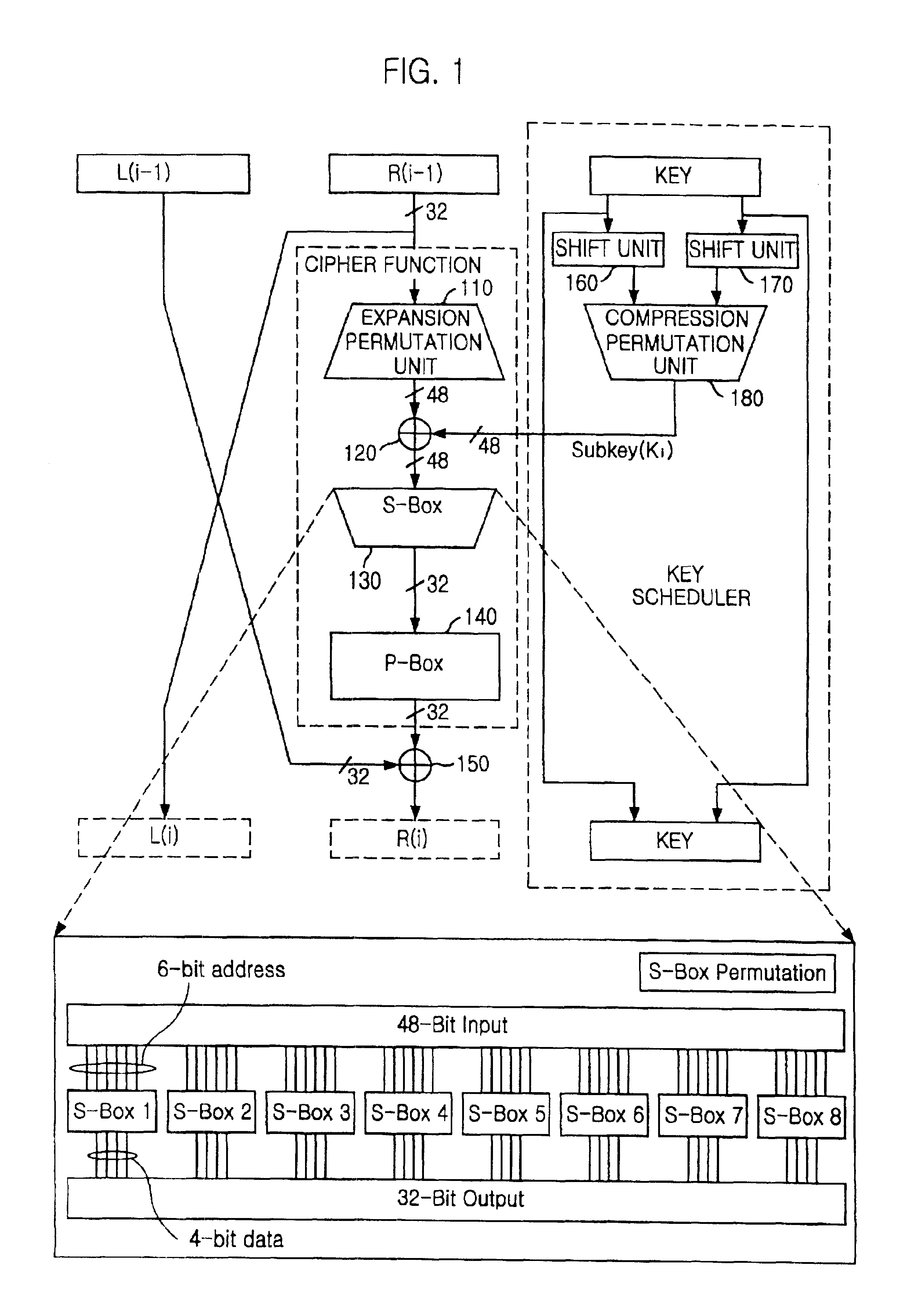

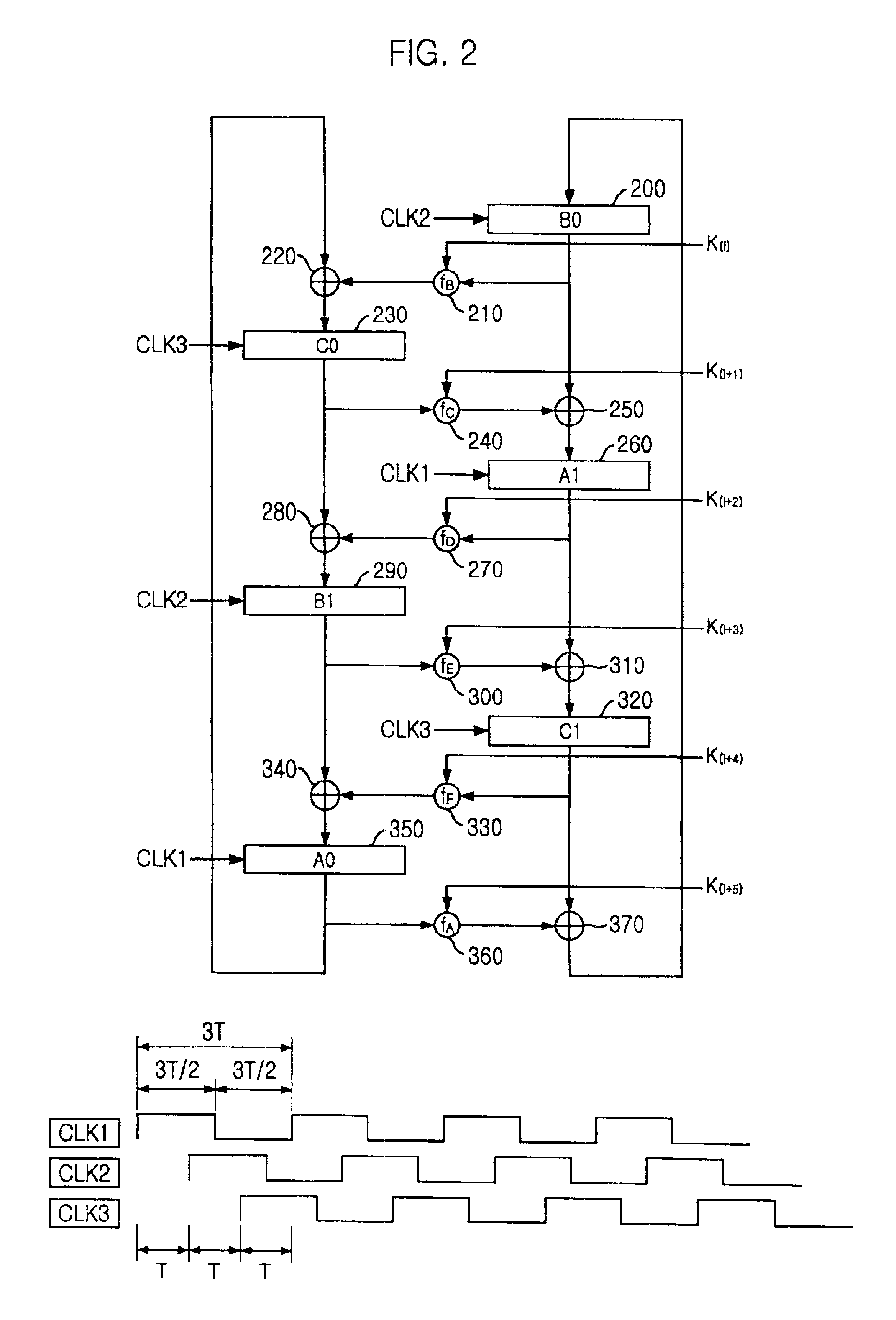

Encryption apparatus using data encryption standard algorithm

InactiveUS20020003876A1Improve process capabilityEncryption apparatus with shift registers/memoriesSecret communicationS-boxMultiplexer

An encryption device eliminates data contention and minimizes area by accessing twice data for a given time by using a memory device of two times faster access time. The encryption device for performing encryption of plain text blocks using data encryption standard algorithm, wherein the encryption device includes an initial permutation unit, a data encryption unit having n-stage (n is an even number) pipeline structure using a first clock, a second clock and a third clock, and an inverse initial permutation unit, the encryption device includes: a multiplexer for selecting one of n / 3 48-bit inputs; 8 S-Boxes, each for receiving 6-bit address among the selected 48-bit and outputting 4-bit data; a demultiplexer for distributing 32-bit data from the S-Boxes to n / 3 outputs; and a controller for control the multiplexer and the demultiplexer with a fourth clock and a fifth clock, wherein the fourth and the fifth clock are faster than the first, the second and the third clocks by n / 3 times.

Owner:ABOV SEMICON

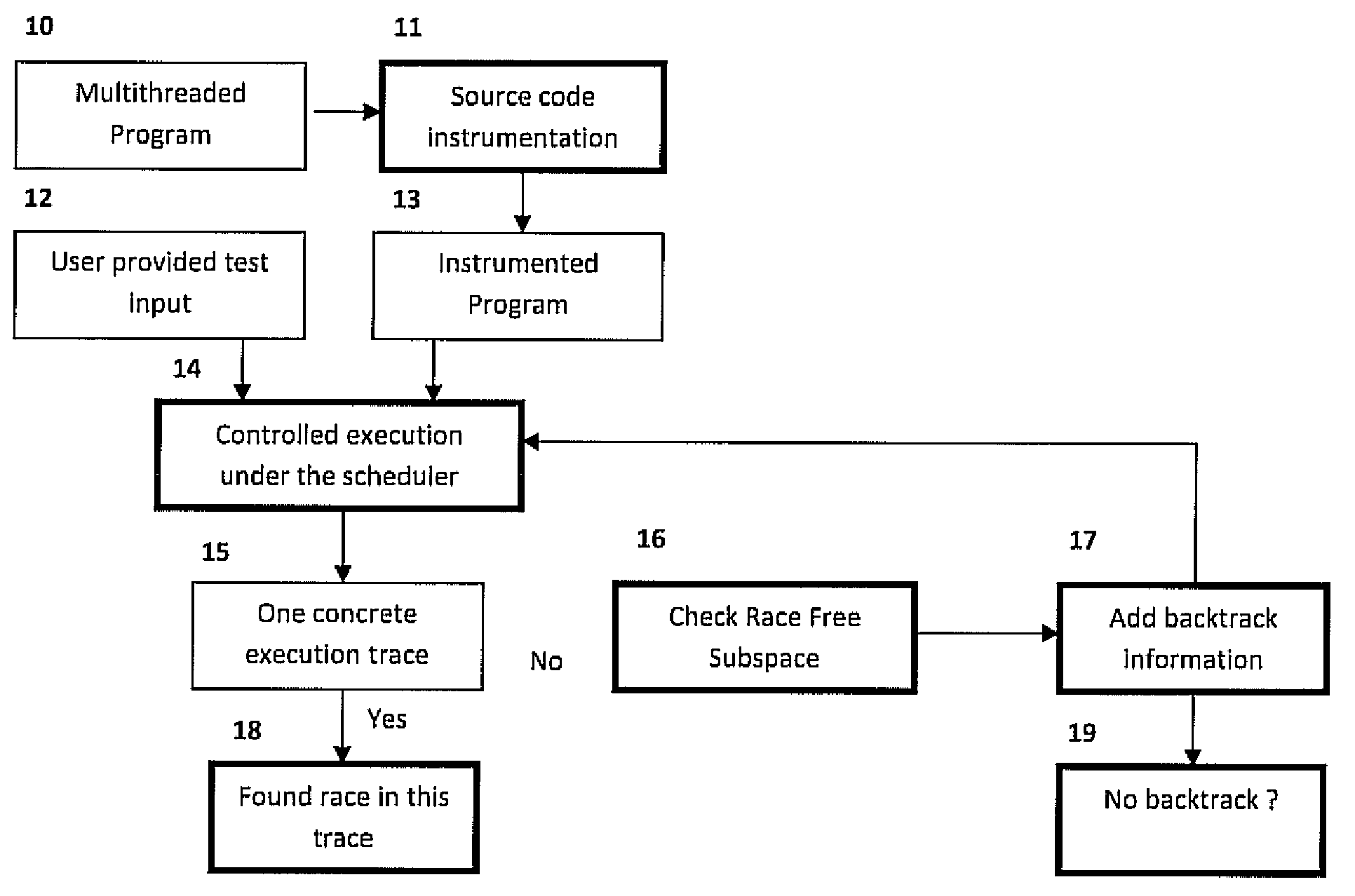

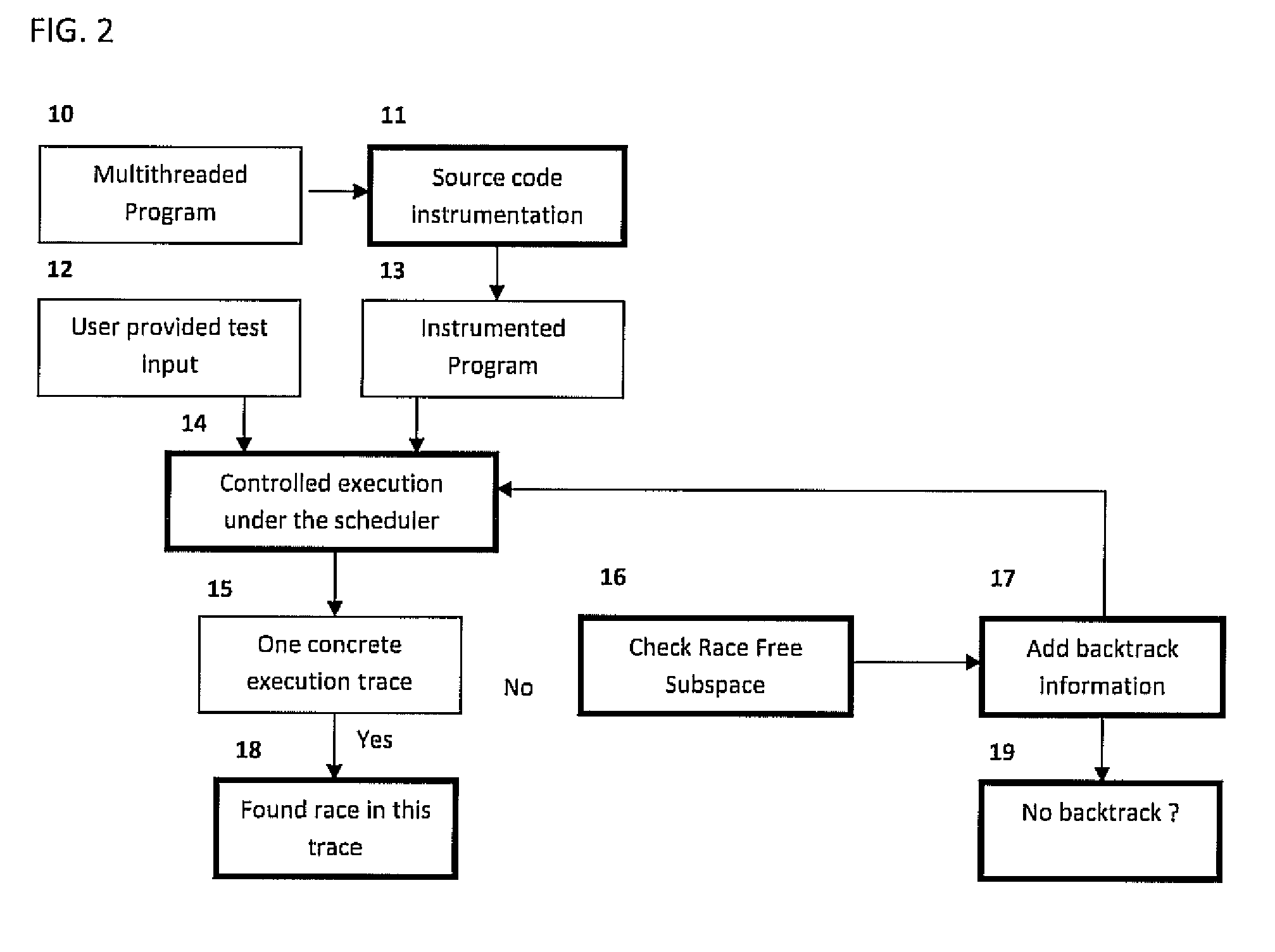

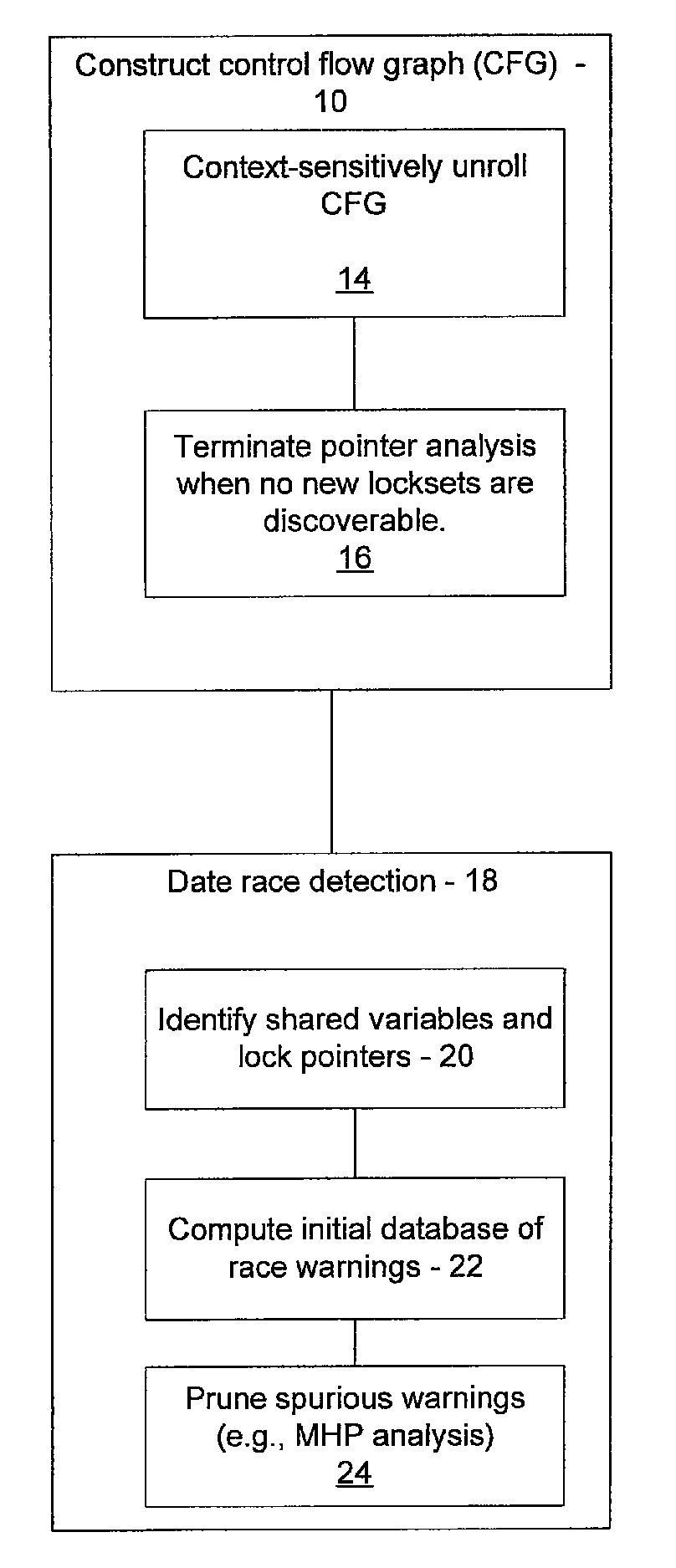

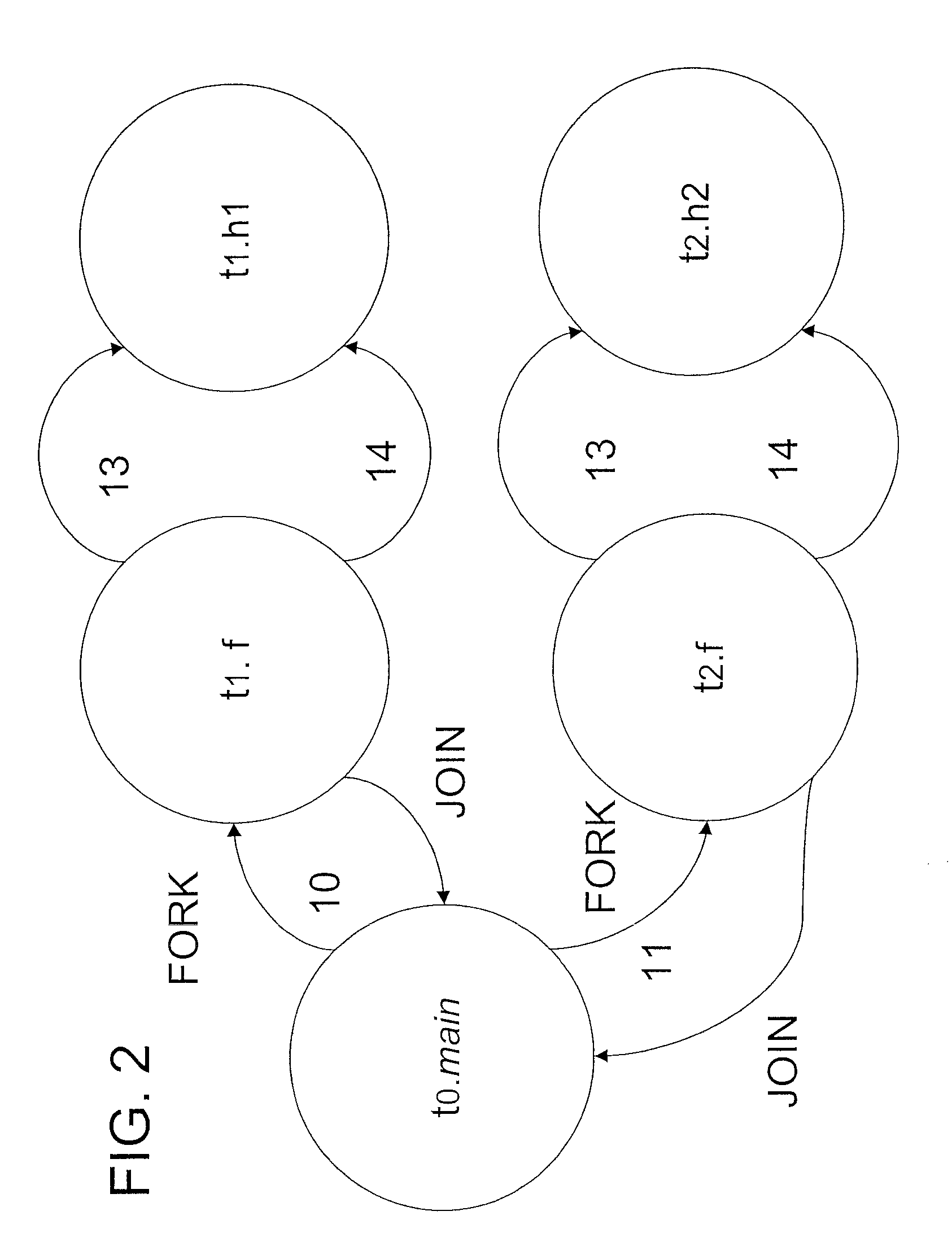

Dynamic model checking with property driven pruning to detect race conditions

ActiveUS20090282288A1Effective and practicalEasy to scaleFault responseProgram controlState dependentDynamic models

A system and method for dynamic data race detection for concurrent systems includes computing lockset information using a processor for different components of a concurrent system. A controlled execution of the system is performed where the controlled execution explores different interleavings of the concurrent components. The lockset information is used during the controlled execution to check whether a search subspace associated with a state in the execution is free of data races. A race-free search subspace is dynamically pruned to reduce resource usage.

Owner:NEC CORP

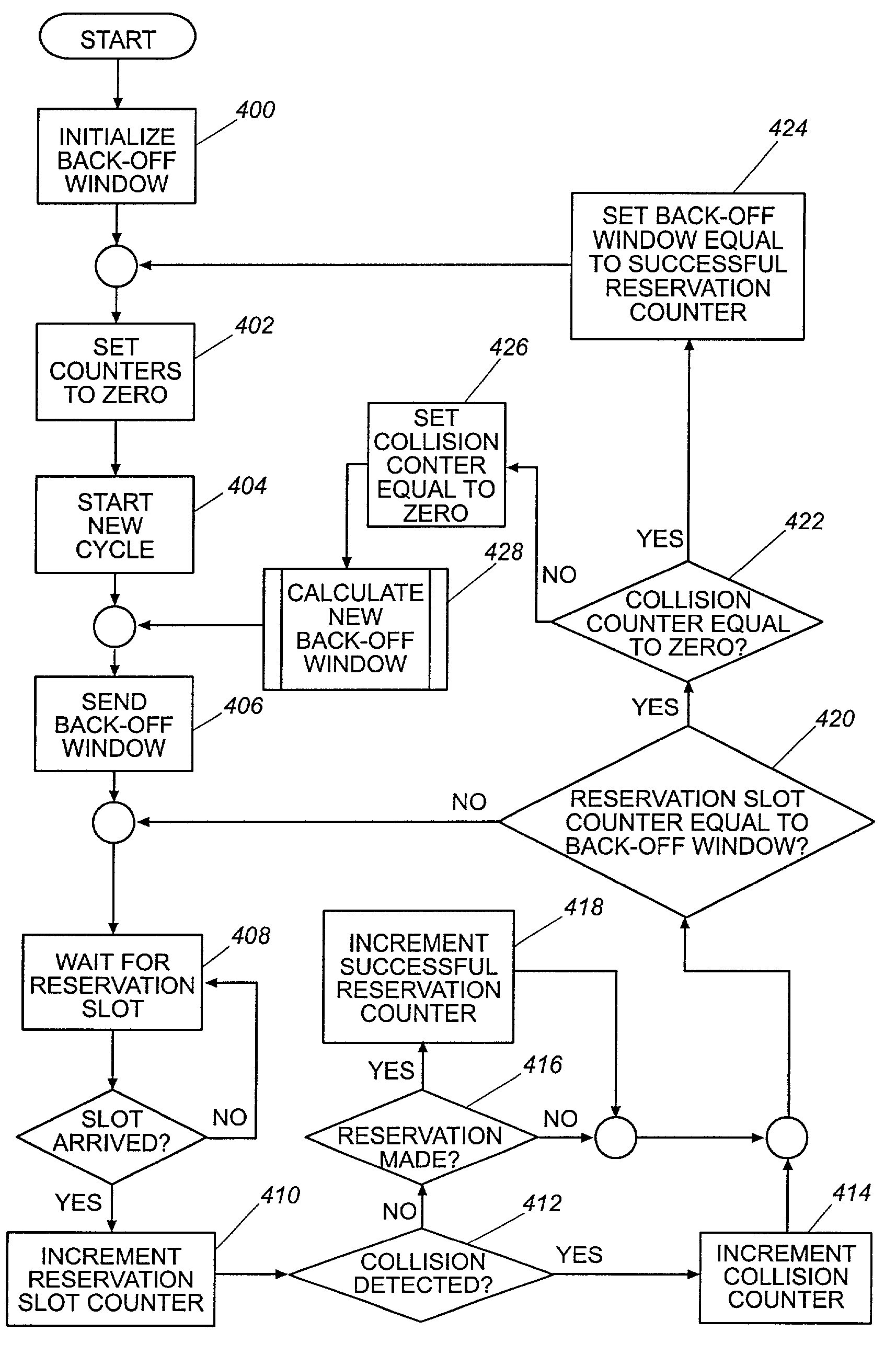

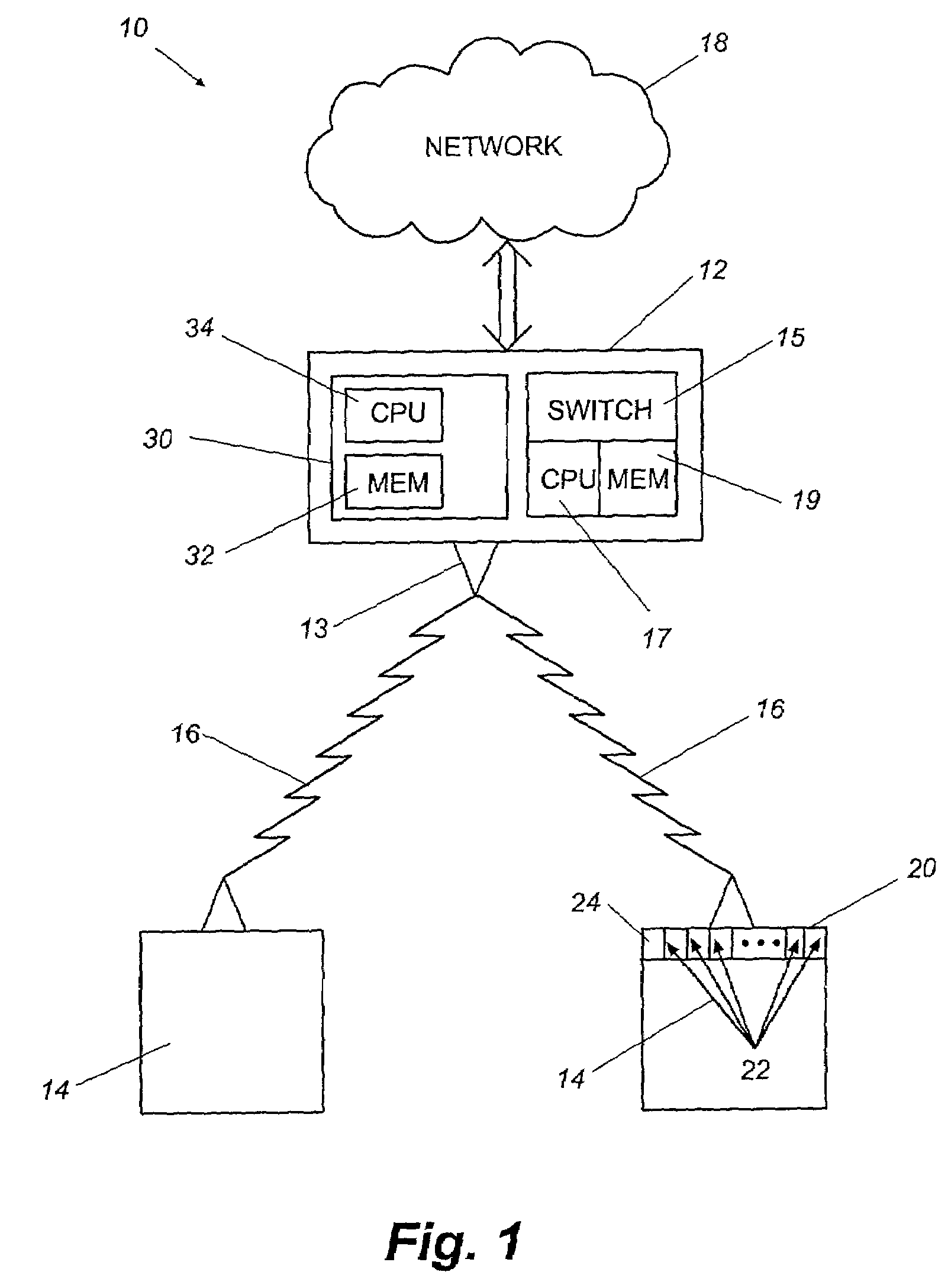

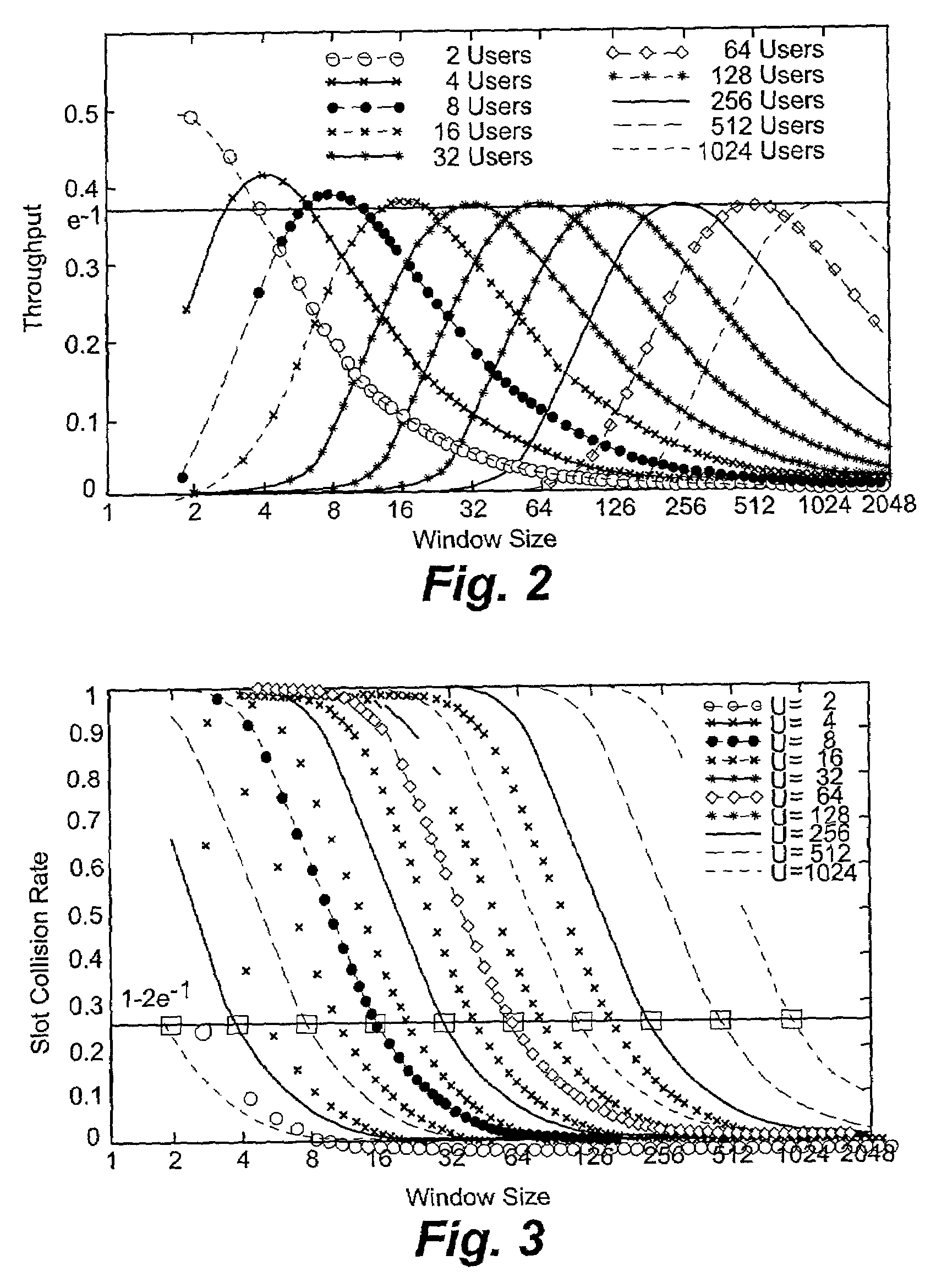

Back off methods and systems

A Near Optimal Fairness (NOF) algorithm is disclosed for resolving data collisions in a network shared by a plurality of users. The NOF algorithm calculates an optimal back-off or contention window which is broadcast to users competing for system bandwidth. The NOF algorithm handles data contention in cycles and guarantees that each user competing for system bandwidth within a cycle will make a successful reservation before the cycle ends and a new cycle begins. The size of the back-off window is preferably equal to the number of successful reservations in the previous cycle, and functions as an estimate of the number of competing users in the current cycle.

Owner:LUCENT TECH INC

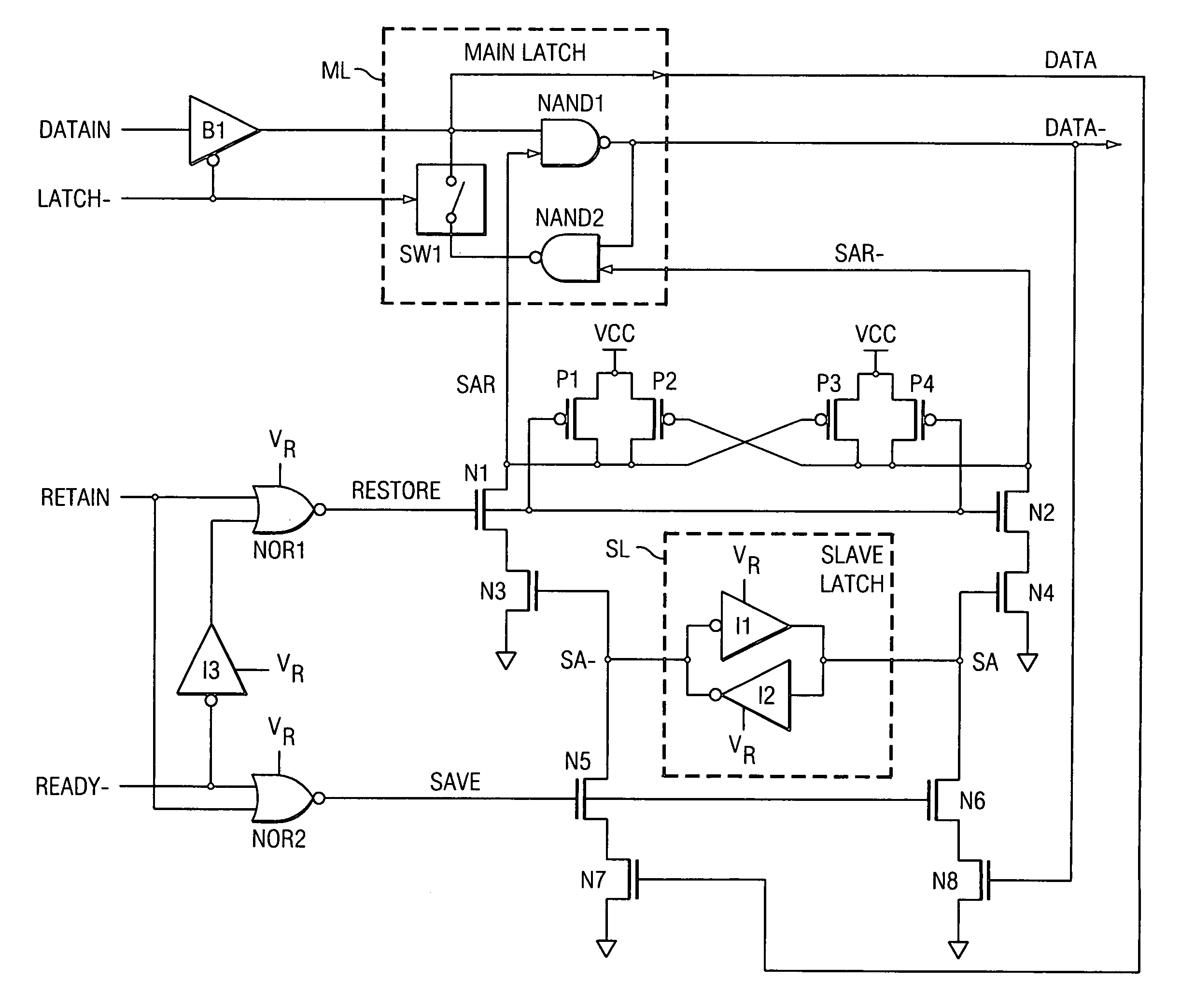

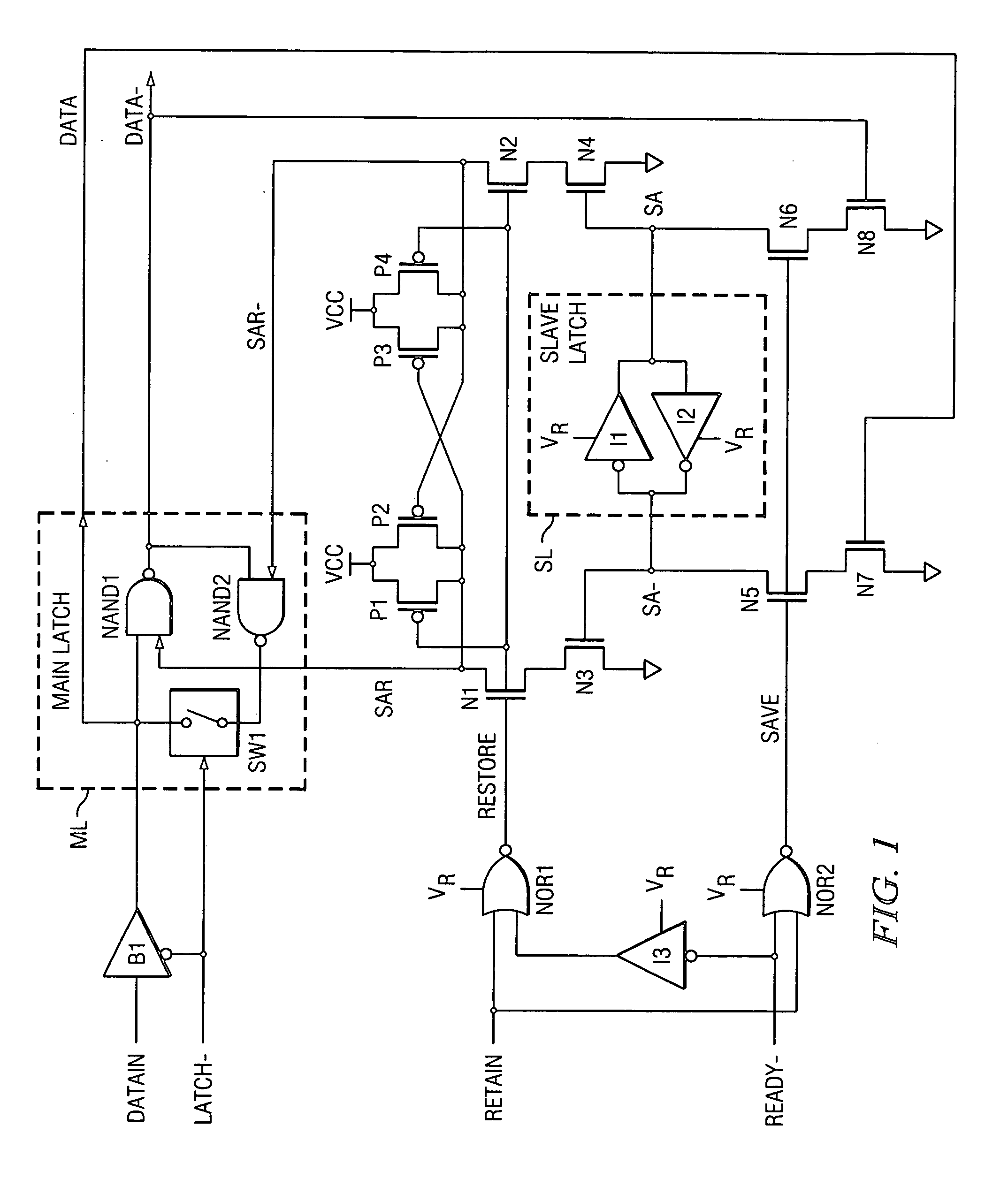

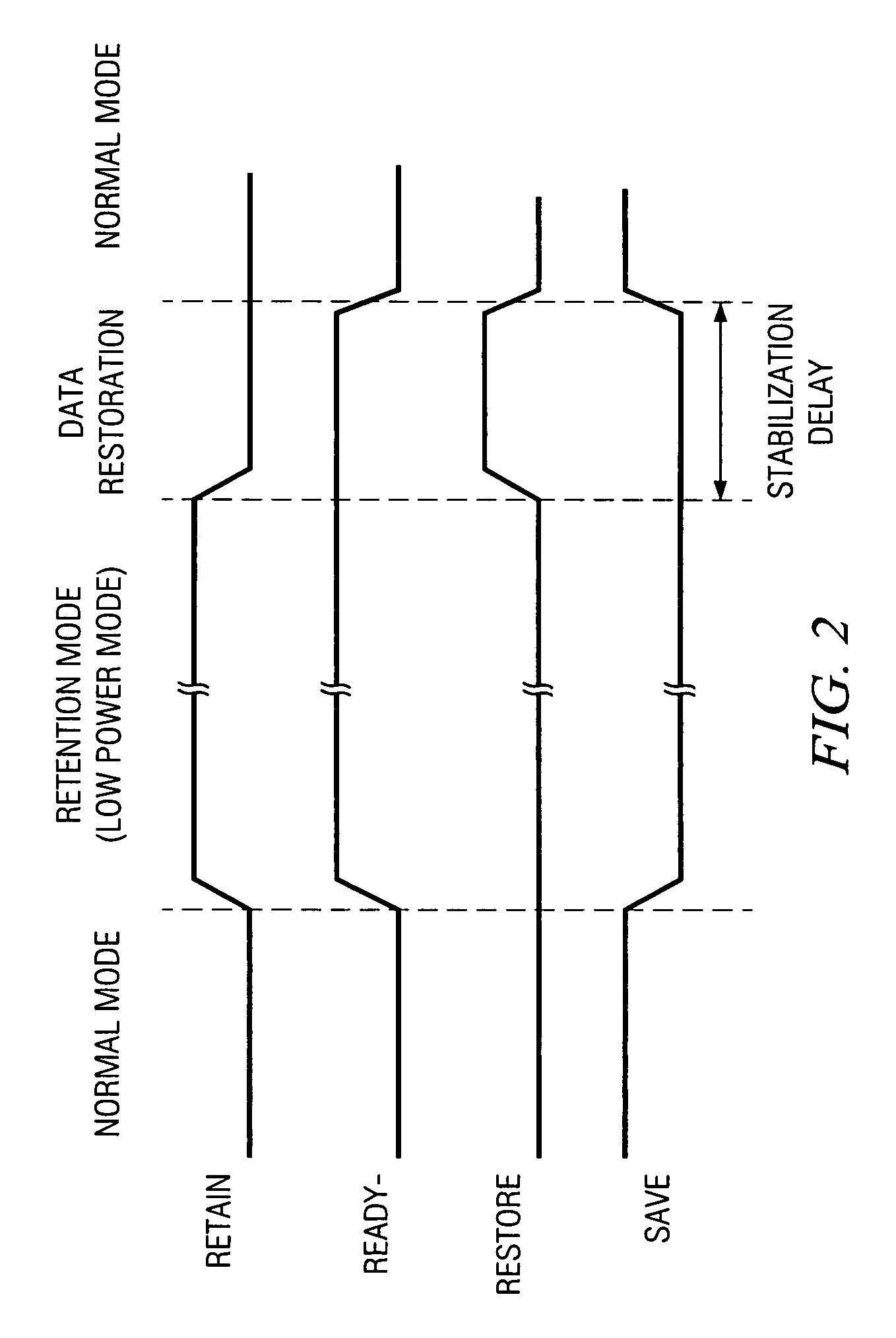

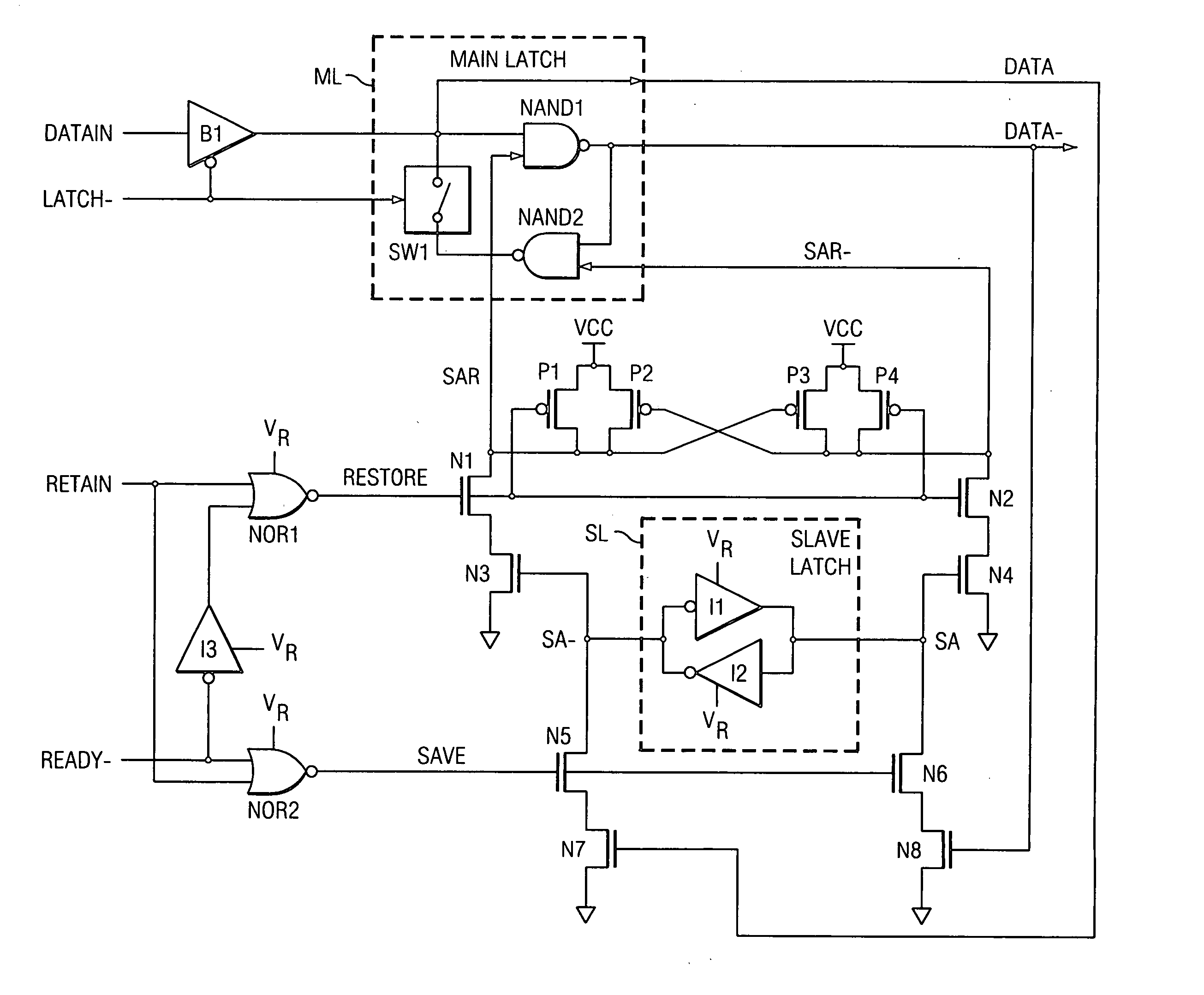

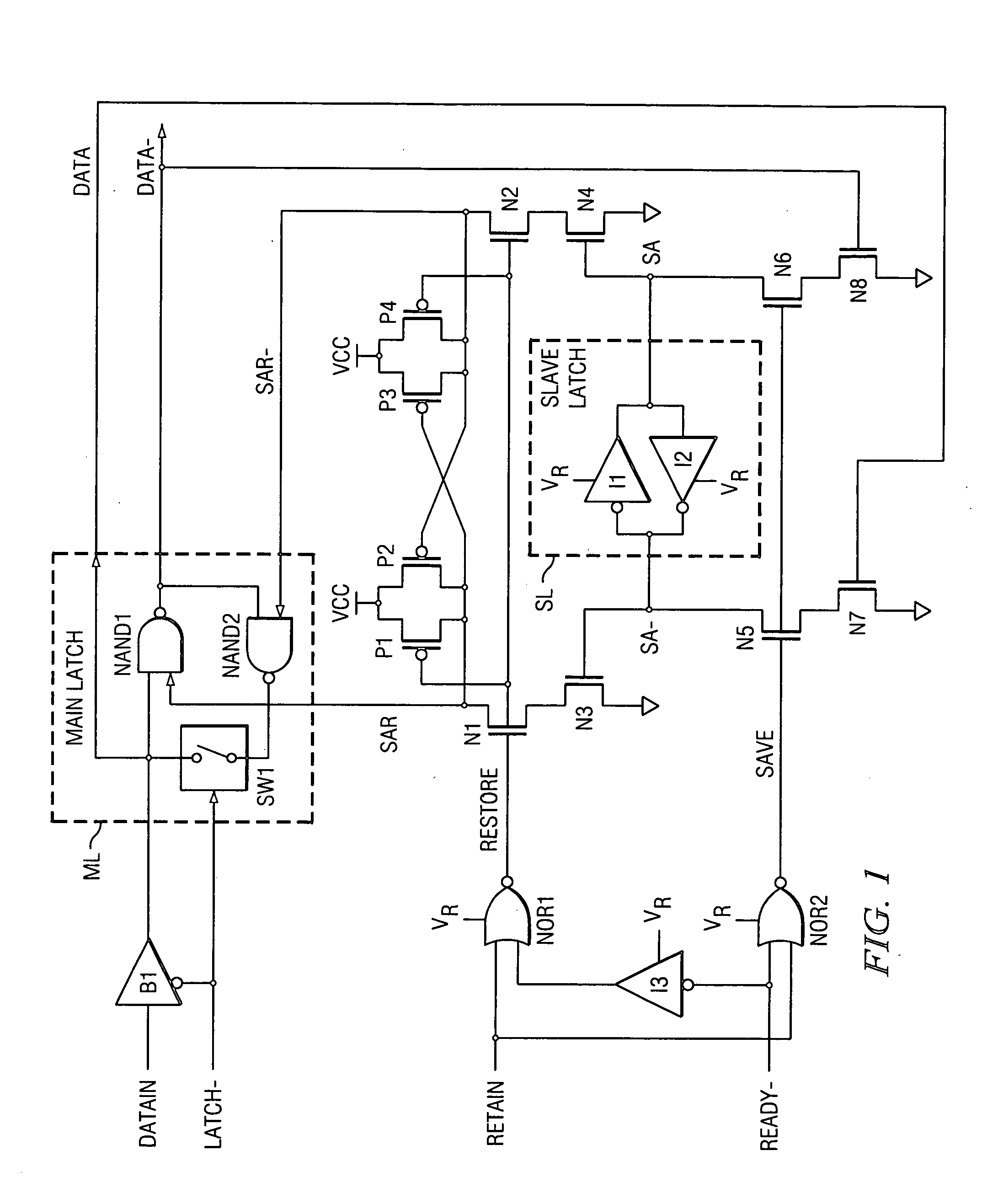

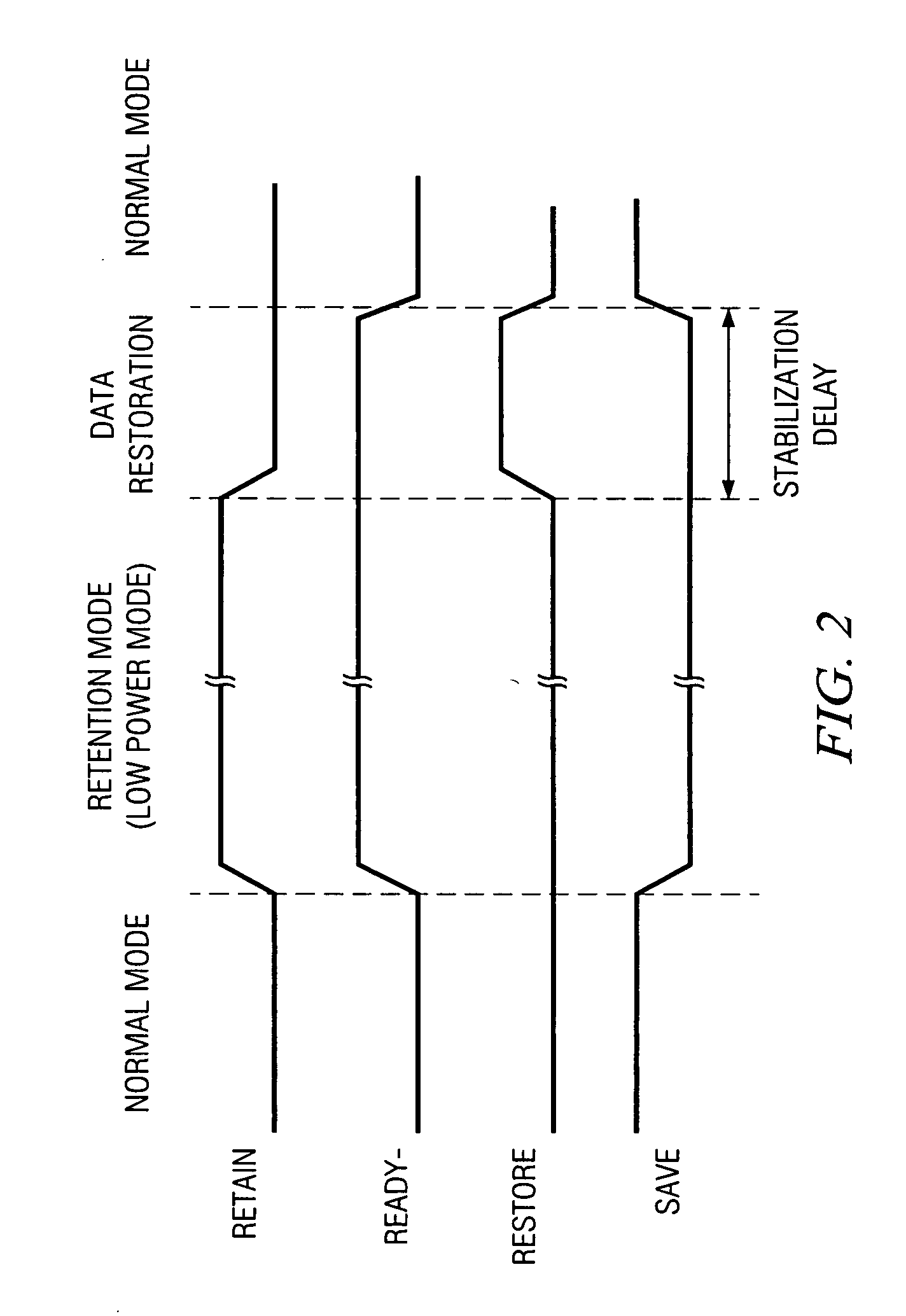

Ultra low-power data retention latch

An embodiment of a ultra low-power data retention latch circuit involves a slave latch SL that concurrently latches the same data that is loaded into a main circuit (such as a main latch ML) during normal operation. When the circuit enters a low power (data retention) mode, power (VCC) to the main latch ML is removed and the slave latch SL retains the most recent data (retained data SA, SA-). When power is being restored to the main latch ML, the slave latch's retained data SA, SA- is quickly restored to the main latch ML through what constitute Set and Reset inputs SAR, SAR- of the ML. This arrangement ensures that data restoration is much quicker than conventional arrangements that require the output data path DATA- to be stabilized before power is re-applied to the main latch. Further, there is no need to wait for power to the ML to be stable before restoring data from the SL to the ML, providing an increase in data restoration speed over conventional data retention latches. Using retained data SA, SA- (as mirrored in SAR, SAR-) to control the Set and Reset inputs prevents data contention in the main latch ML. Moreover, compared to known arrangements, the arrangement provides minimal loading on the DATA, DATA- output paths (driving only N7, N8), thus not compromising speed on the data path (DATAIN . . . DATA / DATA-) through the main latch during normal operation.

Owner:TEXAS INSTR INC

Memory systems and methods for controlling the timing of receiving read data

Embodiments of the present invention provide memory systems having a plurality of memory devices sharing an interface for the transmission of read data. A controller can identify consecutive read requests sent to different memory devices. To avoid data contention on the interface, for example, the controller can be configured to delay the time until read data corresponding to the second read request is placed on the interface.

Owner:MICRON TECH INC

Memory systems and methods for controlling the timing of receiving read data

Embodiments of the present invention provide memory systems having a plurality of memory devices sharing an interface for the transmission of read data. A controller can identify consecutive read requests sent to different memory devices. To avoid data contention on the interface, for example, the controller can be configured to delay the time until read data corresponding to the second read request is placed on the interface.

Owner:MICRON TECH INC

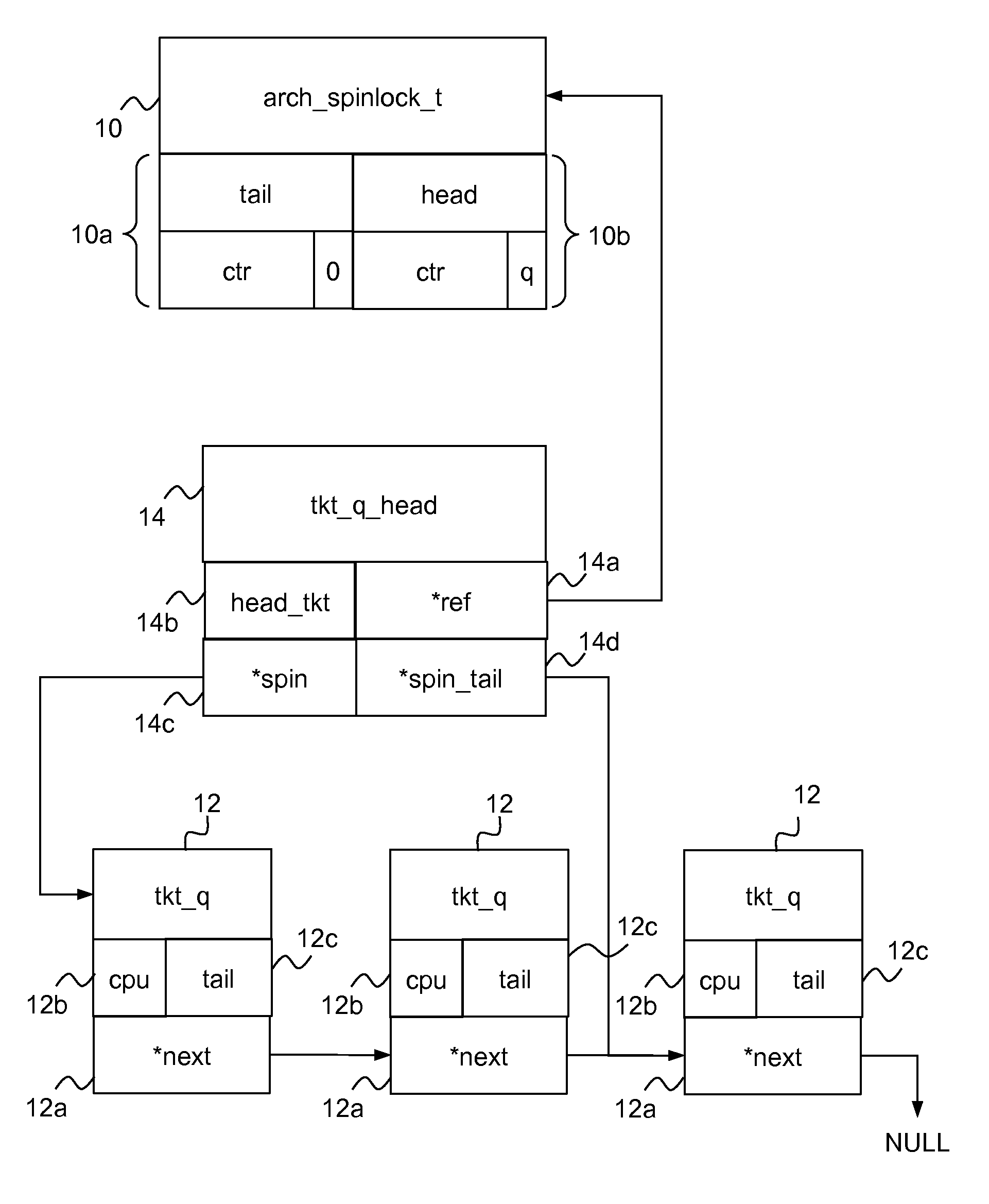

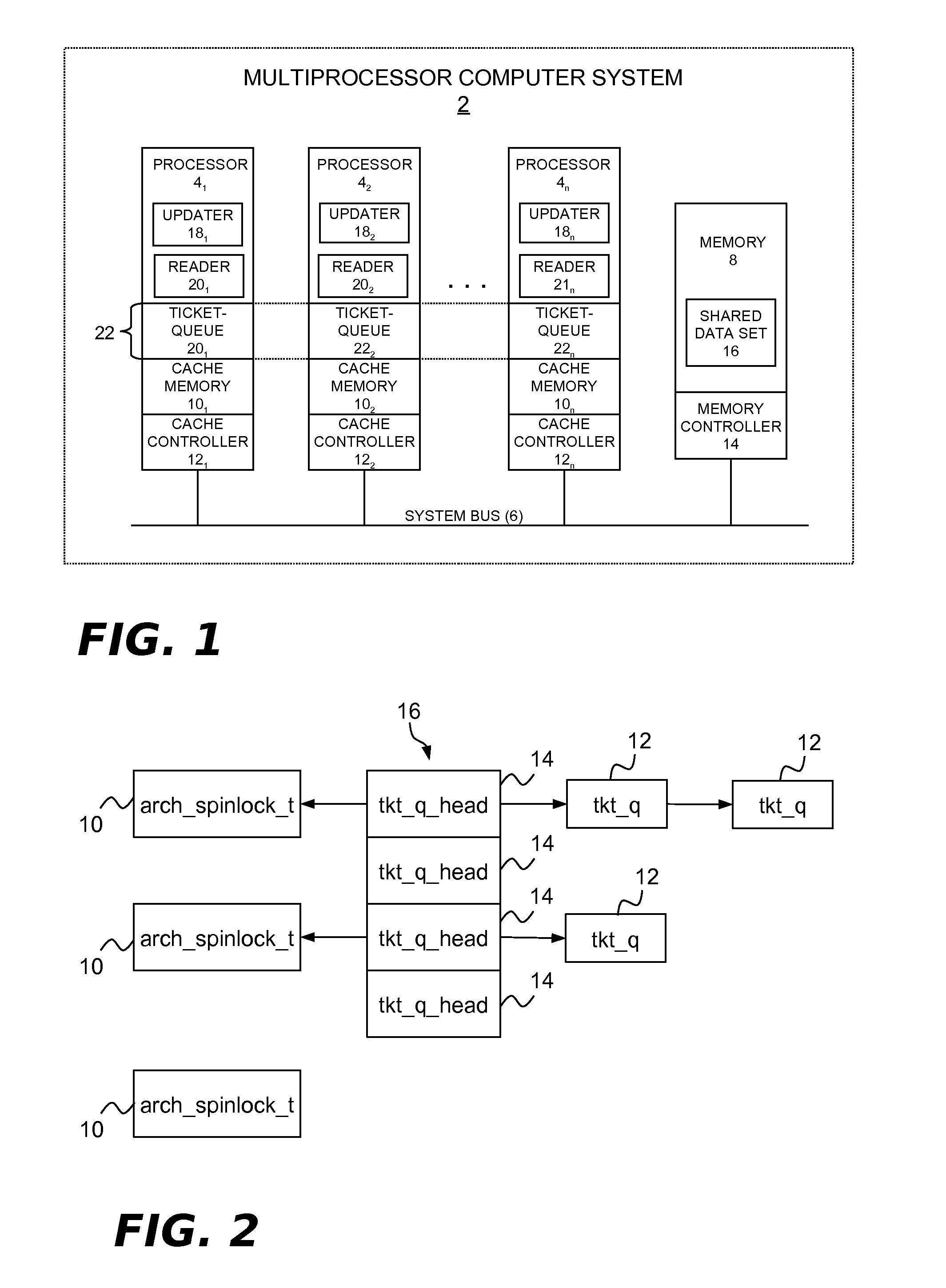

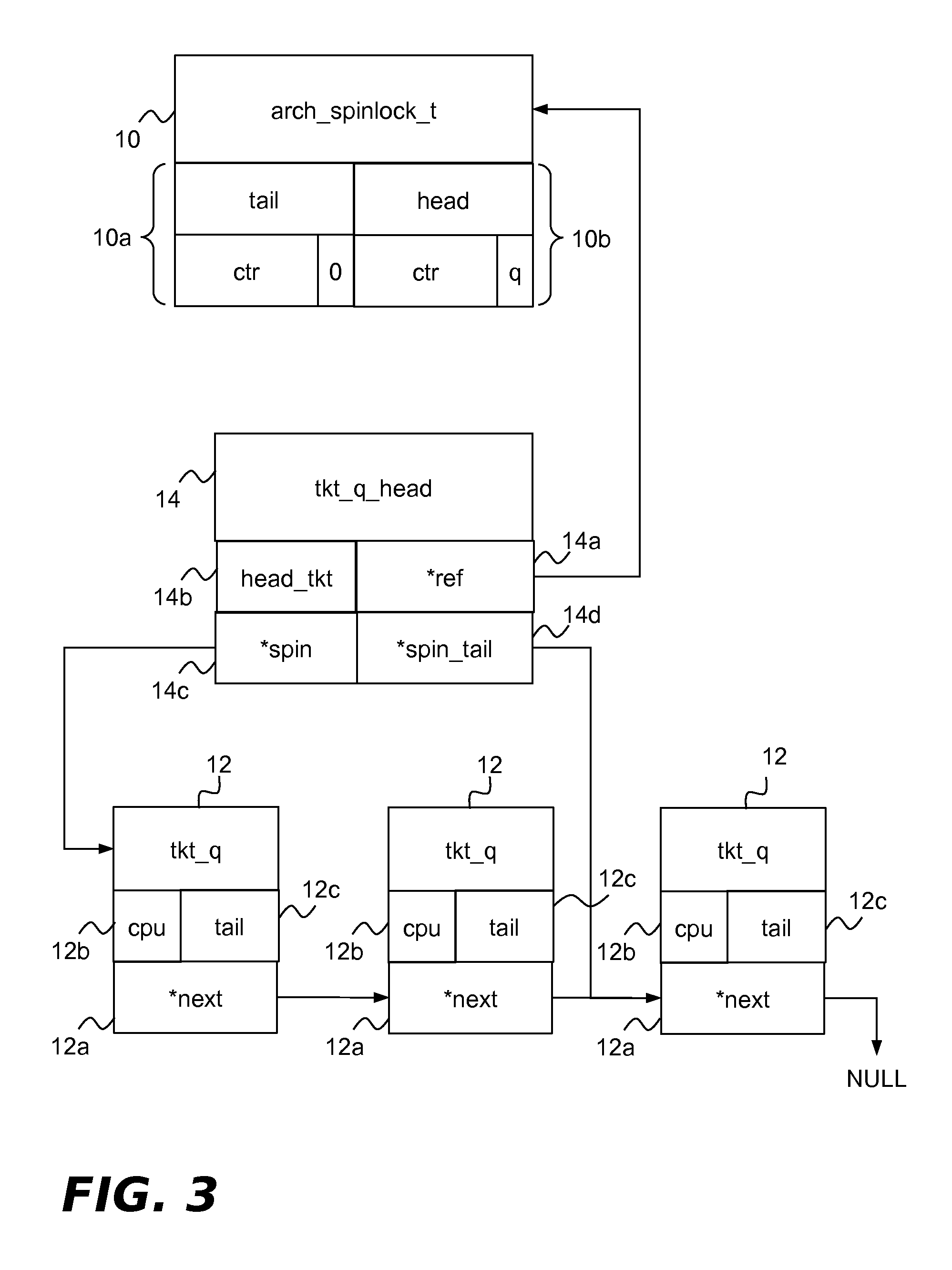

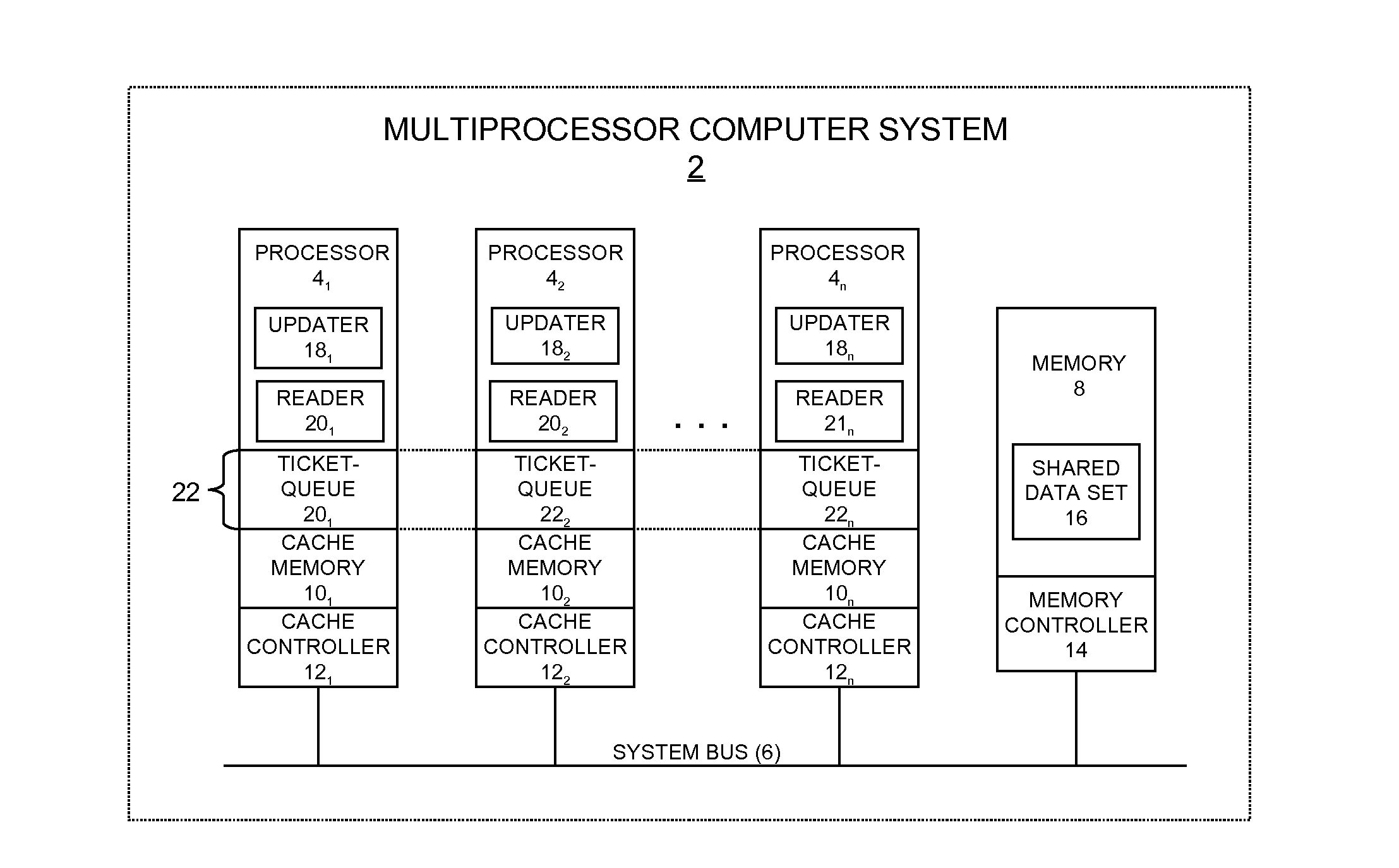

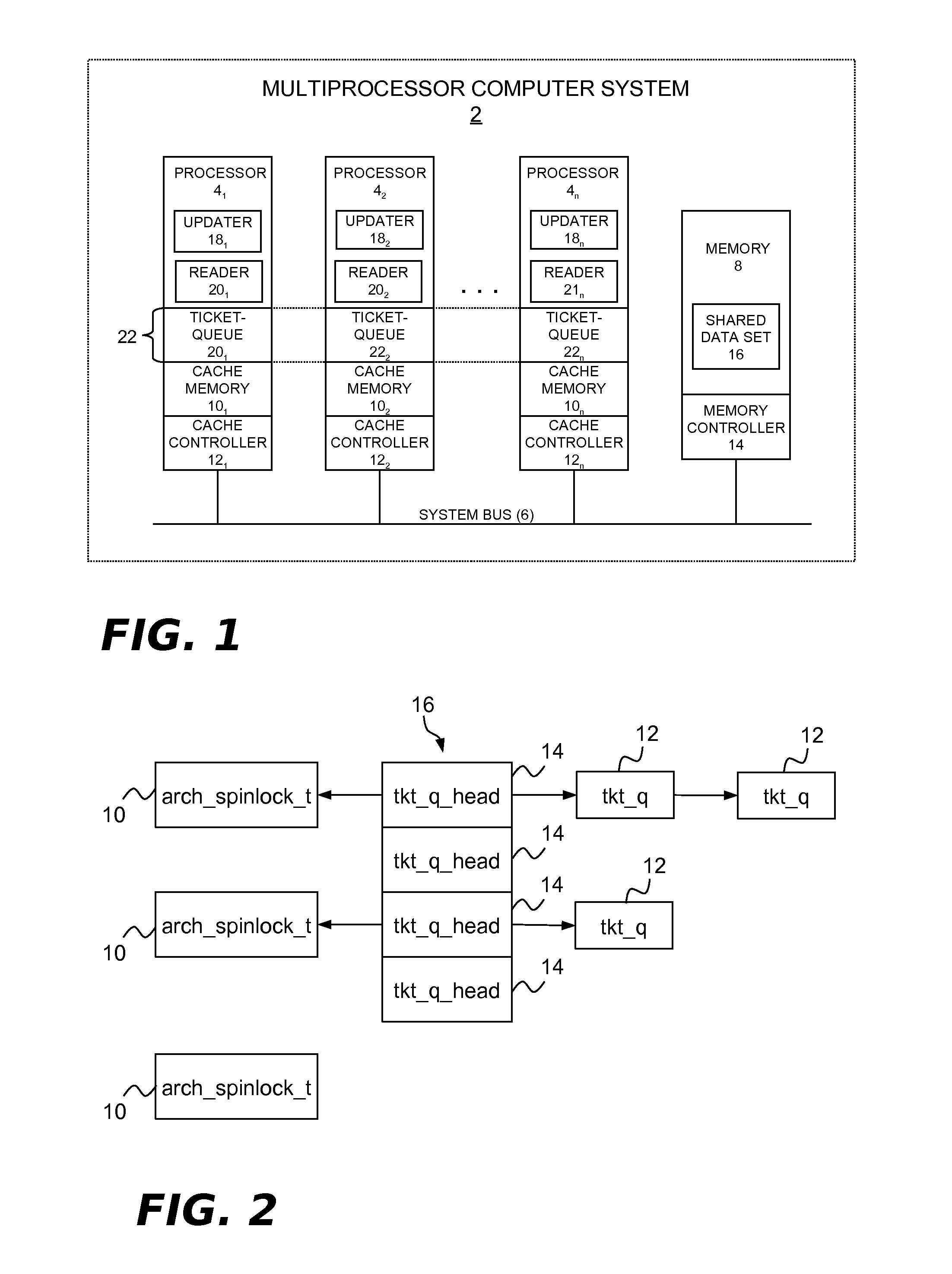

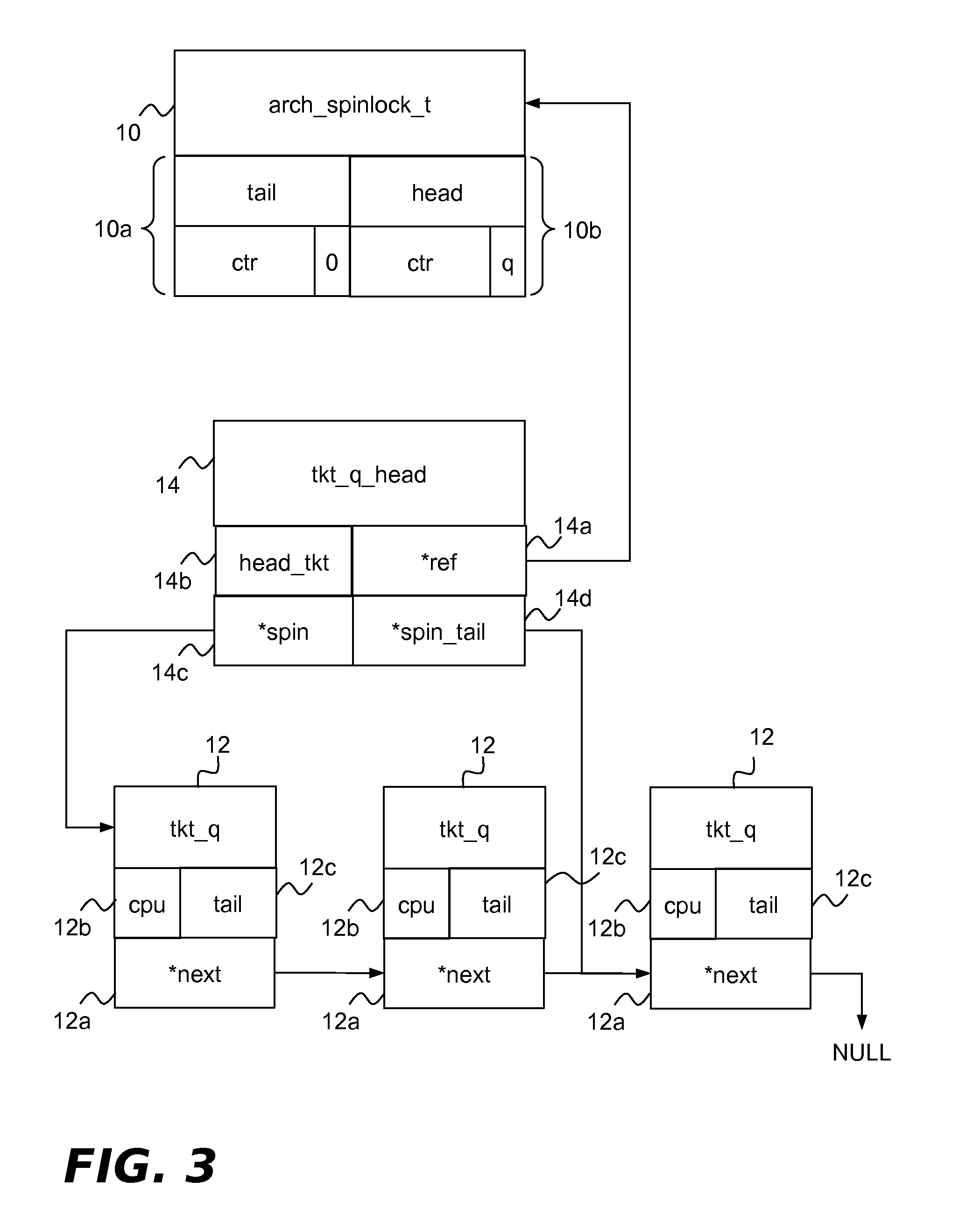

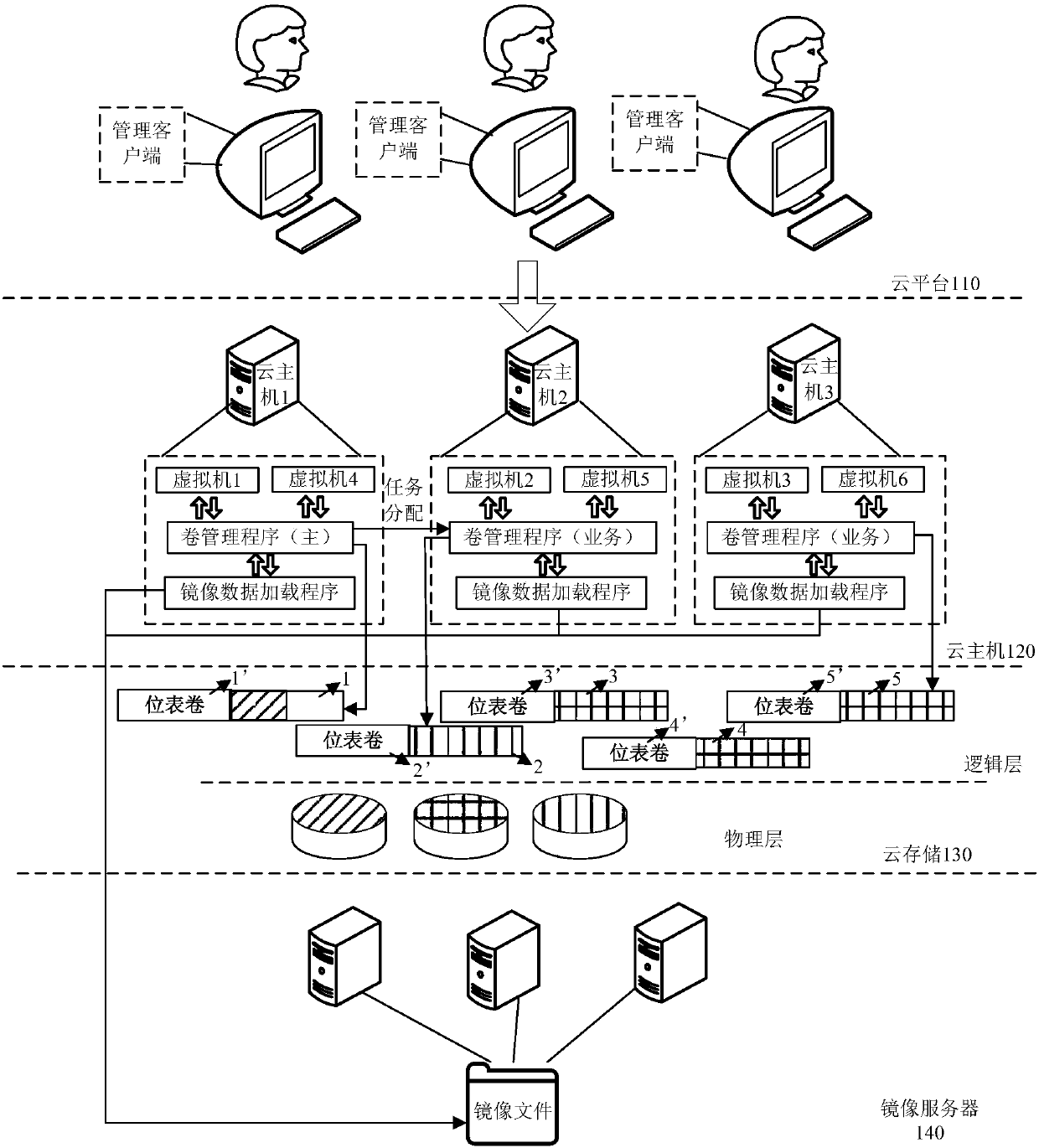

Low Overhead Contention-Based Switching Between Ticket Lock And Queued Lock

InactiveUS20140351231A1Low overhead contention-basedDigital data information retrievalDigital data processing detailsTicket lockData contention

A technique for low overhead contention-based switching between ticket locking and queued locking to access shared data may include establishing a ticket lock, establishing a queue lock, operating in ticket lock mode using the ticket lock to access the shared data during periods of relatively low data contention, and operating in queue lock mode using the queue lock to access the shared data during periods of relatively high data contention.

Owner:IBM CORP

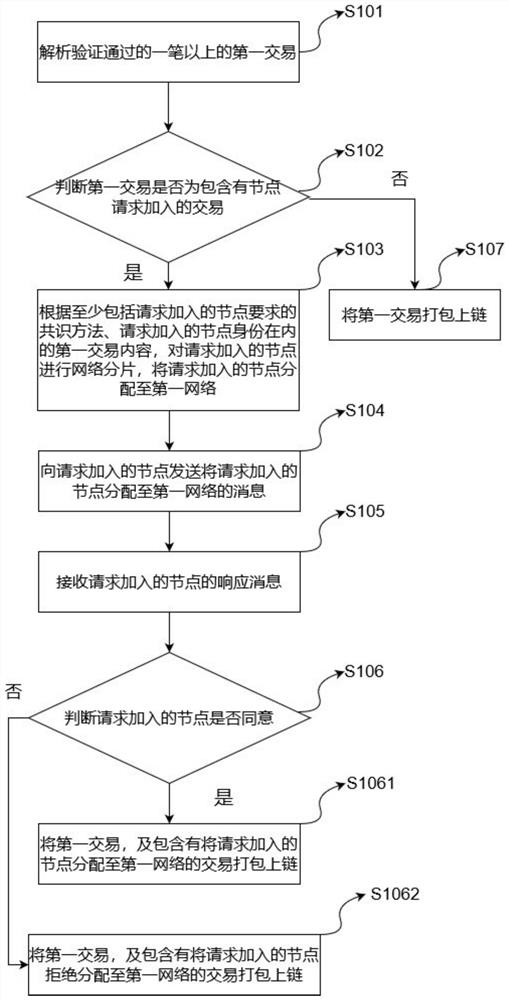

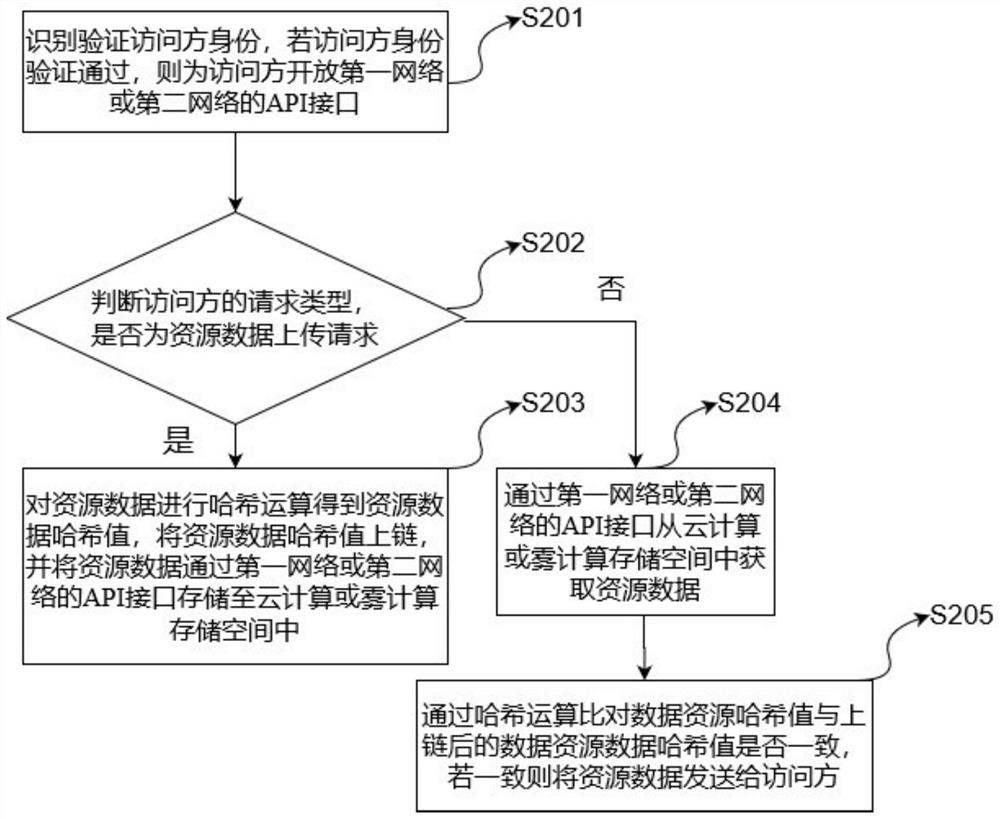

Consensus and resource transmission method and device, and storage medium

ActiveCN111612466AGuaranteed credibilityEnsure safetyUser identity/authority verificationPayment circuitsComputer networkEngineering

The invention discloses a consensus and resource transmission method and device and a storage medium, which belong to the technical field of data sharing. The method comprises the steps of analyzing the more than one first transaction passing the verification; judging whether the first transaction contains a transaction requested to be added by the node or not; if yes, according to a consensus method at least including a node request for joining and a first transaction content including a node identity requested for joining, receiving a response message of the node requesting to join; judgingwhether the node requesting to join agrees, and if so, packaging and linking the first transaction and the transaction including the node requesting to join to the first network; if not, packaging andlinking the first transaction and the transaction which contains the node which is requested to be added and refuses to be allocated to the first network; and if the first transaction does not contain the transaction requested to be added by the node, packaging and linking the first transaction. And during resource transmission, the technical problems of remote multi-activity and data conflict can be solved.

Owner:XIAMEN TANHONG INFORMATION TECH CO LTD

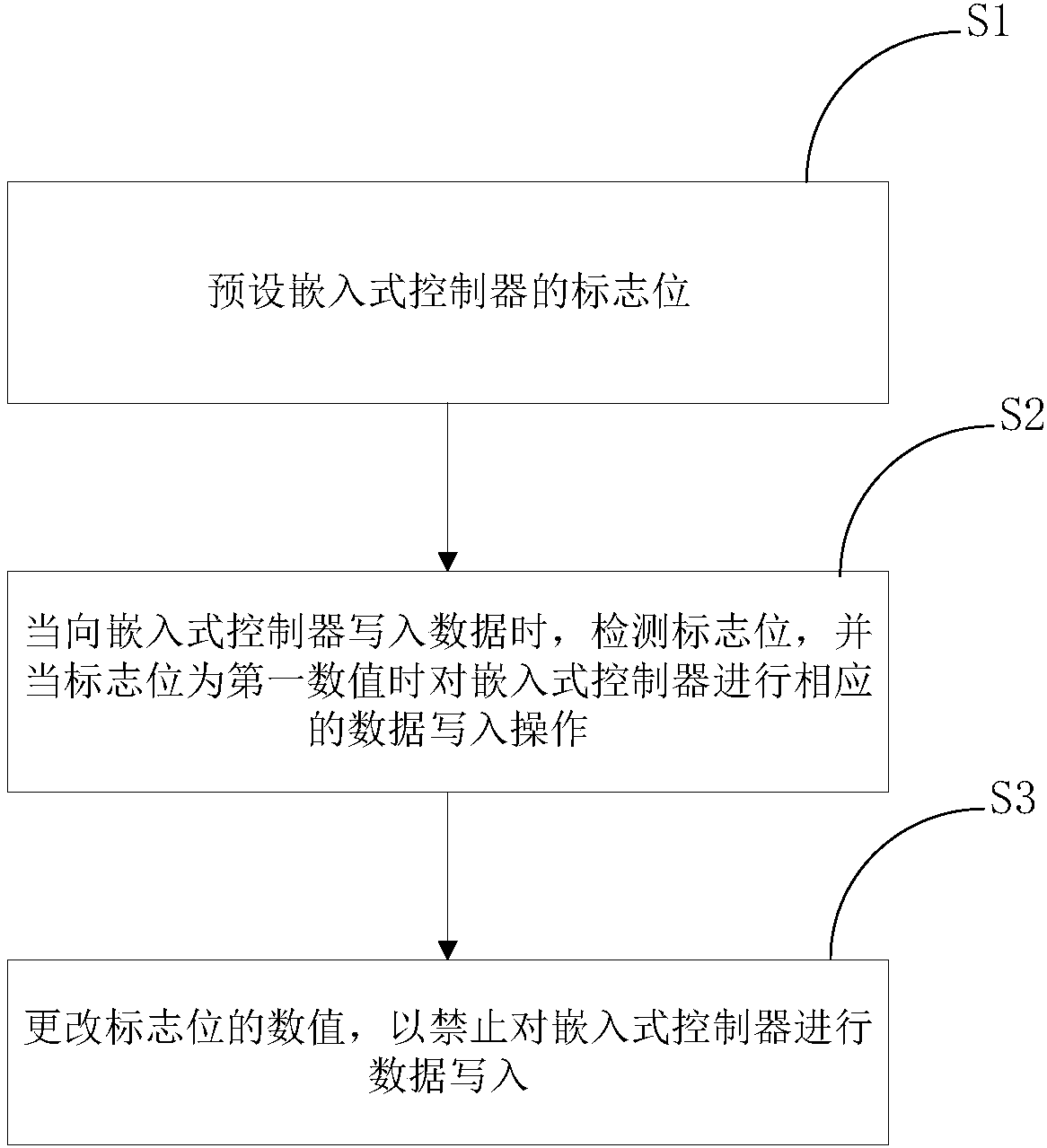

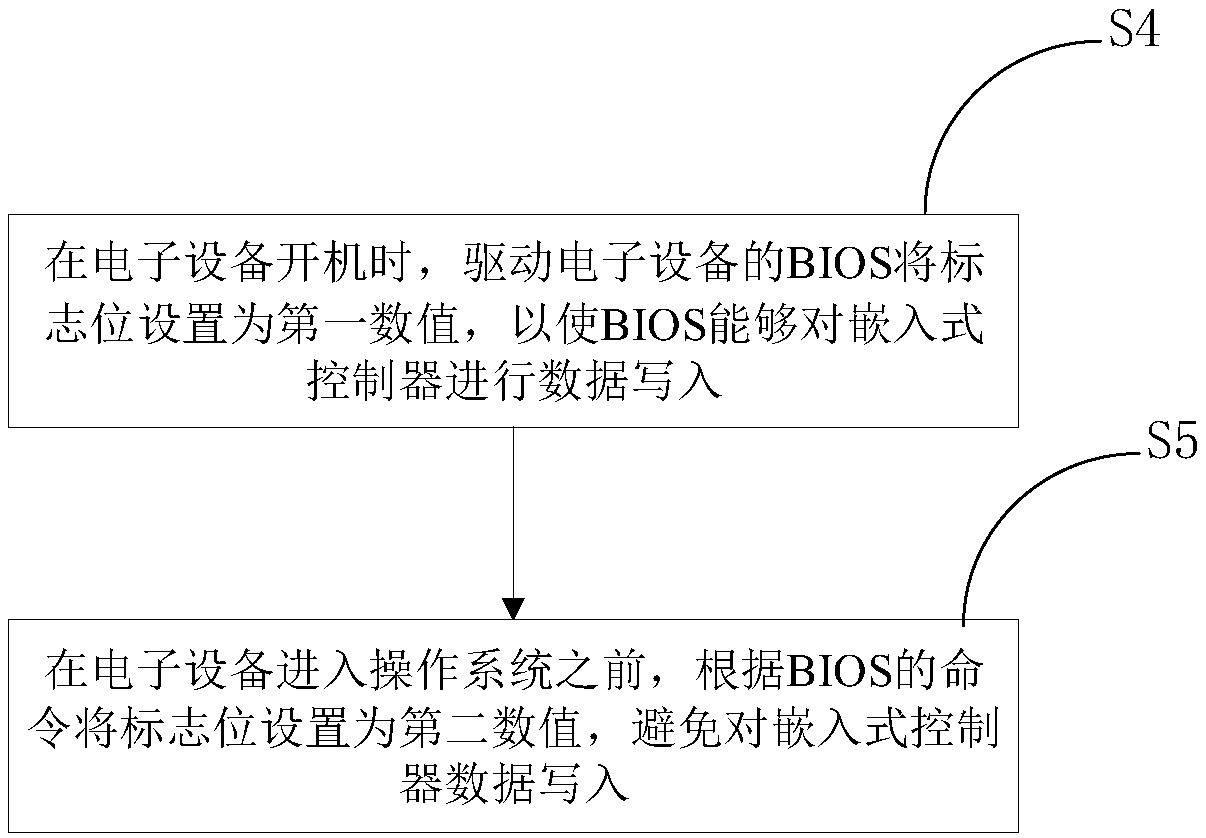

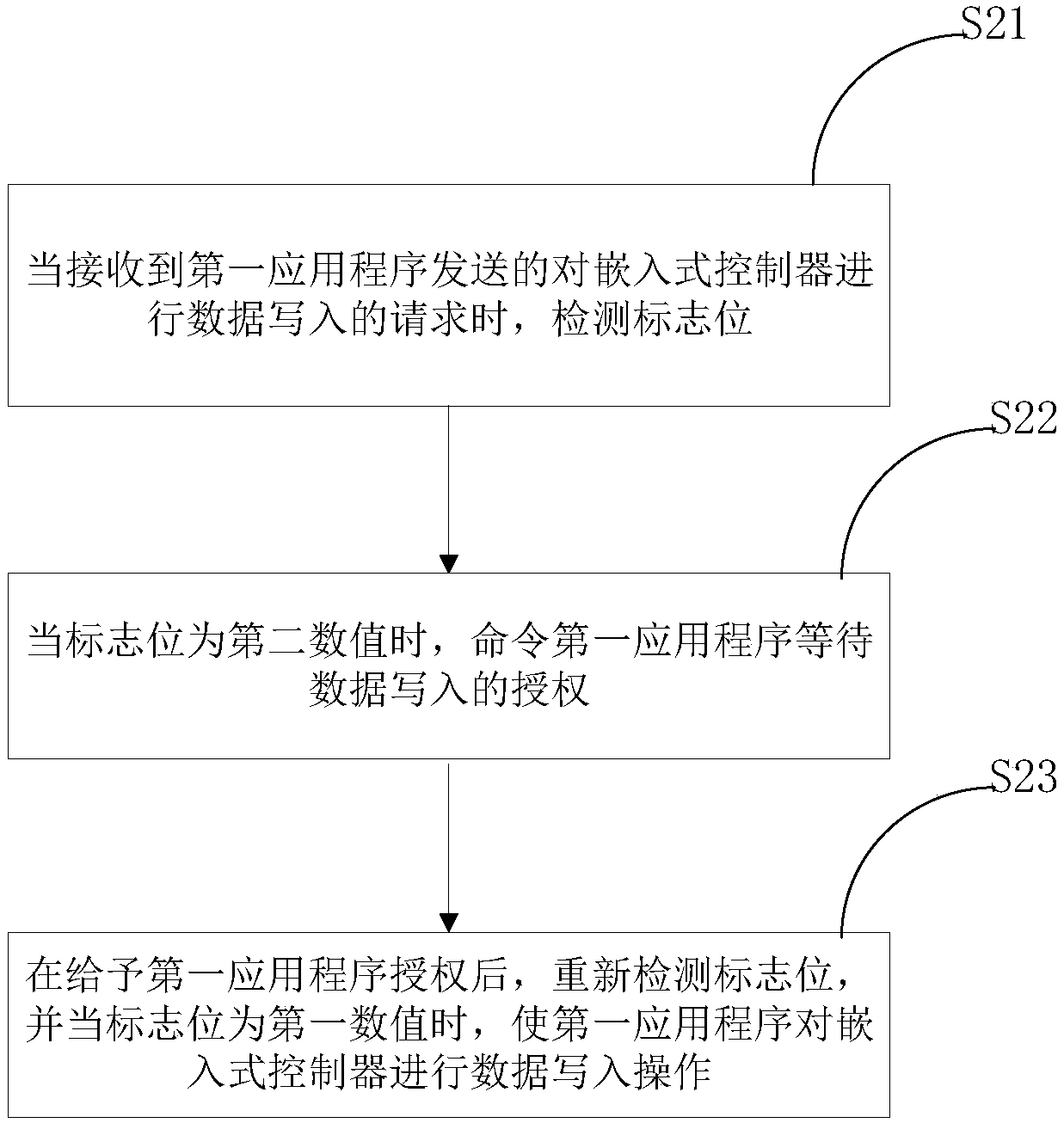

Control method for embedded controller and electronic equipment

ActiveCN107817981AAvoid illegal operationAvoid errorComputer security arrangementsSoftware deploymentDisk controllerApplication software

The invention discloses a control method for an embedded controller and electronic equipment. The method is applied to the electronic equipment. The method comprises the following steps: presetting aflag bit of the embedded controller; detecting the flag bit when data is written into the embedded controller, and performing a corresponding data writing operation on the embedded controller when theflag bit is a first numerical value; and changing the numerical value of the flag bit so as to prohibit to perform data writing on the embedded controller. According to the method, the condition thatthe embedded controller can be subjected to the writing operation by multiple operators (such as multiple application programs) simultaneously can be avoided, particularly the embedded controller canbe prevented from being illegally operated by a rogue program or equipment and the like, and the phenomenon that an error of the embedded controller occurs due to data conflict or hostile attack andthe like can be avoided.

Owner:HEFEI LCFC INFORMATION TECH

Fast and accurate data race detection for concurrent programs with asynchronous calls

ActiveUS8539450B2Error detection/correctionSoftware engineeringPointer aliasingTheoretical computer science

Owner:NEC CORP

Encryption apparatus using data encryption standard algorithm

InactiveUS6914984B2Area minimizationEliminating data contentionEncryption apparatus with shift registers/memoriesTelegraphic message interchanged in timeS-boxMultiplexer

Owner:ABOV SEMICON

Ultra low-power data retention latch

ActiveUS20050104643A1Electric pulse generatorLogic circuit coupling/interface arrangementsEngineeringDatapath

An embodiment of a ultra low-power data retention latch circuit involves a slave latch SL that concurrently latches the same data that is loaded into a main circuit (such as a main latch ML) during normal operation. When the circuit enters a low power (data retention) mode, power (VCC) to the main latch ML is removed and the slave latch SL retains the most recent data (retained data SA, SA-). When power is being restored to the main latch ML, the slave latch's retained data SA, SA- is quickly restored to the main latch ML through what constitute Set and Reset inputs SAR, SAR- of the ML. This arrangement ensures that data restoration is much quicker than conventional arrangements that require the output data path DATA- to be stabilized before power is re-applied to the main latch. Further, there is no need to wait for power to the ML to be stable before restoring data from the SL to the ML, providing an increase in data restoration speed over conventional data retention latches. Using retained data SA, SA- (as mirrored in SAR, SAR-) to control the Set and Reset inputs prevents data contention in the main latch ML. Moreover, compared to known arrangements, the arrangement provides minimal loading on the DATA, DATA- output paths (driving only N7, N8), thus not compromising speed on the data path (DATAIN . . . DATA / DATA-) through the main latch during normal operation.

Owner:TEXAS INSTR INC

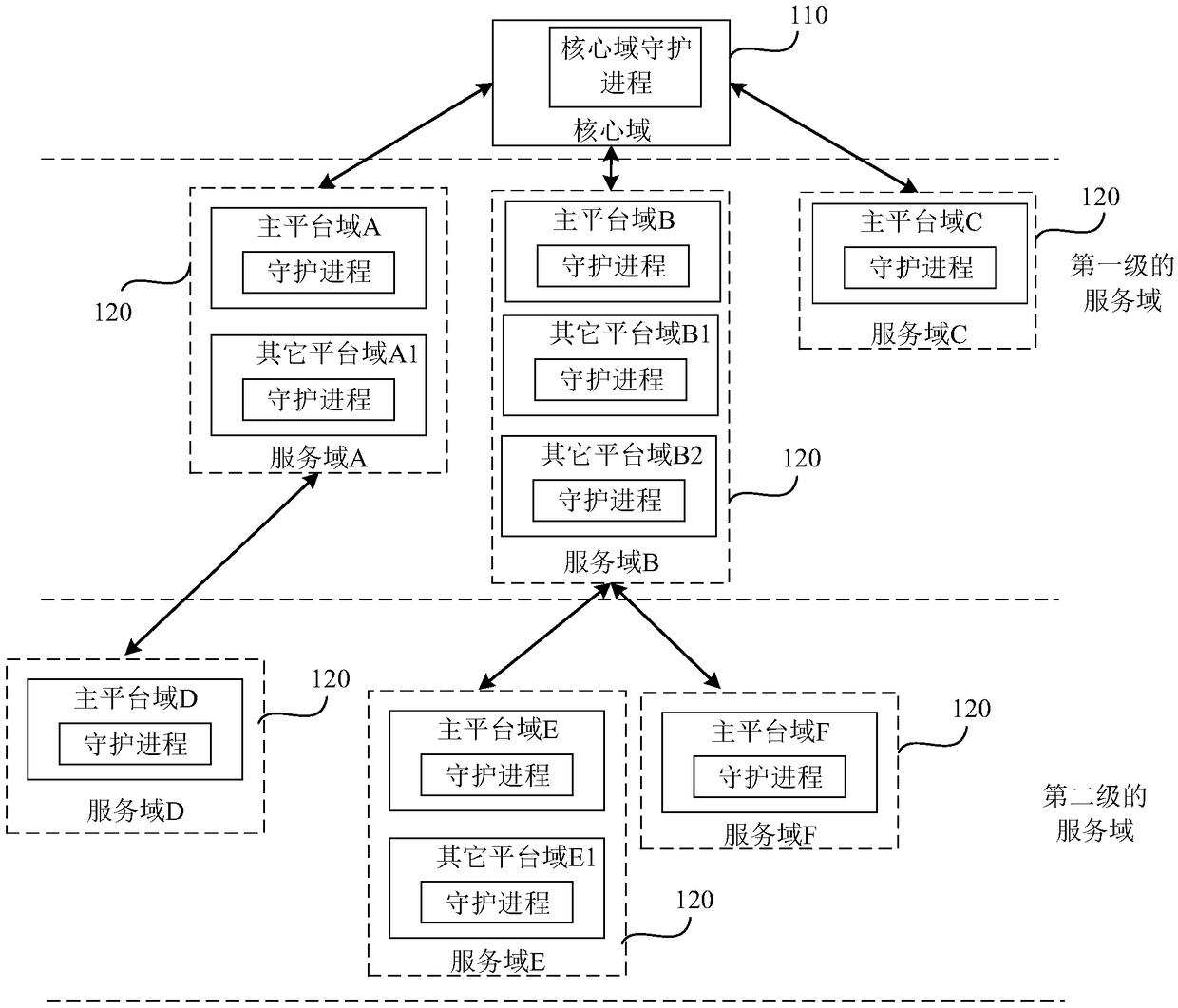

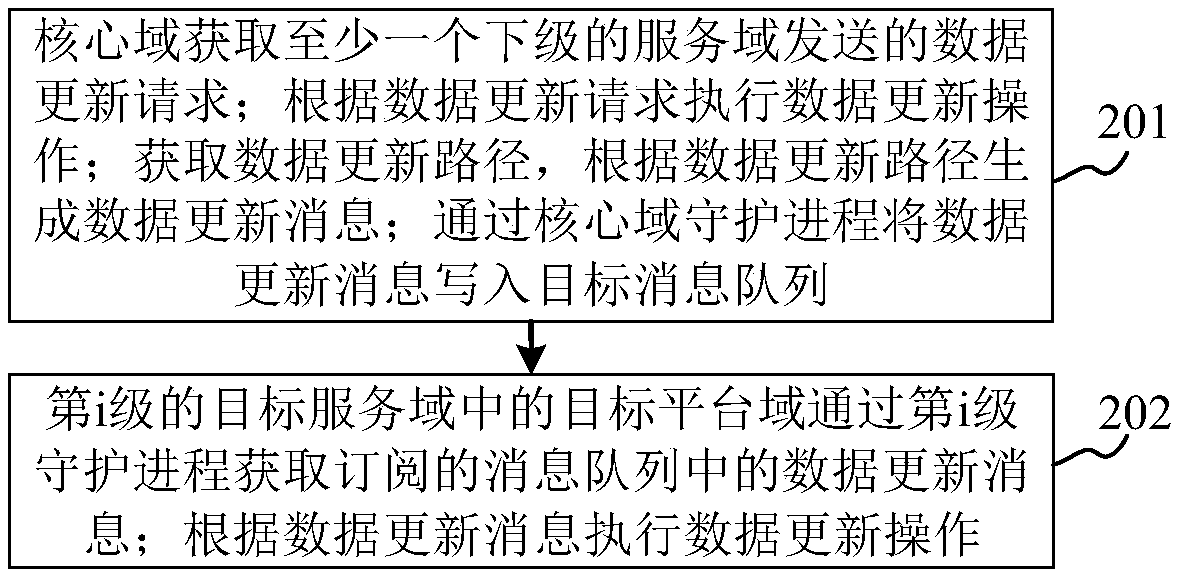

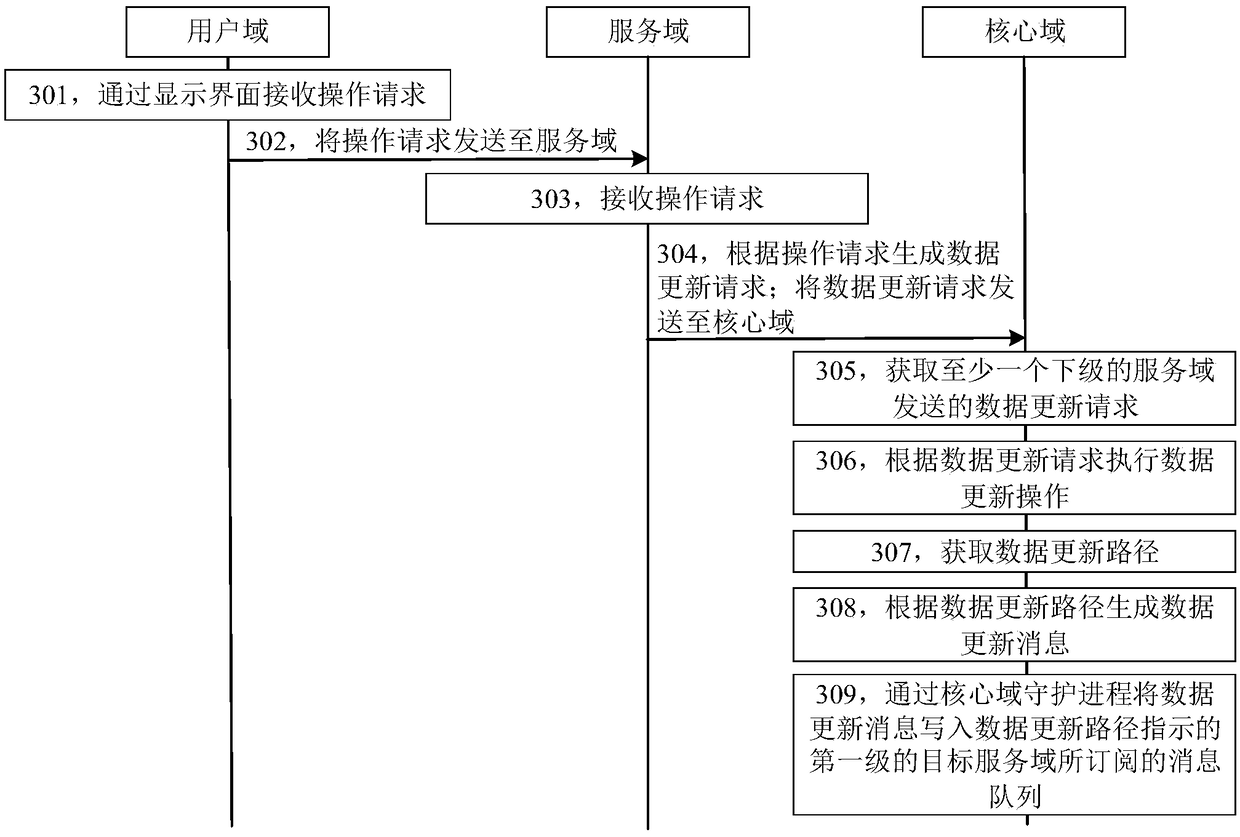

Data synchronization system, method, device and storage medium

ActiveCN109167819AEnsure consistencyResolve data conflictsTransmissionData synchronizationMessage queue

The invention relates to a data synchronization system, a method, a device and a storage medium, belonging to the technical field of data synchronization. The system comprises a core domain and an n-level service domain. At least one service domain comprises m platform domains, and the core domain is located at the upper level of the n-level service domain; the core domain, configured to obtain adata update request sent by at least one subordinate service domain; executing a data update operation according to the data update request; acquiring a data update path and generating a data update message according to the data update path; writing a data update message into a message queue subscribed by a target service domain of a first level indicated by a data update path through a core domain daemon; a target platform domain in the target service domain of the i-th level for obtaining a data update message in a subscribed message queue through the i-th daemon; executing a data update operation according to the data update message. The data synchronization system, the method, the device and the storage medium can solve the problem of data conflict; Ensure the consistency of the updated data.

Owner:SUZHOU KEDA TECH

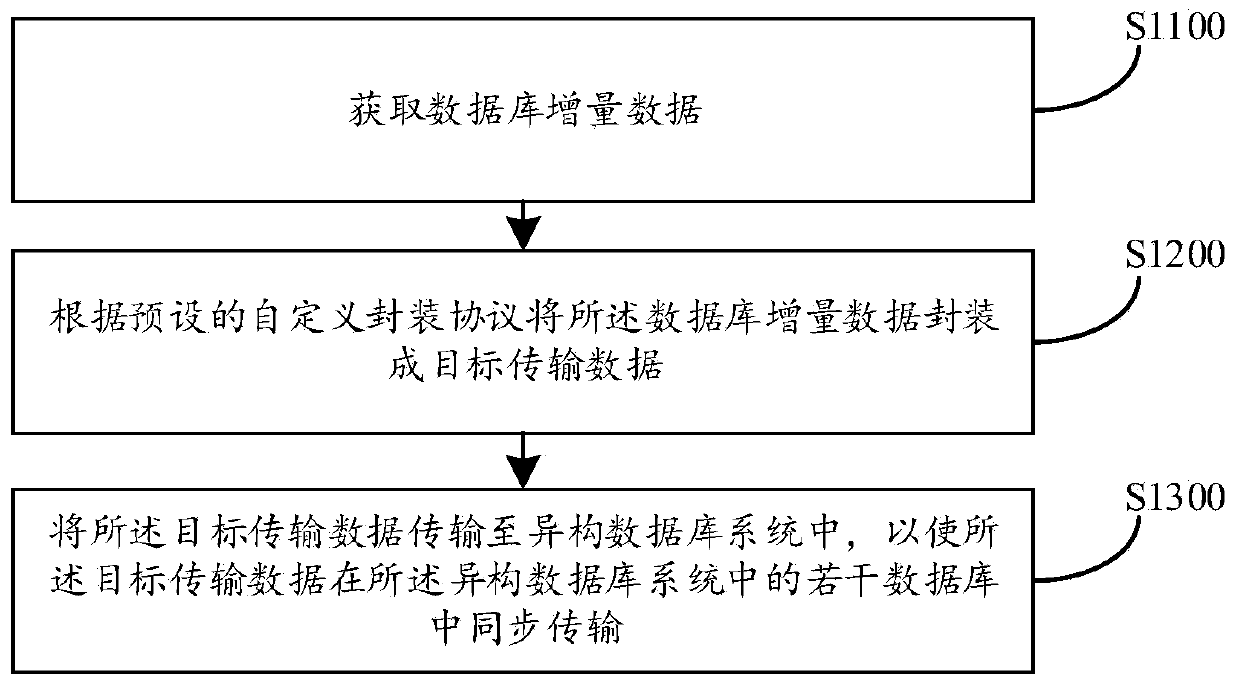

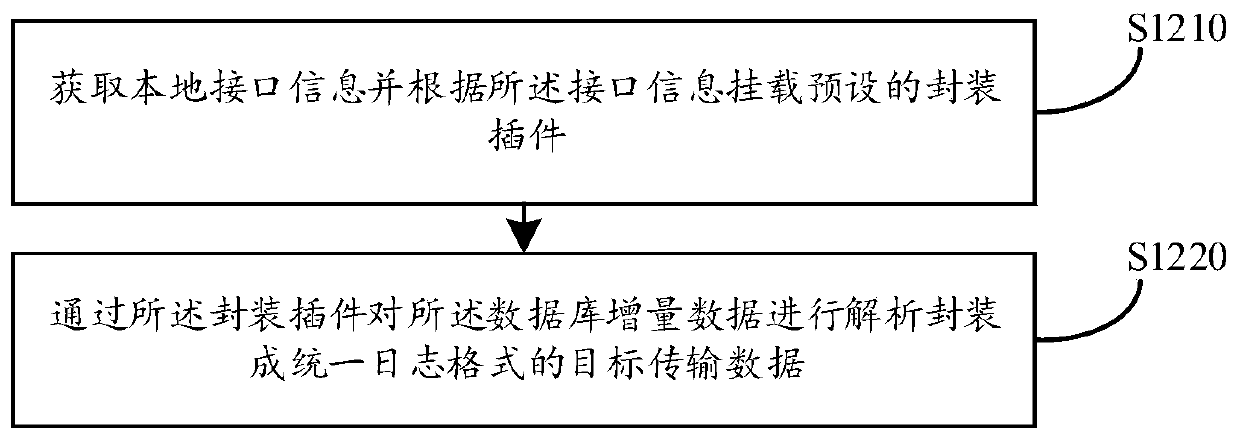

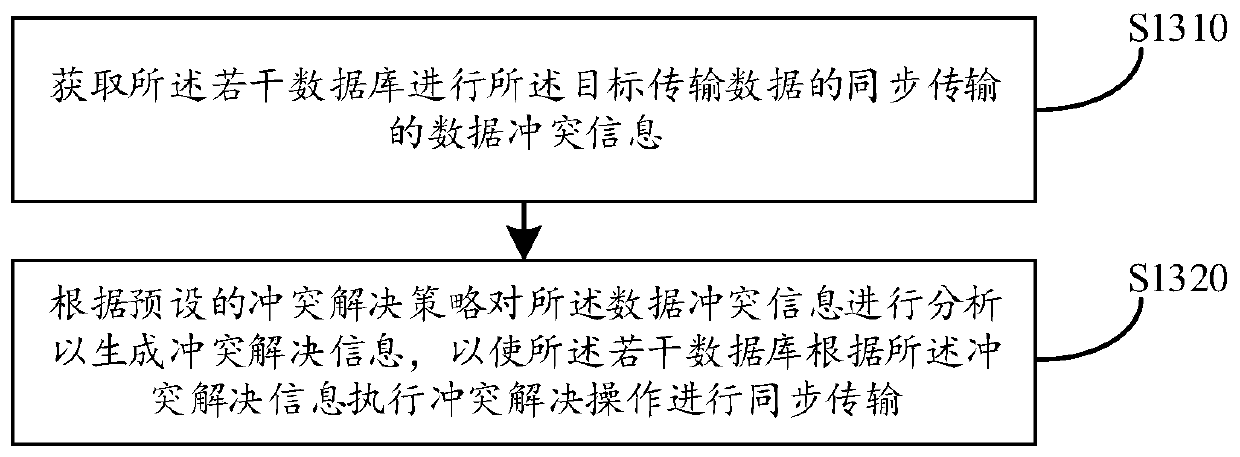

Heterogeneous database synchronization method and device, computer equipment and storage medium

ActiveCN111382201AEasy to replace and migrateAchieve eventual consistencyDatabase updatingDatabase distribution/replicationData transportData mining

Embodiments of the invention disclose a heterogeneous database synchronization method and apparatus, computer equipment and a storage medium. The method comprises the following steps: obtaining database incremental data; packaging the incremental data of the database into target transmission data according to a preset custom packaging protocol; and transmitting the target transmission data to a heterogeneous database system, so that the target transmission data is synchronously transmitted in a plurality of databases in the heterogeneous database system. According to the embodiment of the invention, database incremental data is acquired, the database incremental data is packaged into target transmission data according to a preset custom packaging protocol, and the target transmission datais transmitted to a heterogeneous database system, so the target transmission data is synchronously transmitted by multiple databases in the heterogeneous database system, data conflicts possibly occurring in the data transmission process can be effectively avoided, final consistency of the data is achieved, version iterability of the bottom-layer database is improved, and replacement and migration of the database are facilitated.

Owner:GUANGZHOU BAIGUOYUAN INFORMATION TECH CO LTD

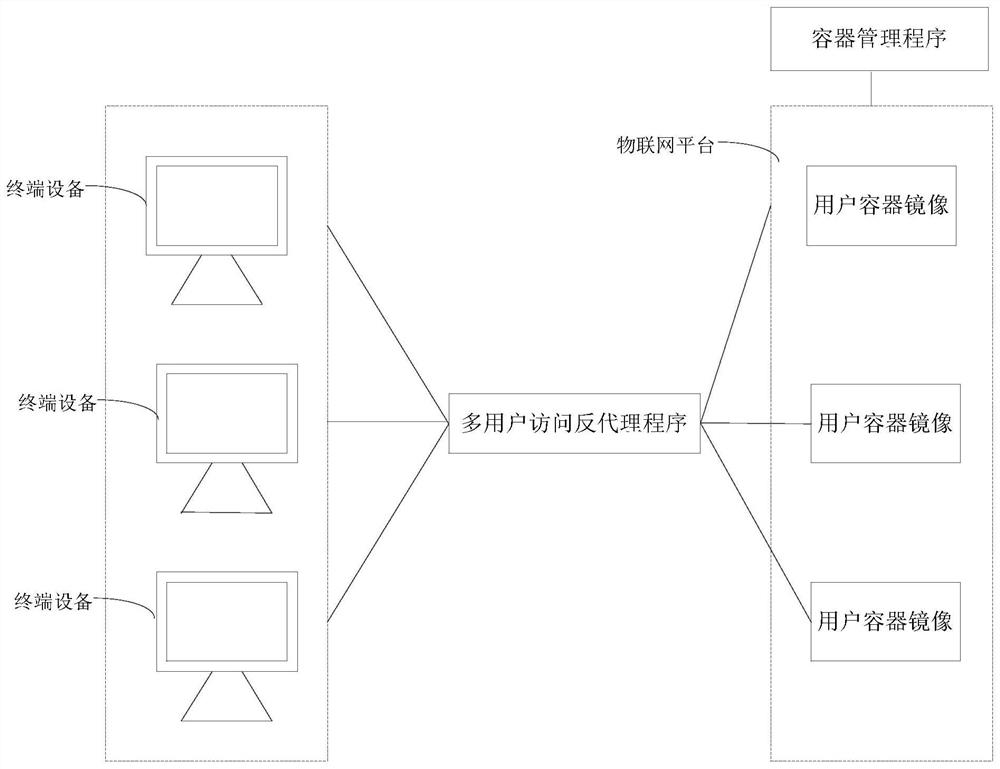

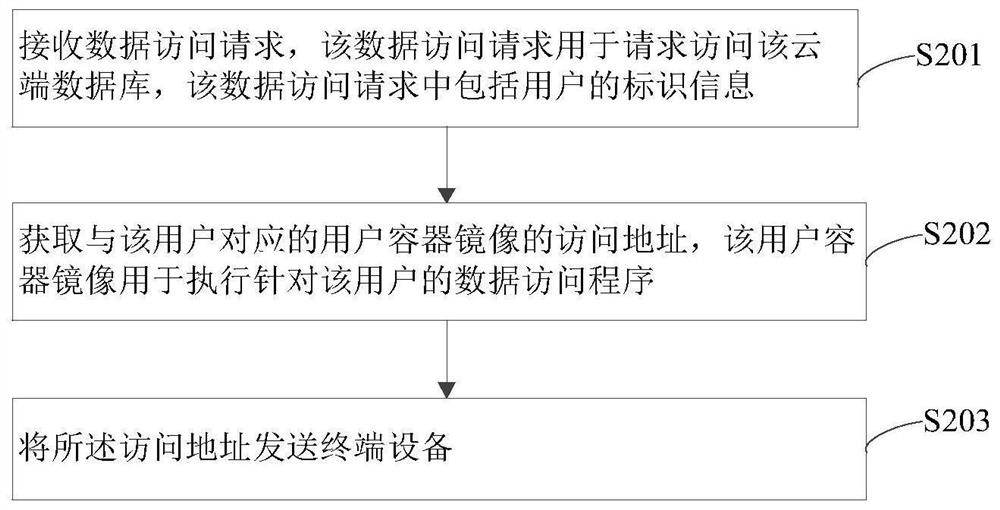

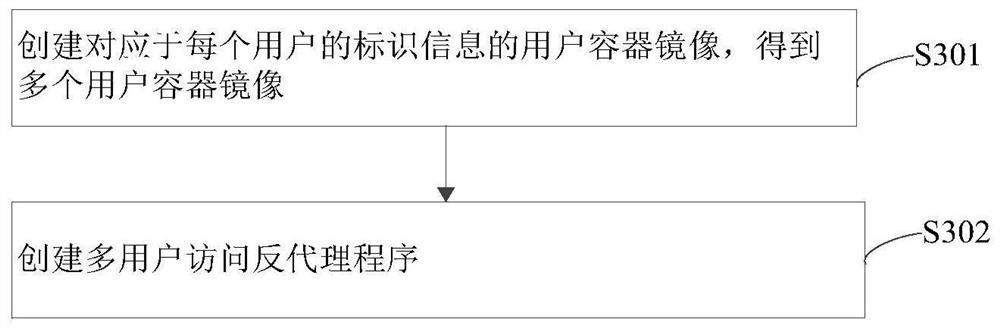

Data access method and device and computer readable storage medium

PendingCN111881470ANo data access violationResolve access violationsInterprogram communicationDigital data protectionData accessTerminal equipment

The invention provides a data access method and device and a computer readable storage medium, the data access method comprises the steps: receiving a data access request, wherein the data access request is used for requesting to access a cloud database, and the data access request comprises identification information of a user; obtaining an access address of a user container mirror image corresponding to the user, wherein the user container mirror image is used for executing a data access program for the user; and sending the access address to terminal equipment. According to the method and the apparatus, multi-user access isolation can be realized when multiple users access the cloud database in parallel, so that data conflicts generated when the users access the data are prevented.

Owner:易通星云(北京)科技发展有限公司

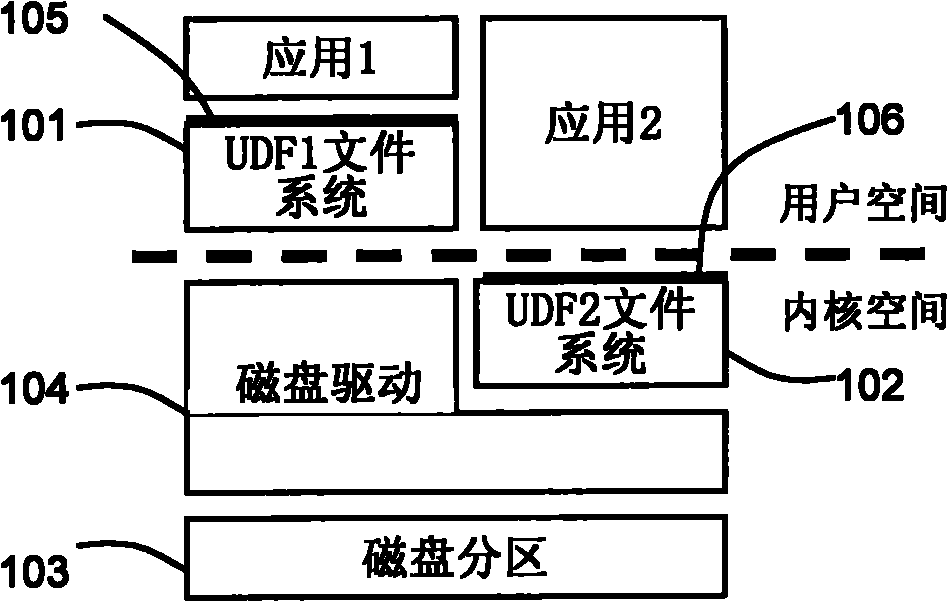

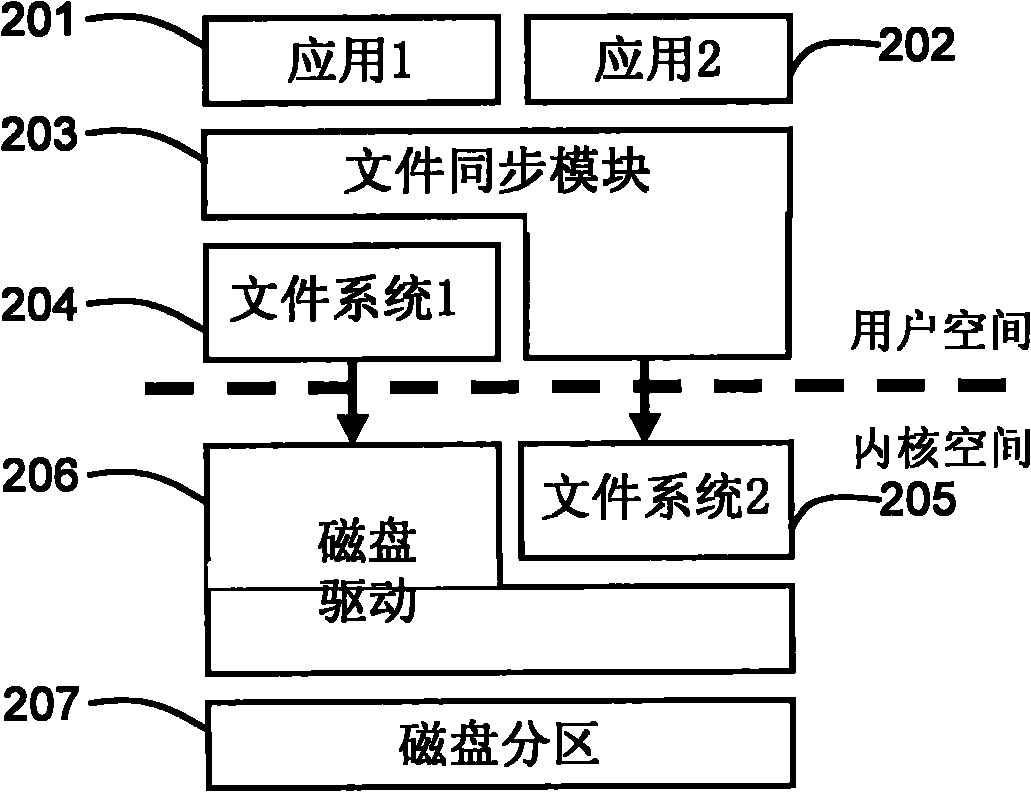

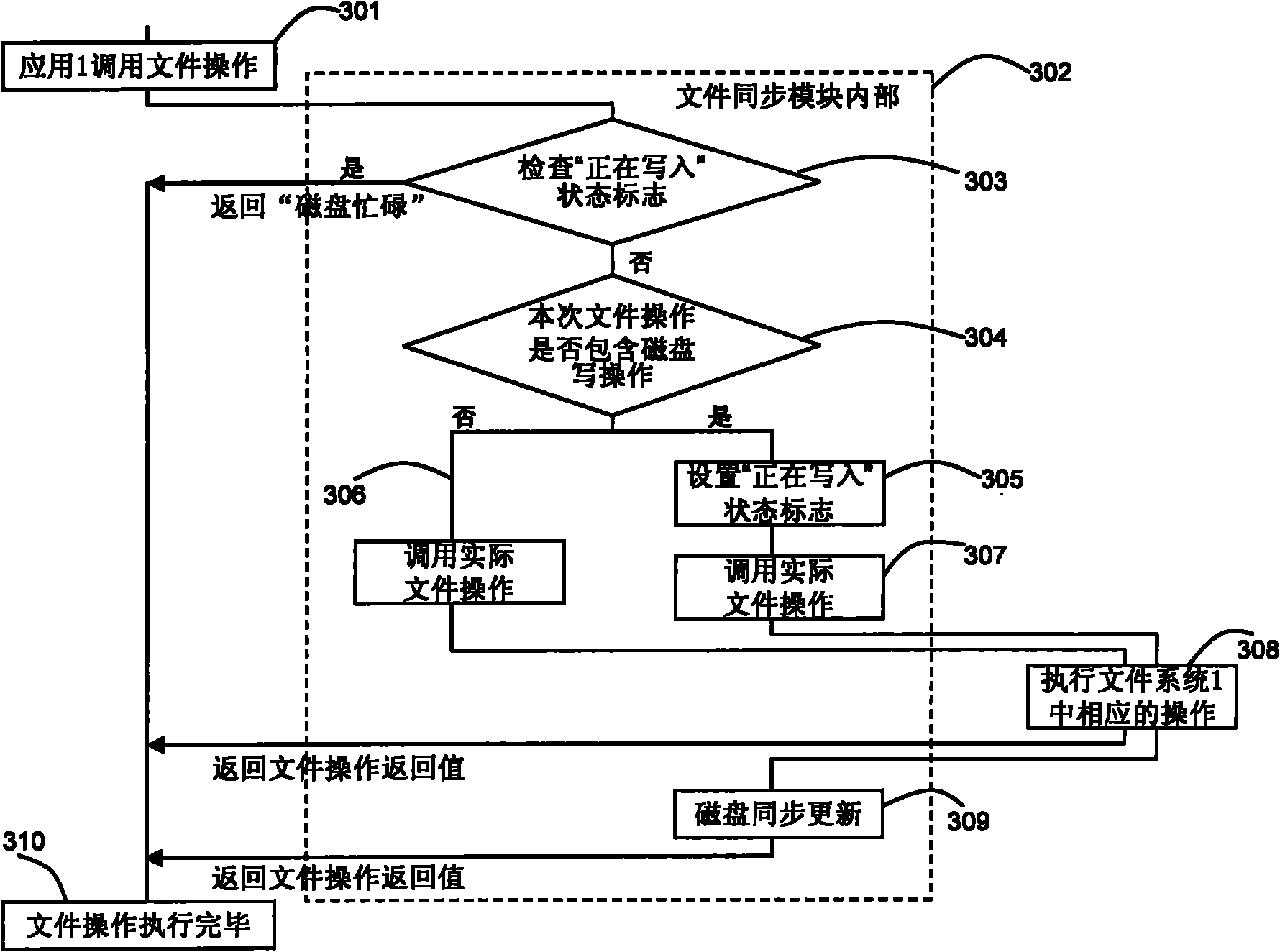

Device and method for regulating interface access of plurality of file systems on same disk partition

InactiveCN102419757AResolve data conflictsGuaranteed exclusivitySpecial data processing applicationsFile systemData contention

The invention provides a device and a method for regulating interface access of a plurality of file systems on the same disk partition. A plurality of file systems comprise a first file system and at least one second file system, wherein the first file system is a file system positioned on a kernel layer; and the second file system is a file system positioned on a user layer. The regulating device is provided with a synchronization module for coordinating the operation of each file system on the disk partition. When any one of the file systems is used, the following operations of a detection step, an allowance step, a protection step and a synchronization step are carried out by the synchronization module. The invention effectively solves the problem of data contention which is possibly generated when a plurality of file systems simultaneously work.

Owner:HITACHI LTD

Low Overhead Contention-Based Switching Between Ticket Lock And Queued Lock

InactiveUS20150355953A1Program synchronisationUnauthorized memory use protectionTicket lockData contention

Owner:INT BUSINESS MASCH CORP

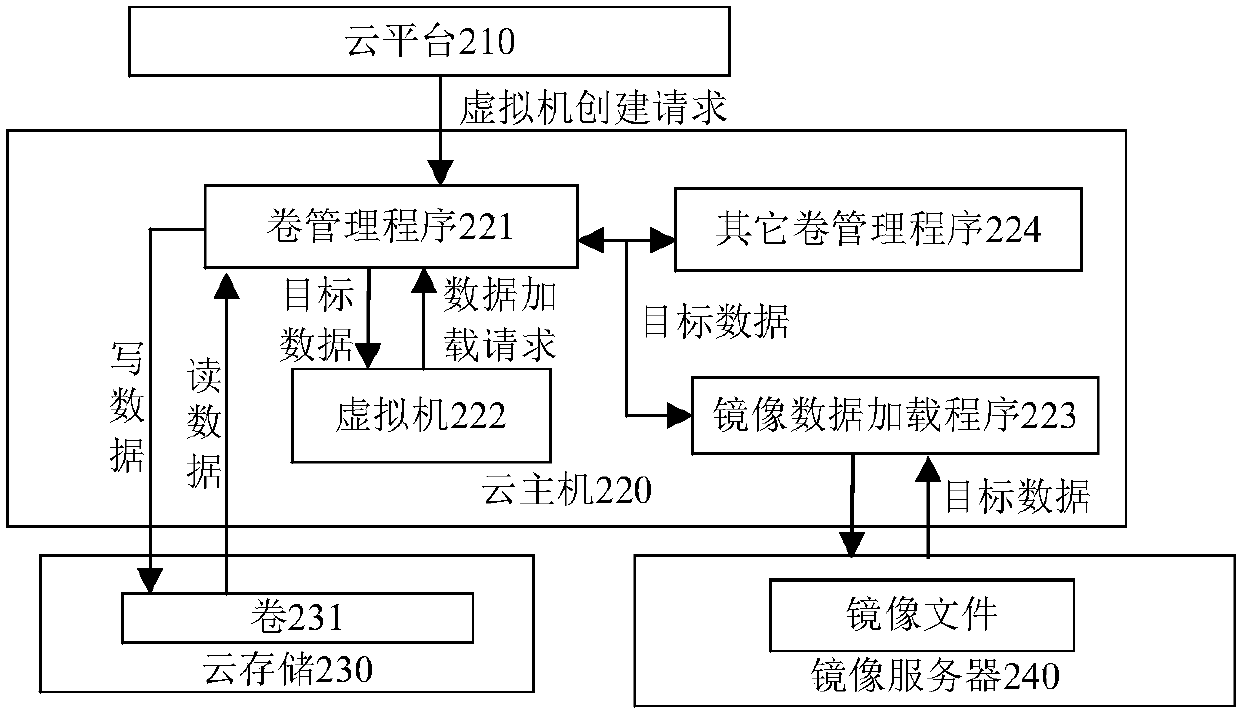

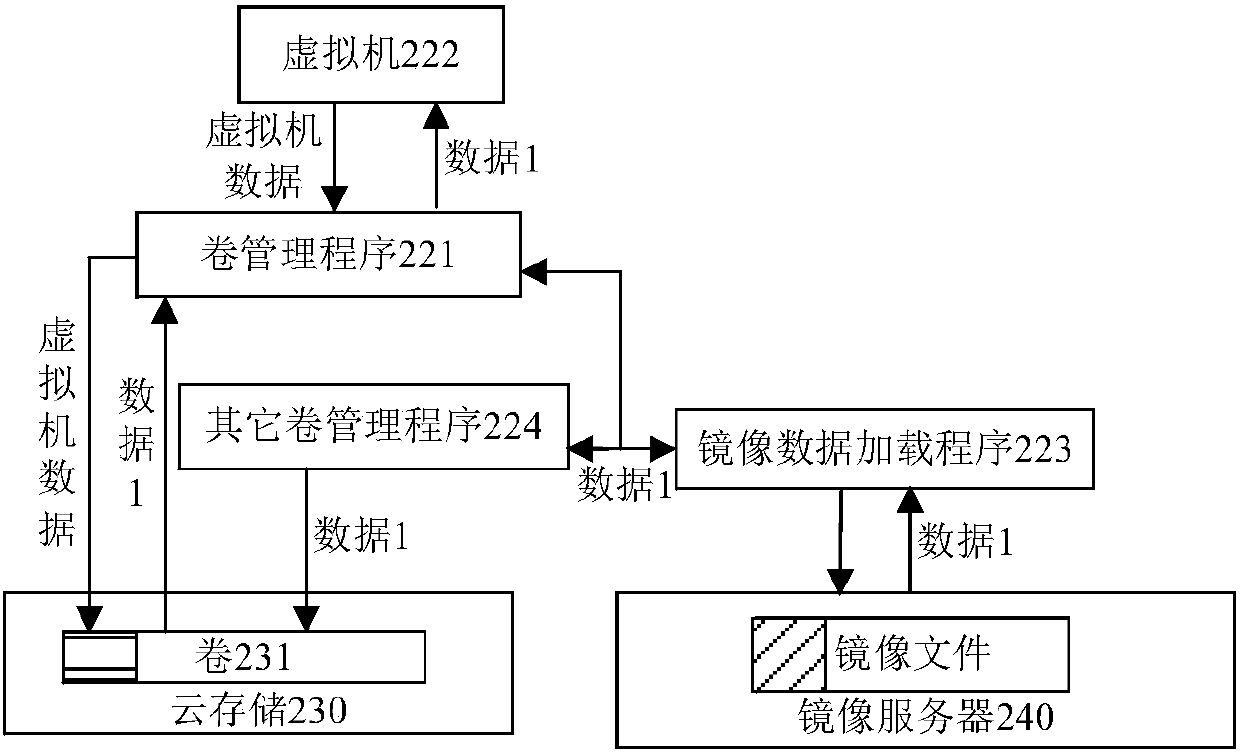

Data loading method and device

ActiveCN107832097ASolve conflictsImprove accuracyProgram loading/initiatingSoftware simulation/interpretation/emulationSoftware engineeringVirtual machine

The embodiment of the invention provides a data loading method and device, and relates to the field of computers. The method comprises the steps of obtaining a writing request of a virtual machine after the virtual machine is started, wherein the writing request is used for writing virtual machine data generated by the virtual machine to a target position in a volume of the virtual machine; when the attribute of the volume is a delay loading attribute, obtaining the loading state of the target position from a bit table volume, wherein the bit table volume is created when a blank volume is created and is used for indicating loading states of data on all positions in the volume, the loading states comprise not loaded, loading and loaded, and loading is used for indicating loading of data onthe corresponding position from a mirror file; when the loading state of the target position is loading, pausing responding of the writing request till the loading state of the target position is loaded and writing the virtual machine data into the target position in the volume. The data loading method and device solve the problem of data conflicts in the data loading process, and the accuracy ofthe data loaded by the virtual machine is improved.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

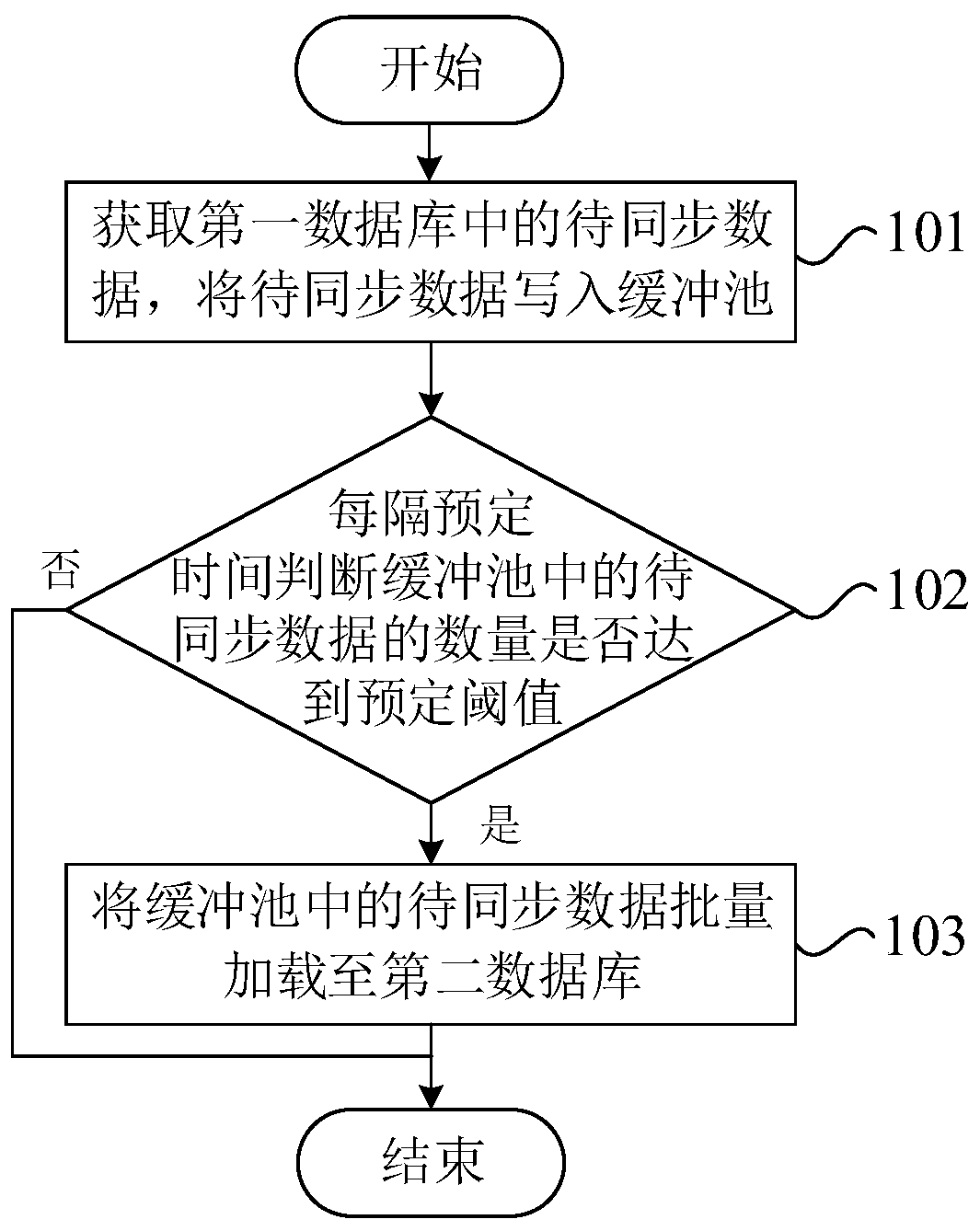

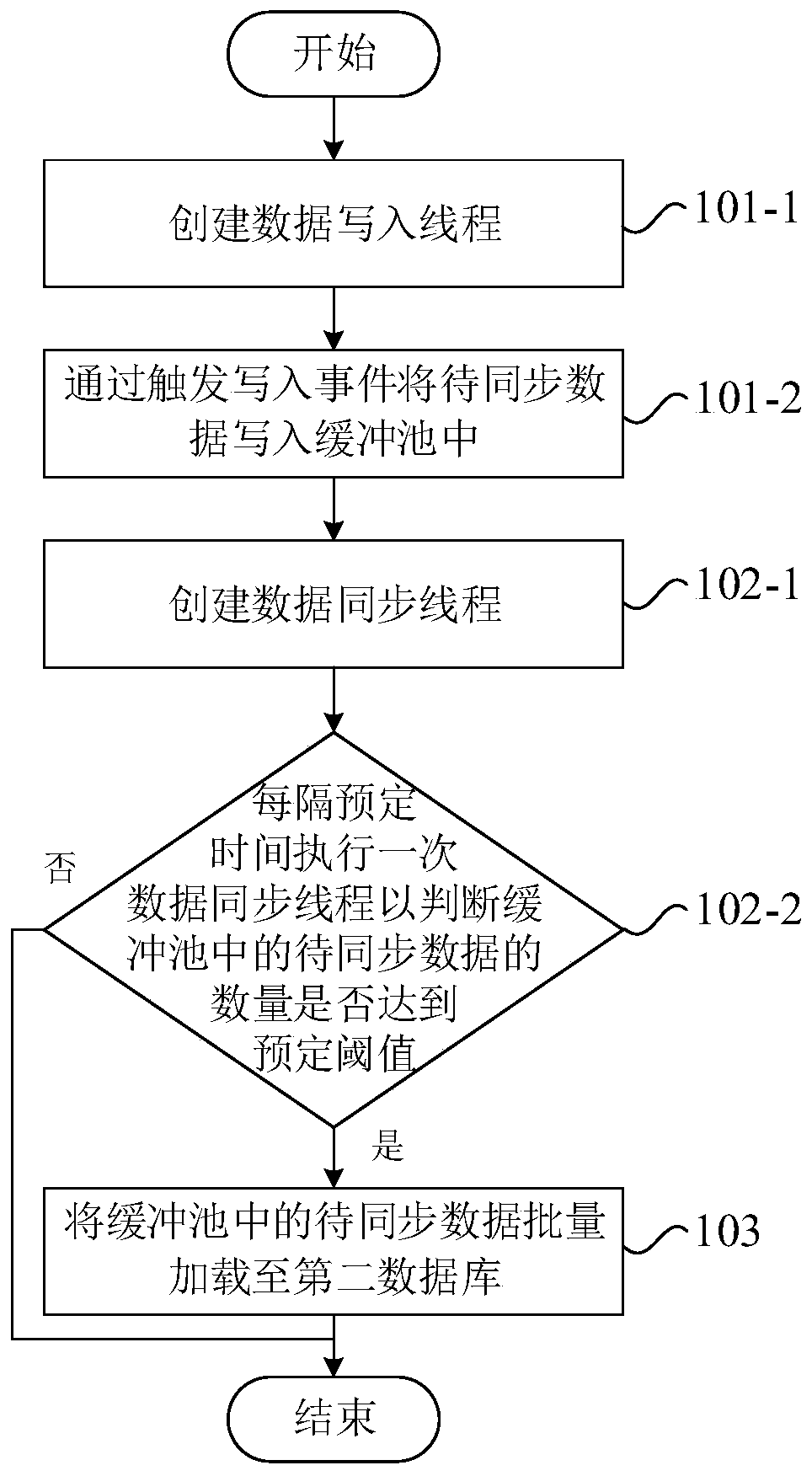

Data synchronization method, device and computer readable storage medium

PendingCN110990435AEnsure consistencyGuaranteed real-timeDatabase distribution/replicationSpecial data processing applicationsData synchronizationCoprocessor

The embodiment of the invention provides a data synchronization method, a data synchronization device and a computer readable storage medium, belongs to the field of databases and aims to synchronizedata to two different types of databases. The method comprises the following steps that: to-be-synchronized data in a first database are obtained, the to-be-synchronized data are written into a bufferpool; whether the quantity of the to-be-synchronized data in the buffer pool reaches a preset threshold value or not is judged every preset time; and if the quantity of the to-be-synchronized data inthe buffer pool reaches the preset threshold value, the to-be-synchronized data in the buffer pool are loaded to a second database in batches. The data are temporarily stored through the buffer pool;when the quantity of the to-be-synchronized data in the buffer pool reaches the preset threshold value, a database coprocessor is used for loading the to-be-synchronized data to the second database in batches; and therefore, the consistency of the data of different databases and the real-time performance of the synchronized data are guaranteed, and meanwhile data loss or data conflicts are avoided.

Owner:MIAOZHEN INFORMATION TECH CO LTD

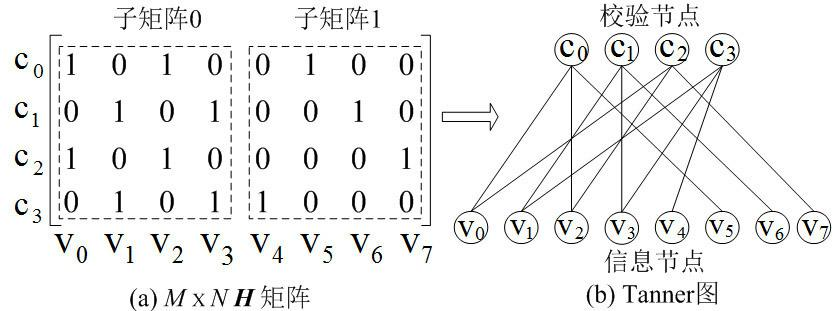

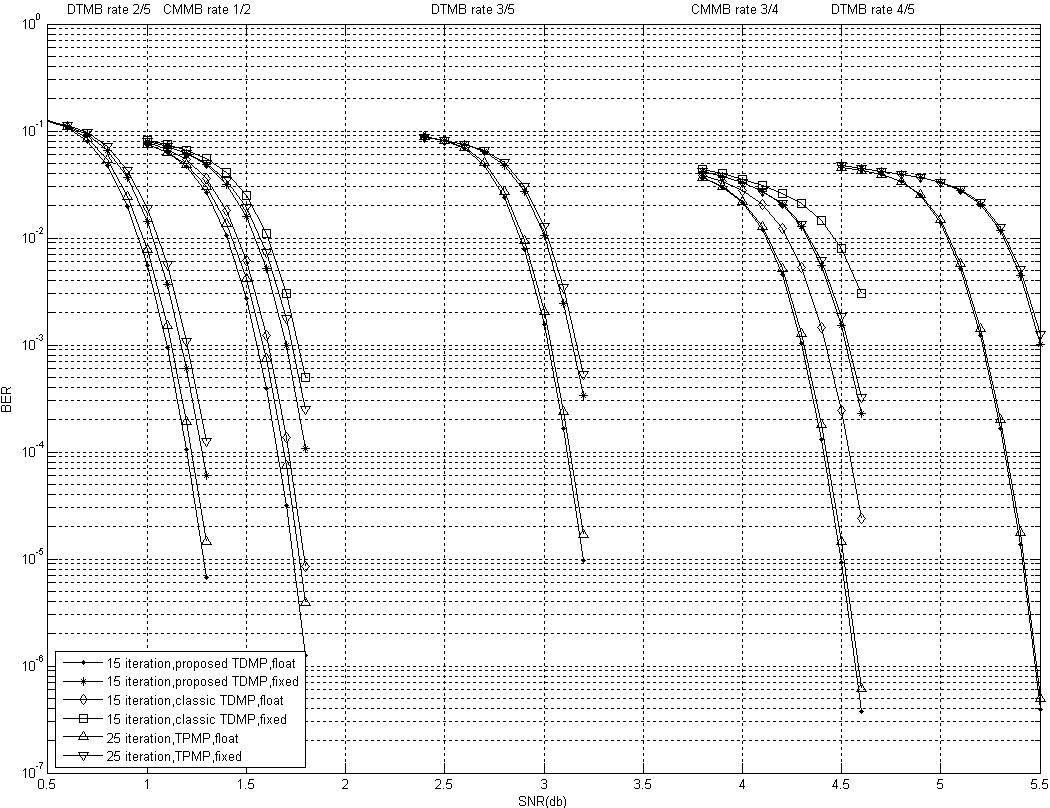

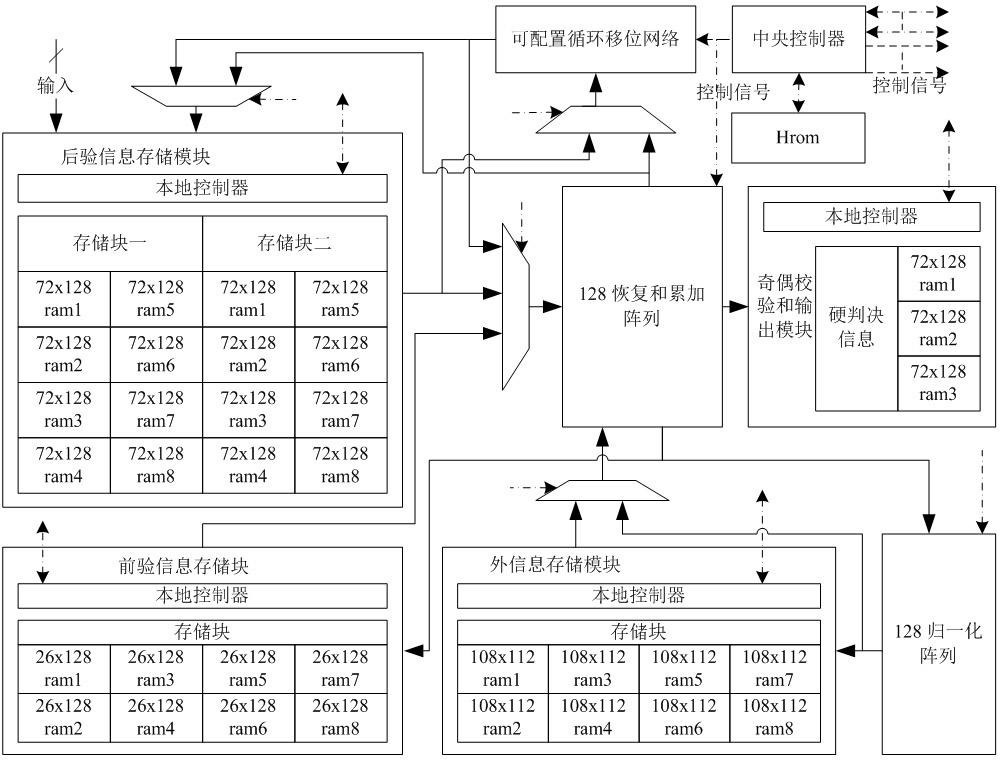

Compatible structure and unstructured low density parity check (LDPC) decoder and decoding algorithm

InactiveCN102624401AFast convergenceImprove efficiencyError correction/detection using multiple parity bitsHardware architectureAlgorithm

The invention provides a high-efficiency low density parity check (LDPC) decoder structure and a data conflict solution. The decoder adopts a universal serial processing mode, and an LDPC decoding algorithm and a hardware architecture are specially optimized. The classical turbo decoding message passing (TDMP) algorithm cannot be applied to unstructured LDPC codes such as LDPC codes in digital video broadcasting-satellite-second generator (DVB-S2) and China mobile multimedia broadcasting (CMMB). If the TDMP algorithm is directly adopted, a data conflict can be caused, and the performance of LDPC codes can be lowered. The TDMP algorithm is optimized, so that the TDMP algorithm can be well applied to the unstructured LDPC codes. The conventional reading-writing of external information is finished at a time, and a large memory space is required; and improvement is made, so that the memory space required by the decoder is effectively reduced. In terms of a processing unit, the recovery of the external information and the update operation of prior information and posterior information are also optimized. Moreover, in order to achieve compatibility with structured and unstructured LDPC codes, a main decoding time sequence is optimized. By the optimization measures, the hardware utilization efficiency of the decoder is improved.

Owner:FUDAN UNIV

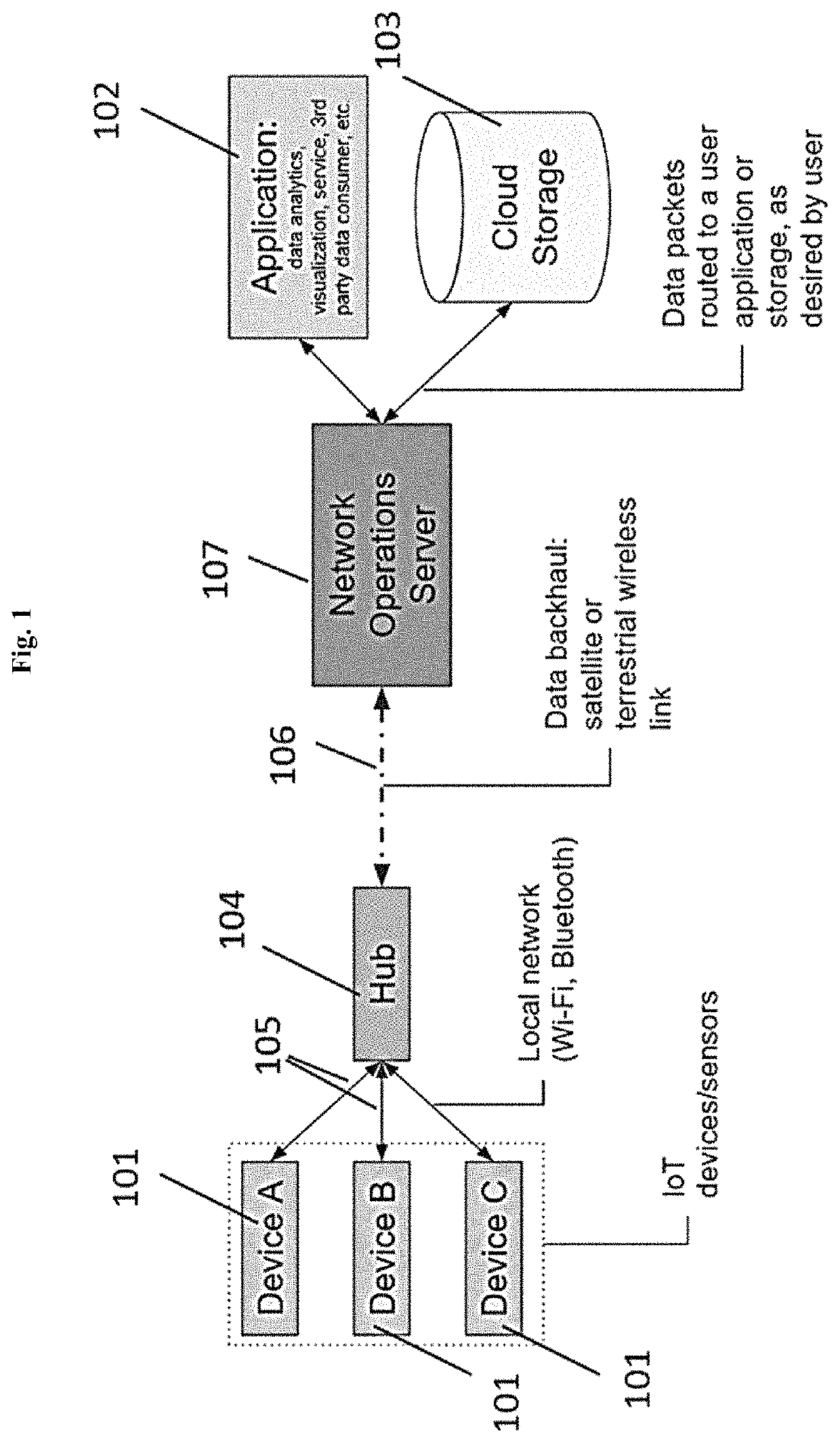

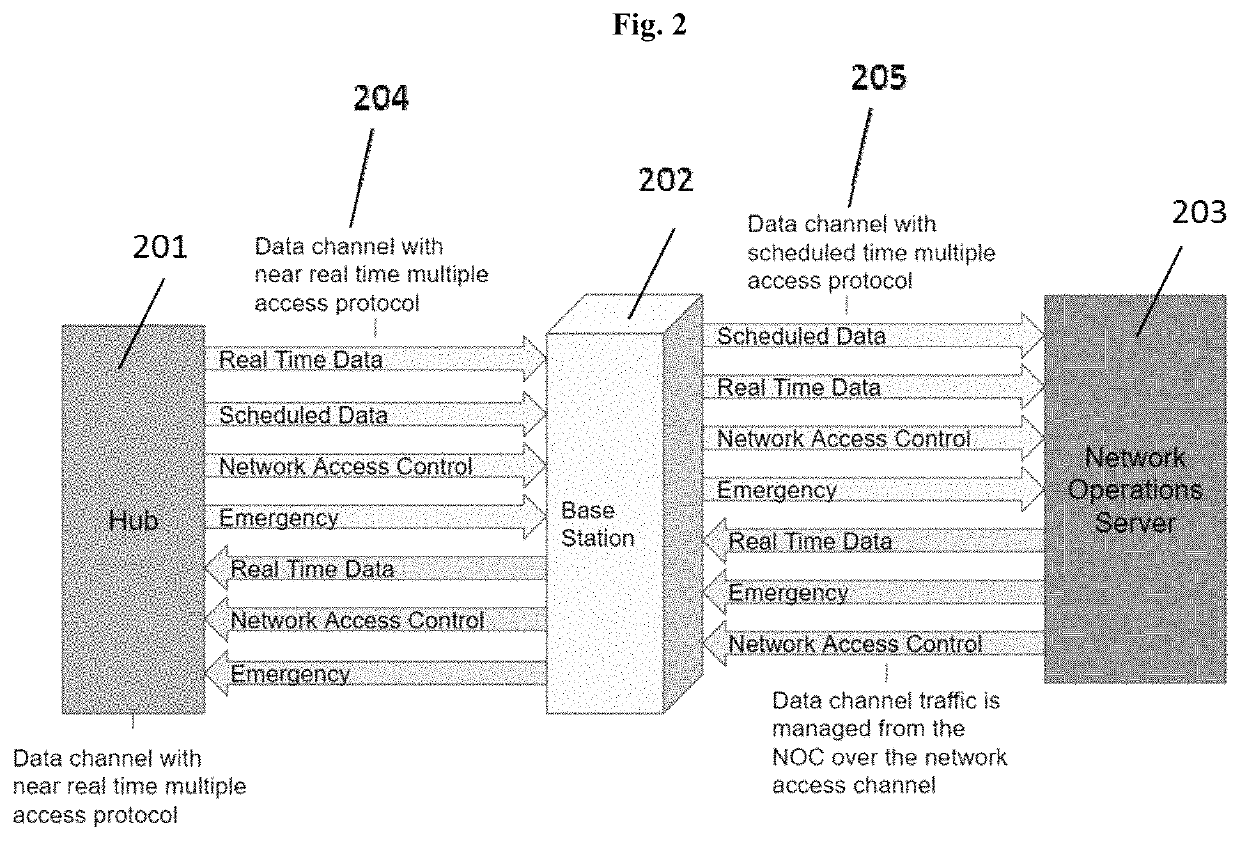

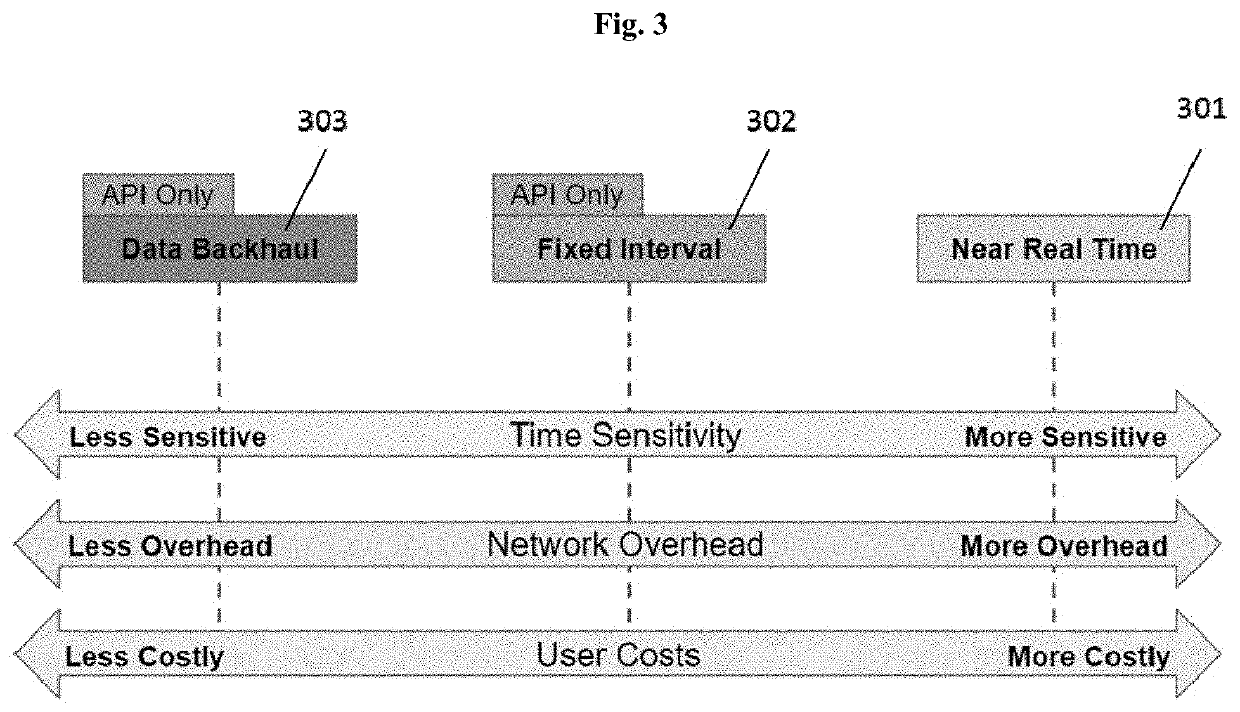

Dynamic multiple access for distributed device communication networks with scheduled and unscheduled transmissions

ActiveUS10833923B2Less sensitive to the timing and responsiveness of the transmissionOptimize networkData switching networksWireless communicationExchange networkData source

Disclosed herein are systems and networks comprising a network operations server application for improving a packet-switched communications network, the application configured to: receive data from data source nodes; provide a management console allowing a user to configure a network multi-access protocol for: i) a node, ii) a type of node, iii) a group of nodes, iv) a type of data packet from a node, v) a type of data packet from a type of node, vi) a type of data packet from a group of nodes, or vii) a specific instance of a data packet from a node, the network multi-access protocol a scheduled or random access protocol; and dynamically create channel assignments to allocate bandwidth of the network among channels based on the configured network multi-access protocols to prevent network saturation and minimize data collisions in the packet-switched network.

Owner:SKYLO TECH INC

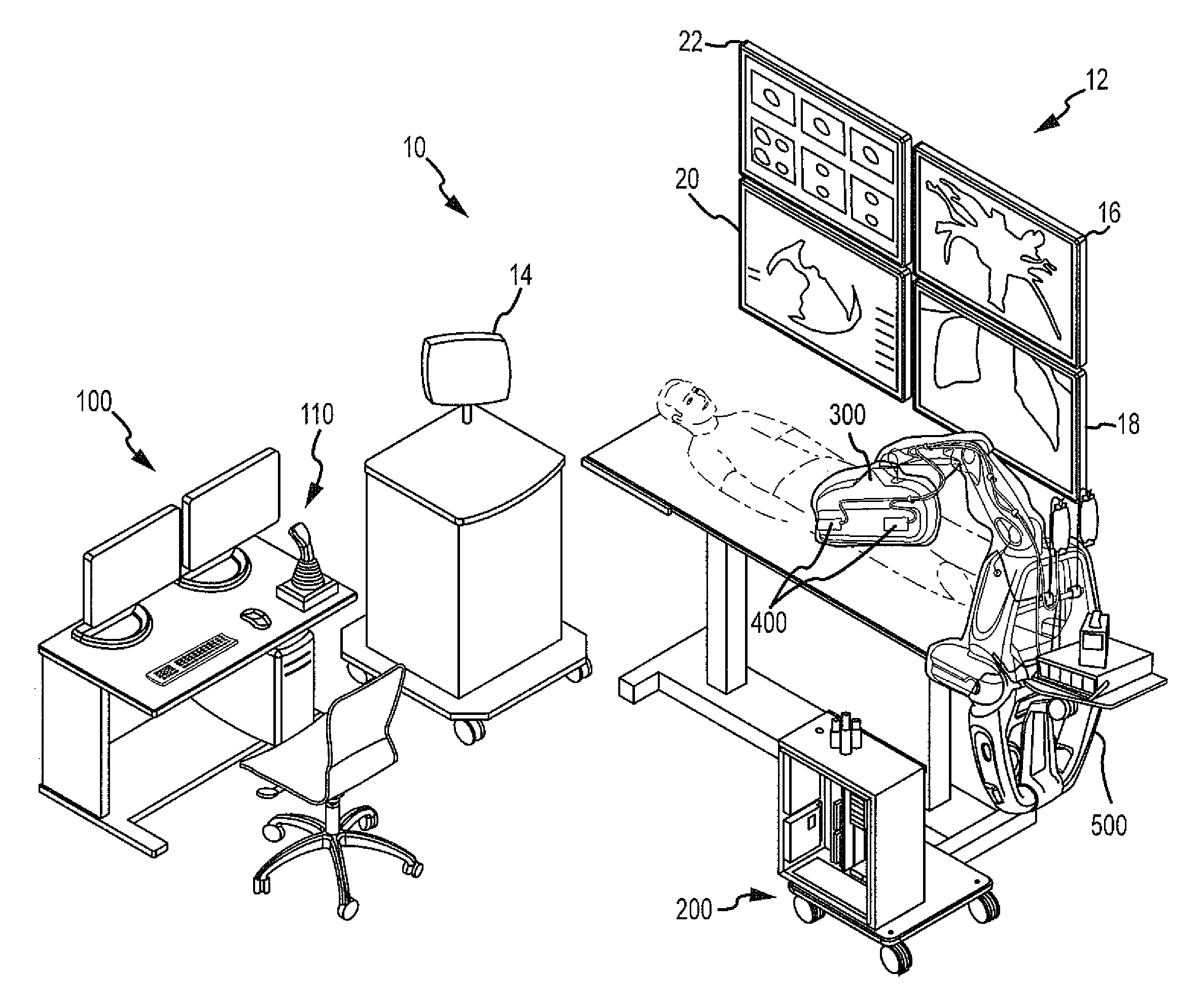

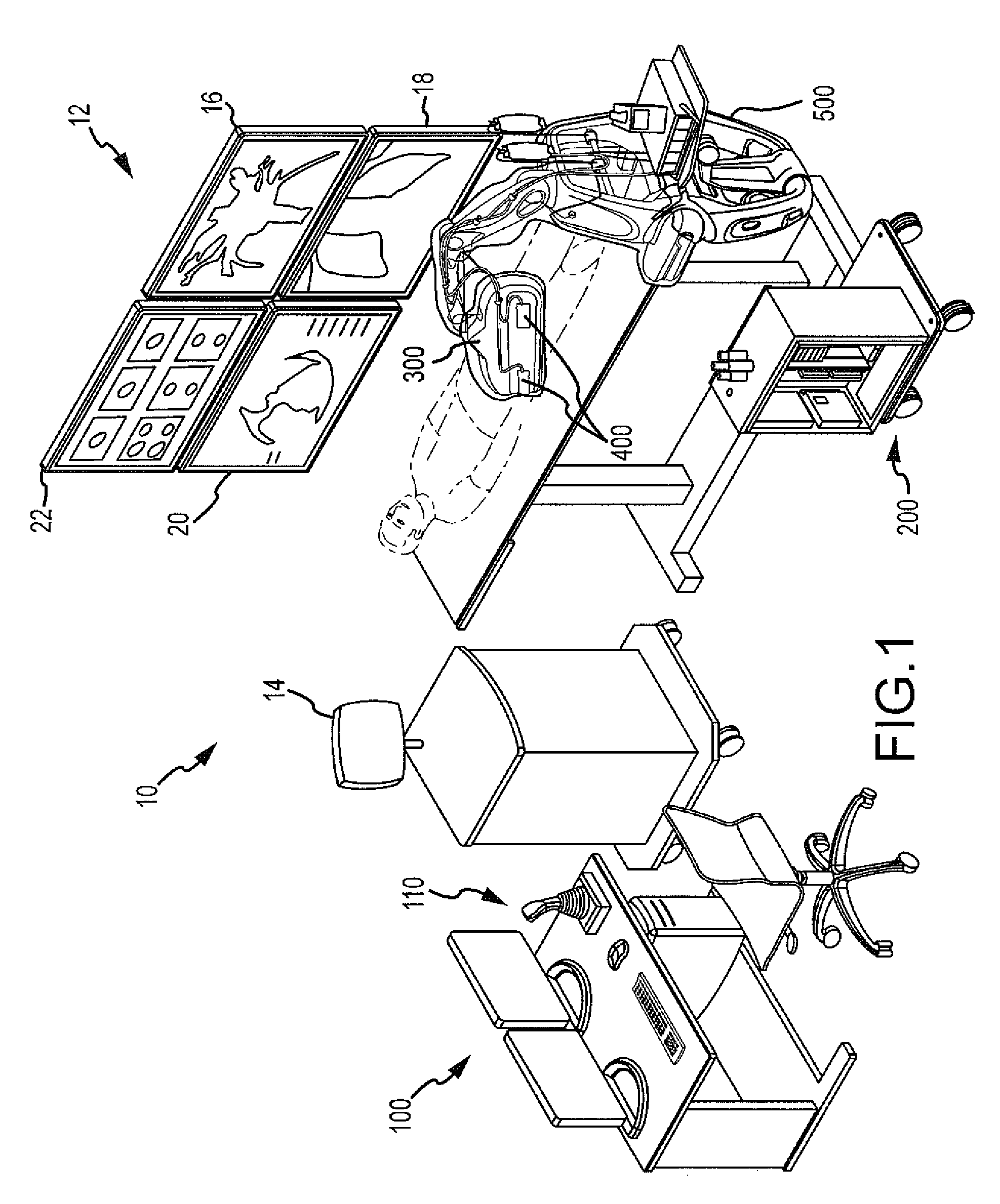

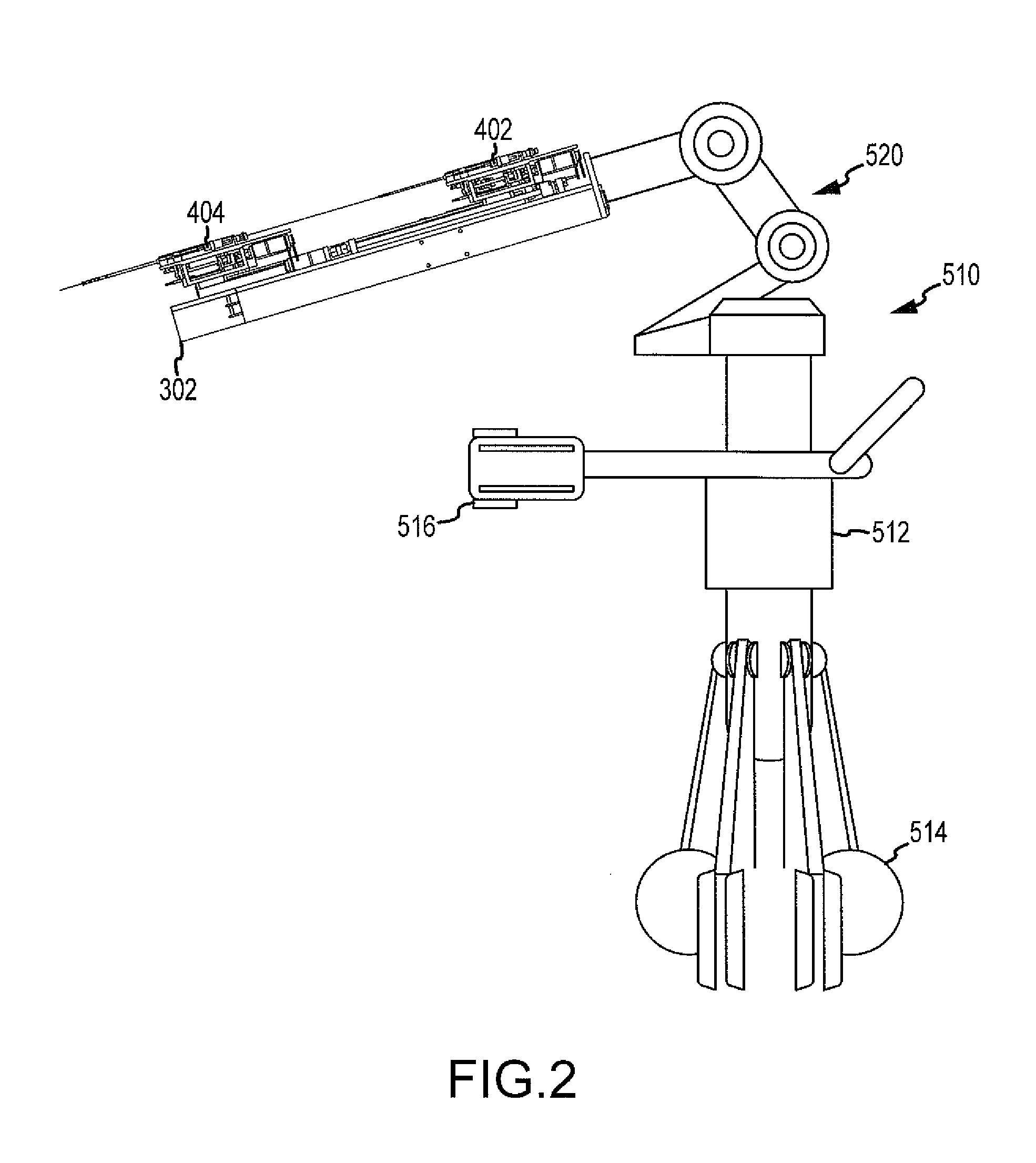

System and methods for avoiding data collisions over a data bus

ActiveUS8601185B2Reduce relative motionImprove efficiencyLocal control/monitoringMedical equipmentGuidance systemMedical equipment

Owner:ST JUDE MEDICAL ATRIAL FIBRILLATION DIV

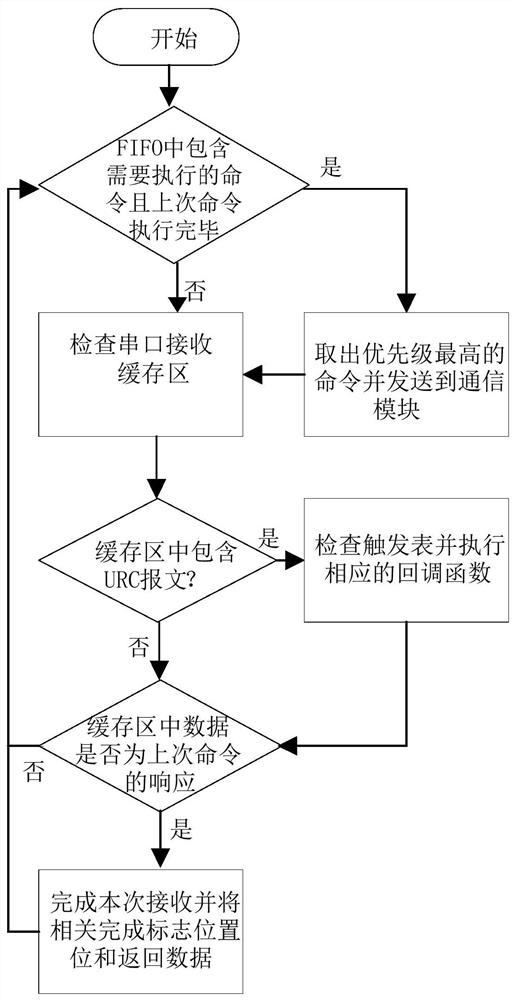

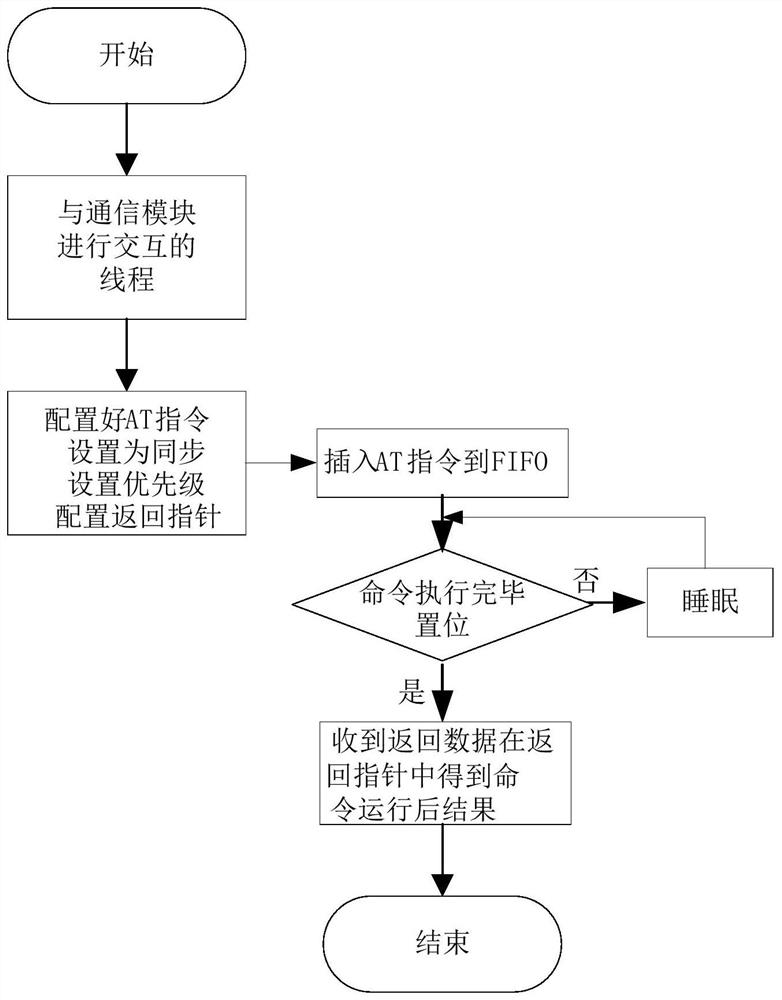

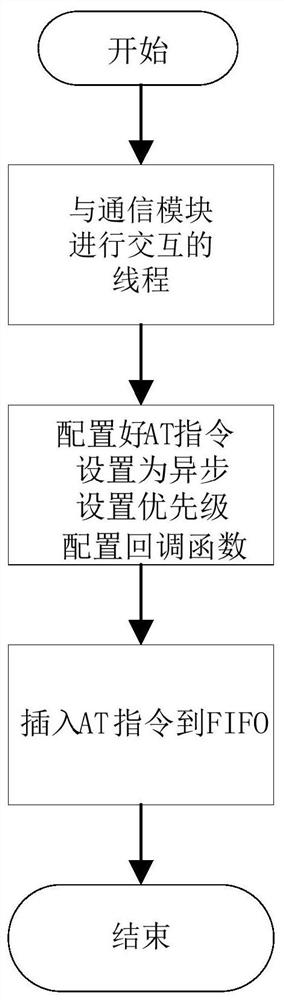

Queue-based AT instruction control method

ActiveCN113179227AAvoid Data ConflictsReliable resultsProgram synchronisationMicrocontrol arrangementsComputer architectureReturn function

The invention discloses a queue-based AT instruction control method, and belongs to the technical field of communication. The invention aims to solve the problems that in Beidou positioning data reporting equipment, a communication module faces the multi-task requirement of an MCU, data conflicts are generated, and task execution errors are caused. The queue-based AT instruction control method comprises the steps of: in a micro-control unit, configuring a priority and a command execution mode for each AT instruction in the thread, wherein the command execution mode comprises a synchronous mode or an asynchronous mode; for the AT instruction in the asynchronous mode, configuring a return function pointer after command execution is finished; then, adding the configured AT instruction into a first-in first-out queue through an interface function; and interacting the AT instructions in the first-in first-out queue with the communication module according to a priority sequence. According to the queue-based AT instruction control method, multi-task data conflicts in communication with the communication module can be avoided.

Owner:HARBIN VEIC TECH

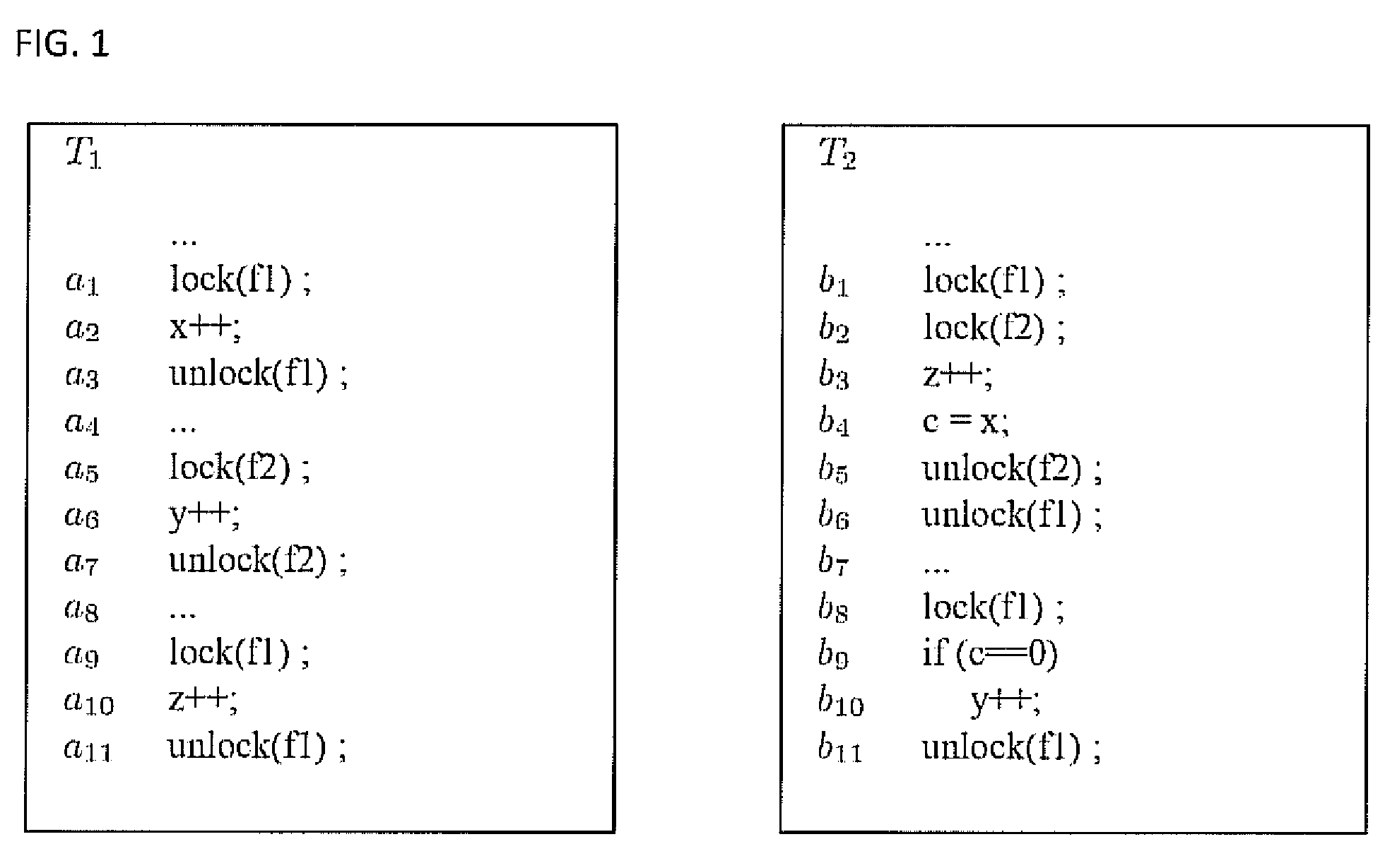

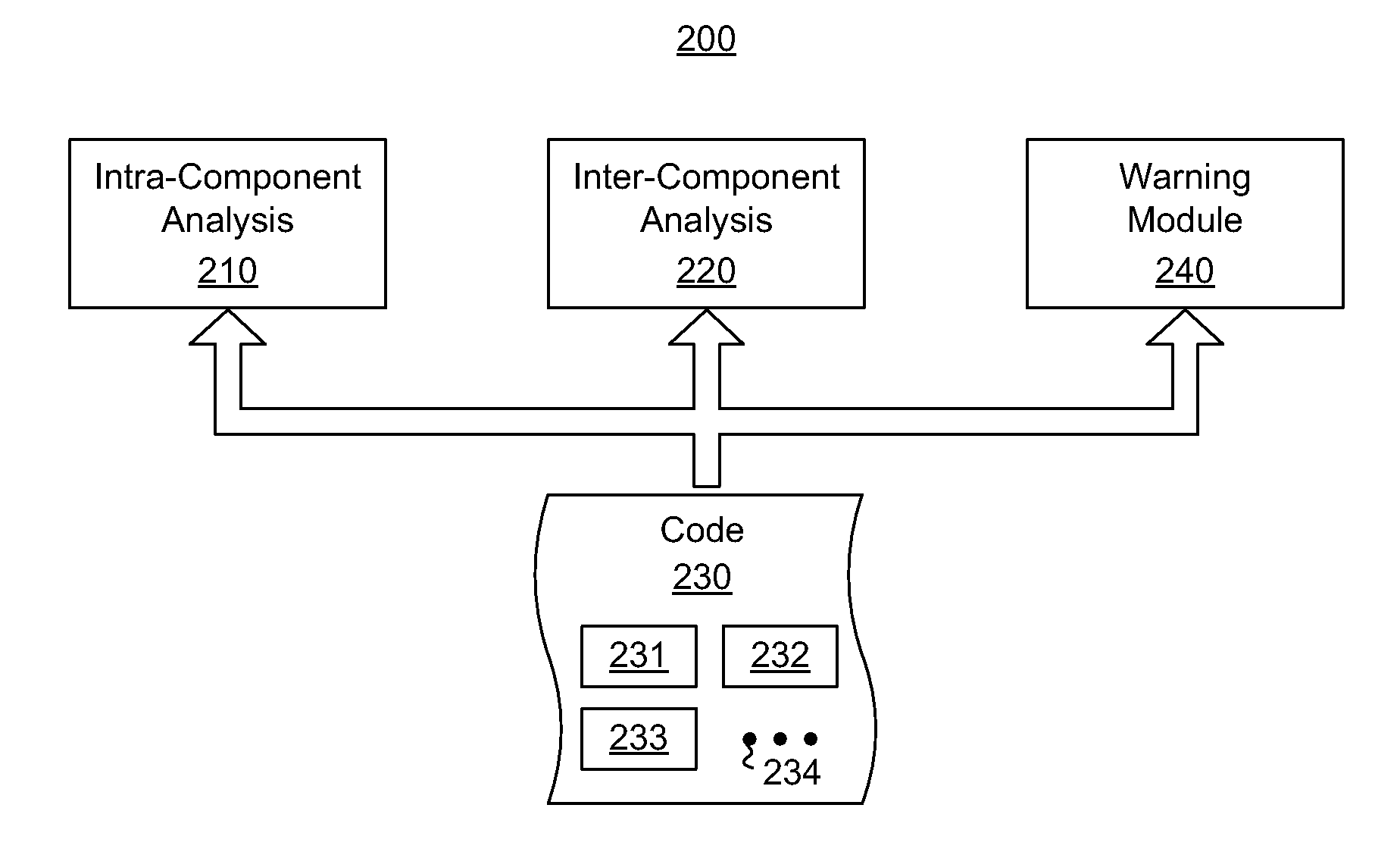

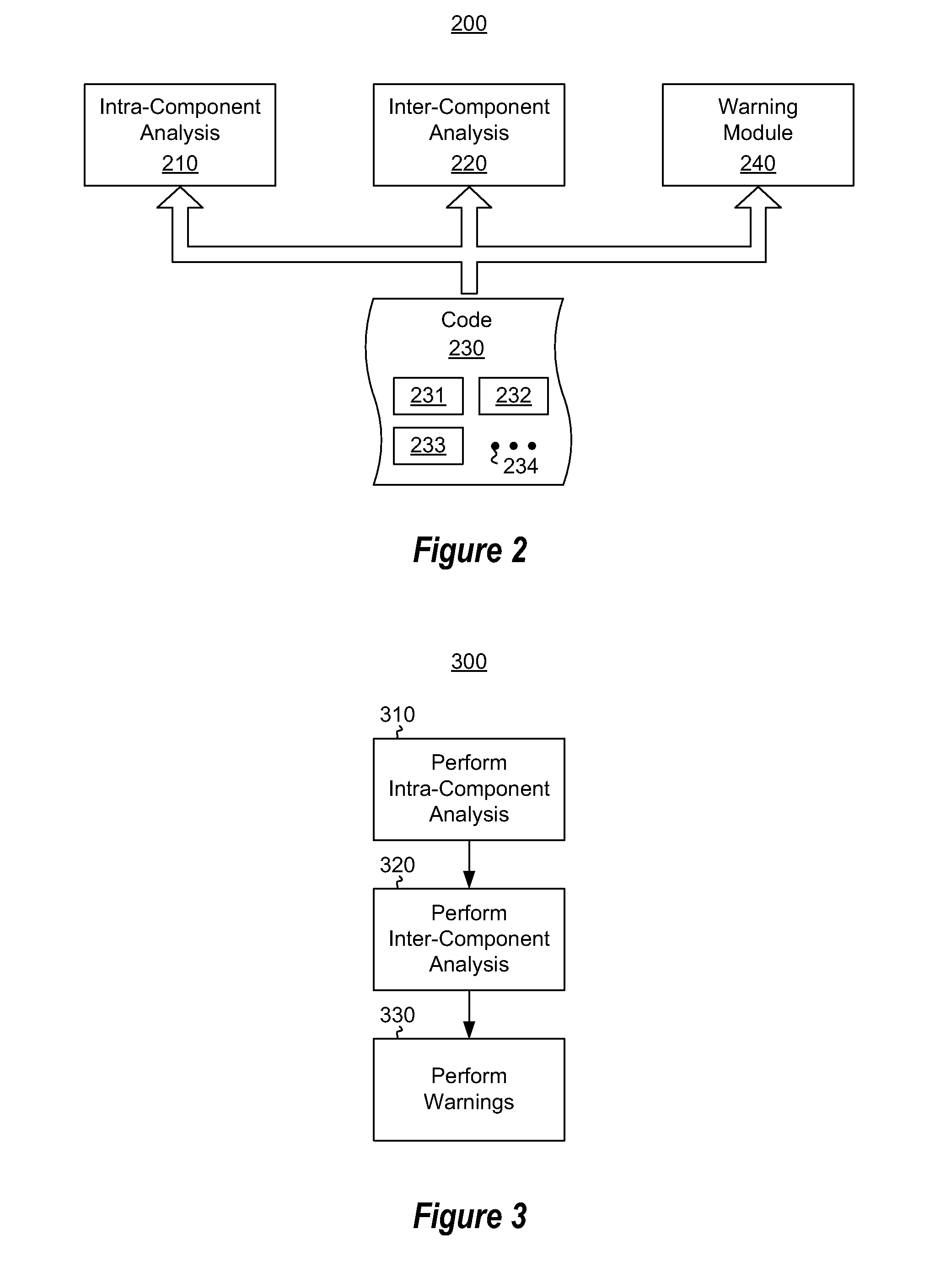

Static data race detection and analysis

Static data race analysis of at least a portion of a multi-threaded application in order to identify potential data race defects in the multi-threaded application. The static data race analysis includes intra-component static analysis as well as inter-component static analysis. The intra-component static analysis for a given component involves identifying a set of memory accesses operations in the component. For each of at least one of the set of memory access operations, the analysis determines whether there is a data race protection element associated with the memory access command.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com