Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

39results about How to "Reduce the amount of model parameters" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Traffic sign recognition method based on YOLO v4-tiny

PendingCN112464910ASmall recognition accuracyThe recognition of small complex traffic scenes is not intensive and the recognition accuracy is not highCharacter and pattern recognitionNeural architecturesTraffic sign recognitionData set

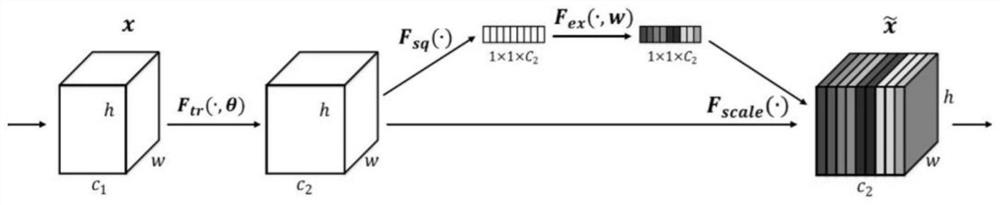

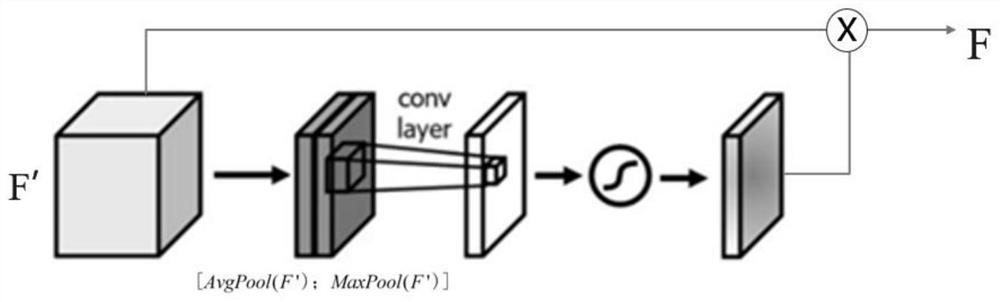

The invention discloses a traffic sign recognition method based on YOLO v4tiny. The method comprises the steps: collecting a traffic sign data set, carrying out the data enhancement of an initial sample traffic sign data set, and dividing the initial sample traffic sign data set into a training set, a verification set and a test set; for a real target frame in the training set, clustering six priori frame sizes with different sizes by taking an intersection-parallel ratio as an index, and embedding a channel attention mechanism and a space attention mechanism into a YOLO v4tiny framework to obtain a YOLO v4tinyCBAM network model; and training the network model through the training set, performing verification through the verification set, and finally testing the performance of the networkmodel through the test set. According to the method, a channel attention and spatial attention mechanism is introduced into the YOLO v4tiny lightweight network, so that the generalization ability is stronger, and the recognition precision is higher.

Owner:HANGZHOU DIANZI UNIV

Traffic sign detection and identification method based on pruning and knowledge distillation

ActiveCN111444760AEffective pruningIncrease pruning rateCharacter and pattern recognitionNeural architecturesTraffic sign detectionData set

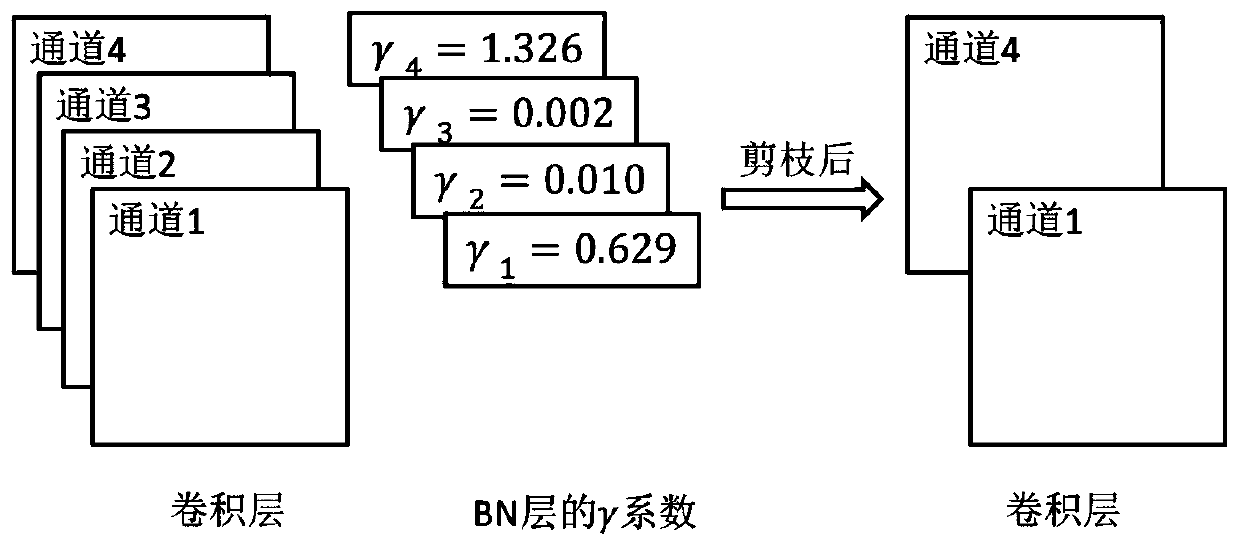

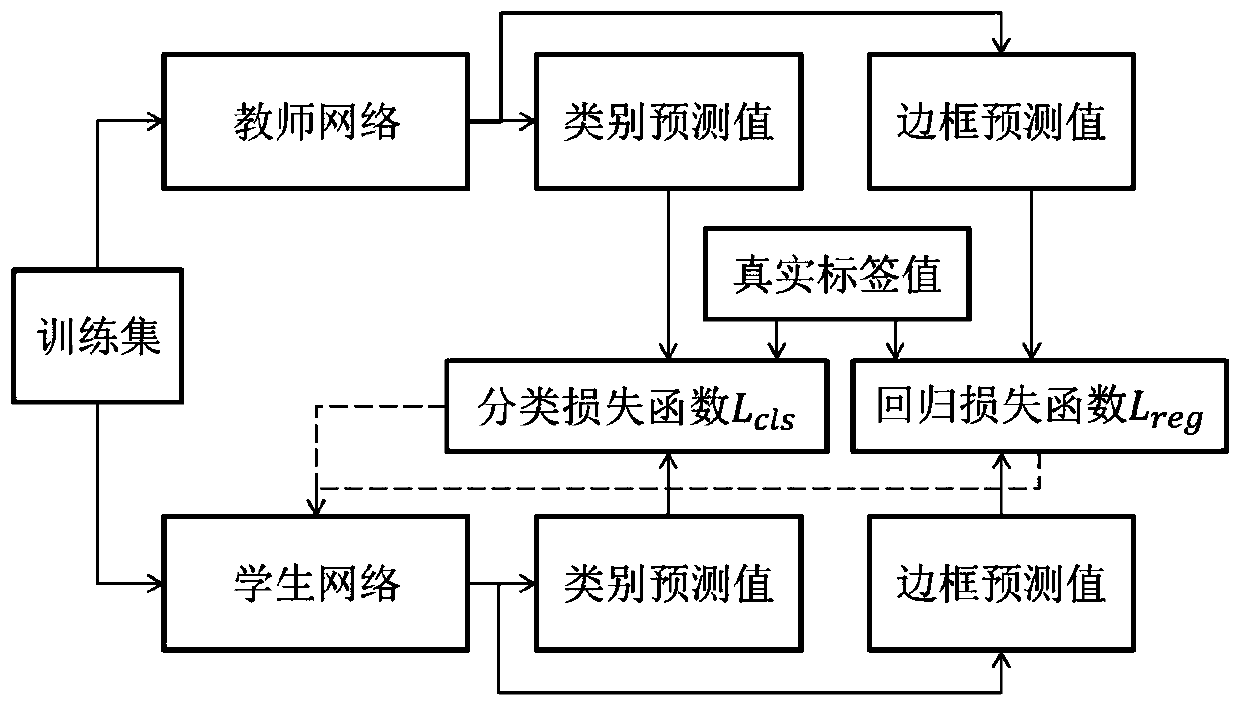

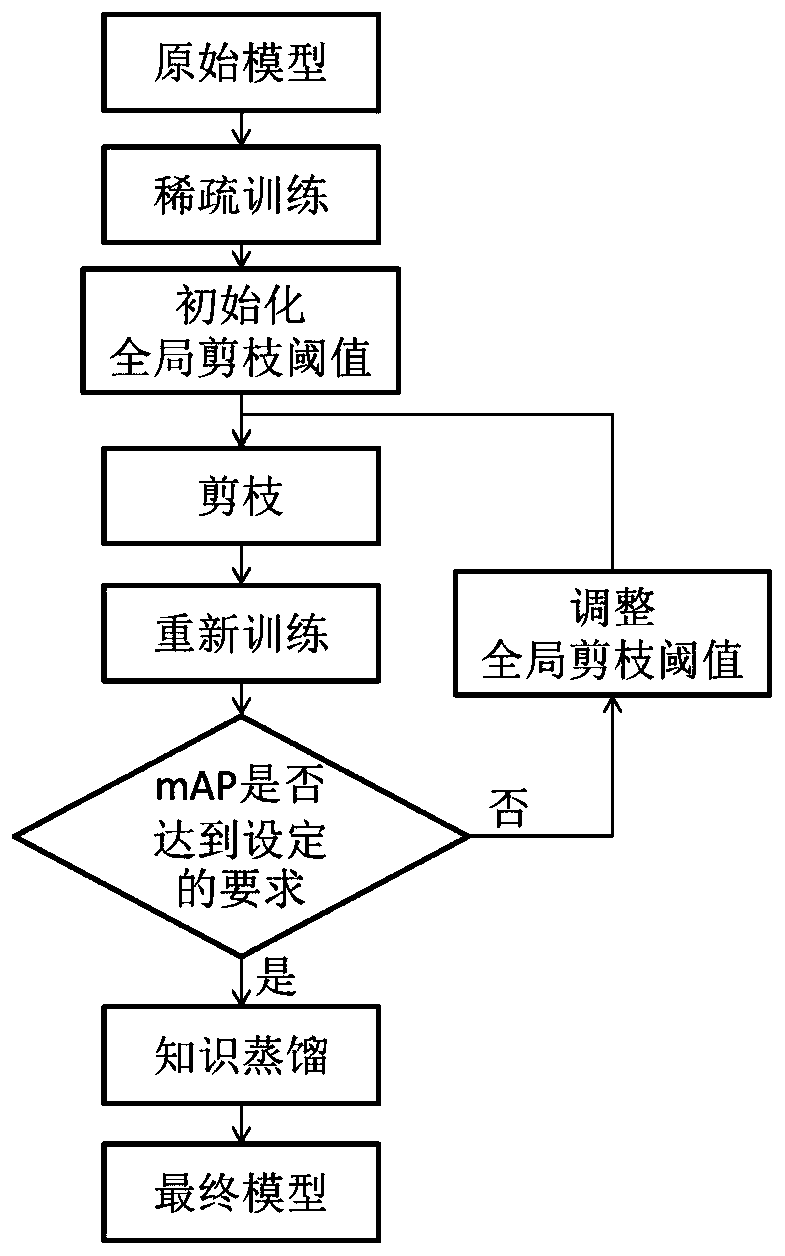

The invention relates to a traffic sign detection and identification method based on pruning and knowledge distillation. The method comprises the following steps: preparing a data set and carrying outdata enhancement; establishing a network and training the network: establishing a YOLOV3-SPP network, loading parameters of a pre-training model trained in the data set ImageNet, and inputting the cut training set images subjected to data enhancement into the network in batches for forward propagation to obtain a model which is an original YOLOV3-SPP network; sparse training: using a scaling coefficient of a BN layer as a parameter for measuring channel importance, adding an L1 regularization item on the basis of an original target function, after adding the L1 regularization item, performingtraining again until loss convergence, and naming the process as sparse training; pruning according to the threshold value; and obtaining a final model by knowledge distillation.

Owner:TIANJIN UNIV

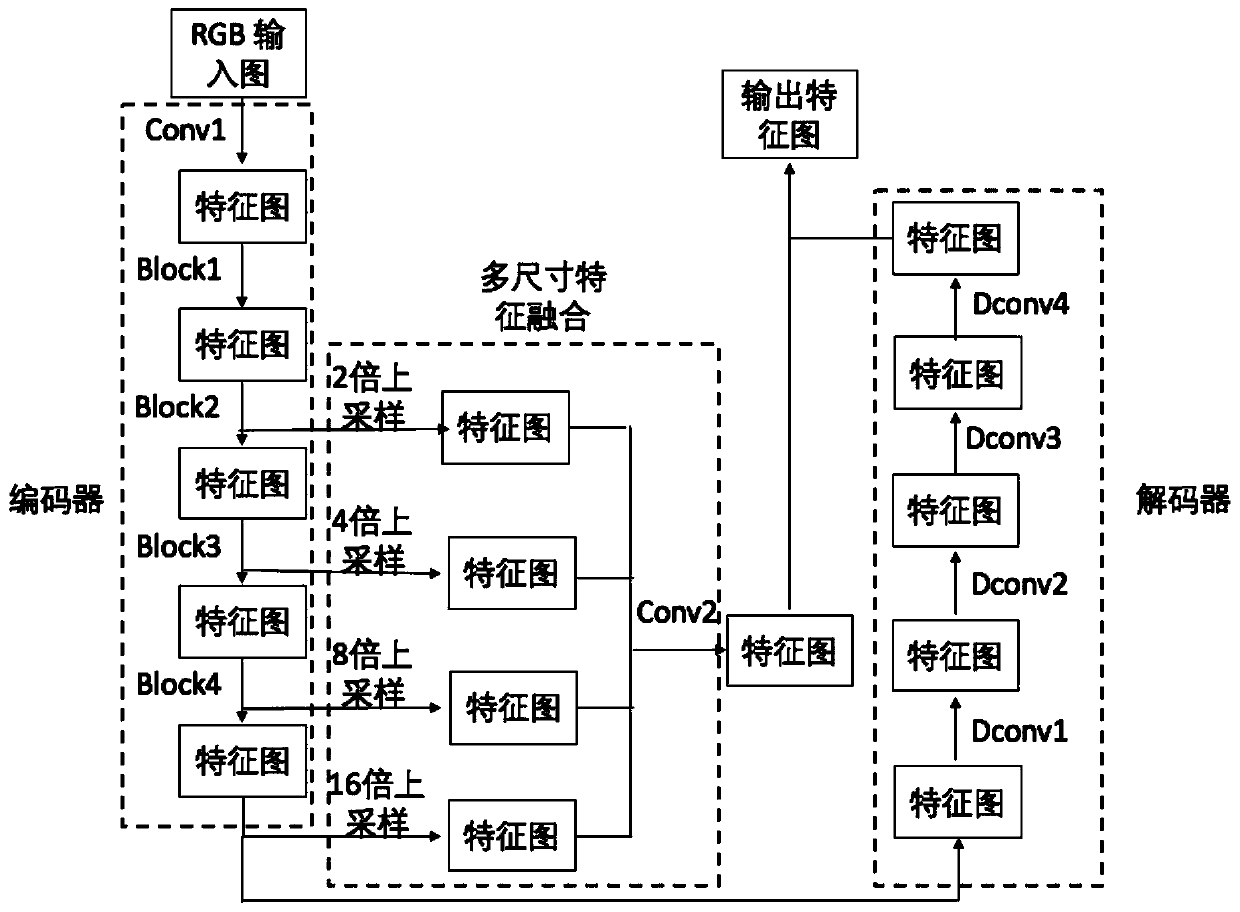

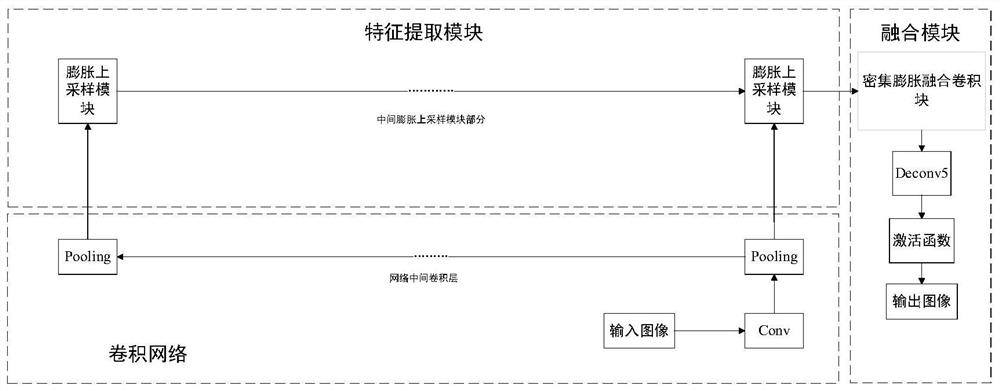

Expansion full-convolution neural network and construction method thereof

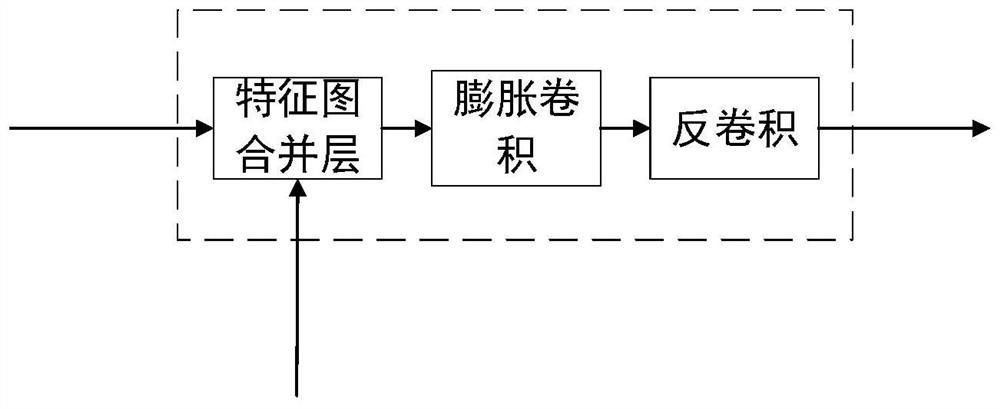

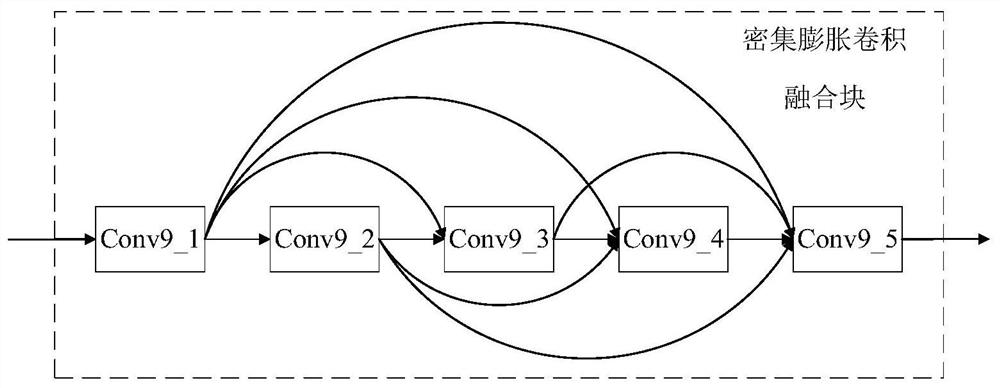

ActiveCN108717569ASolve the characteristicsSolve the fusion problemNeural architecturesPhysical realisationNerve networkComputer science

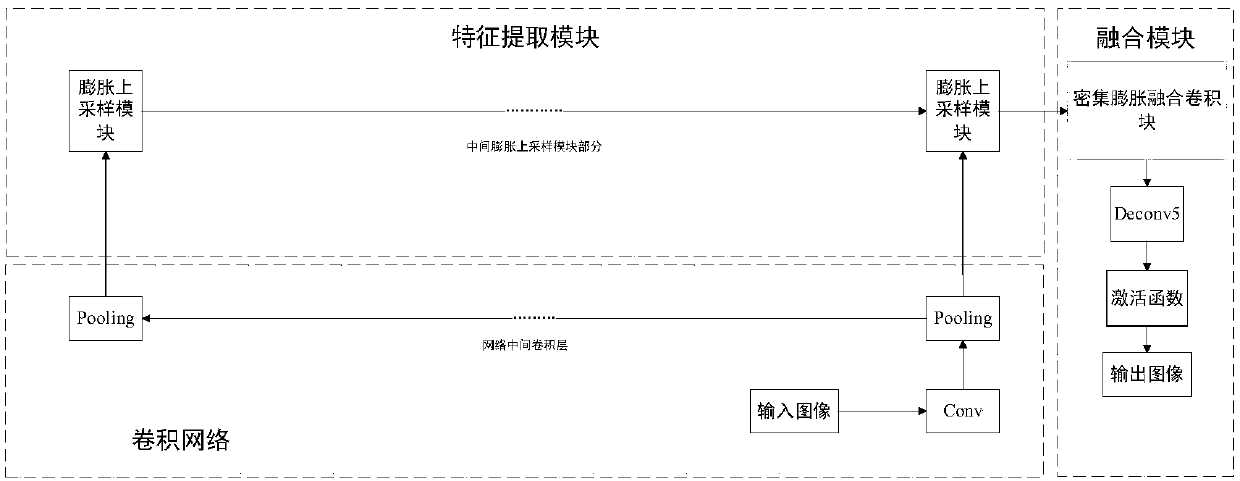

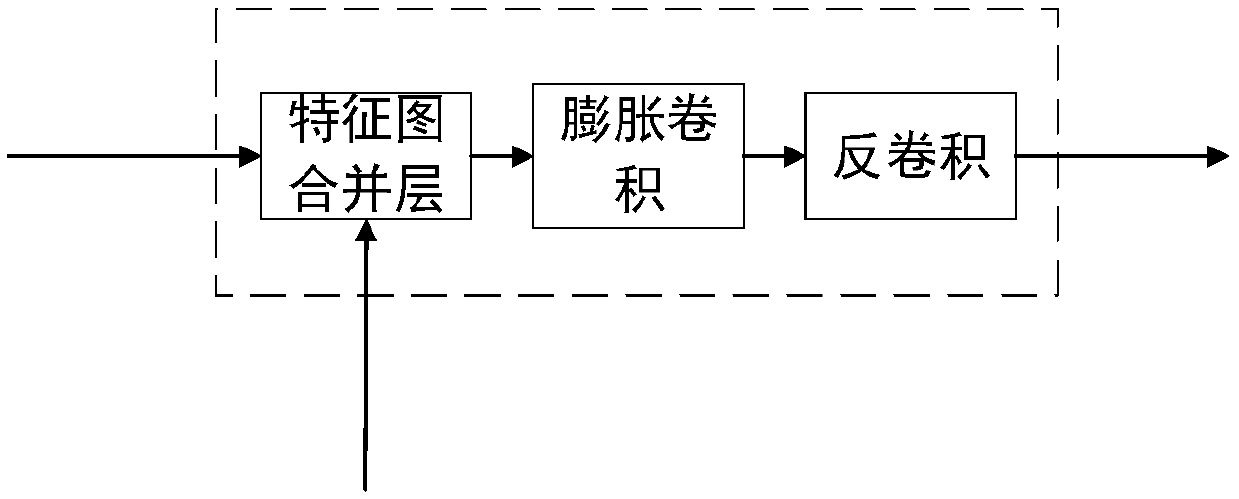

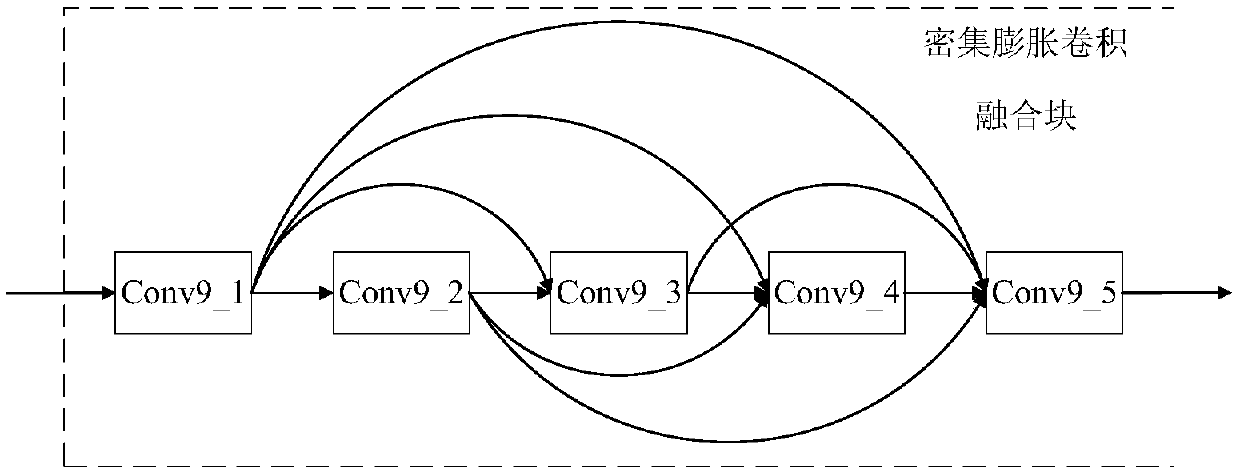

The invention discloses an expansion full-convolution neural network and a construction method thereof. The neural network comprises a convolution neural network, a feature extraction module, and a feature fusion module connected in order; the construction method comprises the following steps: selecting the convolution neural network; removing a full-connection layer and a classification layer forclassifying in the convolution neural network, and only leaving the middle convolution layer and a pooling layer, and extracting a feature map from the convolution layer and the pooling layer; constructing a feature extraction module, wherein the feature extraction module comprises multiple expansion upper-sampling modules connected in series, each expansion upper-sampling module respectively comprises a feature map merge layer, an expansion convolution layer and a deconvolution layer; and constructing a feature fusion module, wherein the feature fusion module comprises a dense expansion convolution block and a deconvolution layer. The expansion full-convolution neural network disclosed by the invention effectively solves the feature extraction and fusion problem in the convolution neuralnetwork, and can be applied to a pixel-level labelling task of an image.

Owner:ARMY ENG UNIV OF PLA

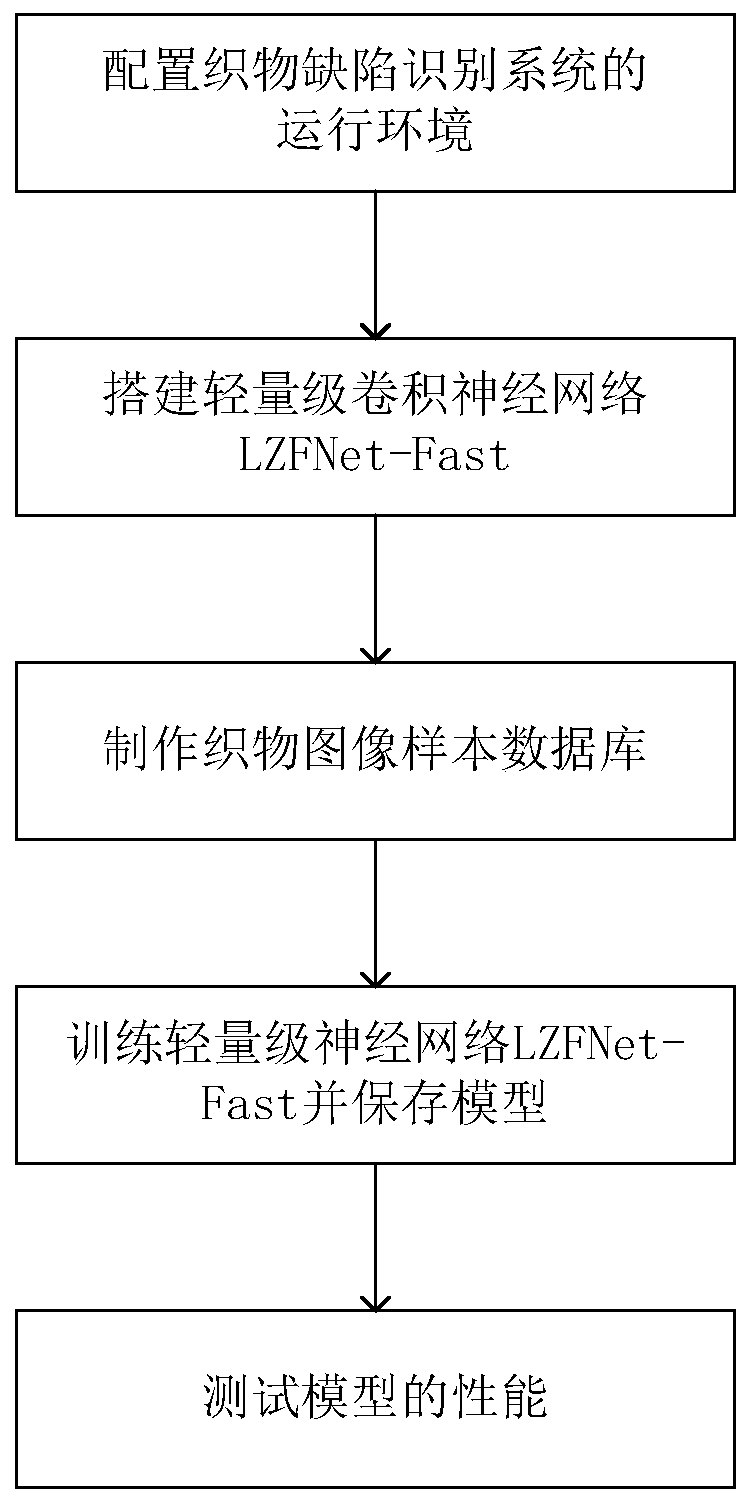

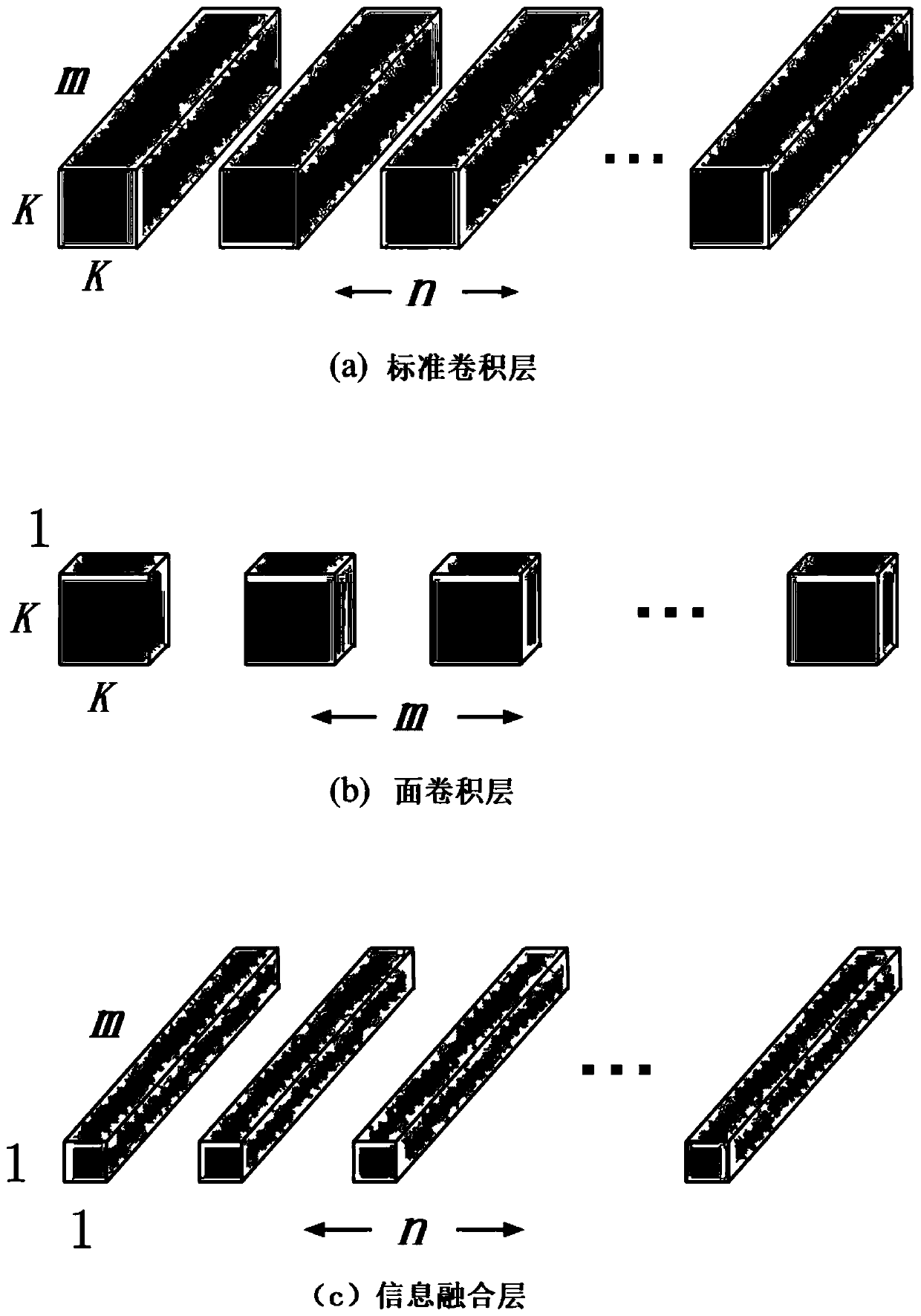

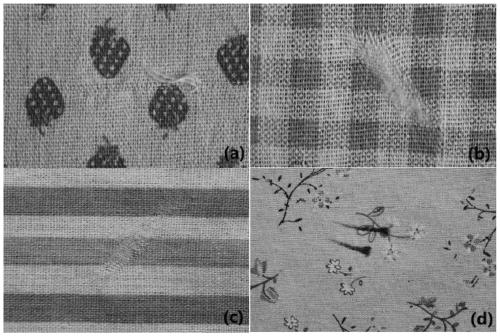

Construction method of fabric defect recognition system based on lightweight convolutional neural network

ActiveCN110349146AReduce dependenceGuaranteed uptimeImage analysisCharacter and pattern recognitionStandardizationRecognition system

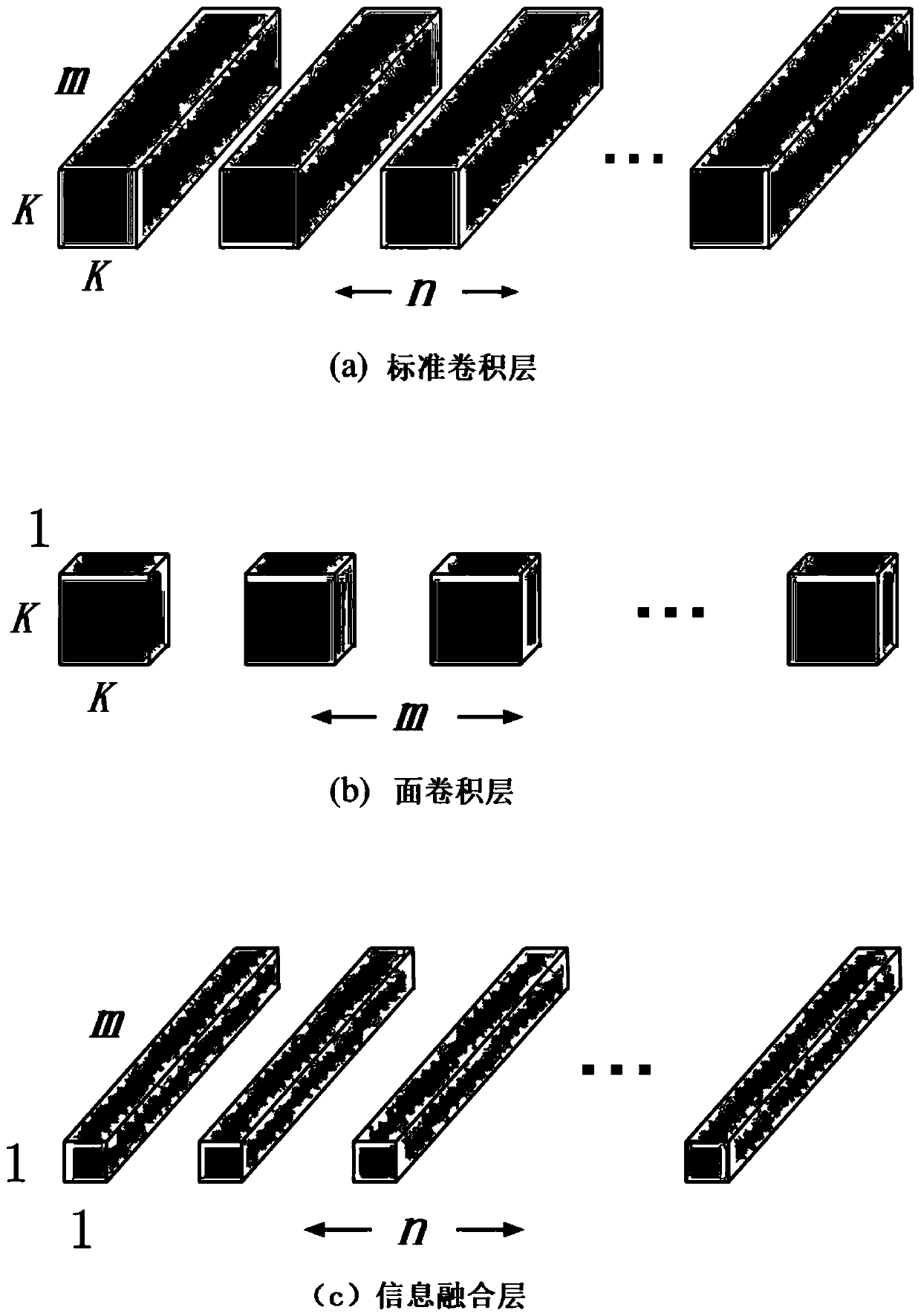

The invention provides a construction method of a fabric defect recognition system based on a lightweight convolutional neural network. The method comprises the following steps: firstly, configuring an operation environment of a fabric defect identification system; obtaining a lightweight convolutional neural network according to factorization convolution; then, collecting fabric image sample data; standardizing the fabric image sample data; dividing the standardized fabric image sample data into a training image set and a test image set; inputting the training image set into a lightweight convolutional neural network for training by using an asynchronous gradient descent training strategy to obtain an LZFNet-Fast model, and finally inputting the test image set into the LZFNet-Fast modelfor testing to verify the performance of the LZFNet-Fast model. According to the method, a standard convolution layer is replaced by a factorized convolution structure, the colored fabric with complextextures is effectively identified, the number of parameters and the calculated amount of the model are reduced, and the identification efficiency is greatly improved.

Owner:ZHONGYUAN ENGINEERING COLLEGE

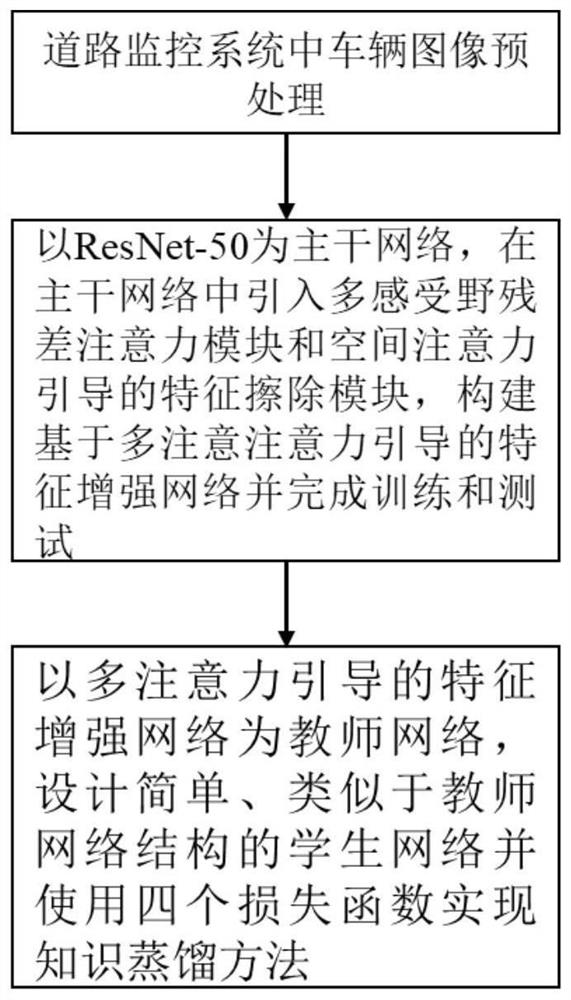

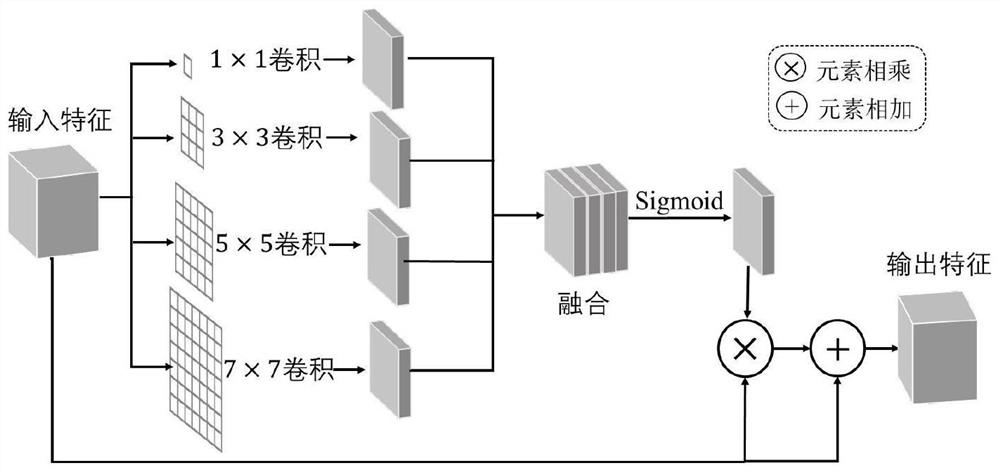

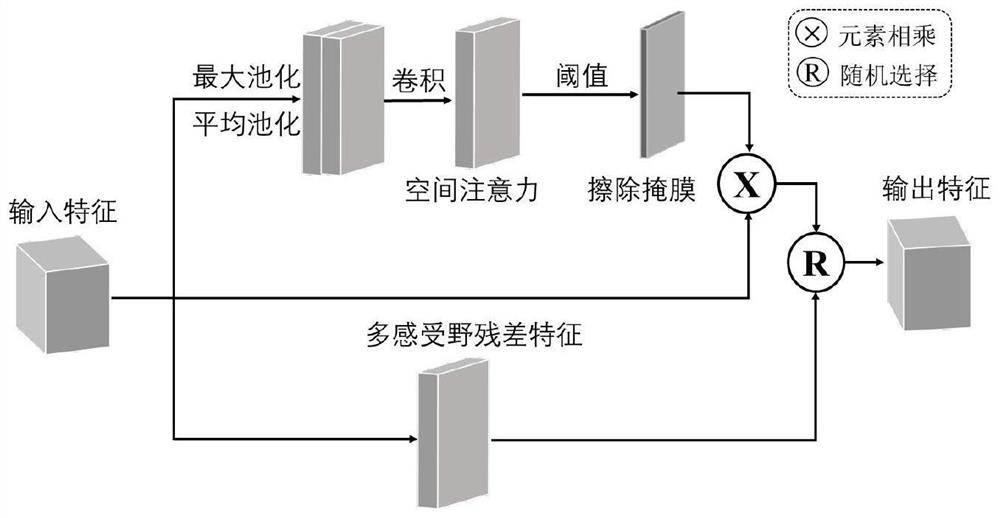

Vehicle re-identification method based on feature enhancement

PendingCN114005096AImprove learning effectImprove discriminationCharacter and pattern recognitionNeural architecturesEngineeringComputer vision

The invention discloses a vehicle re-identification method based on feature enhancement, and the method comprises the steps: constructing a feature enhancement network based on multi-attention guidance, wherein the feature enhancement network is provided with a self-adaptive feature erasing module with space attention guidance and a multi-receptive field residual attention module; helping a backbone network to obtain rich vehicle appearance features under receptive fields of different sizes through multi-receptive-field residual attention, and utilizing a self-adaptive feature erasing module guided by space attention to selectively erase the most significant features of a vehicle, so the local branches of the multi-attention-guided feature enhancement network can mine potential local features, and the global features of the global branches and the potential local features of the erasure branches are fused to complete the vehicle re-identification process. The method of the invention not only can overcome the problem of local significant information loss caused by complex environmental changes, such as violent illumination changes and barrier shielding, but also can meet the requirements of efficiently and quickly searching the target vehicle in safety supervision and intelligent traffic systems.

Owner:HEBEI UNIV OF TECH

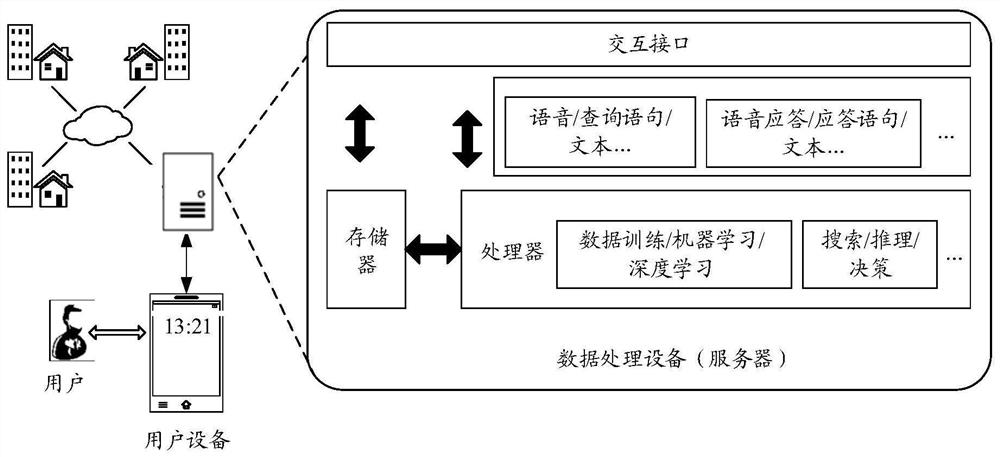

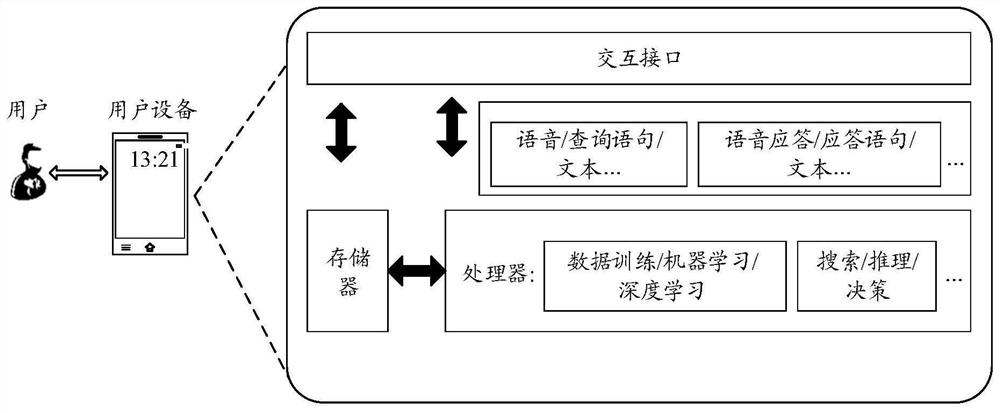

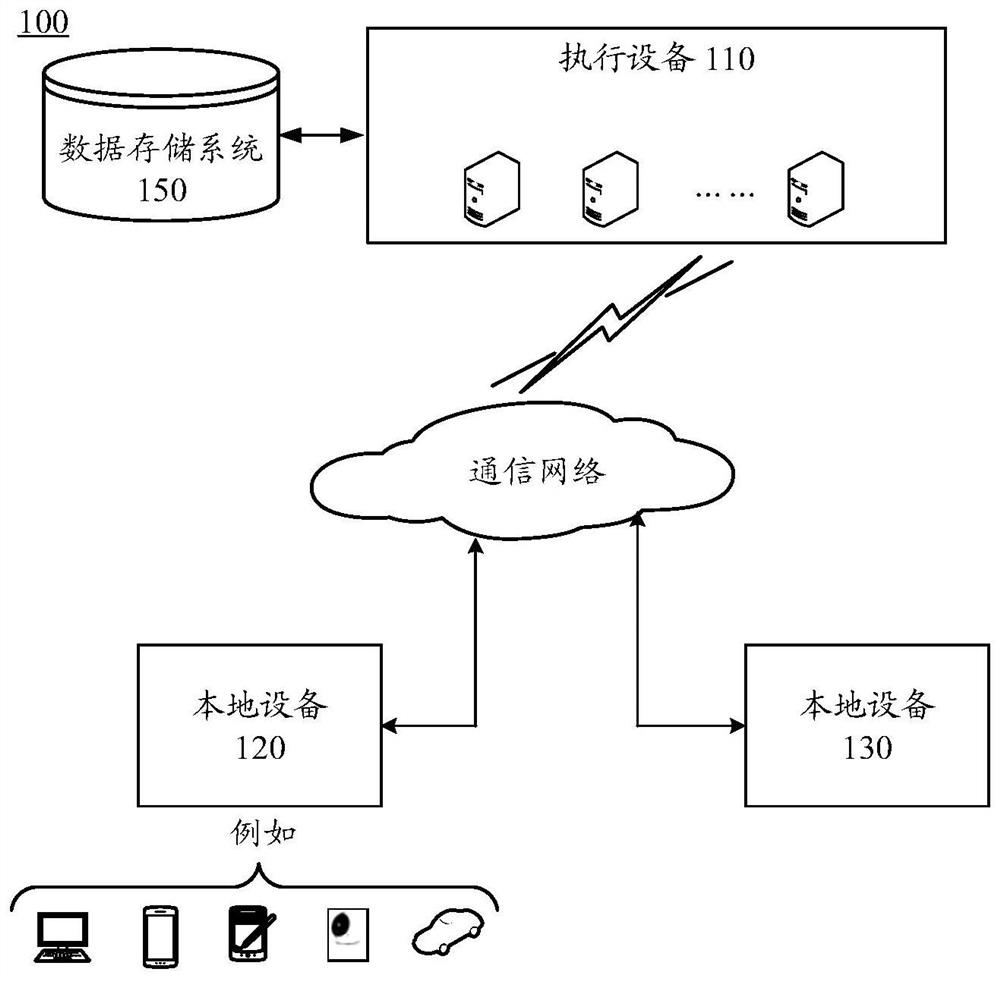

Text processing model training method and text processing method and device

PendingCN112487182AAvoid low accuracyImprove accuracySemantic analysisCharacter and pattern recognitionData packSemantic feature

The invention discloses a text processing model training method, a text method and a device in the field of natural language processing in the field of artificial intelligence. The training method comprises the steps of obtaining a training text; respectively inputting the training text into a teacher model and a student model to obtain sample data output by the teacher model and prediction data output by the student model, the teacher model and the student model respectively comprising an input layer, one or more intermediate layers and an output layer, wherein the sample data comprises sample semantic features output by a middle layer of the teacher model and sample labels output by an output layer, and the prediction data comprises prediction semantic features output by the middle layerof the student model and prediction labels output by the output layer; and training model parameters of the student model based on the sample data and the prediction data to obtain a target student model. According to the technical scheme, knowledge migration is effectively carried out on the student model, so that the accuracy of the text processing result of the student model is improved.

Owner:HUAWEI TECH CO LTD

Light human body action recognition method based on deep learning

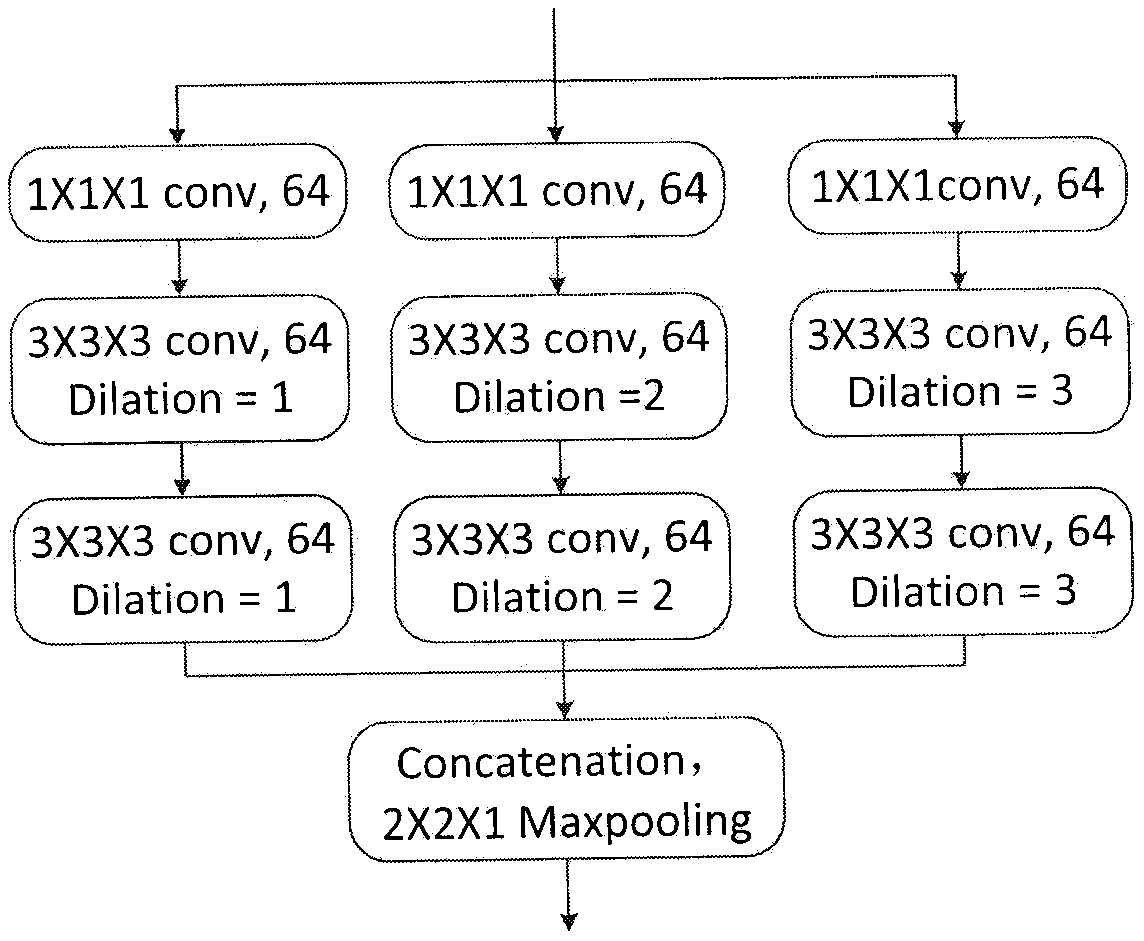

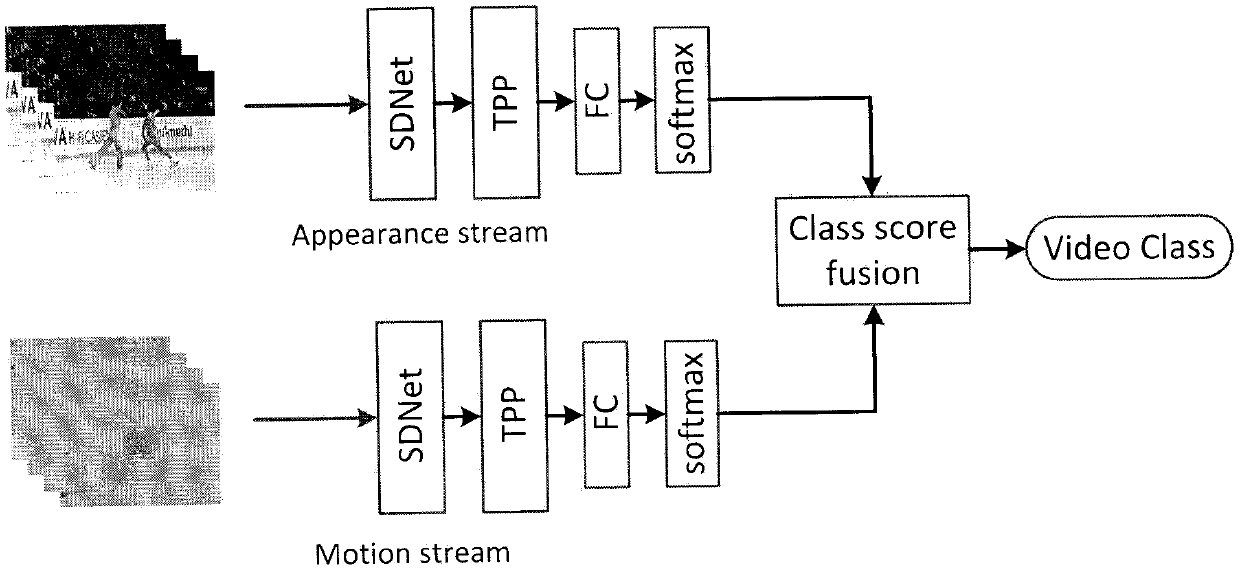

InactiveCN109977904AAvoid deep questionsReduce the amount of model parametersCharacter and pattern recognitionNeural architecturesLearning basedHuman body

The invention discloses a light human body action recognition method based on deep learning. The method comprises the steps of firstly constructing a light-weight deep learning network (SDNet) combining a shallow network and a deep network, wherein the network comprises a shallow multi-scale module and a deep network module, and constructing a light-weight human body action recognition model basedon deep learning based on the network; in the model, firstly utilizing the SDNet to perform feature extraction and representation on the space-time double flow; utilizing a time pyramid pooling layerto aggregate the video frame level features of the time stream and the space stream into the video level representation; obtaining a recognition result of the space-time double flow to the input sequence via a full connection layer and a softmax layer, and finally fusing the double-flow result in a weighted average fusion mode to obtain a final recognition result. By adopting the light human bodyaction recognition method based on the deep learning, the model parameter quantity can be greatly reduced on the premise of ensuring that the recognition precision is not reduced.

Owner:CHENGDU UNIV OF INFORMATION TECH

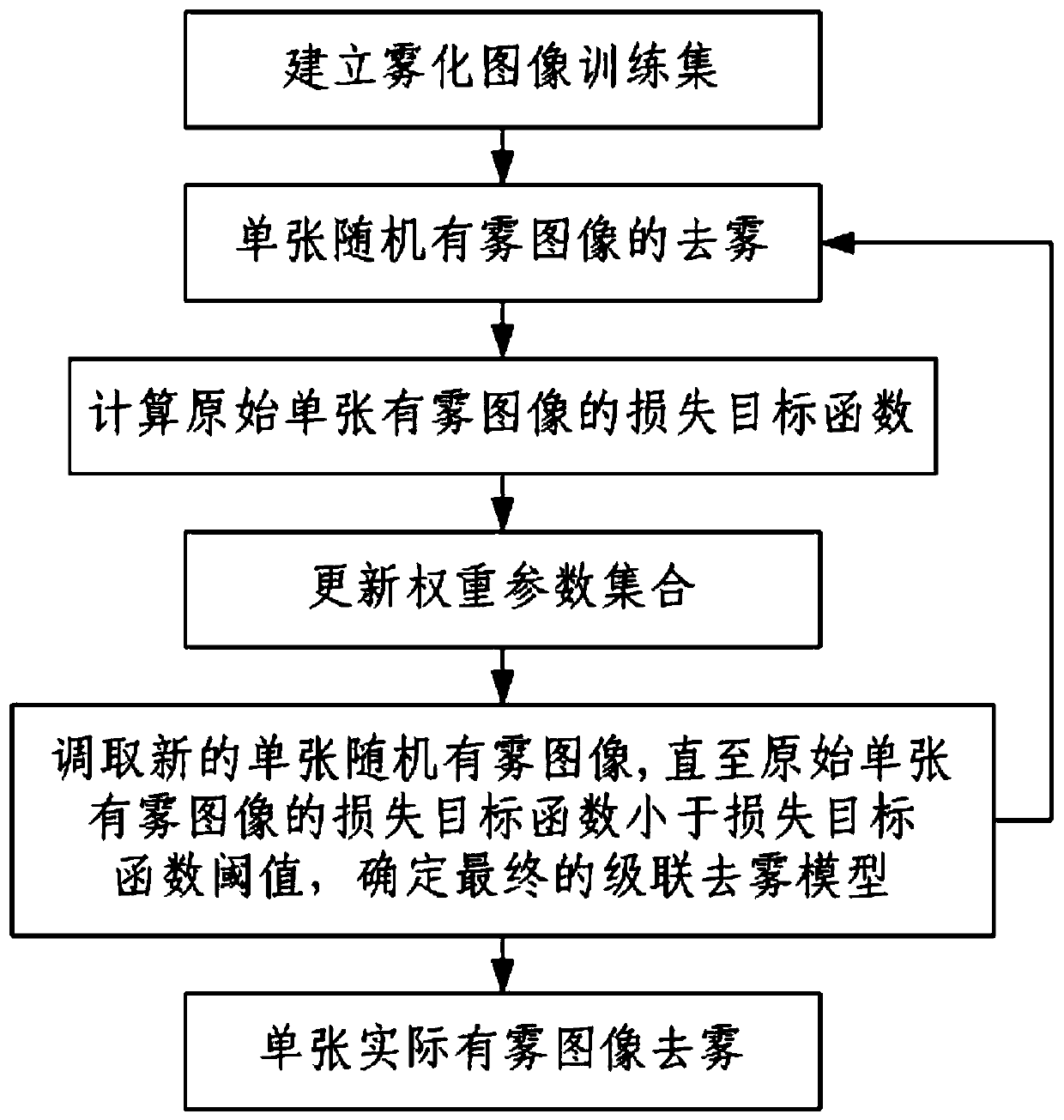

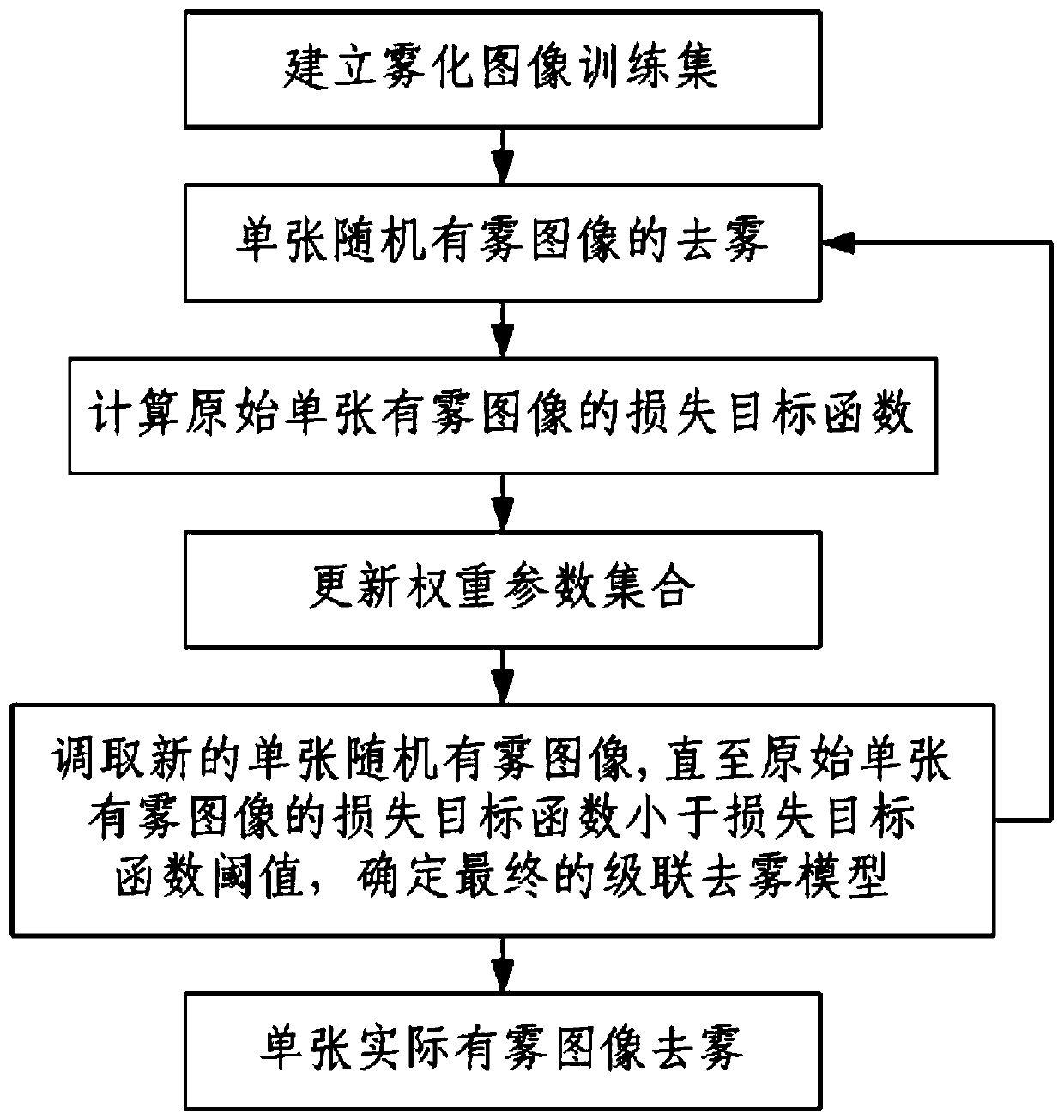

Image defogging method based on multi-scale dark channel prior cascade deep neural network

ActiveCN110363727AImprove accuracyImprove real-time performanceImage enhancementImage analysisModel parametersGlobal illumination

The invention discloses an image defogging method based on a multi-scale dark channel prior cascade deep neural network. The method comprises the following steps: 1, establishing an atomized image training set; 2, defogging a single random foggy image; 3, calculating a loss objective function of the original single foggy image; 4, updating the weight parameter set; 5, calling a new single random foggy image, circulating the step 2 to the step 4 until the loss target function of the original single foggy image is smaller than the loss target function threshold, and determining a final cascade defogging model; and 6, defogging a single actual foggy image. According to the invention, the convolutional neural network is used to estimate dark channel and global illumination parameters on imagesof different scales. The deep neural network is used as a model, and then the dark channel and the defogged image are fused step by step. Finally, the defogged image is obtained through supervised learning. The feature modeling capability of the deep neural network is effectively utilized. The parameter fusion of different scales is achieved. The high-resolution defogged image can be obtained under the condition of few model parameters.

Owner:中国人民解放军火箭军工程大学

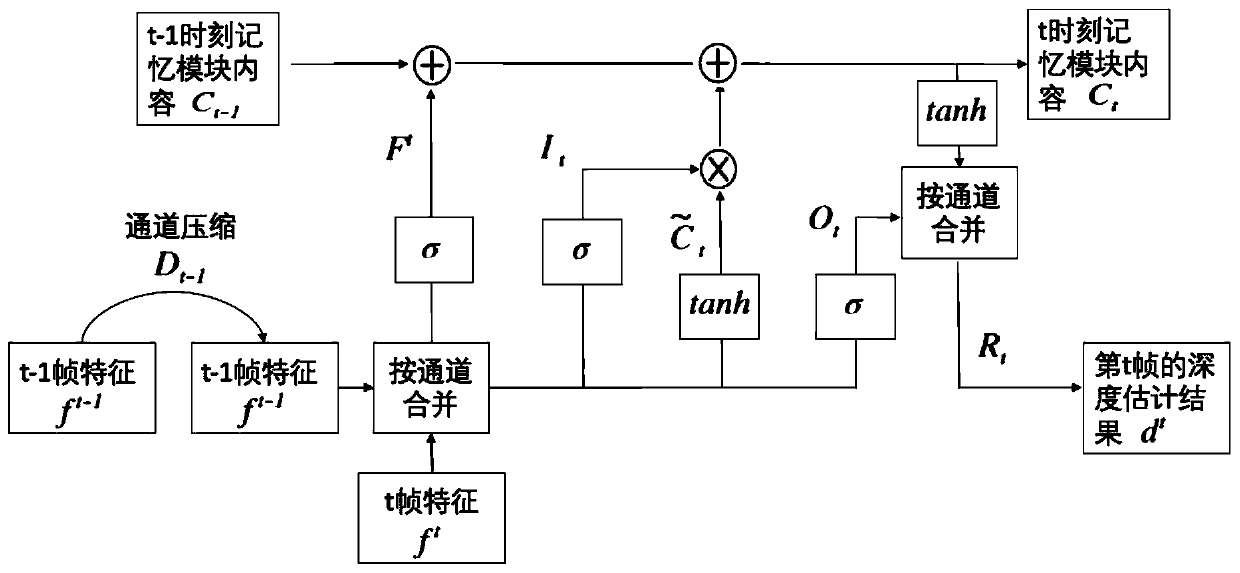

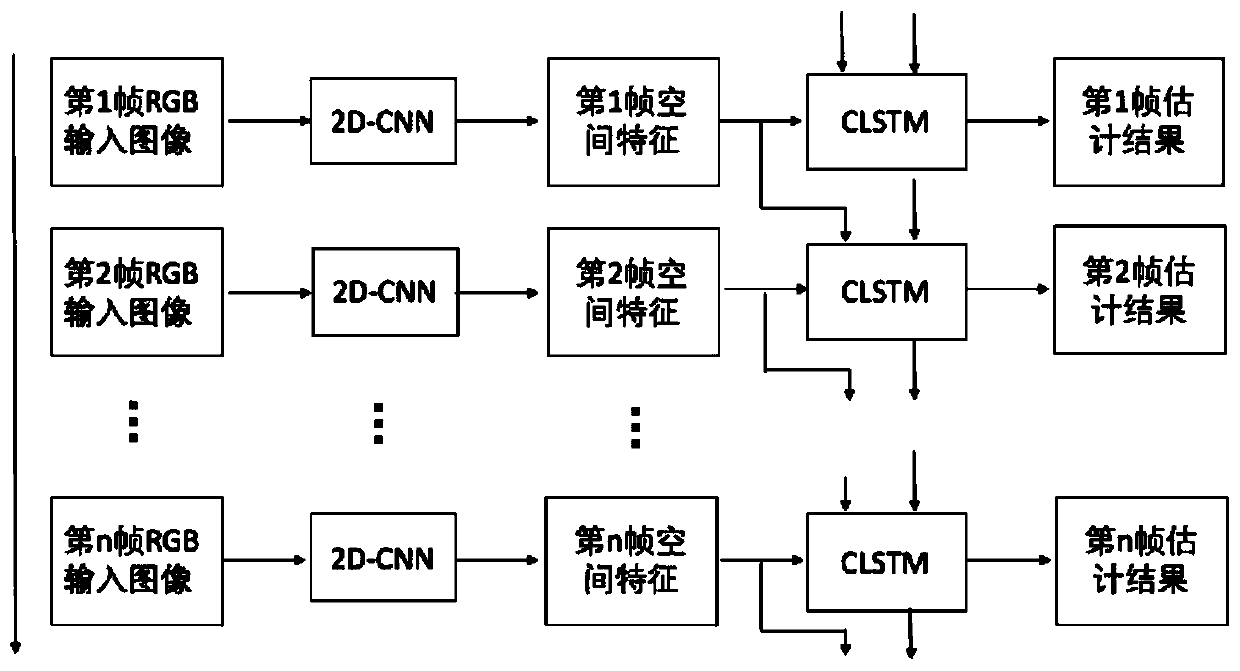

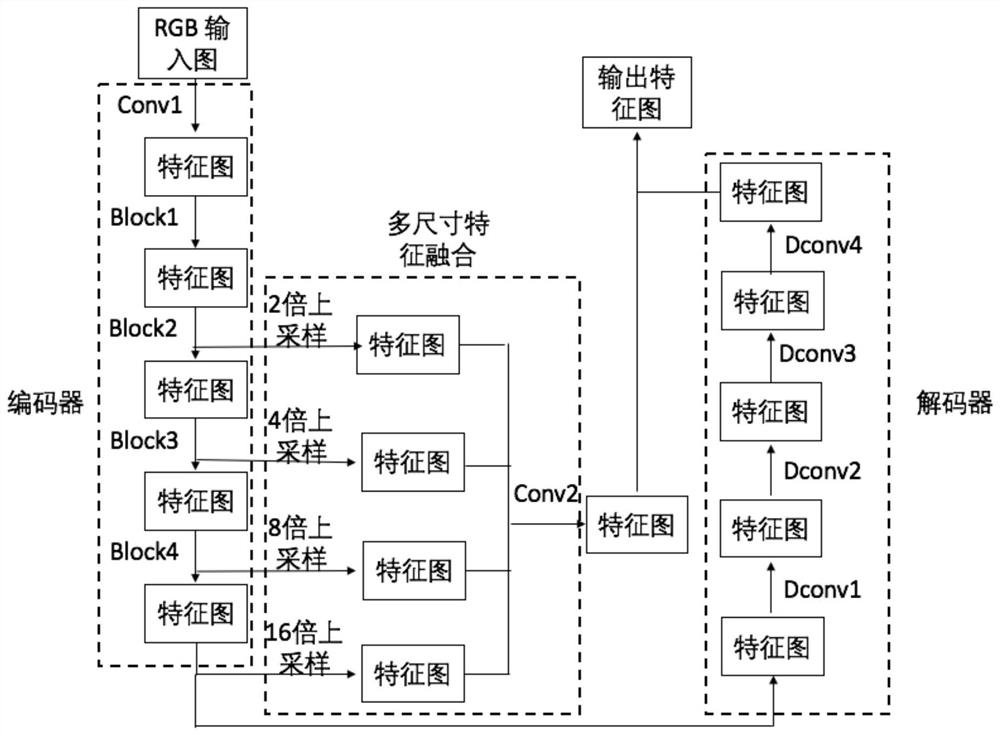

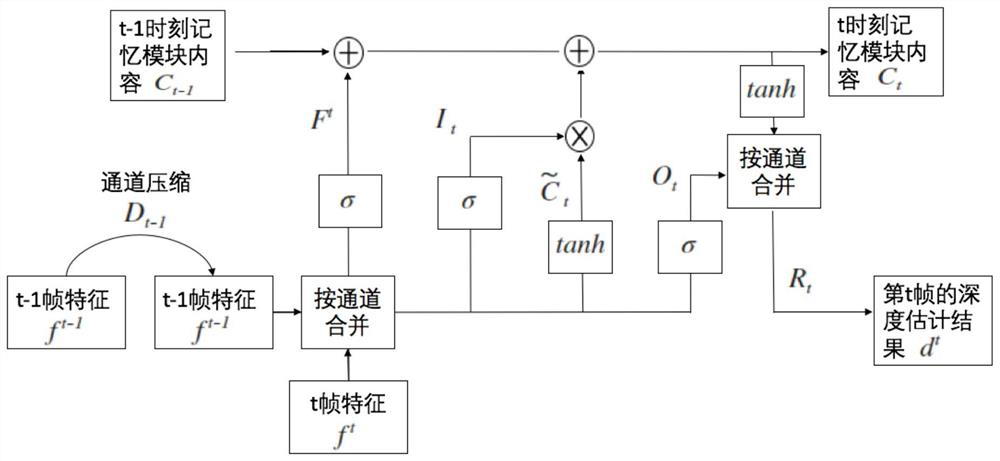

Real-time monocular video depth estimation method

ActiveCN110246171ARun fastReduce the amount of model parametersImage enhancementImage analysisRgb imageGenerative adversarial network

The invention relates to a real-time monocular video depth estimation method, which combines a 2D-CNN (Two-Dimensional Convolutional Neural Network) and a convolutional long-short term memory network to construct a model capable of performing real-time depth estimation on monocular video data by utilizing space and time sequence information at the same time. A GAN (Generative Adversarial Network) is used for constraining an estimated result. In terms of evaluation precision, the method can be compared with a current state-of-th-art model. In the aspect of use overhead, the model operation speed is higher, the model parameter quantity is smaller, and fewer computing resources are needed. The result estimated by the model has good time consistency, and when depth estimation is carried out on multiple continuous frames, the change condition of the obtained depth result image is consistent with the change condition of the input RGB image, so that sudden change and jitter are avoided.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

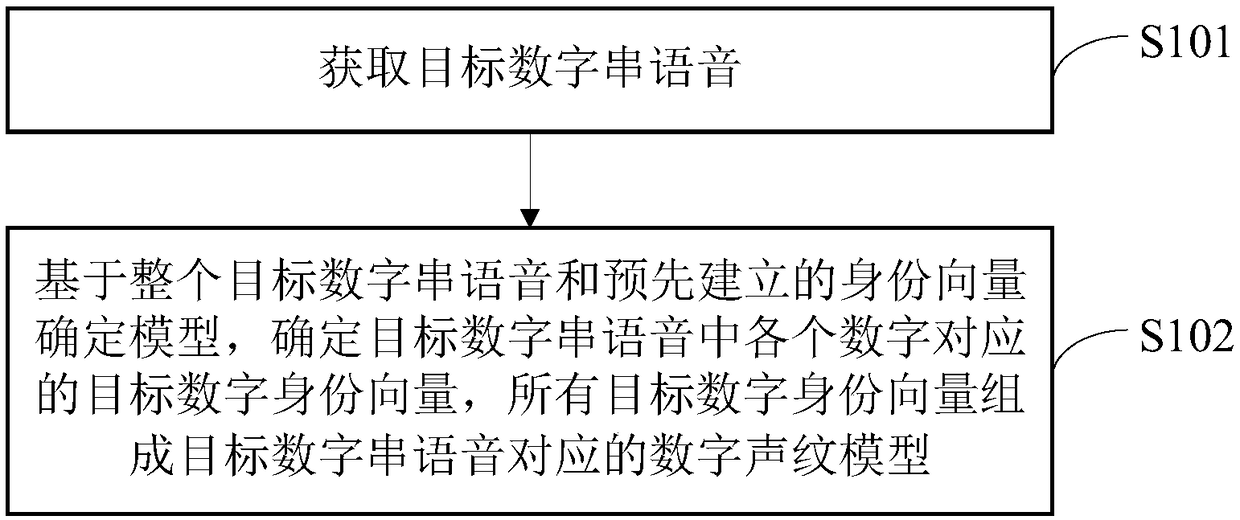

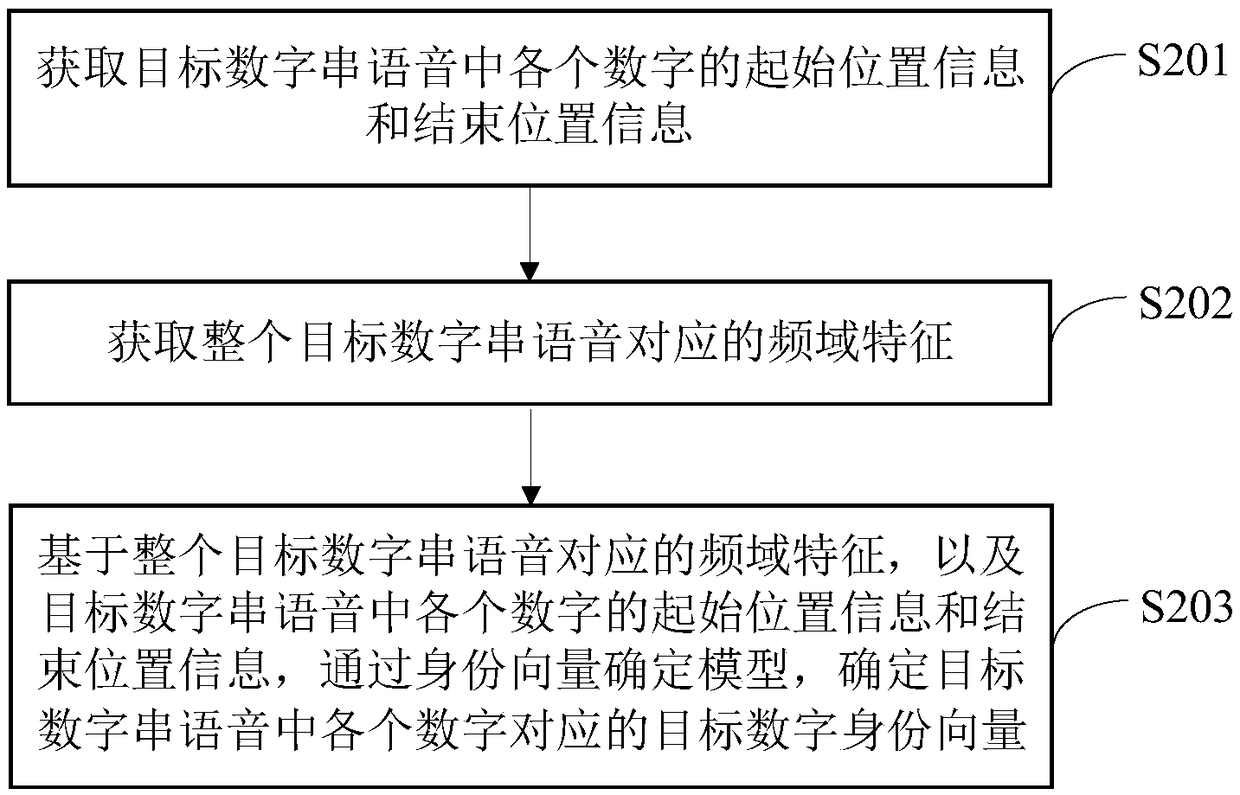

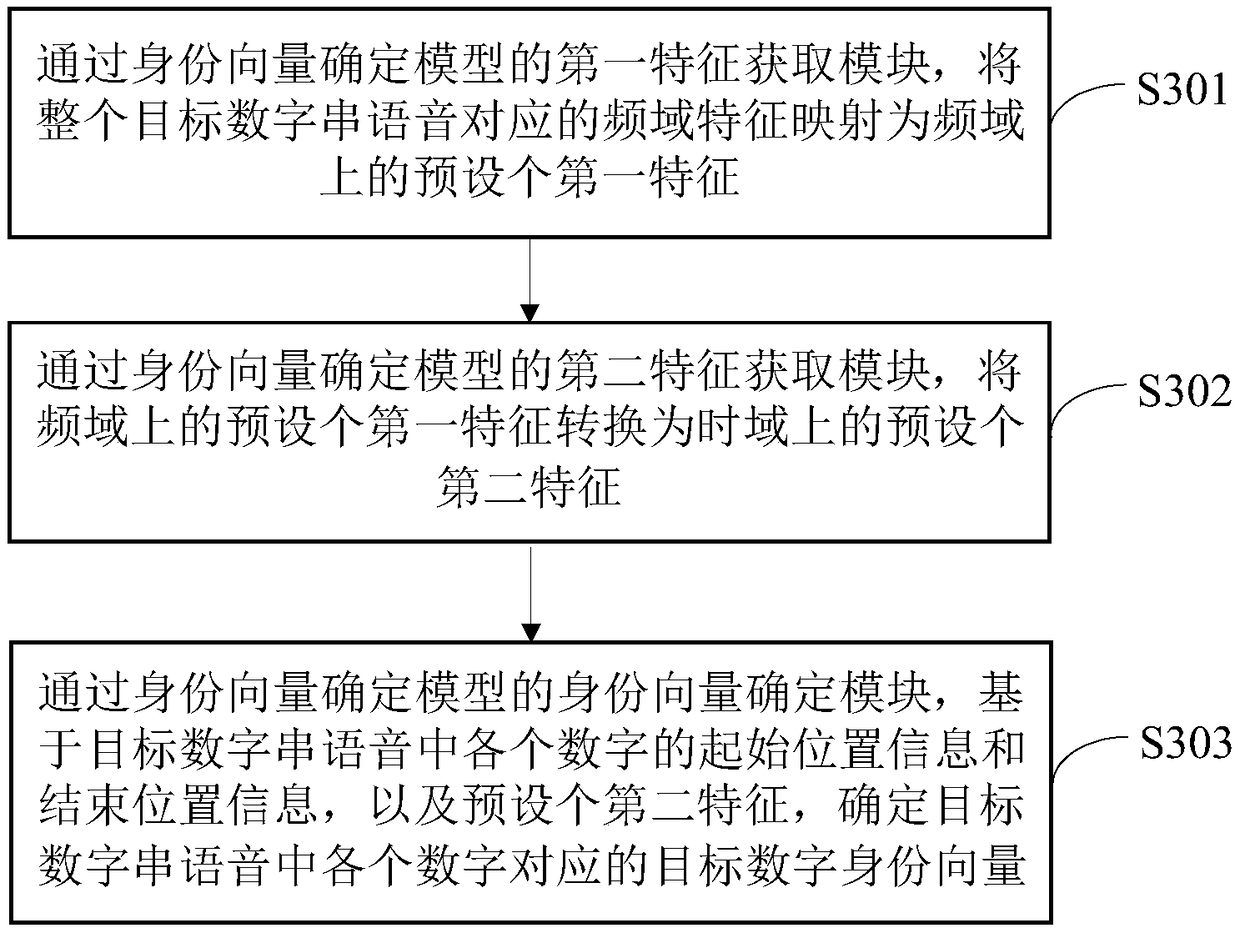

Digital string voice processing method and device thereof

ActiveCN109448732ANot easy to interfereLess susceptible to interferenceSpeech analysisDigital identitySpeech sound

The invention provides a digital string voice processing method and a device thereof. The method includes the following steps: acquiring a target digital string voice; determining a model based on theentire target digital string voice and a pre-established identity vector, determining a target digital identity vector corresponding to each digit in the target digital string voice, and constitutinga digital voiceprint model corresponding to the target digital string voice by all target digital identity vectors, wherein an identity vector determination model is obtained by using training of thedigital string voice, and the digital voiceprint model contains the structured information for training the digital strings in digital string voice. The digital string voice processing method can determine the stable and accurate digital voiceprint model.

Owner:IFLYTEK CO LTD

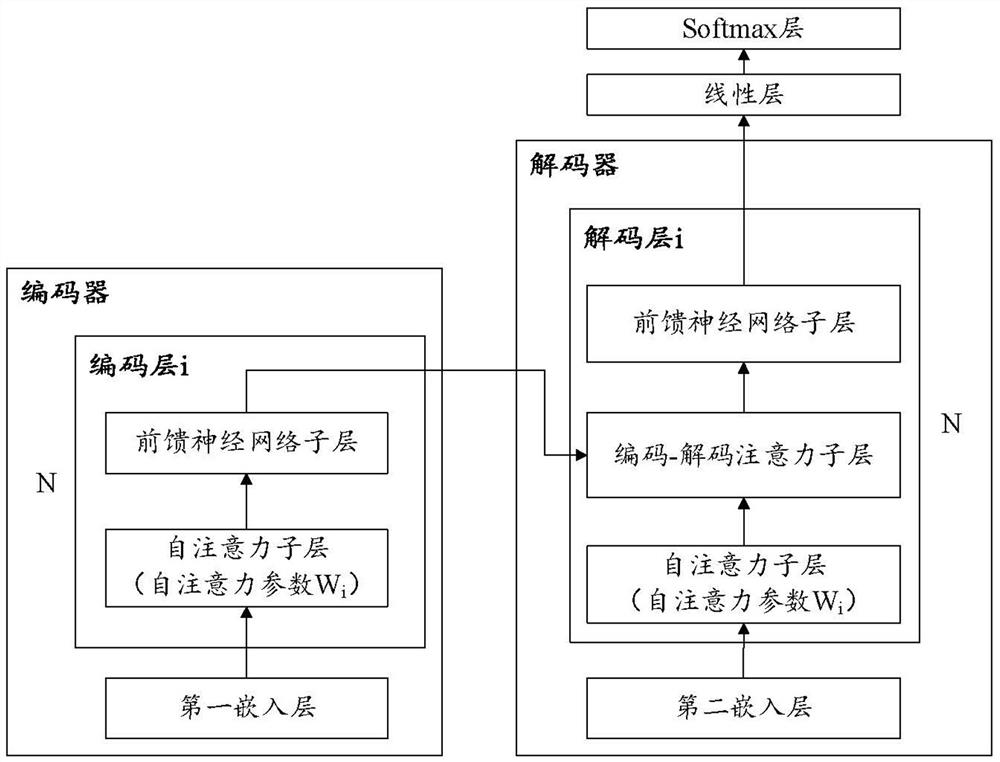

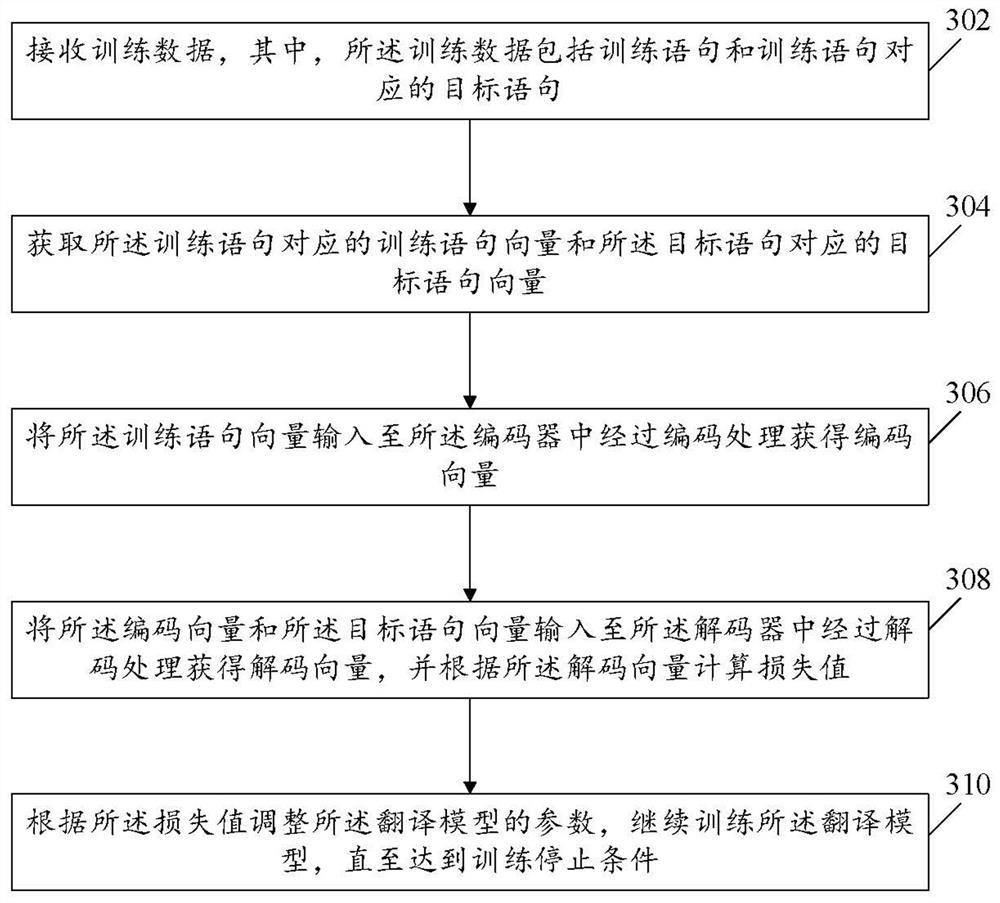

Translation model training method and device

PendingCN111931518AGuaranteed accuracyReduce the amount of model parametersNatural language translationNeural architecturesAlgorithmTheoretical computer science

The invention provides a translation model training method and device. The translation model comprises an encoder and a decoder. The encoder comprises n encoding layers which are connected in sequence, the decoder comprises n decoding layers which are connected in sequence, a self-attention sub-layer of the ith encoding layer and a self-attention sub-layer of the ith decoding layer share a self-attention parameter, n is greater than or equal to 1, and i is greater than or equal to 1 and less than or equal to n. The method comprises the following steps of: receiving a training statement and a target statement corresponding to the training statement; obtaining a training statement vector corresponding to the training statement and a target statement vector corresponding to the target statement; inputting the training statement vector into the encoder, and performing encoding processing to obtain an encoding vector; inputting the encoding vector and the target statement vector into the decoder, decoding the encoding vector and the target statement vector to obtain a decoding vector, and calculating a loss value according to the decoding vector; and adjusting parameters of the translation model according to the loss value.

Owner:BEIJING KINGSOFT DIGITAL ENTERTAINMENT CO LTD

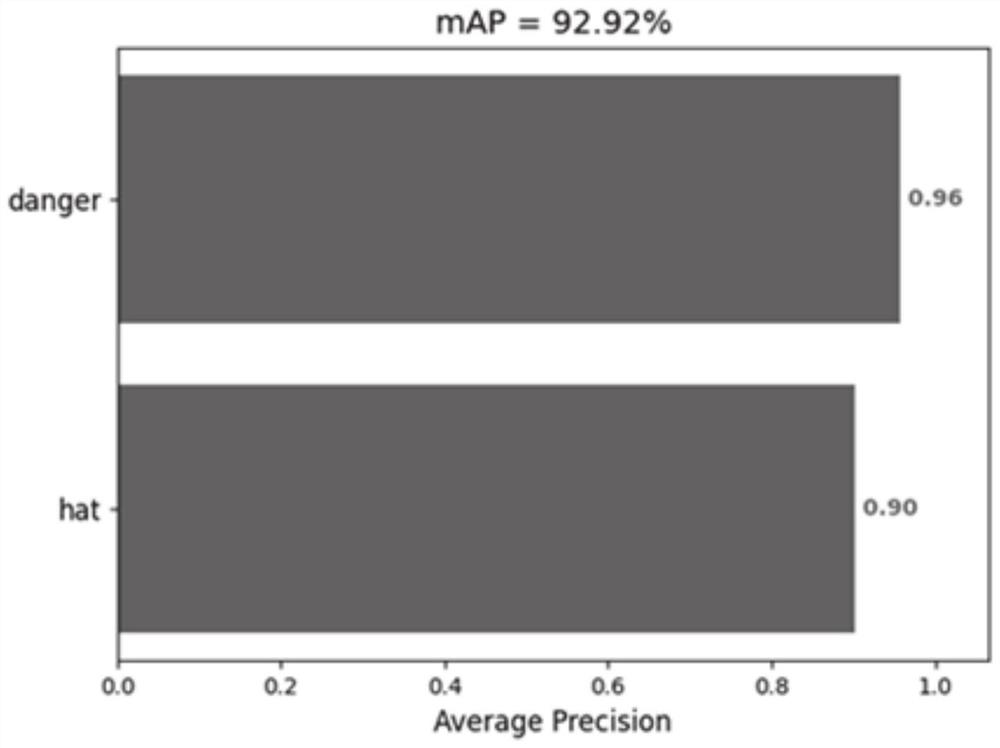

Lightweight safety helmet detection method and system for mobile terminal

PendingCN114067211AObvious superioritySimple structureCharacter and pattern recognitionNeural architecturesSimulationNetwork structure

The invention provides a lightweight safety helmet detection method and system for a mobile terminal. The method comprises steps of obtaining related image information; obtaining a detection result of the safety helmet according to the obtained related image information and a preset safety helmet detection model, wherein the safety helmet detection model is obtained by improving a Darknet53 residual network in a YOLOv3 network, specifically, the number of channels is divided into a first part and a second part according to a CspNet network architecture, and the first part is not subjected to any convolution operation; the second part is subjected to convolution and Concat operation twice, the feature map after convolution operation and the second part are superposed, and the superposed feature map and the first part are subjected to concat connection; according to the lightweight target detection algorithm provided by the invention, the network model structure is improved, the purposes of simple network structure and small parameter quantity are realized, the influence on the time delay problem is relatively small, and the lightweight target detection algorithm is suitable for mobile equipment or scenes which cannot be applied by the original algorithm.

Owner:QILU UNIV OF TECH

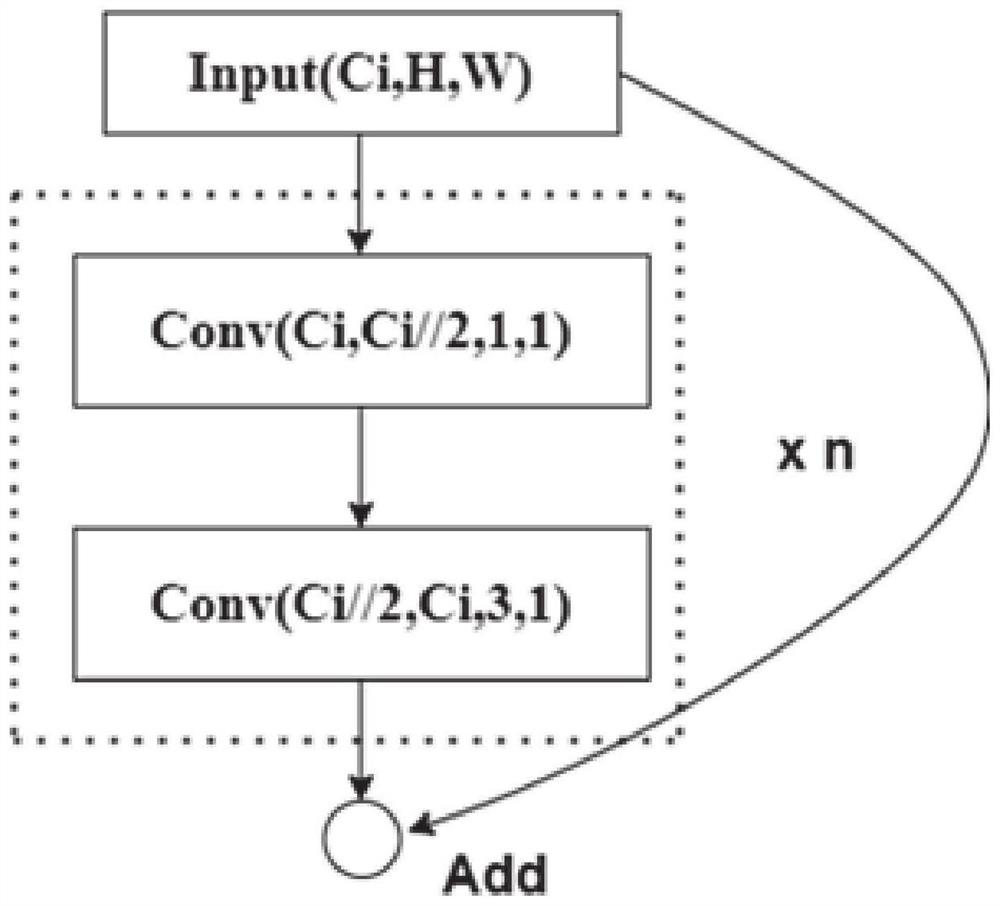

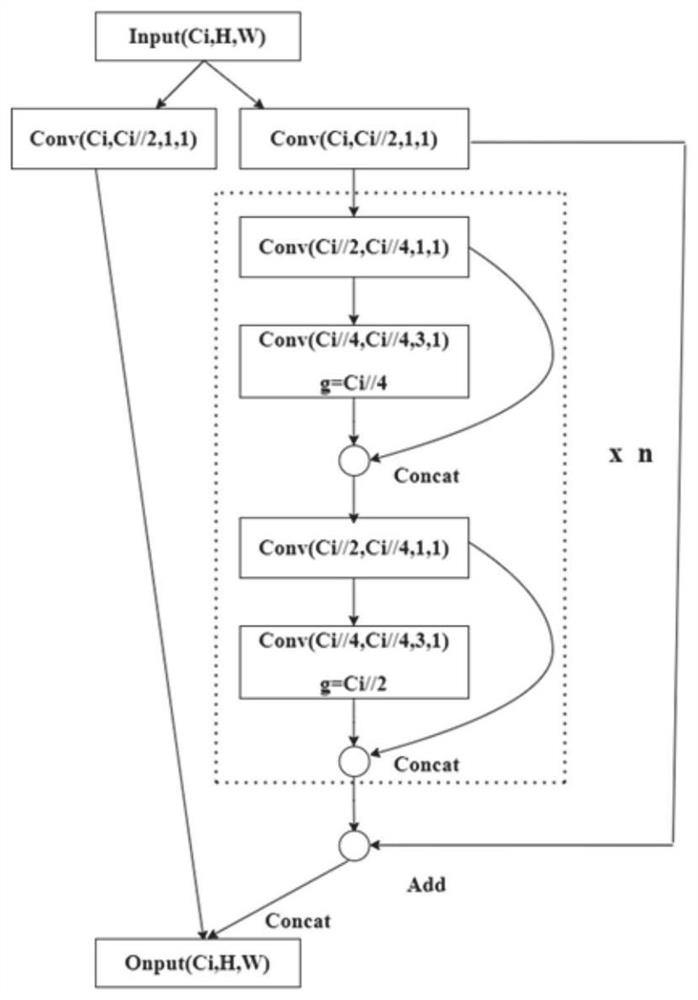

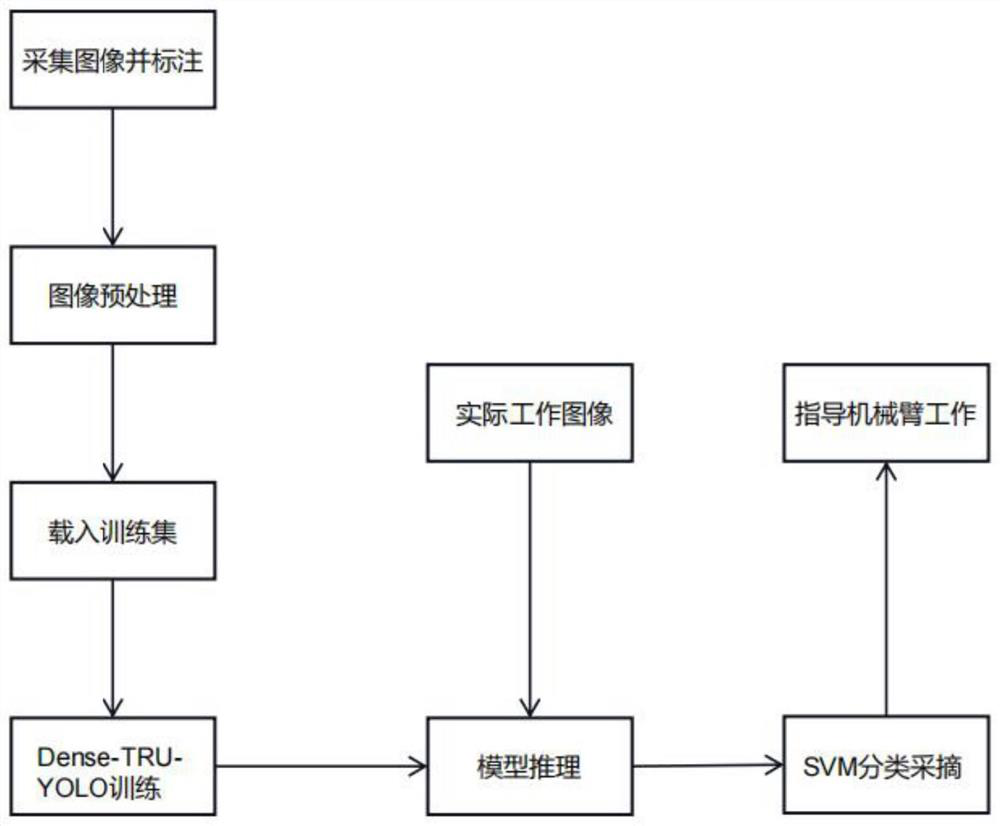

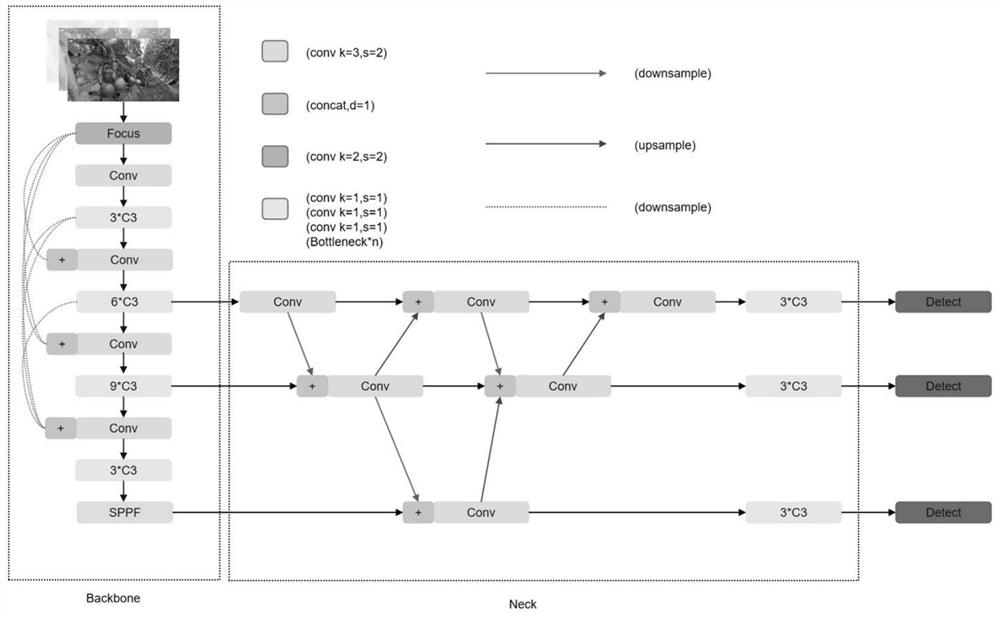

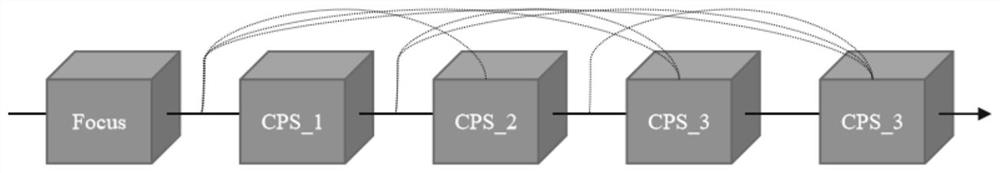

Method for identifying sheltered and overlapped fruits for picking robot

PendingCN114882498AImprove recognition accuracyImprove detection accuracyImage enhancementImage analysisEngineeringImaging Feature

The invention relates to a method for identifying sheltered and overlapped fruits for a picking robot, which belongs to the field of image identification, proposes a Dense-TRH-YOLO model, fuses a Denseblock module into a backbone network on the basis of YOLOv5, creates a segment path from an early stage layer to a later stage layer, and fuses a Transfomer module into the model, thereby improving semantic distinguishability and reducing category confusion. The method comprises the following steps of: firstly, extracting image features of each layer through a Unit + +-PAN neck structure, and finally, replacing a CIOU of an original model with an Officient IOU Loss loss function to carry out frame regression so as to output a detection frame position and classification confidence, respectively calculating a width-height difference value on the basis of the CIOU so as to replace an aspect ratio, and introducing Focal Loss to solve the problem of difficult and easy sample imbalance.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

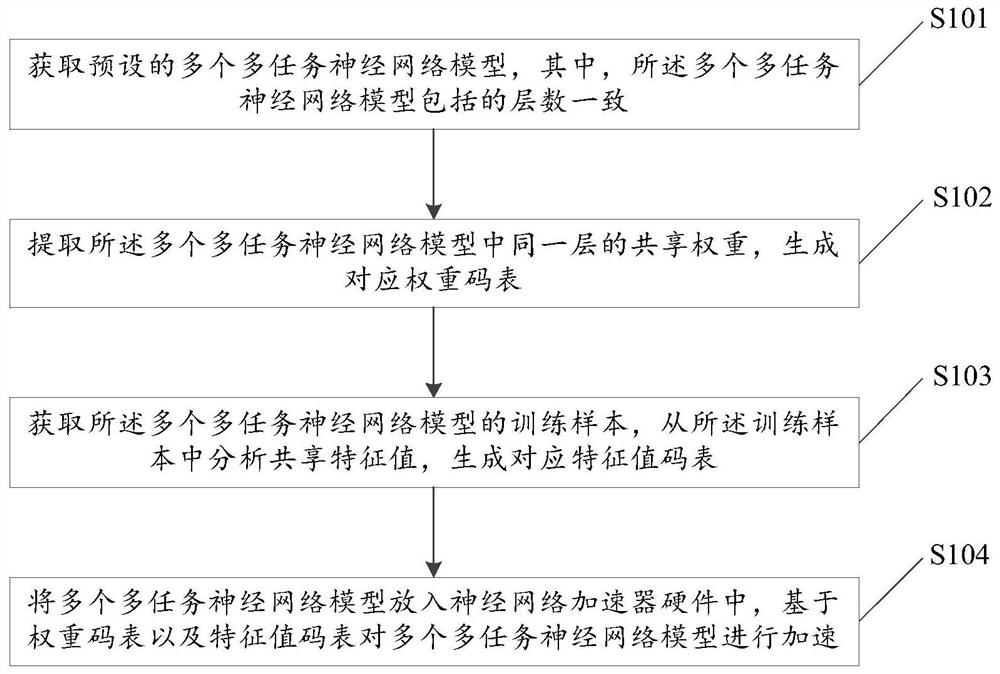

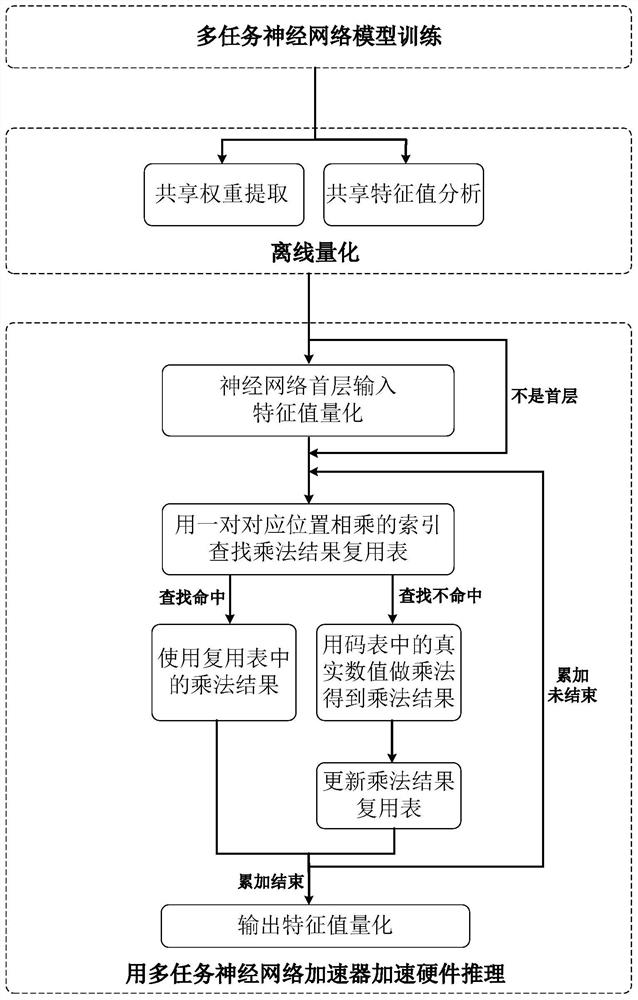

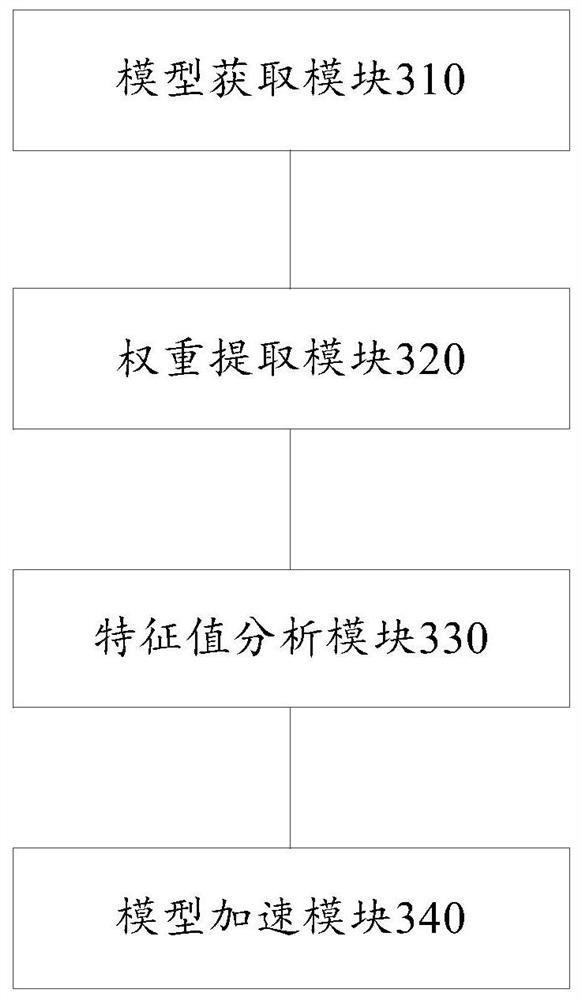

Quantification and hardware acceleration method and device for multi-task neural network

PendingCN111797984AReduce the amount of model parametersReduce overheadNeural architecturesPhysical realisationAlgorithmEngineering

The embodiment of the invention relates to a quantification and hardware acceleration method and device for a multi-task neural network. The method comprises steps of obtaining a plurality of preset multi-task neural network models, and enabling the number of layers of the multi-task neural network models to be consistent; extracting a shared weight of the same layer in the plurality of multi-taskneural network models, and generating a corresponding weight code table, the weight code table including the shared weight; training samples of the multiple multi-task neural network models are obtained, shared feature values being analyzed from the training samples, a corresponding feature value code table being generated, and the feature value code table comprising the shared feature values; and putting a plurality of multi-task neural network models into neural network accelerator hardware, and accelerating a plurality of multi-task neural network models based on the weight code table andthe feature value code table.

Owner:宁波物栖科技有限公司

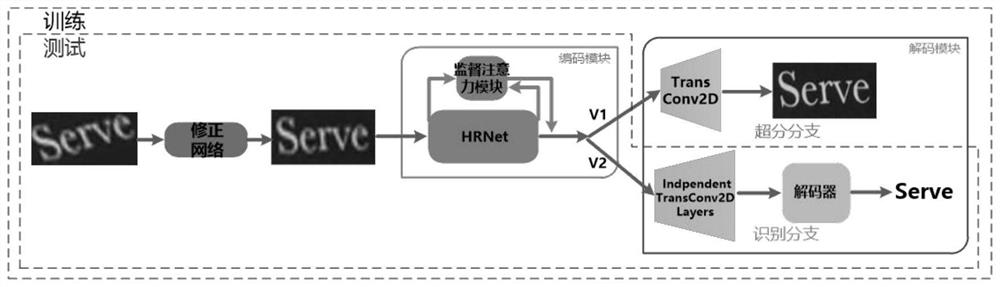

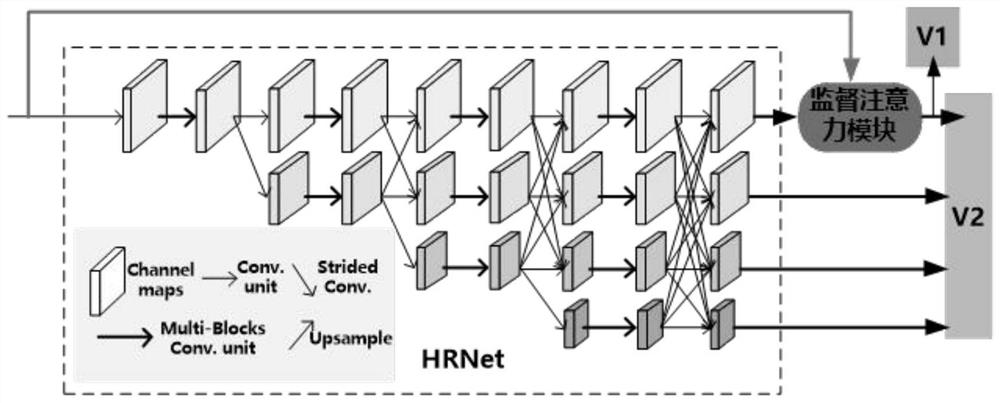

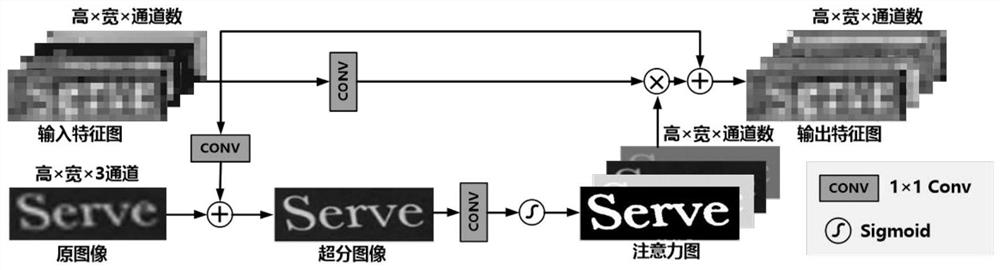

Scene text recognition method based on HRNet coding and double-branch decoding

PendingCN114140786AEasy to implementReduce the amount of model parametersCharacter and pattern recognitionNeural learning methodsComputer visionLinear network coding

The invention discloses a scene text recognition method based on HRNet coding and double-branch decoding. When a traditional deep learning method is used for scene text recognition, the recognition accuracy is reduced when the problems of text distortion, image blurring and low resolution are encountered. The method comprises the following steps of: performing random Gaussian blur on an original text image of a single scene to obtain a low-resolution image; and building a scene text recognition model based on HRNet coding and double-branch decoding, wherein the scene text recognition model based on HRNet coding and double-branch decoding comprises a correction network TPS, a coding module, a super-branch branch and a recognition branch. According to the method, HRNet coding and double-branch decoding are introduced, the recognition accuracy of the model for fuzzy and low-resolution images is improved, and the model parameter quantity and time consumption are reduced in the mode of abandoning super branches during testing.

Owner:HANGZHOU NORMAL UNIVERSITY

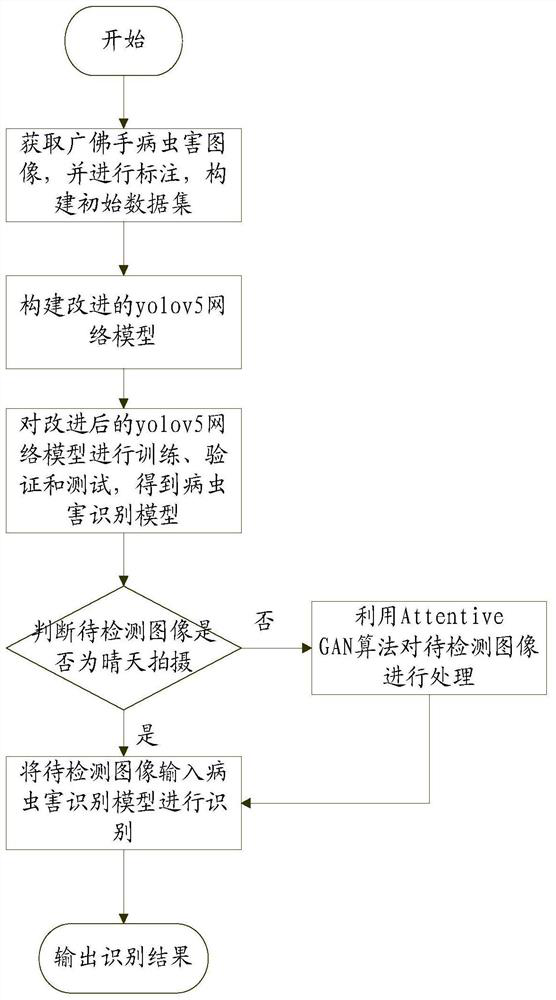

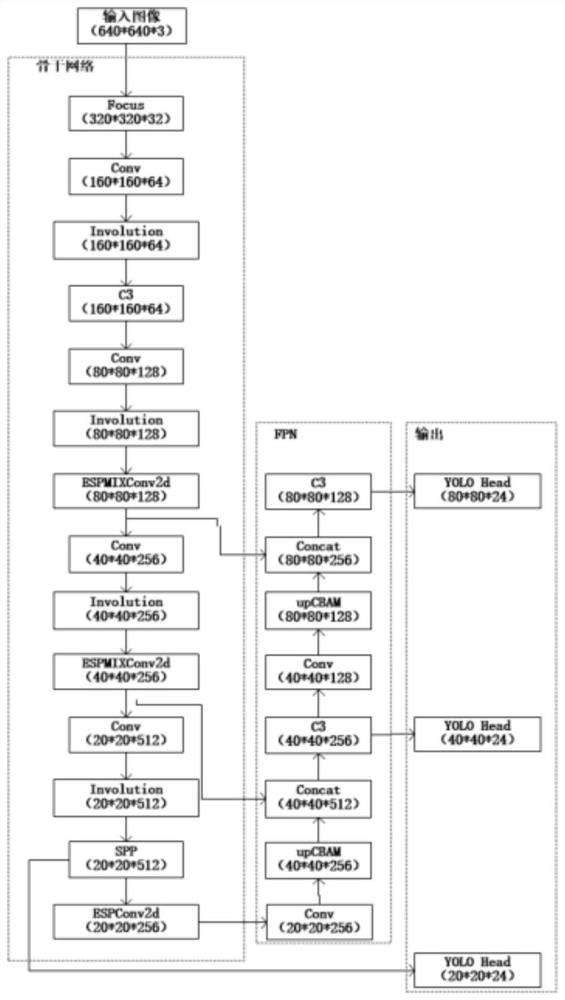

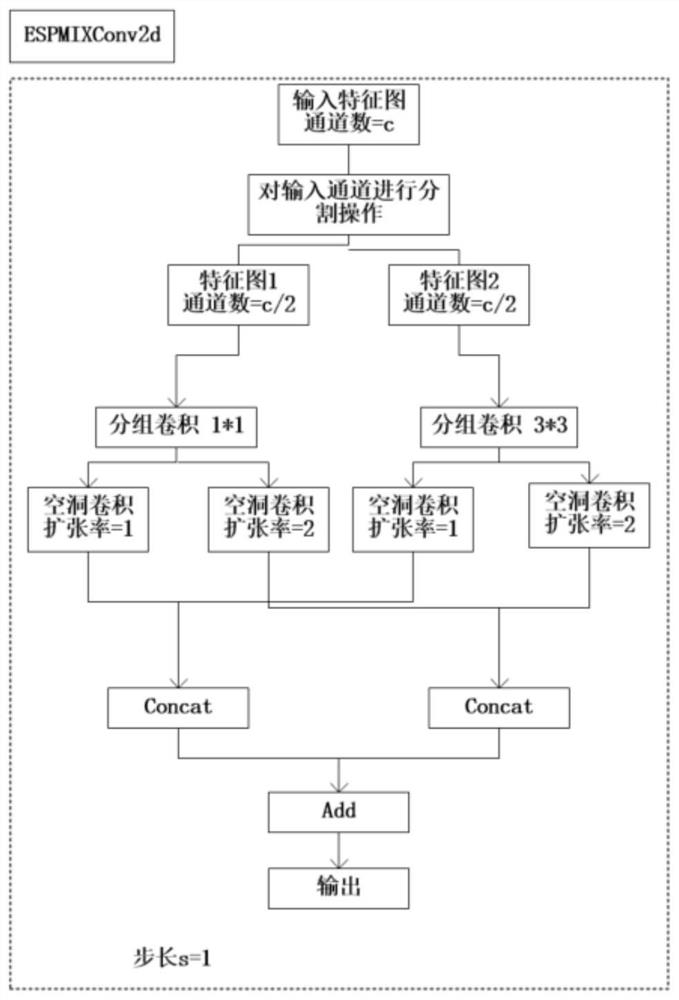

Method and system for identifying Citri medica diseases and insect pests based on improved yolov5 network

PendingCN114005029AReduce the amount of model parametersExpand the receptive fieldCharacter and pattern recognitionNeural architecturesImage basedInsect

The invention discloses a method and system for identifying Citri medica diseases and insect pests based on an improved yolov5 network, and the method comprises the steps of obtaining a Citri medica diseases and insect pests image, marking, and constructing an initial data set; introducing a yolov5 network model, and improving a backbone network and a Neck module of the yolov5 network model; training, verifying and testing the improved yolov5 network model by using the initial data set to obtain a final diseases and insect pests identification model; pre-processing a to-be-detected image; judging whether the to-be-detected image is shot in a sunny day or a rainy day, and if the to-be-detected image is shot in the rainy day, processing the to-be-detected image by using an Attentive GAN algorithm; if the to-be-detected image is shot in sunny days, not processing; and carrying out diseases and insect pests identification on the pre-processed to-be-detected image based on the diseases and insect pests identification model. According to the method, the improved yolov5 network is combined with the Attentive GAN algorithm, so that the Citri medica diseases and insect pests can be identified under rainy weather conditions, the network parameter quantity and the size of a network model can be reduced, and the identification accuracy is improved.

Owner:SOUTH CHINA AGRI UNIV

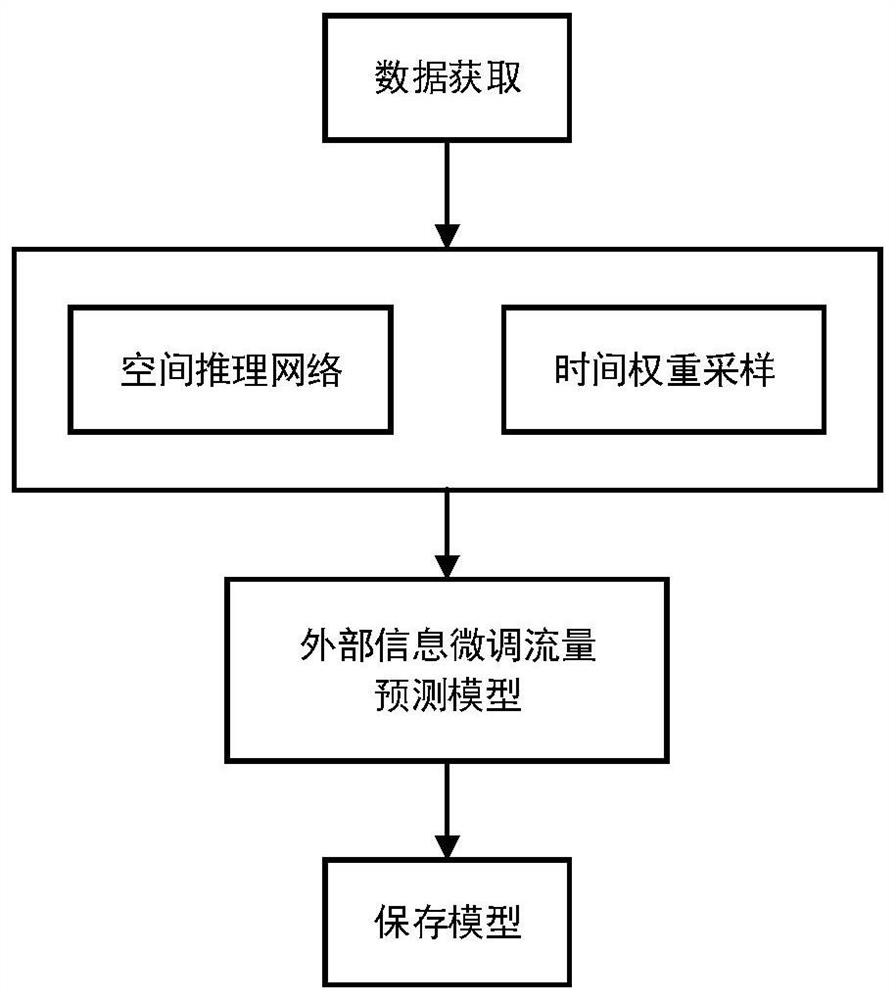

Urban fine-grained flow prediction method and system based on limited data resources

PendingCN113947250ASimplify the prediction problemGuaranteed prediction accuracyForecastingResourcesTraffic predictionAlgorithm

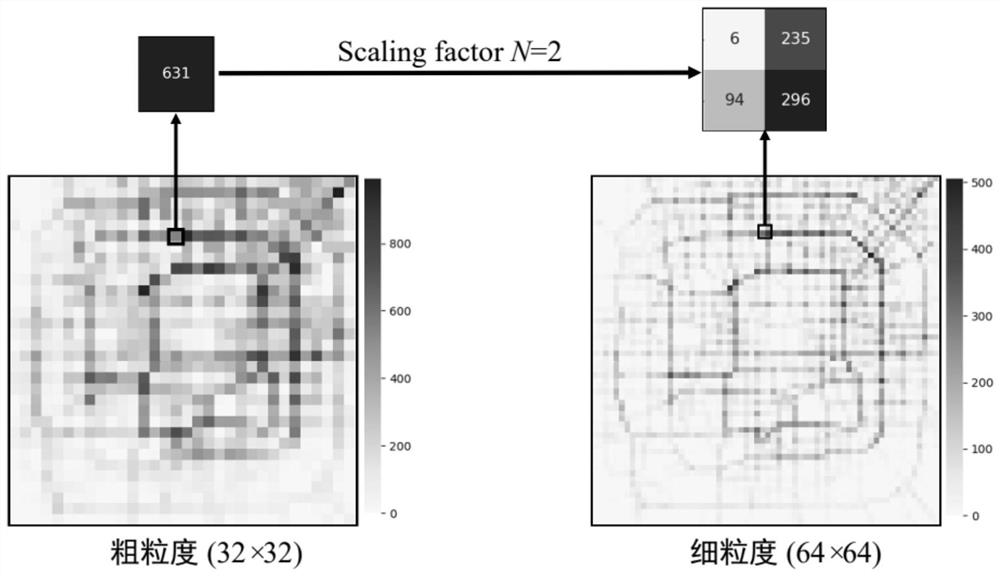

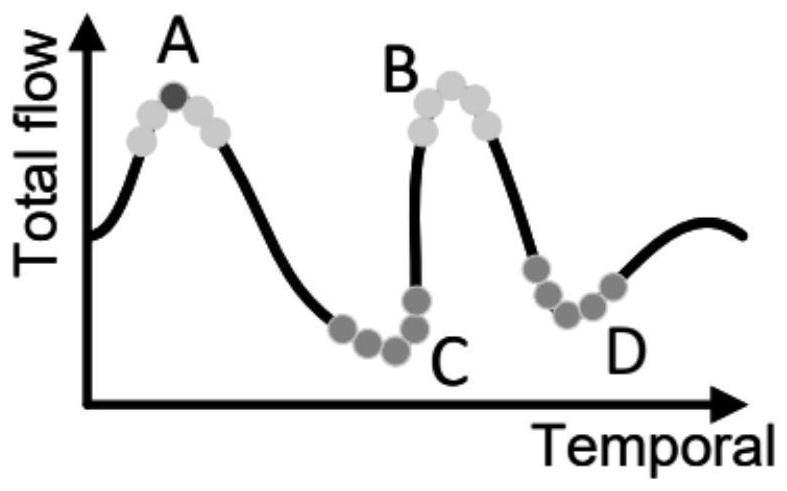

The invention discloses an urban fine-grained flow prediction method and system based on limited data resources. The method comprises the following steps: acquiring flow distribution data and corresponding external factor data of a to-be-predicted area within a certain time; obtaining a fine granularity flow distribution diagram and a coarse granularity flow distribution diagram according to a set coarse and fine granularity scaling factor; performing down-sampling on the coarse-grained flow distribution diagram to obtain a down-sampled coarse-grained flow diagram; and training a spatial inference encoder from a down-sampling coarse-grained flow diagram to a coarse-grained flow distribution diagram, wherein the spatial inference encoder is used for predicting the fine-grained flow of the region. Under the condition that training resources are limited, an inference network is provided to simplify the urban fine-grained traffic prediction problem, and the traffic prediction precision and reliability of the model are improved.

Owner:SHANDONG UNIV +1

Neural network design and training method for denoising lightweight real image

ActiveCN113191972AImprove efficiencyImprove filtering effectImage enhancementImage analysisMachine learningEngineering

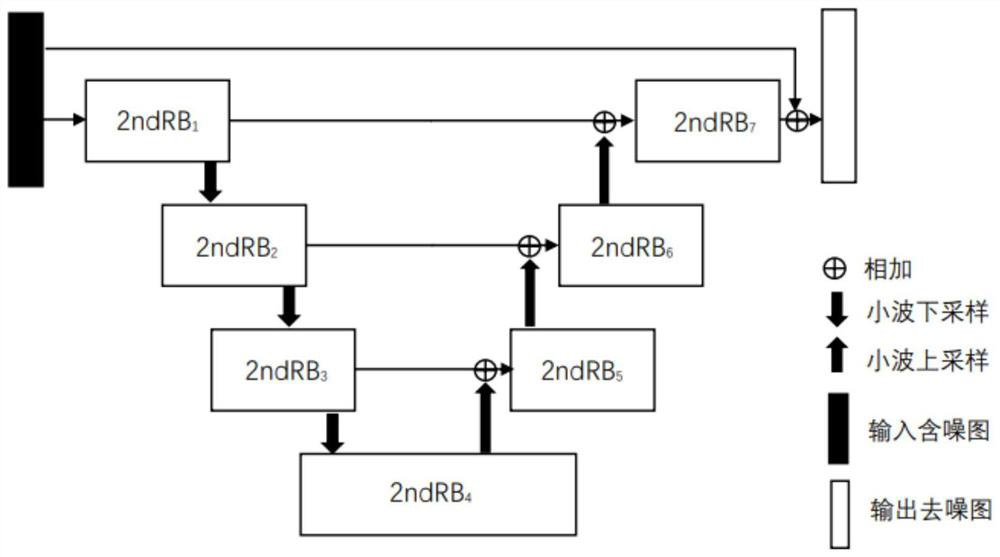

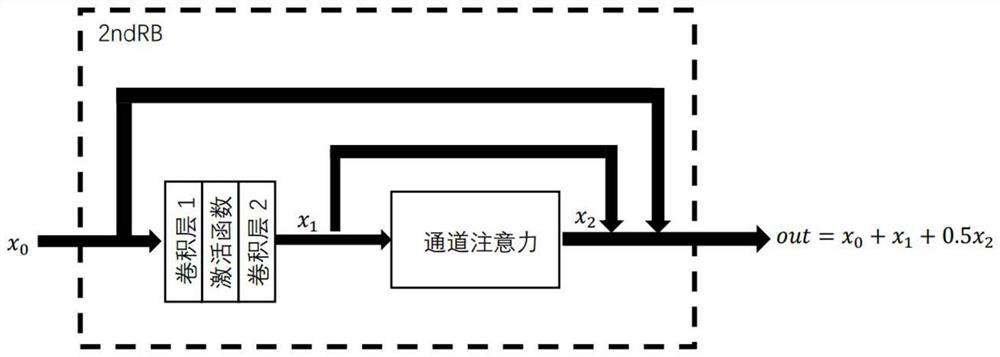

The invention discloses a neural network design and training method for denoising lightweight real image, the lightweight real image denoising neural network adopts a four-scale U-shaped network structure, and comprises seven second-order residual attention modules, three down-sampling modules and three up-sampling modules. According to the method, the real noisy image can be quickly denoised, and the denoised image is obtained.

Owner:XI AN JIAOTONG UNIV

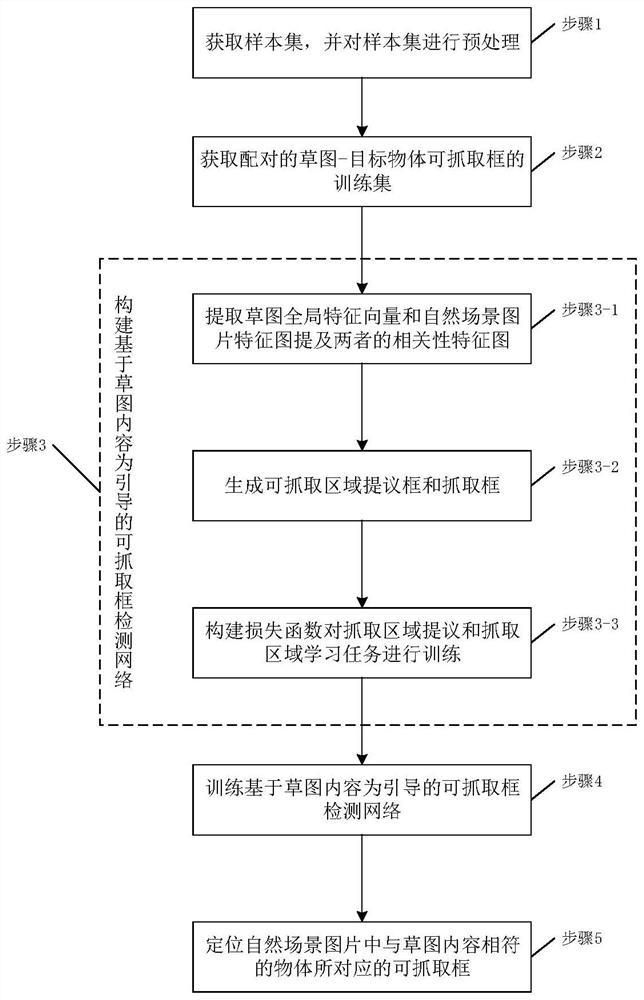

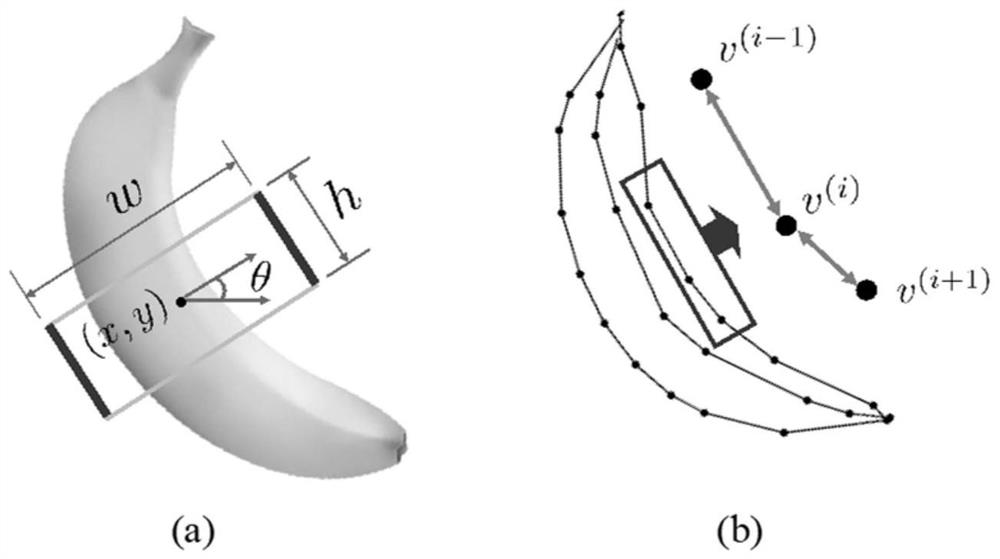

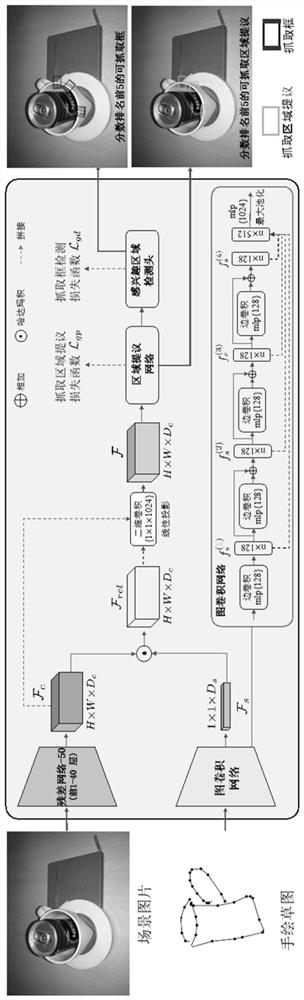

Method and system for detecting grabbable points of target object based on freehand sketch

PendingCN114373127AFast convergenceImprove generalization abilityCharacter and pattern recognitionNeural architecturesNetwork ConvergenceComputer graphics (images)

The invention relates to a target object grabbable point detection method and system based on a freehand sketch, and the method comprises the steps: obtaining a natural scene picture set marked with a grabbing frame and a freehand sketch sample set corresponding to an object in a scene, and carrying out the preprocessing of a sample; matching the sketch with the grabbable frame of the target object, and constructing a training set; constructing a grabable box detection network based on the sketch content as guidance; training a grabbable box detection network based on sketch content guidance; and using the trained grabbable box detection network to position a grabbable box corresponding to an object conforming to the sketch content in the natural scene picture. Compared with the prior art, the method has the advantages that the robot is guided to complete the target object grabbing task based on the sketch, the network convergence is fast, and the generalization ability is high.

Owner:FUDAN UNIV

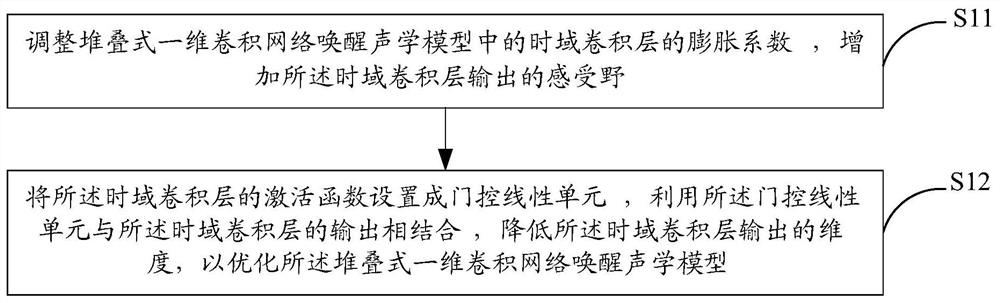

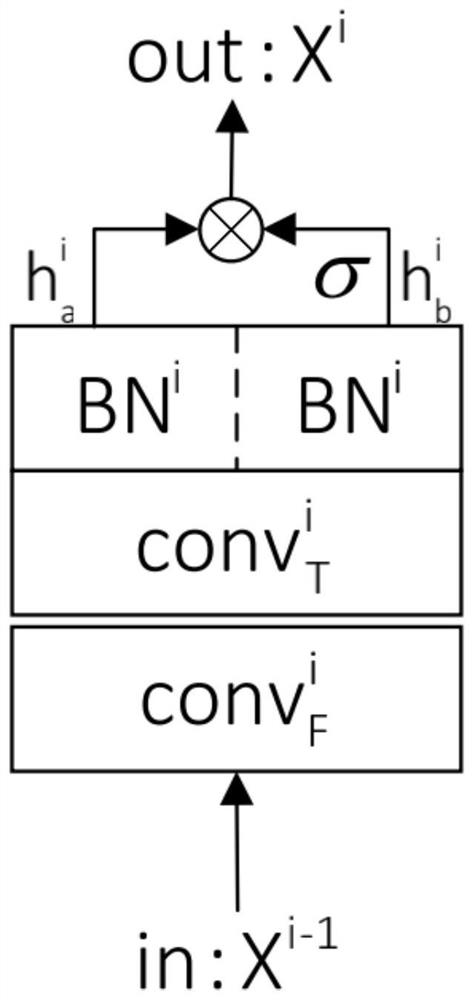

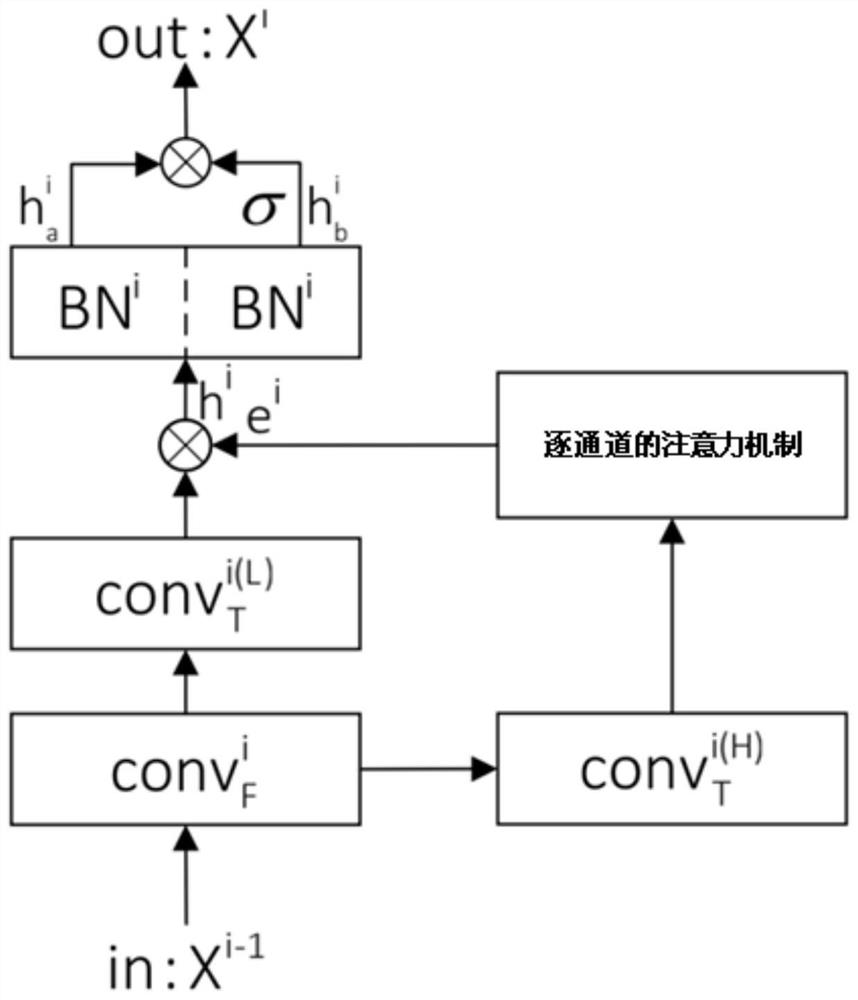

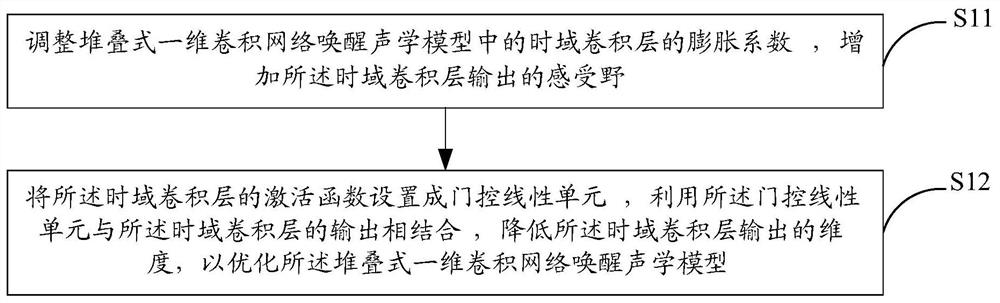

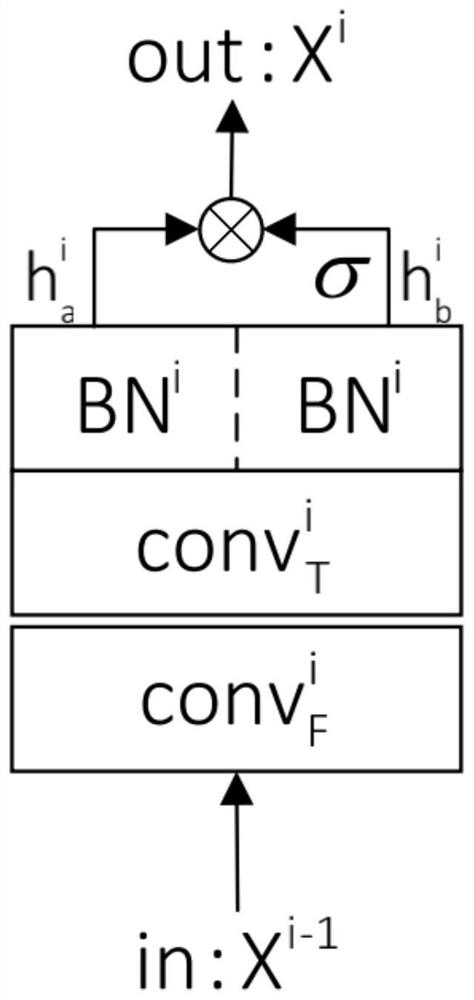

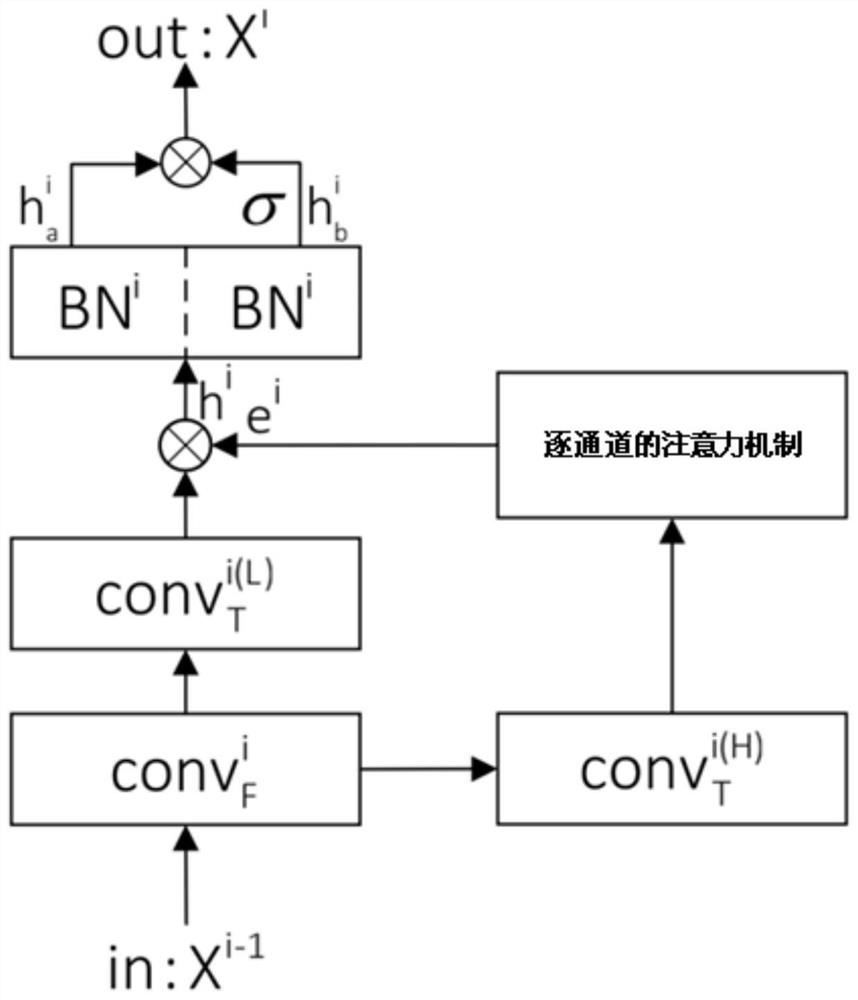

Optimization method and system for stacked one-dimensional convolutional network wake-up acoustic model

ActiveCN113129873BExpand the receptive fieldImprove wake-up accuracySustainable transportationSpeech recognitionTime domainActivation function

An embodiment of the present invention provides an optimization method for a stacked one-dimensional convolutional network wake-up acoustic model. The method includes: adjusting the expansion coefficient of the time-domain convolution layer in the stacked one-dimensional convolutional network wake-up acoustic model, increasing the receptive field output by the time-domain convolution layer; setting the activation function of the time-domain convolution layer to gate control Linear unit, using gated linear unit combined with the output of the time-domain convolutional layer to reduce the dimension of the output of the time-domain convolutional layer to optimize the stacked 1D convolutional network wake-up acoustic model. The embodiment of the present invention also provides an optimization system for a stacked one-dimensional convolutional network wake-up acoustic model. The interval of the convolution kernels in the embodiment of the present invention increases the receptive field, which effectively increases the receptive field of the model and improves the wake-up accuracy. At the same time, after the gated linear unit is combined with the S1DCNN model, the output dimension can be reduced to the original one. Half of the model parameters are better compressed, so that under the same parameter amount, a higher wake-up rate can be achieved.

Owner:AISPEECH CO LTD

Image Dehazing Method Based on Multi-scale Dark Channel Prior Cascade Deep Neural Network

ActiveCN110363727BImprove accuracyImprove real-time performanceImage enhancementImage analysisSupervised learningGlobal illumination

The invention discloses an image dehazing method based on a multi-scale dark channel prior cascade deep neural network, which includes the following steps: 1. Establishing a foggy image training set; 2. Dehazing a single random hazy image; 3. Calculate the loss objective function of the original single hazy image; 4. Update the weight parameter set; 5. Retrieve a new single random hazy image, and loop from step 2 to step 4 until the loss objective function of the original single hazy image Less than the loss objective function threshold, determine the final cascade dehazing model; 6. Dehaze a single actual hazy image. This invention uses convolutional neural networks to estimate dark channels and global illumination parameters on images of different scales, and then fuses dark channels and dehazed images step by step, and finally obtains dehazed images through supervised learning, effectively utilizing the power of deep neural networks. Feature modeling capabilities enable parameter fusion at different scales, enabling high-resolution dehazing images to be obtained with fewer model parameters.

Owner:ROCKET FORCE UNIV OF ENG

Optimization method and system of stacked one-dimensional convolutional network wake-up acoustic model

ActiveCN113129873AExpand the receptive fieldImprove wake-up accuracySustainable transportationSpeech recognitionTime domainActivation function

The embodiment of the invention provides an optimization method of a stacked one-dimensional convolutional network wake-up acoustic model. The method comprises the following steps: adjusting an expansion coefficient of a time domain convolutional layer in a stacked one-dimensional convolutional network wake-up acoustic model, and increasing a receptive field output by the time domain convolutional layer; and setting an activation function of the time domain convolution layer as a gated linear unit, and combining the gated linear unit with the output of the time domain convolution layer to reduce the dimension of the output of the time domain convolution layer so as to optimize the stacked one-dimensional convolution network wake-up acoustic model. The embodiment of the invention further provides a system for optimizing the stacked one-dimensional convolutional network wake-up acoustic model. According to the embodiment of the invention, the interval of the convolution kernel causes the increase of the receptive field, so that the receptive field of the model is effectively increased, the wake-up precision is improved, meanwhile, after the gating linear unit is combined with the S1DCNN model, the output dimension can be reduced to half of the original dimension, the model parameter quantity is better compressed, and a higher wake-up rate can be achieved under the same parameter quantity.

Owner:AISPEECH CO LTD

Construction Method of Fabric Defect Recognition System Based on Lightweight Convolutional Neural Network

ActiveCN110349146BReduce dependenceGuaranteed uptimeImage analysisCharacter and pattern recognitionAlgorithmEngineering

The present invention proposes a construction method of a fabric defect recognition system based on a lightweight convolutional neural network, the steps of which are as follows: firstly, configure the operating environment of the fabric defect recognition system, and obtain lightweight convolutions according to factorable convolutions Then, collect fabric image sample data, and standardize the fabric image sample data, the standardized fabric image sample data is divided into training image set and test image set, and then use the training strategy of asynchronous gradient descent to train the image set Input the lightweight convolutional neural network for training to obtain the LZFNet-Fast model. Finally, input the test image set into the LZFNet-Fast model for testing to verify the performance of the LZFNet-Fast model. The invention uses a factorable convolution structure to replace the standard convolution layer, effectively recognizes colored fabrics with complex textures, reduces the number of parameters and calculations of the model, and greatly improves the recognition efficiency.

Owner:ZHONGYUAN ENGINEERING COLLEGE

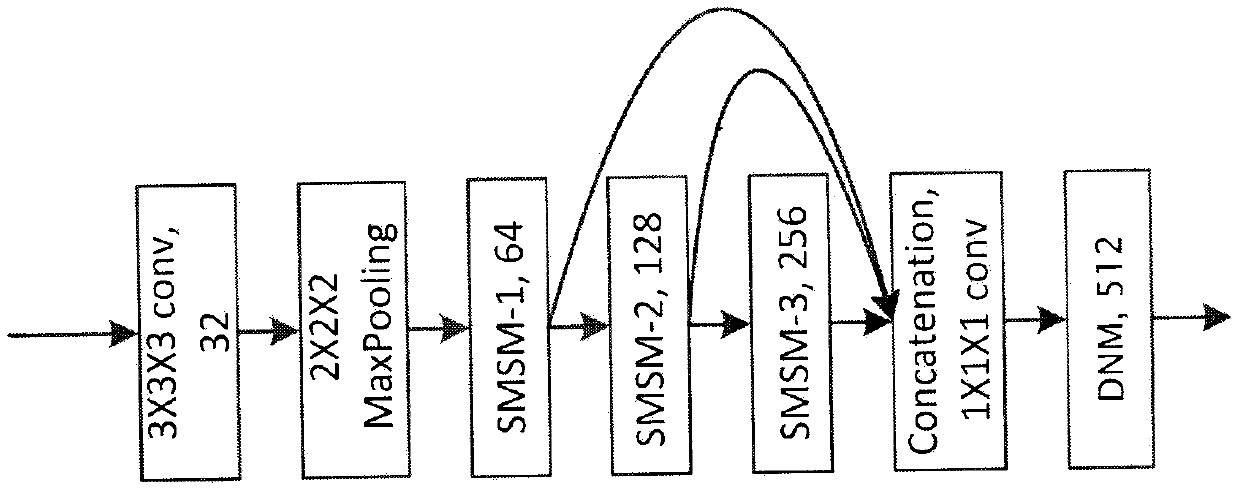

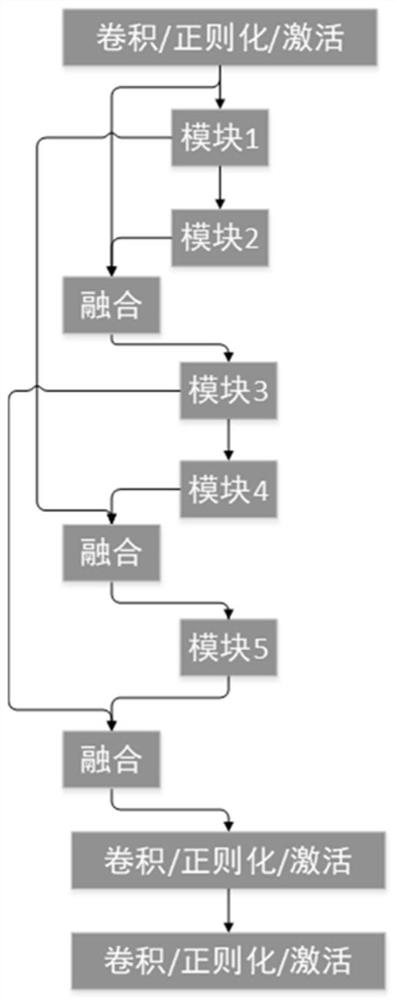

A Real-time Detection Method of Pedestrians and Vehicles Based on Lightweight Deep Network

ActiveCN107578091BEfficient detectionEasy to detectCharacter and pattern recognitionNeural architecturesFeature extractionComputation complexity

The present invention provides a real-time detection method for pedestrians and vehicles based on a lightweight deep network. The lightweight deep network uses 5 modules + 3 kinds of convolution operations, and the meta-module only contains 2 kinds of convolution operations to realize the feature extraction function. . The jump connection mode between different modules and the more robust feature spectrum fusion technology of the present invention enable the network to achieve a better detection effect on pedestrians and vehicles when the model parameters are small, and can effectively detect images or Pedestrian vehicles in the video. The new deep network proposed by the present invention has the advantages of small model parameters, small computational complexity and high detection accuracy, and can realize real-time detection of pedestrians and vehicles on the embedded platform, and has good practicability and real-time performance .

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Image head counting method and device based on context attention

PendingCN114581849AImprove counting efficiencyReduce the amount of model parametersCharacter and pattern recognitionNeural architecturesPattern recognitionChannel density

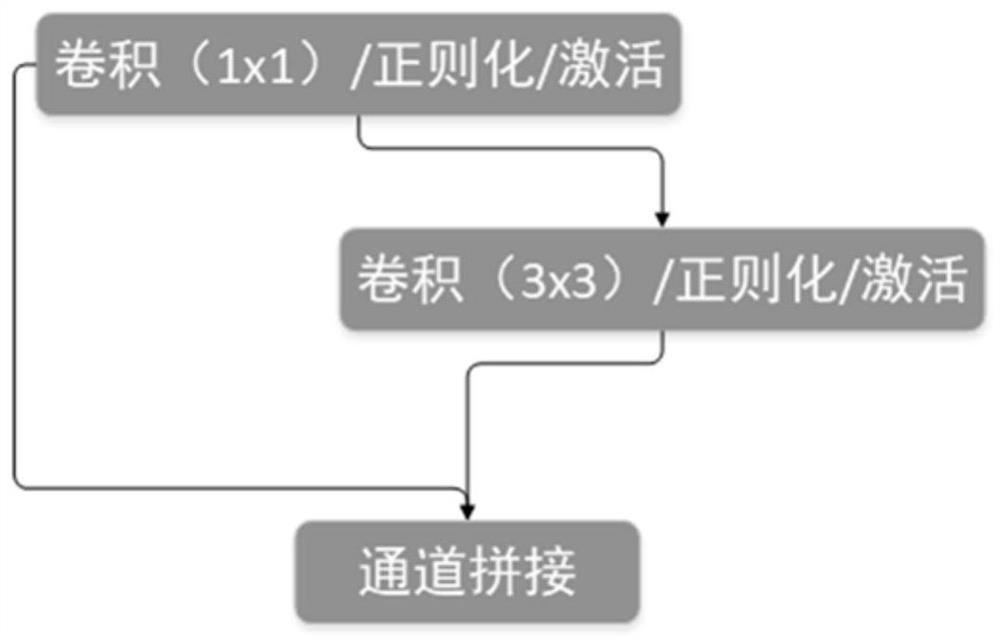

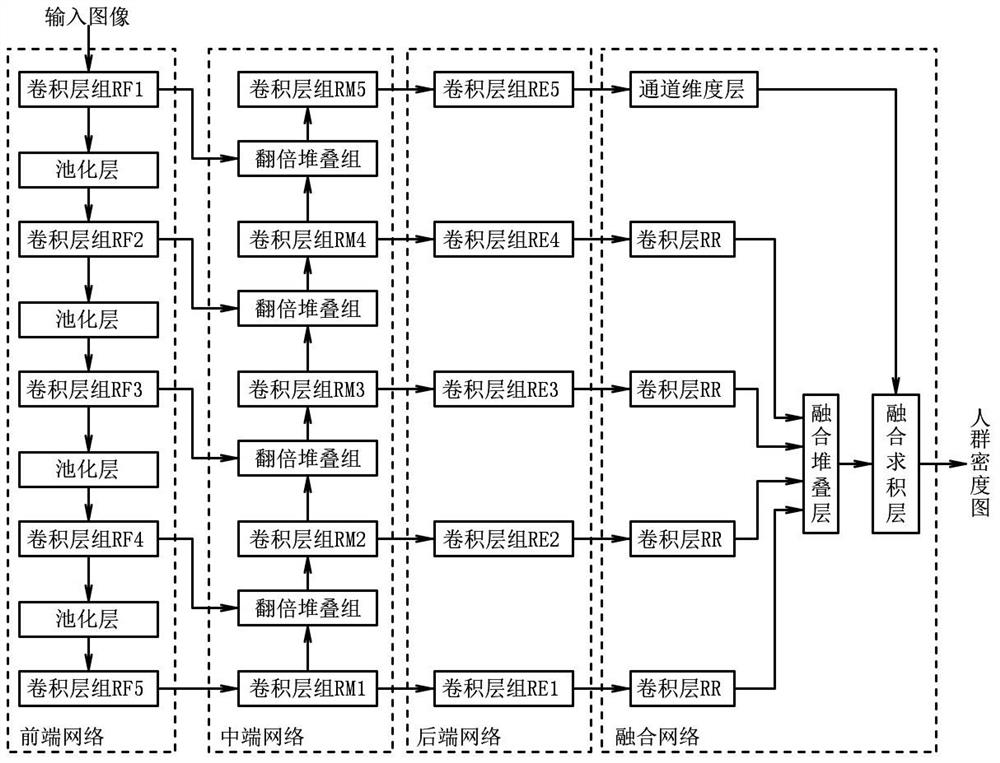

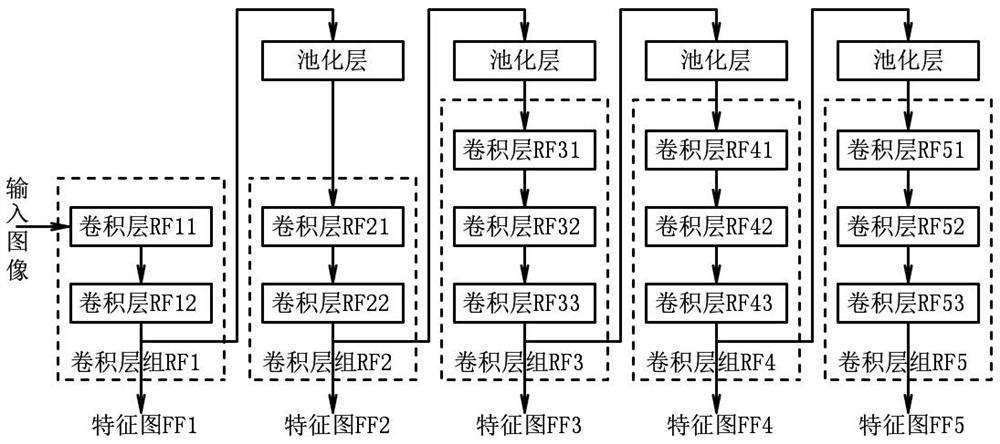

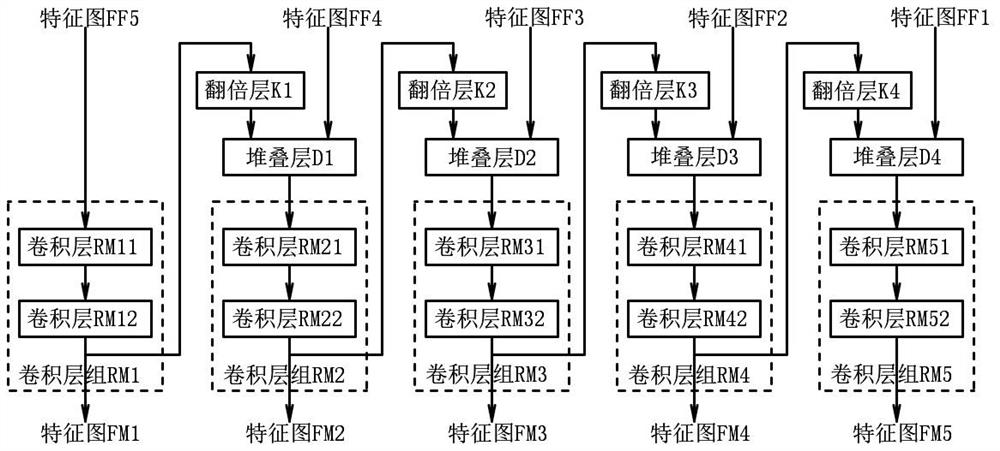

The invention discloses a picture head counting method and device based on context attention. According to the method, a part of a convolutional layer of VGG16 is used as a front-end network, four 64-channel density maps are generated in a middle-end network and a rear-end network, context feature sampling is introduced, and a four-channel coefficient feature map is formed. And further performing convolution and stacking on the four 64-channel density maps in the fusion network to obtain a four-channel intermediate density map. The intermediate density map and the coefficient feature map after Sigmoid or Softmax operation are multiplied pixel by pixel and then fused, a final crowd density map is obtained, and finally the number of people is obtained according to integral accumulation of the crowd density map. Compared with head counting based on a multi-column neural network, the counting efficiency can be improved to a certain extent, and compared with existing counting, the accuracy is greatly improved.

Owner:JIANGSU WISEDU INFORMATION TECH

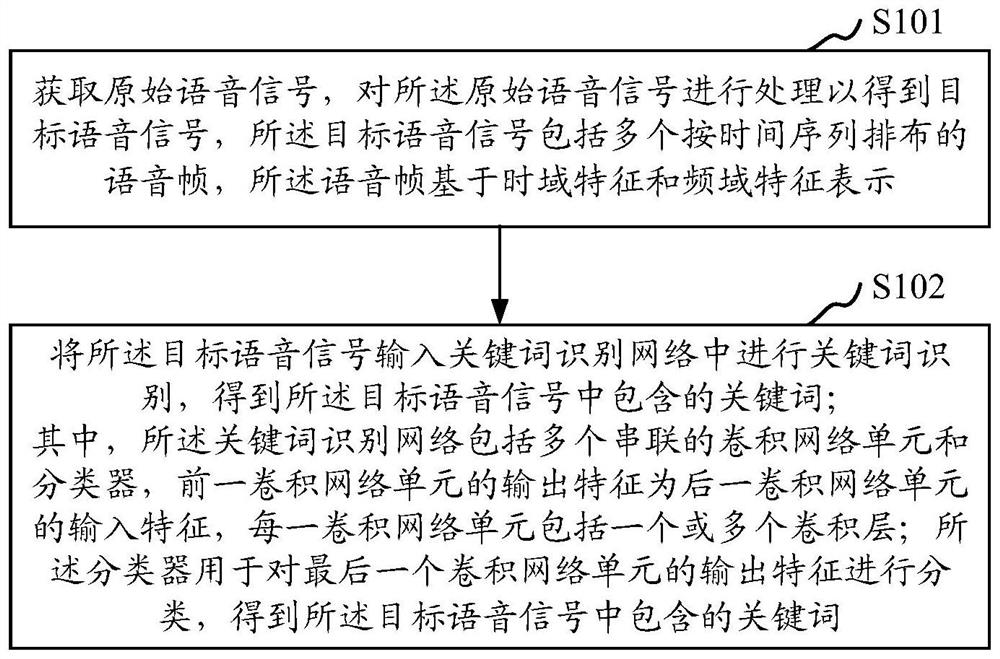

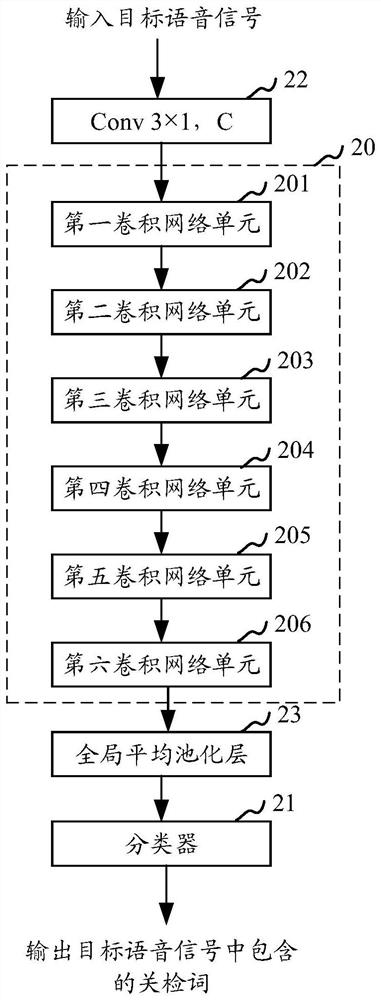

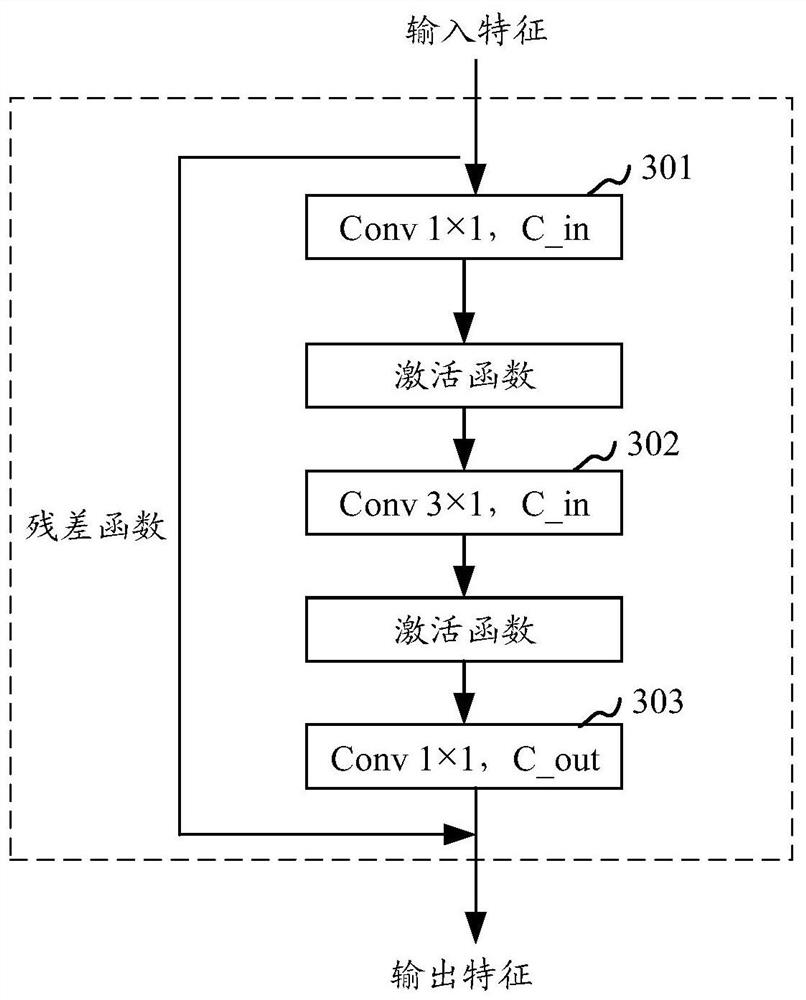

Keyword identification method and device, storage medium and computer equipment

The invention discloses a keyword identification method and device, a storage medium and computer equipment, and the method comprises the steps: obtaining an original voice signal, and carrying out the framing processing of the original voice signal to obtain a target voice signal, wherein the target voice signal comprises a plurality of voice frames which are arranged according to a time sequence, and the voice frame is represented based on a time domain feature and a frequency domain feature; and inputting the target voice signal into a keyword identification network for keyword identification to obtain a keyword contained in the target voice signal, wherein the keyword identification network comprises a plurality of convolutional network units connected in series and a classifier, the output feature of the previous convolutional network unit is the input feature of the next convolutional network unit, each convolutional network unit comprises one or more convolutional layers, and the classifier is used for classifying the output features of the last convolutional network unit to obtain keywords contained in the target voice signal. The identification precision can be considered while the model parameter quantity is reduced.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

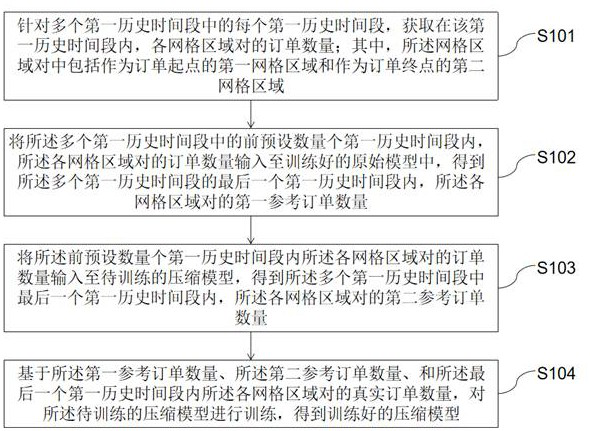

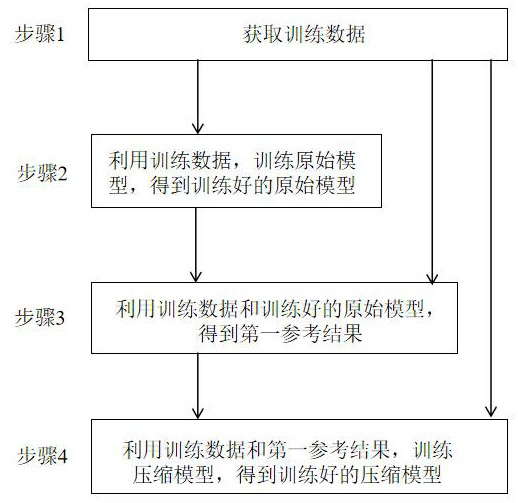

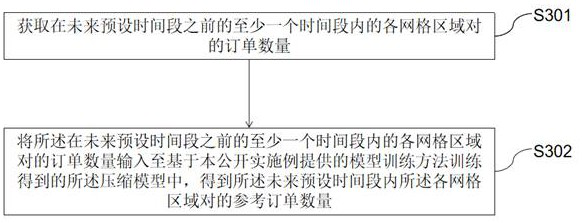

Model training method for determining order quantity, method and device for determining order quantity

ActiveCN114386880BReduce consumptionReduce the amount of model parametersResourcesMachine learningTime segmentOrder form

The present disclosure provides a model training method, a demand determination method, and an apparatus, wherein the model training method includes: for each first historical time period in a plurality of first historical time periods, obtaining data obtained in the first historical time period The number of orders for each grid area pair in the internal model; input the order quantity of each grid area pair in the first historical period of the previous preset number into the original model to obtain the first reference order quantity; The order quantity of each grid area pair in a historical time period is input into the compression model, and the second reference order quantity is obtained; based on the first reference order quantity, the second reference order quantity and each grid area in the last first historical time period The compressed model is trained on the number of true orders. The embodiment of the present disclosure compresses the original model through knowledge distillation, and the obtained compressed model uses fewer parameters, which can reduce the consumption of computing resources in the process of determining the number of orders.

Owner:BEIJING QISHENG SCIENCE AND TECHNOLOGY CO LTD +1

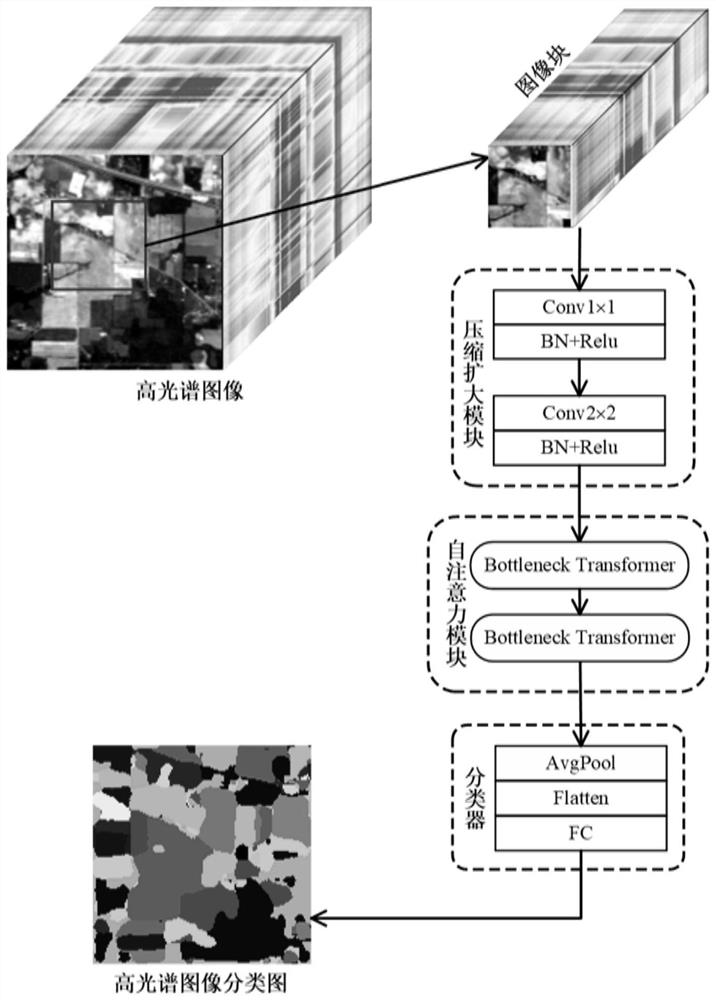

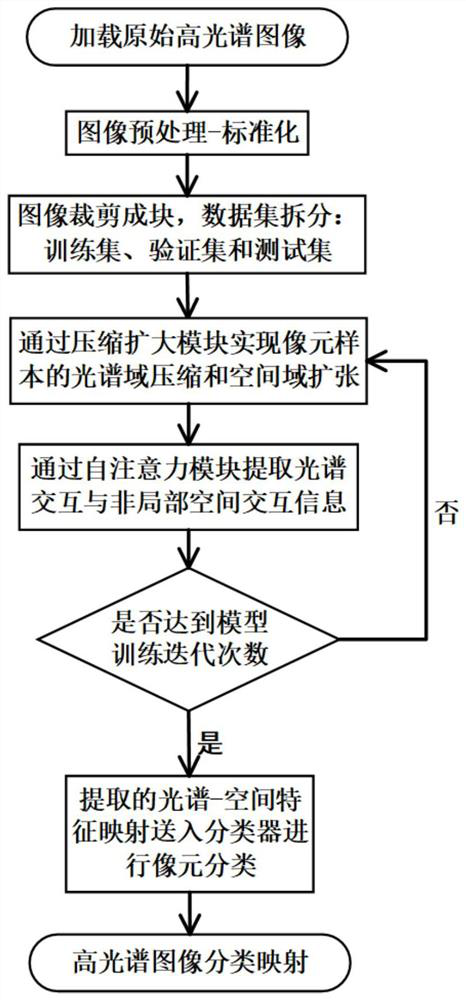

Hyperspectral image classification method and system

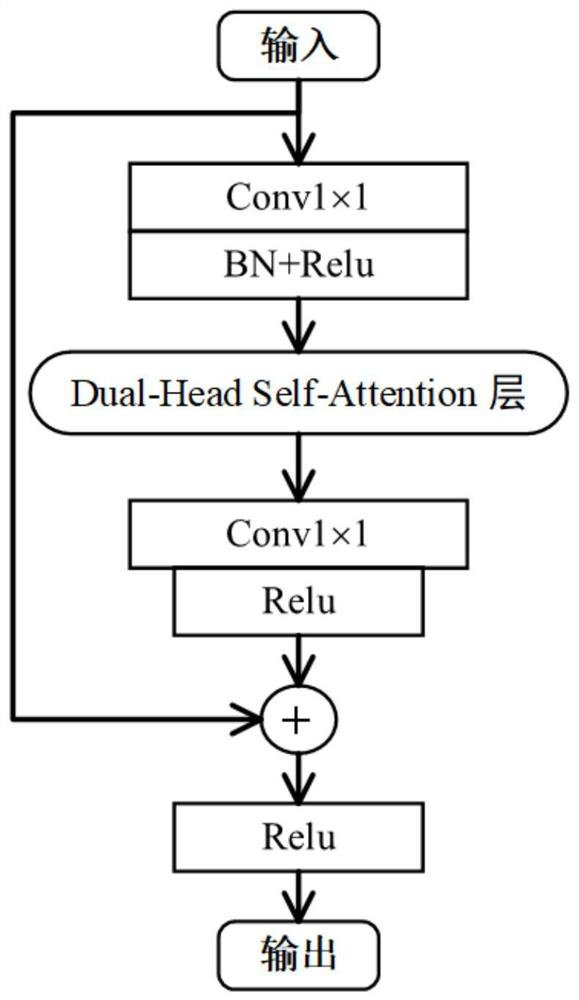

PendingCN114581789AExpand the receptive fieldImprove performanceScene recognitionNeural architecturesPattern recognitionRadiology

The invention provides a hyperspectral image classification method and system, and the method comprises the steps: obtaining a hyperspectral image, and carrying out the preprocessing of the hyperspectral image; performing channel interaction and compression of a pixel spectral dimension and space window expansion and alignment of a pixel sample space dimension on the preprocessed hyperspectral image to obtain mapping features; based on the mapping features, performing information interaction twice in sequence to obtain spectrum-space features; based on the spectrum-space features, a classifier is adopted to obtain the category of each pixel in the obtained hyperspectral image; according to the information interaction, after spectral feature channel compression, non-local space information extraction and spectral feature channel expansion are sequentially carried out on input features, the input features are fused with the input features, and output features are obtained. A receptive field for capturing spatial information is expanded, and richer and more robust spectral spatial information can be captured to efficiently complete a hyperspectral image classification task.

Owner:山东锋士信息技术有限公司

A real-time monocular video depth estimation method

ActiveCN110246171BRun fastReduce the amount of model parametersImage enhancementImage analysisAlgorithmComputer graphics (images)

The invention relates to a real-time monocular video depth estimation method, which combines a two-dimensional convolutional neural network 2D-CNN and a convolutional long-short-term memory network to construct a real-time depth estimation method that can simultaneously utilize spatial and time sequence information for monocular video data. 's model. At the same time, the generated adversarial network GAN is used to constrain the estimated results. In terms of evaluation accuracy, it is comparable to the current state-of-the-art model. In terms of usage overhead, the model runs faster, has fewer model parameters, and requires less computing resources. And the results estimated by this model have good temporal consistency. When depth estimation is performed on consecutive multiple frames, the changes of the obtained depth result map are consistent with the changes of the input RGB map, and there will be no sudden change or jitter.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

A dilated fully convolutional neural network device and its construction method

ActiveCN108717569BSolve the fusion problemSimple structureNeural architecturesPhysical realisationEngineeringDeconvolution

The invention discloses an expanded fully convolutional neural network and a construction method thereof. The neural network includes a sequentially connected convolutional neural network, a feature extraction module, and a feature fusion module. The construction method is as follows: Select the convolutional neural network: remove the fully connected layer and classification layer used for classification in the convolutional neural network, leaving only the middle convolutional layer and pooling layer, and remove the convolutional layer and pooling layer Extract the feature map; construct the feature extraction module: the feature extraction module includes a plurality of series-connected expansion upsampling modules, and each expansion upsampling module includes a feature map merging layer, an expansion convolution layer and a deconvolution layer; construct Feature fusion module: The feature fusion module consists of a dense dilated fusion convolution block and a deconvolution layer. The invention effectively solves the problem of feature extraction and fusion in the convolutional neural network, and can be applied to the pixel-level labeling task of images.

Owner:ARMY ENG UNIV OF PLA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com