Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

47results about How to "Optimize memory usage" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

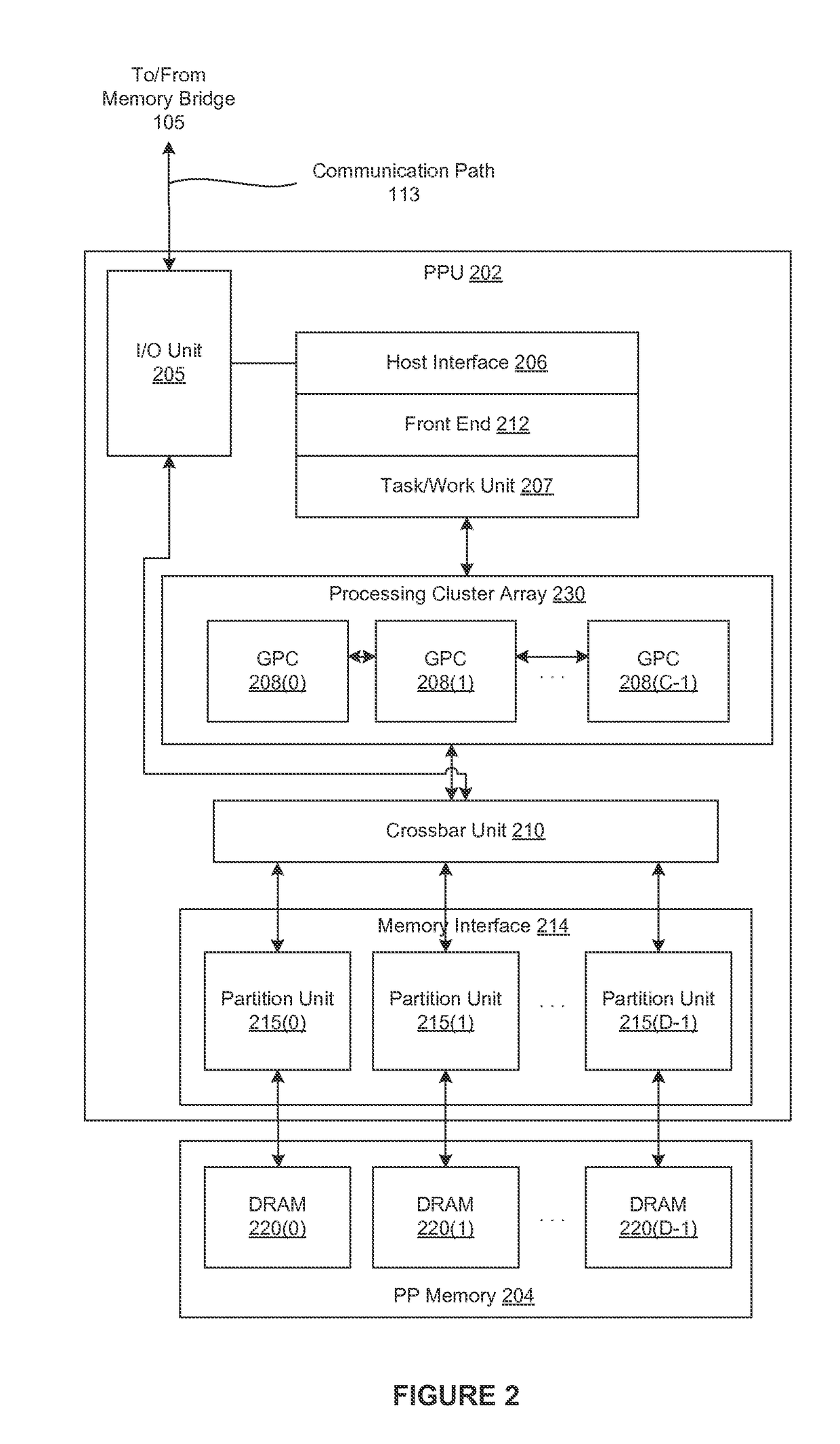

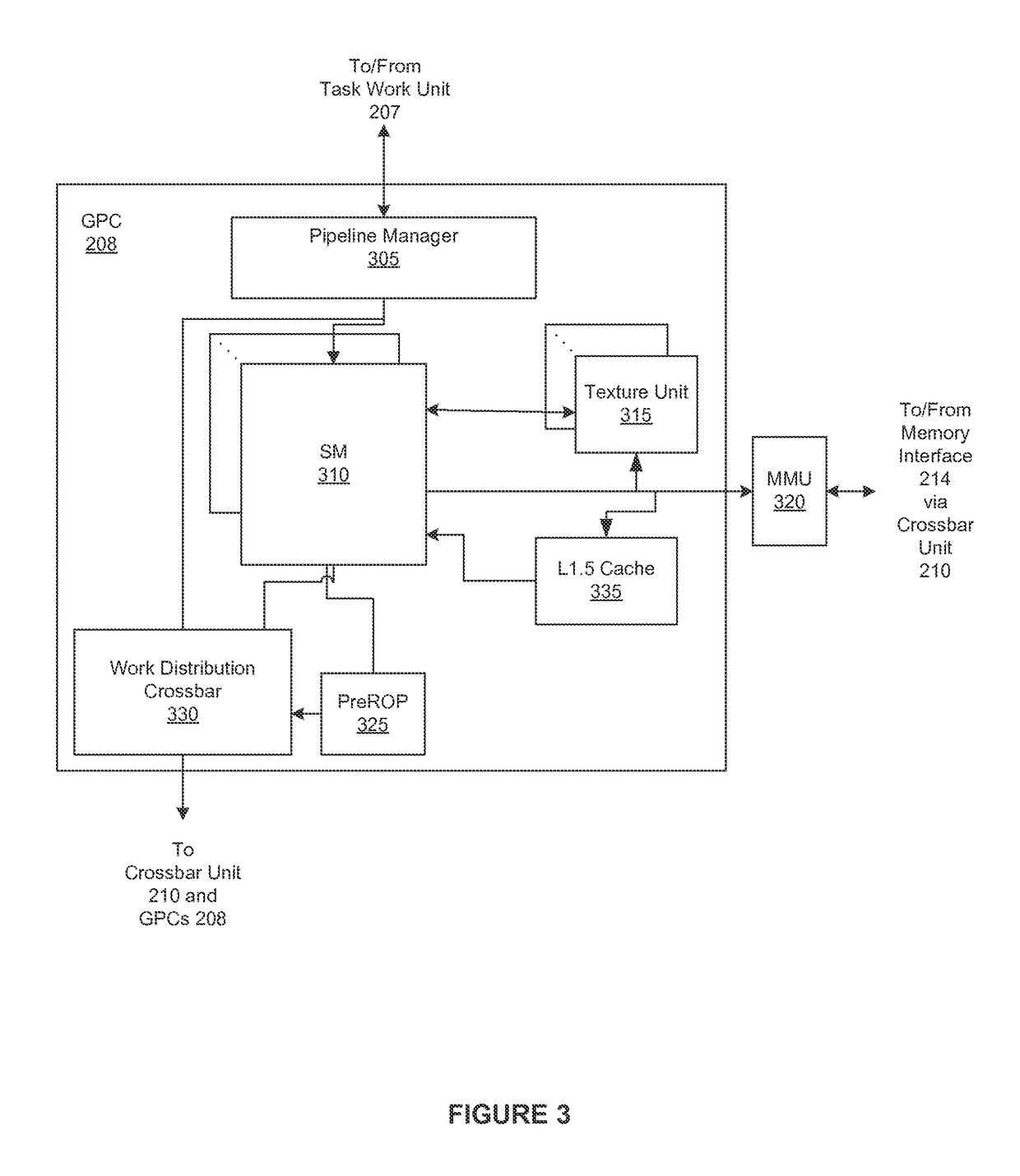

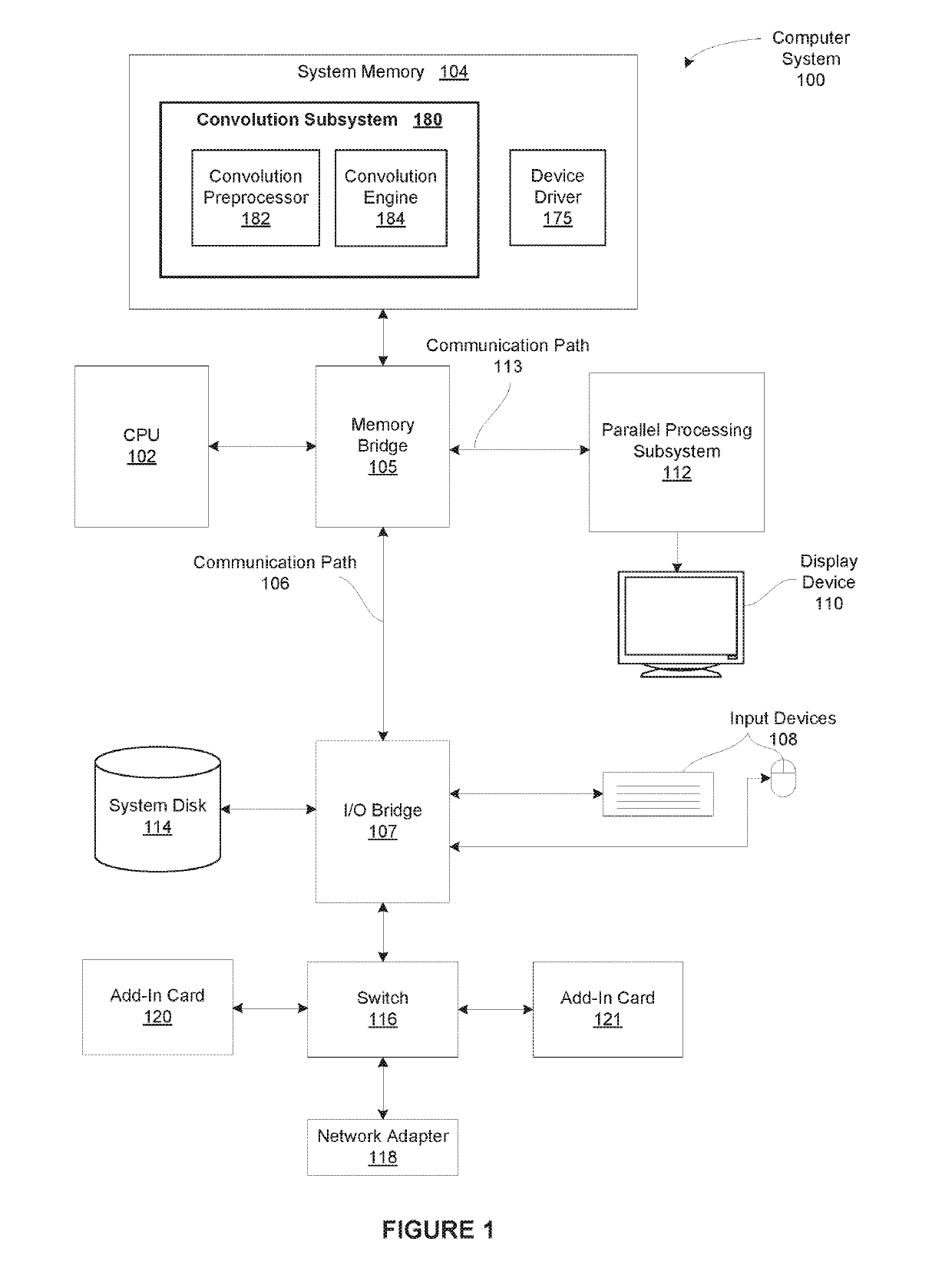

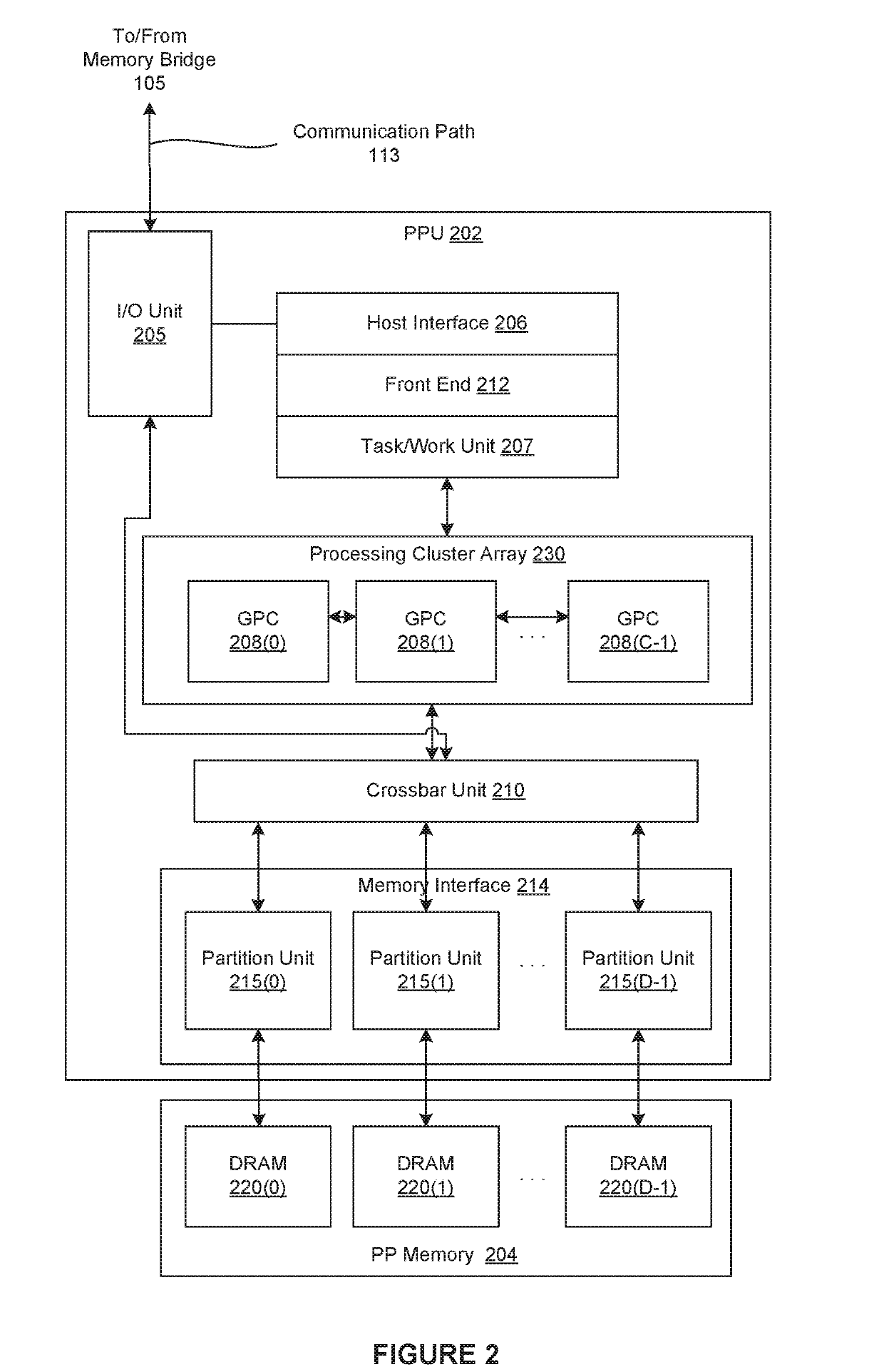

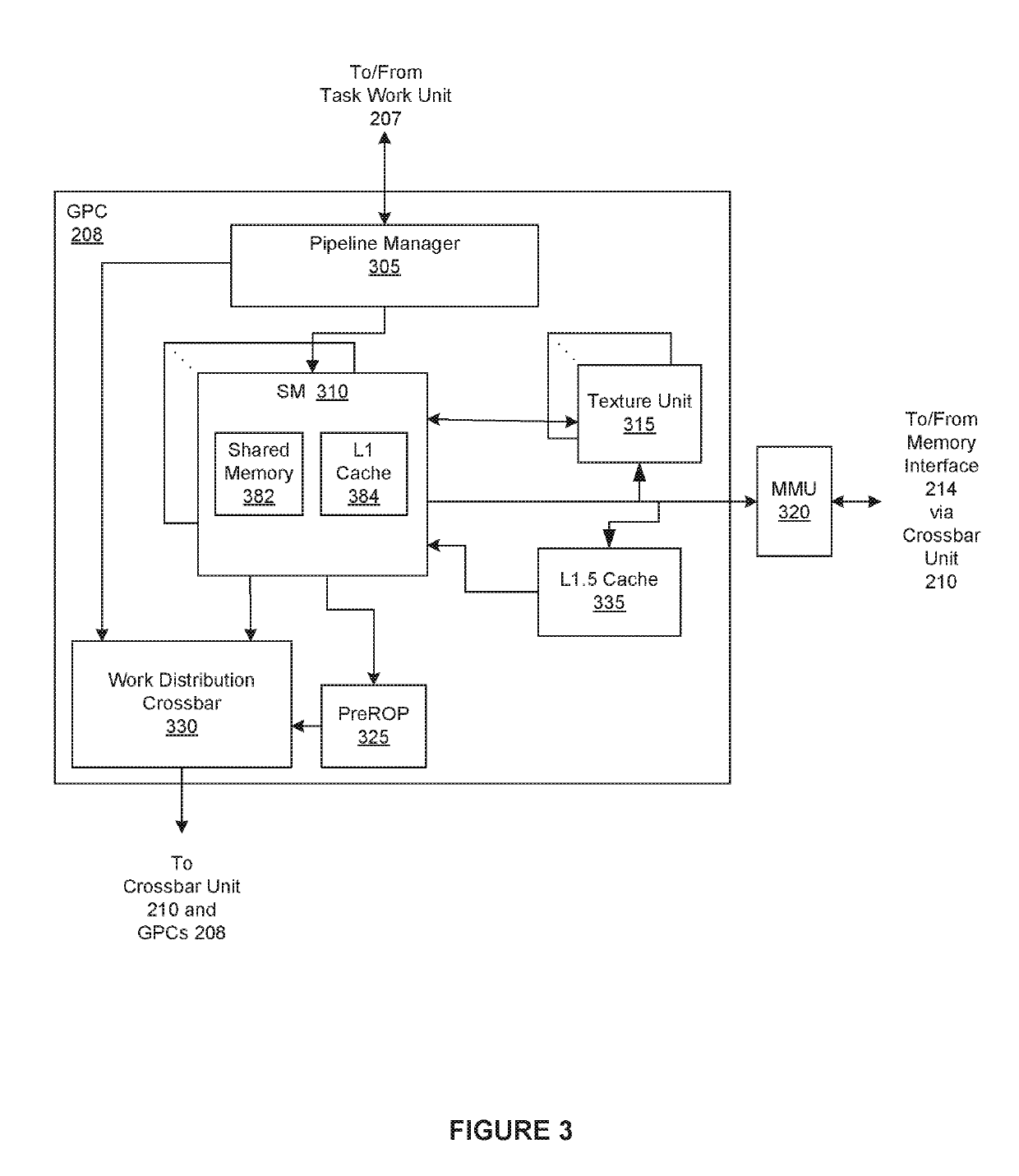

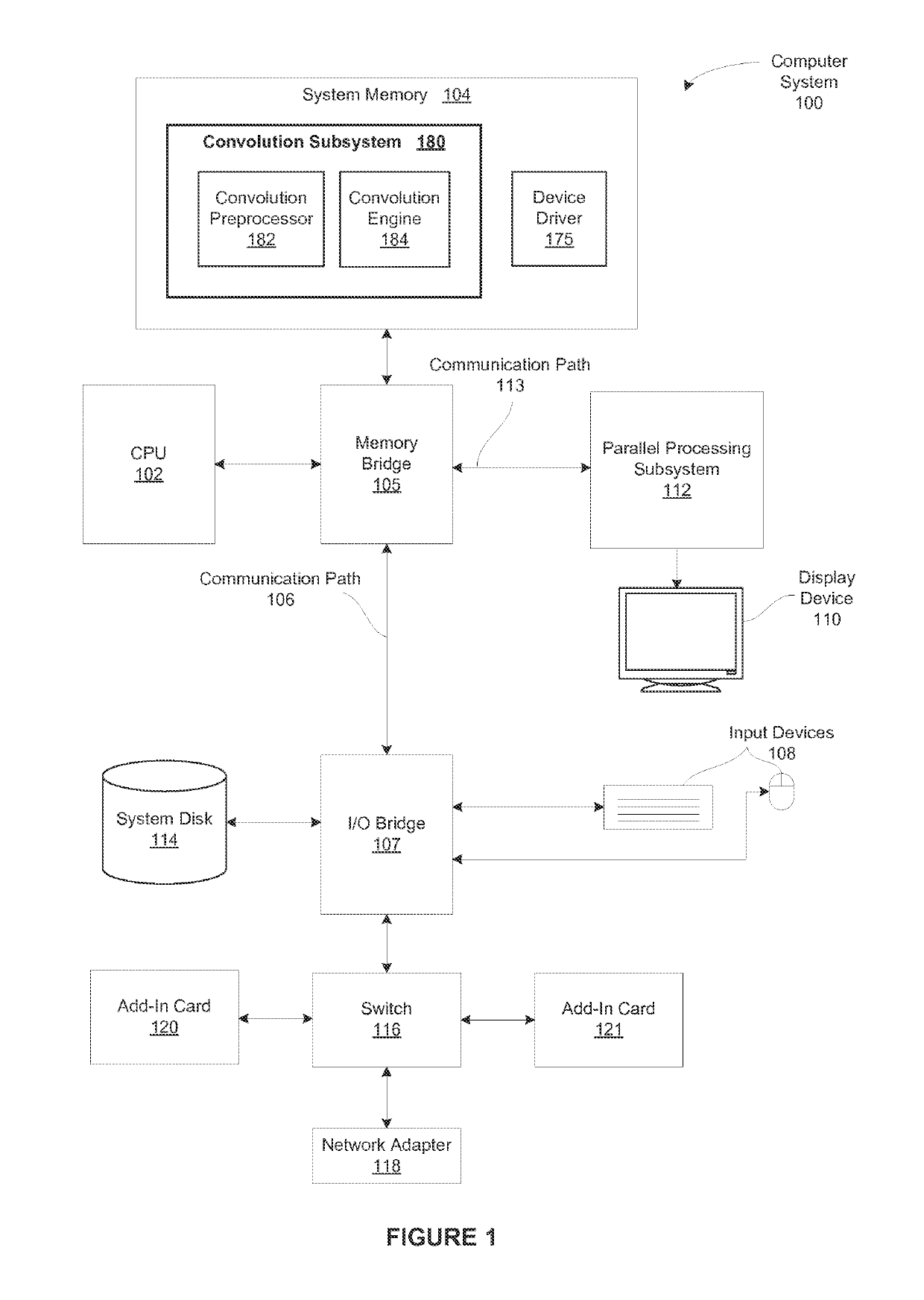

Performing multi-convolution operations in a parallel processing system

ActiveUS20160062947A1Easy to operateOptimizing on-chip memory usageBiological modelsComplex mathematical operationsLine tubingParallel processing

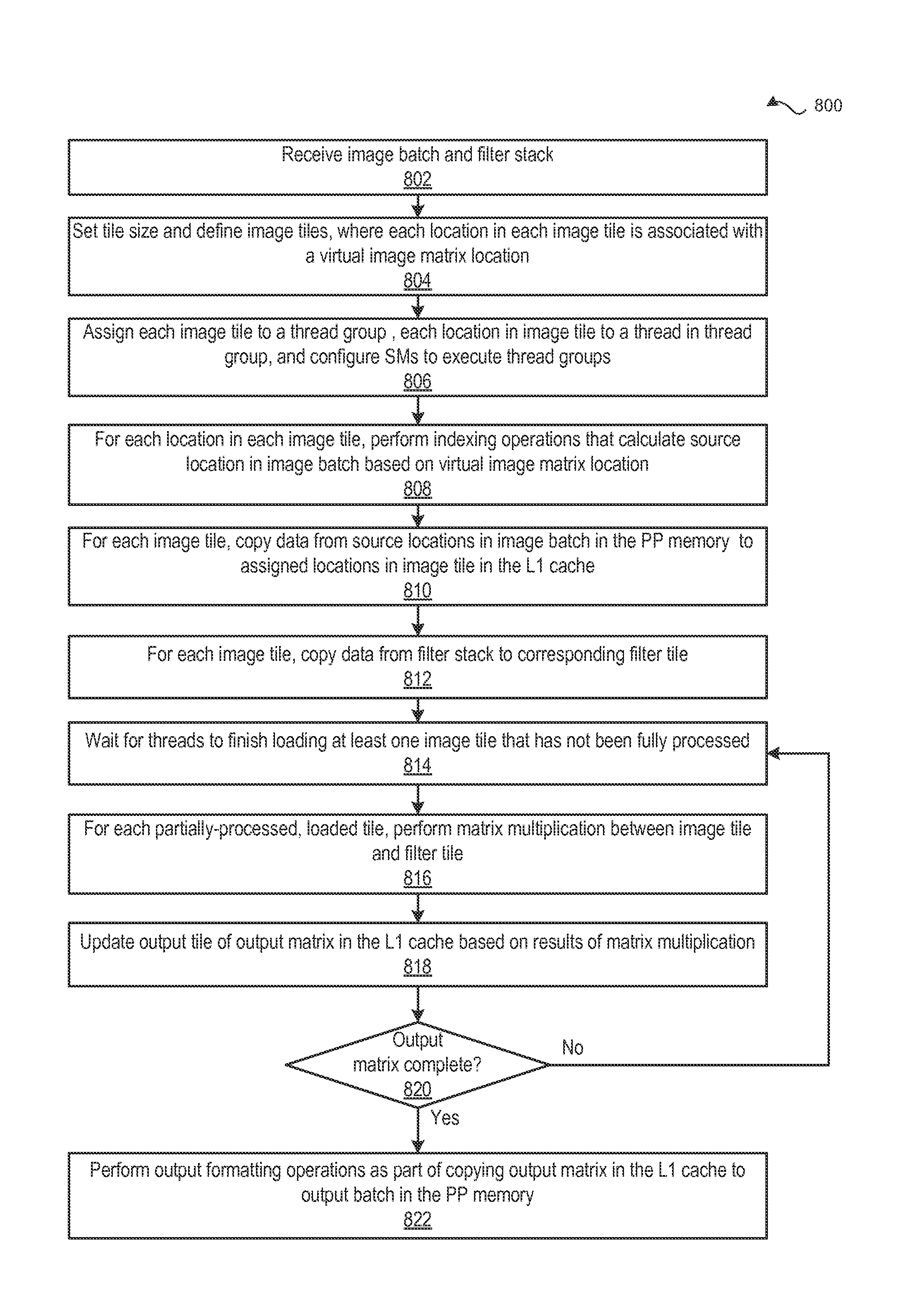

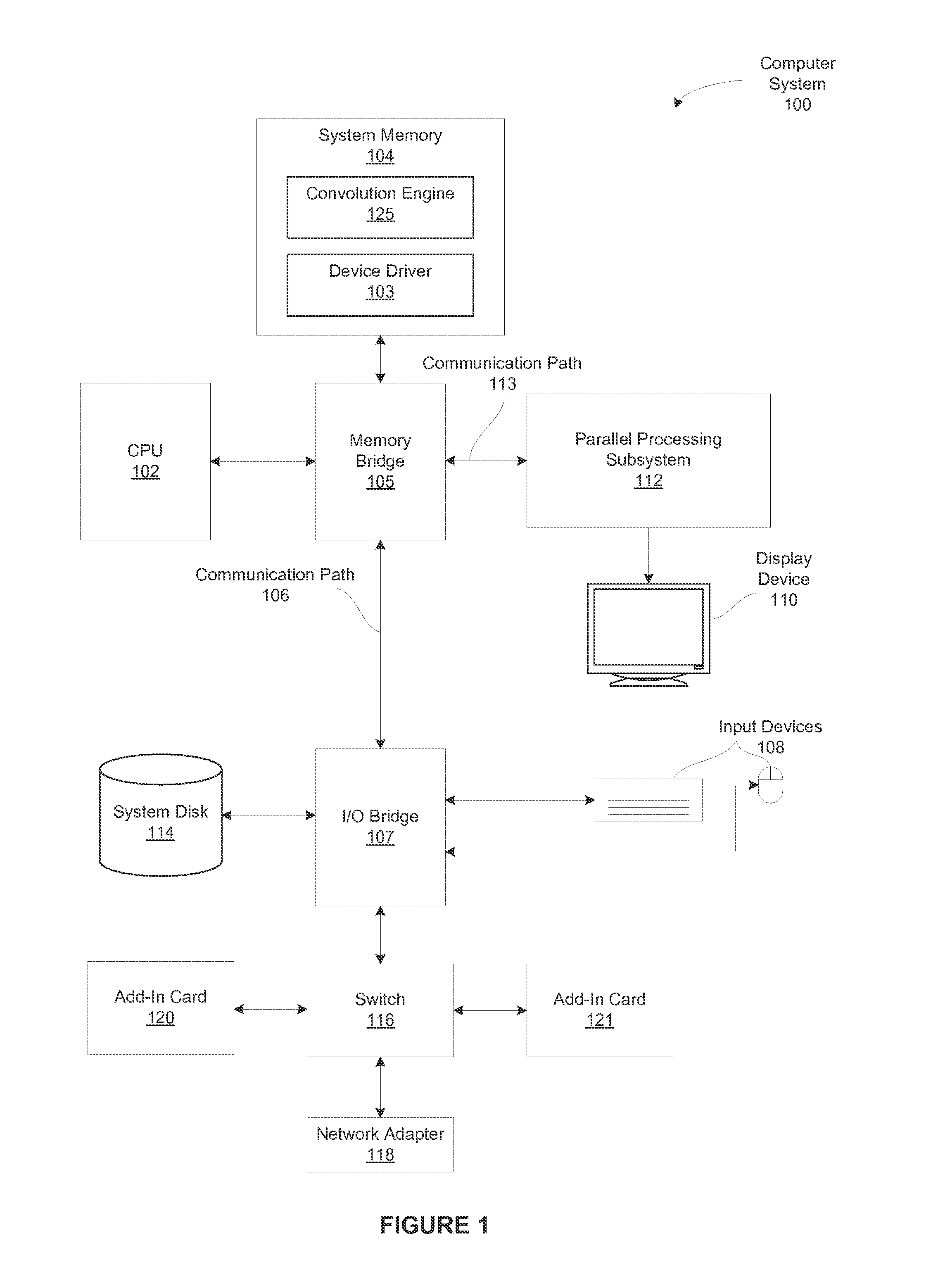

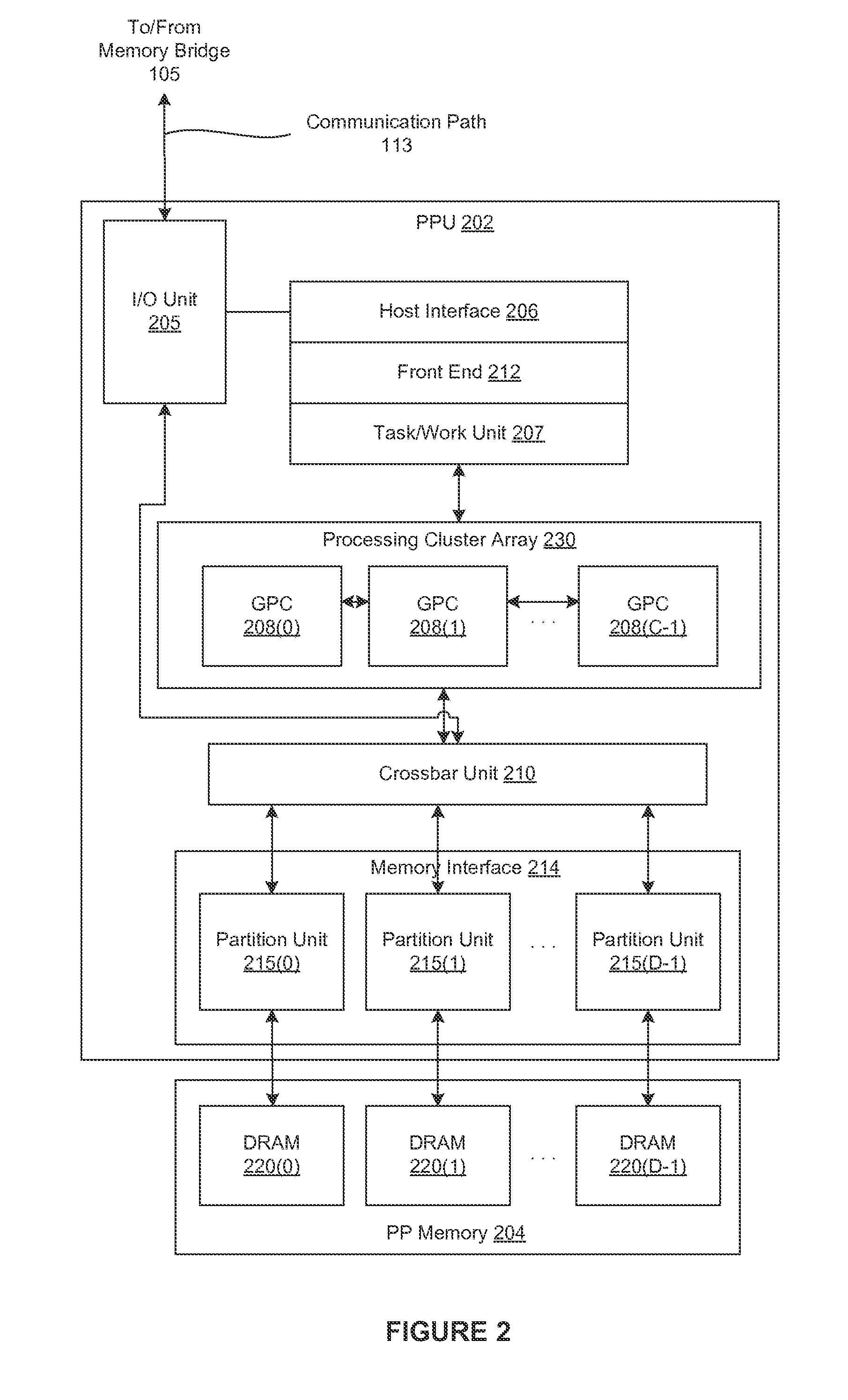

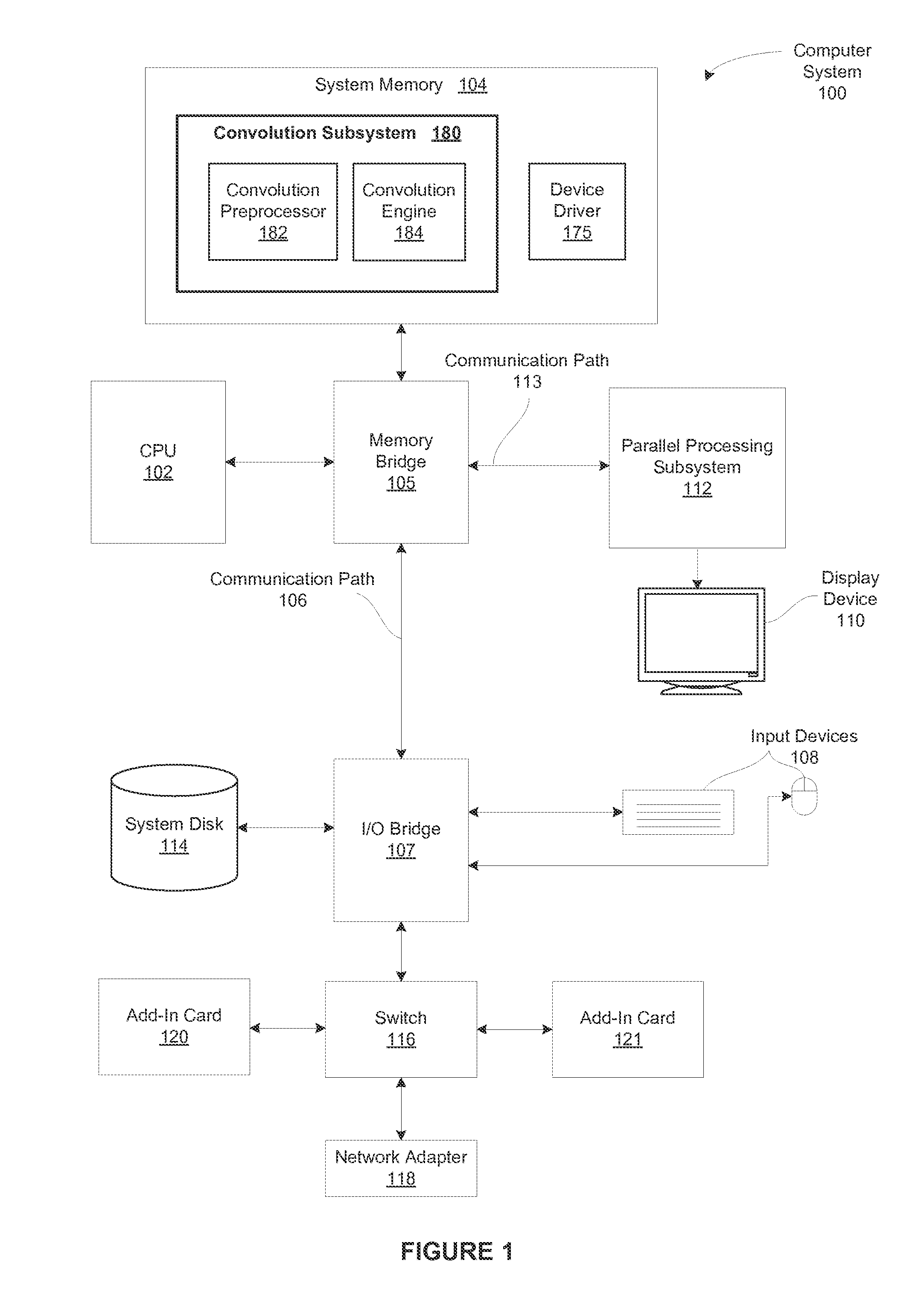

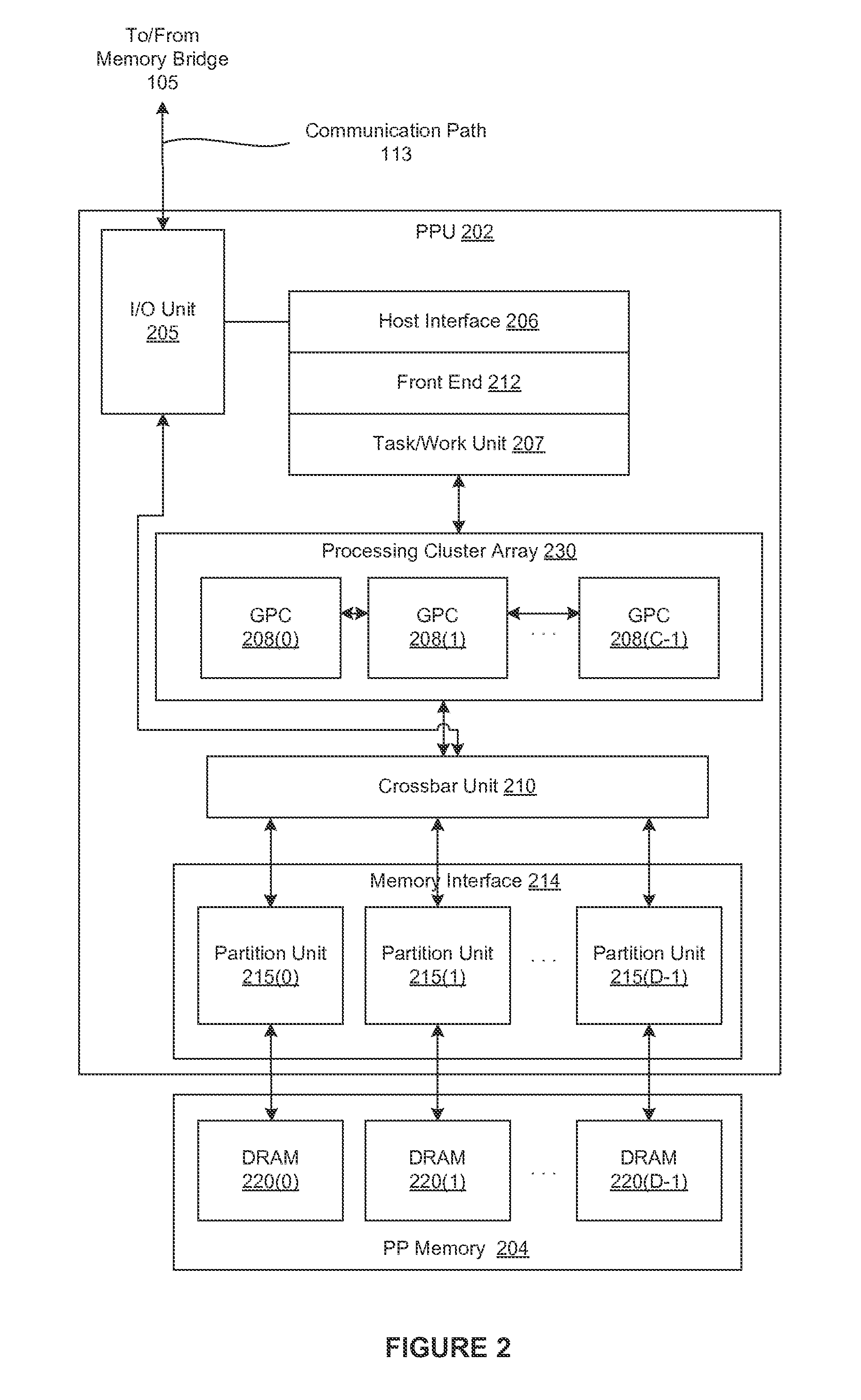

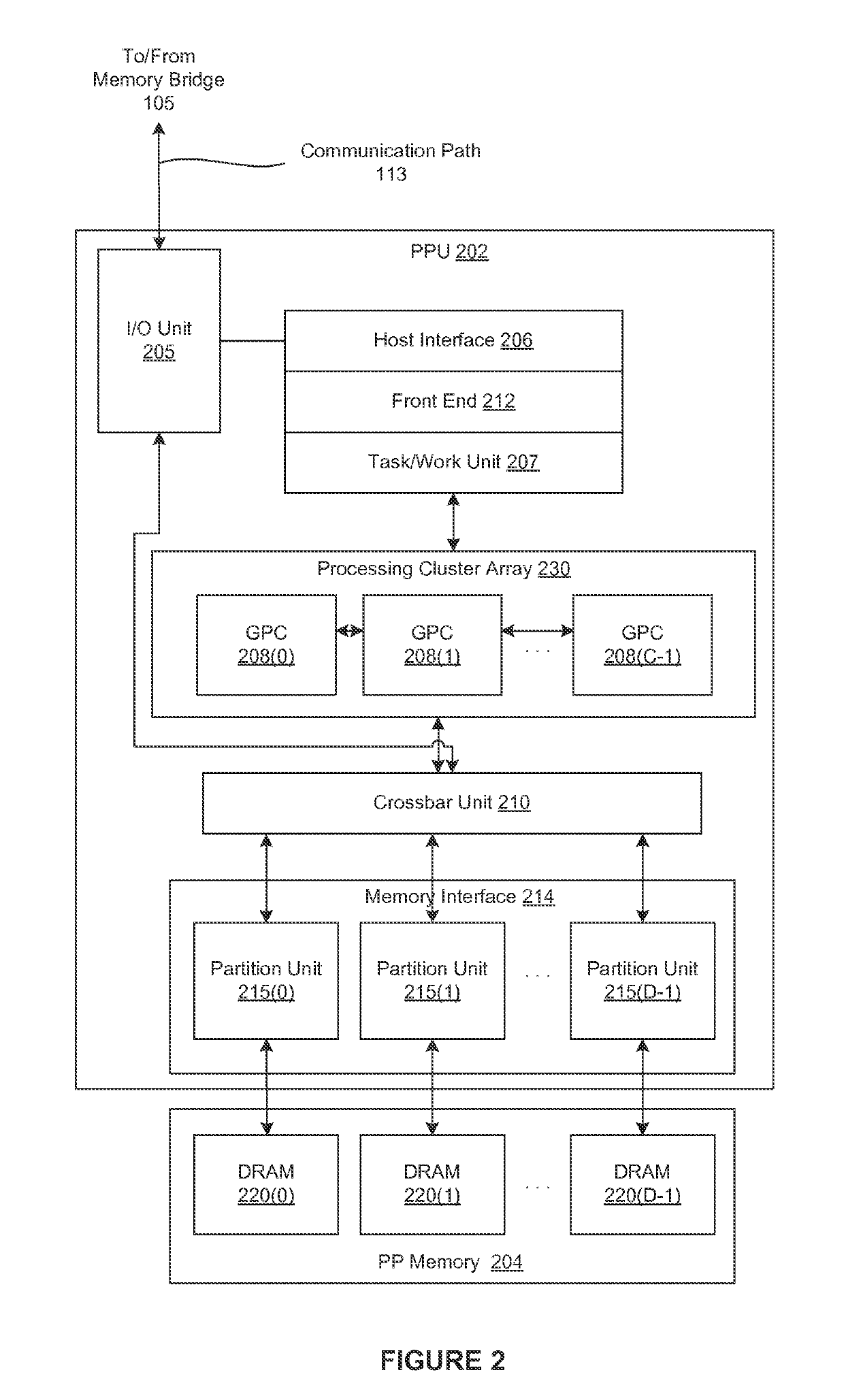

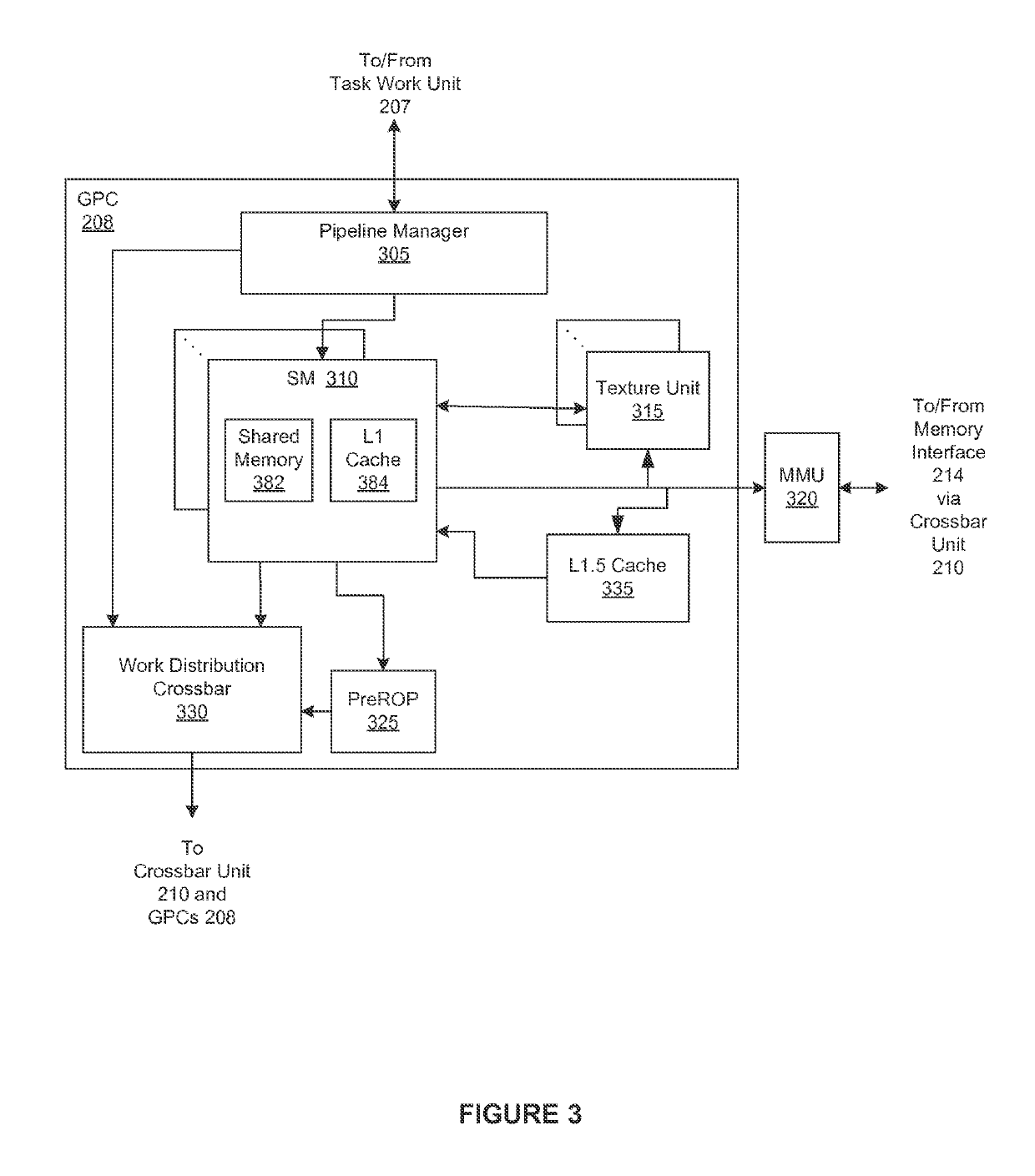

In one embodiment of the present invention a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch. Notably, the source locations reflect the contribution of the image tile to an output tile of an output matrix—the result of the multi-convolution operation. Subsequently, the pipeline copies data from the source locations to the image tile. Similarly, the pipeline copies data from a filter stack to a filter tile. The pipeline then performs matrix multiplication operations between the image tile and the filter tile to generate data included in the corresponding output tile. To optimize both on-chip memory usage and execution time, the pipeline creates each image tile in on-chip memory as-needed.

Owner:NVIDIA CORP

Indirectly accessing sample data to perform multi-convolution operations in a parallel processing system

ActiveUS20160162402A1Optimizing parallel processing memory usageLower latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel processingMatrix multiplication

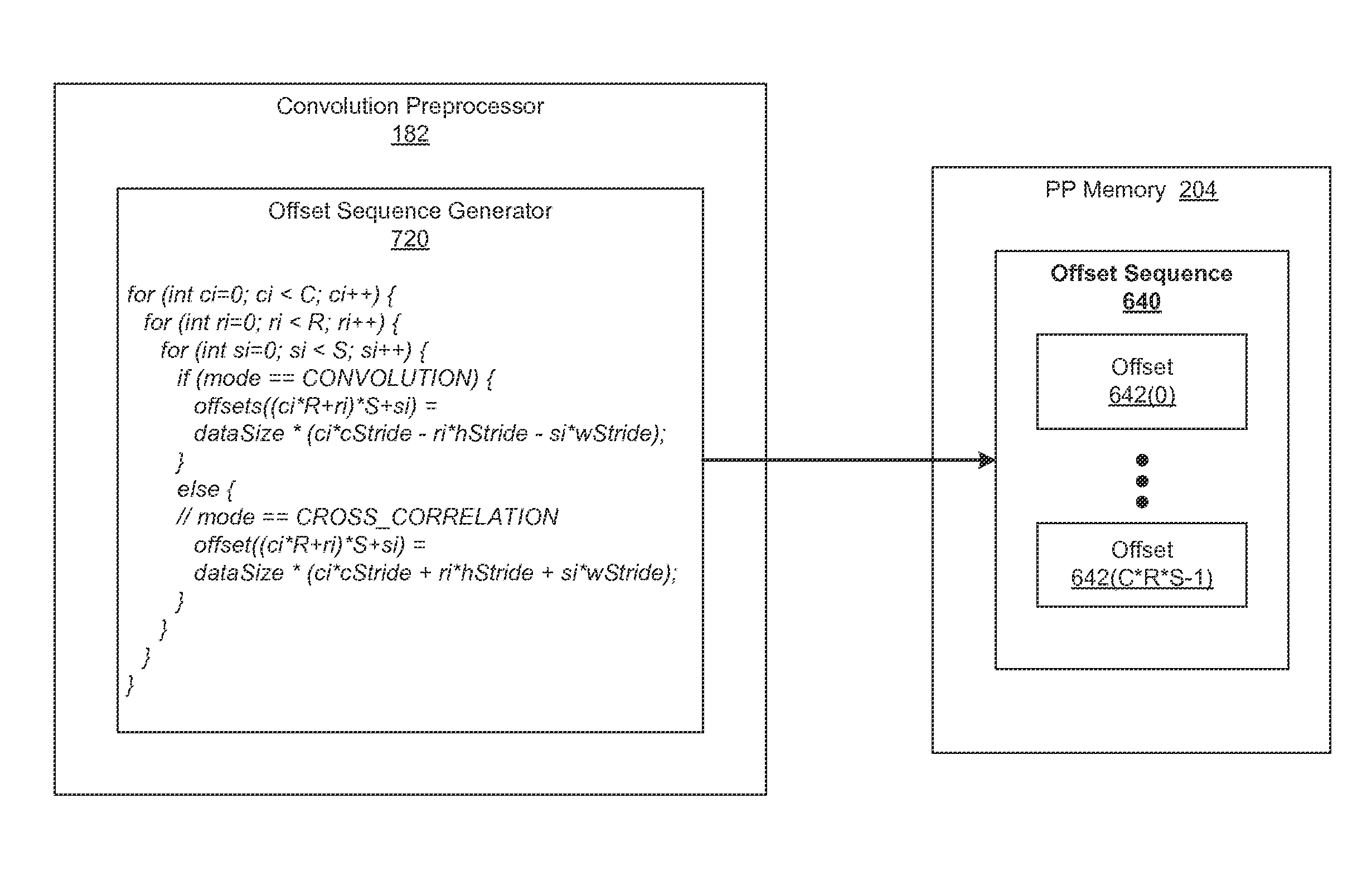

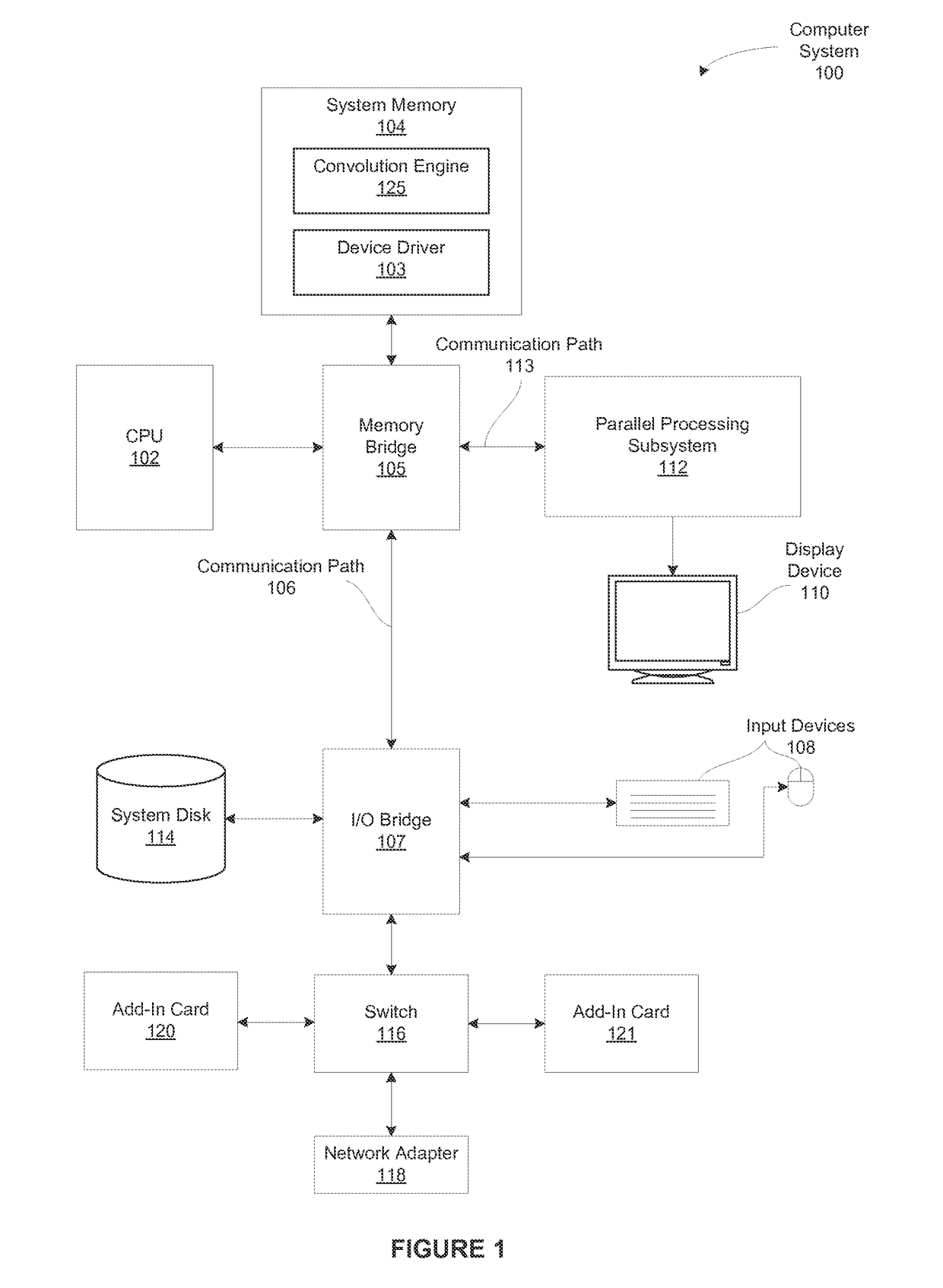

In one embodiment of the present invention, a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch based on one or more start addresses and one or more offsets. Subsequently, the pipeline copies data from the source locations to the image tile. The pipeline then performs matrix multiplication operations between the image tile and a filter tile to generate a contribution of the image tile to an output matrix. To optimize the amount of memory used, the pipeline creates each image tile in shared memory as needed. Further, to optimize the throughput of the matrix multiplication operations, the values of the offsets are precomputed by a convolution preprocessor.

Owner:NVIDIA CORP

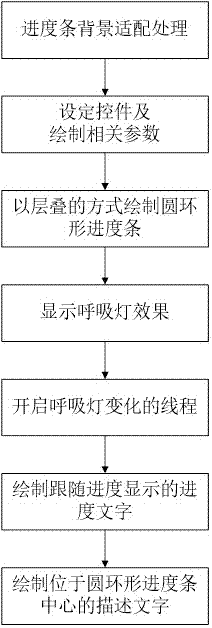

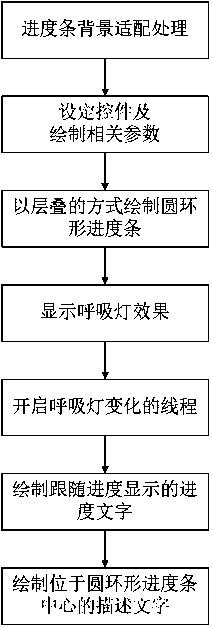

Stacked display algorithm of progress bar for breathing lamp effect

ActiveCN107346250AImprove efficiencyTake up less resourcesExecution for user interfacesProgress barText display

The invention relates to a stacked display algorithm of a progress bar for a breathing lamp effect. The stacked display algorithm comprises the following steps of (1) carrying out background adaptive processing on the progress bar, wherein the progress bar is a circular progress bar, the background of the progress bar is round and the diameter of the circular progress bar is smaller than that of the background of the progress bar; (2) setting a control and drawing related parameters; (3) drawing the circular progress bar in a stacking manner in the background area of the progress bar; (4) displaying the breathing lamp effect; (5) starting a change thread of the breathing lamp; (6) drawing progress text displayed along with the progress; and (7) drawing description text at the center of the circular progress bar. Through the circular progress bar and surrounding of the breathing lamp effect, aesthetic, individual, intuitive and easy-to-understand progress bar showing is achieved, and the algorithm is high in efficiency, few in occupied resources, good in universality and suitable for an Android system.

Owner:BEIJING KUWO TECH

Performing multi-convolution operations in a parallel processing system

ActiveUS10223333B2Easy to operateOptimize memory usageBiological modelsComplex mathematical operationsLine tubingParallel processing

In one embodiment of the present invention a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch. Notably, the source locations reflect the contribution of the image tile to an output tile of an output matrix--the result of the multi-convolution operation. Subsequently, the pipeline copies data from the source locations to the image tile. Similarly, the pipeline copies data from a filter stack to a filter tile. The pipeline then performs matrix multiplication operations between the image tile and the filter tile to generate data included in the corresponding output tile. To optimize both on-chip memory usage and execution time, the pipeline creates each image tile in on-chip memory as-needed.

Owner:NVIDIA CORP

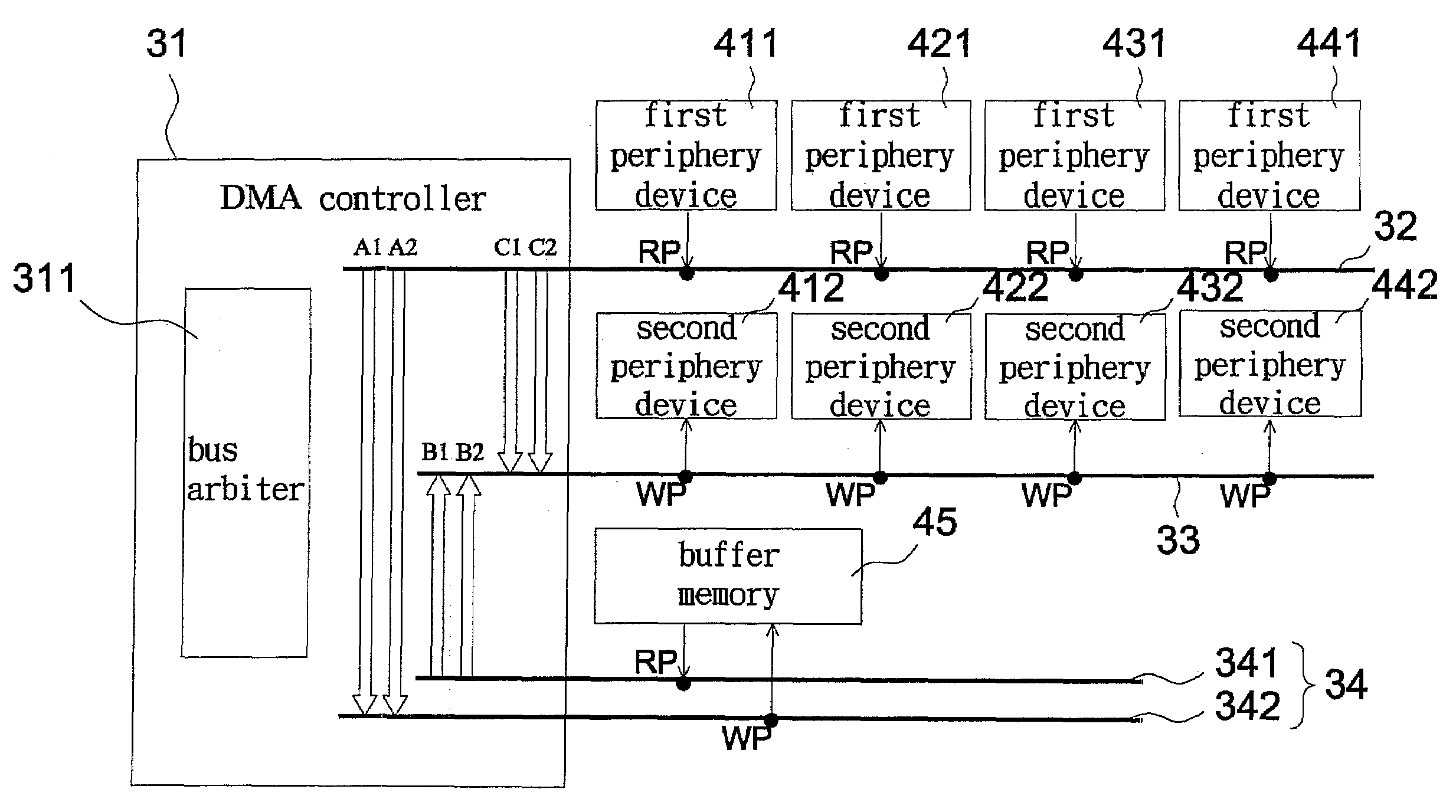

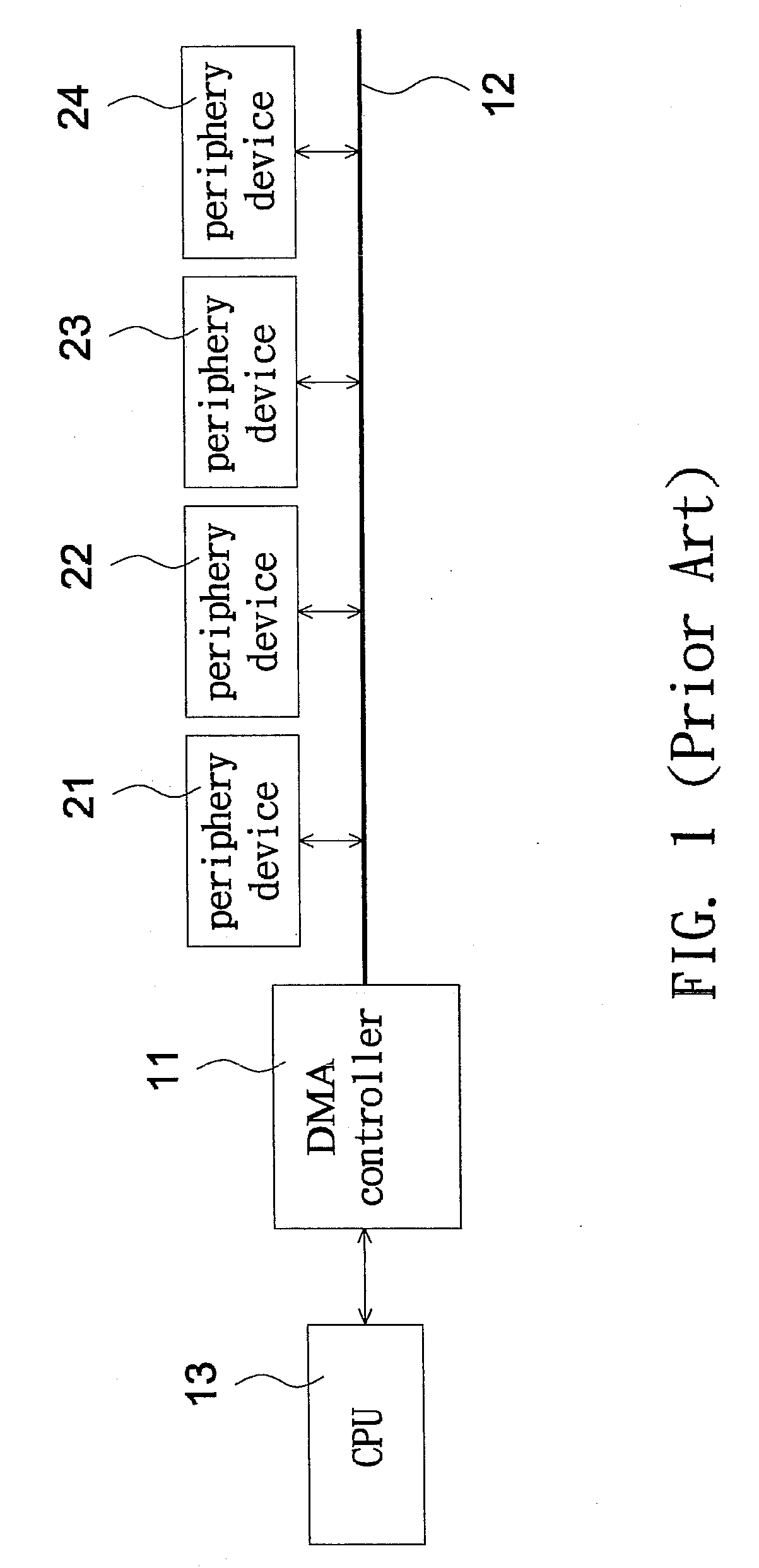

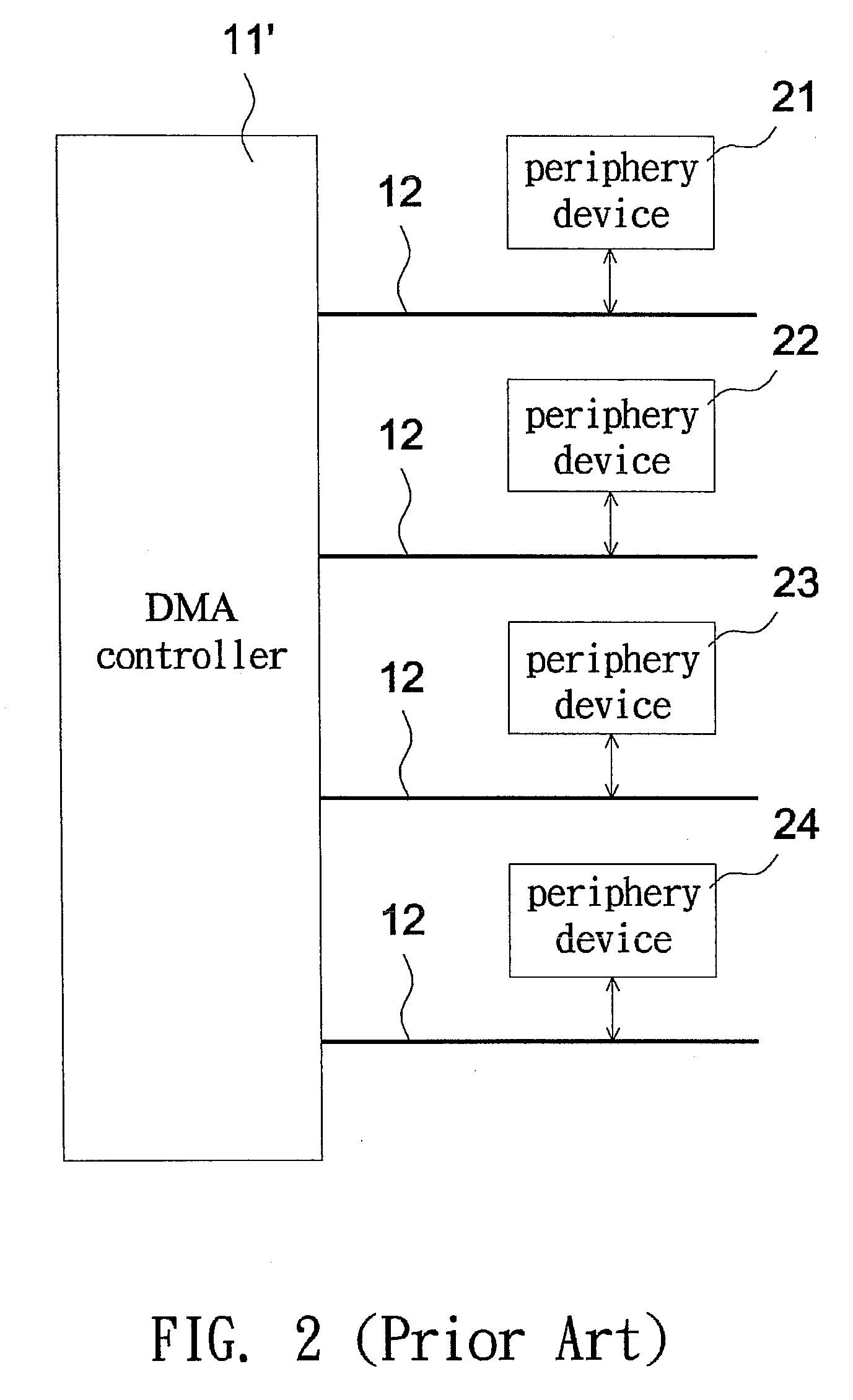

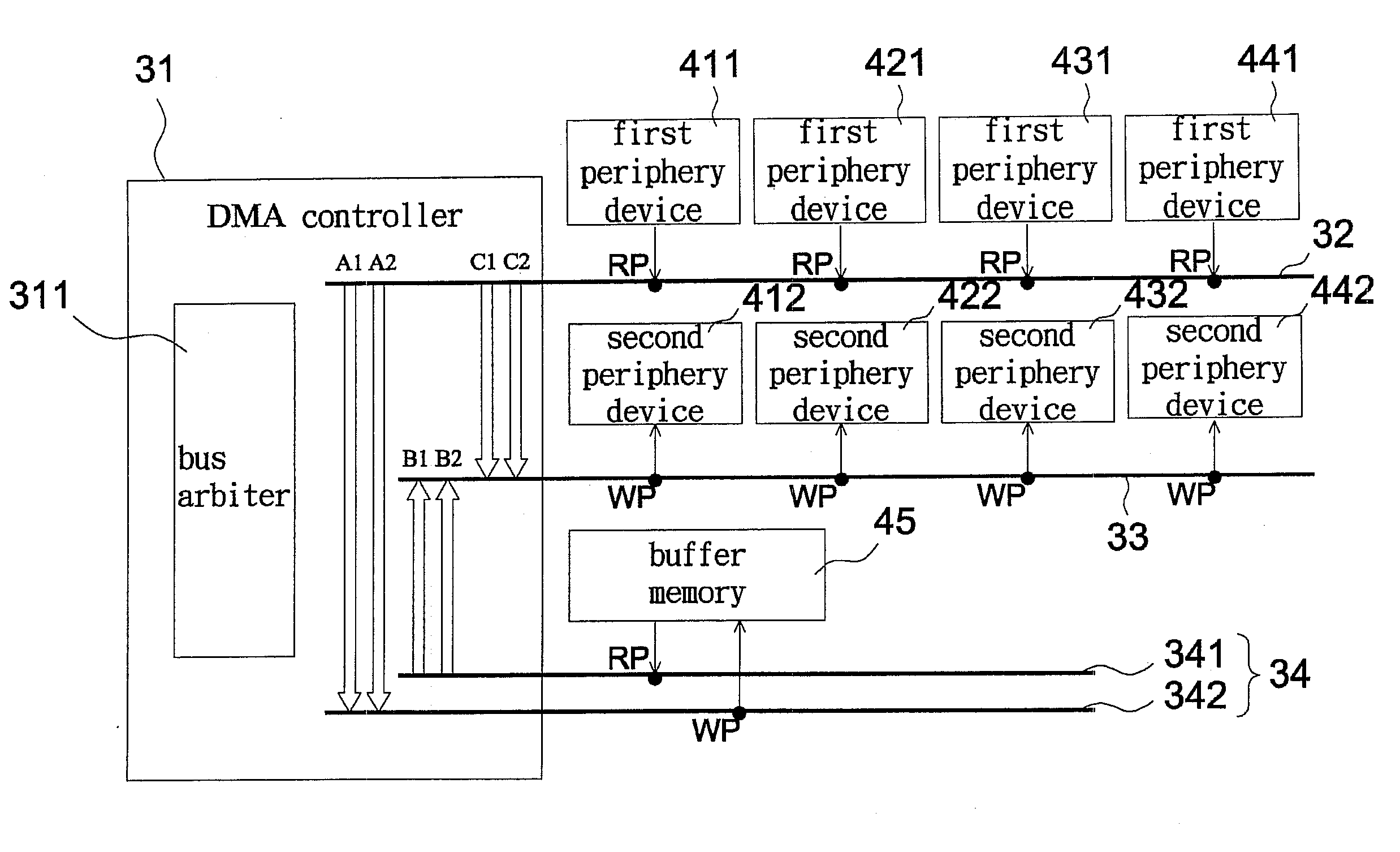

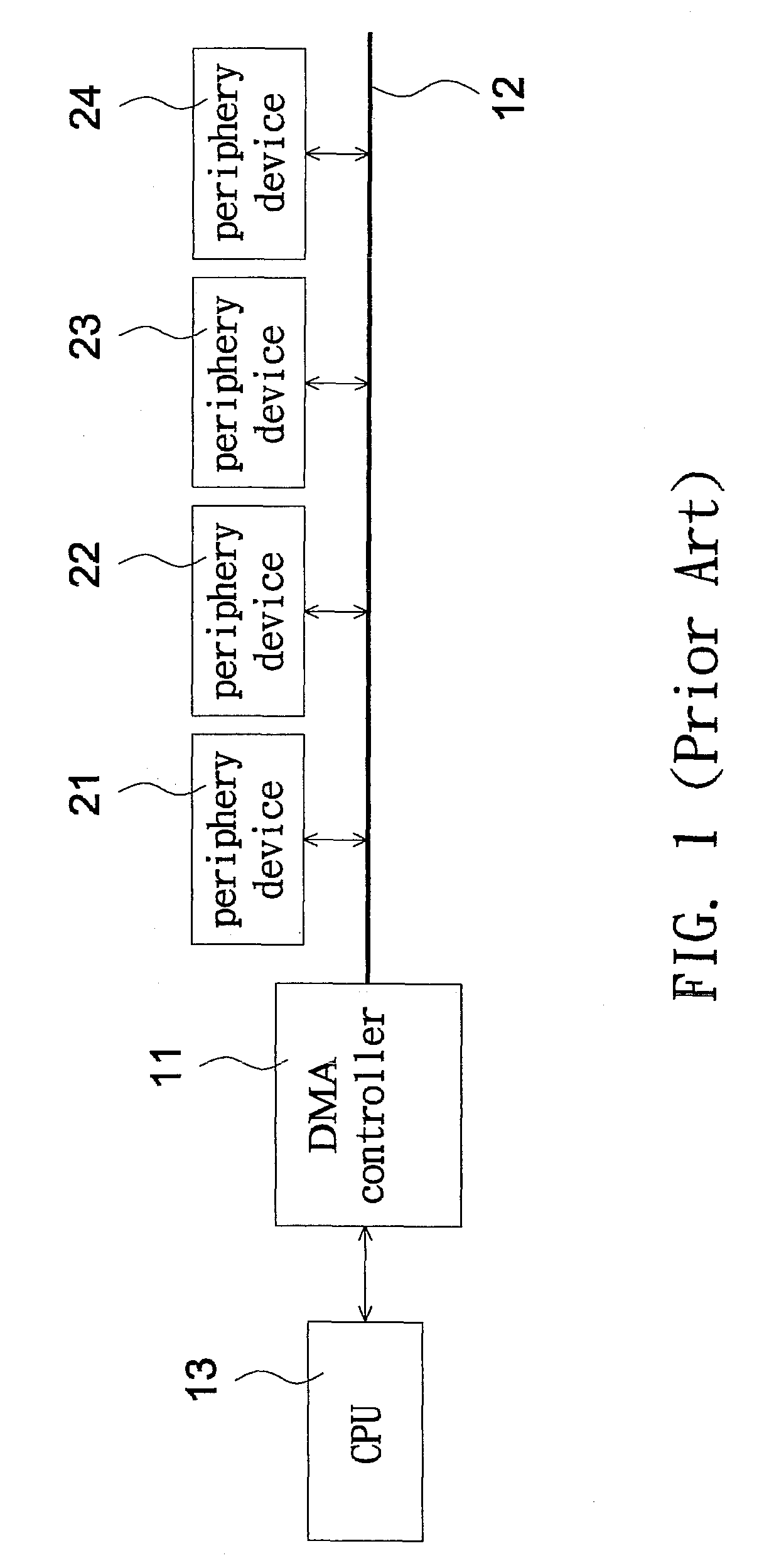

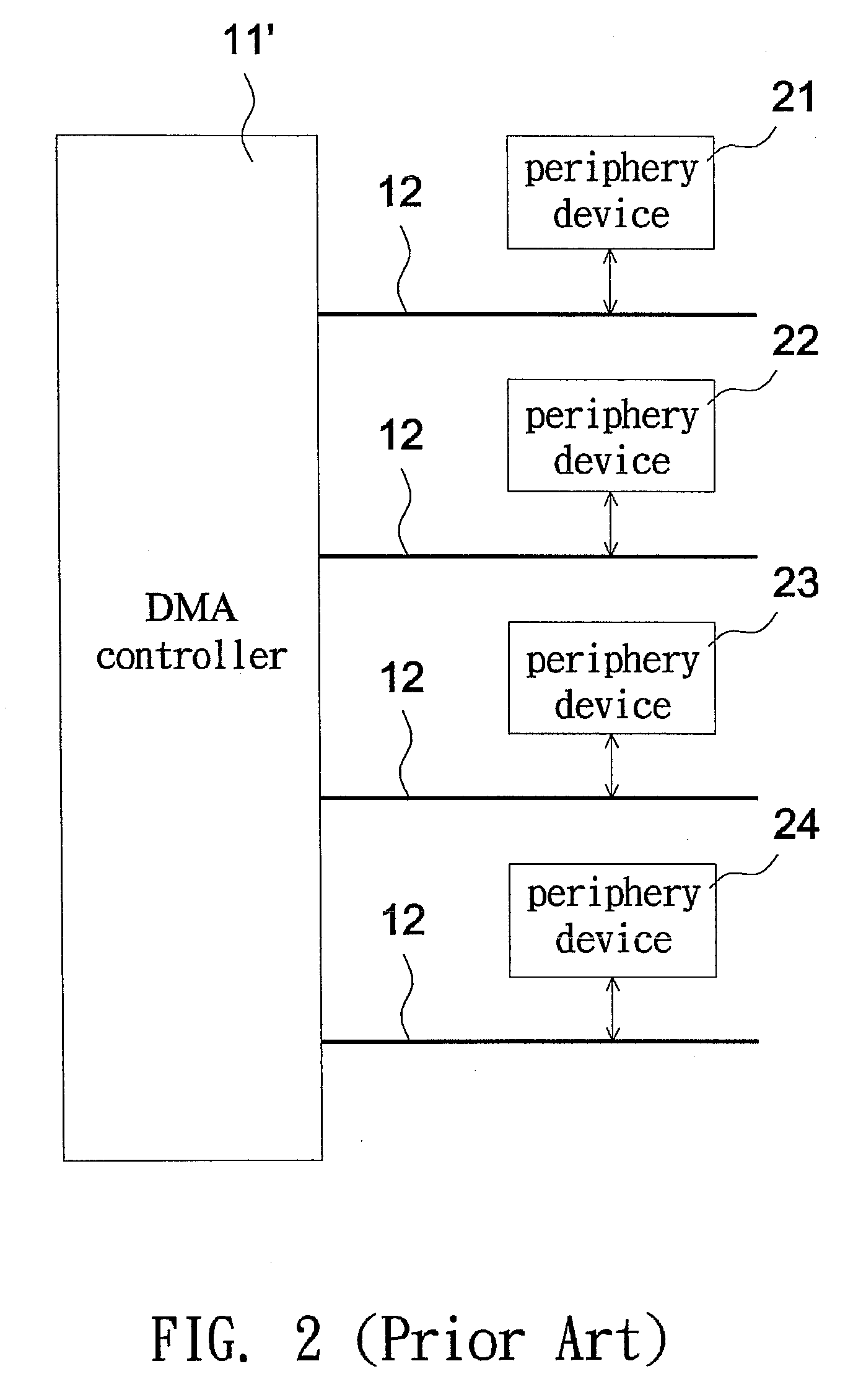

Direct memory access system and method

InactiveUS20090125648A1Improve acceleration performanceImprove transmission efficiencyInput/output processes for data processingData conversionDirect memory accessMemory bus

A DMA system includes at lease one read bus, at least one write bus, at least one buffer memory bus, and a DMA controller. The DMA controller comprises a plurality of channels and a bus arbiter. The channels are electrically connected to the read bus, the write bus, and the buffer memory bus. A source address and a destination address of data for each channel are assigned by a control table. The bus arbiter performs bus arbitration and prioritizes data access among the read bus, the write bus, and the buffer memory bus.

Owner:SONIX TECH

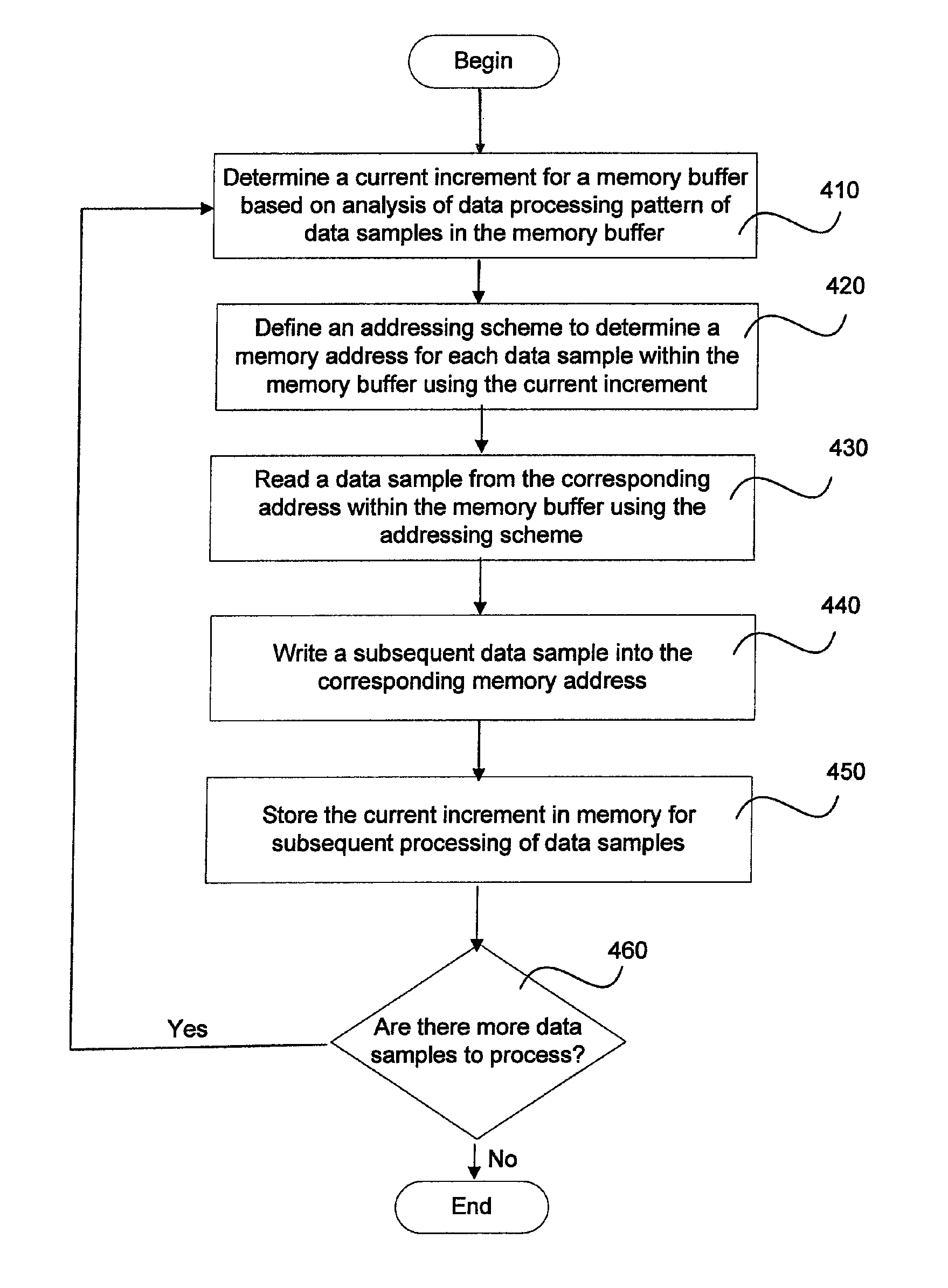

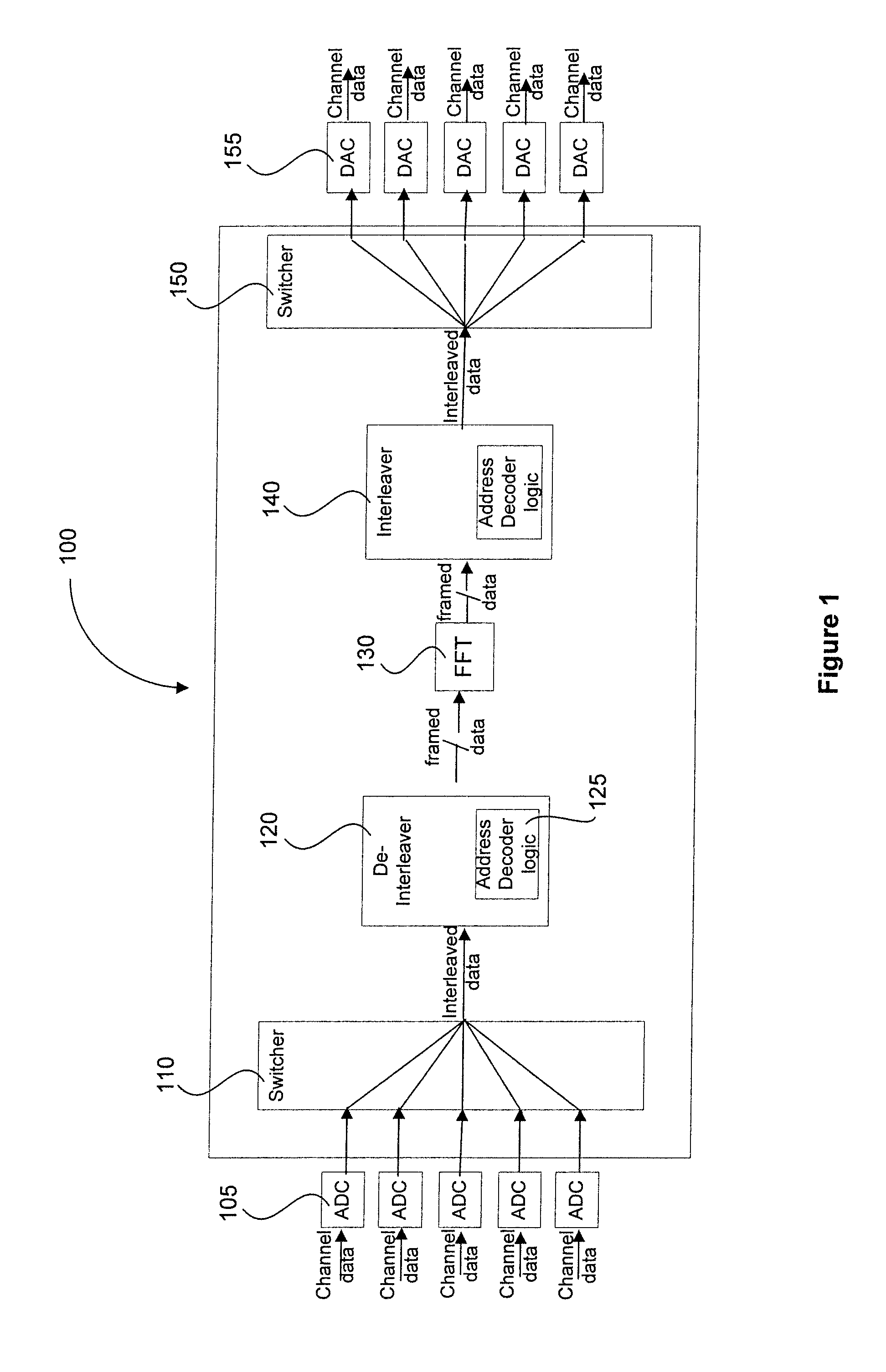

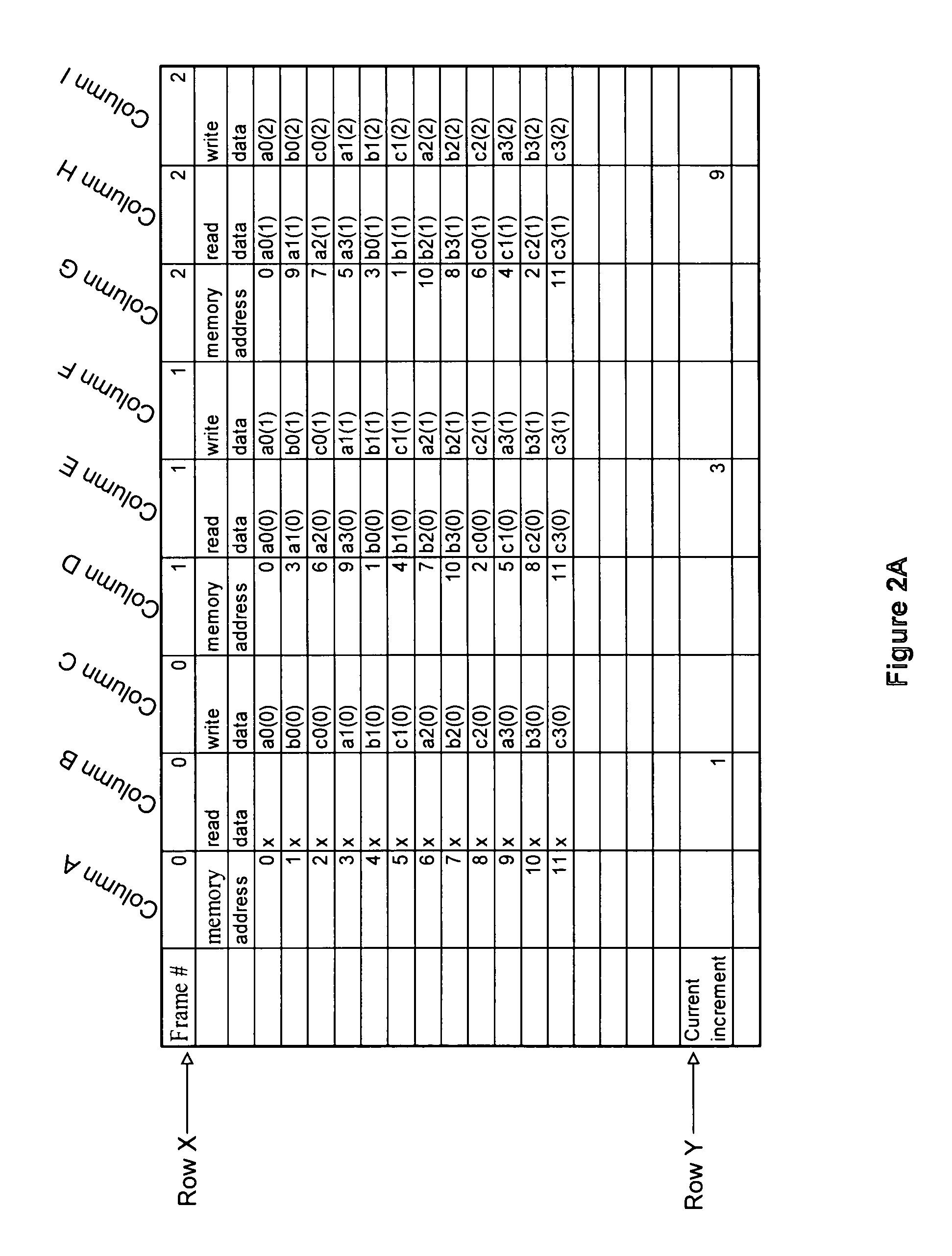

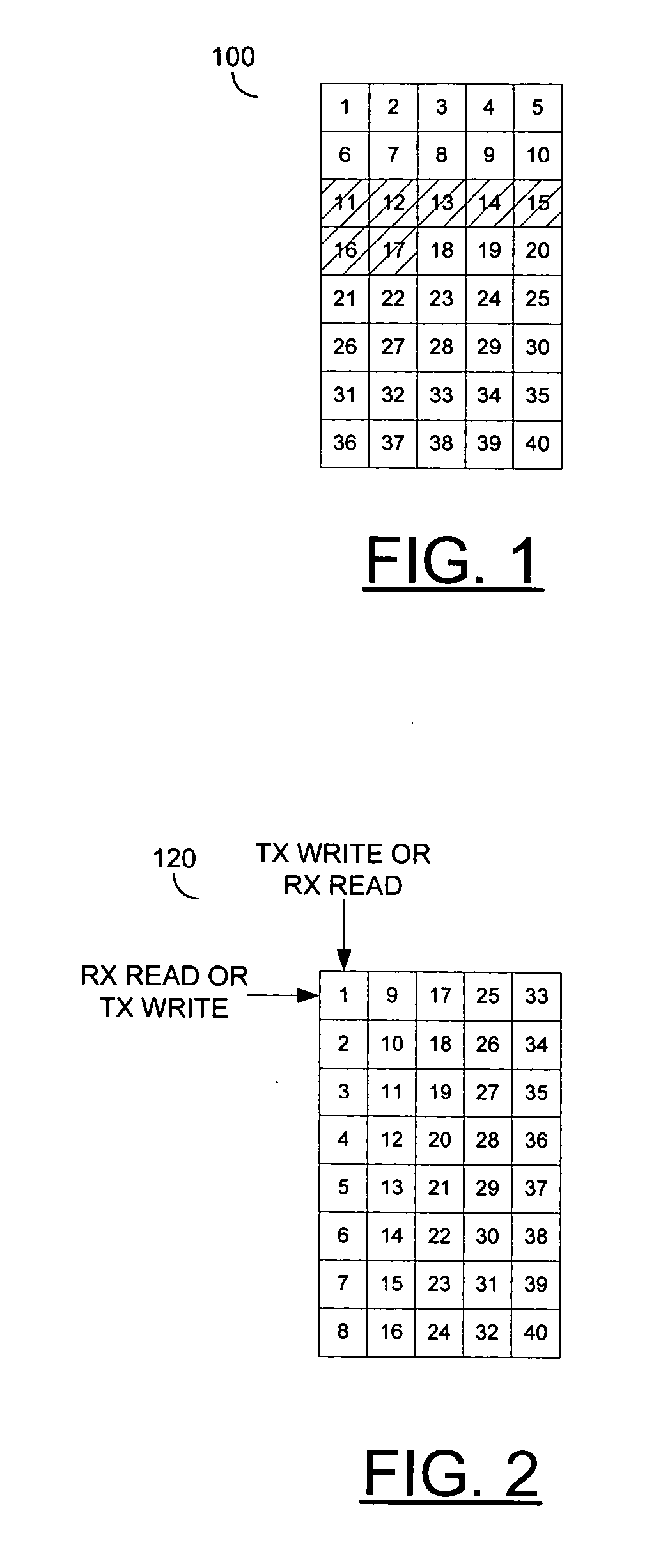

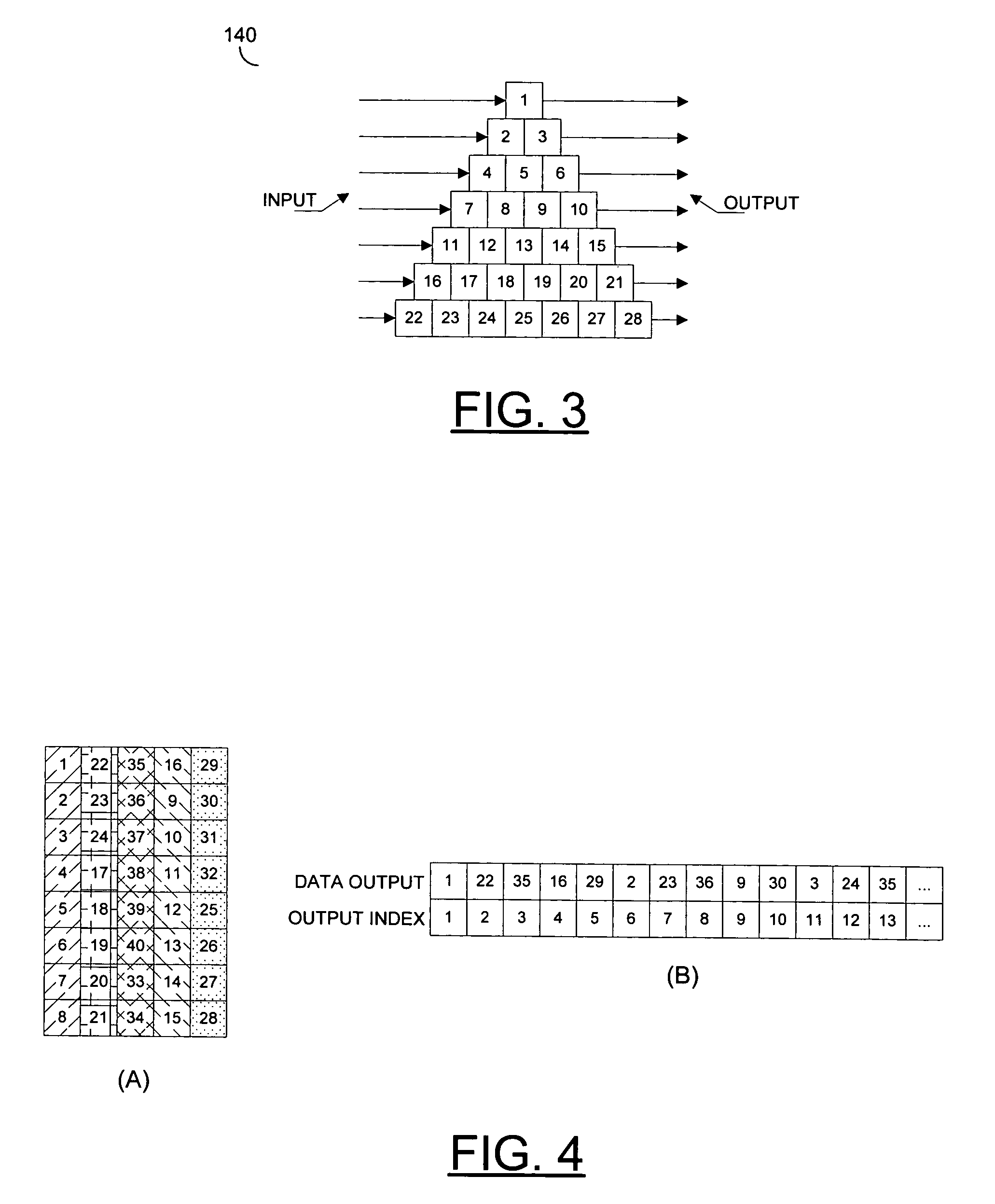

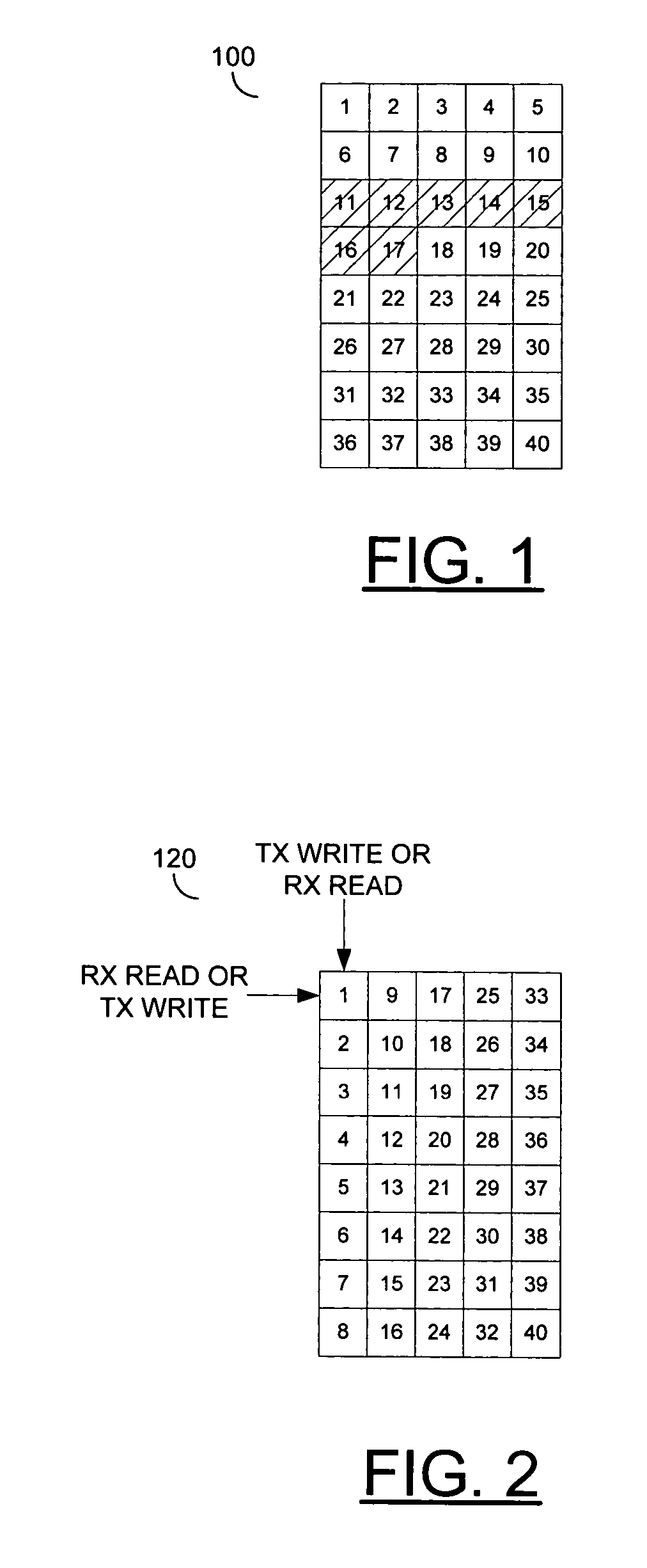

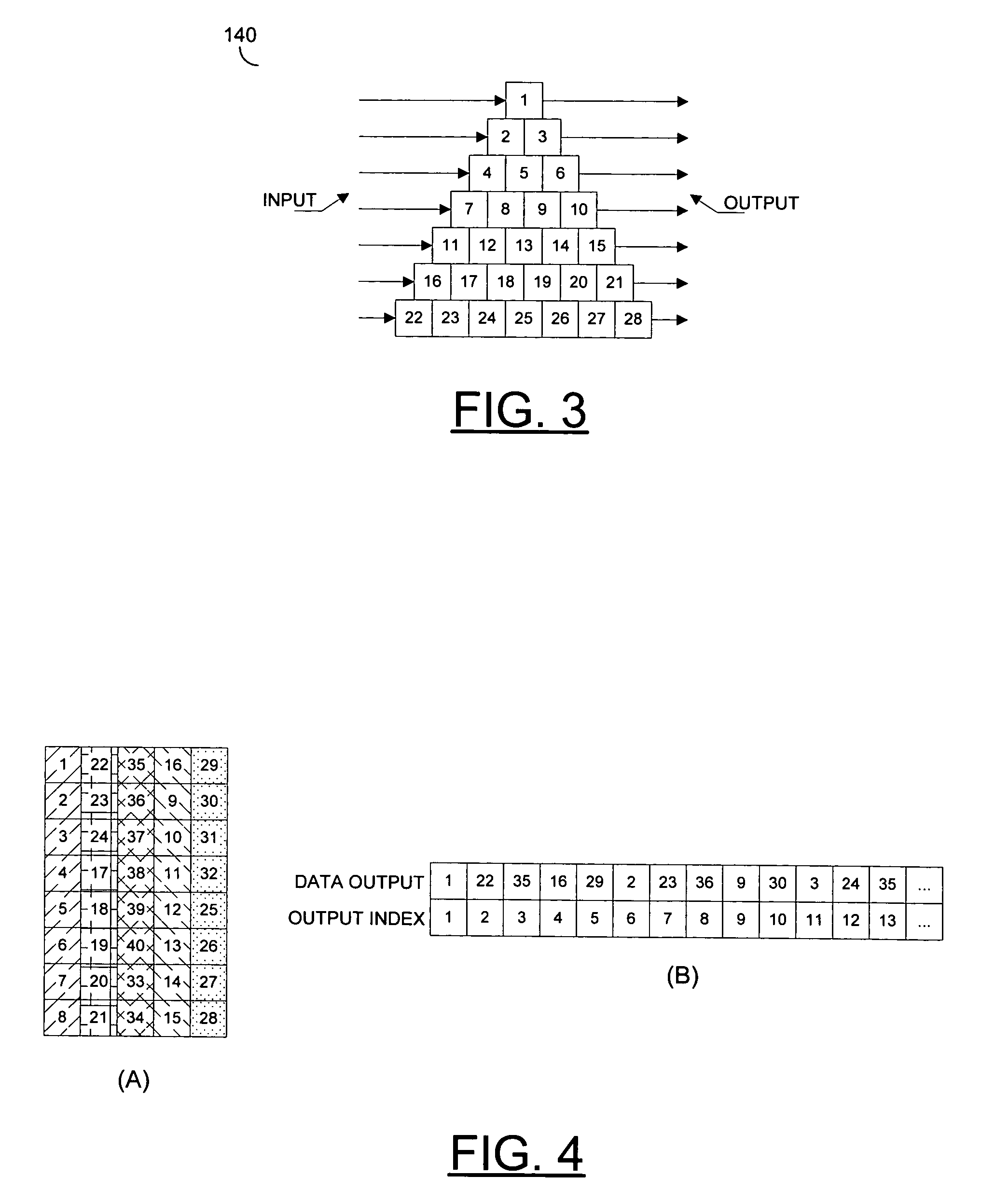

Single buffer multi-channel de-interleaver/interleaver

InactiveUS8732435B1Optimize memory usageEasy to useMemory architecture accessing/allocationMemory systemsMemory addressData stream

An input data stream is received for processing at an integrated circuit (IC) through multiple channels. The input data stream is interleaved and the interleaved input data stream is stored in a memory buffer associated with the IC. An addressing scheme is defined for reading and writing data samples from and to the memory buffer within the IC. The addressing scheme includes determining a current increment by analyzing a pattern associated with data samples within the memory buffer and determining the memory address for each data sample within the memory buffer using the current increment. A data sample for a frame is read from a corresponding address within the memory buffer using the addressing scheme and a subsequent data sample from the interleaved input data stream is written into the corresponding address of the memory buffer. The current increment and the addressing scheme are stored in the memory buffer. The current increment is dynamically determined by analyzing data samples in the memory buffer after processing of each frame of data and is used in redefining the addressing scheme for subsequent data processing.

Owner:ALTERA CORP

Algorithm of progress bar of breathing lamp effect

ActiveCN107315594AImprove efficiencyIntuitive and easy to understandSoftware engineeringSpecific program execution arrangementsProgress barFuzzy filtering

The invention relates to an algorithm of a progress bar of a breathing lamp effect. The algorithm includes the steps that firstly, a new control class BurnProgressView is user-defined on the basis of layout control Linearlayout, and an initialization method is added to a construction function; secondly, in onSizeChanged callback, the size of the circular progress bar is initialized according to the control size, and an inner ring area and an outer ring area are determined according to the width of a drawing pen so that gradient radian lines can be conveniently drawn on the two sides of the progress bar; thirdly, a thread of a breathing lamp change is started, the radiuses of a drawing pen fuzzy filter are circularly switched, and a height mark of corrugated lines is changed to be consistent with the rhythm of a breathing lamp; fourthly, in onDraw callback, progresses and effects are sequentially and dynamically drawn. According to the algorithm, the ring-shaped progress bar and the surrounding of the breathing lamp effect are adopted, attractive, personalized, visually and easily understood progress bar display is achieved through the circular progress bar and the breathing lamp effect, the algorithm efficiency is high, few resources are occupied, the universality is good, and the algorithm is suitable for being used in the Android system.

Owner:BEIJING KUWO TECH

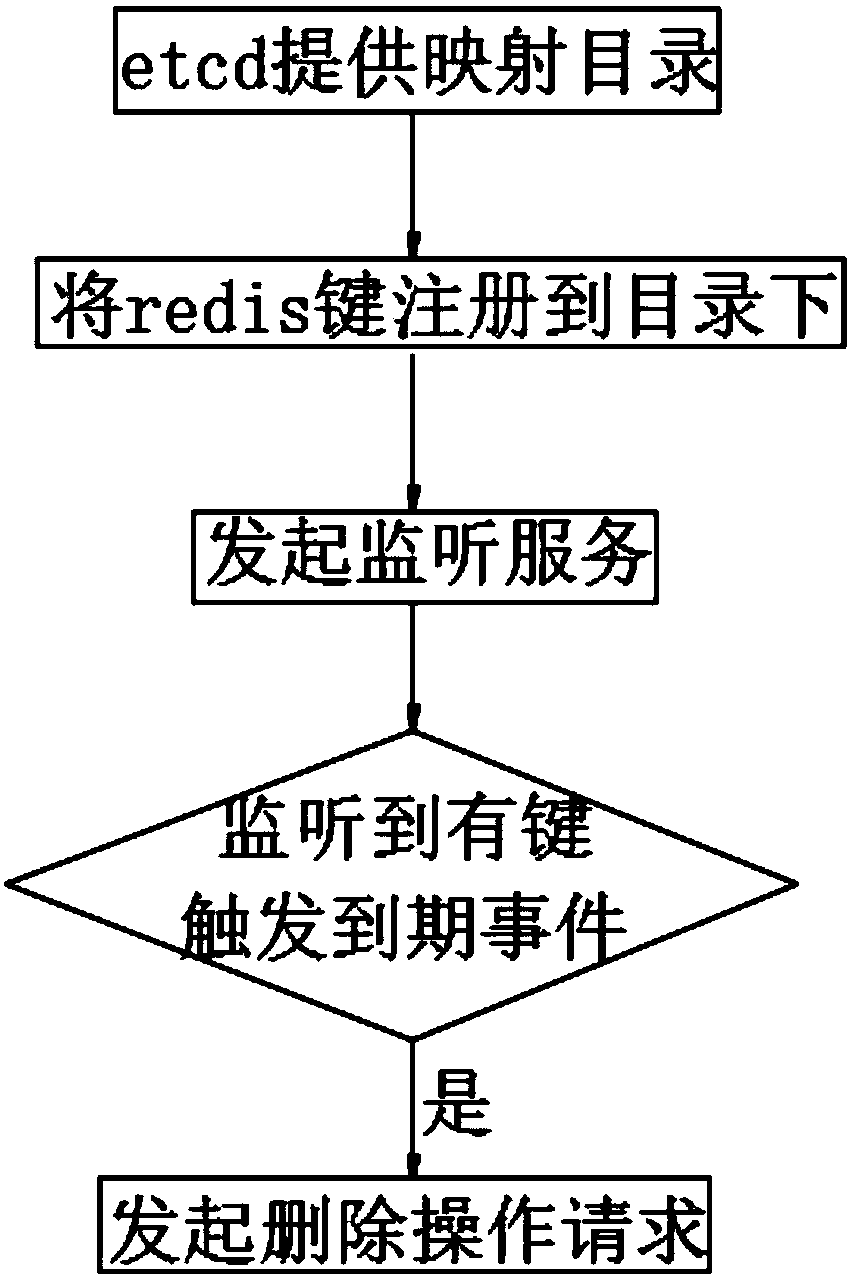

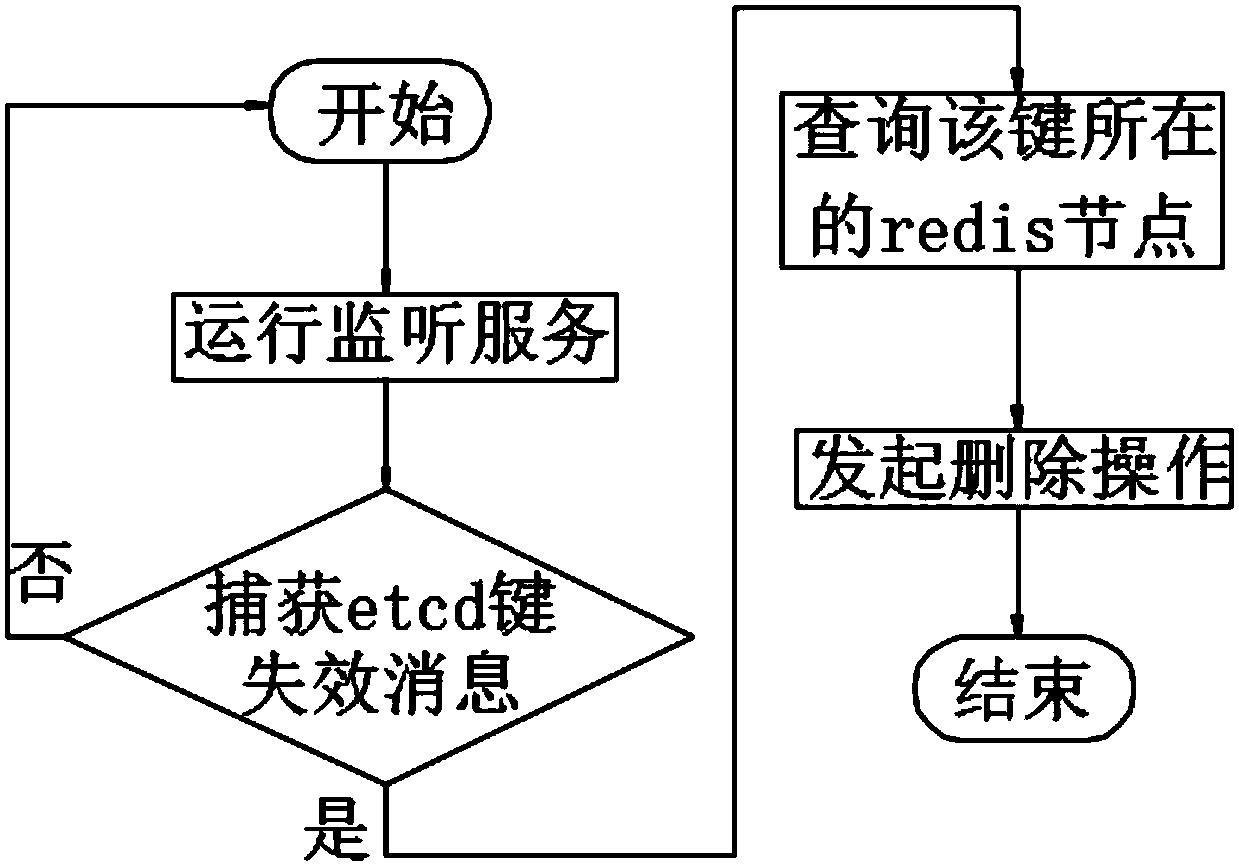

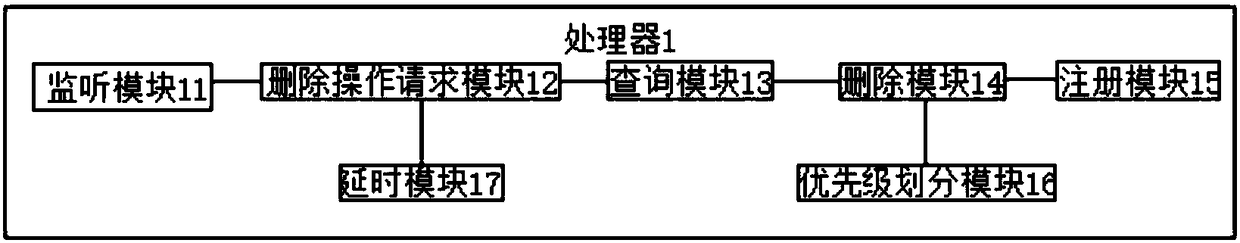

Redis data expiration processing method and apparatus

InactiveCN108108416AImprove concurrencyReduce dependenceSpecial data processing applicationsThe InternetComputer science

The present invention belongs to the technical field of Internets, and in particular relates to a redis data expiration processing method and apparatus. The method comprises the following steps that:S1: the etcd provides a mapped directory, and registers the redis key in the directory; S2: a monitoring service is initiated to the etcd, and a delete operation request is initiated to the redis whenit is detected that there is at least one key trigger expiration event; and S3: a redis node where the key that triggered the expiration event is located is queried, and a delete operation for deleting the key is performed on the node. The technical scheme of the present invention has the advantages that the performance of optimizing the redis can be improved, the user experience can be enhancedand the like.

Owner:台州市吉吉知识产权运营有限公司

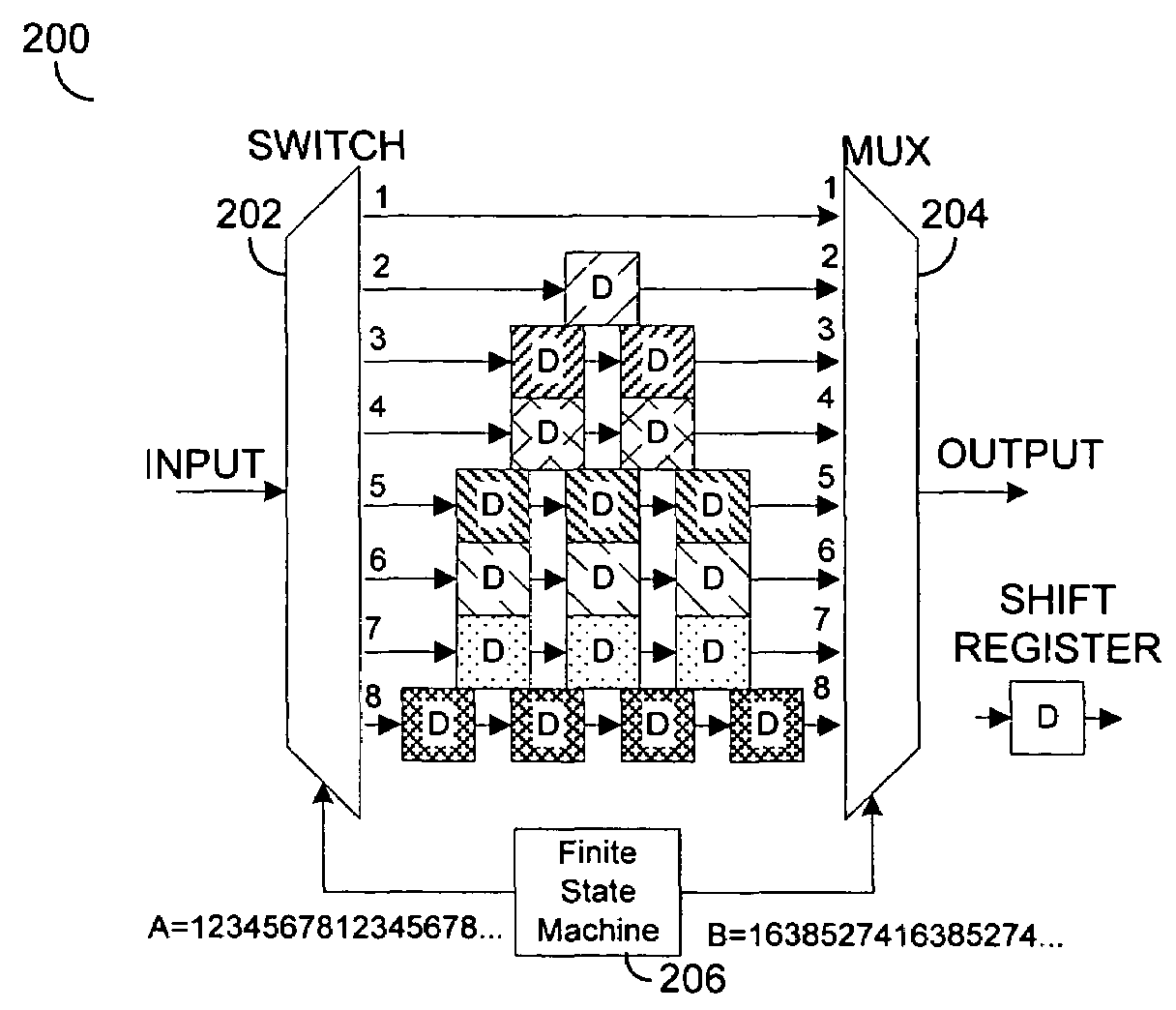

Optimized interleaver and/or deinterleaver design

InactiveUS20050094677A1Optimize memory usageSimple designCode conversionTime-division multiplexShift registerData signal

An apparatus comprising an input circuit, a storage circuit and an output circuit. The input circuit may be configured to generate a plurality of data paths in response to an input data signal having a plurality of data items sequentially presented in a first order. The storage circuit may be configured to store each of the data paths in a respective shift register chain. The output circuit may be configured to generate an output data signal in response to each of the shift register chains. The output data signal presents the data items in a second order different from said first order.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

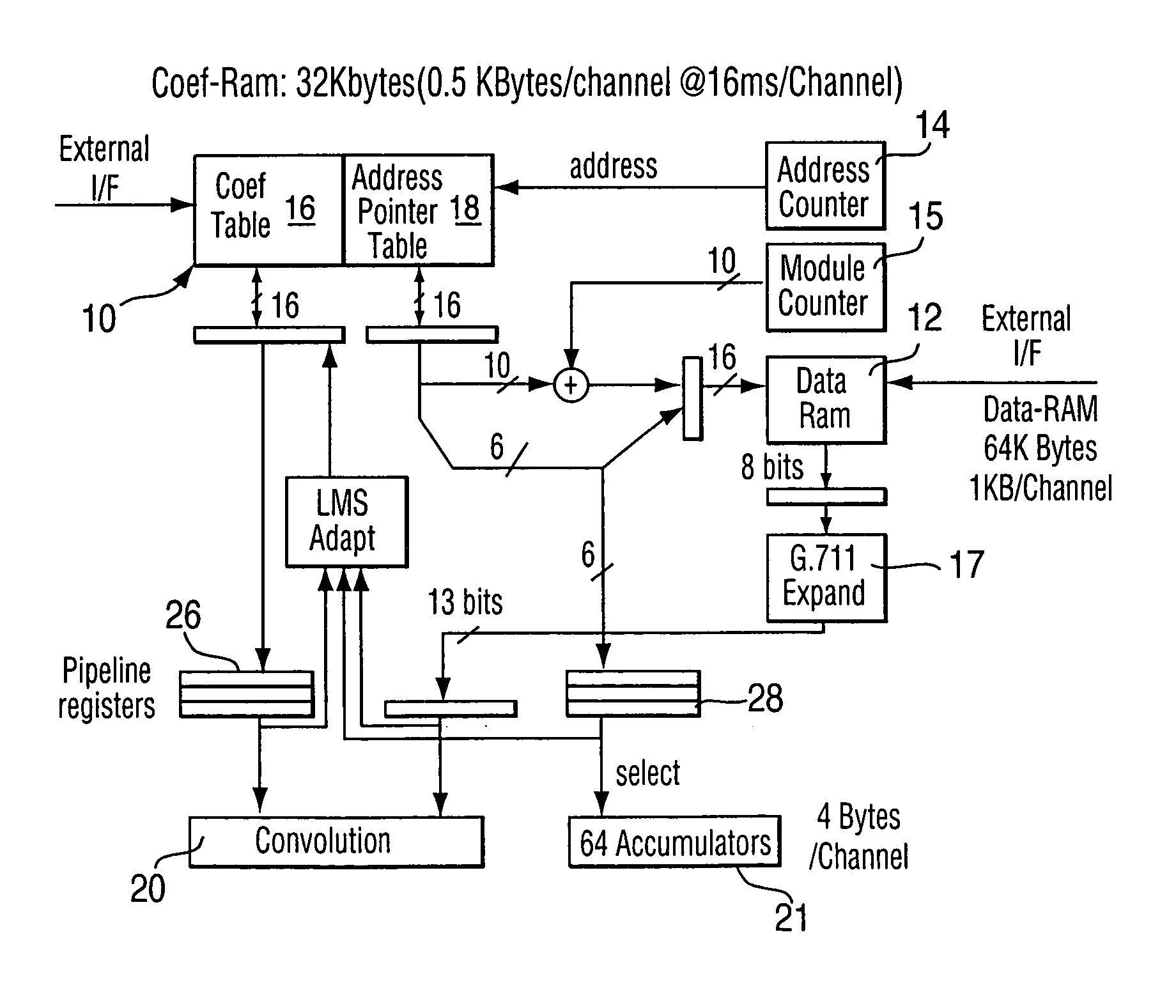

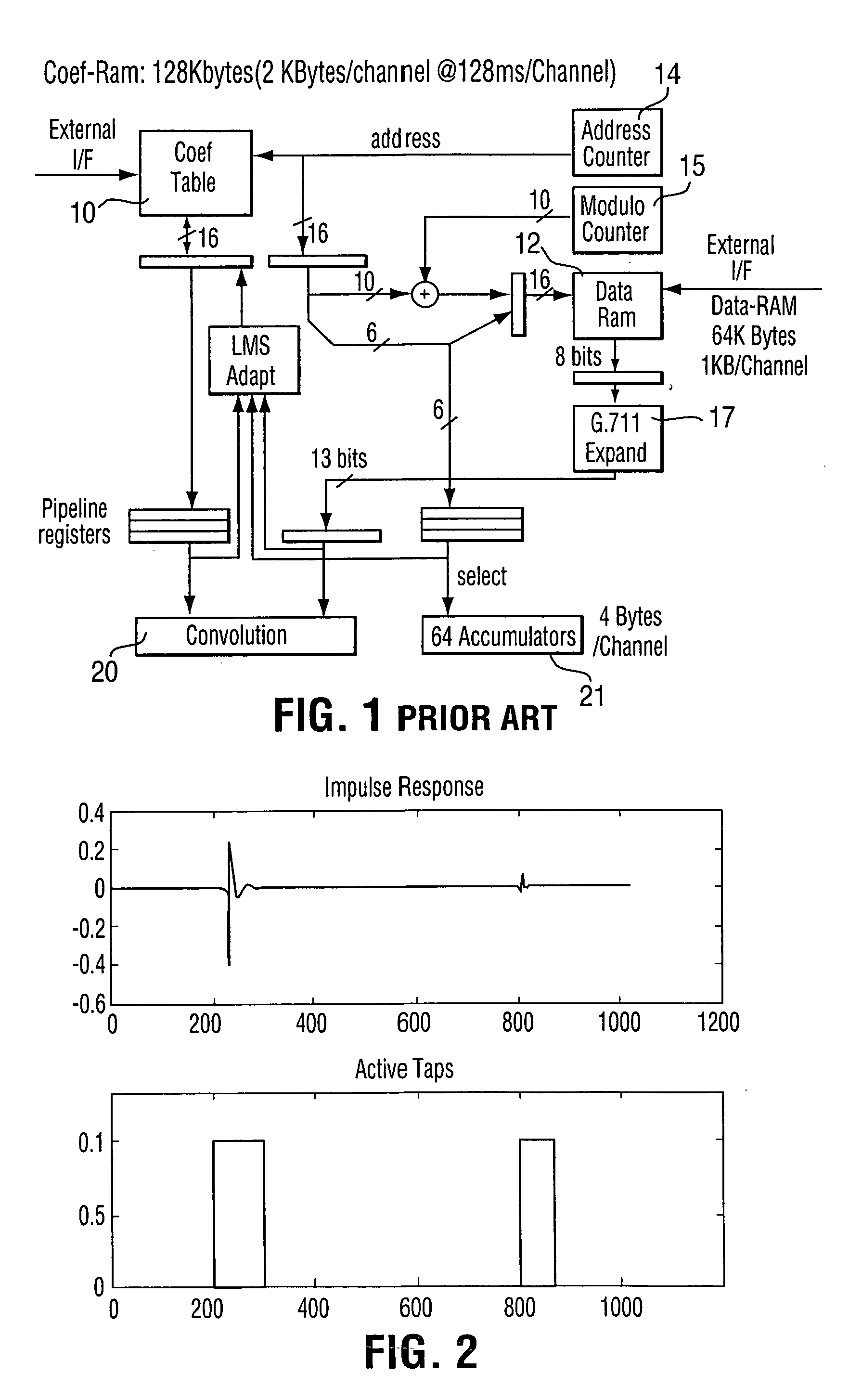

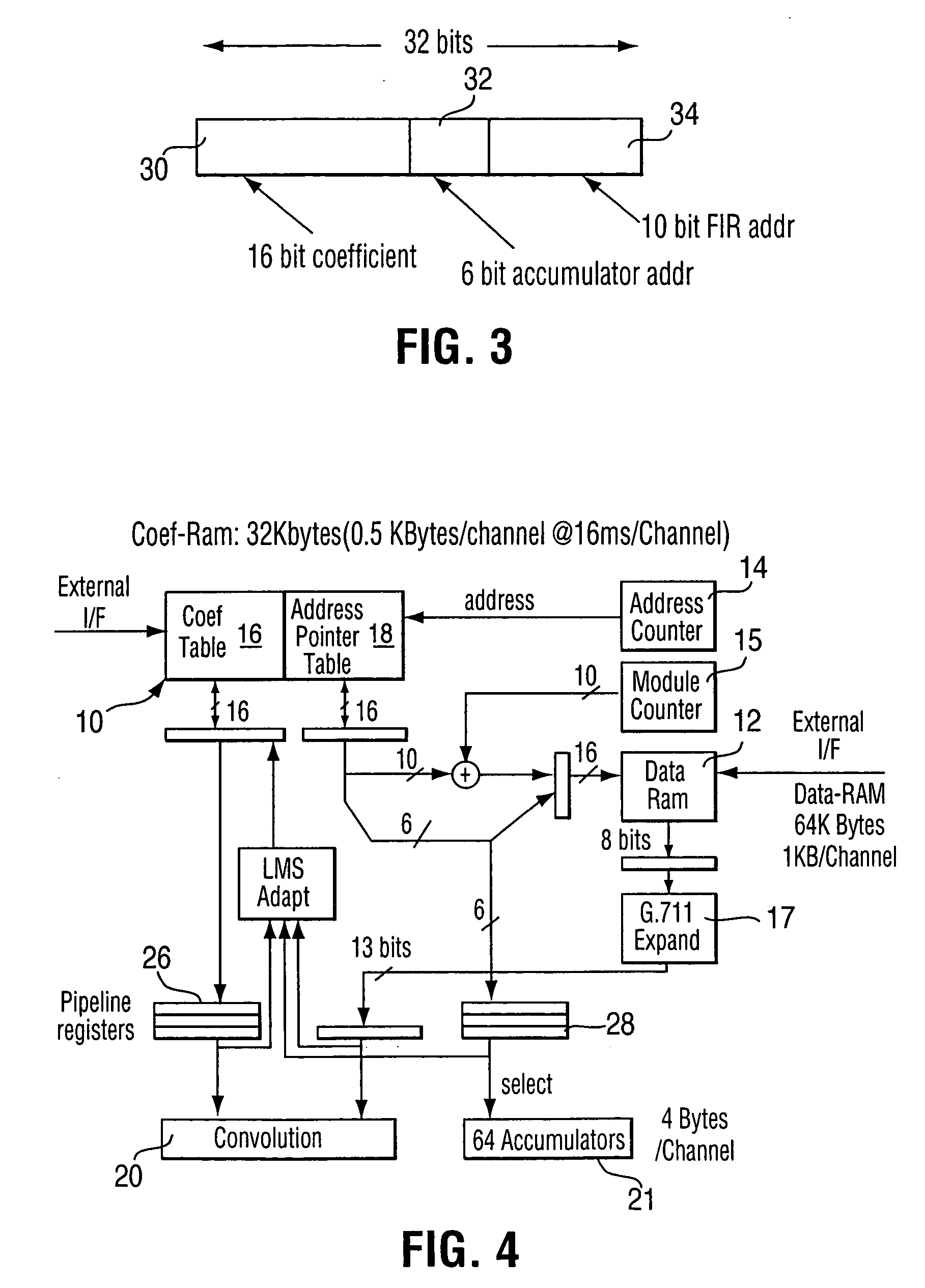

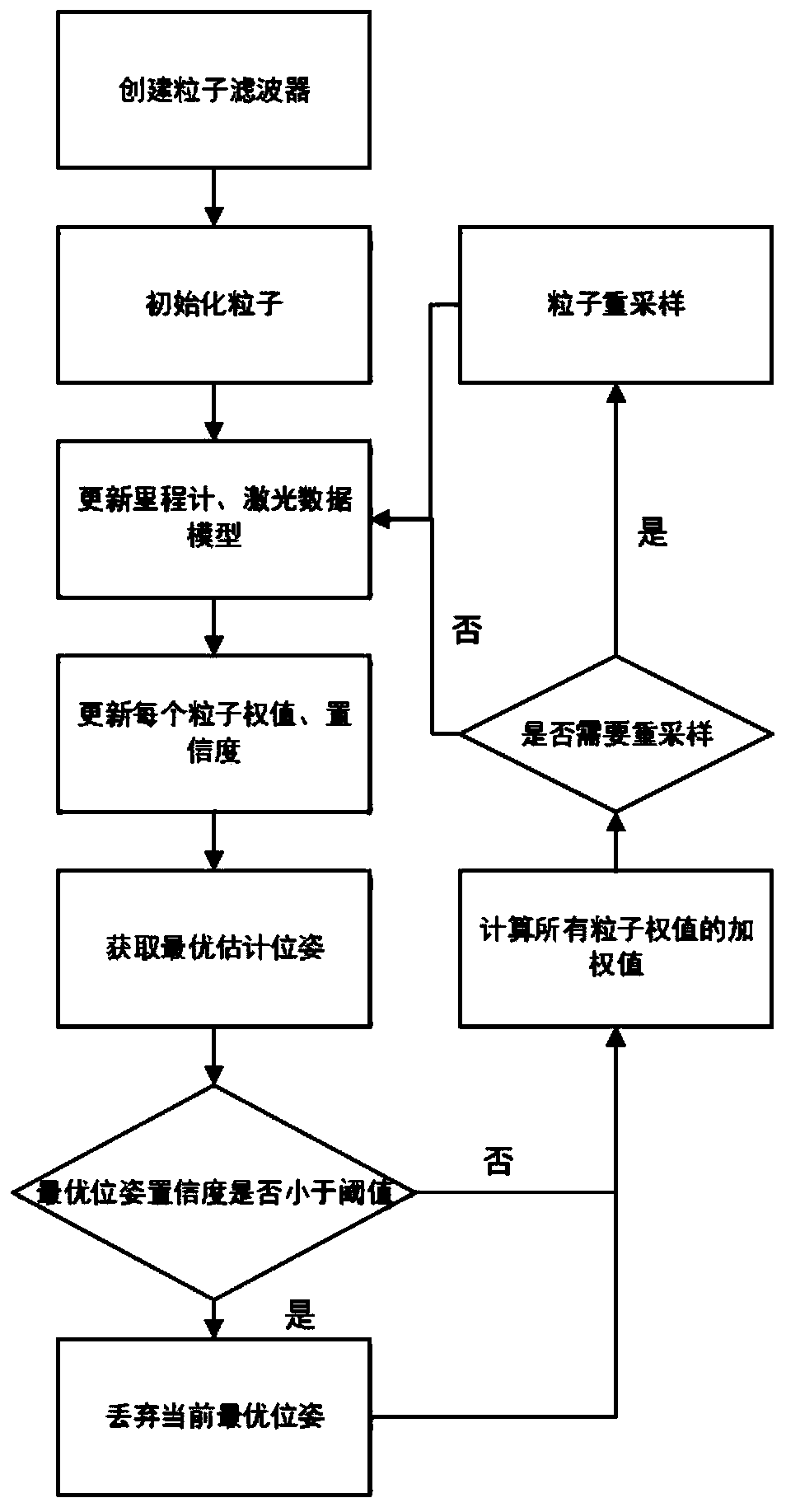

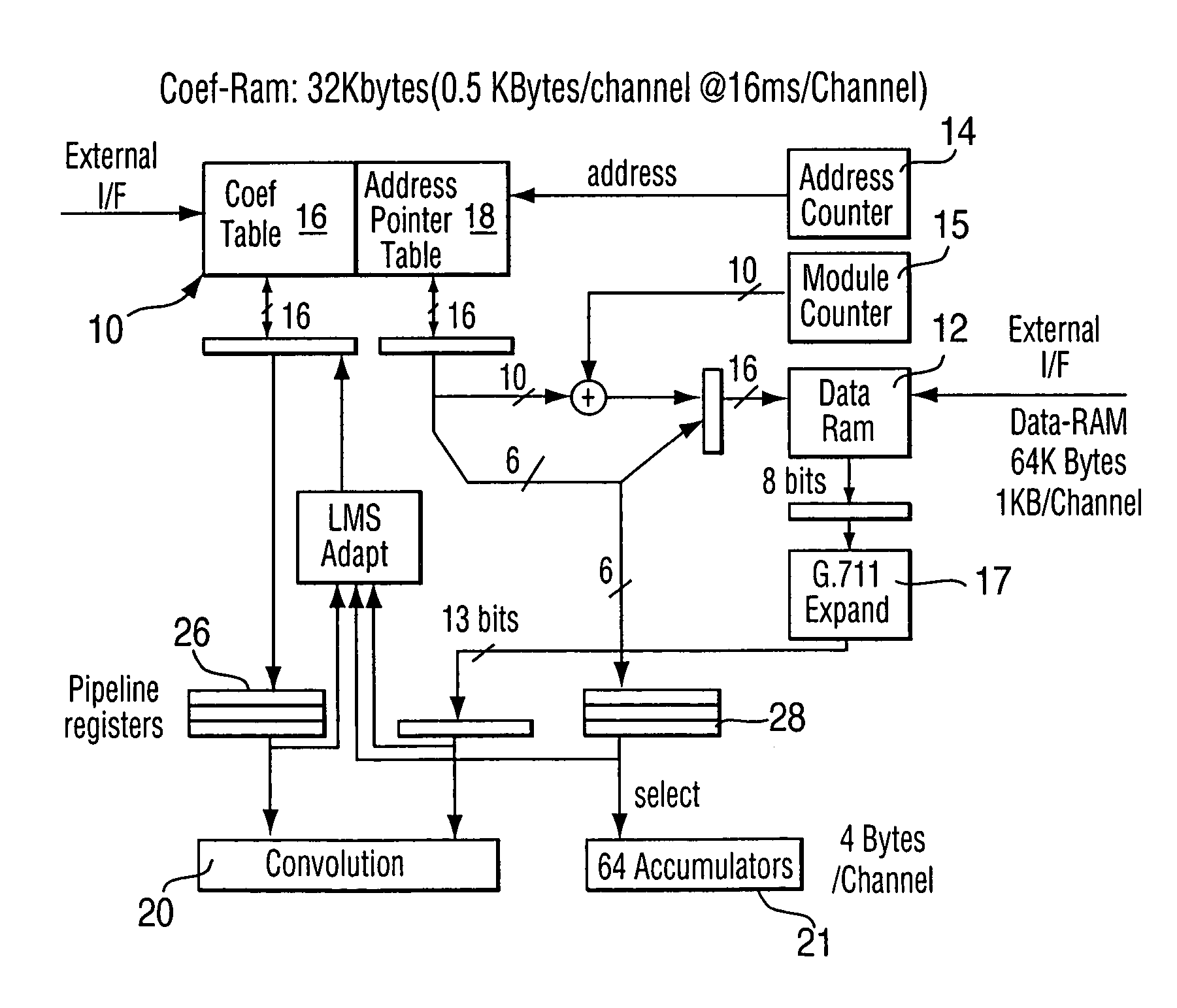

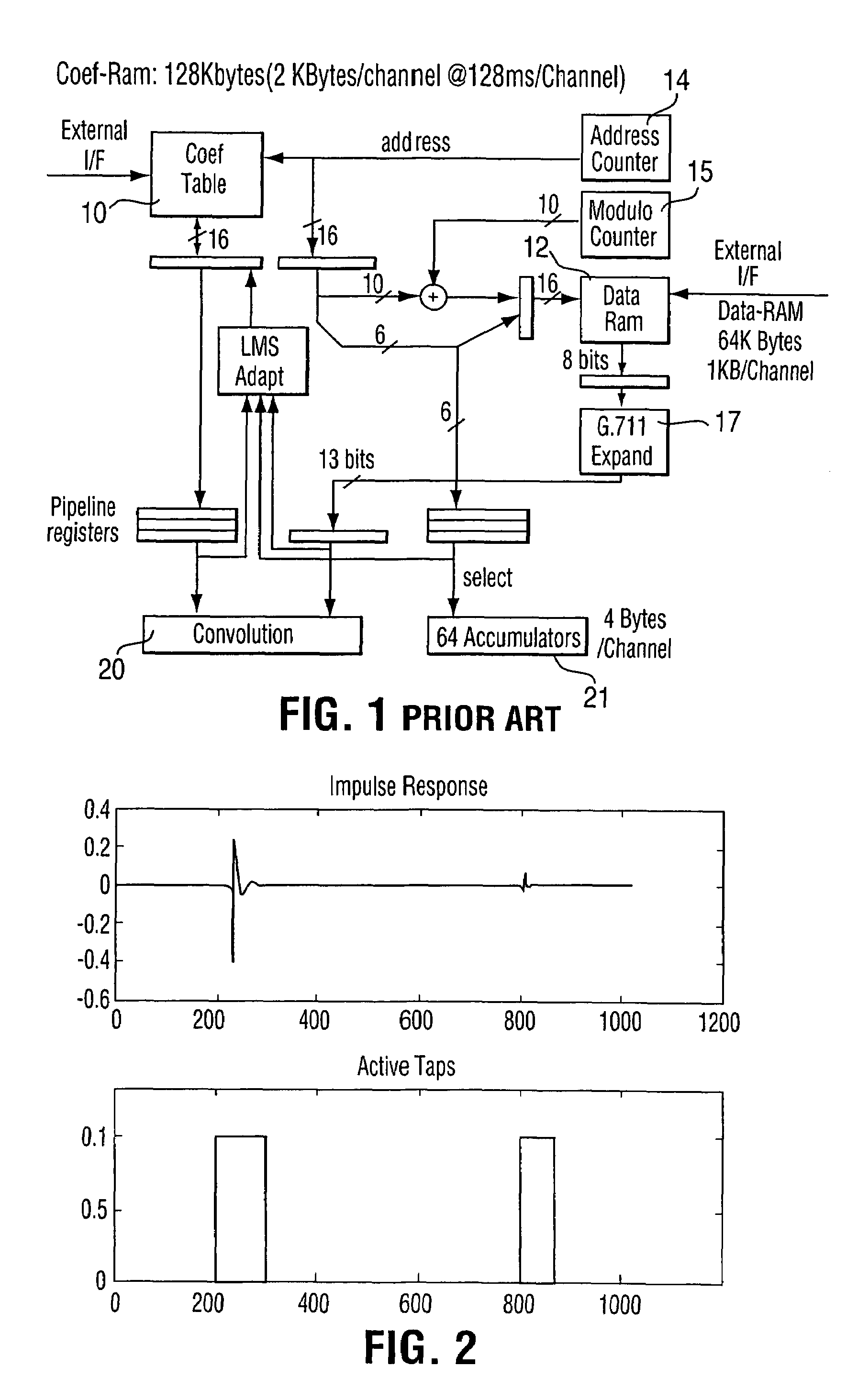

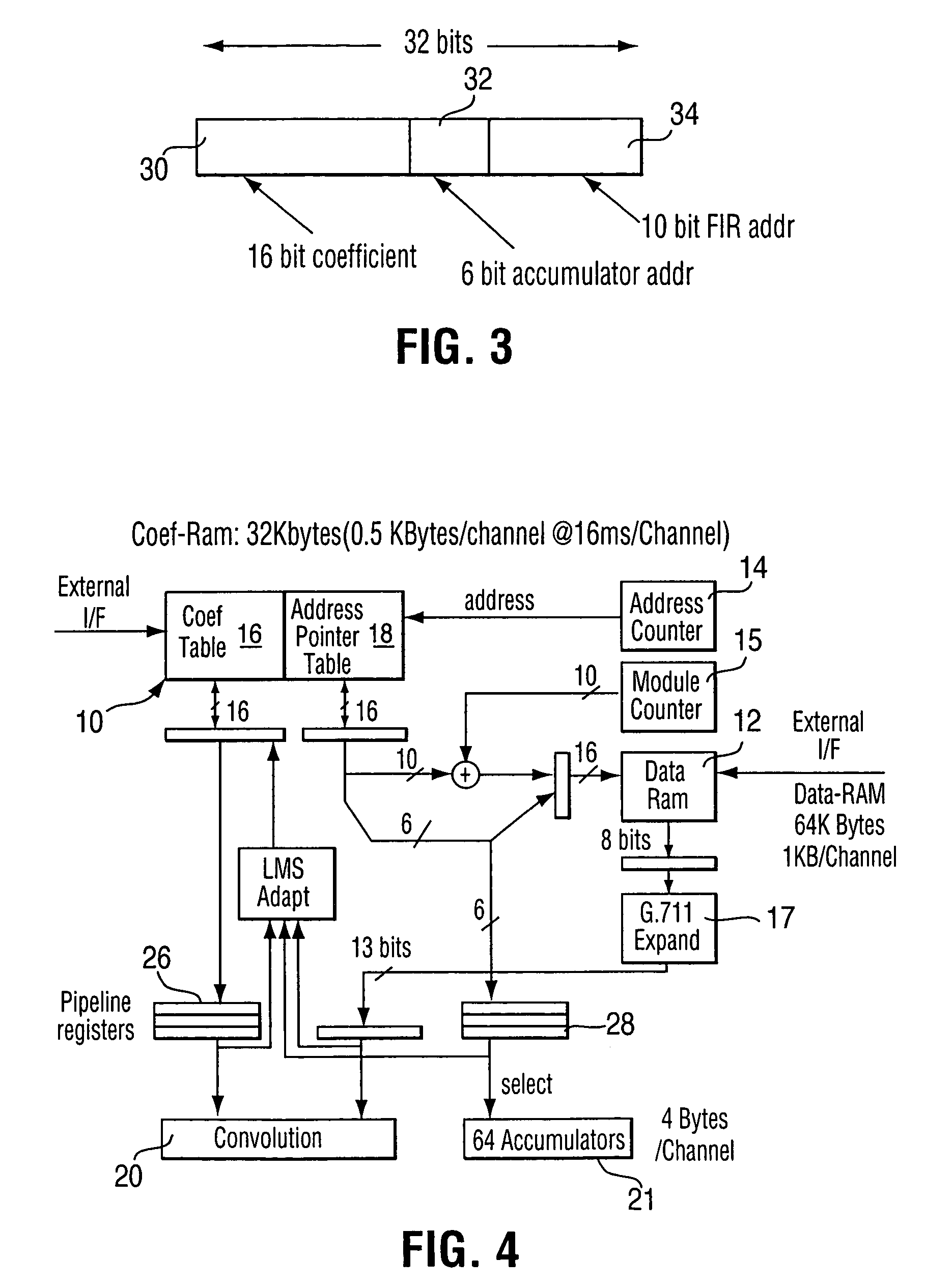

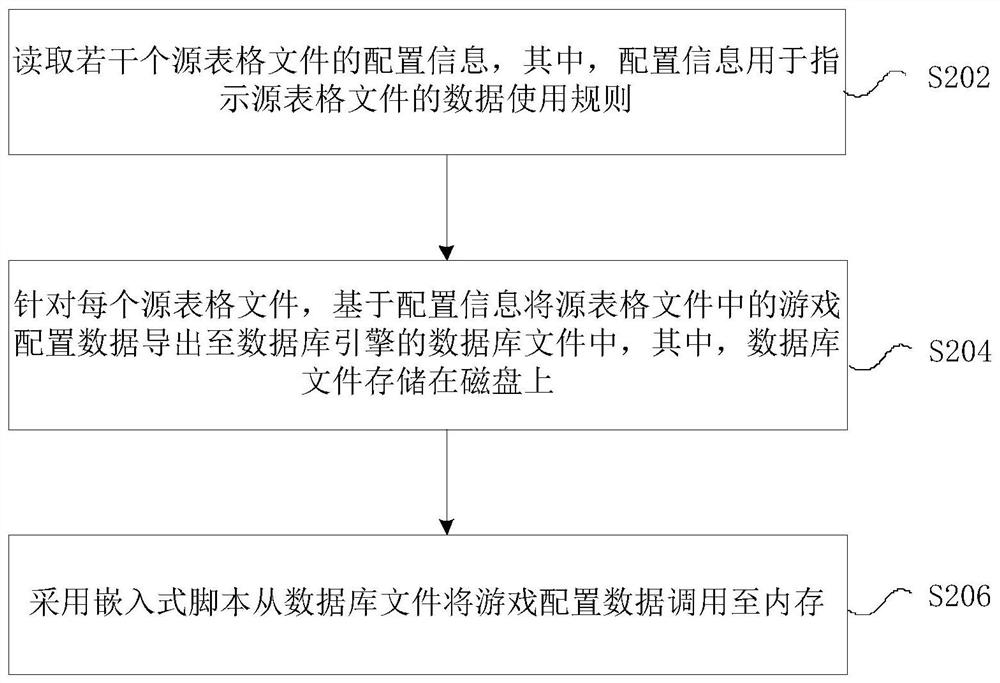

Reduced complexity adaptive filter

ActiveUS20050027768A1Reduce in quantityReduce storageMultiple-port networksAdaptive networkAdaptive filterParallel computing

A FIR filter for use in an adaptive multi-channel filtering system, includes a first memory for storing data, and a second memory for storing filter coefficients. The second memory stores only non-zero valued coefficients or coefficients that are above a predetermined magnitude threshold such that the overall number of coefficients processed is significantly reduced.

Owner:IP GEM GRP LLC

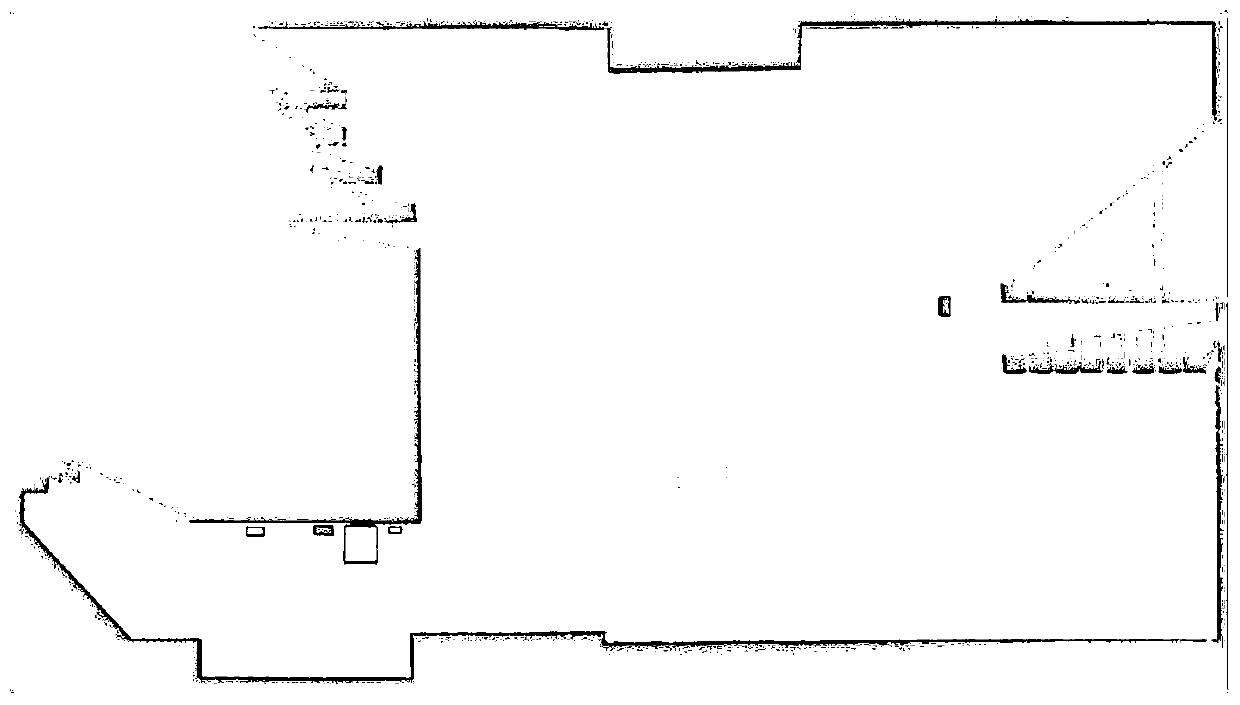

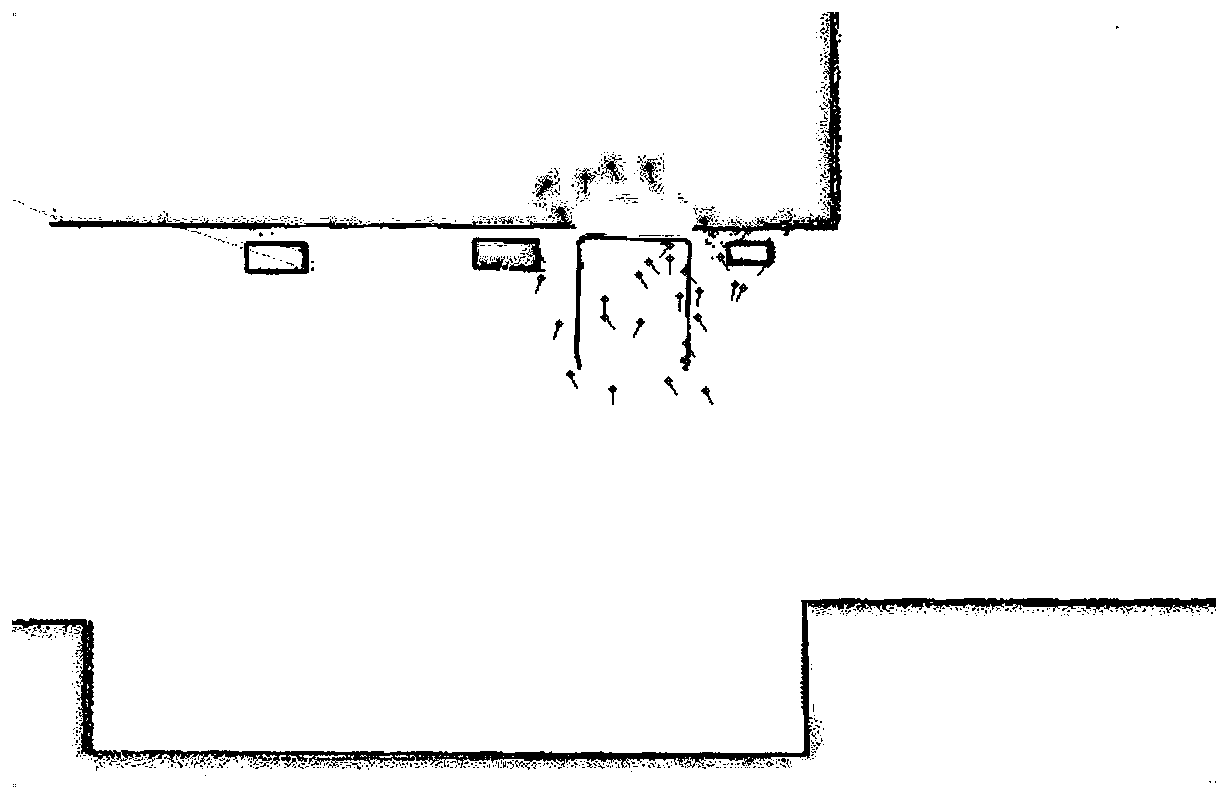

2D laser data-based real-time positioning method of robot

ActiveCN110082776AImprove real-time performanceOptimize memory usageInternal combustion piston enginesNavigation instrumentsReal-time computingLaser data

The invention discloses a 2D laser data-based real-time positioning method of a robot. The 2D laser data-based real-time positioning method is mainly used for solving the problem of low real-time performance and excessively low positioning accuracy of an existing robot positioning algorithm. On the basis of 2D laser data, an automatic Monte-Carlo algorithm is used as a basis, the gesture of the robot is updated in real time by the laser data according to a speed of the robot, and unreasonable gesture is filtered according to estimated gesture confidence. The method is used for positioning therobot, relatively high real-time performance is achieved, and moreover, the positioning accuracy can be controlled with a range being 1 centimeter.

Owner:GUIZHOU POWER GRID CO LTD

Indirectly accessing sample data to perform multi-convolution operations in a parallel processing system

PendingUS20190220731A1Optimize memory usageLower latencyCharacter and pattern recognitionProgram controlComputational scienceEngineering

In one embodiment of the present invention, a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch based on one or more start addresses and one or more offsets. Subsequently, the pipeline copies data from the source locations to the image tile. The pipeline then performs matrix multiplication operations between the image tile and a filter tile to generate a contribution of the image tile to an output matrix. To optimize the amount of memory used, the pipeline creates each image tile in shared memory as needed. Further, to optimize the throughput of the matrix multiplication operations, the values of the offsets are precomputed by a convolution preprocessor.

Owner:NVIDIA CORP

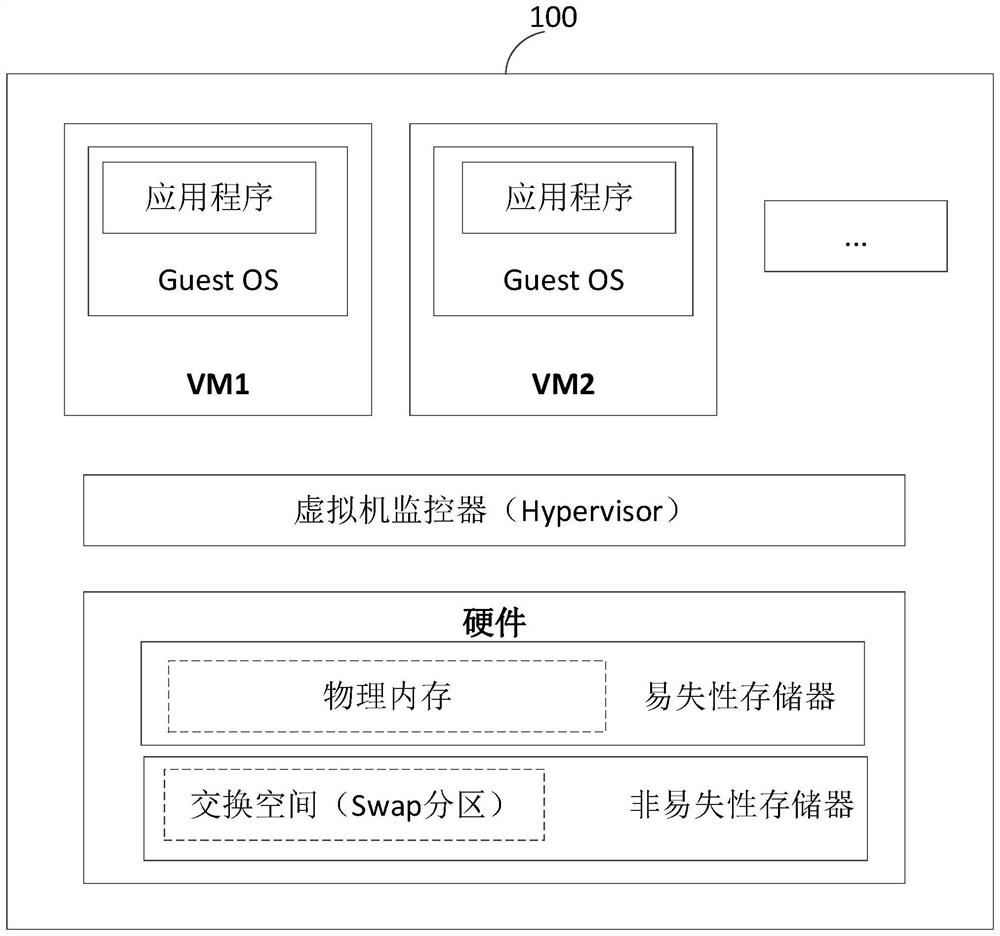

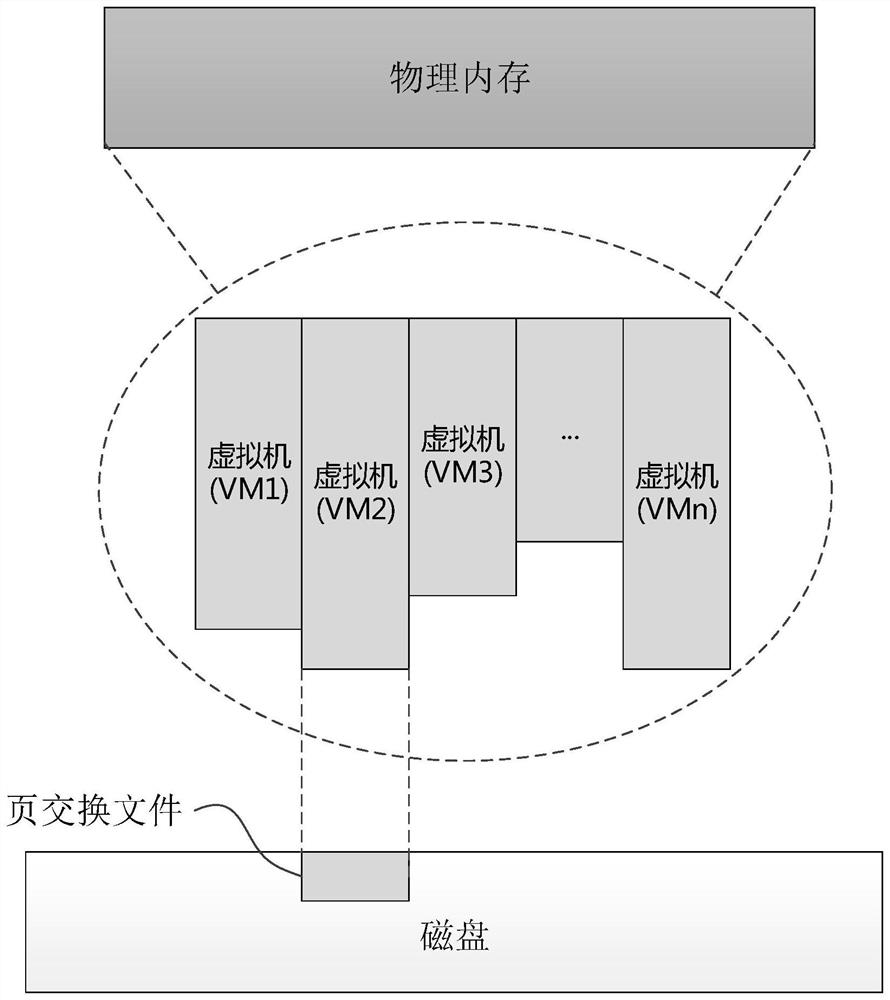

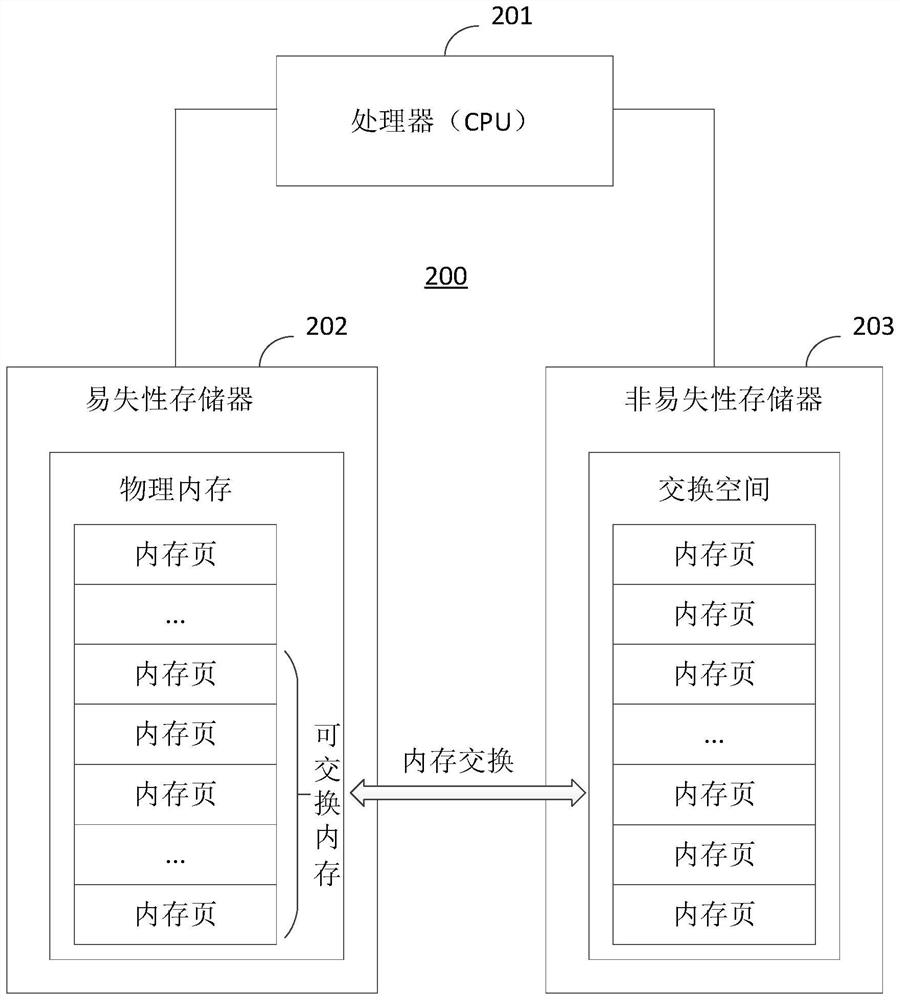

Virtual machine memory management method and equipment

PendingCN112579251AImprove stabilityAvoid ability to declineMemory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemTerm memory

The invention provides a virtual machine memory management method and equipment. The method comprises the following steps: identifying a non-operating system memory of a virtual machine from total memory allocated to the virtual machine; wherein the total memory comprises the memory of the virtual machine and the management memory of the virtual machine monitor; wherein the memory of the virtual machine comprises the memory of an operating system of the virtual machine and the memory of a non-operating system of the virtual machine; taking a memory of a non-operating system of the virtual machine as an exchangeable memory; and storing the data in the exchangeable memory into a nonvolatile memory. The problem of obvious reduction of the performance of the computer in an excessive submissionscene of the virtual machine can be avoided, the running stability of the virtual machine is improved, and the user experience is improved.

Owner:HUAWEI TECH CO LTD

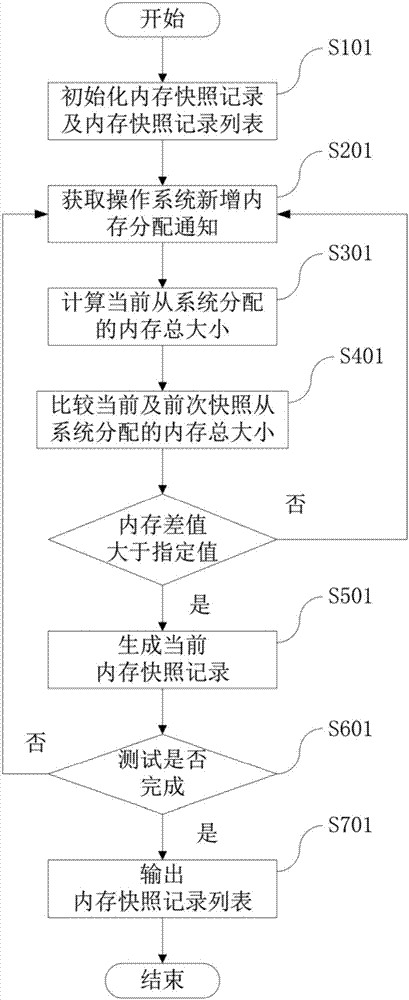

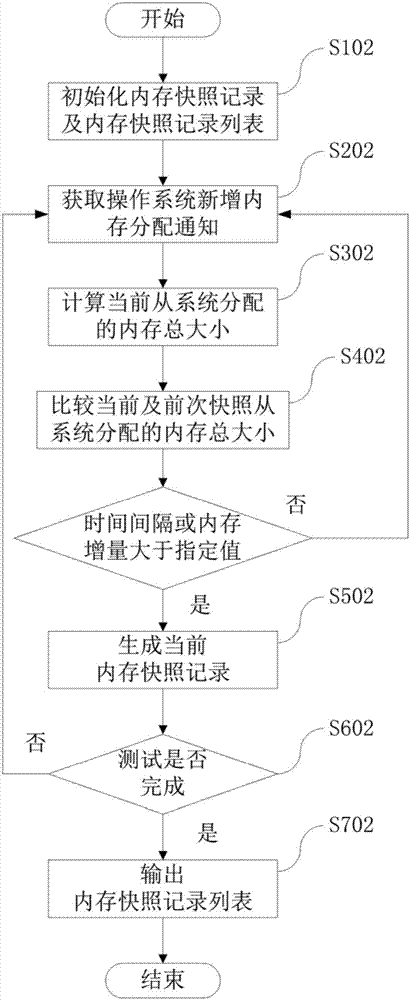

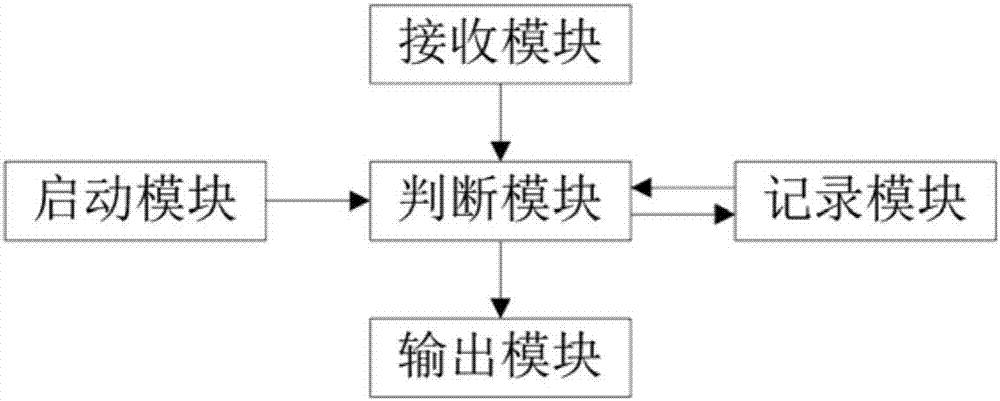

Method and device for automatic snapshot of inner memory

InactiveCN107480047AMonitor memory changesOptimize memory usageHardware monitoringSoftware testing/debuggingOperational systemMobile phone

Disclosed is a method for automatic snapshot of an inner memory. The method comprises the steps of initializing inner memory snapshot records and a list of the inner memory snapshot records, setting the total size of the allocated inner memory for a current inner memory snapshot record as zero, and storing the current inner memory snapshot record to the list of the inner memory snapshot records; by monitoring a function of allocating inner memories to an operation system from a Mono platform of a Unity engine, obtaining a notification for new inner memory allocation from the operation system; according to the received notification for new inner memory allocation from the operation system, calculating the total size of the inner memory currently allocated from the system; calculating the difference between the total size of the inner memory allocated in a current moment and the total size of the allocated inner memory in the previous inner memory snapshot record; automatically generating the current inner memory snapshot records according to the difference and / or the interval between the current moment and the moment of the previous memory snapshot record, and storing the current inner memory snapshot record to the list of the inner memory snapshot records. The method for the automatic snapshot of the inner memory has the advantages that developers of mobile phone applications can conveniently monitor the changing conditions of the inner memory in the running process of a game.

Owner:ZHUHAI KINGSOFT ONLINE GAME TECH CO LTD +1

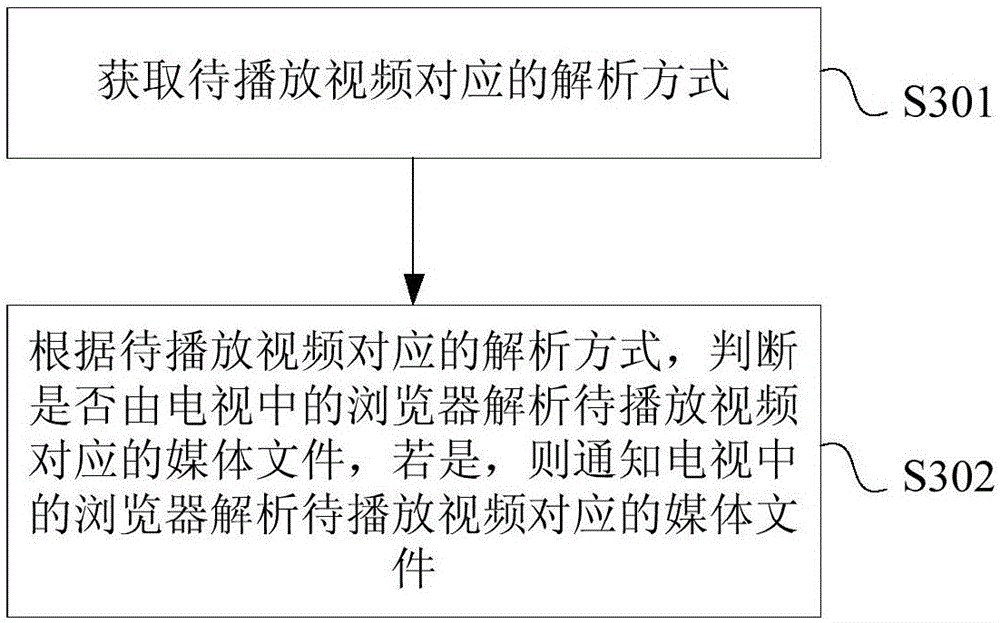

Video playing method in television and television

ActiveCN106254961AImprove processing efficiencyOptimize memory usageSelective content distributionComputer graphics (images)Decodes

The invention provides a video playing method in a television and the television. The method comprises the steps that audio and video data corresponding to a video to be played and a decoder identifier which decodes the audio data and the video data are acquired, wherein audio and video data and the decoder identifier are acquired a browser in the television and the browser parses a media file corresponding to the video to be played; according to the decoder identifier, audio and video data are respectively decoded; and the decoded audio data and the decoded video data are respectively played. The method comprises the steps that the browser in the television parses the media file corresponding to the video to be played; and a bottom player of the television only needs to directly receive the parsed audio data and video data, and decodes, outputs and displays the audio data and the video data. Normal network video playback is ensured.

Owner:HISENSE VISUAL TECH CO LTD

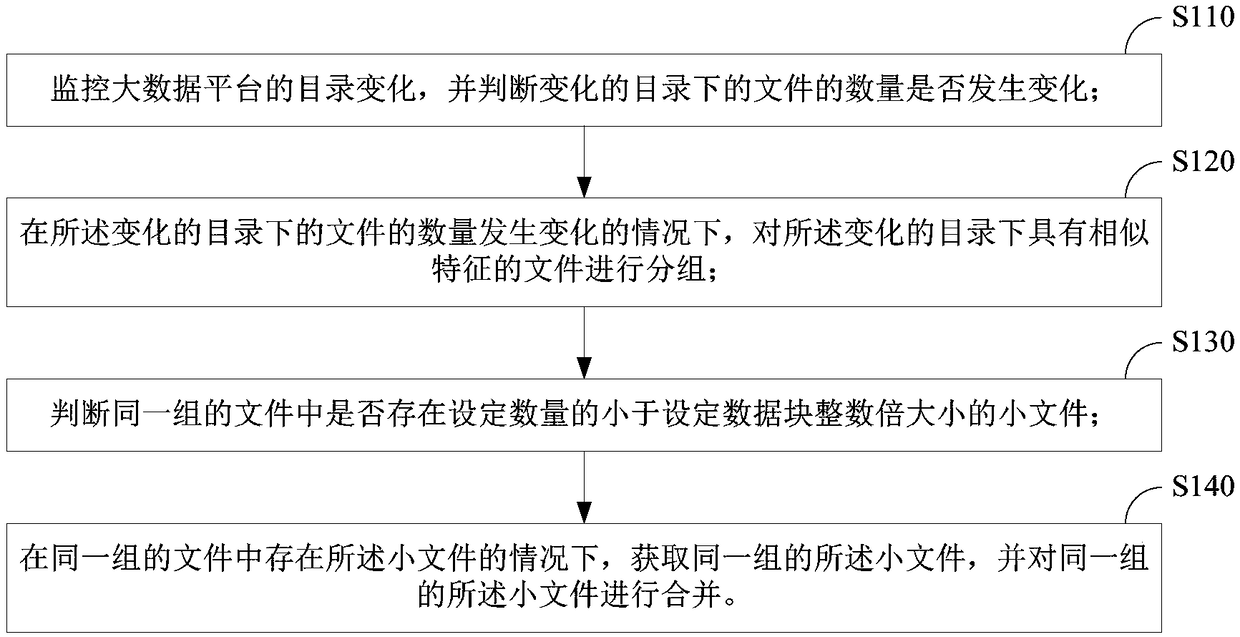

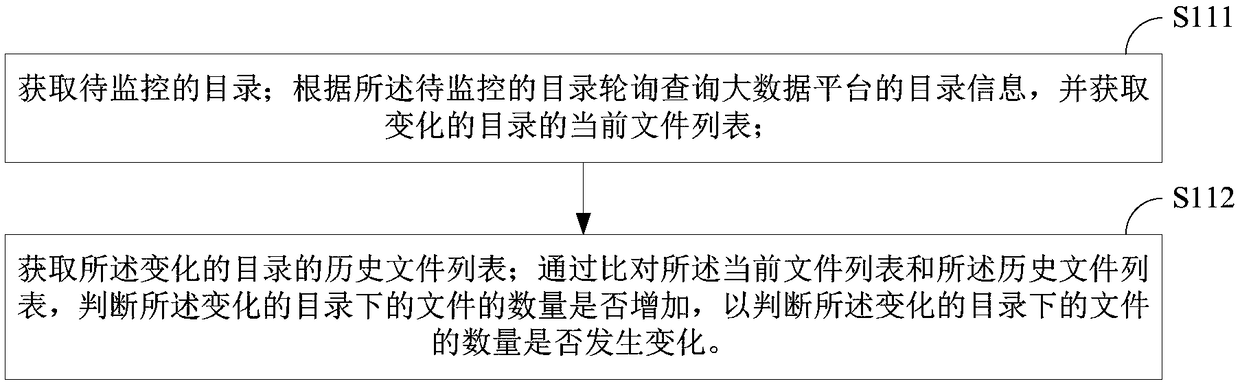

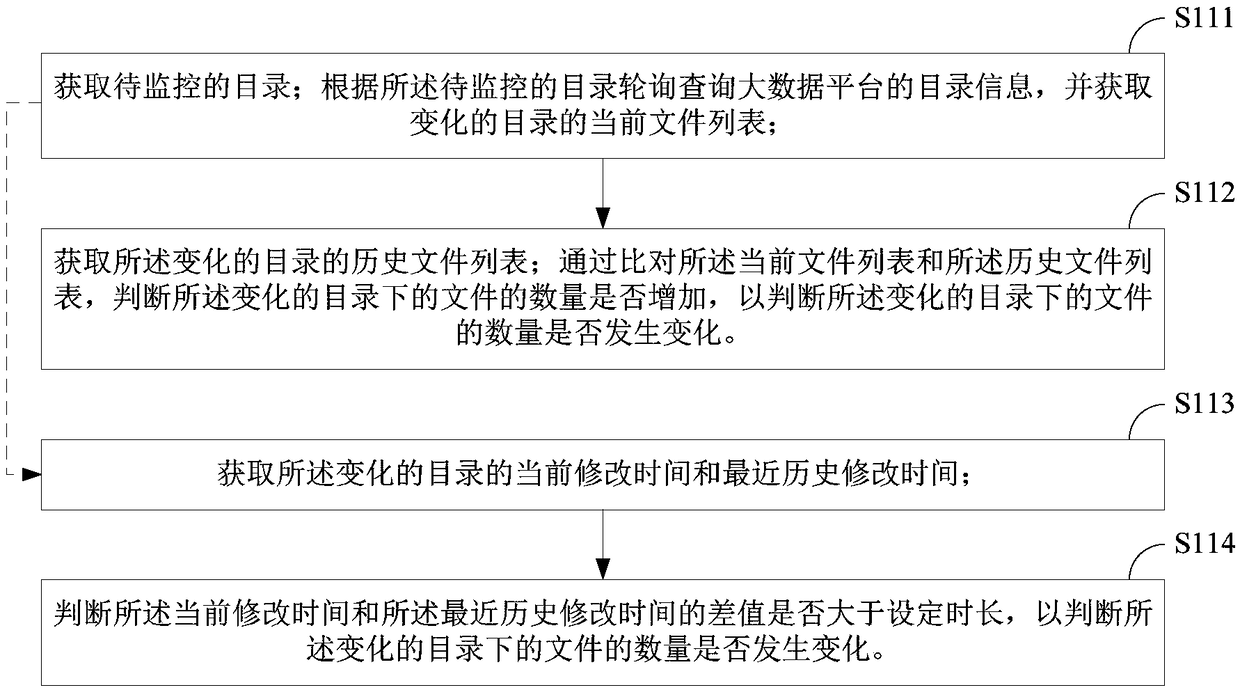

File merging method and device for big data platform

ActiveCN109446165ASave resourcesImprove real-time performanceFile/folder operationsFile system typesMemory footprintData platform

The invention provides a file merging method and device of a big data platform. The method comprises the following steps of monitoring the directory change of the big data platform, and judging whether the number of files under the changed directory changes or not; grouping files having similar characteristics under the changed directory in a case where the number of files under the changed directory changes; judging whether there are small files smaller than an integer multiple of a set number of data blocks in the same group of files; when the small files exist in the files of the same group, obtaining the small files of the same group, and merging the small files of the same group. Through the above scheme, small files can be reduced, memory usage of namenode can be optimized, and the large data platform can accommodate more files.

Owner:CHINA UNITECHS

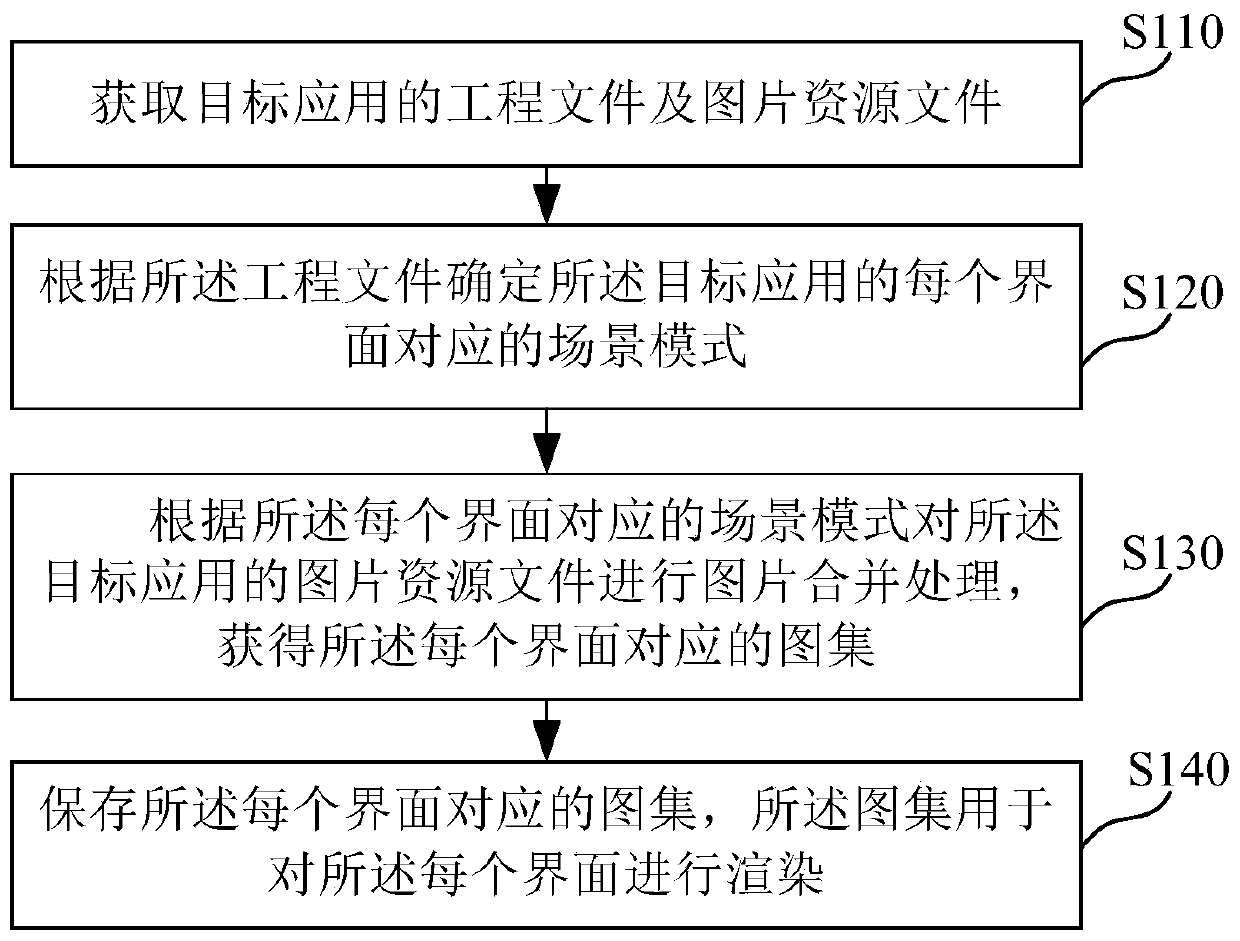

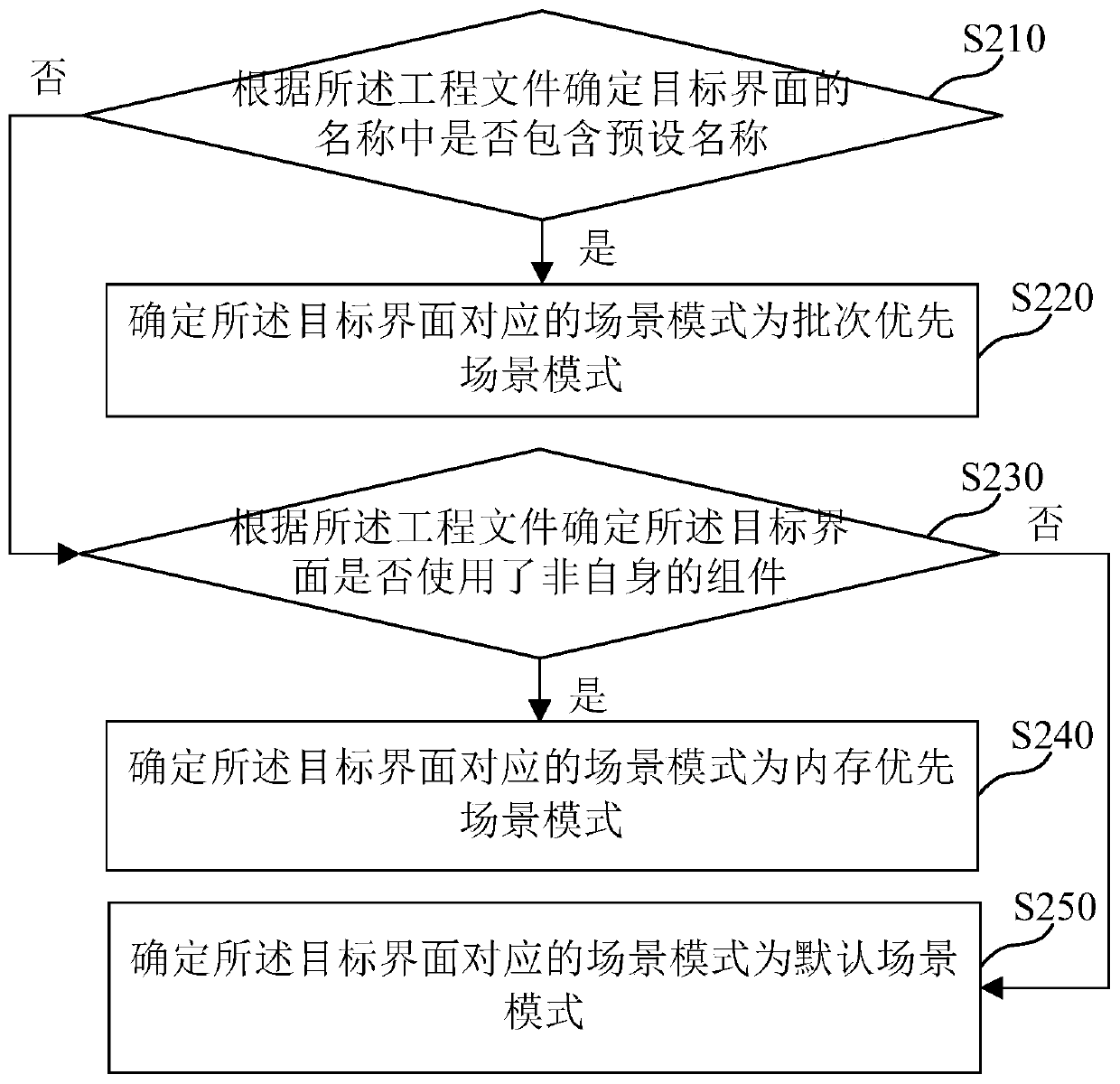

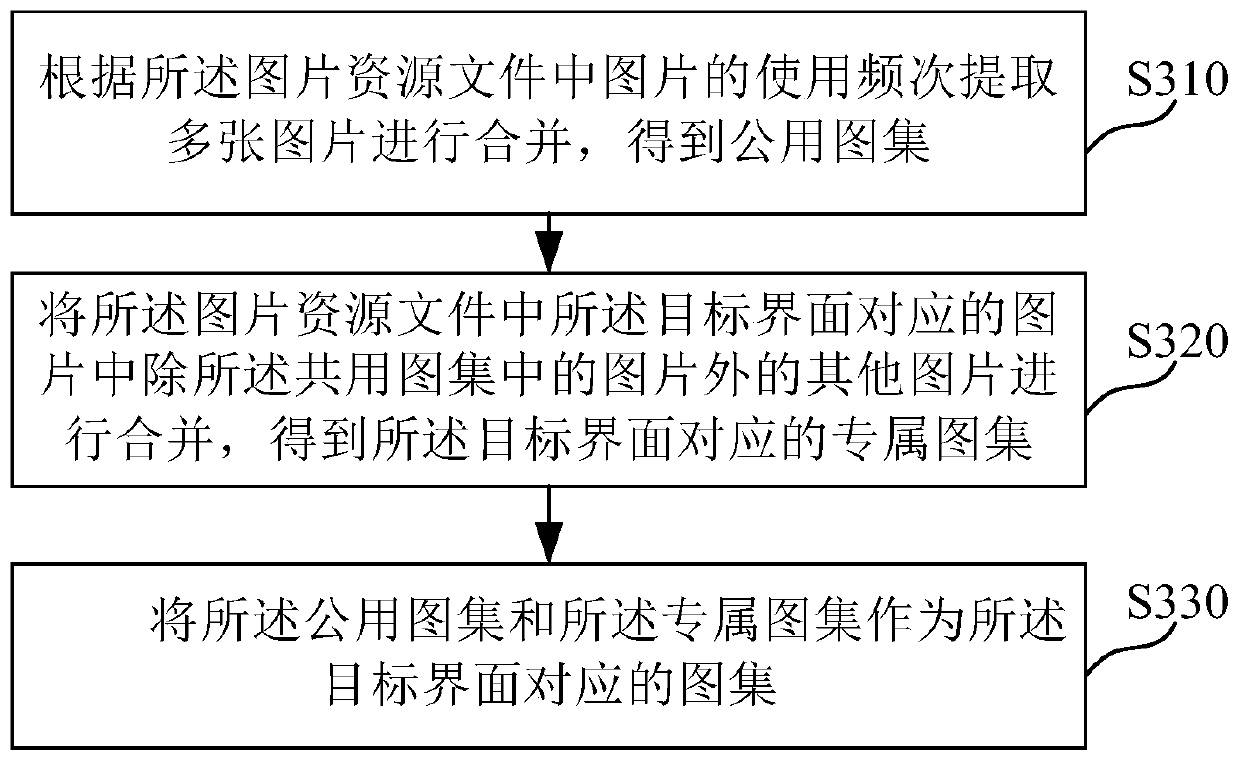

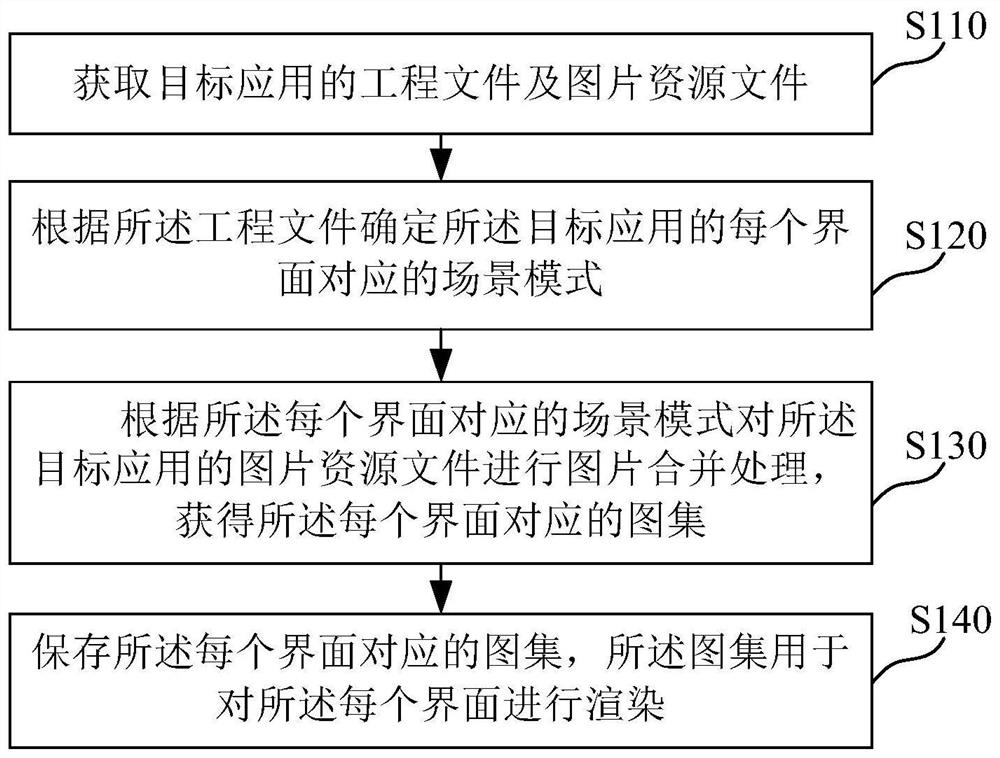

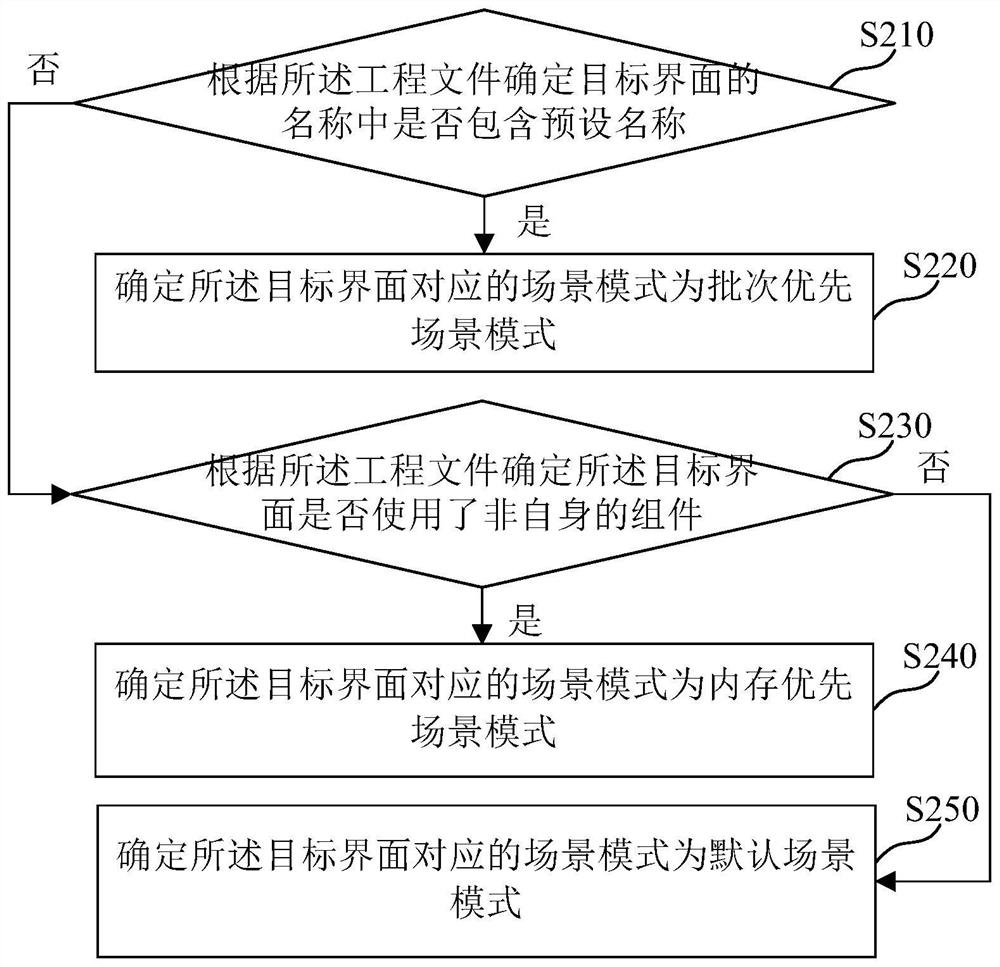

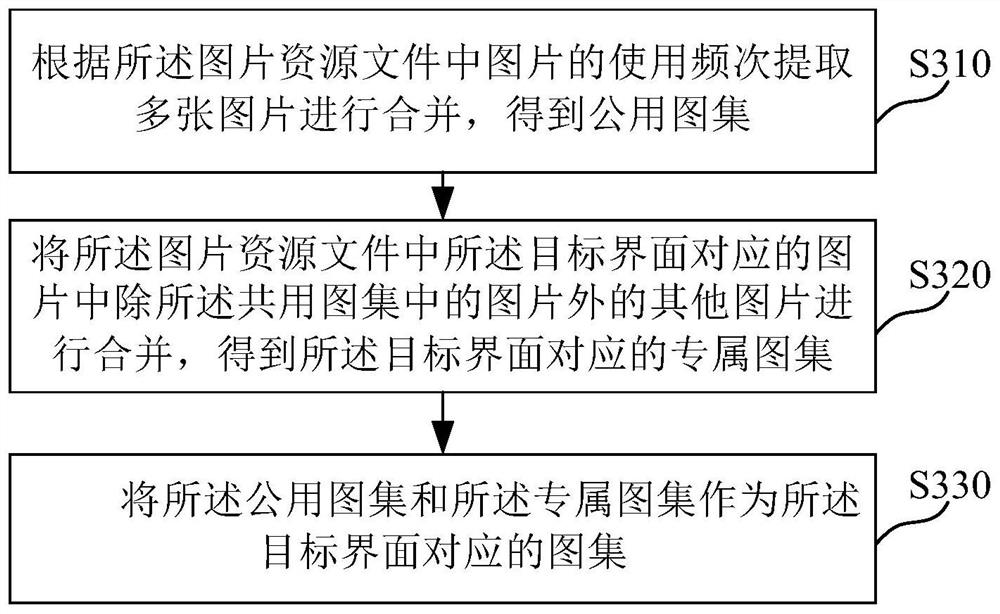

Interface rendering processing method and device

ActiveCN111026493AImprove performanceReduce overheadExecution for user interfaces3D-image renderingComputer hardwareComputer graphics (images)

The invention relates to the technical field of computers, and provides an interface rendering processing method and device, a computer readable storage medium and electronic equipment. The method comprises the steps of obtaining an engineering file and a picture resource file of a target application; determining a scene mode corresponding to each interface of the target application according to the engineering file; performing picture merging processing on the picture resource file of the target application according to the scene mode corresponding to each interface to obtain a picture set corresponding to each interface; and storing the atlas corresponding to each interface, wherein the atlas is used for rendering each interface. According to the technical scheme, rendering batches can be effectively reduced, and the interface rendering efficiency can be improved.

Owner:网易(上海)网络有限公司

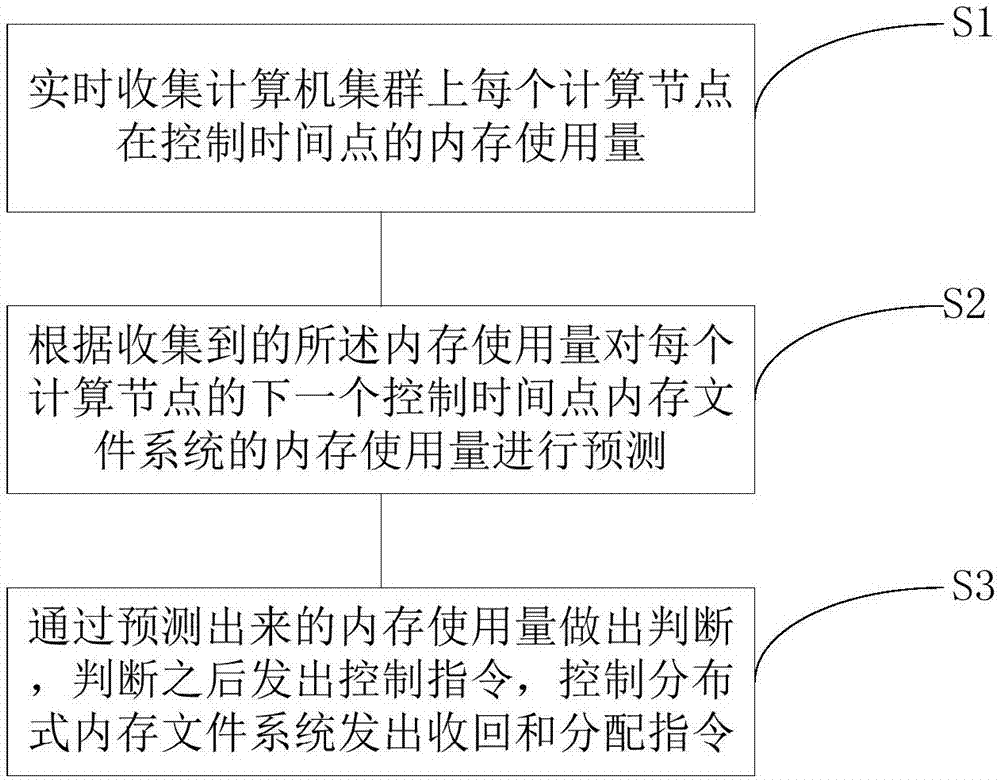

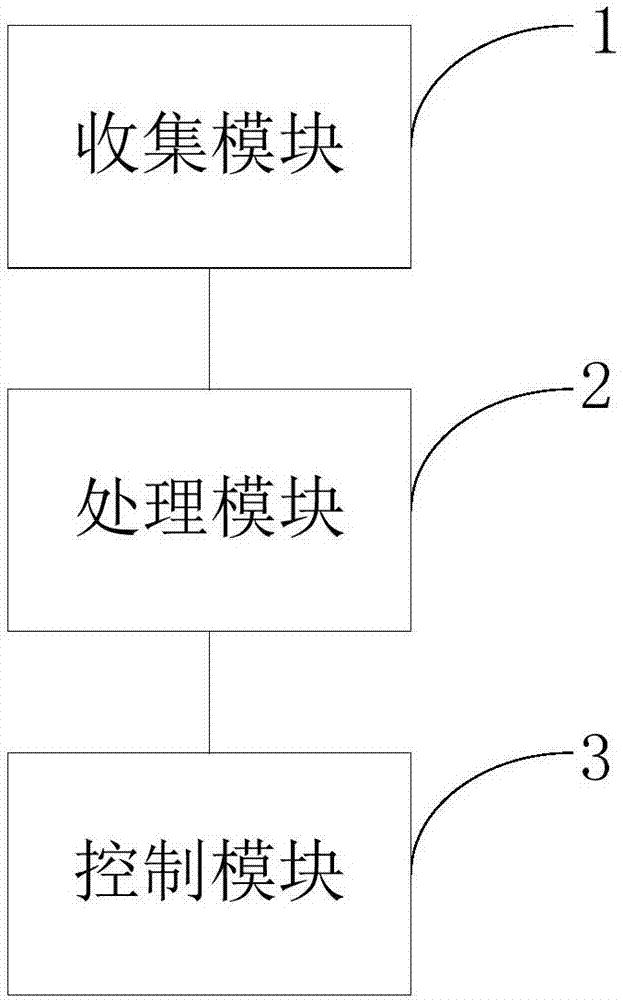

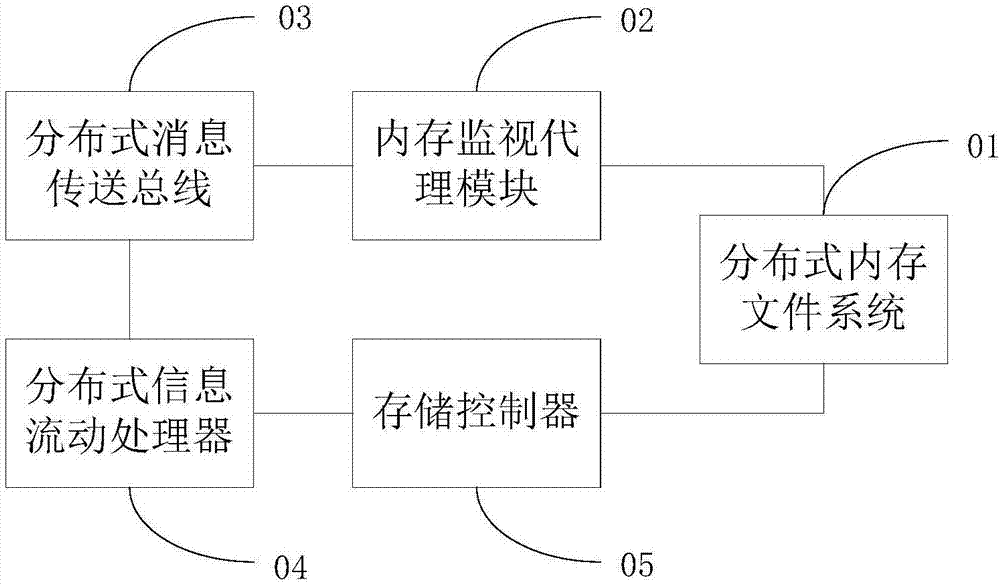

Real-time dynamic management method and system for distributed memory file system

InactiveCN107341055AOptimize memory usageImprove computing efficiencyResource allocationTransmissionDistributed memoryComputer cluster

The invention provides a real-time dynamic management method and system for a distributed memory file system applied to a computer cluster. The method is used for dynamically adjusting a memory usage amount of the distributed memory file system on the computer cluster. The method comprises the following steps of collecting a memory usage amount of each computing node on the computer cluster at a control time point in real time; predicting a memory usage amount of the memory file system at the next control time point of each computing node according to the collected memory usage amount; and performing judgment through the predicted memory usage amount, and sending out a control instruction after the judgment to control the distributed memory file system to send out recovery and allocation instructions. By adopting the dynamic control system and method, the memory use of the computer cluster can be optimized, so that the efficiency of data-intensive and compute-intensive computing on the computer cluster is improved.

Owner:杭州知物数据科技有限公司

Reduced complexity adaptive filter

ActiveUS7461113B2Optimize memory usageEfficient implementationMultiple-port networksAdaptive networkAdaptive filterParallel computing

A FIR filter for use in an adaptive multi-channel filtering system, includes a first memory for storing data, and a second memory for storing filter coefficients. The second memory stores only non-zero valued coefficients or coefficients that are above a predetermined magnitude threshold such that the overall number of coefficients processed is significantly reduced.

Owner:IP GEM GRP LLC

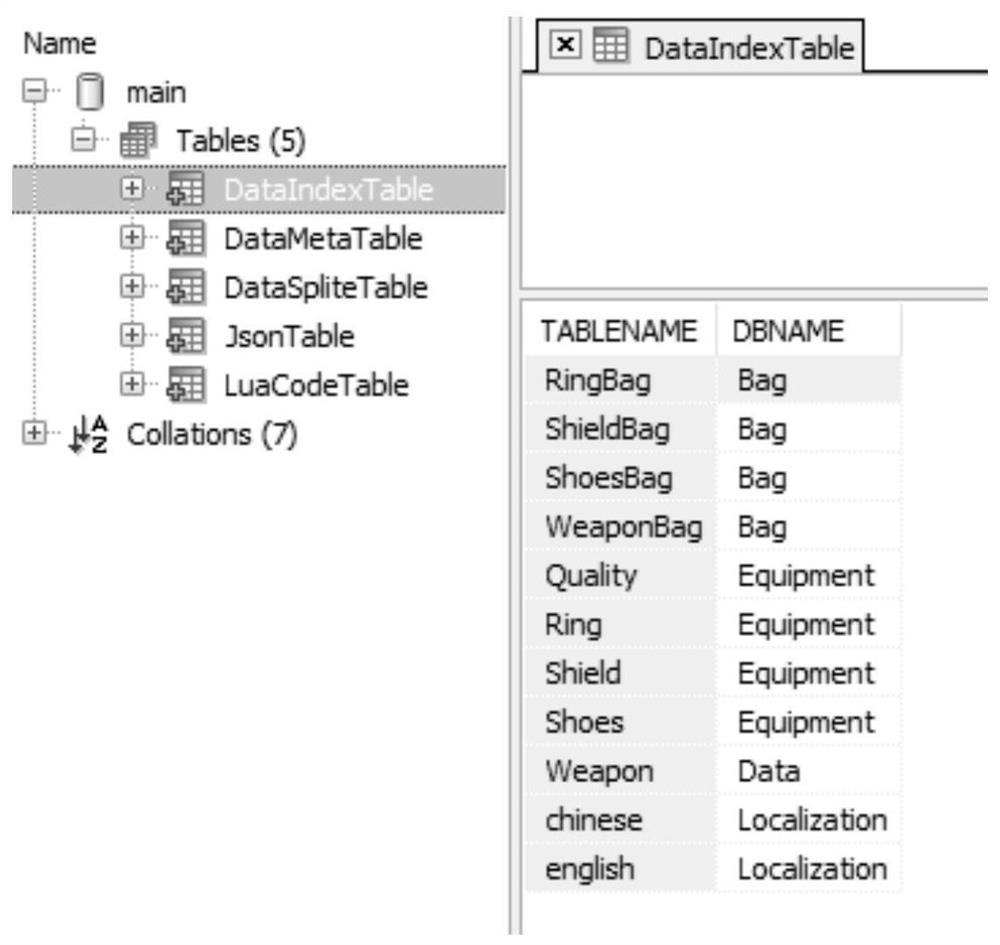

Game configuration data calling method, device, storage medium and electronic device

PendingCN113900702AOptimize memory usageSolve high-occupancy technical issuesDatabase management systemsVersion controlComputer hardwareTable (database)

The invention provides a game configuration data calling method, device, a storage medium and an electronic device.The method comprises the steps that configuration information of a plurality of source table files is read, and the configuration information is used for indicating data use rules of the source table files; aiming at each source table file, based on the configuration information, the game configuration data in the source table file is exported into a database file of a database engine, and the database file is stored on a disk; and calling the game configuration data to a memory from the database file by adopting an embedded script. Through the method and the device, the technical problem of high memory occupation caused by calling the game configuration data from the outside in related technologies is solved, and the memory occupation during running is effectively reduced.

Owner:BEIJING PERFECT WORLD SOFTWARE TECH DEV CO LTD

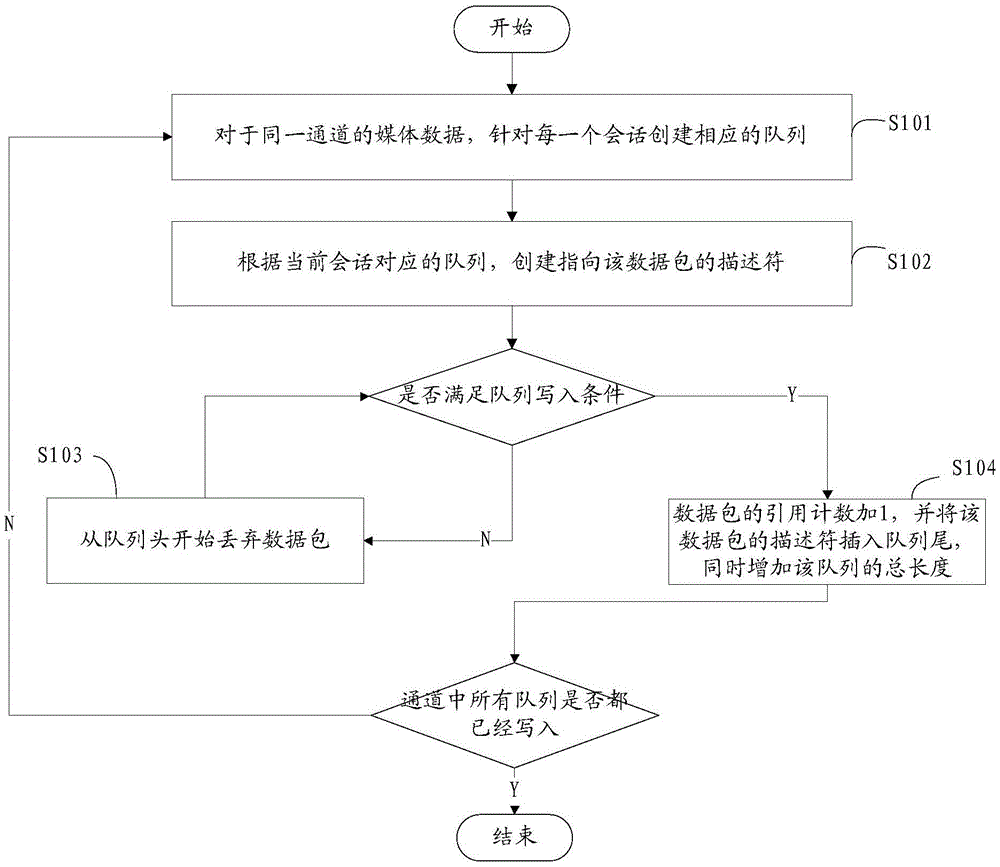

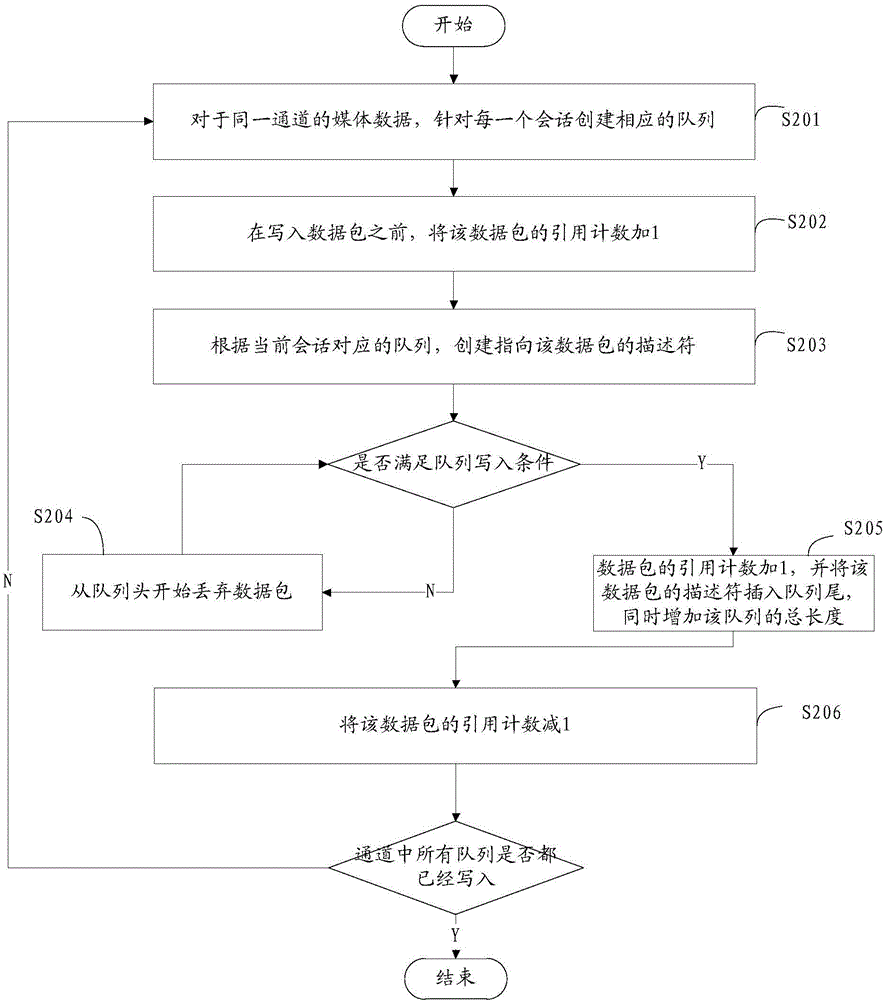

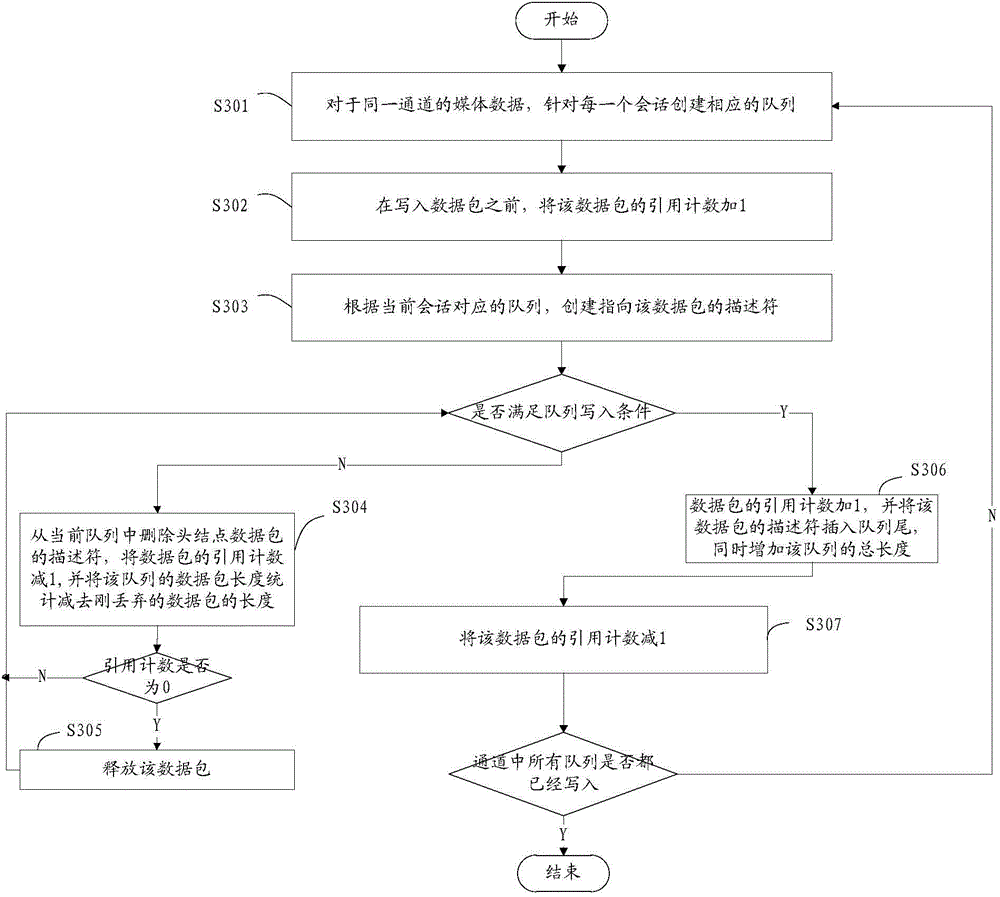

Read-write method and read-write device for shared queue

InactiveCN104063452AOptimize memory usageImprove efficiencyTelevision systemsSpecial data processing applicationsNetwork packetTail

The invention is suitable for the technical field of multichannel retransmission of audio / video, and provides a writing method and a writing device for a shared queue. The writing method comprises the following steps: for media data in the same channel, establishing descriptors pointing to data packages according to a queue corresponding to the current session; when the writing conditions of the queue are not met, dropping the data packages beginning from the head of the queue until the writing conditions are met; when the writing conditions of the queue are met, adding one on reference counting of the data packages, and inserting the descriptors of the data packages in the tail of the queue, and increasing the total length of the queue at the same time. Sessions in the same video channel own respective queues, the descriptors are stored in the queues, and the queues are managed by adopting the manner of reference counting for the application of the same data package; the queue management is combined with shared cache, and the management style that a group of data is shared by multiple queues is realized, so that the memory usage in an embedded system is greatly optimized, and the efficiency and stability of the system are improved.

Owner:武汉众智数字技术有限公司

Indirectly accessing sample data to perform multi-convolution operations in a parallel processing system

ActiveUS10255547B2Optimize memory usageLower latencyMemory adressing/allocation/relocationCharacter and pattern recognitionLine tubingParallel processing

In one embodiment of the present invention, a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch based on one or more start addresses and one or more offsets. Subsequently, the pipeline copies data from the source locations to the image tile. The pipeline then performs matrix multiplication operations between the image tile and a filter tile to generate a contribution of the image tile to an output matrix. To optimize the amount of memory used, the pipeline creates each image tile in shared memory as needed. Further, to optimize the throughput of the matrix multiplication operations, the values of the offsets are precomputed by a convolution preprocessor.

Owner:NVIDIA CORP

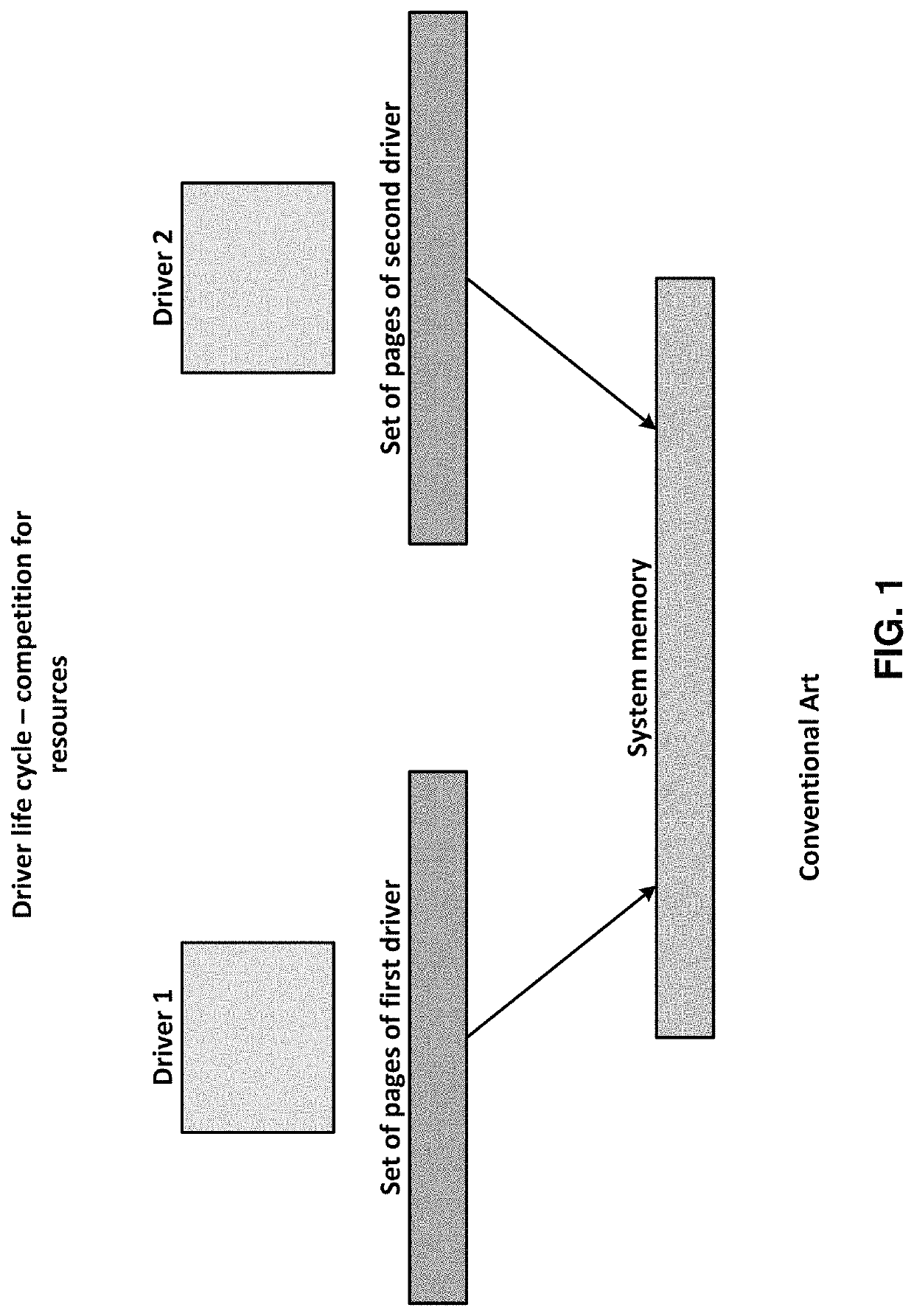

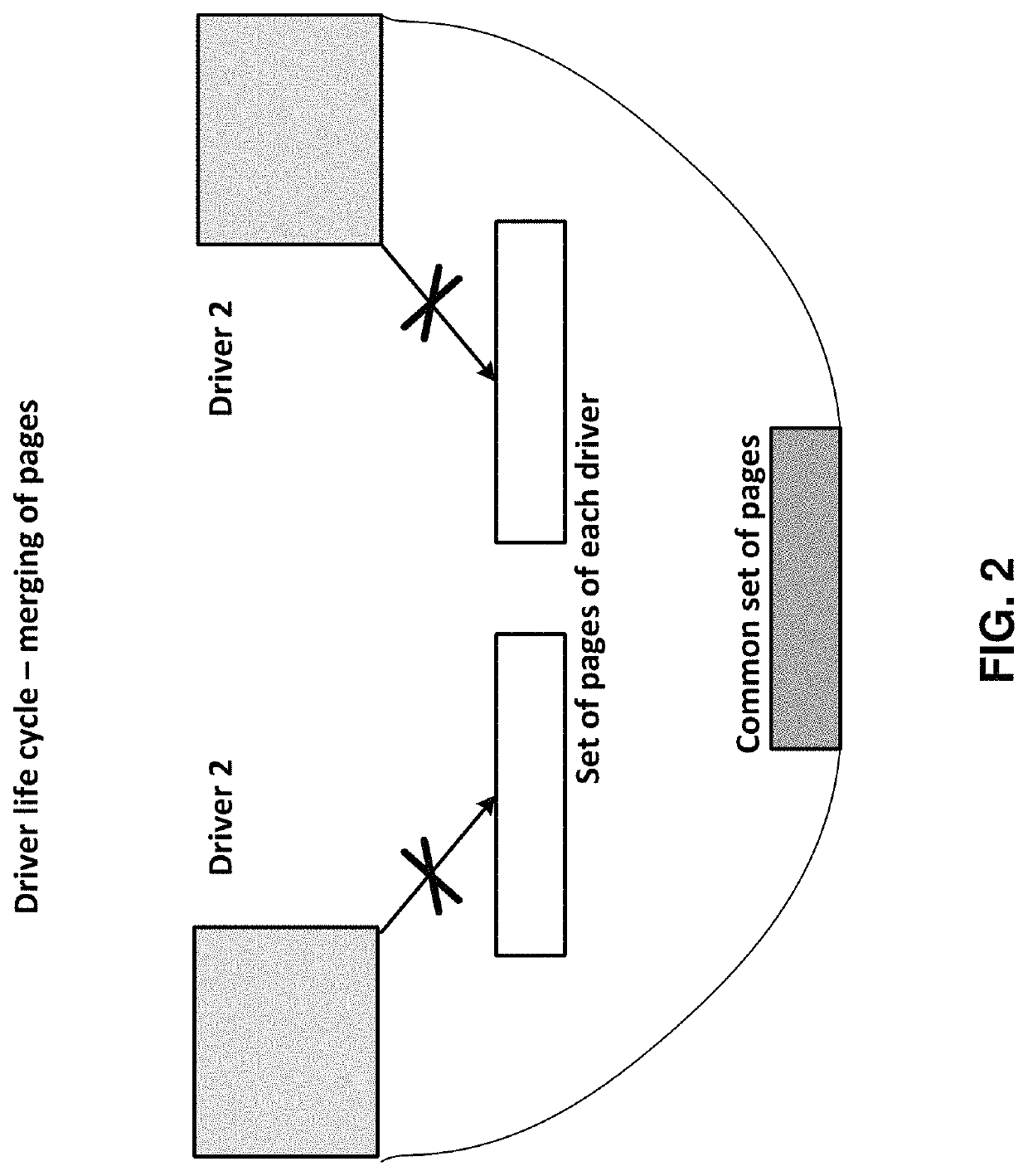

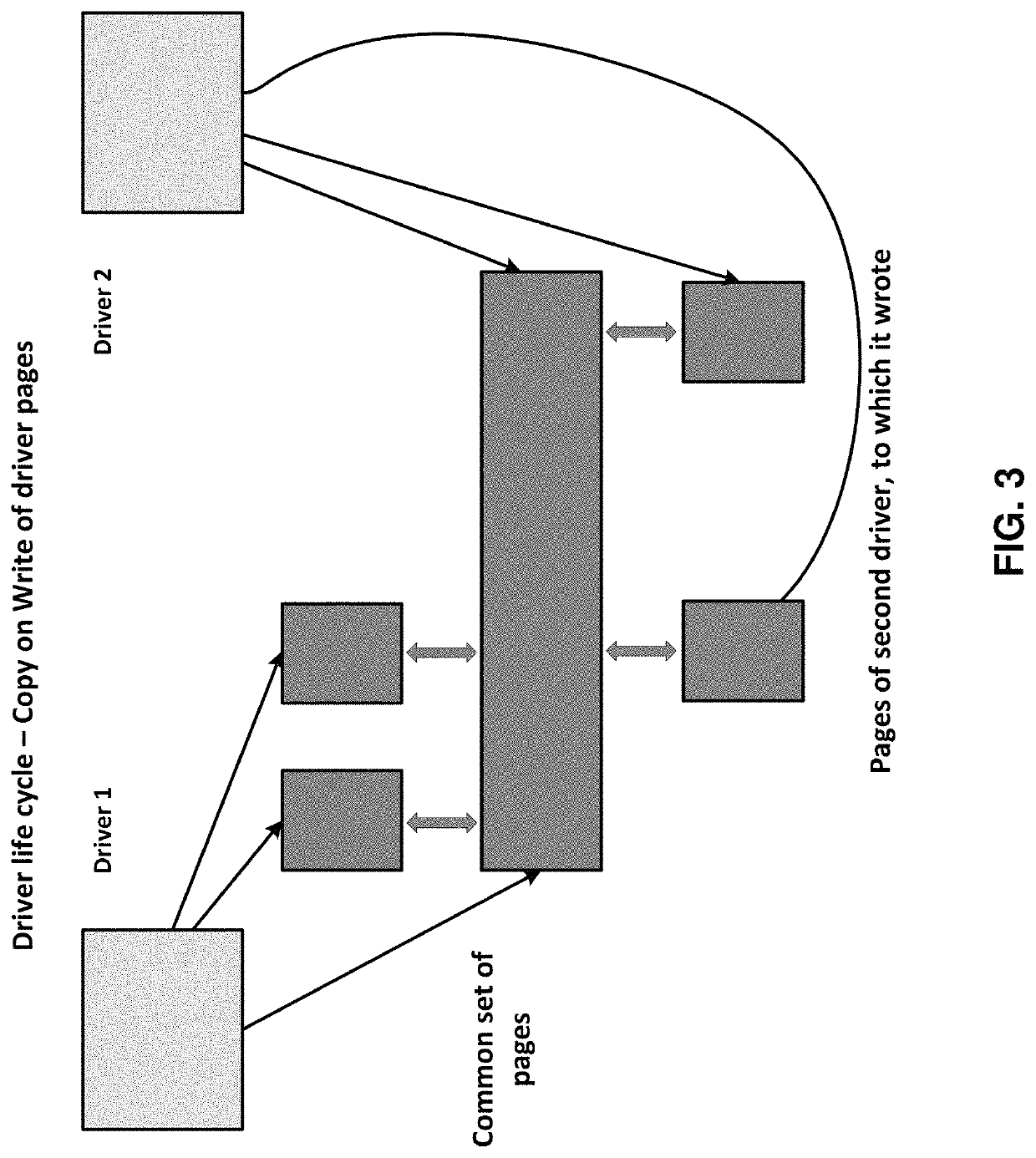

Method and system for sharing driver pages

ActiveUS10713181B1Optimize memory usageSave resourcesMemory architecture accessing/allocationBootstrappingVirtual memoryVirtualization

On a computer system having a processor, a single OS and a first instance of a system driver installed and performing system services, method for sharing driver pages among Containers, including instantiating a plurality of Containers that virtualize the OS, wherein the first instance is loaded from an image, and instantiating a second instance of the system driver upon request from Container for system services by: allocating virtual memory pages for the second instance and loading, from the image, the second instance into a physical memory; acquiring virtual addresses of identical pages of the first instance compared to the second instance; mapping the virtual addresses of the identical pages of the second instance to physical pages to which virtual addresses of the corresponding pages of the first instance are mapped, and protecting the physical pages from modification; and releasing physical memory occupied by the identical pages of the second instance.

Owner:VIRTUOZZO INT GMBH

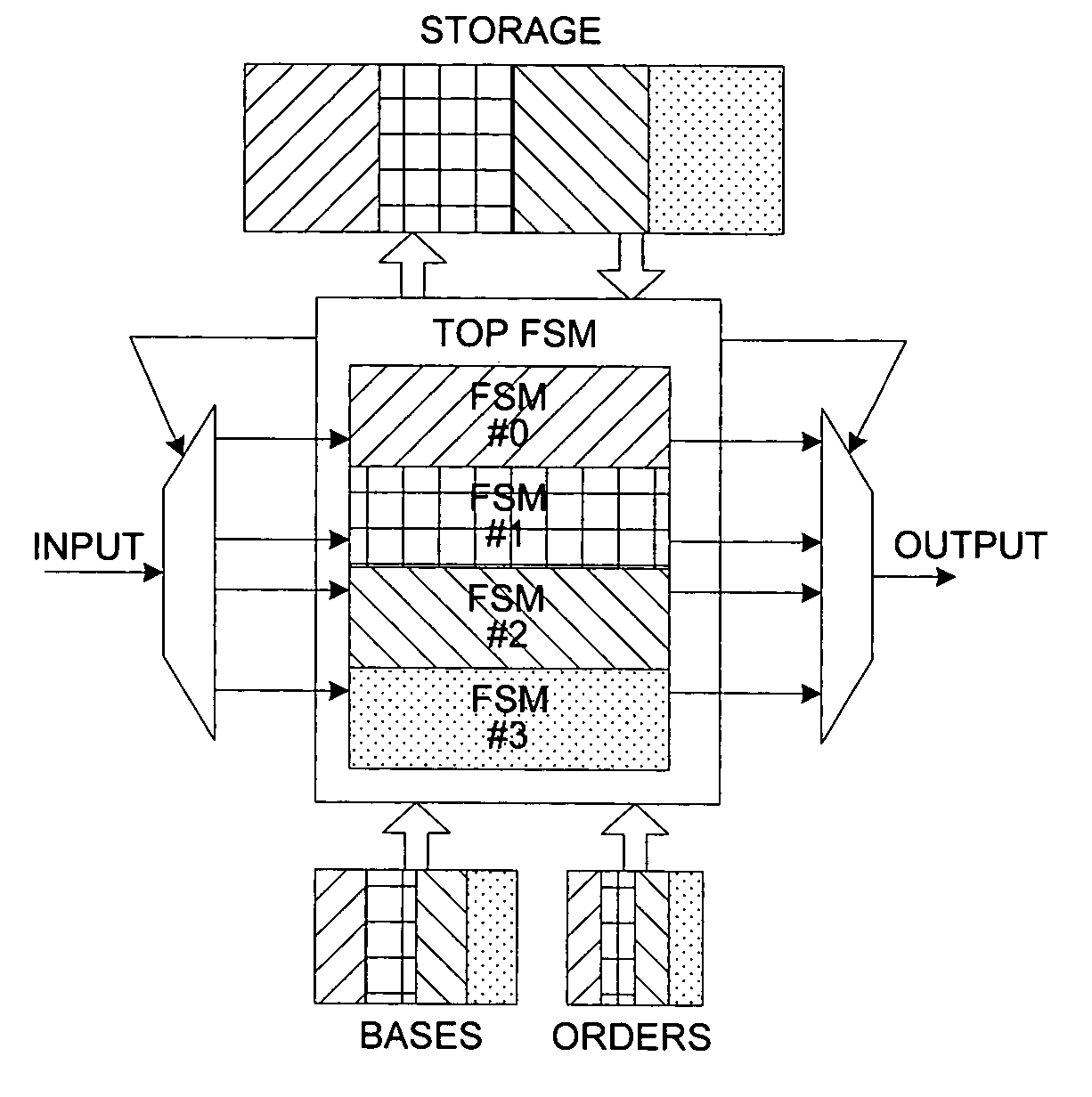

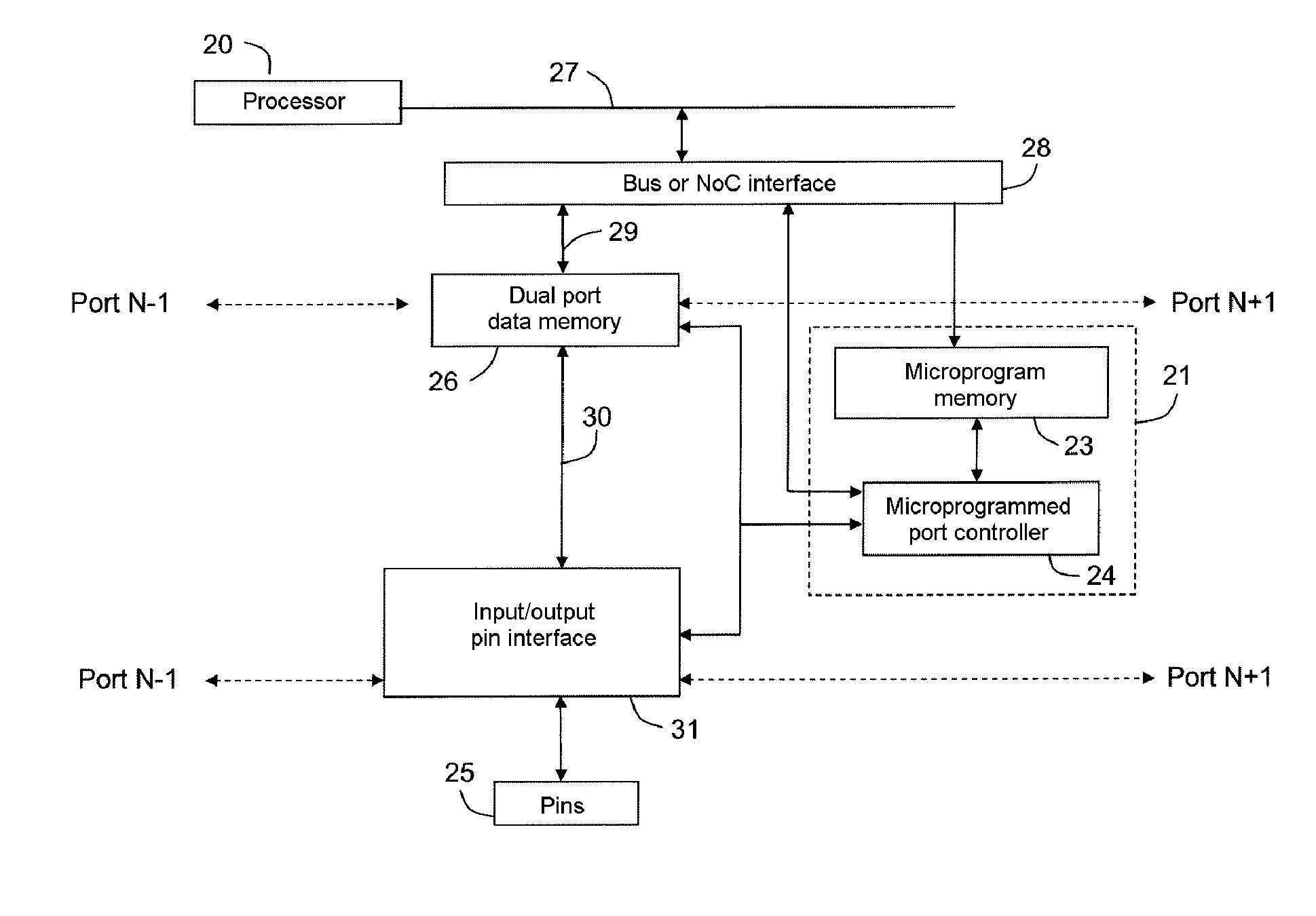

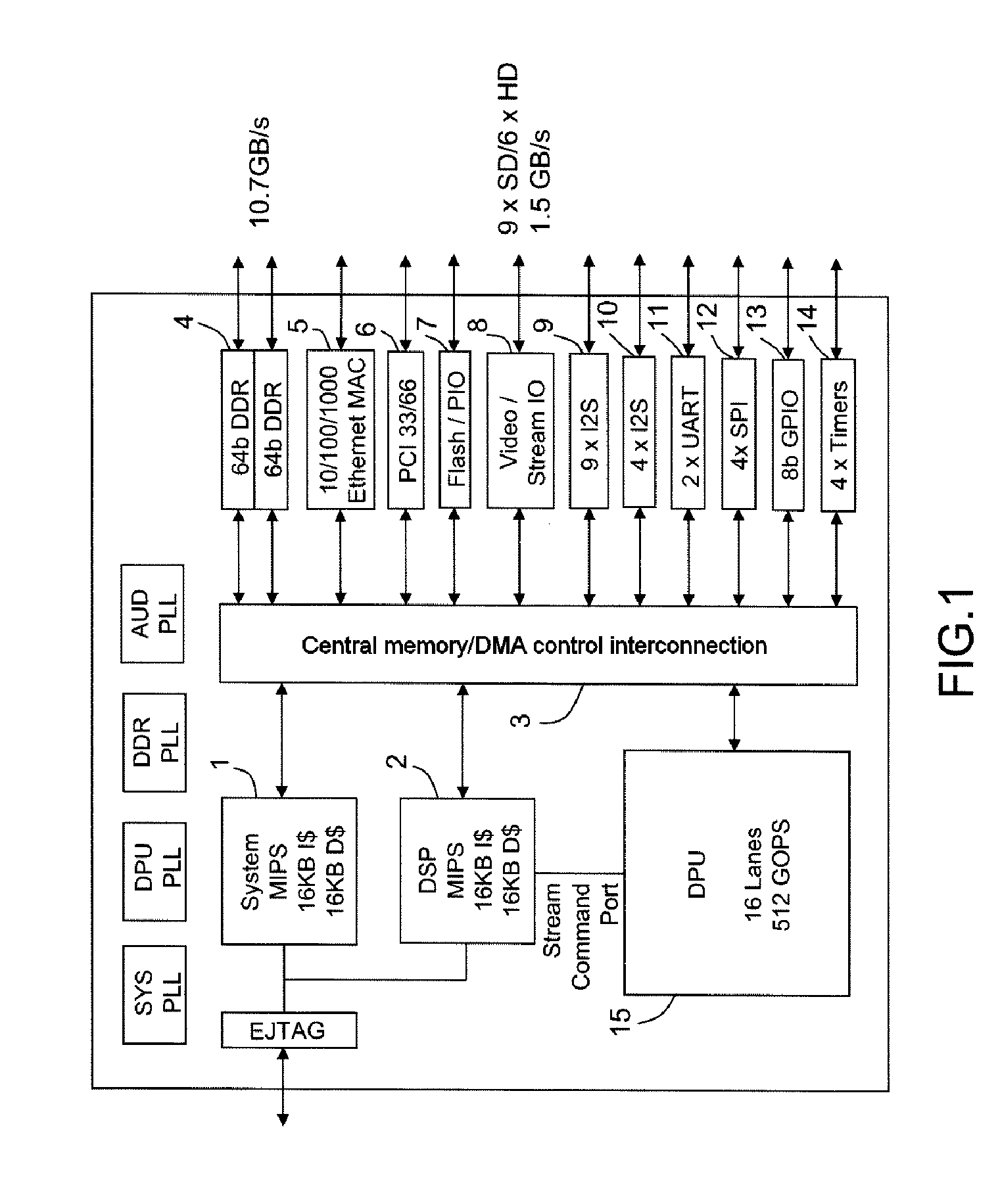

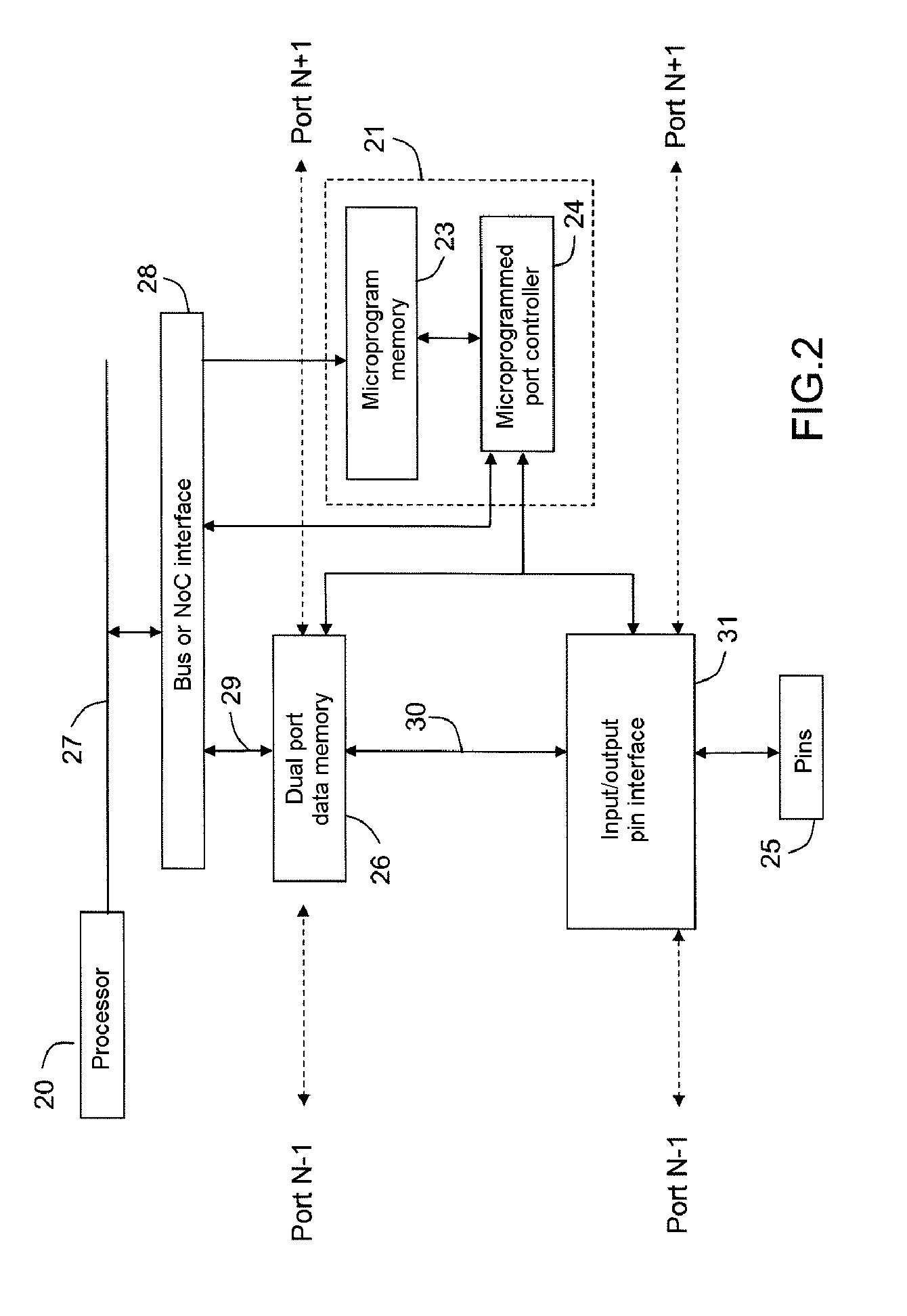

Circuit comprising a microprogrammed machine for processing the inputs or the outputs of a processor so as to enable them to enter or leave the circuit according to any communication protocol

ActiveUS20110060893A1Allow optimizationResource sharingDigital computer detailsTransmissionComputer science

A circuit having at least one processor and a microprogrammed machine for processing the data which enters or leaves the processor in order to input or output the data into / from the circuit in compliance with a communication protocol.

Owner:COMMISSARIAT A LENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES

Optimized interleaver and/or deinterleaver design

InactiveUS7502390B2Optimize memory usageSimple designCode conversionTime-division multiplexShift registerData signal

An apparatus comprising an input circuit, a storage circuit and an output circuit. The input circuit may be configured to generate a plurality of data paths in response to an input data signal having a plurality of data items sequentially presented in a first order. The storage circuit may be configured to store each of the data paths in a respective shift register chain. The output circuit may be configured to generate an output data signal in response to each of the shift register chains. The output data signal presents the data items in a second order different from said first order.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Direct memory access system and method

InactiveUS7836221B2Improve acceleration performanceImprove transmission efficiencyInput/output processes for data processingData conversionDirect memory accessData access

Owner:SONIX TECH

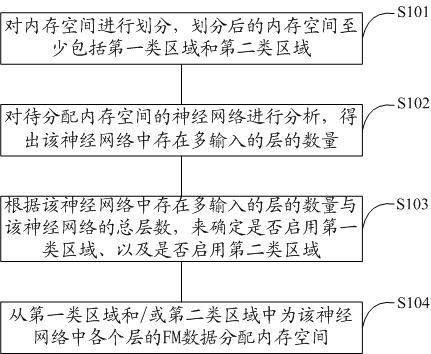

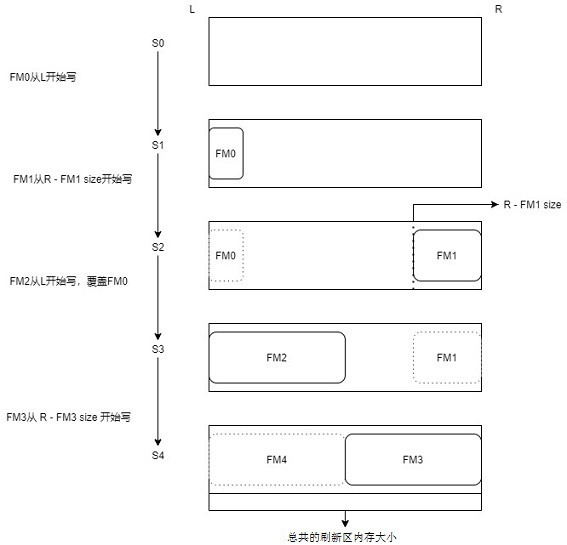

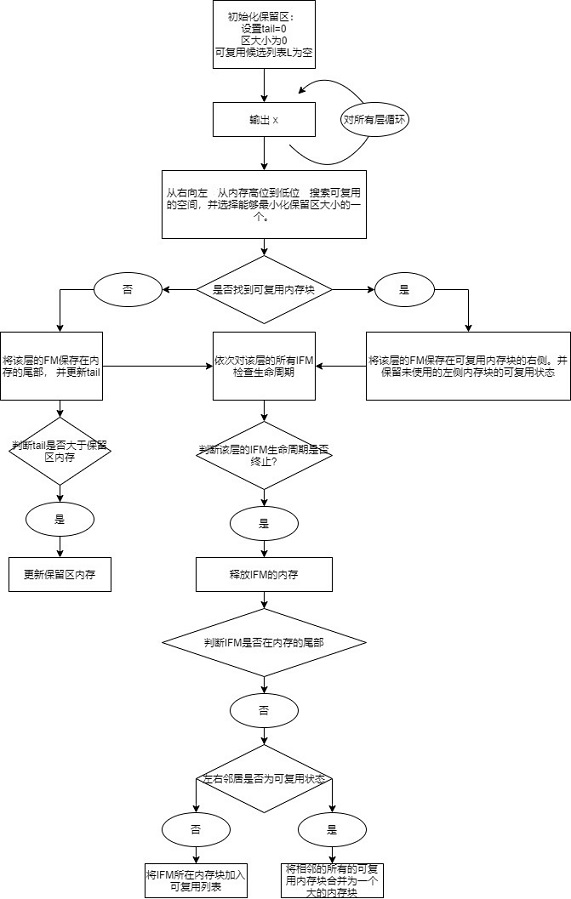

Memory management method and device for neural network reasoning

ActiveCN112256440AReduce memory usageOptimize memory usageResource allocationBiological neural network modelsMulti inputMemory footprint

The invention discloses a memory management method and device for neural network reasoning, and the method comprises the steps: dividing a memory space into a first type of region and a second type ofregion, enabling the first type of region to be only used for storing FM data with the life cycle of 1, and enabling the second type of region to be used for storing FM data with any life cycle; analyzing the neural network to which the memory space is to be allocated, and determining whether to start a first type of region and a second type of region according to the number of layers with multiple inputs in the neural network and the total number of layers of the neural network; and allocating a memory space to the FM data of each layer in the neural network from the first type of region and / or the second type of region. According to the method, a proper memory management strategy is adaptively selected according to the structure of the neural network, and memory use is optimized. According to the method, a greedy algorithm is used for searching for the optimal memory allocation scheme layer by layer, memory occupation of neural network reasoning can be reduced, and memory usage is minimized as much as possible.

Owner:SENSLAB INC

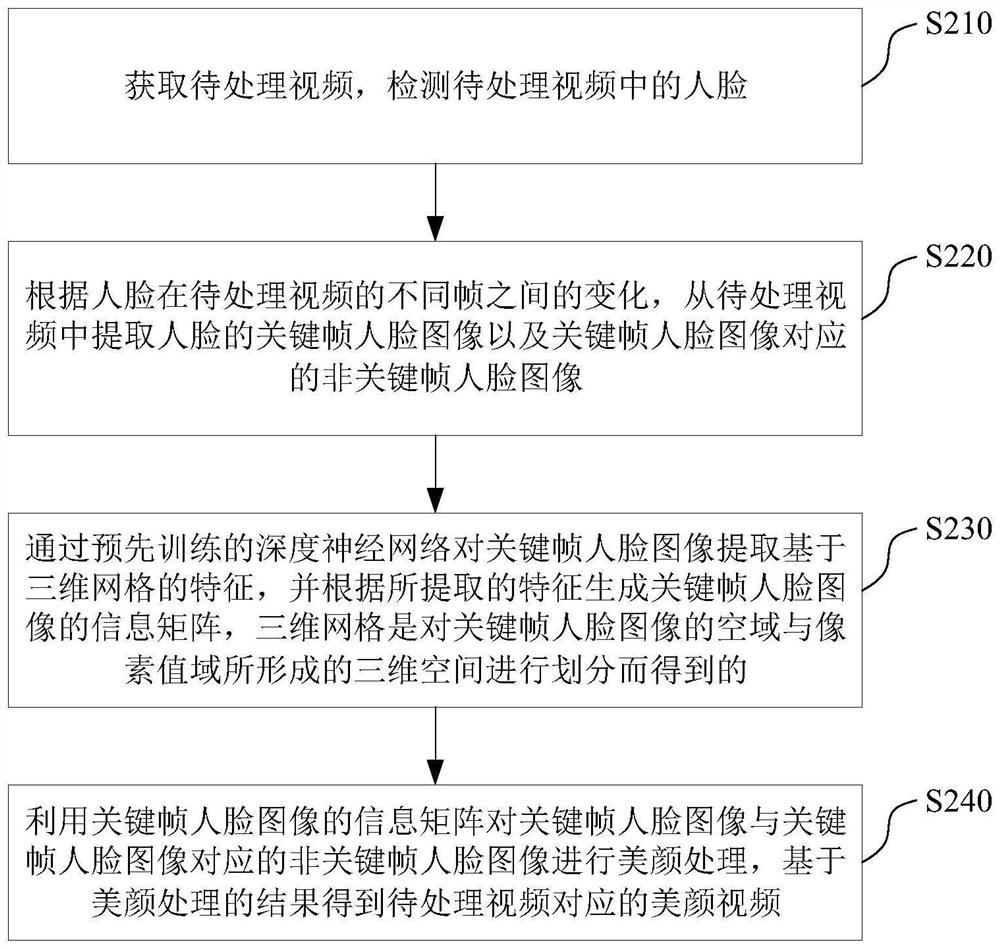

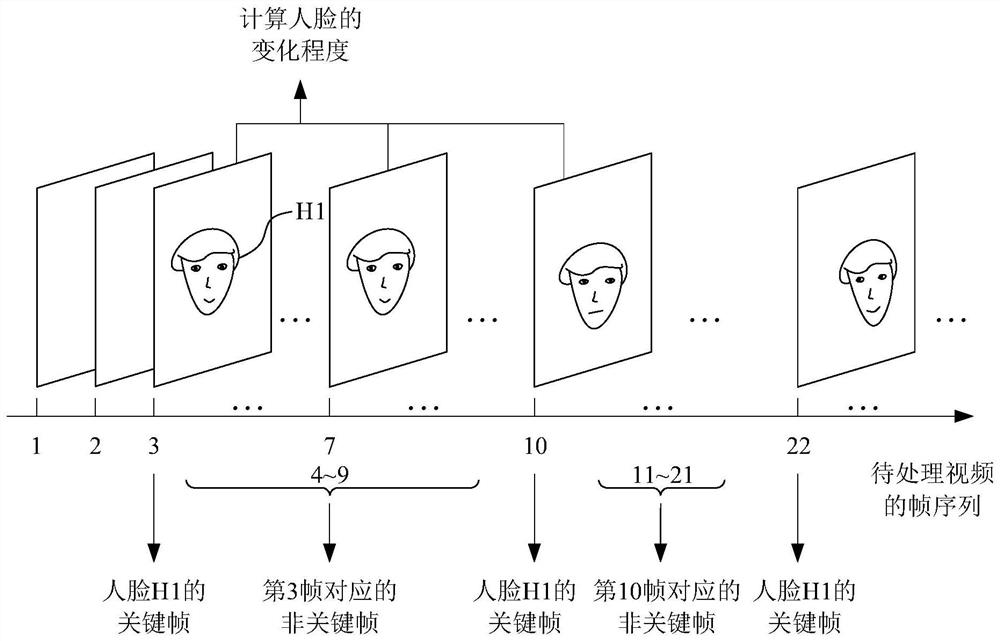

Video beautification processing method and device, storage medium and electronic equipment

PendingCN114565532ASimple processSmall amount of calculationImage enhancementImage analysisImage extractionVideo processing

The invention provides a video beautification processing method and device, a storage medium and electronic equipment, and relates to the technical field of image and video processing. The method comprises the following steps: acquiring a to-be-processed video, and detecting a face in the to-be-processed video; extracting a key frame face image of the face and a non-key frame face image corresponding to the key frame face image from the to-be-processed video according to the change of the face between different frames of the to-be-processed video; extracting features based on a three-dimensional grid from the key frame face image through a pre-trained deep neural network, and generating an information matrix of the key frame face image according to the extracted features; and performing facial beautification processing on the key frame face image and the non-key frame face image corresponding to the key frame face image by using the information matrix of the key frame face image, and obtaining a beautified video corresponding to the to-be-processed video based on a facial beautification processing result. According to the invention, the computing power resource overhead of video beautification processing is reduced.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

A stacked display method of progress bar with breathing light effect

ActiveCN107346250BImprove efficiencyTake up less resourcesExecution for user interfacesText displayProgress bar

The invention relates to a stacked display algorithm of a progress bar for a breathing lamp effect. The stacked display algorithm comprises the following steps of (1) carrying out background adaptive processing on the progress bar, wherein the progress bar is a circular progress bar, the background of the progress bar is round and the diameter of the circular progress bar is smaller than that of the background of the progress bar; (2) setting a control and drawing related parameters; (3) drawing the circular progress bar in a stacking manner in the background area of the progress bar; (4) displaying the breathing lamp effect; (5) starting a change thread of the breathing lamp; (6) drawing progress text displayed along with the progress; and (7) drawing description text at the center of the circular progress bar. Through the circular progress bar and surrounding of the breathing lamp effect, aesthetic, individual, intuitive and easy-to-understand progress bar showing is achieved, and the algorithm is high in efficiency, few in occupied resources, good in universality and suitable for an Android system.

Owner:BEIJING KUWO TECH

Processing method and device for interface rendering

ActiveCN111026493BImprove performanceReduce overheadExecution for user interfaces3D-image renderingComputer graphics (images)Computers technology

The present disclosure relates to the field of computer technology, and provides a processing method and device for interface rendering, a computer-readable storage medium, and electronic equipment. Wherein, the above method includes: obtaining the project file and image resource file of the target application; determining the scene mode corresponding to each interface of the target application according to the project file; The image resource file of the application performs image merging processing to obtain the atlas corresponding to each interface; save the atlas corresponding to each interface, and the atlas is used to render each interface. The technical solution of the present disclosure can effectively reduce rendering batches, and is beneficial to improving interface rendering efficiency.

Owner:网易(上海)网络有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com