Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

37 results about "Score fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

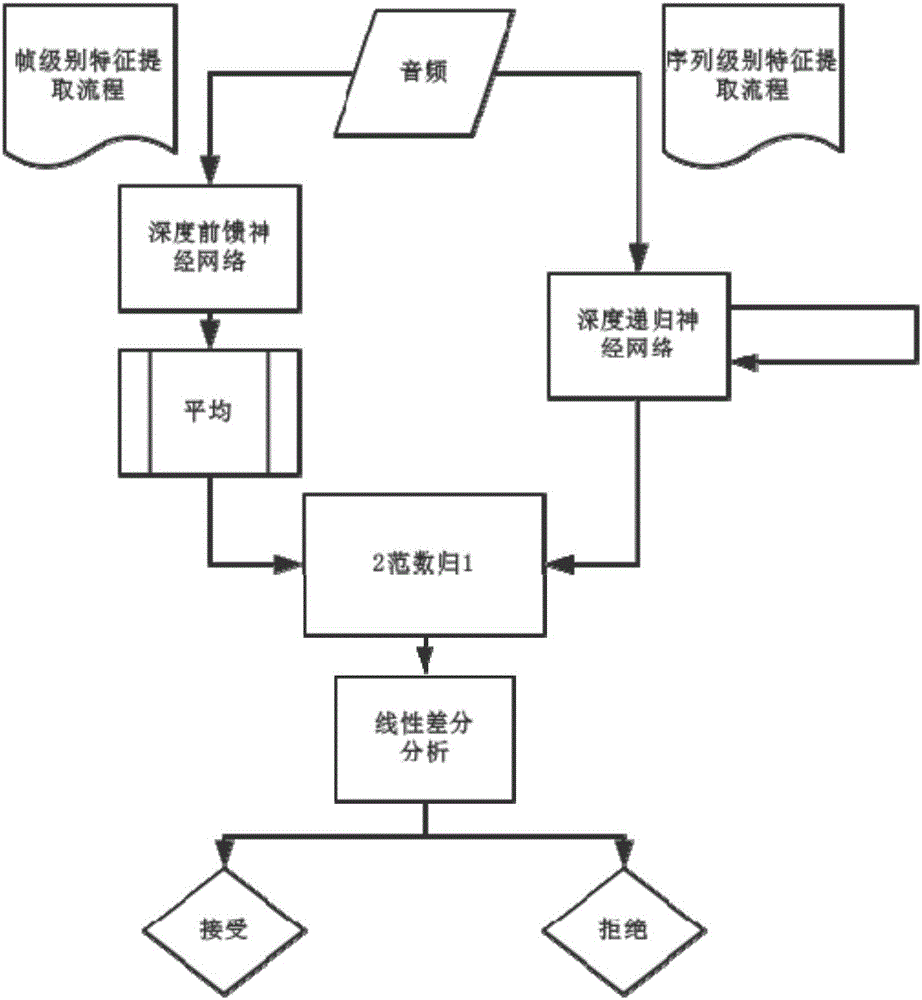

Method and system for detecting voice spoofing attack of speakers on basis of deep learning

The invention discloses a method and a system for detecting voice spoofing attack of speakers on the basis of deep learning. The method includes constructing audio-frequency training sets, initializing deep feed-forward neural networks and deep recurrent neural networks and respectively training the deep feed-forward neural networks and the deep recurrent neural networks by the aid of multi-frame feature vectors and single-frame vector sequences of the training sets; respectively leading frame level and sequence level feature vectors of to-be-tested audio frequencies into two trained linear differential analysis models in test phases, weighting two obtained result grades to obtain scores and comparing the scores to predefined threshold values so as to discriminate voice spoofing. The method and the system have the advantages that local features can be captured, and global information can be grasped; the linear differential analysis models are used as classifiers in identification and verification phases, the voice spoofing attack can be judged by means of grade fusion, and accordingly the voice spoofing detection accuracy can be greatly improved.

Owner:AISPEECH CO LTD

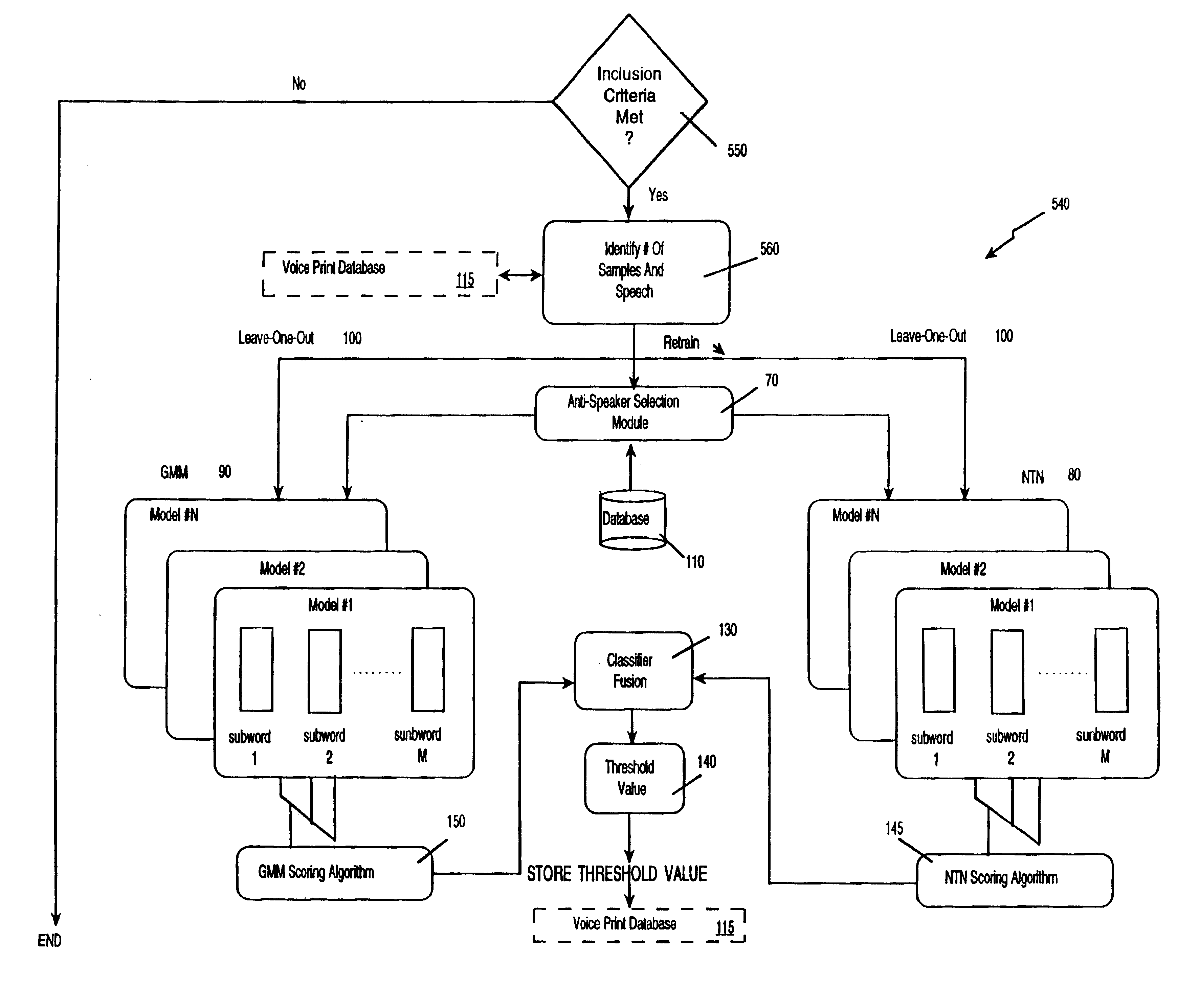

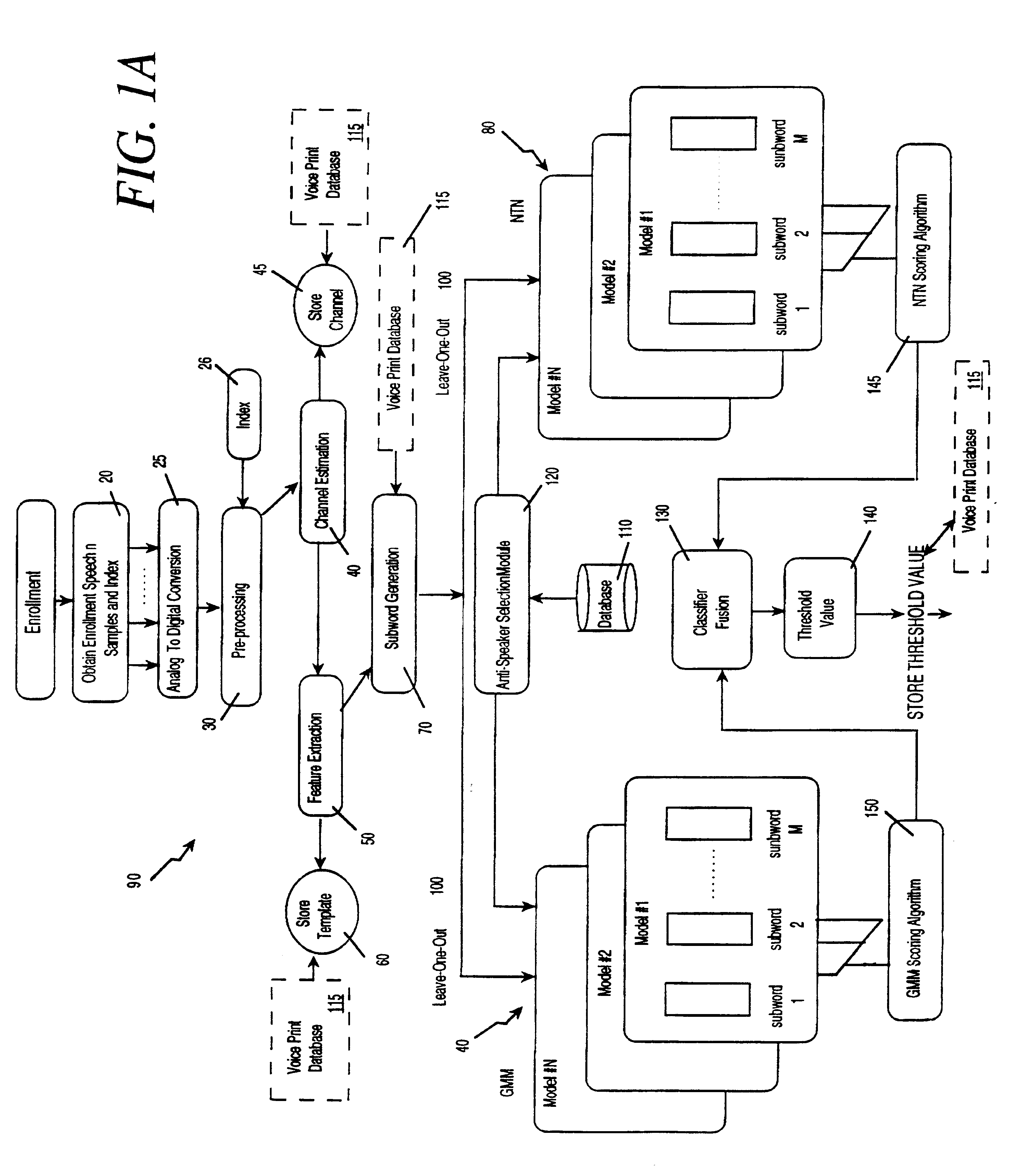

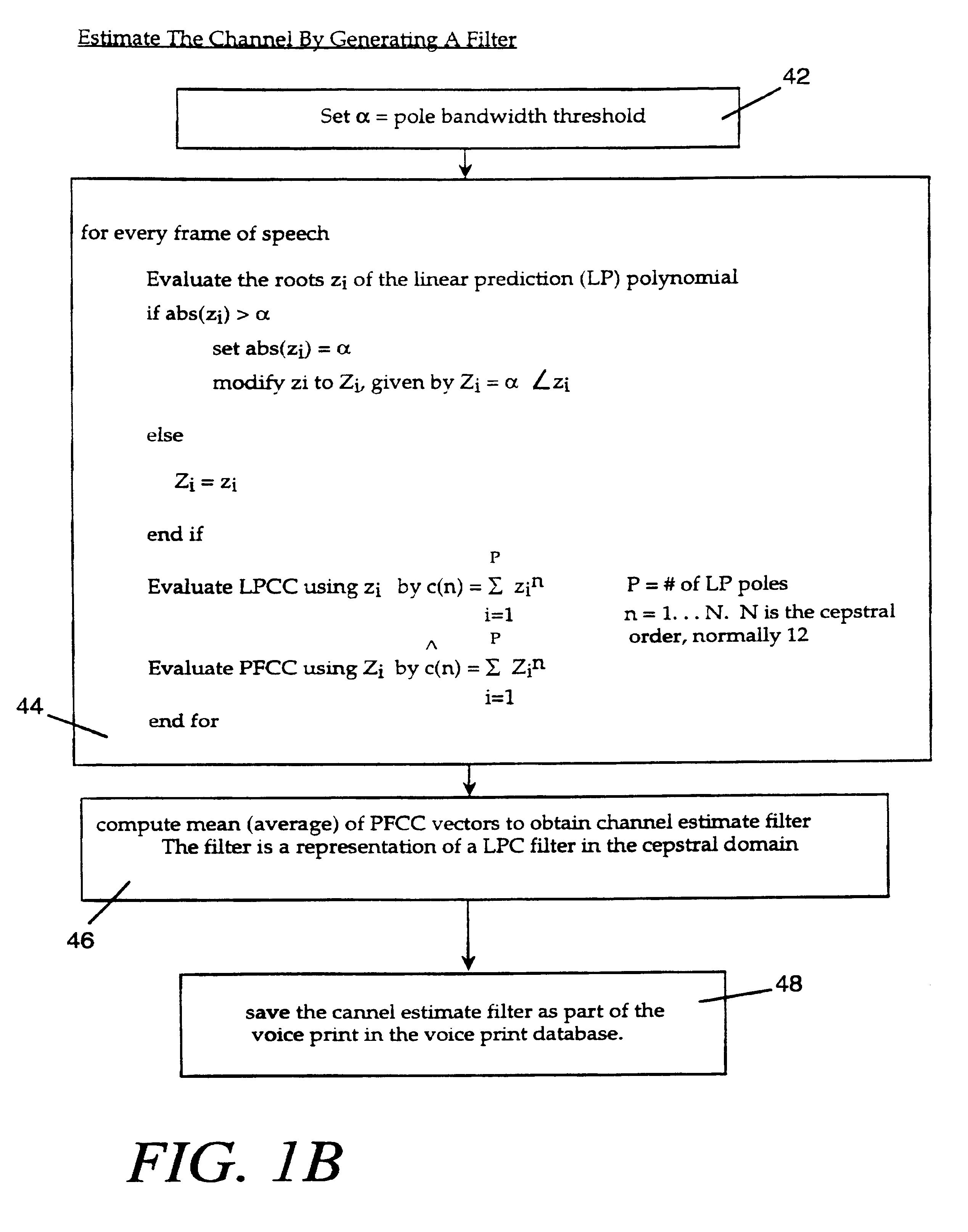

Subword-based speaker verification with multiple-classifier score fusion weight and threshold adaptation

InactiveUS6539352B1Increase in sizeImprove generalization abilitySpeech recognitionSpeech segmentationSpeaker verification

The voice print system of the present invention is a subword-based, text-dependent automatic speaker verification system that embodies the capability of user-selectable passwords with no constraints on the choice of vocabulary words or the language. Automatic blind speech segmentation allows speech to be segmented into subword units without any linguistic knowledge of the password. Subword modeling is performed using a multiple classifiers. The system also takes advantage of such concepts as multiple classifier fusion and data resampling to successfully boost the performance. Key word / key phrase spotting is used to optimally locate the password phrase. Numerous adaptation techniques increase the flexibility of the base system, and include: channel adaptation, fusion adaptation, model adaptation and threshold adaptation.

Owner:BANK ONE COLORADO NA AS AGENT +1

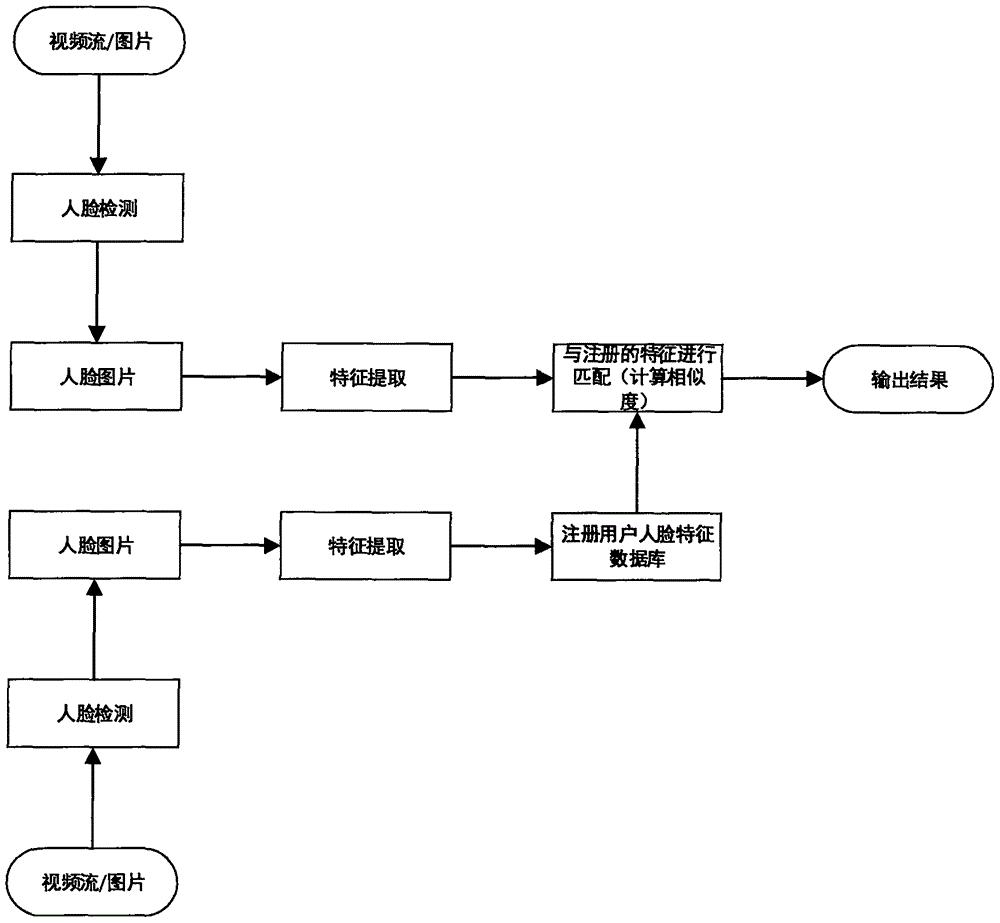

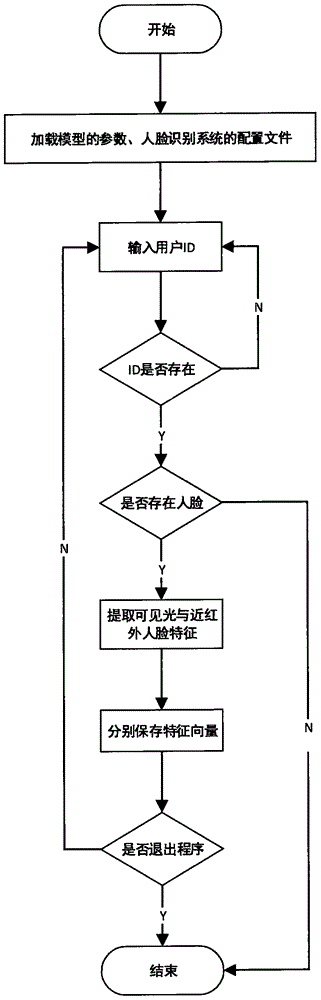

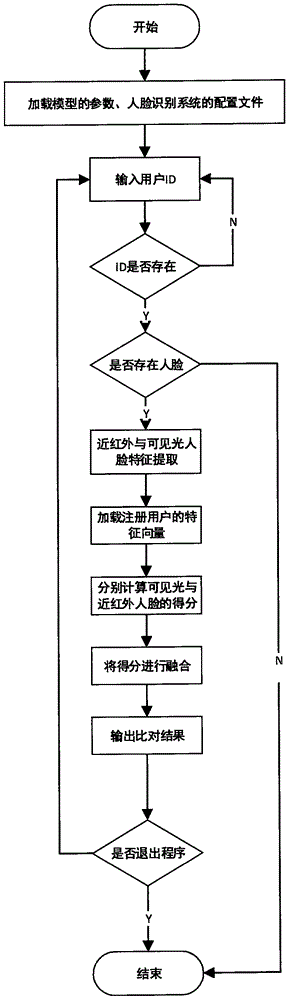

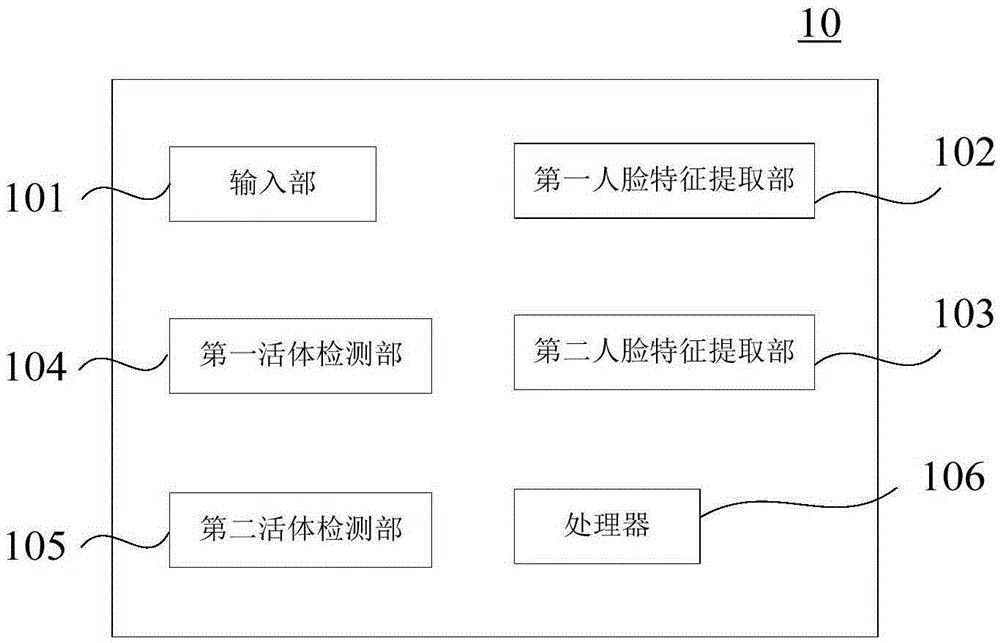

Face recognition method and system based on adaptive score fusion and deep learning

InactiveCN106709477ASolve the problem of missing detailsPlay a complementary roleCharacter and pattern recognitionNeural learning methodsSelf adaptiveEuclidean distance

The invention discloses a face recognition method and system based on adaptive score fusion and deep learning. The system comprises a near infrared face and visible light face acquisition unit for jointly acquiring pictures of the same face by cooperation of cameras of two modes, a face feature extraction unit for respectively extracting features from the acquired near infrared face picture and visible light face picture via a deep convolution model, an adaptive score fusion unit for solving the similarity (that is, the score of the pictures) of the two pictures via an Euclidean distance and then fusing the face features extracted by using a deep learning algorithm via an adaptive score fusion algorithm on the score level to solve the final score of the user, and a result output unit for outputting the final score. The method and the system can solve the influence of the intensity of near infrared light on face imaging, and can also solve the problem that the details of the near infrared picture disappear by using the visible light picture.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

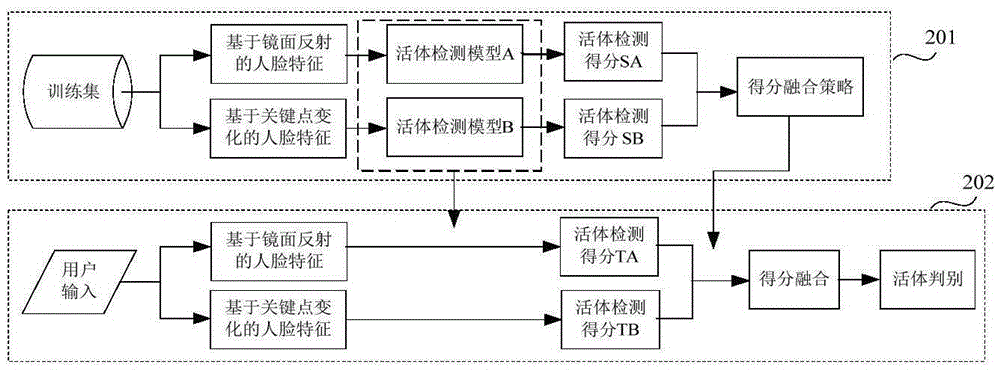

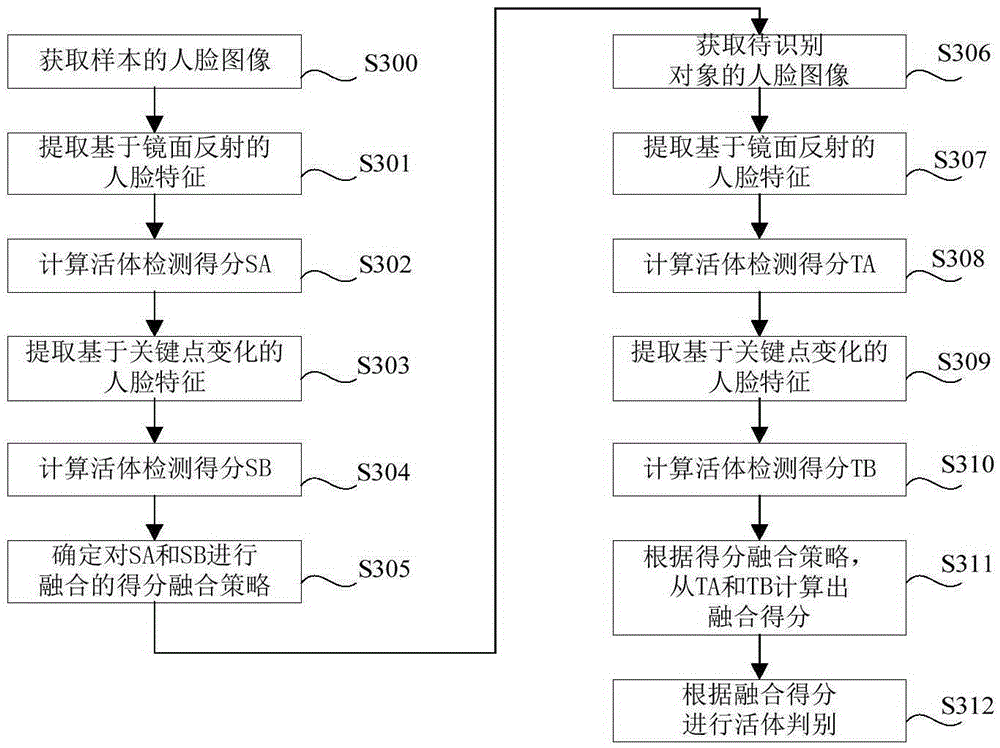

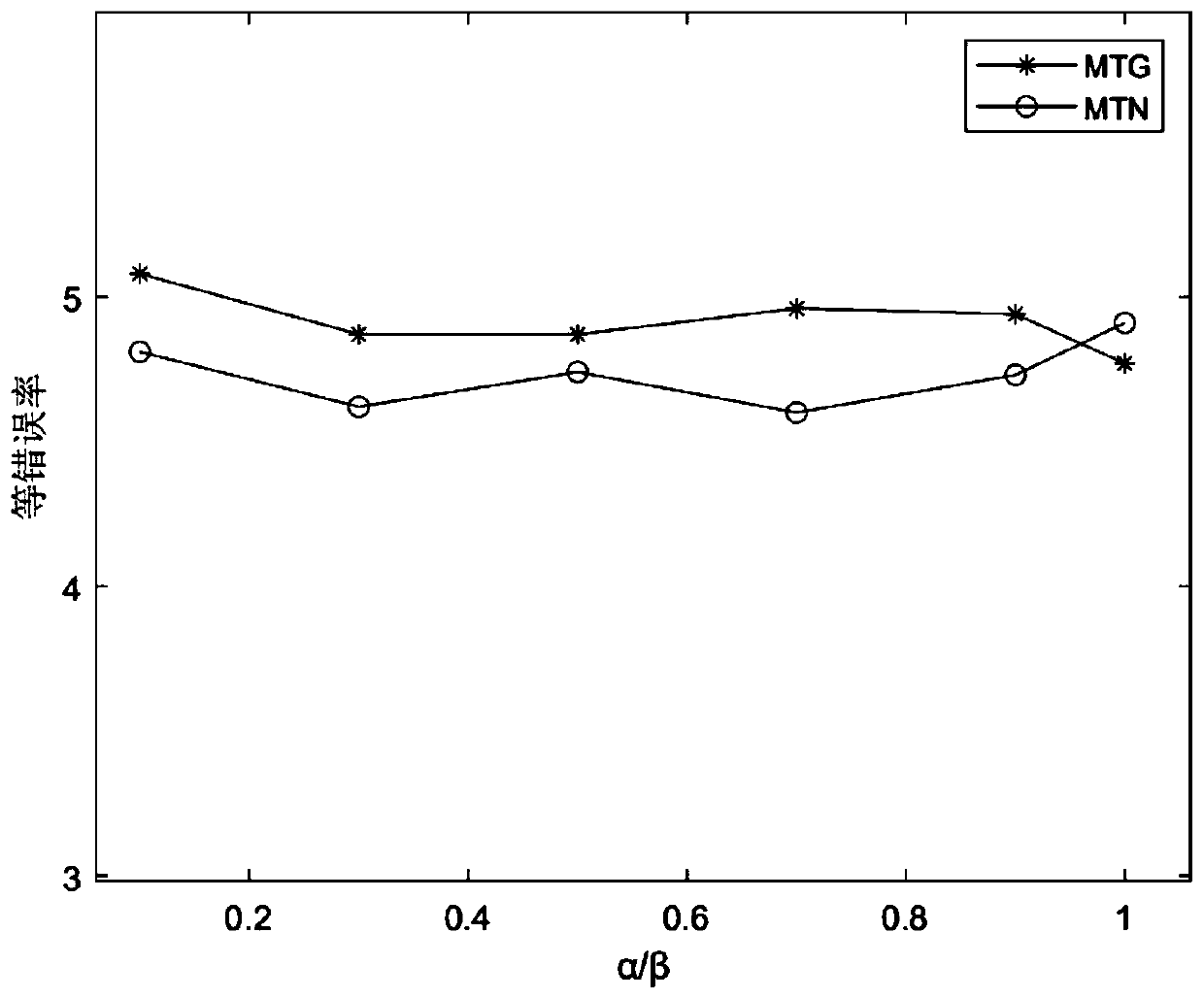

Face-identification-based living body determination method and equipment

The invention relates to a face-identification-based living body determination method and equipment. The method comprises: obtaining a face image of a sample, carrying out first living body detection and second living body detection on the face image, and calculating a first living body detection score and a second living body detection score of the sample; determining a score fusion strategy of fusion of the first living body detection score and the second living body detection score; obtaining a face image of a to-be-identified object, carrying out first living body detection and second living body detection on the face image, and calculating a first living body detection score and a second living body detection score of the to-be-identified object; according to the score fusion strategy, calculation a fusion score based on the first living body detection score and the second living body detection score of the to-be-identified object; and carrying out living body determination on the to-be-identified object based on the fusion score. Besides, a first face feature is extracted according to a mirror face reflection characteristic in the first living body detection; and a second face feature is extracted according to face key point changing in the second living body detection.

Owner:北京汉王智远科技有限公司

Voiceprint recognition method based on gender, nationality and emotion information

ActiveCN111243602AImprove accuracyEasy to learnSpeech analysisEnergy efficient computingMedicineTest set

The invention discloses a voiceprint recognition method based on gender, nationality and emotion information. The method specifically comprises the following steps: firstly, data preprocessing, secondly, feature extraction, and secondly, neural network parameter training: according to the specific structure of a neural network, in the training process, the input sequence of training sentences is disrupted, then 128 sentences are randomly selected as a training batch, and the number of data iterations is 80; wherein a training file required by the scoring fusion tool is a development set resultand a test set result of each system; wherein VOXCELEB1 test is used in the test set; wherein the development set is a test file which is generated based on 1211 training speaker statements and comprises 40,000 test pairs; and a final test set scoring result is obtained through 100 times of iteration. According to the invention, the recognition rate is improved.

Owner:TIANJIN UNIV

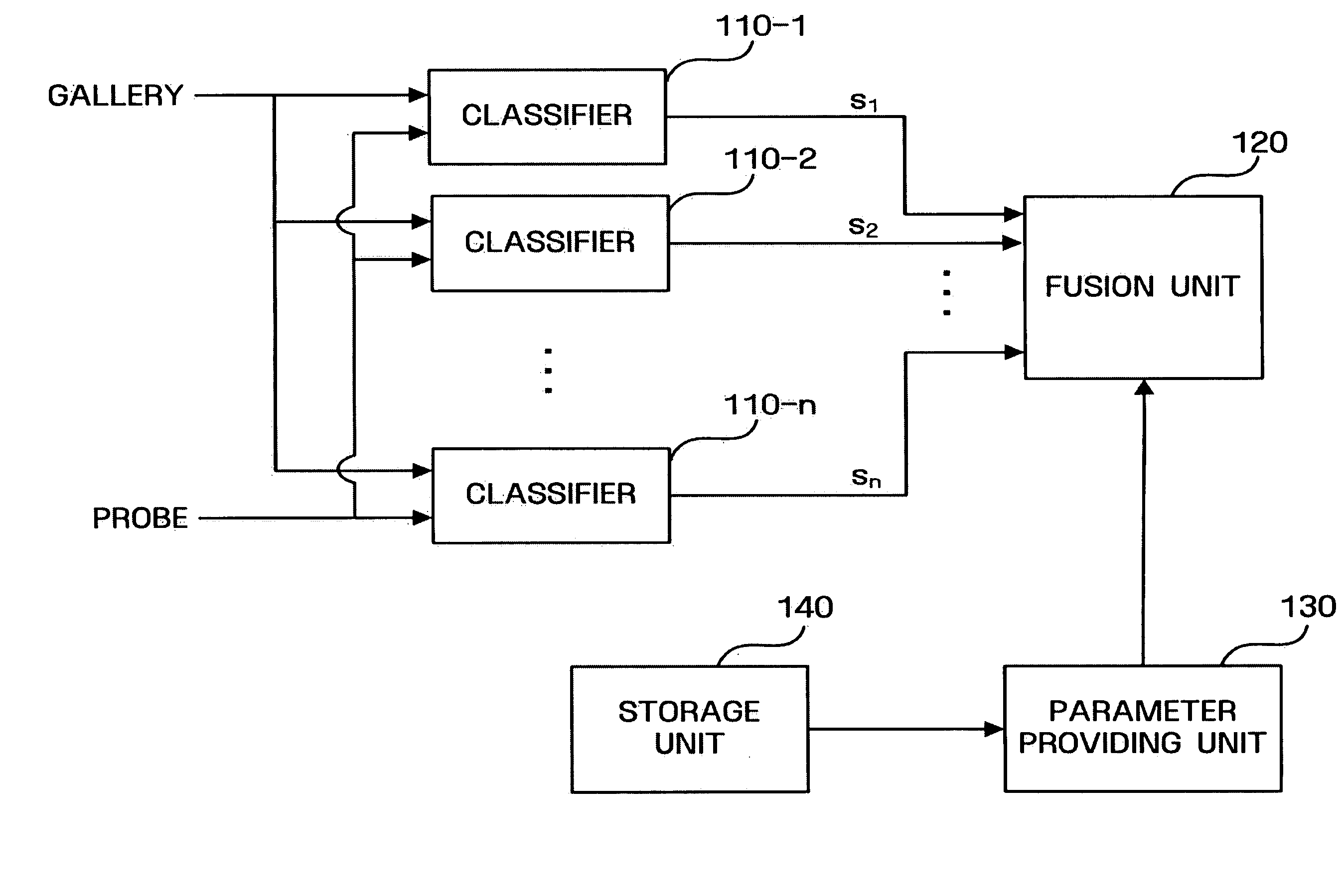

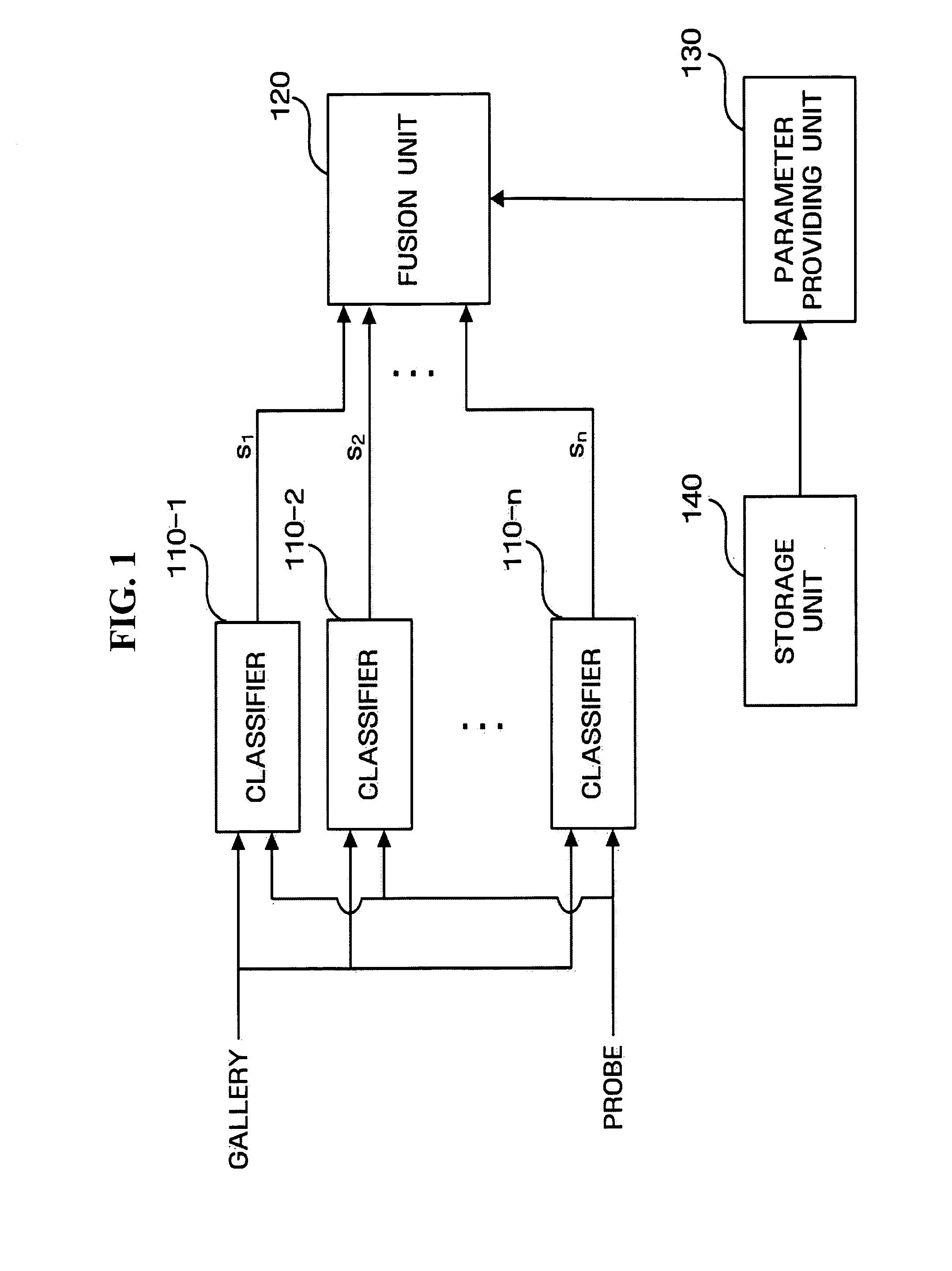

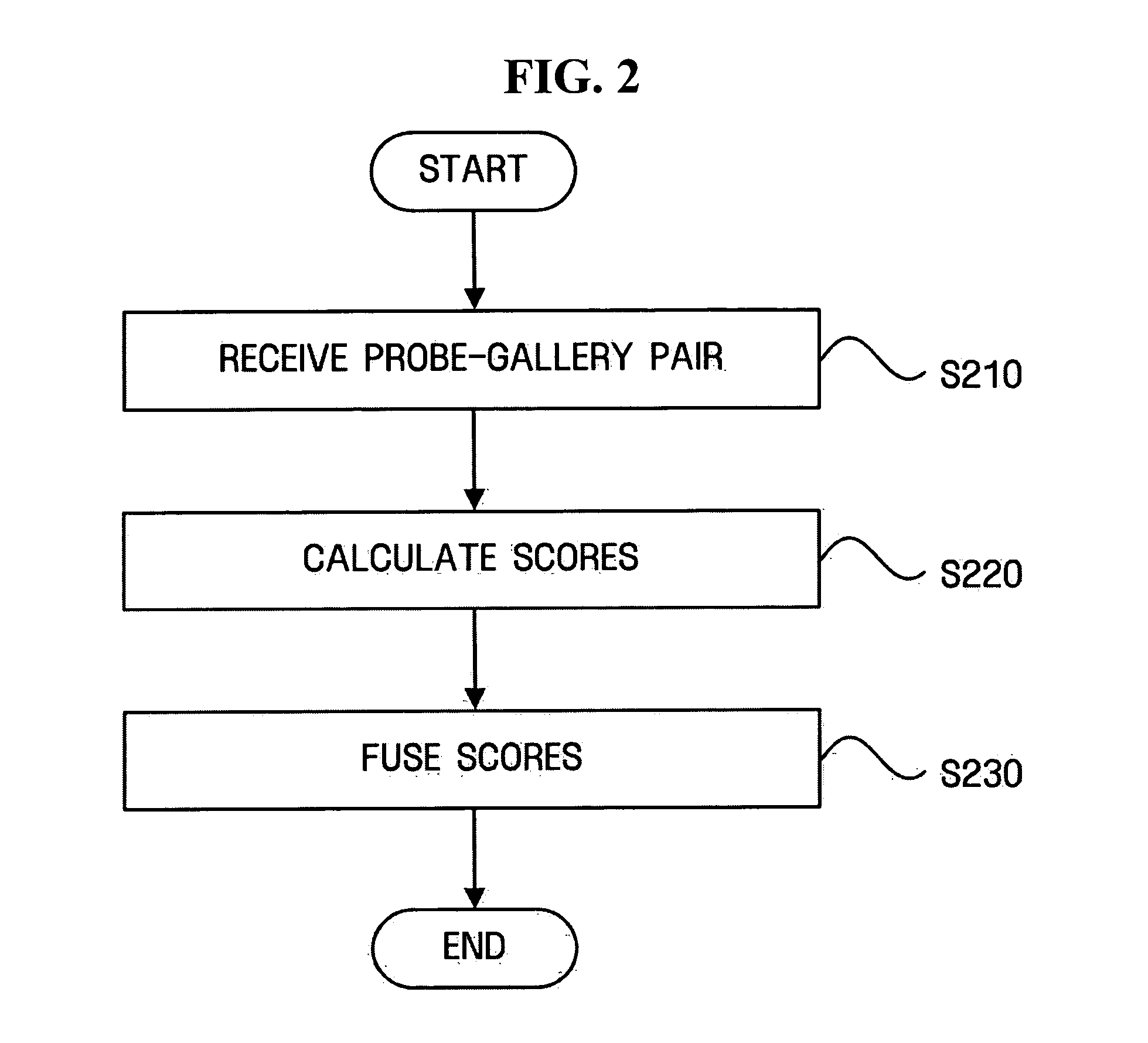

Score fusion method and apparatus

A score fusion method and apparatus. The score fusion method includes receiving a plurality of scores respectively obtained by a plurality of classifiers, and fusing the received scores using a likelihood ratio. The score fusion apparatus includes a fusion unit which receives a plurality of scores respectively obtained by a plurality of classifiers and fuses the received scores using a likelihood ratio, and a parameter providing unit which provides the fusion unit with a plurality of parameters needed for fusing the received scores.

Owner:SAMSUNG ELECTRONICS CO LTD

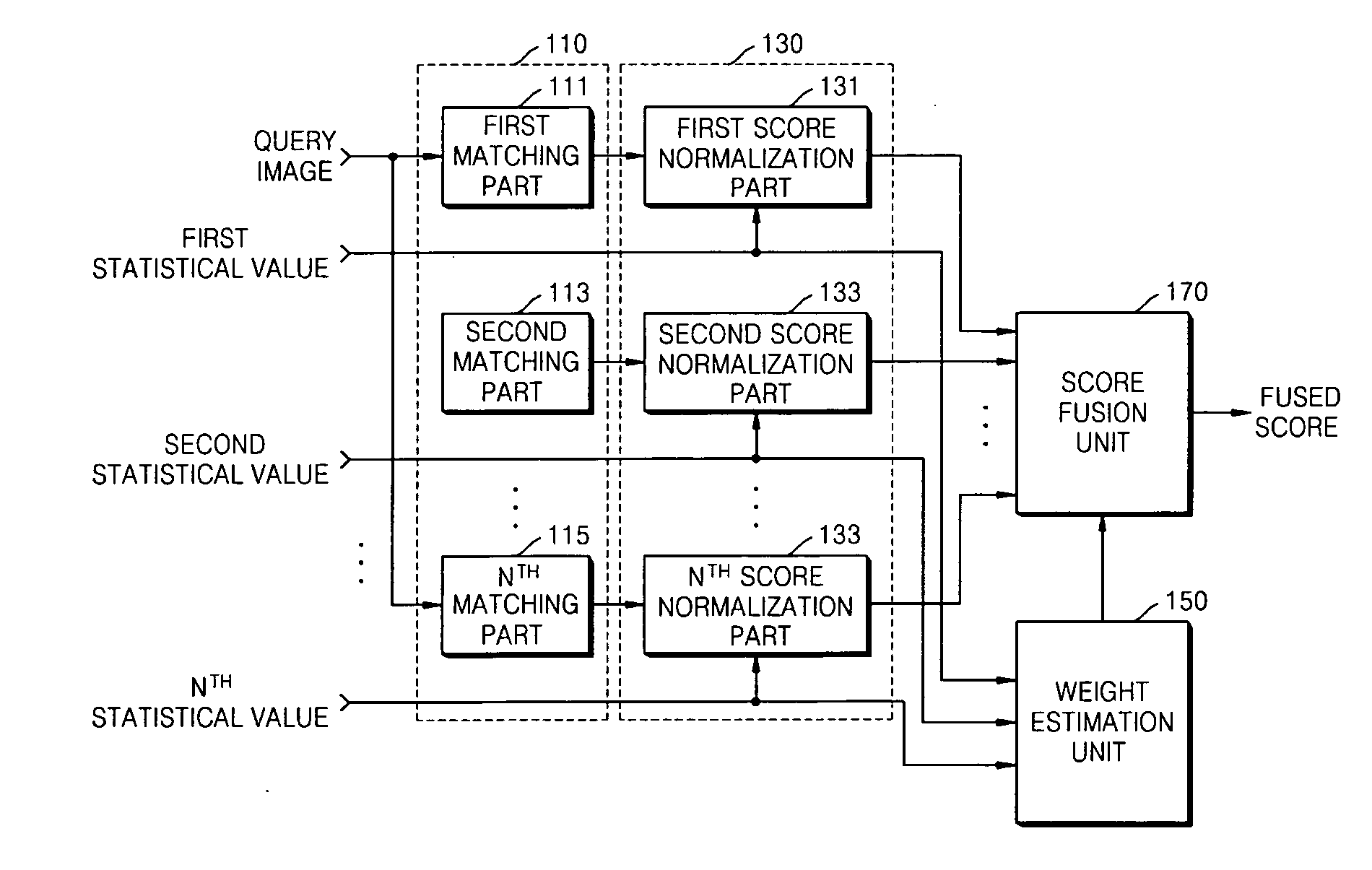

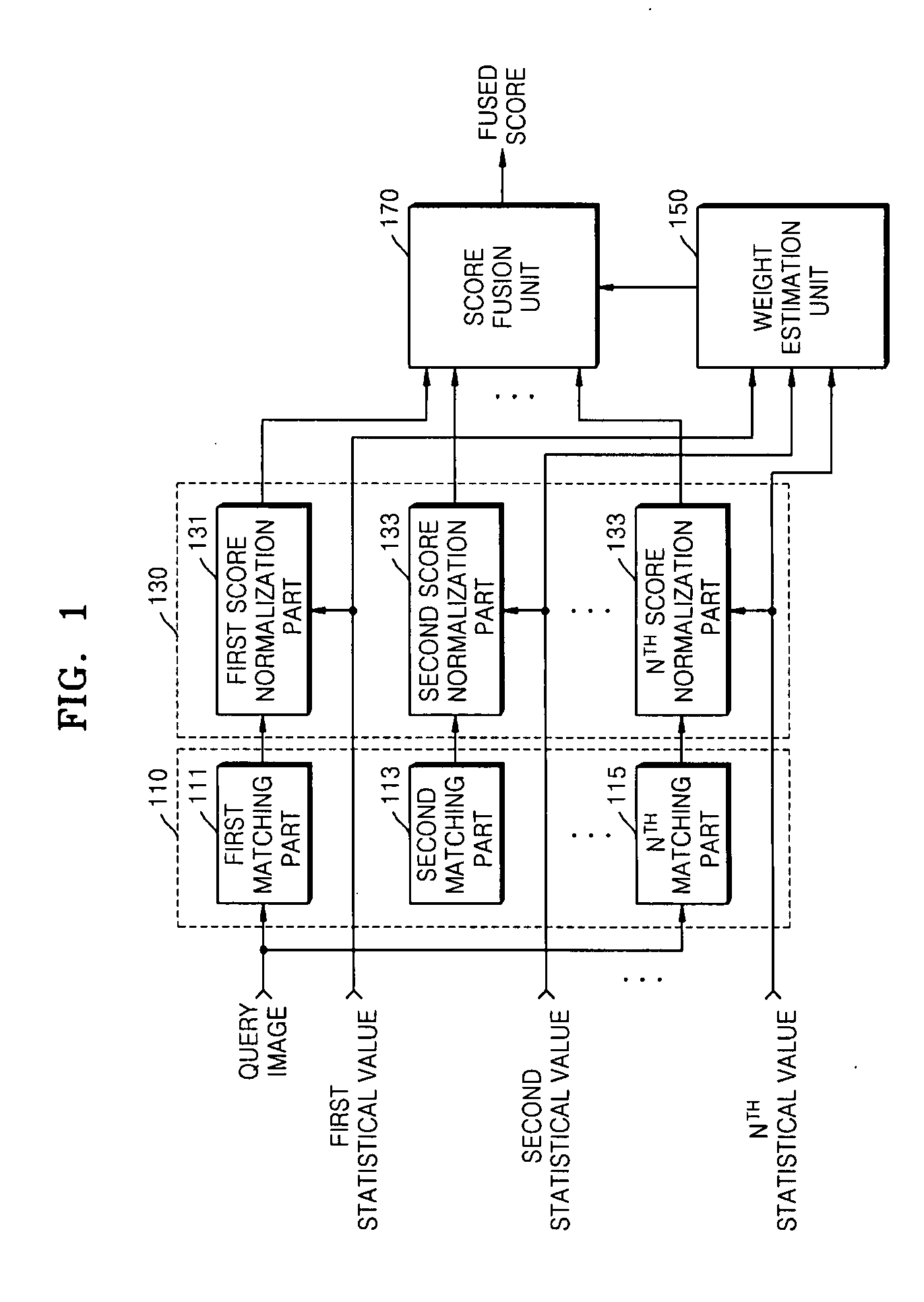

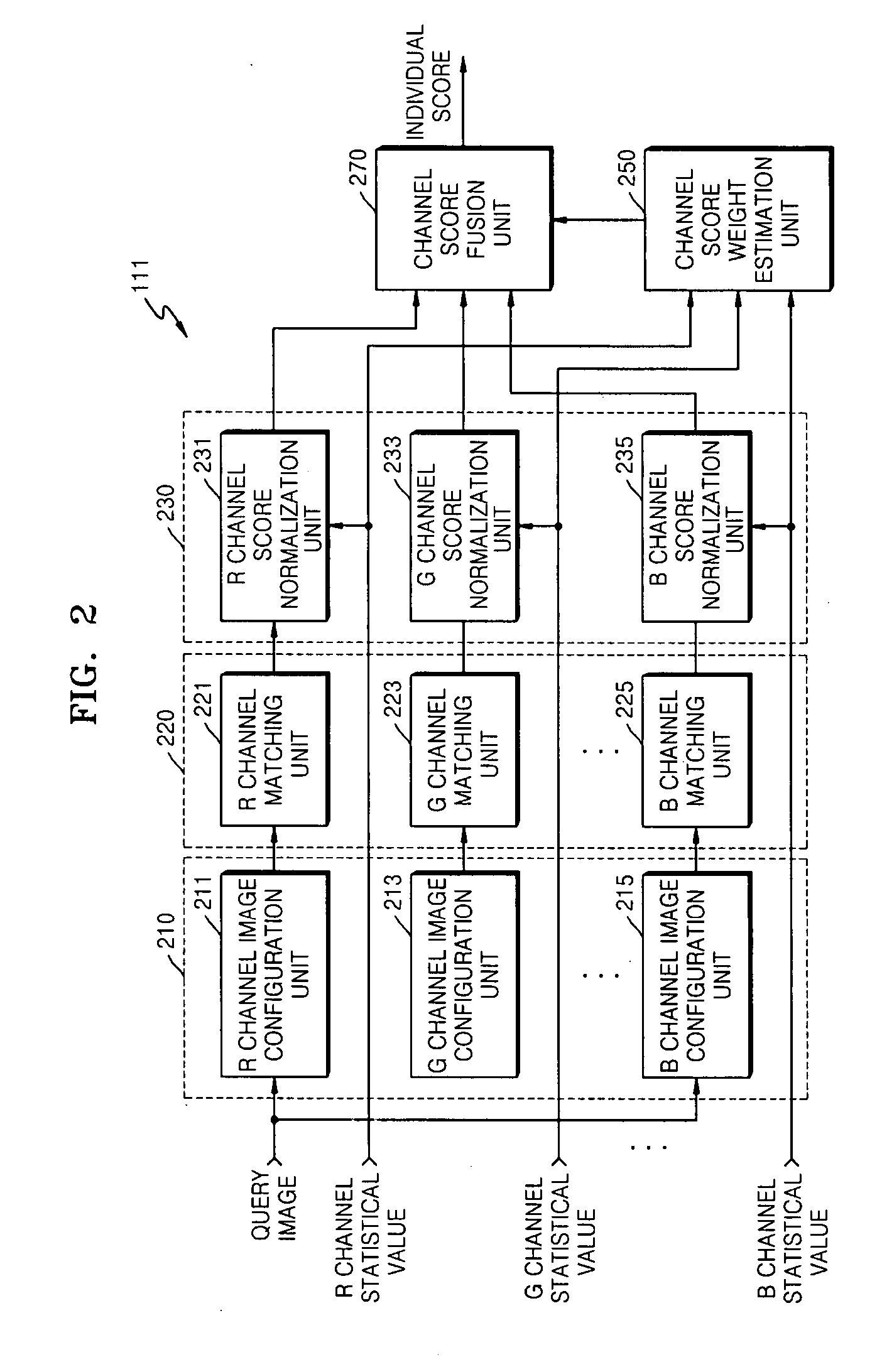

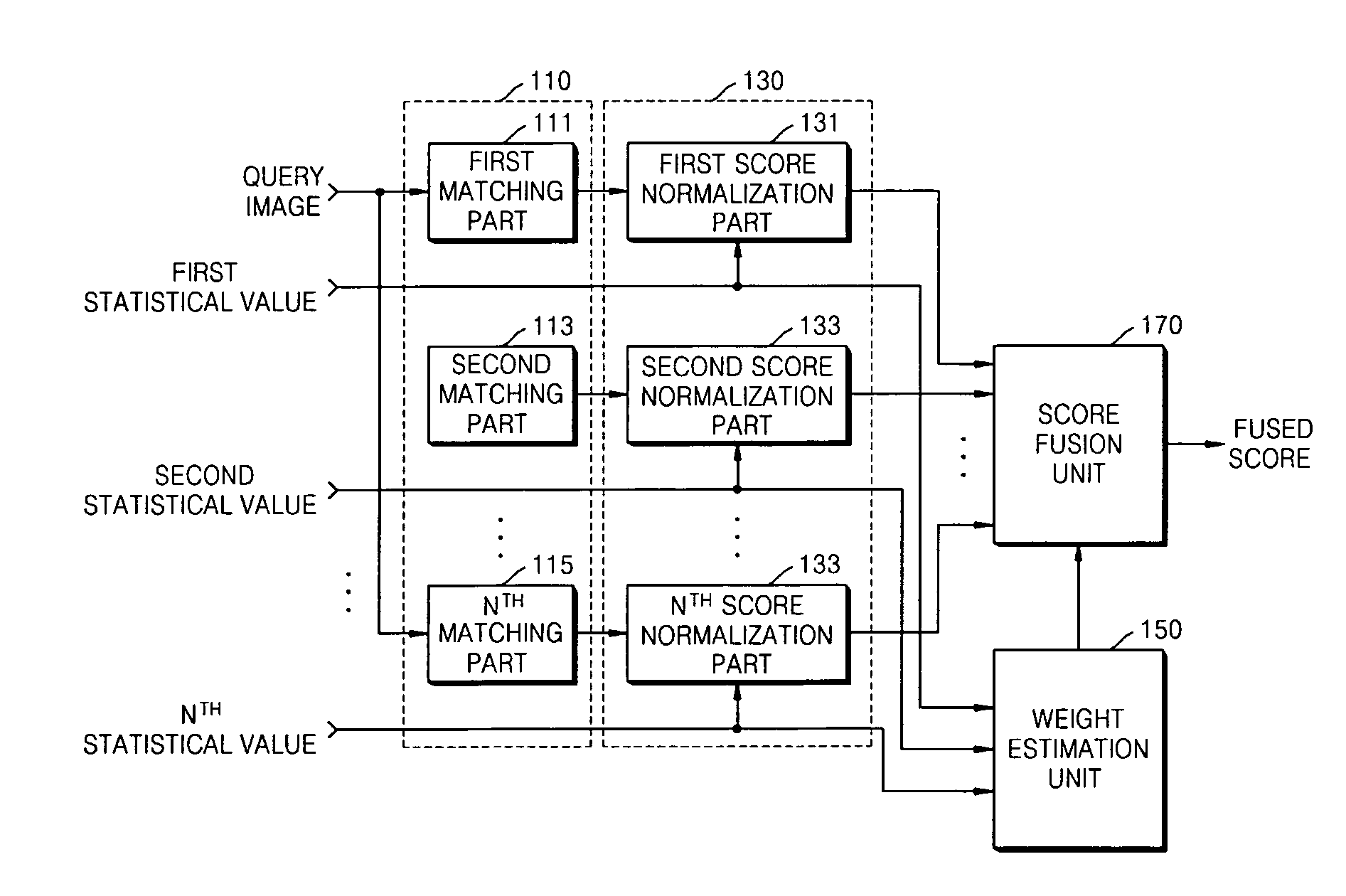

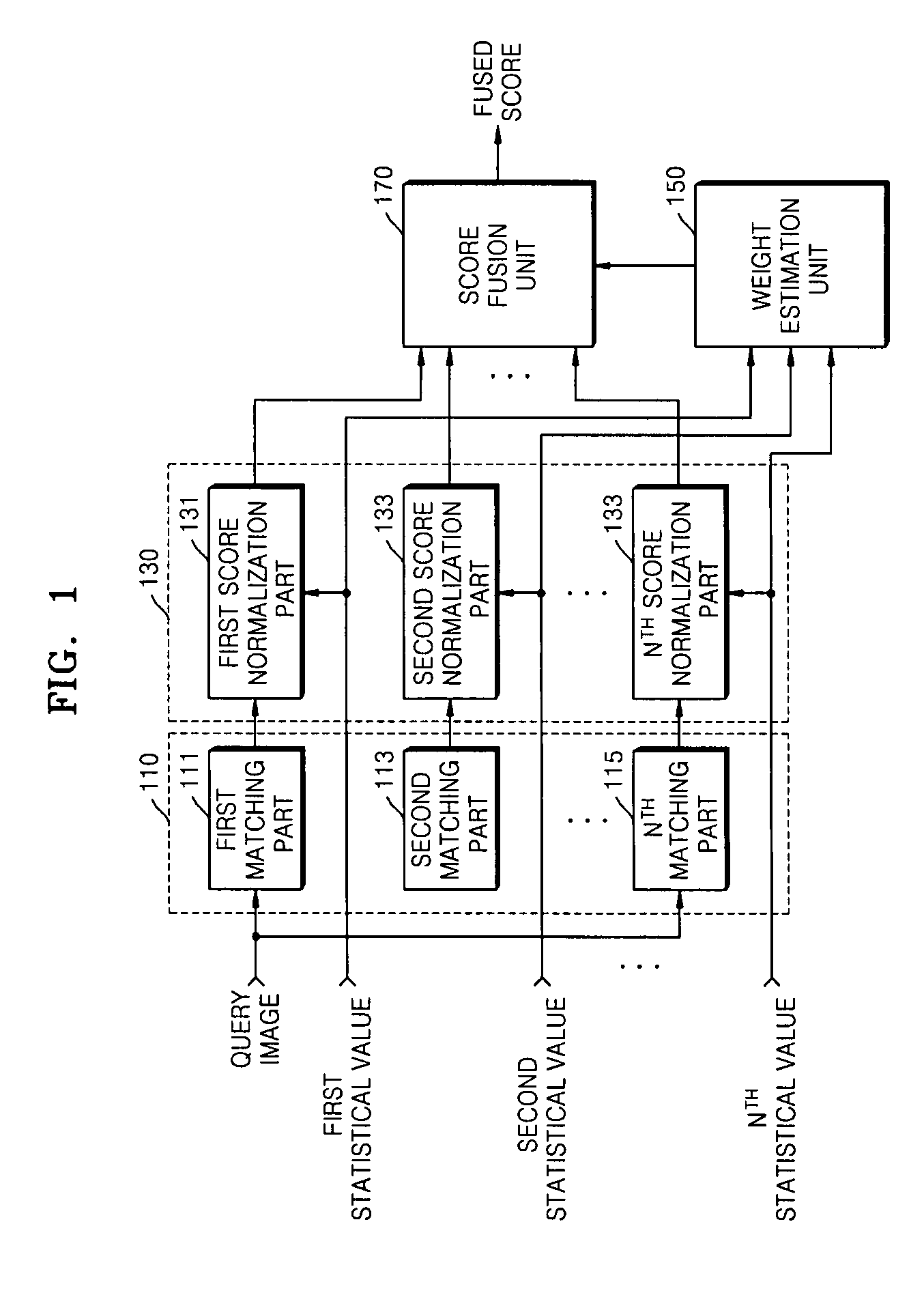

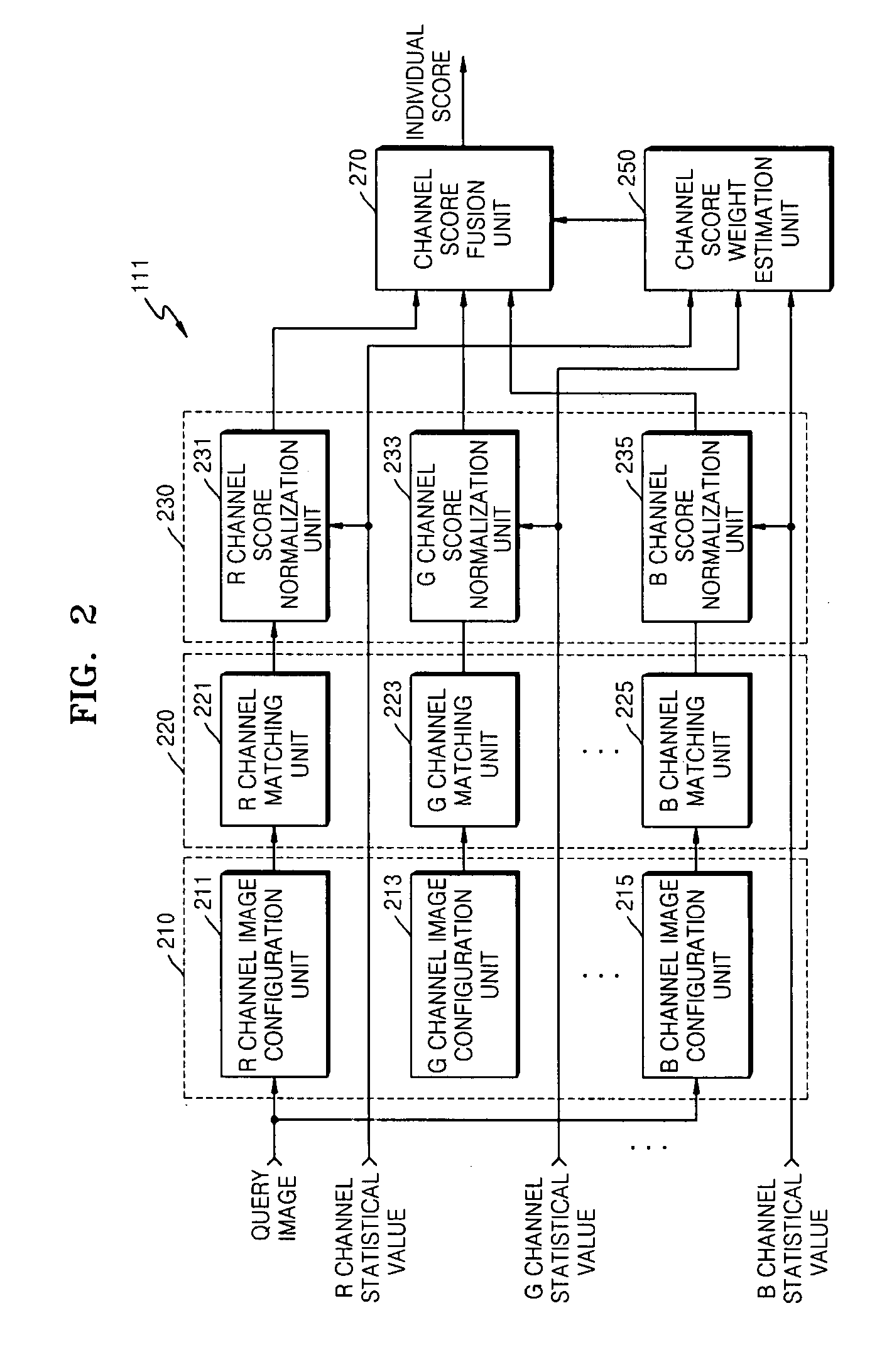

Object verification apparatus and method

InactiveUS20070203904A1Improve performanceImage analysisDigital data processing detailsPattern recognitionEstimated Weight

Provided are an object verification apparatus and method. The object verification apparatus includes a matching unit performing a plurality of different matching algorithms on a query image and generating a plurality of scores; a score normalization unit normalizing each of the generated scores to be adaptive to the query image; a weight estimation unit estimating weights of the normalized scores based on the respective matching algorithms applied; and a score fusion unit fusing the normalized scores by respectively applying the weights estimated by the weight estimation unit to the normalized scores.

Owner:SAMSUNG ELECTRONICS CO LTD

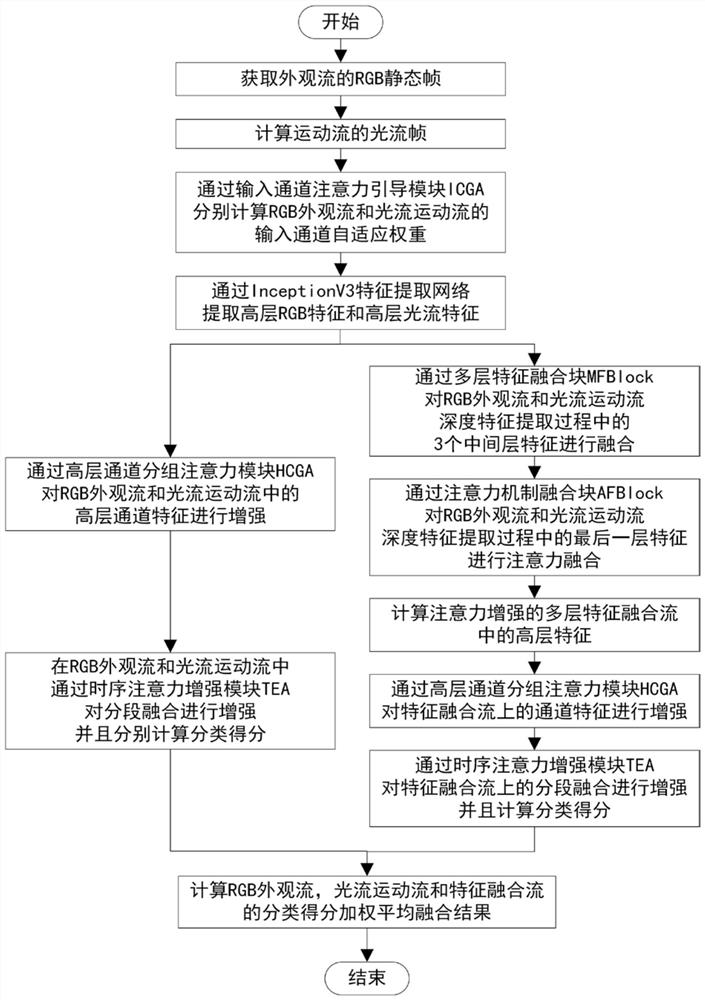

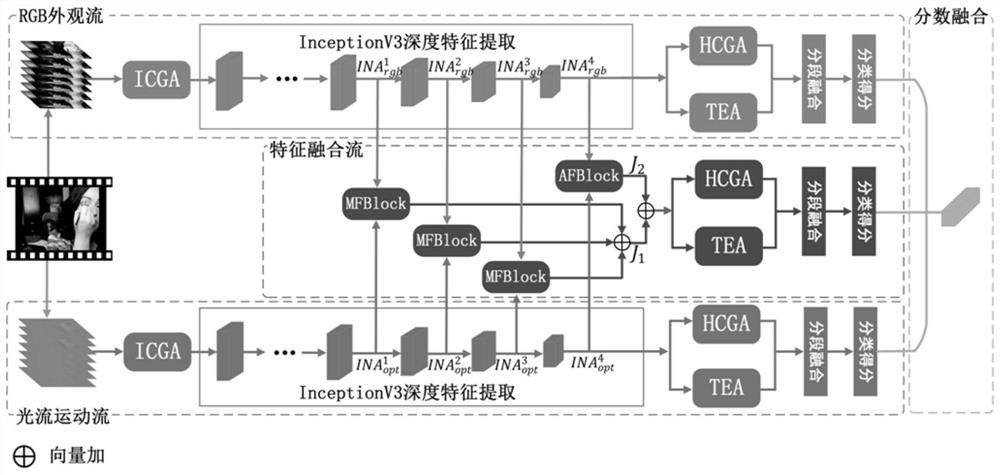

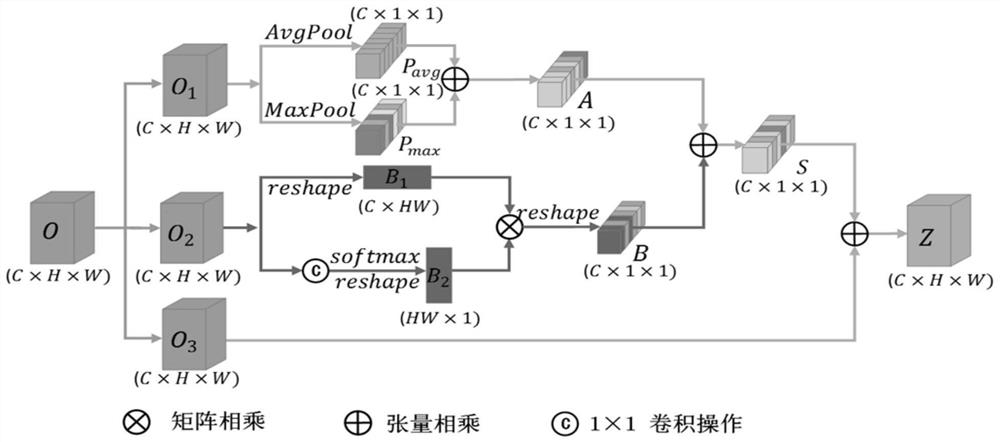

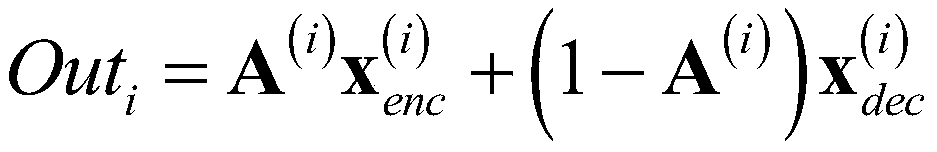

Behavior recognition method based on space-time attention enhancement feature fusion network

ActiveCN111709304AEnhanced ability to extract valid channel featuresImprove the problem of easy feature overfittingCharacter and pattern recognitionNeural architecturesFrame sequenceMachine vision

The invention discloses a behavior recognition method based on a space-time attention enhancement feature fusion network, and belongs to the field of machine vision. According to the method, a networkarchitecture based on an appearance flow and motion flow double-flow network is adopted, and is called as a space-time attention enhancement feature fusion network. Aiming at a traditional double-flow network, simple feature or score fusion is adopted for different branches, an attention-enhanced multi-layer feature fusion flow is constructed to serve as a third branch to supplement a double-flowstructure. Meanwhile, aiming at the problem that the traditional deep network neglects modeling of the channel characteristics and cannot fully utilize the mutual relation between the channels, the channel attention modules of different levels are introduced to establish the mutual relation between the channels to enhance the expression capability of the channel characteristics. In addition, thetime sequence information plays an important role in segmentation fusion, and the representativeness of important time sequence features is enhanced by performing time sequence modeling on the frame sequence. Finally, the classification scores of different branches are subjected to weighted fusion.

Owner:JIANGNAN UNIV

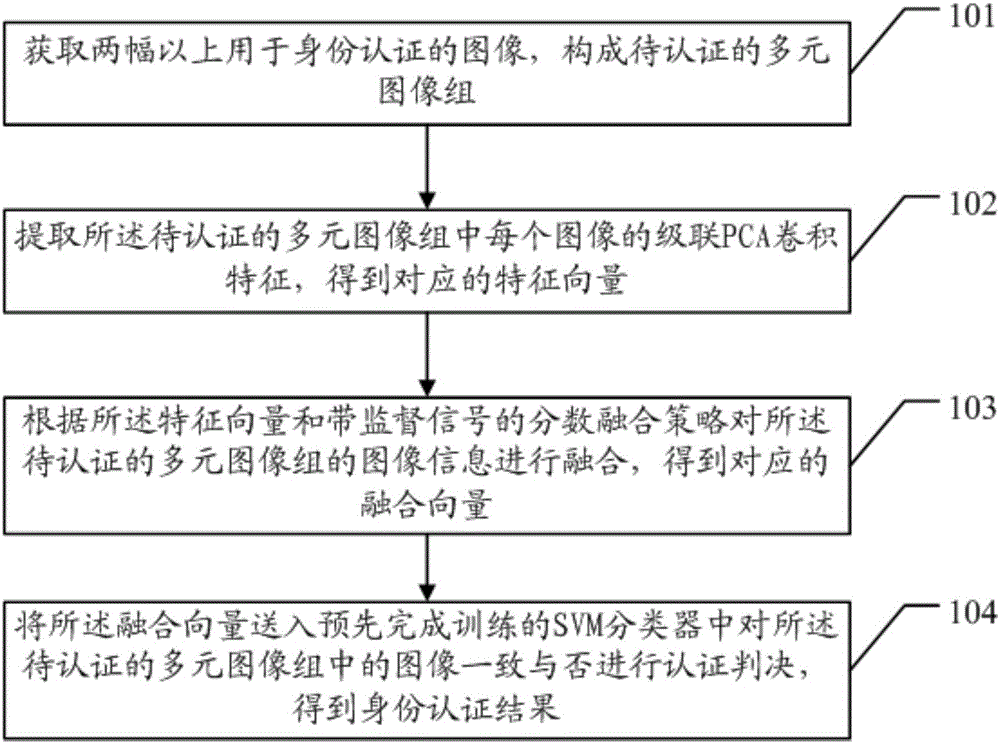

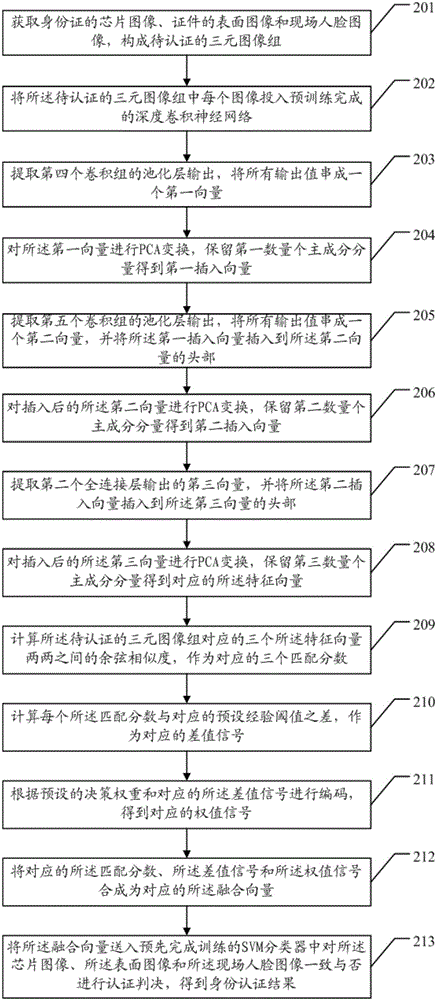

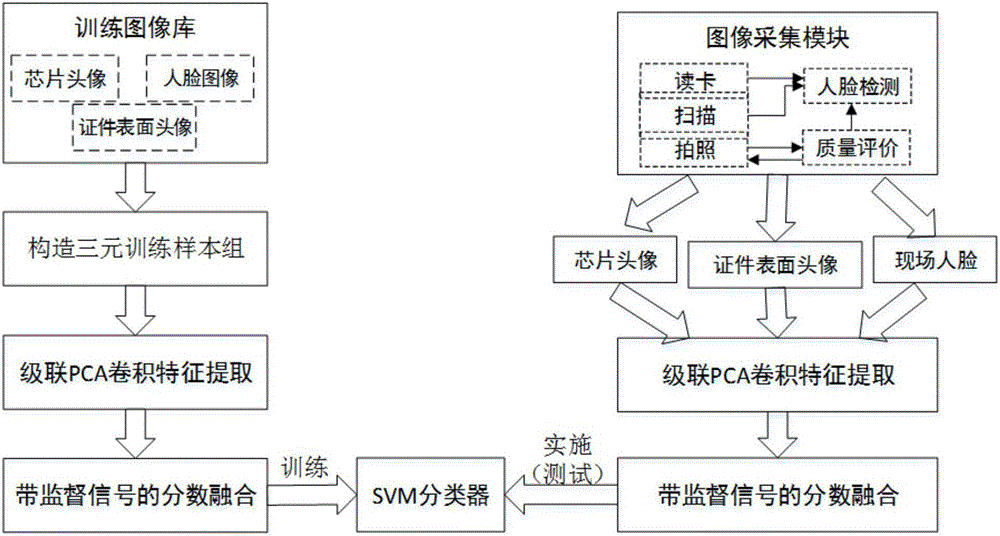

Offline identity authentication method and apparatus

ActiveCN106127103AReduce the burden onImprove authentication efficiencyKernel methodsCharacter and pattern recognitionFeature vectorSvm classifier

Embodiments of the invention disclose an offline identity authentication method and apparatus, which is used for solving the problems that dependence on a human face database of the public security ministry exists and a chip head portrait, a certificate surface head portrait and a certificate holder head portrait are difficultly identified in consistency in the prior art. The method disclosed by the embodiment of the invention comprises the steps of obtaining two or more images used for identity authentication to form a to-be-authenticated multivariate image group; extracting a concatenated PCA convolutional characteristic of each image in the to-be-authenticated multivariate image group to obtain a corresponding eigenvector; performing fusion on image information of the to-be-authenticated multivariate image group according to the eigenvector and a score fusion policy with a supervisory signal to obtain a corresponding fusion vector; and inputting the fusion vector into a pre-trained SVM classifier to perform authentication judgment on whether images in the to-be-authenticated multivariate image group are consistent or not so as to obtain an identity authentication result.

Owner:GRG BAKING EQUIP CO LTD +1

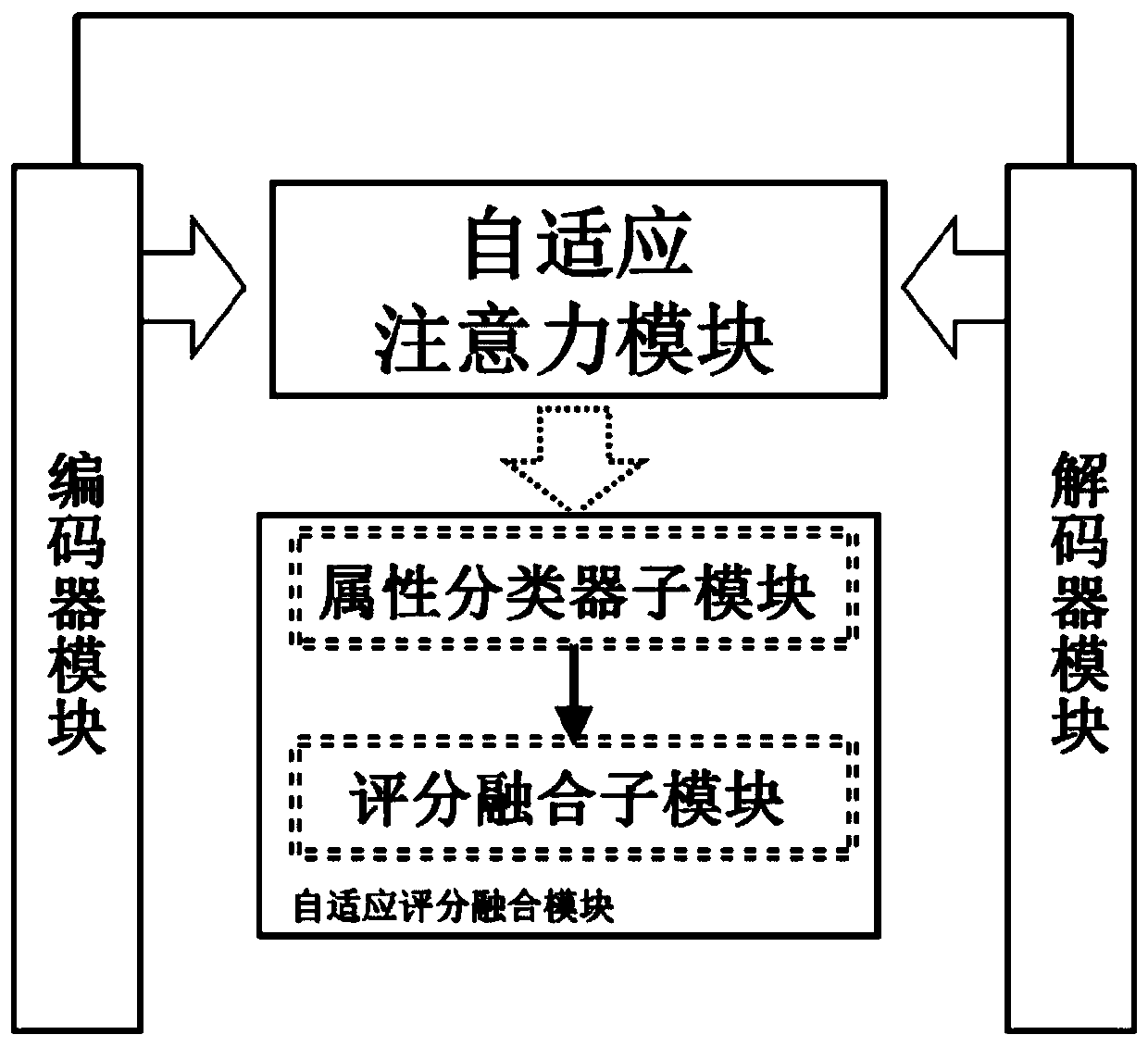

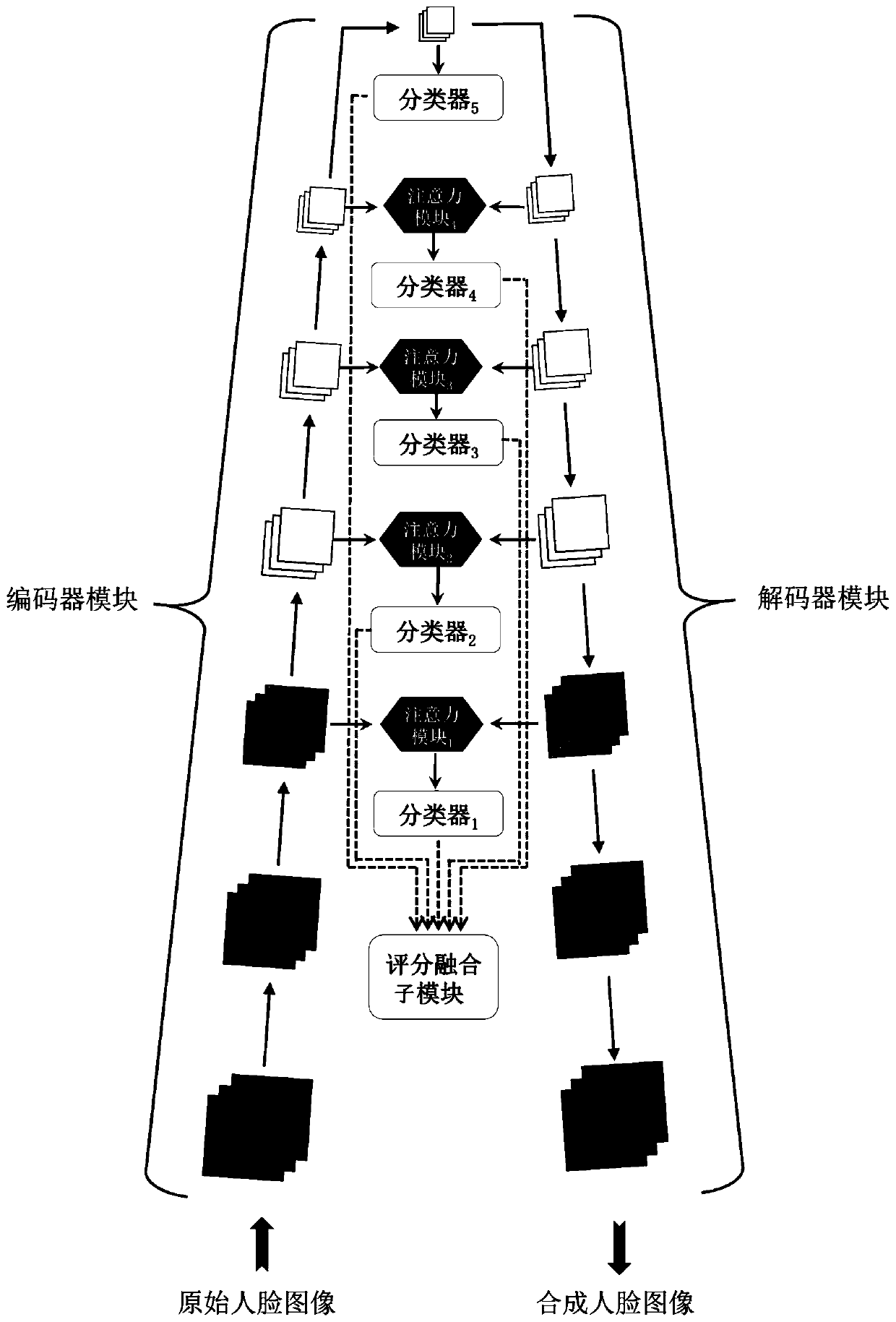

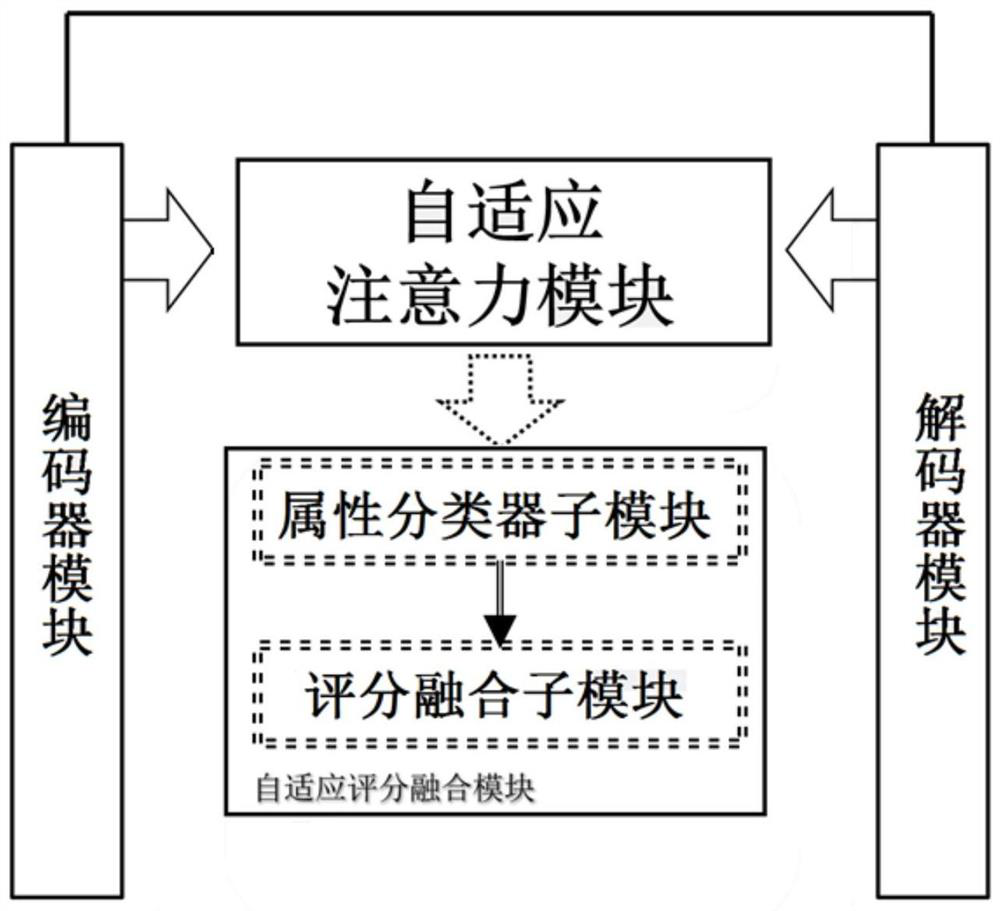

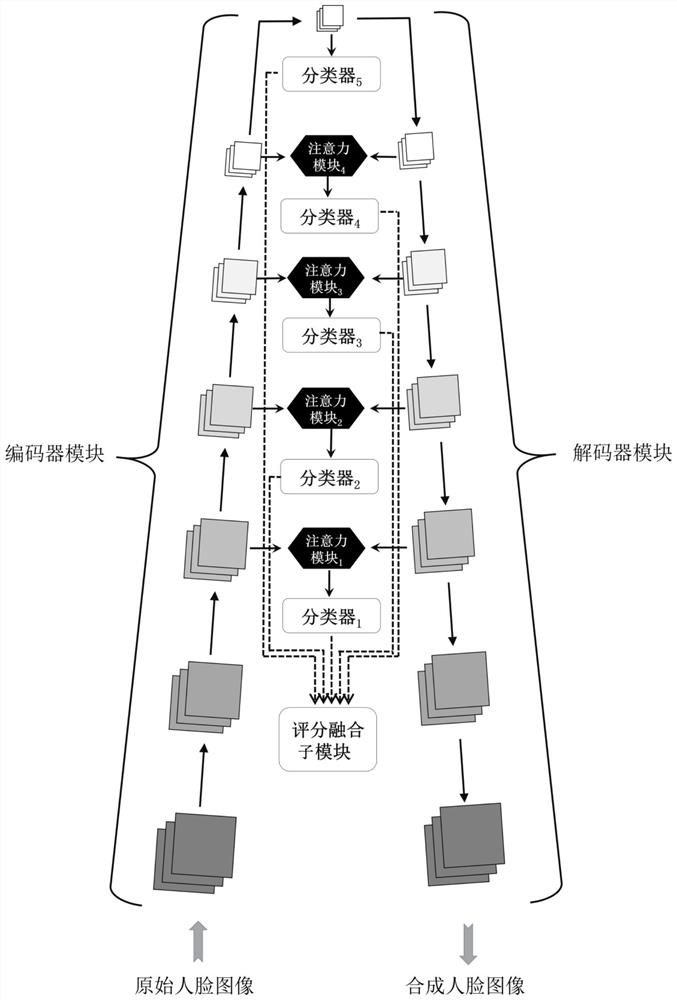

A face attribute classification system based on a bidirectional Ladder structure

The invention relates to the technical field of computer vision, in particular to a face attribute classification system based on a bidirectional Ladder structure, which aims to solve the problem of how to fully utilize characteristics of different levels in a deep network and corresponding relations between characteristics of different levels and different face attributes so as to improve the accuracy of face attribute classification. For this purpose, the face attribute classification system based on the bidirectional Ladder structure provided by the invention comprises a bidirectional Ladder self-encoder module, a self-adaptive attention module and a self-adaptive score fusion module, the bidirectional Ladder auto-encoder module comprises an encoder module and a decoder module, the self-adaptive attention module comprises a plurality of attention sub-modules, and the self-adaptive score fusion module is configured to obtain a face attribute classification result of the face image tobe detected according to an output result of the encoder module and a result output by the attention sub-module. Based on the structure, the coding features and the decoding features of different levels can be fully utilized, and the accuracy of face attribute classification is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

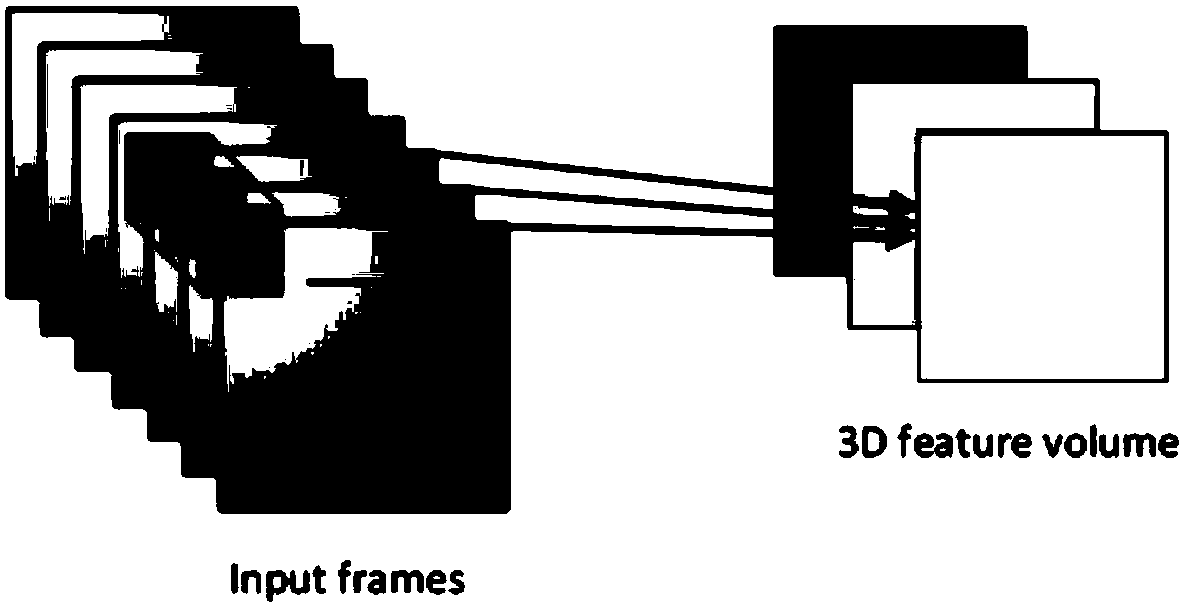

Stereoscopic video quality evaluation method based on 3D convolution neural network

ActiveCN108235003AValid training dataEffective establishmentImage enhancementImage analysisStereoscopic videoTemporal information

The invention relates to a stereoscopic video quality evaluation method based on a 3D convolution neural network. The stereoscopic video quality evaluation method based on the 3D convolution neural network comprises the following steps: preprocessing data; training the 3D convolution neural network; and performing quality score fusion: dividing the whole test video into two parts randomly, whereinone part is used for the training of a 3D CNN model, and the other part is used for model test; and obtaining a prediction score of each input video block from the test stereoscopic video after the training process of the 3D CNN model. In order to obtain the overall evaluation score of the video, a quality score fusion strategy considering global time information is adopted: firstly, performing integration on the cube-level scores on the spatial dimension by means of the average pooling; defining the weight of each segment calculated based on the motion intensity to simulate global time information; then calculating the weight of the motion intensity of each time dimension in the total motion intensity of the stereoscopic video; and finally, summarizing the video-level prediction scores as the weighted sum of each time dimension to obtain stereoscopic video fusion quality score.

Owner:TIANJIN UNIV

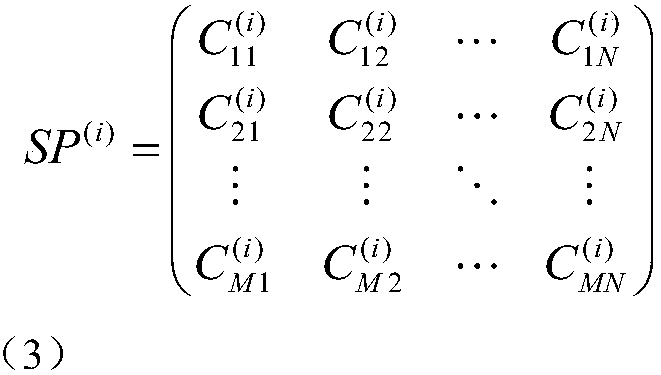

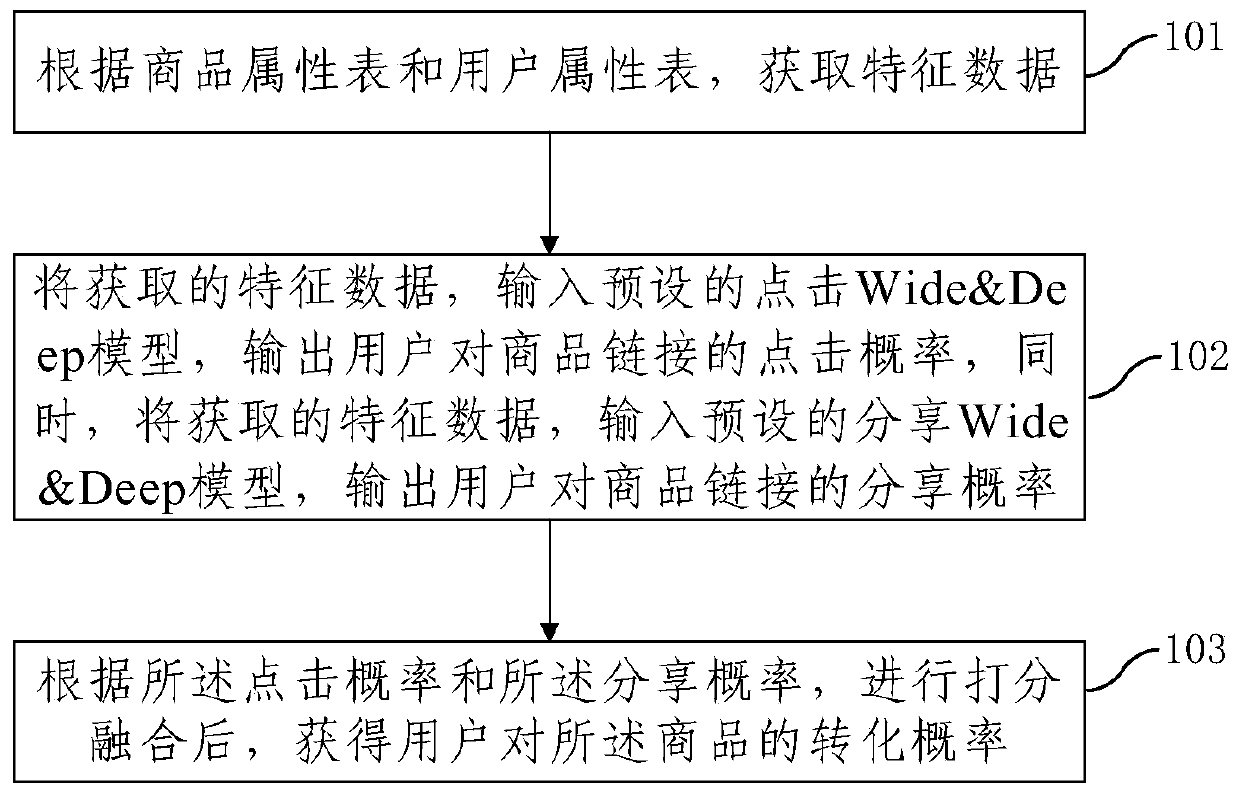

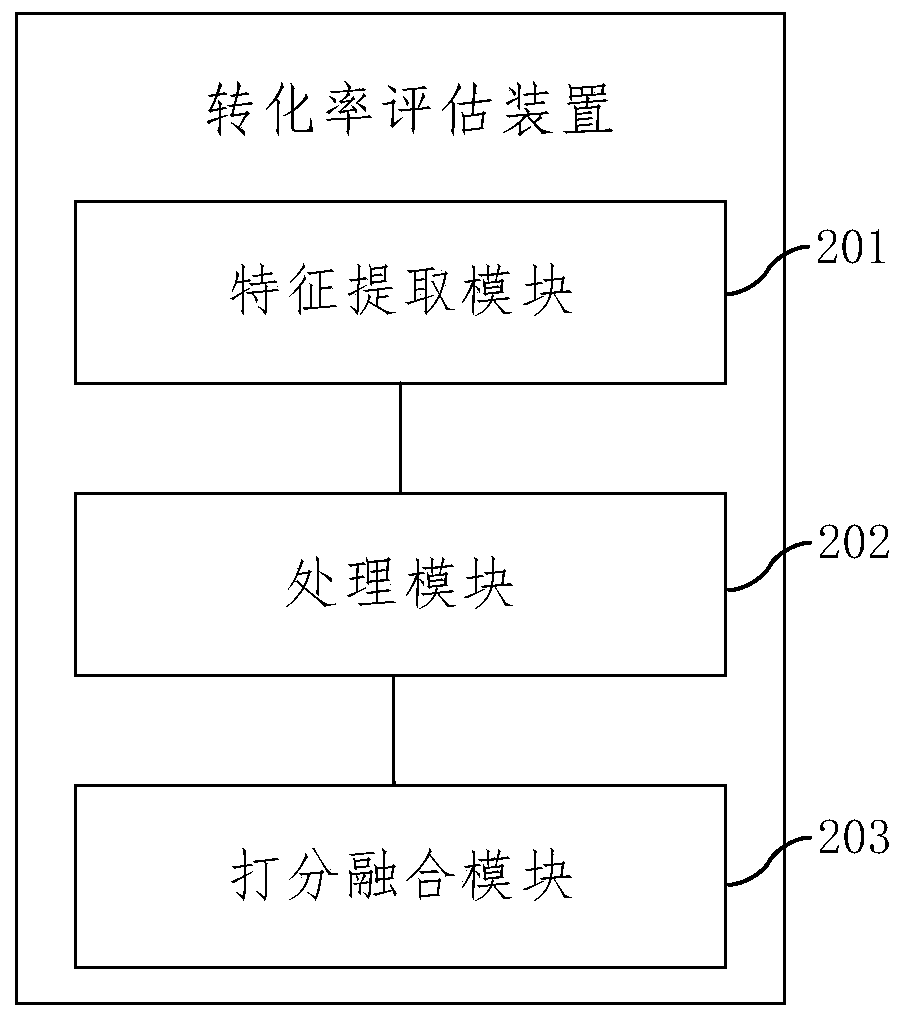

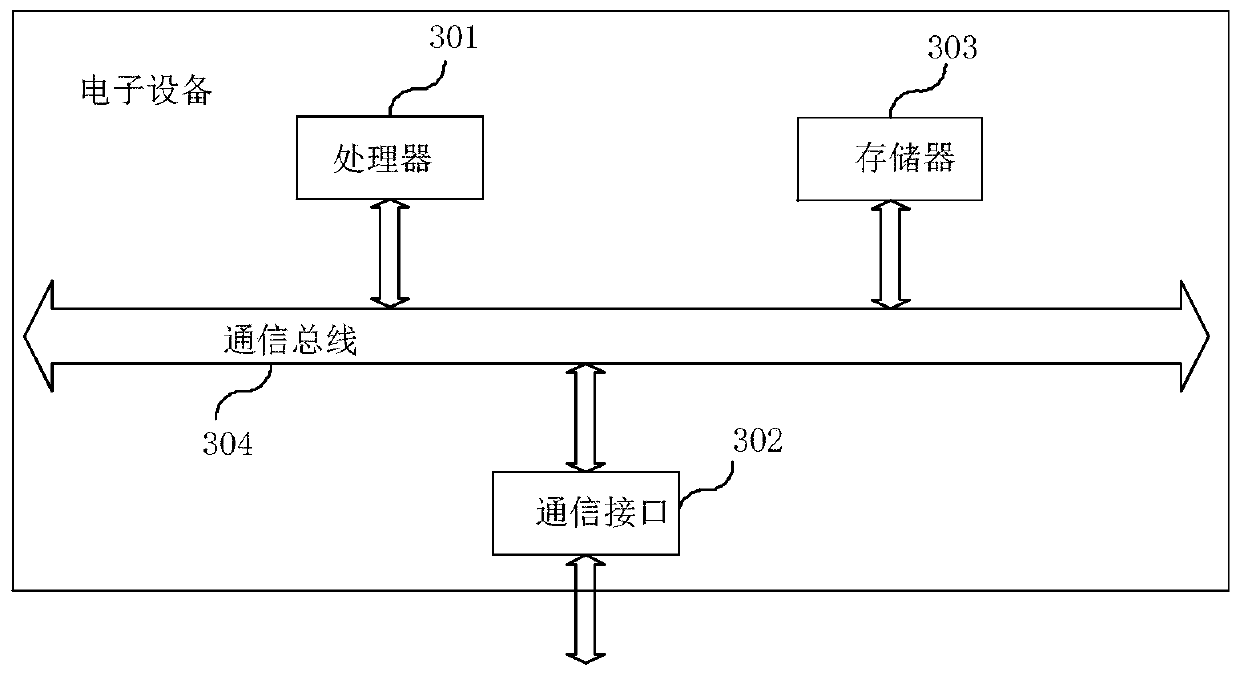

Conversion rate evaluation method and device

PendingCN110880124AAccurate and real-time click probabilityAccurate and share probabilities in real timeMarketingEngineeringFeature data

The embodiment of the invention provides a conversion rate evaluation method and a device, and the method comprises the steps: obtaining feature data according to a commodity attribute table and a user attribute table; inputting the obtained feature data into a preset click Wide & Deep model, outputting the click probability of the user to the commodity link, inputting the obtained feature data into a sharing Wide & Deep model, and outputting the sharing probability of the user to the commodity link; according to the click probability and the sharing probability, after score fusion is carriedout, obtaining the conversion probability of the commodity by the user; wherein the click or share Wi-Fi & Deep model is obtained by training according to the sample commodity attribute table and thesample user attribute table of the determined click or share result. According to the method, the click probability and the sharing probability of the user on the commodity can be accurately obtained,and the determined conversion probability is more accurate through fusion of the two fine ranking scores and the recall score.

Owner:TSINGHUA UNIV +1

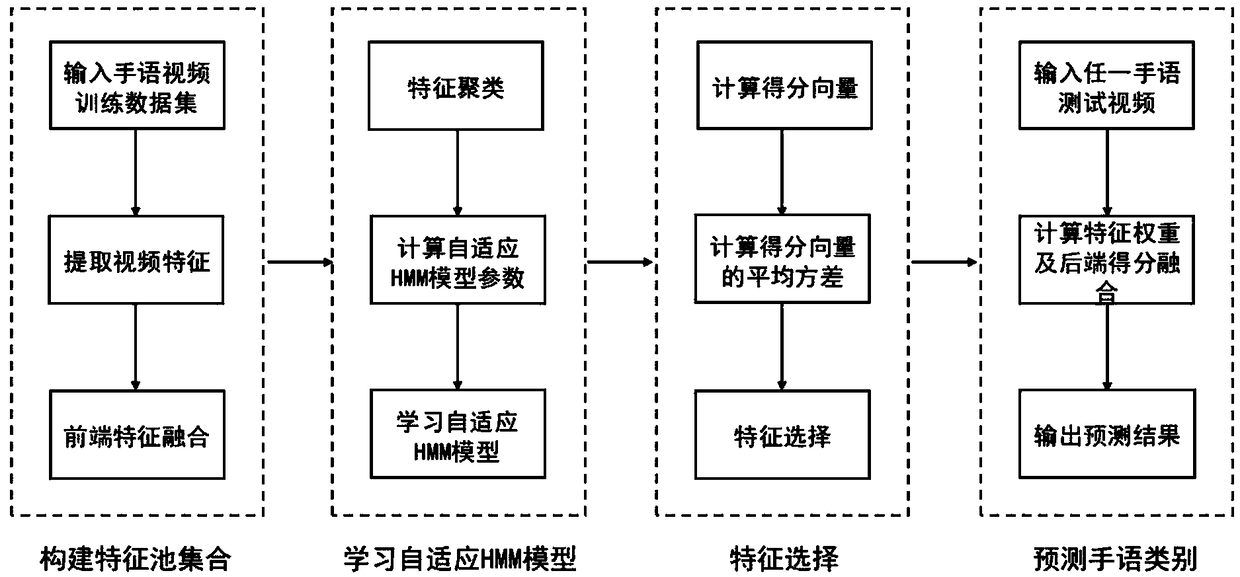

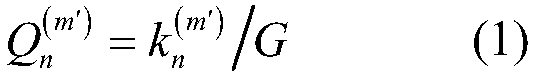

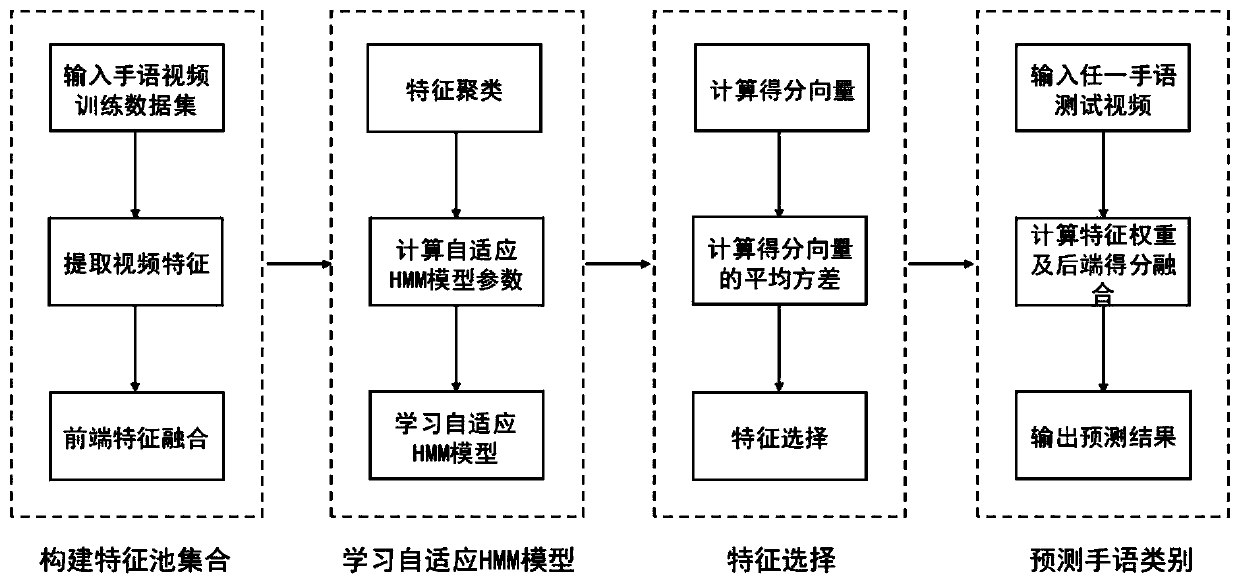

Multi-feature fusion sign language recognition method based on adaptive hidden markov

ActiveCN109409231AHigh precisionImprove robustnessCharacter and pattern recognitionHide markov modelMulti feature fusion

The invention discloses a multi-feature fusion sign language recognition method based on adaptive hidden markov, which comprises the following steps: 1. Firstly, extracting multiple features from a sign language video database and performing front-end fusion, namely constructing a feature pool set; then, an adaptive hidden Markov model of sign language video under different features in the featurepool set is constructed, and a feature selection strategy to obtain the appropriate back-end score fusion features. After selecting the back-end scoring fusion features, the scoring vectors under each back-end scoring fusion feature are calculated, and different weights are assigned to them, and then the back-end scoring fusion is carried out, so as to obtain the optimal fusion effect. The invention can realize the accurate recognition of sign language categories in sign language video and improve the robustness of the recognition.

Owner:HEFEI UNIV OF TECH

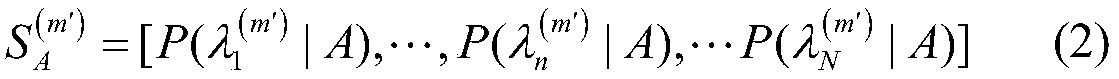

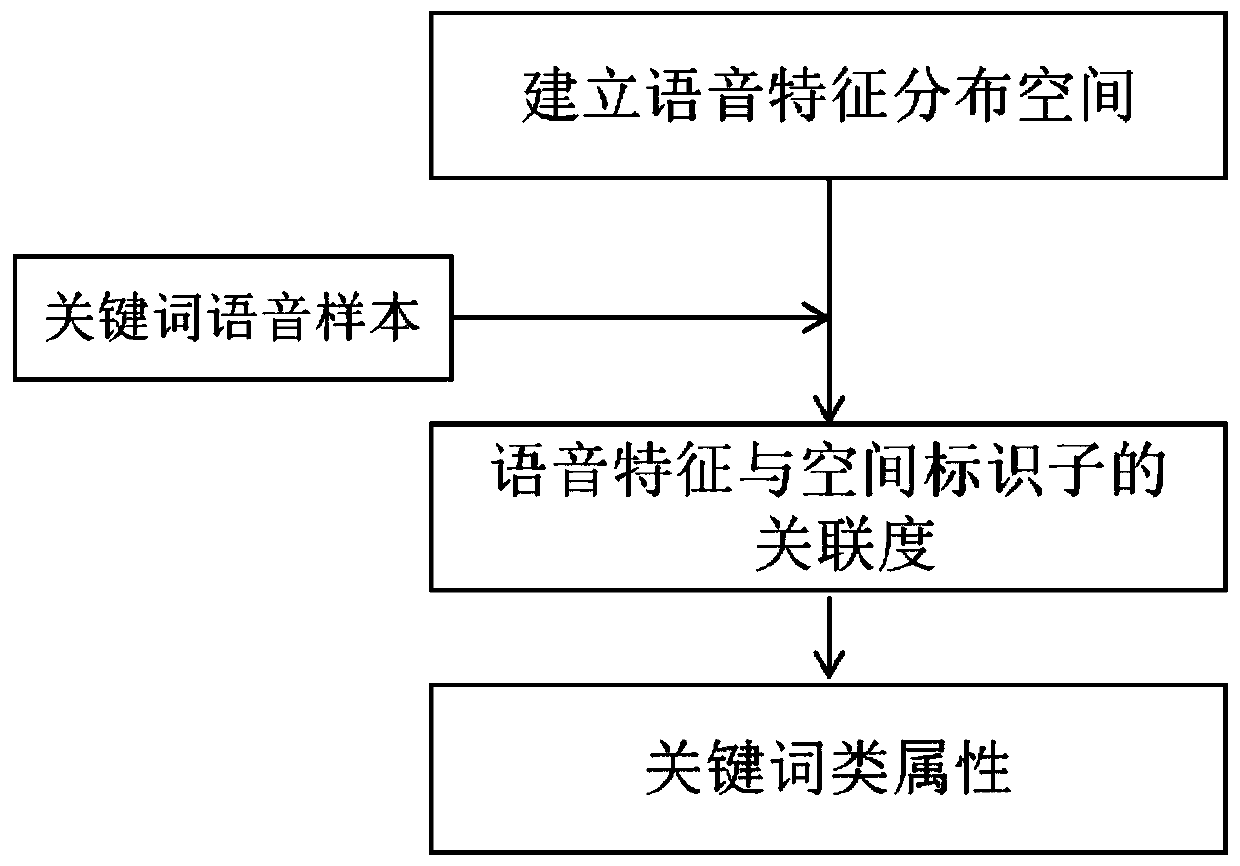

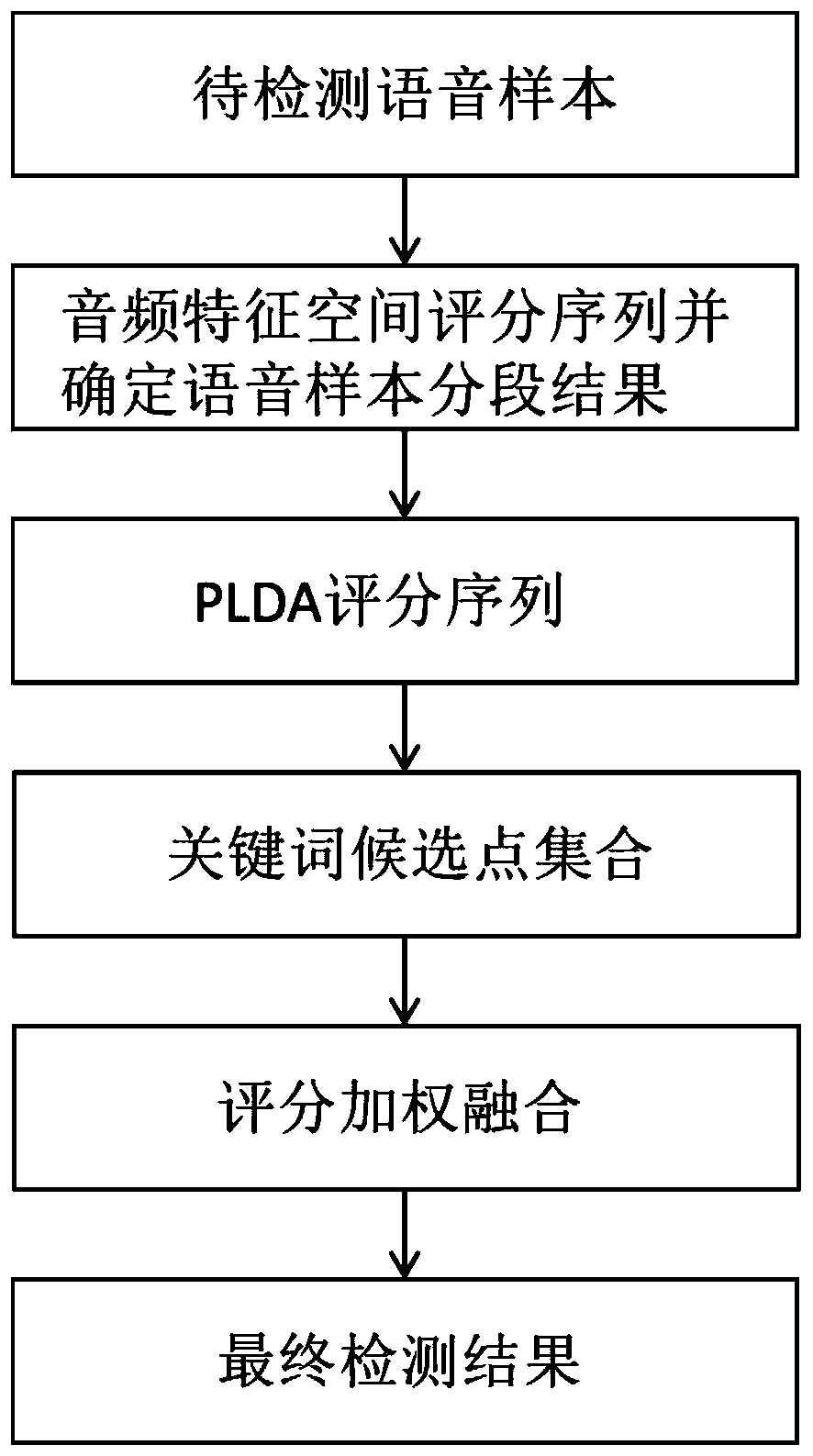

Voice keyword detection method based on complementary model score fusion

ActiveCN111128128AImprove detection accuracyReduce confusionSpeech recognitionEnergy efficient computingMedicineAlgorithm

The invention provides a voice keyword detection method based on complementary model score fusion. The method comprises the following steps of 1), introducing keyword modeling based on i-vector on thebasis of keyword modeling in an audio feature space, (2) self-adaptive segmented window shift: for a voice sample to be detected, intercepting a voice segment from the starting signal, obtaining thedistribution expression of the current segment in the voice feature space, calculating the similarity between the distribution expression and the keyword class attributes to obtain a class score sequence of the current segment, obtaining the window shift of the next segment according to the score of the current segment, processing segment by segment until the signal is ended, and dividing a voicesample to be detected into K segments, and 3) conducting score fusion by using the position of a keyword candidate point. According to the method, a keyword detection algorithm with certain complementarity is realized by adopting two different models, the score results of the two models are fused, the problem of voice keyword detection under the condition of a small training sample amount can be solved, and meanwhile, the keyword detection accuracy can be improved.

Owner:SOUTH CHINA UNIV OF TECH

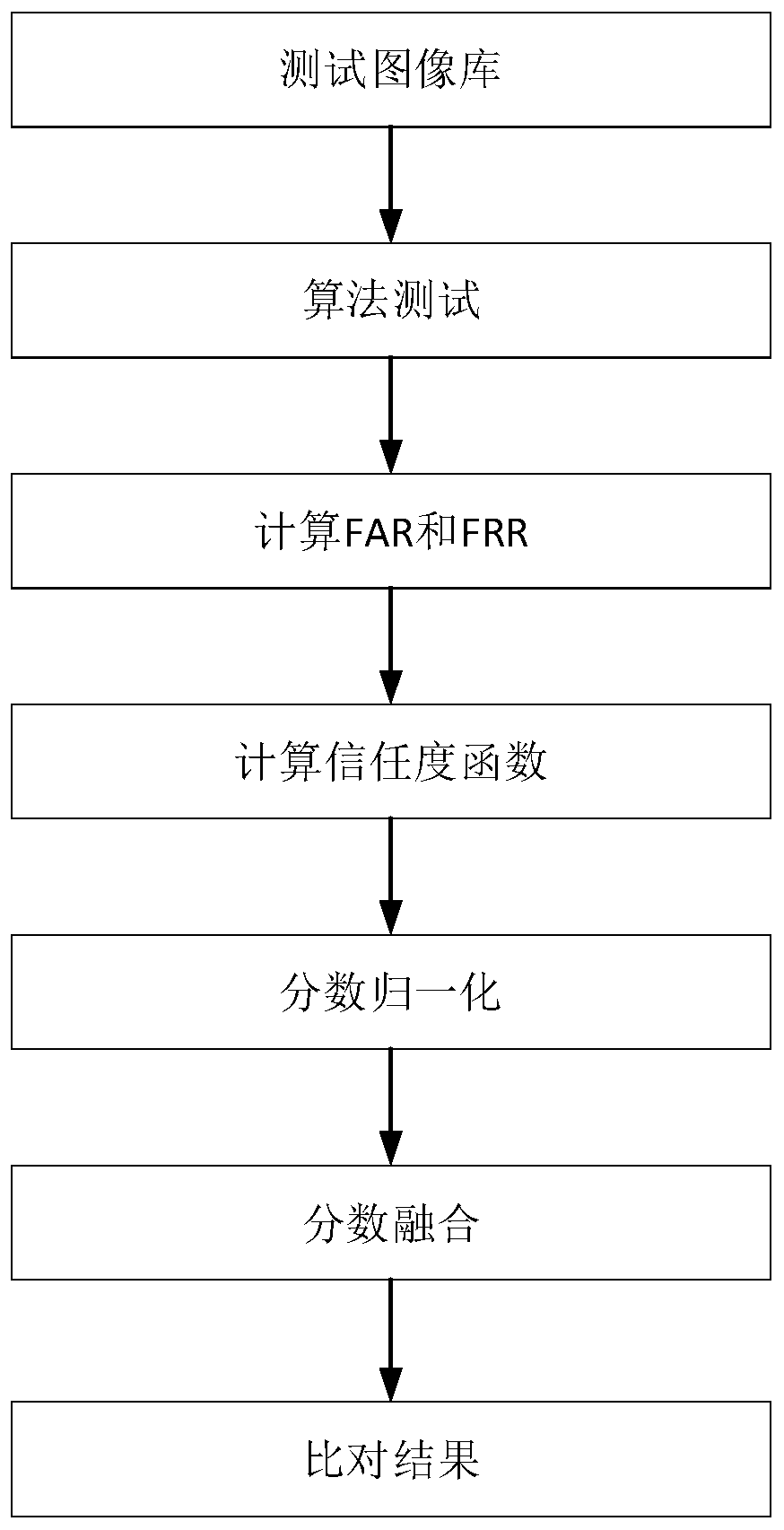

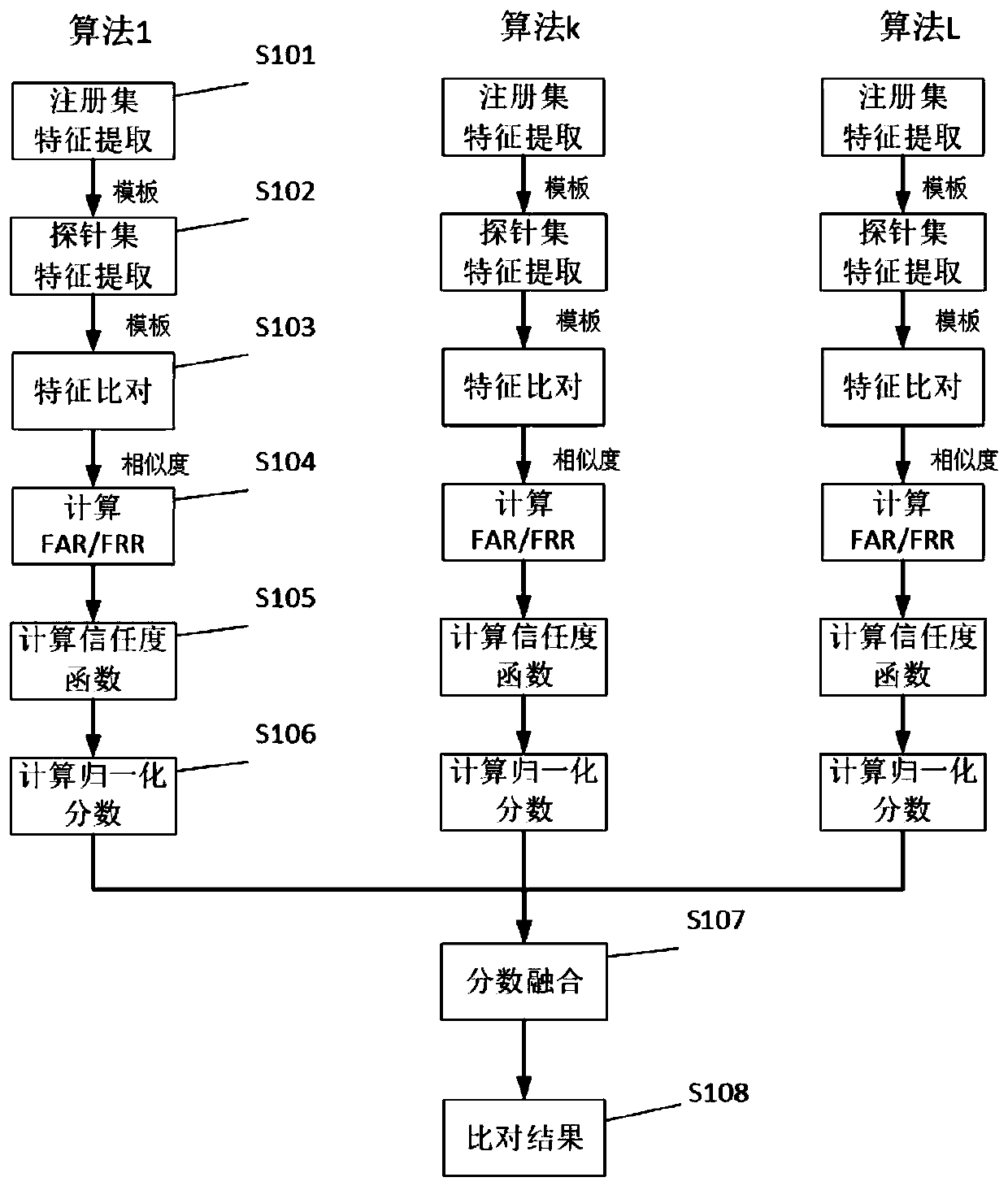

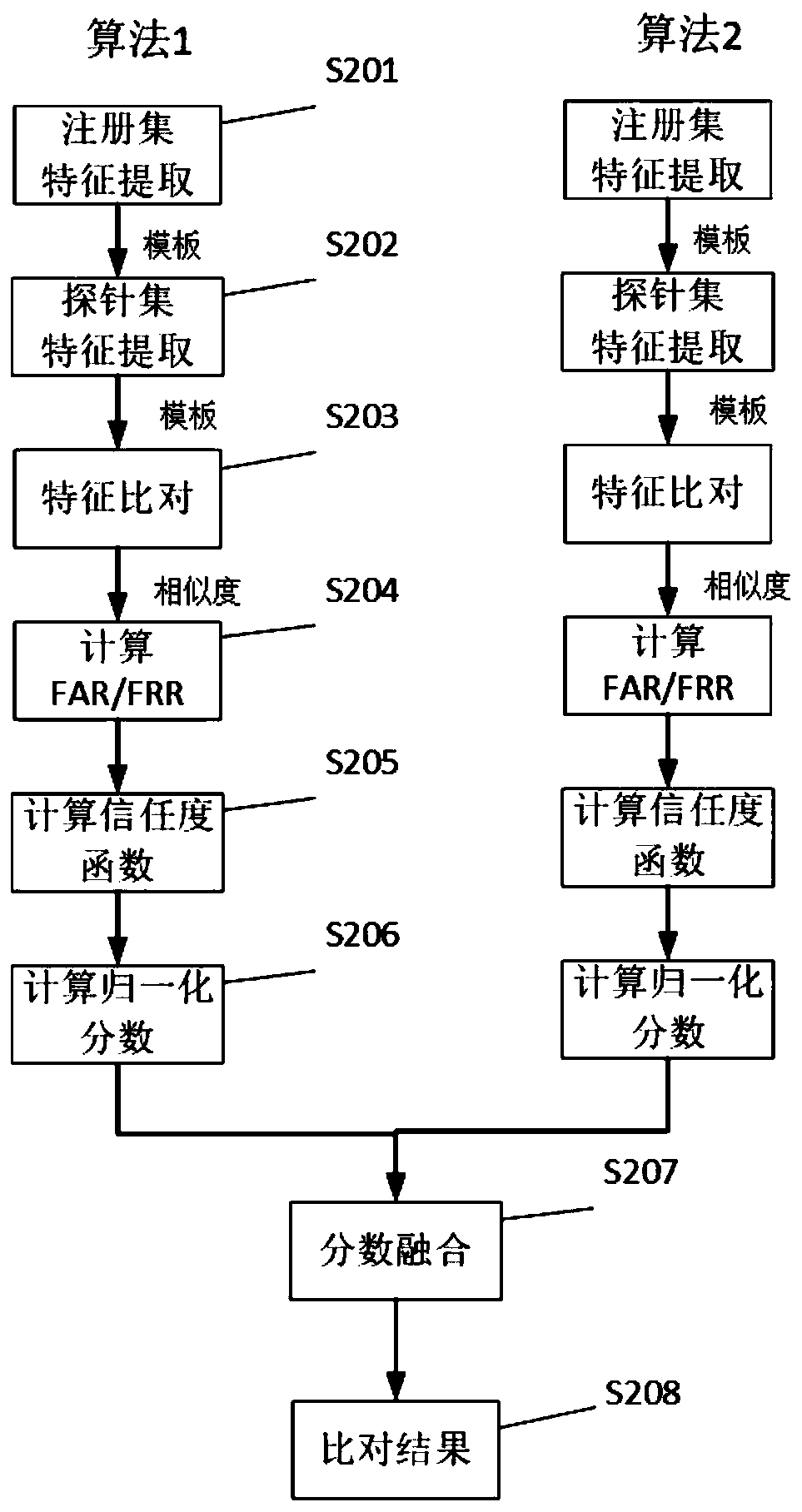

Multi-algorithm fused face recognition method

PendingCN110532856AImprove recognition accuracyRegistration failure rate decreasedCharacter and pattern recognitionRecognition algorithmFace perception

The invention discloses a multi-algorithm fusion face recognition method, and the method comprises the following steps: constructing a unified benchmark test image library, wherein the benchmark testimage library construction method comprises a registration set and a probe set; based on the benchmark test image library, enabling each face recognition algorithm to be subjected to algorithm test; calculating the FAR and the FRR of each algorithm on the benchmark test image library; calculating a trust degree function of each algorithm; normalizing the comparison score of each algorithm; carrying out score fusion by adopting a credibility-based weighting mode; and outputting a fusion comparison result according to the fusion comparison score. On the basis of establishing a unified test benchmark library, performance test is carried out on a plurality of different face recognition algorithms, comparison similarity scores are converted into corresponding credibility, and the converted scores meet credibility orderliness, so that the converted scores have comparability.

Owner:THE FIRST RES INST OF MIN OF PUBLIC SECURITY +1

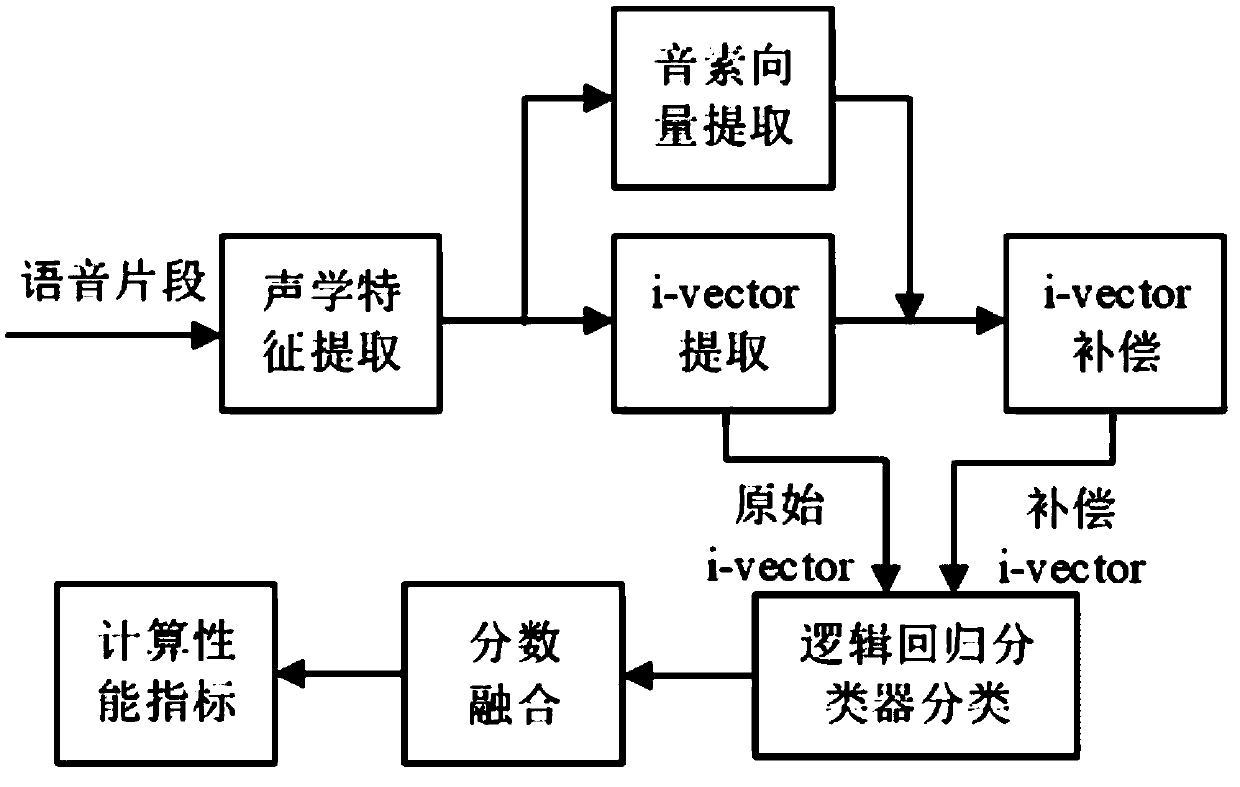

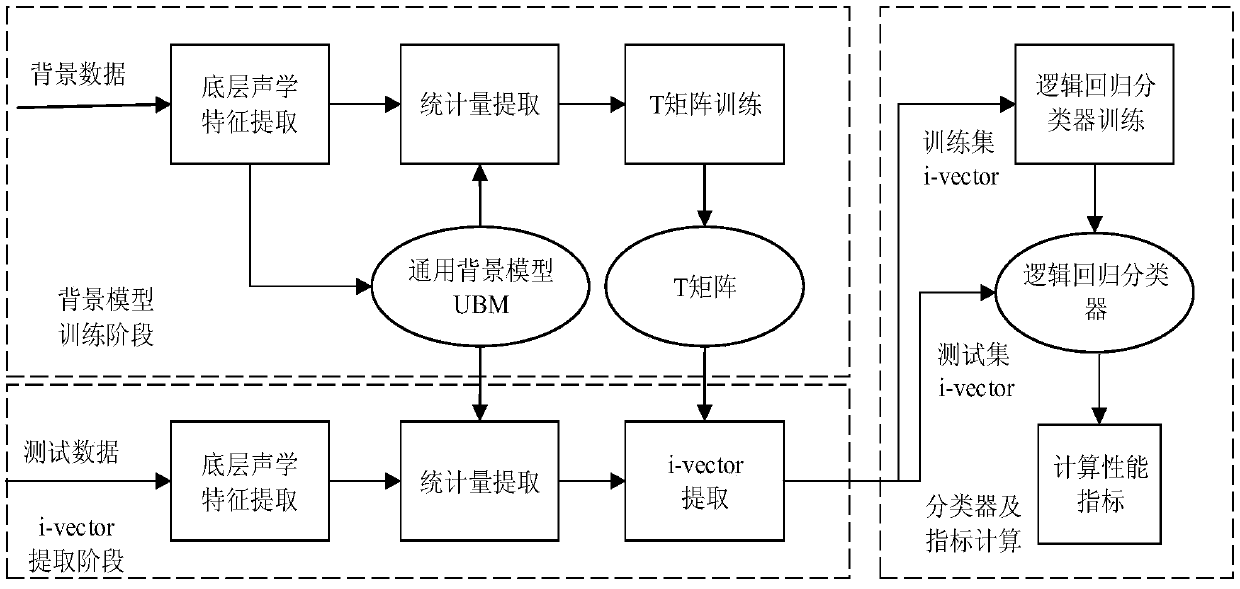

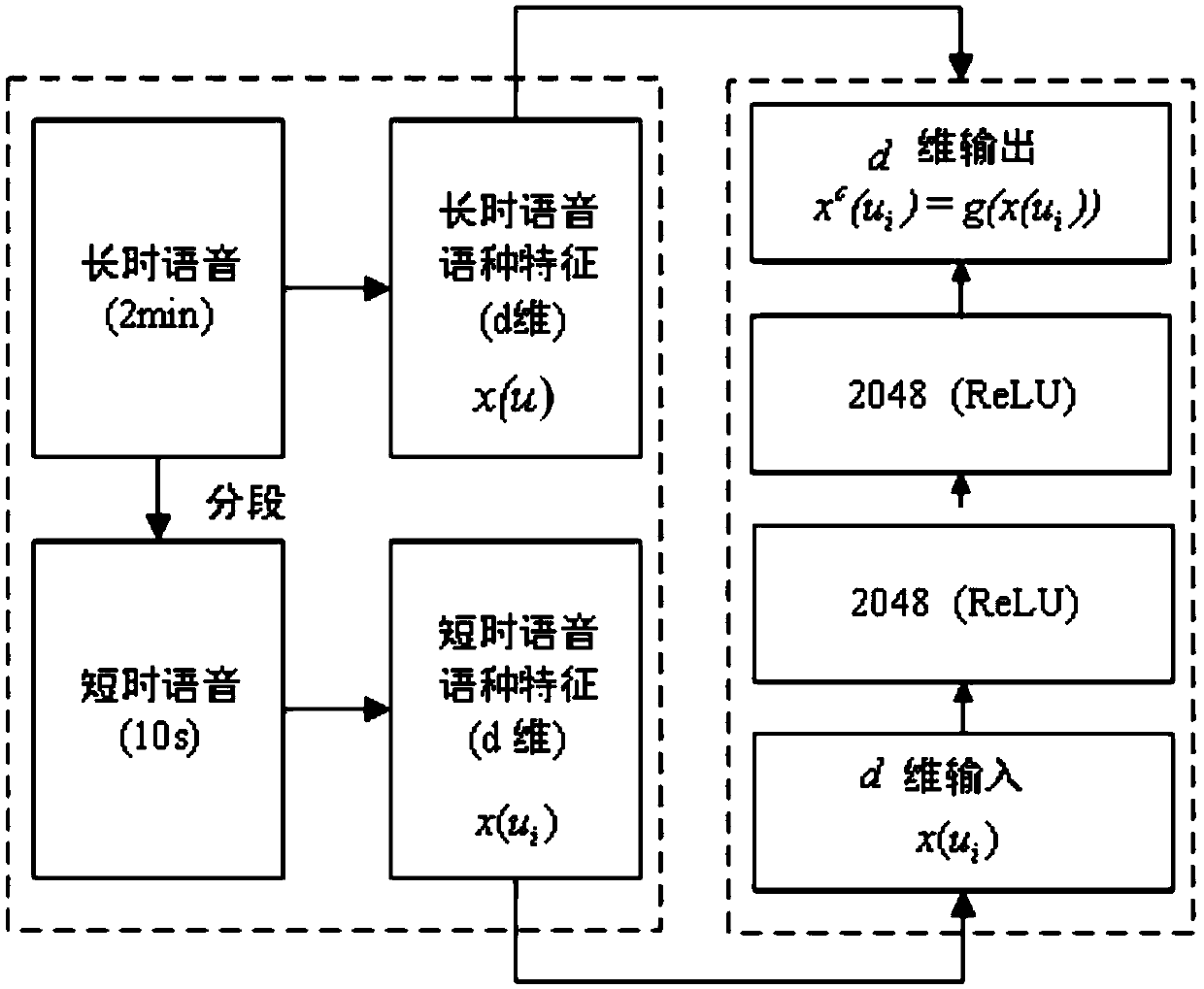

Language identification and classification method and device based on noise reduction automatic encoder

ActiveCN110858477AAlleviate the problem of unbalanced distributionDistribution balanceSpeech recognitionAlgorithmClassification methods

The invention provides a language identification and classification method based on a noise reduction automatic encoder. The method includes: step 1) extracting a to-be-identified speech signal from ato-be-identified speech segment to obtain underlying acoustic characteristics; step 2) extracting an original i-vector from the underlying acoustic characteristics obtained in step 1); step 3) calculating and obtaining a phoneme vector pc (u); step 4) splicing the original i-vector with the phoneme vector pc (u), and then inputting to a DAE-based i-vector compensation network to obtain a compensated i-vector; step 5) inputting the original i-vector obtained in step 2) and the compensated i-vector obtained in step 4) respectively into a pre-trained logistic regression classifier to obtain corresponding score vectors; and step 6) performing score fusion on the corresponding score vectors obtained in step 5) to obtain a final score vector, further obtaining a probability of each language category, and determining the language category to which it belongs.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

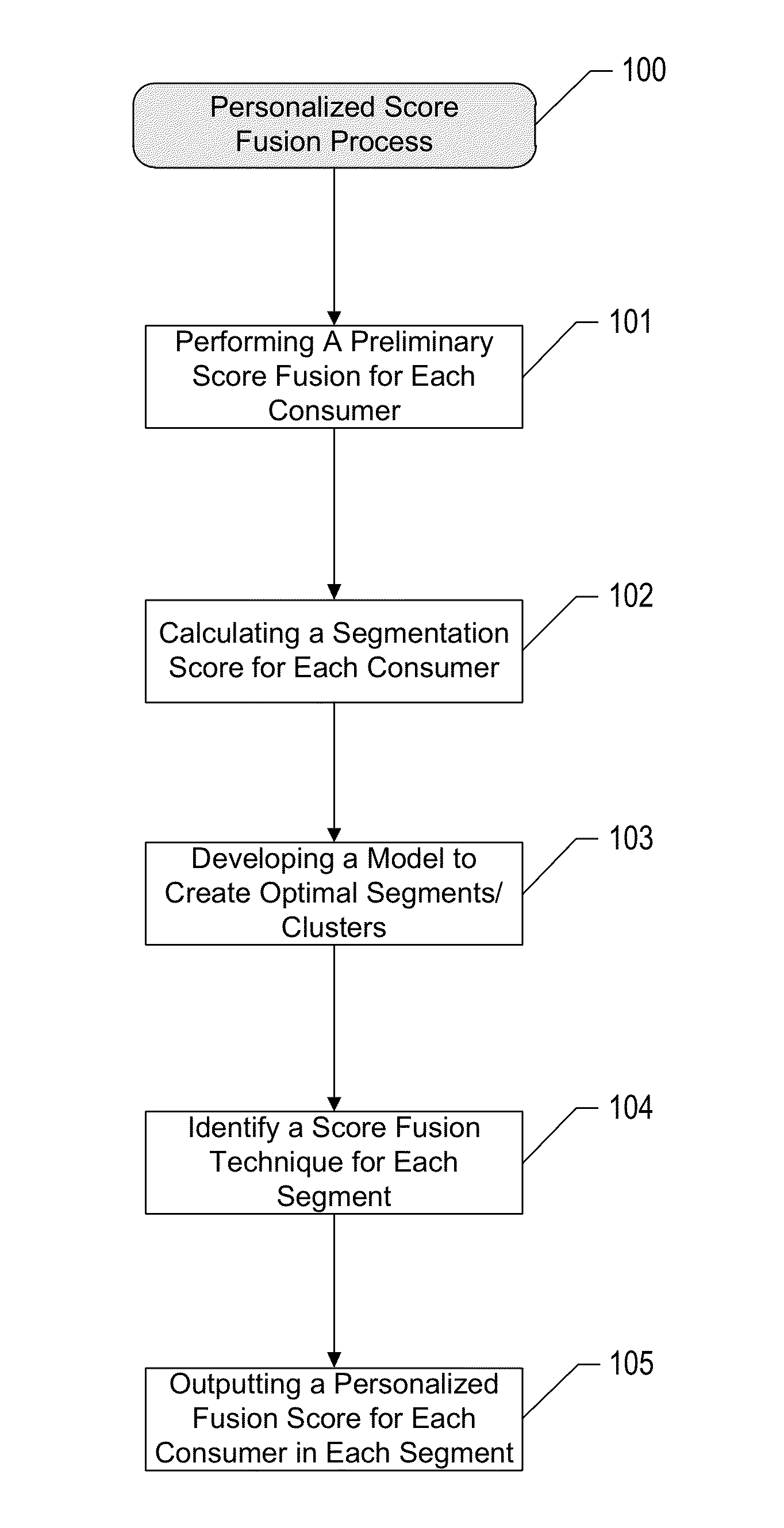

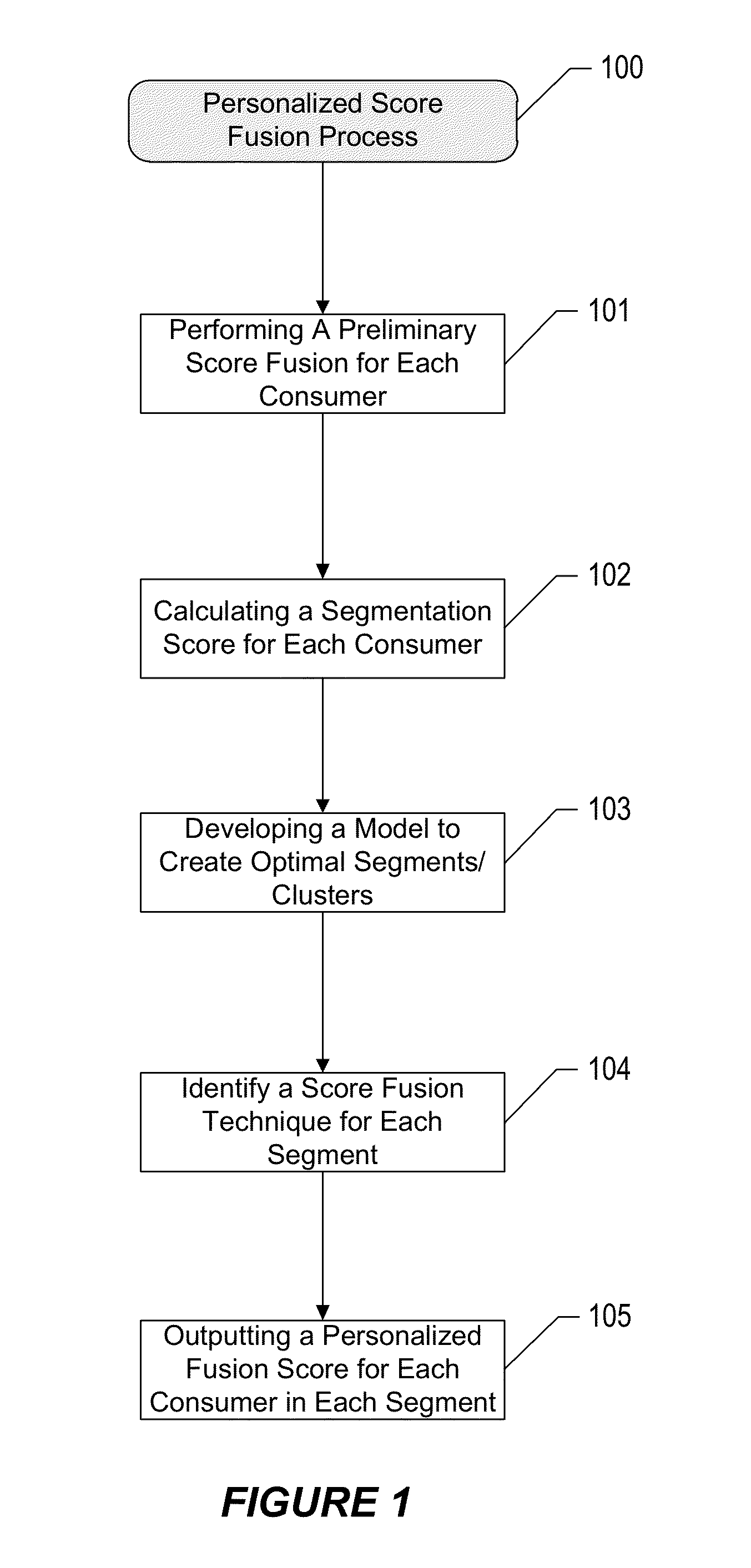

Determining a personalized fusion score

InactiveUS20130173452A1Good flexibilityImprove accuracyFinanceDesign optimisation/simulationPersonalizationData mining

Various embodiments of the present invention provide systems and methods for determining a personalized fusion score. In certain embodiments, the systems and methods are configured for calculating preliminary fused scores for consumers at least in part by applying a first score fusion technique across the sample of consumer data. Segmentation scores are then calculated based at least in part upon the preliminary fused scores. In those and other embodiments, the segmentation scores enable creation of a plurality of cluster subsets within the sample of consumer data. In certain embodiments cluster subsets are defined at least in part by a particular score mix, while in other embodiments subsets are defined at least in part by respective score fusion techniques that prove optimal for each subset. Further, in various embodiments, application of multiple score fusion techniques across respective cluster subsets provides personalized fusion scores for the consumers in each respective cluster subset.

Owner:EQUIFAX INC

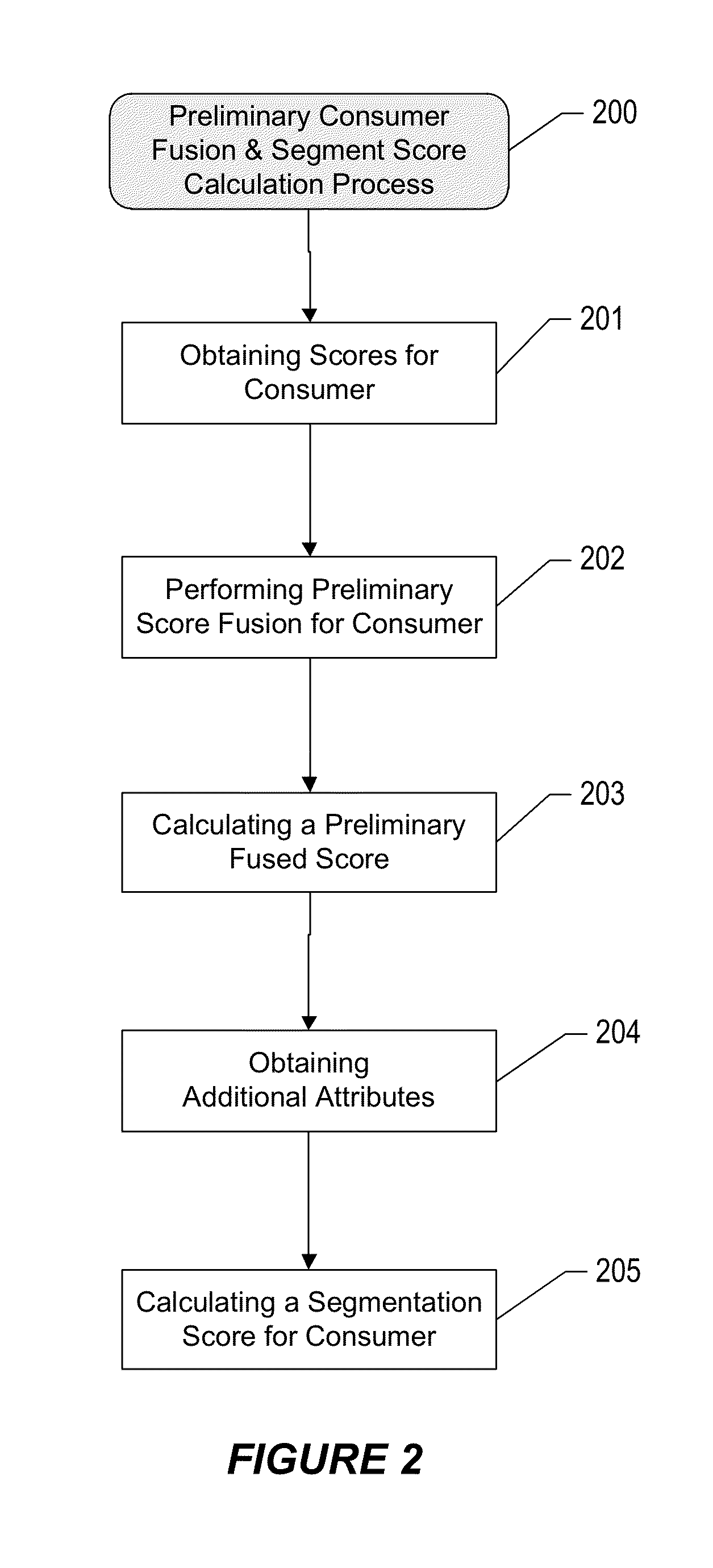

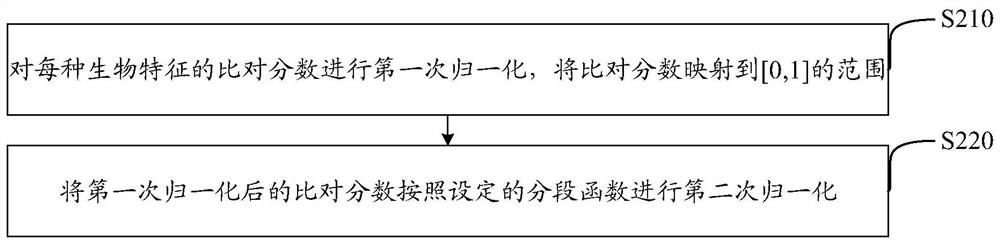

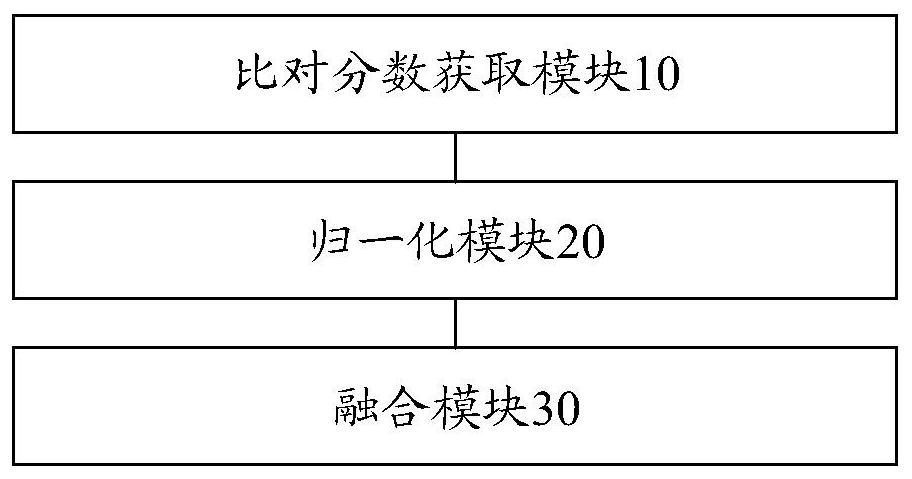

Comparison score fusion method and device for multi-modal biological recognition, medium and equipment

PendingCN113822308AHigh precisionImprove recognition accuracyCharacter and pattern recognitionDigital data authenticationData setBiology

The invention discloses a comparison score fusion method and device for multi-modal biological recognition, a medium and equipment, and belongs to the field of biological recognition. The method comprises the following steps: acquiring comparison scores of various biological features; normalizing the comparison score of each biological feature according to a plurality of set thresholds, wherein the plurality of thresholds are corresponding comparison thresholds when the FAR of the biological feature on the training set is a plurality of specific values; and performing weighted summation on the normalized comparison score according to a weighting coefficient to obtain a fused comparison score, wherein the weighting coefficient of the comparison score of each biological feature is calculated and obtained according to the corresponding FRR when the FAR of the biological feature on the training set is a plurality of specific values. According to the method and device, high precision can be achieved on data sets of banks and the like.

Owner:BEIJING TECHSHINO TECH +1

Object verification apparatus and method

InactiveUS8219571B2Improve performanceImage analysisDigital data processing detailsEstimated WeightPattern recognition

Provided are an object verification apparatus and method. The object verification apparatus includes a matching unit performing a plurality of different matching algorithms on a query image and generating a plurality of scores; a score normalization unit normalizing each of the generated scores to be adaptive to the query image; a weight estimation unit estimating weights of the normalized scores based on the respective matching algorithms applied; and a score fusion unit fusing the normalized scores by respectively applying the weights estimated by the weight estimation unit to the normalized scores.

Owner:SAMSUNG ELECTRONICS CO LTD

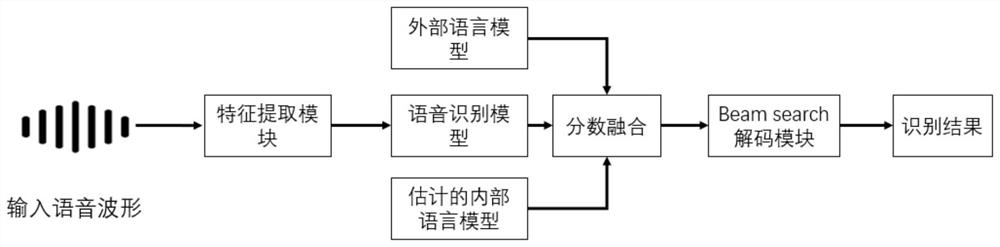

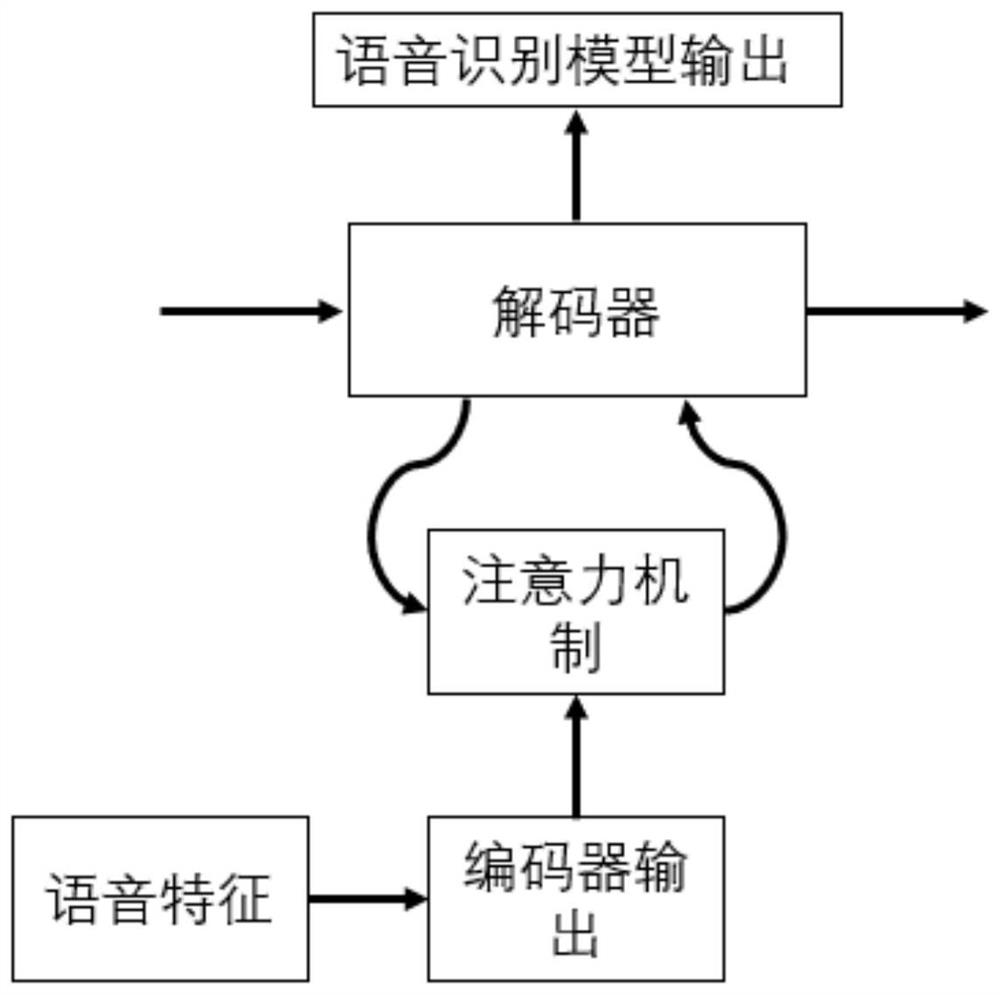

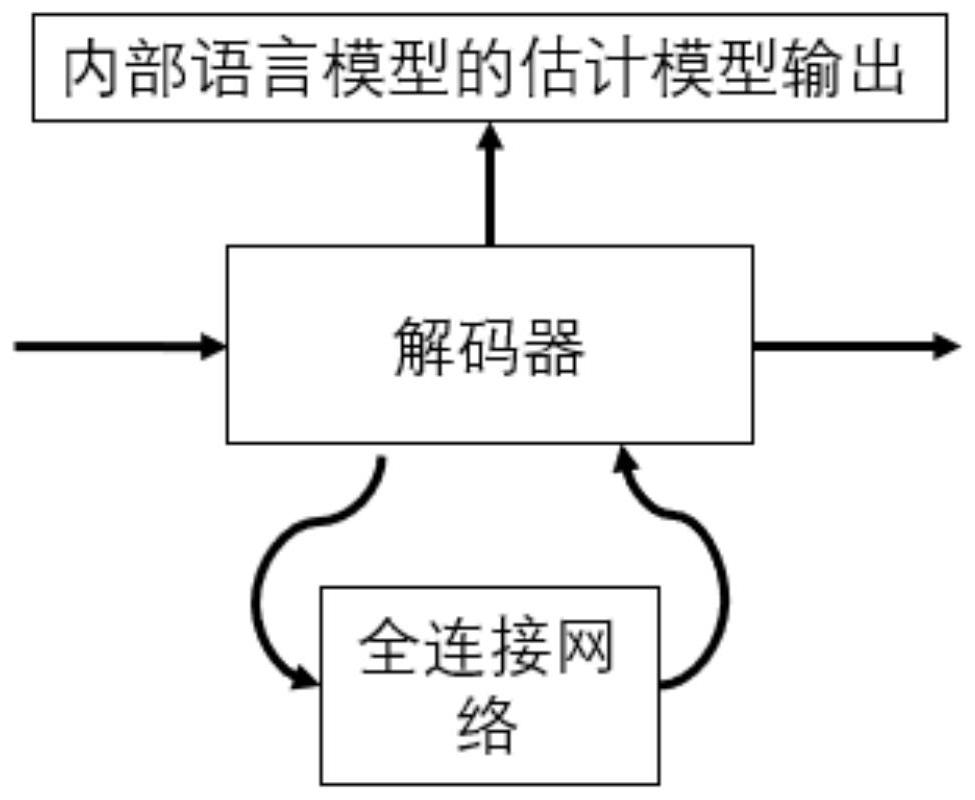

Fusion method based on end-to-end speech recognition model and language model

The invention belongs to the technical field of end-to-end speech recognition, and discloses a fusion method based on an end-to-end speech recognition model and a language model, and the method comprises the following steps: S1, training an end-to-end speech recognition model through employing a speech and text pair, and training an external language model through employing text data; s2, independently taking out a decoder part of the trained speech recognition model and forming an independent model; s3, independently training the independent model by using training data to a text, and obtaining an estimation model of the internal language model after convergence; and S4, the score fusion of the estimation models of the speech recognition model, the external language model and the internal language model is decoded to obtain a decoding result. The algorithm can improve the recognition accuracy after the speech recognition model and the language model are fused, and has a wide application prospect in the field of speech recognition.

Owner:SOUTH CHINA UNIV OF TECH

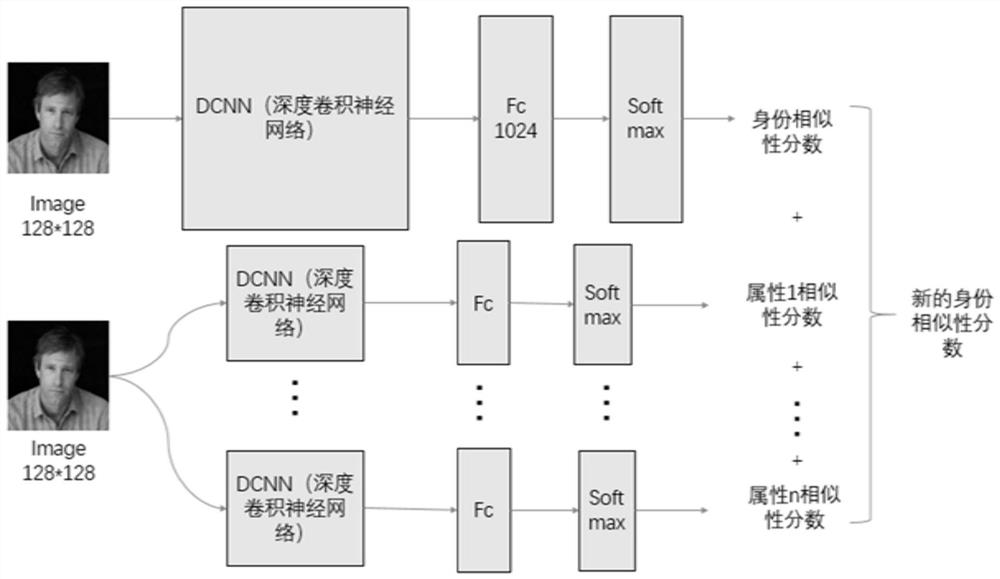

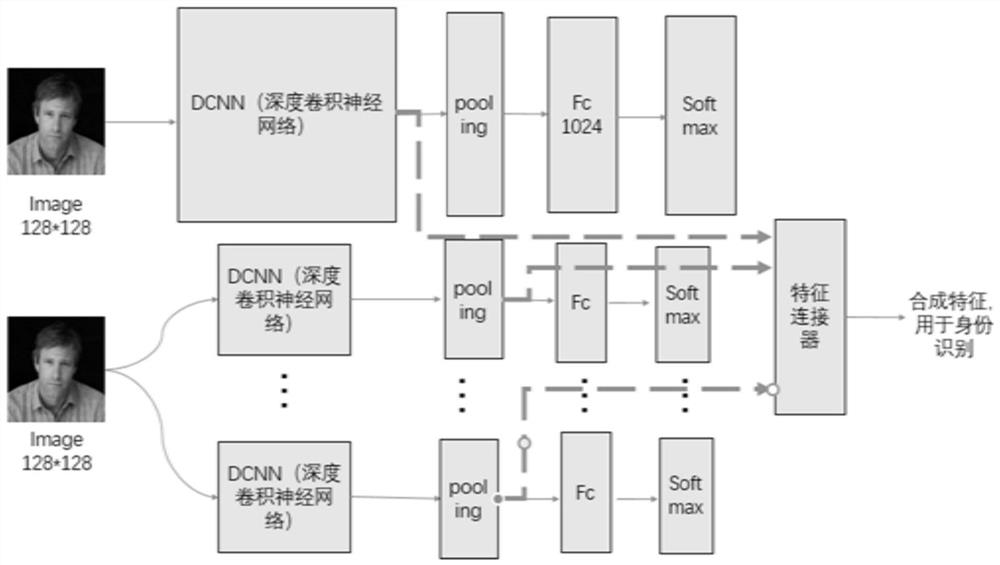

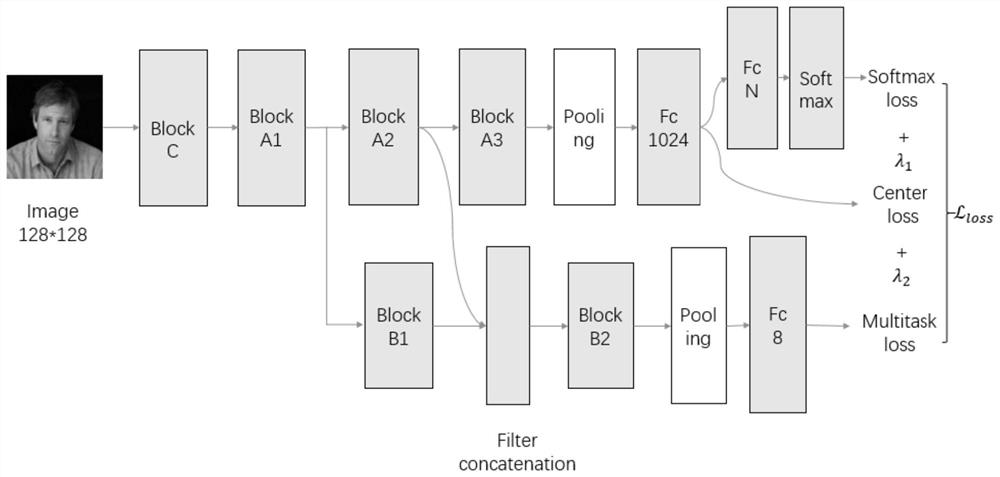

Face Recognition Method Based on Combining Face Attribute Information

ActiveCN107766850BImprove accuracyPredictive attributesCharacter and pattern recognitionNeural architecturesPattern recognitionTask network

The invention discloses a face recognition method based on combining face attribute information, and belongs to the technical field of digital image processing. Aiming at the technical problem that the existing fusion method needs to train multiple DCNN networks, and then performs score fusion or feature fusion for further training, the work task is heavy and complex, which is not conducive to practical application, and discloses a new fusion method of identity information and attribute information. way to improve the accuracy of face recognition. The invention fuses the face identity authentication network and the attribute recognition network to form a fusion network, and adopts the joint learning method to learn the identity feature and the face attribute feature at the same time, which not only improves the correct rate of face recognition, but also can predict the face. It is a multi-task network; it adopts a cost-sensitive weighting function, so that it does not depend on the data distribution of the target domain, and realizes balanced training in the source data domain; and the modified fusion framework only adds a small number of parameters, The additional computational load is relatively small.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Face attribute classification system based on bidirectional ladder structure

The present invention relates to the field of computer vision technology, in particular to a face attribute classification system based on a two-way ladder structure, aiming to solve how to make full use of the features of different levels in the deep network, and the correspondence between features of different levels and different face attributes relationship to improve the accuracy of face attribute classification. For this purpose, the face attribute classification system based on the two-way Ladder structure provided by the present invention includes a two-way Ladder self-encoder module, an adaptive attention module and an adaptive scoring fusion module; the two-way Ladder self-encoder module includes an encoder module and a decoder The adaptive attention module includes a plurality of attention submodules; the adaptive score fusion module is configured to obtain the face attribute classification result of the face image to be tested according to the output result of the encoder module and the output result of the attention submodule . Based on the above structure, different levels of encoding features and decoding features can be fully utilized to improve the accuracy of face attribute classification.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

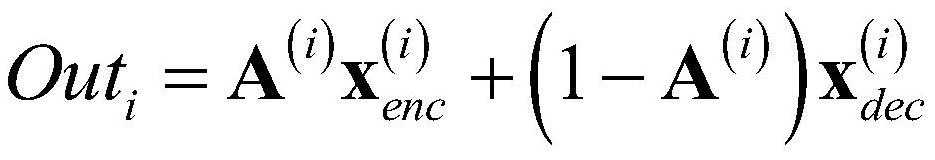

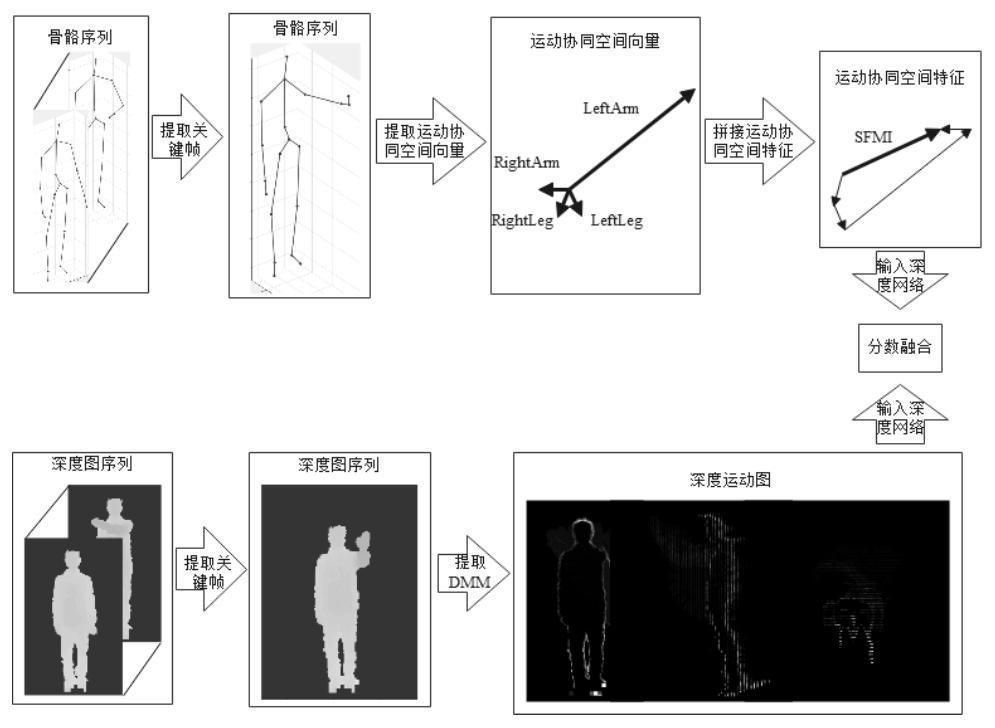

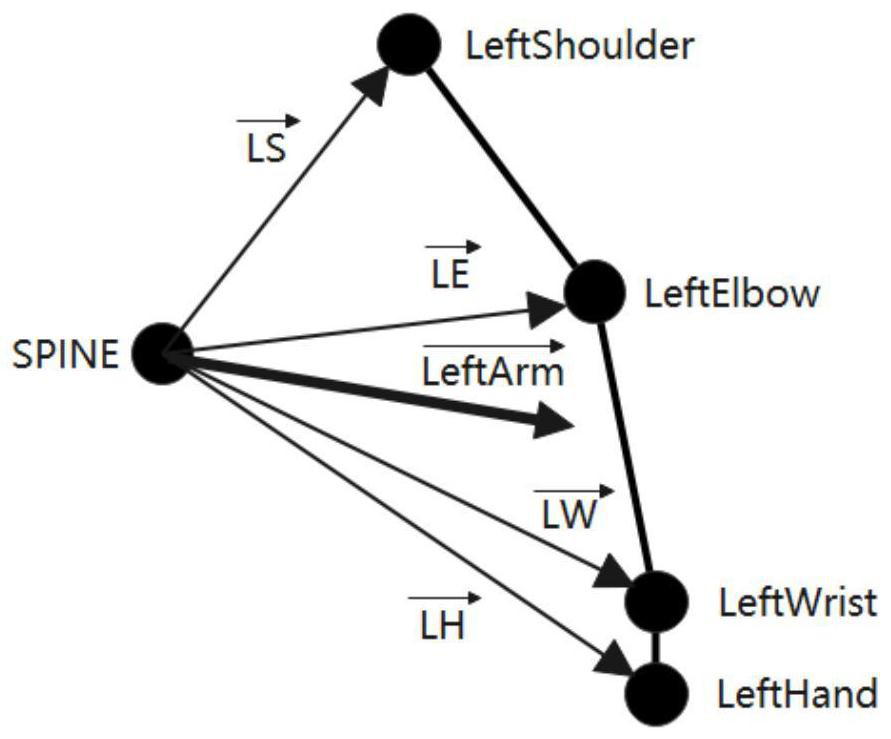

Human body behavior recognition method based on motion cooperation space

PendingCN114677621AImprove computing efficiencyStrong complementarityBiometric pattern recognitionNeural architecturesHuman bodyMotion coordination

The invention discloses a human body behavior identification method based on a motion cooperation space. The method comprises the following steps: S1, respectively carrying out key frame extraction based on a motion state measurement coefficient on an initial skeleton sequence and a depth map sequence; s2, extracting motion coordination space vectors from the skeleton sequence processed in the step S1, and splicing the motion coordination space vectors into motion coordination space features; extracting DMM features from the depth map sequence processed in the step S1 to obtain a depth motion map; and S3, inputting the deep motion map and the motion collaborative spatial features into a deep network at the same time, and performing score fusion. Based on the idea of multi-modal fusion, skeleton data and depth data are combined, so that the data are more complete, complementation of various heterogeneous information is realized, redundancy among modals is eliminated, a new behavior recognition method system is established, and a new thought and theoretical basis are provided for research and application of a human body behavior recognition method.

Owner:CHANGZHOU UNIV

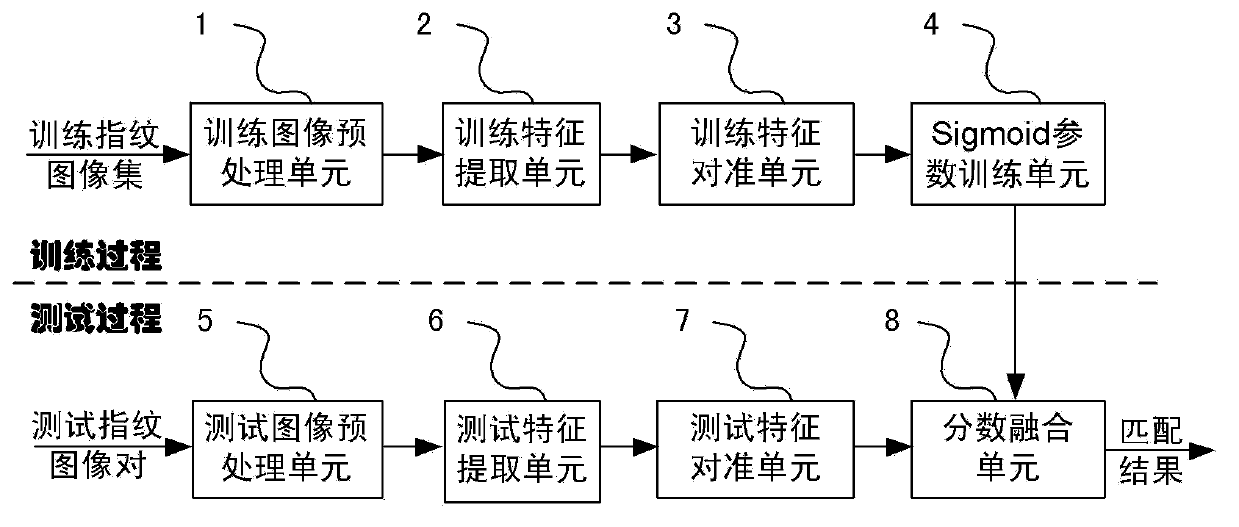

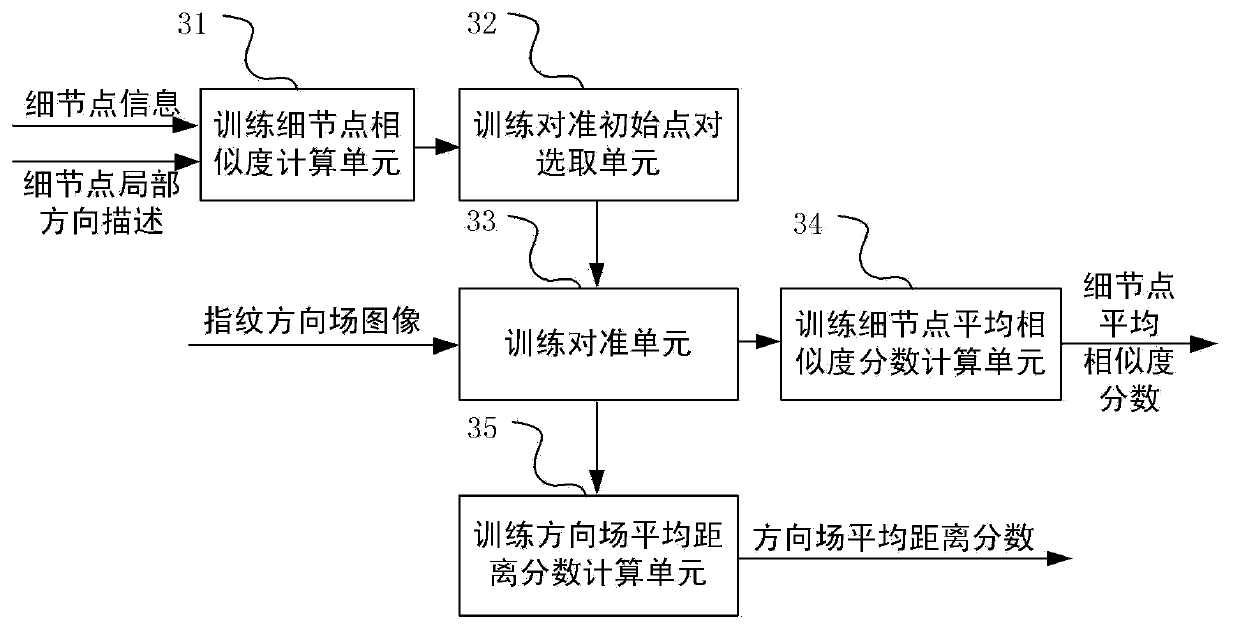

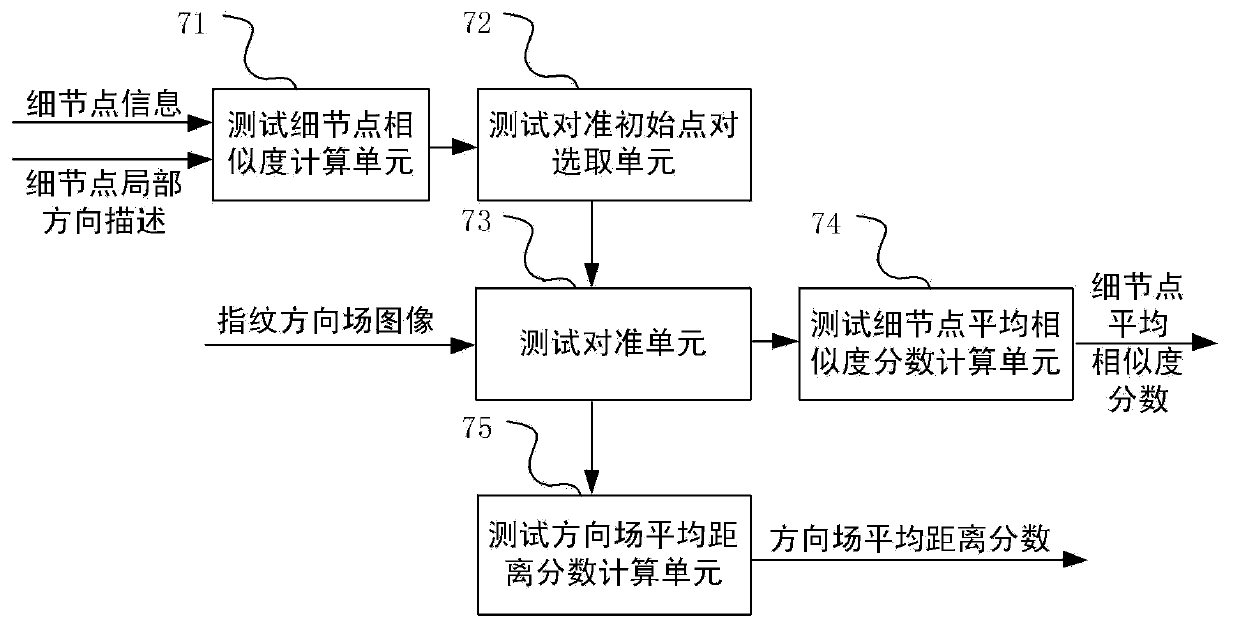

Fingerprint score fusion system and method based on Sigmoid expansion

ActiveCN102819754BImprove matching accuracy performanceImprove performanceCharacter and pattern recognitionFingerprintImage pre processing

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

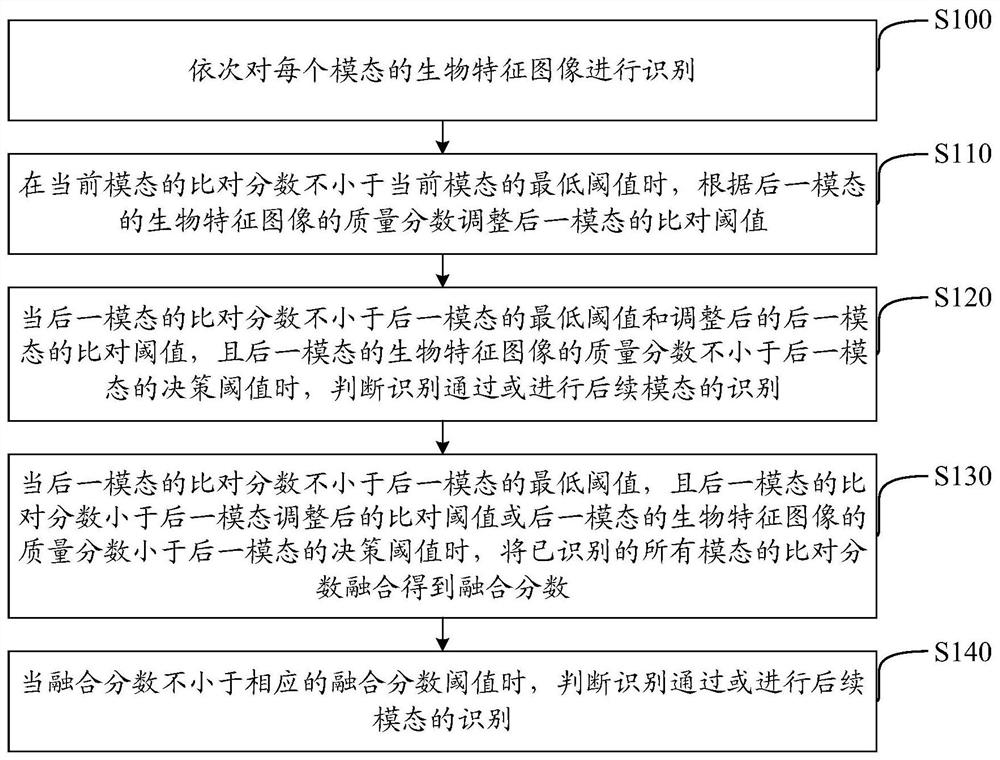

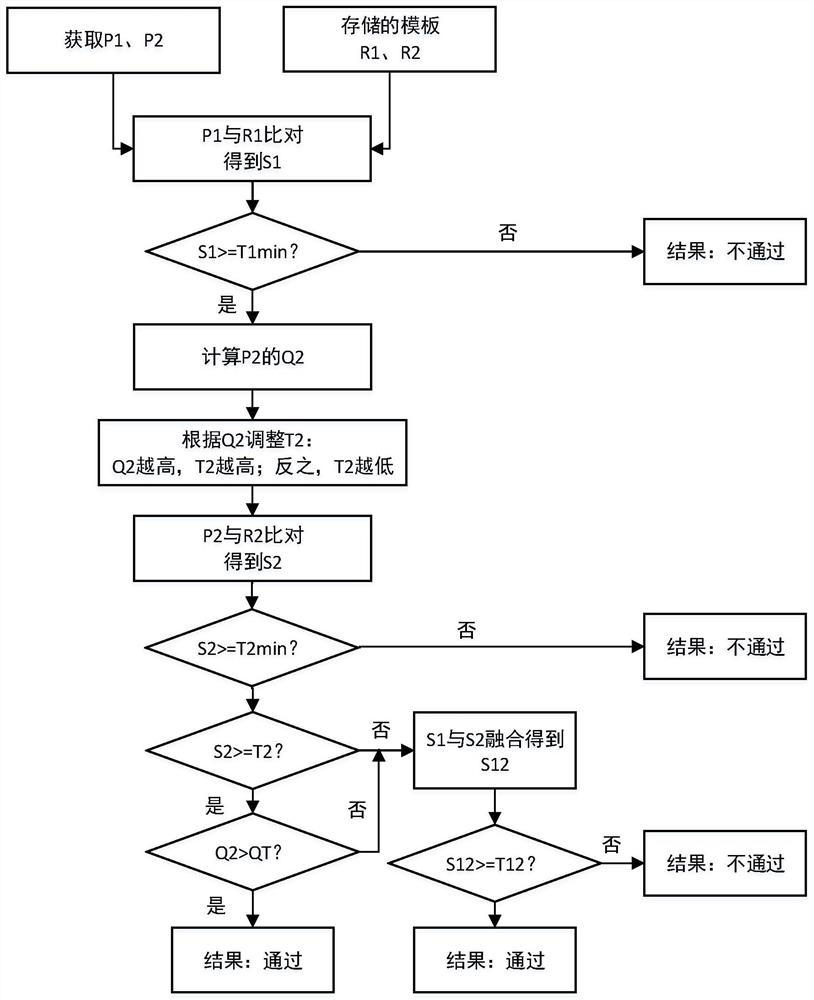

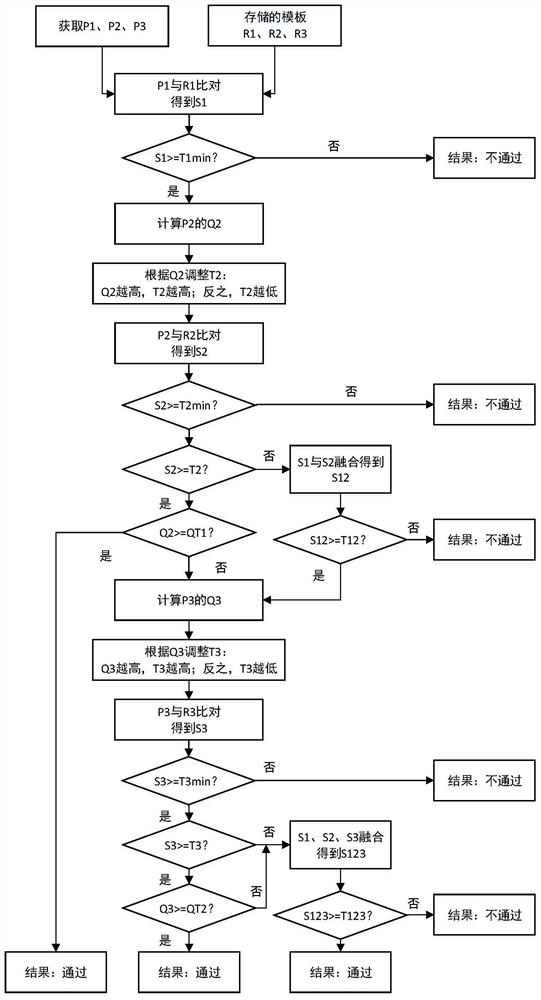

Biological characteristic multi-modal fusion identification method and device, storage medium and equipment

PendingCN114332905AIncrease the probability of authenticationImprove securityBiometric pattern recognitionIdentity recognitionEngineering

The invention discloses a biological feature multi-modal fusion recognition method and device, a storage medium and equipment, and belongs to the field of biological recognition. Adjusting a comparison threshold value of the latter modal according to the quality score of the biological characteristic image of the latter modal in the multi-modal identification; when the comparison score of the latter mode is not smaller than the adjusted comparison threshold value of the latter mode and the quality score of the biological feature image of the latter mode is not smaller than the decision threshold value of the latter mode, judging that the recognition is passed or performing the recognition of the subsequent modes; otherwise, fusing the comparison scores of all the identified modals to obtain a fused score; and according to the fusion score, judging whether the identification is passed or carrying out subsequent modal identification. According to the method, the comparison threshold value is dynamically adjusted according to the quality score of the biological feature image, auxiliary decision making is carried out on the recognition result through the quality score of the biological feature image, meanwhile, the probability of identity authentication is gradually increased in combination with comparison score fusion, and the safety and reliability of biological identity recognition are improved.

Owner:BEIJING TECHSHINO TECH +1

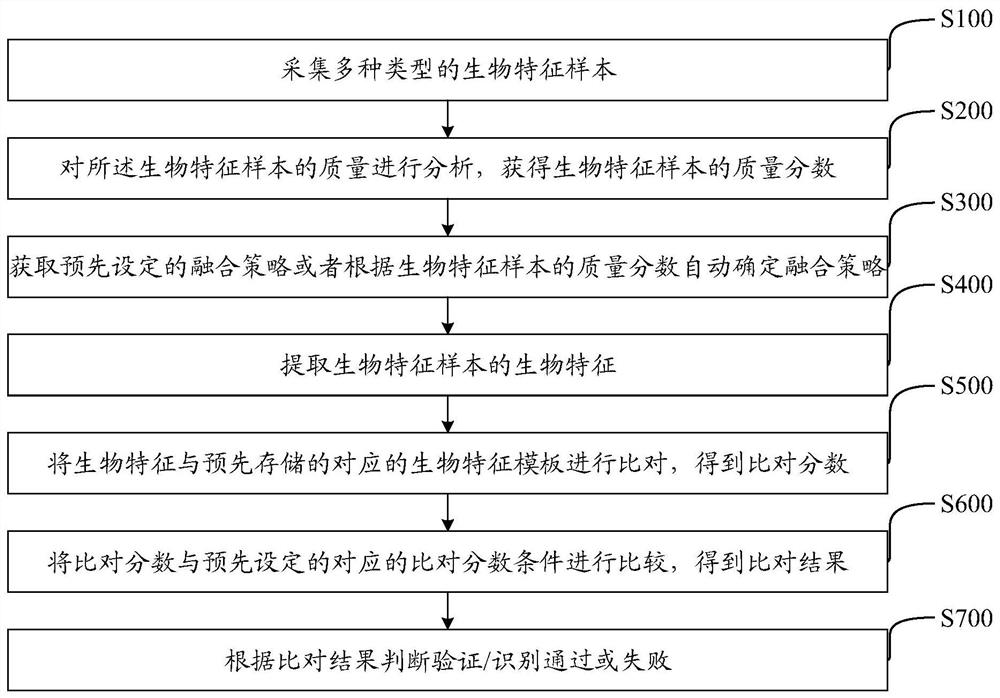

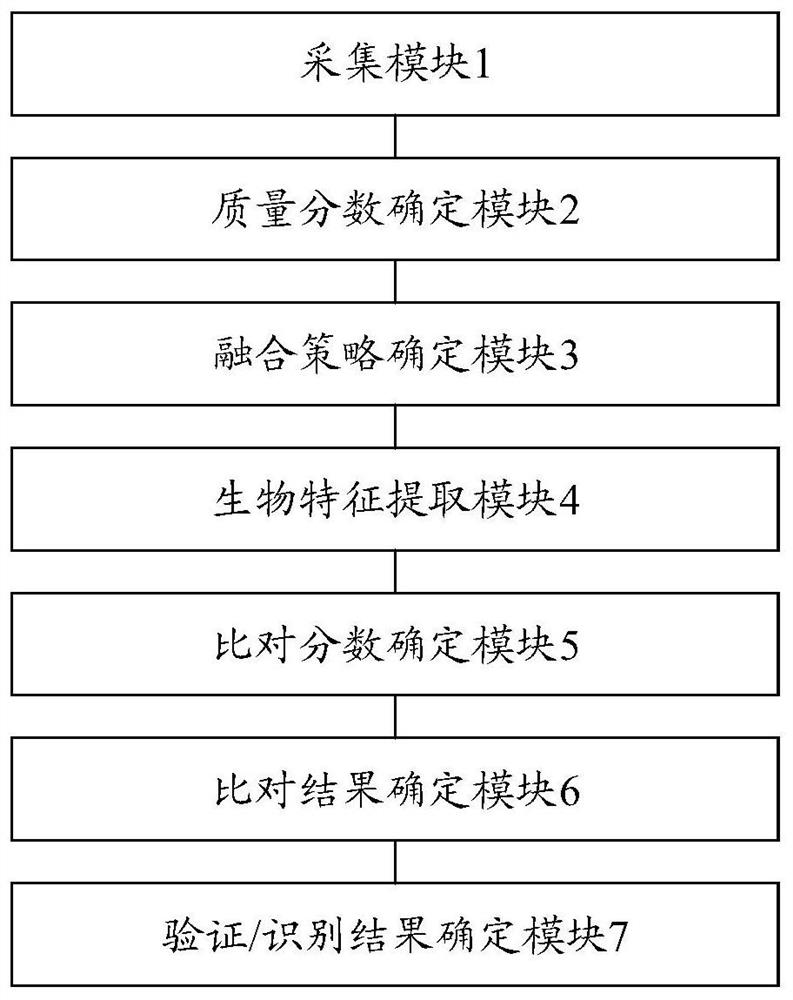

Biological feature recognition multi-modal fusion method and device, storage medium and equipment

PendingCN113361554AAchieve integrationOvercome limitationsCharacter and pattern recognitionDigital data authenticationBiometric fusionIdentity recognition

The invention discloses a biometric feature recognition multi-modal fusion method and device, a computer readable storage medium and equipment, and belongs to the field of biometric recognition. In the process of registration and verification / recognition of biological characteristics, various biological characteristic samples are collected, the mass fraction of the biological characteristic samples are obtained through sample quality judgment, the mass fraction and preset fusion threshold control parameters are compared according to a fusion strategy, and if fusion conditions are met, fusion processing is carried out. The fusion comprises sample data fusion, feature data fusion, comparison score fusion and comparison result fusion. Whether the fusion condition is met or not is judged according to the mass fraction of the multiple biological characteristic samples, fusion of the multiple biological characteristics is achieved, the limitation existing when identity recognition authentication is carried out through a single biological characteristic in a traditional method can be overcome, biological characteristic fusion processing is more reasonable and more effective, and a more comprehensive and safer guarantee is provided for identity identification and authentication.

Owner:BEIJING TECHSHINO TECH +1

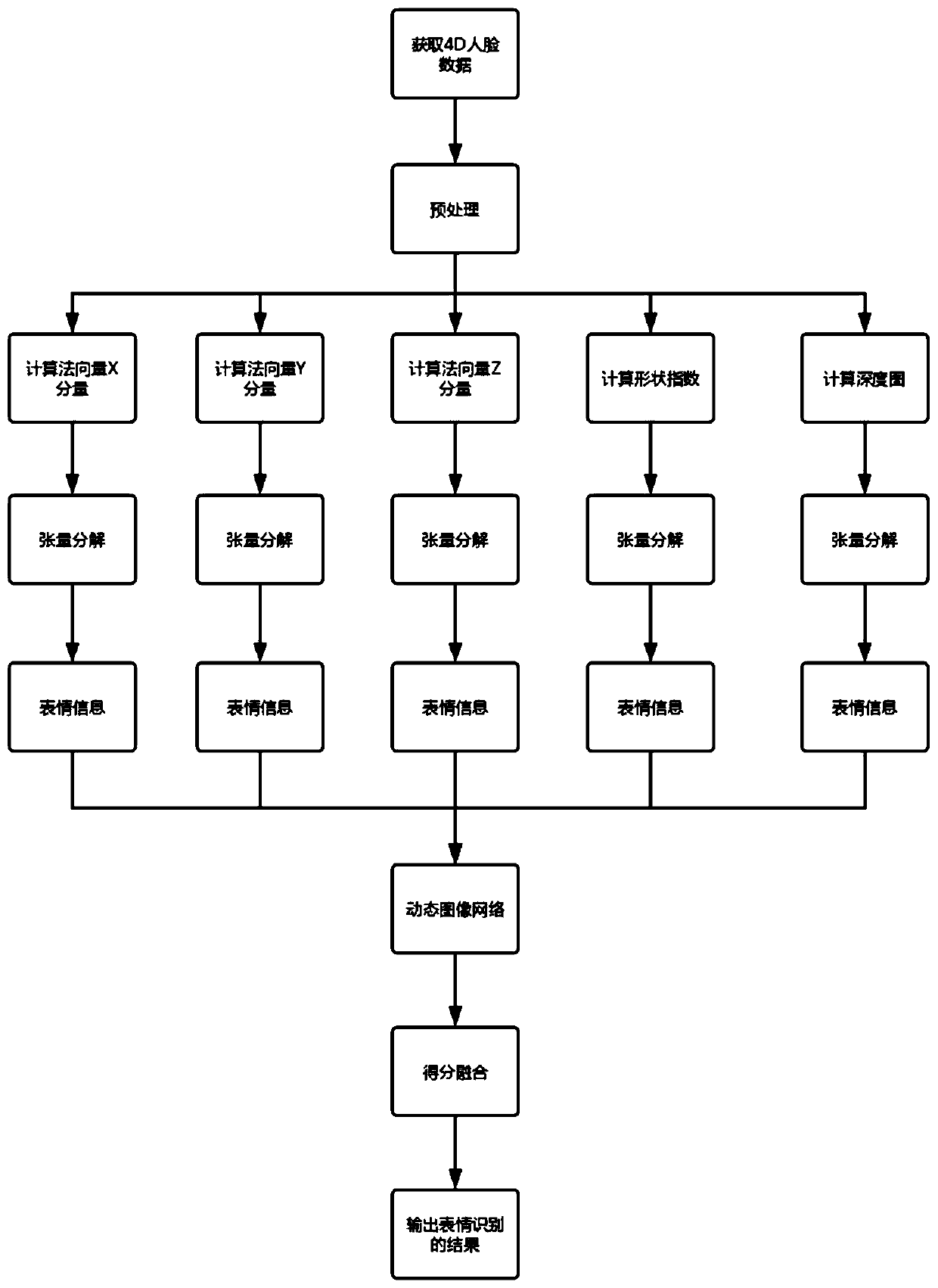

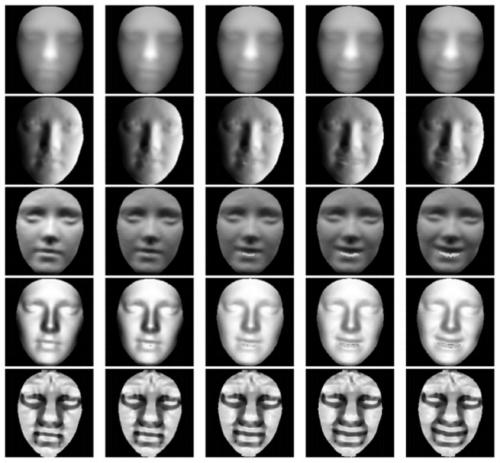

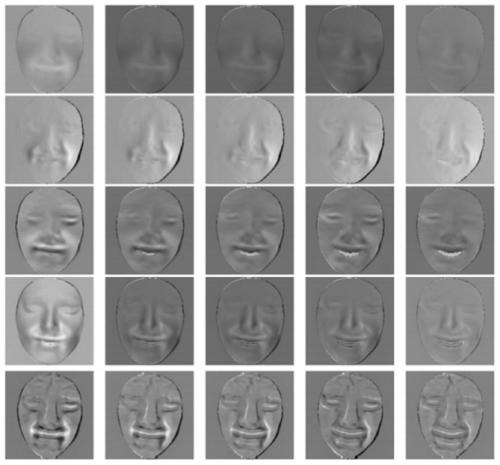

Tensor decomposition-based multi-feature fusion 4D expression recognition method

ActiveCN111178255AOvercome the disadvantages of being greatly affected by factors such as lighting attitudeGood effectNeural architecturesAcquiring/recognising facial featuresTensor decompositionRadiology

The invention discloses a tensor decomposition-based multi-feature fusion 4D expression recognition method. The method comprises the steps of obtaining human face 4D expression data; preprocessing thehuman face 4D expression data, and calculating to obtain three normal vector components, a shape index and a depth map of the 4D human face data; tensor decomposition is carried out on three normal vector components, the shape index and the depth map of the 4D face data, and dynamic face expression information is extracted; and classifying the dynamic facial expression information by using a dynamic image network, and performing score fusion on a classification result to obtain a final classification result. According to the method, the information of the 4D face data is fully utilized, the three components, the shape index and the depth map of the normal vector of the face are calculated for the sequence face data, the 3D geometrical information of the face is fully utilized, for different people, the features of the face are more representative and discriminative, and the accuracy of face recognition and expression recognition is higher.

Owner:XI AN JIAOTONG UNIV

Multi-feature Fusion Sign Language Recognition Method Based on Adaptive Hidden Markov

ActiveCN109409231BHigh precisionImprove robustnessCharacter and pattern recognitionHide markov modelMulti feature fusion

The invention discloses a multi-feature fusion sign language recognition method based on adaptive hidden Markov, which includes: 1. Firstly, multiple features are extracted from the sign language video database and the front-end fusion is performed, that is, a feature pool set is constructed; and then each sign language video is constructed. An adaptive hidden Markov model under different features in the feature pool set, and a feature selection strategy is proposed to obtain a suitable back-end score fusion feature; after selecting the back-end score fusion feature, calculate each back-end score The score vector under the fusion feature is assigned different weights, and then the back-end score fusion is performed to obtain the optimal fusion effect. The invention can realize the accurate identification of sign language video sign language category and improve the robustness of identification.

Owner:HEFEI UNIV OF TECH

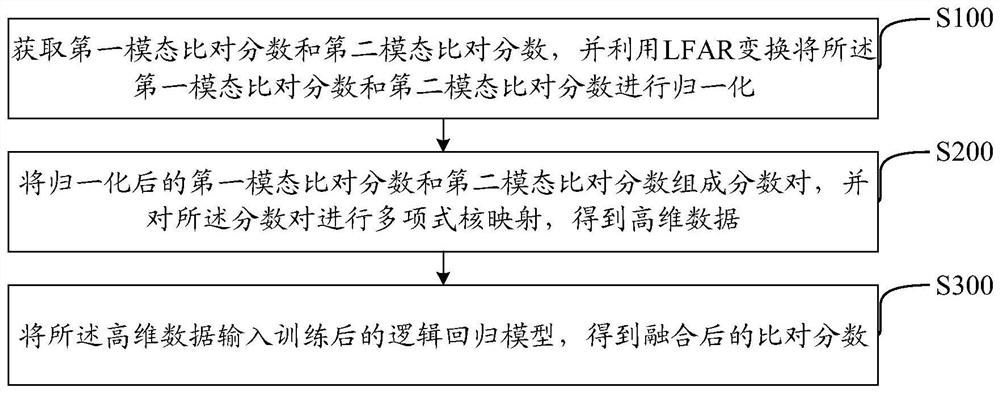

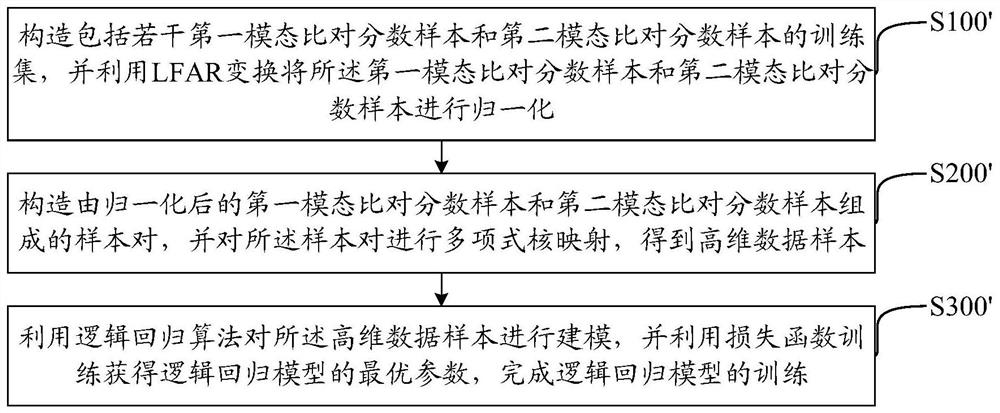

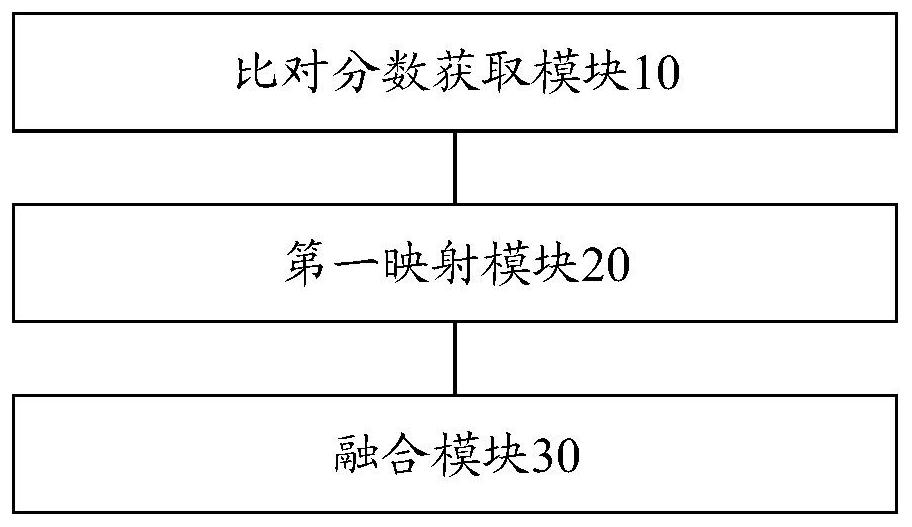

Multi-modal score fusion method and device, computer readable storage medium and equipment

The invention discloses a multi-modal score fusion method and device, a computer readable storage medium and equipment, and belongs to the field of biological recognition. The method comprises the following steps: acquiring a first modal comparison score and a second modal comparison score, and normalizing the first modal comparison score and the second modal comparison score by using LFAR transformation; forming a score pair by the normalized first modal comparison score and the normalized second modal comparison score, and performing polynomial kernel mapping on the score pair to obtain high-dimensional data; and inputting the high-dimensional data into the trained logistic regression model to obtain a fused comparison score. The method can give full play to the advantages of different modes, and achieves good recognition precision.

Owner:BEIJING TECHSHINO TECH +1

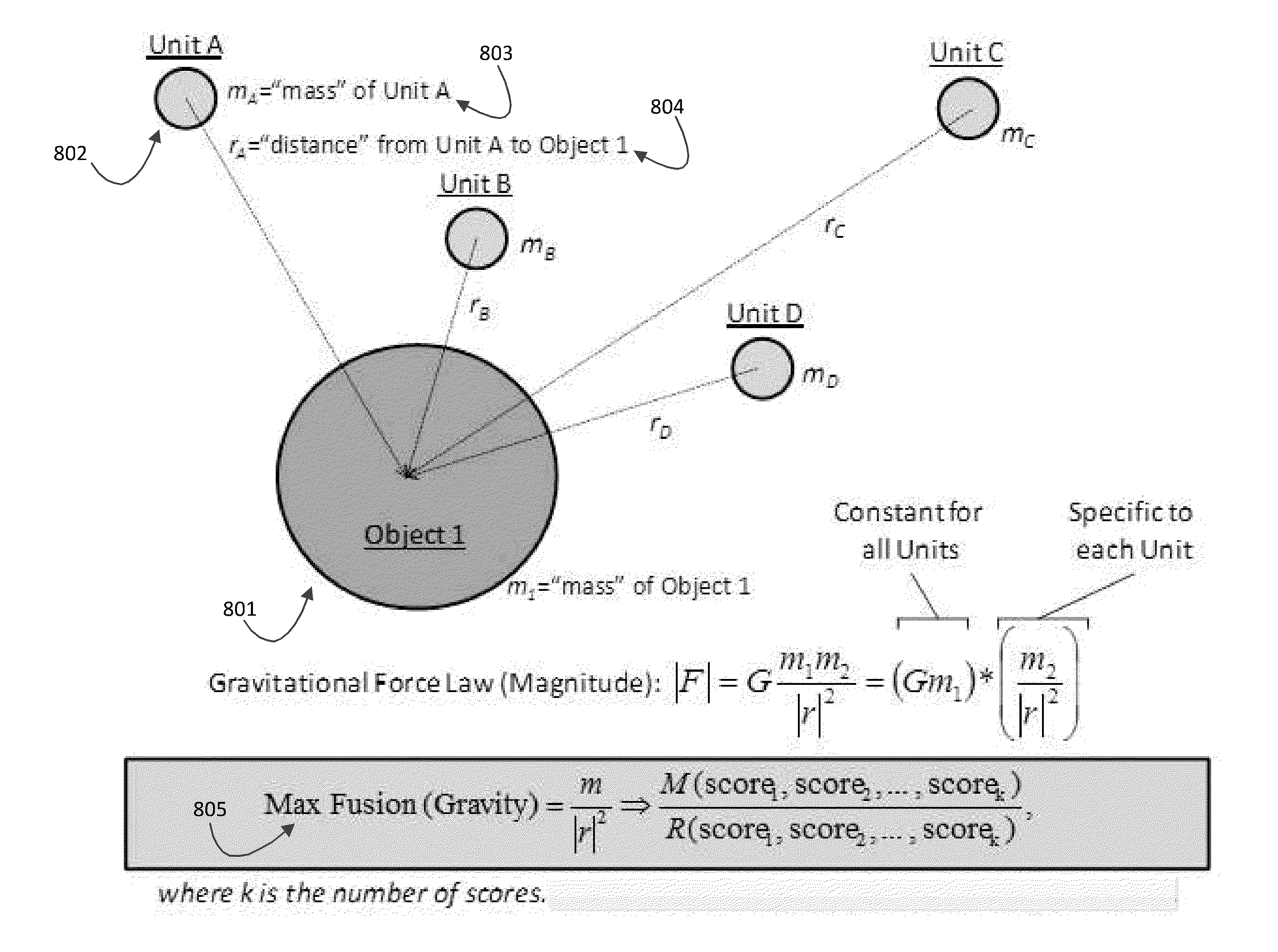

Score fusion based on the gravitational force between two objects

InactiveUS20130173237A1FinanceComputation using non-denominational number representationMassive gravityObject based

Various embodiments of the present invention provide systems, methods, and computer-program products for fusing at least two scores. In various embodiments, at least two scores are received in which each score predicts the probability of an outcome associated with a particular unit. In particular embodiments, A mass and a distance are calculated between two objects based on the at least two scores in which the first of the two objects is a constant and the second of the two objects comprises one or more characteristics of the particular unit. Further, in particular embodiments, a gravitational force between the two objects is calculated based on the mass and the distance and this gravitational force is used as a fused score for the at least two scores.

Owner:EQUIFAX INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com