Stereoscopic video quality evaluation method based on 3D convolution neural network

A convolutional neural network and stereoscopic video technology, applied in the field of video processing, can solve the problem of unavailability of reference video, and achieve the effect of high computational efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

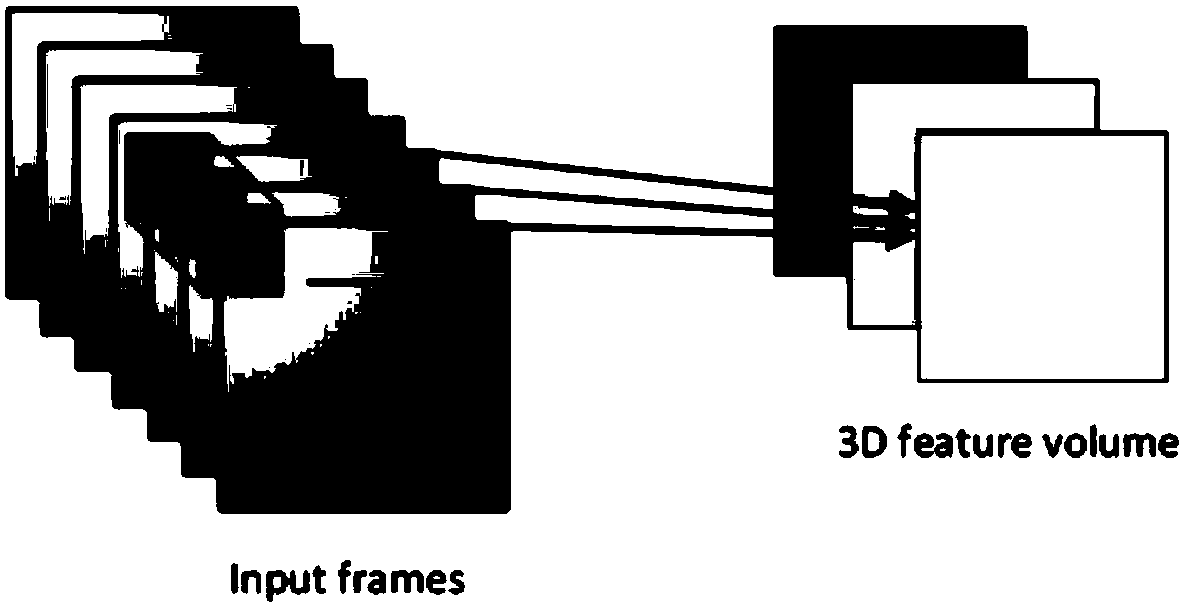

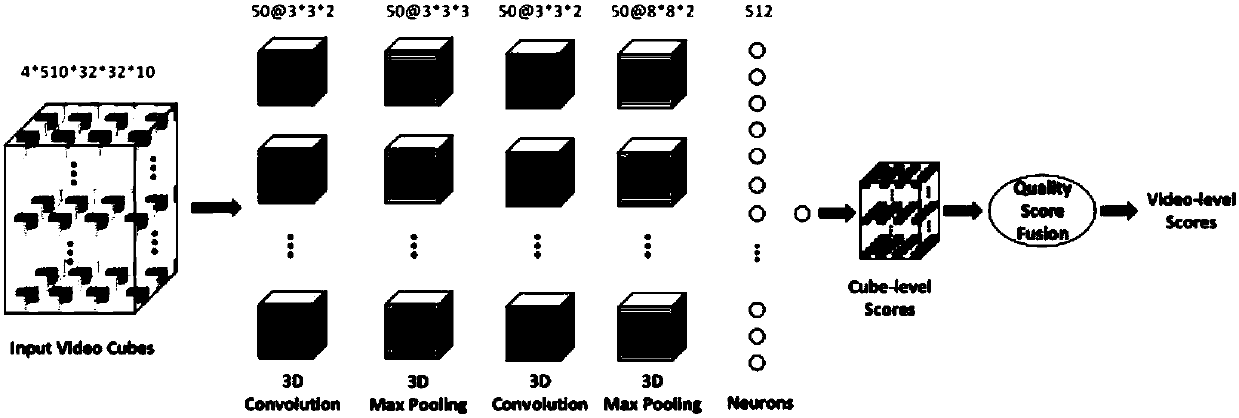

[0019] 1. Data preprocessing

[0020] (1) Difference video:

[0021] Calculate the difference video between the left view and the right view at the stereoscopic video position (x, y, z), the calculation formula is as follows:

[0022] D. L (x,y,z)=|V L (x,y,z)-V R (x,y,z)| (1)

[0023] where V L and V R denoted as left and right views at stereoscopic video position (x, y, z), respectively, D L Represents the difference video.

[0024] (2) Dataset enhancement:

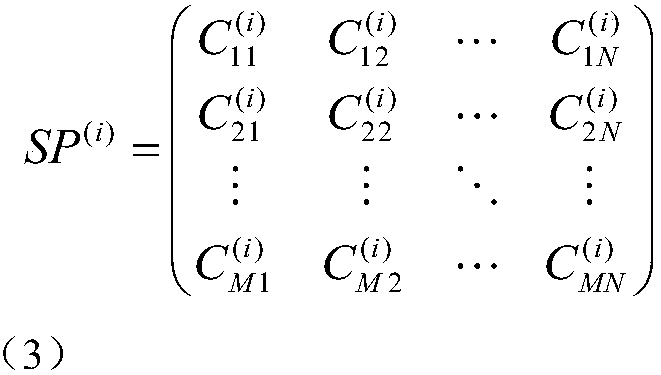

[0025] We slide a 32×32 box with a stride of 32, crop the entire video in the spatial dimension, and select frames with a stride of 8 in the temporal dimension, and derive many low-resolution images by dividing the original video in the spatial and temporal dimensions. rate of short video cubes. The size of each cubic video is set to 10×32×32, that is, 10 frames, and the resolution of each frame is 32×32. In this scheme, 32×32 rectangular boxes are cropped at the same position in 10 consecutive frames to gene...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com